Patents

Literature

2181 results about "Pose" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer vision and robotics, a typical task is to identify specific objects in an image and to determine each object's position and orientation relative to some coordinate system. This information can then be used, for example, to allow a robot to manipulate an object or to avoid moving into the object. The combination of position and orientation is referred to as the pose of an object, even though this concept is sometimes used only to describe the orientation. Exterior orientation and translation are also used as synonyms of pose.

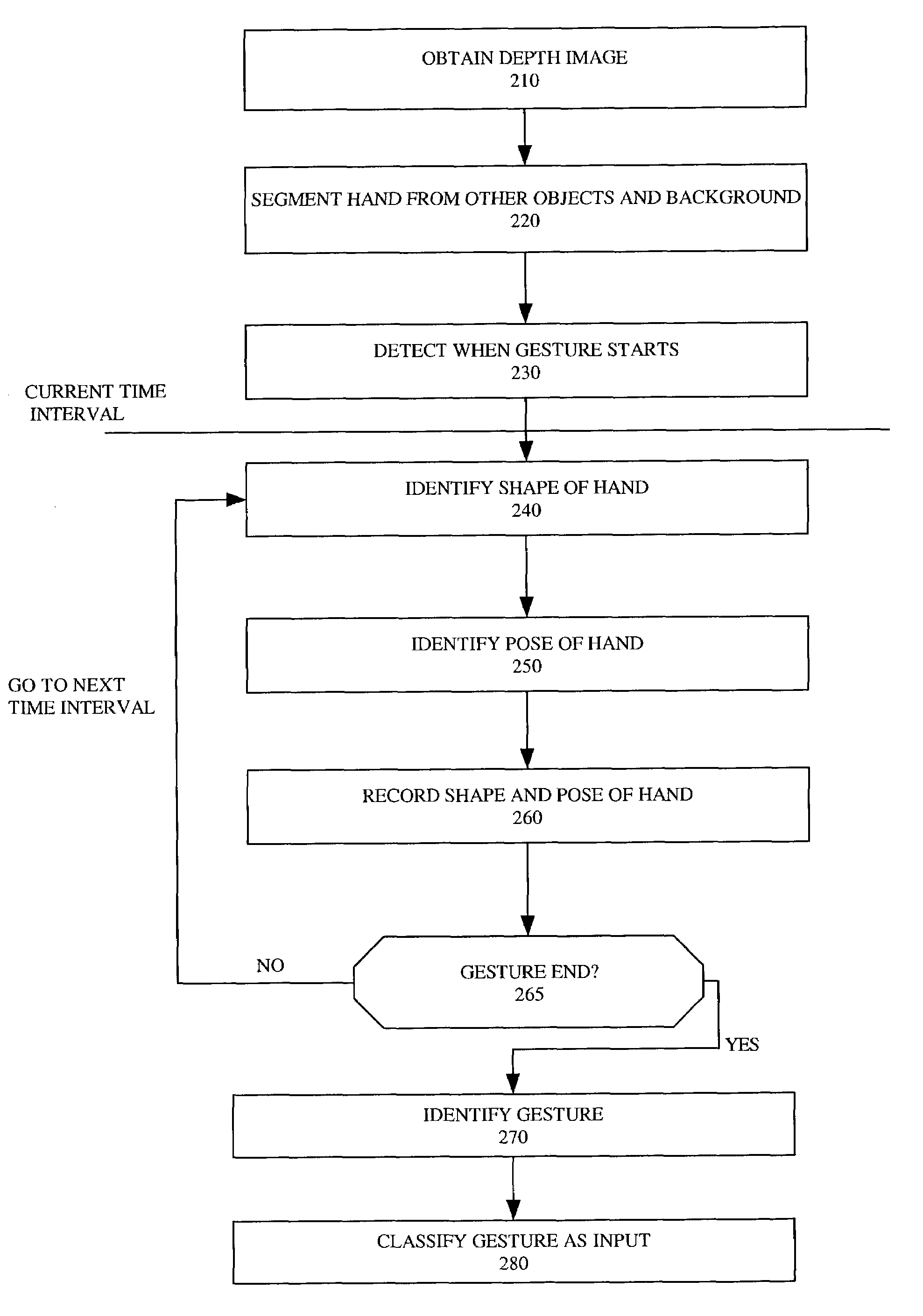

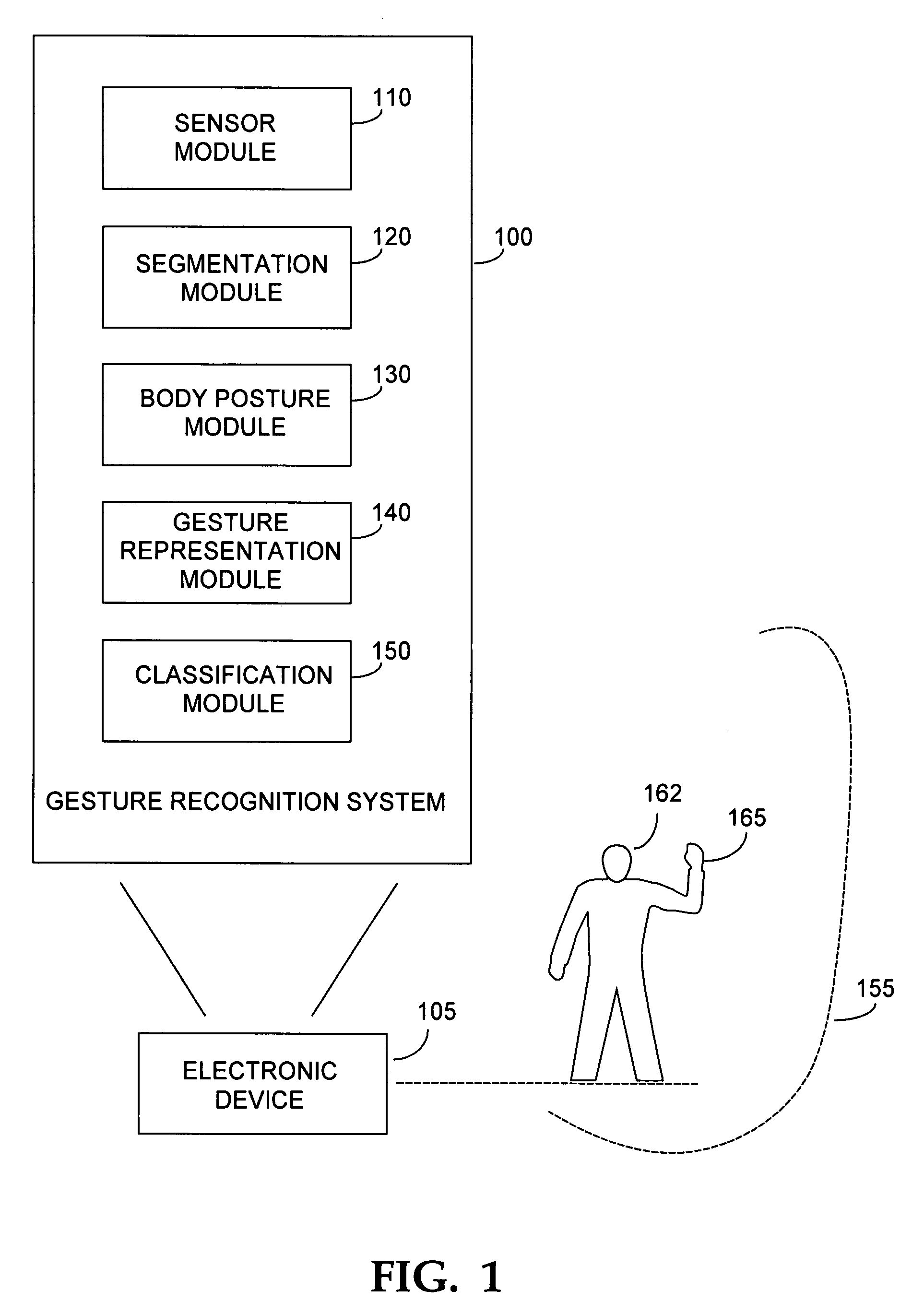

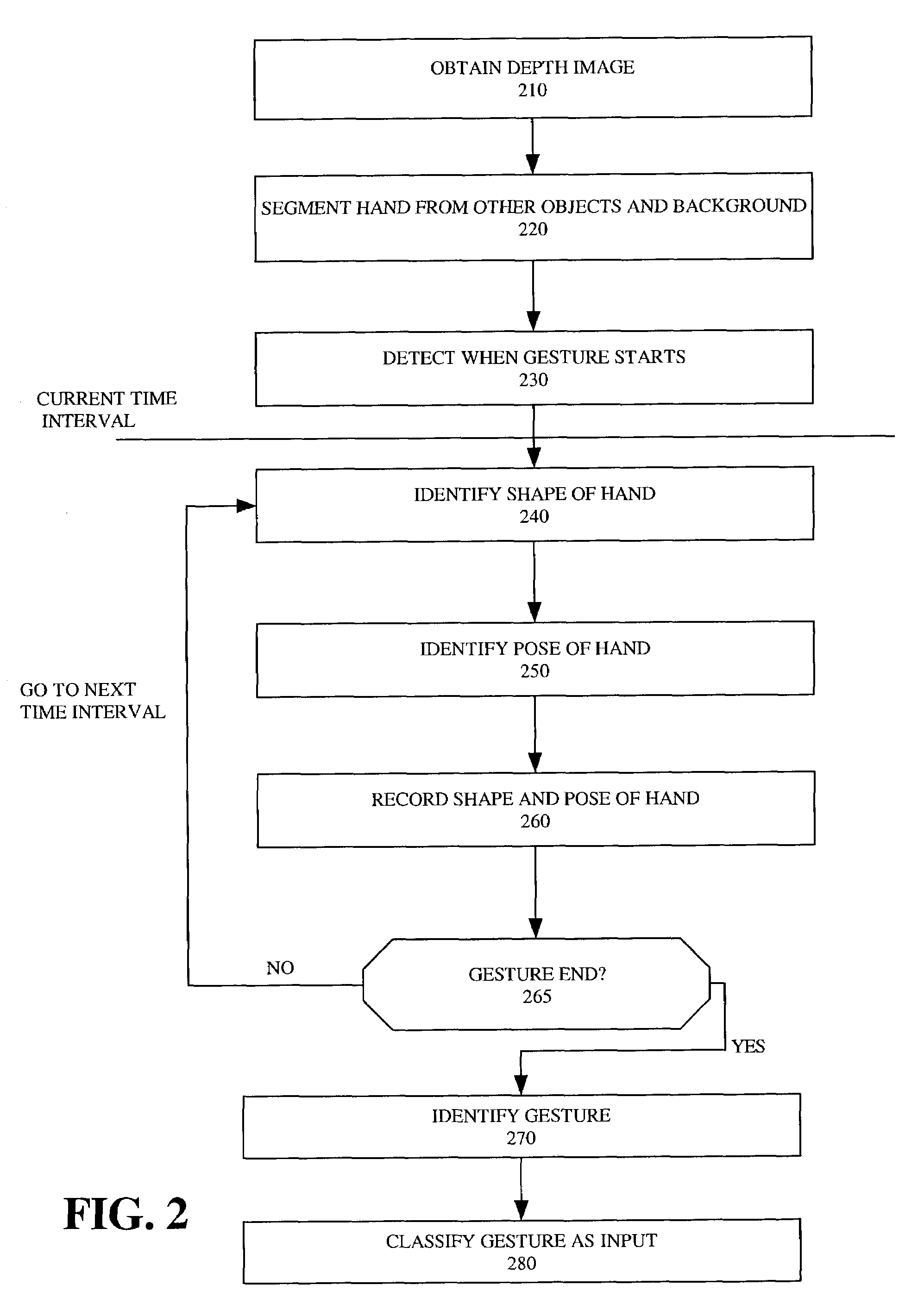

Gesture recognition system using depth perceptive sensors

ActiveUS7340077B2Input/output for user-computer interactionIndoor gamesPhysical medicine and rehabilitationPhysical therapy

Owner:MICROSOFT TECH LICENSING LLC

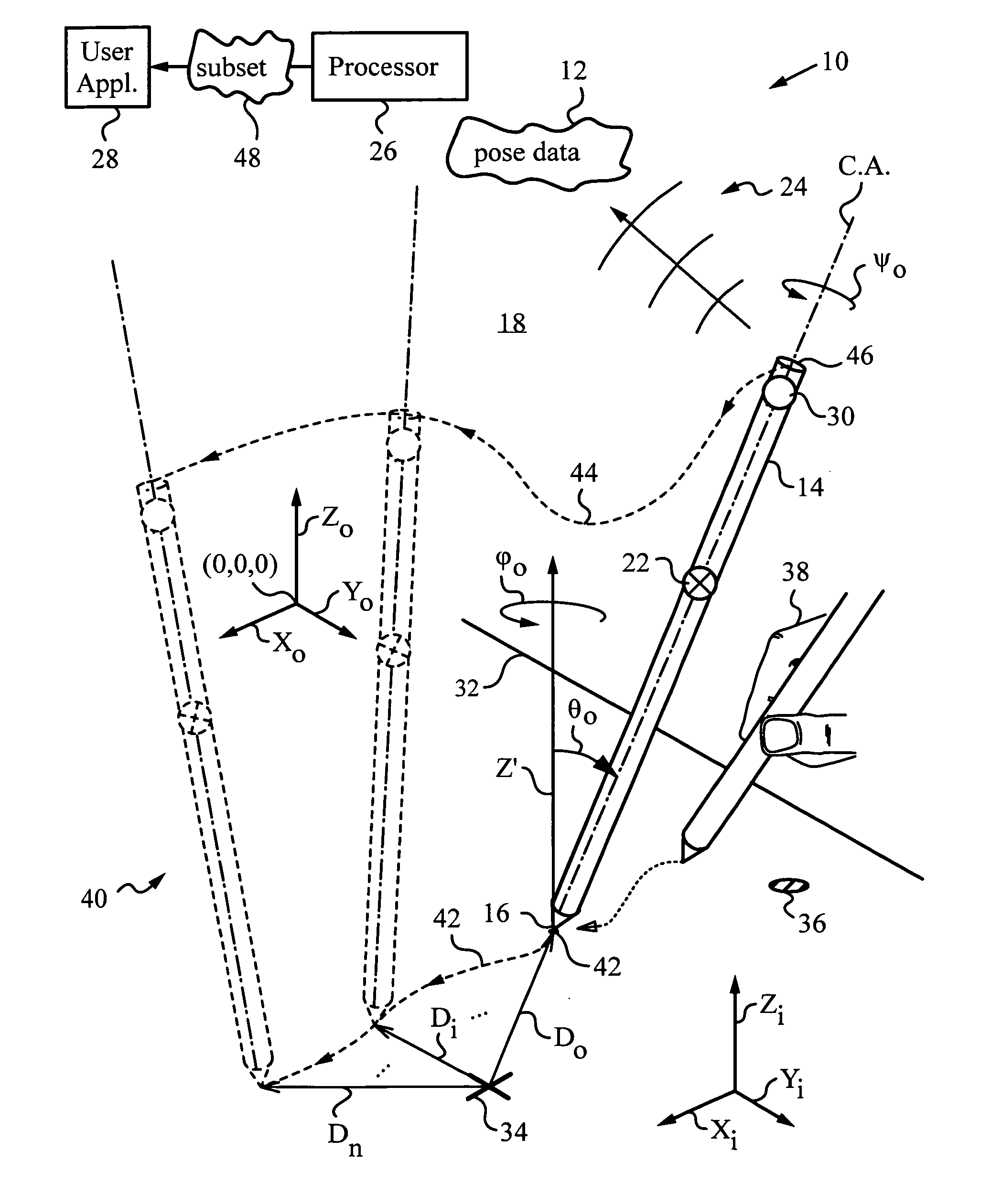

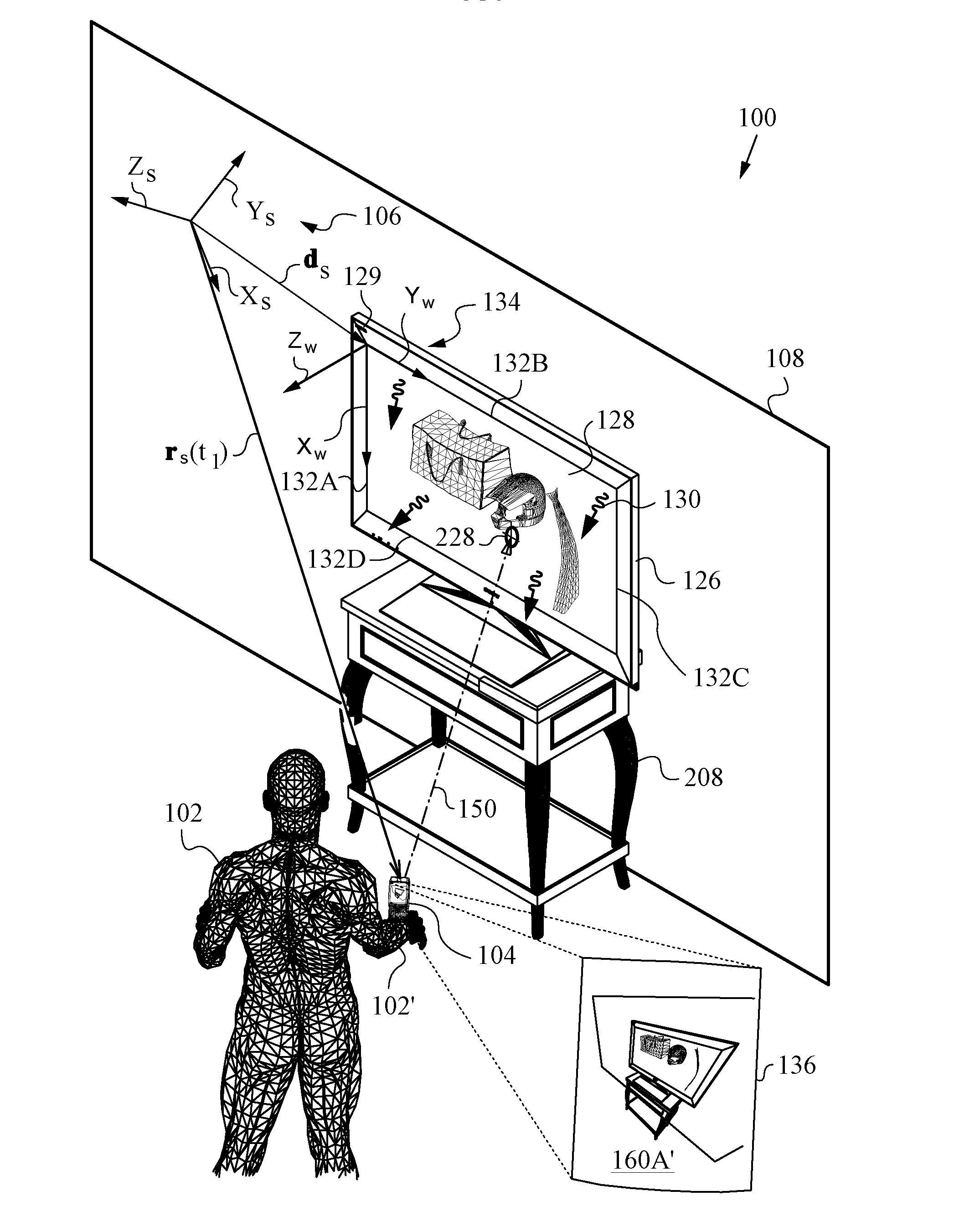

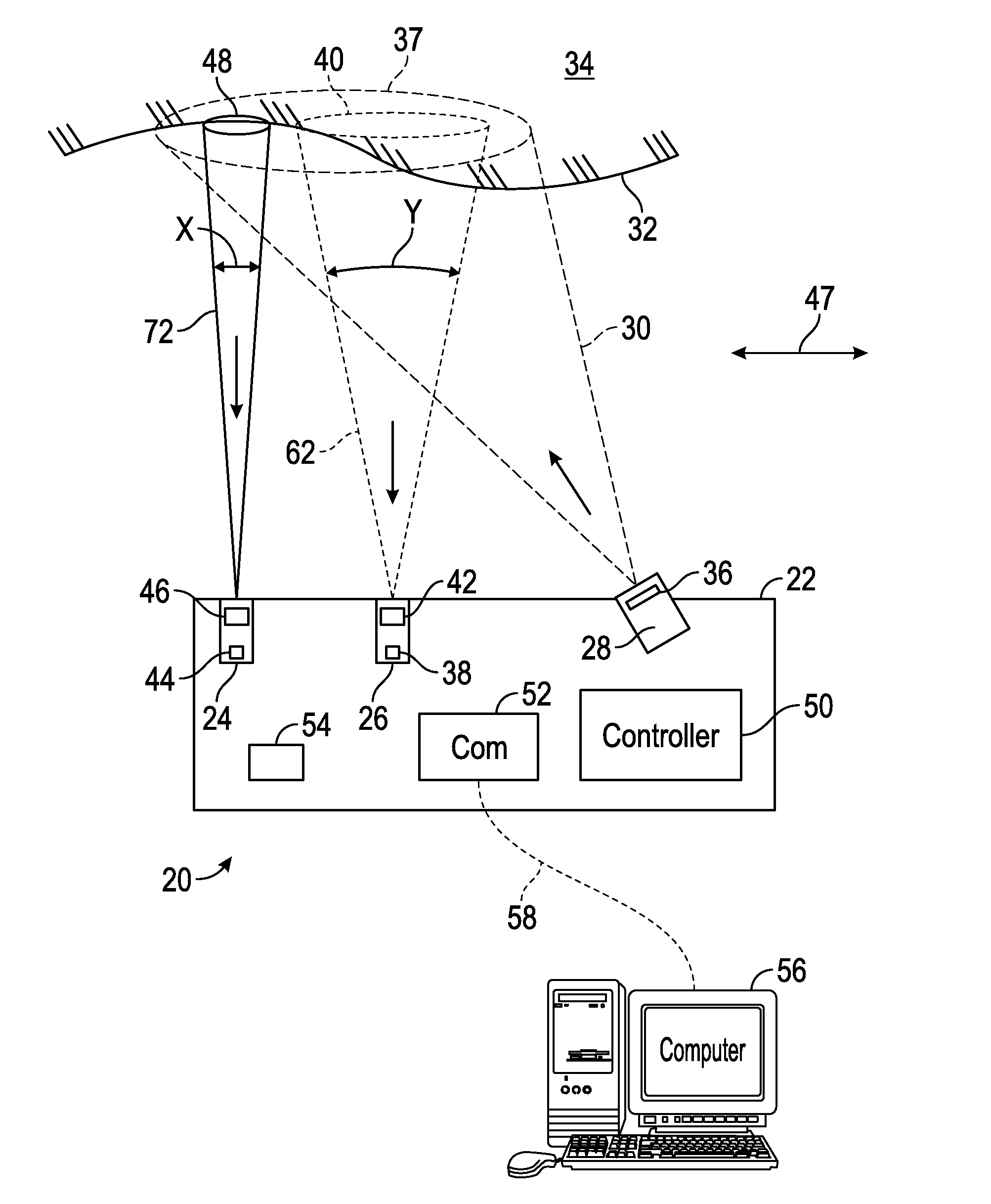

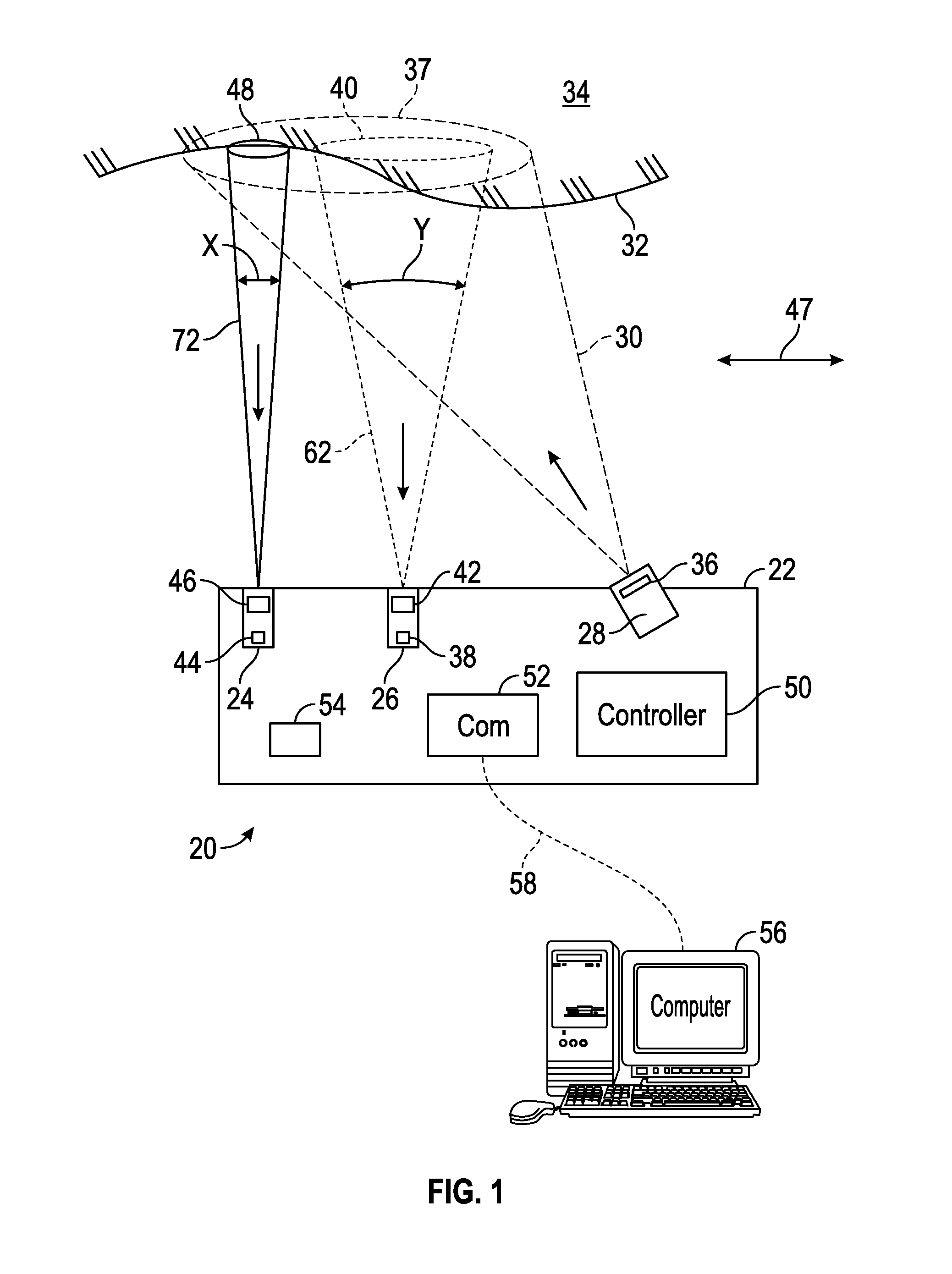

Apparatus and method for determining an absolute pose of a manipulated object in a real three-dimensional environment with invariant features

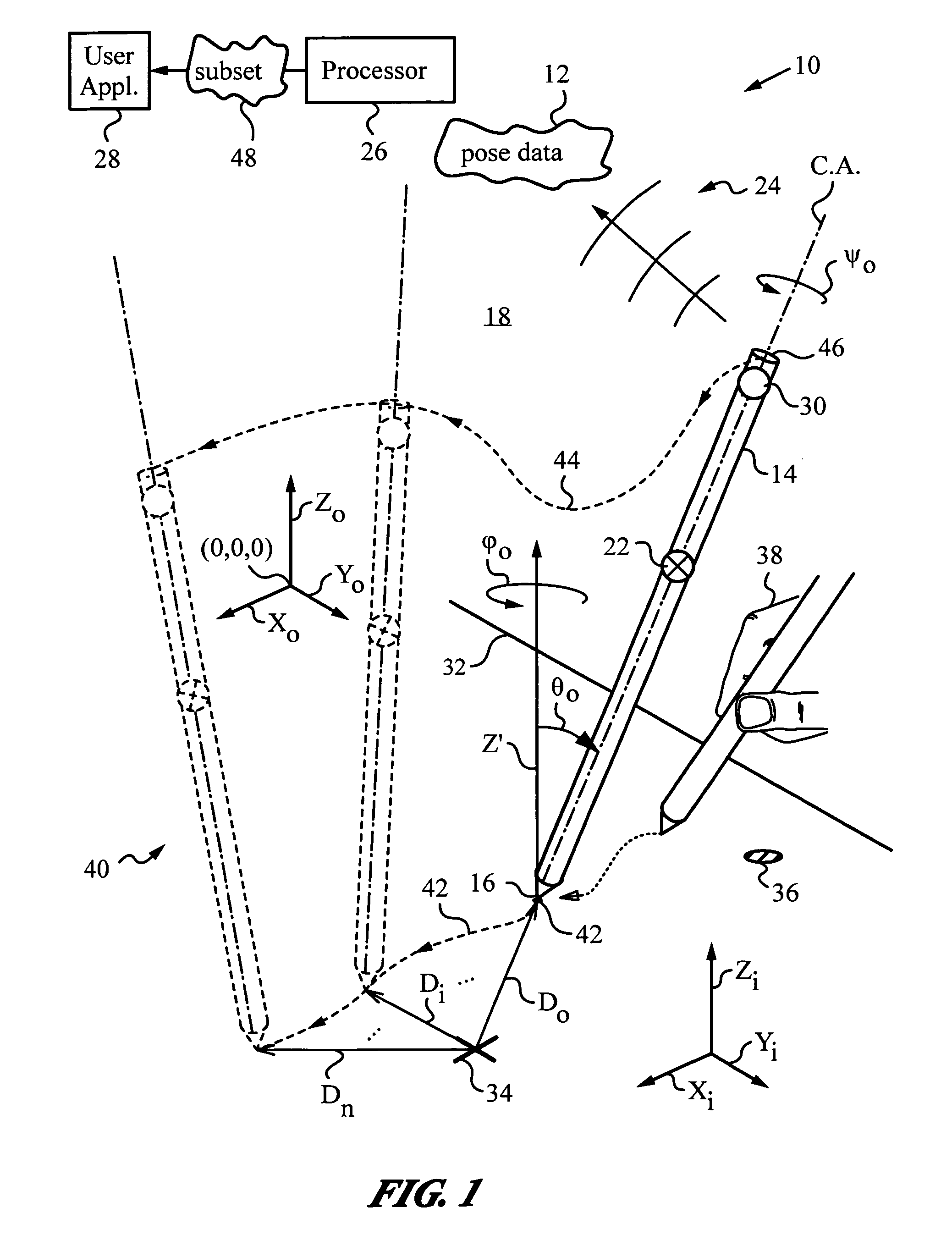

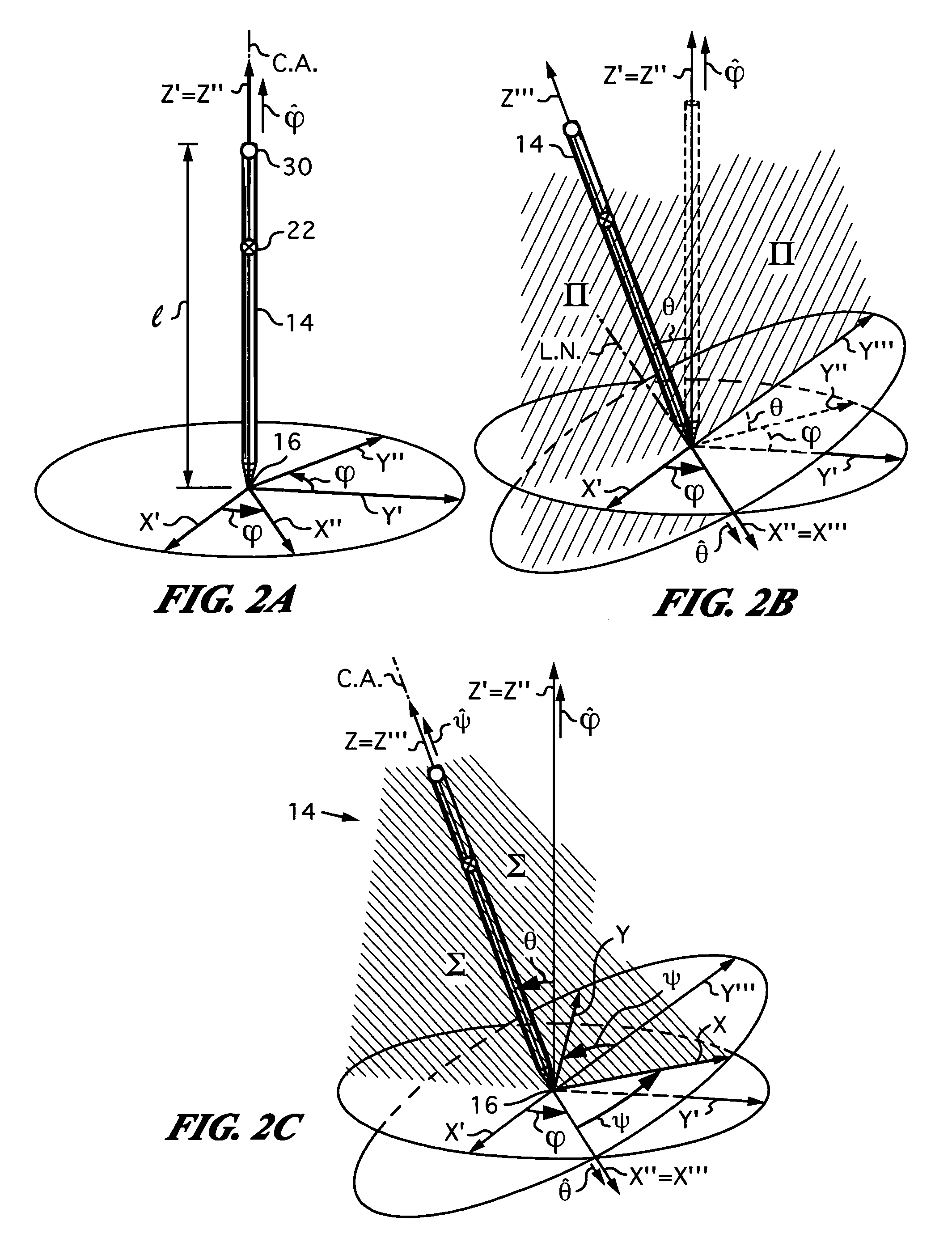

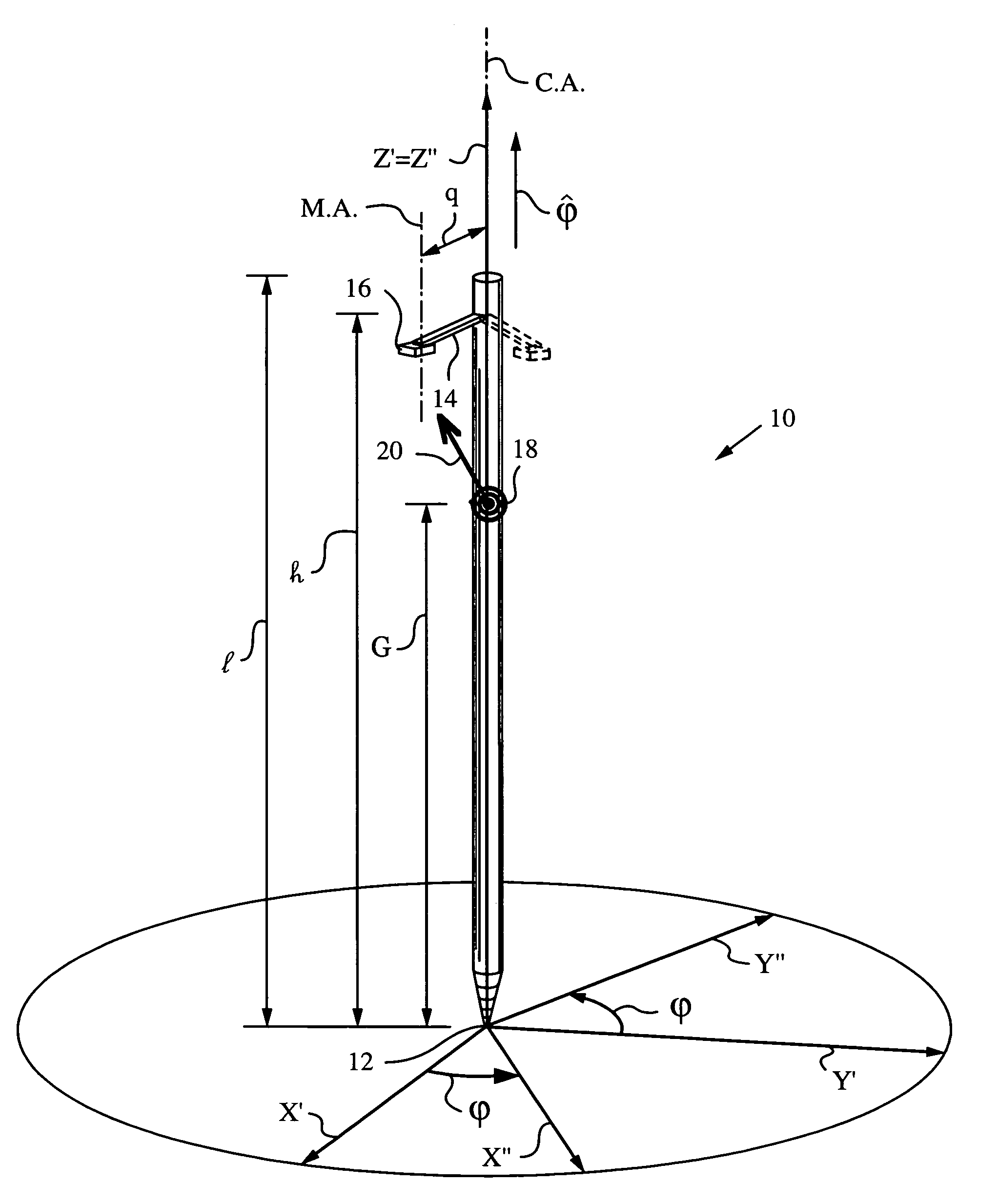

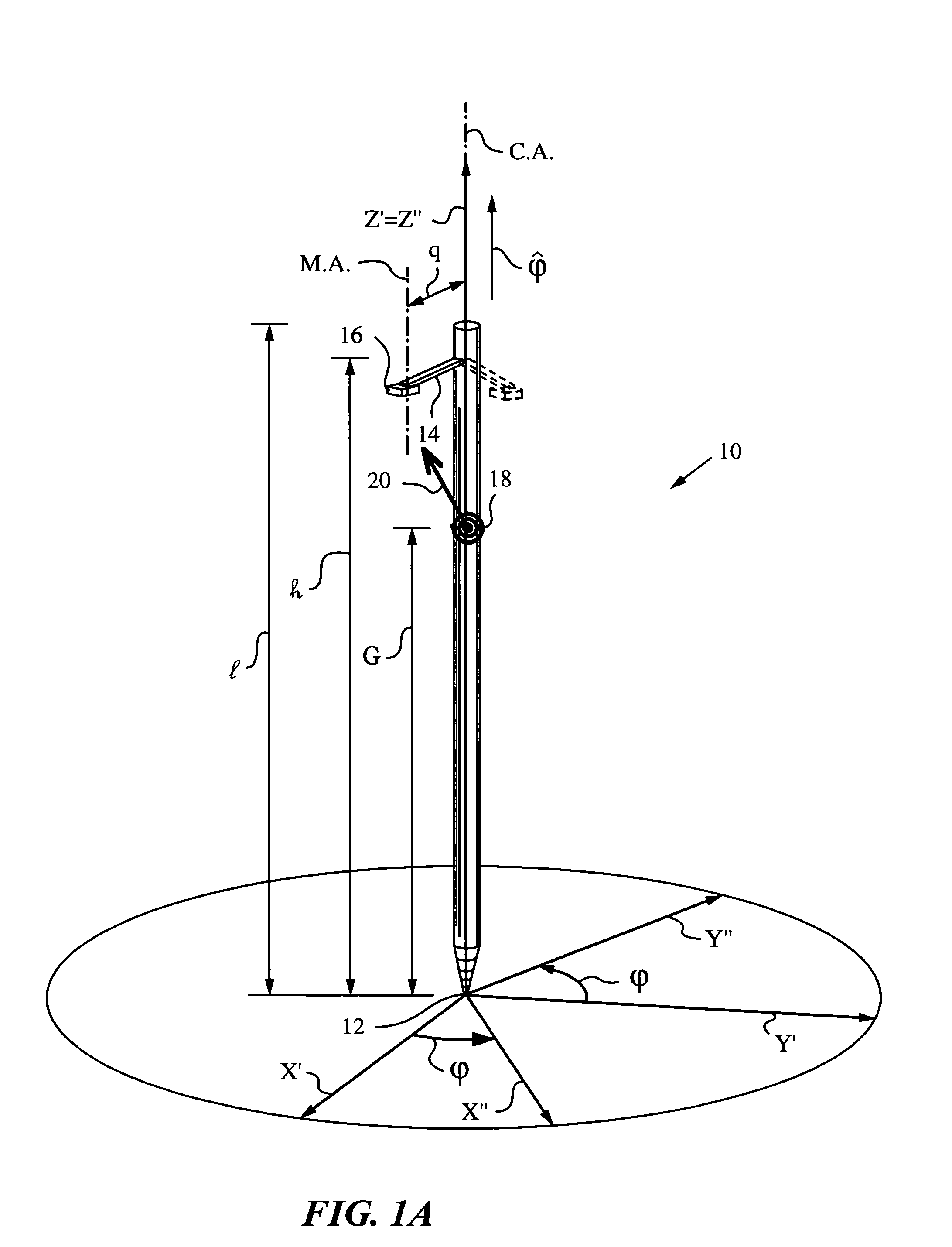

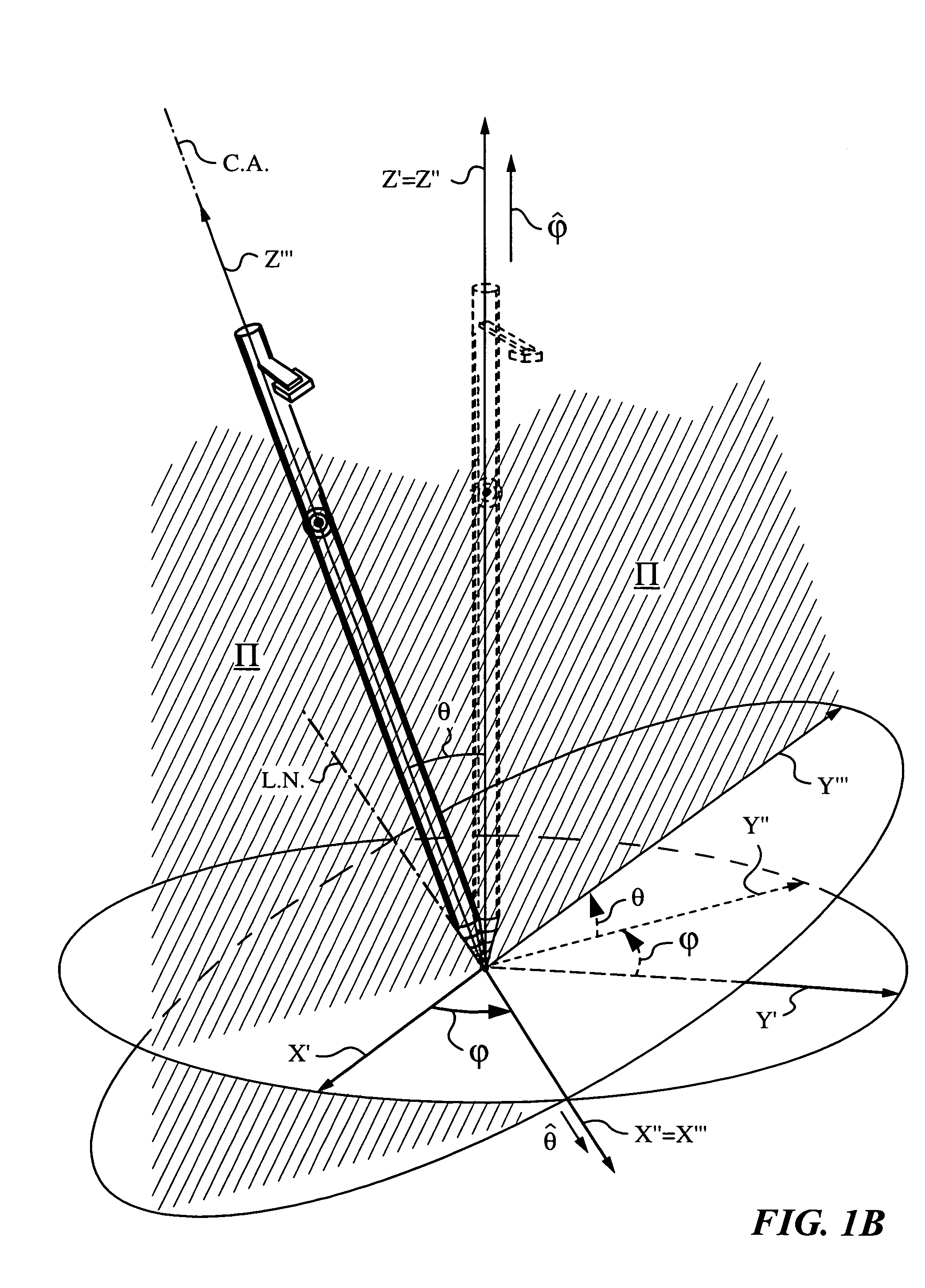

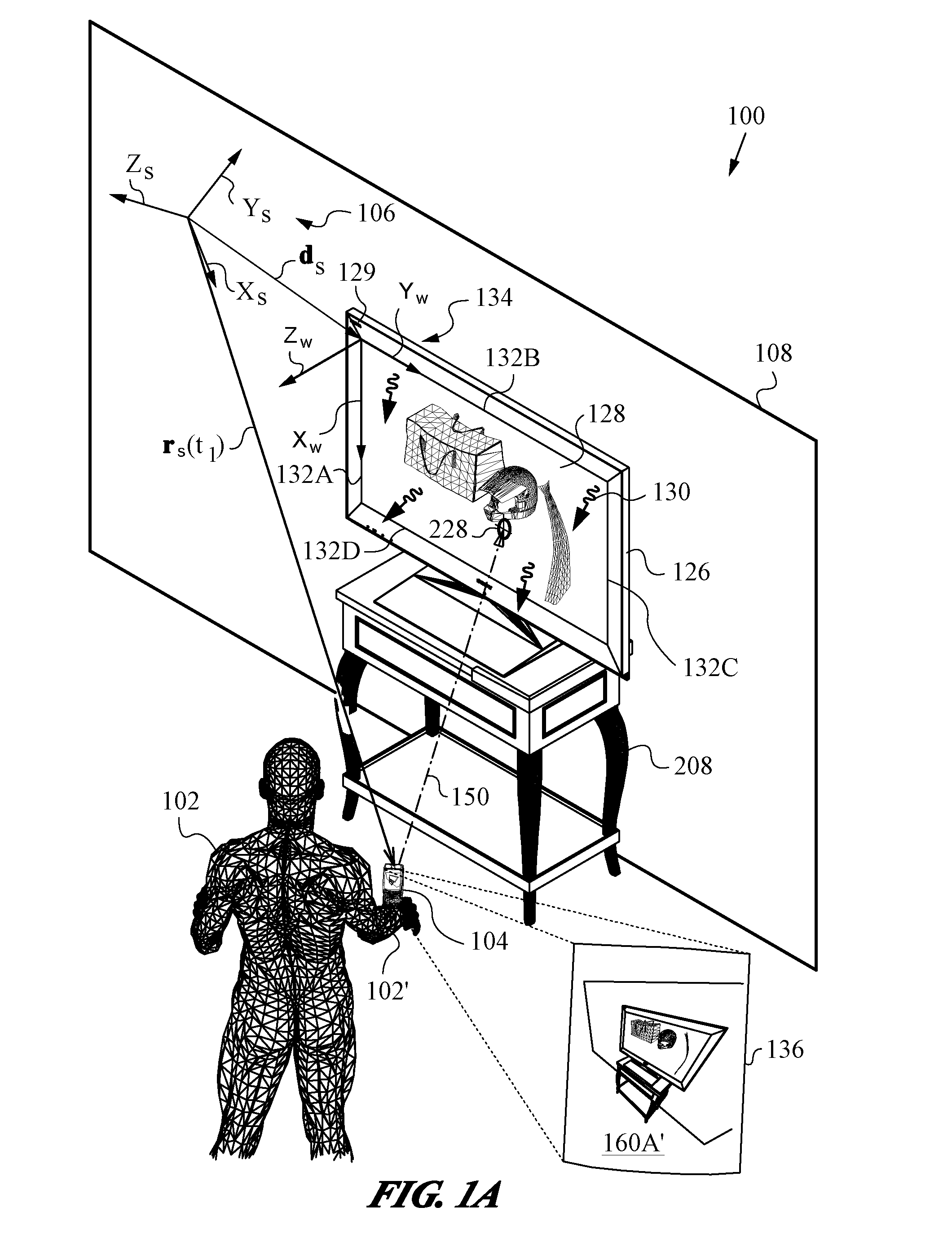

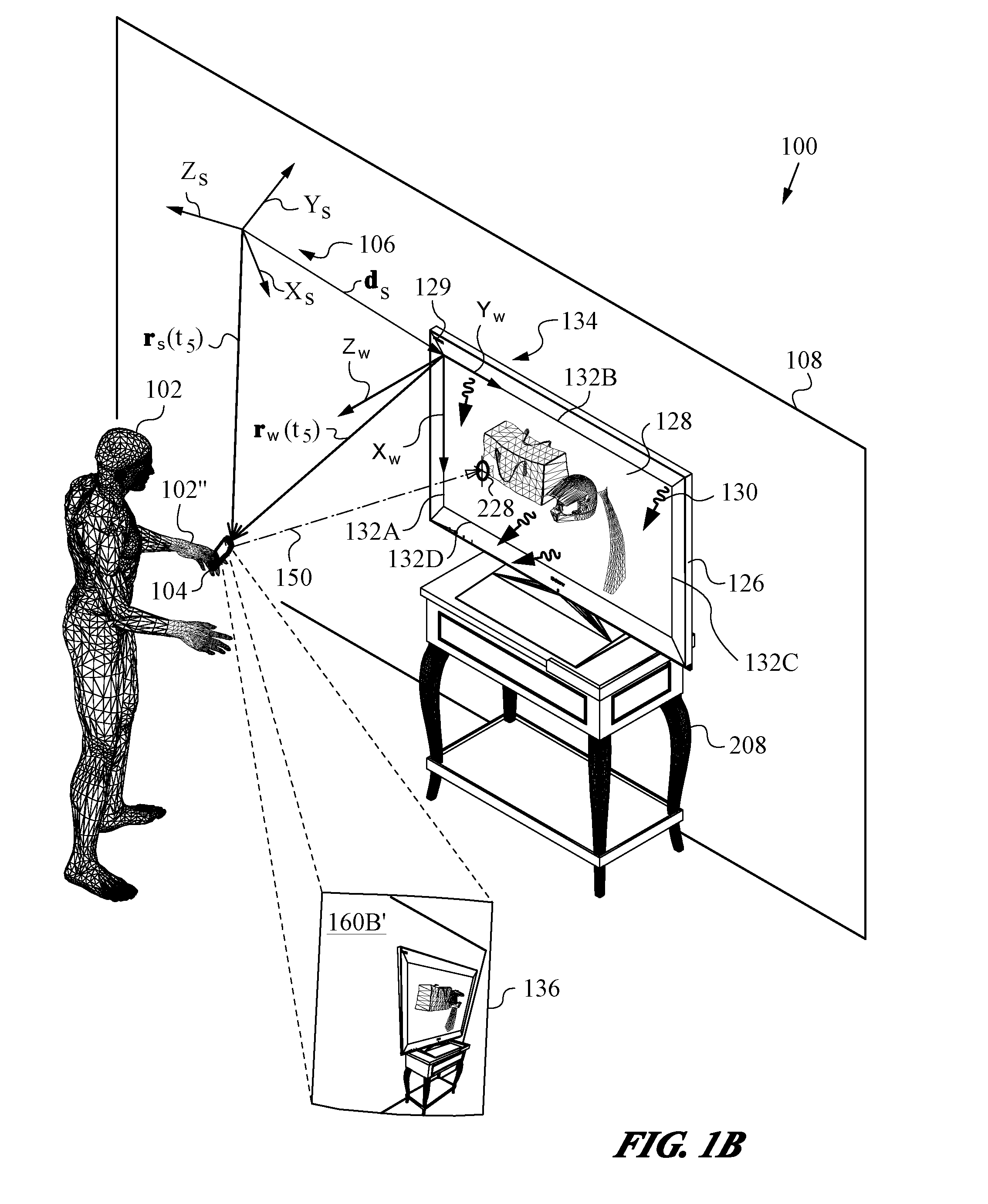

An apparatus and method for optically inferring an absolute pose of a manipulated object in a real three-dimensional environment from on-board the object with the aid of an on-board optical measuring arrangement. At least one invariant feature located in the environment is used by the arrangement for inferring the absolute pose. The inferred absolute pose is expressed with absolute pose data (φ, θ, ψ, x, y, z) that represents Euler rotated object coordinates expressed in world coordinates (Xo, Yo, Zo) with respect to a reference location, such as, for example, the world origin. Other conventions for expressing absolute pose data in three-dimensional space and representing all six degrees of freedom (three translational degrees of freedom and three rotational degrees of freedom) are also supported. Irrespective of format, a processor prepares the absolute pose data and identifies a subset that may contain all or fewer than all absolute pose parameters. This subset is transmitted to an application via a communication link, where it is treated as input that allows a user of the manipulated object to interact with the application and its output.

Owner:ELECTRONICS SCRIPTING PRODS

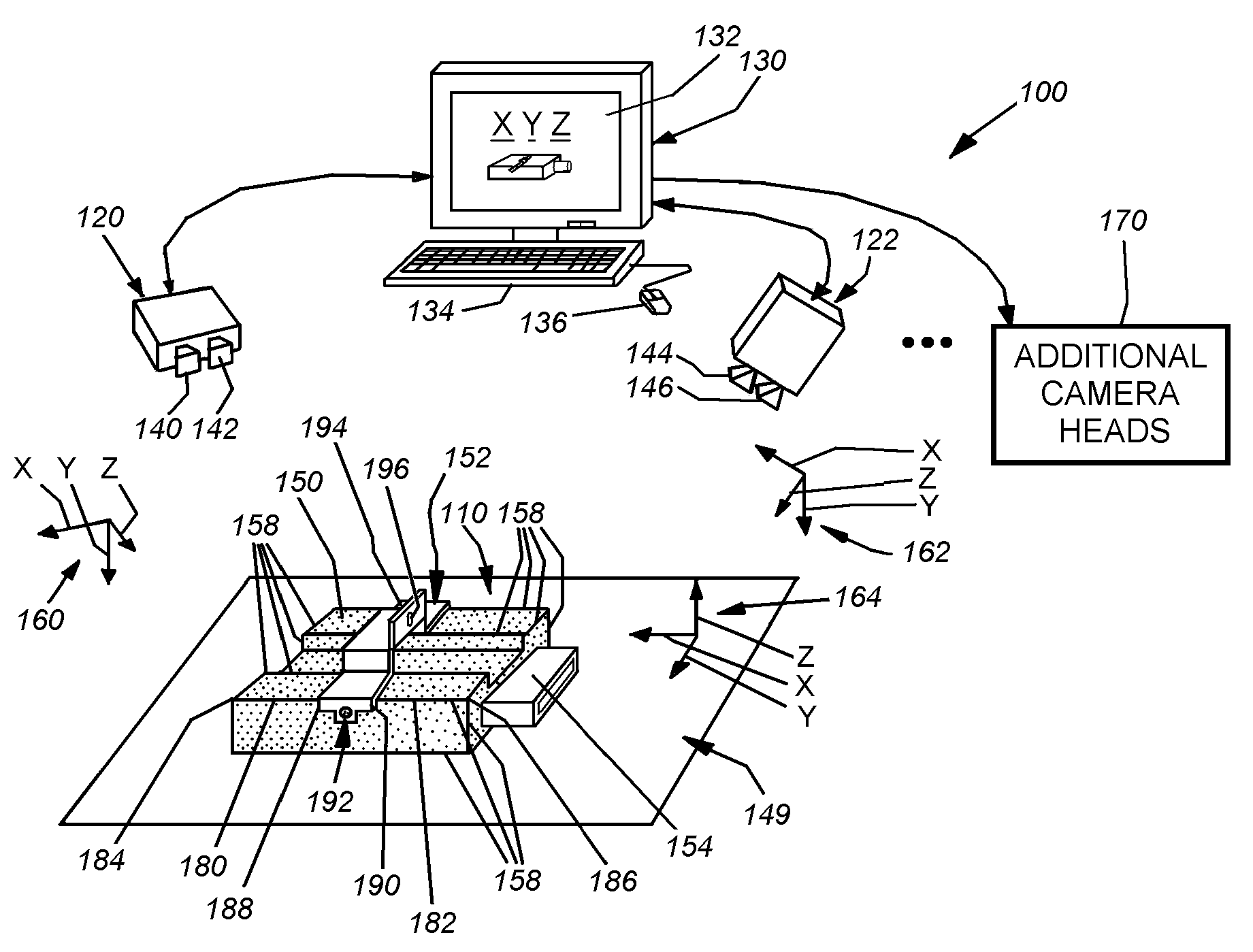

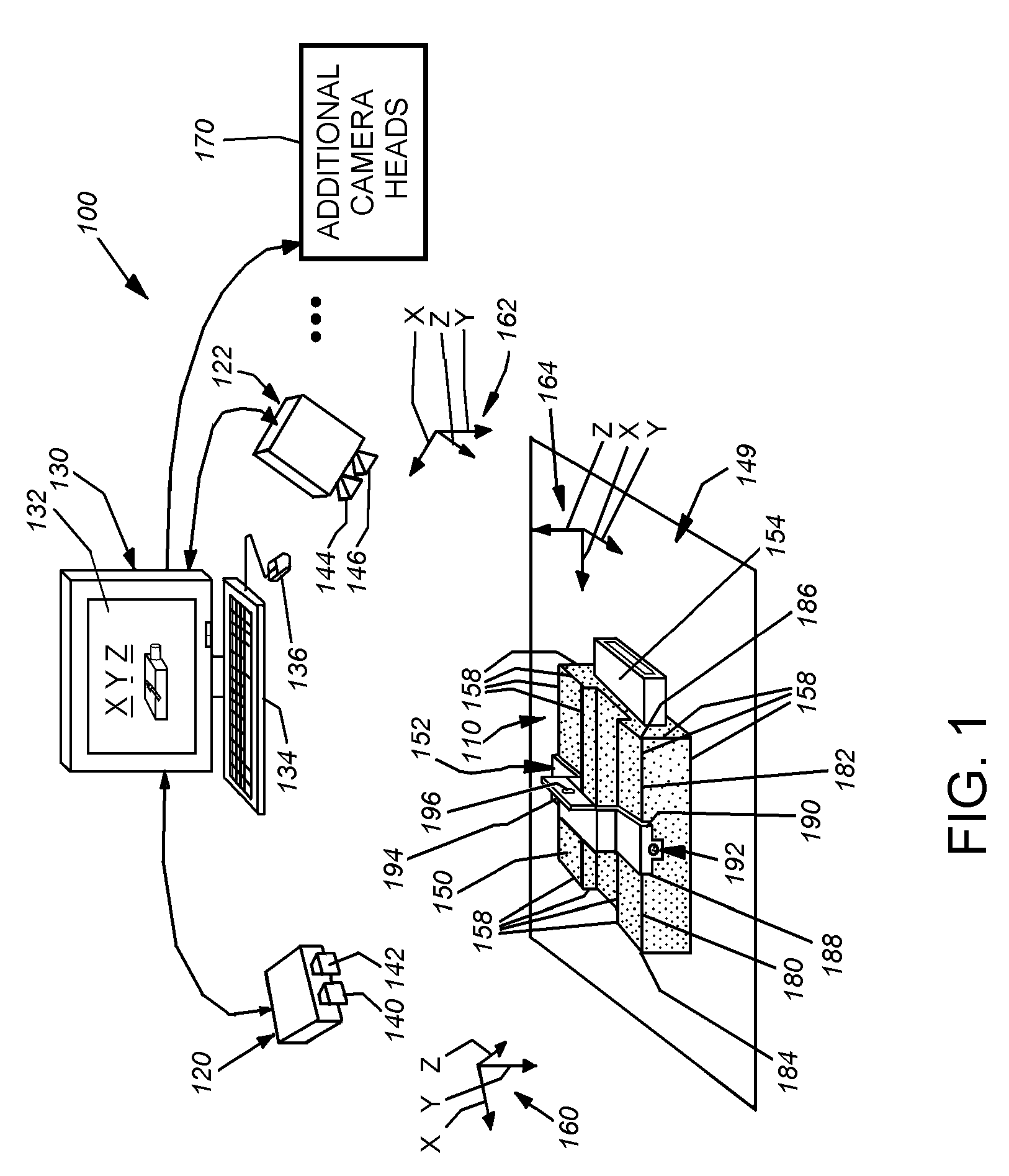

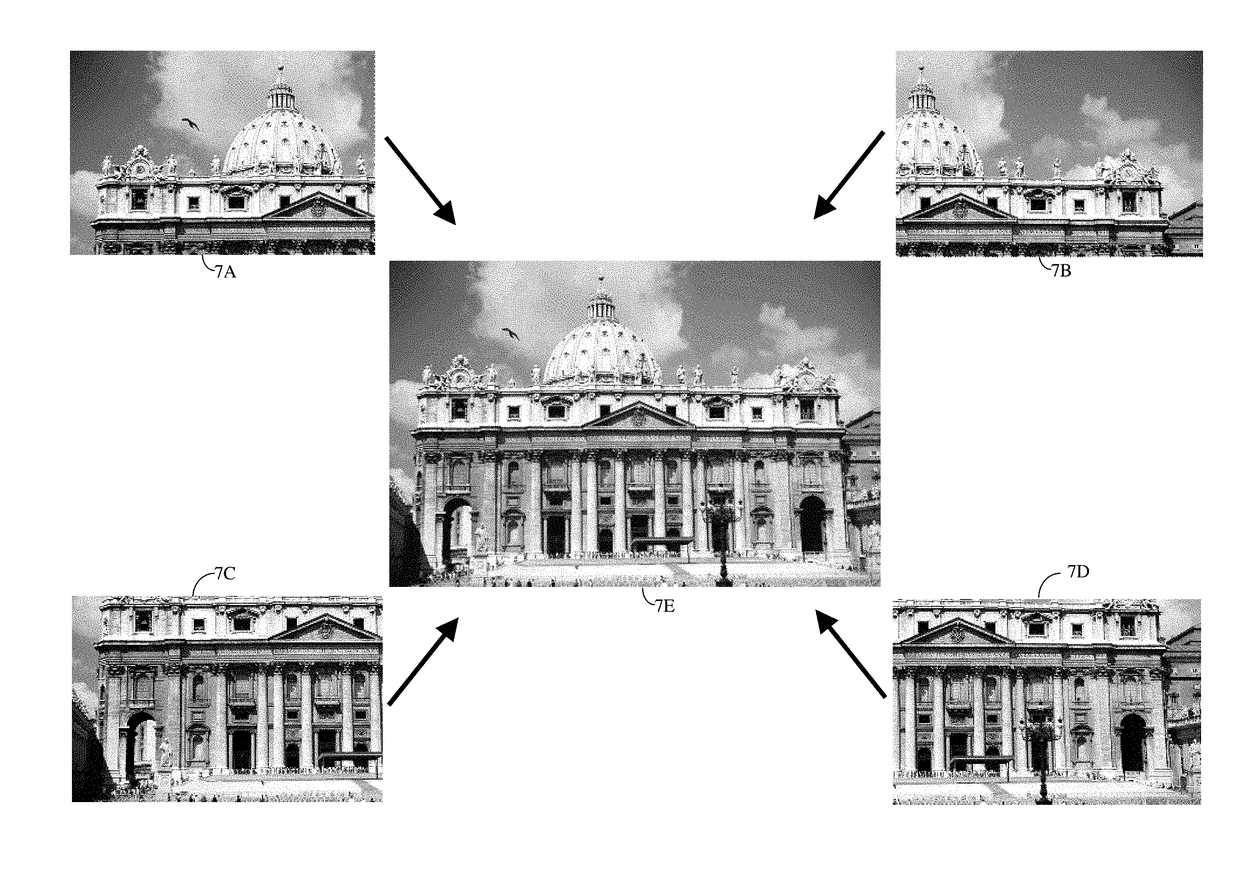

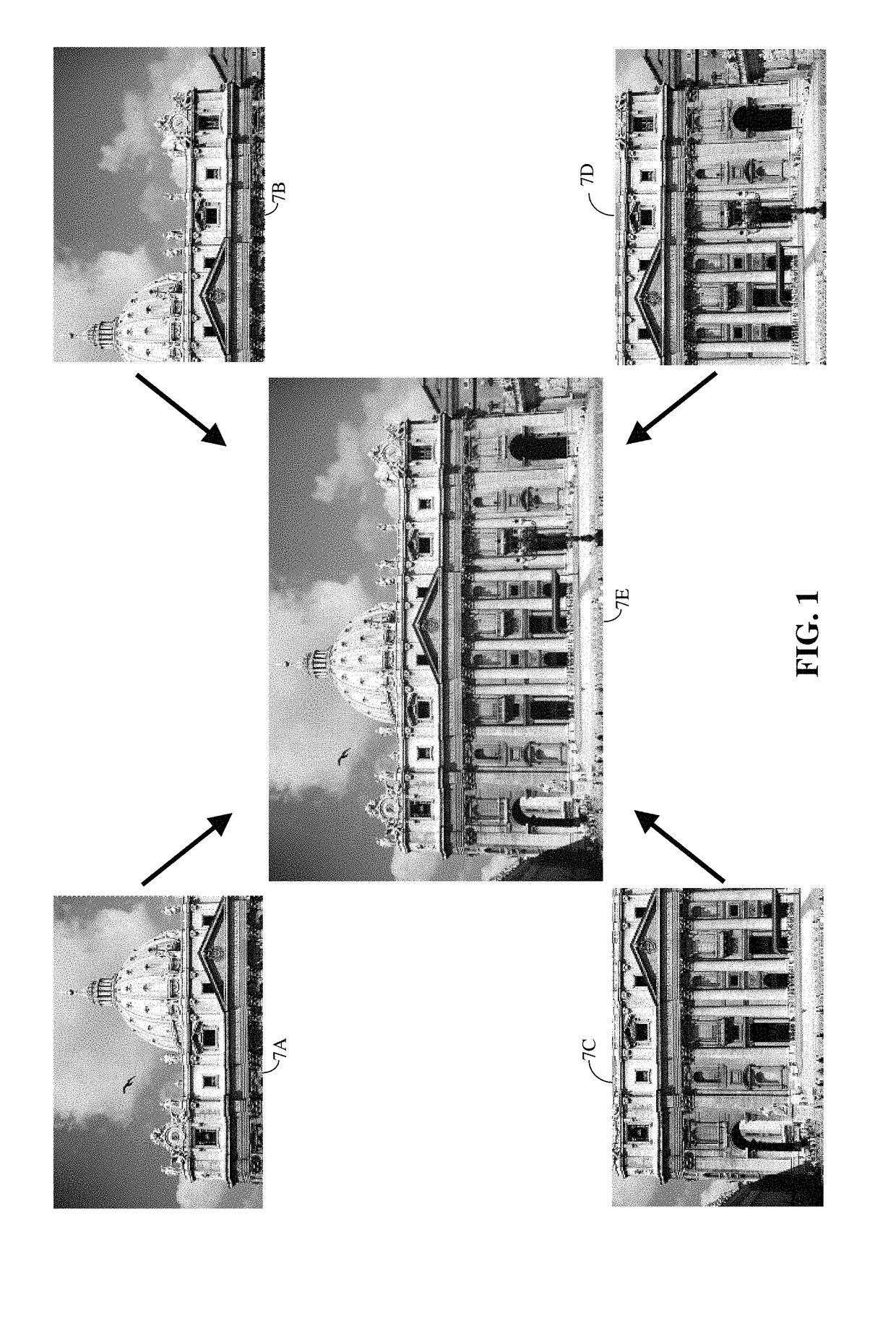

System and method for three-dimensional alignment of objects using machine vision

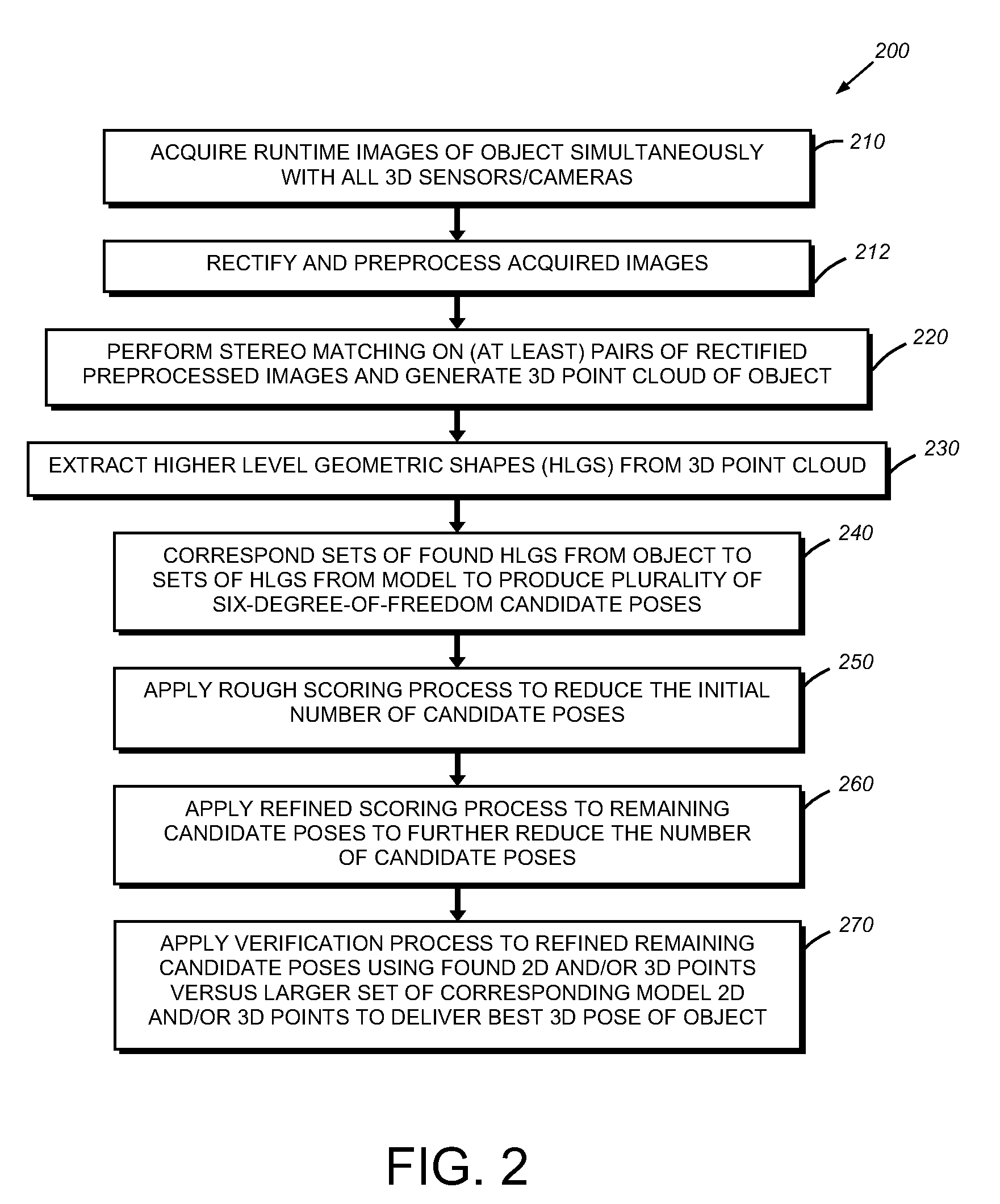

This invention provides a system and method for determining the three-dimensional alignment of a modeledobject or scene. After calibration, a 3D (stereo) sensor system views the object to derive a runtime 3D representation of the scene containing the object. Rectified images from each stereo head are preprocessed to enhance their edge features. A stereo matching process is then performed on at least two (a pair) of the rectified preprocessed images at a time by locating a predetermined feature on a first image and then locating the same feature in the other image. 3D points are computed for each pair of cameras to derive a 3D point cloud. The 3D point cloud is generated by transforming the 3D points of each camera pair into the world 3D space from the world calibration. The amount of 3D data from the point cloud is reduced by extracting higher-level geometric shapes (HLGS), such as line segments. Found HLGS from runtime are corresponded to HLGS on the model to produce candidate 3D poses. A coarse scoring process prunes the number of poses. The remaining candidate poses are then subjected to a further more-refined scoring process. These surviving candidate poses are then verified by, for example, fitting found 3D or 2D points of the candidate poses to a larger set of corresponding three-dimensional or two-dimensional model points, whereby the closest match is the best refined three-dimensional pose.

Owner:COGNEX CORP

Method and apparatus for determining absolute position of a tip of an elongate object on a plane surface with invariant features

ActiveUS7088440B2Input/output for user-computer interactionAngle measurementMicro structureRobotic arm

A method and apparatus for determining a pose of an elongate object and an absolute position of its tip while the tip is in contact with a plane surface having invariant features. The surface and features are illuminated with a probe radiation and a scattered portion, e.g., the back-scattered portion, of the probe radiation returning from the plane surface and the feature to the elongate object at an angle τ with respect to an axis of the object is detected. The pose is derived from a response of the scattered portion to the surface and the features and the absolute position of the tip on the surface is obtained from the pose and knowledge about the feature. The probe radiation can be directed from the object to the surface at an angle σ to the axis of the object in the form of a scan beam. The scan beam can be made to follow a scan pattern with the aid of a scanning arrangement with one or more arms and one or more uniaxial or biaxial scanners. Angle τ can also be varied, e.g., with the aid of a separate or the same scanning arrangement as used to direct probe radiation to the surface. The object can be a pointer, a robotic arm, a cane or a jotting implement such as a pen, and the features can be edges, micro-structure or macro-structure belonging to, deposited on or attached to the surface which the tip of the object is contacting.

Owner:ELECTRONICS SCRIPTING PRODS

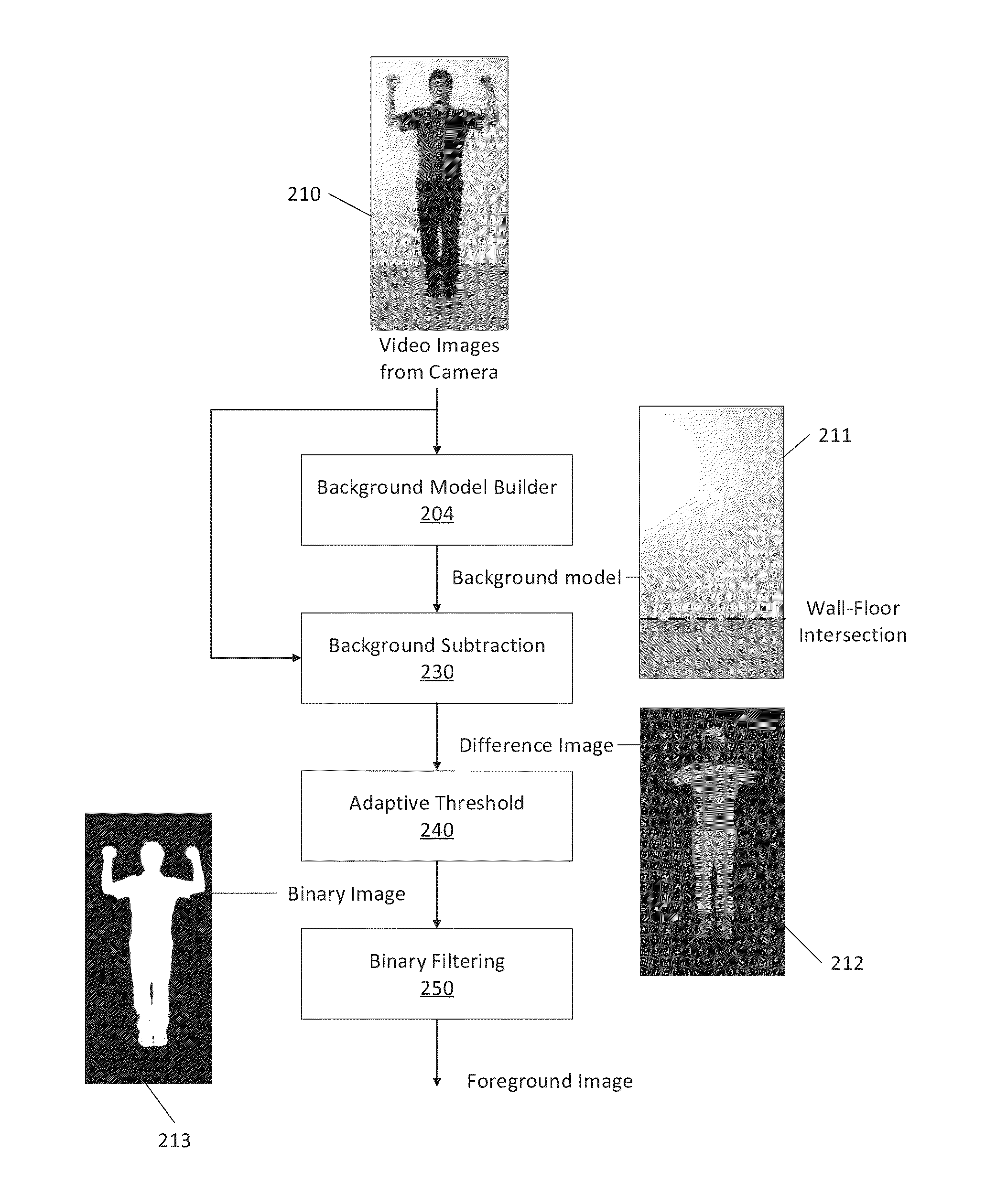

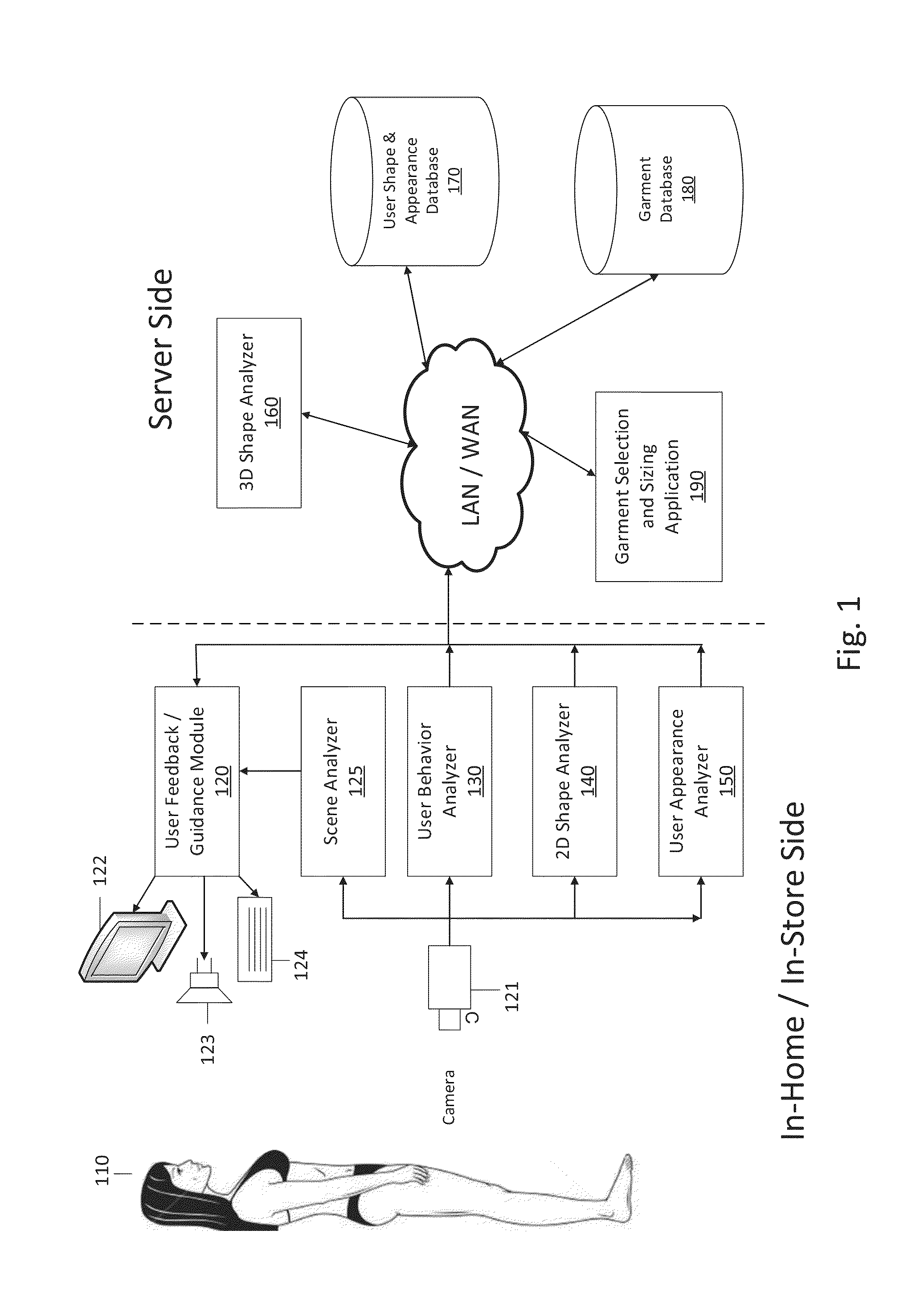

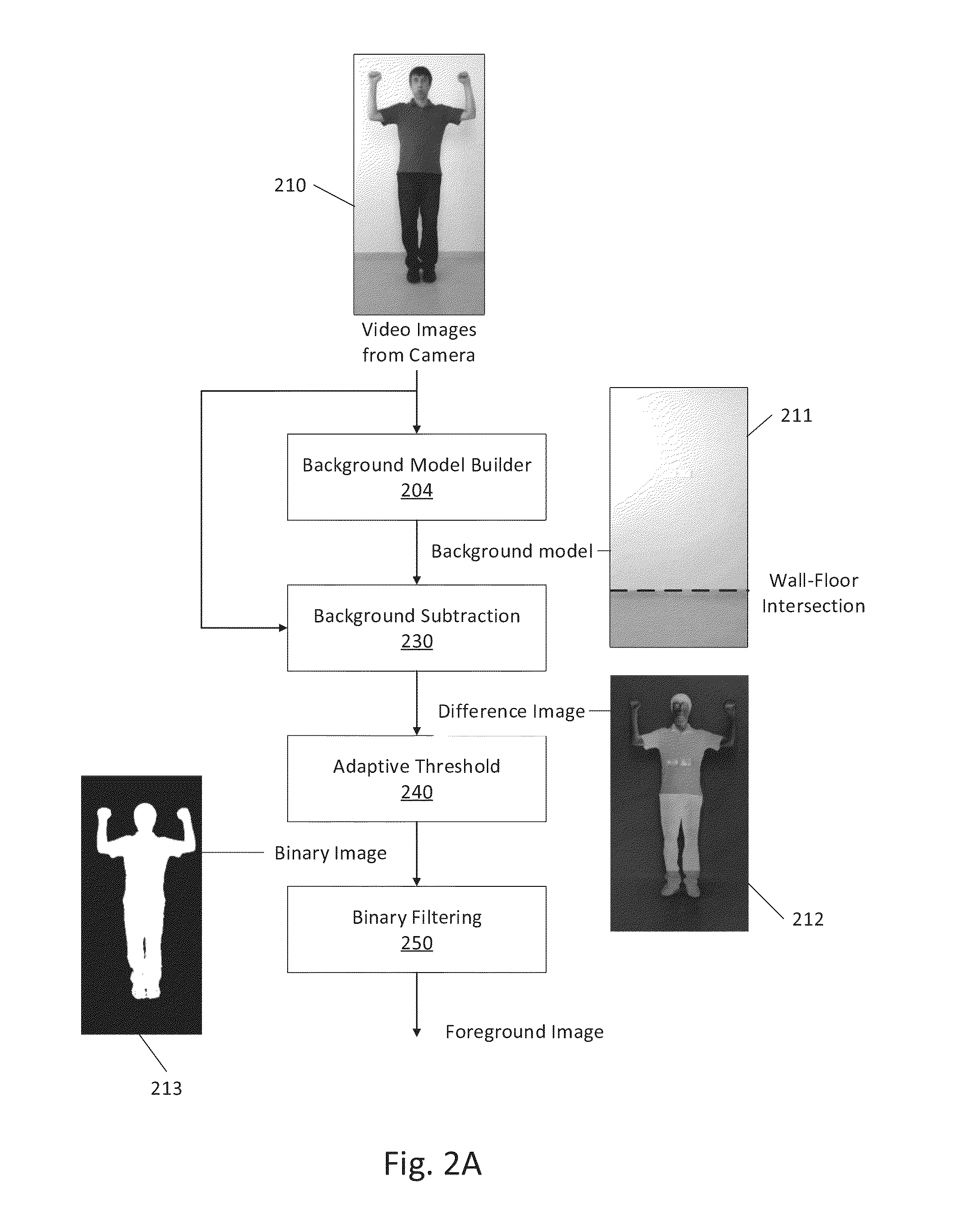

System and method for deriving accurate body size measures from a sequence of 2d images

A method for deriving accurate body size measures of a user from a sequence of 2D images includes: a) automatically guiding the user through a sequence of body poses and motions; b) scanning the body of said user by obtaining a sequence of raw 2D images of said user as captured by at least one camera during said guided sequence of poses and motions; c) analyzing the behavior of said user to ensure that the user follows the provided instructions; d) extracting and encoding 2D shape data descriptors from said sequence of images by using a 2D shape analyzer (2DSA); and e) integrating said 2D shape descriptors and data representing the user's position, pose and rotation into a 3D shape model.

Owner:SIZER TECH LTD

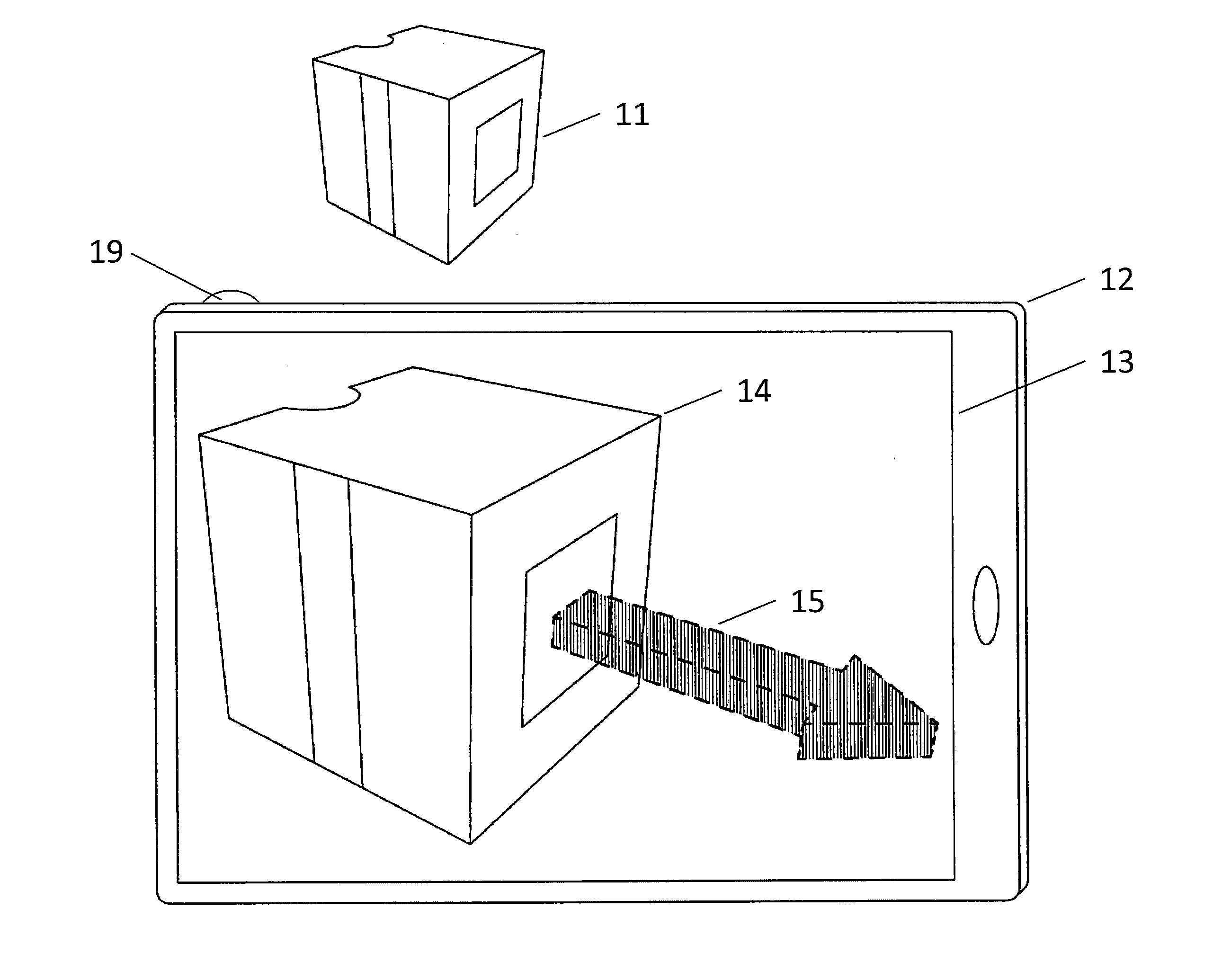

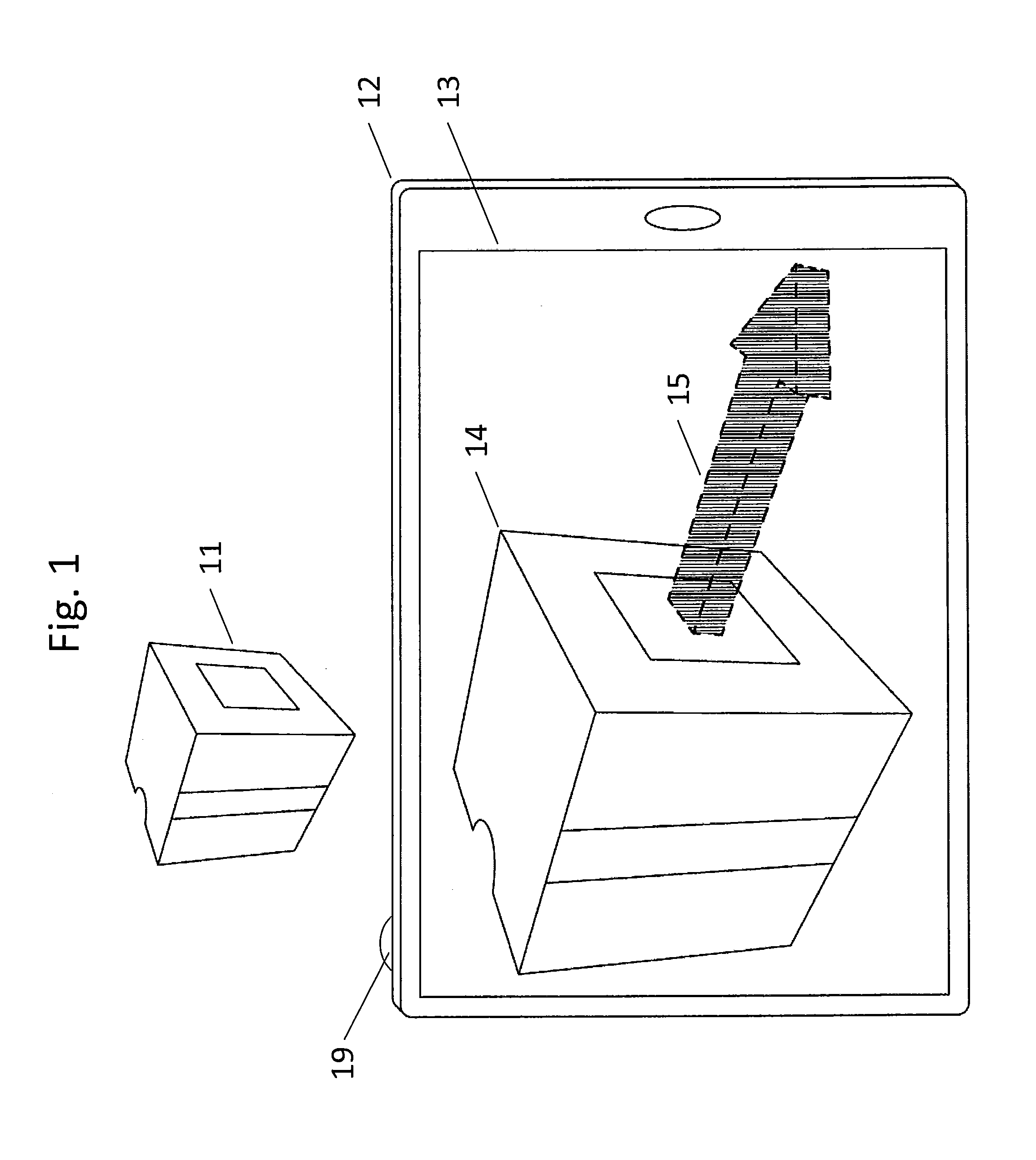

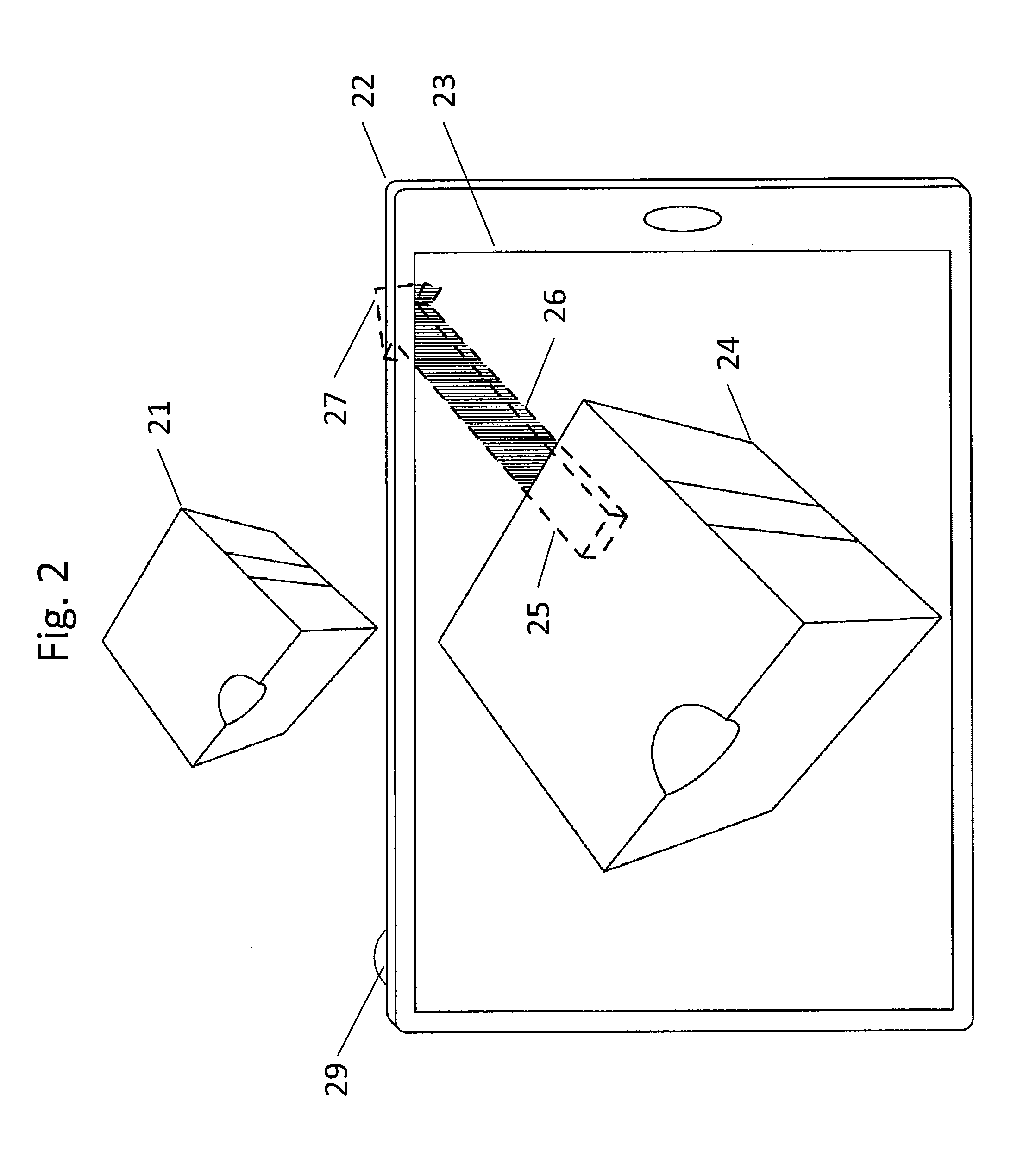

Deriving Input from Six Degrees of Freedom Interfaces

InactiveUS20160098095A1Apparent advantageInput/output for user-computer interactionCathode-ray tube indicatorsSix degrees of freedomVirtual space

The present invention relates to interfaces and methods for producing input for software applications based on the absolute pose of an item manipulated or worn by a user in a three-dimensional environment. Absolute pose in the sense of the present invention means both the position and the orientation of the item as described in a stable frame defined in that three-dimensional environment. The invention describes how to recover the absolute pose with optical hardware and methods, and how to map at least one of the recovered absolute pose parameters to the three translational and three rotational degrees of freedom available to the item to generate useful input. The applications that can most benefit from the interfaces and methods of the invention involve 3D virtual spaces including augmented reality and mixed reality environments.

Owner:ELECTRONICS SCRIPTING PRODS

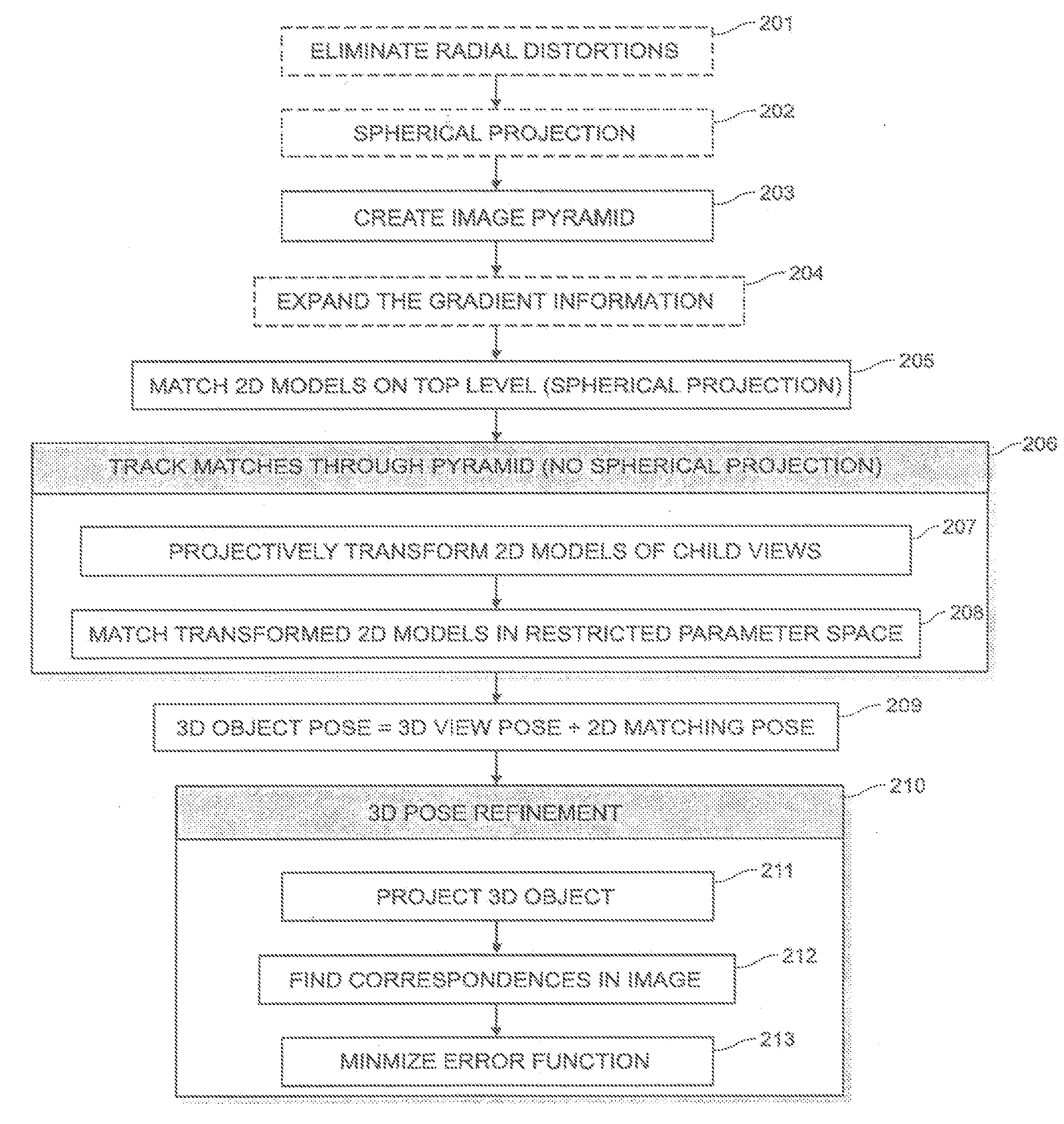

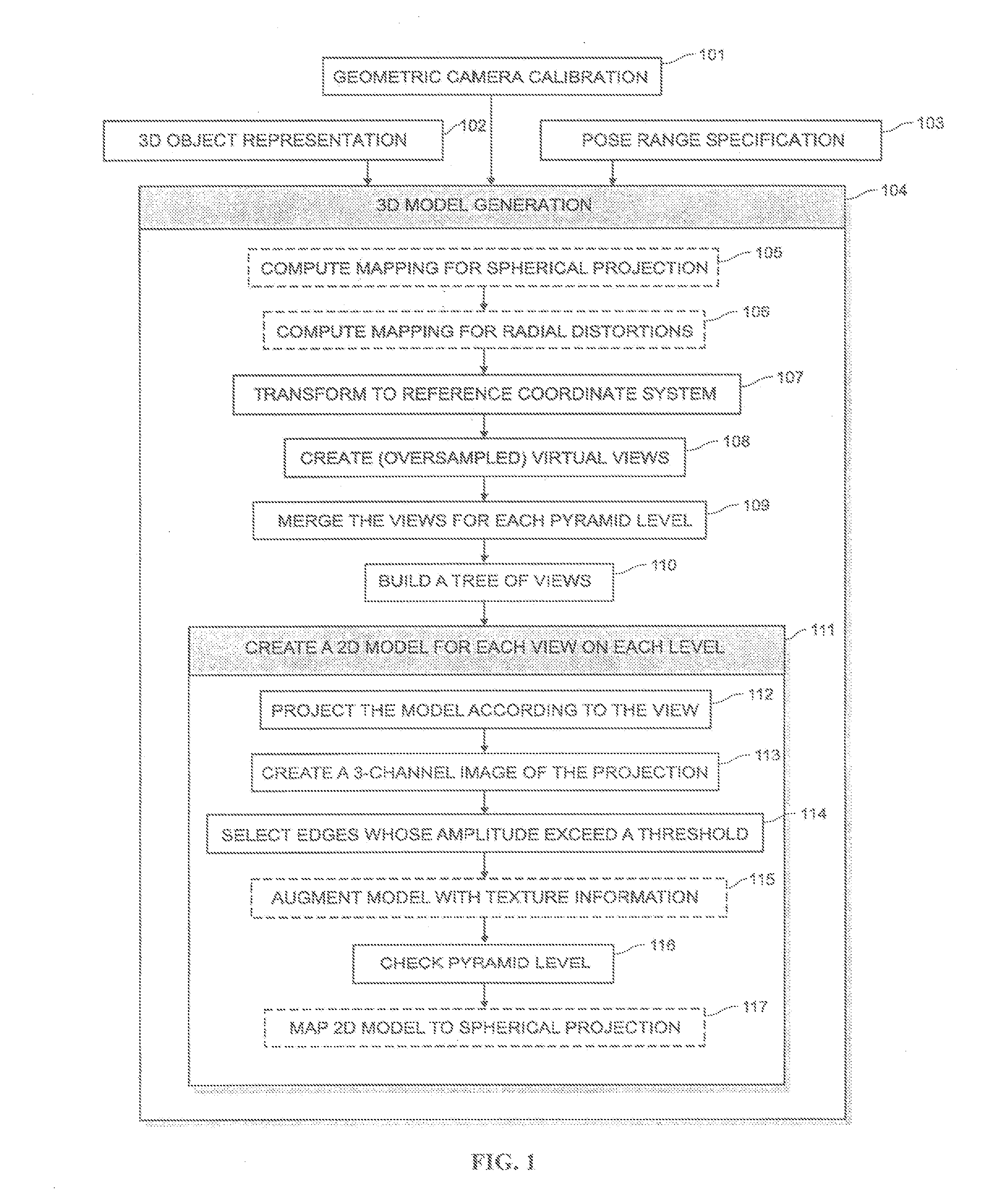

System and method for 3D object recognition

ActiveUS20090096790A1Significant distortionReduce the impactProgramme-controlled manipulator3D-image renderingPresent methodView based

The present invention provides a system and method for recognizing a 3D object in a single camera image and for determining the 3D pose of the object with respect to the camera coordinate system. In one typical application, the 3D pose is used to make a robot pick up the object. A view-based approach is presented that does not show the drawbacks of previous methods because it is robust to image noise, object occlusions, clutter, and contrast changes. Furthermore, the 3D pose is determined with a high accuracy. Finally, the presented method allows the recognition of the 3D object as well as the determination of its 3D pose in a very short computation time, making it also suitable for real-time applications. These improvements are achieved by the methods disclosed herein.

Owner:MVTEC SOFTWARE

Method for estimating a pose of an articulated object model

ActiveUS20110267344A1Eliminate visible discontinuityMinimize ghosting artifactImage enhancementImage analysisSource imageComputer based

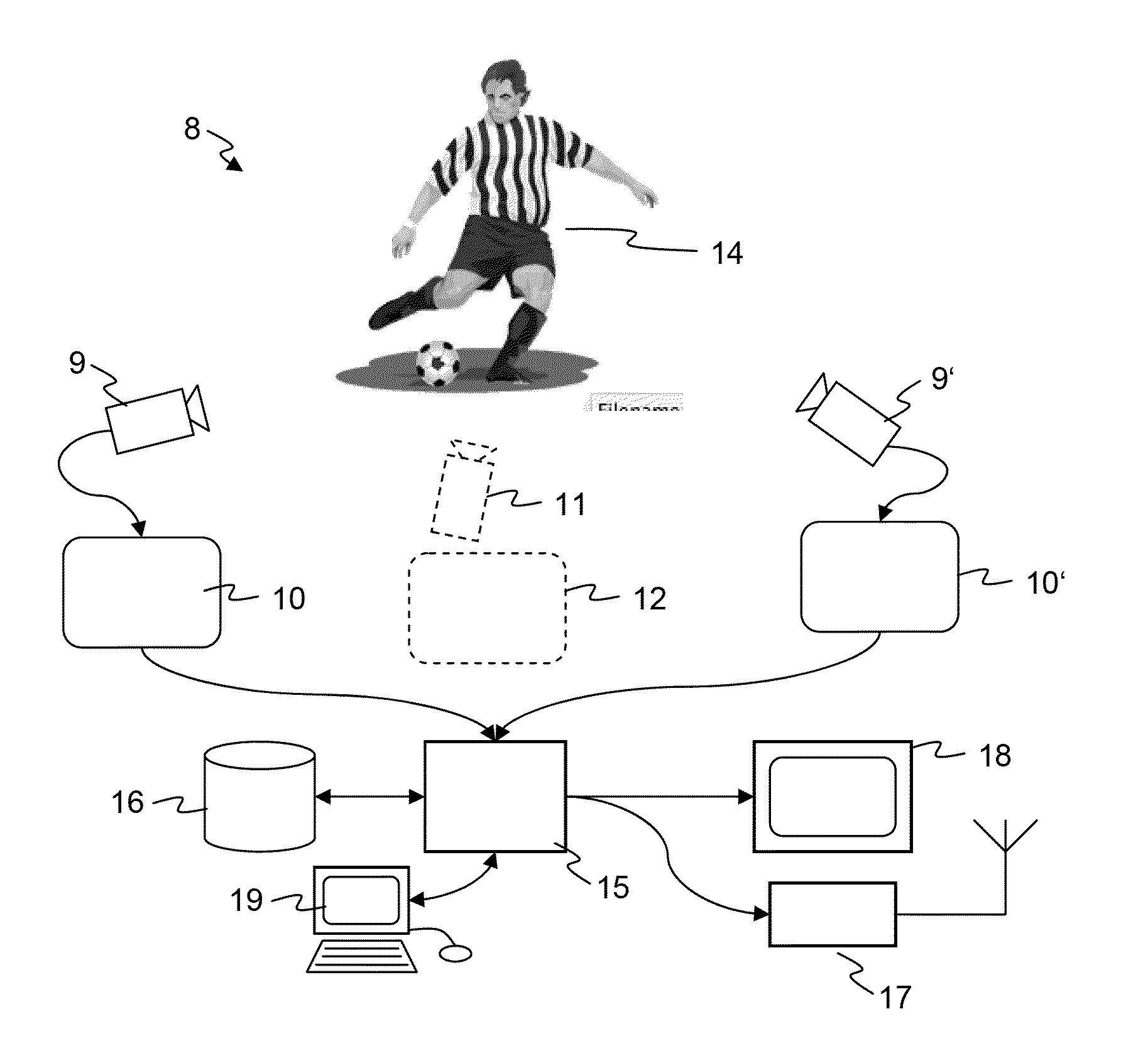

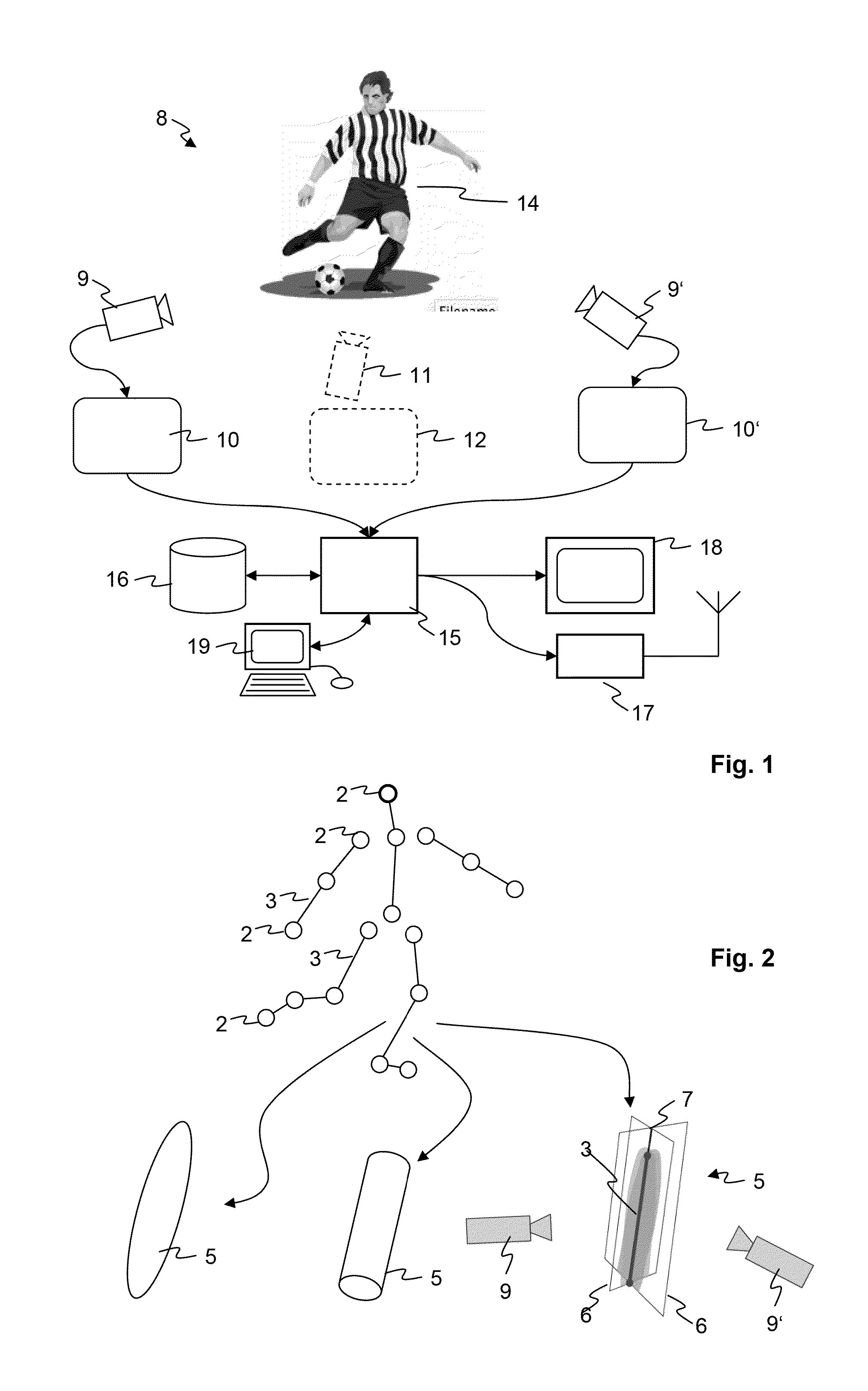

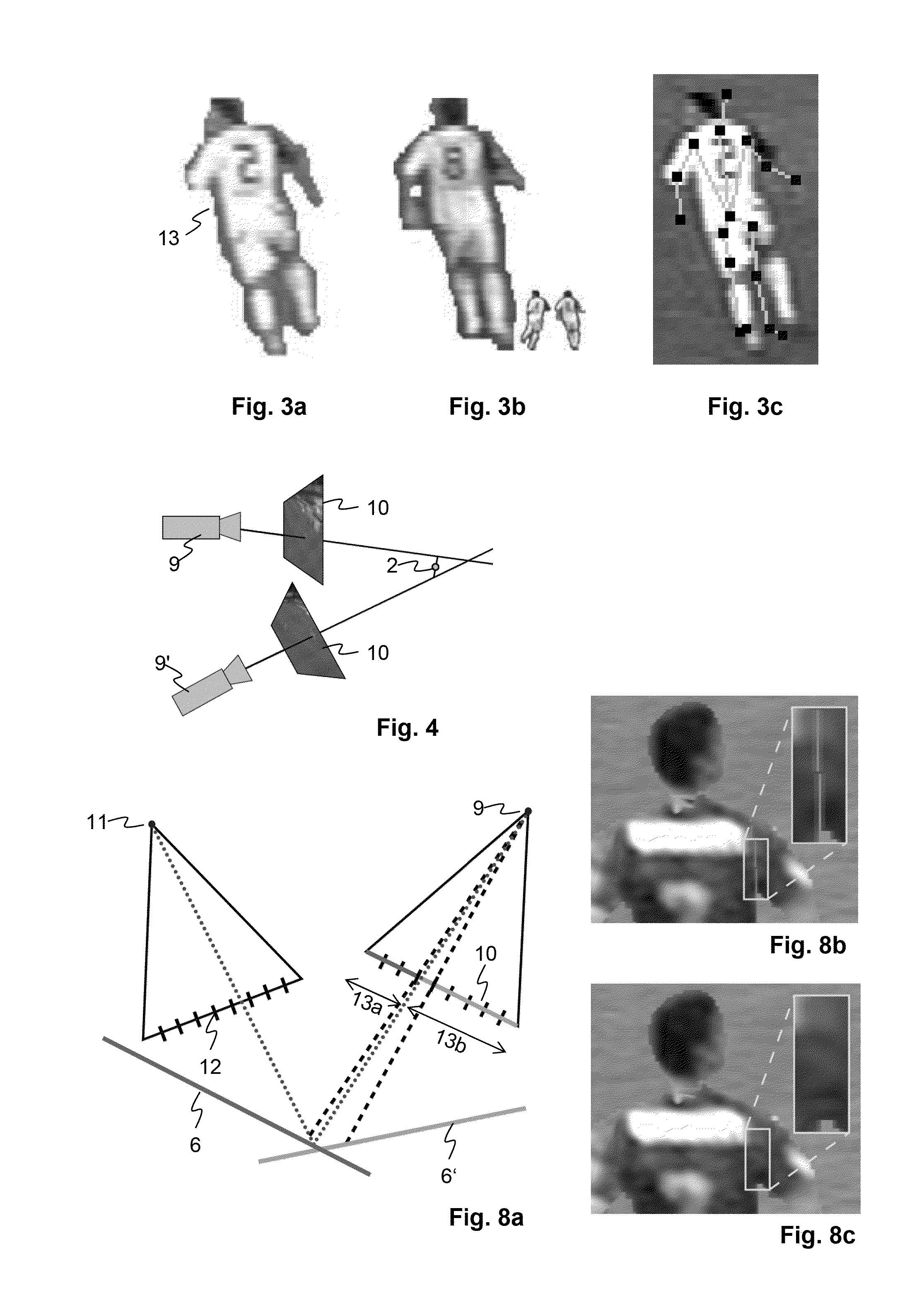

A computer-implemented method for estimating a pose of an articulated object model (4), wherein the articulated object model (4) is a computer based 3D model (1) of a real world object (14) observed by one or more source cameras (9), and wherein the pose of the articulated object model (4) is defined by the spatial location of joints (2) of the articulated object model (4), comprises the steps ofobtaining a source image (10) from a video stream;processing the source image (10) to extract a source image segment (13);maintaining, in a database, a set of reference silhouettes, each being associated with an articulated object model (4) and a corresponding reference pose;comparing the source image segment (13) to the reference silhouettes and selecting reference silhouettes by taking into account, for each reference silhouette,a matching error that indicates how closely the reference silhouette matches the source image segment (13) and / ora coherence error that indicates how much the reference pose is consistent with the pose of the same real world object (14) as estimated from a preceding source image (10);retrieving the corresponding reference poses of the articulated object models (4); andcomputing an estimate of the pose of the articulated object model (4) from the reference poses of the selected reference silhouettes.

Owner:VIZRT AG

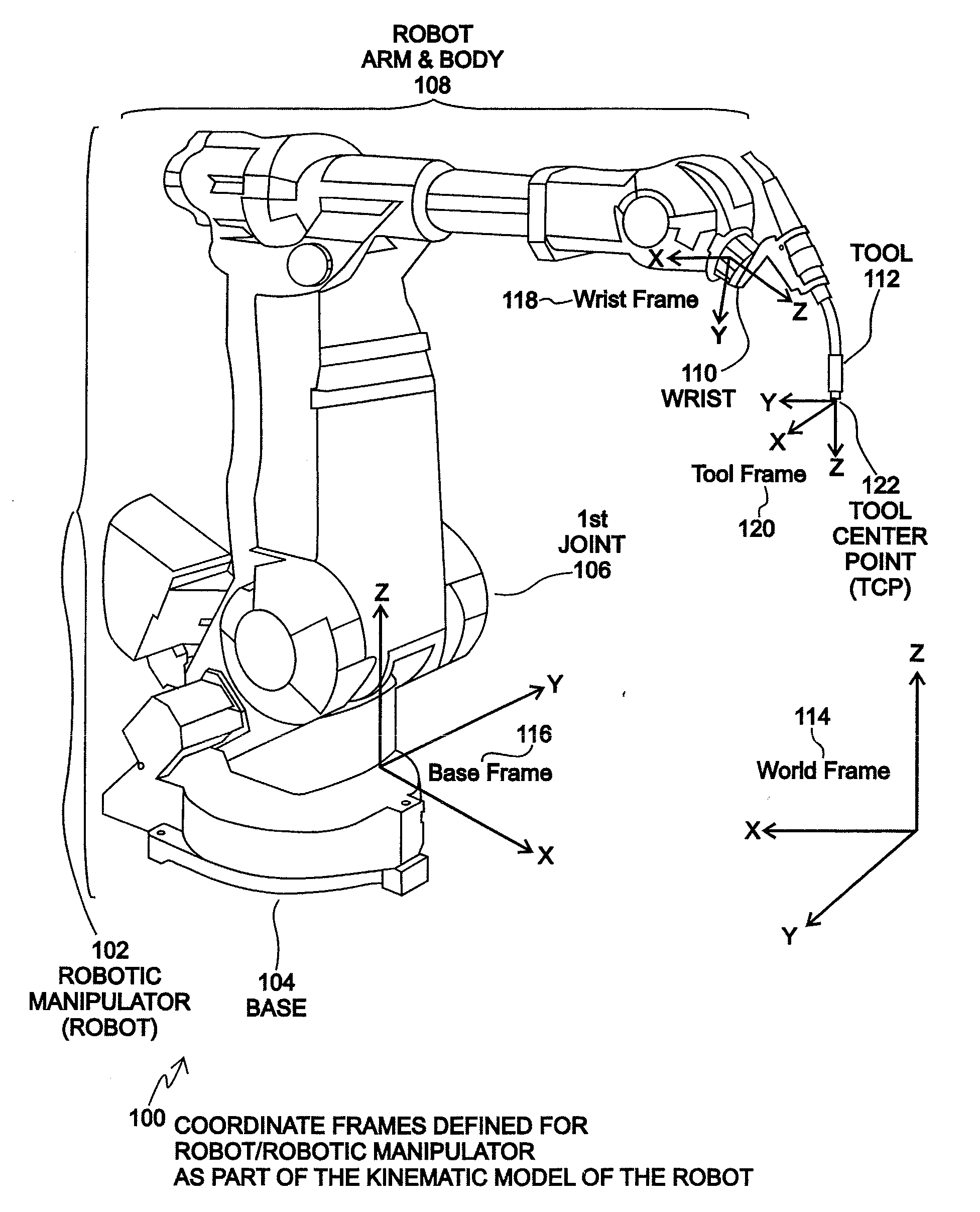

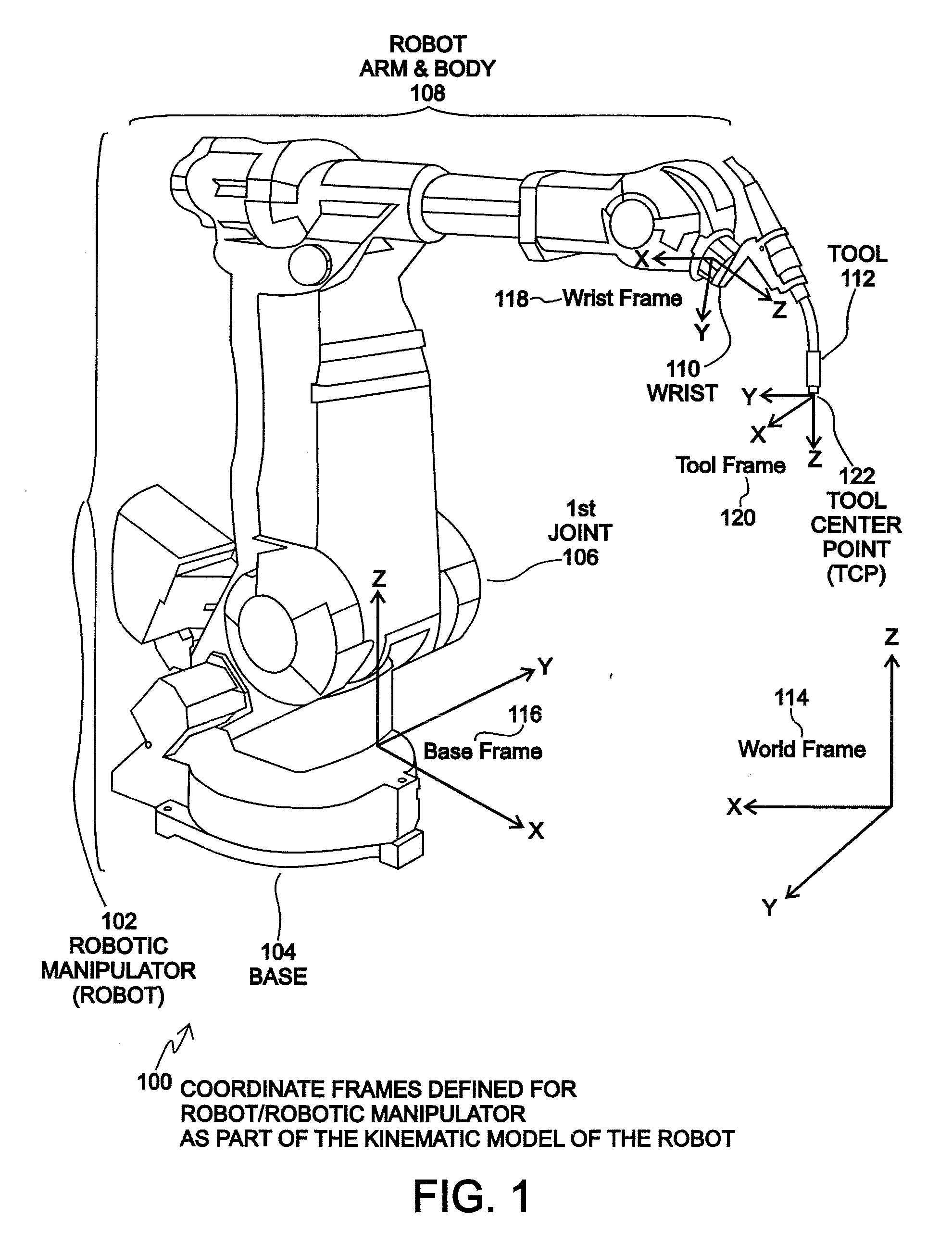

Method and system for finding a tool center point for a robot using an external camera

Disclosed is a method and system for finding a relationship between a tool-frame of a tool attached at a wrist of a robot and robot kinematics of the robot using an external camera. The position and orientation of the wrist of the robot define a wrist-frame for the robot that is known. The relationship of the tool-frame and / or the Tool Center Point (TCP) of the tool is initially unknown. For an embodiment, the camera captures an image of the tool. An appropriate point on the image is designated as the TCP of the tool. The robot is moved such that the wrist is placed into a plurality of poses. Each pose of the plurality of poses is constrained such that the TCP point on the image falls within a specified geometric constraint (e.g. a point or a line). A TCP of the tool relative to the wrist frame of the robot is calculated as a function of the specified geometric constraint and as a function of the position and orientation of the wrist for each pose of the plurality of poses. An embodiment may define the tool-frame relative to the wrist frame as the calculated TCP relative to the wrist frame. Other embodiments may further refine the calibration of the tool-frame to account for tool orientation and possibly for a tool operation direction. An embodiment may calibrate the camera using a simplified extrinsic technique that obtains the extrinsic parameters of the calibration, but not other calibration parameters.

Owner:RIMROCK AUTOMATION

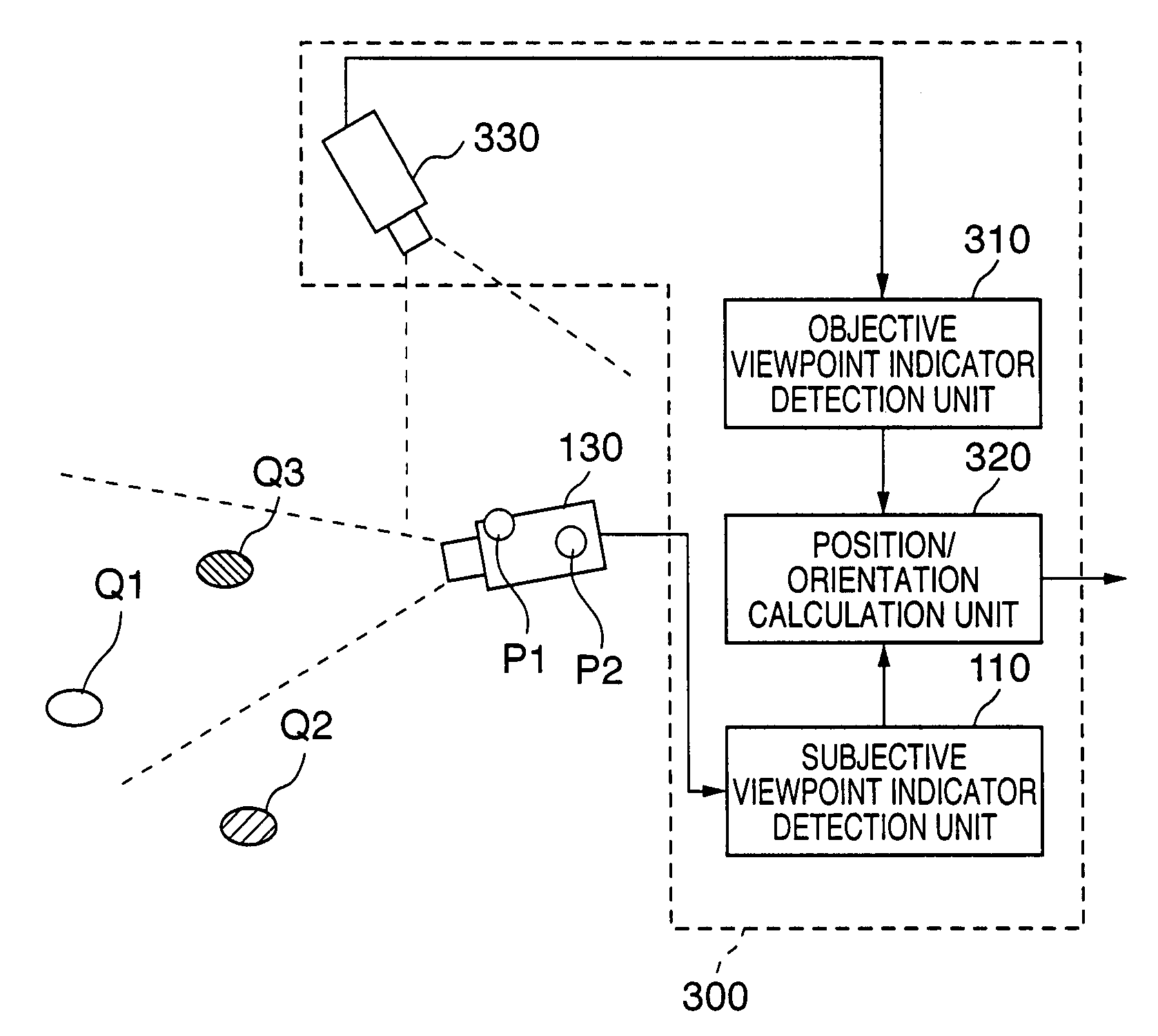

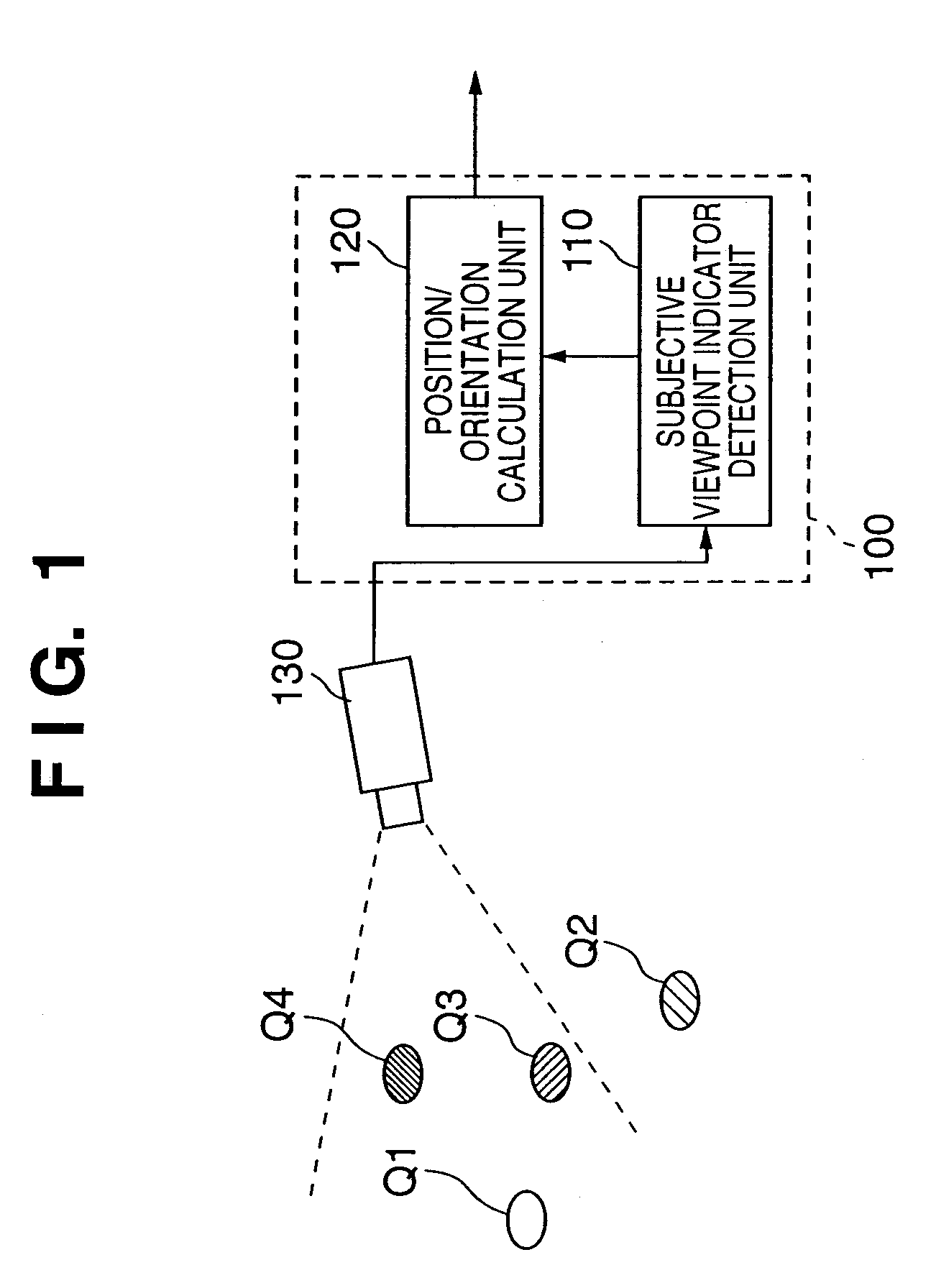

Position/orientation measurement method, and position/orientation measurement apparatus

ActiveUS7092109B2Improve accuracyHigh precision measurementTelevision system detailsImage analysisOrientation measurementComputer science

A first error coordinate between the image coordinate of a first indicator, which is arranged on the real space and detected on a first image captured by a first image sensing unit, and the estimated image coordinate of the first indicator, which is estimated to be located on the first image in accordance with the position / orientation relationship between the first image sensing unit (with the position and orientation according to a previously calculated position / orientation parameter) and the first indicator, is calculated. On the other-hand, a second error coordinate between the image coordinate of a second indicator, which is arranged on the first image sensing unit and detected on a second image that includes the first image sensing unit, and the estimated image coordinate of the second indicator, which is estimated to be located on the second image in accordance with the position / orientation relationship between the first image sensing unit (with the position and orientation according to the position / orientation parameter), and the second indicator, is calculated. Using the first and second error coordinates, the position / orientation parameter is corrected.

Owner:CANON KK

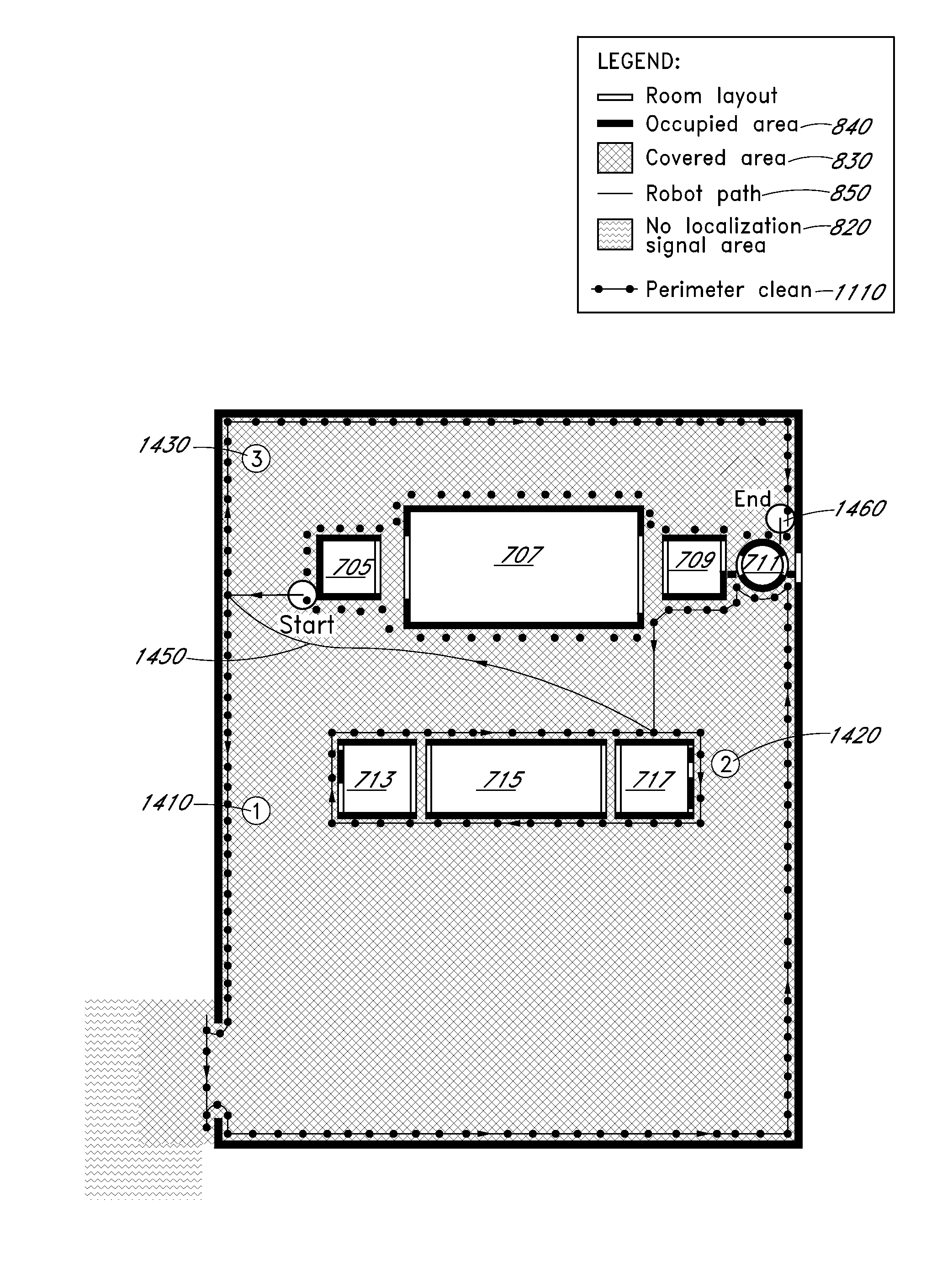

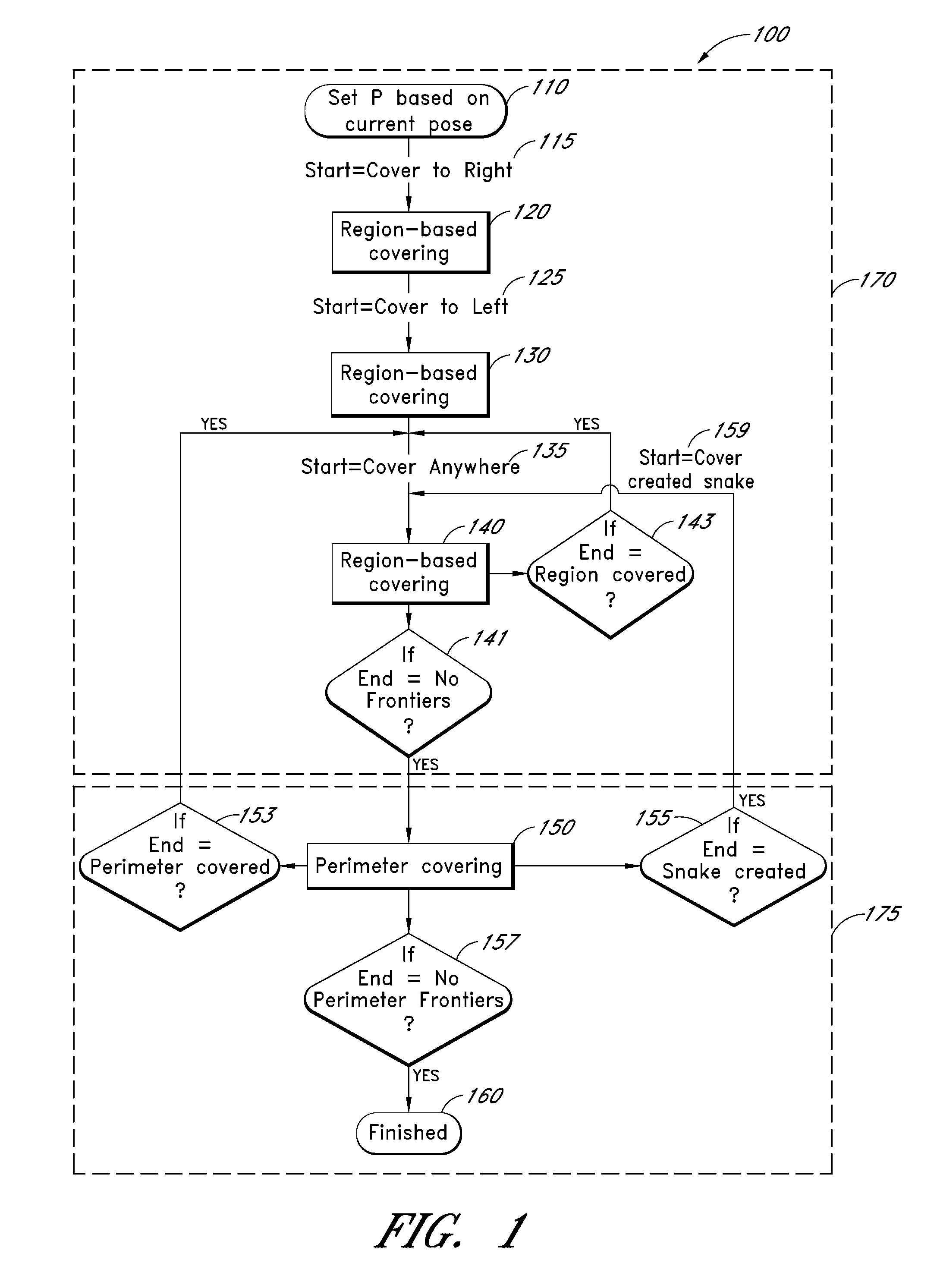

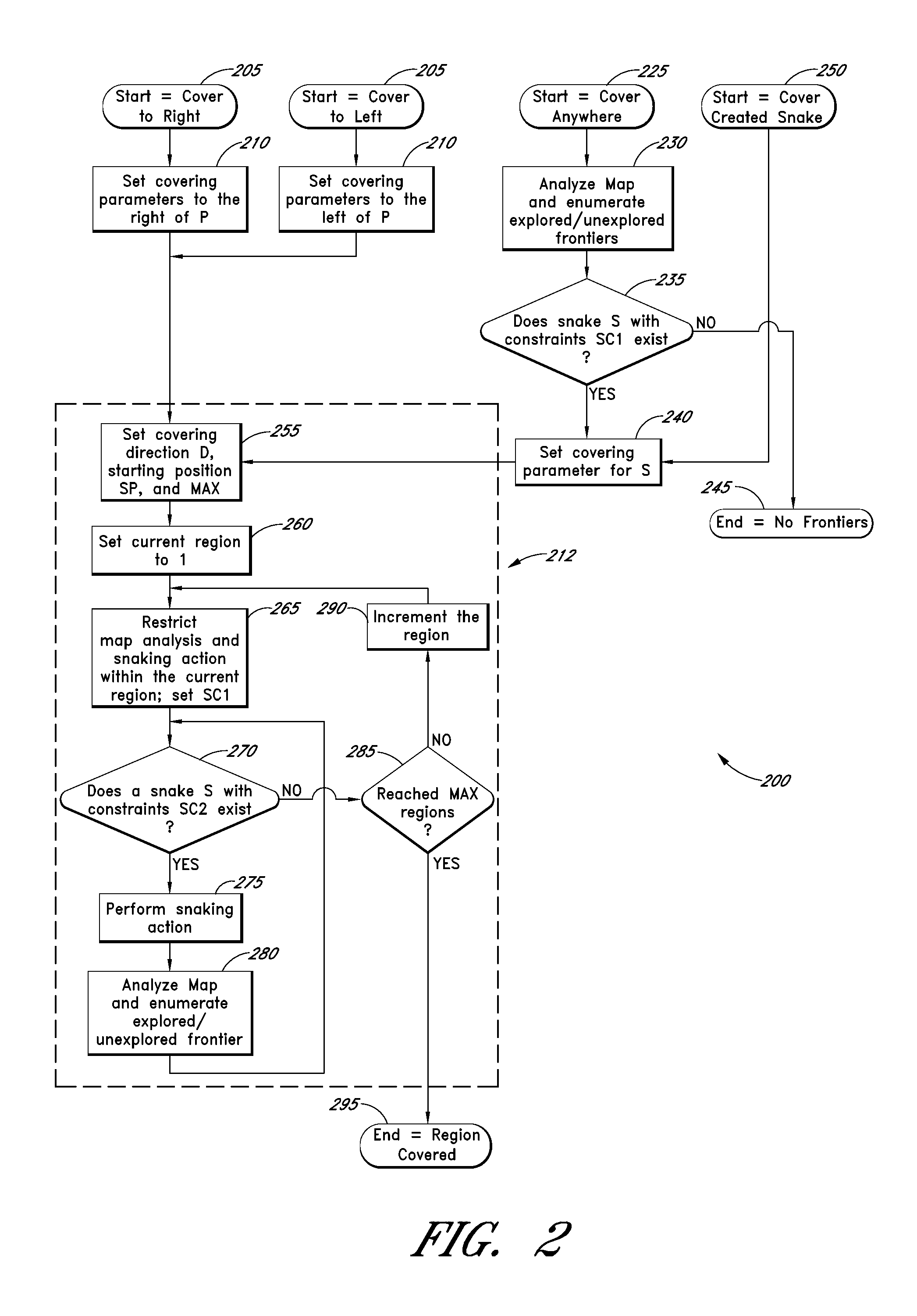

Methods and systems for complete coverage of a surface by an autonomous robot

A robot configured to navigate a surface, the robot comprising a movement mechanism; a logical map representing data about the surface and associating locations with one or more properties observed during navigation; an initialization module configured to establish an initial pose comprising an initial location and an initial orientation; a region covering module configured to cause the robot to move so as to cover a region; an edge-following module configured to cause the robot to follow unfollowed edges; a control module configured to invoke region covering on a first region defined at least in part based at least part of the initial pose, to invoke region covering on least one additional region, to invoke edge-following, and to invoke region covering cause the mapping module to mark followed edges as followed, and cause a third region covering on regions discovered during edge-following.

Owner:IROBOT CORP

Workpiece apparent defect detection method based on machine vision

ActiveCN106204614AFast positioningHigh precisionImage enhancementImage analysisTemplate matchingVisual system

The invention discloses a workpiece apparent defect detection method based on machine vision. Firstly, a visual system is used for guiding a robot, the pose of a target workpiece is precisely positioned according to a template matching algorithm based on a gray value, and then workpiece apparent defect detection is carried out and comprises the steps that firstly, a workpiece image is acquired and pretreated through median filtering; secondly, the target workpiece is divided through a global threshold value, and workpiece pose correction is carried out; thirdly, burr interference of the edge of the workpiece is removed through mathematical morphology open operation; fourthly, notches, material sticking, cracking, indentation, needle eyes, scratches and foaming apparent defects are detected. The method solves the problems that the artificial detection speed is slow, the efficiency is low and precision is poor; the problems that according to current vision detection, defect types are singular, the imaging quality is poor, and the false drop rate is high are solved, and the automation degree of precise workpiece production and product quality are improved.

Owner:XIANGTAN UNIV

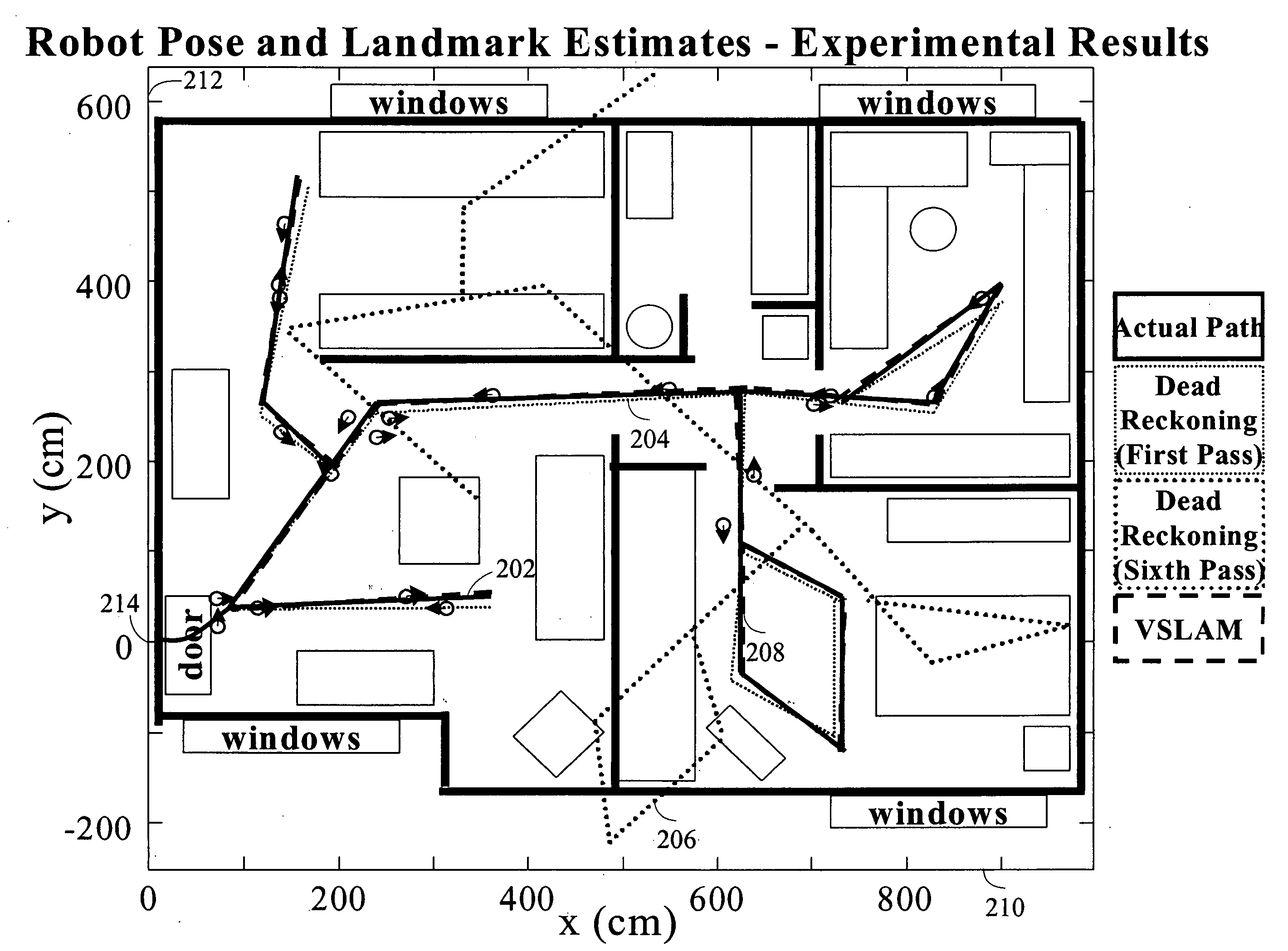

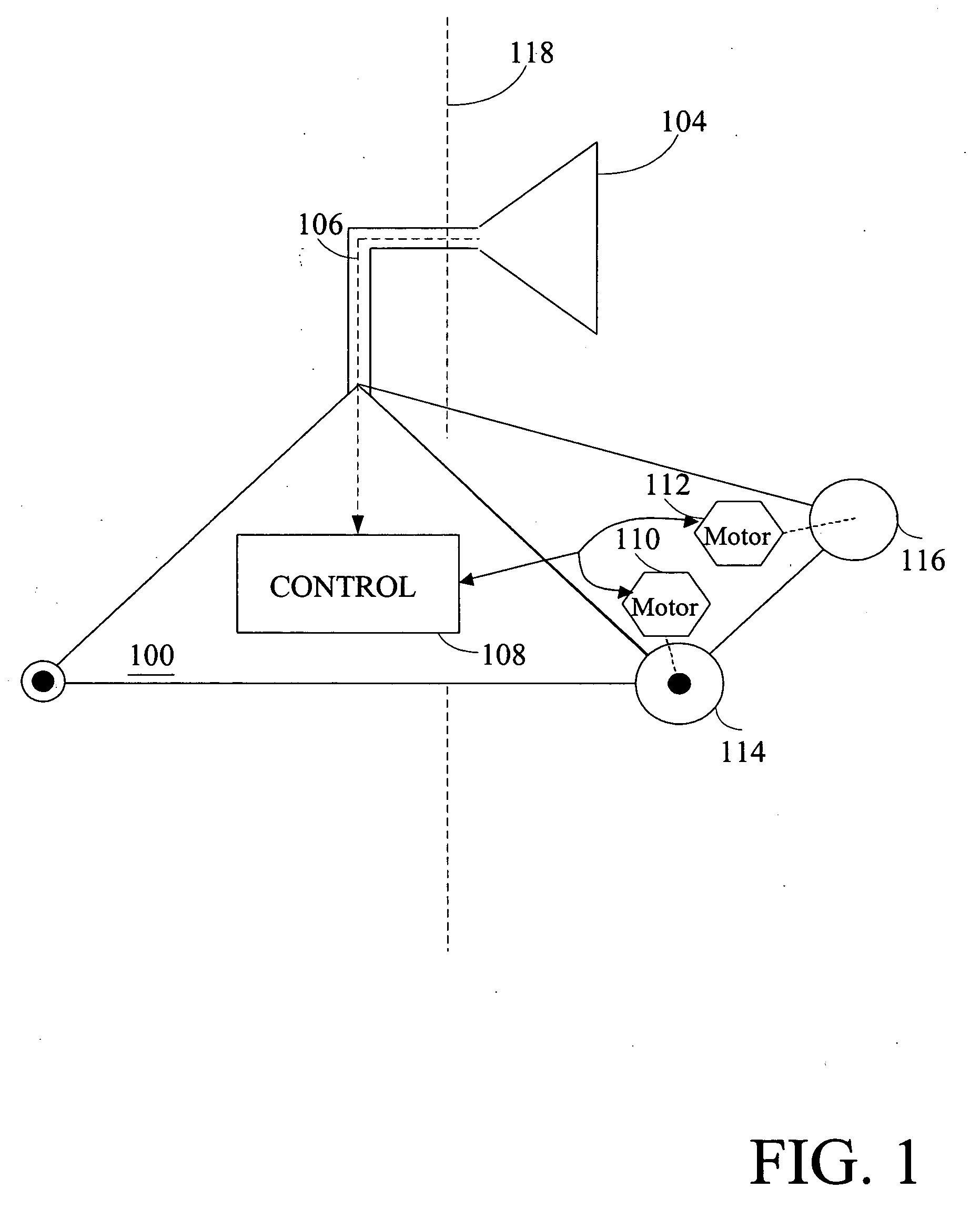

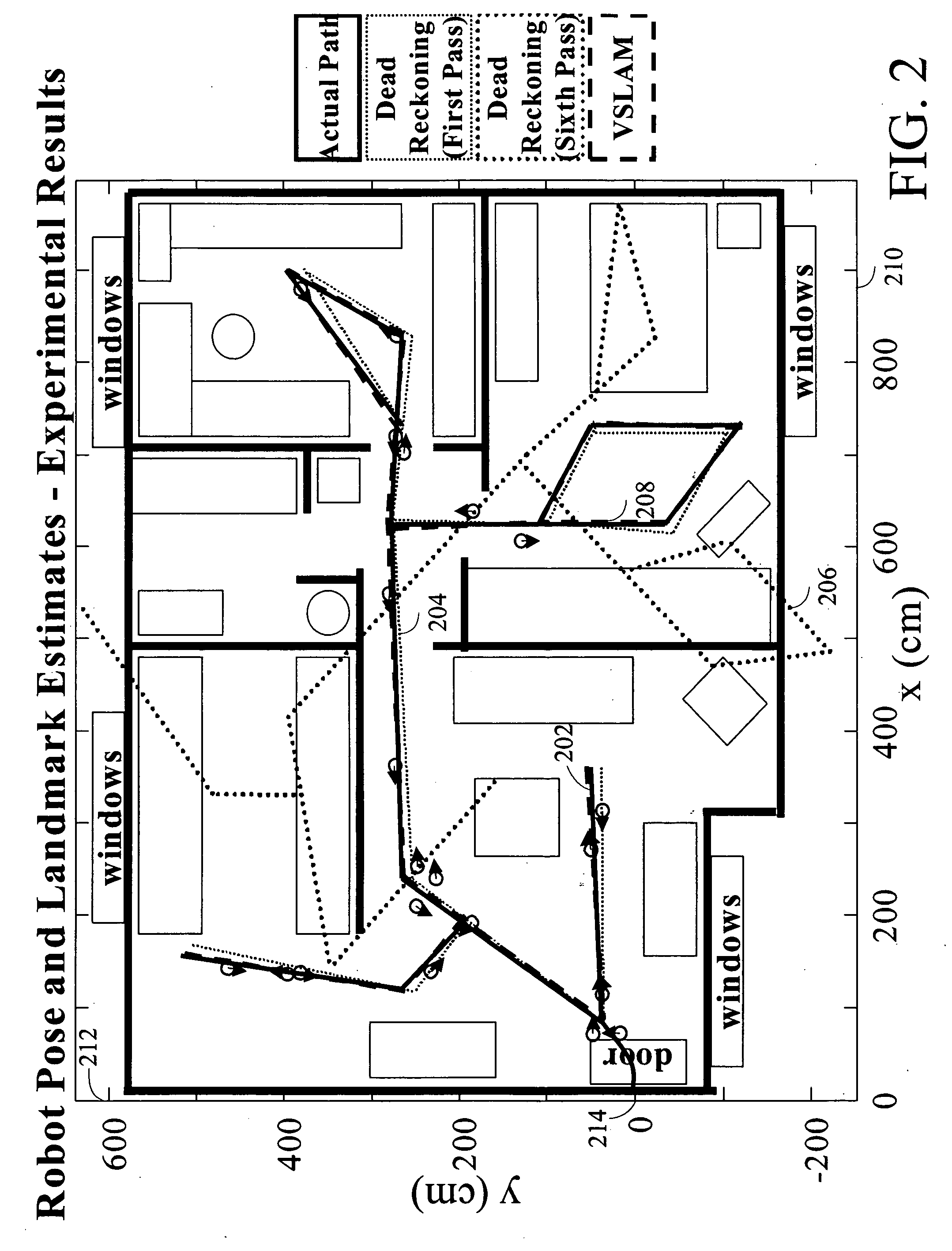

Systems and methods for incrementally updating a pose of a mobile device calculated by visual simultaneous localization and mapping techniques

ActiveUS20060012493A1Image enhancementInstruments for road network navigationPattern recognitionSimultaneous localization and mapping

The invention is related to methods and apparatus that use a visual sensor and dead reckoning sensors to process Simultaneous Localization and Mapping (SLAM). These techniques can be used in robot navigation. Advantageously, such visual techniques can be used to autonomously generate and update a map. Unlike with laser rangefinders, the visual techniques are economically practical in a wide range of applications and can be used in relatively dynamic environments such as environments in which people move. One embodiment further advantageously uses multiple particles to maintain multiple hypotheses with respect to localization and mapping. Further advantageously, one embodiment maintains the particles in a relatively computationally-efficient manner, thereby permitting the SLAM processes to be performed in software using relatively inexpensive microprocessor-based computer systems.

Owner:IROBOT CORP

Multiple Hypotheses Segmentation-Guided 3D Object Detection and Pose Estimation

A machine vision system and method uses captured depth data to improve the identification of a target object in a cluttered scene. A 3D-based object detection and pose estimation (ODPE) process is use to determine pose information of the target object. The system uses three different segmentation processes in sequence, where each subsequent segmentation process produces larger segments, in order to produce a plurality of segment hypotheses, each of which is expected to contain a large portion of the target object in the cluttered scene. Each segmentation hypotheses is used to mask 3D point clouds of the captured depth data, and each masked region is individually submitted to the 3D-based ODPE.

Owner:SEIKO EPSON CORP

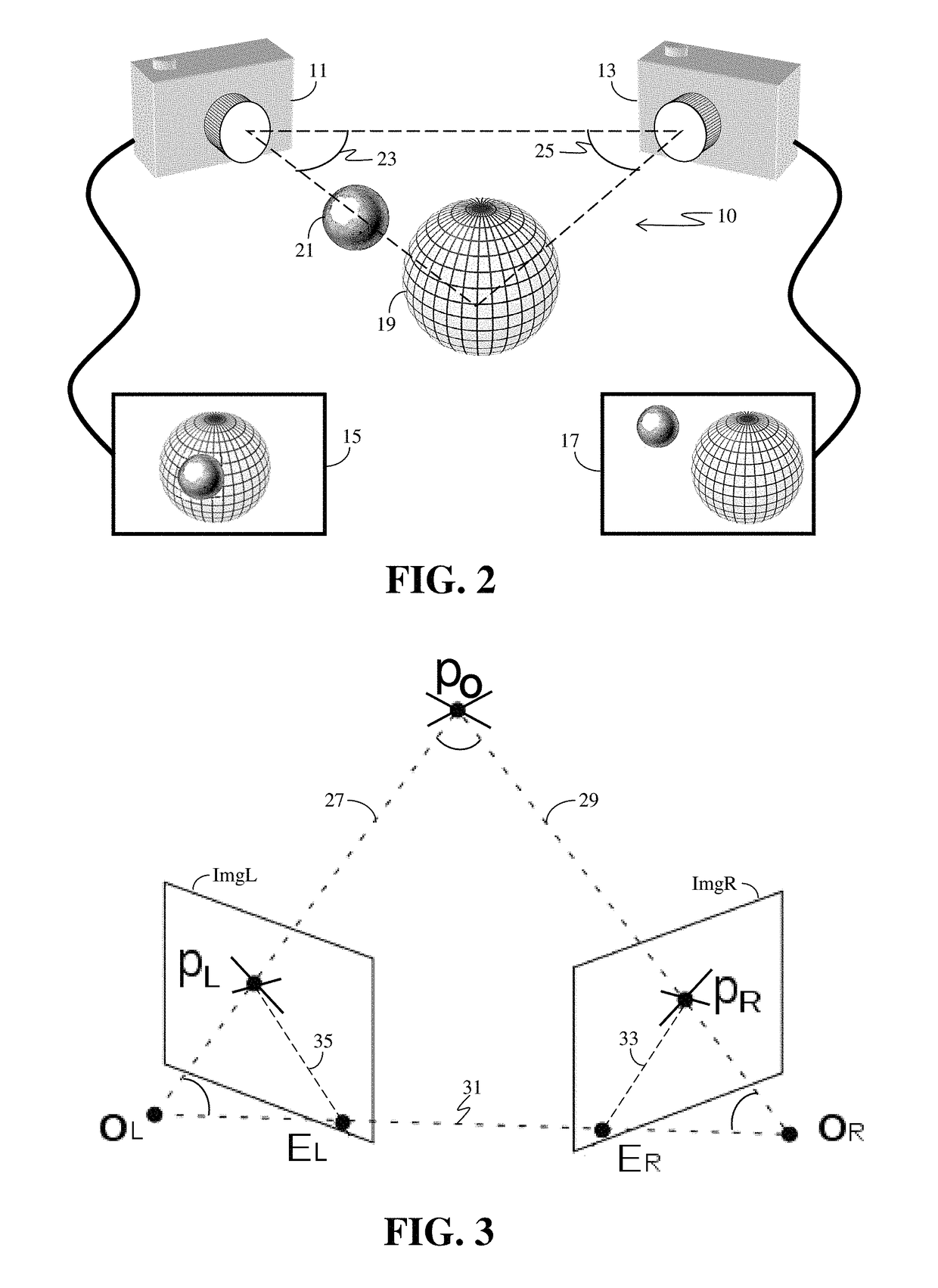

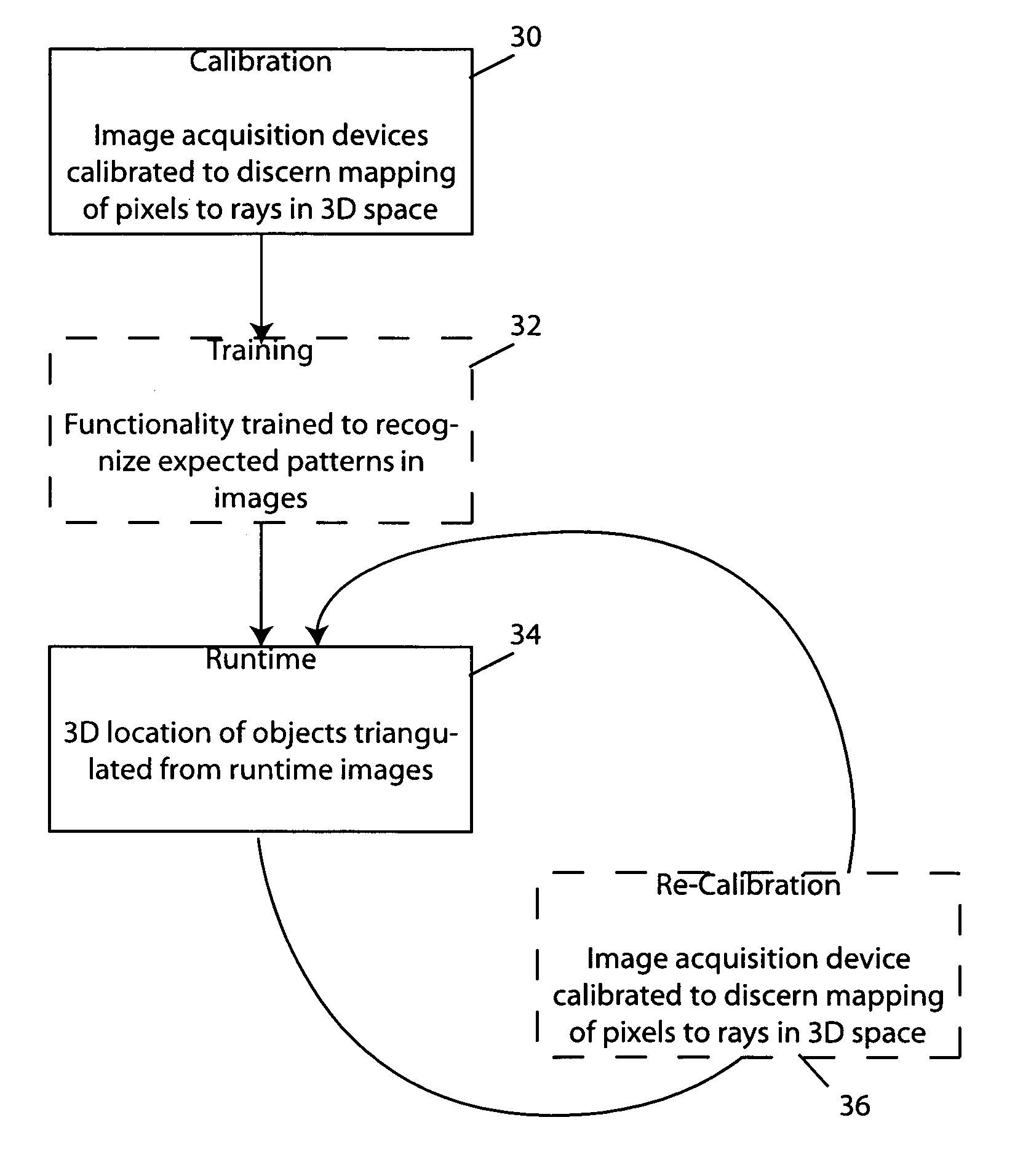

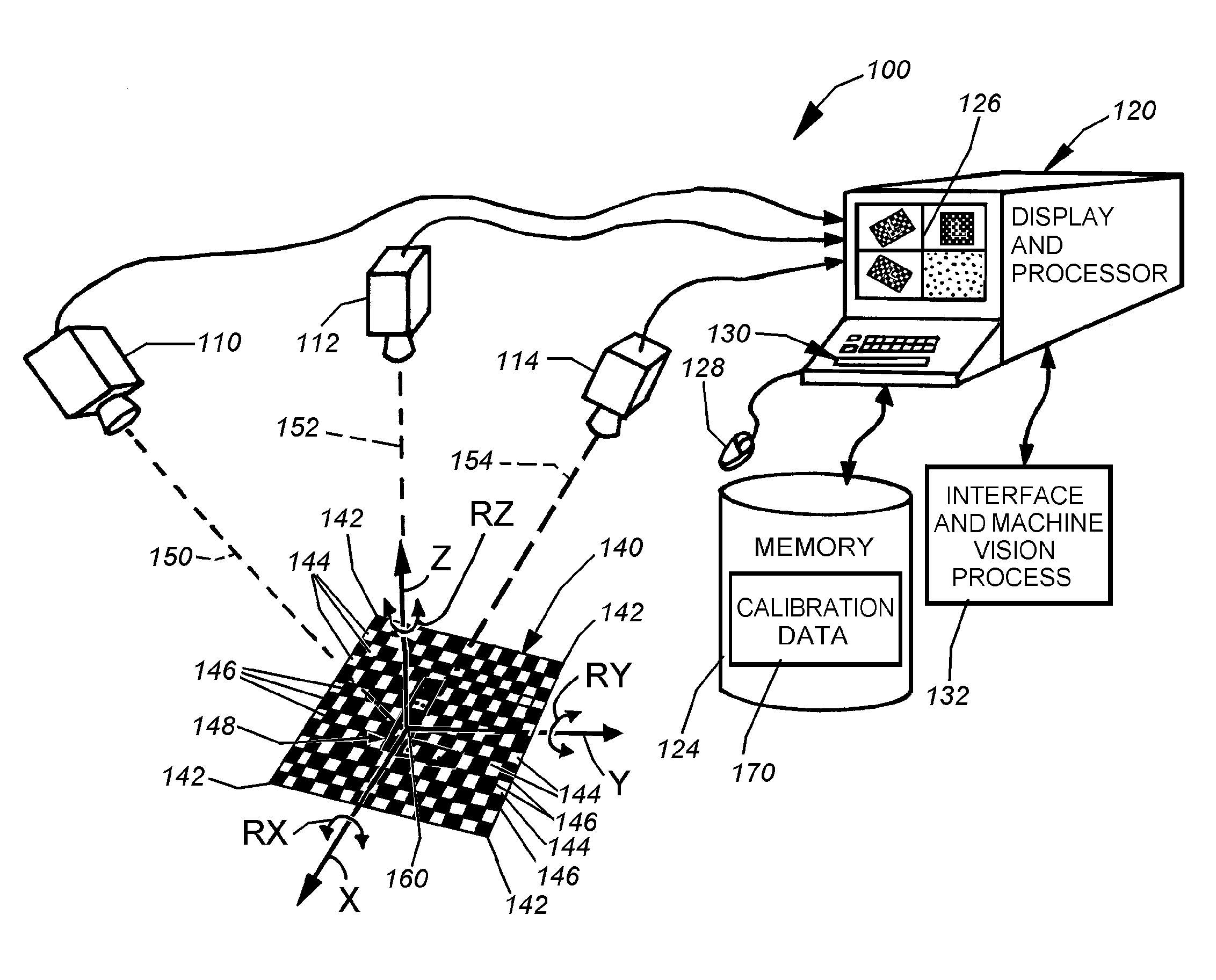

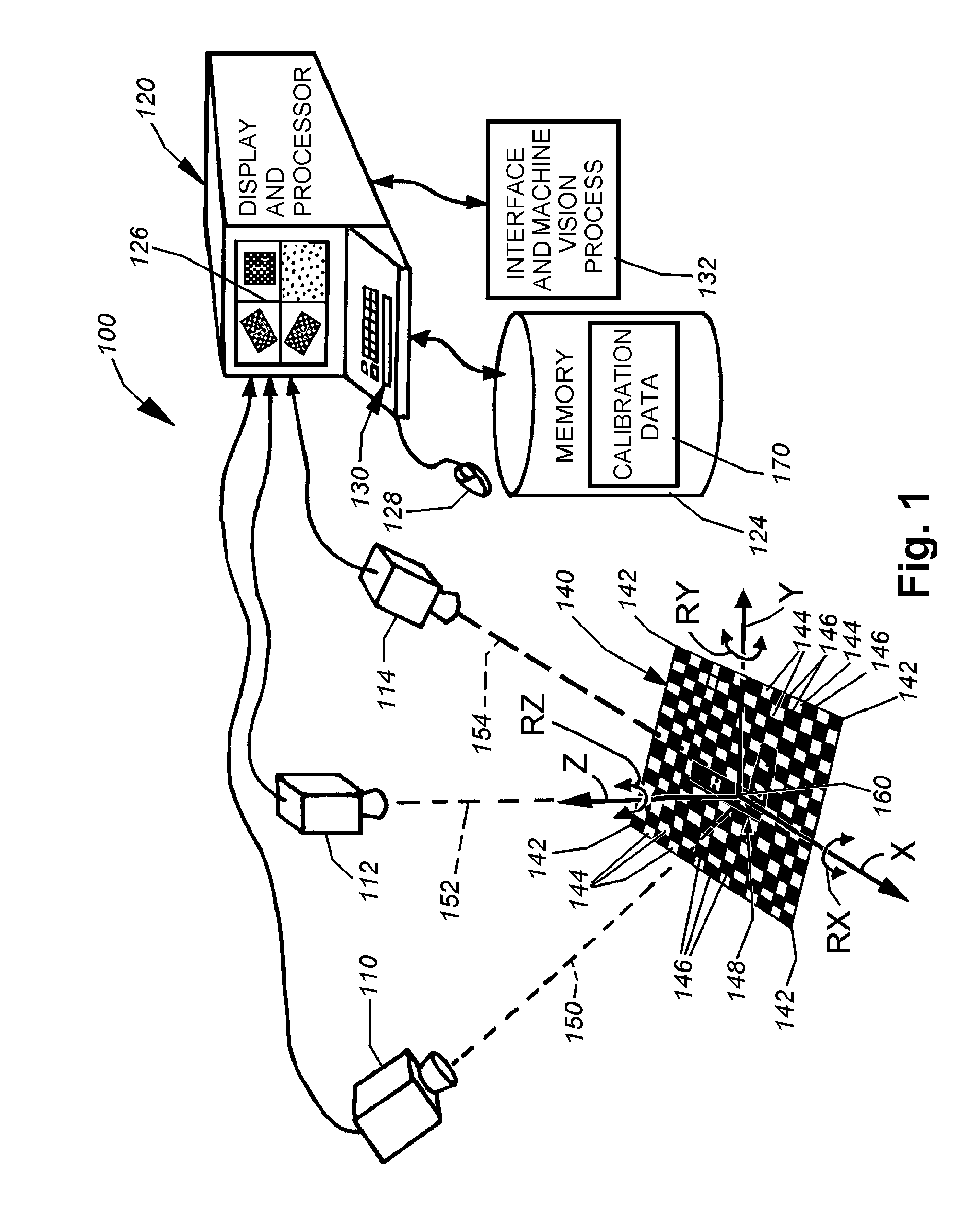

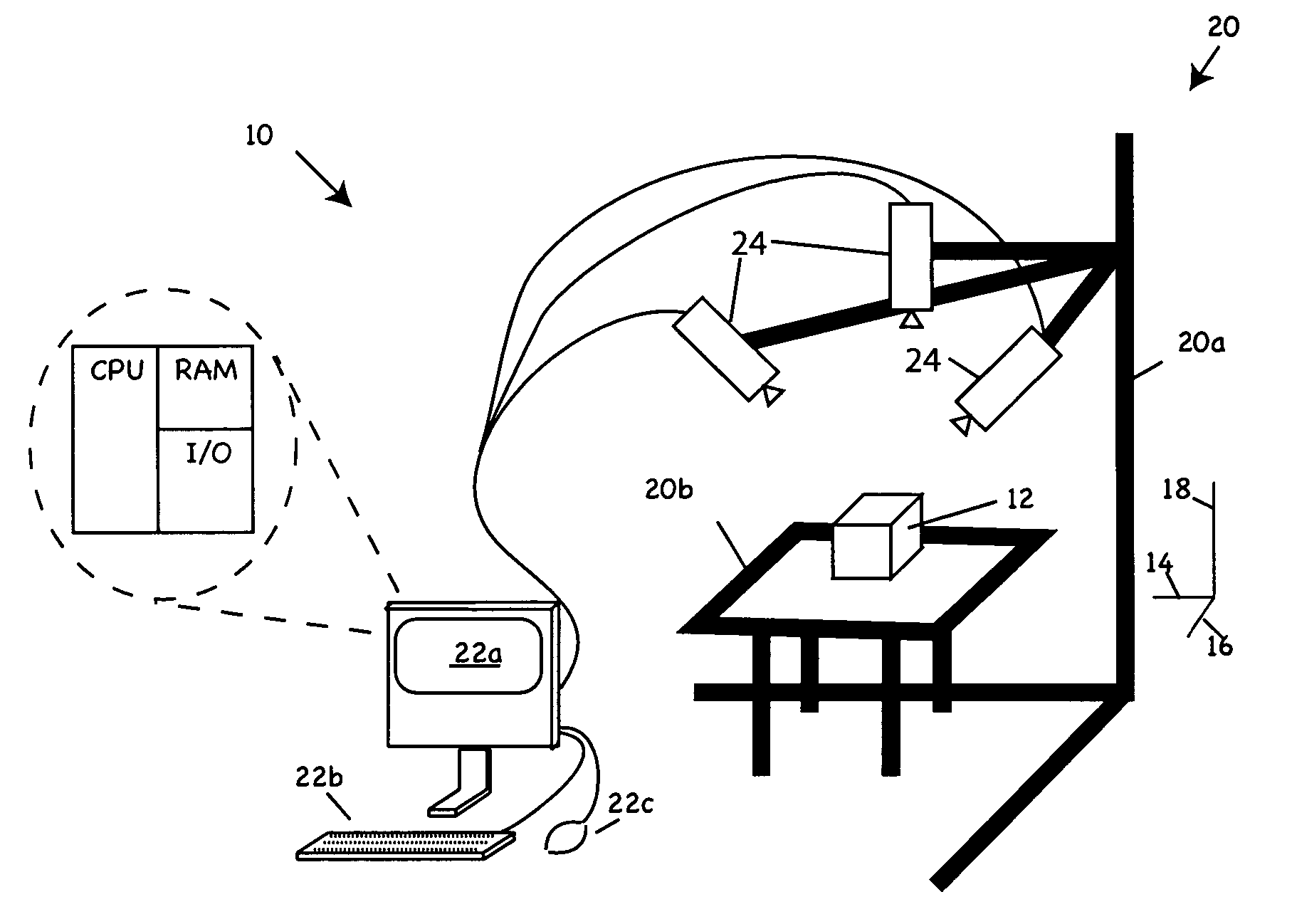

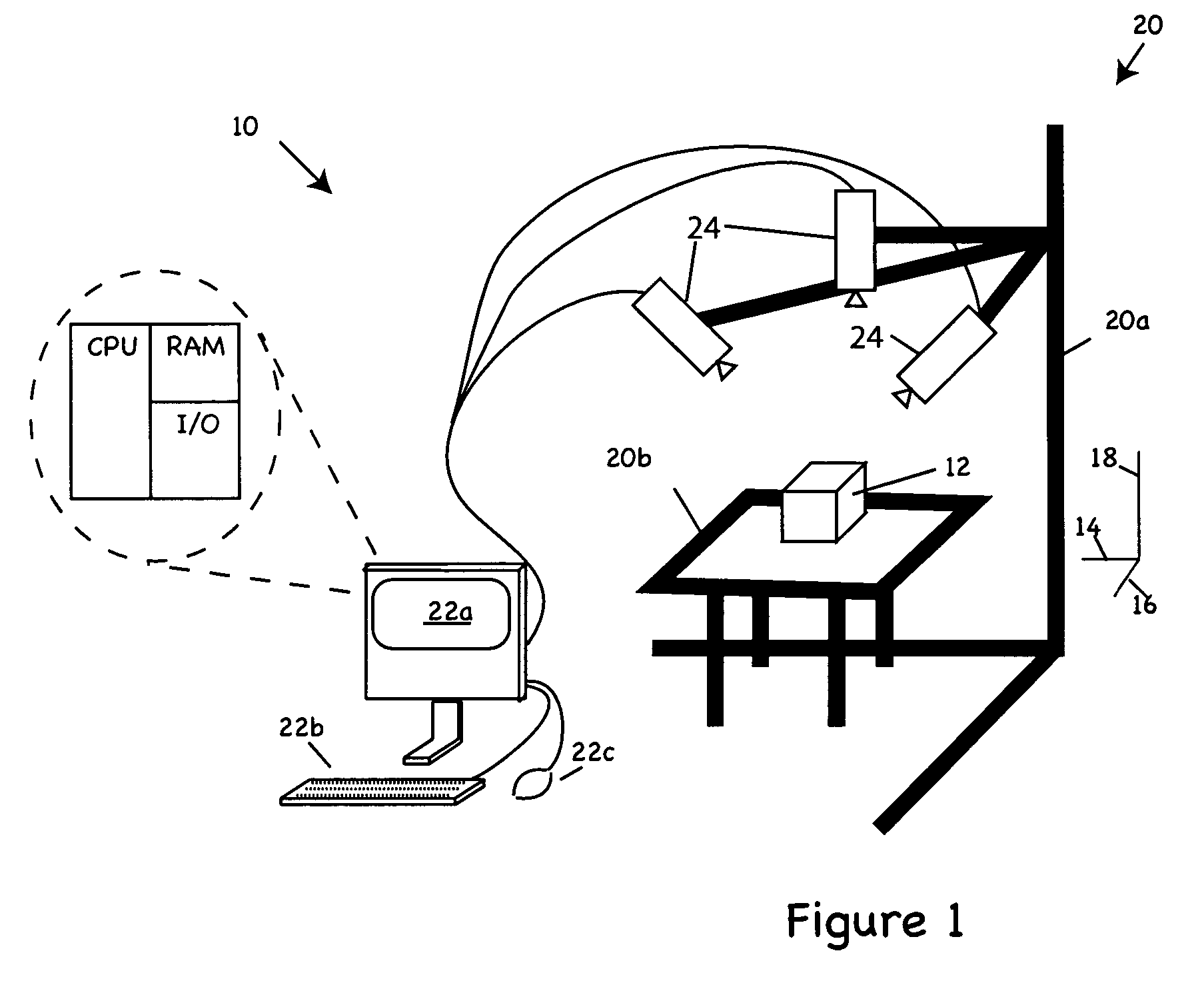

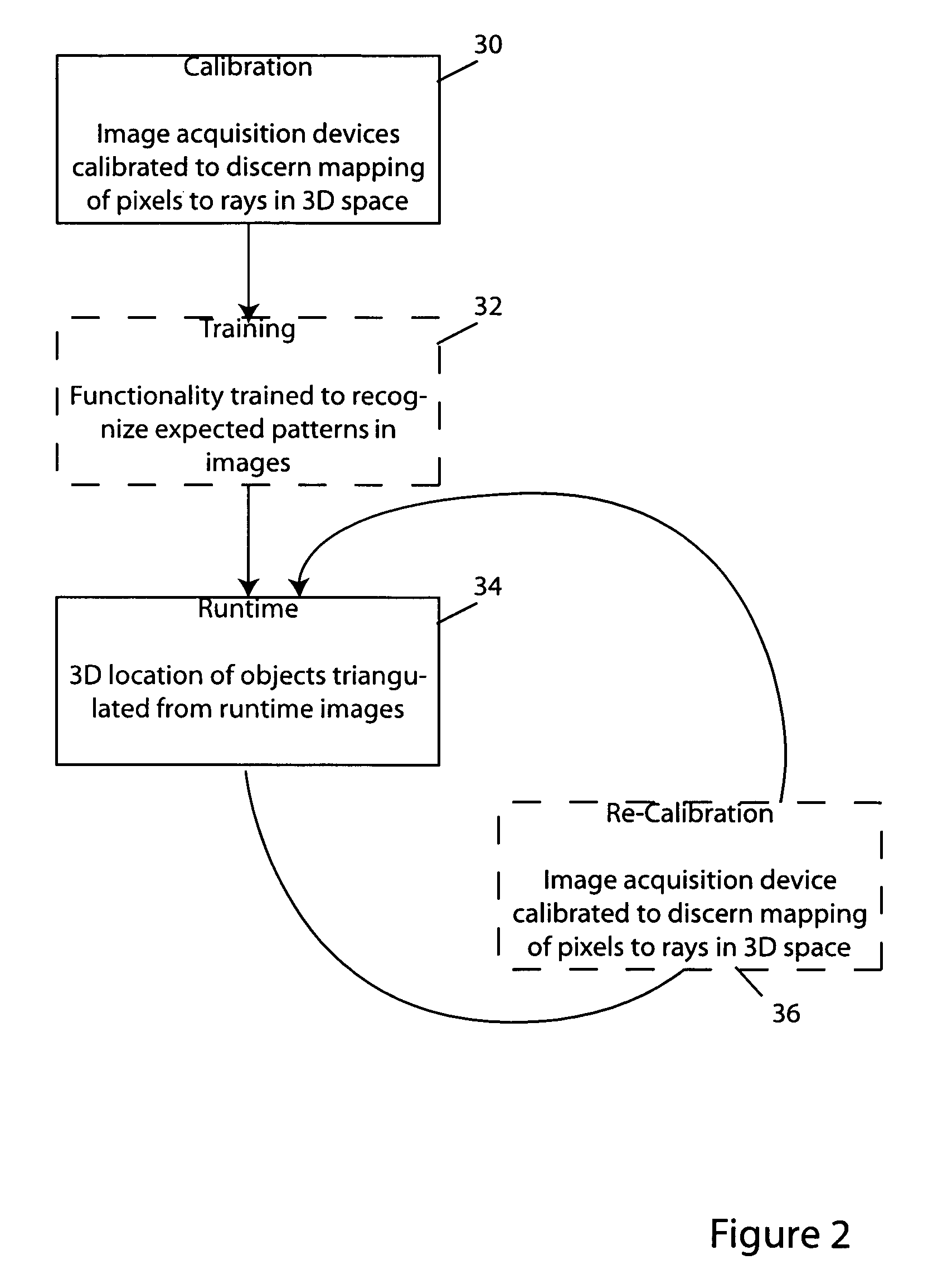

Methods and apparatus for practical 3D vision system

ActiveUS20070081714A1Facilitates finding patternsHigh match scoreCharacter and pattern recognitionCamera lensMachine vision

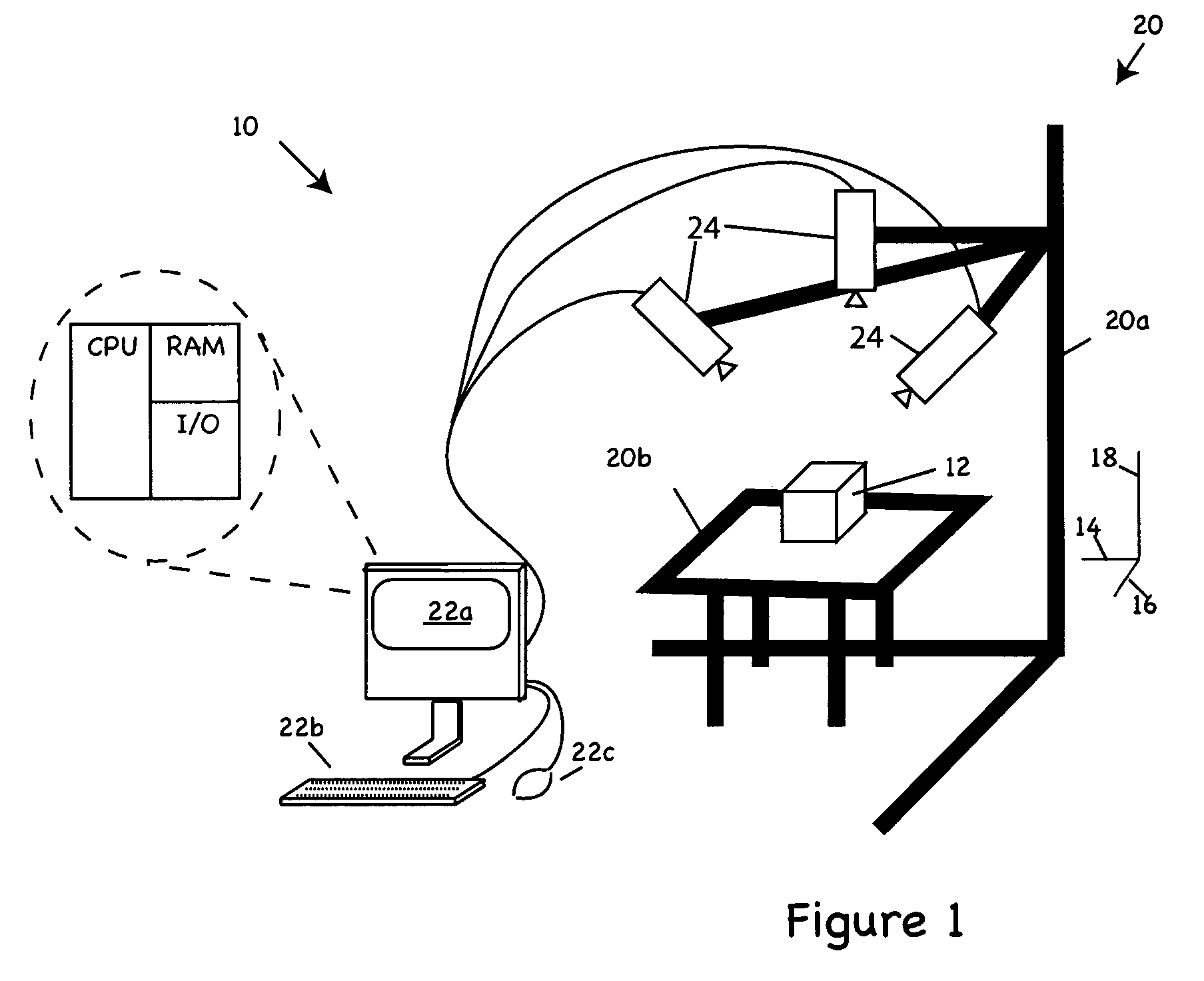

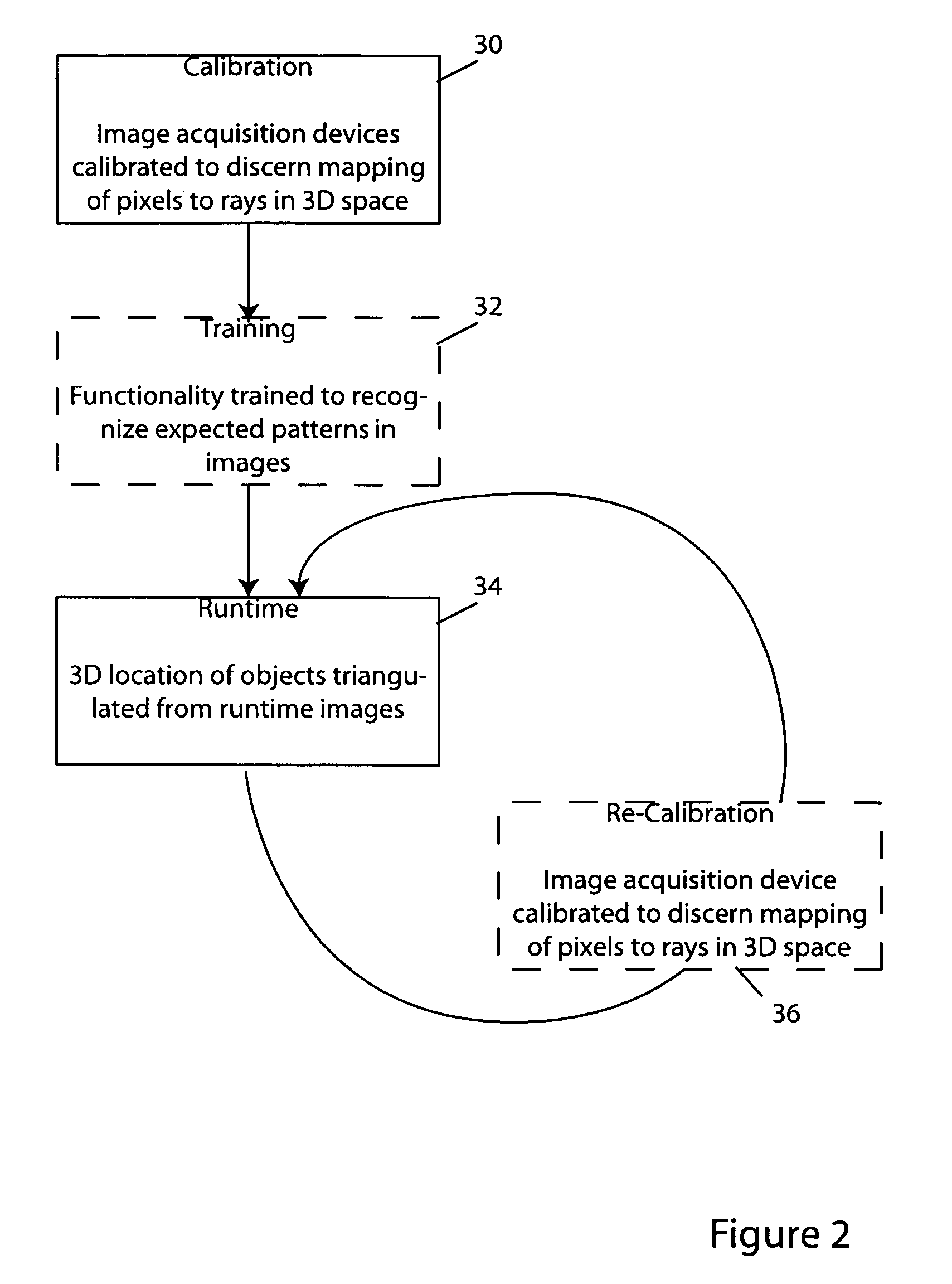

The invention provides inter alia methods and apparatus for determining the pose, e.g., position along x-, y- and z-axes, pitch, roll and yaw (or one or more characteristics of that pose) of an object in three dimensions by triangulation of data gleaned from multiple images of the object. Thus, for example, in one aspect, the invention provides a method for 3D machine vision in which, during a calibration step, multiple cameras disposed to acquire images of the object from different respective viewpoints are calibrated to discern a mapping function that identifies rays in 3D space emanating from each respective camera's lens that correspond to pixel locations in that camera's field of view. In a training step, functionality associated with the cameras is trained to recognize expected patterns in images to be acquired of the object. A runtime step triangulates locations in 3D space of one or more of those patterns from pixel-wise positions of those patterns in images of the object and from the mappings discerned during calibration step.

Owner:COGNEX TECH & INVESTMENT

Camera calibration

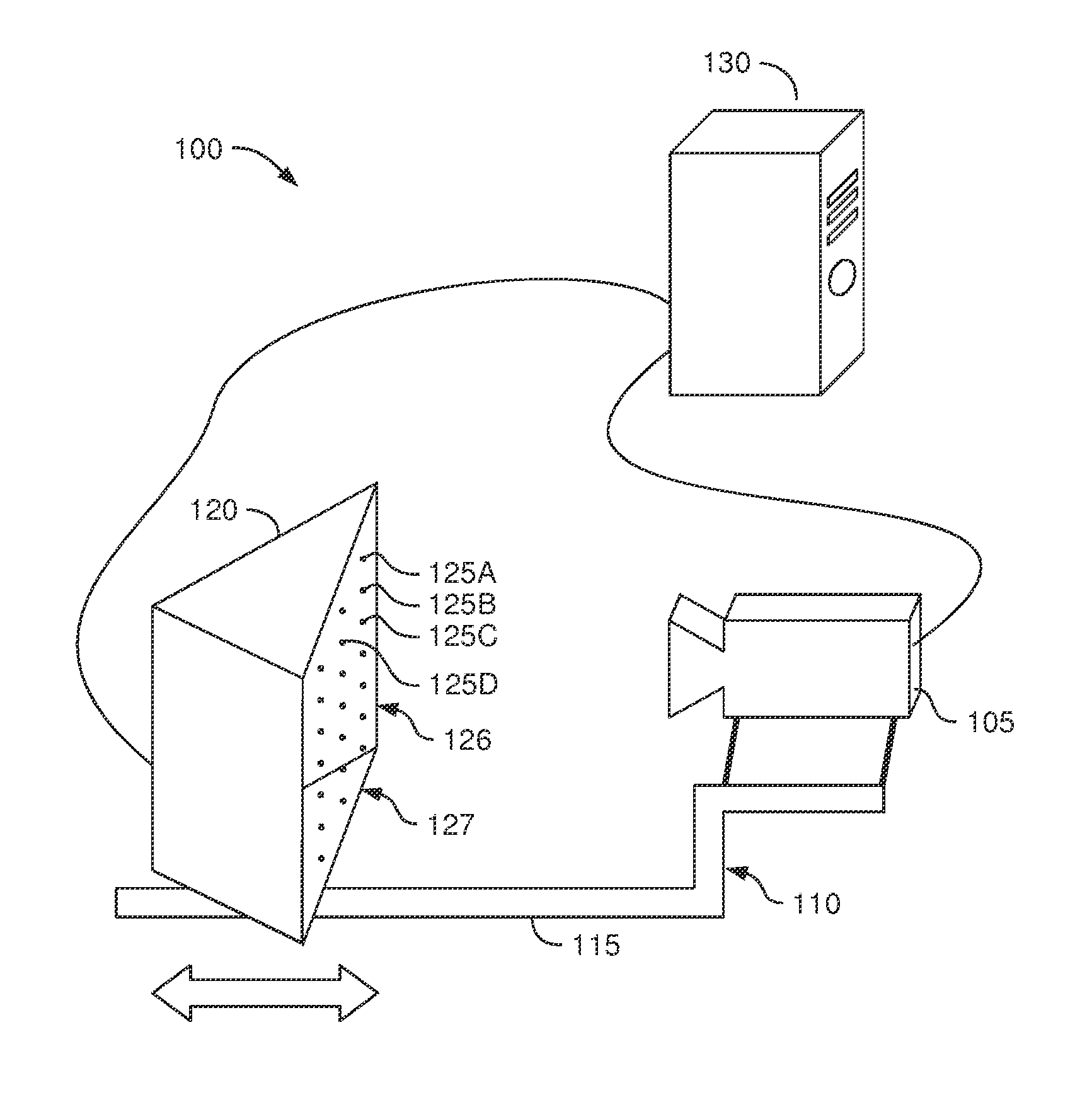

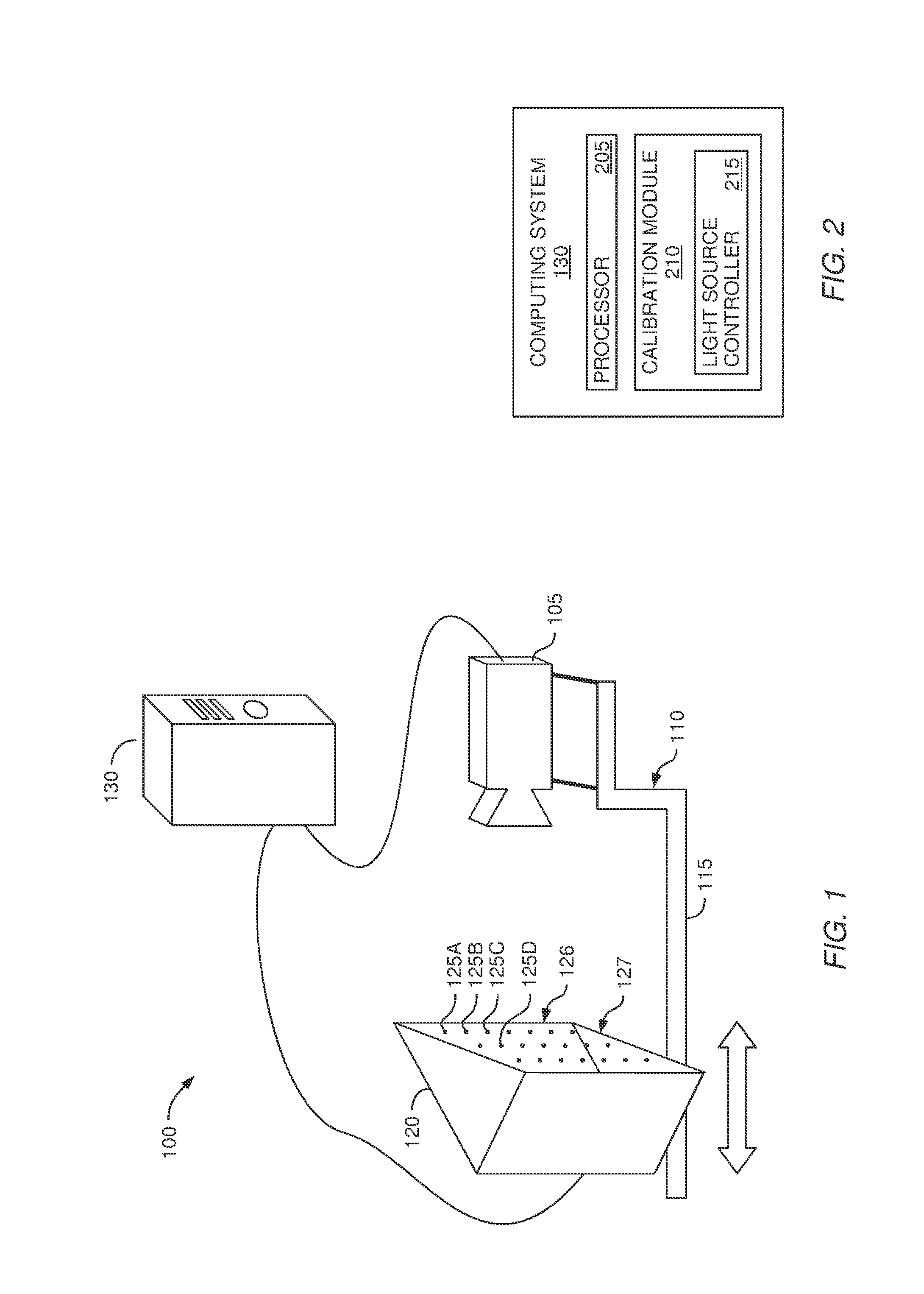

Embodiments herein include systems, methods and articles of manufacture for calibrating a camera. In one embodiment, a computing system may be coupled to a calibration apparatus and the camera to programmatically identify the intrinsic properties of the camera. The calibration apparatus includes a plurality of light sources which are controlled by the computer system. By selectively activating one or more of the light sources, the computer system identifies correspondences that relate the 3D position of the light sources to the 2D image captured by the camera. The computer system then calculates the intrinsics of the camera using the 3D to 2D correspondences. After the intrinsics are measured, cameras may be further calibrated to in order to identify 3D locations of objects within their field of view in a presentation area. Using passive markers in a presentation area, computing system may use an iterative process that estimates the actual pose of the camera.

Owner:DISNEY ENTERPRISES INC

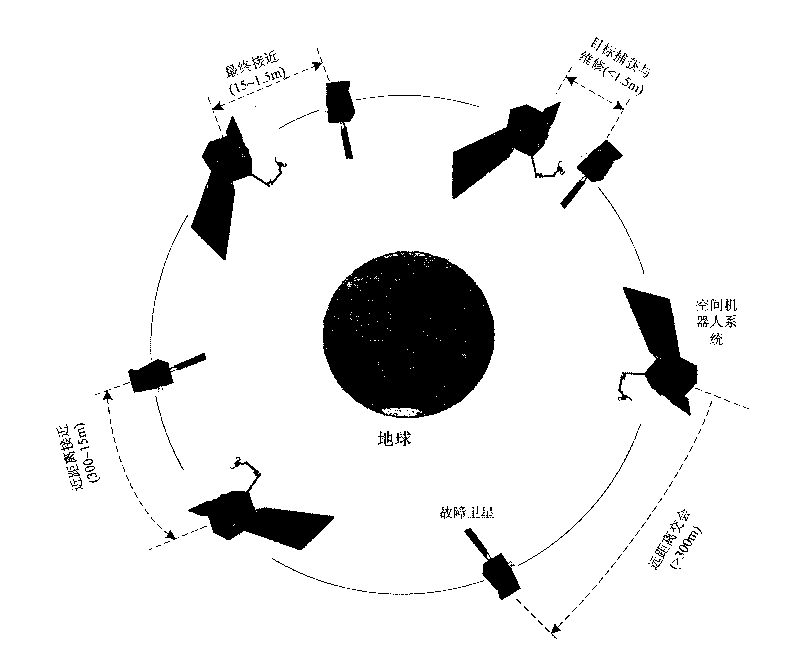

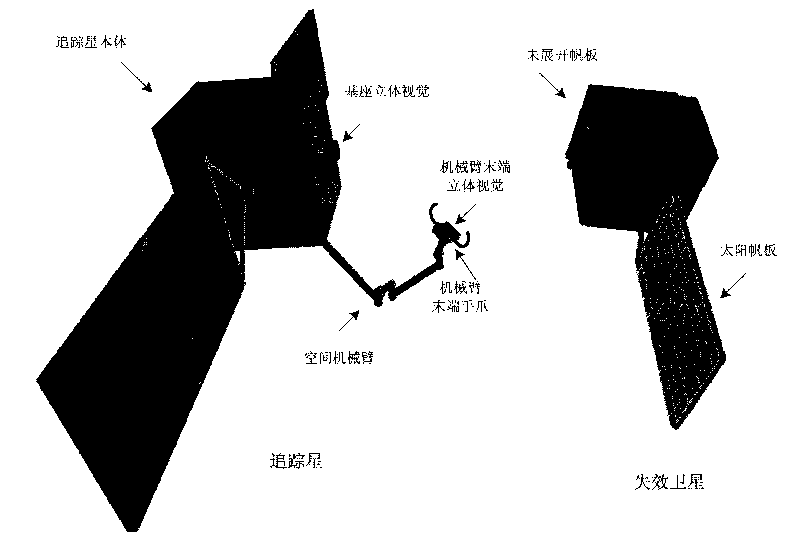

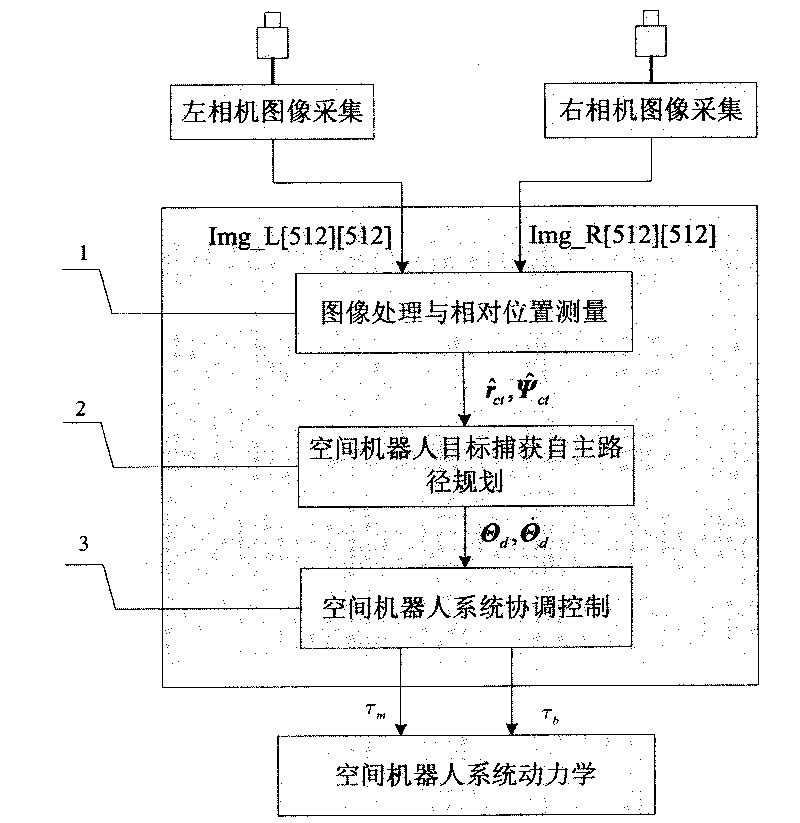

Autonomously identifying and capturing method of non-cooperative target of space robot

InactiveCN101733746AReal-time prediction of motion statusPredict interference in real timeProgramme-controlled manipulatorToolsKinematicsTarget capture

The invention relates to an autonomously identifying and capturing method of a non-cooperative target of a space robot, comprising the main steps of (1) pose measurement based on stereoscopic vision, (2) autonomous path planning of the target capture of the space robot and (3) coordinative control of a space robot system, and the like. The pose measurement based on the stereoscopic vision is realized by processing images of a left camera and a right camera in real time, and computing the pose of a non-cooperative target star relative to a base and a tail end, wherein the processing comprises smoothing filtering, edge detection, linear extraction, and the like. The autonomous path planning of the target capture of the space robot comprises is realized by planning the motion tracks of joints in real time according to the pose measurement results. The coordinative control of the space robot system is realized by coordinately controlling mechanical arms and the base to realize the optimal control property of the whole system. In the autonomously identifying and capturing method, a self part of a spacecraft is directly used as an identifying and capturing object without installing a marker or a comer reflector on the target star or knowing the geometric dimension of the object, and the planned path can effectively avoid the singular point of dynamics and kinematics.

Owner:HARBIN INST OF TECH

Method and system for providing information associated with a view of a real environment superimposed with a virtual object

InactiveUS20160307374A1Satisfy the user experienceInput/output for user-computer interactionGraph readingComputer graphics (images)Radiology

Providing information associated with a view of a real environment superimposed with a virtual object, includes obtaining a model of a real object located in a real environment and a model of a virtual object, determining a spatial relationship between the real object and the virtual object, determining a pose of a device displaying the view relative to the real object, determining a visibility of the virtual object in the view according to the pose of the device, the spatial relationship and the models of the real object and the virtual object, and if the virtual object is not visible in the view, determining a movement for at least one of the device and at least part of the real object such that the virtual object is visible in the view in response to the at least one movement, and providing information indicative of the at least one movement.

Owner:APPLE INC

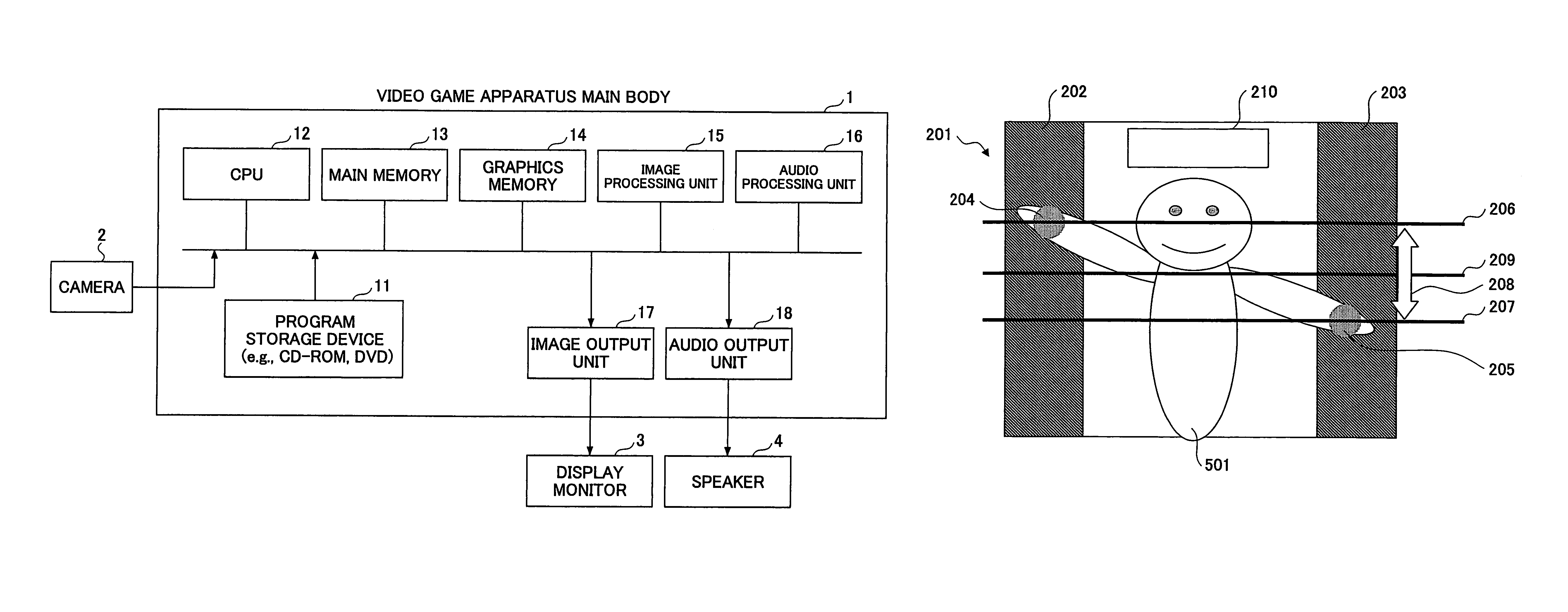

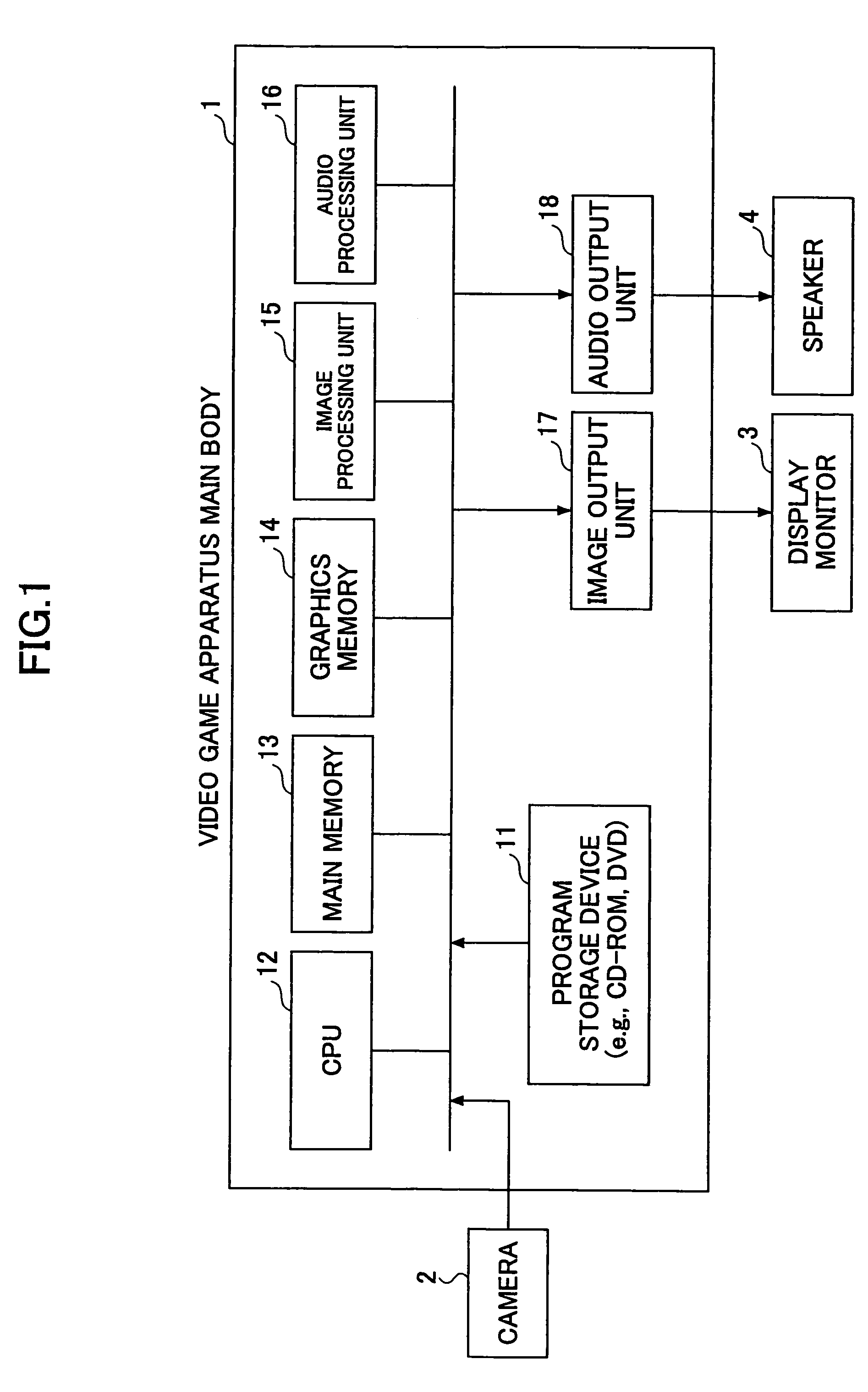

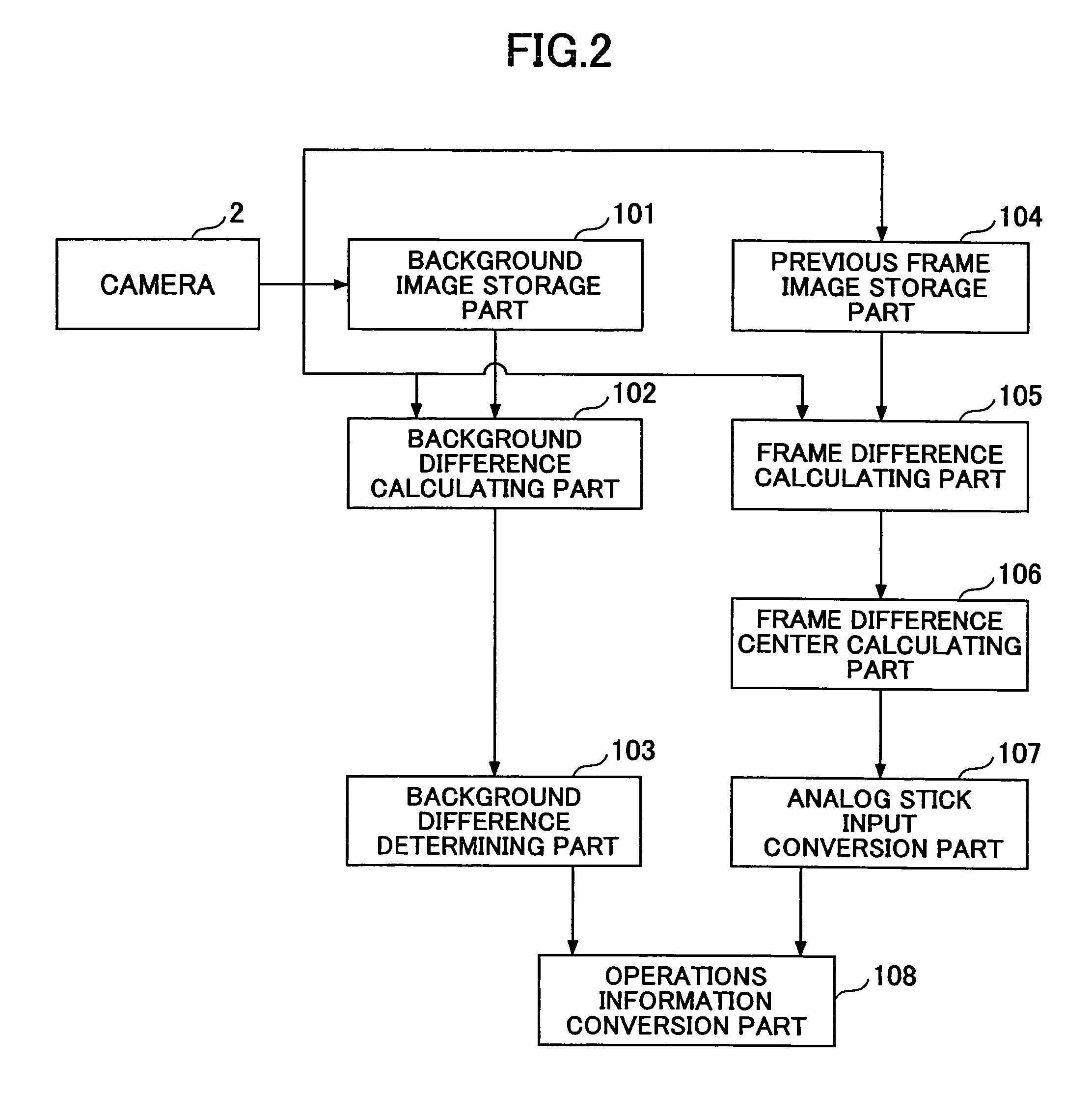

Pose detection method, video game apparatus, pose detection program, and computer-readable medium containing computer program

A technique is disclosed for detecting a pose of a player in a video game using an input image supplied by a camera. The technique involves calculating a frame difference and / or background difference of an image of a predetermined region of an image captured by the camera which captured image includes a player image representing the pose of the player, and generating operations information corresponding to the pose of the player based on the calculated frame difference and / or background difference.

Owner:SEGA CORP

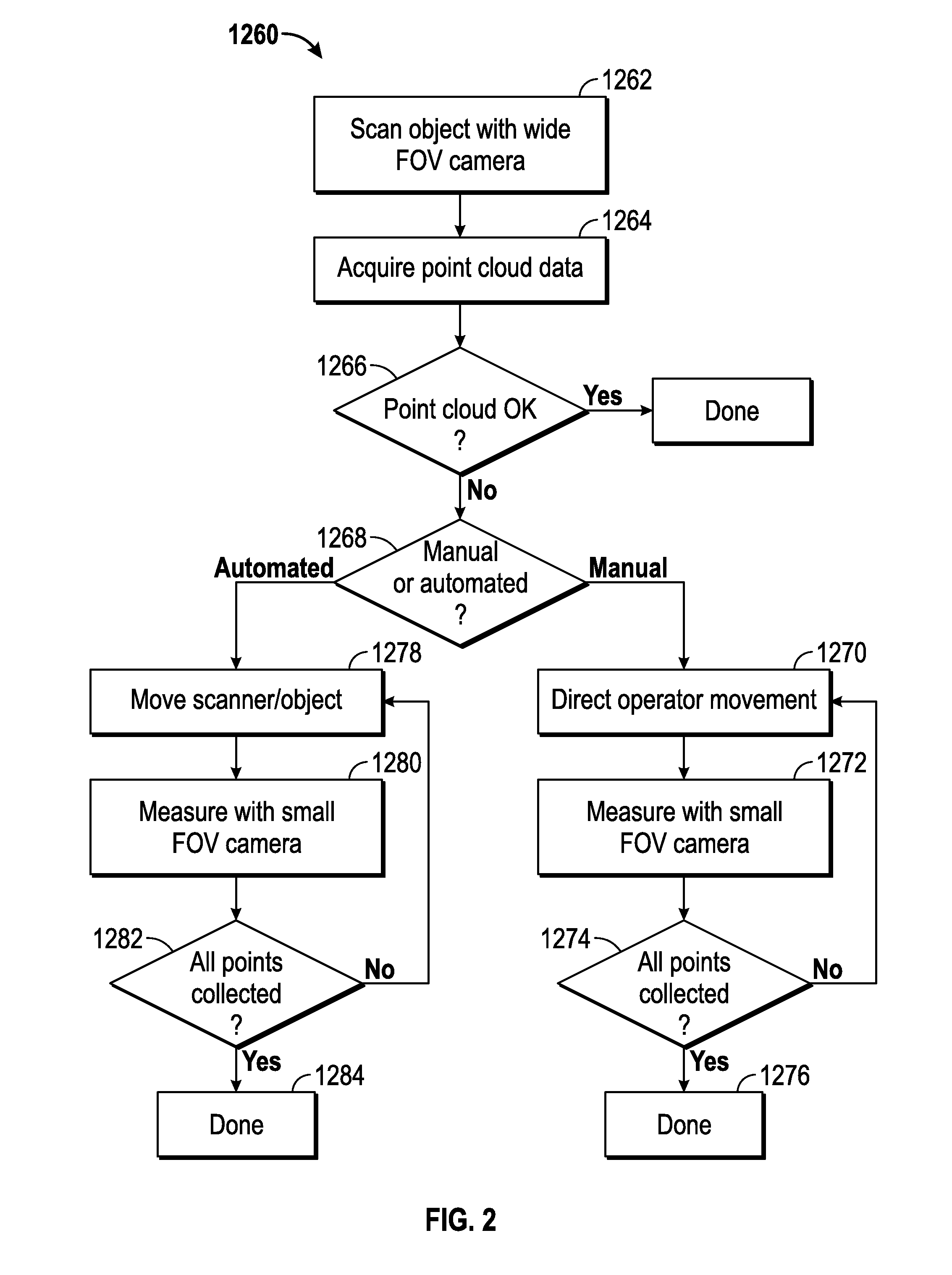

Three-Dimensional Scanner With External Tactical Probe and Illuminated Guidance

ActiveUS20140267623A1Eliminate the problemImage enhancementImage analysisGoodness of fitPostural orientation

An assembly that includes a projector and camera is used with a processor to determine three-dimensional (3D) coordinates of an object surface. The processor fits collected 3D coordinates to a mathematical representation provided for a shape of a surface feature. The processor fits the measured 3D coordinates to the shape and, if the goodness of fit is not acceptable, selects and performs at least one of: changing a pose of the assembly, changing an illumination level of the light source, changing a pattern of the transmitted With the changes in place, another scan is made to obtain 3D coordinates.

Owner:FARO TECH INC

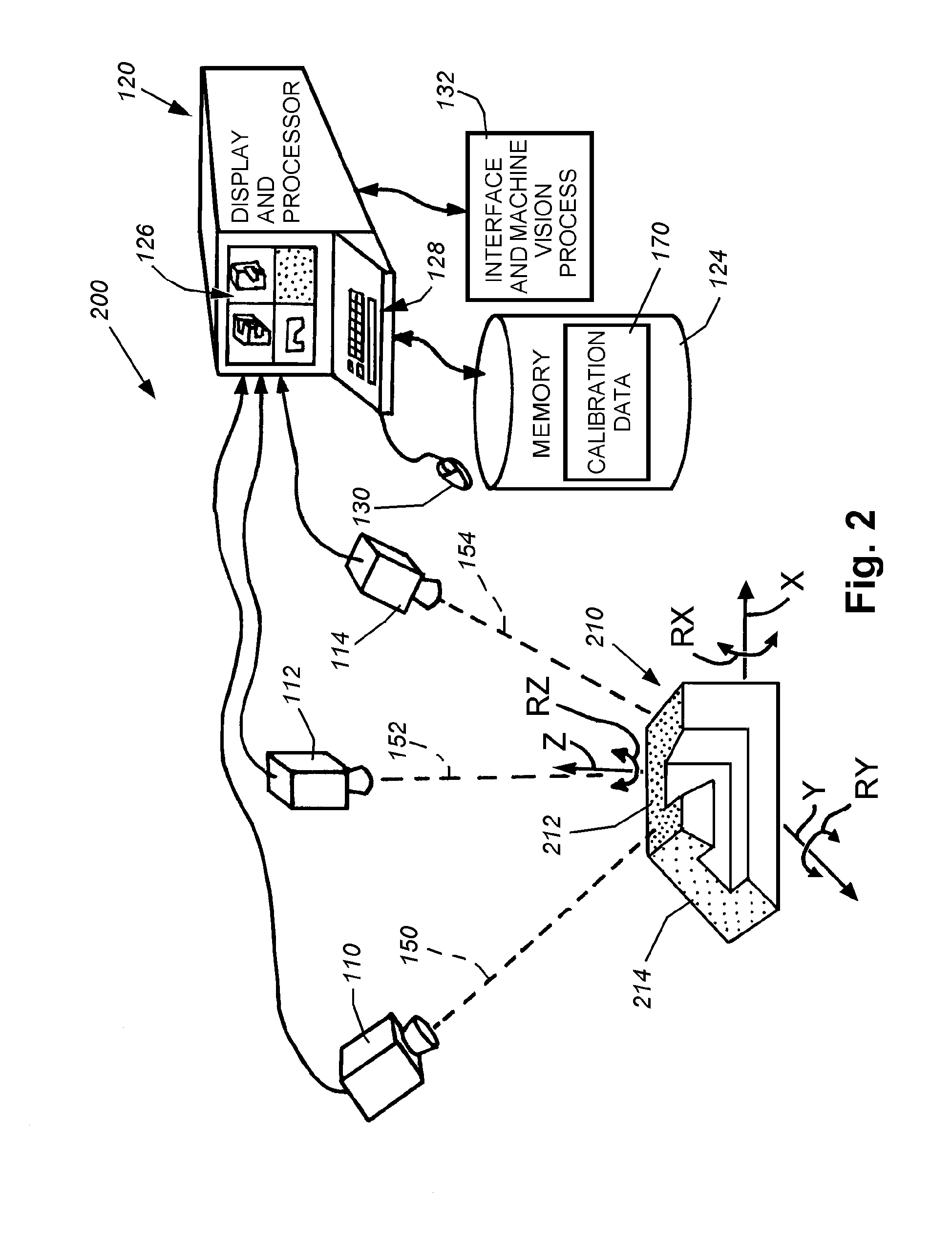

System and method for locating a three-dimensional object using machine vision

ActiveUS20080298672A1Error minimizationOvercome disadvantagesImage enhancementImage analysisPattern recognitionVision processing

This invention provides a system and method for determining position of a viewed object in three dimensions by employing 2D machine vision processes on each of a plurality of planar faces of the object, and thereby refining the location of the object. First a rough pose estimate of the object is derived. This rough pose estimate can be based upon predetermined pose data, or can be derived by acquiring a plurality of planar face poses of the object (using, for example multiple cameras) and correlating the corners of the trained image pattern, which have known coordinates relative to the origin, to the acquired patterns. Once the rough pose is achieved, this is refined by defining the pose as a quaternion (a, b, c and d) for rotation and a three variables (x, y, z) for translation and employing an iterative weighted, least squares error calculation to minimize the error between the edgelets of trained model image and the acquired runtime edgelets. The overall, refined / optimized pose estimate incorporates data from each of the cameras' acquired images. Thereby, the estimate minimizes the total error between the edgelets of each camera's / view's trained model image and the associated camera's / view's acquired runtime edgelets. A final transformation of trained features relative to the runtime features is derived from the iterative error computation.

Owner:COGNEX CORP

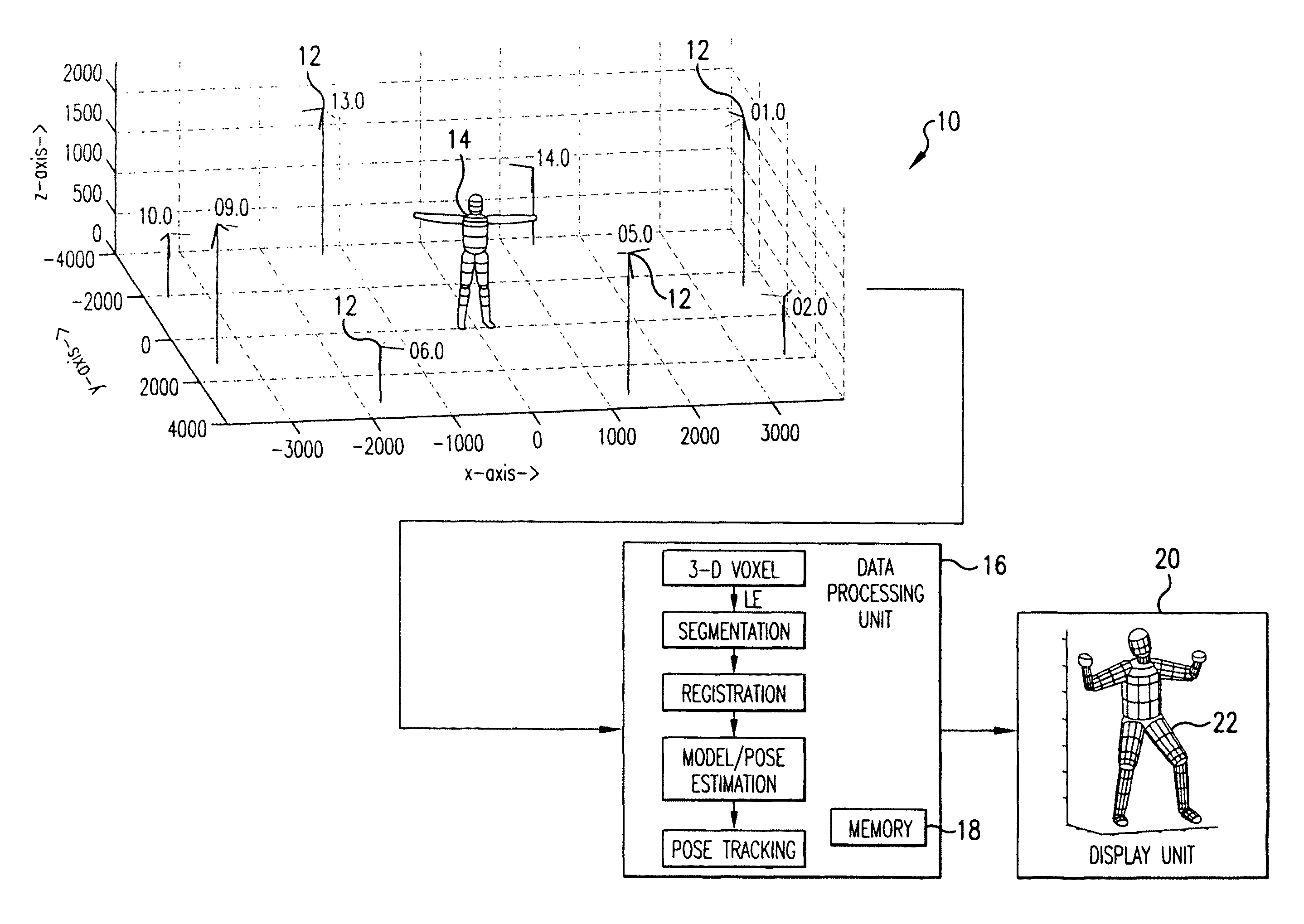

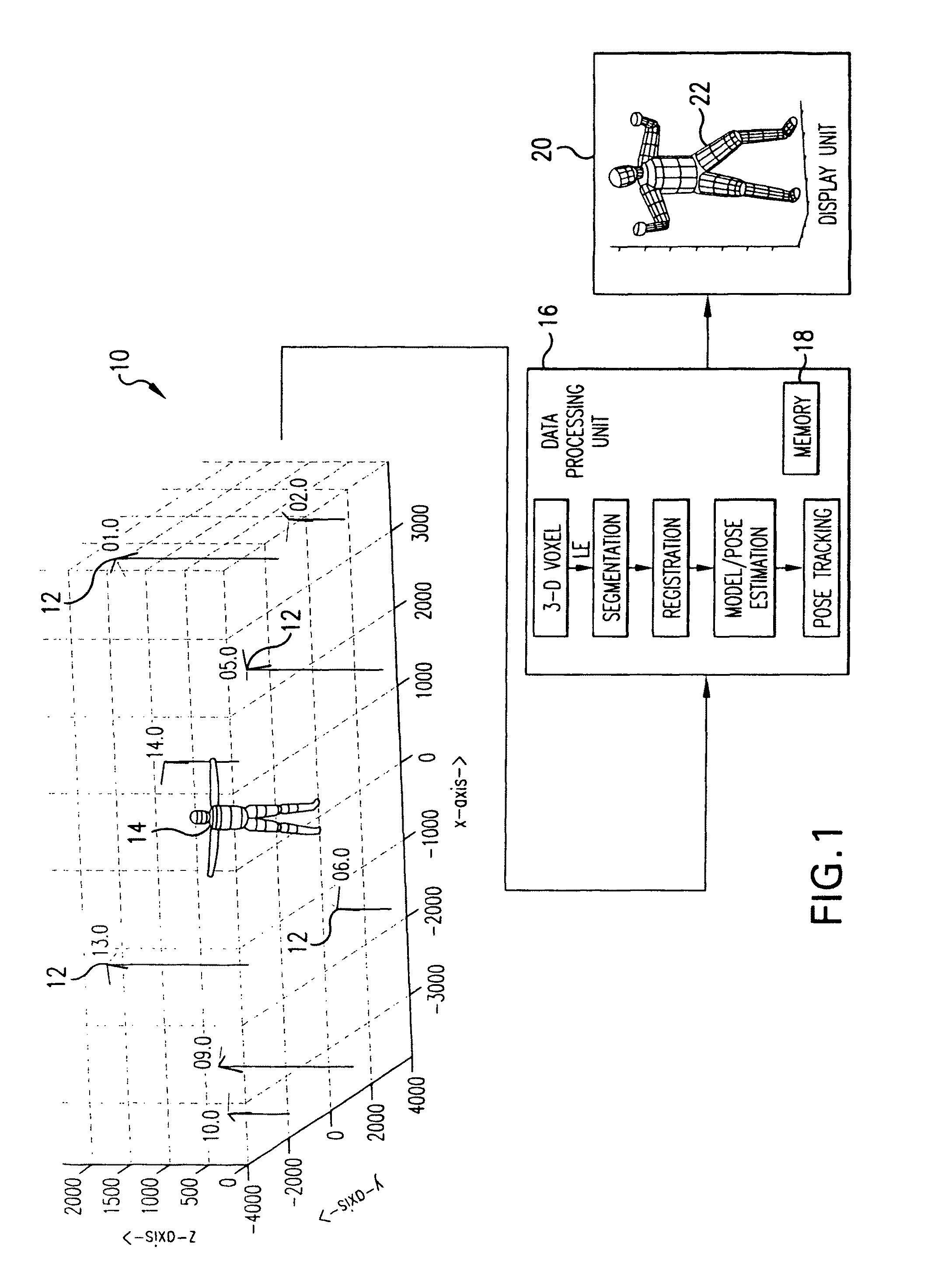

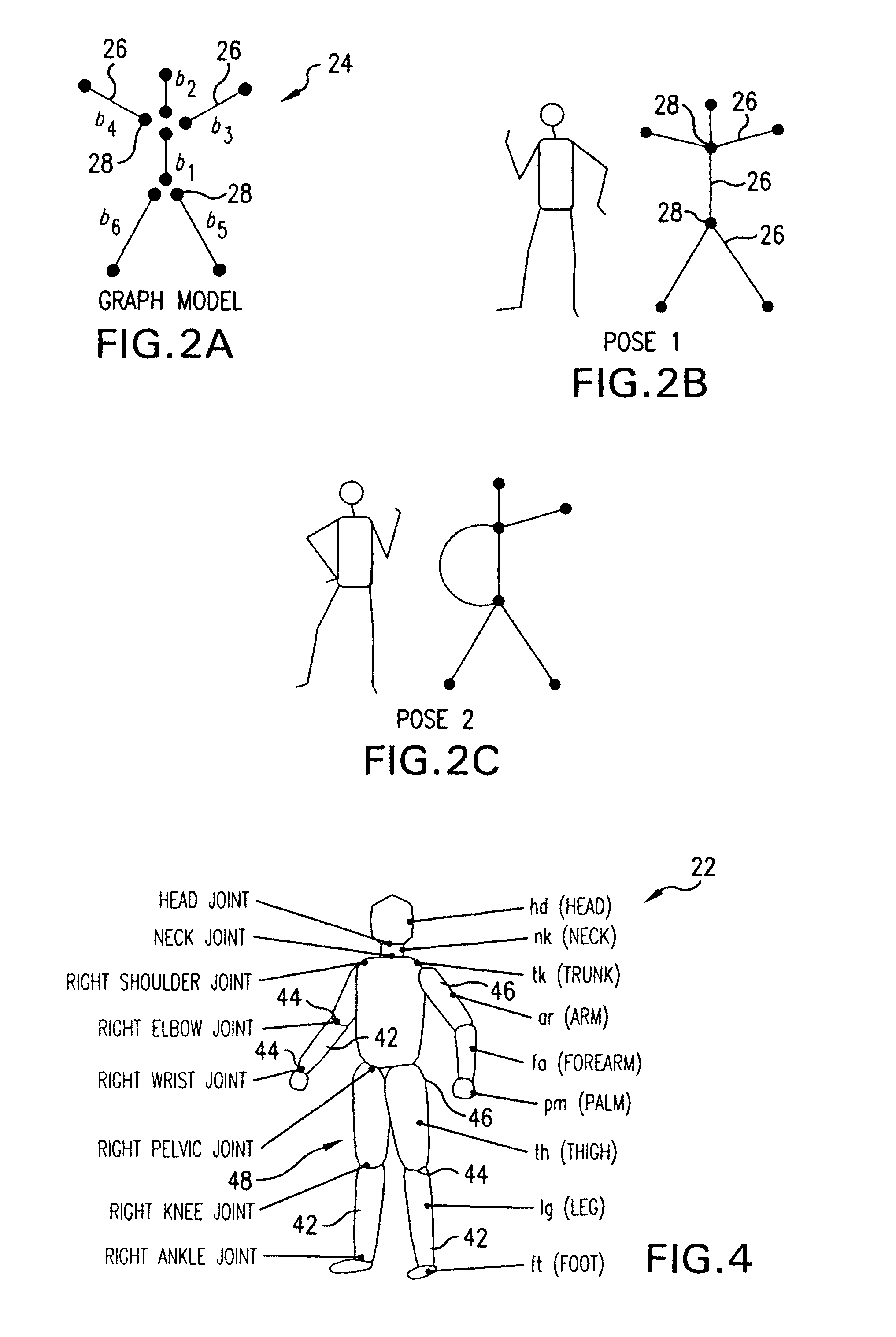

Method and system for markerless motion capture using multiple cameras

InactiveUS8023726B2Accurate estimateAccurate presentationImage enhancementDetails involving processing stepsPattern recognitionVoxel

Completely automated end-to-end method and system for markerless motion capture performs segmentation of articulating objects in Laplacian Eigenspace and is applicable to handling of the poses of some complexity. 3D voxel representation of acquired images are mapped to a higher dimensional space (k), where k depends on the number of articulated chains of the subject body, so as to extract the 1-D representations of the articulating chains. A bottom-up approach is suggested in order to build a parametric (spline-based) representation of a general articulated body in the high dimensional space followed by a top-down probabilistic approach that registers the segments to an average human body model. The parameters of the model are further optimized using the segmented and registered voxels.

Owner:UNIV OF MARYLAND

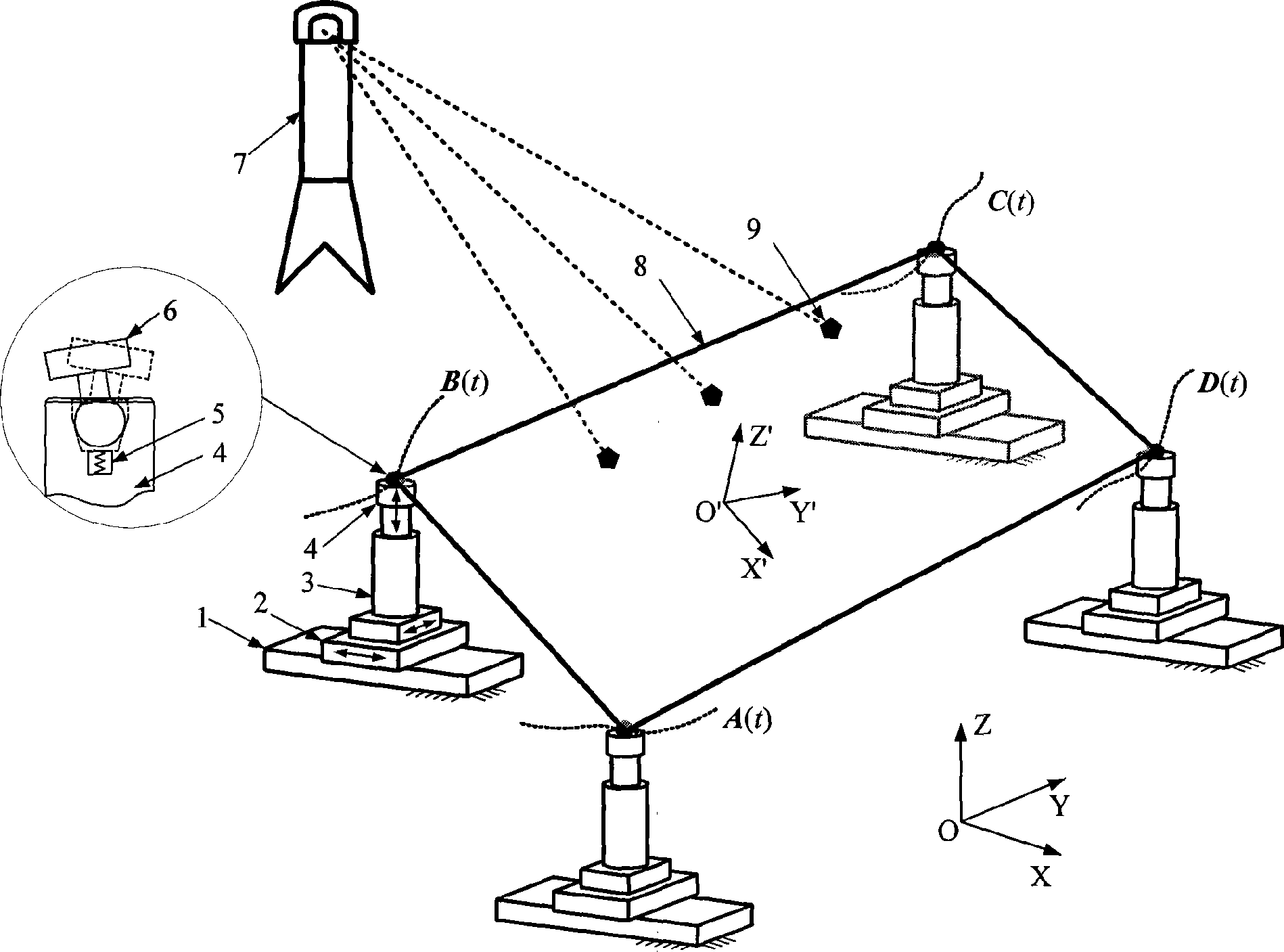

Pose alignment system and method of aircraft part based on four locater

InactiveCN101362512AGood dynamic propertiesRealize automatic adjustmentAircraft assemblyGlobal coordinate systemAirplane

The invention discloses an aircraft component position and pose adjusting system based on four locators and a method thereof. The position and pose adjusting system comprises four three-coordinate locators, a spherical technical connector, an aircraft component to be adjusted, a laser tracker and a target reflecting sphere, the three-coordinate locator comprises a bottom plate, and an X-direction motion mechanism, a Y-direction motion mechanism, a Z-direction motion mechanism and a displacement sensor which are arranged from the lower part and the upper part in sequence. The position and pose adjusting method comprises the following steps: firstly, a global coordinate system OXYZ is established, and the current position and pose and the target position and pose of the aircraft component to be adjusted are calculated; secondly, the path of the aircraft component to be adjusted from the current position and pose to the target position and pose is planed; thirdly, the tracks of motion mechanisms in all the directions are formed according to the path; and fourthly, the three locators are synergetically moved, and the position adjusting is realized. The method has the following advantages: firstly, the supporting to the aircraft component to be adjusted can be realized; secondly, the automatic adjusting to position and pose of the aircraft component to be adjusted can be realized; and thirdly, the inch adjusting of position and pose of the aircraft component to be adjusted can be realized.

Owner:ZHEJIANG UNIV +1

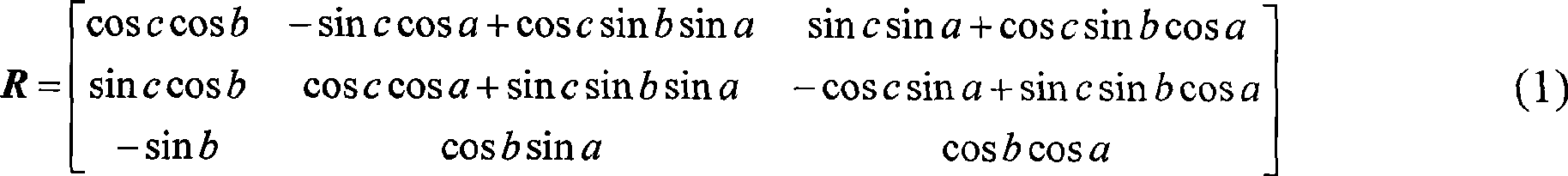

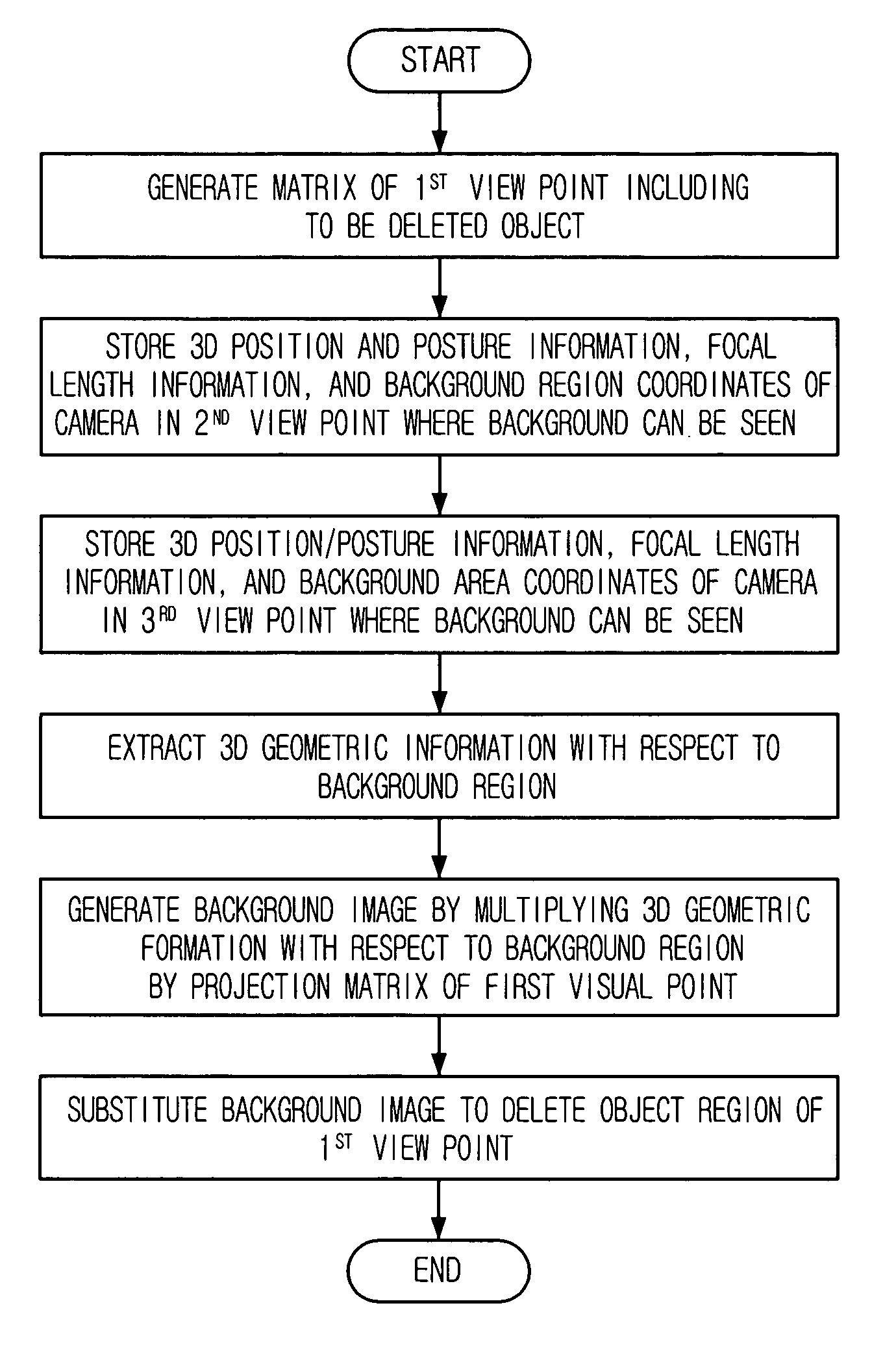

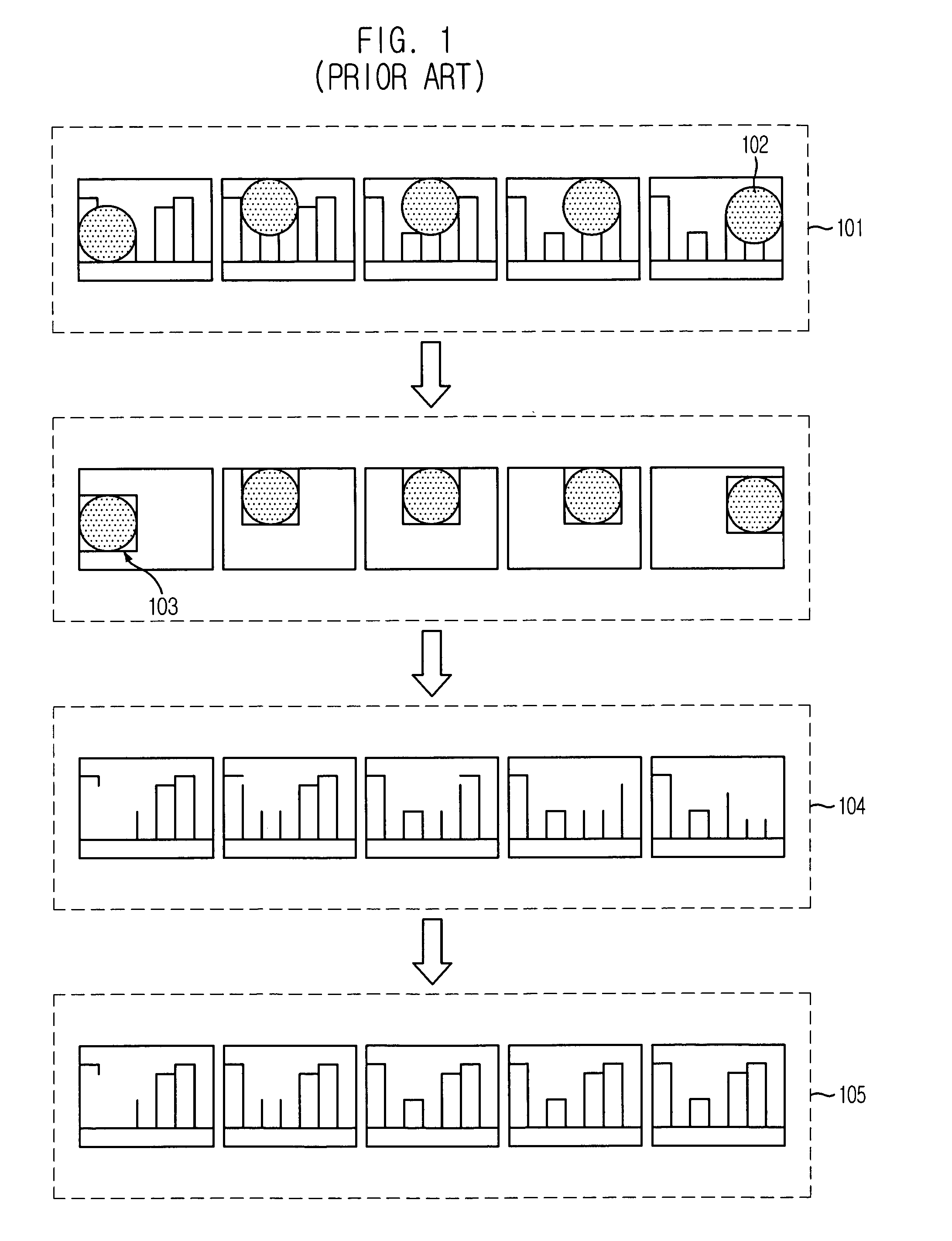

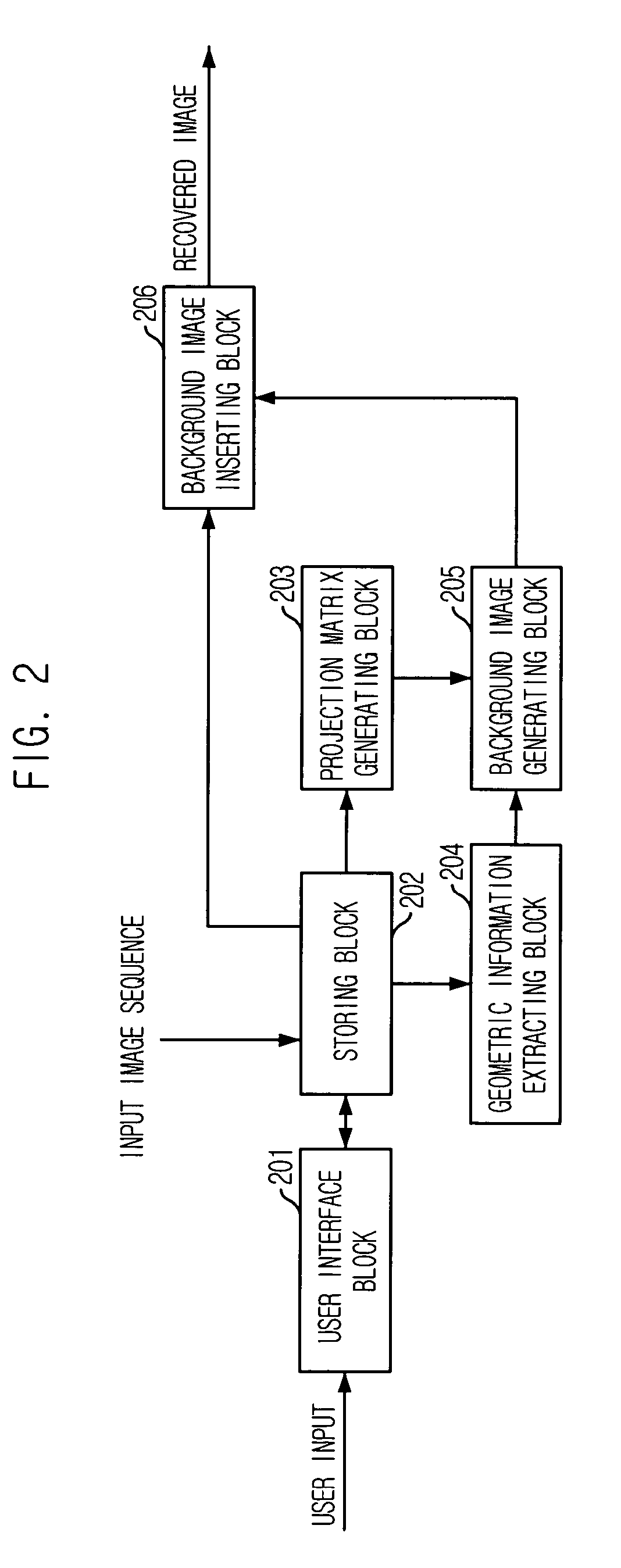

Apparatus for recovering background in image sequence and method thereof

ActiveUS7555158B2Television system detailsCharacter and pattern recognitionBackground imageImage sequence

Owner:ELECTRONICS & TELECOMM RES INST

Methods and apparatus for practical 3D vision system

ActiveUS8111904B2Facilitates finding patternsHigh match scoreCharacter and pattern recognitionColor television detailsMachine visionComputer graphics (images)

Owner:COGNEX TECH & INVESTMENT

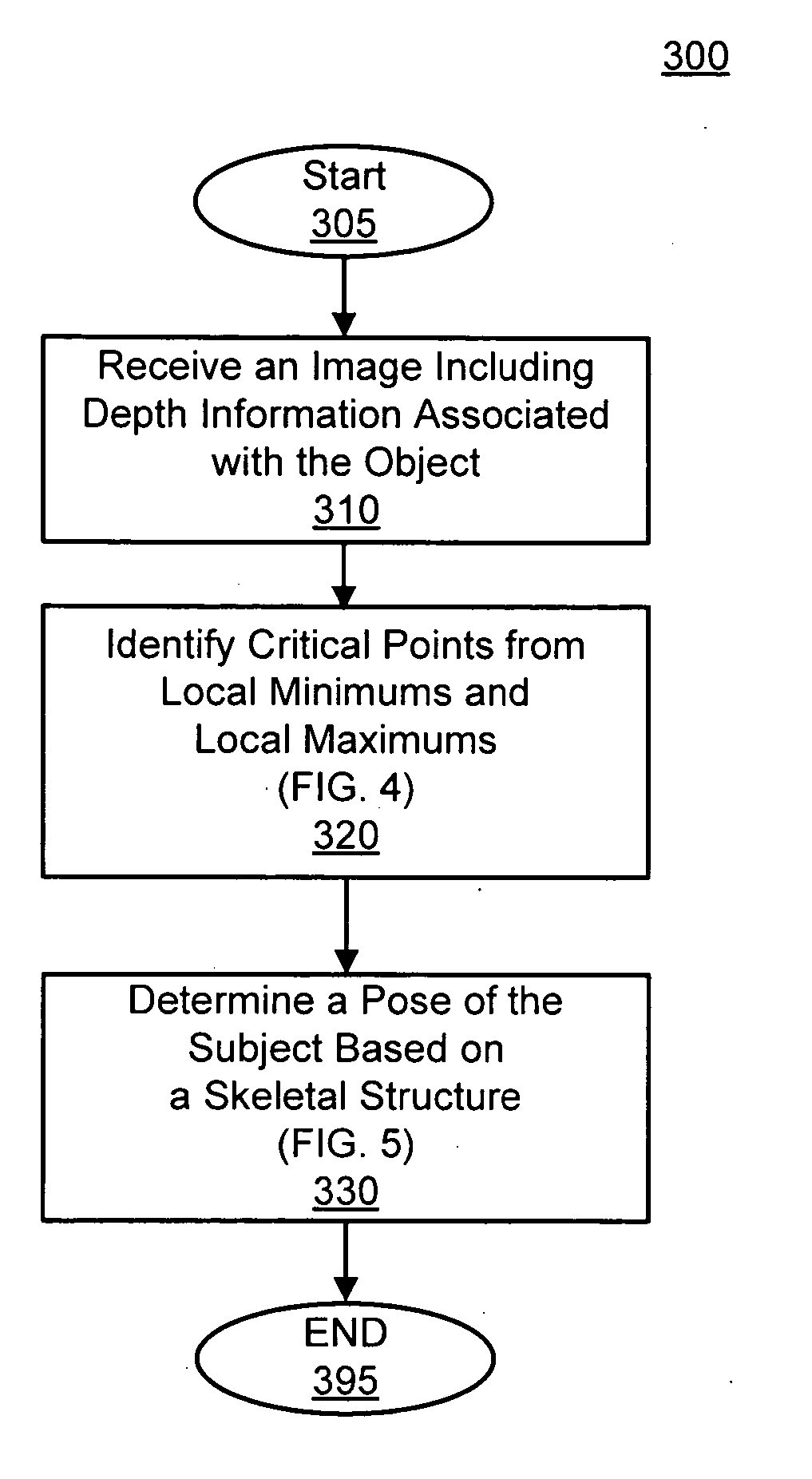

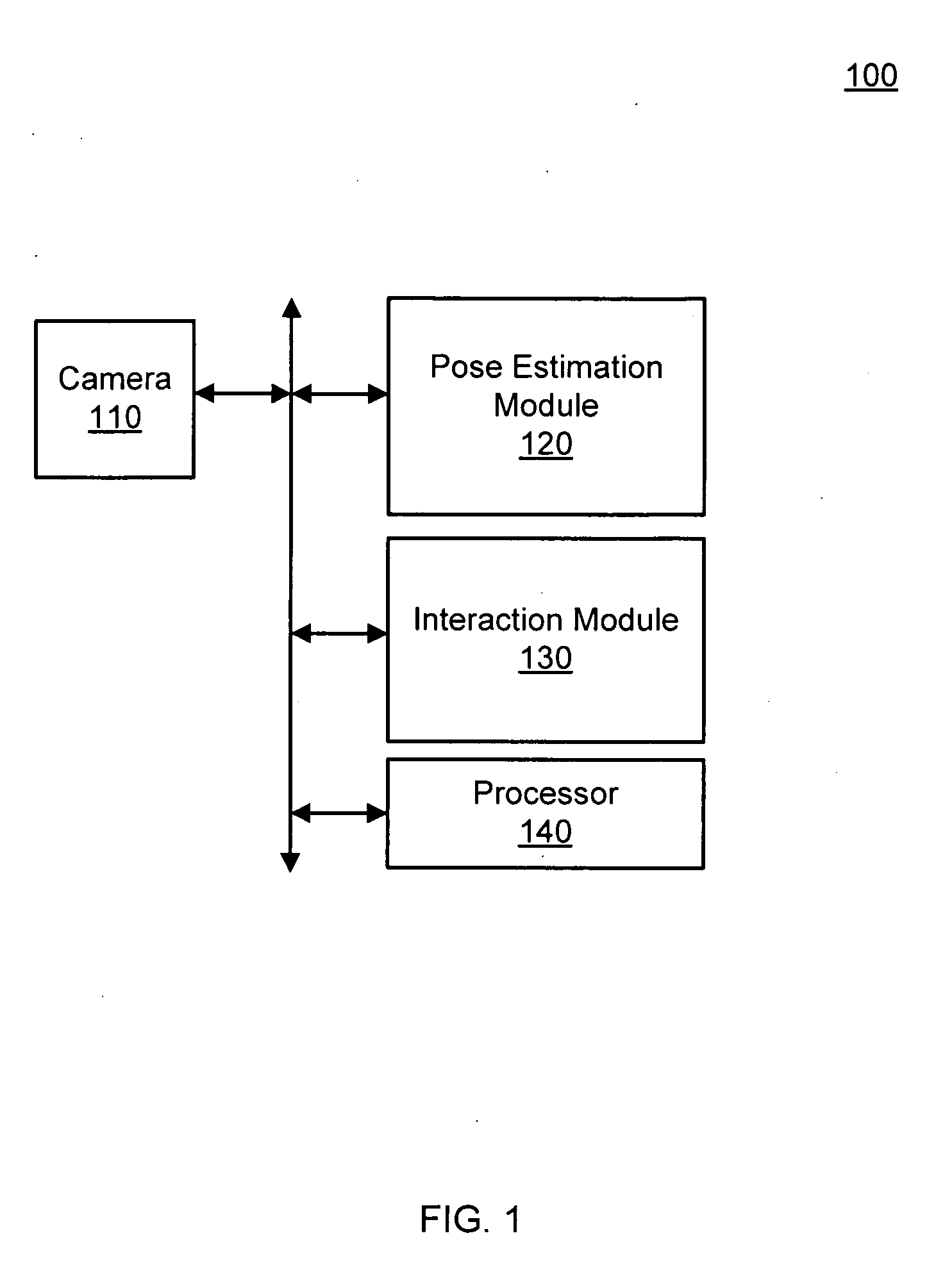

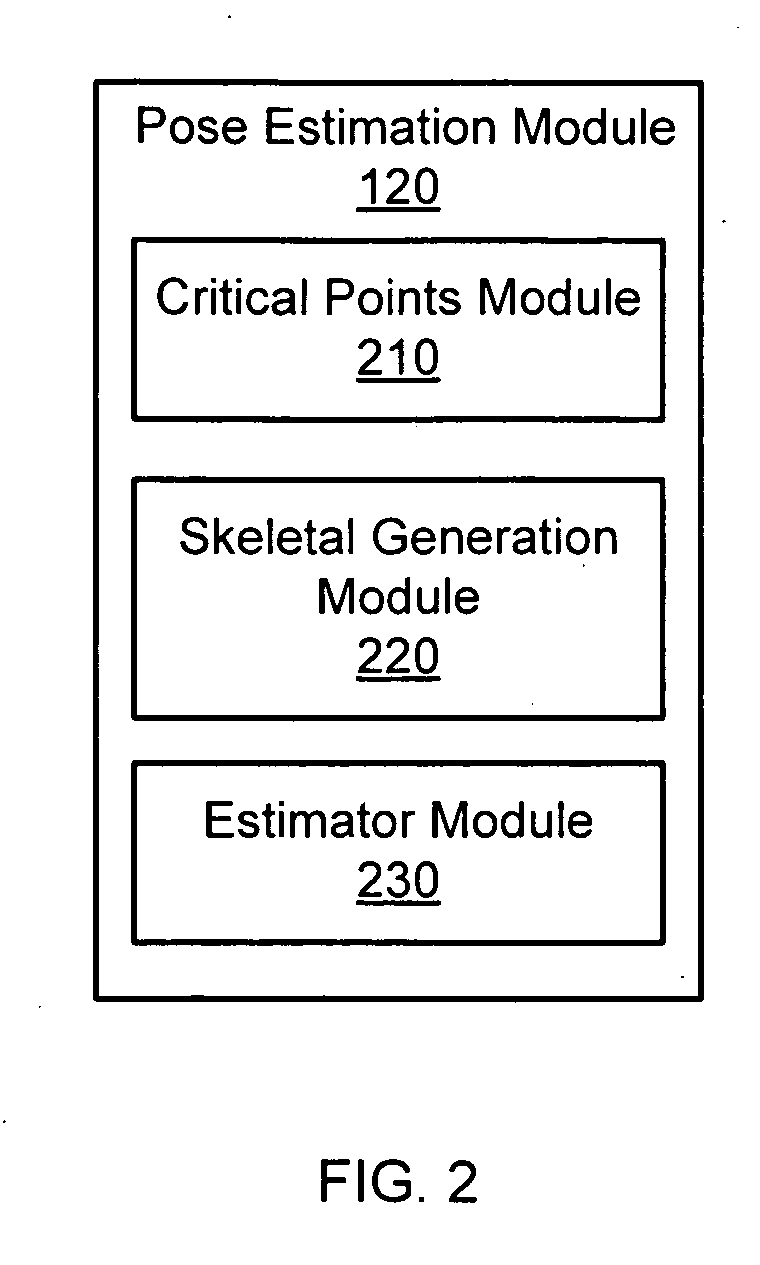

Pose estimation based on critical point analysis

Methods and systems for estimating a pose of a subject. The subject can be a human, an animal, a robot, or the like. A camera receives depth information associated with a subject, a pose estimation module to determine a pose or action of the subject from images, and an interaction module to output a response to the perceived pose or action. The pose estimation module separates portions of the image containing the subject into classified and unclassified portions. The portions can be segmented using k-means clustering. The classified portions can be known objects, such as a head and a torso, that are tracked across the images. The unclassified portions are swept across an x and y axis to identify local minimums and local maximums. The critical points are derived from the local minimums and local maximums. Potential joint sections are identified by connecting various critical points, and the joint sections having sufficient probability of corresponding to an object on the subject are selected.

Owner:THE OHIO STATE UNIV RES FOUND +1

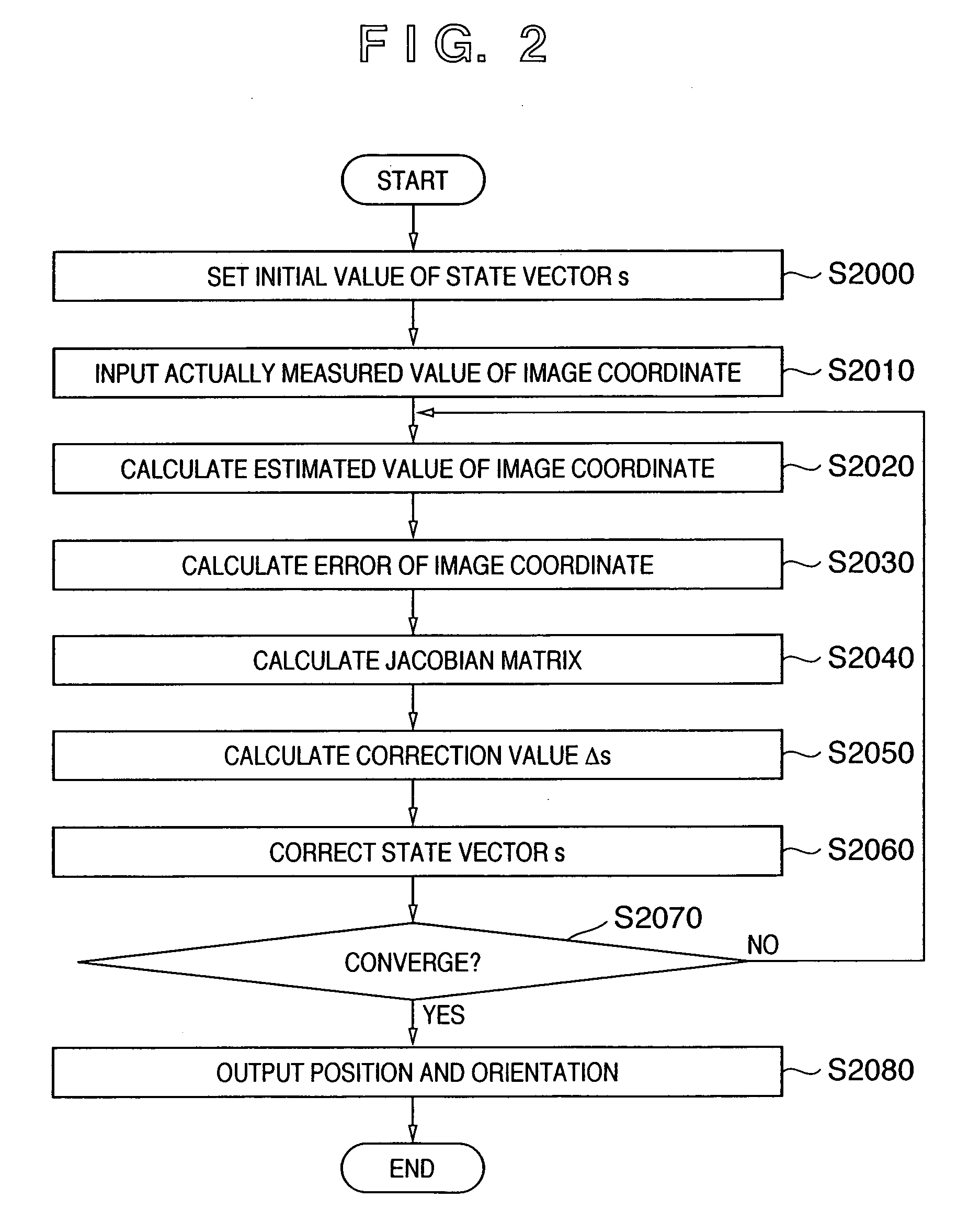

Method for determining the pose of a camera with respect to at least one real object

ActiveUS20120120199A1Reduce computing timeImage enhancementImage analysisIteration loopComputer vision

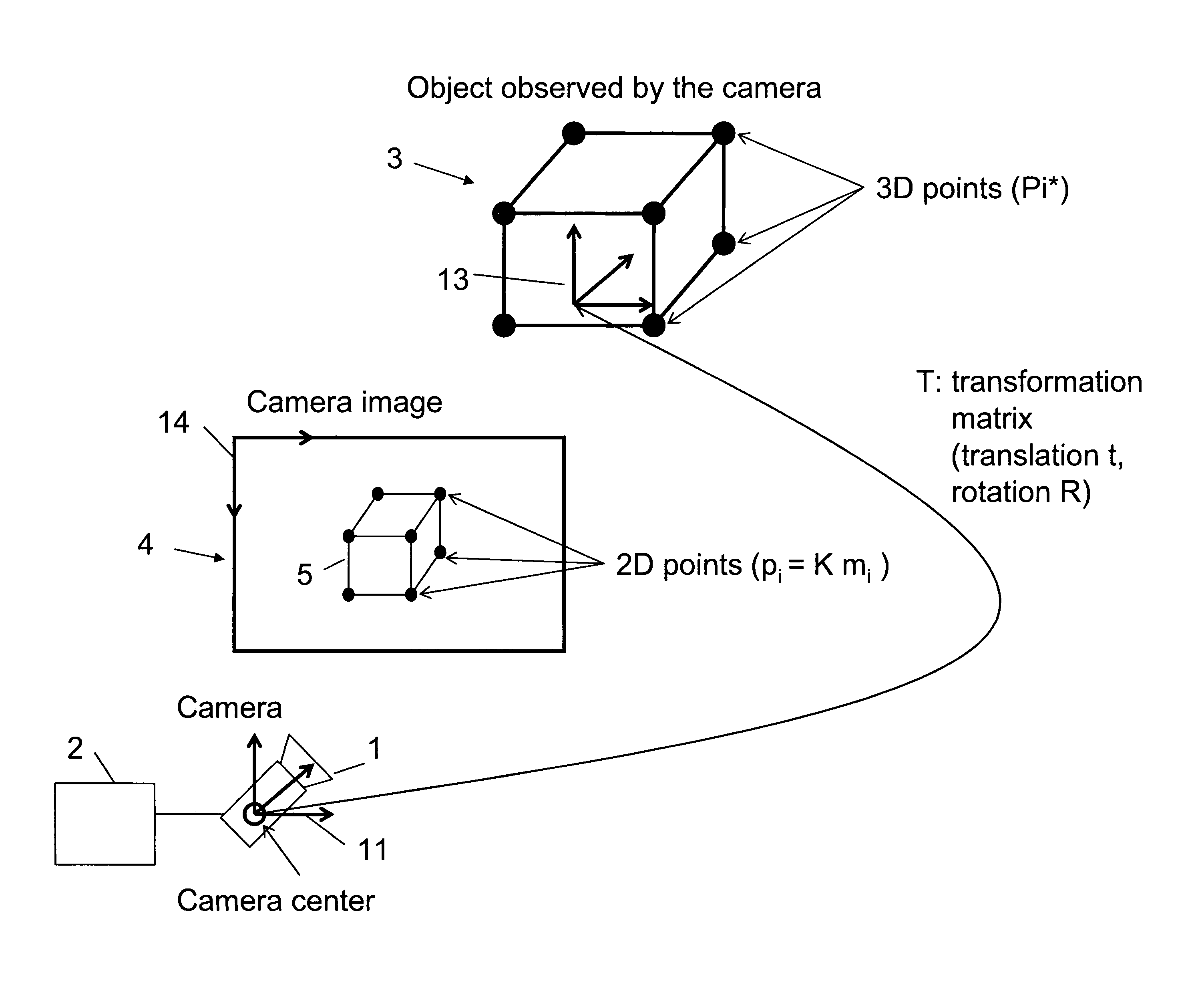

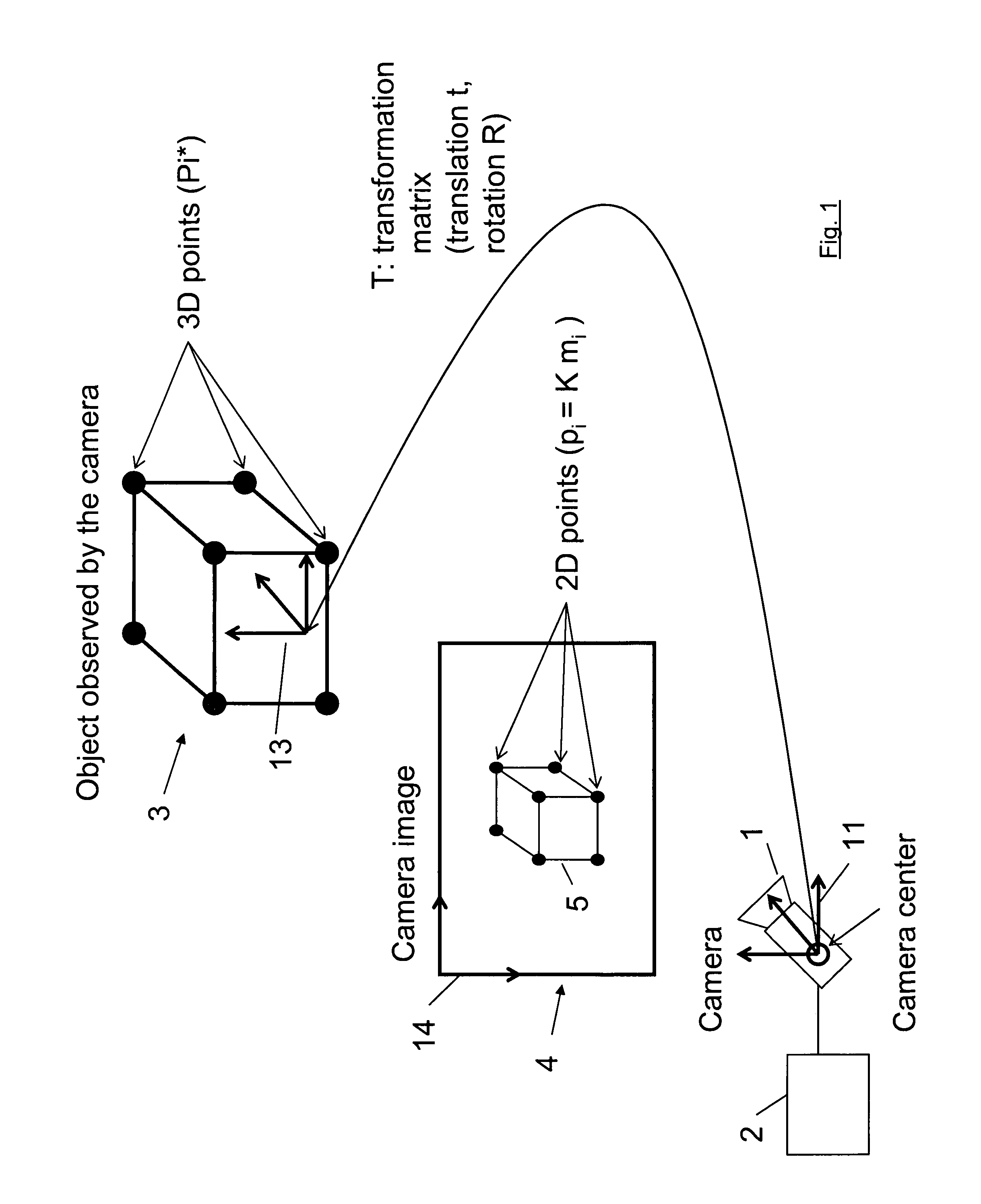

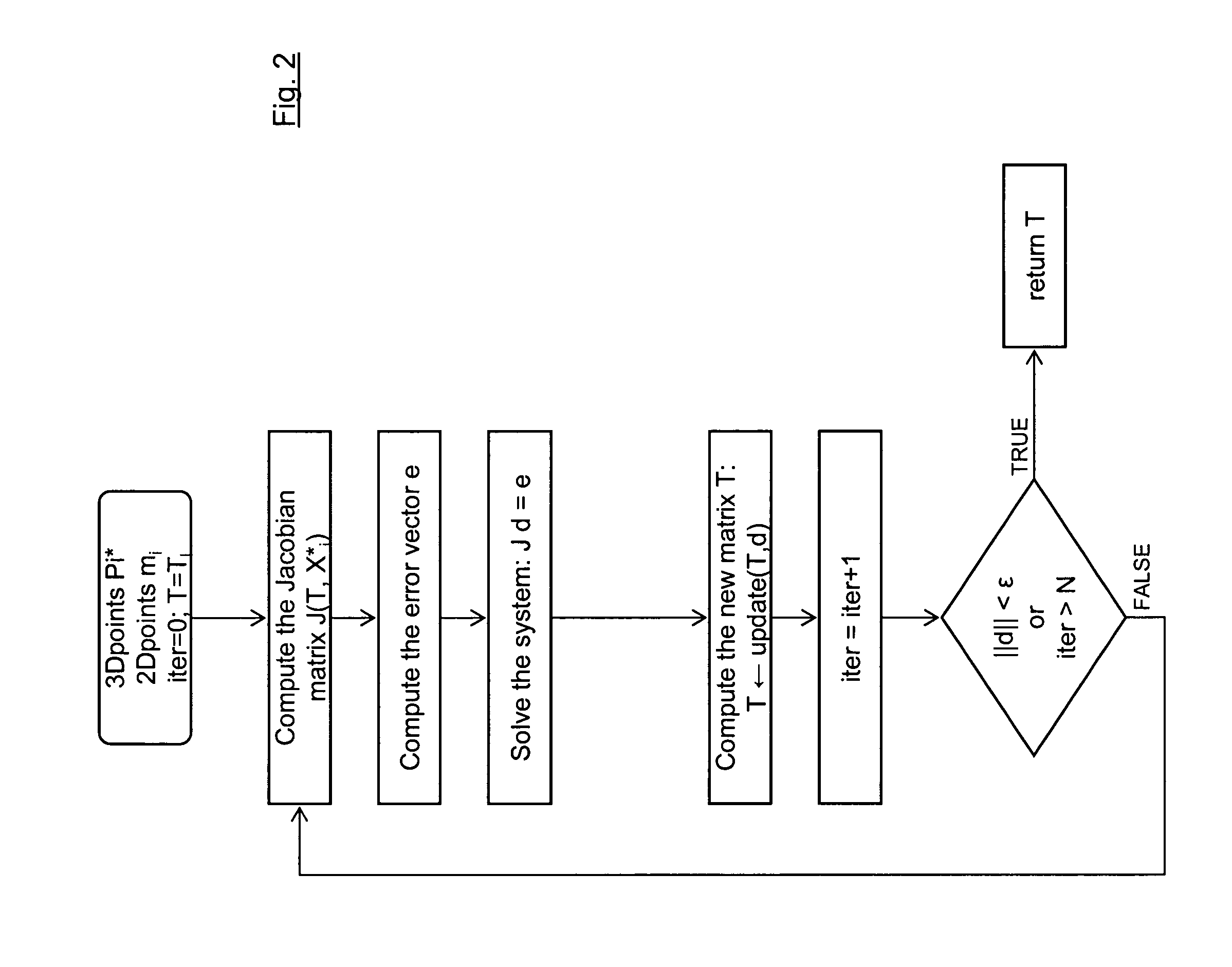

A method for determining the pose of a camera with respect to at least one real object, the method comprises the following steps: operating the camera (1) for capturing a 2-dimensional (or 3-dimensional) image (4) including at least a part of the real object (3), providing a transformation matrix (T) which includes information regarding a correspondence between 3-dimensional points (Pi*) associated with the real object (3) and corresponding 2-dimensional points (or 3-dimensional points) (p,) of the real object (5) as included in the 2-dimensional (or 3-dimensional) image (4), and determining an initial estimate of the transformation matrix (Tl) as an initial basis for an iterative minimization process used for iteratively refining the transformation matrix, determining a Jacobian matrix (J) which includes information regarding the initial estimate of the transformation matrix (Tl) and reference values of 3-dimensional points (Pi*) associated with the real object (3). Further, in the iterative minimization process, in each one of multiple iteration loops determining a respective updated version of the transformation matrix (T) based on a respective previous version of the transformation matrix (T) and based on the Jacobian matrix (J), wherein the Jacobian matrix is not updated during the iterative minimization process, and determining the pose of the camera (1) with respect to the real object (3) using the transformation matrix (T) determined at the end of the iterative minimization process. As a result, the camera pose can be calculated with rather low computational time.

Owner:APPLE INC

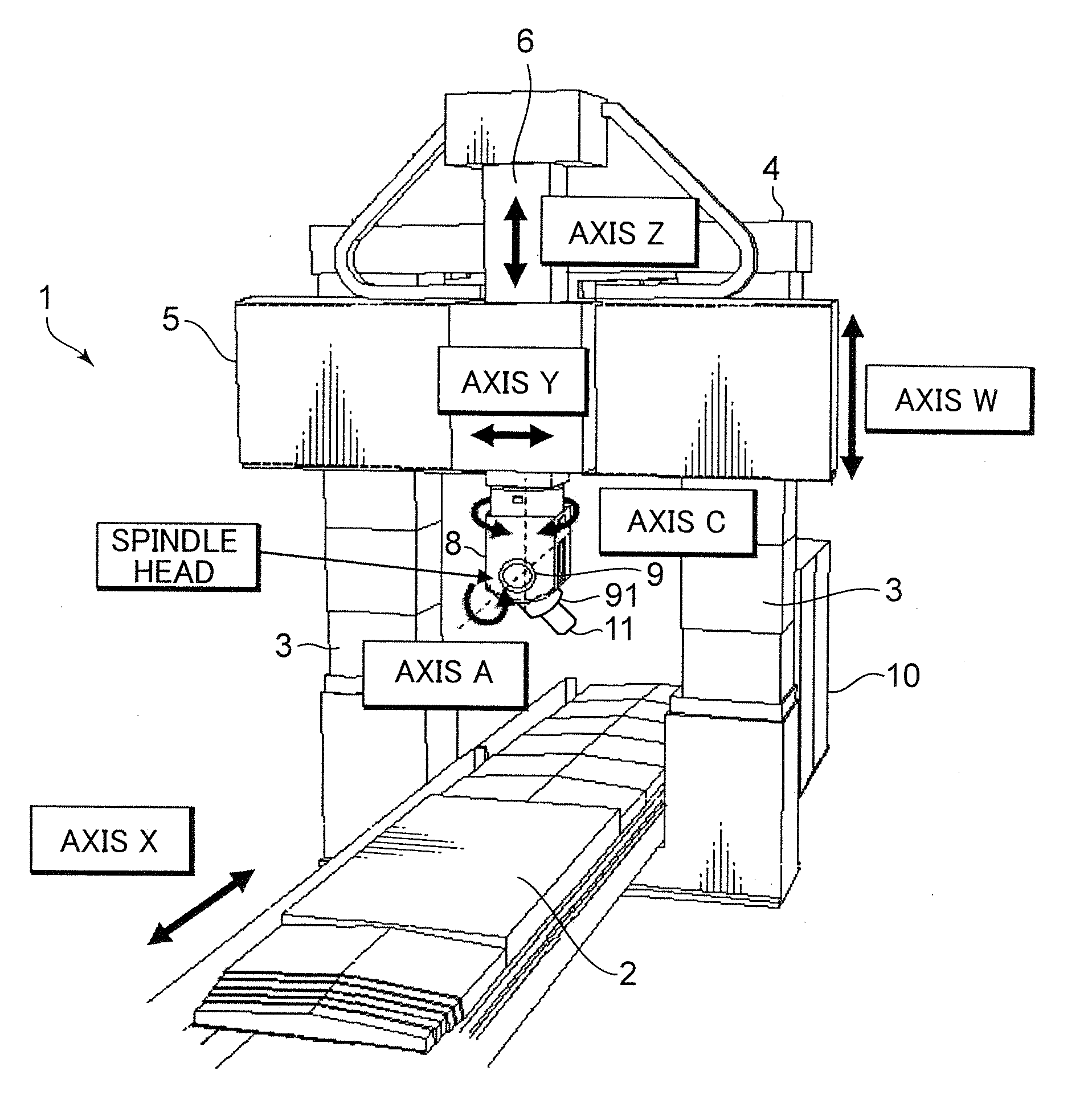

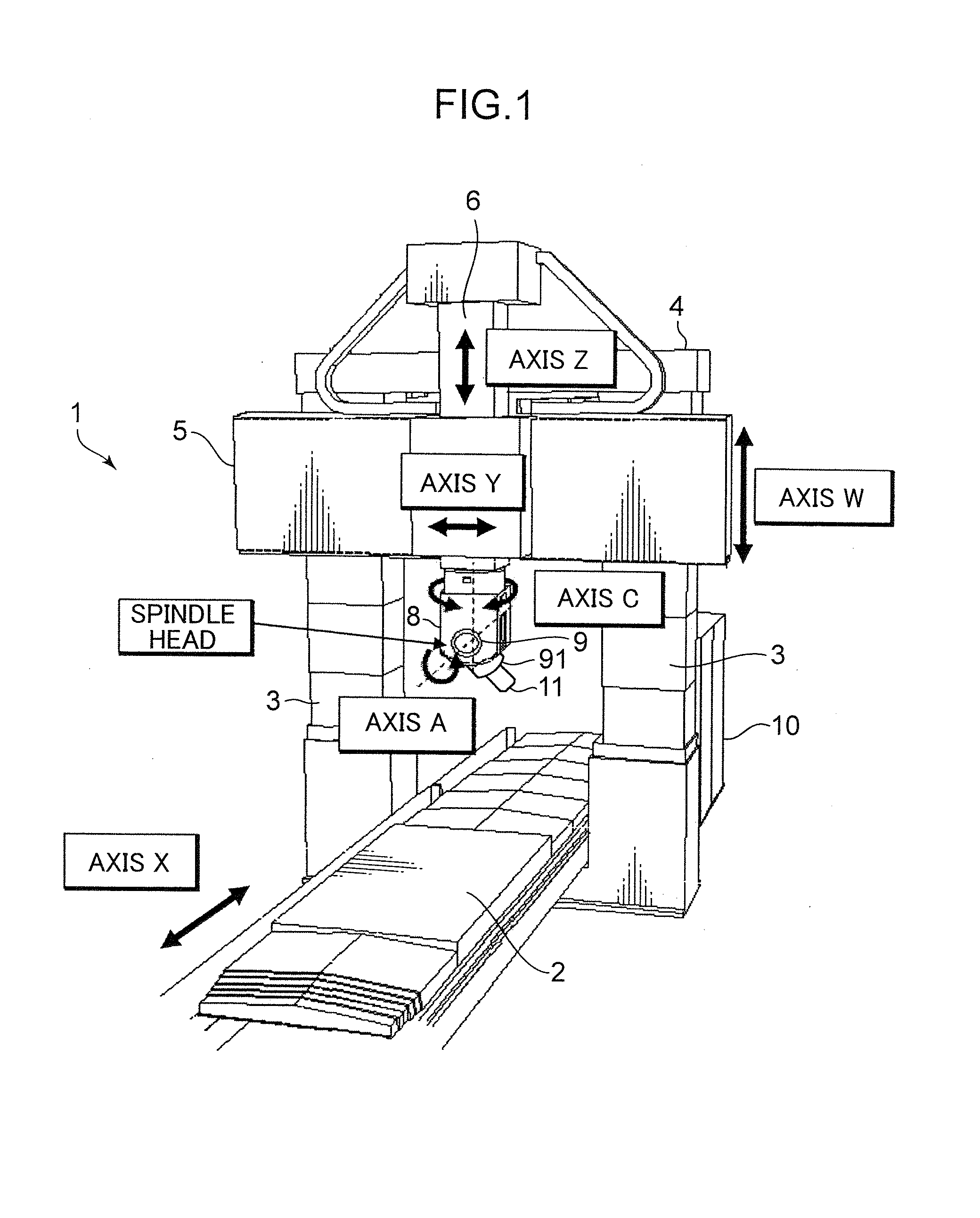

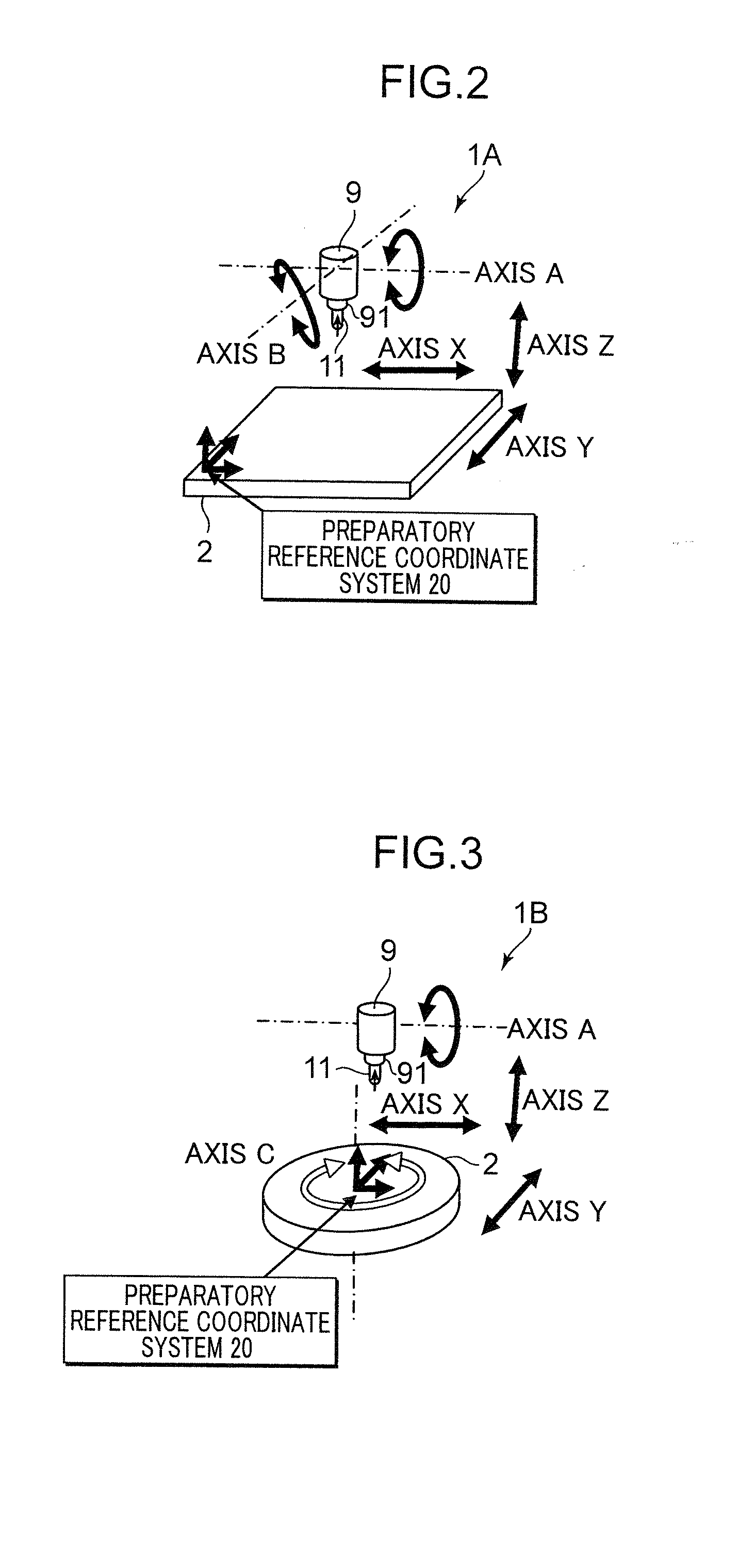

Numerical control device

A main control process is made common to all machine tools by describing in a NC program a tool trajectory including a change in posture in a coordinate system (30) fixed to a machining object (W), fixedly arranging a preparatory reference coordinate system (20) on a machine table (2), representing an installation position of the machining object (W) and a position of a spindle (91) on which a tool (11) is mounted in the preparatory reference coordinate system (20), and containing portions relating to a configuration of axes in a conversion function group of correlation between the position (q) of the spindle (91) and an axis coordinate (r). Thus, the processes of reading the NC program, correction of the tool trajectory and conversion into the trajectory of a spindle position based on the installation position of the machining object, the tool shape, and tool dimensions are made completely common.

Owner:SHINNIPPON KOKI

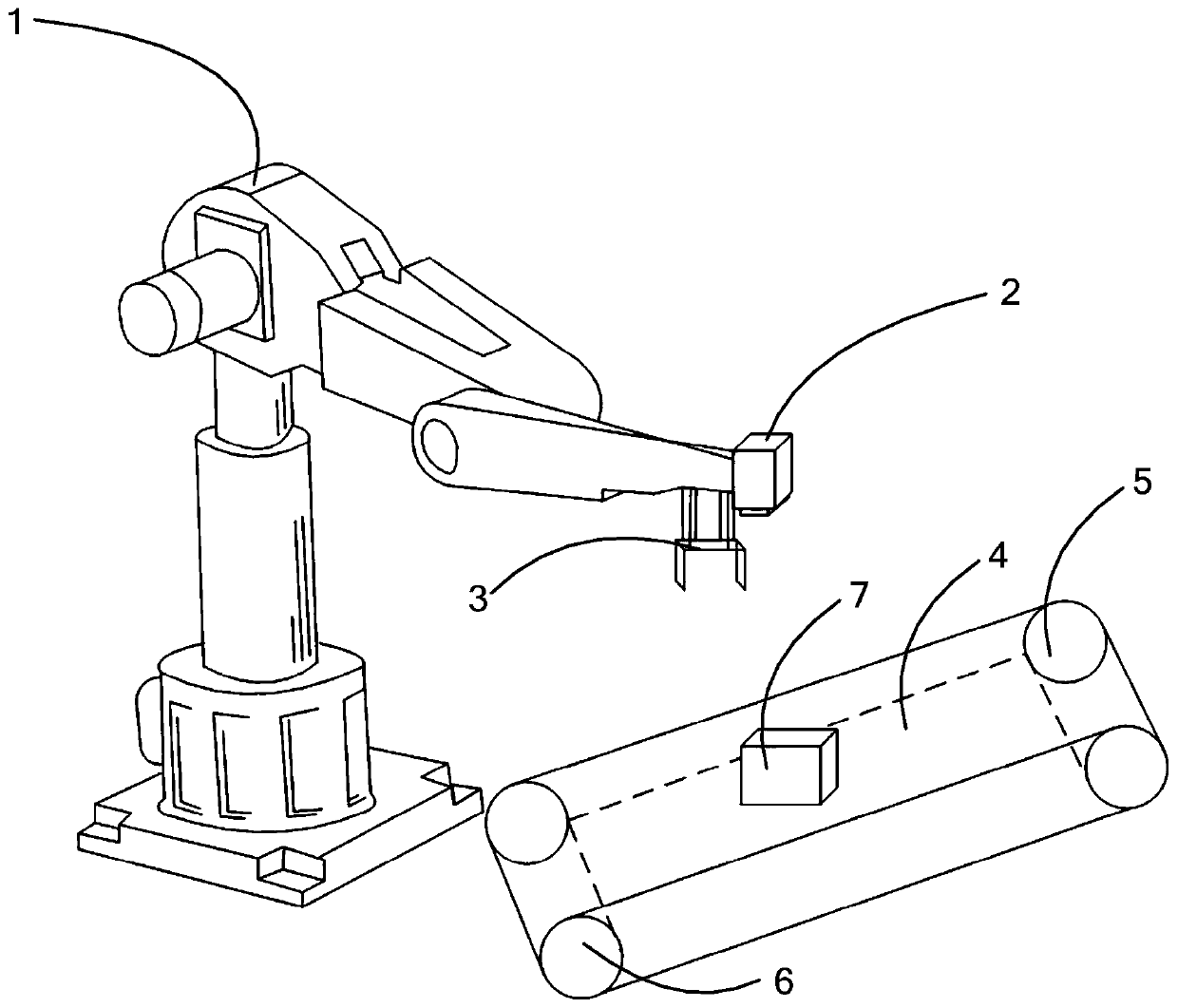

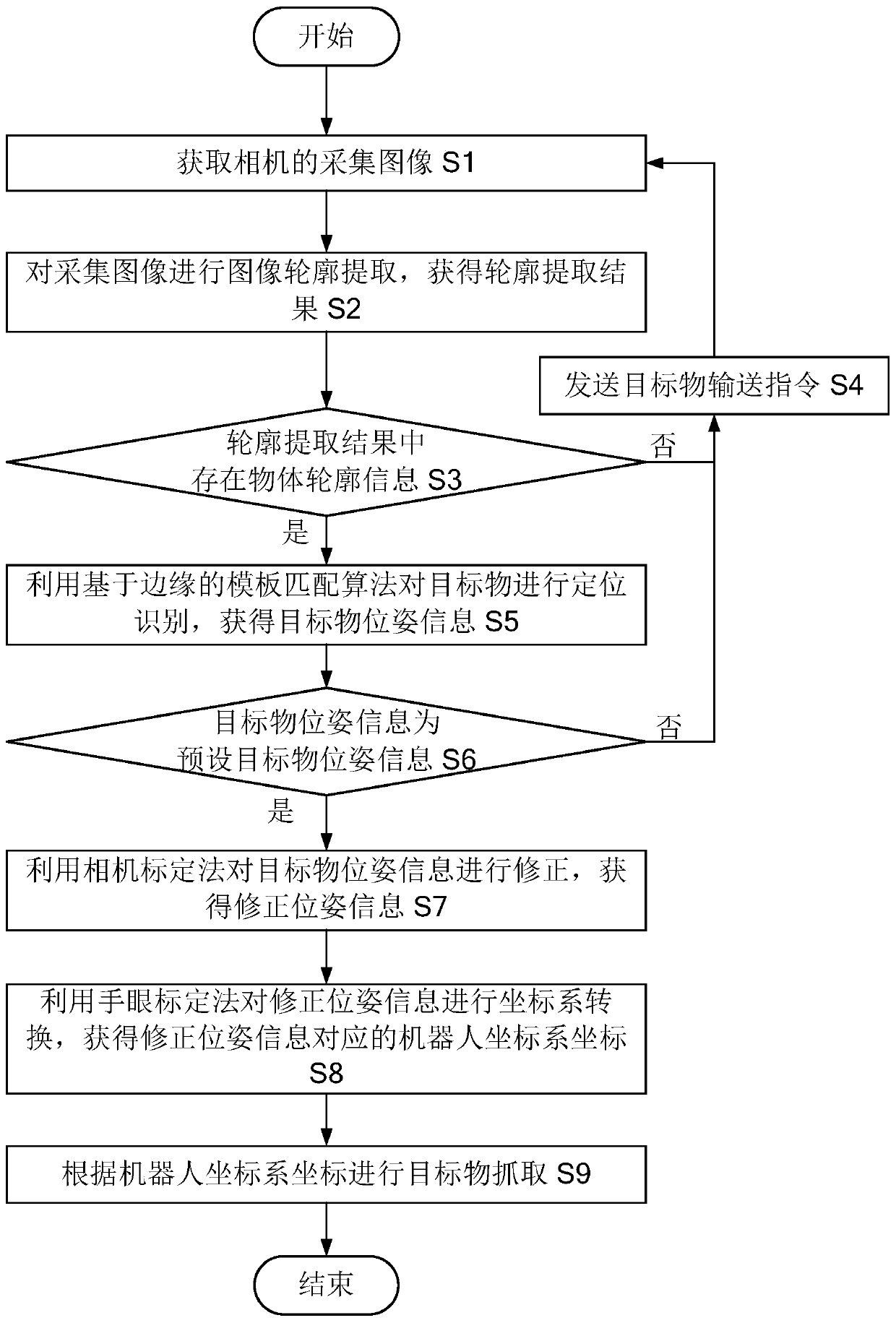

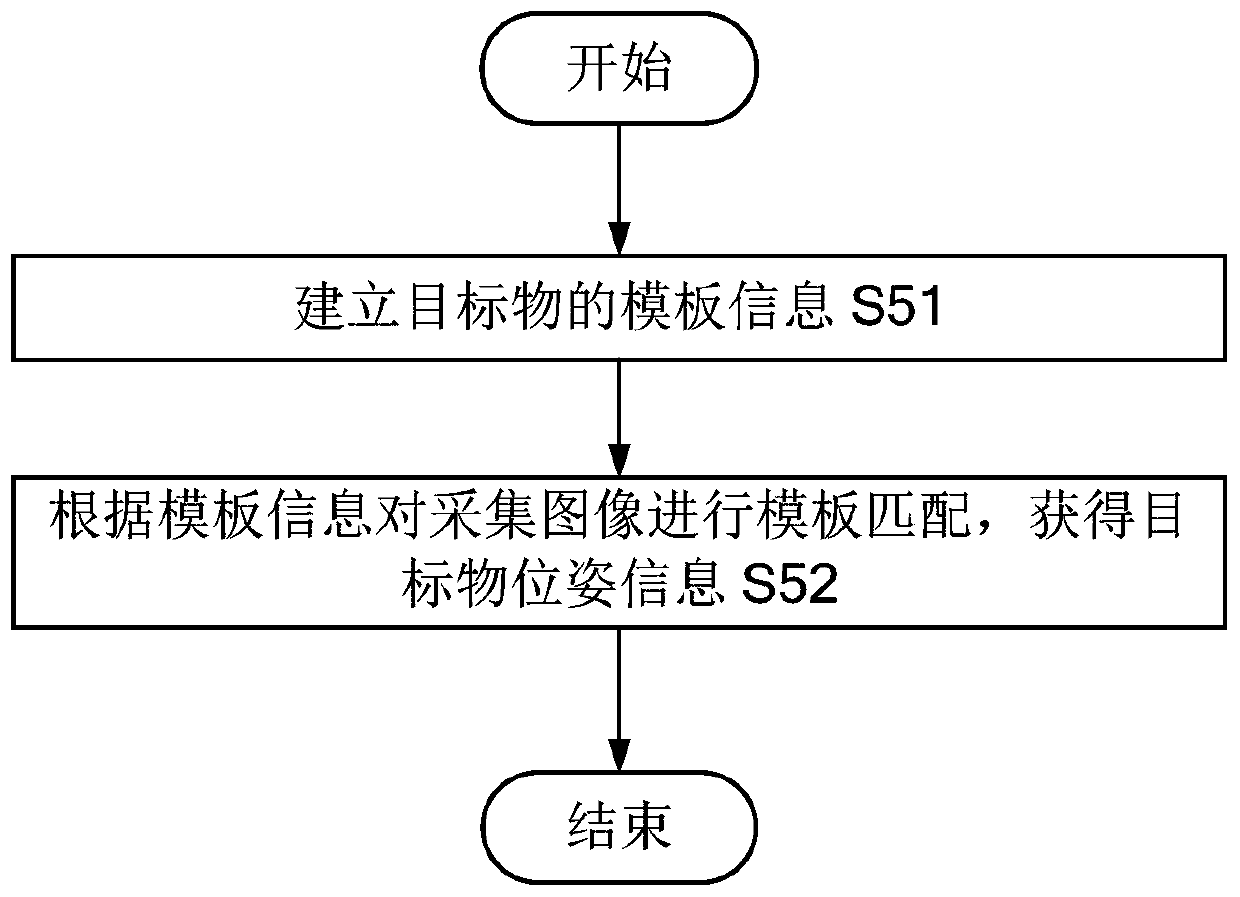

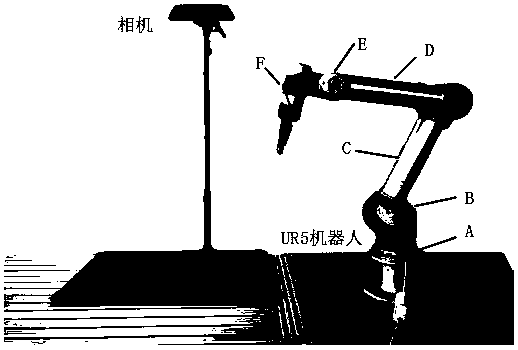

Industrial robot visual recognition positioning grabbing method, computer device and computer readable storage medium

InactiveCN110660104AImprove stabilityHigh precisionImage enhancementProgramme-controlled manipulatorComputer graphics (images)Engineering

The invention provides an industrial robot visual recognition positioning grabbing method, a computer device and a computer readable storage medium. The method comprises the steps that image contour extraction is performed on an acquired image; when the object contour information exists in the contour extraction result, positioning and identifying the target object by using an edge-based templatematching algorithm; when the target object pose information is preset target object pose information, correcting the target object pose information by using a camera calibration method; and performingcoordinate system conversion on the corrected pose information by using a hand-eye calibration method. The computer device comprises a controller, and the controller is used for implementing the industrial robot visual recognition positioning grabbing method when executing the computer program stored in the memory. A computer program is stored in the computer readable storage medium, and when thecomputer program is executed by the controller, the industrial robot visual recognition positioning grabbing method is achieved. The method provided by the invention is higher in recognition and positioning stability and precision.

Owner:GREE ELECTRIC APPLIANCES INC

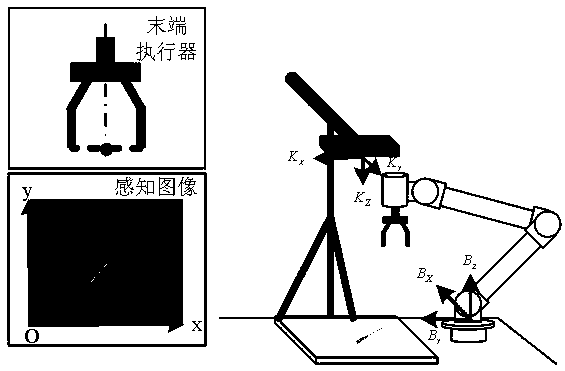

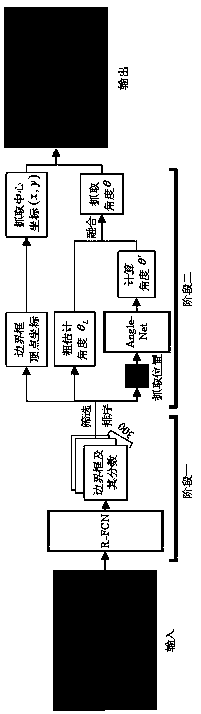

Cascaded convolutional neural network-based quick detection method of irregular-object grasping pose of robot

InactiveCN108510062AImprove real-time performanceGuaranteed to be dynamically scalableImage enhancementImage analysisColor imageNerve network

The invention relates to a cascaded convolutional neural network-based quick detection method of an irregular-object grasping pose of a robot. Firstly, a cascaded-type two-stage convolutional-neural-network model of a position-attitude rough-to-fine form is constructed, in a first stage, a region-based fully convolutional network (R-FCN) is adopted to realize grasping positioning and rough estimation of a grasping angle, and in a second stage, accurate calculation of the grasping angle is realized through constructing a new Angle-Net model; and then current scene images containing to-be-grasped objects are collected to be used as original on-site image samples to be used for training, the two-stage convolutional-neural-network model is trained by means of a transfer learning mechanism, then each collected monocular color image is input to the cascaded-type two-stage convolutional-neural-network model in online running, and finally, an end executor of the robot is driven by an obtainedgrasping position and attitude for object grasping control. According to the method, grasping detection accuracy is high, detection speed of the irregular-object grasping pose of the robot is effectively increased, and real-time performance of running of a grasping attitude detection algorithm is improved.

Owner:SOUTHEAST UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com