Patents

Literature

2427 results about "Visual recognition" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Visual or Image Recognition is a popular research area and technology, which finds immense use in many disciplines in both industrial and consumer focused applications. Its part of a broader domain called computer vision and benefits from applying Machine Learning (ML) and Deep Learning (DL) algorithms.

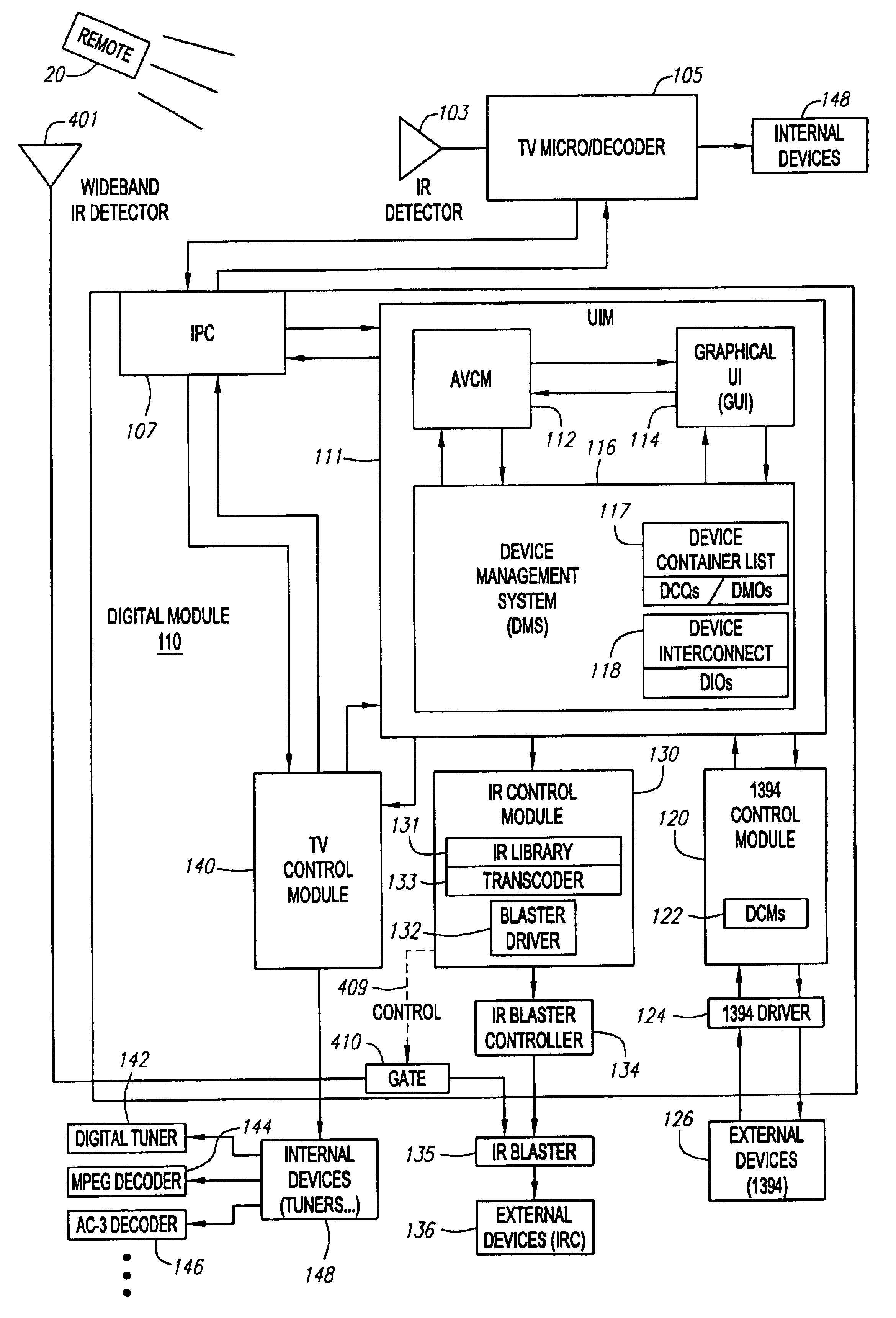

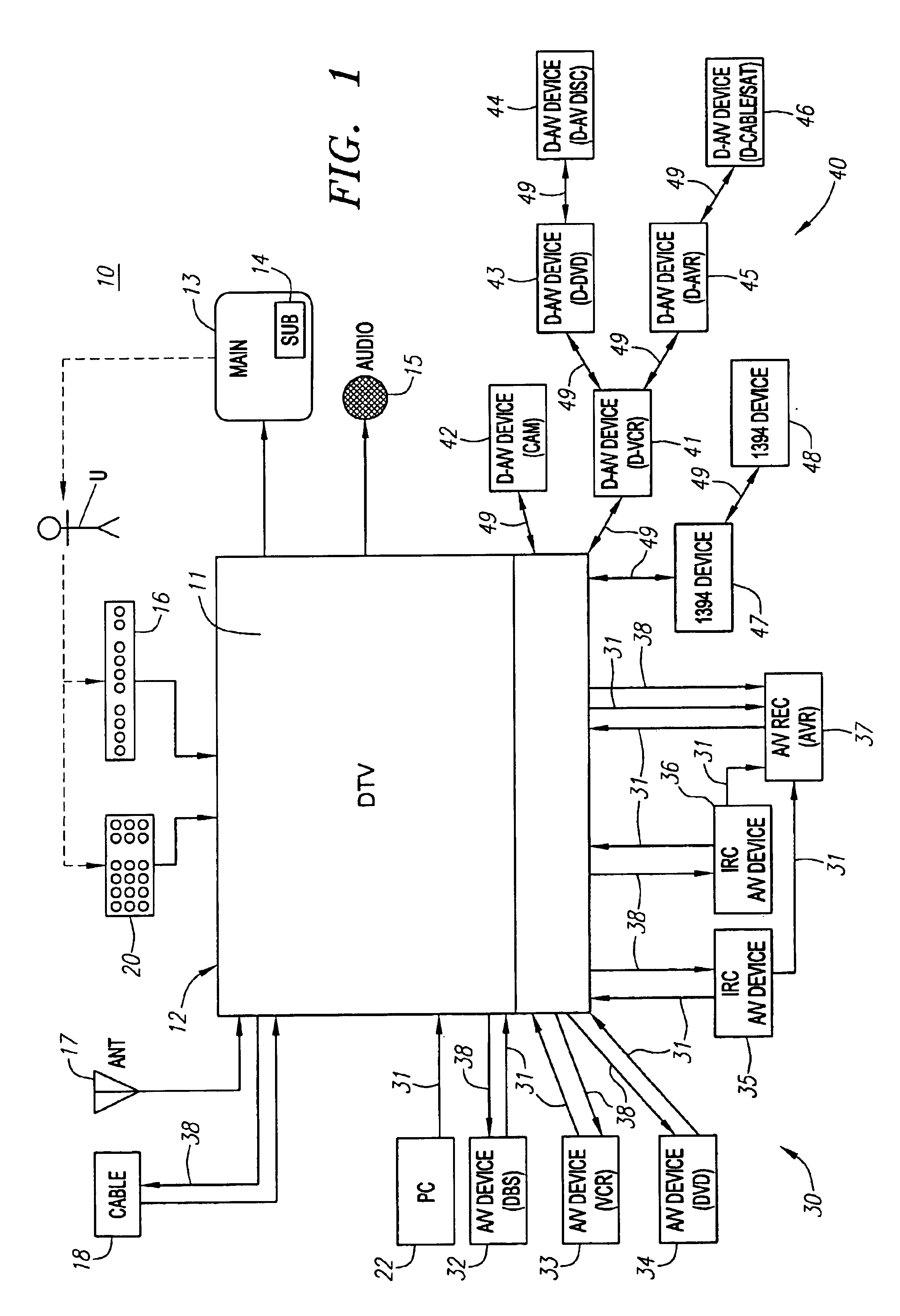

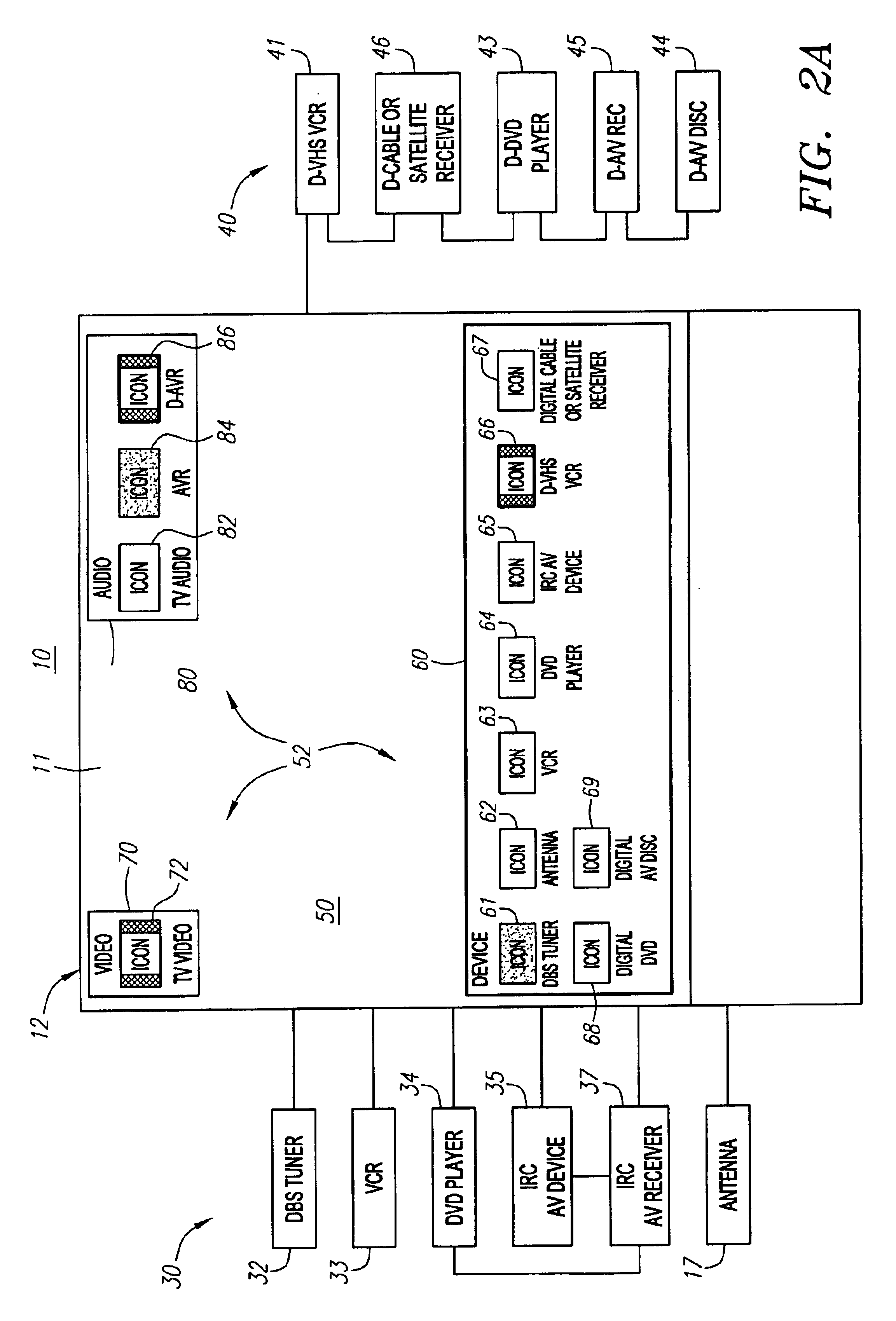

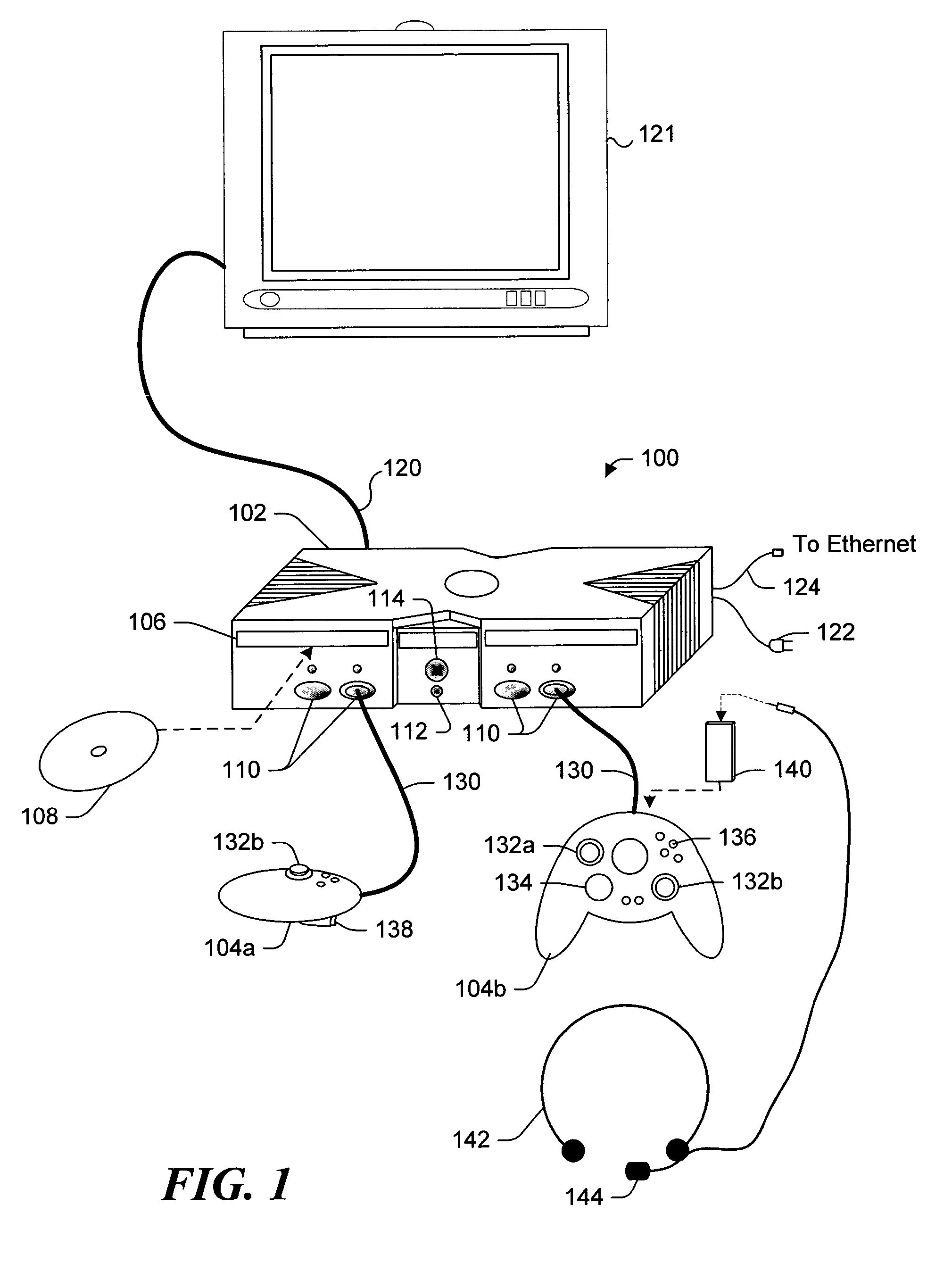

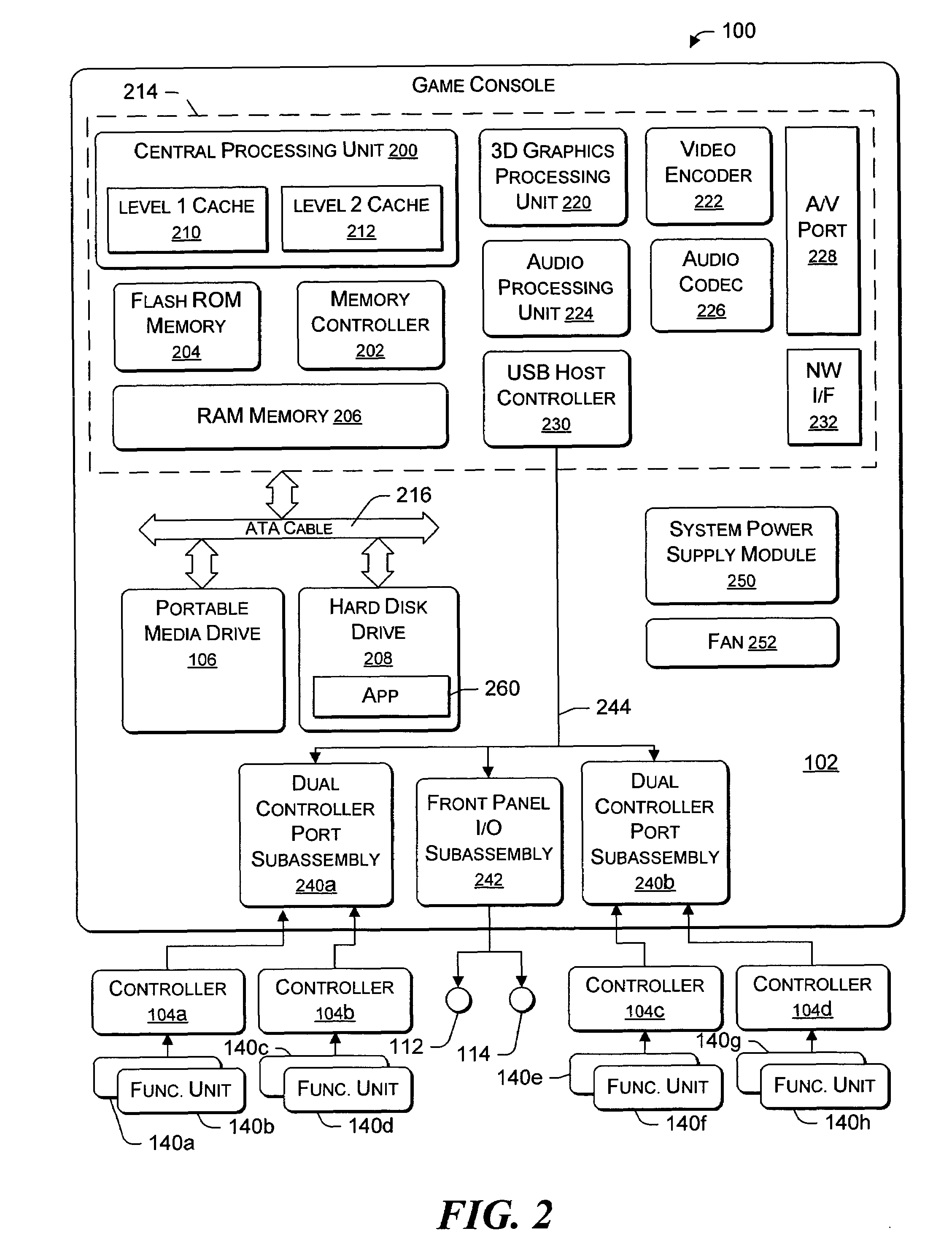

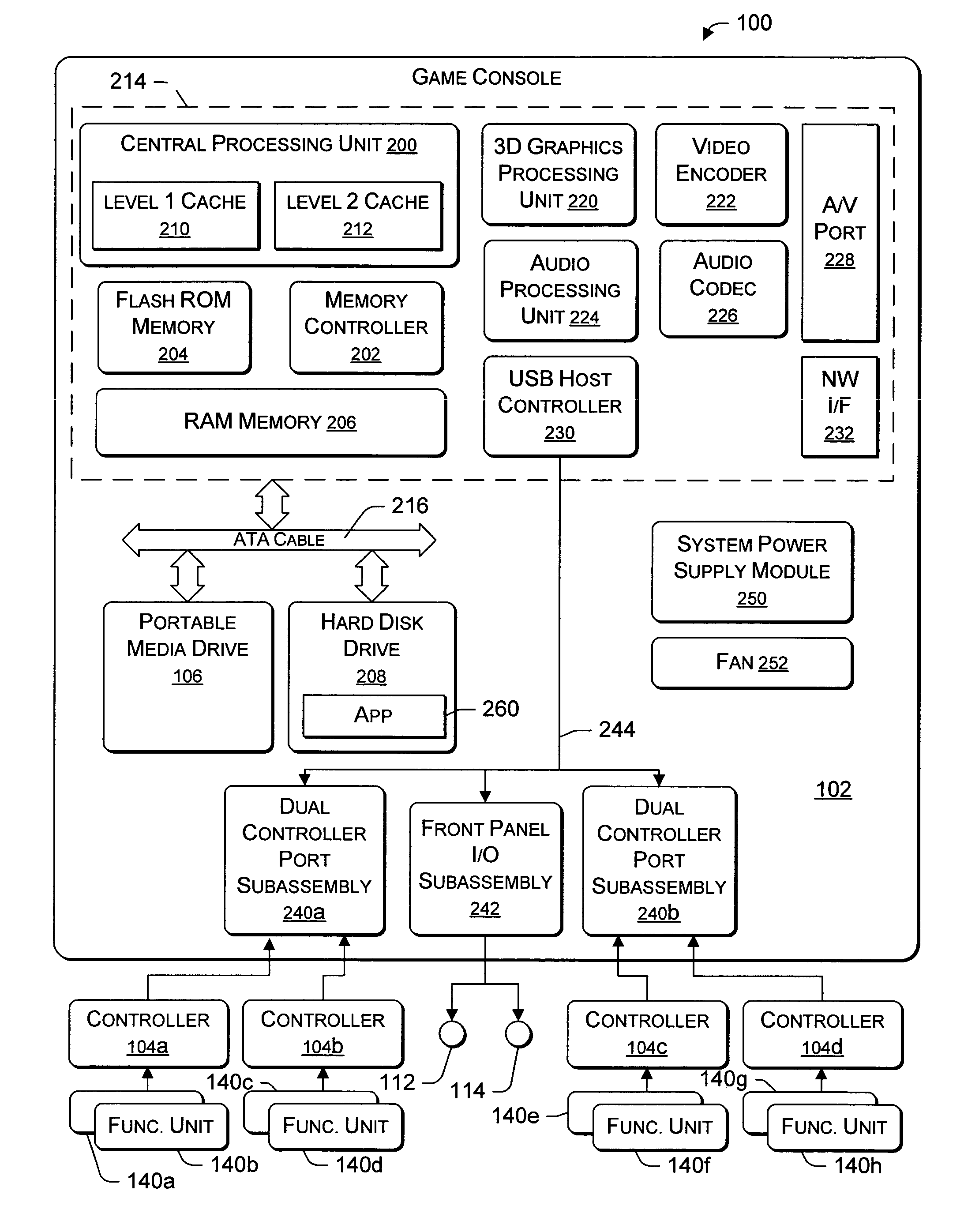

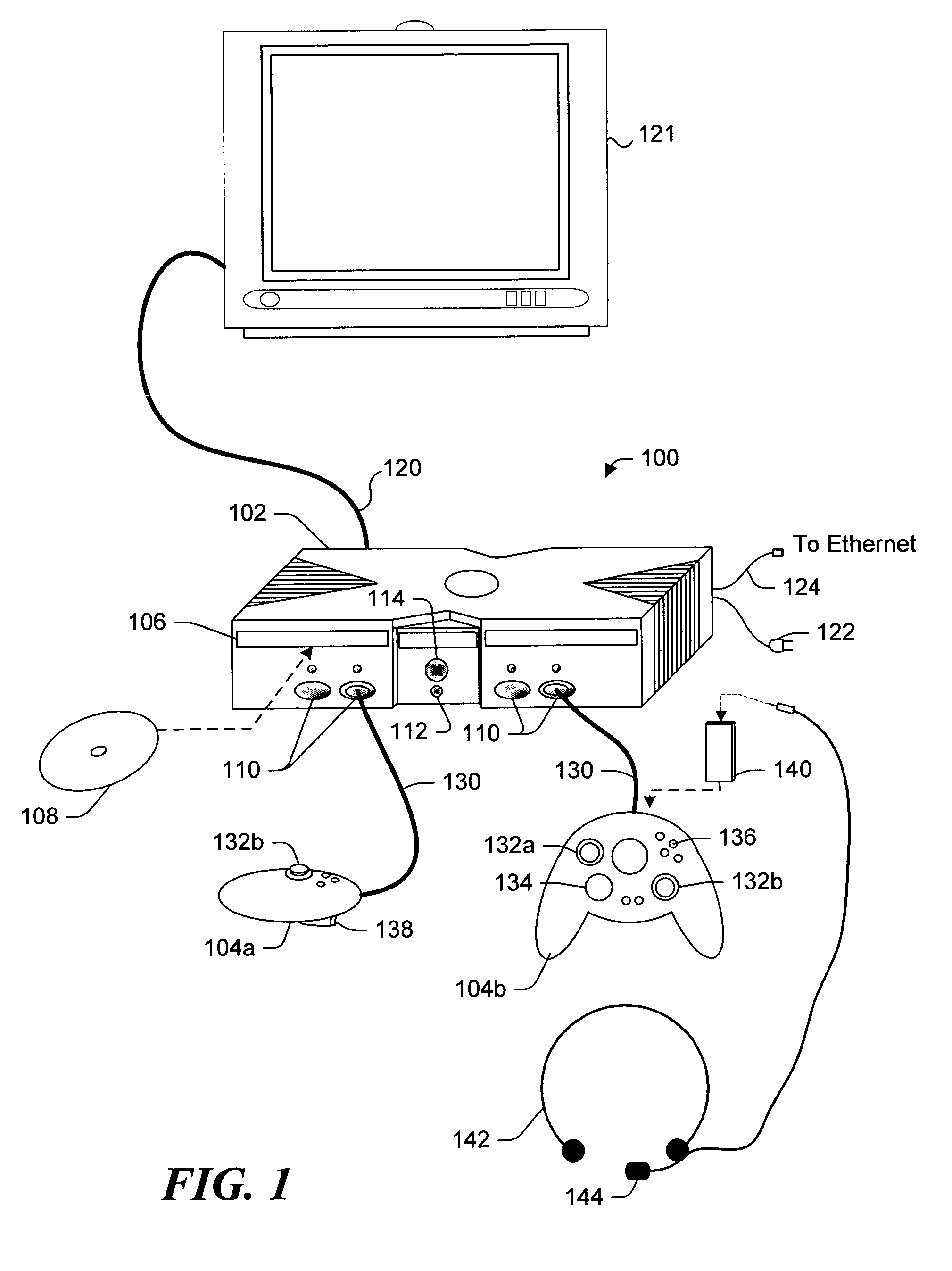

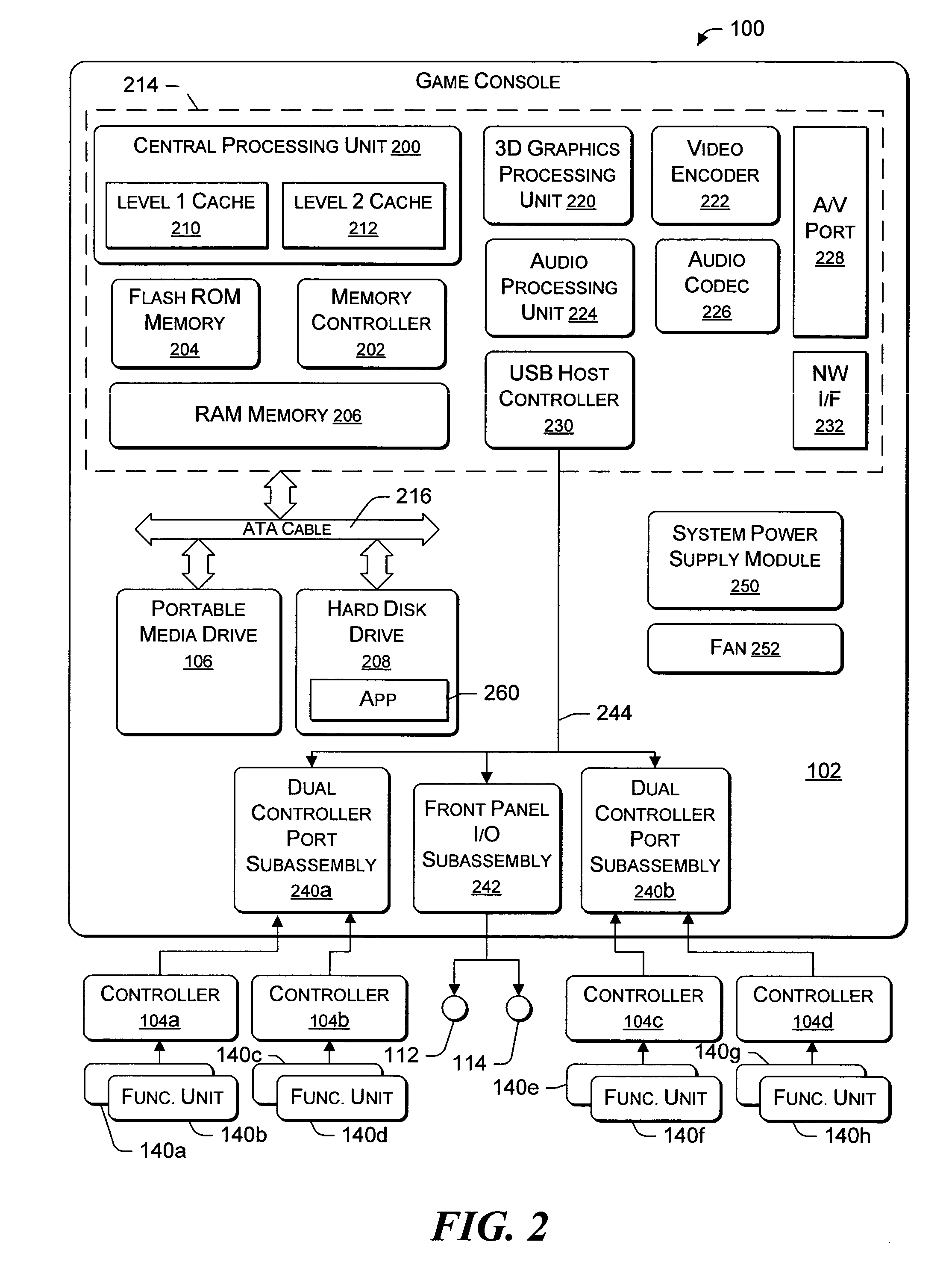

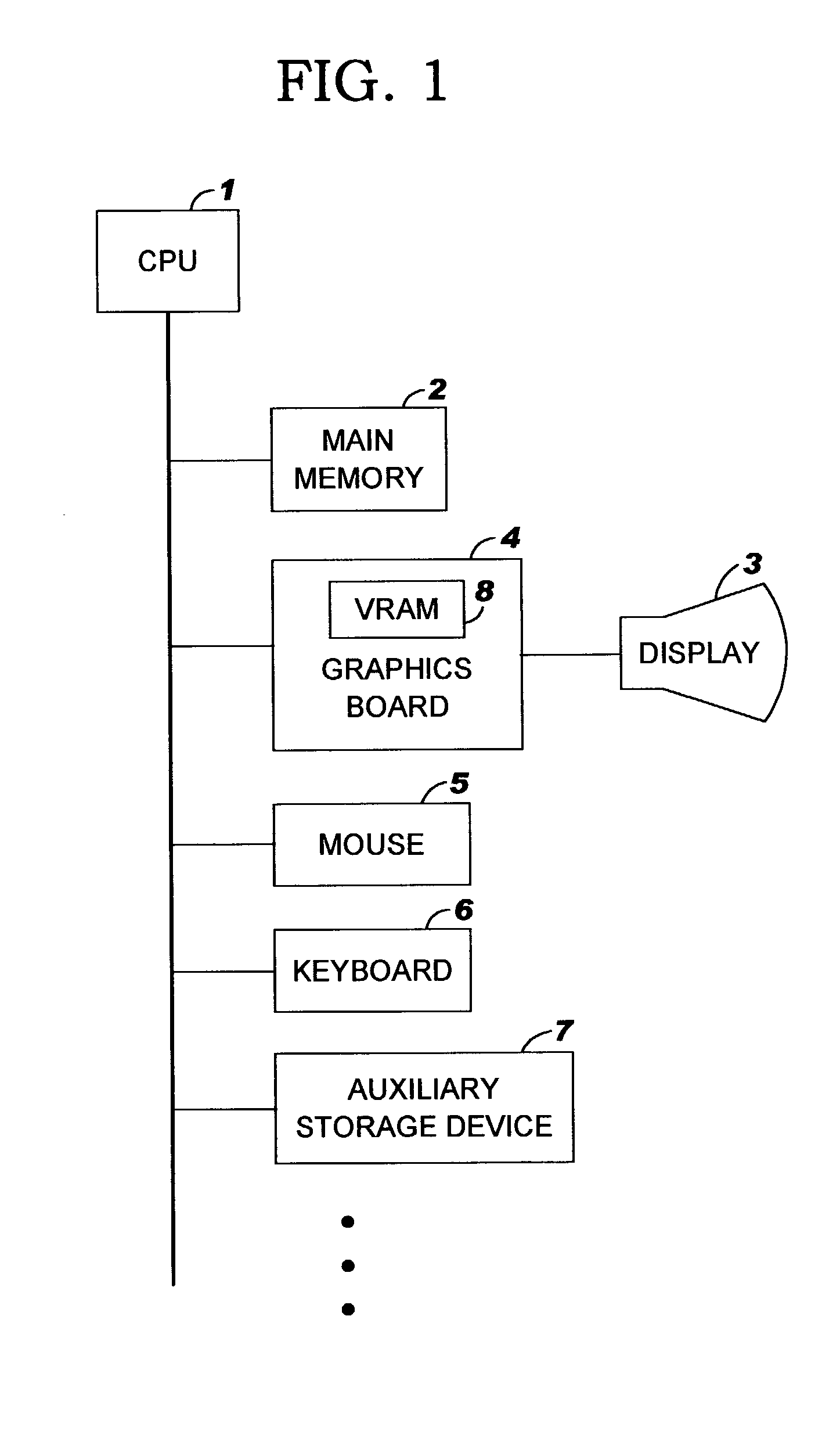

Control system and user interface for network of input devices

InactiveUS6930730B2Avoid signalingTelevision system detailsElectric signal transmission systemsGraphicsControl layer

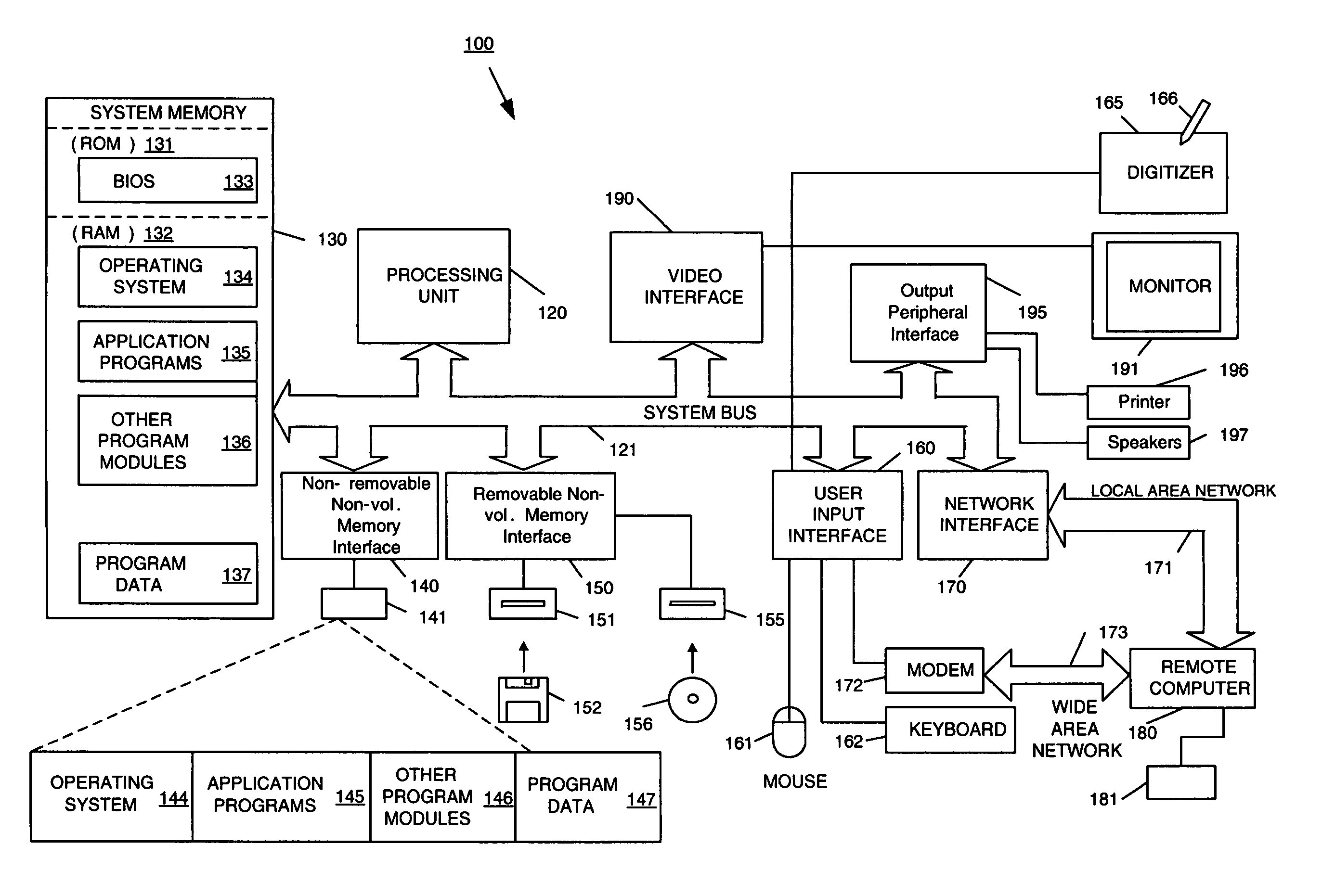

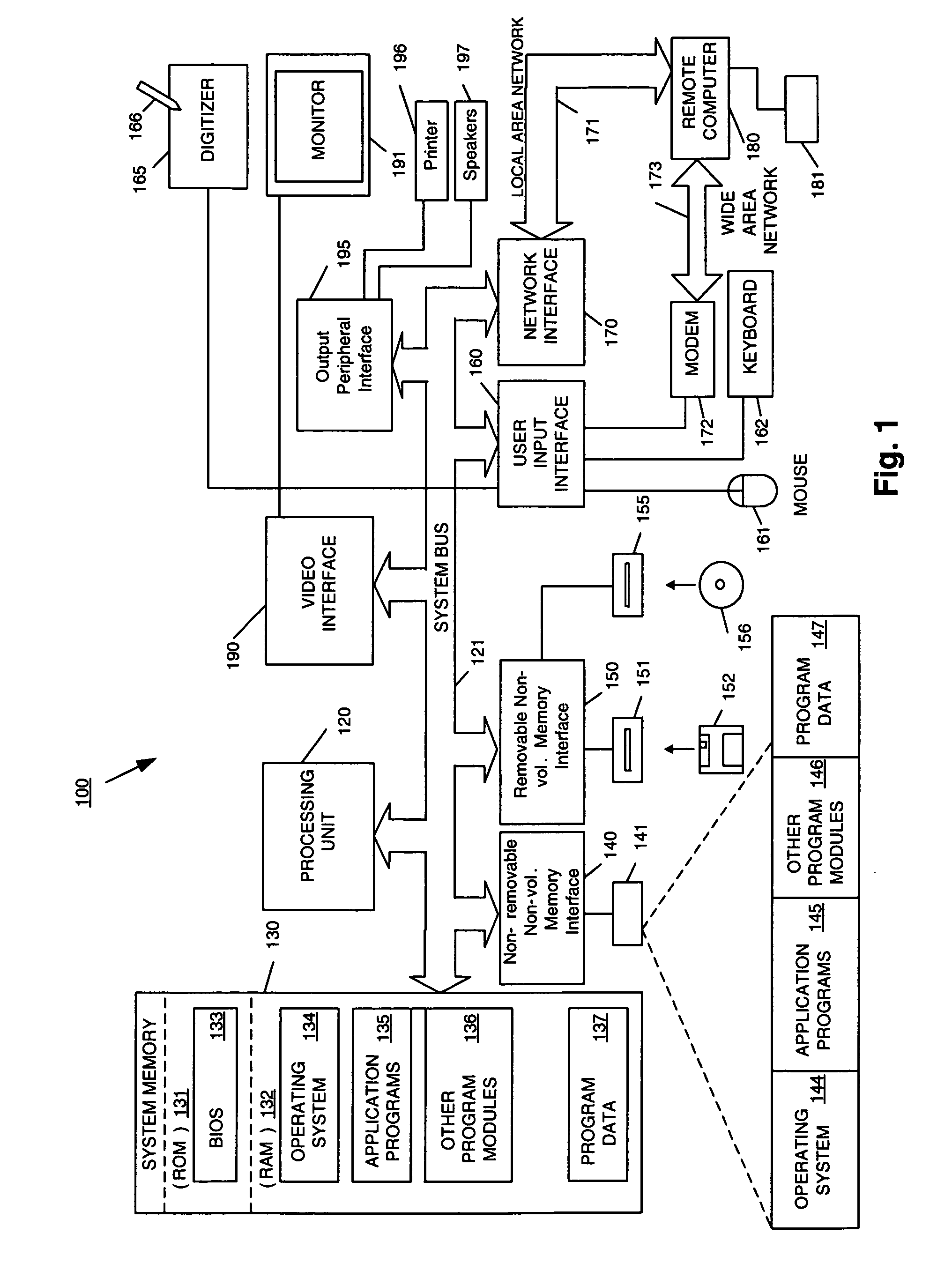

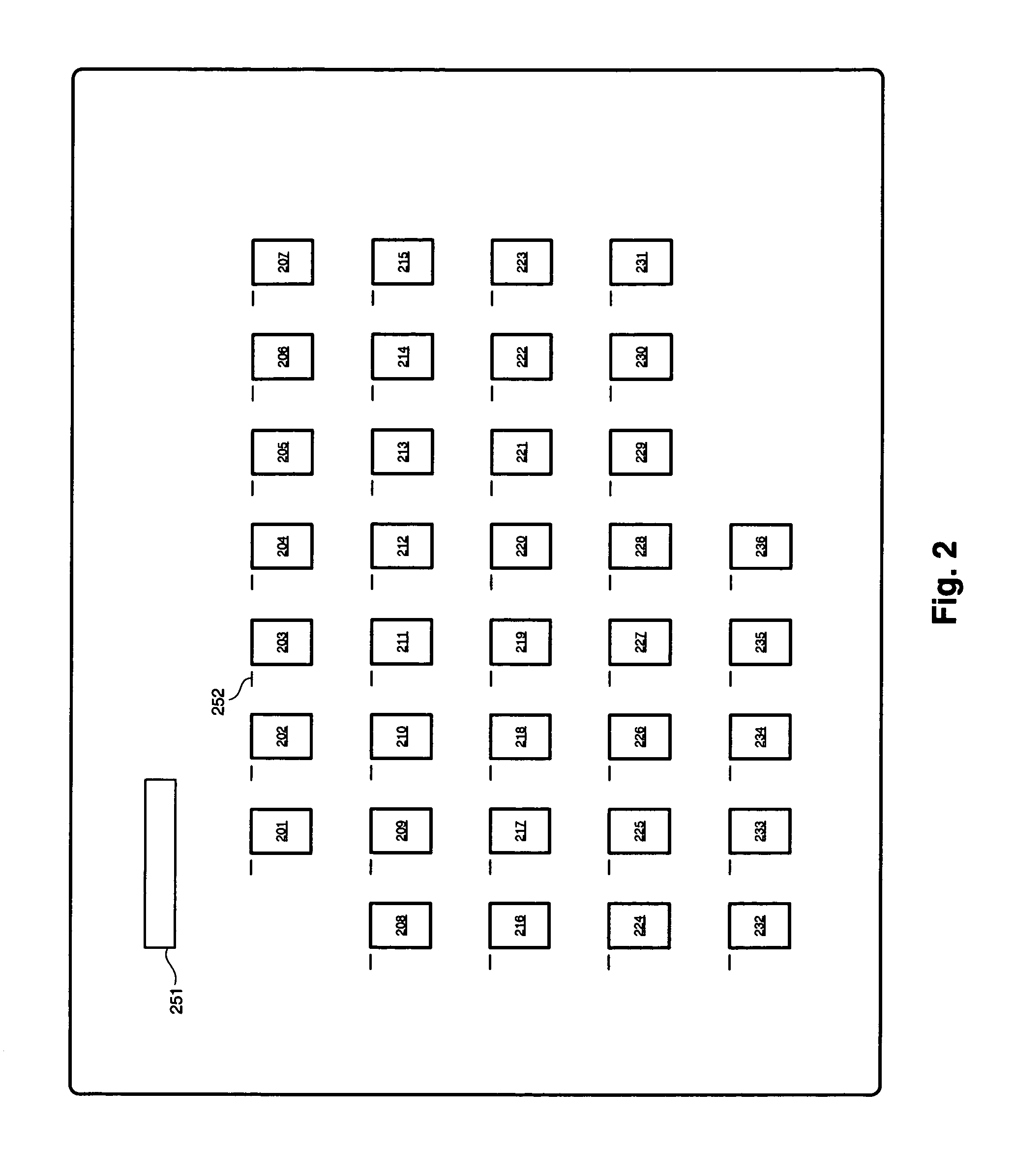

Apparatus, methods, and systems for centrally and uniformly controlling the operation of a variety of devices, such as communication, consumer electronic, audio-video, analog, digital, 1394, and the like, over a variety of protocols within a network system and, more particularly, a control system and uniform user interface for centrally controlling these devices in a manner that appears seamless and transparent to the user. In a preferred embodiment, a command center or hub of a network system includes a context and connection permutation sensitive control system that enables centralized and seamless integrated control of all types of input devices. The control system preferably includes a versatile icon based graphical user interface that provides a uniform, on-screen centralized control system for the network system. The user interface, which includes a visual recognition system, enables the user to transparently control multiple input devices over a variety of protocols while operating on a single control layer of an input command device. In an alternative embodiment, the control system also enables gated signal pass-through control while avoiding signal jamming.

Owner:MITSUBISHI ELECTRIC US

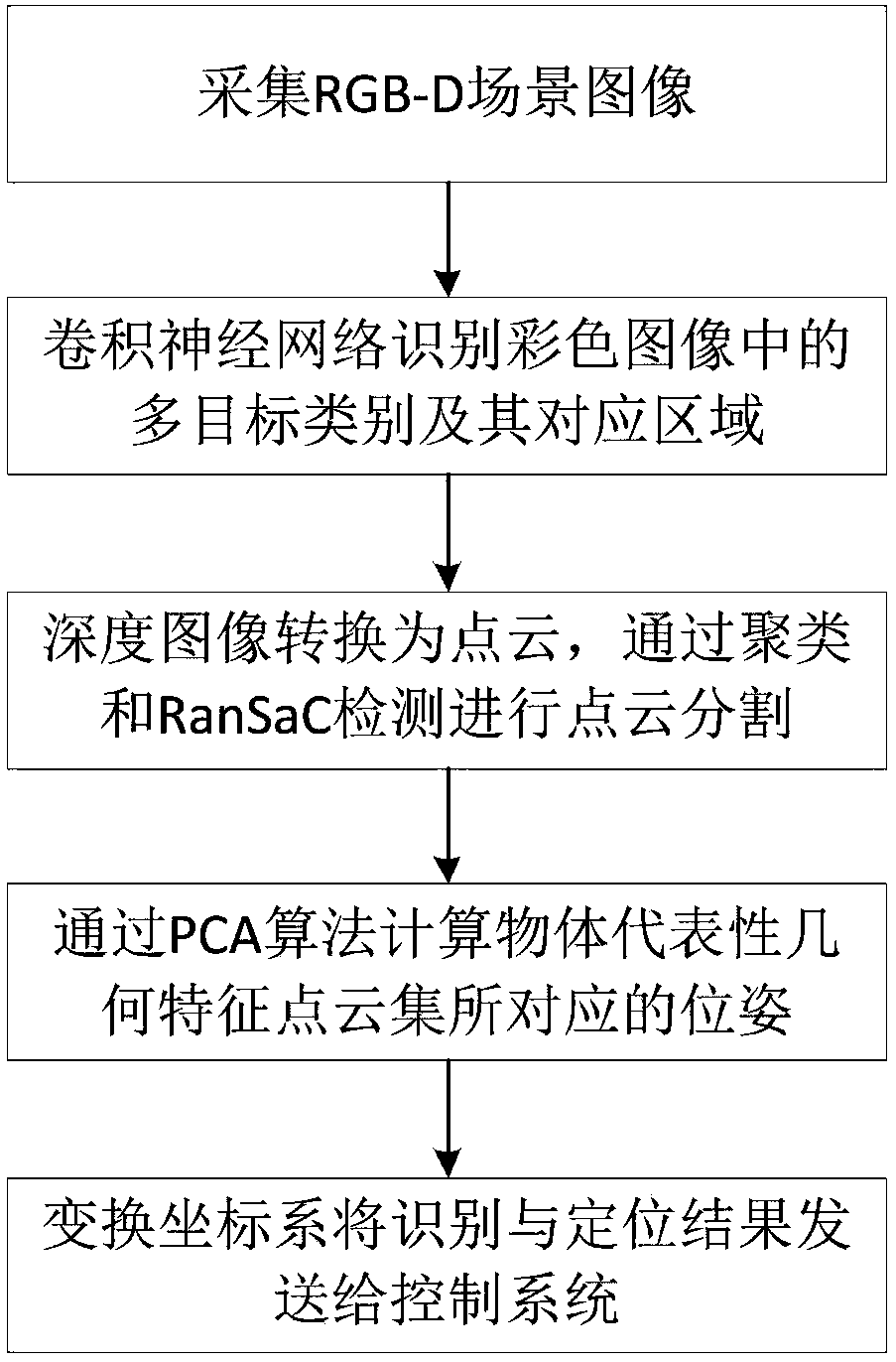

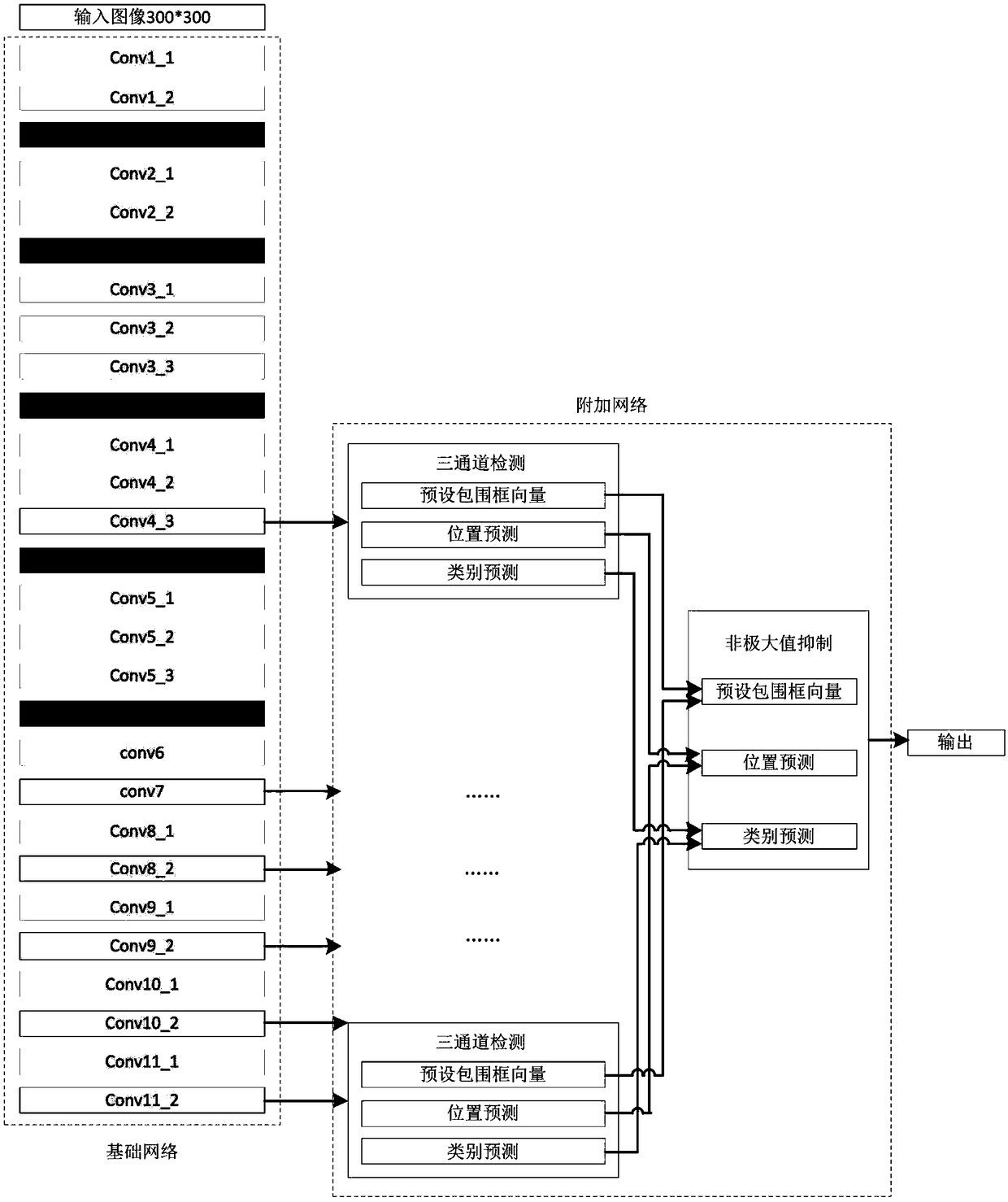

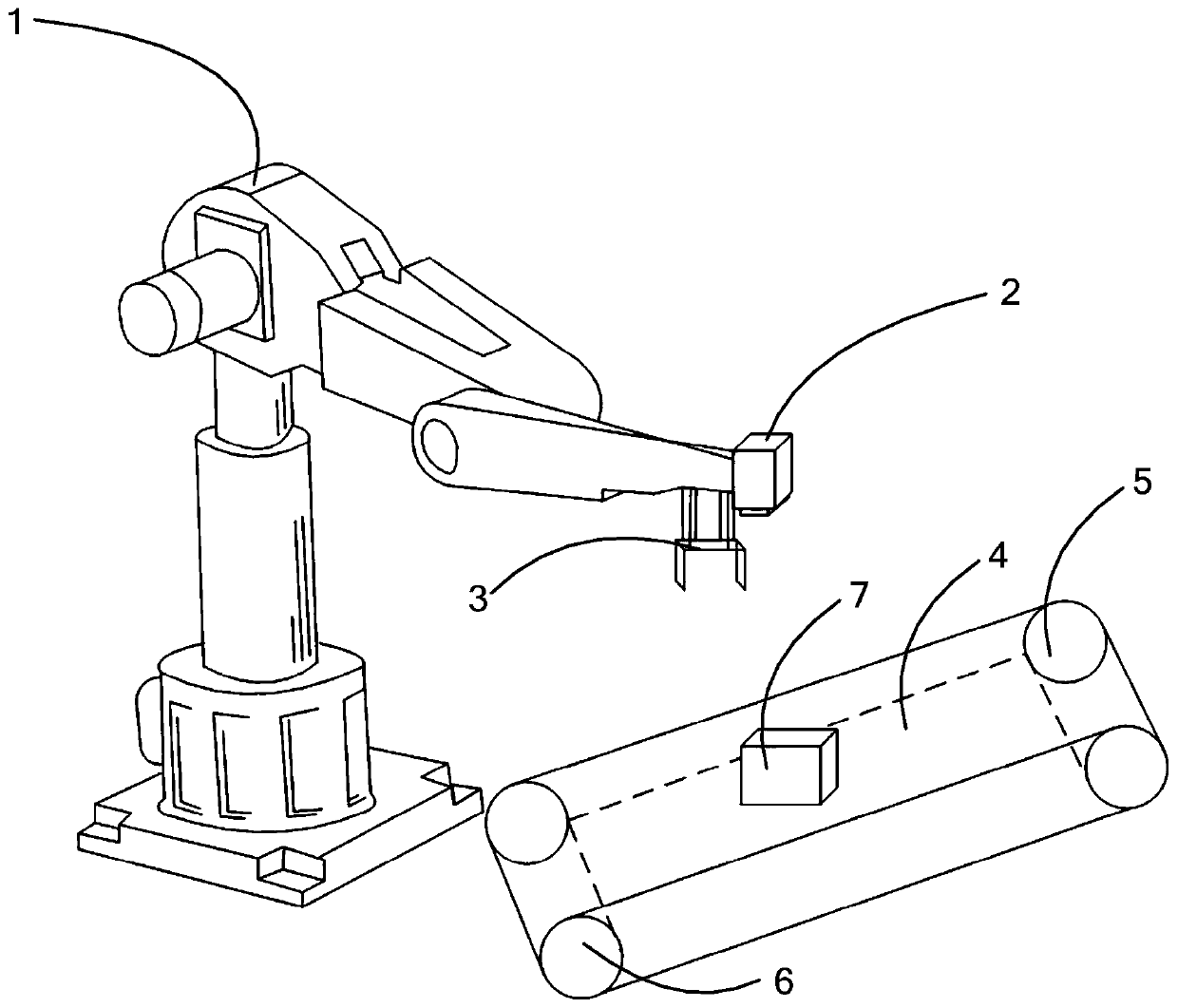

Visual recognition and positioning method for robot intelligent capture application

The invention relates to a visual recognition and positioning method for robot intelligent capture application. According to the method, an RGB-D scene image is collected, a supervised and trained deep convolutional neural network is utilized to recognize the category of a target contained in a color image and a corresponding position region, the pose state of the target is analyzed in combinationwith a deep image, pose information needed by a controller is obtained through coordinate transformation, and visual recognition and positioning are completed. Through the method, the double functions of recognition and positioning can be achieved just through a single visual sensor, the existing target detection process is simplified, and application cost is saved. Meanwhile, a deep convolutional neural network is adopted to obtain image features through learning, the method has high robustness on multiple kinds of environment interference such as target random placement, image viewing anglechanging and illumination background interference, and recognition and positioning accuracy under complicated working conditions is improved. Besides, through the positioning method, exact pose information can be further obtained on the basis of determining object spatial position distribution, and strategy planning of intelligent capture is promoted.

Owner:合肥哈工慧拣智能科技有限公司

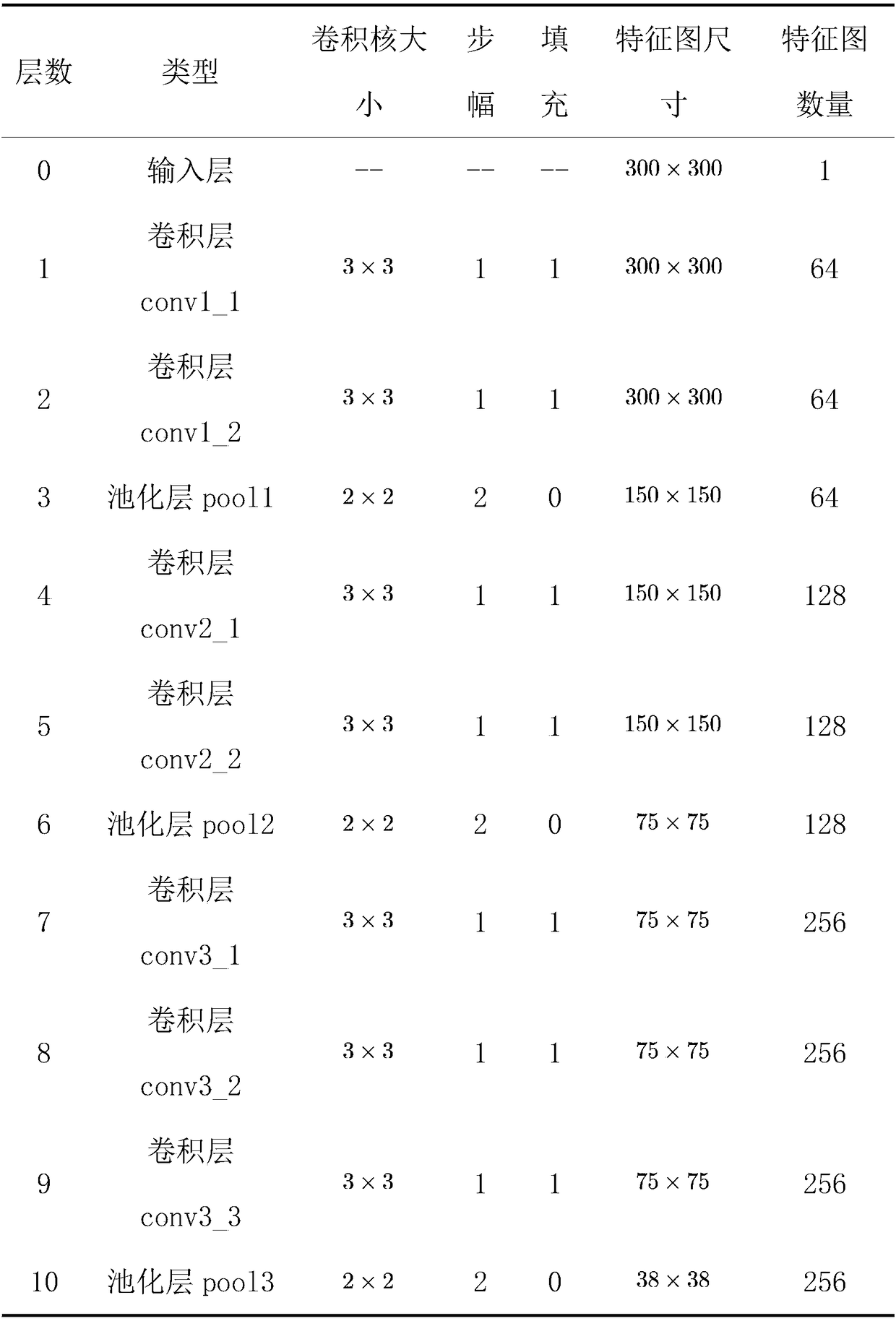

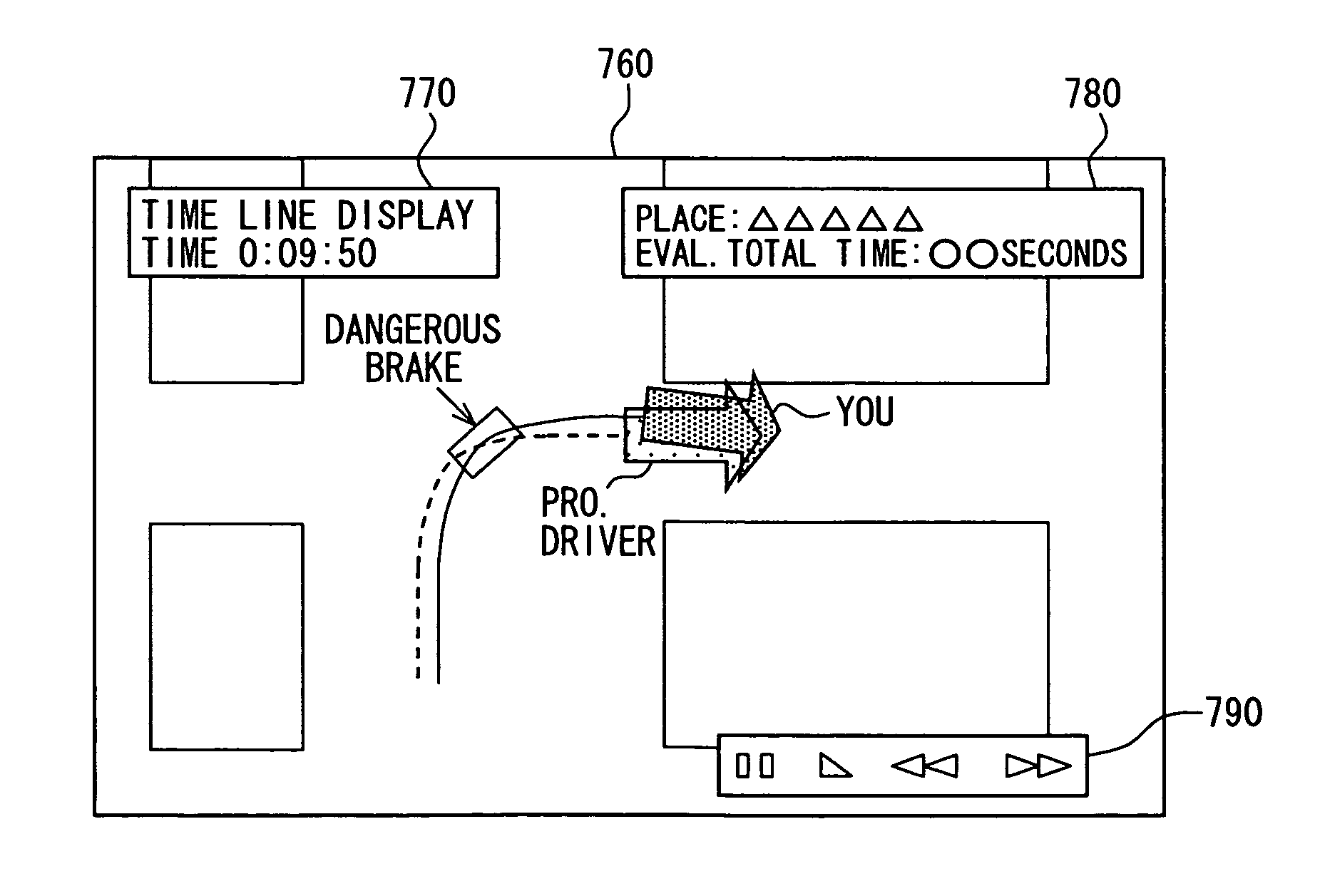

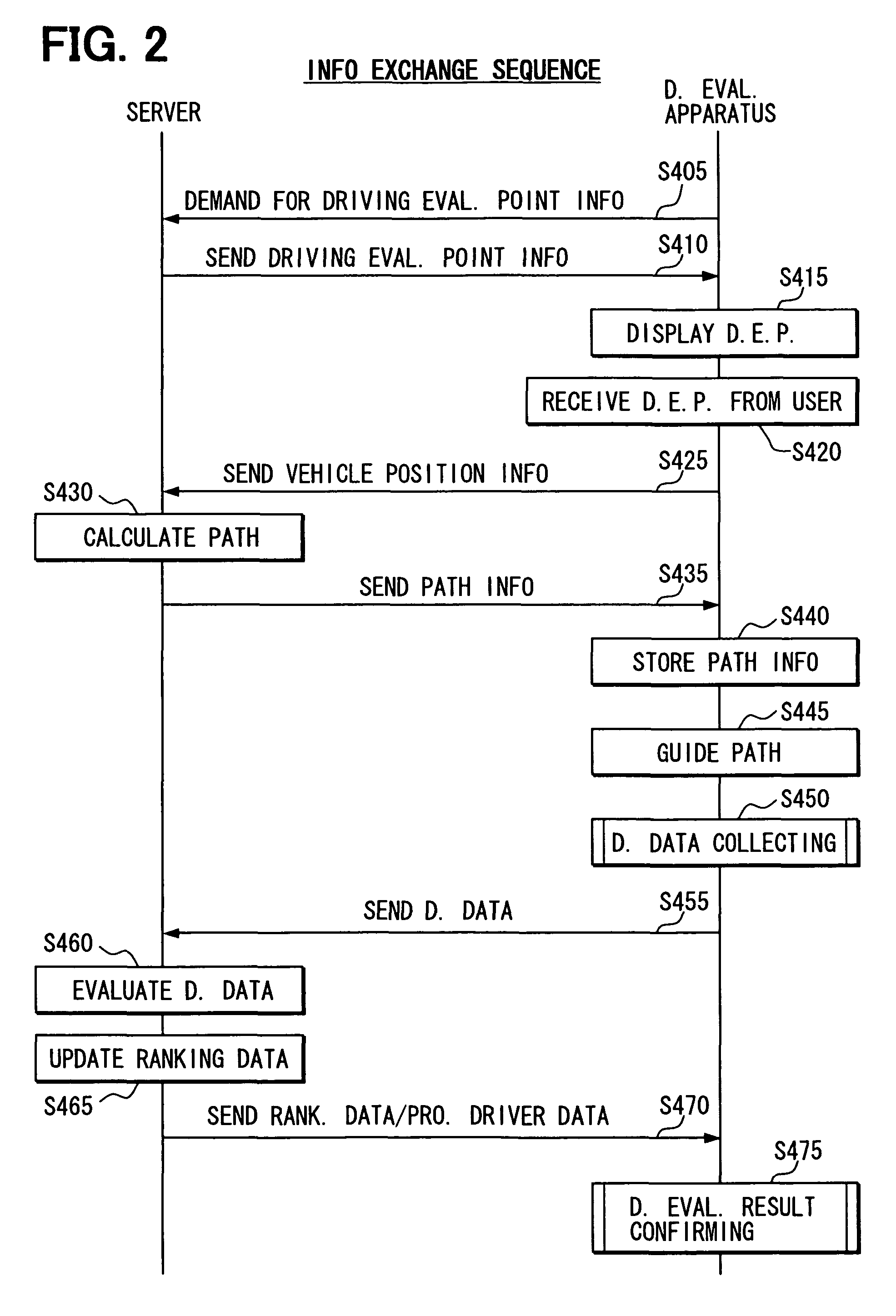

Driving evaluation system and server

InactiveUS8010283B2Easy to identifyReduce the necessary timeCosmonautic condition simulationsInstruments for road network navigationDriver/operatorAnimation

Travel information of a target vehicle is stored in time sequence and, on the basis of the travel information and pre-stored travel information of a professional driver's vehicle, a travel position of the target vehicle and a travel position of the professional driver's vehicle are displayed in animation. In such a manner, the driver can visually recognize the travel positions of the displayed target vehicle and the reference vehicle and understand how much the target vehicle is deviated from the ideal travel position.

Owner:DENSO CORP

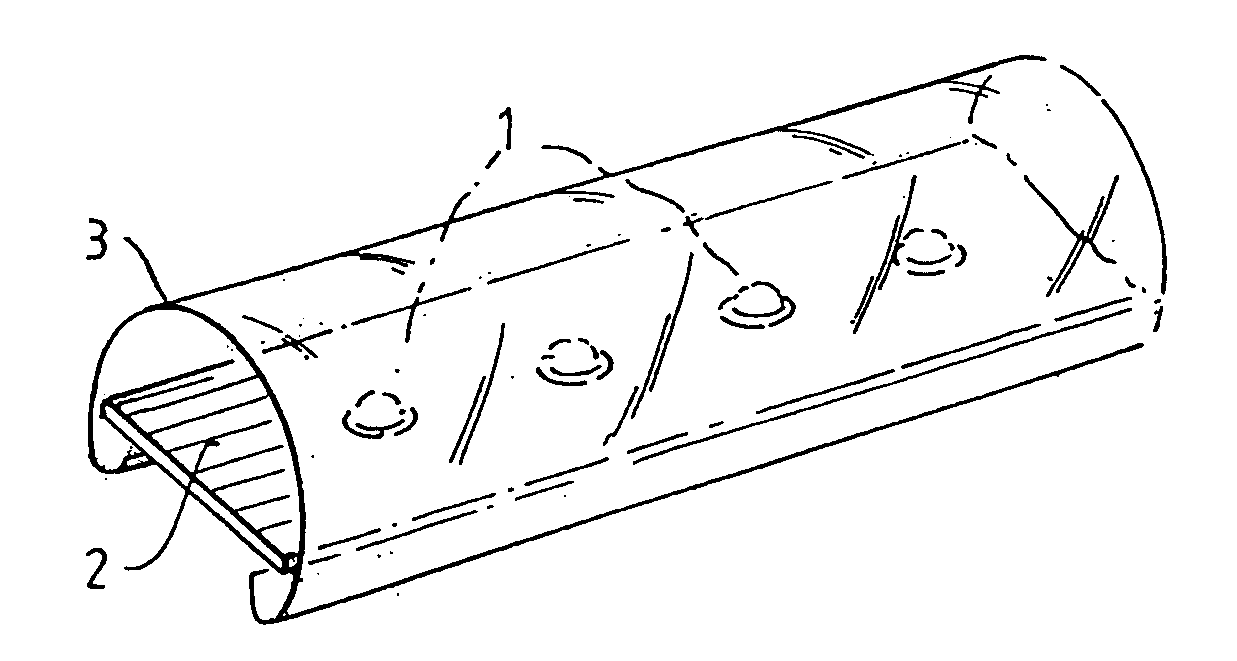

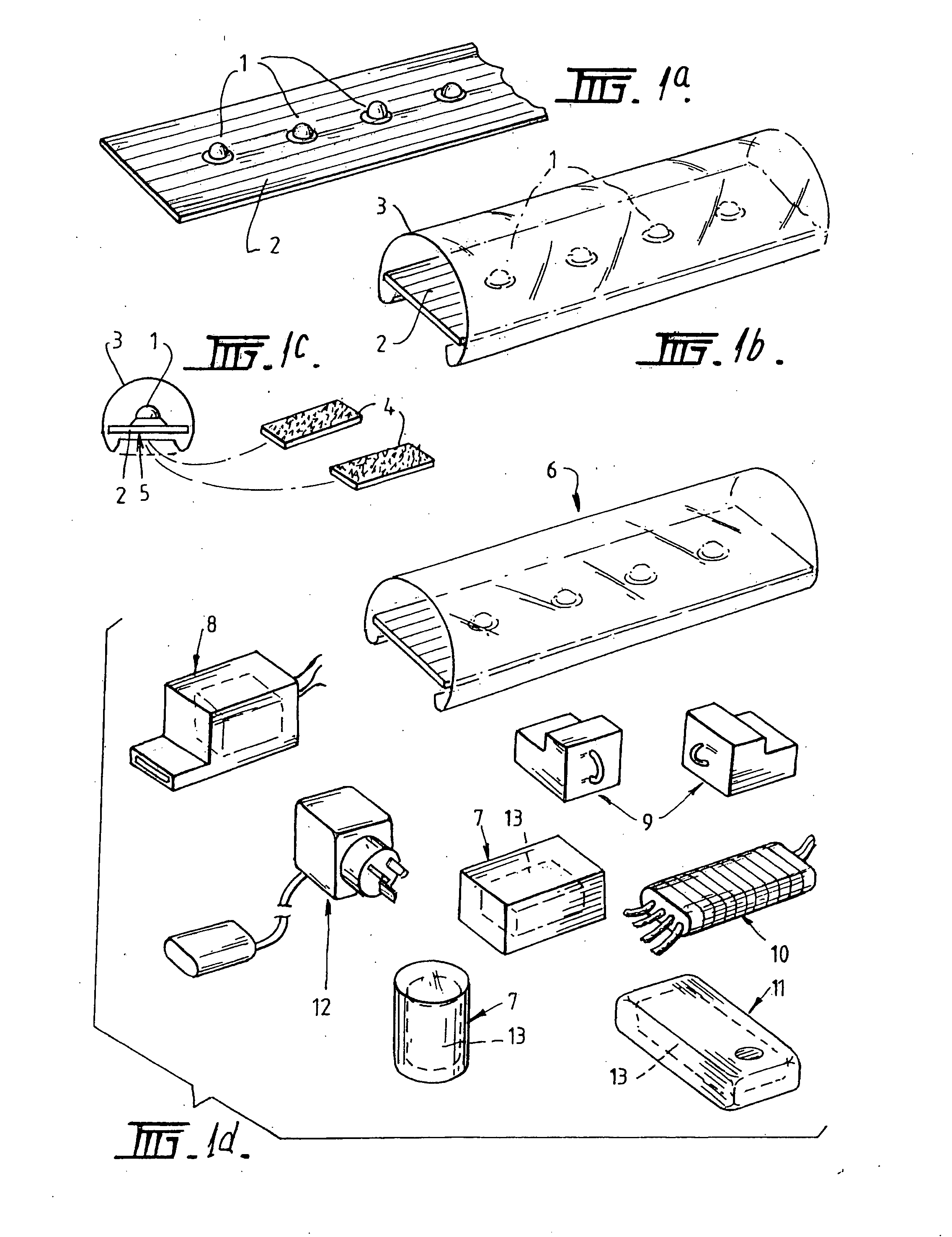

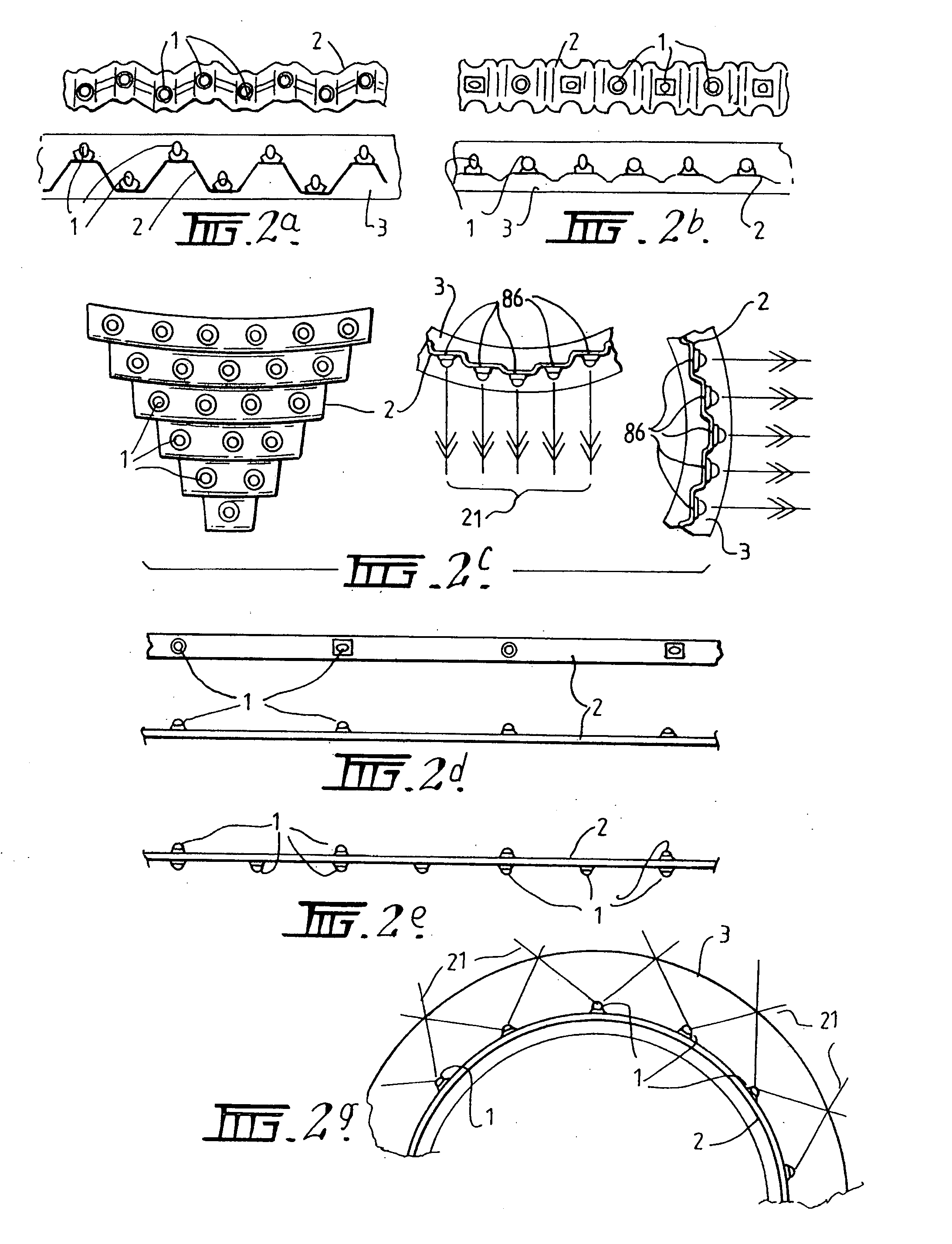

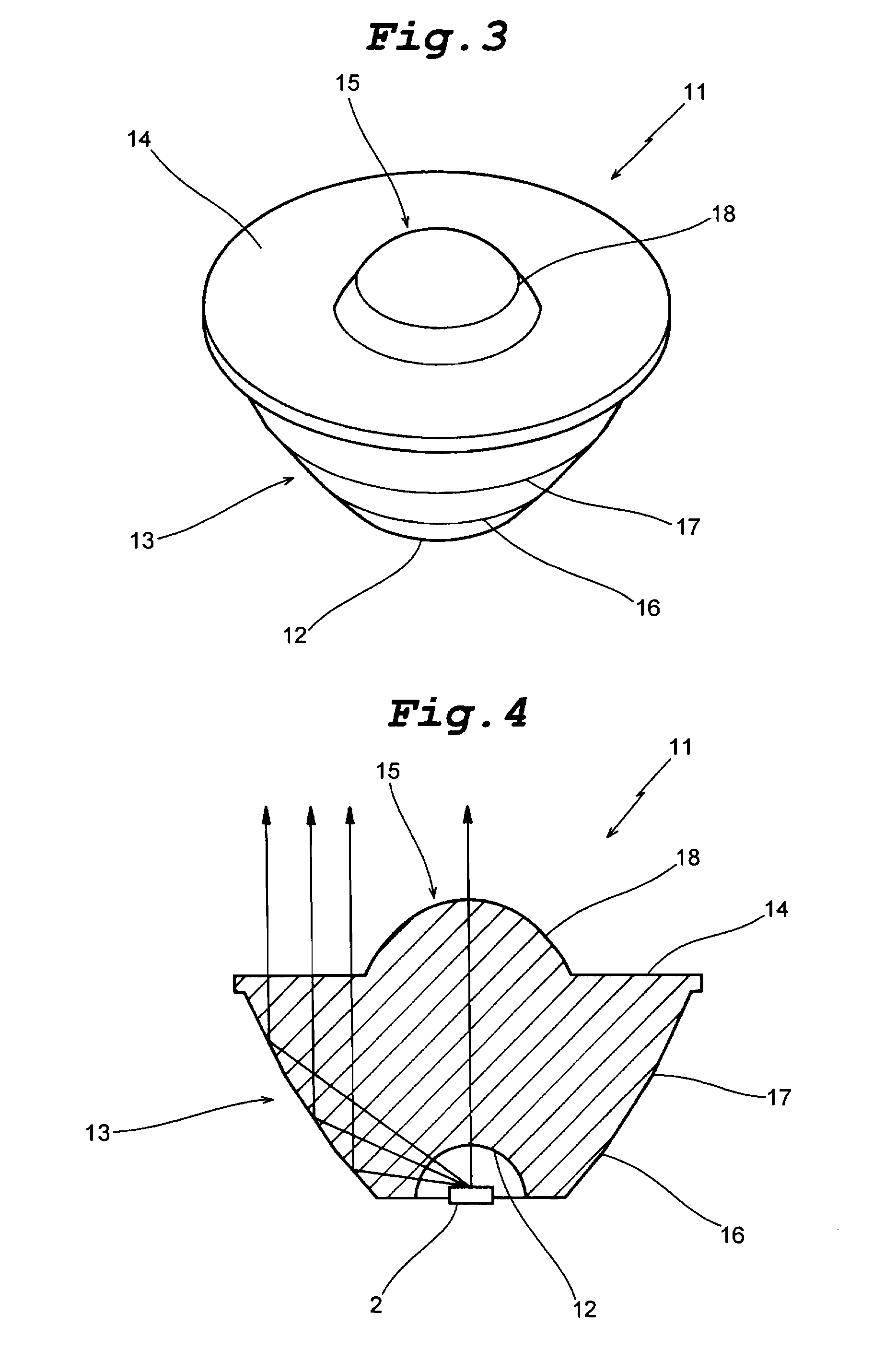

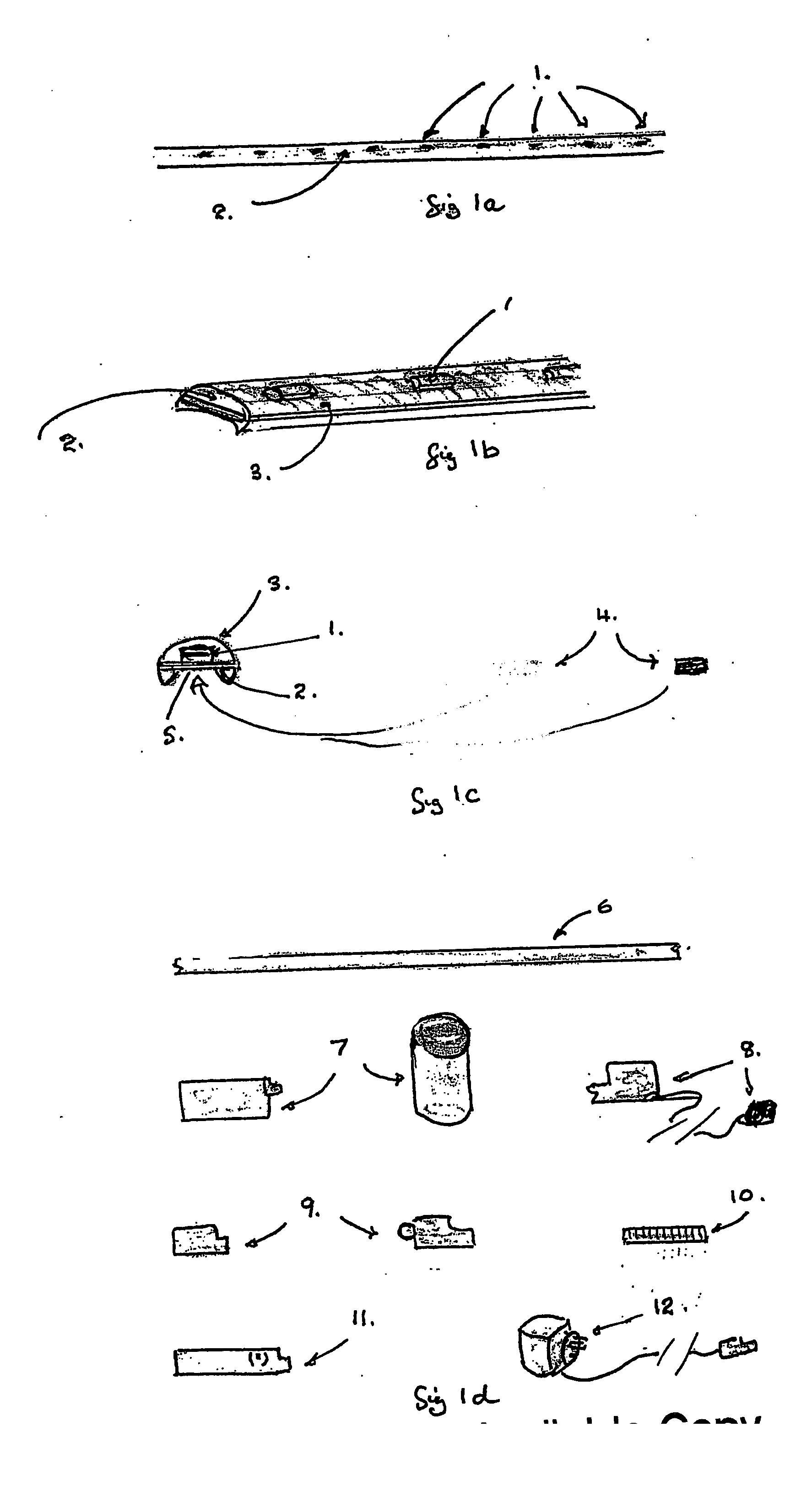

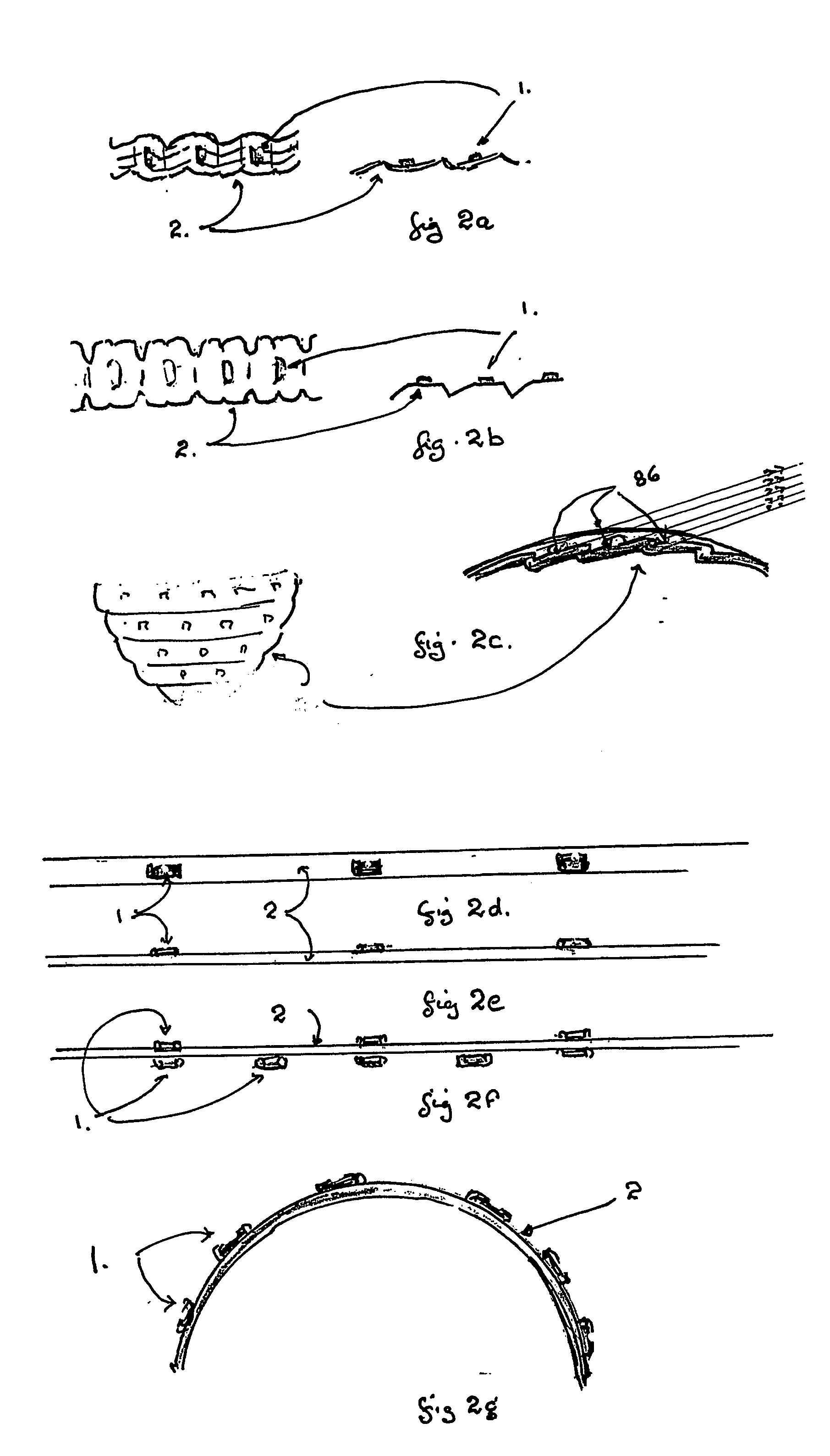

Methods and apparatus relating to improved visual recognition and safety

InactiveUS20070291473A1Increase flexibilityEasy to identifyLighting support devicesPoint-like light sourceVisual recognitionIdentification device

A visual recognition and identification apparatus comprising a mounting means adapted for placement on an object wherein said mounting means incorporates one or a plurality of light emitting diodes adapted to provide a visual signal characterised in that said LED's are mounted in, on, or connected to a printed circuit board, wherein said printed circuit board is surface modified to provide a distinct angle of mounting for one or a plurality of LED's to provide a highly defined focused viewing angle for said apparatus, wherein said mounting angle results in the focusing of said LED's at a defined focal point.

Owner:TRAYNOR NEIL

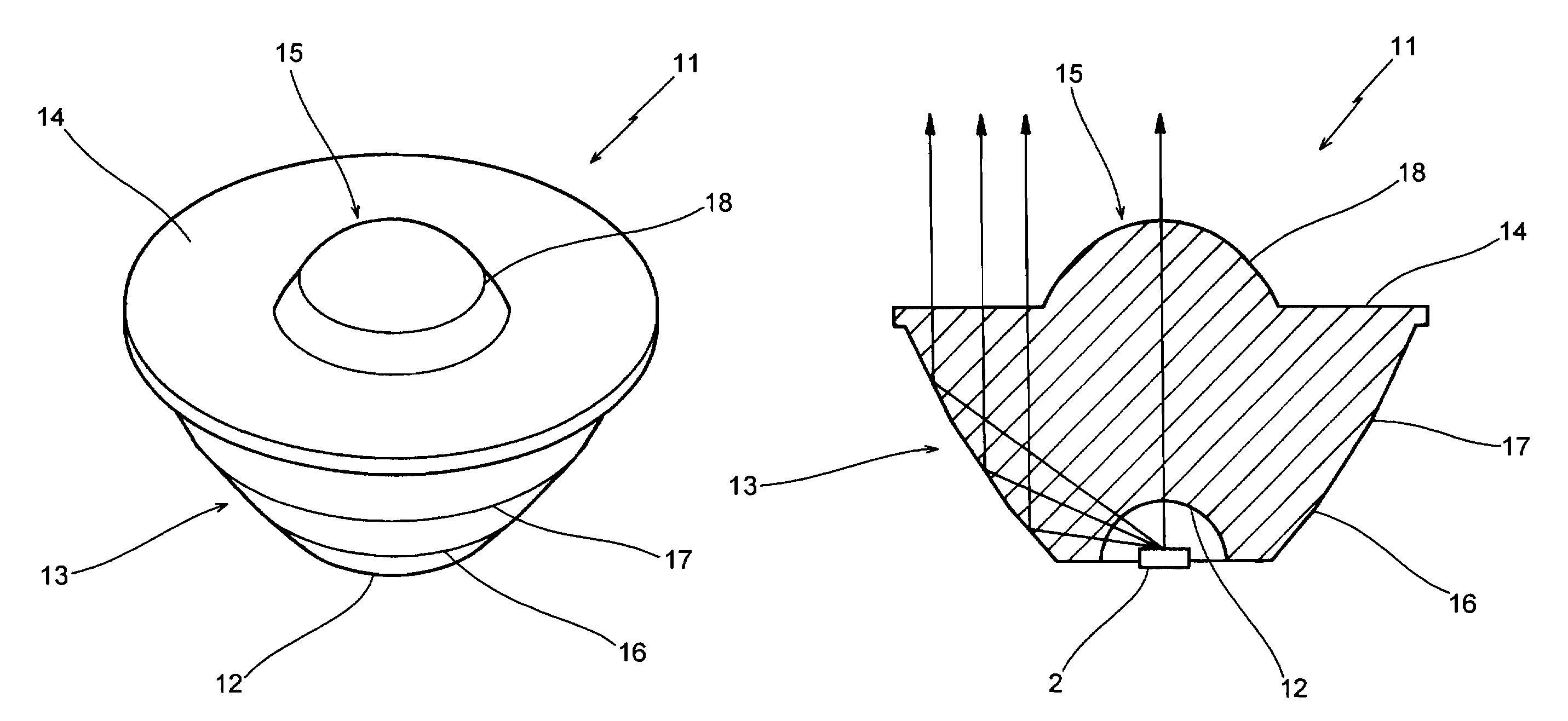

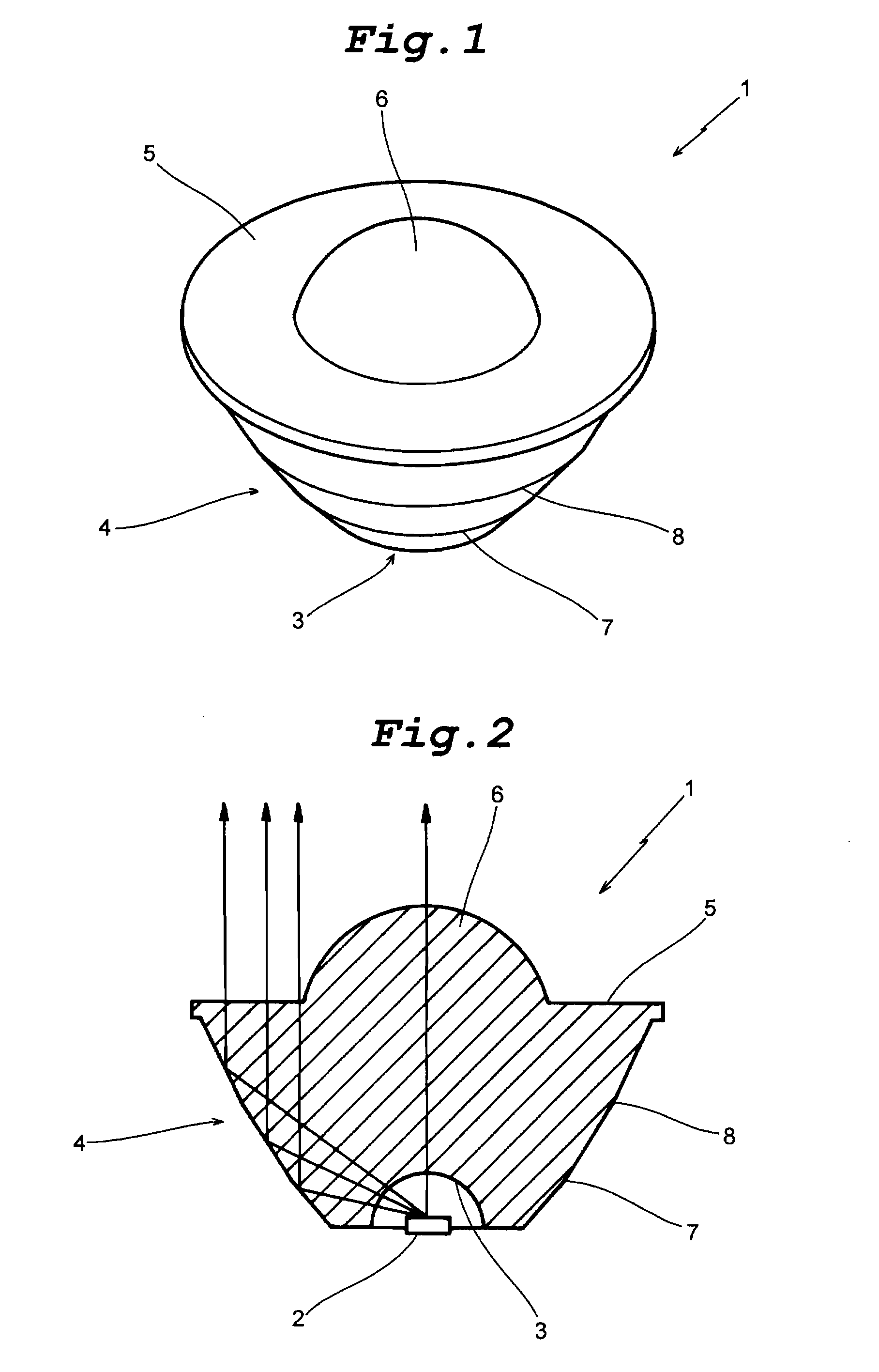

Indicator lamp having a converging lens

It is sought to provide an indicator lamp, which is excellent not only in short distance visual recognition property but also in long distance one, as well as being further excellent in sight field angle property.An indicator lamp includes a light-emitting element 1 and a light-emitting element lens 2 having a light-emitting element mounting cavity 3 formed at the bottom, in which the light-emitting element disposed in the cavity 3 emits light to be fully reflected by the peripheral surface of the lens 1 and proceeds as emission light flux forwardly of the lens 1. The slope angle of the peripheral surface with respect to the lens axis is reduced progressively from the bottom toward the lens front surface 5 in three stages, thus forming circumferential corners 7 and 8 as boundaries between adjacent ones of the three stages. The circumferential corners scatter light emitted from the light emitting element 2 to provide concentric emission light fluxes as viewed from the side of the lens front.

Owner:OKAYA ELECTRIC INDS

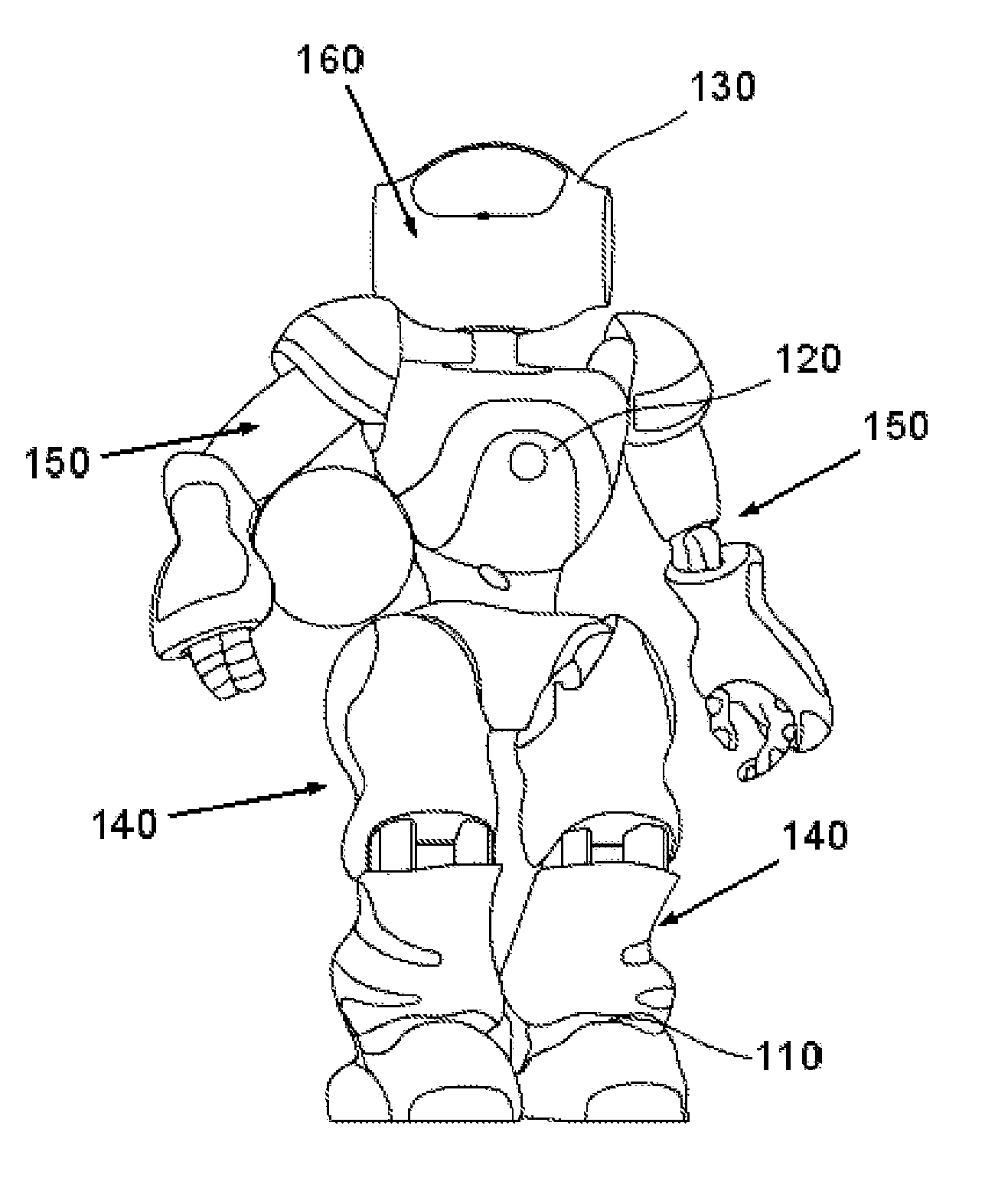

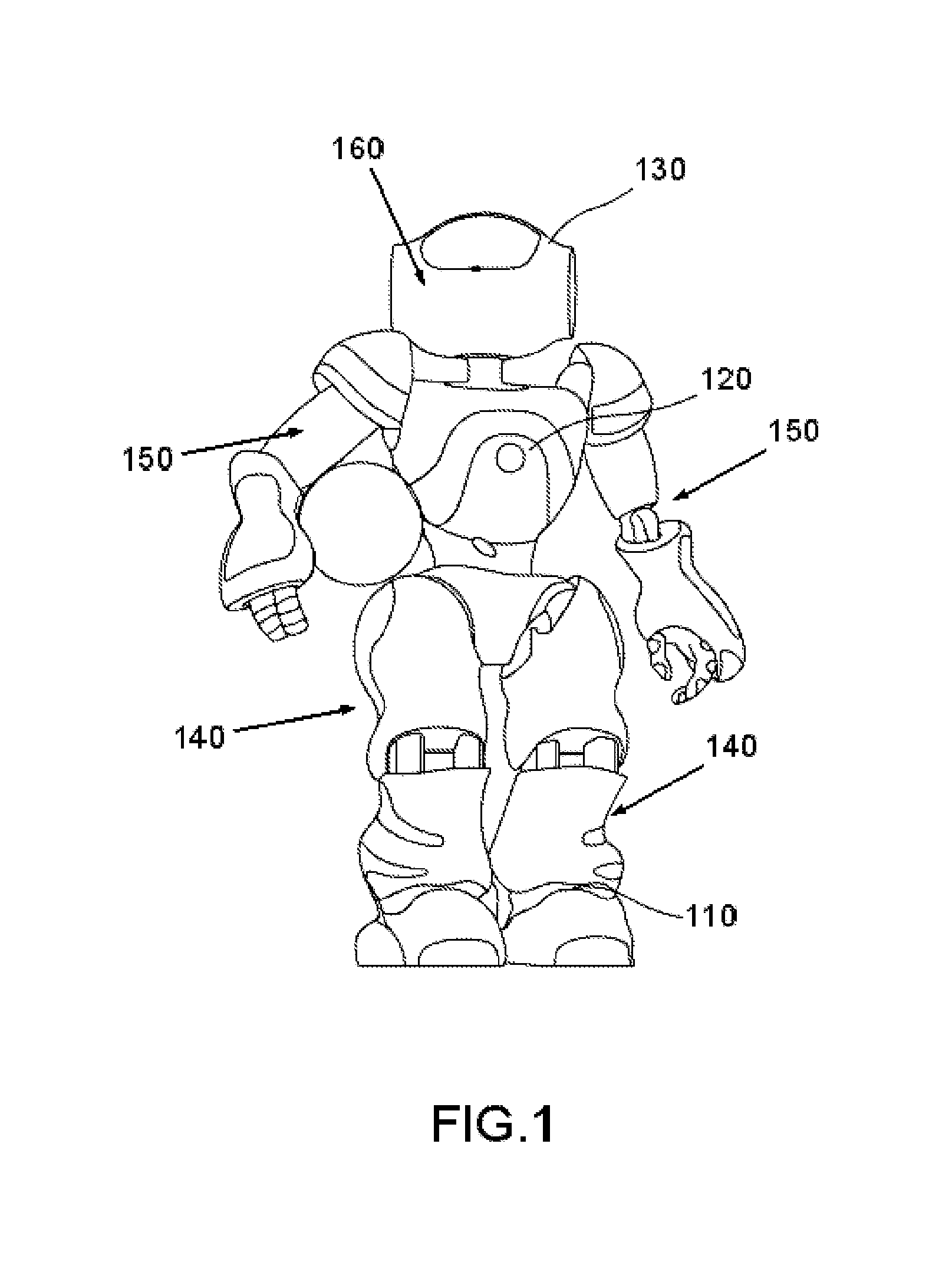

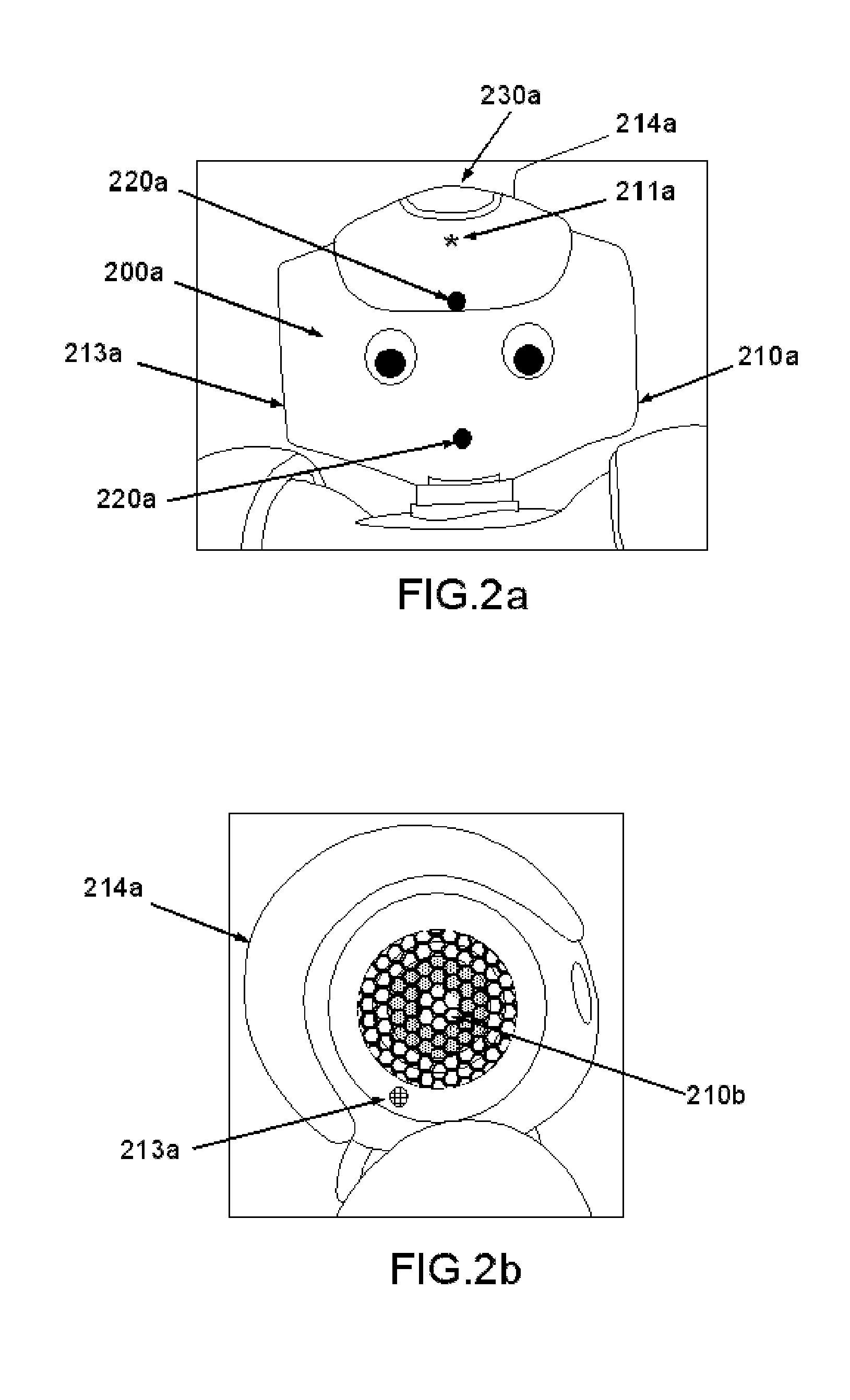

"humanoid robot equipped with a natural dialogue interface, method for controlling the robot and corresponding program"

InactiveUS20130218339A1Easy to adaptPromote resultsComputer controlSimulator controlDiagnostic Radiology ModalityHumanoid robot nao

A humanoid robot equipped with an interface for natural dialog with an interlocutor is provided. Previously, the modalities of dialog between humanoid robots equipped moreover with evolved displacement functionalities and human beings are limited notably by the capabilities for voice and visual recognition processing that can be embedded onboard said robots. The present disclosure provides robots are presently equipped with capabilities to resolve doubt on a several modalities of communication of the messages that they receive and for combining these various modalities which make it possible to greatly improve the quality and the natural character of dialogs with the robots' interlocutors. This affords simple and user-friendly means for carrying out the programming of the functions making it possible to ensure the fluidity of these multimodal dialogs.

Owner:SOFTBANK ROBOTICS EURO

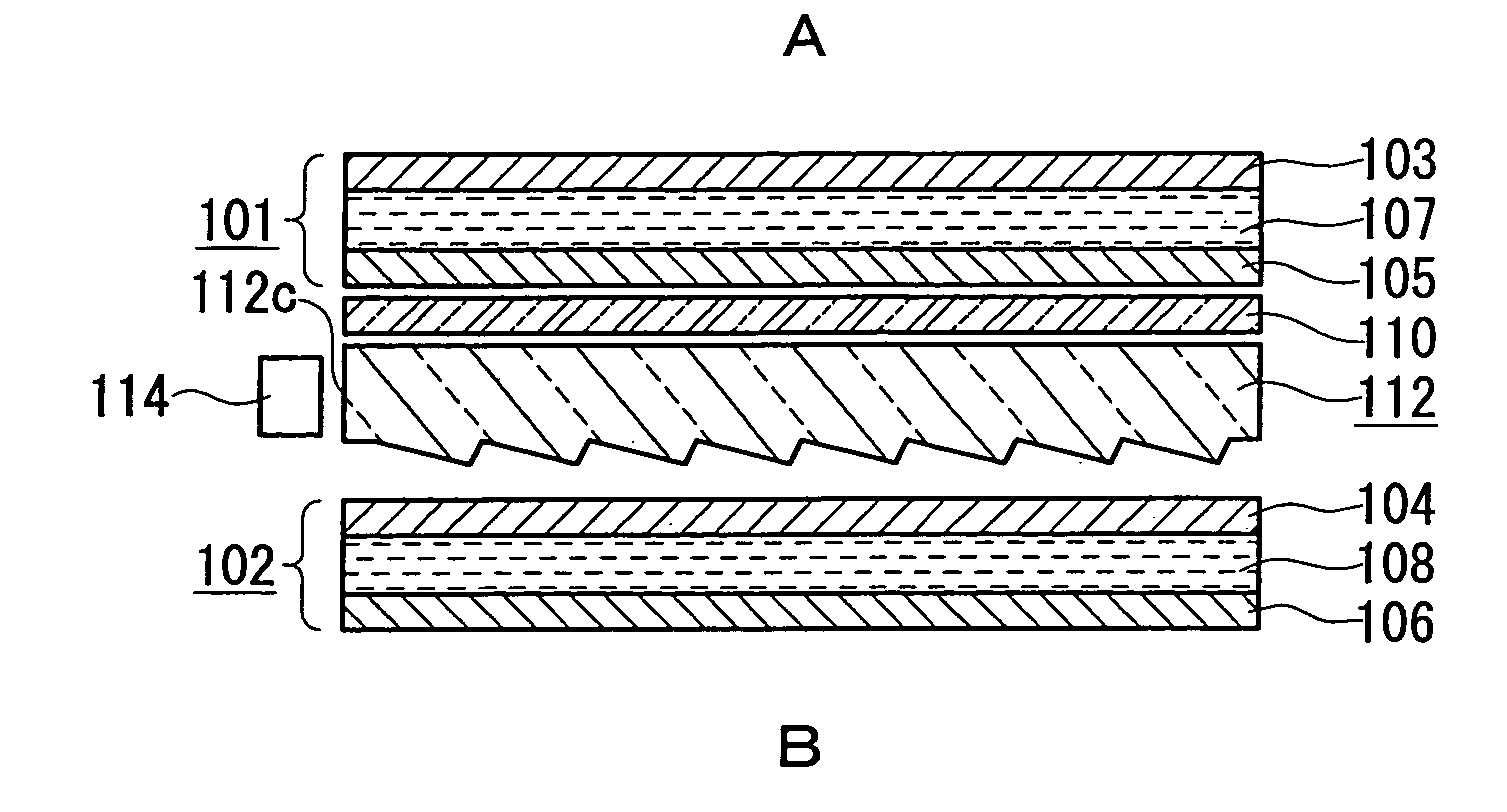

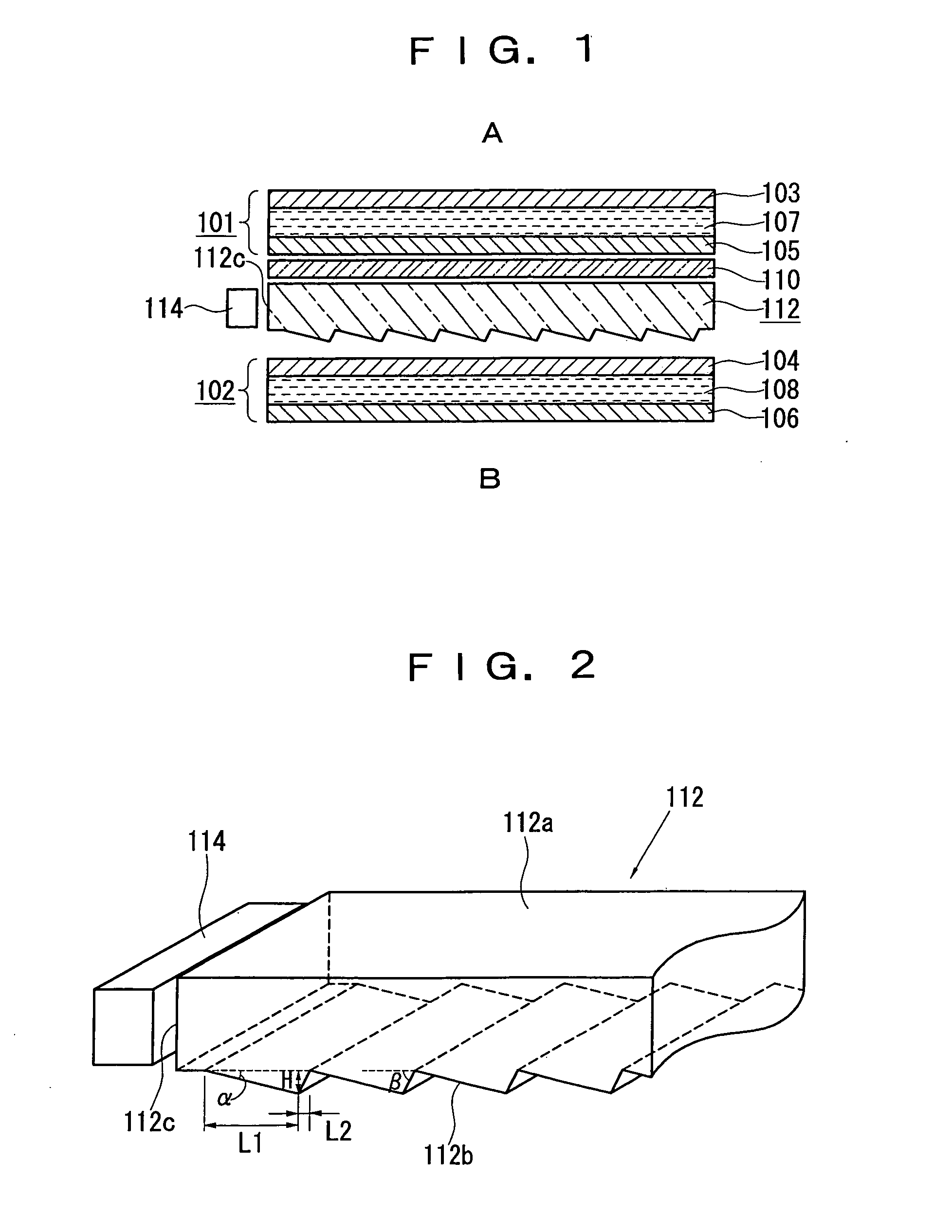

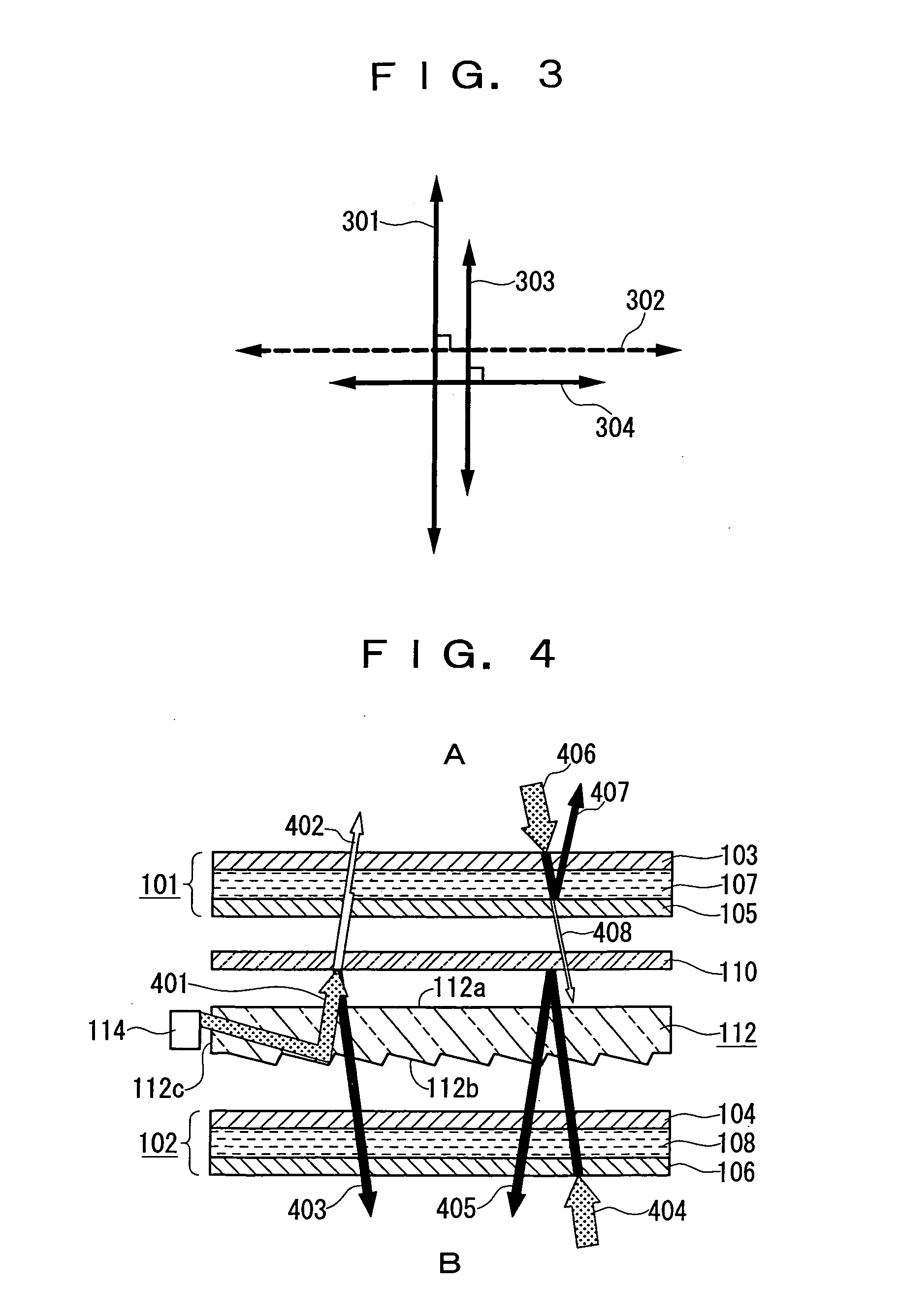

Liquid crystal display device

InactiveUS20050073627A1Improve portabilityReduce power consumptionNon-linear opticsReflectorsLight guideEngineering

A liquid crystal display device has first and second liquid crystal panels (101, 102) mainly comprising liquid crystal cells (107, 108), respectively, disposed back to back to enable the visual recognition of the liquid crystal panels (101, 102). A light guide plate (112) is disposed between the first liquid crystal panel (101) and the second liquid crystal panel (102) with a light source (114) located adjacent to its one end face (112c), and a polarization separator (110) located between the first liquid crystal panel (101) and the light guide plate (112). Light emitted from the light guide plate (112) is split into two beams of polarized light: one is emitted to the first liquid crystal panel (101), and the other to the second liquid crystal panel (102) through the light guide plate (112). This constitution thins down a both-sided display liquid crystal display device and reduces power consumption.

Owner:CITIZEN WATCH CO LTD

Coded surgical aids

The present invention can provide a coded surgical suture, which is capable of affording a visual identification effect for determining the direction or end portions of the surgical suture. The surgical suture can have an elongated body member having first and second end portions. A coding member can be provided on one of the two end portions of the body member to afford a visual identification effect. In an exemplary embodiment, the coding member can have a color element. The present invention can also provide a coding system for identifying the direction or the end portions of a surgical suture or for distinguishing between different surgical sutures.

Owner:THE UNIVERSITY OF HONG KONG

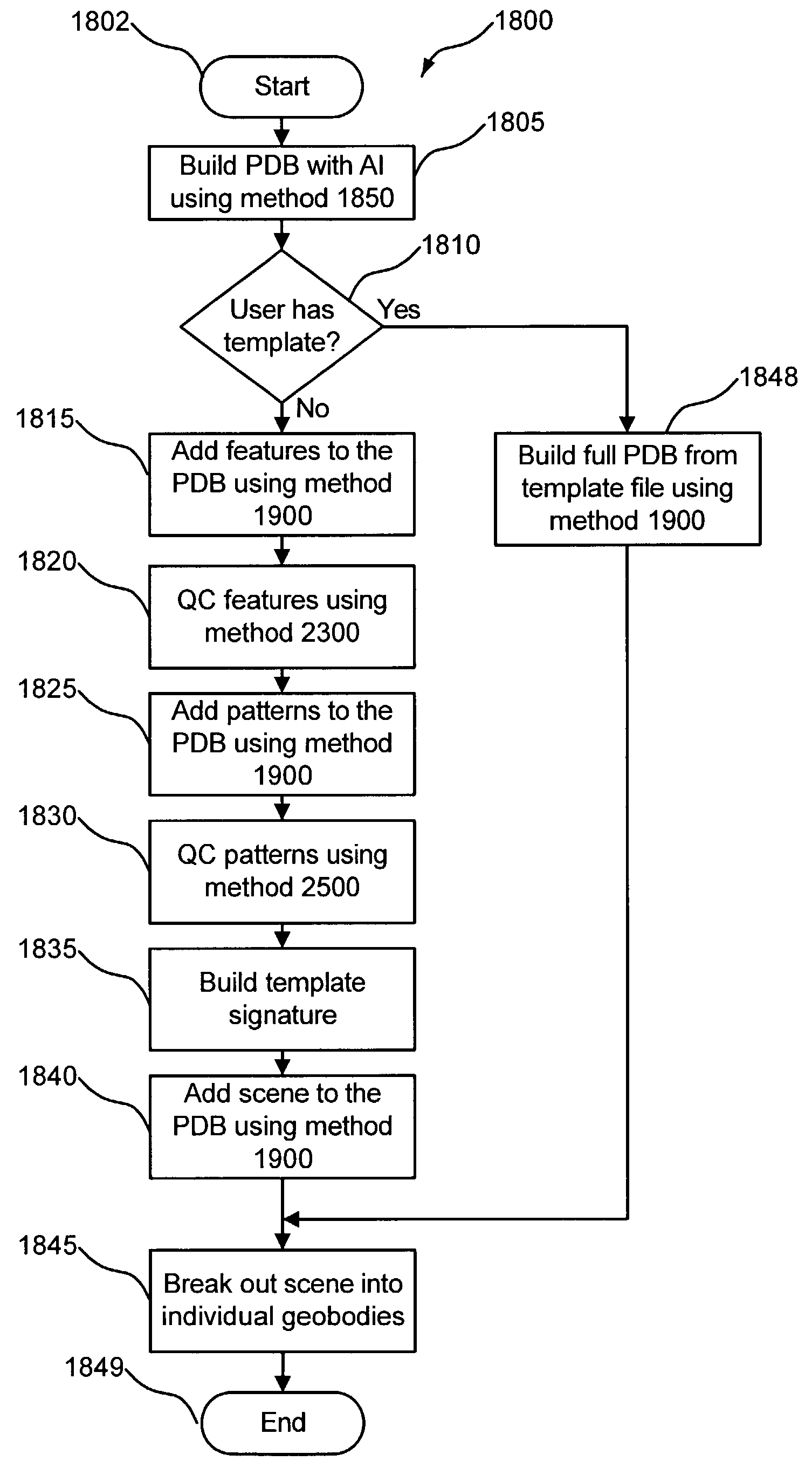

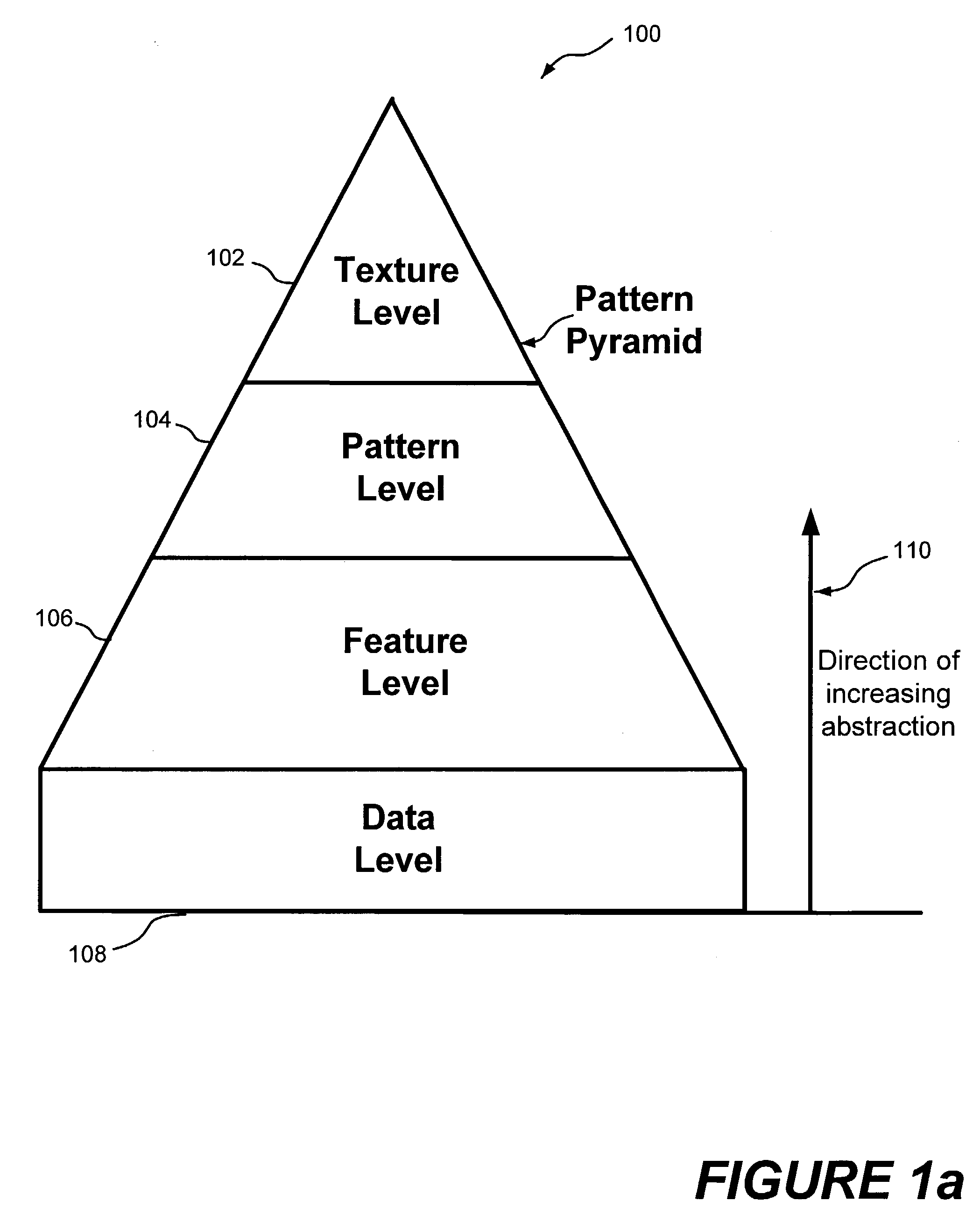

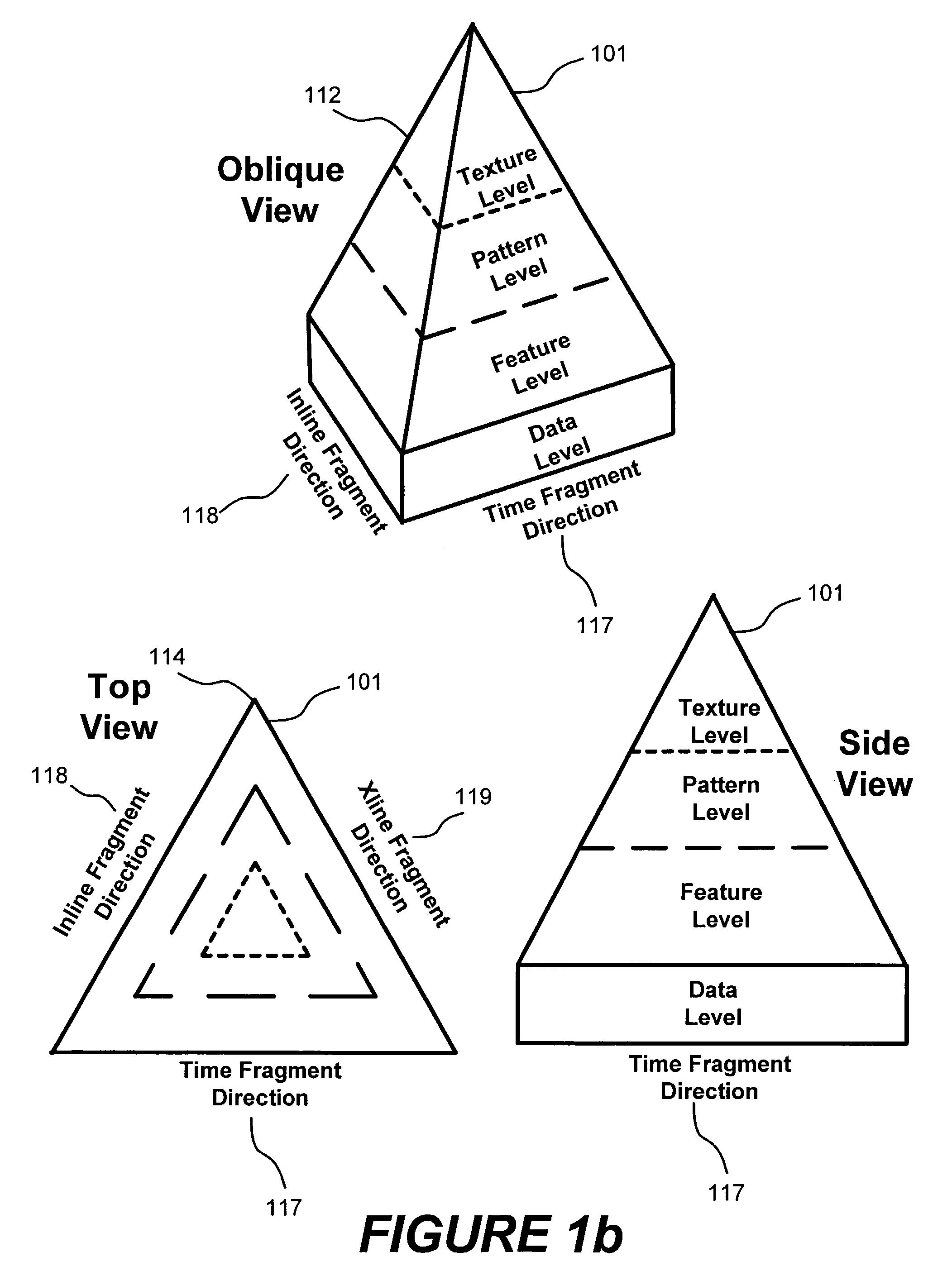

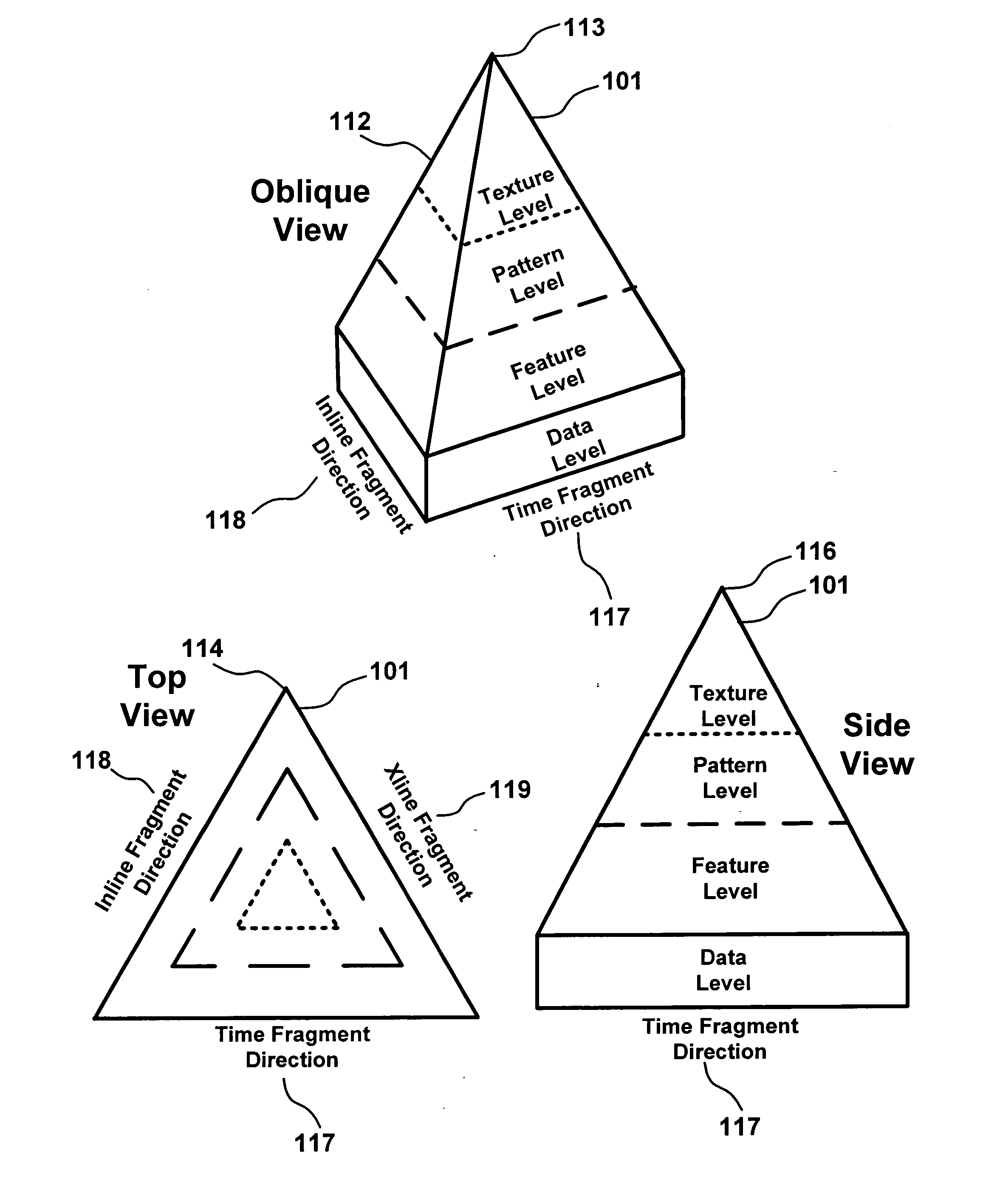

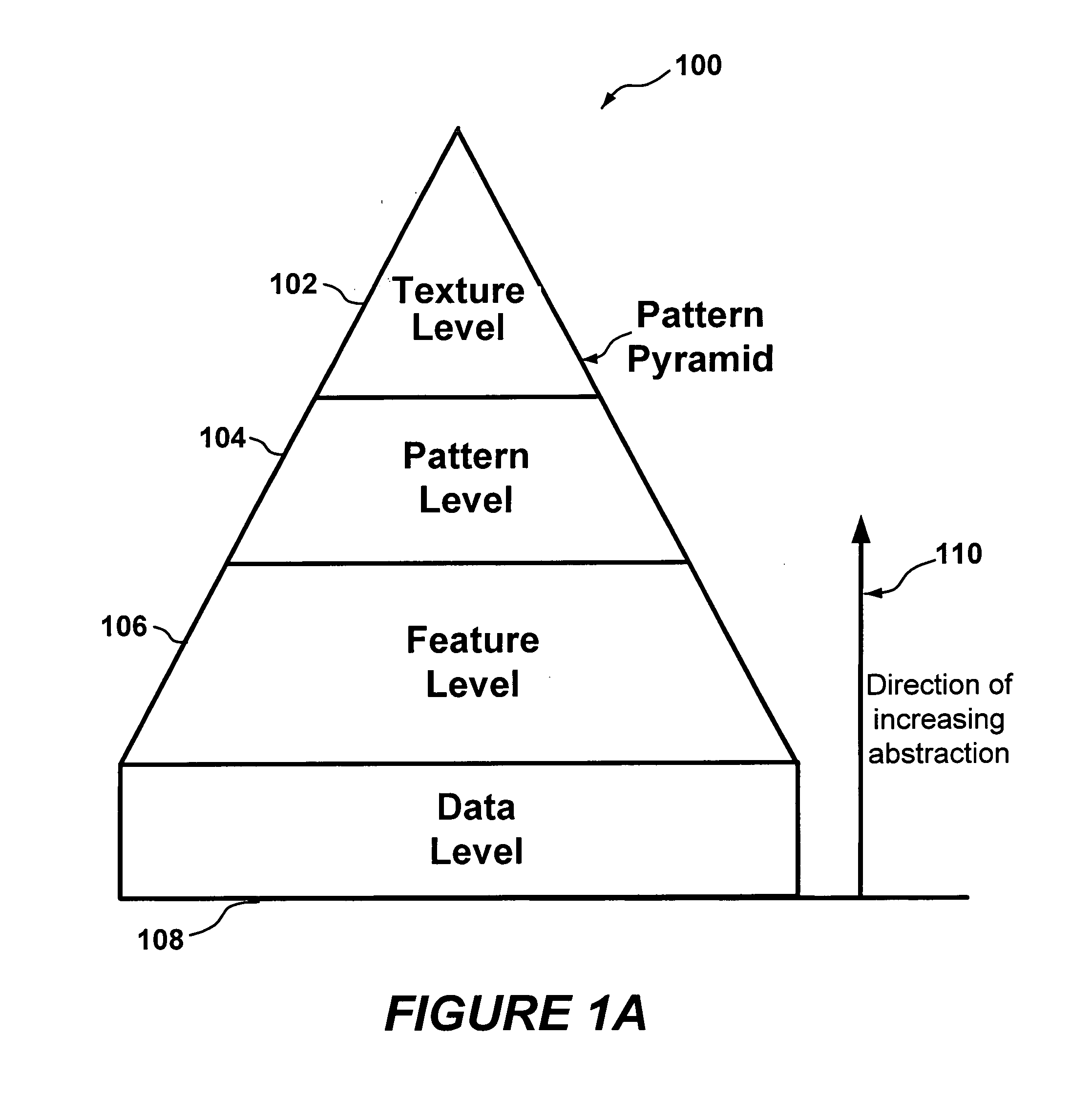

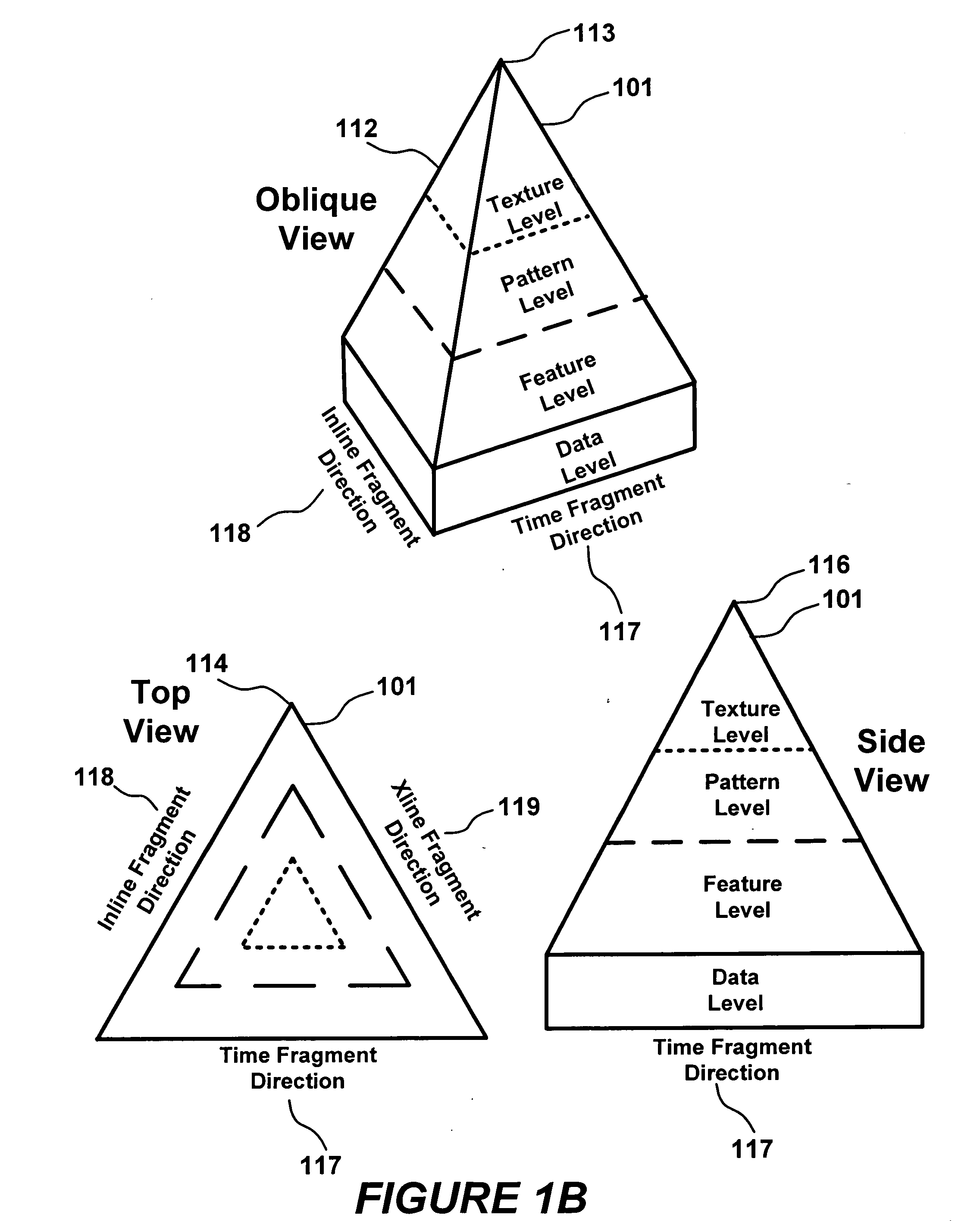

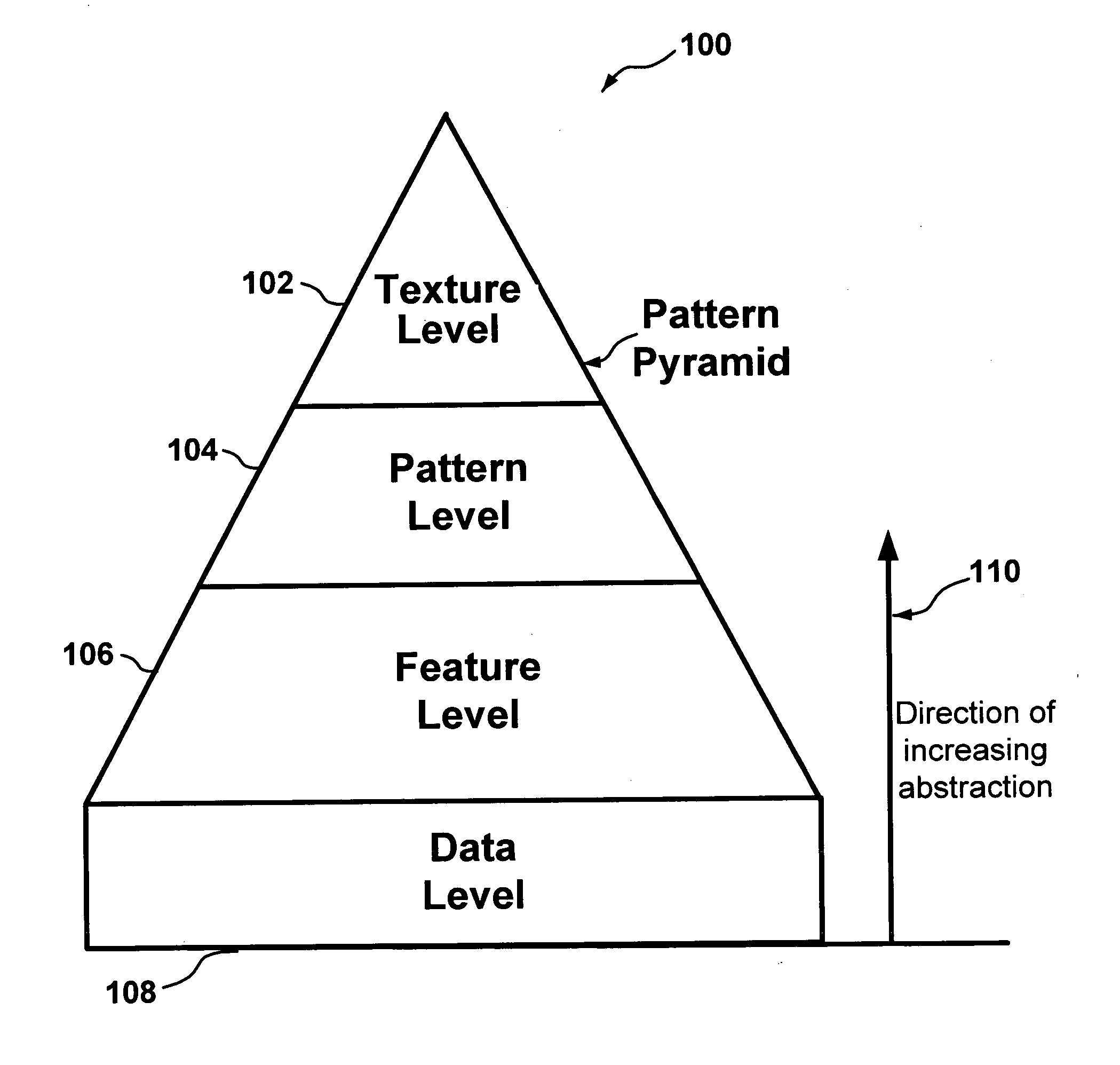

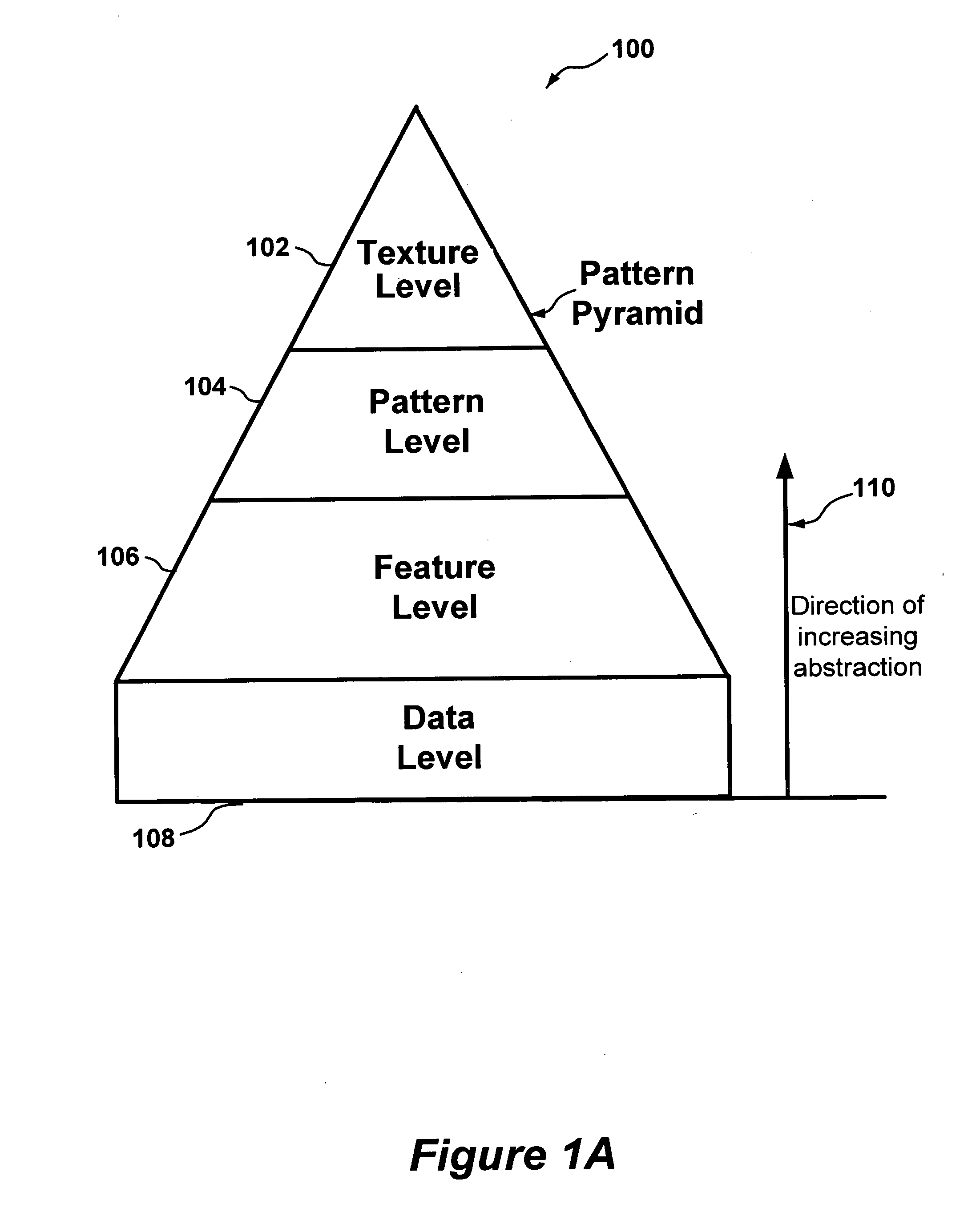

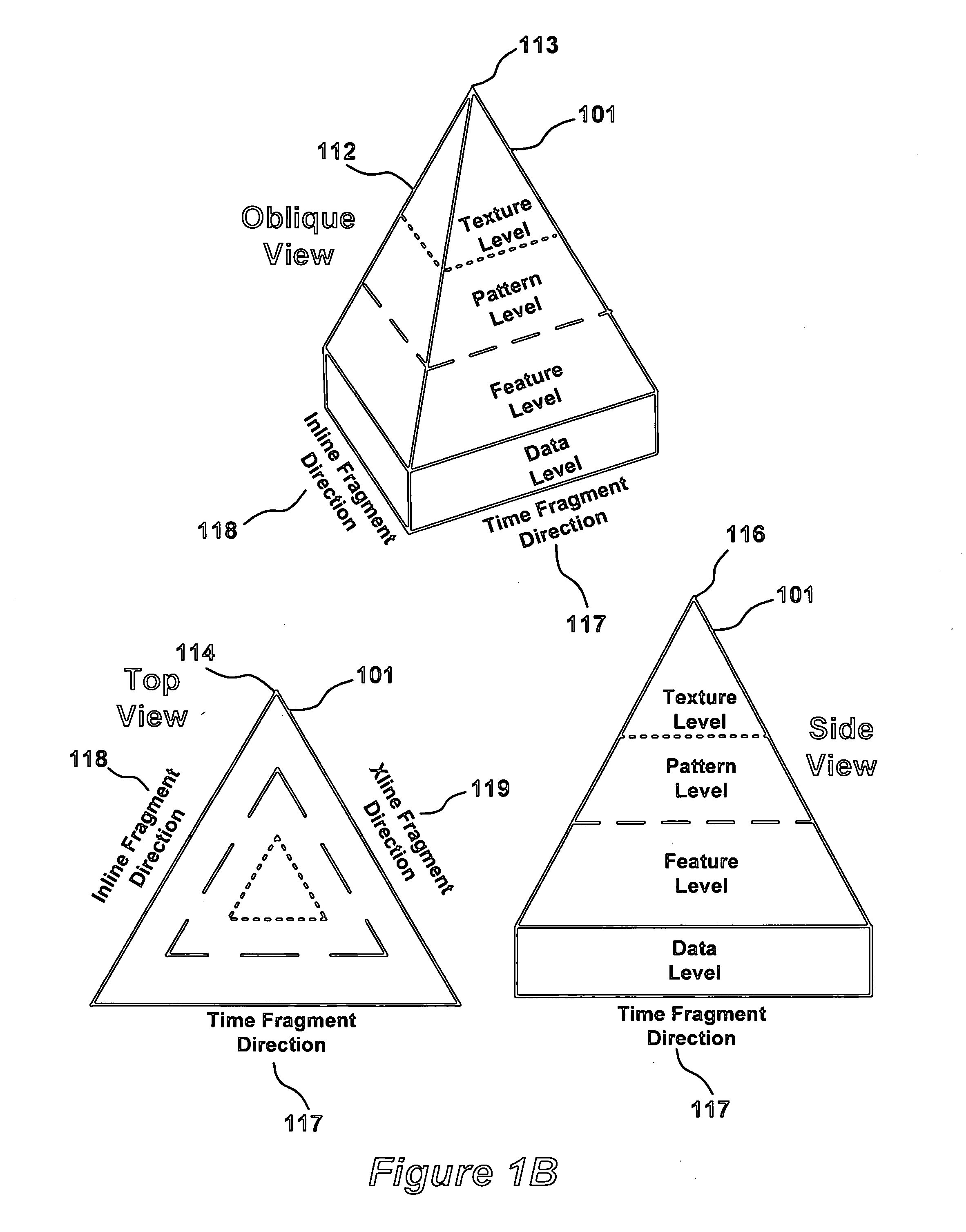

Pattern recognition applied to oil exploration and production

ActiveUS7184991B1Facilitates visual recognitionComparison process is enhancedDigital computer detailsCharacter and pattern recognitionPattern recognitionVisual recognition

An apparatus and method for analyzing known data, storing the known data in a pattern database (“PDB”) as a template is provided. Additional methods are provided for comparing new data against the templates in the PDB. The data is stored in such a way as to facilitate the visual recognition of desired patterns or indicia indicating the presence of a desired or undesired feature within the new data. The apparatus and method is applicable to a variety of applications where large amounts of information are generated, and / or if the data exhibits fractal or chaotic attributes.

Owner:IKON SCI AMERICAS

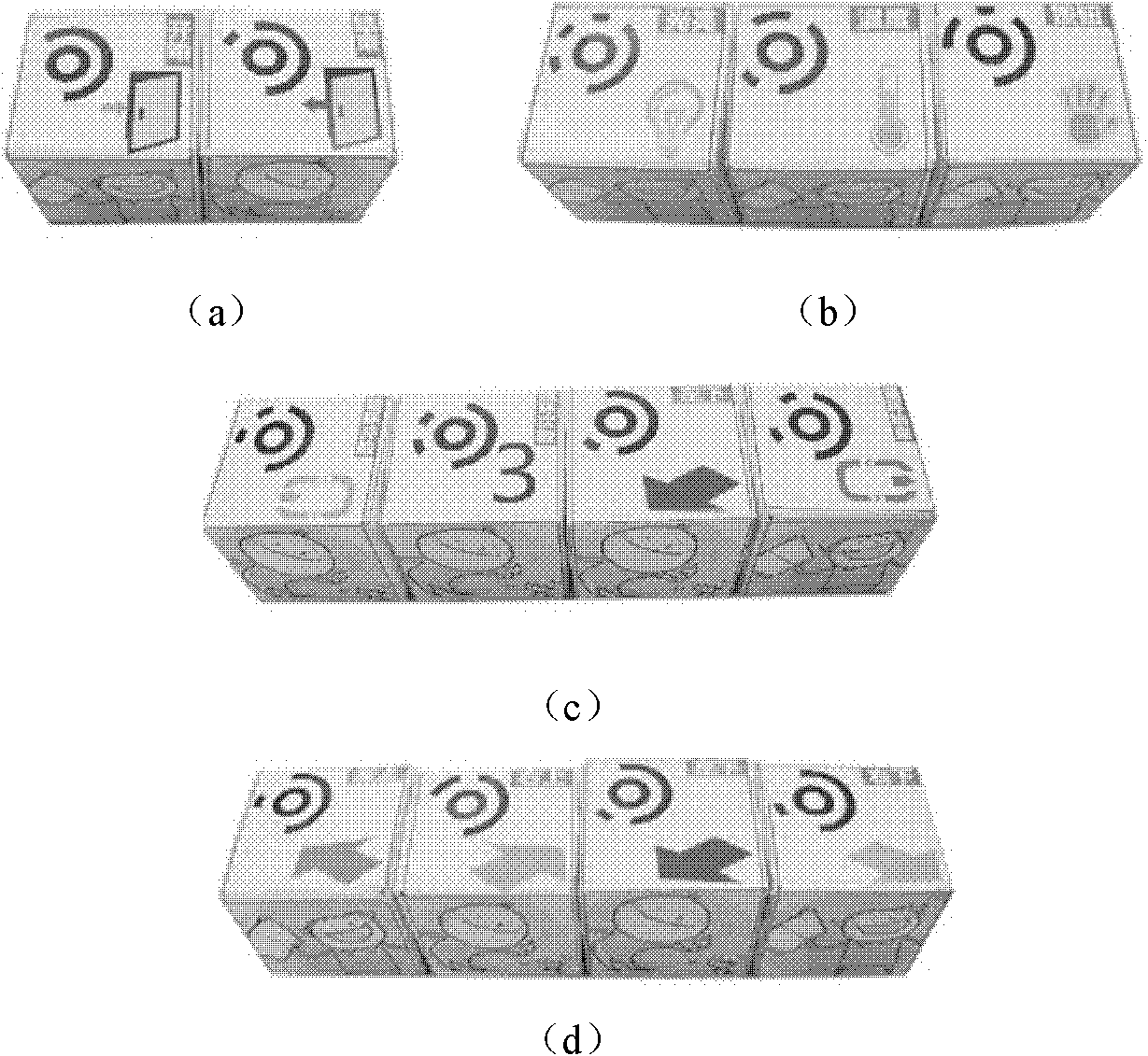

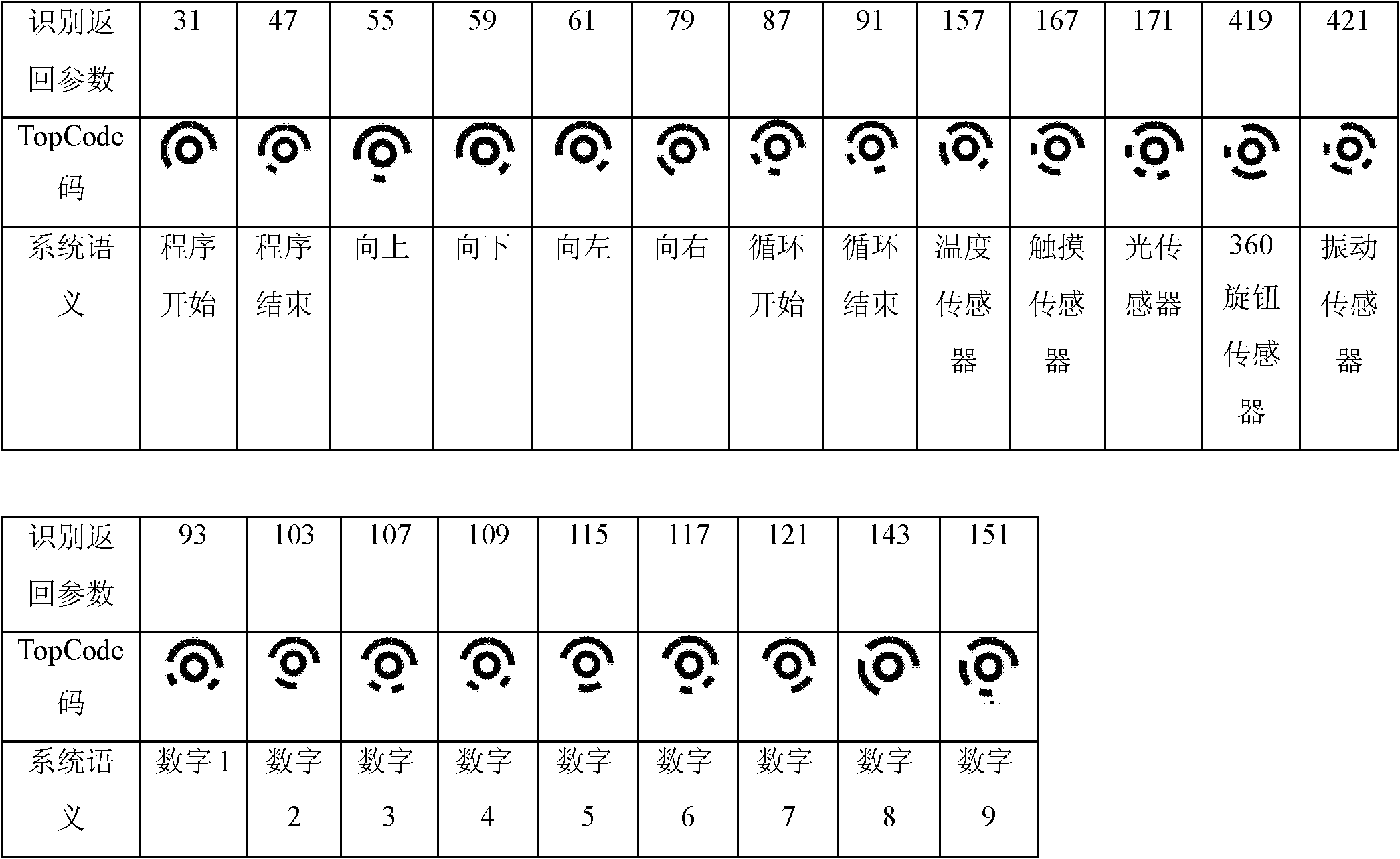

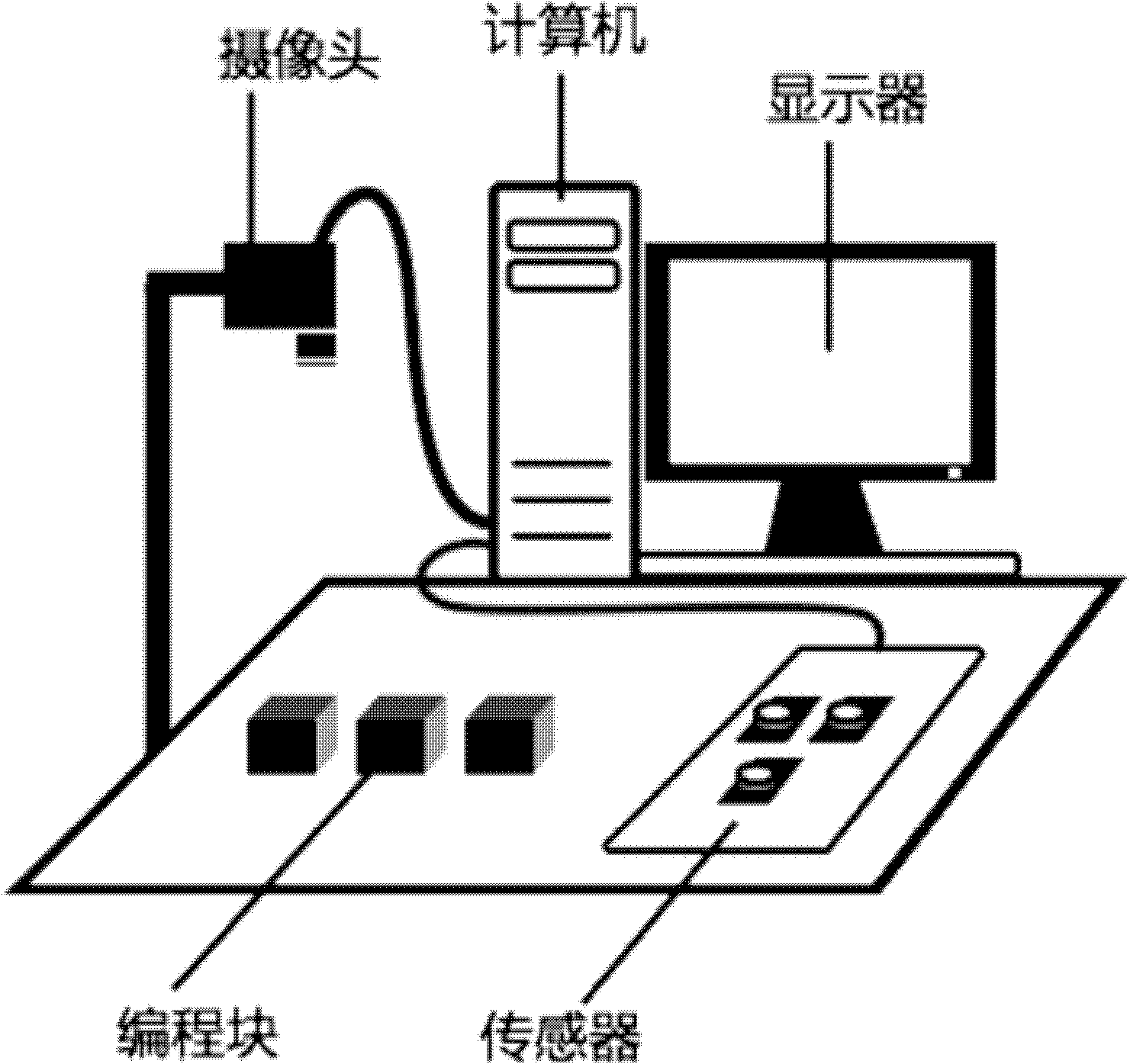

Material object programming method and system

The invention discloses a material object programming method and a material object programming system, which belong to the field of human-machine interaction. The method comprises the following steps of: 1) establishing a set of material object programming display environment; 2) shooting the sequence of material object programming blocks which are placed by a user and uploading the shot image toa material object programming processing module by using an image acquisition unit; 3) converting the sequence of the material object blocks into a corresponding functional semantic sequence by usingthe material object programming processing module according to the computer vision identification modes and the position information of the material object programming blocks; 4) determining whether the current functional semantic sequence meets the grammatical and semantic rules of the material object display environment or not, and if the current functional semantic sequence does not meet the grammatical and semantic rules of the material object display environment, feeding back a corresponding error prompt; 5) replacing the corresponding material object programming blocks by using the useraccording to the prompt information; and 6) repeating the steps 2) to 5) until the functional semantic sequence corresponding to the sequence of the placed material object programming blocks meets the grammatical and semantic rules of the material object display environment, and finishing a programming task. By using the method and the system, the problem that children and green hands are difficult to learn programming is solved, and the system has low cost and is easy to popularize.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

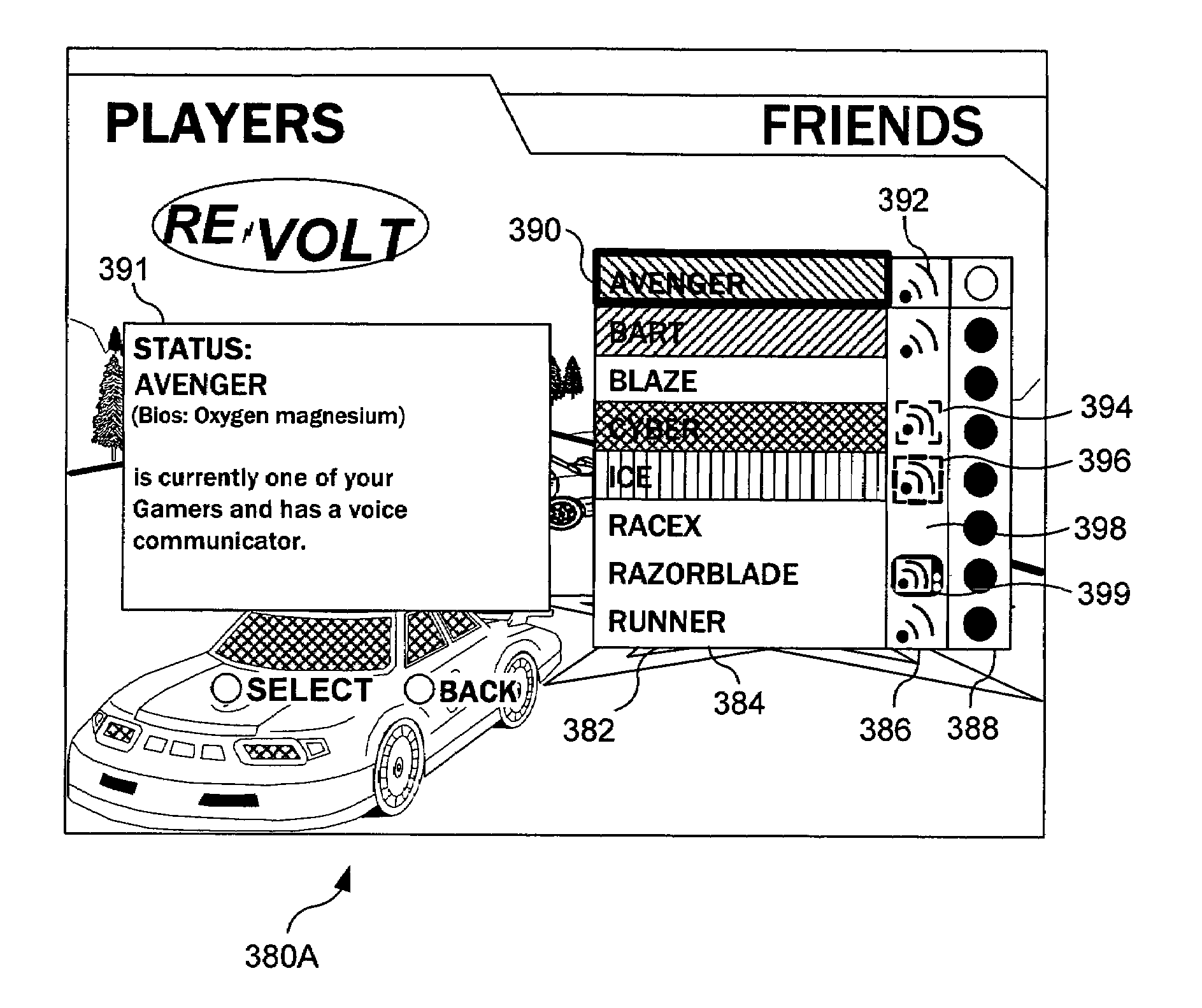

Visual indication of current voice speaker

Visually identifying one or more known or anonymous voice speakers to a listener in a computing session. For each voice speaker, voice data include a speaker identifier that is associated with a visual indicator displayed to indicate the voice speaker who is currently speaking. The speaker identifier is first used to determine voice privileges before the visual indicator is displayed. The visual indicator is preferably associated with a visual element controlled by the voice speaker, such as an animated game character. Visually identifying a voice speaker enables the listener and / or a moderator of the computing session to control voice communications, such as muting an abusive voice speaker. The visual indicator can take various forms, such as an icon displayed adjacent to the voice speaker's animated character, or a different icon displayed in a predetermined location if the voice speaker's animated character is not currently visible to the listener.

Owner:MICROSOFT TECH LICENSING LLC

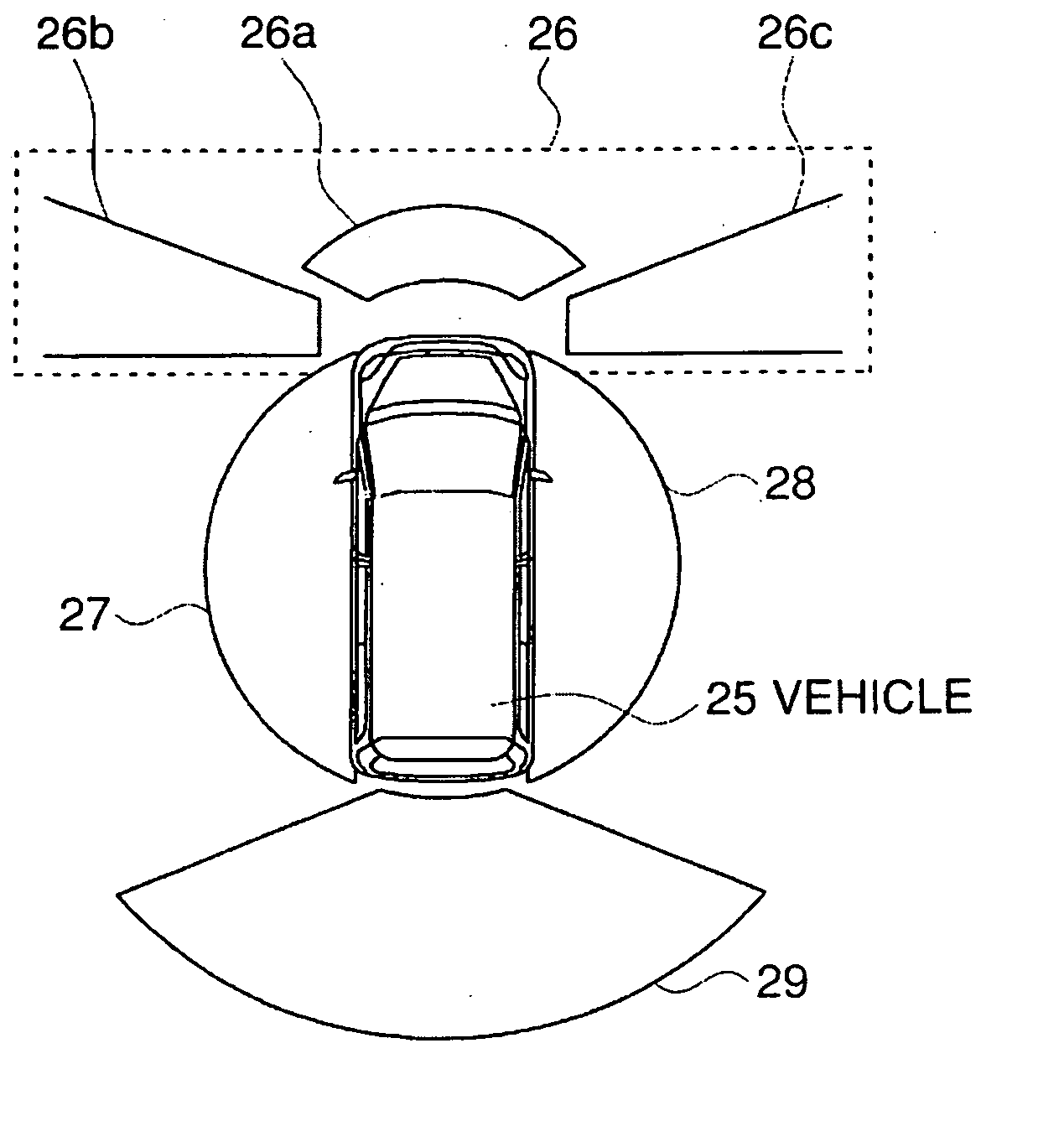

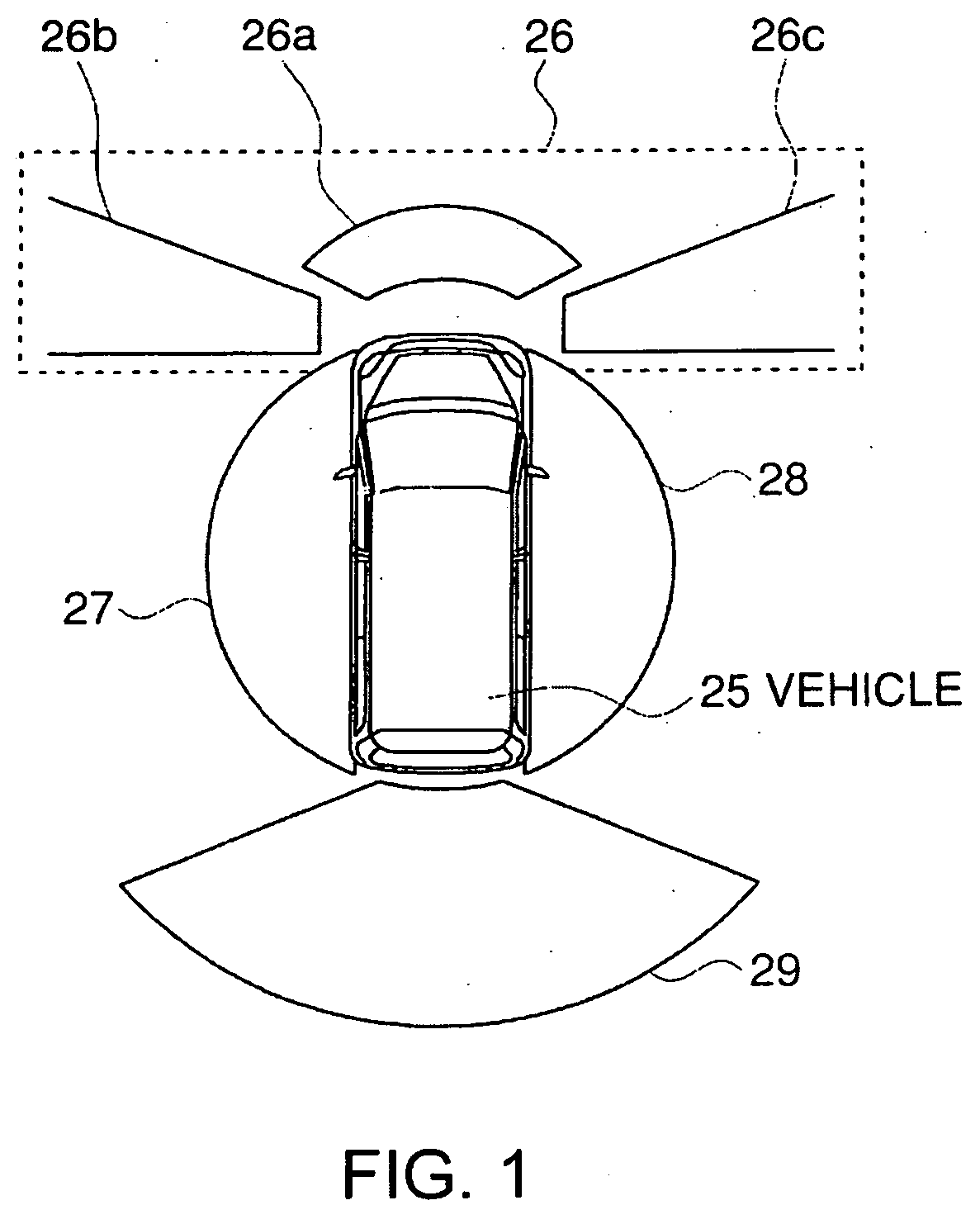

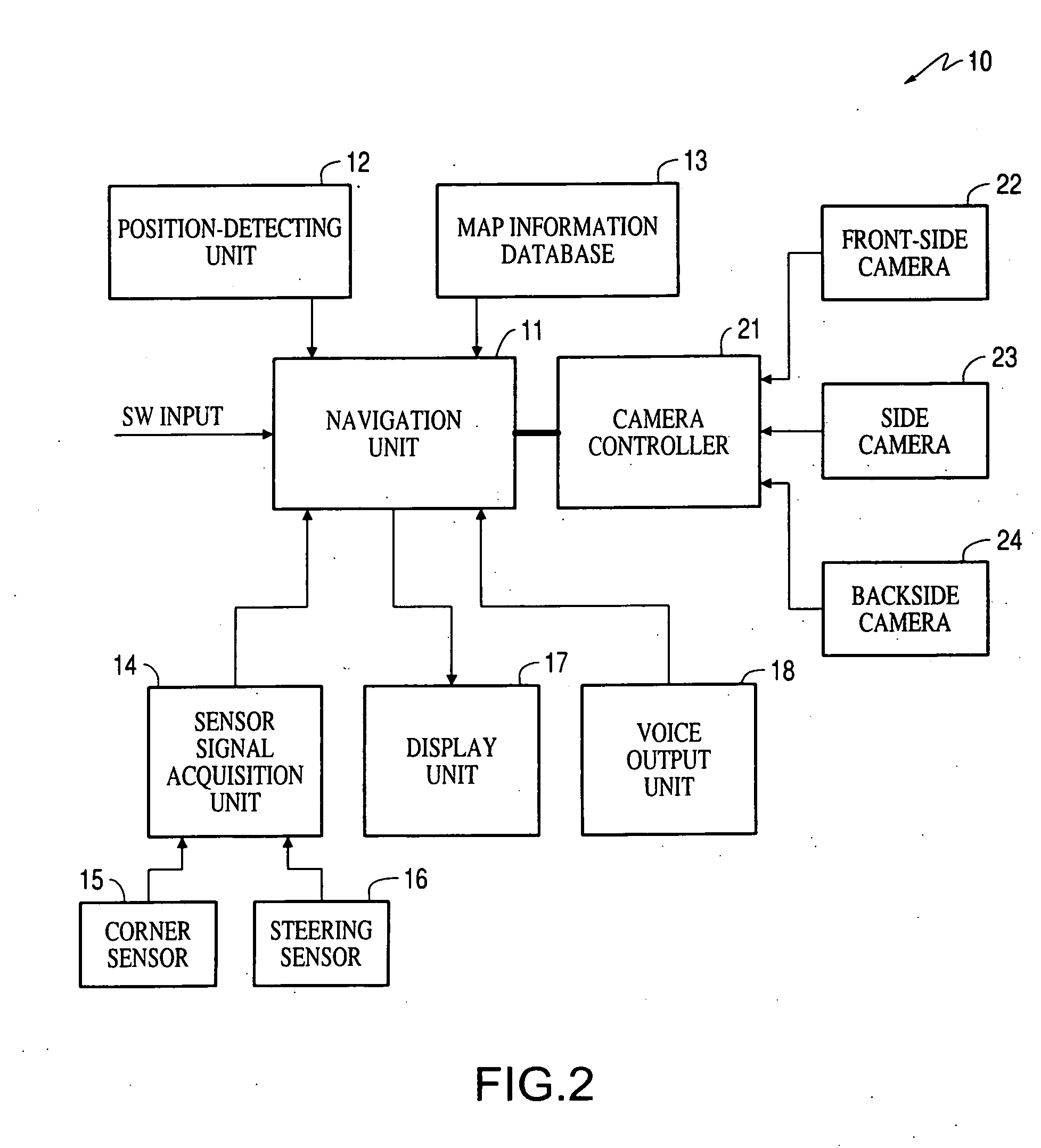

Visual recognition apparatus, methods, and programs for vehicles

InactiveUS20060215020A1Simple and safe processView accuratelyAnti-collision systemsColor television detailsDriver/operatorDisplay device

Around-vehicle visual recognition apparatus, methods, and programs acquire circumstances of a vehicle, including the vehicle's location and cause a camera to take images around the vehicle. The apparatus, methods, and programs determine at least one first area around the vehicle that is more relevant to a driver based on the circumstances of the vehicle than another area around the vehicle and display an image of the at least one first area on a display.

Owner:AISIN AW CO LTD

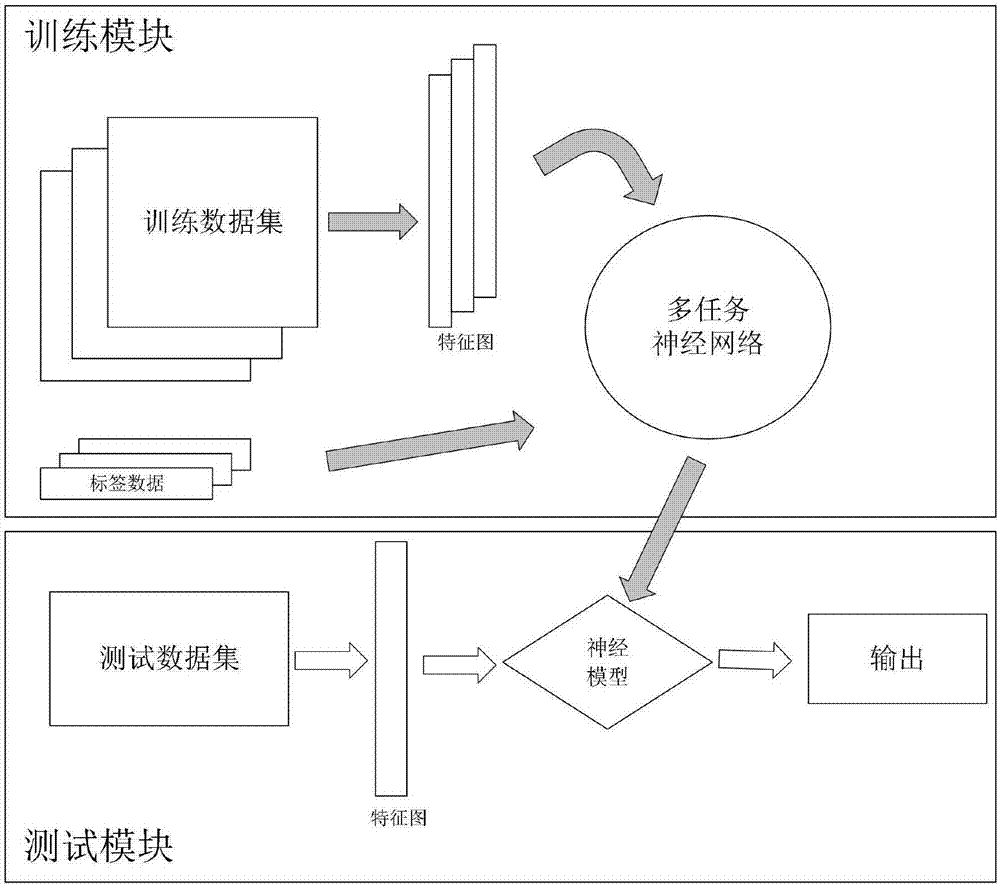

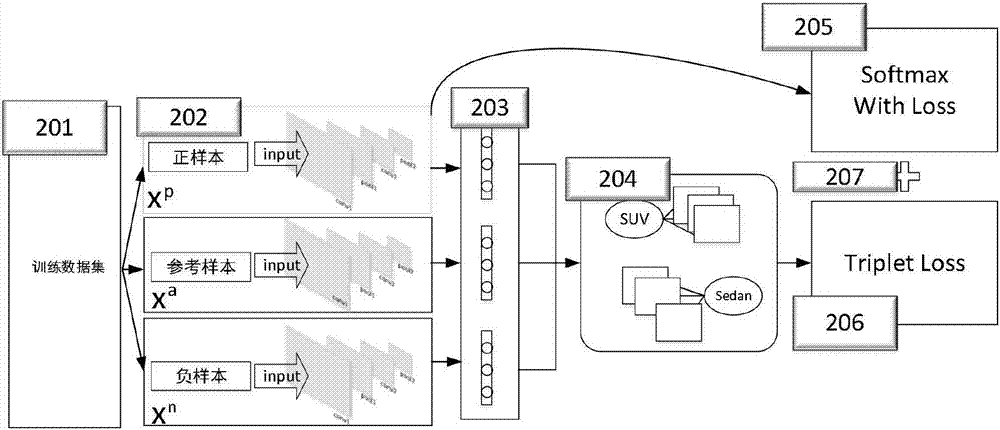

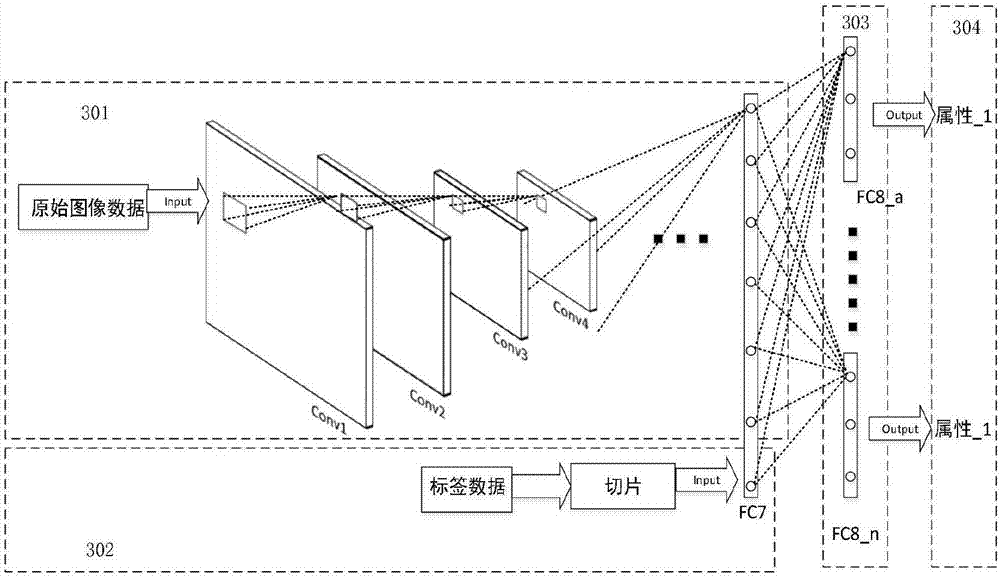

Fine granularity vehicle multi-property recognition method based on convolutional neural network

ActiveCN107886073AReduce human interventionCharacter and pattern recognitionNeural architecturesExtensibilityData set

The invention relates to a fine granularity vehicle multi-property recognition method based on a convolutional neural network and belongs to the technical field of computer visual recognition. The method comprises the steps that a neural network structure is designed, including a convolution layer, a pooling layer and a full-connection layer, wherein the convolution layer and the pooling layer areresponsible for feature extraction, and a classification result is output by calculating an objective loss function on the last full-connection layer; a fine granularity vehicle dataset and a tag dataset are utilized to train the neural network, the training mode is supervised learning, and a stochastic gradient descent algorithm is utilized to adjust a weight matrix and offset; and a trained neural network model is used for performing vehicle property recognition. The method can be applied to multi-property recognition of a vehicle, the fine granularity vehicle dataset and the multi-propertytag dataset are utilized to obtain more abstract high-level expression of the vehicle through the convolutional neural network, invisible characteristics reflecting the nature of the to-be-recognizedvehicle are learnt from a large quantity of training samples, therefore, extensibility is higher, and recognition precision is higher.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

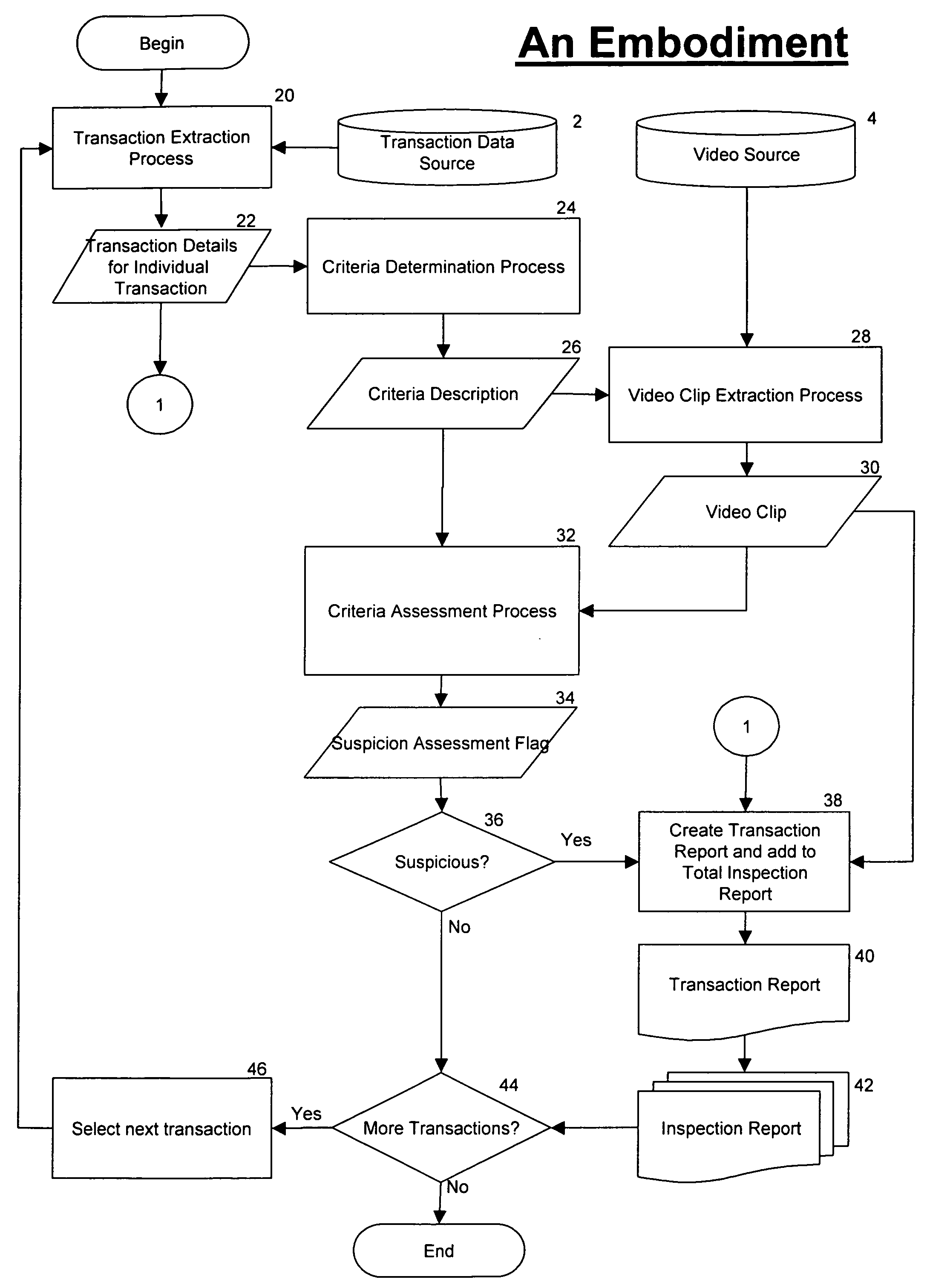

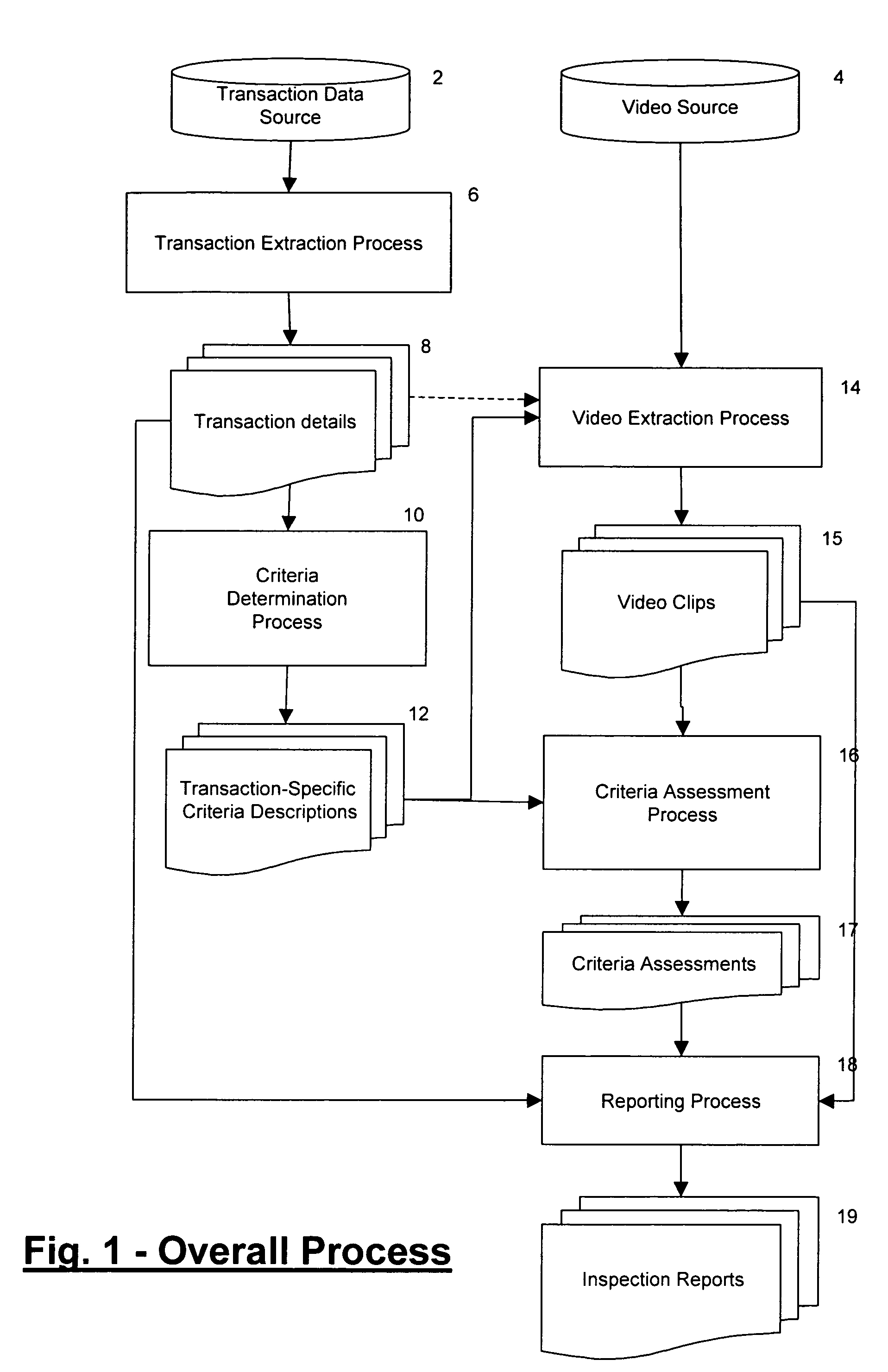

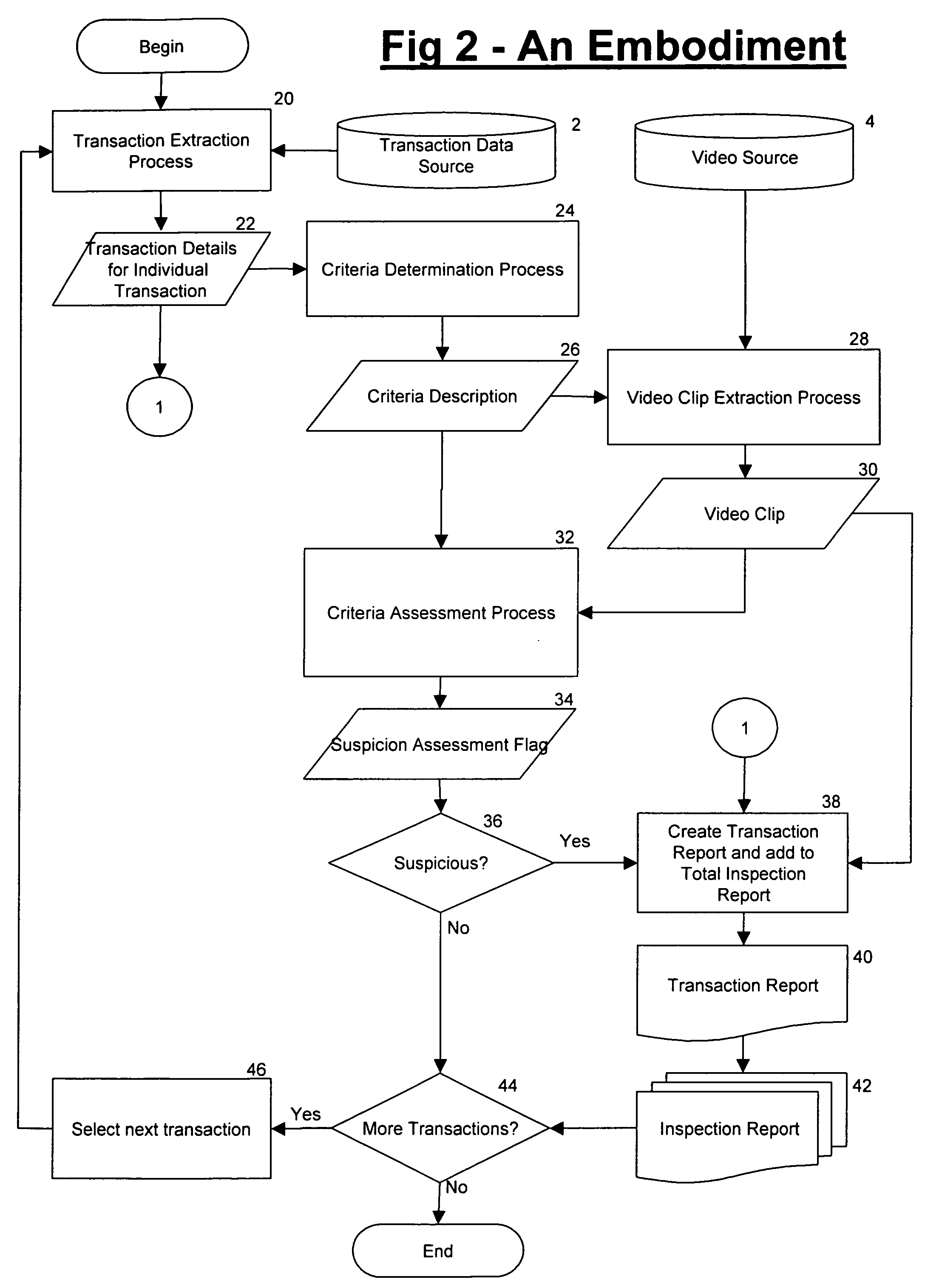

Method and apparatus for auditing transaction activity in retail and other environments using visual recognition

ActiveUS7516888B1Quick identificationShorten the timePayment architectureApparatus for meter-controlled dispensingTransaction dataFinancial transaction

A system detects a transaction outcome by obtaining video data associated with a transaction area and by obtaining transaction data concerning at least one transaction that occurs at the transaction area. The system correlates the video data associated with the transaction area to the transaction data to identify specific video data captured during occurrence of that at least one transaction at the transaction area. Based a transaction classification indicated by the transaction data, the system processes the video data to identify appropriate visual indicators within the video data that correspond to the transaction classification.

Owner:NCR CORP

Methods and apparatus relating to improved visual recognition and safety

InactiveUS20050152142A1Improve road safetyIncrease flexibilityLighting support devicesPoint-like light sourceVisual recognitionPrinted circuit board

A visual recognition and identification apparatus comprising a mounting means (2, 18) adapted for placement in a physical environment wherein the mounting means incorporates one or a plurality of light emitting diodes (1) adapted to provide a visual signal characterised in the light emitting diodes (1) are mounted and physically associated with the printed circuit circuit board (2, 18) wherein the printed circuit board (2, 18) has been modified to provide a distinct angle mounting for the light emitting diodes (1) to provide a defined viewing angle and wherein the printed circuit board (2, 18) is manufactured from the compliant material so as to allow the visual recognition and identification apparatus to be moulded and adapted to a number of environments including fixture to non flat surfaces, incorporation into clothing (19, 21), caps and badges by way of sewing or incorporation into a range of devices.

Owner:TRAYNOR NEIL

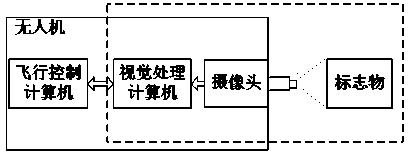

Accurate visual positioning and orienting method for rotor wing unmanned aerial vehicle

InactiveCN104298248APrecision hoverFlexible and convenient hoveringPosition/course control in three dimensionsVisual field lossVisual recognition

The invention discloses an accurate visual positioning and orienting method for a rotor wing unmanned aerial vehicle on the basis of an artificial marker. The accurate visual positioning and orienting method includes the following steps that the marker with a special pattern is installed on the surface of an artificial facility or the surface of a natural object; a camera is calibrated; the proportion mapping relation among the actual size of the marker, the relative distance between the marker and the camera and the size, in camera imaging, of the marker is set up, and the keeping distance between the unmanned aerial vehicle and the marker is set; the unmanned aerial vehicle is guided to fly to the position where the unmanned aerial vehicle is to be suspended, the unmanned aerial vehicle is adjusted so that the marker pattern can enter the visual field of the camera, and a visual recognition function is started; a visual processing computer compares the geometrical characteristic of the pattern shot currently and a standard pattern through visual analysis to obtain difference and transmits the difference to a flight control computer to generate a control law so that the unmanned aerial vehicle can be adjusted to eliminate the deviation of the position, the height and the course, and accurate positioning and orienting suspension is achieved. The accurate visual positioning and orienting method is high in independence, good in stability, high in reliability and beneficial for safety operation, nearby the artificial facility the natural object, of the unmanned aerial vehicle.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

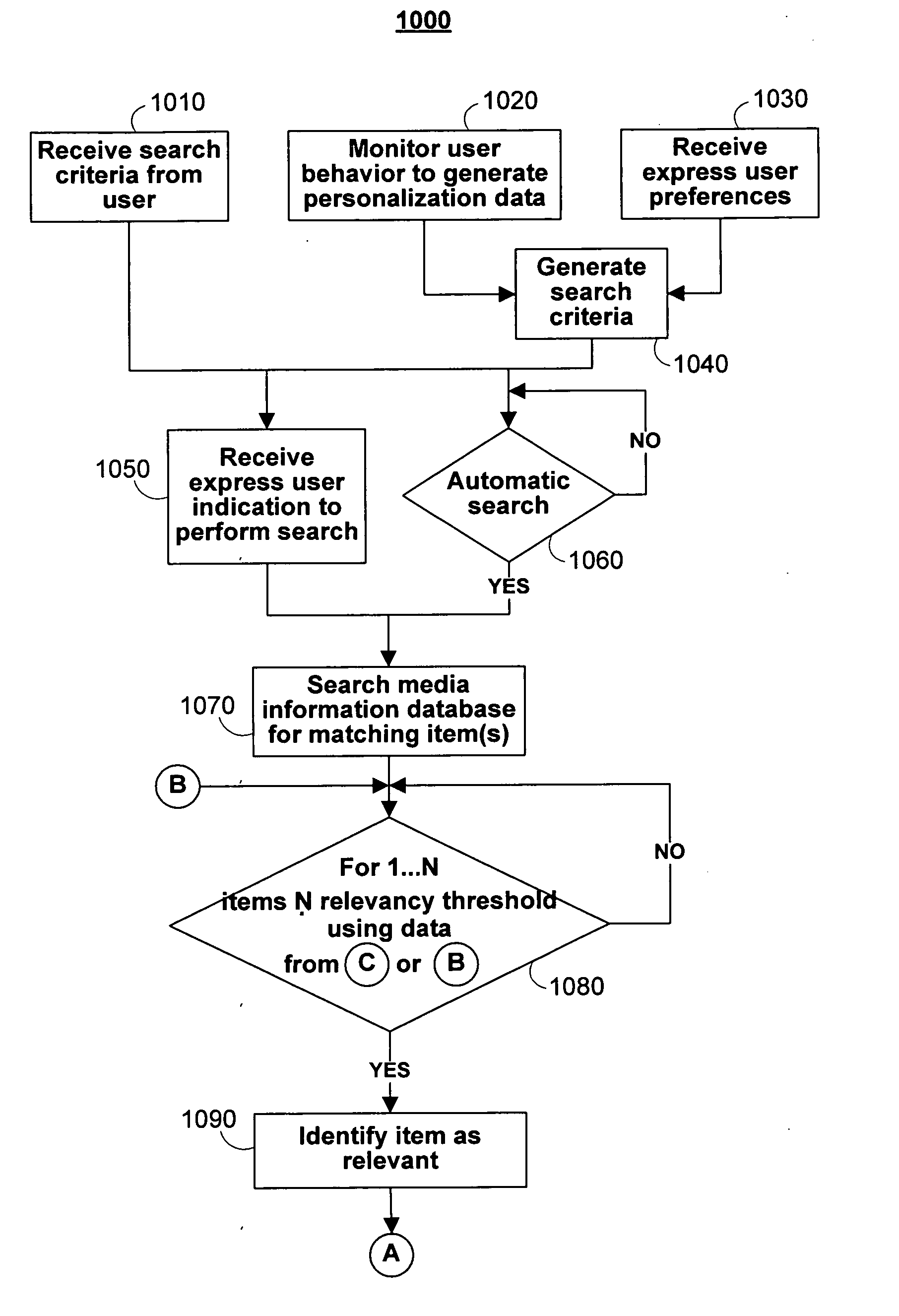

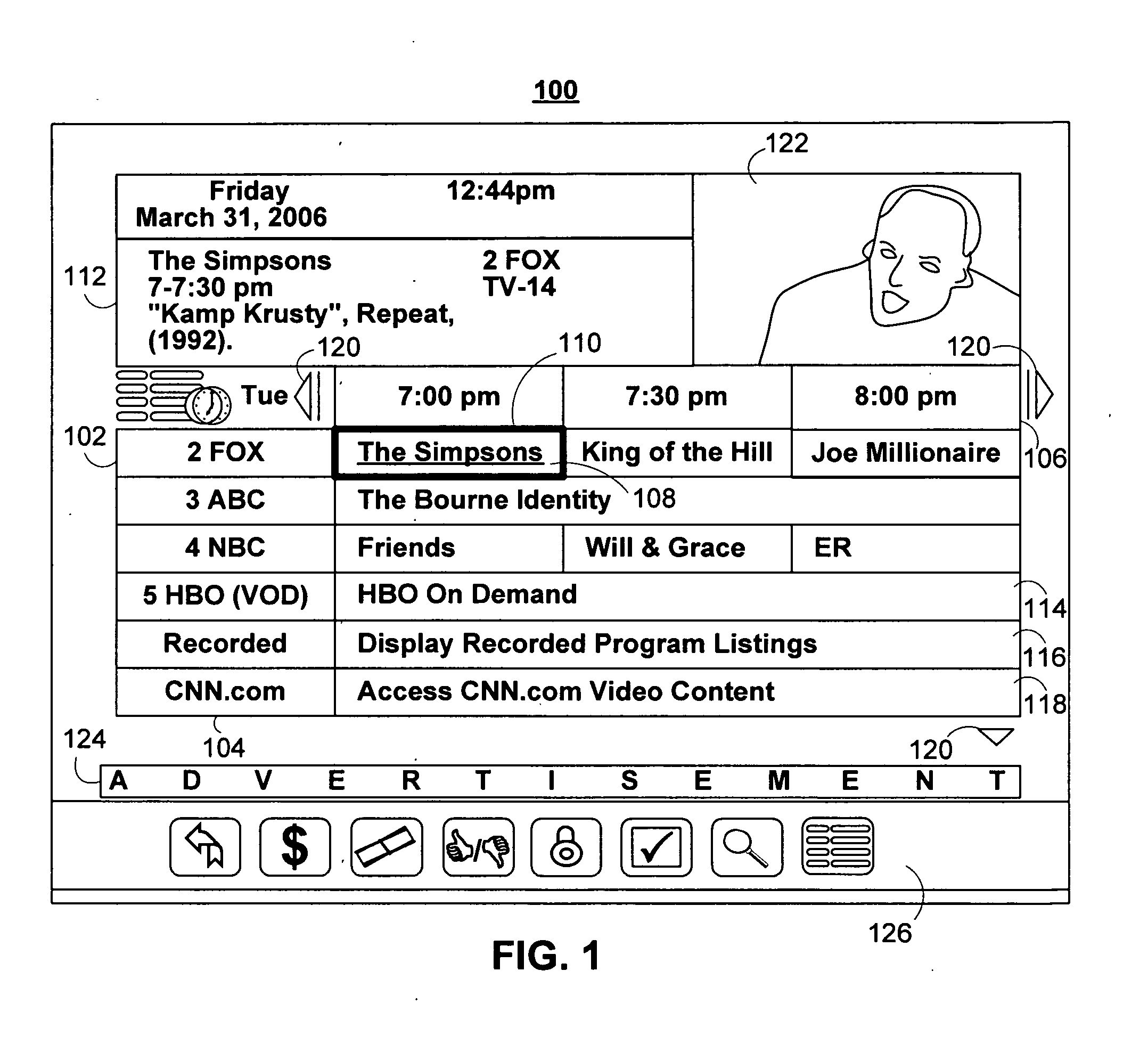

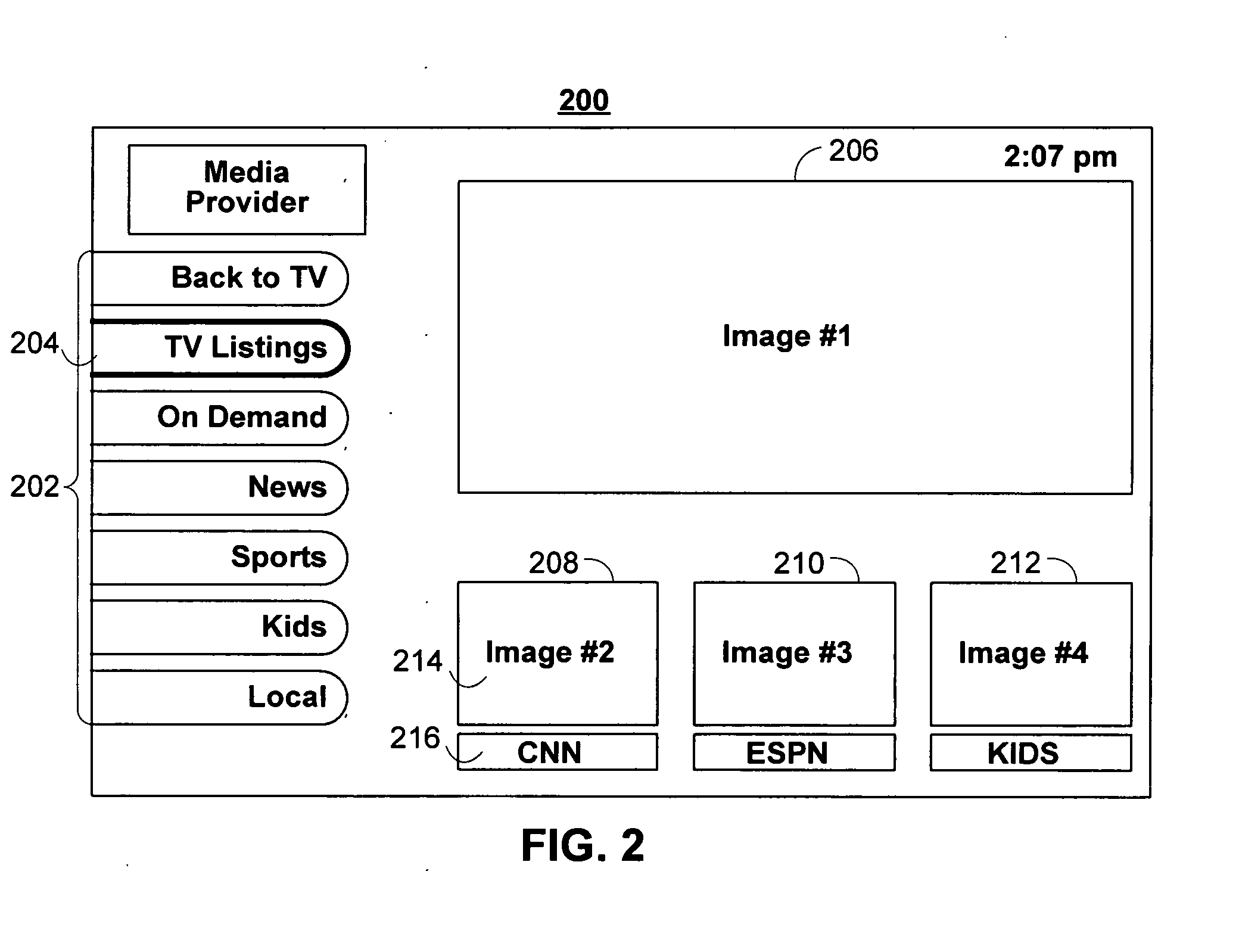

Presenting media guidance search results based on relevancy

InactiveUS20080104058A1Television system detailsDigital data processing detailsMediaFLOVisual recognition

Systems and methods for presenting search results based on relevancy in an interactive media guidance application are disclosed. After performing a user-initiated or automatic search for media content, the interactive media guidance application determines which of the hits are most relevant to the user. The guidance application then displays, or visually identifies, the relevant items. Some embodiments employ using different display arrangements based on the number of relevant items. Some embodiments display the relevant items in recommendation lists or hot lists.

Owner:UNITED VIDEO PROPERTIES

Method and system for utilizing string-length ratio in seismic analysis

InactiveUS20050288863A1Facilitates visual recognitionSimple processCharacter and pattern recognitionSeismic signal processingHydrocotyle bowlesioidesVisual recognition

An apparatus and method for analyzing known data, storing the known data in a pattern database (“PDB”) as a template is provided. Additional methods are provided for comparing new data against the templates in the PDB. The data is stored in such a way as to facilitate the visual recognition of desired patterns or indicia indicating the presence of a desired or undesired feature within the new data. Data may be analyzed as fragments, and the characteristics of various fragments, such as sting length, may be calculated and compared to other indicia to indicate the presence or absence of a particular substance, such as a hydrocarbon. The apparatus and method is applicable to a variety of applications where large amounts of information are generated, and / or if the data exhibits fractal or chaotic attributes.

Owner:CHROMA ENERGY

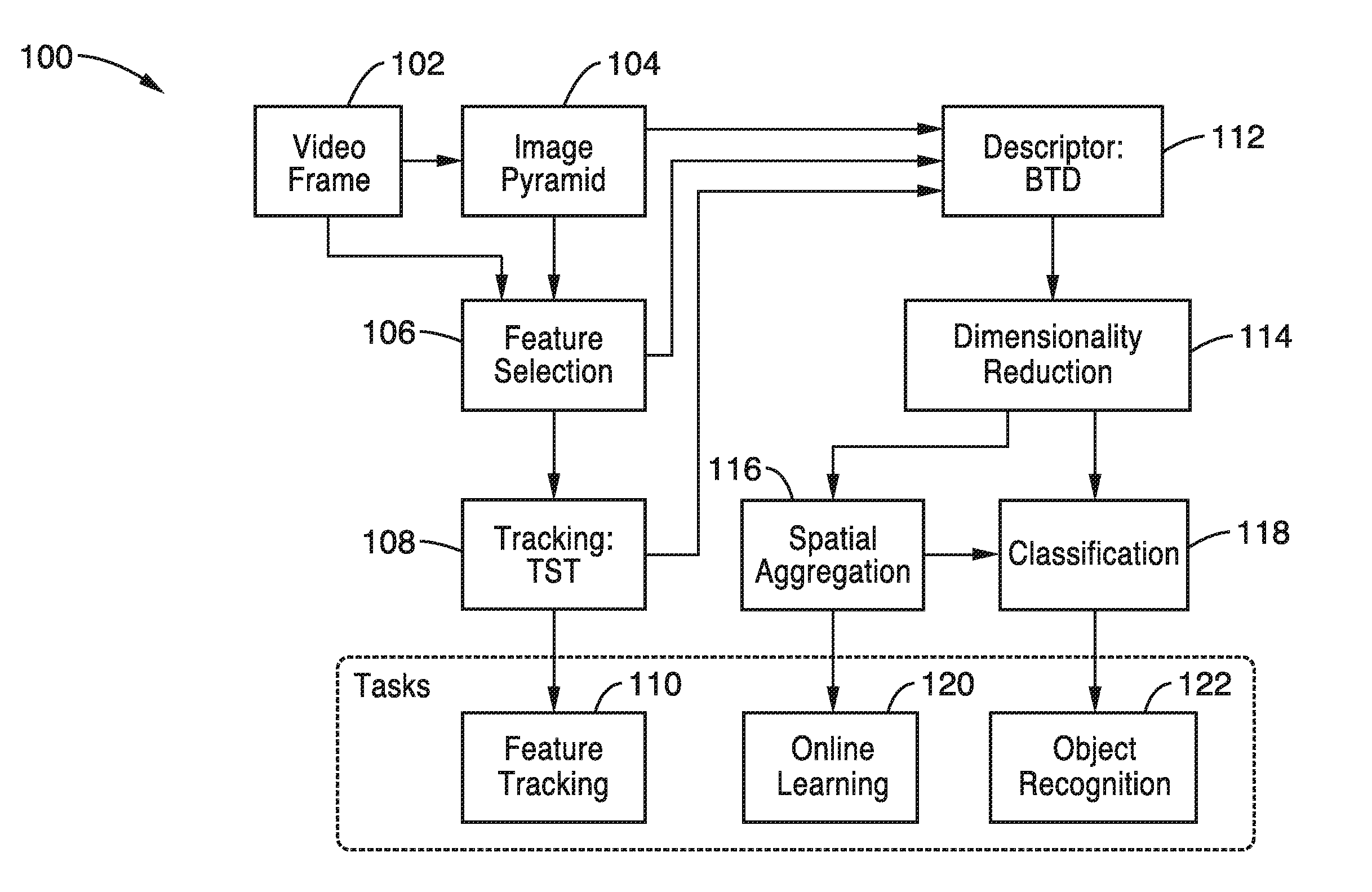

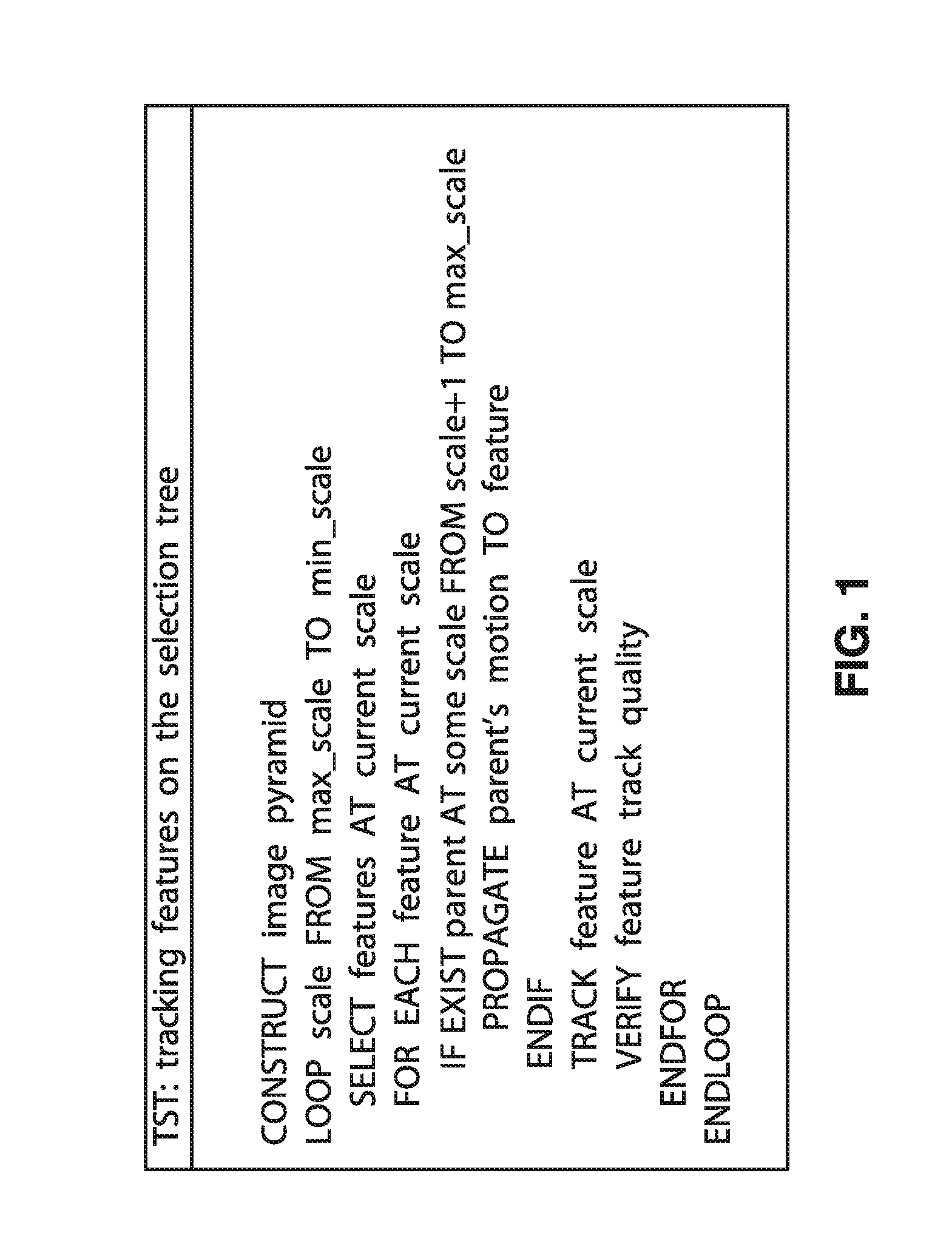

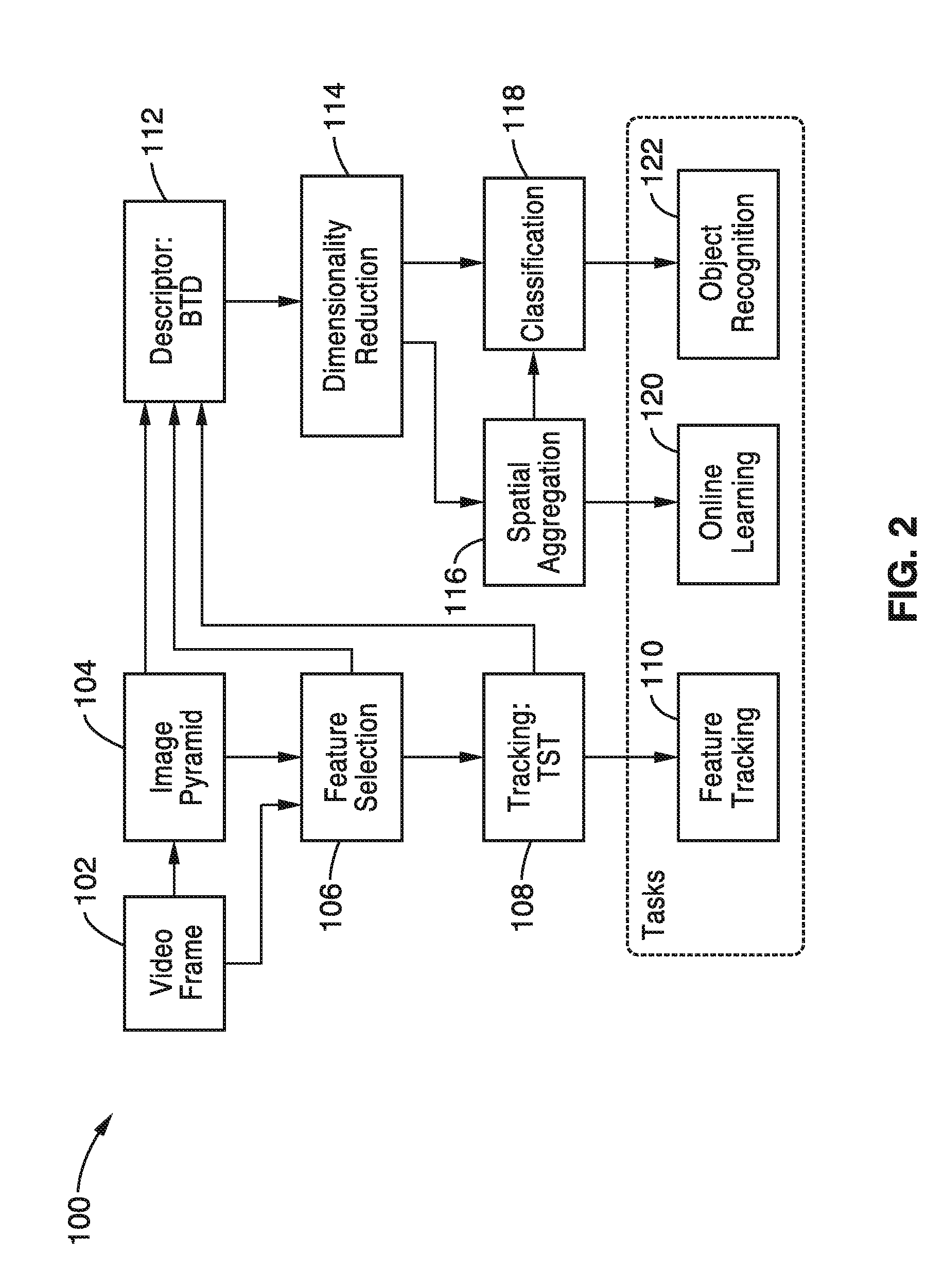

End-to-end visual recognition system and methods

We describe an end-to-end visual recognition system, where “end-to-end” refers to the ability of the system of performing all aspects of the system, from the construction of “maps” of scenes, or “models” of objects from training data, to the determination of the class, identity, location and other inferred parameters from test data. Our visual recognition system is capable of operating on a mobile hand-held device, such as a mobile phone, tablet or other portable device equipped with sensing and computing power. Our system employs a video based feature descriptor, and we characterize its invariance and discriminative properties. Feature selection and tracking are performed in real-time, and used to train a template-based classifier during a capture phase prompted by the user. During normal operation, the system scores objects in the field of view based on their ranking.

Owner:RGT UNIV OF CALIFORNIA

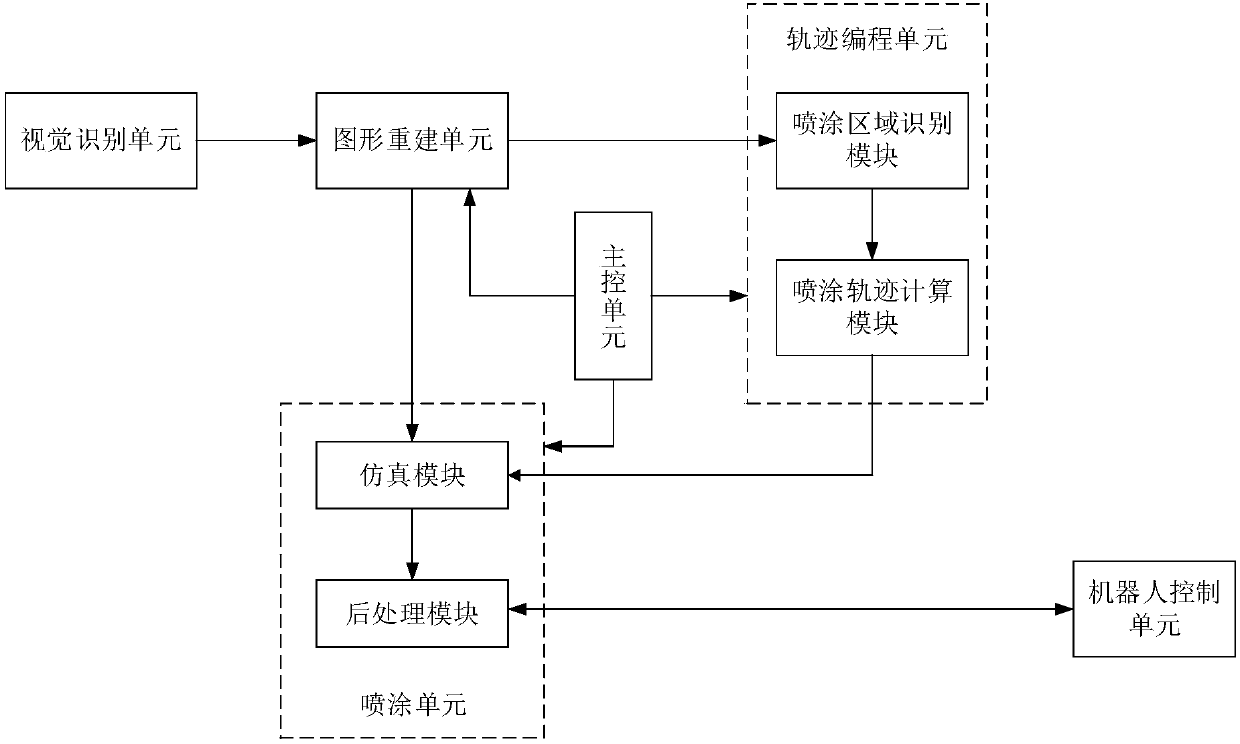

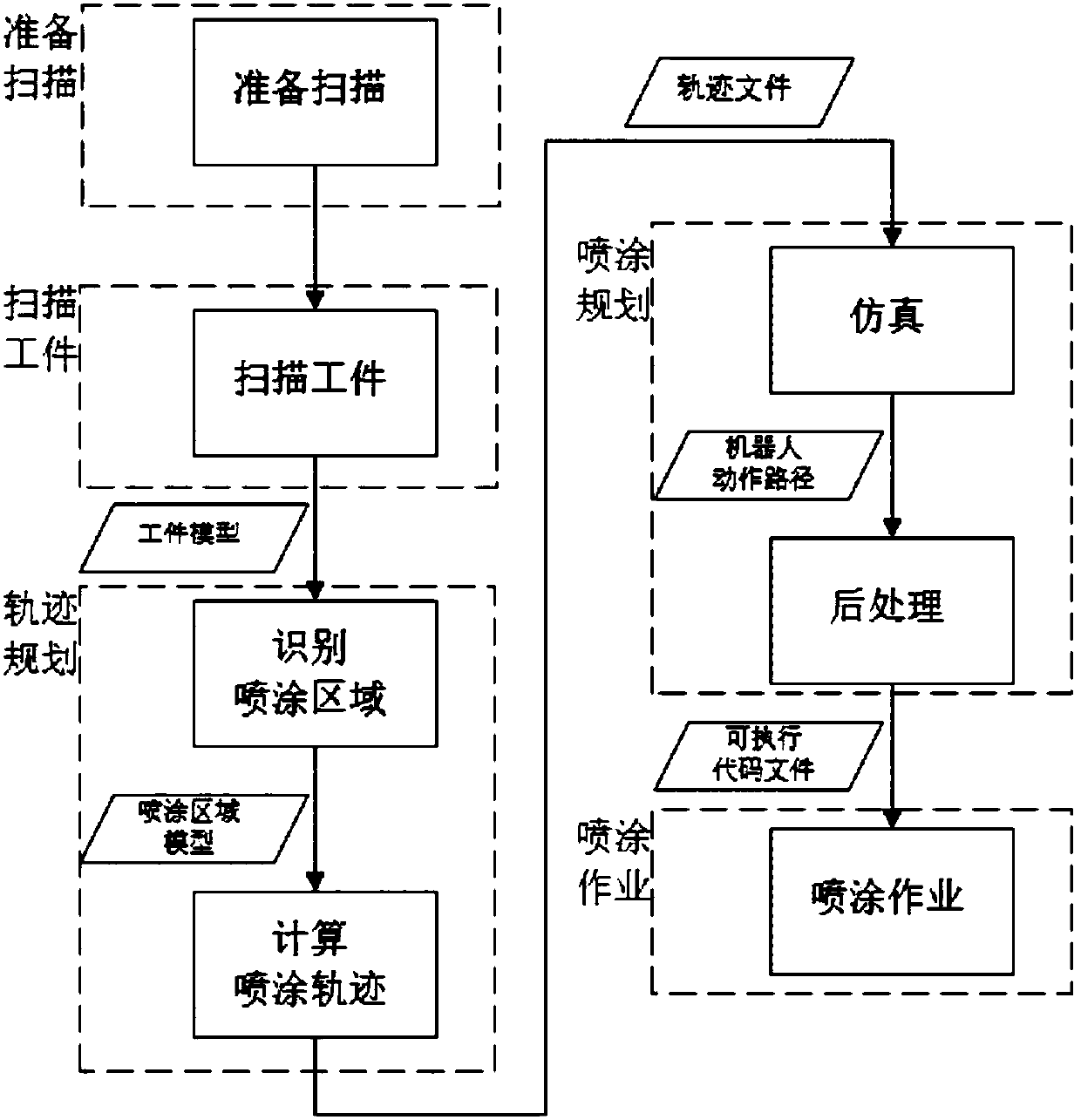

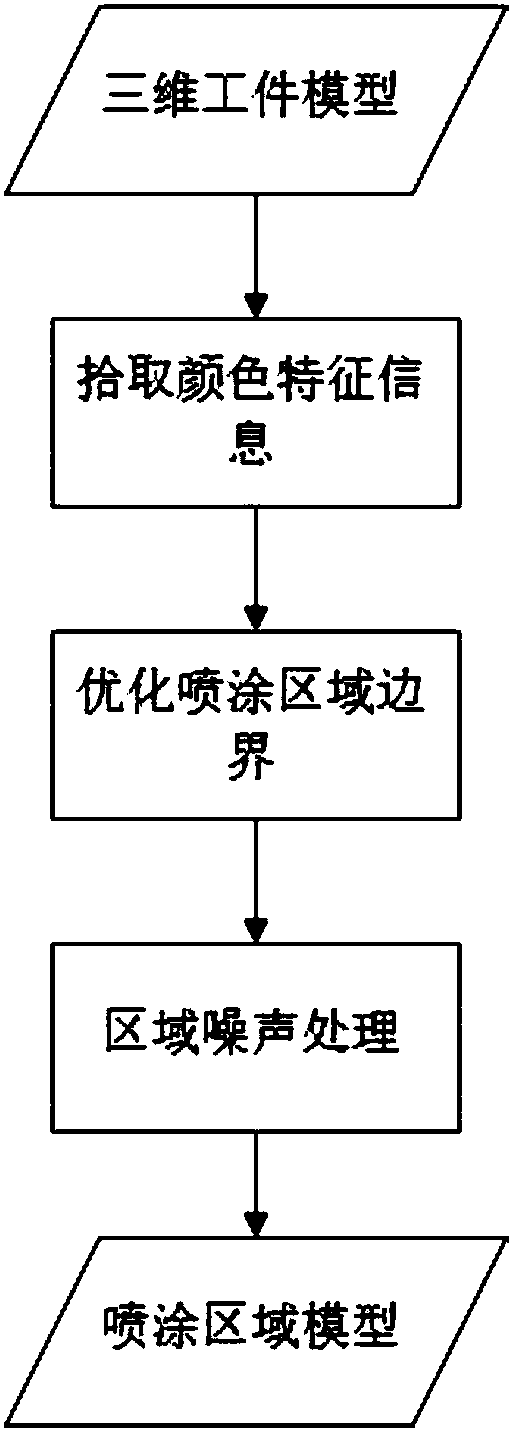

Mobile robot automatic spraying device and mobile robot automatic spraying control system and method

PendingCN107908152AImprove adaptabilityLess programming effortNumerical controlSimulationVisual perception

The invention discloses a mobile robot automatic spraying device and a mobile robot automatic spraying control system and method. The mobile robot automatic spraying control system comprises a visualrecognition unit, a main control unit, a graphic reconstruction unit, a trajectory programming unit, a spraying unit and a robot control unit, wherein the visual recognition unit performs processing on collected image information of a workpiece to obtain geometric information; the graphic reconstruction unit performs optimization processing on the geometric information of the workpiece to obtain agridding three-dimensional model of the workpiece; the trajectory programming unit automatically recognizes a spraying area and calculates a spraying trajectory strategy to obtain a spraying trajectory file; the spraying unit plans a spraying path according to a positional relation between the workpiece of a robot and the spraying trajectory file, and generates codes capable of being executed bythe robot; and the robot control unit controls the industrial robot to execute a spraying action according to the code file. According to the invention, a visual recognition based trajectory planningmethod is adopted, the spraying trajectory is automatically planned in allusion to a workpiece to be sprayed, the robot automatically completes the paint spraying work, the work flexibility is increased, and the programming workload is reduced.

Owner:苏州瀚华智造智能技术有限公司

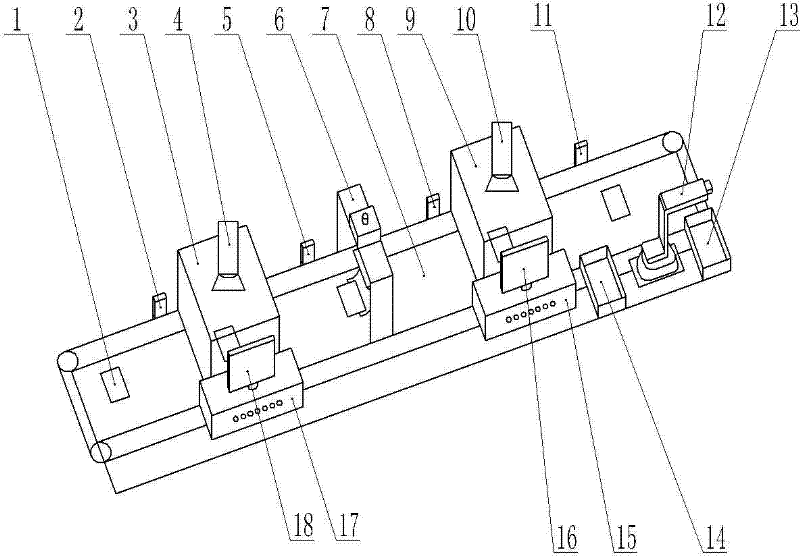

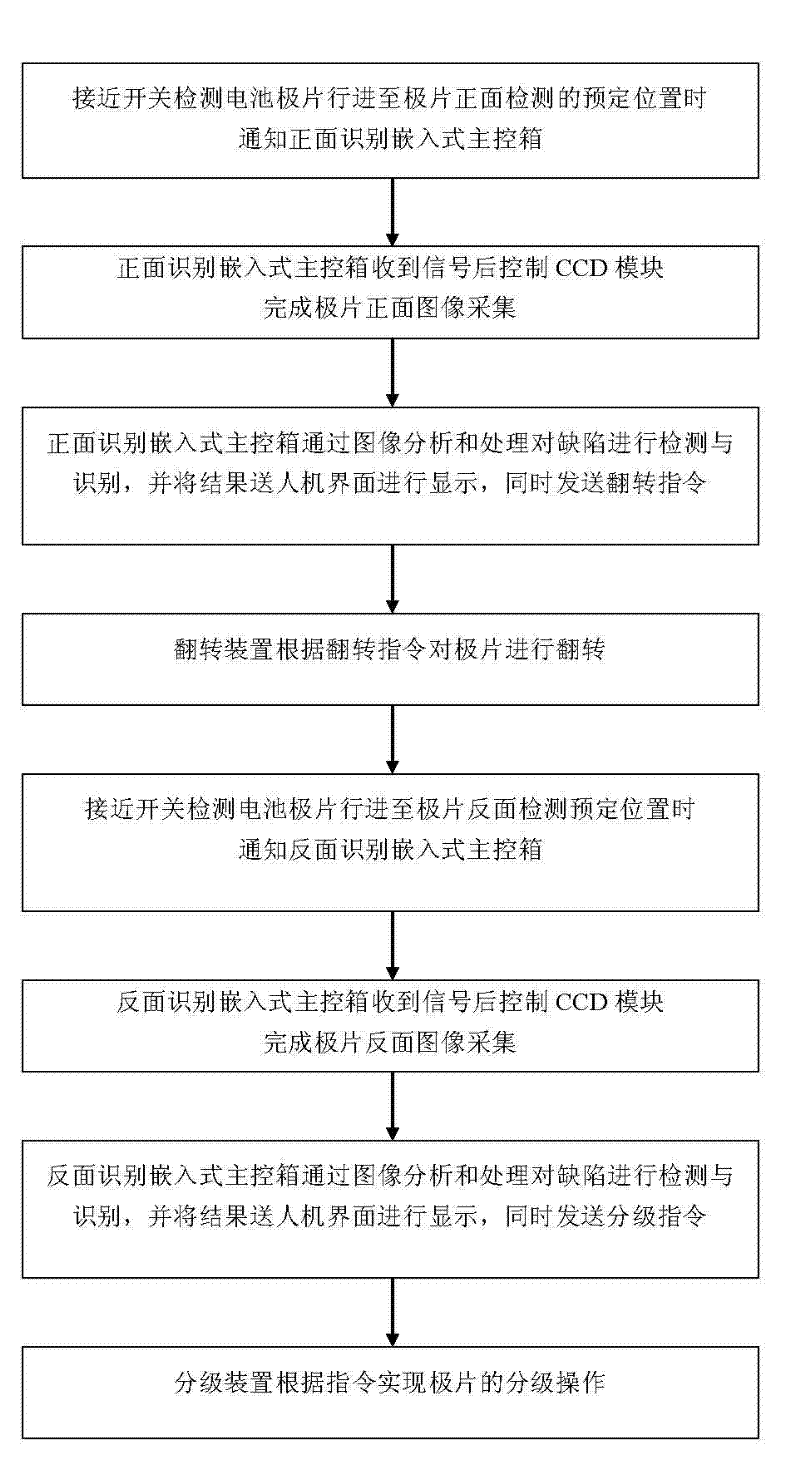

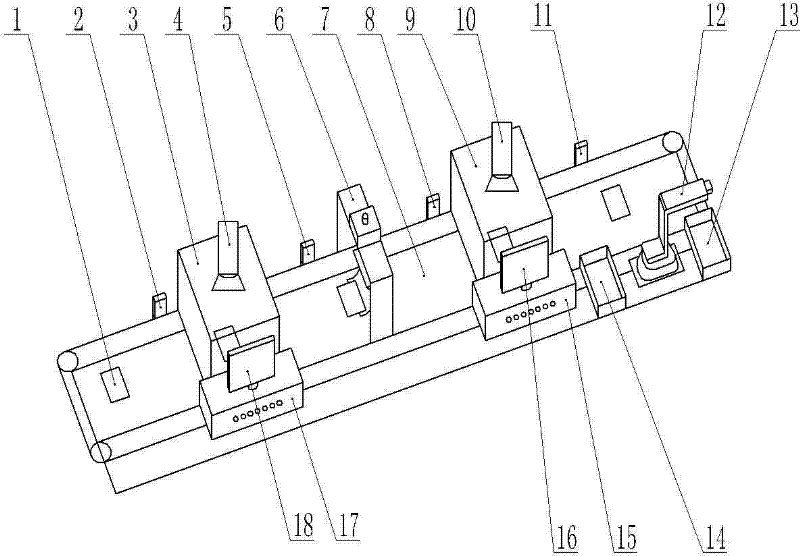

An online detection and classification device and method for lithium-ion battery pole pieces

InactiveCN102284431ARealize online detectionThe detection process is fastOptically investigating flaws/contaminationSortingProduction rateVisual recognition

The invention relates to an on-line detection grading device for a lithium ion battery pole piece and a method thereof. In the device, image data of the lithium ion battery pole piece are acquired by using an embedded visual recognition device and are analyzed and processed to judge whether the appearance quality of the pole piece meets requirements or not, so that the actions of a turnover part and a grading part are controlled to realize real-time on-line double-side detection and grading of the electrode pole piece. The method has high detection speed; generally, the detection of the frontside and the back side of one electrode pole piece can be finished within 1 second, so that the production efficiency is increased; if the pole piece is manually detected, longer time is required to be taken; the detection accuracy is high; the embedded visual detection technology is adopted, so that the accuracy is high and the stability is also realized, and a detection result has high uniformity; the running time is long, namely a detection system can uninterruptedly run for 24 hours, so that the productivity is improved and the labor cost is saved; and on-line detection can be realized, so that the on-line detection of the electrode pole piece is realized, the interference in a manual operation process is reduced, and the productivity is improved.

Owner:HENAN UNIV OF SCI & TECH

Method and system for trace aligned and trace non-aligned pattern statistical calculation in seismic analysis

InactiveUS20060184488A1Facilitates visual recognitionComparison process is enhancedCharacter and pattern recognitionSeismic signal processingHydrocotyle bowlesioidesVisual recognition

An apparatus and method for analyzing known data, storing the known data in a pattern database (“PDB”) as a template is provided. Additional methods are provided for comparing new data against the templates in the PDB. The data is stored in such a way as to facilitate the visual recognition of desired patterns or indicia indicating the presence of a desired or undesired feature within the new data. Data may be analyzed as fragments, and the characteristics of various fragments, such as string length, may be calculated and compared to other indicia to indicate the presence or absence of a particular substance, such as a hydrocarbon. The length and / or character of each fragment are a product of the cutting criteria in both horizontal and vertical orientations. Modifying the cutting criteria can have a beneficial effect on the results of the analysis and / or in the amount of time necessary to achieve useful results. The apparatus and method is applicable to a variety of applications where large amounts of information are generated, and / or if the data exhibits fractal or chaotic attributes.

Owner:IKON SCI AMERICAS

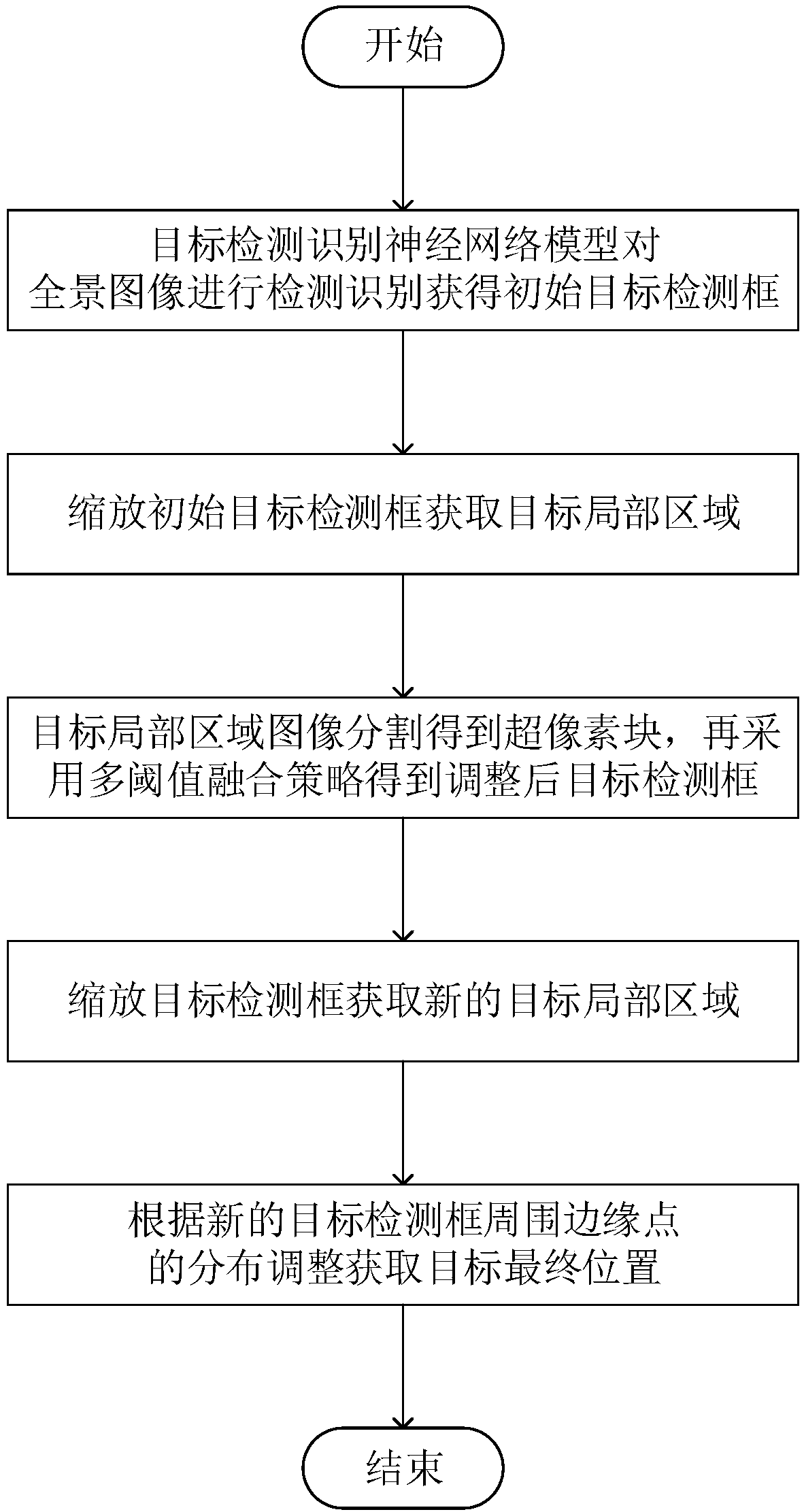

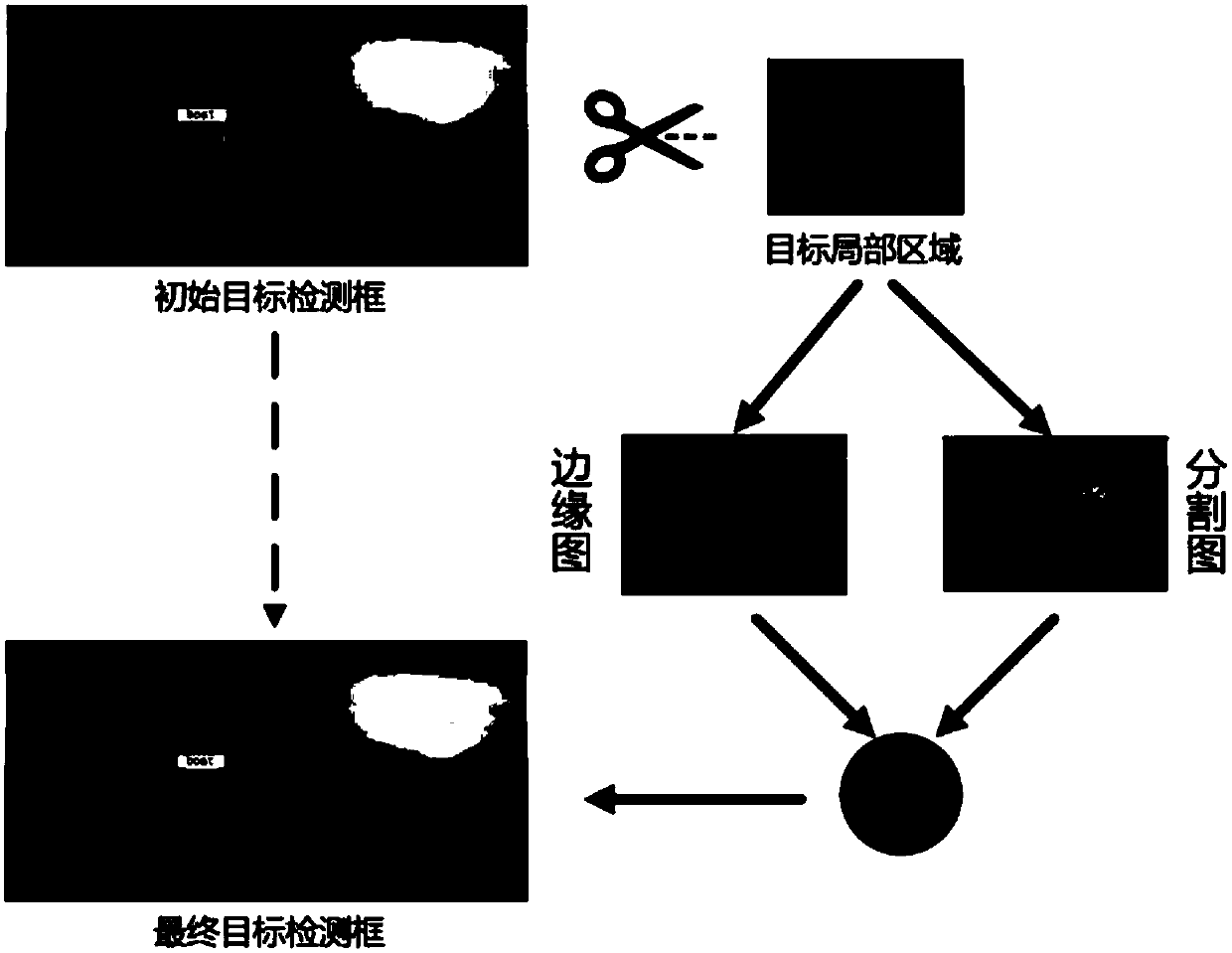

Target detection and recognition method for water surface panoramic images

ActiveCN107844750AOvercoming distortion effectsCharacter and pattern recognitionPattern recognitionEdge maps

The invention discloses a target detection and recognition method for water surface panoramic images, and belongs to the technical field of computer visual recognition. The method comprises the following steps of: firstly carrying out target detection and recognition on a panoramic image by utilizing a target detection and recognition neural network model, and obtaining a target type and an initial position of a detection box; carrying image segmentation on a target local area to obtain a plurality of superpixel blocks, and combining the superpixel blocks by adoption of a multi-threshold fusion strategy so as to obtain an adjusted target detection box; calculating an edge map of a new target local area, adjusting the target detection box according to peripheral edge points of the target detection box so as to obtain a final target detection box; and finally converting the position of the final target detection box into a target practical position. According to the method, distortion effect in panoramic images can be effectively overcome, and target positions can be correctly recognized from the panoramic images.

Owner:HUAZHONG UNIV OF SCI & TECH

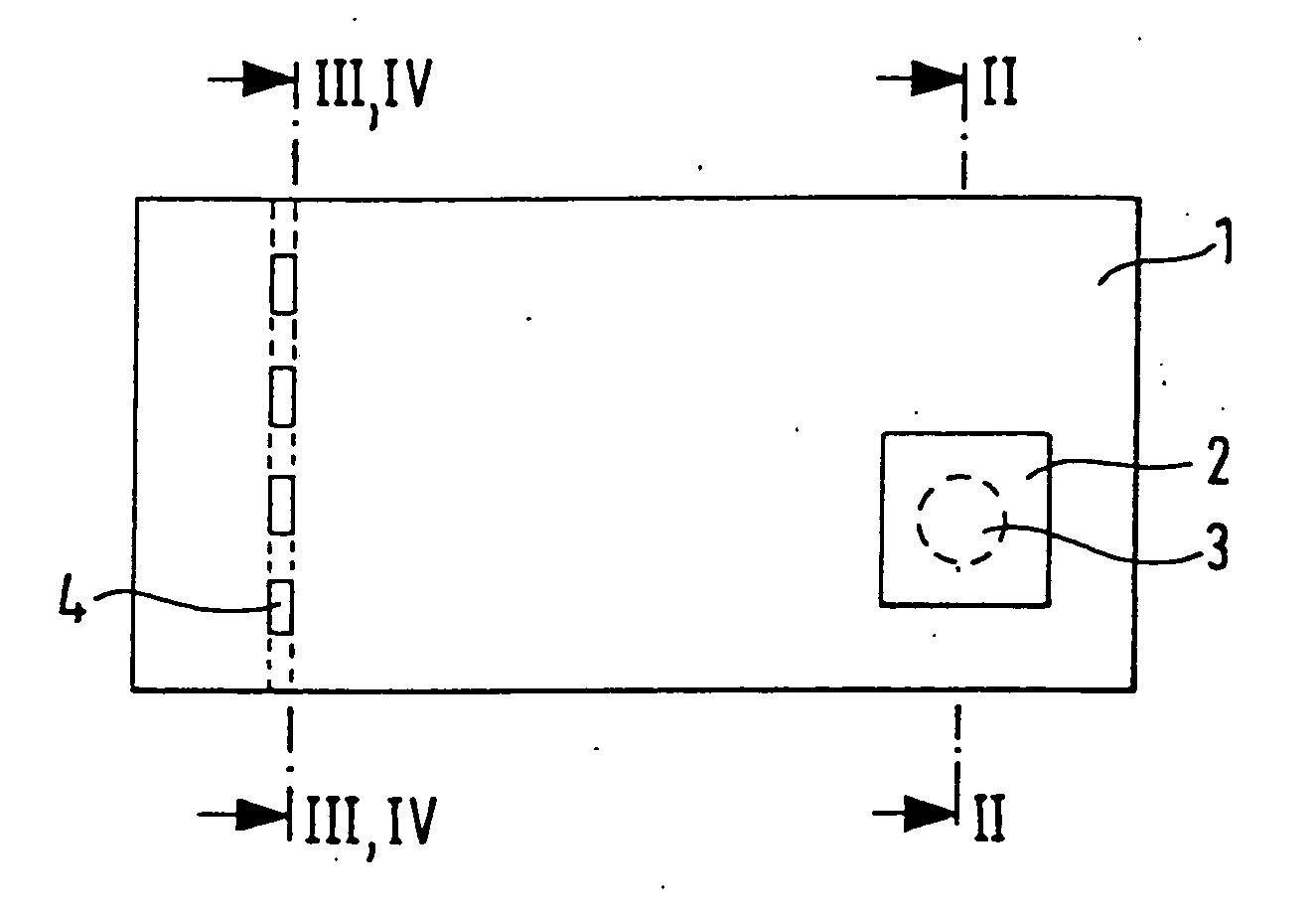

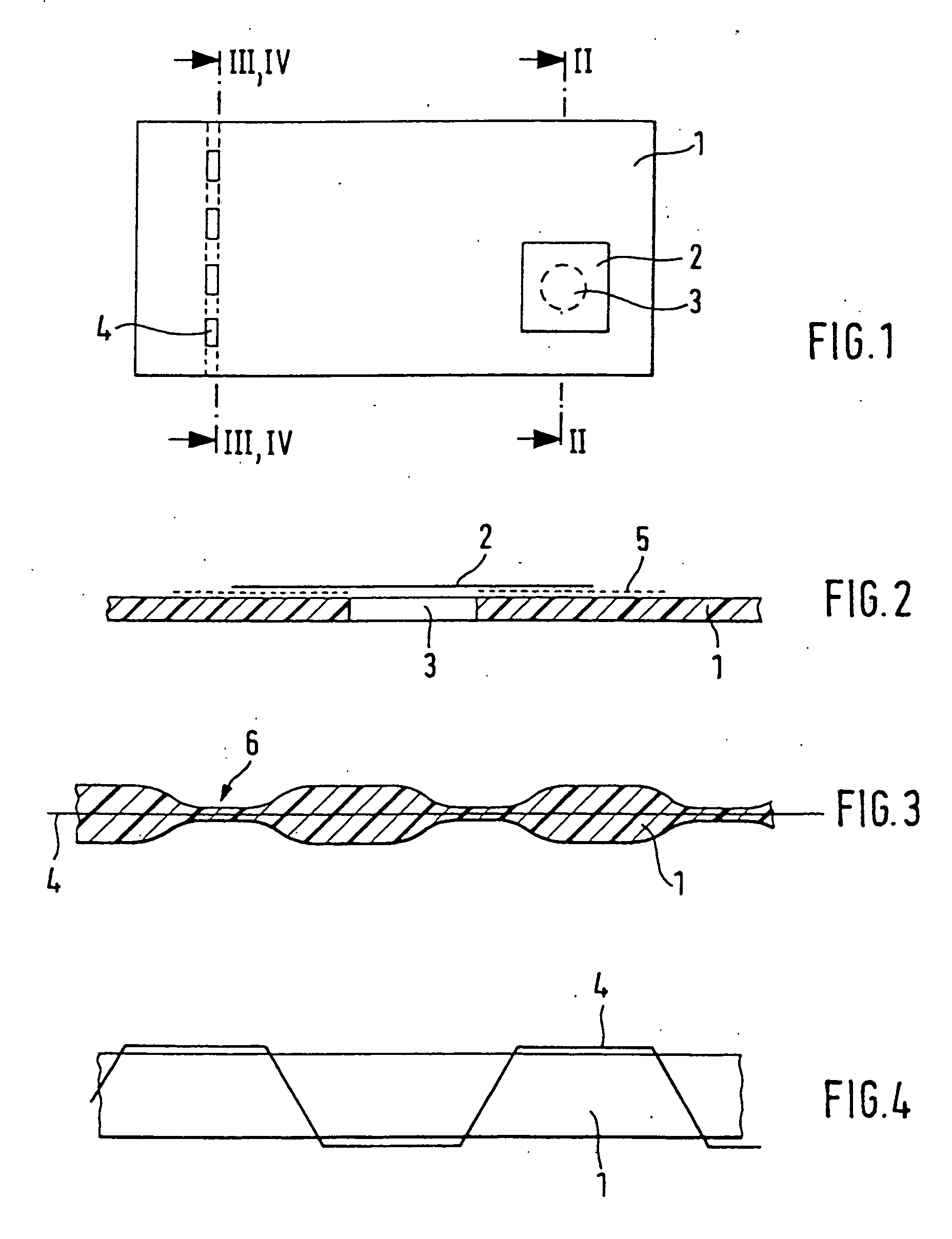

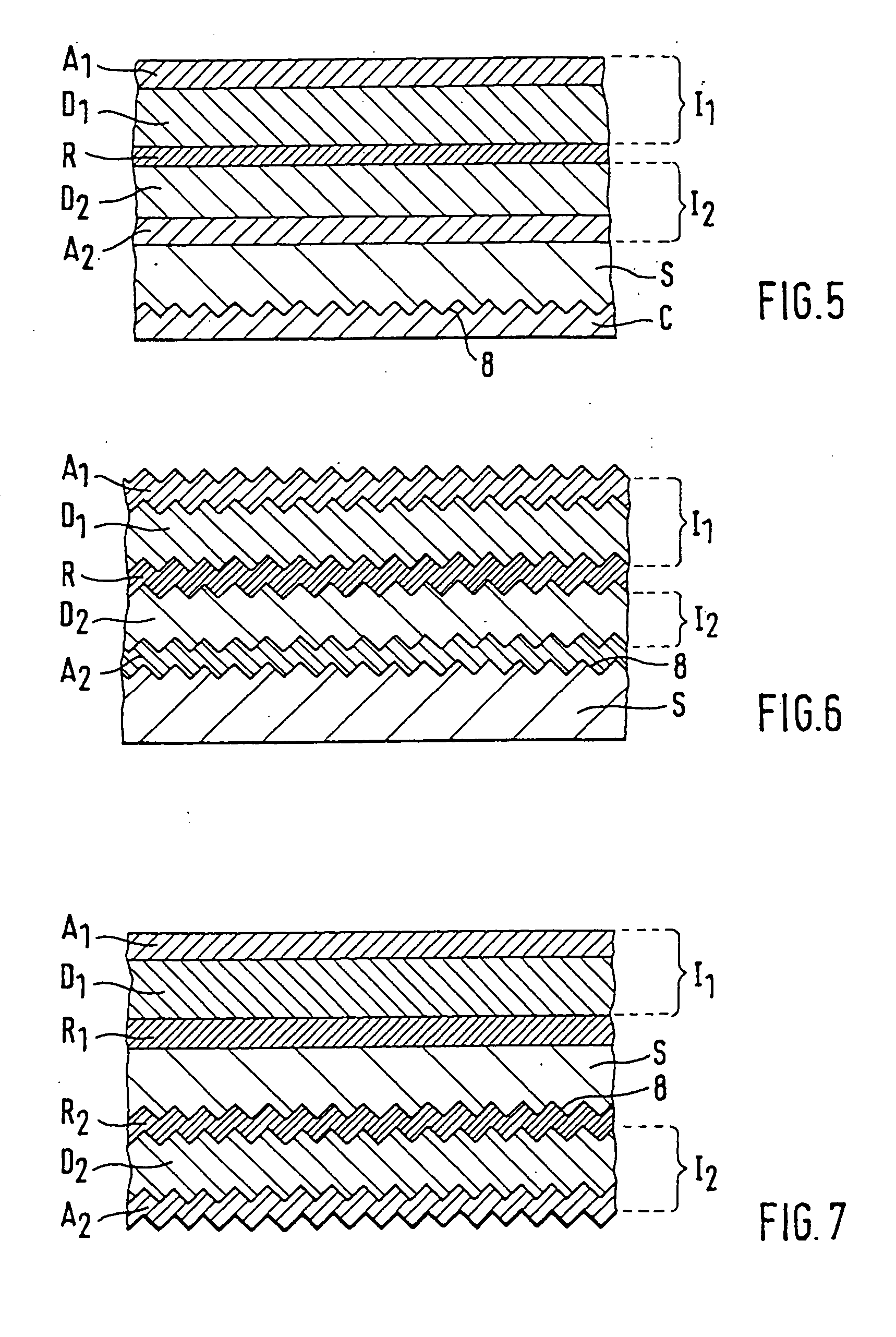

Security element and security document with one such security element

InactiveUS20050127663A1Improve anti-counterfeiting performanceImprove reflective effectOther printing matterHolographic optical componentsColor shiftComputer science

A security element 2, 4 for embedding in or application to a security document in such a way that it is visually recognizable from both sides of the security document 1, is structured in a multilayer fashion and includes two interference elements I1, I2 with color shift effect, a metallic reflection layer R located in between as well as, optionally, diffraction structures 8. Depending on the disposition of the layers I1, R, I2 and the optionally present diffraction structures. 8 on a transparent substrate S the color shift effect and / or the diffractive effects are perceptible-from-one or from both sides of the security element 2, 4. The security element is particularly suitable as a two-sided window thread 4 and as a label or transfer element 2 above a hole 3 in the security document 1.

Owner:GIESECKE & DEVRIENT CURRENCY TECHNOLOGY GMBH

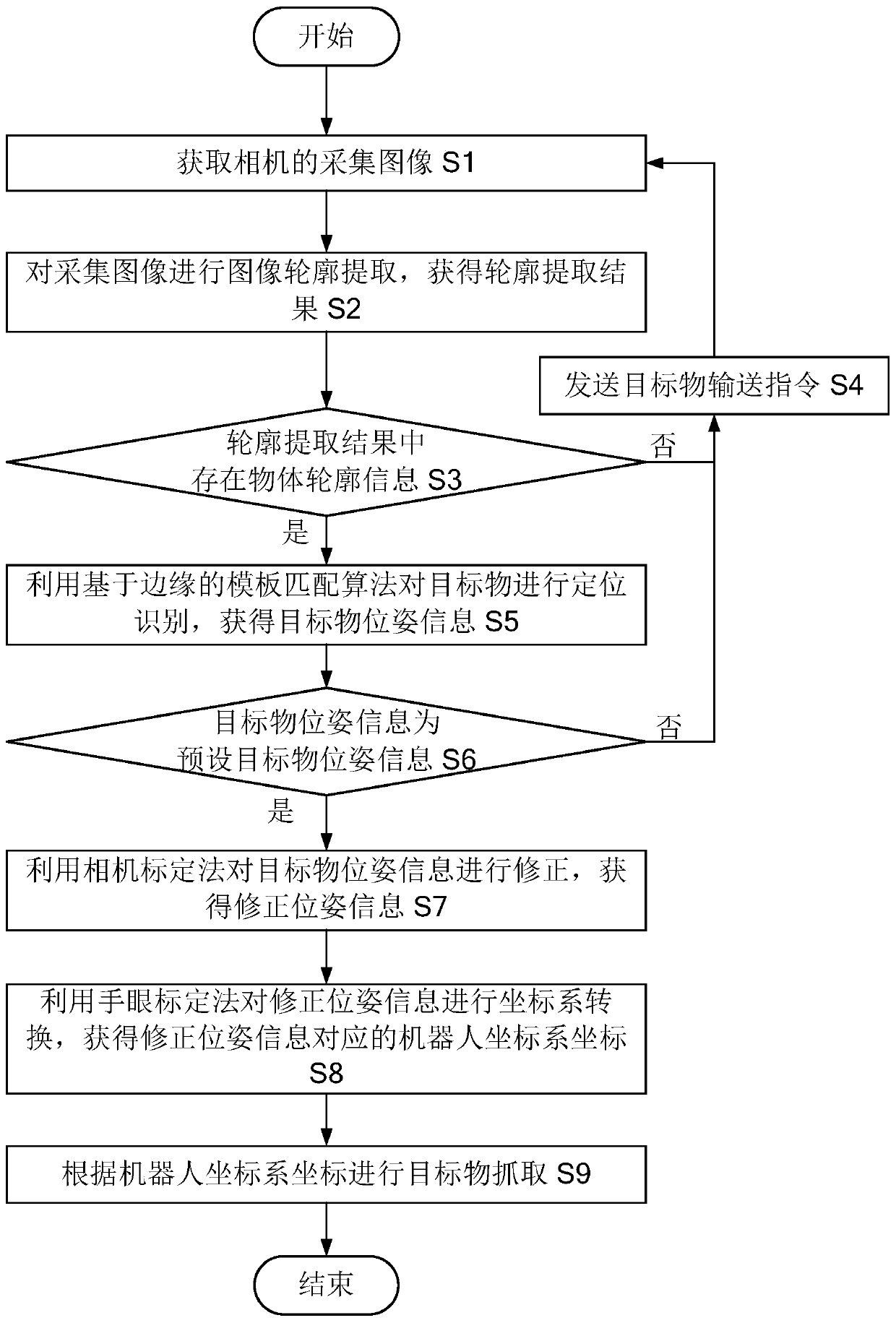

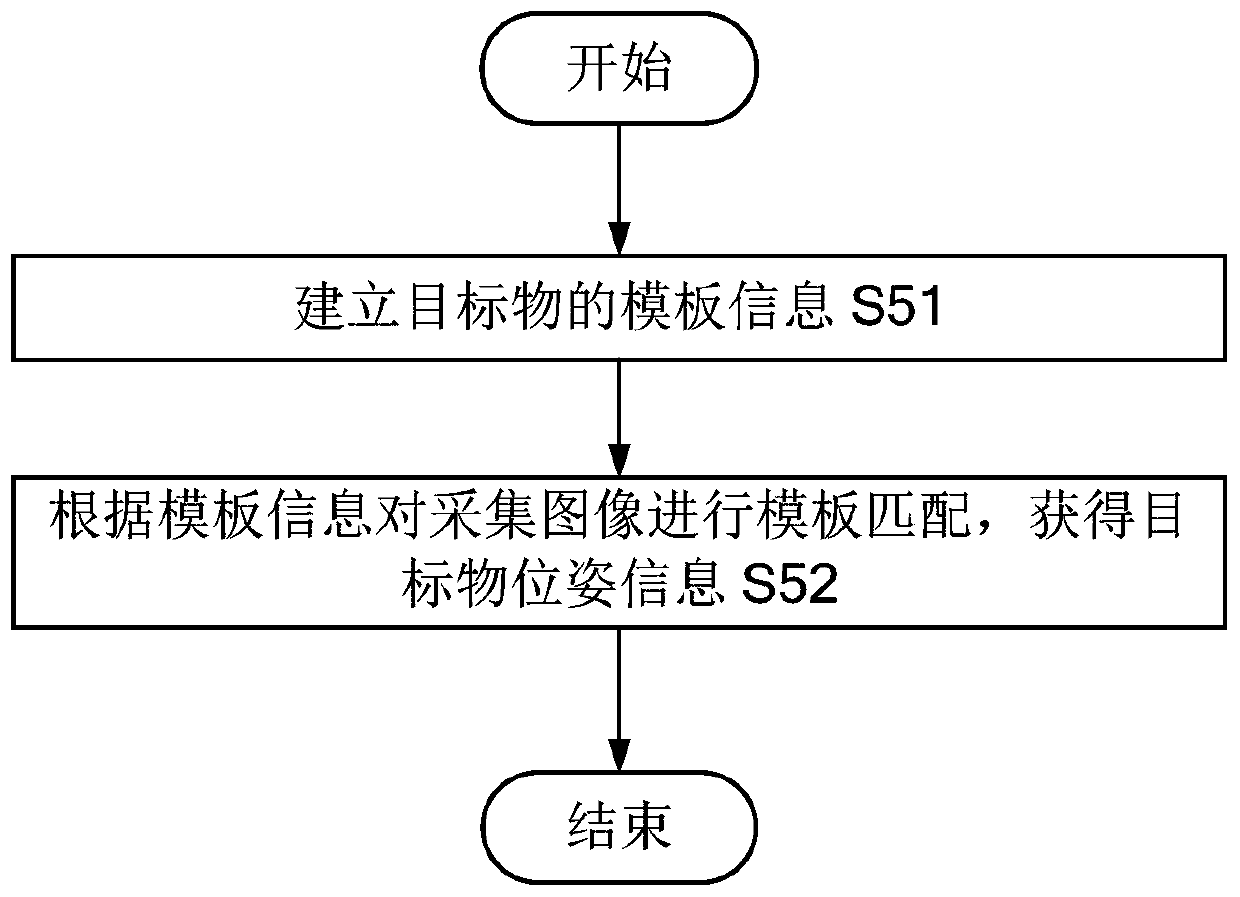

Industrial robot visual recognition positioning grabbing method, computer device and computer readable storage medium

InactiveCN110660104AImprove stabilityHigh precisionImage enhancementProgramme-controlled manipulatorComputer graphics (images)Engineering

The invention provides an industrial robot visual recognition positioning grabbing method, a computer device and a computer readable storage medium. The method comprises the steps that image contour extraction is performed on an acquired image; when the object contour information exists in the contour extraction result, positioning and identifying the target object by using an edge-based templatematching algorithm; when the target object pose information is preset target object pose information, correcting the target object pose information by using a camera calibration method; and performingcoordinate system conversion on the corrected pose information by using a hand-eye calibration method. The computer device comprises a controller, and the controller is used for implementing the industrial robot visual recognition positioning grabbing method when executing the computer program stored in the memory. A computer program is stored in the computer readable storage medium, and when thecomputer program is executed by the controller, the industrial robot visual recognition positioning grabbing method is achieved. The method provided by the invention is higher in recognition and positioning stability and precision.

Owner:GREE ELECTRIC APPLIANCES INC

Visual indication of current voice speaker

Visually identifying one or more known or anonymous voice speakers to a listener in a computing session. For each voice speaker, voice data include a speaker identifier that is associated with a visual indicator displayed to indicate the voice speaker who is currently speaking. The speaker identifier is first used to determine voice privileges before the visual indicator is displayed. The visual indicator is preferably associated with a visual element controlled by the voice speaker, such as an animated game character. Visually identifying a voice speaker enables the listener and / or a moderator of the computing session to control voice communications, such as muting an abusive voice speaker. The visual indicator can take various forms, such as an icon displayed adjacent to the voice speaker's animated character, or a different icon displayed in a predetermined location if the voice speaker's animated character is not currently visible to the listener.

Owner:MICROSOFT TECH LICENSING LLC

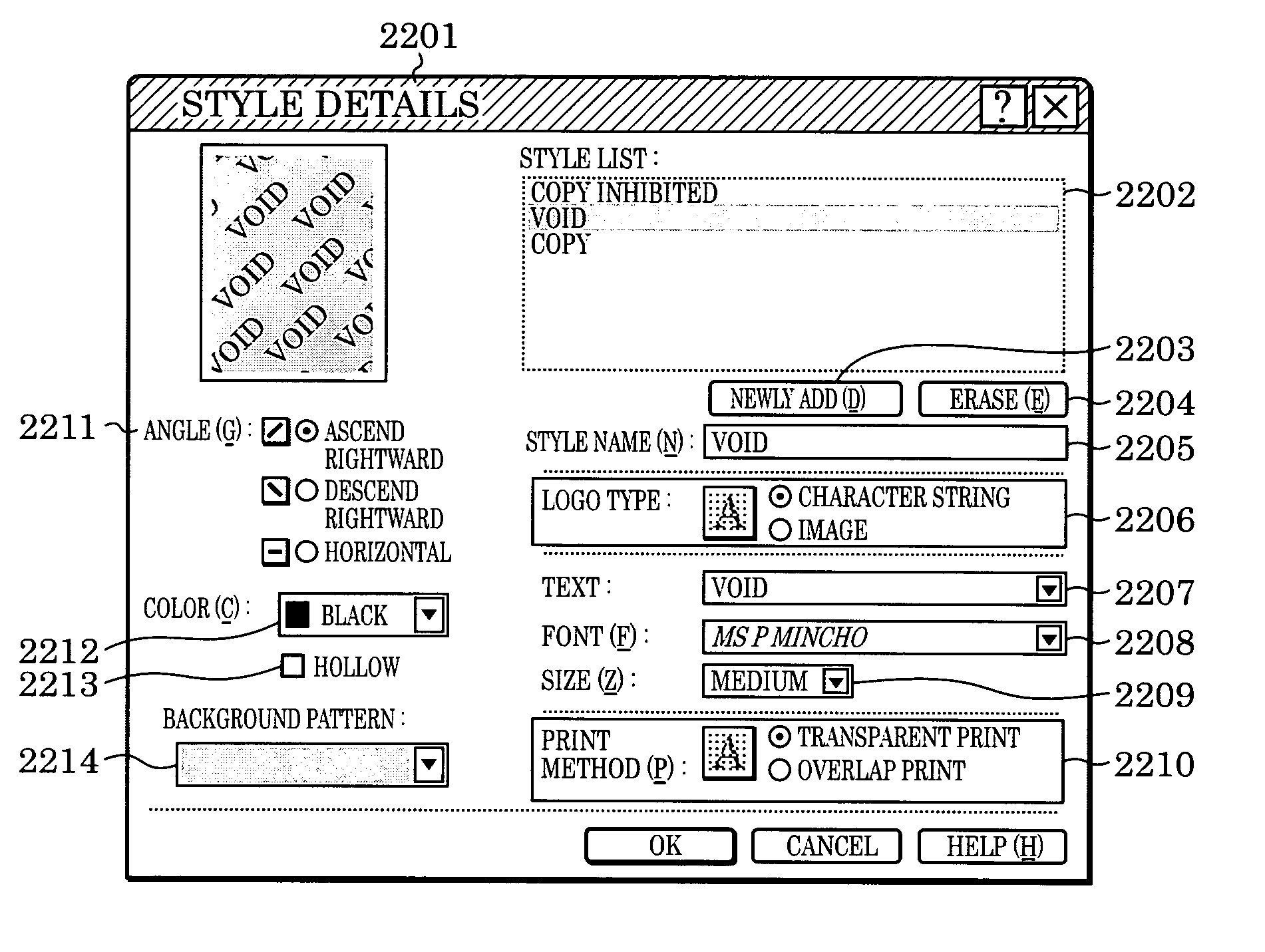

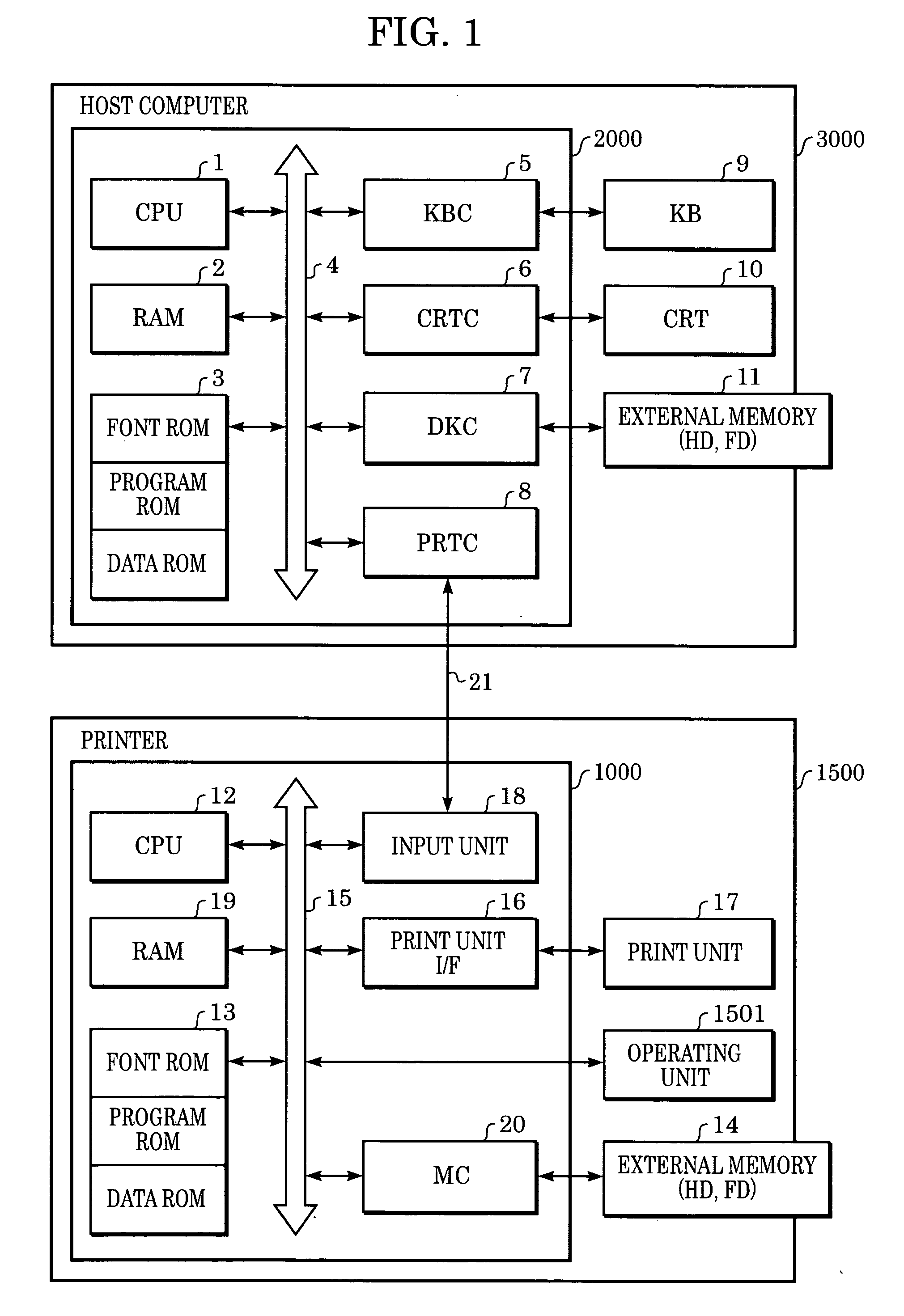

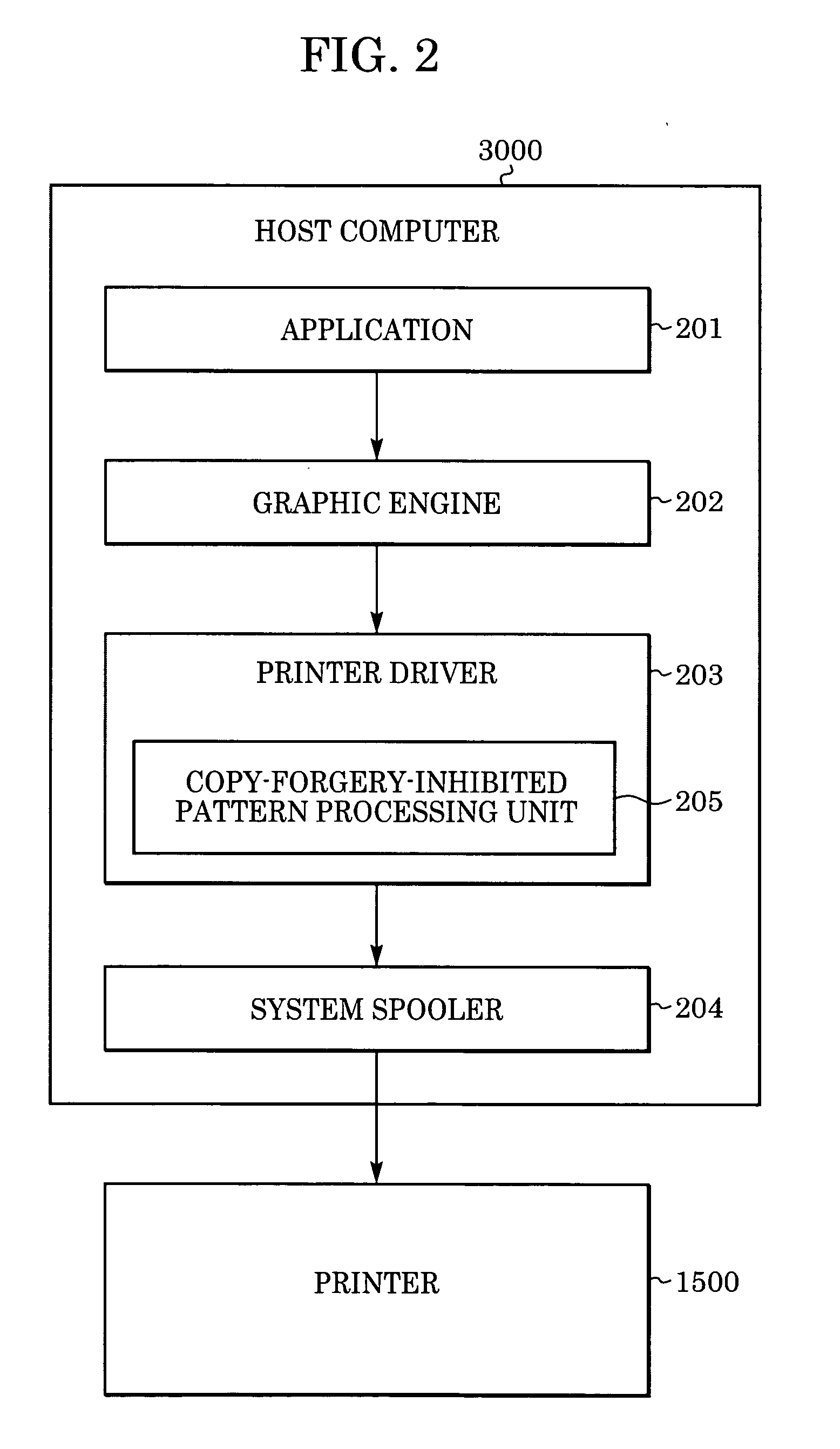

Image processing apparatus and image processing method

ActiveUS20050078974A1Electrographic process apparatusImage data processing detailsImaging processingComputer graphics (images)

Viewability is improved creating more reliable visual recognition of an image including a copy-forgery-inhibited pattern image to clearly distinguish a copy and an original being displayed for preview. An image processing apparatus produces data of a copy-forgery-inhibited pattern image that is added to an image to be output for printing and comprises a latent image and a background image. The apparatus includes: a display unit for displaying an image; and a display control unit for distinctively displaying images, on the display unit, in a first display state displaying the copy-forgery-inhibited pattern image and in a second display state in which a display mode of at least one of the latent image and the background image of the copy-forgery-inhibited pattern image differs from a display mode of the image displayed in the first display state.

Owner:CANON KK

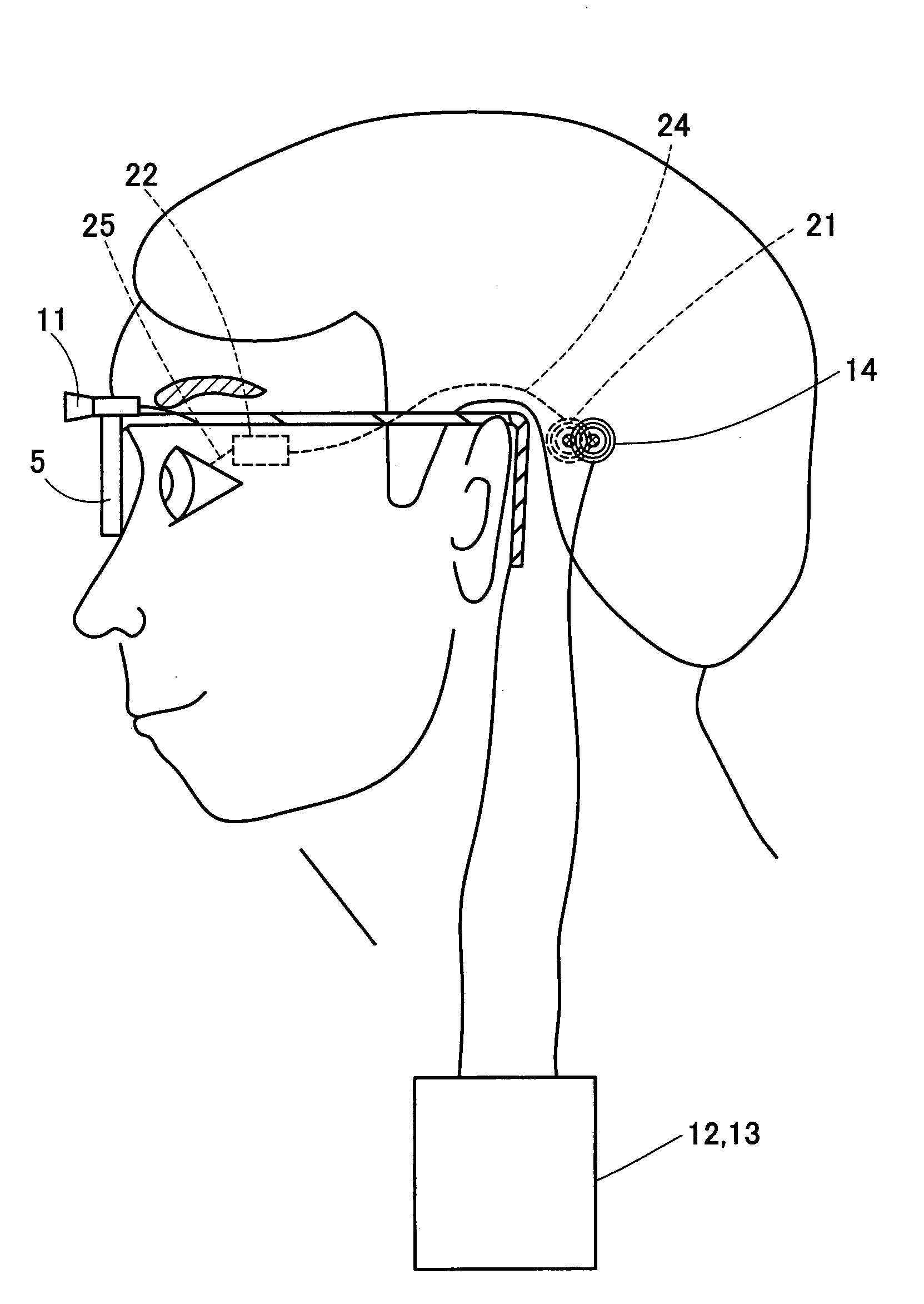

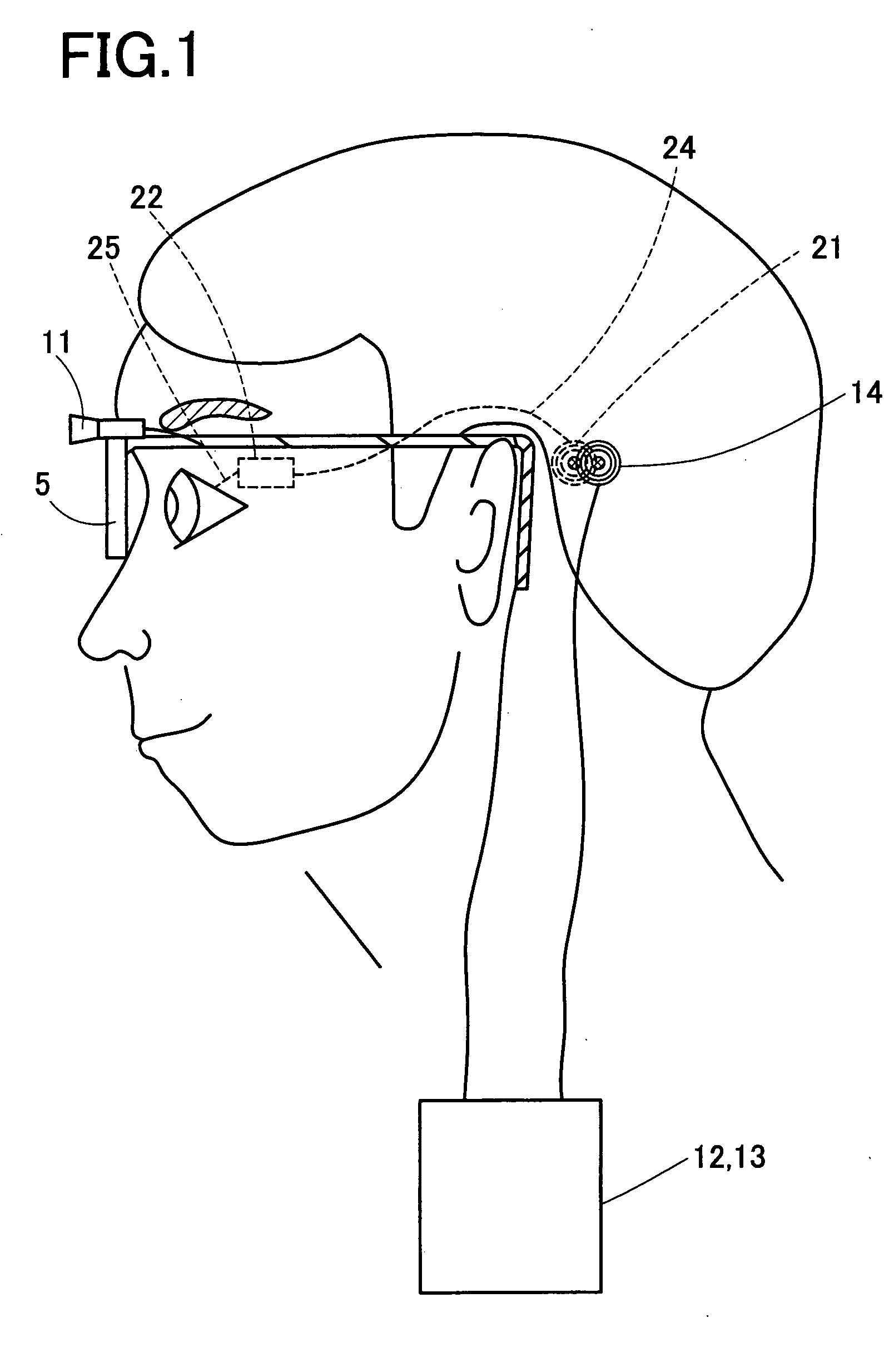

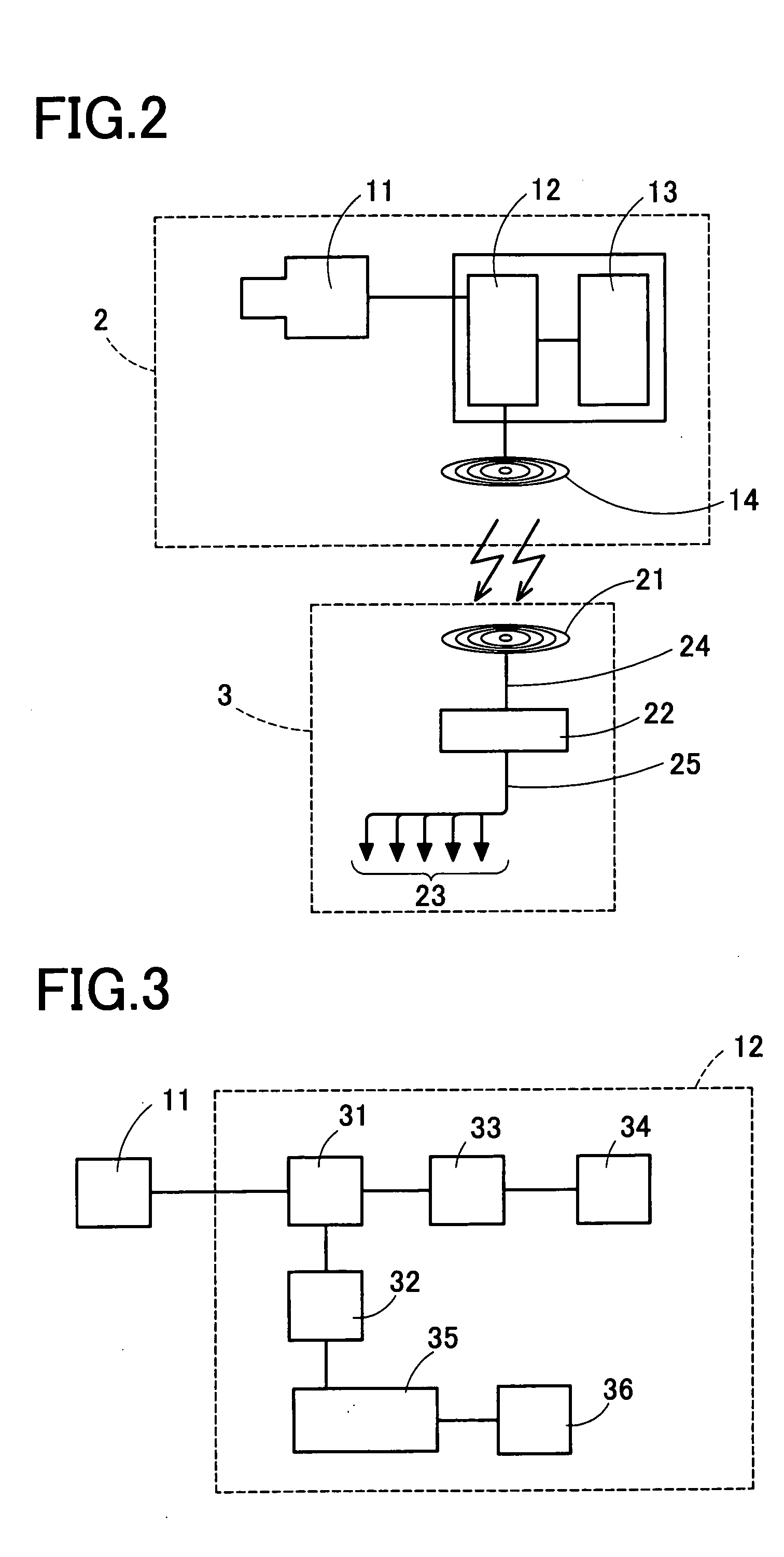

Artificail vision system

ActiveUS20060058857A1Improve power supply capacityStable long-term useHead electrodesEye implantsVisual recognitionOptic nerve

An object is to provide an artificial vision system ensuring a wide field of view without damaging a retina. In the artificial vision system, a plurality of electrodes (23) are to be implanted so as to stick in an optic papilla of an eye of a patient. A signal for stimulation pulse is generated based on an image captured by an image pick up device (11) to be disposed outside a body of the patient. The electrical stimulation signals outputted from the electrodes (23) based on the signals for stimulation pulse stimulate an optic nerve of the eye, thereby enabling the patient to visually recognize the image from the image pickup device (11).

Owner:NIDEK CO LTD +1

Filtering a collection of items

InactiveUS20060190817A1Easily visualizedData processing applicationsSpecial data processing applicationsThumbnailUser interface

A user interface is provided wherein a set of items is displayed as a set of item representations (such as icons or thumbnails), and wherein a filtered subset of those items are visually identified in accordance with a user-defined criterion. All of the item representations are displayed on the screen in some form, regardless of which of the items have been filtered out. Further, the item representations may be displayed in various formats such as collected together in arrays or carousels as appropriate. This may allow the user interface to visually distinguish between those items that have been filtered out and those that are considered relevant.

Owner:MICROSOFT TECH LICENSING LLC

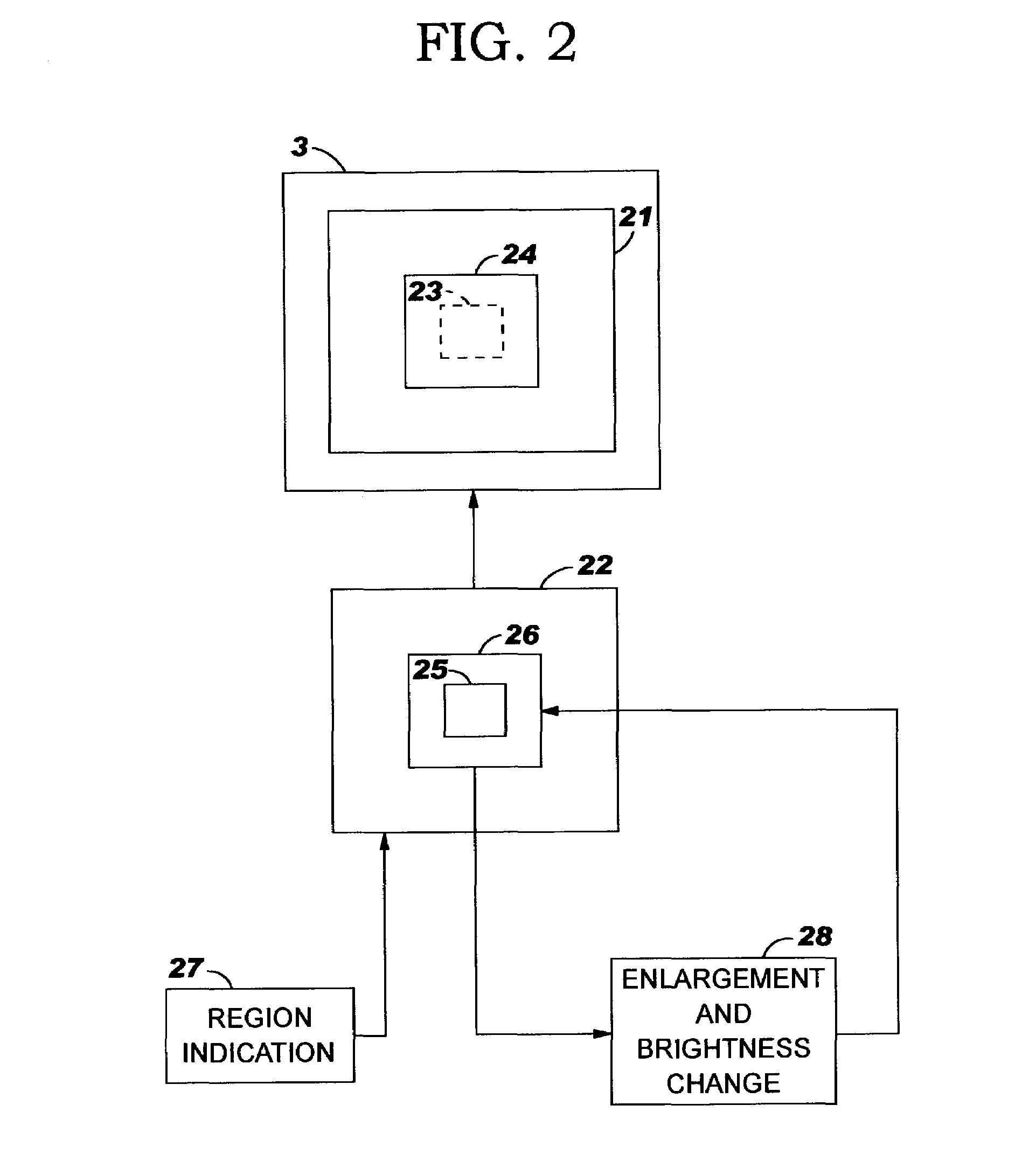

Image display device, image display method, and image display program

InactiveUS6972771B2Increase contrastEasy to identifyCathode-ray tube indicatorsInput/output processes for data processingGraphicsDisplay device

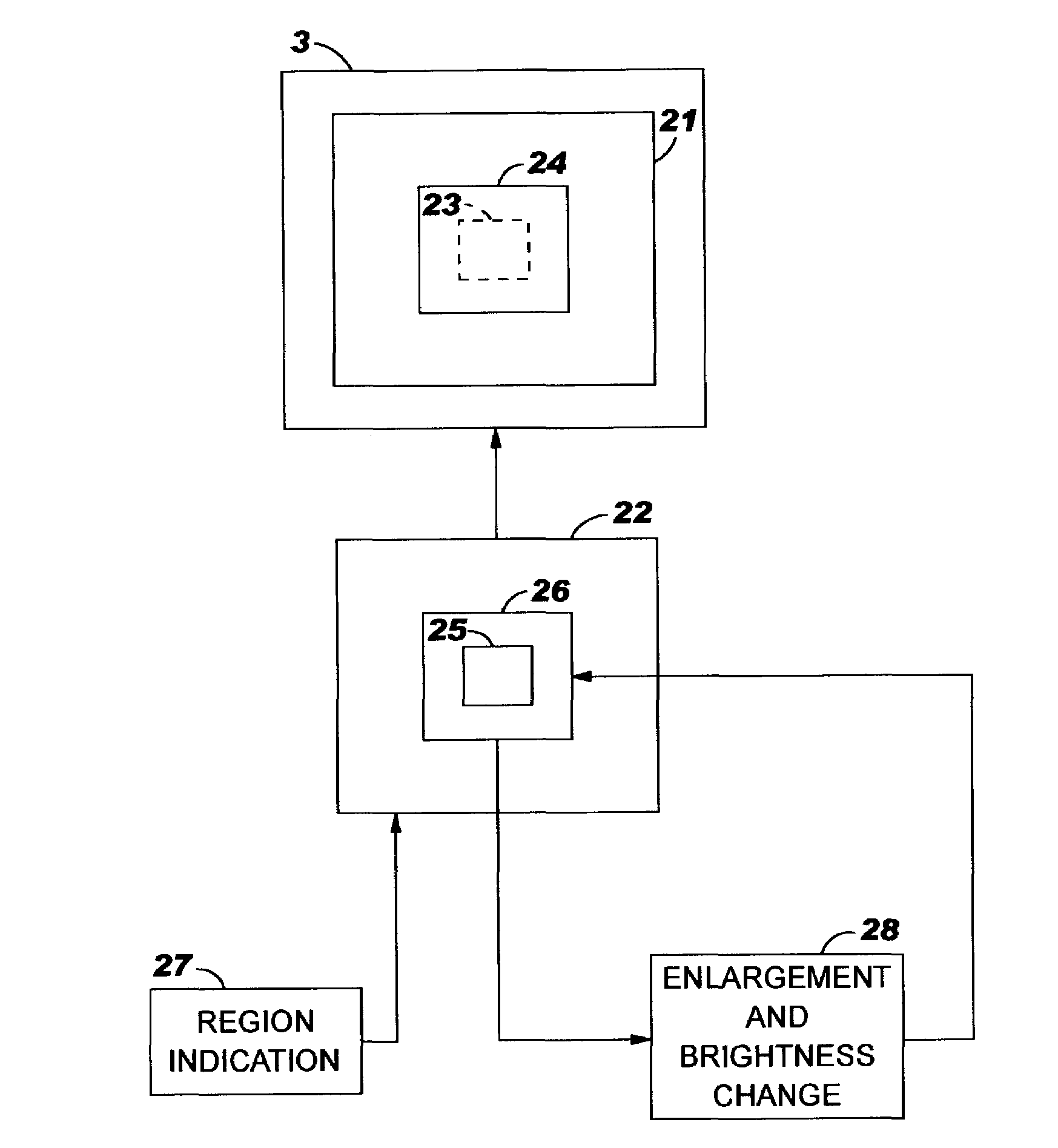

A technique is provided for improving visual recognition of letters, graphics, etc., in a portion of a displayed image that has been enlarged. An image display system includes an image display component for displaying on a screen an image based on image data, a region indicator component for permitting a region to be enlarged from the image to be selected and identified, a data modification component for modifying the portion of the image data corresponding to an enlarged display region so that the selected image is enlarged and displayed within the enlarged display region on the screen, and a brightness adjusting component for adjusting values instructing brightness of the corresponding image data part such that a contrast of the image within the enlarged display is higher than a contrast of the original image within the enlarged display region.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com