Patents

Literature

12734 results about "Classification result" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

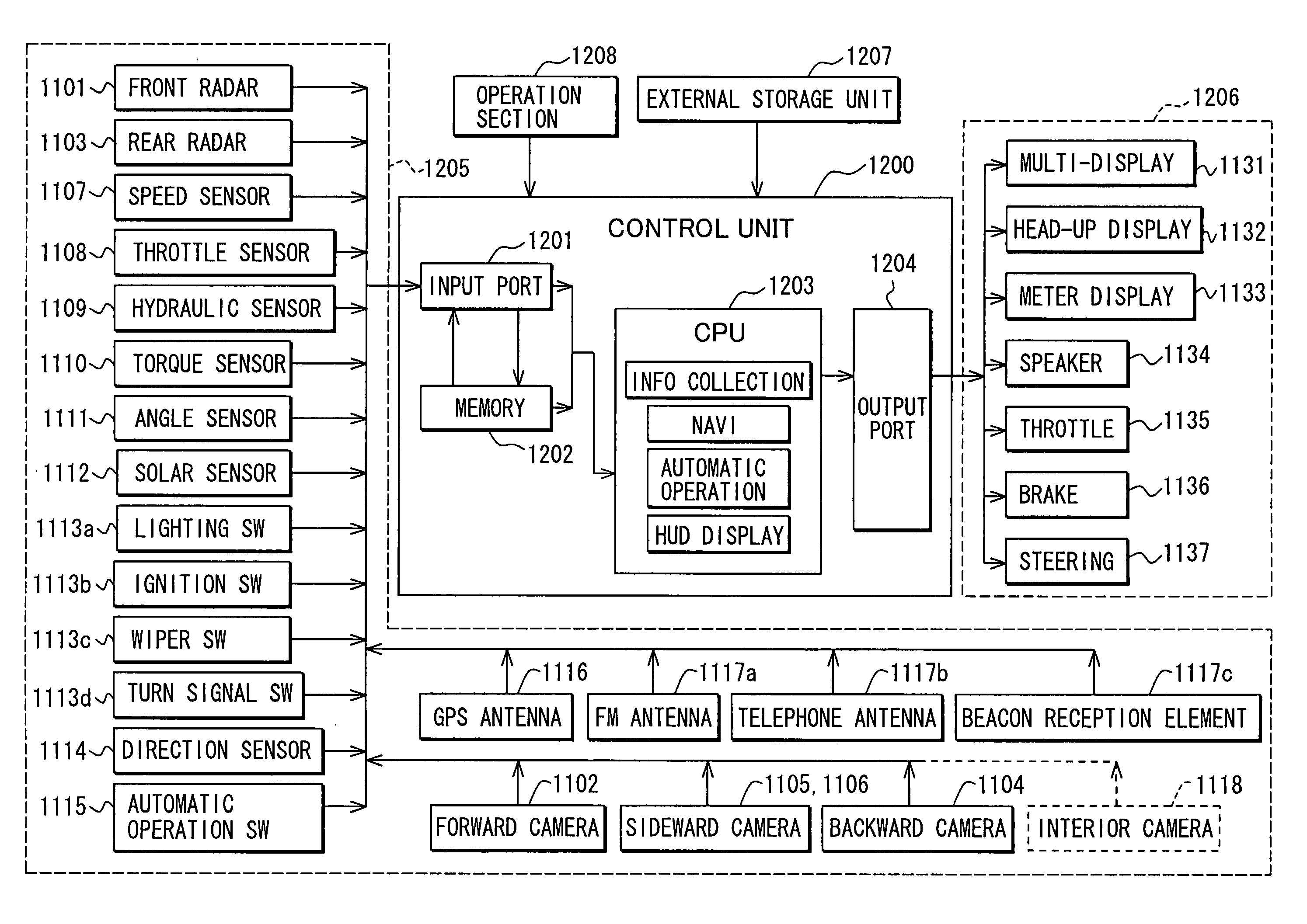

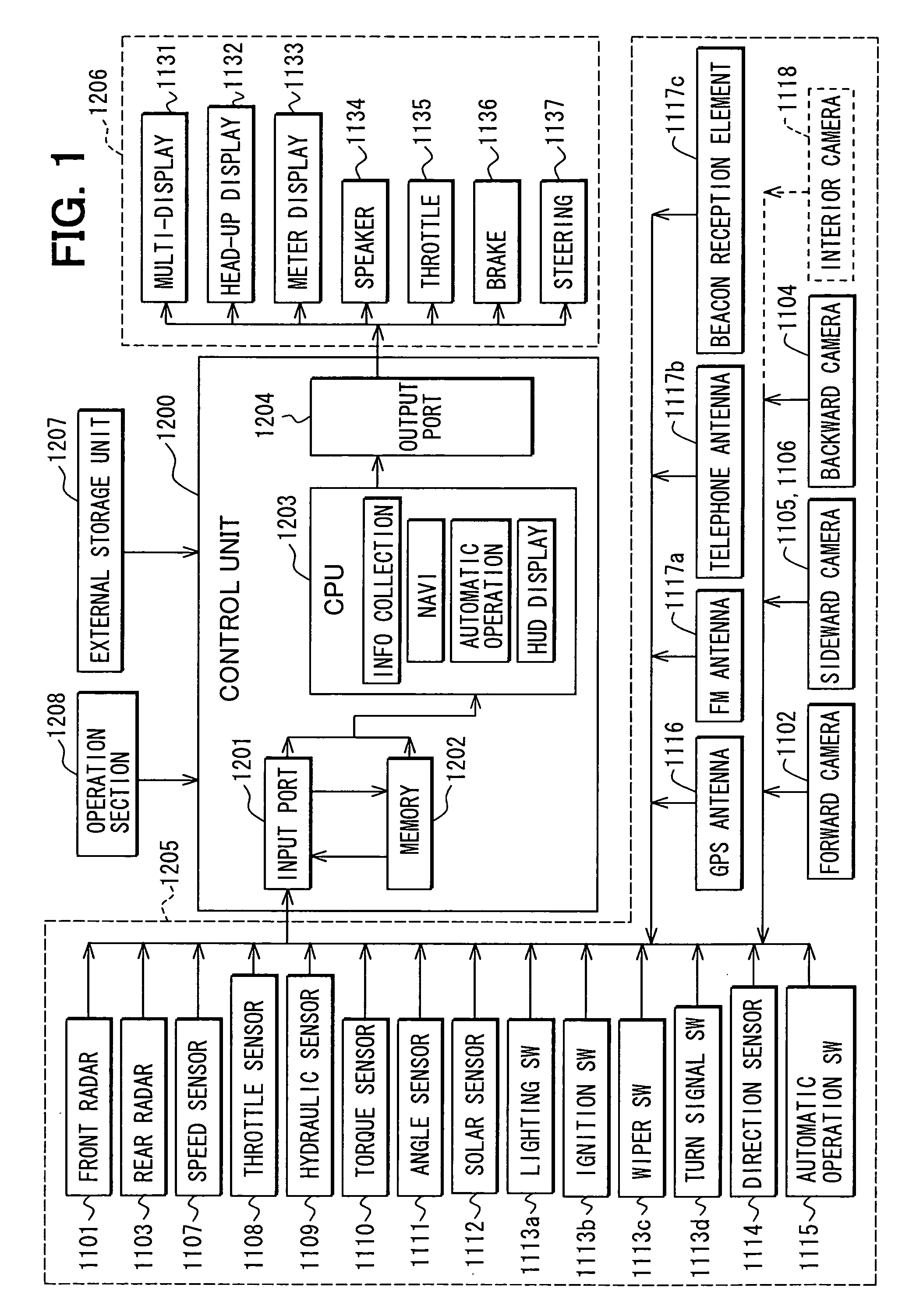

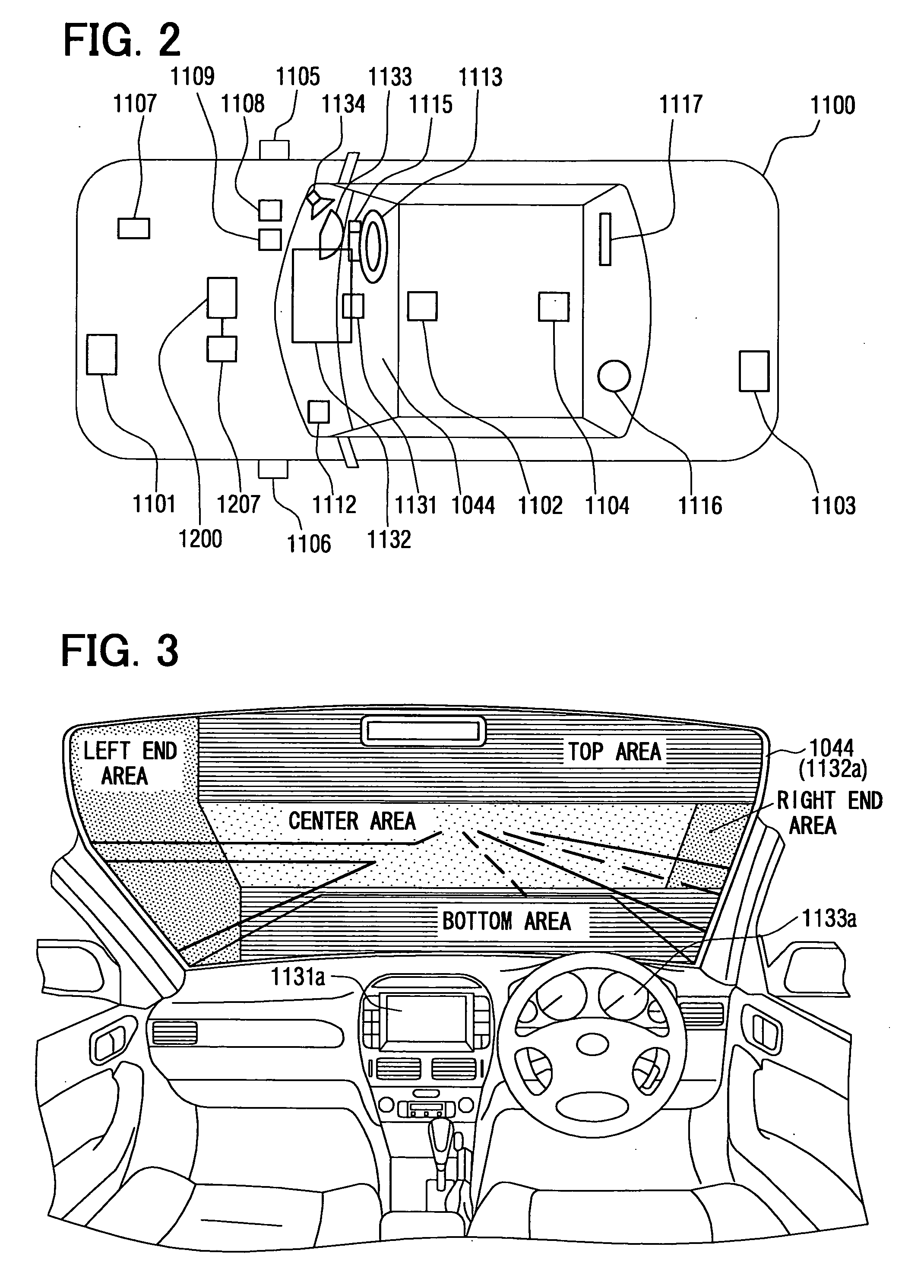

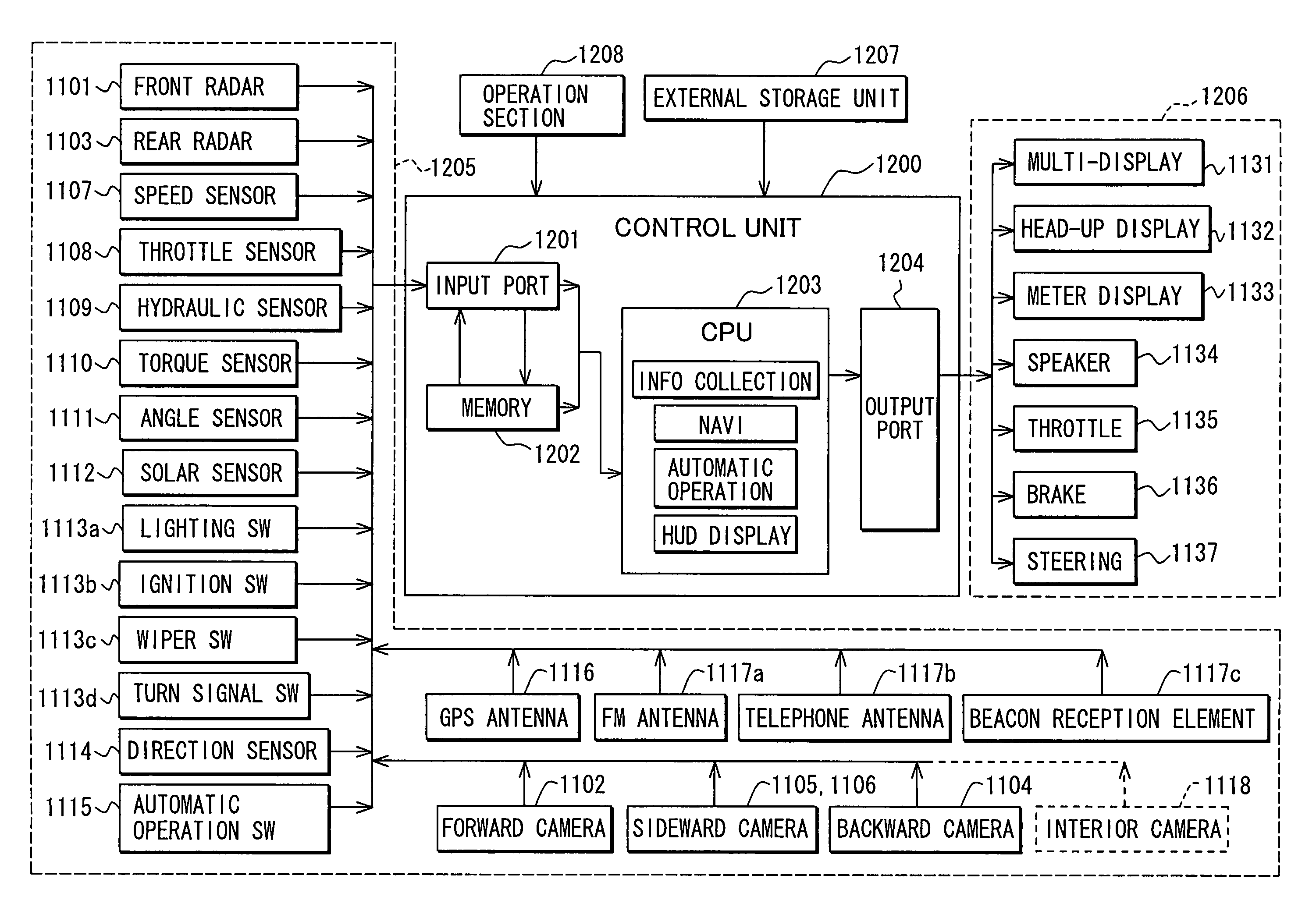

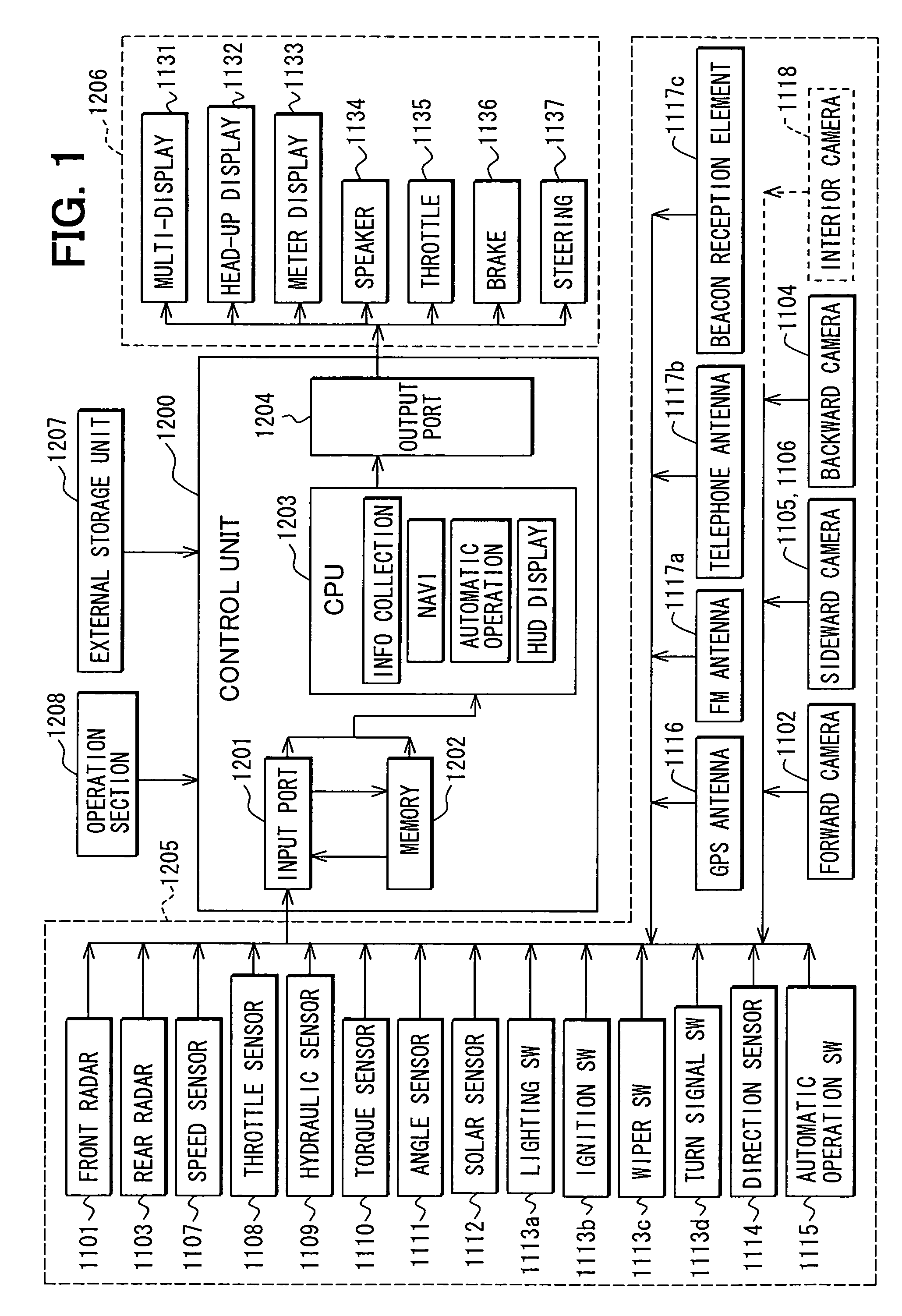

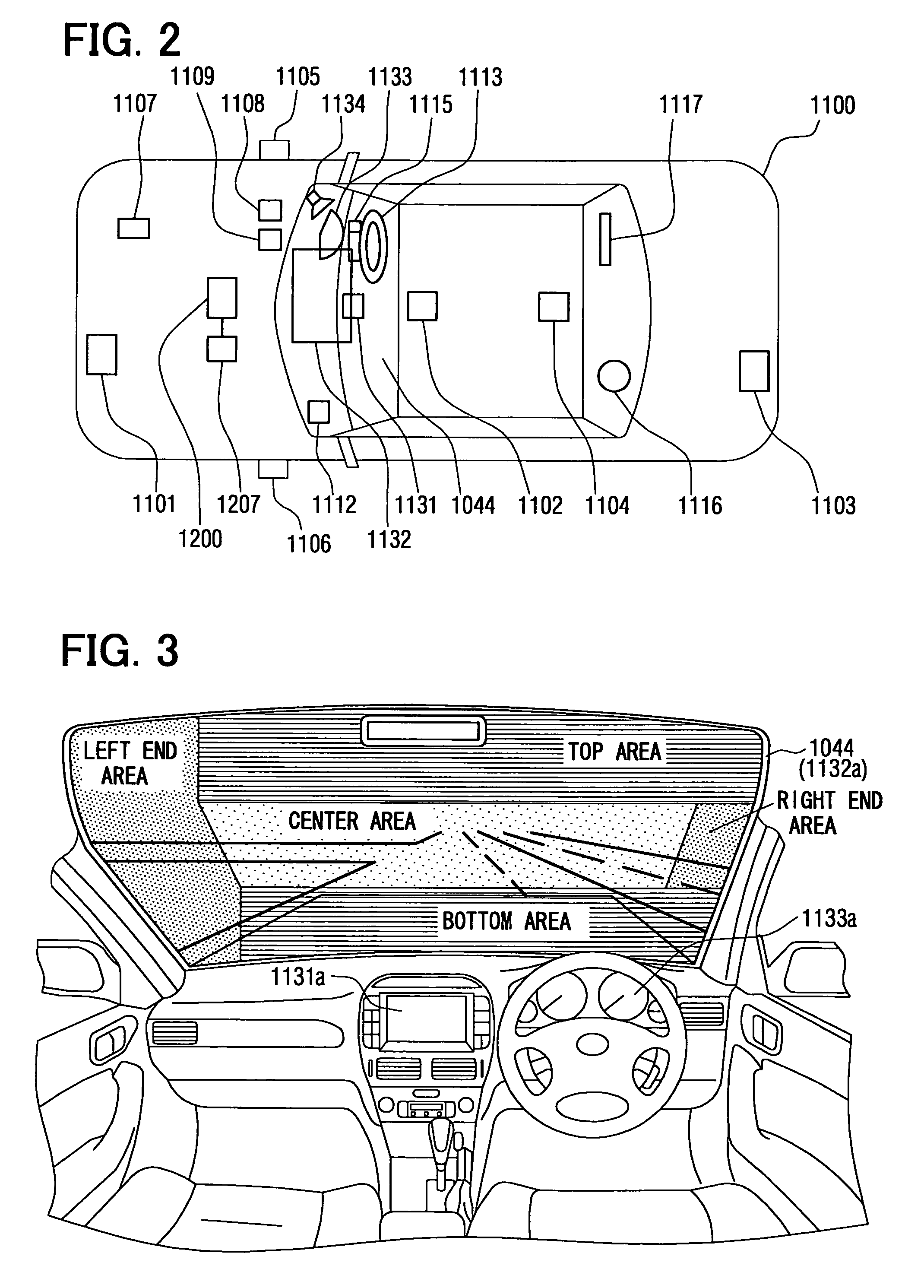

Vehicle information display system

ActiveUS20050154505A1Easy to understandMuch information without hindering a driver's visual fieldInstruments for road network navigationRoad vehicles traffic controlHead-up displayInformation display systems

A vehicle information display system includes a head-up display for reflecting an image on a windshield of a vehicle and displaying the image so that a driver recognizes the image as a virtual image. Information is collected for being displayed by the head-up display. A circumstance of the vehicle, a circumstance of surrounding of the vehicle, or a circumstance of the driver is detected. The collected information is classified in accordance with a detection result. Then, display contents of the head-up display are controlled in accordance with a classification result.

Owner:DENSO CORP

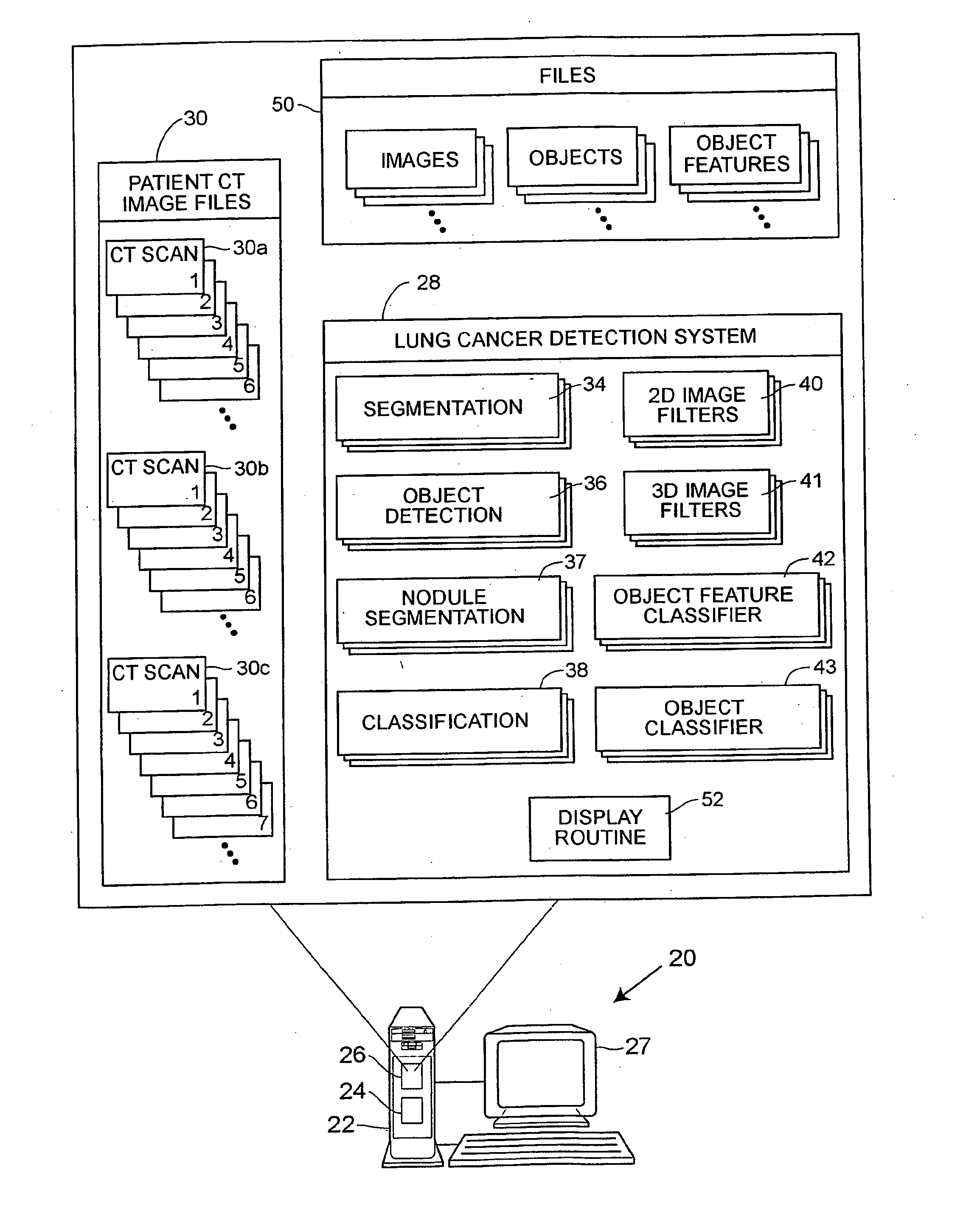

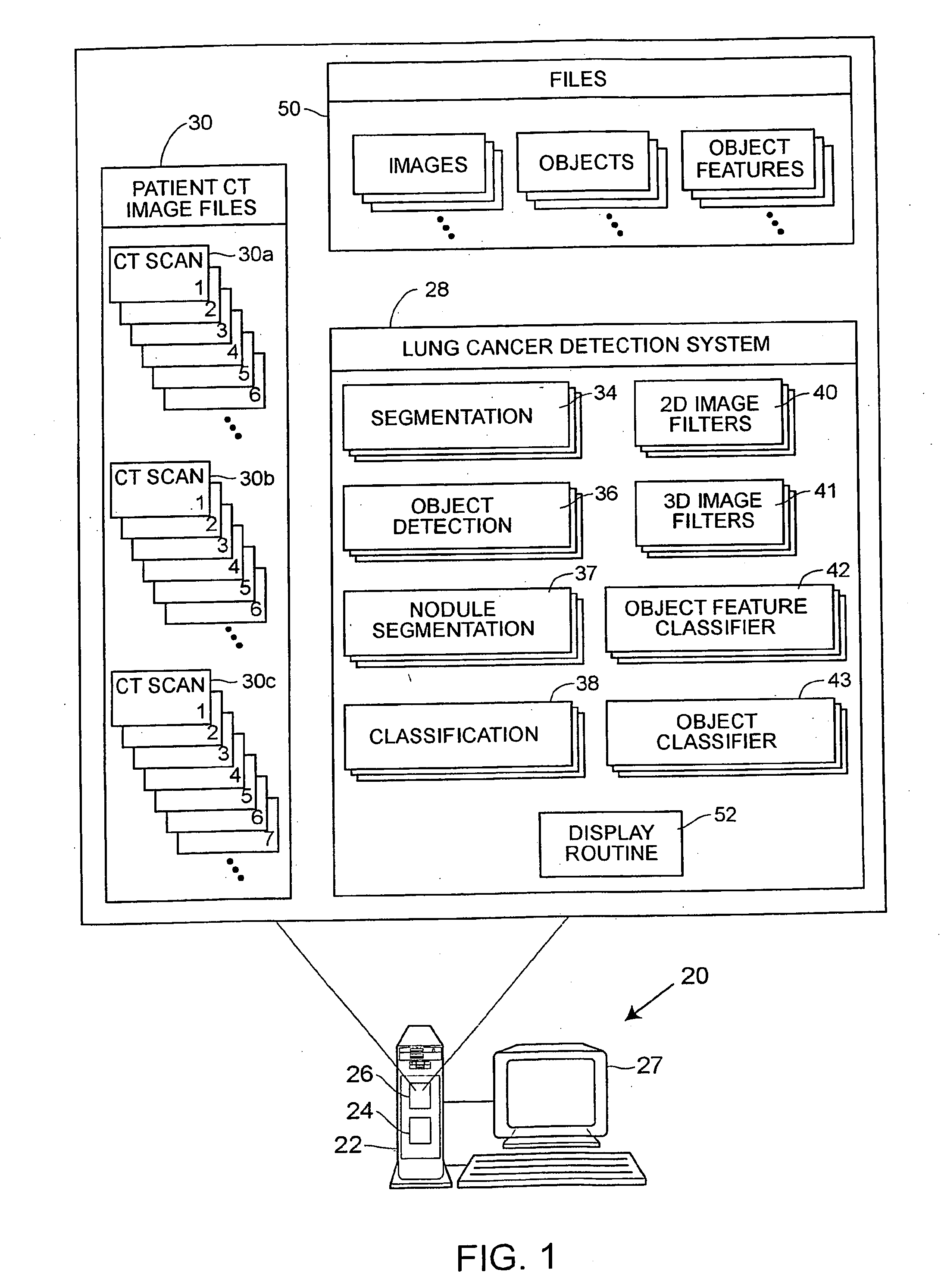

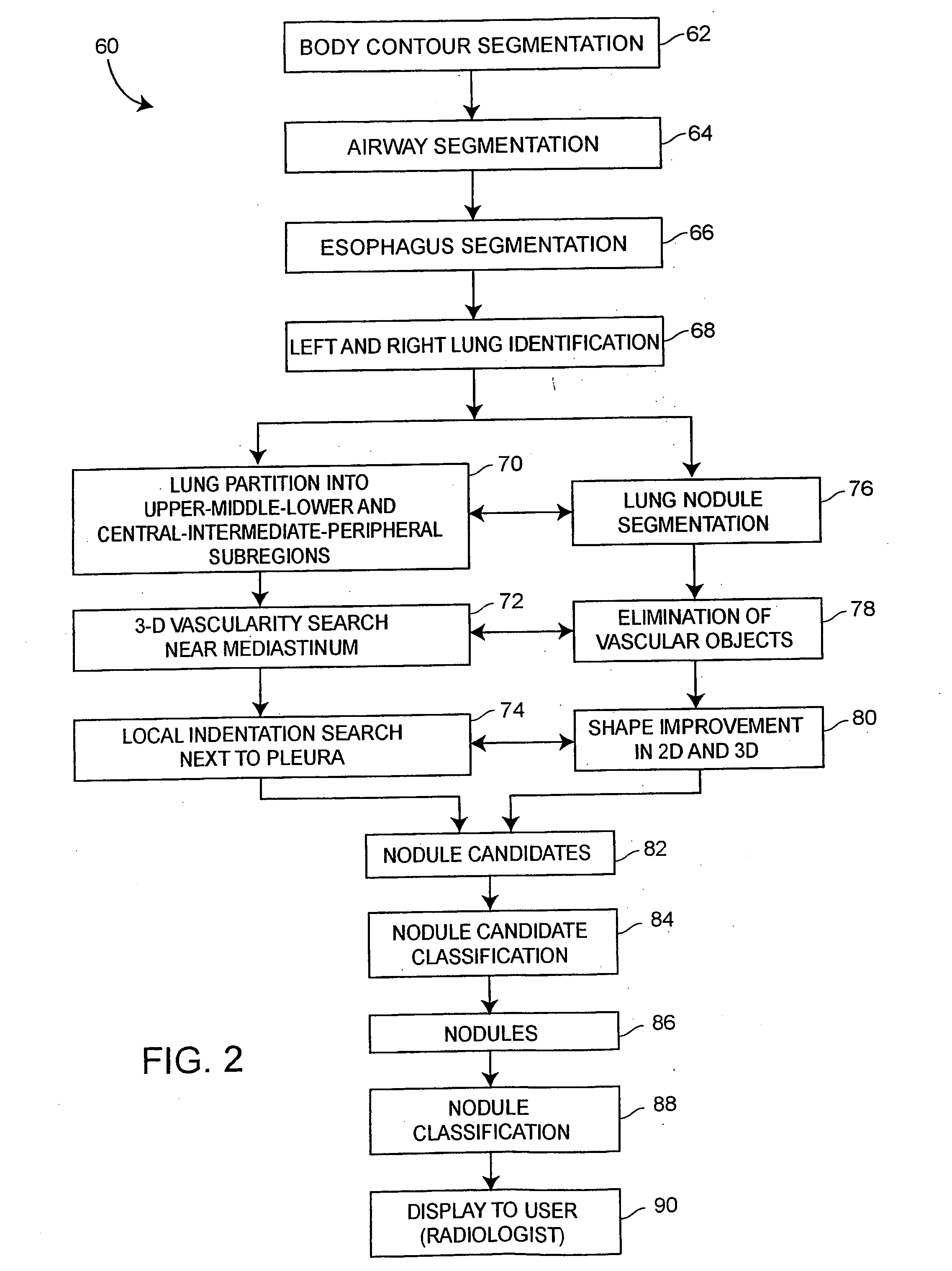

Lung nodule detection and classification

A computer assisted method of detecting and classifying lung nodules within a set of CT images includes performing body contour, airway, lung and esophagus segmentation to identify the regions of the CT images in which to search for potential lung nodules. The lungs are processed to identify the left and right sides of the lungs and each side of the lung is divided into subregions including upper, middle and lower subregions and central, intermediate and peripheral subregions. The computer analyzes each of the lung regions to detect and identify a three-dimensional vessel tree representing the blood vessels at or near the mediastinum. The computer then detects objects that are attached to the lung wall or to the vessel tree to assure that these objects are not eliminated from consideration as potential nodules. Thereafter, the computer performs a pixel similarity analysis on the appropriate regions within the CT images to detect potential nodules and performs one or more expert analysis techniques using the features of the potential nodules to determine whether each of the potential nodules is or is not a lung nodule. Thereafter, the computer uses further features, such as speculation features, growth features, etc. in one or more expert analysis techniques to classify each detected nodule as being either benign or malignant. The computer then displays the detection and classification results to the radiologist to assist the radiologist in interpreting the CT exam for the patient.

Owner:RGT UNIV OF MICHIGAN

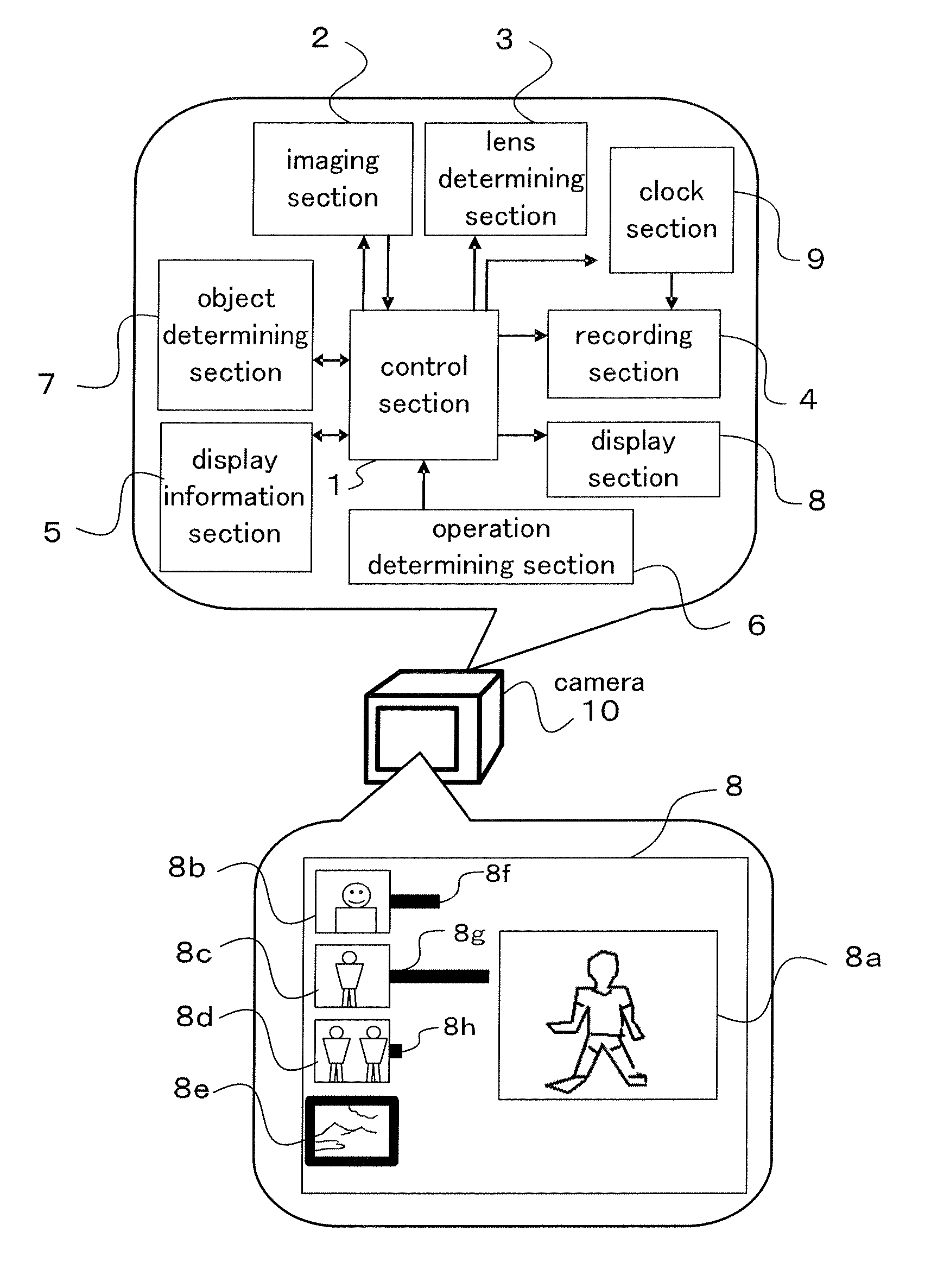

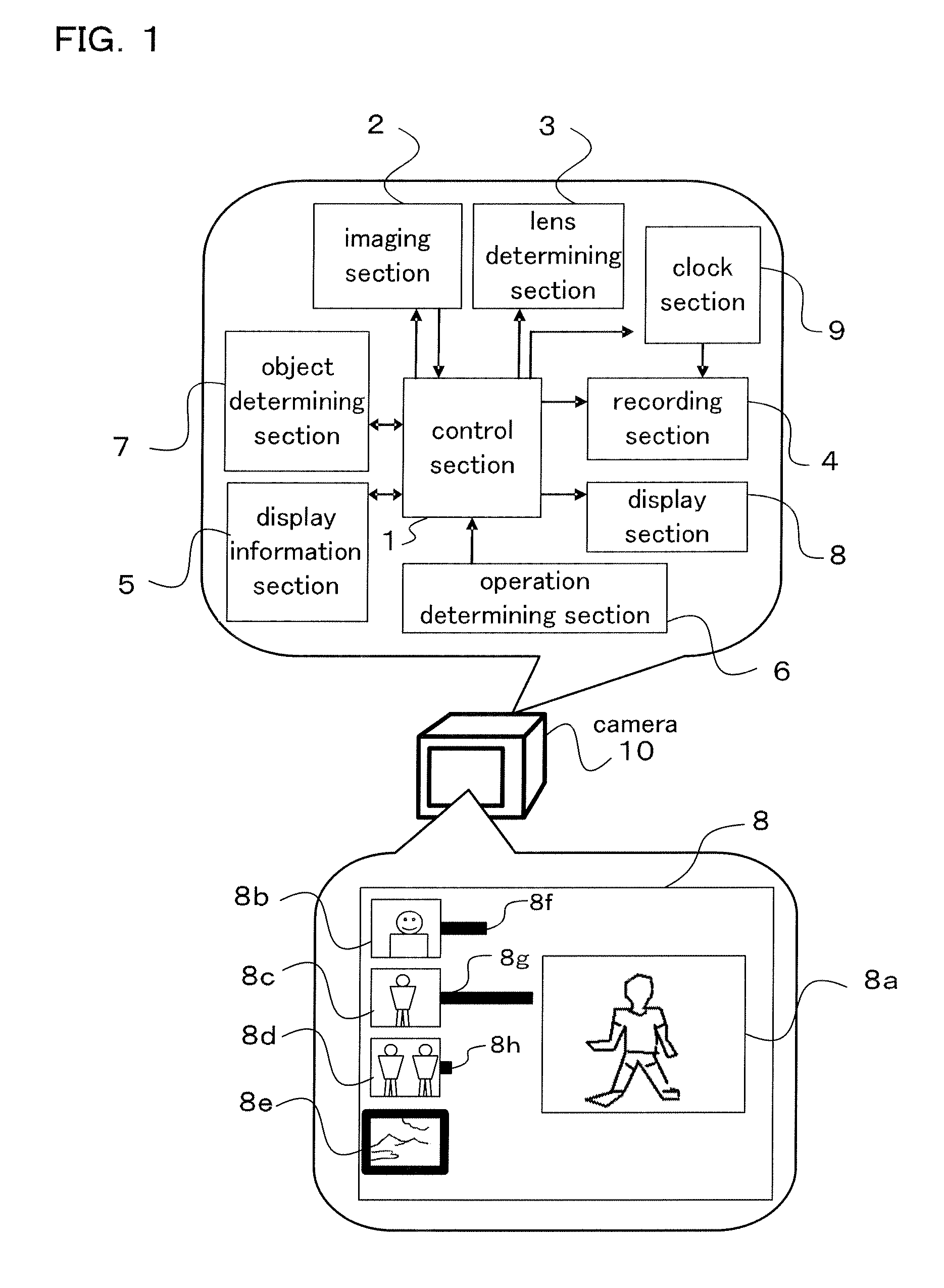

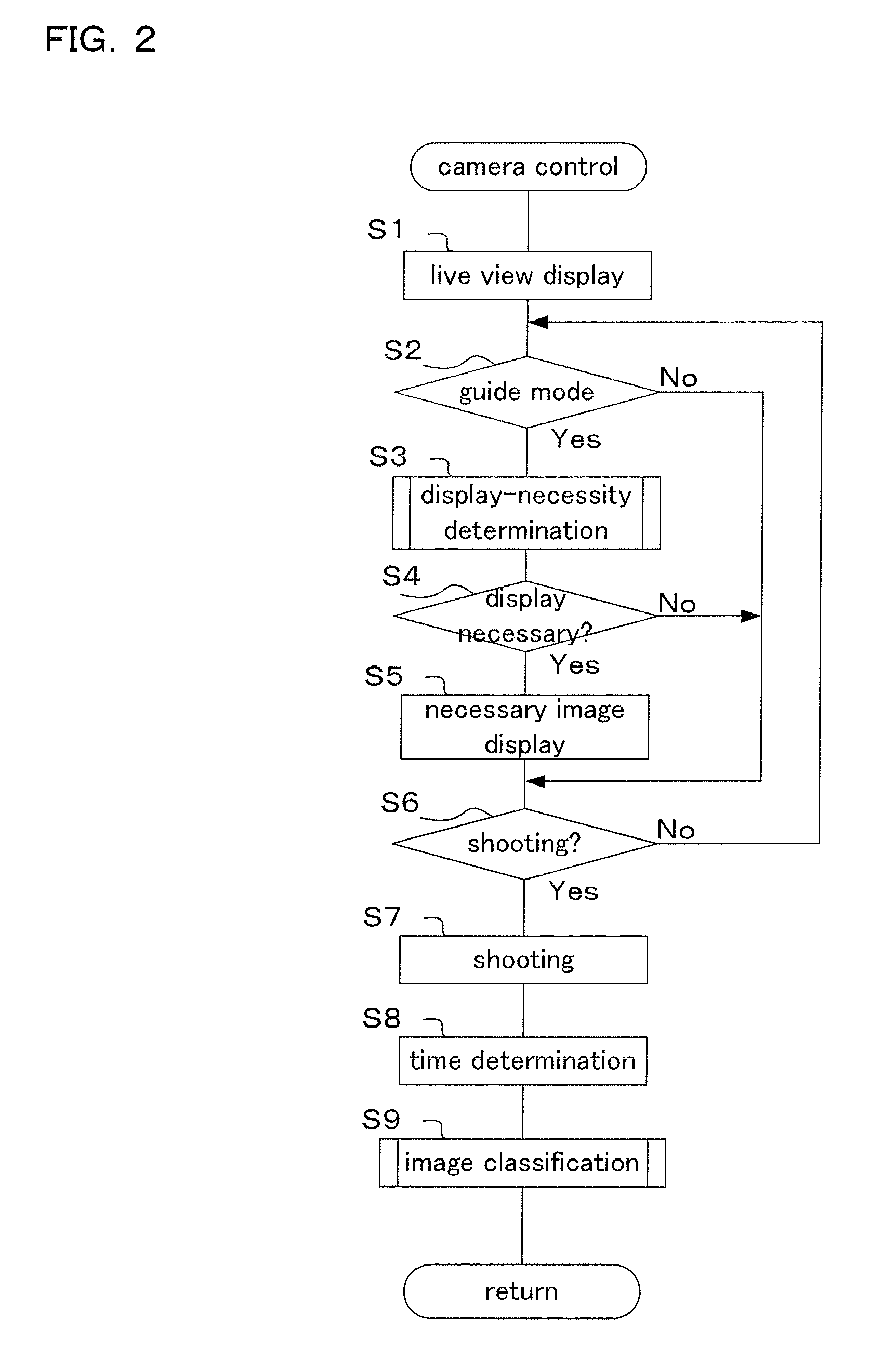

Imaging apparatus, imaging system, and imaging method

InactiveUS20090040324A1Television system detailsCharacter and pattern recognitionObject basedImage conversion

An imaging apparatus includes an imaging section converting an image into image data, an image classifying section classifying the image data, and a display section for displaying information regarding a recommended image as a shooting object based on a classification result by the image classifying section. Further, a server having a shooting assist function receives image data from an imaging apparatus that has an imaging section converting an image into the image data and includes a scene determining section classifying the received image data and determining whether a typical image has been taken repeatedly, a guide information section outputting information regarding a recommended image as a shooting object based on a determination result by the scene determining section, and a communication section outputting the information to the imaging apparatus.

Owner:OLYMPUS CORP

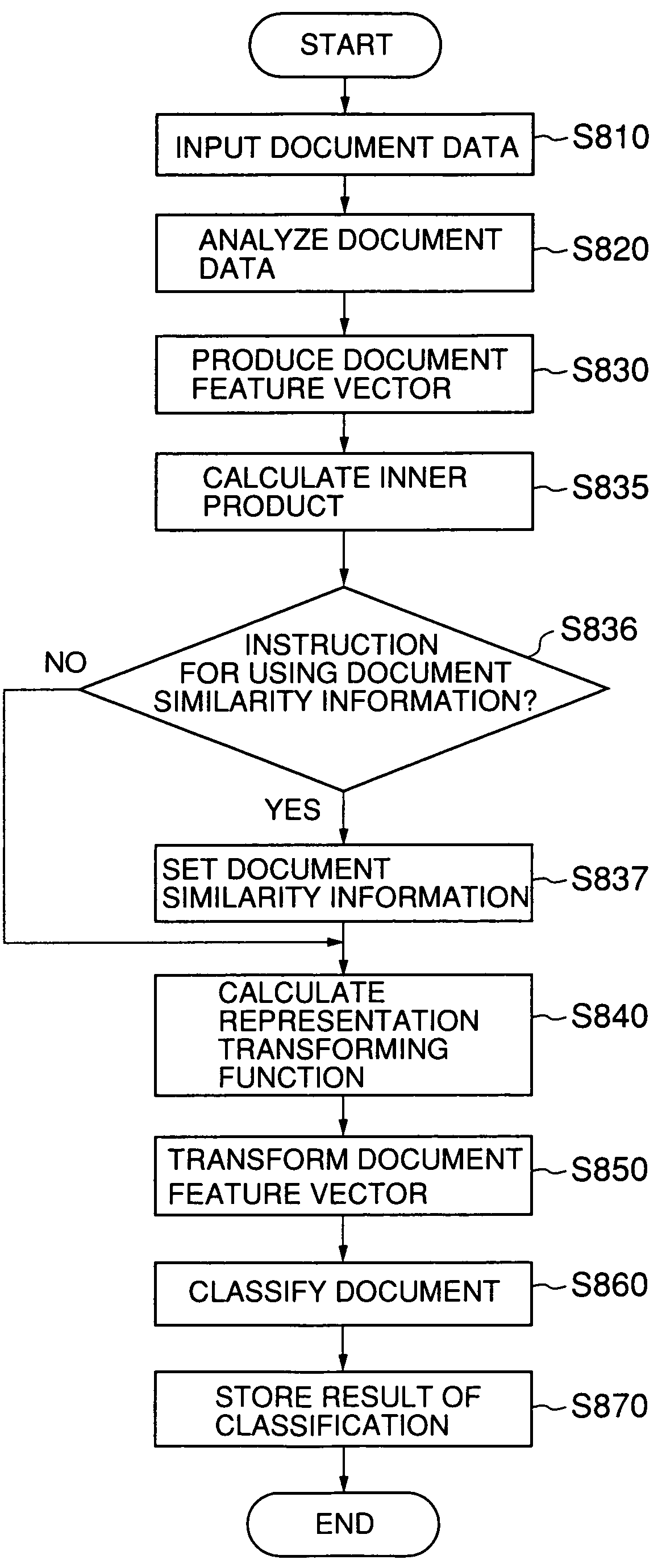

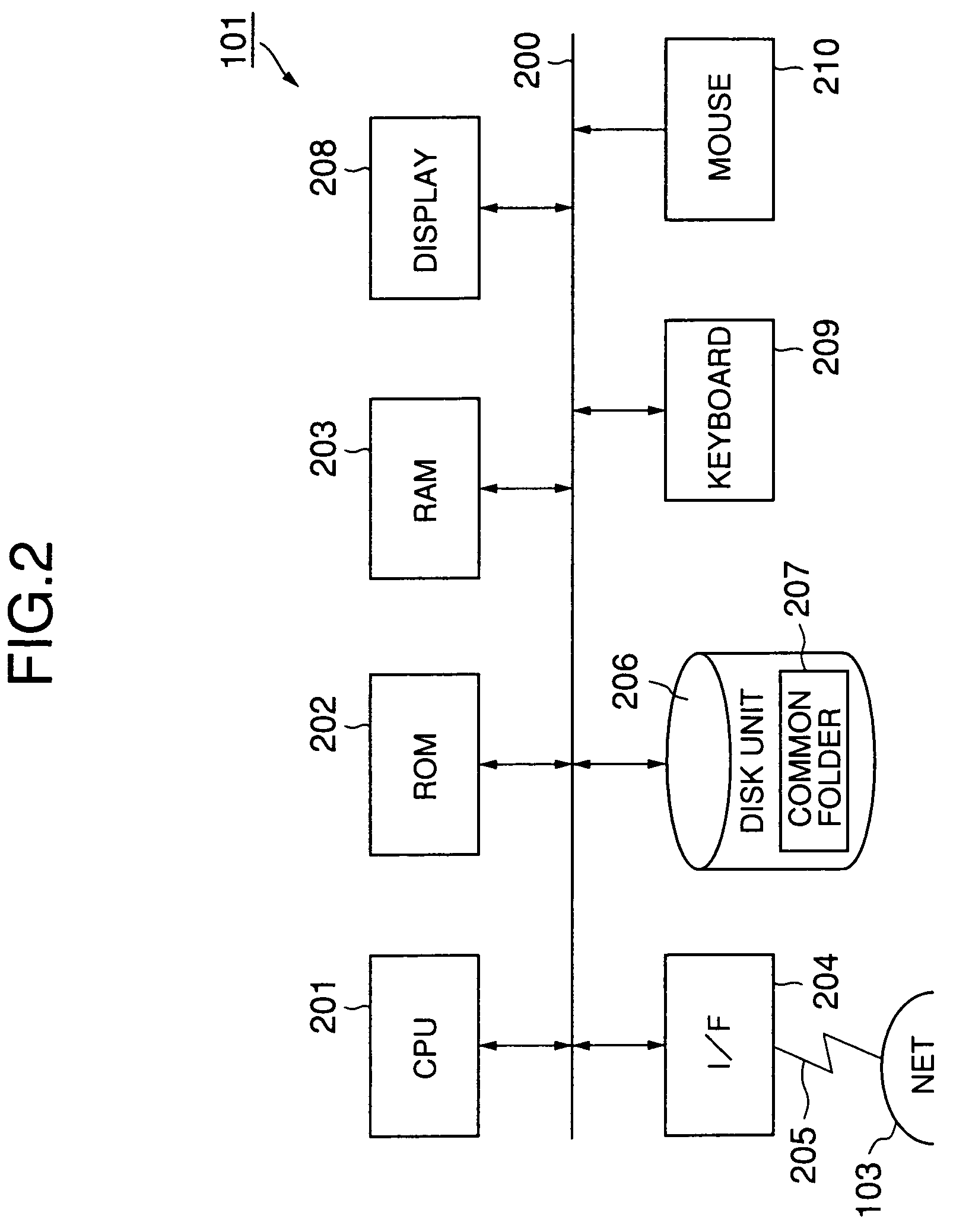

Document classification system and method for classifying a document according to contents of the document

InactiveUS7194471B1Eliminate the problemThe result is accurateData processing applicationsDigital data information retrievalDocument preparationDocumentation

A document classification system and method reflects operator's intention in a result of classification of document so that an accurate result of classification can be achieved. The document to be classifies has contents contains a plurality of items. At least one of the items contained in the document is designated. The document data is converted into converted data so that the converted data contains only data corresponding to the designated item. Classification of the document is done by using the converted data.

Owner:RICOH KK

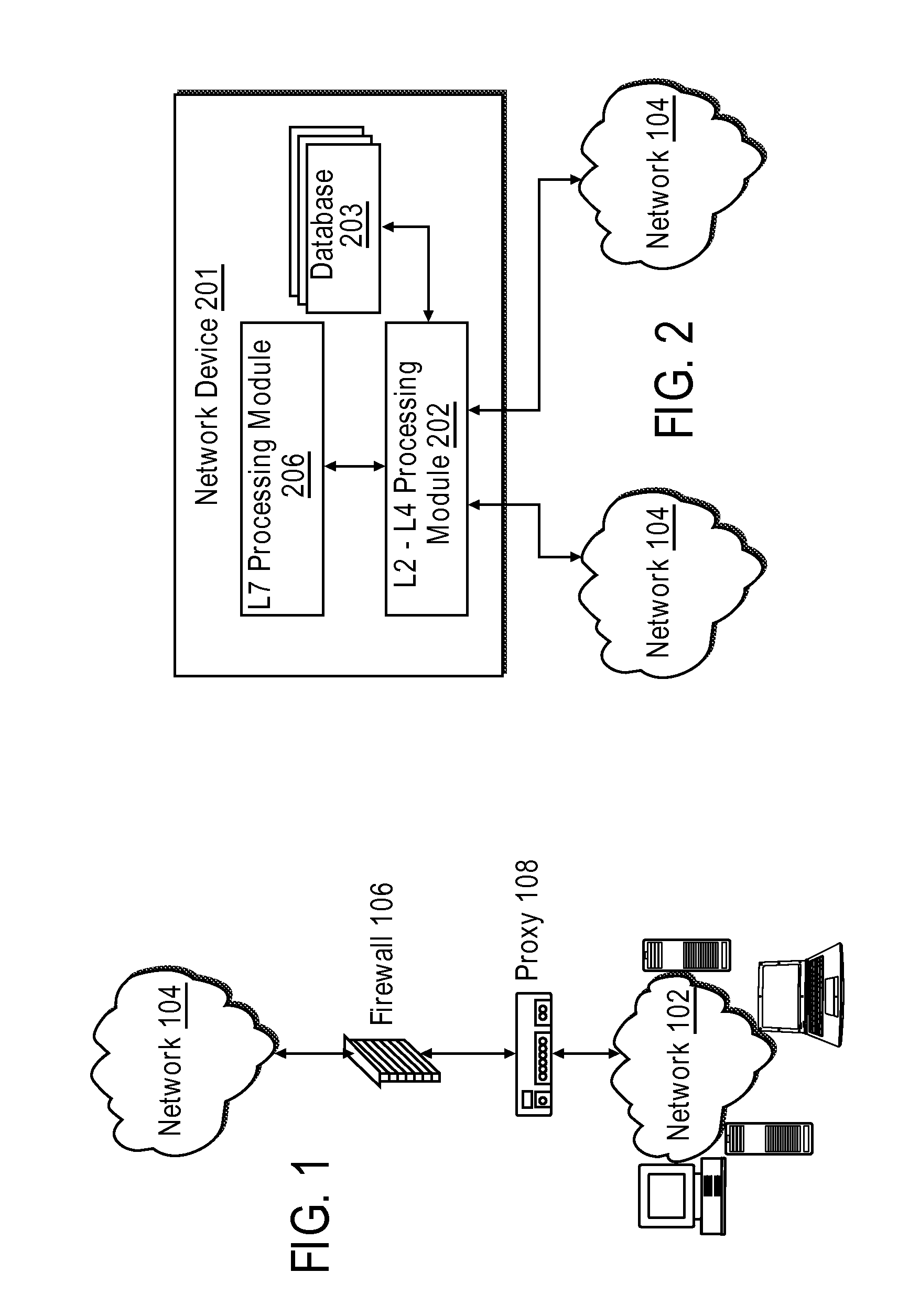

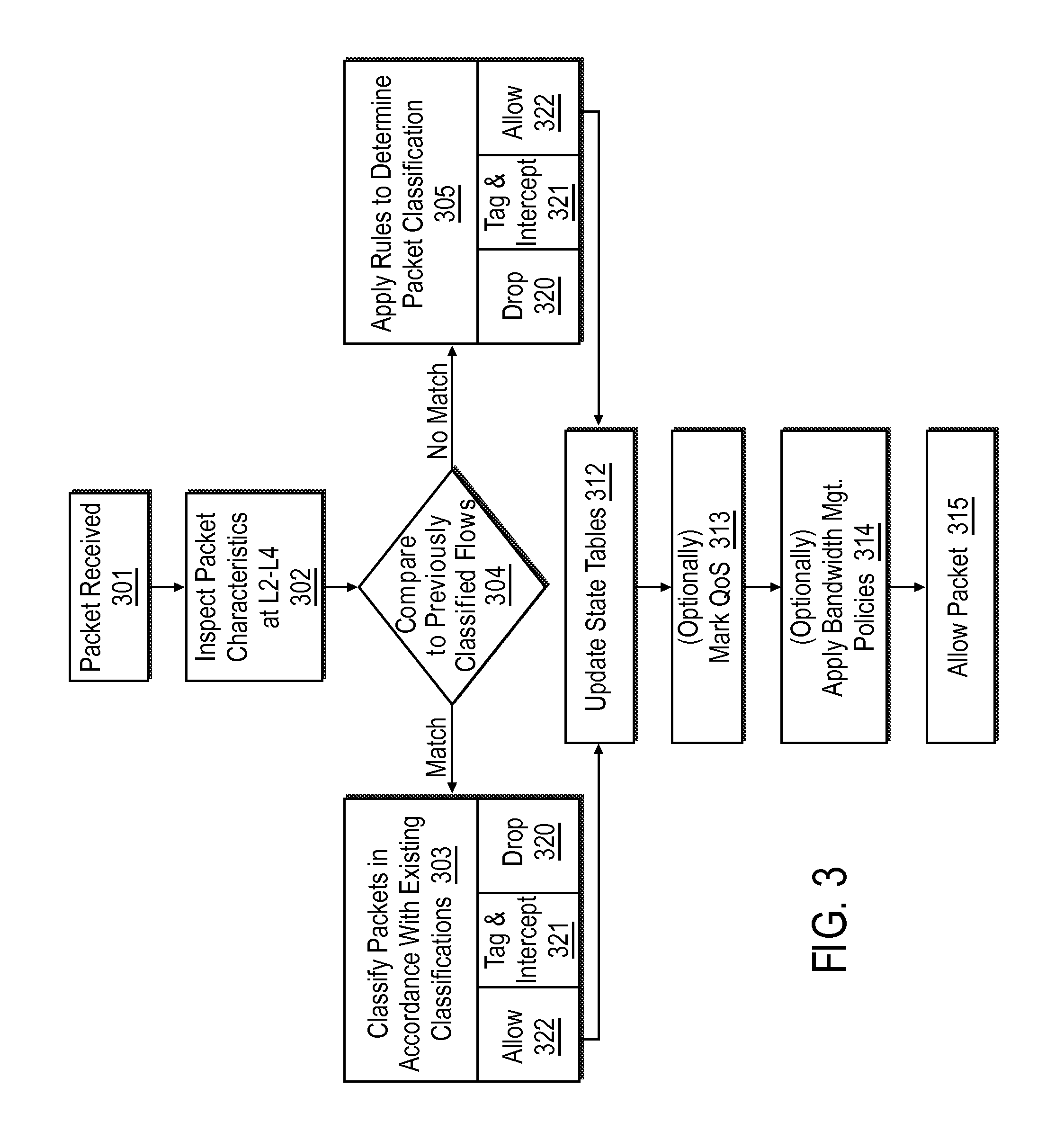

System and method of traffic inspection and classification for purposes of implementing session nd content control

ActiveUS20080077705A1Accurate representationMultiple digital computer combinationsKnowledge representationTraffic capacityReal-time computing

Packets received at a network appliance are classified according to packet classification rules based on flow state information maintained by the network appliance and evaluated for each packet as it is received at the appliance on the basis of OSI Level 2-Level 4 (L2-L4) information retrieved from the packet. The received packets are acted upon according to outcomes of the classification; and the flow state information is updated according to actions taken on the received packets. The updated flow state information is then made available to modules performing additional processing of one or more of the packets at OSI Layer 7 (L7).

Owner:CA TECH INC

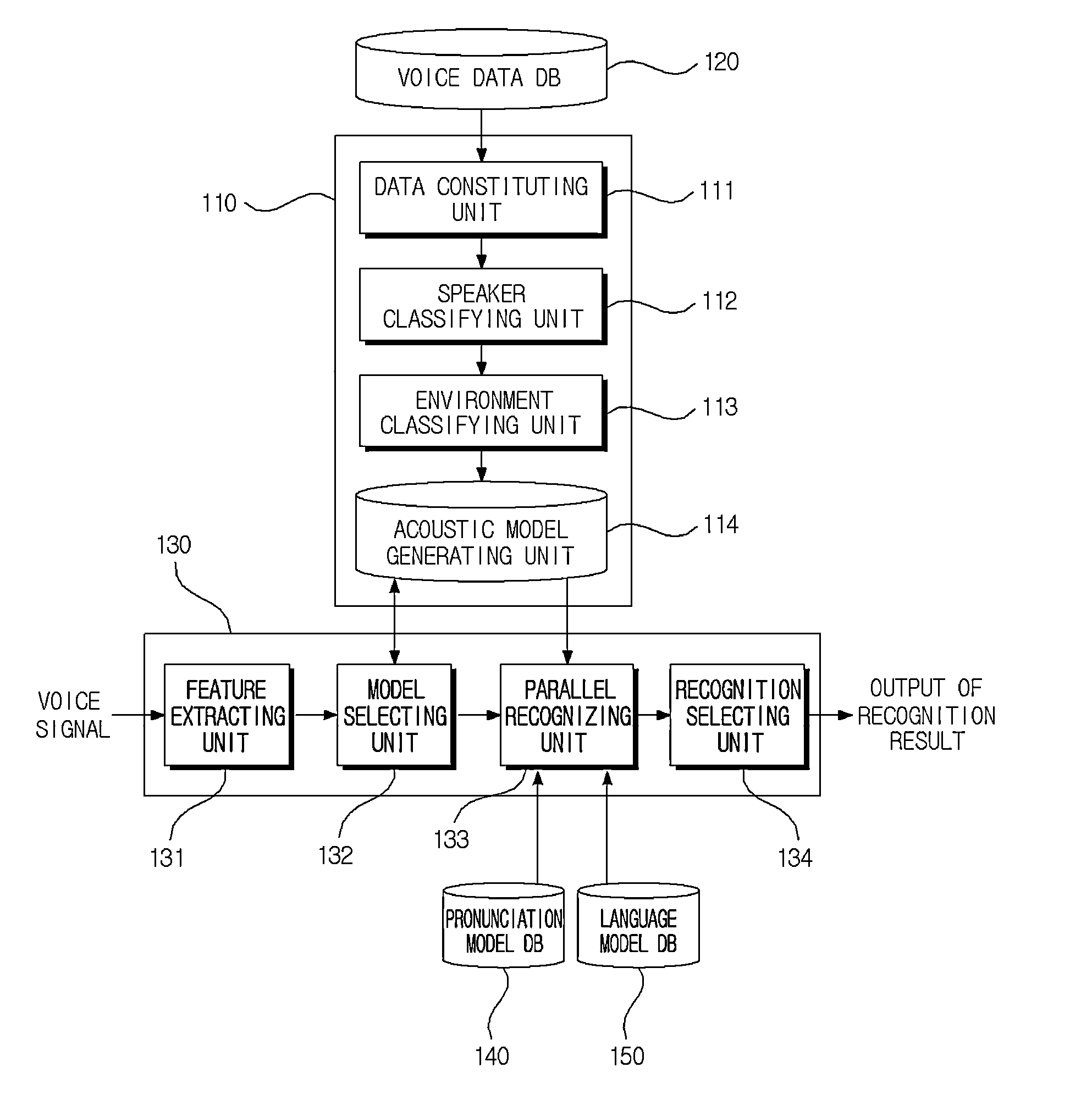

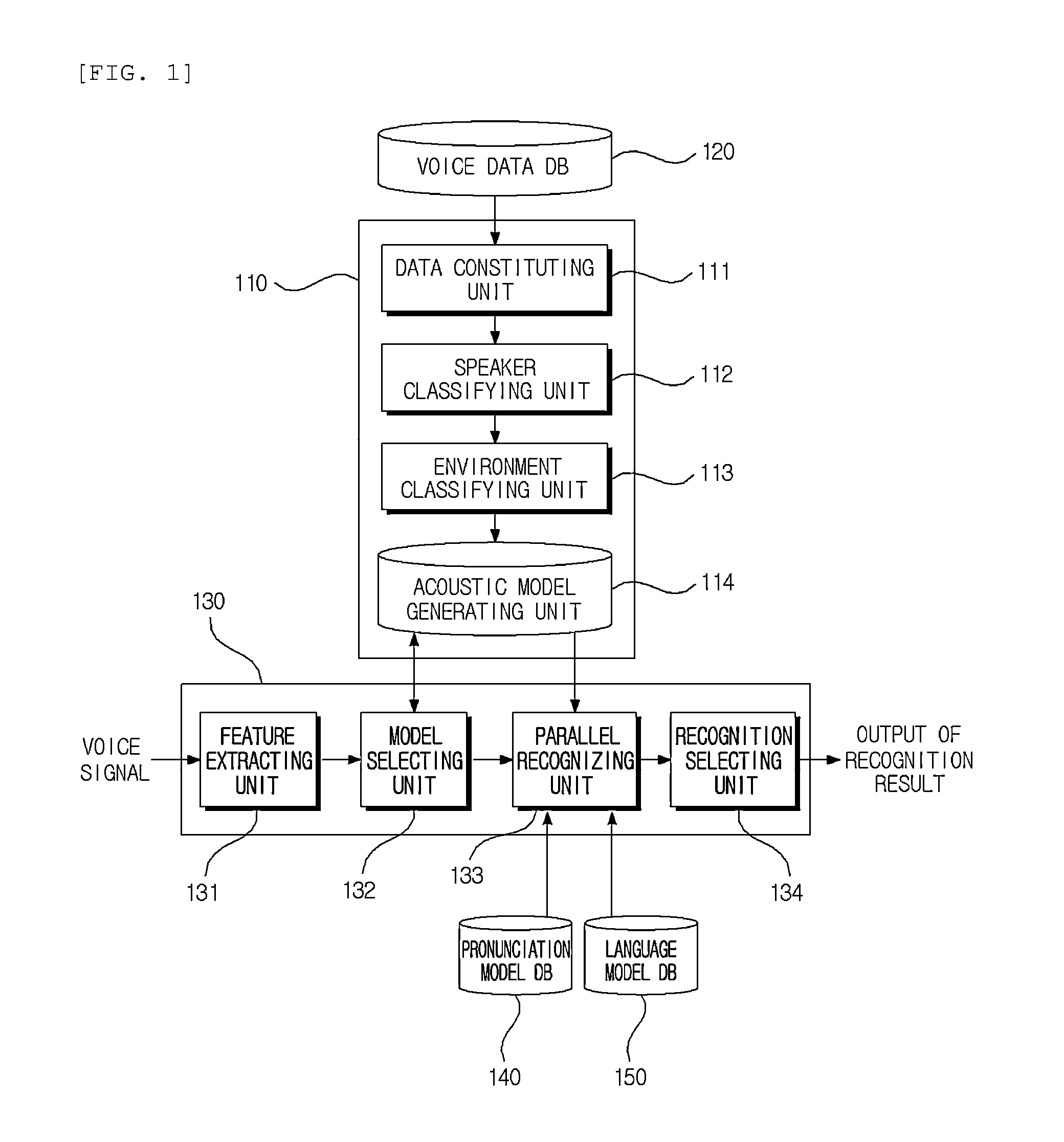

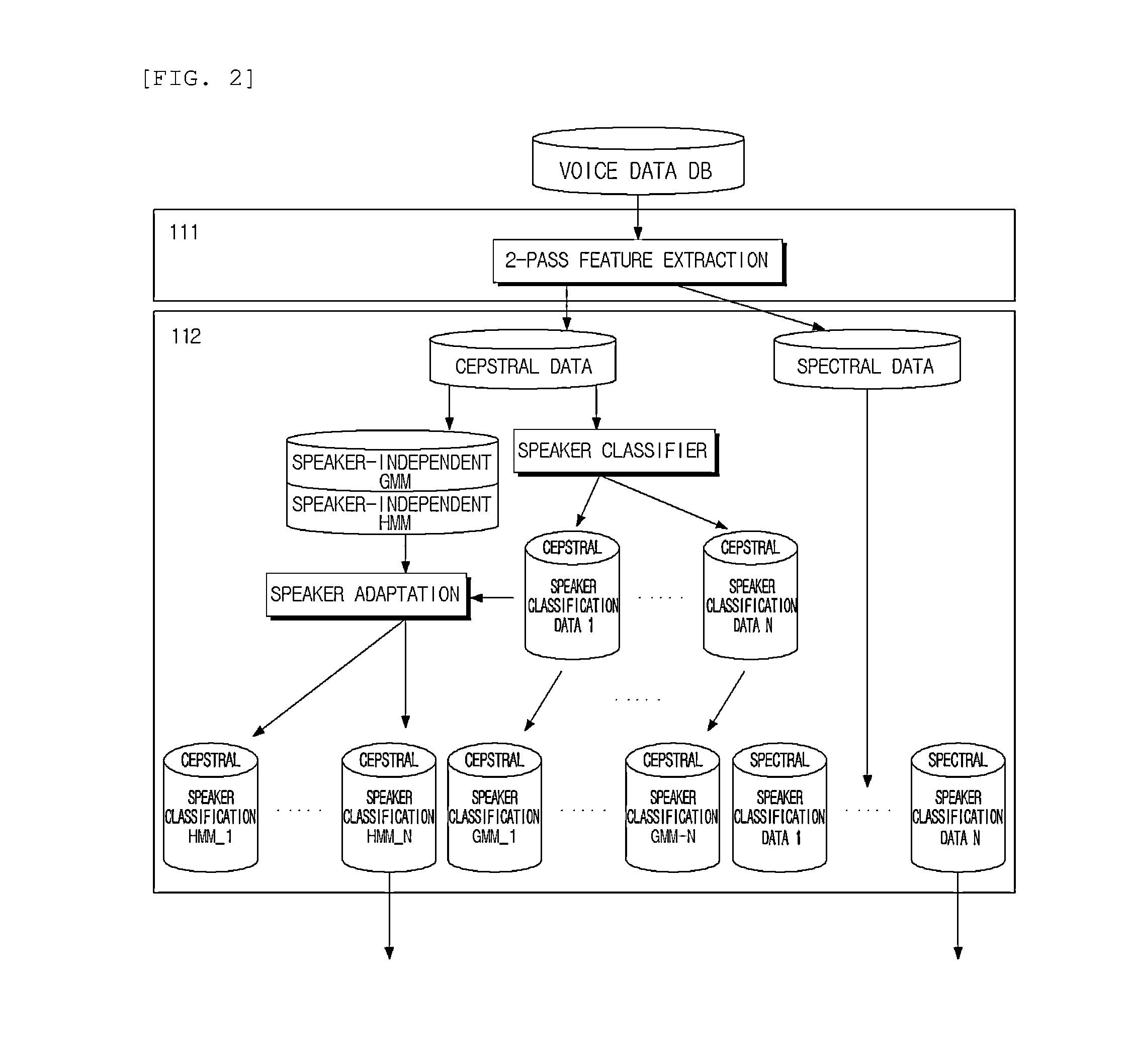

Apparatus for speech recognition using multiple acoustic model and method thereof

Disclosed are an apparatus for recognizing voice using multiple acoustic models according to the present invention and a method thereof. An apparatus for recognizing voice using multiple acoustic models includes a voice data database (DB) configured to store voice data collected in various noise environments; a model generating means configured to perform classification for each speaker and environment based on the collected voice data, and to generate an acoustic model of a binary tree structure as the classification result; and a voice recognizing means configured to extract feature data of voice data when the voice data is received from a user, to select multiple models from the generated acoustic model based on the extracted feature data, to parallel recognize the voice data based on the selected multiple models, and to output a word string corresponding to the voice data as the recognition result.

Owner:ELECTRONICS & TELECOMM RES INST

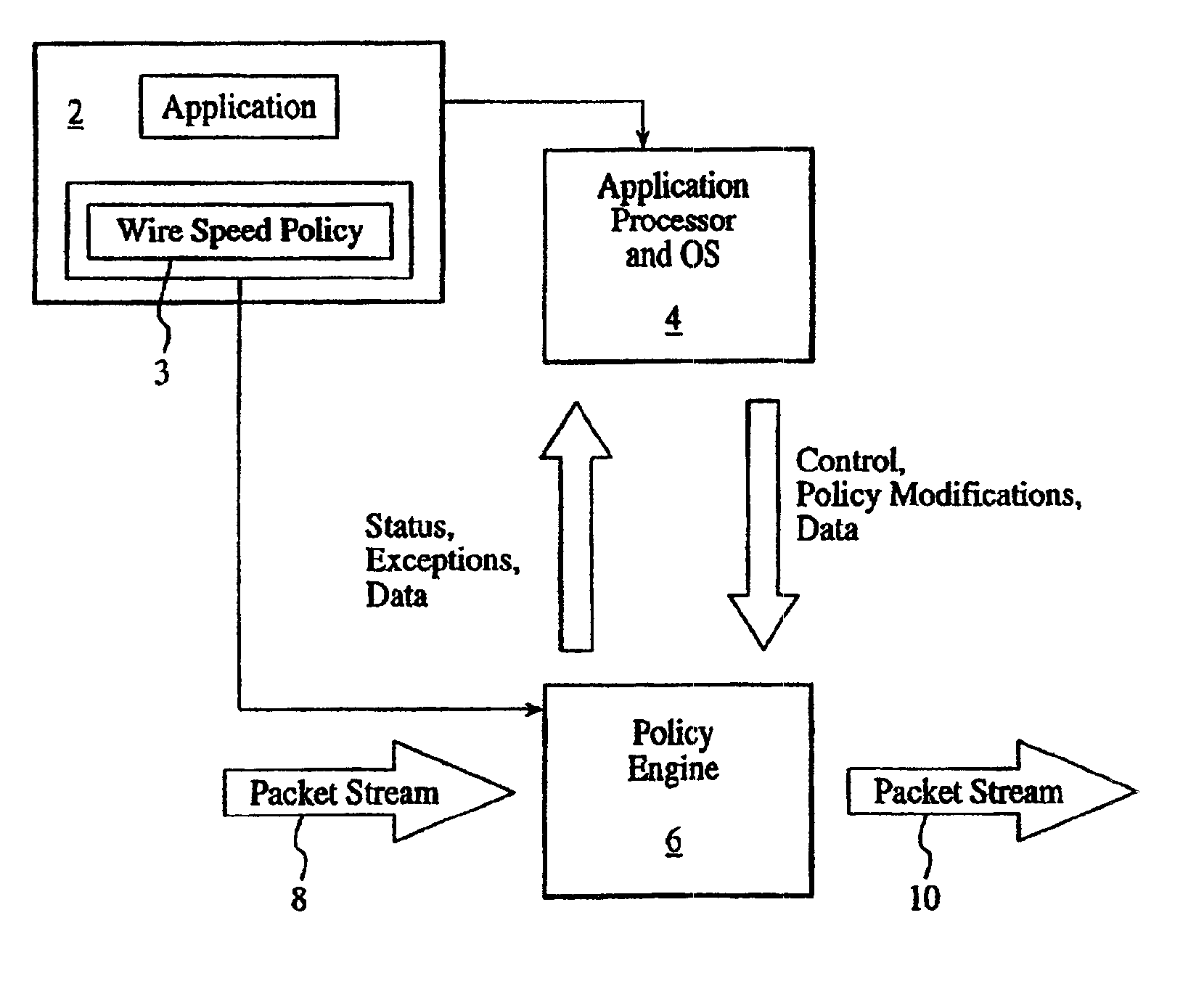

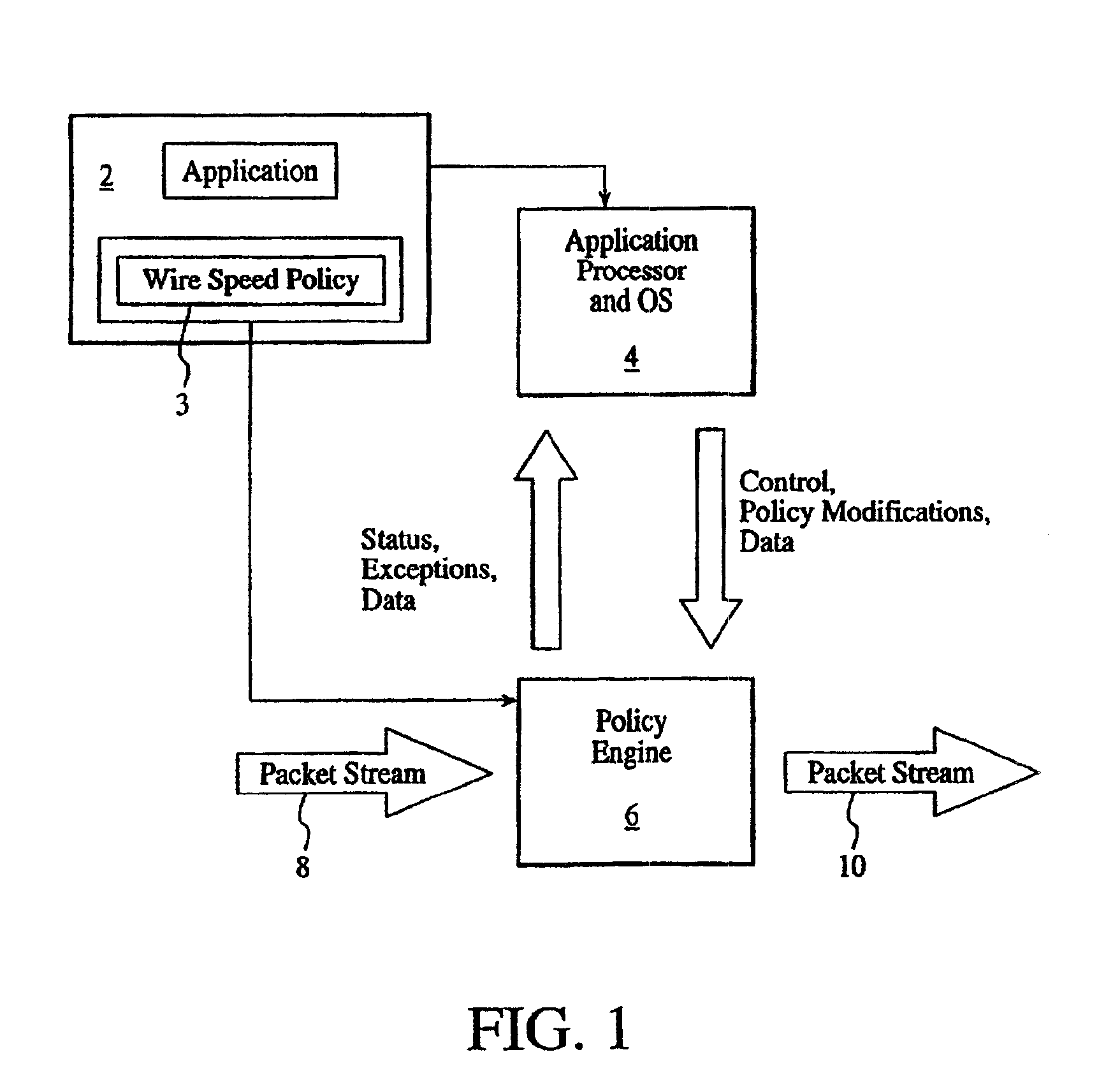

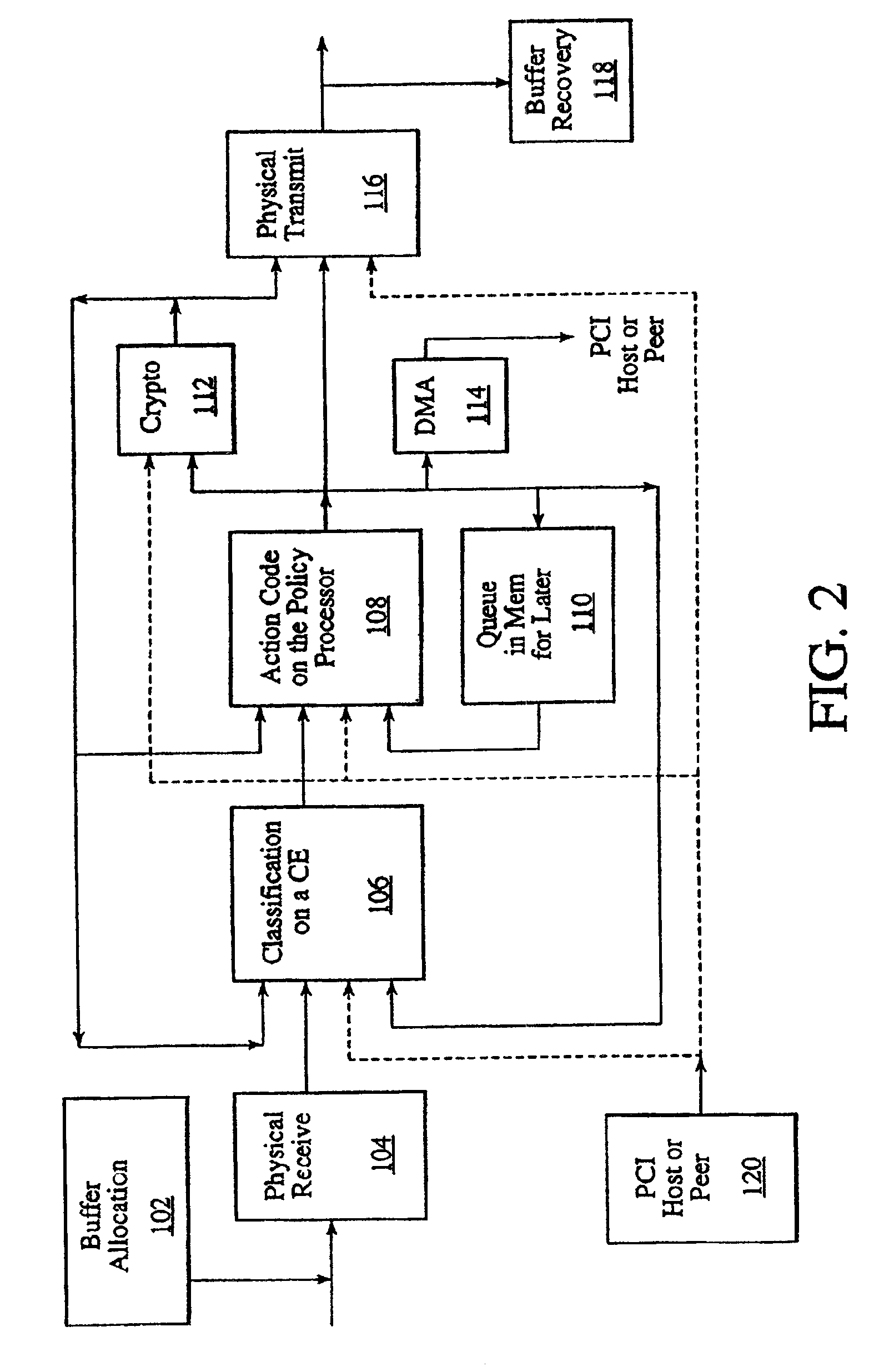

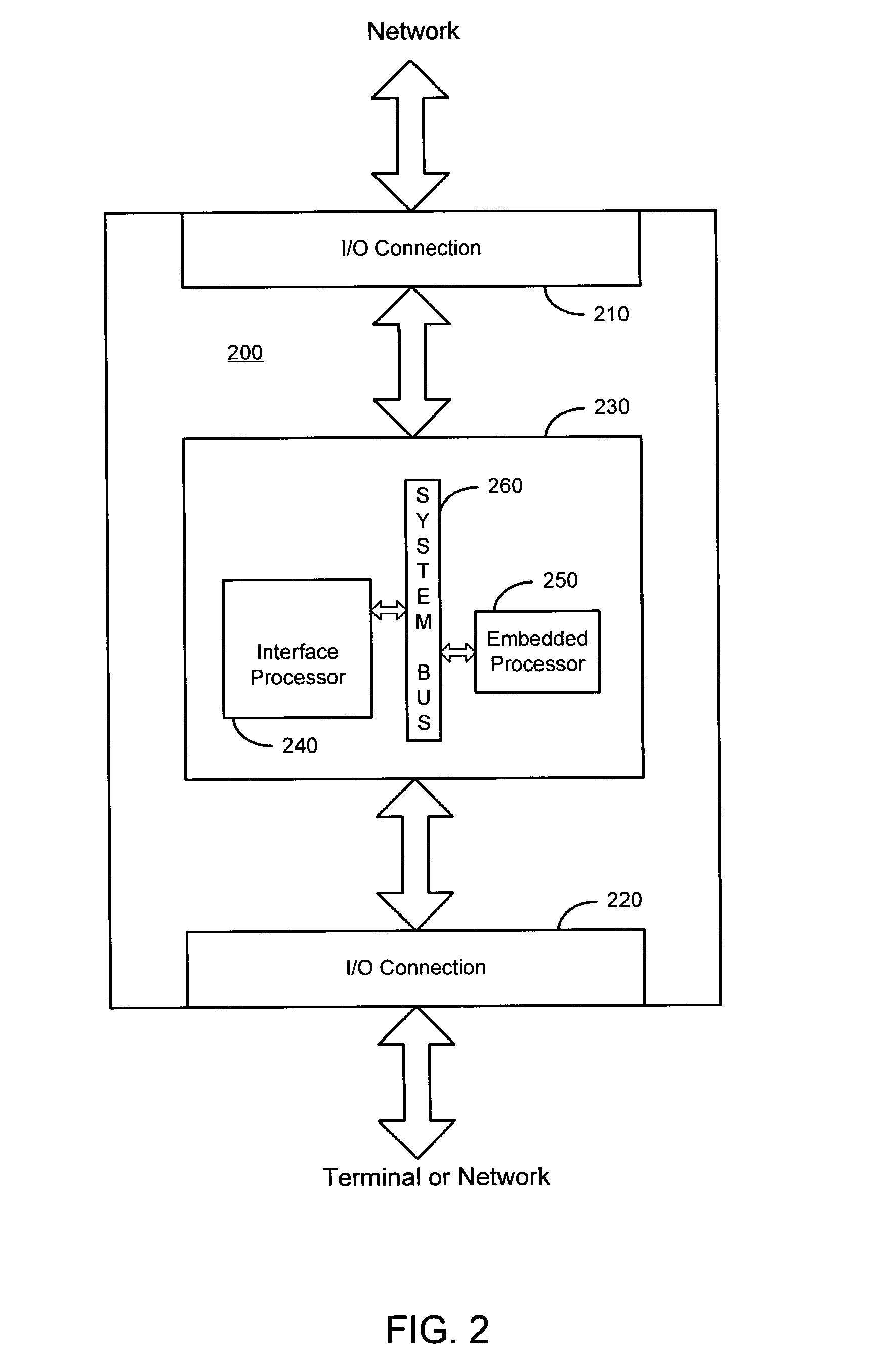

Programmable system for processing a partitioned network infrastructure

InactiveUS6859841B2Many timesMinimize movementConditional code generationInstruction analysisGeneral purposeNetwork interface controller

The present invention relates to a general-purpose programmable packet-processing platform for accelerating network infrastructure applications which have been structured so as to separate the stages of classification and action. Network packet classification, execution of actions upon those packets, management of buffer flow, encryption services, and management of Network Interface Controllers are accelerated through the use of a multiplicity of specialized modules. A language interface is defined for specifying both stateless and stateful classification of packets and to associate actions with classification results in order to efficiently utilize these specialized modules.

Owner:INTEL CORP

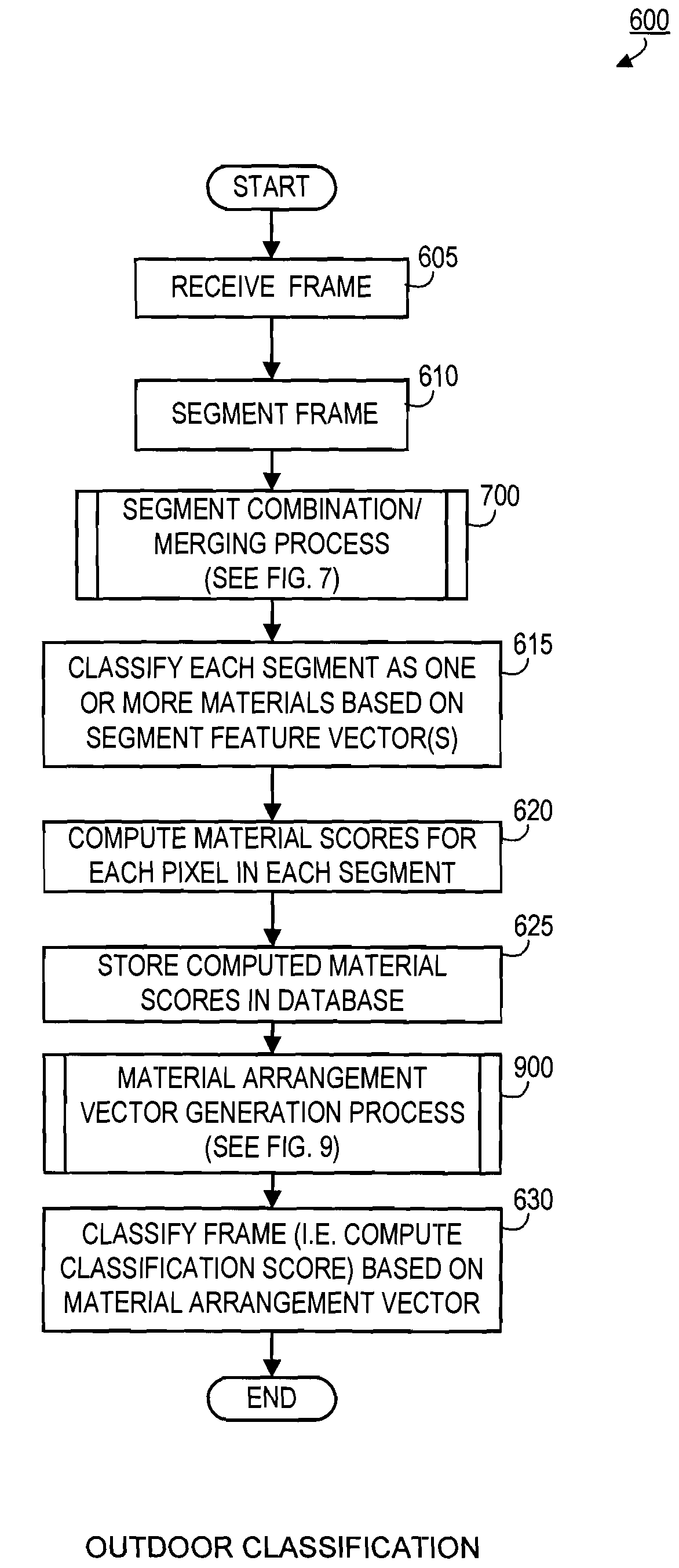

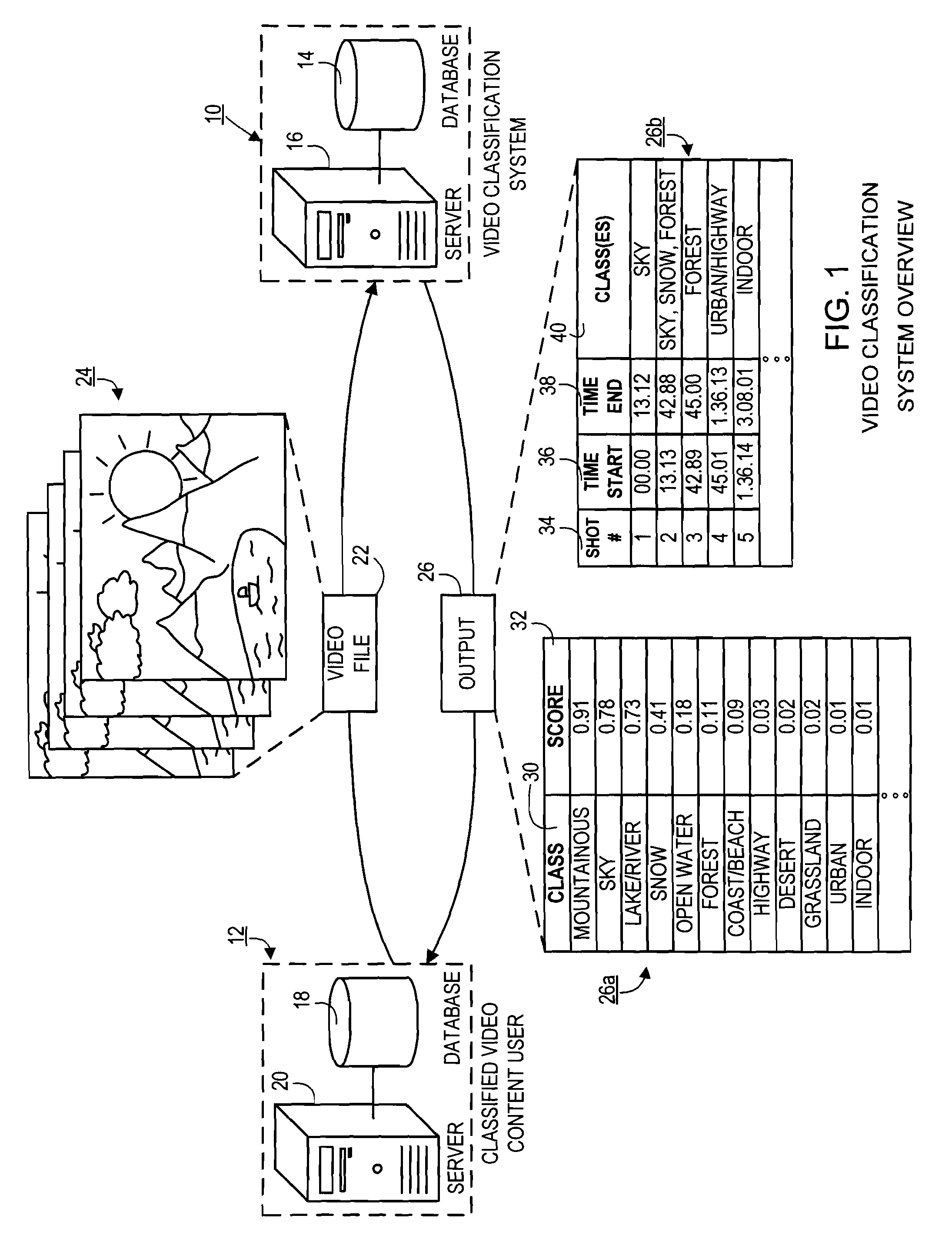

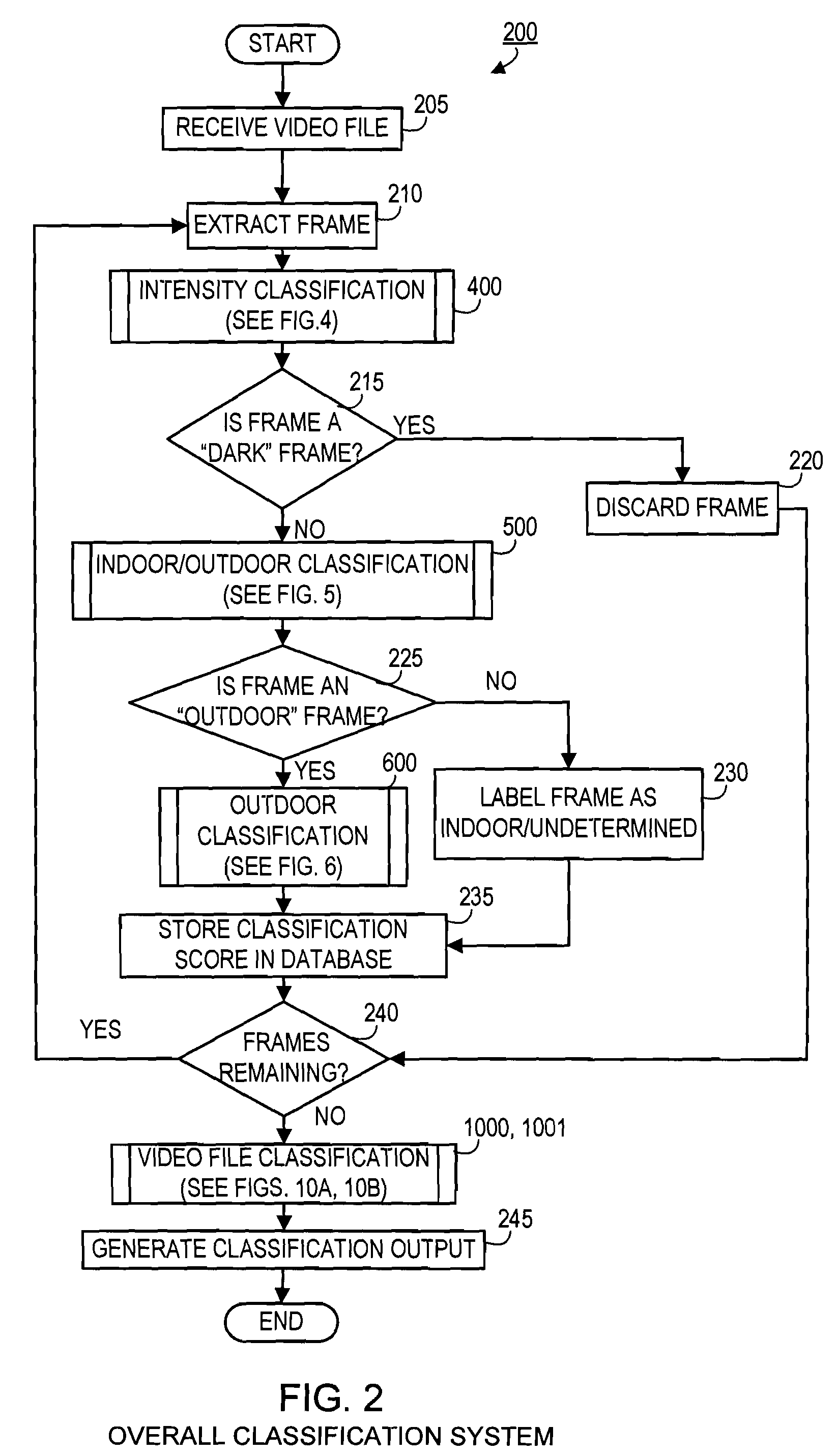

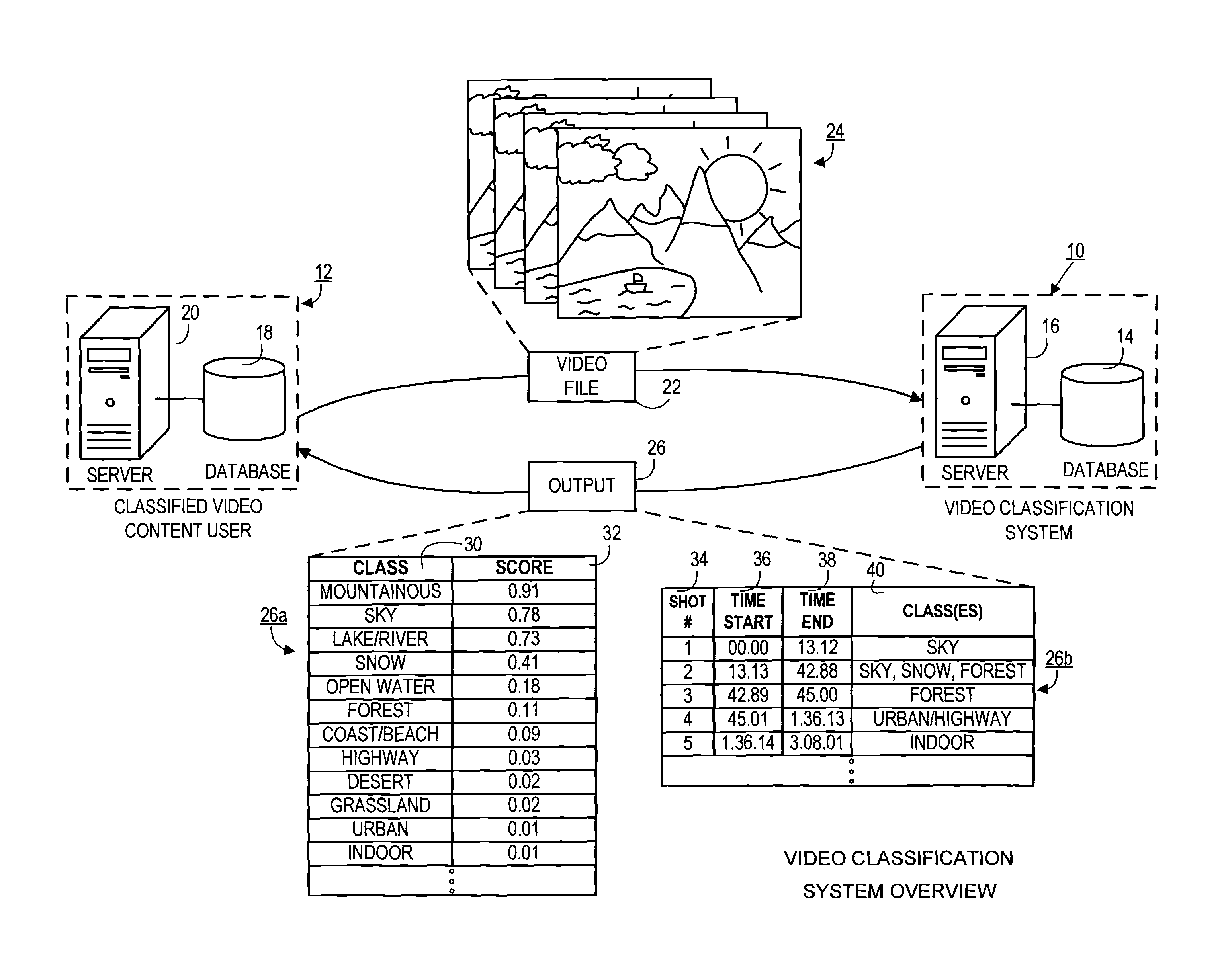

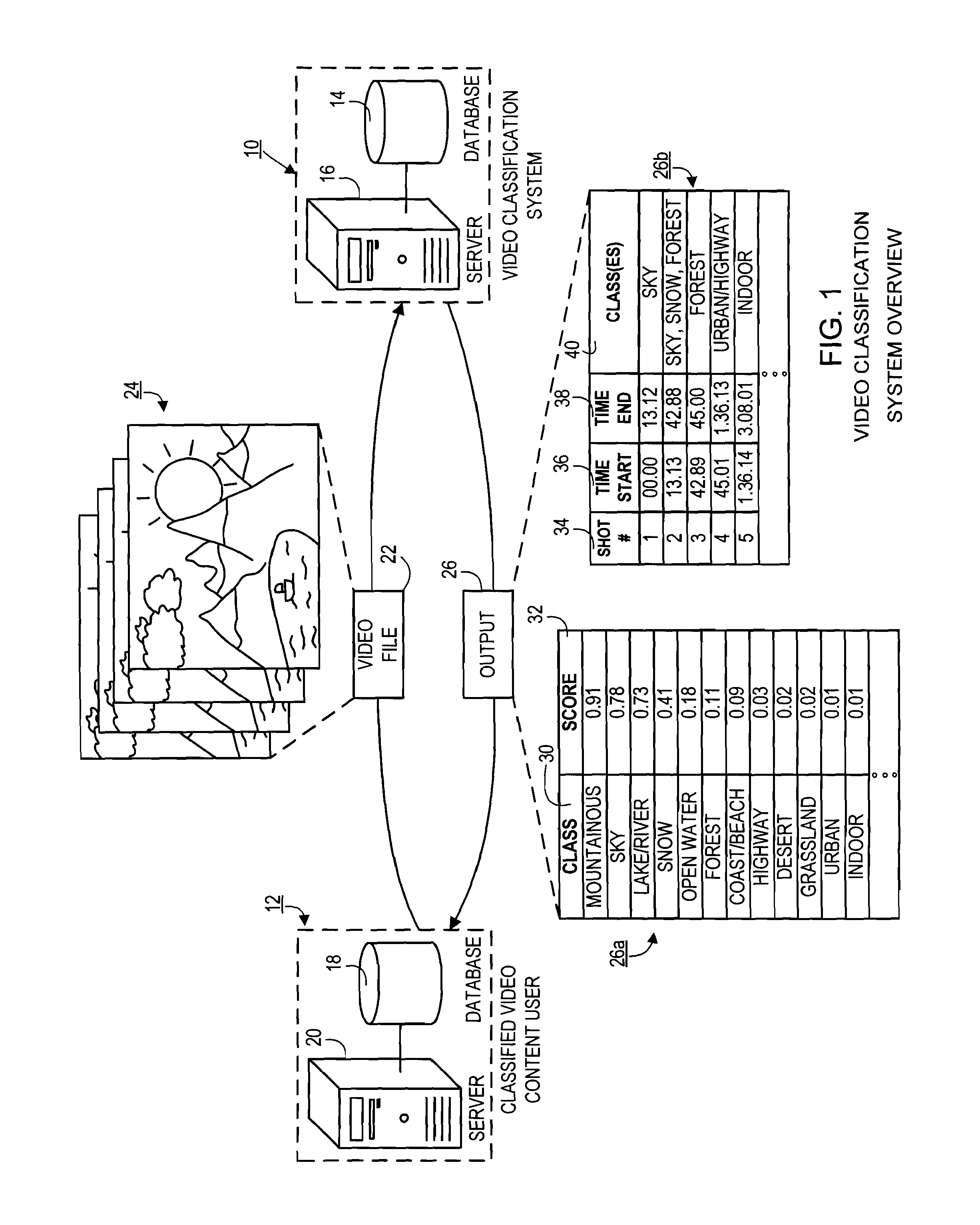

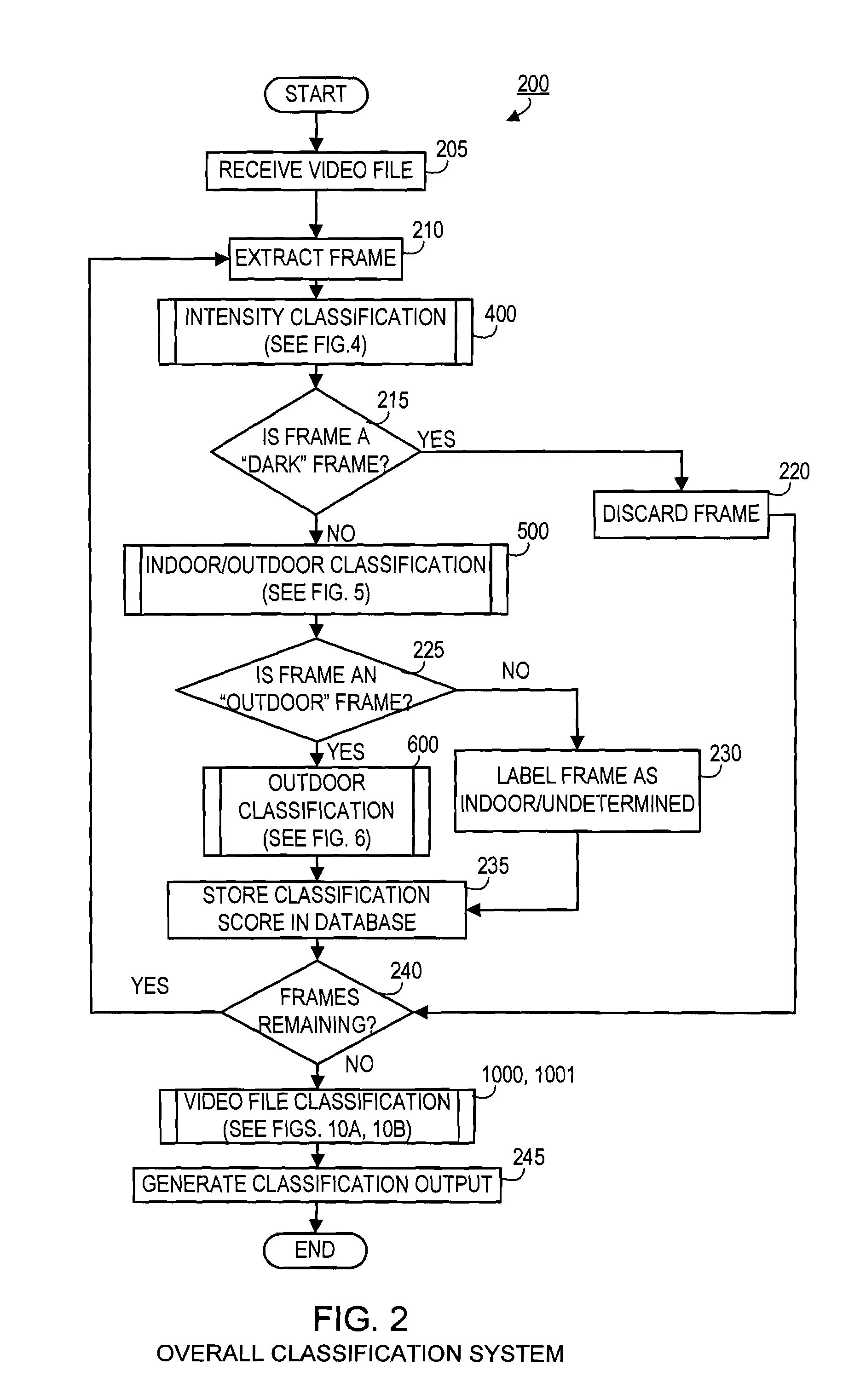

Systems and methods for semantically classifying shots in video

The present disclosure relates to systems and methods for classifying videos based on video content. For a given video file including a plurality of frames, a subset of frames is extracted for processing. Frames that are too dark, blurry, or otherwise poor classification candidates are discarded from the subset. Generally, material classification scores that describe type of material content likely included in each frame are calculated for the remaining frames in the subset. The material classification scores are used to generate material arrangement vectors that represent the spatial arrangement of material content in each frame. The material arrangement vectors are subsequently classified to generate a scene classification score vector for each frame. The scene classification results are averaged (or otherwise processed) across all frames in the subset to associate the video file with one or more predefined scene categories related to overall types of scene content of the video file.

Owner:TIVO SOLUTIONS INC

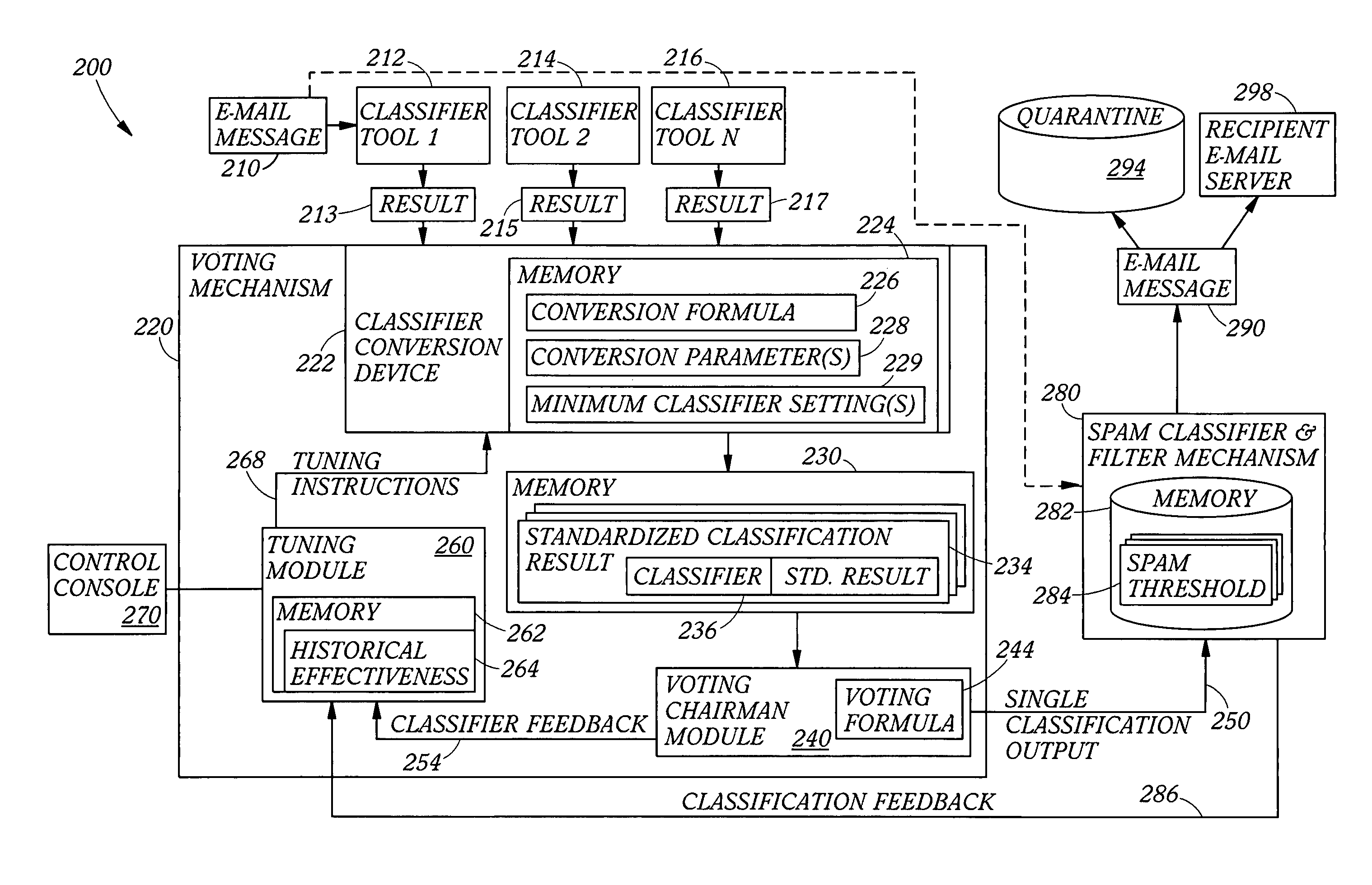

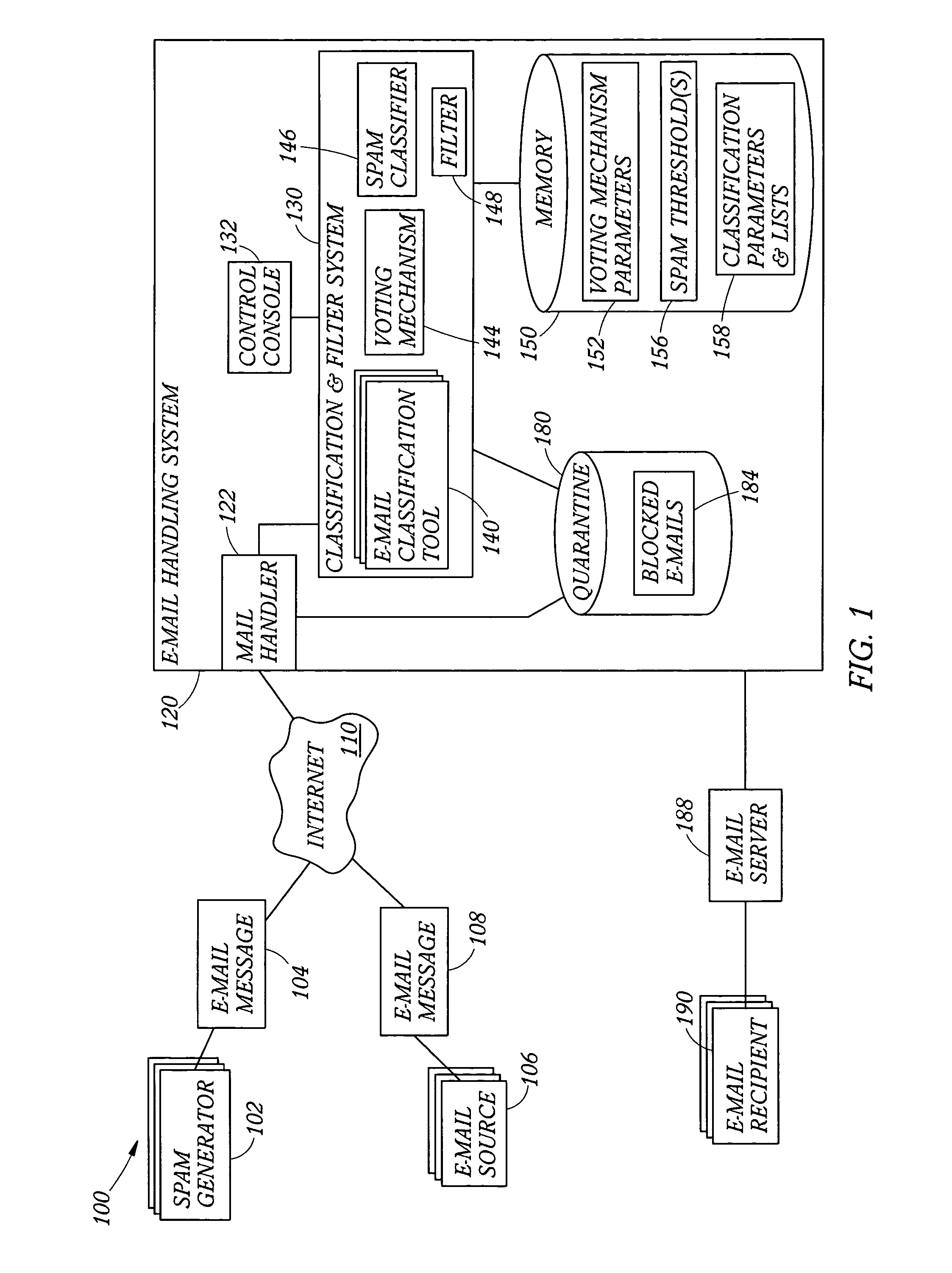

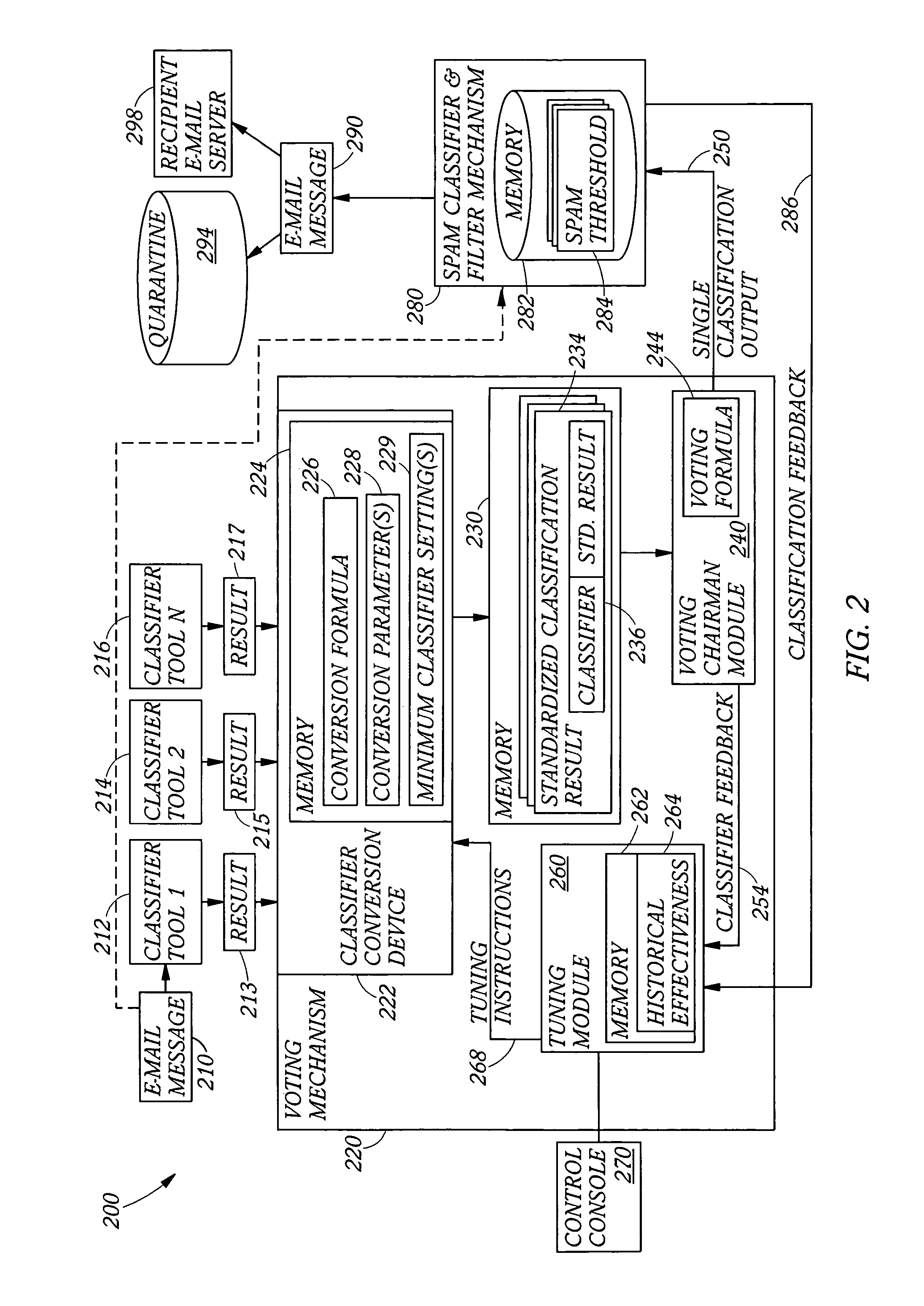

Fuzzy logic voting method and system for classifying e-mail using inputs from multiple spam classifiers

ActiveUS7051077B2Efficient identificationEffective controlMultiple digital computer combinationsData switching networksEmail classificationMachine learning

A method, and corresponding system, for identifying e-mail messages as being unwanted junk or spam. The method includes converting the outputs of a set of e-mail classification tools into a standardized format, such as a probability having a value between zero and one. The standardized outputs of the classification tools are then input to a voting mechanism which uses a voting algorithm based on fuzzy logic to combine the standardized outputs into a single classification result. The use of a fuzzy logic algorithm creates a more useful result as the classifier results are not merely averaged. In one embodiment, the single classification result is itself a probability that is provided to a spam classifier or comparator that functions to compare the single classification result to a spam threshold value and based on the comparison to classify the e-mail message as spam or not spam.

Owner:MUSARUBRA US LLC

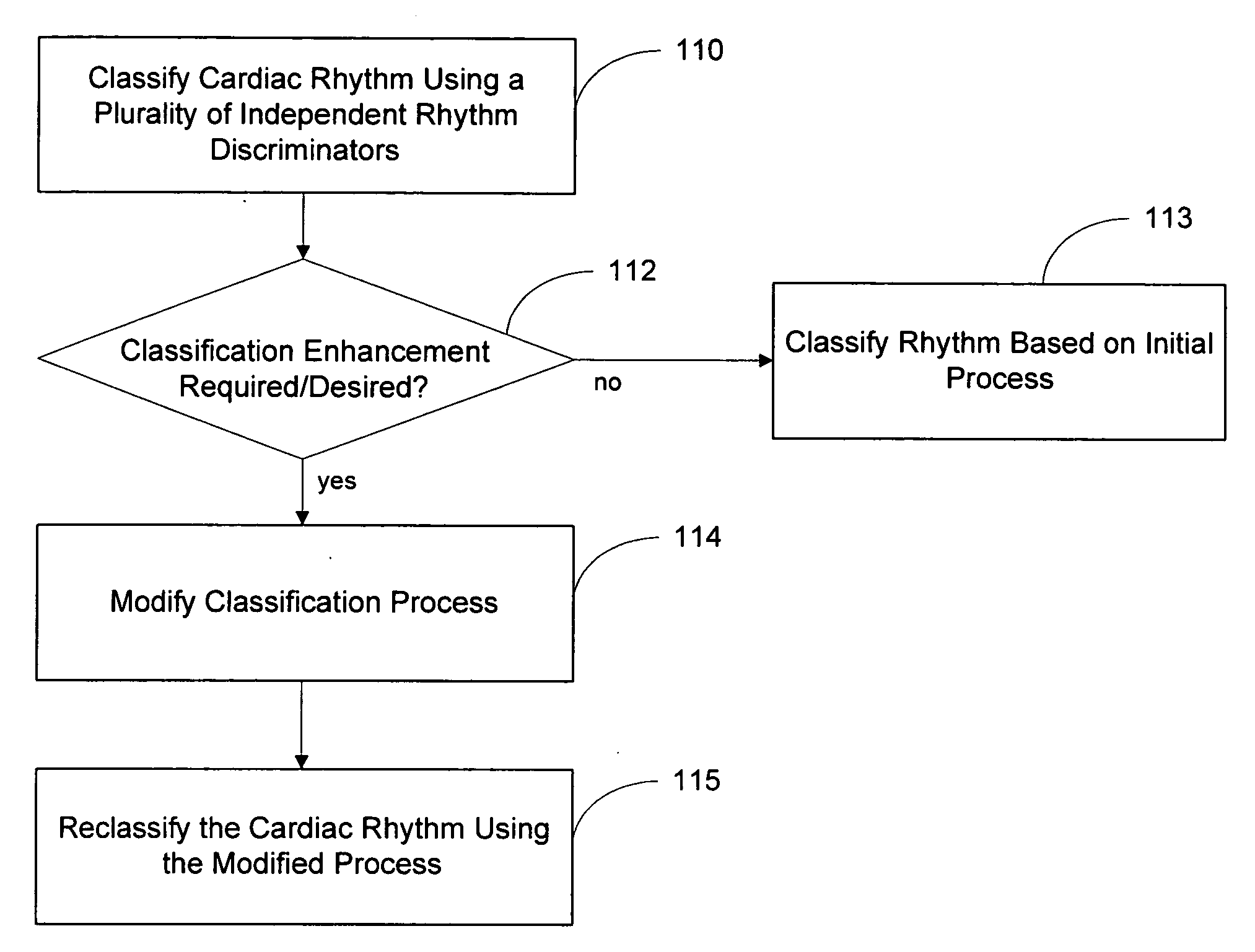

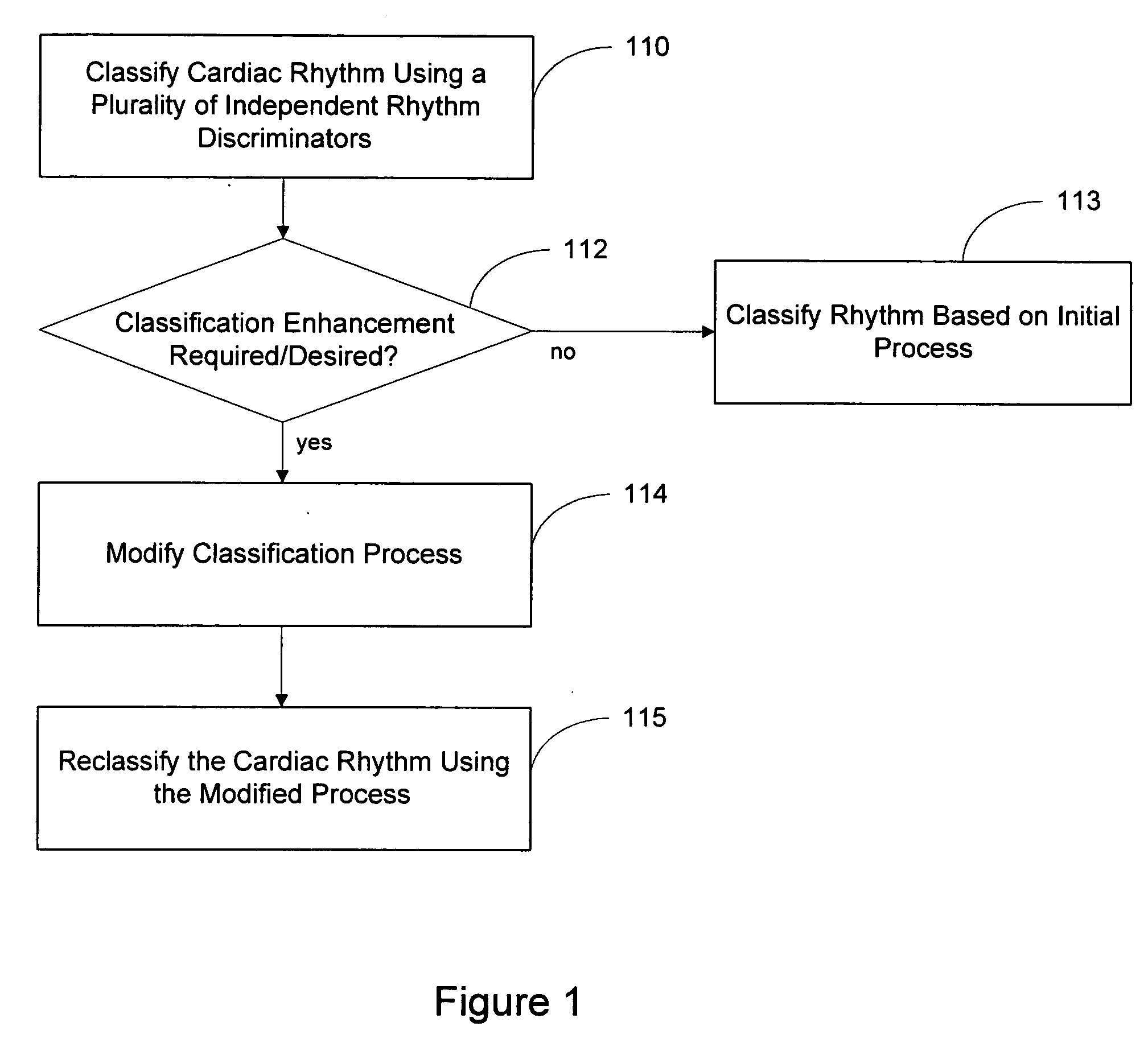

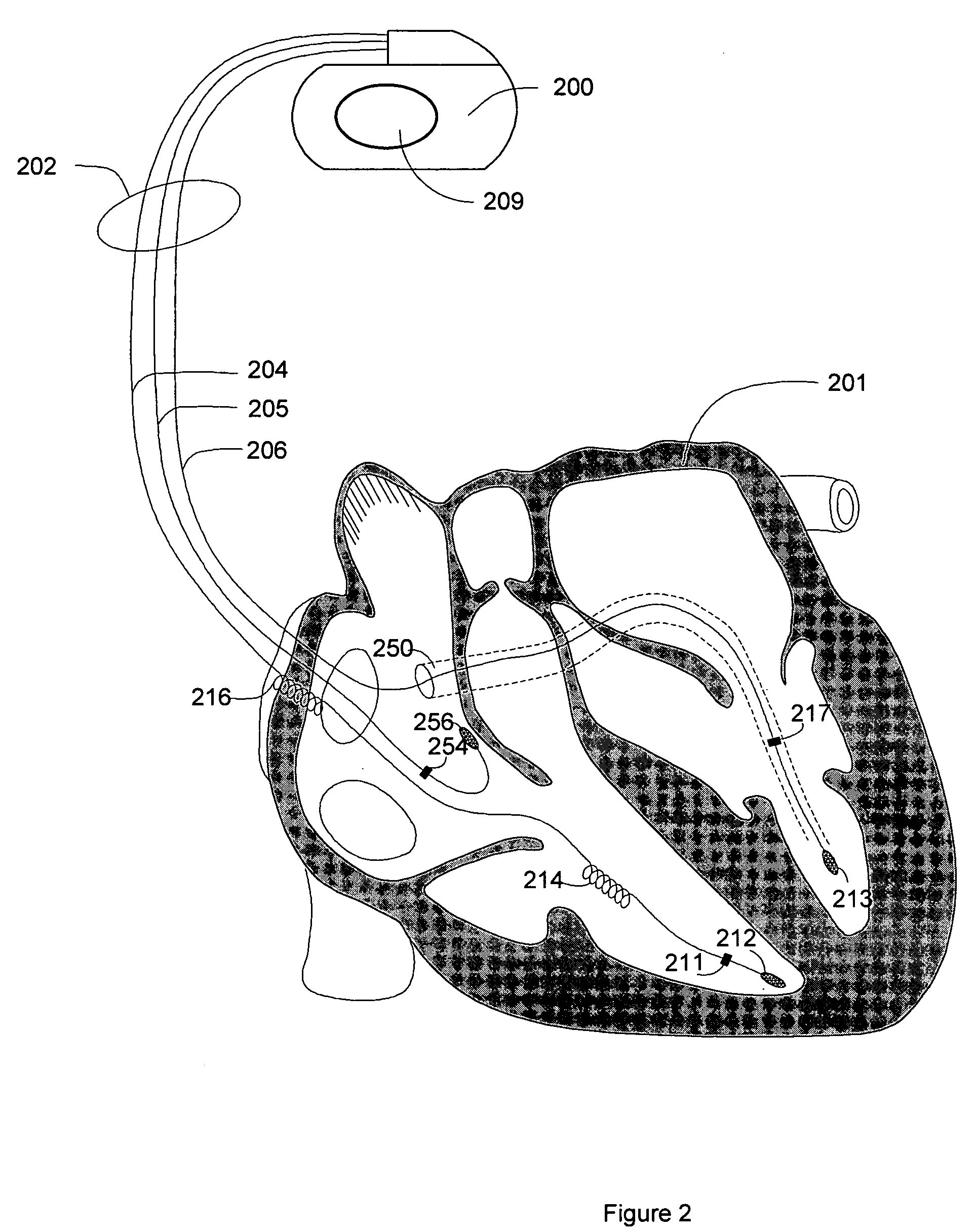

Blending cardiac rhythm detection processes

ActiveUS20060217621A1Easy to classifyRhythm classificationElectrotherapyElectrocardiographyProcess systemsDiscriminator

Systems and methods are described for classifying a cardiac rhythm. A cardiac rhythm is classified using a classification process that includes a plurality of cardiac rhythm discriminators. Each rhythm discriminator provides an independent classification of the cardiac rhythm. The classification process is modified if the modification is likely to produce enhanced classification results. The rhythm is reclassified using the modified classification process.

Owner:CARDIAC PACEMAKERS INC

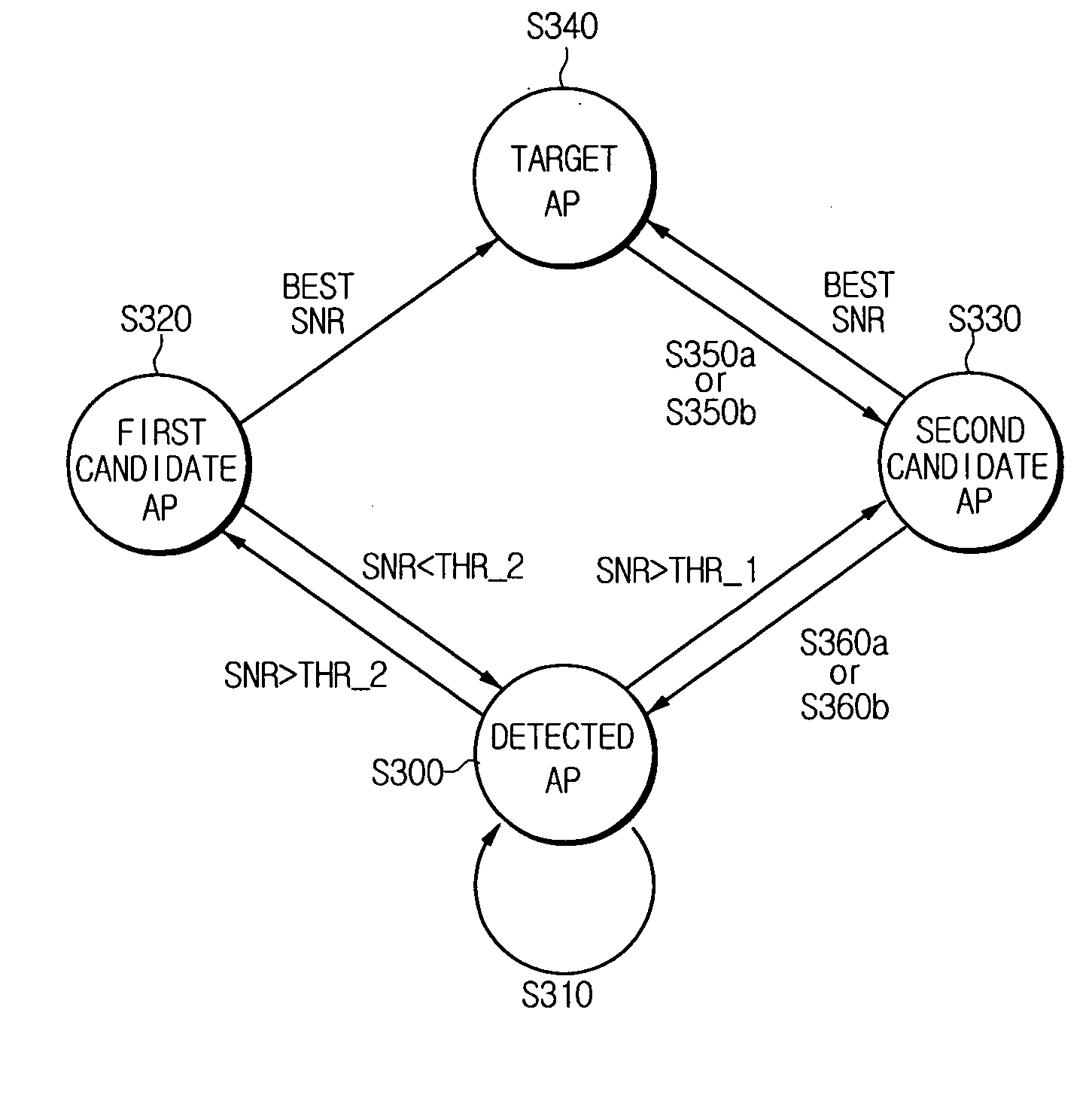

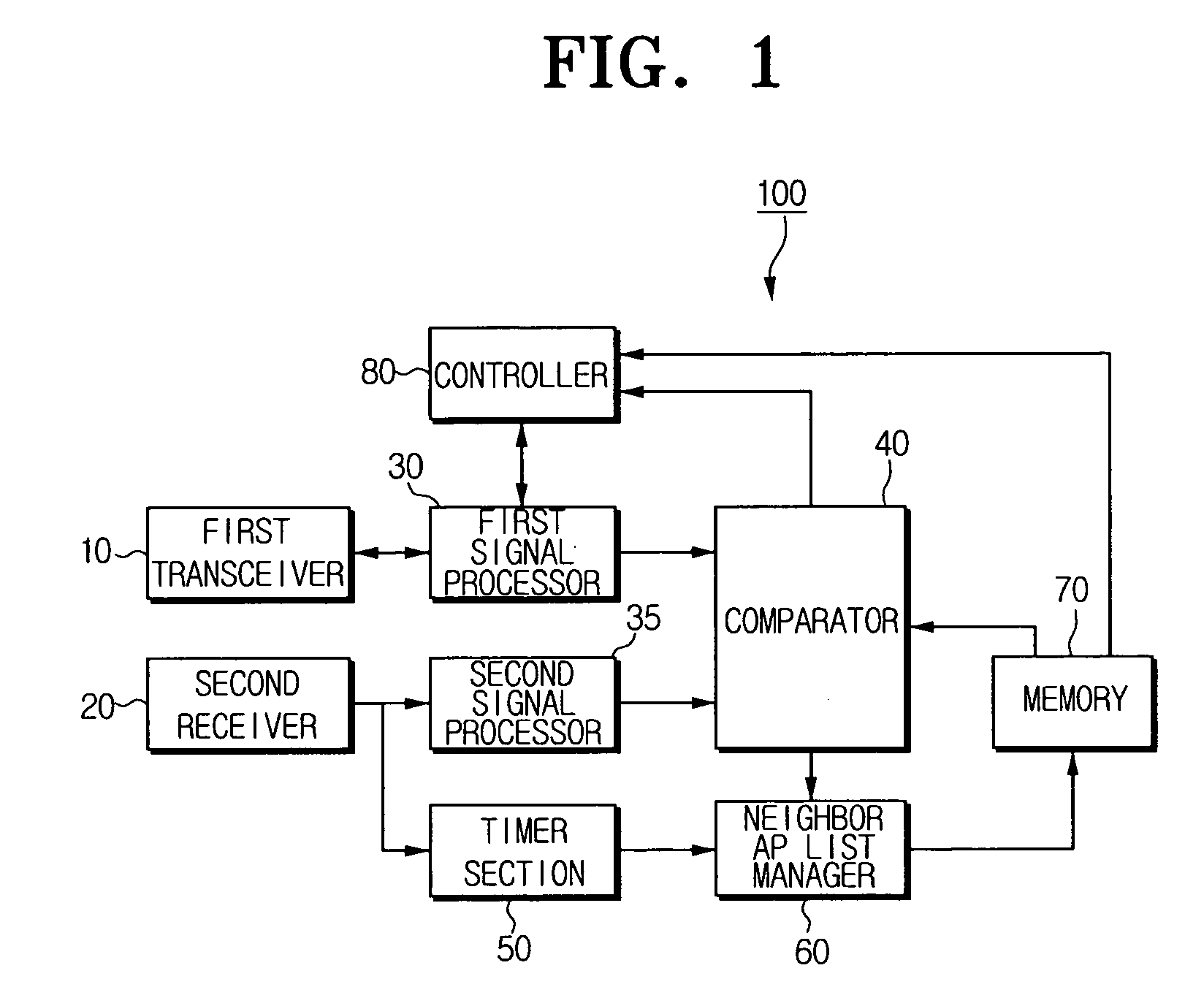

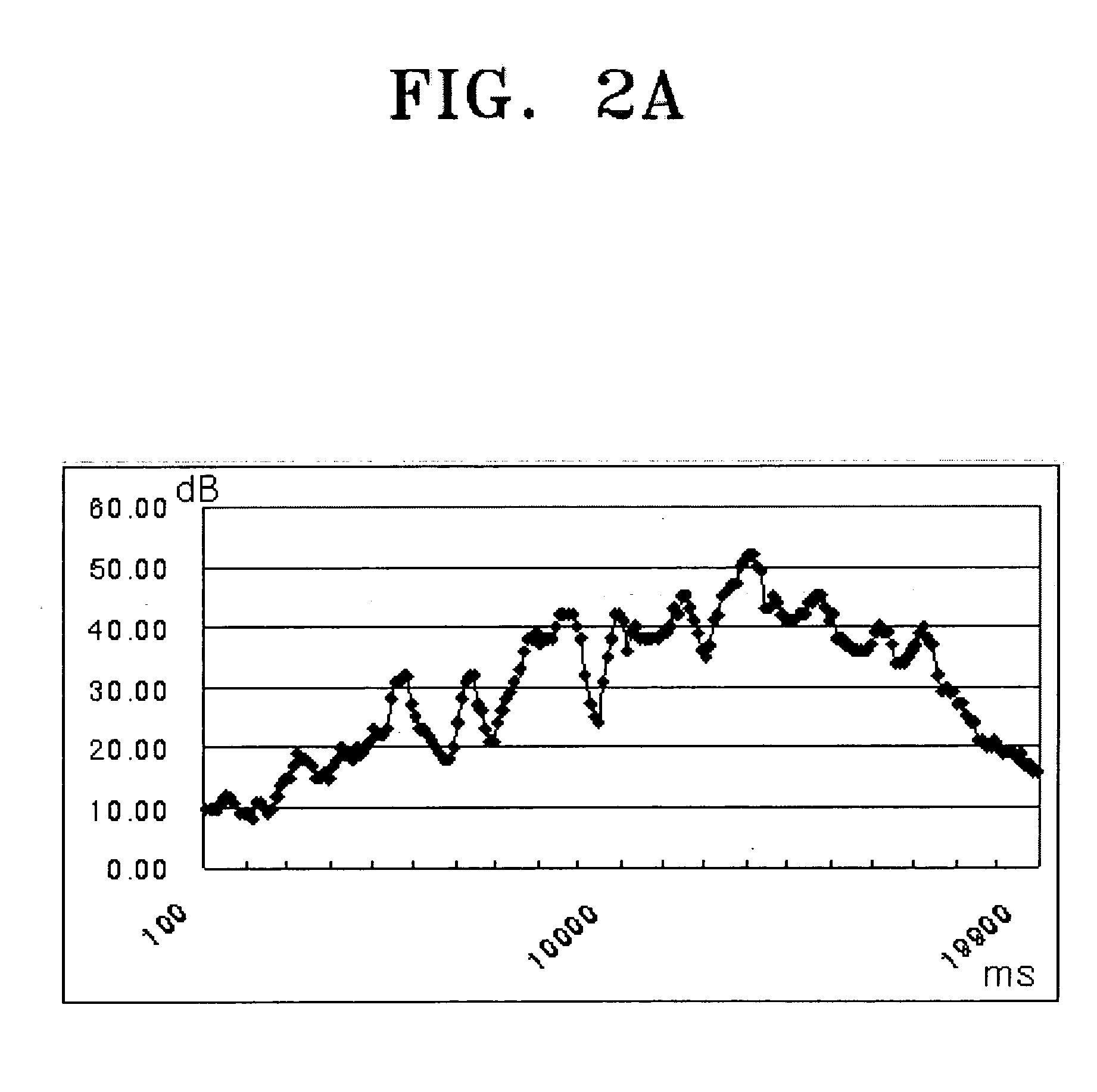

Fast handover method optimized for IEEE 802.11 Networks

ActiveUS20050255847A1Method is fastQuick serviceNetwork topologiesData switching by path configurationBeacon frameRadio channel

A fast handover method optimized for IEEE 802.11 networks. In a wireless local area system including a mobile terminal and at least two wireless access points (APs) that communicate with the mobile terminal over a unique radio channel, the fast handover method includes receiving a beacon frame signal from the serving AP and the neighbor APs of the mobile terminal; generating a first signal to determine a state of each of the neighbor APs based on the beacon frame signal received from each of the neighbor APs; comparing the first signal with predefined thresholds, classifying the neighbor APs into a detected AP, a candidate AP, and a target AP according to a result of the comparison, and storing the classification result in a neighbor AP list; and selecting an AP for the handover based on the classification result in the neighbor AP list.

Owner:SAMSUNG ELECTRONICS CO LTD

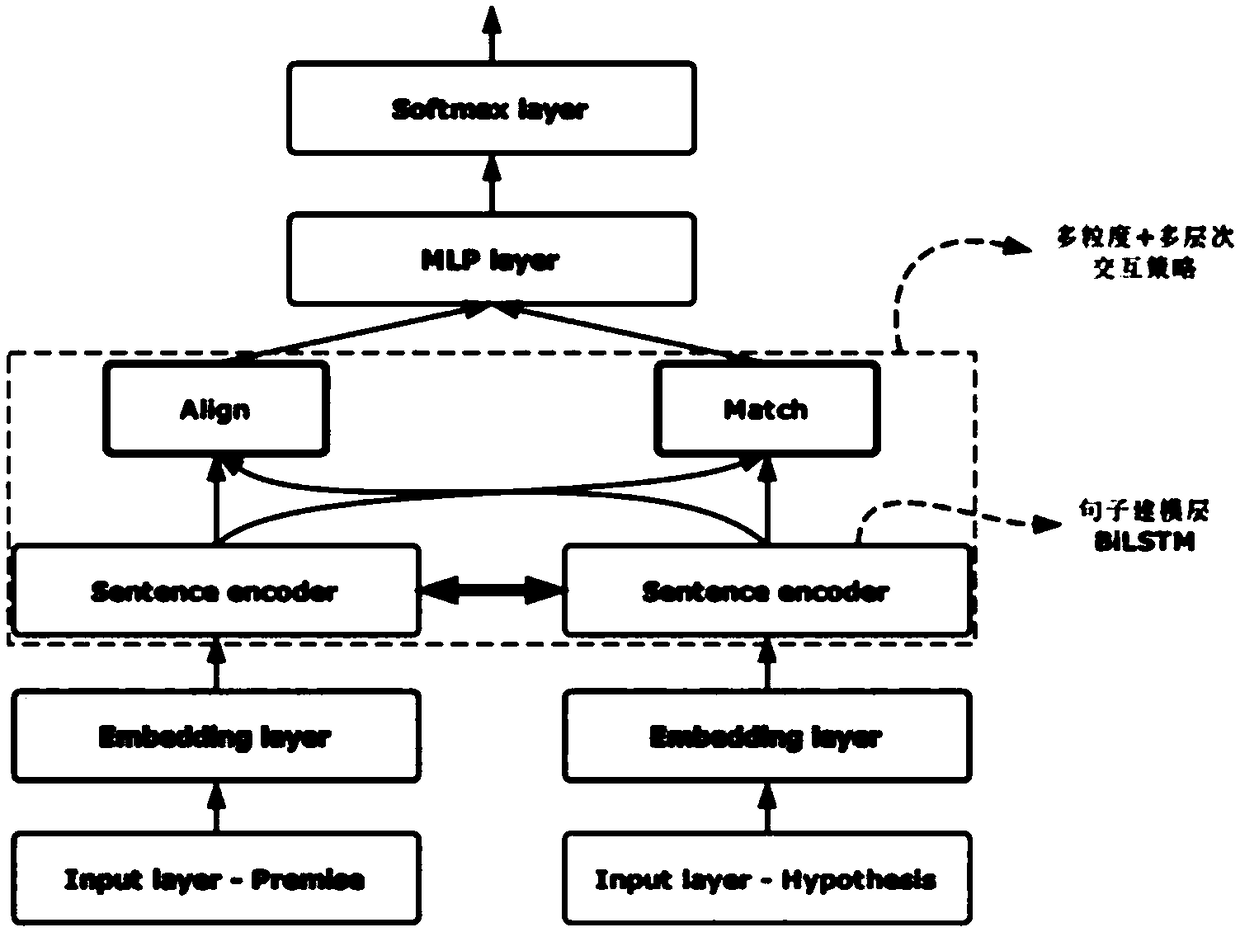

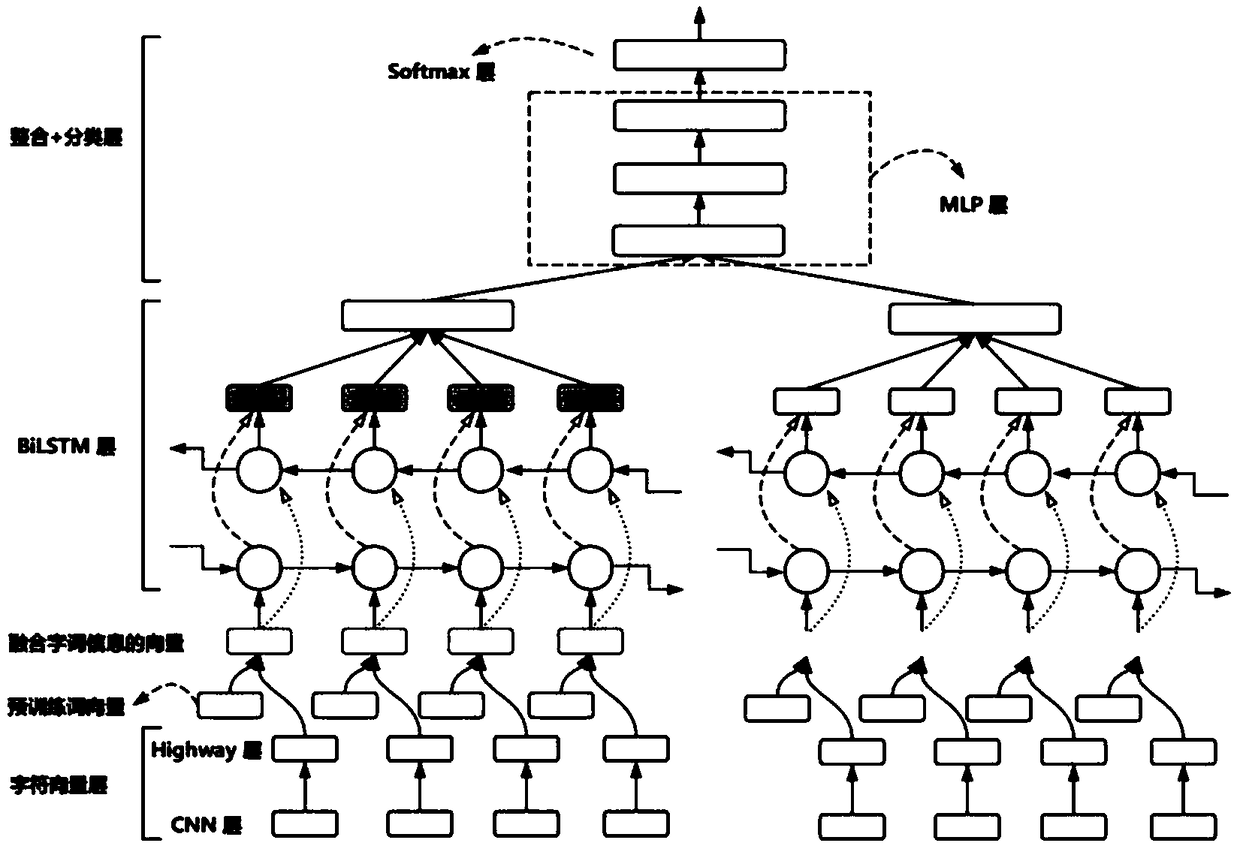

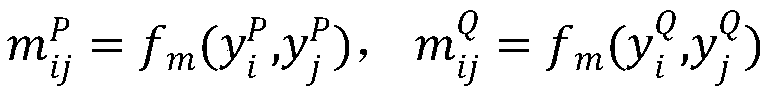

A text implication relation recognition method based on multi-granularity information fusion

ActiveCN109299262ASmall granularityQuality improvementSemantic analysisCharacter and pattern recognitionFeature learningGranularity

The present invention provides a text implication relation recognition method which fuses multi-granularity information, and proposes a modeling method which fuses multi-granularity information fusionand interaction between words and words, words and words, words and sentences. The invention firstly uses convolution neural network and Highway network layer in character vector layer to establish word vector model based on character level, and splices with word vector pre-trained by GloVe; Then the sentence modeling layer uses two-way long-short time memory network to model the word vector of fused word granularity, and then interacts and matches the text pairs through the sentence matching layer to fuse the attention mechanism, finally obtains the category through the integration classification layer; After the model is established, the model is trained and tested to obtain the text implication recognition and classification results of the test samples. This hierarchical structure method which combines the multi-granularity information of words, words and sentences combines the advantages of shallow feature location and deep feature learning in the model, so as to further improve the accuracy of text implication relationship recognition.

Owner:SUN YAT SEN UNIV +1

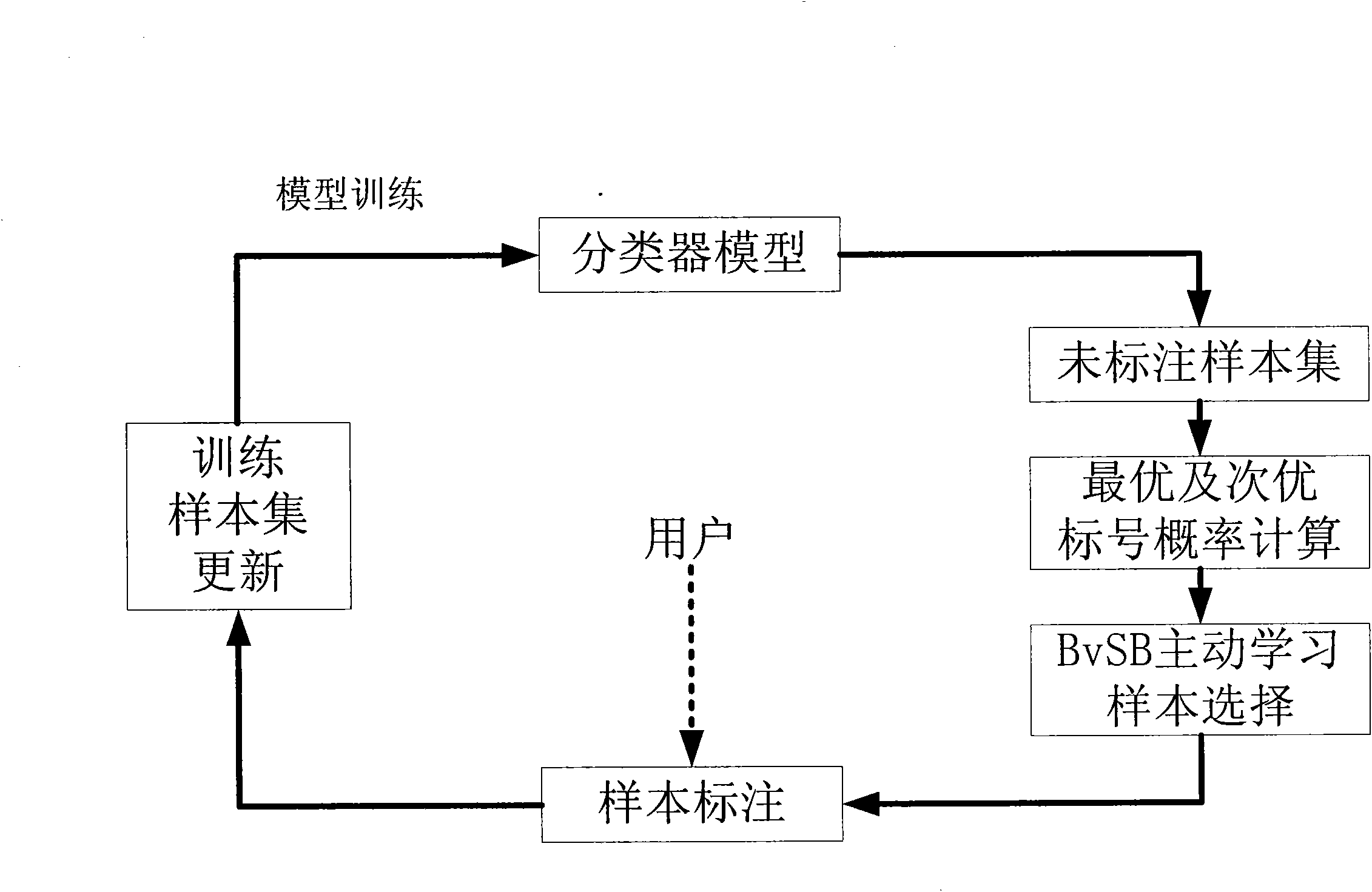

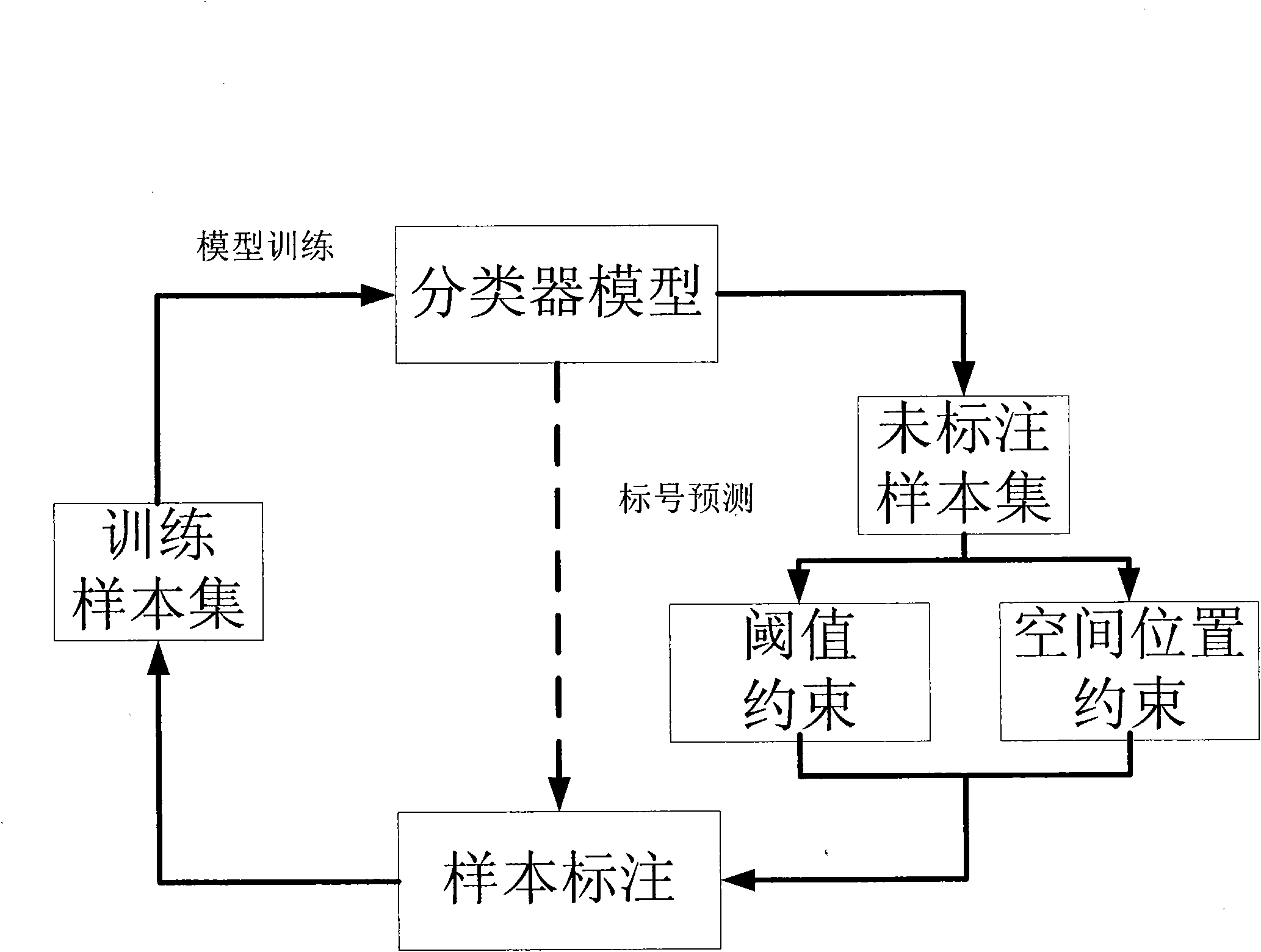

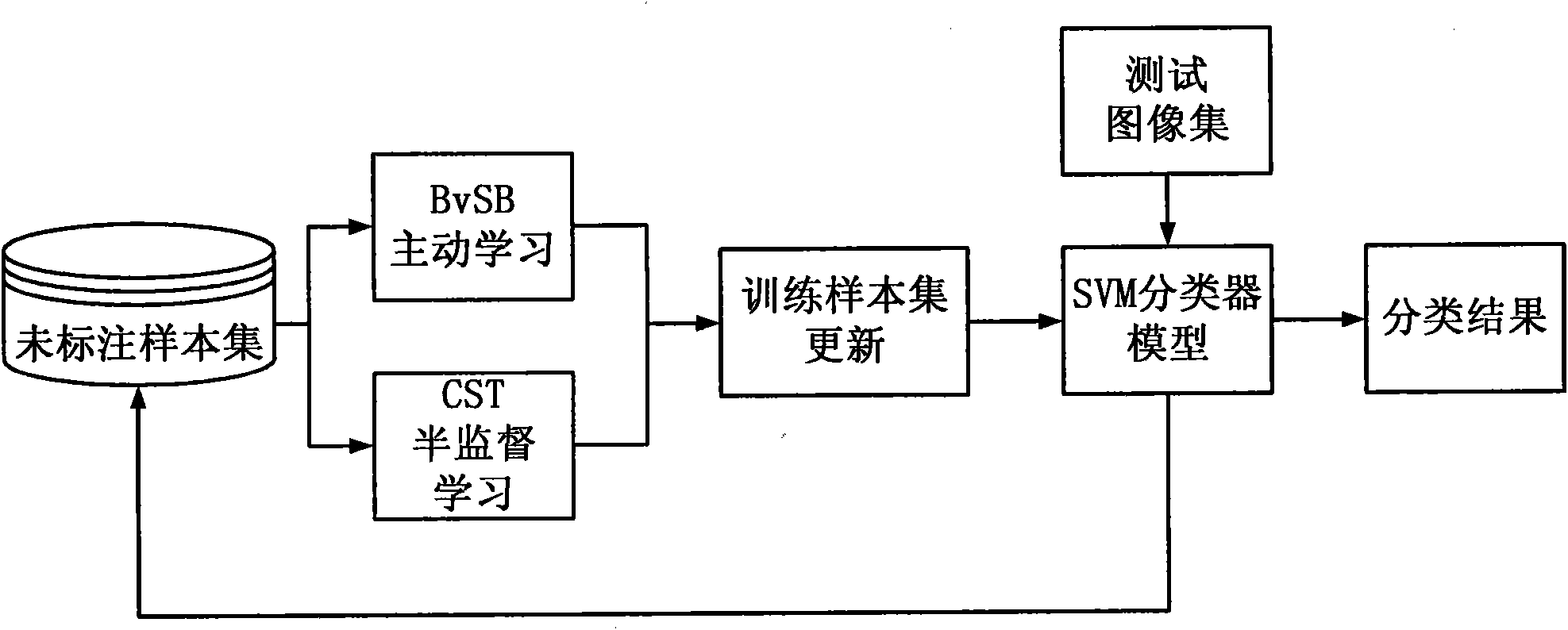

Multiclass image classification method based on active learning and semi-supervised learning

InactiveCN101853400AEfficient image classification effectDoes not increase computational burdenCharacter and pattern recognitionInformation processingComputation complexity

The invention relates to the technical field of image information processing, in particular to a multiclass image classification method based on active learning and semi-supervised learning. The method comprises five steps: initial sample selection and classifier model training, BvSB active learning sample selection, CST semi-supervised learning, training sample set and classifier model updating and assorting process iteration. Through the operations of BvSB active learning sample selection, CST semi-supervised learning and SVM classification, the invention has efficient image classification effect under the condition of less manual tagging, does not increase overmuch computation burden, can quickly provide classification effects and also can take consideration of the demand of a classification system on the computation complexity.

Owner:WUHAN UNIV

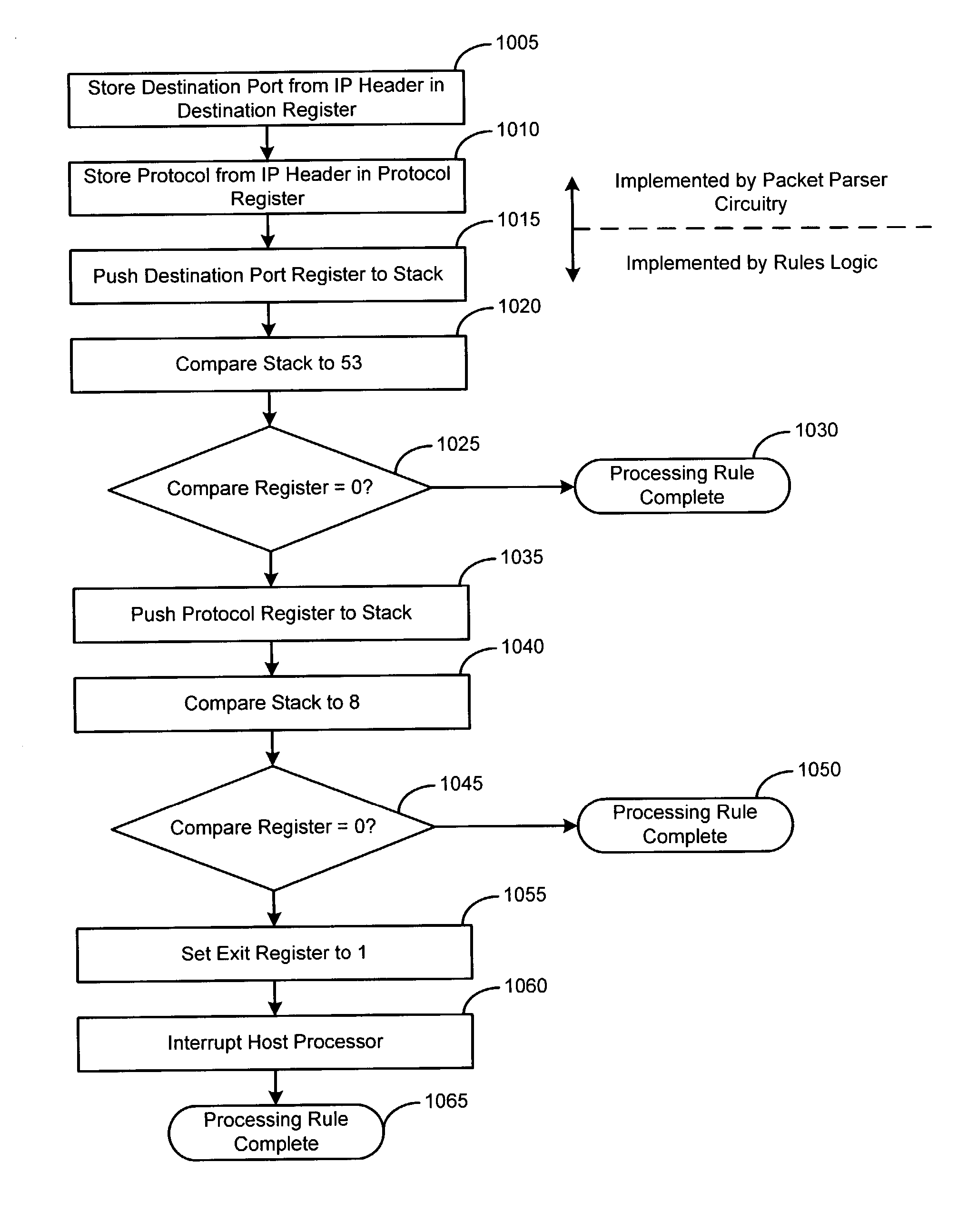

Hardware-based packet filtering accelerator

InactiveUS20040039940A1Digital computer detailsConcurrent instruction executionProcessor registerParallel computing

A data packet filtering accelerator processor operates in parallel with a host processor and is arranged on an integrated circuit with the host processor. The accelerator processor classifies data packets by executing a sequence machine code instructions converted directly from a set of rules. Portions of data packets are passed to the accelerator processor from the host processor. The accelerator processor includes packet parser circuit for parsing the data packets into relevant data units and storing the relevant data units in memory. A packet analysis circuit executes the sequence of machine code instructions converted directly from the set of rules. The machine code instruction sequence operates on the relevant data units to classify the data packet. The packet analysis circuit returns the results of the classification to the host processor by storing the classification results in a register accessible by the host processor.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

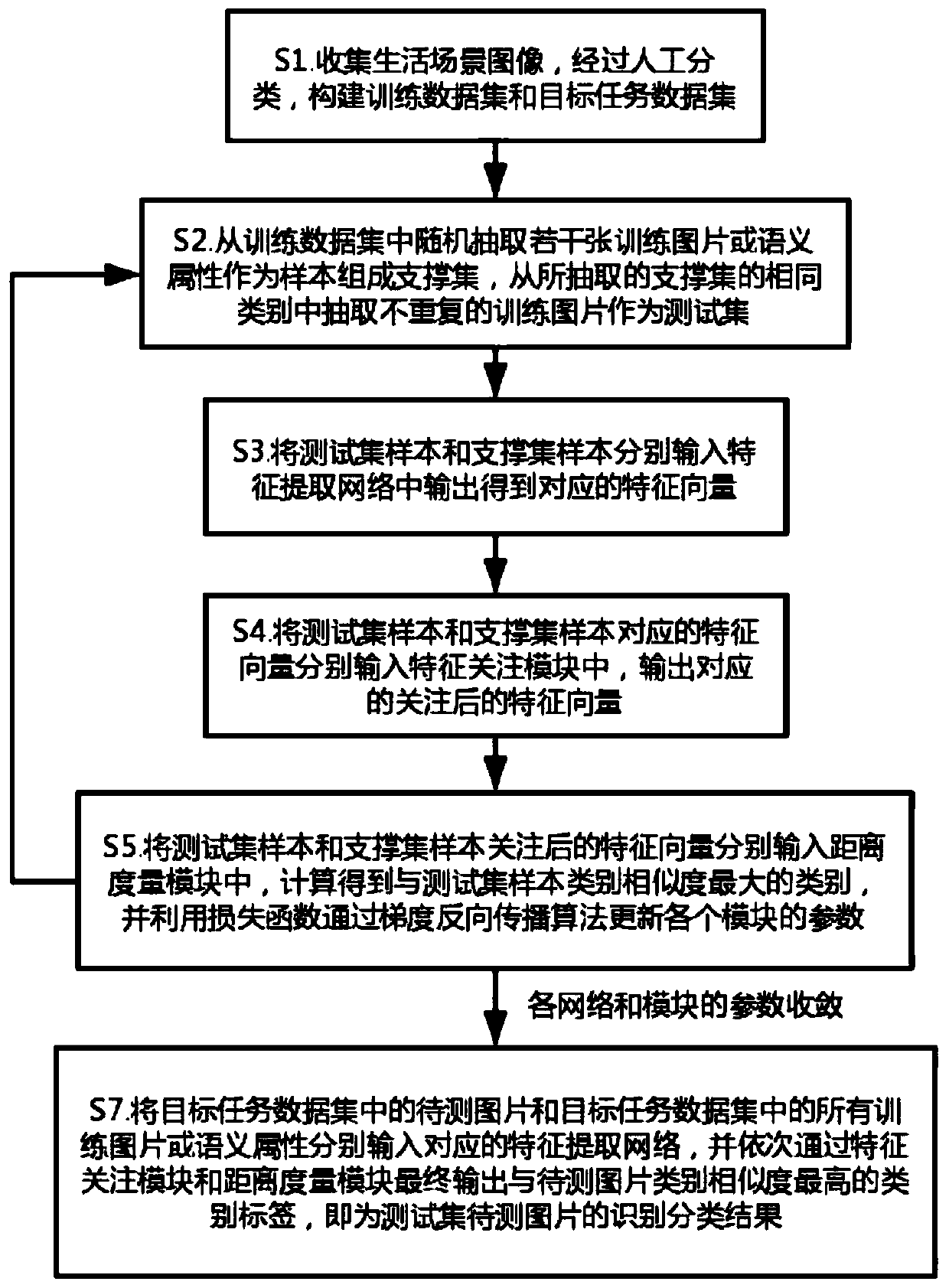

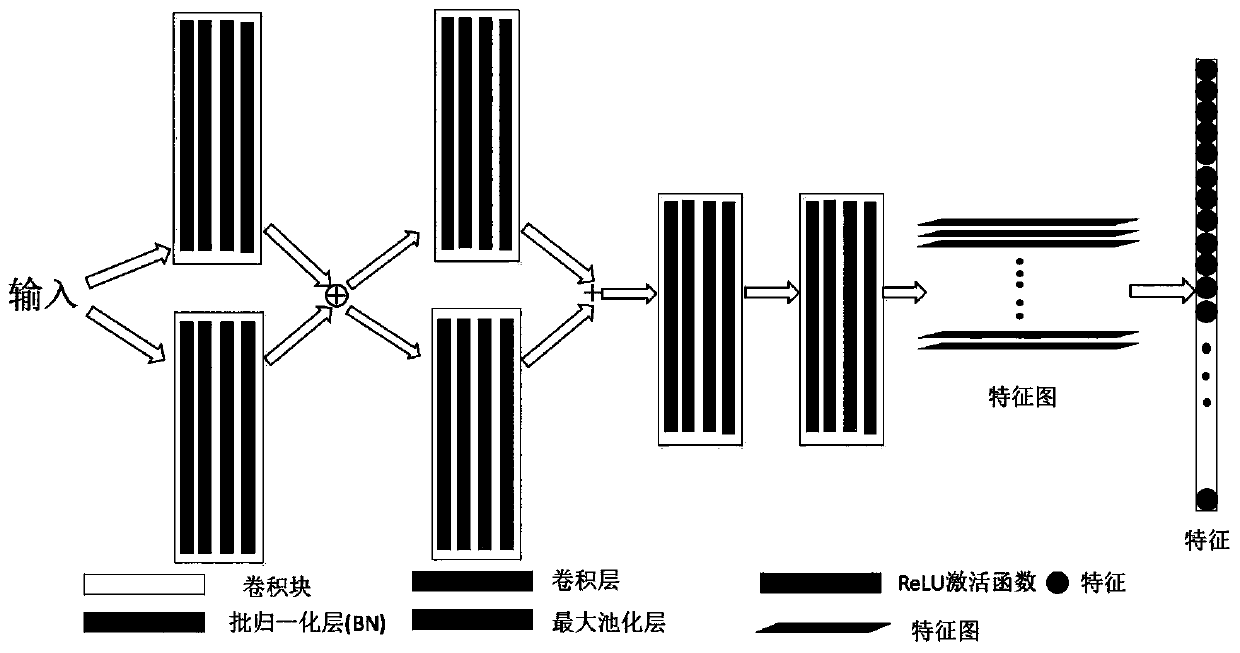

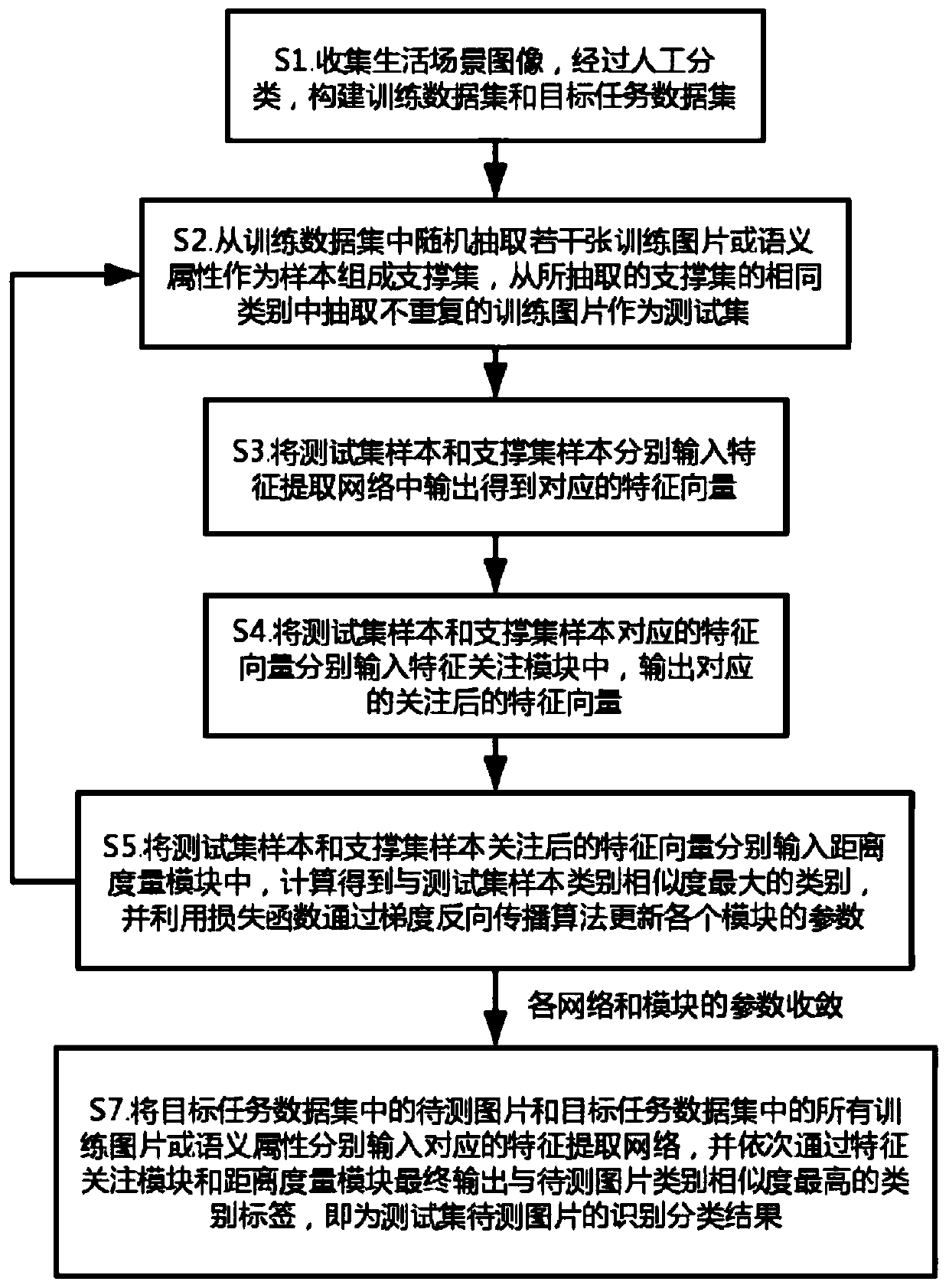

Small sample and zero sample image classification method based on metric learning and meta-learning

ActiveCN109961089ASolve the recognition classification problemAccurate classificationCharacter and pattern recognitionEnergy efficient computingSmall sampleData set

The invention relates to the field of computer vision recognition and transfer learning, and provides a small sample and zero sample image classification method based on metric learning and meta-learning, which comprises the following steps of: constructing a training data set and a target task data set; selecting a support set and a test set from the training data set; respectively inputting samples of the test set and the support set into a feature extraction network to obtain feature vectors; sequentially inputting the feature vectors of the test set and the support set into a feature attention module and a distance measurement module, calculating the category similarity of the test set sample and the support set sample, and updating the parameters of each module by utilizing a loss function; repeating the above steps until the parameters of the networks of the modules converge, and completing the training of the modules; and enabling the to-be-tested picture and the training picture in the target task data set to sequentially pass through a feature extraction network, a feature attention module and a distance measurement module, and outputting a category label with the highestcategory similarity with the test set to obtain a classification result of the to-be-tested picture.

Owner:SUN YAT SEN UNIV

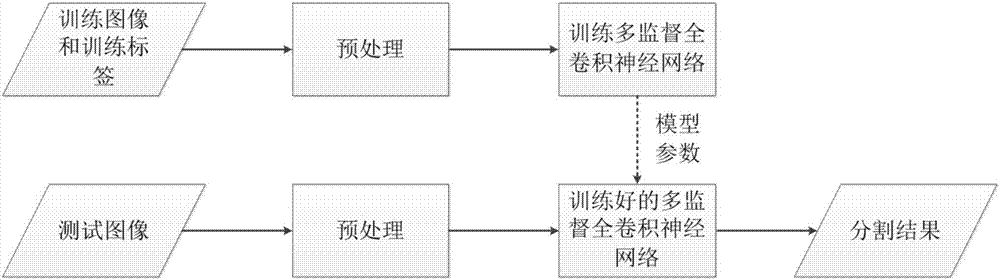

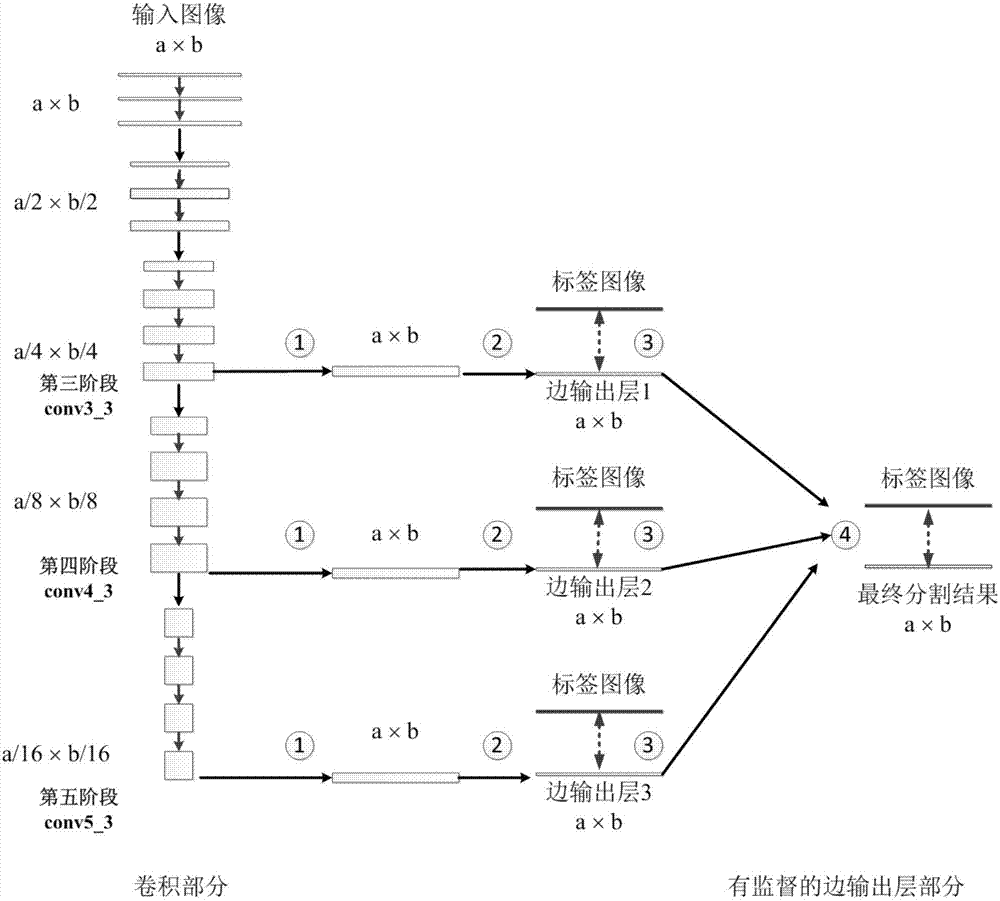

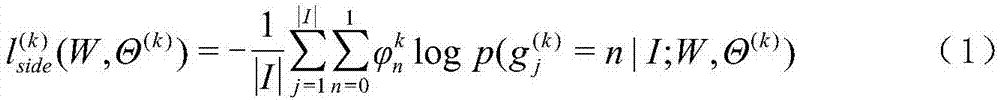

Image segmentation method based on multi-supervision full-convolution neural network

InactiveCN107169974AIncrease training speedImprove Segmentation AccuracyImage enhancementImage analysisMultiple edgesNetwork structure

The invention relates to an image segmentation method based on a multi-supervision full-convolution neural network. According to the invention, further improvement is carried out based on the full convolution neural network, so a novel network structure is provided. The network structure has three edge output layers with supervision which are capable of guiding a network to learn multi-scale features and allowing the network to acquire local features and global features of images. Meanwhile, in order to keep context information in the images, in the upper sampling parts of the network, upper sampling is performed on output feature images by sue of multiple feature channels. Finally, a fusion layer with the weight is used for fusing the classification results of the multiple edge output layers, so the final image segmentation result is obtained. The method is characterized by high segmentation precision and quick segmentation speed. In osteosarcoma CT data segmentation, the DSC coefficient of the acquired segmentation result by use of the provided algorithm reaches 86.88%, which is higher than that of a traditional FCN algorithm.

Owner:UNIV OF SCI & TECH OF CHINA

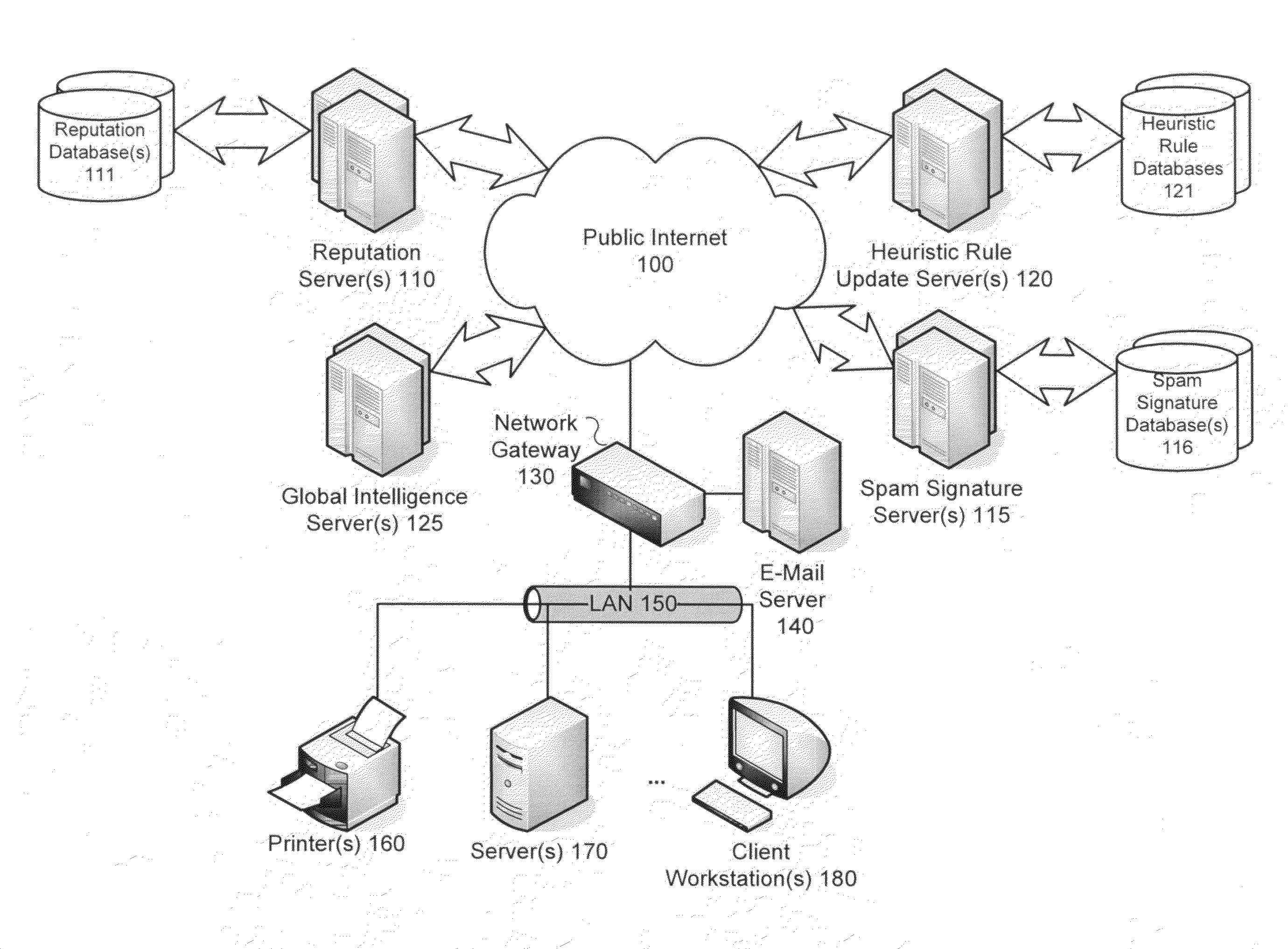

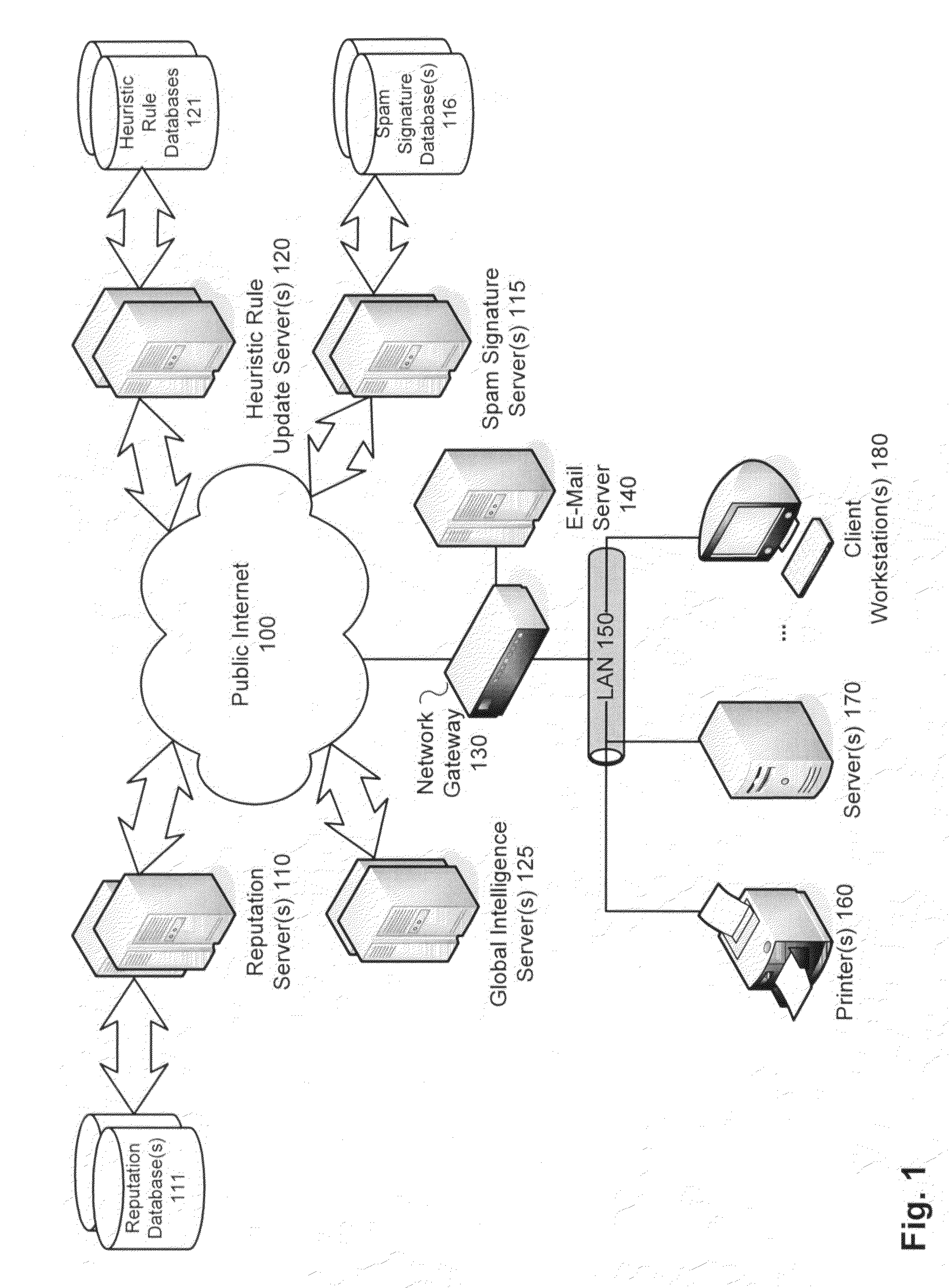

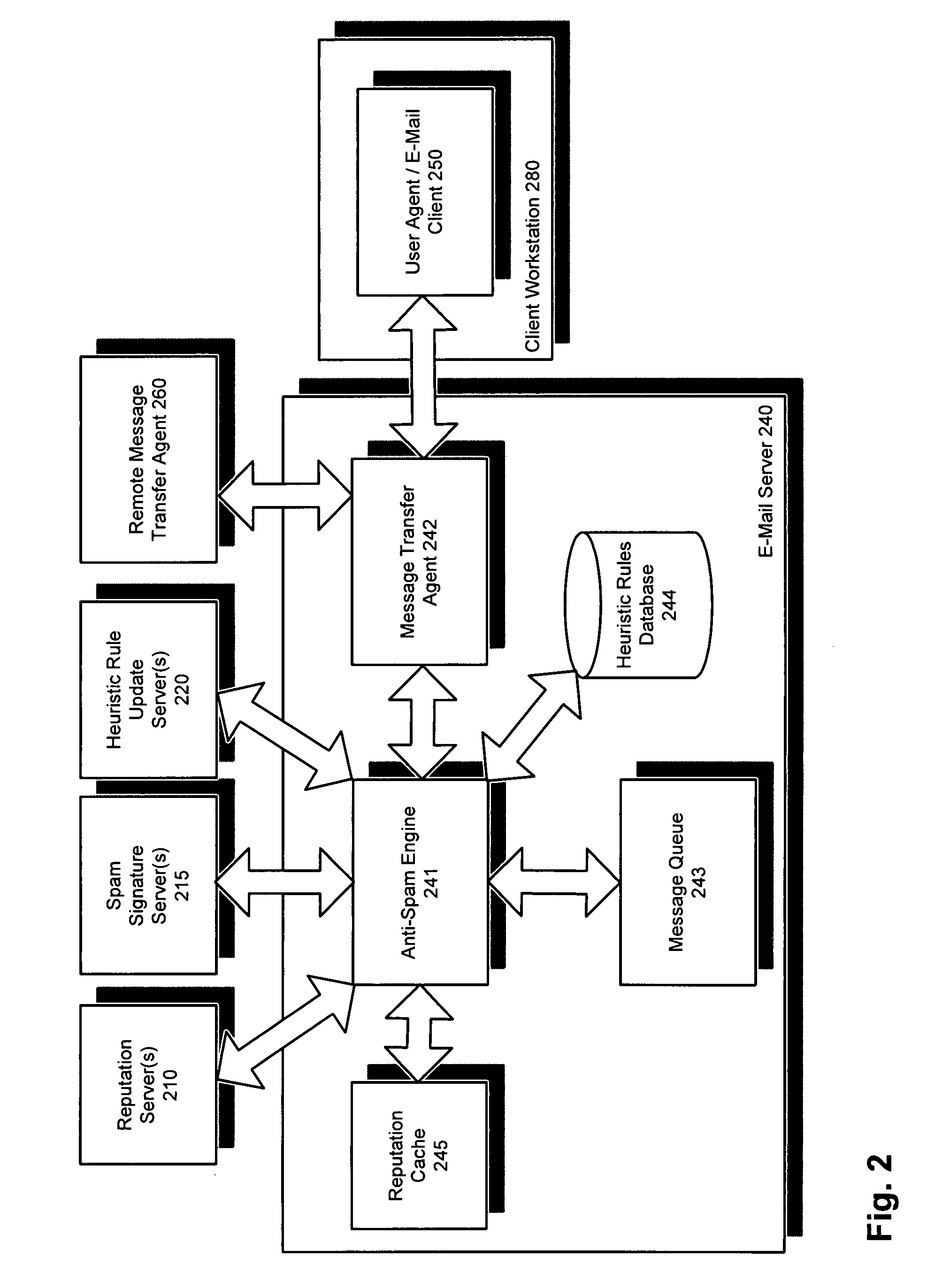

Use of global intelligence to make local information classification decisions

ActiveUS20090064323A1Content analysis accurateAccurate analysisMemory loss protectionUnauthorized memory use protectionDecision methodsElectronic information

Methods and systems are provided for delaying local information classification until global intelligence has an opportunity to be gathered. According to one embodiment, an initial information identification process, e.g., an initial spam detection, is performed on received electronic information, e.g., an e-mail message. Based on the initial information identification process, classification of the received electronic information is attempted. If the received electronic information cannot be unambiguously classified as being within one of a set of predetermined categories (e.g., spam or clean), then an opportunity is provided for global intelligence to be gathered regarding the received electronic information by queuing the received electronic information for re-evaluation. The electronic information is subsequently classified by performing a re-evaluation information identification process, e.g., re-evaluation spam detection, which provides a more accurate categorization result than the initial information identification process. Handling the electronic information in accordance with a policy associated with the categorization result.

Owner:FORTINET

Industrial character identification method based on convolution neural network

ActiveCN106650721AQuick identificationEfficient identificationNeural architecturesCharacter recognitionProduction lineGraphics

The invention provides an industrial character identification method based on a convolution neural network. The method comprises the steps of establishing character data sets, carrying out data enhancement and preprocessing on the character data sets and establishing a CNN (Convolution Neural Network) integrated model, wherein the model comprises three different individual classifiers, training is carried out through utilization of the model, the training is finished by two steps, a first step is offline training, an offline training model is obtained, a second step is online training, the offline training model is used for initialization, a special production line character data set is trained, and an online training model is obtained; carrying out preprocessing, character positioning and single character image segmentation on a target image; sending the segmented character images to the trained online training model, and probability values of classifying the single target images into classes by the three classifiers in the CNN integrated model is obtained; final decision is carried out in a voting mode, thereby obtaining a classification result of test data. According to the method, characters on different production lines can be identified rapidly and efficiently.

Owner:吴晓军

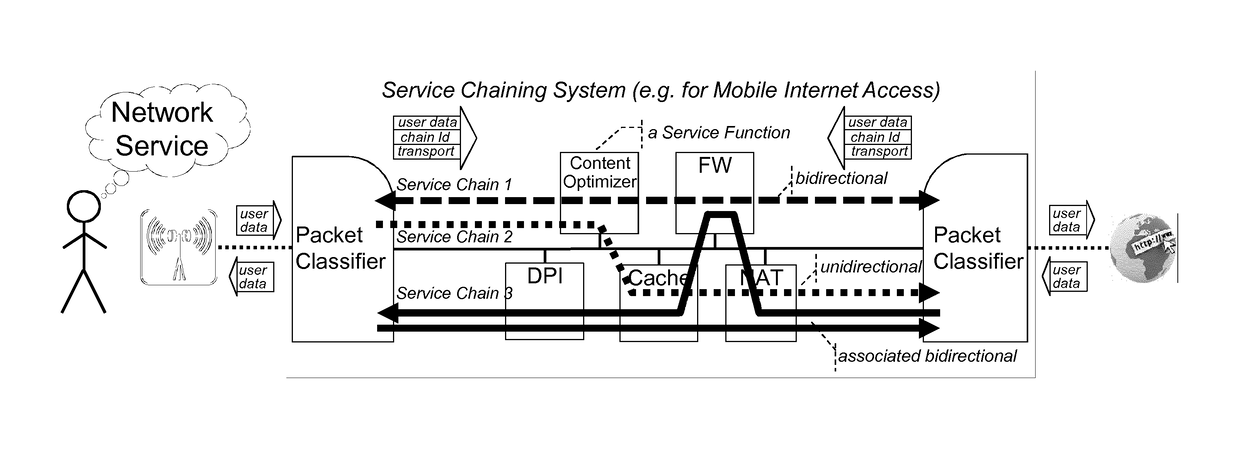

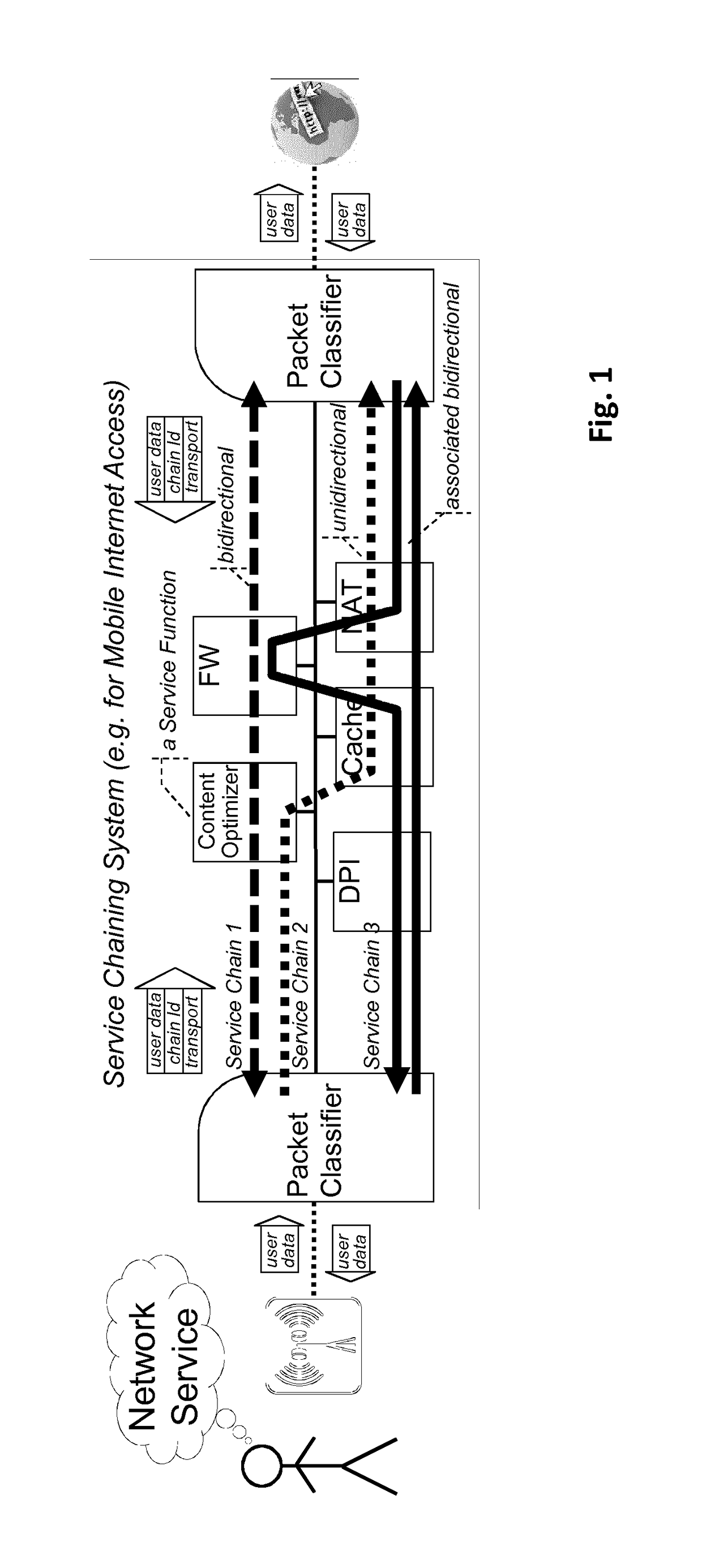

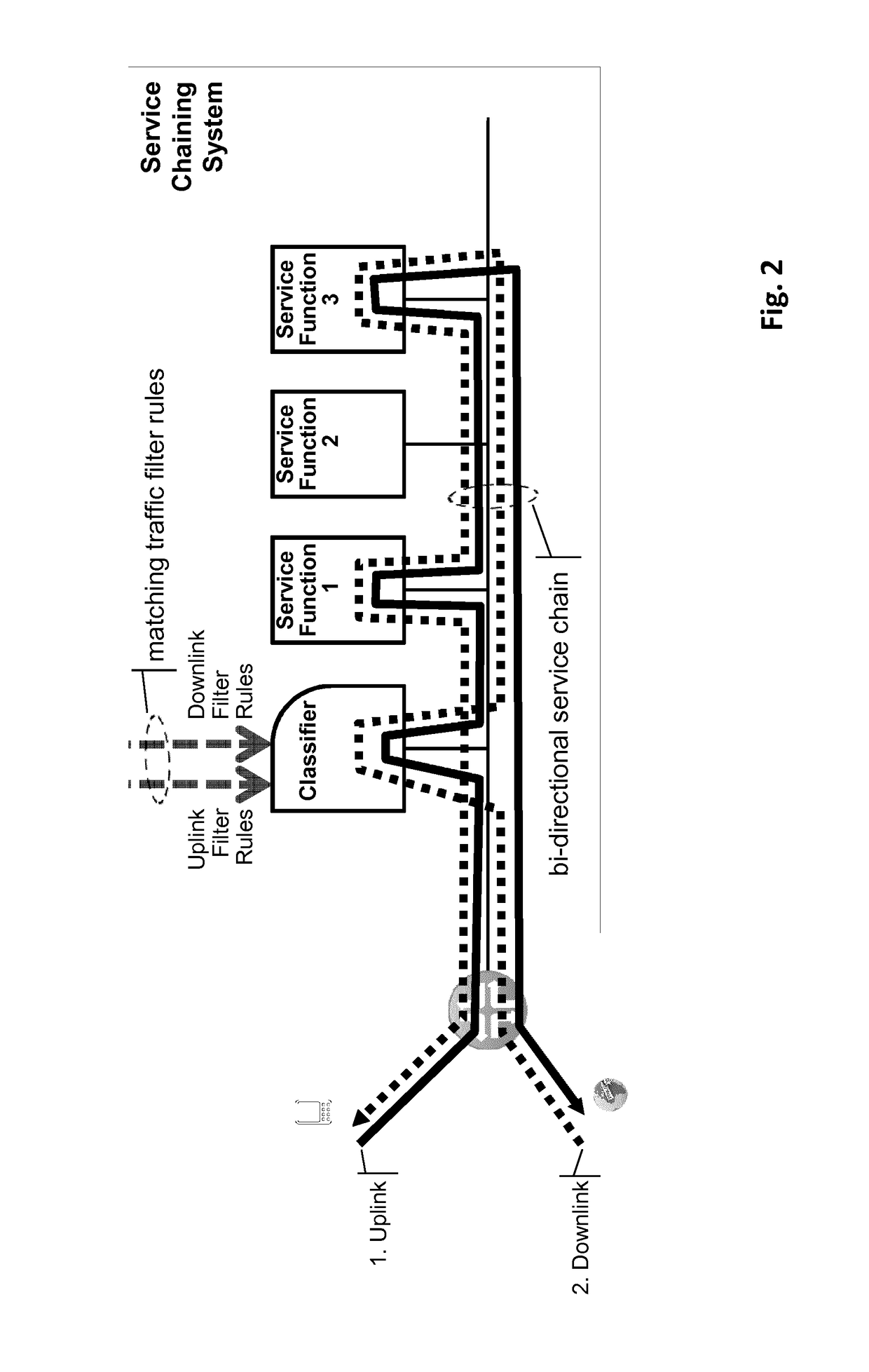

Chaining of network service functions in a communication network

In an apparatus of a communication network first packets of a data flow in a first direction are acquired, each having a first service chain identifier identifying a first chain of services which have been applied to the first packets in the first direction of the data flow. The first service chain identifier represents a classification result of classification functions used for selecting the first chain of services. Based on the first service chain identifier, a packet filter is calculated, which is associated with a second chain of services to be applied to second packets of the data flow in a second direction of the data flow when the second packets enter the communication network in the second direction.

Owner:NOKIA SOLUTIONS & NETWORKS OY

Vehicle information display system

ActiveUS7561966B2Much information without hindering a driver's visual fieldSafe and reliableInstruments for road network navigationAnalogue computers for trafficHead-up displayDriver/operator

Owner:DENSO CORP

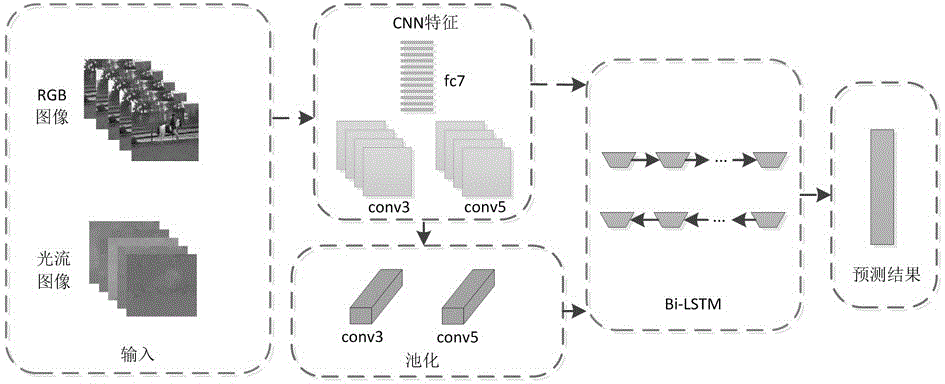

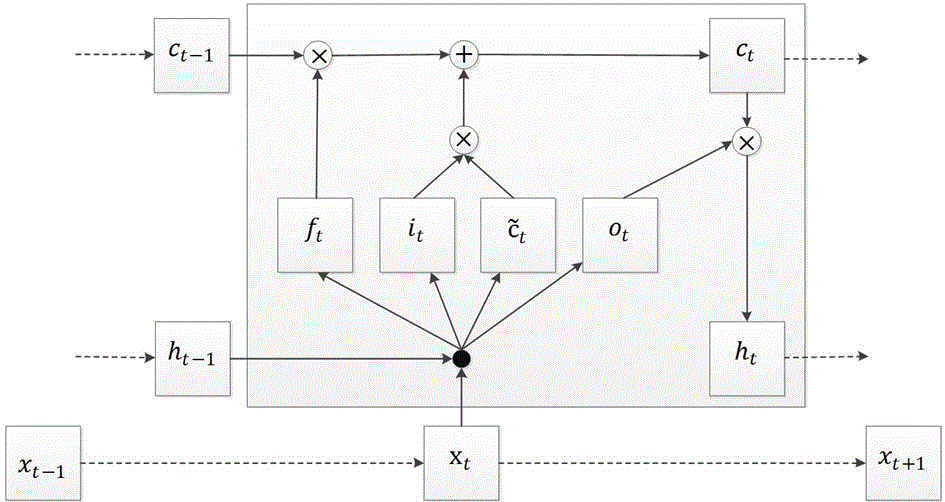

Bidirectional long short-term memory unit-based behavior identification method for video

InactiveCN106845351AImprove accuracyGuaranteed accuracyCharacter and pattern recognitionNeural architecturesTime domainTemporal information

Owner:SUZHOU UNIV

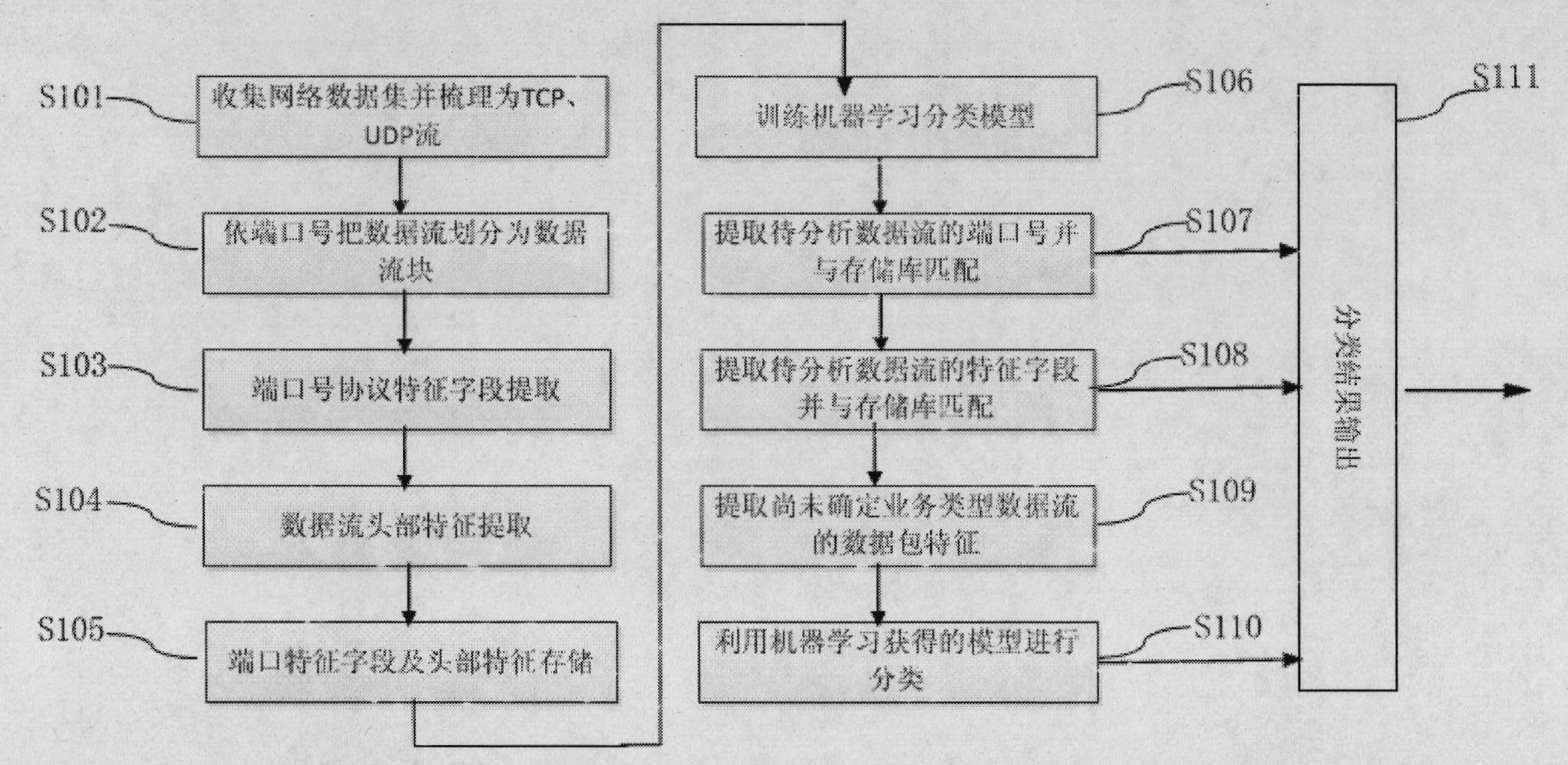

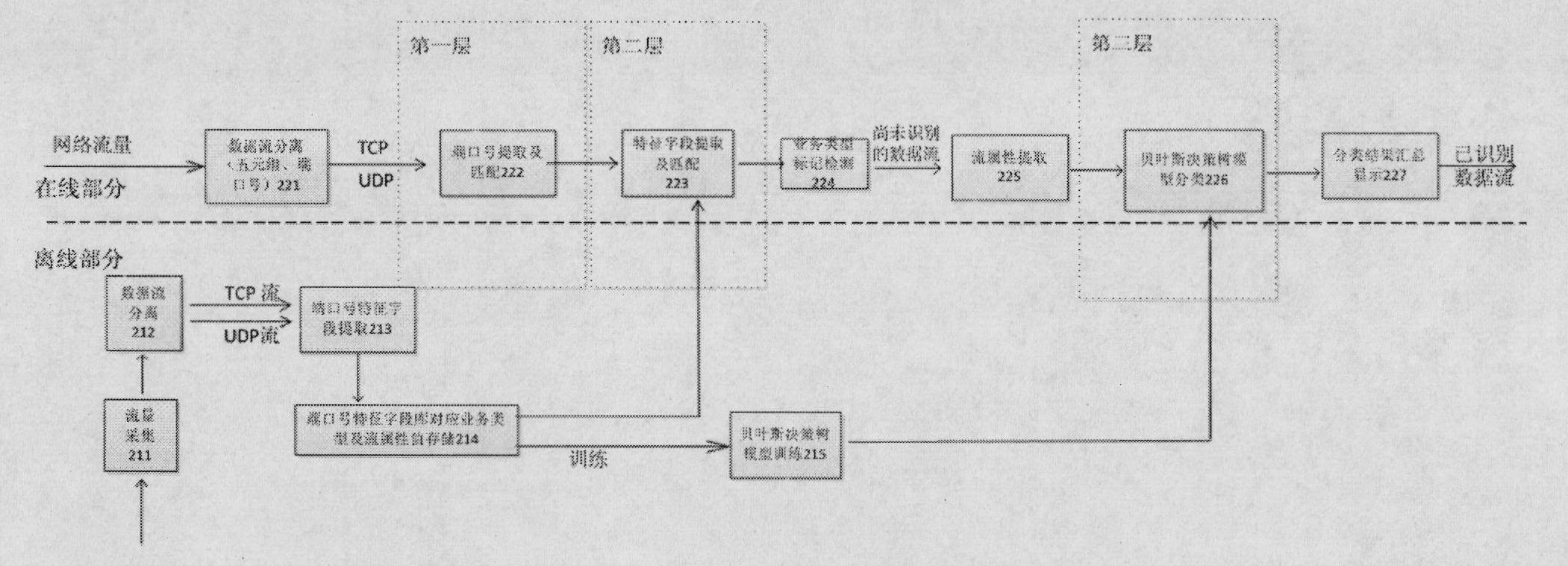

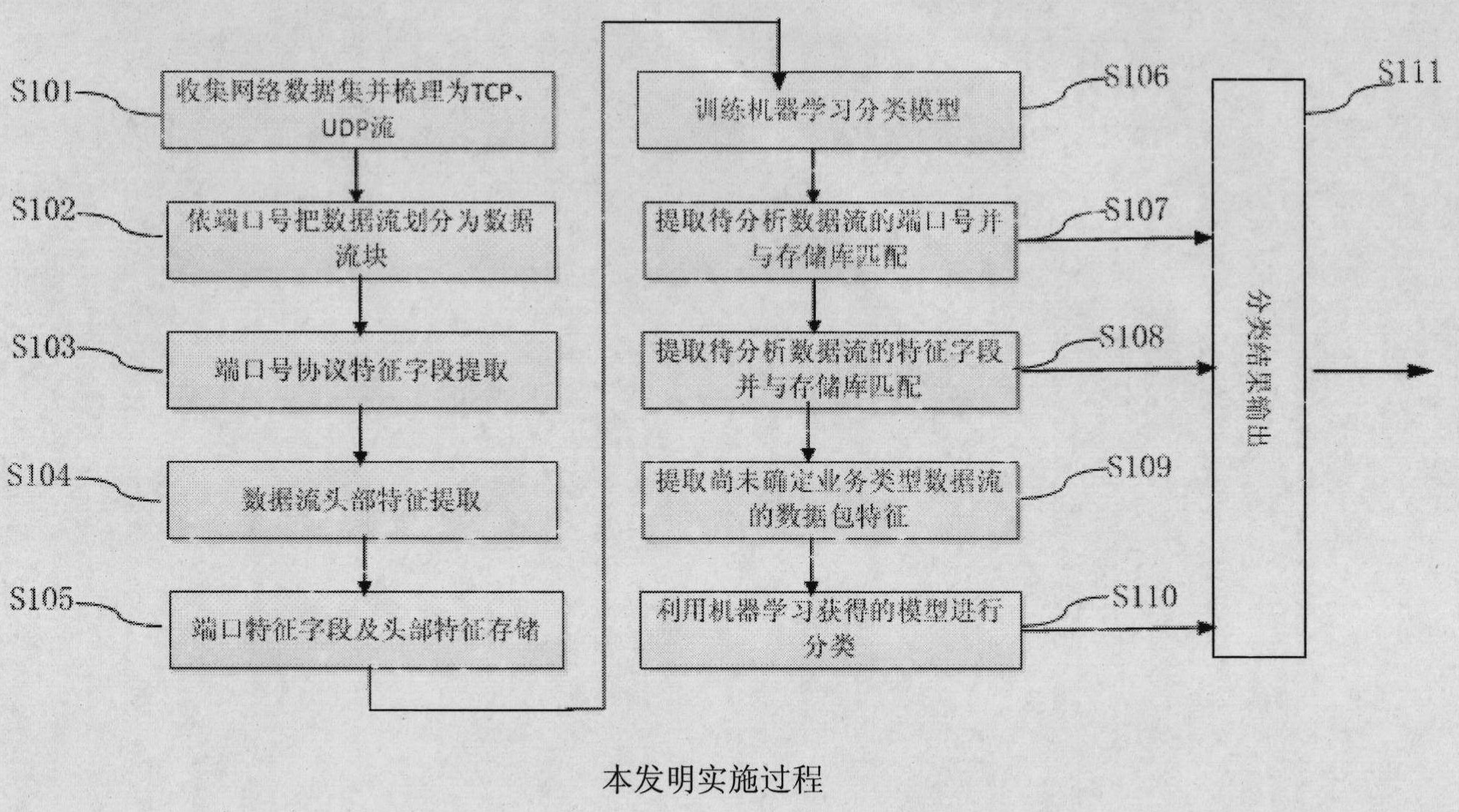

Stratification characteristic analysis-based method and apparatus thereof for on-line identification for TCP, UDP flows

The invention relates to a stratification characteristic analysis-based method and an apparatus thereof for on-line identification for TCP, UDP flows. The method comprises the following steps that: an off-line phase determines a common port number of a first layer to-be-identified service type and a characteristic field of a second layer to-be-identified service data flow through a protocol analysis; a port number and characteristic field database is constructed; meanwhile, a third layer Bayesian decision tree model is obtained by training by employing a machine study method; and service type identification on a flow is completed by utilizing the characteristic database and a study model at an on-line classification phase. In addition, the apparatus provided in the invention comprises a data flow separating module, a characteristic extraction module, a characteristic storage module, a characteristic matching module, an attribute extraction module, a model construction and classification module and a classification result display module. According to the embodiment of the invention, various application layer services based on TCP and UDP are accurately identified; moreover, the identification process is simple and highly efficient; therefore, the method and the apparatus are suitable for realization of a hardware apparatus and can be applied for equipment and systems that require on-line flow identification in a high speed backbone network and an access network.

Owner:BEIJING UNIV OF POSTS & TELECOMM

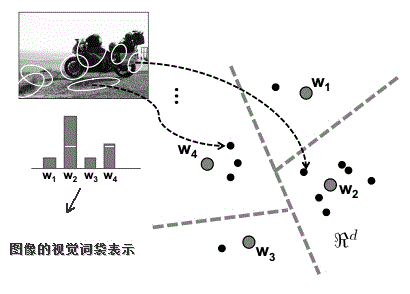

Remote sensing image classification method based on multi-feature fusion

ActiveCN102622607AImprove classification accuracyEnhanced Feature RepresentationCharacter and pattern recognitionSynthesis methodsClassification methods

The invention discloses a remote sensing image classification method based on multi-feature fusion, which includes the following steps: A, respectively extracting visual word bag features, color histogram features and textural features of training set remote sensing images; B, respectively using the visual word bag features, the color histogram features and the textural features of the training remote sensing images to perform support vector machine training to obtain three different support vector machine classifiers; and C, respectively extracting visual word bag features, color histogram features and textural features of unknown test samples, using corresponding support vector machine classifiers obtained in the step B to perform category forecasting to obtain three groups of category forecasting results, and synthesizing the three groups of category forecasting results in a weighting synthesis method to obtain the final classification result. The remote sensing image classification method based on multi-feature fusion further adopts an improved word bag model to perform visual word bag feature extracting. Compared with the prior art, the remote sensing image classification method based on multi-feature fusion can obtain more accurate classification result.

Owner:HOHAI UNIV

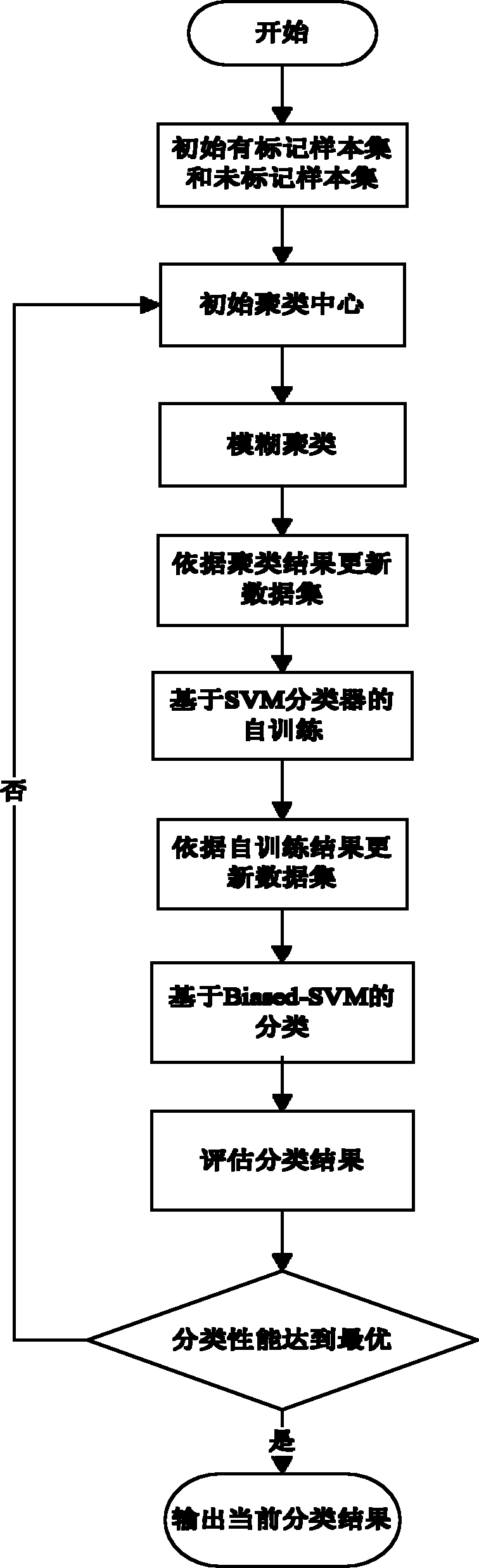

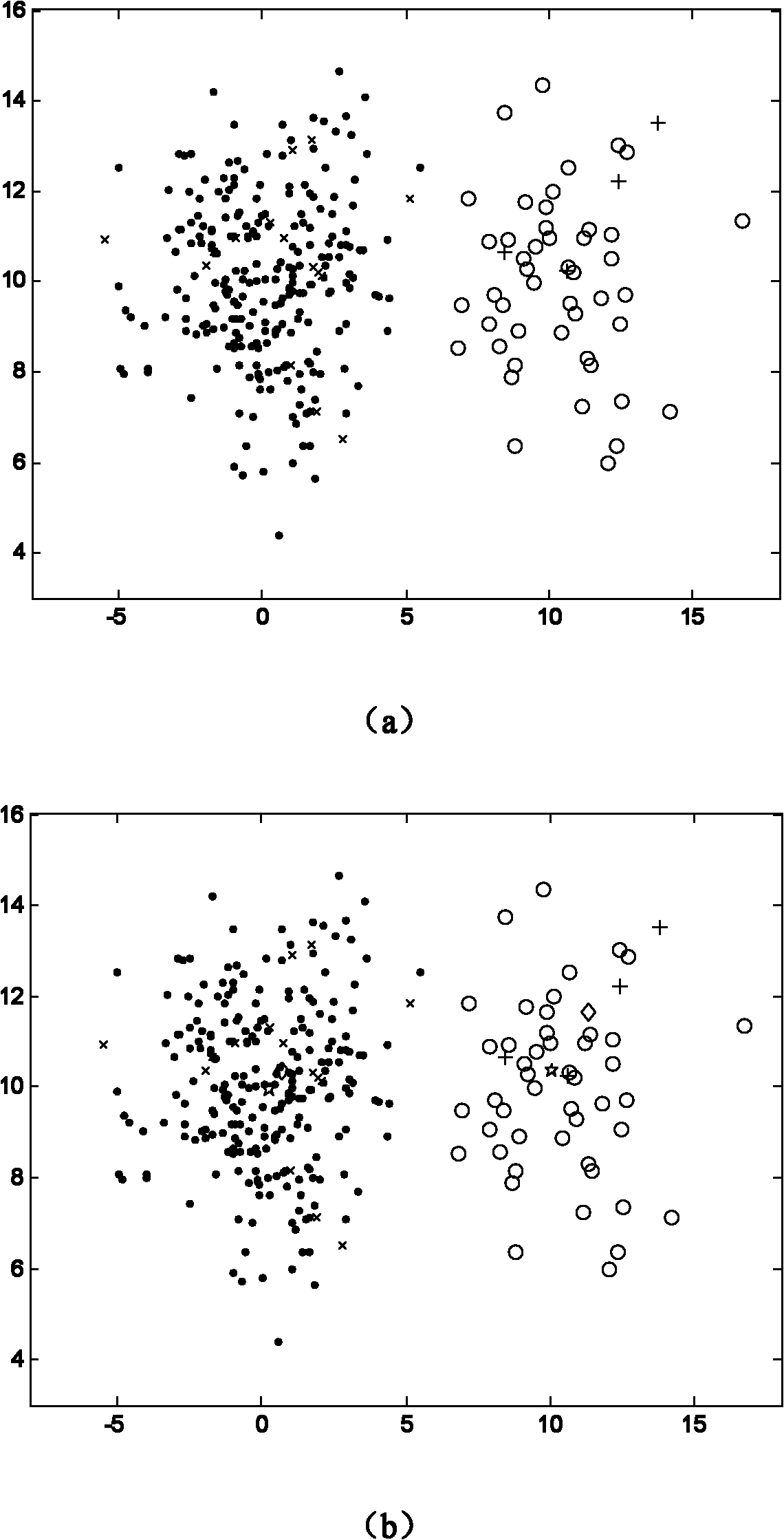

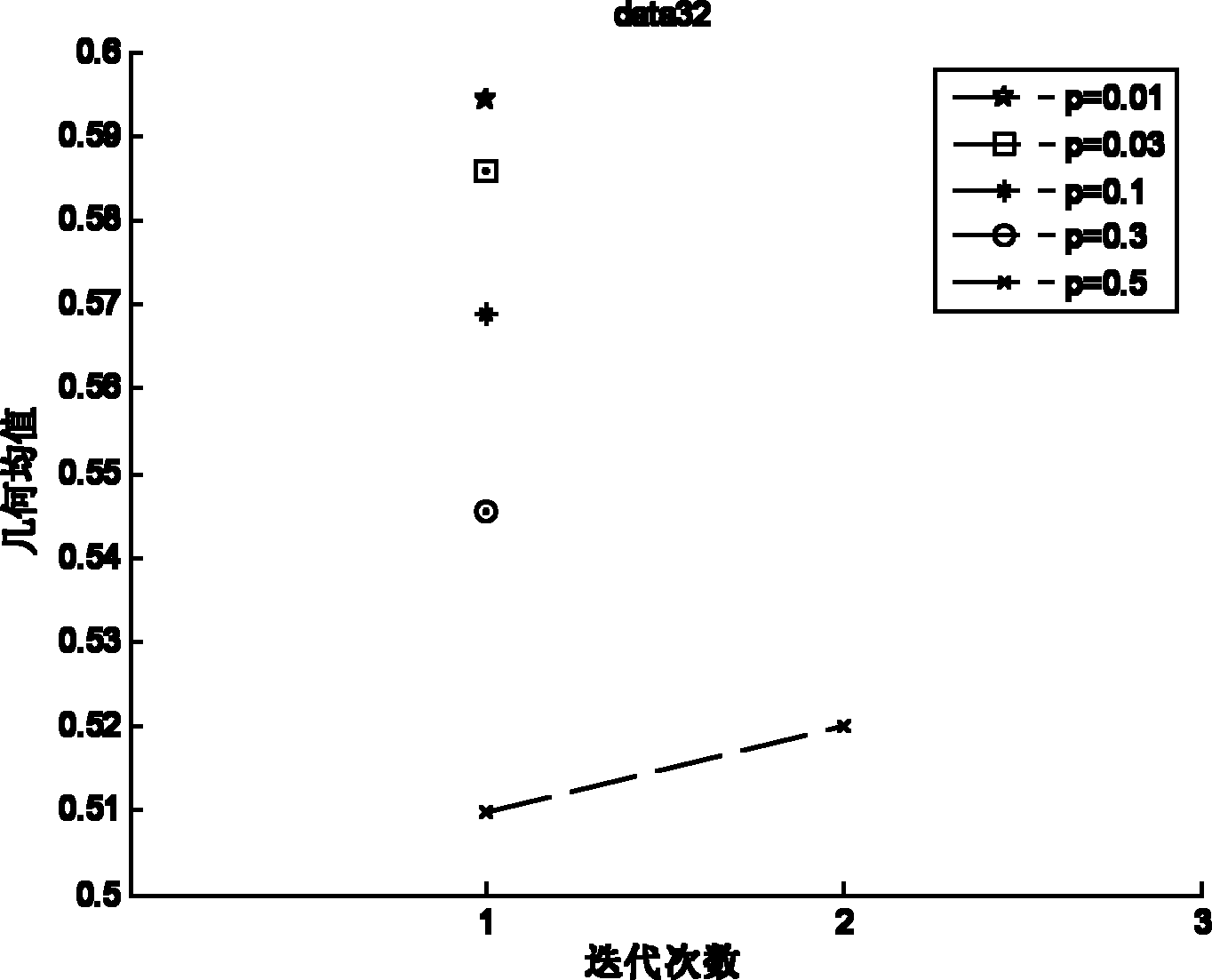

Semi-supervised classification method of unbalance data

InactiveCN101980202AImprove generalization abilityTedious and time-consuming labeling workSpecial data processing applicationsSelf trainingAlgorithm

The invention discloses a semi-supervised classification method of unbalance data, which is mainly used for solving the problem of low classification precision of a minority of data which have fewer marked samples and high degree of unbalance in the prior art. The method is implemented by the following steps: (1) initializing a marked sample set and an unmarked sample set; (2) initializing a cluster center; (3) implementing fuzzy clustering; (4) updating the marked sample set and unmarked sample set according to the result of the clustering; (5) performing the self-training based on a support vector machine (SVM) classifier; (6) updating the marked sample set and unmarked sample set according to the result of the self-training; (7) performing the classification of support vector machines Biased-SVM based on penalty parameters; and (8) estimating a classification result and outputting the result. For unbalance data which have fewer marked samples, the method improves the classification precision of a minority of data. And the method can be used for classifying and identifying unbalance data having few training samples.

Owner:XIDIAN UNIV

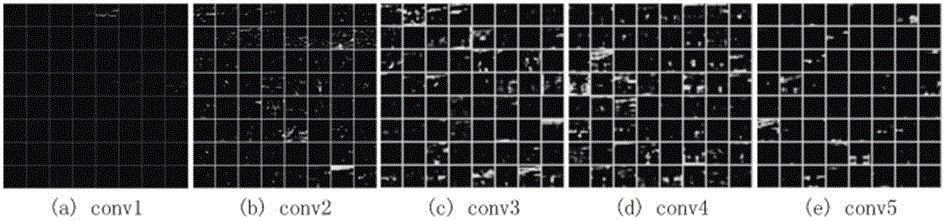

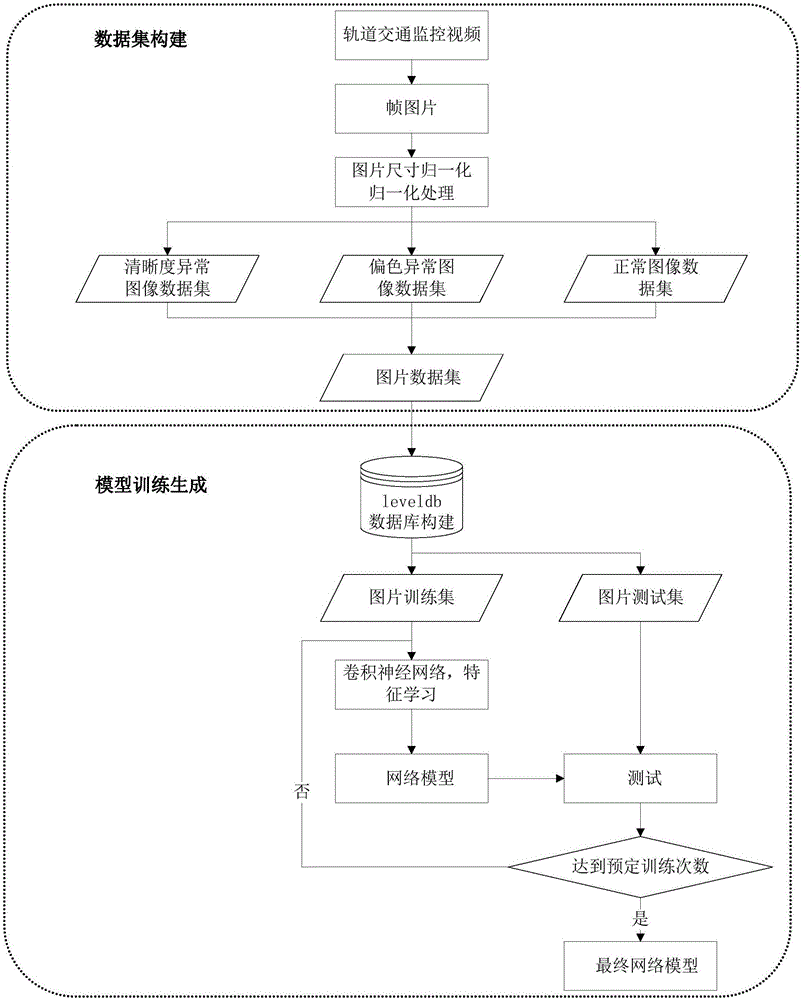

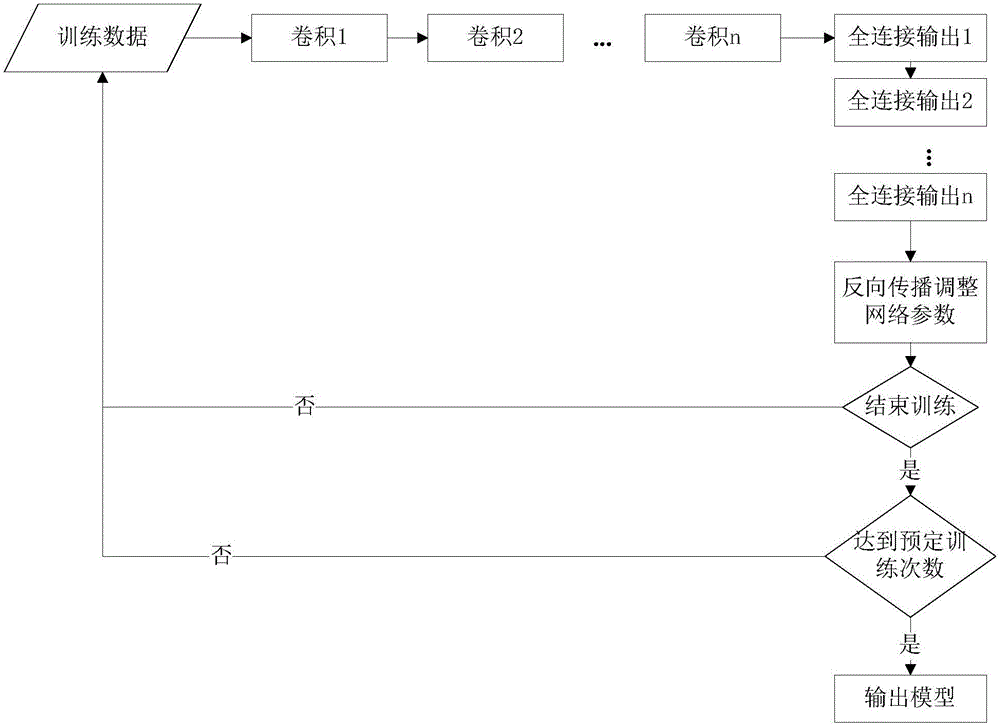

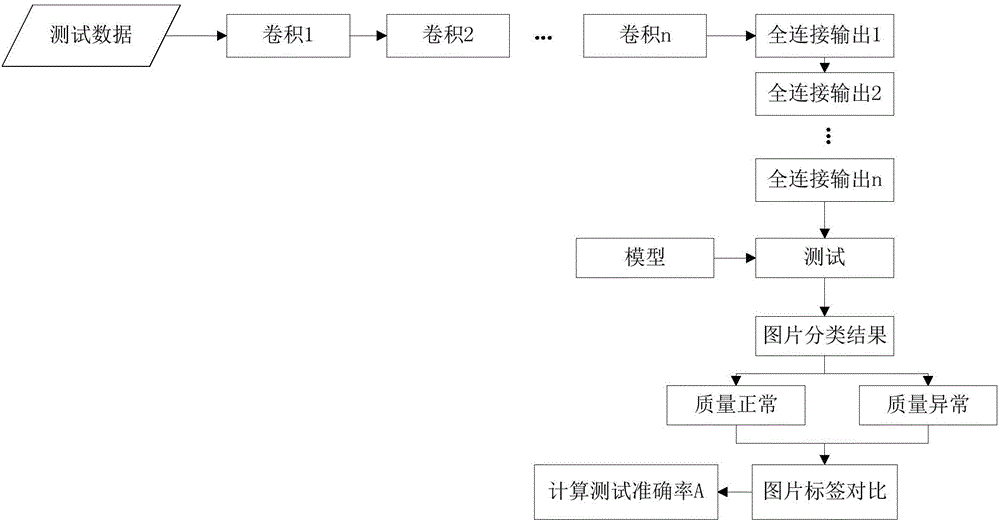

Urban rail transit panoramic monitoring video fault detection method based on depth learning

InactiveCN106709511AImprove robustnessImprove generalization abilityCharacter and pattern recognitionNeural architecturesData setModel testing

The invention provides an urban rail transit panoramic monitoring video fault detection method based on depth learning. The method comprises a data set construction process, a model training generation process and an image classification recognition process. The data set construction process processes a definition abnormity video, a colour cast abnormity video and a normal video in an urban rail transit panoramic monitoring video. A training set and a test set are classified. The model training generation process comprises model training and model test. The model training is to train a fault video image recognition model based on a convolution neural network. The convolutional neural network comprises a plurality of convolution layers and a plurality of full connection layers. The model test is to calculate the test accuracy. If expectation is not fulfilled, the fault video image recognition model is optimized. The image classification recognition process comprises the steps that a single-frame image to be recognized is input into the model, and the fault video image recognition model outputs an image classification result to complete the fault image detection of the urban rail transit panoramic monitoring video.

Owner:HUAZHONG NORMAL UNIV +1

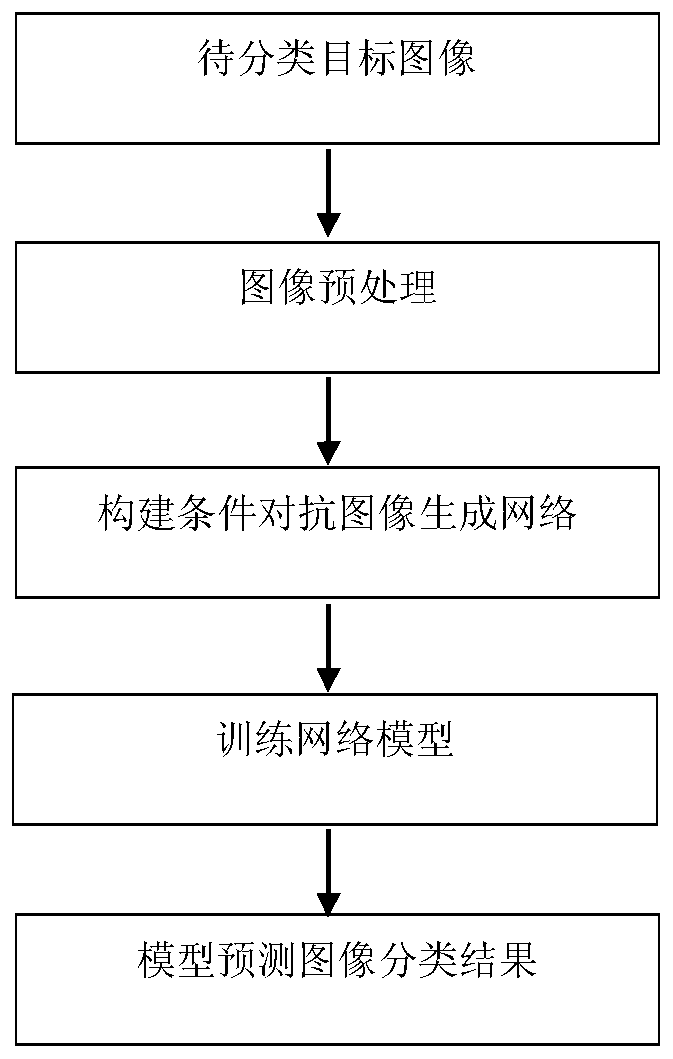

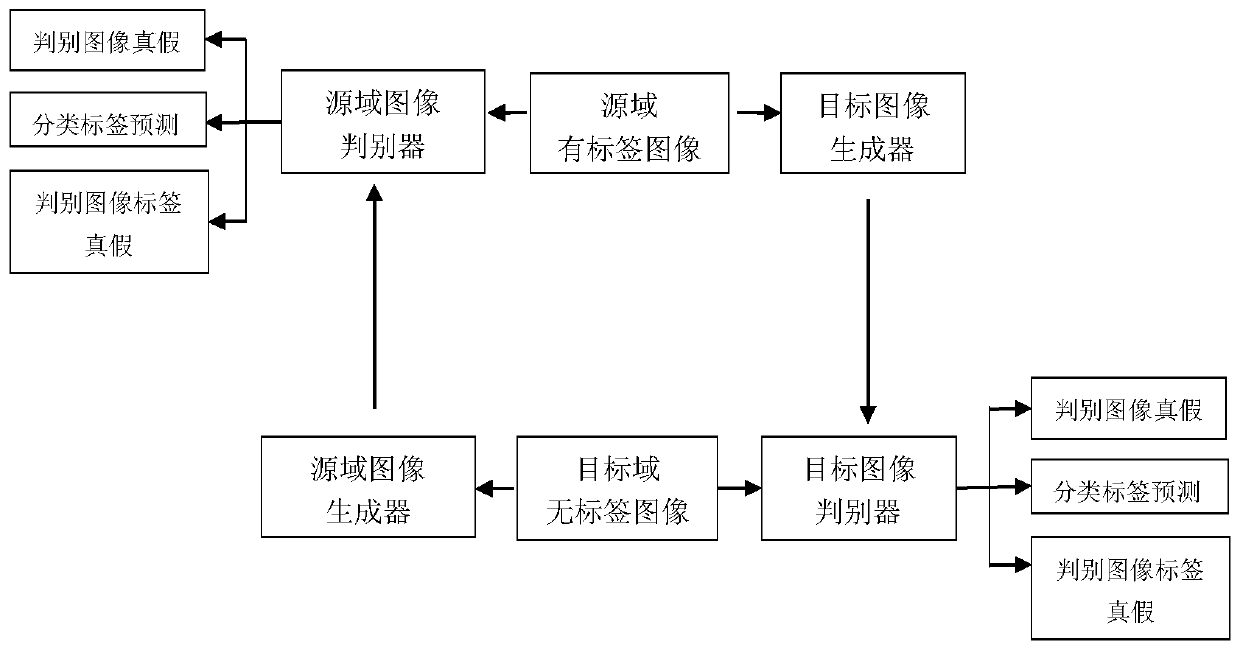

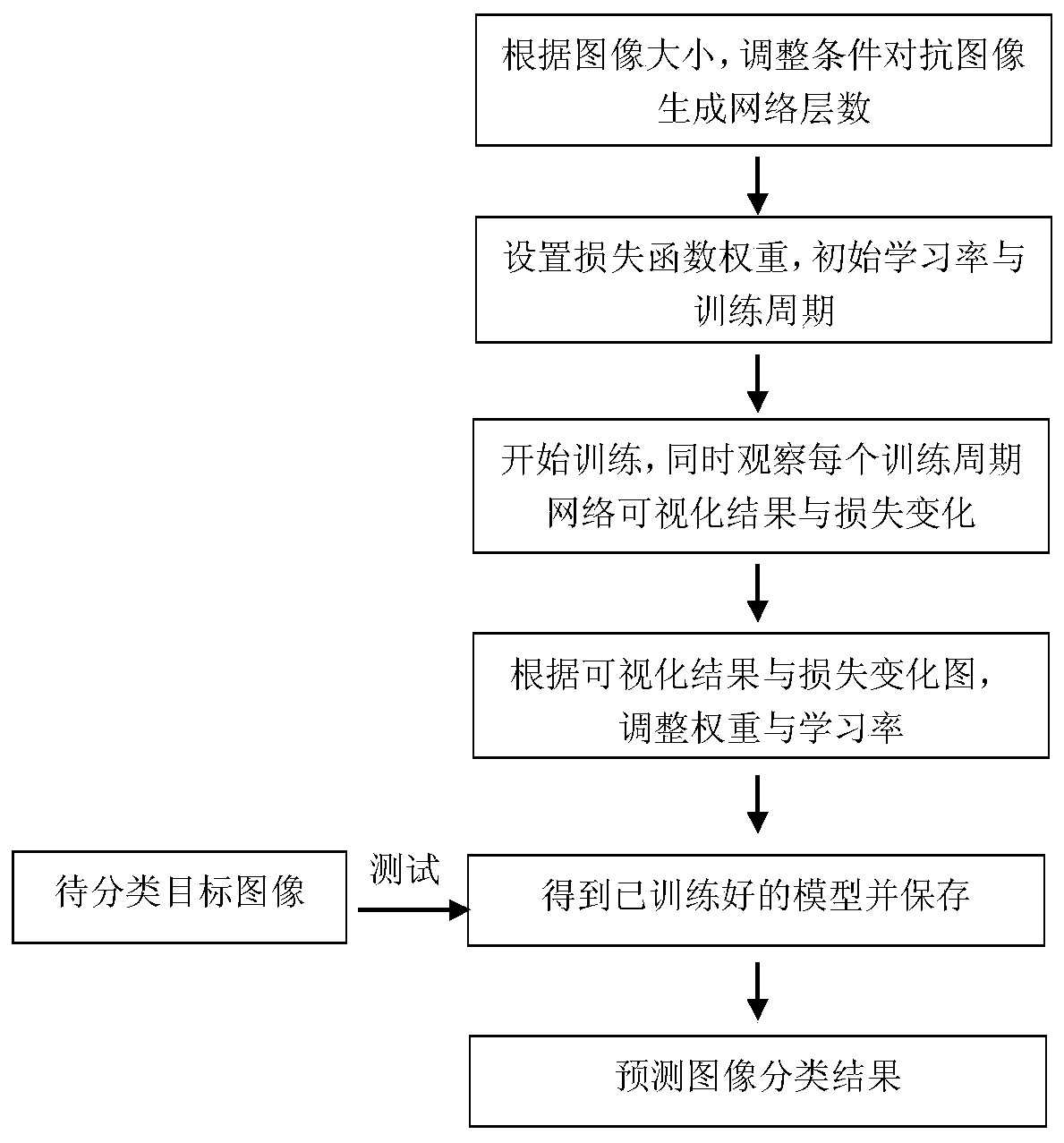

Unsupervised domain adaptive image classification method based on conditional generative adversarial network

ActiveCN109753992ARealize mutual conversionImprove domain adaptabilityCharacter and pattern recognitionNeural architecturesData setClassification methods

The invention discloses an unsupervised domain adaptive image classification method based on a conditional generative adversarial network. The method comprises the following steps: preprocessing an image data set; constructing a cross-domain conditional confrontation image generation network by adopting a cyclic consistent generation confrontation network and applying a constraint loss function; using the preprocessed image data set to train the constructed conditional adversarial image generation network; and testing the to-be-classified target image by using the trained network model to obtain a final classification result. According to the method, a conditional adversarial cross-domain image migration algorithm is adopted to carry out mutual conversion on source domain image samples andtarget domain image samples, and consistency loss function constraint is applied to classification prediction of target images before and after conversion. Meanwhile, discriminative classification tags are applied to carry out conditional adversarial learning to align joint distribution of source domain image tags and target domain image tags, so that the source domain image with the tags is applied to train the target domain image, classification of the target image is achieved, and classification precision is improved.

Owner:NANJING NORMAL UNIVERSITY

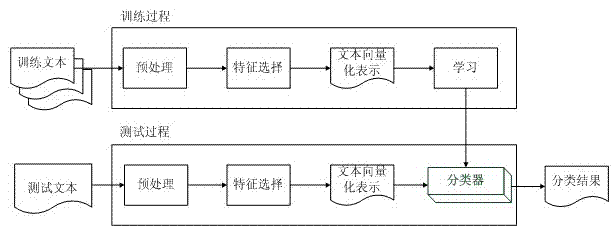

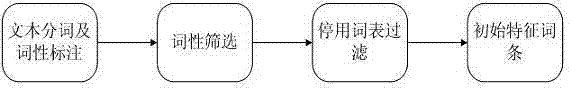

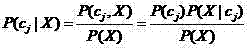

Text sentiment classification method facing Chinese Web comments

InactiveCN103116637AEffective data miningEffective classificationSpecial data processing applicationsFeature vectorClassification methods

The invention belongs to the field of data processing technology and discloses a text sentiment classification method facing Chinese Web comments. The text sentiment classification method includes a training process and a classification process. The training process includes the steps of carrying out training text preprocessing, carrying out feature selecting, carrying out vectorization representation of a text and obtaining a training classifier. The classification process includes the steps of carrying out test text preprocessing, carrying out feature selecting, utilizing the classifier to classify and outputting a classification result. On the basis of an original document classification method, document frequency (DF) and information gain (IG) are used and a sentiment dictionary of negative words, degree adverbs and dynamic sentiment words are built to distinguish sentiment tendency of Chinese feature words, select feature words, calculate a feature weight value and build a feature vector. Moreover, a NaiveBayes classification algorithm is used for training to obtain the classifier, carrying out sentiment classification on the text, providing effective data mining for users and then carrying out analysis processing.

Owner:WUXI NANLIGONG TECH DEV +1

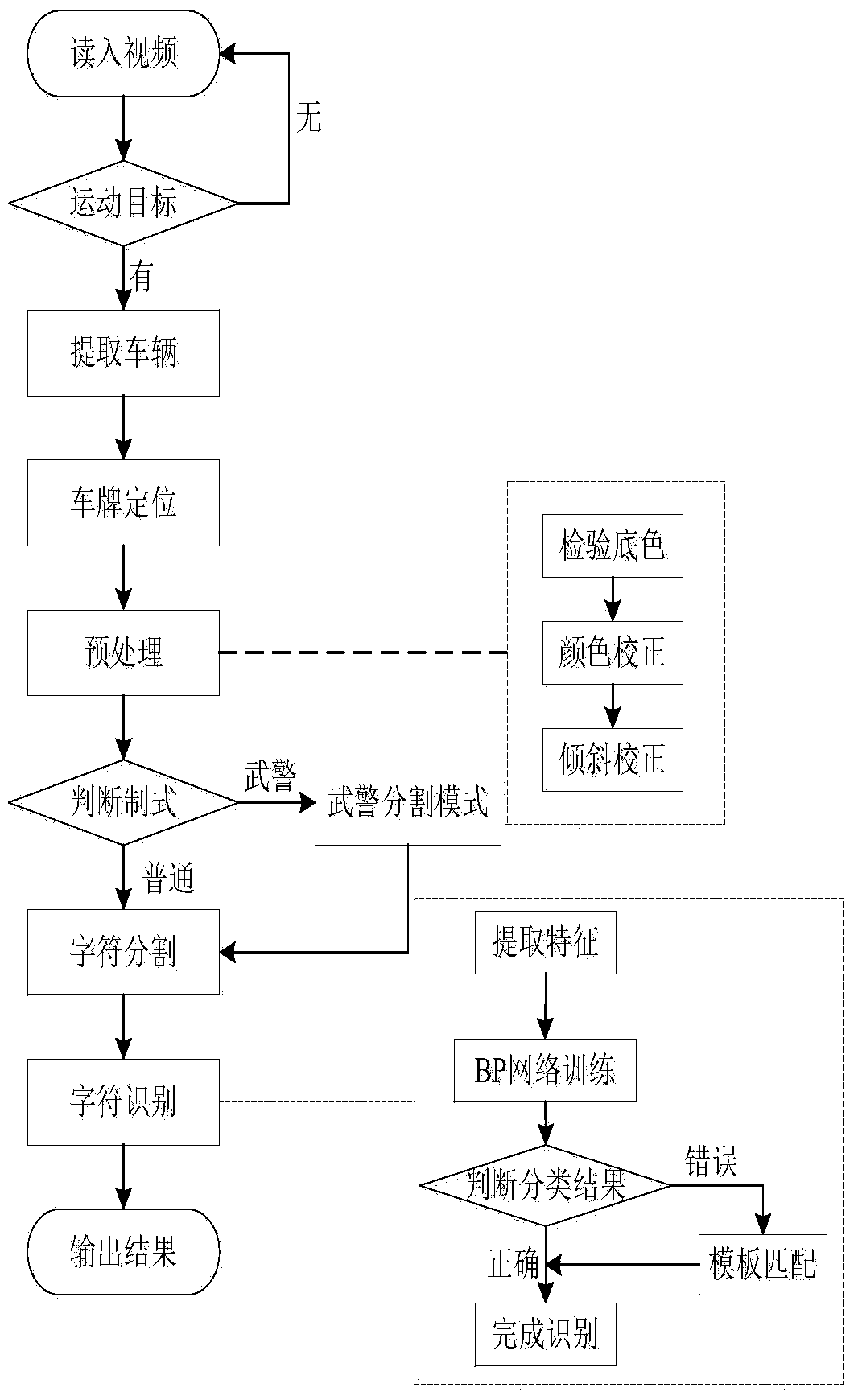

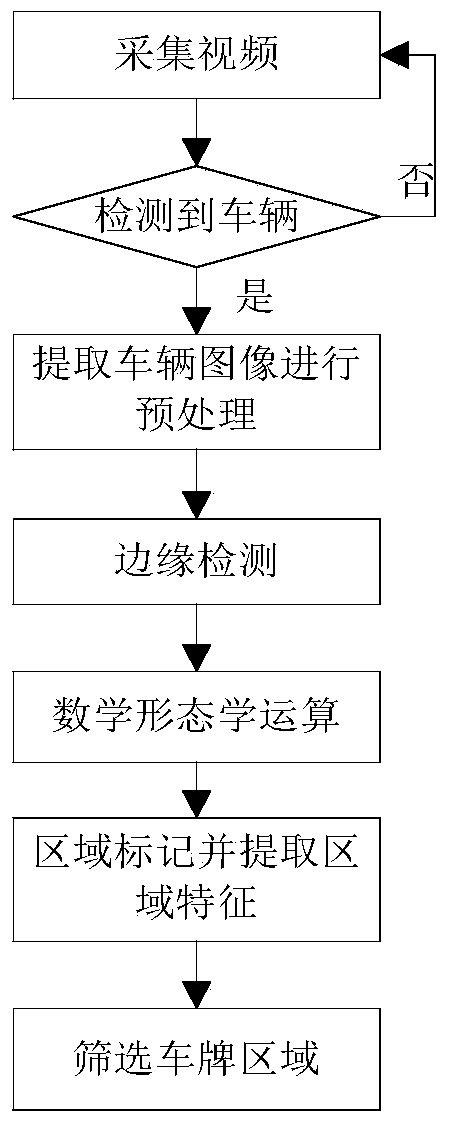

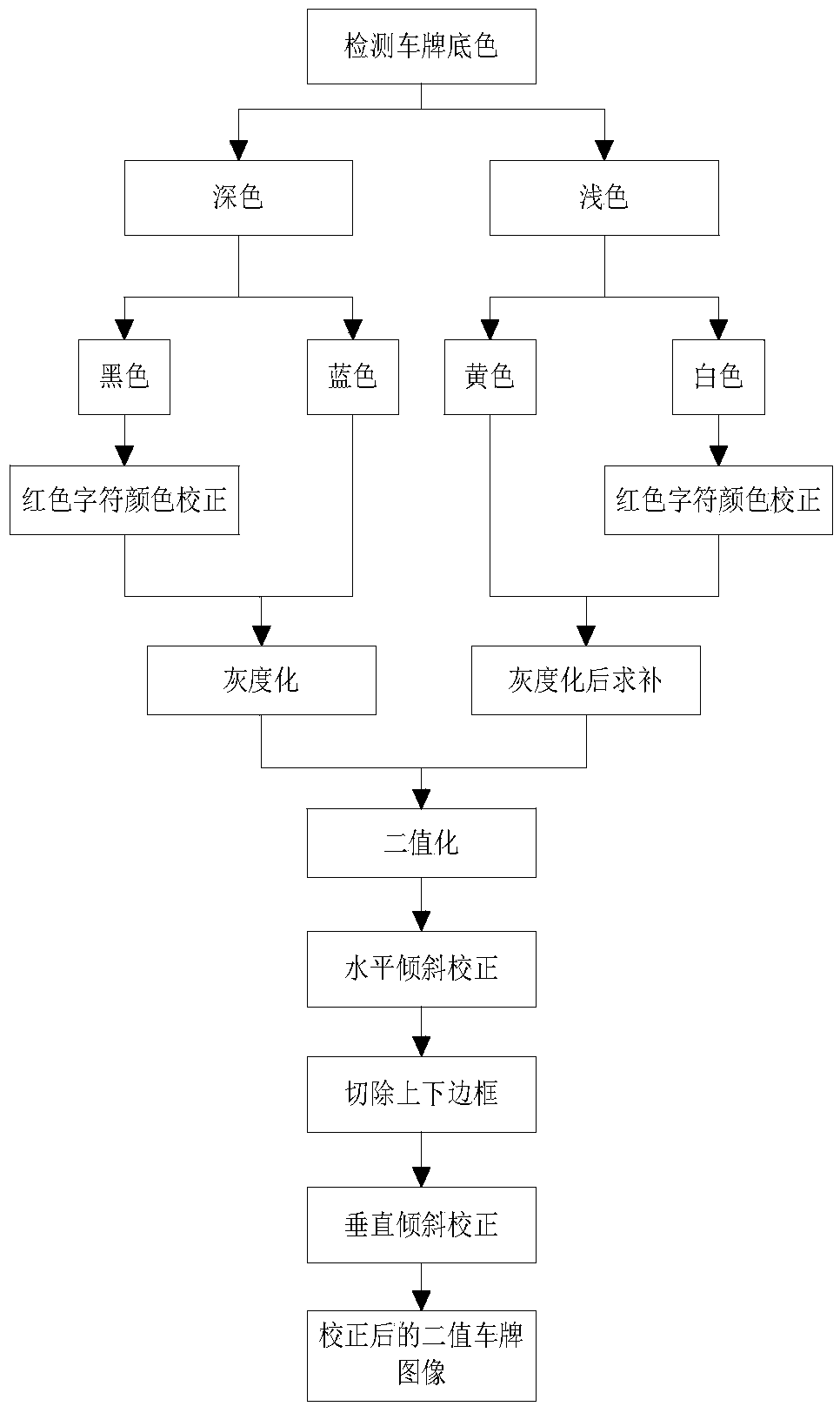

Vehicle license plate recognition method based on video

InactiveCN104050450AReduce sizeLow costCharacter and pattern recognitionFeature vectorTemplate matching

The invention provides a vehicle license plate recognition method based on a video. According to the vehicle license plate recognition method based on the video, moving vehicles are detected and separated out with the vehicle video which is obtained through actual photographing by means of a camera serving as input, the accurate position of a vehicle license plate area is determined by conducting vertical edge extraction on a target vehicle image obtained after pre-processing, a vehicle license plate image is separated out, color correction, binaryzation and inclination correction are conducted on a vehicle license plate image, each character in the positioned vehicle license plate area is separated to serve as an independent character, feature extraction is conducted one each character, obtained feature vectors are classified through a classifier which is well trained in advance, a classification result serves as a preliminary recognition result, secondary recognition is conducted on the stained vehicle license plate characters according to a template matching algorithm imitating the visual characteristics of human eyes, and then a final vehicle license plate recognition result is obtained. The vehicle license plate recognition method based on the video has the advantages that hardware cost is reduced, the management efficiency of an intelligent transportation system is improved, the anti-jamming performance and the robustness are high, the recognition efficiency is high, and the recognition speed is high.

Owner:XIAN TONGRUI NEW MATERIAL DEV

Systems and Methods for Semantically Classifying and Normalizing Shots in Video

The present disclosure relates to systems and methods for classifying videos based on video content. For a given video file including a plurality of frames, a subset of frames is extracted for processing. Frames that are too dark, blurry, or otherwise poor classification candidates are discarded from the subset. Generally, material classification scores that describe type of material content likely included in each frame are calculated for the remaining frames in the subset. The material classification scores are used to generate material arrangement vectors that represent the spatial arrangement of material content in each frame. The material arrangement vectors are subsequently classified to generate a scene classification score vector for each frame. The scene classification results are averaged (or otherwise processed) across all frames in the subset to associate the video file with one or more predefined scene categories related to overall types of scene content of the video file.

Owner:TIVO SOLUTIONS INC

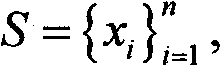

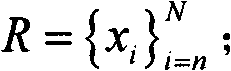

Graph-based semi-supervised high-spectral remote sensing image classification method

ActiveCN102096825AReduce restrictionsReduce complexityCharacter and pattern recognitionComputation complexityPartition of unity

The invention relates to a graph-based semi-supervised high-spectral remote sensing image classification method. The method comprises the following steps: extracting the features of an input image; randomly sampling M points from an unlabeled sample, constructing a set S with L marked points, constructing a set R with the rest of the points; calculating K adjacent points of the points in the sets S and R in the set S by use of a class probability distance; constructing two sparse matrixes WSS and WSR by a linear representation method; using label propagation to obtain a label function F<*><S>, and calculating the label prediction function F<*><R> of the sample points in the set R to determine the labels of all the pixel points of the input image. According to the method, the adjacent points of the sample points can be calculated by use of the class probability distance, and the accurate classification of high-spectral images can be achieved by utilizing semi-supervised conduction, thus the calculation complexity is greatly reduced; in addition, the problem that the graph-based semi-supervised learning algorithm can not be used for large-scale data processing is solved, and the calculation efficiency can be improved by at least 20-50 times within the per unit time when the method provided by the invention is used, and the visual effects of the classified result graphs are good.

Owner:XIDIAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com