Patents

Literature

224 results about "Visual Word" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

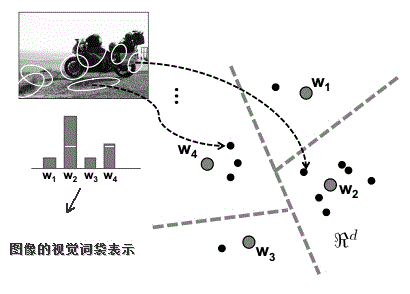

Visual words, as used in image retrieval systems, refer to small parts of an image which carry some kind of information related to the features (such as the color, shape or texture), or changes occurring in the pixels such as the filtering, low-level feature descriptors (SIFT, SURF, ...etc.).

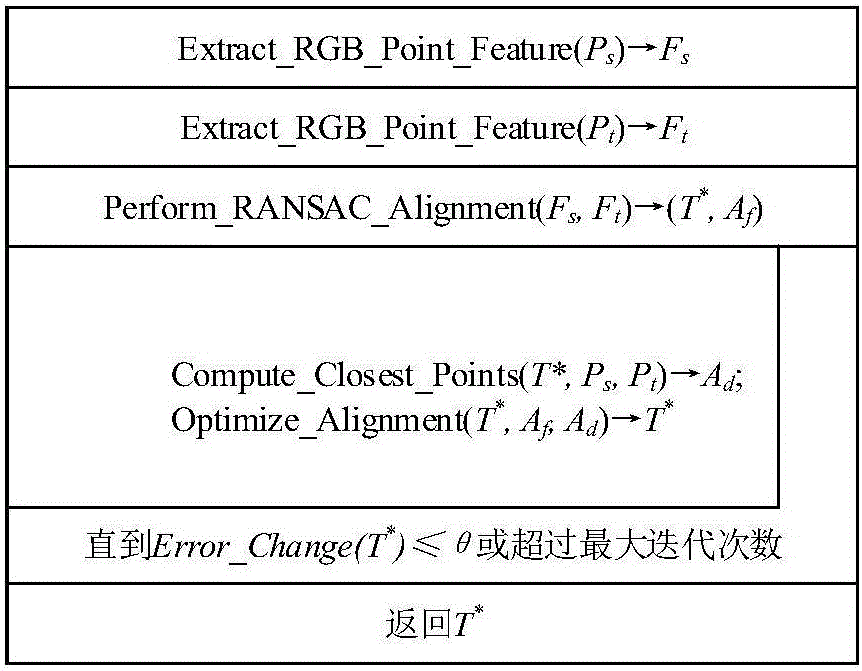

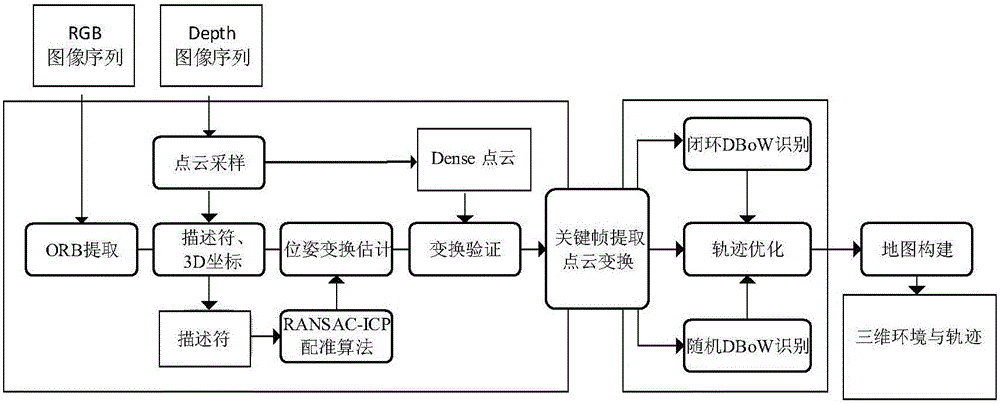

ORB key frame closed-loop detection SLAM method capable of improving consistency of position and pose of robot

InactiveCN105856230AGuaranteed positioning accuracyGuaranteed accuracyProgramme controlProgramme-controlled manipulatorClosed loopImaging Feature

The invention discloses an ORB key frame closed-loop detection SLAM method capable of improving the consistency of the position and the pose of a robot. The ORB key frame closed-loop detection SLAM method comprises the following steps of, firstly, acquiring color information and depth information of the environment by adopting an RGB-D sensor, and extracting the image features by using the ORB features; then, estimating the position and the pose of the robot by an algorithm based on RANSAC-ICP interframe registration, and constructing an initial position and pose graph; and finally, constructing BoVW (bag of visual words) by extracting the ORB features in a Key Frame, carrying out similarity comparison on the current key frame and words in the BoVW to realize closed-loop key frame detection, adding constraint of the position and pose graph through key frame interframe registration detection, and obtaining the global optimal position and pose of the robot. The invention provides the ORB key frame closed-loop detection SLAM method with capability of improving the consistency of the position and the pose of the robot, higher constructing quality of an environmental map and high optimization efficiency.

Owner:简燕梅

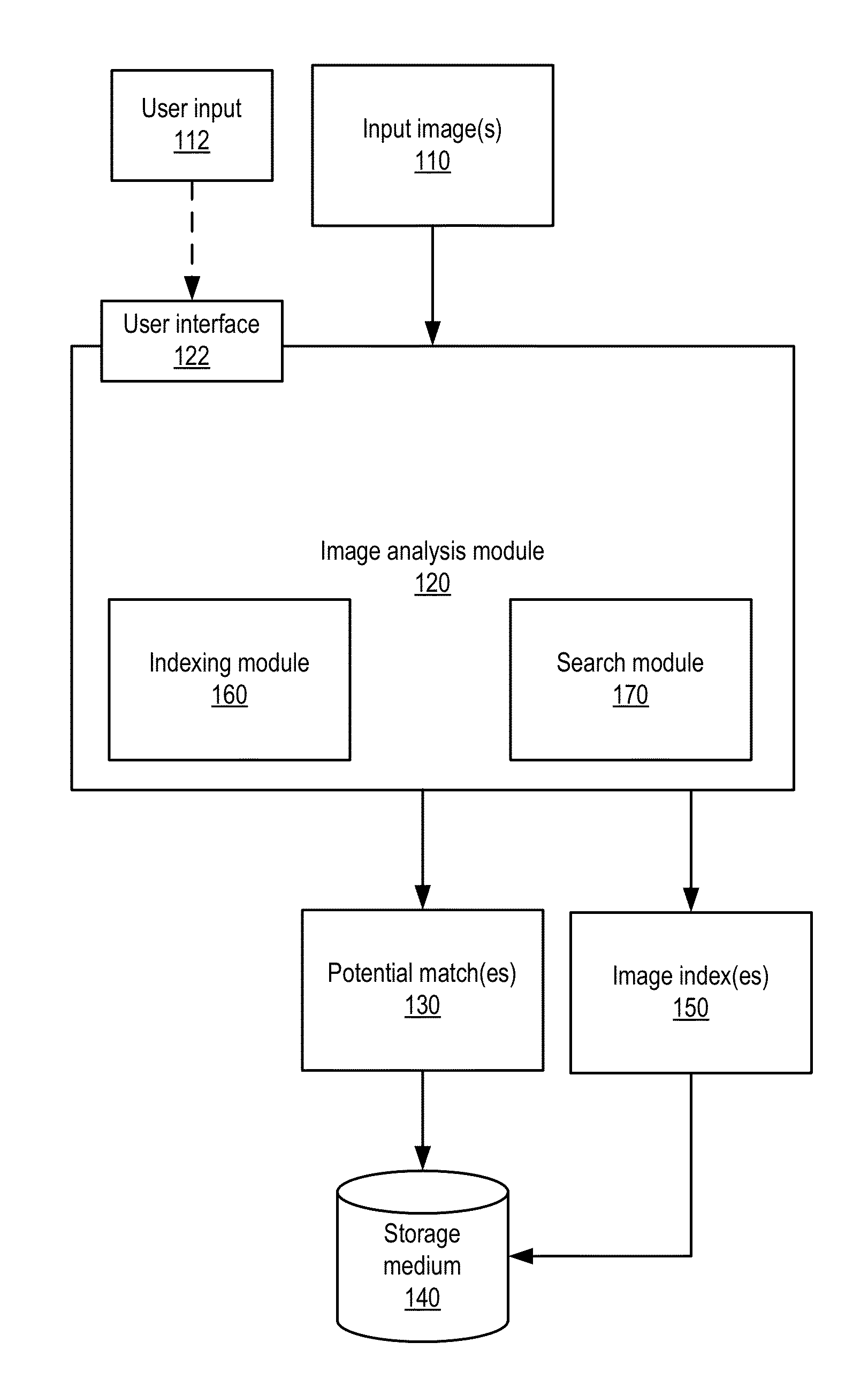

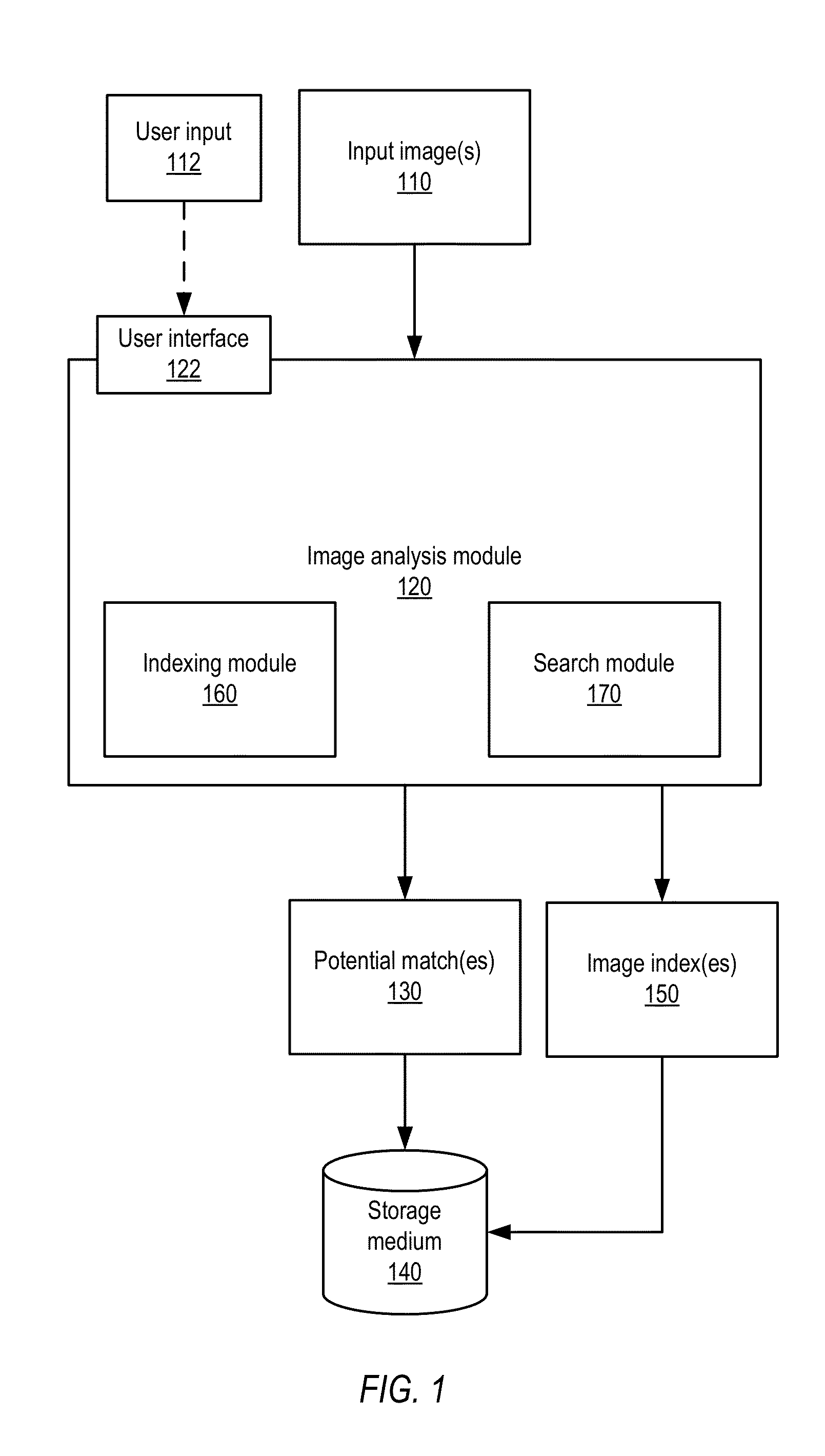

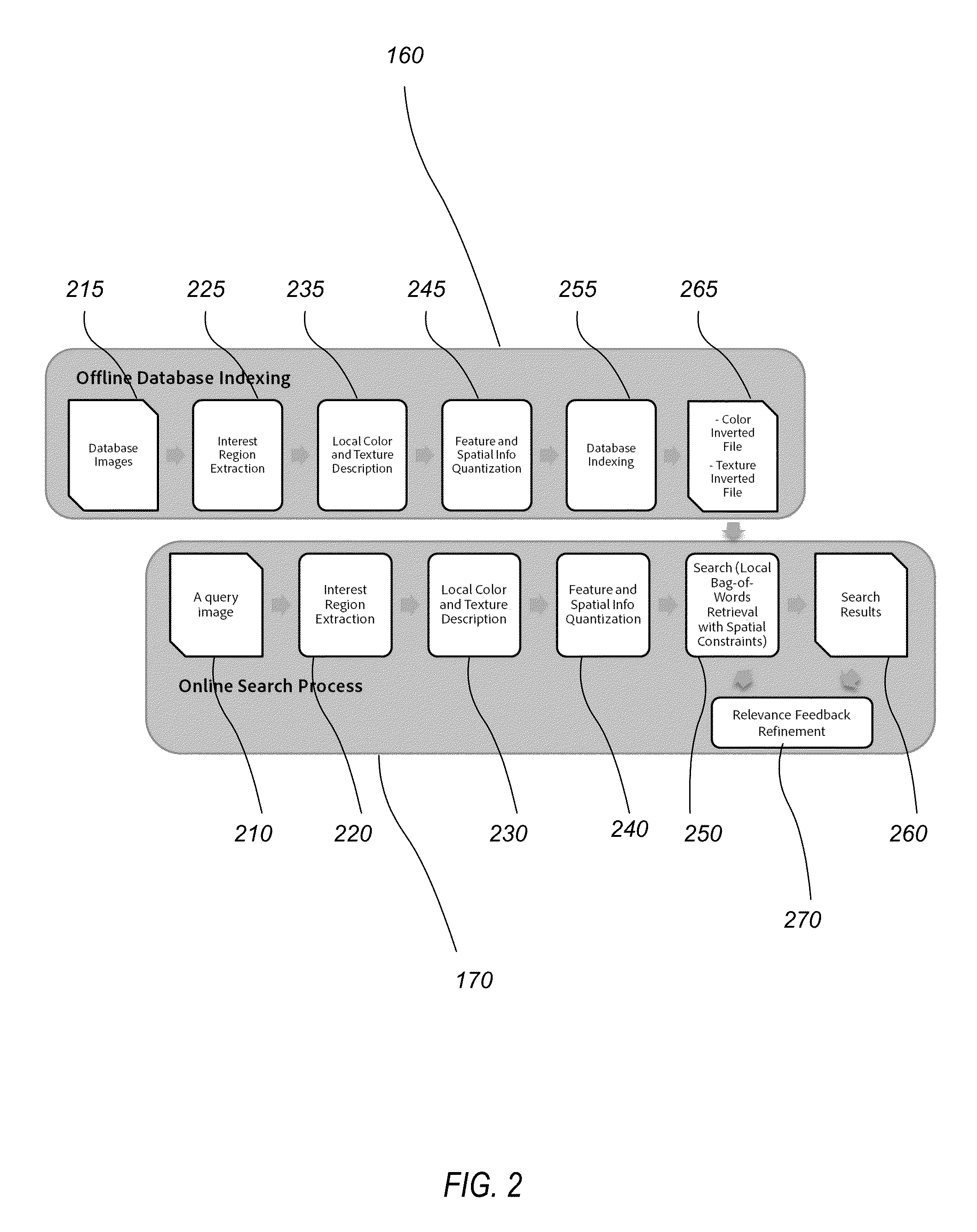

Methods and Apparatus for Visual Search

ActiveUS20130121600A1Digital data information retrievalCharacter and pattern recognitionPattern recognitionBag of features

Each image of a set of images is characterized with a set of sparse feature descriptors and a set of dense feature descriptors. In some embodiments, both the set of sparse feature descriptors and the set of dense feature descriptors are calculated based on a fixed rotation for computing texture descriptors, while color descriptors are rotation invariant. In some embodiments, the descriptors of both sparse and dense features are then quantized into visual words. Each database image is represented by a feature index including the visual words computed from both sparse and dense features. A query image is characterized with the visual words computed from both sparse and dense features of the query image. A rotated local Bag-of-Features (BoF) operation is performed upon a set of rotated query images against the set of database images. Each of the set of images is ranked based on the rotated local Bag-of-Features operation.

Owner:ADOBE SYST INC

Remote sensing image classification method based on multi-feature fusion

ActiveCN102622607AImprove classification accuracyEnhanced Feature RepresentationCharacter and pattern recognitionSynthesis methodsClassification methods

The invention discloses a remote sensing image classification method based on multi-feature fusion, which includes the following steps: A, respectively extracting visual word bag features, color histogram features and textural features of training set remote sensing images; B, respectively using the visual word bag features, the color histogram features and the textural features of the training remote sensing images to perform support vector machine training to obtain three different support vector machine classifiers; and C, respectively extracting visual word bag features, color histogram features and textural features of unknown test samples, using corresponding support vector machine classifiers obtained in the step B to perform category forecasting to obtain three groups of category forecasting results, and synthesizing the three groups of category forecasting results in a weighting synthesis method to obtain the final classification result. The remote sensing image classification method based on multi-feature fusion further adopts an improved word bag model to perform visual word bag feature extracting. Compared with the prior art, the remote sensing image classification method based on multi-feature fusion can obtain more accurate classification result.

Owner:HOHAI UNIV

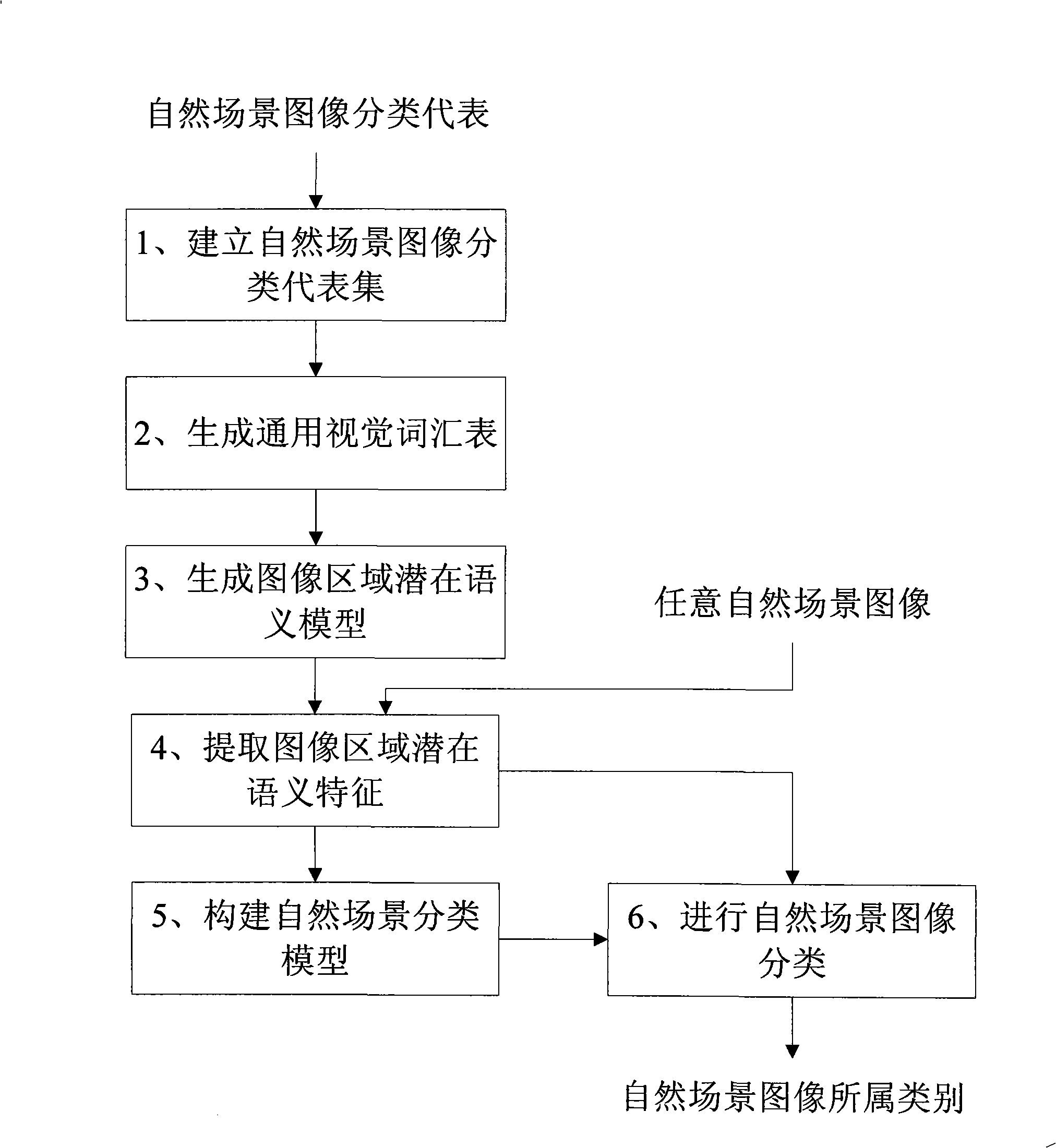

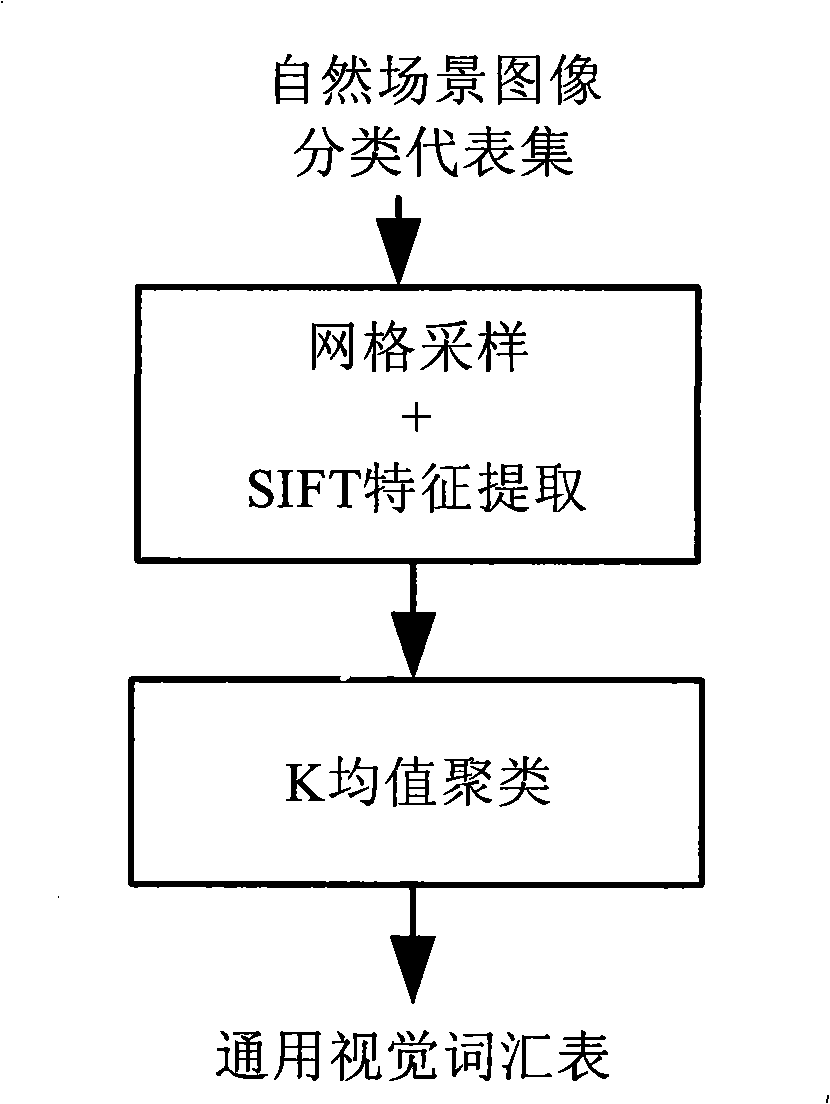

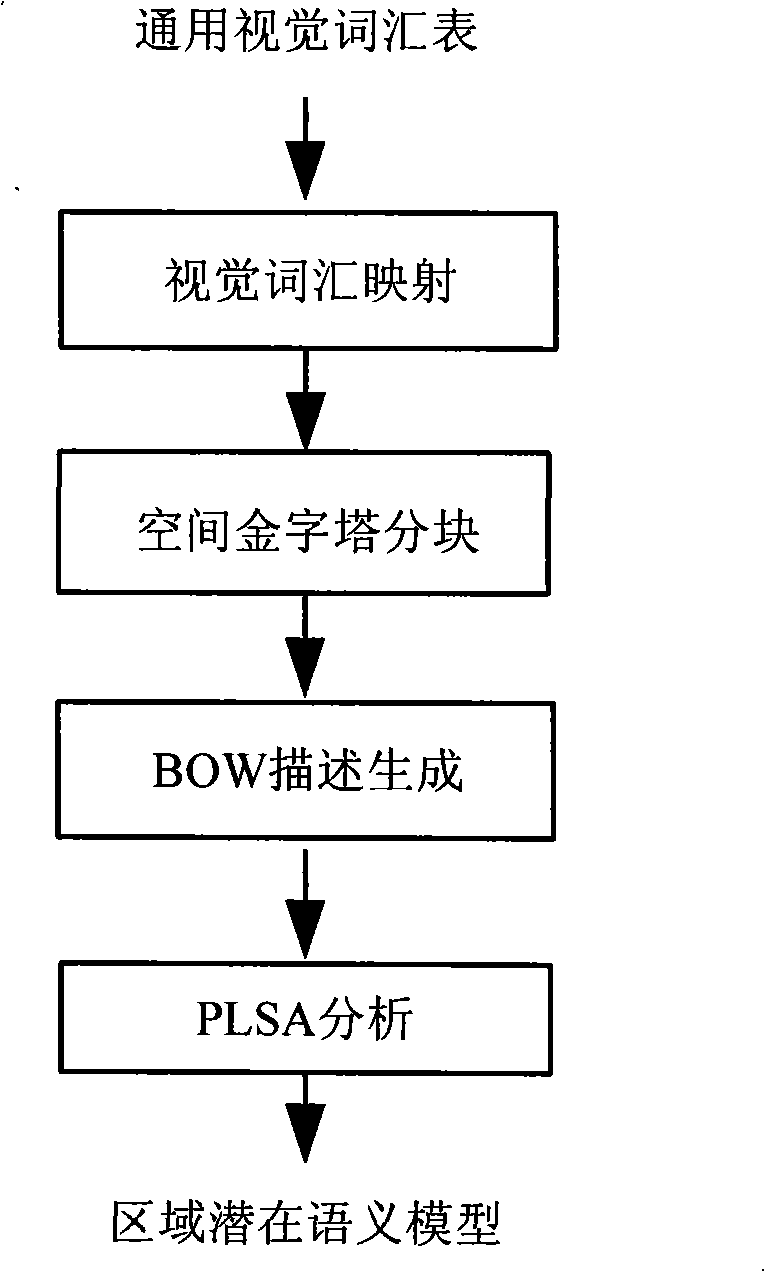

Nature scene image classification method based on area dormant semantic characteristic

InactiveCN101315663AImprove accuracyHigh degree of automationCharacter and pattern recognitionSpecial data processing applicationsImage extractionFeature extraction

The invention discloses a method for the classification of natural scene images on the basis of regional potential semantic feature, aiming at carrying out the classification of the natural scene images by utilizing the regional potential semantic information of the images and the distribution rule of the information in space. The technical proposal comprises the following steps: firstly, a representative collection of the classification of the natural scene images is established; secondly, sampling point SIFT feature extraction is carried out to the images in the representative collection of the classification of the natural scene images to generate a general visual word list; thirdly, the regional potential semantic model of an image is produced on the representative collection of the classification of the natural scene images; fourthly, the extraction of the regional potential semantic feature of the image is carried out to any image; finally, a natural scene classification model is generate, and classification is carried out to the regional potential semantic feature of the image according to the natural scene classification model. The method inducts the regional potential semantic feature, thus not only describing the regional information of image sub-blocks, but also including the distribution information of the image sub-blocks in space; compared with other methods, the method of the invention can obtain higher accuracy, and no manual labeling is needed, thus having high degree of automation.

Owner:NAT UNIV OF DEFENSE TECH

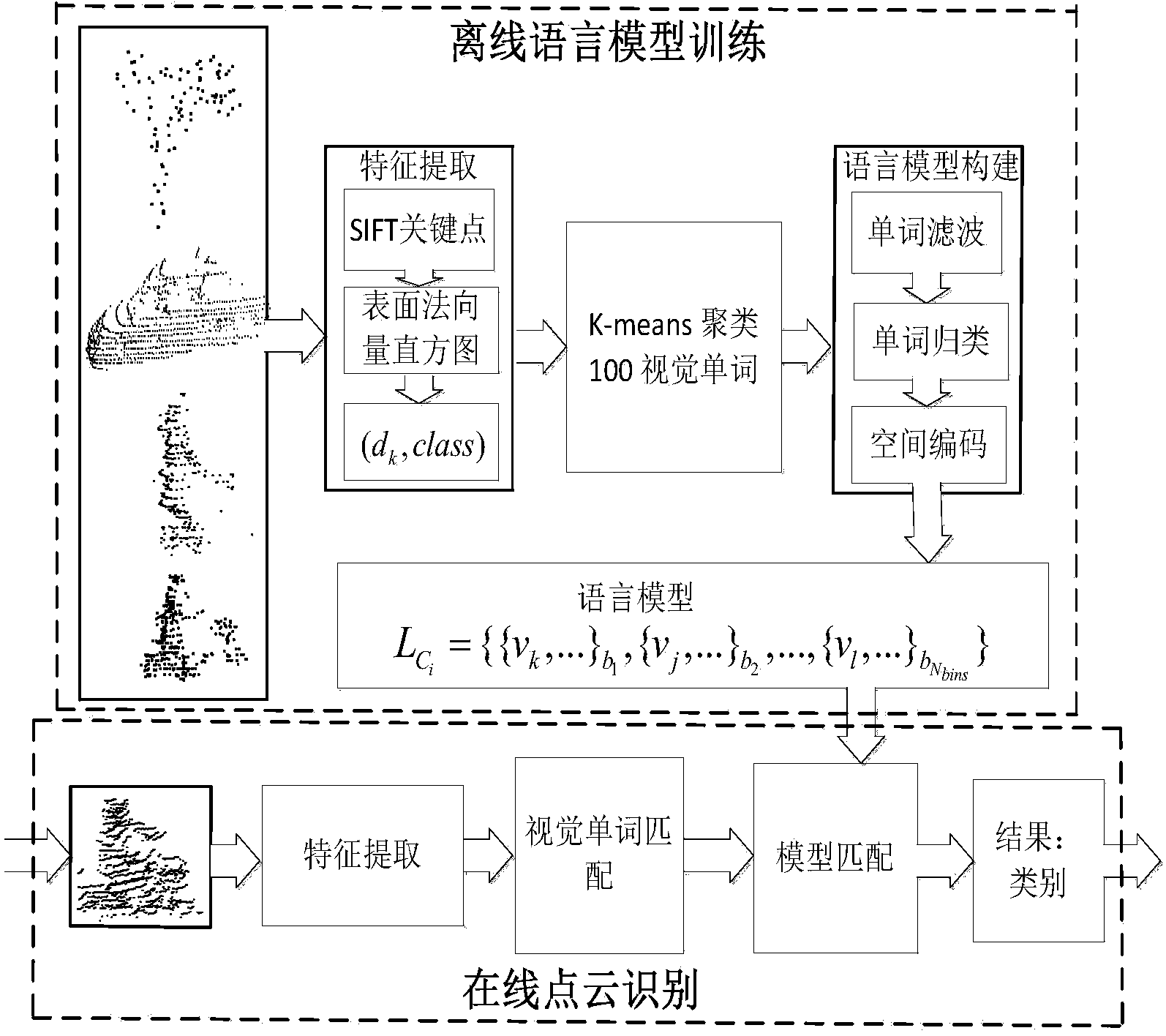

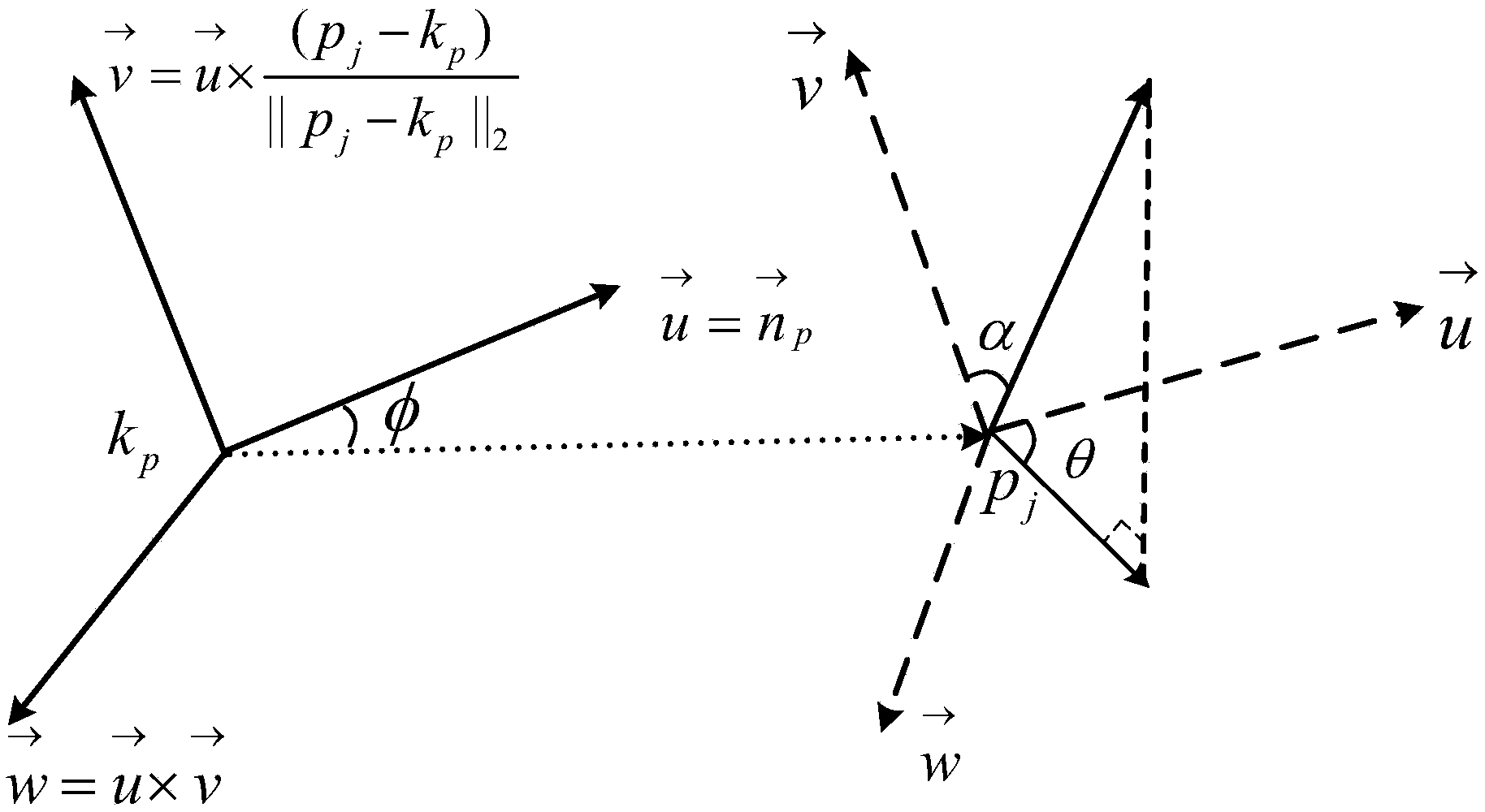

Method for identifying objects in 3D point cloud data

ActiveCN104298971AFeatures are stable and reliableAccurate modelingCharacter and pattern recognitionPoint cloudCrucial point

The invention discloses a method for identifying objects in 3D point cloud data. 2D SIFT features are extended to a 3D scene, SIFT key points and a surface normal vector histogram are combined to achieve scale-invariant local feature extraction of 3D depth data, and the features are stable and reliable. A provided language model overcomes the shortcoming that a traditional visual word bag model is not accurate and is easily influenced by noise when using local features to describe global features, and the accuracy of target global feature description based on the local features is greatly improved. By means of the method, the model is accurate, and identification effect is accurate and reliable. The method can be applied to target identification in all outdoor complicated or simple scenes.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

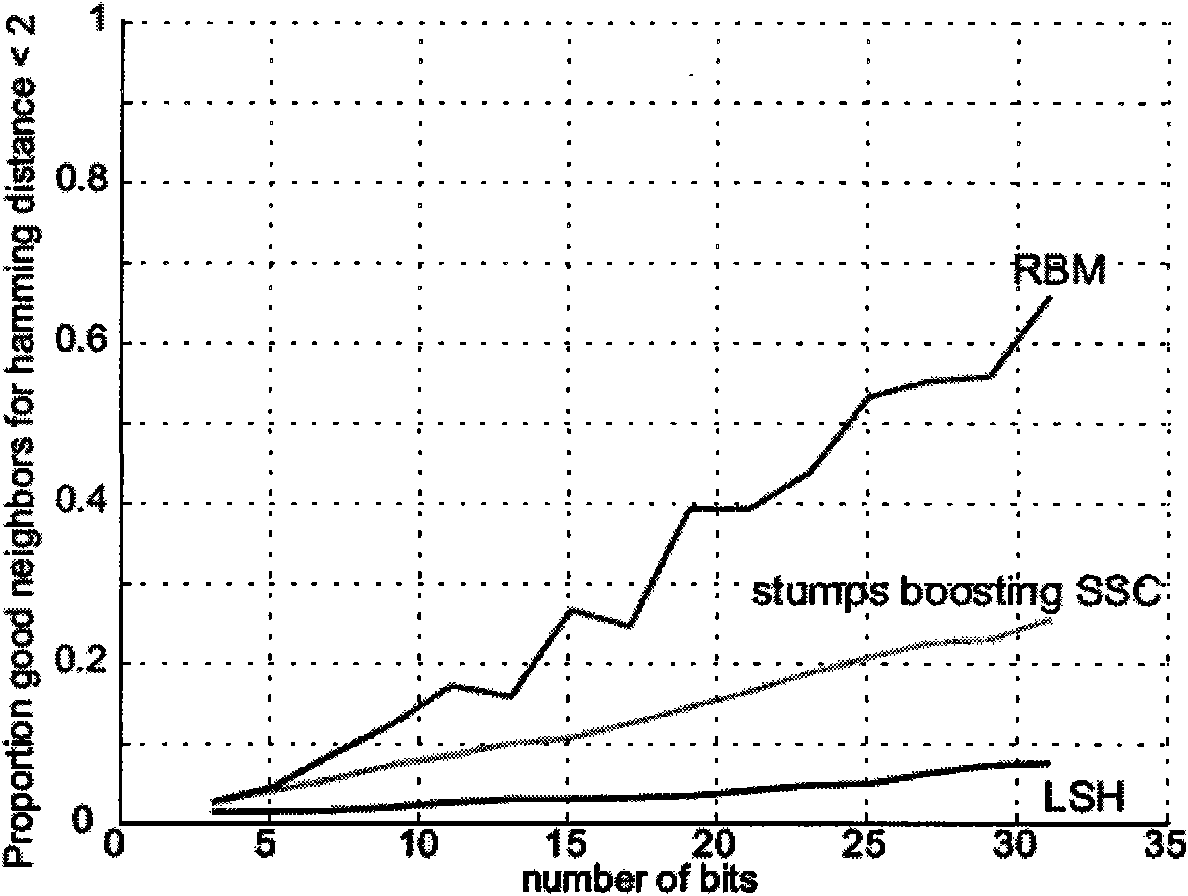

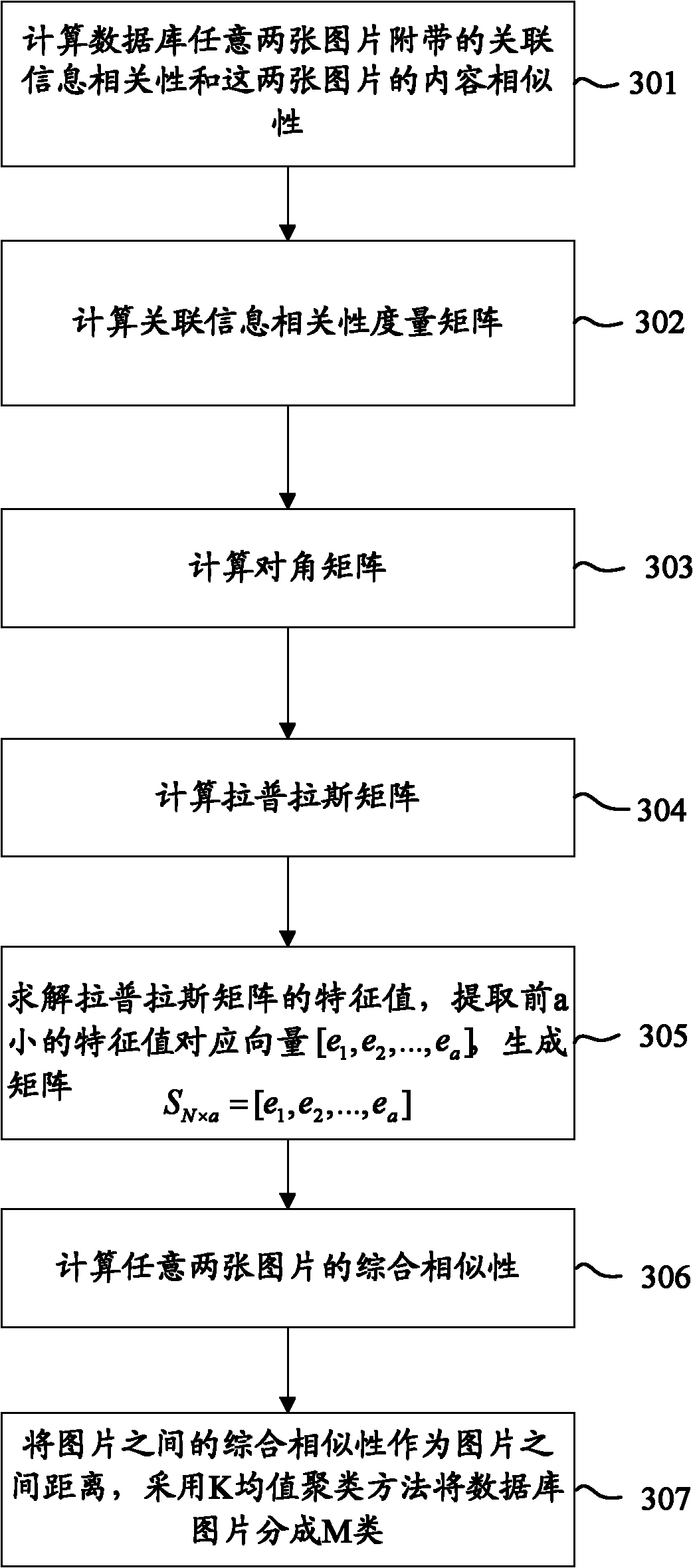

Sparse dimension reduction-based spectral hash indexing method

InactiveCN101894130AImprove interpretabilityImprove search efficiencyCharacter and pattern recognitionSpecial data processing applicationsSearch problemPrincipal component analysis

The invention discloses a sparse dimension reduction-based spectral hash indexing method, which comprises the following steps: 1) extracting image low-level features of an original image by using an SIFT method; 2) clustering the image low-level features by using a K-means method, and using each cluster center as a sight word; 3) reducing the dimensions of the vectors the sight words by using a sparse component analysis method directly and making the vectors sparse; 4) resolving an Euclidean-to-Hamming space mapping function by using the characteristic equation and characteristic roots of a weighted Laplace-Beltrami operator so as to obtain a low-dimension Hamming space vector; and 5) for an image to be searched, the Hamming distance between the image to be searched and the original image in the low-dimensional Hamming space and using the Hamming distance as the image similarity computation result. In the invention, the sparse dimension reduction mode instead of a spectral has principle component analysis dimension reduction mode is adopted, so the interpretability of the result is improved; and the searching problem of the Euclidean space is mapped into the Hamming space, and the search efficiency is improved.

Owner:ZHEJIANG UNIV

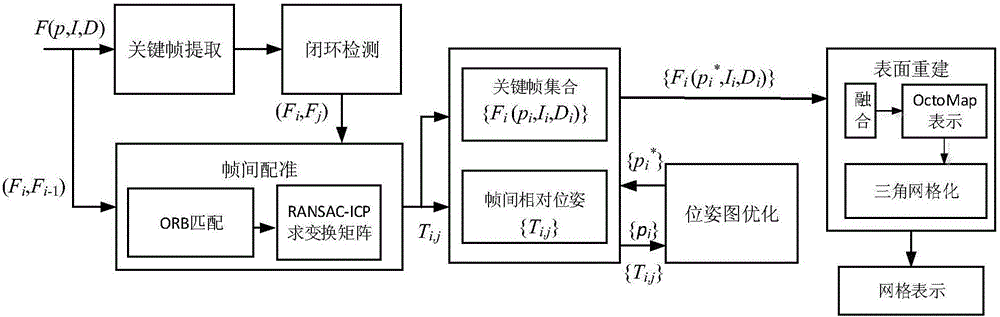

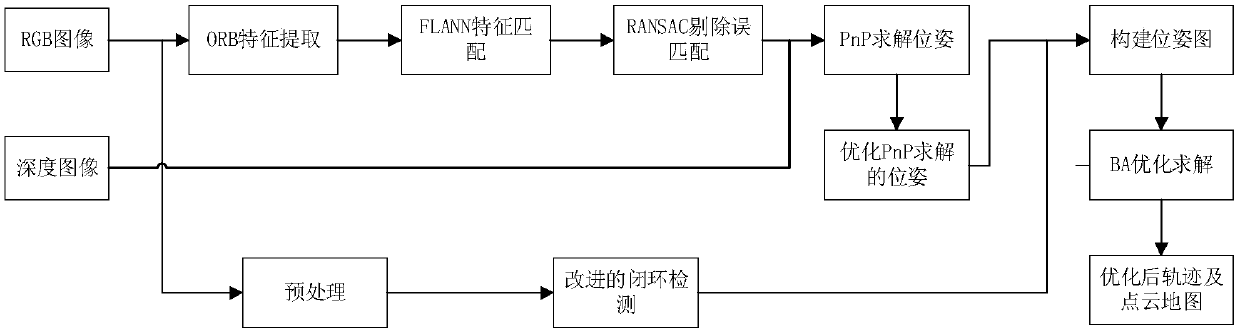

Improved closed-loop detection algorithm-based mobile robot vision SLAM (Simultaneous Location and Mapping) method

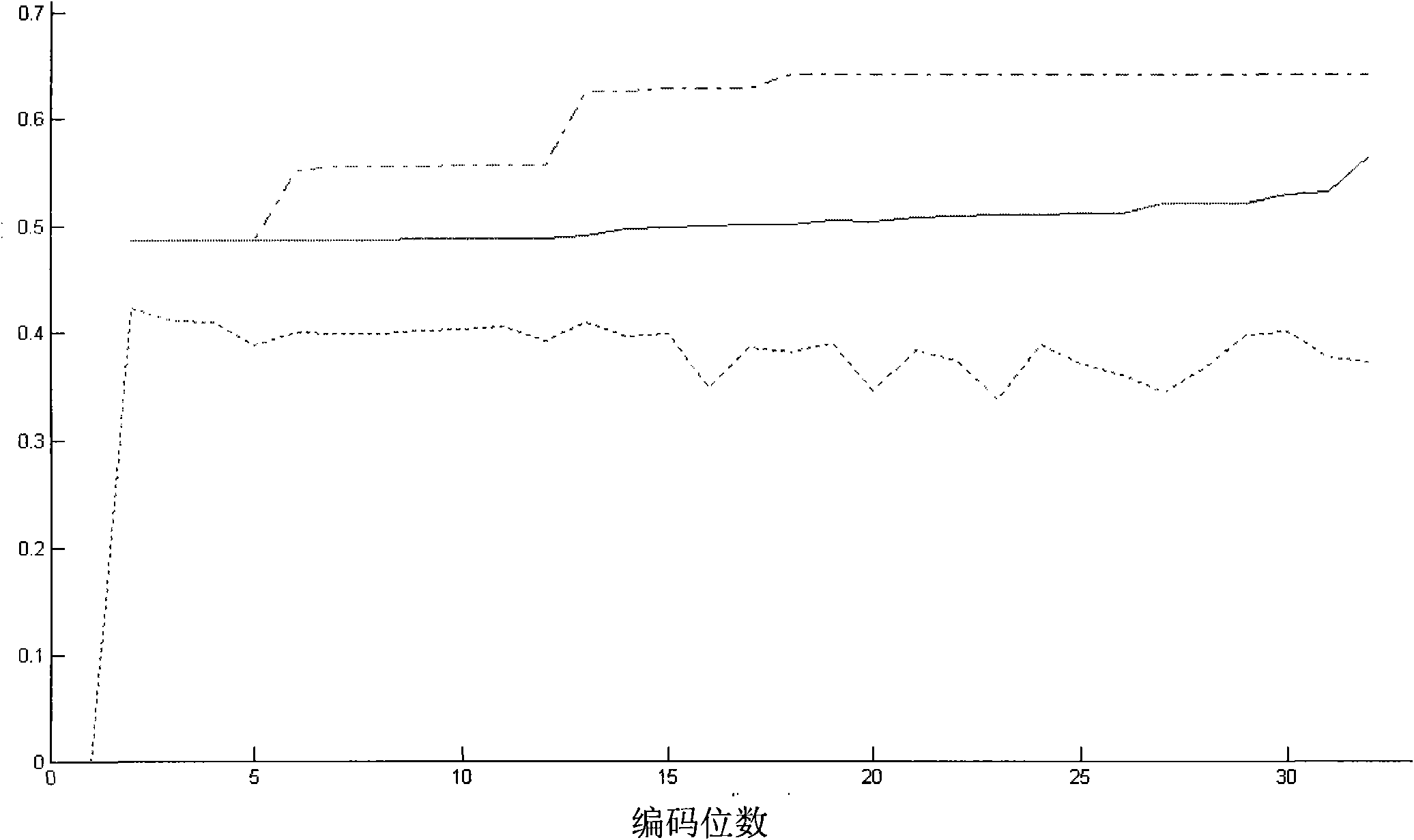

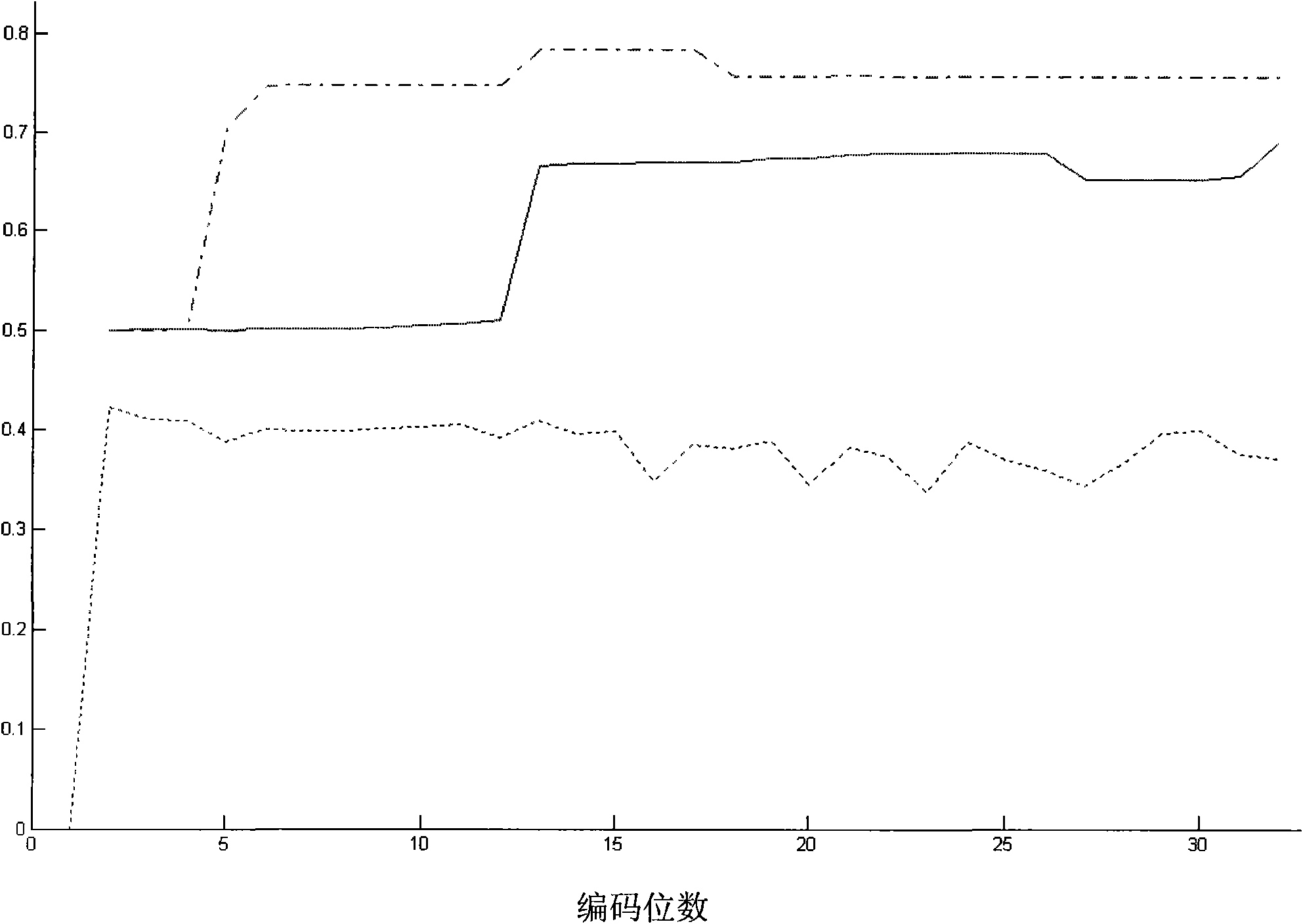

InactiveCN107680133AImprove extraction speedImprove matching speedImage enhancementImage analysisRgb imageClosed loop

The present invention provides an improved closed-loop detection algorithm-based mobile robot vision SLAM (Simultaneous Location and Mapping) method. The method includes the following steps that: S1,Kinect is calibrated through a using the Zhang Dingyou calibration method; S2, ORB feature extraction is performed on acquired RGB images, and feature matching is performed by using the FLANN (Fast Library for Approximate Nearest network); S3, mismatches are deleted, the space coordinates of matching points are obtained, and inter-frame pose transformation (R, t) is estimated through adopting thePnP algorithm; S4, structureless iterative optimization is performed on the pose transformation solved by the PnP; and S5, the image frames are preprocessed, the images are described by using the bagof visual words, and an improved similarity score matching method is used to perform image matching so as to obtain closed-loop candidates, and correct closed-loops are selected; and S6, an image optimization method centering cluster adjustment is used to optimize poses and road signs, and more accurate camera poses and road signs are obtained through continuous iterative optimization. With the method of the invention adopted, more accurate pose estimations and better three-dimensional reconstruction effects under indoor environments can be obtained.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

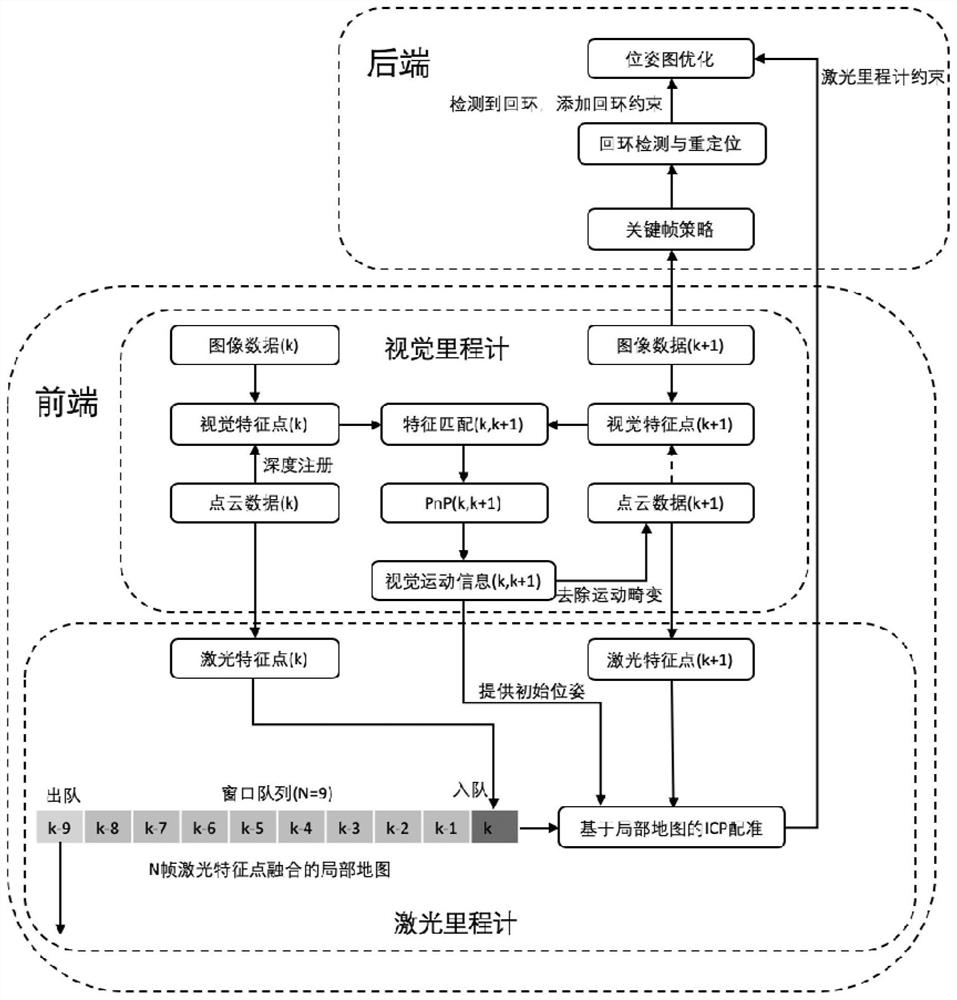

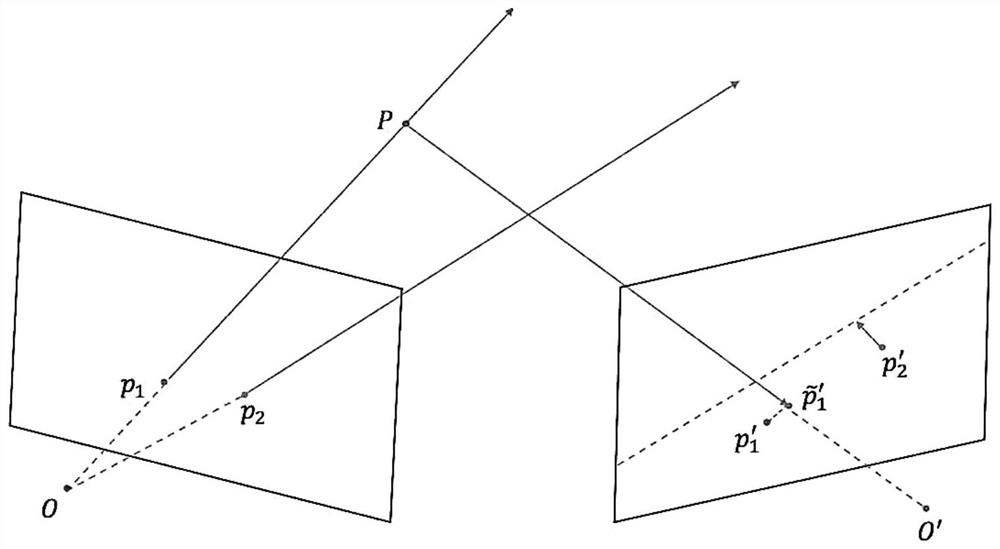

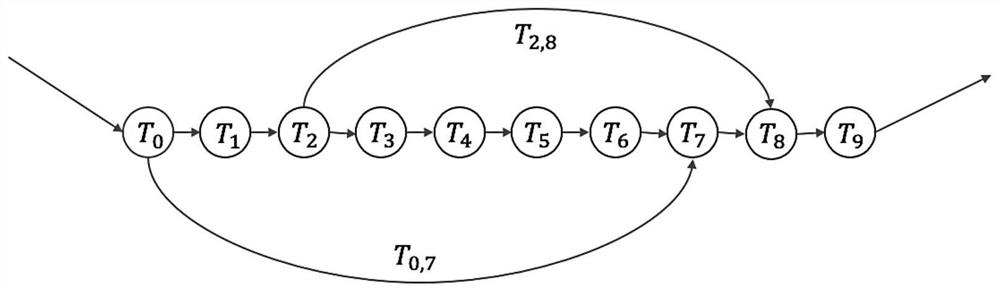

Simultaneous localization and mapping method based on vision and laser radar

InactiveCN112258600AAccurate initial poseIterative convergence is fastImage enhancementReconstruction from projectionSimultaneous localization and mappingPoint cloud

The invention discloses a simultaneous localization and mapping method based on vision and laser radar, and belongs to the field of SLAM. According to the method, the laser odometer and the visual odometer are operated at the same time, the visual odometer can assist the laser point cloud in better removing motion distortion, and meanwhile the point cloud with motion distortion removed can be projected to the image to serve as depth information for motion calculation of the next frame. After the laser odometer obtains a good initial value, the laser odometer is prevented from falling into a degradation scene due to the defects of a single sensor, and the odometer achieves higher positioning precision due to the addition of visual information. A loop detection and repositioning module is achieved through a visual word bag, and a complete visual laser SLAM system is formed; after loop is detected, the system performs pose graph optimization according to loop constraints, accumulated errors after long-time movement are eliminated to a certain extent, and high-precision positioning and point cloud map construction can be completed in a complex environment.

Owner:ZHEJIANG UNIV

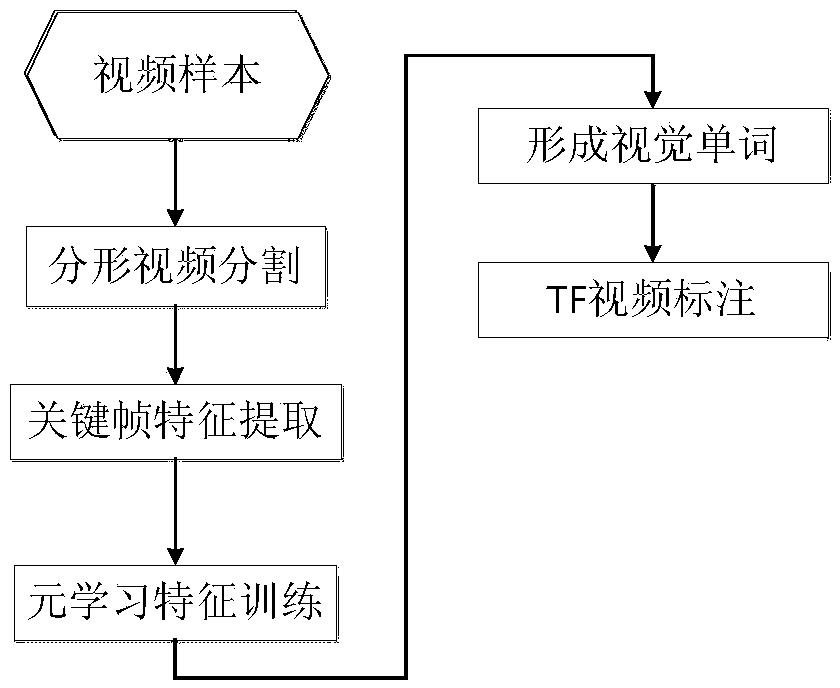

Mass video semantic annotation method based on Spark

ActiveCN104239501AAvoid constraintsRealize analysisImage analysisCharacter and pattern recognitionFeature extractionVideo annotation

The invention provides a mass video semantic annotation method based on Spark. The method is mainly based on elastic distributed storage of mass video under a Hadoop big data cluster environment and adopts a Spark computation mode to conduct video annotation. The method mainly comprises the following contents: a video segmentation method based on a fractal theory and realization thereof on Spark; a video feature extraction method based on Spark and a visual word forming method based on a meta-learning strategy; a video annotation generation method based on Spark. Compared with the traditional single machine computation, parallel computation or distributed computation, the mass video semantic annotation method based on Spark can improve the computation speed by more than a hundred times and has the advantages of complete annotation content information, low error rate and the like.

Owner:THE 28TH RES INST OF CHINA ELECTRONICS TECH GROUP CORP

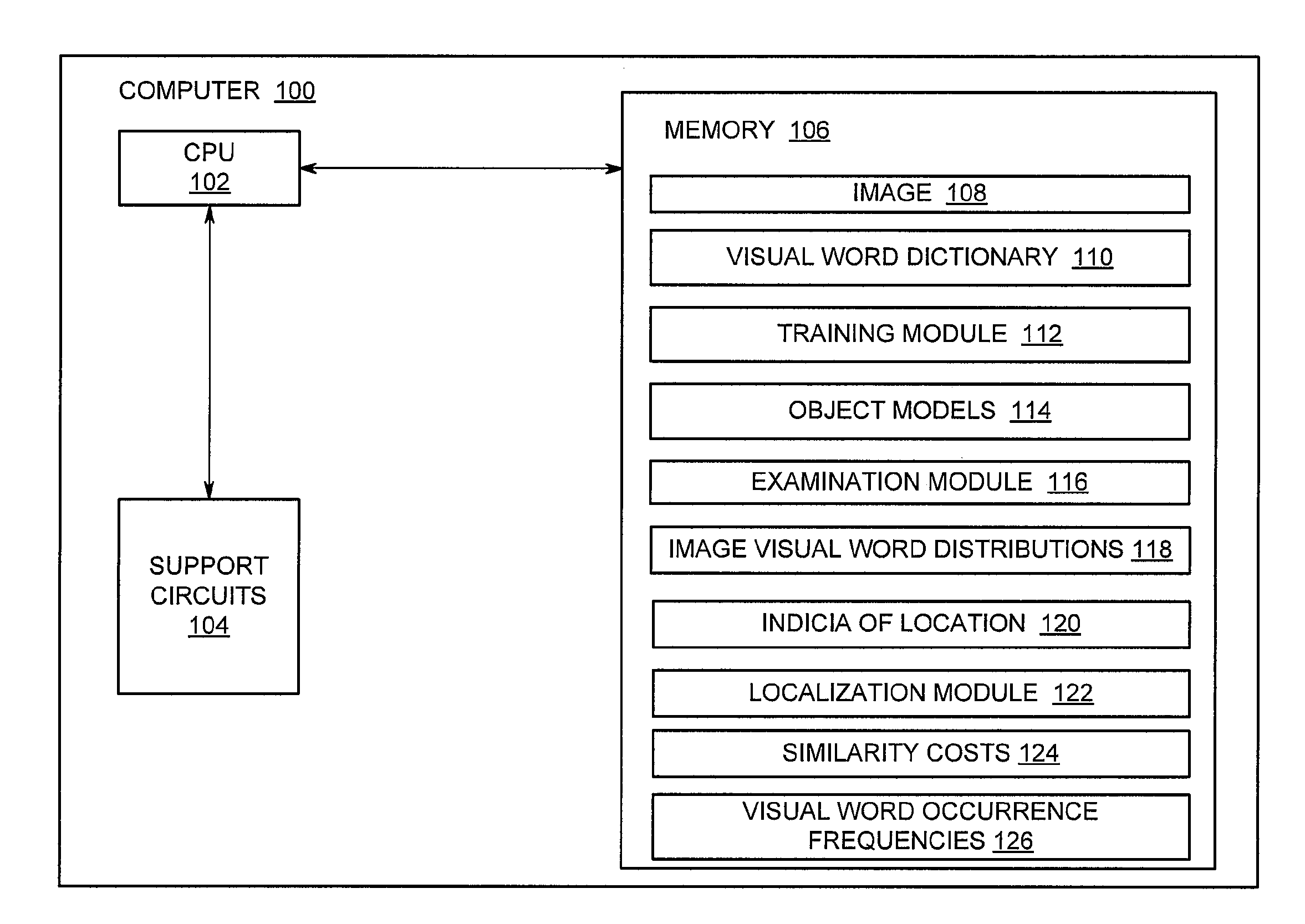

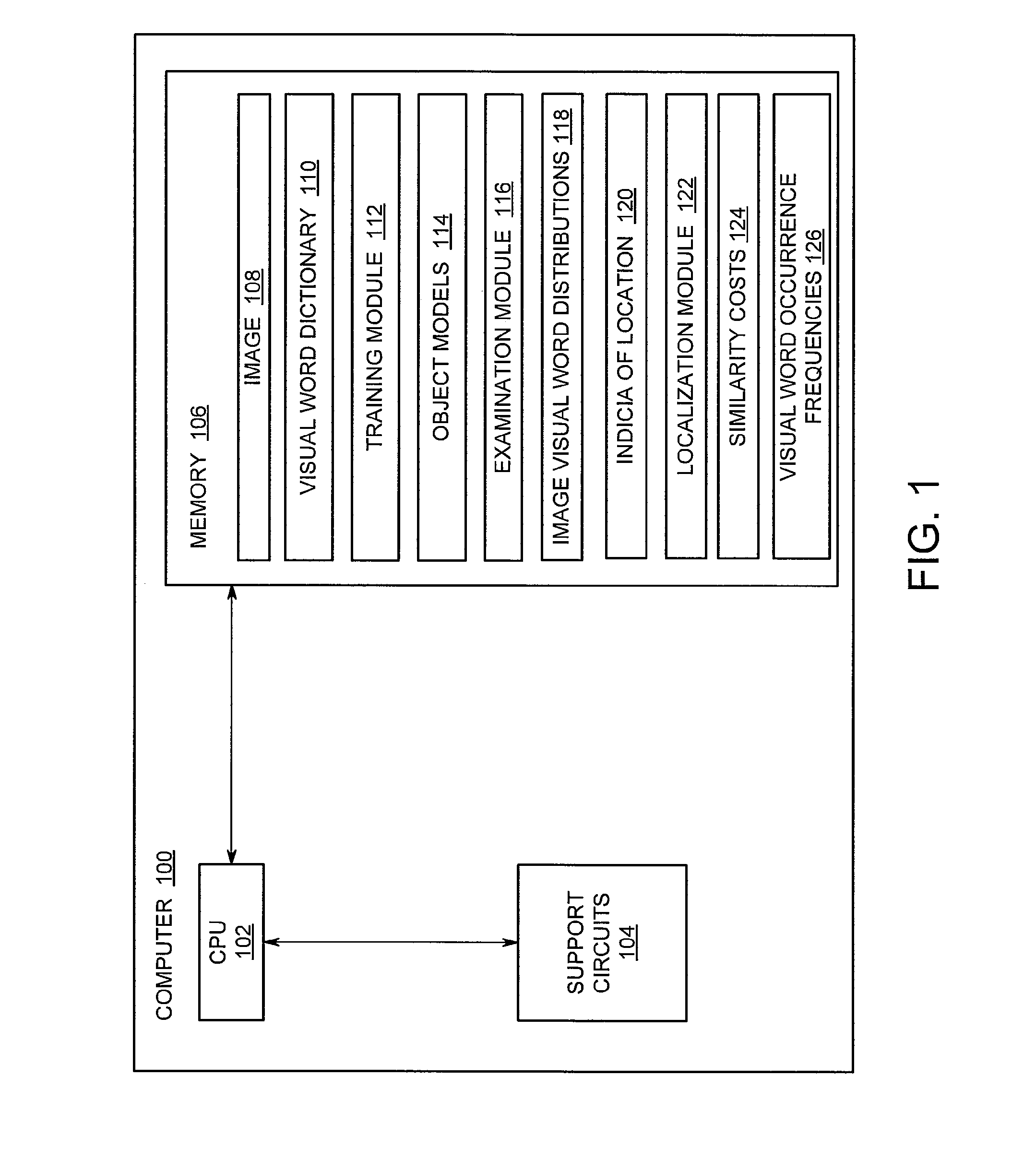

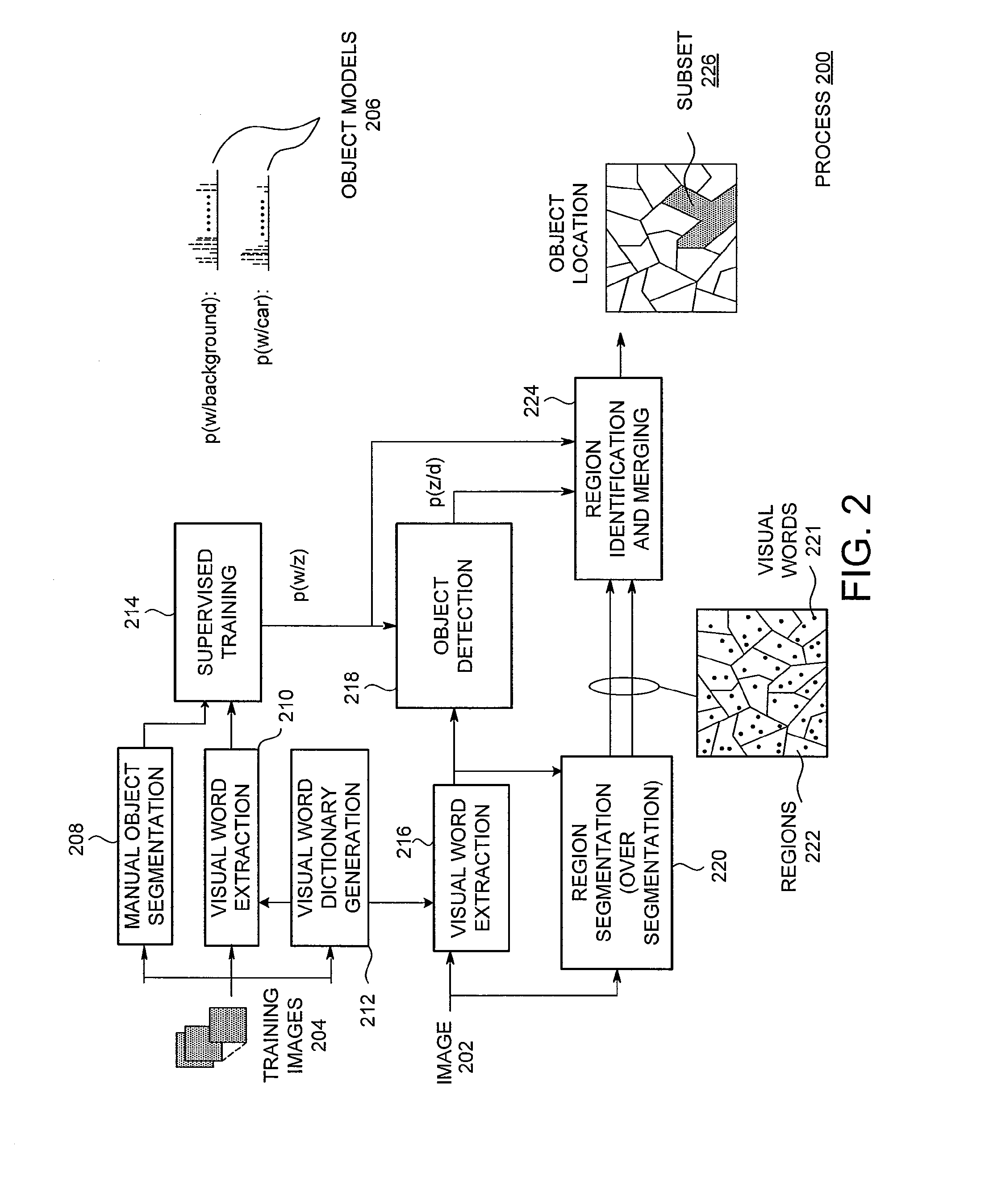

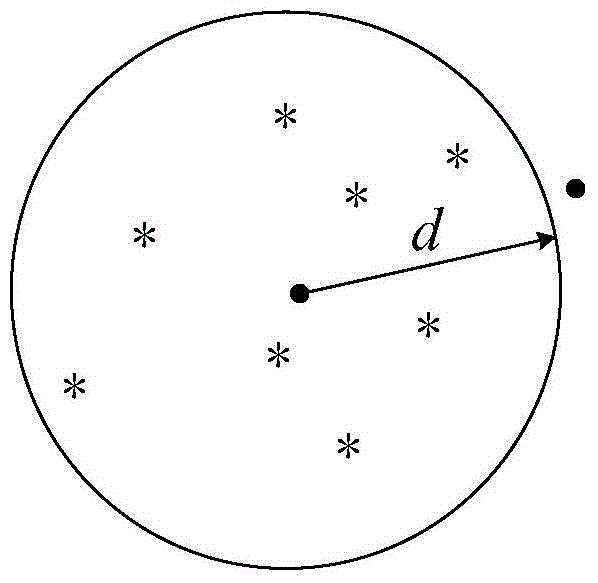

Method and apparatus for localizing an object within an image

An improved method and apparatus for localizing objects within an image is disclosed. In one embodiment, the method comprises accessing at least one object model representing visual word distributions of at least one training object within training images, detecting whether an image comprises at least one object based on the at least one object model, identifying at least one region of the image that corresponds with the at least one detected object and is associated with a minimal dissimilarity between the visual word distribution of the at least one detected object and a visual word distribution of the at least one region and coupling the at least one region with indicia of location of the at least one detected object.

Owner:SONY CORP

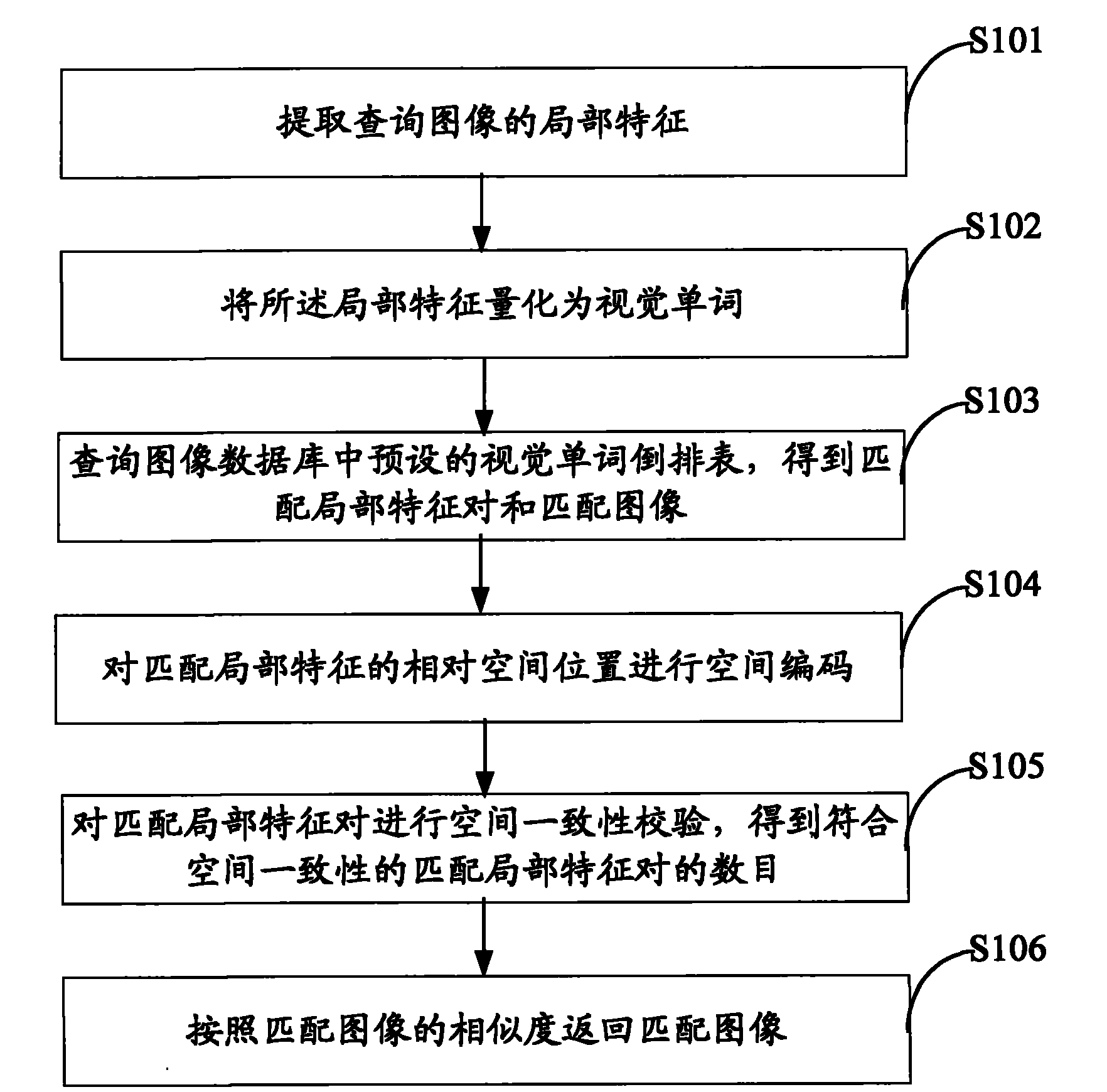

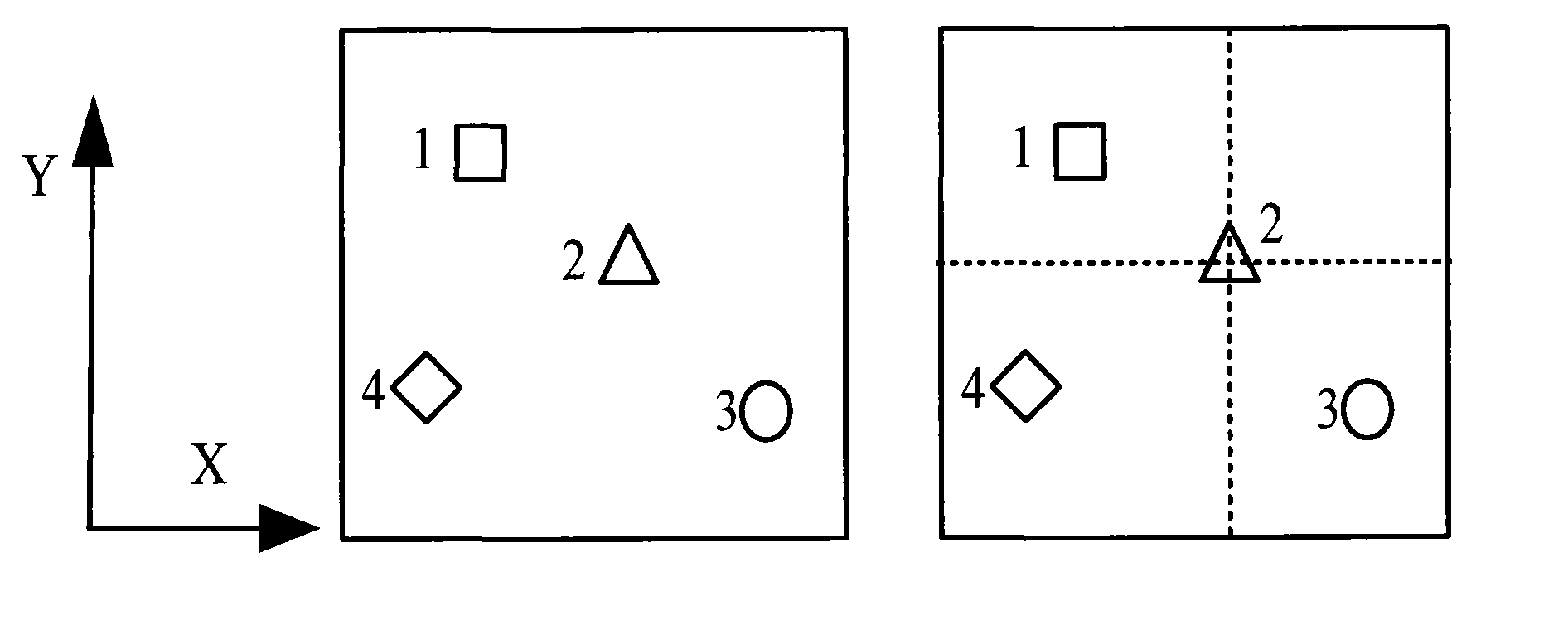

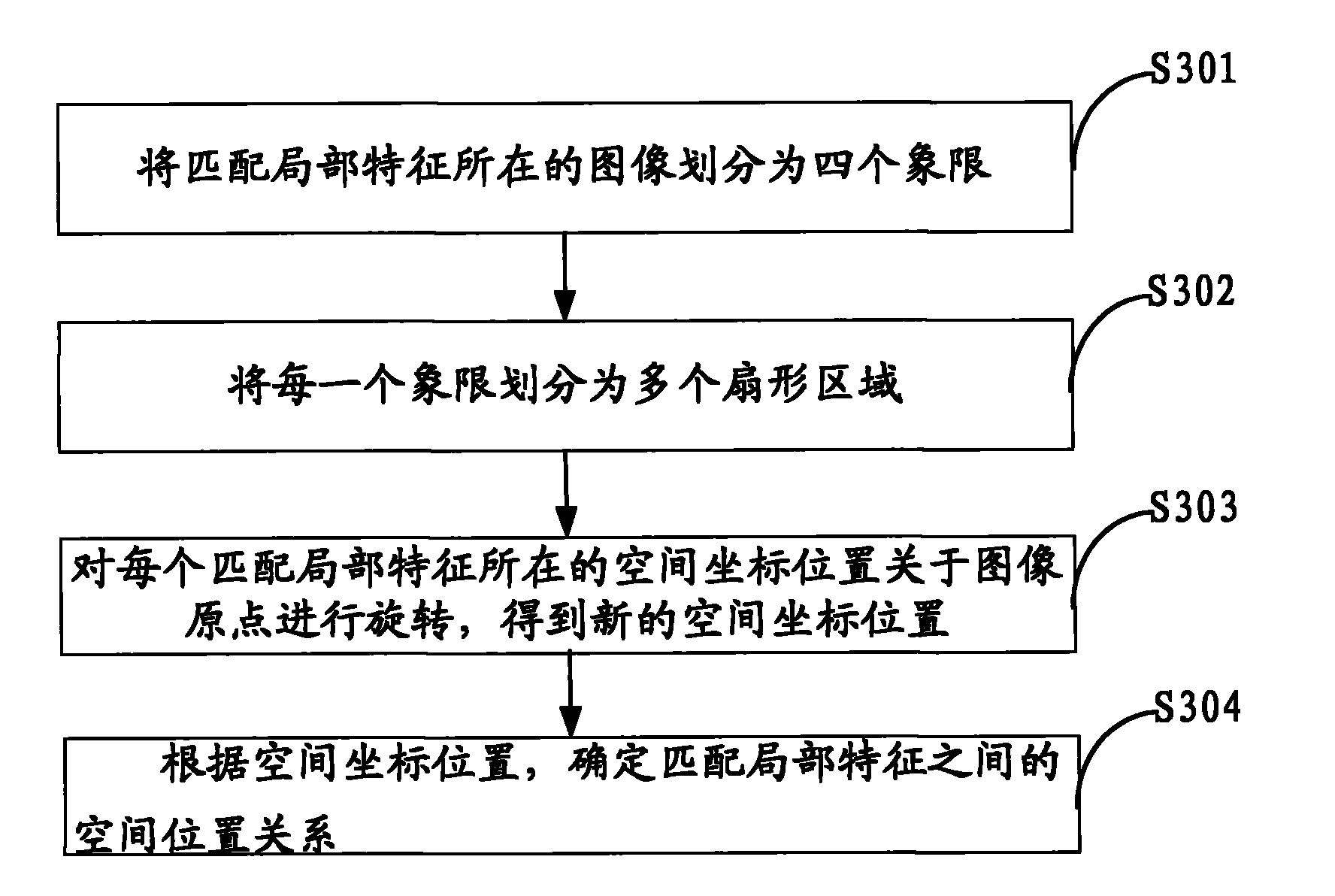

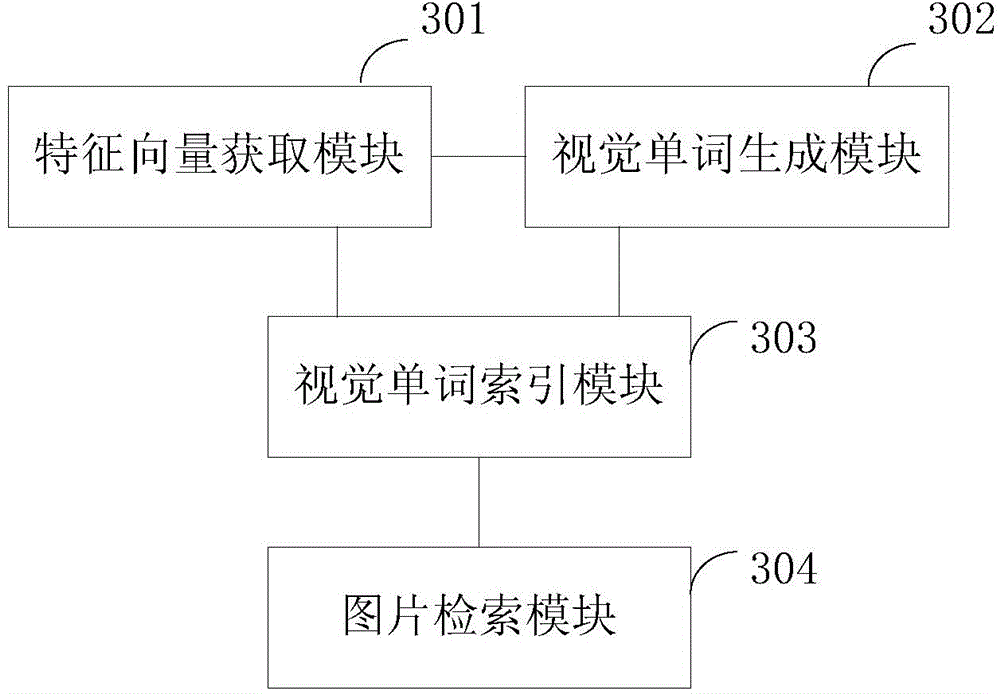

Image retrieval method, device and system

ActiveCN102368237AReduce computational complexityExclude False Local Feature MatchesSpecial data processing applicationsSpatial consistencyVisual perception

The invention discloses an image retrieval method, an image retrieval device and an image retrieval system, wherein the image retrieval method comprises the following steps: extracting the local features of a query image, and quantizing the local features into visual words; querying a preset visual-word inverted list in an image database by using the visual words so as to obtain matched local-feature pairs and matched images; respectively carrying out space encoding on relative space positions between matched local features in the query image and the matched images so as to obtain a space code picture of the query image and space code pictures of the matched images; executing a space consistency check on the space code picture of the query image and the space code pictures of the matched images so as to obtain the number of the matched local-feature pair in conformity with the space consistency; and according to the numbers of the matched local-feature pairs (in conformity with the space consistency) of different matched images, returning to the matched images according to the similarity of the matched images. By using the method provided by the invention, the image retrieval accuracy and the retrieval efficiency can be improved, and the time consuming for retrieval can be reduced.

Owner:UNIV OF SCI & TECH OF CHINA

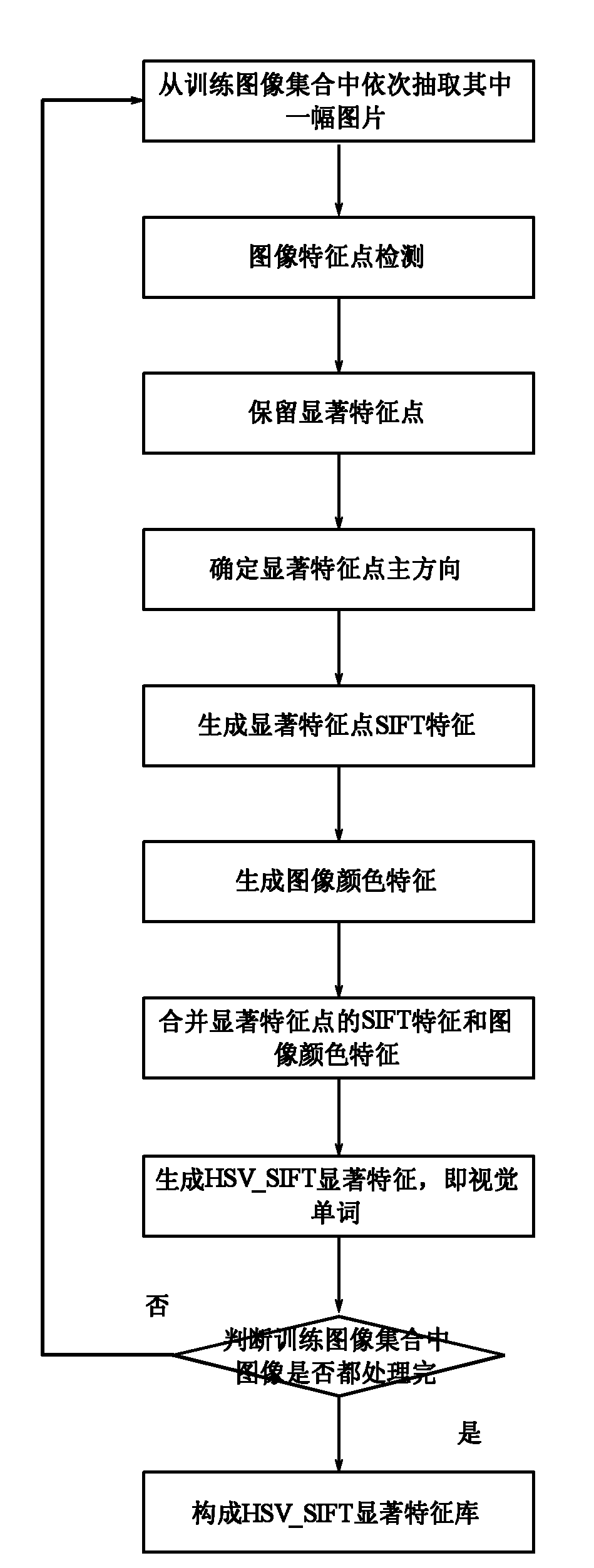

Probabilistic latent semantic model object image recognition method with fusion of significant characteristic of color

ActiveCN102629328ABridging the Semantic GapEasy to solve identification problemsCharacter and pattern recognitionPattern recognitionNear neighbor

The invention provides a probabilistic latent semantic model object image recognition method with the fusion of a significant characteristic of a color, belonging to the field of image recognition technology. The method is characterized by: using an SIFT algorithm to extract a local significant characteristic of an image, adding a color characteristic simultaneously, generating a HSV_SIFT characteristic, introducing TF_IDF weight information to carry out characteristic reconstruction such that the local significant characteristic has discrimination more, using a latent semantic characteristicmodel to obtain an image latent semantic characteristic, and finally using a nearest neighbor KNN classifier to carry out classification. According to the method, not only is color information of theimage considered, but also the distribution of a visual word in a whole image set is fully considered, thus the local significant characteristic of an object has discrimination more, and the ability of recognition is raised.

Owner:猫窝科技(天津)有限公司

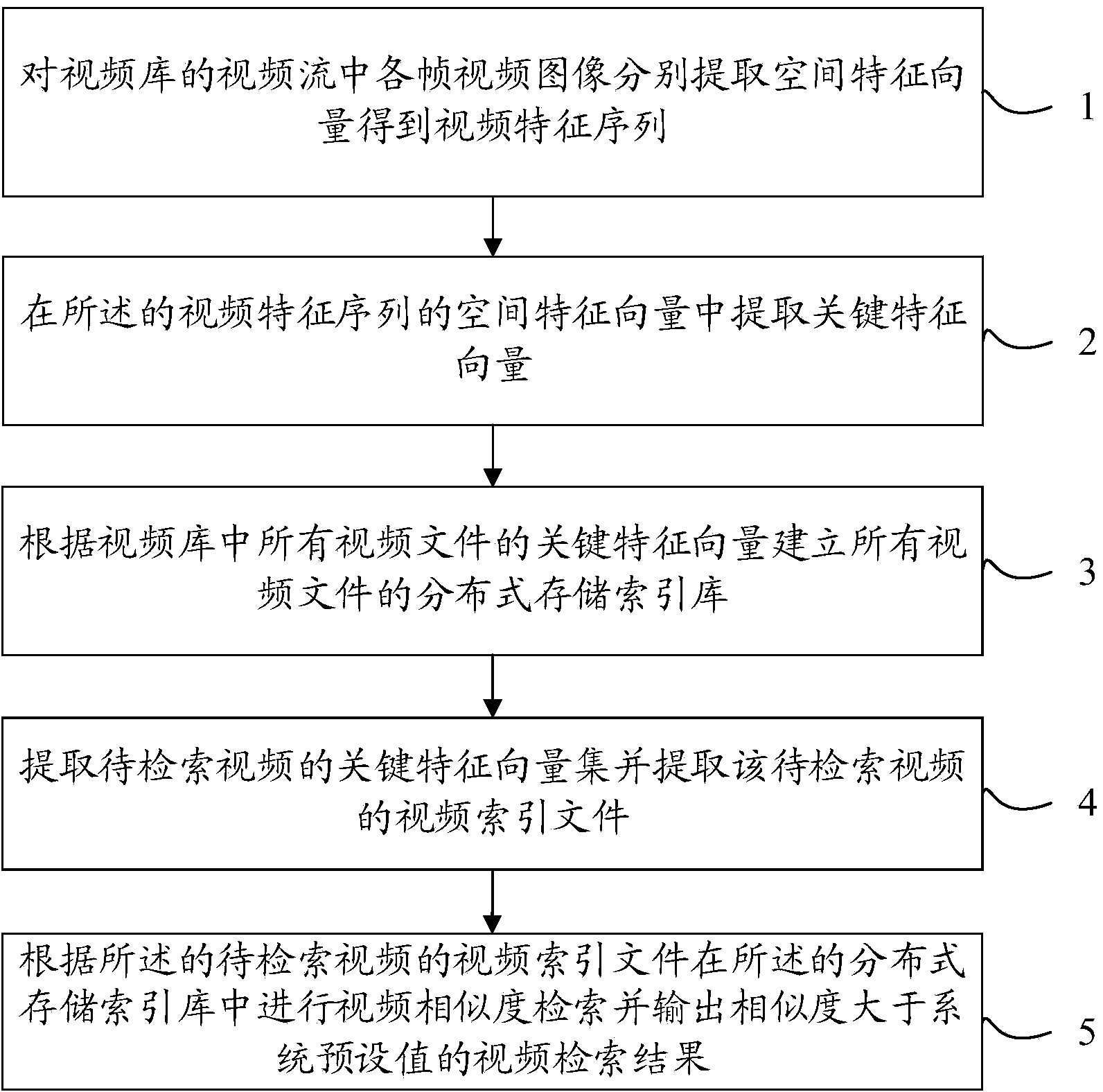

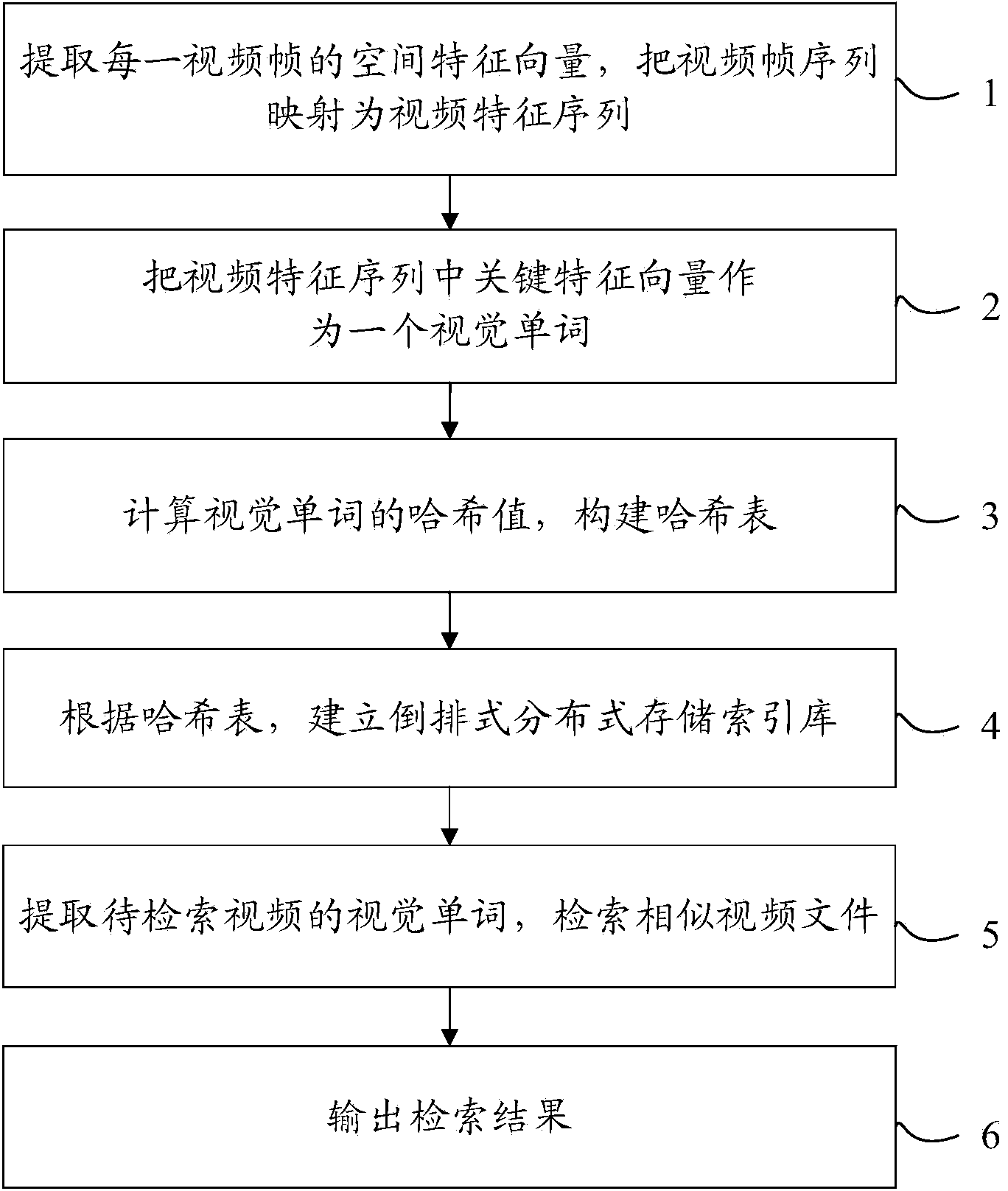

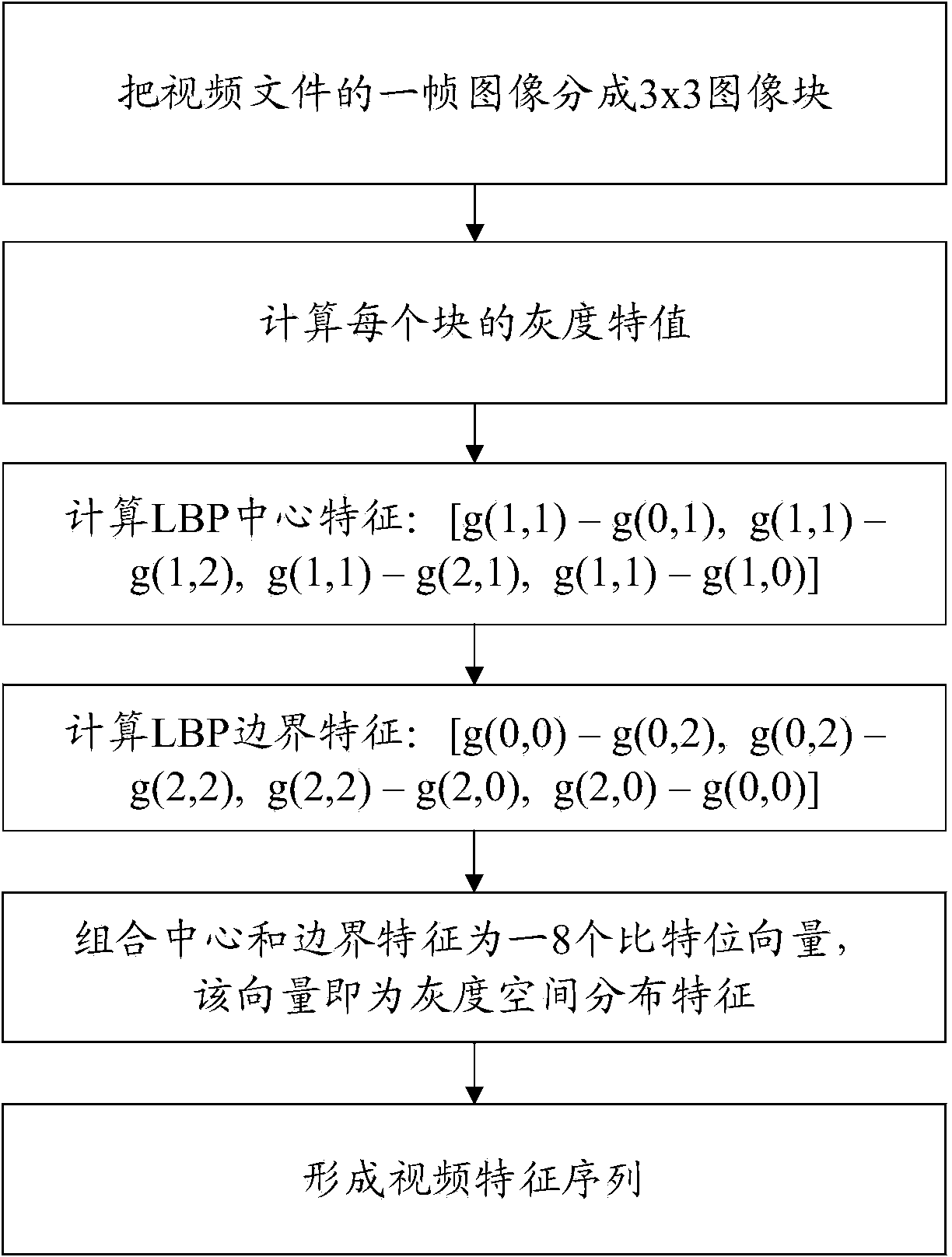

Method for realizing quick retrieval of mass videos

ActiveCN104050247AOvercoming Extraction Parameter Selection ProblemsImprove retrieval speedVideo data indexingCharacter and pattern recognitionVideo retrievalFeature vector

The invention relates to a method for realizing the quick retrieval of mass videos. The method comprises the following steps: respectively extracting spatial feature vectors from all frame video images in a video stream of a video library to obtain video feature sequences; extracting key feature vectors from the spatial feature vectors; establishing a distributed storage index database according to the key feature vectors of all video files in the video library; extracting key feature vector sets of videos to be retrieved and extracting video index files of the videos to be retrieved; performing the video similarity retrieving in the distributed storage index database according to the video index files of the videos to be retrieved and outputting video retrieval results of the video files with the similarity larger than the preset value of the system. Through the adoption of the method with the structure, representative visual words are adopted to replace key frames, video information is completely represented, a large amount of redundant of video information does not exist, the video information is very compact, the retrieval speed is increased, and the method has mass data concurrent processing capacity, and is wider in application range.

Owner:SHANGHAI MEIQI PUYUE COMM TECH

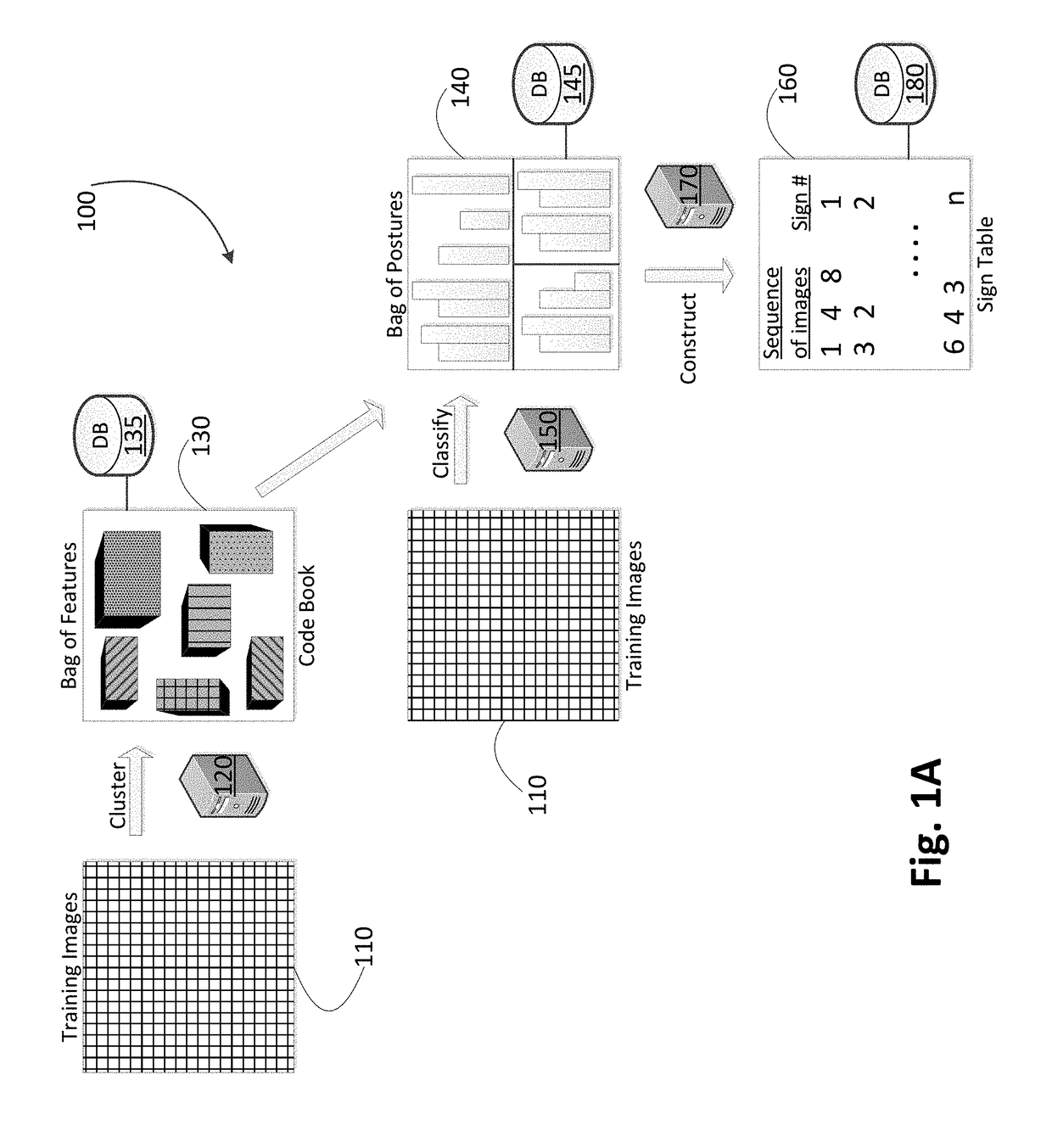

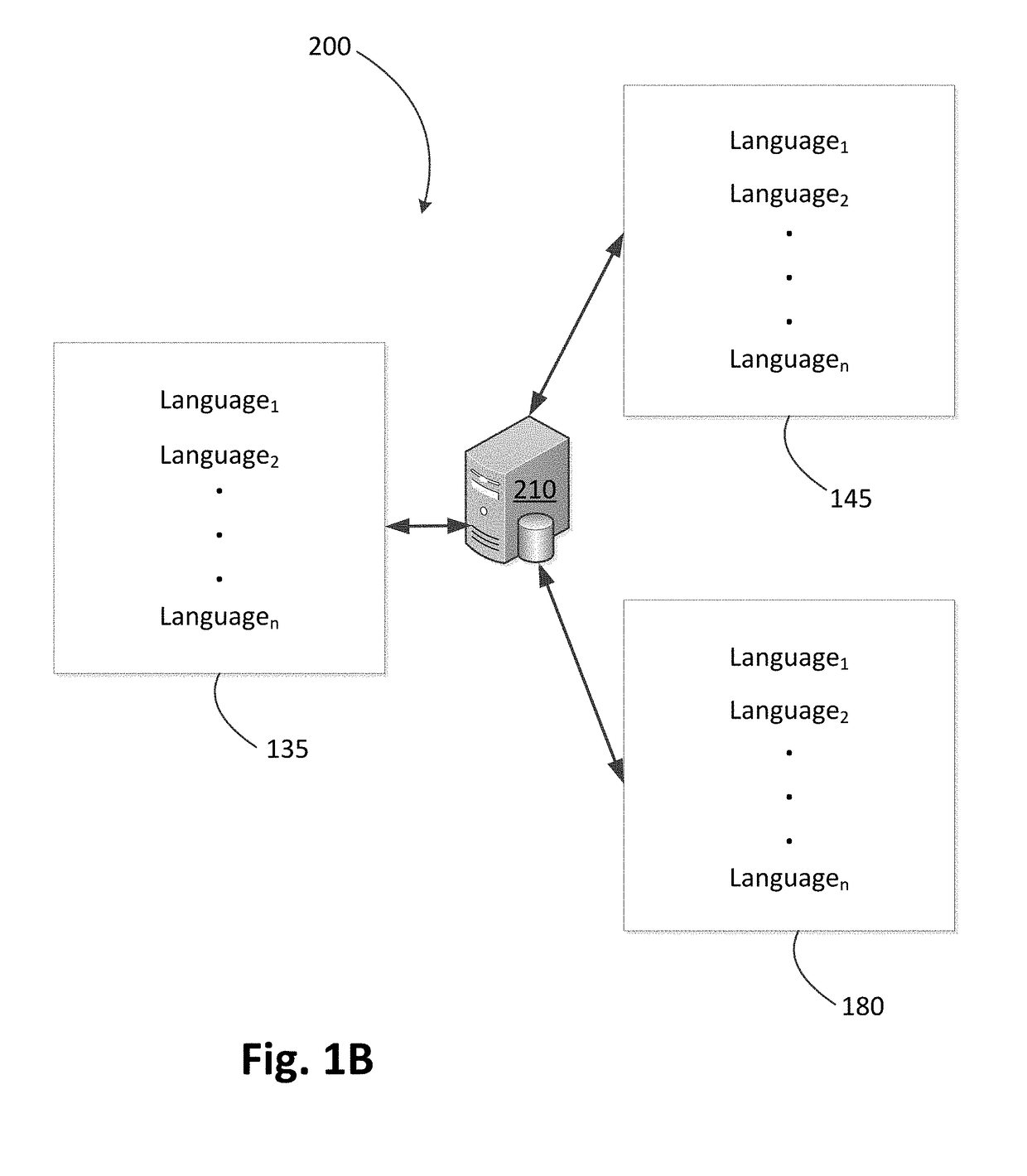

Automated sign language recognition

InactiveUS10037458B1Input/output for user-computer interactionSpeech analysisKalispel languageCode book

A sign language recognizer is configured to detect interest points in an extracted sign language feature, wherein the interest points are localized in space and time in each image acquired from a plurality of frames of a sign language video; apply a filter to determine one or more extrema of a central region of the interest points; associate features with each interest point using a neighboring pixel function; cluster a group of extracted sign language features from the images based on a similarity between the extracted sign language features; represent each image by a histogram of visual words corresponding to the respective image to generate a code book; train a classifier to classify each extracted sign language feature using the code book; detect a posture in each frame of the sign language video using the trained classifier; and construct a sign gesture based on the detected postures.

Owner:KING FAHD UNIVERSITY OF PETROLEUM AND MINERALS

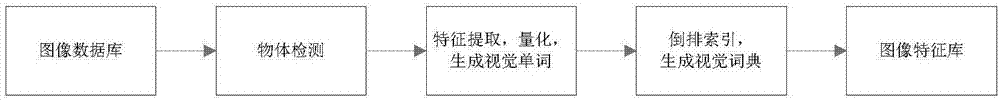

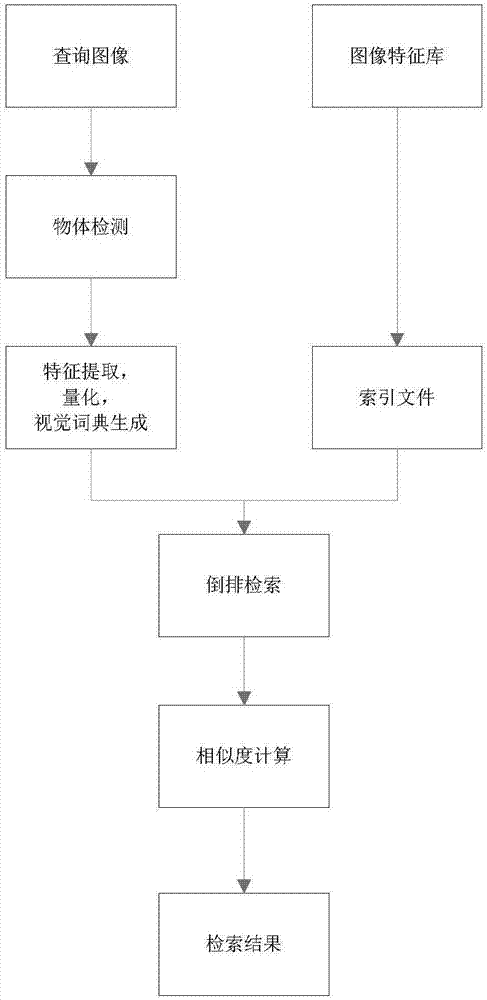

Image retrieval method based on object detection

ActiveCN107256262AReduce distractionsImprove accuracyCharacter and pattern recognitionSpecial data processing applicationsSemantic gapImaging Feature

The invention discloses an image retrieval method based on object detection. The method is used for solving the problem that multiple objects in an image are not retrieved respectively during image retrieval. According to the implementation process of the method, object detection is performed on an image in an image database, and one or more objects in the image are detected; SIFT features and MSER features of the detected objects are extracted and combined to generate feature bundles; a K mean value and a k-d tree are adopted to make the feature bundles into visual words; visual word indexes of the objects in the image database are established through reverse indexing, and an image feature library is generated; and an object detection method is used to make objects in a query image into visual words, similarity compassion is performed on the visual words of the query image and the visual words of the image feature library, and the image with the highest score is output to serve as an image retrieval result. Through the method, the objects in the image can be retrieved respectively, background interference and image semantic gaps are reduced, and accuracy, retrieval speed and efficiency are improved; and the method is used for image retrieval on a specific object in the image, including a person.

Owner:XIDIAN UNIV

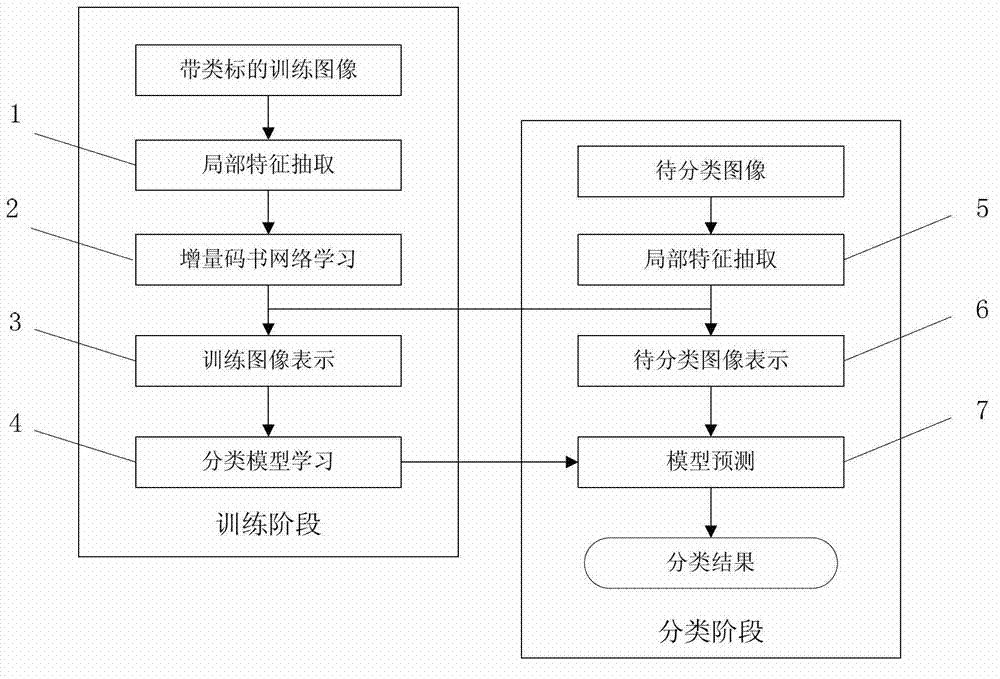

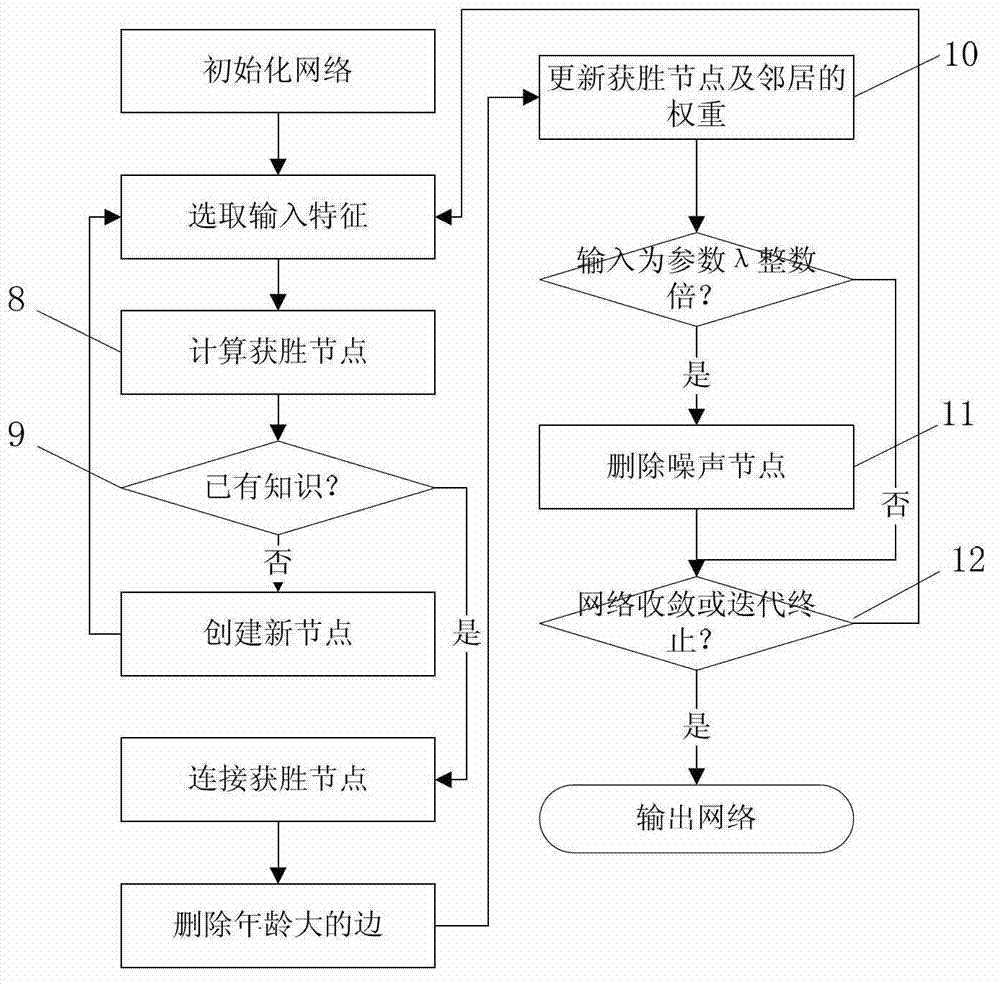

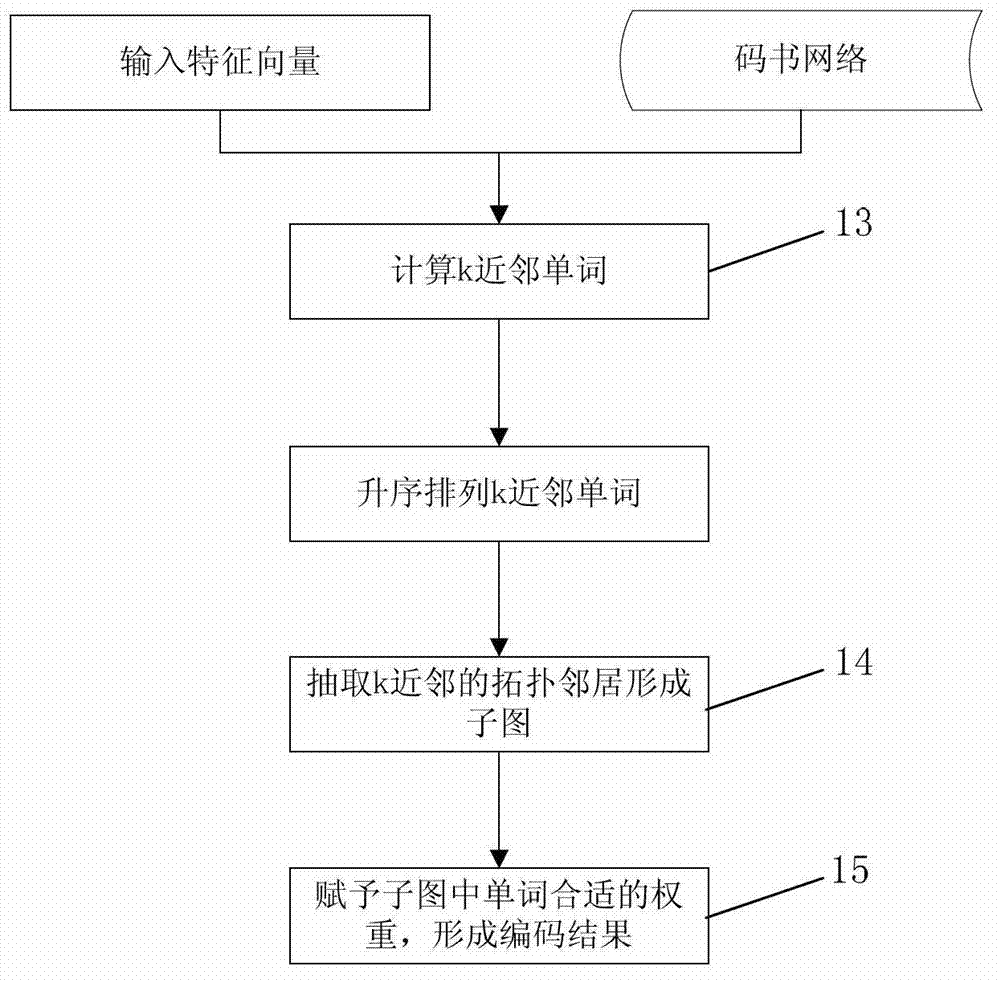

Increment neural network and sub-graph code based image classification method

InactiveCN103116766AOvercome the disadvantages of not being able to directly handle large-scale dataRich semantic informationCharacter and pattern recognitionNerve networkCode book

The invention discloses an increment neural network and sub-graph code based image classification method. The increment neural network and sub-graph code based image classification method comprises the following steps of: extracting local characteristics; studying increment code books in a network; performing characteristic coding based on a sub-graph; getting image spaces together; and studying a classifier and predicting a model. The increment neural network and sub-graph code based image classification method greatly reduces time complexity of a traditional algorithm to a great extent as the code book can be studied efficiently, and the space relationship between visual words is also kept; in addition, the characteristic coding based on the sub-graph is specifically for performing characteristic coding by fully utilizing the space relationship between the visual words, so that more abundant semantic information can be extracted, and excellent classification performances are obtained finally while the computational efficiency of a classification system is increased. Therefore, the increment neural network and sub-graph code based image classification method has a relatively high use value.

Owner:NANJING UNIV

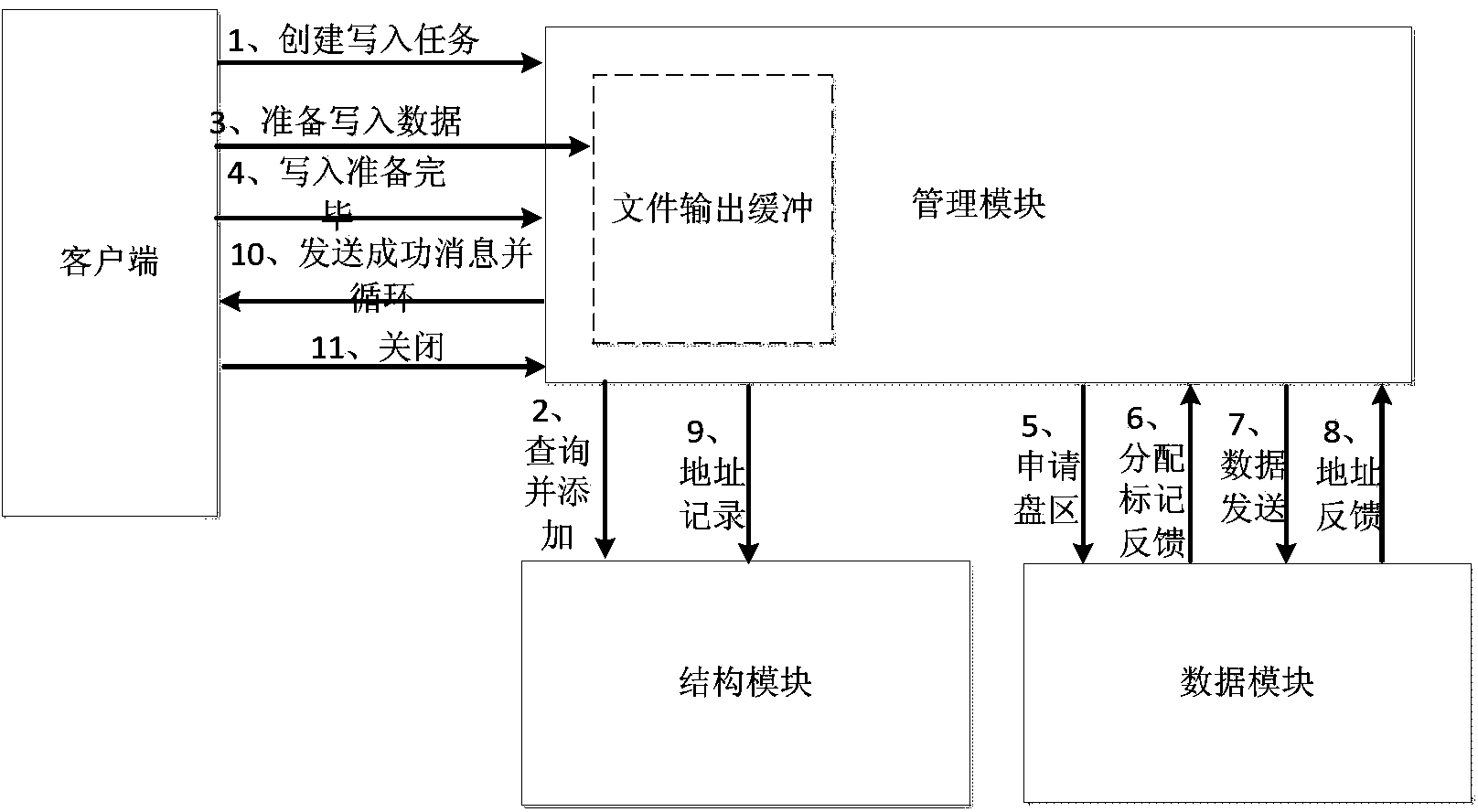

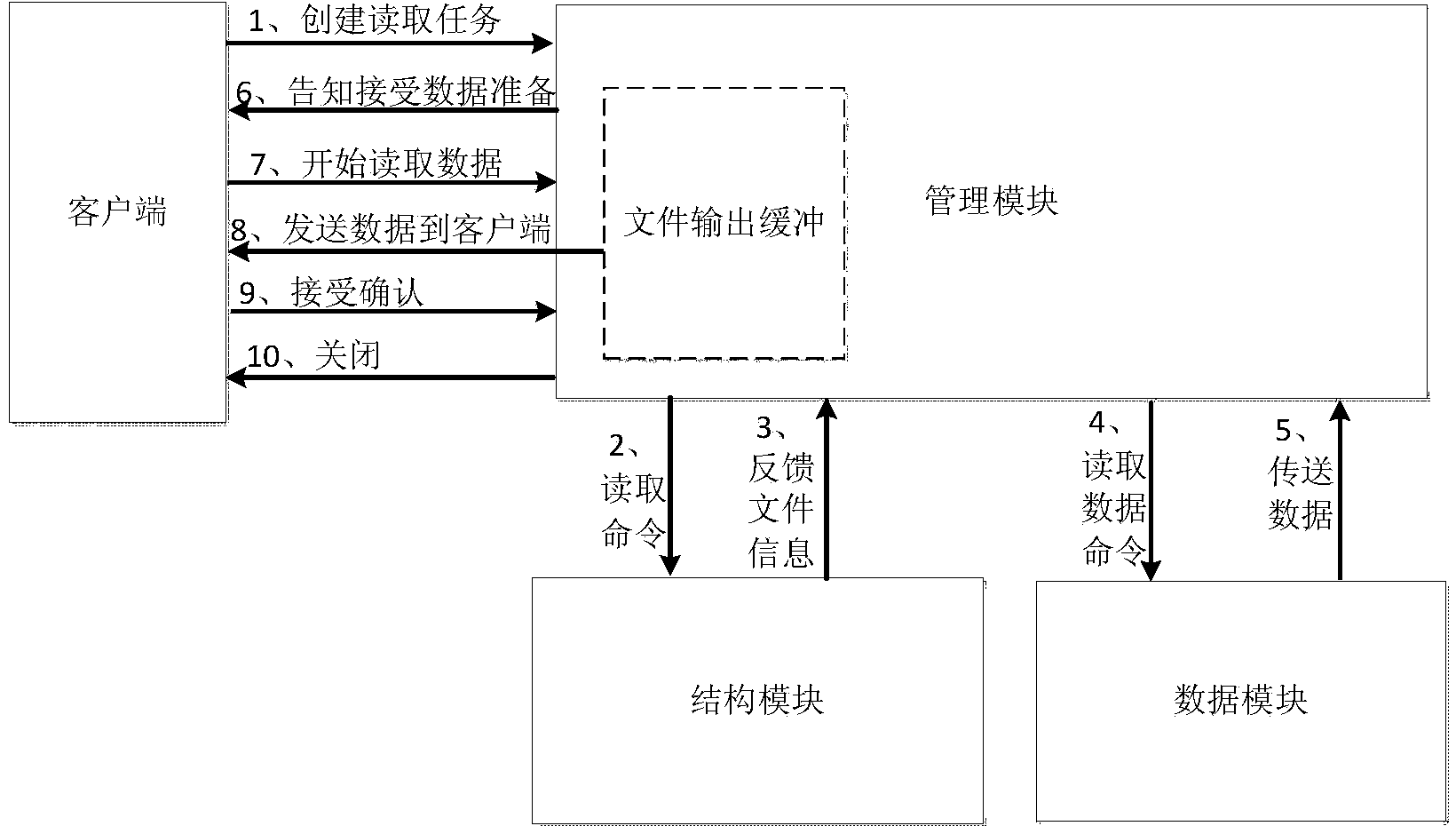

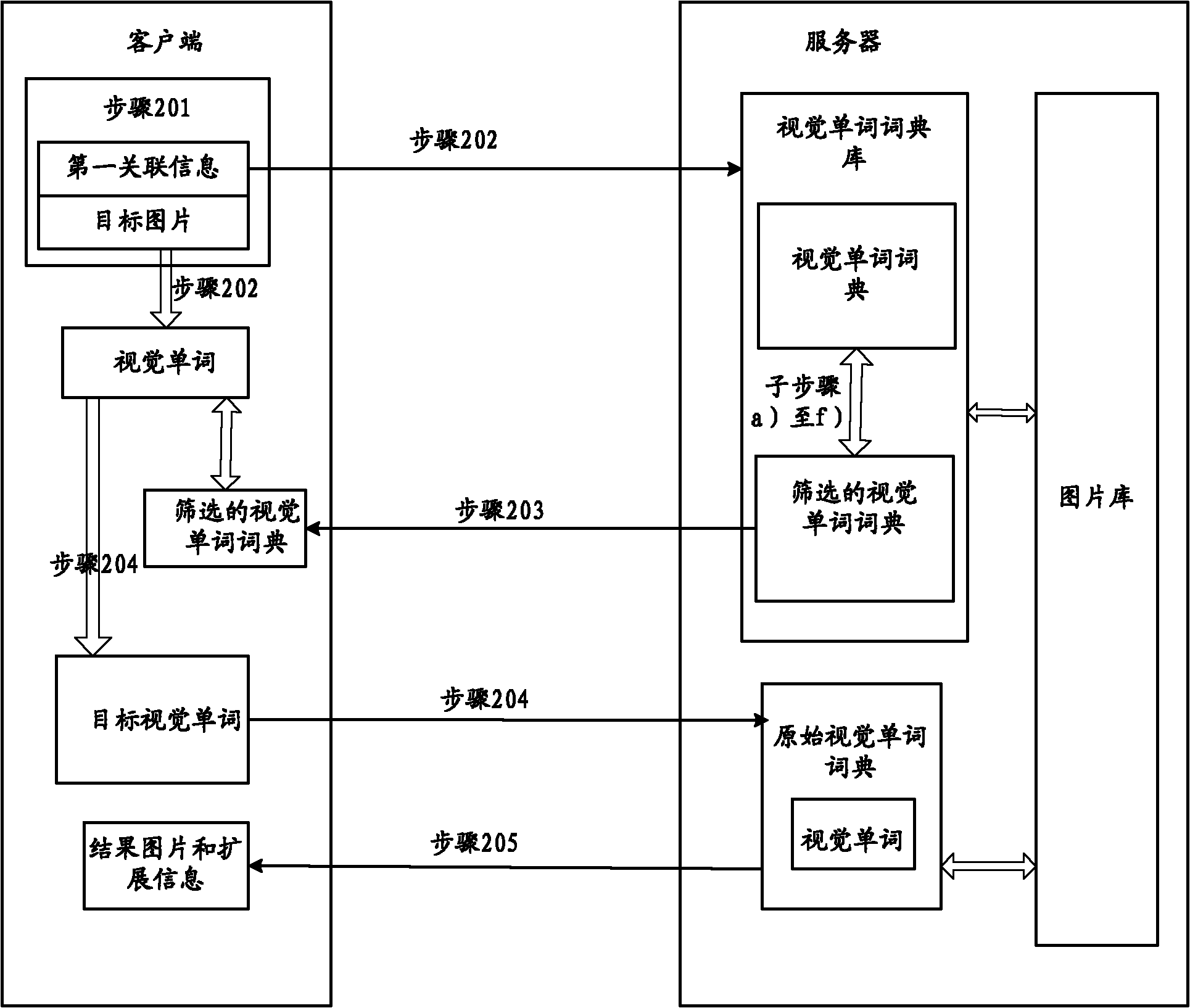

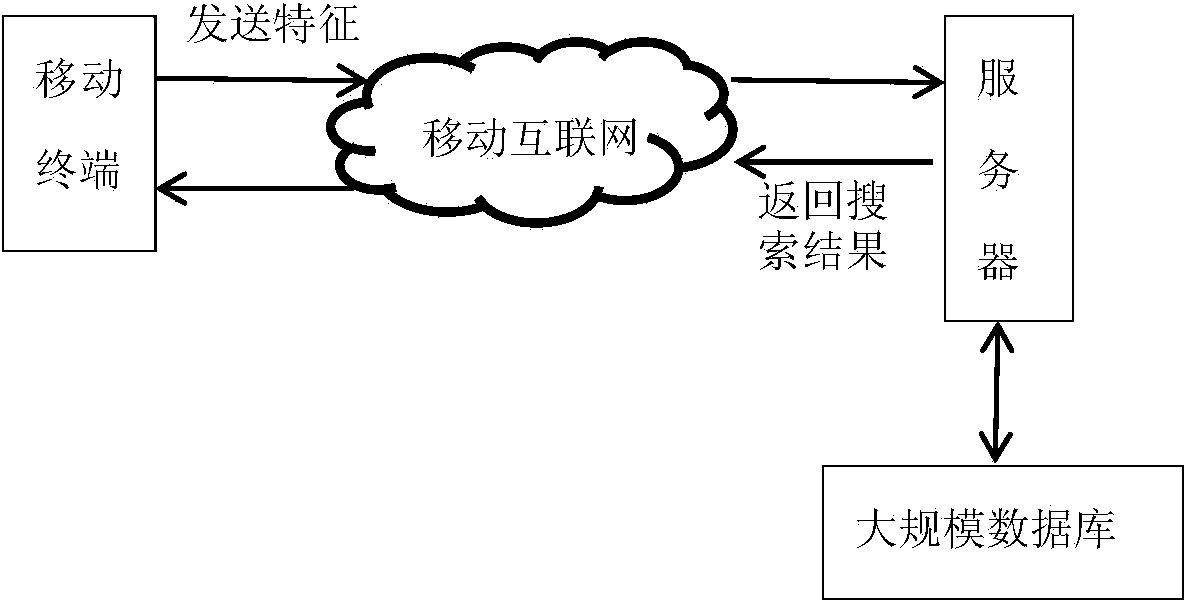

Image searching method and system, client side and server

ActiveCN102063472AImprove efficiencyFast response timeSpecial data processing applicationsClient-sideComputer science

The invention provides image searching method and system and a client side and a server, wherein the image searching method comprises the steps: the client side obtains a target image to be searched and first relevance information aiming at the target image, sends the first relevance information to the server, and obtains a vision word of the target image; the server searches a vision word dictionary in a vision word dictionary library inside the server based on the first relevance information, and sends the vision word dictionary to the client side; the client side obtains a target vision word based on the vision word dictionary sent by the server and sends the target vision word to the server; and the client side receives the target vision word, searches more than one result image corresponding to the target vision word and relevant expansion information and sends the result image and the relevant expansion information to the client side. The image searching method shortens the searching waiting time of a client, improves the searching performance and the efficiency and can be suitable for various fields.

Owner:PEKING UNIV

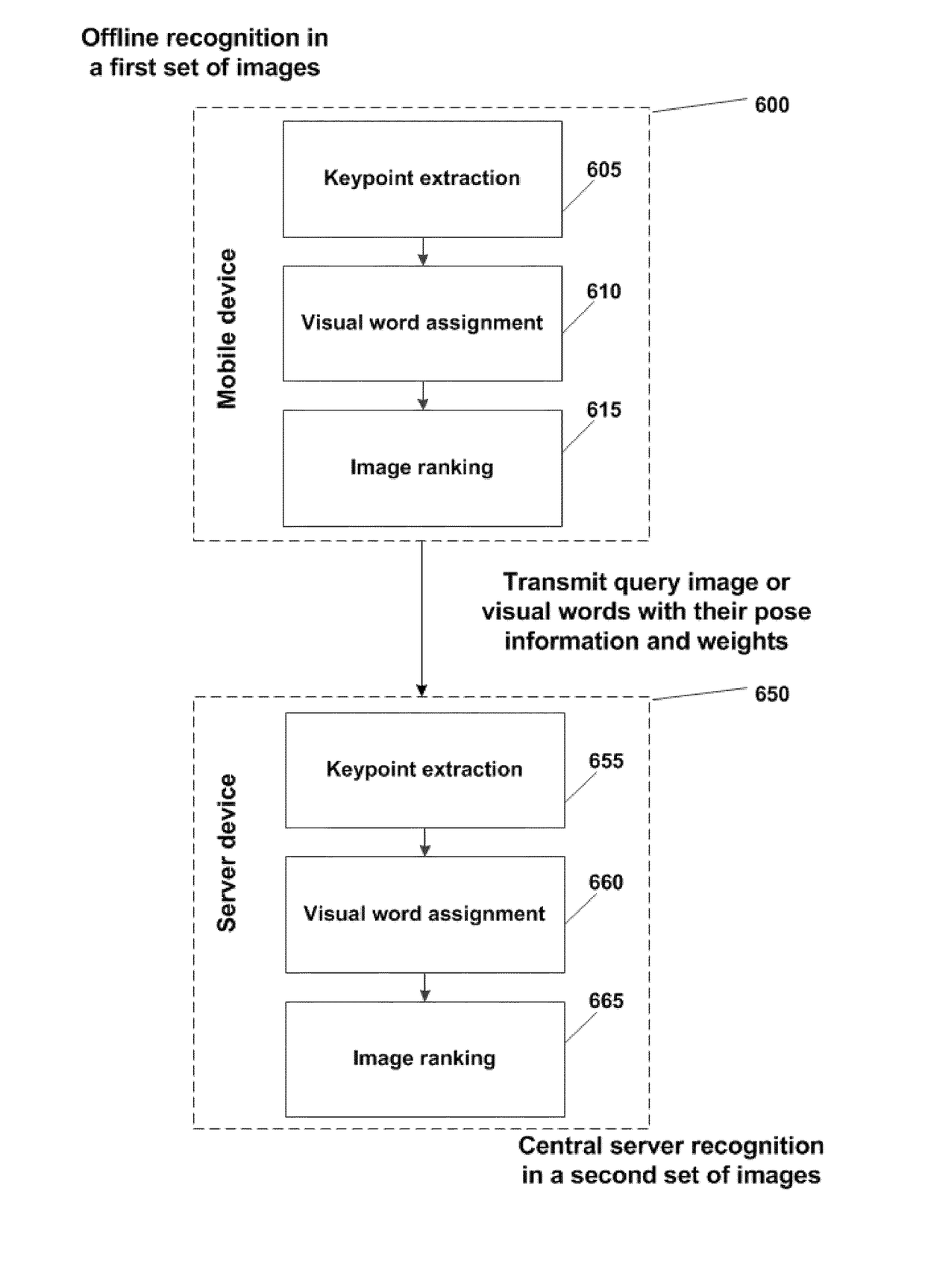

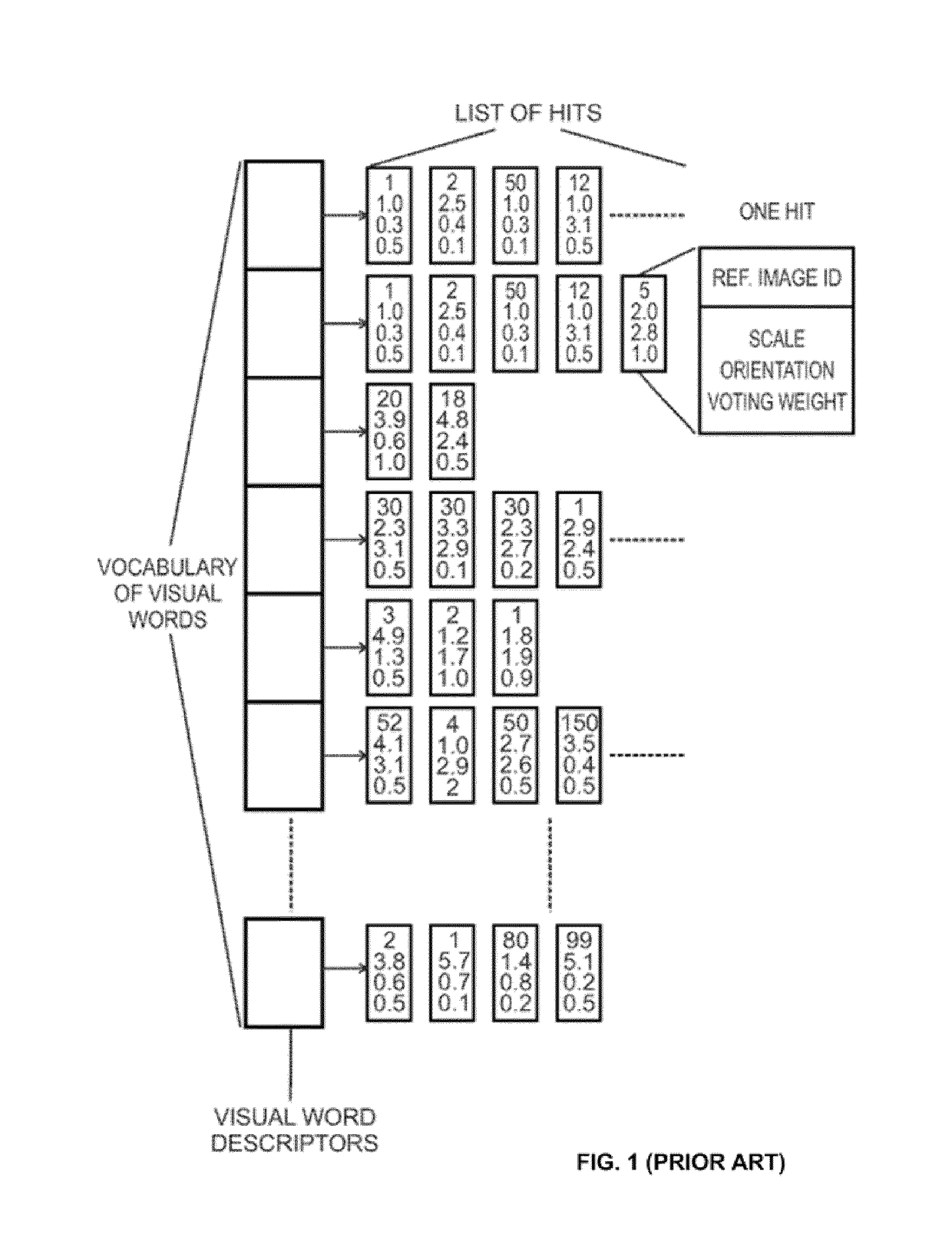

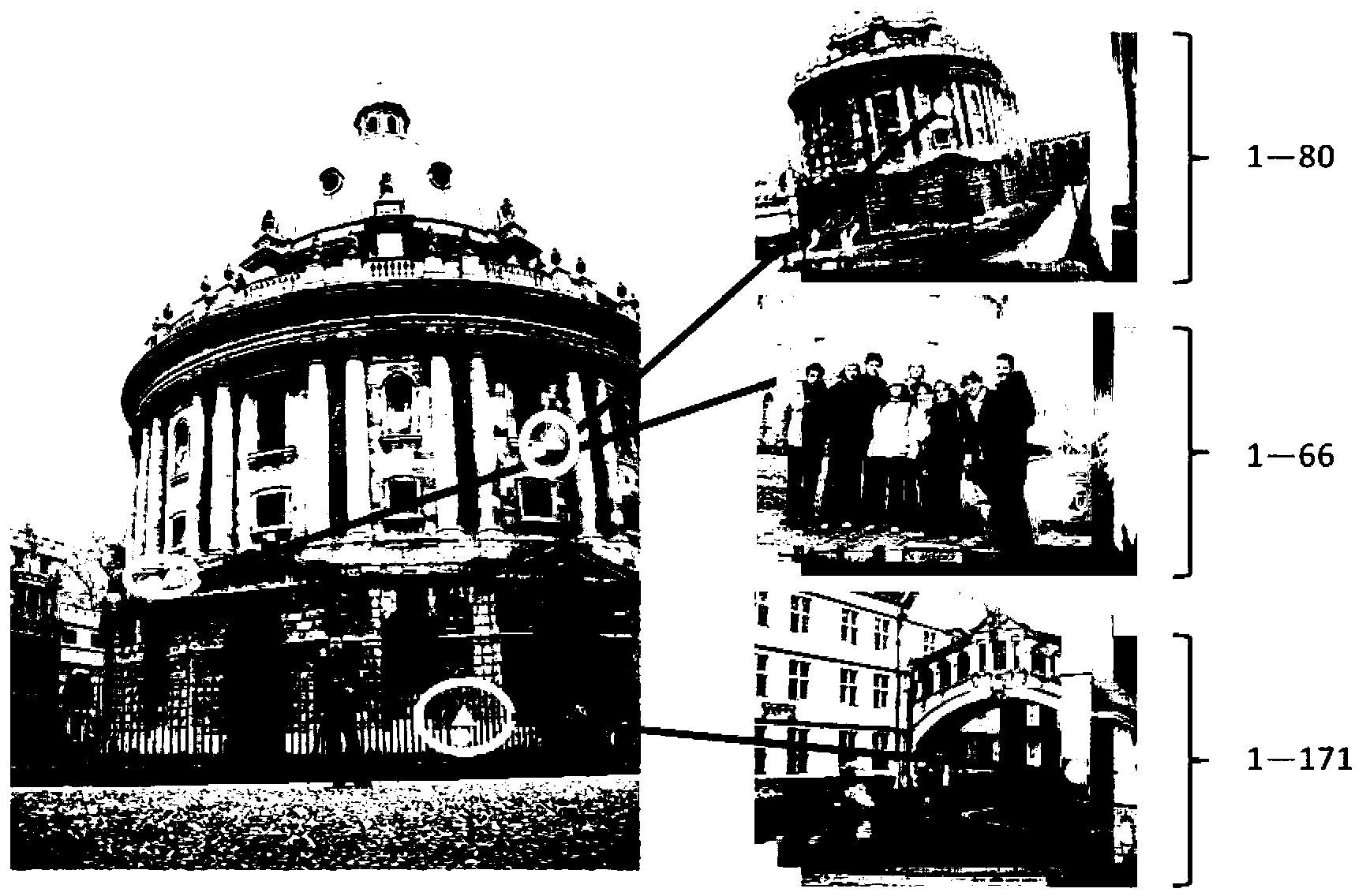

Offline, hybrid and hybrid with offline image recognition

ActiveUS20170185857A1Digital data information retrievalCharacter and pattern recognitionPattern recognitionHybrid system

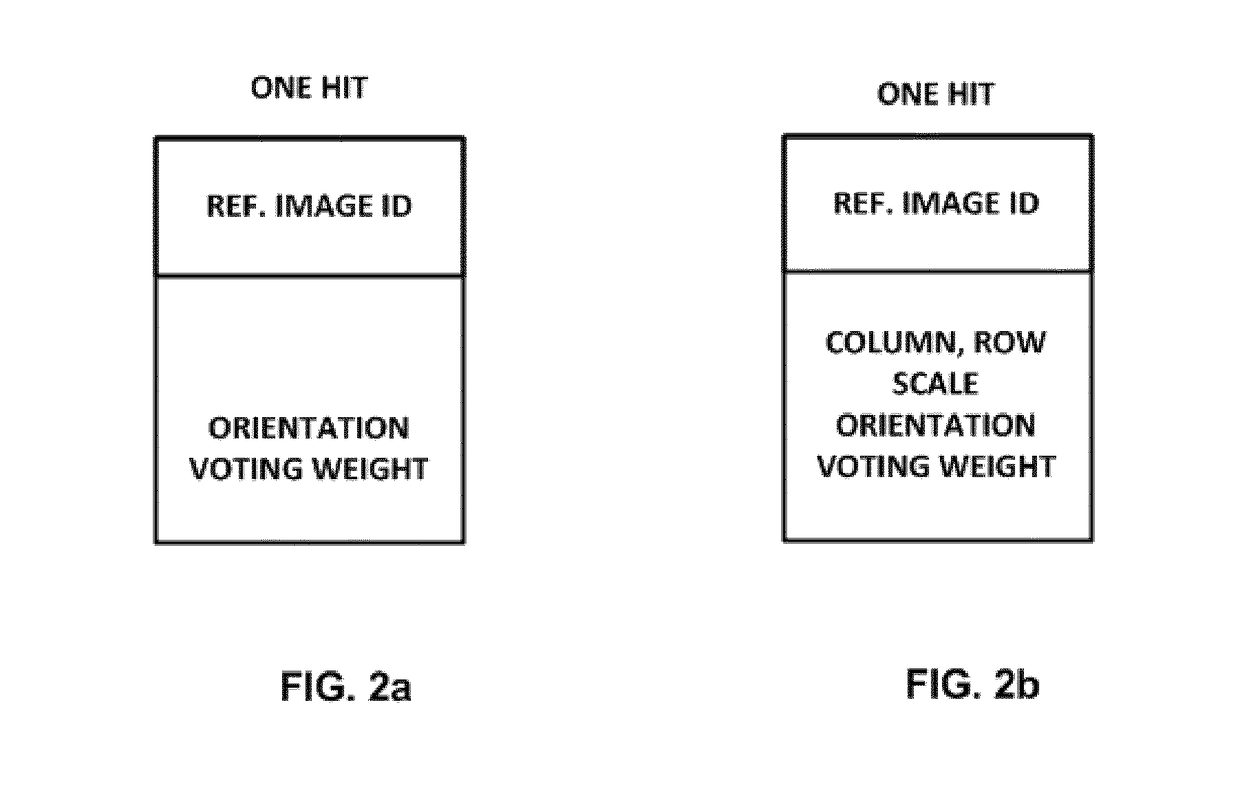

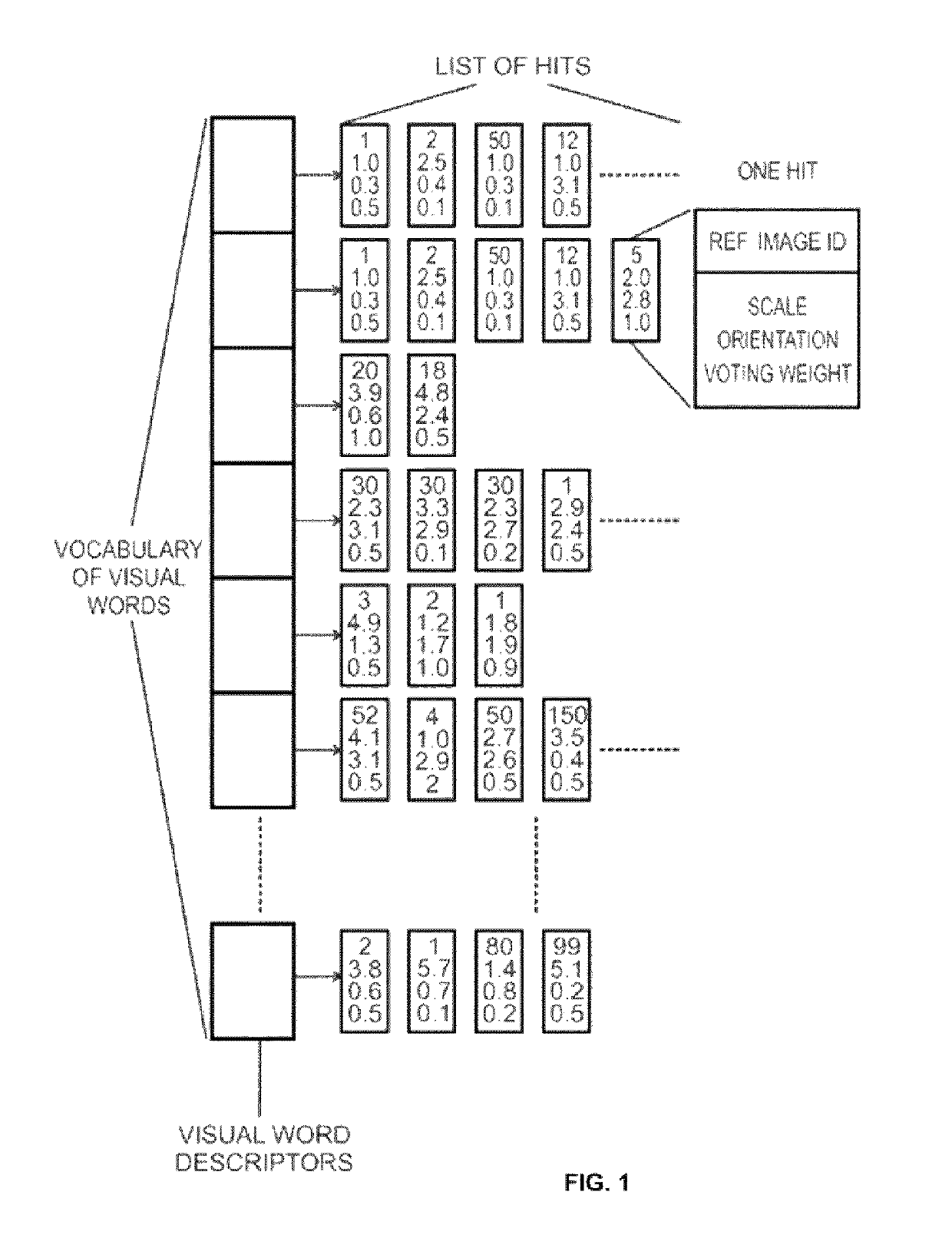

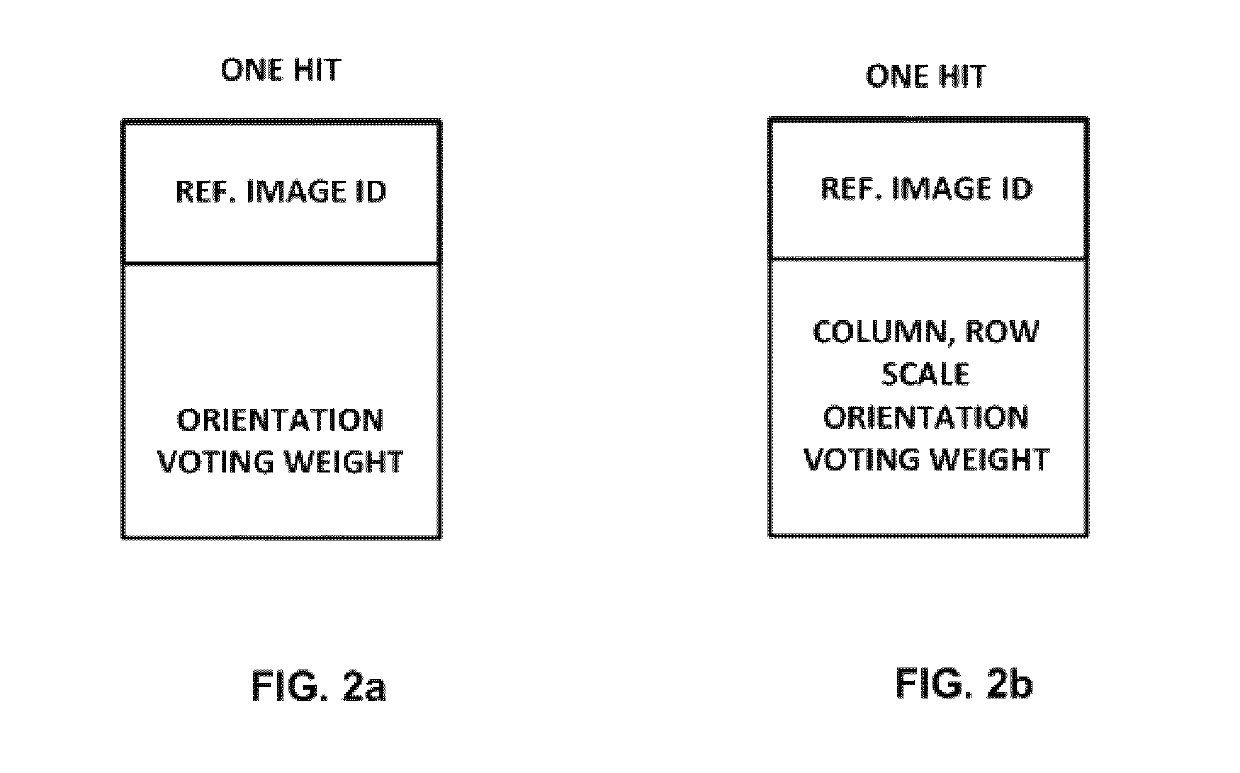

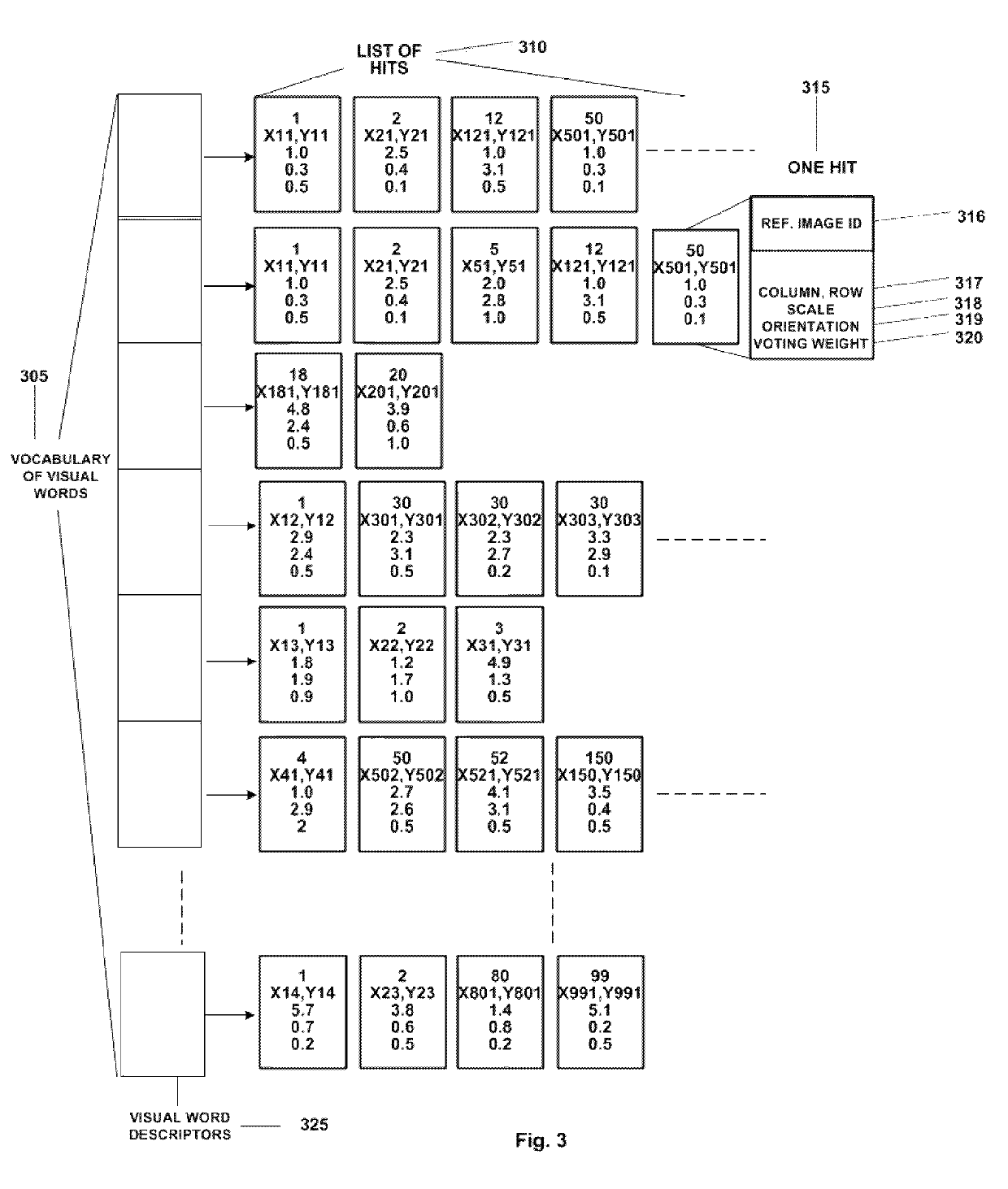

Methods and systems of identification of objects in query images are disclosed. Keypoints in the query images are identified corresponding to objects to be identified. Visual words are identified in a dictionary of visual words for the identified keypoints. A set of hits is identified corresponding to reference images comprising the identified keypoints. Reference images corresponding to the identified set of hits are ranked using clustering of matches in a limited pose space. The limited pose space comprises a one-dimensional table corresponding to the rotation between the object to be identified with respect to the reference image. A first subset of M reference images that obtained a rank above a predetermined threshold is then selected. Offline, hybrid and combined offline and hybrid systems for performing the proposed methods are disclosed.

Owner:CATCHOOM TECH

Offline, hybrid and hybrid with offline image recognition

ActiveUS10268912B2Digital data information retrievalCharacter and pattern recognitionPattern recognitionHybrid system

Methods and systems of identification of objects in query images are disclosed. Keypoints in the query images are identified corresponding to objects to be identified. Visual words are identified in a dictionary of visual words for the identified keypoints. A set of hits is identified corresponding to reference images comprising the identified keypoints. Reference images corresponding to the identified set of hits are ranked using clustering of matches in a limited pose space. The limited pose space comprises a one-dimensional table corresponding to the rotation between the object to be identified with respect to the reference image. A first subset of M reference images that obtained a rank above a predetermined threshold is then selected. Offline, hybrid and combined offline and hybrid systems for performing the proposed methods are disclosed.

Owner:CATCHOOM TECH

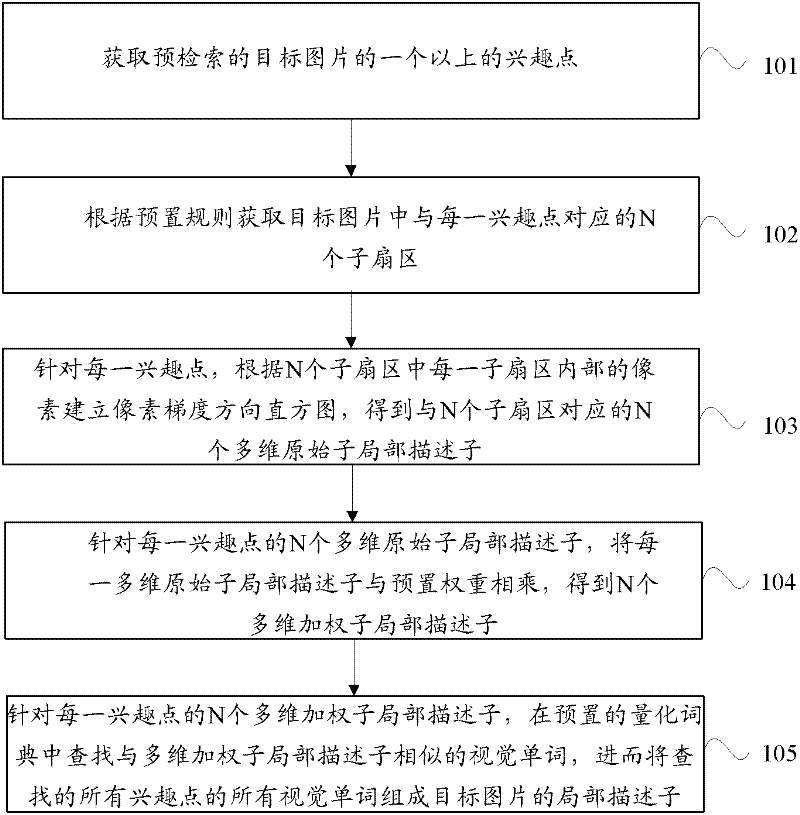

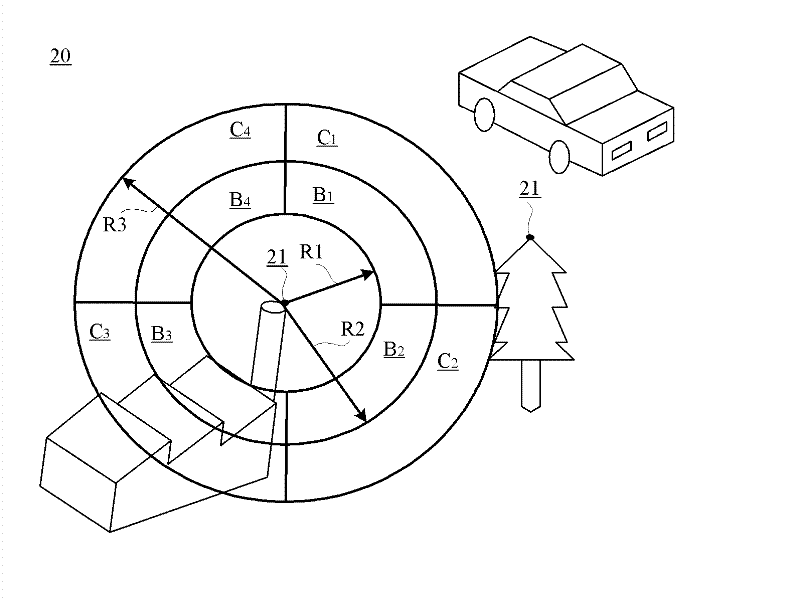

Extracting method for local descriptor, image searching method and image matching method

ActiveCN102521618AImprove the ability to distinguishReduce query response timeCharacter and pattern recognitionMulti dimensionalImage matching

The invention discloses an extracting method for a local descriptor, an image searching method and an image matching method. The extracting method comprises the following steps of: obtaining more than one interesting point on a target image searched in advance; obtaining N sub-fan areas corresponding to each interesting point in the target image; aiming at each interesting point, establishing a pixel gradient directional histogram according to pixels in each of the N sub-fan areas, thereby obtaining N multi-dimensional original sub-local descriptors corresponding to N sub-fan areas; multiplying each of the multi-dimensional original sub-local descriptors with a preset weight, thereby obtaining N multi-dimensional weighted sub-local descriptors; searching for visual words similar to the multi-dimensional weighted sub-local descriptors in a preset quantized dictionary, thereby obtaining N visual words corresponding to each interesting point; and forming the local descriptor of the target image by all the searched visual words of the interesting points. The local descriptor obtained according to the extracting method has a better judging capability.

Owner:PEKING UNIV

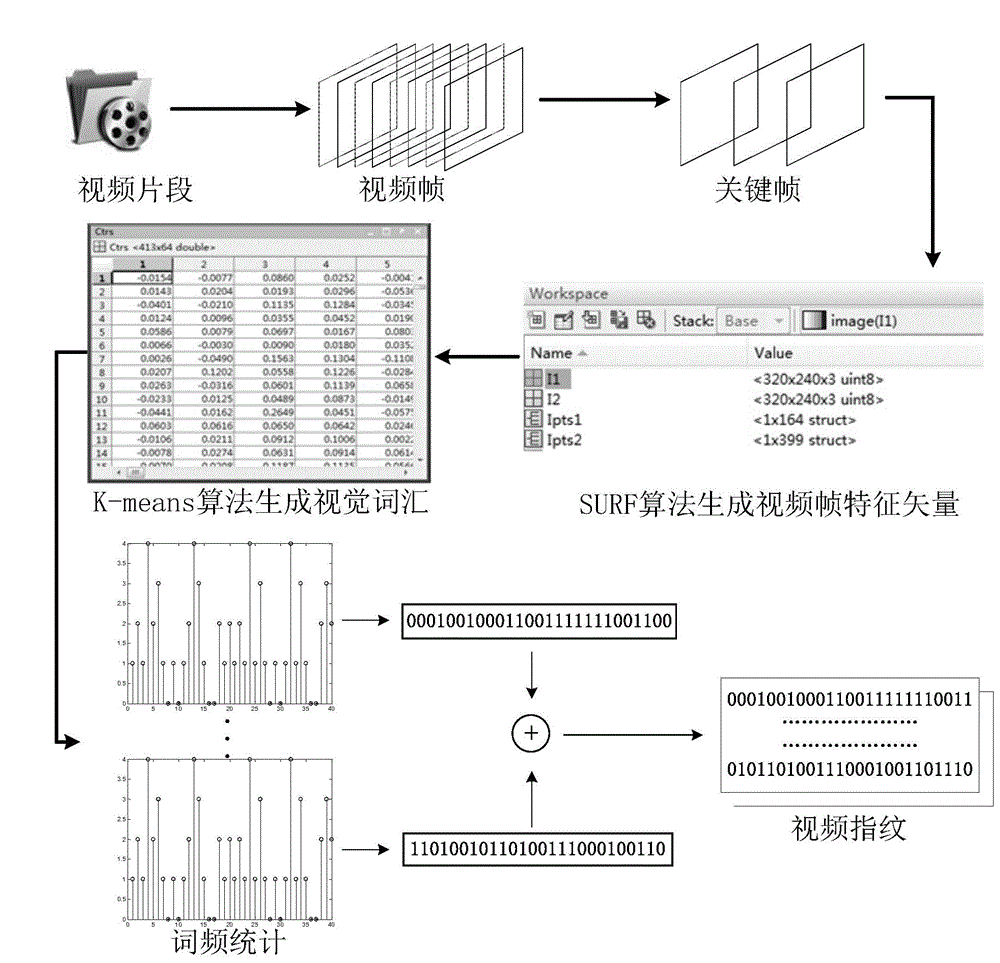

Video fingerprint extraction method based on SURF algorithm

ActiveCN104063706AImprove performanceSmall amount of calculationCharacter and pattern recognitionSpecial data processing applicationsFeature vectorVisual perception

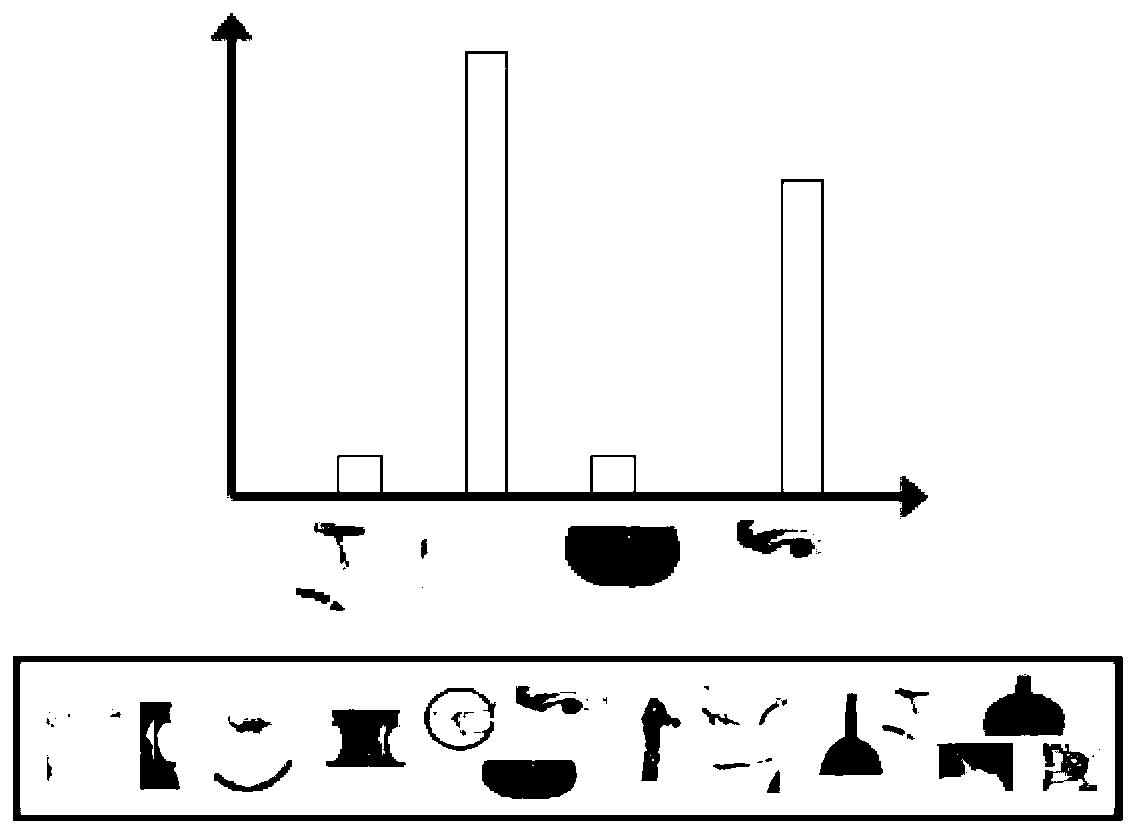

The invention discloses a video fingerprint extraction method based on the SURF algorithm. Feature points of a video key frame are extracted through the SURF algorithm, a feature vector set of the feature points is generated, then clustering is performed on the generated feature point set using the K-means clustering algorithm, each clustering center is taken as a visual word of the video so as to generate a visual word set of the video, the visual words of the video are used to replace feature vectors of the original feature points in the key frame, and statistics is performed on the word frequency information of the visual words, the word frequency information of each key frame visual word is subjected to the quantification process and represented by a binary sequence, that is the fingerprint information of the key frame, and finally, the fingerprint information of all key frames are serially connected according to the timing sequence to generate the fingerprint information of the video. The video fingerprint extraction method has advantages of good accuracy and good robustness, and can get a certain balance in terms of the real-time performance.

Owner:成都星亿年智慧科技有限公司

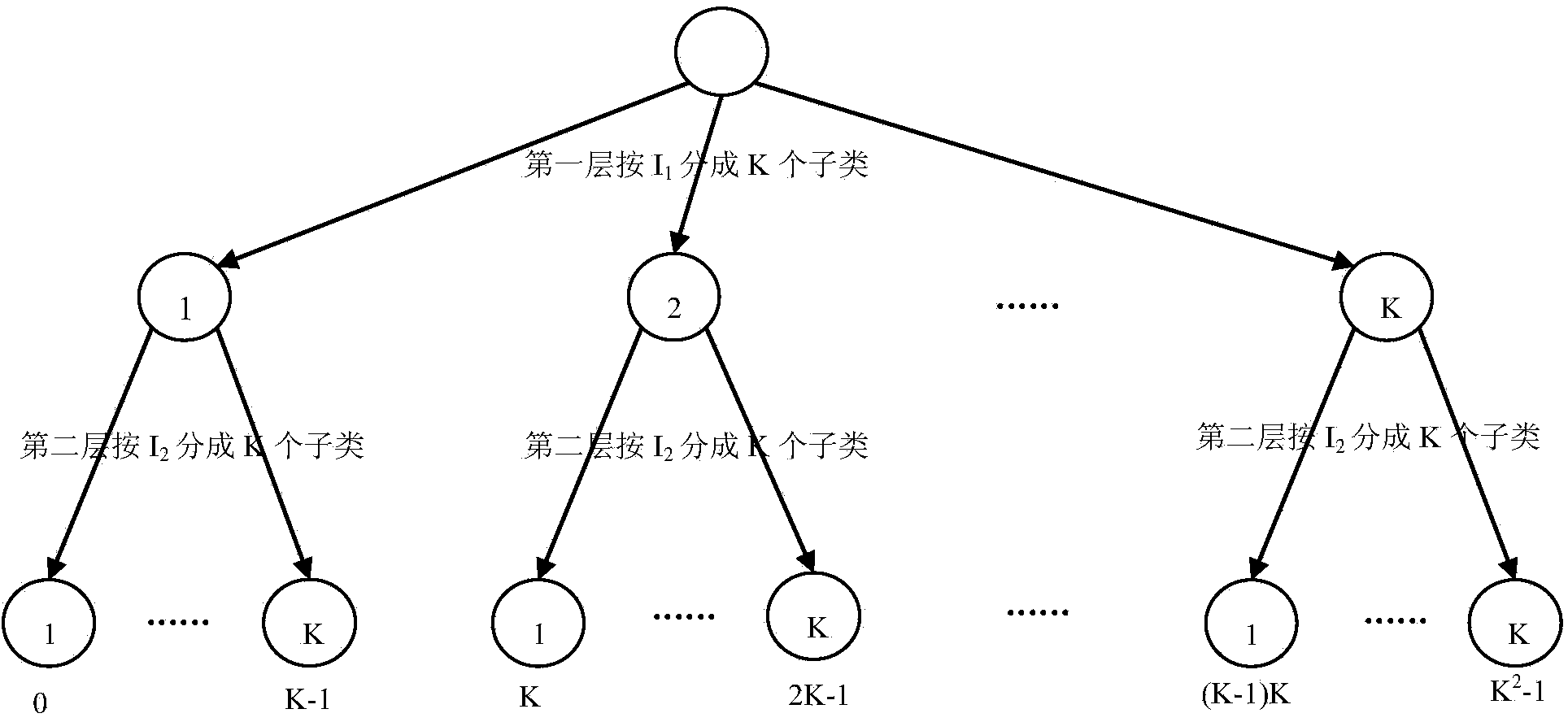

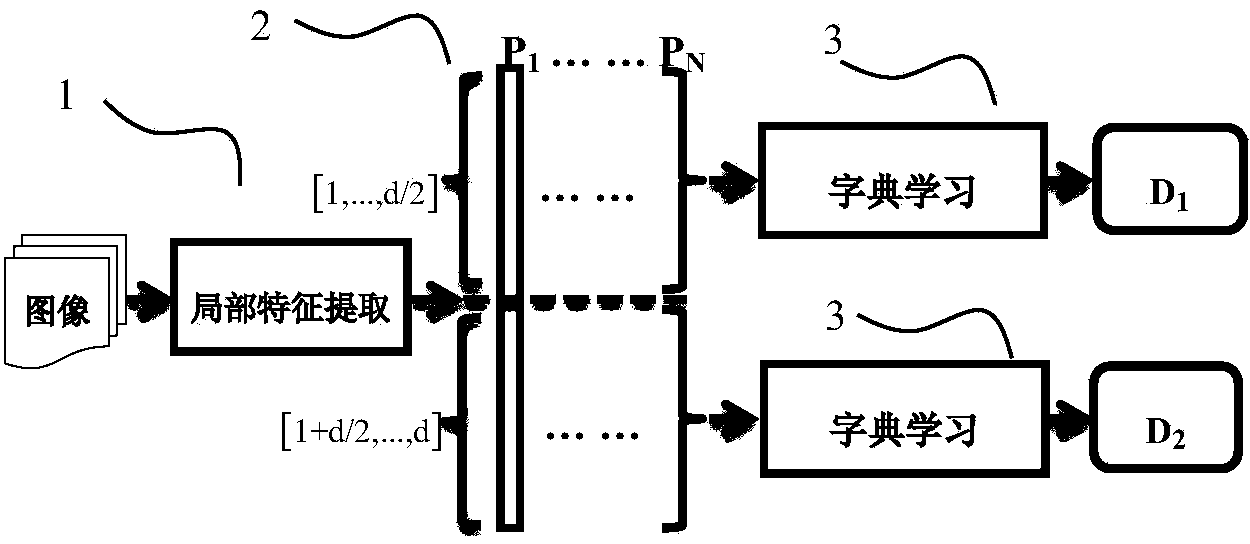

Dictionary learning method, visual word bag characteristic extracting method and retrieval system

ActiveCN104036012AReduce training timeReduce memory usageSpecial data processing applicationsDictionary learningFeature extraction

The invention provides a dictionary learning method. The dictionary learning method includes 1), dividing local characteristic vector of images into first segments and second segments on the basis of dimensionality; 2) establishing a first data matrix by the first segments of a plurality of local characteristic vectors, and establishing a second data matrix by the second segments of a plurality of local characteristic vectors; 3) subjecting the first data matrix to sparse non-negative matrix factorization to obtain a first dictionary sparsely coding the first segments of the local characteristic vectors; subjecting the second data matrix to sparse non-negative matrix factorization to obtain a second dictionary sparsely coding the second segments of the local characteristic vectors. The invention further provides a visual word bag characteristic extracting method for sparsely indicating the local characteristic vectors of the images segment by segment on the basis of the dictionaries and provides a corresponding retrieval system. Memory usage can be greatly reduced, wordlist training time and characteristic extraction time are shortened, and the dictionary learning method is particularly suitable for mobile terminals.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

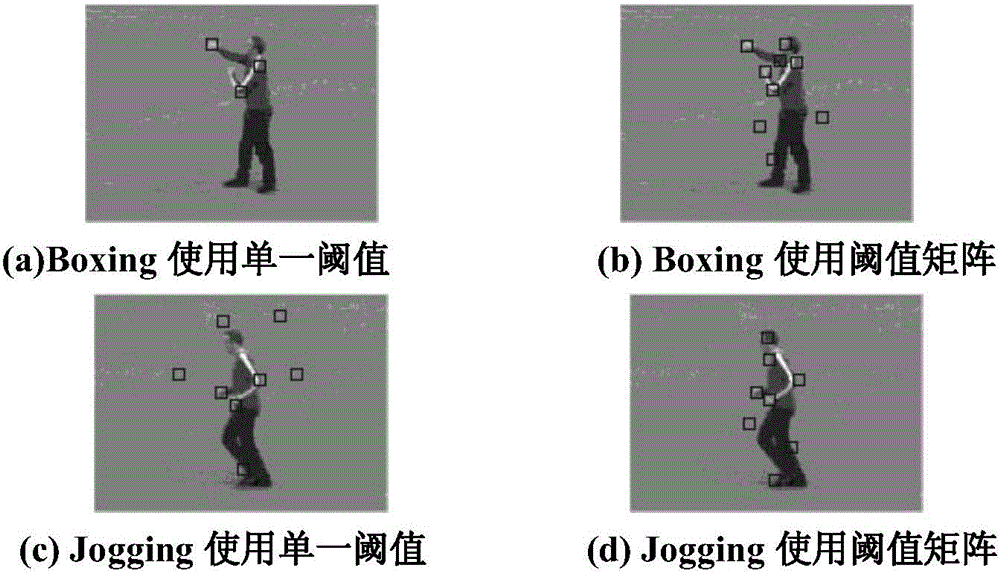

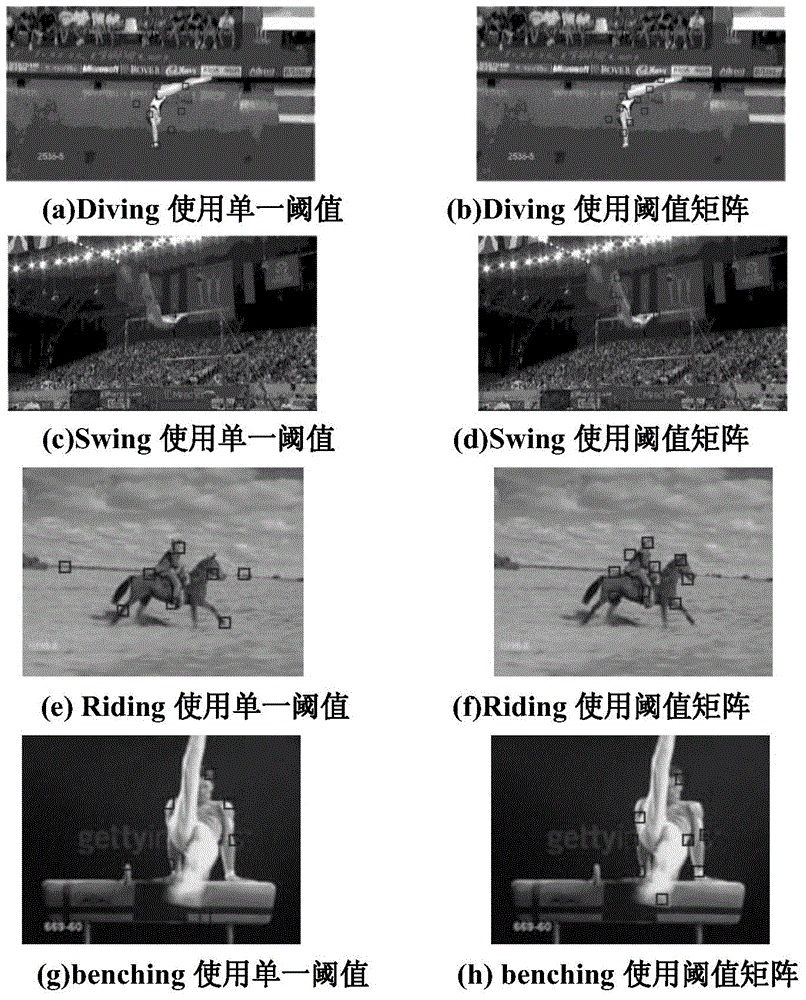

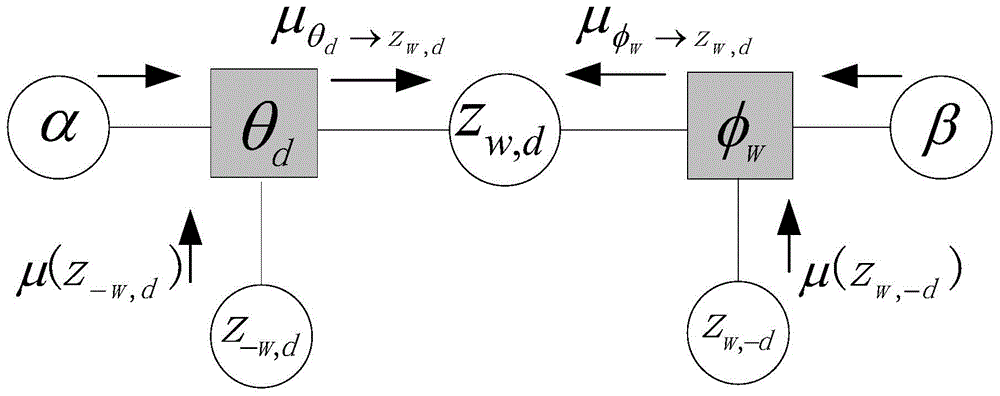

Method for recognizing human behavior based on threshold matrix and characteristics-fused visual word

ActiveCN104616316ASolve the problem of global increase and decreaseReduce the numberImage analysisCharacter and pattern recognitionClassification resultVisual Word

The invention discloses a method for recognizing human behavior based on a threshold matrix and a characteristics-fused visual word. The method is characterized by comprising the steps of extracting the visual word by the salience calculation method, namely, performing the salience calculation for a training video frame to obtain the location of an area of a human, detecting interesting points at the inside and outside of the area through different thresholds, and then calculating the visual word based on the obtained interesting points; molding and analyzing the obtained visual word, and constructing an action model; extracting the visual word from a testing video frame by the same salience calculation method after the construction mode is constructed; classifying the obtained visual word as input through the constructed action model; returning the action classification result as a human behavior label in the testing video, so as to finish the recognizing of the human behavior. With the adoption of the method, the accuracy of recognizing human behavior under a complex scene can be effectively ensured.

Owner:SUZHOU UNIV

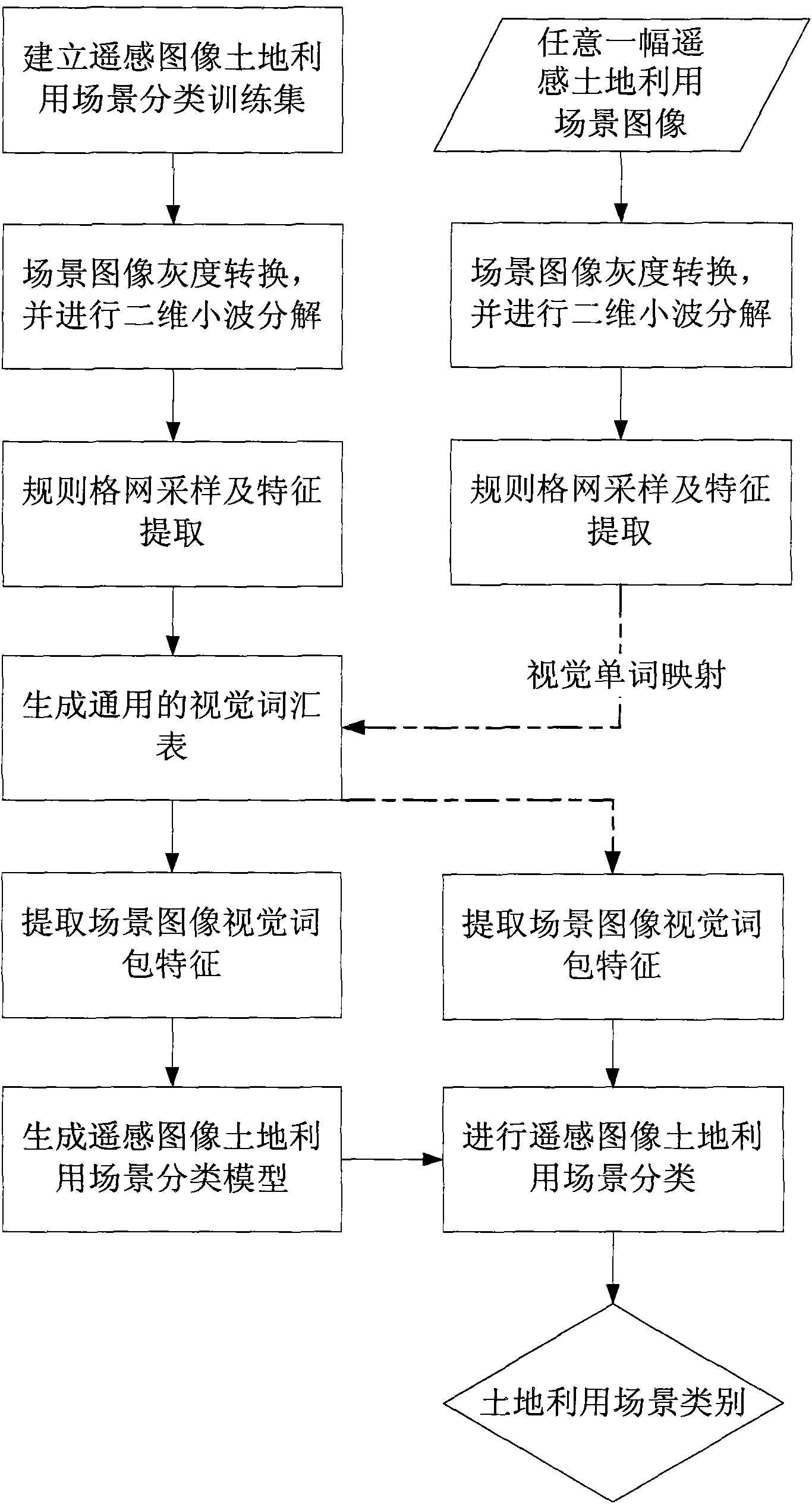

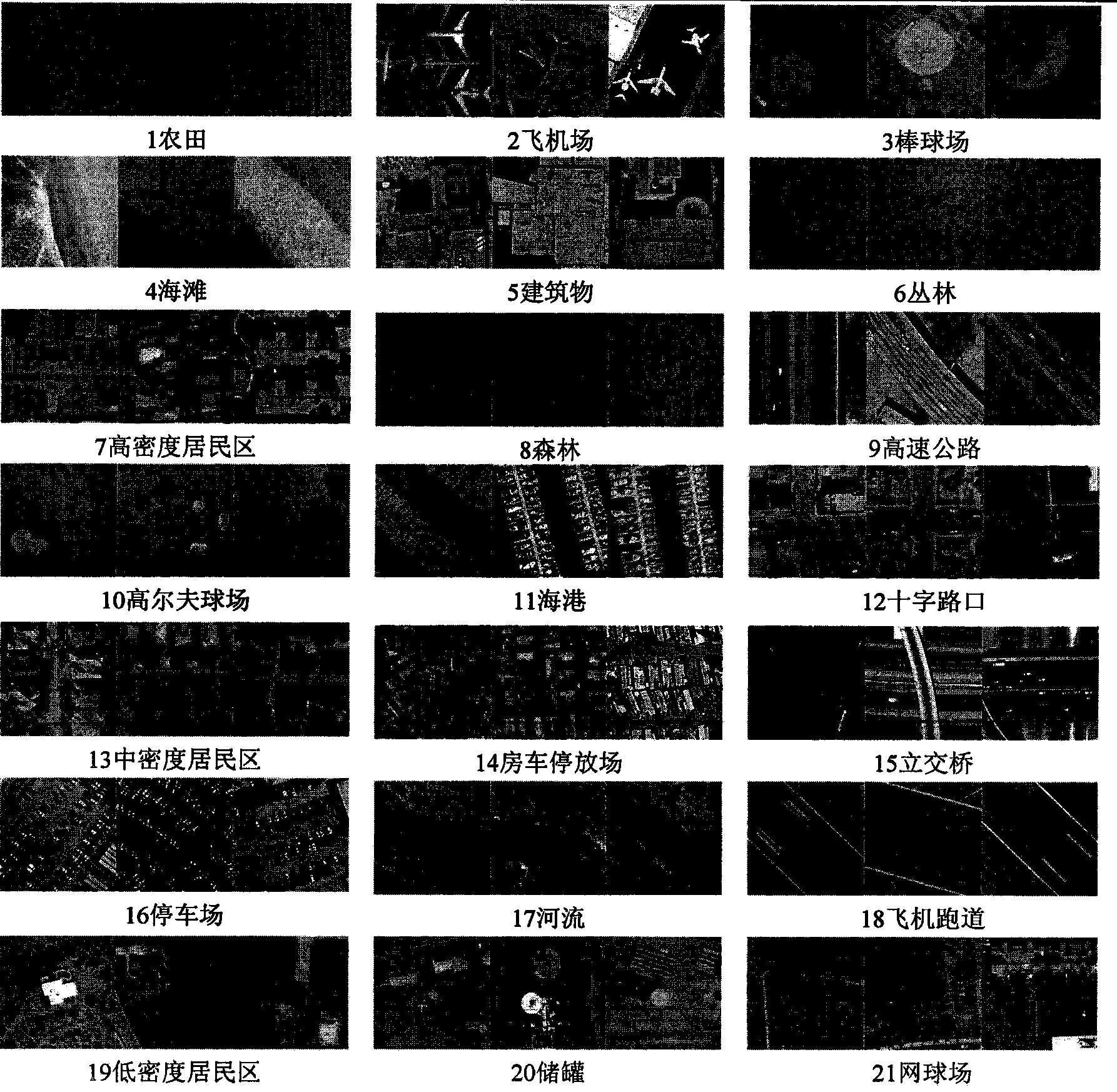

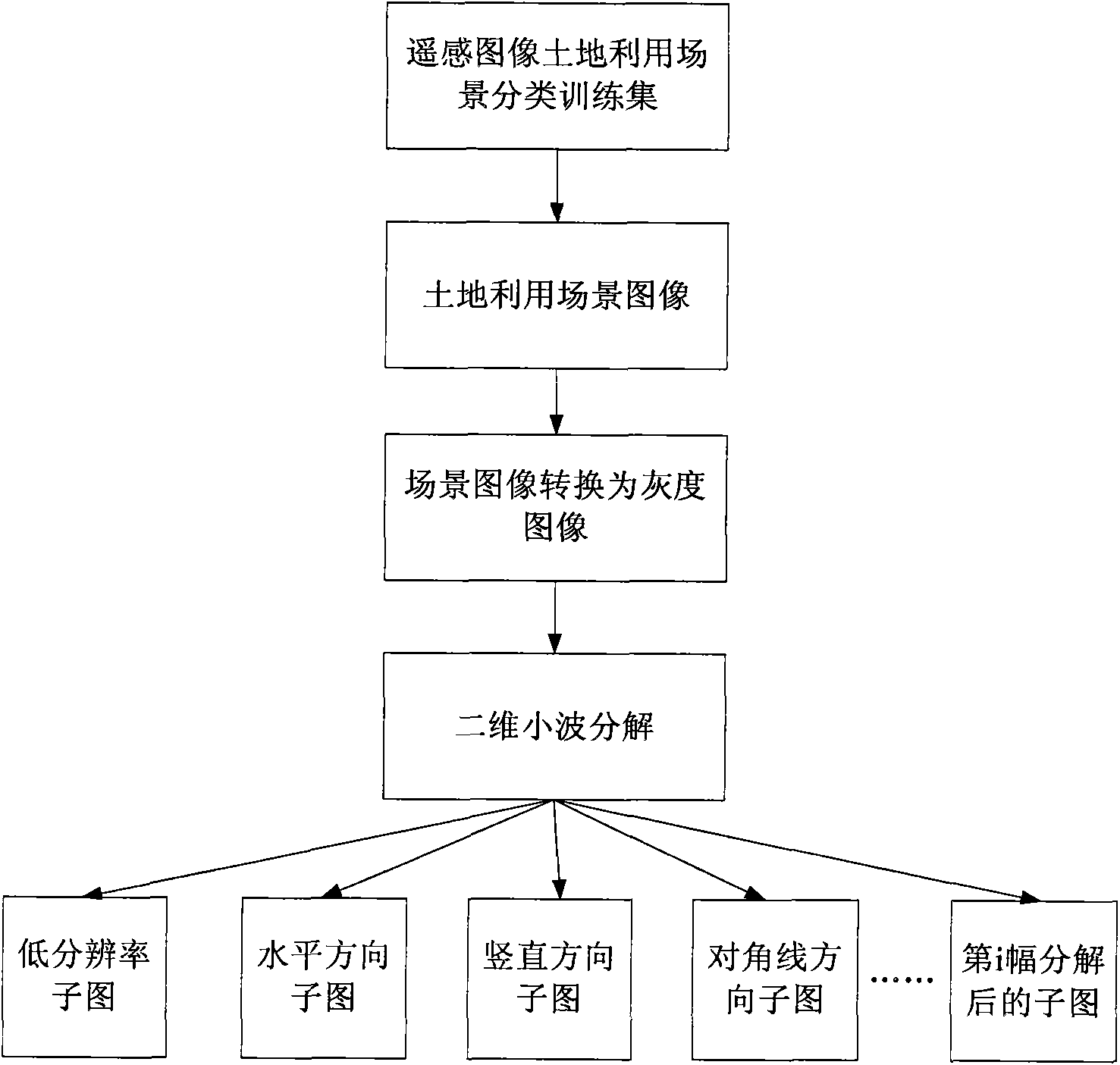

Remote sensing image land utilization scene classification method based on two-dimension wavelet decomposition and visual sense bag-of-word model

ActiveCN103413142ASolve the lack of considerationImprove utilizationCharacter and pattern recognitionDecompositionClassification methods

The invention relates to a remote sensing image land utilization scene classification method based on two-dimension wavelet decomposition and a visual sense bag-of-word model. The method comprises the steps that a remote sensing image land utilization scene classification training set is built; scene images in the training set are converted to grayscale images, and two-dimension decomposition is conducted on the grayscale images; regular-grid sampling and SIFT extracting are conducted on the converted grayscale images and sub-images formed after two-dimension decomposition, and universal visual word lists of the converted grayscale images and the sub-images are independently generated through clustering; visual word mapping is conducted on each image in the training set to obtain bag-of-word characteristics; the bag-of-word characteristics of each image in the training set and corresponding scene category serial numbers serve as training data for generating a classification model through an SVM algorithm; images of each scene are classified according to the classification model. The remote sensing image land utilization scene classification method well solves the problems that remote sensing image texture information is not sufficiently considered through an existing scene classification method based on a visual sense bag-of-word model, and can effectively improve scene classification precision.

Owner:INST OF REMOTE SENSING & DIGITAL EARTH CHINESE ACADEMY OF SCI

Indoor common object identification method based on machine vision

InactiveCN102708380AMitigate the impact of object recognitionCharacter and pattern recognitionMachine visionMulti dimensional

The invention discloses an indoor common object identification method based on machine vision. The method comprises the following step of: (1) establishing a visual word bank of a kind of objects, and clustering the scale of the determiner bank through an mean value K; (2) preliminarily processing images, expressing an image with the words in the word bank, and approximately distinguishing a background and a foreground with similar threshold values; (3) describing the images, wherein the information contained in one image is mapped into a multi-dimensional column vector (x0,x1x2......xP-3xP-2xP-1y0y1y2......yQ-3yQ-2yQ-1) of 1*(P+Q), and the characteristics and the space relation in the images are vectorized, P is the emerging times of the word in the visual word bank, and Q is the statistics of the space relation; and (4) supporting a vector machine with a no-supervision identification classifier so as to realize the classified identification. With the adoption of the indoor common object identification method, the machine can identify the object more accurately.

Owner:SOUTHEAST UNIV

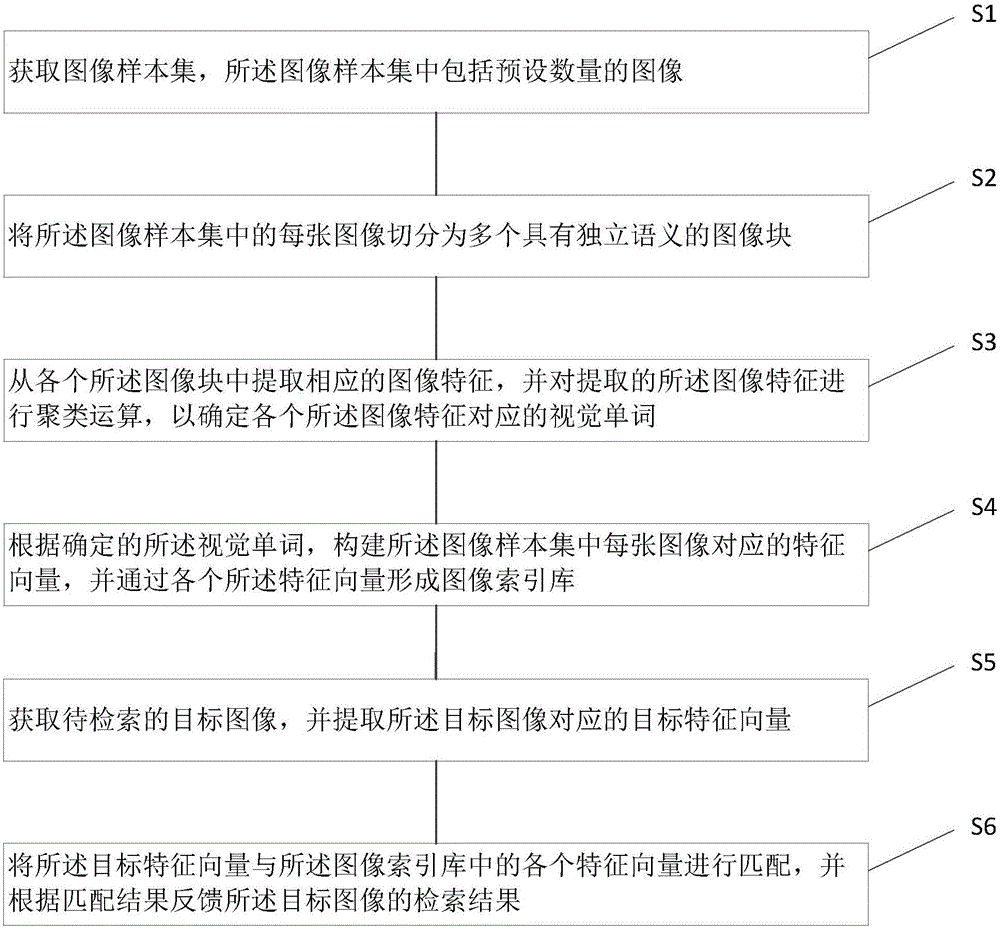

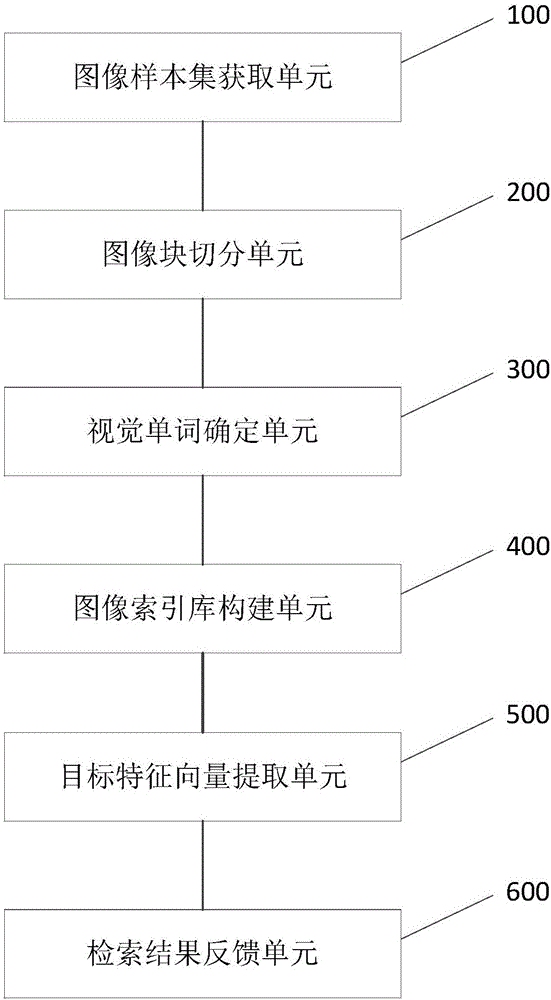

Depth feature-based image retrieval method and apparatus

The invention provides a depth feature-based image retrieval method and apparatus. The method comprises the steps of obtaining an image sample set; cutting each image in the image sample set into a plurality of image blocks with independent semanteme; extracting corresponding image features from the image blocks, and performing clustering operation on the extracted image features to determine visual words corresponding to the image features; constructing eigenvectors corresponding to the images in the image sample set according to the determined visual words, and forming an image index library through the eigenvectors; obtaining a to-be-retrieved target image, and extracting a target eigenvector corresponding to the target image; and matching the target eigenvector with the eigenvectors in the image index library, and feeding back a retrieval result of the target image according to a matching result. According to the depth feature-based image retrieval method and apparatus provided by the invention, the image retrieval precision can be improved.

Owner:PLA UNIV OF SCI & TECH

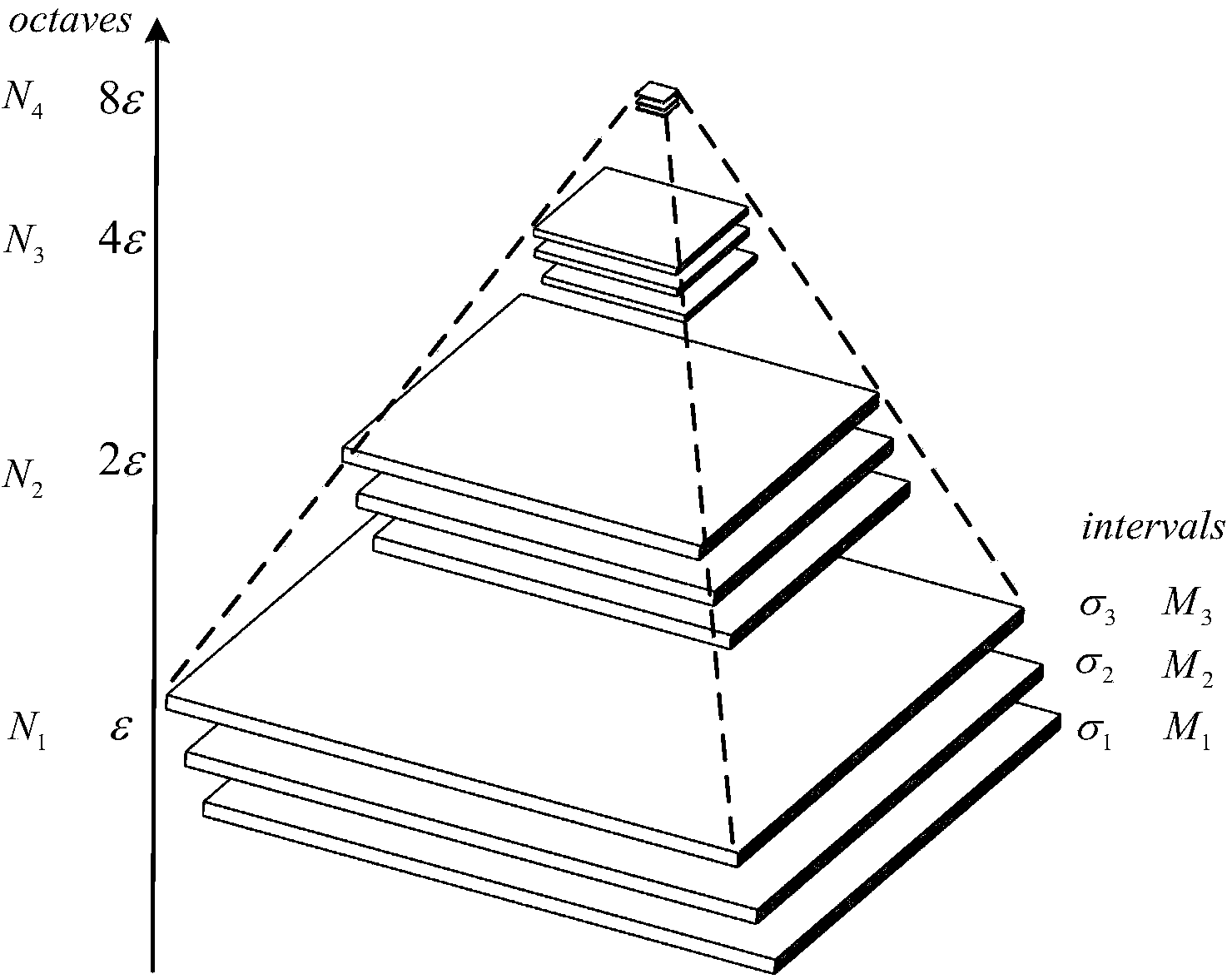

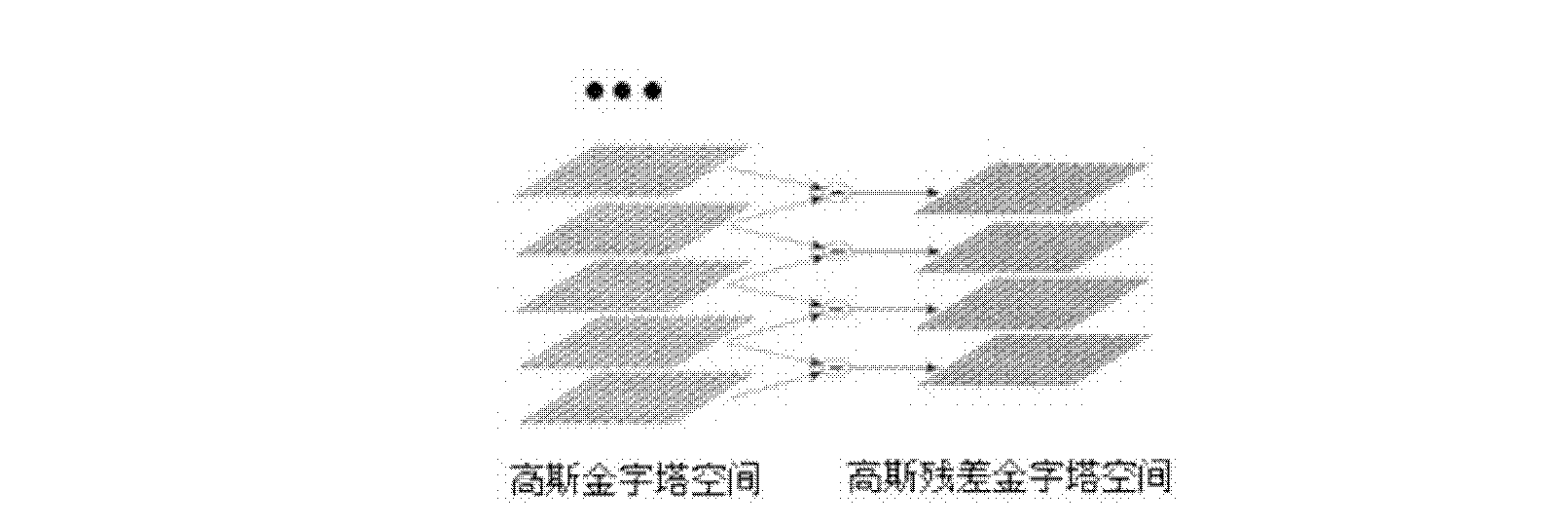

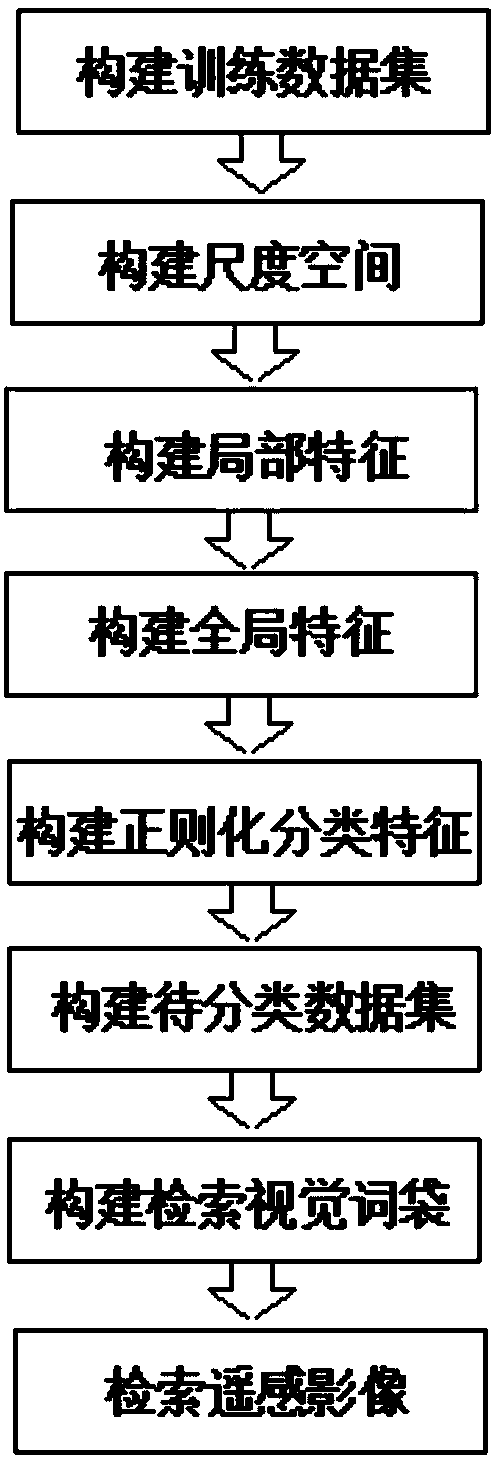

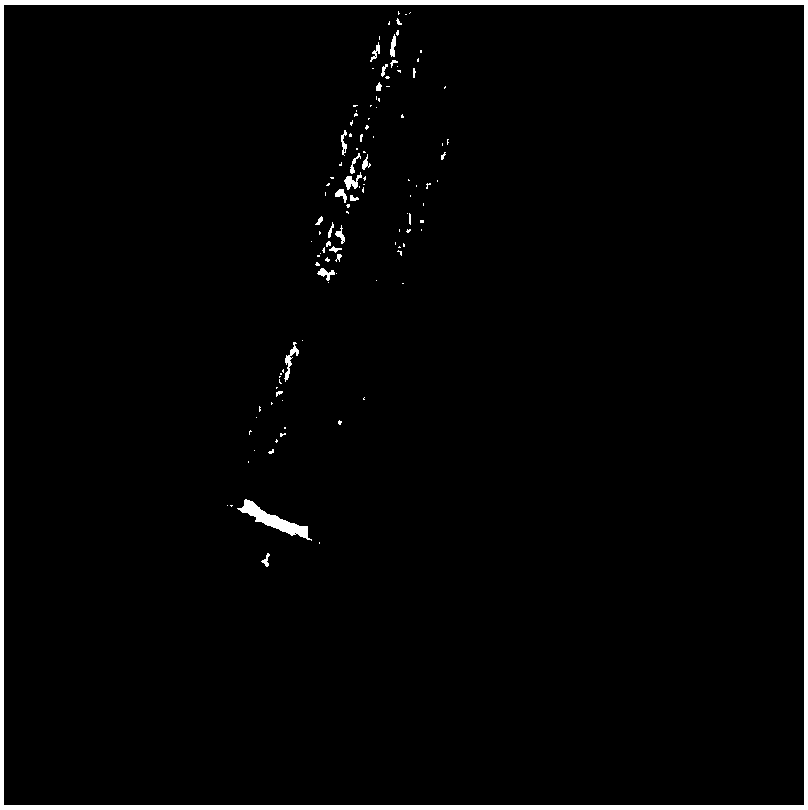

Remote sensing image classification and retrieval method

InactiveCN108537238AAchieve retrievalHigh precisionImage analysisCharacter and pattern recognitionData setScale-invariant feature transform

The invention belongs to the technical field of digital image processing and particularly relates to a remote sensing image classification and retrieval method. The remote sensing image classificationand retrieval method comprises the following technological processes: constructing a training data set, constructing a scale space, constructing local features, constructing global features, constructing regularized classification features, constructing a to-be-classified data set, constructing a retrieval visual word bag and retrieving remote sensing images. The remote sensing image classification and retrieval method provided by the invention has the benefits that the local features of classification are found on different scale spaces of the remote sensing images by adopting scale-invariant feature transform; the global features of the remote sensing images are constructed by adopting a generalized search tree, and a Gaussian weight function is introduced for the regularization fusionof the local features and the global features; the classification of the remote sensing images is developed on the basis of the regularized fusion of the local features and the global features, so that the retrieval of the images is finally realized; a principle is scientific and reasonable; the fusion of the local features and the global features of the remote sensing images can comprehensively depict the multi-scale, spatial and textural features of the remote sensing images, so that ground features of different sizes and orientations are completely classified.

Owner:崔植源

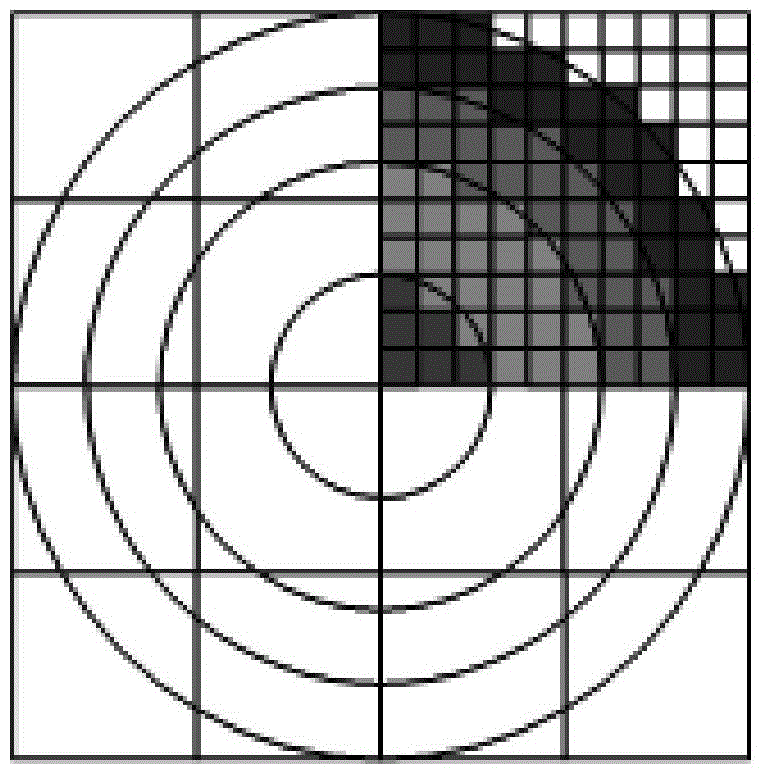

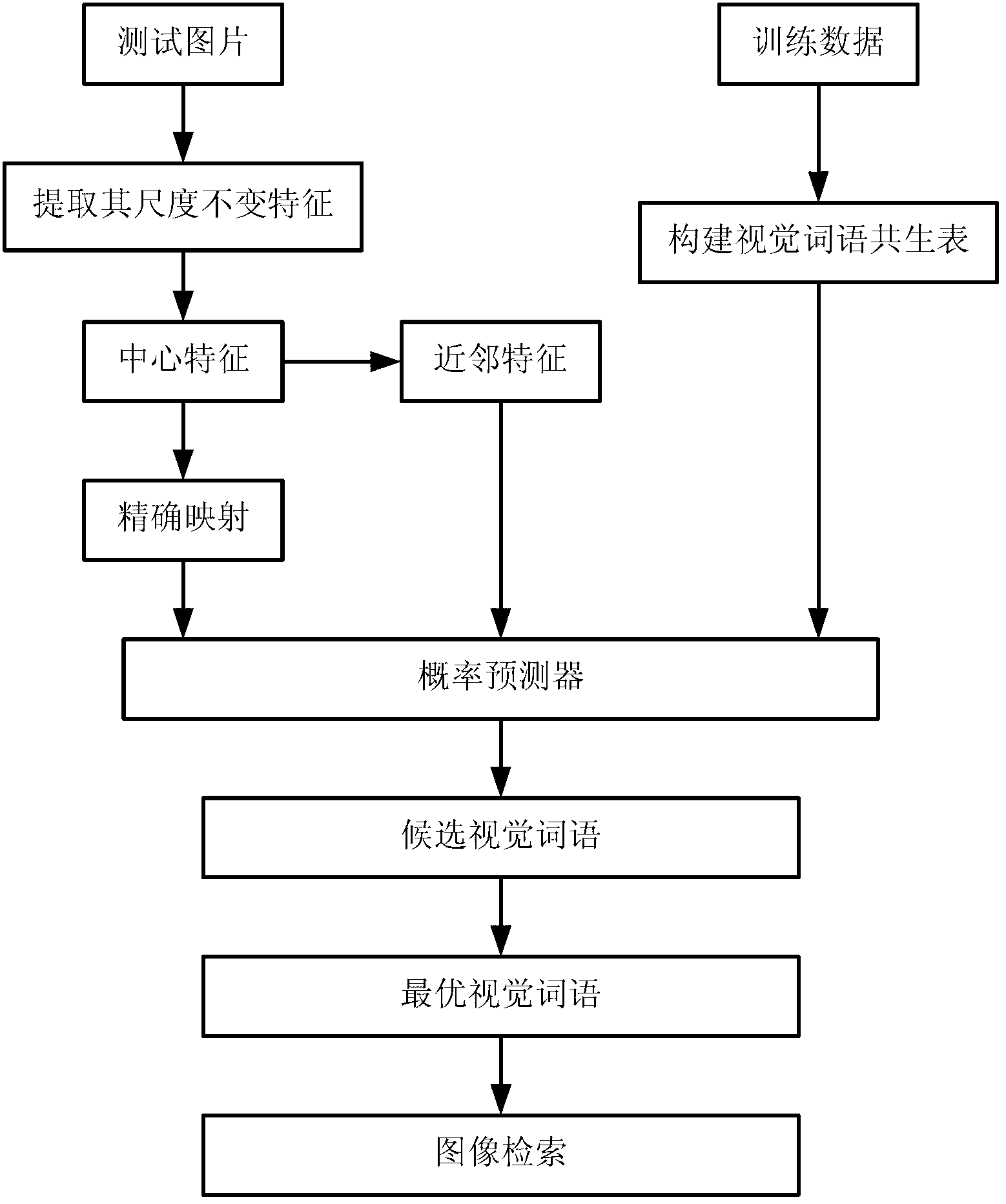

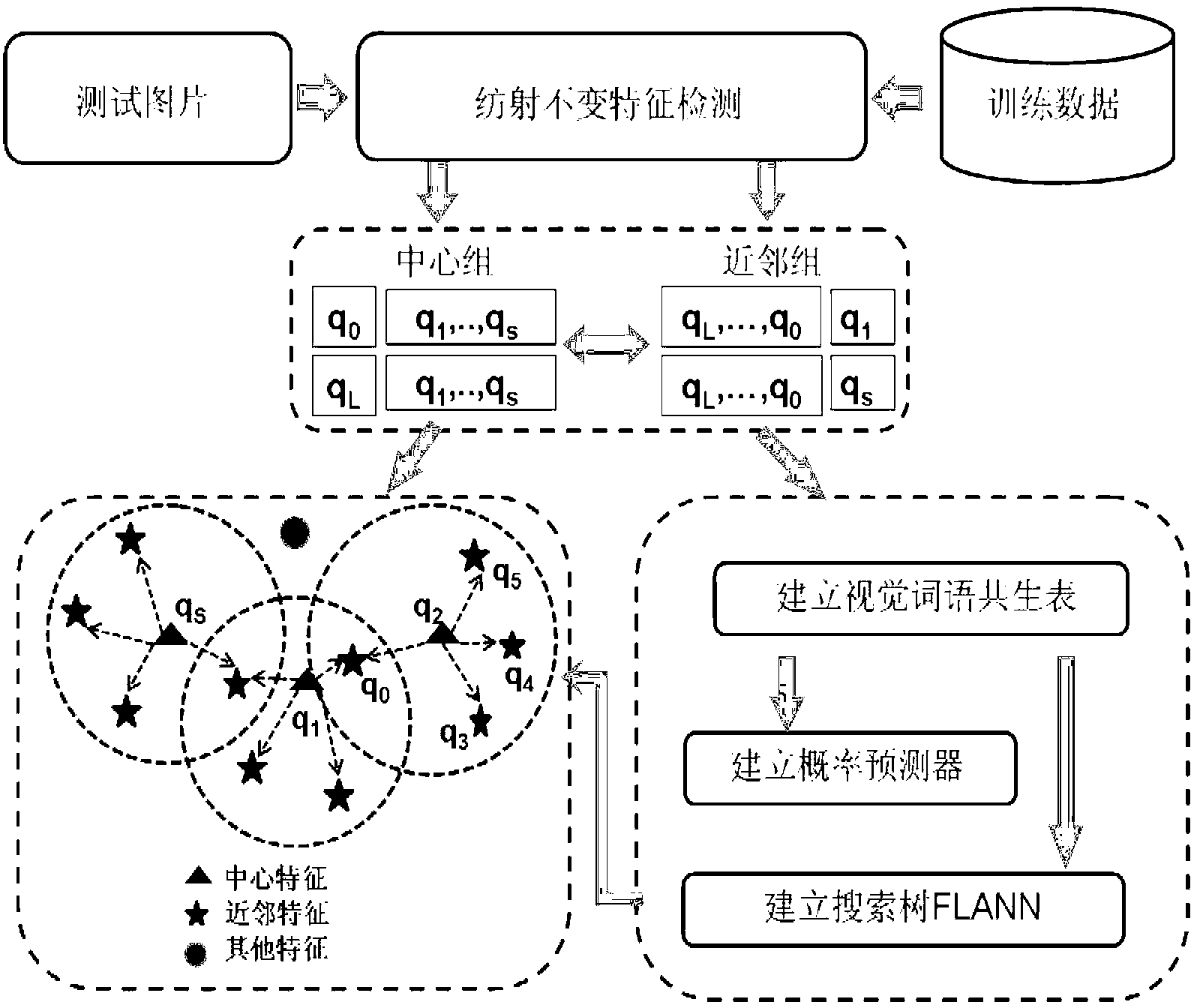

Image search method based on space symbiosis of visual words

InactiveCN102799614AEffective and rapid generation ofReduce time complexitySpecial data processing applicationsPattern recognitionNear neighbor

The invention provides an image search method based on space symbiosis of visual words. The image search method comprises the following steps of: counting the symbiosis probability between any two visual words in a training database, and constructing a visual word symbiosis table; extracting a size constant characteristic of an input query image; randomly selecting the partial characteristic from the size constant characteristic as a central characteristic, and performing precise mapping on the central characteristic; counting neighboring characteristics in an affine constant region of the central characteristic; forecasting candidate visual words for the neighboring characteristics through a high-order probability forecaster according to the visual word symbiosis table and a precise mapping result; and comparing distances between the candidate words and the size constant characteristic, determining the optimal visual word, and performing image search. By the symbiosis of the visual words, the visual words can be produced effectively and quickly, and image search can be performed.

Owner:PEKING UNIV

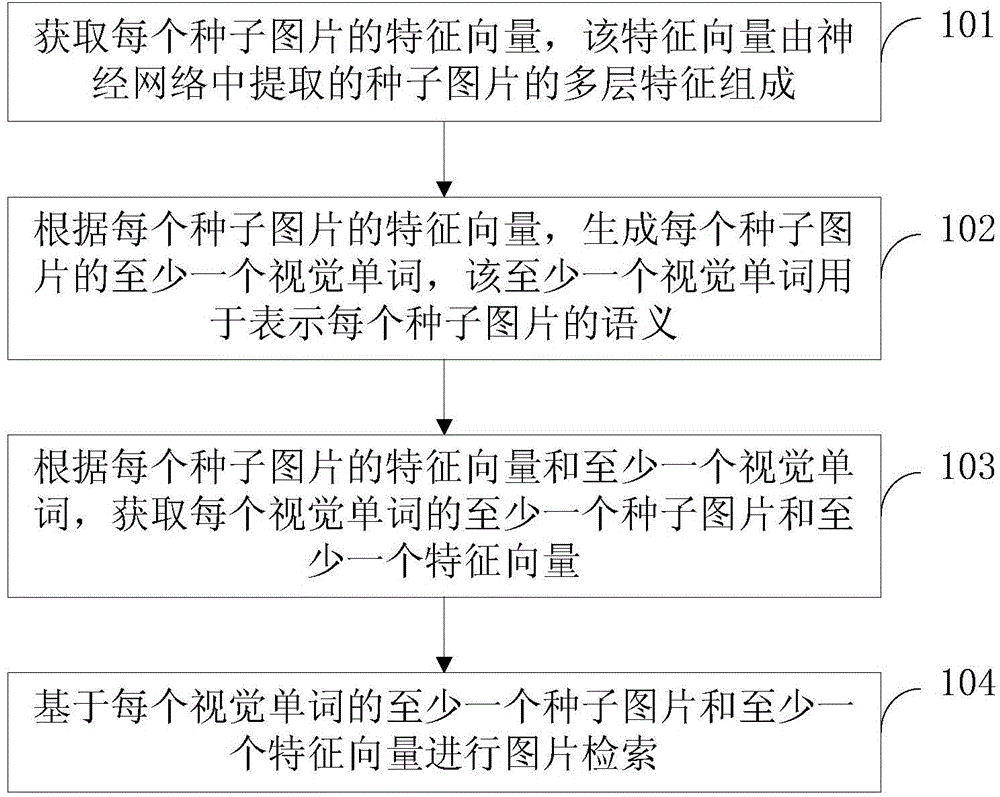

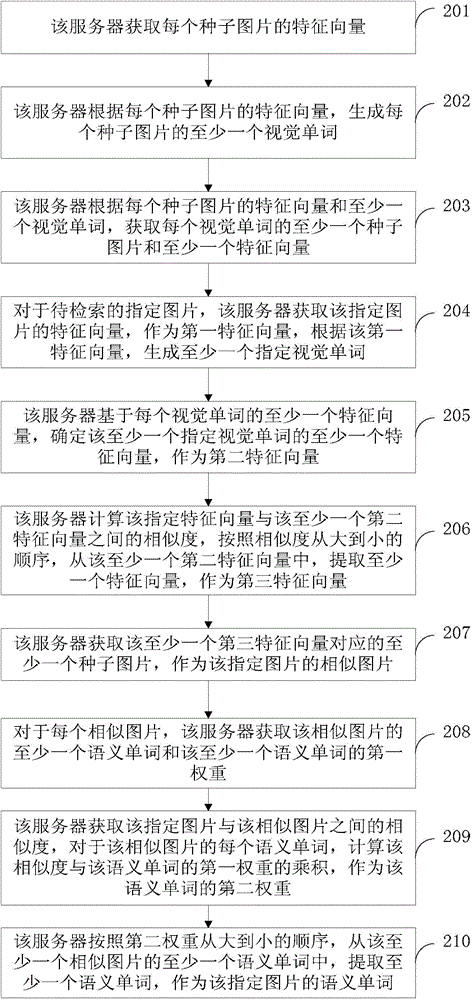

Image retrieval method and device

ActiveCN105468596AMeet search needsImprove search accuracySpecial data processing applicationsFeature vectorImaging processing

The invention discloses an image retrieval method and device, and belongs to the field of image processing. The method comprises the following steps: obtaining the feature vector of each seed image, wherein the feature vector is composed of multi-level features of the seed image extracted from a neural network; generating at least one visual word of each seed image according to the feature vector of each seed image, wherein the at least one visual word is used for expressing the semantic of each seed image; and obtaining at least one seed image and at least one feature vector of each visual word according to the feature vector of each seed image and the at least one visual word, and carrying out image retrieval. According to the method disclosed by the invention, the seed image is regarded as the set of the visual words, the feature vector of the seed image is obtained, at least one visual word is generated according to the feature vector, the at least one visual word is used for expressing the semantic of the seed image, and when carrying out the image retrieval based on the visual word, the retrieval accuracy is improved, and the retrieval demand of a user can be satisfied.

Owner:TENCENT TECH (SHENZHEN) CO LTD

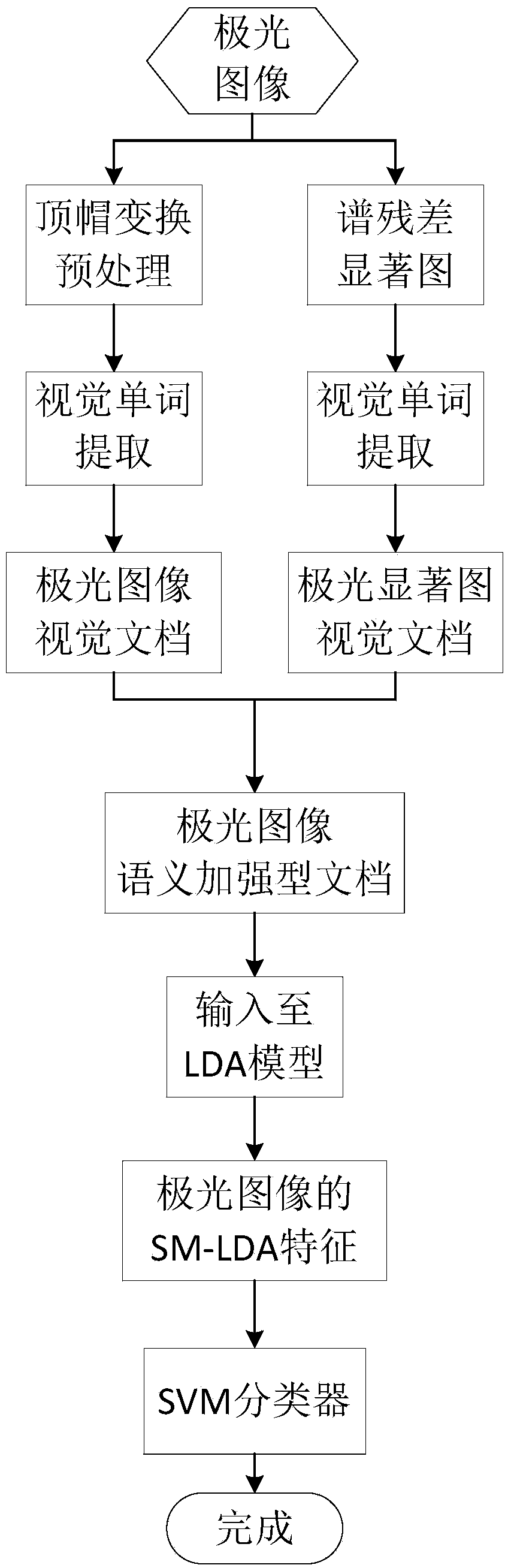

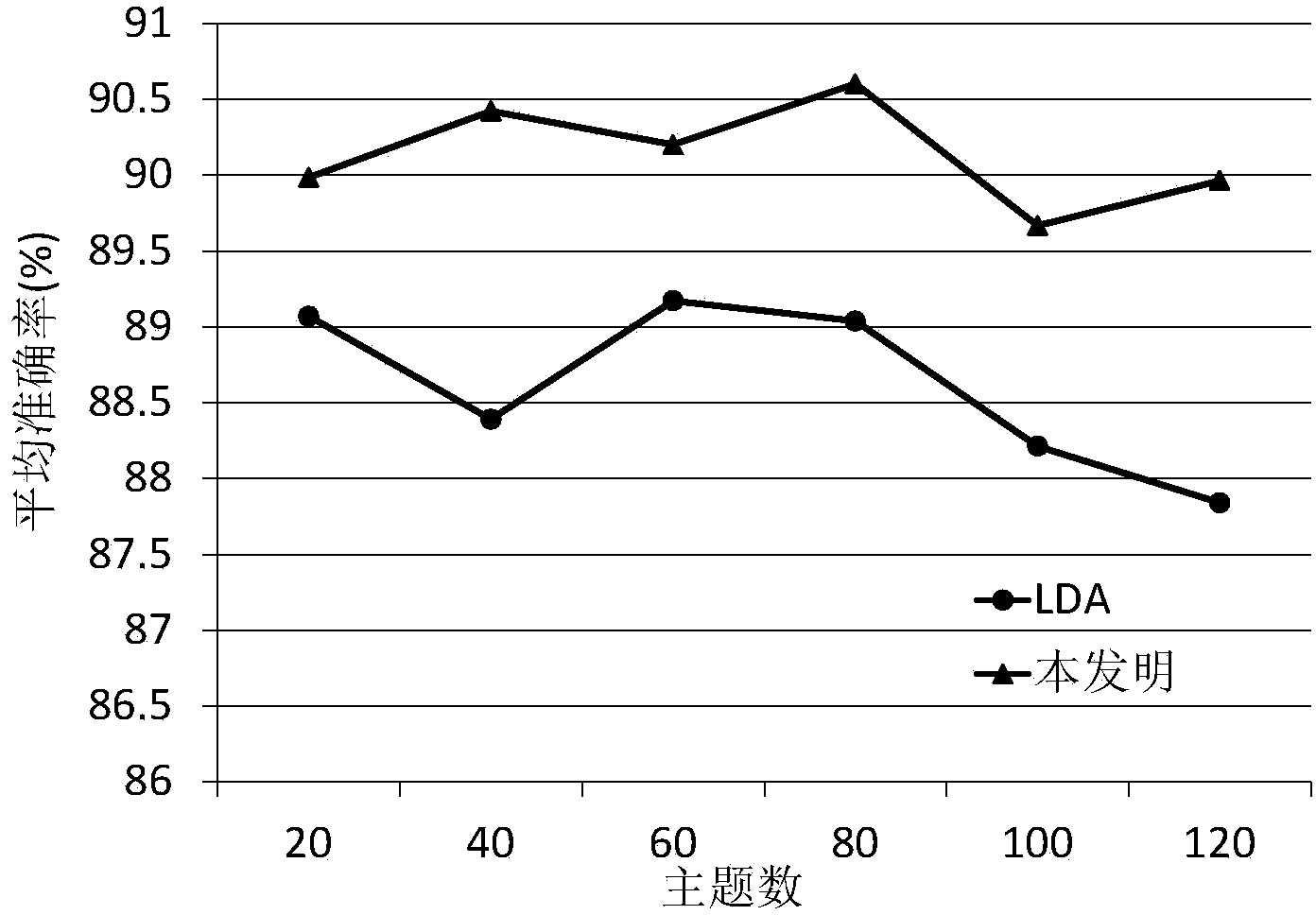

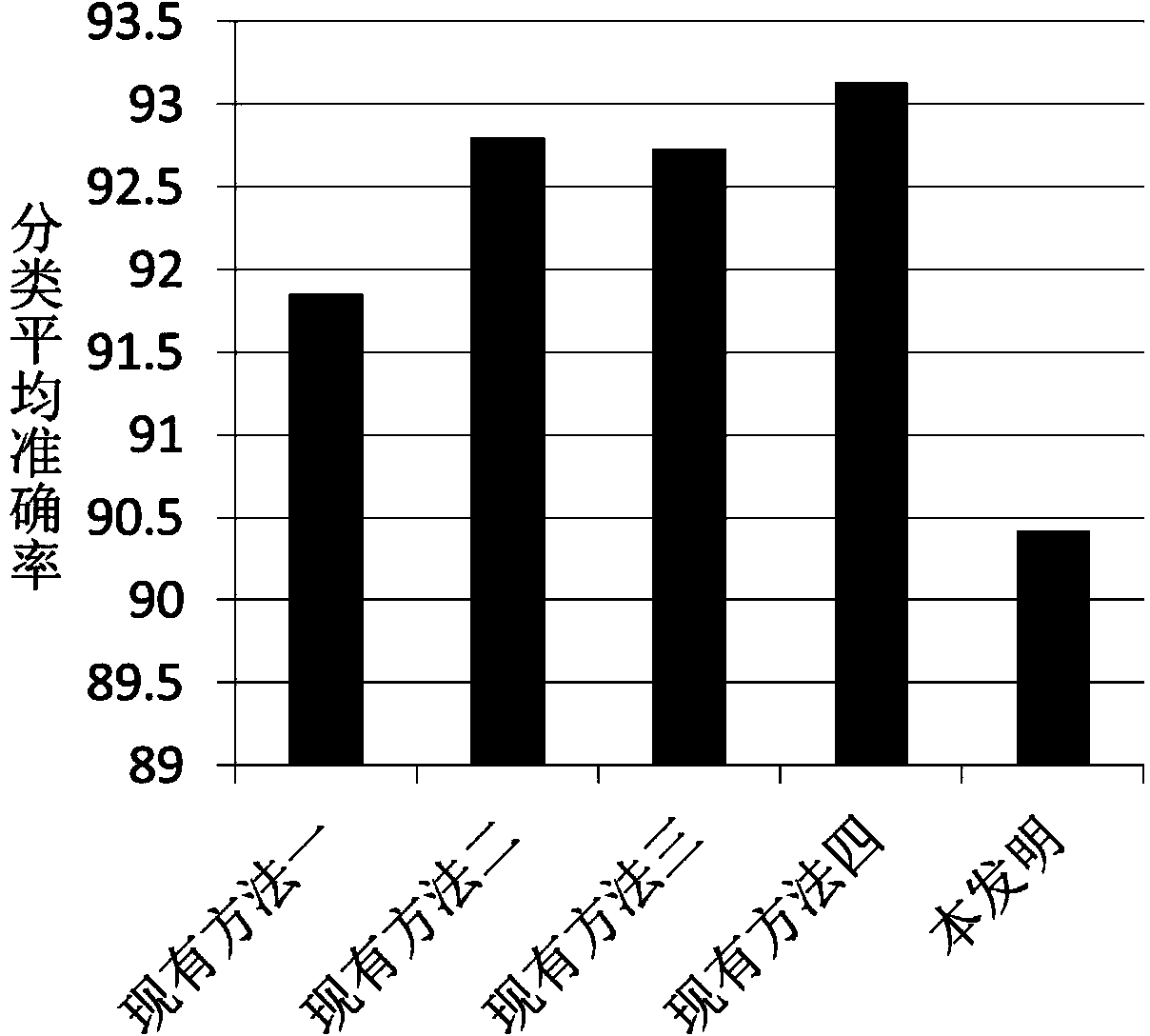

Aurora image classification method based on latent theme combining with saliency information

InactiveCN103632166AImprove uniformityAvoid the pitfall of extracting its featuresCharacter and pattern recognitionSupport vector machineDocumentation procedure

The invention discloses an aurora image classification method based on a latent theme combining with saliency information, and mainly solves the problem that existing technical classification is low in accuracy and classification efficiency and narrow in application range. The method includes the implementation steps: (1) preprocessing an aurora image, extracting visual words of the preprocessed aurora image and generating a visual documentation; (2) using a spectral residual algorithm to acquire an aurora saliency map of the inputted aurora image, extracting visual words of the aurora saliency map and generating a visual document of the aurora saliency map; (3) connecting the visual documents in the step (1) and the step (2) to generate a semantic enhanced document of the aurora image, and inputting the semantic enhanced document of the aurora image to a Latent Dirichlet Allocation model to obtain saliency information latent semantic distribution characteristics SM-LDA of the aurora image; (4) inputting the SM-LDA characteristics into a support vector machine for classification so as to obtain a final classification result. By the method applicable to scene classification and target recognition, high classification accuracy is maintained, meanwhile, classification time is shortened, and classification efficiency is improved.

Owner:XIDIAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com