Dictionary learning method, visual word bag characteristic extracting method and retrieval system

A visual bag of words, dictionary learning technology, applied in the direction of electronic digital data processing, special data processing applications, instruments, etc., can solve the problem of mobile terminal processor performance, limited memory and power resources, vocabulary takes up a lot of memory, and descriptors are differentiated In order to achieve the effect of shortening training time and feature extraction time, reducing memory usage, and improving retrieval performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

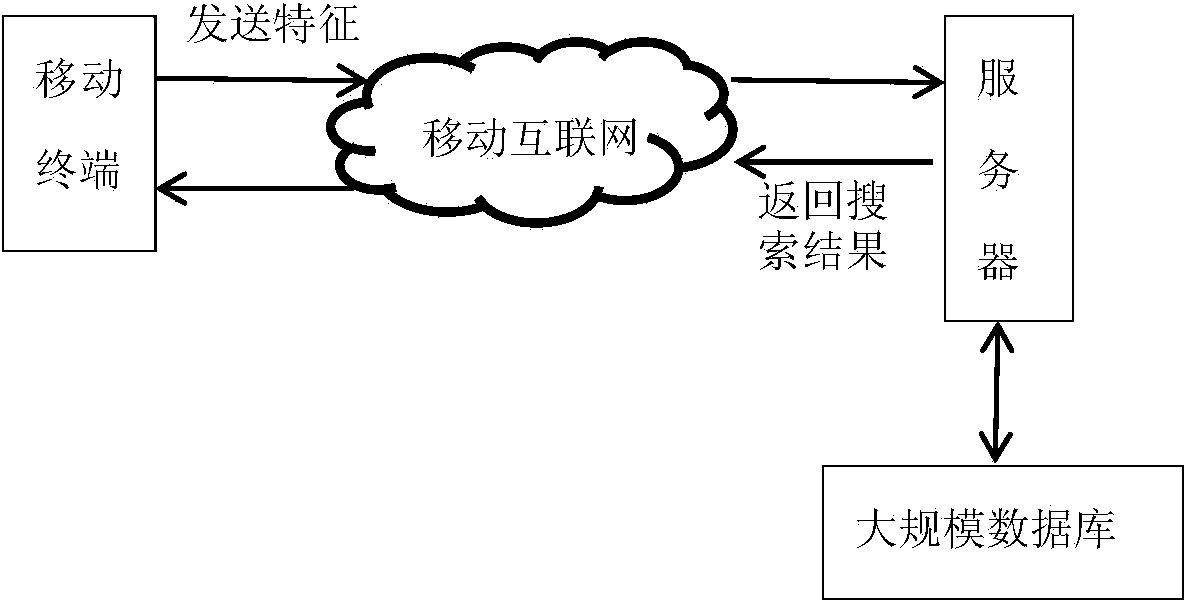

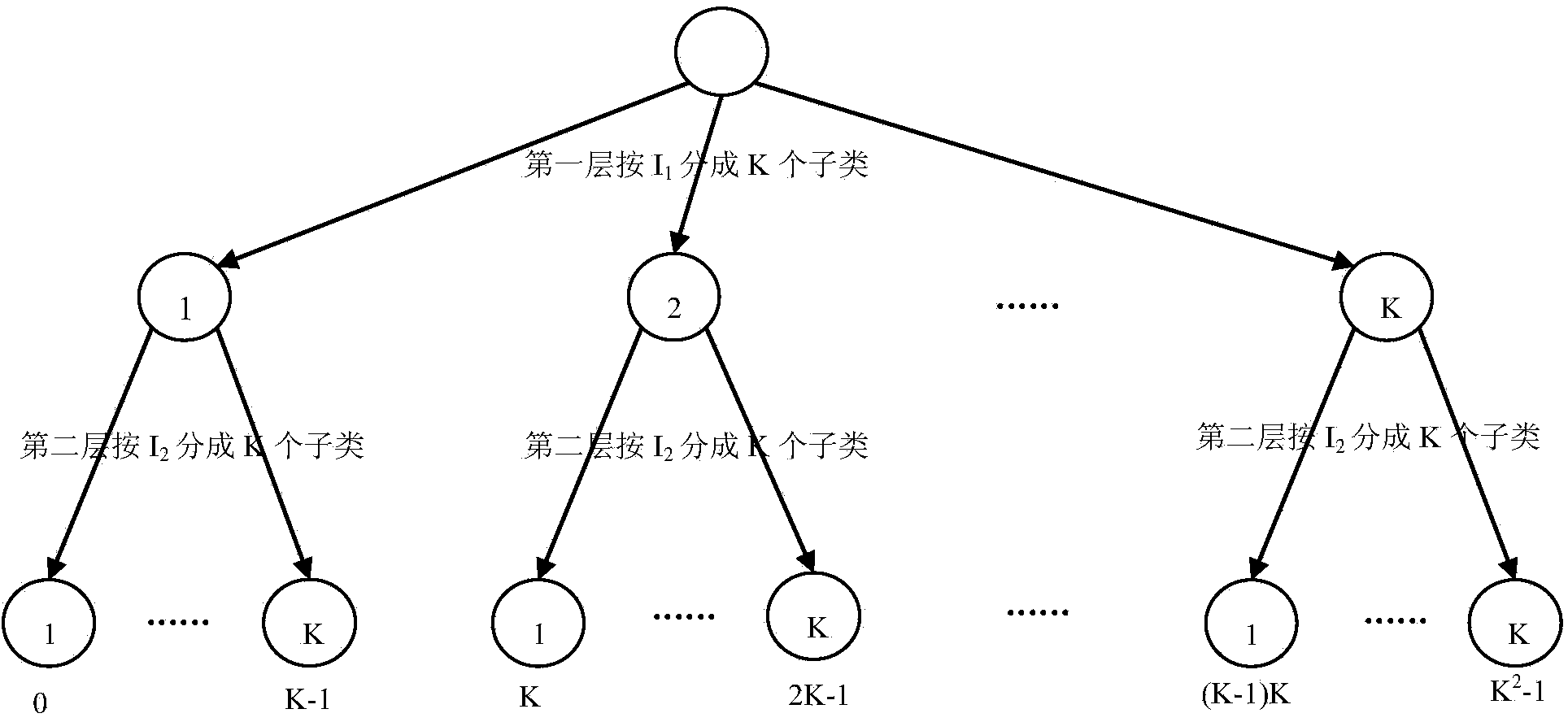

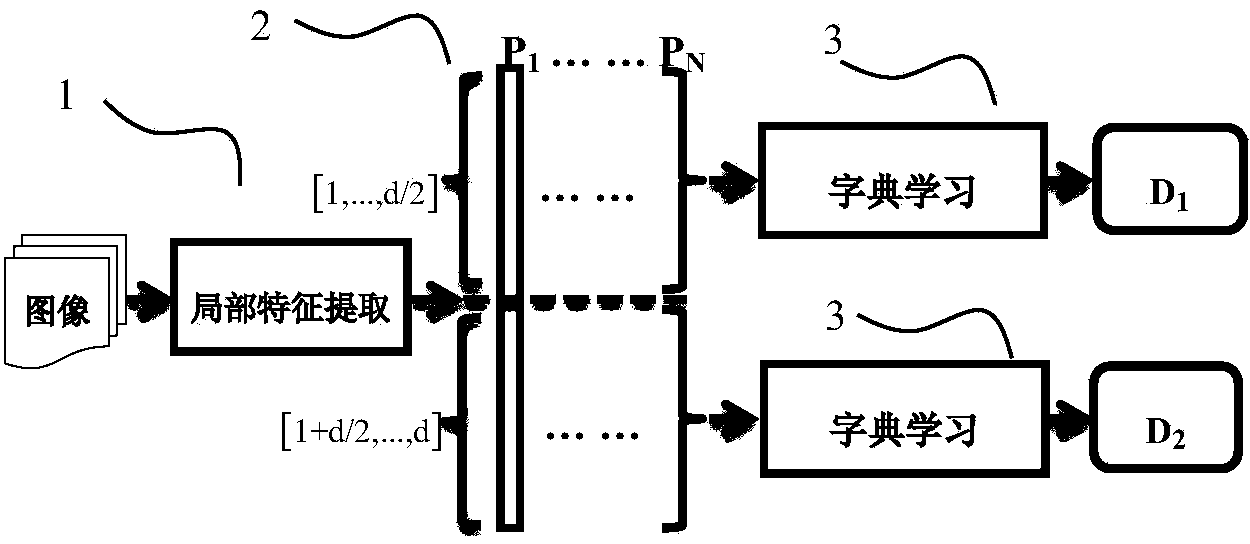

[0054] According to an embodiment of the present invention, a high-dimensional visual bag-of-words feature representation method based on segmented sparse coding is proposed. Visual bag-of-words feature representation refers to the use of vector quantization methods to map high-dimensional local feature vectors of images to visual keywords in the large vocabulary BoW, thereby reducing terminal-to-server transmission traffic, reducing network delays, and reducing server-side feature storage Occupied. The high-dimensional visual bag-of-words feature quantification method of this embodiment pioneered the idea of "small code table and big vocabulary", which greatly reduced the memory occupation and time consumption of the terminal’s memory for feature quantification calculation, and originally solved the current current situation. There are methods that can't be used in mobile terminals because they occupy too much memory, which makes it possible for BoW to be widely used in mobi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com