Patents

Literature

80results about How to "Bridging the "Semantic Gap"" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

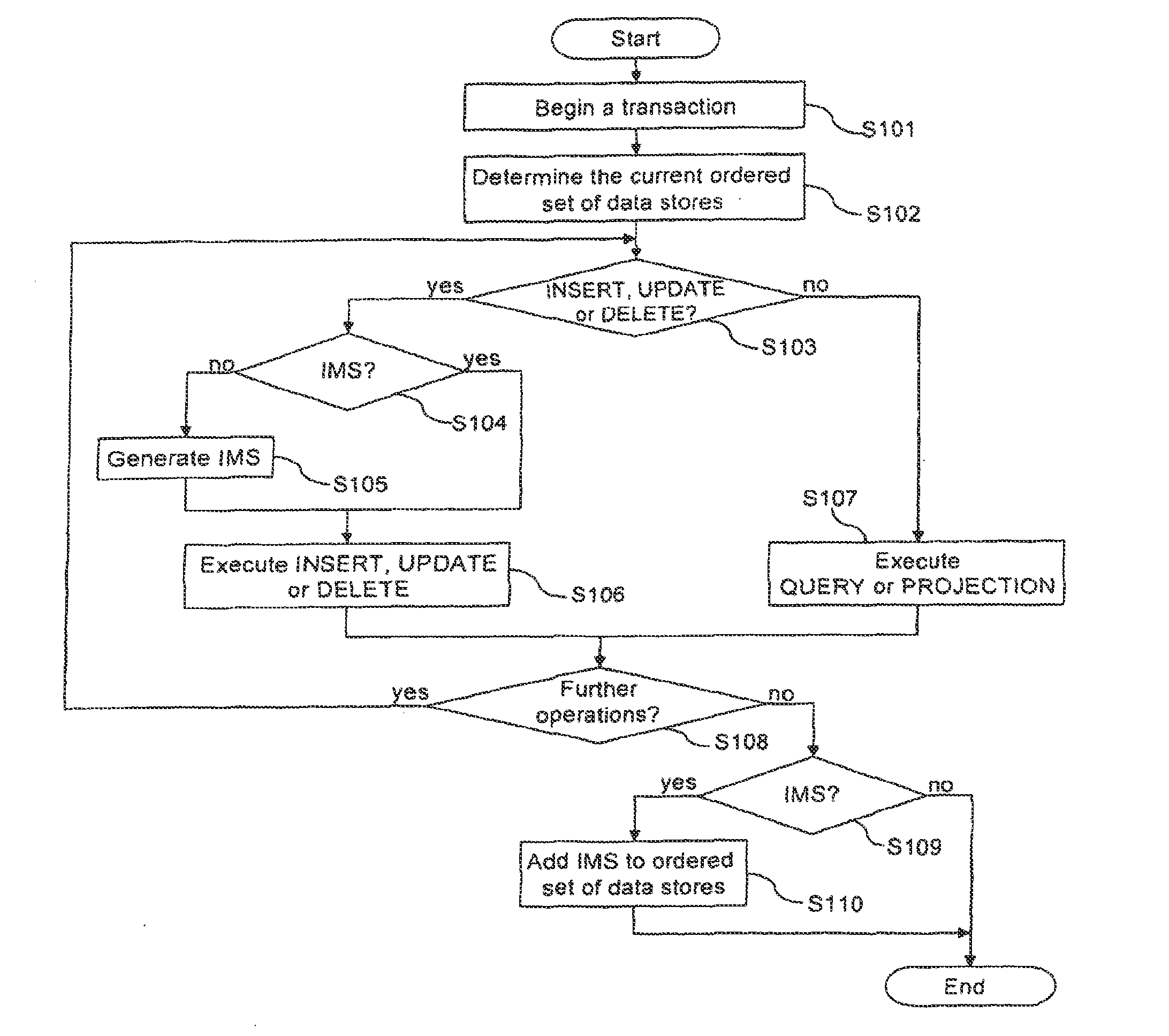

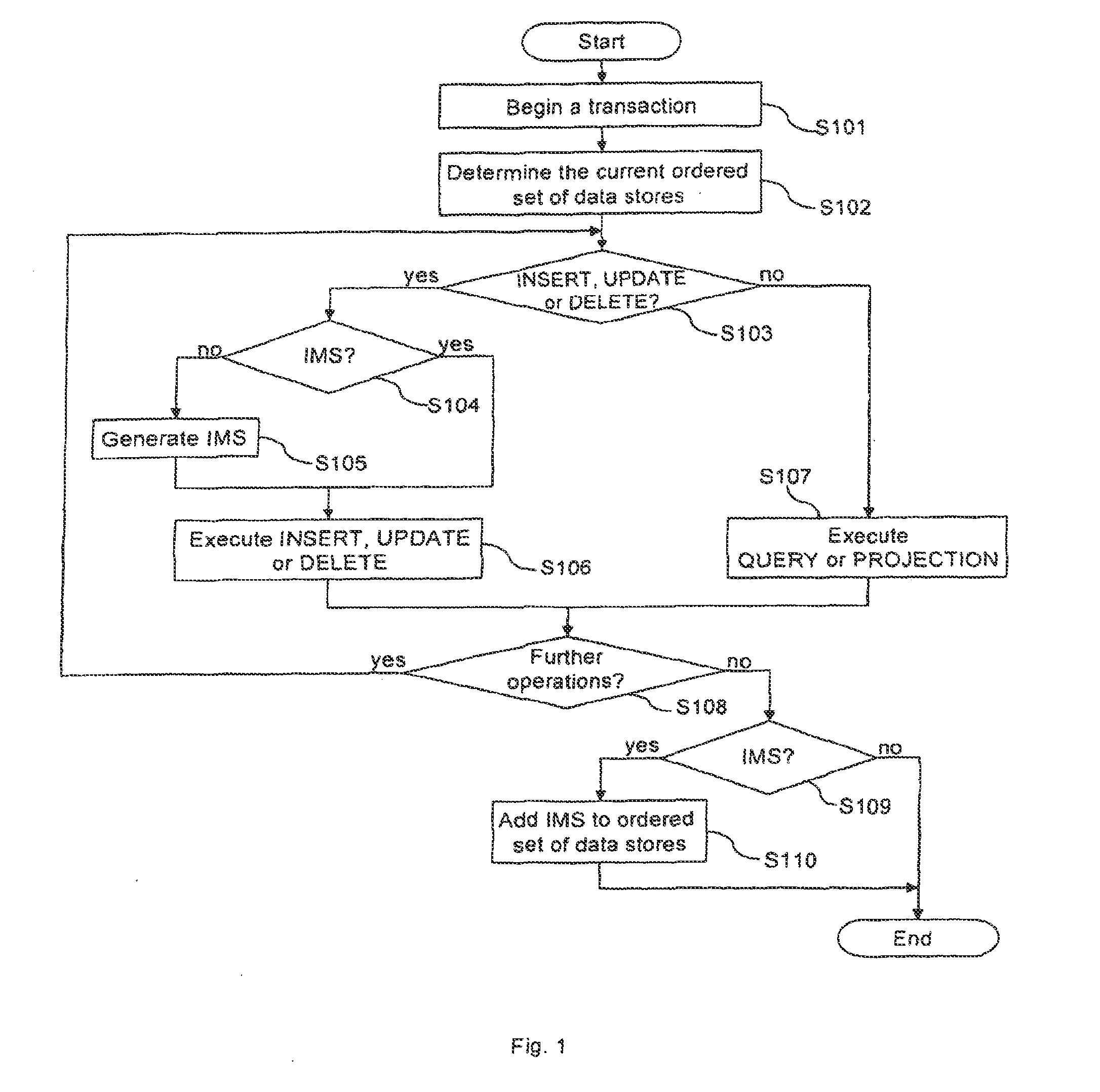

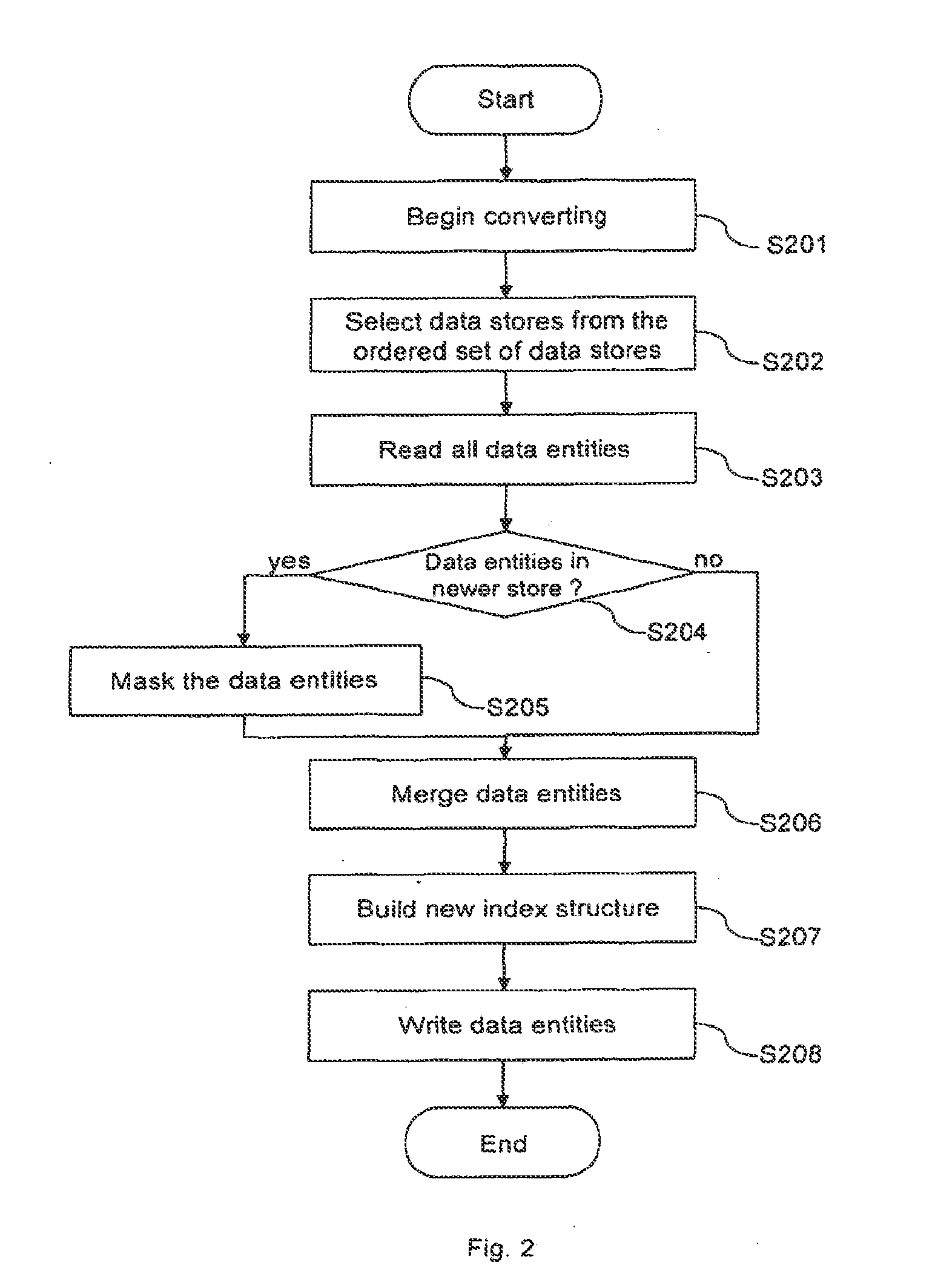

Method for performing transactions on data and a transactional database

ActiveUS20130110766A1Improve performanceLow costDigital data information retrievalDigital data processing detailsData storingOrder set

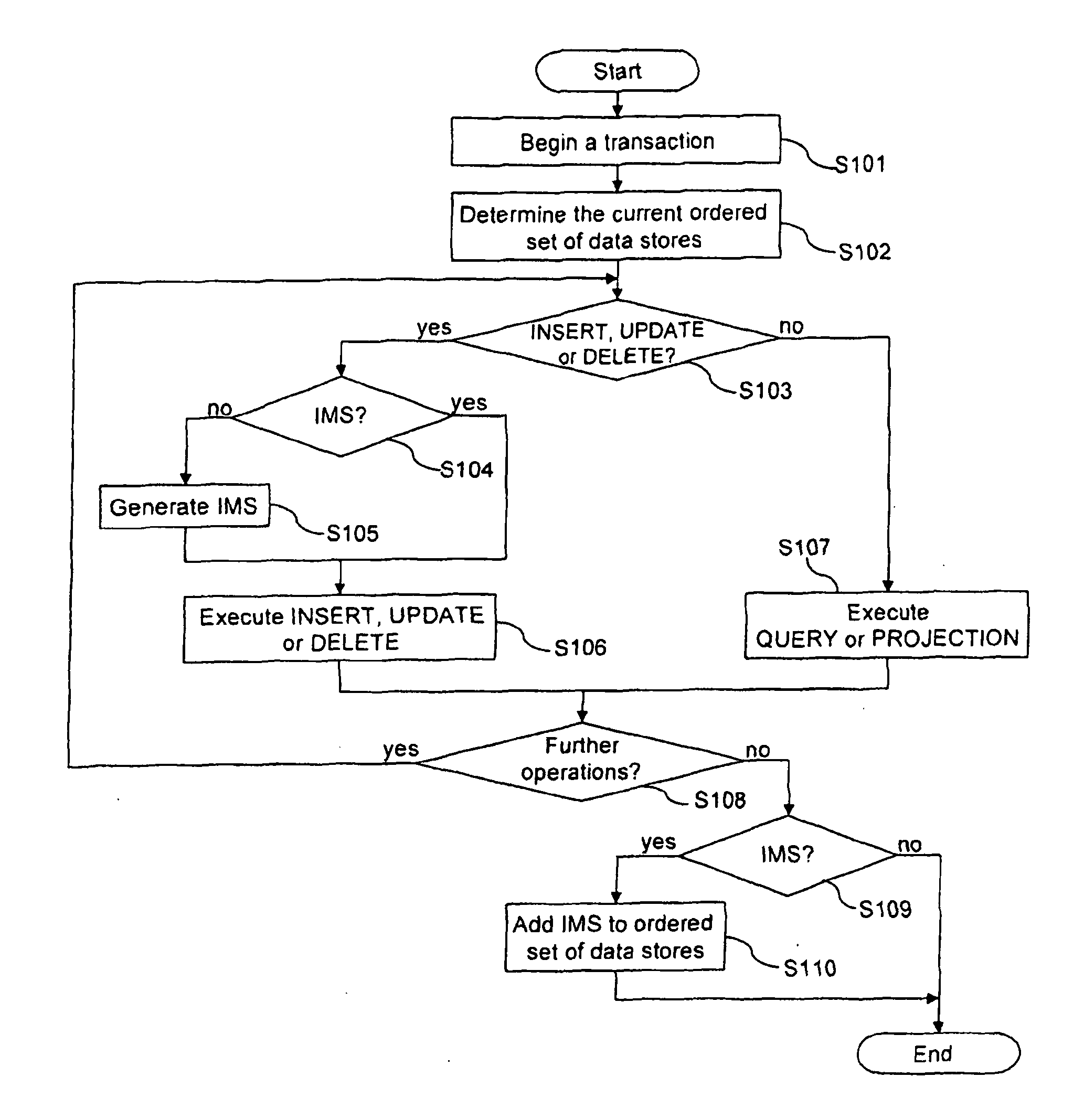

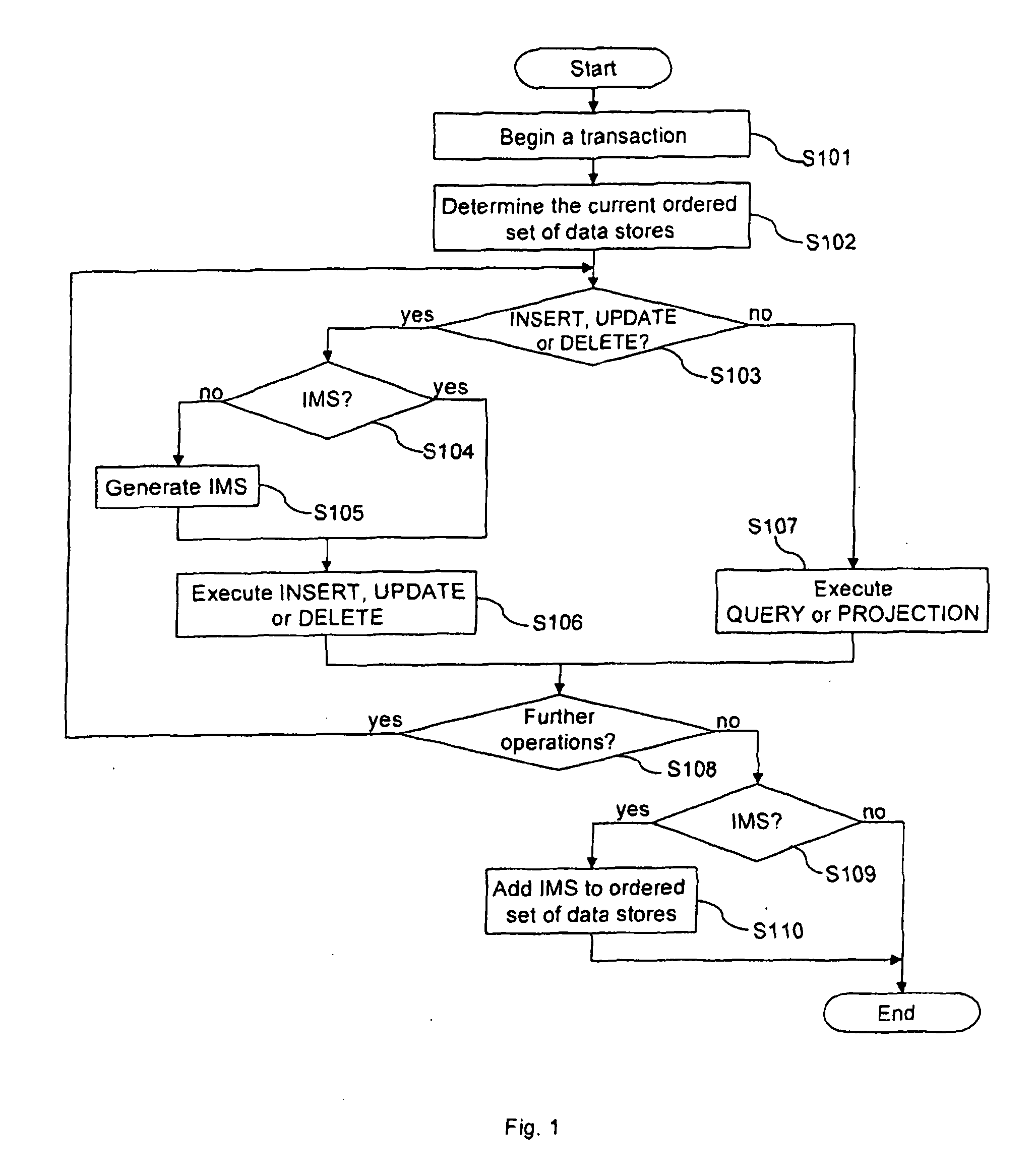

The present invention provides a method for performing transactions on data entities in a database and a transactional database. The database comprises an ordered set of data stores with at least one static data store, wherein said static data store uses an index structure based on a non-updatable representation of an ordered set of integers according to the principle of compressed inverted indices. The method allows to generate a modifiable data store when the performed transaction comprises an insert, update or delete operation, to execute operations of the transaction on the ordered set being present at the time when the transaction has been started and, if present, on the modifiable data store and to convert data stores to a new static data store, The insert, update or delete operation are executed on the modifiable data store which is the only data store modifiable for the transaction.

Owner:OPEN TEXT SA ULC

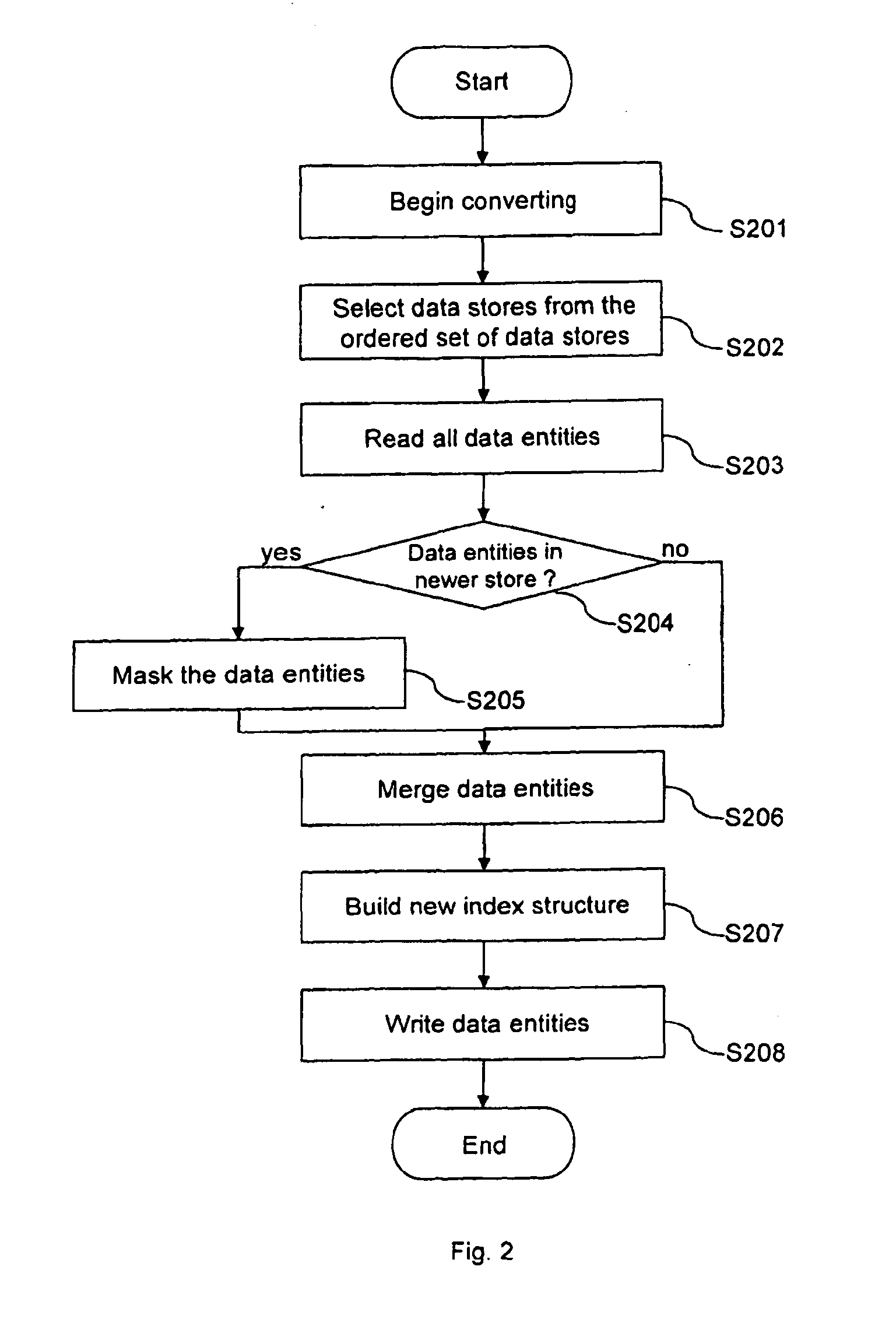

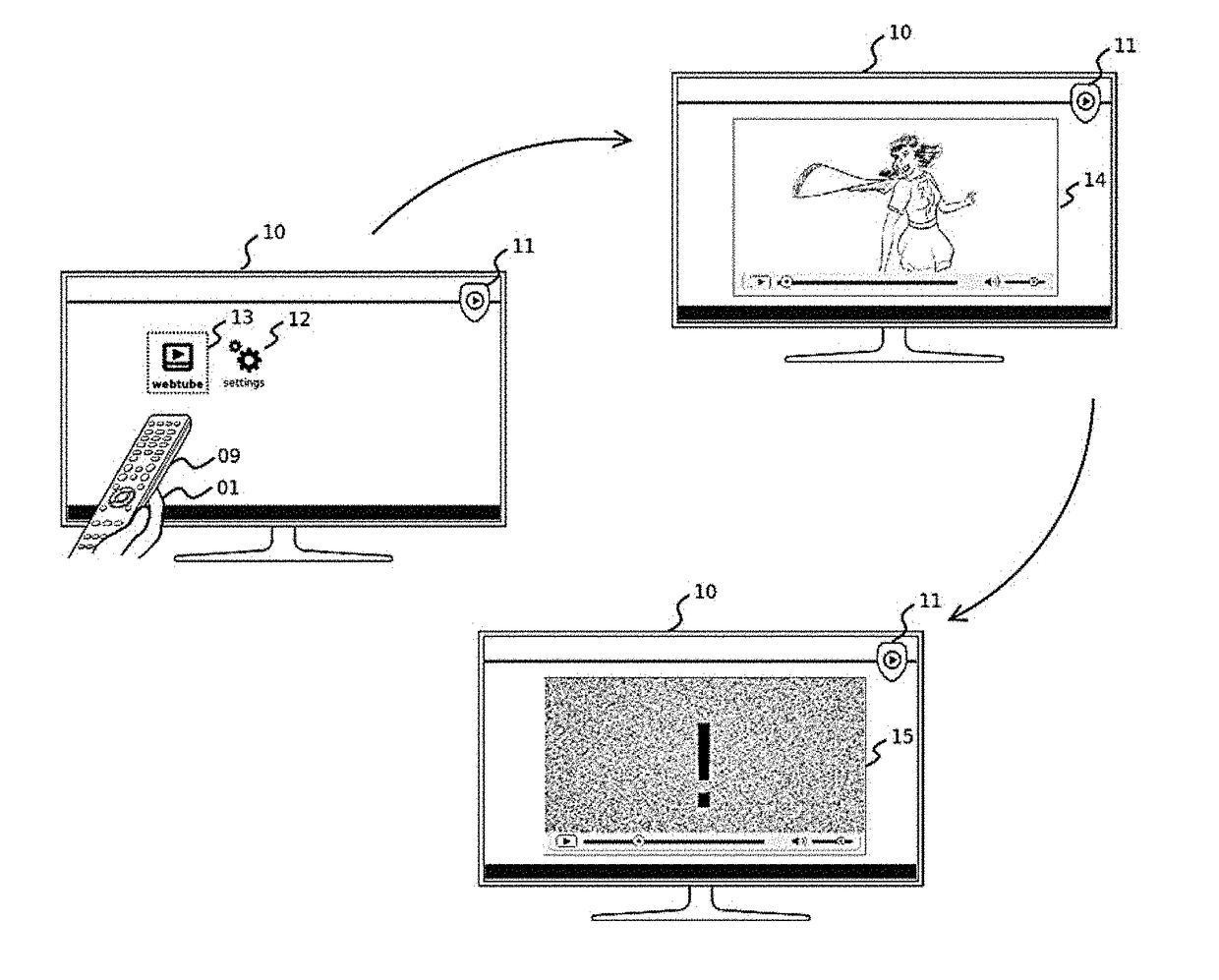

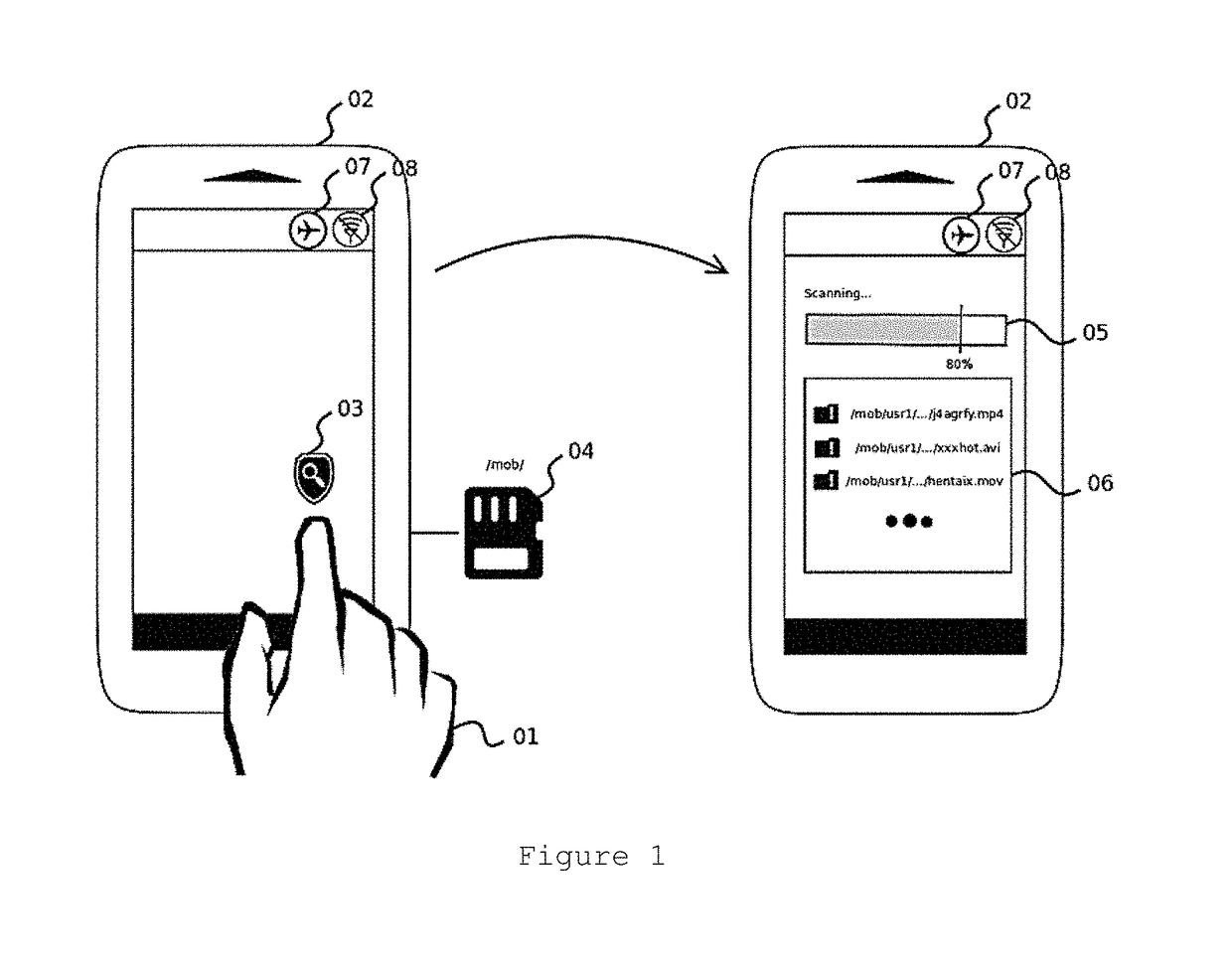

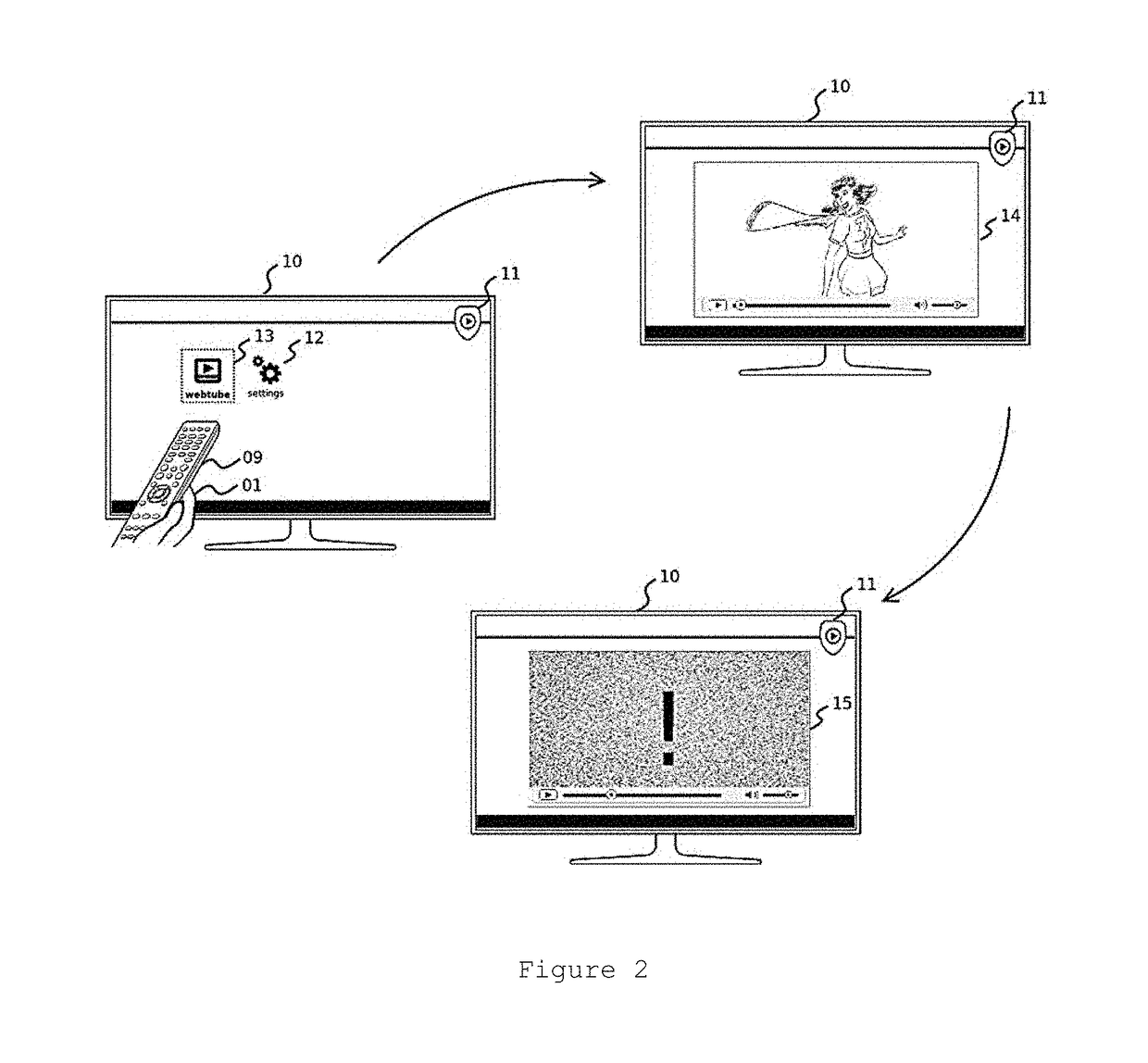

Multimodal and real-time method for filtering sensitive media

ActiveUS20170289624A1Bridging the Semantic GapEfficient and effectiveMetadata video data retrievalSpeech analysisPattern recognitionDigital video

A multimodal and real-time method for filtering sensitive content, receiving as input a digital video stream, the method including segmenting digital video into video fragments along the video timeline; extracting features containing significant information from the digital video input on sensitive media; reducing the semantic difference between each of the low-level video features, and the high-level sensitive concept; classifying the video fragments, generating a high-level label (positive or negative), with a confidence score for each fragment representation; performing high-level fusion to properly match the possible high-level labels and confidence scores for each fragment; and predicting the sensitive time by combining the labels of the fragments along the video timeline, indicating the moments when the content becomes sensitive.

Owner:SAMSUNG ELECTRONICSA AMAZONIA LTDA +1

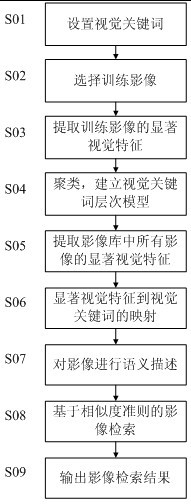

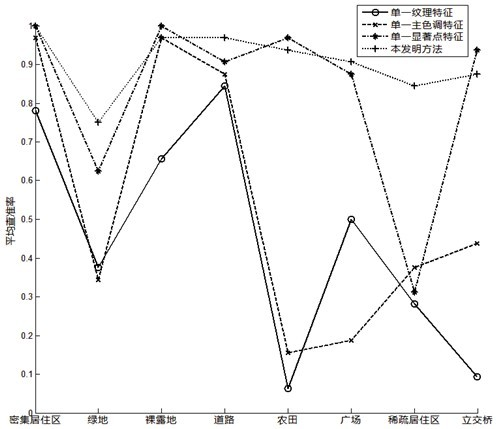

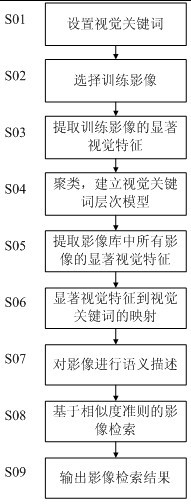

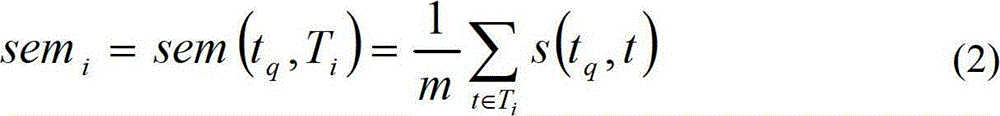

Visual keyword based remote sensing image semantic searching method

InactiveCN102073748AImprove recallImprove precisionCharacter and pattern recognitionSpecial data processing applicationsCluster algorithmFeature vector

The invention relates to a visual keyword based remote sensing image semanteme searching method. The method comprises the following steps: setting visual keywords which describe image contents in an image base; selecting a training image from the image base; extracting remarkable visual characteristics of each training image, wherein the remarkable visual characteristics include remarkable points, main dominant tone and texture; acquiring a key mode through a cluster center of a cluster algorithm; establishing a visual keyword hierarchical model by adopting a Gaussian mixture model; extracting the remarkable visual characteristics of all images in the image base, setting weight parameters, and constructing a visual keyword characteristic vector describing the image semanteme; and calculating the similarity between an image to be searched and all images according to the similarity criterion, and outputting a search result according to the high-low sequence of the similarity. The method can effectively improve the recall ratio and the precision ratio of image searching by establishing the correlation between low-layer remarkable visual characteristics and high-layer semantic information through the visual keywords, and the technical scheme provided by the invention has excellent expansibility.

Owner:WUHAN UNIV

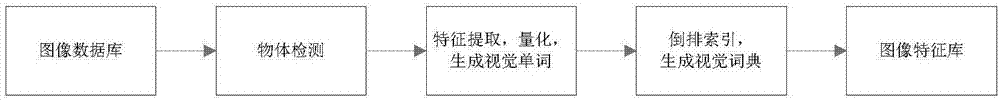

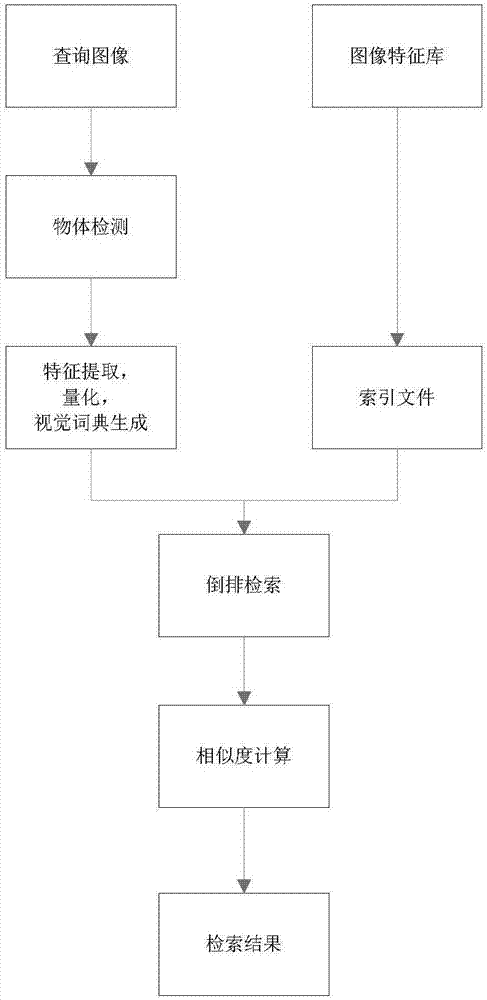

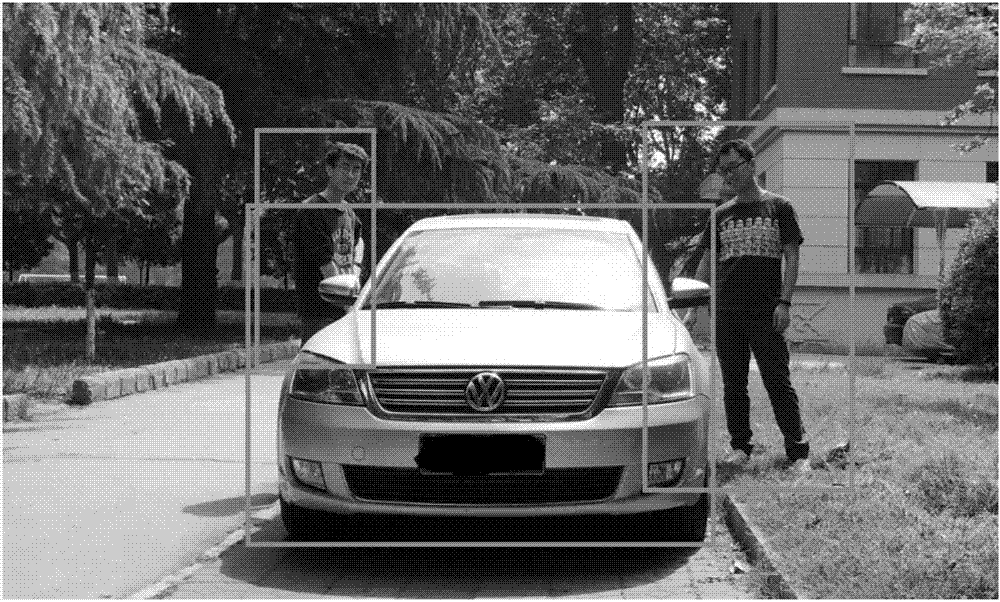

Image retrieval method based on object detection

ActiveCN107256262AReduce distractionsImprove accuracyCharacter and pattern recognitionSpecial data processing applicationsSemantic gapImaging Feature

The invention discloses an image retrieval method based on object detection. The method is used for solving the problem that multiple objects in an image are not retrieved respectively during image retrieval. According to the implementation process of the method, object detection is performed on an image in an image database, and one or more objects in the image are detected; SIFT features and MSER features of the detected objects are extracted and combined to generate feature bundles; a K mean value and a k-d tree are adopted to make the feature bundles into visual words; visual word indexes of the objects in the image database are established through reverse indexing, and an image feature library is generated; and an object detection method is used to make objects in a query image into visual words, similarity compassion is performed on the visual words of the query image and the visual words of the image feature library, and the image with the highest score is output to serve as an image retrieval result. Through the method, the objects in the image can be retrieved respectively, background interference and image semantic gaps are reduced, and accuracy, retrieval speed and efficiency are improved; and the method is used for image retrieval on a specific object in the image, including a person.

Owner:XIDIAN UNIV

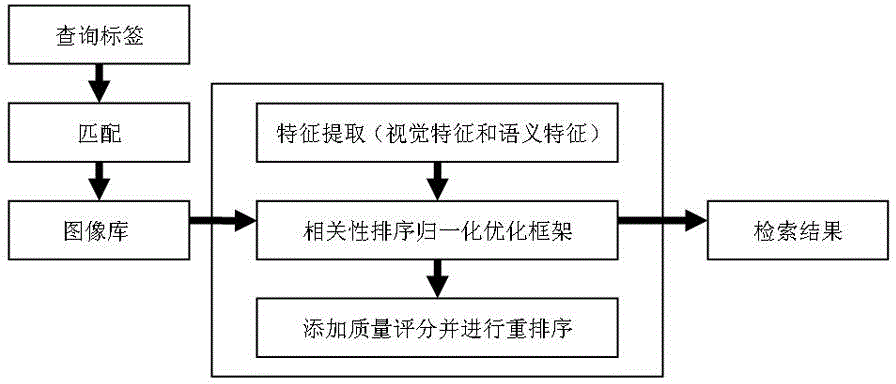

Correlation-quality sequencing image retrieval method based on tag retrieval

InactiveCN102750385ABridging the Semantic GapAttract attentionSpecial data processing applicationsRe sequencingSorting algorithm

The invention discloses a correlation-quality sequencing image retrieval method based on tag retrieval. The correlation-quality sequencing image retrieval method comprises the following steps of: firstly, automatically sequencing social images according to the correlation of images and tags, fusing the vision consistency among the images and the vision consistency of the semanteme correlation among the images and the tags by utilizing a normalization frame, and solving an optimization problem by utilizing an iteration algorithm so as to obtain a correlation sequence; and selecting the brightness, the contrast ratio and the color variety of the images to be used as quality characteristics of the images to balance previously returned images, overlaying correlation scores and quality scores by utilizing a linear model, and re-sequencing the images according to a final total score, and therefore, a correlation-quality sequencing algorithm is realized.

Owner:BEIJING YINGPU TECH CO LTD

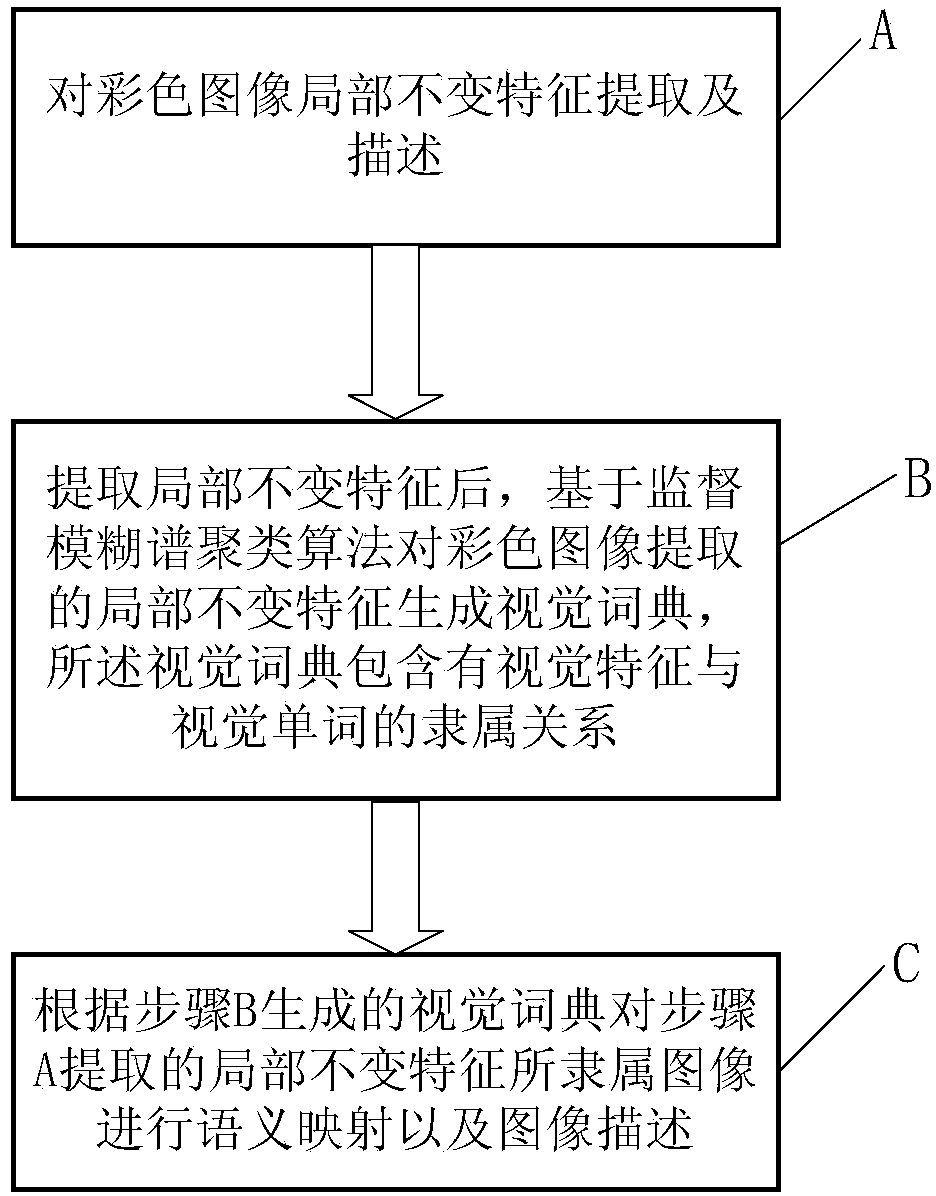

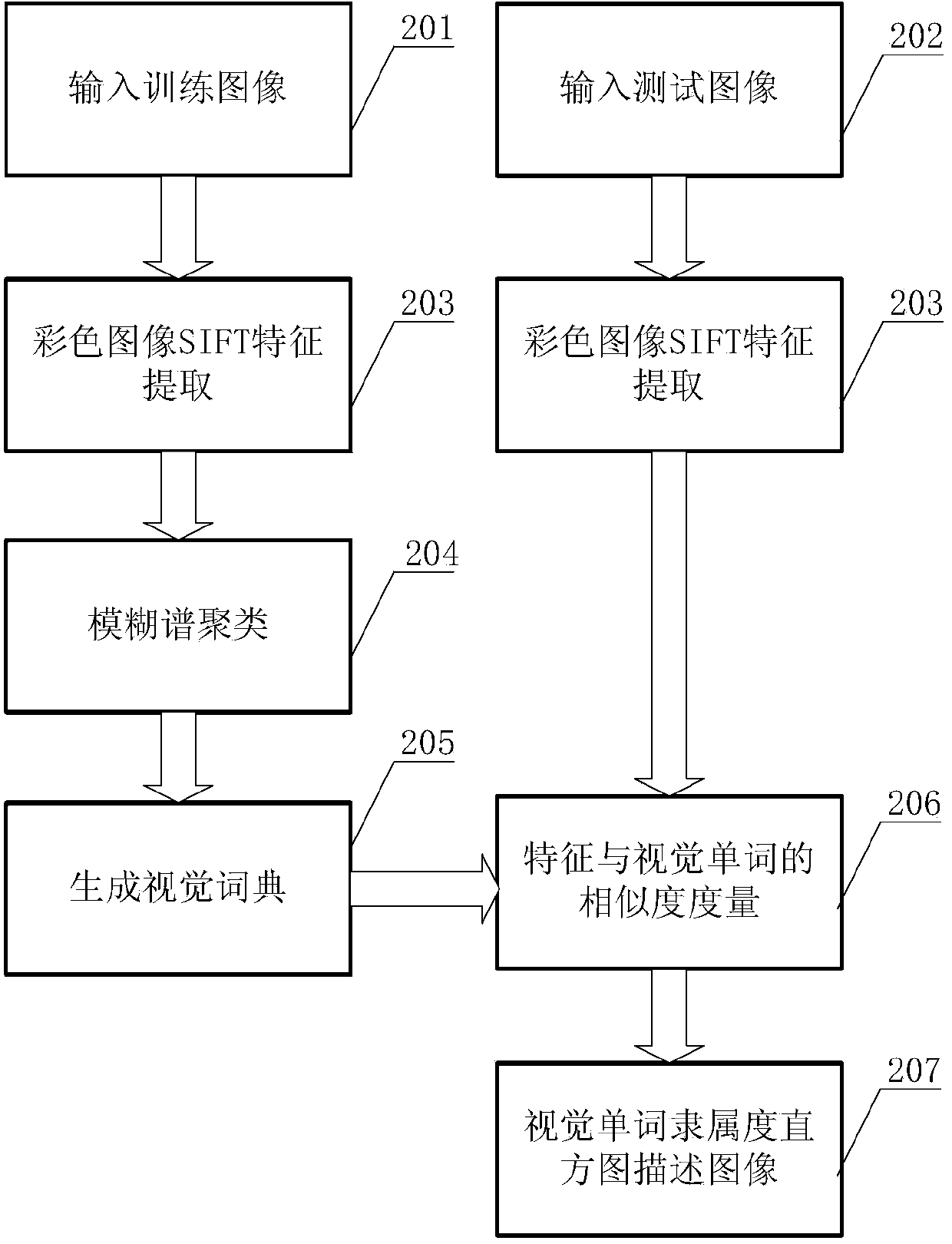

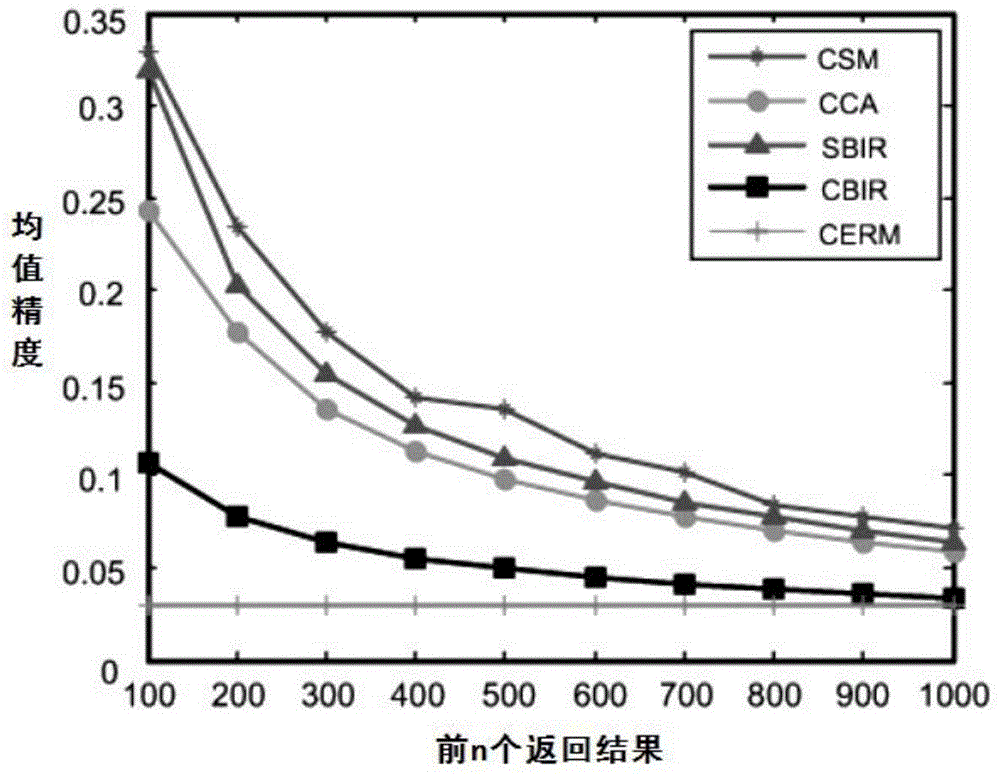

Semantic mapping method of local invariant feature of image and semantic mapping system

ActiveCN103530633AImprove accuracyBridging the Semantic GapCharacter and pattern recognitionSpecial data processing applicationsSpectral clustering algorithmSemantic gap

The invention is applicable to the technical field of image processing and provides a semantic mapping method of the local invariant feature of an image. The semantic mapping method comprises the following steps of step A: extracting and describing the local invariant feature of the colorful image; step B: after extracting the local invariant feature, generating a visual dictionary for the local invariant feature extracted from the colorful image on the basis of an algorithm for supervising fuzzy spectral clustering, wherein the visual dictionary comprises the attached relation of visual features and visual words; step C: carrying out semantic mapping and image description on the attached image with the local invariant feature extracted in the step A according to the visual dictionary generated in the step B. The semantic mapping method provided by the invention has the advantages that the problem of semantic gaps can be eliminated, the accuracy of image classification, image search and target recognition is improved and the development of the theory and the method of machine vision can be promoted.

Owner:湖南植保无人机技术有限公司

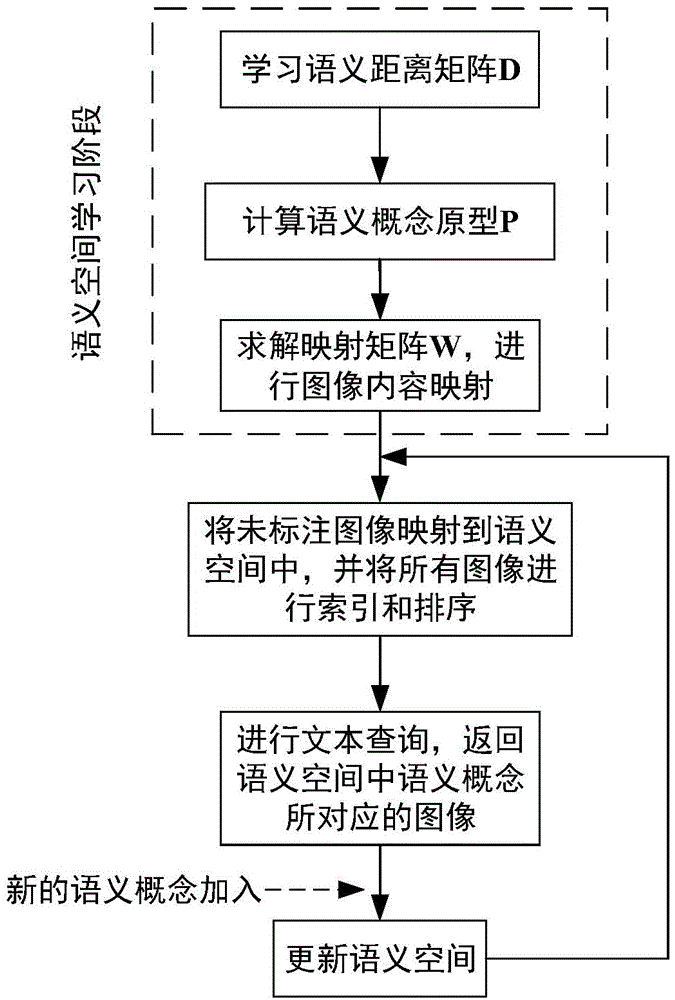

Image retrieval method based on semantic mapping space construction

ActiveCN104156433AValid setFast updateStill image data retrievalSpecial data processing applicationsText searchingSemantic space

The invention discloses an image retrieval method based on semantic mapping space construction. The image retrieval method is characterized by comprising the steps of (1) learning semantic mapping space, (2) estimating a semantic concept of each image which is not labeled, (3) conducting ascending sorting on the images corresponding to the semantic concepts in the semantic space, (4) inputting text search terms to be retrieved, and returning the images corresponding to the semantic concepts. According to the image retrieval method, the image retrieval accuracy can be effectively improved.

Owner:HEFEI UNIV OF TECH

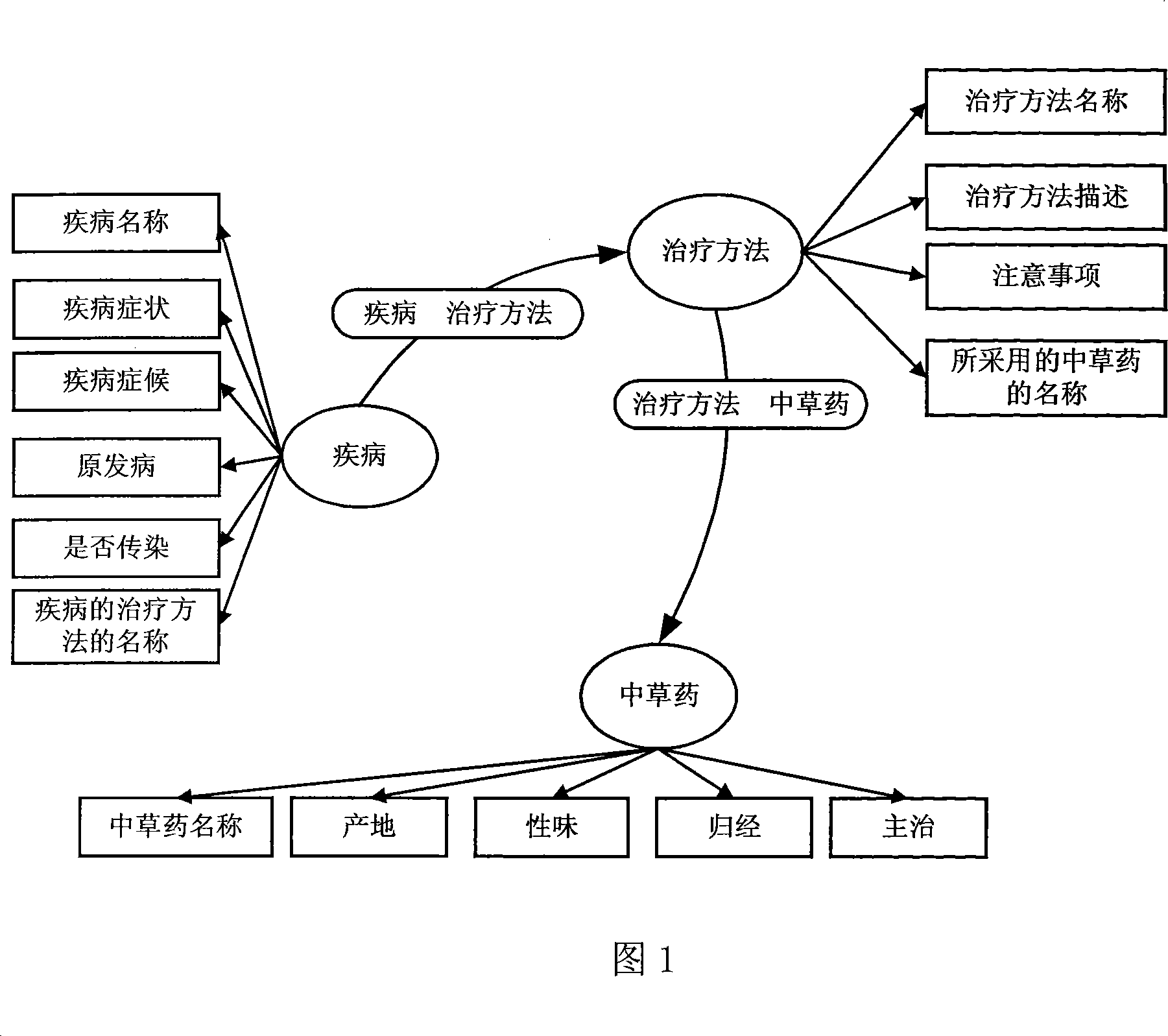

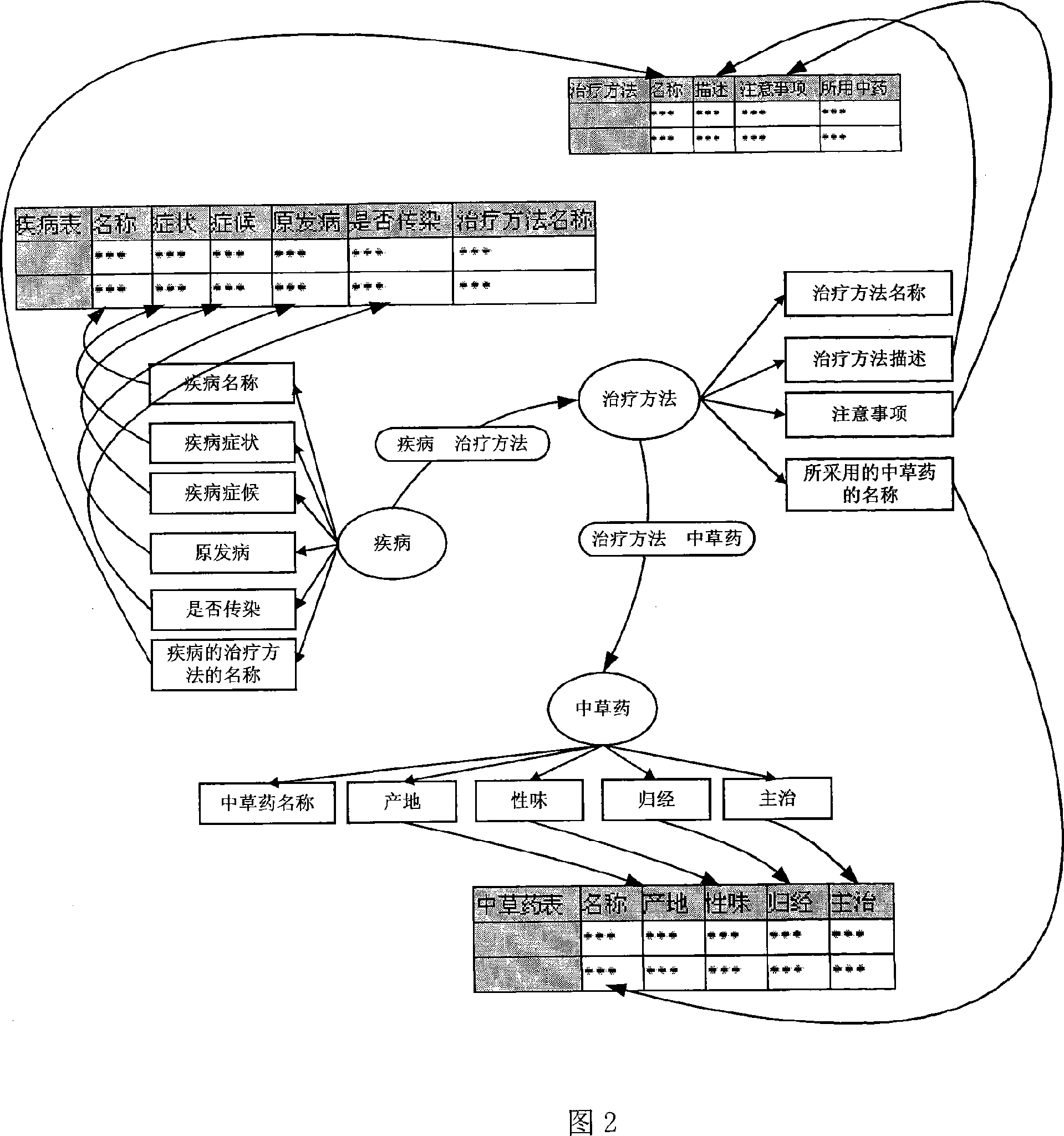

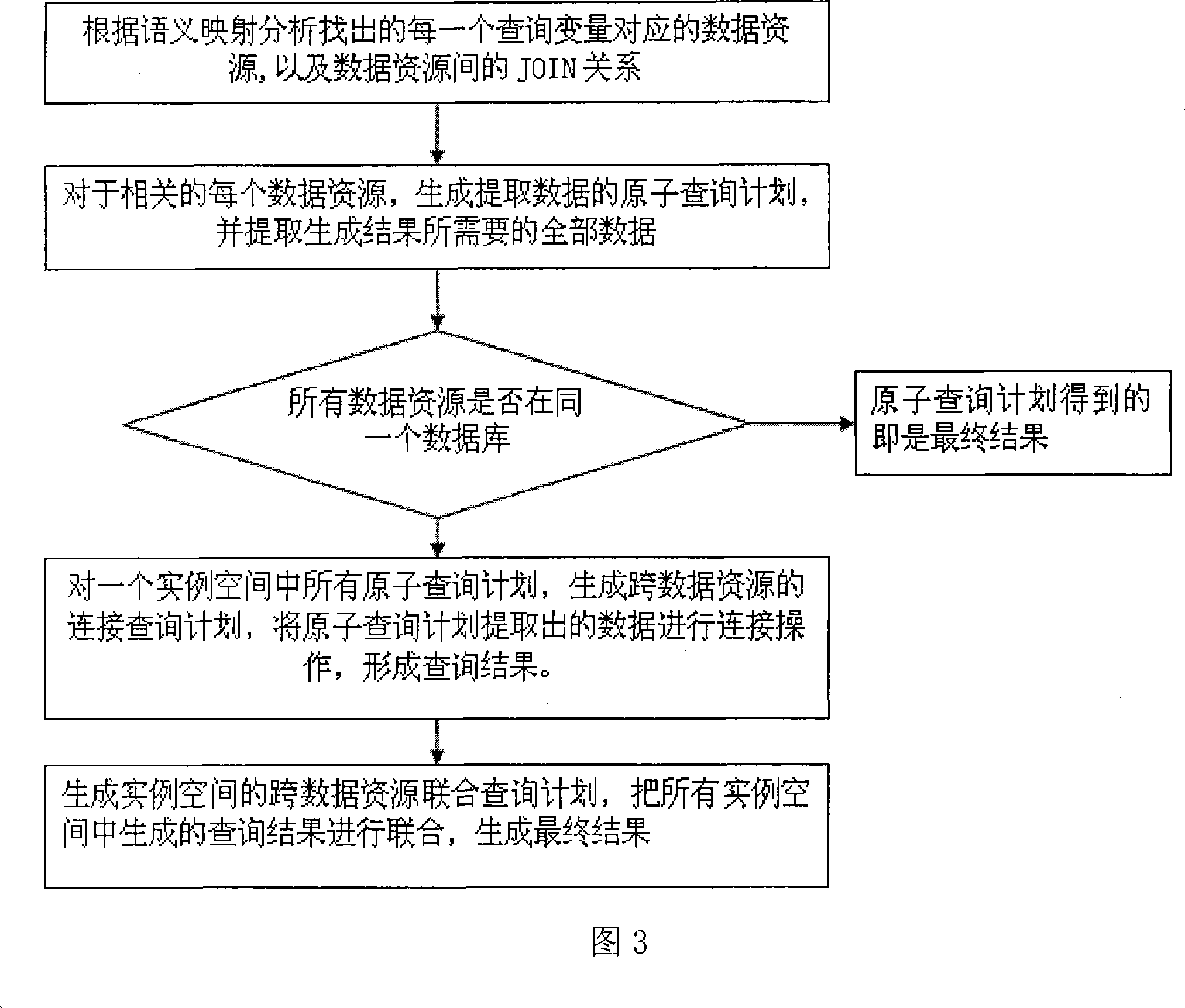

Heterogeneous relational database data integration method based on meaning

InactiveCN101149749AHigh feasibilityImplement dynamic joinSpecial data processing applicationsRelational databaseData integration

The invention discloses a data integration method based on semantic heterogeneous and isomerous relational database. It achieves the sharing of massive relational data through construction of ontological warehouse in the field and register various relational database to body model. The invention effectively shield model differences exist in the relational database of different medium and structure, compared with data integration method of other heterogeneous and isomerous relational database, this invention embodies good performances in feasibility, dynamic augmentability, universality and transparency aspect.

Owner:ZHEJIANG UNIV

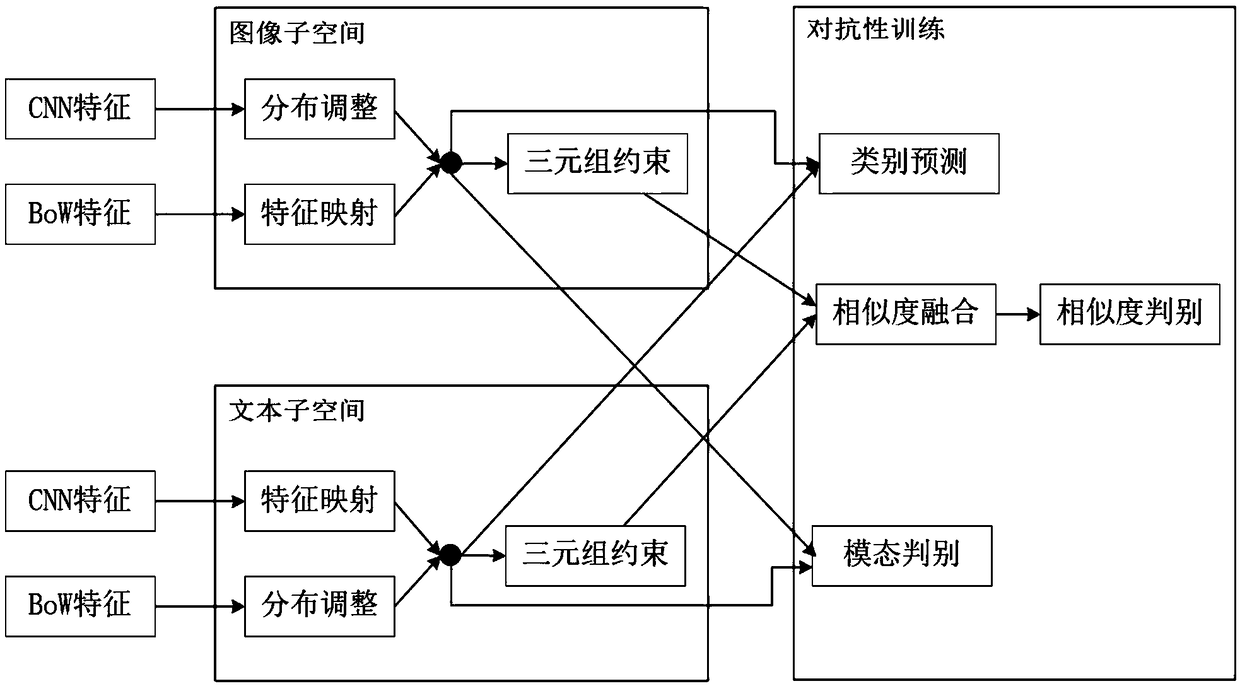

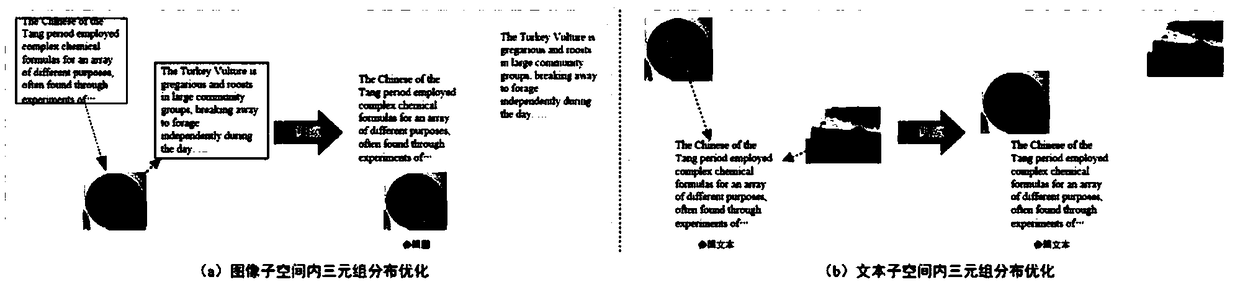

An antagonistic cross-media retrieval method based on bilingual semantic space

ActiveCN109344266AStrong complementarityAchieve balanceMetadata multimedia retrievalSpecial data processing applicationsSemantic gapVector distribution

The invention discloses an antagonistic cross-media retrieval method based on bilingual meaning space, which relates to the technical fields of pattern recognition, natural language processing, multimedia retrieval and the like. Including: feature generation process, bilingual semantic space construction process and antagonistic semantic space optimization process. The invention realizes that theoriginal image and the text information are kept to the maximum extent while the semantic gap is eliminated by establishing an isomorphic bilingual meaning space, namely a text subspace and an image subspace. And through antagonism training to optimize the distribution of isomorphic subspace data, mining the rich semantic information in multimedia data, and fitting the vector distribution of different modes in the semantic space under the condition that the categories remain unchanged and the modes can be distinguished. The method of the invention can effectively eliminate the heterogeneity ofdifferent modal information, realize effective cross-media retrieval, and has wide market demand and application prospect in the fields of picture and text retrieval, pattern recognition and the like.

Owner:PEKING UNIV SHENZHEN GRADUATE SCHOOL

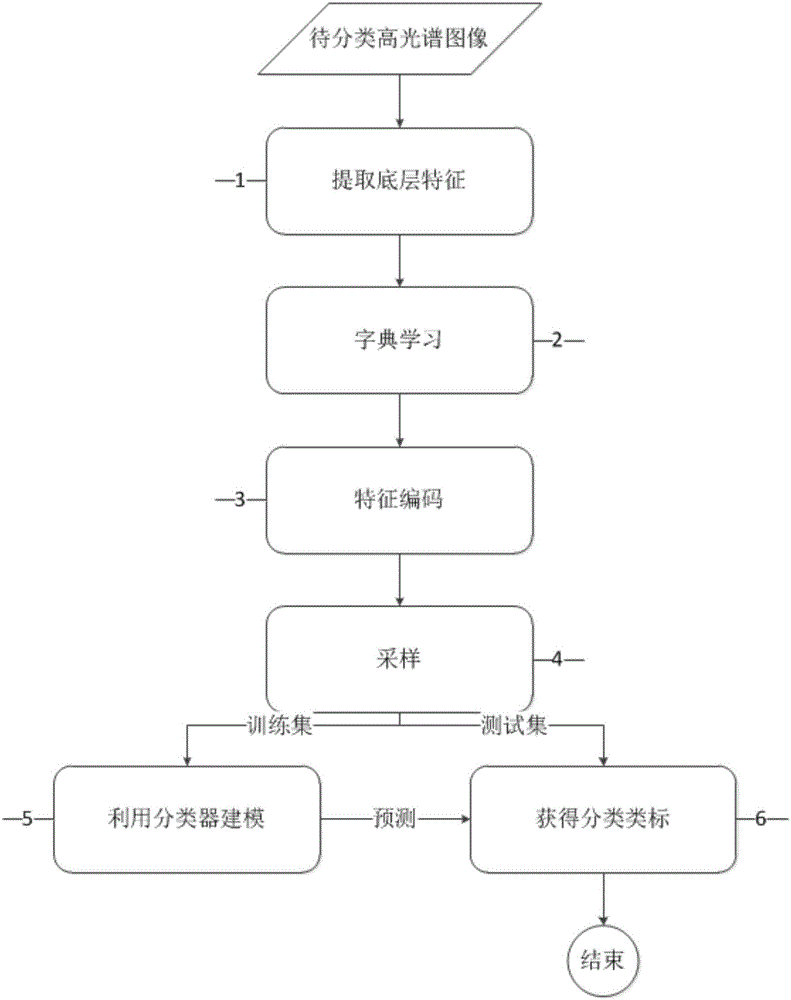

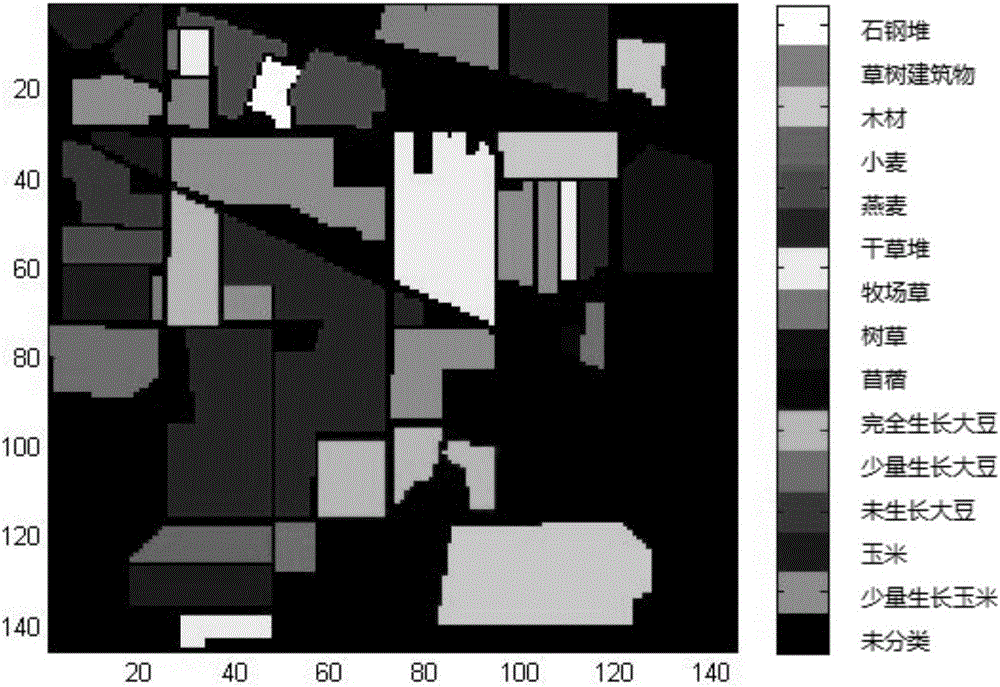

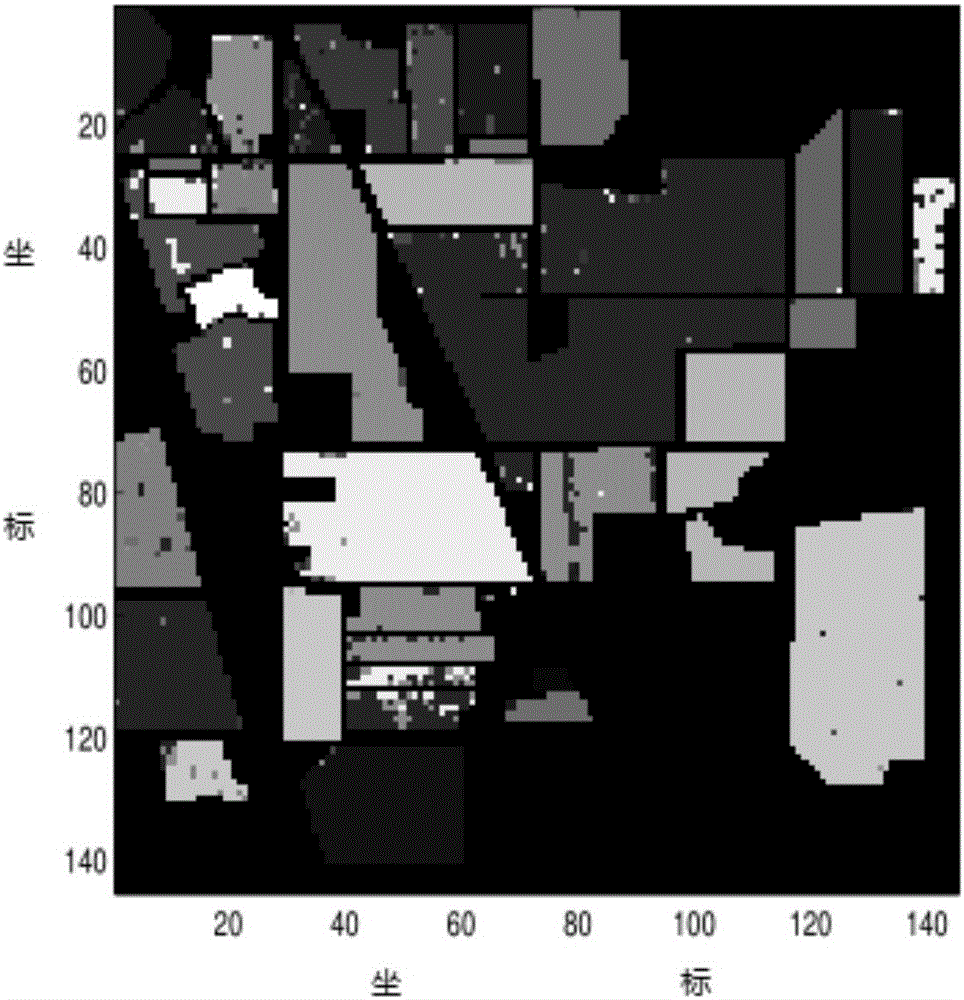

High spectral image classification method based on morphological characteristics and dictionary learning

InactiveCN106203510ABridging the "semantic gap"Improve use valueCharacter and pattern recognitionDictionary learningSemantic gap

The invention discloses a high spectral image classification method based on morphological characteristics and dictionary learning. The method comprises the following steps of extracting morphological characteristics from high spectral images, a process of dictionary learning, coding the characteristics, and classifying the images. The method is applied to the field of high spectral image classification, the structural relation of space information in the high spectral images is taken into full consideration, high-level semantic mapping is constructed on the basis of the space relation information, high-level semantic codes which can maintain the structure information of the characteristic space effectively are obtained and used for a high spectral image classification task, and the problem that semantic gaps exist between the high level meanings and bottom characteristics of the high spectral images is overcome. The method has substantial effect in high spectral image classification, and has higher application values.

Owner:NANJING UNIV

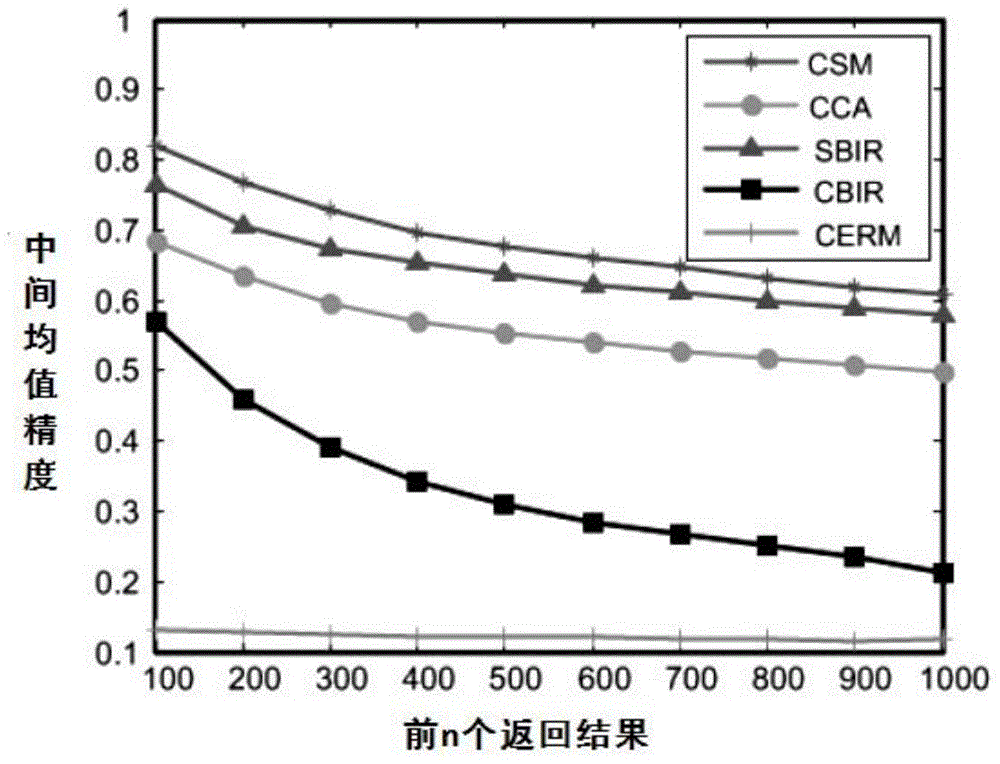

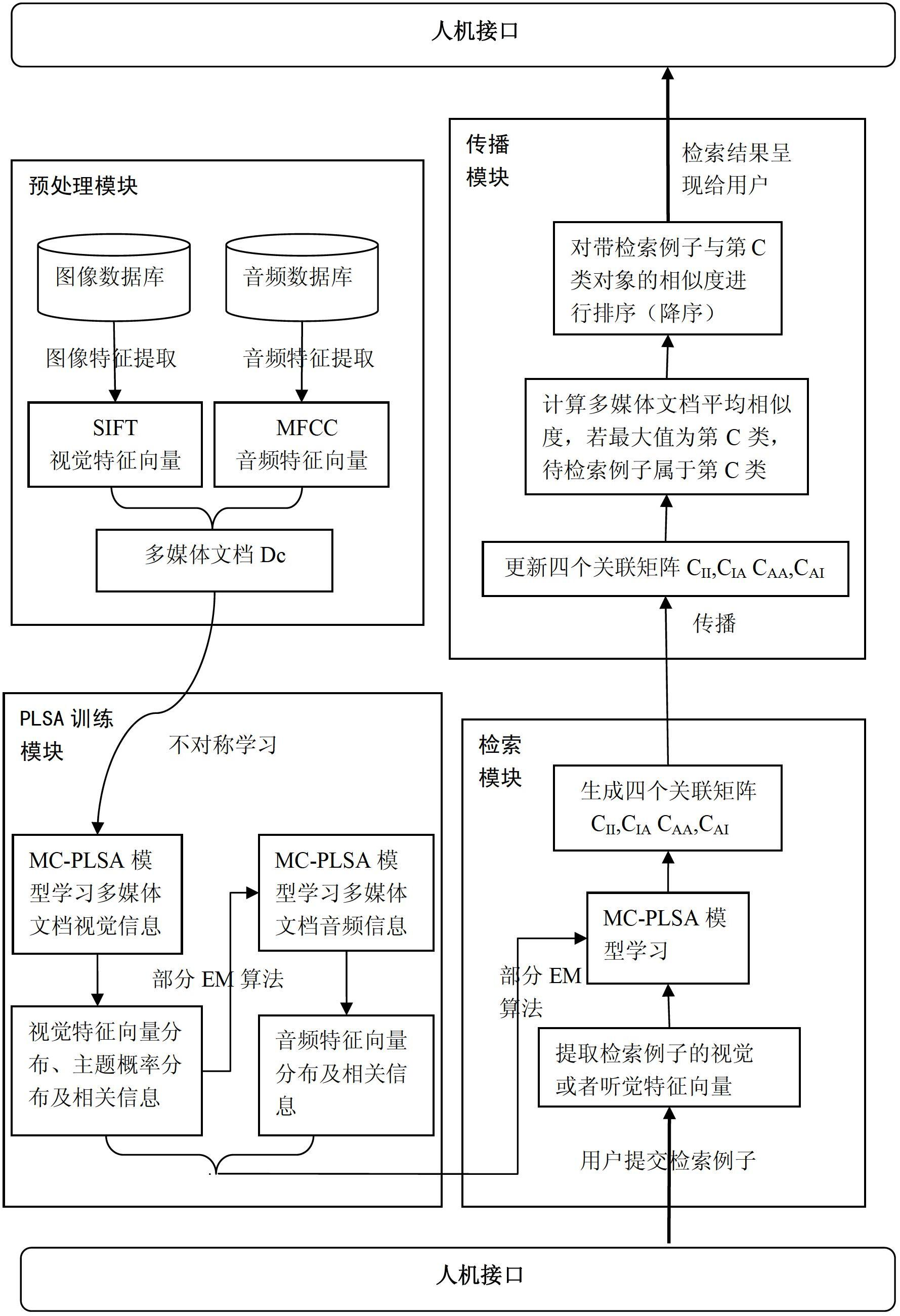

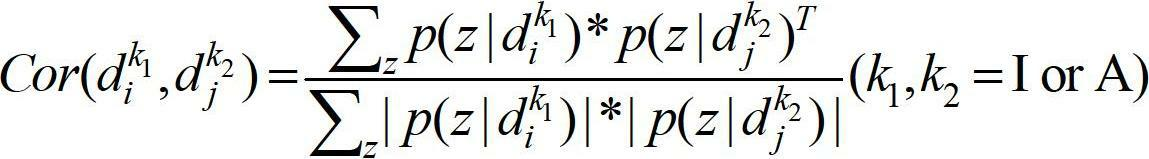

Cross-media information analysis and retrieval method

InactiveCN102693321AAlleviate the problem of excessive space complexitySolve the problem of feature heterogeneitySpecial data processing applicationsFeature vectorInformation analysis

The invention provides a cross-media information analysis and retrieval method, which comprises the following steps of: performing semantic integration processing on multimode information; performing expansion according to a probability latent semantic analysis model to obtain a multilayer continuous probability latent semantic analysis model for processing a continuous feature vector; learning the multilayer-continuous probability latent semantic analysis model by adopting an asymmetric learning method, and calculating the visual feature vector distribution of an image, the visual feature vector distribution of an audio and topic probability distribution; submitting a training set and a tested media object which serves as a retrieval example by a user, and calculating intra-mode and inter-mode initial similarity values of the image and the audio in the retrieval sample; constructing a propagation model, and updating the intra-mode and inter-mode similarity values according to the propagation model; and performing secondary retrieval according to the updated similarity values.

Owner:CHANGZHOU HIGH TECH RES INST OF NANJING UNIV

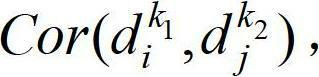

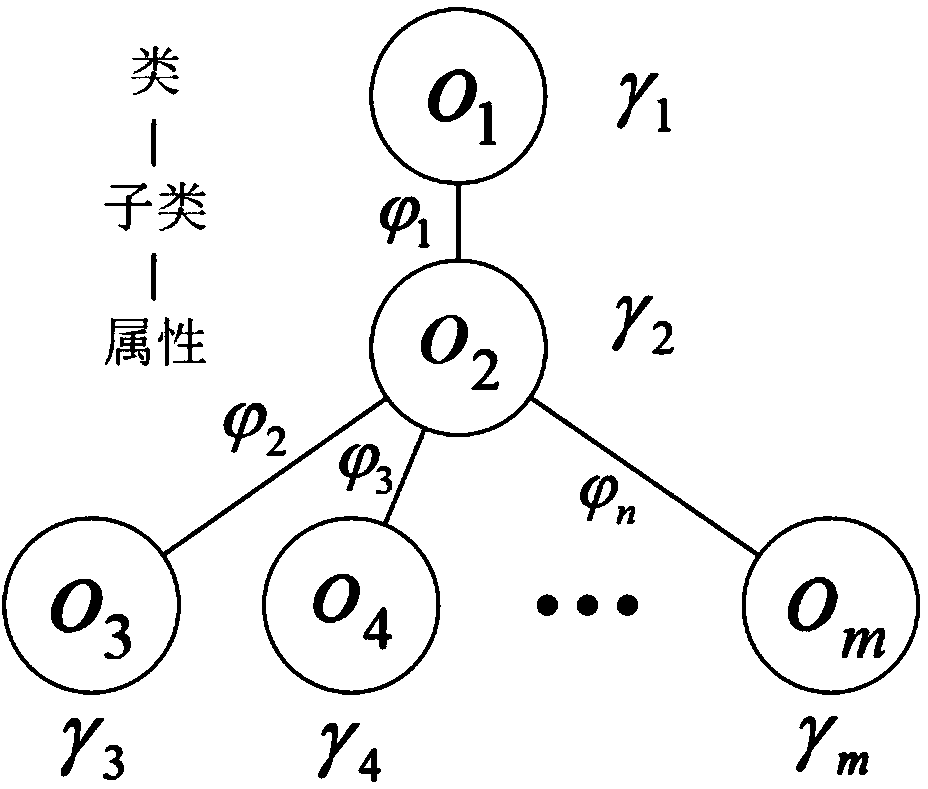

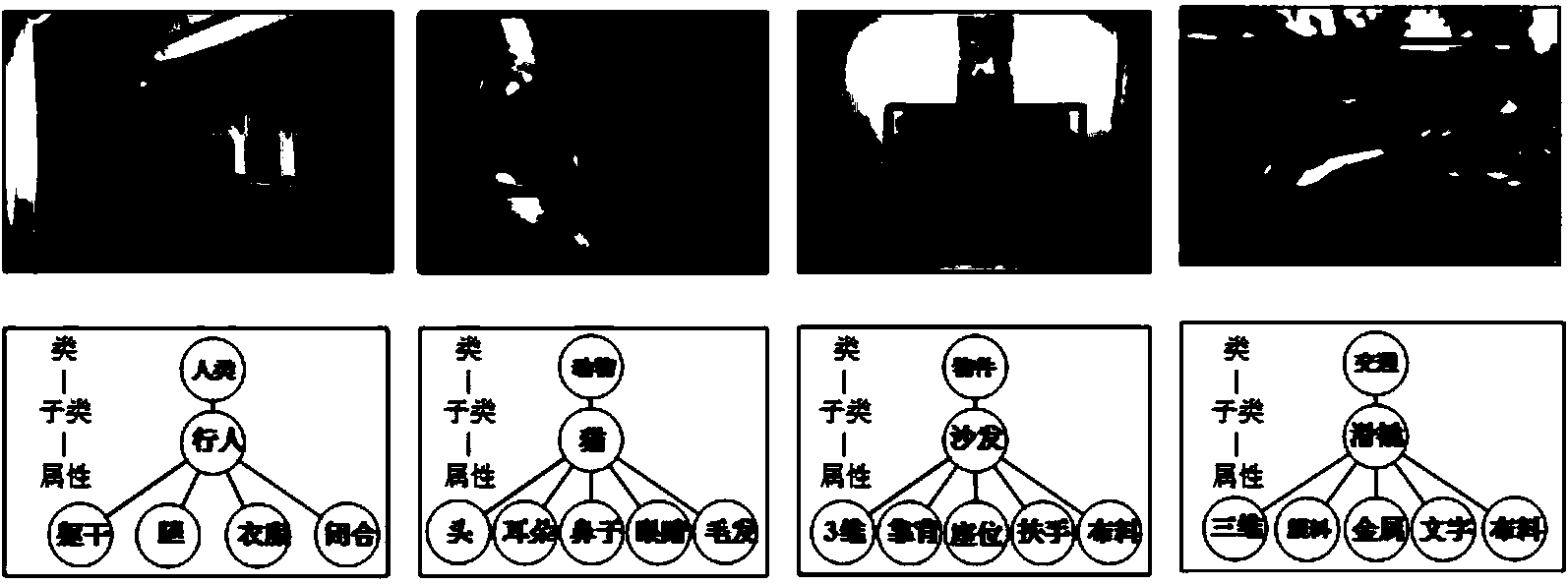

Structured image description method

InactiveCN103530403AEasy to understandComprehensive descriptionCharacter and pattern recognitionSpecial data processing applicationsConditional random fieldImage description

The invention belongs to the technical field of image retrieval, in particular to a structured image description method. The structured image description method comprises the steps that an image for training is obtained, and three-layer tree-shaped structure label is established for each object in the image, so that a training set is formed; the bottom-layer characteristics of each object of the image in the training set are extracted, all candidate classes, subclasses and classifiers with corresponding attributes are obtained through training, and therefore intermediate data required for modeling of the next step are formed; a conditional random field model is established and model parameters are obtained through training; image segmentation is firstly conducted, objects contained in an image to be described are segmented, and the bottom-layer characteristics of each object of the image to be described are further extracted; tree-shaped structure label of each object of the image to be described is predicated through the established CRF model and the model parameters obtained through training and according to the maximum product belief propagation algorithm. According to the structured image description method, the distinction degree between images can be improved and a good retrieval result is generated.

Owner:TIANJIN UNIV

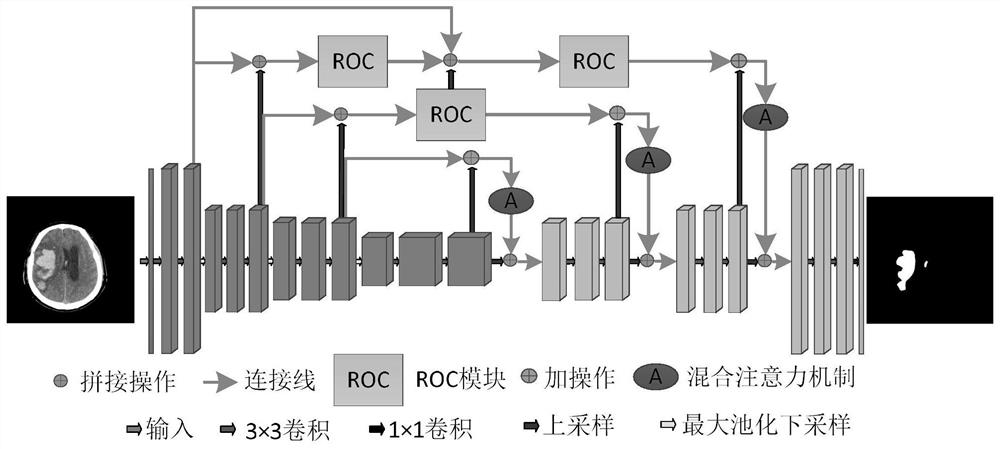

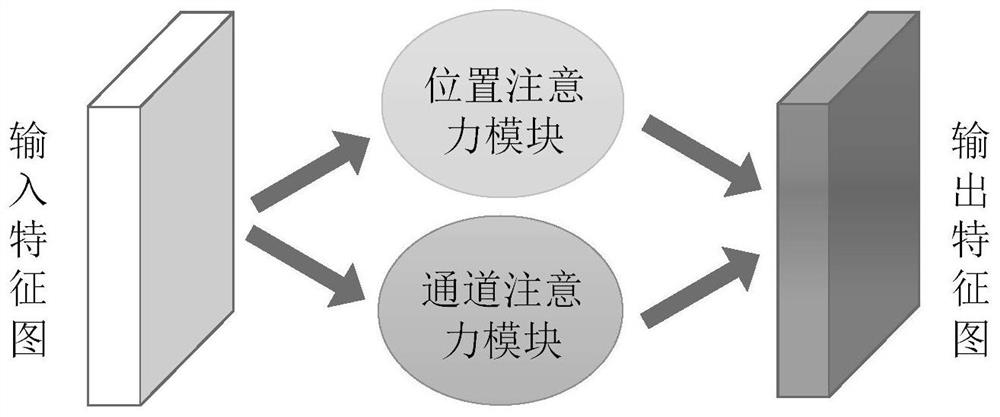

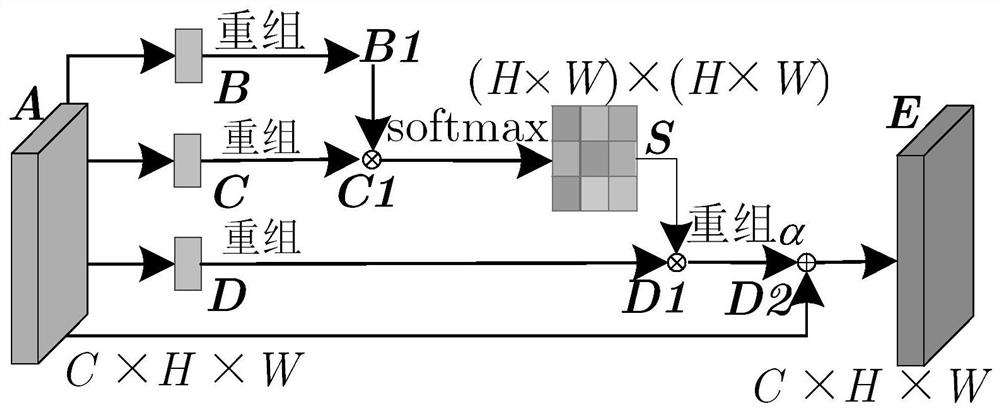

CT image segmentation method based on improved AU-Net network

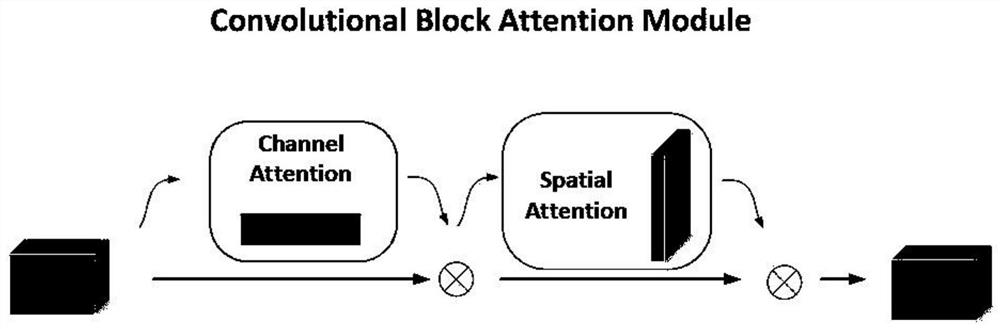

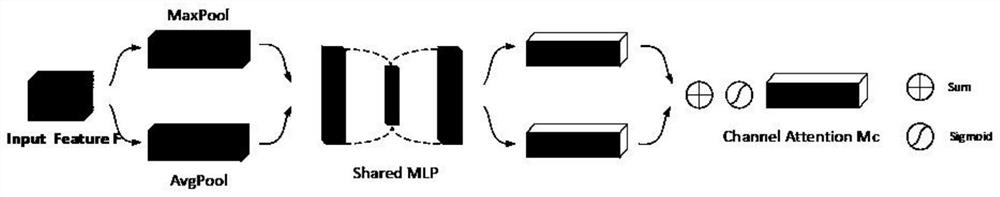

ActiveCN112927240ABridging the Semantic GapEnhanced Feature LearningImage enhancementImage analysisBrain ctImaging processing

The invention belongs to the field of image processing, and particularly relates to a CT image segmentation method based on an improved AU-Net network, and the method comprises the steps: obtaining a to-be-segmented brain CT image, and carrying out the preprocessing of the obtained brain CT image; inputting the processed image into the trained improved AU-Net network for image recognition and segmentation to obtain a segmented CT image; identifying a cerebral hemorrhage area according to the segmented brain CT image. The improved AU-Net network comprises an encoder, a decoder and a hopping connection part. Aiming at the problem of low segmentation precision caused by large size and shape difference of the hemorrhage part of the cerebral hemorrhage CT image, the invention provides a coding-decoding-based structure, and a residual octave convolution module is designed in the structure, so that the model can segment and identify the image more accurately.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

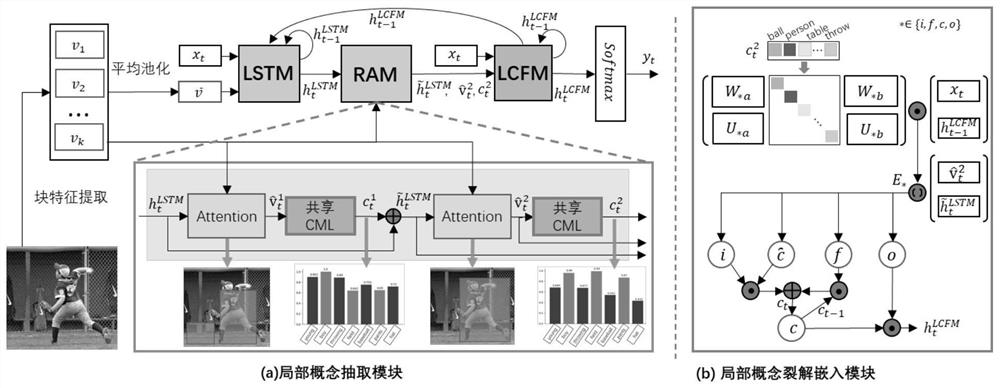

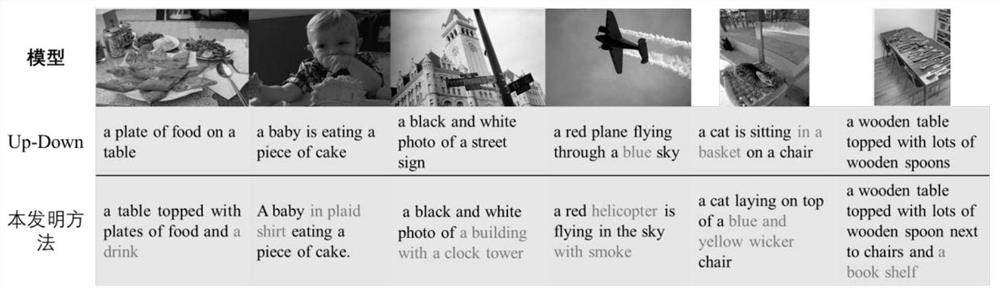

Image description method based on adaptive local concept embedding

ActiveCN111737511AAccurate connectionBridging the Semantic GapCharacter and pattern recognitionNatural language data processingImage descriptionSelf adaptive

The invention discloses an image description method based on self-adaptive local concept embedding, and belongs to the technical field of artificial intelligence. The method comprises the following steps: 1, extracting a plurality of candidate regions of an image to be described and features corresponding to the candidate regions by adopting a target detector; 2, inputting the features extracted in the step 1 into the trained neural network so as to output a description result of the to-be-described image. Aiming at the defect that the traditional attention mechanism-based image description method has no explicit modeling of the relationship between the local area and the concept, the invention provides a scheme for adaptively generating the visual area through a context mechanism and generating the visual concept thereby, and enhances the connection from vision to language, thereby improving the accuracy of generated description.

Owner:南强智视(厦门)科技有限公司

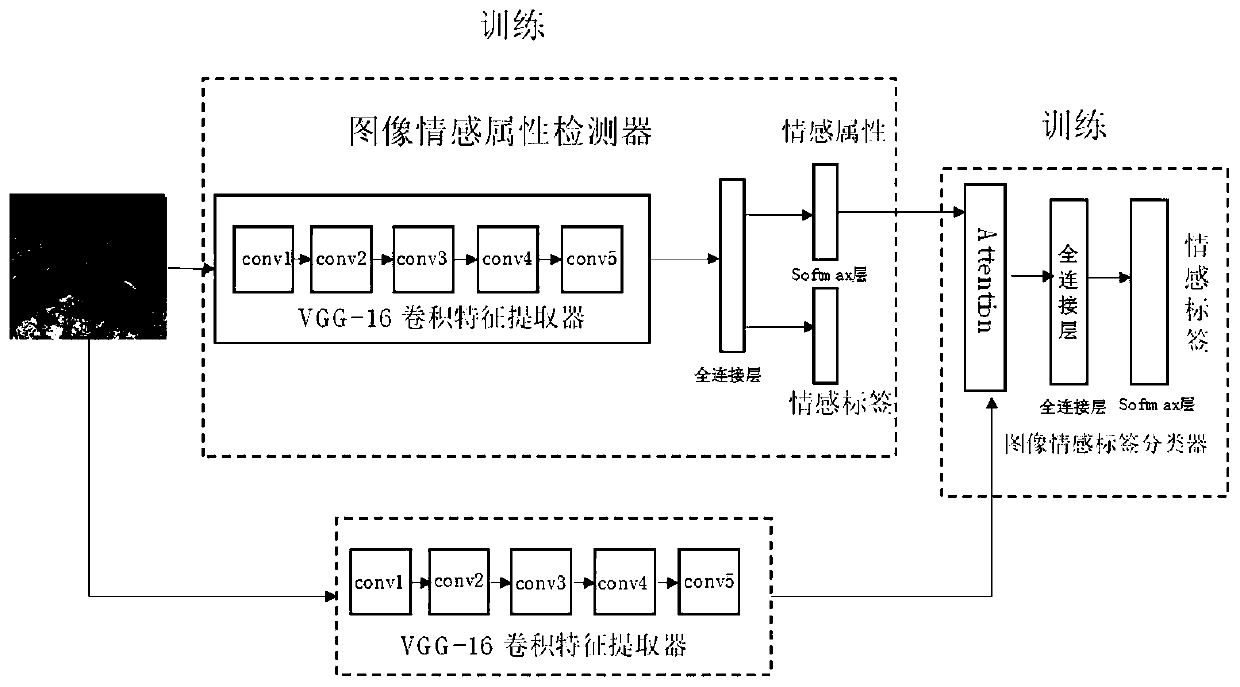

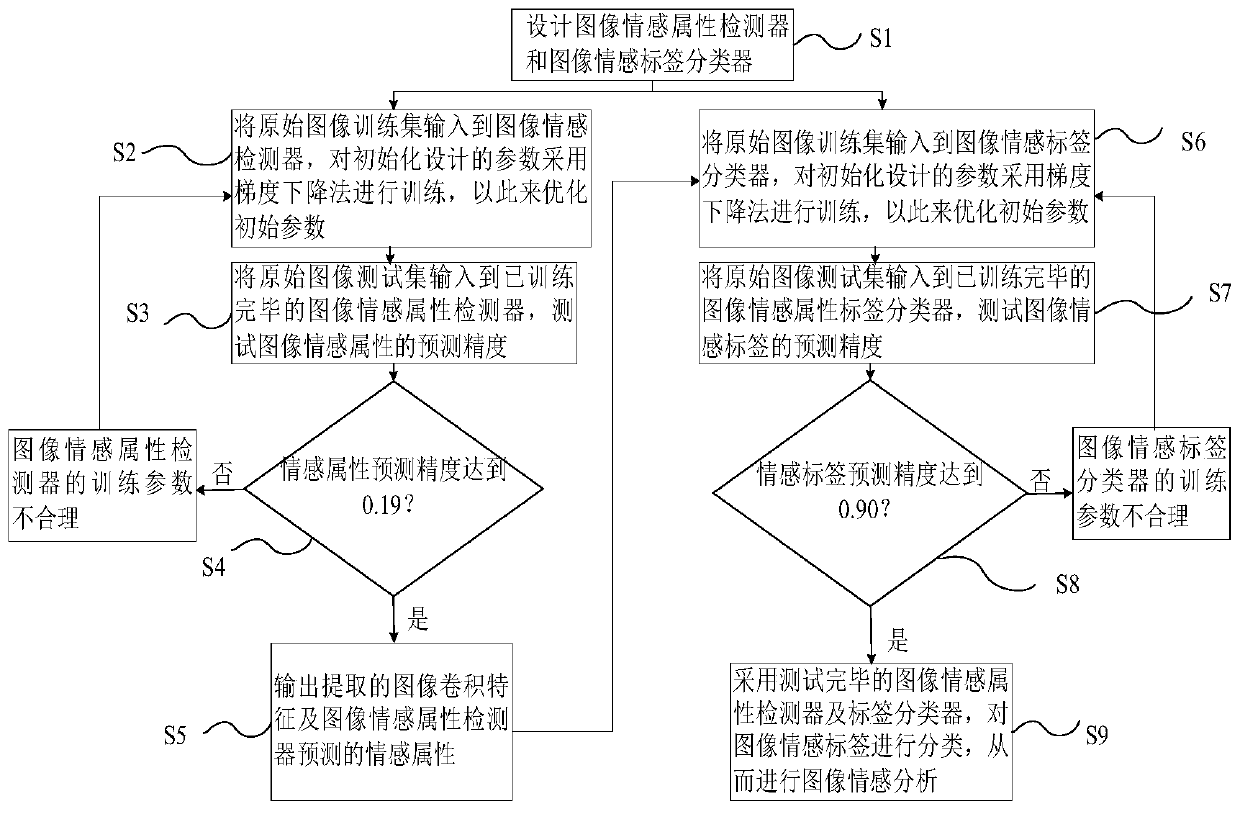

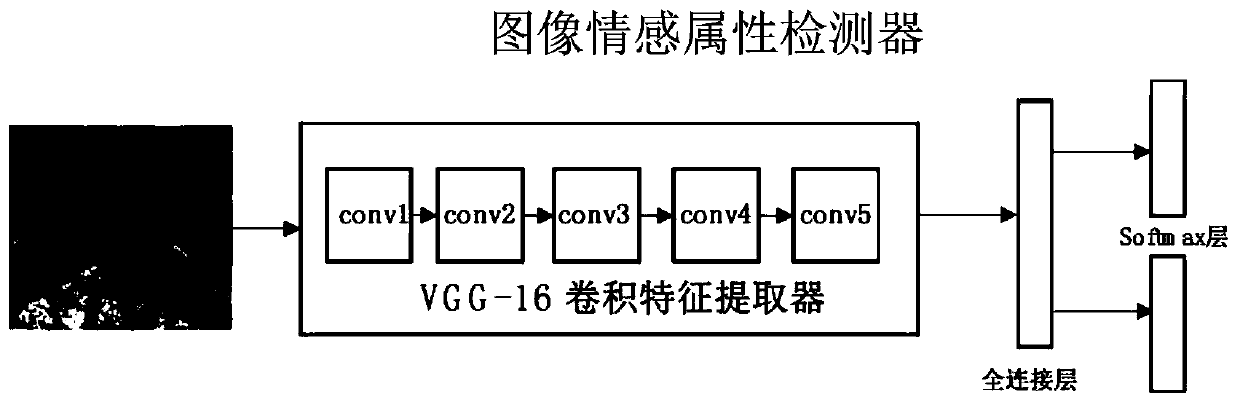

Image sentiment analysis method based on multi-task learning mode

ActiveCN110263822AImprove classification accuracyAccurate predictionCharacter and pattern recognitionNeural architecturesPattern recognitionSemantic gap

The invention discloses an image sentiment analysis method based on a multi-task learning mode. The method comprises the following steps: constructing an image sentiment attribute detector and an image sentiment label classifier; using a gradient descent method to train initialization parameters of the image emotion attribute detector; testing the prediction precision of the emotion attributes of the image and judging whether the emotion attributes reach the standard or not, if yes, reasonably designing the training parameters of the detector, otherwise, retraining; taking the output of the image emotion detector and the convolution characteristics of the original image as the input of an emotion label classifier, and training classifier initialization parameters by adopting a gradient descent method; testing the prediction precision of the label classifier and judging whether the prediction precision reaches the standard or not, namely, reasonably designing training parameters of the label classifier when the prediction precision reaches the standard, otherwise, retraining; classifying the image emotion tags, and analyzing the image emotion. According to the method, the influence caused by a semantic gap can be reduced, image emotion prediction is more accurate, and the method is better suitable for large-scale image emotion classification tasks.

Owner:GUANGDONG UNIV OF TECH

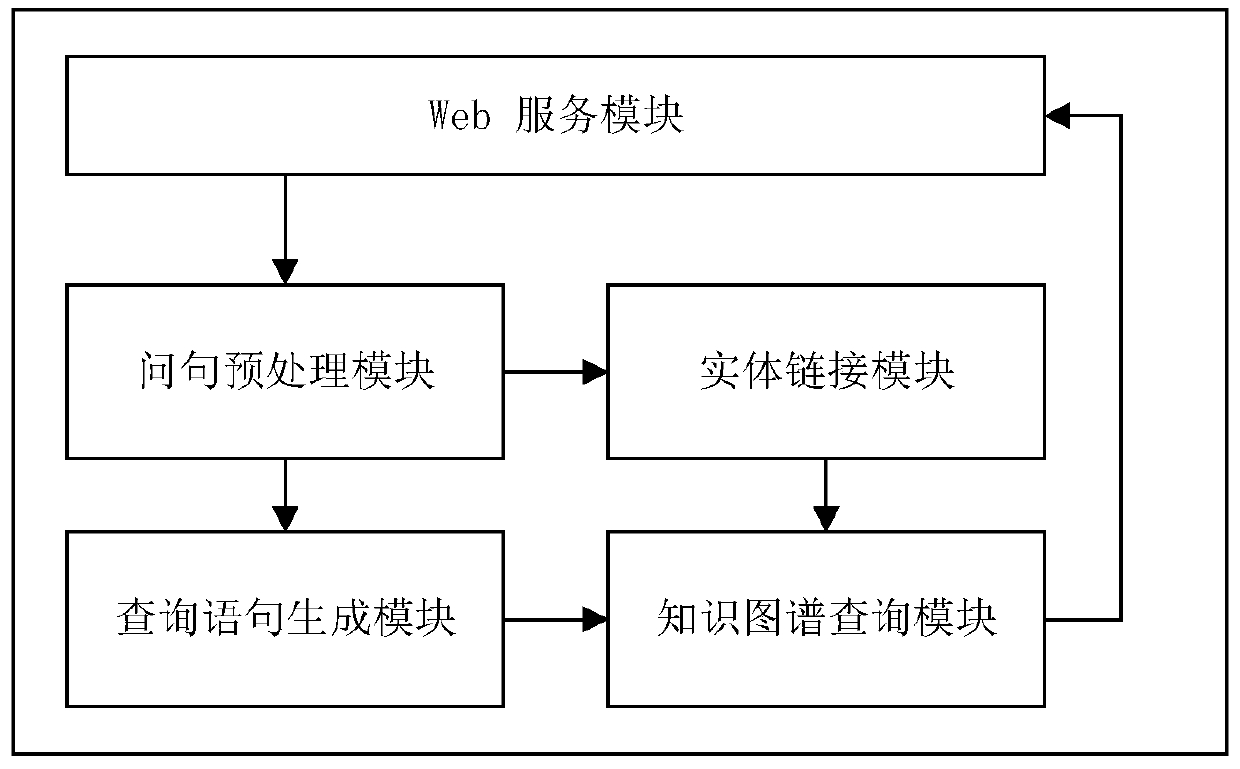

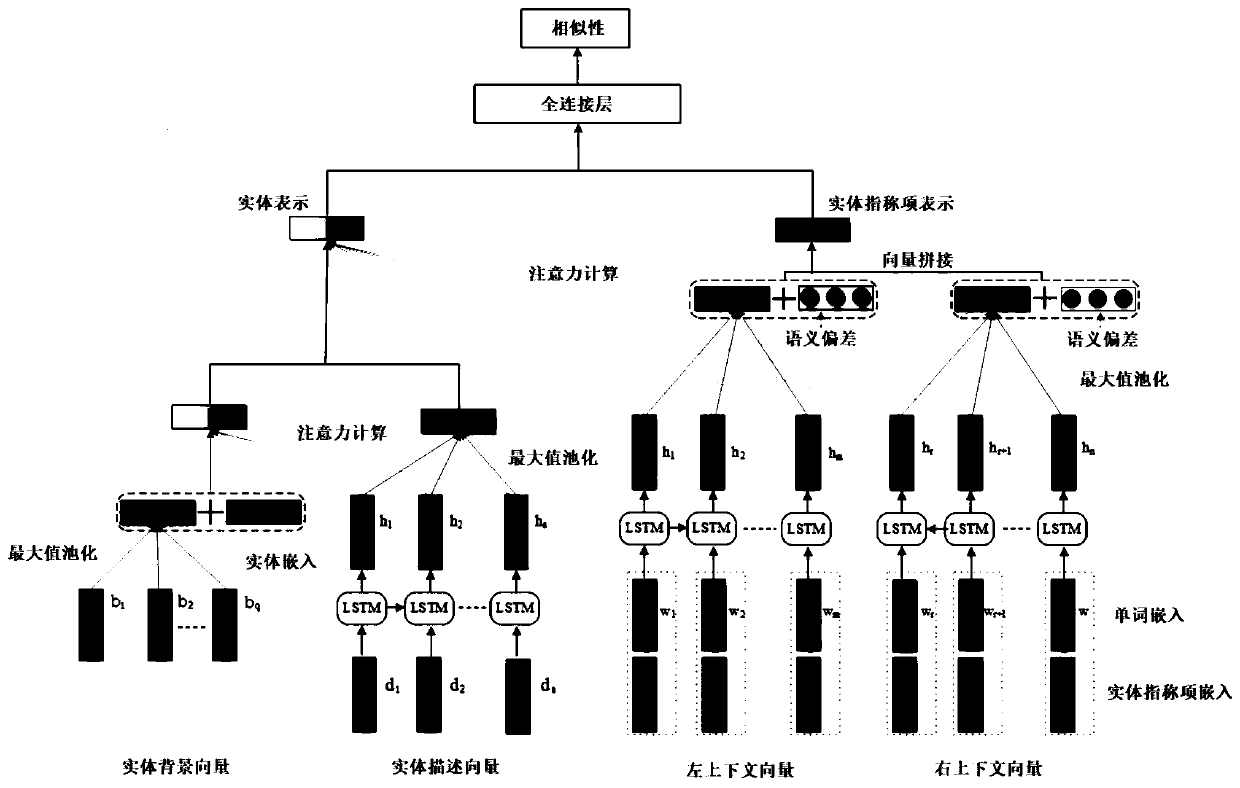

Entity linking method for Chinese knowledge graph question-answering system

ActiveCN111563149AImprove accuracyBridging the Semantic GapNeural architecturesSpecial data processing applicationsEntity linkingLink model

The invention provides an entity linking method for a Chinese knowledge graph question-answering system. The method comprises the following steps: firstly, performing joint embedding on words and entities in a training corpus to obtain joint embedding vectors of the words and the entities; for an input text of the Chinese knowledge graph question-answering system, firstly, recognizing entity reference items in the input text, and determining a candidate entity list according to the entity reference items; and constructing an entity link model based on an LSTM network, performing vector splicing on the entity representation vector and the entity reference item representation vector to obtain a similarity score of the entity reference item and the candidate entity, and finally obtaining a score rank of the candidate entity, thereby selecting the candidate entity with the highest score as a target entity corresponding to the entity reference item. According to the method, the defect of link model training data redundancy caused by diversity of user questioning modes is effectively solved, and words with similar semantics can be replaced and used in the context, so that the link effectiveness is improved, and the accuracy of a question and answer system is improved.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

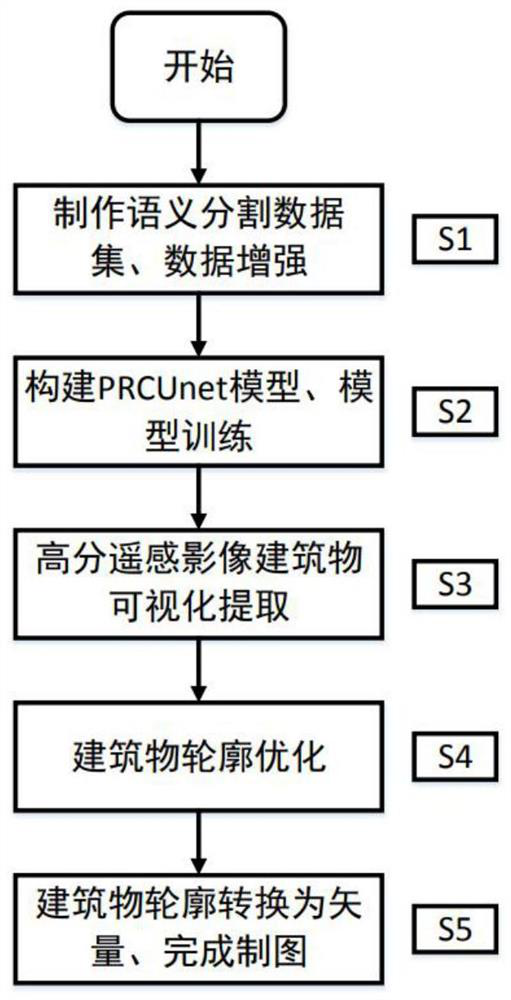

Remote sensing image building extraction and contour optimization method based on deep learning

ActiveCN113516135AEliminate the effects ofReduce the amount of parametersImage enhancementImage analysisBoundary contourData set

The invention provides a remote sensing image building extraction and contour optimization method based on deep learning, and belongs to the field of environment measurement. The thought of semantic segmentation is applied to building extraction, and building contour optimization is carried out by fusing the Hausdorff distance. A residual structure, a convolution attention module and pyramid pooling are introduced into a Unet model by utilizing the feature extraction capability of a residual module, the balance capability of a convolution attention module on space information and channel information and the multi-scale scene analysis characteristic of a pyramid pooling module, a PRCUnet model is established, semantic information and detail information are concerned at the same time, and the defect of Unet on small target detection is made up. According to the method, the used data set IoU and the recall rate both reach 85% or above, the precision is remarkably superior to that of a Unet model, the precision of the extracted building is higher, and the optimized building boundary is closer to the boundary contour of a real building.

Owner:XUZHOU NORMAL UNIVERSITY

Method for performing transactions on data and a transactional database

ActiveUS20150081623A1Improve performanceLow costDigital data information retrievalDigital data processing detailsTheoretical computer scienceClient-side

Embodiments include an evaluator that can receive a query containing a predicate from an application executing on a client device. The evaluator can process the predicate using a tree structure containing nodes representing objects and edges representing relationships thereof. The processing can include applying filters to attributes of the relationships to identify a first set of objects relevant to the predicate and navigating along each incoming role of a relationship and from there via an outgoing role to other objects to identify a second set of objects relevant to the predicate. An object is relevant to the predicate if at least a value of a field of the object is equal or similar to a value of the predicate. Responsive to the query, the evaluator can return identifiers associated with a united set of the first set of objects and the second set of objects to the application.

Owner:OPEN TEXT SA ULC

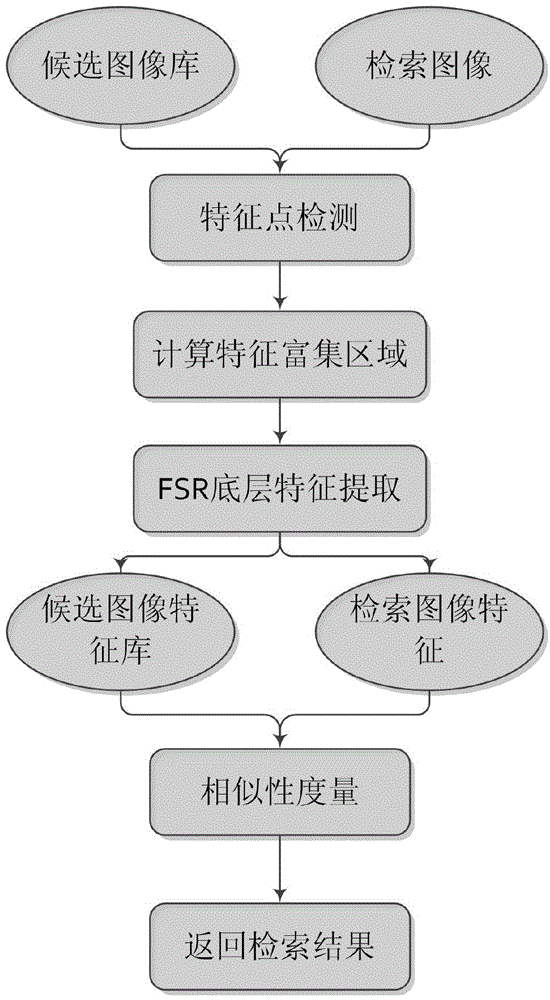

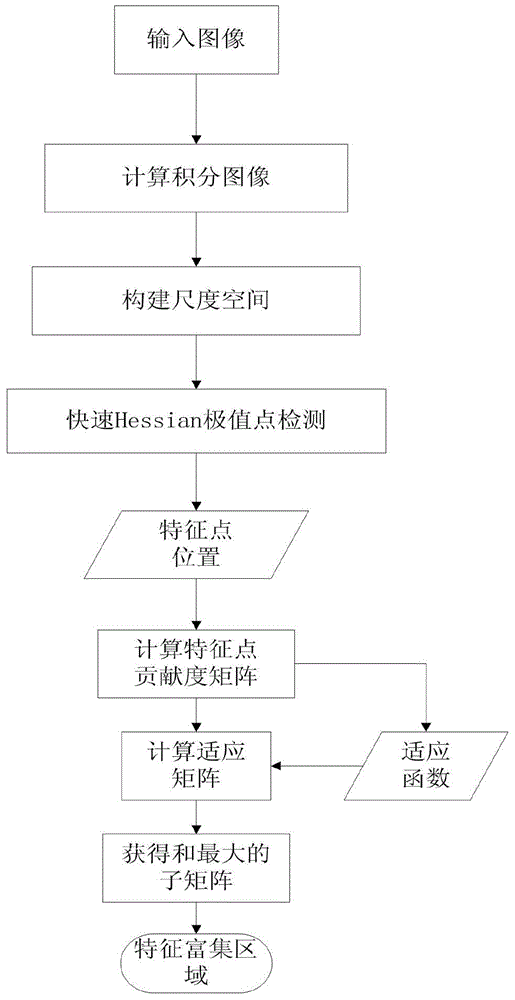

Image retrieval method based on characteristic enrichment area set

ActiveCN104361096ABridging the Semantic GapImprove search accuracyCharacter and pattern recognitionSpecial data processing applicationsDistribution matrixComputation complexity

The invention discloses an image retrieval method based on a characteristic enrichment area. The method comprises the following steps of firstly, acquiring a candidate characteristic point set by calculating a Hessian matrix and non-maximum value restraint, and acquiring a sub-pixel-level characteristic point set by utilizing a three-dimensional linear interpolation method; secondly, calculating a distribution matrix and an adaption matrix of characteristic points according to coordinate positions of the obtained characteristic points of an image, and by utilizing a maximum sub-matrix and an algorithm, solving a sub-matrix of the adaption matrix, namely the most dense distribution area of the characteristic points as the characteristic enrichment area of the image; thirdly, selecting a shape bottom layer characteristic, a texture bottom layer characteristic and a color bottom layer characteristic for the characteristic enrichment area; finally, measuring similarities according to a Gaussian non-linear distance function, and quickly retrieving the image according to the ascending order of the similarities. According to the method, the calculation complexity of image retrieval can be effectively reduced, and the operation efficiency and the accuracy of the image retrieval are improved.

Owner:HEFEI HUIZHONG INTPROP MANAGEMENT

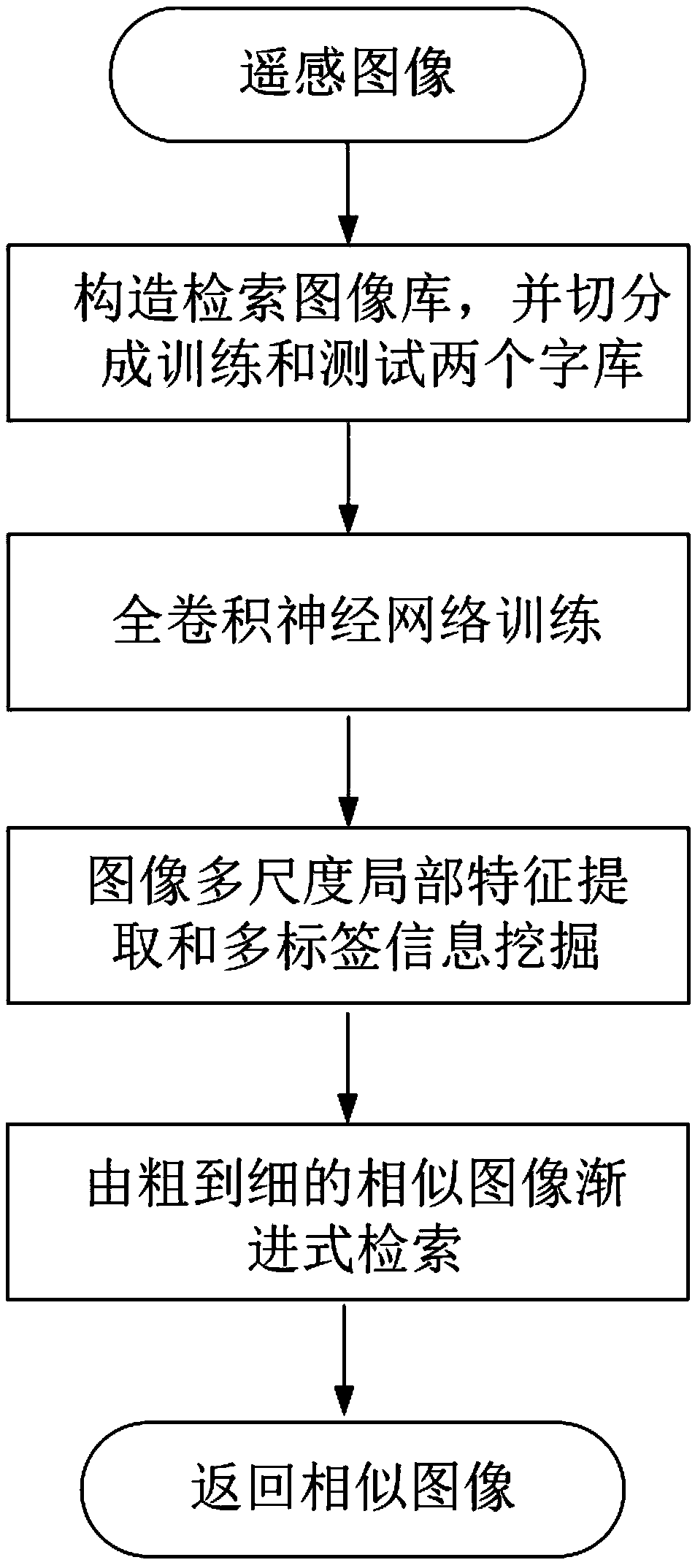

A remote sensing image multi-label retrieval method and system based on a full convolutional neural network

ActiveCN109657082ABridging the "Semantic Gap"Improve image retrieval resultsStill image data queryingSpecial data processing applicationsFeature vectorSingle label

The invention provides a remote sensing image multi-label retrieval method and system based on a full convolutional neural network. multi-label image retrieval is realized by considering multi-category information of remote sensing images, and the method comprises the following steps: inputting a retrieval image library, and dividing the retrieval image library into a training set and a verification set; Constructing a full convolutional neural network model FCN, and performing network training by using the training set; Performing multi-class label prediction on each image in the verificationset by using FCN to obtain a segmentation result; Carrying out up-sampling on each convolutional layer feature map; Extracting local features of each image in the verification set to obtain feature vectors for retrieval; And finally, carrying out coarse-to-fine two-step retrieval based on the extracted multi-scale features and the multi-label information. According to the method, the full convolutional neural network is used for learning multi-scale local features of the image, multi-label information hidden in the image is fully mined, and compared with an existing remote sensing image retrieval method based on a single label, the accuracy of image retrieval is effectively improved.

Owner:WUHAN UNIV

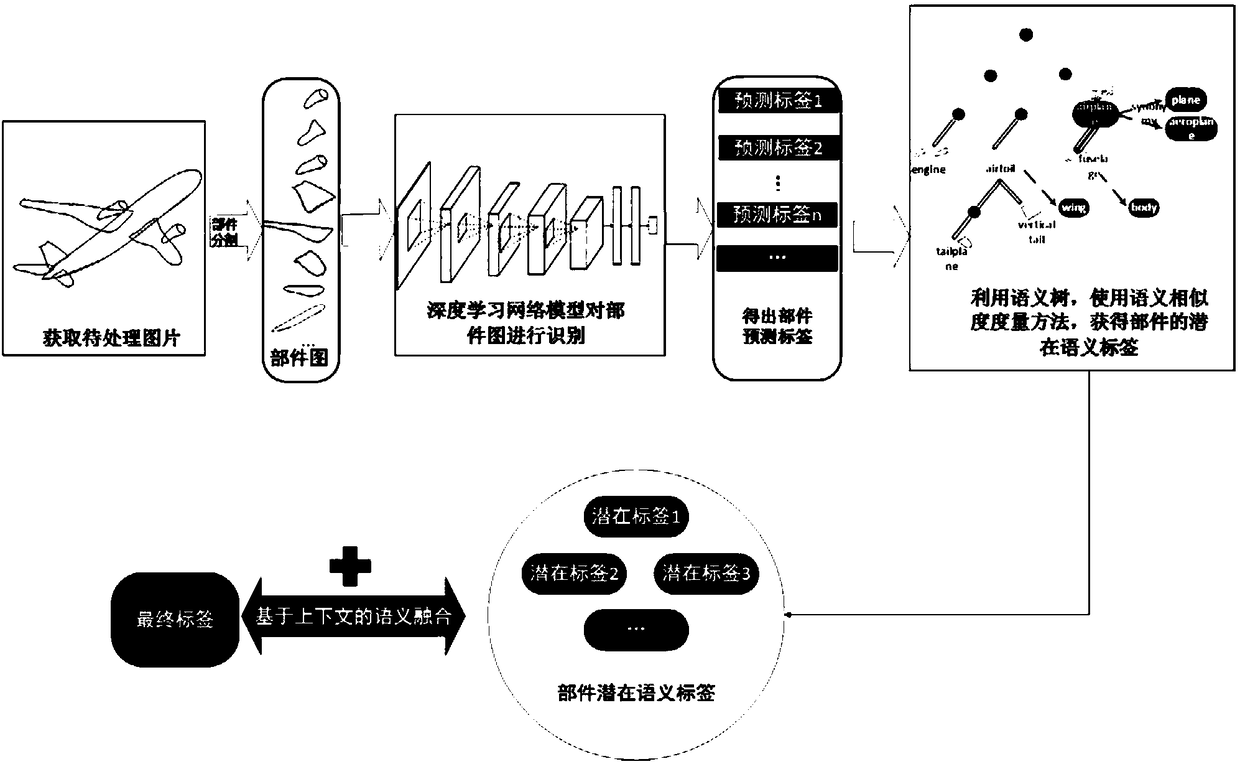

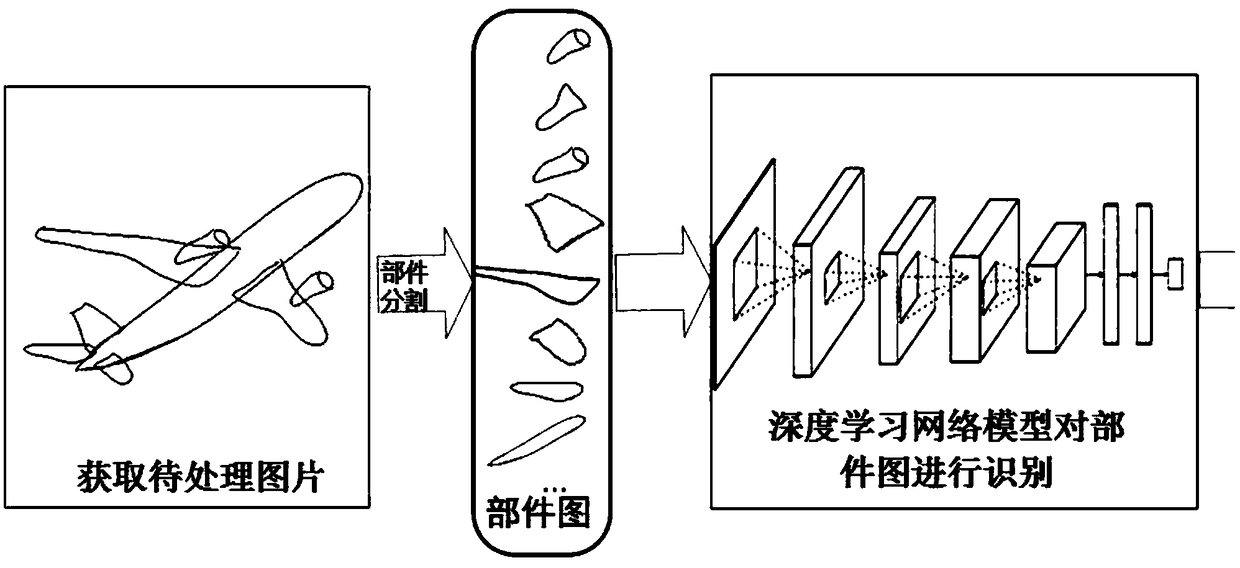

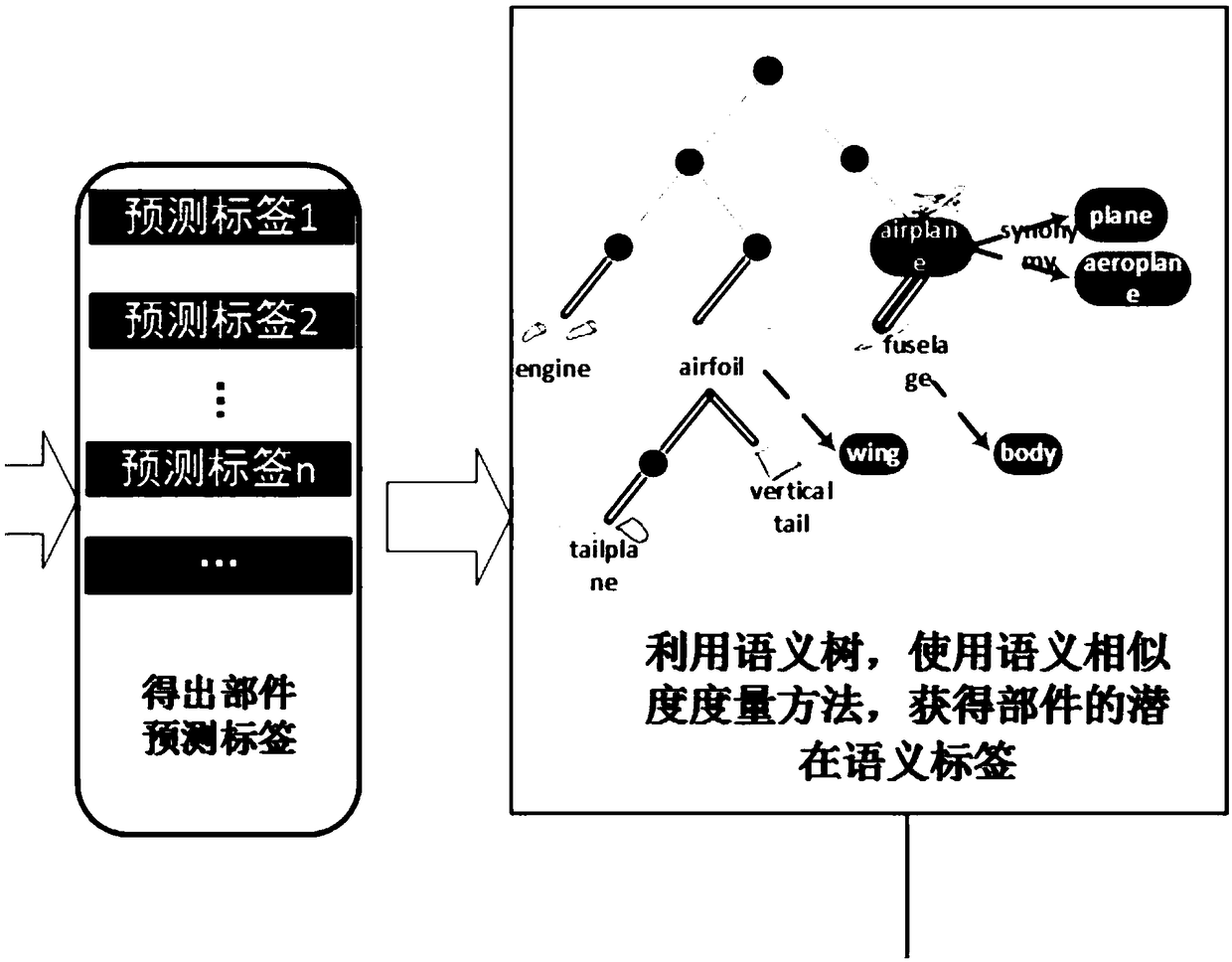

A sketch recognition method and an application of the method in commodity retrieval

ActiveCN109325529ASimple compositionImprove accuracyImage enhancementImage analysisSemantic treeEngineering

The invention discloses a sketch recognition method, which comprises the following steps of S1, obtaining pictures to be processed; S2, segmenting the collected picture into parts with semantic information to obtain a part diagram of the sketch; S3, obtaining the label of the component through identifying the component diagram by using the depth learning network model; S4, associating the semanticinformation of the component with the semantic information of the object to which the component belongs; S5, outputting the label of the object to which the part belongs obtained through the semantictree. The application of the method in commodity retrieval is characterizd by comprising the following steps of 1) obtaining picture information; 2) using a retrieval system to utilize the sketch recognition method to obtain the label of the article that the user wants to find according to the picture; 3) recommending the corresponding commodity for the user according to the identified label. Themethod and the application of the invention improve the correct rate of the identification of the complete sketch, save the time for the user to select the commodity, and enhance the user experience.

Owner:ANHUI UNIVERSITY

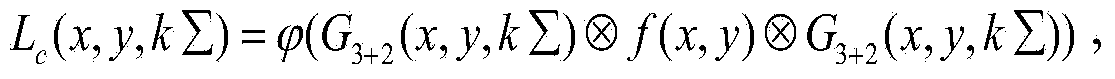

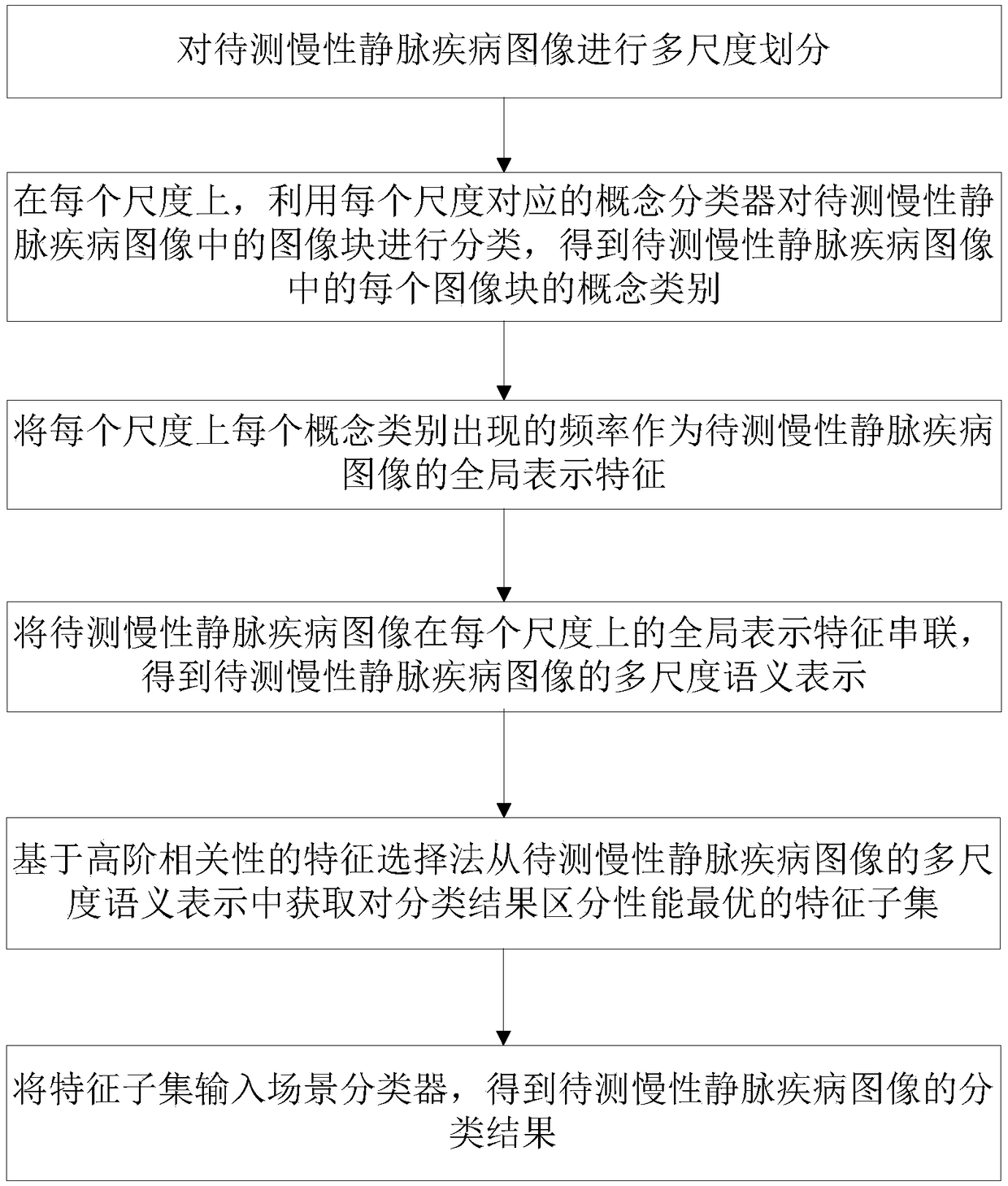

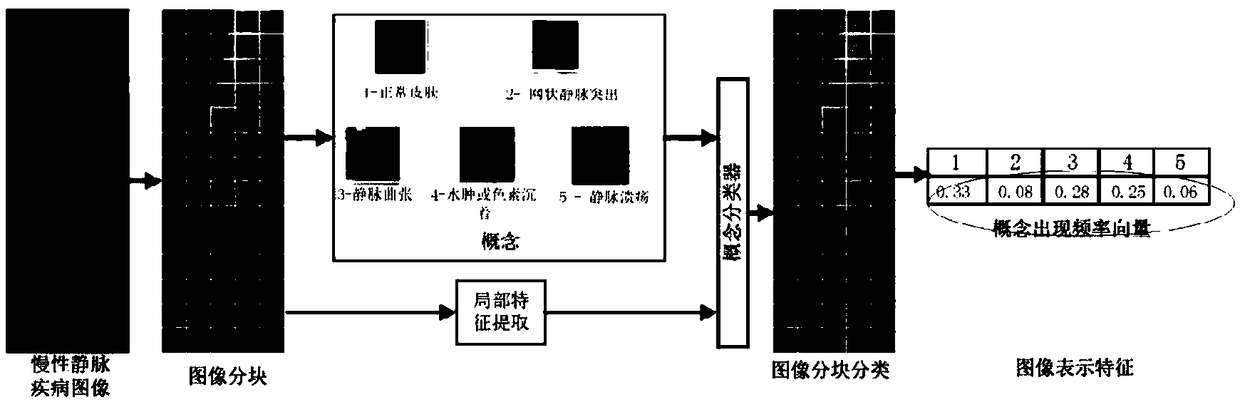

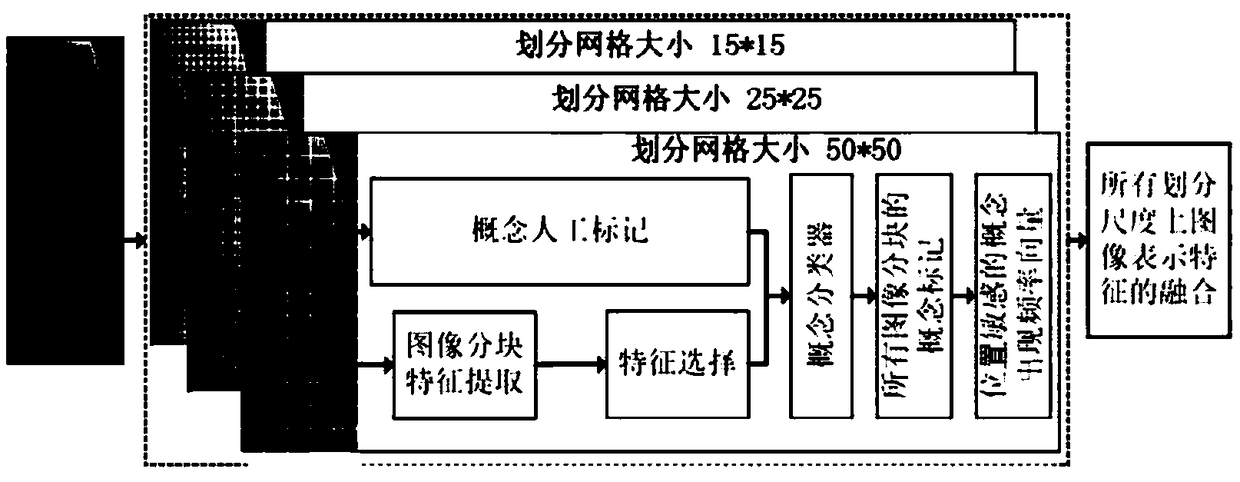

Image classification method for chronic venous disease based on multi-scale semantic features

ActiveCN109034253ABridging the "Semantic Gap"Narrowing the "Semantic Gap"Recognition of medical/anatomical patternsDiseaseSemantic representation

The invention discloses an image classification method of chronic venous disease based on multi-scale semantic features, includes multi-scale division of chronic vein disease image to be measured, classification of image block by concept classifier corresponding to each scale on each scale, and obtaining concept class of each image block in the chronic vein disease image to be measured; the frequency of each concept category on each scale is taken as the global representation feature, and the global representation feature on each scale is serially connected to obtain the multi-scale semantic representation of the chronic venous disease images to be measured. The feature selection method based on high-order correlation obtains the best feature subset from the multi-scale semantic representation, and inputs the feature subset into the scene classifier to obtain the classification results of the chronic venous disease images to be tested. The classification result of the invention has high accuracy and strong reliability.

Owner:HUAZHONG UNIV OF SCI & TECH

Cloud image retrieval method based on shape feature

ActiveCN105447100ABridging the Semantic GapEffective combinationImage analysisSpecial data processing applicationsComputation complexitySemantic gap

The present invention discloses a cloud image retrieval method based on a shape feature. In effective combination with brightness temperature information of a cloud top of a cloud image, a cloud system is segmented by using an iteration threshold segmentation method; and a shape feature of the cloud system is extracted by using a geometric invariant moment that is relatively low in computational complexity and strong in robustness, thereby overcoming difficulty in analyzing the cloud image by using a conventional cloud image retrieval method based on the shape feature, and especially overcoming problems of method universality, computational complexity and robustness. In addition, according to the method, a bottom cloud image feature is transformed from euclidean space to sparse space, and a cloud image database is retrieved by using a distribution rule of the sparse space, thereby effectively alleviating a semantic gap problem of a conventional retrieval method.

Owner:NINGBO UNIV

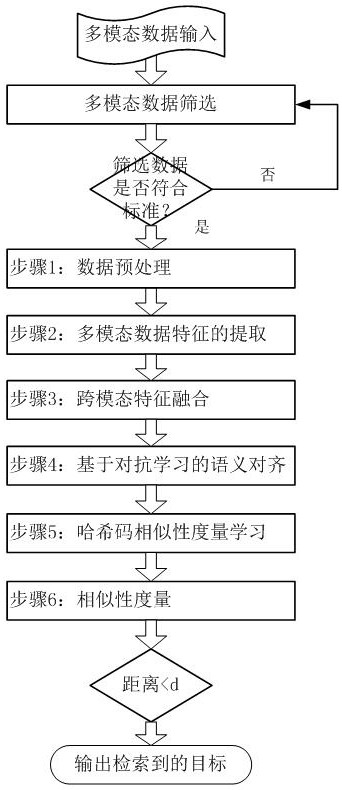

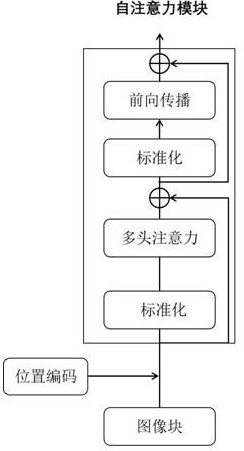

Unsupervised cross-modal retrieval method based on attention mechanism enhancement

ActiveCN113971209ARich Visual Semantic InformationEnhance expressive abilityCharacter and pattern recognitionStill image data indexingSemantic gapGenerative adversarial network

The invention belongs to the technical field of artificial intelligence smart community application, and relates to an unsupervised cross-modal retrieval method based on attention mechanism enhancement, which comprises the following steps of: enhancing visual semantic features of an image, then aggregating feature information of different modals, mapping fused multi-modal features to the same semantic feature space, then, on the basis of a generative adversarial network, adversarial learning is carried out on the image modal and text modal features and the same semantic feature after multi-modal fusion, aligning semantic features of different modals, and finally, generating hash codes by the different modal features after alignment of the generative adversarial network; and performing intra-modal feature and Hash code similarity measurement learning and inter-modal feature and Hash code similarity measurement, so a heterogeneous semantic gap problem between different modalities is reduced, a dependency relationship between different modal features is enhanced, a semantic gap between different modal data is reduced, and semantic common characteristics among different modes can be represented more robustly.

Owner:QINGDAO SONLI SOFTWARE INFORMATION TECH

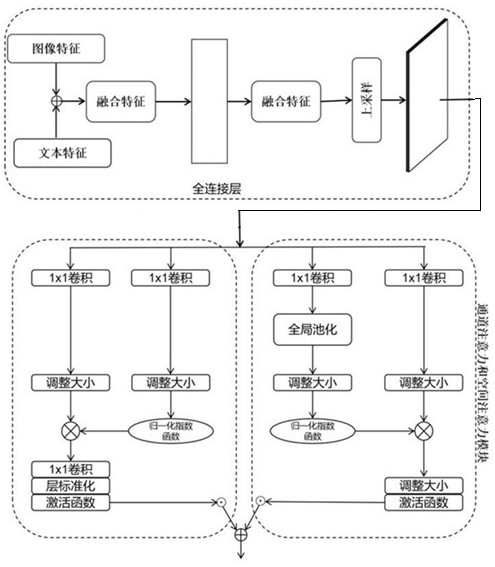

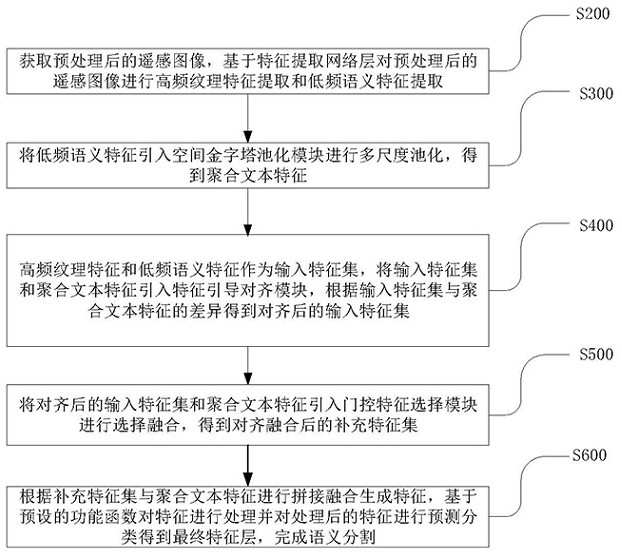

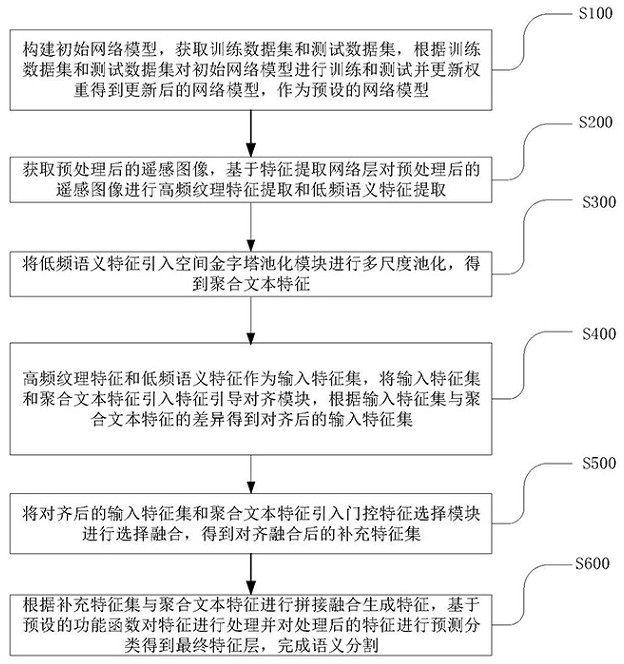

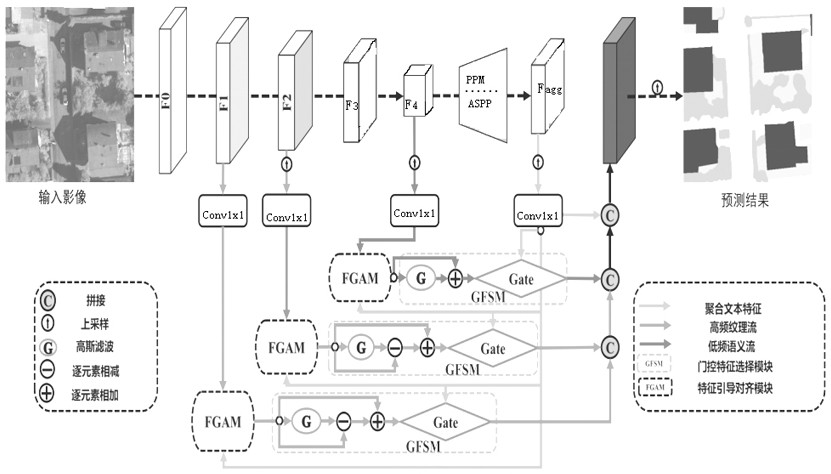

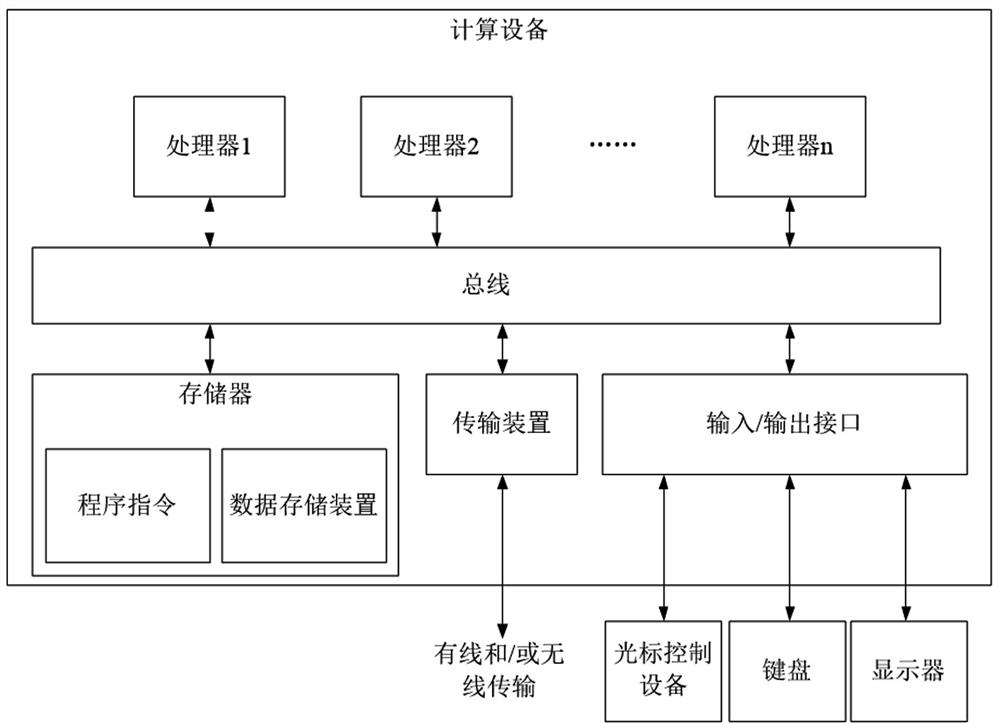

Remote sensing image semantic segmentation method and device, computer equipment and storage medium

ActiveCN113034506AHigh precisionBridging the Semantic GapImage enhancementImage analysisFeature setEngineering

The invention discloses a remote sensing image semantic segmentation method and device, computer equipment and a storage medium, and the method comprises the steps of obtaining a preprocessed remote sensing image, carrying out the high-frequency texture feature and low-frequency semantic feature extraction of the preprocessed remote sensing image based on a feature extraction network layer, and taking the extracted features as an input feature set; introducing the low-frequency semantic features into a spatial pyramid pooling module for multi-scale pooling, and obtaining aggregated text features; introducing the input feature set and the aggregated text features into a feature guide alignment module, and obtaining an aligned input feature set according to the difference between the input feature set and the aggregated text features; introducing the aligned input feature set and the aggregated text features into a gating feature selection module for selective fusion to obtain an aligned and fused supplementary feature set; and according to the supplementary feature set and the aggregated text features, performing splicing fusion to generate features, processing the features based on a preset performance function, and performing prediction classification on the processed features to obtain a final feature layer. The segmentation precision is effectively improved.

Owner:HUNAN UNIV

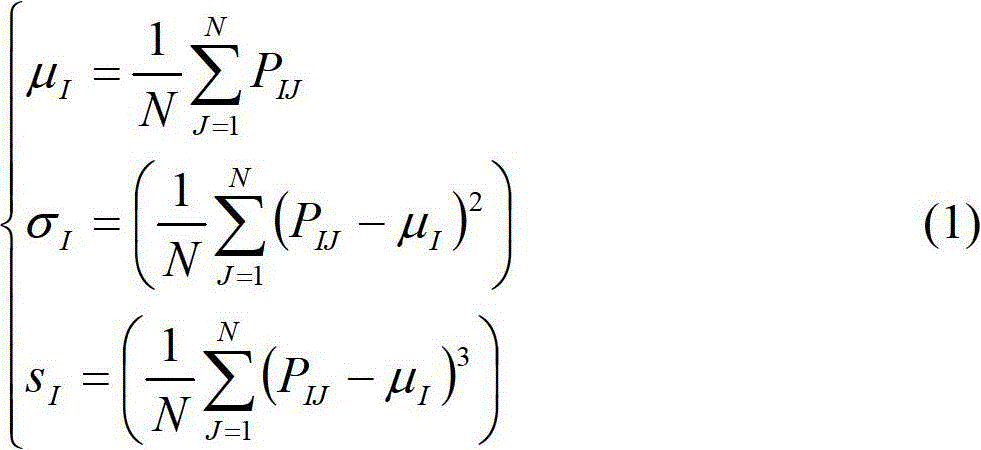

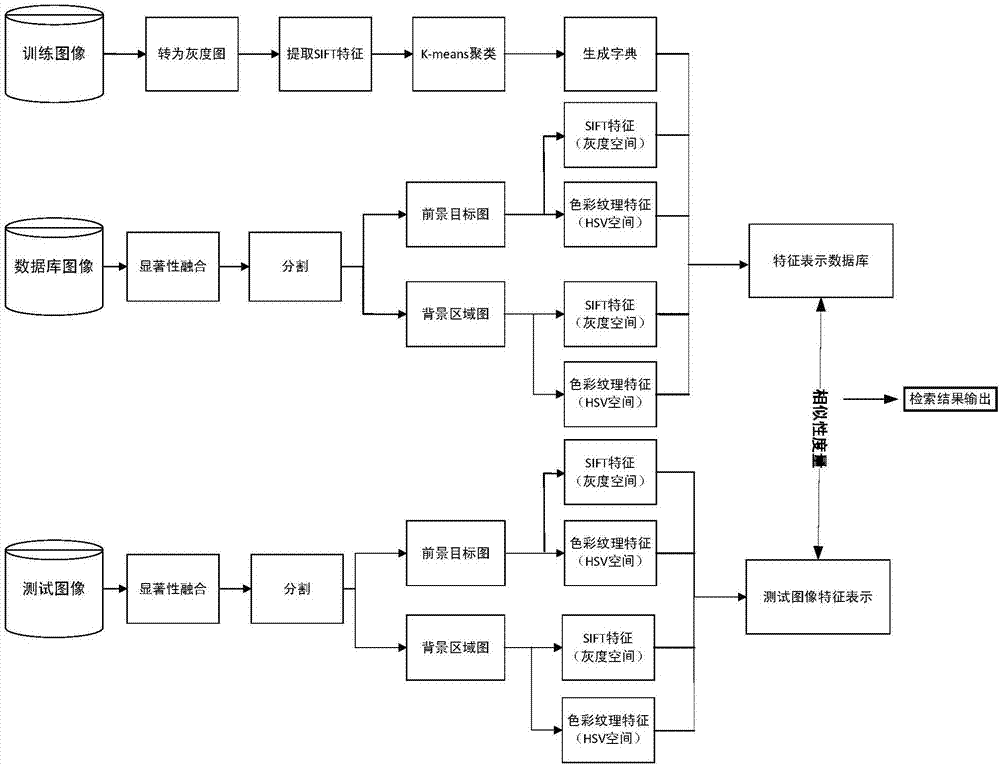

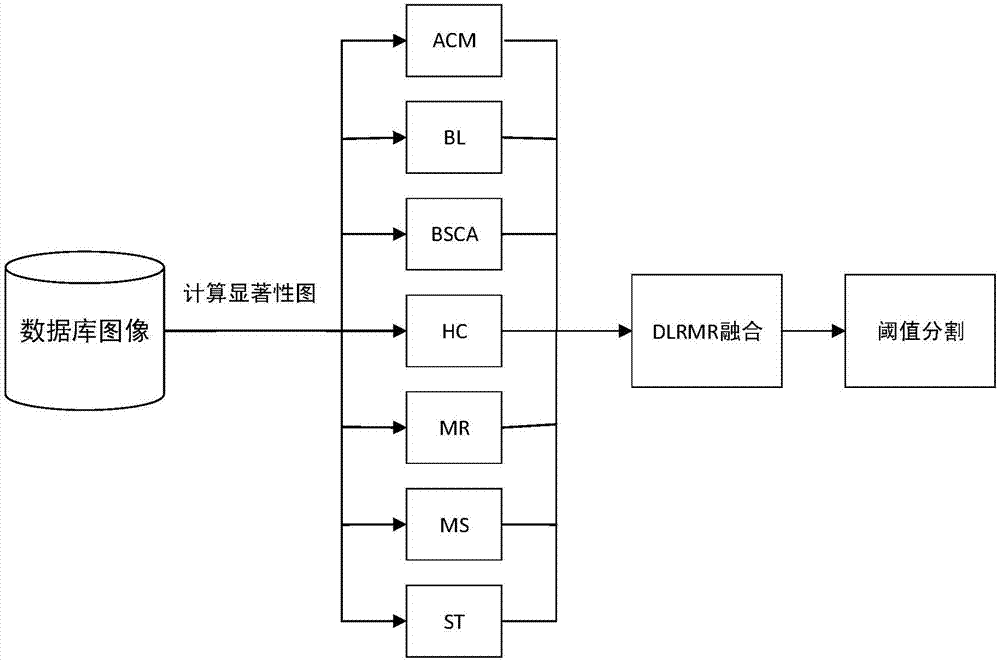

Image retrieval method based on visual saliency fusion

InactiveCN107357834ABridging the Semantic GapImage analysisCharacter and pattern recognitionVision basedImage texture

Provided is an image retrieval method based on visual significant integration. A retrieval object is color images. The image retrieval method comprises a training process and a testing process. The training process comprises step 1, building a visual word dictionary: orderly analyzing all images in a training image set so as to set up the visual word dictionary for subsequent retrieval; step 2, utilizing a fusion algorithm of image visual saliency characteristics to obtain a visual saliency picture and obtaining a foreground target picture and a background area picture by partitioning of the saliency picture; step 3, respectively extracting image color characteristics and image texture characteristics of the foreground target picture and the background area picture; step 4, counting a visual word distribution histogram of each picture in a database based on operation of step 1. The testing process also comprises the step 5 of retrieving testing images. The invention provides the image retrieval method based on visual significant integration which is good in partitioning effect and high in retrieval performance.

Owner:ZHEJIANG UNIV OF TECH

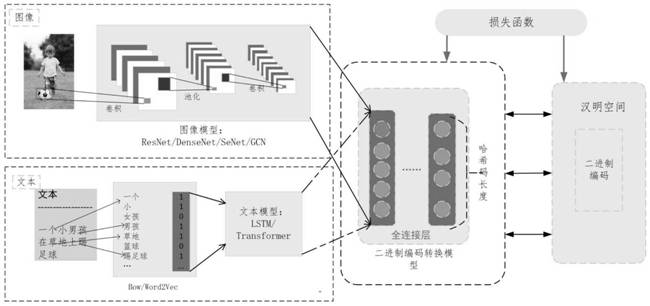

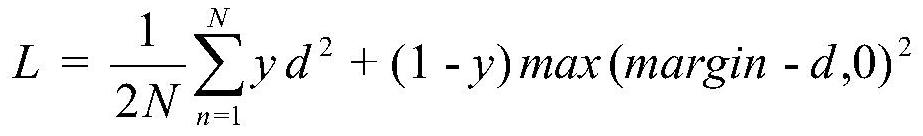

Universal cross-modal retrieval model based on deep hash

PendingCN113076465AQuick buildBridging the Semantic GapSemantic analysisCharacter and pattern recognitionOriginal dataTheoretical computer science

The invention discloses a universal cross-modal retrieval model based on deep hash. The universal cross-modal retrieval model comprises an image model, a text model, a binary code conversion model and a Hamming space. The image model is used for the feature and semantic extraction of the image data; the text model is used for the feature and semantic extraction of the text data; the binary code conversion model is used for converting the original features into the binary codes; the Hamming space is a common subspace of images and the text data, and the similarity of the cross-modal data can be directly calculated in the Hamming space. According to the universal model for solving cross-modal retrieval by combining deep learning and Hash learning, the data points in an original feature space are mapped into the binary codes in the public Hamming space, similarity ranking is carried out by calculating the Hamming distance between the codes of the data to be queried and the codes of the original data, and therefore a retrieval result is obtained, and the retrieval efficiency is greatly improved. The binary codes are used for replacing the original data storage, so that the requirement of the retrieval tasks for the storage capacity is greatly reduced.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

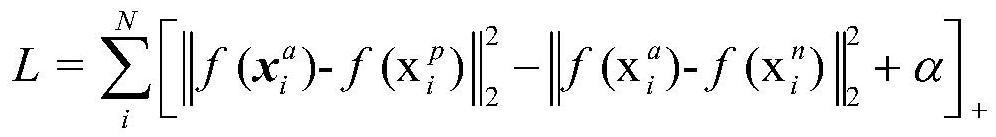

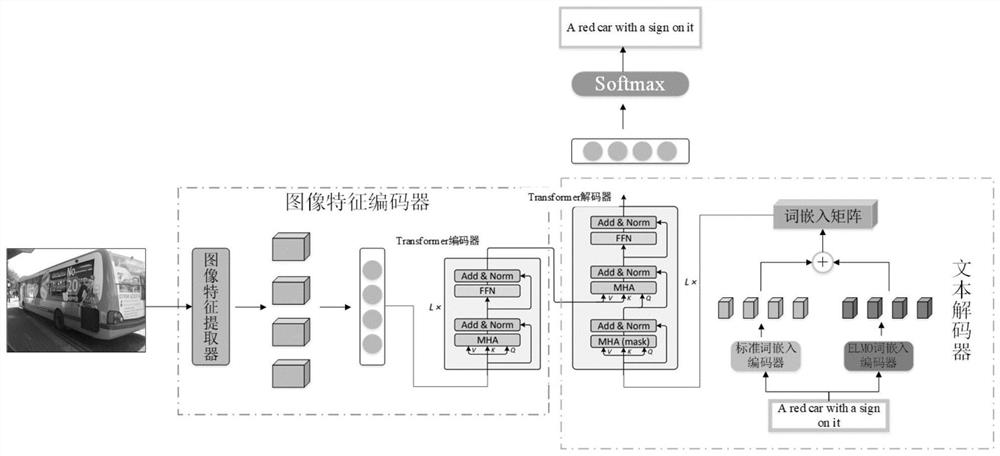

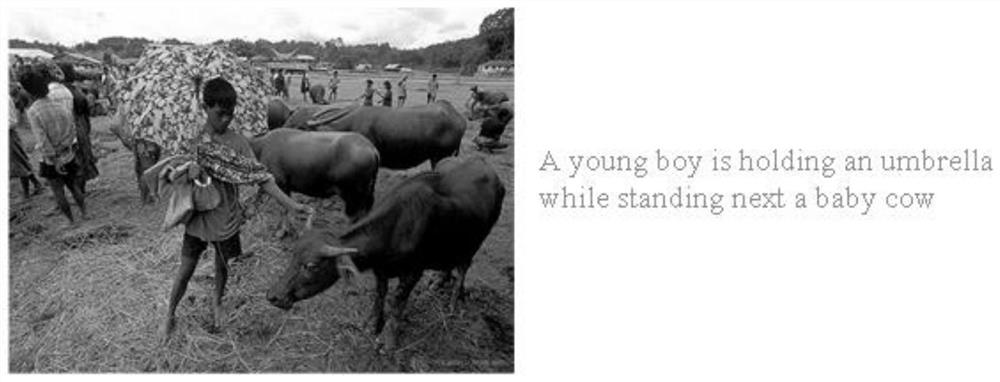

Multi-modal Transformer image description method based on dynamic word embedding

PendingCN113344036AImprove semantic description abilityImprove understandingSemantic analysisCharacter and pattern recognitionSemantic gapImage description

The invention discloses a multi-modal Transformer image description method based on dynamic word embedding, and belongs to the field of artificial intelligence. According to the invention, a model for simultaneously performing intra-modal attention and inter-modal attention is constructed, fusion of multi-modal information is realized, the convolutional neural network is bridged with the Transformer, image information and text information are fused in the same vector space, and the accuracy of language description of the model is improved; a semantic gap problem existing in the field of image description is reduced. Compared with a baseline model using Bottom-up and LSTM, the invention has the advantages that the BLEU-1, the BLEU-2, the BLEU-3, the BLEU-4, the ROUGE-L and the CIDEr-D are all improved.

Owner:KUNMING UNIV OF SCI & TECH

Cross-modal retrieval method and device and storage medium

InactiveCN114861016ABridging the Semantic GapImprove accuracyCharacter and pattern recognitionOther databases queryingFeature extractionSimilarity computation

The invention discloses a cross-modal retrieval method and device and a storage medium. The cross-modal retrieval method comprises the following steps: receiving retrieval data, and determining a modal of the retrieval data; inputting the retrieval data into a feature extraction model with at least two feature extraction units, and extracting a feature representation vector of the retrieval data through the feature extraction unit corresponding to the mode of the retrieval data; traversing an index database according to the feature representation vector, and querying a plurality of candidate retrieval results related to the retrieval data; and inputting the retrieval data and the candidate retrieval results into a similarity calculation model with a multi-modal fusion feature extraction unit, performing similarity calculation, and sorting the candidate retrieval results according to the similarity.

Owner:人民中科(北京)智能技术有限公司

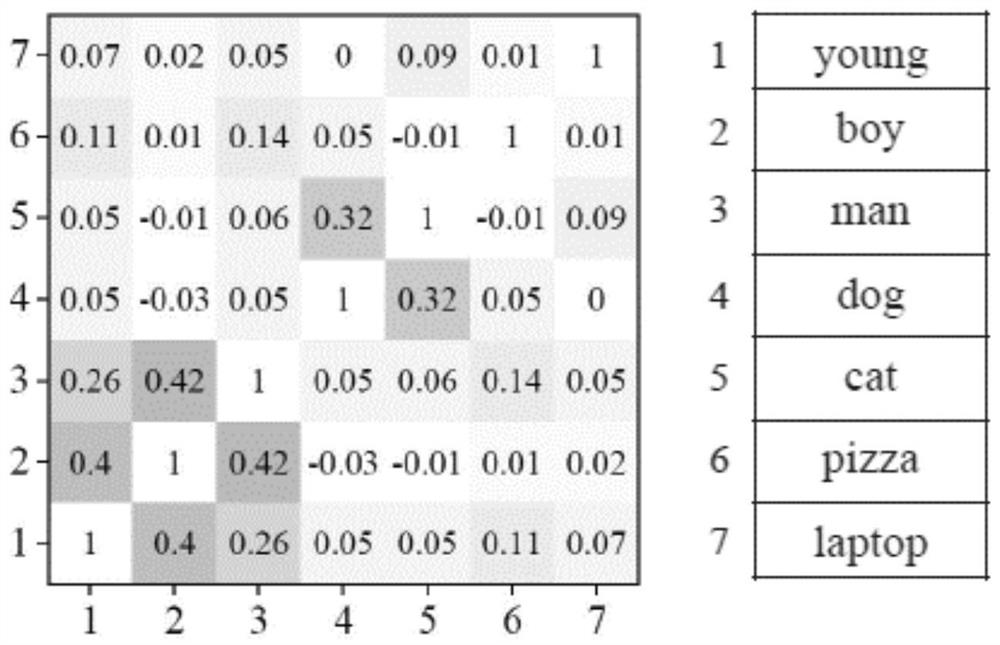

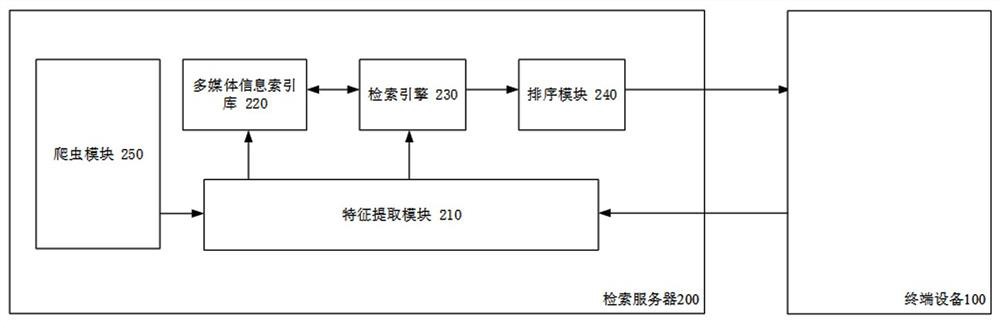

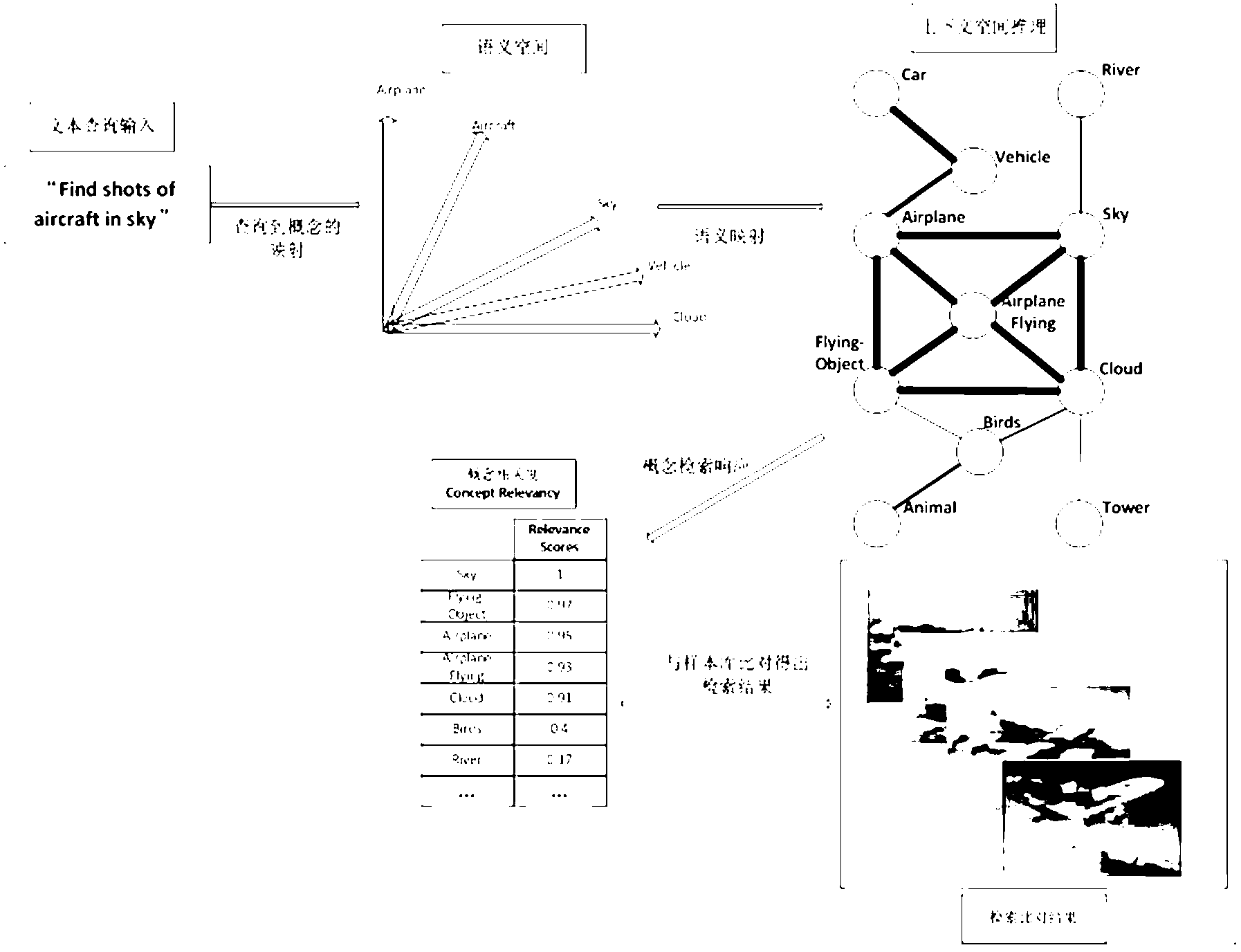

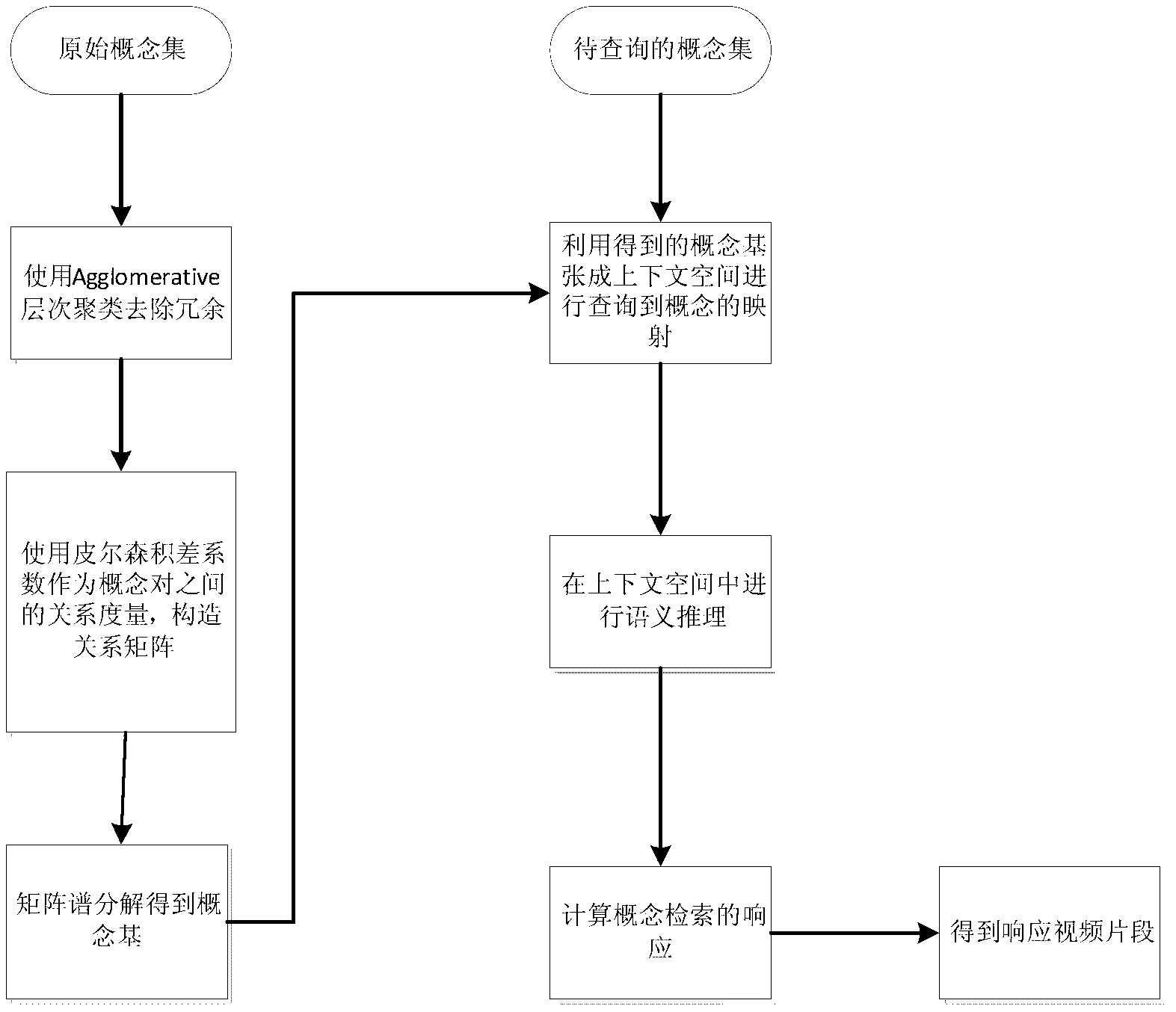

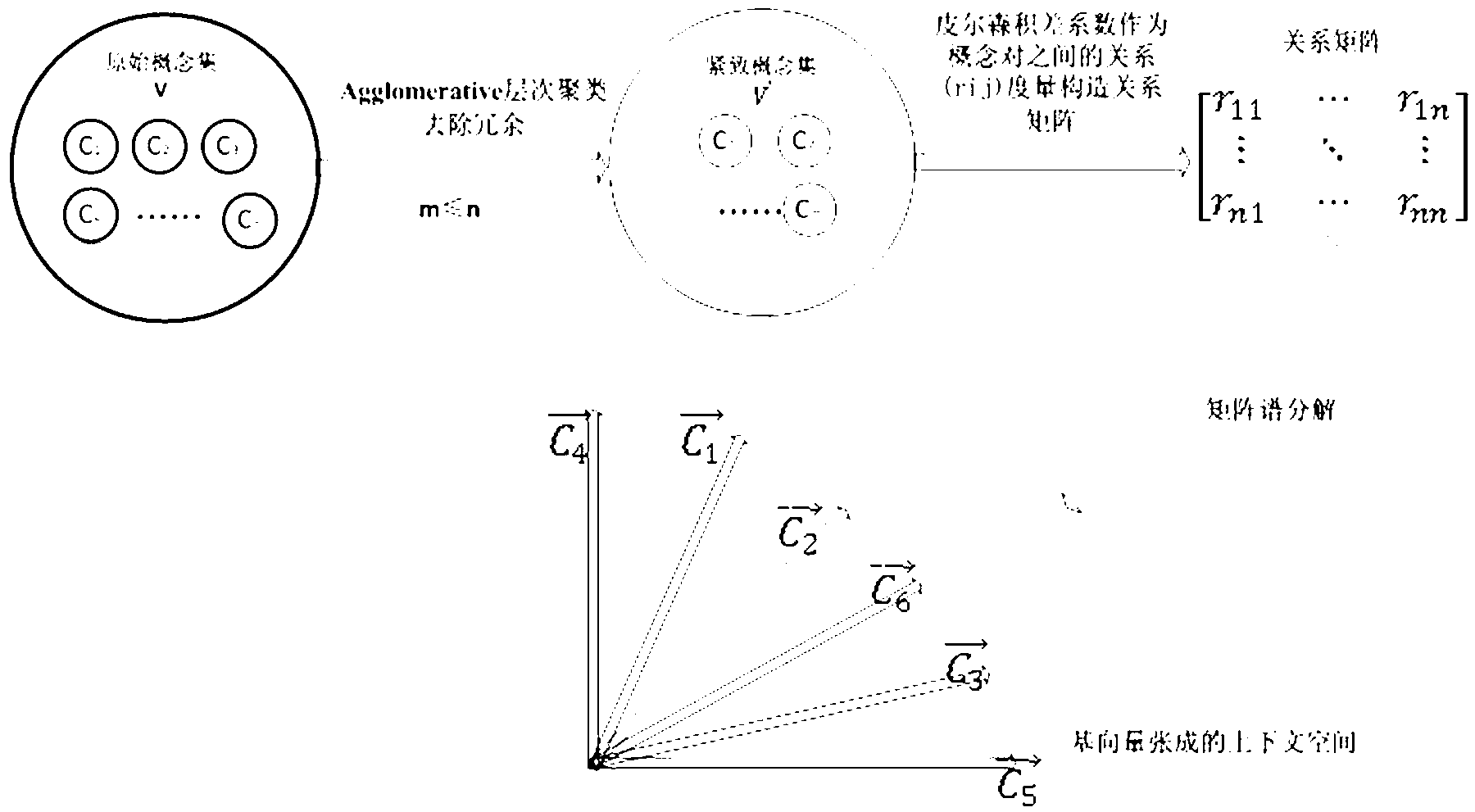

Video retrieving method based on context space

ActiveCN103279578ABridging the Semantic GapHigh precisionSpecial data processing applicationsVideo retrievalEffective solution

The invention provides a video retrieving method based on context space. The video retrieving method includes constructing a concept similarity matrix by analyzing basic concept space objects, then subjecting the concept similarity matrix to spectral factorization to obtain a group of basic concept sets, finally mapping known concepts to space spanned by the group of basic concept sets, and measuring similarity among the concept objects on the space. According to the video retrieving method based on the context space, by means of the way of constructing the concept space, problems of locality, inconsistency and the like caused by measurement standards under traditional ontology concept sets are avoided, so that the measurement standards among the concept objects mapped to the space are uniform and wholly consistent, an effective solution is provided for solving video retrieving problems, relationships of basic abstract conceptions are closer, and accuracy of video retrieval is effectively improved.

Owner:魏骁勇

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com