Image description method based on adaptive local concept embedding

An image description and self-adaptive technology, applied in neural learning methods, still image data retrieval, metadata still image retrieval, etc., can solve problems such as modeling visual features and conceptual relationships without considering explicit

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0081] The technical solutions and beneficial effects of the present invention will be described in detail below in conjunction with the accompanying drawings.

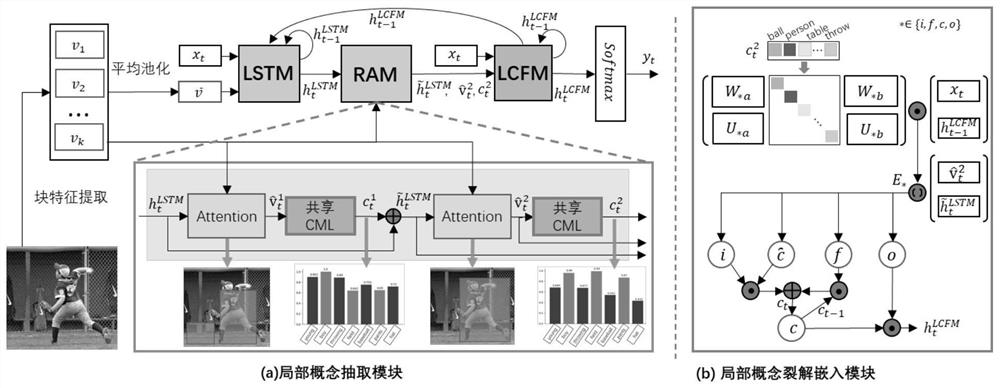

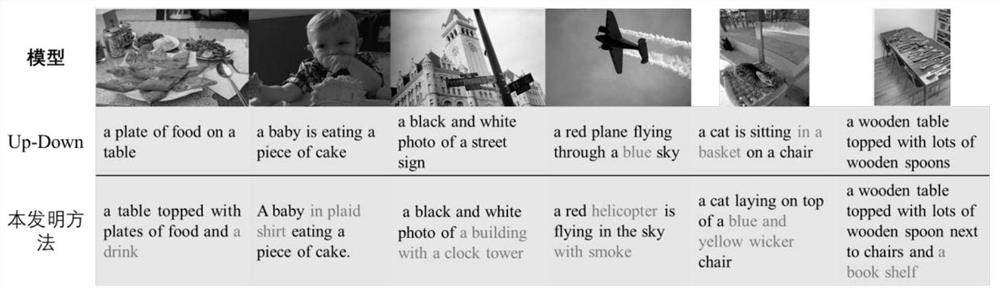

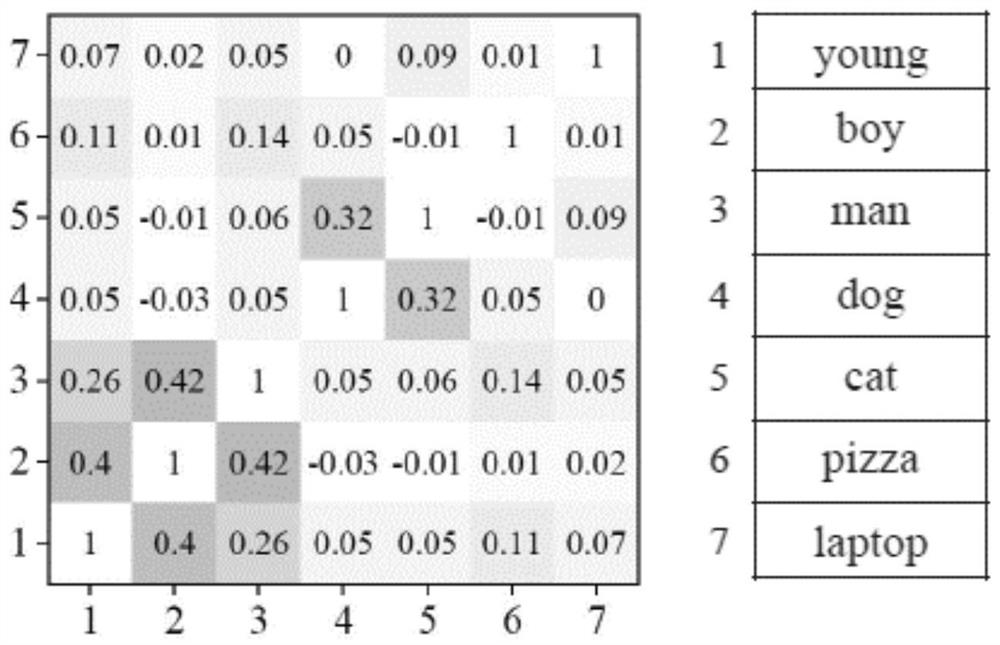

[0082] The purpose of the present invention is to address the shortcomings of traditional attention-based image description methods that do not explicitly model the relationship between local regions and concepts, and propose a scheme to adaptively generate visual regions and thereby generate visual concepts through the context mechanism, so as to enhance visual Connection to language and accuracy, providing an image description method based on adaptive local concept embedding. The specific algorithm flow is as figure 1 shown.

[0083] The present invention comprises the following steps:

[0084] 1) For the images in the image library, first use the convolutional neural network to extract the corresponding image features;

[0085] 2) Use a recurrent neural network to map the current input word and global...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com