Patents

Literature

56714 results about "Neural network nn" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Neural network drug dosage estimation

InactiveUS6658396B1Improve accuracyGood precisionDrug and medicationsBiological neural network modelsNerve networkPatient characteristics

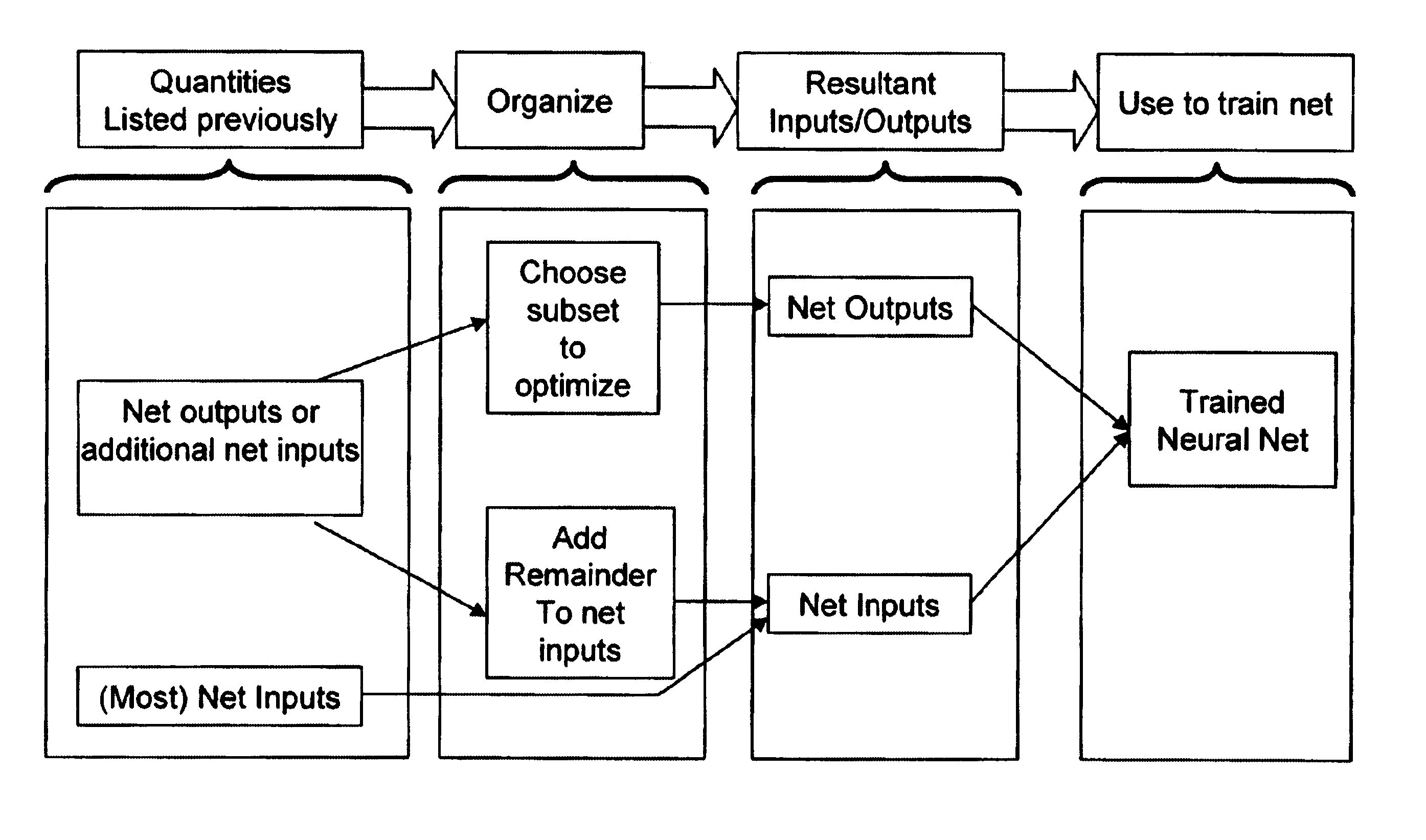

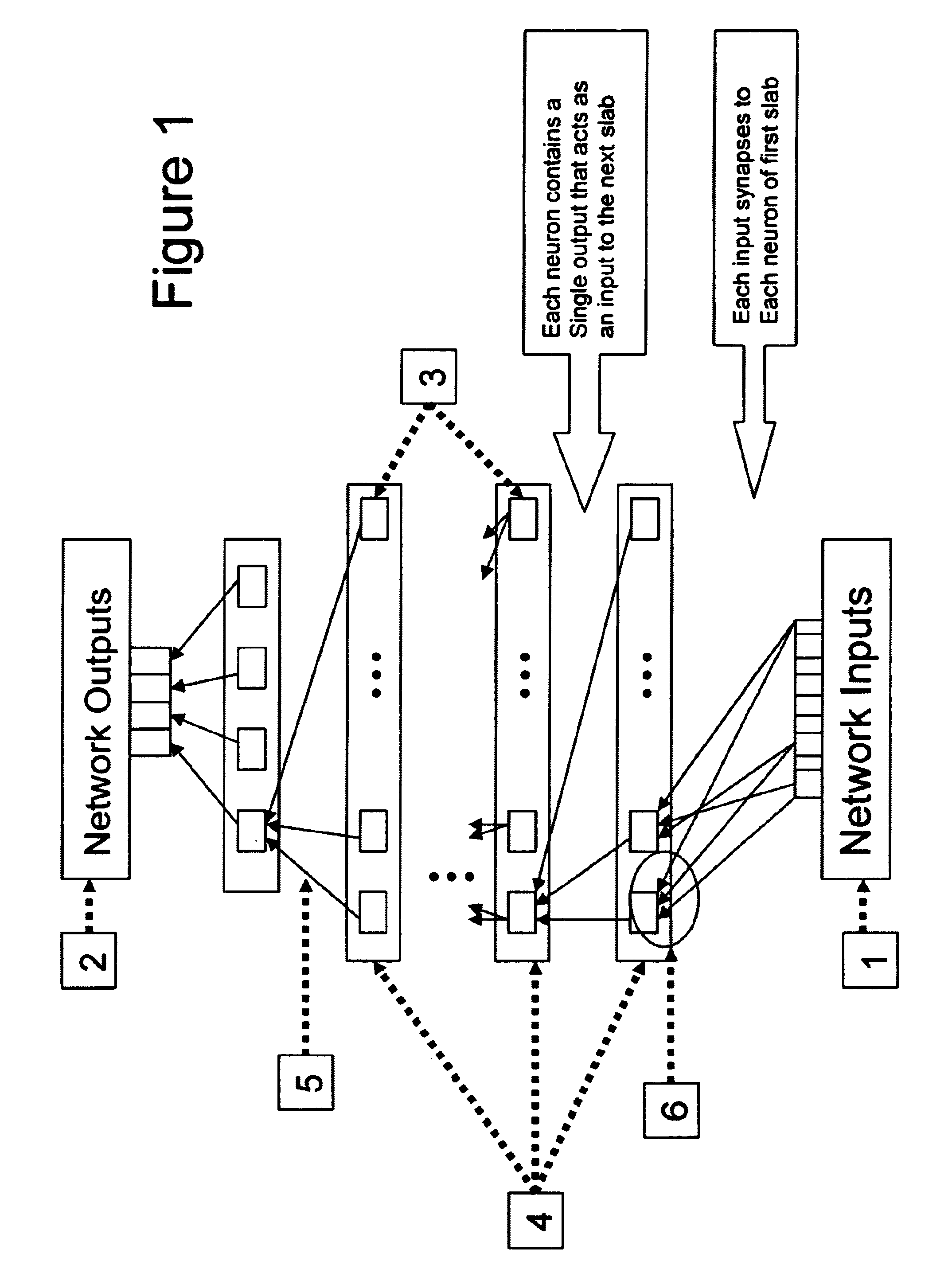

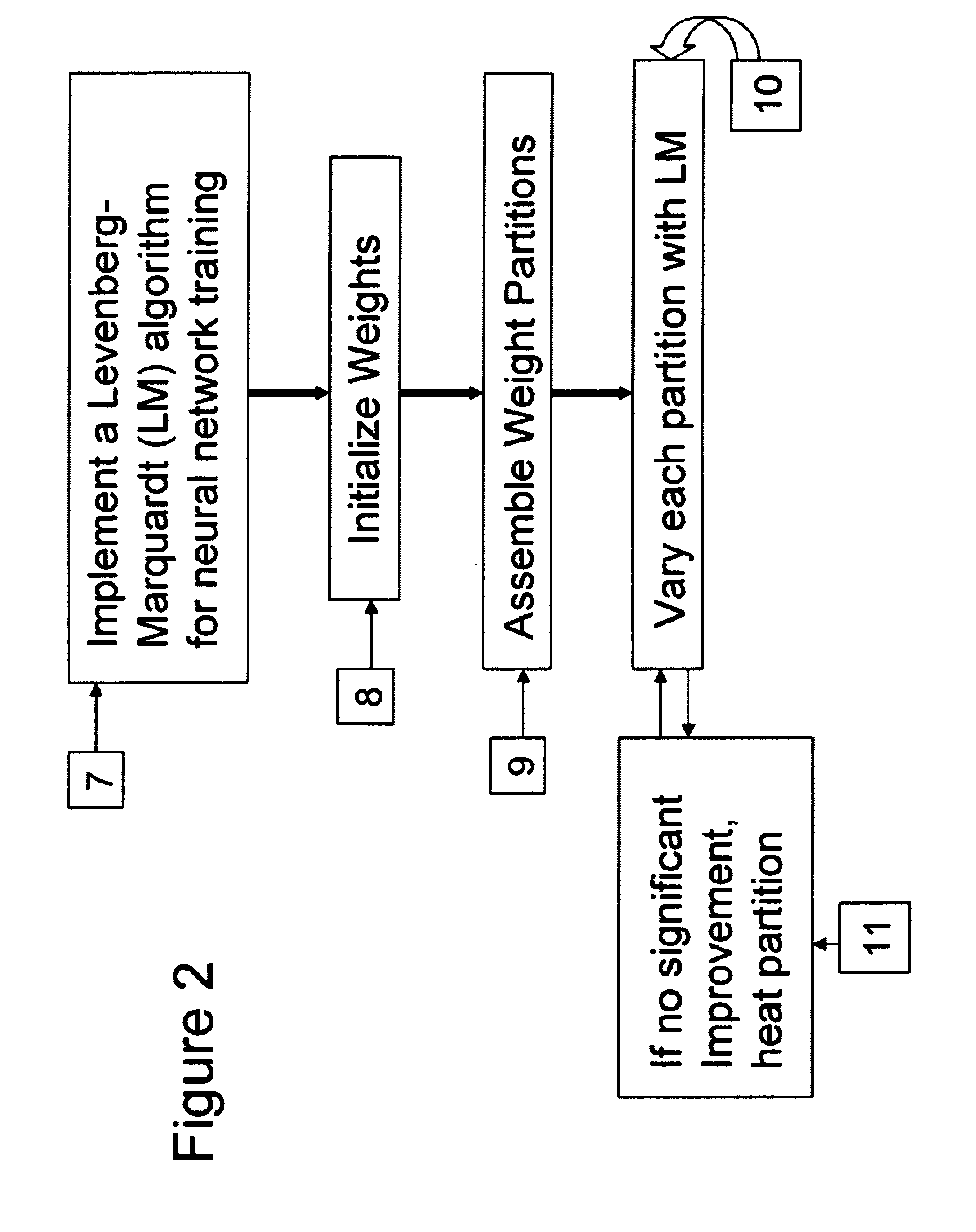

Neural networks are constructed (programmed), trained on historical data, and used to predict any of (1) optimal patient dosage of a single drug, (2) optimal patient dosage of one drug in respect of the patient's concurrent usage of another drug, (3a) optimal patient drug dosage in respect of diverse patient characteristics, (3b) sensitivity of recommended patient drug dosage to the patient characteristics, (4a) expected outcome versus patient drug dosage, (4b) sensitivity of the expected outcome to variant drug dosage(s), (5) expected outcome(s) from drug dosage(s) other than the projected optimal dosage. Both human and economic costs of both optimal and sub-optimal drug therapies may be extrapolated from the exercise of various optimized and trained neural networks. Heretofore little recognized sensitivities-such as, for example, patient race in the administration of psychotropic drugs-are made manifest. Individual prescribing physicians employing deviant patterns of drug therapy may be recognized. Although not intended to prescribe drugs, nor even to set prescription drug dosage, the neural networks are very sophisticated and authoritative "helps" to physicians, and to physician reviewers, in answering "what if" questions.

Owner:PREDICTION SCI

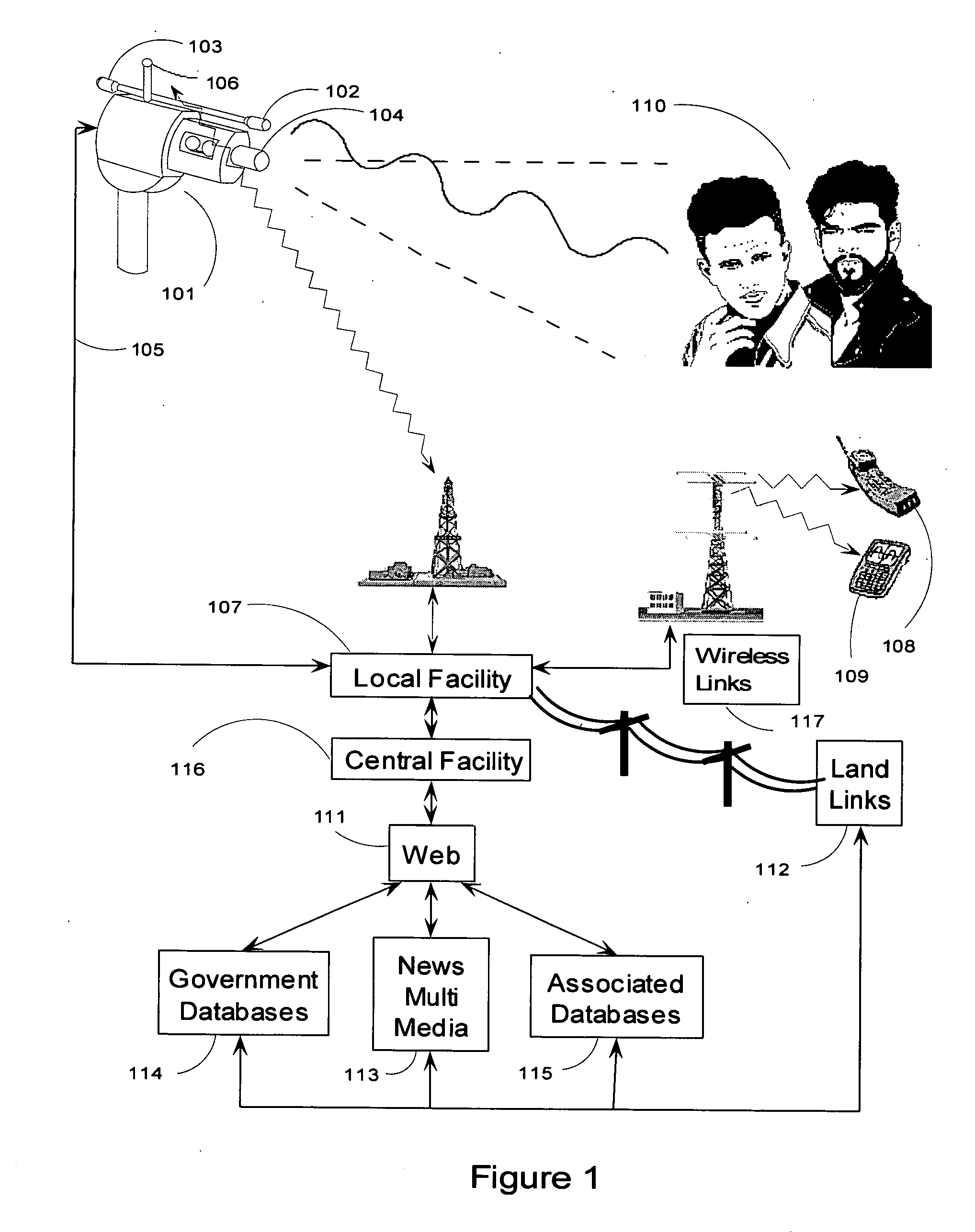

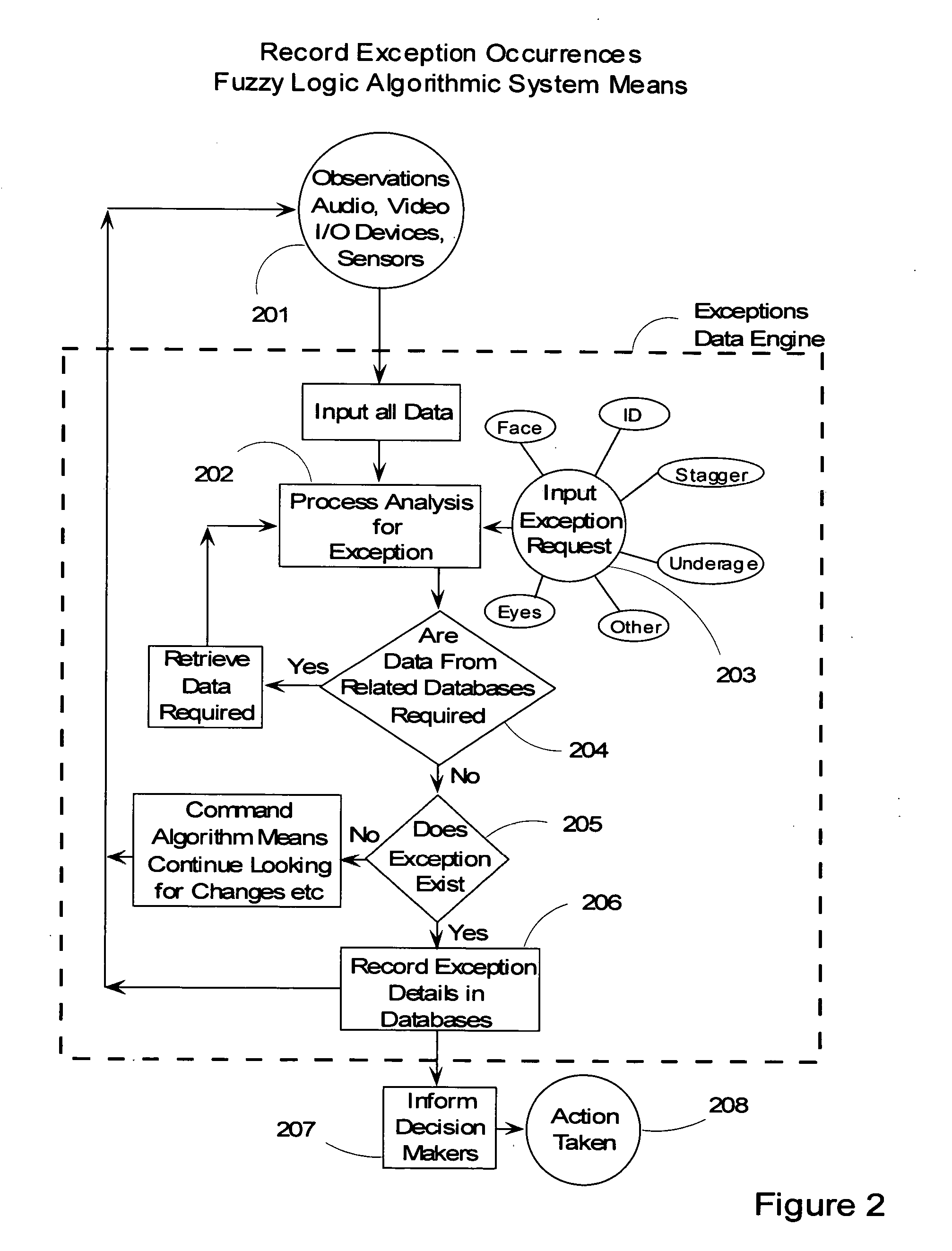

Video surveillance data analysis algorithms, with local and network-shared communications for facial, physical condition, and intoxication recognition, fuzzy logic intelligent camera system

InactiveUS20060190419A1Minimum storage levelReduce data storageDigital computer detailsCharacter and pattern recognitionPattern recognitionVideo monitoring

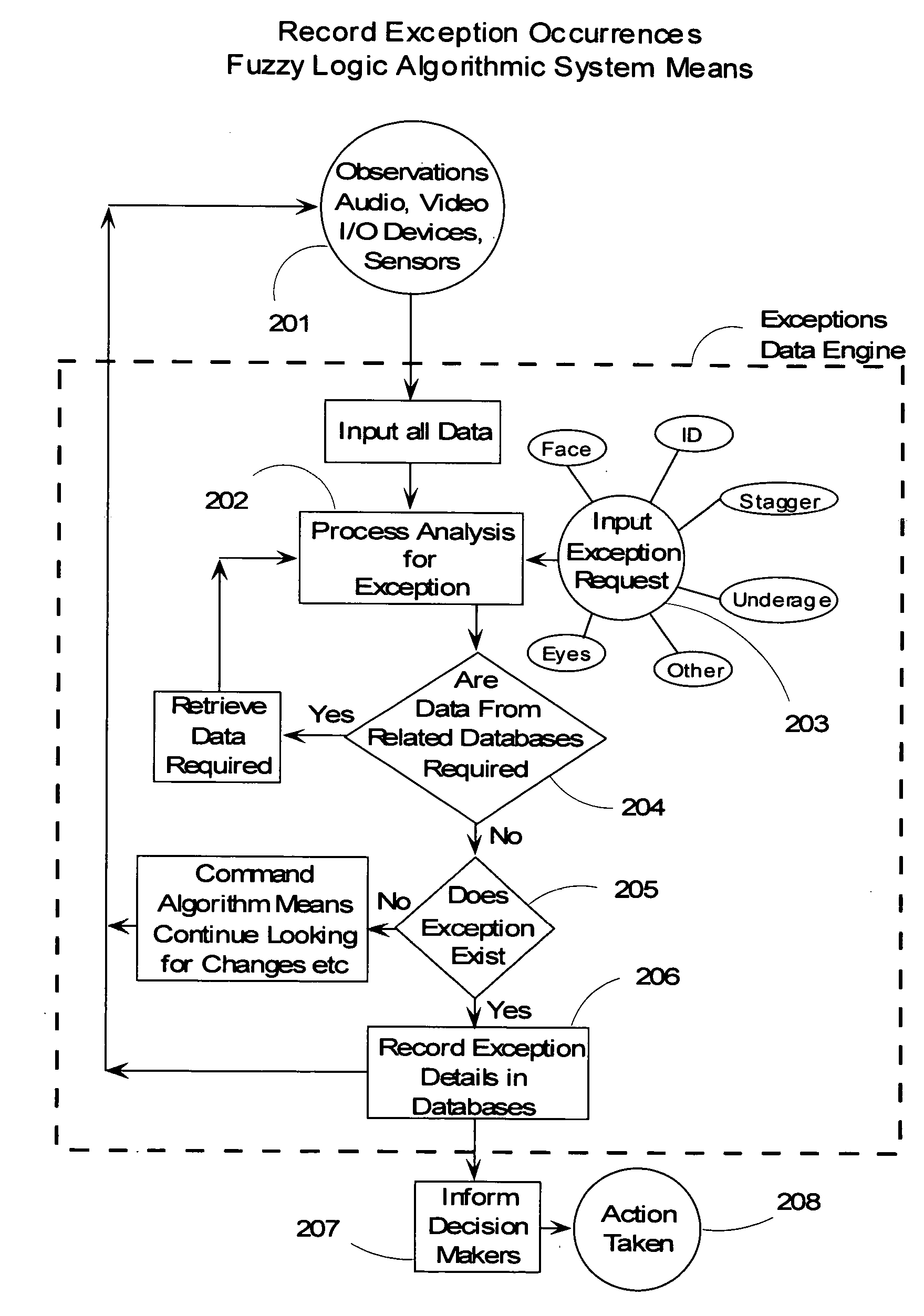

This invention relates to intelligent video surveillance fuzzy logic neural networks, camera systems with local and network-shared communications for facial, physical condition and intoxication recognition. The device we reveal helps reduce underage drinking by detecting and refusing entrance or service to subjects under legal drinking age. The device we reveal can estimate attention of viewers of advertising, entertainment, displays and the like. The invention also relates to method, and Vision, Image and related-data, database-systems to reduce the volume of surveillance data through automatically recognizing and recording only occurrences of exceptions and elimination of non-events thereby achieving a reduction factor of up to 60,000. This invention permits members of the LastCall™ Network to share their databases of the facial recognition and identification of subjects recorded in the exception occurrences with participating members' databases: locally, citywide, nationally and internationally, depending upon level of sharing permission.

Owner:BUNN FR E +1

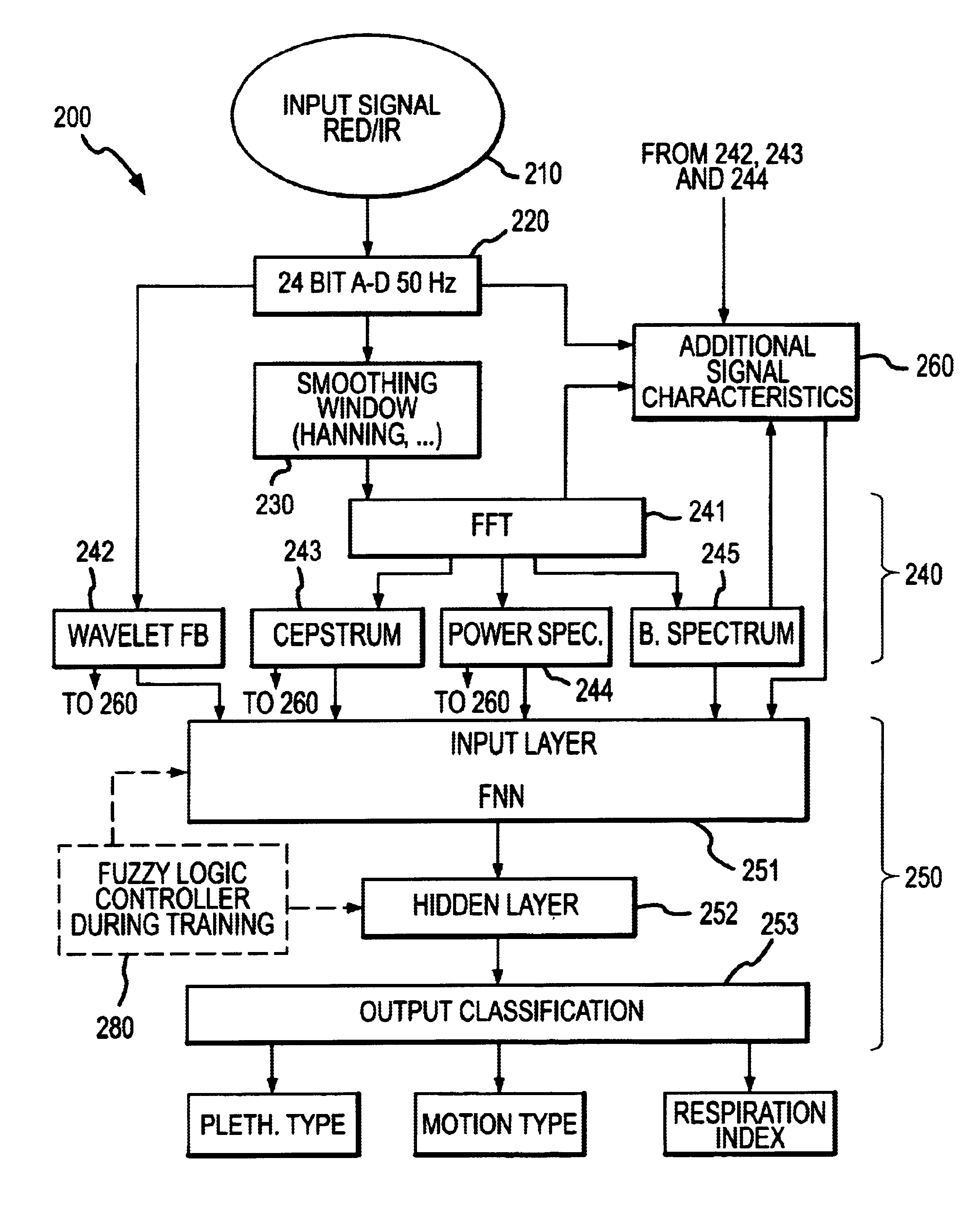

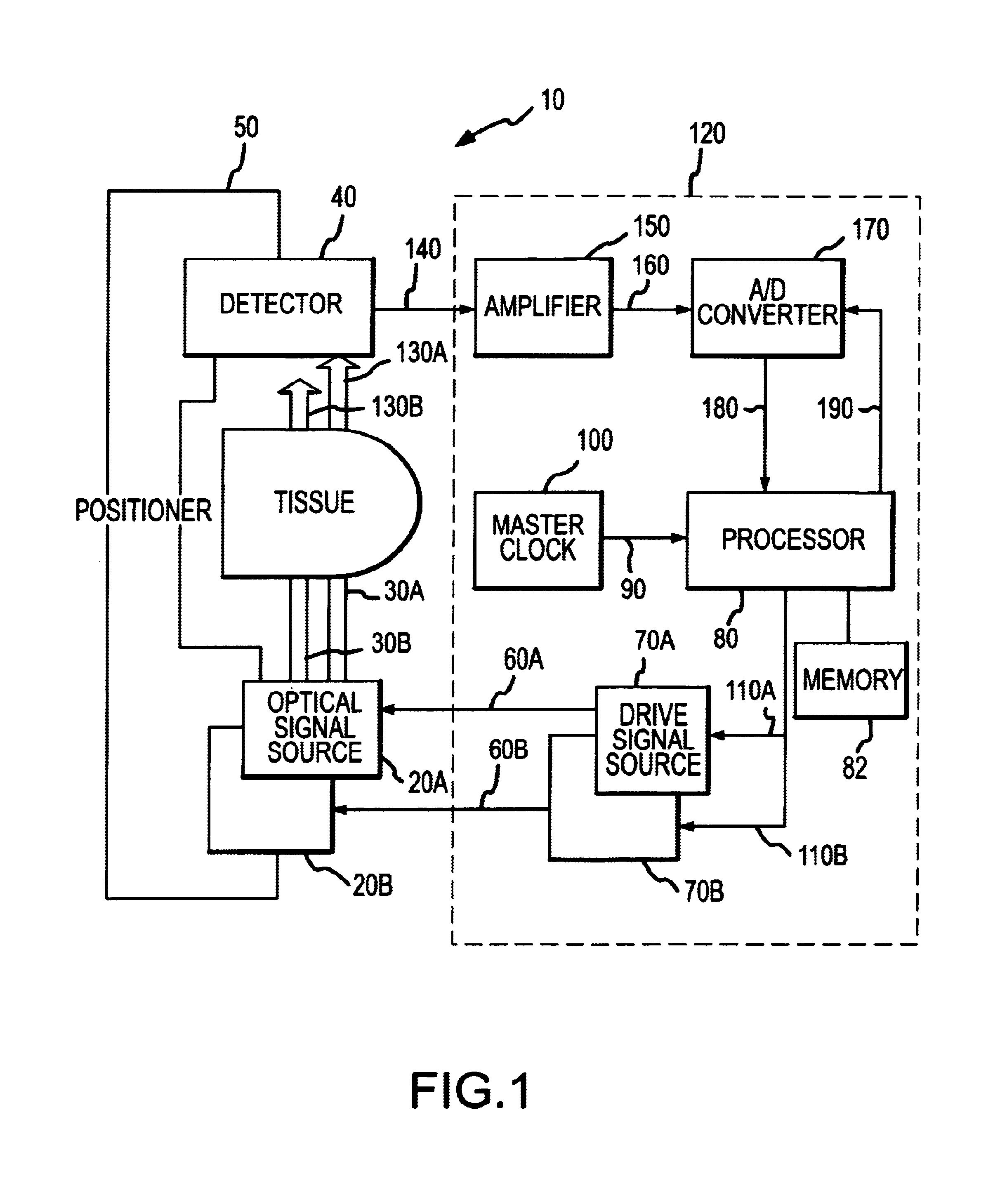

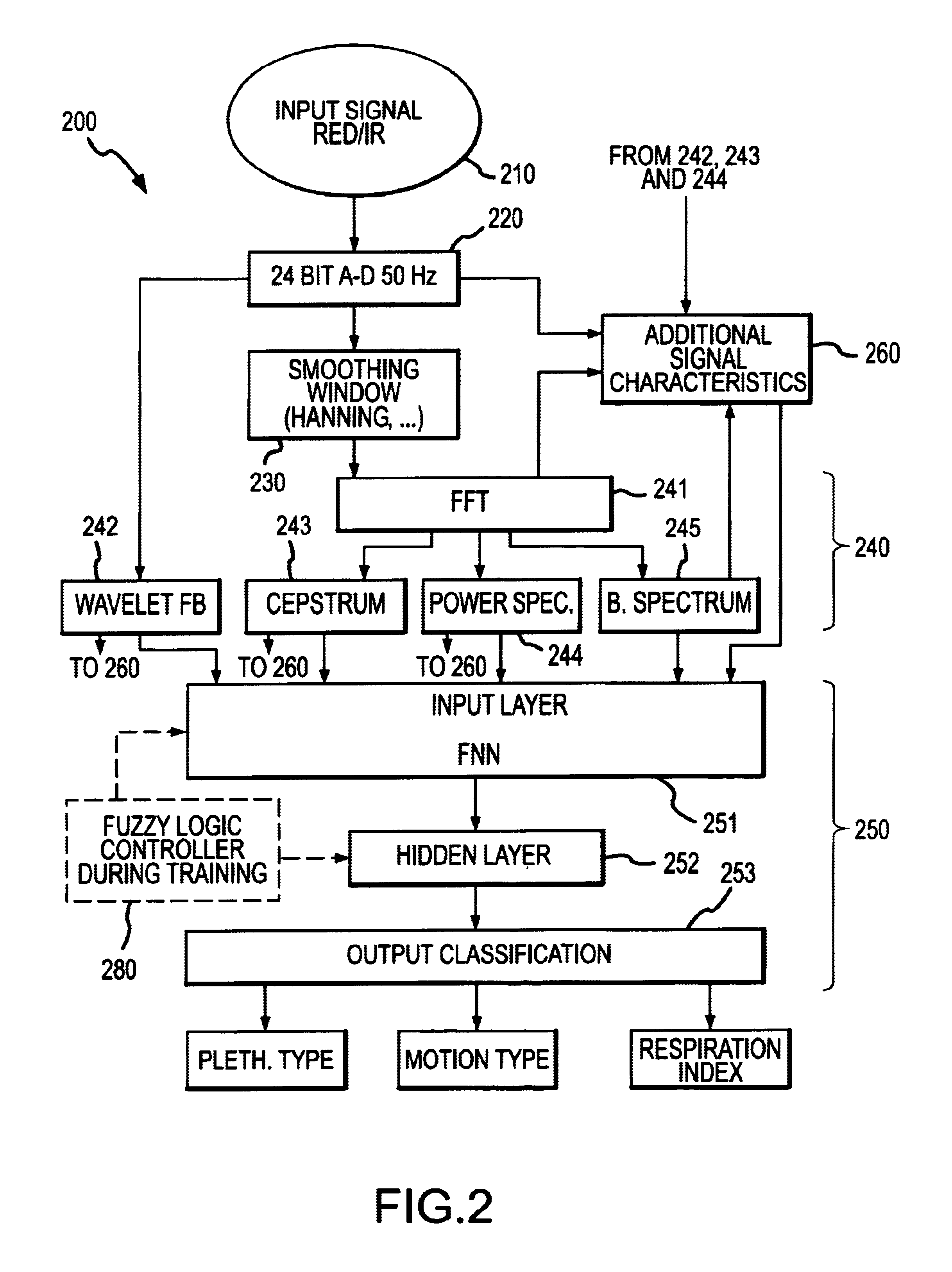

Multi-domain motion estimation and plethysmographic recognition using fuzzy neural-nets

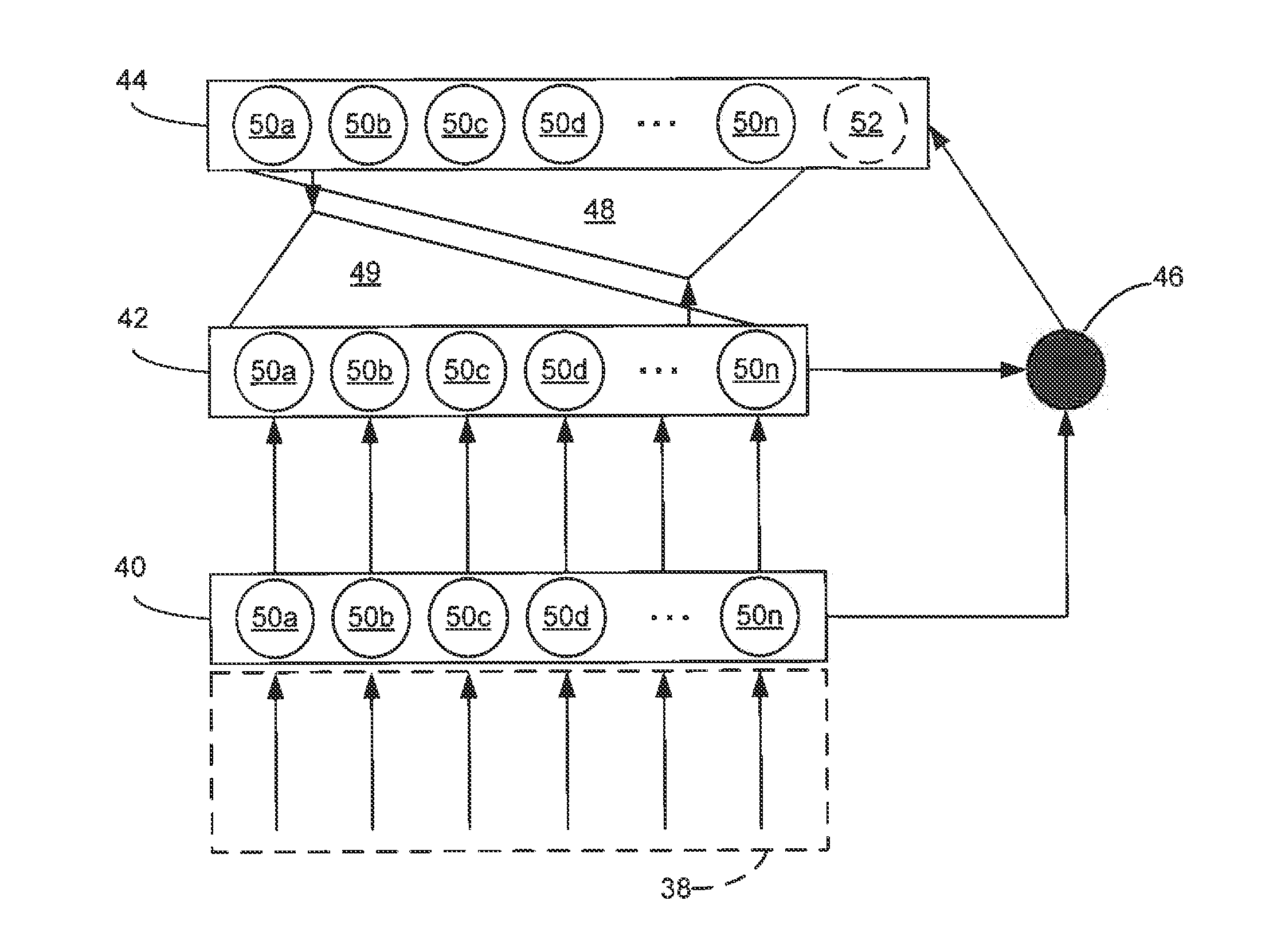

ActiveUS6931269B2Enhanced signalImprove filtering effectSurgeryCatheterPattern recognitionHidden layer

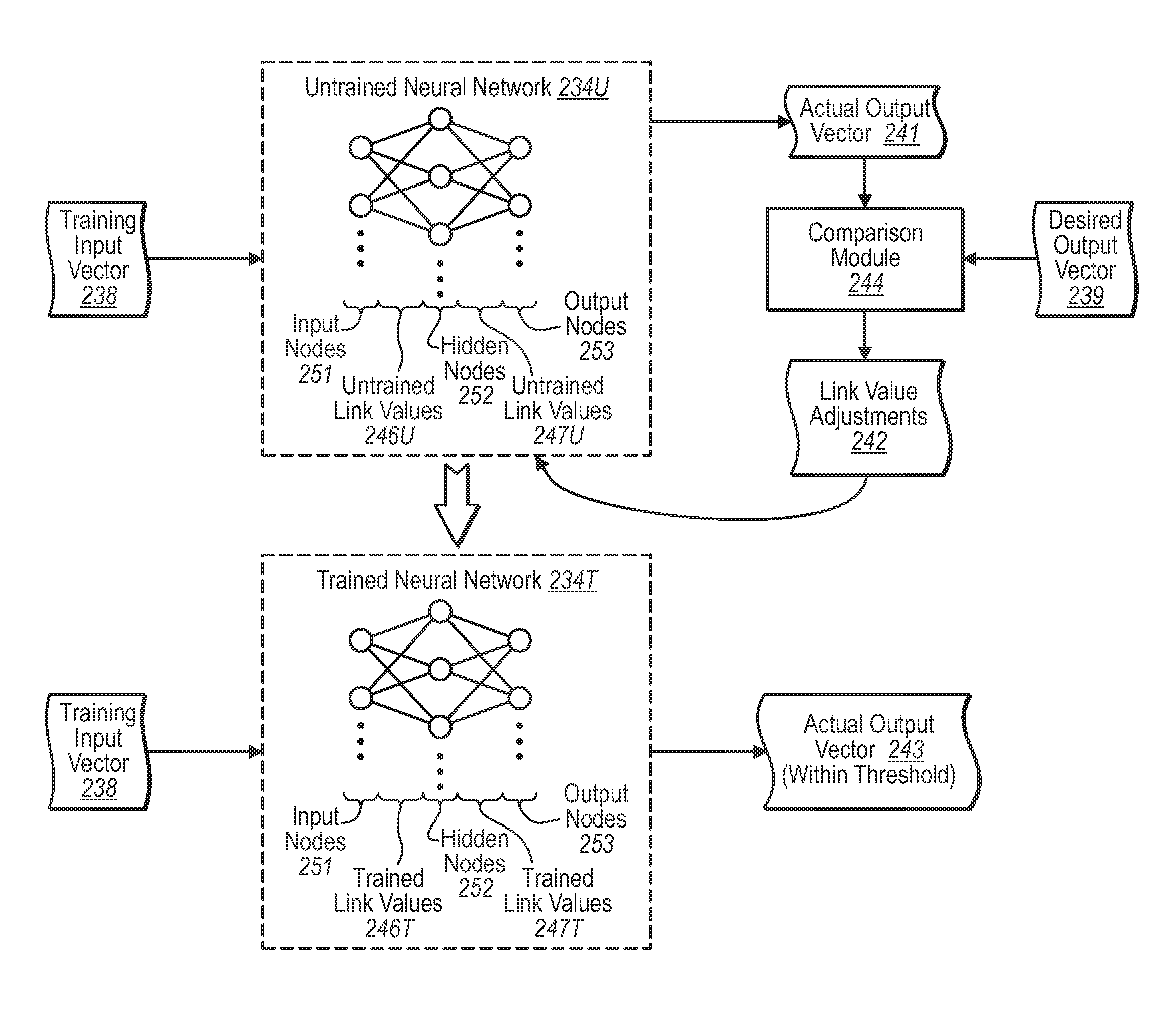

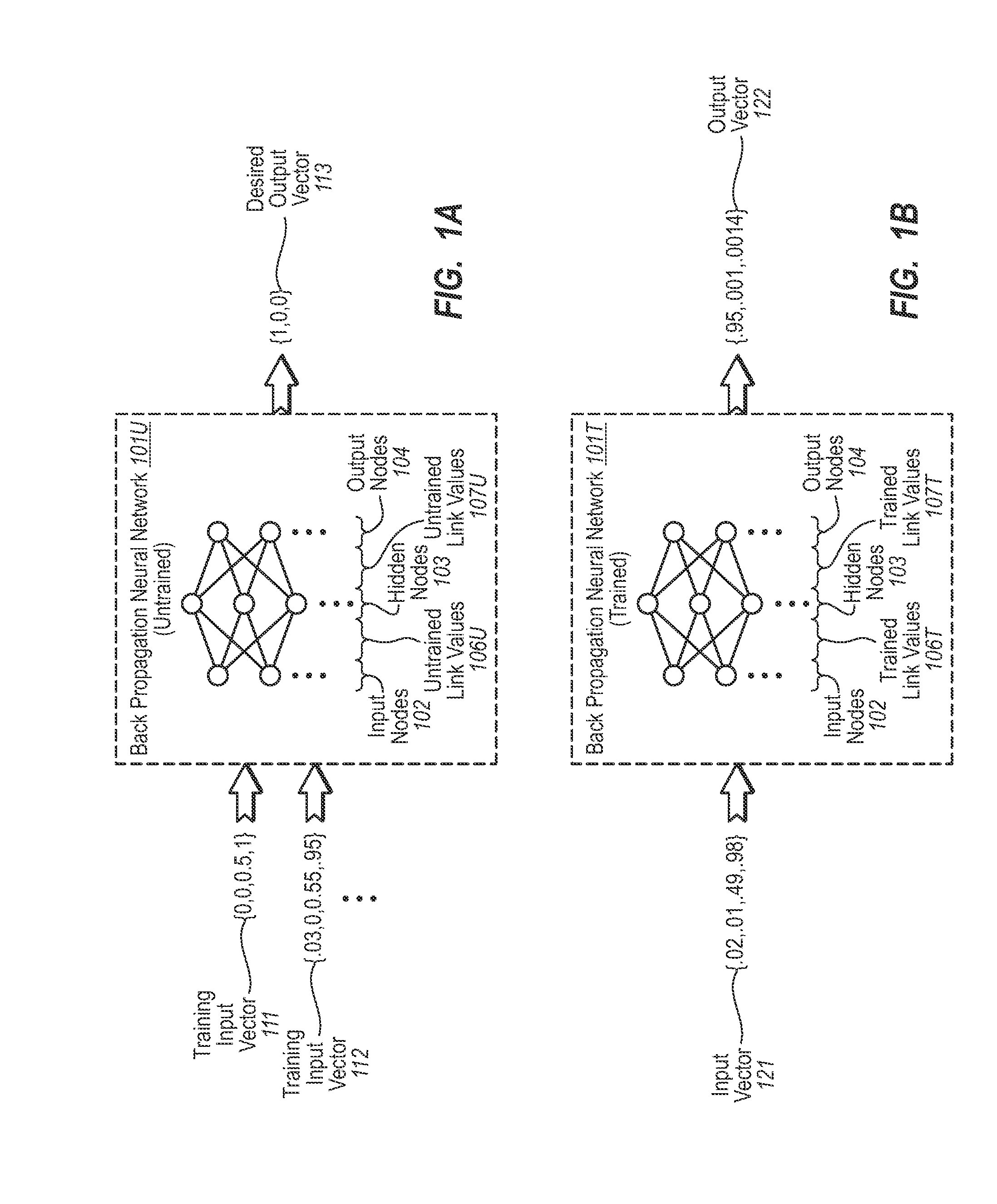

Pulse oximetry is improved through classification of plethysmographic signals by processing the plethysmographic signals using a neural network that receives input coefficients from multiple signal domains including, for example, spectral, bispectral, cepstral and Wavelet filtered signal domains. In one embodiment, a plethysmographic signal obtained from a patient is transformed (240) from a first domain to a plurality of different signal domains (242, 243, 244, 245) to obtain a corresponding plurality of transformed plethysmographic signals. A plurality of sets of coefficients derived from the transformed plethysmographic signals are selected and directed to an input layer (251) of a neural network (250). The plethysmographic signal is classified by an output layer (253) of the neural network (250) that is connected to the input layer (251) by one or more hidden layers (252).

Owner:DATEX OHMEDA

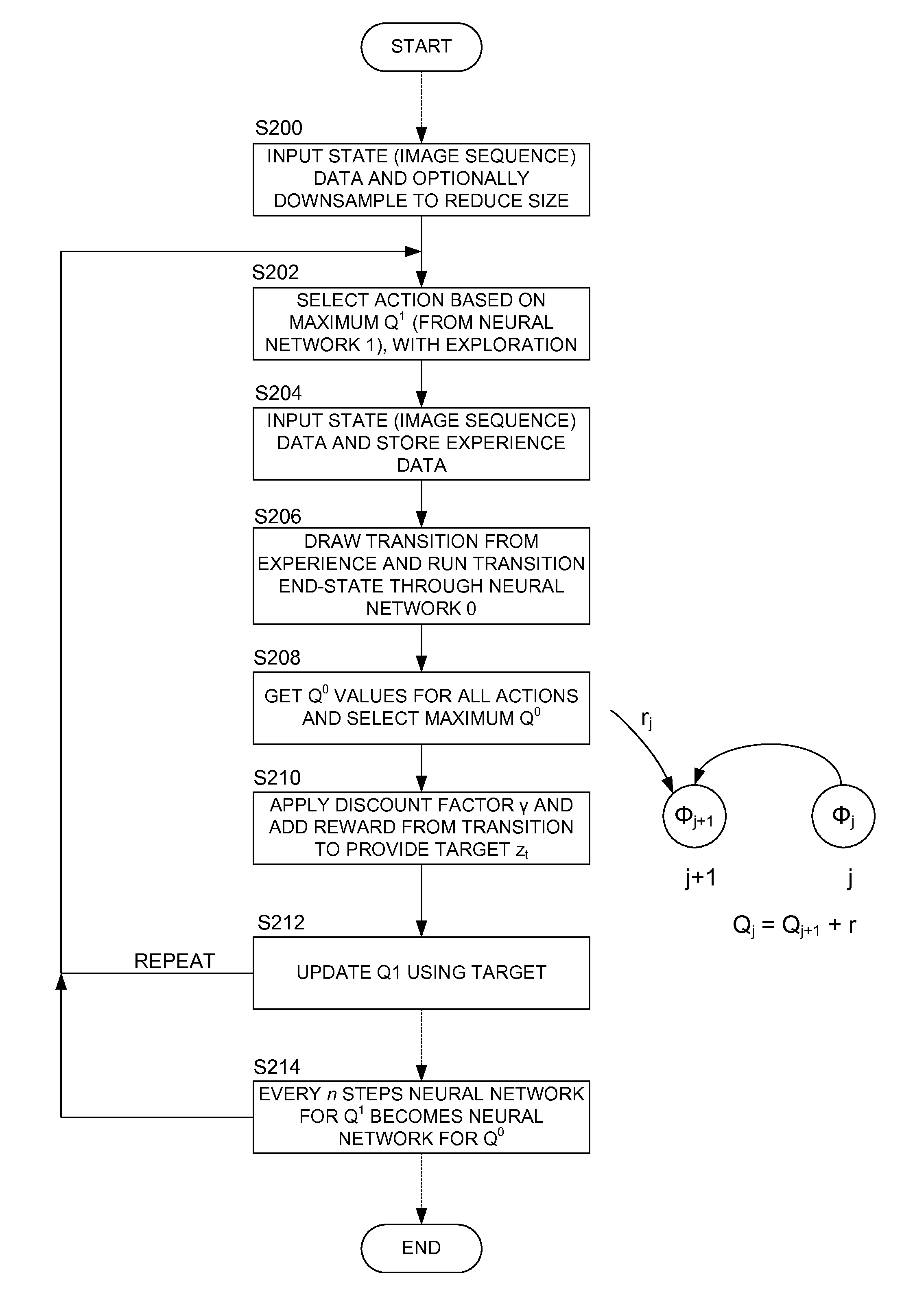

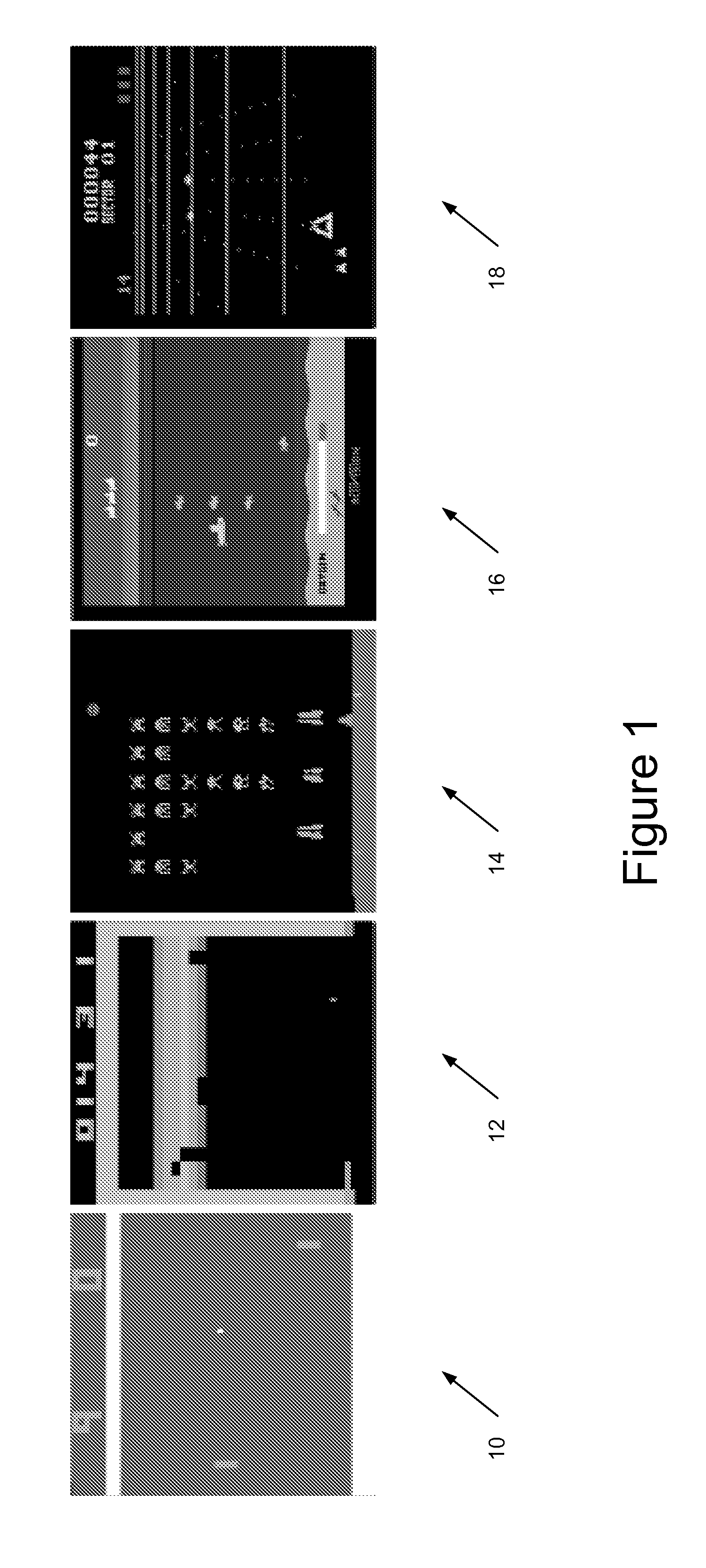

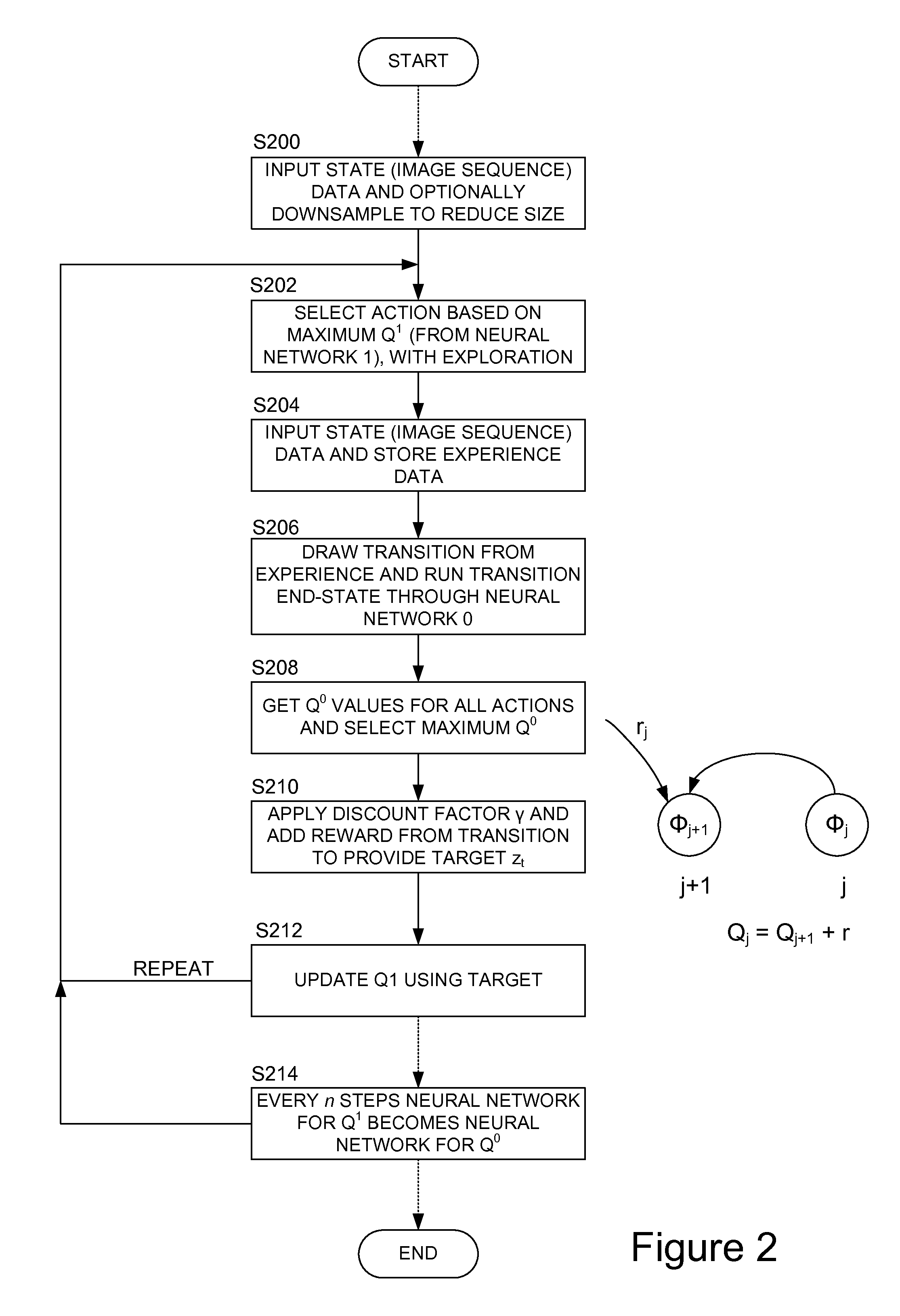

Methods and apparatus for reinforcement learning

ActiveUS20150100530A1Reduce computing costLarge data setDigital computer detailsArtificial lifeAlgorithmReinforcement learning

Owner:DEEPMIND TECH LTD

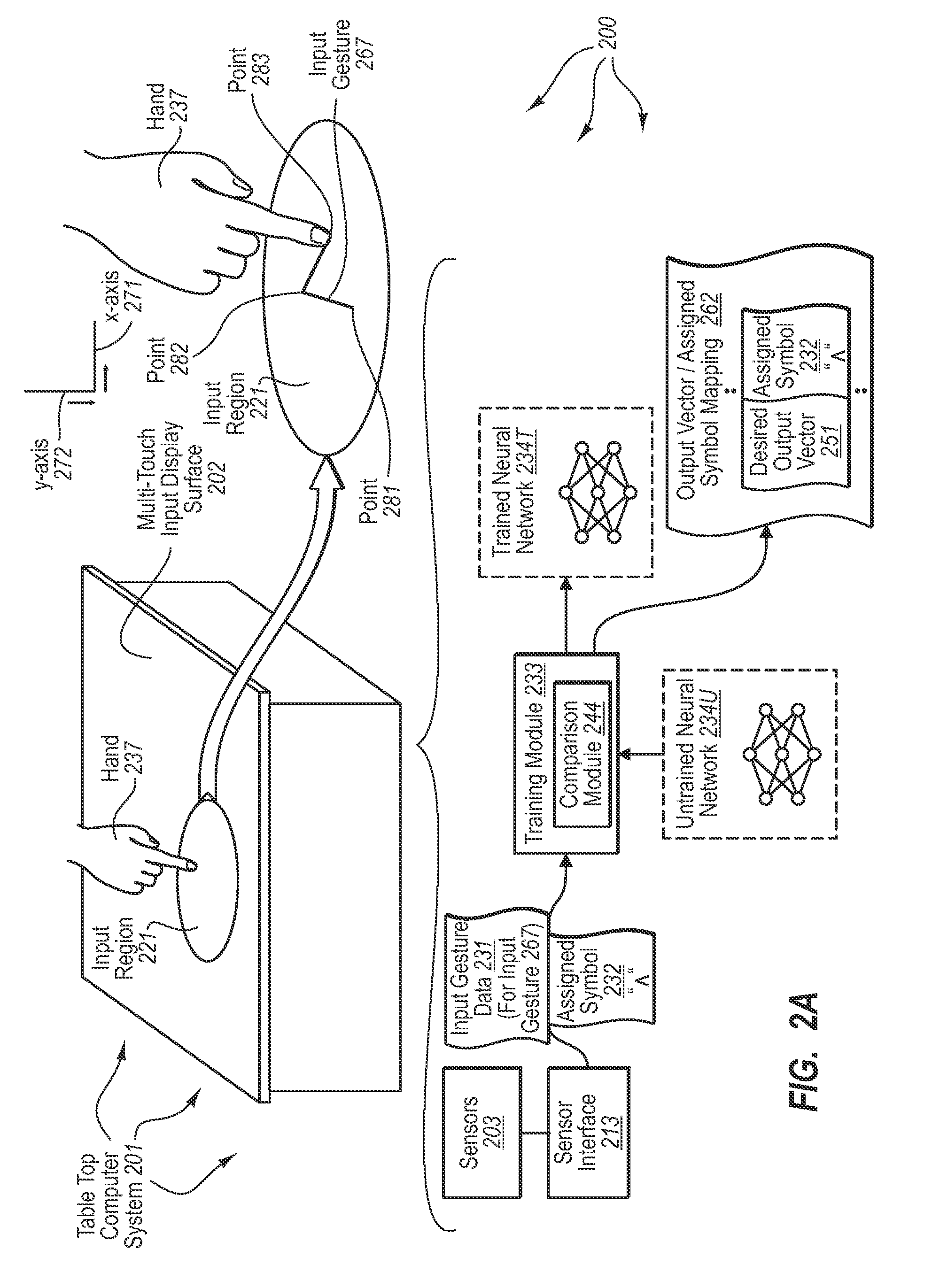

Recognizing input gestures

The present invention extends to methods, systems, and computer program products for recognizing input gestures. A neural network is trained using example inputs and backpropagation to recognize specified input patterns. Input gesture data is representative of movements in contact on a multi-touch input display surface relative to one or more axes over time. Example inputs used for training the neural network to recognize a specified input pattern can be created from sampling input gesture data for example input gestures known to represent the specified input pattern. Trained neural networks can subsequently be used to recognize input gestures that are similar to known input gestures as the specified input pattern corresponding to the known input gestures.

Owner:MICROSOFT TECH LICENSING LLC

Color printer characterization using optimization theory and neural networks

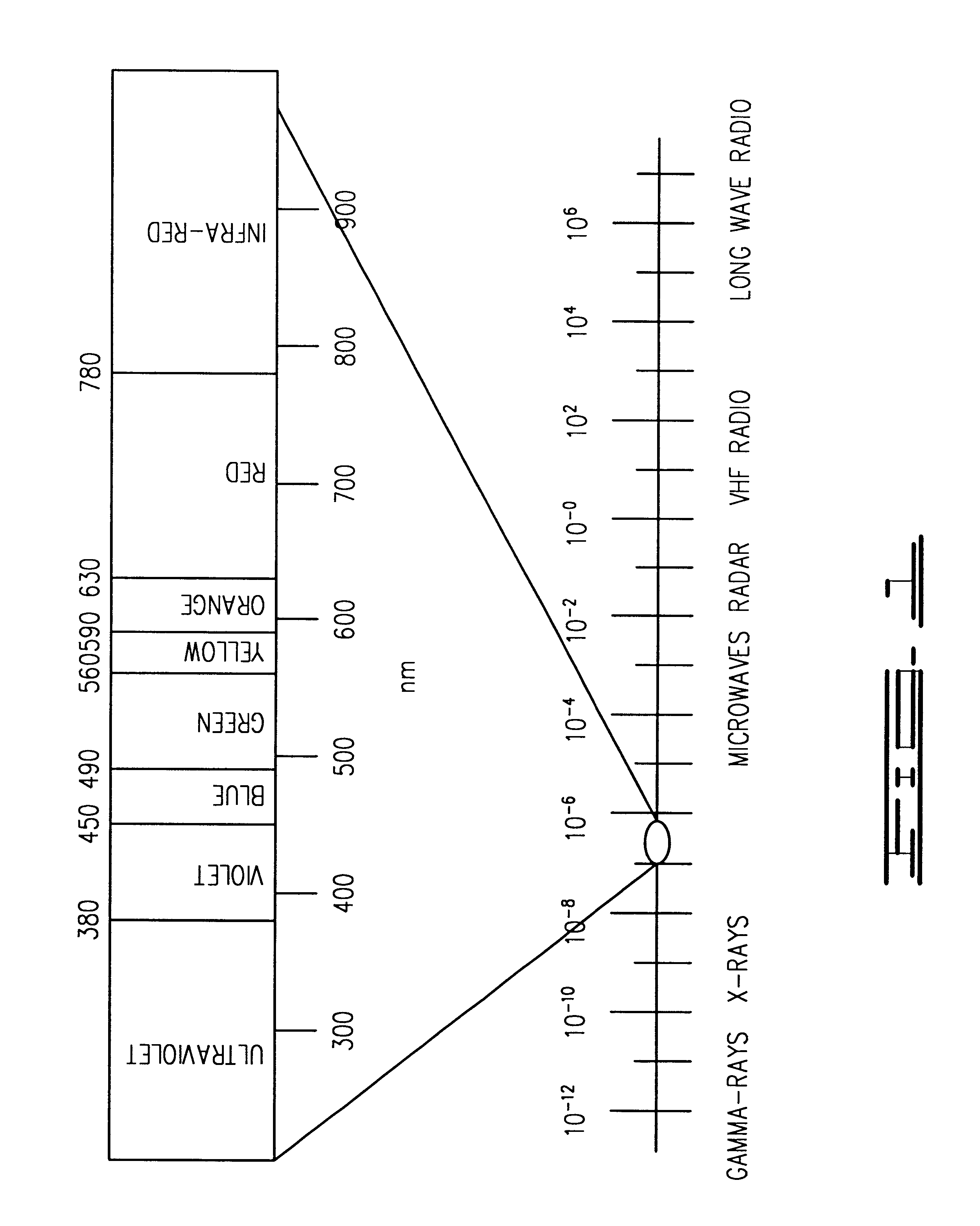

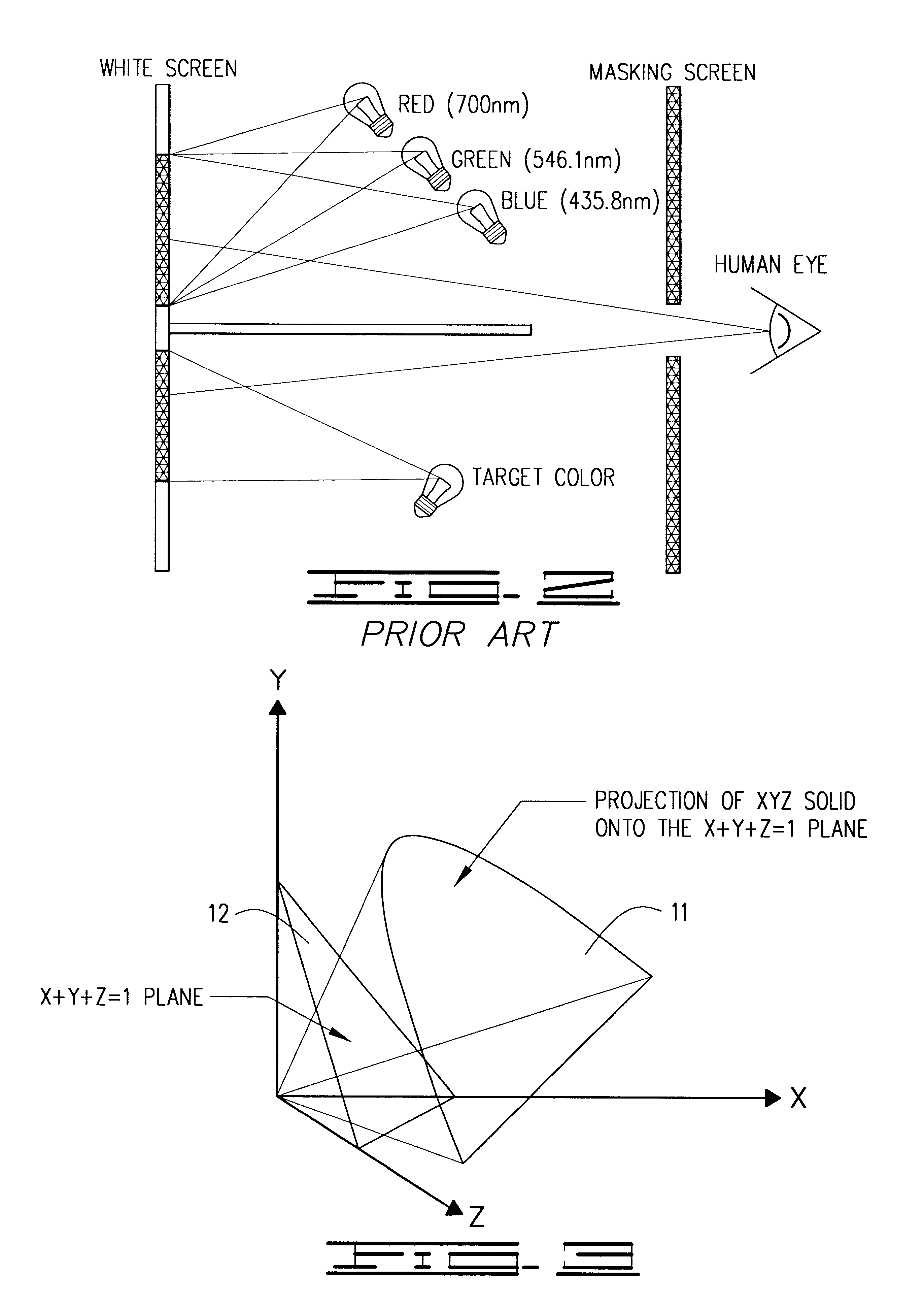

InactiveUS6480299B1Digitally marking record carriersDigital computer detailsPattern recognitionICC profile

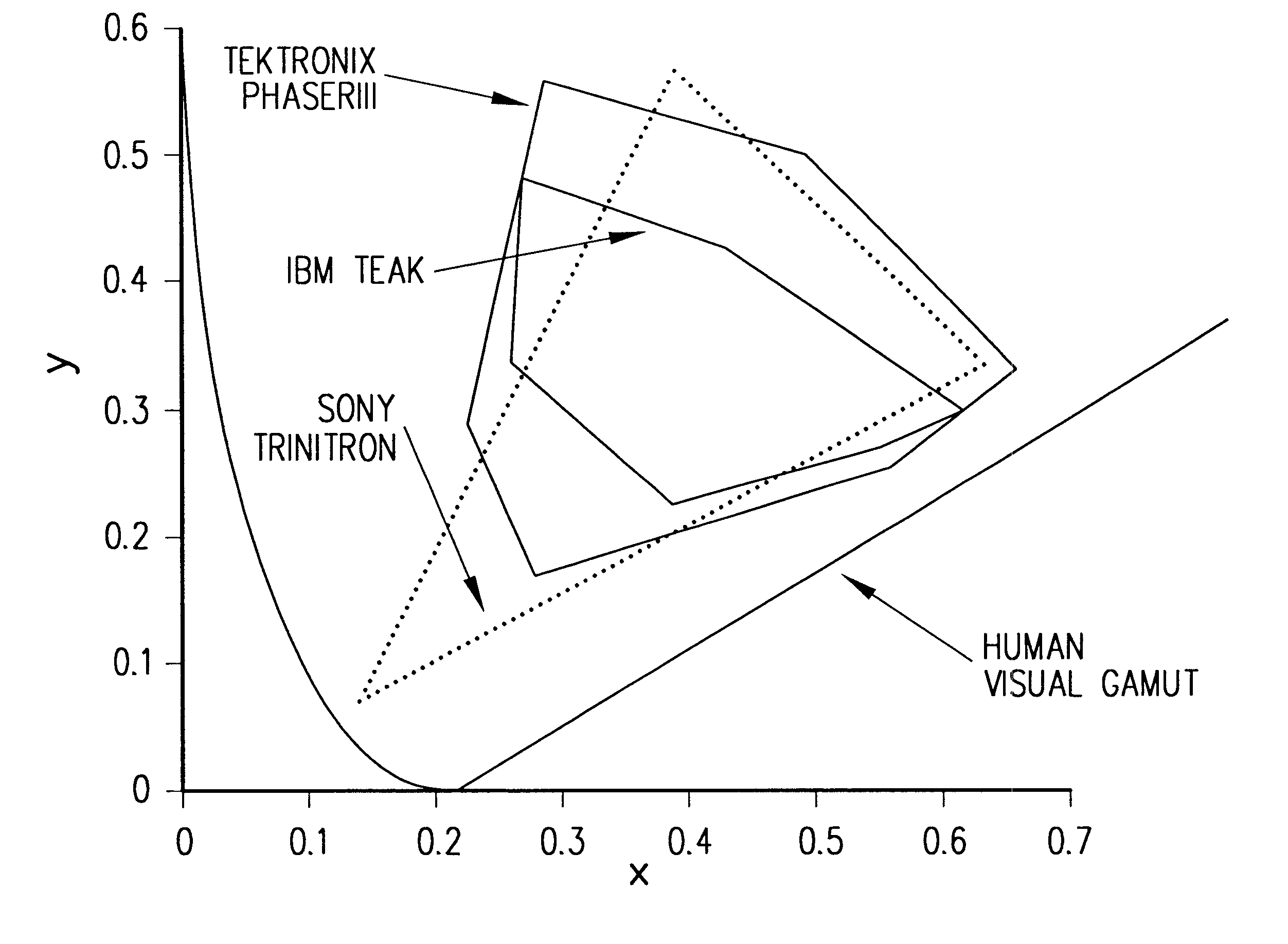

A color management method / apparatus generates image color matching and International Color Consortium (ICC) color printer profiles using a reduced number of color patch measurements. Color printer characterization, and the generation of ICC profiles usually require a large number of measured data points or color patches and complex interpolation techniques. This invention provides an optimization method / apparatus for performing LAB to CMYK color space conversion, gamut mapping, and gray component replacement. A gamut trained network architecture performs LAB to CMYK color space conversion to generate a color profile lookup table for a color printer, or alternatively, to directly control the color printer in accordance with the a plurality of color patches that accurately. represent the gamut of the color printer. More specifically, a feed forward neural network is trained using an ANSI / IT-8 basic data set consisting of 182 data points or color patches, or using a lesser number of data points such as 150 or 101 data points when redundant data points within linear regions of the 182 data point set are removed. A 5-to-7 neuron neural network architecture is preferred to perform the LAB to CMYK color space conversion as the profile lookup table is built, or as the printer is directly controlled. For each CMYK signal, an ink optimization criteria is applied, to thereby control ink parameters such as the total quantity of ink in each CMYK ink printed pixel, and / or to control the total quantity of black ink in each CMYK ink printed pixel.

Owner:UNIV OF COLORADO THE REGENTS OF

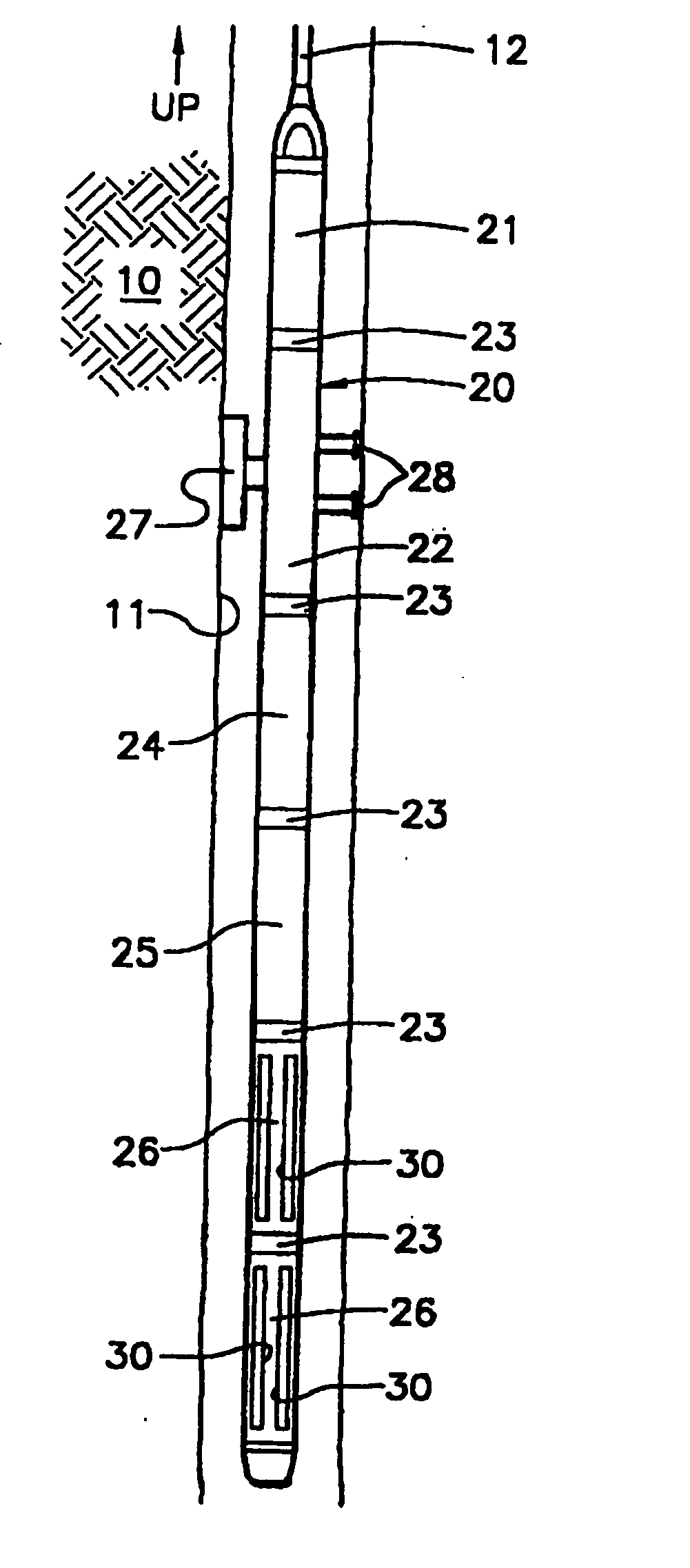

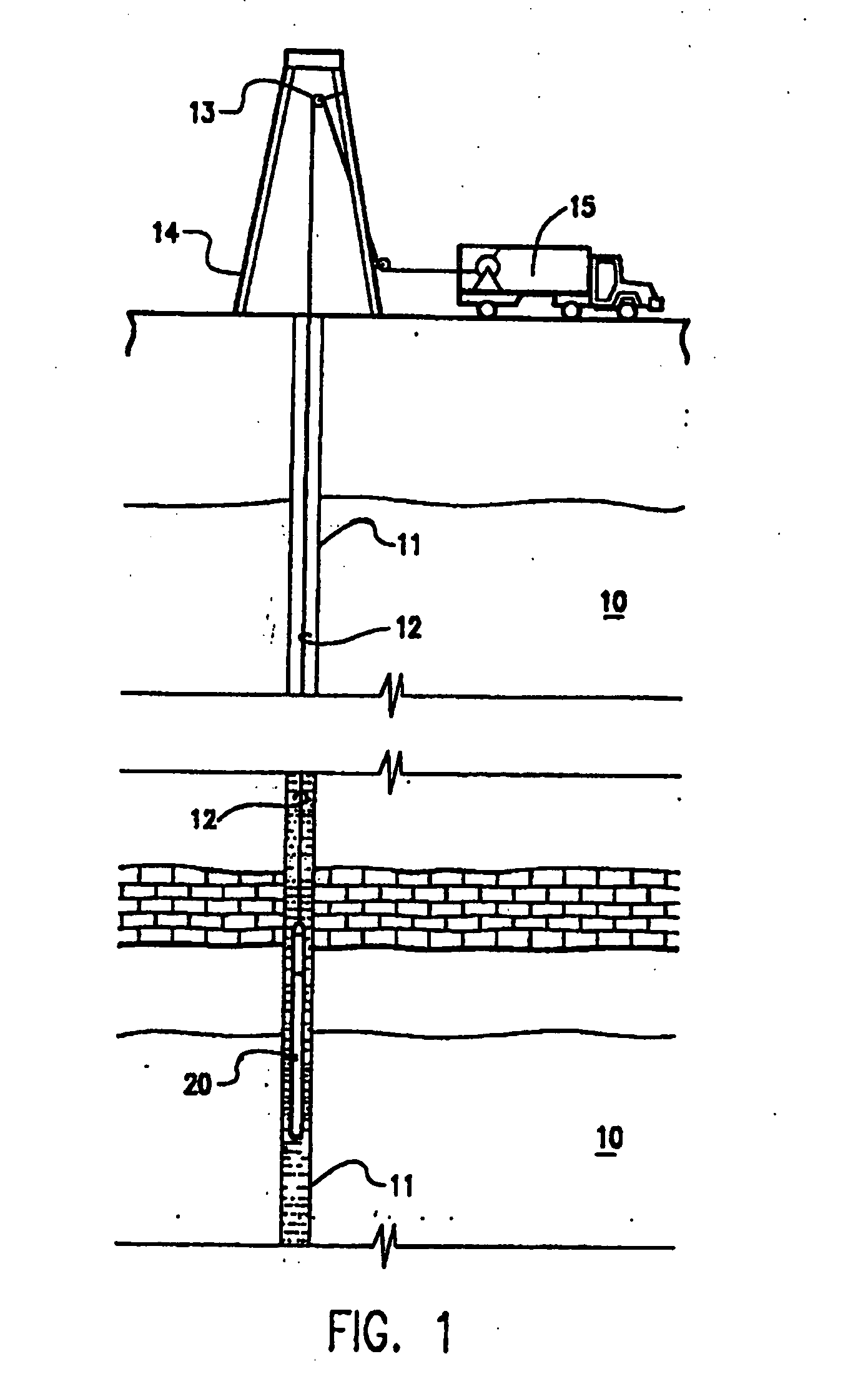

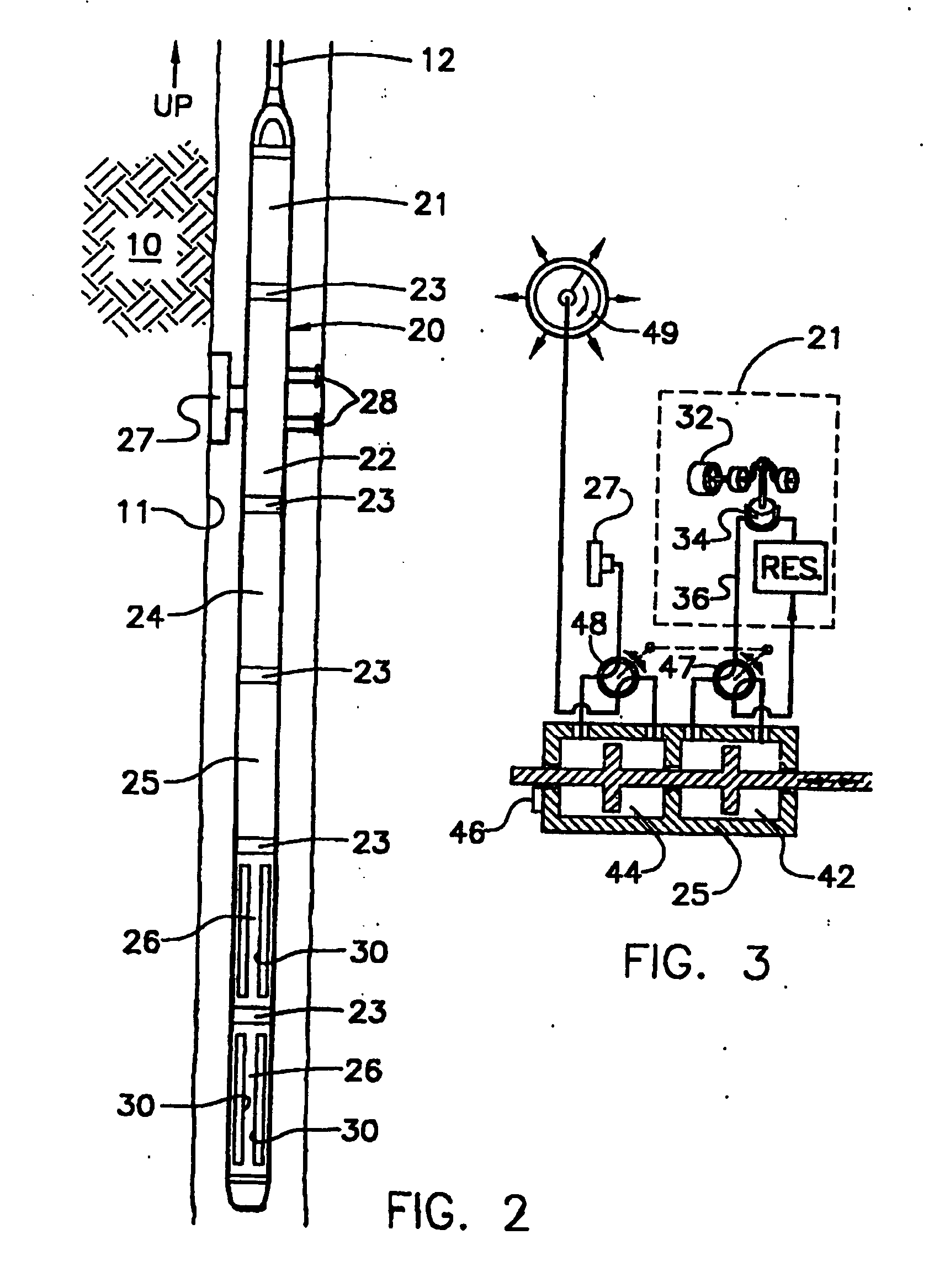

Method and apparatus for a downhole spectrometer based on electronically tunable optical filters

ActiveUS20050099618A1High resolution spectroscopyEasy to analyze and useRadiation pyrometrySurveyGas oil ratioAPI gravity

The present invention provides an apparatus and method for high resolution spectroscopy (approximately 10 picometer wavelength resolution) using a tunable optical filter (TOF) for analyzing a formation fluid sample downhole and at the surface to determine formation fluid parameters. The analysis comprises determination of gas oil ratio, API gravity and various other fluid parameters which can be estimated after developing correlations to a training set of samples using a neural network or a chemometric equation.

Owner:BAKER HUGHES INC

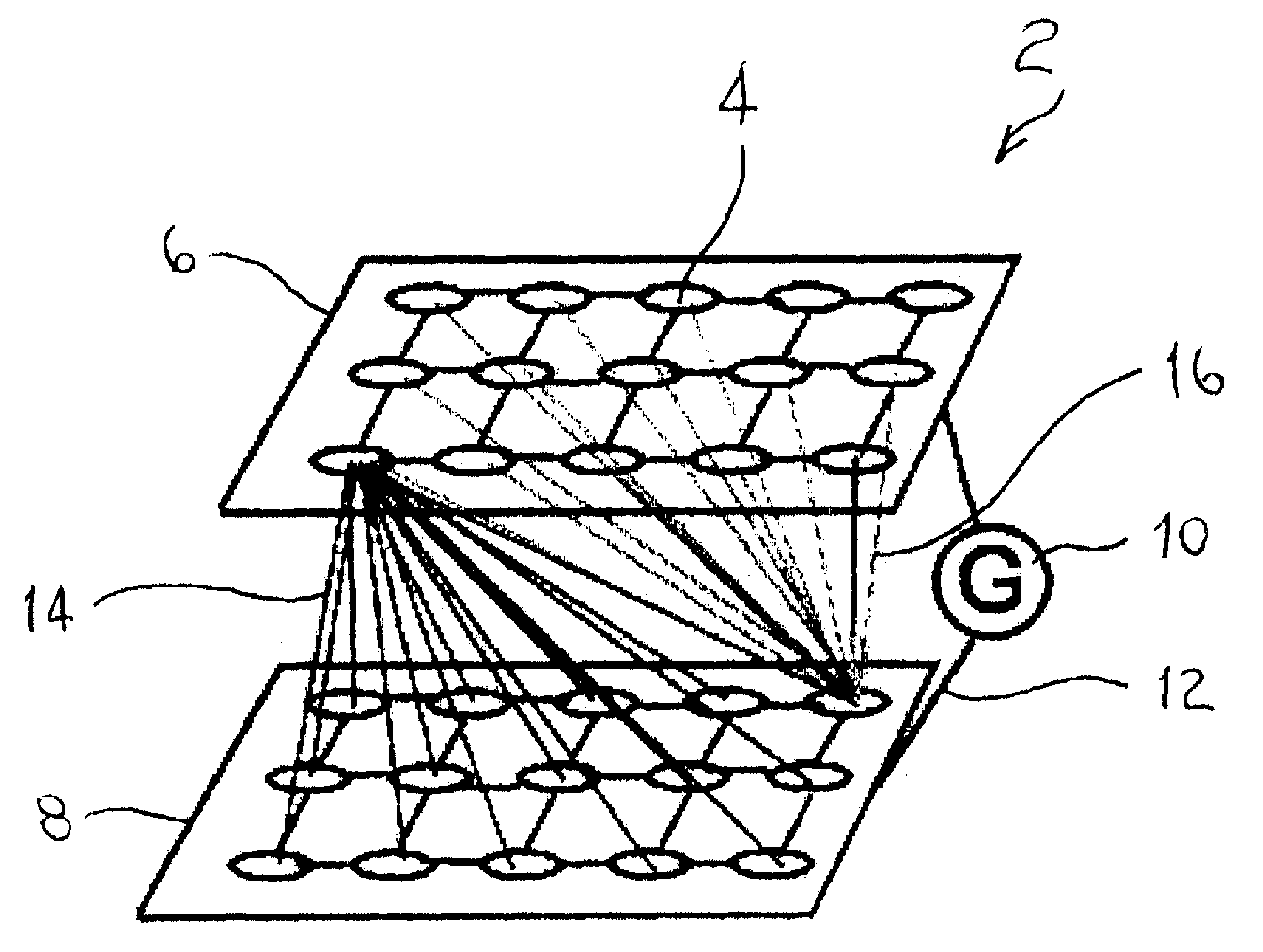

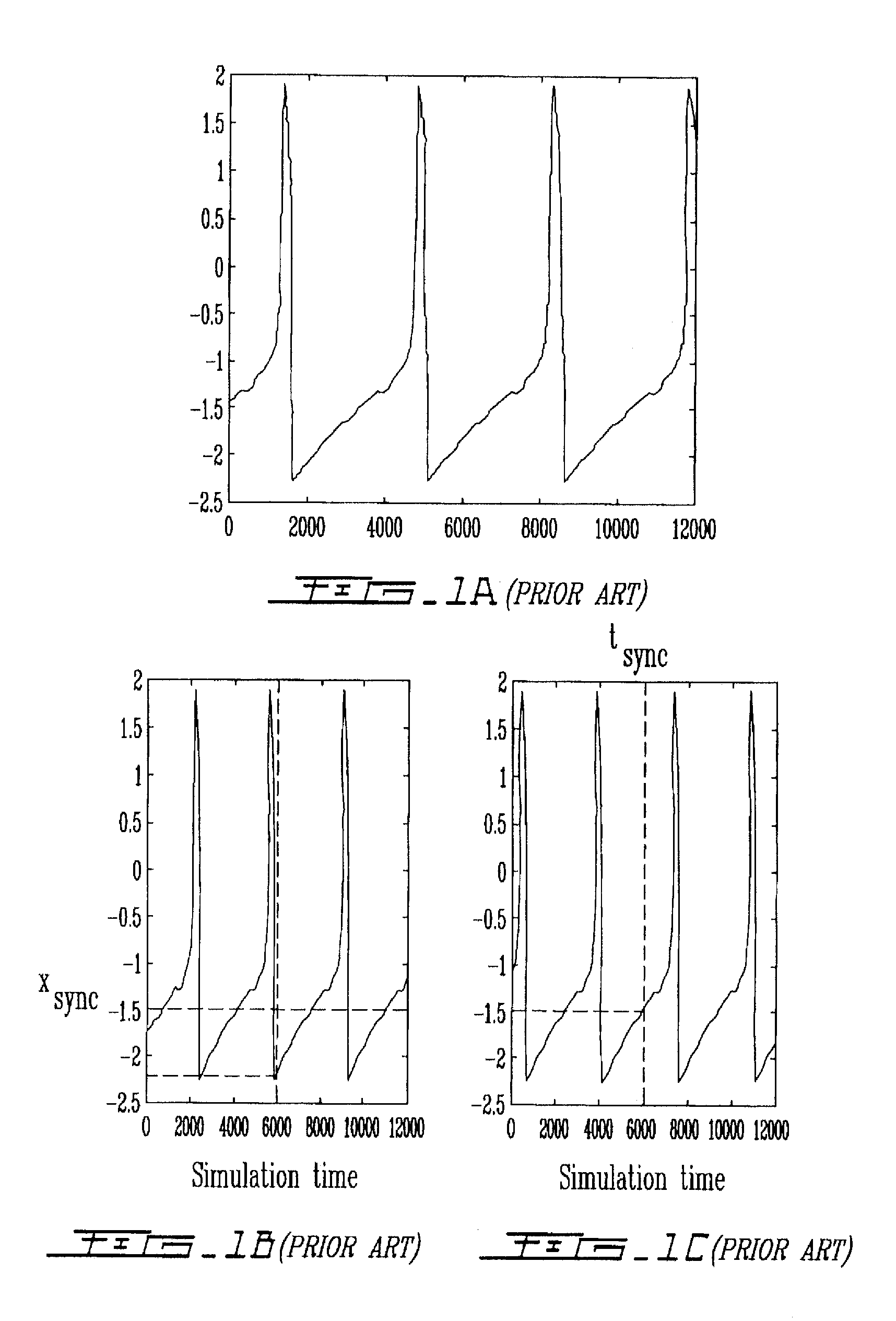

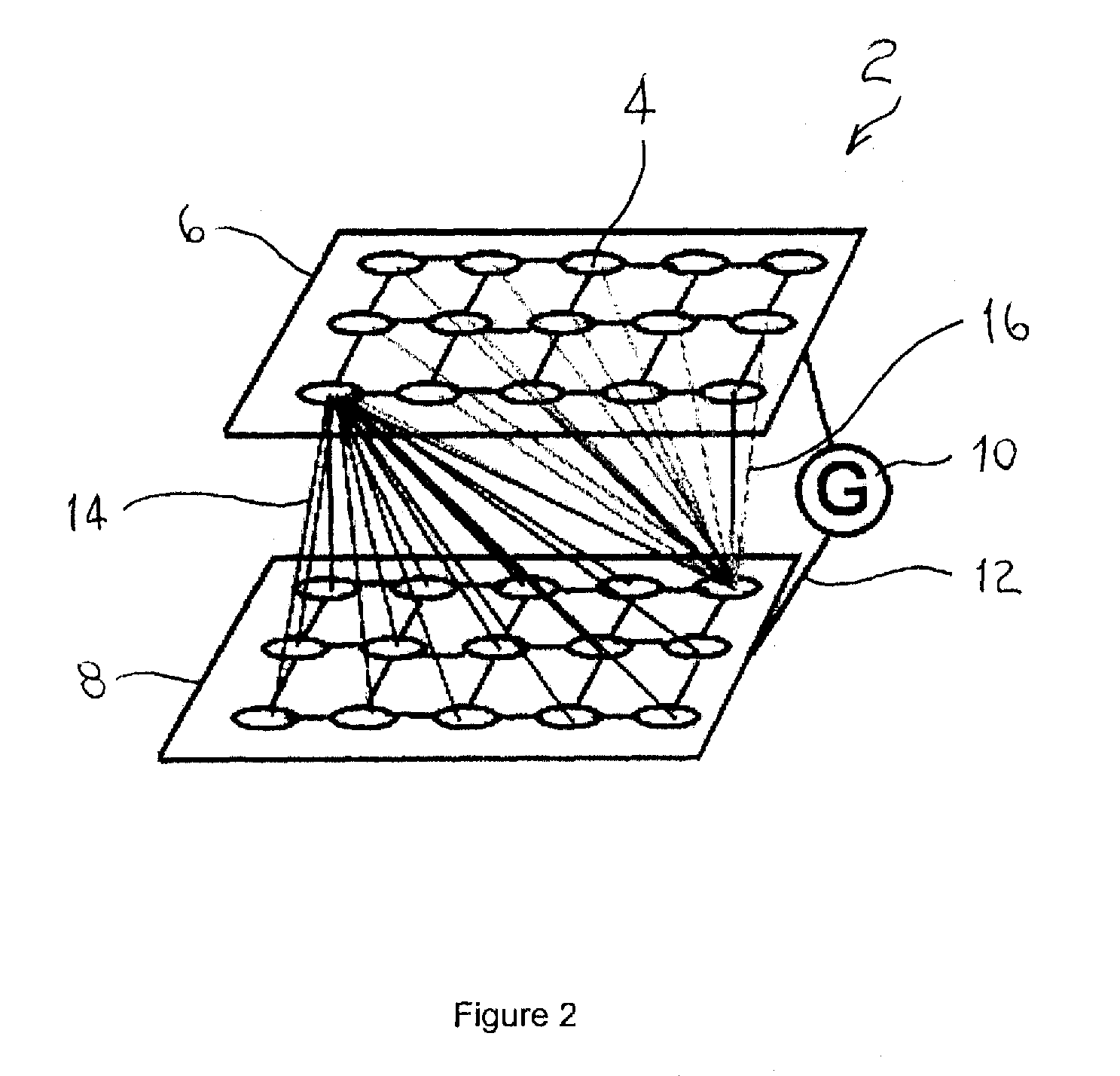

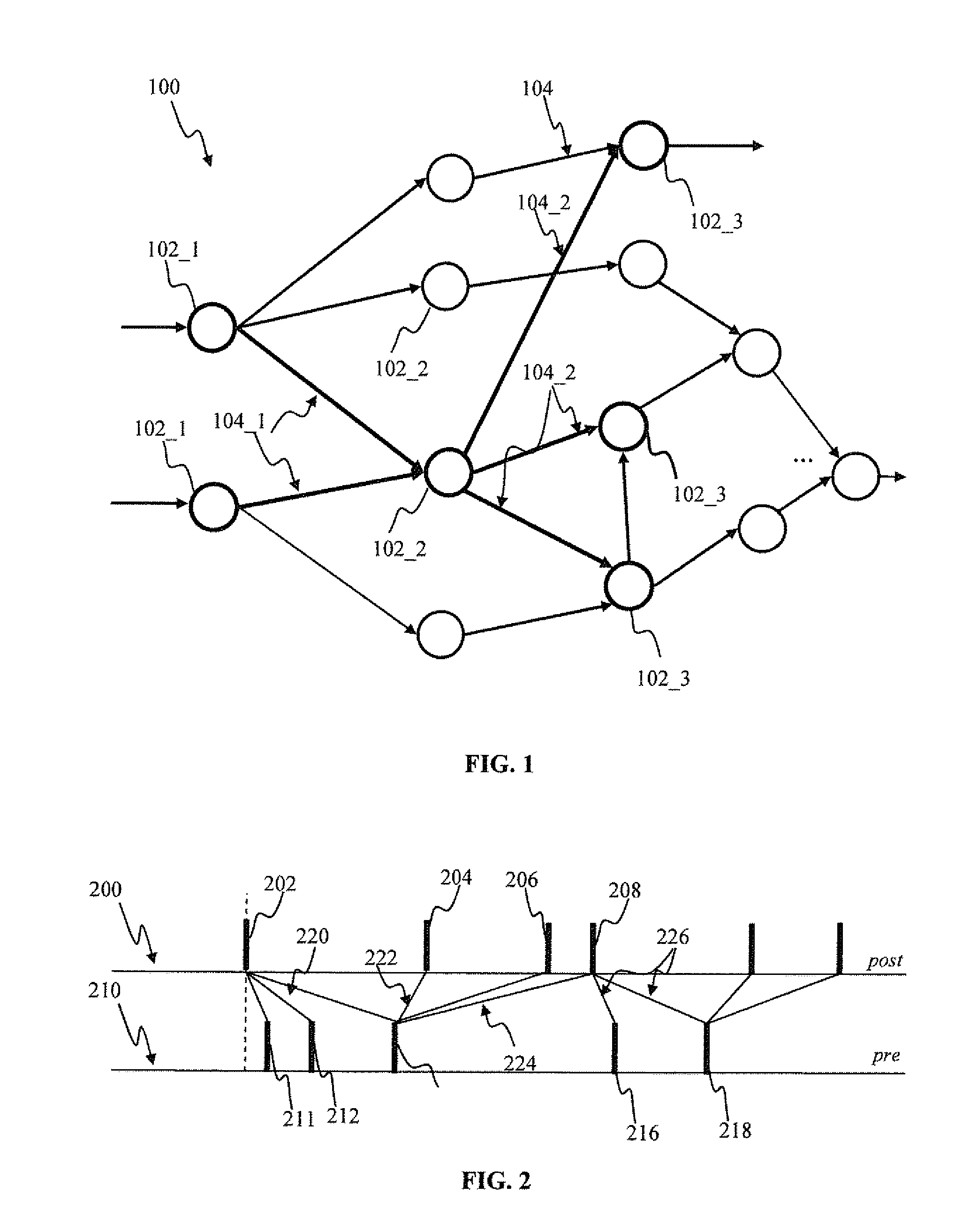

Spatio-temporal pattern recognition using a spiking neural network and processing thereof on a portable and/or distributed computer

ActiveUS20090287624A1Digital computer detailsCharacter and pattern recognitionSpiking neural networkNeuron

A system and method for characterizing a pattern, in which a spiking neural network having at least one layer of neurons is provided. The spiking neural network has a plurality of connected neurons for transmitting signals between the connected neurons. A model for inducing spiking in the neurons is specified. Each neuron is connected to a global regulating unit for transmitting signals between the neuron and the global regulating unit. Each neuron is connected to at least one other neuron for transmitting signals from this neuron to the at least one other neuron, this neuron and the at least one other neuron being on the same layer. Spiking of each neuron is synchronized according to a number of active neurons connected to the neuron. At least one pattern is submitted to the spiking neural network for generating sequences of spikes in the spiking neural network, the sequences of spikes (i) being modulated over time by the synchronization of the spiking and (ii) being regulated by the global regulating unit. The at least one pattern is characterized according to the sequences of spikes generated in the spiking neural network.

Owner:ROUAT JEAN +2

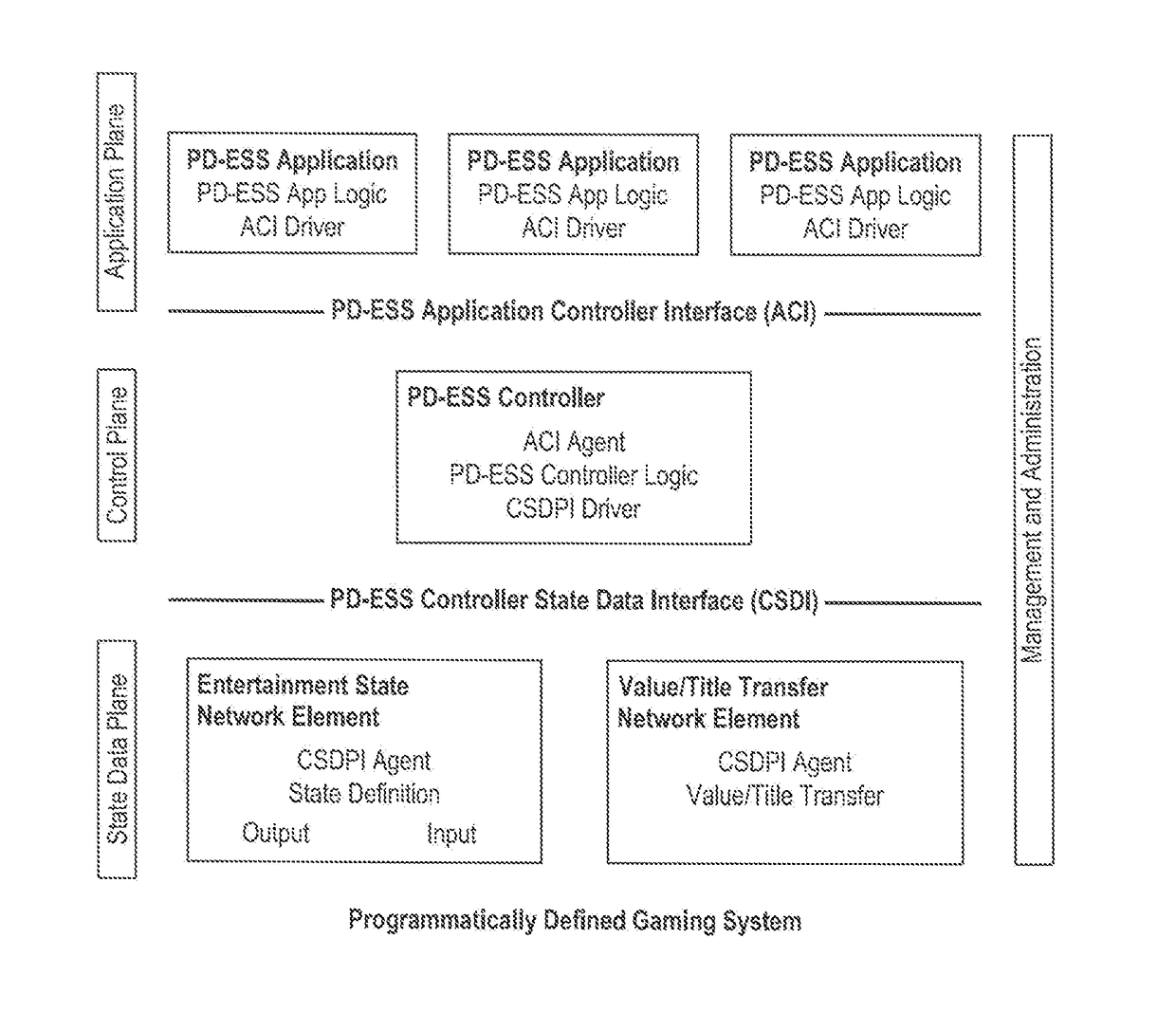

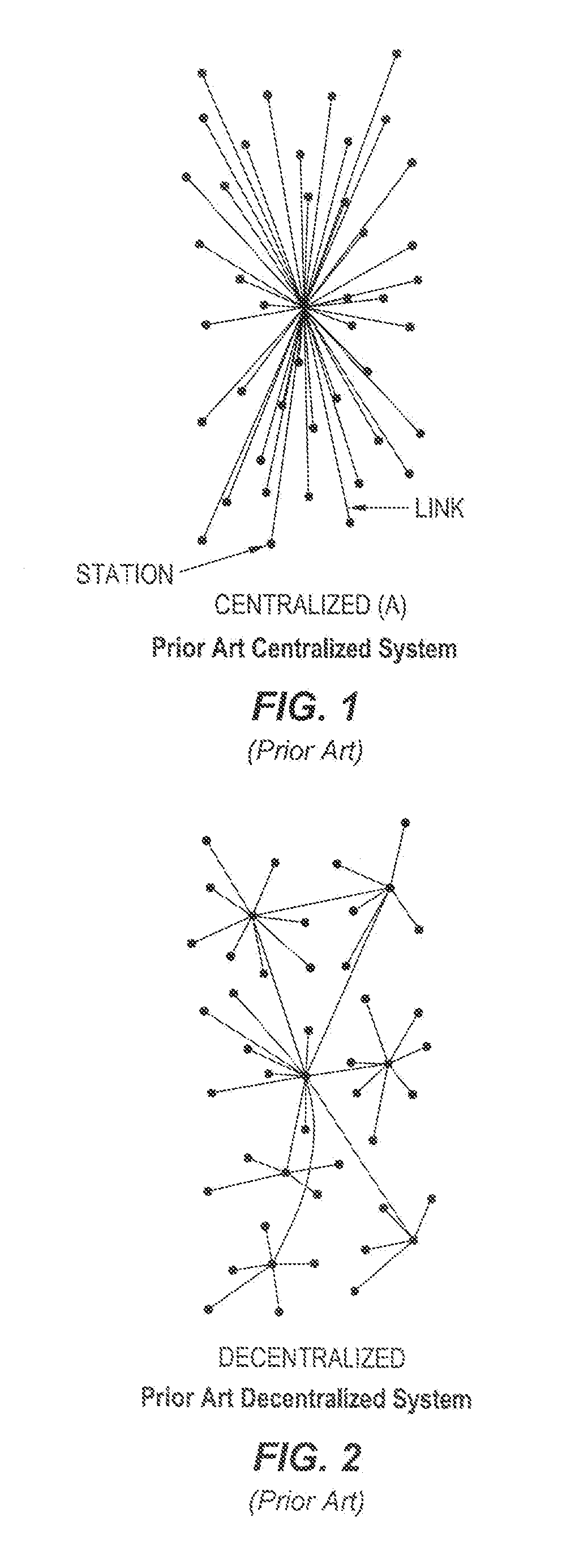

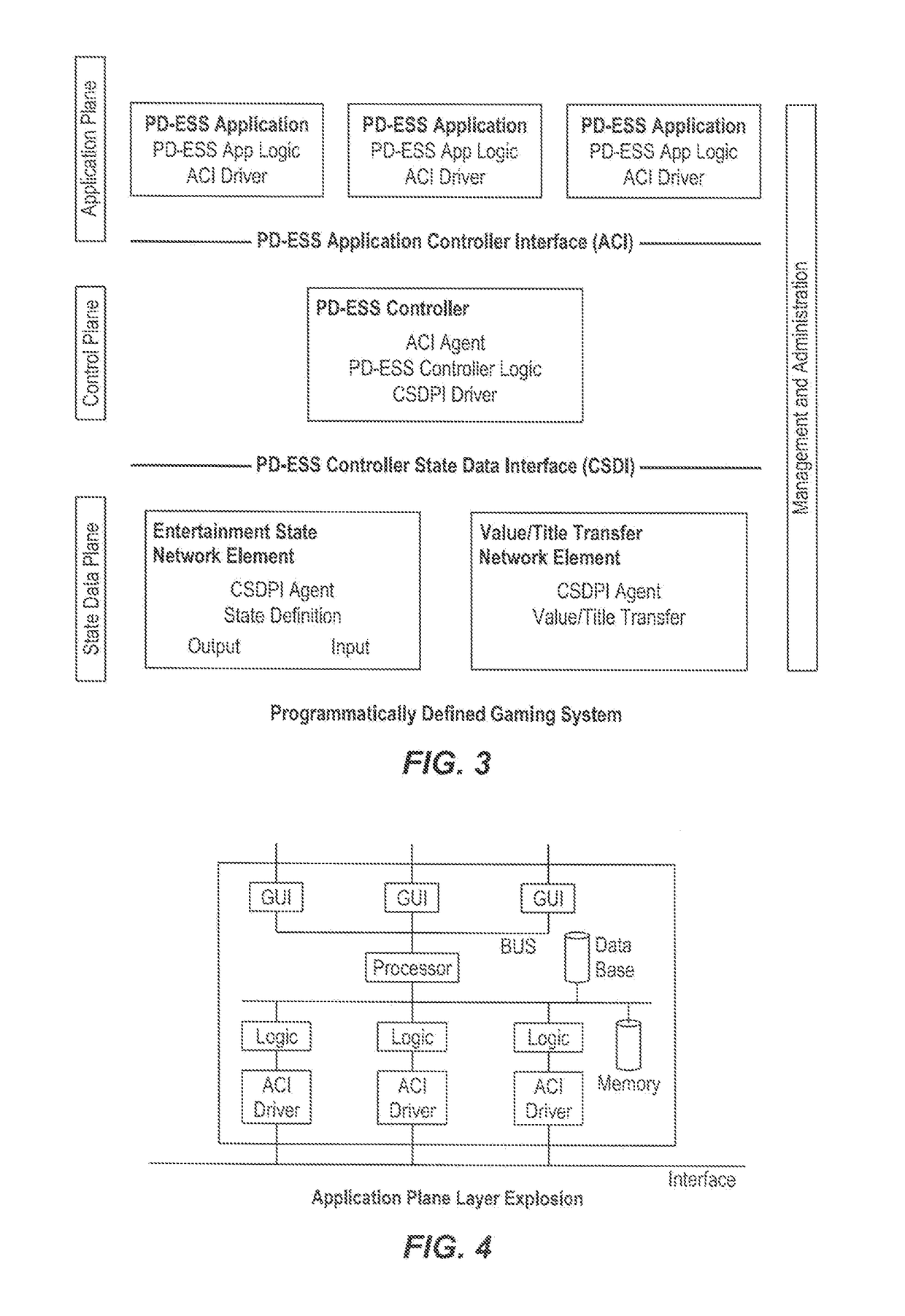

Architectures, systems and methods for program defined entertainment state system, decentralized cryptocurrency system and system with segregated secure functions and public functions

PendingUS20180247191A1Cryptography processingInterprogram communicationMotion detectorDisplay device

Systems and methods are provided for training an artificial intelligence system including the use of one or more human subject responses to stimuli as input to the artificial intelligence system. Displays are oriented to the human subjects to present the stimuli to the human subjects. Detectors monitor the reaction of the human subjects to the stimuli, the detectors including at least motion detectors, the detectors providing an output. An analysis system is coupled to receive the output of the detectors, the analysis system provides an output corresponding to whether the reaction of the human subjects was positive or negative. A neural network utilizes the output of the analysis system, generating a positive weighting for training of the neural network when the output of the analysis system was positive, and a negative weighting for training of the neural network when the output of the analysis system was negative.

Owner:MILESTONE ENTERTAINMENT LLC

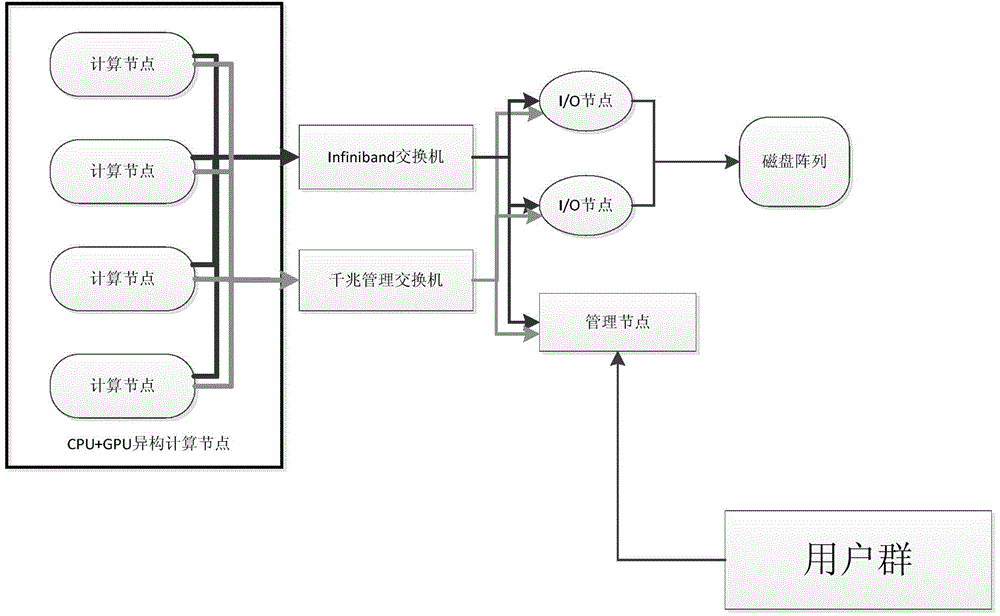

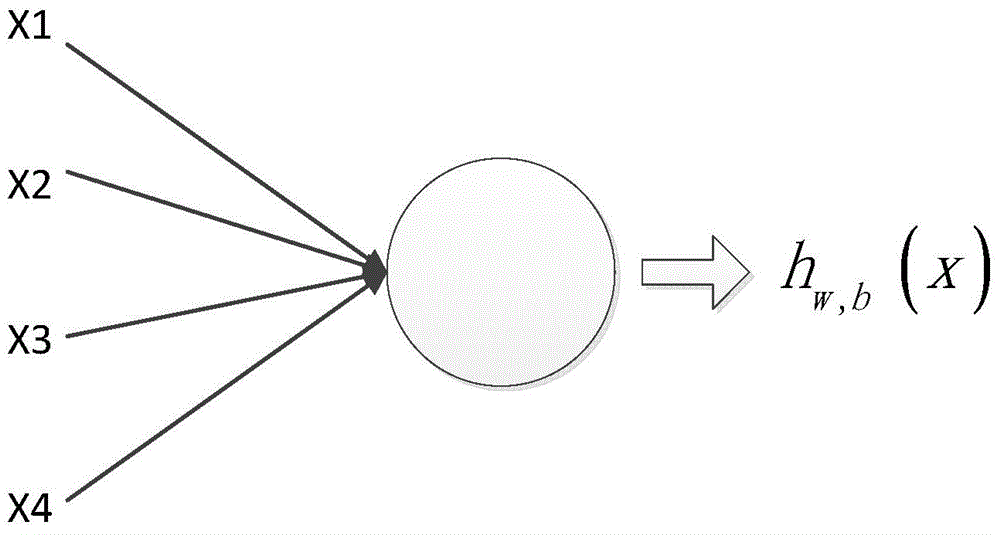

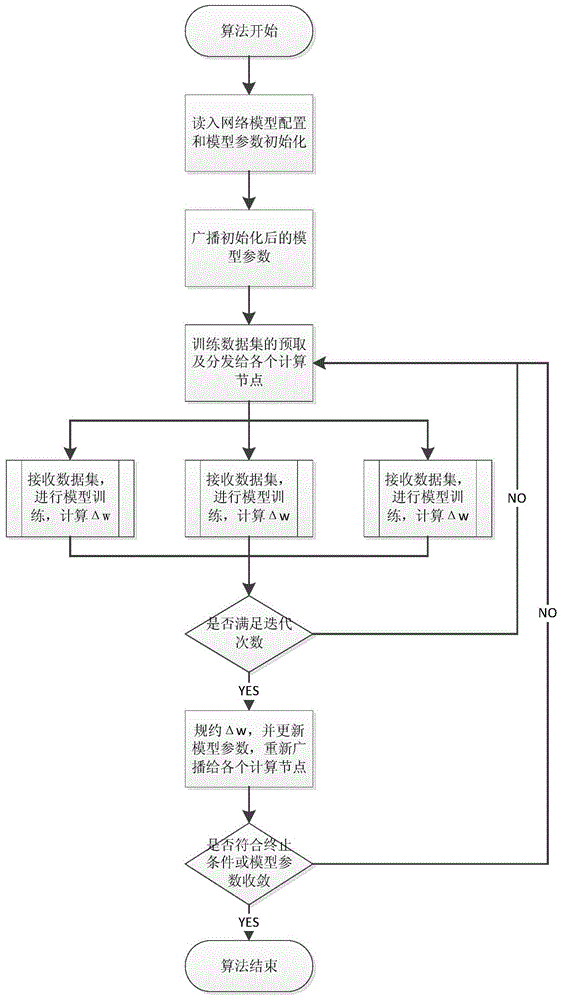

Convolution neural network parallel processing method based on large-scale high-performance cluster

InactiveCN104463324AReduce training timeImprove computing efficiencyBiological neural network modelsConcurrent instruction executionNODALAlgorithm

The invention discloses a convolution neural network parallel processing method based on a large-scale high-performance cluster. The method comprises the steps that (1) a plurality of copies are constructed for a network model to be trained, model parameters of all the copies are identical, the number of the copies is identical with the number of nodes of the high-performance cluster, each node is provided with one model copy, one node is selected to serve as a main node, and the main node is responsible for broadcasting and collecting the model parameters; (2) a training set is divided into a plurality of subsets, the training subsets are issued to the rest of sub nodes except the main mode each time to conduct parameter gradient calculation together, gradient values are accumulated, the accumulated value is used for updating the model parameters of the main node, and the updated model parameters are broadcast to all the sub nodes until model training is ended. The convolution neural network parallel processing method has the advantages of being capable of achieving parallelization, improving the efficiency of model training, shortening the training time and the like.

Owner:CHANGSHA MASHA ELECTRONICS TECH

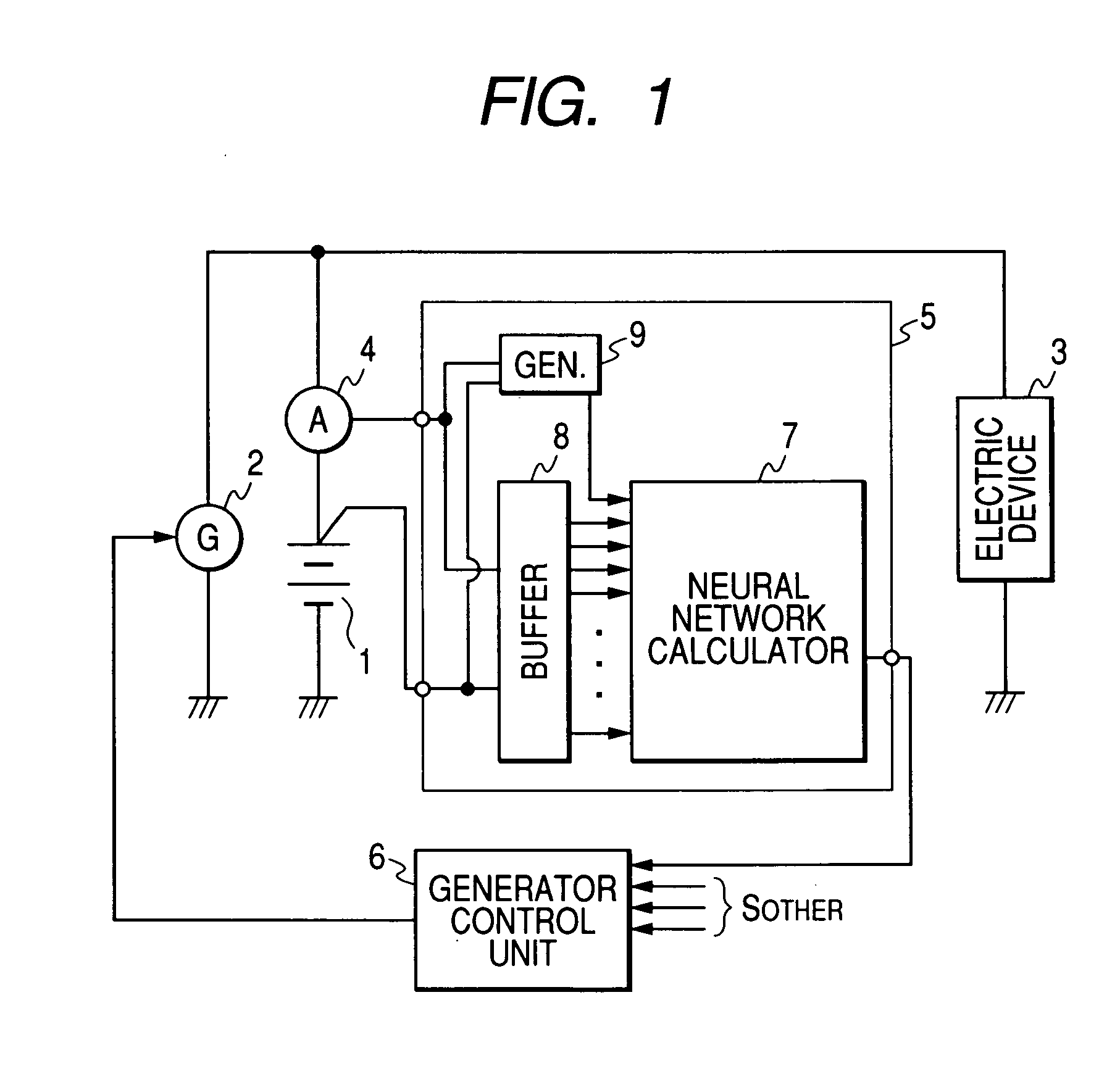

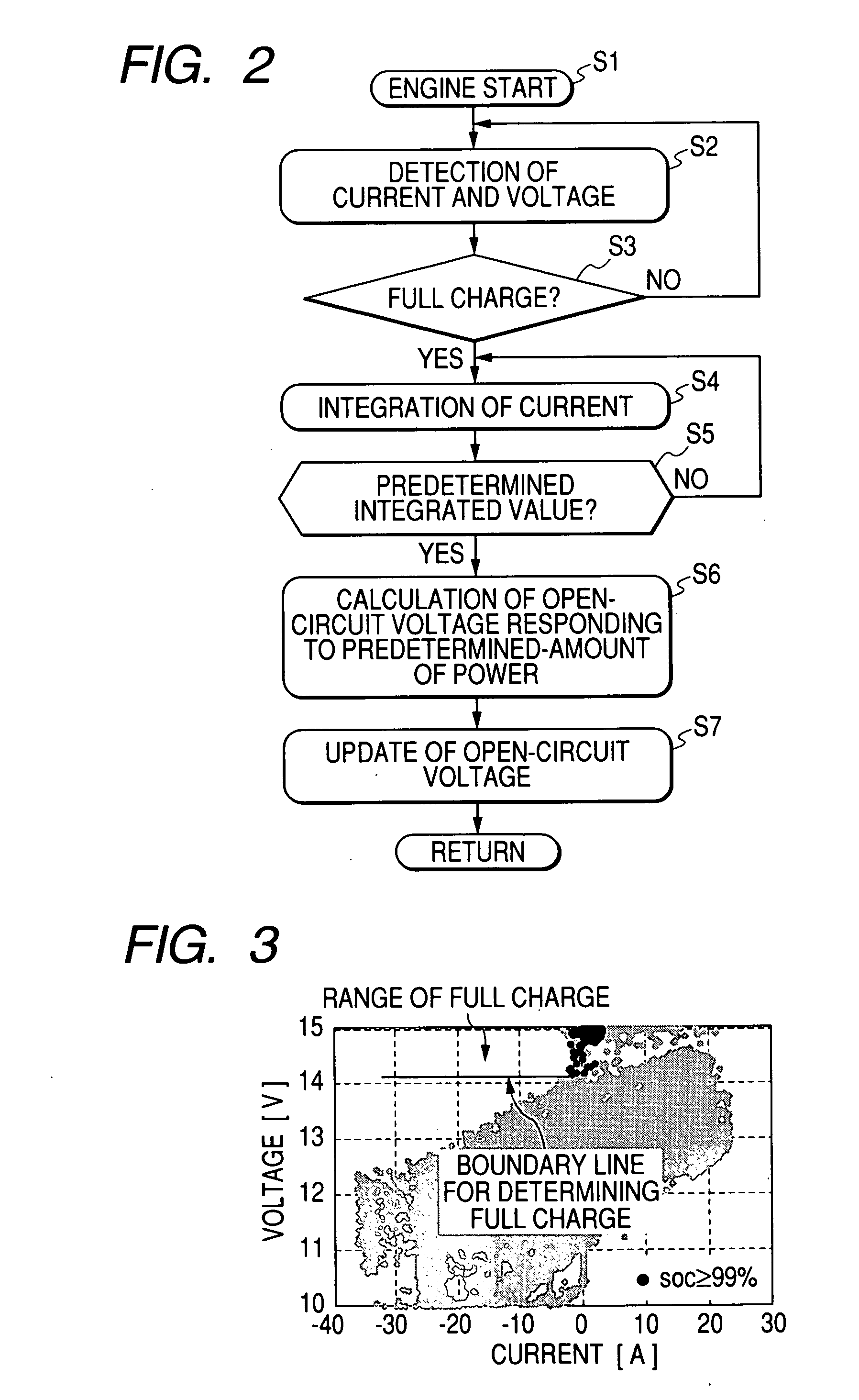

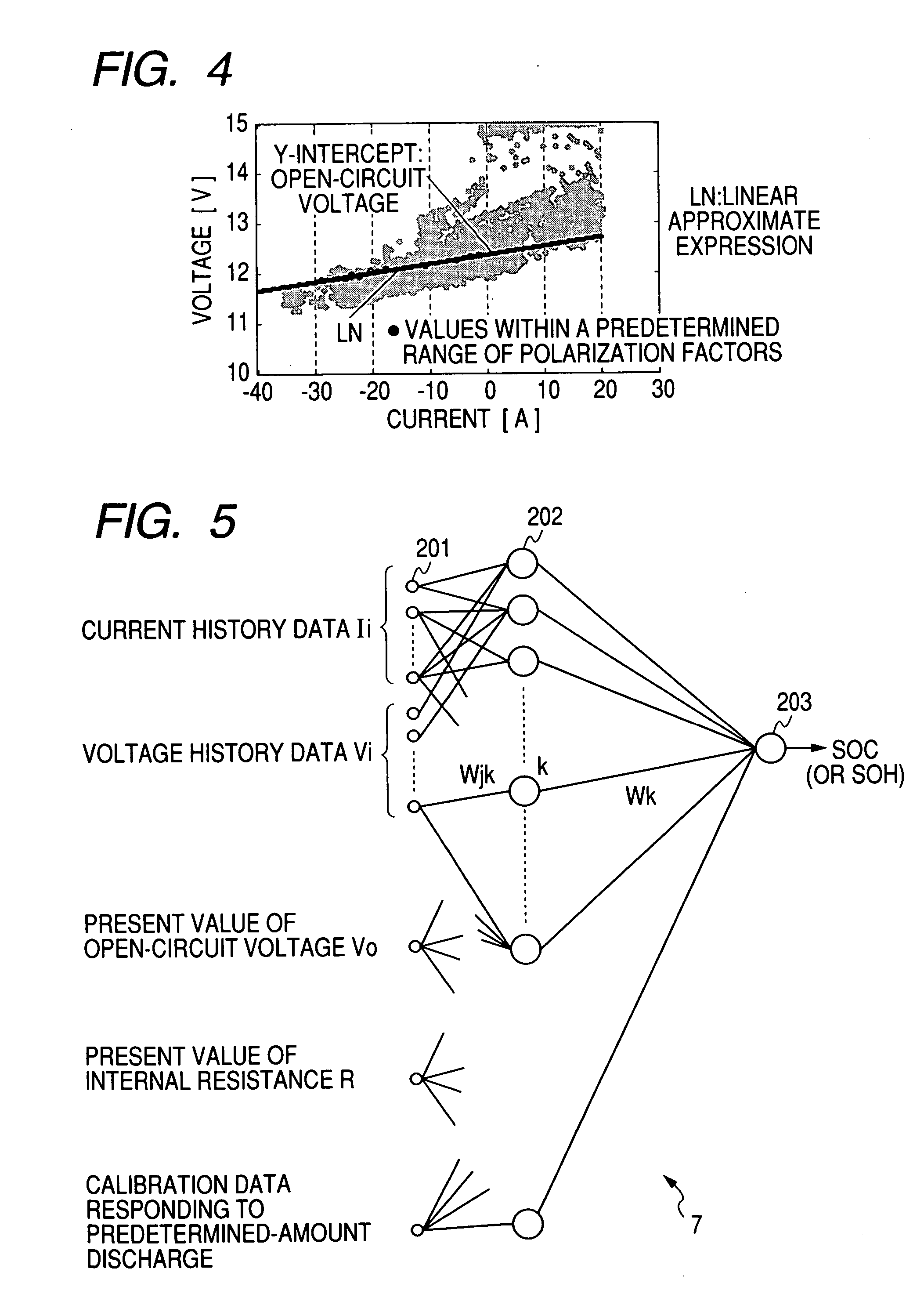

Method and apparatus for detecting charged state of secondary battery based on neural network calculation

InactiveUS20060181245A1Improve accuracyAccurate calculationBatteries circuit arrangementsLighting and heating apparatusBattery state of chargeEngineering

A neural network type of apparatus is provided to detect an internal state of a secondary battery implemented in a battery system. The apparatus comprises a detecting unit, producing unit and estimating unit. The detecting unit detects electric signals indicating an operating state of the battery. The producing unit produces, using the electric signals, an input parameter required for estimating the internal state of the battery. The input parameter reflects calibration of a present charged state of the battery which is attributable to at least one of a present degraded state of the battery and a difference in types of the battery. The estimating unit estimates an output parameter indicating the charged state of the battery by applying the input parameter to neural network calculation.

Owner:DENSO CORP +2

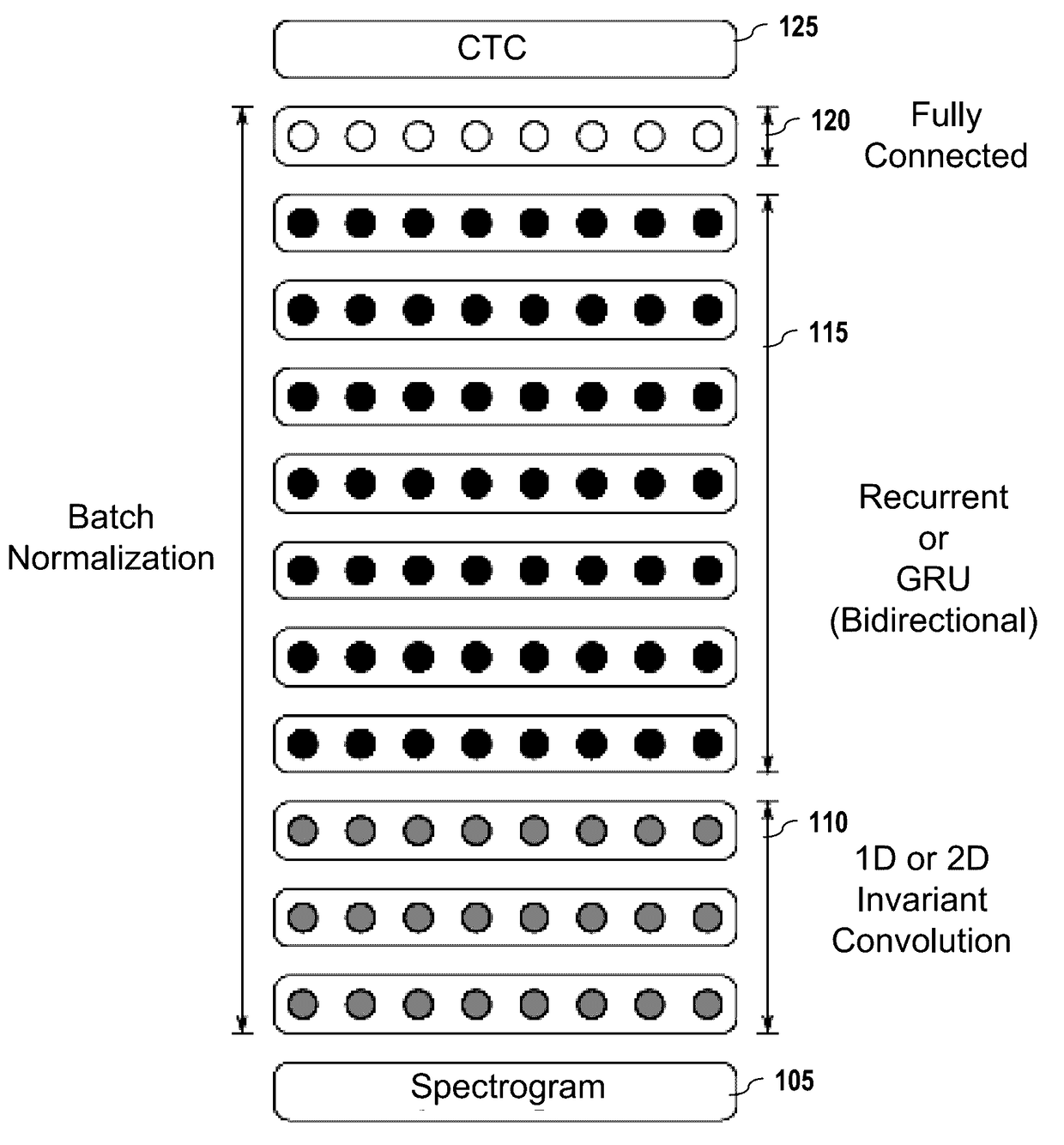

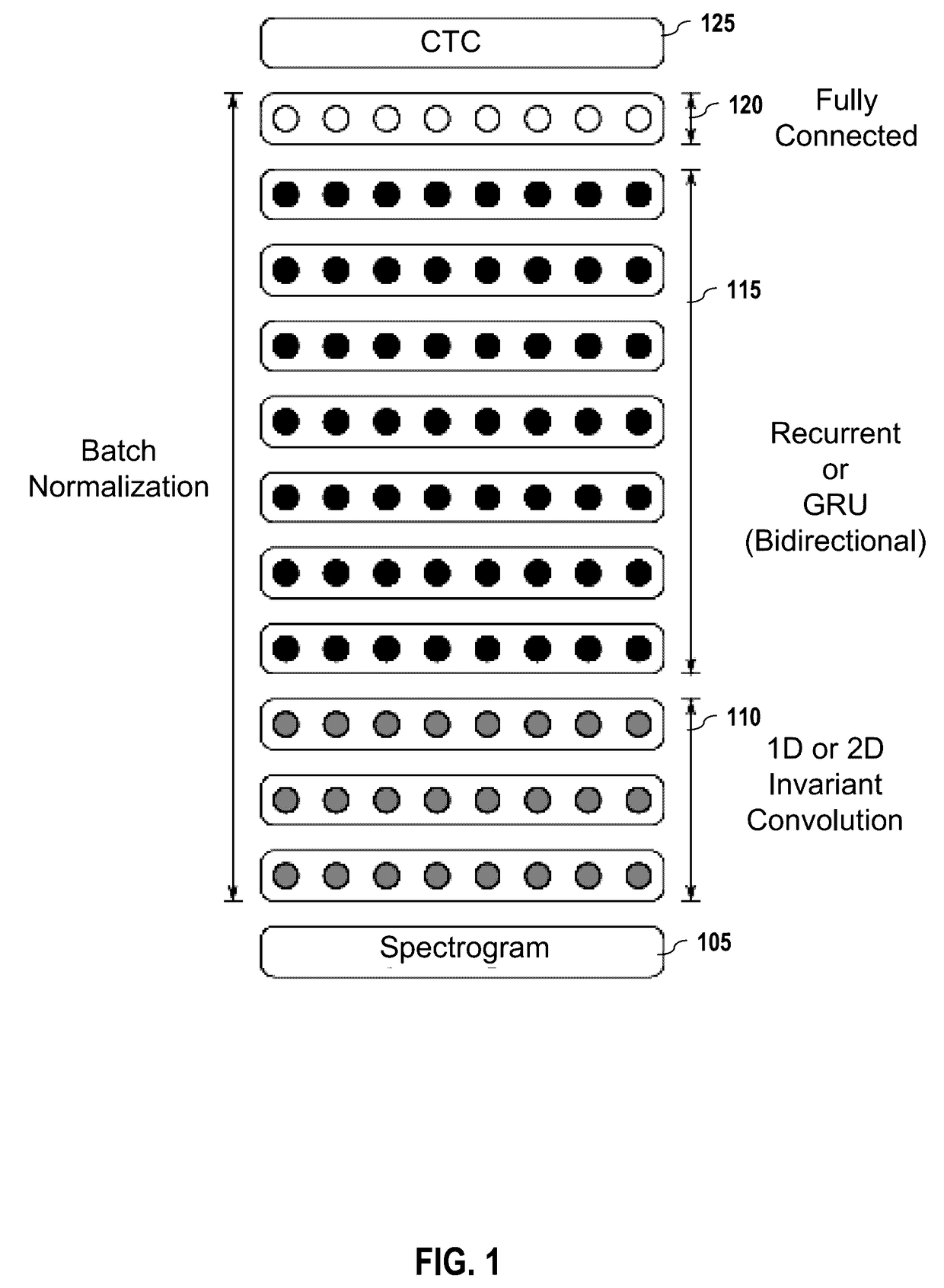

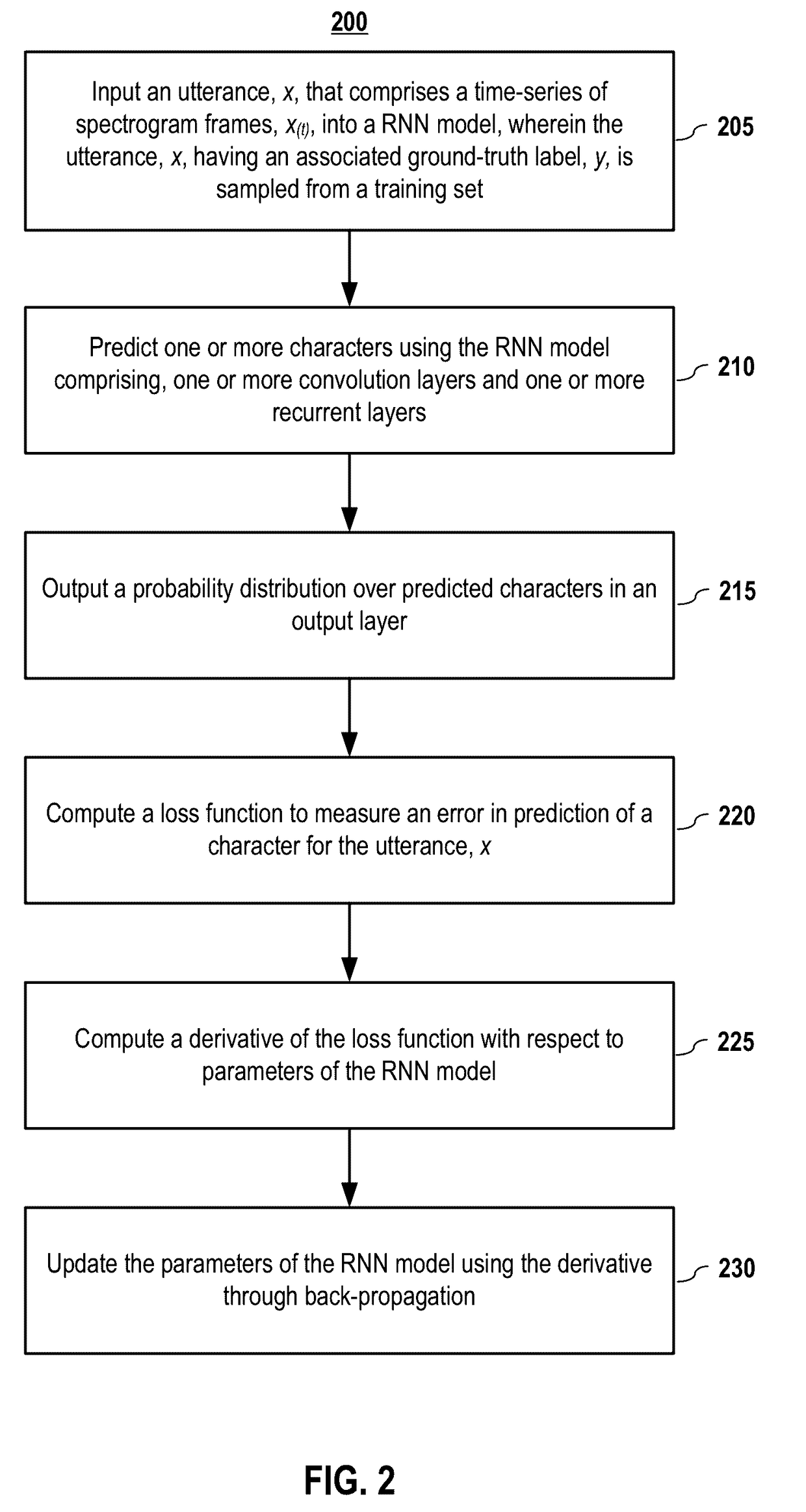

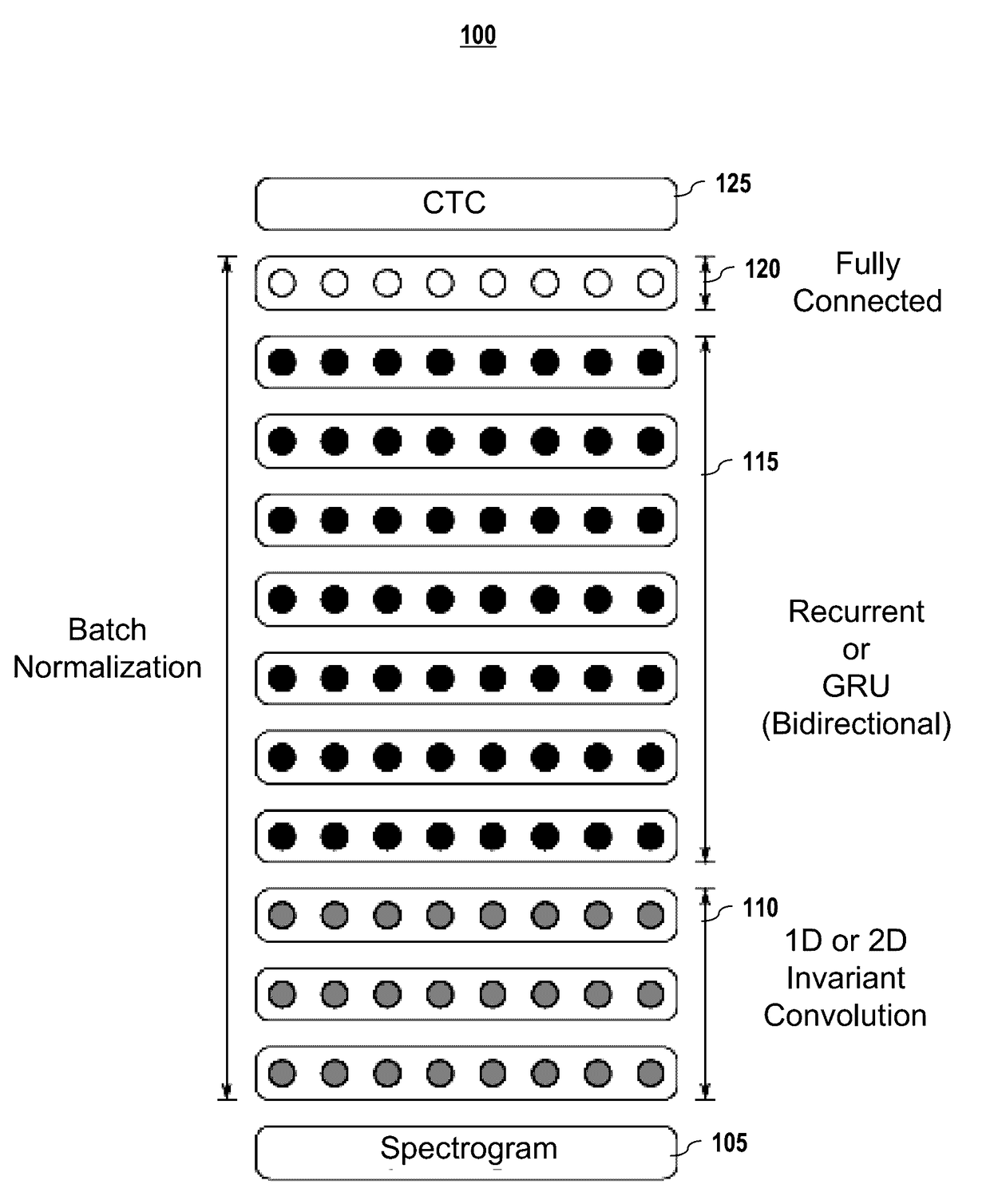

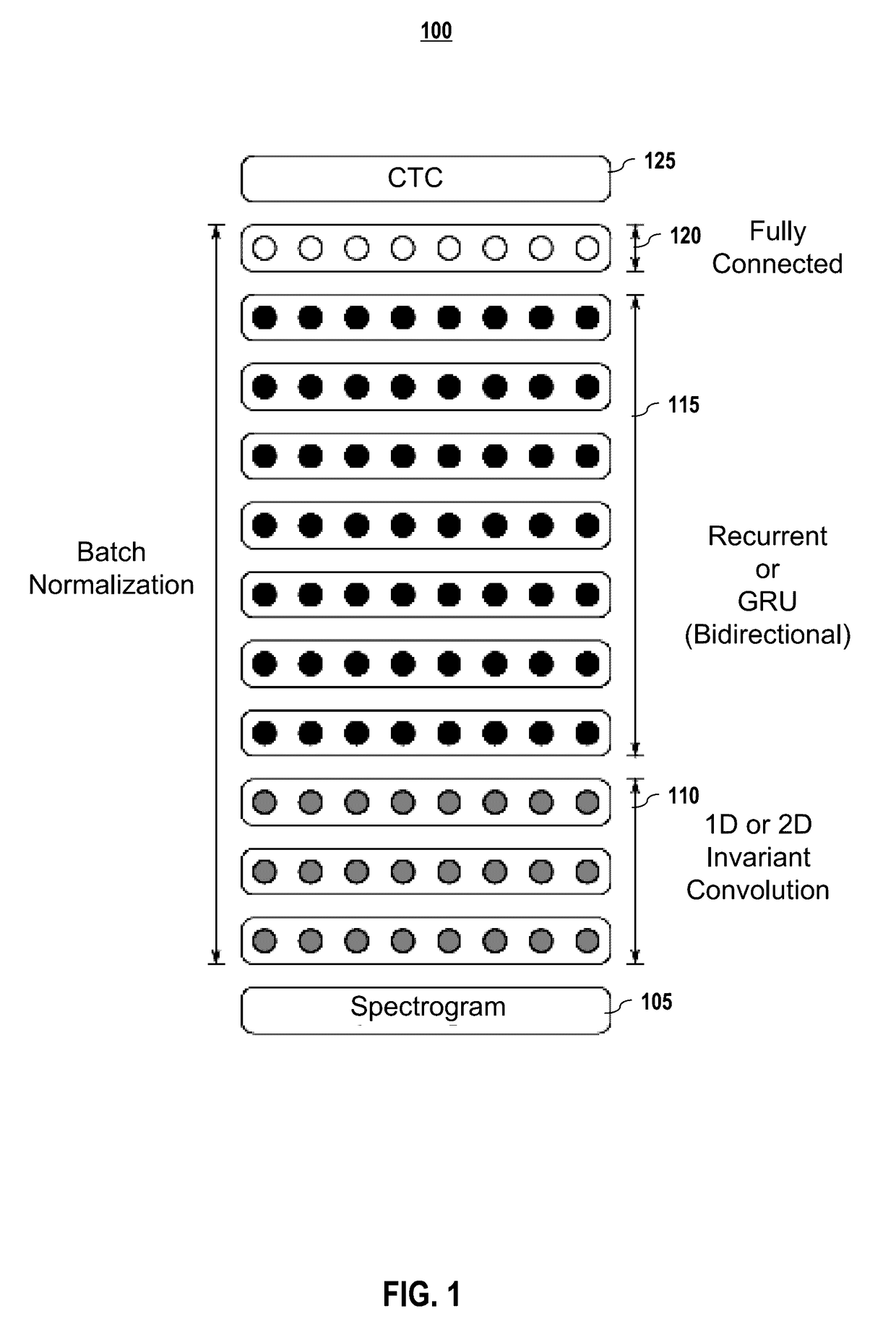

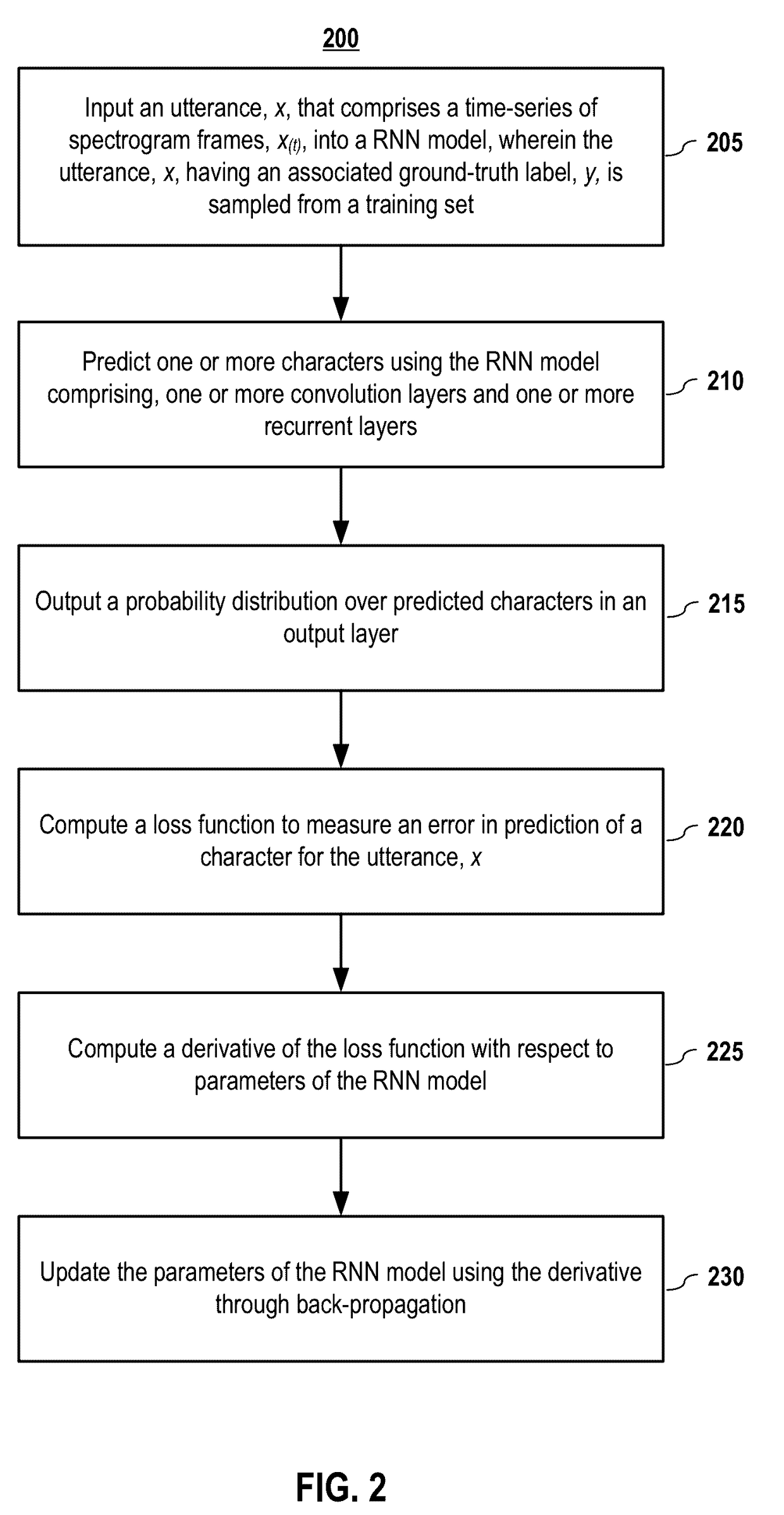

Deployed end-to-end speech recognition

Embodiments of end-to-end deep learning systems and methods are disclosed to recognize speech of vastly different languages, such as English or Mandarin Chinese. In embodiments, the entire pipelines of hand-engineered components are replaced with neural networks, and the end-to-end learning allows handling a diverse variety of speech including noisy environments, accents, and different languages. Using a trained embodiment and an embodiment of a batch dispatch technique with GPUs in a data center, an end-to-end deep learning system can be inexpensively deployed in an online setting, delivering low latency when serving users at scale.

Owner:BAIDU USA LLC

Tag-based apparatus and methods for neural networks

Owner:QUALCOMM INC

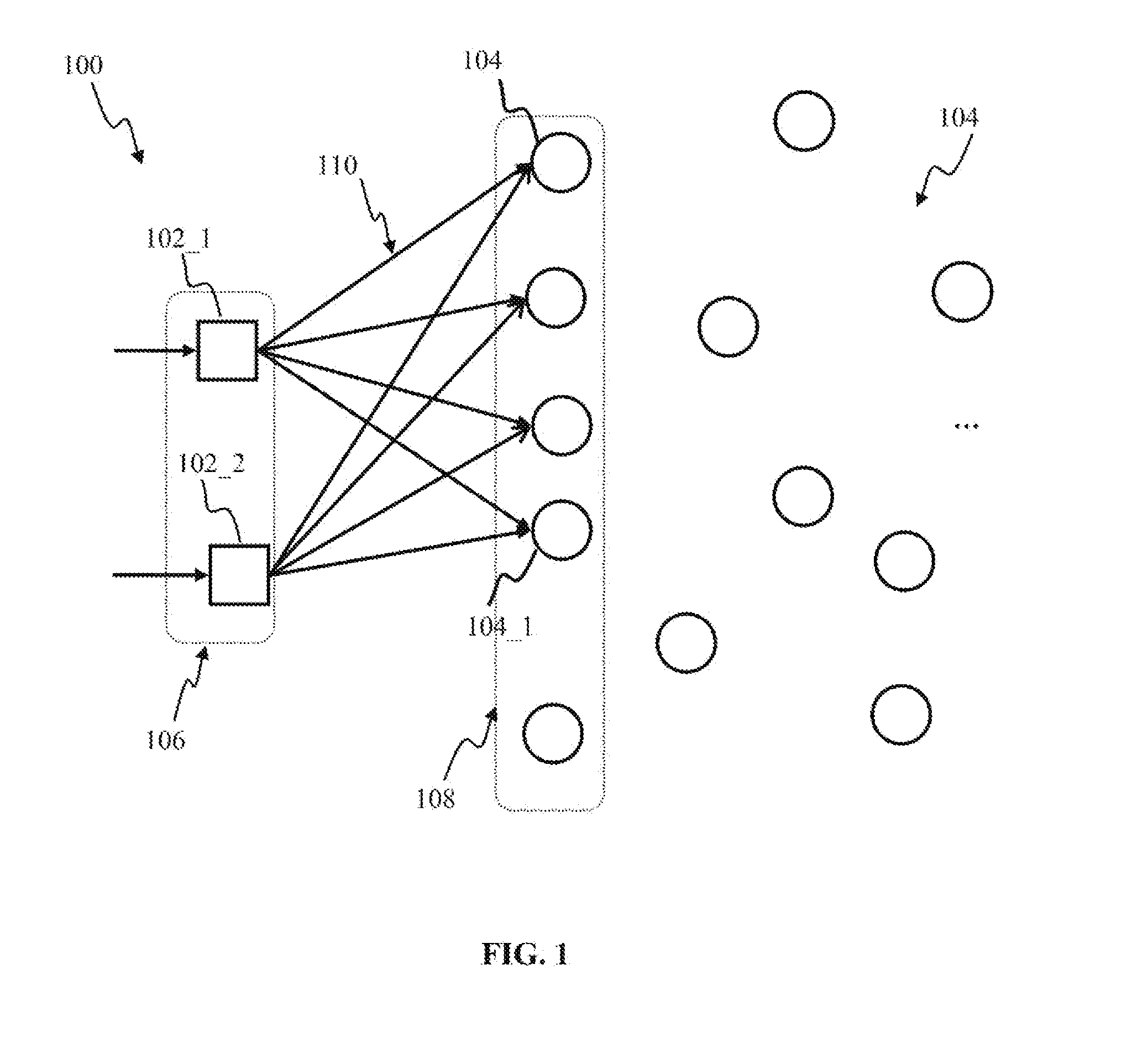

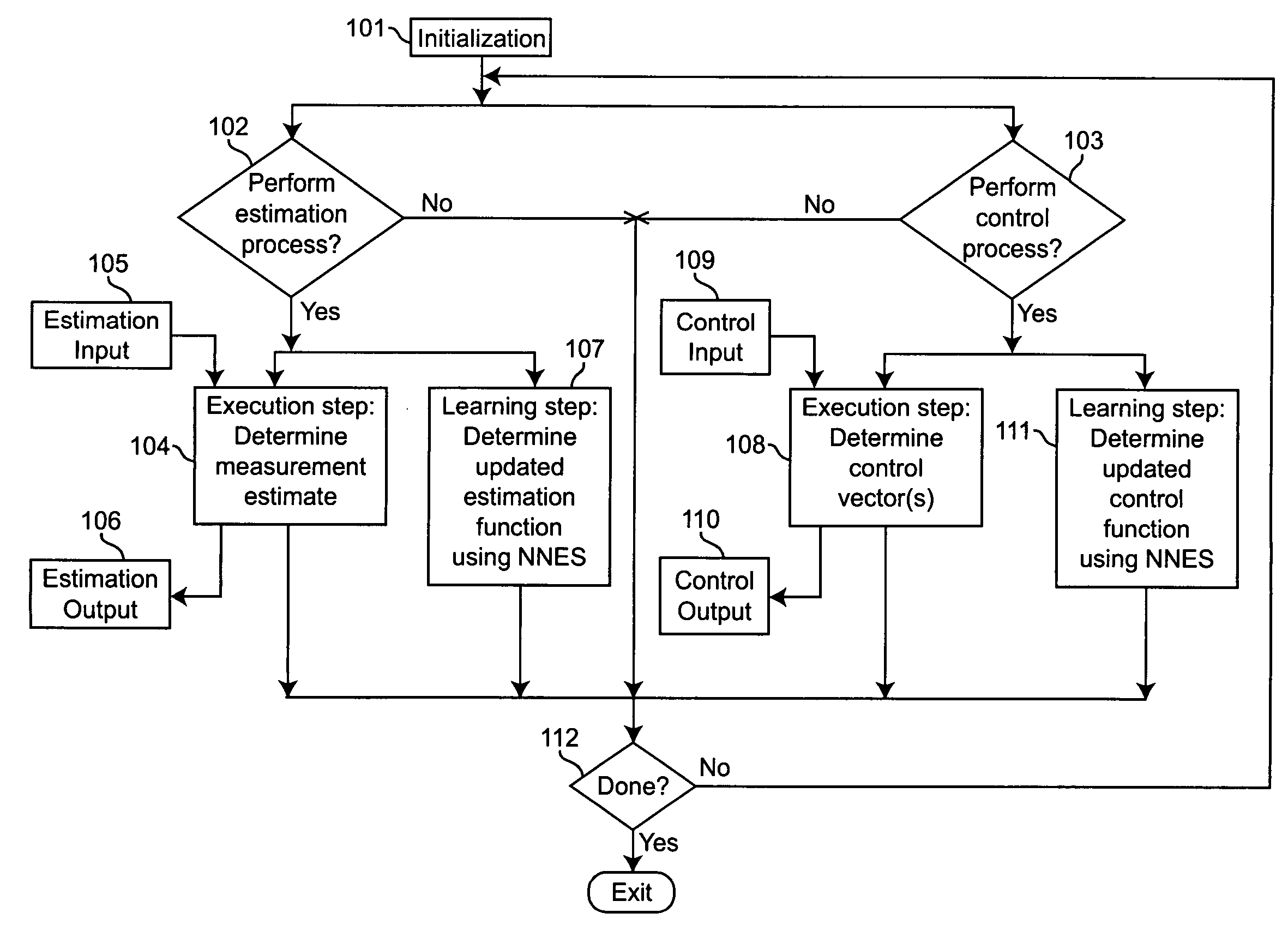

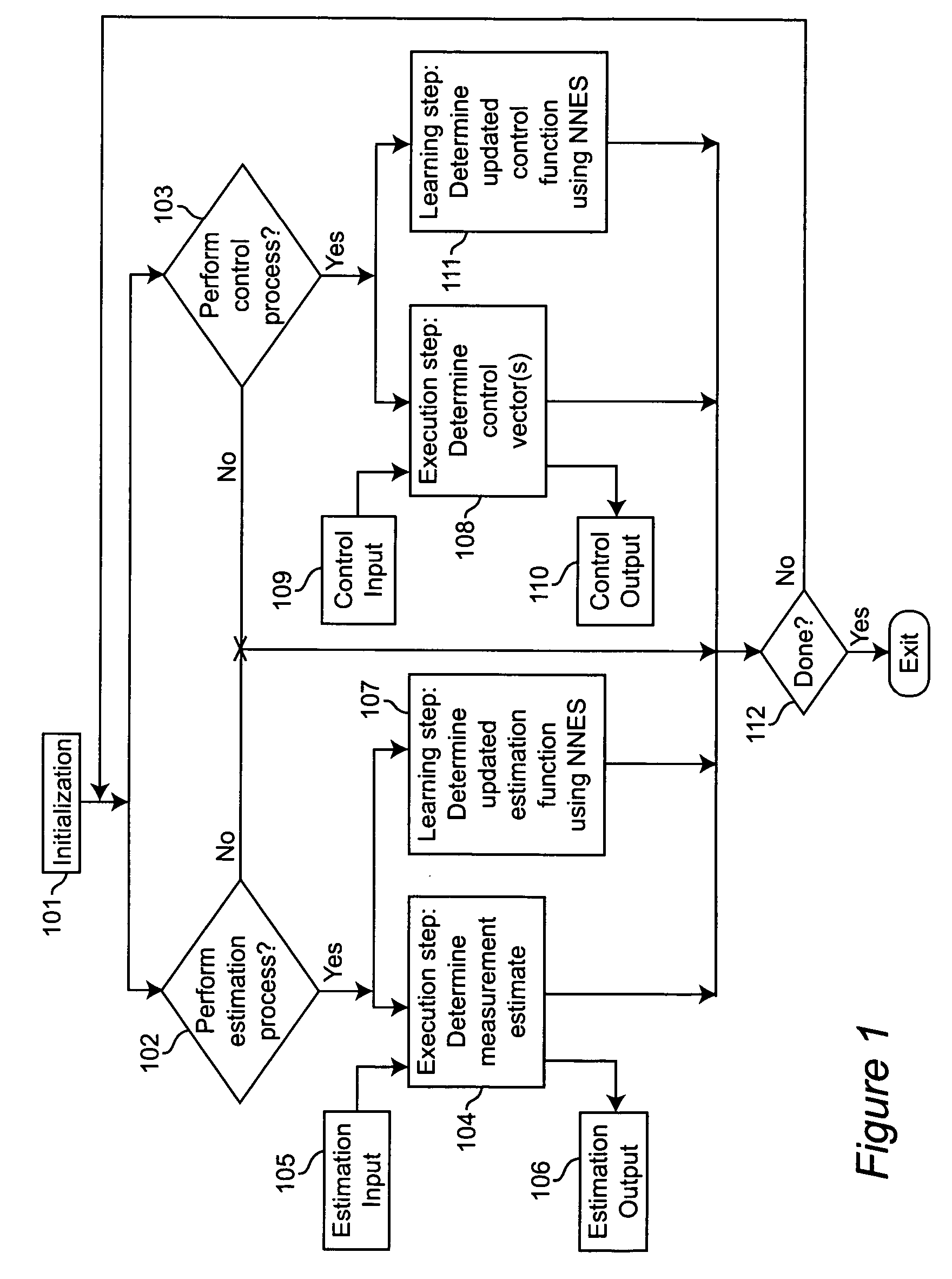

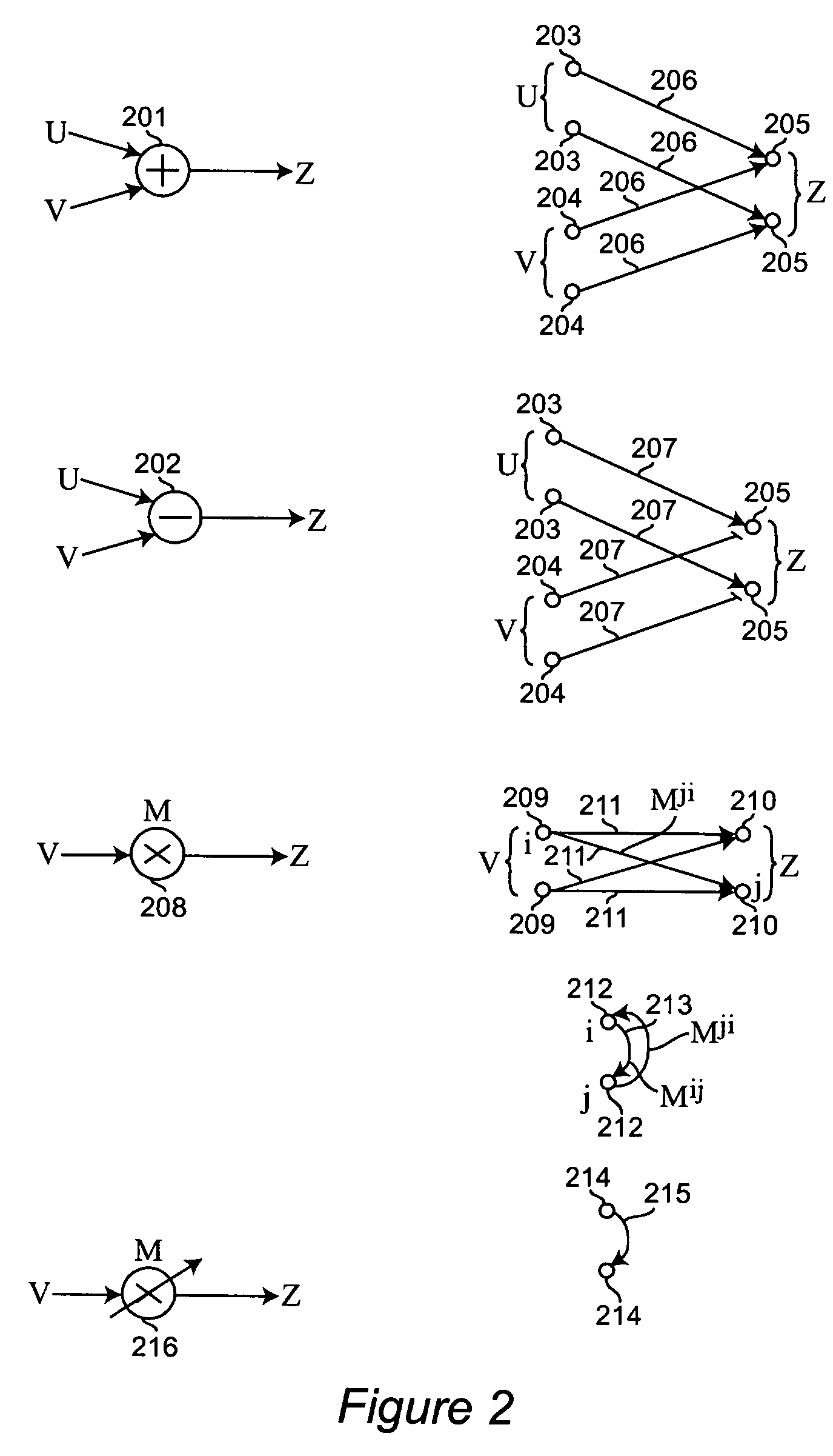

Neural networks for prediction and control

Neural networks for optimal estimation (including prediction) and / or control involve an execution step and a learning step, and are characterized by the learning step being performed by neural computations. The set of learning rules cause the circuit's connection strengths to learn to approximate the optimal estimation and / or control function that minimizes estimation error and / or a measure of control cost. The classical Kalman filter and the classical Kalman optimal controller are important examples of such an optimal estimation and / or control function. The circuit uses only a stream of noisy measurements to infer relevant properties of the external dynamical system, learn the optimal estimation and / or control function, and apply its learning of this optimal function to input data streams in an online manner. In this way, the circuit simultaneously learns and generates estimates and / or control output signals that are optimal, given the network's current state of learning.

Owner:GOOGLE LLC

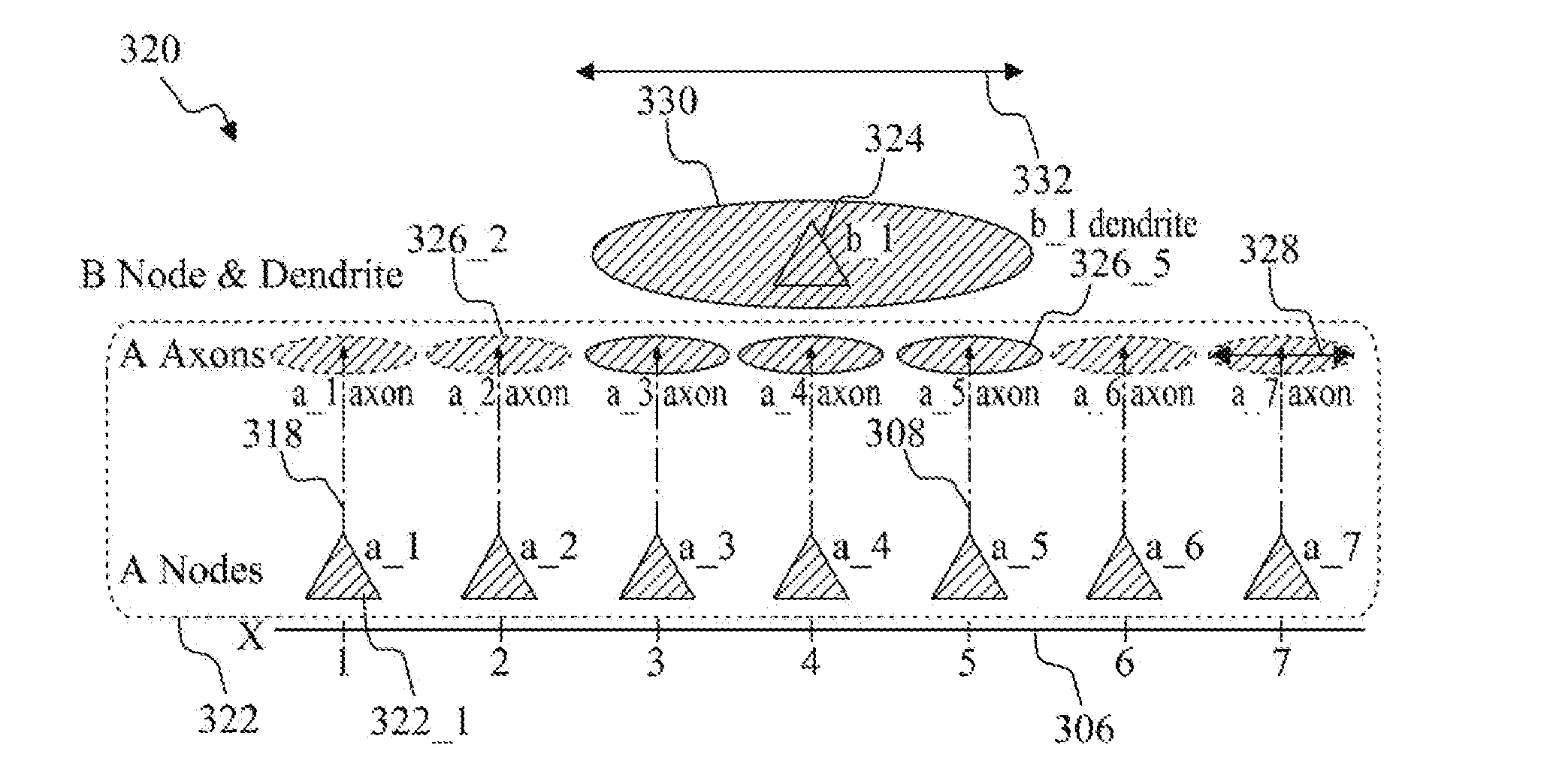

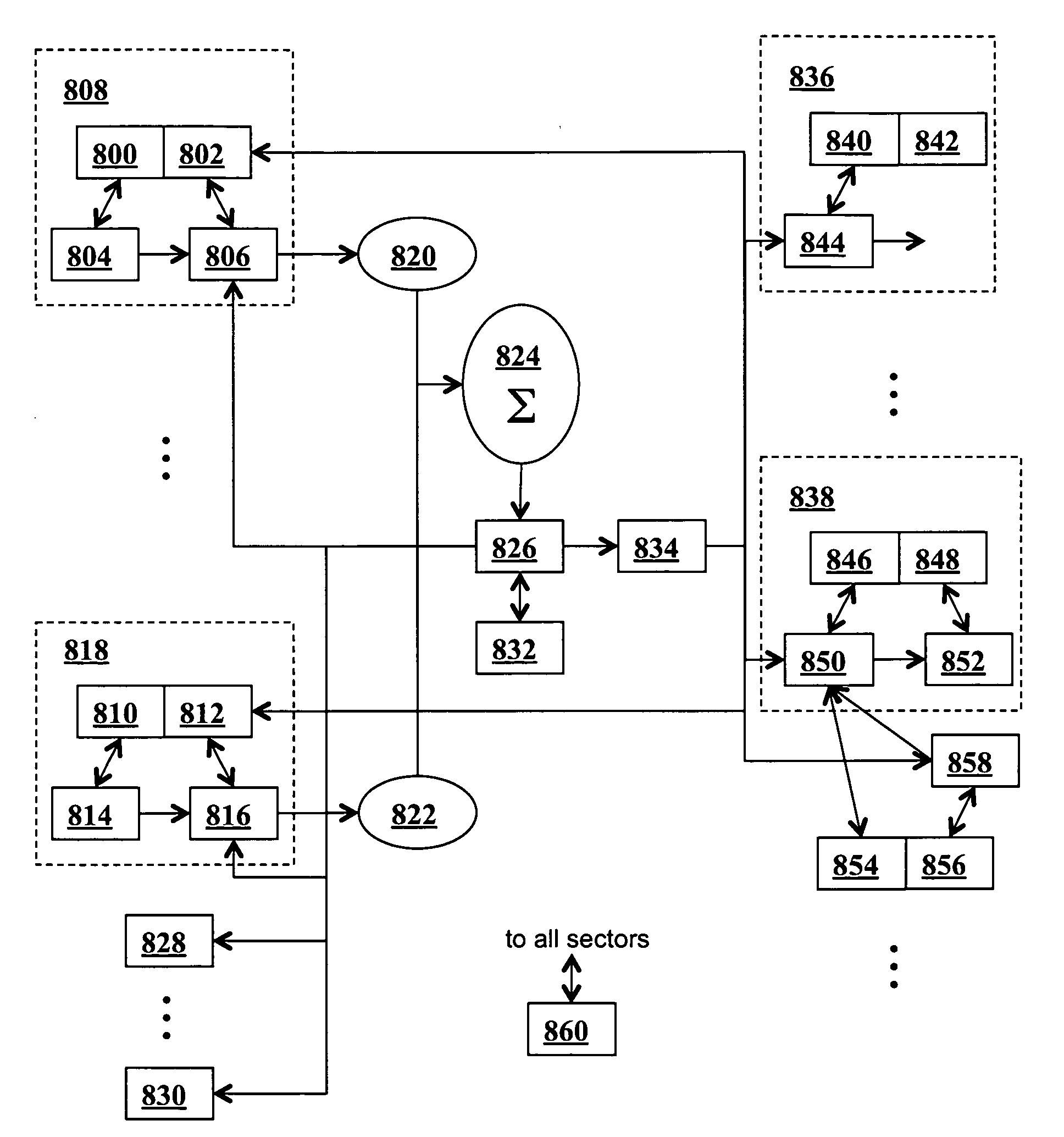

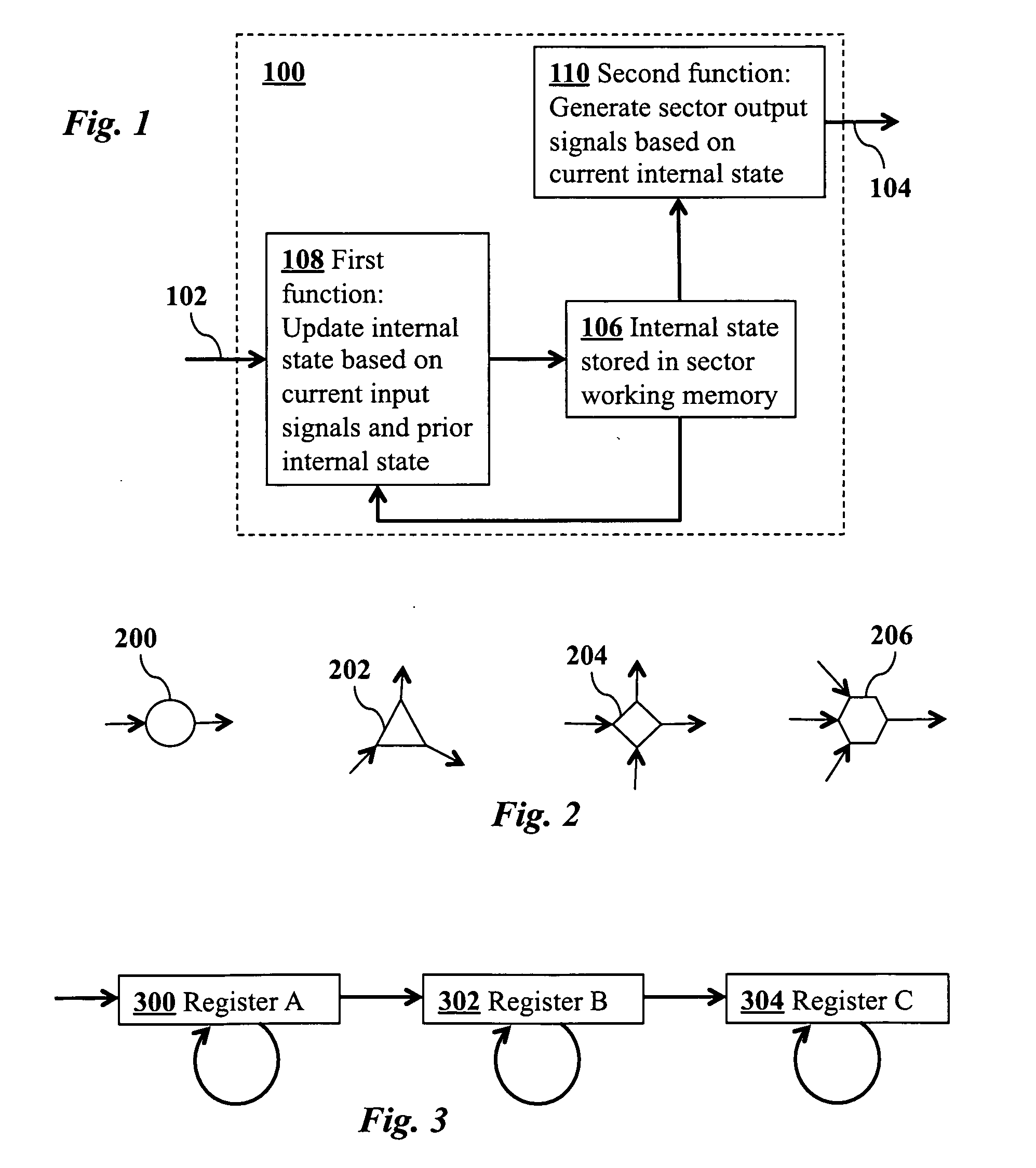

Method for efficiently simulating the information processing in cells and tissues of the nervous system with a temporal series compressed encoding neural network

ActiveUS20110016071A1Effective simulationExact reproductionDigital computer detailsDigital dataInformation processingNervous system

A neural network simulation represents components of neurons by finite state machines, called sectors, implemented using look-up tables. Each sector has an internal state represented by a compressed history of data input to the sector and is factorized into distinct historical time intervals of the data input. The compressed history of data input to the sector may be computed by compressing the data input to the sector during a time interval, storing the compressed history of data input to the sector in memory, and computing from the stored compressed history of data input to the sector the data output from the sector.

Owner:CORTICAL DATABASE

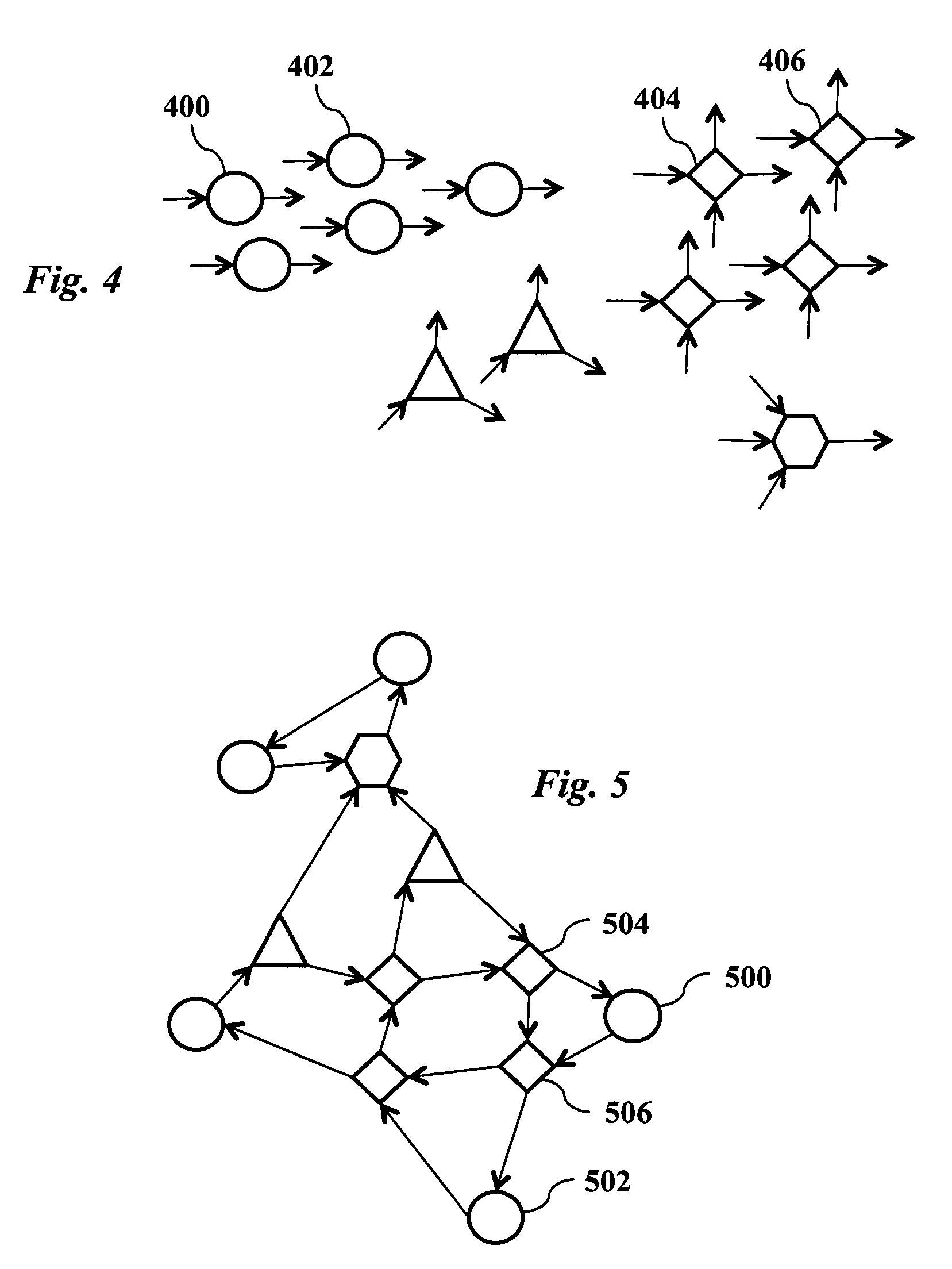

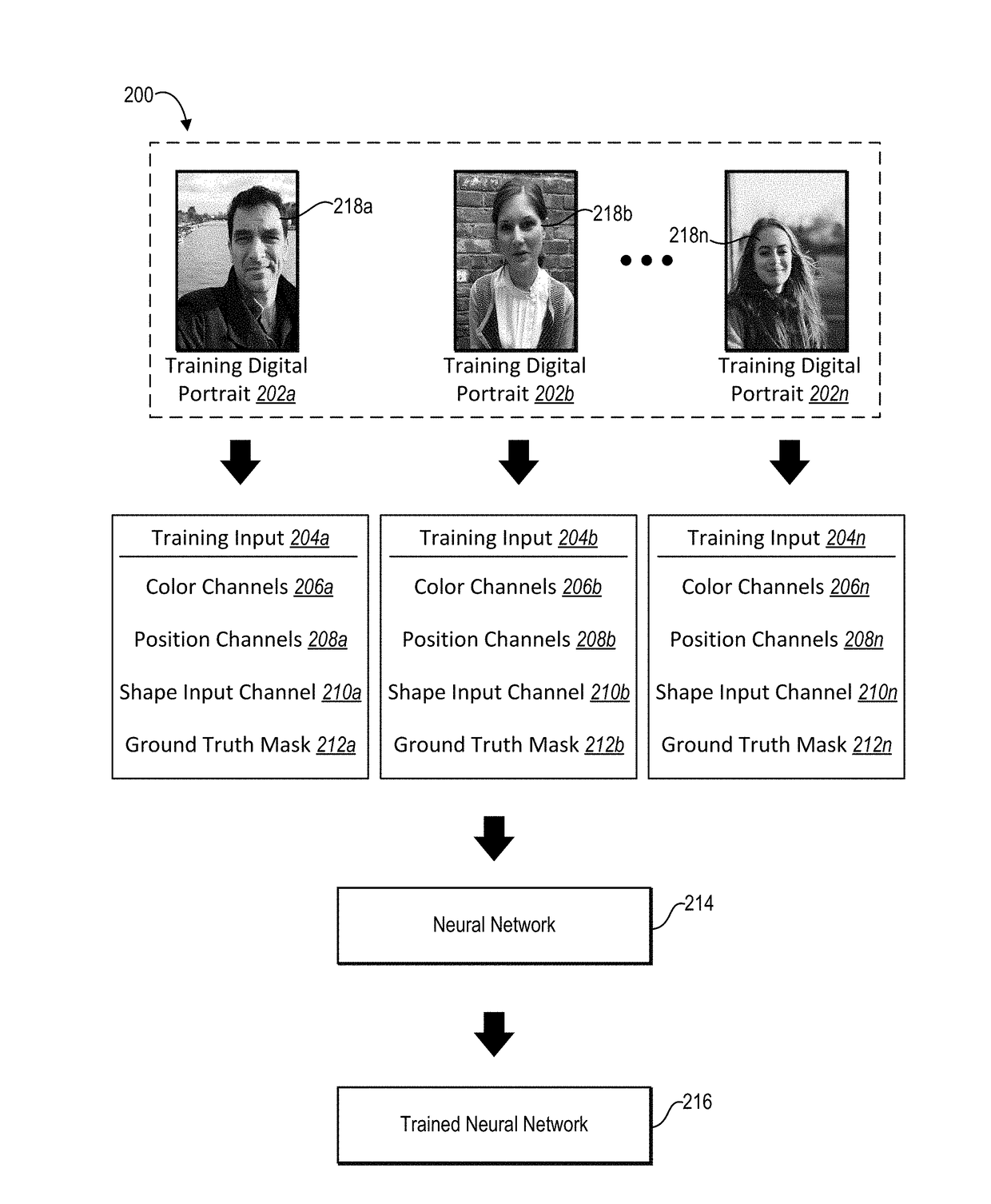

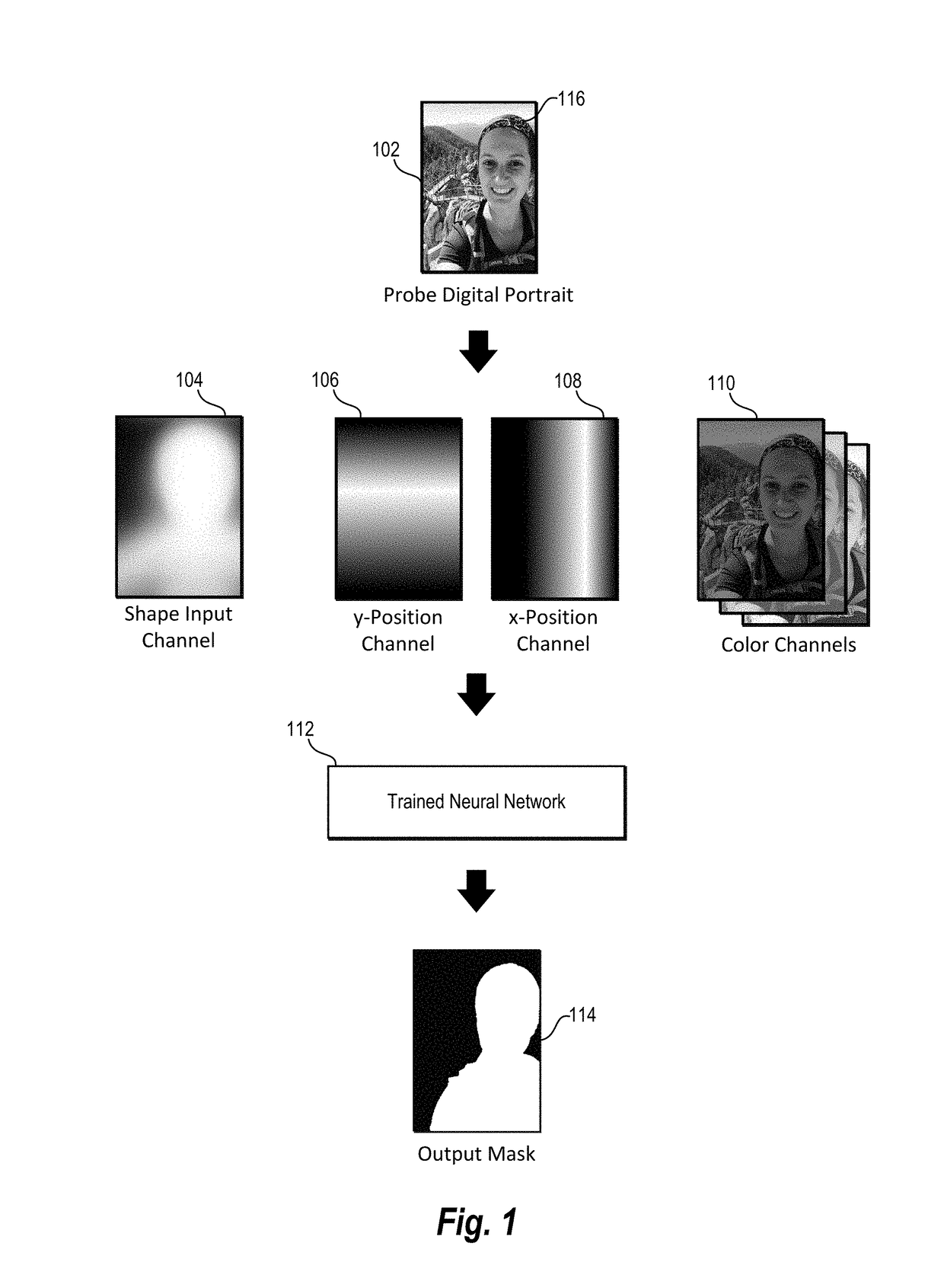

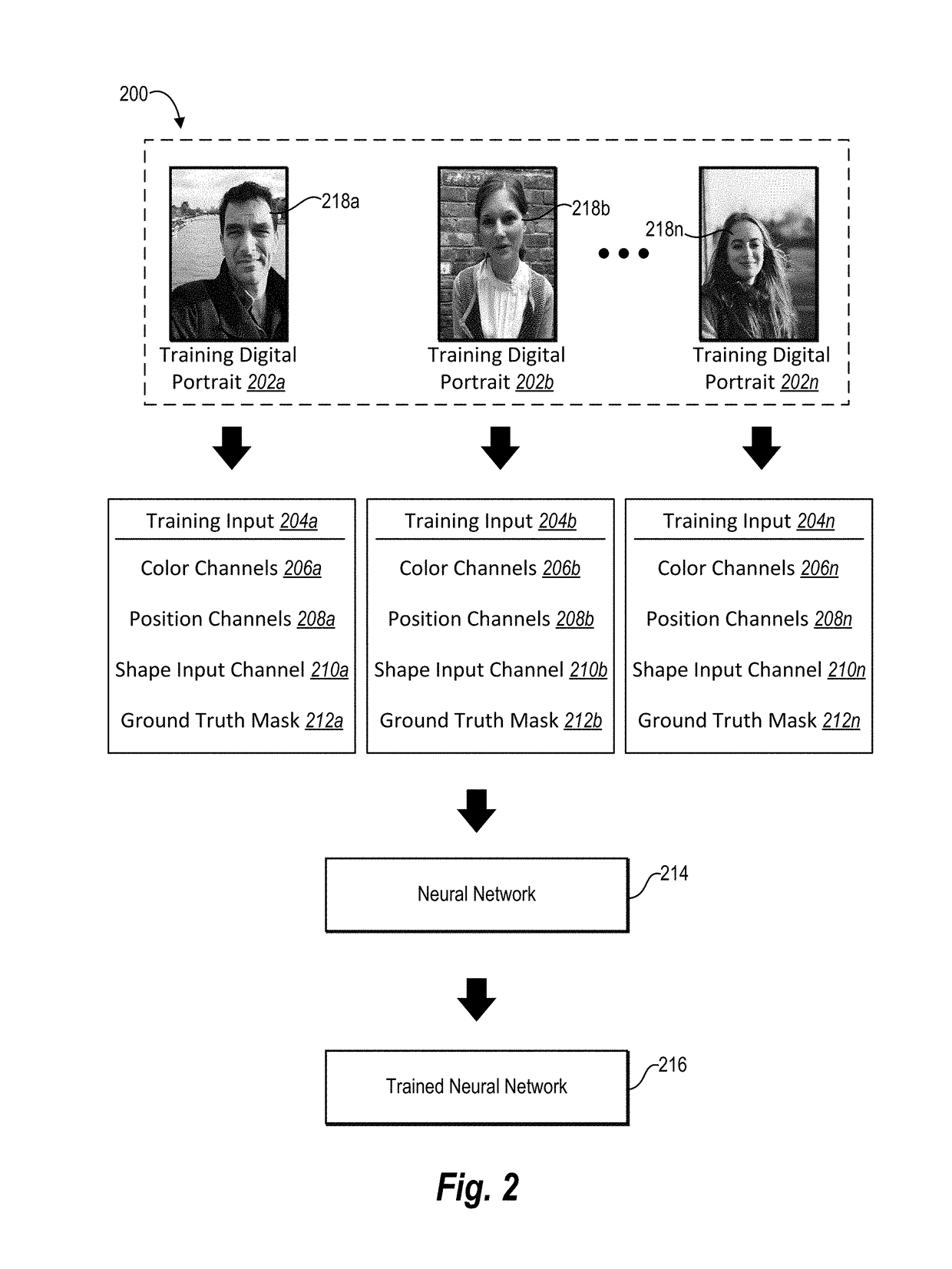

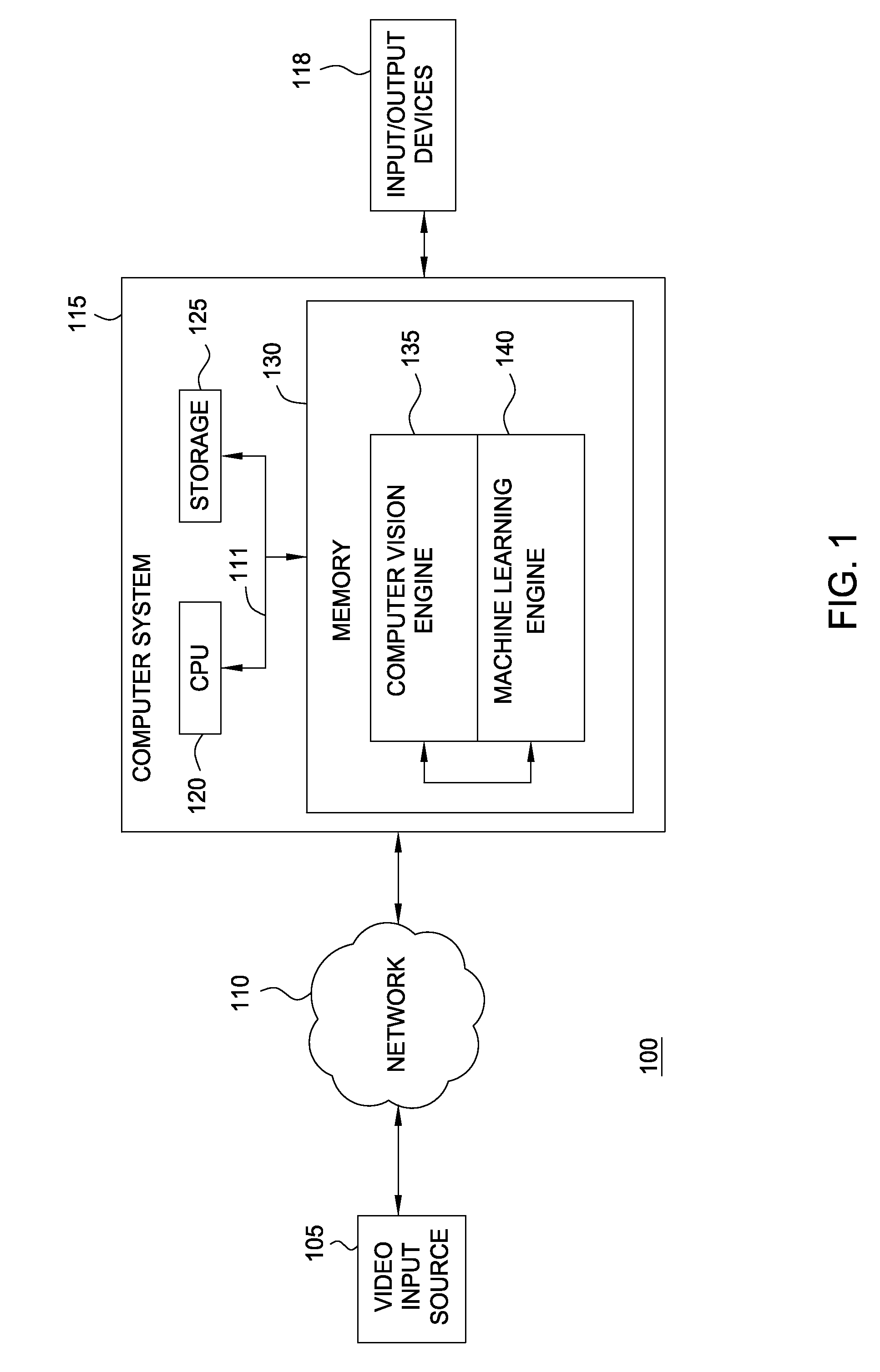

Utilizing deep learning for automatic digital image segmentation and stylization

ActiveUS20170213112A1Improve accuracyLess interactionImage enhancementImage analysisComputer visionDeep learning

Systems and methods are disclosed for segregating target individuals represented in a probe digital image from background pixels in the probe digital image. In particular, in one or more embodiments, the disclosed systems and methods train a neural network based on two or more of training position channels, training shape input channels, training color channels, or training object data. Moreover, in one or more embodiments, the disclosed systems and methods utilize the trained neural network to select a target individual in a probe digital image. Specifically, in one or more embodiments, the disclosed systems and methods generate position channels, training shape input channels, and color channels corresponding the probe digital image, and utilize the generated channels in conjunction with the trained neural network to select the target individual.

Owner:ADOBE SYST INC

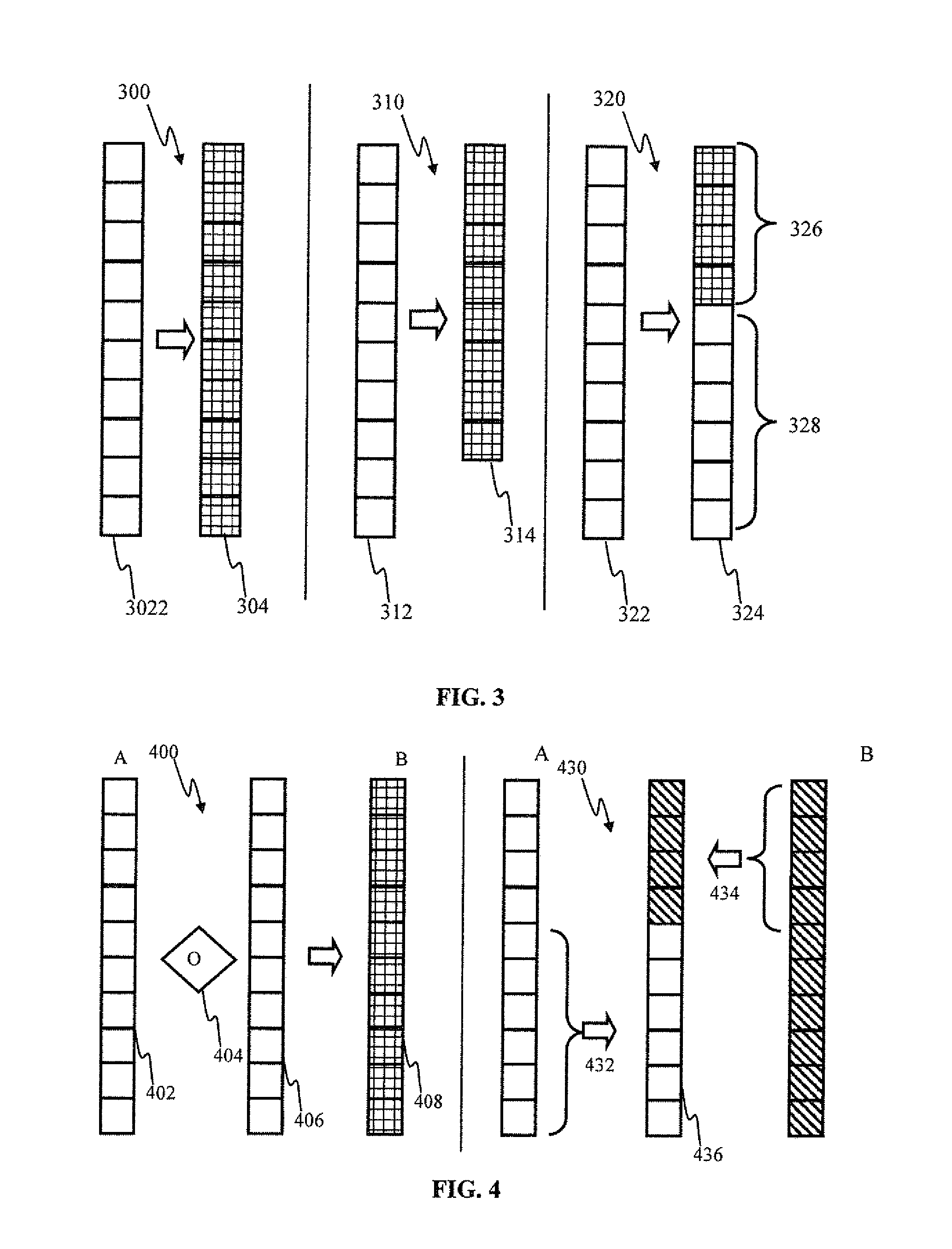

Spiking neural network feedback apparatus and methods

InactiveUS20130297541A1Low efficacyGood curative effectDigital computer detailsDigital dataSpiking neural networkArtificial intelligence

Apparatus and methods for feedback in a spiking neural network. In one approach, spiking neurons receive sensory stimulus and context signal that correspond to the same context. When the stimulus provides sufficient excitation, neurons generate response. Context connections are adjusted according to inverse spike-timing dependent plasticity. When the context signal precedes the post synaptic spike, context synaptic connections are depressed. Conversely, whenever the context signal follows the post synaptic spike, the connections are potentiated. The inverse STDP connection adjustment ensures precise control of feedback-induced firing, eliminates runaway positive feedback loops, enables self-stabilizing network operation. In another aspect of the invention, the connection adjustment methodology facilitates robust context switching when processing visual information. When a context (such an object) becomes intermittently absent, prior context connection potentiation enables firing for a period of time. If the object remains absent, the connection becomes depressed thereby preventing further firing.

Owner:BRAIN CORP

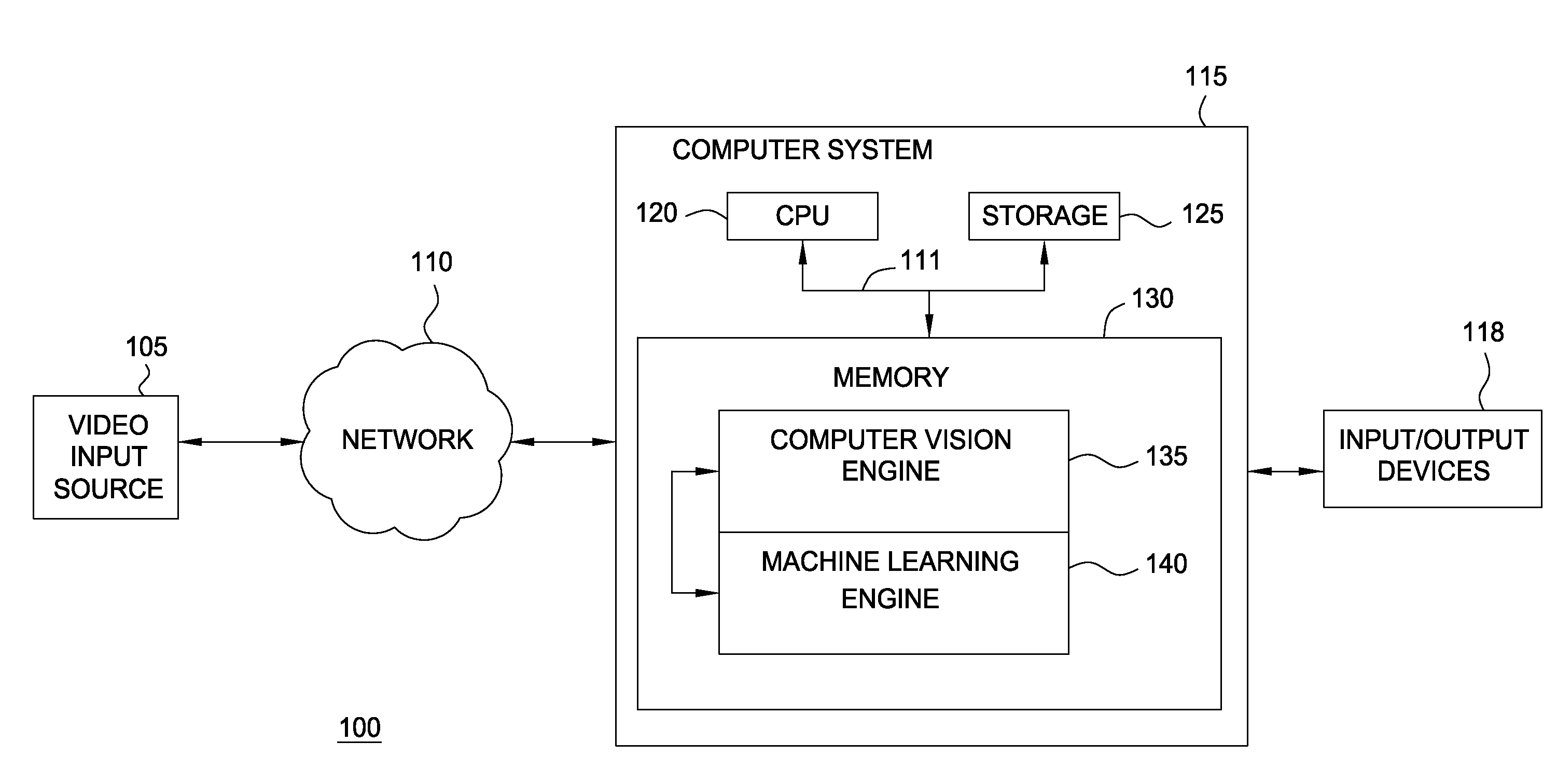

Neural network learning and collaboration apparatus and methods

ActiveUS20140089232A1Reinforce similar learning experienceIncrease macroNeural architecturesSpecial data processing applicationsNeural network learningArtificial intelligence

Apparatus and methods for learning and training in neural network-based devices. In one implementation, the devices each comprise multiple spiking neurons, configured to process sensory input. In one approach, alternate heterosynaptic plasticity mechanisms are used to enhance learning and field diversity within the devices. The selection of alternate plasticity rules is based on recent post-synaptic activity of neighboring neurons. Apparatus and methods for simplifying training of the devices are also disclosed, including a computer-based application. A data representation of the neural network may be imaged and transferred to another computational environment, effectively copying the brain. Techniques and architectures for achieve this training, storing, and distributing these data representations are also disclosed.

Owner:BRAIN CORP

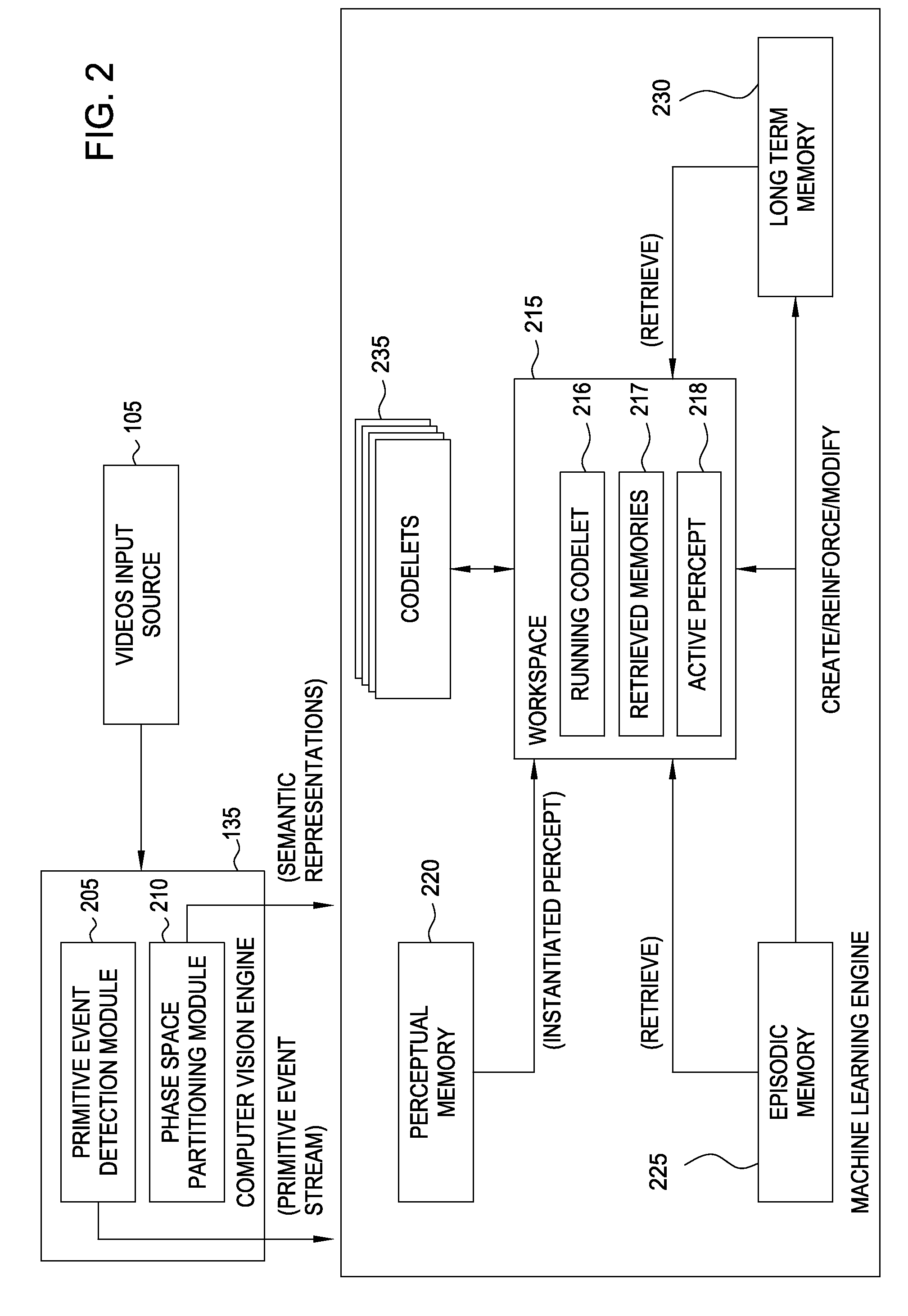

Long-term memory in a video analysis system

ActiveUS20100063949A1Digital computer detailsCharacter and pattern recognitionLong-term memoryNerve network

A long-term memory used to store and retrieve information learned while a video analysis system observes a stream of video frames is disclosed. The long-term memory provides a memory with a capacity that grows in size gracefully, as events are observed over time. Additionally, the long-term memory may encode events, represented by sub-graphs of a neural network. Further, rather than predefining a number of patterns recognized and manipulated by the long-term memory, embodiments of the invention provide a long-term memory where the size of a feature dimension (used to determine the similarity between different observed events) may grow dynamically as necessary, depending on the actual events observed in a sequence of video frames.

Owner:MOTOROLA SOLUTIONS INC

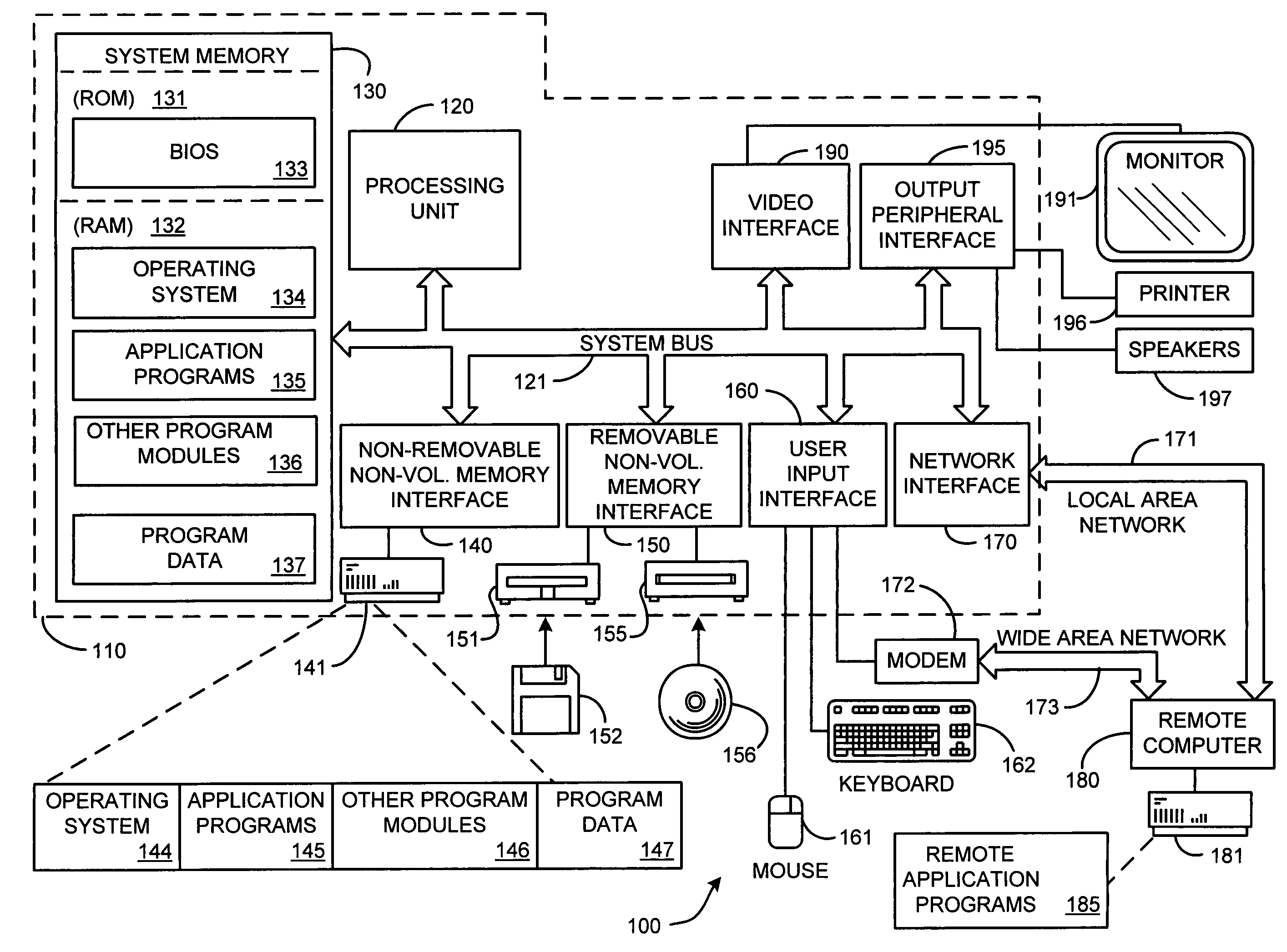

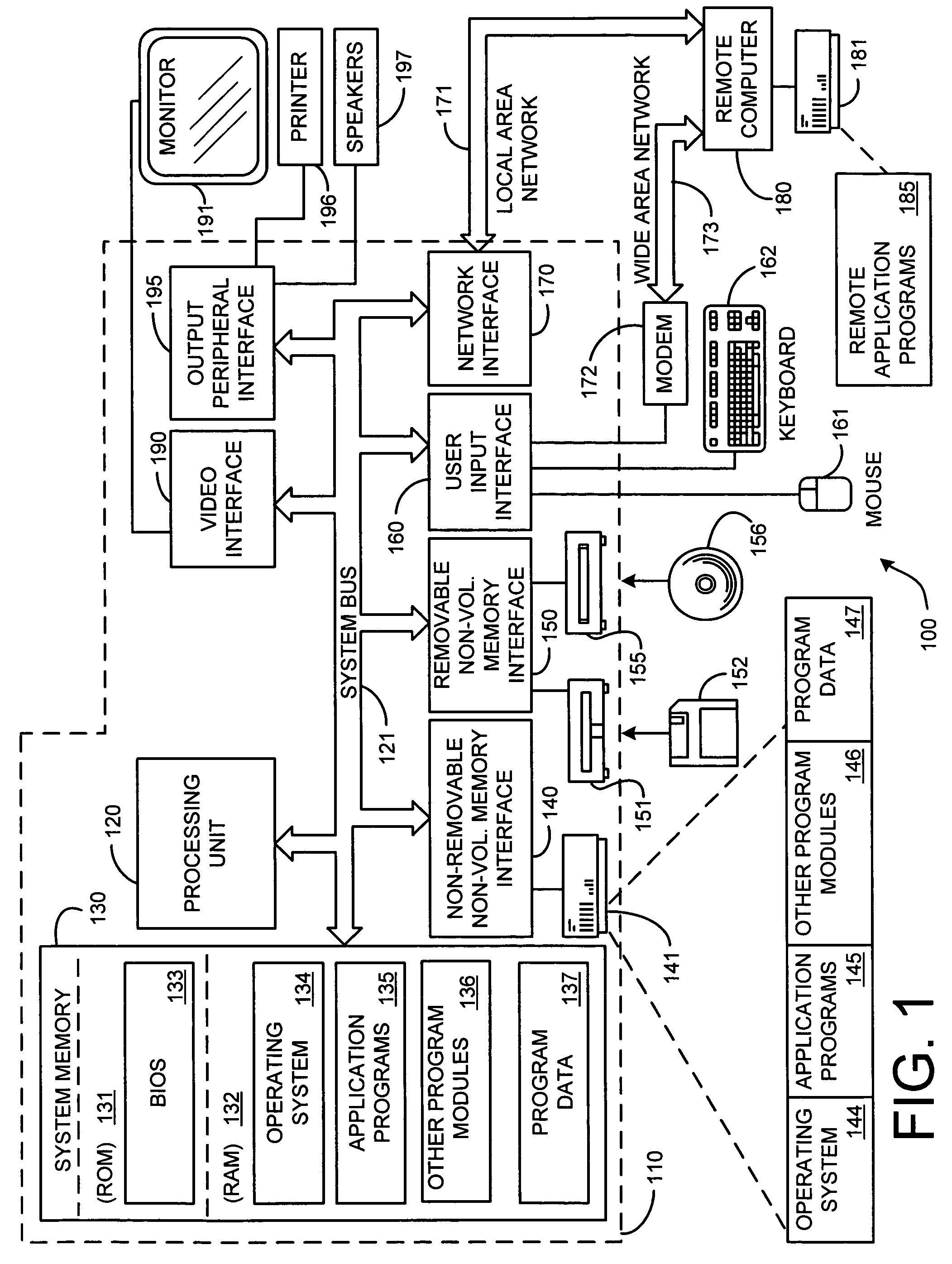

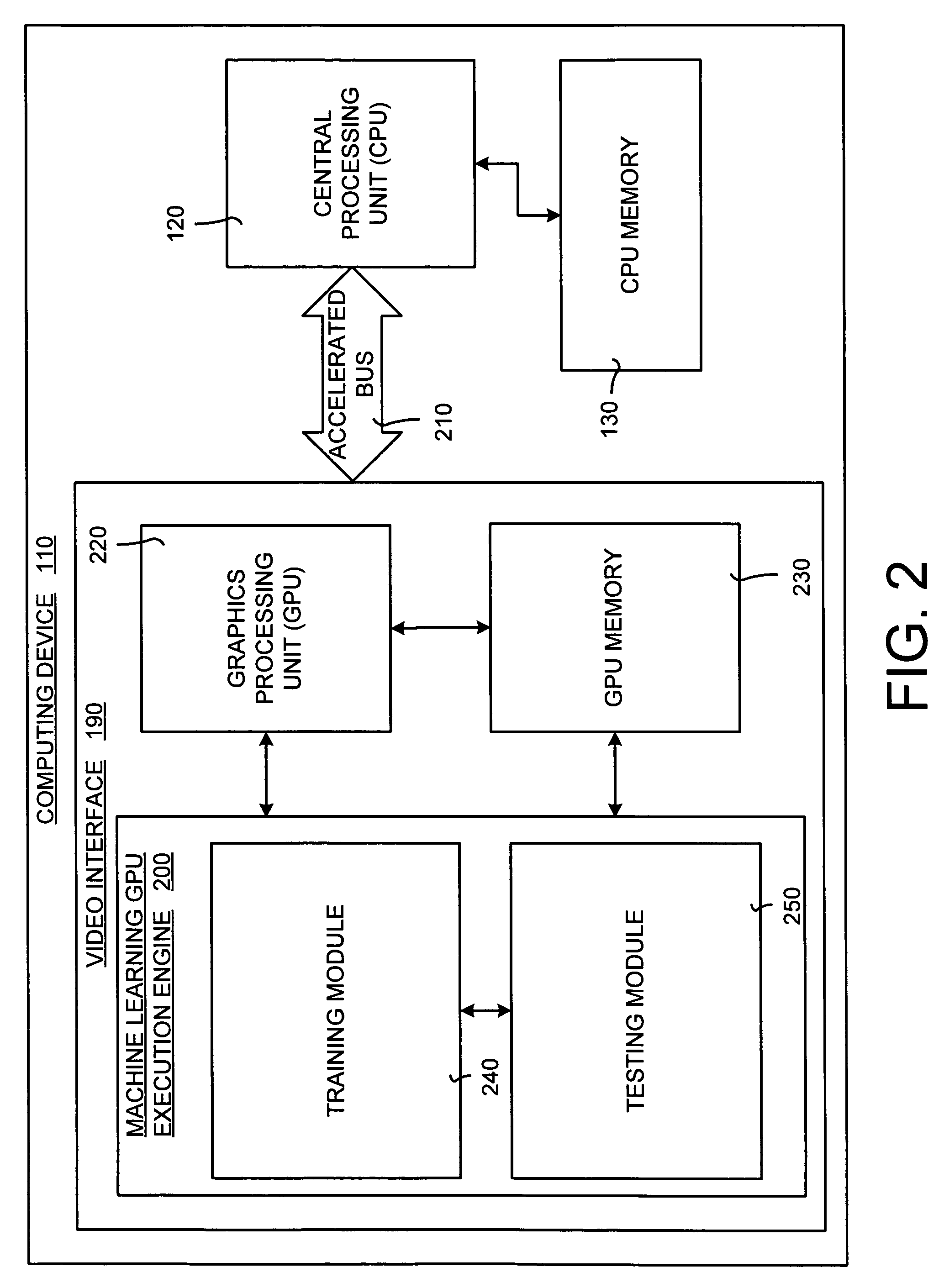

System and method for accelerating and optimizing the processing of machine learning techniques using a graphics processing unit

ActiveUS7219085B2Alleviates computational limitationMore computationCharacter and pattern recognitionKnowledge representationGraphicsTheoretical computer science

A system and method for processing machine learning techniques (such as neural networks) and other non-graphics applications using a graphics processing unit (GPU) to accelerate and optimize the processing. The system and method transfers an architecture that can be used for a wide variety of machine learning techniques from the CPU to the GPU. The transfer of processing to the GPU is accomplished using several novel techniques that overcome the limitations and work well within the framework of the GPU architecture. With these limitations overcome, machine learning techniques are particularly well suited for processing on the GPU because the GPU is typically much more powerful than the typical CPU. Moreover, similar to graphics processing, processing of machine learning techniques involves problems with solving non-trivial solutions and large amounts of data.

Owner:MICROSOFT TECH LICENSING LLC

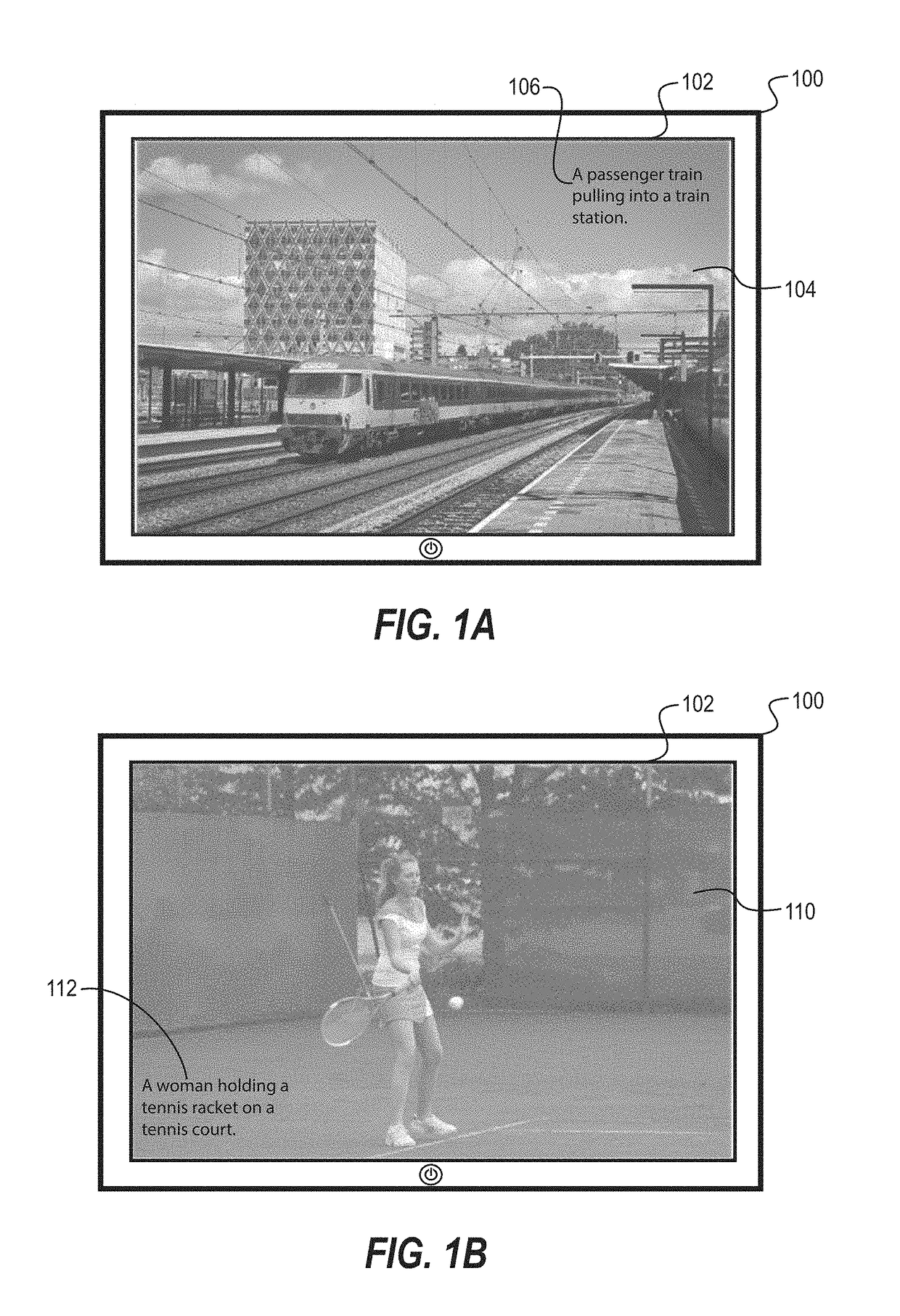

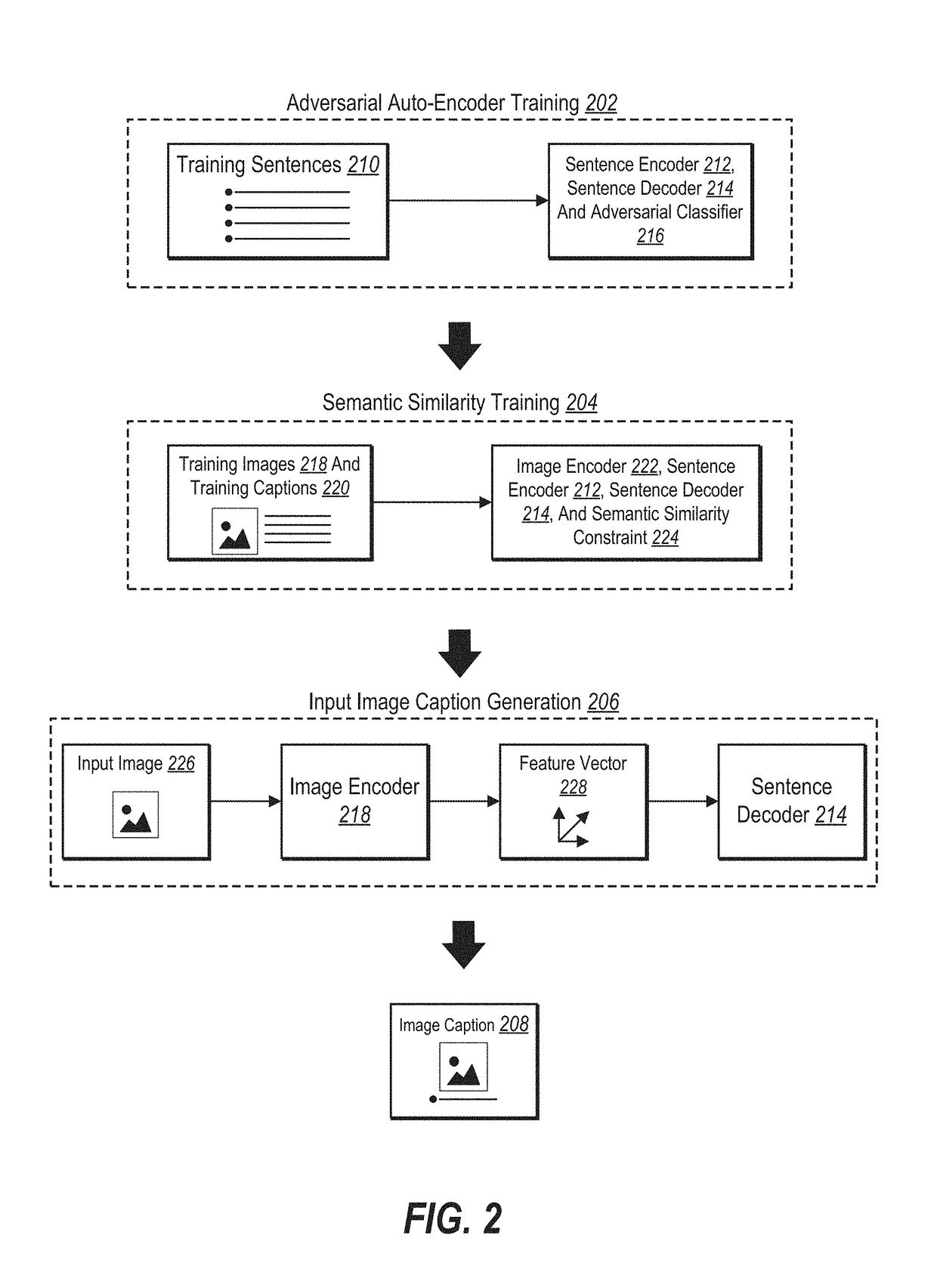

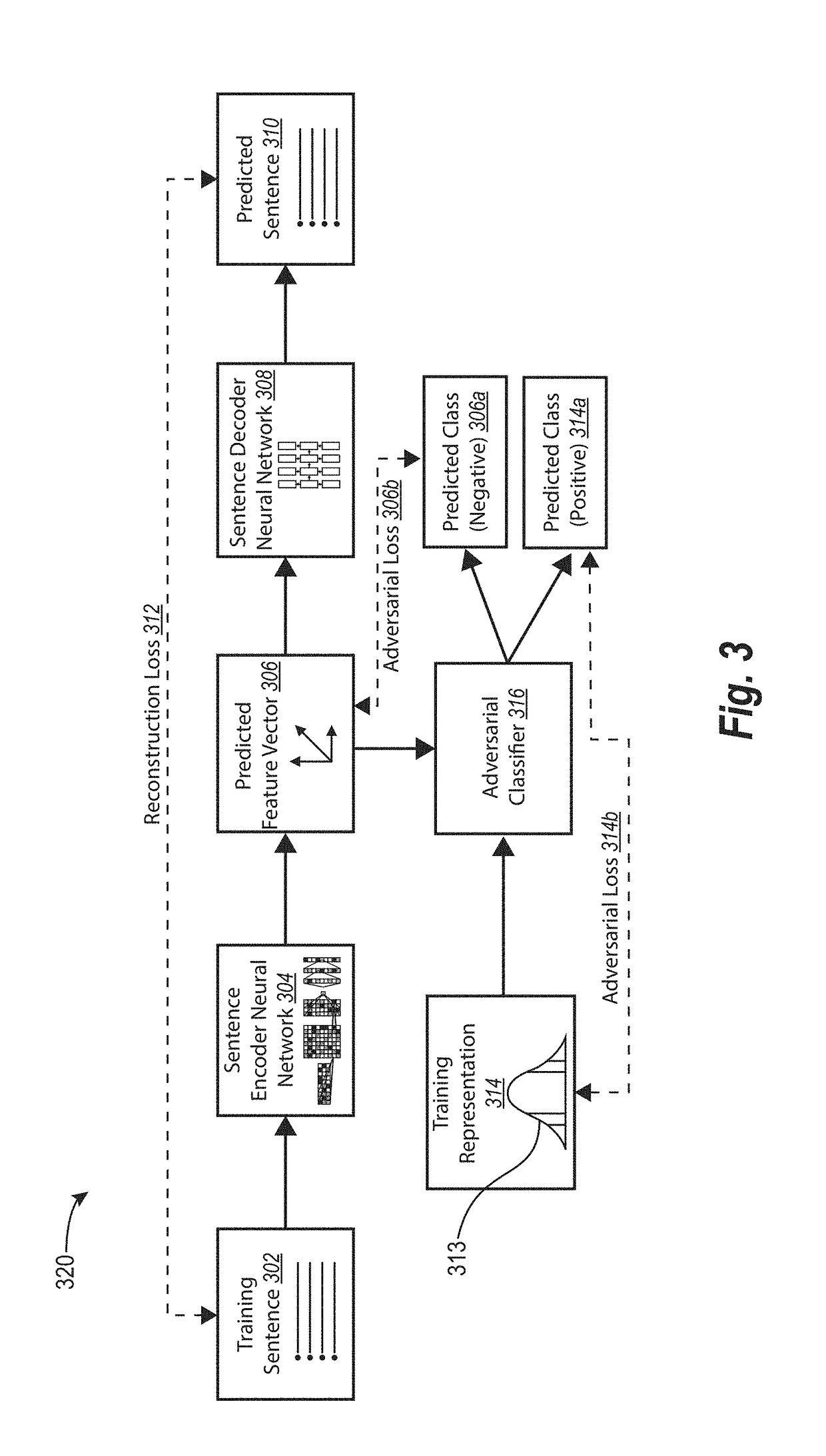

Image captioning utilizing semantic text modeling and adversarial learning

ActiveUS20180373979A1Accurately reflectCharacter and pattern recognitionMachine learningText modelingDigital image

The present disclosure includes methods and systems for generating captions for digital images. In particular, the disclosed systems and methods can train an image encoder neural network and a sentence decoder neural network to generate a caption from an input digital image. For instance, in one or more embodiments, the disclosed systems and methods train an image encoder neural network (e.g., a character-level convolutional neural network) utilizing a semantic similarity constraint, training images, and training captions. Moreover, the disclosed systems and methods can train a sentence decoder neural network (e.g., a character-level recurrent neural network) utilizing training sentences and an adversarial classifier.

Owner:ADOBE SYST INC

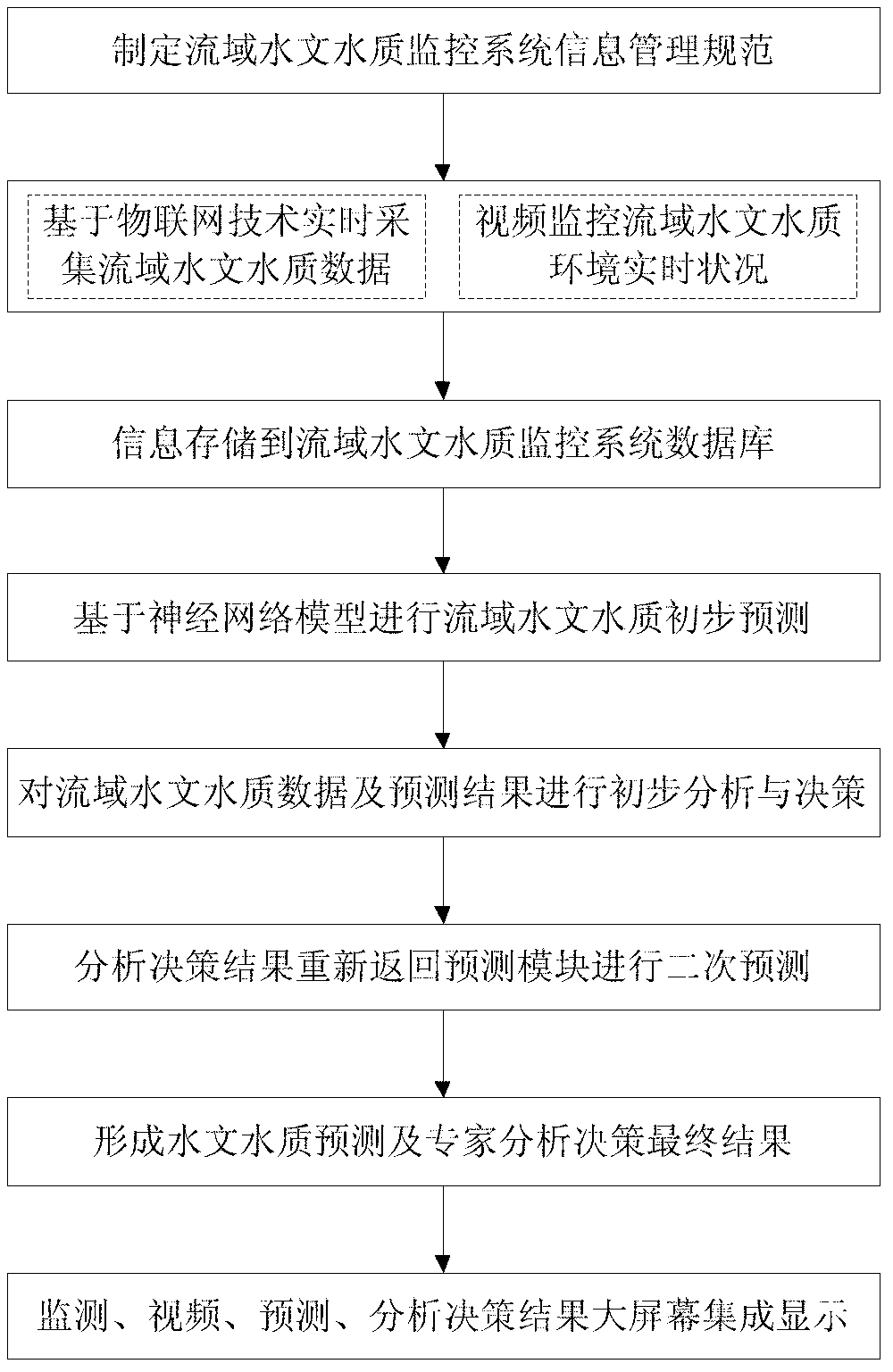

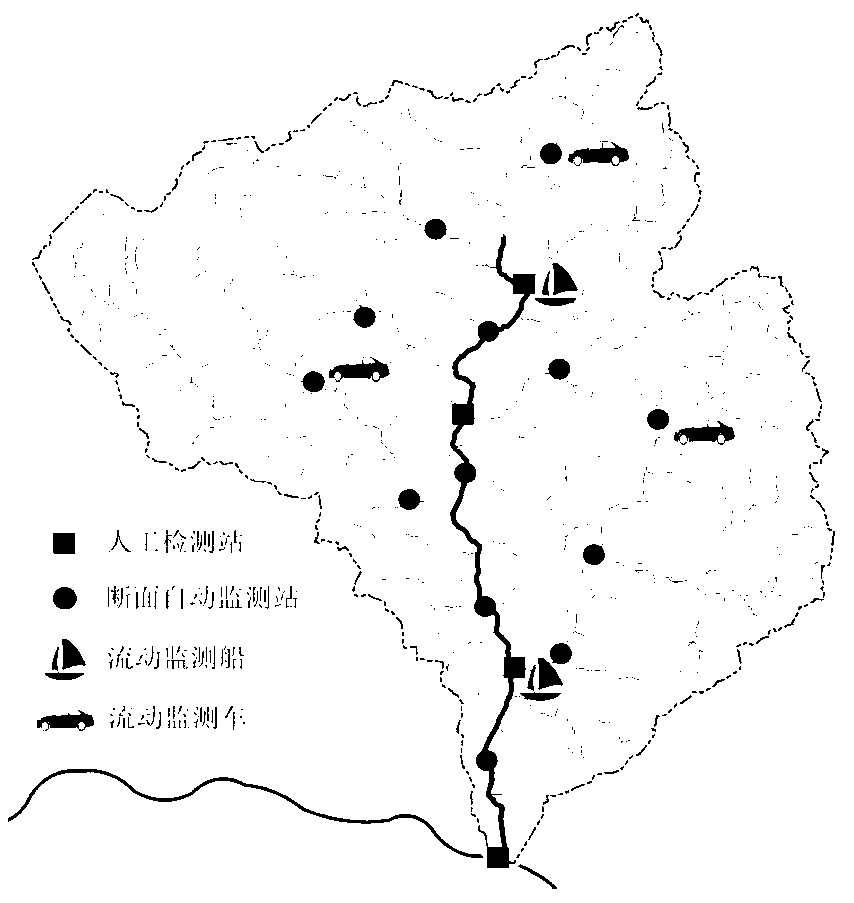

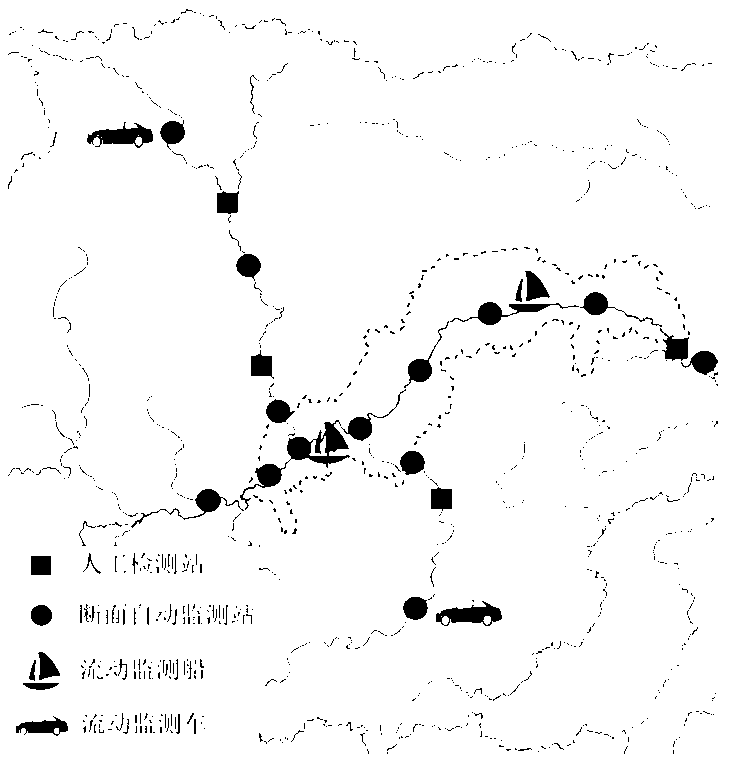

System and method for monitoring hydrology and water quality of river basin under influence of water projects based on Internet of Things

ActiveCN103175513AReal-timeAchieving processing powerChemical analysis using titrationOpen water surveyHydrometryWater quality

The invention provides a method for monitoring hydrology and water quality of a river basin under influence of water projects based on Internet of Things. According to the method, various fixed and flowing sensors are additionally mounted so as to obtain real-time hydrology and water quality data of important water areas, in combination with a video technology, monitoring on a spot environment is achieved, the monitoring information is transmitted through the Internet of Things, intelligent prediction for hydrology and water quality of the river basin is carried out through a neural network, and finally, critical applications, such as current situation assessment, tendency estimation, implementation effect estimation and extreme event handling, are achieved through an expert system. The method provided by the invention can provide real-time, reliable and complete hydrology and water quality information for the river basin under influence of water projects, and can achieve cross-regional and multi-machine type integrated remote protection for the river basin.

Owner:CHINA THREE GORGES CORPORATION

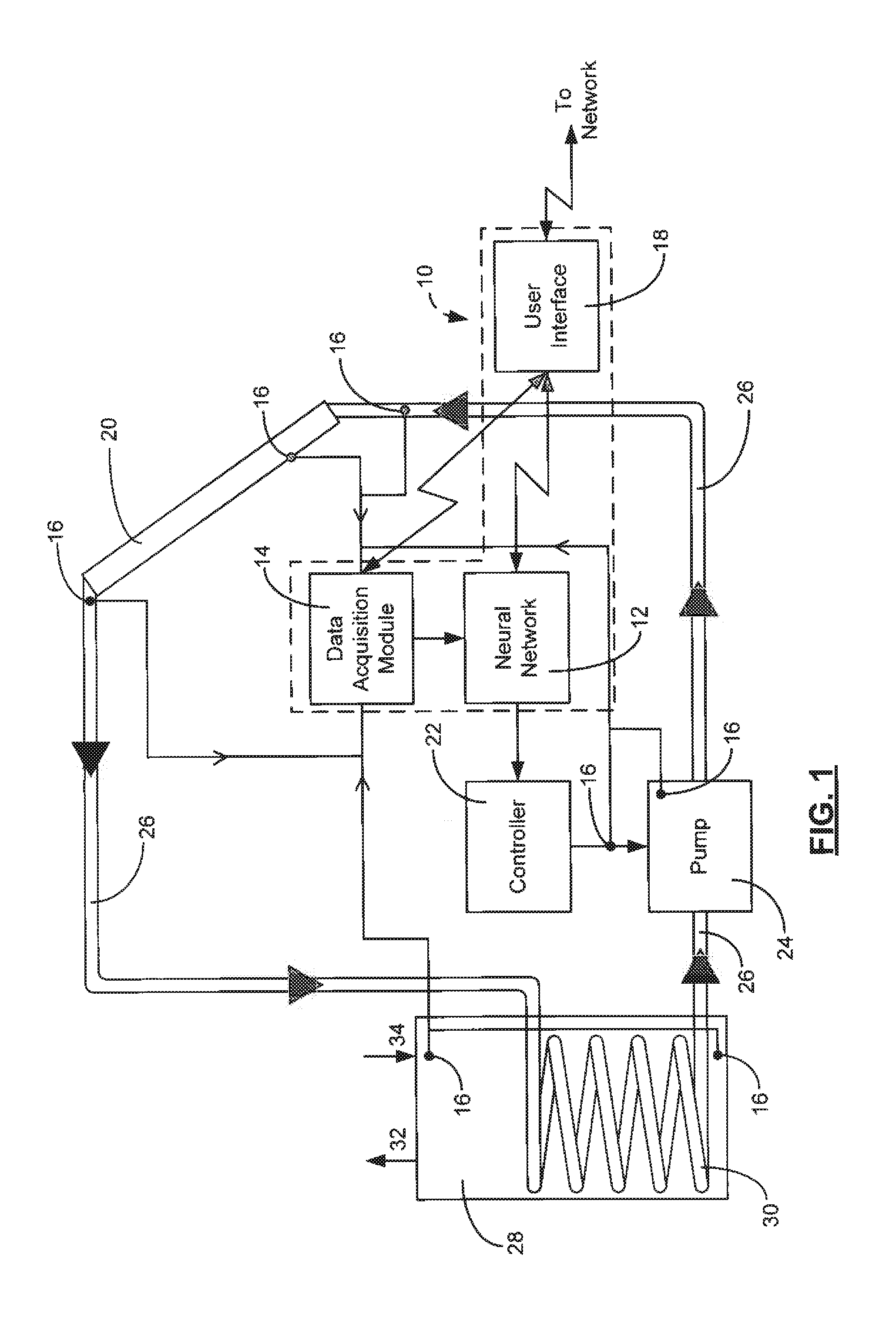

Neural network fault detection system and associated methods

InactiveUS20120166363A1Testing/monitoring control systemsDigital computer detailsSolar water heating systemComputer science

A fault detection system for use with a solar hot water system may include a data acquisition module which may, in turn, include a plurality of sensors. Input data may include a sensed condition. The system may also include a neural network to receive the input data which may be a multi-layer hierarchical adaptive resonance theory (ART) neural network. The neural network may perform an analysis on the input data to determine existence of a fault or a condition indicative of a potential fault. The fault and the condition indicative of the potential fault are prioritized according to the analysis performed by the neural network. A warning output relating to the fault and the condition indicative of the potential fault is generated responsive to the analysis, and is displayed on the user interface.

Owner:STC UNM

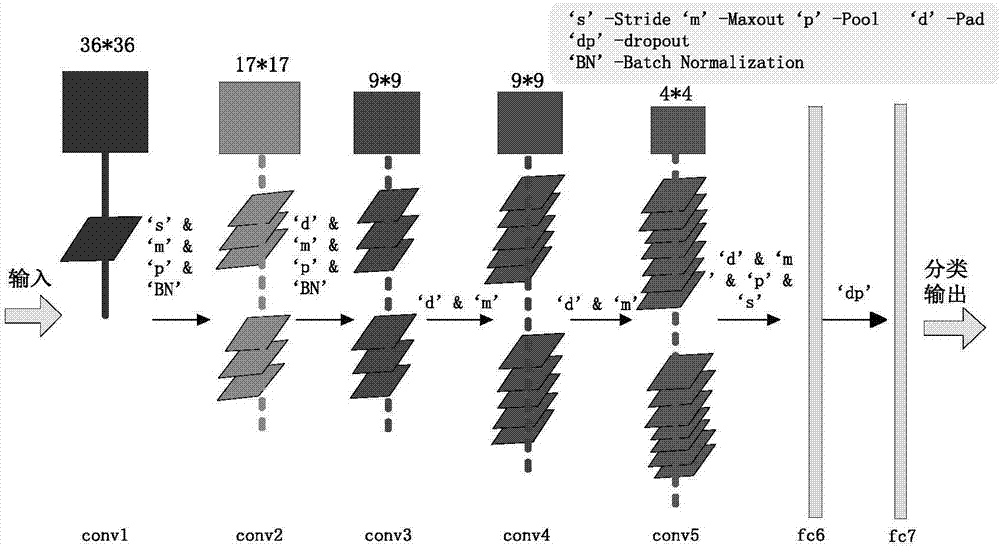

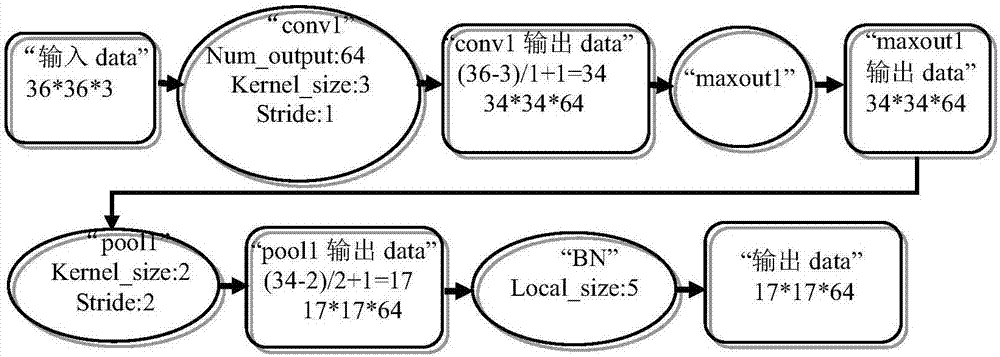

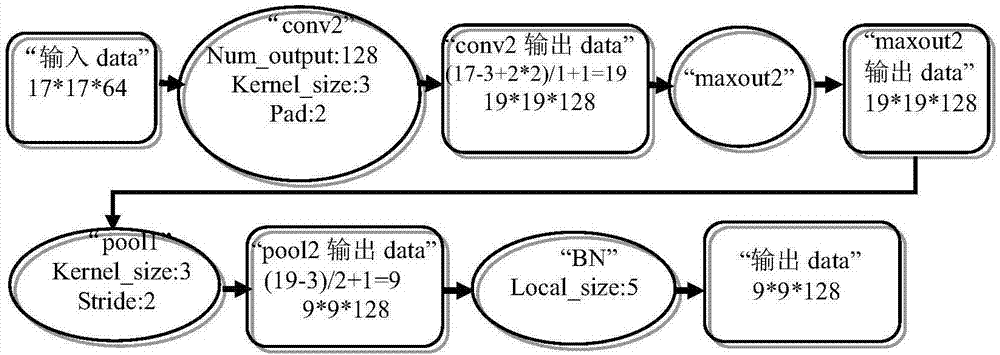

Image classification method based on convolution neural network

PendingCN107341518AImprove accuracyImprove recognition rateCharacter and pattern recognitionNeural learning methodsClassification methodsNetwork structure

The invention discloses an image classification method based on a convolution neural network. The method comprises the following steps: constructing a deep convolution neural network; improving the deep convolution neural network; training and testing the deep convolution neural network; and optimizing the network parameter. By using the image classification method disclosed by the invention, the improvement and the optimization are respectively performed on the network structure and multiple parameters of the convolution neural network, the recognition rate of the deep convolution neural network can be effectively improved, and the accuracy of the image classification is improved.

Owner:EAST CHINA UNIV OF TECH

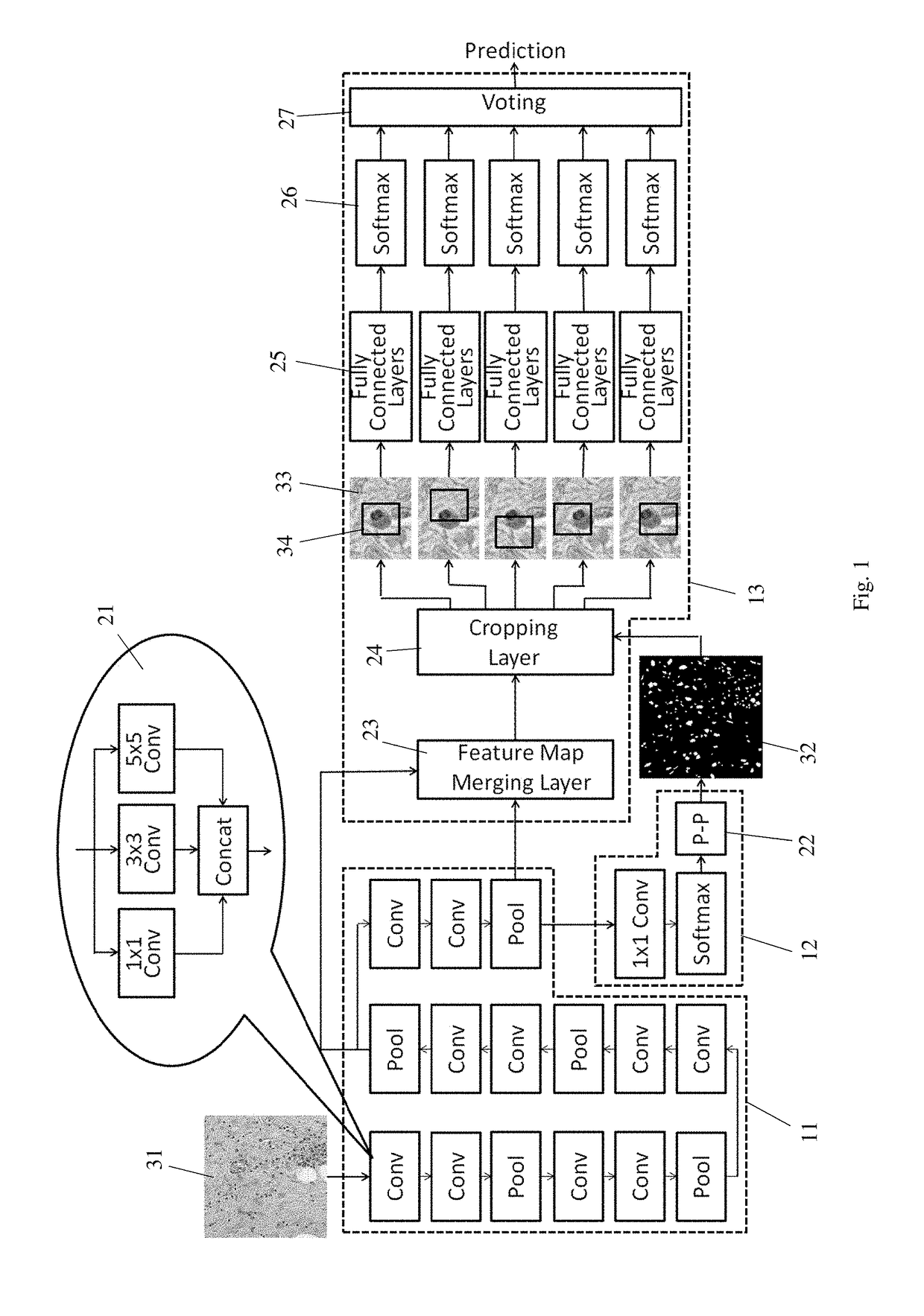

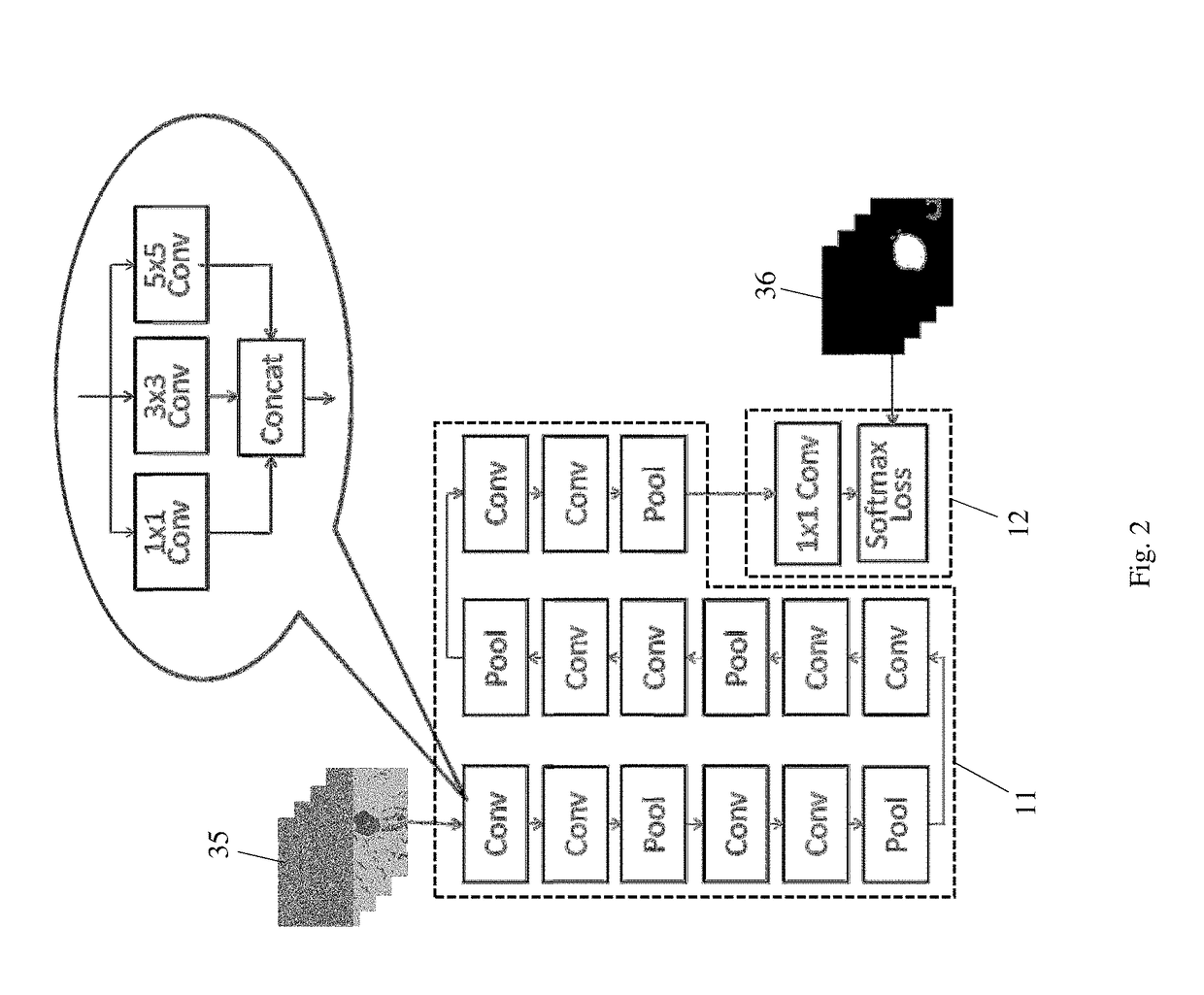

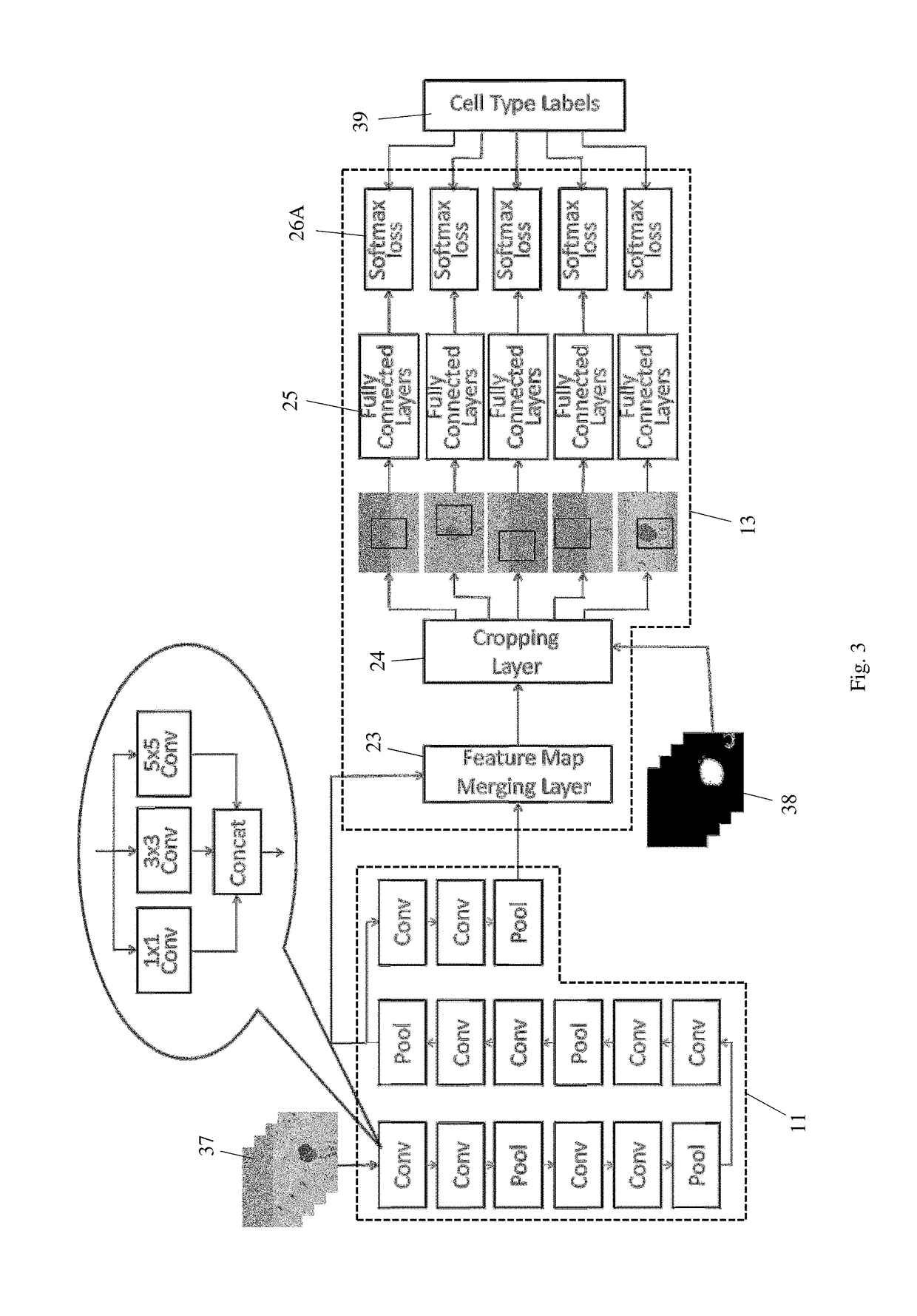

Method and system for detection and classification of cells using convolutional neural networks

ActiveUS20190065817A1Accurate cell segmentationAccurate classificationImage enhancementImage analysisFeature mappingArtificial neural network

An artificial neural network system implemented on a computer for cell segmentation and classification of biological images. It includes a deep convolutional neural network as a feature extraction network, a first branch network connected to the feature extraction network to perform cell segmentation, and a second branch network connected to the feature extraction network to perform cell classification using the cell segmentation map generated by the first branch network. The feature extraction network is a modified VGG network where each convolutional layer uses multiple kernels of different sizes. The second branch network takes feature maps from two levels of the feature extraction network, and has multiple fully connected layers to independently process multiple cropped patches of the feature maps, the cropped patches being located at a centered and multiple shifted positions relative to the cell being classified; a voting method is used to determine the final cell classification.

Owner:KONICA MINOLTA LAB U S A INC

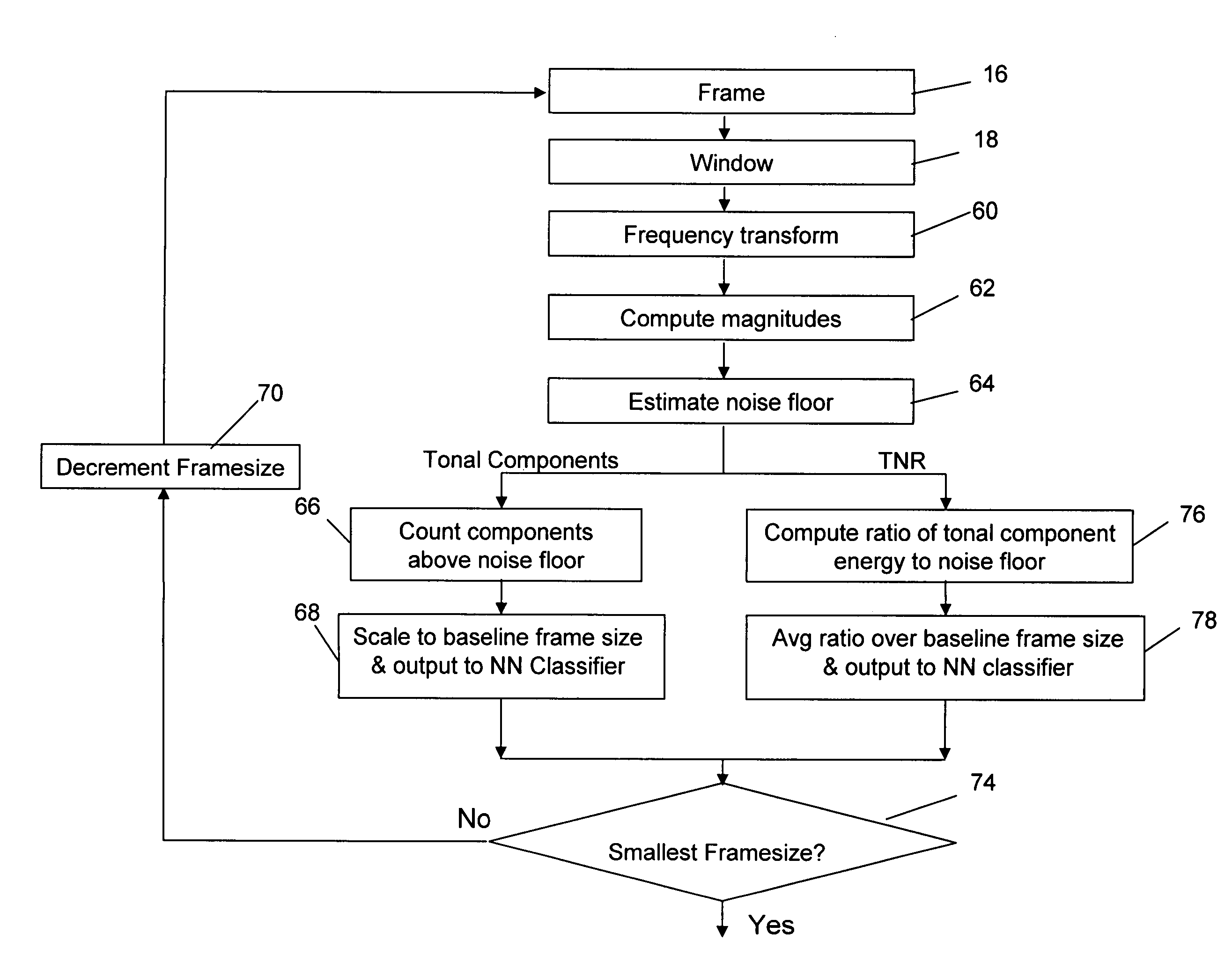

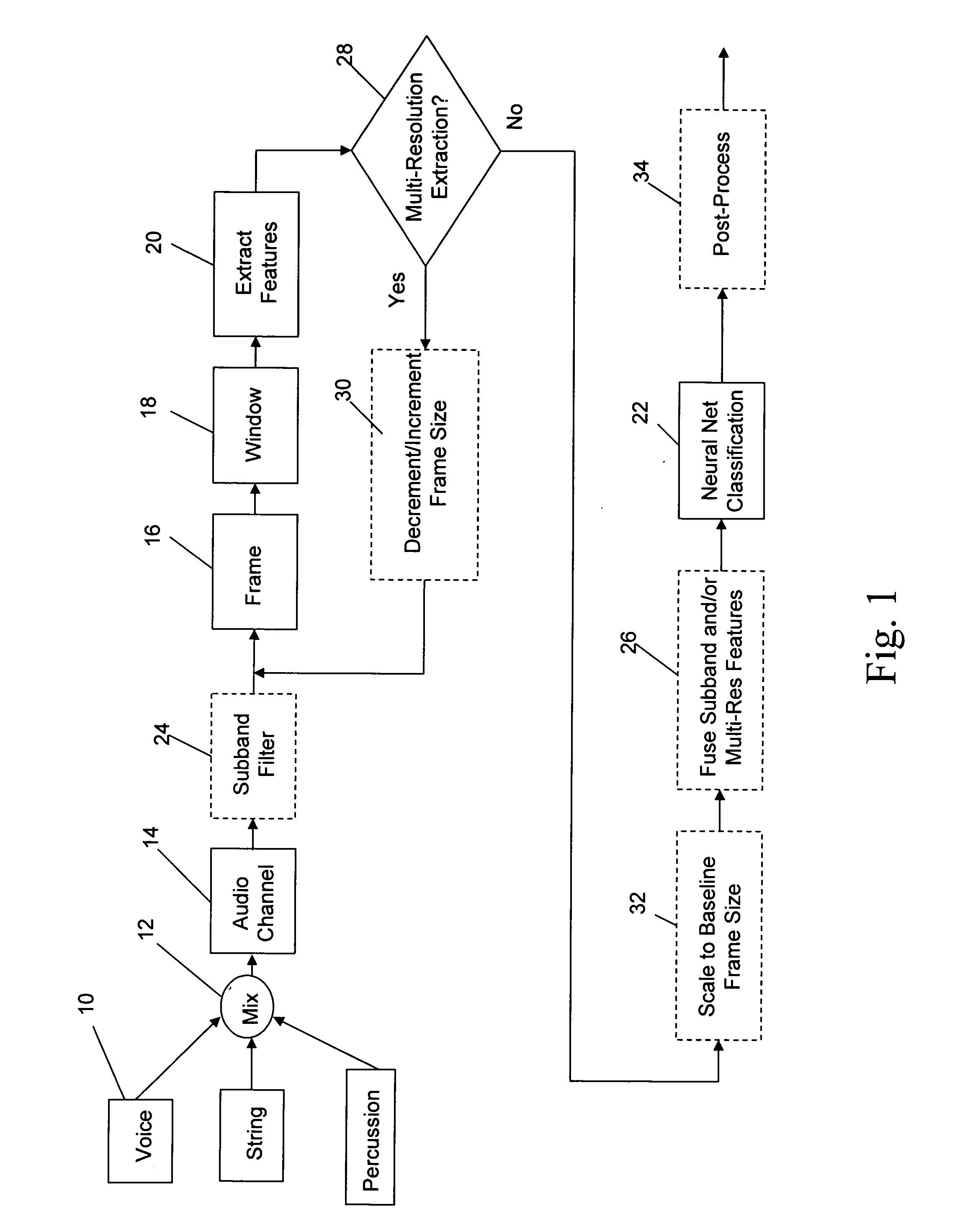

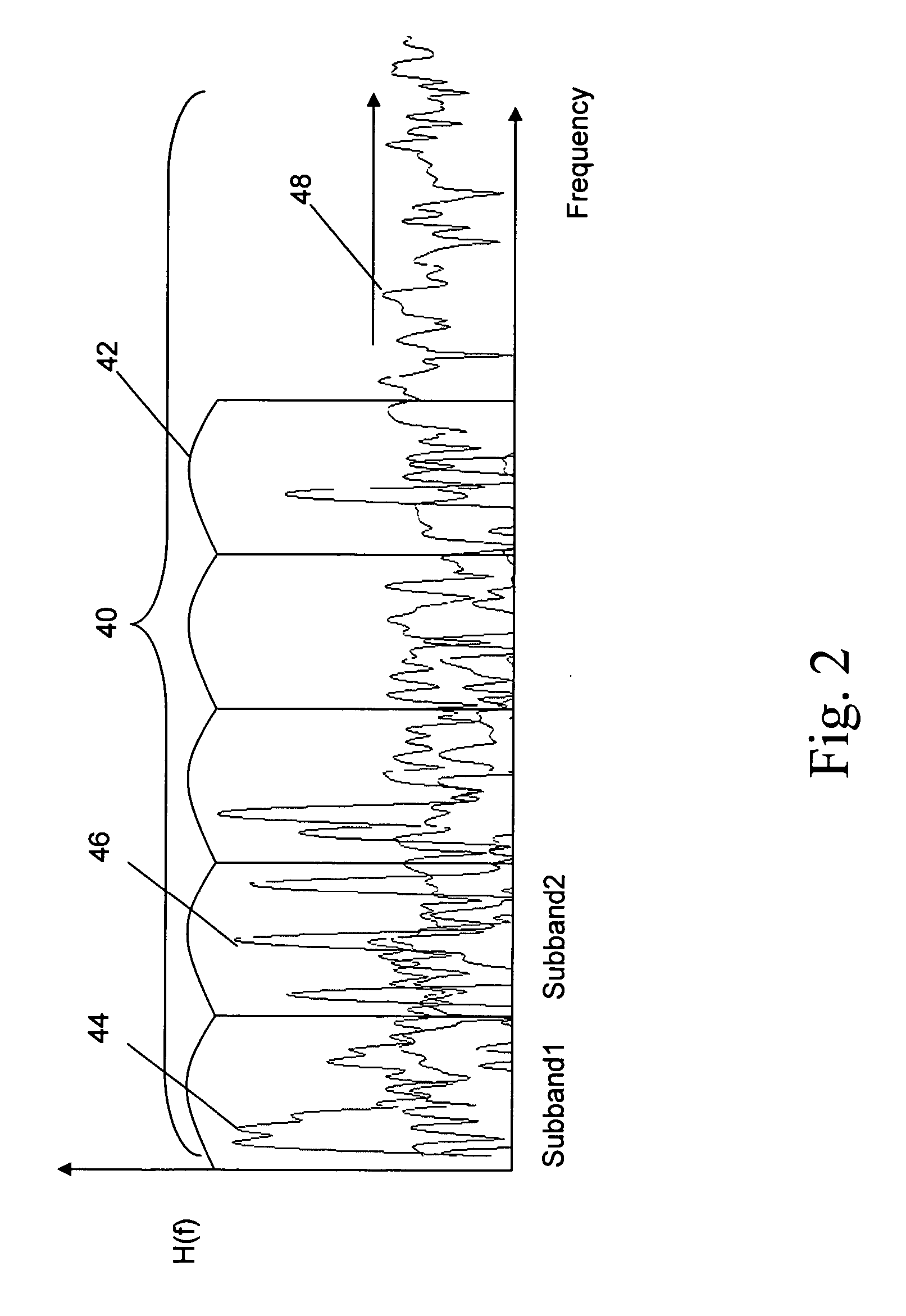

Neural network classifier for separating audio sources from a monophonic audio signal

InactiveUS20070083365A1Improve robustnessEasy to separateSpeech recognitionNetwork outputAudio signal

A neural network classifier provides the ability to separate and categorize multiple arbitrary and previously unknown audio sources down-mixed to a single monophonic audio signal. This is accomplished by breaking the monophonic audio signal into baseline frames (possibly overlapping), windowing the frames, extracting a number of descriptive features in each frame, and employing a pre-trained nonlinear neural network as a classifier. Each neural network output manifests the presence of a pre-determined type of audio source in each baseline frame of the monophonic audio signal. The neural network classifier is well suited to address widely changing parameters of the signal and sources, time and frequency domain overlapping of the sources, and reverberation and occlusions in real-life signals. The classifier outputs can be used as a front-end to create multiple audio channels for a source separation algorithm (e.g., ICA) or as parameters in a post-processing algorithm (e.g. categorize music, track sources, generate audio indexes for the purposes of navigation, re-mixing, security and surveillance, telephone and wireless communications, and teleconferencing).

Owner:DTS

End-to-end speech recognition

Embodiments of end-to-end deep learning systems and methods are disclosed to recognize speech of vastly different languages, such as English or Mandarin Chinese. In embodiments, the entire pipelines of hand-engineered components are replaced with neural networks, and the end-to-end learning allows handling a diverse variety of speech including noisy environments, accents, and different languages. Using a trained embodiment and an embodiment of a batch dispatch technique with GPUs in a data center, an end-to-end deep learning system can be inexpensively deployed in an online setting, delivering low latency when serving users at scale.

Owner:BAIDU USA LLC

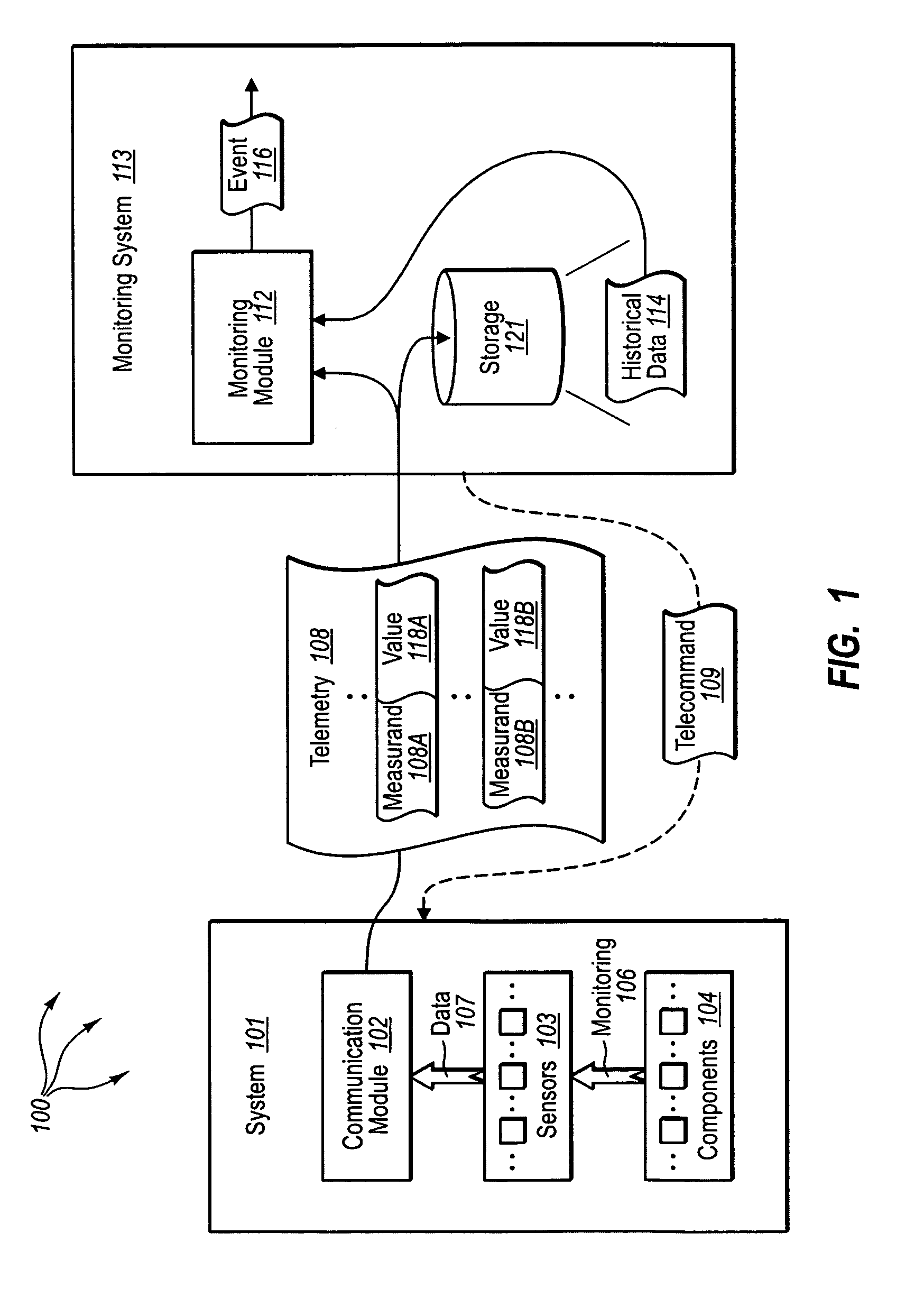

Detecting, classifying, and tracking abnormal data in a data stream

ActiveUS8306931B1Efficiently determineEfficiently determinedDigital computer detailsDigital dataNeural net architectureSelf adaptive

The present invention extends to methods, systems, and computer program products for detecting, classifying, and tracking abnormal data in a data stream. Embodiments include an integrated set of algorithms that enable an analyst to detect, characterize, and track abnormalities in real-time data streams based upon historical data labeled as predominantly normal or abnormal. Embodiments of the invention can detect, identify relevant historical contextual similarity, and fuse unexpected and unknown abnormal signatures with other possibly related sensor and source information. The number, size, and connections of the neural networks all automatically adapted to the data. Further, adaption appropriately and automatically integrates unknown and known abnormal signature training within one neural network architecture solution automatically. Algorithms and neural networks architecture are data driven, resulting more affordable processing. Expert knowledge can be incorporated to enhance the process, but sufficient performance is achievable without any system domain or neural networks expertise.

Owner:DATA FUSION & NEURAL NETWORKS

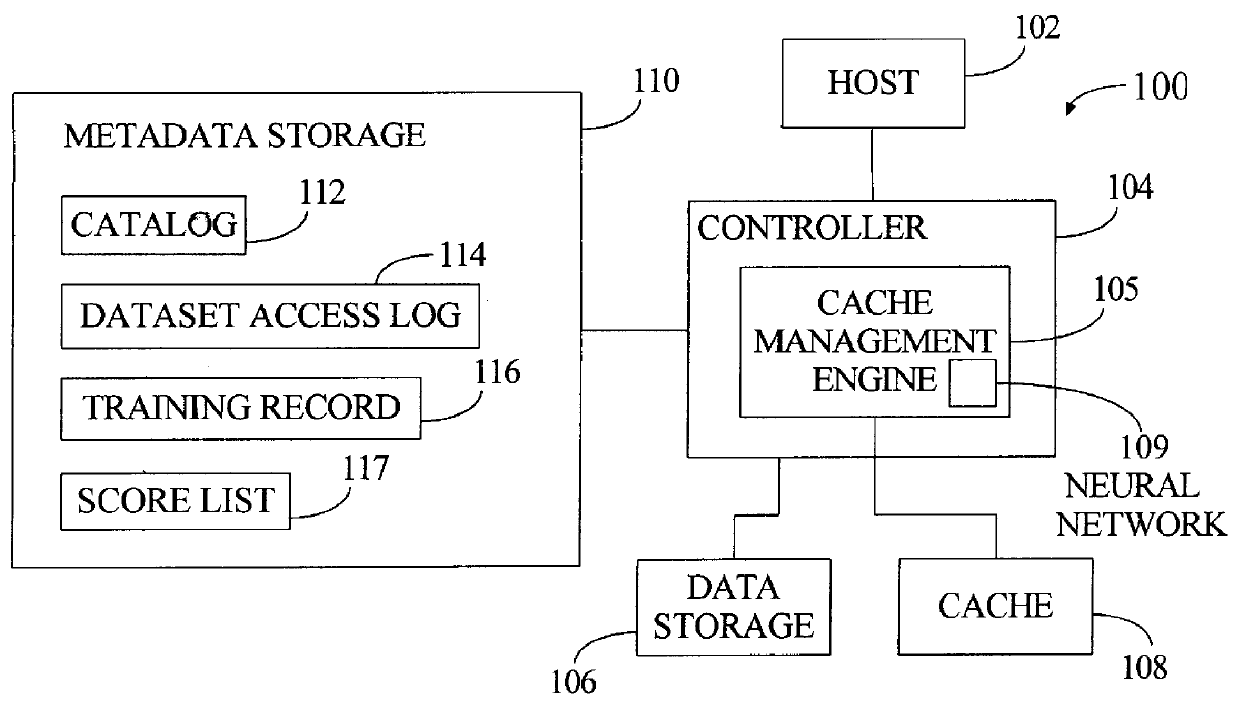

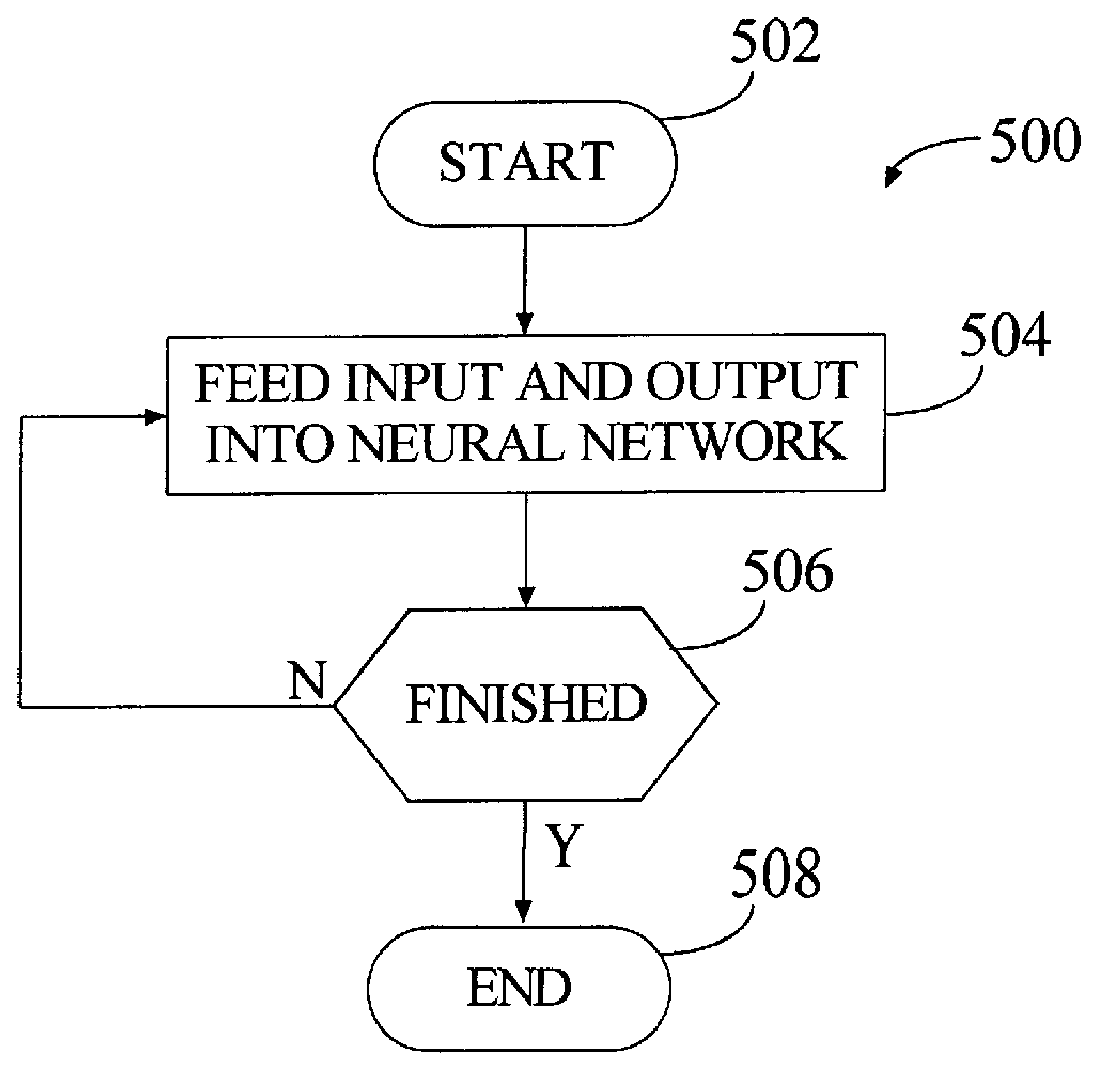

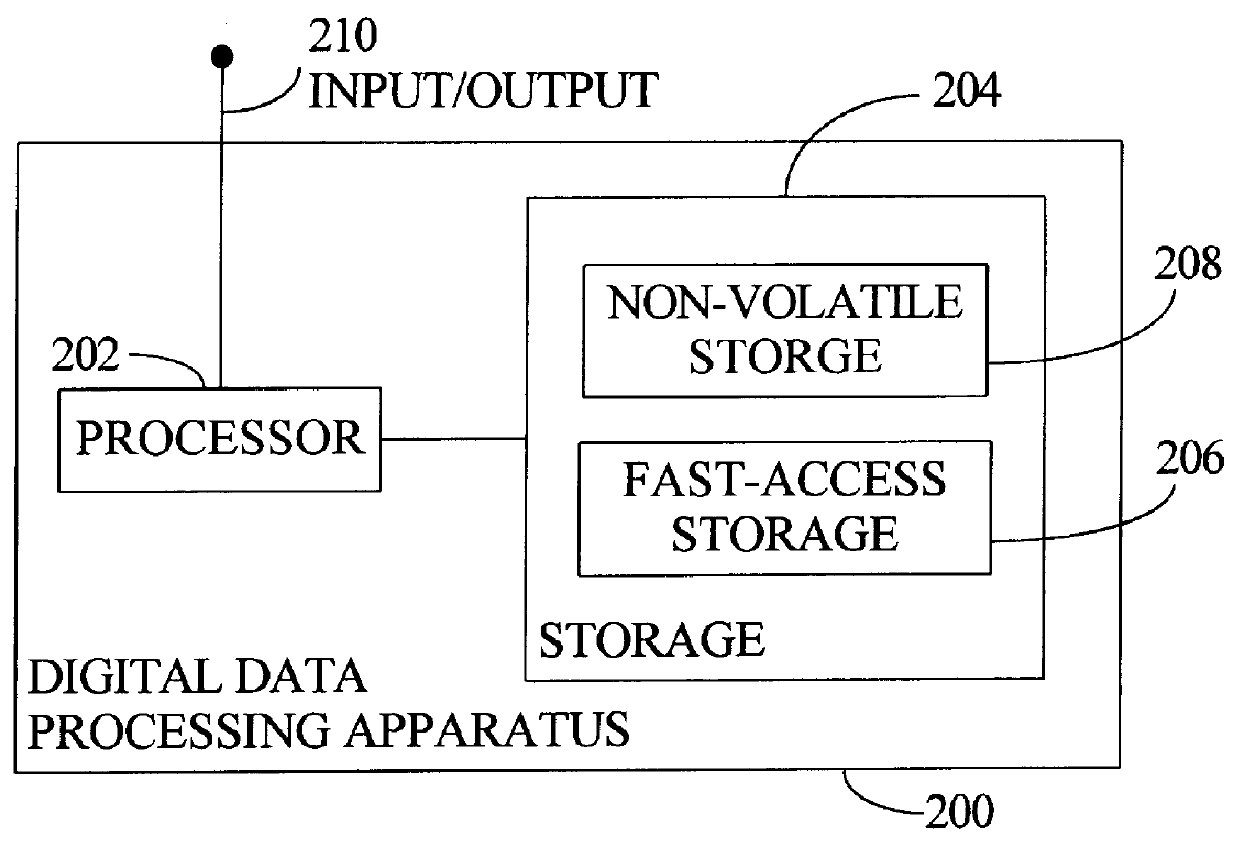

Data storage system with trained predictive cache management engine

InactiveUS6163773AMemory adressing/allocation/relocationDigital computer detailsTime scheduleProgram planning

In a data storage system, a cache is managed by a predictive cache management engine that evaluates cache contents and purges entries unlikely to receive sufficient future cache hits. The engine includes a single output back propagation neural network that is trained in response to various event triggers. Accesses to stored datasets are logged in a data access log; conversely, log entries are removed according to a predefined expiration criteria. In response to access of a cached dataset or expiration of its log entry, the cache management engine prepares training data. This is achieved by determining characteristics of the dataset at various past times between the time of the access / expiration and a time of last access, and providing these characteristics and the times of access as input to train the neural network. As another part of training, the cache management engine provides the neural network with output representing the expiration or access of the dataset. According to a predefined schedule, the cache management engine operates the trained neural network to generate scores for cached datasets, these scores ranking the datasets relative to each other. According to this or a different schedule, the cache management engine reviews the scores, identifies one or more datasets with the least scores, and purges the identified datasets from the cache.

Owner:IBM CORP

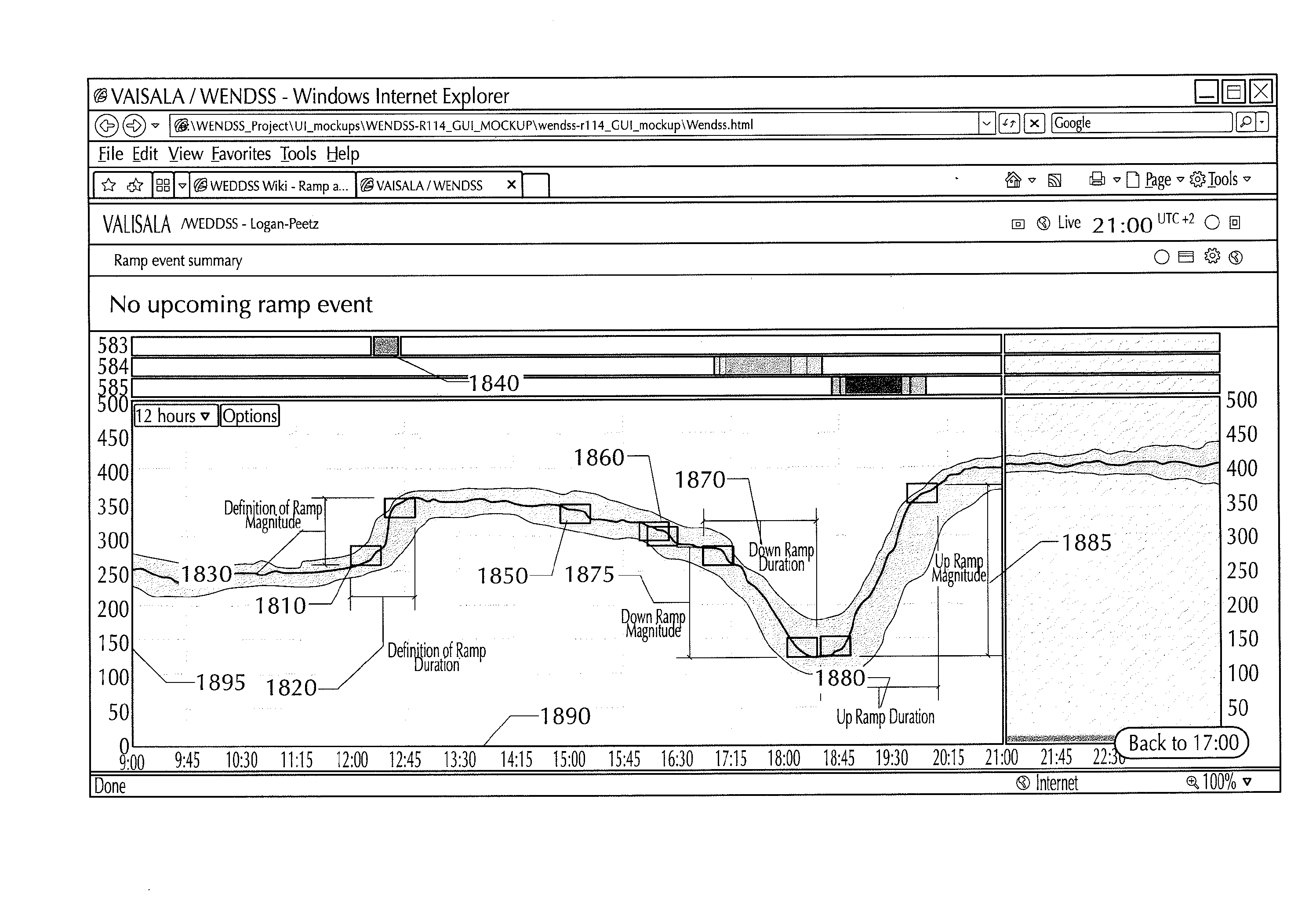

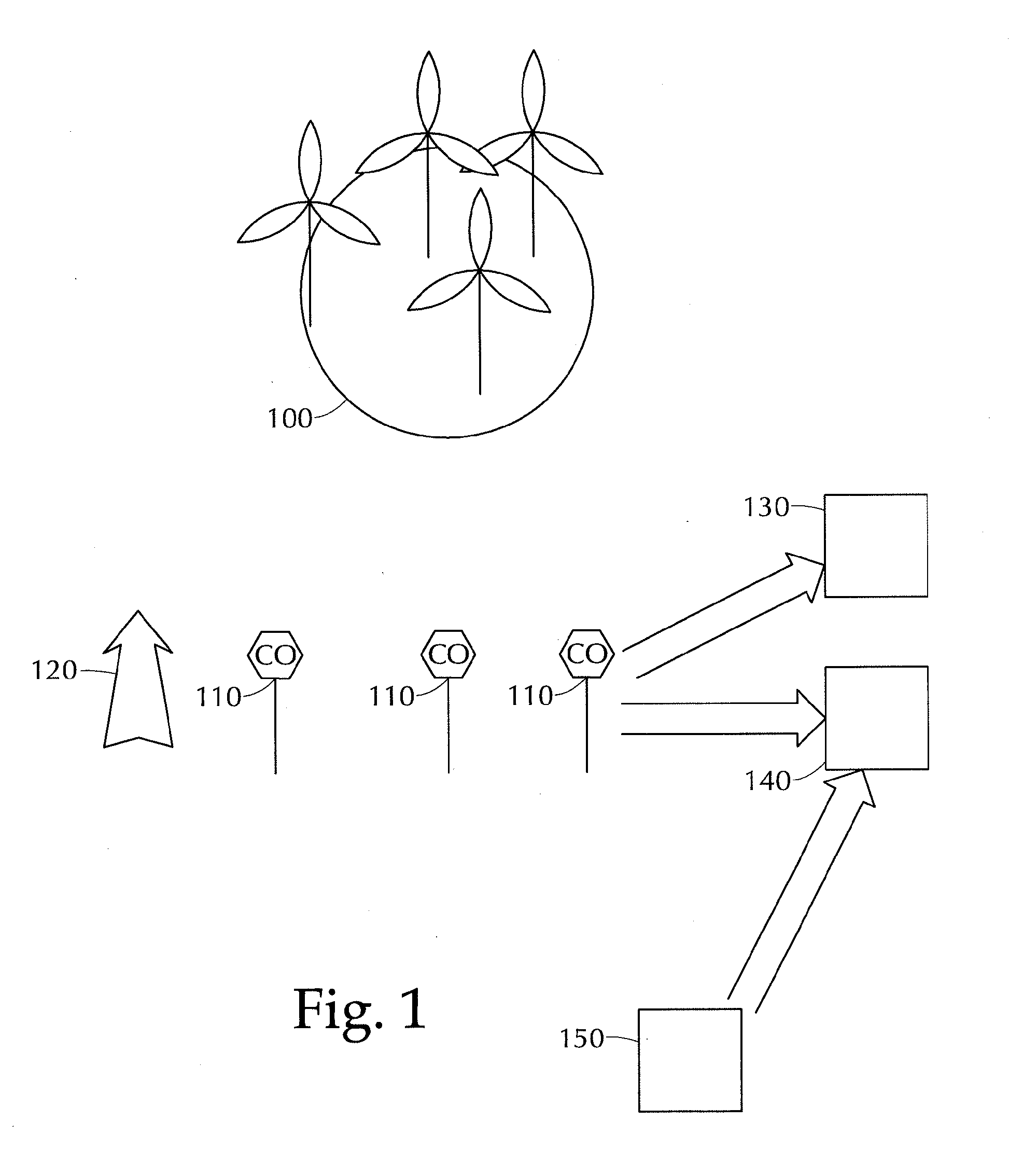

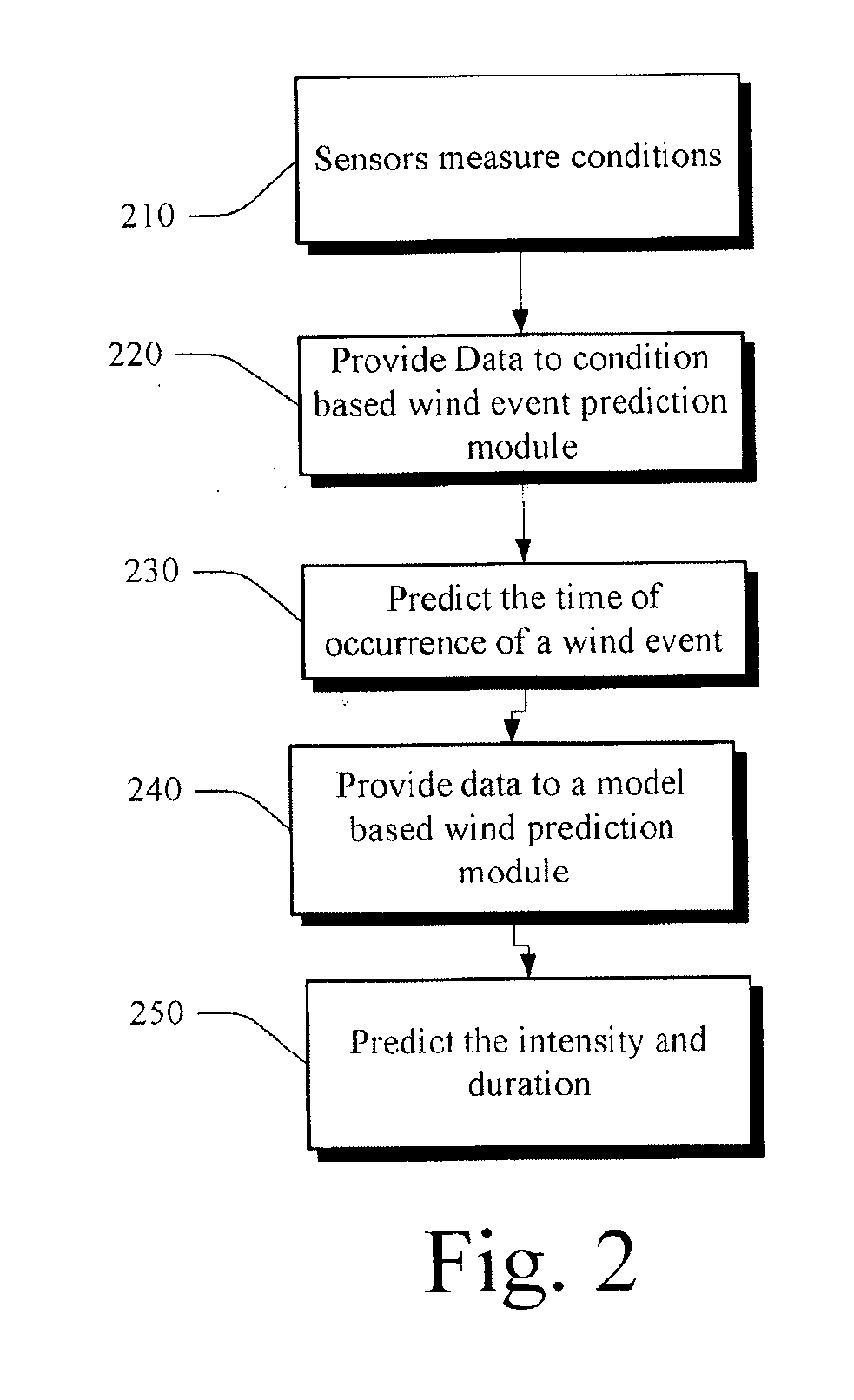

Systems and methods for wind forecasting and grid management

In one embodiment, a wind power ramp event nowcasting system includes a wind condition analyzer for detecting a wind power ramp signal; a sensor array, situated in an area relative to a wind farm, the sensor array providing data to the wind condition analyzer; a mesoscale numerical model; a neural network pattern recognizer; and a statistical forecast model, wherein the statistical model receives input from the wind condition analyzer, the mesoscale numerical model, and the neural network pattern recognizer; and the statistical forecast model outputs a time and duration for the wind power ramp event (WPRE) for the wind farm.

Owner:VAISALA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com