Patents

Literature

7064 results about "Model parameters" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A model parameter is a configuration variable that is internal to the model and whose value can be estimated from data. They are required by the model when making predictions. They values define the skill of the model on your problem. They are estimated or learned from data.

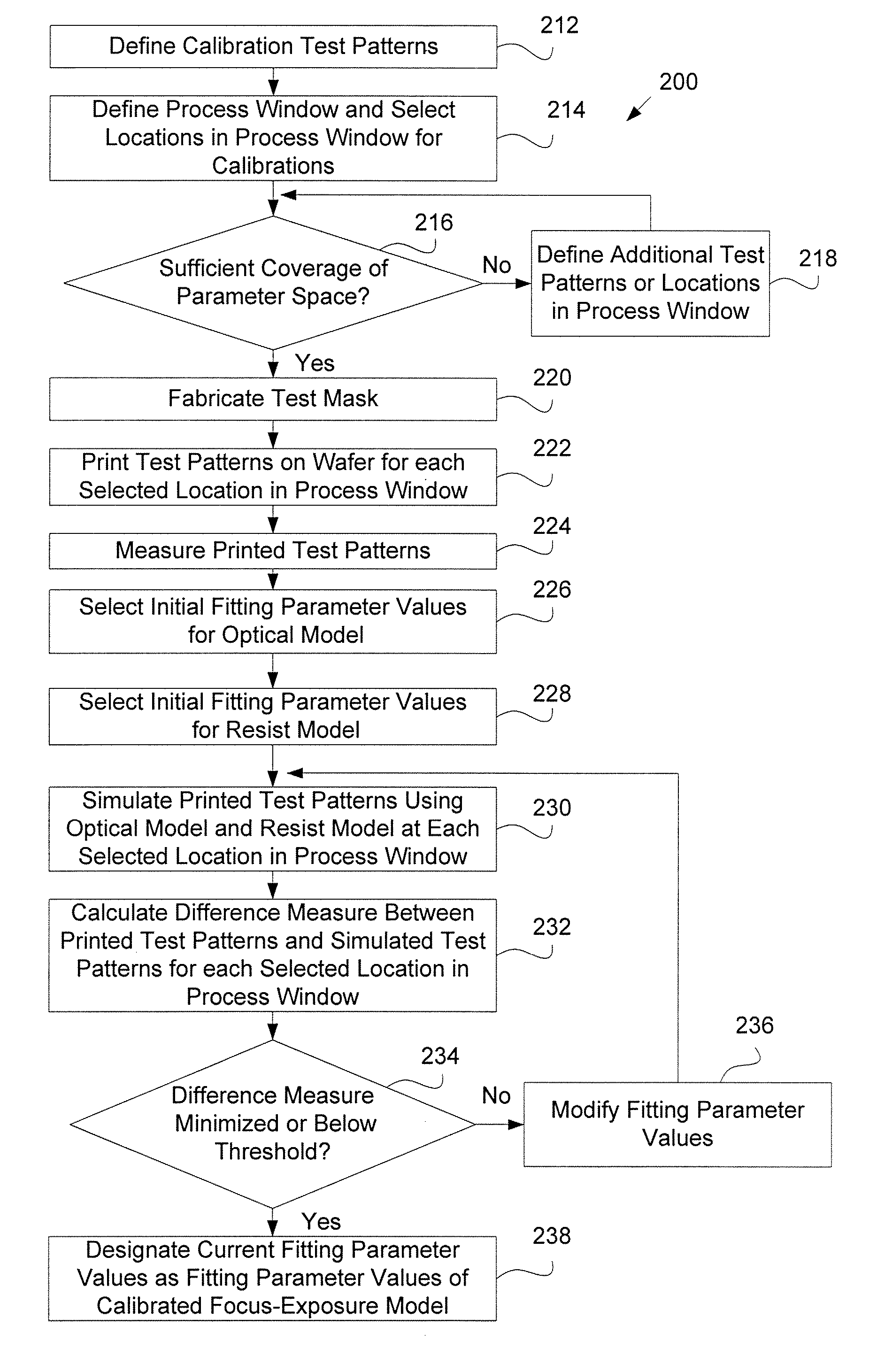

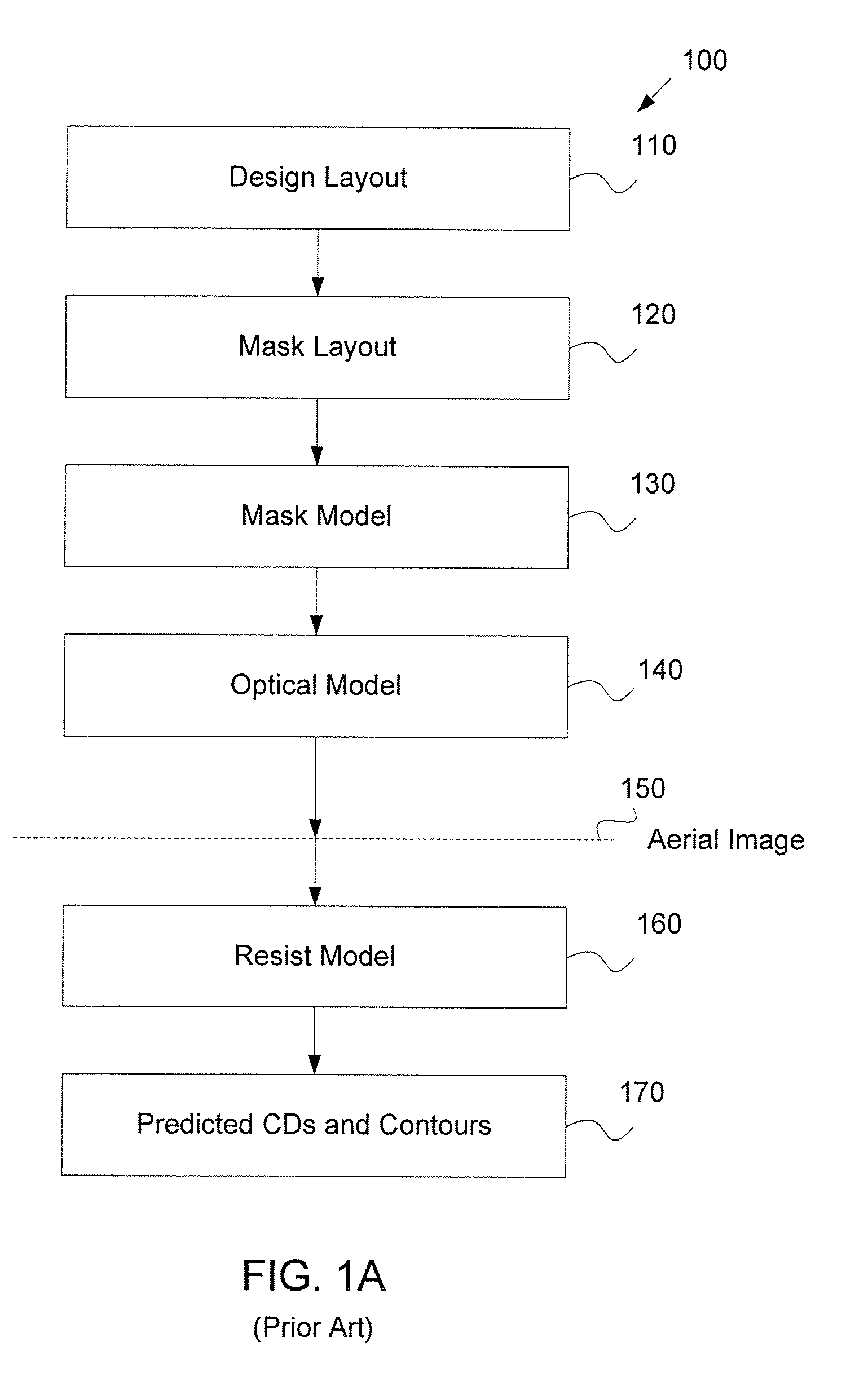

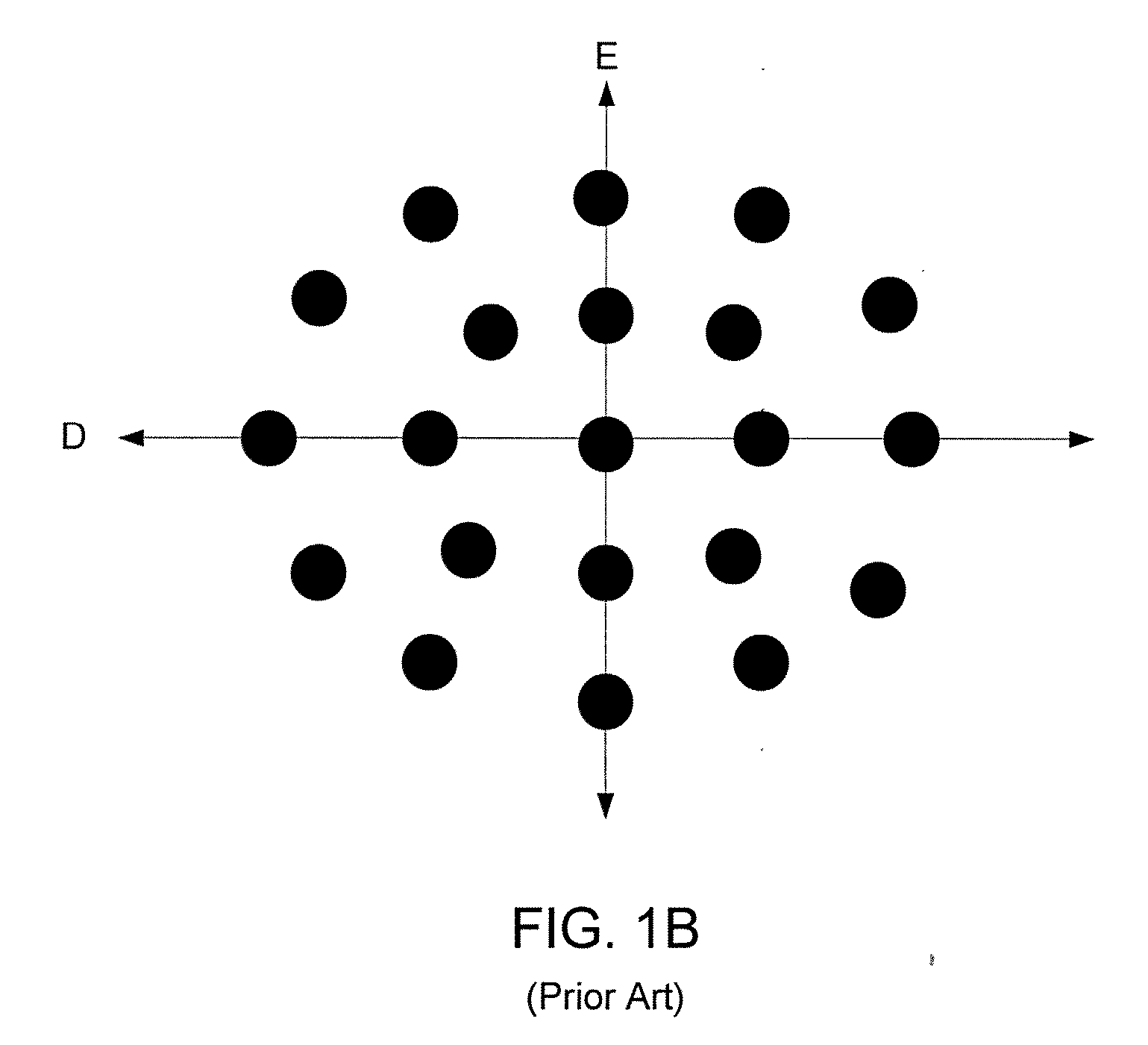

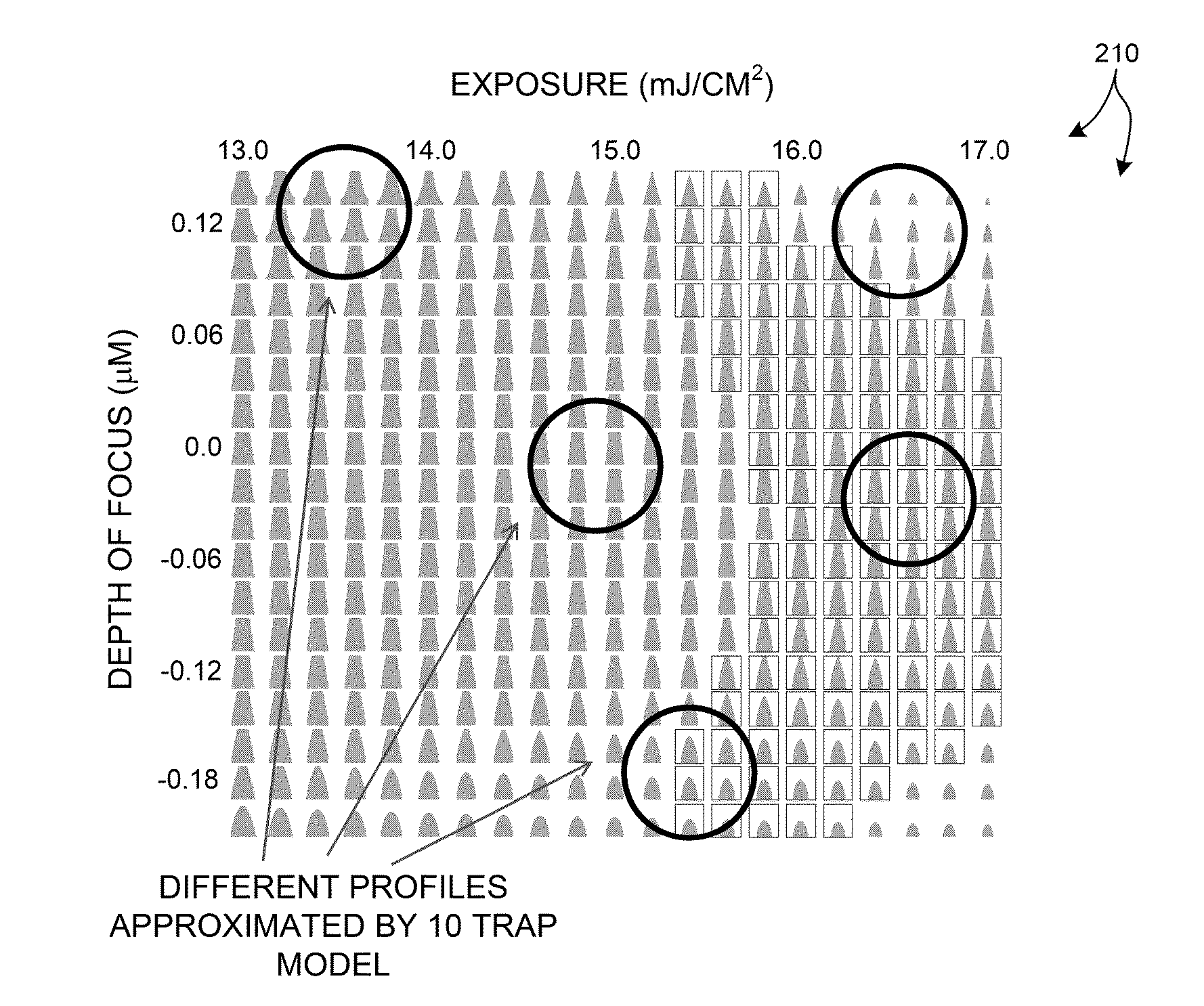

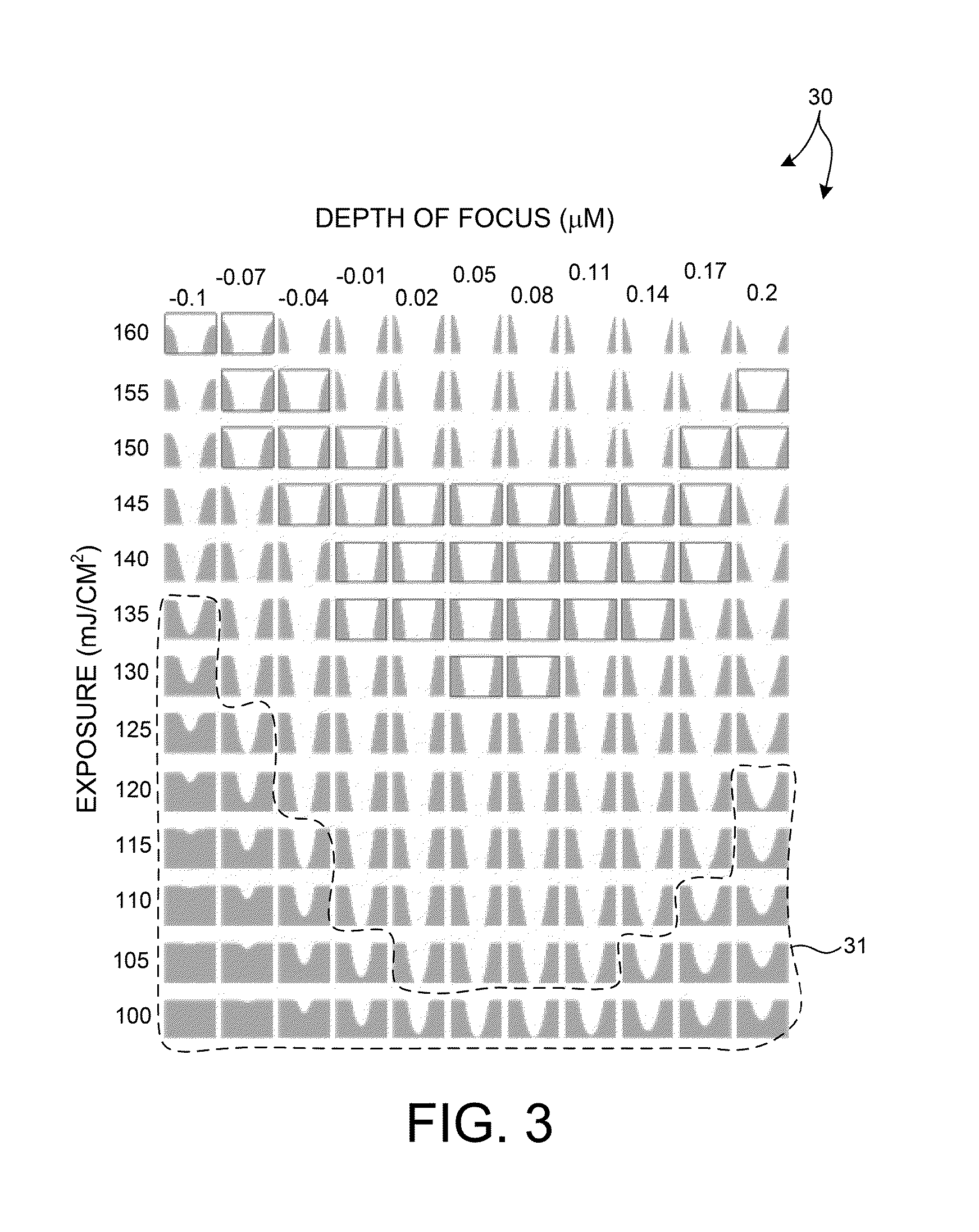

System and method for creating a focus-exposure model of a lithography process

ActiveUS20070031745A1Good accuracy and robustnessPhotomechanical apparatusOriginals for photomechanical treatmentLithography processAlgorithm

A system and a method for creating a focus-exposure model of a lithography process are disclosed. The system and the method utilize calibration data along multiple dimensions of parameter variations, in particular within an exposure-defocus process window space. The system and the method provide a unified set of model parameter values that result in better accuracy and robustness of simulations at nominal process conditions, as well as the ability to predict lithographic performance at any point continuously throughout a complete process window area without a need for recalibration at different settings. With a smaller number of measurements required than the prior-art multiple-model calibration, the focus-exposure model provides more predictive and more robust model parameter values that can be used at any location in the process window.

Owner:ASML NETHERLANDS BV

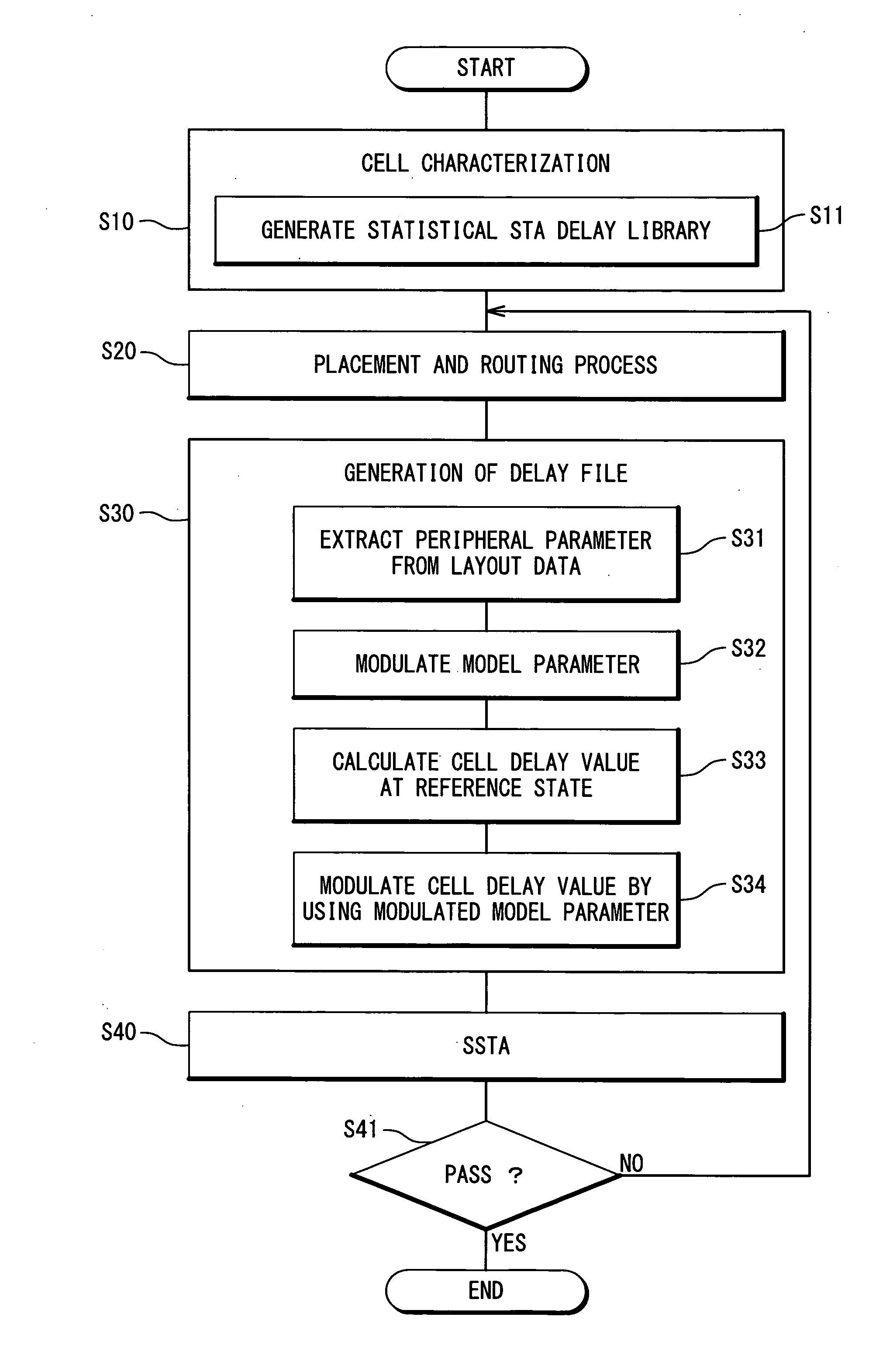

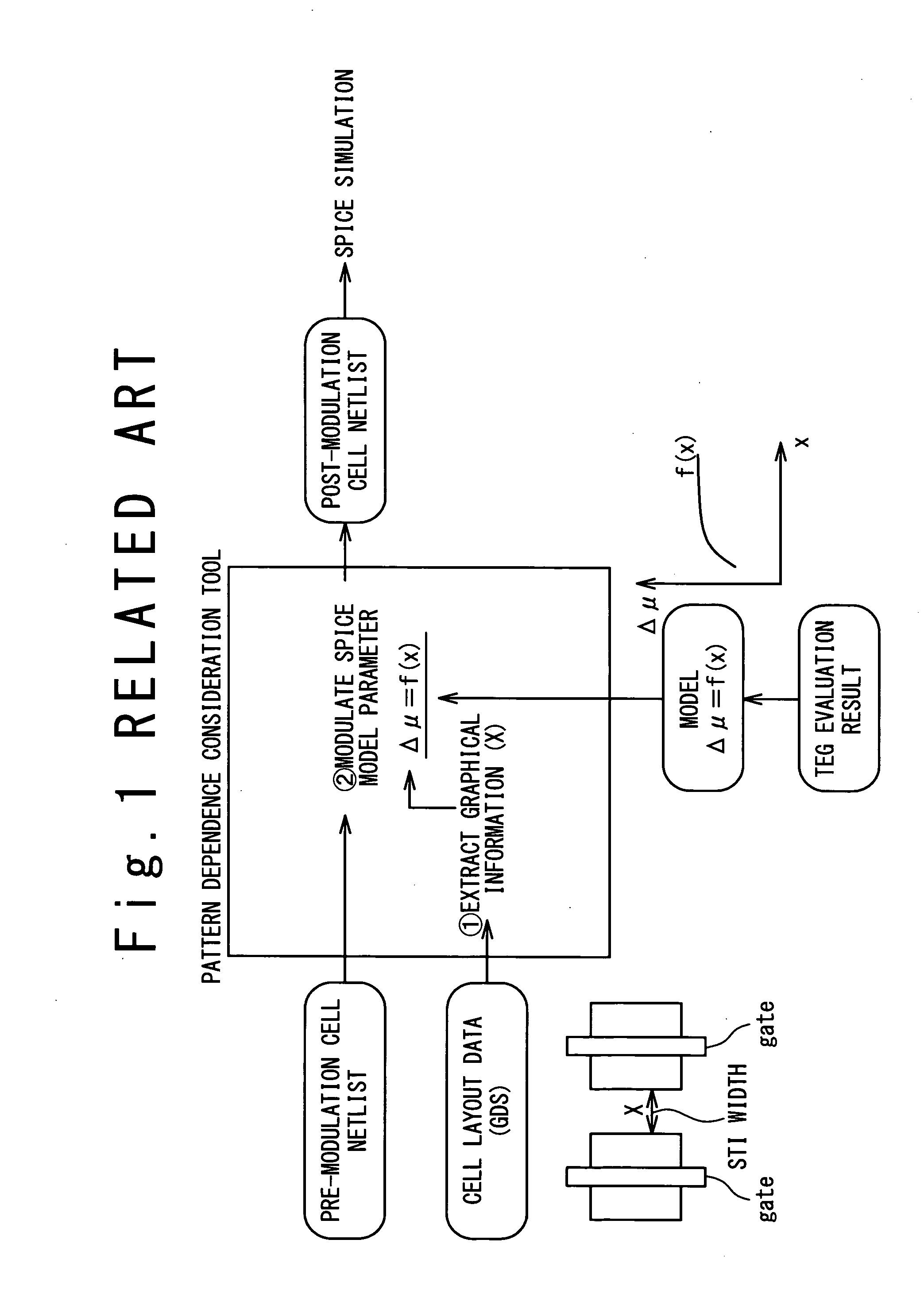

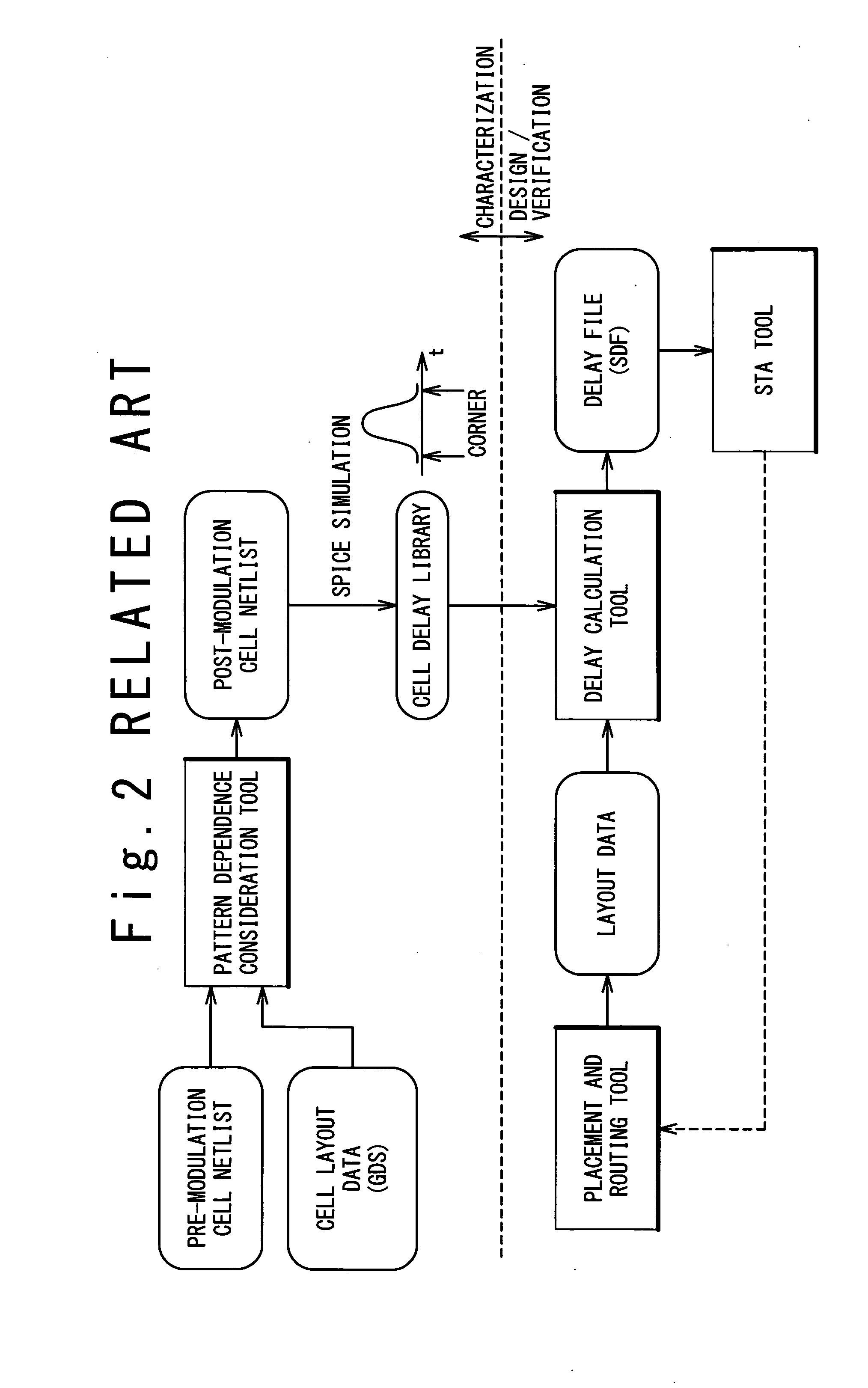

Method and program for designing semiconductor integrated circuit

InactiveUS20090024974A1Increase in design/verification TAT can be preventedHigh delay accuracyCAD circuit designSoftware simulation/interpretation/emulationModel parametersCell based

A design method for an LSI includes: generating a delay library for use in a statistical STA, wherein the delay library provides a delay function that expresses a cell delay value as a function of model parameters of a transistor; generating a layout data; and calculating a delay value of a target cell based on the delay library and the layout data. The calculating includes: referring to the layout data to extract a parameter specifying a layout pattern around a target transistor; modulating model parameters of the target transistor such that the characteristics corresponding to the extracted parameter is obtained in a circuit simulation; calculating, by using the delay function, a reference delay value of the target cell; and calculating, by using the delay function and the modulation amount of the model parameter, a delay variation from the reference delay value depending on the modulation amount.

Owner:RENESAS ELECTRONICS CORP

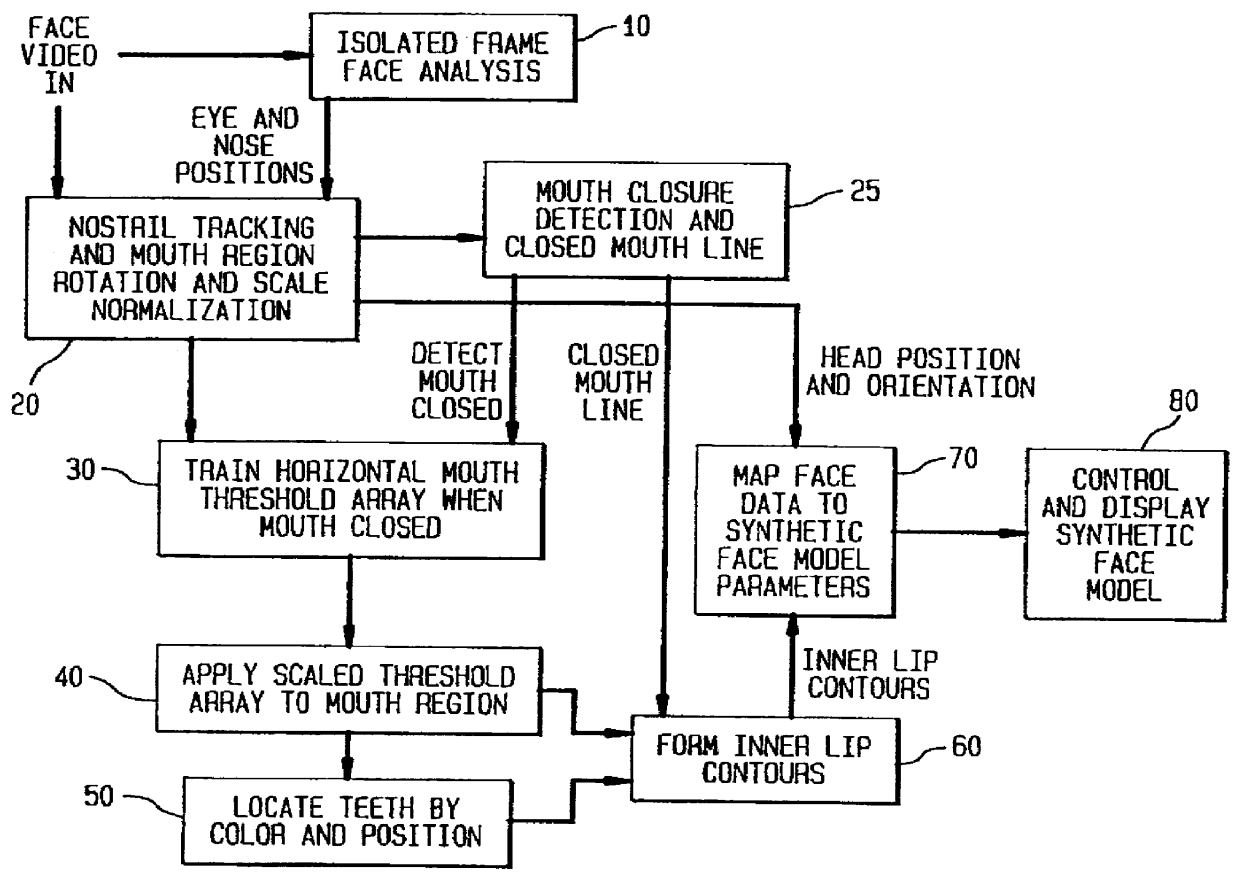

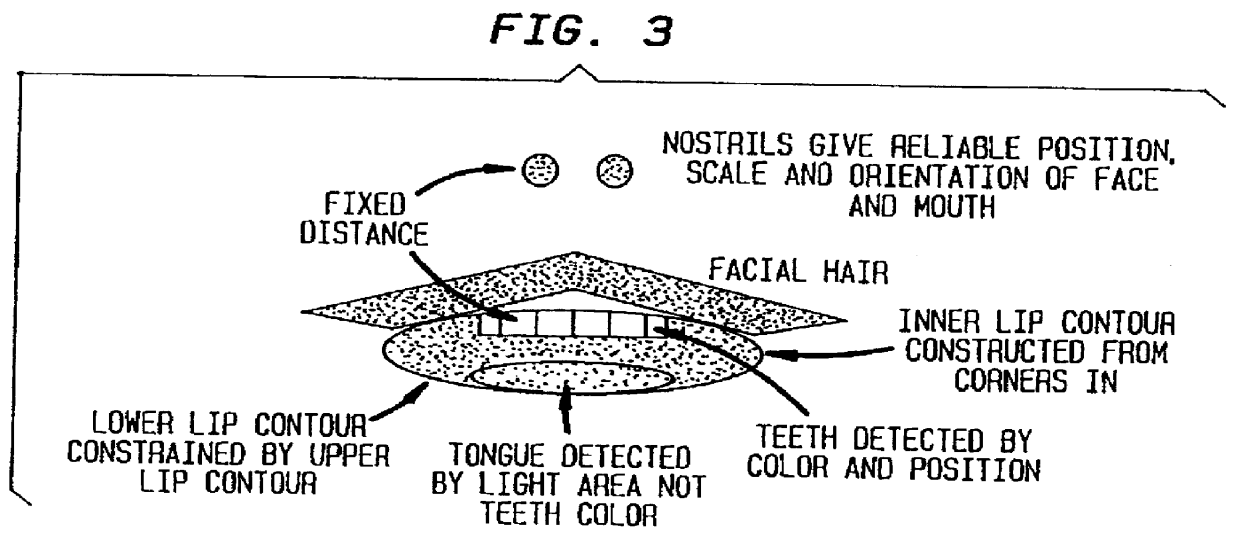

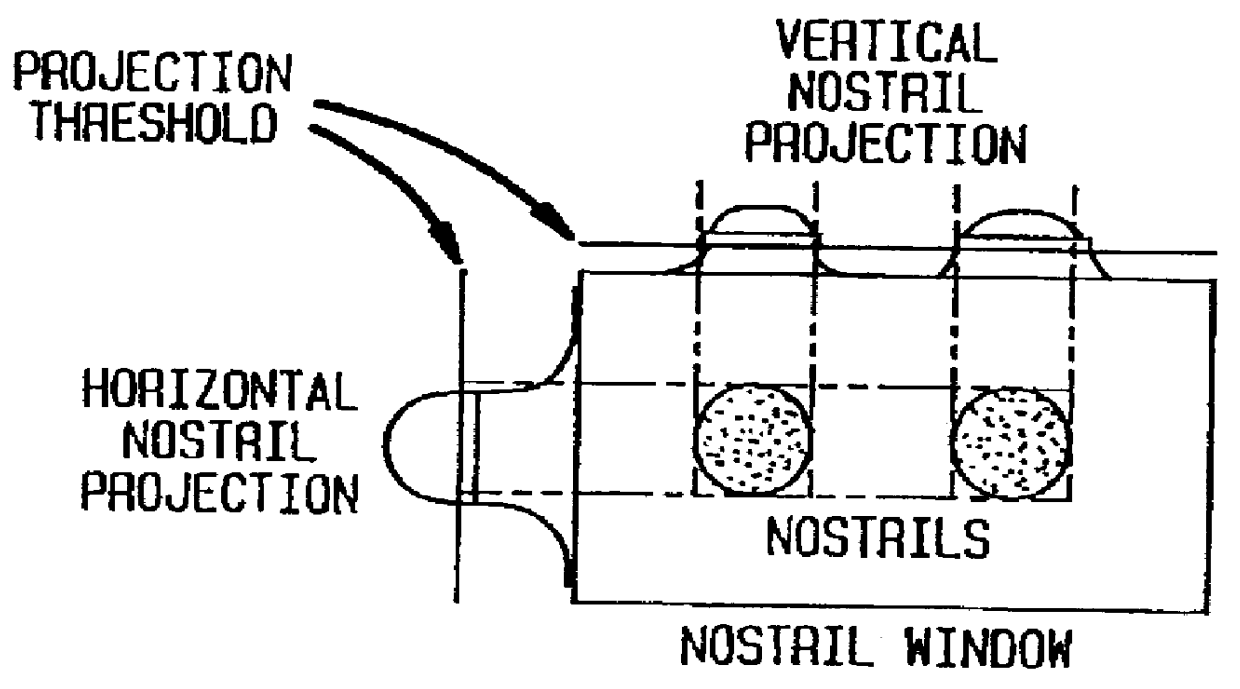

Face feature analysis for automatic lipreading and character animation

A face feature analysis which begins by generating multiple face feature candidates, e.g., eyes and nose positions, using an isolated frame face analysis. Then, a nostril tracking window is defined around a nose candidate and tests are applied to the pixels therein based on percentages of skin color area pixels and nostril area pixels to determine whether the nose candidate represents an actual nose. Once actual nostrils are identified, size, separation and contiguity of the actual nostrils is determined by projecting the nostril pixels within the nostril tracking window. A mouth window is defined around the mouth region and mouth detail analysis is then applied to the pixels within the mouth window to identify inner mouth and teeth pixels and therefrom generate an inner mouth contour. The nostril position and inner mouth contour are used to generate a synthetic model head. A direct comparison is made between the inner mouth contour generated and that of a synthetic model head and the synthetic model head is adjusted accordingly. Vector quantization algorithms may be used to develop a codebook of face model parameters to improve processing efficiency. The face feature analysis is suitable regardless of noise, illumination variations, head tilt, scale variations and nostril shape.

Owner:ALCATEL-LUCENT USA INC

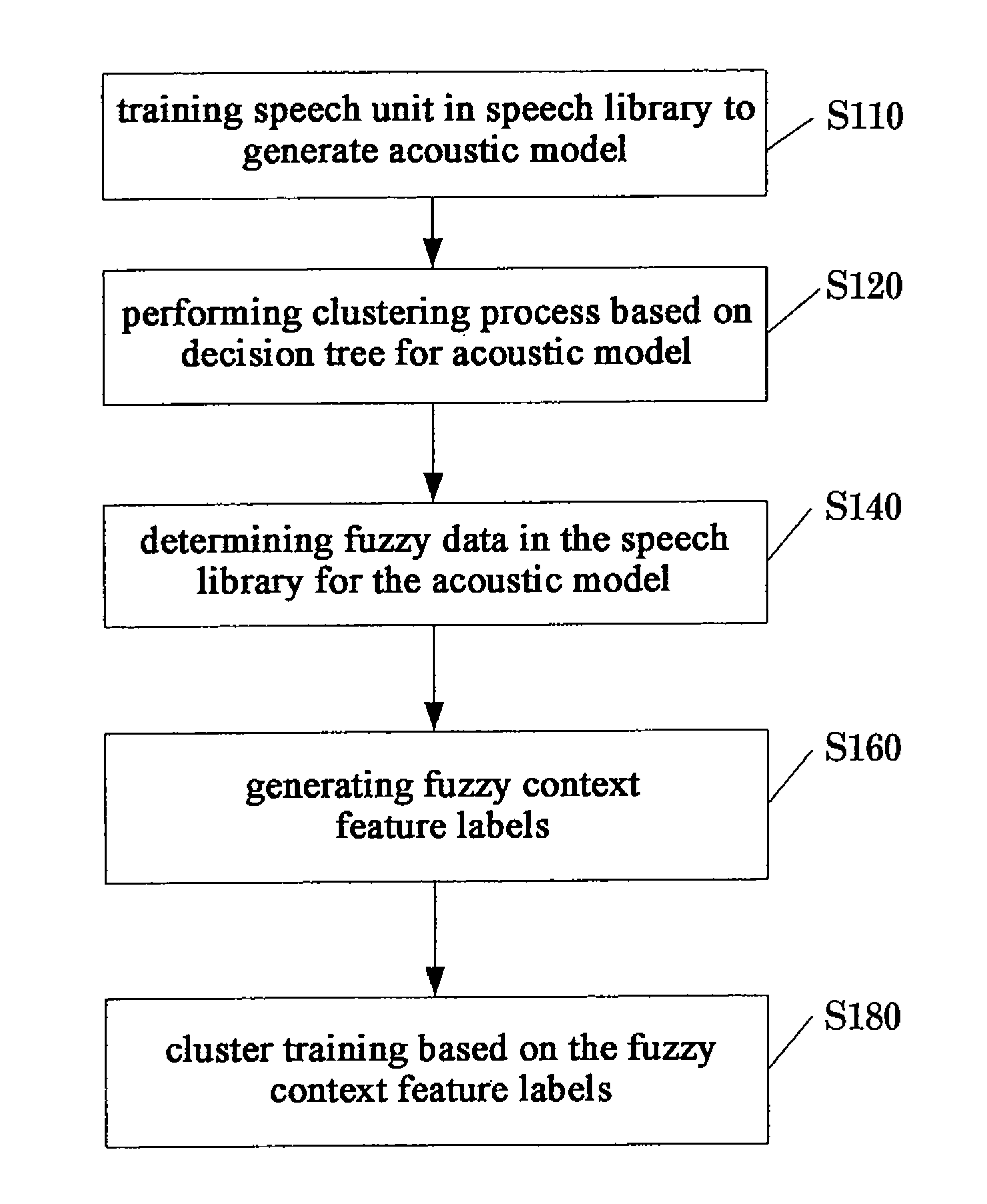

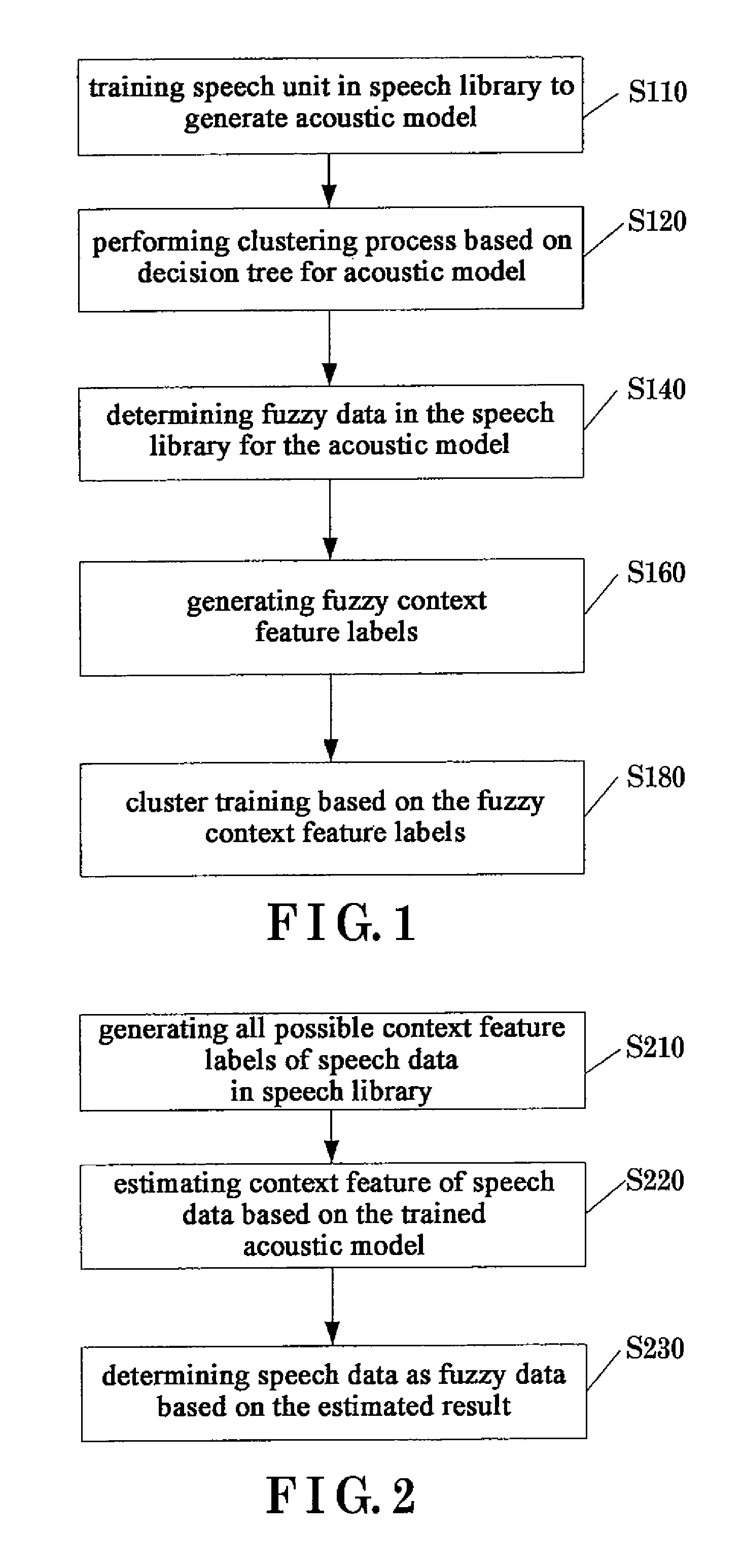

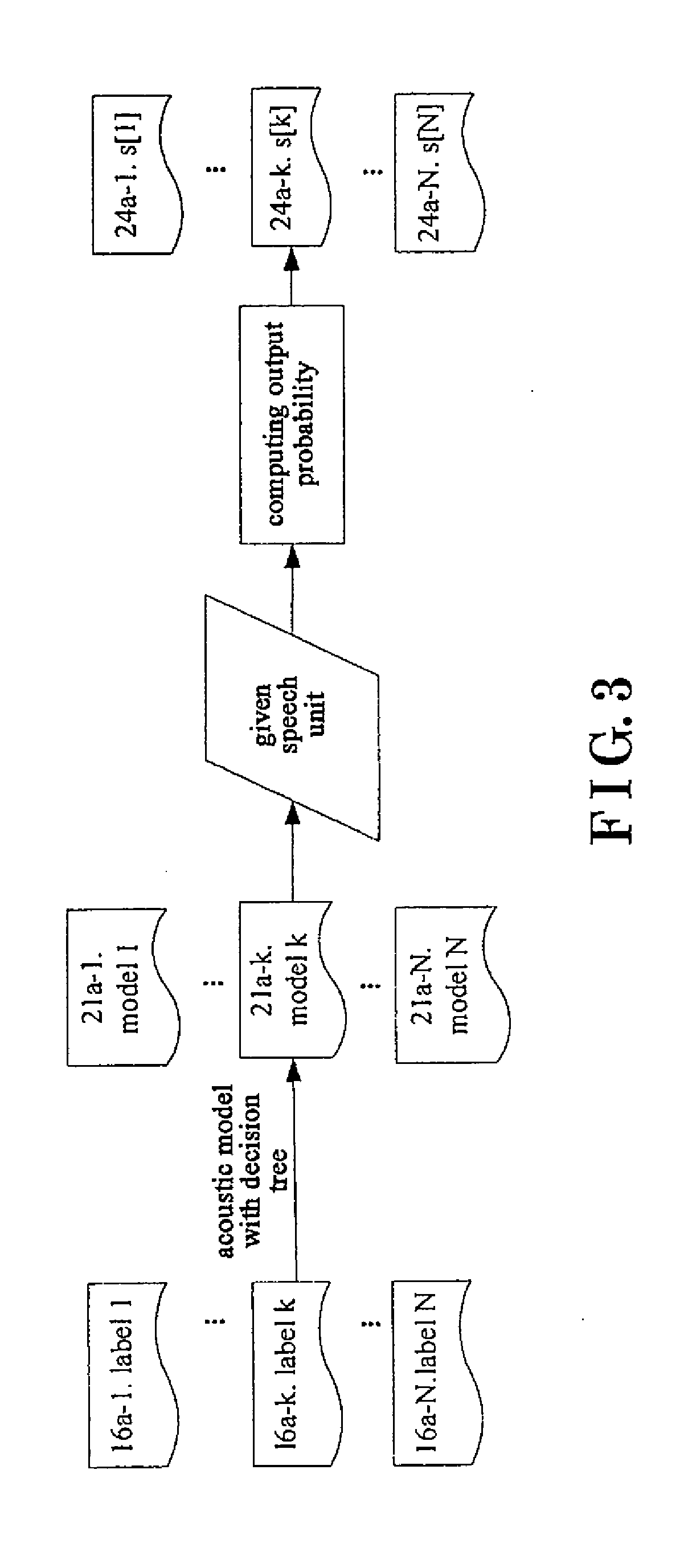

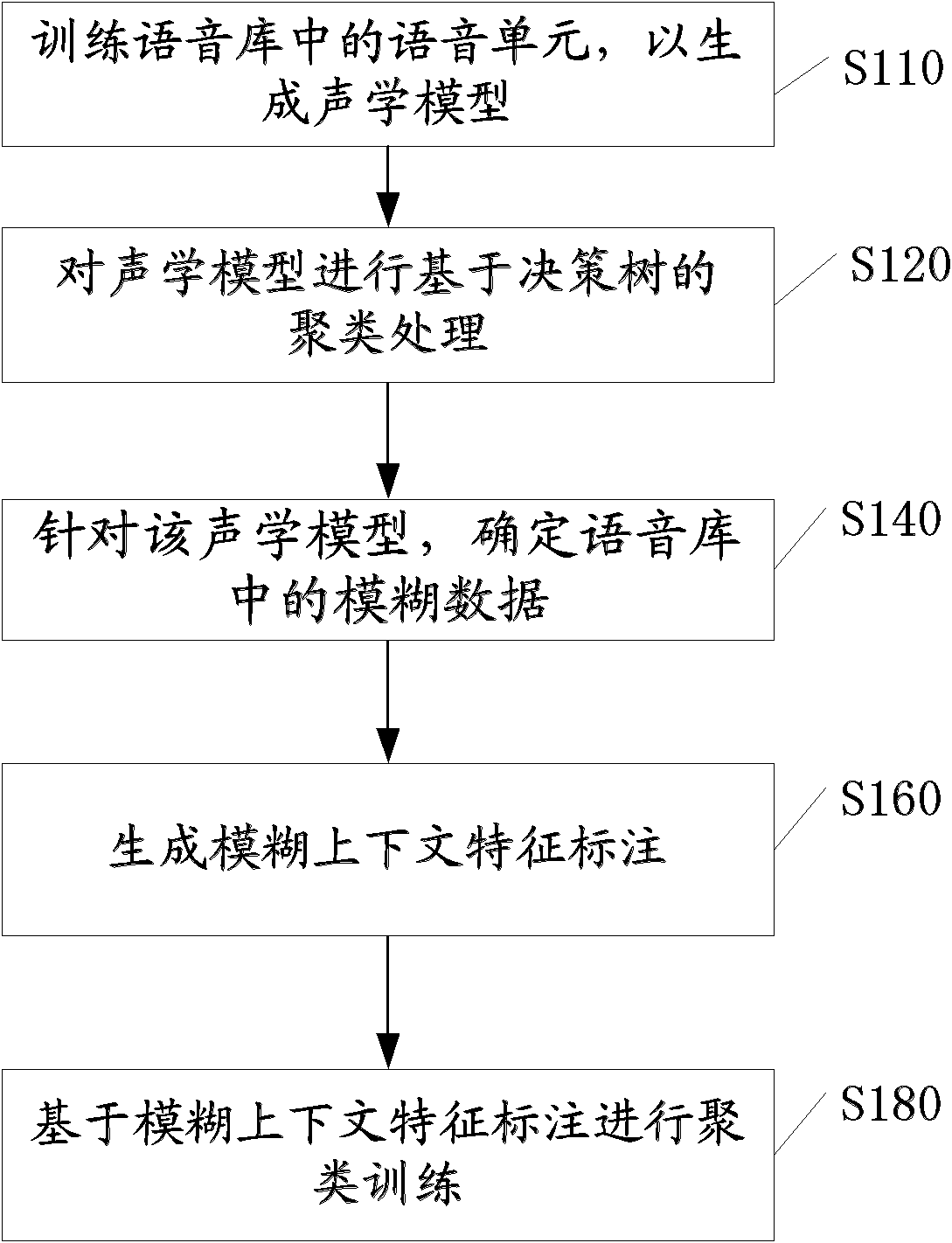

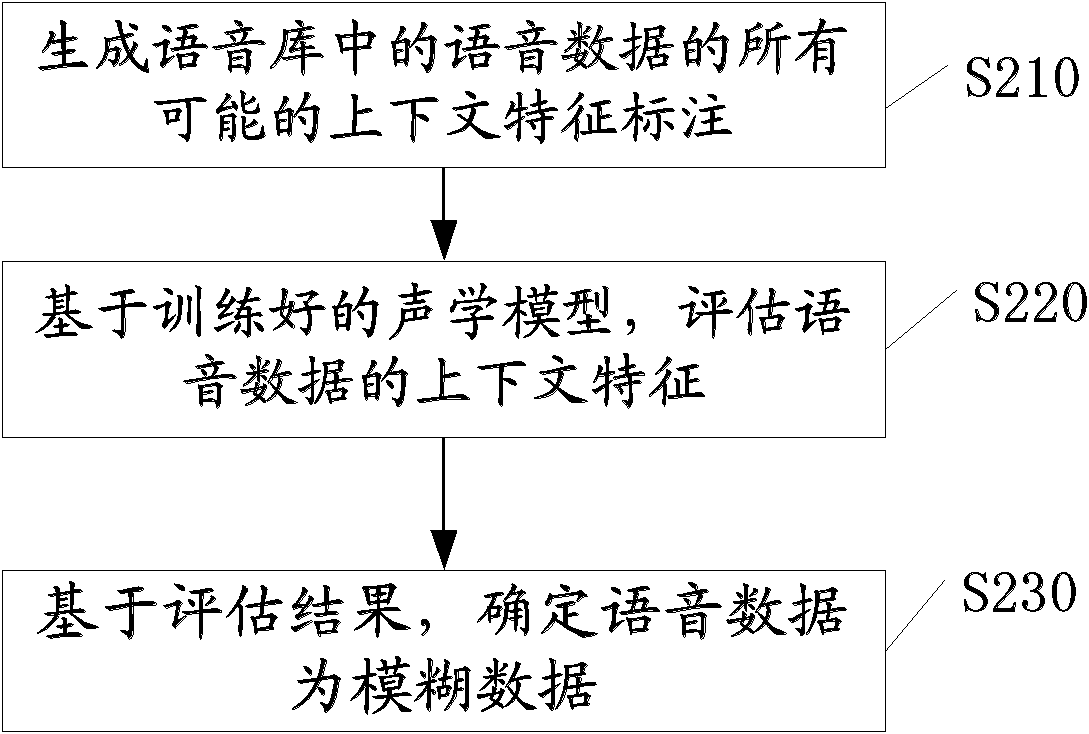

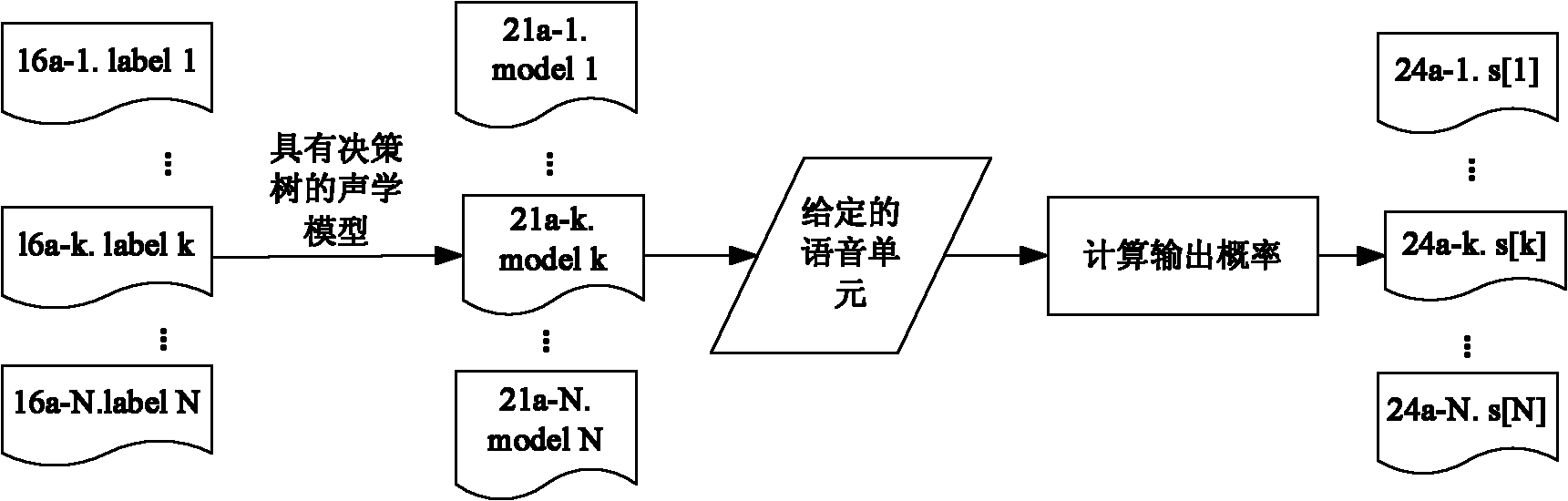

Method, apparatus for synthesizing speech and acoustic model training method for speech synthesis

According to one embodiment, a method, apparatus for synthesizing speech, and a method for training acoustic model used in speech synthesis is provided. The method for synthesizing speech may include determining data generated by text analysis as fuzzy heteronym data, performing fuzzy heteronym prediction on the fuzzy heteronym data to output a plurality of candidate pronunciations of the fuzzy heteronym data and probabilities thereof, generating fuzzy context feature labels based on the plurality of candidate pronunciations and probabilities thereof, determining model parameters for the fuzzy context feature labels based on acoustic model with fuzzy decision tree, generating speech parameters from the model parameters, and synthesizing the speech parameters via synthesizer as speech.

Owner:KK TOSHIBA

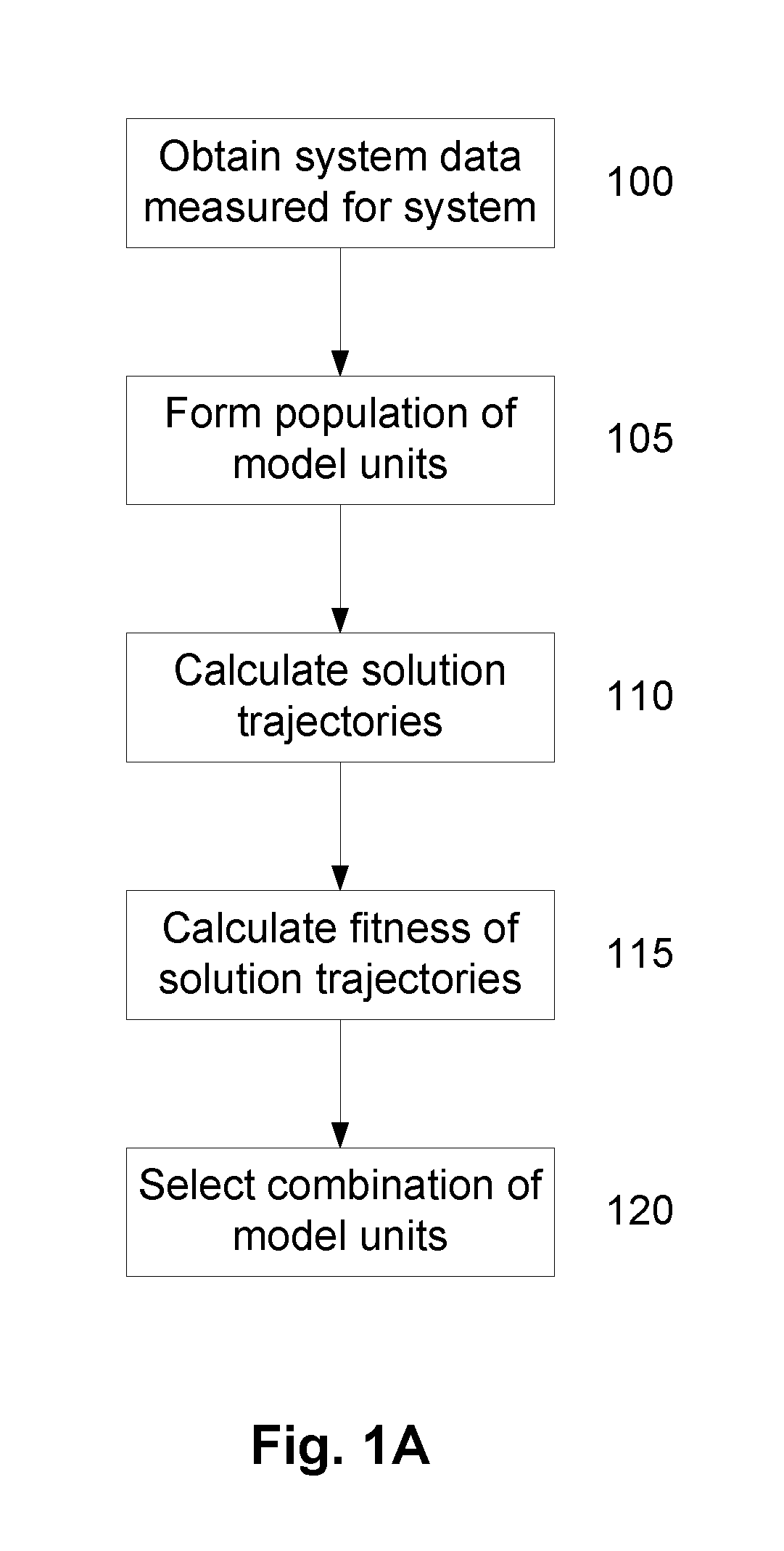

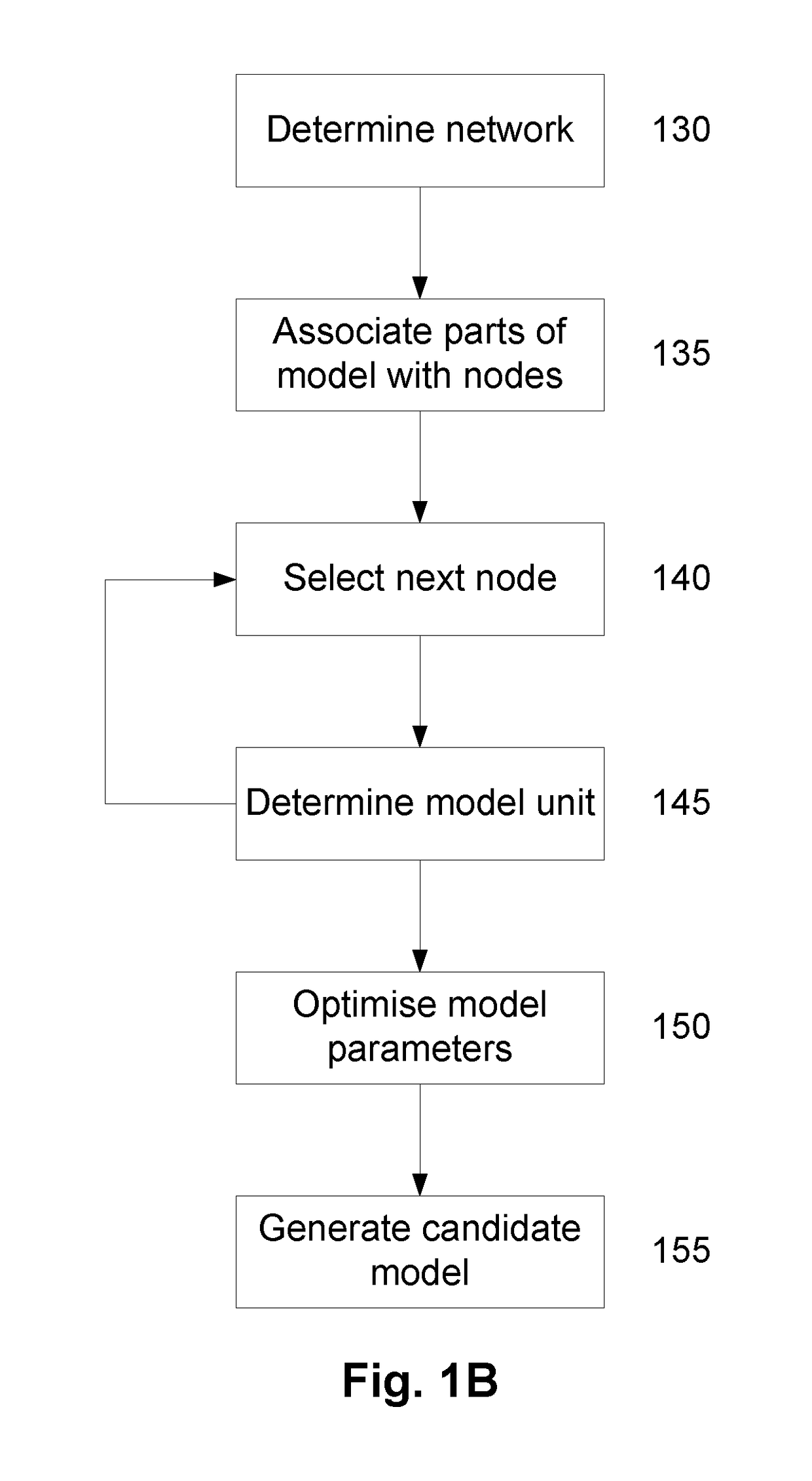

A System and Method for Modelling System Behaviour

ActiveUS20170147722A1Reduce the impactReduce impactMedical simulationDesign optimisation/simulationCollective modelModel system

A method of modelling system behaviour of a physical system, the method including, in one or more electronic processing devices obtaining quantified system data measured for the physical system, the quantified system data being at least partially indicative of the system behaviour for at least a time period, forming at least one population of model units, each model unit including model parameters and at least part of a model, the model parameters being at least partially based on the quantified system data, each model including one or more mathematical equations for modelling system behaviour, for each model unit calculating at least one solution trajectory for at least part of the at least one time period; determining a fitness value based at least in part on the at least one solution trajectory; and, selecting a combination of model units using the fitness values of each model unit, the combination of model units representing a collective model that models the system behaviour.

Owner:EVOLVING MACHINE INTELLIGENCE

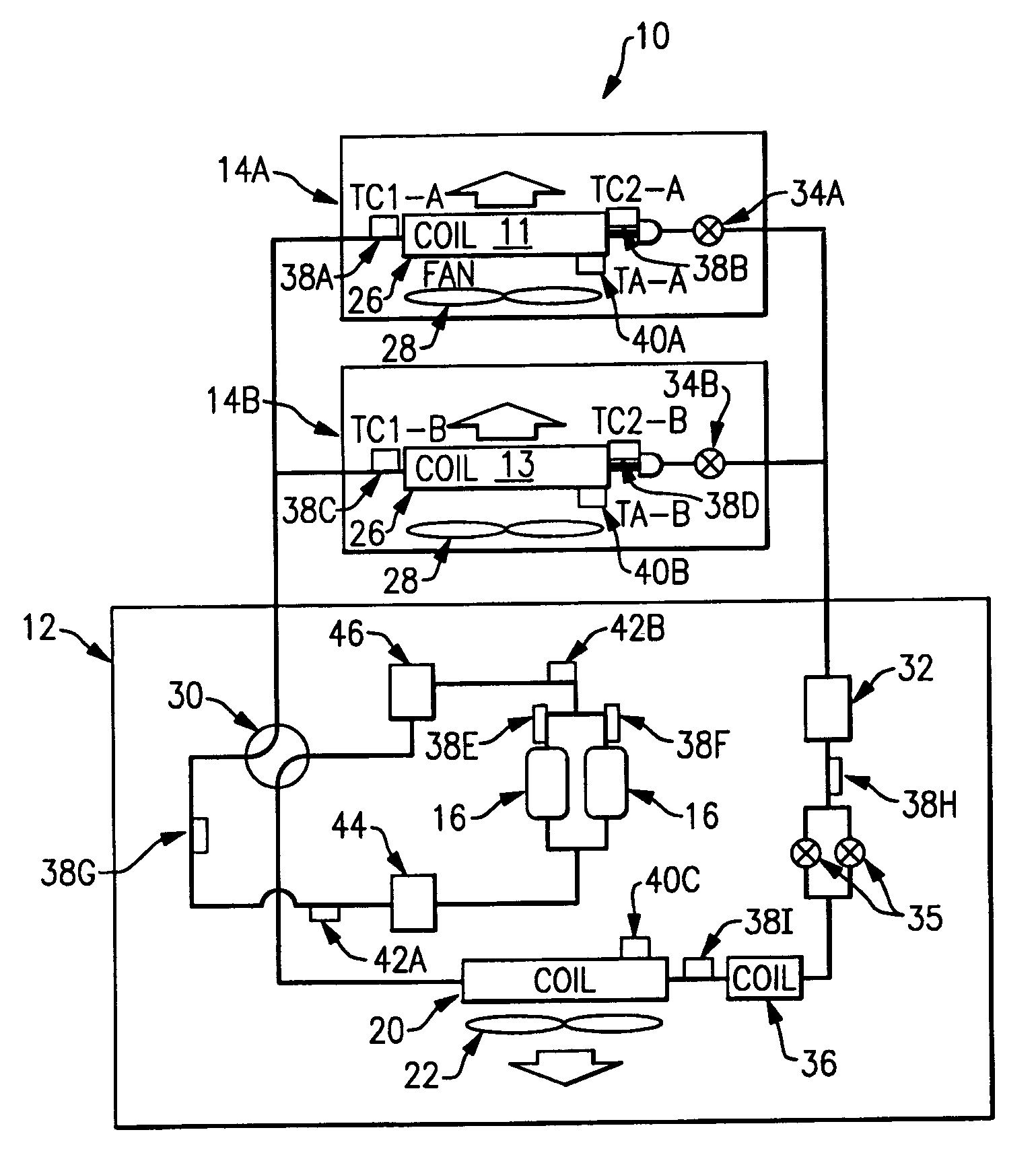

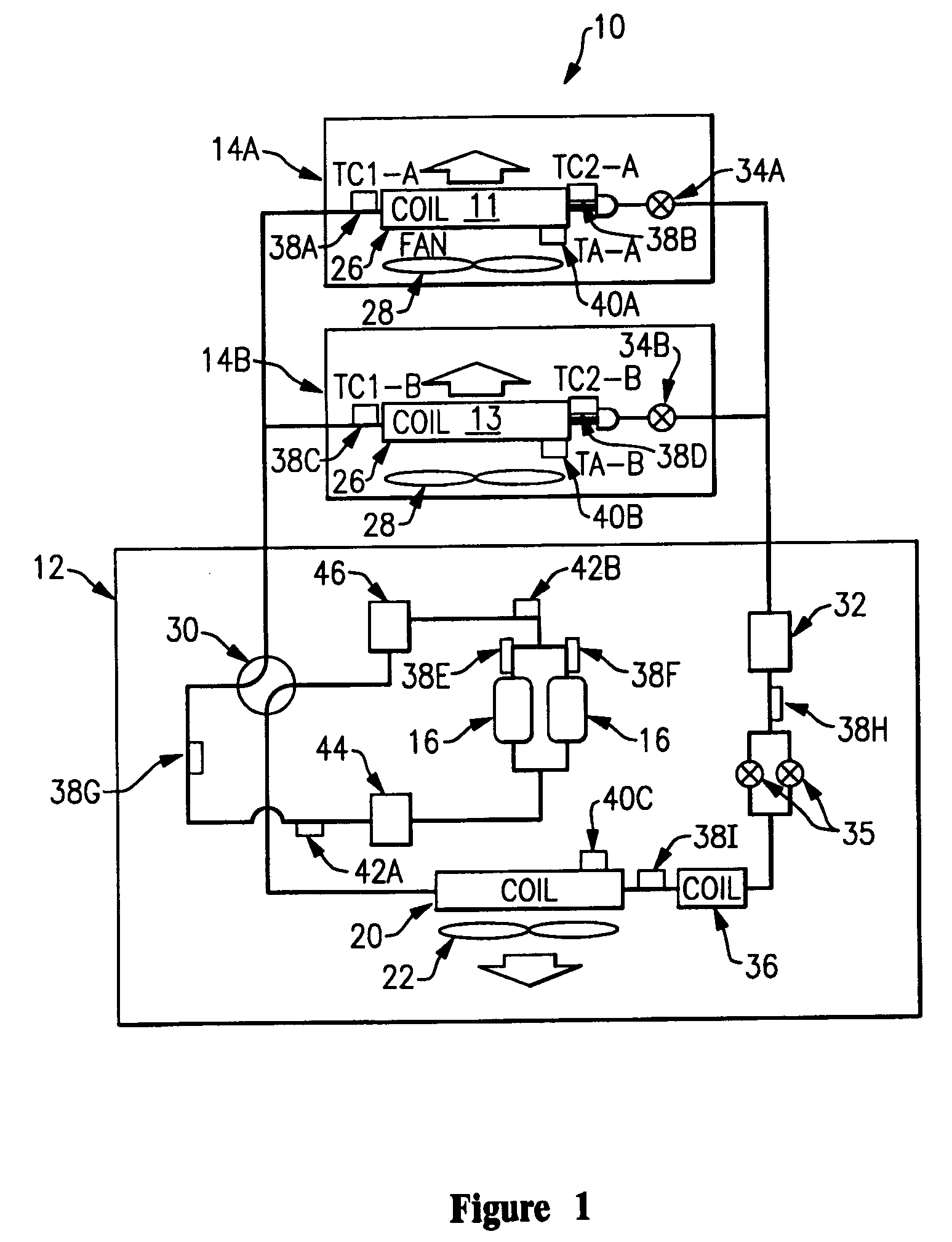

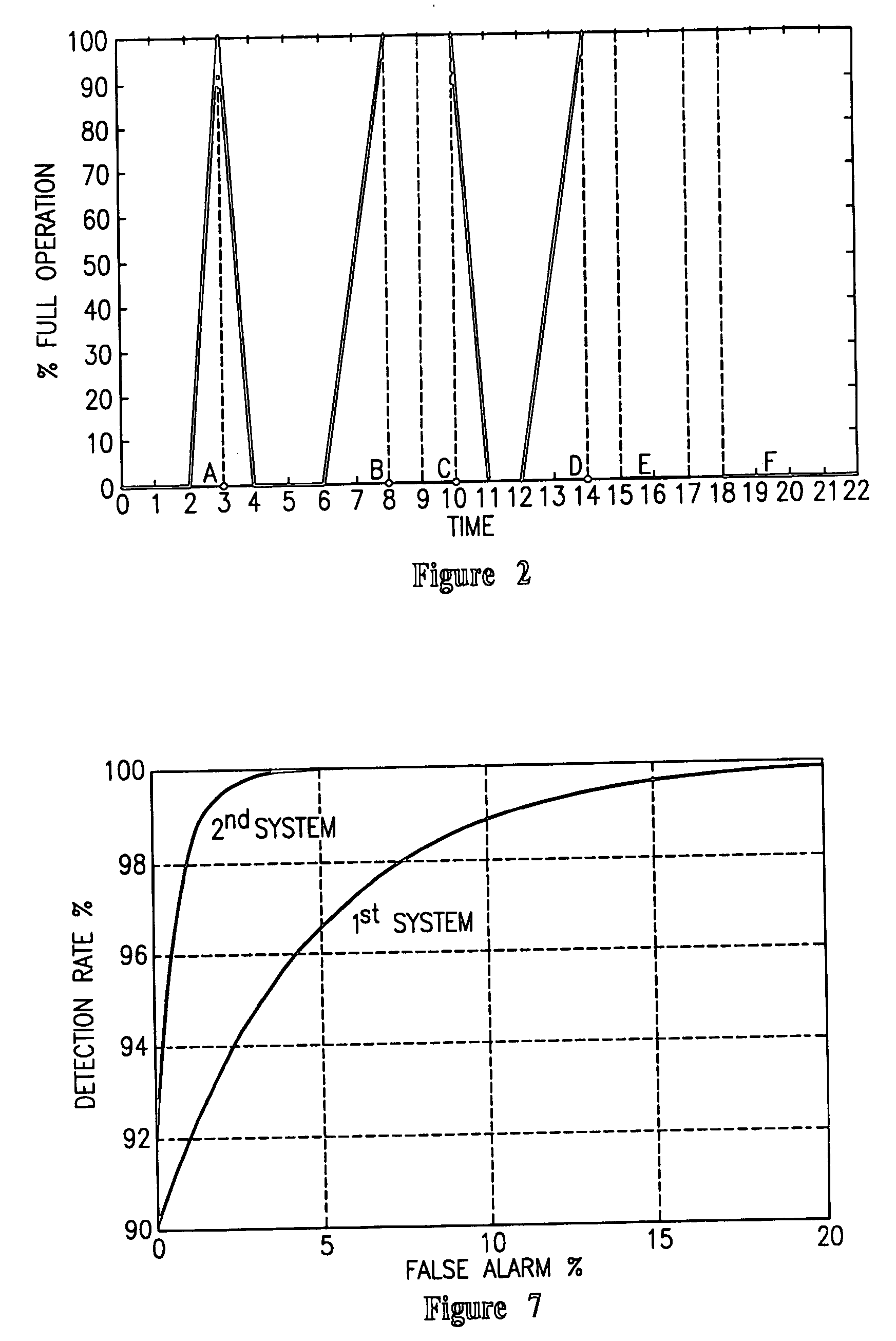

Fault diagnostics and prognostics based on distance fault classifiers

InactiveUS7188482B2Easy to interpret, calibrate and implementMaximize distanceAir-treating devicesSpace heating and ventilationOnline algorithmAir filter

The present invention is directed to a mathematical approach to detect faults by reconciling known data driven techniques with a physical understanding of the HVAC system and providing a direct linkage between model parameters and physical system quantities to arrive at classification rules that are easy to interpret, calibrate and implement. The fault modes of interest are low system refrigerant charge and air filter plugging. System data from standard sensors is analyzed under no-fault and full-fault conditions. The data is screened to uncover patterns though which the faults of interest manifest in sensor data and the patterns are analyzed and combined with available physical system information to develop an underlying principle that links failures to measured sensor responses. These principles are then translated into online algorithms for failure detection.

Owner:CARRIER CORP

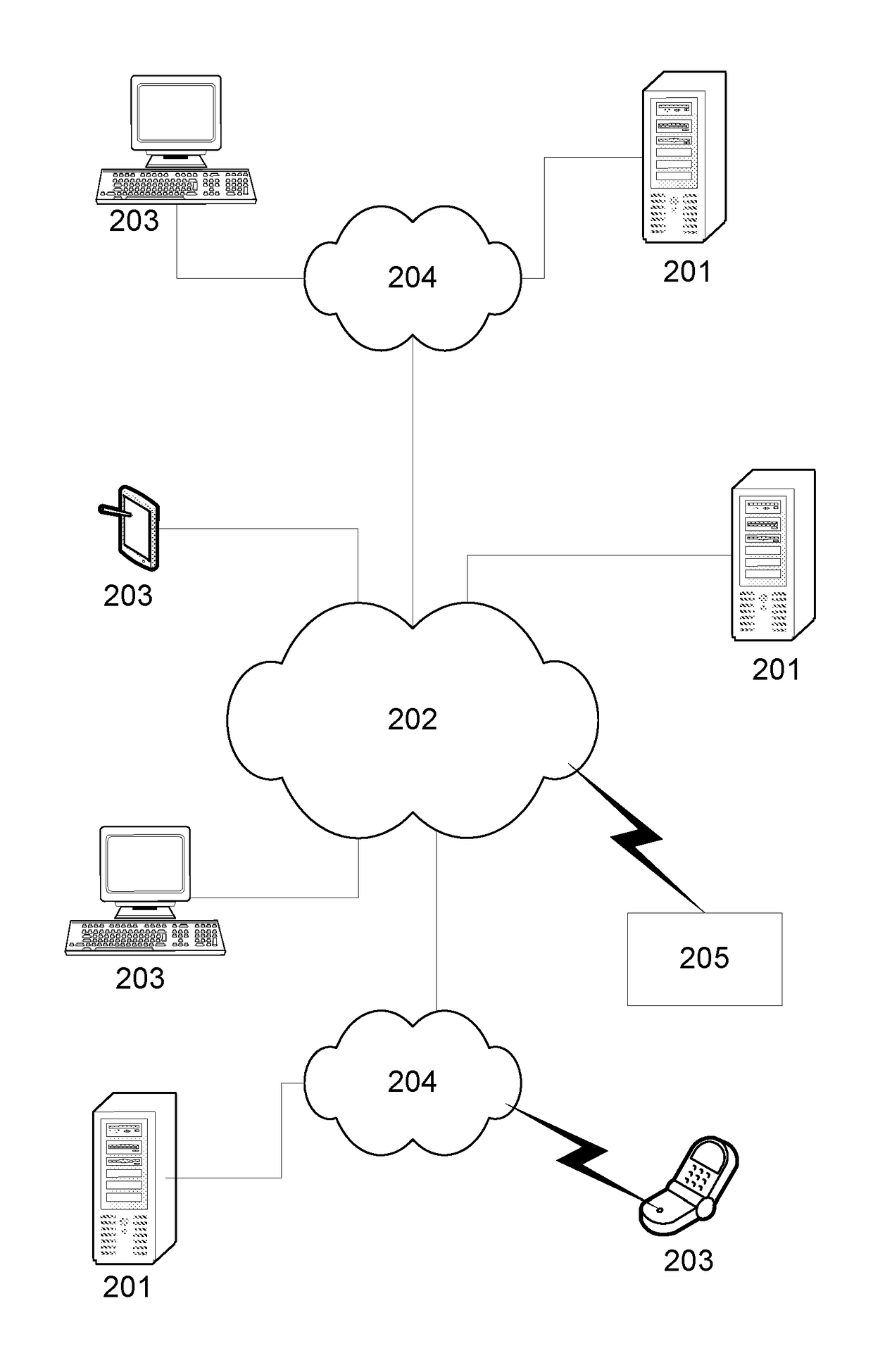

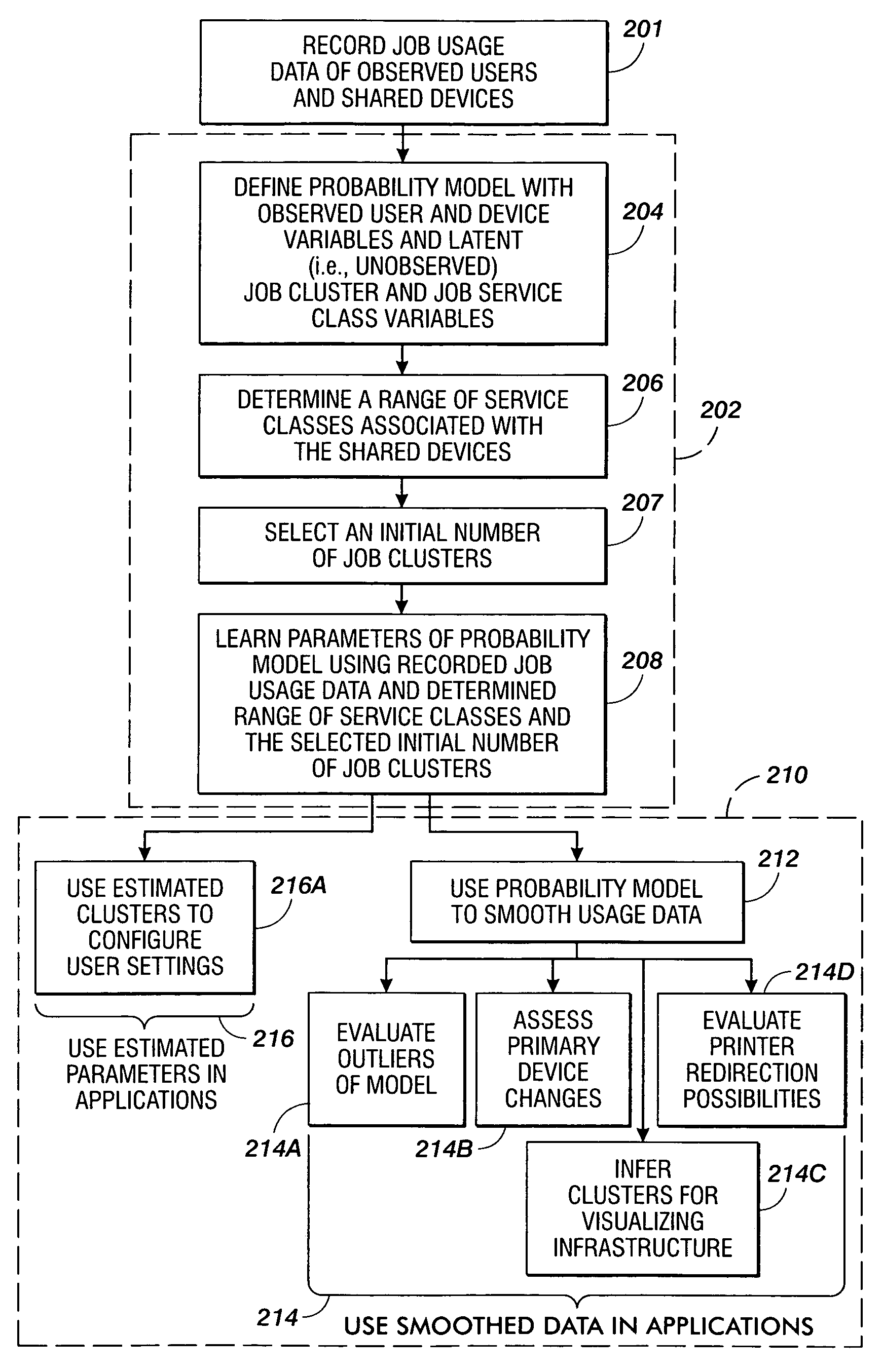

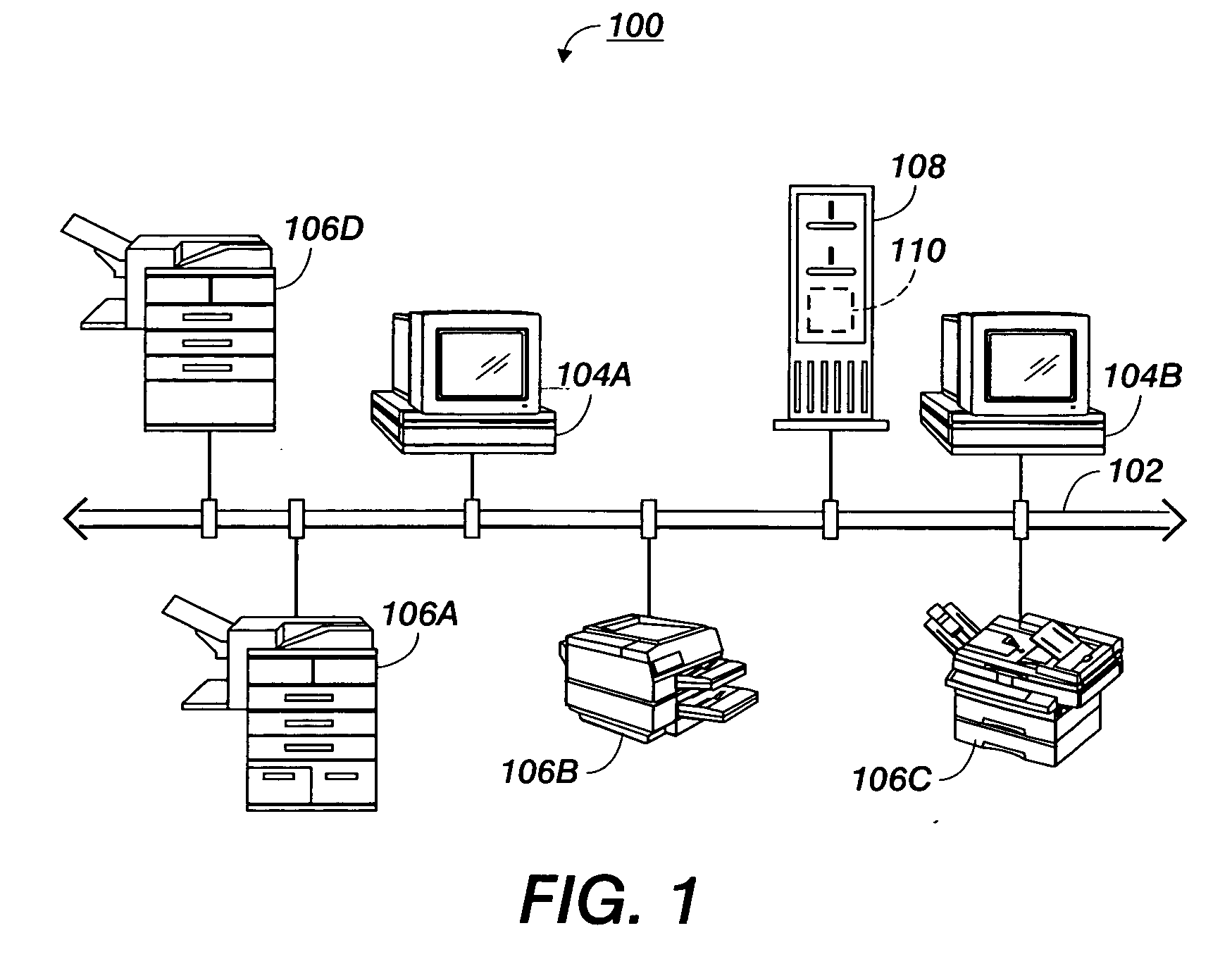

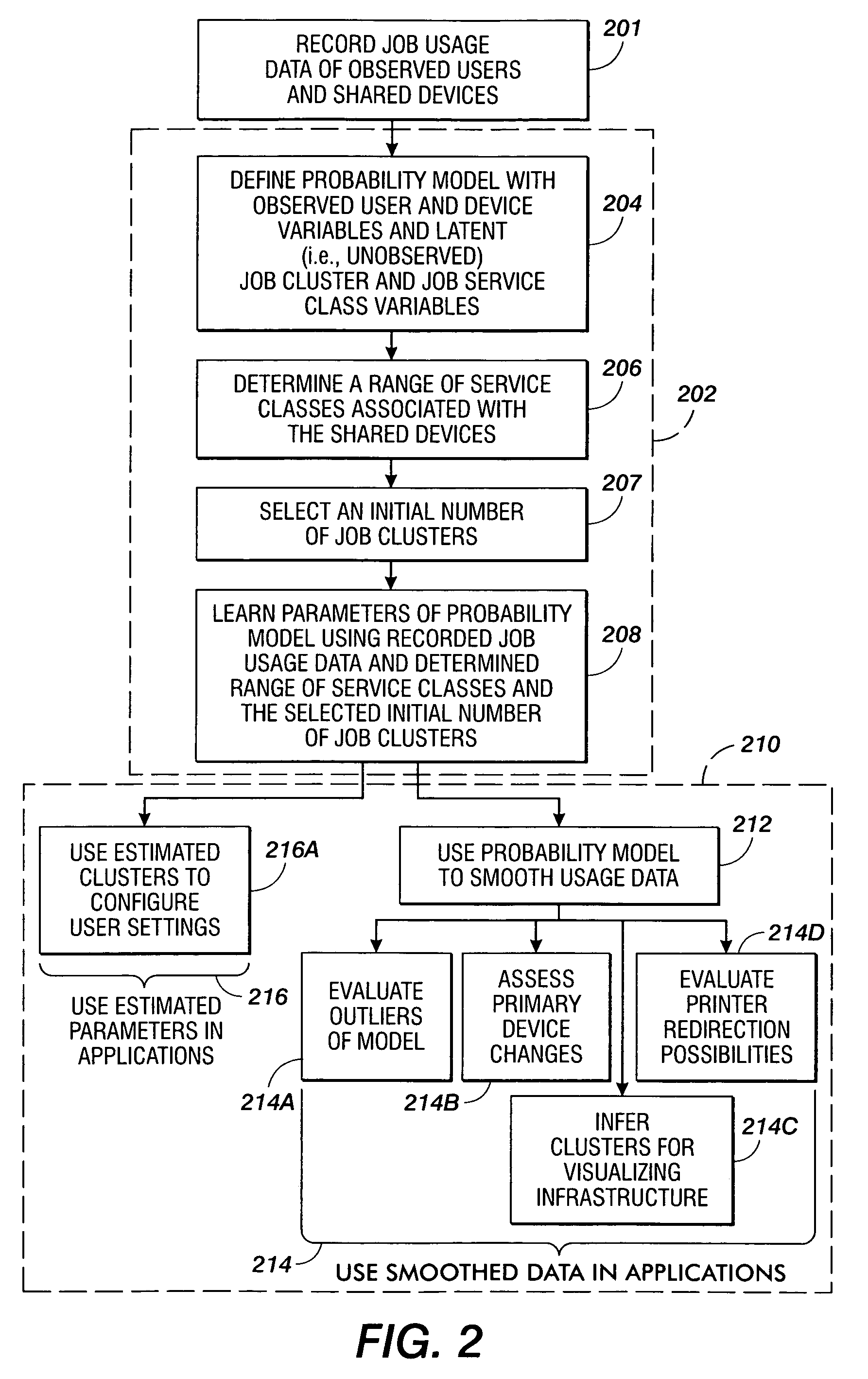

Probabilistic modeling of shared device usage

ActiveUS20060206445A1Wide rangeDigital computer detailsCharacter and pattern recognitionClass of serviceModel parameters

Methods are disclosed for estimating parameters of a probability model that models user behavior of shared devices offering different classes of service for carrying out jobs. In operation, usage job data of observed users and devices carrying out the jobs is recorded. A probability model is defined with an observed user variable, an observed device variable, a latent job cluster variable, and a latent job service class variable. A range of job service classes associated with the shared devices is determined, and an initial number of job clusters is selected. Parameters of the probability model are learned using the recorded job usage data, the determined range of service classes, and the selected initial number of job clusters. The learned parameters of the probability model are applied to evaluate one or more of: configuration of the shared devices, use of the shared devices, and job redirection between the shared devices.

Owner:XEROX CORP

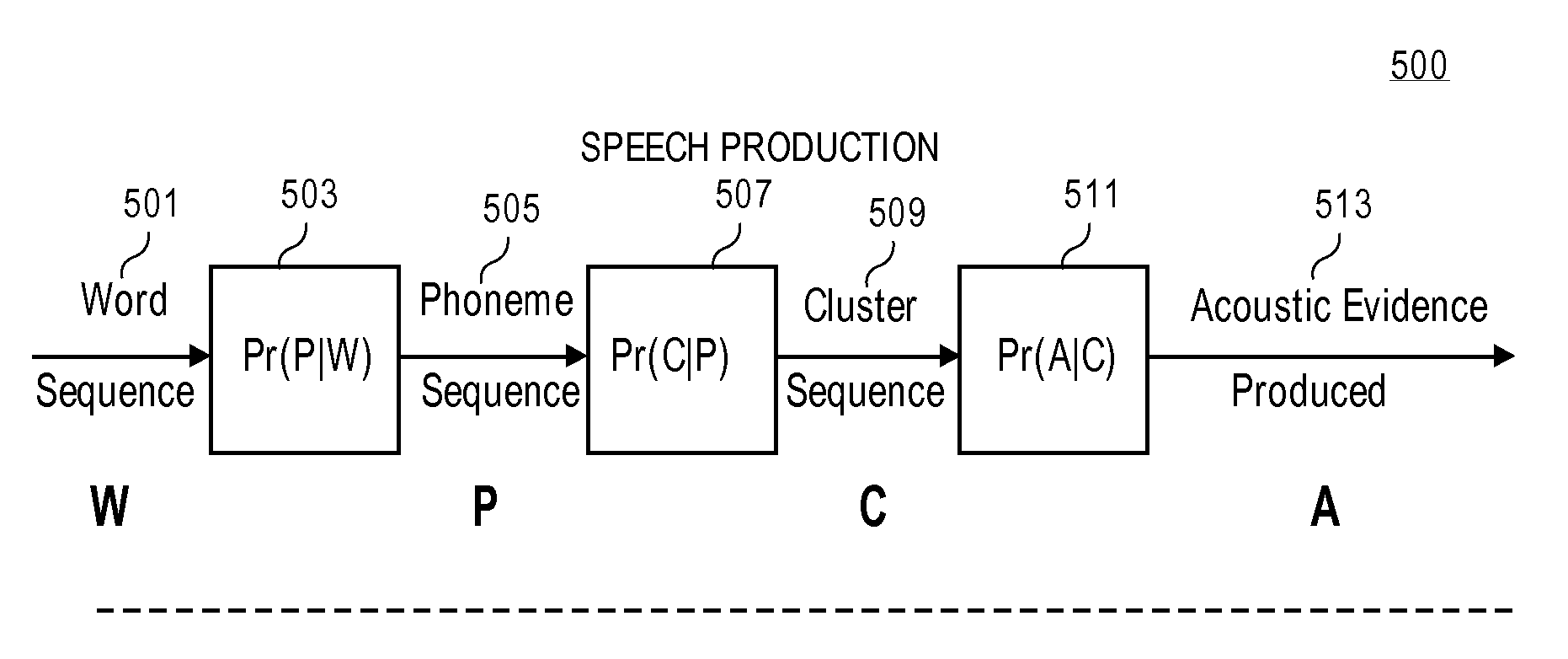

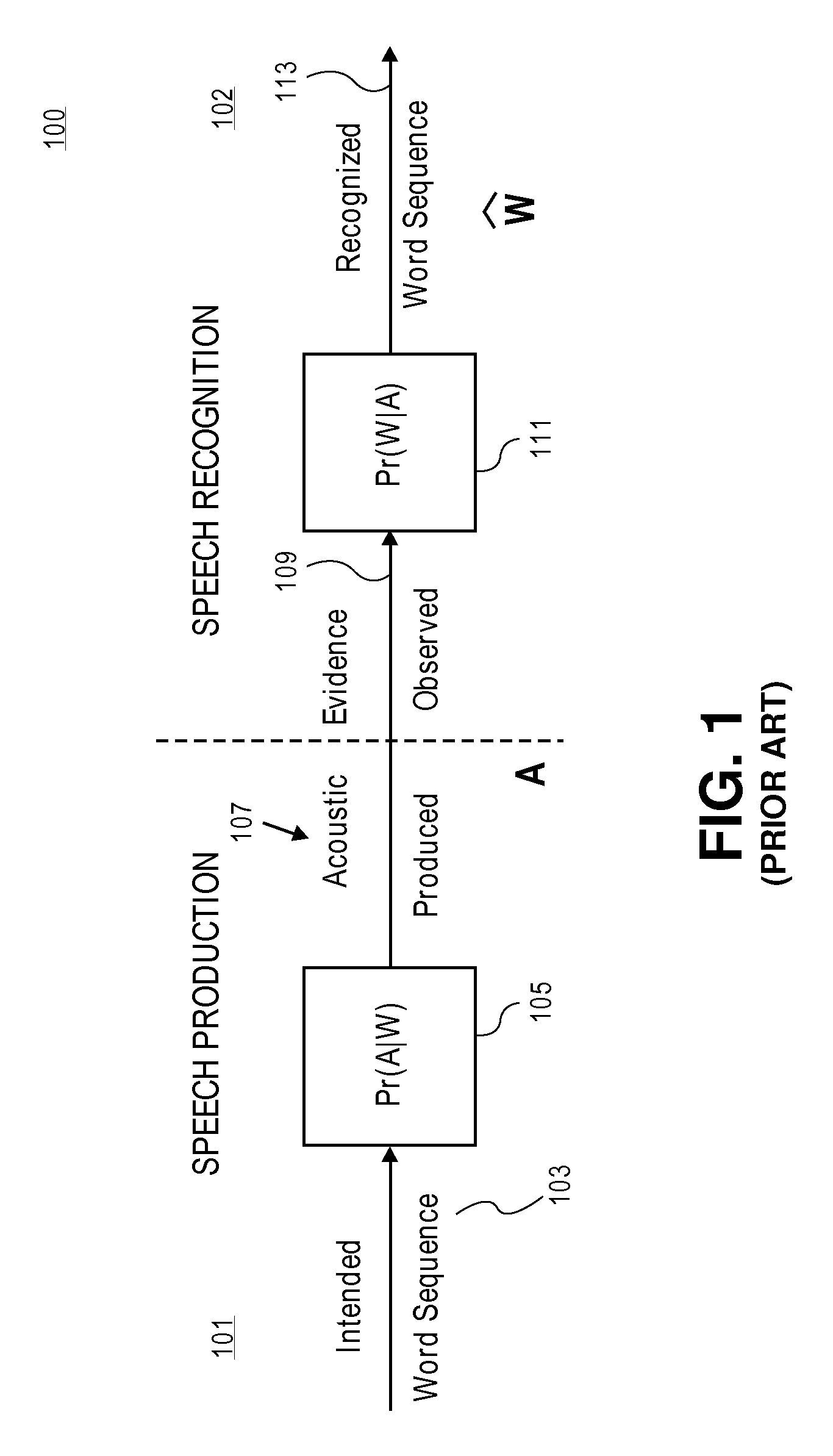

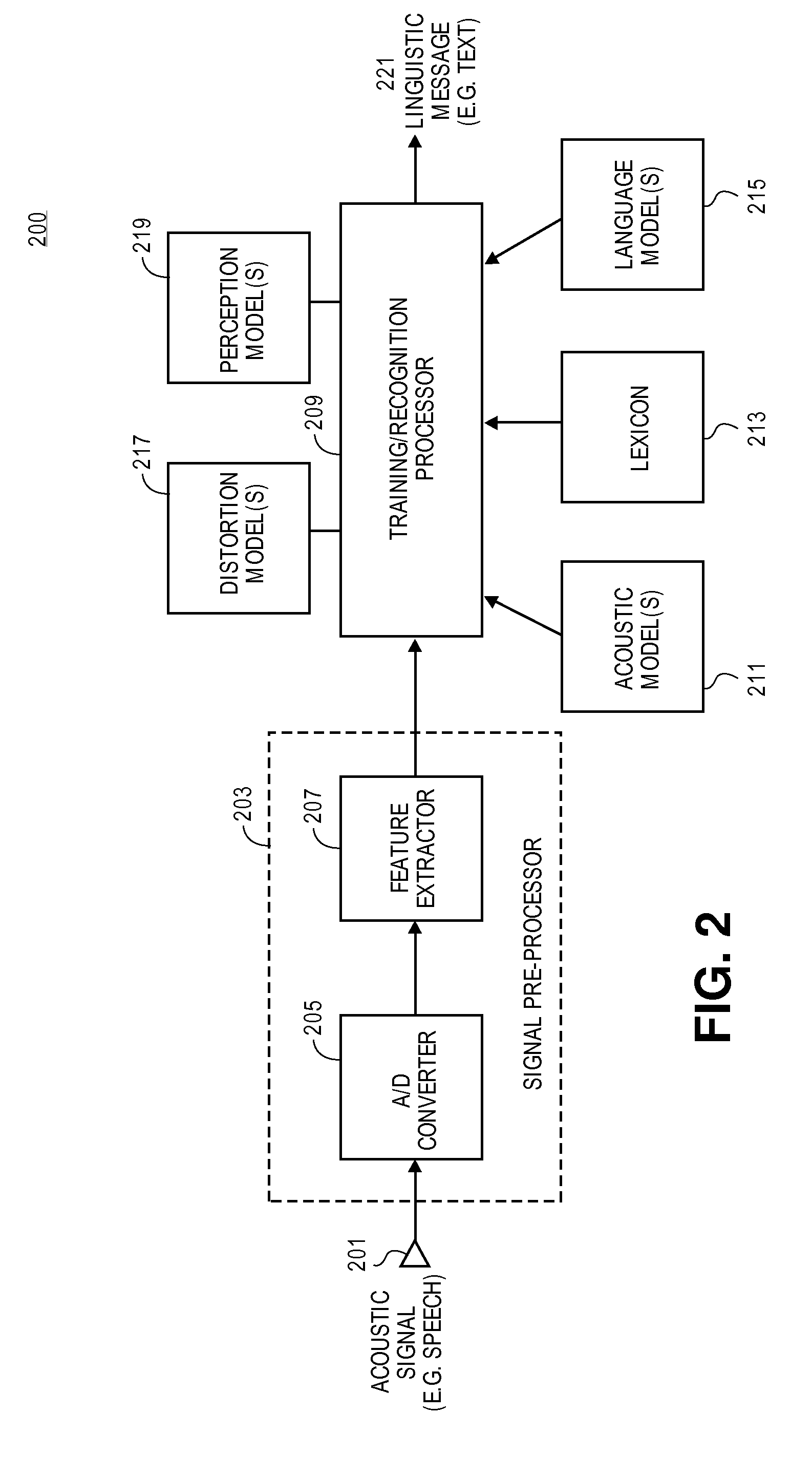

Methods and apparatuses for automatic speech recognition

ActiveUS20110004475A1Reduce the amount requiredSpeech recognitionModel parametersContinuous parameter

Exemplary embodiments of methods and apparatuses for automatic speech recognition are described. First model parameters associated with a first representation of an input signal are generated. The first representation of the input signal is a discrete parameter representation. Second model parameters associated with a second representation of the input signal are generated. The second representation of the input signal includes a continuous parameter representation of residuals of the input signal. The first representation of the input signal includes discrete parameters representing first portions of the input signal. The second representation includes discrete parameters representing second portions of the input signal that are smaller than the first portions. Third model parameters are generated to couple the first representation of the input signal with the second representation of the input signal. The first representation and the second representation of the input signal are mapped into a vector space.

Owner:APPLE INC

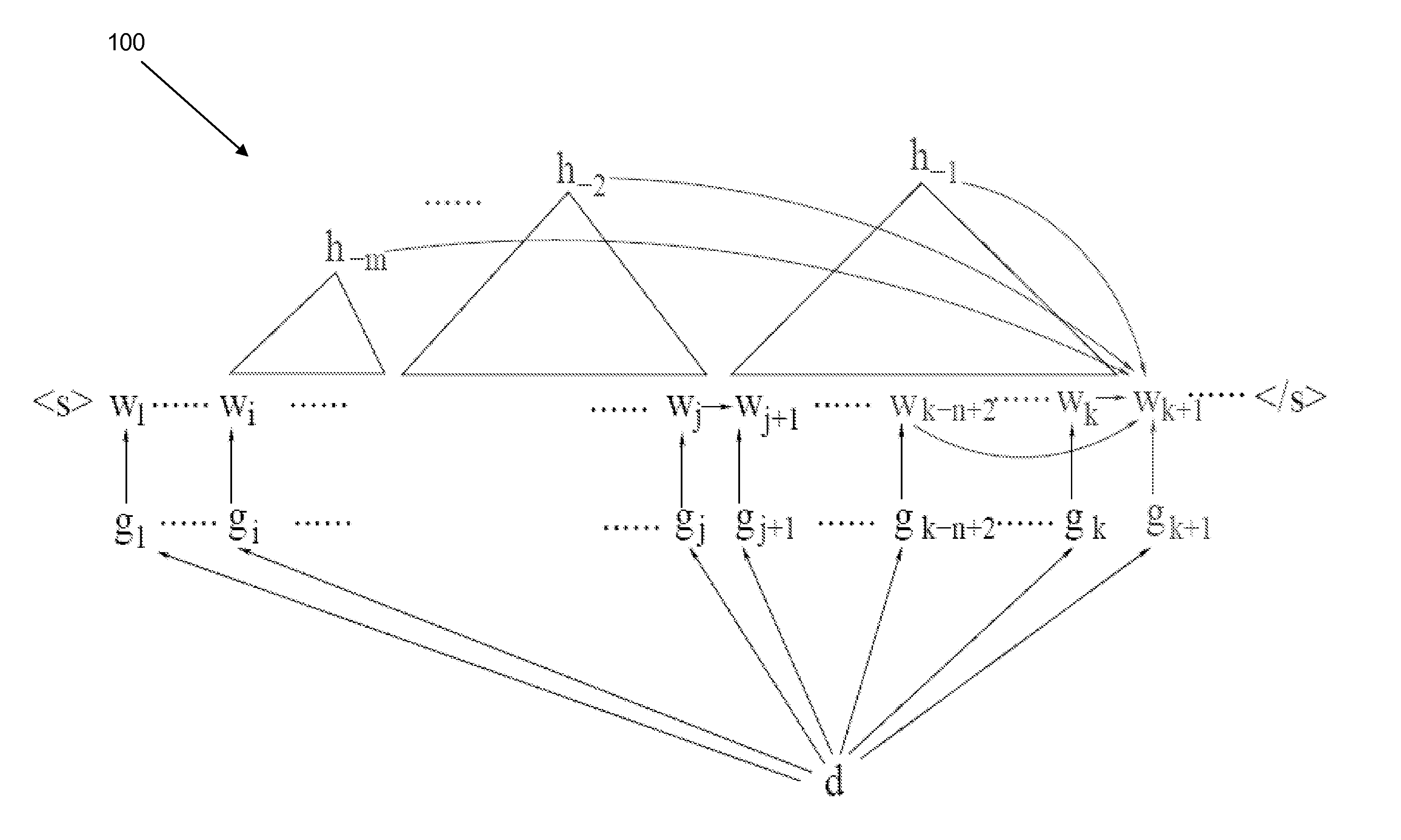

Large Scale Distributed Syntactic, Semantic and Lexical Language Models

InactiveUS20130325436A1Semantic analysisSpeech recognitionExpectation–maximization algorithmModel parameters

A composite language model may include a composite word predictor. The composite word predictor may include a first language model and a second language model that are combined according to a directed Markov random field. The composite word predictor can predict a next word based upon a first set of contexts and a second set of contexts. The first language model may include a first word predictor that is dependent upon the first set of contexts. The second language model may include a second word predictor that is dependent upon the second set of contexts. Composite model parameters can be determined by multiple iterations of a convergent N-best list approximate Expectation-Maximization algorithm and a follow-up Expectation-Maximization algorithm applied in sequence, wherein the convergent N-best list approximate Expectation-Maximization algorithm and the follow-up Expectation-Maximization algorithm extracts the first set of contexts and the second set of contexts from a training corpus.

Owner:WRIGHT STATE UNIVERSITY

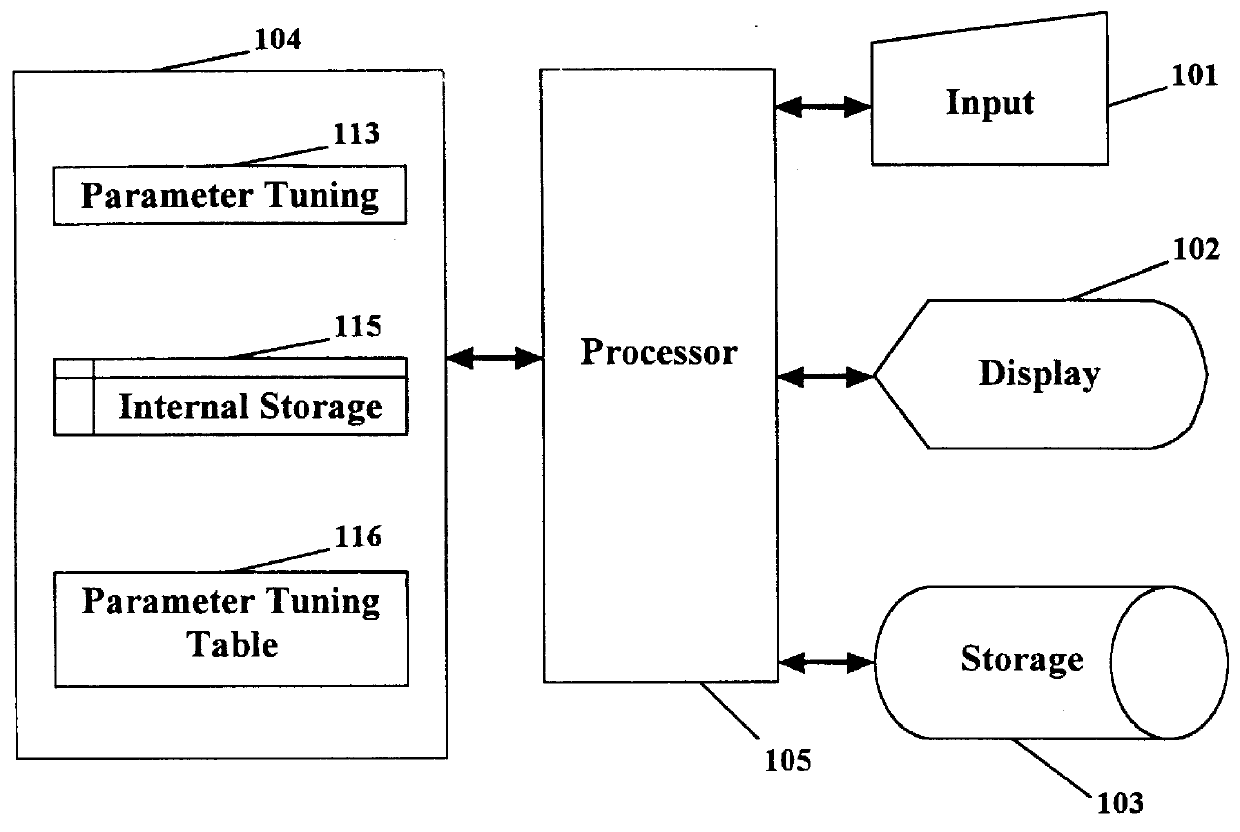

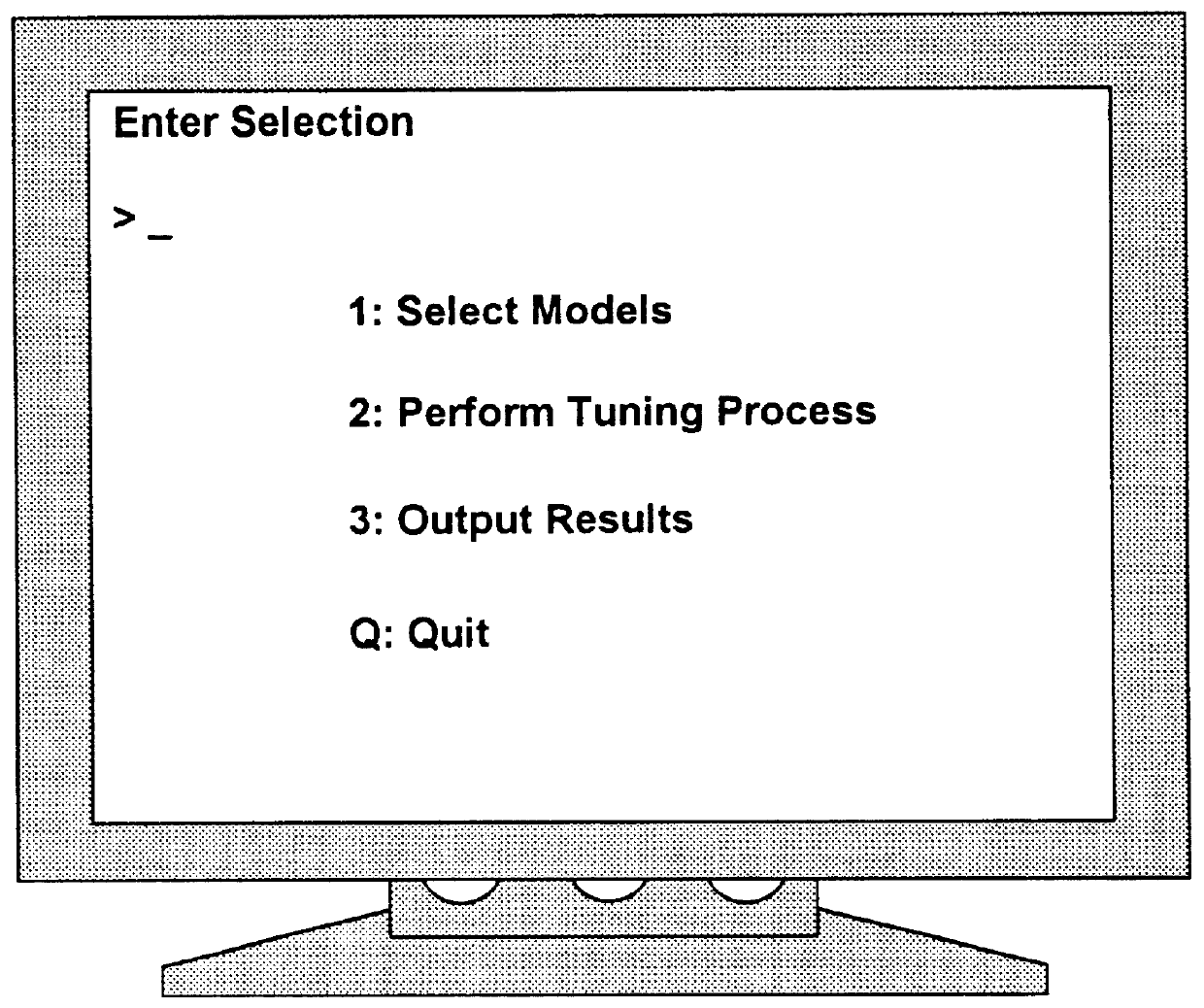

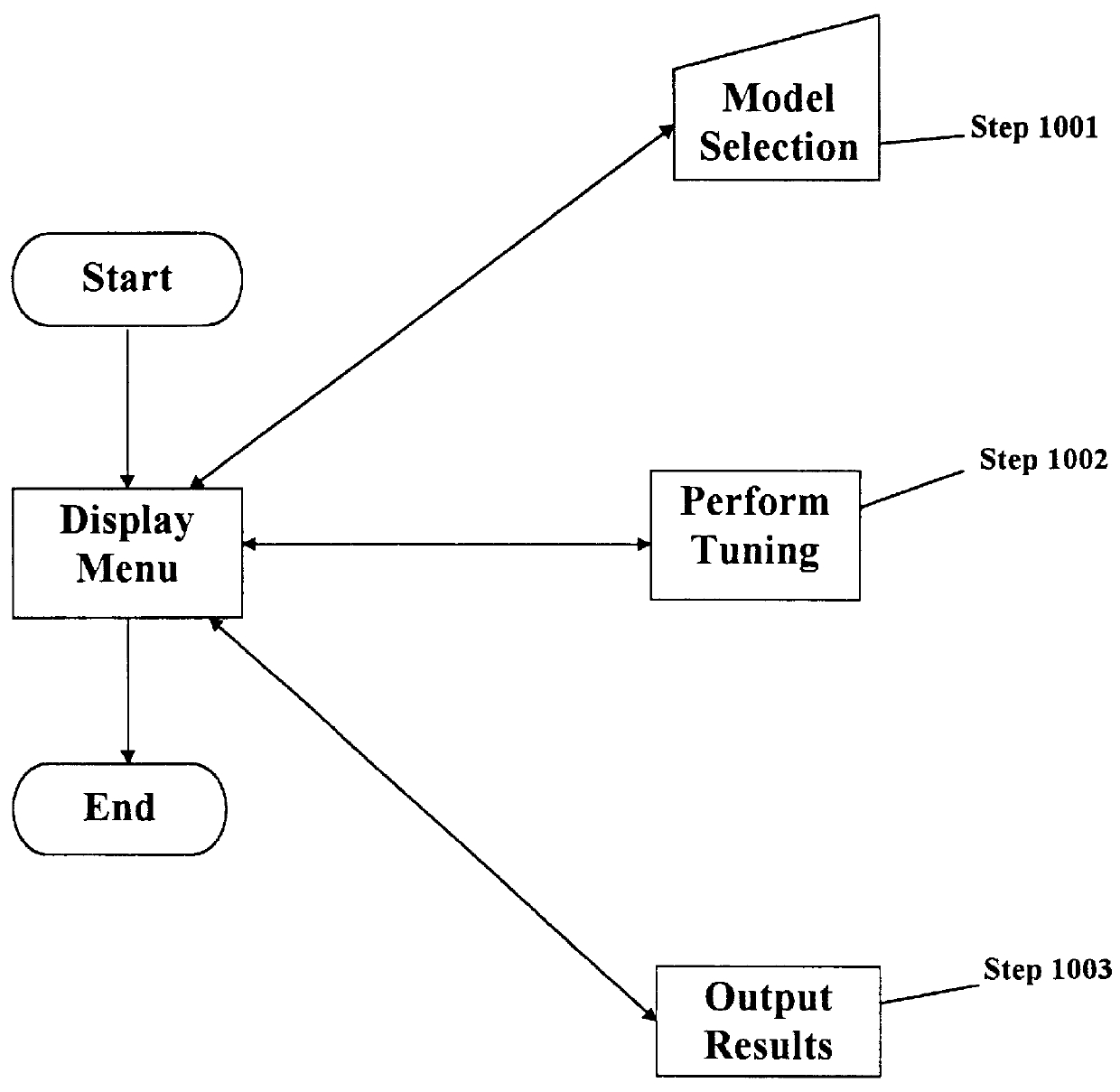

Method for stabilized tuning of demand models

InactiveUS6078893AStabilize tuningGreat numerical stabilityMarketingSpecial data processing applicationsRequirements modelModel parameters

A method for tuning a demand model in manner that is stable with respect to fluctuations in the sales history used for the tuning is provided. A market model is selected, which predicts how a subset of the parameters in the demand model depends upon information external to the sales history; this model may itself have a number of parameters. An effective figure-of-merit function is defined, consisting of a standard figure-of-merit function based upon the demand model and the sales history, plus a function that attains a minimum value when the parameters of the demand model are closest to the predictions of the market model. This effective figure-of-merit function is minimized with respect to the demand model and market model parameters. The resulting demand model parameters conform to the portions of the sales history data that show a strong trend, and conform to the external market information when the corresponding portions of the sales history data show noise.

Owner:SAP AG

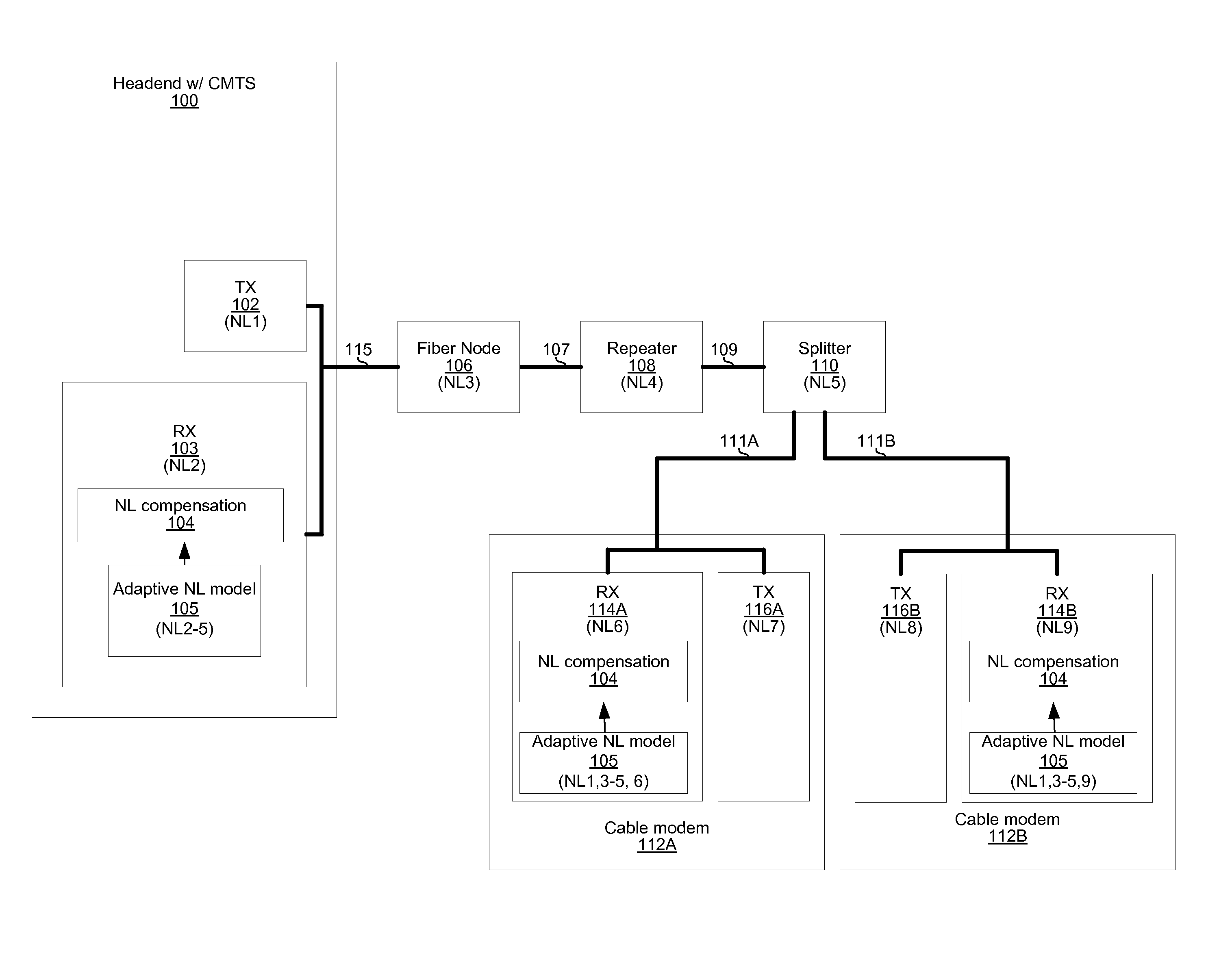

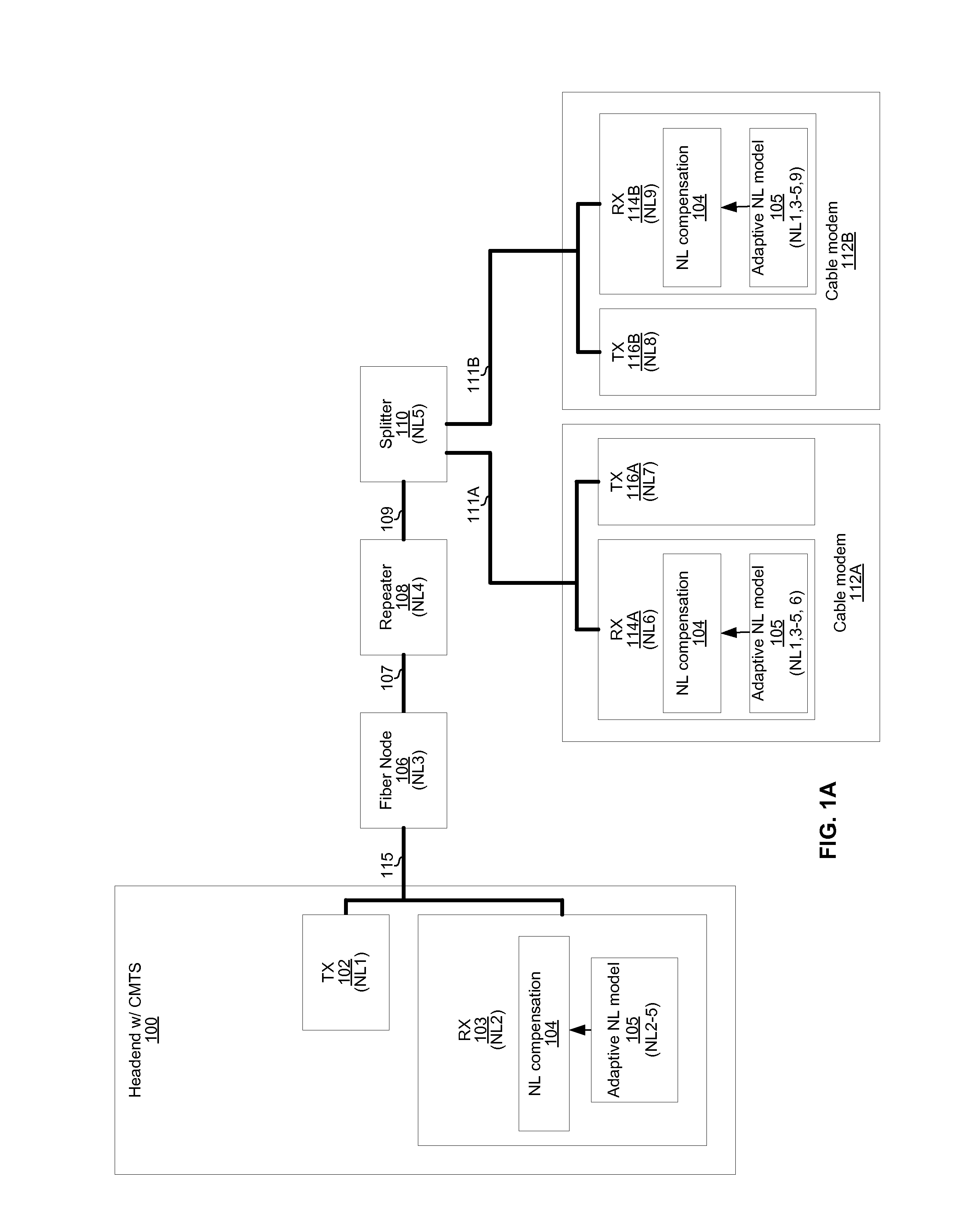

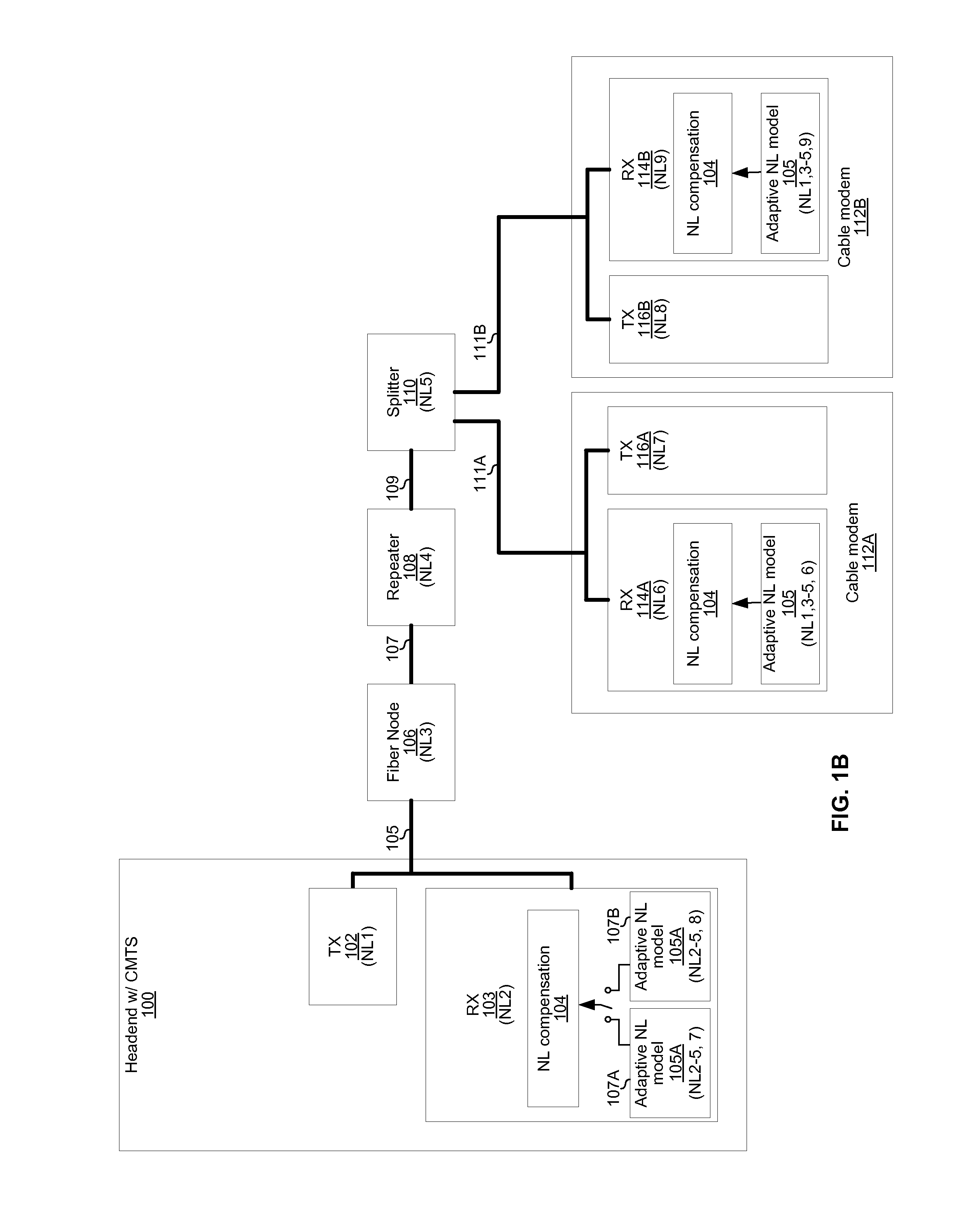

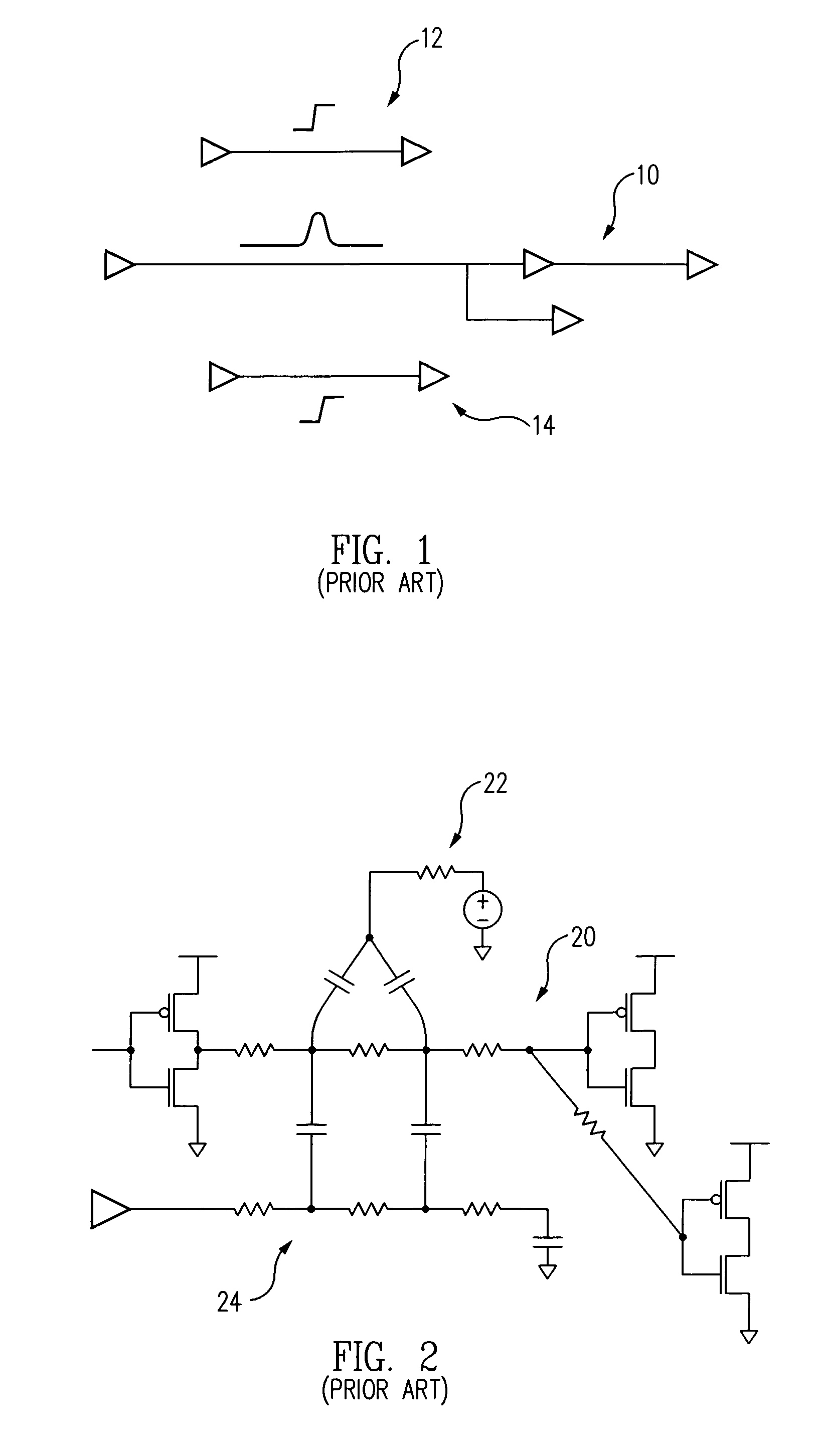

Communication methods and systems for nonlinear multi-user environments

InactiveUS20150207527A1Error preventionModulated-carrier systemsNonlinear distortionMulti user environment

An electronic receiver comprises a nonlinear distortion modeling circuit and a nonlinear distortion compensation circuit. The nonlinear distortion modeling circuit is operable to determine a plurality of sets of nonlinear distortion model parameter values, where each of the sets of nonlinear distortion model parameter values representing nonlinear distortion experienced by signals received by the electronic receiver from a respective one a plurality of communication partners. The nonlinear distortion compensation circuit is operable to use the sets of nonlinear distortion model parameter values for processing of signals from the plurality of communication partners. Each of the sets of nonlinear distortion model parameter values may comprises a plurality of values corresponding to a plurality of signal powers. The sets of nonlinear distortion model parameters may be stored in a lookup table indexed by a signal strength parameter.

Owner:AVAGO TECH INT SALES PTE LTD

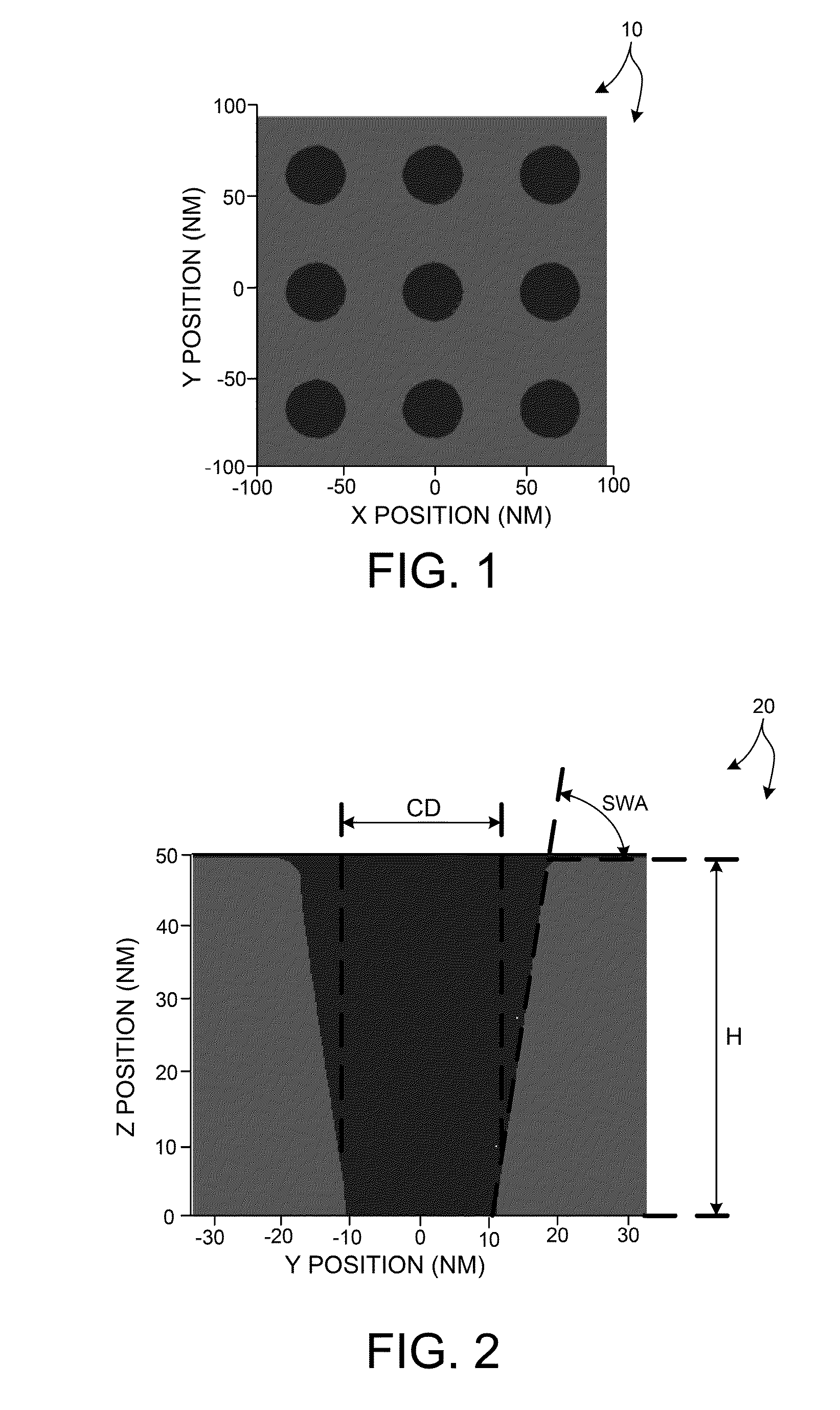

Integrated use of model-based metrology and a process model

ActiveUS20140172394A1Predictive result is improvedSimple processPhotomechanical apparatusDesign optimisation/simulationMetrologyModel method

Methods and systems for performing measurements based on a measurement model integrating a metrology-based target model with a process-based target model. Systems employing integrated measurement models may be used to measure structural and material characteristics of one or more targets and may also be used to measure process parameter values. A process-based target model may be integrated with a metrology-based target model in a number of different ways. In some examples, constraints on ranges of values of metrology model parameters are determined based on the process-based target model. In some other examples, the integrated measurement model includes the metrology-based target model constrained by the process-based target model. In some other examples, one or more metrology model parameters are expressed in terms of other metrology model parameters based on the process model. In some other examples, process parameters are substituted into the metrology model.

Owner:KLA TENCOR TECH CORP

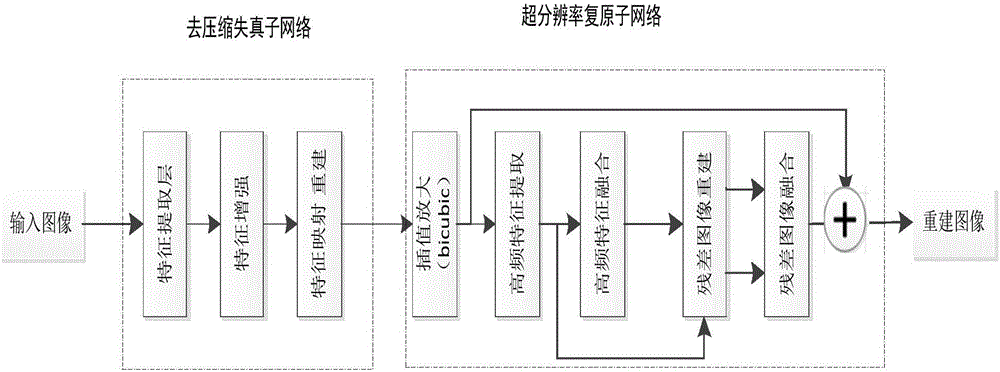

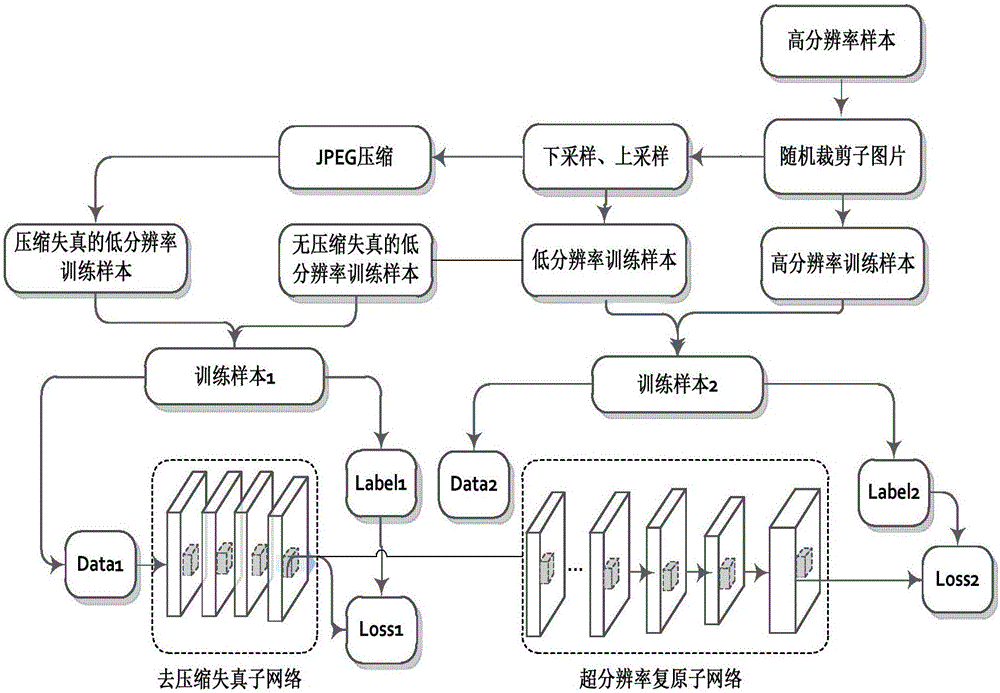

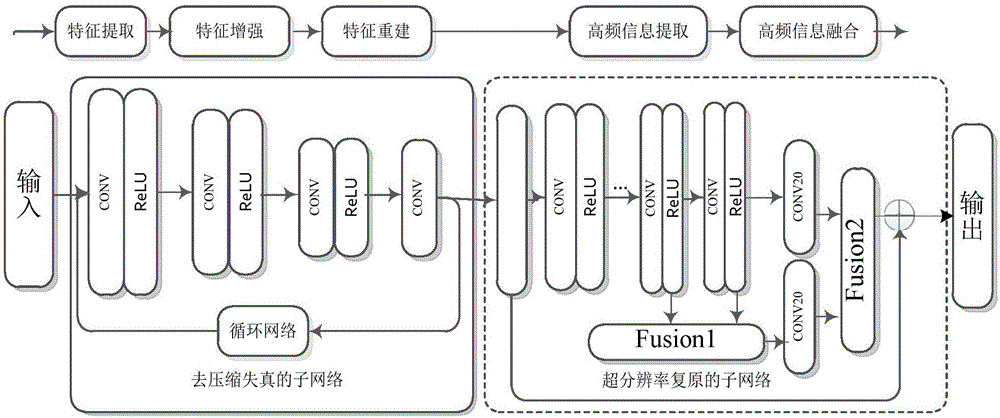

Compressed low-resolution image restoration method based on combined deep network

The present invention provides a compressed low-resolution image restoration method based on a combined deep network, belonging to the digital image / video signal processing field. The compressed low-resolution image restoration method based on the combined deep network starts from the aspect of the coprocessing of the compression artifact and downsampling factors to complete the restoration of a degraded image with the random combination of the compression artifact and the low resolution; the network provided by the invention comprises 28 convolution layers to establish a leptosomatic network structure, according to the idea of transfer learning, a model trained in advance employs a fine tuning mode to complete the training convergence of a greatly deep network so as to solve the problems of vanishing gradients and gradient explosion; the compressed low-resolution image restoration method completes the setting of the network model parameters through feature visualization, and the relation of the end-to-end learning degeneration feature and the ideal features omits the preprocessing and postprocessing; and finally, three important fusions are completed, namely the fusion of the feature figures with the same size, the fusion of residual images and the fusion of the high-frequency information and the high-frequency initial estimation figure, and the compressed low-resolution image restoration method can solve the super-resolution restoration problem of the low-resolution image with the compression artifact.

Owner:BEIJING UNIV OF TECH

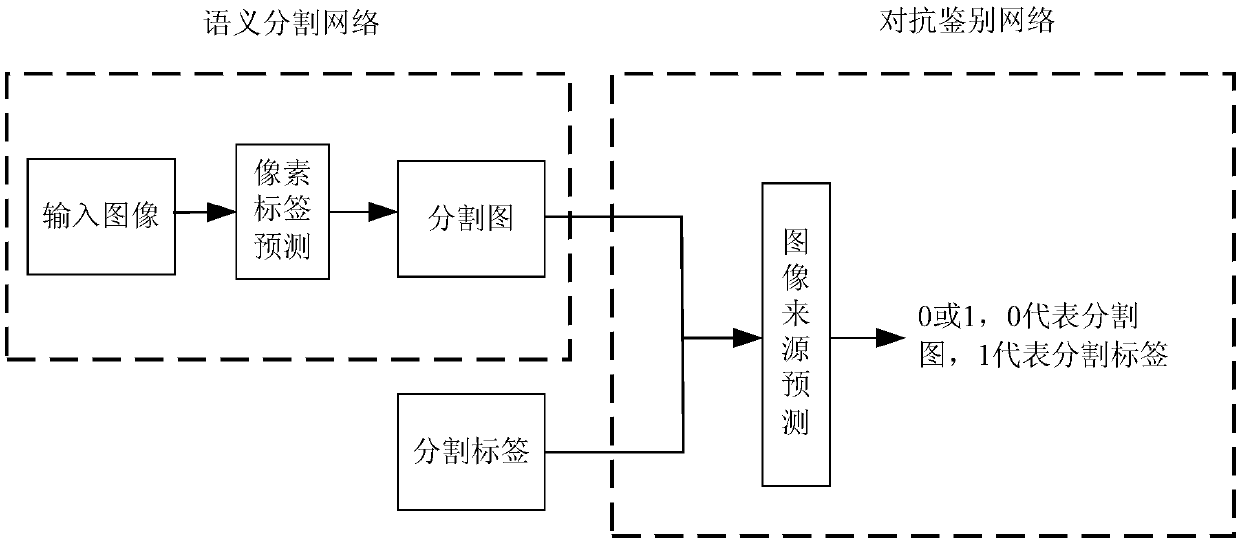

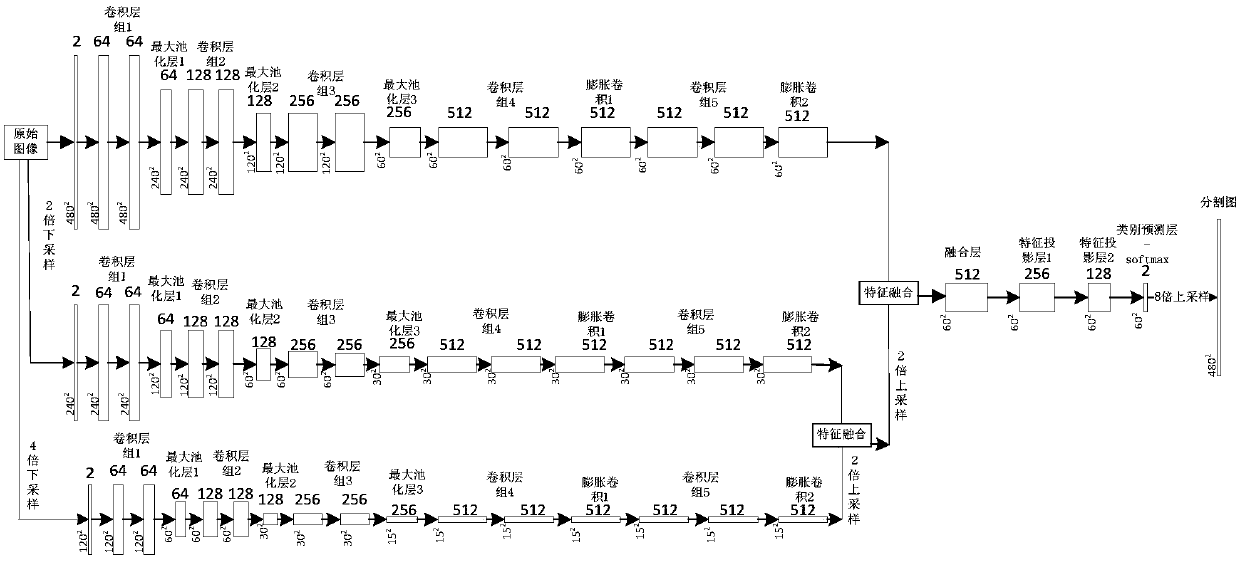

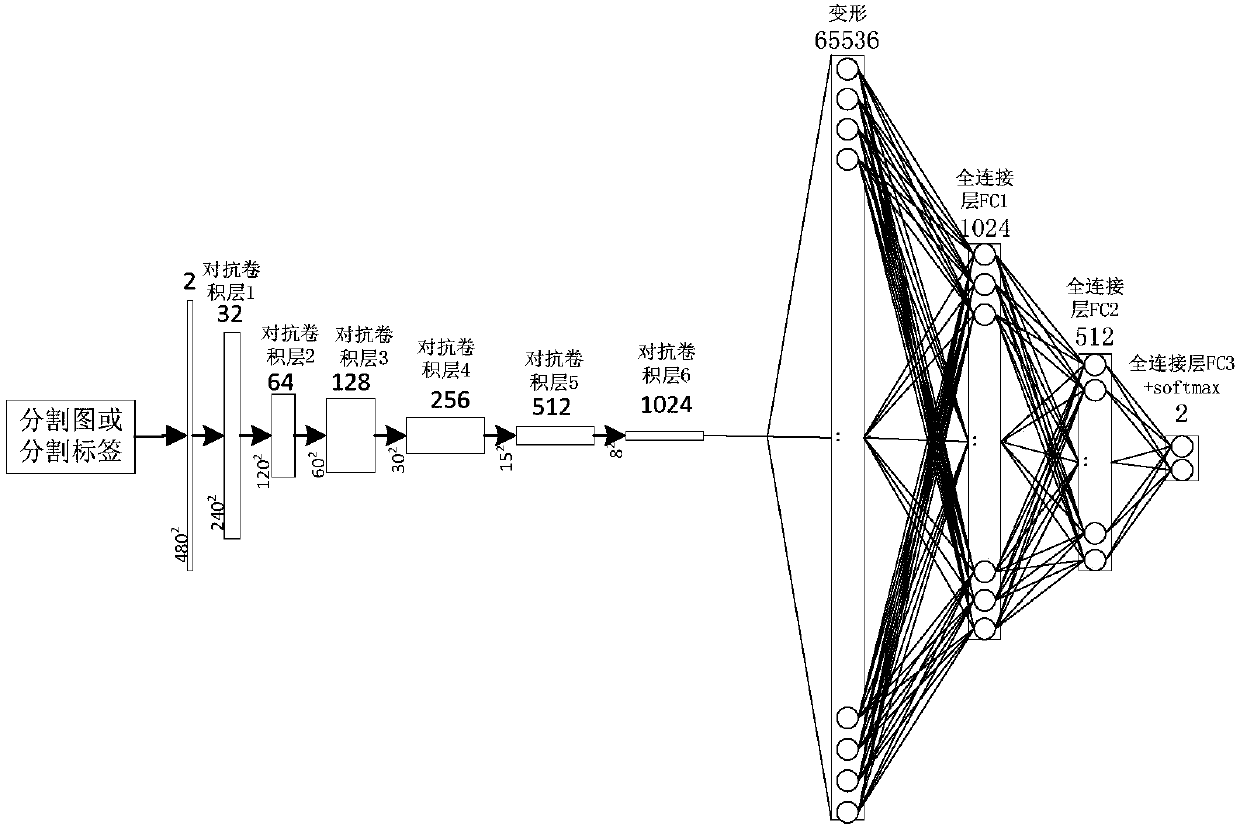

Multi-scale feature fusion ultrasonic image semantic segmentation method based on adversarial learning

ActiveCN108268870AImprove forecast accuracyFew parametersNeural architecturesRecognition of medical/anatomical patternsPattern recognitionAutomatic segmentation

The invention provides a multi-scale feature fusion ultrasonic image semantic segmentation method based on adversarial learning, and the method comprises the following steps: building a multi-scale feature fusion semantic segmentation network model, building an adversarial discrimination network model, carrying out the adversarial training and model parameter learning, and carrying out the automatic segmentation of a breast lesion. The method provided by the invention achieves the prediction of a pixel class through the multi-scale features of input images with different resolutions, improvesthe pixel class label prediction accuracy, employs expanding convolution for replacing partial pooling so as to improve the resolution of a segmented image, enables the segmented image generated by asegmentation network guided by an adversarial discrimination network not to be distinguished from a segmentation label, guarantees the good appearance and spatial continuity of the segmented image, and obtains a more precise high-resolution ultrasonic breast lesion segmented image.

Owner:CHONGQING NORMAL UNIVERSITY

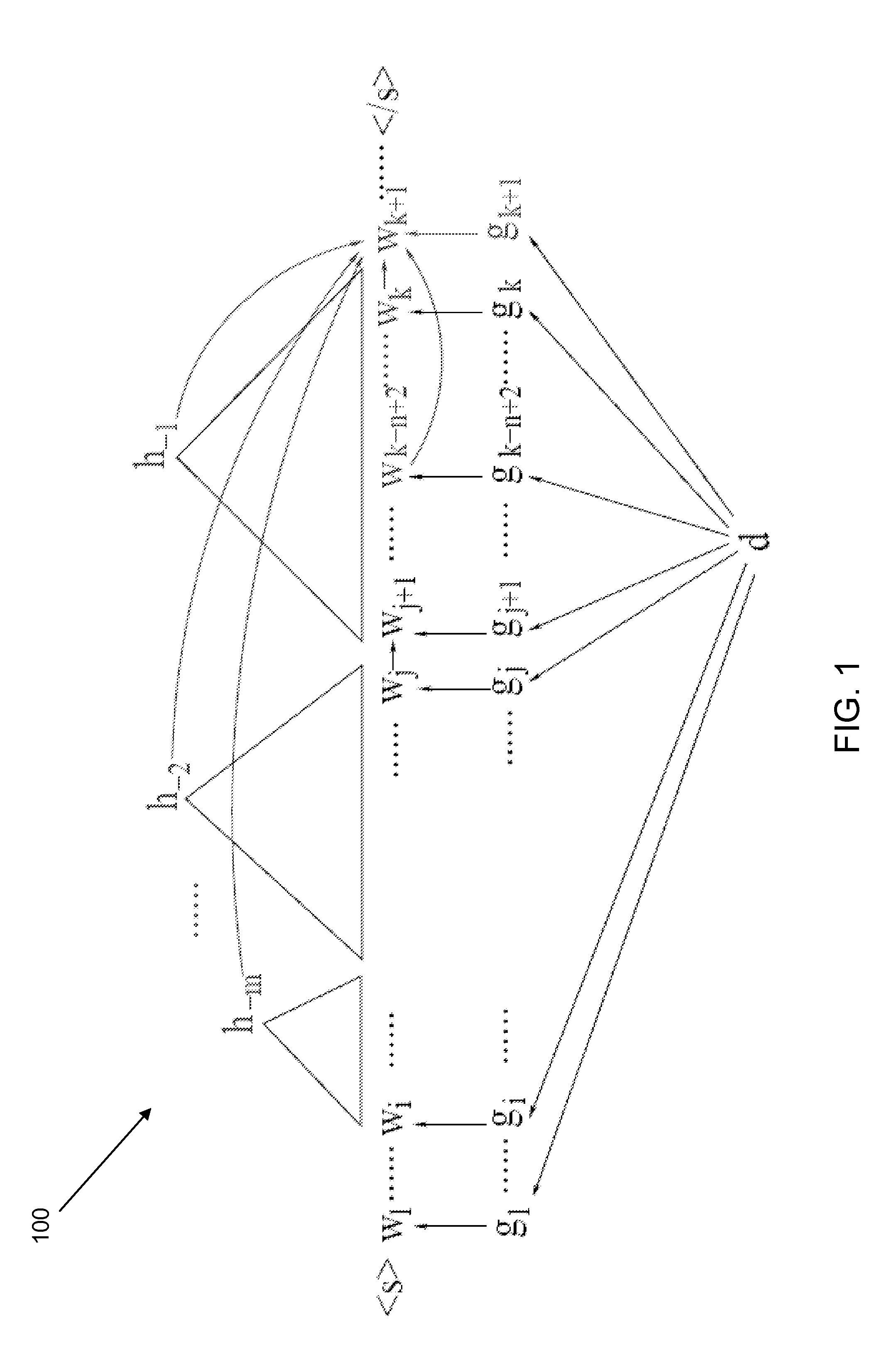

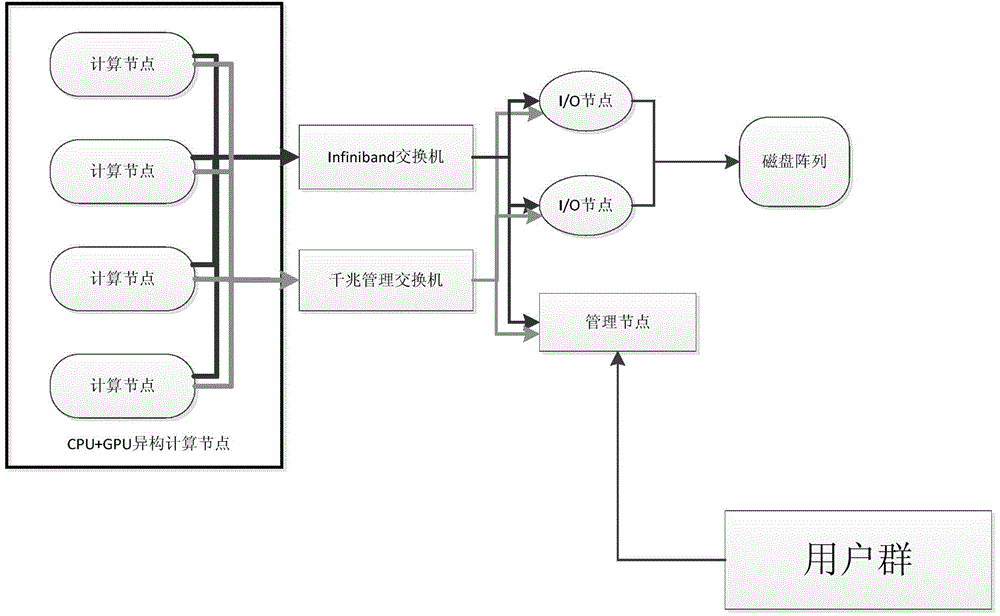

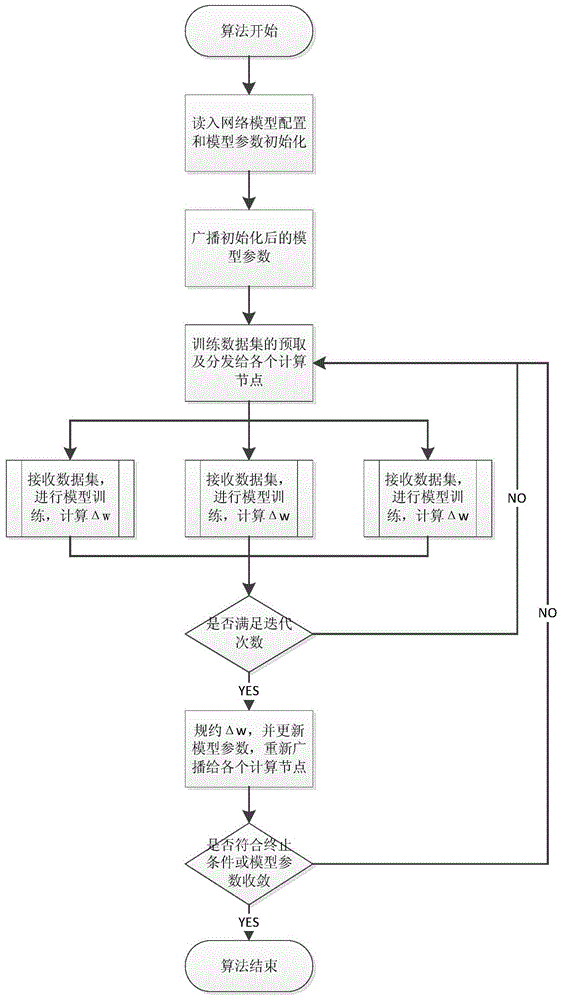

Convolution neural network parallel processing method based on large-scale high-performance cluster

InactiveCN104463324AReduce training timeImprove computing efficiencyBiological neural network modelsConcurrent instruction executionNODALAlgorithm

The invention discloses a convolution neural network parallel processing method based on a large-scale high-performance cluster. The method comprises the steps that (1) a plurality of copies are constructed for a network model to be trained, model parameters of all the copies are identical, the number of the copies is identical with the number of nodes of the high-performance cluster, each node is provided with one model copy, one node is selected to serve as a main node, and the main node is responsible for broadcasting and collecting the model parameters; (2) a training set is divided into a plurality of subsets, the training subsets are issued to the rest of sub nodes except the main mode each time to conduct parameter gradient calculation together, gradient values are accumulated, the accumulated value is used for updating the model parameters of the main node, and the updated model parameters are broadcast to all the sub nodes until model training is ended. The convolution neural network parallel processing method has the advantages of being capable of achieving parallelization, improving the efficiency of model training, shortening the training time and the like.

Owner:CHANGSHA MASHA ELECTRONICS TECH

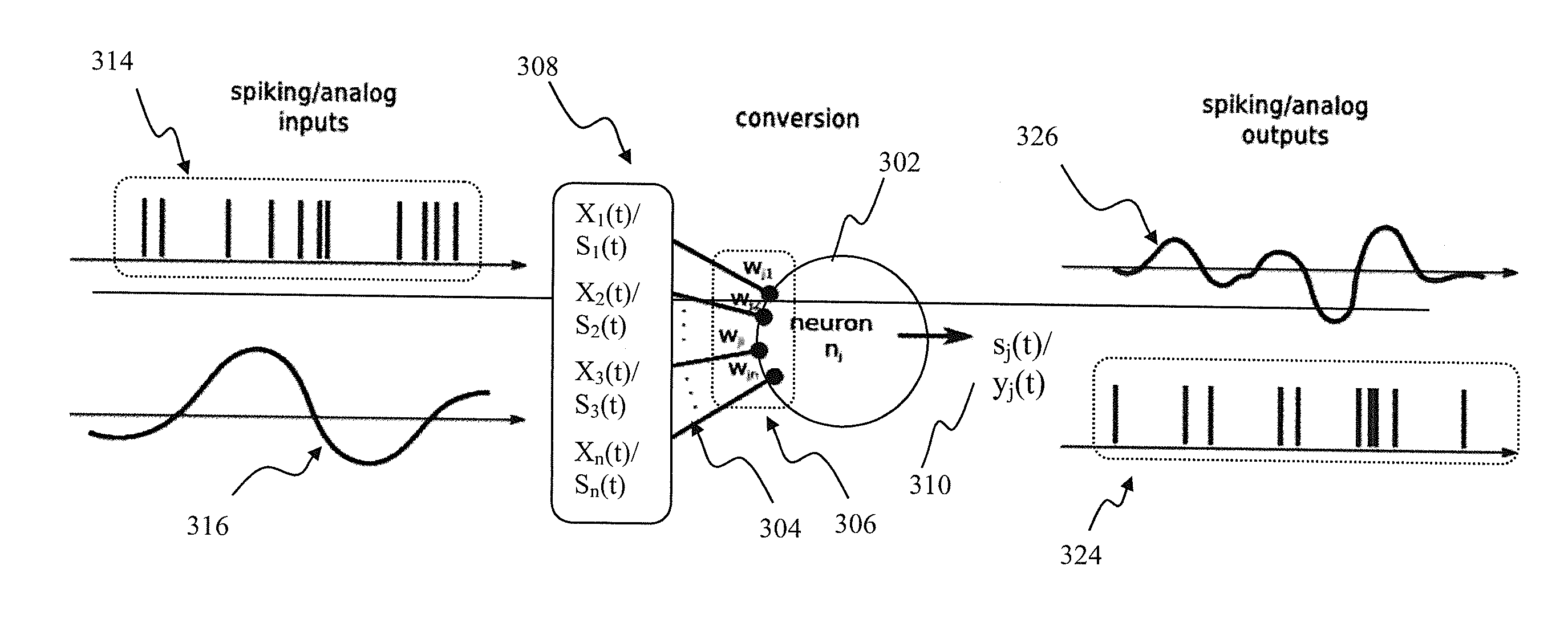

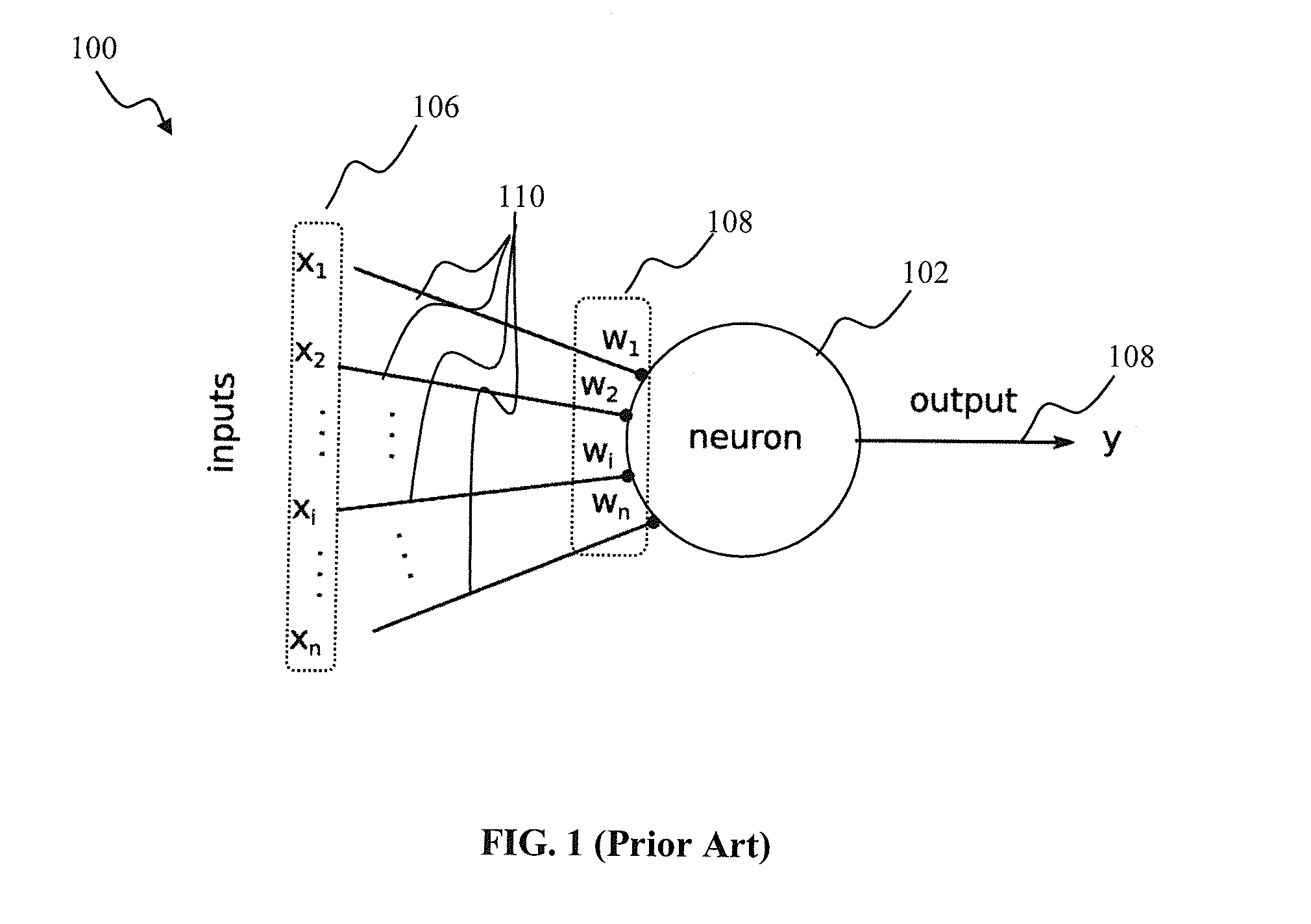

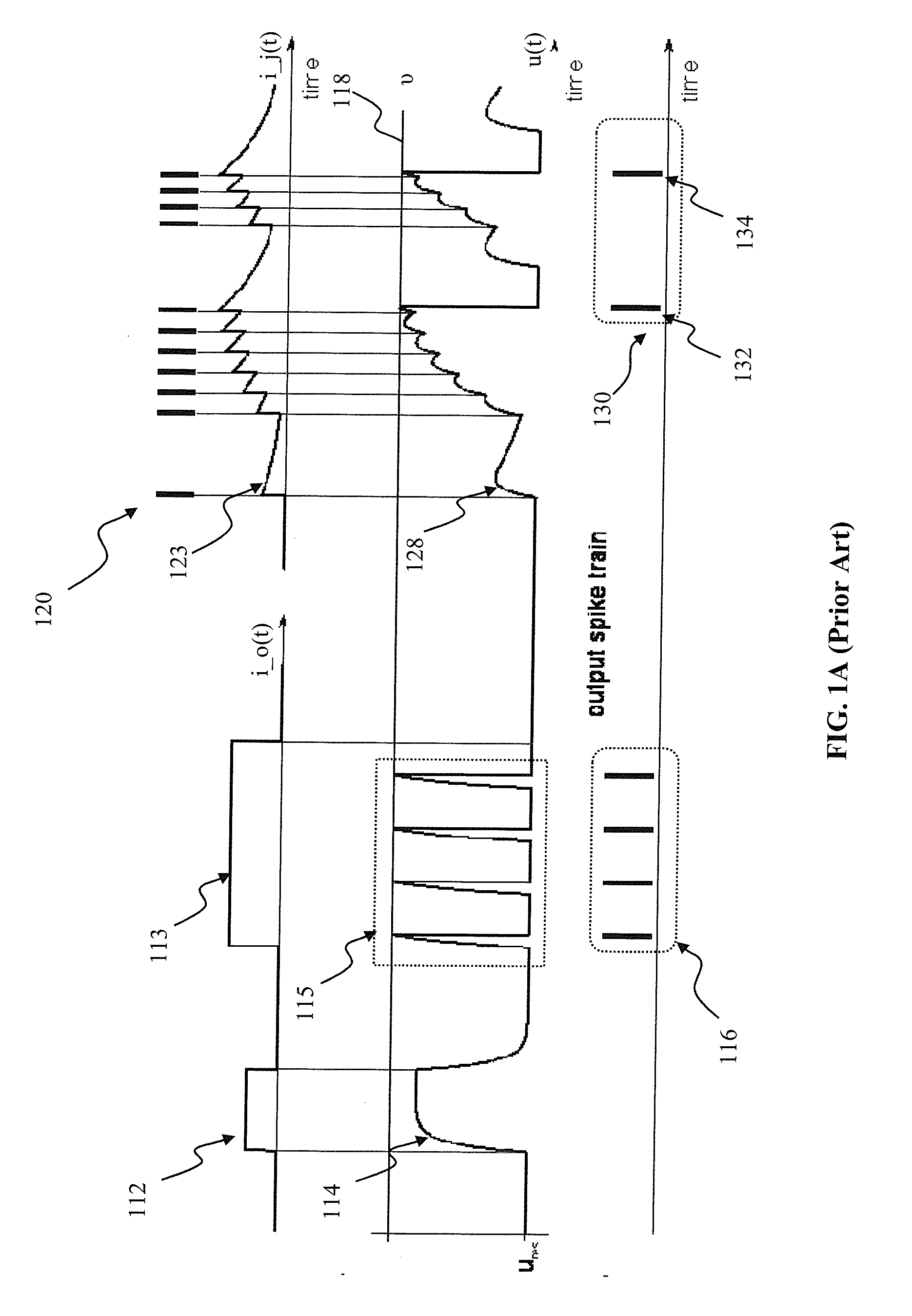

Neural network apparatus and methods for signal conversion

InactiveUS20130151450A1Digital computer detailsNeural architecturesNerve networkSpiking neural network

Apparatus and methods for universal node design implementing a universal learning rule in a mixed signal spiking neural network. In one implementation, at one instance, the node apparatus, operable according to the parameterized universal learning model, receives a mixture of analog and spiking inputs, and generates a spiking output based on the model parameter for that node that is selected by the parameterized model for that specific mix of inputs. At another instance, the same node receives a different mix of inputs, that also may comprise only analog or only spiking inputs and generates an analog output based on a different value of the node parameter that is selected by the model for the second mix of inputs. In another implementation, the node apparatus may change its output from analog to spiking responsive to a training input for the same inputs.

Owner:PONULAK FILIP

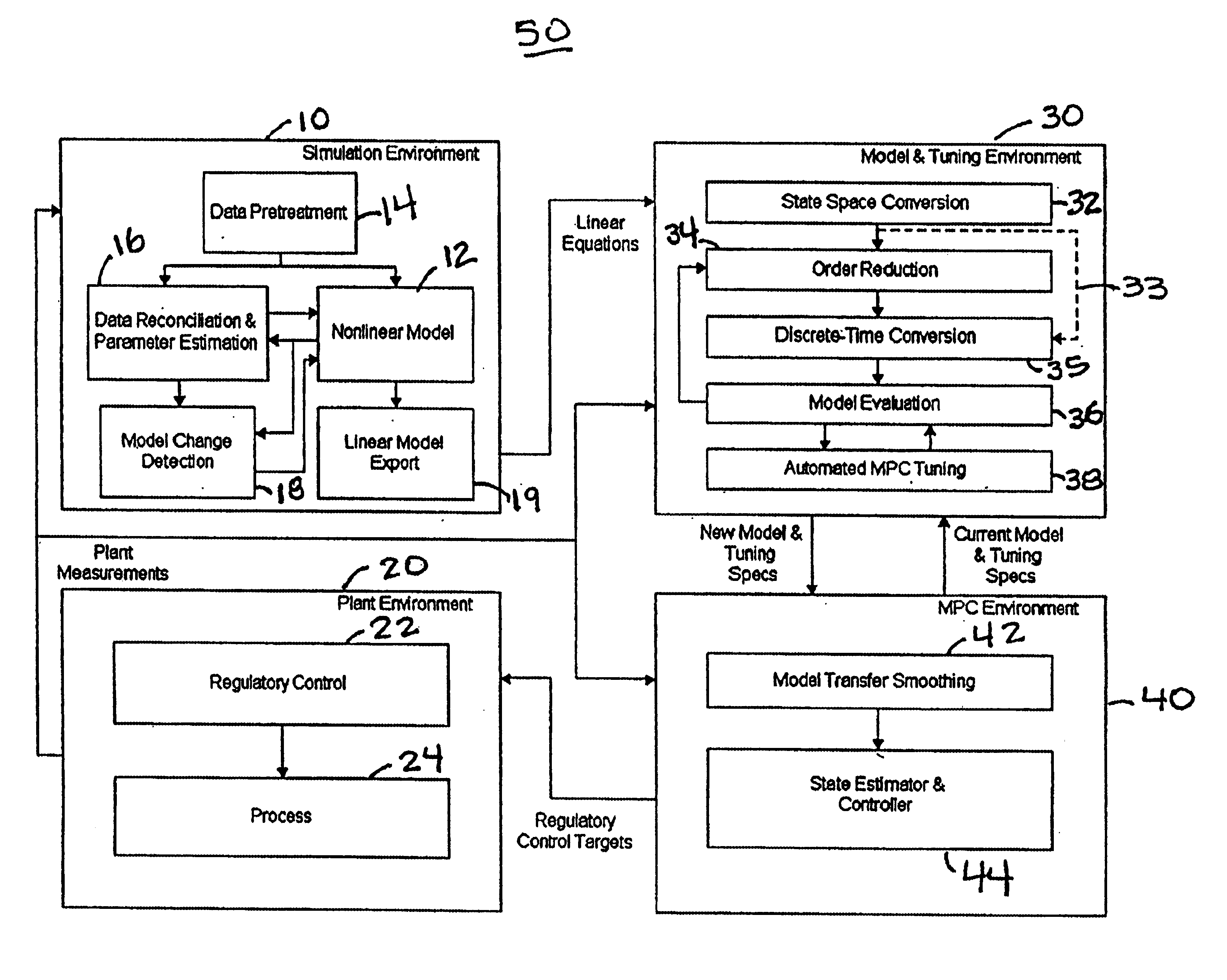

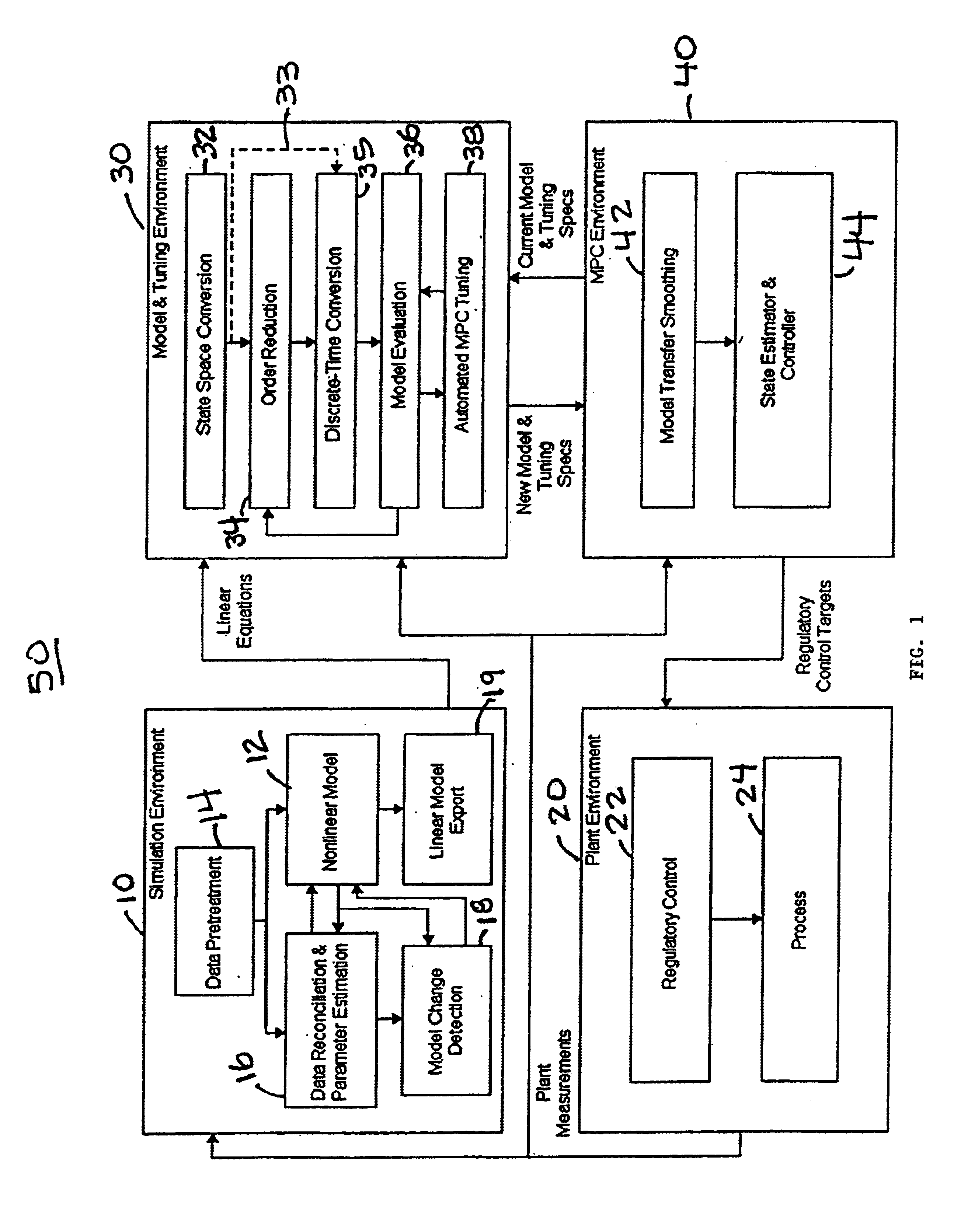

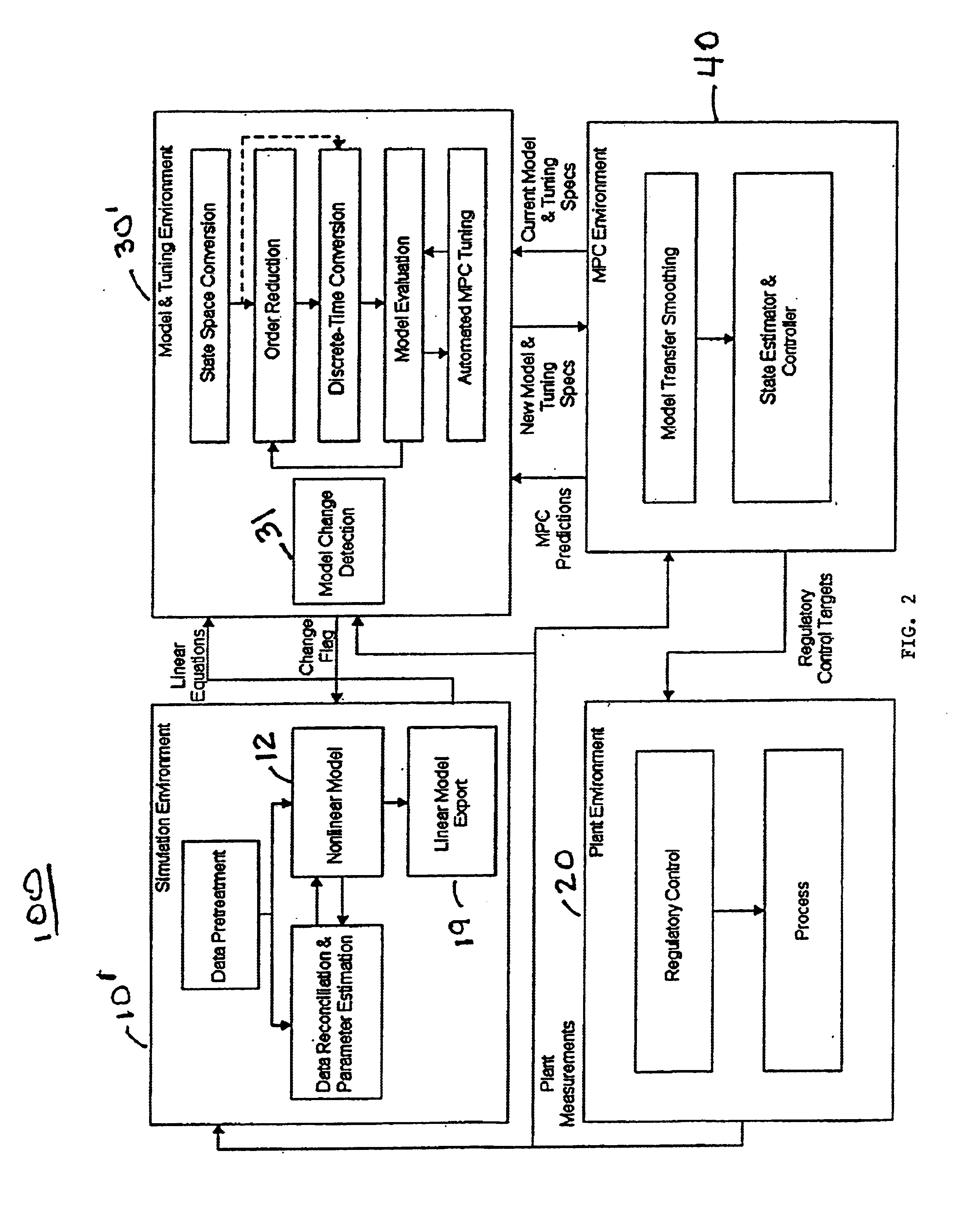

System and methodology and adaptive, linear model predictive control based on rigorous, nonlinear process model

InactiveUS6826521B1Analogue computers for chemical processesAdaptive controlSoftware systemPredictive controller

A methodology for process modeling and control and the software system implementation of this methodology, which includes a rigorous, nonlinear process simulation model, the generation of appropriate linear models derived from the rigorous model, and an adaptive, linear model predictive controller (MPC) that utilizes the derived linear models. A state space, multivariable, model predictive controller (MPC) is the preferred choice for the MPC since the nonlinear simulation model is analytically translated into a set of linear state equations and thus simplifies the translation of the linearized simulation equations to the modeling format required by the controller. Various other MPC modeling forms such as transfer functions, impulse response coefficients, and step response coefficients may also be used. The methodology is very general in that any model predictive controller using one of the above modeling forms can be used as the controller. The methodology also includes various modules that improve reliability and performance. For example, there is a data pretreatment module used to pre-process the plant measurements for gross error detection. A data reconciliation and parameter estimation module is then used to correct for instrumentation errors and to adjust model parameters based on current operating conditions. The full-order state space model can be reduced by the order reduction module to obtain fewer states for the controller model. Automated MPC tuning is also provided to improve control performance.

Owner:ABB AUTOMATION INC

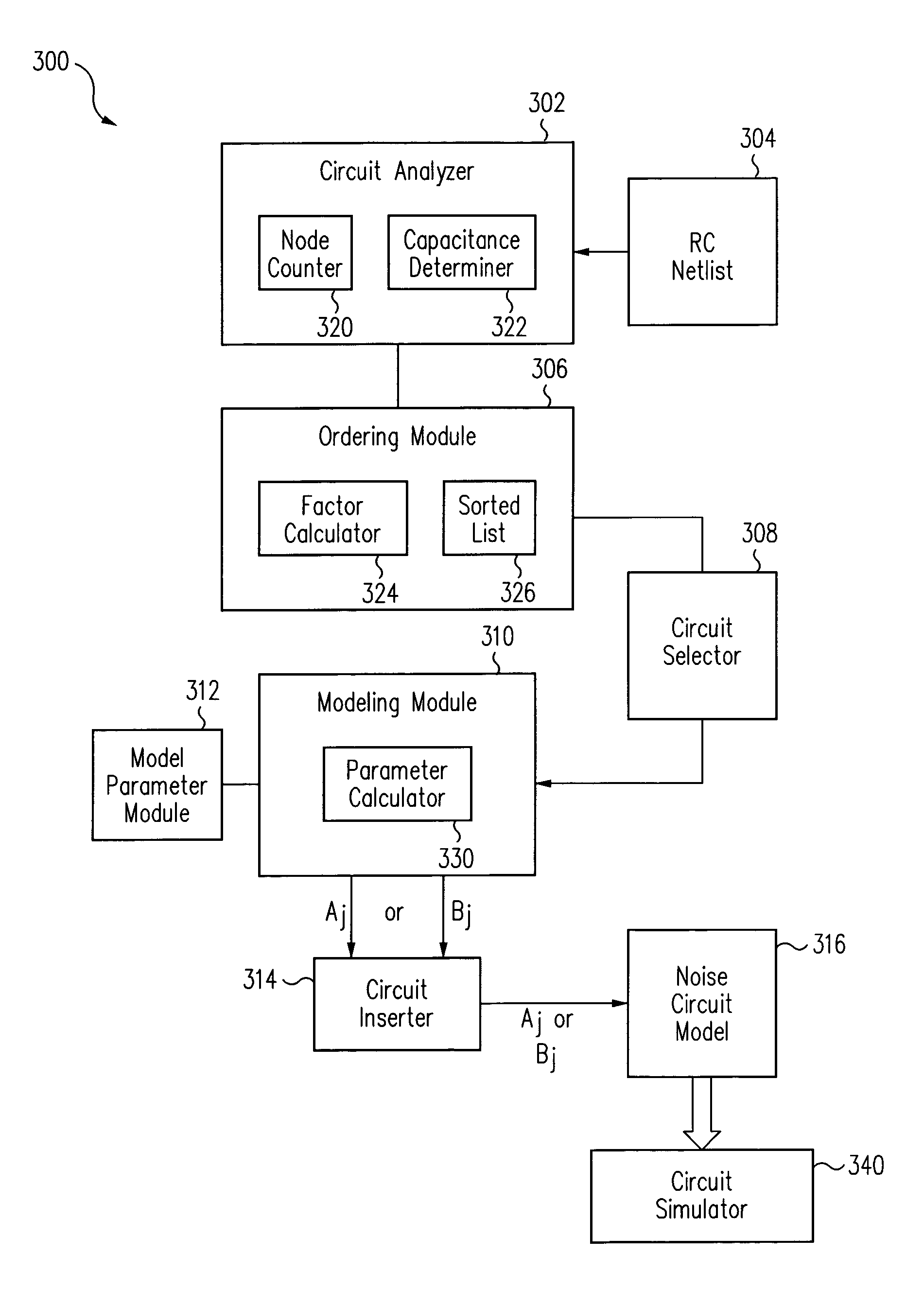

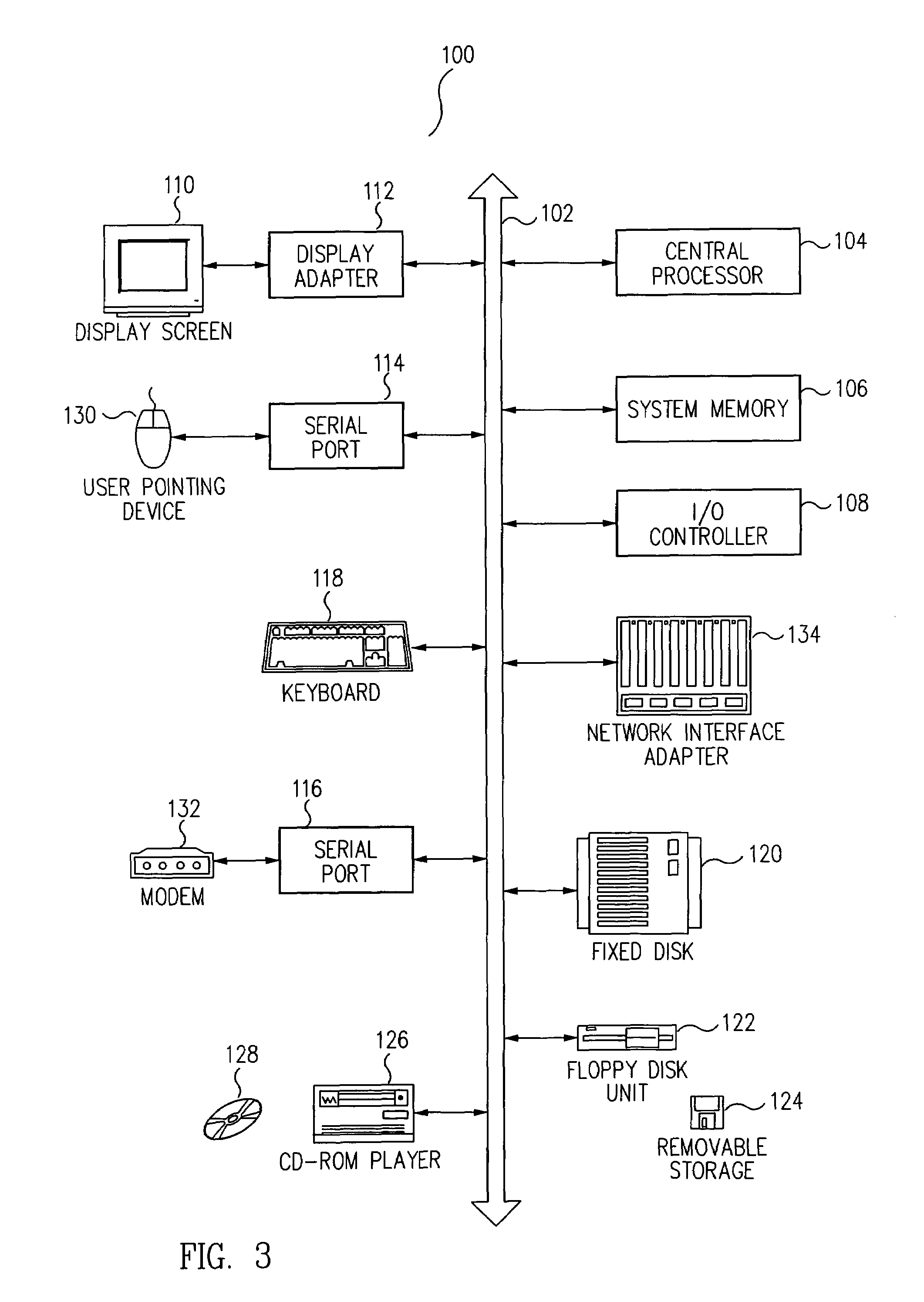

Method and apparatus for generating circuit model for static noise analysis

ActiveUS7181716B1Computer aided designSpecial data processing applicationsAttacker modelModel parameters

Owner:ORACLE INT CORP

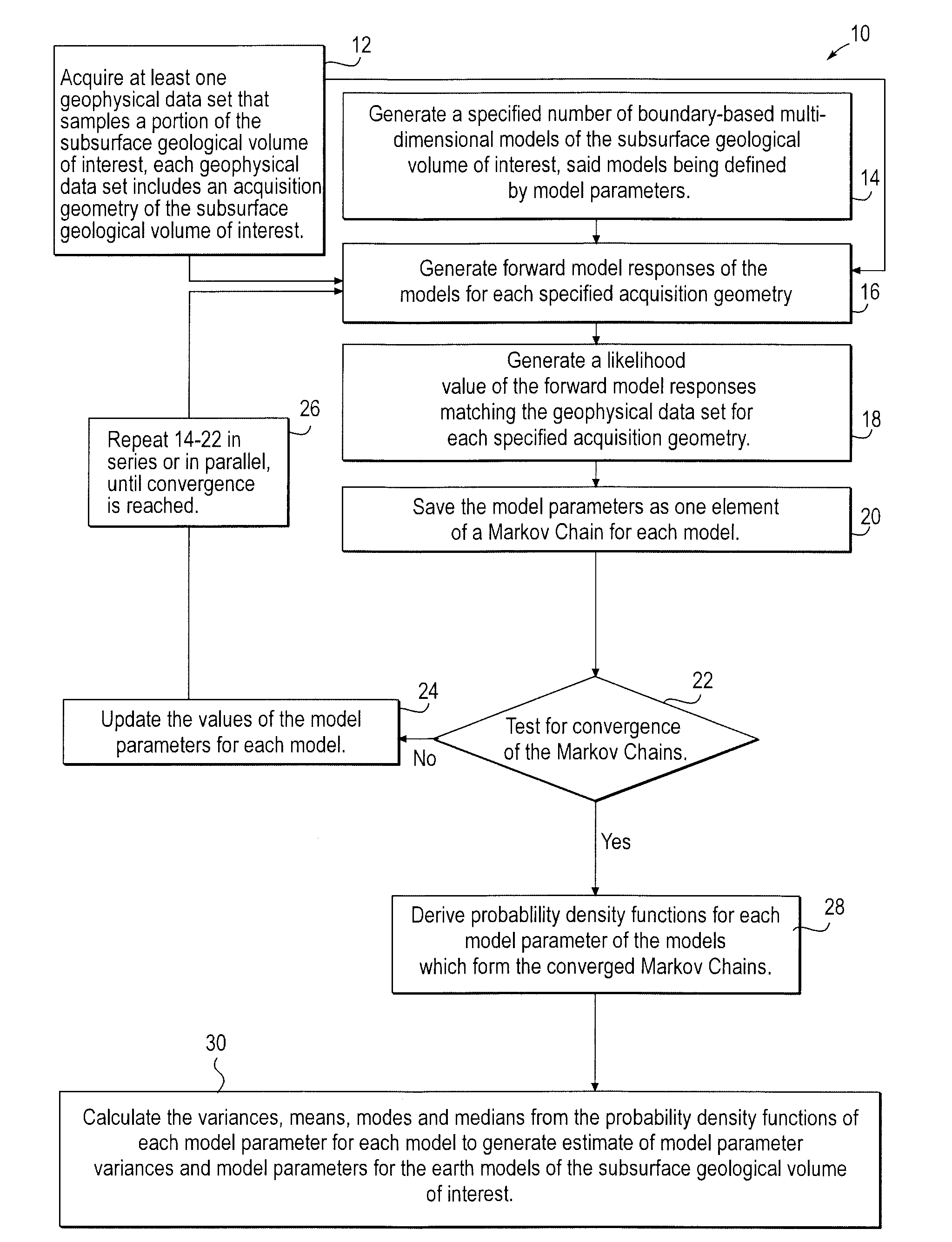

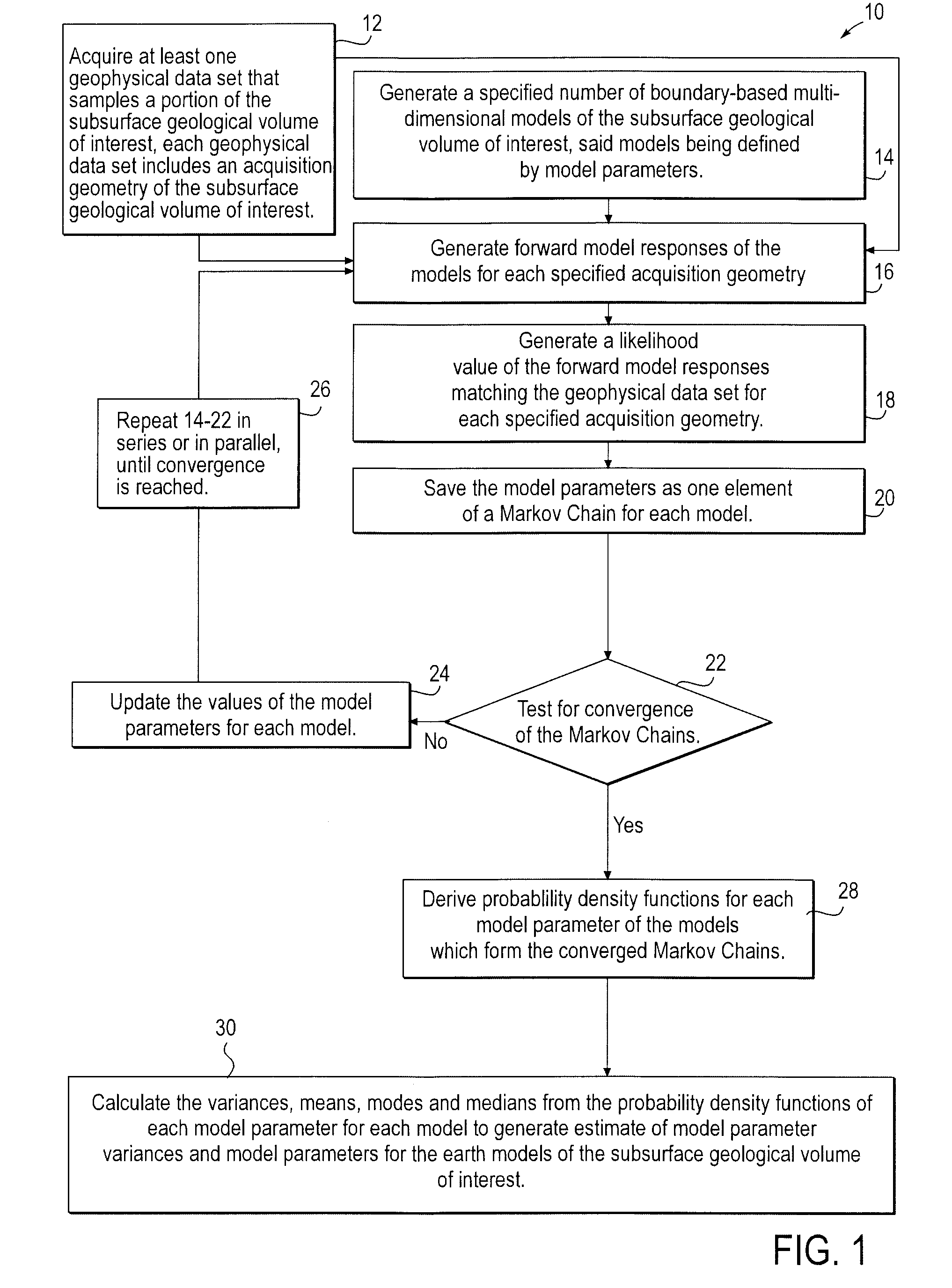

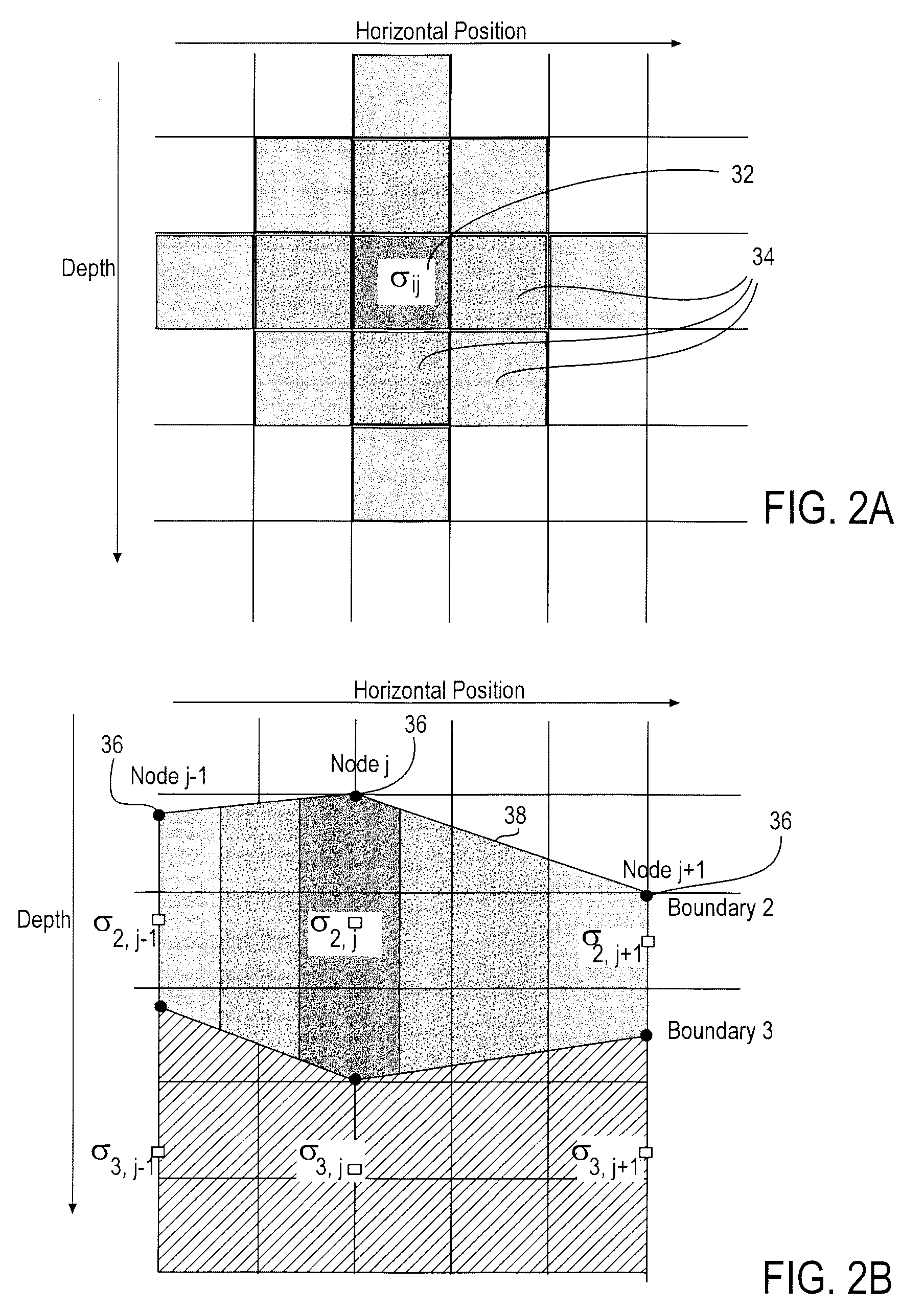

Stochastic inversion of geophysical data for estimating earth model parameters

A computer implemented stochastic inversion method for estimating model parameters of an earth model. In an embodiment, the method utilizes a sampling-based stochastic technique to determine the probability density functions (PDF) of the model parameters that define a boundary-based multi-dimensional model of the subsurface. In some embodiments a sampling technique known as Markov Chain Monte Carlo (MCMC) is utilized. MCMC techniques fall into the class of “importance sampling” techniques, in which the posterior probability distribution is sampled in proportion to the model's ability to fit or match the specified acquisition geometry. In another embodiment, the inversion includes the joint inversion of multiple geophysical data sets. Embodiments of the invention also relate to a computer system configured to perform a method for estimating model parameters for accurate interpretation of the earth's subsurface.

Owner:CHEVROU USA INC

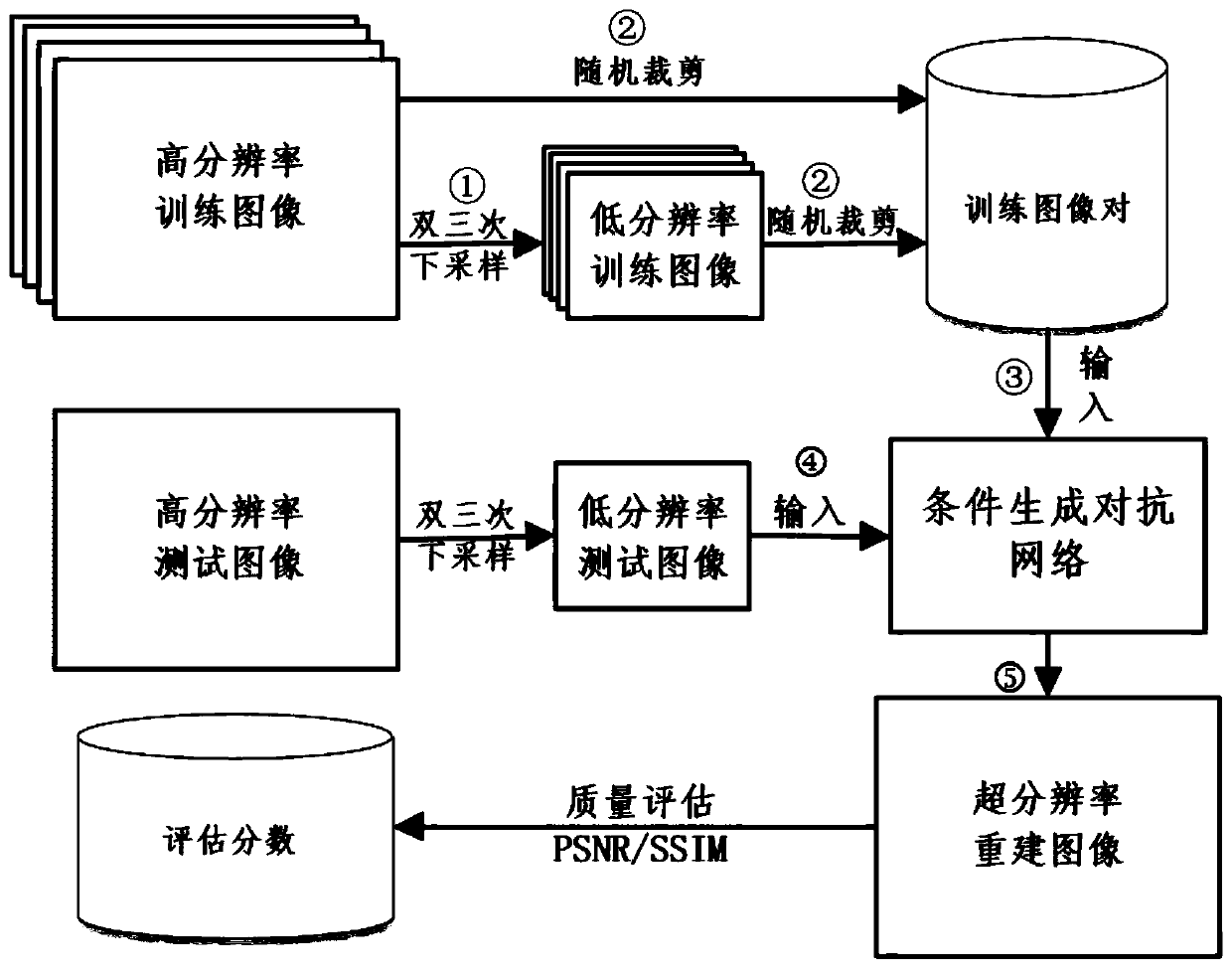

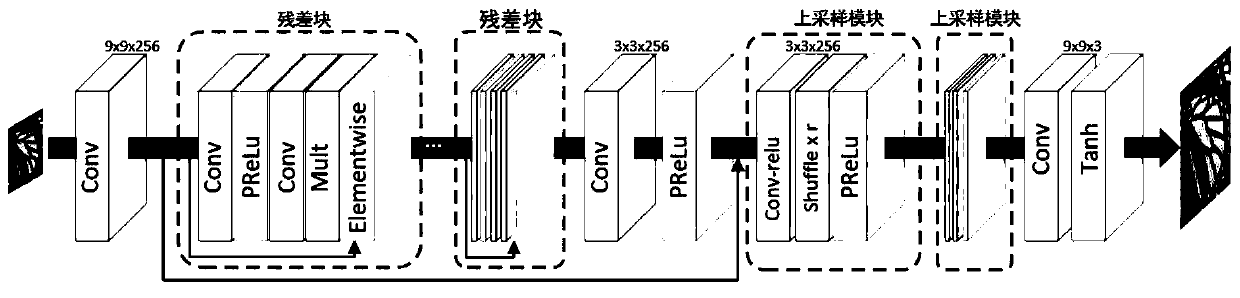

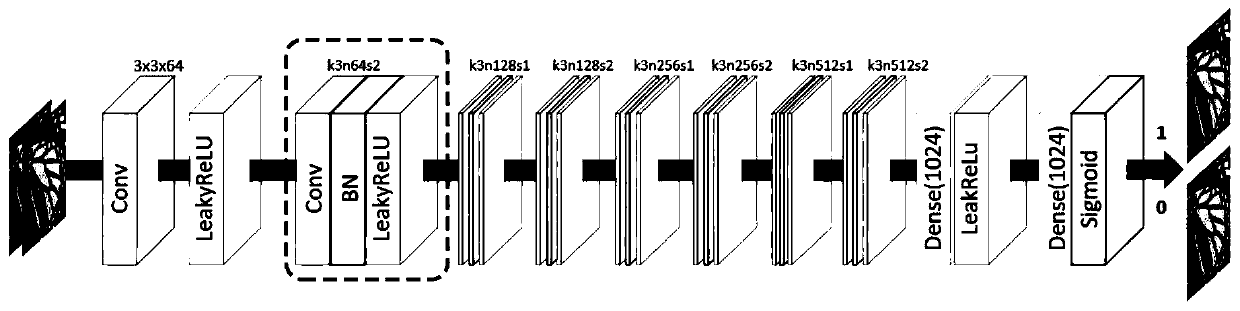

Single image super-resolution reconstruction method based on conditional generative adversarial network

ActiveCN110136063AImprove discrimination accuracyImprove performanceGeometric image transformationNeural architecturesGenerative adversarial networkReconstruction method

The invention discloses a single image super-resolution reconstruction method based on a conditional generative adversarial network. A judgment condition, namely an original real image, is added intoa judger network of the generative adversarial network. A deep residual error learning module is added into a generator network to realize learning of high-frequency information and alleviate the problem of gradient disappearance. The single low-resolution image is input to be reconstructed into a pre-trained conditional generative adversarial network, and super-resolution reconstruction is performed to obtain a reconstructed high-resolution image; learning steps of the conditional generative adversarial network model include: learning a model of the conditional adversarial network; inputtingthe high-resolution training set and the low-resolution training set into a conditional generative adversarial network model, using pre-trained model parameters as initialization parameters of the training, judging the convergence condition of the whole network through a loss function, obtaining a finally trained conditional generative adversarial network model when the loss function is converged,and storing the model parameters.

Owner:NANJING UNIV OF INFORMATION SCI & TECH

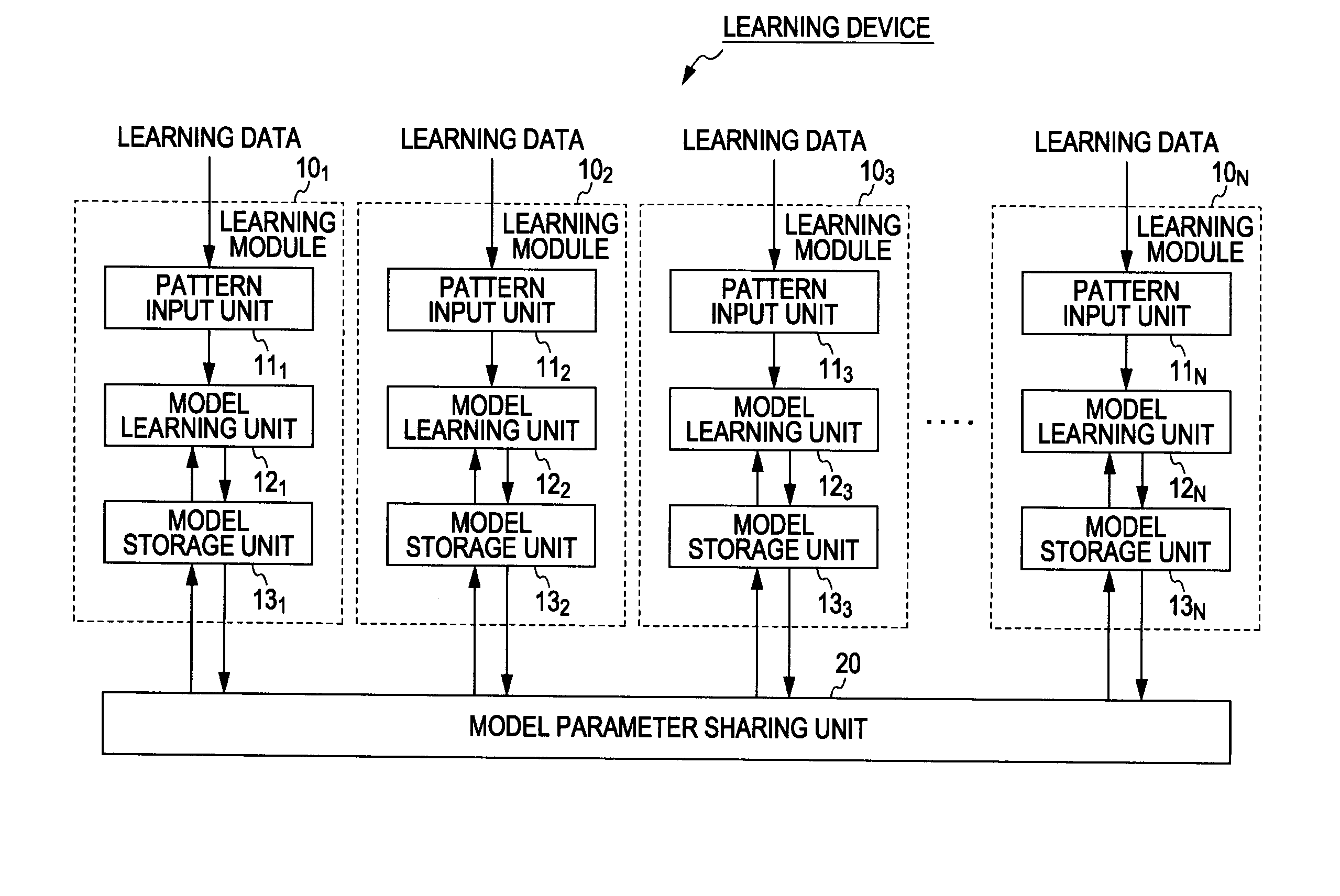

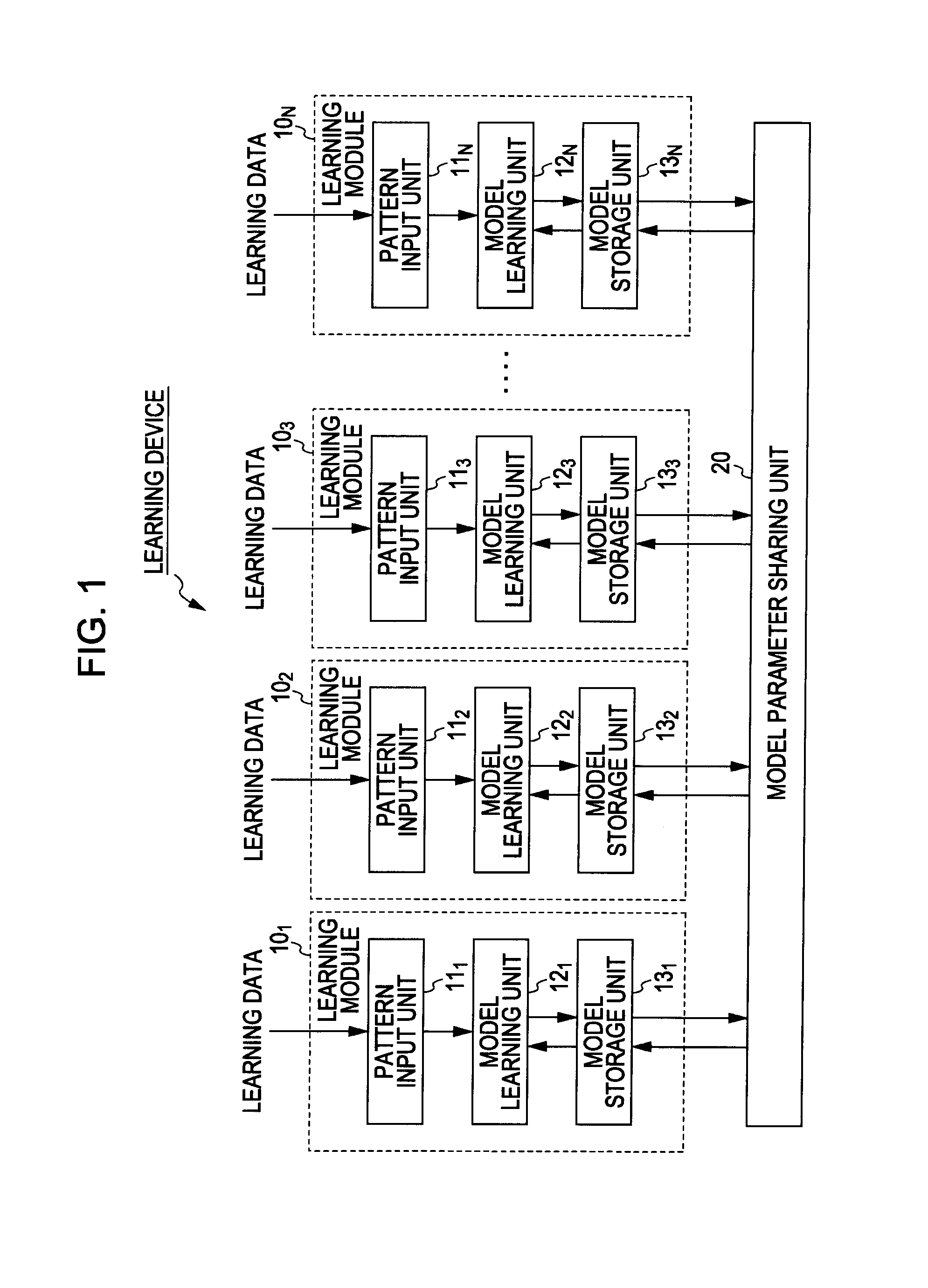

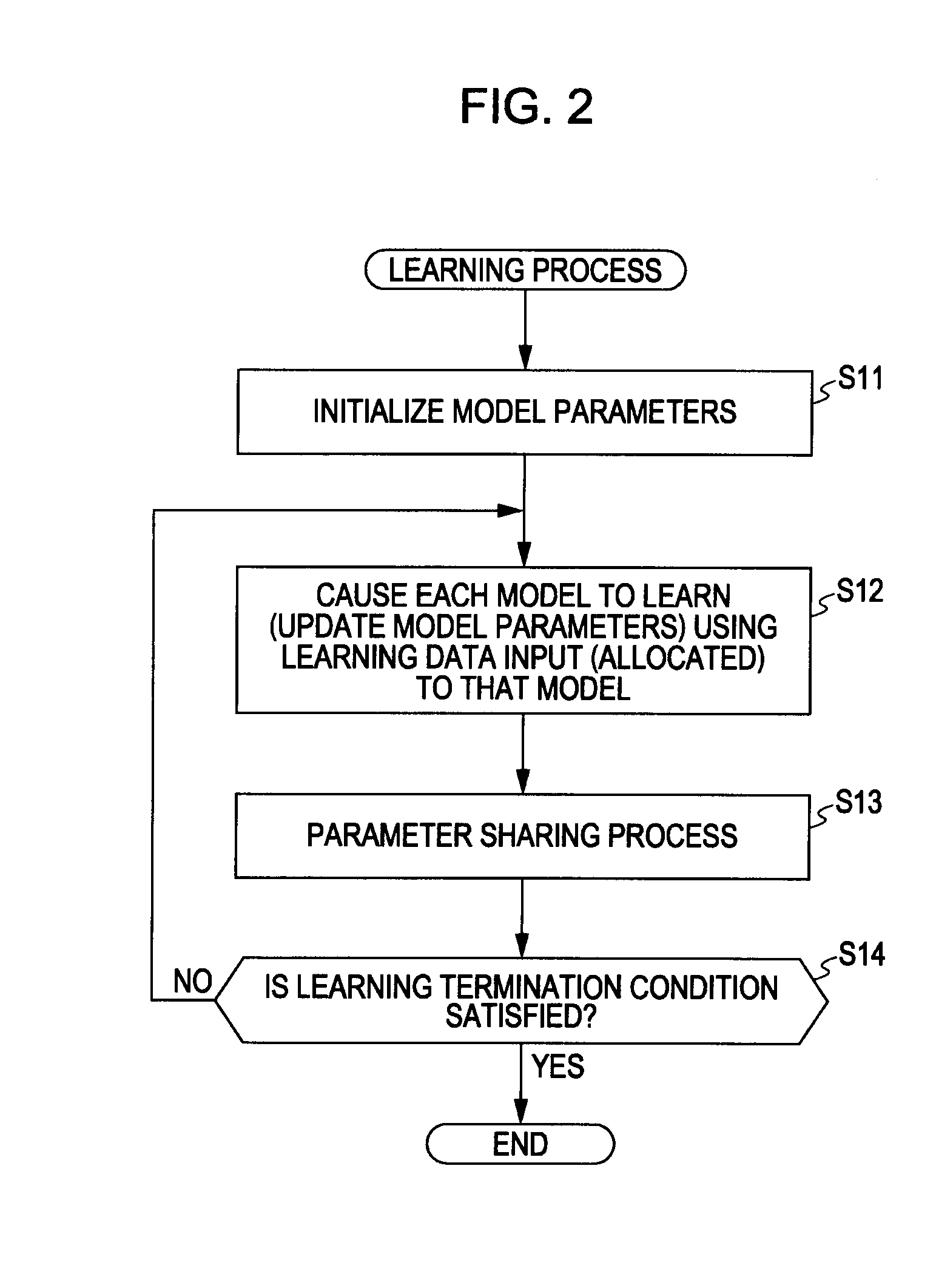

Learning Device, Learning Method, and Program

InactiveUS20100010948A1Error minimizationDigital computer detailsDigital dataPattern recognitionPattern learning

A learning device includes: a plurality of learning modules, each of which performs update learning to update a plurality of model parameters of a pattern learning model that learns a pattern using input data; model parameter sharing means for causing two or more learning modules from among the plurality of learning modules to share the model parameters; and sharing strength updating means for updating sharing strengths between the learning modules so as to minimize learning errors when the plurality of model parameters are updated by the update learning.

Owner:SONY CORP

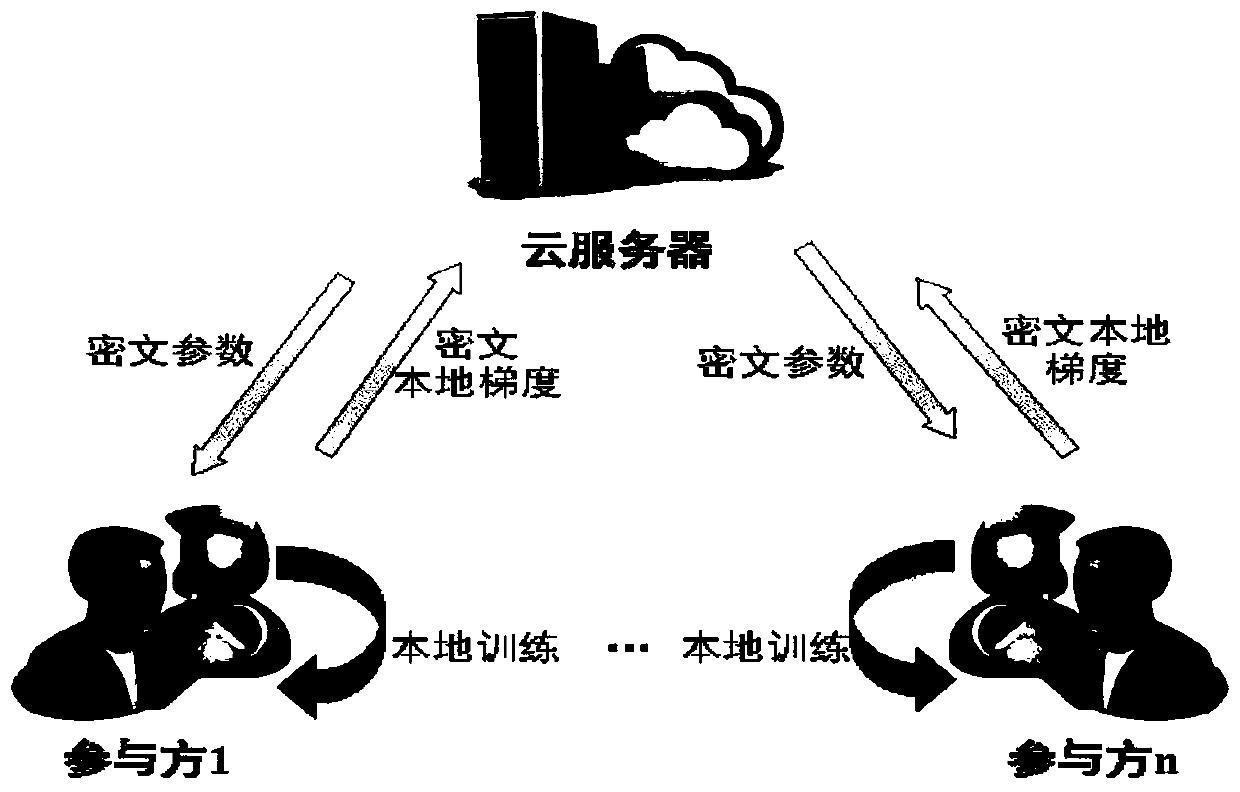

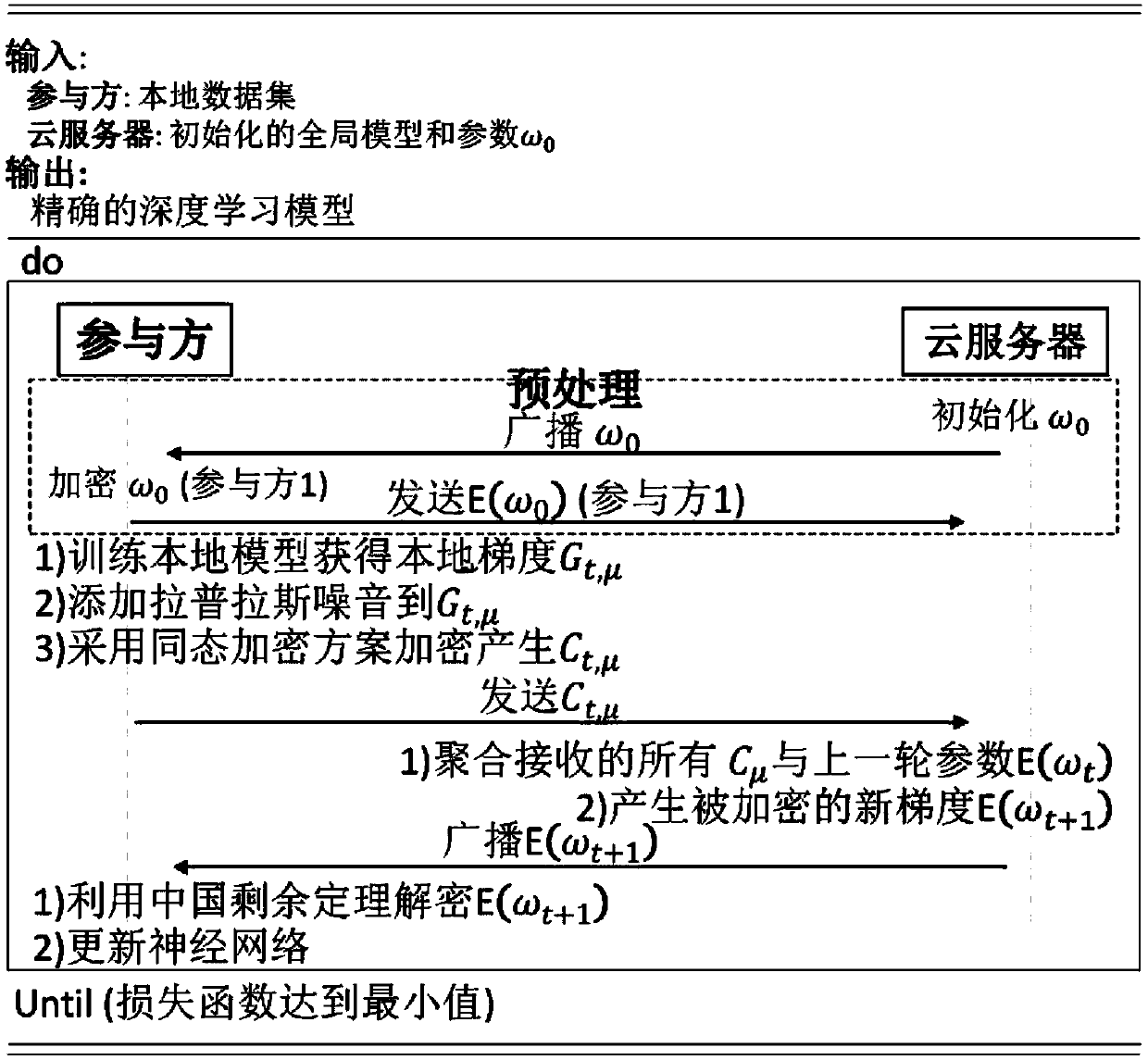

A combined deep learning training method based on a privacy protection technology

ActiveCN109684855AAvoid gettingSafe and efficient deep learning training methodDigital data protectionCommunication with homomorphic encryptionPattern recognitionData set

The invention belongs to the technical field of artificial intelligence, and relates to a combined deep learning training method based on a privacy protection technology. The efficient combined deep learning training method based on the privacy protection technology is achieved. In the invention, each participant first trains a local model on a private data set to obtain a local gradient, then performs Laplace noise disturbance on the local gradient, encrypts the local gradient and sends the encrypted local gradient to a cloud server; The cloud server performs aggregation operation on all thereceived local gradients and the ciphertext parameters of the last round, and broadcasts the generated ciphertext parameters; And finally, the participant decrypts the received ciphertext parameters and updates the local model so as to carry out subsequent training. According to the method, a homomorphic encryption scheme and a differential privacy technology are combined, a safe and efficient deep learning training method is provided, the accuracy of a training model is guaranteed, and meanwhile a server is prevented from inferring model parameters, training data privacy and internal attacksto obtain private information.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

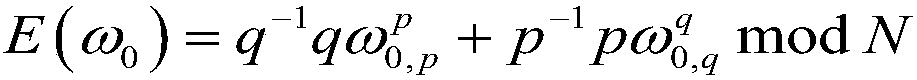

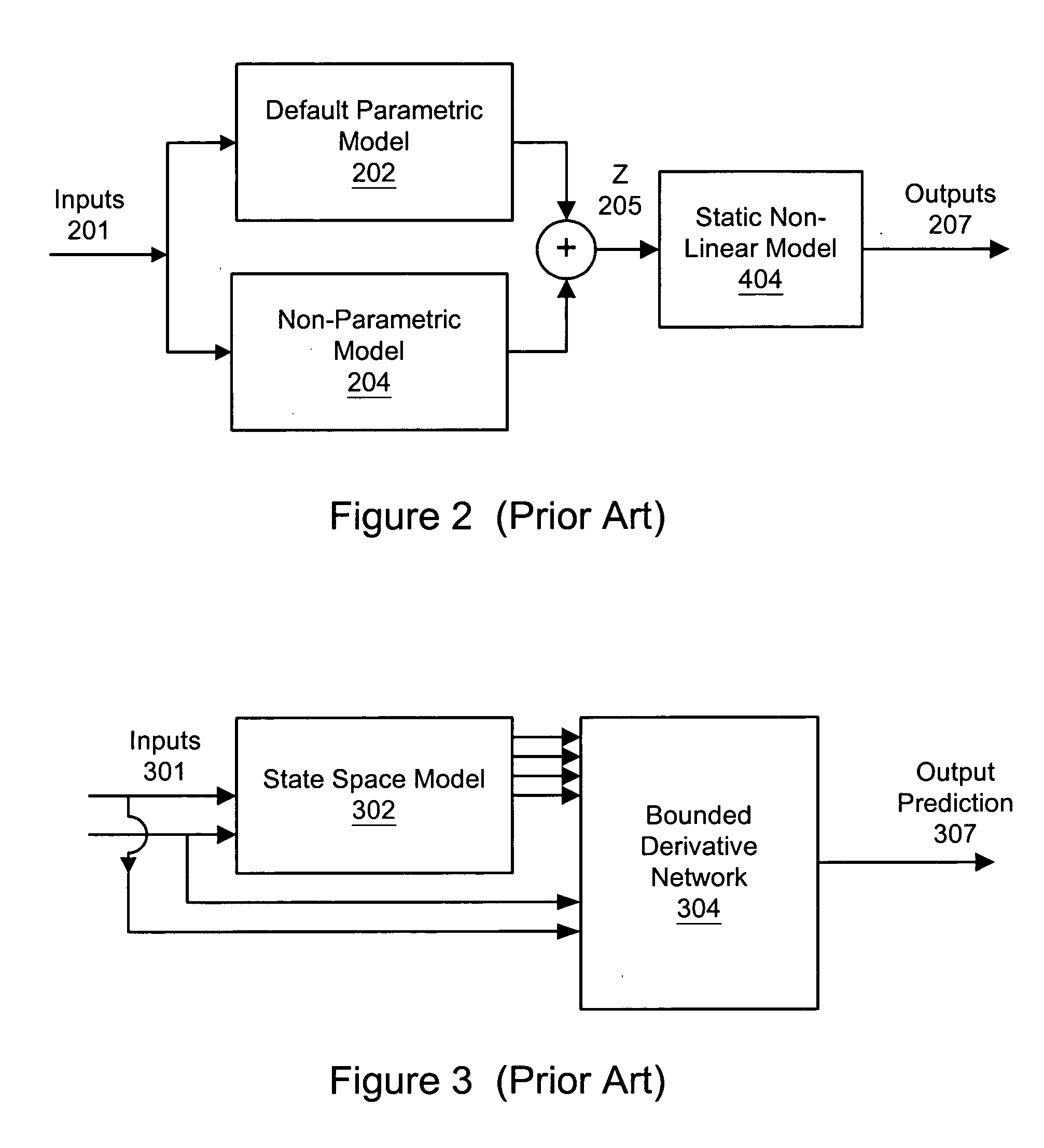

Parametric universal nonlinear dynamics approximator and use

InactiveUS20050187643A1Simulator controlDigital computer detailsNonlinear approximationDynamic models

Owner:ROCKWELL AUTOMATION TECH

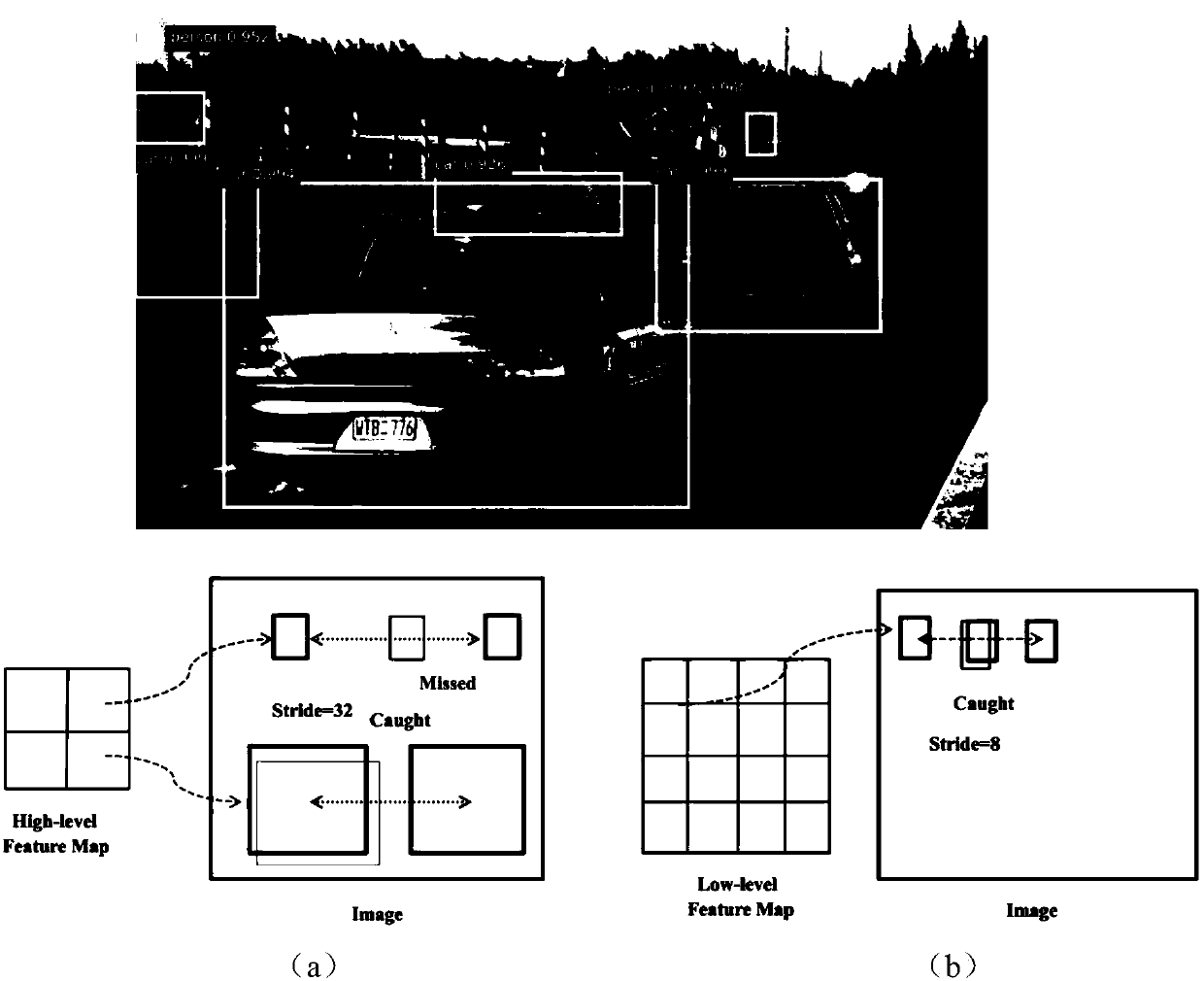

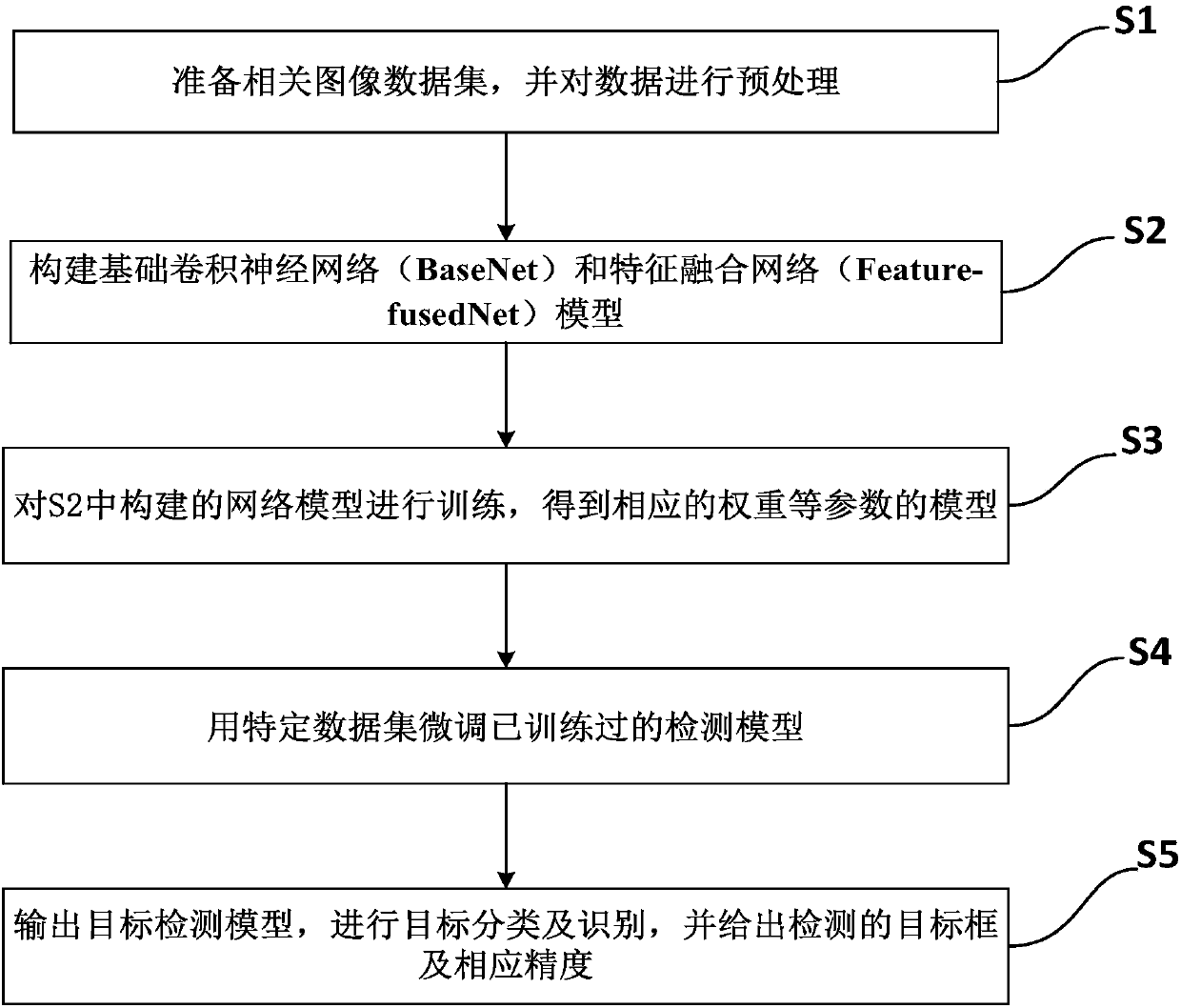

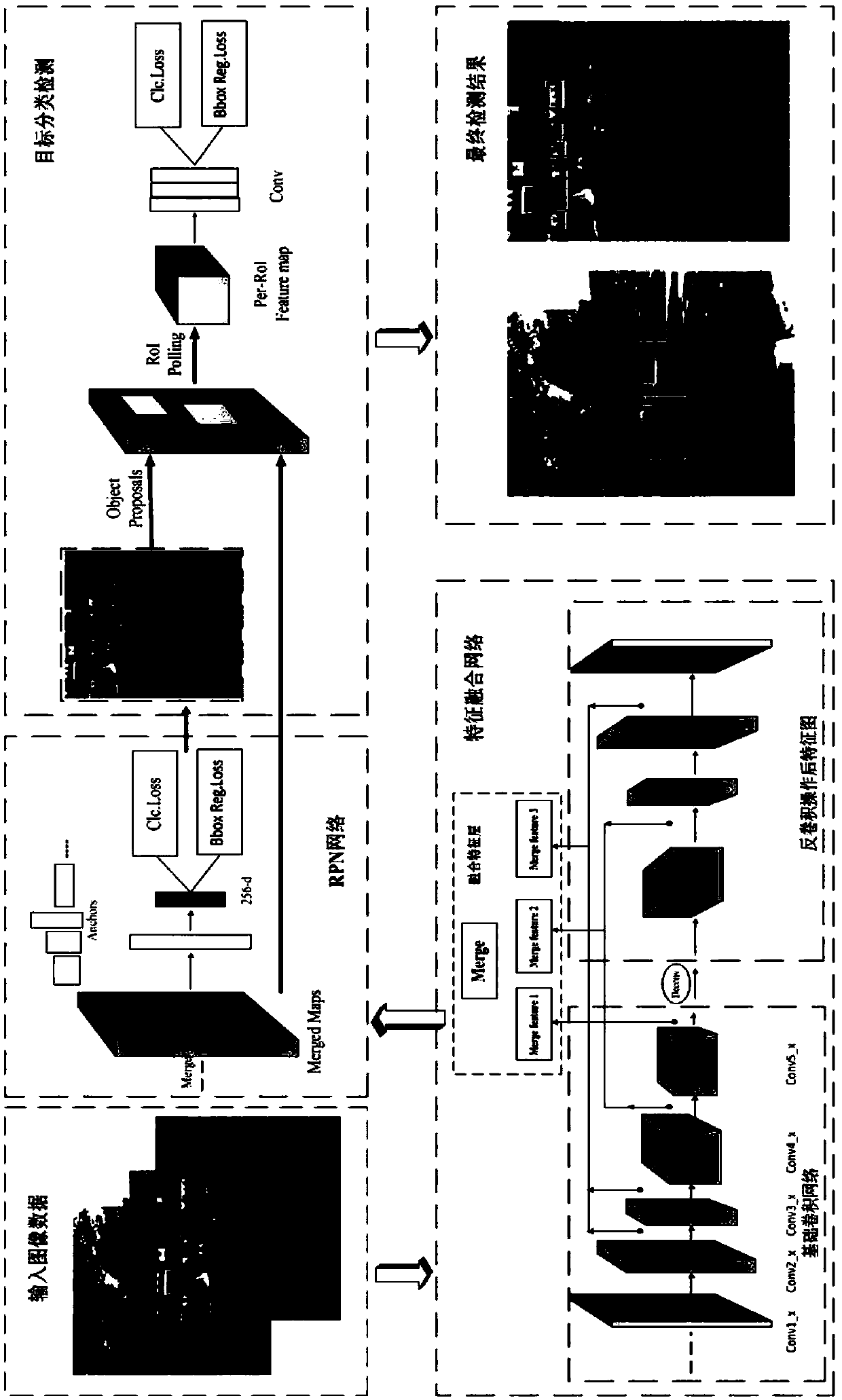

Multistage target detection method and model based on CNN multistage feature fusion

ActiveCN108509978AImprove accuracyOptimize network structureCharacter and pattern recognitionNeural architecturesData setNerve network

The invention discloses a multi-stage target detection method and model based on CNN multistage characteristic fusion; the method mainly comprises the following steps: preparing a related image data set, and processing the data; building a base convolution nerve network (BaseNet) and Feature-fused Net model; training the network model built in the previous step so as to obtain a model of the corresponding weight parameter; using a special data set to finely adjust the trained detection model; outputting a target detection model so as to make target classification and identification, and providing a detection target frame and the corresponding precision. In addition, the invention also provides a multi-class target detection structure model based on CNN multistage characteristic fusion, thus improving the whole detection accuracy, optimizing model parameter values, and providing a more reasonable model structure.

Owner:CENT SOUTH UNIV

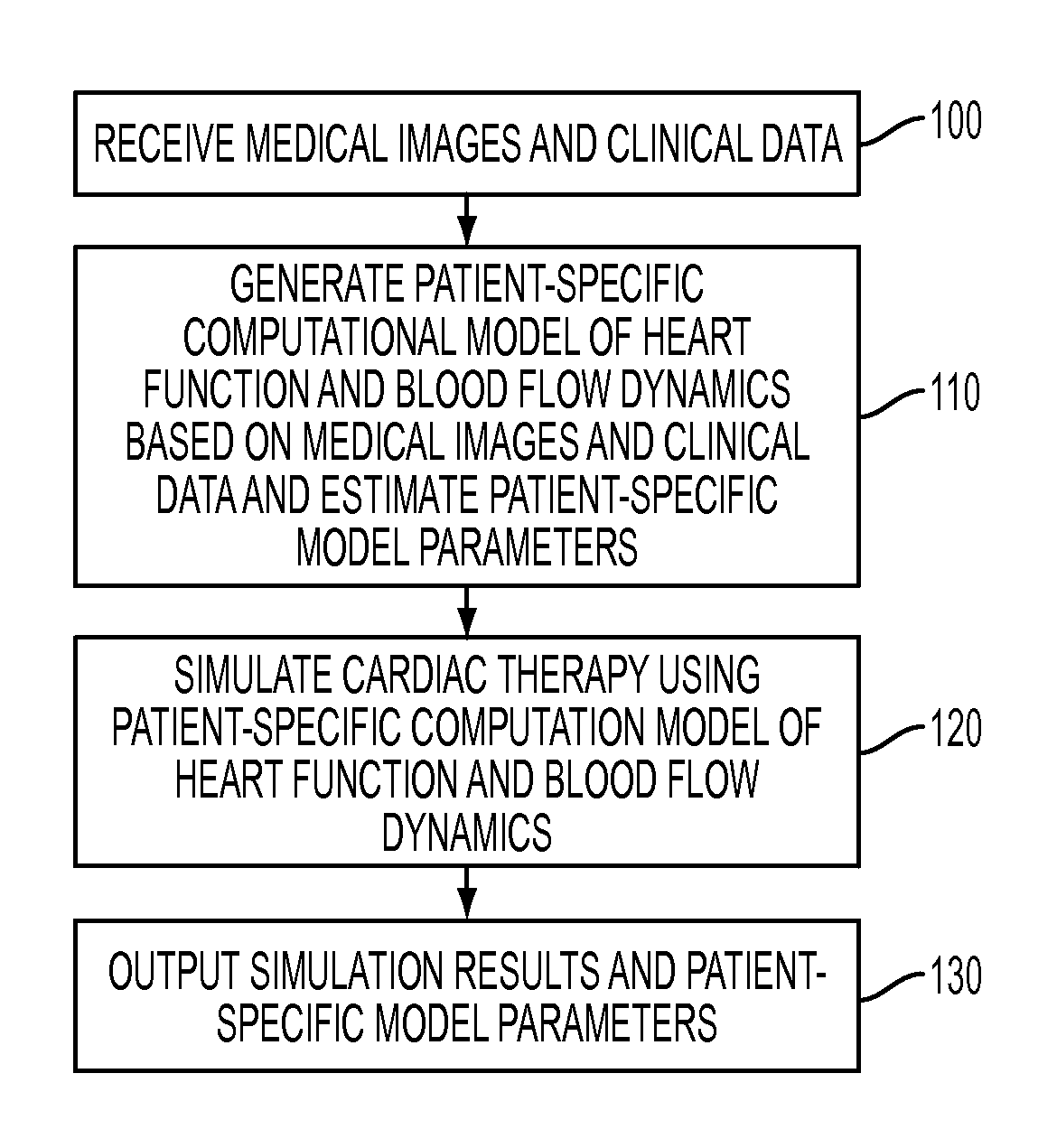

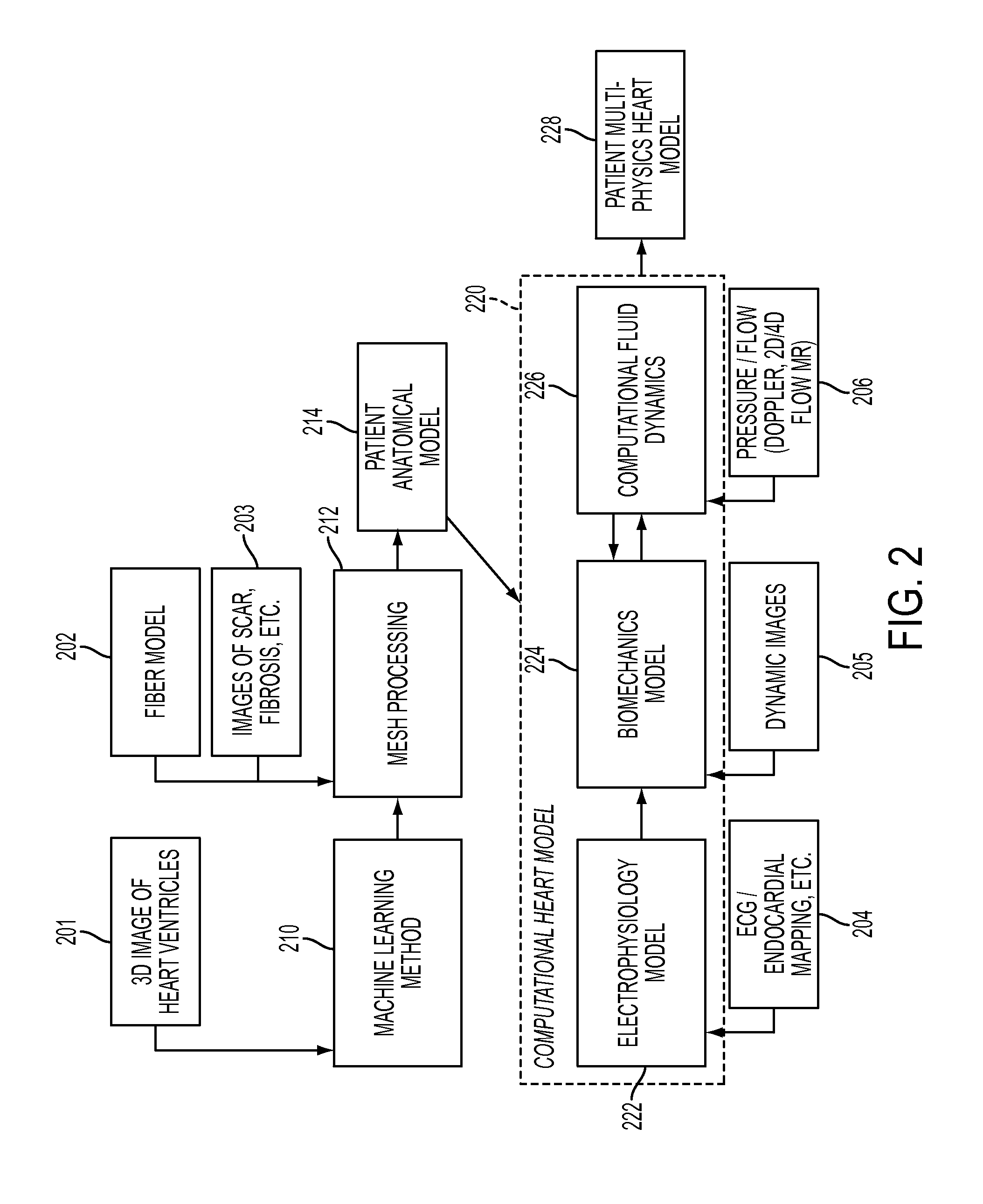

Method and System for Advanced Measurements Computation and Therapy Planning from Medical Data and Images Using a Multi-Physics Fluid-Solid Heart Model

ActiveUS20130197884A1Exact reproductionMedical simulationUltrasonic/sonic/infrasonic diagnosticsComputational modelPatient data

Method and system for computation of advanced heart measurements from medical images and data; and therapy planning using a patient-specific multi-physics fluid-solid heart model is disclosed. A patient-specific anatomical model of the left and right ventricles is generated from medical image patient data. A patient-specific computational heart model is generated based on the patient-specific anatomical model of the left and right ventricles and patient-specific clinical data. The computational model includes biomechanics, electrophysiology and hemodynamics. To generate the patient-specific computational heart model, initial patient-specific parameters of an electrophysiology model, initial patient-specific parameters of a biomechanics model, and initial patient-specific computational fluid dynamics (CFD) boundary conditions are marginally estimated. A coupled fluid-structure interaction (FSI) simulation is performed using the initial patient-specific parameters, and the initial patient-specific parameters are refined based on the coupled FSI simulation. The estimated model parameters then constitute new advanced measurements that can be used for decision making.

Owner:SIEMENS HEALTHCARE GMBH

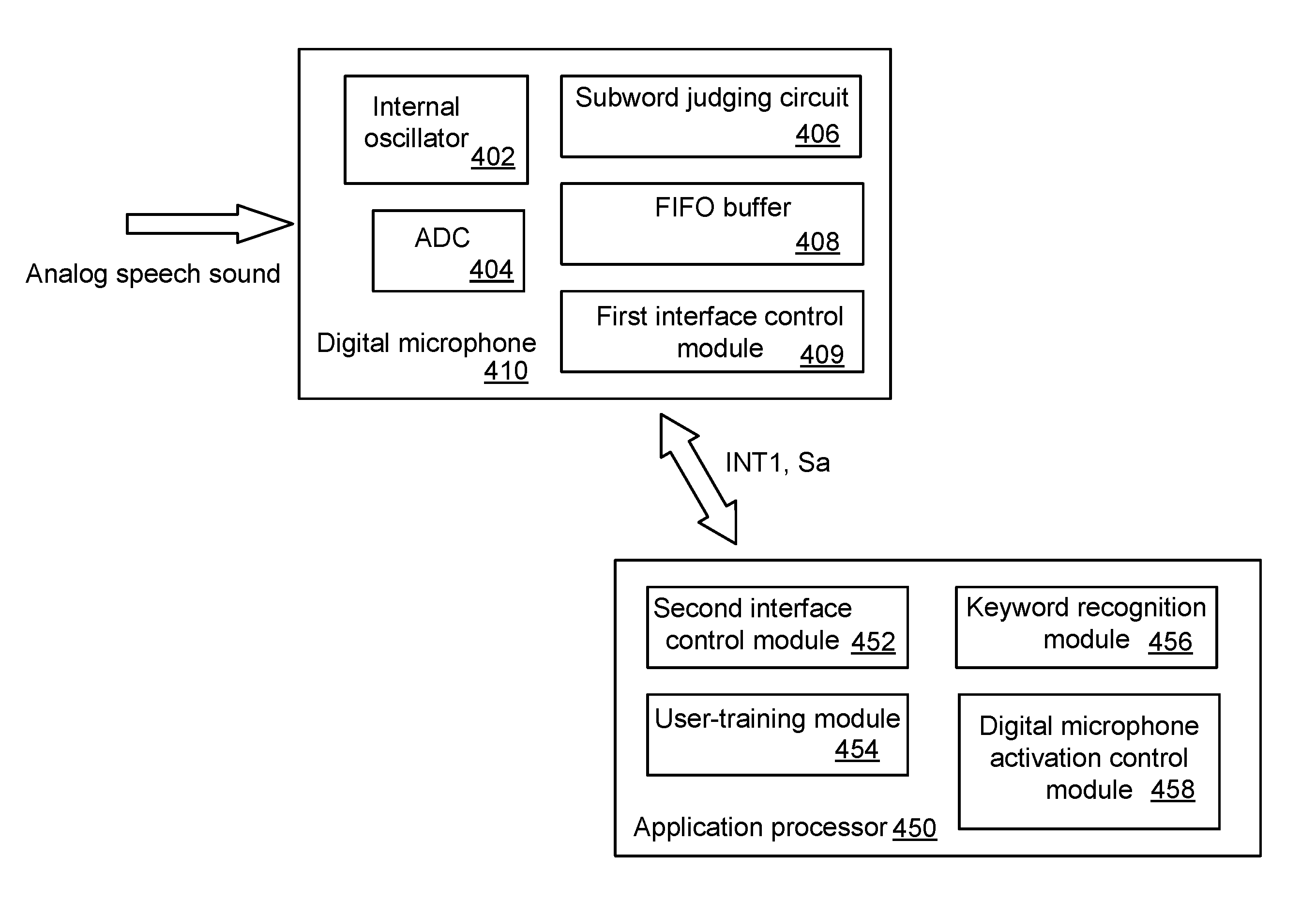

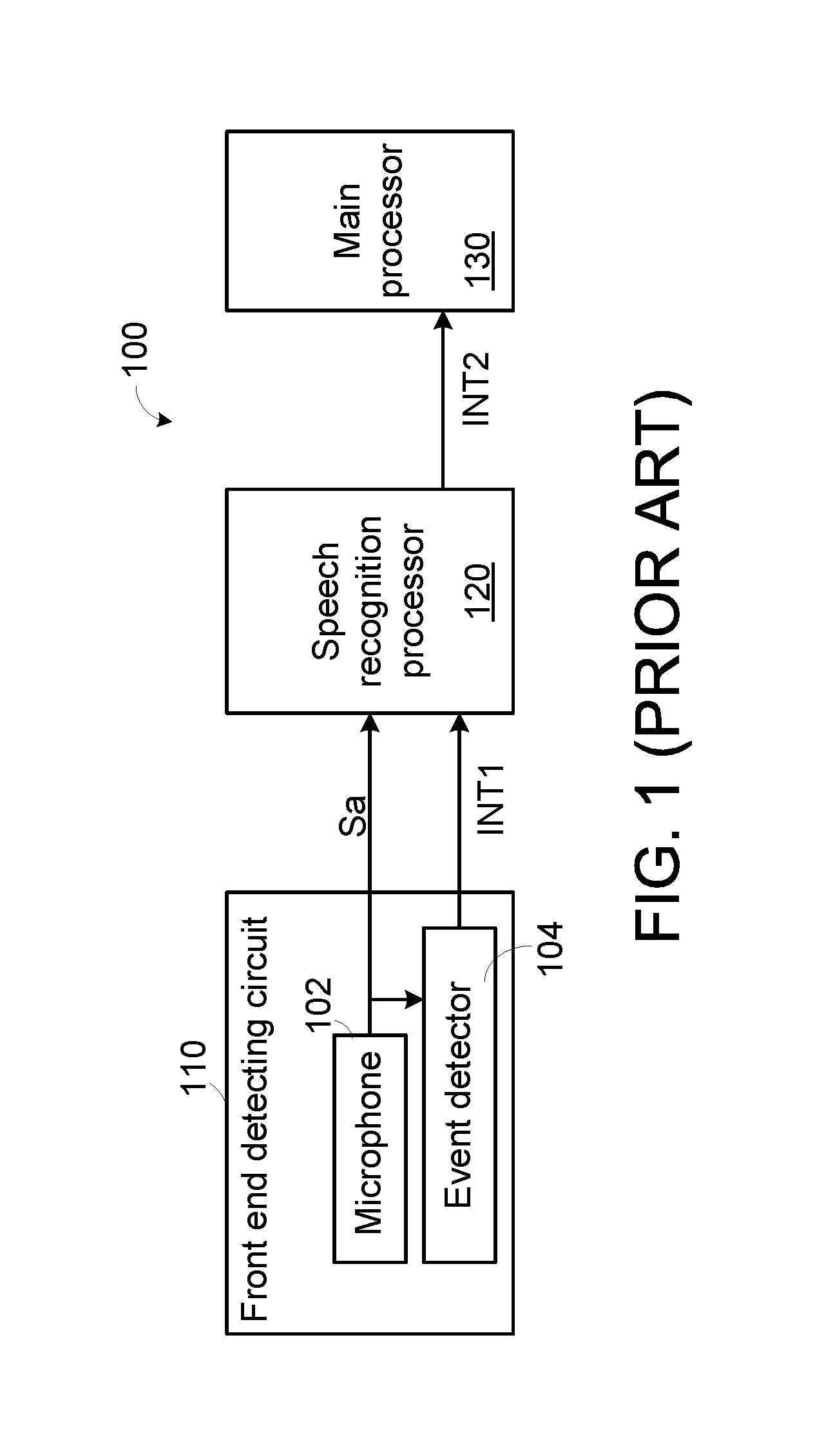

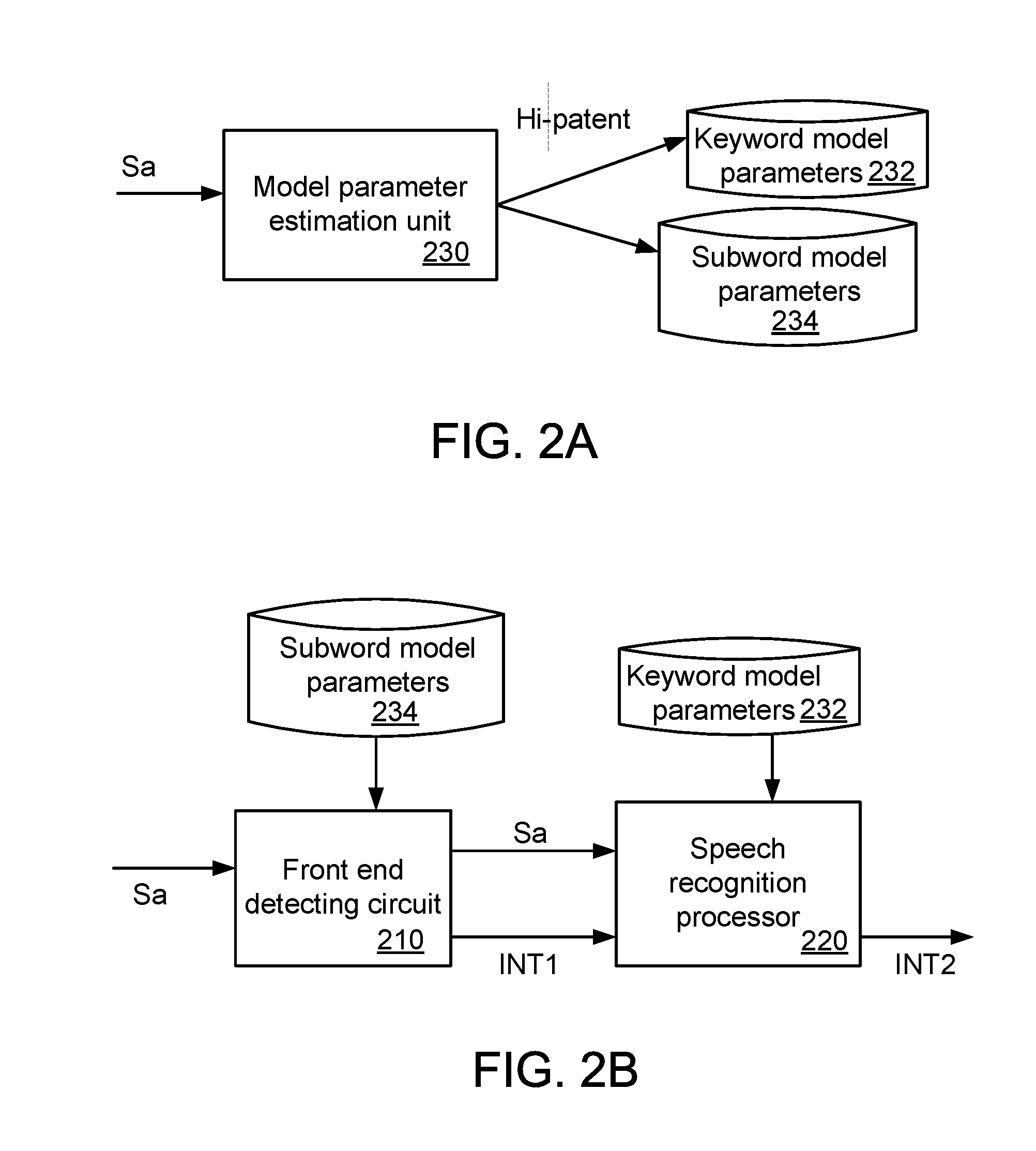

Voice wakeup detecting device with digital microphone and associated method

ActiveUS20160171976A1Accurate identificationPower managementSubstation equipmentSleep stateModel parameters

A voice wakeup detecting device for an electronic product includes a digital microphone and an application processor. The digital microphone has a function of judging whether a digital voice signal contains a subword according to subword model parameters. If the digital microphone confirms that the digital voice signal contains the subword, the digital microphone generates a first interrupt signal and outputs the digital voice signal. The application processor is enabled in response to the first interrupt signal. The application processor judges whether the digital voice signal contains a keyword according to keyword model parameters. If the application processor confirms that the digital voice signal contains the keyword, the electronic product is waked up from a sleep state to a normal working state under control of the application processor.

Owner:MEDIATEK INC

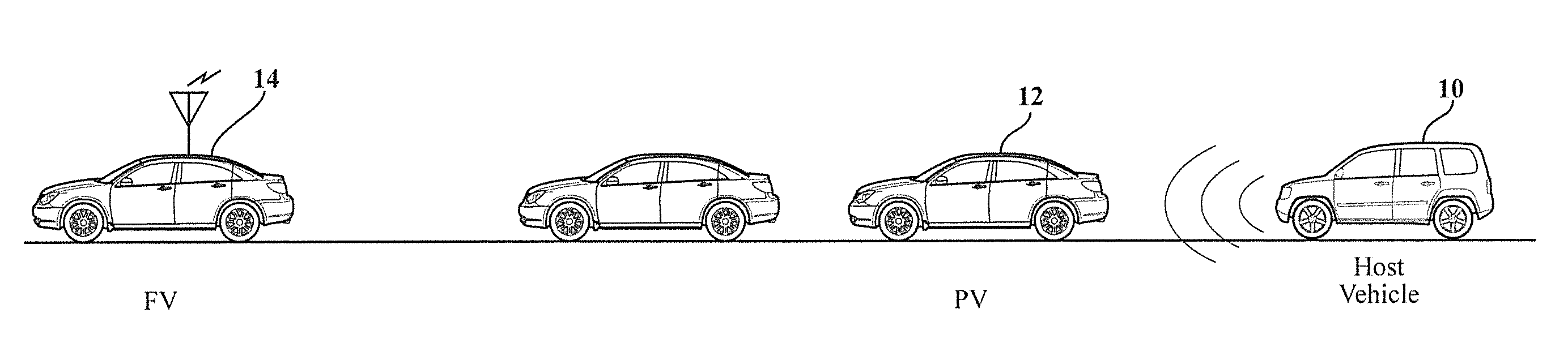

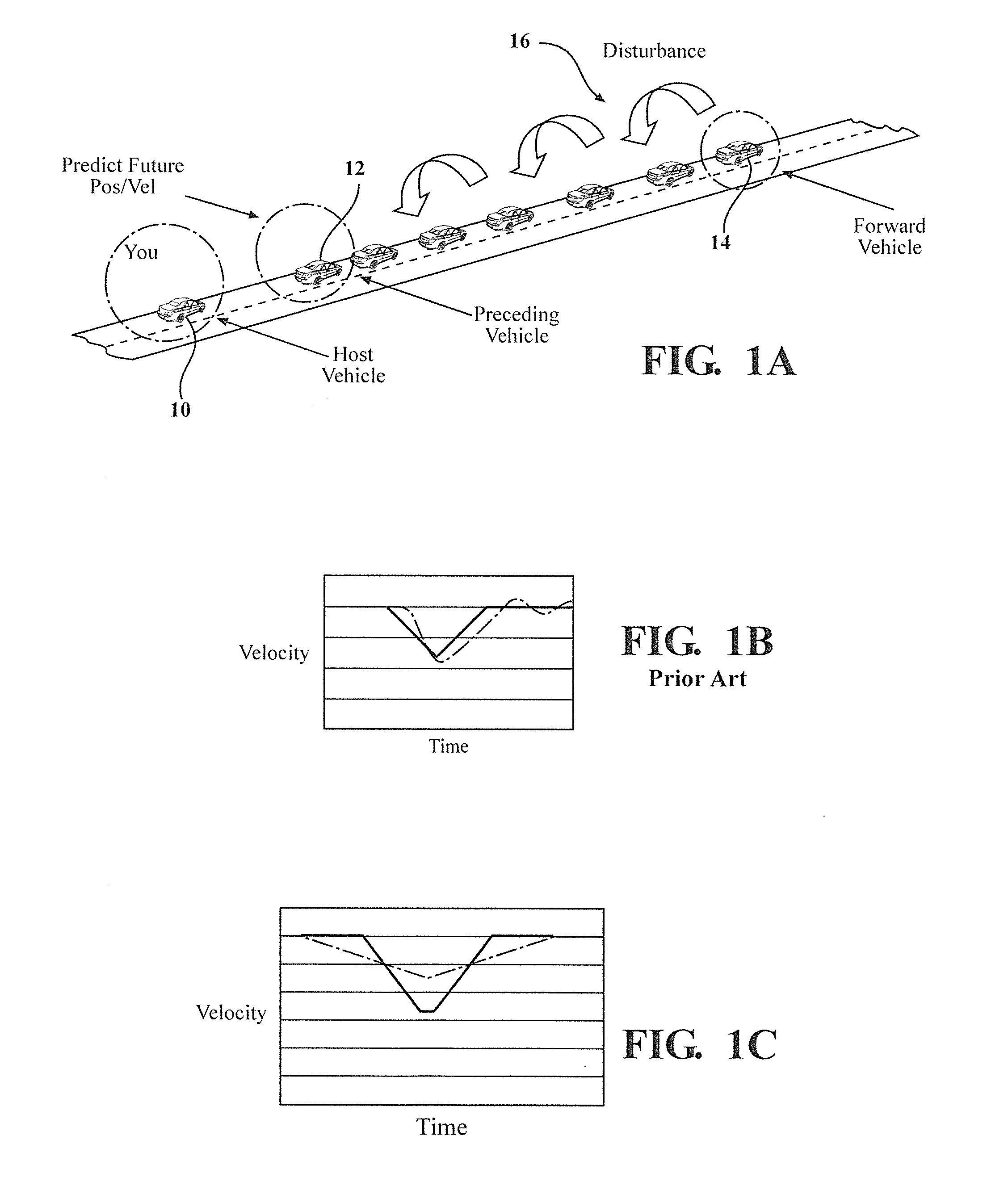

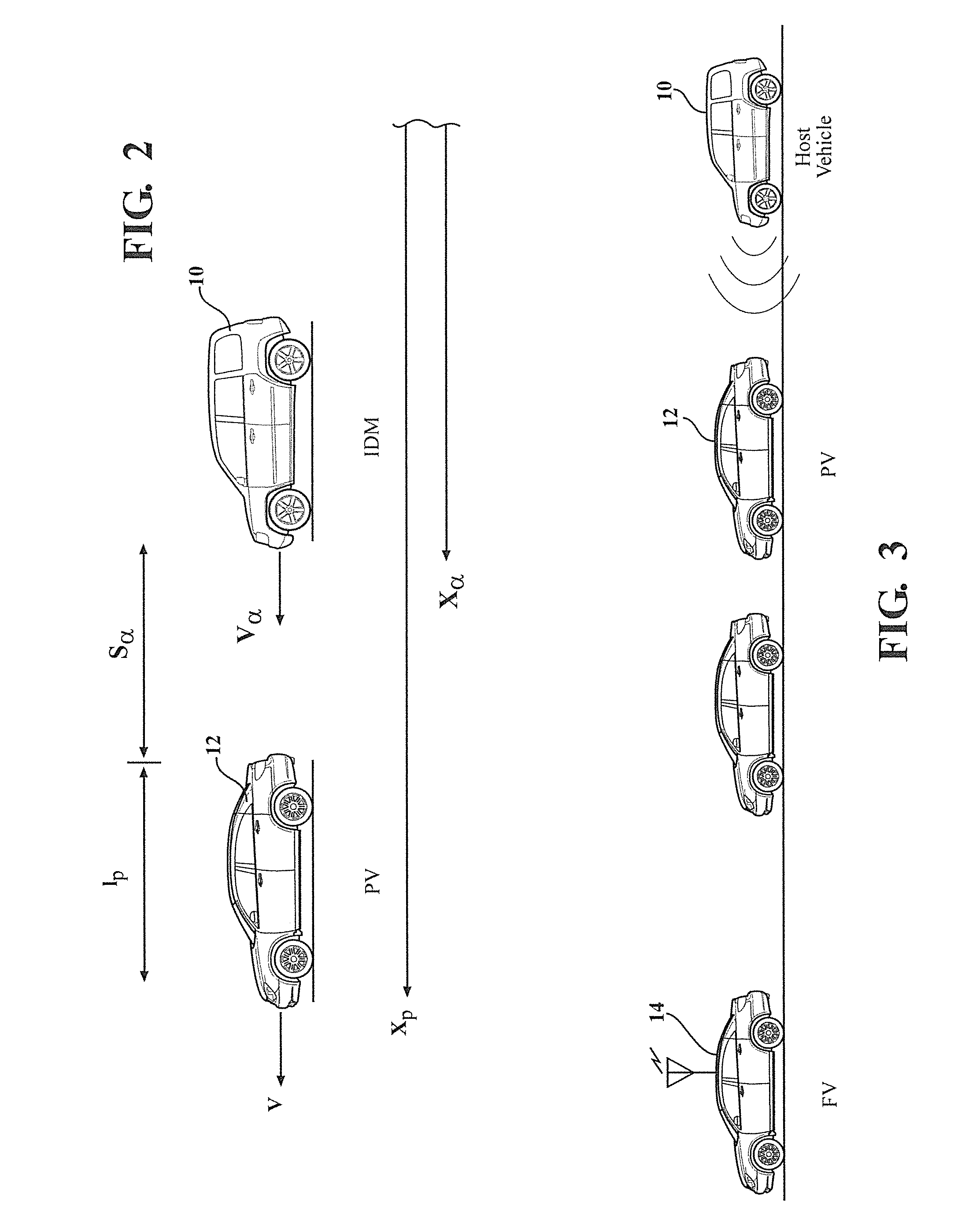

Preceding vehicle state prediction

ActiveUS20140005906A1Reduce fuel consumptionSmoothing out traffic flowVehicle fittingsDigital data processing detailsState predictionIntelligent driver model

A platoon model allows improved prediction of preceding vehicle future state. In this context, the preceding vehicle is a vehicle immediately ahead of the host vehicle, and the dynamic state of the preceding vehicle was predicted based on data received from one or more vehicles in the platoon. The intelligent driver model (IDM) was extended to model car-following dynamics within a platoon. A parameter estimation approach may be used to estimate the model parameters, for example to adapt to different driver types. An integrated approach including both state prediction and parameter estimation was highly effective.

Owner:TOYOTA JIDOSHA KK

Method and equipment for voice synthesis and method for training acoustic model used in voice synthesis

The invention relates to a method and equipment for voice synthesis and a method for training an acoustic model used in voice synthesis. The method for voice synthesis includes the steps as follows: confirming that data generated by text analysis is fuzzy polyphone data; and performing fuzzy polyphone prediction for the fuzzy polyphone data, so as to output a plurality of candidate pronunciations and the probability thereof; generating the fuzzy context characteristic tagging based on the candidate pronunciations and the probability thereof; based on the acoustical model provided with a fuzzy decision tree, confirming model parameters direct at the fuzzy context characteristic tagging; generating voice parameters based on the model parameters; and synthesizing voice through the voice parameters. As per the method and equipment provided by the embodiment of the invention, the fuzzy treatment can be performed for polyphone words difficult for prediction in a Chinese text, so as to improve the synthesis quality of Chinese polyphones.

Owner:KK TOSHIBA

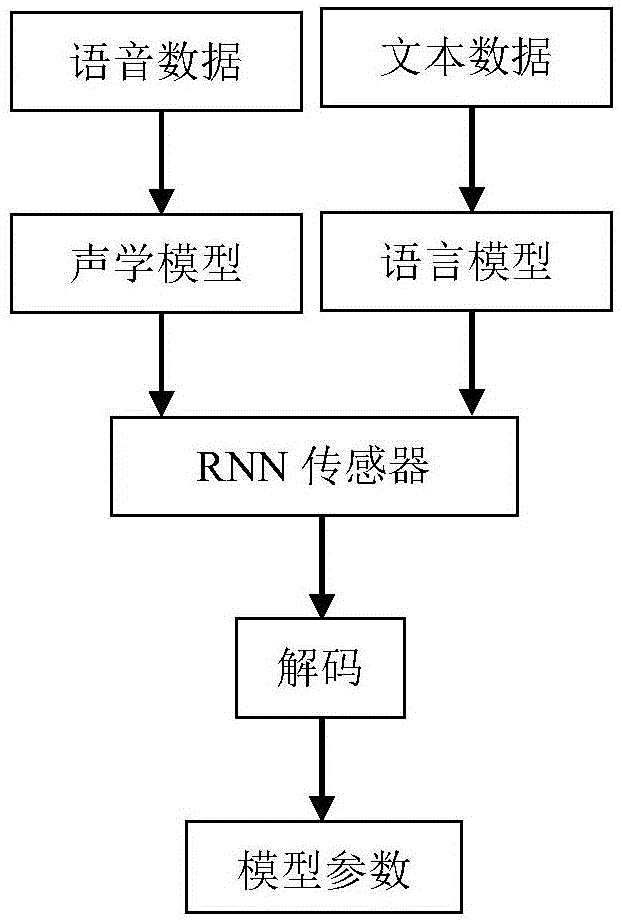

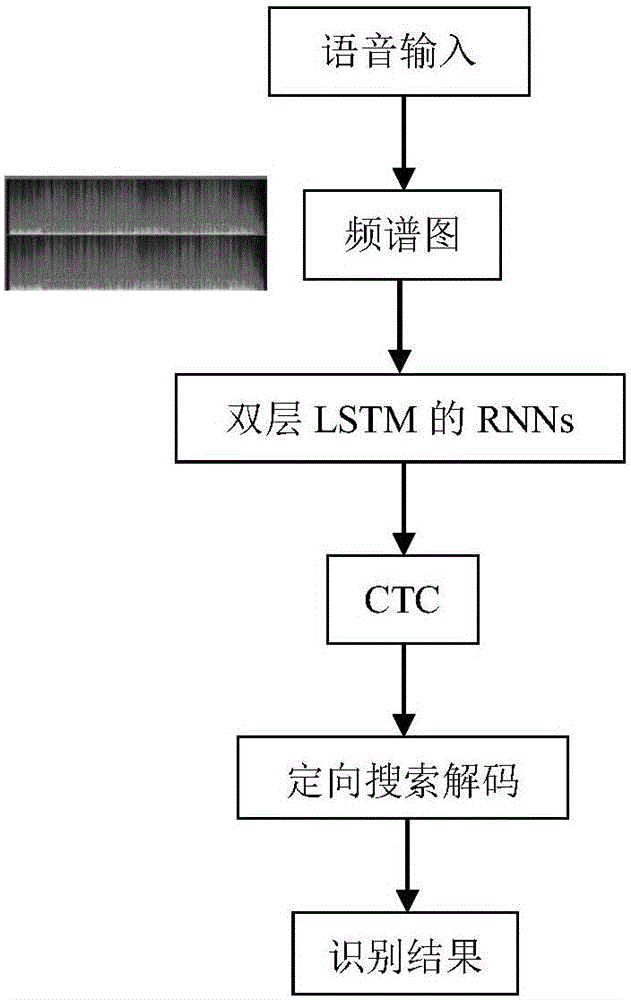

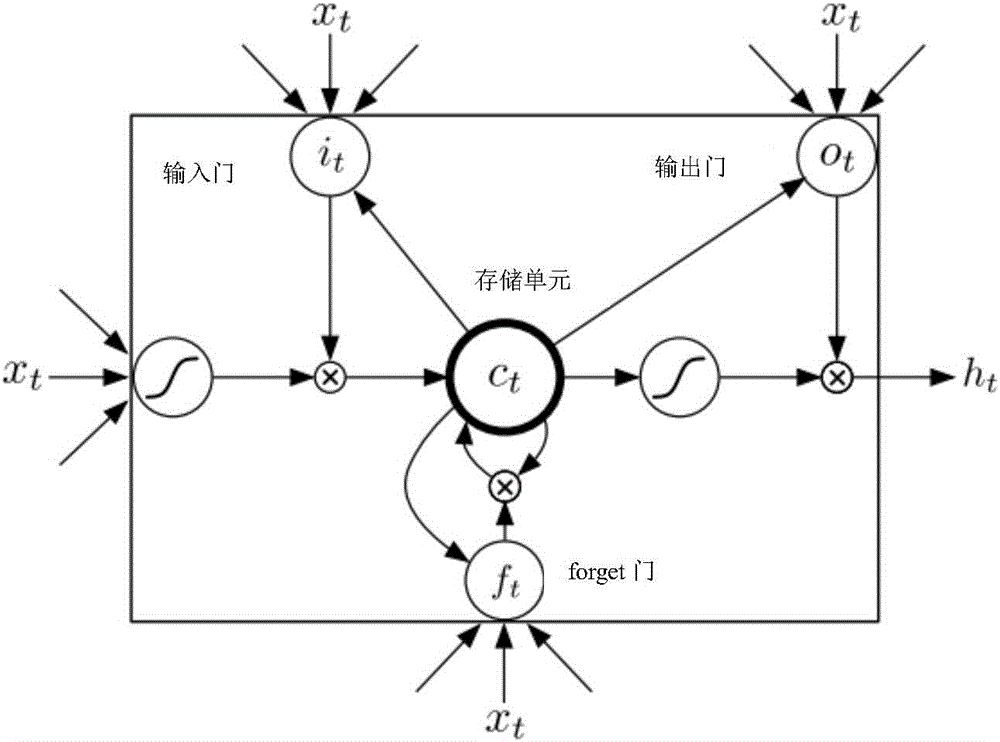

Voice identification method using long-short term memory model recurrent neural network

The invention discloses a voice identification method using a long-short term memory model recurrent neural network. The voice identification method comprises training and identification. The training process comprises steps of introducing voice data and text data to generate a commonly-trained acoustic and language mode, and using an RNN sensor to perform decoding to form a model parameter. The identification process comprises steps of converting voice input to a frequency spectrum graph through Fourier conversion, using the recursion neural network of the long-short term memory model to perform orientational searching decoding and finally generating an identification result. The voice identification method adopts the recursion neural network (RNNs) and adopts connection time classification (CTC) to train RNNs through an end-to-end training method. These LSTM units combining with the long-short term memory have good effects and combines with multi-level expression to prove effective in a deep network; only one neural network model (end-to-end model) exits from a voice characteristic (an input end) to a character string (an output end) and the neural network can be directly trained by a target function which is a some kind of a proxy of WER, which avoids to cost useless work to optimize an individual target function.

Owner:SHENZHEN WEITESHI TECH

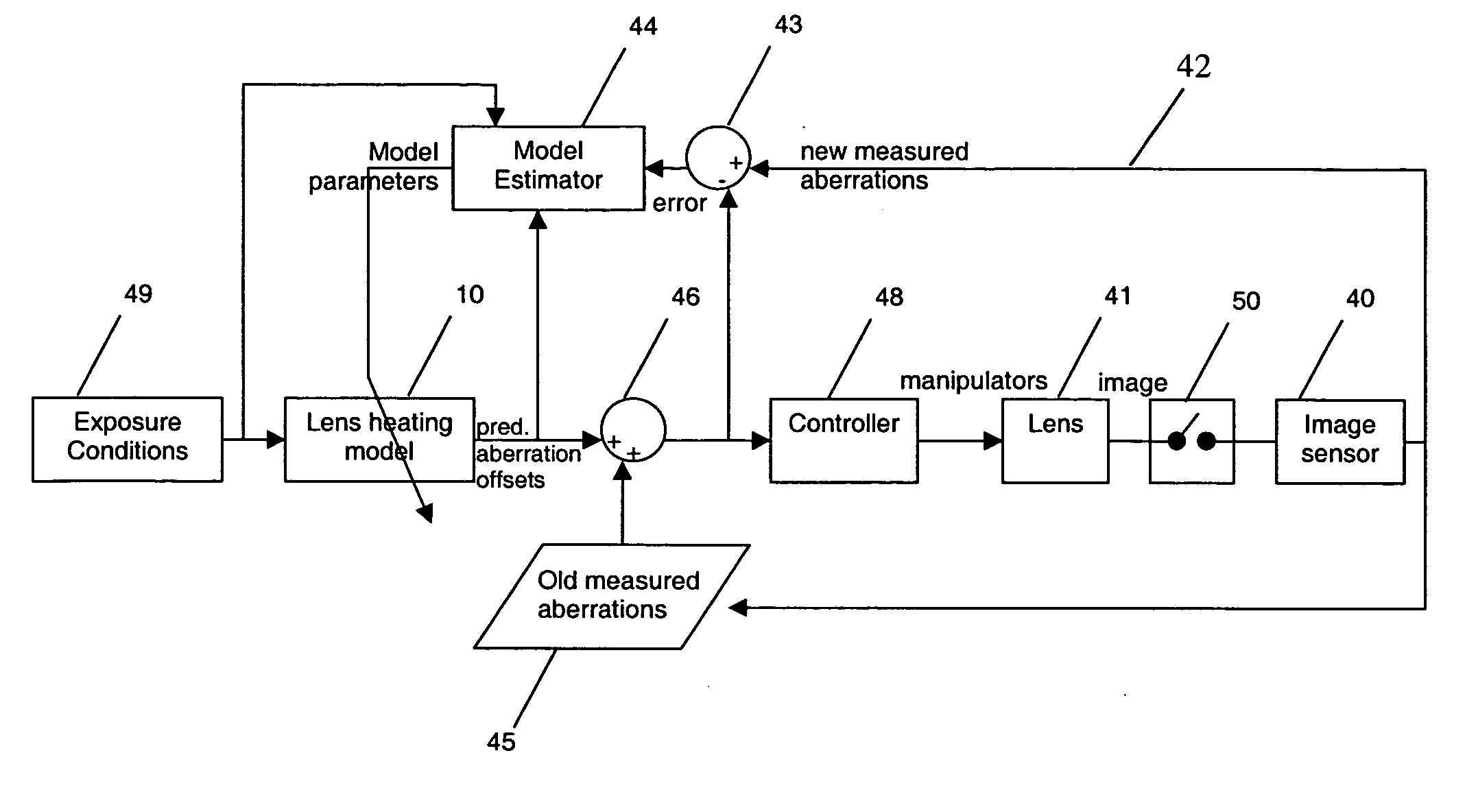

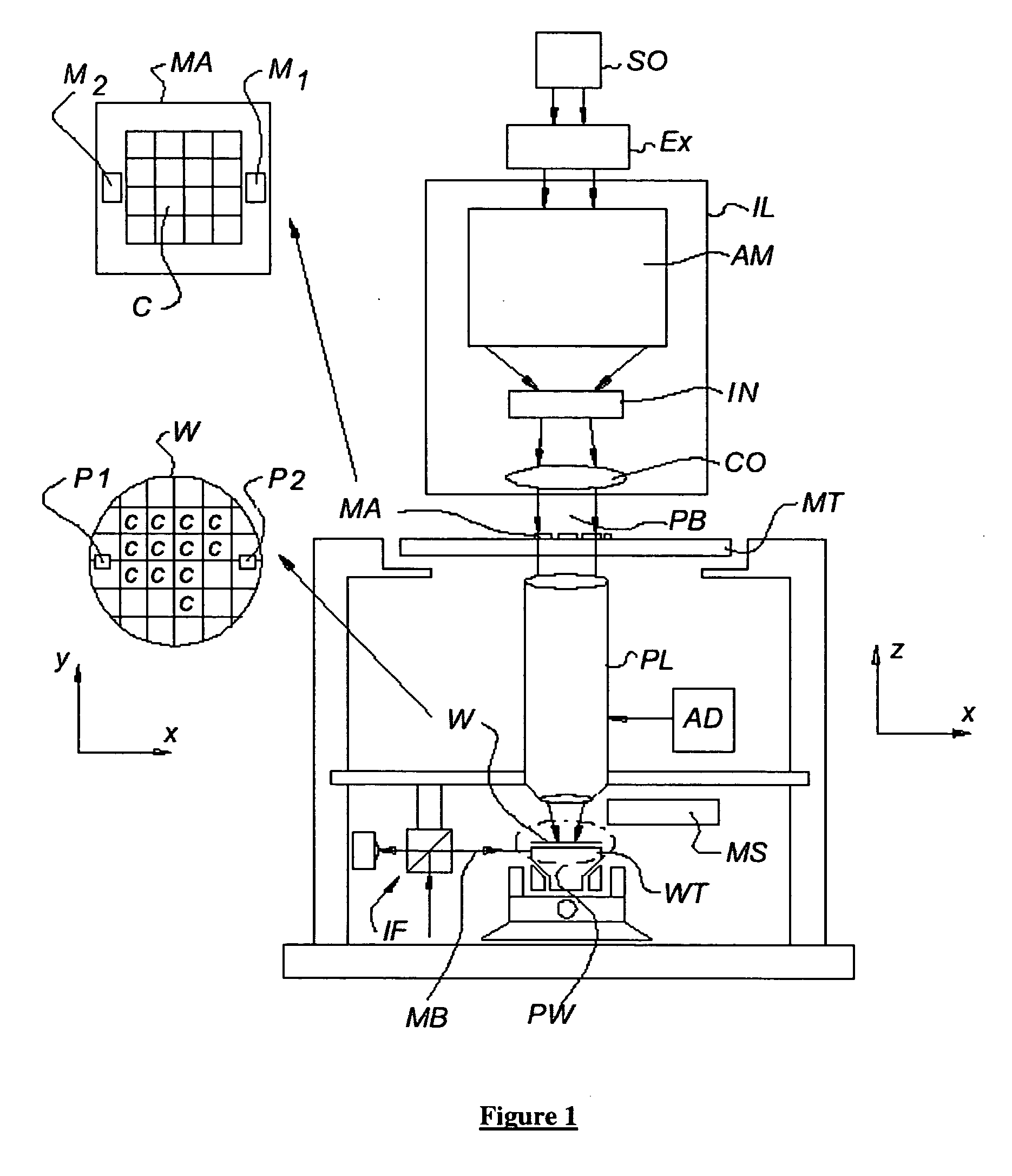

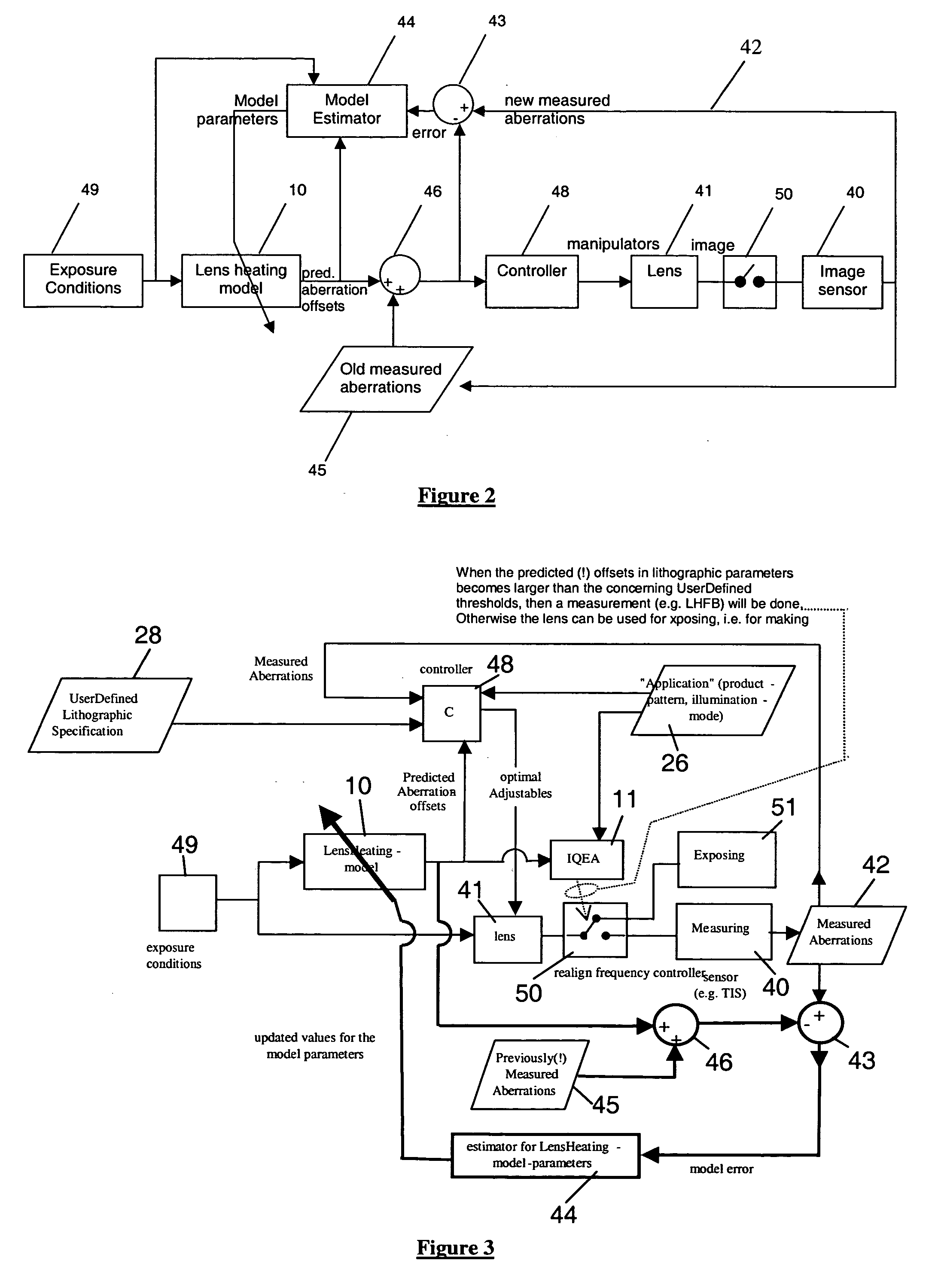

Lithographic projection apparatus and device manufacturing method using such lithographic projection apparatus

ActiveUS20060114437A1Reduce throughputAvailability is not compromisedPhotomechanical apparatusPhotographic printingControl systemControl signal

A lithographic projection apparatus includes a measurement system for measuring changes in projection system aberrations with time, and a predictive control system for predicting variation of projection system aberrations with time on the basis of model parameters and for generating a control signal for compensating a time-varying property of the apparatus, such as the OVL values (X-Y adjustment) and the FOC values (Z adjustment) of a lens of the projection system for example. An inline model identification system is provided for estimating model parameter errors on the basis of projection system aberration values provided by the predictive control system and measured projection system aberration values provided by the measurement system, and an updating system utilizes the model parameter errors for updating the model parameters of the predictive control system in order to maintain the time-varying property within acceptable performance criteria.

Owner:ASML NETHERLANDS BV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com