Patents

Literature

8452results about "Speech synthesis" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

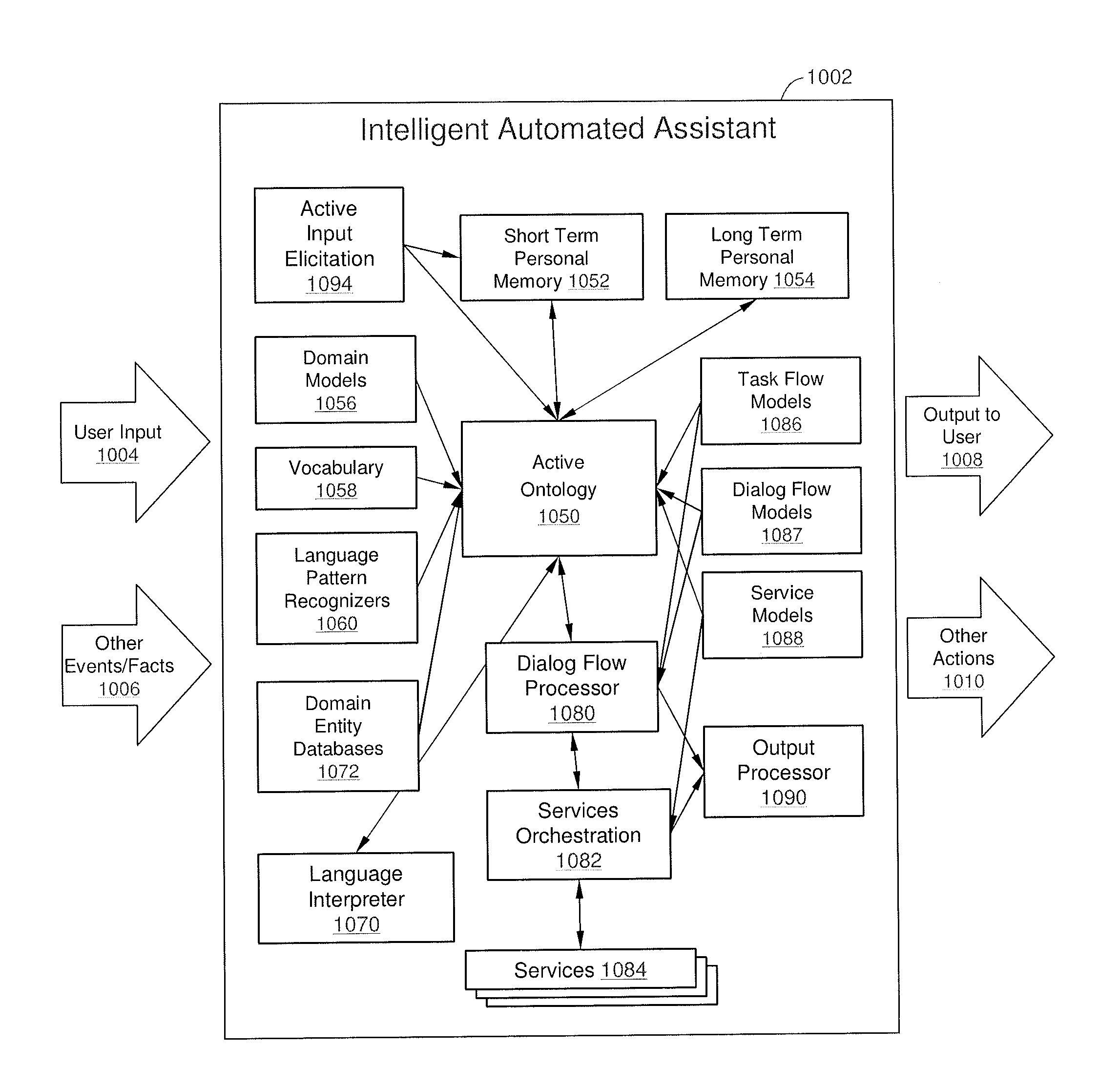

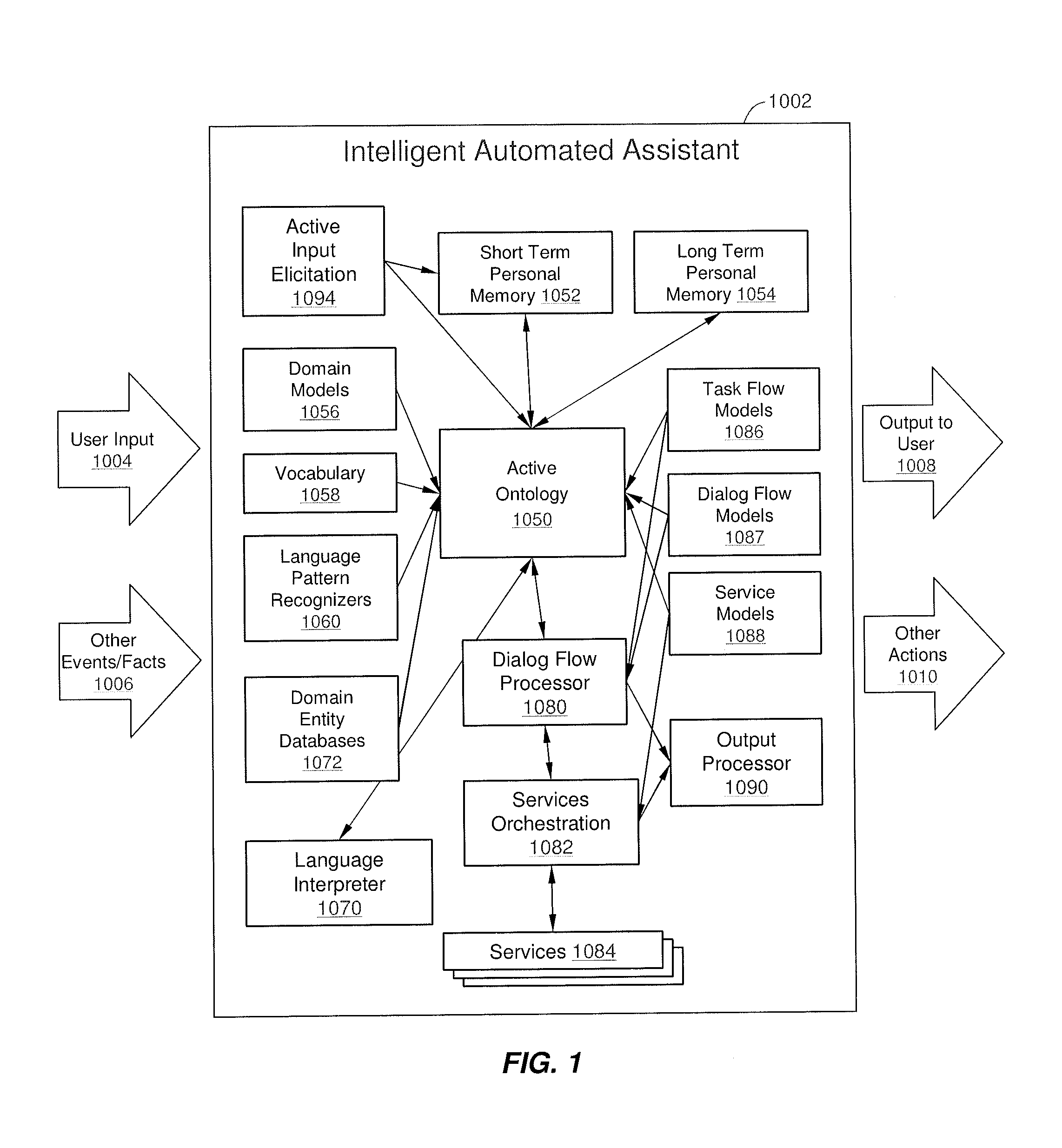

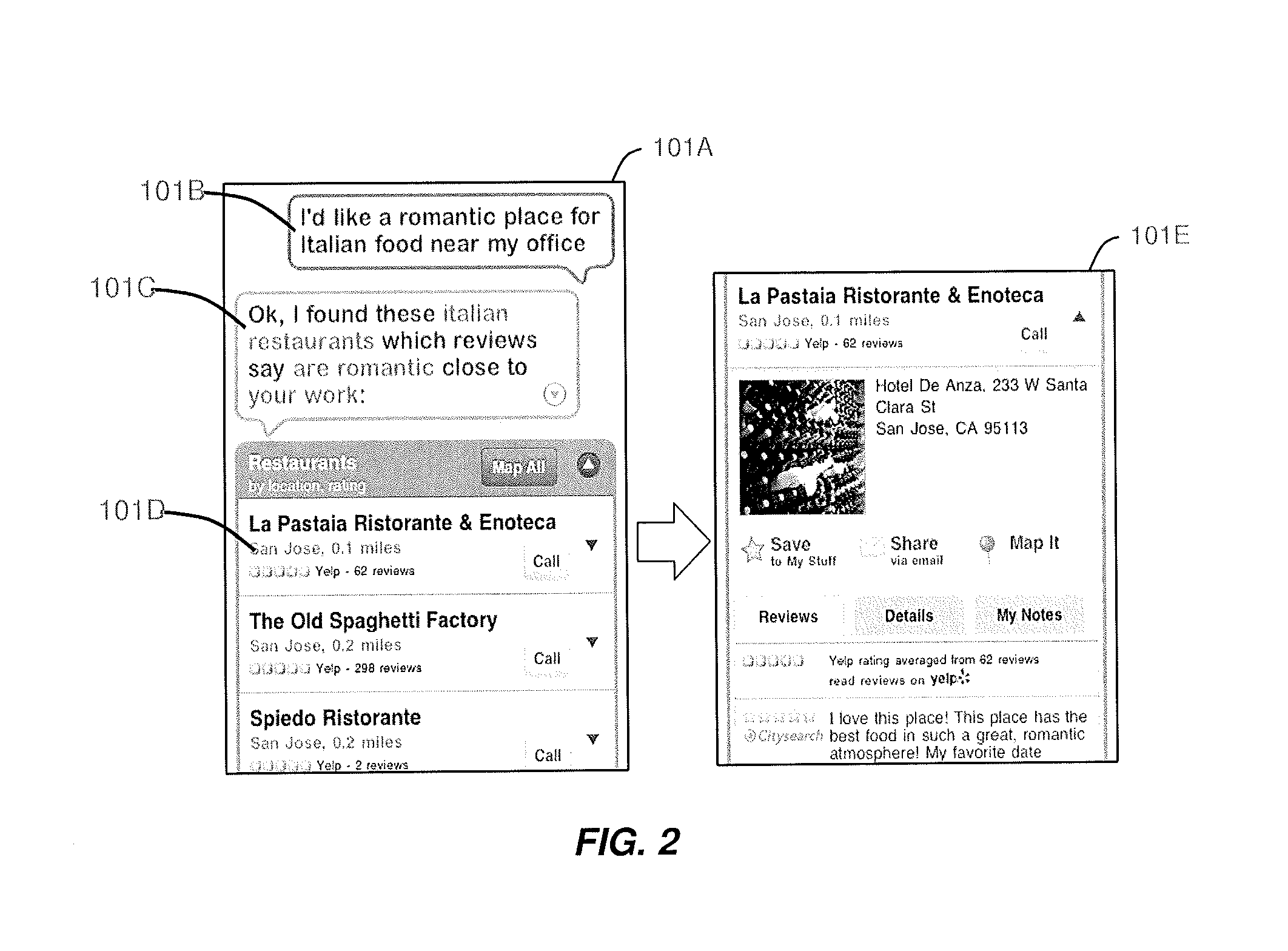

Intelligent Automated Assistant

ActiveUS20120016678A1Improve user interactionPromote effective engagementNatural language translationSemantic analysisService provisionSystem usage

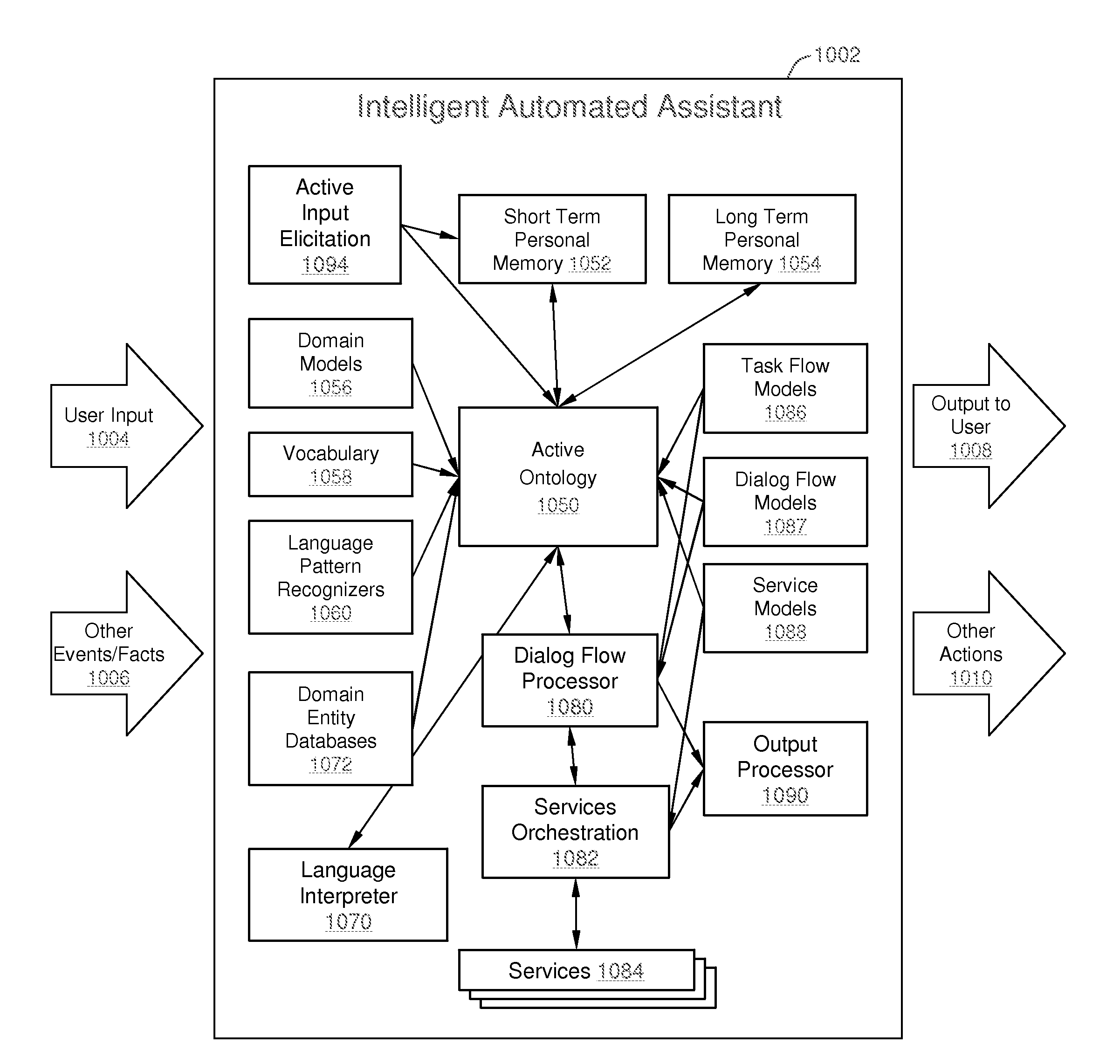

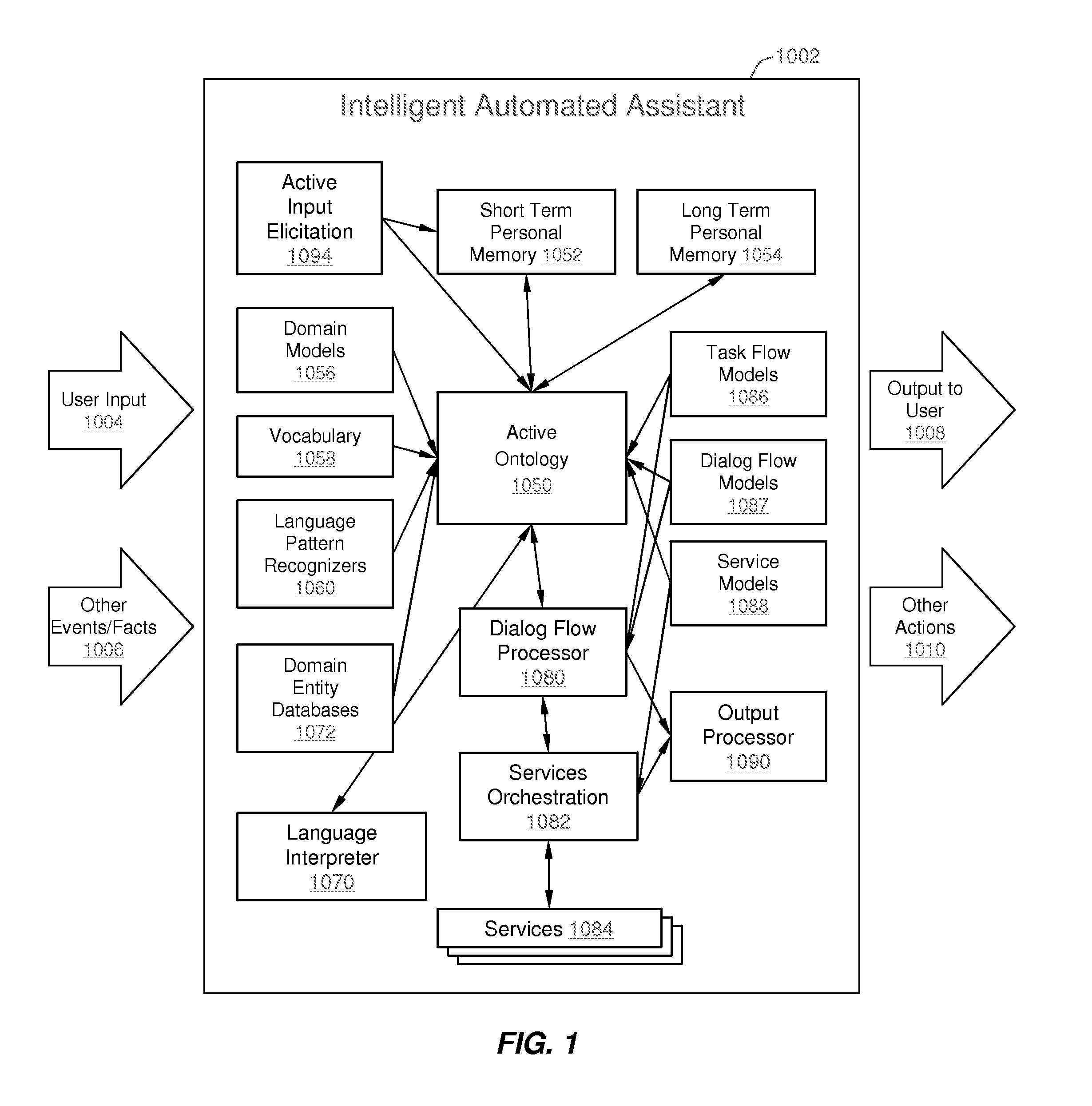

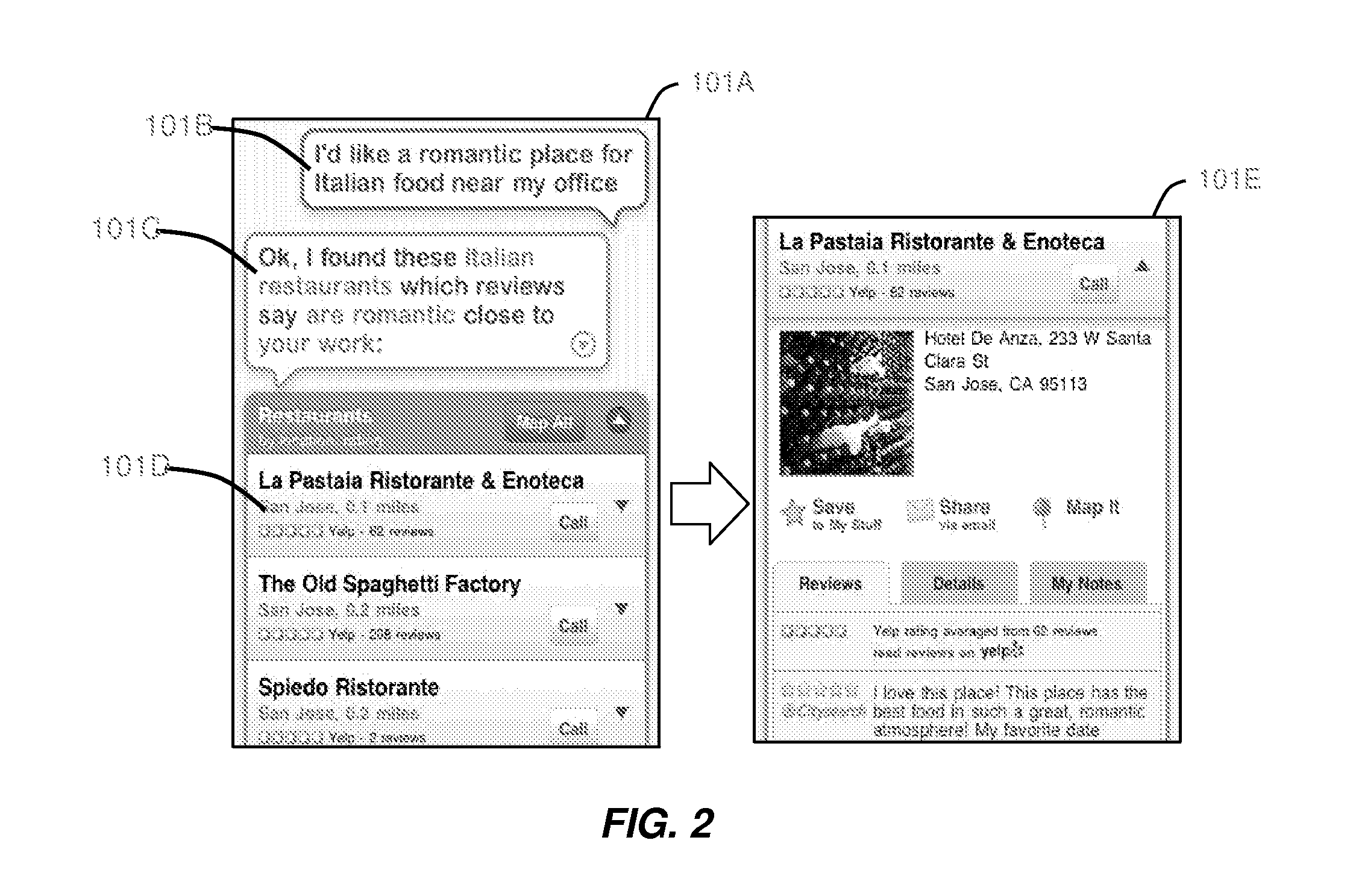

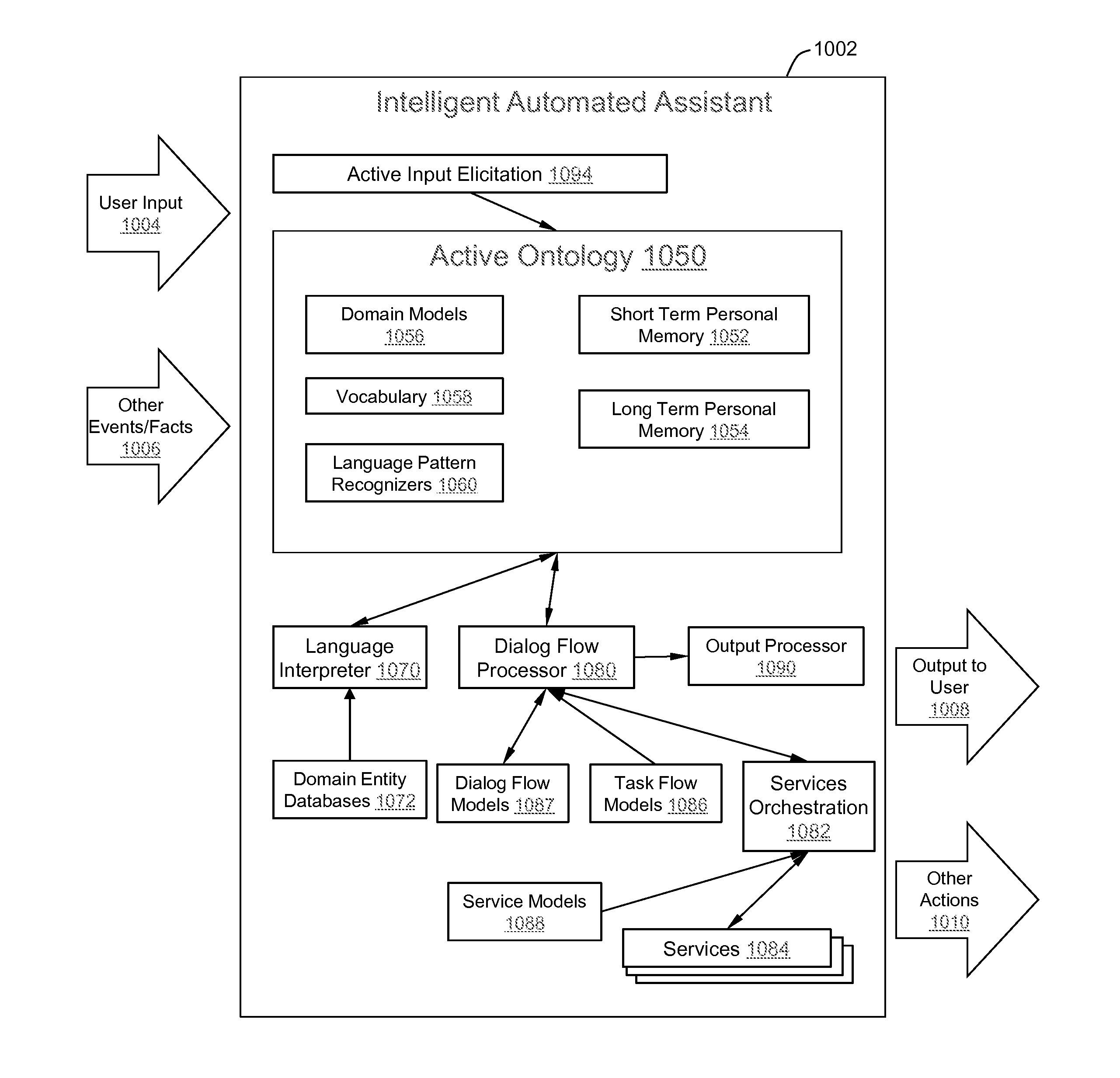

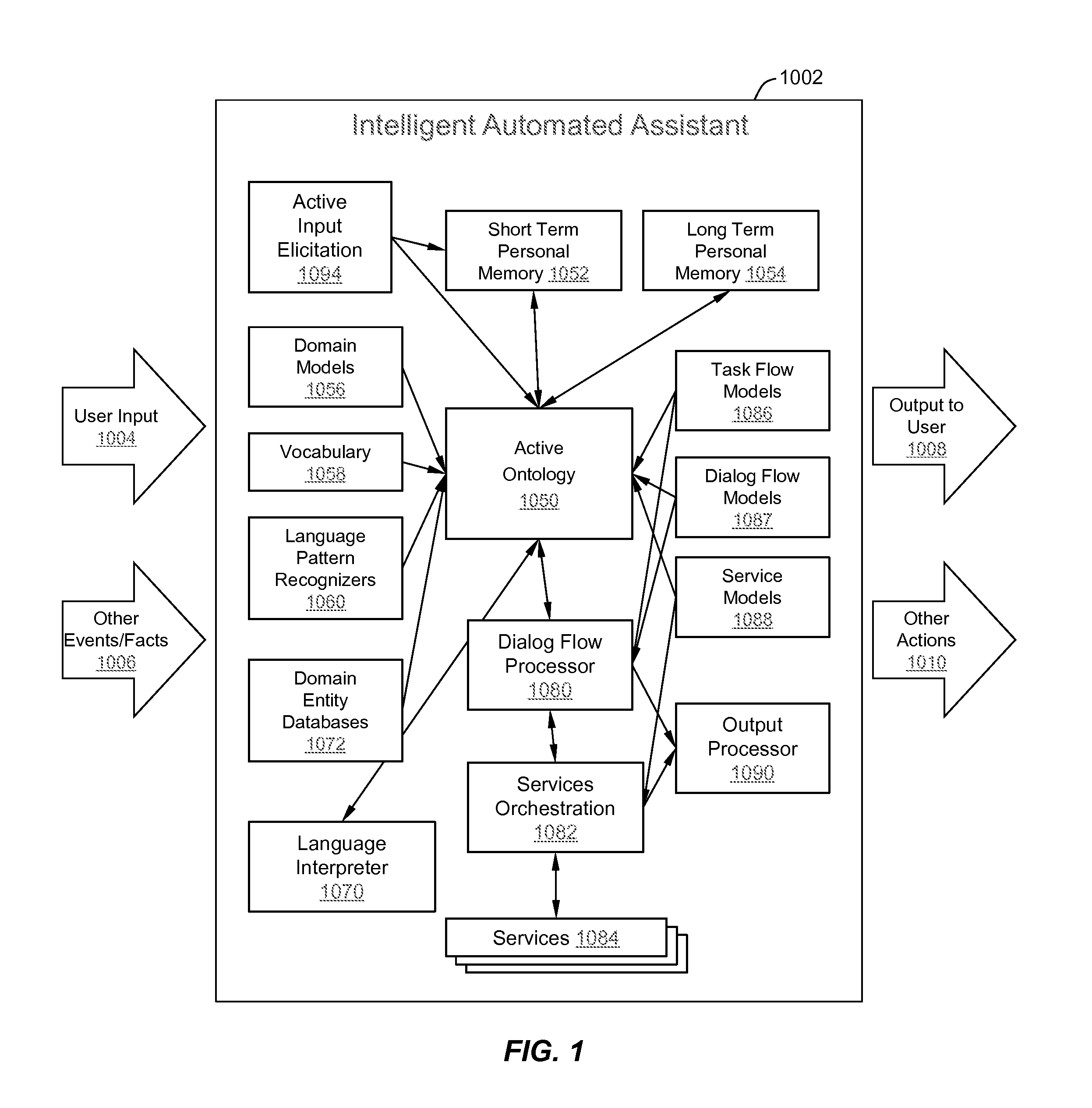

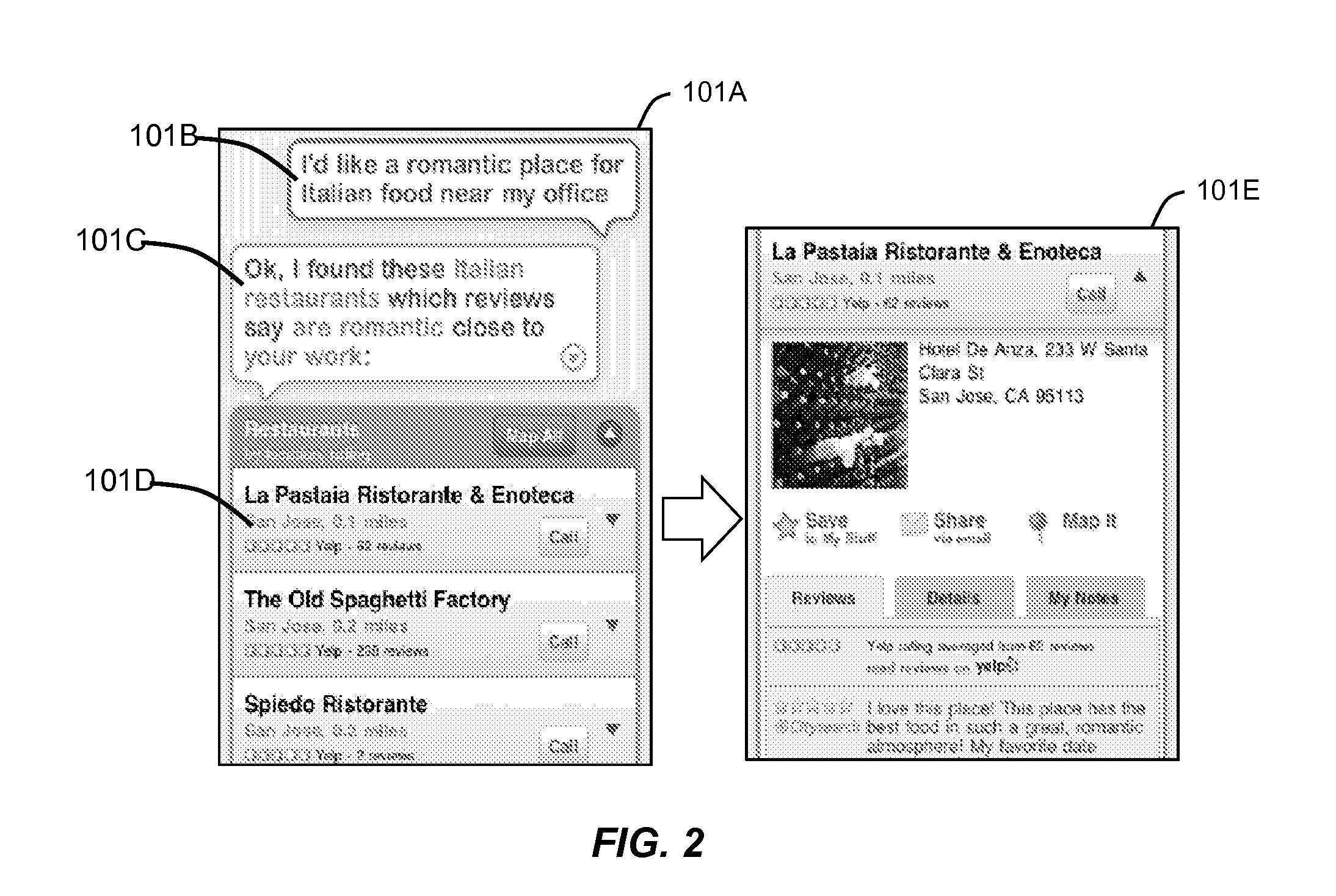

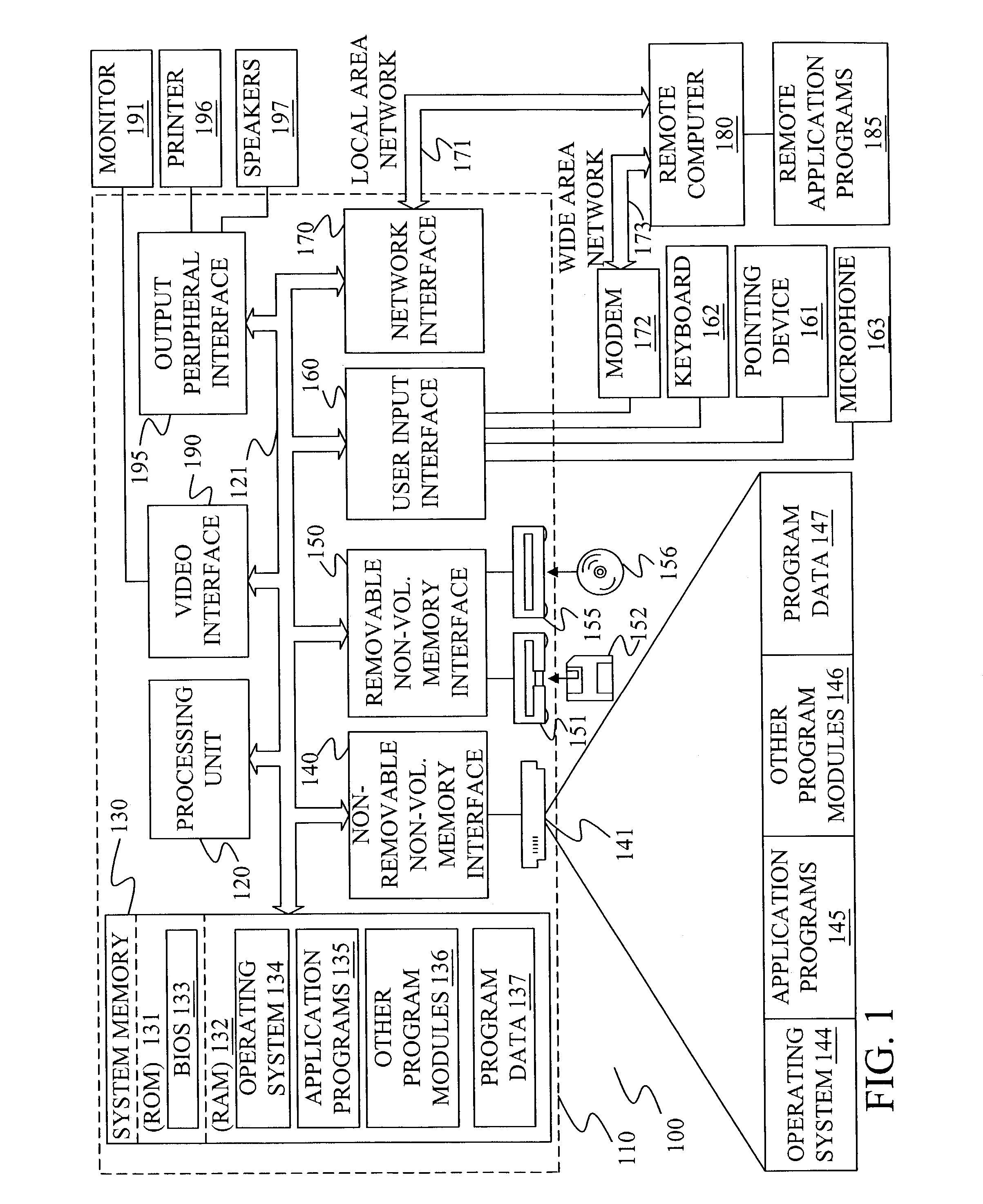

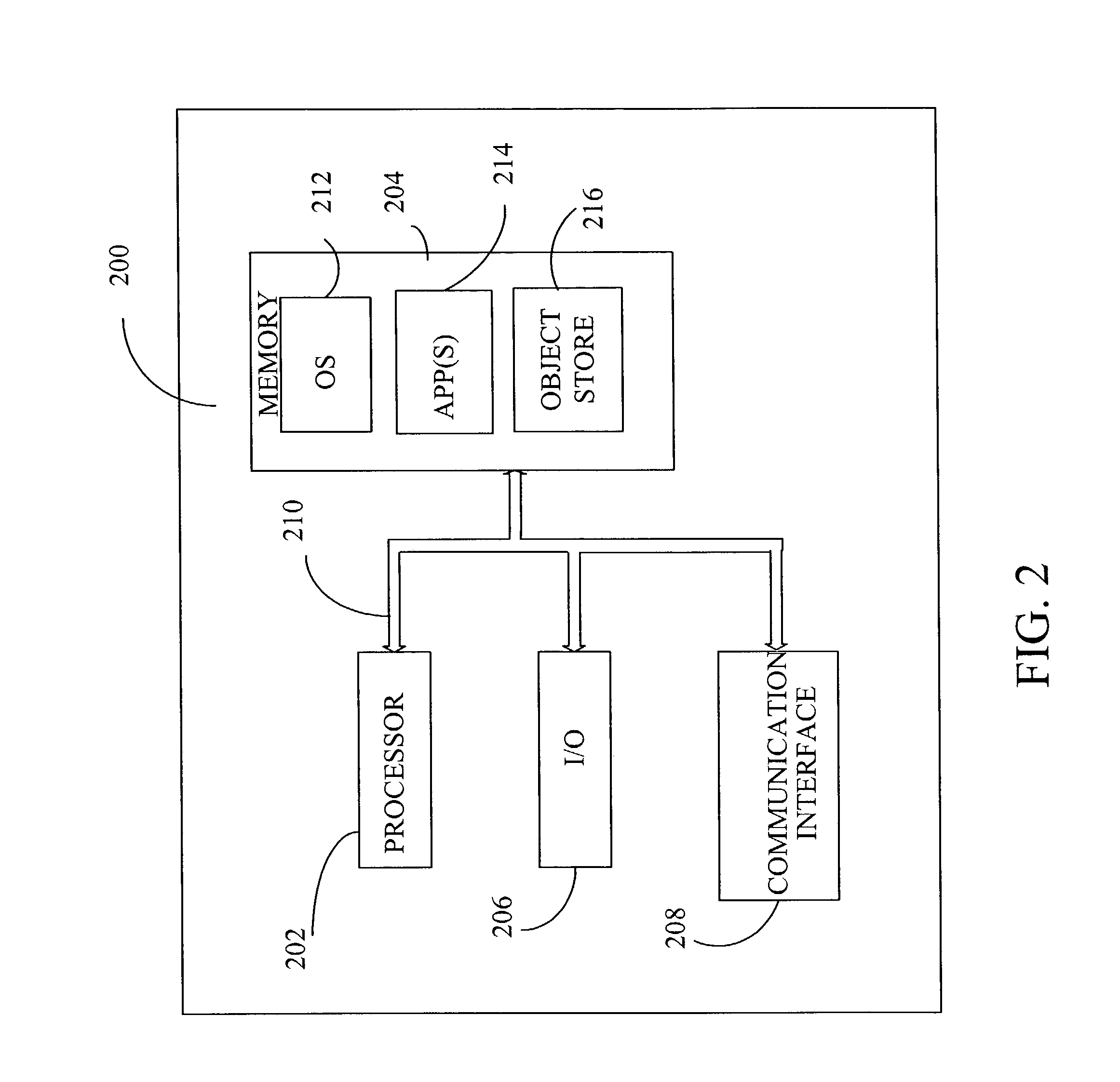

An intelligent automated assistant system engages with the user in an integrated, conversational manner using natural language dialog, and invokes external services when appropriate to obtain information or perform various actions. The system can be implemented using any of a number of different platforms, such as the web, email, smartphone, and the like, or any combination thereof. In one embodiment, the system is based on sets of interrelated domains and tasks, and employs additional functionally powered by external services with which the system can interact.

Owner:APPLE INC

Mobile systems and methods for responding to natural language speech utterance

ActiveUS7693720B2Promotes feeling of naturalOvercome deficienciesDigital data information retrievalSpeech recognitionRemote systemTelematics

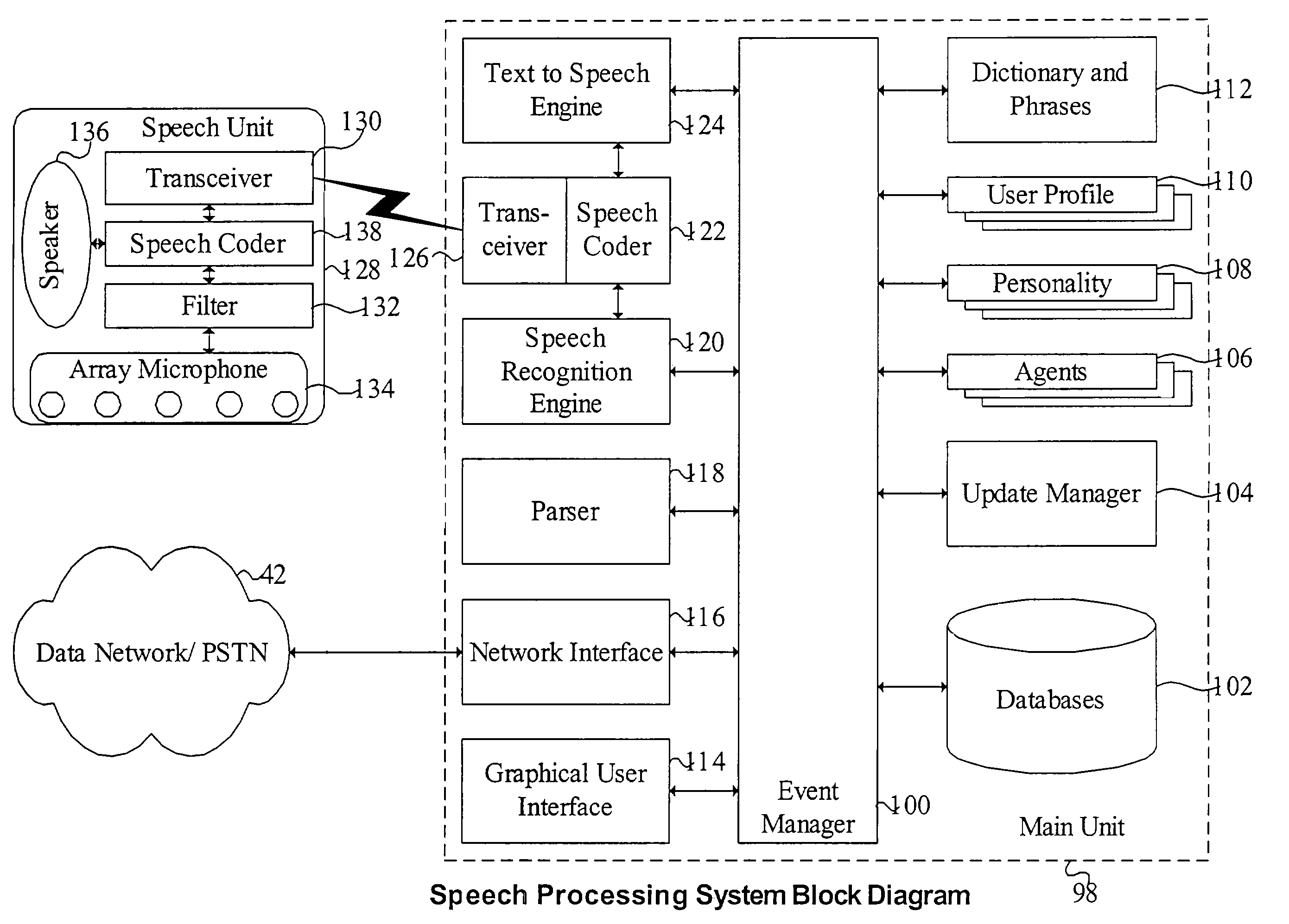

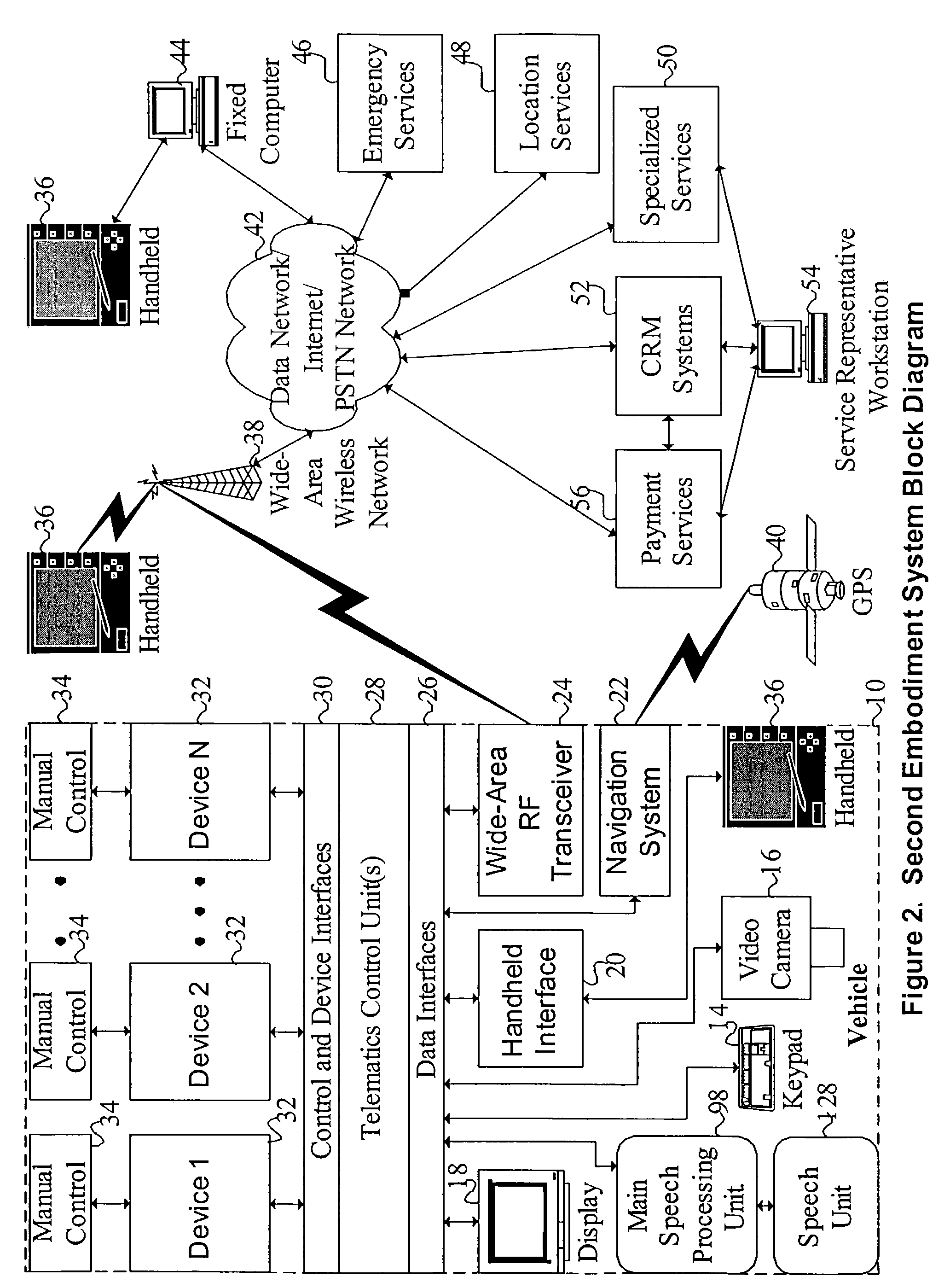

Mobile systems and methods that overcomes the deficiencies of prior art speech-based interfaces for telematics applications through the use of a complete speech-based information query, retrieval, presentation and local or remote command environment. This environment makes significant use of context, prior information, domain knowledge, and user specific profile data to achieve a natural environment for one or more users making queries or commands in multiple domains. Through this integrated approach, a complete speech-based natural language query and response environment can be created. The invention creates, stores and uses extensive personal profile information for each user, thereby improving the reliability of determining the context and presenting the expected results for a particular question or command. The invention may organize domain specific behavior and information into agents, that are distributable or updateable over a wide area network. The invention can be used in dynamic environments such as those of mobile vehicles to control and communicate with both vehicle systems and remote systems and devices.

Owner:DIALECT LLC

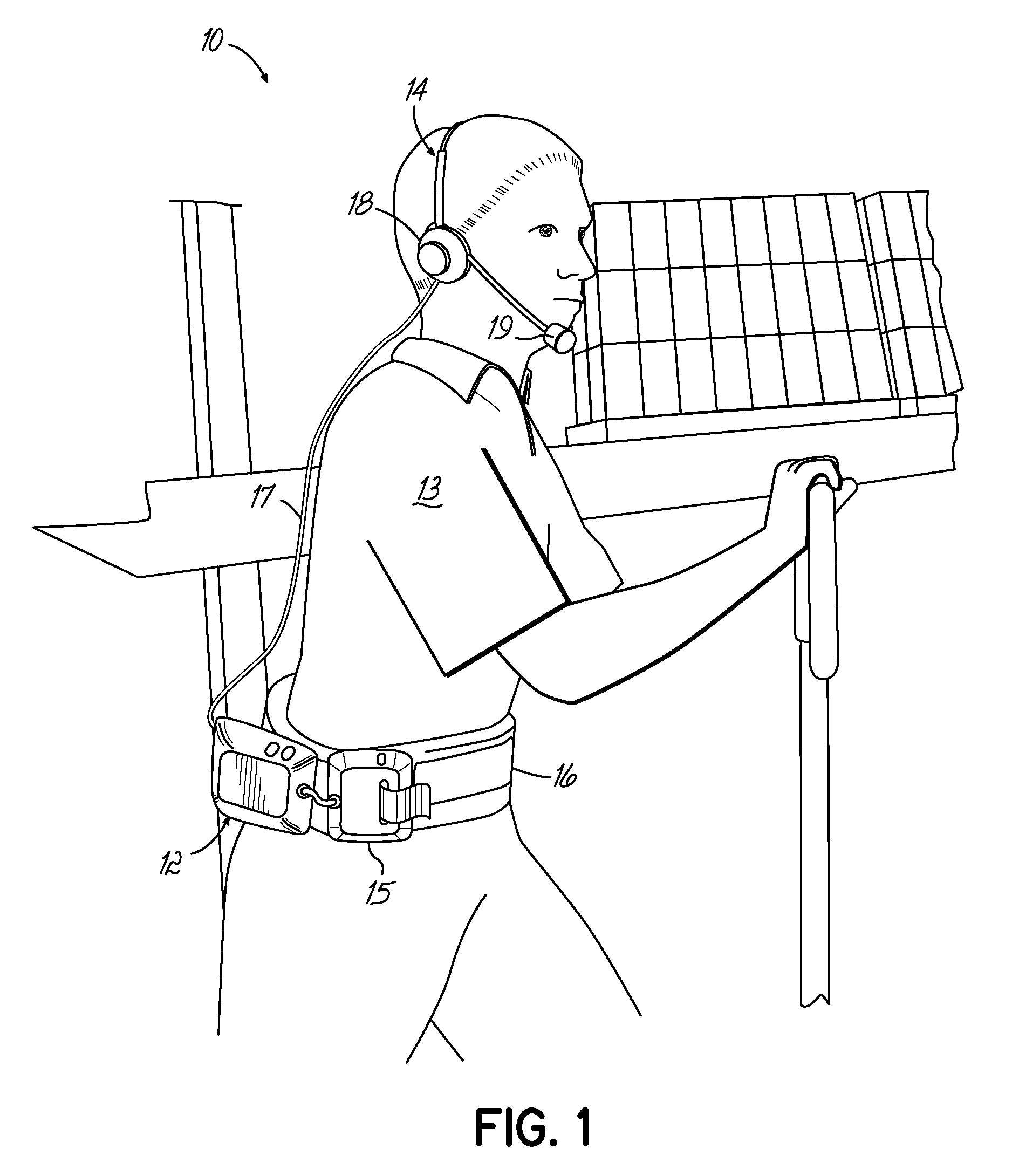

Voice-enabled documents for facilitating operational procedures

InactiveUS20140108010A1Increase productivityImprove efficiencyCharacter and pattern recognitionAutomatic exchangesClient-sideField service

A voice-enabled document system facilitates execution of service delivery operations by eliminating the need for manual or visual interaction during information retrieval by an operator. Access to voice-enabled documents can facilitate operations for mobile vendors, on-site or field-service repairs, medical service providers, food service providers, and the like. Service providers can access the voice-enabled documents by using a client device to retrieve the document, display it on a screen, and, via voice commands initiate playback of selected audio files containing information derived from text data objects selected from the document. Data structures that are components of a voice-enabled document include audio playback files and a logical association that links the audio playback files to user-selectable fields, and to a set of voice commands.

Owner:INTERMEC IP

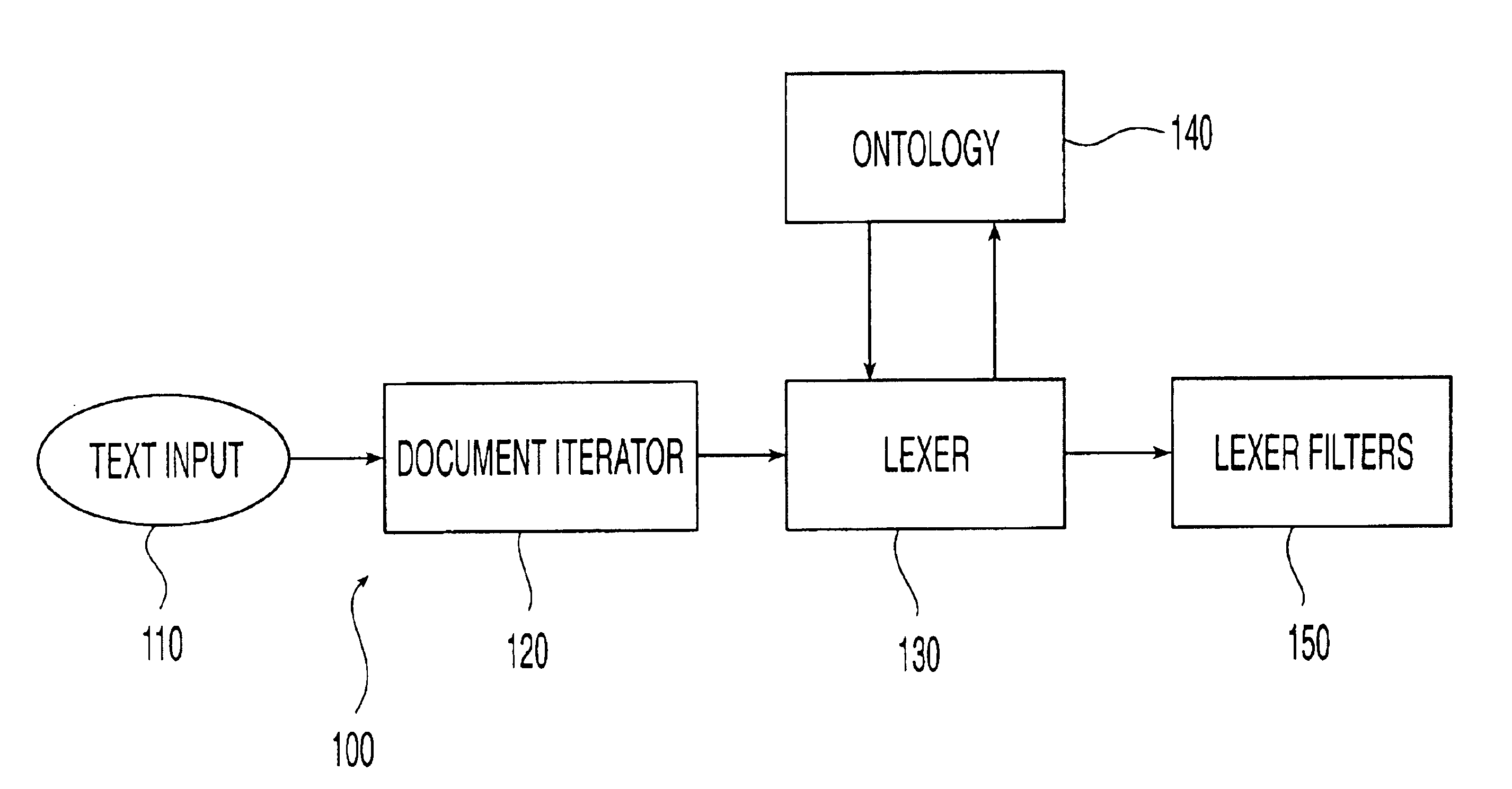

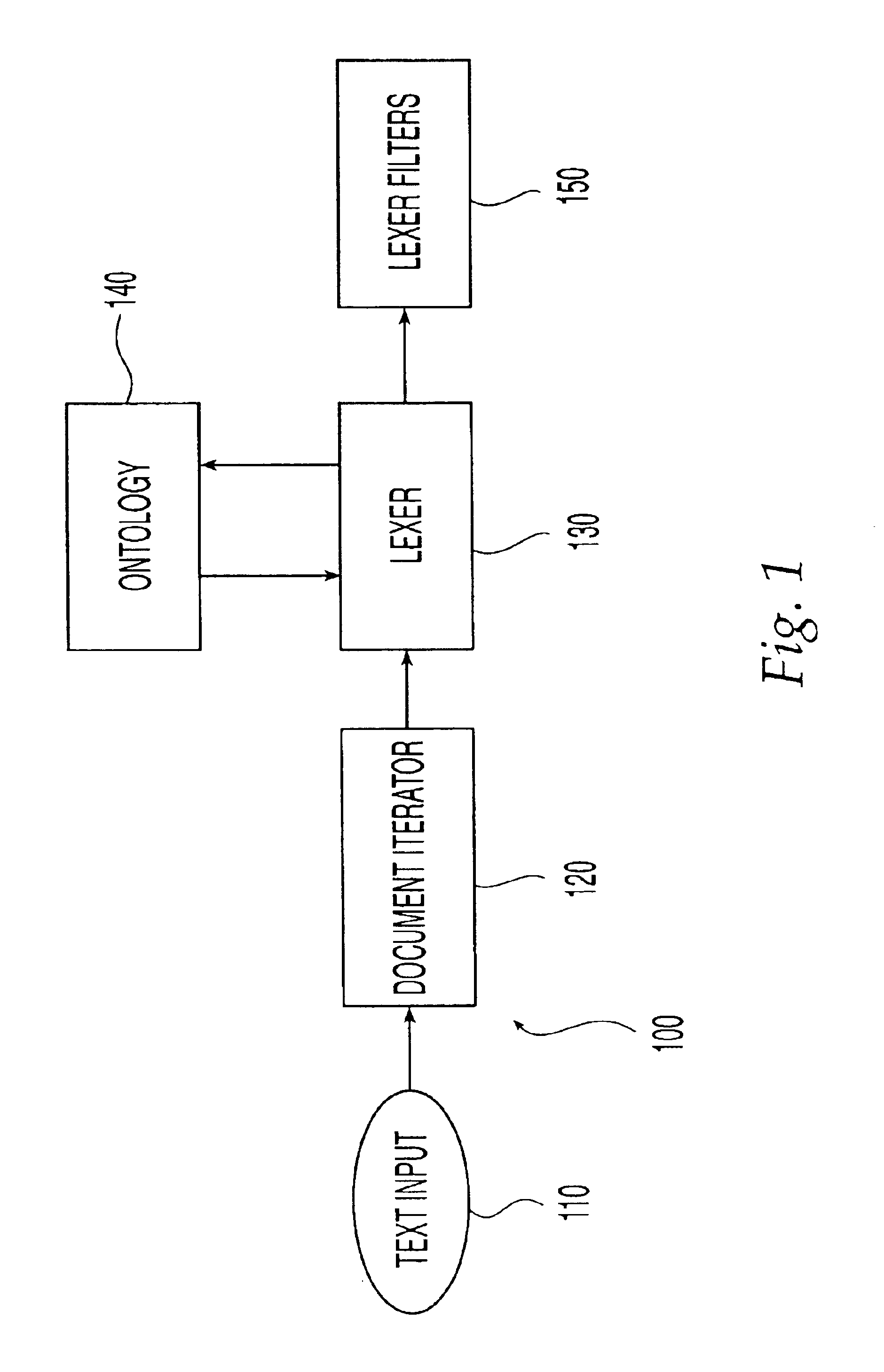

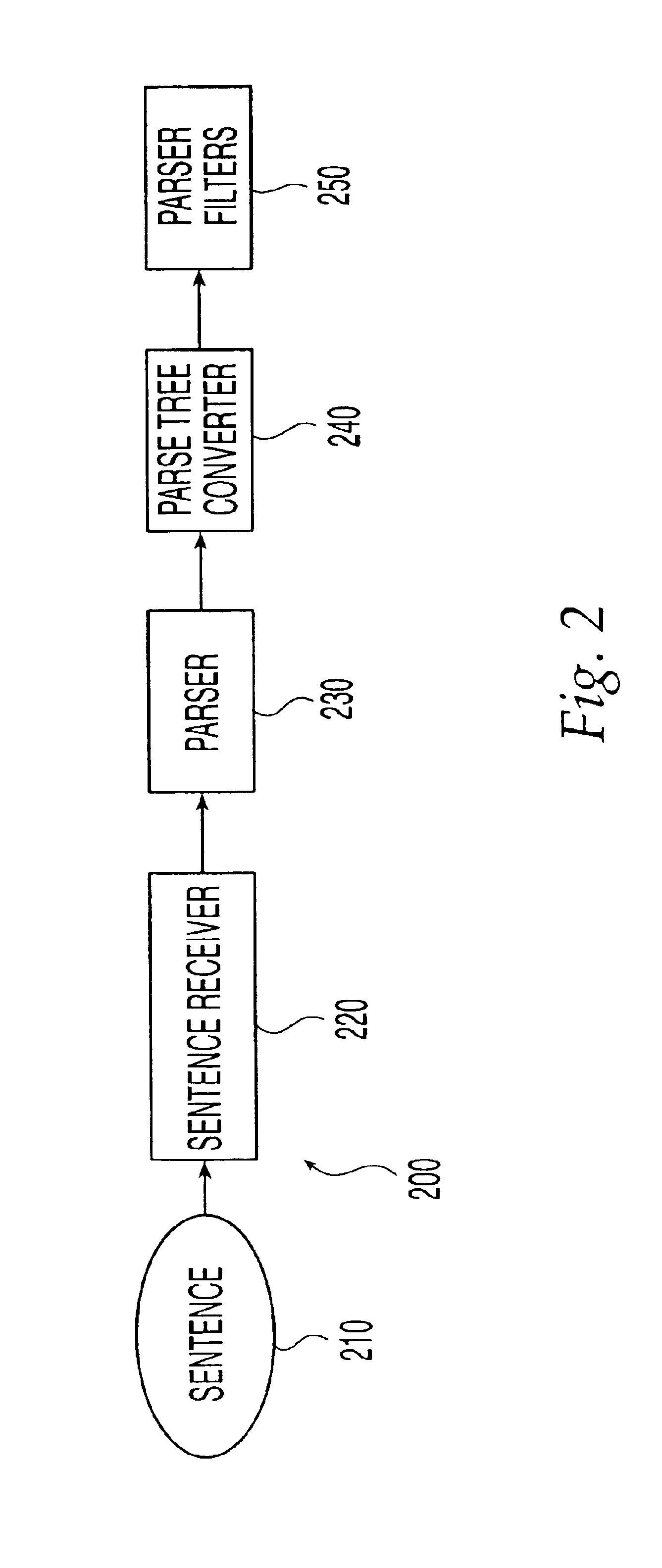

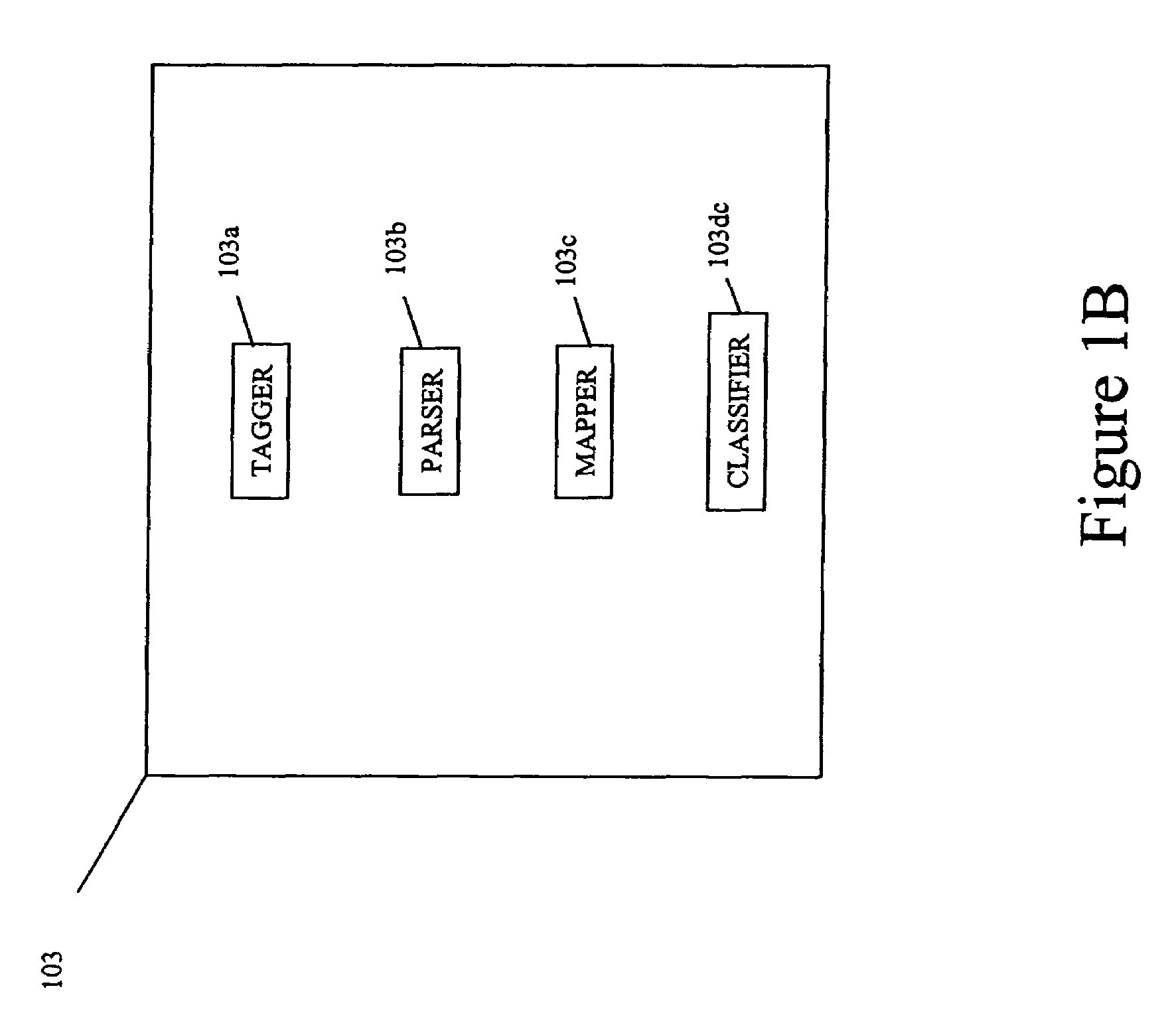

Ontology-based parser for natural language processing

ActiveUS7027974B1Maximum speedData processing applicationsNatural language data processingPart of speechNatural language programming

An ontology-based parser incorporates both a system and method for converting natural-language text into predicate-argument format that can be easily used by a variety of applications, including search engines, summarization applications, categorization applications, and word processors. The ontology-based parser contains functional components for receiving documents in a plurality of formats, tokenizing them into instances of concepts from an ontology, and assembling the resulting concepts into predicates. The ontological parser has two major functional elements, a sentence lexer and a parser. The sentence lexer takes a sentence and converts it into a sequence of ontological entities that are tagged with part-of-speech information. The parser converts the sequence of ontological entities into predicate structures using a two-stage process that analyzes the grammatical structure of the sentence, and then applies rules to it that bind arguments into predicates.

Owner:LEIDOS

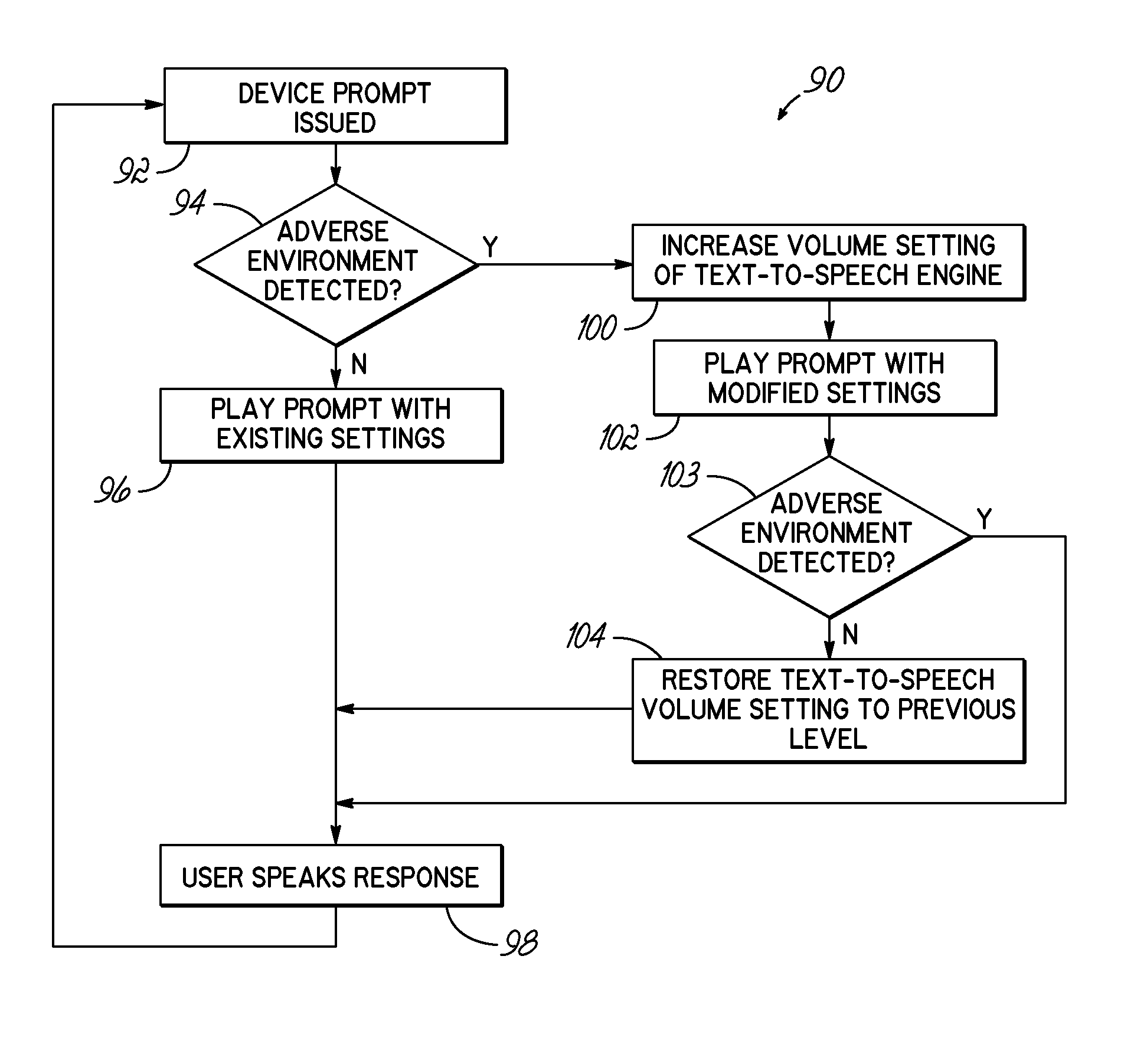

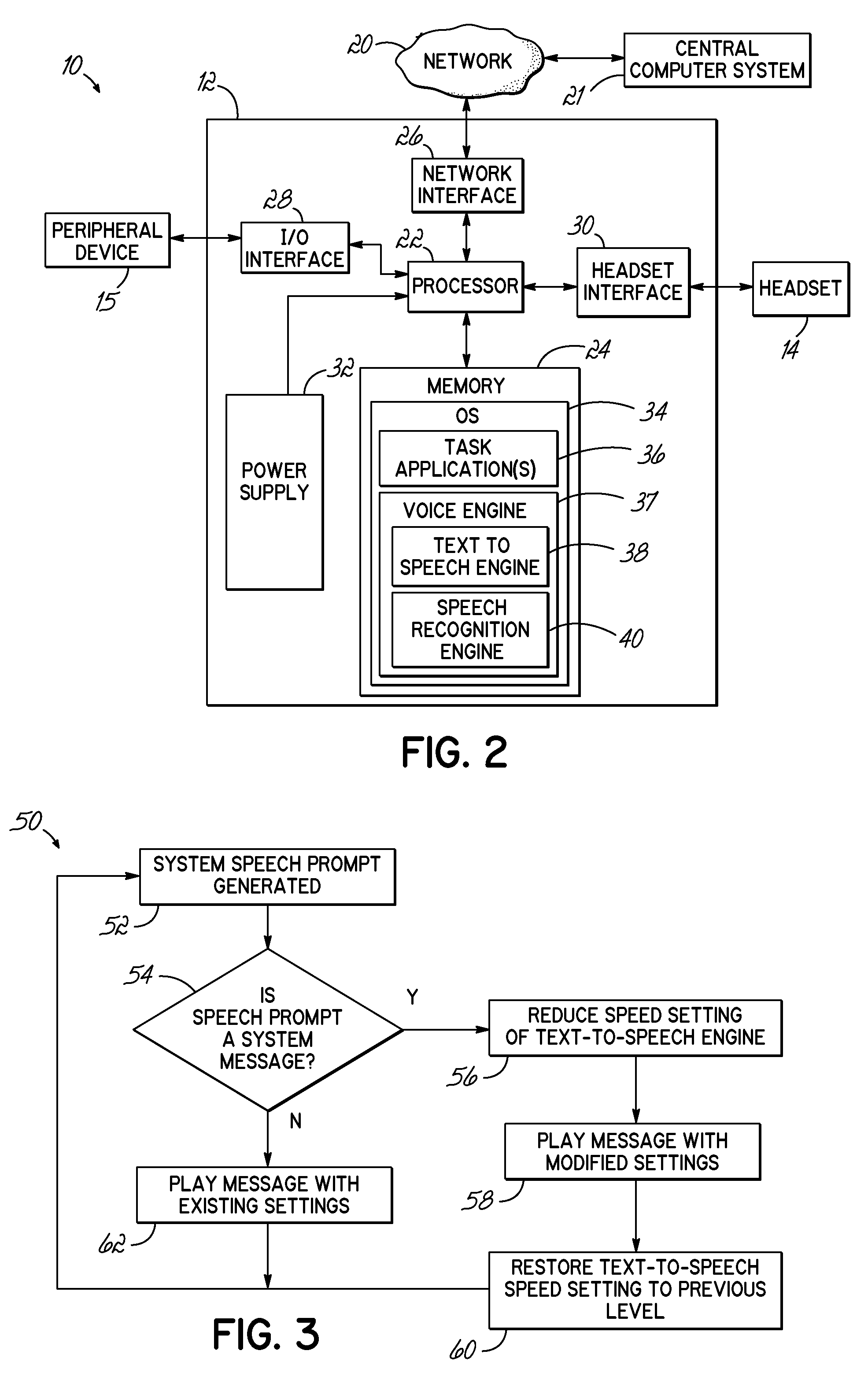

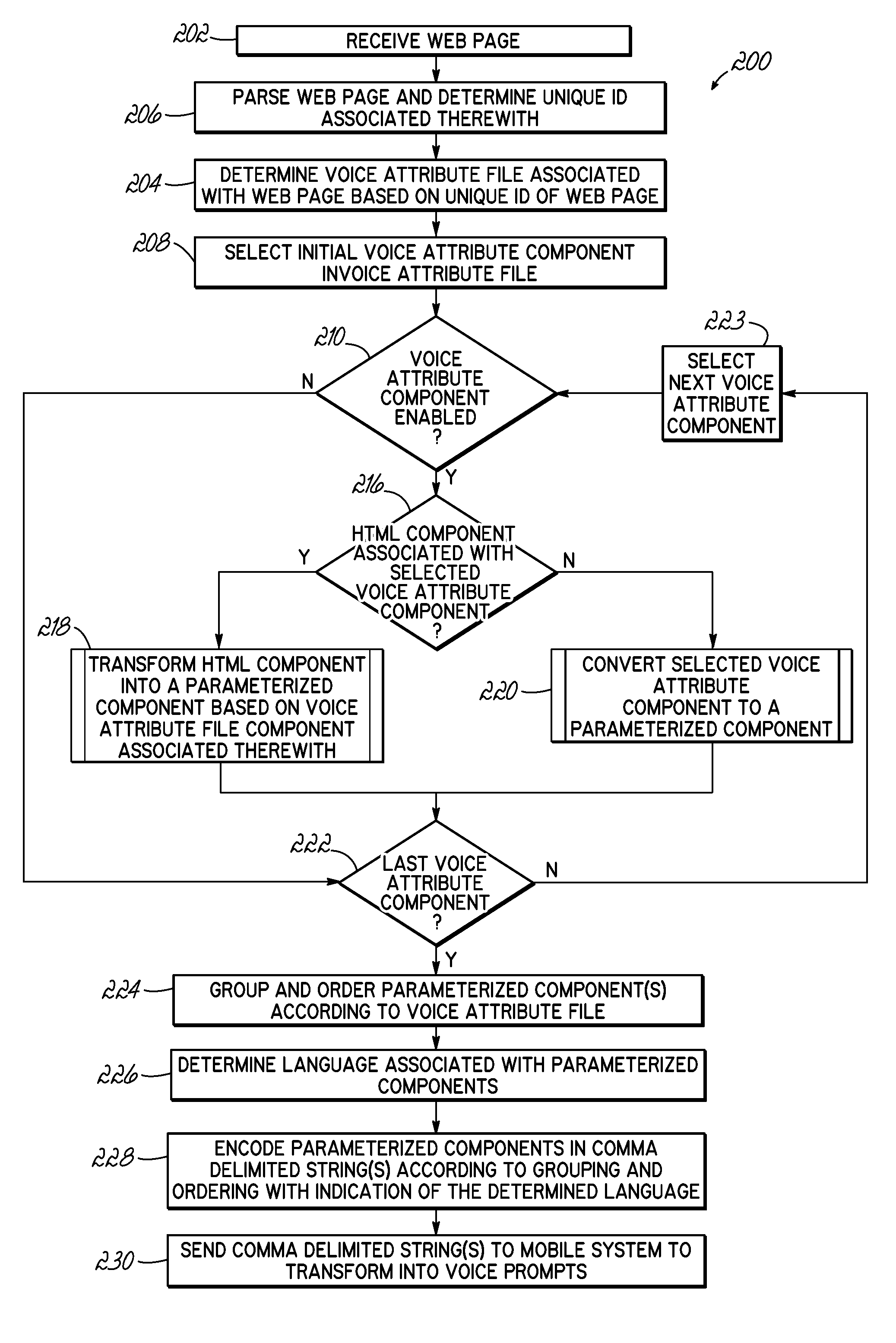

Systems and methods for dynamically improving user intelligibility of synthesized speech in a work environment

Method and apparatus that dynamically adjusts operational parameters of a text-to-speech engine in a speech-based system. A voice engine or other application of a device provides a mechanism to alter the adjustable operational parameters of the text-to-speech engine. In response to one or more environmental conditions, the adjustable operational parameters of the text-to-speech engine are modified to increase the intelligibility of synthesized speech.

Owner:VOCOLLECT

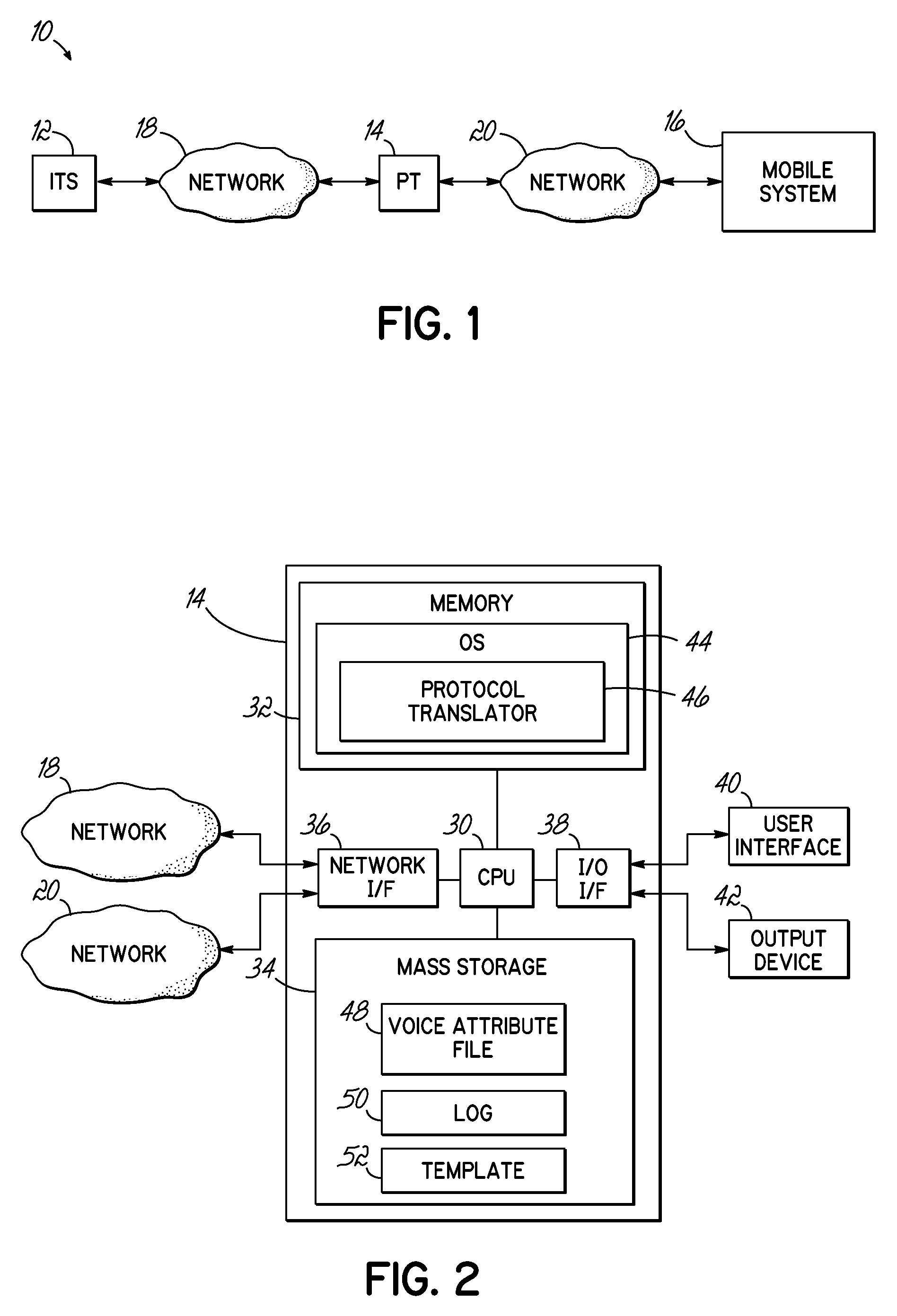

Transforming components of a web page to voice prompts

Embodiments of the invention address the deficiencies of the prior art by providing a method, apparatus, and program product to of converting components of a web page to voice prompts for a user. In some embodiments, the method comprises selectively determining at least one HTML component from a plurality of HTML components of a web page to transform into a voice prompt for a mobile system based upon a voice attribute file associated with the web page. The method further comprises transforming the at least one HTML component into parameterized data suitable for use by the mobile system based upon at least a portion of the voice attribute file associated with the at least one HTML component and transmitting the parameterized data to the mobile system.

Owner:VOCOLLECT

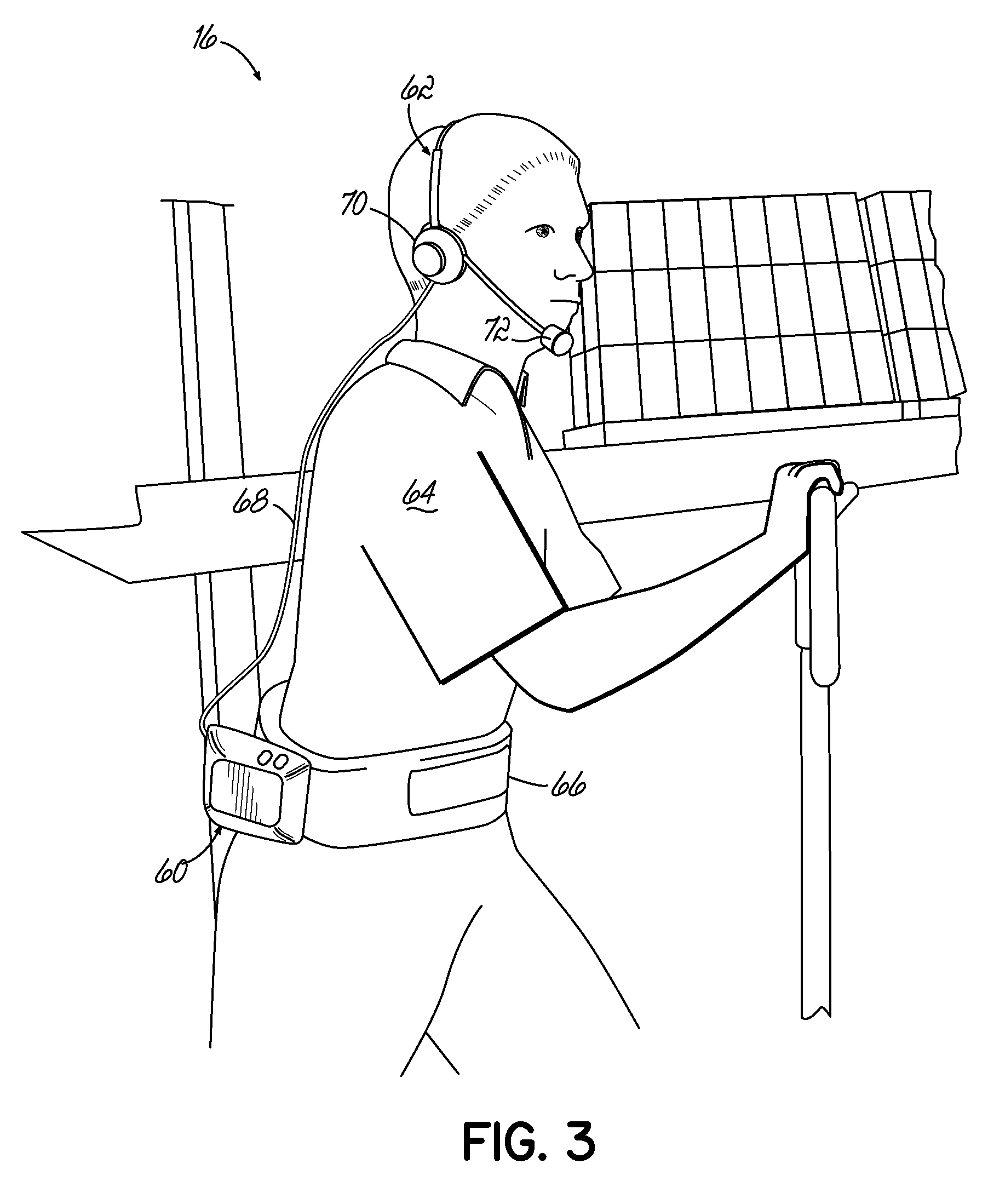

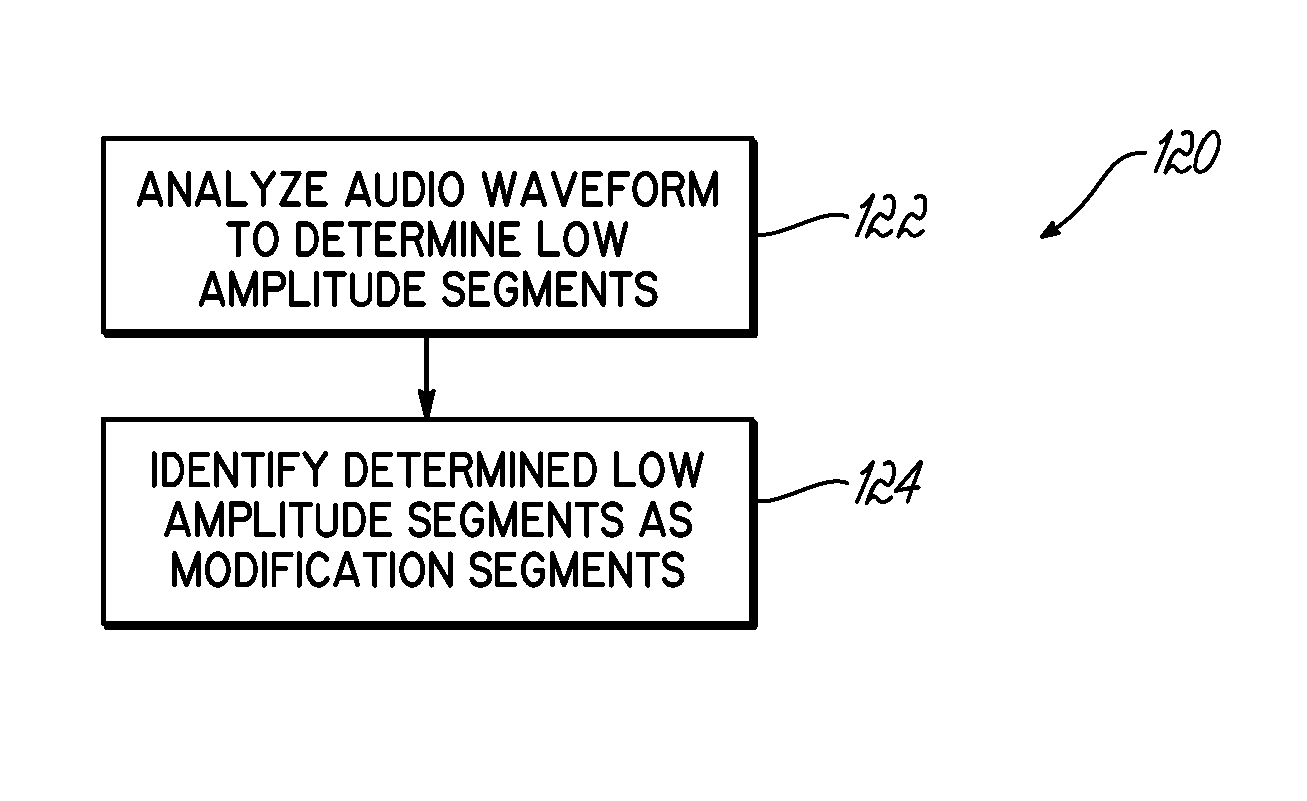

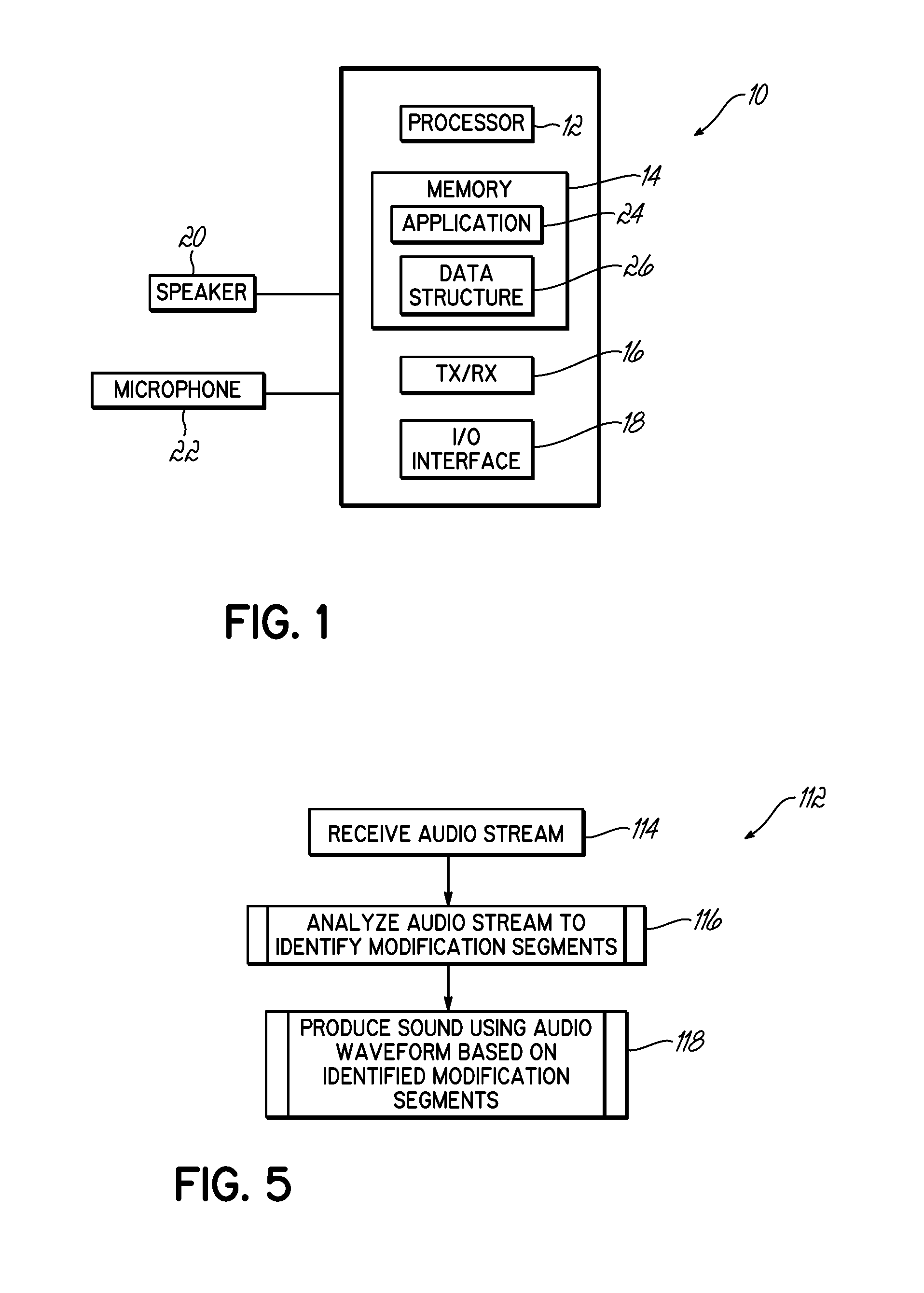

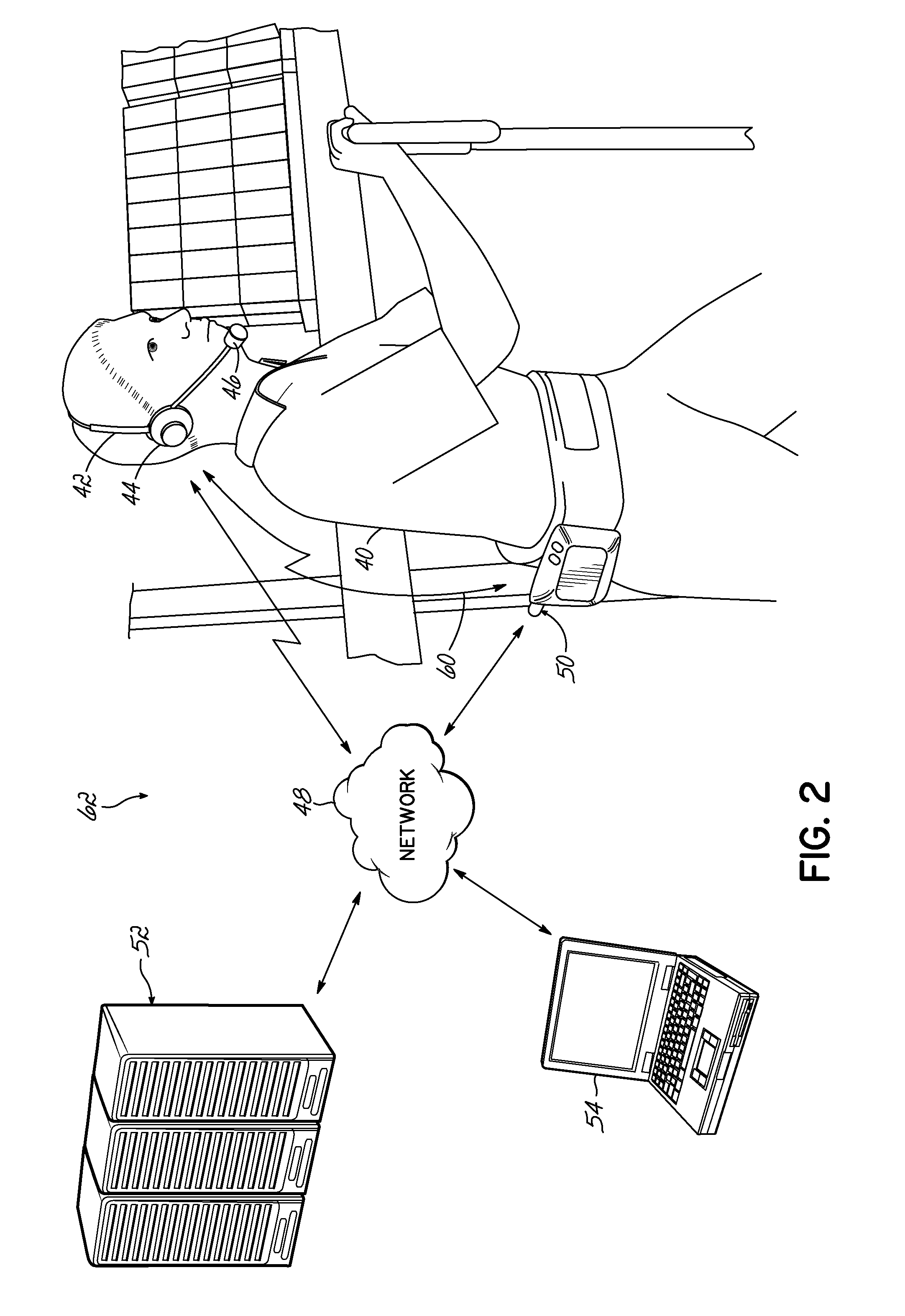

Method and system for mitigating delay in receiving audio stream during production of sound from audio stream

ActiveUS20140270196A1Reduce impactReduce delaysMicrophonesElectrical transducersCLARITYSound production

A communication component modifies production of an audio waveform at determined modification segments to thereby mitigate the effects of a delay in processing and / or receiving a subsequent audio waveform. The audio waveform and / or data associated with the audio waveform are analyzed to identify the modification segments based on characteristics of the audio waveform and / or data associated therewith. The modification segments show where the production of the audio waveform may be modified without substantially affecting the clarity of the sound or audio. In one embodiment, the invention modifies the sound production at the identified modification segments to extend production time and thereby mitigate the effects of delay in receiving and / or processing a subsequent audio waveform for production.

Owner:VOCOLLECT

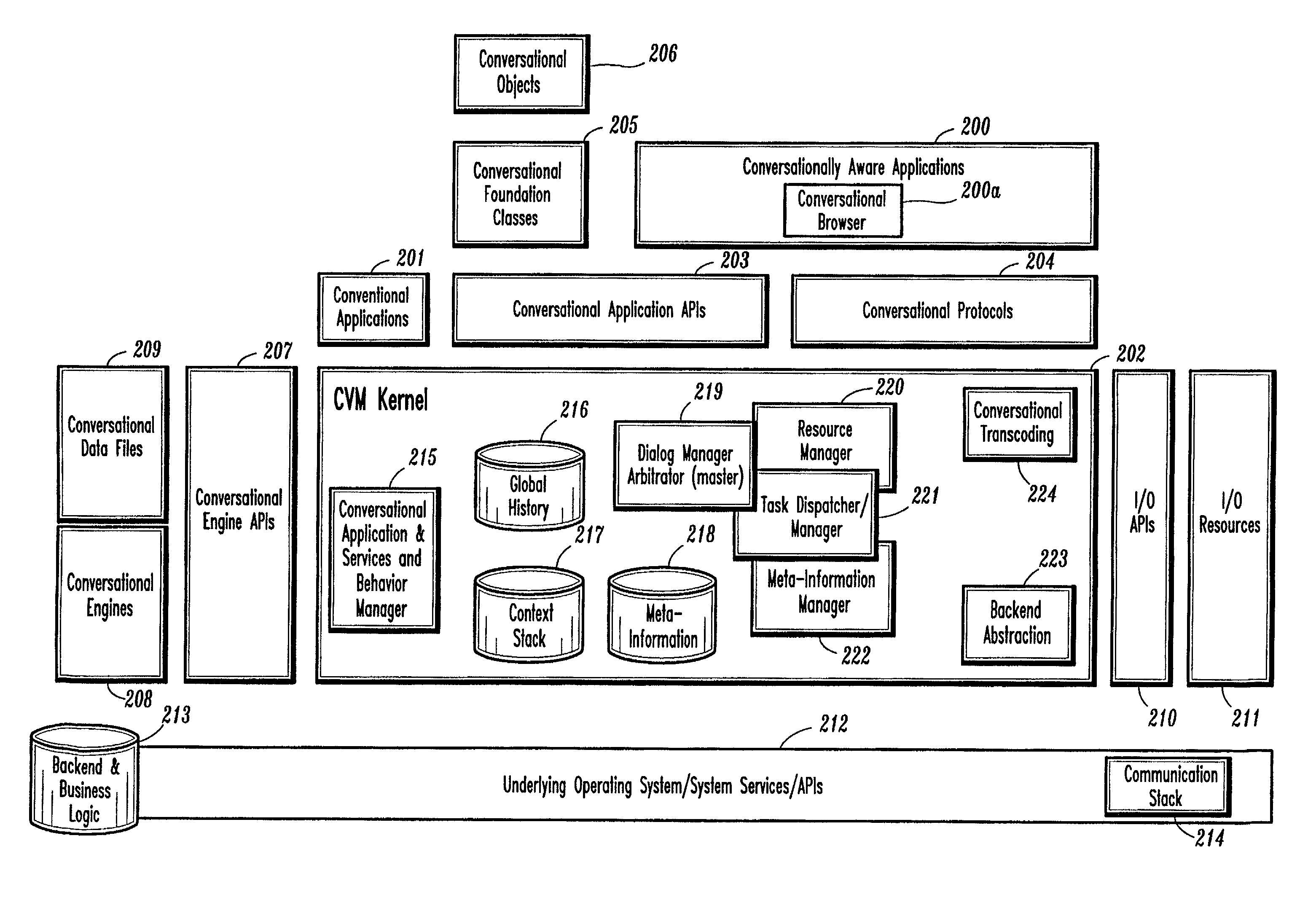

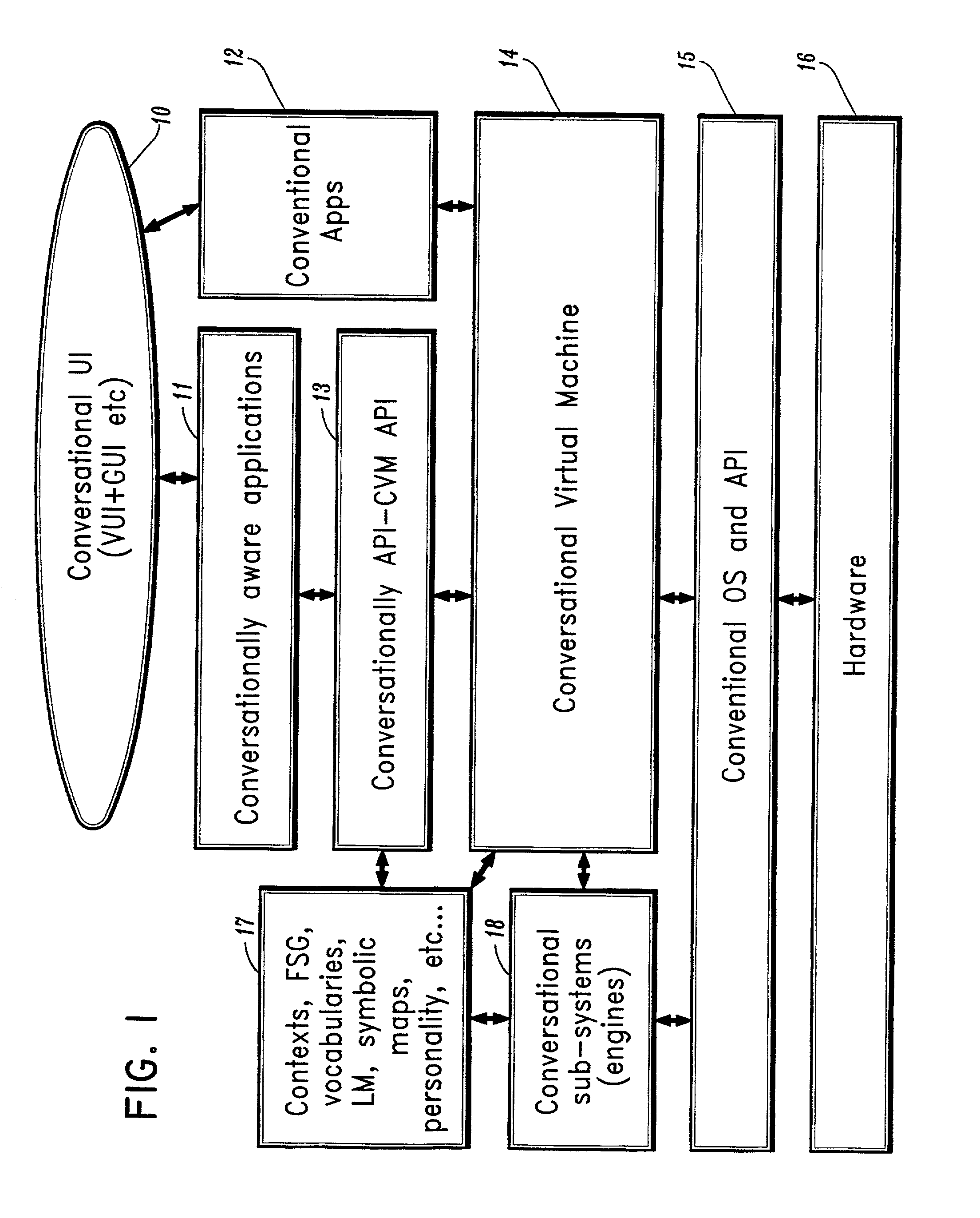

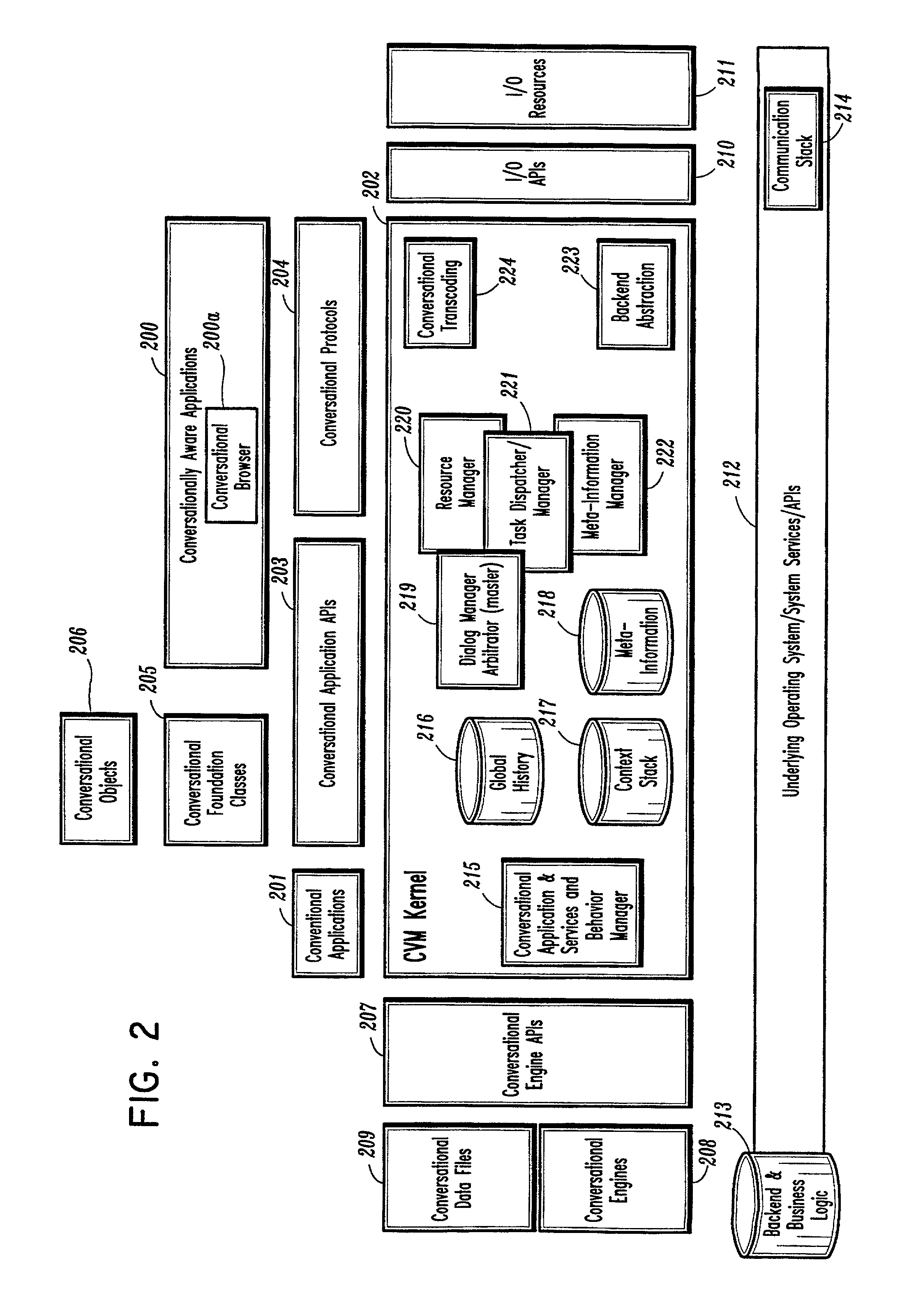

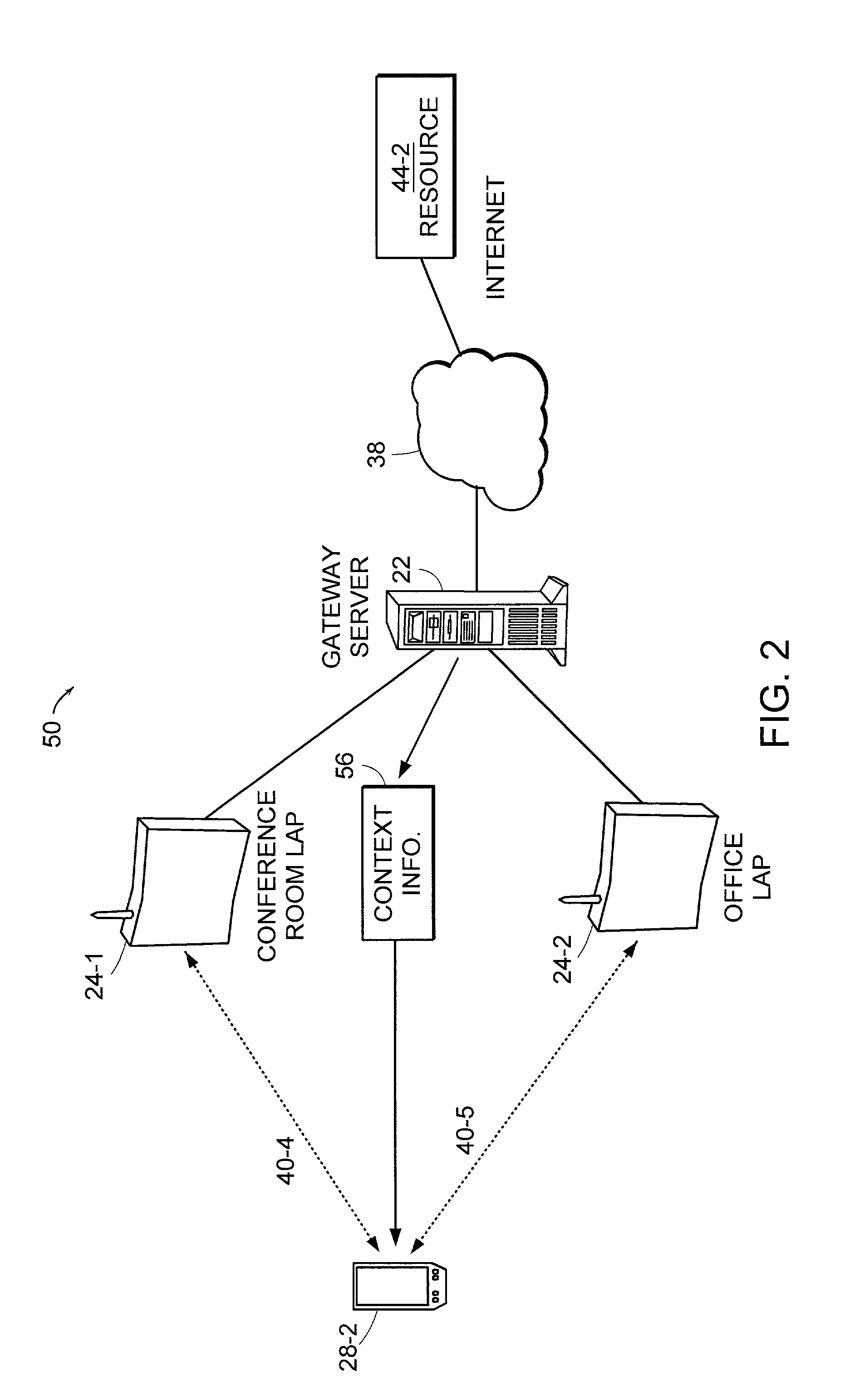

Conversational computing via conversational virtual machine

InactiveUS7137126B1Limitation for transferReduce degradationInterconnection arrangementsResource allocationConversational speechApplication software

A conversational computing system that provides a universal coordinated multi-modal conversational user interface (CUI) (10) across a plurality of conversationally aware applications (11) (i.e., applications that “speak” conversational protocols) and conventional applications (12). The conversationally aware maps, applications (11) communicate with a conversational kernel (14) via conversational application APIs (13). The conversational kernel (14) controls the dialog across applications and devices (local and networked) on the basis of their registered conversational capabilities and requirements and provides a unified conversational user interface and conversational services and behaviors. The conversational computing system may be built on top of a conventional operating system and APIs (15) and conventional device hardware (16). The conversational kernel (14) handles all I / O processing and controls conversational engines (18). The conversational kernel (14) converts voice requests into queries and converts outputs and results into spoken messages using conversational engines (18) and conversational arguments (17). The conversational application API (13) conveys all the information for the conversational kernel (14) to transform queries into application calls and conversely convert output into speech, appropriately sorted before being provided to the user.

Owner:UNILOC 2017 LLC

Intelligent Automated Assistant

ActiveUS20120245944A1Improve user interactionEffectively engageNatural language translationSemantic analysisService provisionComputer science

The intelligent automated assistant system engages with the user in an integrated, conversational manner using natural language dialog, and invokes external services when appropriate to obtain information or perform various actions. The system can be implemented using any of a number of different platforms, such as the web, email, smartphone, and the like, or any combination thereof. In one embodiment, the system is based on sets of interrelated domains and tasks, and employs additional functionally powered by external services with which the system can interact.

Owner:APPLE INC

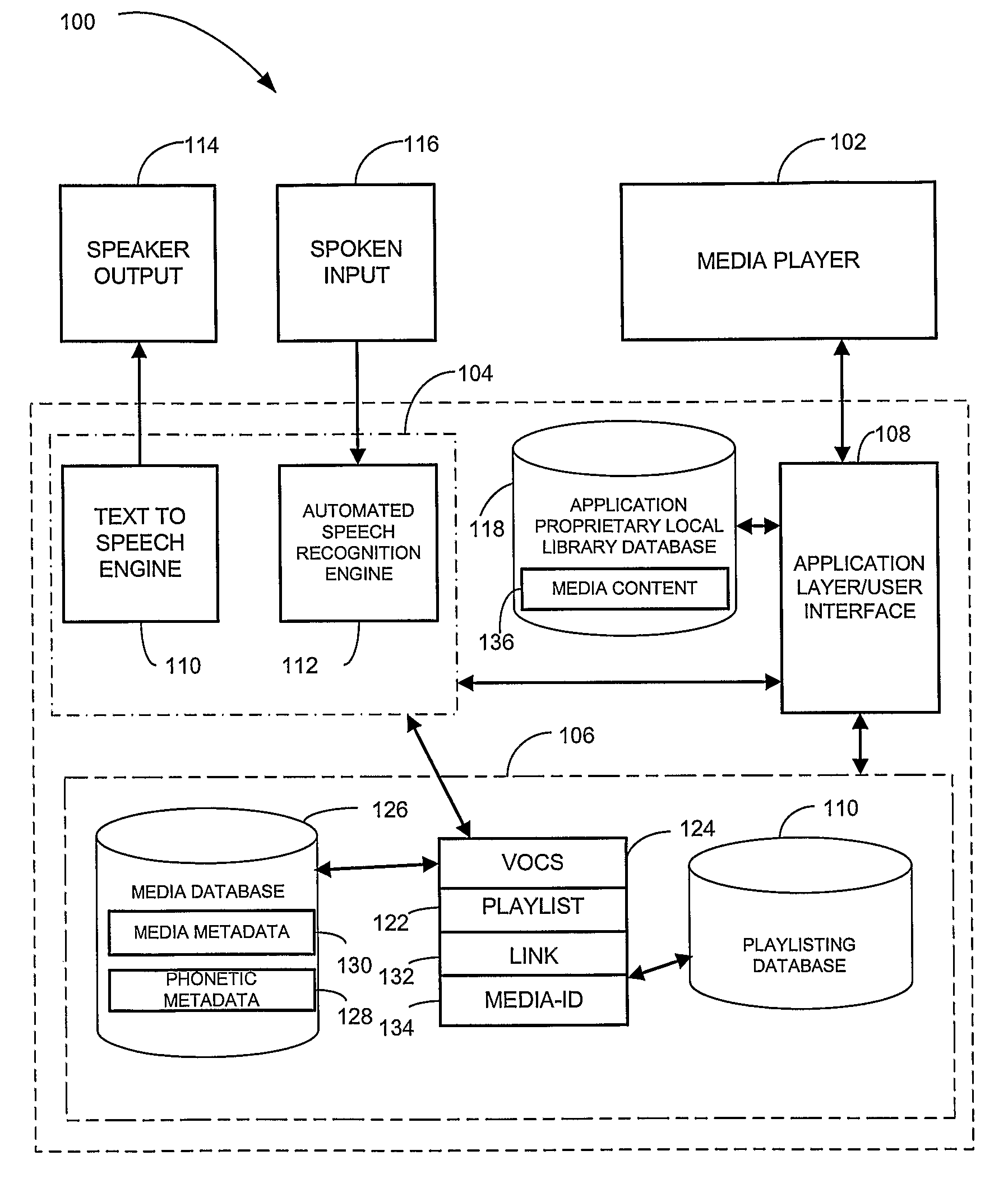

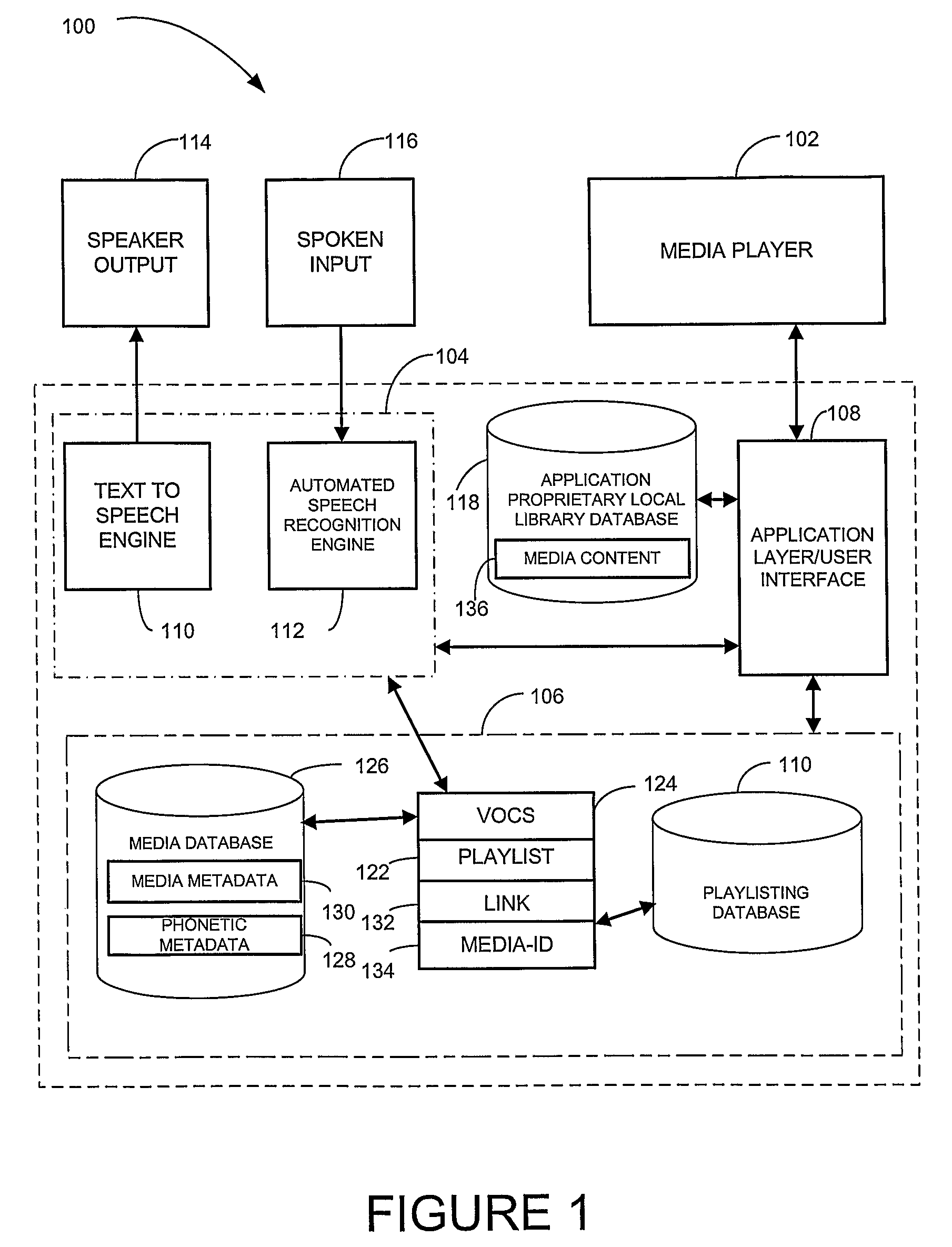

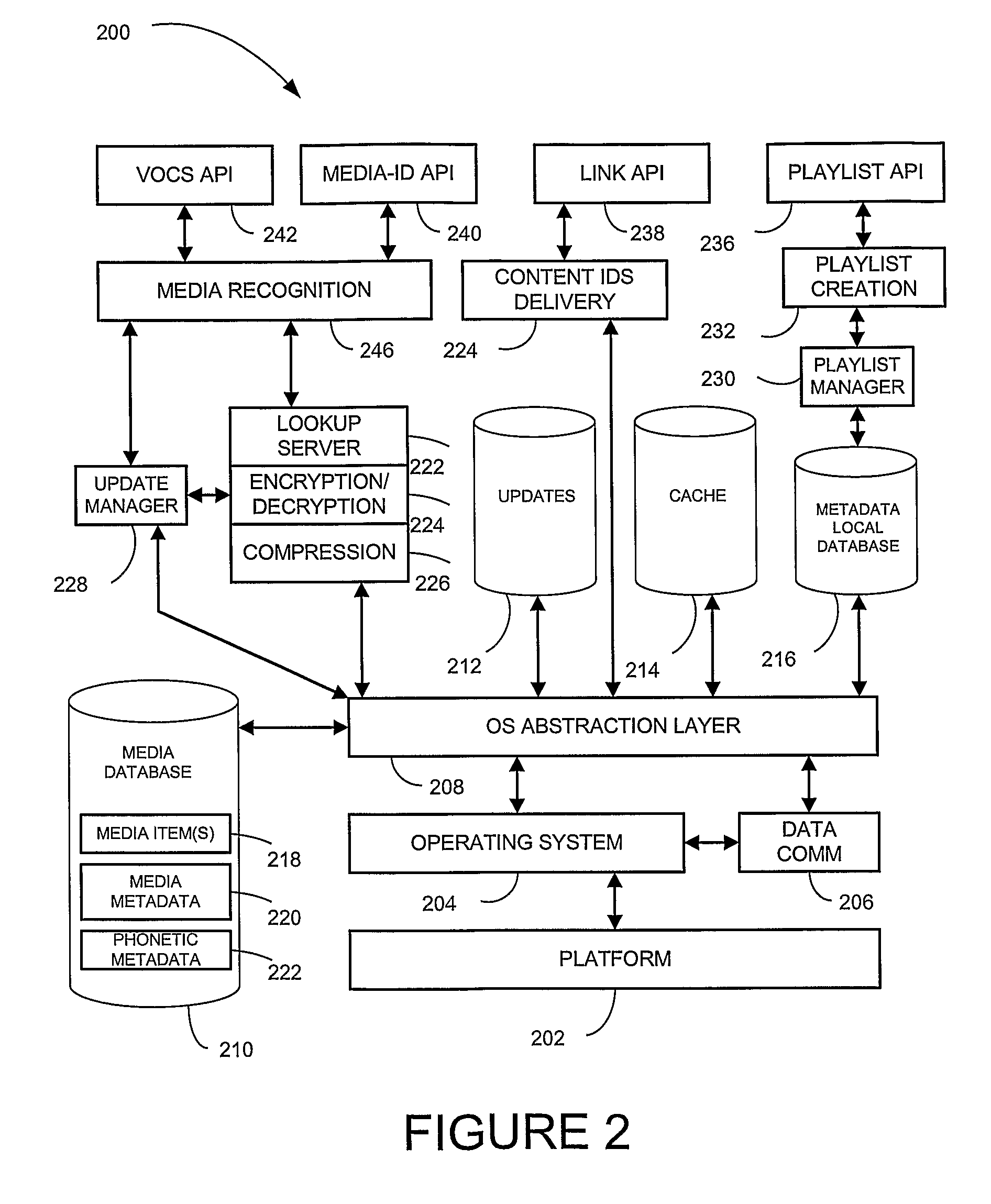

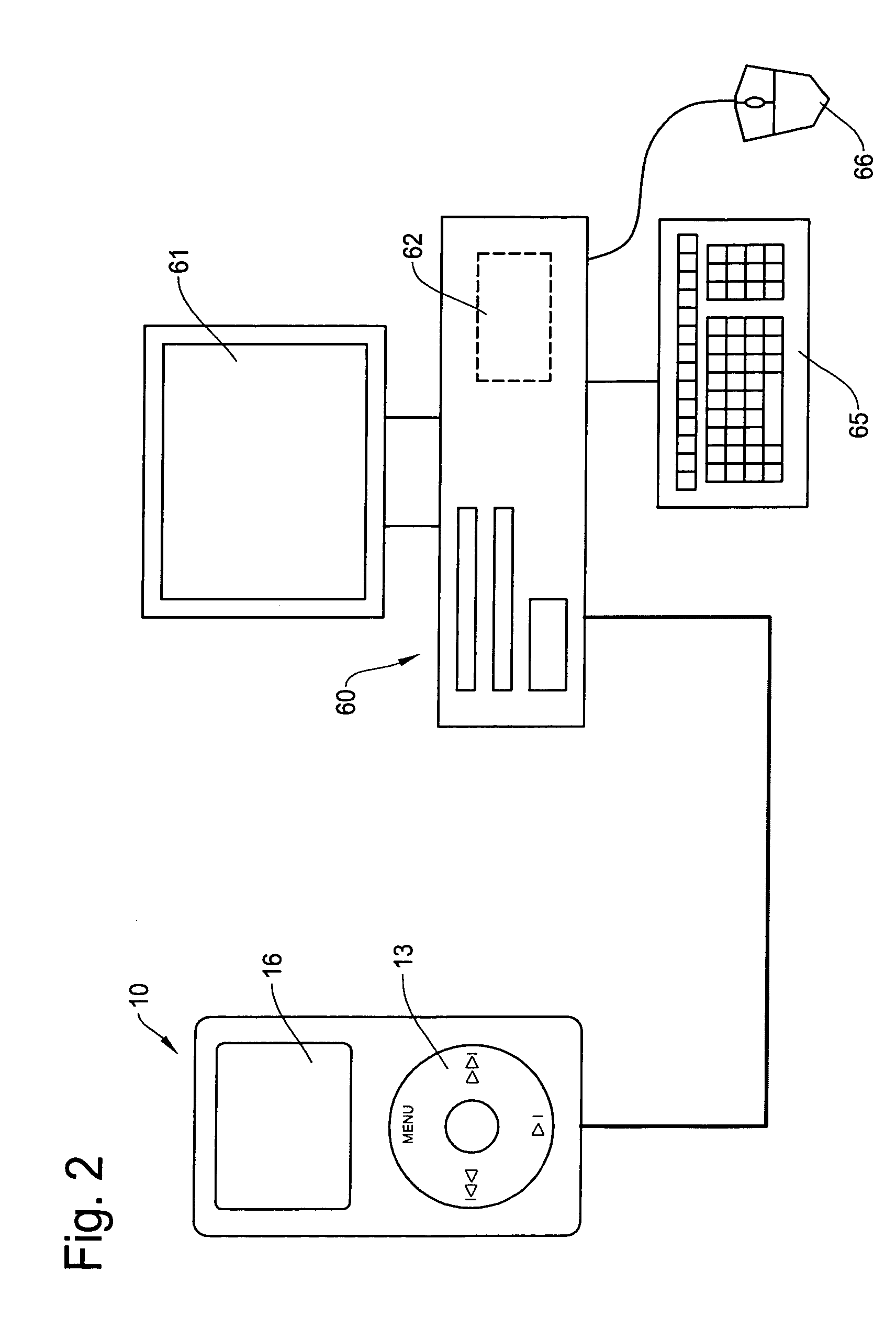

Method and apparatus to control operation of a playback device

InactiveUS20090076821A1Metadata audio data retrievalSpecial data processing applicationsSpeech soundMetadata

Media metadata is accessible for a plurality of media items (See FIG. 12). The media metadata includes a number of strings to identify information regarding the media items (See FIG. 12). Phonetic metadata is associated the number of strings of the media metadata (See FIG. 12). Each portion of the phonetic metadata is stored in an original language of the string (See FIG. 12).

Owner:GRACENOTE

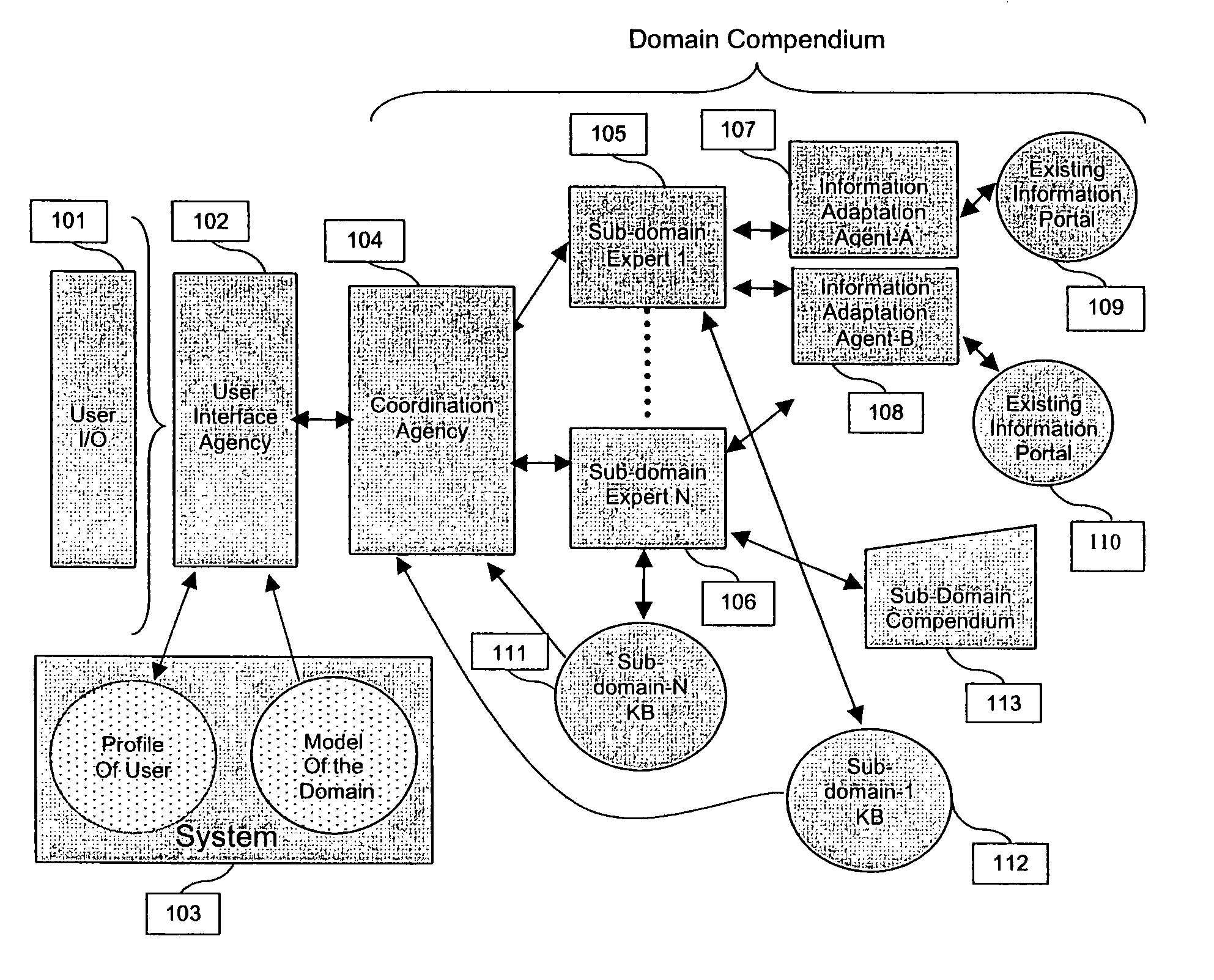

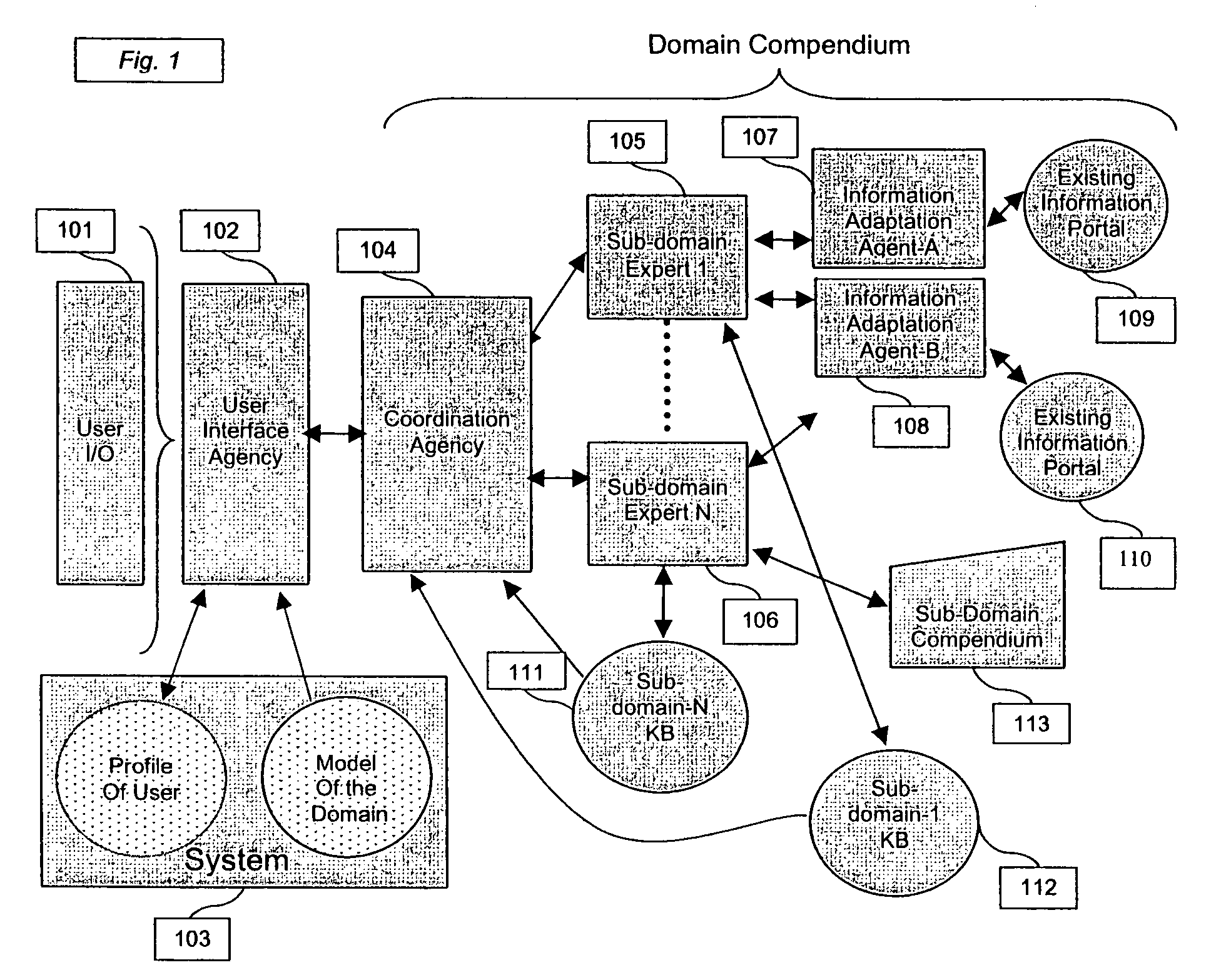

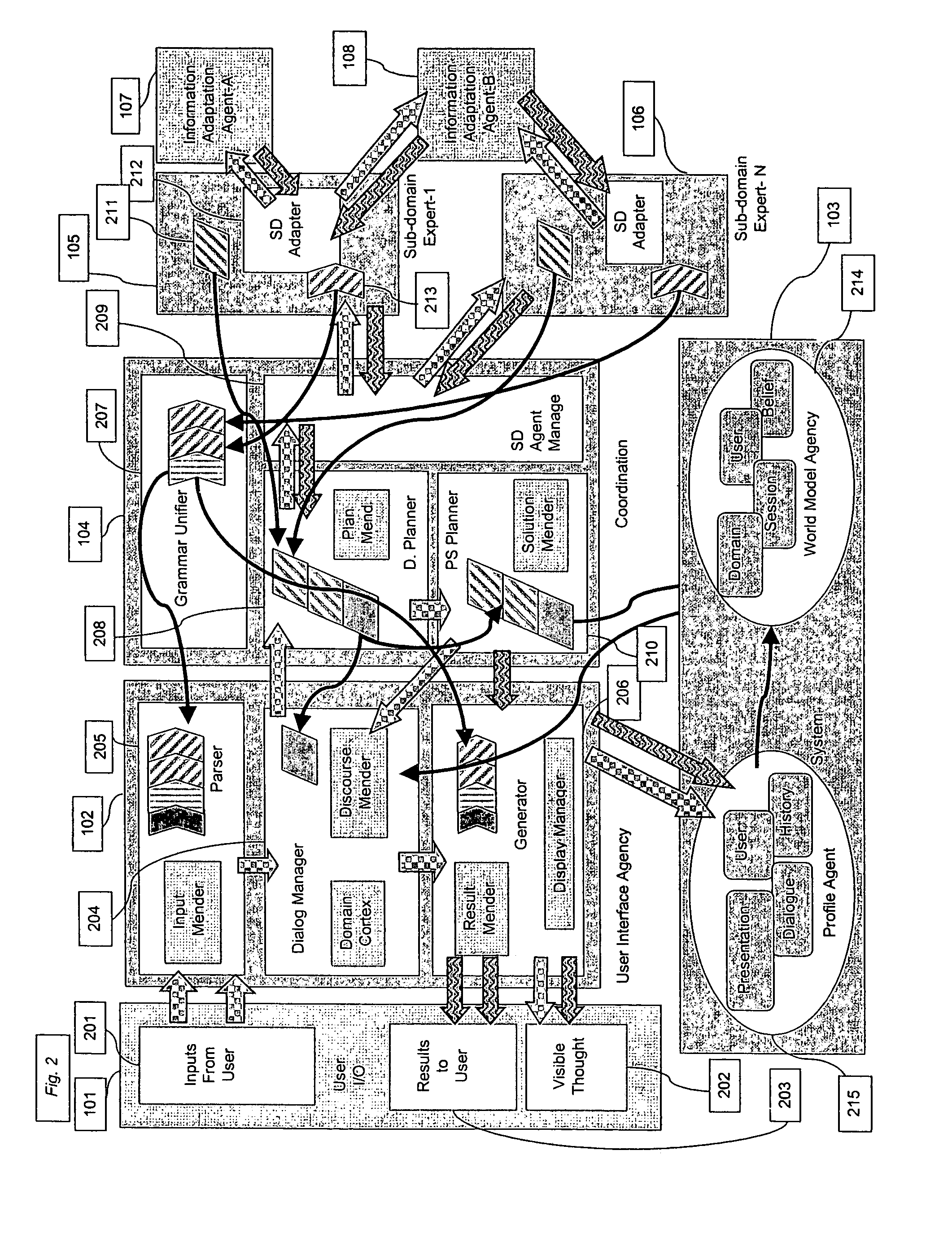

Intelligent portal engine

ActiveUS7092928B1Improve system performanceReduce ambiguityDigital computer detailsNatural language data processingGraphicsHuman–machine interface

Owner:OL SECURITY LIABILITY CO

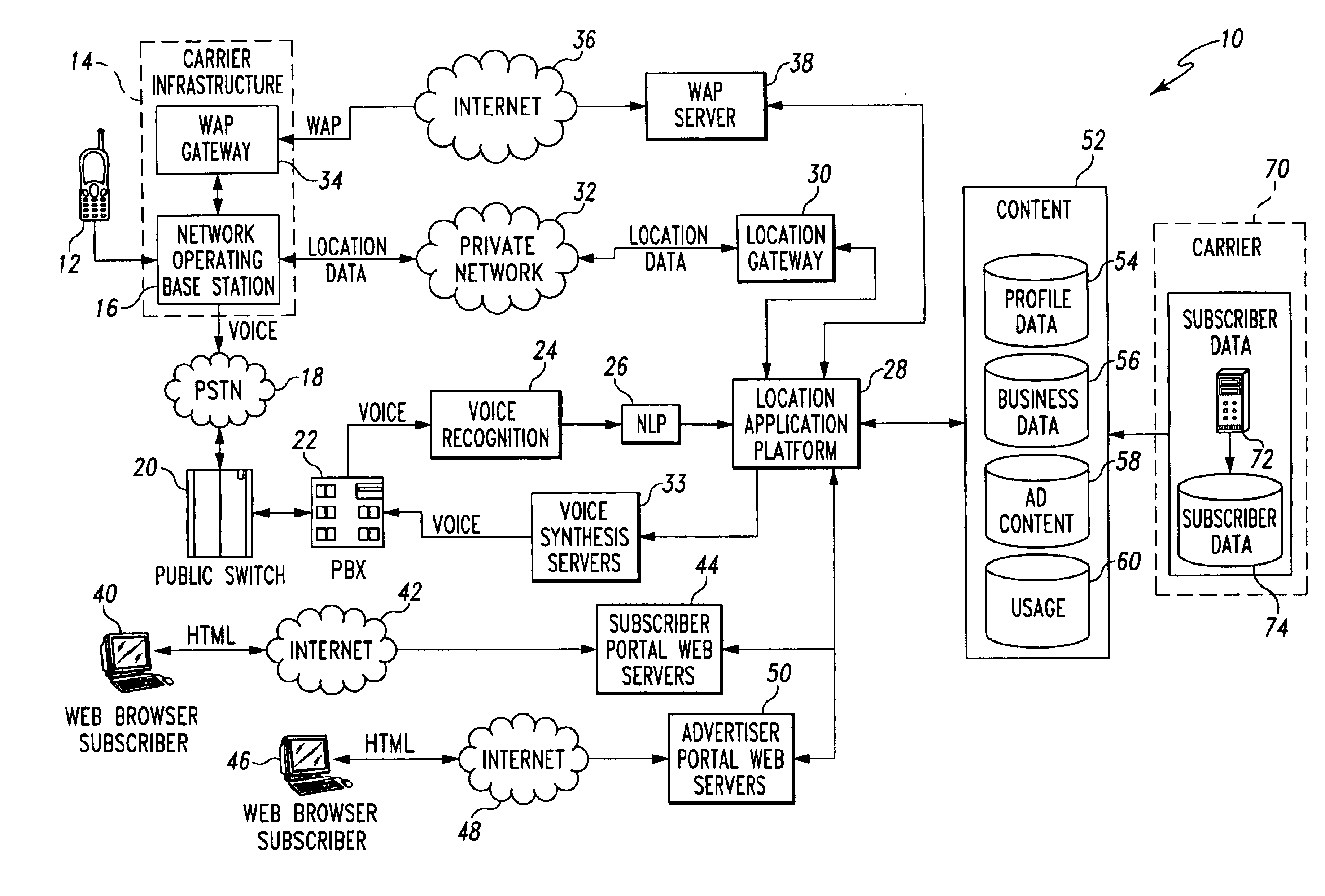

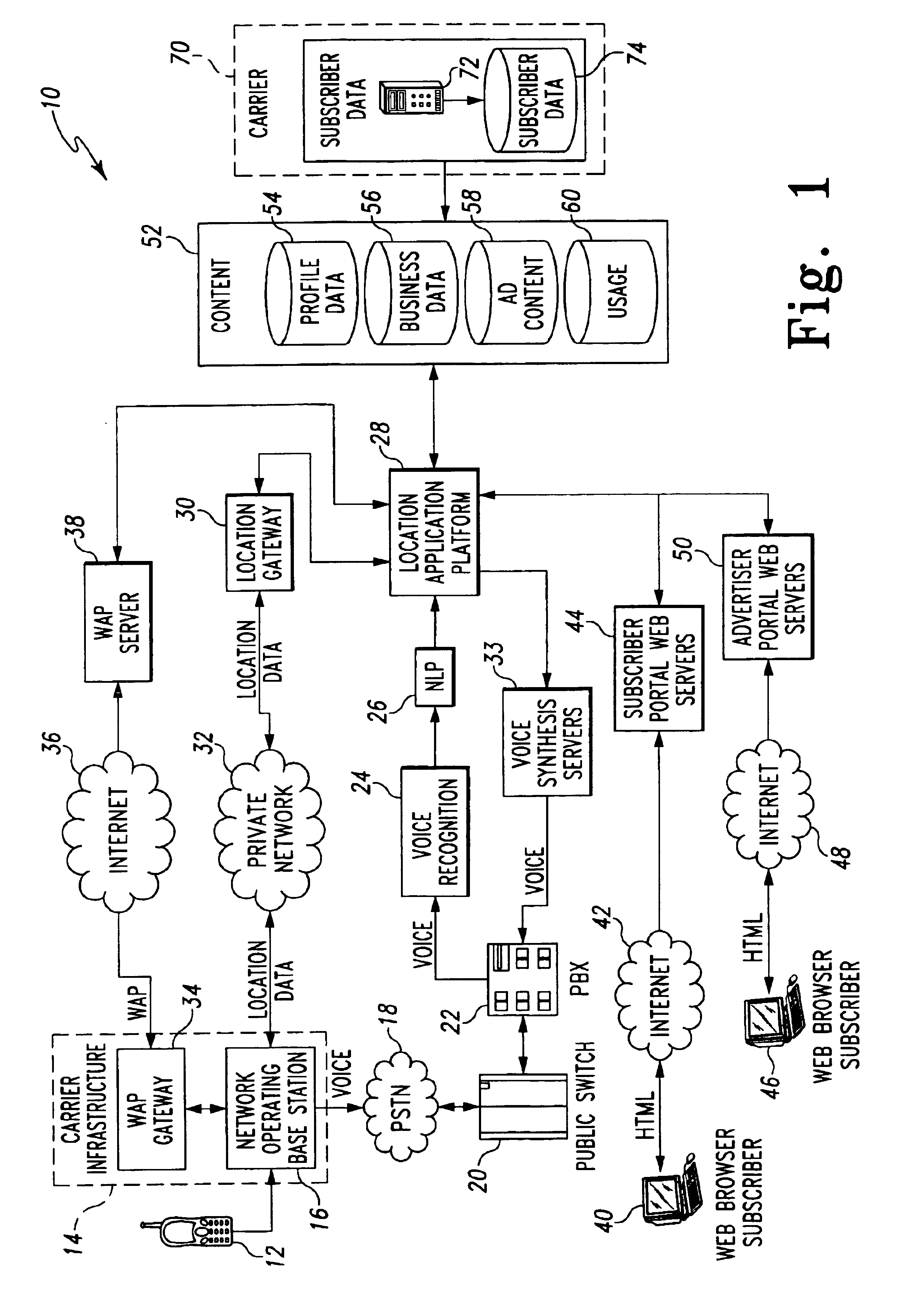

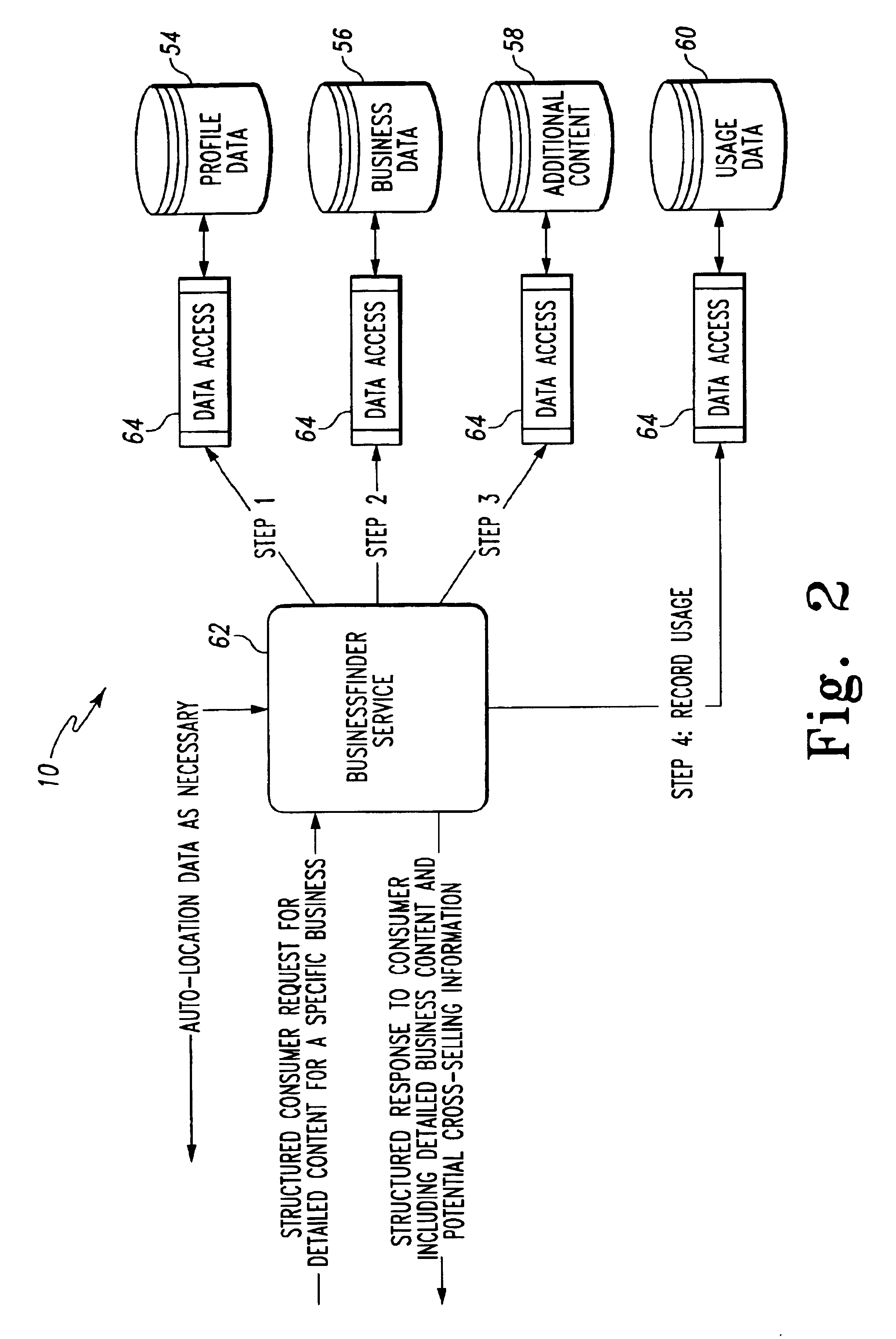

Location-based services

InactiveUS6944447B2Multiple digital computer combinationsSubstation equipmentCommunications systemApplication server

The present invention discloses a method and system for providing location-based services to a remote terminal that is connected to various types of communication systems. A tailored request for information is generated with the remote terminal. In addition, a geographic indicator associated with the remote terminal is also generated in the preferred embodiment. The tailored request for information and the geographic indicator are transmitted to a location-based application server. A structured response to the tailored request for information is generated with the location-based application server, wherein the structured response is based on the geographic indicator of the remote terminal. The structured response is the transmitted to the remote terminal using one of several different types of communication protocols and / or mediums.

Owner:ACCENTURE GLOBAL SERVICES LTD

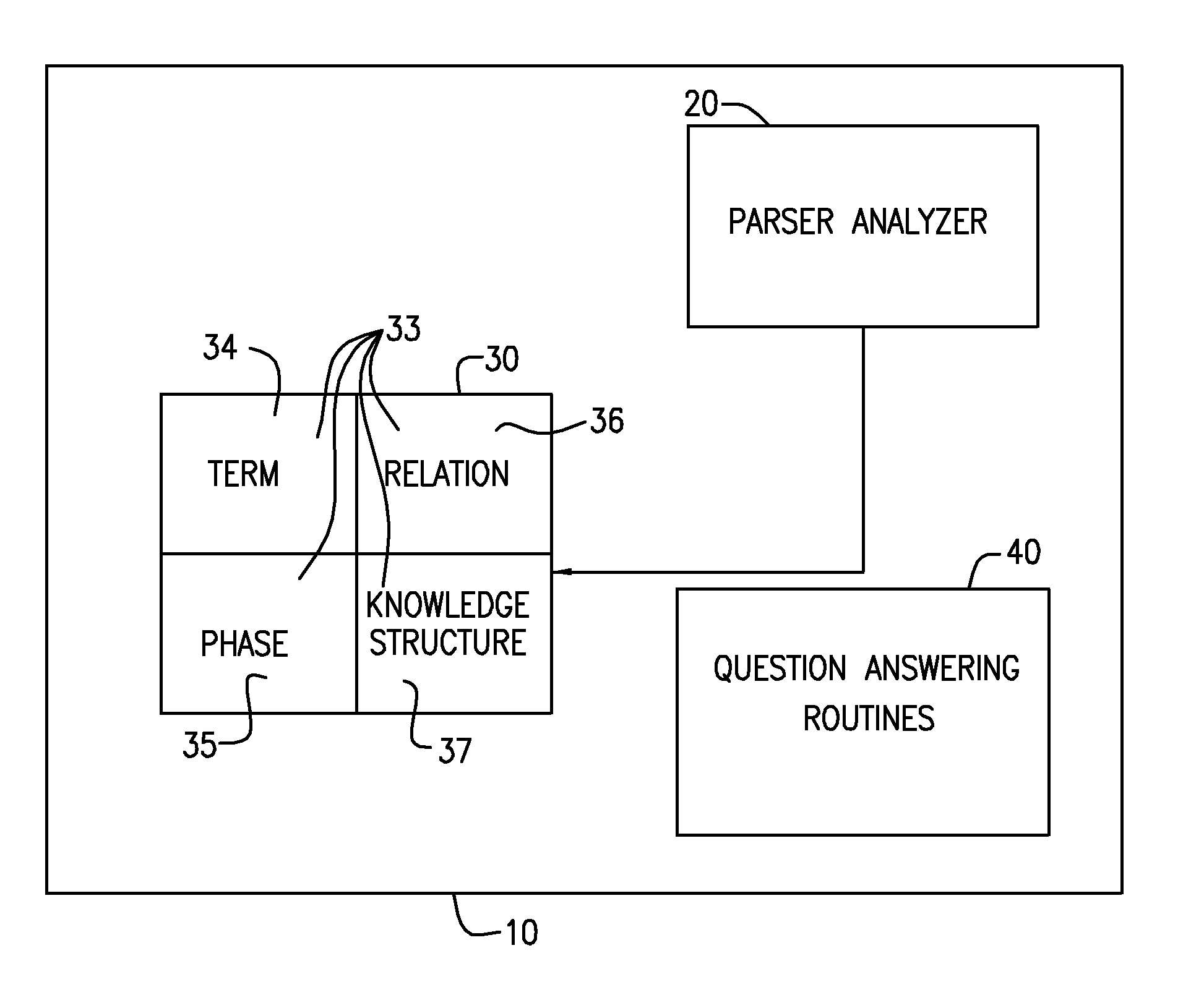

Intelligent home automation

InactiveUS20100332235A1Electric signal transmission systemsMultiple keys/algorithms usageSpoken languageThe Internet

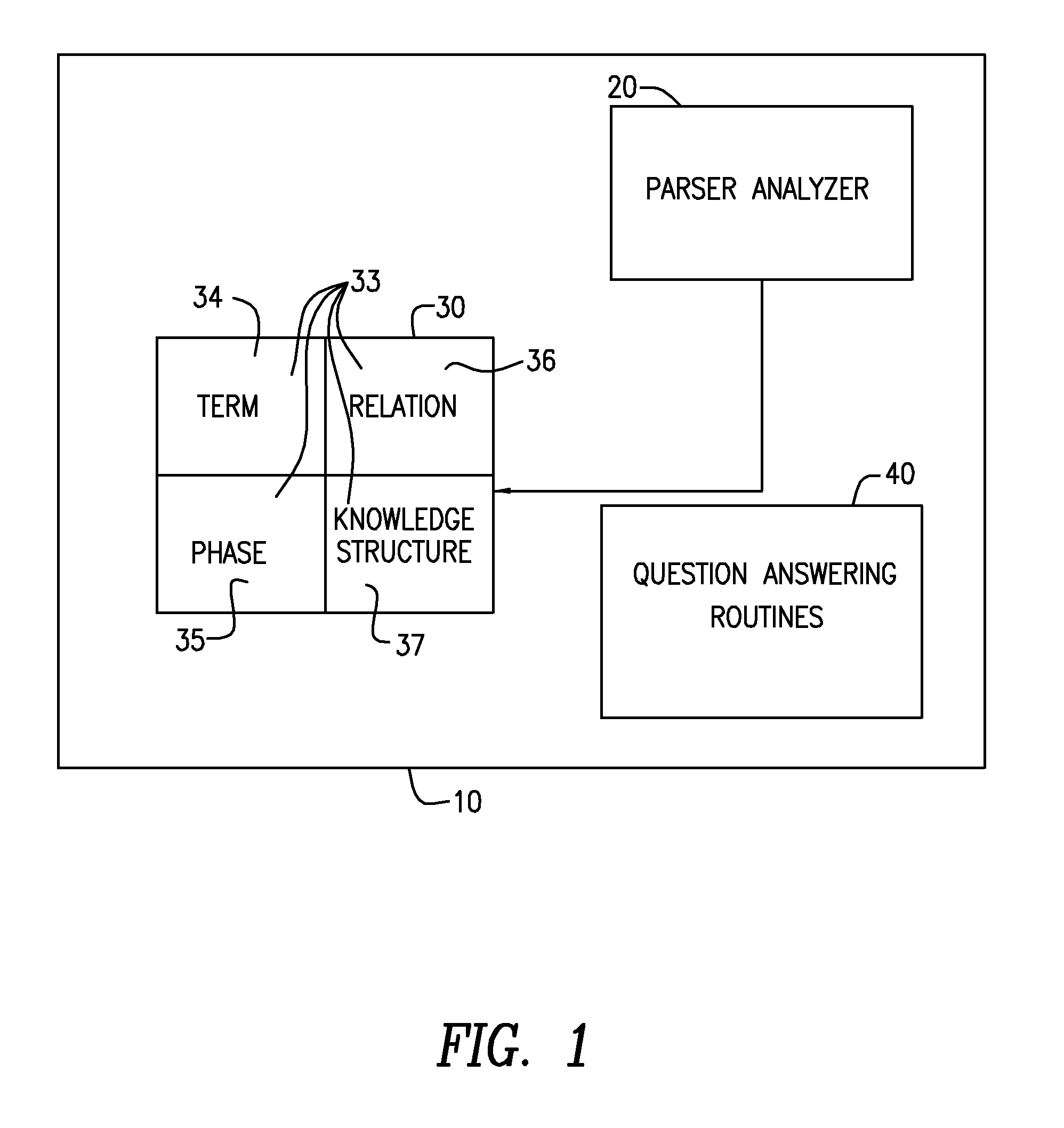

An intelligent home automation system answers questions of a user speaking “natural language” located in a home. The system is connected to, and may carry out the user's commands to control, any circuit, object, or system in the home. The system can answer questions by accessing the Internet. Using a transducer that “hears” human pulses, the system may be able to identify, announce and keep track of anyone entering or staying in the home or participating in a conversation, including announcing their identity in advance. The system may interrupt a conversation to implement specific commands and resume the conversation after implementation. The system may have extensible memory structures for term, phrase, relation and knowledge, question answering routines and a parser analyzer that uses transformational grammar and a modified three hypothesis analysis. The parser analyzer can be dormant unless spoken to. The system has emergency modes for prioritization of commands.

Owner:DAVID ABRAHAM BEN

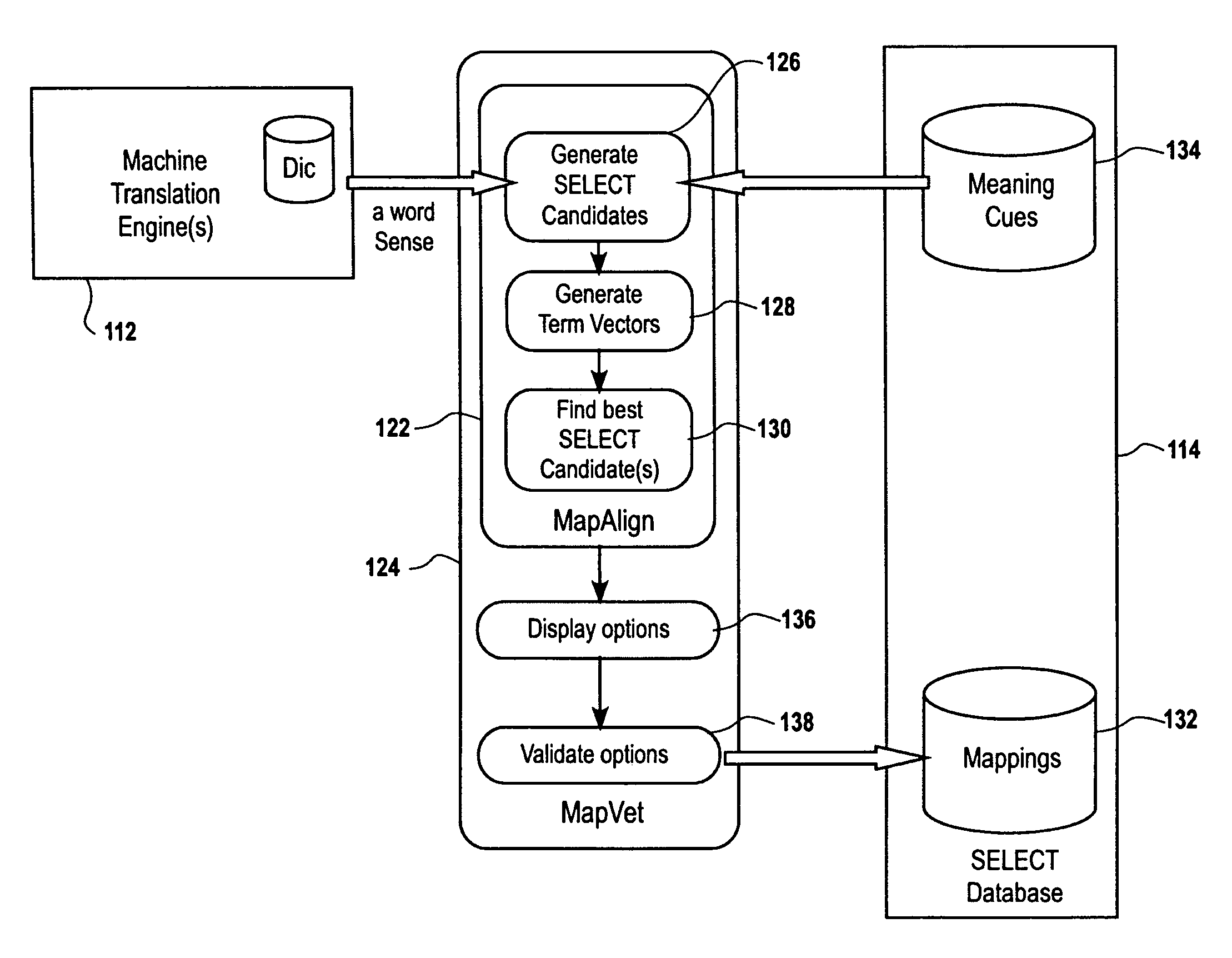

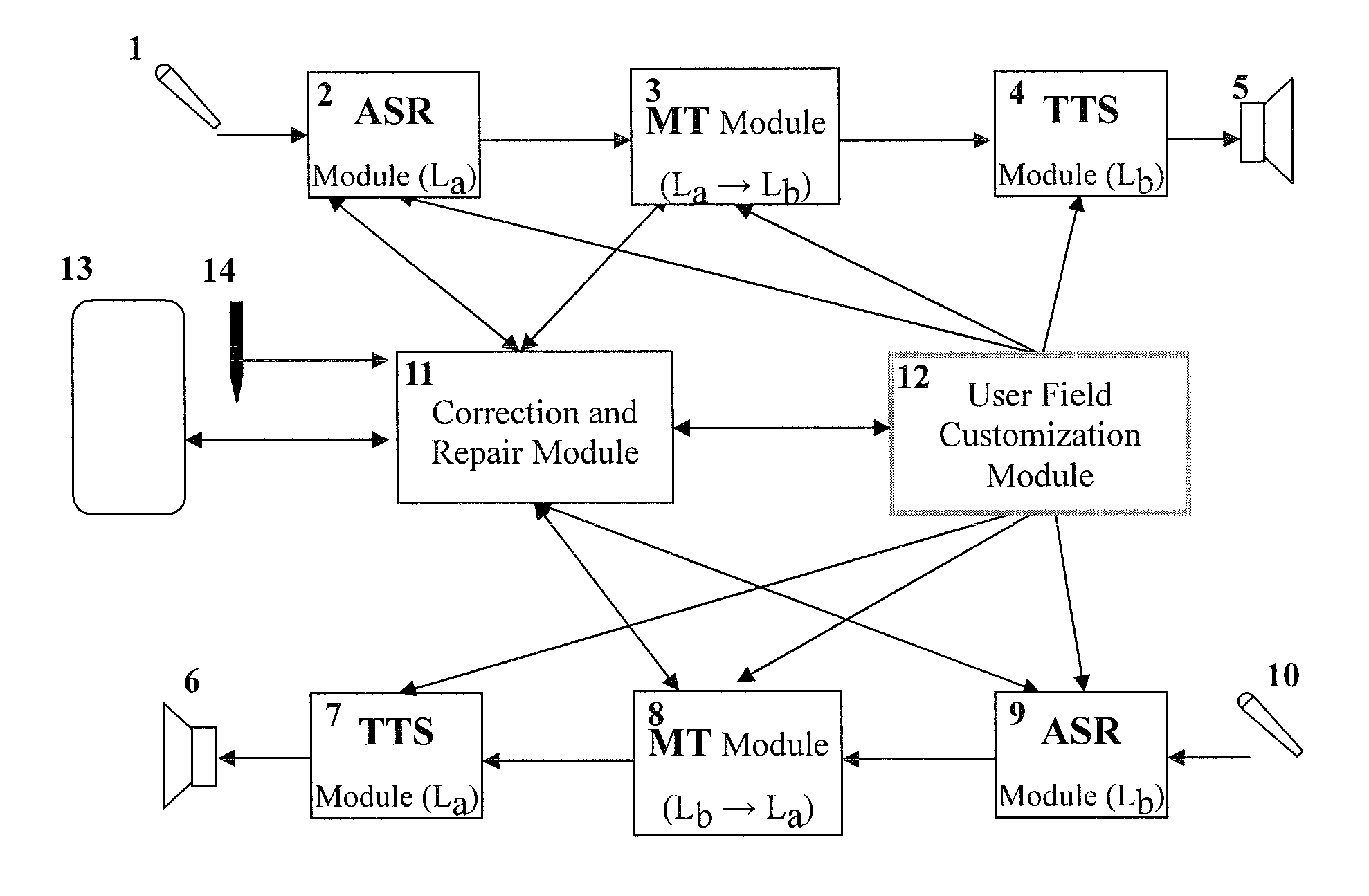

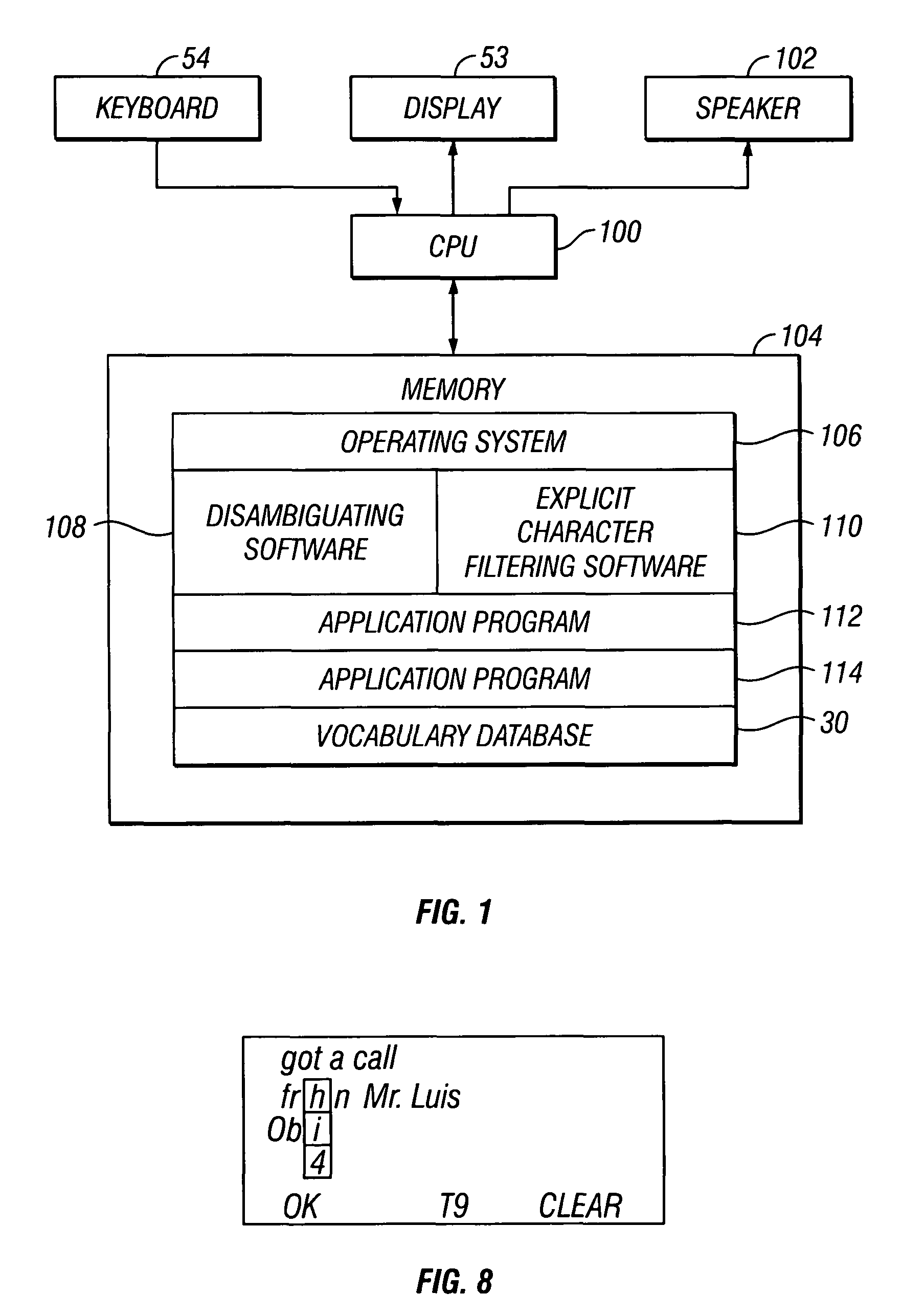

Speech-enabled language translation system and method enabling interactive user supervision of translation and speech recognition accuracy

ActiveUS7539619B1Natural language translationSpeech recognitionSpeech to speech translationAmbiguity

A system and method for a highly interactive style of speech-to-speech translation is provided. The interactive procedures enable a user to recognize, and if necessary correct, errors in both speech recognition and translation, thus providing robust translation output than would otherwise be possible. The interactive techniques for monitoring and correcting word ambiguity errors during automatic translation, search, or other natural language processing tasks depend upon the correlation of Meaning Cues and their alignment with, or mapping into, the word senses of third party lexical resources, such as those of a machine translation or search lexicon. This correlation and mapping can be carried out through the creation and use of a database of Meaning Cues, i.e., SELECT. Embodiments described above permit the intelligent building and application of this database, which can be viewed as an interlingua, or language-neutral set of meaning symbols, applicable for many purposes. Innovative techniques for interactive correction of server-based speech recognition are also described.

Owner:ZAMA INNOVATIONS LLC

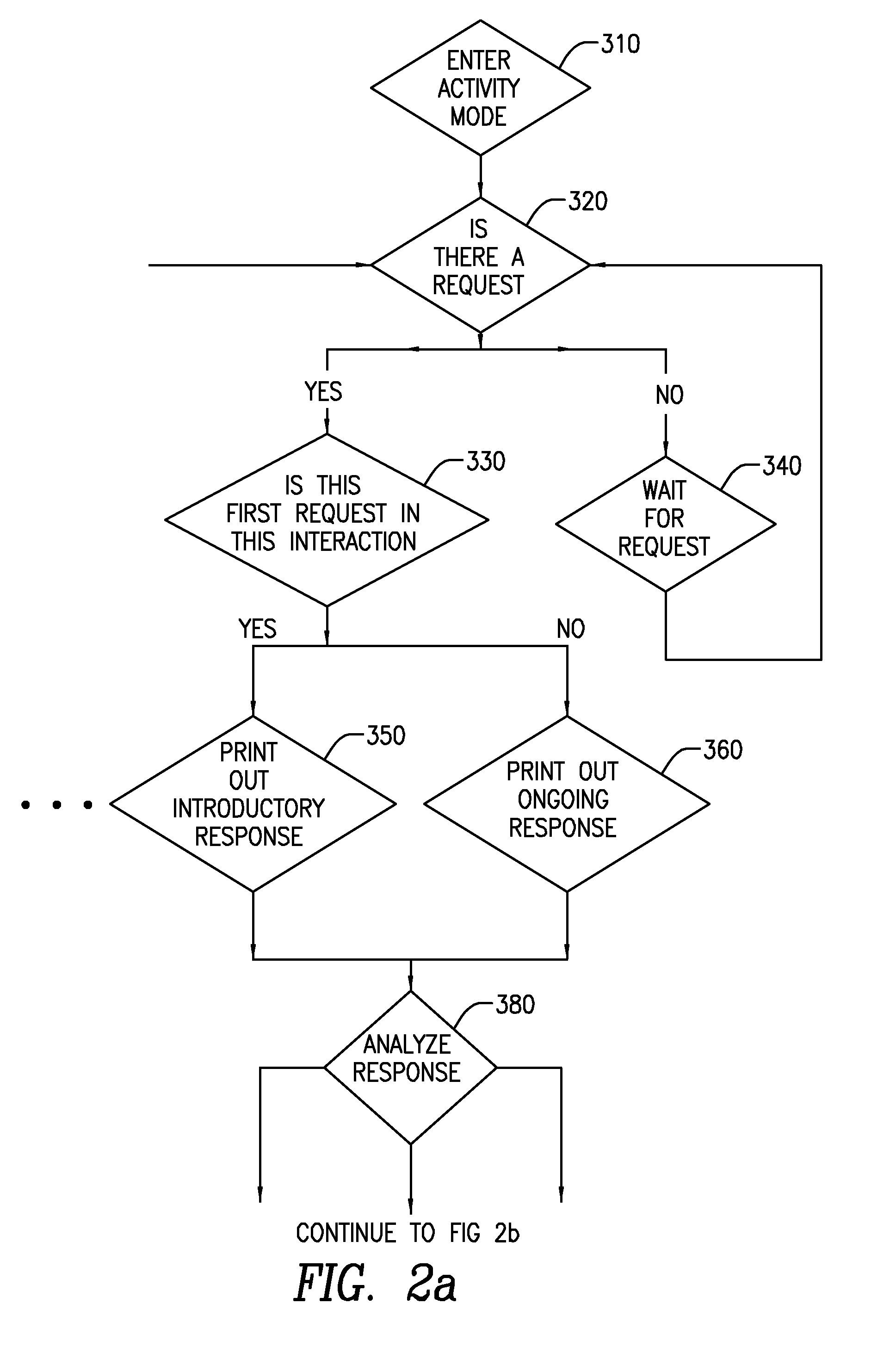

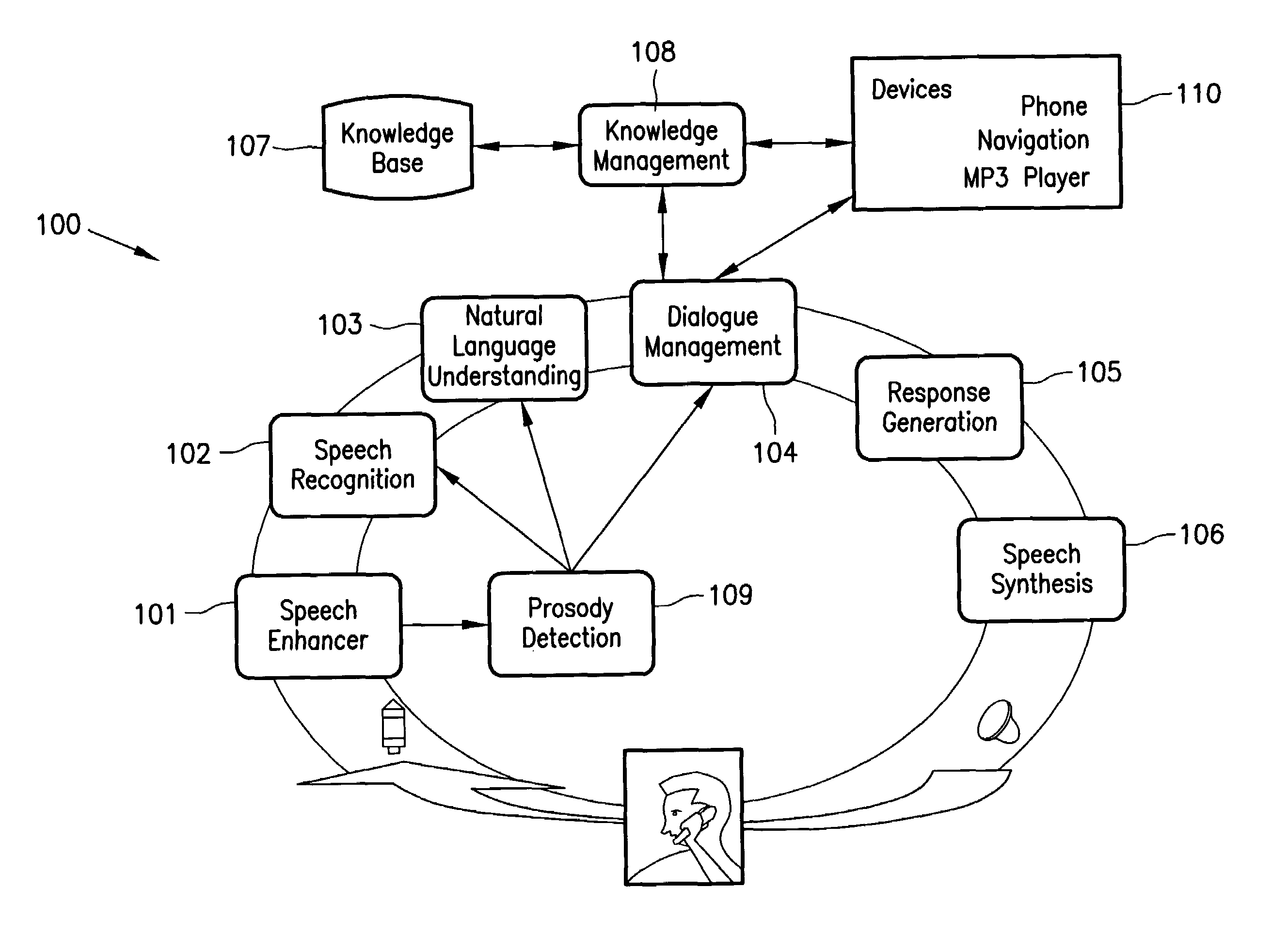

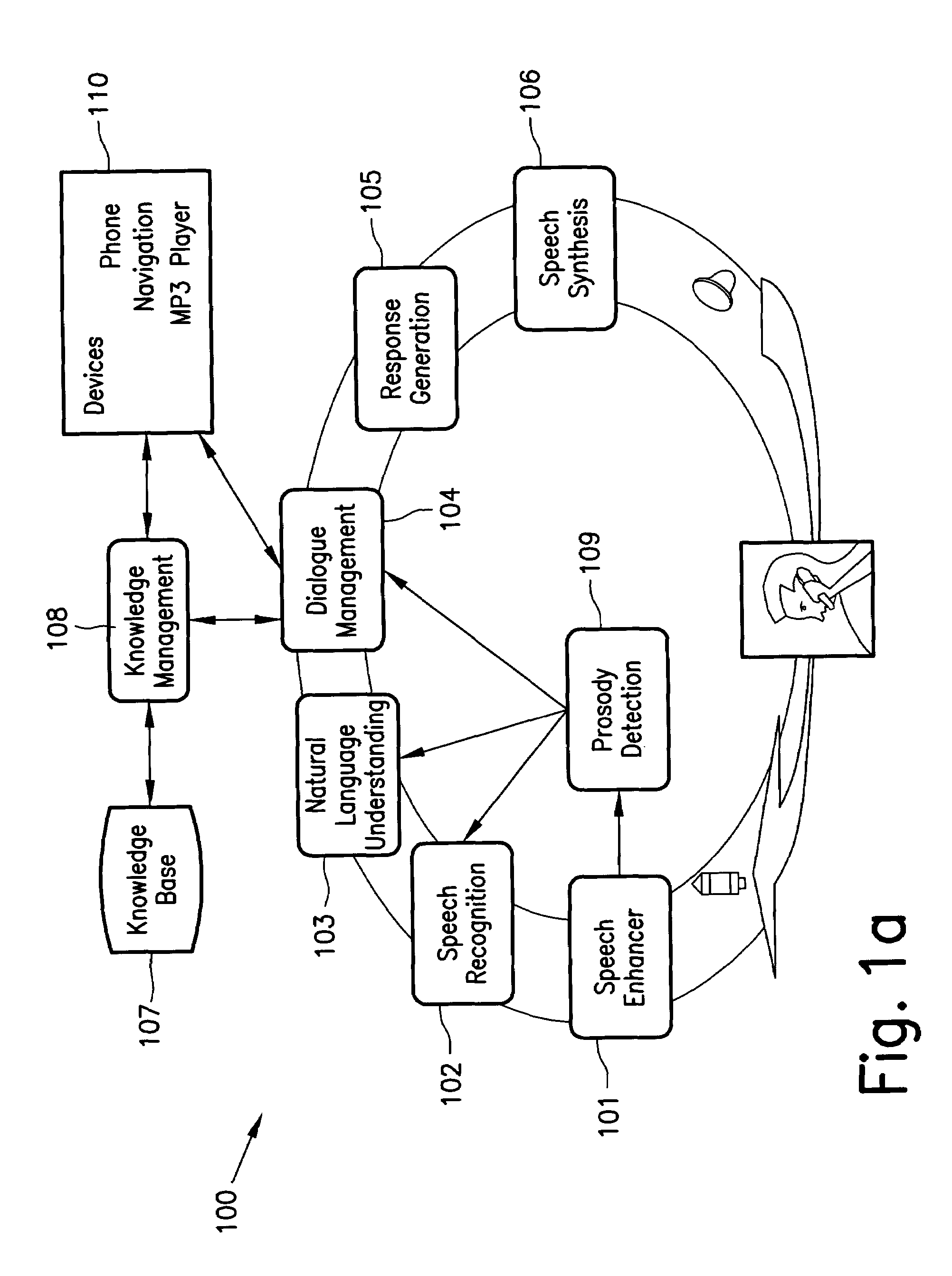

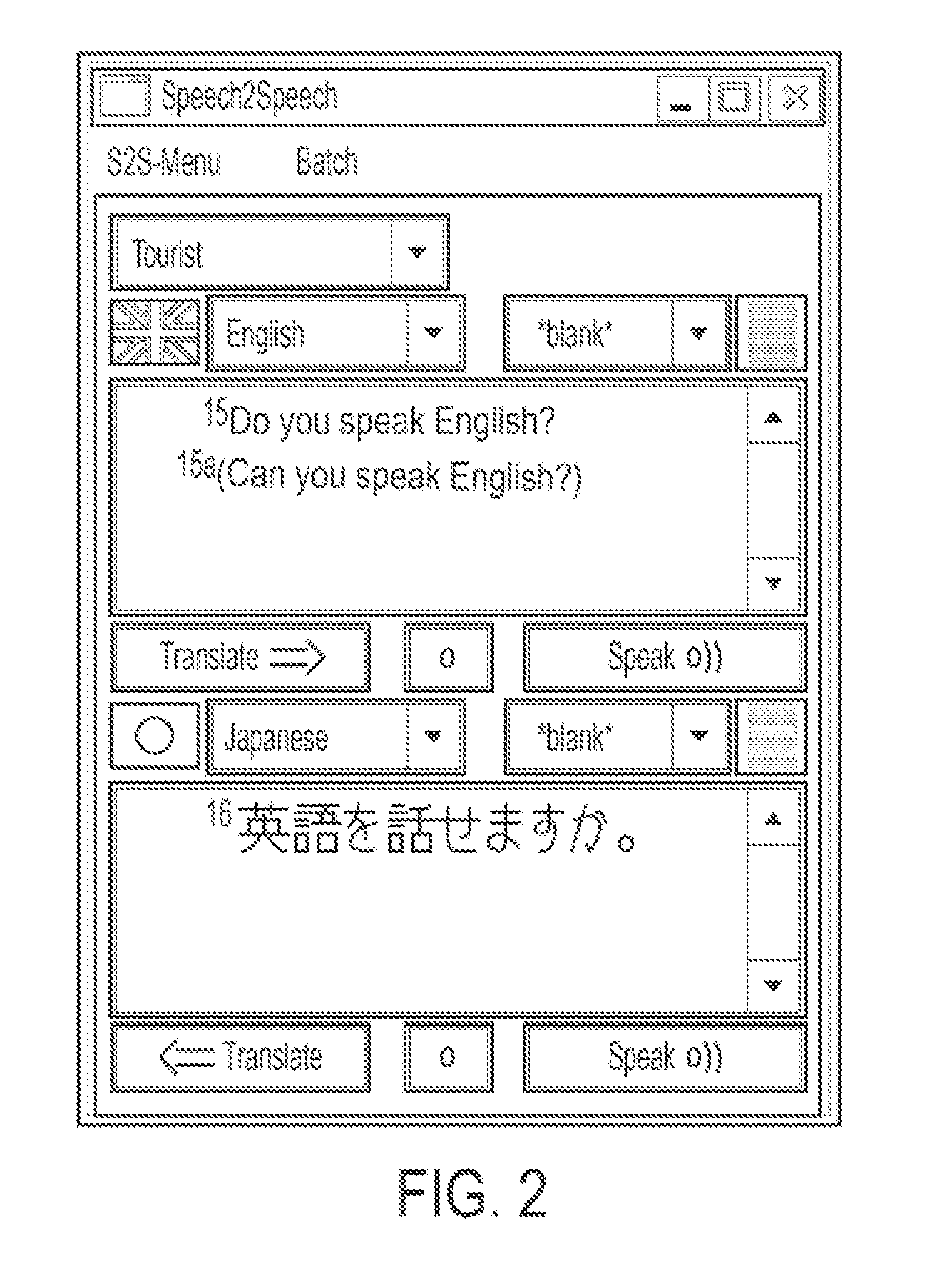

Method and system for interactive conversational dialogue for cognitively overloaded device users

ActiveUS7716056B2Easy and efficientAdapt quicklyNatural language data processingSpeech recognitionNatural language processingWave form

A system and method to interactively converse with a cognitively overloaded user of a device, includes maintaining a knowledge base of information regarding the device and a domain, organizing the information in at least one of a relational manner and an ontological manner, receiving speech from the user, converting the speech into a word sequence, recognizing a partial proper name in the word sequence, identifying meaning structures from the word sequence using a model of the domain information, adjusting a boundary of the partial proper names to enhance an accuracy of the meaning structures, interpreting the meaning structures in a context of the conversation with the cognitively overloaded user using the knowledge base, selecting a content for a response to the cognitively overloaded user, generating the response based on the selected content, the context of the conversation, and grammatical rules, and synthesizing speech wave forms for the response.

Owner:ROBERT BOSCH CORP +1

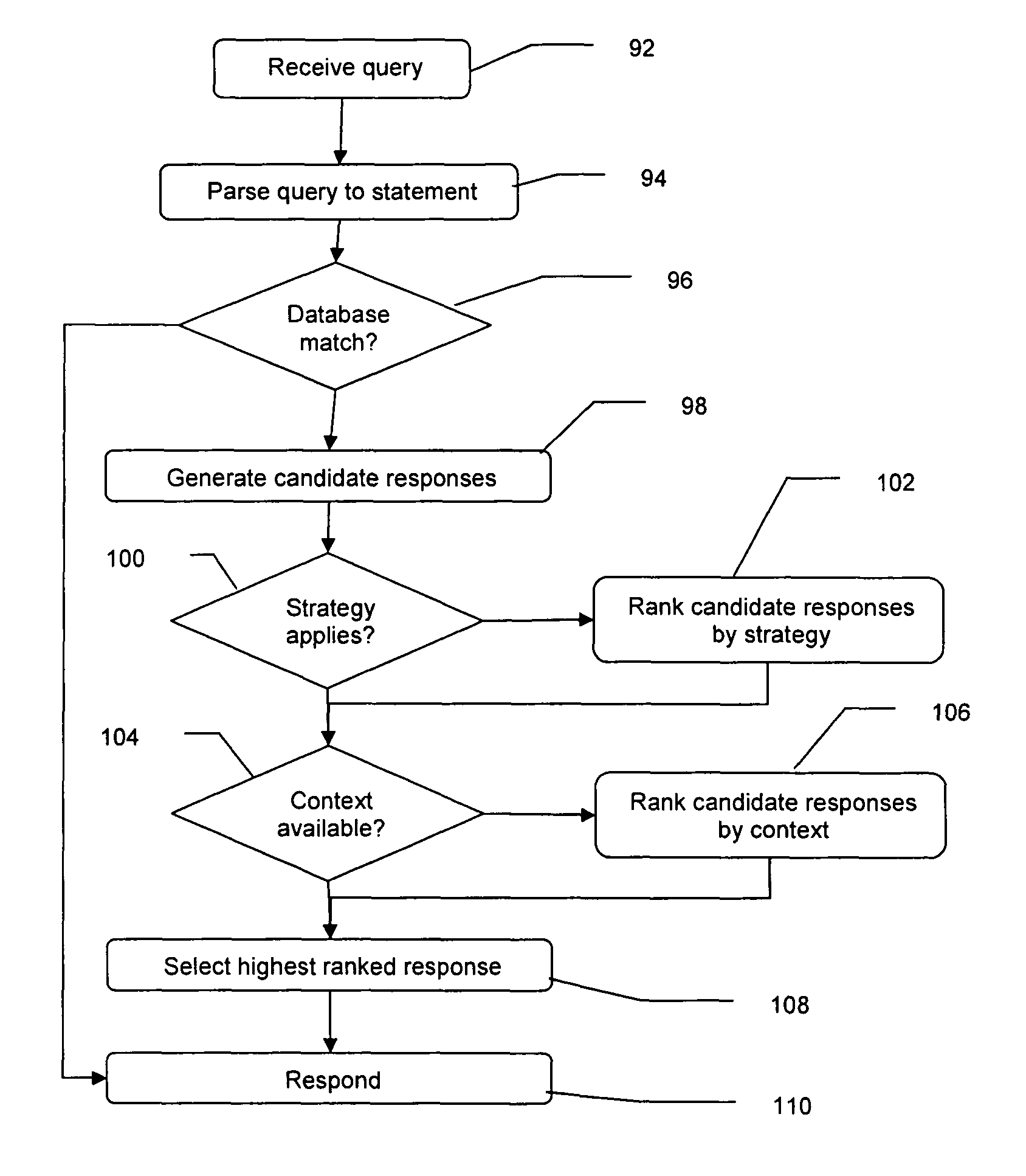

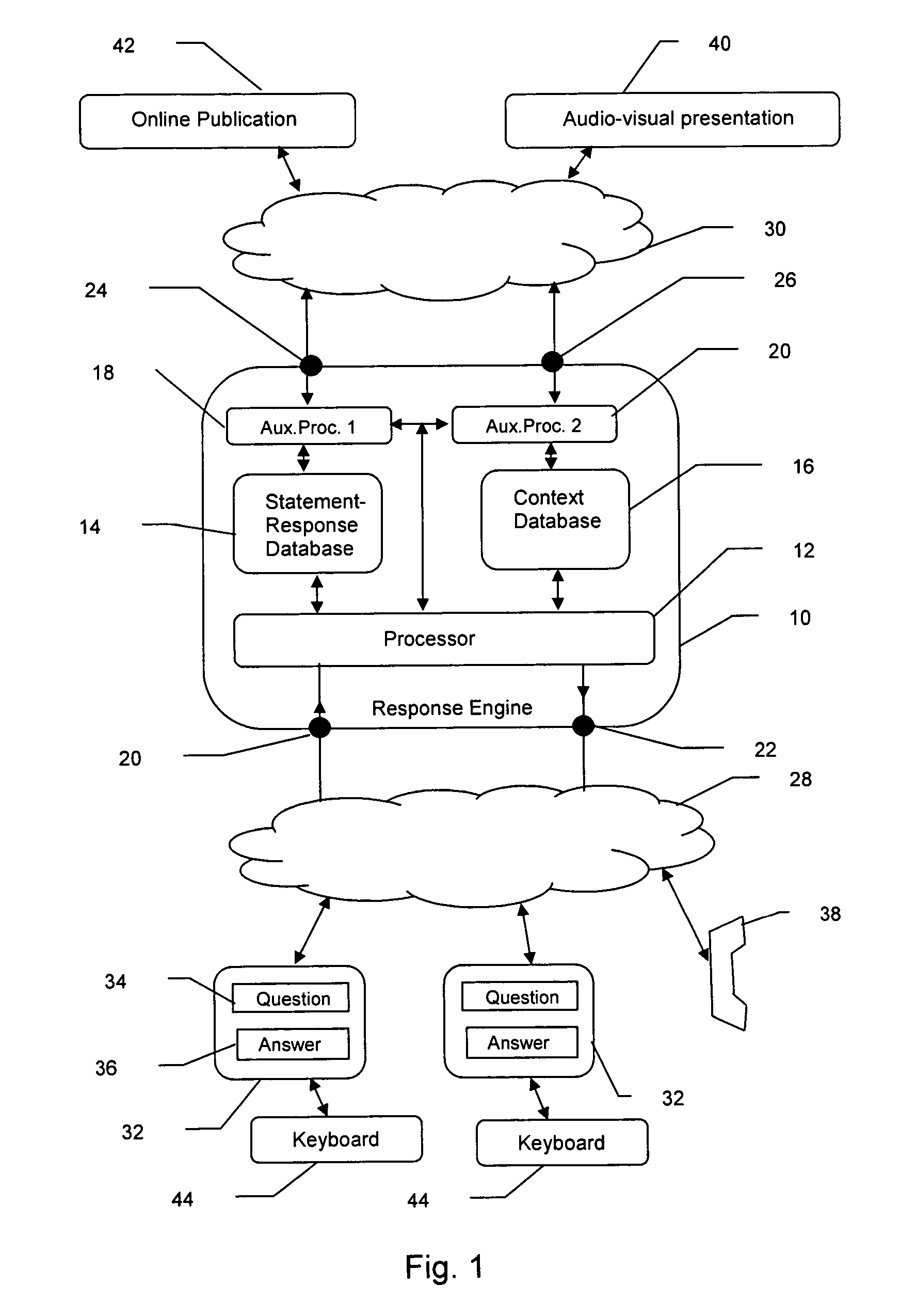

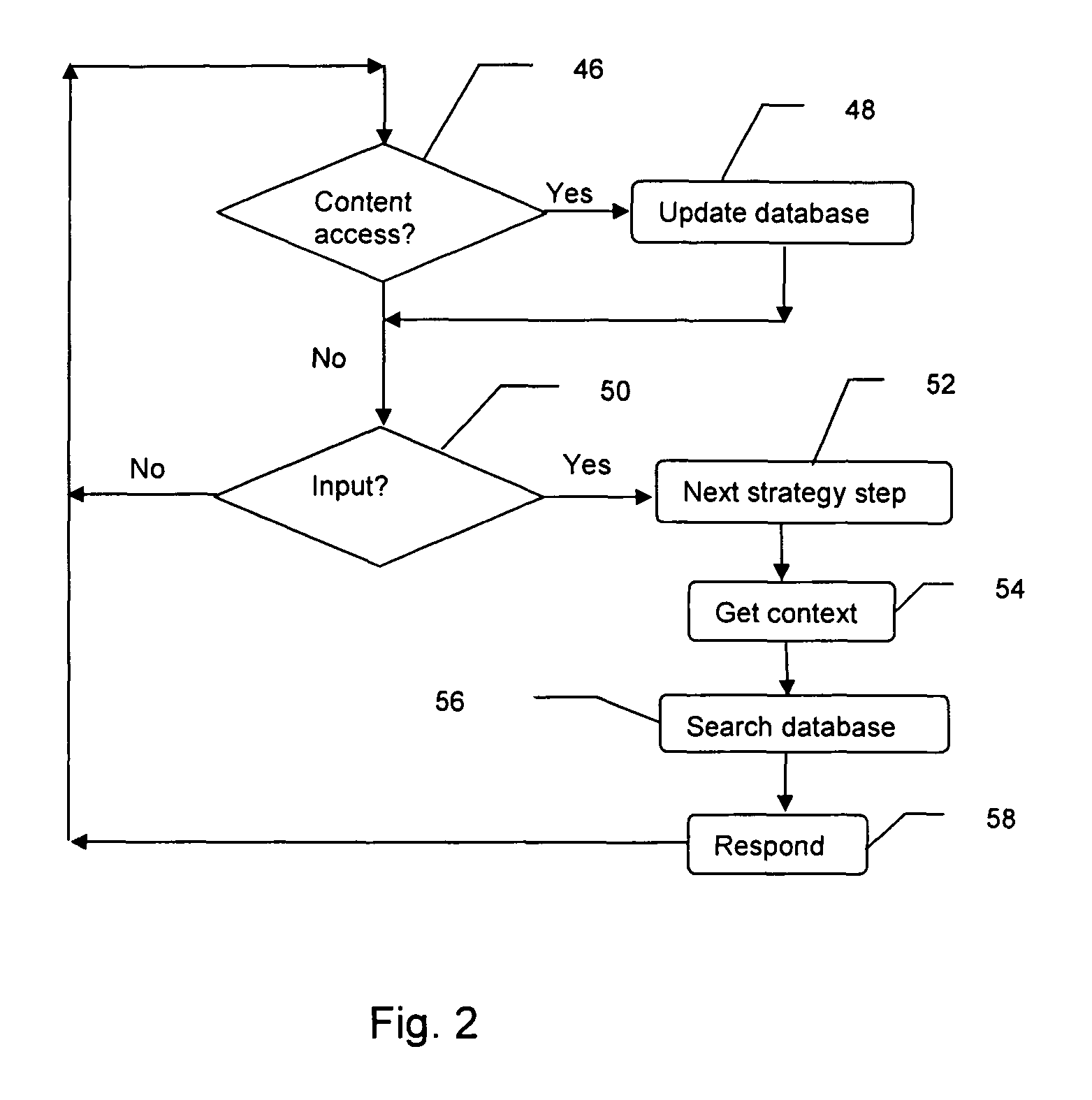

Response generator for mimicking human-computer natural language conversation

InactiveUS7783486B2Easy to createSpeech recognitionSpecial data processing applicationsContext dataDatabase

The present invention is an autonomous response engine and method that can more successfully mimic a human conversational exchange. In an exemplary, preferred embodiment of the invention, the response engine has a statement-response database that is autonomously updated, thus enabling a database of significant size to be easily created and maintained with current information. The response engine autonomously generates natural language responses to natural language queries by following one of several conversation strategies, by choosing at least one context element from a context database and by searching the updated statement-response data base for appropriate matches to the queries.

Owner:ROSSER ROY JONATHAN +1

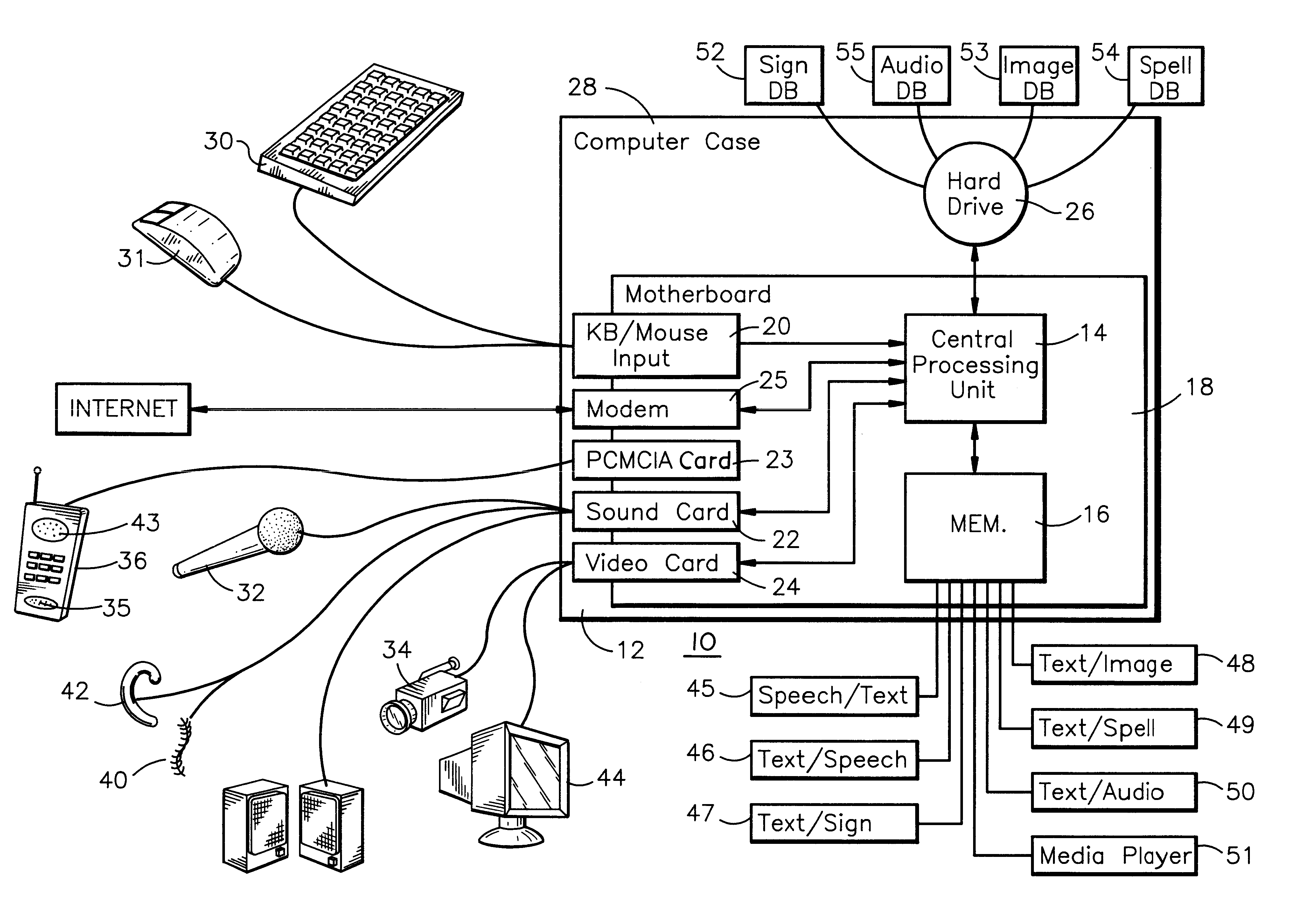

Electronic translator for assisting communications

InactiveUS6377925B1Hearing impaired stereophonic signal reproductionAutomatic exchangesData streamOutput device

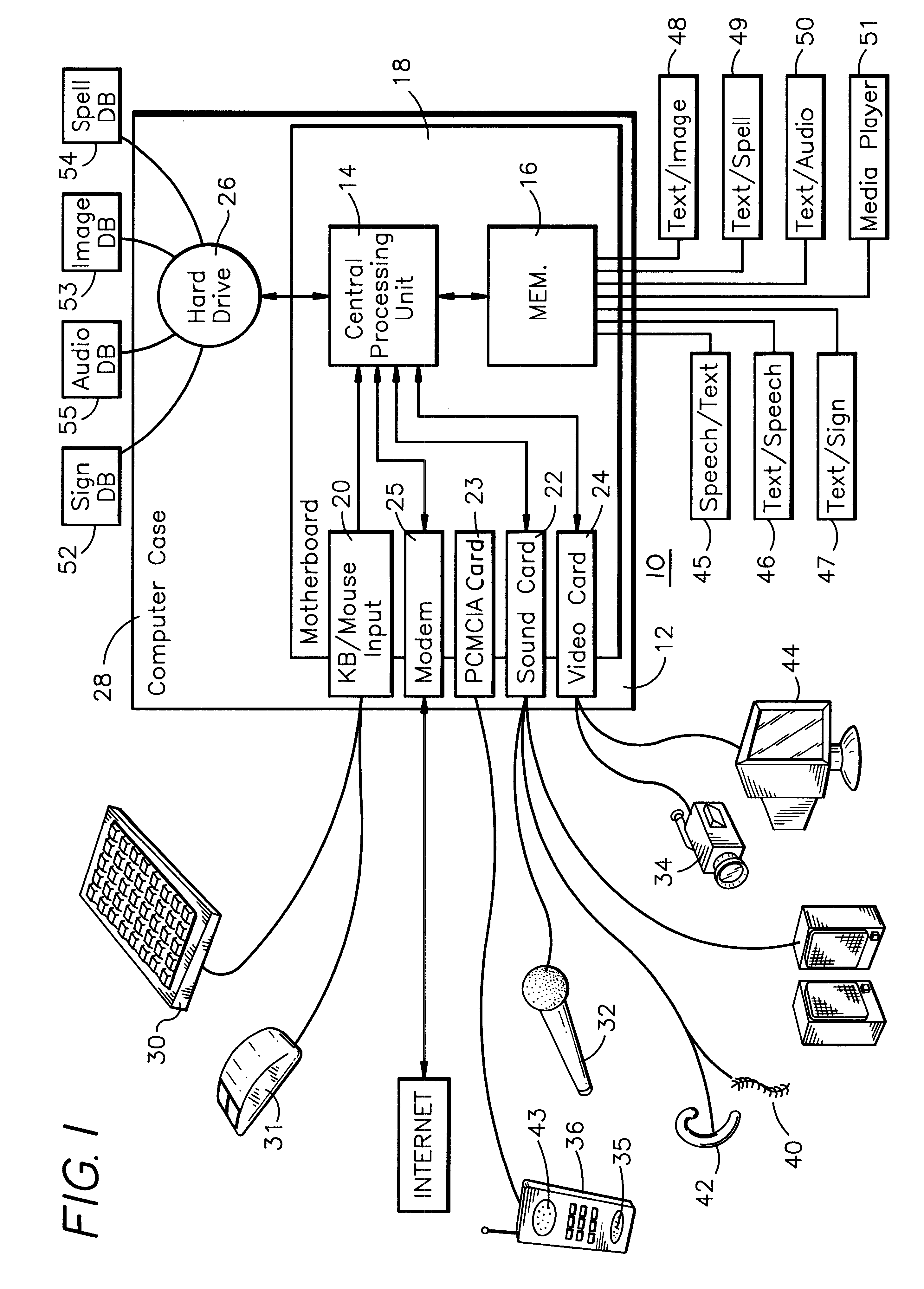

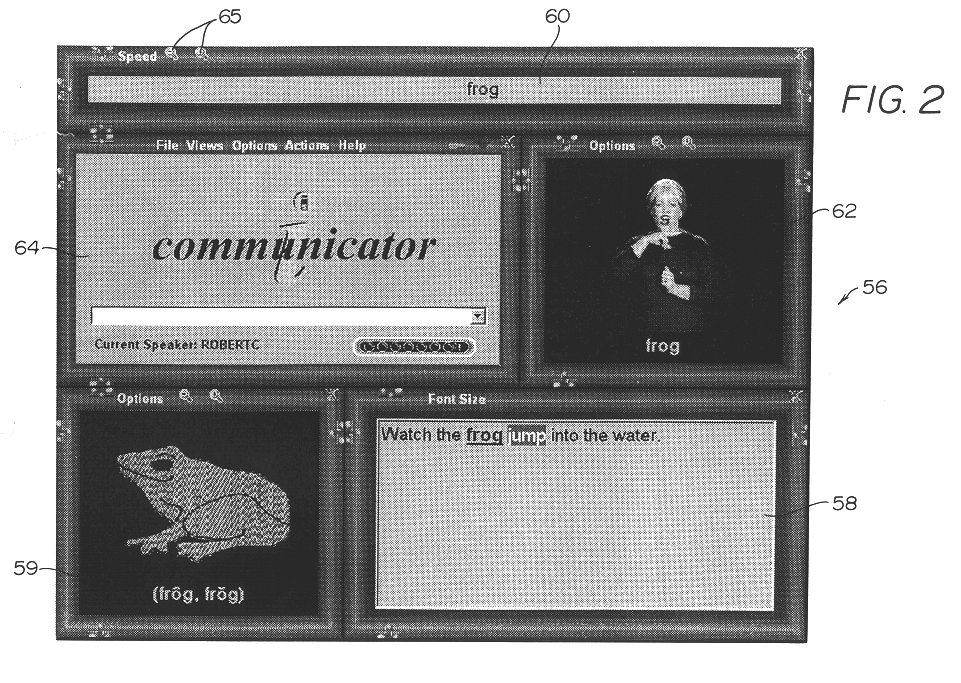

An electronic translator translates input speech into multiple streams of data that are simultaneously delivered to the user, such as a hearing impaired individual. Preferably, the data is delivered in audible, visual and text formats. These multiple data streams are delivered to the hearing-impaired individual in a synchronized fashion, thereby creating a cognitive response. Preferably, the system of the present invention converts the input speech to a text format, and then translates the text to any of three other forms, including sign language, animation and computer generated speech. The sign language and animation translations are preferably implemented by using the medium of digital movies in which videos of a person signing words, phrase and finger spelled words, and of animations corresponding to the words, are selectively accessed from databases and displayed. Additionally the received speech is converted to computer-generated speech for input to various hearing enhancement devices used by the deaf or hearing-impaired, such as cochlear implants and hearing aids, or other output devices such as speakers, etc. The data streams are synchronized utilizing a high-speed personal computer to facilitate sufficiently fast processing that the text, video signing and audible streams can be generated simultaneously in real time. Once synchronized the data streams are presented to the subject concurrently in a method that allows the process of mental comprehension to occur. The electronic translator can also be interfaced to other communications devices, such as telephones. Preferably, the hearing-impaired person is also able to use the system's keyboard or mouse to converse or respond.

Owner:INTERACTIVE SOLUTIONS

System and method for supporting interactive user interface operations and storage medium

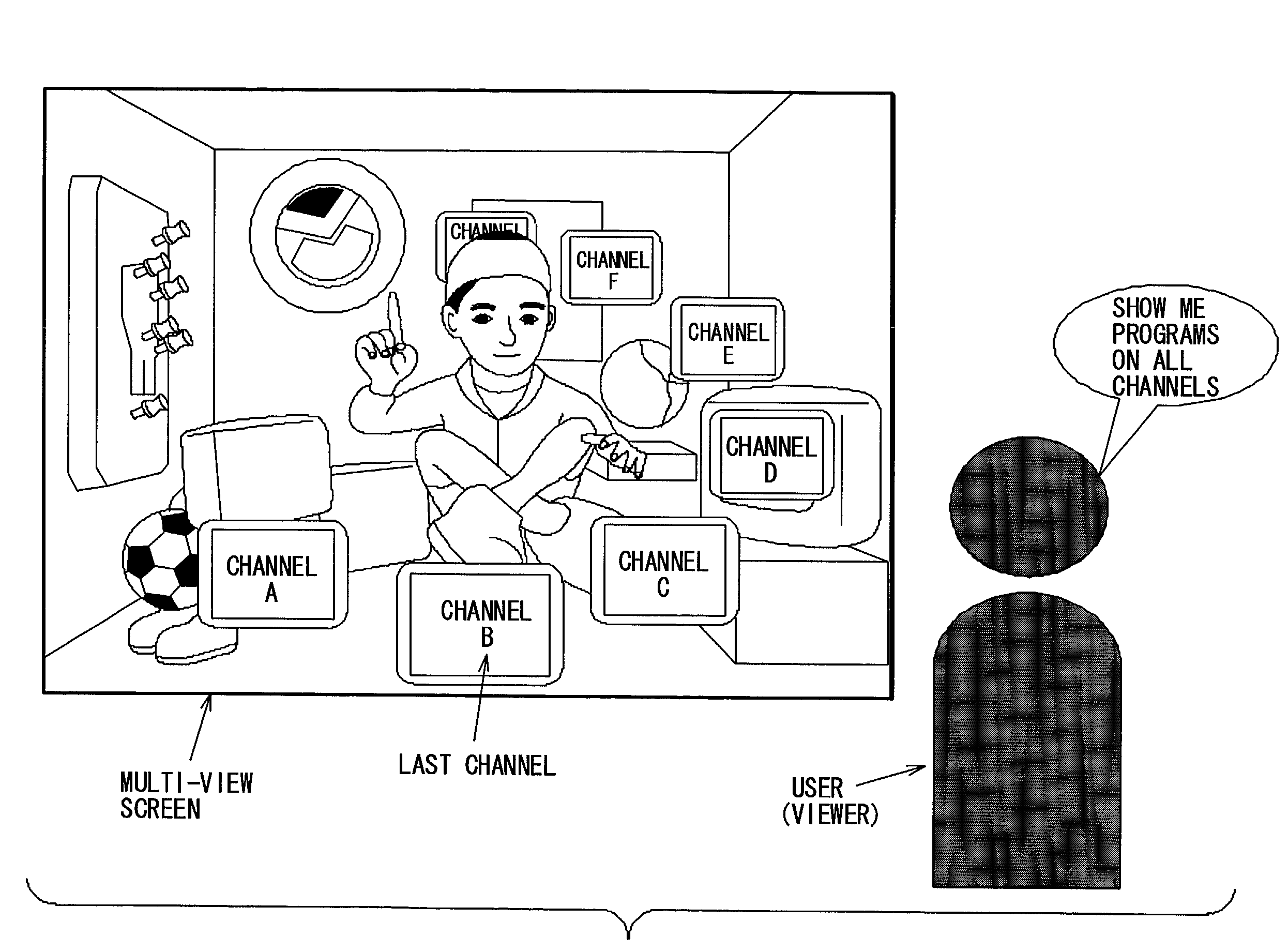

InactiveUS7426467B2Easy to operateMeet demandInput/output for user-computer interactionTelevision system detailsAnimationCommand system

There is provided a system for supporting interactive operations for inputting user commands to a household electric apparatus such as a television set / monitor and information apparatuses. According to the system for supporting interactive operations applying an animated character called a personified assistant interacting with a user based on speech synthesis and animation, realizing a user-friendly user interface and simultaneously making it possible to meet a demand for complex commands or providing an entry for services. Further, since the system is provided with a command system producing an effect close to human natural language, the user can easily operate the apparatus with a feeling close to ordinary human conversation.

Owner:SONY CORP

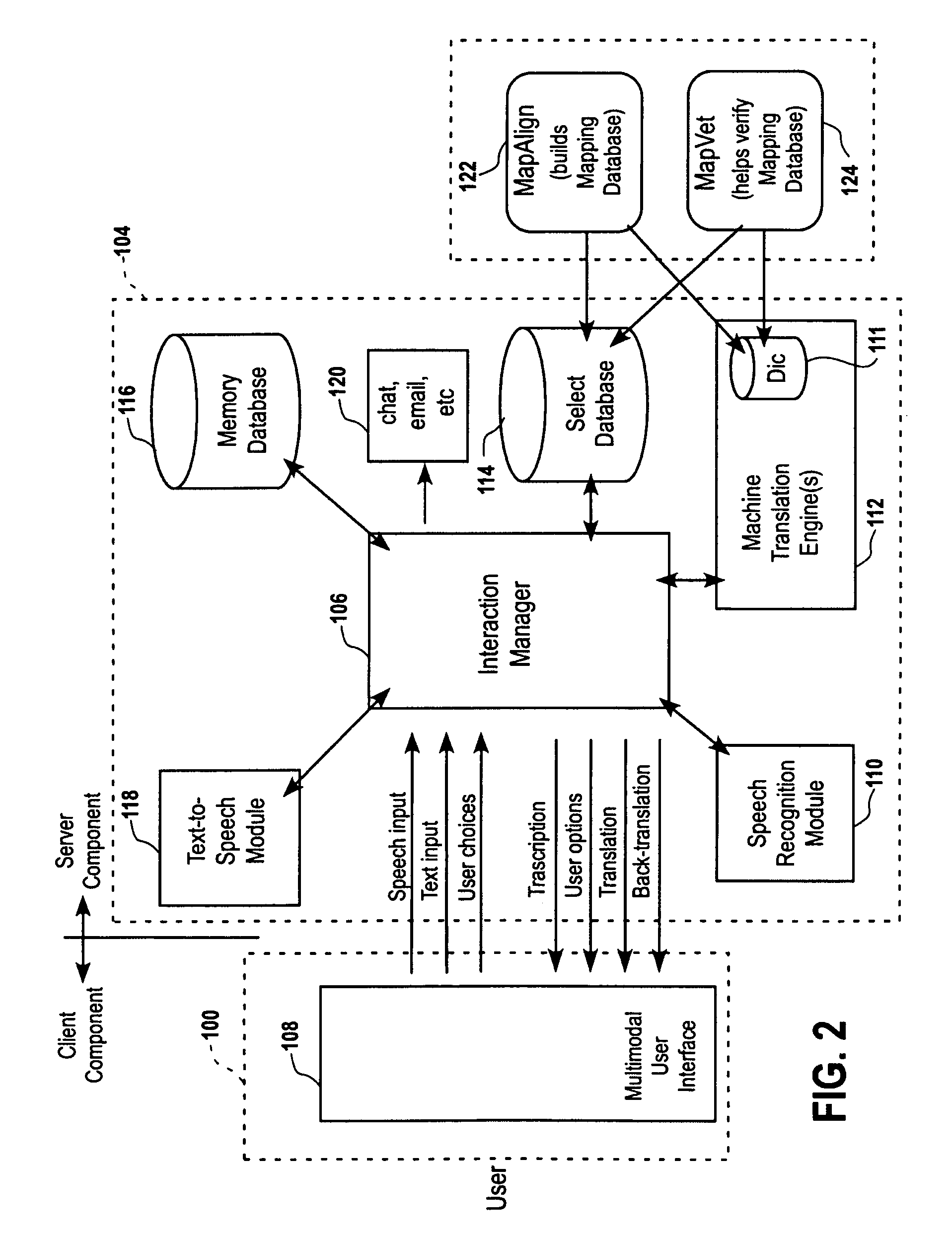

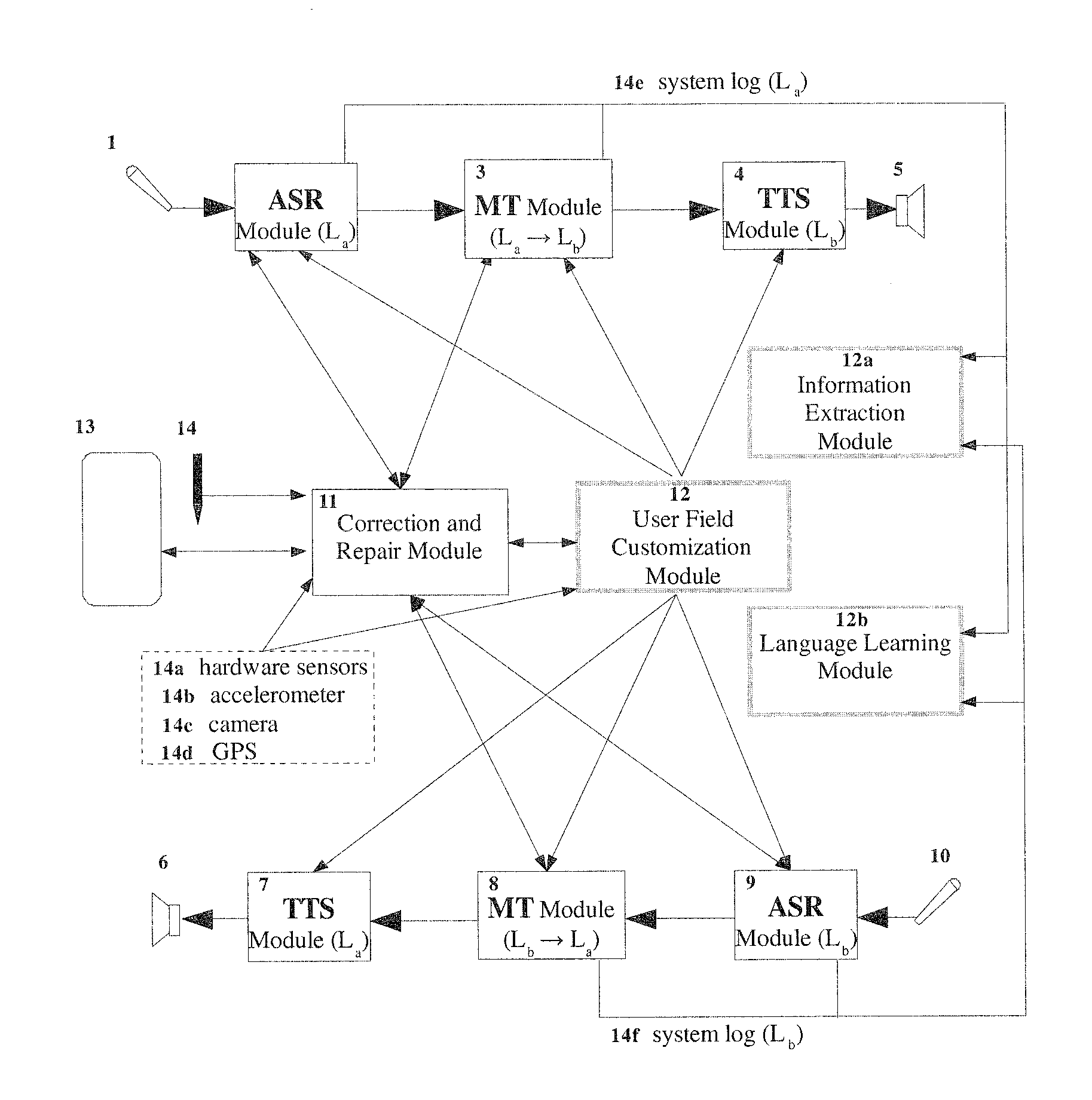

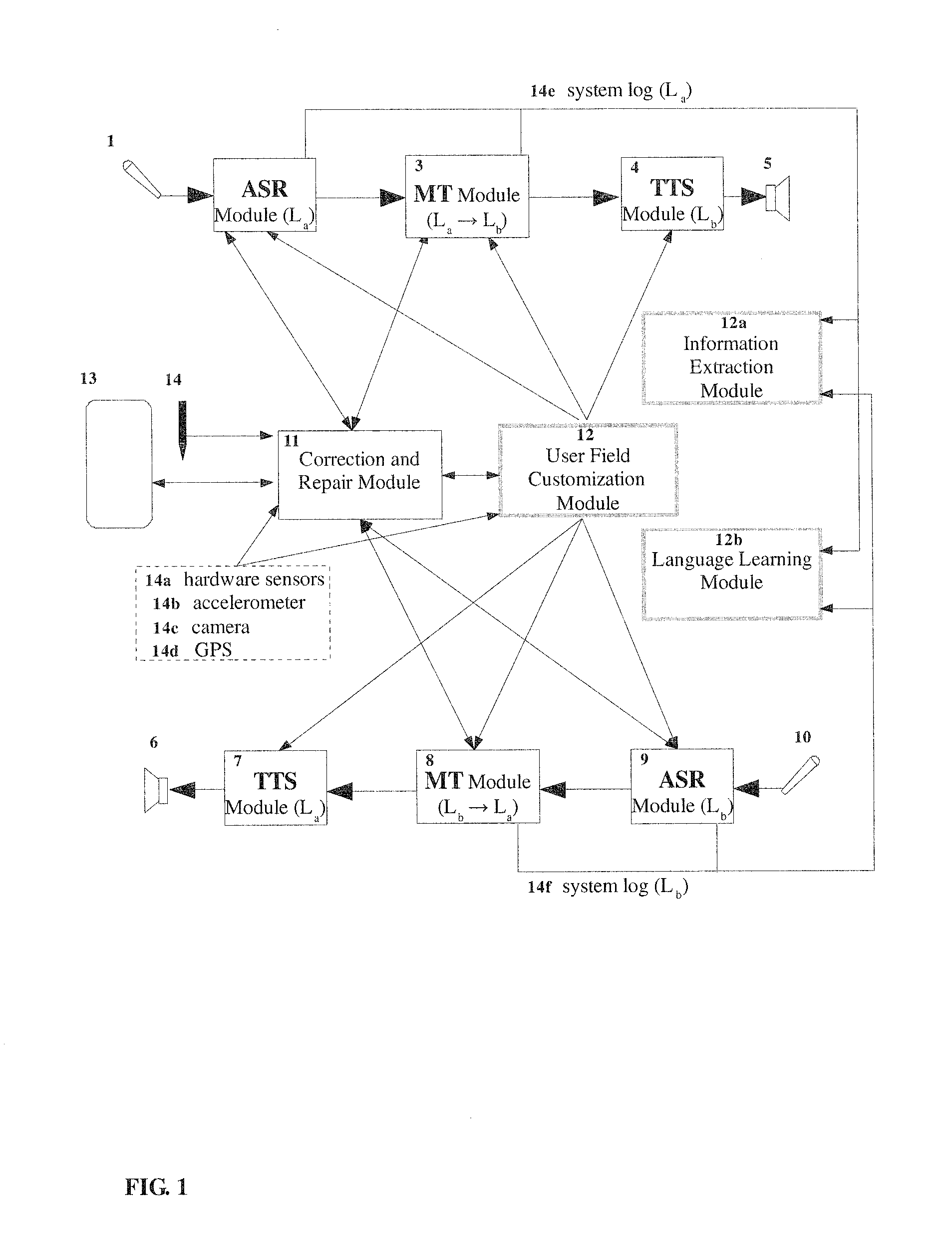

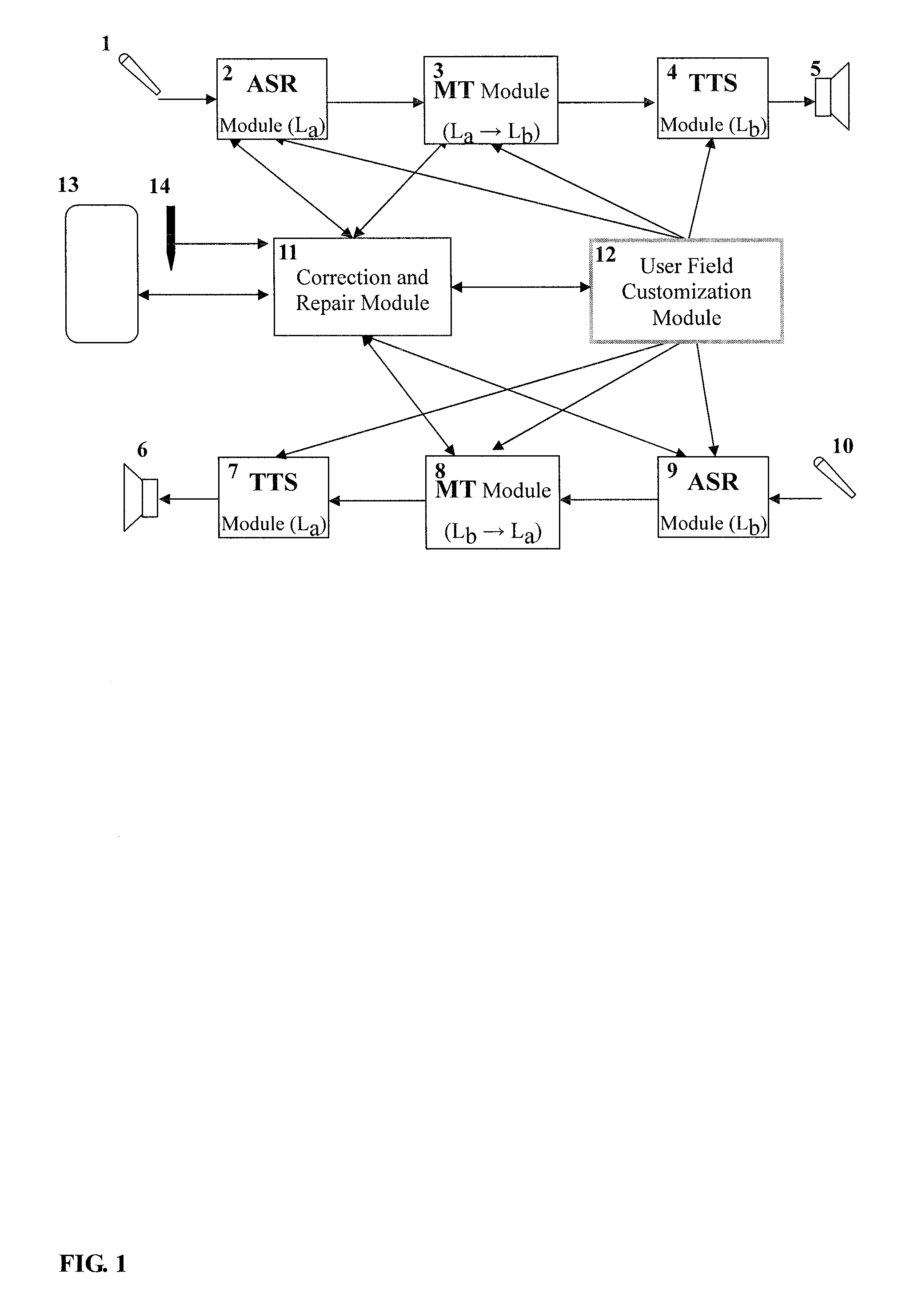

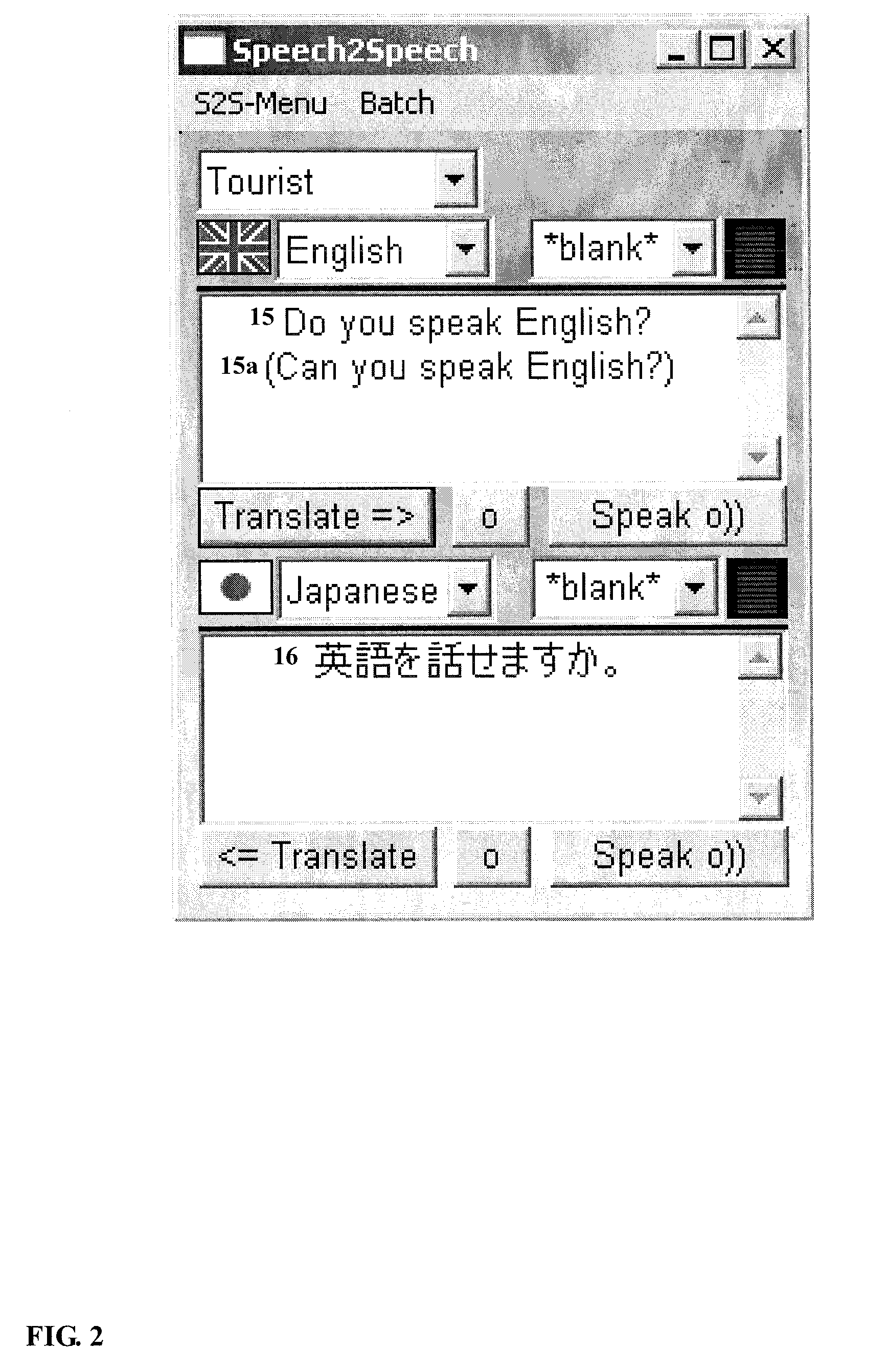

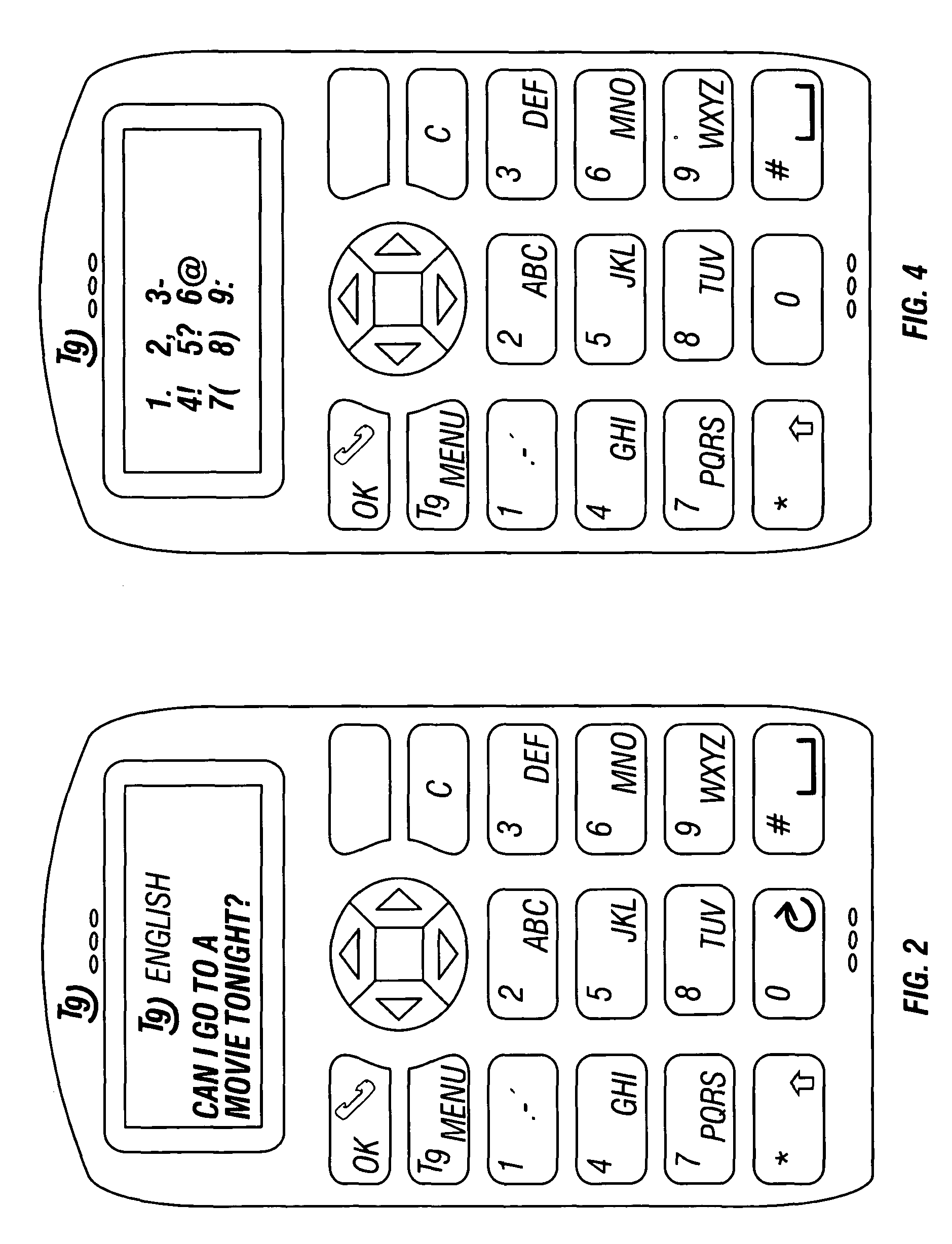

Enhanced speech-to-speech translation system and methods

ActiveUS20110307241A1Improve usabilityNatural language translationSpeech recognitionThird partySpeech to speech translation

A speech translation system and methods for cross-lingual communication that enable users to improve and modify content and usage of the system and easily abort or reset translation. The system includes a speech recognition module configured for accepting an utterance, a machine translation module, an interface configured to communicate the utterance and proposed translation, a correction module and an abort action unit that removes any hypotheses or partial hypotheses and terminates translation. The system also includes modules for storing favorites, changing language mode, automatically identifying language, providing language drills, viewing third party information relevant to conversation, among other things.

Owner:META PLATFORMS INC

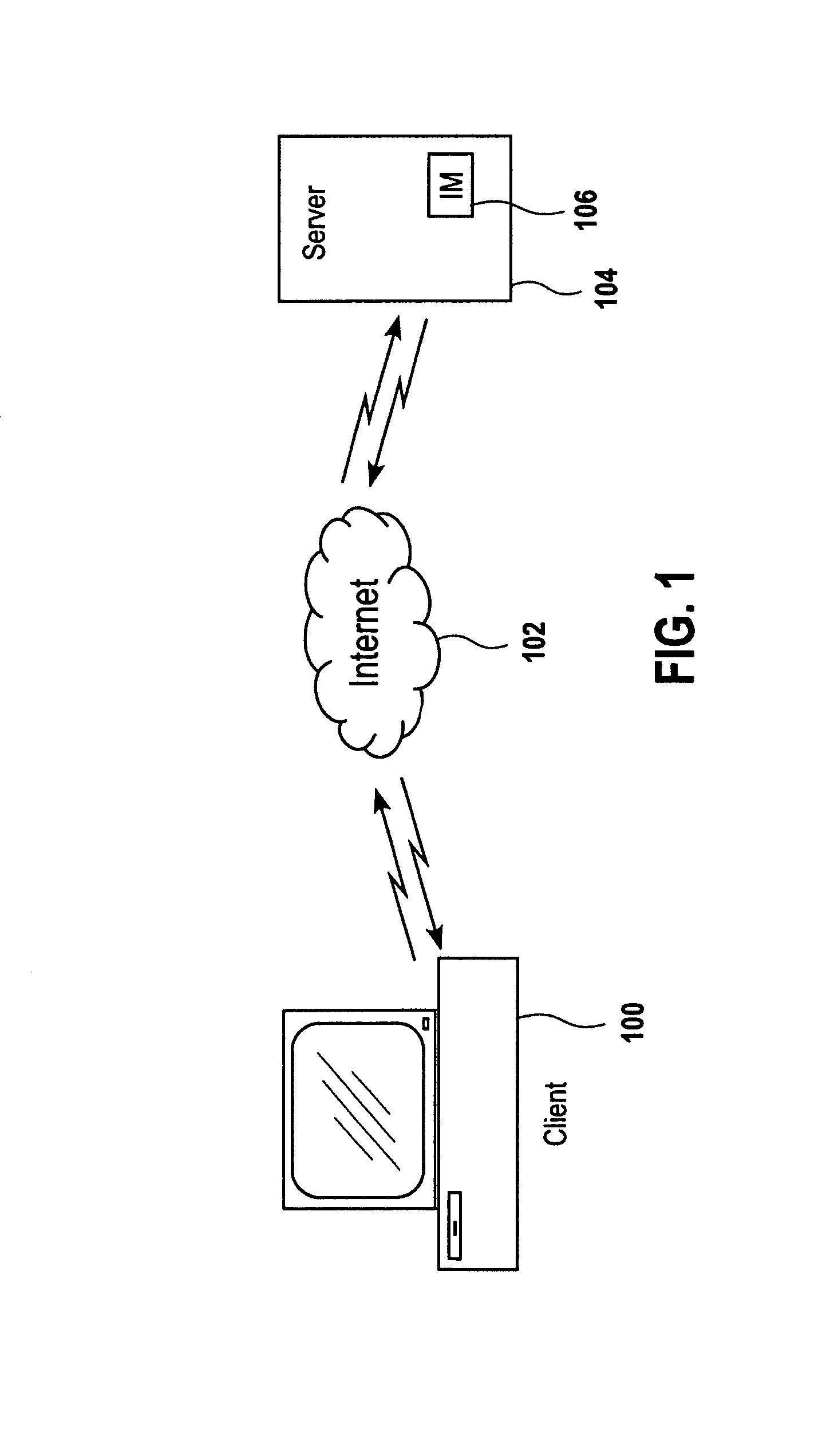

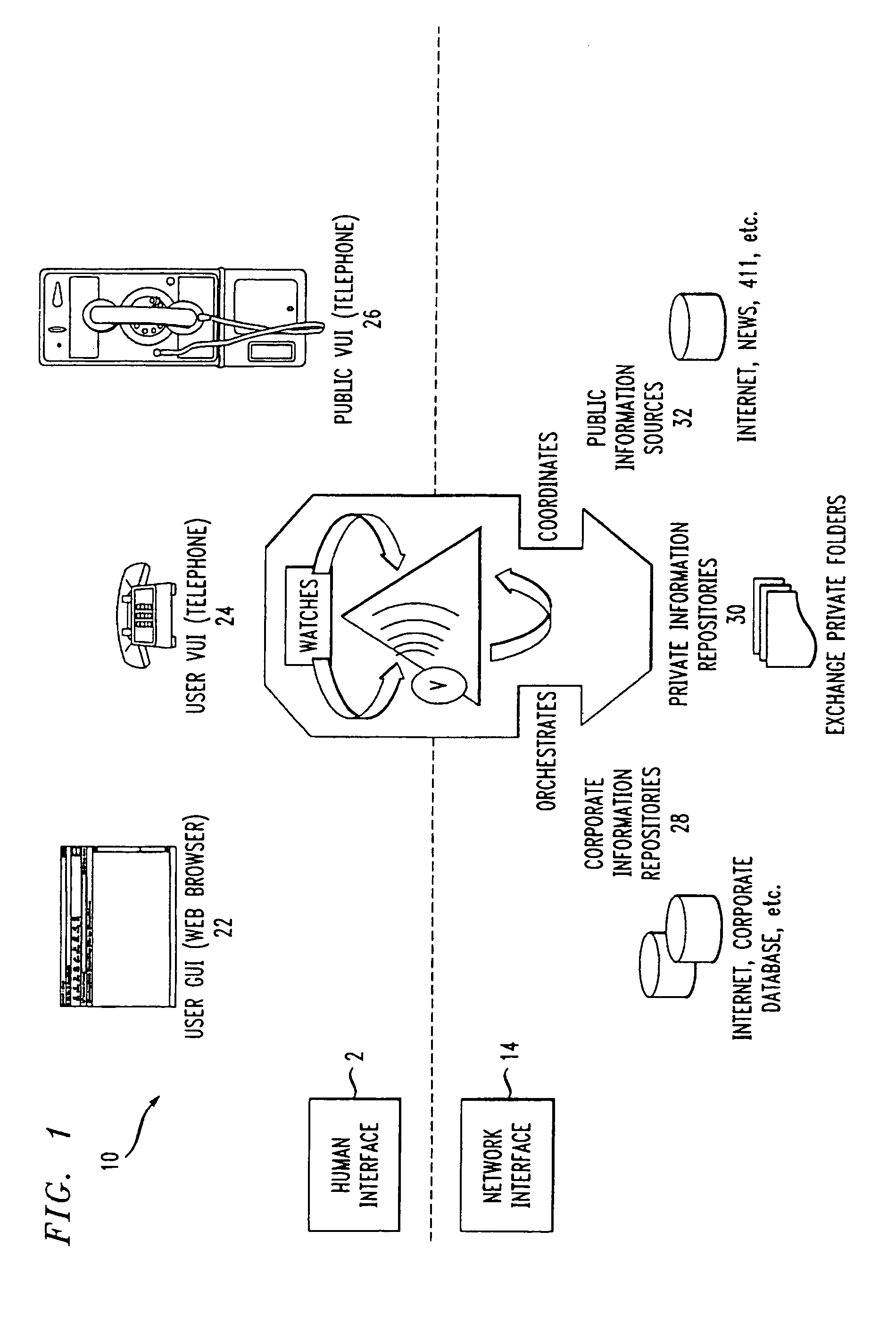

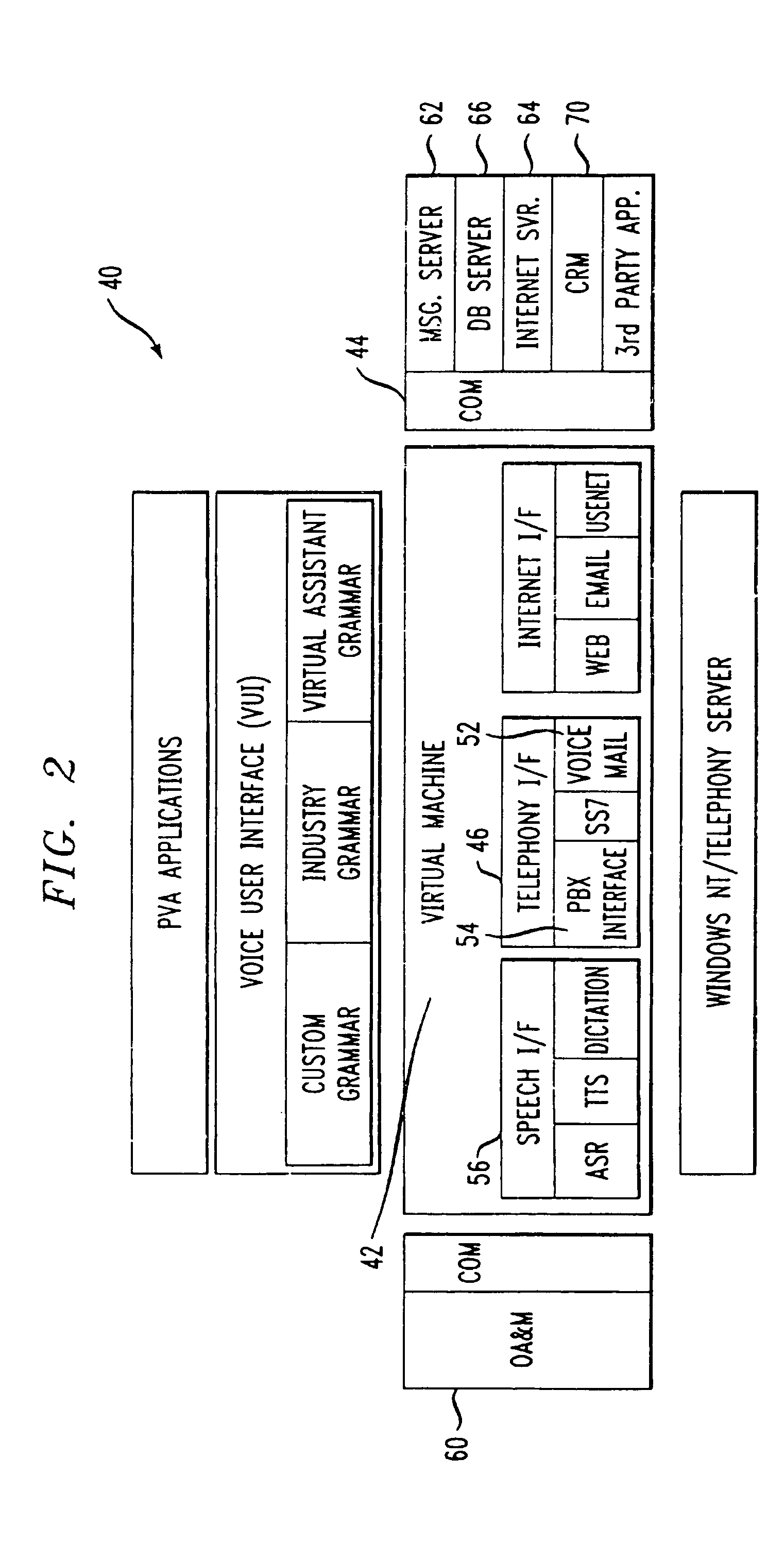

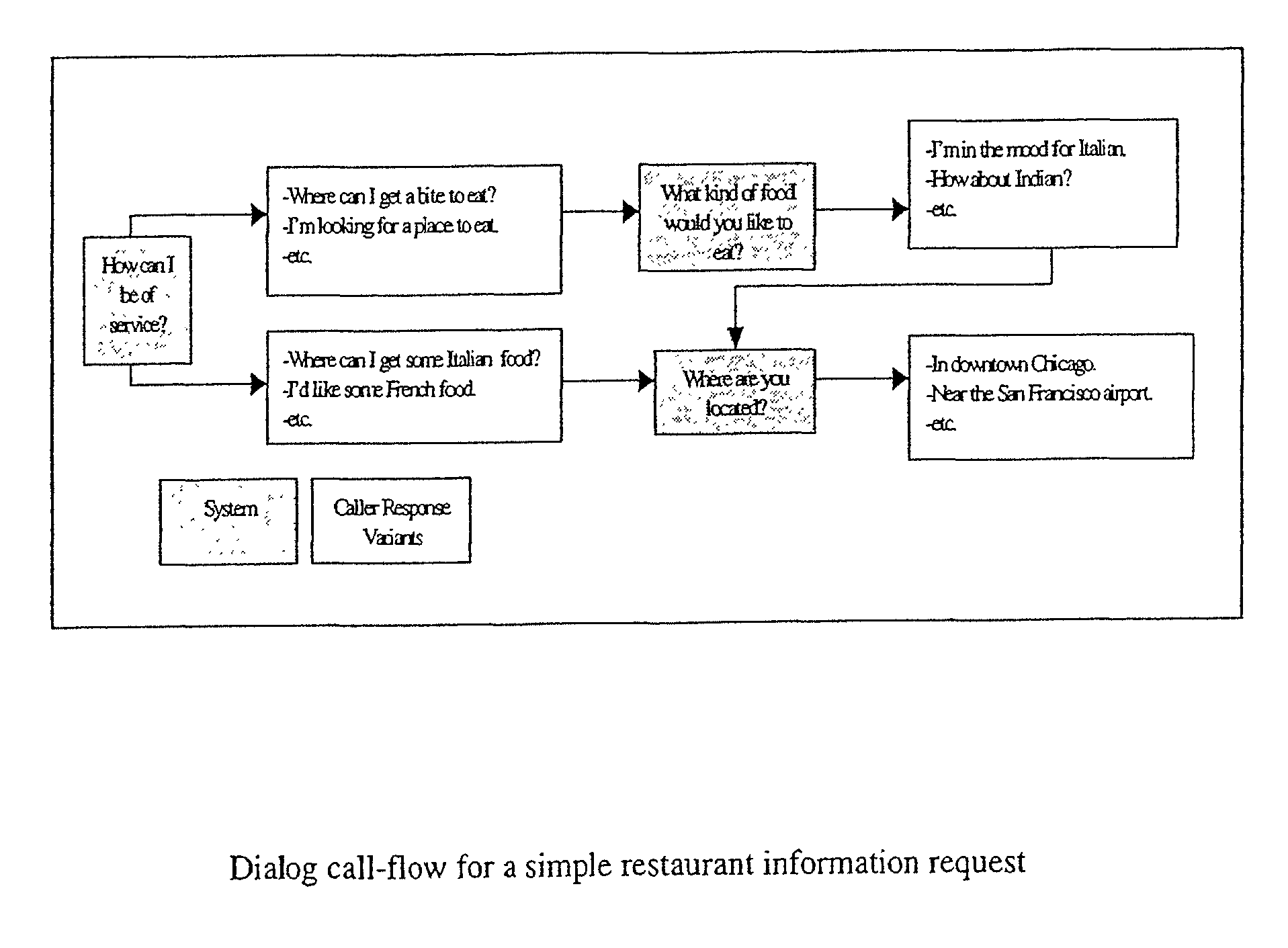

Personal virtual assistant

InactiveUS7415100B2Automatic call-answering/message-recording/conversation-recordingAutomatic exchangesUser inputZoom

A computer-based virtual assistant the behavior of which can be changed by the user, comprising a voice user interface for inputting information into and receiving information from the virtual assistant by speech, a communications network, a virtual assistant application running on a remote computer, the remote computer being electronically coupled to the user interface via the communications network, wherein the behavior of the virtual assistant changes responsive to user input. A computer-based virtual assistant that also automatically adapts its behavior is disclosed, comprising a voice user interface for inputting information into and receiving information from the virtual assistant by speech, a communications network, a virtual assistant application running on a remote computer, the remote computer being electronically coupled to the user interface via the communications network, wherein the remote computer is programmed to automatically change the behavior of the virtual assistant responsive to input received by the virtual assistant. As detailed below, the virtual assistant adapts to the user in many different ways based on the input the virtual assistant receives. Such input could be user information, such as information about the user's experience, the time between user sessions, the amount of time a user pauses when recording a message, the user's emotional state, whether the user uses words associated with polite discourse, and the amount of time since a user provided input to the virtual assistant during a session.

Owner:AVAYA TECH LLC

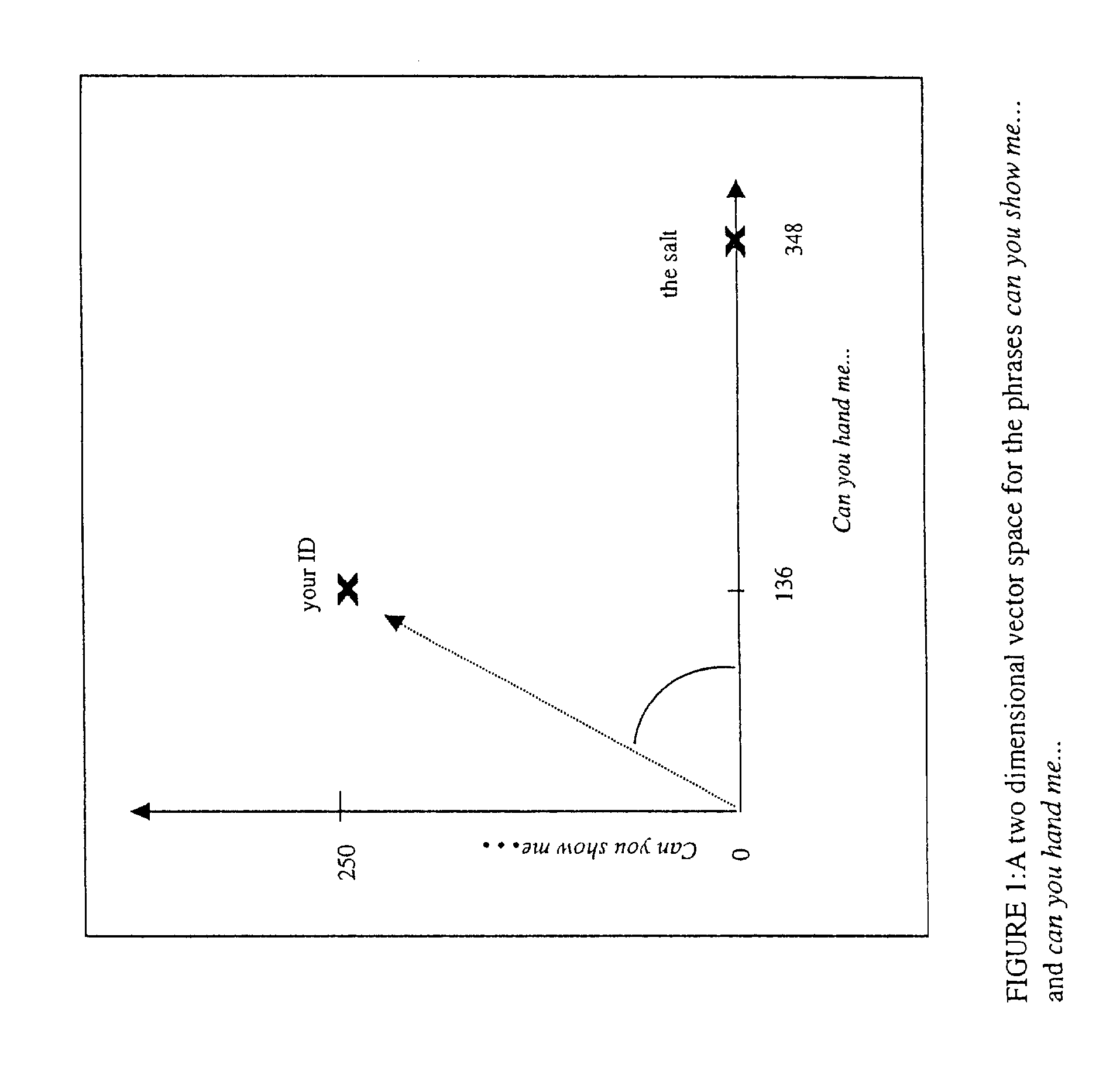

Methods for creating a phrase thesaurus

InactiveUS8374871B2Quick identificationMore complex and better performing systemsNatural language data processingSpeech recognitionHuman–robot interactionSpeech sound

The invention enables creation of grammar networks that can regulate, control, and define the content and scope of human-machine interaction in natural language voice user interfaces (NLVUI). More specifically, the invention concerns a phrase-based modeling of generic structures of verbal interaction and use of these models for the purpose of automating part of the design of such grammar networks.

Owner:NANT HLDG IP LLC

Prioritizing Selection Criteria by Automated Assistant

ActiveUS20130111348A1Improve user interactionEffectively engageNatural language translationSemantic analysisSelection criterionSpeech input

Methods, systems, and computer readable storage medium related to operating an intelligent digital assistant are disclosed. A user request is received, the user request including at least a speech input received from a user. The user request including the speech input is processed to obtain a representation of user intent for identifying items of a selection domain based on at least one selection criterion. A prompt is provided to the user, the prompt presenting two or more properties relevant to items of the selection domain and requesting the user to specify relative importance between the two or more properties. A listing of search results is provided to the user, where the listing of search results has been obtained based on the at least one selection criterion and the relative importance provided by the user.

Owner:APPLE INC

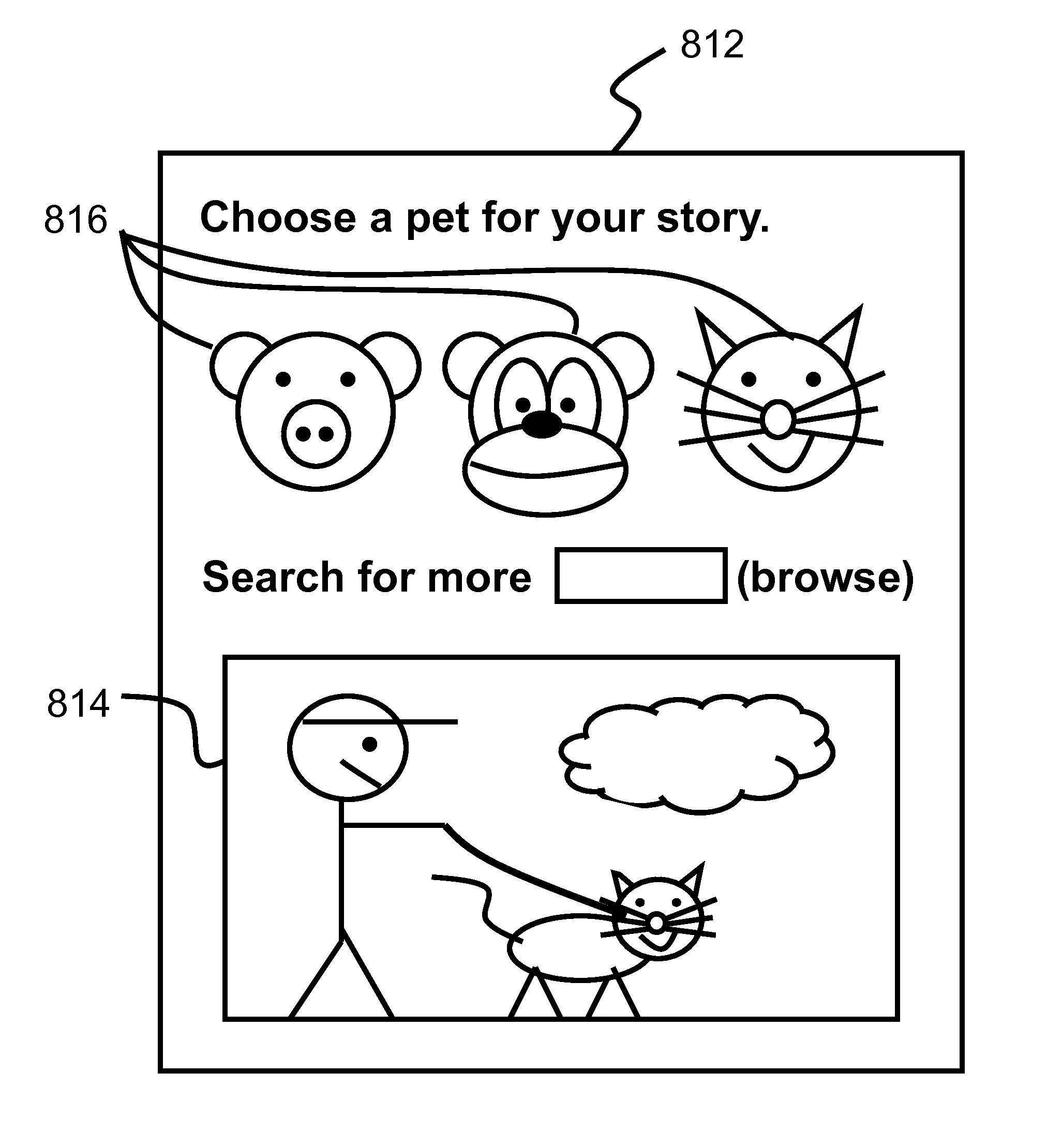

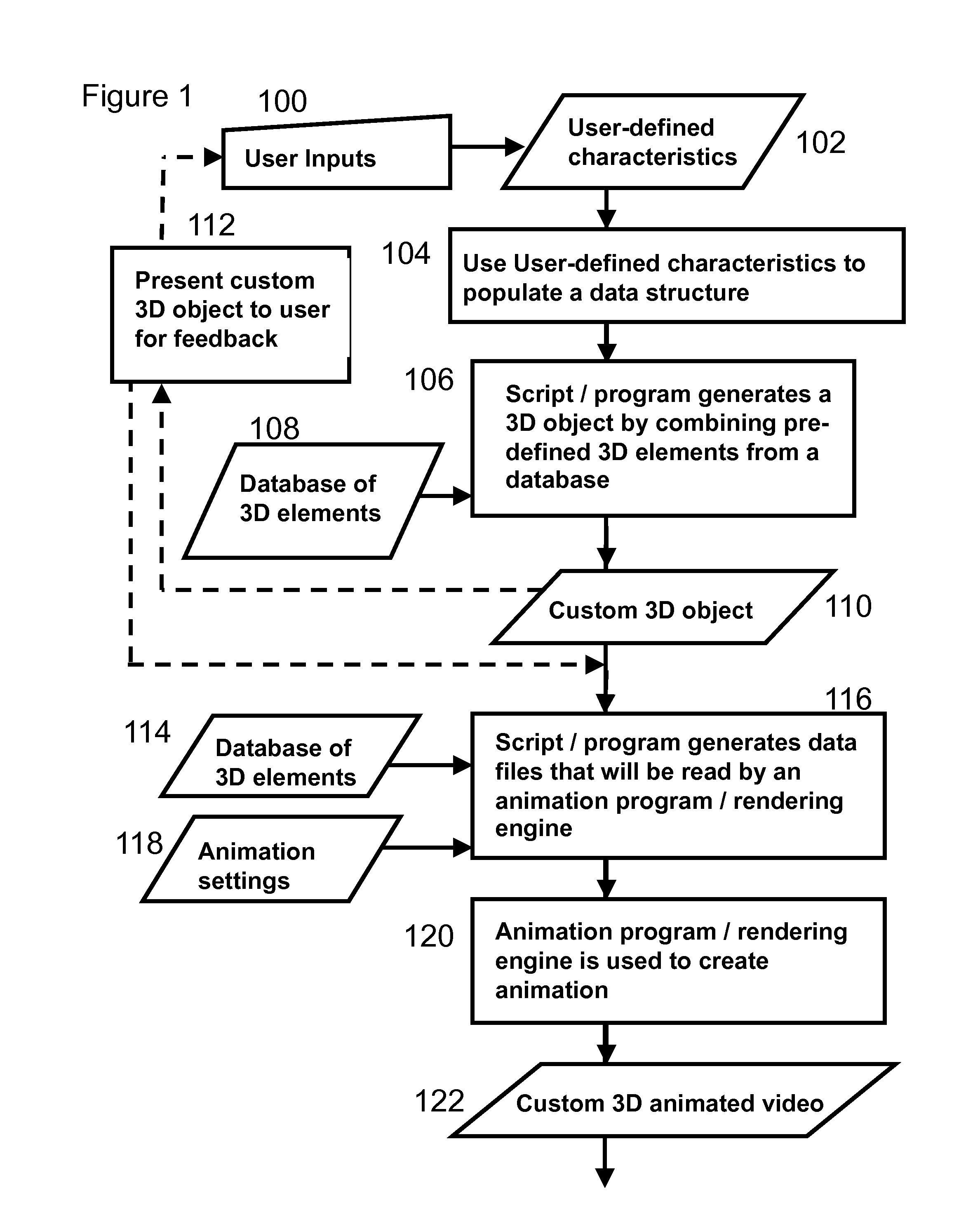

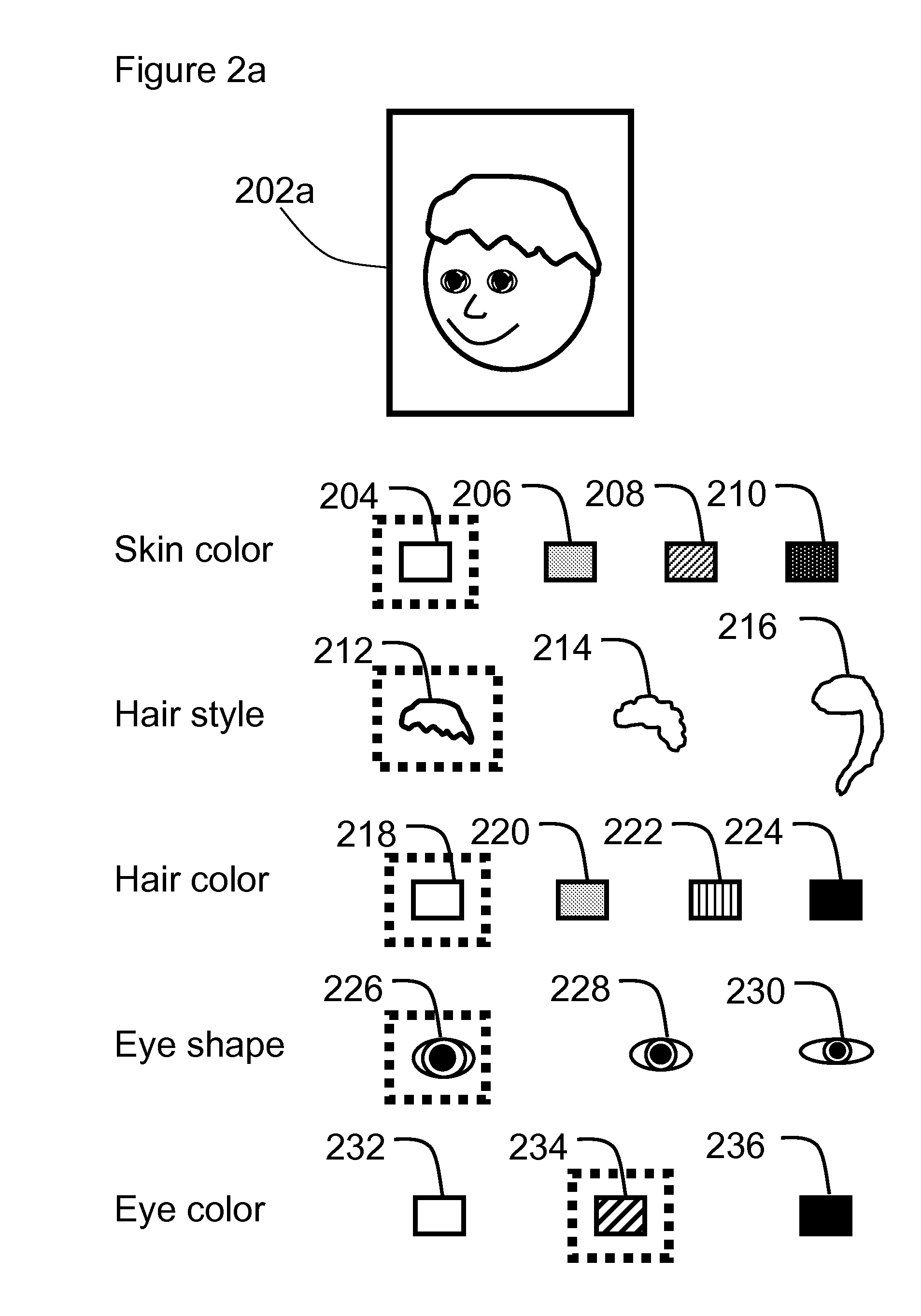

User Customized Animated Video and Method For Making the Same

A customized animation video system and method generate a customized or personalized animated video using user input where the customized animated video is rendered in a near immediate timeframe (for example, in less than 10 minutes). The customized animation video system and method of the present invention enable seamless integration of an animated representation of a subject or other custom object into the animated video. That is, the system and method of the present invention enable the generation of an animated representation of a subject that can be viewed from any desired perspective in the animated video without the use of multiple photographs or other 2D depictions of the subject. Furthermore, the system and method of the present invention enables the generation of an animated representation of a subject that is in the same graphic style as the rest of the animated video.

Owner:PANDOODLE CORPORATION

System and methods for maintaining speech-to-speech translation in the field

ActiveUS20090281789A1Increase probabilityNatural language translationSpeech recognitionSpeech to speech translationLanguage module

A method and apparatus are provided for updating the vocabulary of a speech translation system for translating a first language into a second language including written and spoken words. The method includes adding a new word in the first language to a first recognition lexicon of the first language and associating a description with the new word, wherein the description contains pronunciation and word class information. The new word and description are then updated in a first machine translation module associated with the first language. The first machine translation module contains a first tagging module, a first translation model and a first language module, and is configured to translate the new word to a corresponding translated word in the second language. Optionally, the invention may be used for bidirectional or multi-directional translation

Owner:META PLATFORMS INC

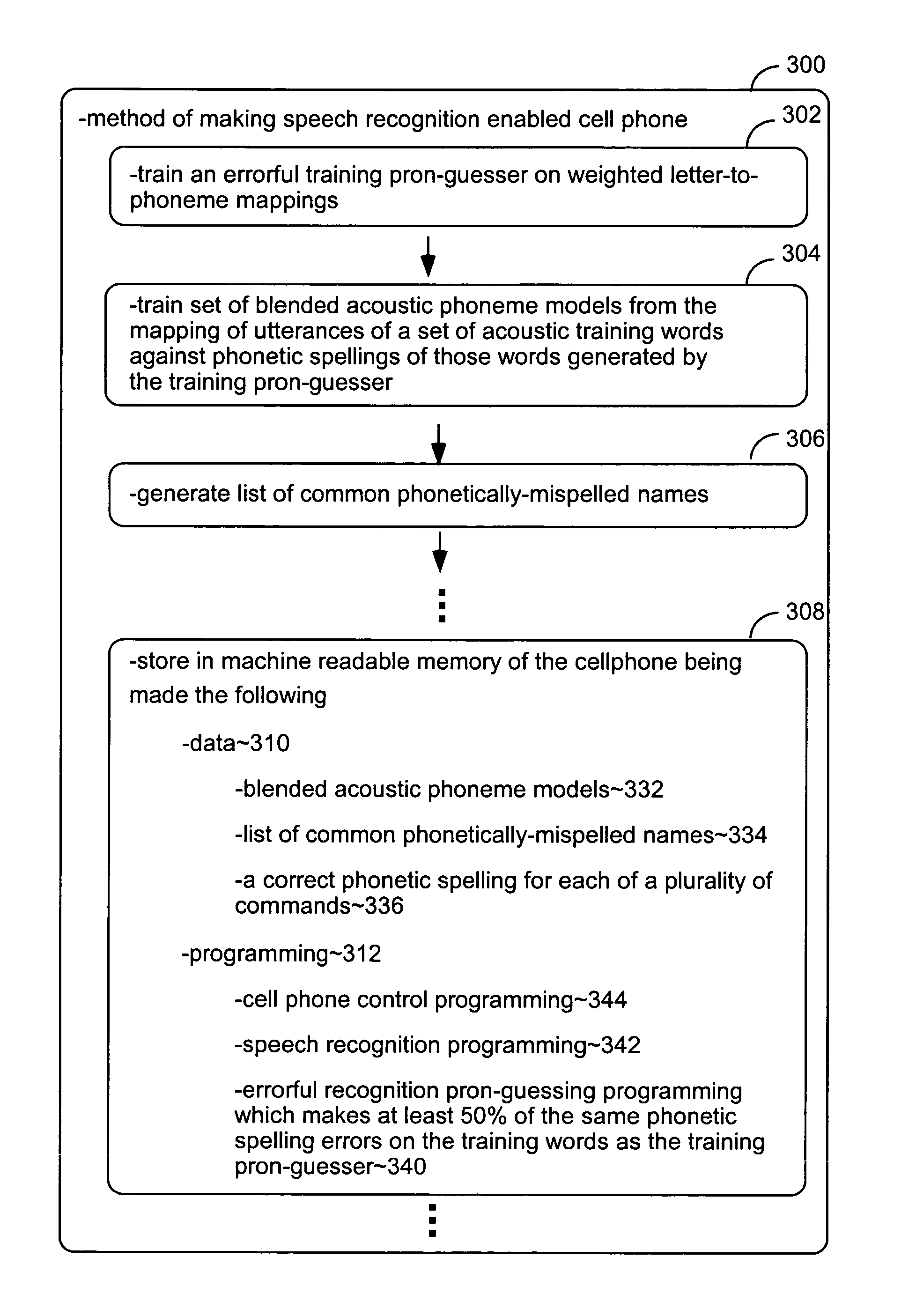

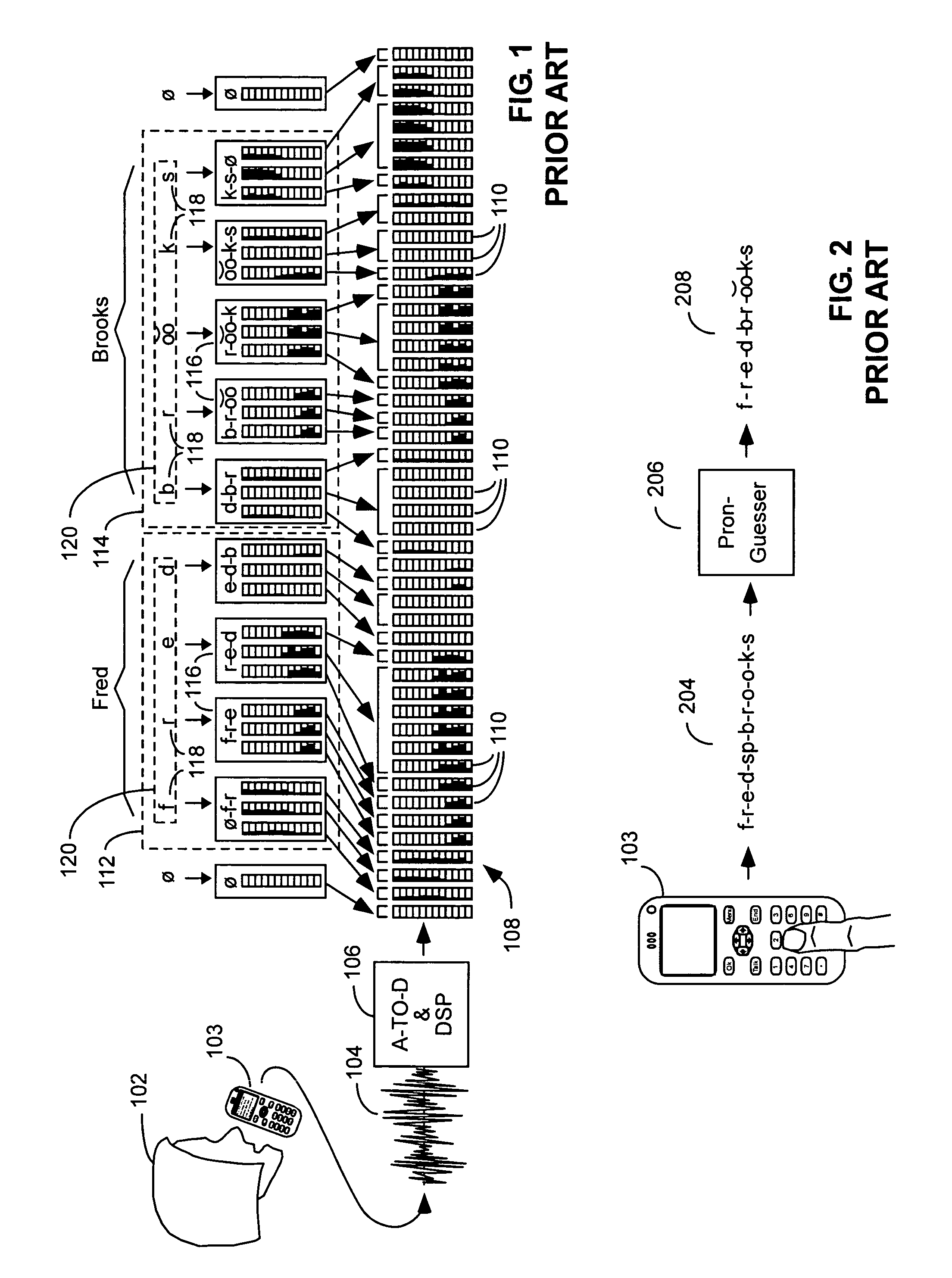

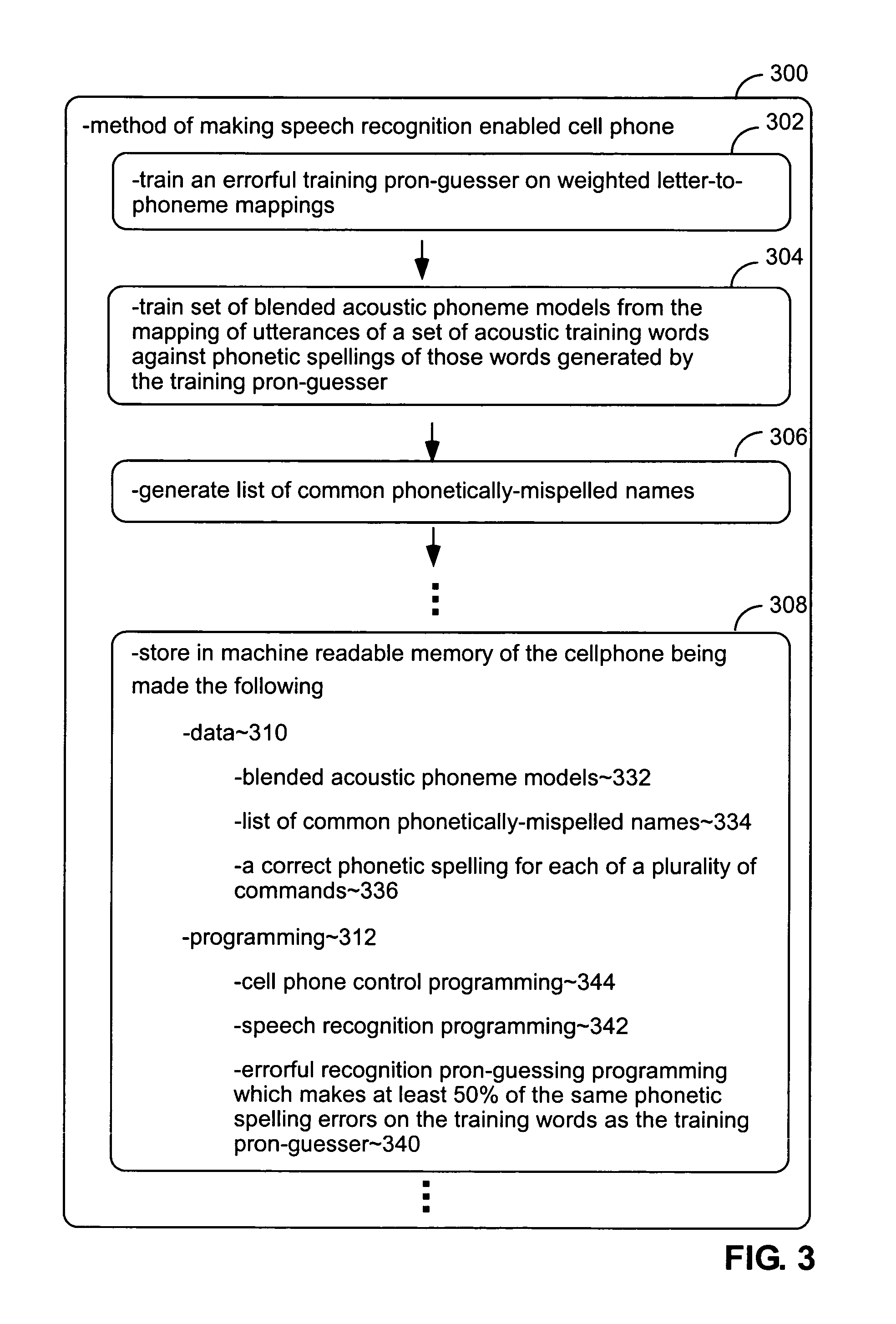

Training and using pronunciation guessers in speech recognition

InactiveUS7467087B1Reduce frequencyReliable phonetic spellingSpeech recognitionSpeech synthesisWord modelSpeech identification

The error rate of a pronunciation guesser that guesses the phonetic spelling of words used in speech recognition is improved by causing its training to weigh letter-to-phoneme mappings used as data in such training as a function of the frequency of the words in which such mappings occur. Preferably the ratio of the weight to word frequency increases as word frequencies decreases. Acoustic phoneme models for use in speech recognition with phonetic spellings generated by a pronunciation guesser that makes errors are trained against word models whose phonetic spellings have been generated by a pronunciation guesser that makes similar errors. As a result, the acoustic models represent blends of phoneme sounds that reflect the spelling errors made by the pronunciation guessers. Speech recognition enabled systems are made by storing in them both a pronunciation guesser and a corresponding set of such blended acoustic models.

Owner:CERENCE OPERATING CO

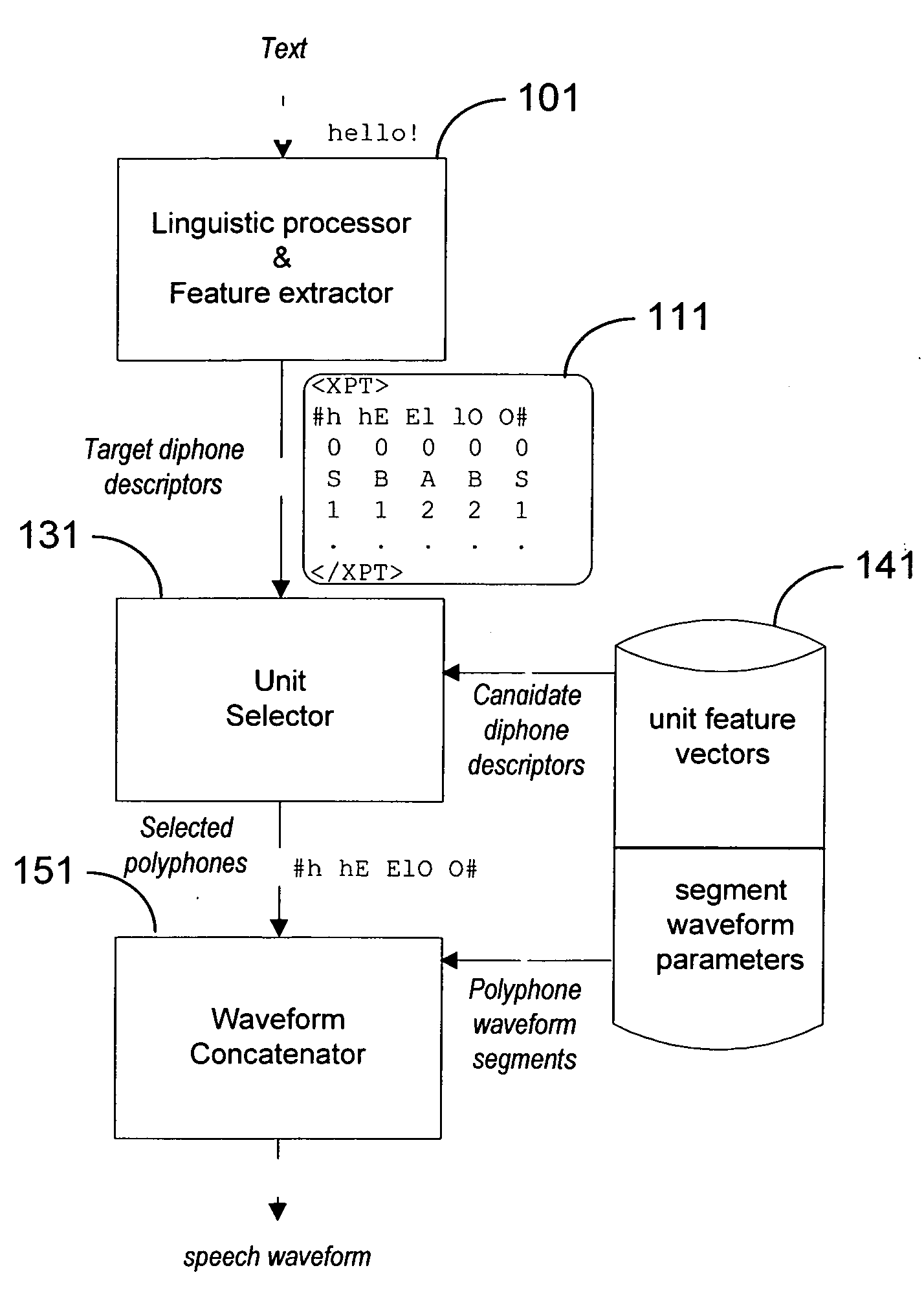

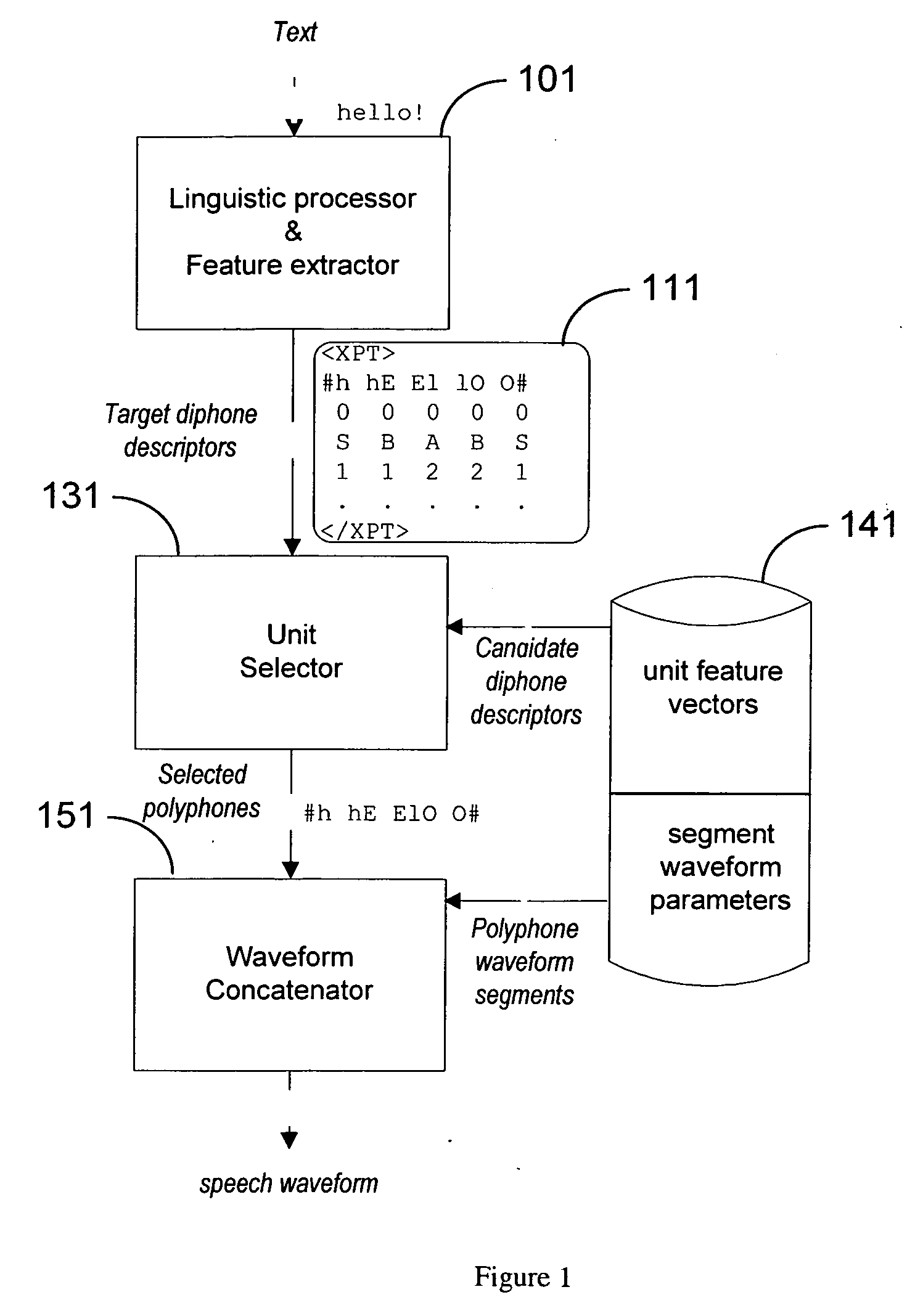

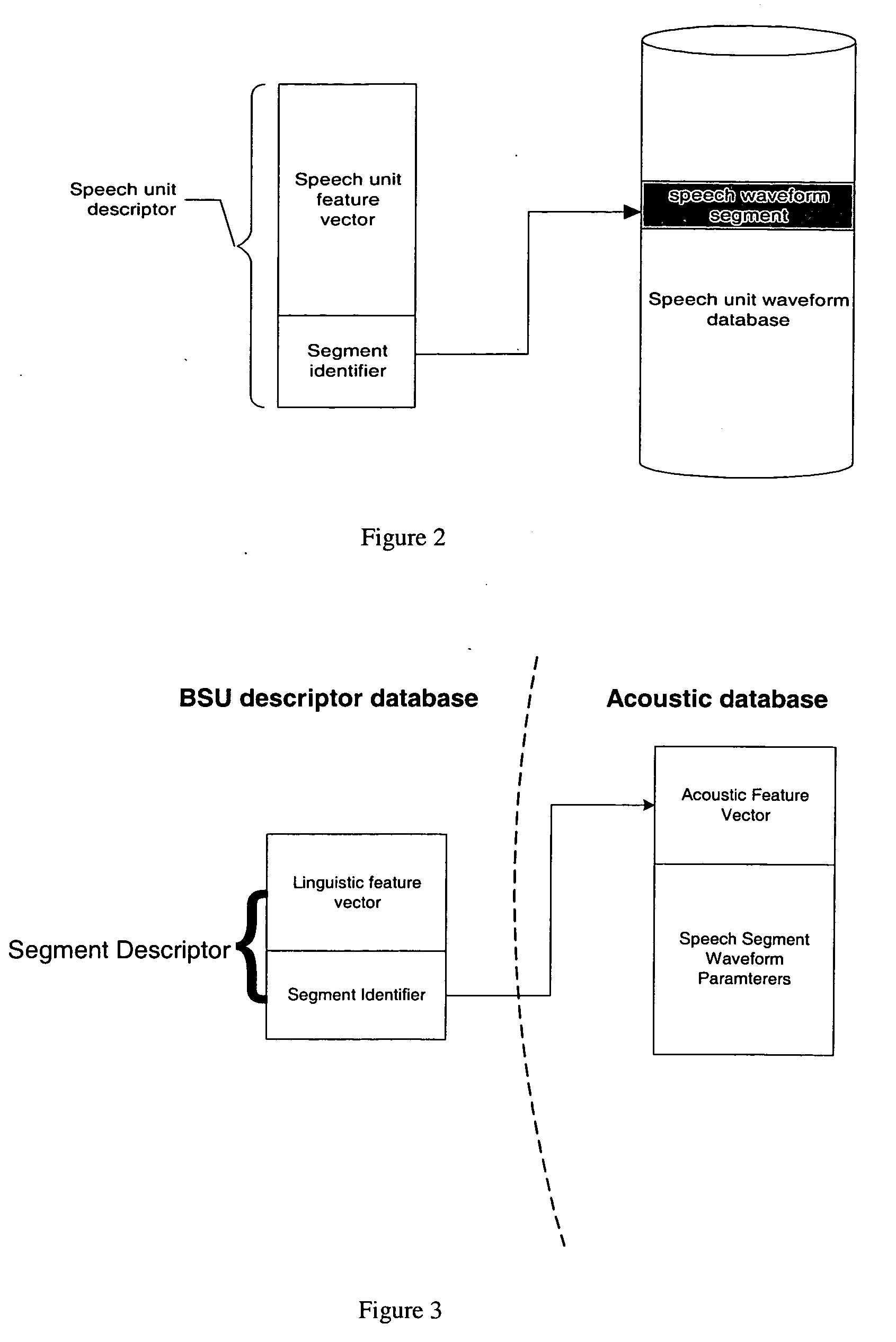

Corpus-based speech synthesis based on segment recombination

ActiveUS20050182629A1Quality improvementSpecial data processing applicationsSpeech synthesisConcatenationSpeech sound

A system and method generate synthesized speech through concatenation of speech segments that are derived from a large prosodically-rich corpus of speech segments including using an additional dictionary of speech segment identifier sequences.

Owner:NUANCE COMM INC

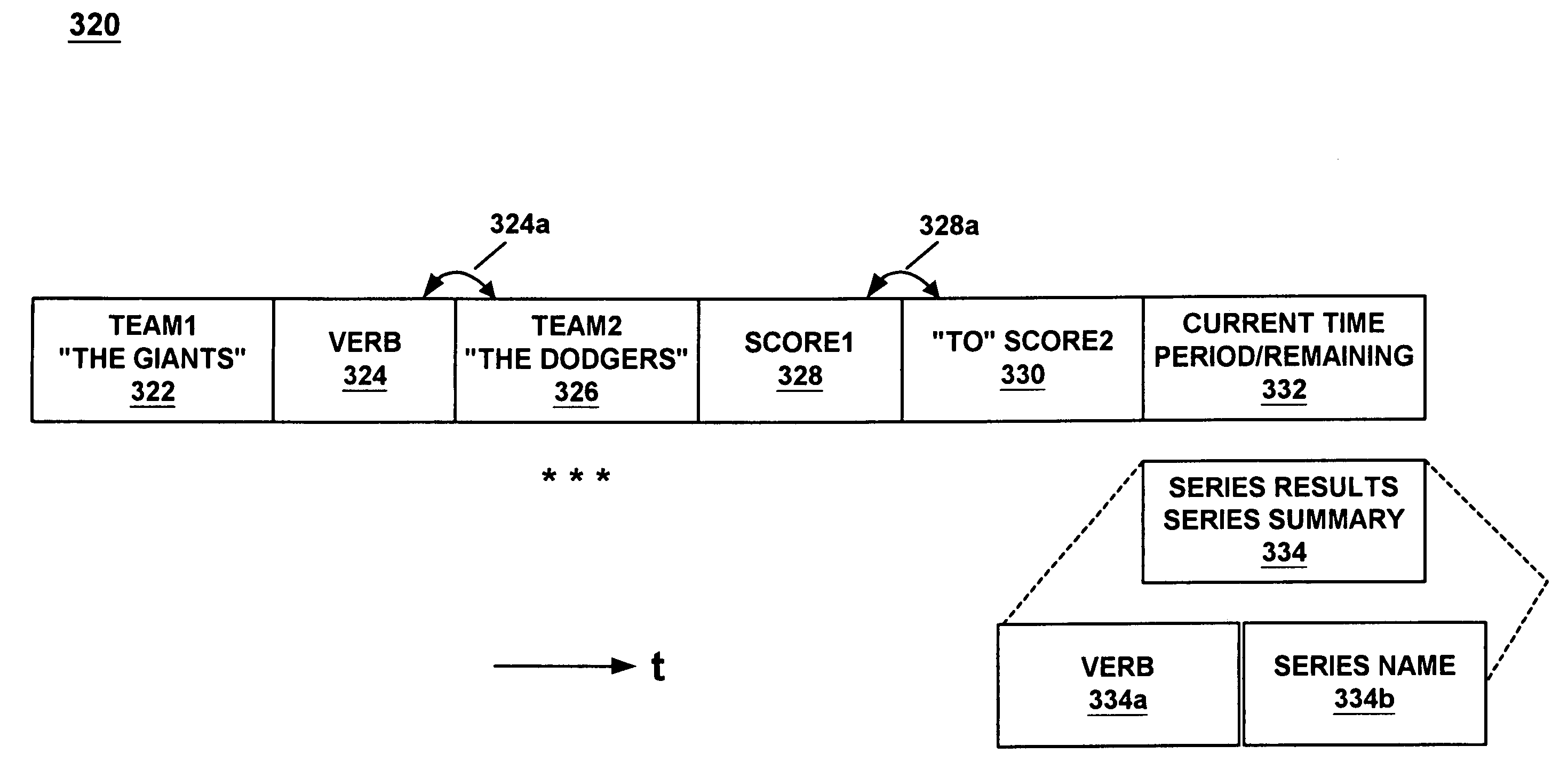

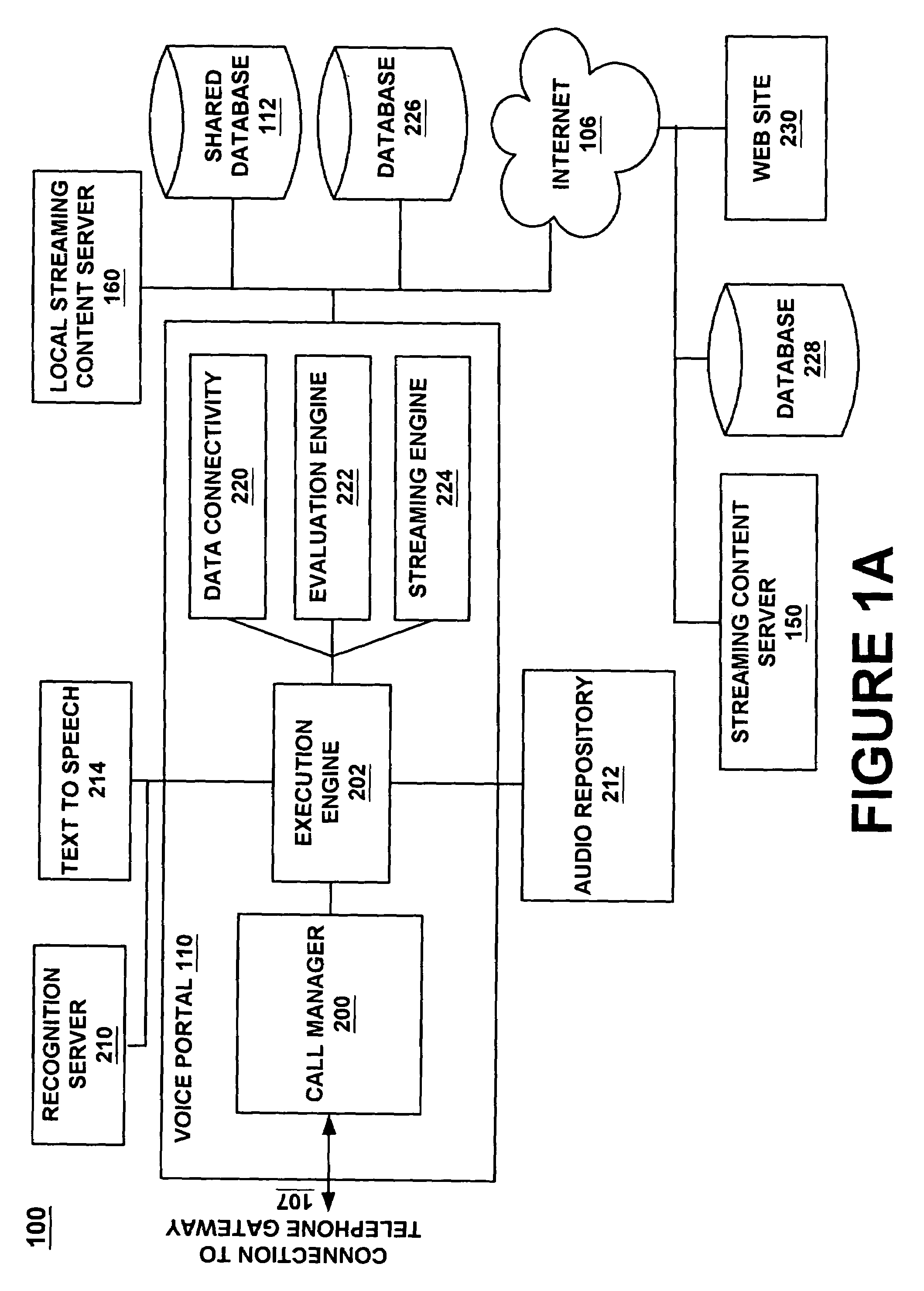

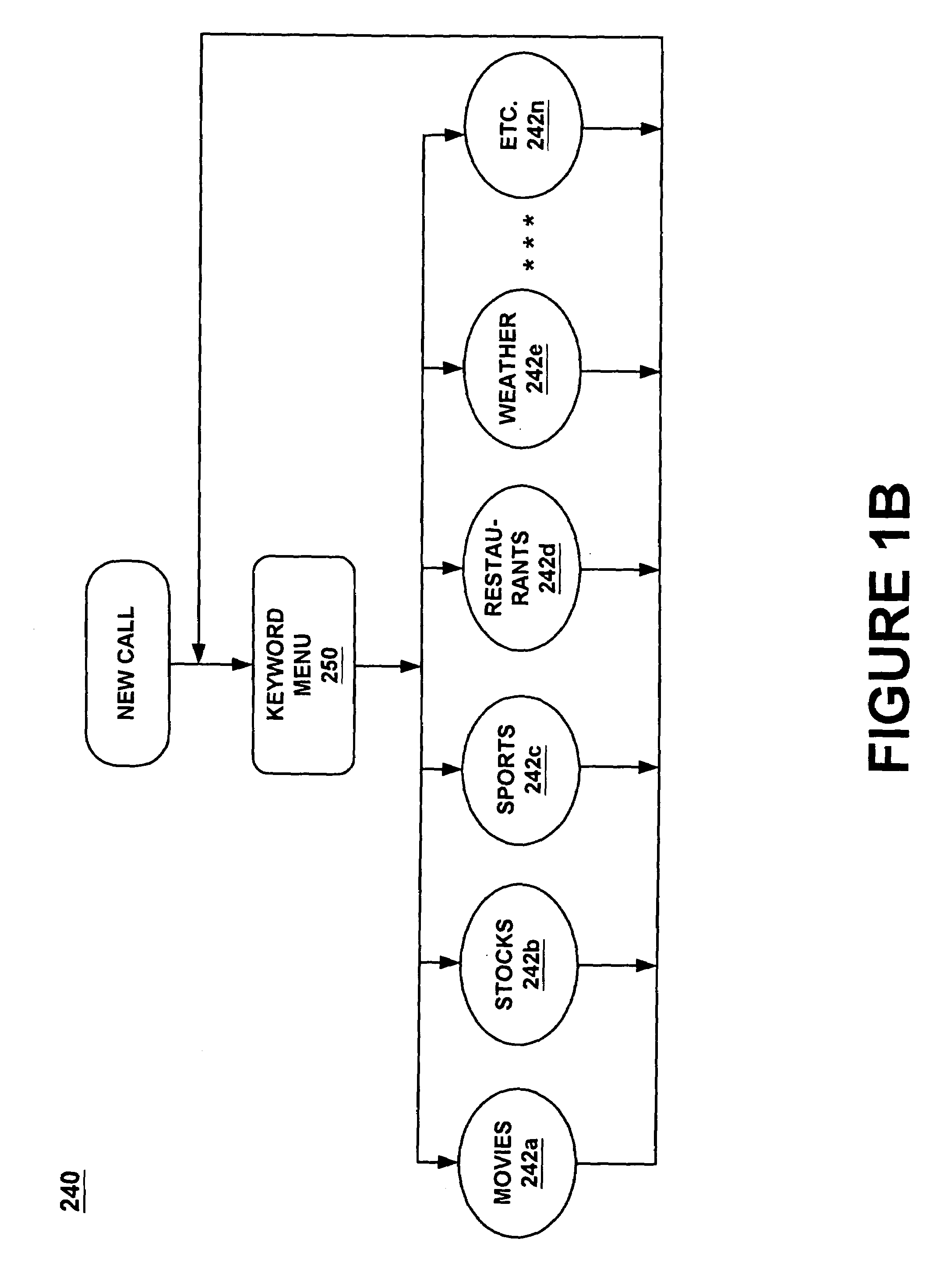

Providing services for an information processing system using an audio interface

InactiveUS7308408B1Structured and efficient and effectiveEffectivelyAutomatic exchangesSpeech recognitionInformation processingSpeech identification

A method and system for providing efficient menu services for an information processing system that uses a telephone or other form of audio user interface. In one embodiment, the menu services provide effective support for novice users by providing a full listing of available keywords and rotating house advertisements which inform novice users of potential features and information. For experienced users, cues are rendered so that at any time the user can say a desired keyword to invoke the corresponding application. The menu is flat to facilitate its usage. Full keyword listings are rendered after the user is given a brief cue to say a keyword. Service messages rotate words and word prosody. When listening to receive information from the user, after the user has been cued, soft background music or other audible signals are rendered to inform the user that a response may now be spoken to the service. Other embodiments determine default cities, on which to report information, based on characteristics of the caller or based on cities that were previously selected by the caller. Other embodiments provide speech concatenation processes that have co-articulation and real-time subject-matter-based word selection which generate human sounding speech. Other embodiments reduce the occurrences of falsely triggered barge-ins during content delivery by only allowing interruption for certain special words. Other embodiments offer special services and modes for calls having voice recognition trouble. The special services are entered after predetermined criterion have been met by the call. Other embodiments provide special mechanisms for automatically recovering the address of a caller.

Owner:MICROSOFT TECH LICENSING LLC

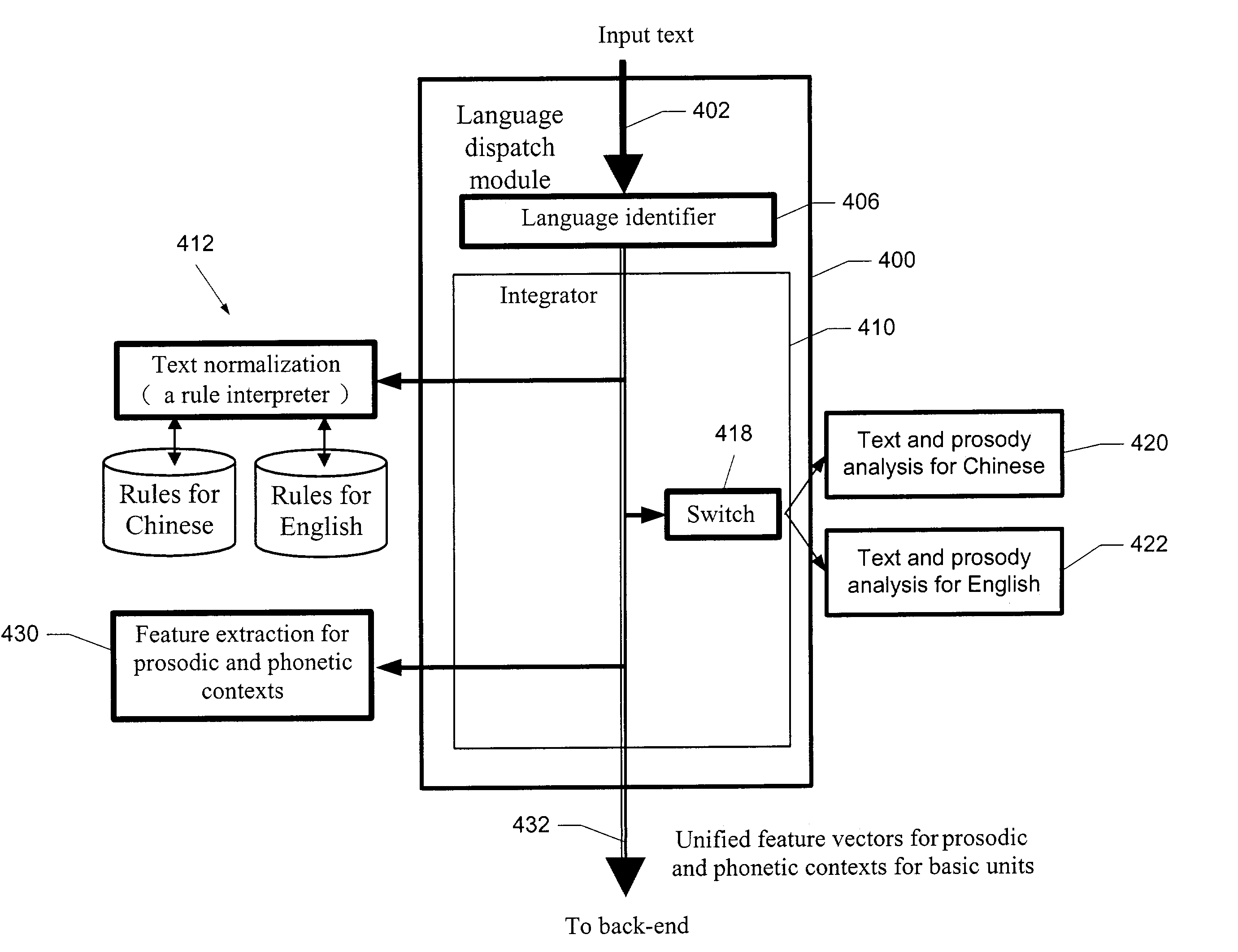

Front-end architecture for a multi-lingual text-to-speech system

InactiveUS7496498B2Smooth switchingMaintaining fluent intonationIndoor gamesNatural language data processingSpeech synthesisSpeech sound

A text processing system for processing multi-lingual text for a speech synthesizer includes a first language dependent module for performing at least one of text and prosody analysis on a portion of input text comprising a first language. A second language dependent module performs at least one of text and prosody analysis on a second portion of input text comprising a second language. A third module is adapted to receive outputs from the first and second dependent module and performs prosodic and phonetic context abstraction over the outputs based on multi-lingual text.

Owner:MICROSOFT TECH LICENSING LLC

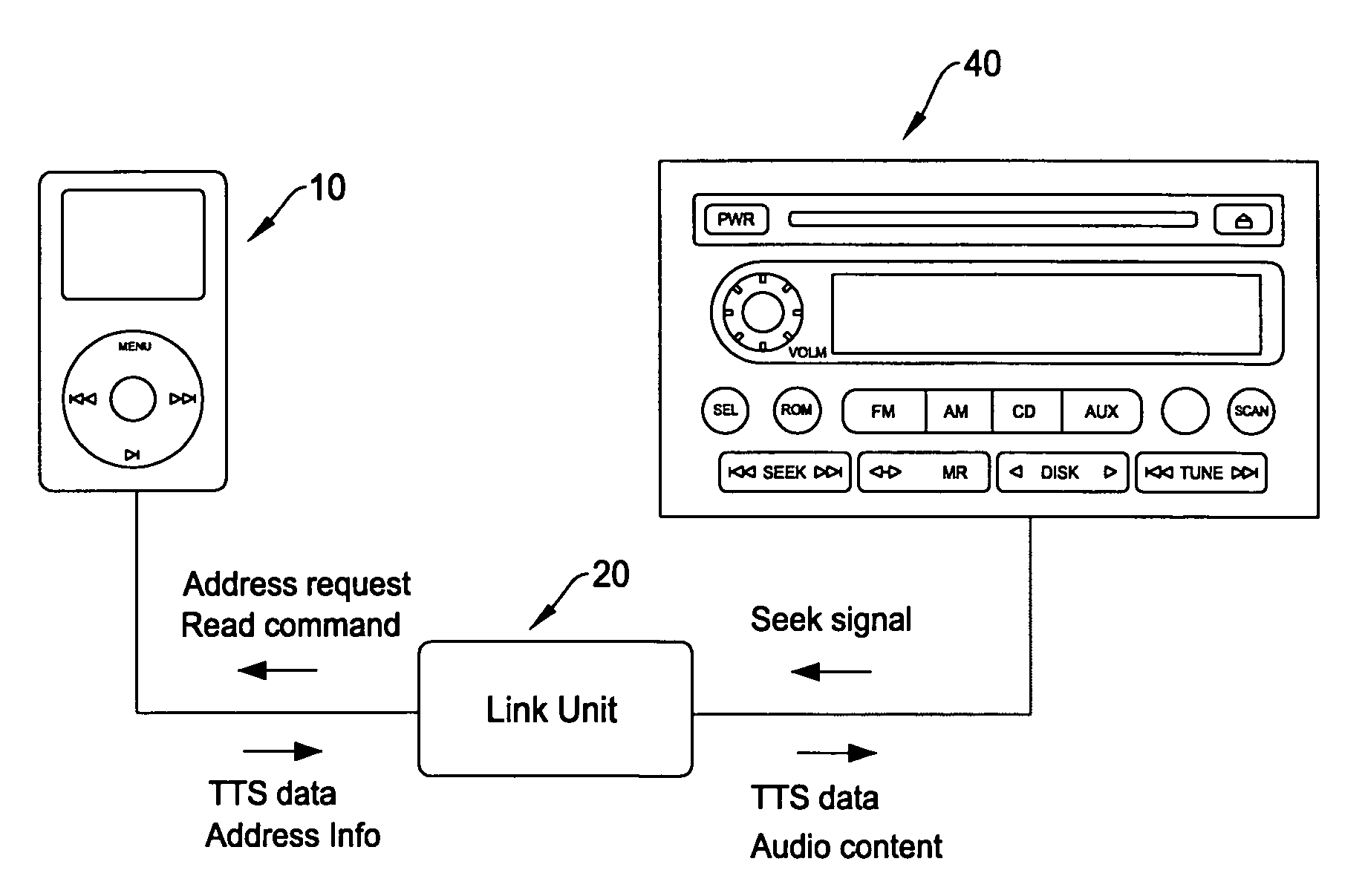

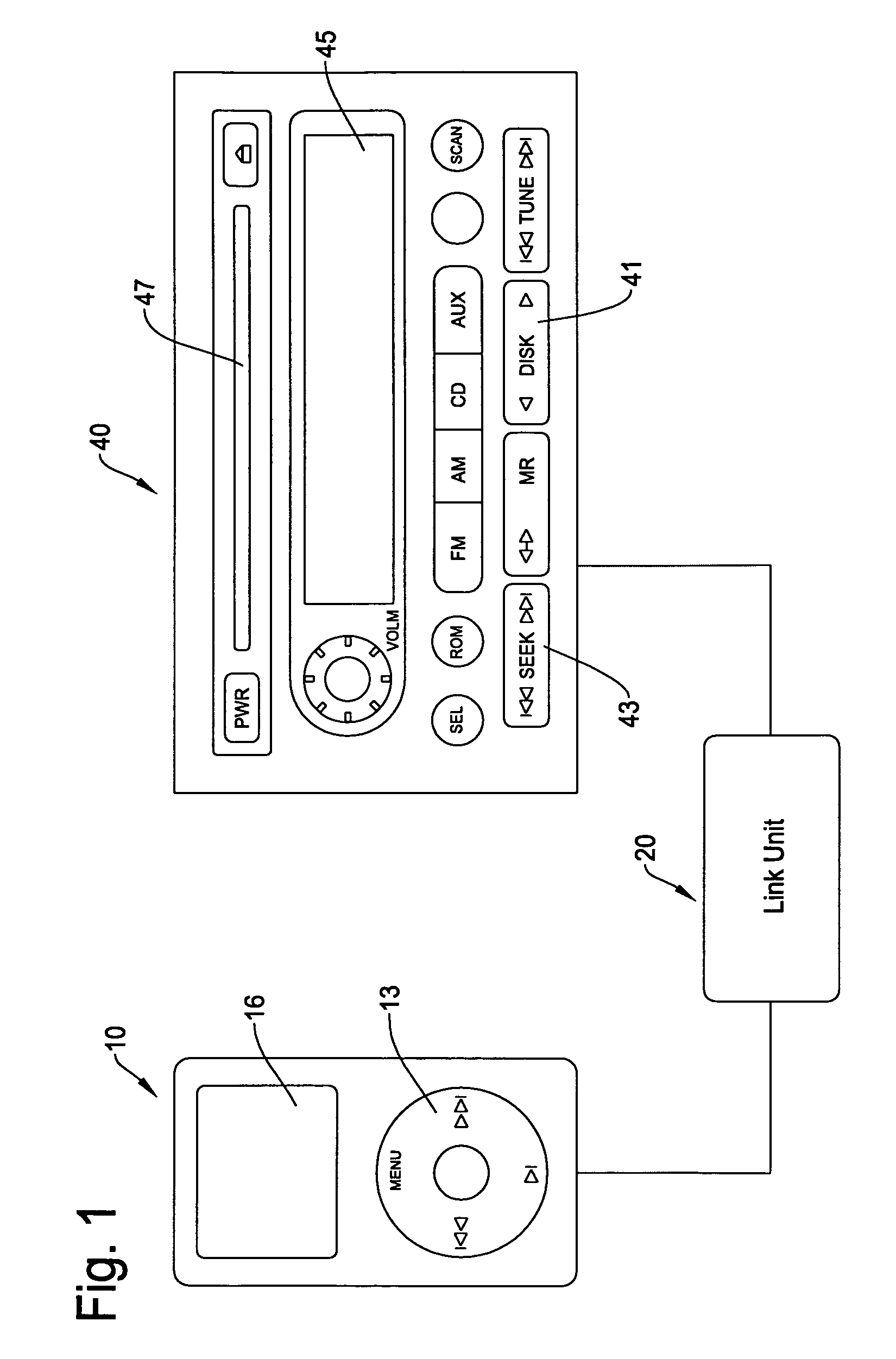

Digital audio file search method and apparatus using text-to-speech processing

ActiveUS7684991B2Avoid visual distractionsMetadata audio data retrievalSpecial data processing applicationsDigital Audio TapeSpeech technology

A digital audio file search method and apparatus for digital audio files is provided that allows a user to navigate the audio files by generating speech sounds related to the information of the audio files to facilitate searching and playback. The digital audio file search method and apparatus searches for audio files in a portable digital audio player in combination with an automobile audio system through speech sounds by utilizing text-to-speech processing and by prompting response from a user in response to the generated speech sounds. The text-to-speech technology is utilized to generate the speech sound based on tag-data of the audio files. When hearing the speech sounds, the user gives instruction for searching the files without being distracted from driving the automobile.

Owner:ALPINE ELECTRONICS INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com