Patents

Literature

5971 results about "Human–robot interaction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Human–robot interaction is the study of interactions between humans and robots. It is often referred as HRI by researchers. Human–robot interaction is a multidisciplinary field with contributions from human–computer interaction, artificial intelligence, robotics, natural language understanding, design, and social sciences.

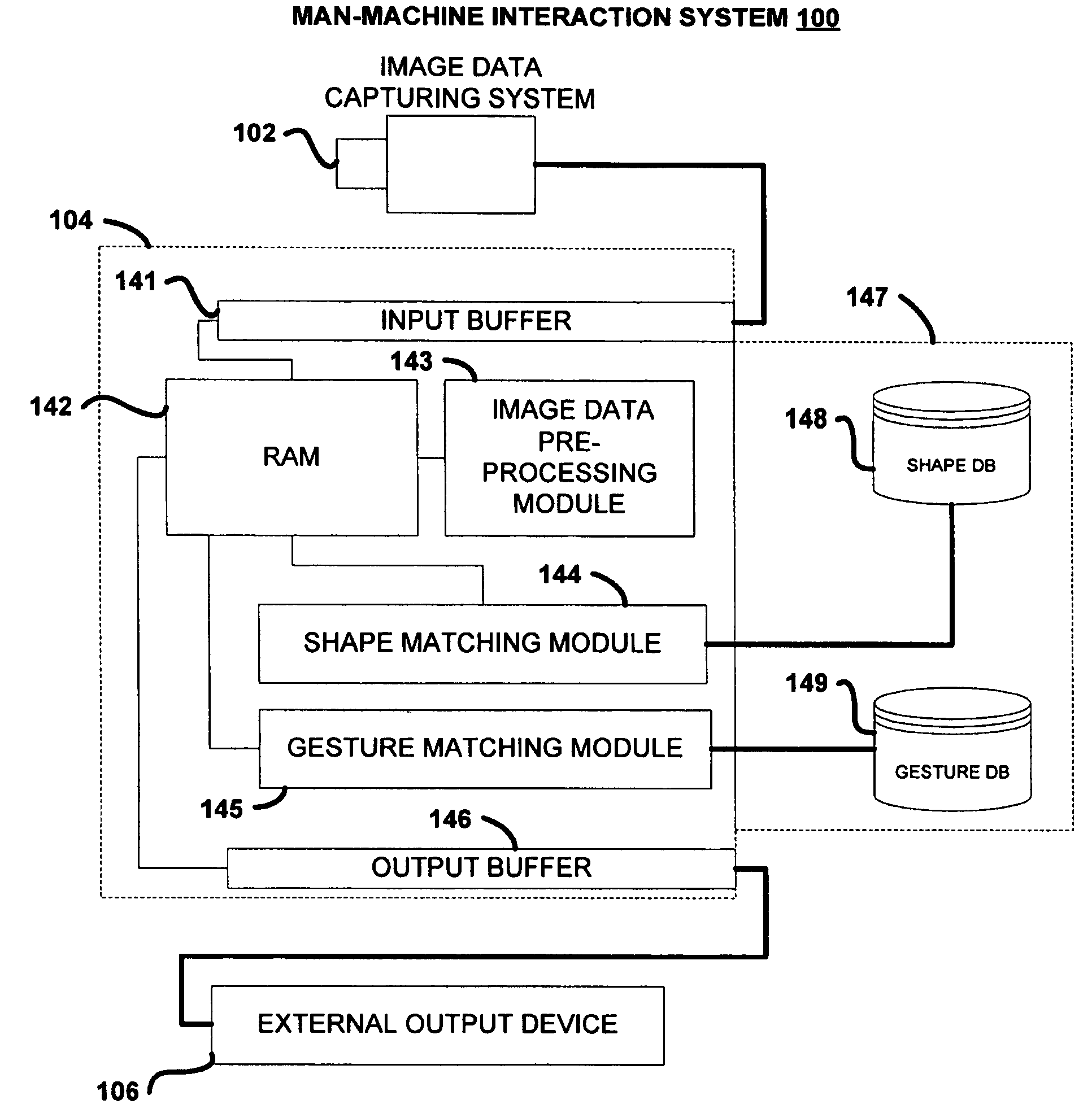

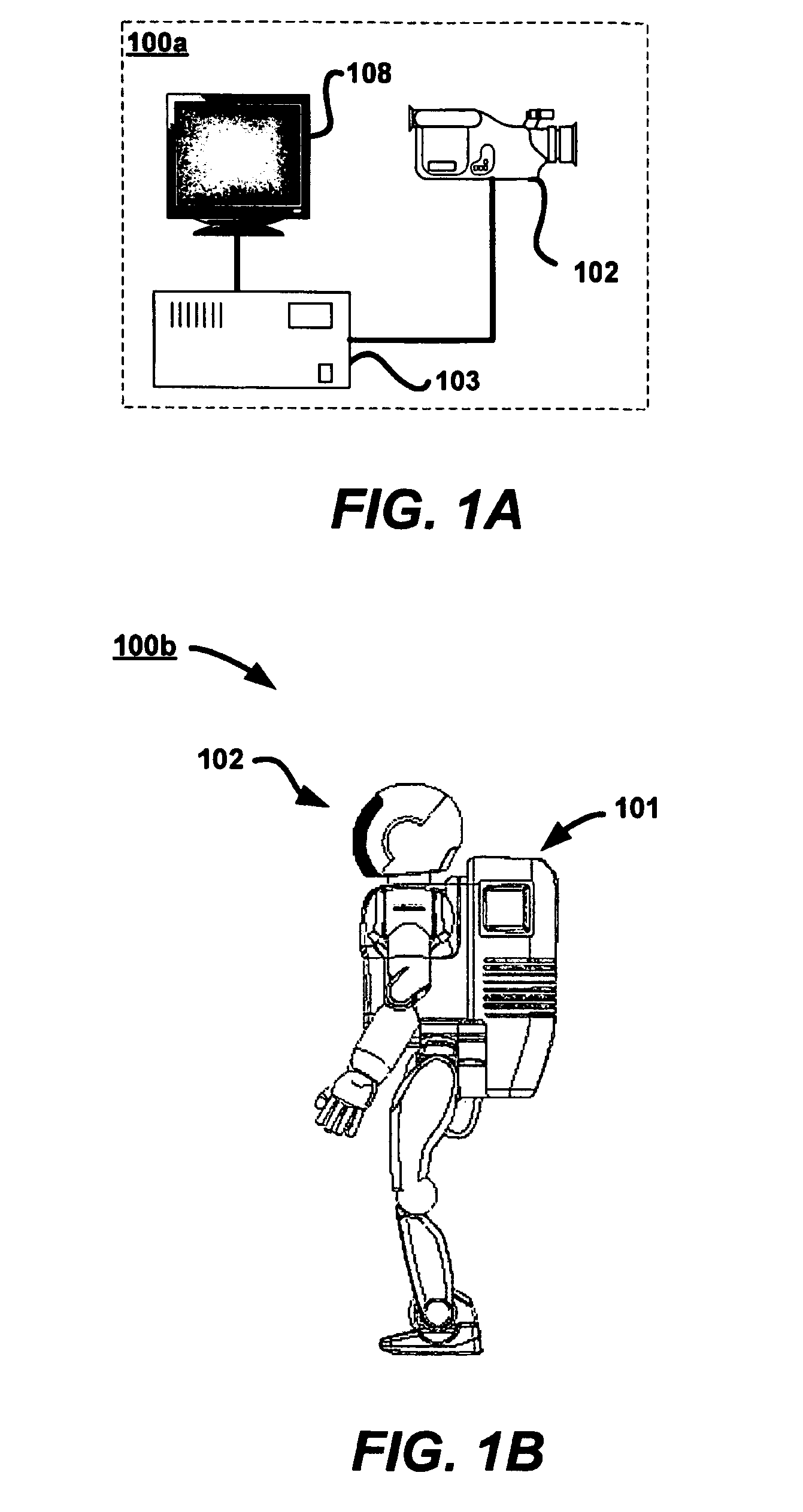

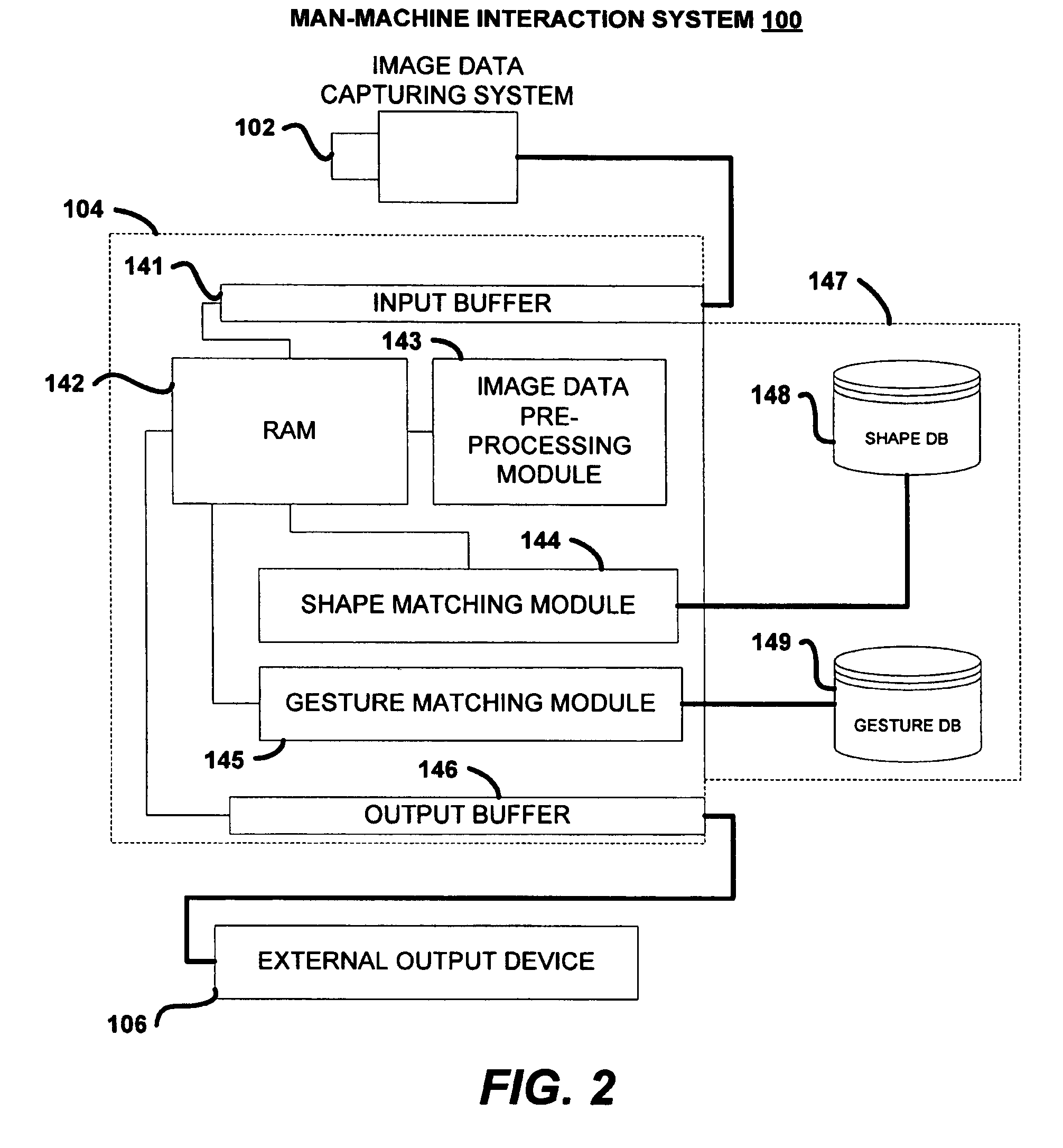

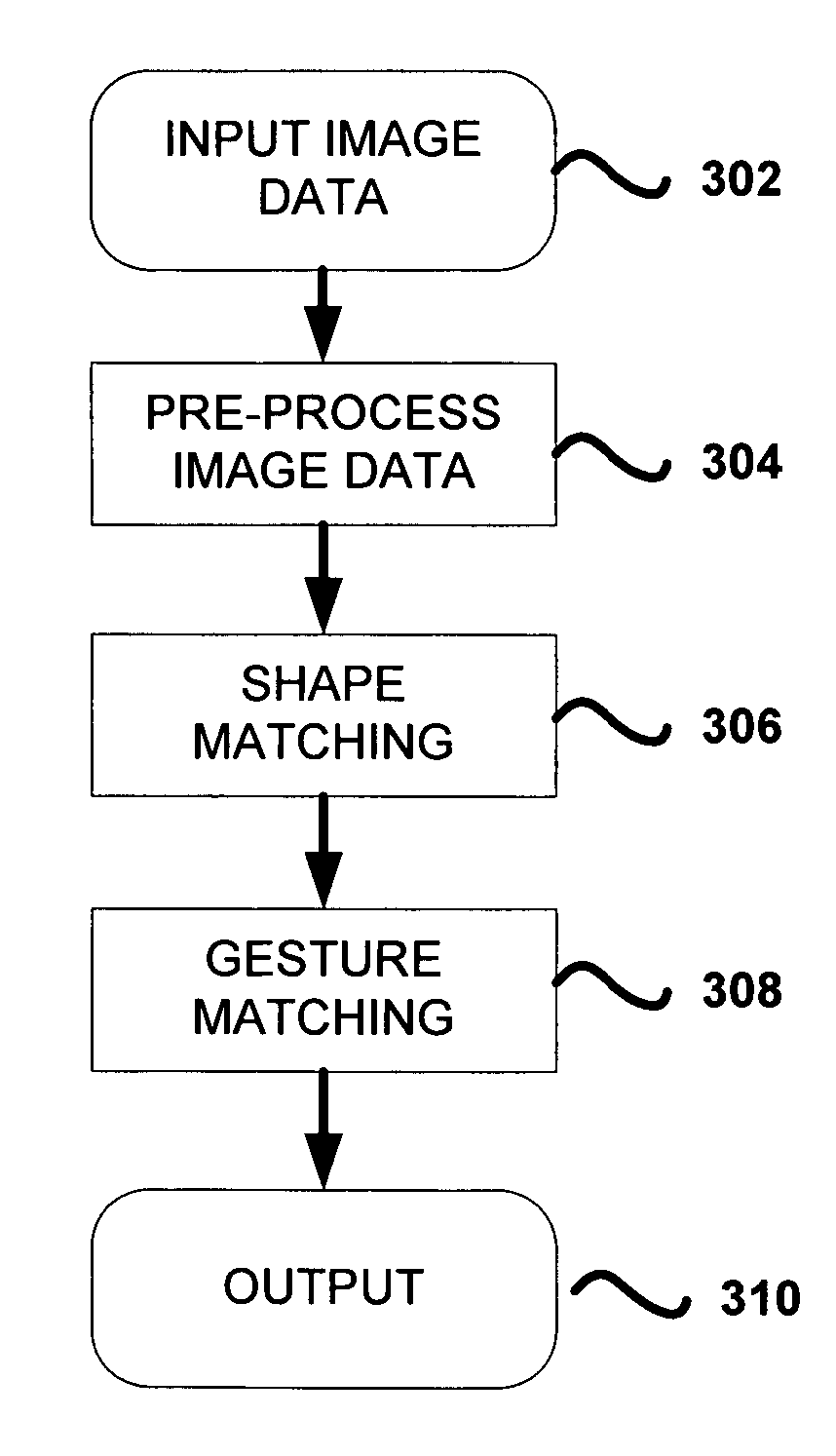

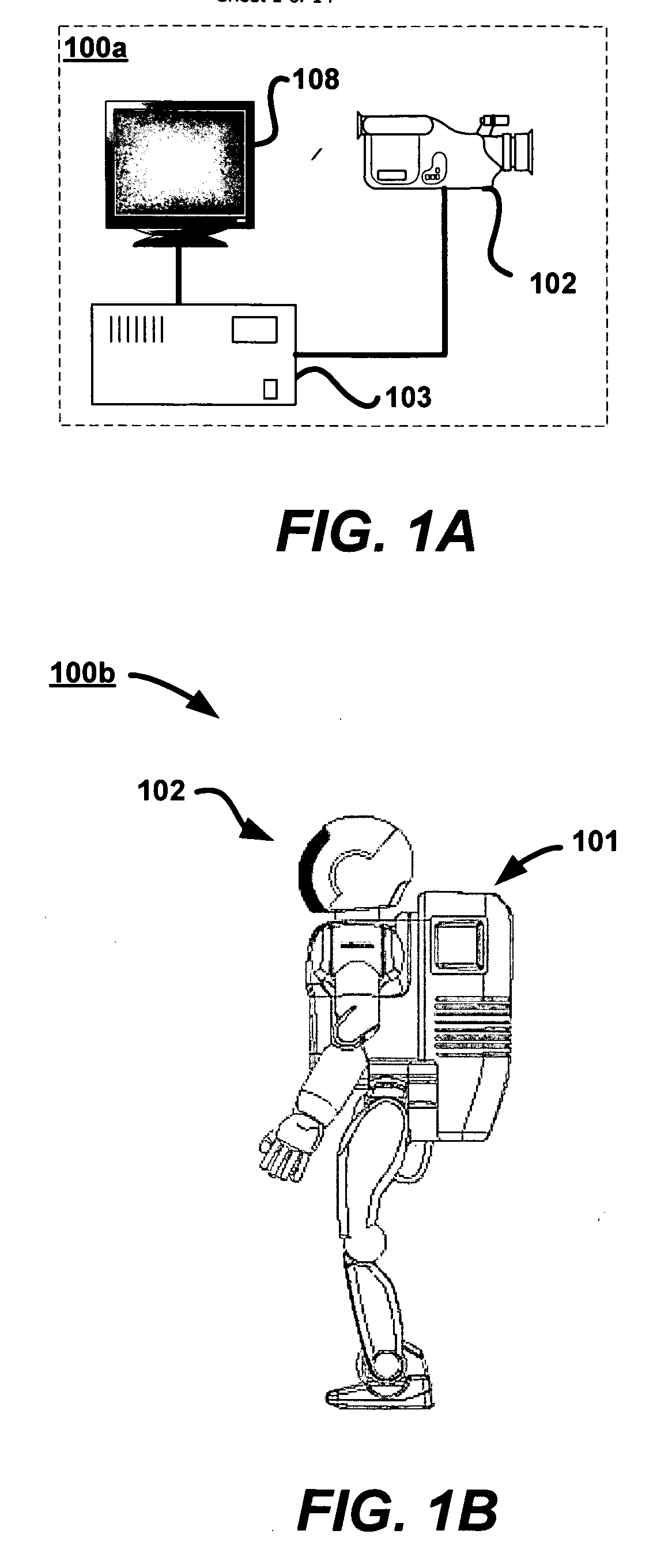

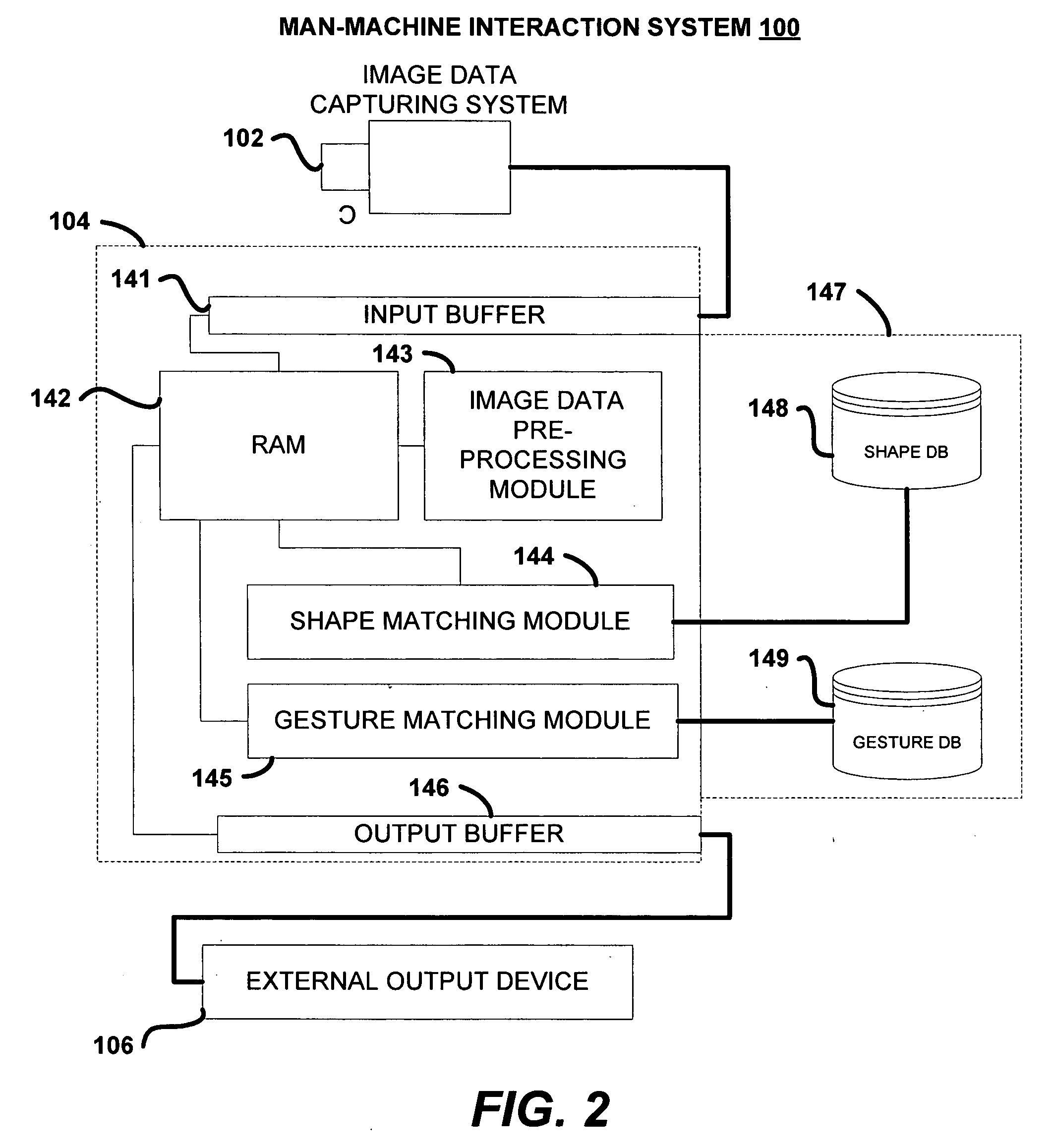

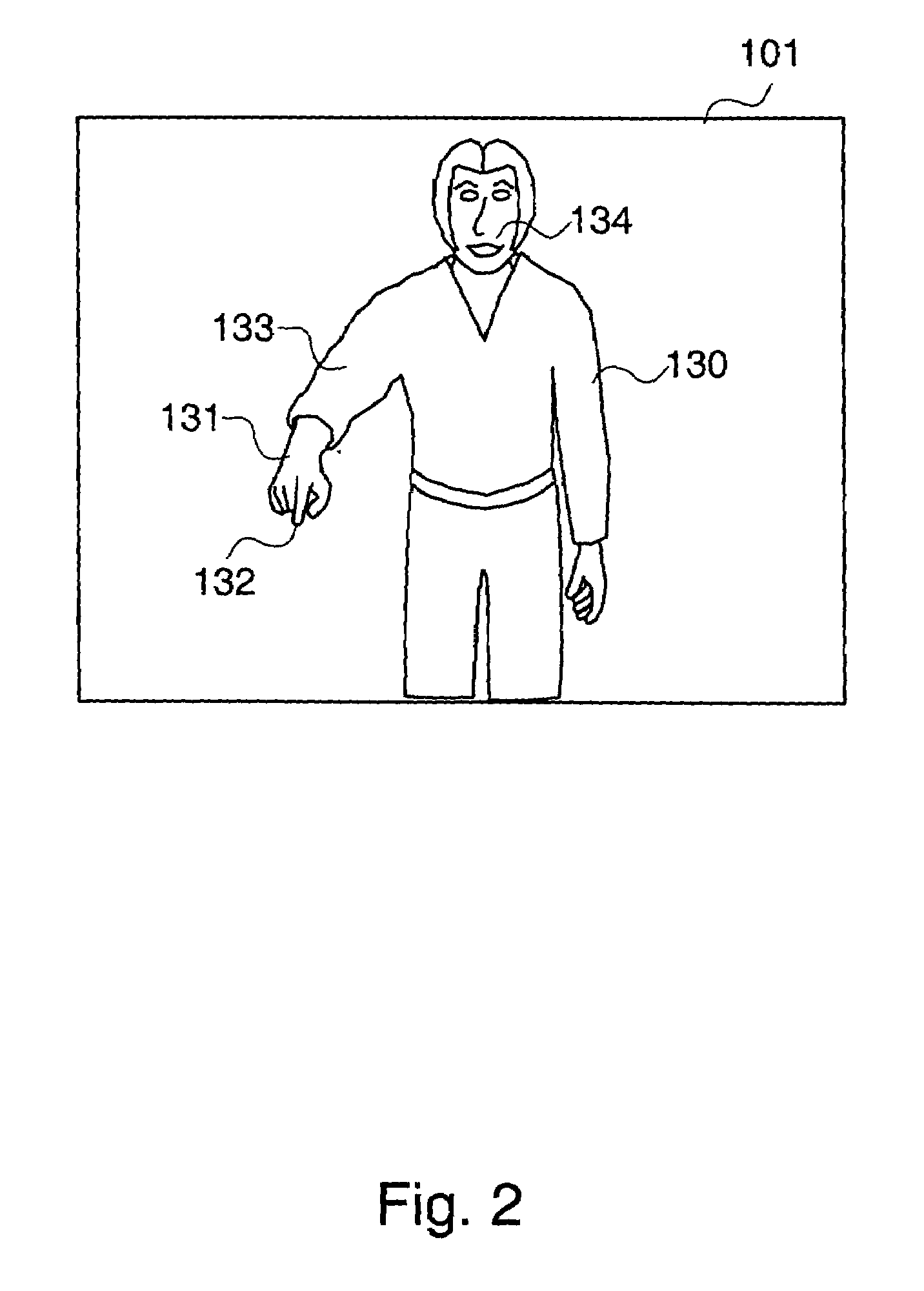

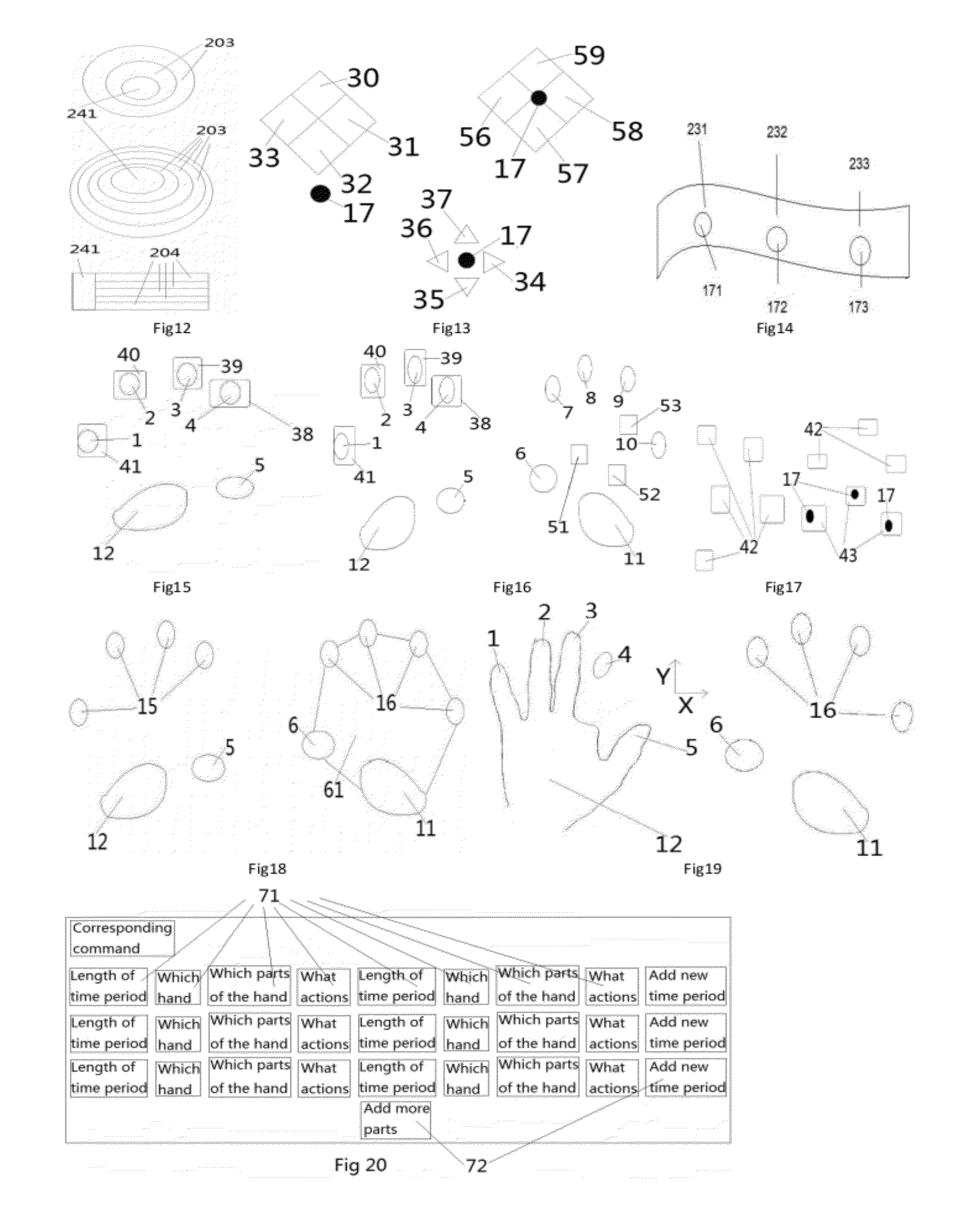

Sign based human-machine interaction

Communication is an important issue in man-to-robot interaction. Signs can be used to interact with machines by providing user instructions or commands. Embodiment of the present invention include human detection, human body parts detection, hand shape analysis, trajectory analysis, orientation determination, gesture matching, and the like. Many types of shapes and gestures are recognized in a non-intrusive manner based on computer vision. A number of applications become feasible by this sign-understanding technology, including remote control of home devices, mouse-less (and touch-less) operation of computer consoles, gaming, and man-robot communication to give instructions among others. Active sensing hardware is used to capture a stream of depth images at a video rate, which is consequently analyzed for information extraction.

Owner:THE OHIO STATE UNIV RES FOUND +1

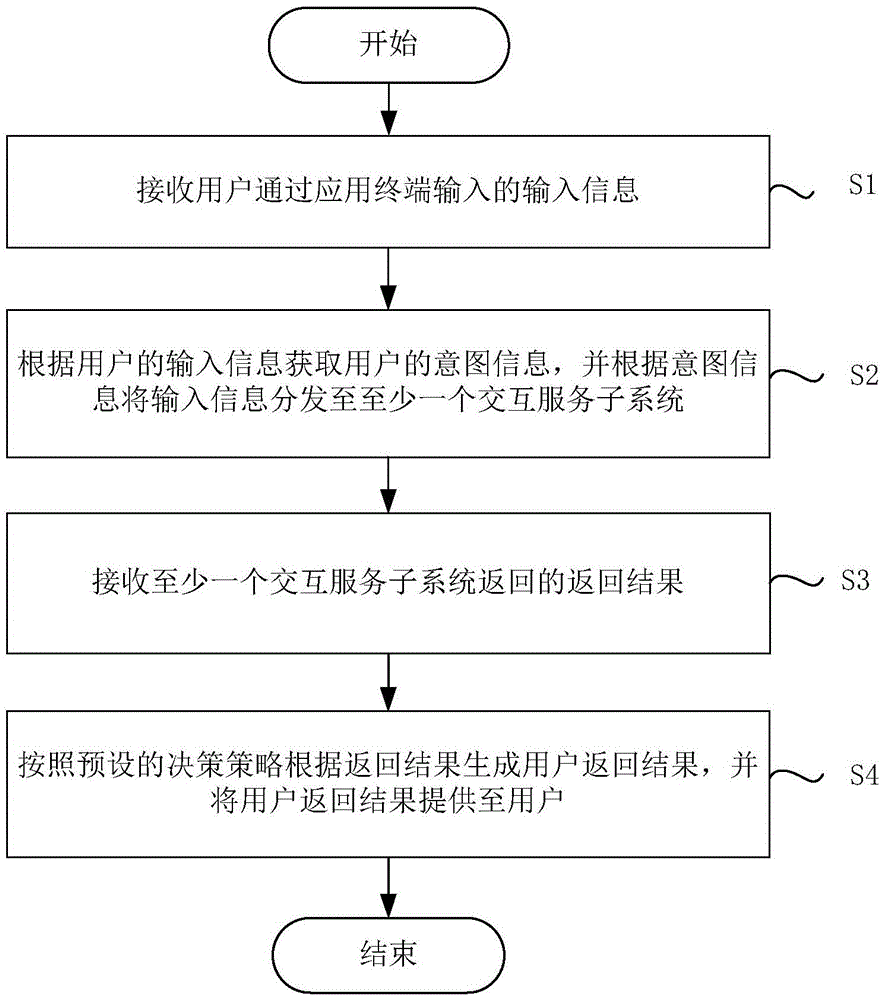

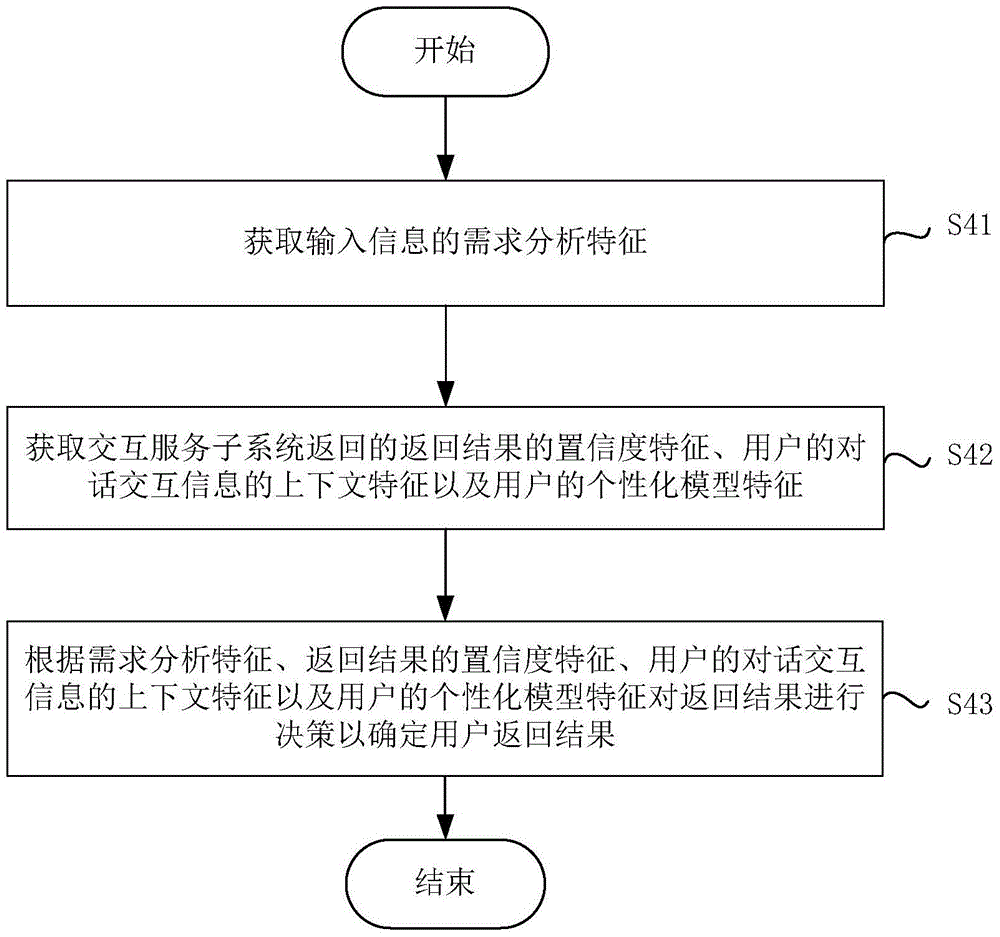

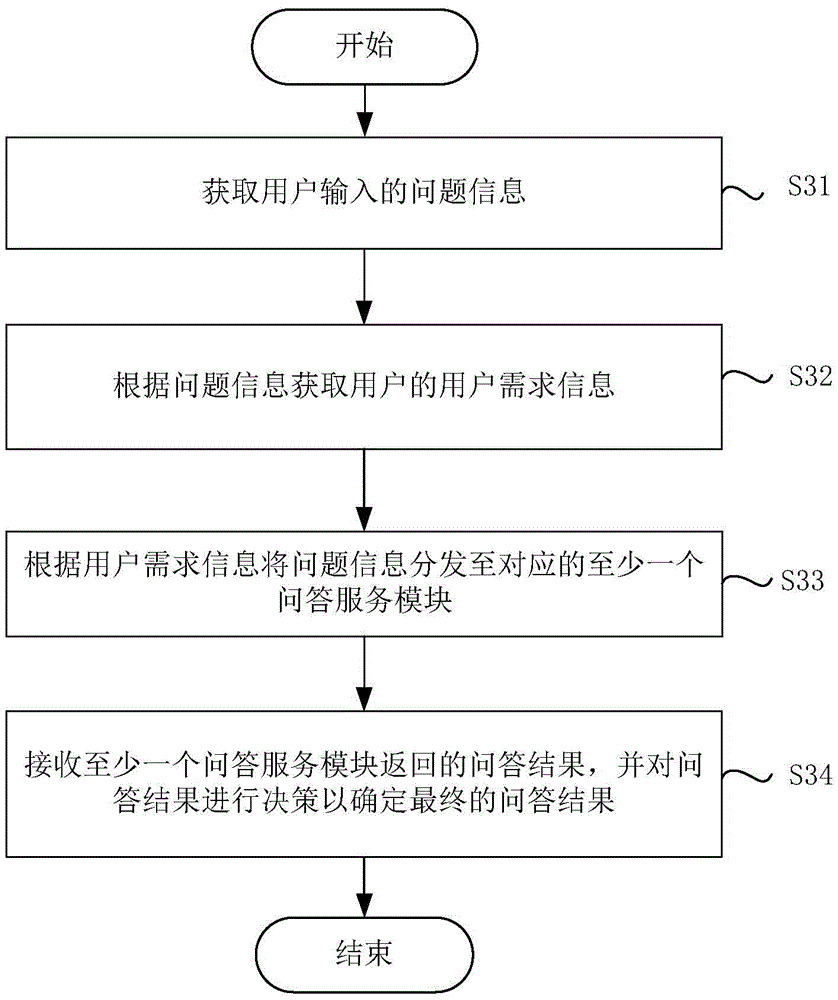

Man-machine interaction method and system based on artificial intelligence

ActiveCN105068661AEasy and pleasant interactive experienceInput/output for user-computer interactionGraph readingDecision strategyComputer terminal

The invention discloses a man-machine interaction method and system based on artificial intelligence. The method includes the following steps of receiving input information input by a user through an application terminal, obtaining the intent information of the user according to the input information of the user, distributing the input information to at least one interaction service subsystem according to the intent information, receiving the return result returned by the interaction service subsystems, generating a user return result according to the return result through a preset decision strategy, and providing the user return result to the user. By means of the method and system, the man-machine interaction system is virtualized instead of being instrumentalized, and the user can obtain the relaxed and pleasure interaction experience in the intelligent interaction process through chat, research and other service. The search in the form of keywords is improved into the search based on natural languages, the user can express demands through flexible and free natural languages, and the multi-round interaction process is closer to the interaction experience among humans.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

Sign based human-machine interaction

Communication is an important issue in man-to-robot interaction. Signs can be used to interact with machines by providing user instructions or commands. Embodiment of the present invention include human detection, human body parts detection, hand shape analysis, trajectory analysis, orientation determination, gesture matching, and the like. Many types of shapes and gestures are recognized in a non-intrusive manner based on computer vision. A number of applications become feasible by this sign-understanding technology, including remote control of home devices, mouse-less (and touch-less) operation of computer consoles, gaming, and man-robot communication to give instructions among others. Active sensing hardware is used to capture a stream of depth images at a video rate, which is consequently analyzed for information extraction.

Owner:THE OHIO STATE UNIV RES FOUND +1

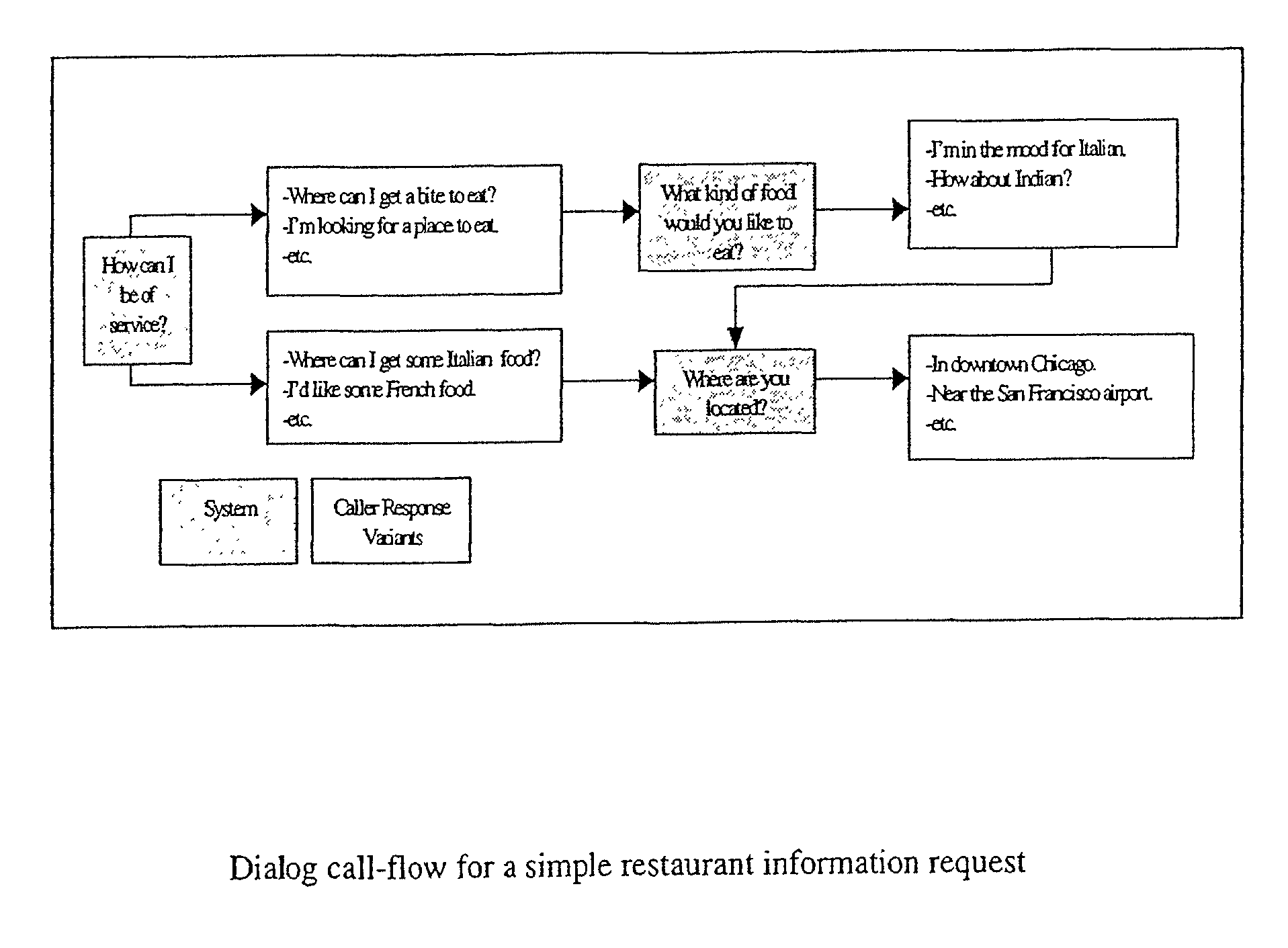

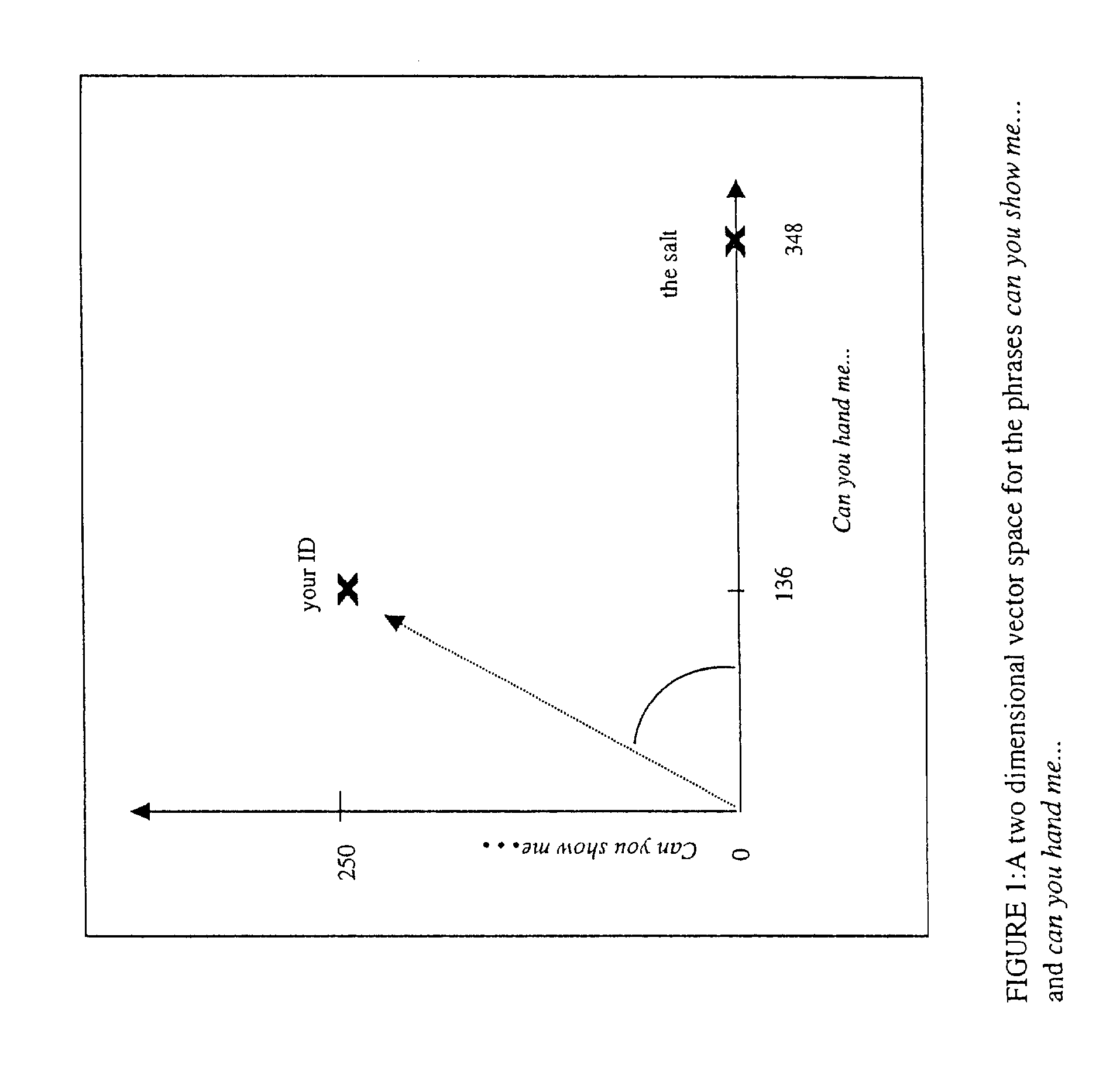

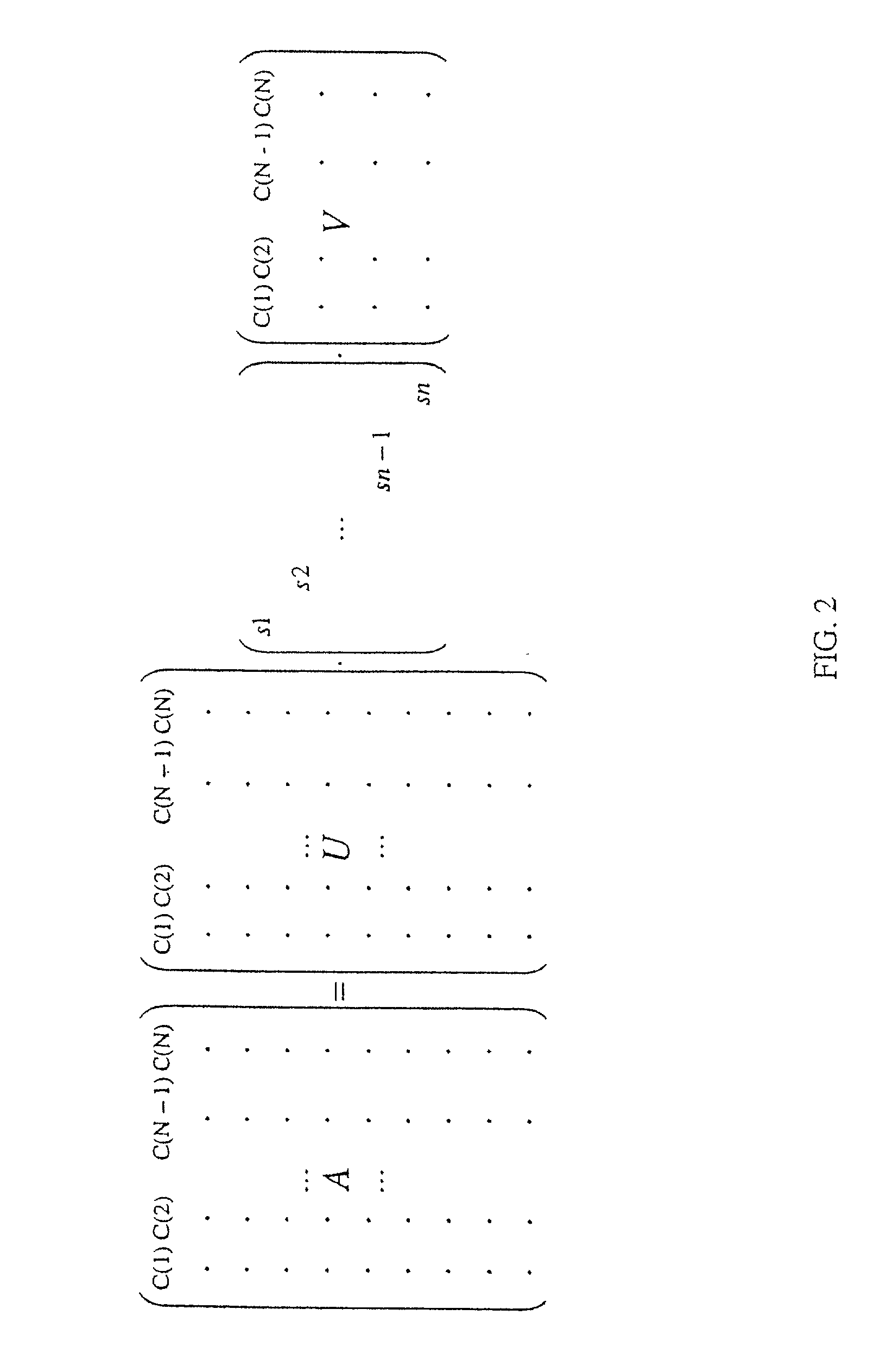

Methods for creating a phrase thesaurus

InactiveUS8374871B2Quick identificationMore complex and better performing systemsNatural language data processingSpeech recognitionHuman–robot interactionSpeech sound

The invention enables creation of grammar networks that can regulate, control, and define the content and scope of human-machine interaction in natural language voice user interfaces (NLVUI). More specifically, the invention concerns a phrase-based modeling of generic structures of verbal interaction and use of these models for the purpose of automating part of the design of such grammar networks.

Owner:NANT HLDG IP LLC

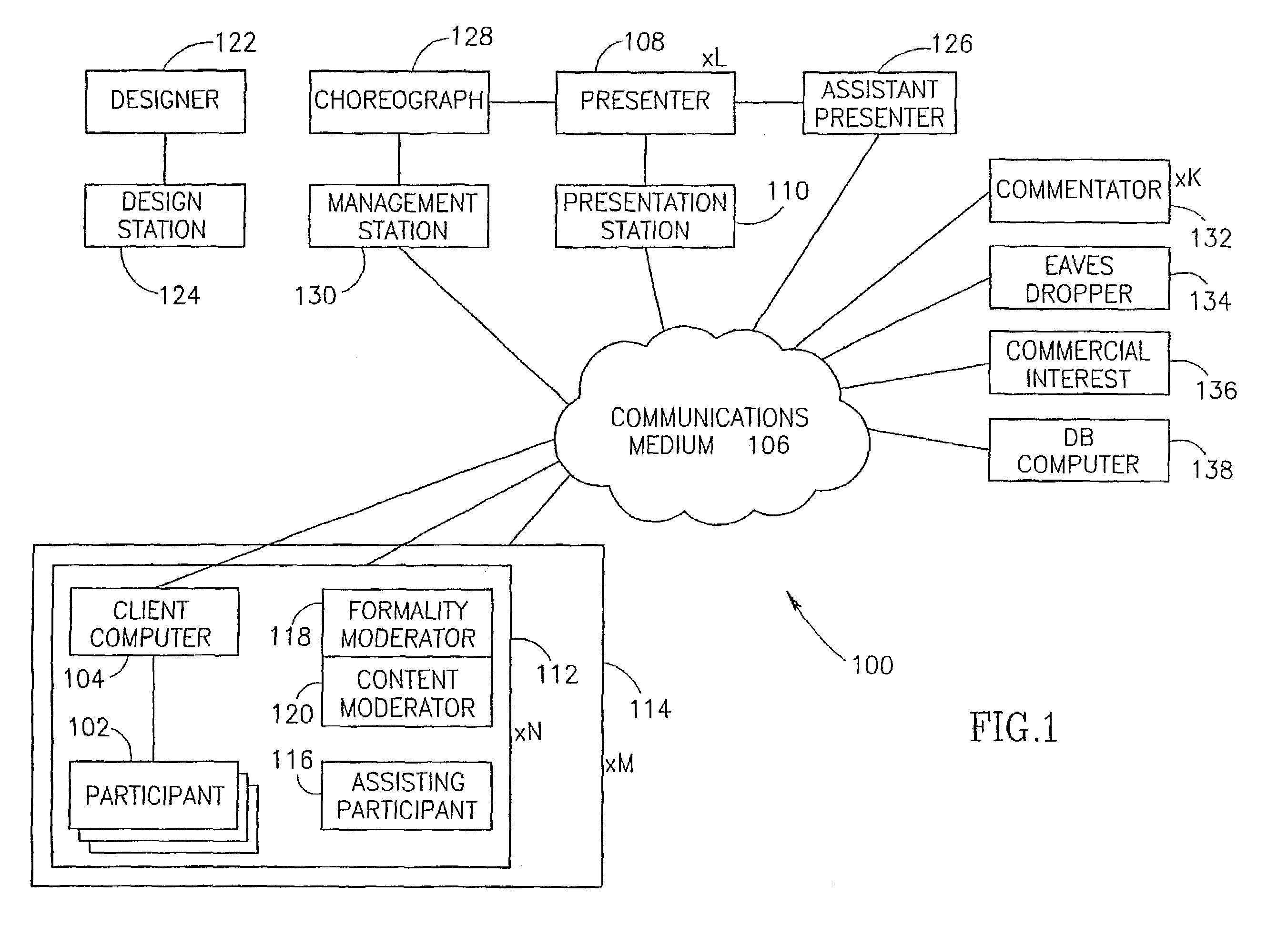

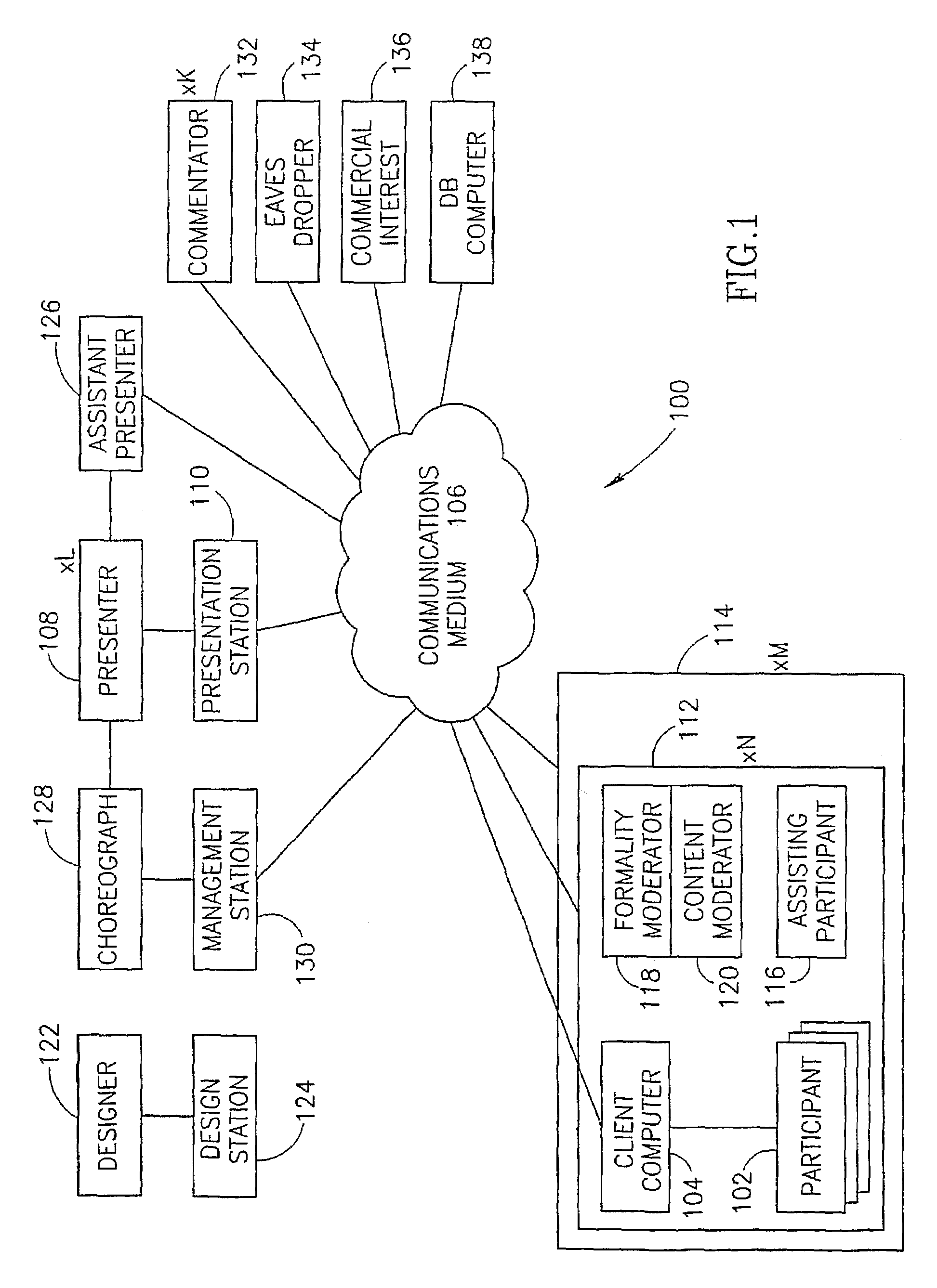

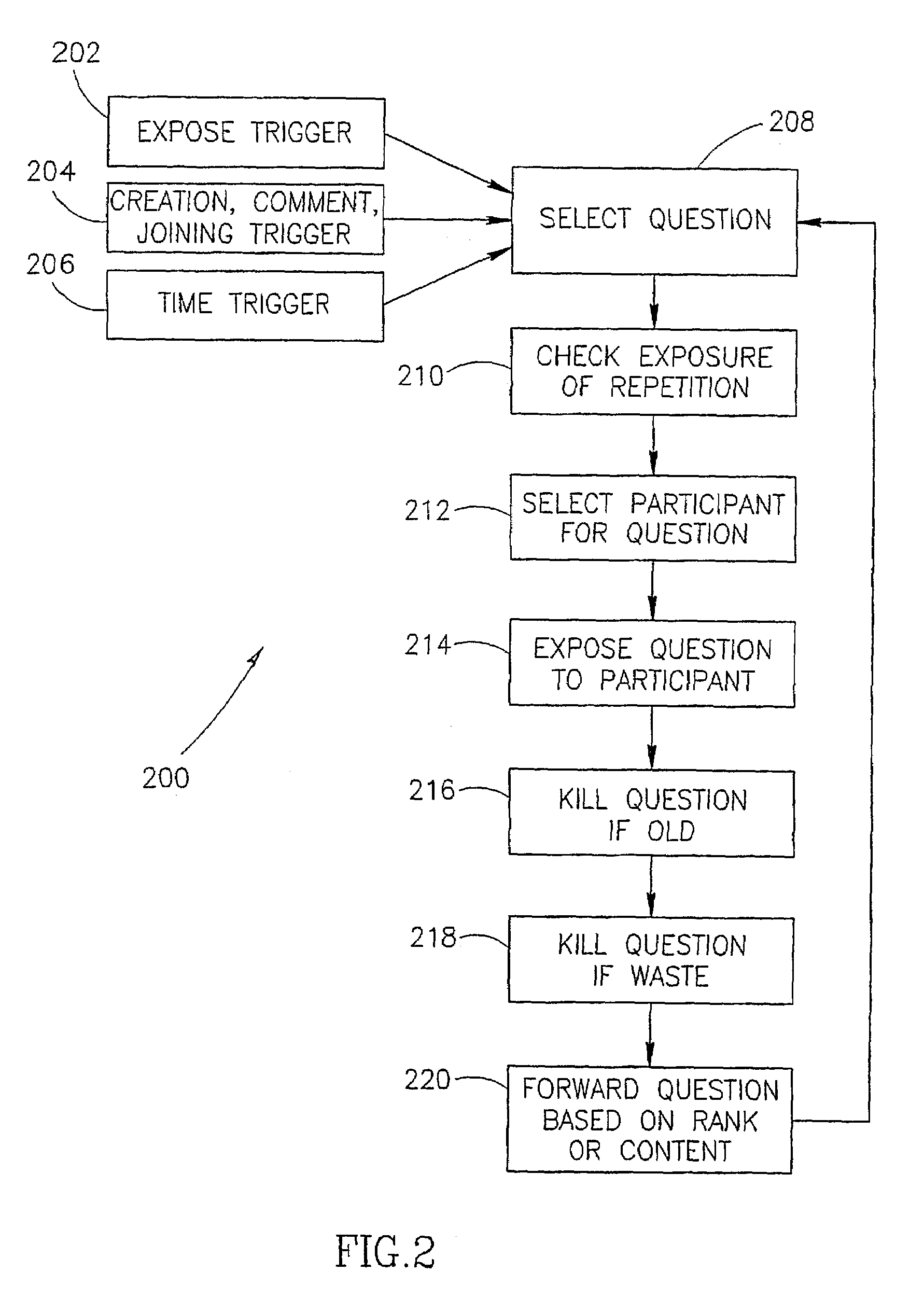

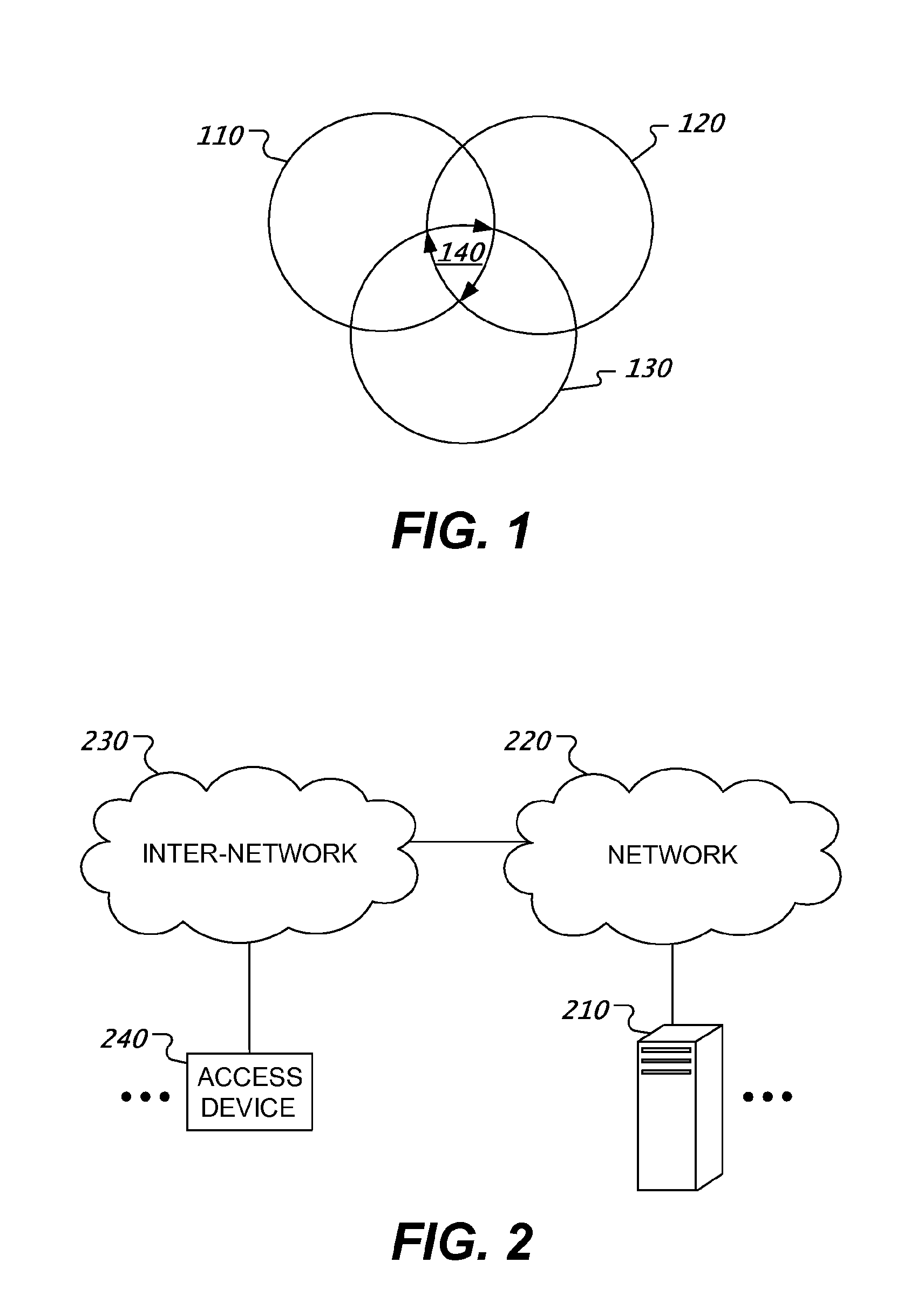

Large group interactions via mass communication network

InactiveUS7092821B2Reduces opportunity proliferationImprove efficiencyOffice automationTwo-way working systemsHuman interactionAutomatic control

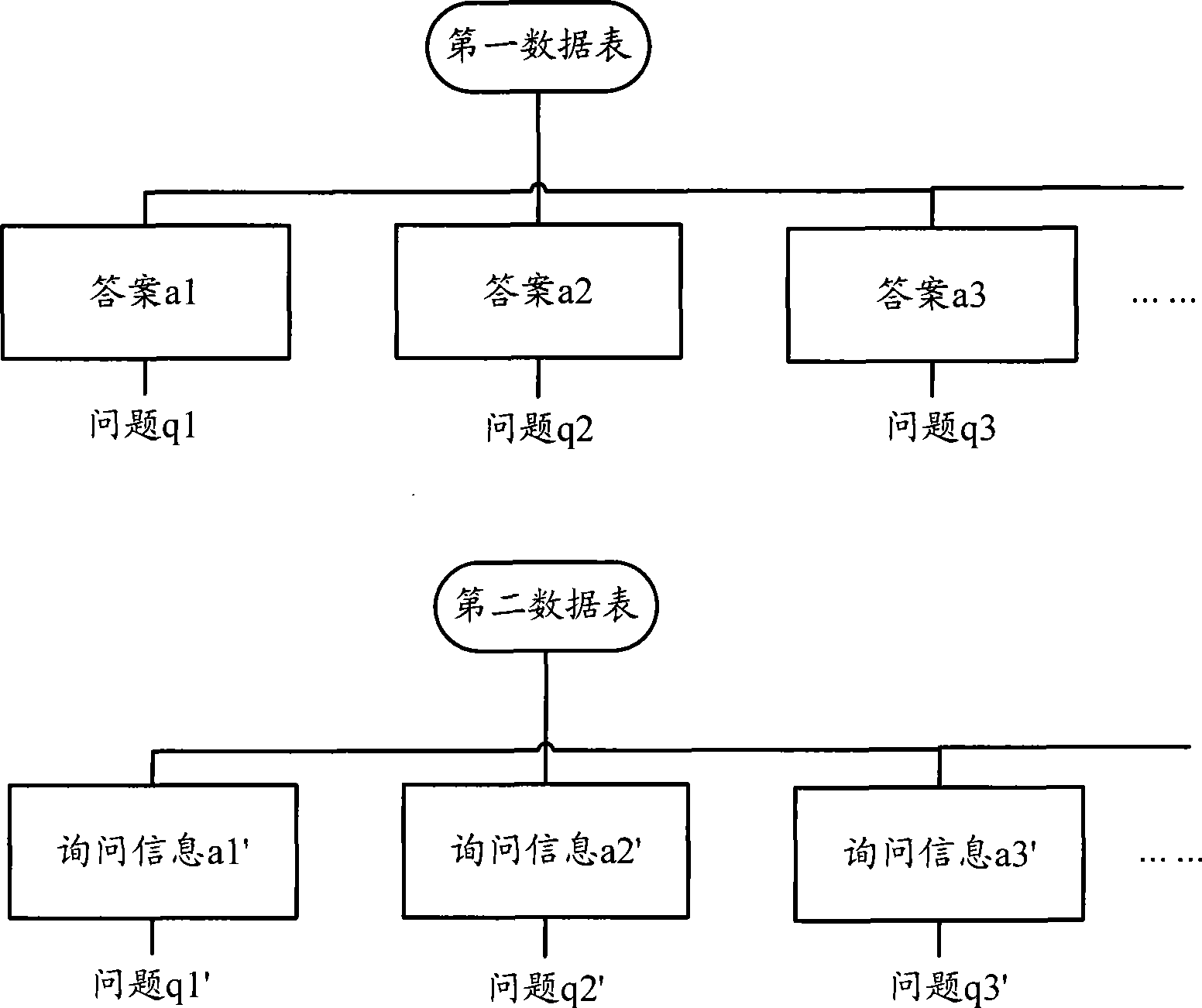

A method of supporting mass human-interaction events, including: providing a mass interaction event by a computer network (100) in which a plurality of participants (102) interact with each other by generating information comprising of questions, responses to questions and fact information for presentation to other participants and assimilating information: and controlling, automatically by a computer (104) the rate of information presentation to each participant, to be below a maximum information assimilation rate of each participant.

Owner:INVOKE SOLUTIONS

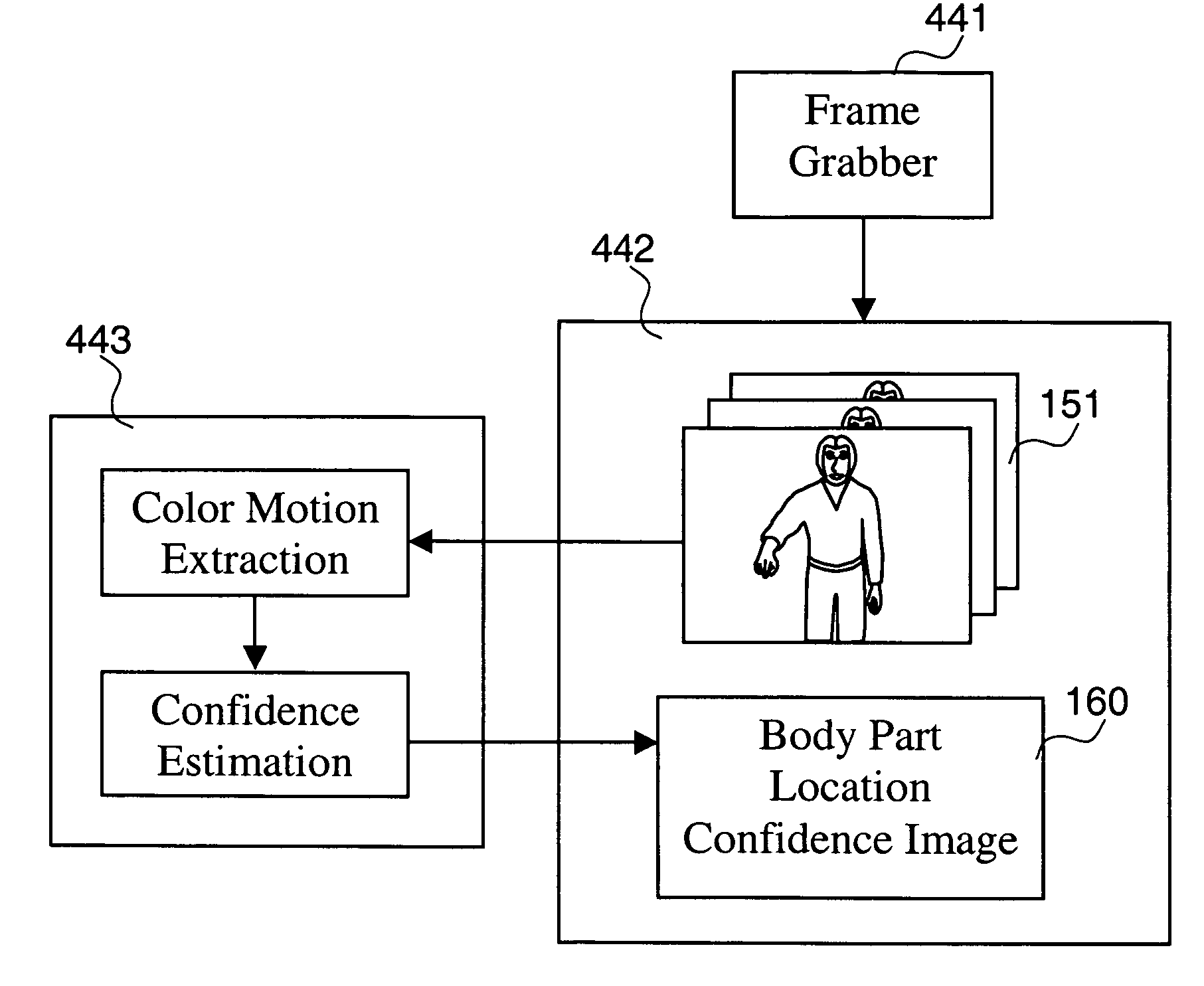

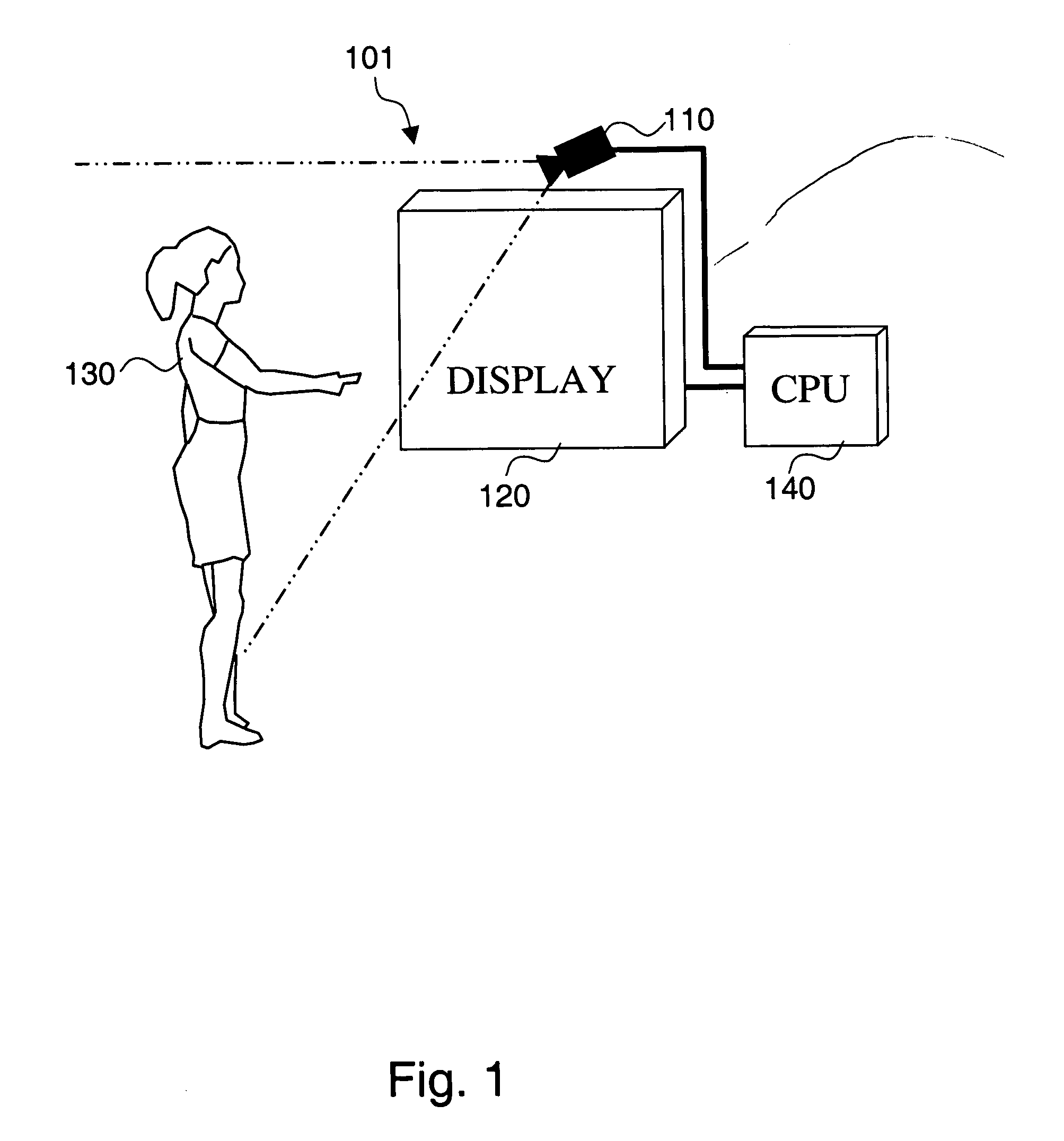

Method and system for detecting conscious hand movement patterns and computer-generated visual feedback for facilitating human-computer interaction

ActiveUS7274803B1Effective visual feedbackEfficient feedbackCharacter and pattern recognitionColor television detailsContact freePublic place

The present invention is a system and method for detecting and analyzing motion patterns of individuals present at a multimedia computer terminal from a stream of video frames generated by a video camera and the method of providing visual feedback of the extracted information to aid the interaction process between a user and the system. The method allows multiple people to be present in front of the computer terminal and yet allow one active user to make selections on the computer display. Thus the invention can be used as method for contact-free human-computer interaction in a public place, where the computer terminal can be positioned in a variety of configurations including behind a transparent glass window or at a height or location where the user cannot touch the terminal physically.

Owner:F POSZAT HU

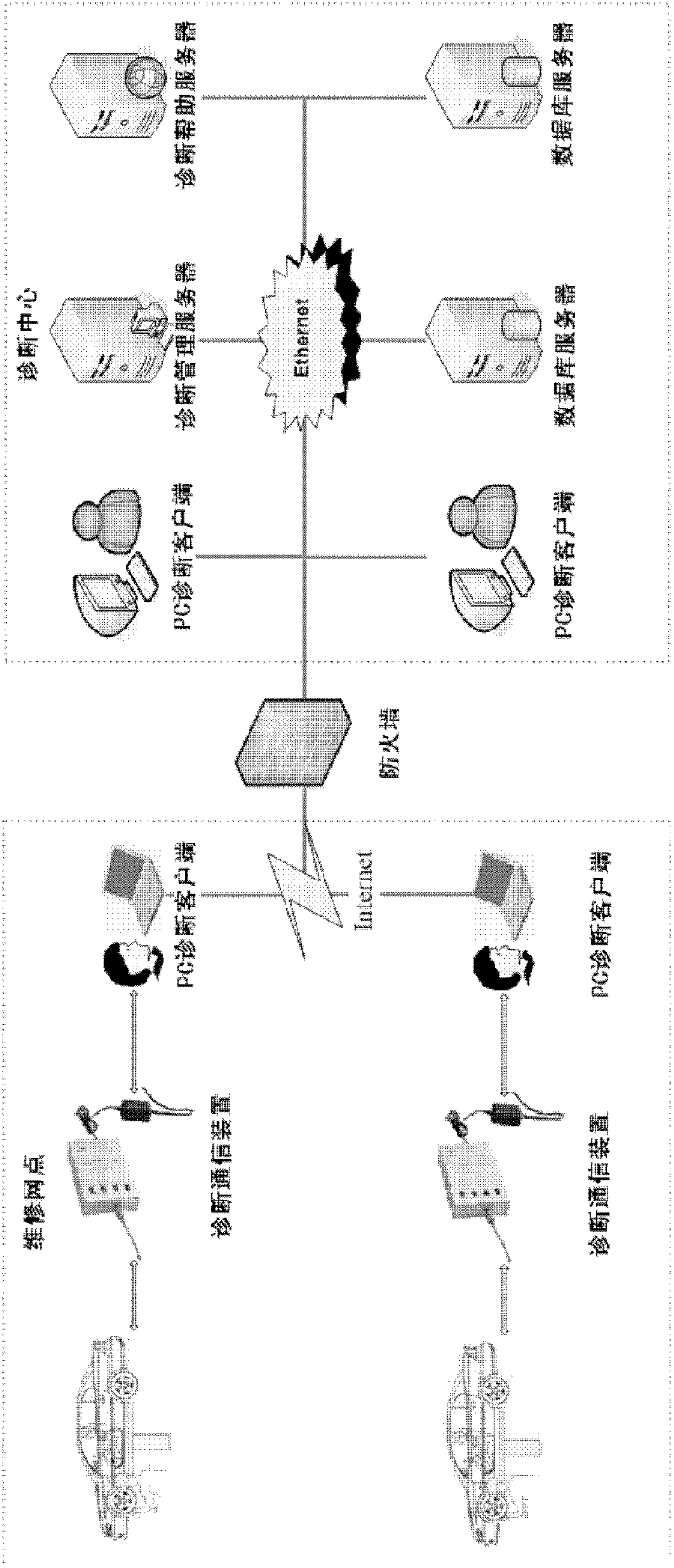

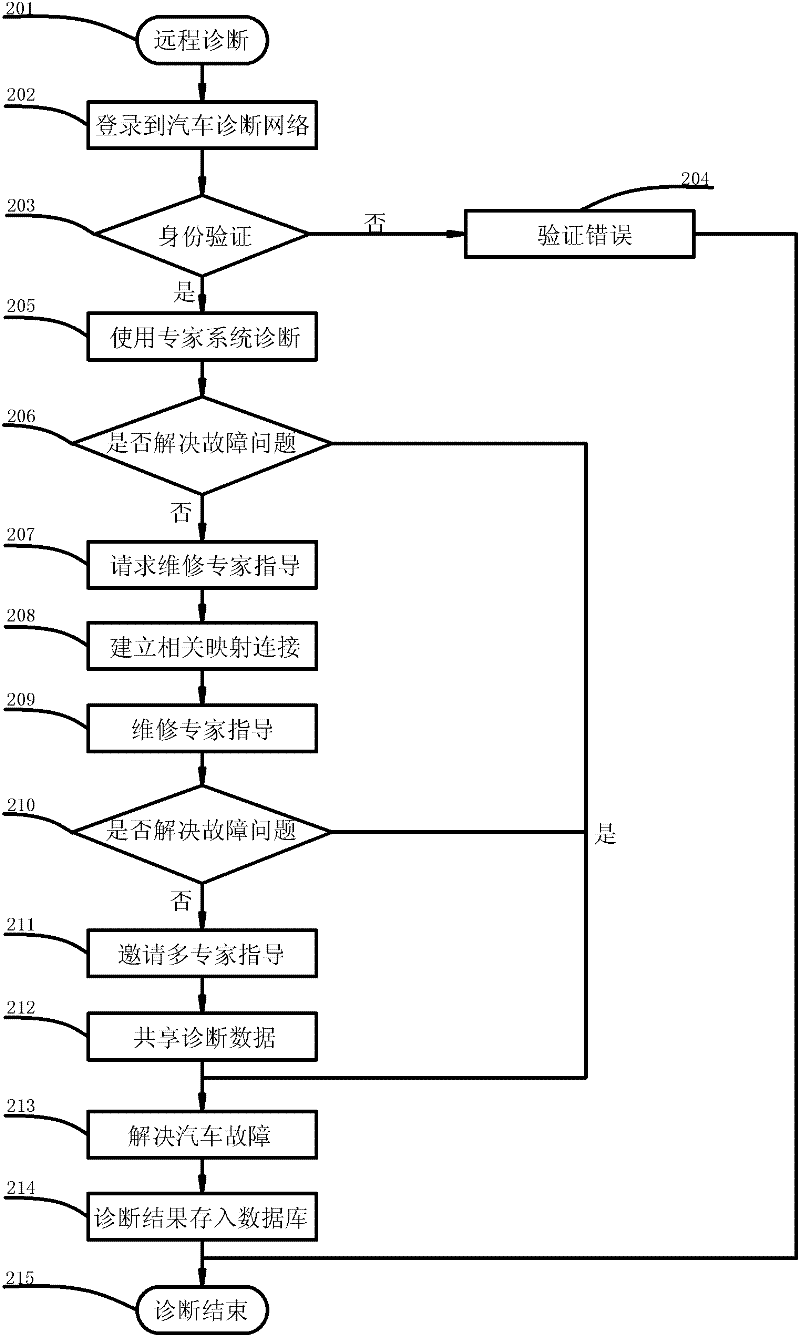

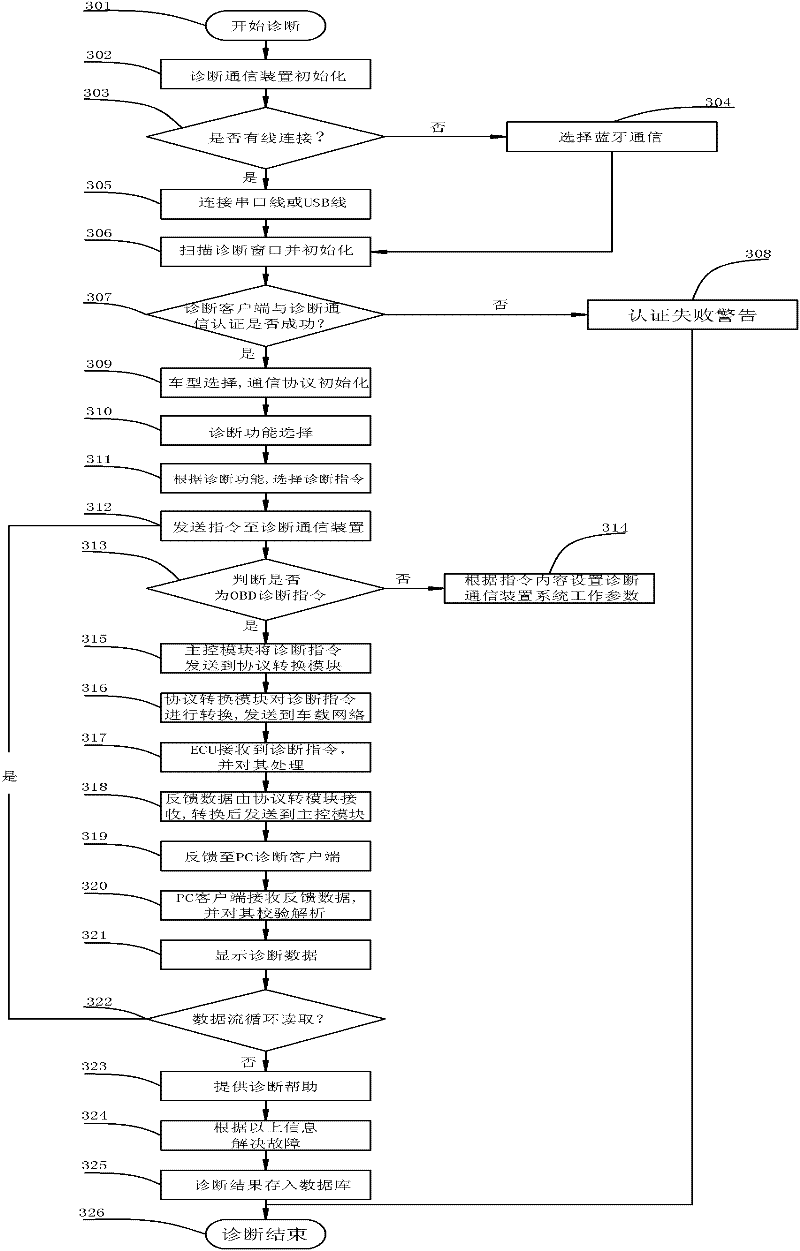

Multifunctional remote fault diagnosis system for electric control automobile

ActiveCN102183945AReduce the cost of after-sales serviceImprove the efficiency of after-sales serviceElectric testing/monitoringInteraction interfaceNetwork management

The invention relates to a multifunctional remote fault diagnosis system for an electric control automobile. The multifunctional remote fault diagnosis system comprises a remote fault diagnosis service center, PC (Personal Computer) diagnosis client sides and a diagnosis communication device. The remote fault diagnosis service center serves as a key of the system and is mainly used for realizing an automobile fault diagnosis network management function and an automobile remote fault diagnosis assistance function; the PC diagnosis client sides are mainly used for providing specific automobile diagnosis application functions and remote diagnosis interfaces for users with different rights through human-computer interaction interfaces; and the diagnosis communication device is mainly used for realizing the data communication between the PC diagnosis client sides and a vehicle-mounted network and providing diagnosis data service for upper applications. By means of the multifunctional remote fault diagnosis system, with the remote fault diagnosis service center as a core and all PC diagnosis client sides as nodes, an automobile fault diagnosis network is established; automobile diagnosis data sharing is realized by means of the diagnosis communication device; multifunctional automobile remote fault assistance and fault elimination help can be provided; automobile fault information is subjected to statistic analysis; and a reliable automobile quality report is provided for an automobile manufacturer.

Owner:WUHAN UNIV OF TECH +1

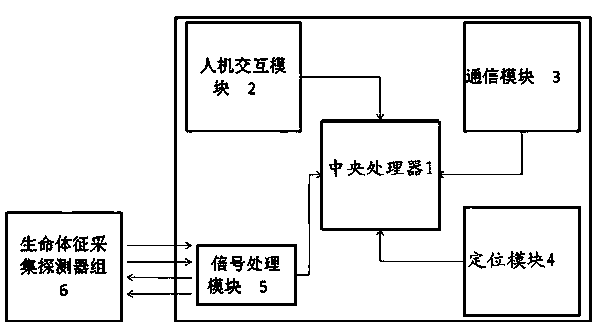

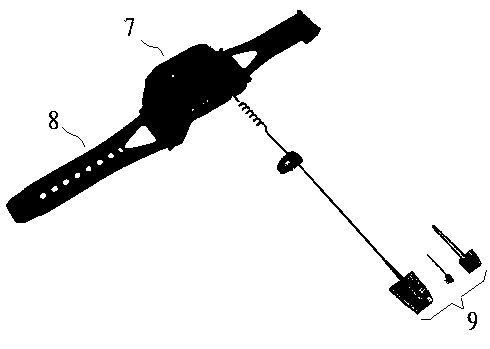

Wrist-type vital sign monitoring device and monitoring method thereof

ActiveCN103417202AImprove convenienceTo achieve the practical purpose of real-time monitoringCatheterDiagnostic recording/measuringComputer moduleEngineering

The invention discloses a wrist-type vital sign monitoring device and a monitoring method thereof. A central processing unit, a human-computer interaction module, a communication module, a positioning module, a signal processing module and a vital sign collecting detector set are integrated in a wrist-type watch, and the wrist-type watch is worn on a wrist through a watchband. The vital sign collecting detector set comprises a plurality of physiological index sensors, and each physiological index sensor is arranged in the wrist-type watch in an integrated or detachably replaced mode. The portable device which has a positioning function and can monitor the physiological signal monitoring value of a user in real time is contrasted with pre-stored normal index parameters aiming at the user, and when a disease sign is found, the device sends out a prompt for the user and meanwhile sends the current related physiological signal monitoring values of the user, the physiological signal monitoring values in the recent period and the current geographical location information of the user to a service center, so that the precious time is earned for the user obtaining the timely salvation.

Owner:赵蕴博

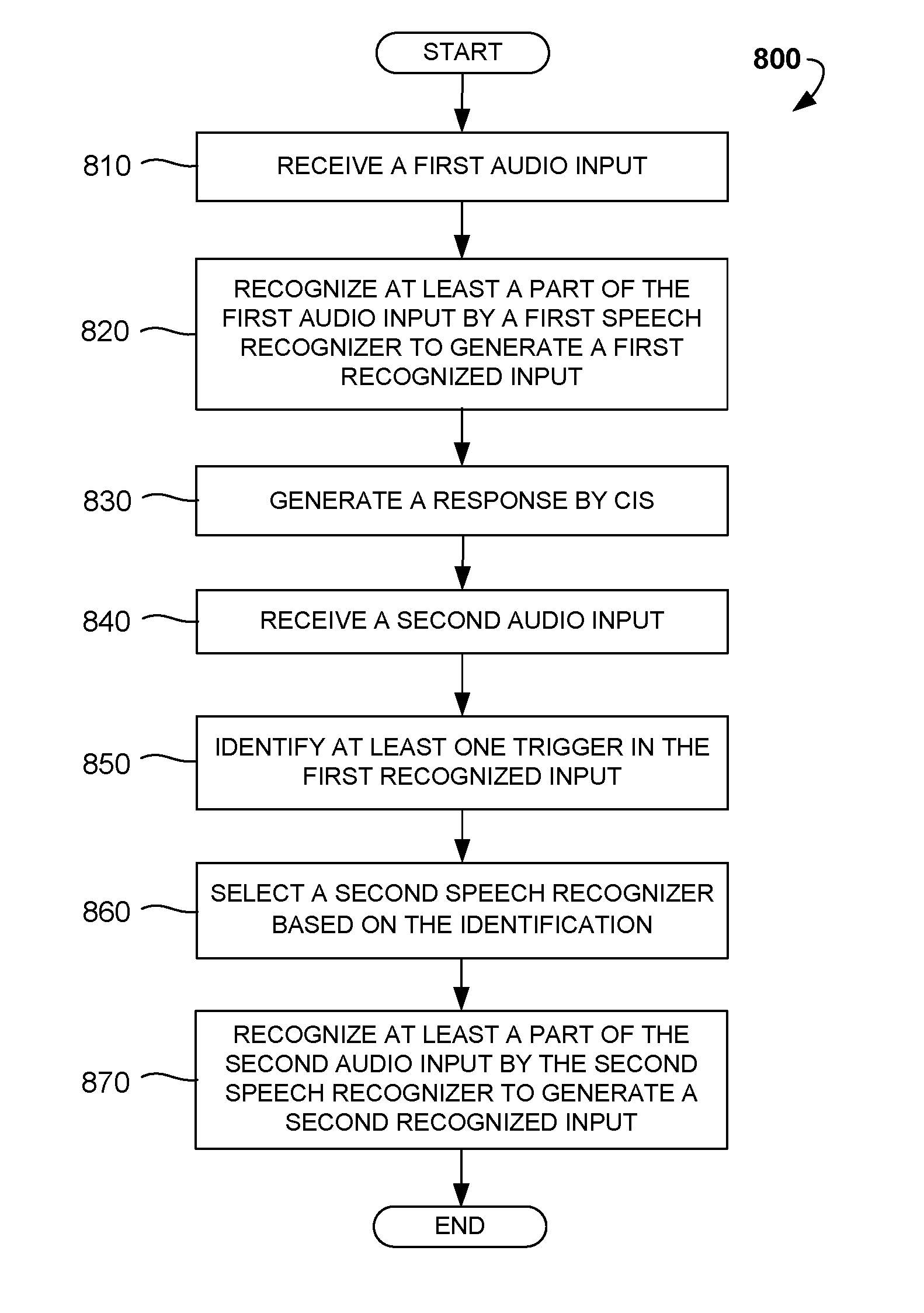

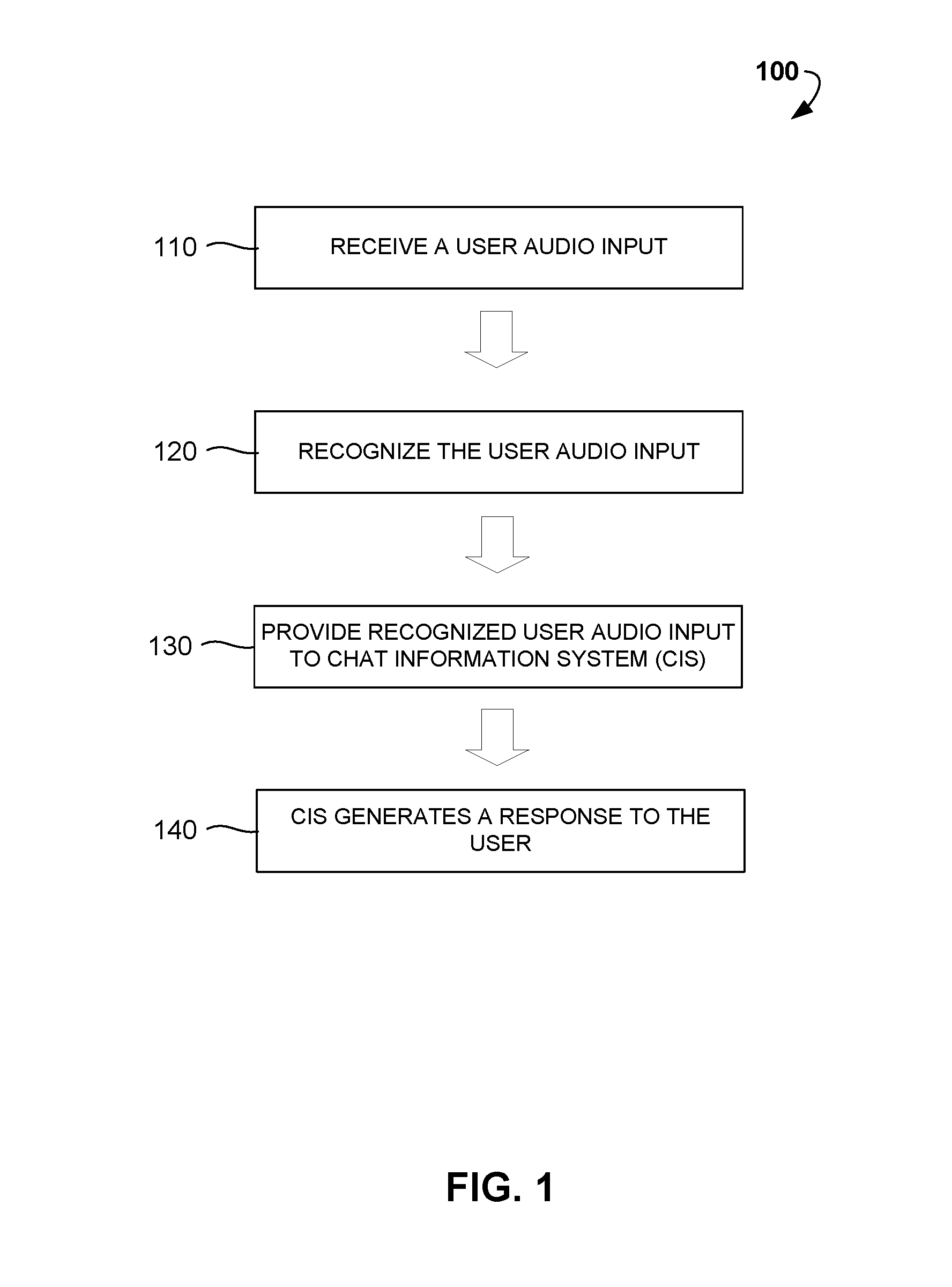

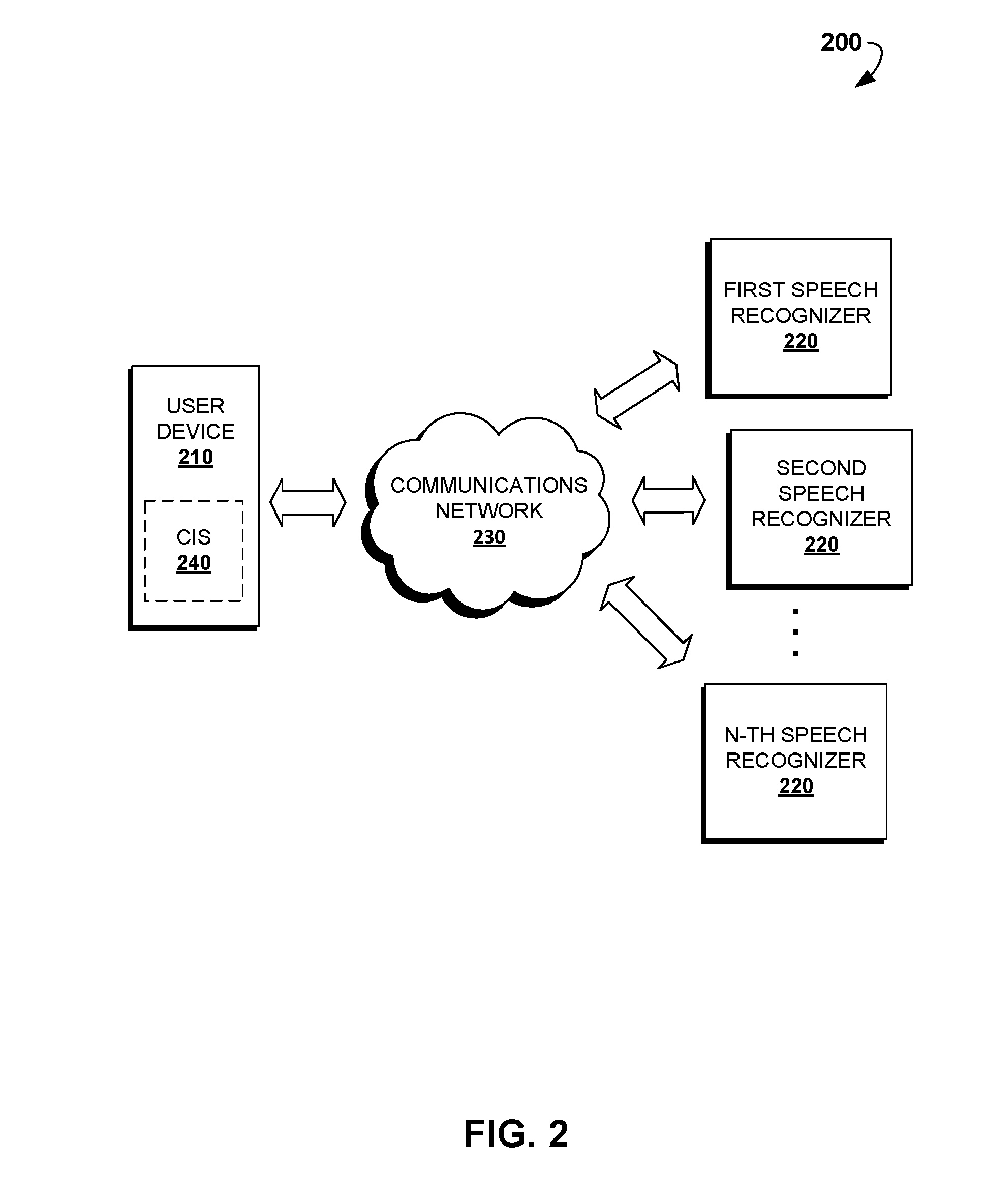

Selective speech recognition for chat and digital personal assistant systems

ActiveUS20160260434A1Simple technologyImprove accuracySpeech recognitionAddress bookMultiple criteria

Disclosed are computer-implemented methods and systems for dynamic selection of speech recognition systems for the use in Chat Information Systems (CIS) based on multiple criteria and context of human-machine interaction. Specifically, once a first user audio input is received, it is analyzed so as to locate specific triggers, determine the context of the interaction or predict the subsequent user audio inputs. Based on at least one of these criteria, one of a free-diction recognizer, pattern-based recognizer, address book based recognizer or dynamically created recognizer is selected for recognizing the subsequent user audio input. The methods described herein increase the accuracy of automatic recognition of user voice commands, thereby enhancing overall user experience of using CIS, chat agents and similar digital personal assistant systems.

Owner:GOOGLE LLC

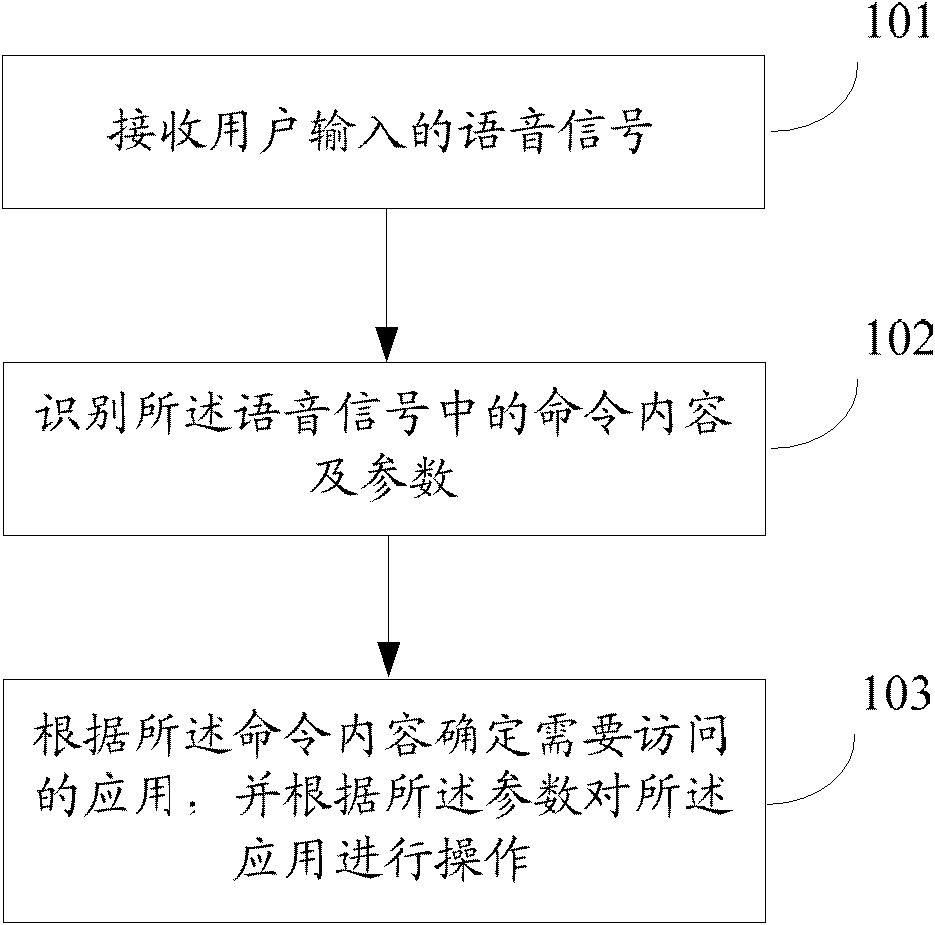

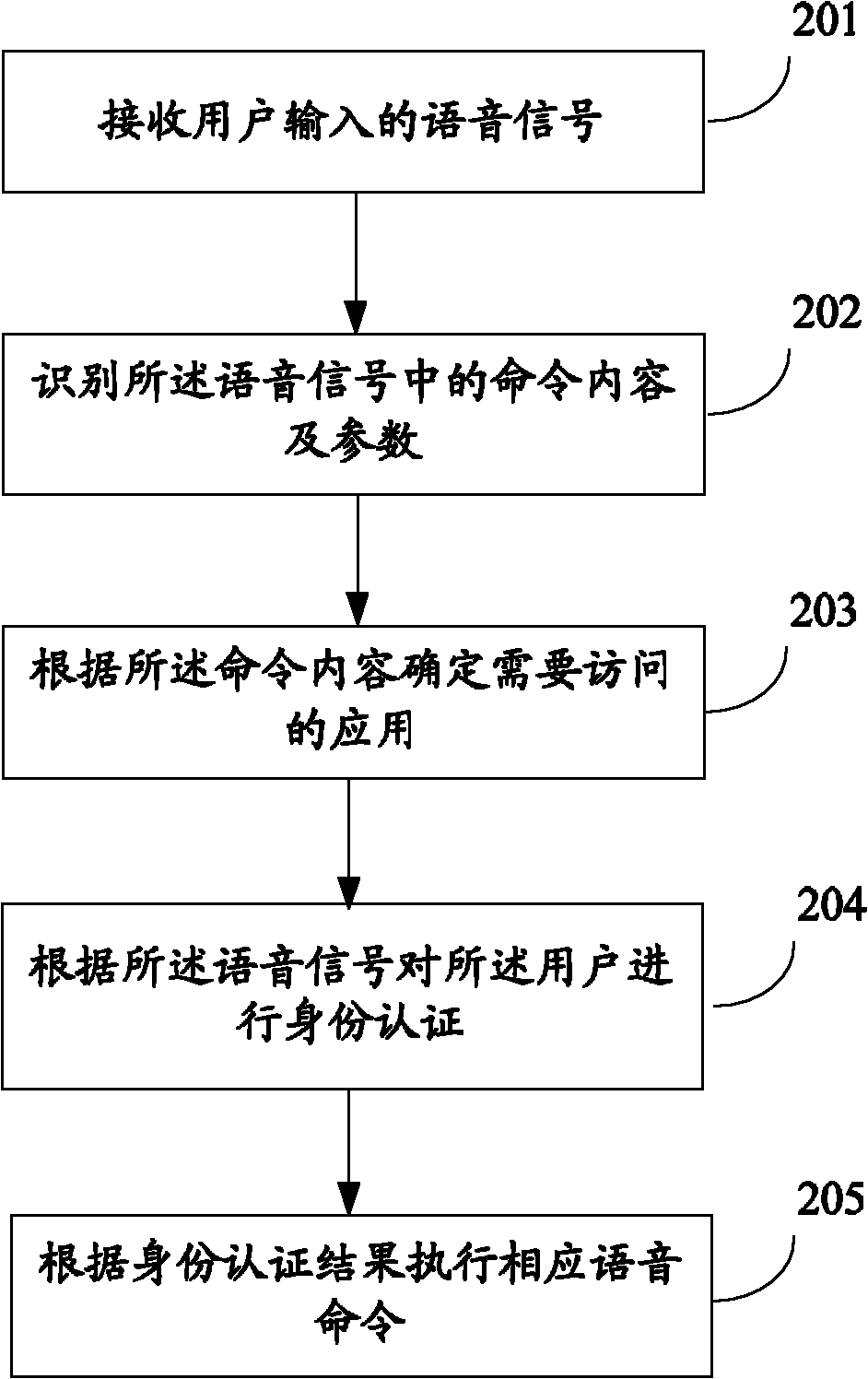

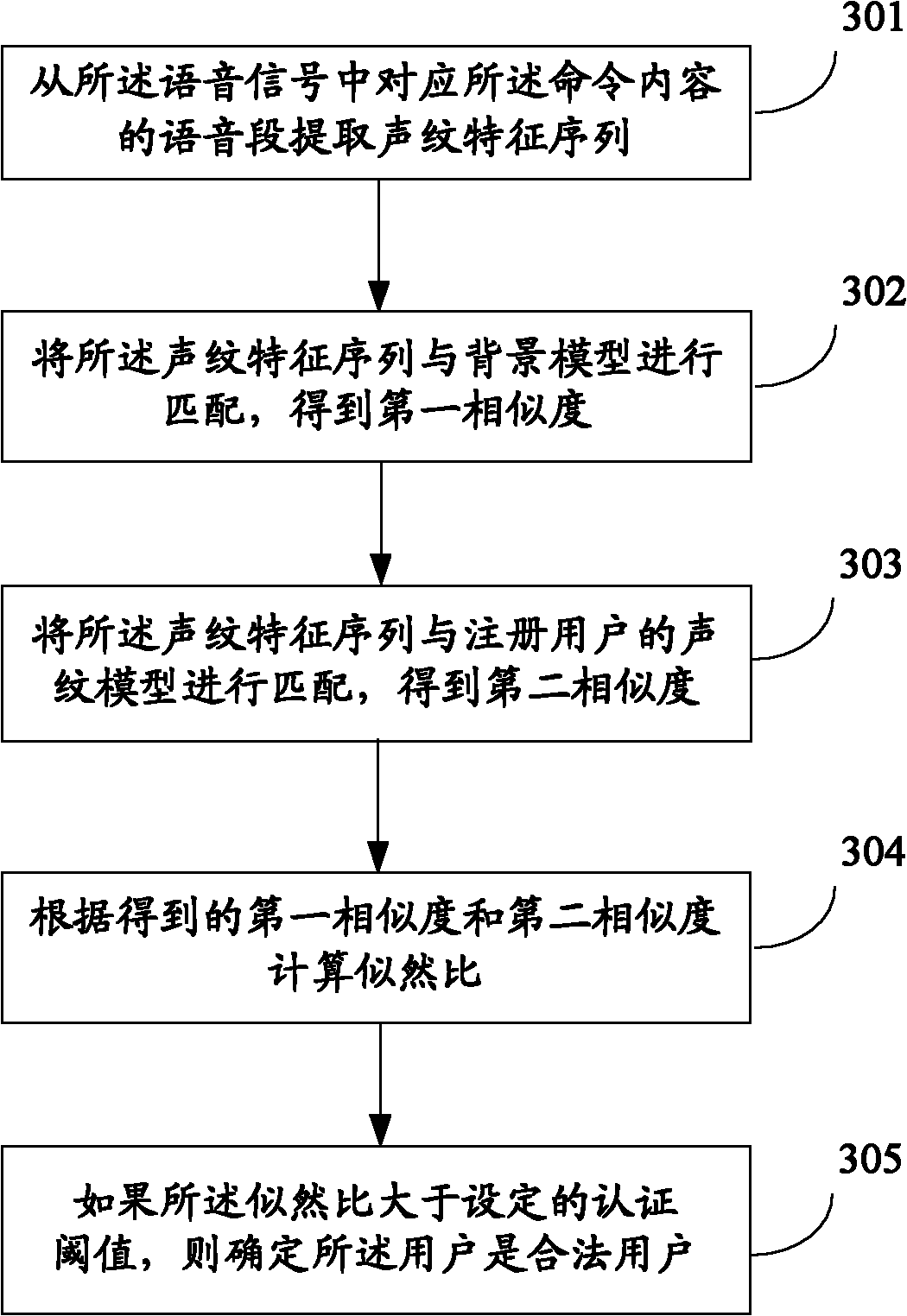

Personal assistant application access method and system

InactiveCN102510426ARealize the function of personal virtual assistantEfficient shortcut command orientationSpeech analysisSubstation equipmentAccess methodUser input

The invention relates to the technical field of application access and discloses a personal assistant application access method and system. The method comprises the following steps: receiving a voice signal input by a user; identifying command content and parameters in the voice signal; according to the command content, determining application which needs to access; and according to the parameters, operating the application. By utilizing the personal assistant application access method and system disclosed by the invention, the human-computer interaction efficiency can be improved.

Owner:IFLYTEK CO LTD

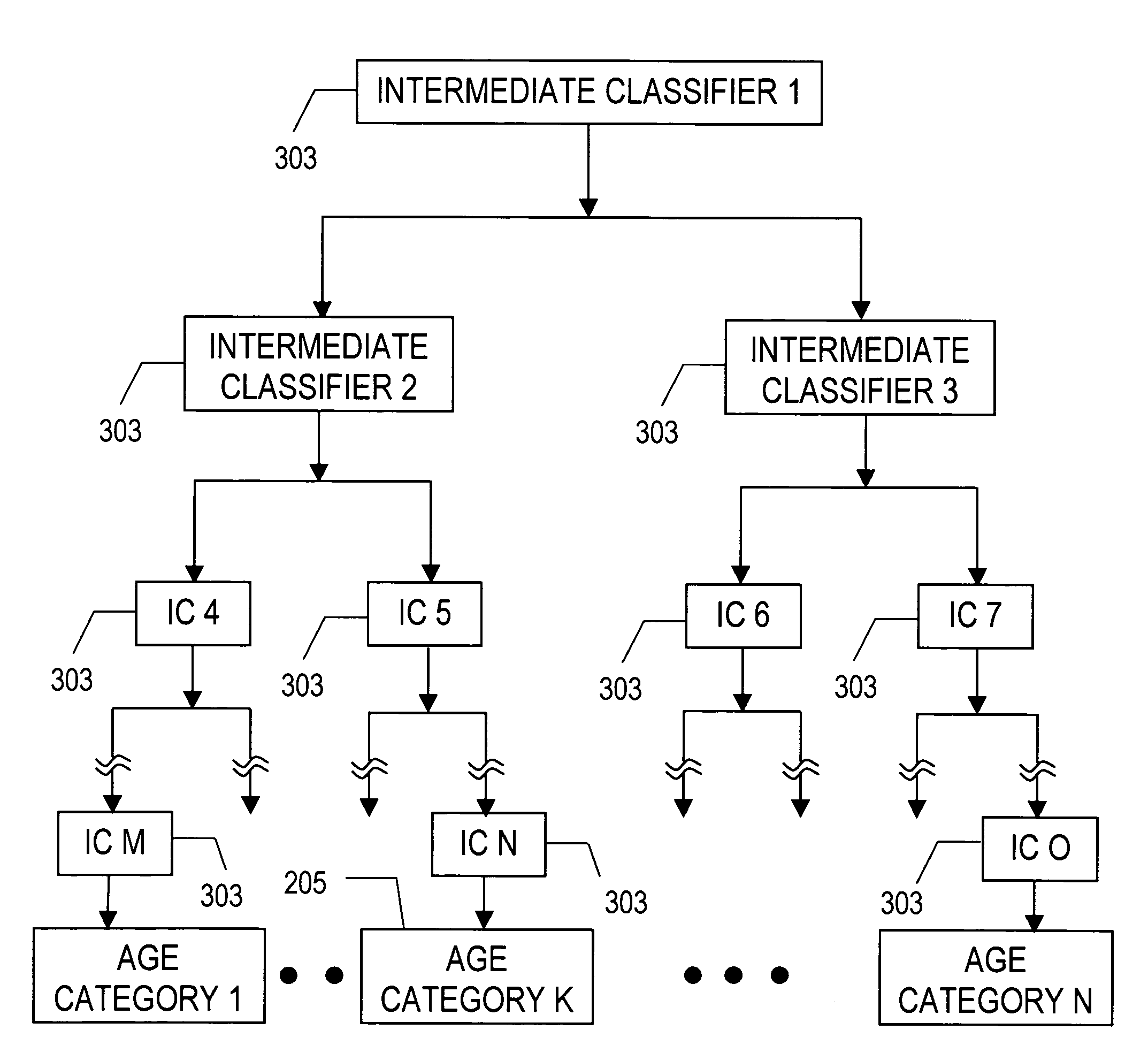

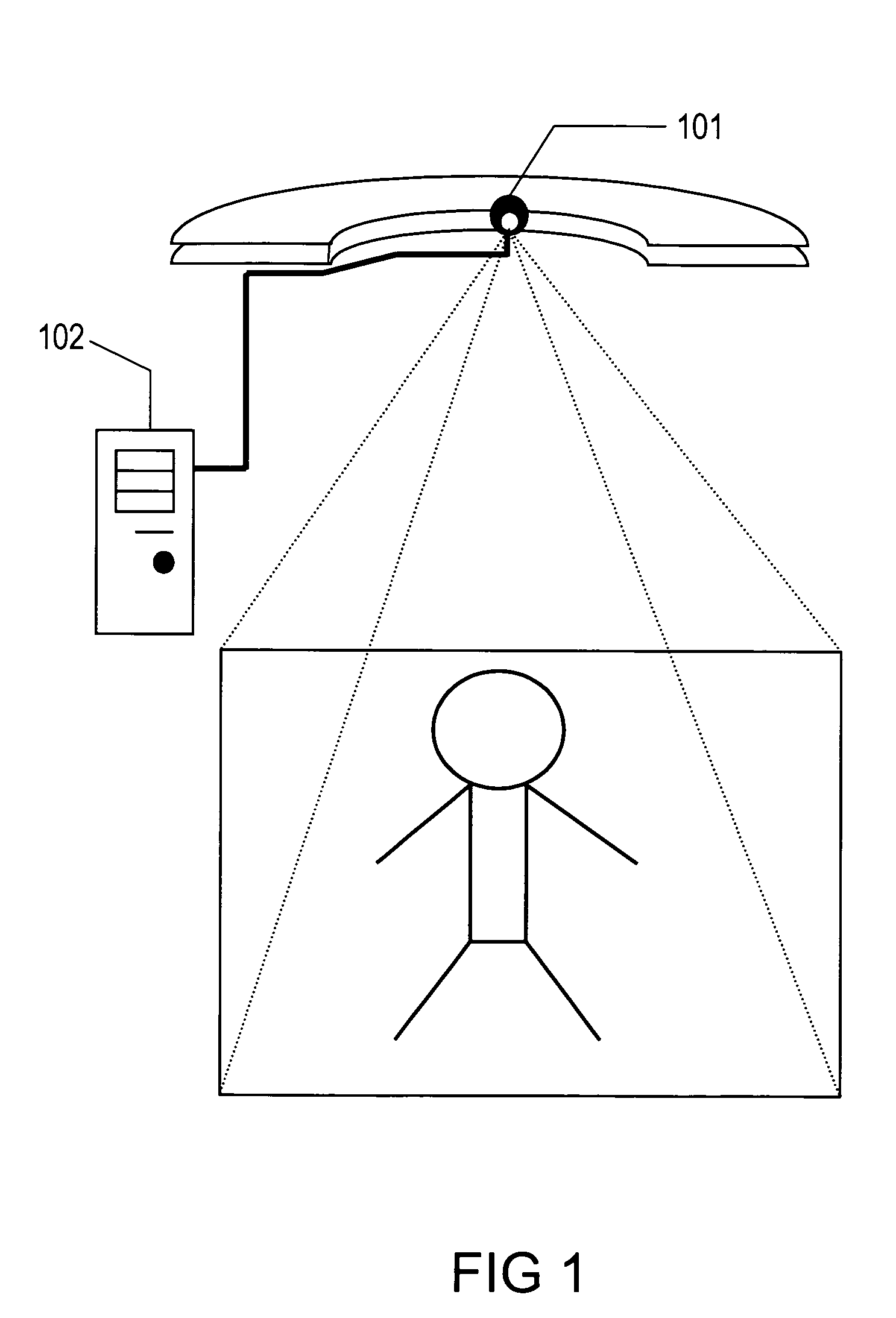

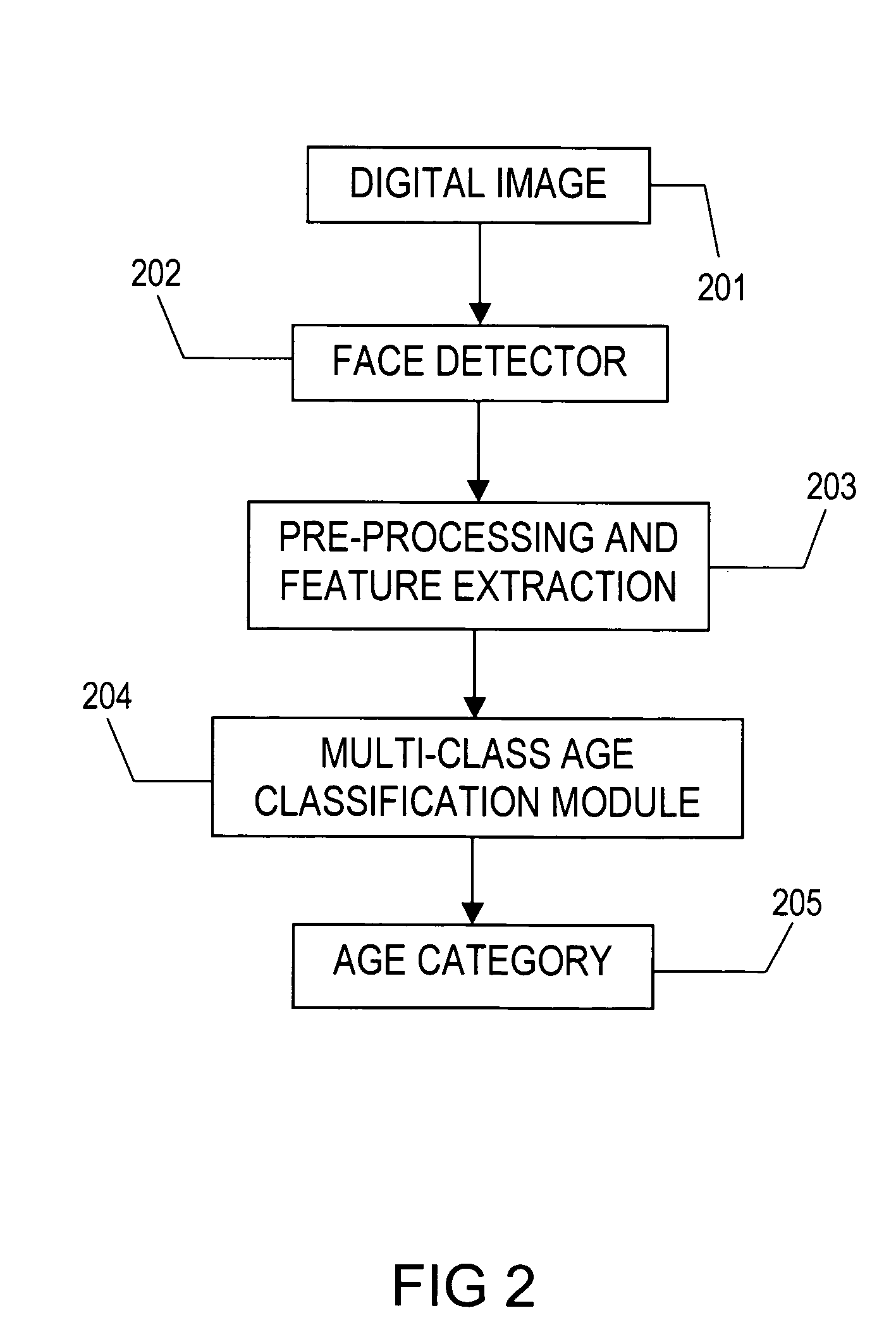

Classification of humans into multiple age categories from digital images

The present invention includes a method and system for automatically extracting the multi-class age category information of a person from digital images. The system detects the face of the person(s) in an image, extracts features from the face(s), and then classifies into one of the multiple age categories. Using appearance information from the entire face gives better results as compared to currently known techniques. Moreover, the described technique can be used to extract age category information in more robust manner than currently known methods, in environments with a high degree of variability in illumination, pose and presence of occlusion. Besides use as an automated data collection system wherein given the necessary facial information as the data, the age category of the person is determined automatically, the method could also be used for targeting certain age-groups in advertisements, surveillance, human computer interaction, security enhancements and immersive computer games.

Owner:VIDEOMINING CORP

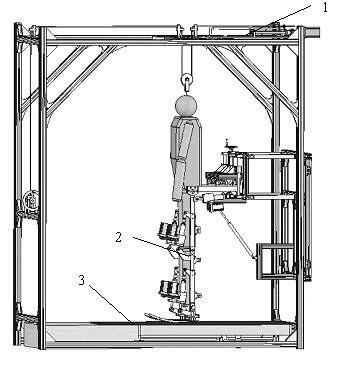

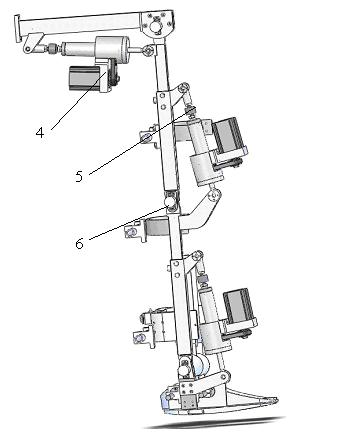

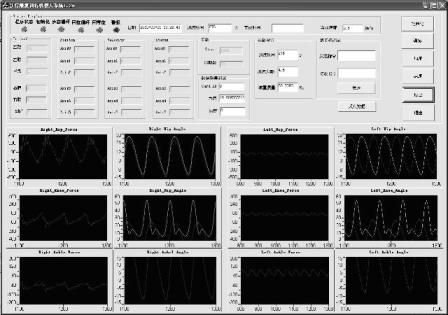

Motion control method of lower limb rehabilitative robot

InactiveCN102058464ARealize tracking controlEnhanced awareness of initiativeChiropractic devicesMovement coordination devicesEngineeringActive participation

The invention relates to a motion control method of a lower limb rehabilitative robot. In the method, aiming at different rehabilitation stages of a patient, two working modes of passive training and active training are carried out: under the mode of passive training, the patient is driven by controlling the robot to finish specific motions or motion according to a right physiological gait track; abnormal motions of the patient are completely restrained; and the patient passively follows the robot to do walking rehabilitation training; under the mode of active training, limited abnormal motions of the patient are restrained by the robot; through a real-time detection on joint driving forces generated when the patient acts on the robot in the motion process, human-computer interaction moment is extracted by utilizing an inverse dynamic model to judge the active motion intention of lower limbs of the patient; and the interaction moment is converted into correction value of gait track by utilizing an impedance controller to directly correct or generate the gait training track the patient expects through an adaptive controller, therefore, the purpose that the robot can provide auxiliary force and resistant force for the rehabilitation training can be indirectly realized. By means of the motion control method of the lower limb rehabilitative robot, rehabilitation training motions suitable for different rehabilitation stages can be provided for a dysbasia patient, thereby enhancing active participation degree of the rehabilitation training of the patient, building confidence of the rehabilitation and positivity of the motion, and then enhancing effect of the rehabilitation training.

Owner:SHANGHAI UNIV

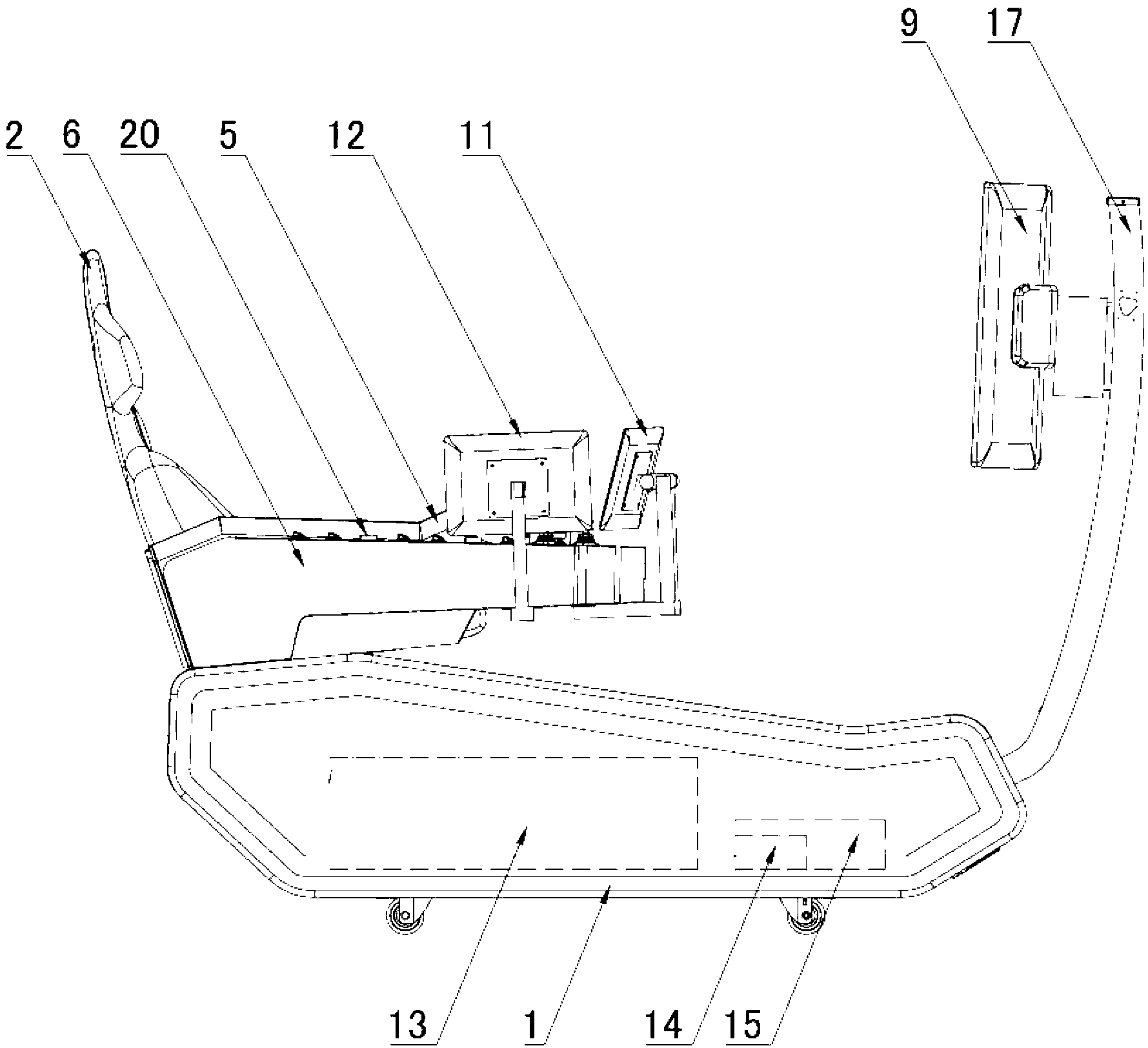

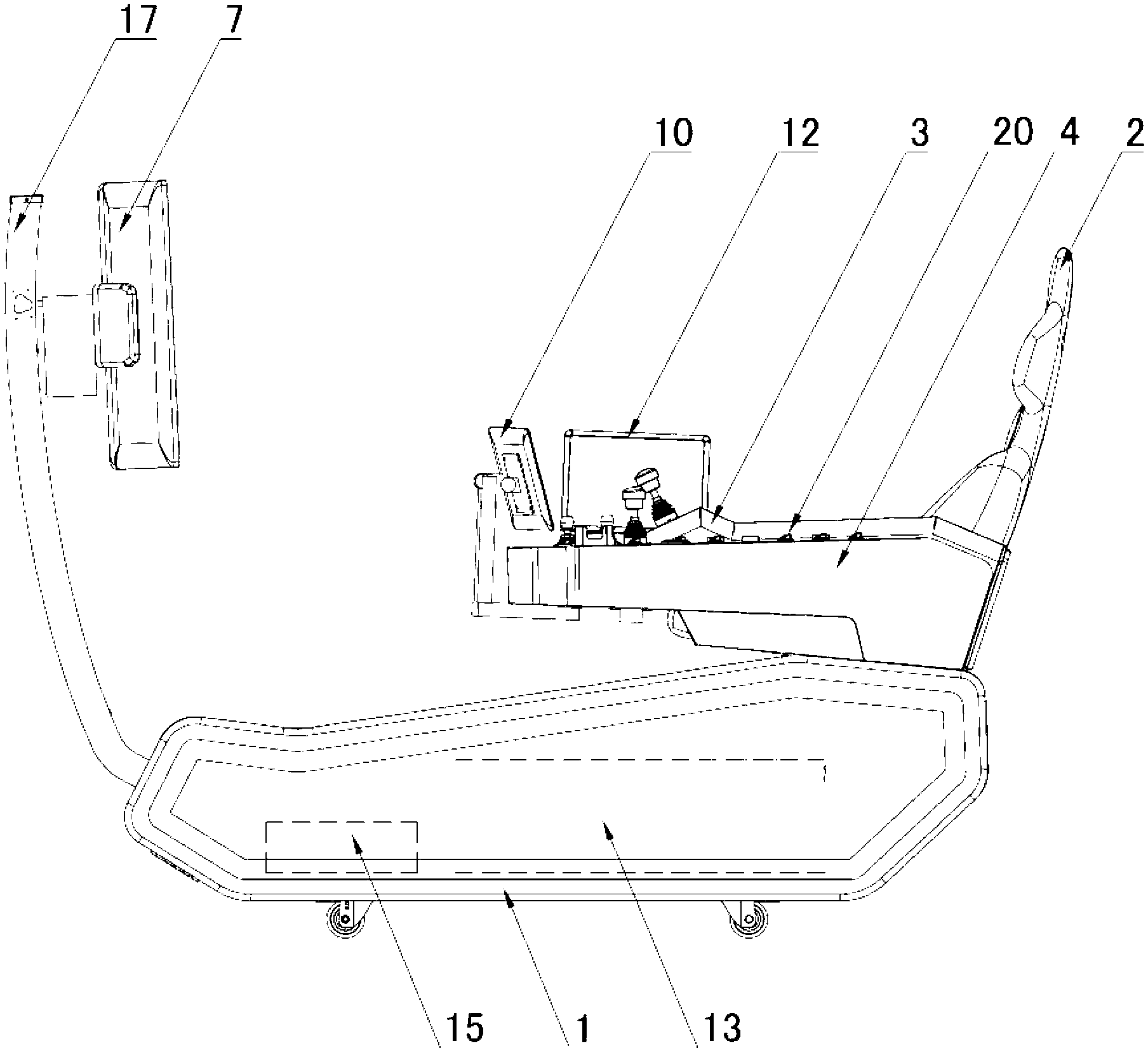

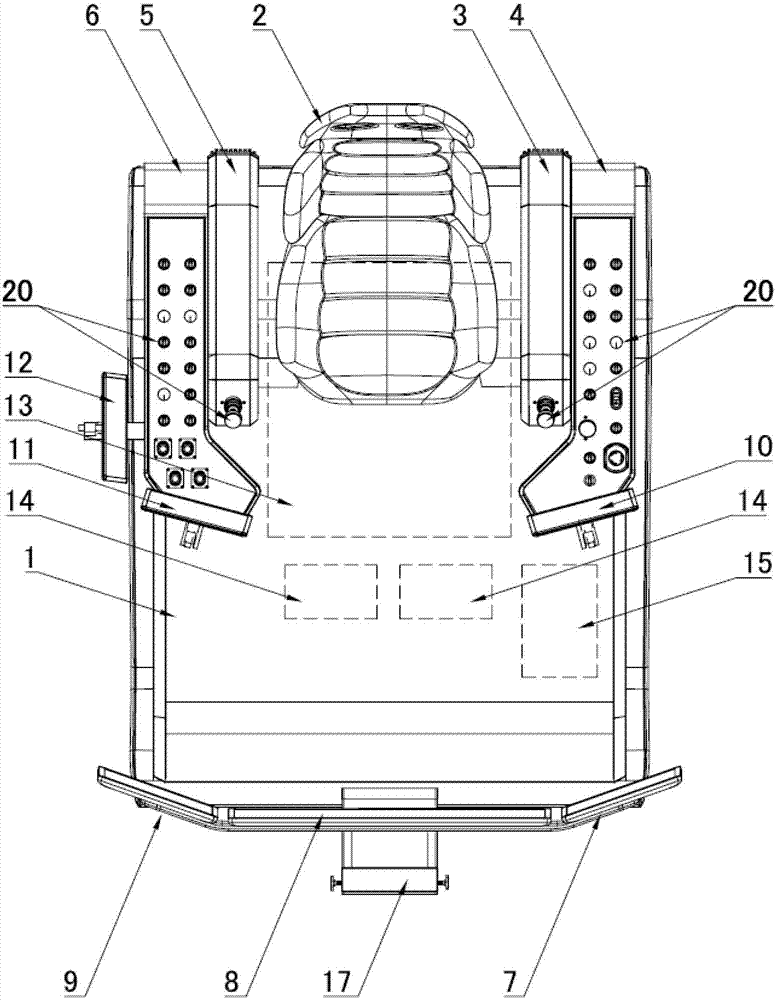

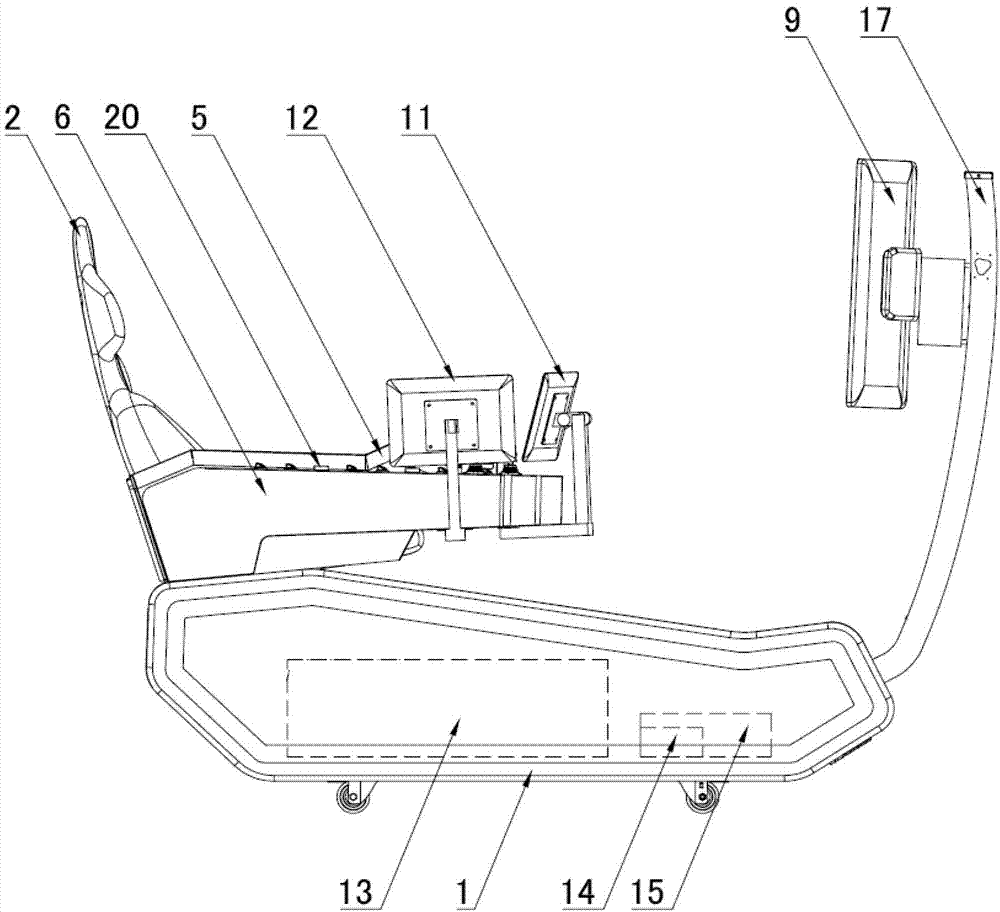

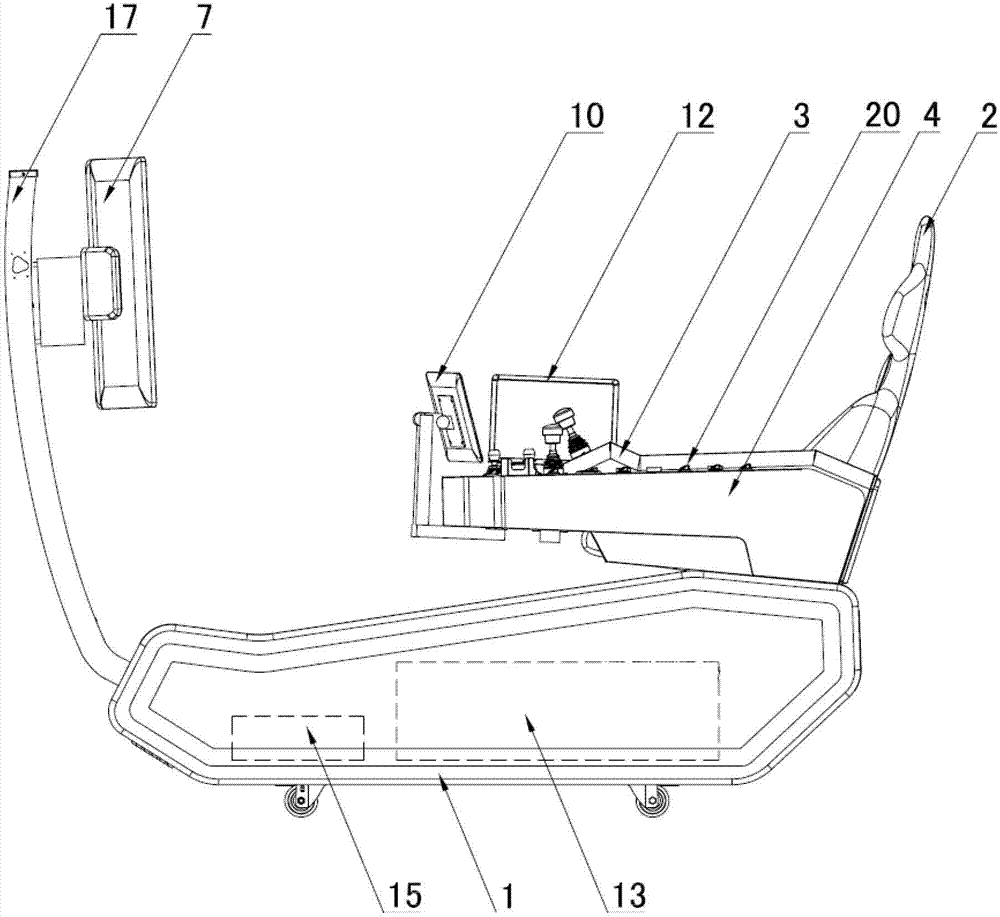

Coiled tubing operation equipment simulator

The invention discloses a coiled tubing operation equipment simulator, which comprises a base, a seat which is arranged at the middle part of the rear end of the base, simulation control parts which are arranged on the two sides of the seat, a data acquisition module which is used for receiving control signals of the simulation control parts, a main scene display which is arranged at the front of the seat, an injection head scene display and a downhole scene display which are arranged on the two sides of the main scene display, a computer system which is connected with the displays and the data acquisition module and can display simulated scenes in the displays, a simulation instrument display which is connected with the computer system, and an input device which is used for man-machine interaction. The coiled tubing operation equipment simulator can simulate various operation scenes in the actual working process of coiled tubing operation equipment, realize various simulation operations on the coiled tubing operation equipment and reproduce the actual operation and working process of the real coiled tubing operation equipment.

Owner:YANTAI JEREH PETROLEUM EQUIP & TECH CO LTD +1

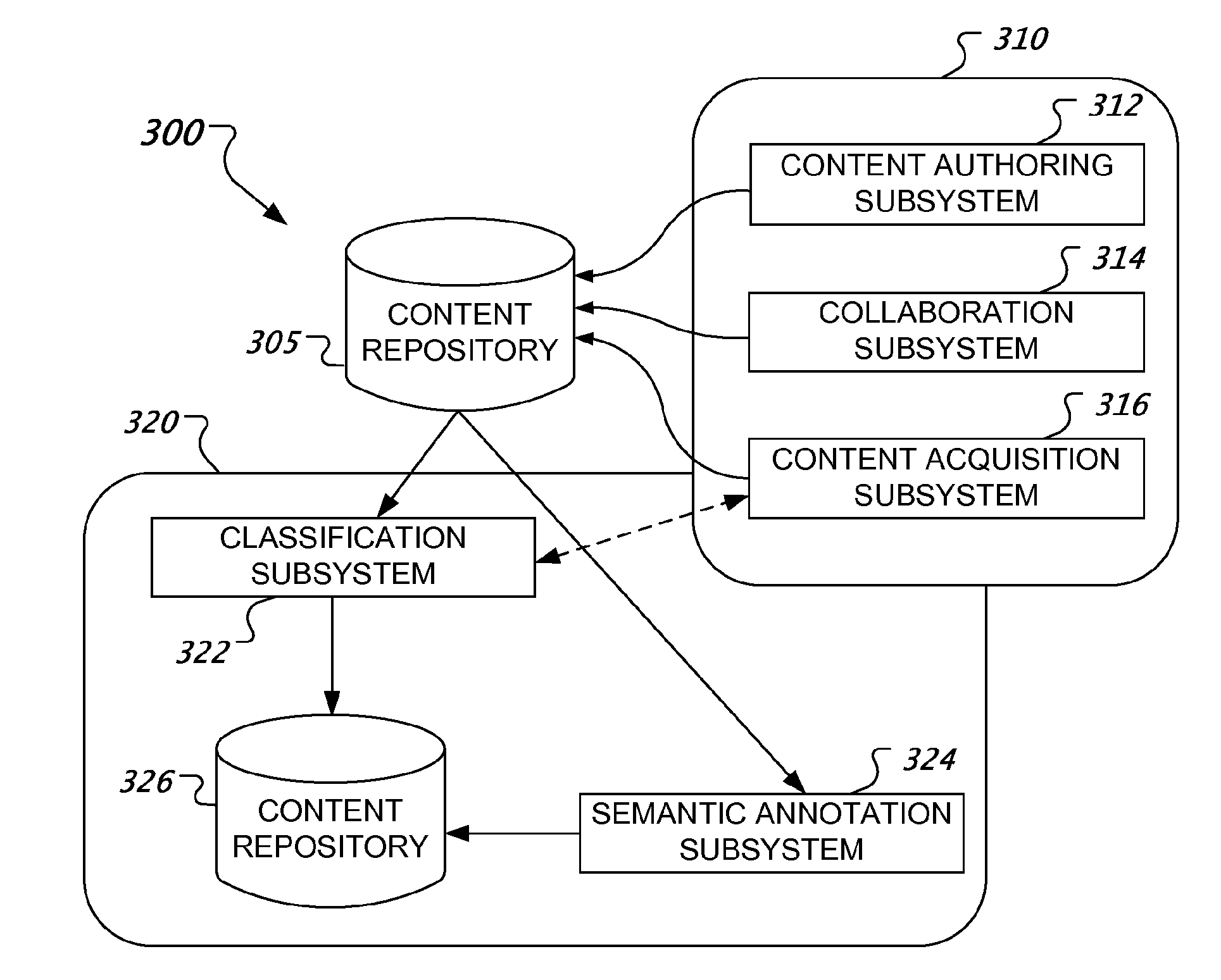

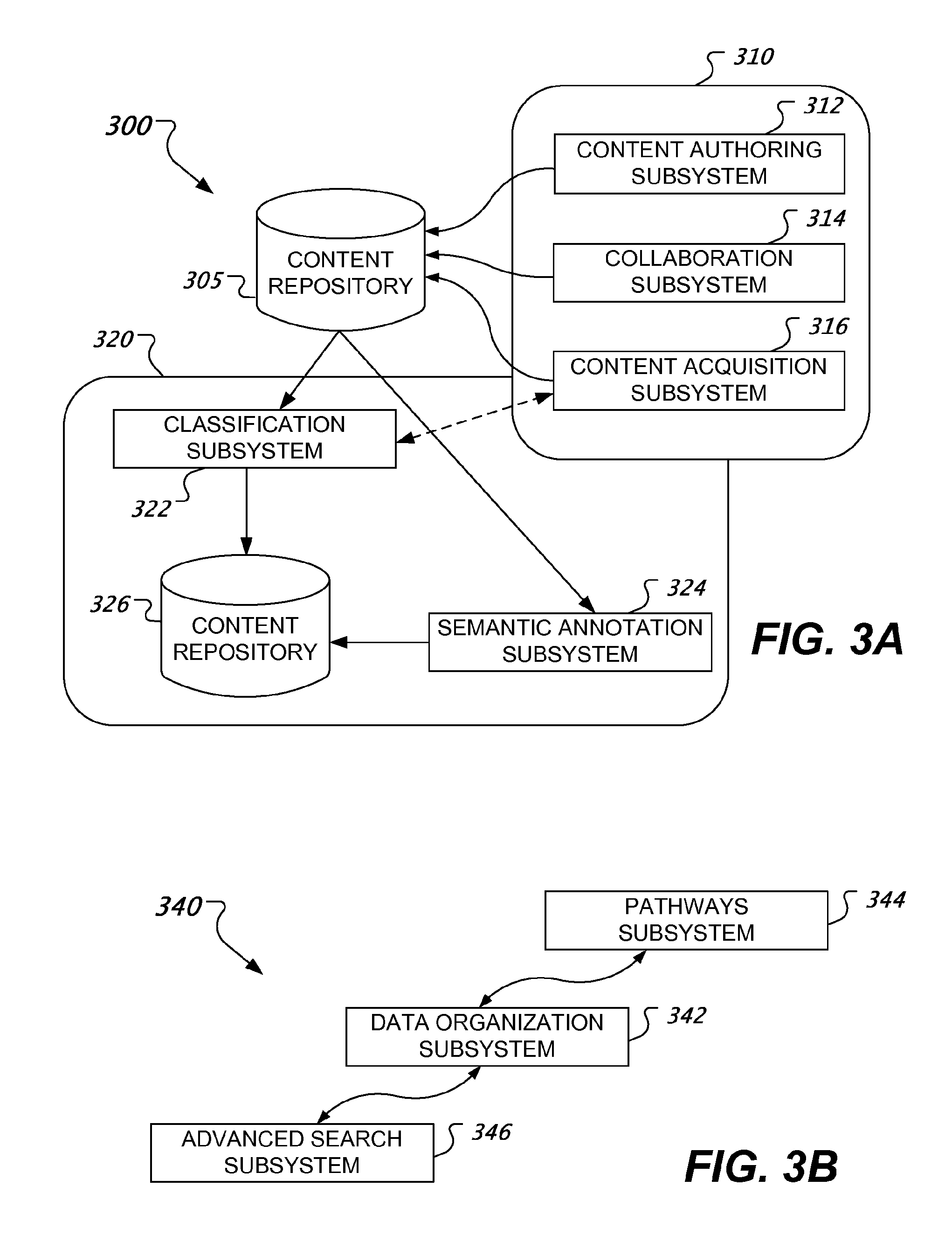

Adaptive Knowledge Platform

InactiveUS20110264649A1Easy to organizeImprove filtering effectData processing applicationsDigital data processing detailsData setMultiple perspective

Methods, systems, and apparatus, including medium-encoded computer program products, for providing an adaptive knowledge platform. In one or more aspects, a system can include a knowledge management component to acquire, classify and disseminate information of a dataset; a human-computer interaction component to visualize multiple perspectives of the dataset and to model user interactions with the multiple perspectives; and an adaptivity component to modify one or more of the multiple perspectives of the dataset based on a user-interaction model.

Owner:ALEXANDRIA INVESTMENT RES & TECH

Coiled tubing operation equipment simulator

The invention discloses a coiled tubing operation equipment simulator, which comprises a base, a seat which is arranged at the middle part of the rear end of the base, simulation control parts which are arranged on the two sides of the seat, a data acquisition module which is used for receiving control signals of the simulation control parts, a main scene display which is arranged at the front of the seat, an injection head scene display and a downhole scene display which are arranged on the two sides of the main scene display, a computer system which is connected with the displays and the data acquisition module and can display simulated scenes in the displays, a simulation instrument display which is connected with the computer system, and an input device which is used for man-machine interaction. The coiled tubing operation equipment simulator can simulate various operation scenes in the actual working process of coiled tubing operation equipment, realize various simulation operations on the coiled tubing operation equipment and reproduce the actual operation and working process of the real coiled tubing operation equipment.

Owner:YANTAI JEREH PETROLEUM EQUIP & TECH CO LTD +1

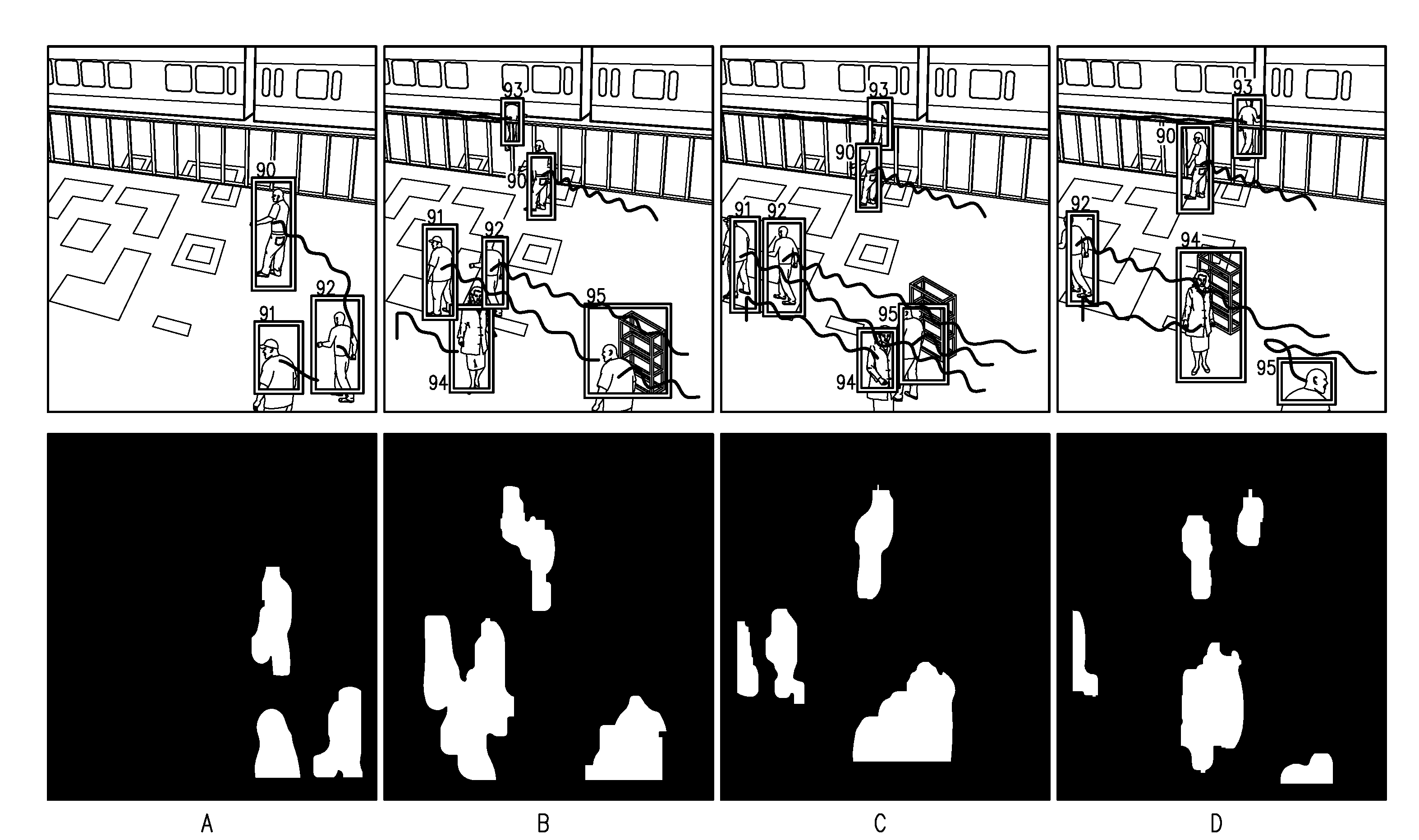

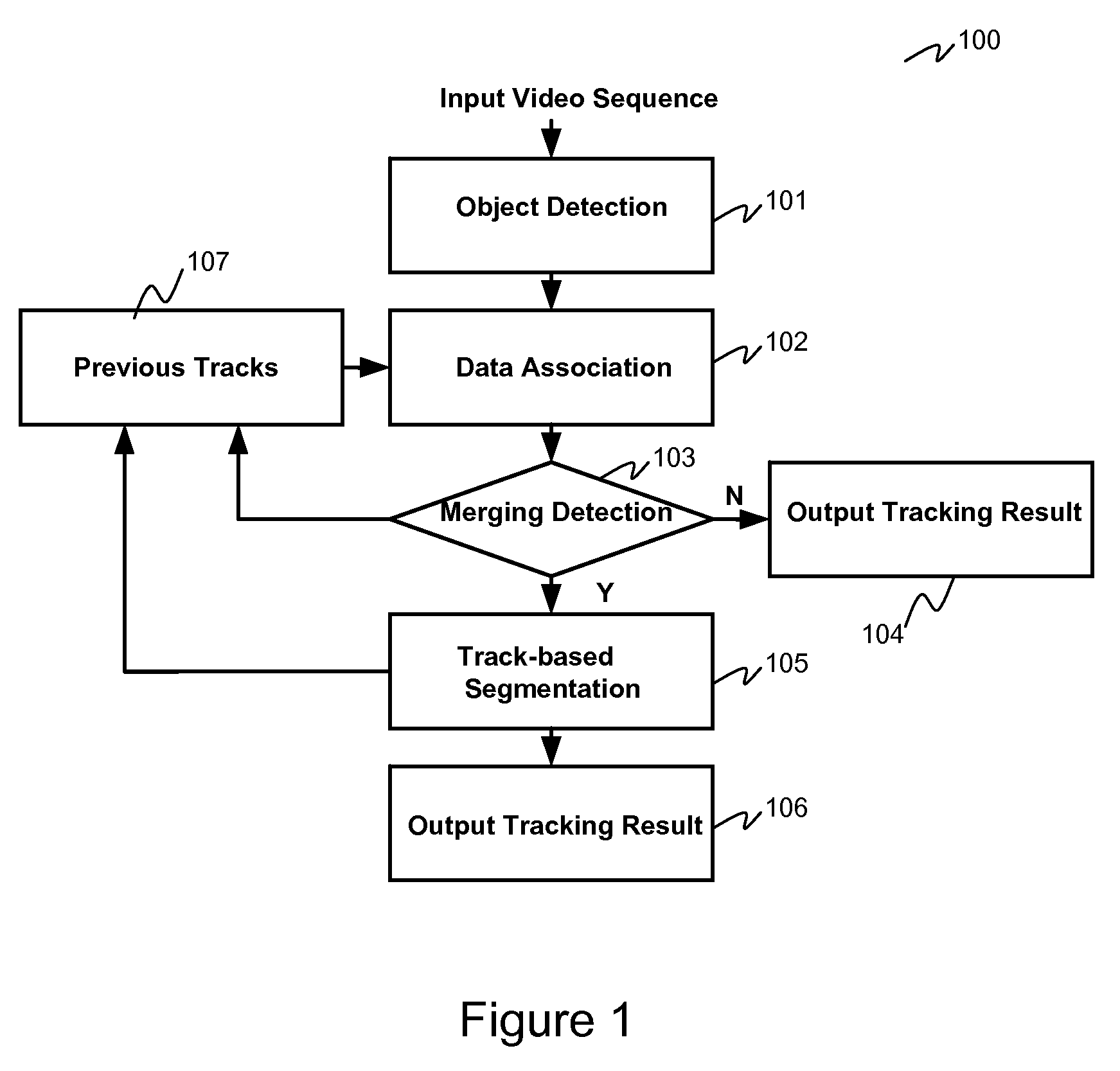

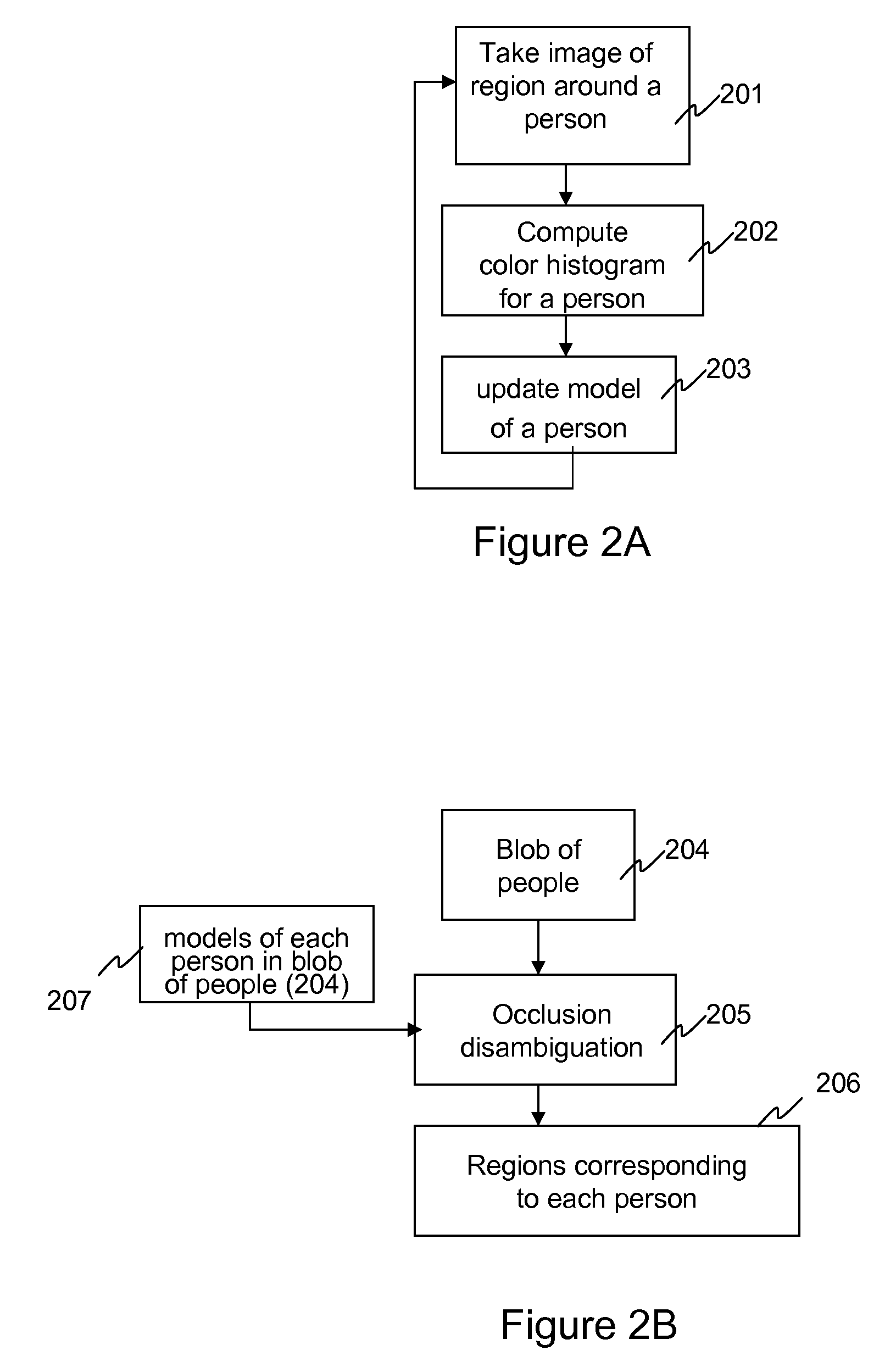

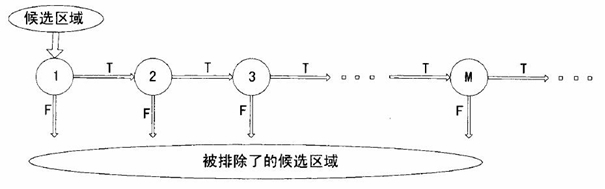

Efficient tracking multiple objects through occlusion

InactiveUS20090002489A1Eliminate the problemCharacter and pattern recognitionColor television detailsVideo monitoringComputer vision

Visual tracking of multiple objects in a crowded scene is critical for many applications include surveillance, video conference and human computer interaction. Complex interactions between objects result in partial or significant occlusions, making tracking a highly challenging problem. Presented is a novel efficient approach to tracking a varying number of objects through occlusion. The object tracking during occlusion is posed as a track-based segmentation problem in the joint-object space. Appearance models are used to interpret the foreground into multiple layer probabilistic masks in a Bayesian framework. The search for optimal segmentation solution is achieved by a greedy searching algorithm and integral image for real-time computing. Promising results on several challenging video surveillance sequences have been demonstrated.

Owner:FUJIFILM BUSINESS INNOVATION CORP

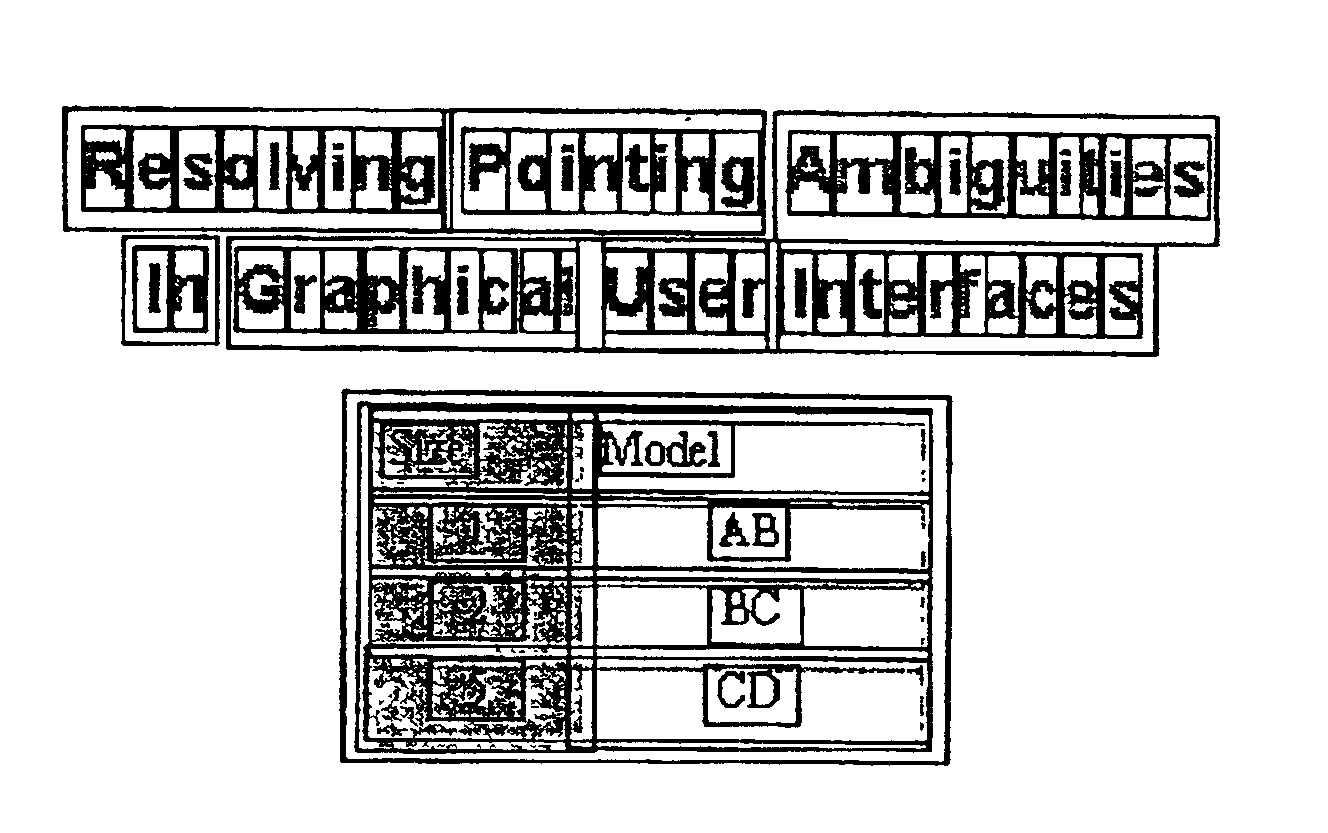

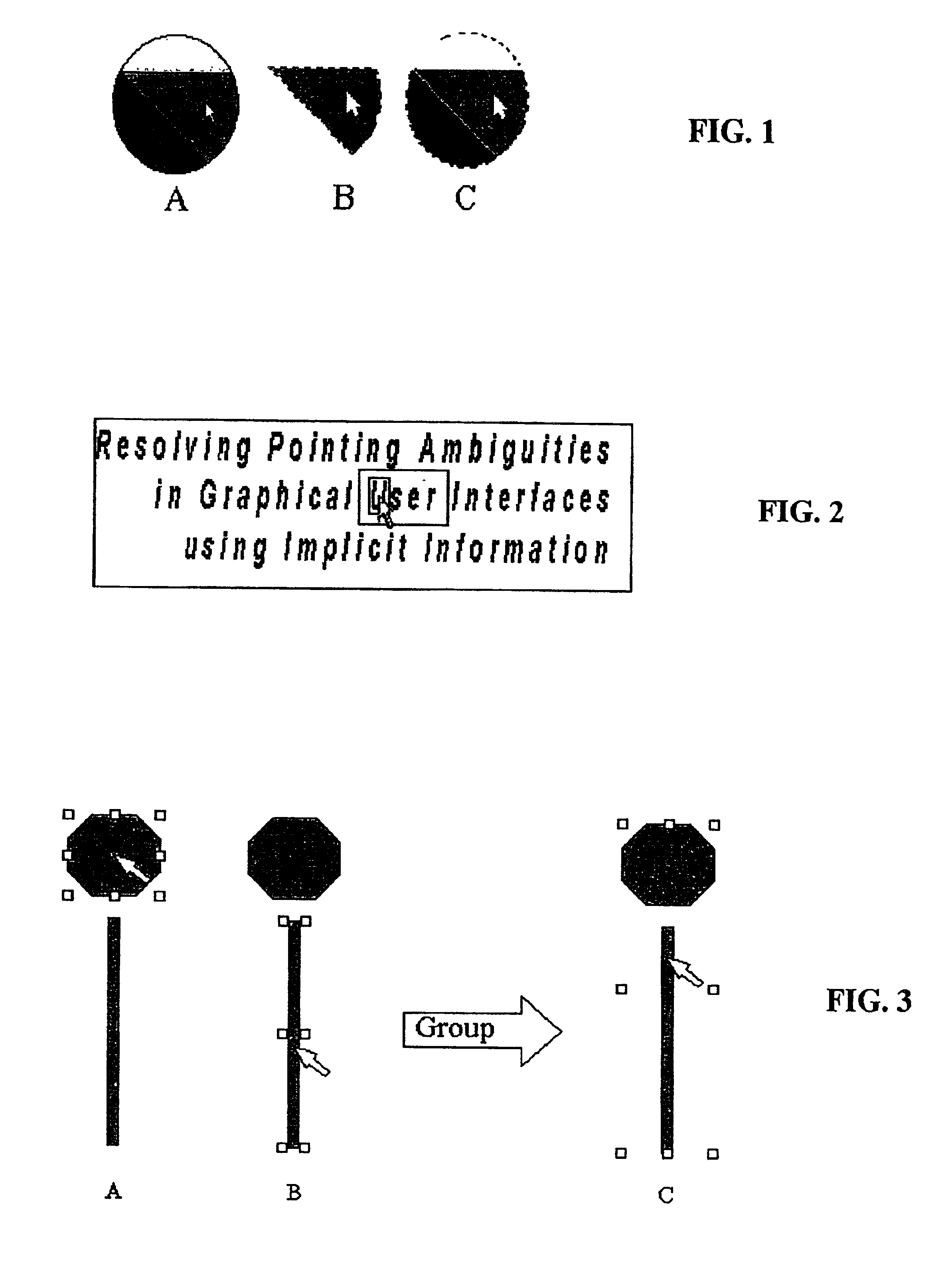

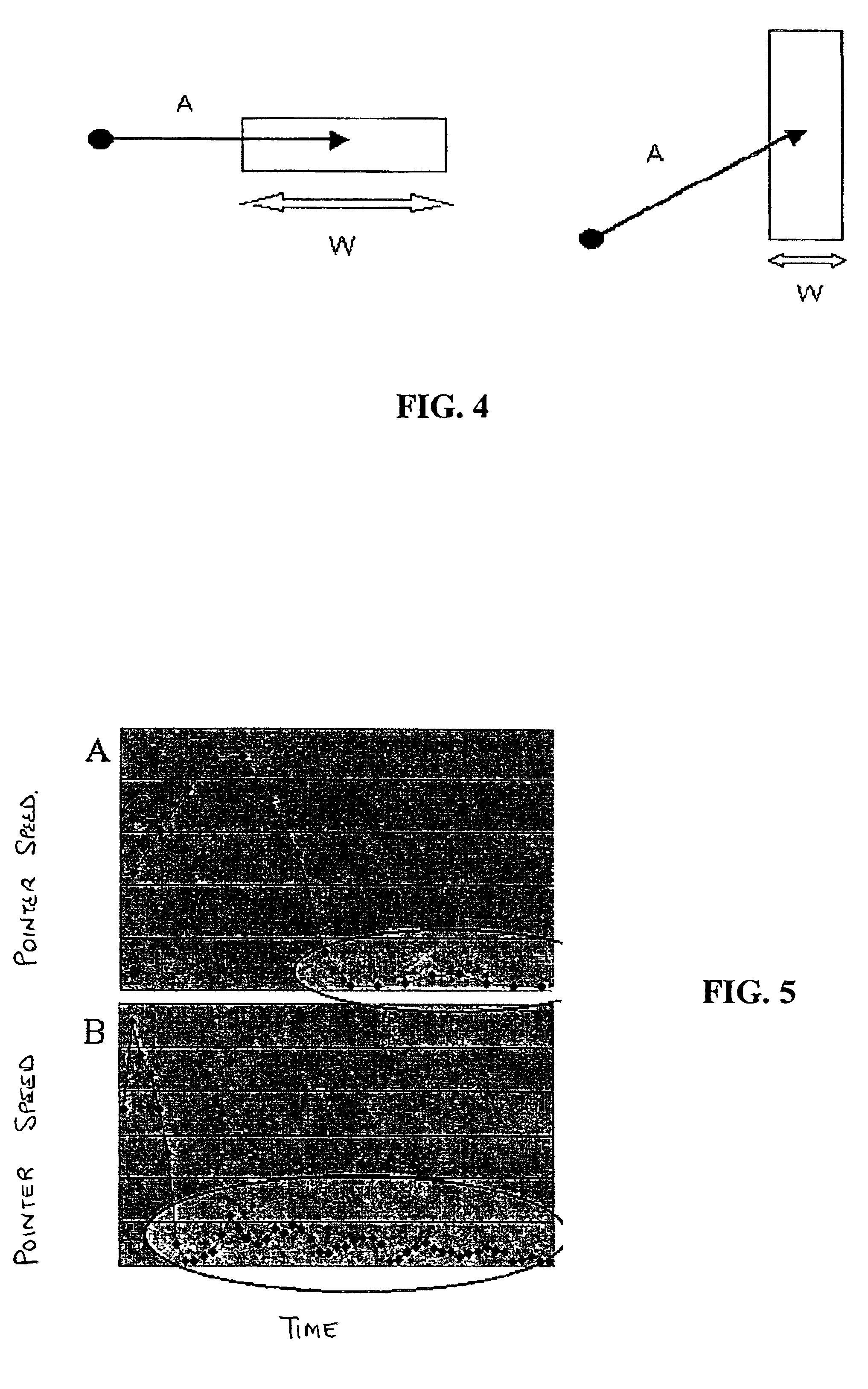

Method and system for implicitly resolving pointing ambiguities in human-computer interaction (HCI)

InactiveUS6907581B2Simple design and implementationEasy accessInput/output processes for data processingObject basedAmbiguity

Owner:RAMOT AT TEL AVIV UNIV LTD

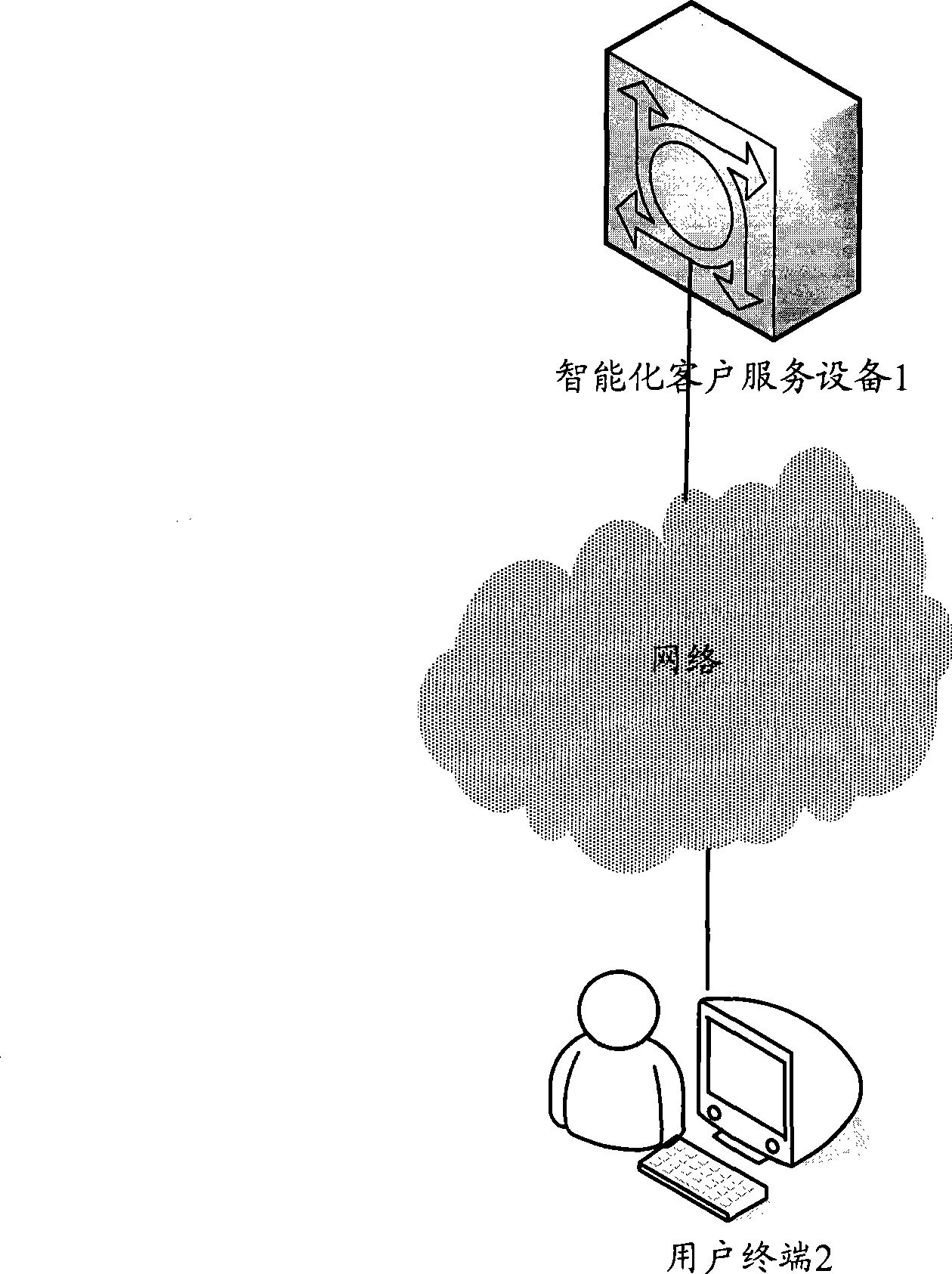

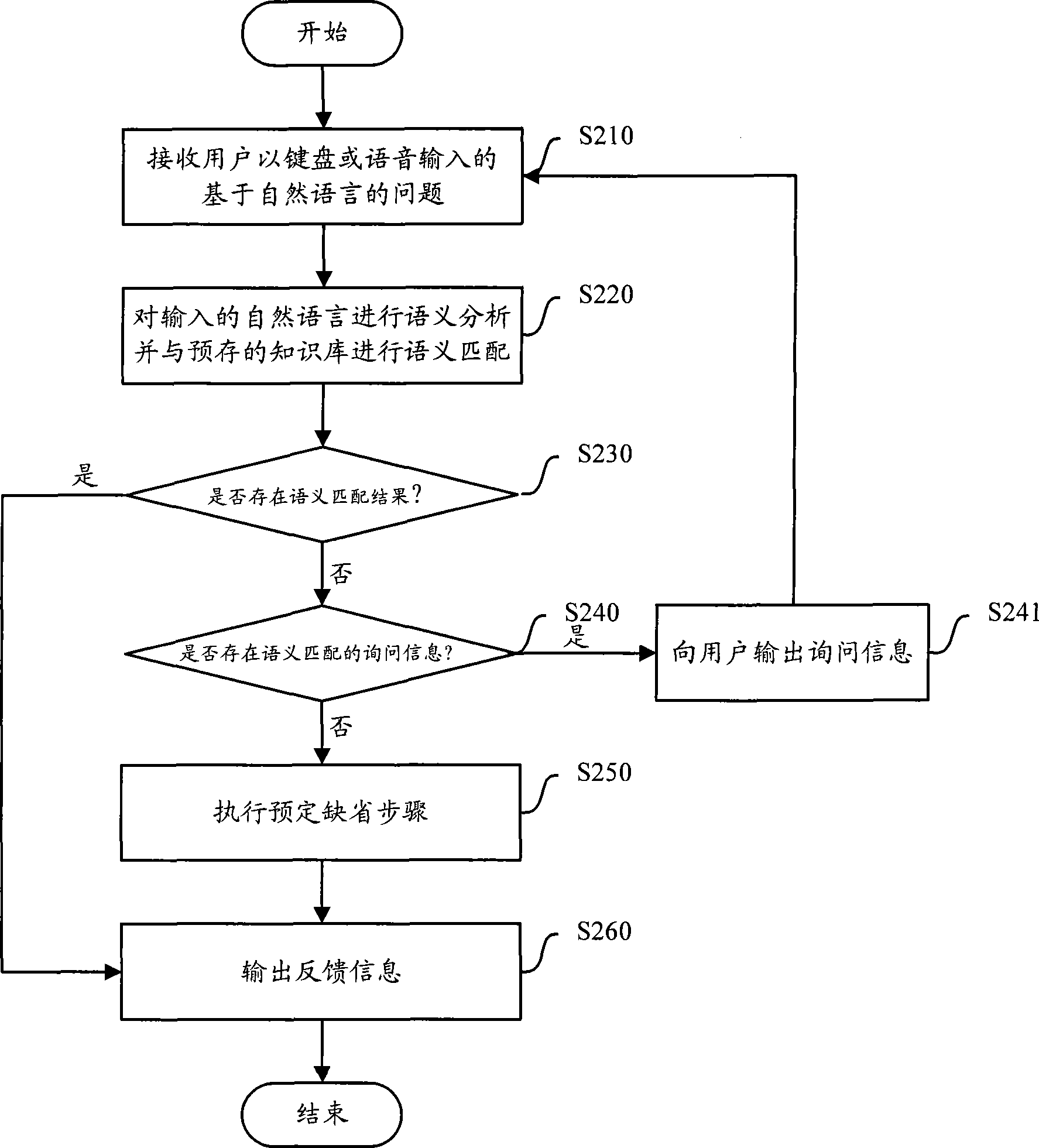

Method and equipment for implementing automatic customer service through human-machine interaction technology

ActiveCN101431573AReduce reinvestmentLow running costSpecial service for subscribersSupervisory/monitoring/testing arrangementsHuman–robot interactionHuman system interaction

The invention provides a method realizing automatic client service using human-computer interaction technique, and an equipment realizing the method. The method includes: receiving problem imported with nature language from the user, converting the nature language into information identified by the computer using human-computer interaction technique; outputting feedback information according withthe information identified for the computer. In addition, the invention also provides the equipment realizing the method.

Owner:SHANGHAI XIAOI ROBOT TECH CO LTD

Man-machine interactive system for unmanned vehicle

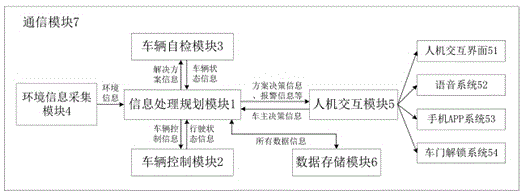

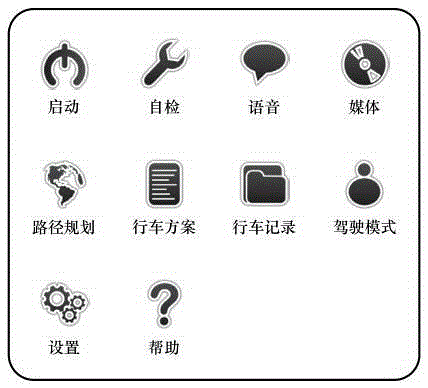

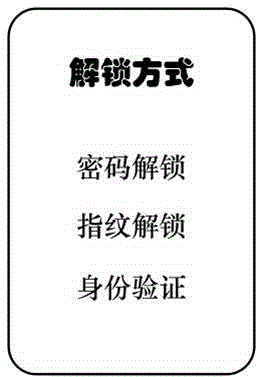

ActiveCN105346483AImprove completenessImprove securityElectric/fluid circuitInformation processingResponse method

The invention provides a man-machine interactive system for an unmanned vehicle. The system comprises an information processing and planning module, a vehicle control module, a self-inspection module, an environment information acquisition module, a man-machine interactive module, a data storage module and a communication module. System operation manners are divided into the manual operation manner, the voice operation manner and the mobile phone APP control manner. The system is mainly operated according to the steps that firstly, according to the situation whether a user enters the vehicle or remotely controls the vehicle, a corresponding unlocking manner and the corresponding operation manner are adopted; secondly, the vehicle is started, the man-machine interactive system is activated, and vehicle self-inspection is performed; thirdly, after it is confirmed that the vehicle is free of faults, a destination is input, and a driving route is planned according to the actual condition; fourthly, the vehicle is driven according to a driving scheme; and fifthly, the vehicle state and the surroundings are monitored in real time, whether an accident occurs or not is judged, and the driving manner and the route are adjusted at any time according to the surrounding condition till the vehicle reaches the destination. The invention further provides a method that a standby computer takes charge when a central computer breaks down and other three accident handling methods.

Owner:CHANGZHOU JIAMEI TECH CO LTD

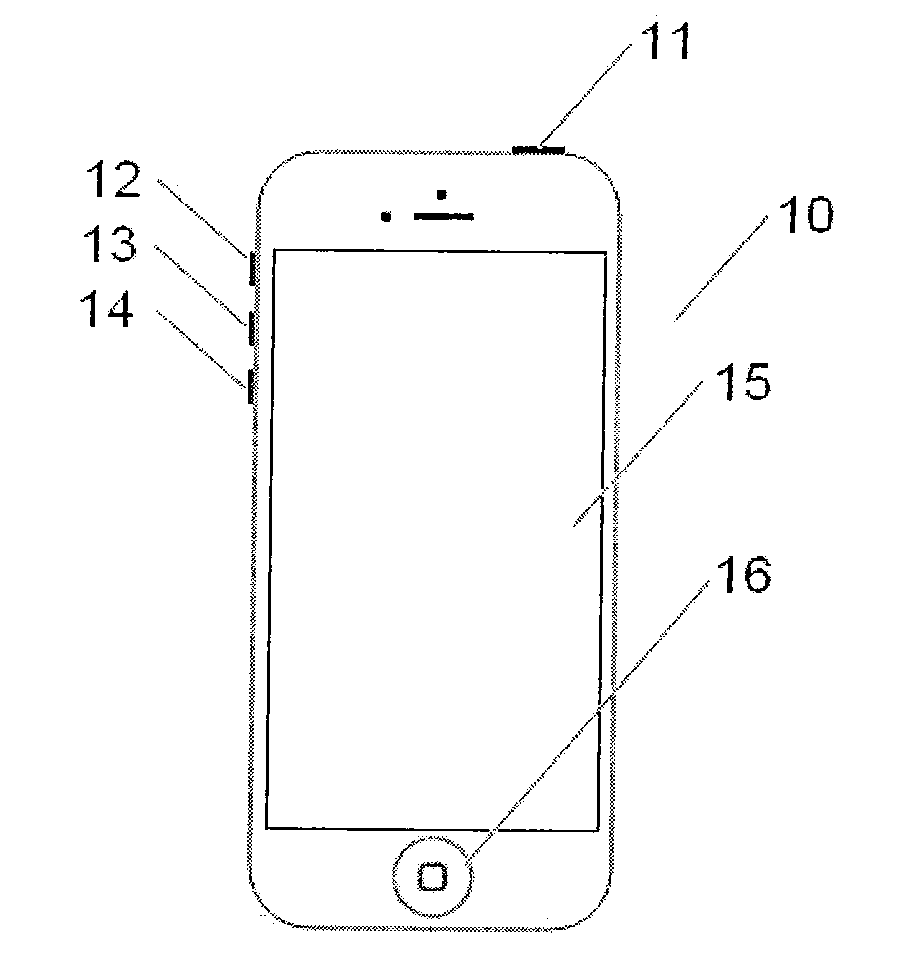

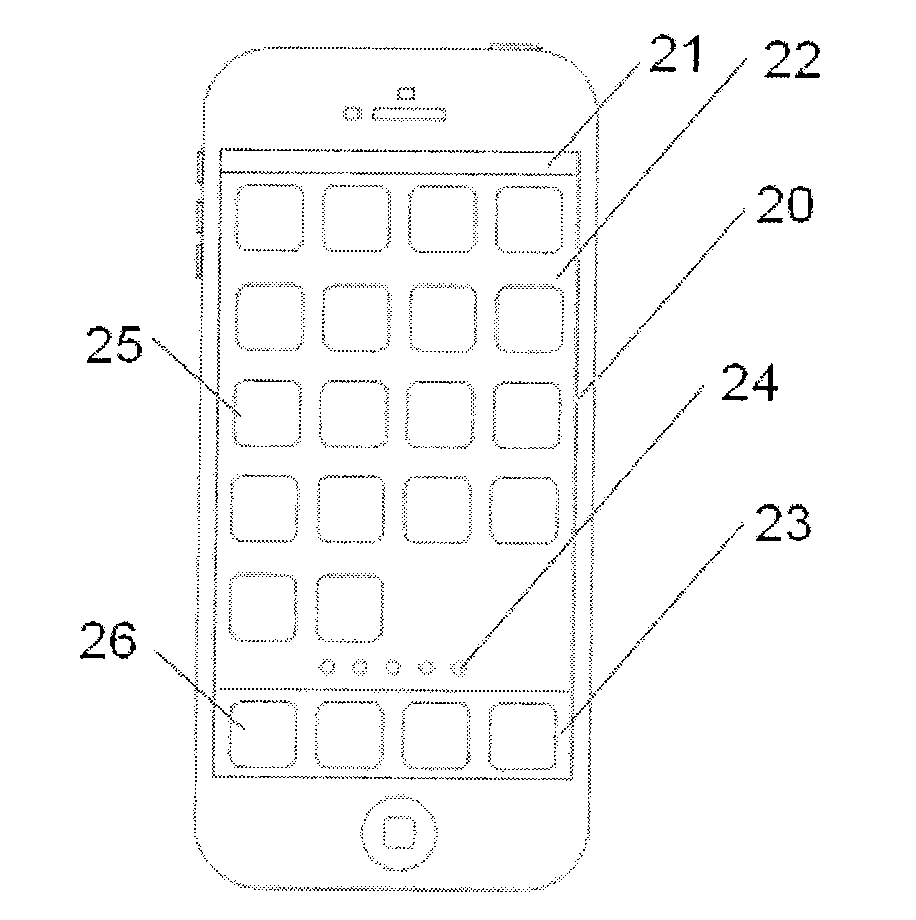

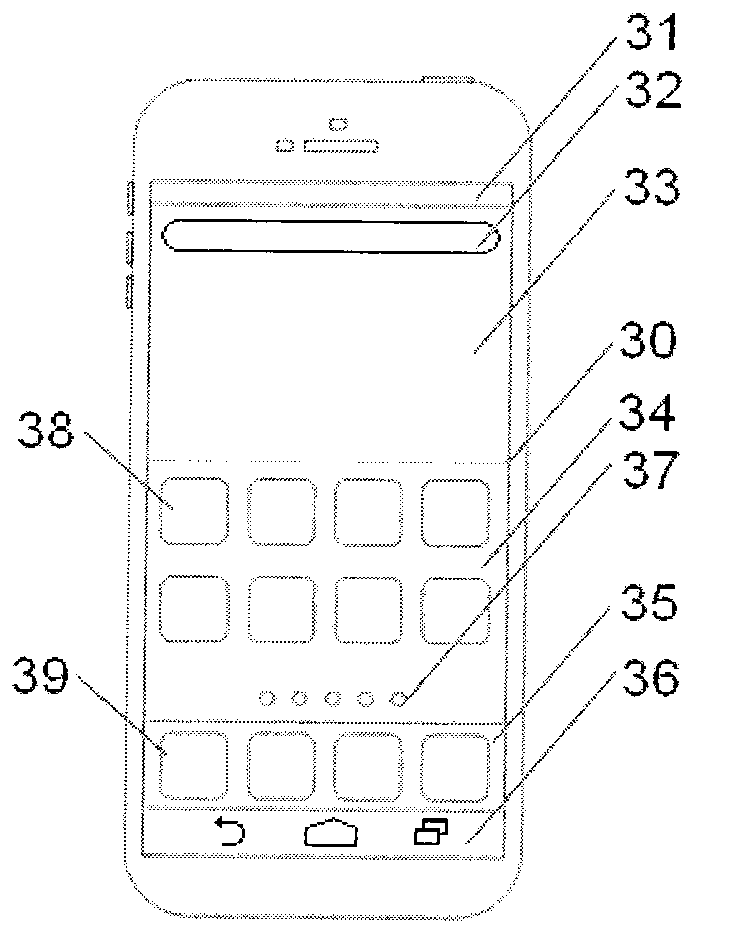

Mobile operating system

InactiveCN103309618AThe main interface is cleanThe main interface is beautifulDigital data processing detailsSubstation equipmentHuman–robot interactionChange detection

The invention relates to a mobile operating system which comprises a series of brand-new innovative technologies like intelligent dynamic icon, global icon interface, quick sliding start, quick voice assistant, quick delete assistant, intelligent motion start, an intelligent state change detection, quick complete close, fastback, efficient multi-task interface and global file browsing interface, all aspects of the existing iOS and the existing Android are completely innovated, and a human-computer interaction infrastructure of a mobile operating system of the next generation is established.

Owner:JIANG HONGMING

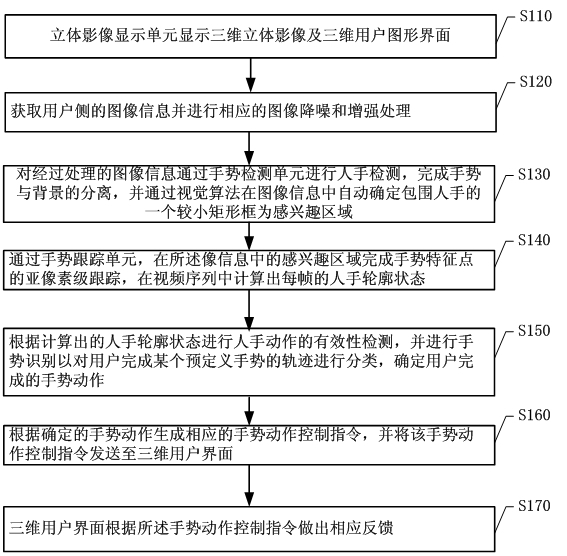

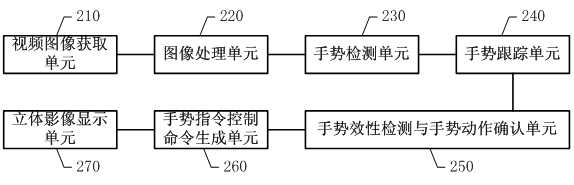

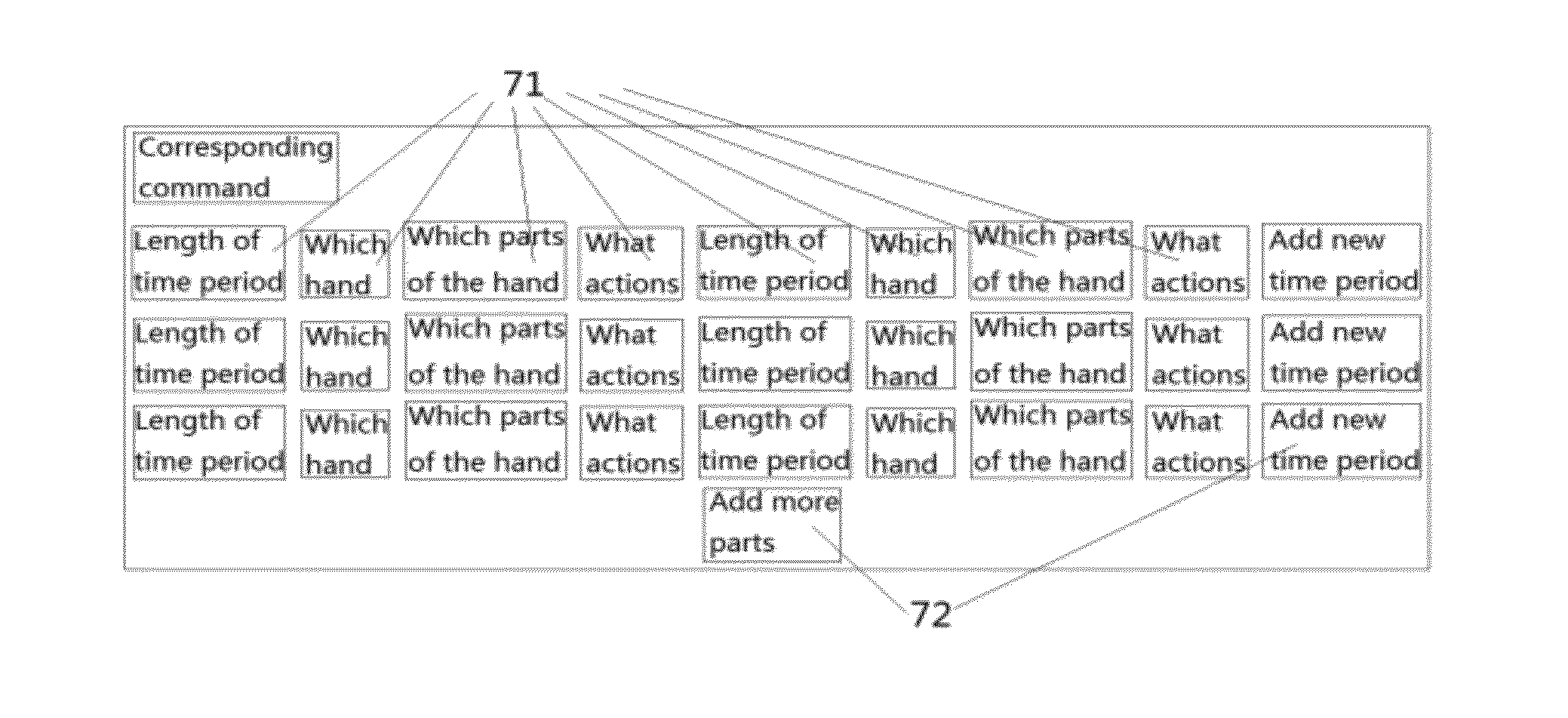

Man-machine interactive system and real-time gesture tracking processing method for same

InactiveCN102426480AReal-time hand detectionReal-time trackingInput/output for user-computer interactionCharacter and pattern recognitionVision algorithmsMan machine

The invention discloses a man-machine interactive system and a real-time gesture tracking processing method for the same. The method comprises the following steps of: obtaining image information of user side, executing gesture detection by a gesture detection unit to finish separation of a gesture and a background, and automatically determining a smaller rectangular frame which surrounds the hand as a region of interest in the image information through a vision algorithm; calculating the hand profile state of each frame in a video sequence by a gesture tracking unit; executing validity check on hand actions according to the calculated hand profile state, and determining the gesture action finished by the user; and generating a corresponding gesture action control instruction according to the determined gesture action, and making corresponding feedbacks by a three-dimensional user interface according to the gesture action control instruction. In the system and the method, all or part of gesture actions of the user are sensed so as to finish accurate tracking on the gesture, thus, a real-time robust solution is provided for an effective gesture man-machine interface port based on a common vision sensor.

Owner:KONKA GROUP

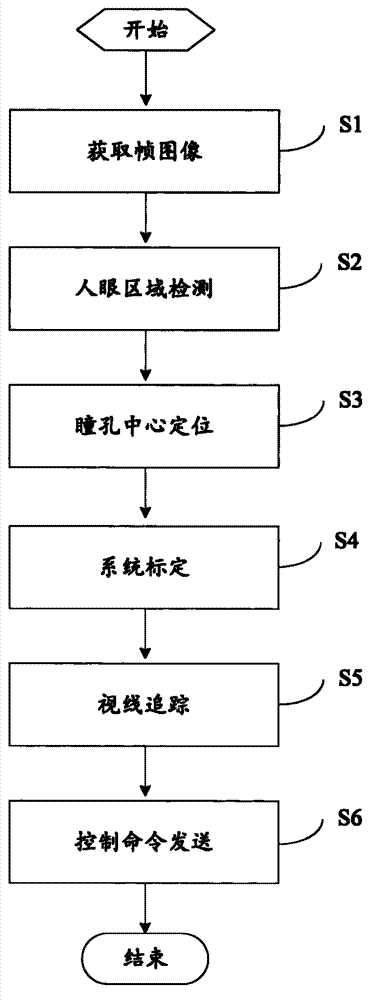

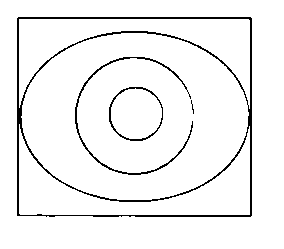

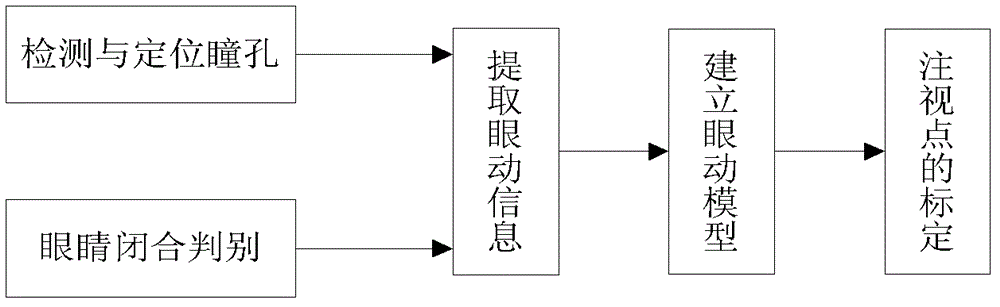

Man-machine interaction method and system based on sight judgment

ActiveCN102830797ARealize the operationEasy to operateInput/output for user-computer interactionGraph readingEye closurePupil

The invention relates to the technical field of man-machine interaction and provides a man-machine interaction method based on sight judgment, to realize the operation on an electronic device by a user. The method comprises the following steps of: obtaining a facial image through a camera, carrying out human eye area detection on the image, and positioning a pupil center according to the detected human eye area; calculating a corresponding relationship between an image coordinate and an electronic device screen coordinate system; tracking the position of the pupil center, and calculating a view point coordinate of the human eye on an electronic device screen according to the corresponding relationship; and detecting an eye blinking action or an eye closure action, and issuing corresponding control orders to the electronic device according to the detected eye blinking action or the eye closure action. The invention further provides a man-machine interaction system based on sight judgment. With the adoption of the man-machine interaction method, the stable sight focus judgment on the electronic device is realized through the camera, and control orders are issued through eye blinking or eye closure, so that the operation on the electronic device by the user becomes simple and convenient.

Owner:SHENZHEN INST OF ADVANCED TECH

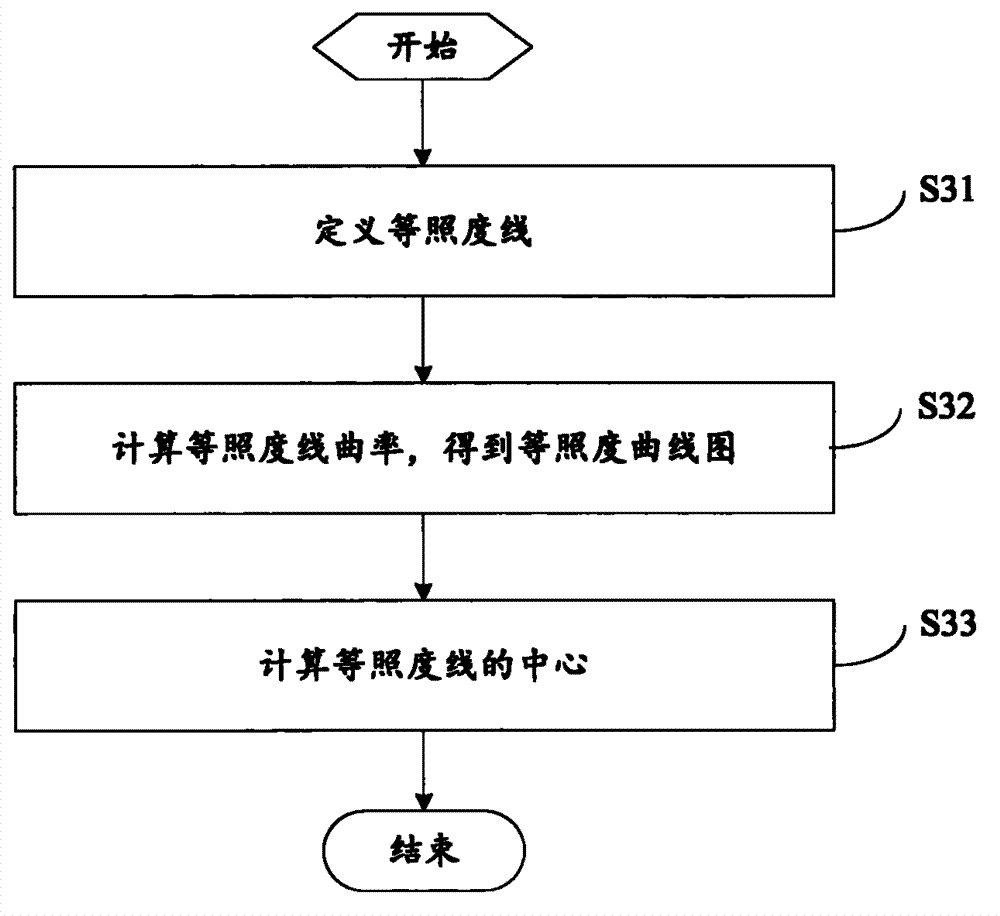

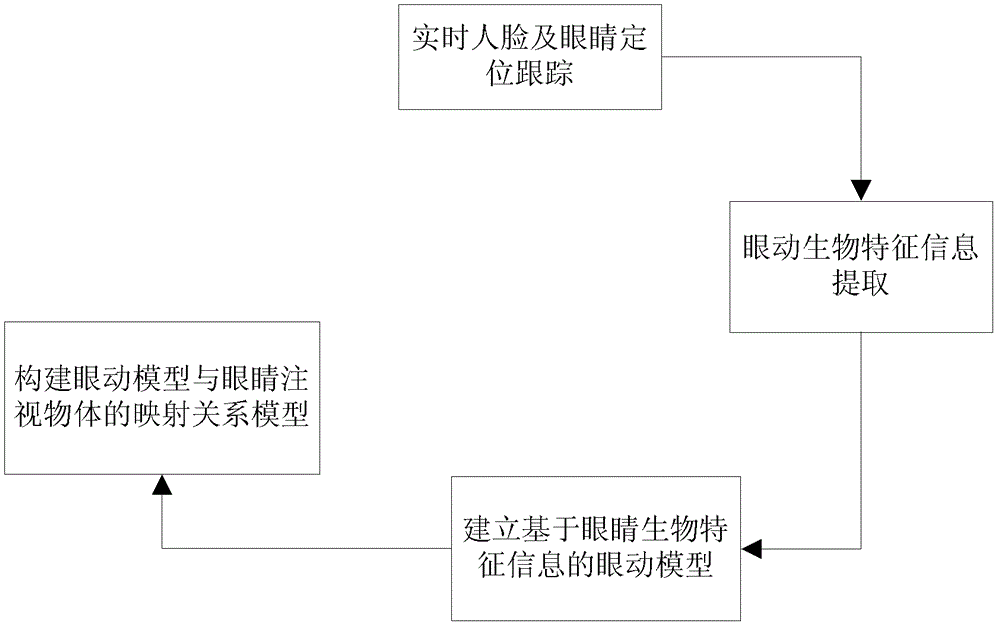

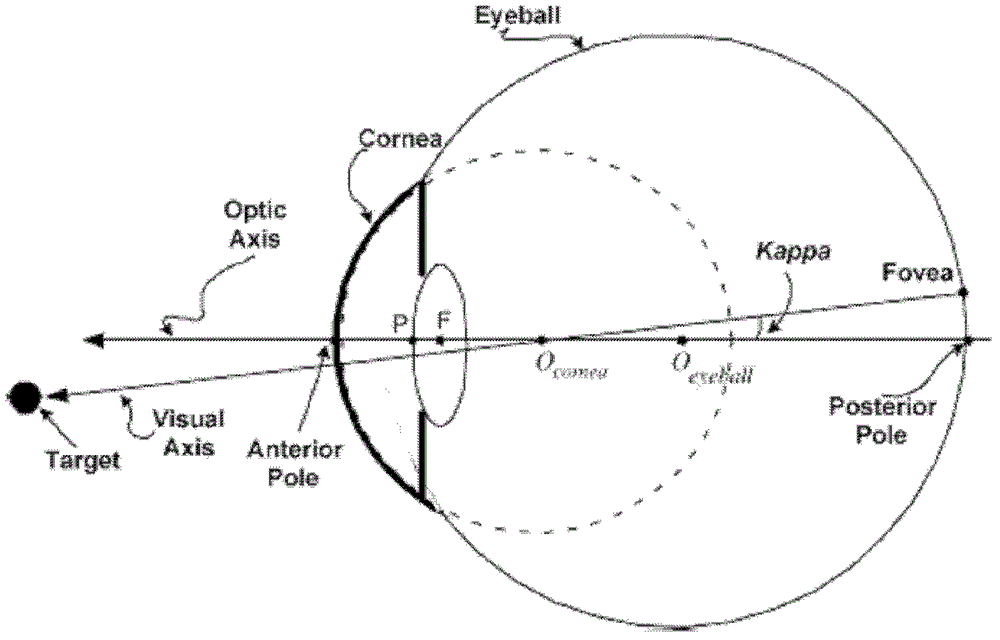

Non-contact free space eye-gaze tracking method suitable for man-machine interaction

ActiveCN102749991AAccurate detectionPrecise positioningInput/output for user-computer interactionCharacter and pattern recognitionJet aeroplaneAviation

The invention provides a non-contact free space eye-gaze tracking method suitable for man-machine interaction. The method comprises the steps of positioning and tracking a face and eyes in real time, extracting eye movement biological characteristic information, building an eye movement model based on the eye movement biological characteristic information, building a mapping relational model of the eye movement model and an eye-gazed object and the like. The non-contact free space eye-gaze tracking method suitable for the man-machine interaction relates to multiple crossed fields of image processing, computer vision, pattern recognition and the like and has wide application prospect in the fields of new-generation man-machine interaction, disabled people assisting, aerospace relating field, sports, automobile and airplane driving, virtual reality, games and the like. In addition, the method has great practical significance for improvement of the life and self-care level of disabled people, building of a harmonious society and improvement of independent innovative capability in the national high-and-new technical fields of the man-machine interaction, unmanned driving and the like.

Owner:广东百泰科技有限公司

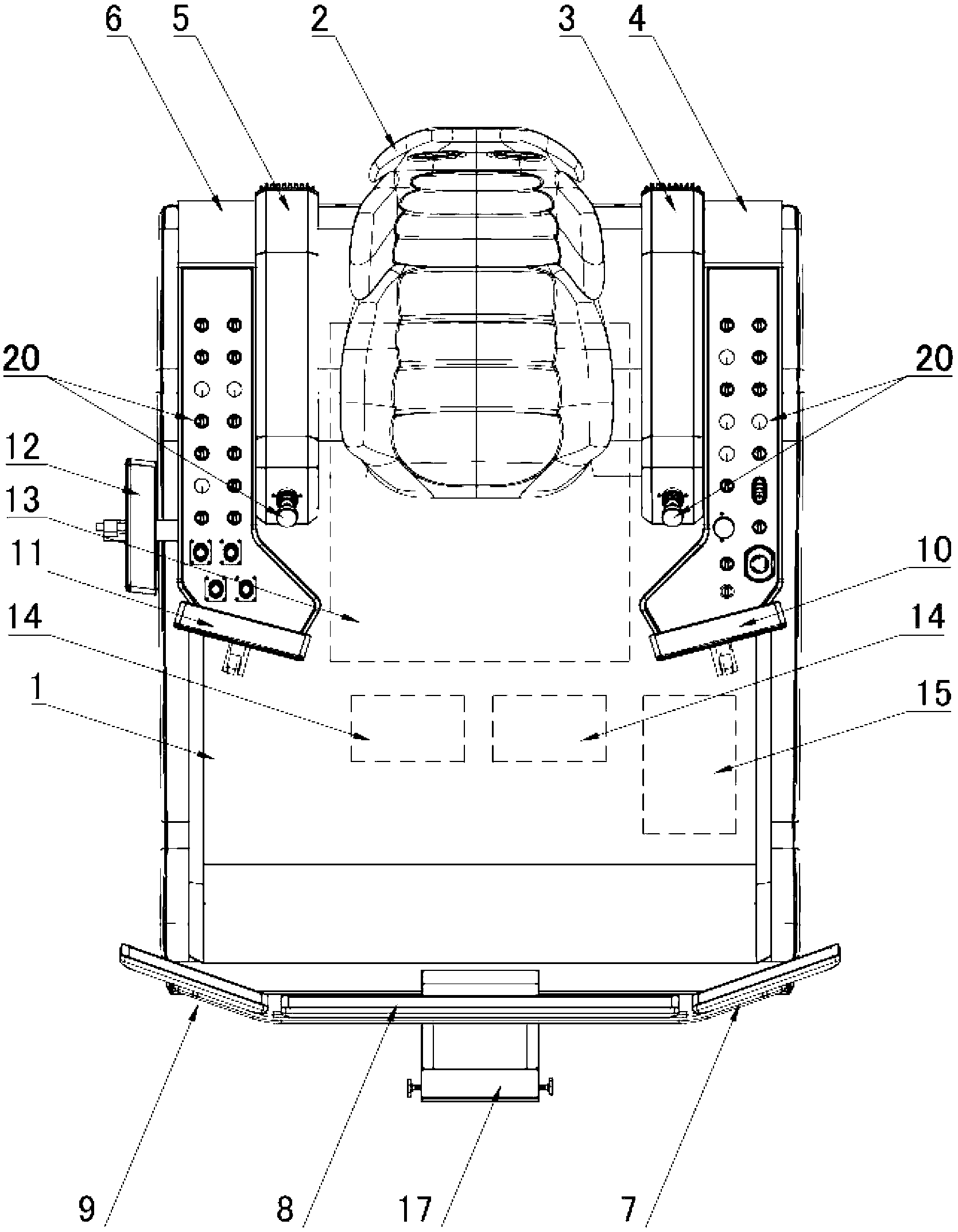

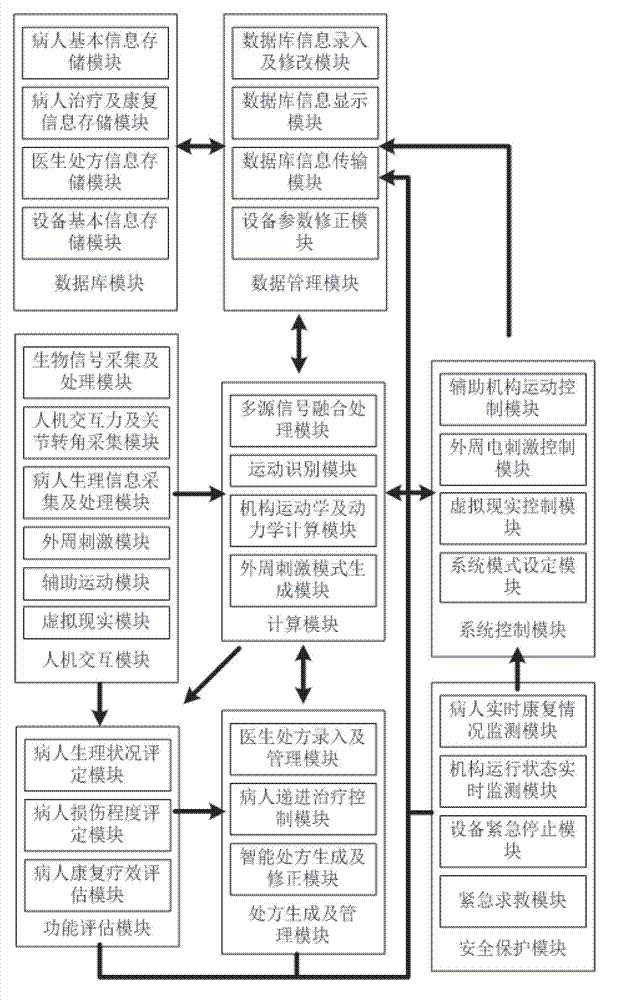

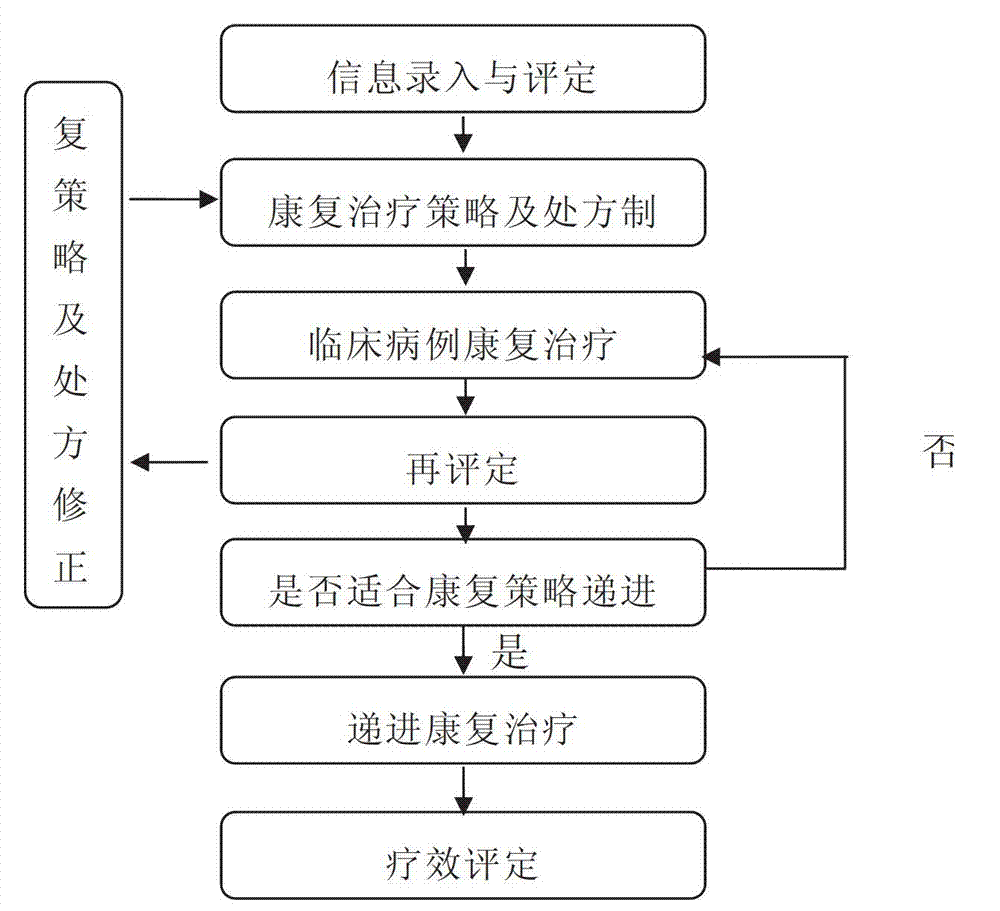

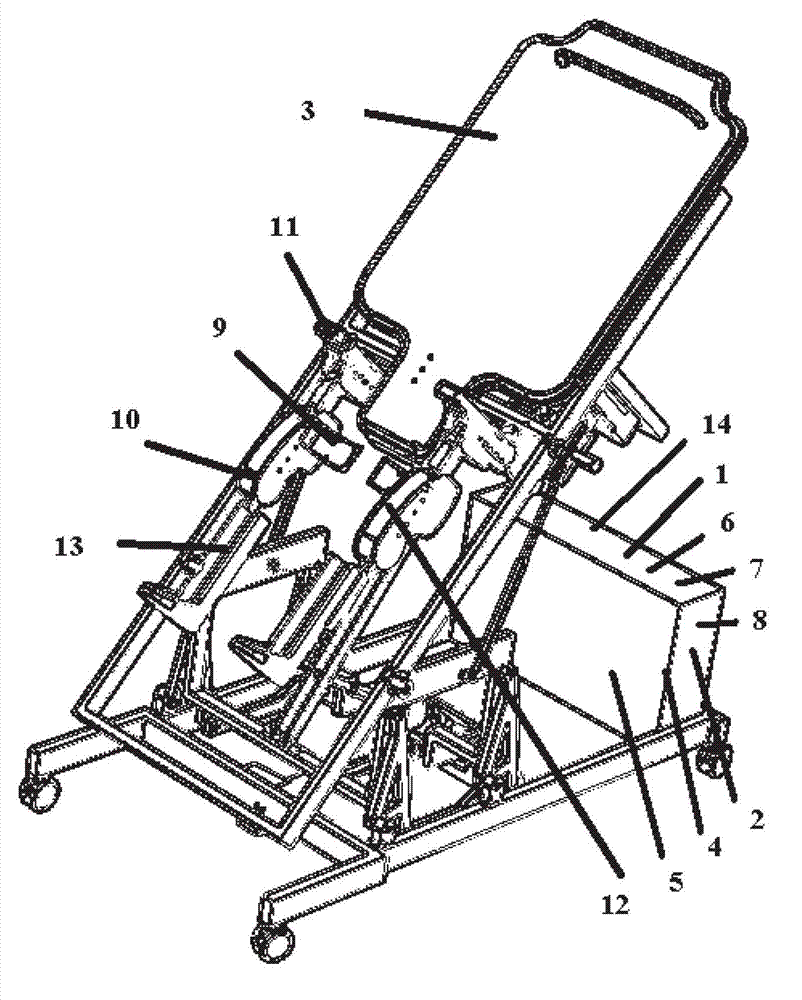

Multifunctional composite rehabilitation system for patient suffering from central nerve injury

ActiveCN102813998ARealize human-machine coordinated controlGood curative effectGymnastic exercisingChiropractic devicesDiseaseHuman motion

The invention discloses a multifunctional composite rehabilitation system for a patient suffering from central nerve injury. The multifunctional composite rehabilitation system comprises a database module, a data managing module, a man-machine interaction module, a function evaluating module, a prescription generating and managing module, a calculating module, a system control module and a safety protecting module. By utilizing the artificial intelligence, the human motion intension can be predicted and recognized, the man-machine coordination control is realized, and the active type rehabilitation treatment of the patient is finished; through a multisource signal collection, signal fusion and real-time control technologies, every function is organically combined, the coordinative rehabilitation treatment of every function module is realized, the rehabilitation effect is effectively improved and the labor consumption is reduced; during the process of rehabilitation, the patient is guided to finish the rehabilitation treatment step by step by using the virtual reality technology; through the interaction among a doctor, the patient and a computer, a progressive composite rehabilitation strategy according with the disease condition of the patient is comprehensively determined, so that the patient suffering fromnerve injury can receive the most effective rehabilitation treatment within a gold rehabilitation period of 50 days, and therefore, the maximization of the functional rehabilitation is promoted.

Owner:SHANGHAI JIAO TONG UNIV

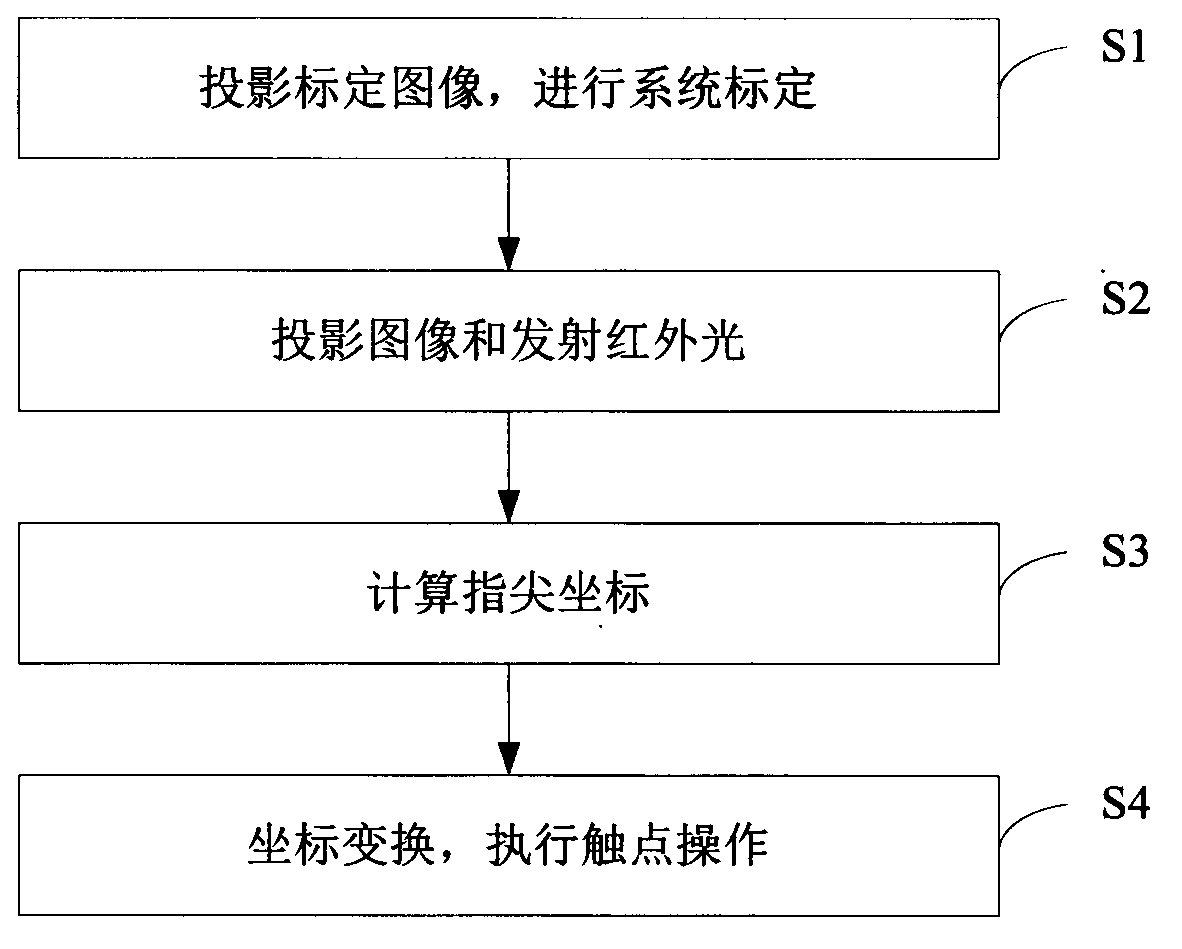

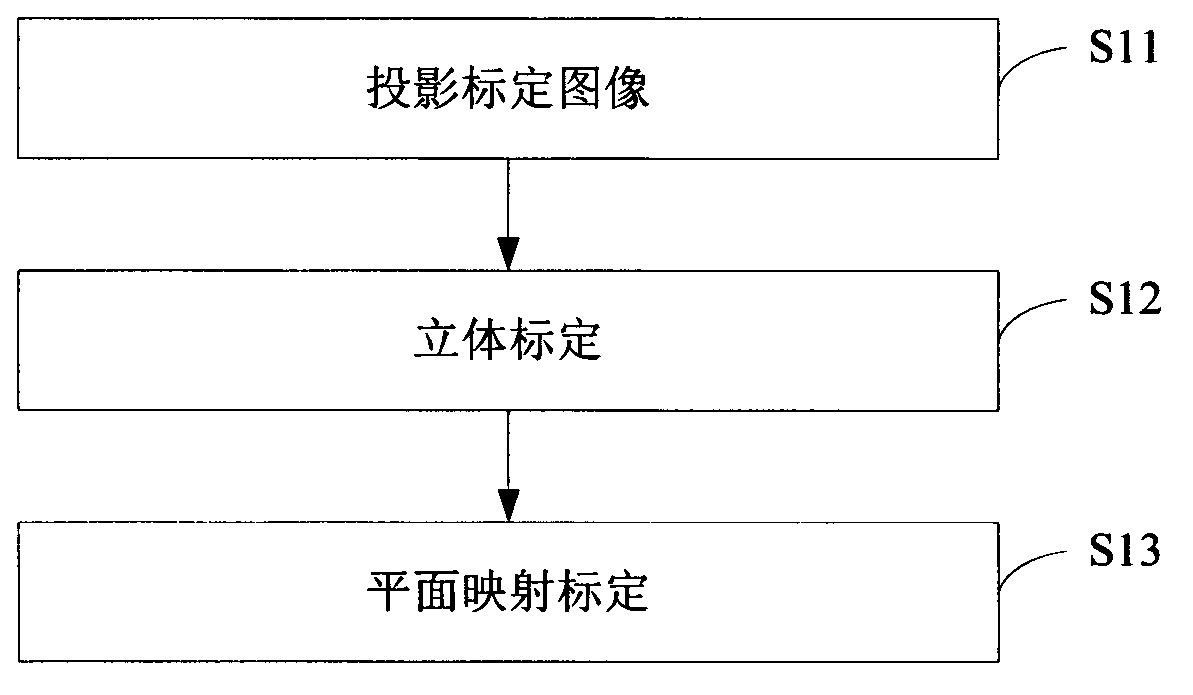

Human-machine interaction method and system based on binocular stereoscopic vision

ActiveCN102799318AImprove human-computer interactionEnhanced interactionInput/output processes for data processingHuman–robot interactionProjection plane

The invention relates to the technical field of human-machine interaction and provides a human-machine interaction method and a human-machine interaction system based on binocular stereoscopic vision. The human-machine interaction method comprises the following steps: projecting a screen calibration image to a projection plane and acquiring the calibration image on the projection surface for system calibration; projecting an image and transmitting infrared light to the projection plane, wherein the infrared light forms a human hand outline infrared spot after meeting a human hand; acquiring an image with the human hand outline infrared spot on the projection plane and calculating a fingertip coordinate of the human hand according to the system calibration; and converting the fingertip coordinate into a screen coordinate according to the system calibration and executing the operation of a contact corresponding to the screen coordinate. According to the invention, the position and the coordinate of the fingertip are obtained by the system calibration and infrared detection; a user can carry out human-machine interaction more conveniently and quickly on the basis of touch operation of the finger on a general projection plane; no special panels and auxiliary positioning devices are needed on the projection plane; and the human-machine interaction device is simple in and convenient for mounting and using and lower in cost.

Owner:SHENZHEN INST OF ADVANCED TECH

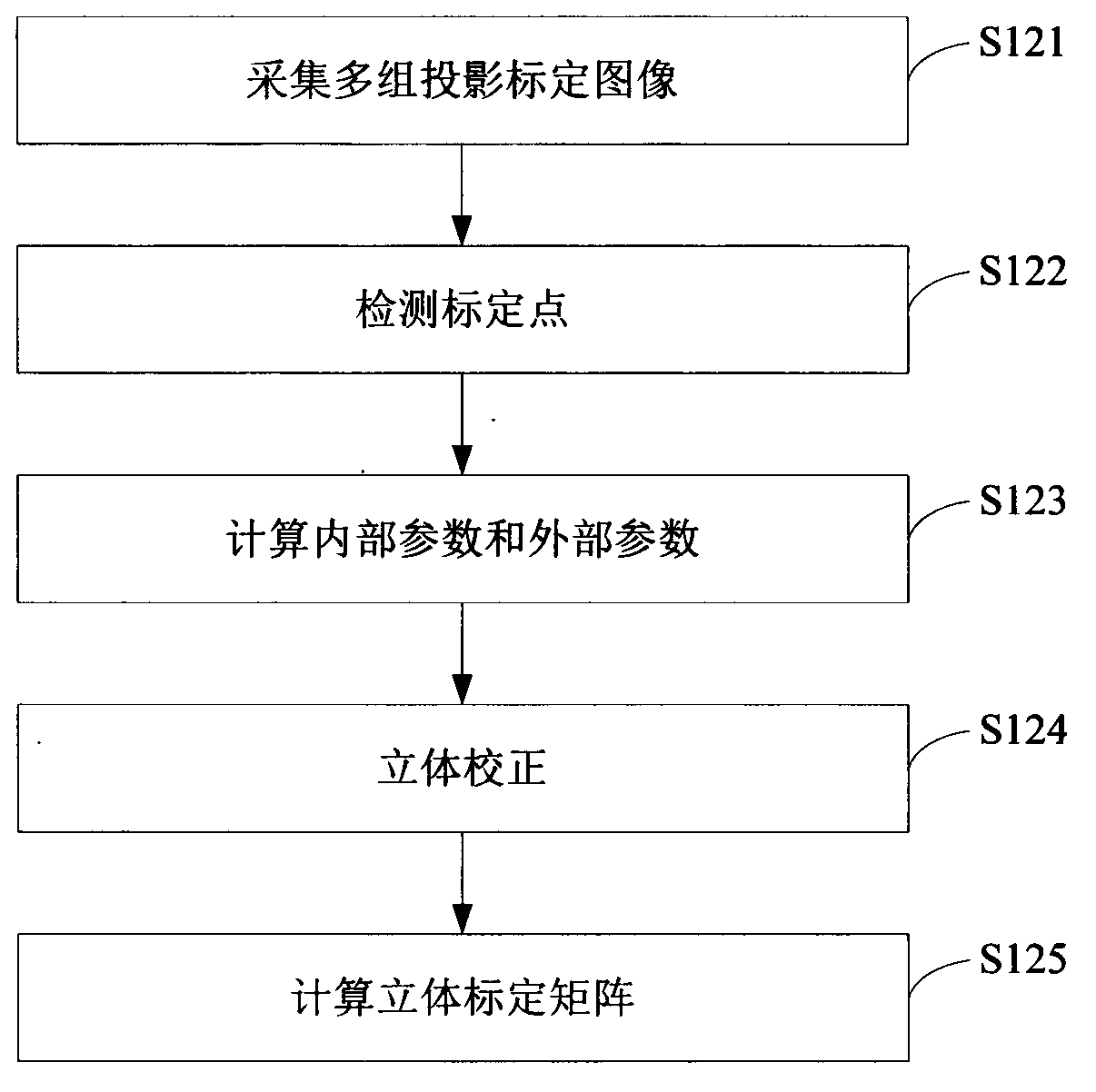

Method and interface for man-machine interaction

InactiveUS20120182296A1Cathode-ray tube indicatorsInput/output processes for data processingGraphicsHuman–robot interaction

Provided are a mechanism and an interface for man-machine interaction, especially a mechanism and an interface for man-machine interaction applicable to multi-touch. A mode and a method for constructing a graphic interface in the environment of touching operation are provided, thus sufficiently using the advantages of multi-touch devices and inputting more plentiful operation instruction information through less operation. The solution mainly describes how to use multi-touch to provide more plentiful input information and how to express operation commands with more complex structures through less steps, and how to enable the interface to be more smart, to accord with ergonomic and to be more easy to use multiple parts of a hand to operate at the same time.

Owner:HAN DINGNAN

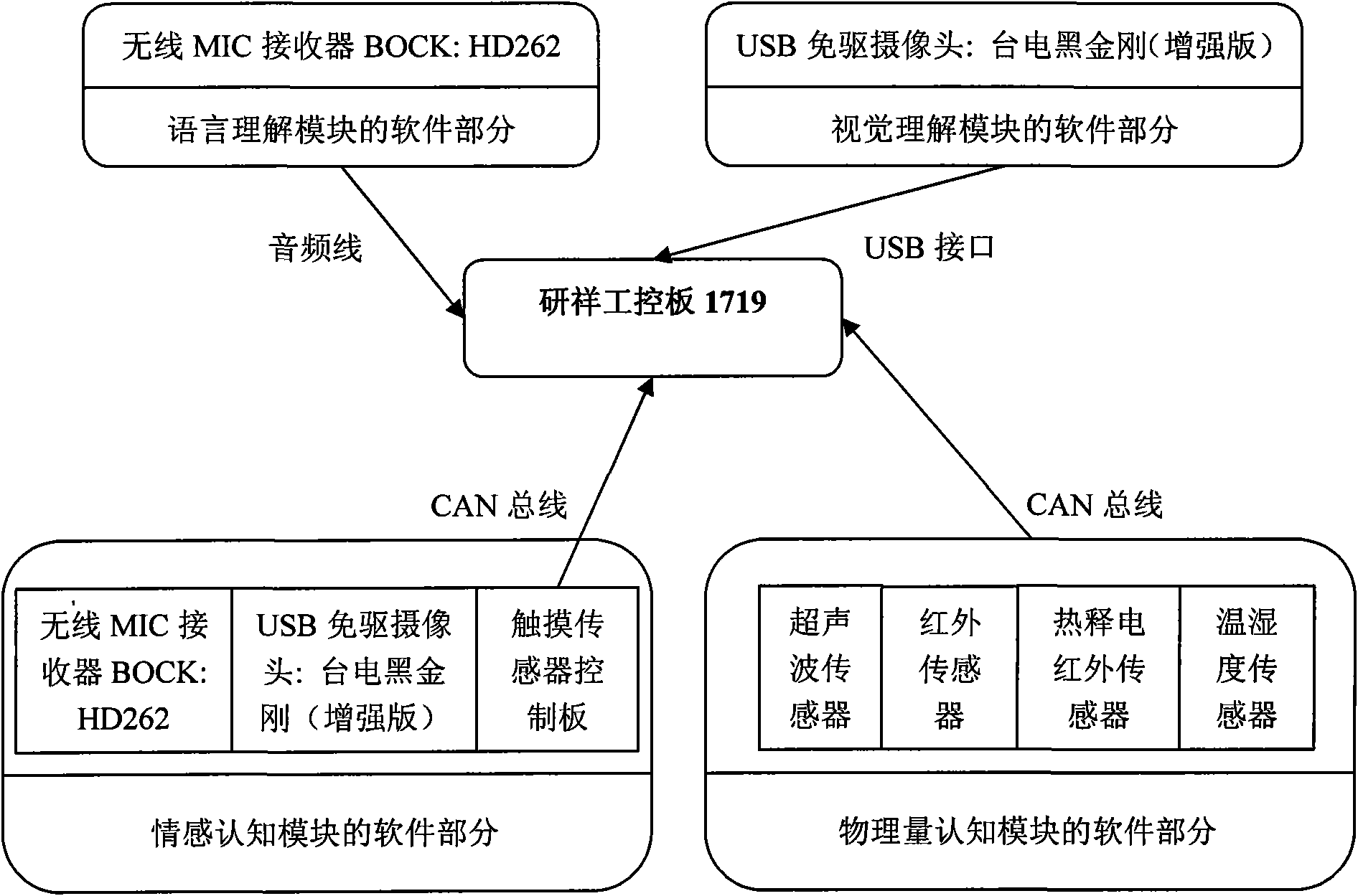

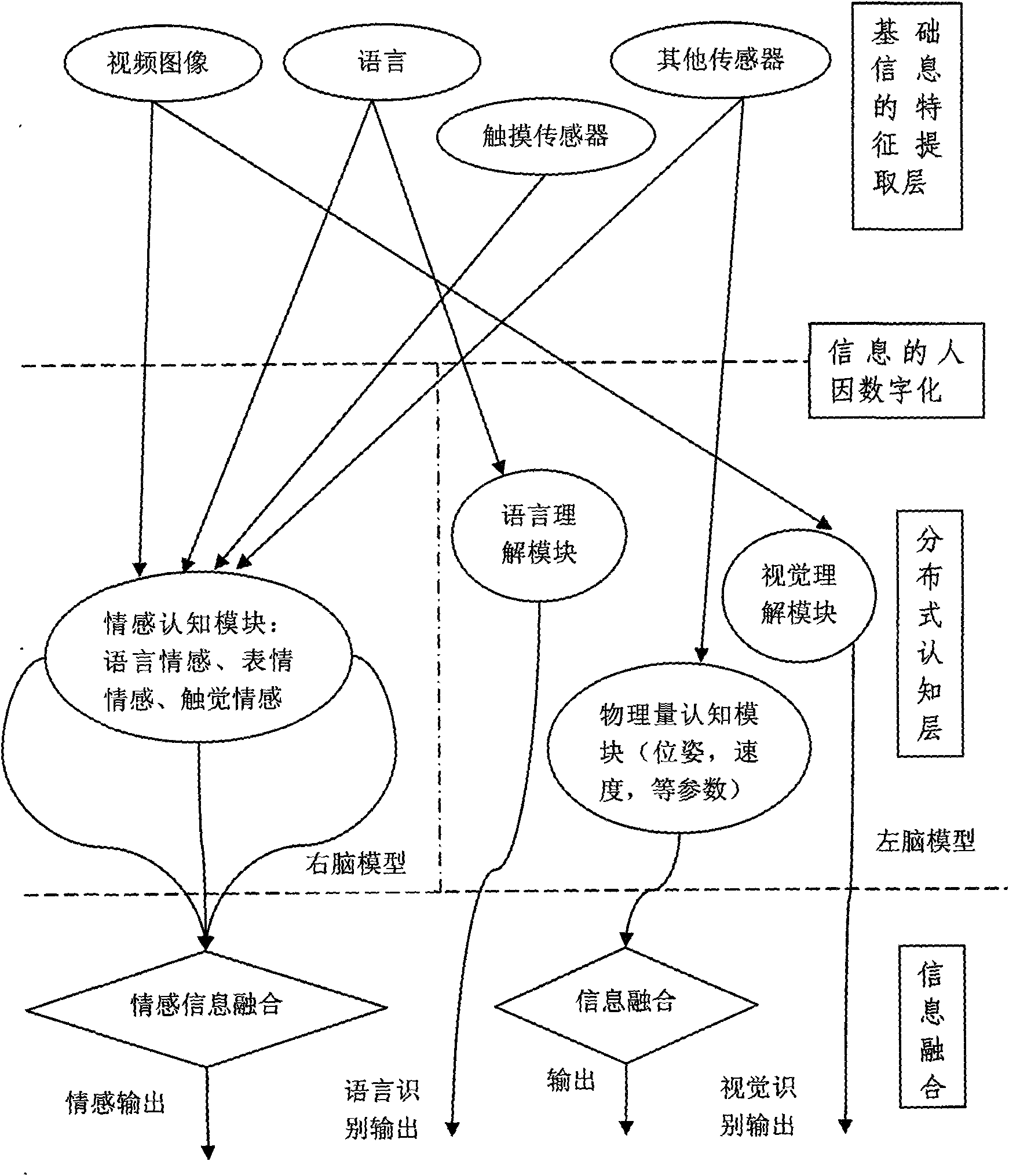

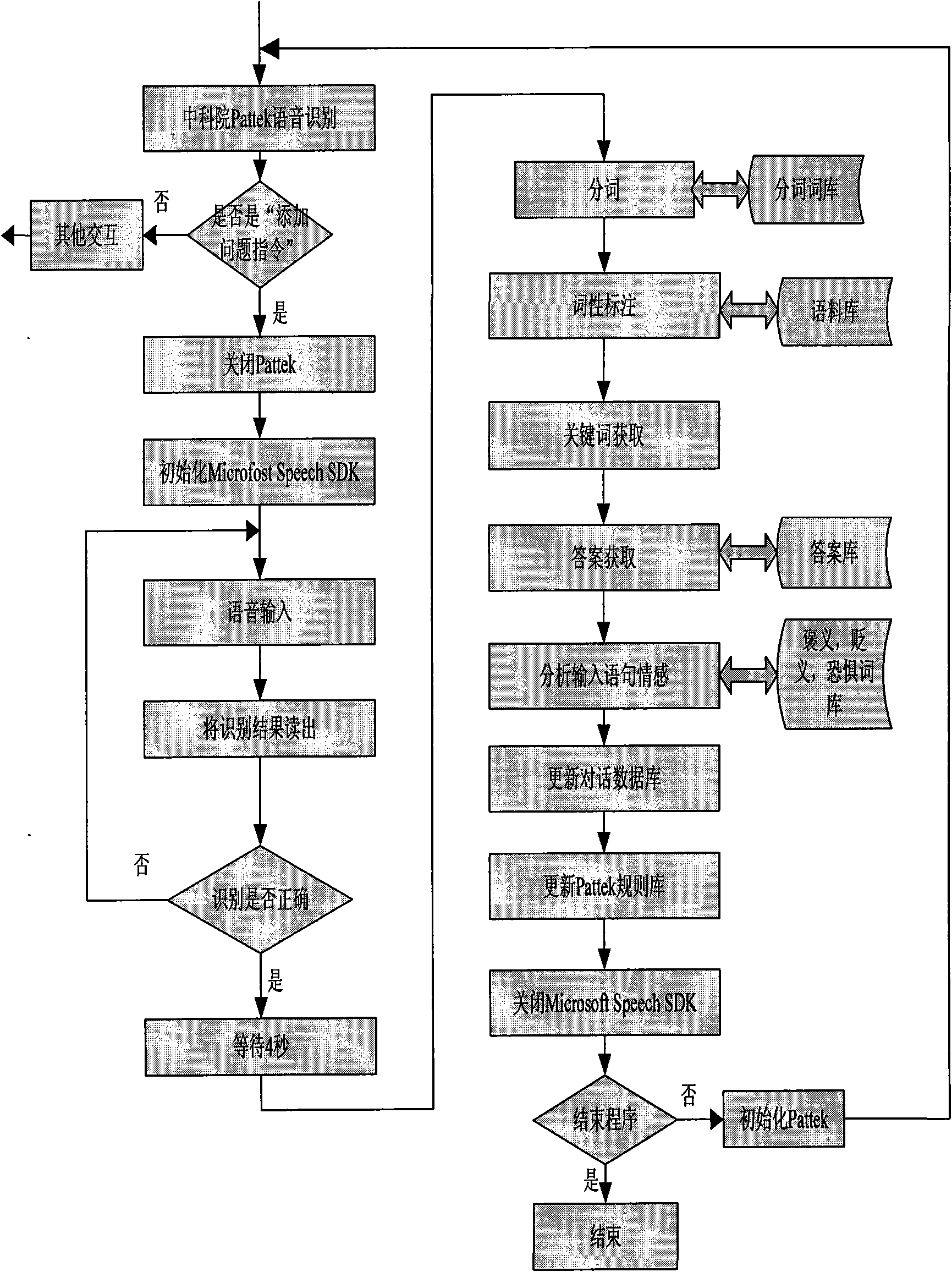

Distributed cognitive technology for intelligent emotional robot

InactiveCN101604204AInput/output for user-computer interactionCharacter and pattern recognitionHuman behaviorLanguage understanding

The invention provides distributed cognitive technology for an intelligent emotional robot, which can be applied in the field of multi-channel human-computer interaction in service robots, household robots, and the like. In a human-computer interaction process, the multi-channel cognition for the environment and people is distributed so that the interaction is more harmonious and natural. The distributed cognitive technology comprises four parts, namely 1) a language comprehension module which endows a robot with an ability of understanding human language after the steps of word division, word gender labeling, key word acquisition, and the like; 2) a vision comprehension module which comprises related vision functions such as face detection, feature extraction, feature identification, human behavior comprehension, and the like; 3) an emotion cognition module which extracts related information in language, expression and touch, analyzes user emotion contained in the information, synthesizes a comparatively accurate emotion state, and makes the intelligent emotional robot cognize the current emotion of a user; and 4) a physical quantity cognition module which makes the robot understand the environment and self state as the basis of self adjustment.

Owner:UNIV OF SCI & TECH BEIJING

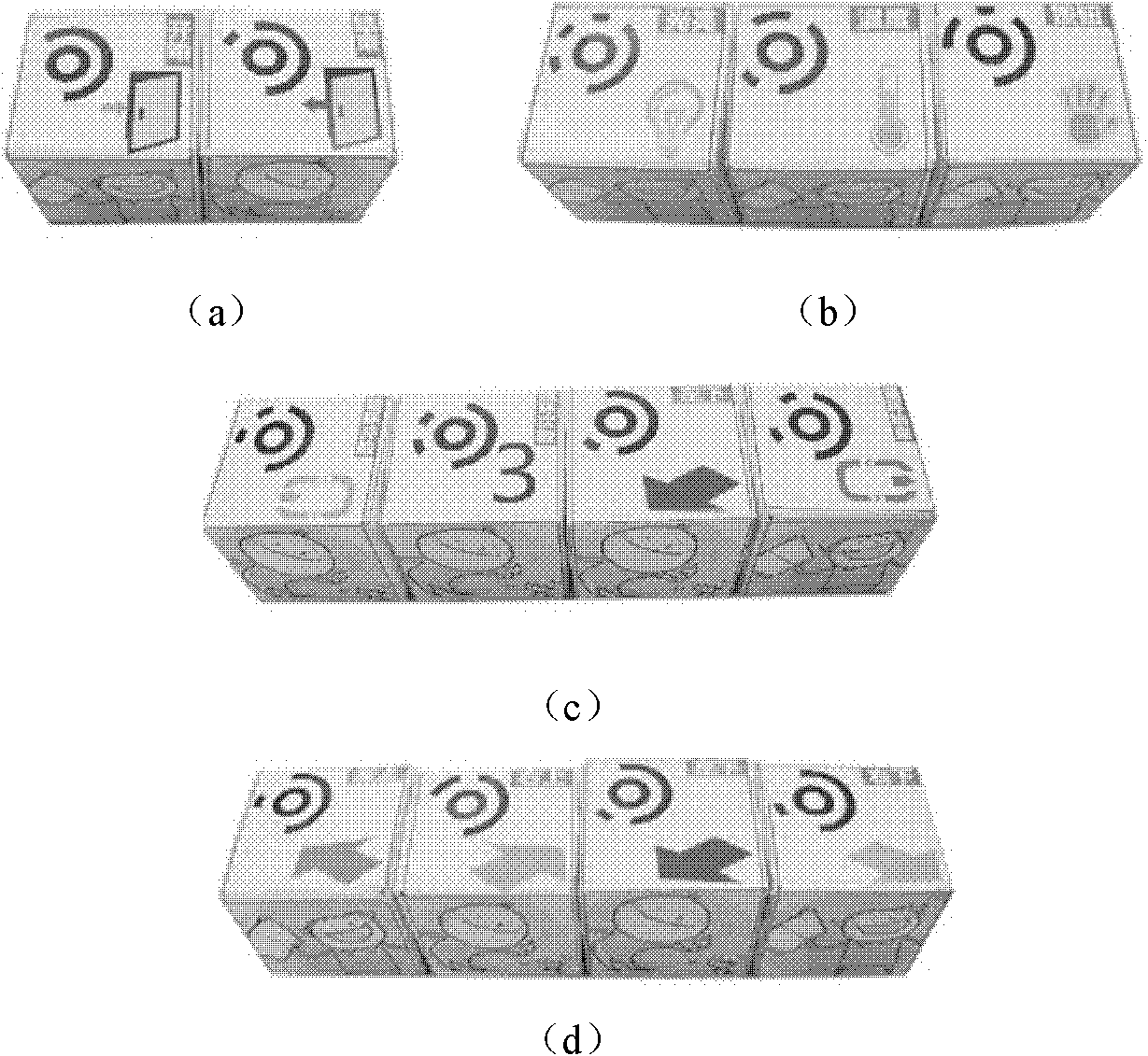

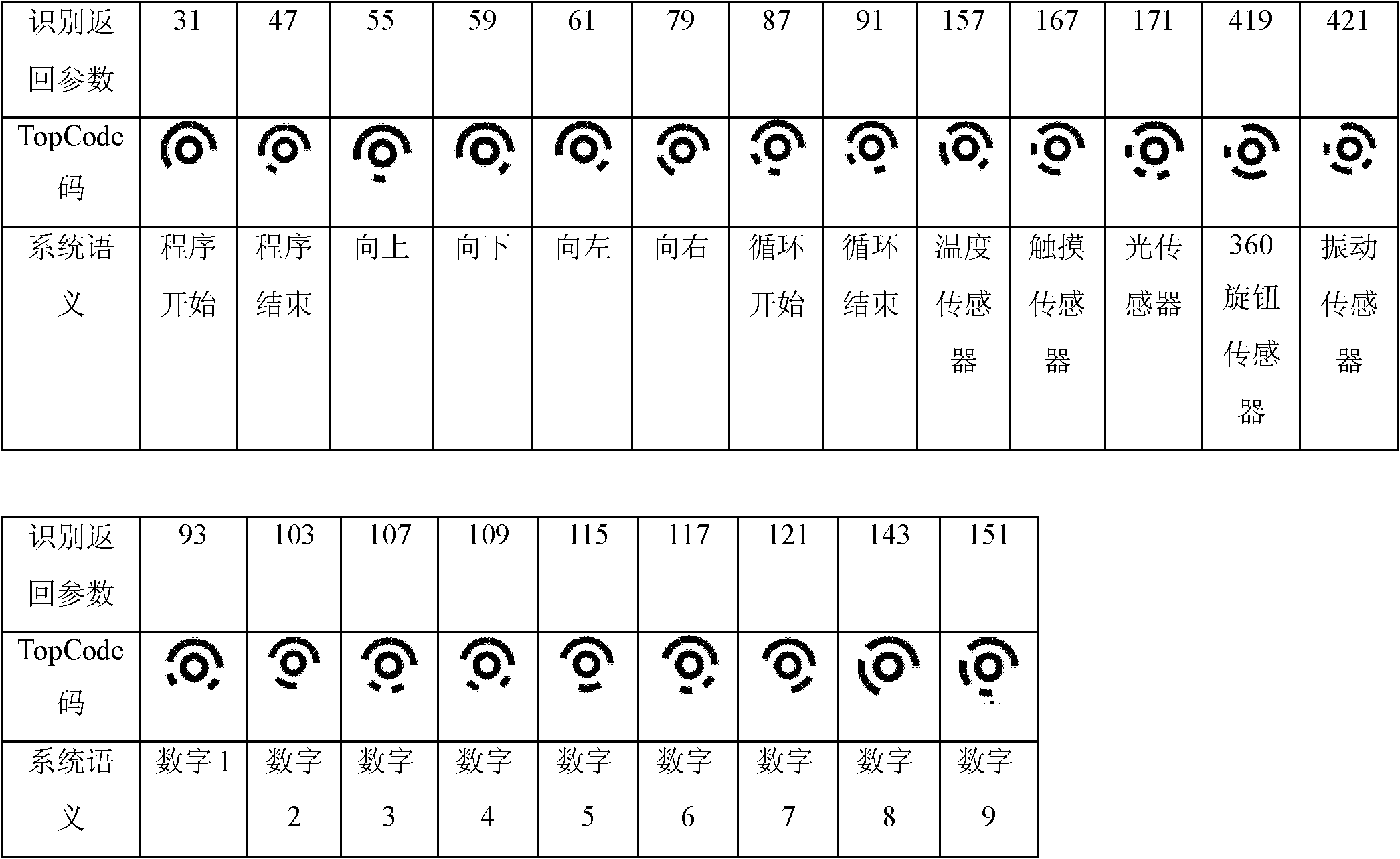

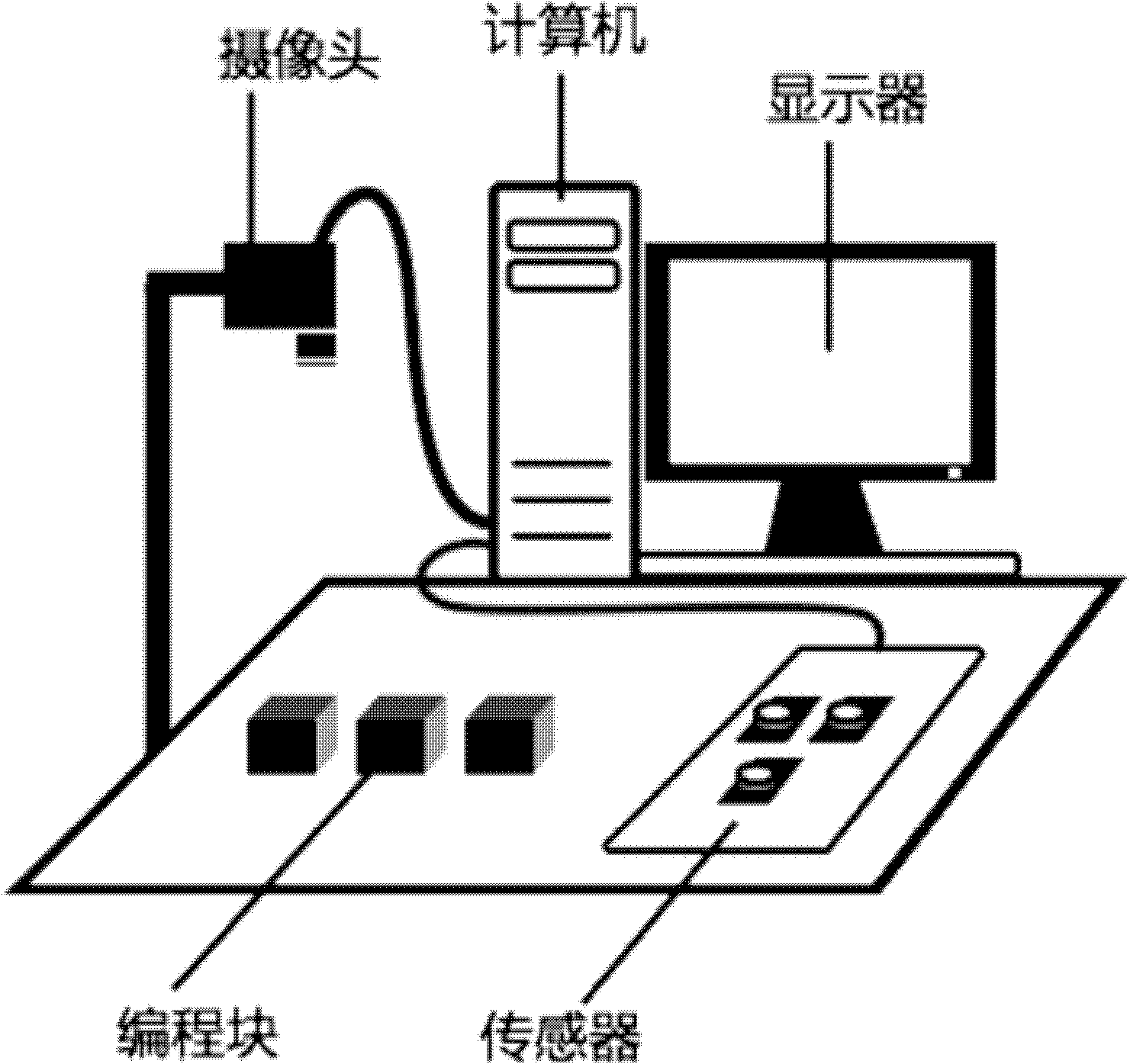

Material object programming method and system

The invention discloses a material object programming method and a material object programming system, which belong to the field of human-machine interaction. The method comprises the following steps of: 1) establishing a set of material object programming display environment; 2) shooting the sequence of material object programming blocks which are placed by a user and uploading the shot image toa material object programming processing module by using an image acquisition unit; 3) converting the sequence of the material object blocks into a corresponding functional semantic sequence by usingthe material object programming processing module according to the computer vision identification modes and the position information of the material object programming blocks; 4) determining whether the current functional semantic sequence meets the grammatical and semantic rules of the material object display environment or not, and if the current functional semantic sequence does not meet the grammatical and semantic rules of the material object display environment, feeding back a corresponding error prompt; 5) replacing the corresponding material object programming blocks by using the useraccording to the prompt information; and 6) repeating the steps 2) to 5) until the functional semantic sequence corresponding to the sequence of the placed material object programming blocks meets the grammatical and semantic rules of the material object display environment, and finishing a programming task. By using the method and the system, the problem that children and green hands are difficult to learn programming is solved, and the system has low cost and is easy to popularize.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

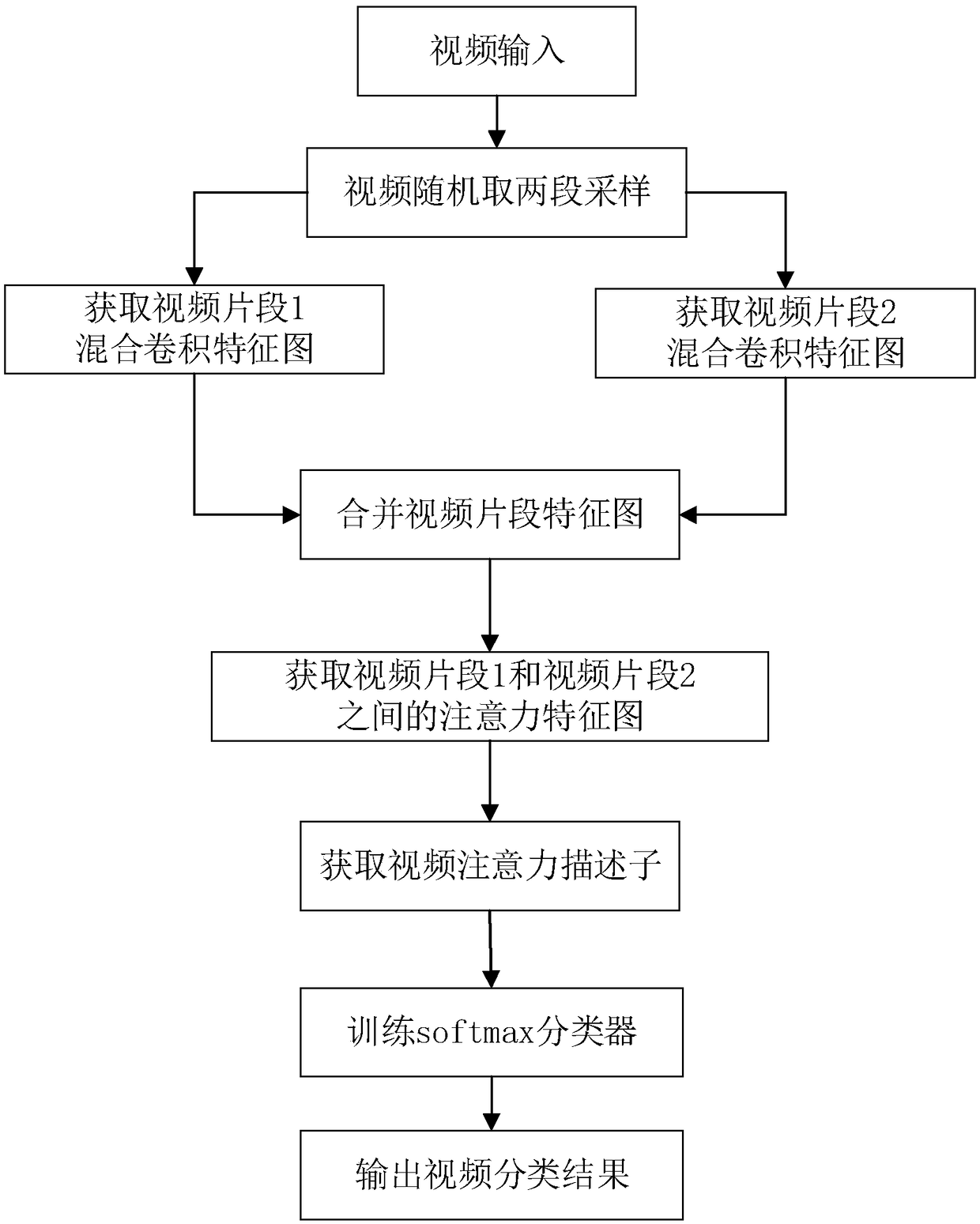

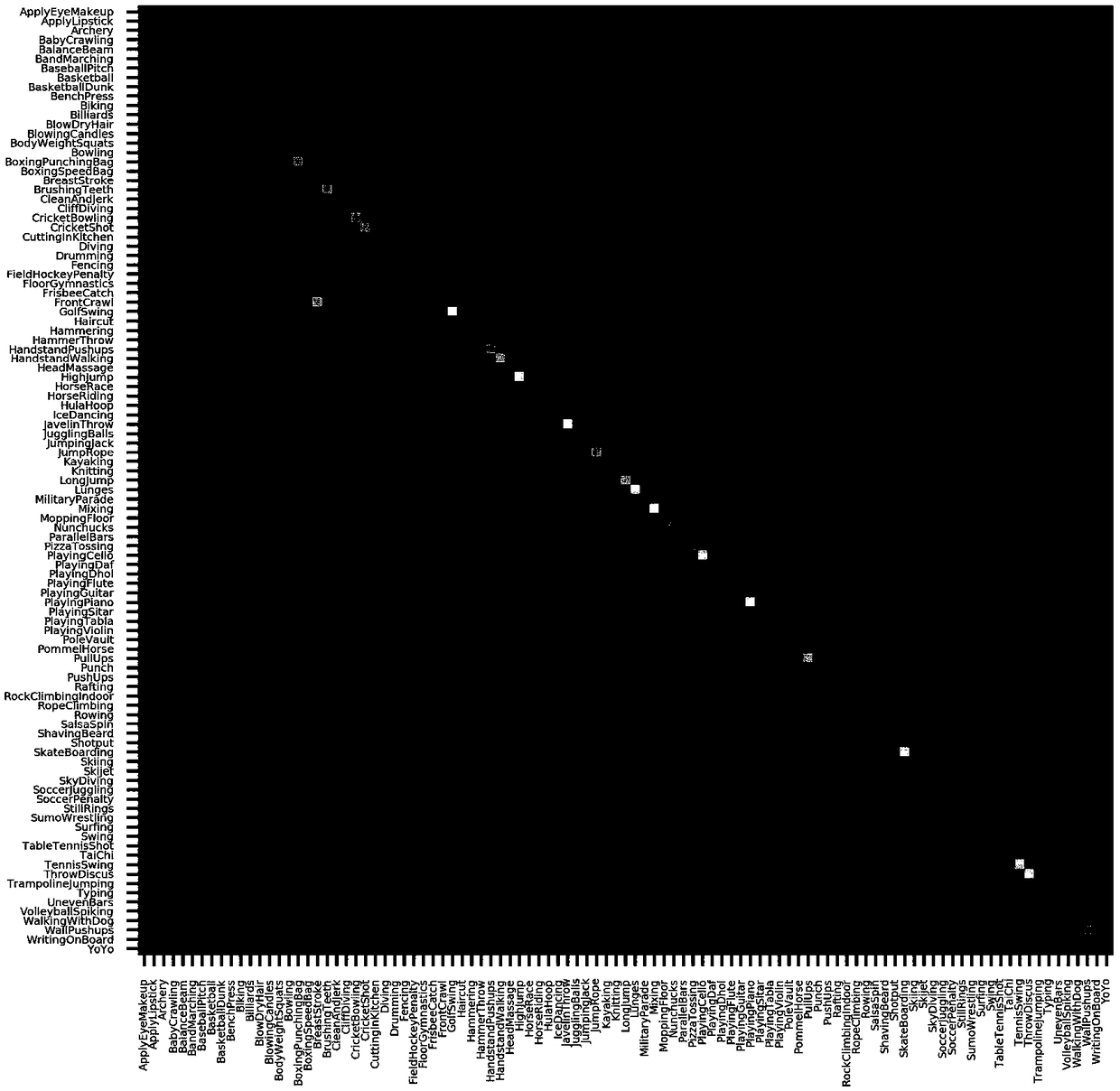

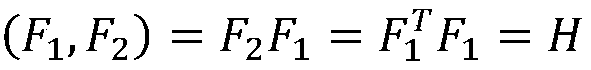

Video classification based on hybrid convolution and attention mechanism

ActiveCN109389055ASmall amount of calculationImprove accuracyCharacter and pattern recognitionNeural architecturesVideo retrievalData set

The invention discloses a video classification method based on a mixed convolution and attention mechanism, which solves the problems of complex calculation and low accuracy of the prior art. The method comprises the following steps of: selecting a video classification data set; Segmented sampling of input video; Preprocessing two video segments; Constructing hybrid convolution neural network model; The video mixed convolution feature map is obtained in the direction of temporal dimension. Video Attention Feature Map Obtained by Attention Mechanism Operation; Obtaining a video attention descriptor; Training the end-to-end entire video classification model; Test Video to be Categorized. The invention directly obtains mixed convolution characteristic maps for different video segments, Compared with the method of obtaining optical flow features, the method of obtaining optical flow features reduces the computational burden and improves the speed, introduces the attention mechanism betweendifferent video segments, describes the relationship between different video segments and improves the accuracy and robustness, and is used for video retrieval, video tagging, human-computer interaction, behavior recognition, event detection and anomaly detection.

Owner:XIDIAN UNIV

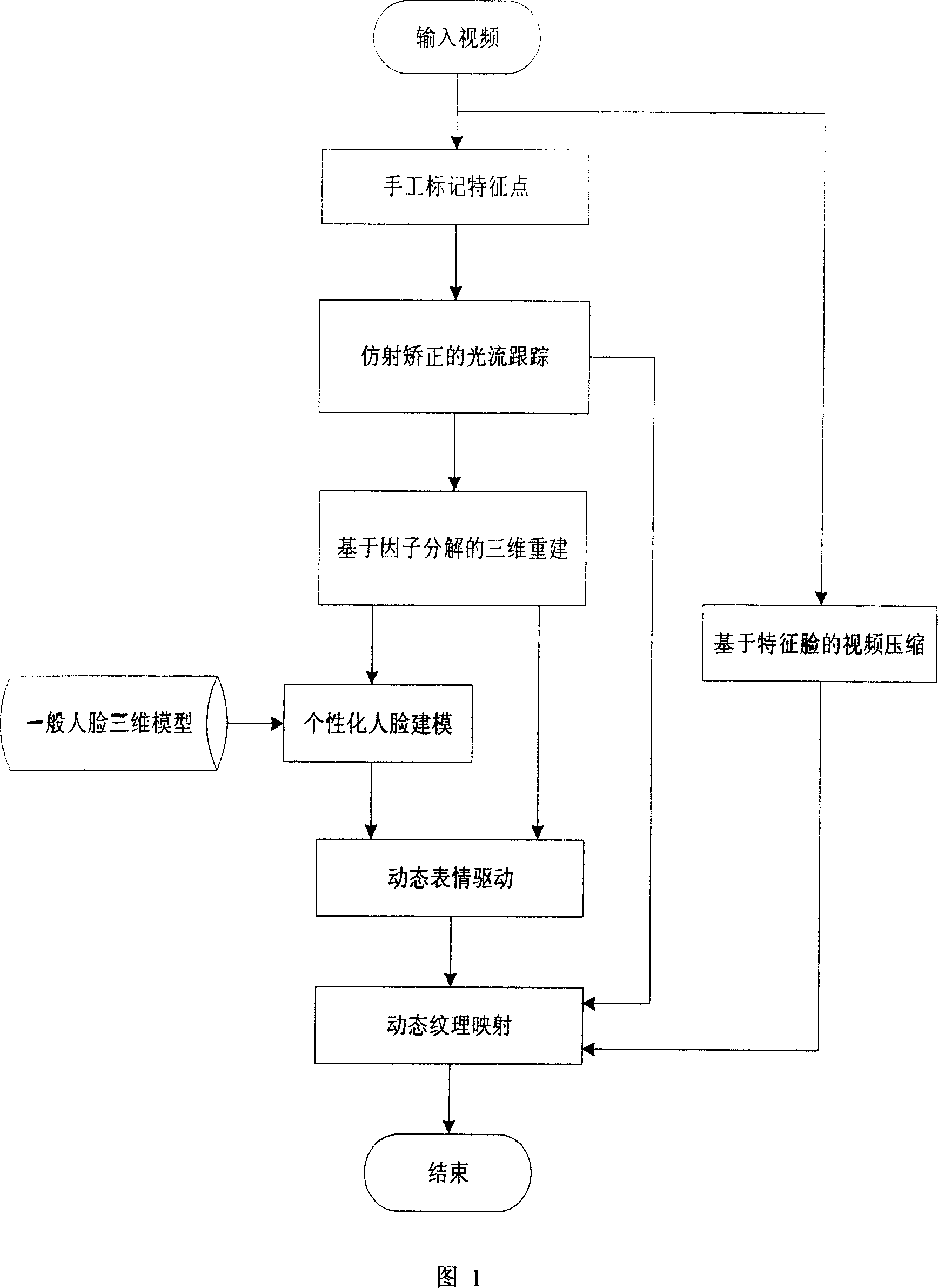

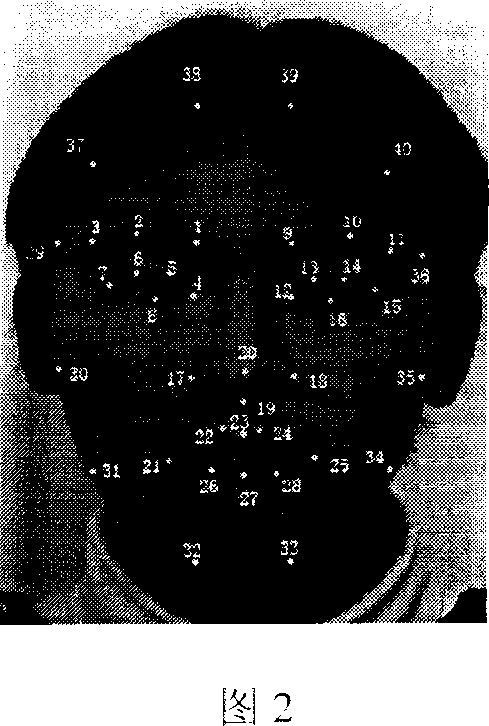

Video flow based three-dimensional dynamic human face expression model construction method

InactiveCN1920886AImprove efficiencyRich expressivenessAnimation3D-image renderingDecompositionPersonalization

The invention relates to a three-dimension dynamic face pathetic model construction method based on video flow, which can return the three-dimension face pathetic based on input video flow, wherein the algorism comprises: (1) marking face character point at the first frame of input video; (2) using light stream method of affine correction to track the character point; (3) rebuilding the two-dimension track data based on factor decomposition into three-dimension data; (4 using rebuilt three-dimension date to match general face model, to generate personal face and dynamic pathetic motion; (5) using character face technique to compress the original video; (6) using character face to rebuild input video and projecting dynamic pattern, to compose true virtual appearance. The invention has high time / spatial efficiency and high value.

Owner:ZHEJIANG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com