Patents

Literature

1837 results about "Human system interaction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Human System Interaction (HSI), or Human-Computer Interaction (HCI), focuses on the design, research and development of interfaces between people and systems such as computers. HSI and HCI are multi-disciplinary fields involving computer science, behavioral science, design technology and other related fields of study.

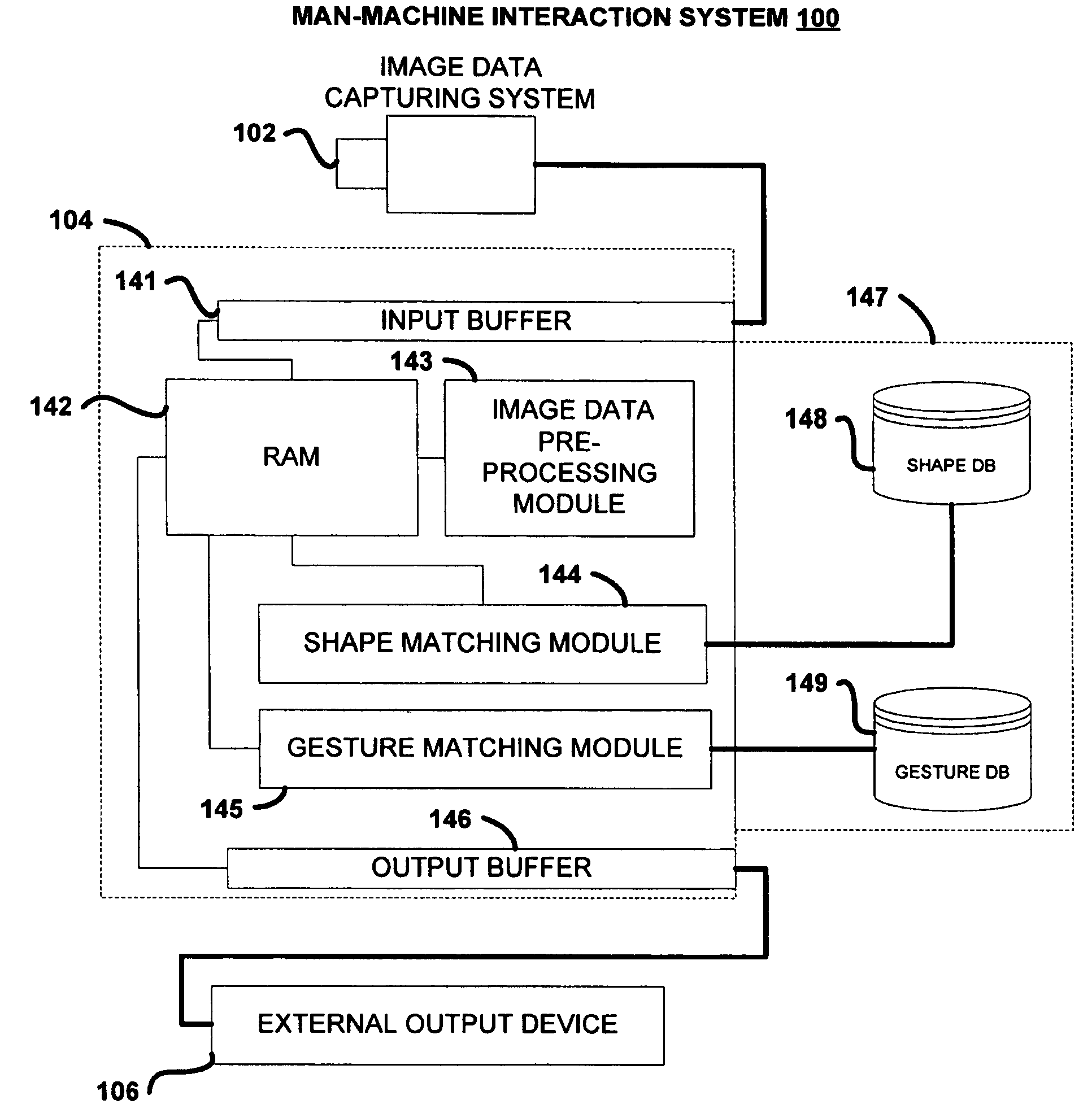

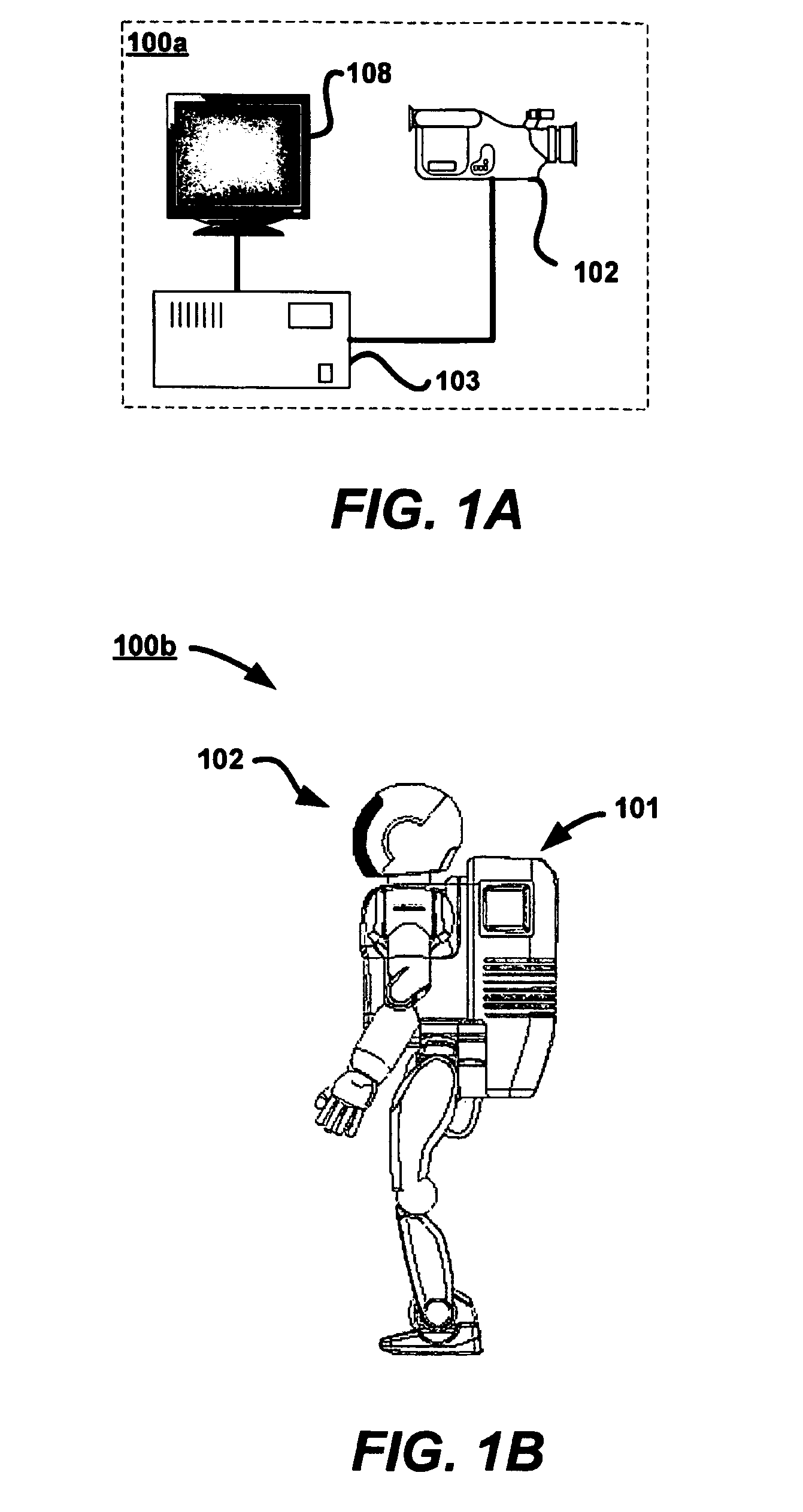

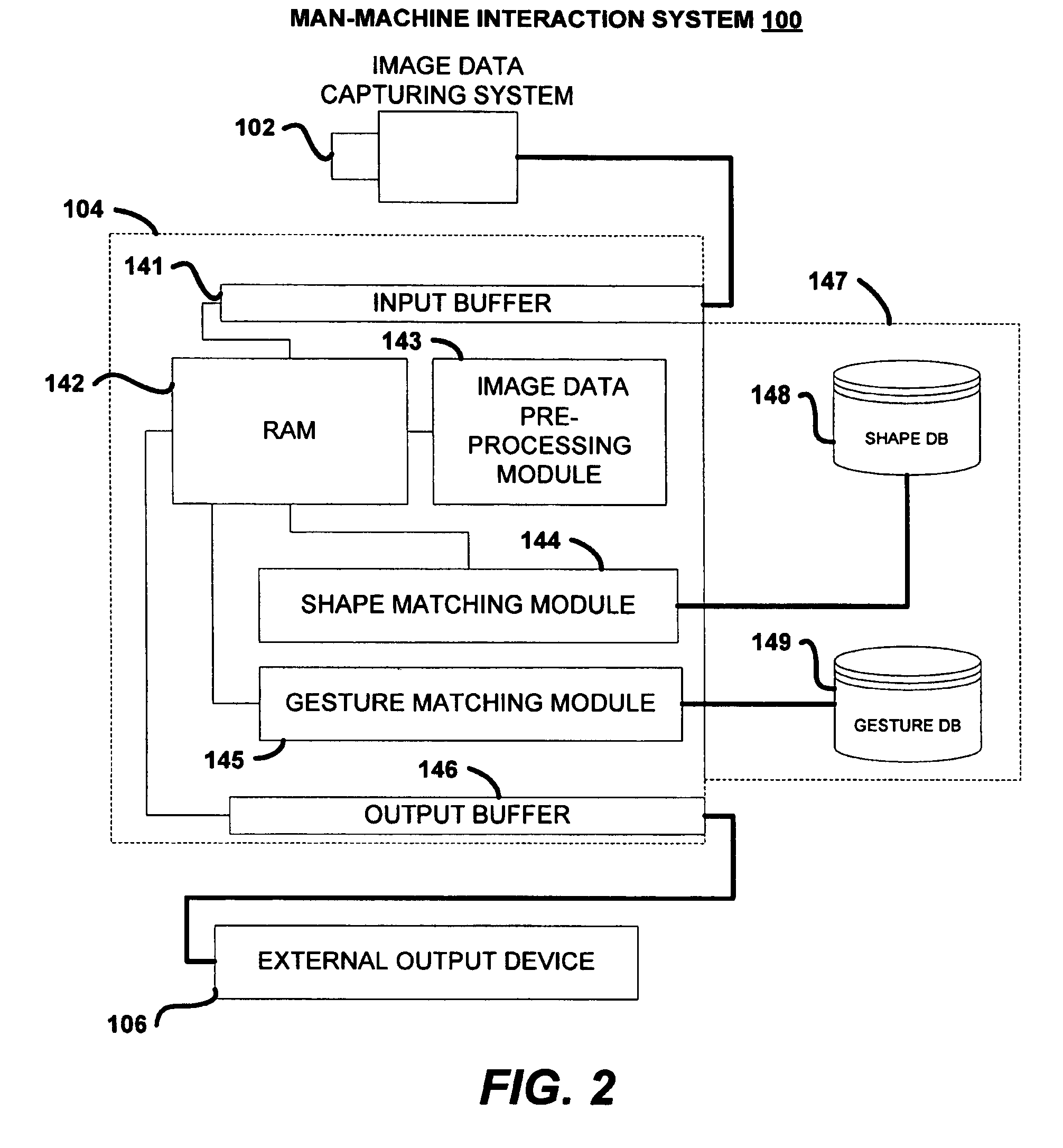

Sign based human-machine interaction

Communication is an important issue in man-to-robot interaction. Signs can be used to interact with machines by providing user instructions or commands. Embodiment of the present invention include human detection, human body parts detection, hand shape analysis, trajectory analysis, orientation determination, gesture matching, and the like. Many types of shapes and gestures are recognized in a non-intrusive manner based on computer vision. A number of applications become feasible by this sign-understanding technology, including remote control of home devices, mouse-less (and touch-less) operation of computer consoles, gaming, and man-robot communication to give instructions among others. Active sensing hardware is used to capture a stream of depth images at a video rate, which is consequently analyzed for information extraction.

Owner:THE OHIO STATE UNIV RES FOUND +1

Human-computer user interaction

ActiveUS20100261526A1Input/output for user-computer interactionElectronic switchingHuman interactionComputer users

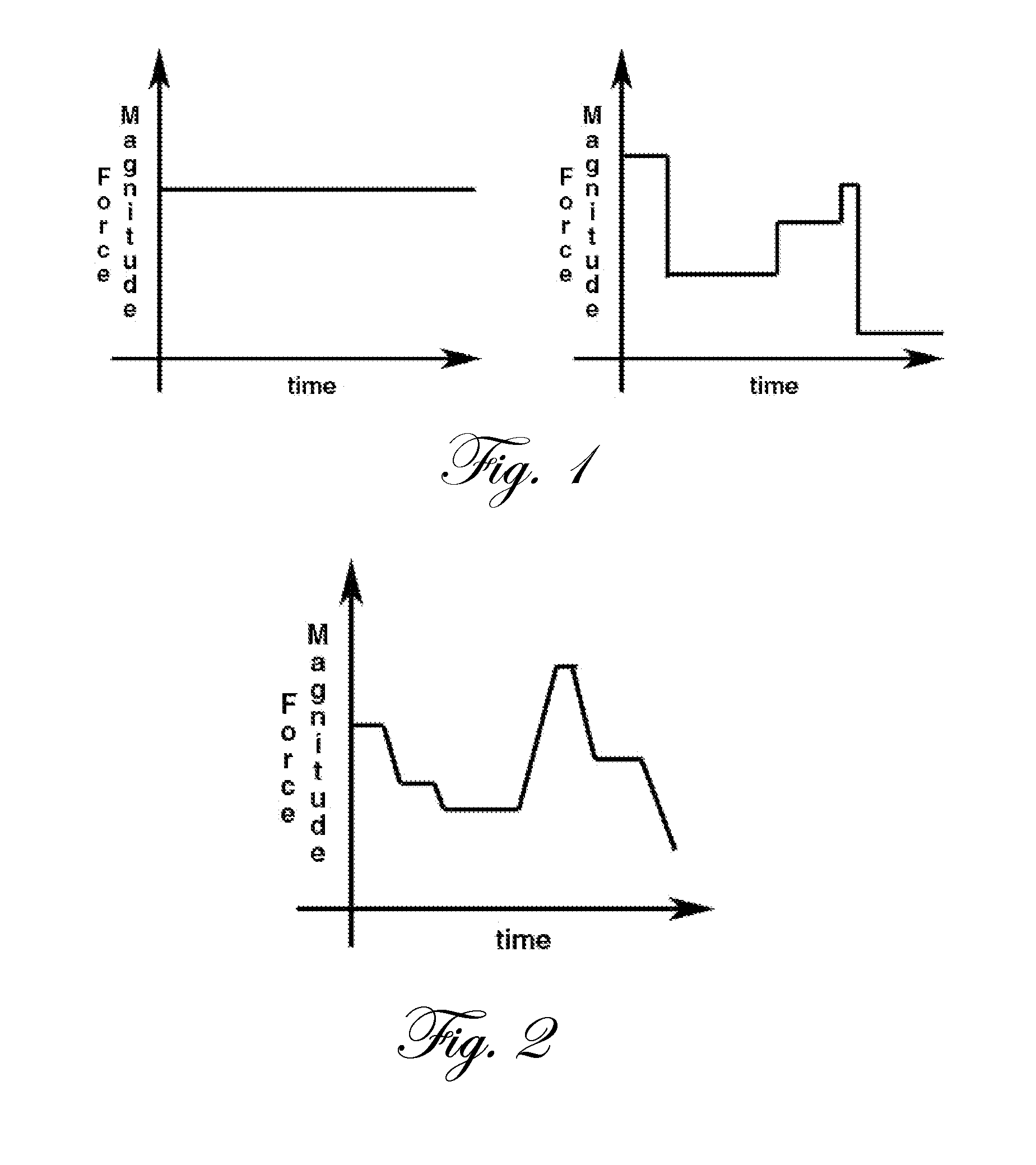

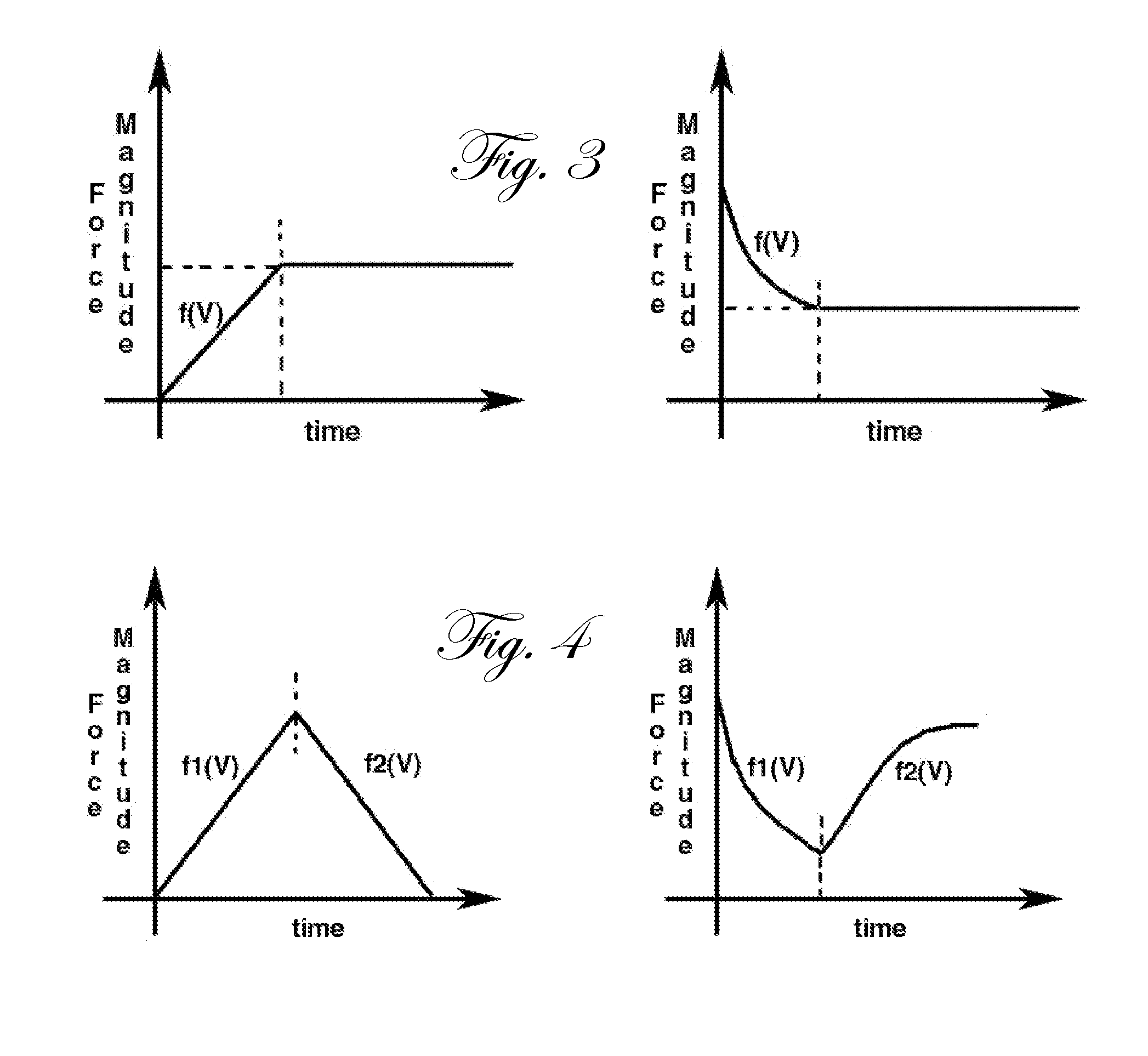

Methods of and apparatuses for providing human interaction with a computer, including human control of three dimensional input devices, force feedback, and force input.

Owner:META PLATFORMS INC

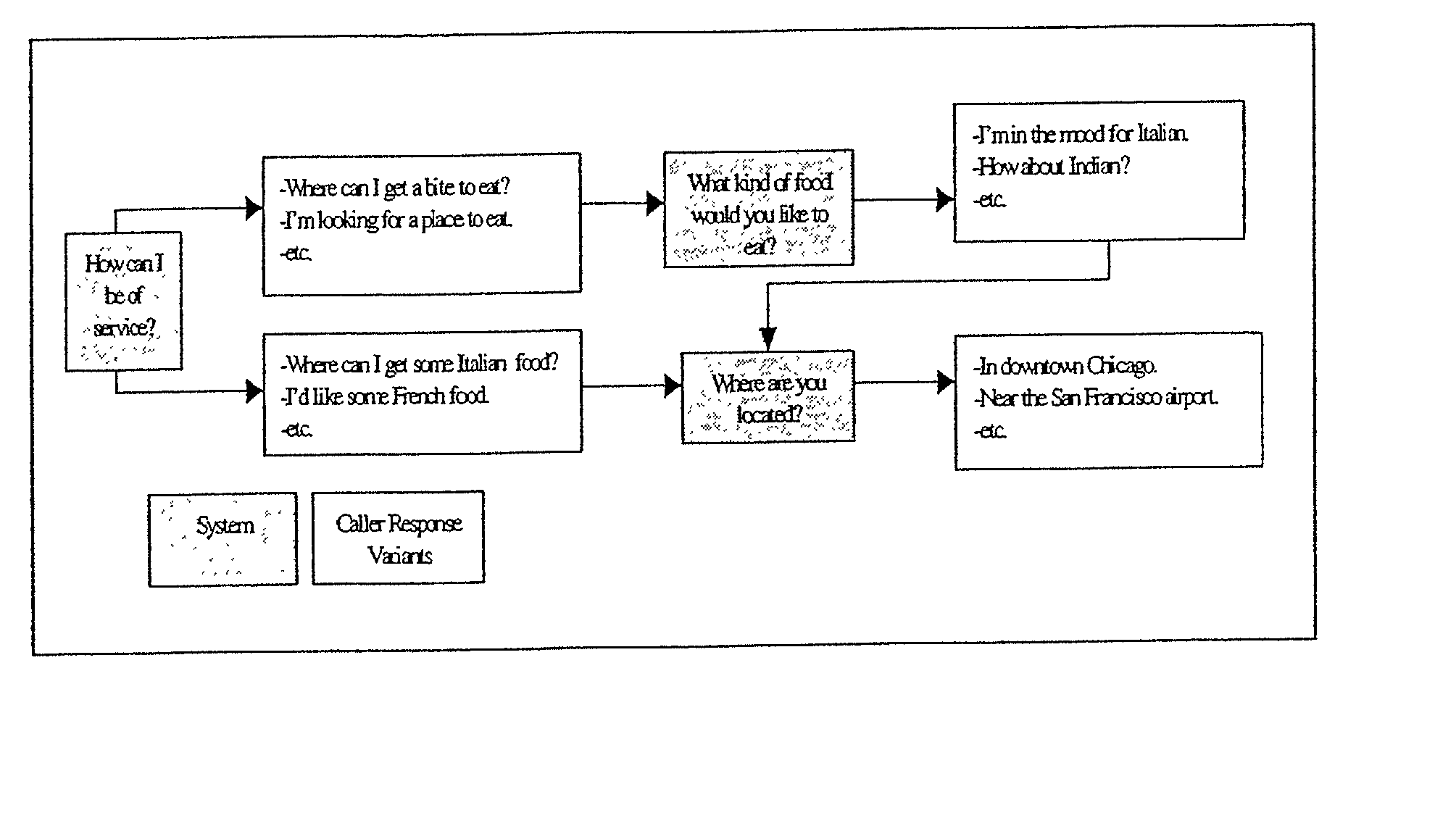

Phrase-based dialogue modeling with particular application to creating recognition grammars for voice-controlled user interfaces

InactiveUS20020128821A1Quick identificationLess timeNatural language data processingSpeech recognitionSpeech soundHuman system interaction

The invention enables creation of grammar networks that can regulate, control, and define the content and scope of human-machine interaction in natural language voice user interfaces (NLVUI). More specifically, the invention concerns a phrase-based modeling of generic structures of verbal interaction and use of these models for the purpose of automating part of the design of such grammar networks.

Owner:NANT HLDG IP LLC

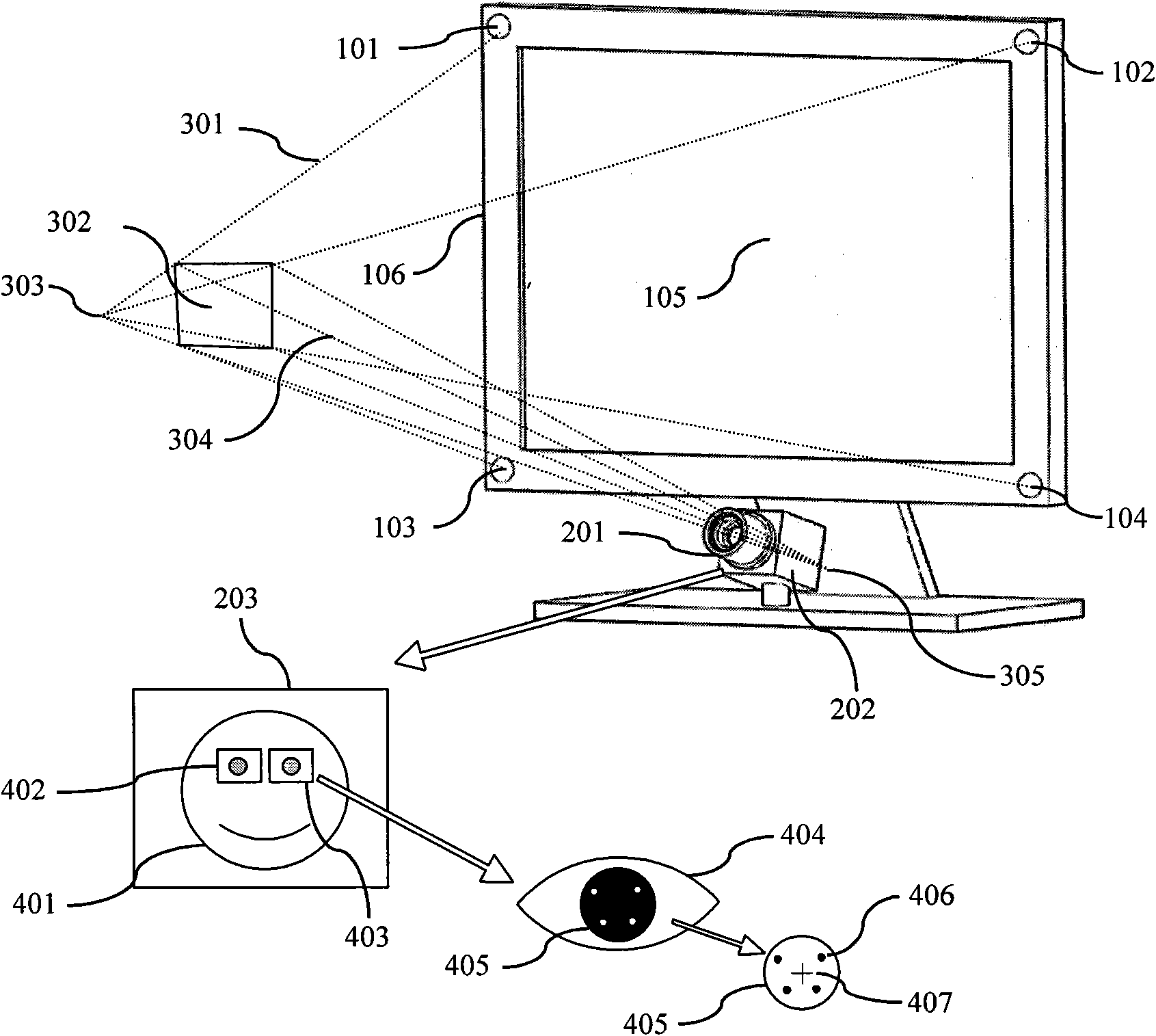

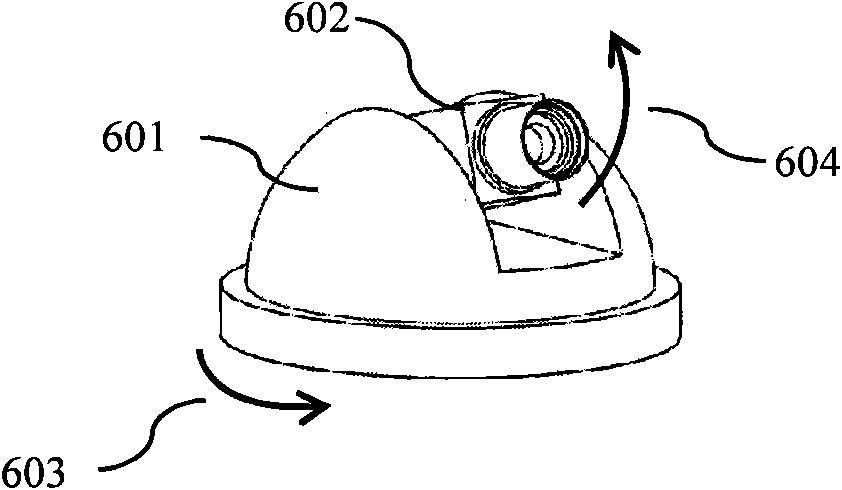

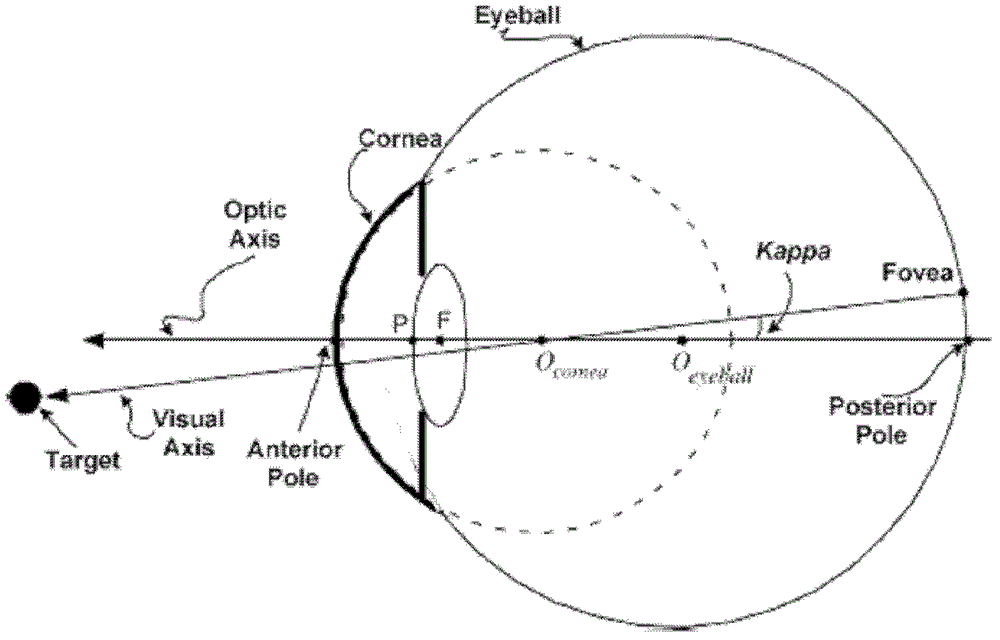

Real time eye tracking for human computer interaction

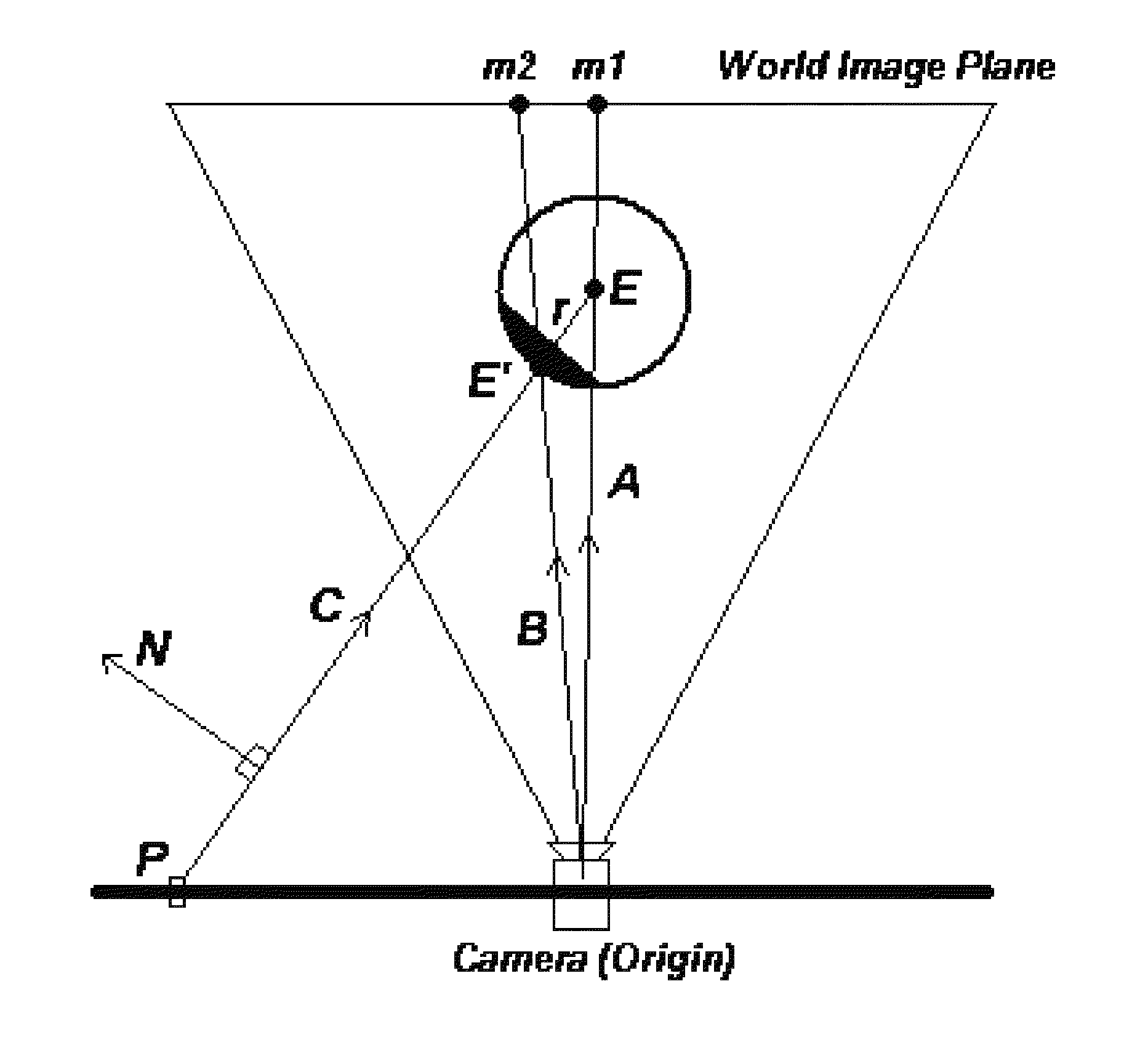

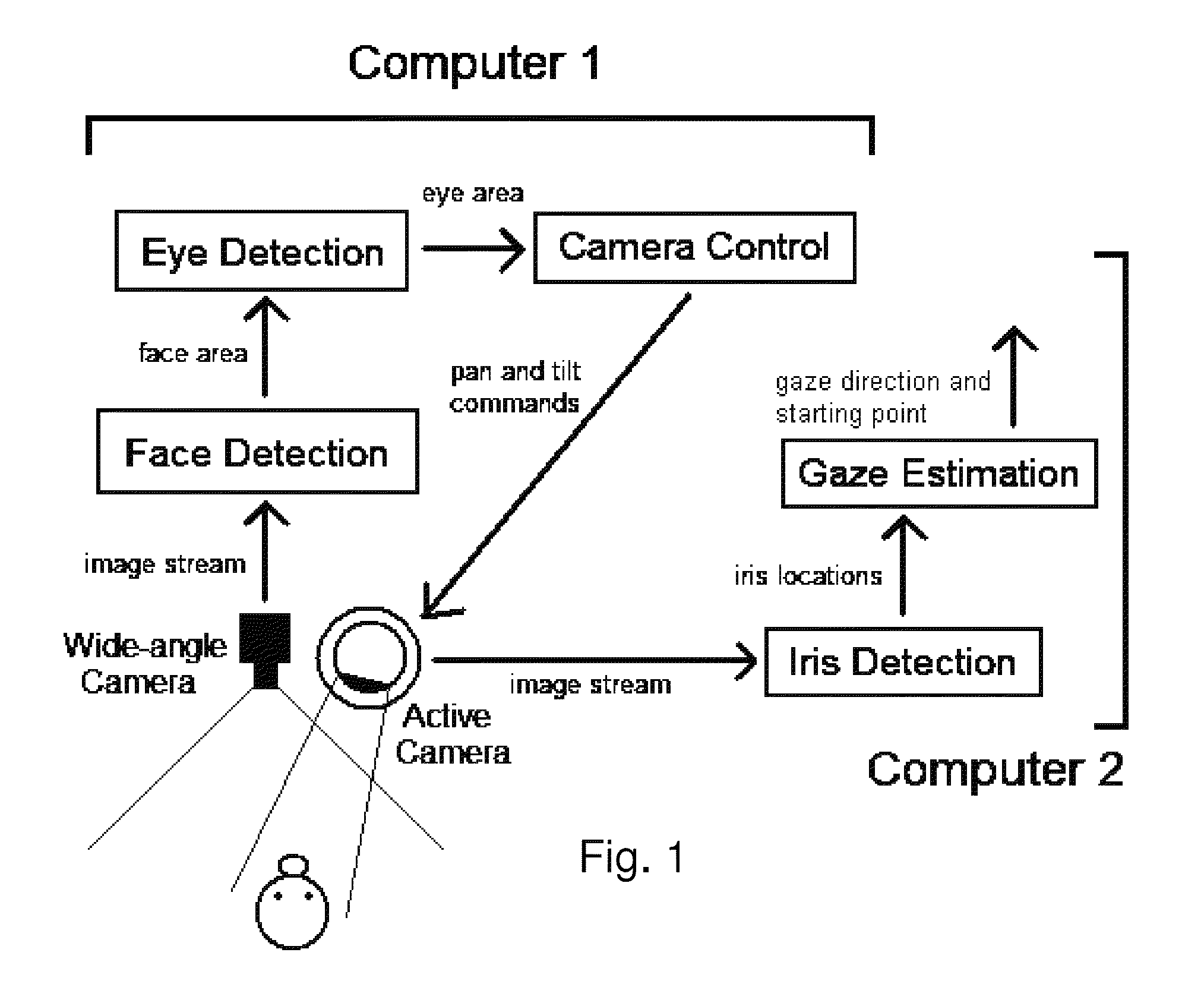

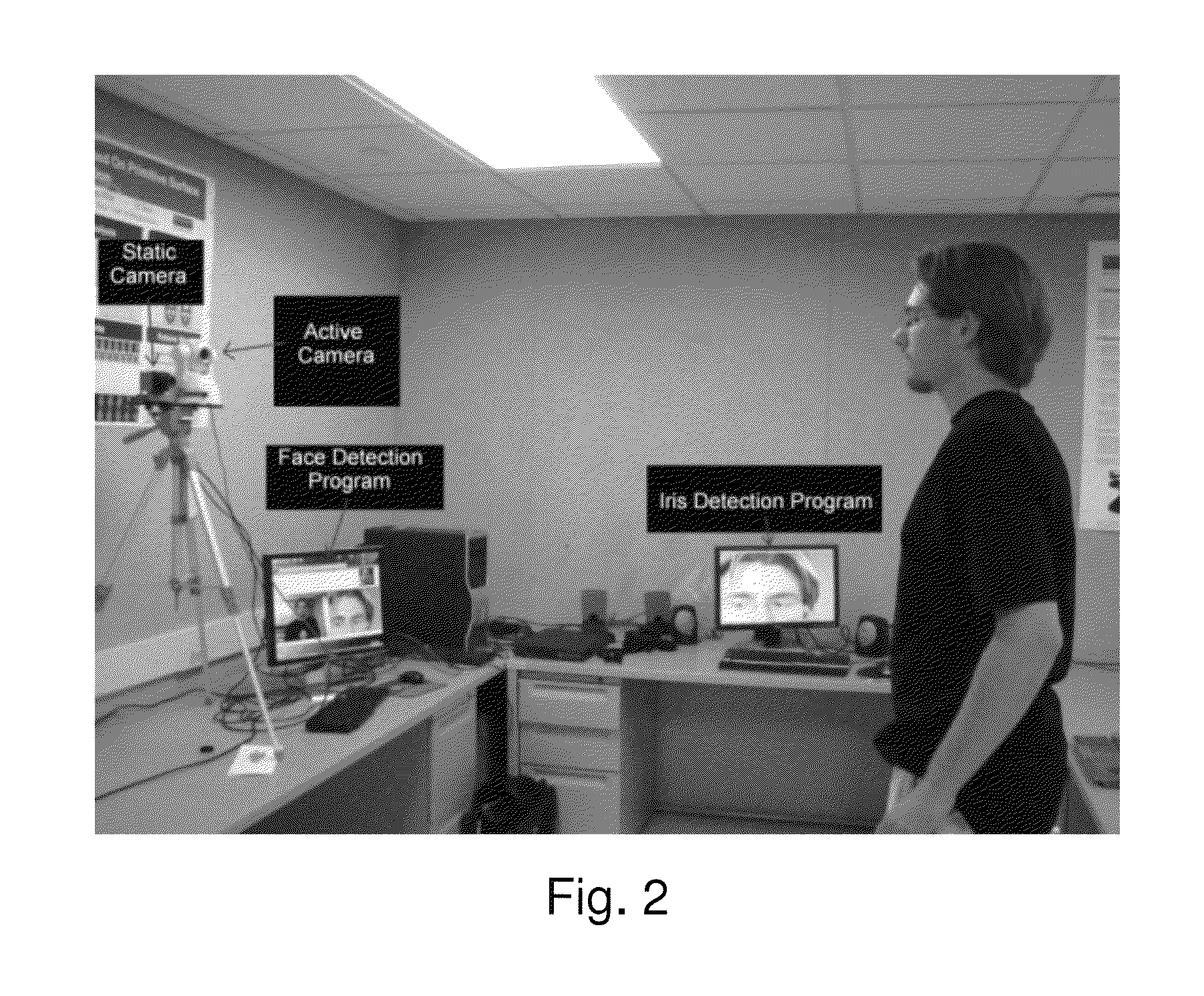

ActiveUS8885882B1Improve interactivityMore intelligent behaviorImage enhancementImage analysisOptical axisGaze directions

A gaze direction determining system and method is provided. A two-camera system may detect the face from a fixed, wide-angle camera, estimates a rough location for the eye region using an eye detector based on topographic features, and directs another active pan-tilt-zoom camera to focus in on this eye region. A eye gaze estimation approach employs point-of-regard (PoG) tracking on a large viewing screen. To allow for greater head pose freedom, a calibration approach is provided to find the 3D eyeball location, eyeball radius, and fovea position. Both the iris center and iris contour points are mapped to the eyeball sphere (creating a 3D iris disk) to get the optical axis; then the fovea rotated accordingly and the final, visual axis gaze direction computed.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

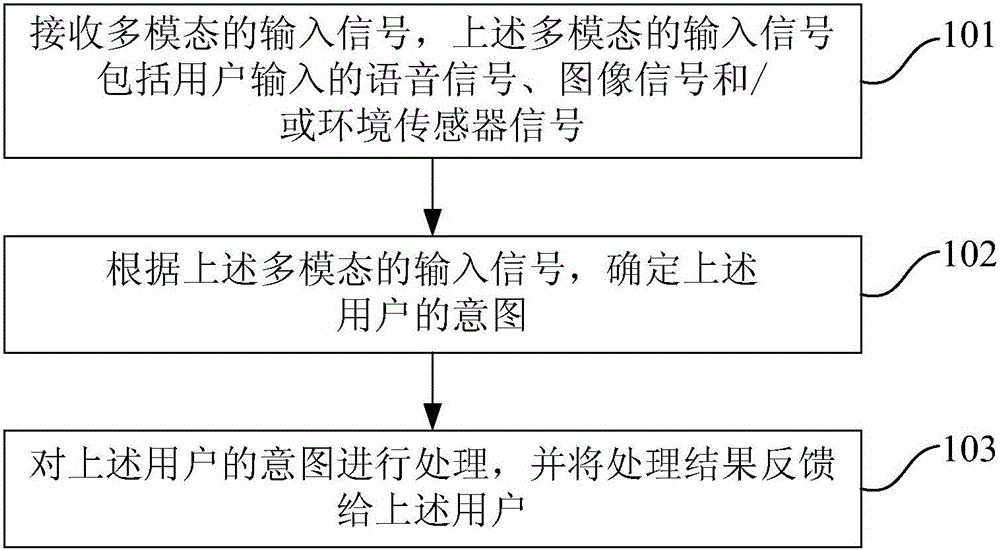

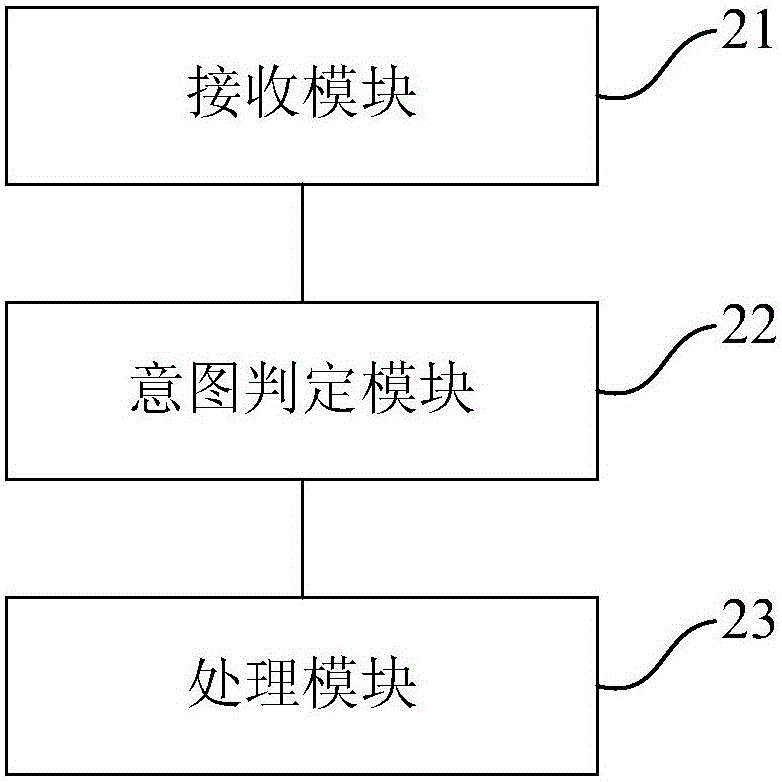

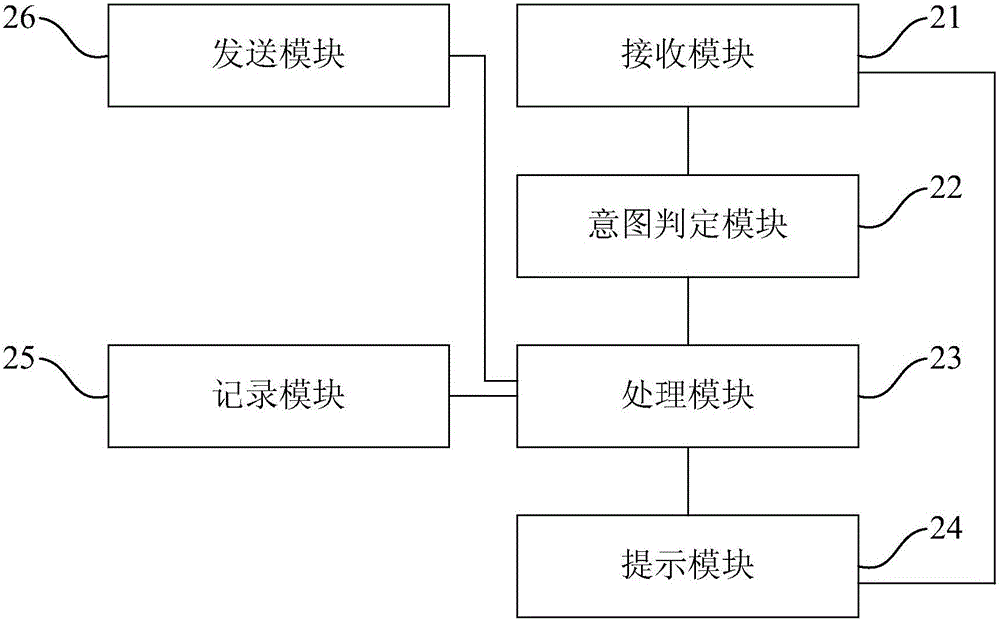

Man-machine interaction method and device based on artificial intelligence and terminal equipment

InactiveCN104951077AImprove experienceGood human-computer interaction functionInput/output for user-computer interactionArtificial lifeUser inputTerminal equipment

The invention provides a man-machine interaction method and device based on artificial intelligence and terminal equipment. The man-machine interaction method based on artificial intelligence comprises the steps of receiving multi-modal input signals, wherein the multi-modal input signals comprise voice signals, image signals and / or environment sensor signals input by a user; determining the intention of the user according to the multi-modal input signals; processing the intention of the user and feeding the processing result back to the user. The man-machine interaction method and device based on artificial intelligence and the terminal equipment can achieve the good man-machine interaction function and high-functioning and high-accompany type intelligent man-machine interaction.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

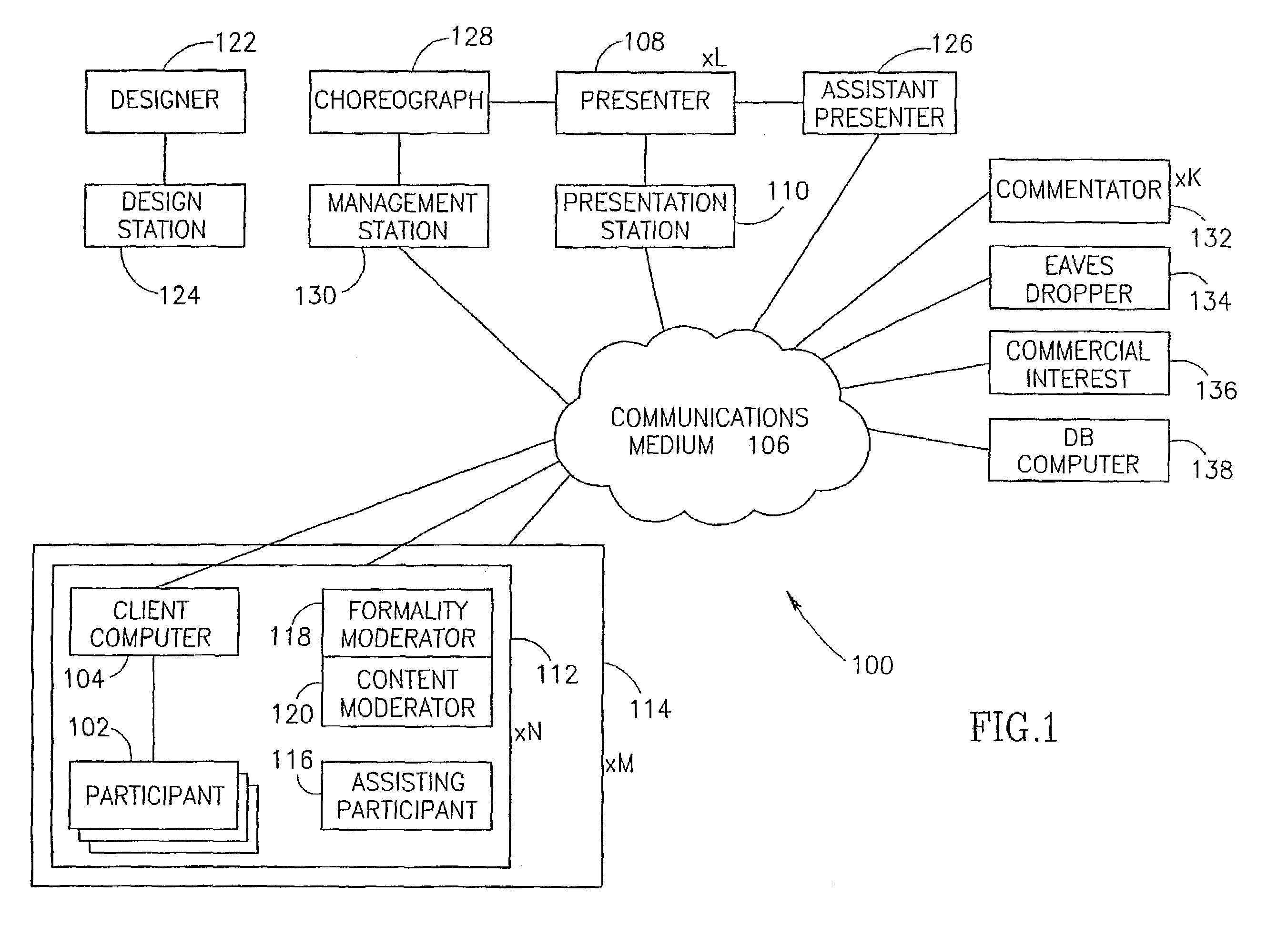

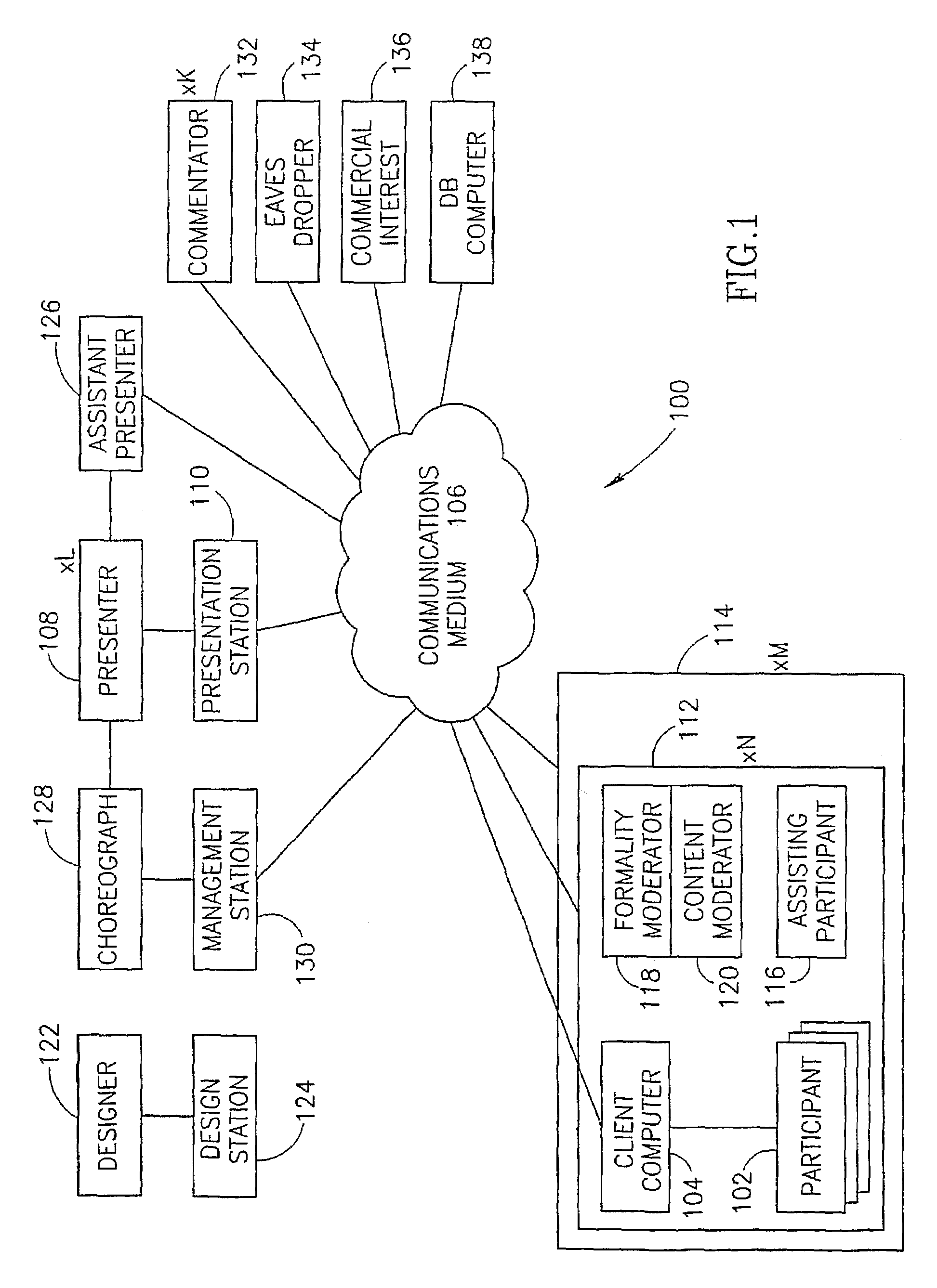

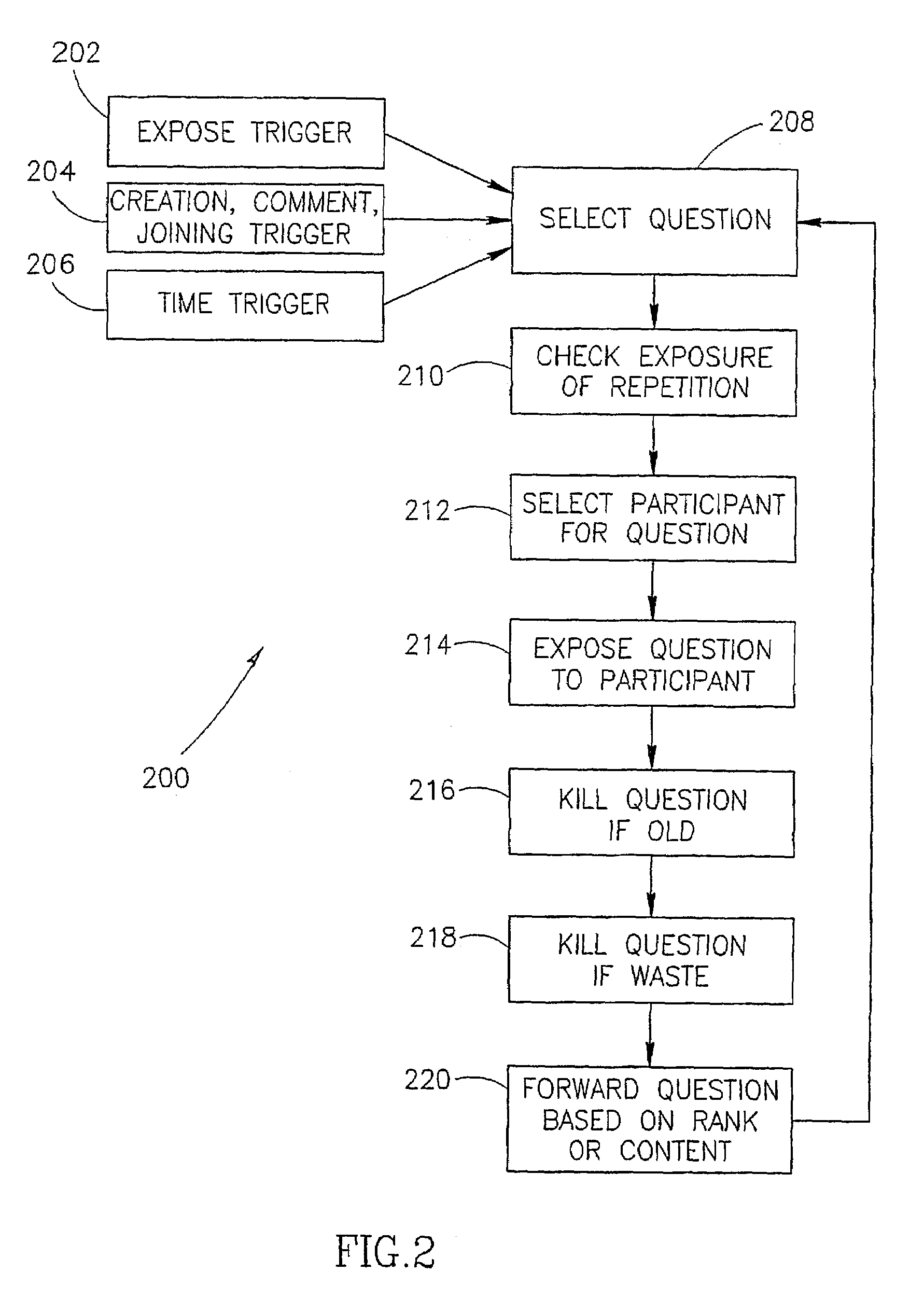

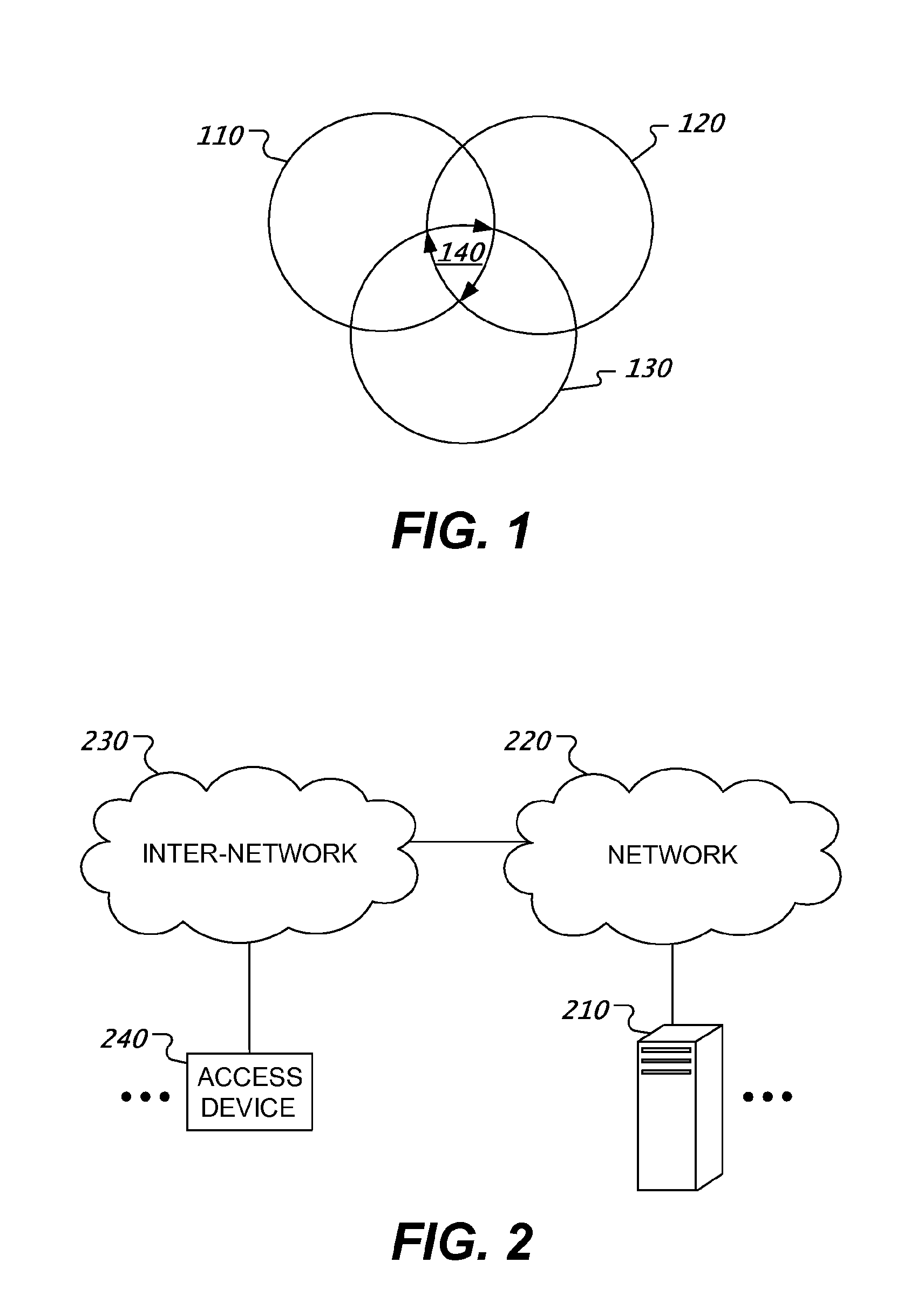

Large group interactions via mass communication network

InactiveUS7092821B2Reduces opportunity proliferationImprove efficiencyOffice automationTwo-way working systemsHuman interactionAutomatic control

A method of supporting mass human-interaction events, including: providing a mass interaction event by a computer network (100) in which a plurality of participants (102) interact with each other by generating information comprising of questions, responses to questions and fact information for presentation to other participants and assimilating information: and controlling, automatically by a computer (104) the rate of information presentation to each participant, to be below a maximum information assimilation rate of each participant.

Owner:INVOKE SOLUTIONS

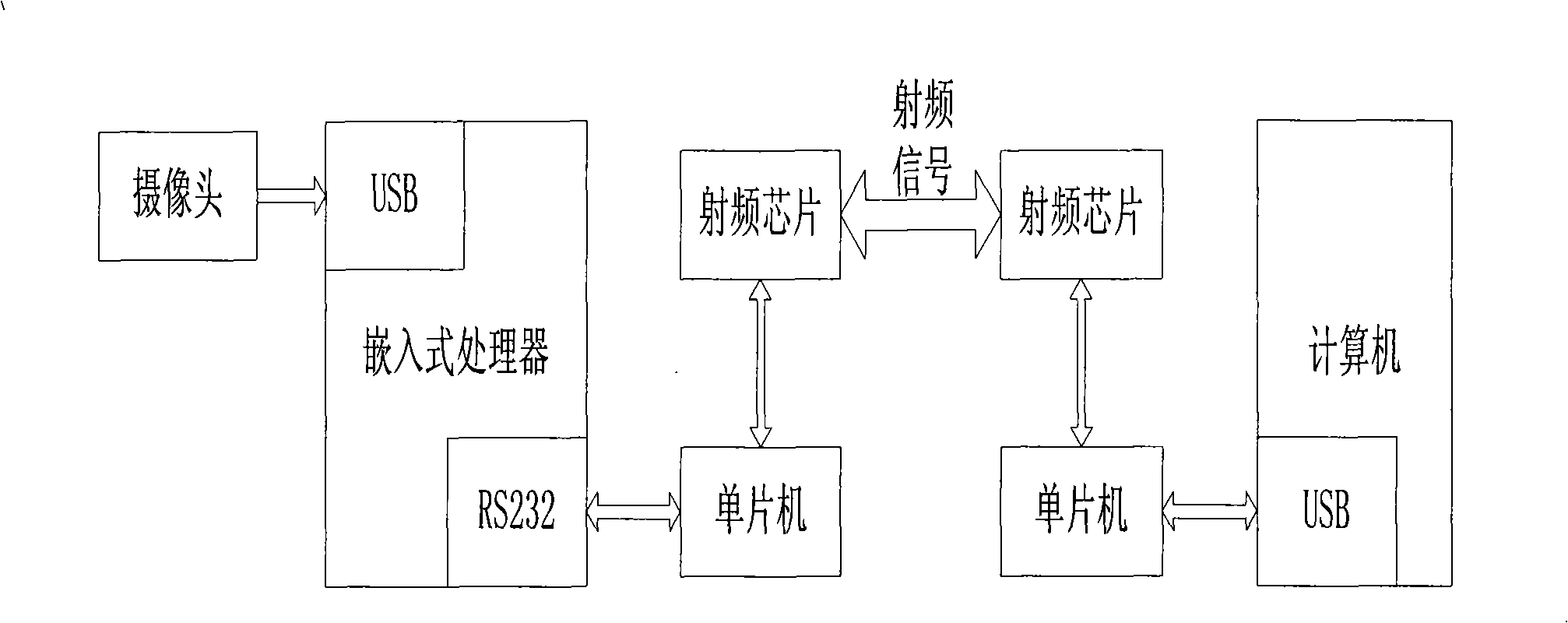

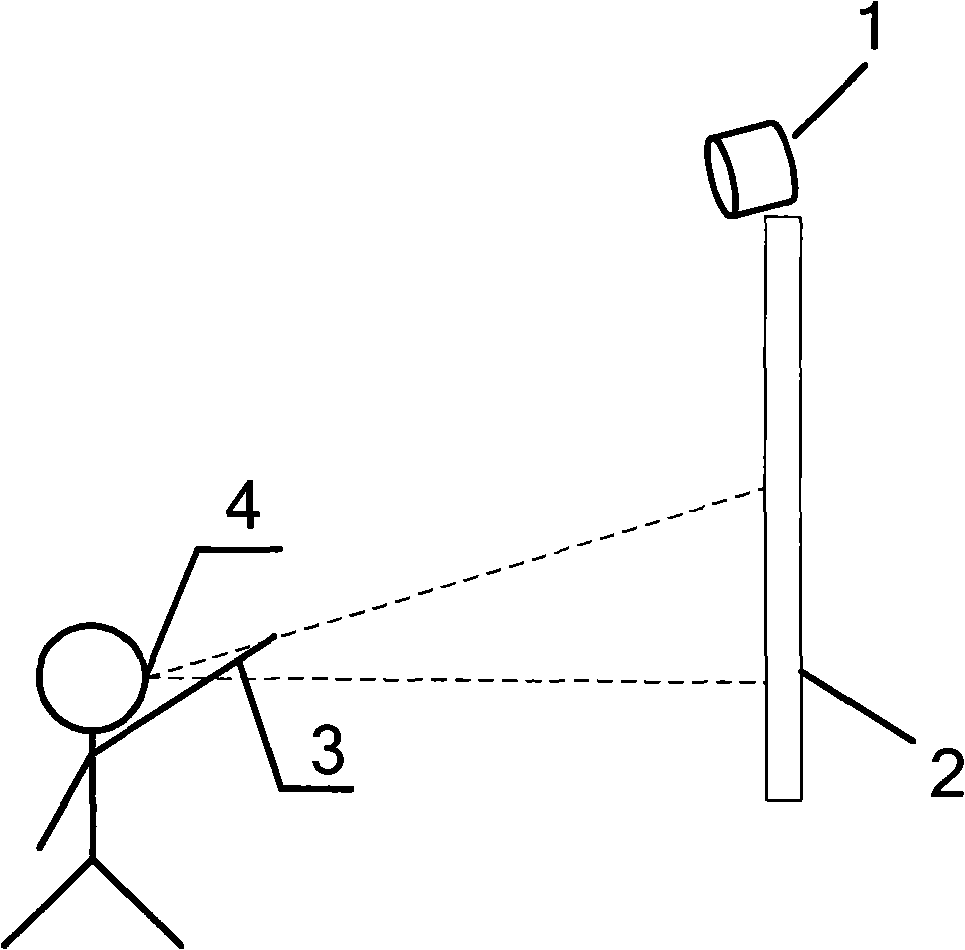

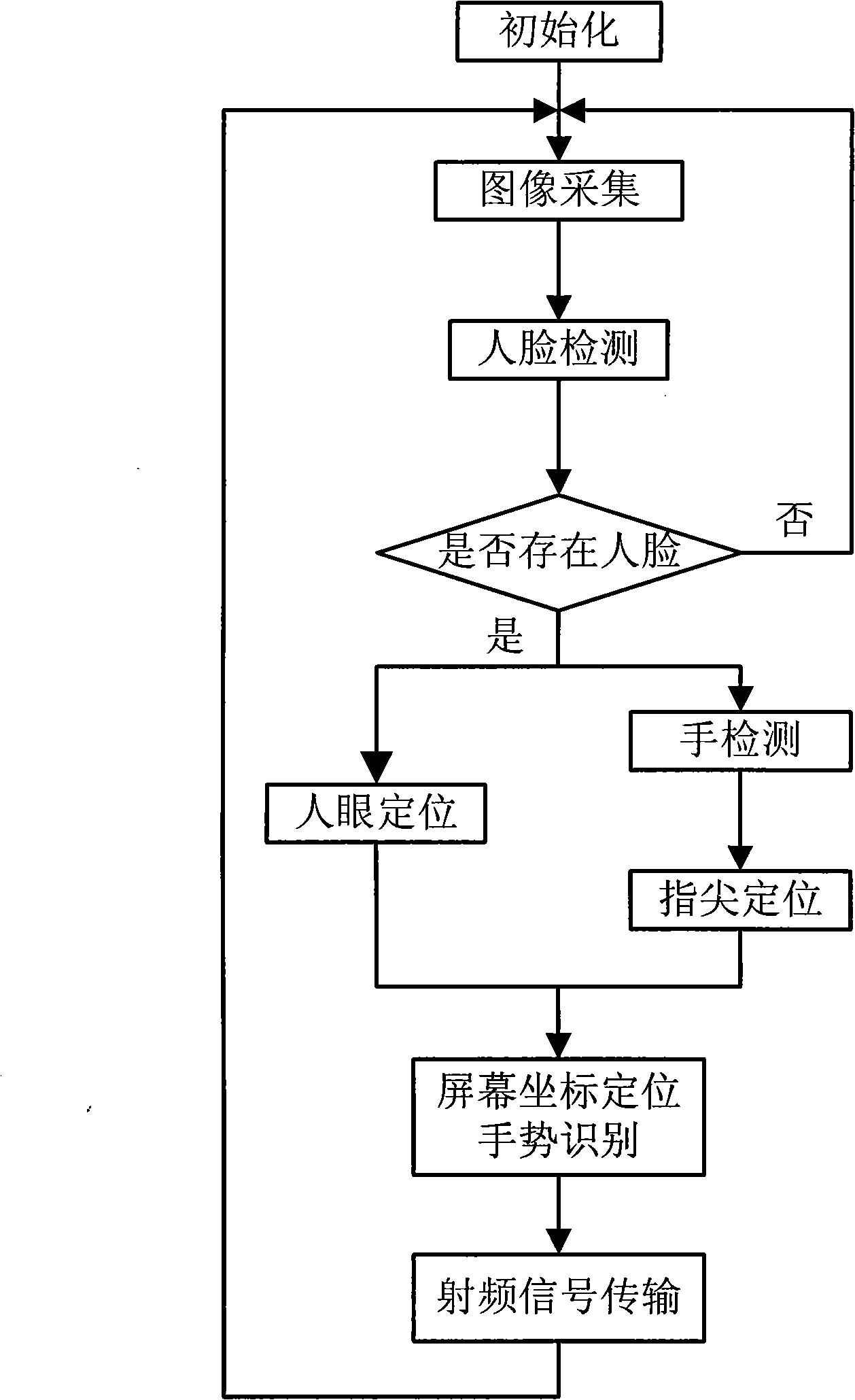

Human-machine interaction method and device based on sight tracing and gesture discriminating

InactiveCN101344816AEasy to controlSolve bugs that limit users' freedom of useInput/output for user-computer interactionCharacter and pattern recognitionImaging processingWireless transmission

The invention discloses a human-computer interaction method and a device based on vision follow-up and gesture identification. The method comprises the following steps of: facial area detection, hand area detection, eye location, fingertip location, screen location and gesture identification. A straight line is determined between an eye and a fingertip; the position where the straight line intersects with the screen is transformed into the logic coordinate of the mouse on the screen, and simultaneously the clicking operation of the mouse is simulated by judging the pressing action of the finger. The device comprises an image collection module, an image processing module and a wireless transmission module. First, the image of a user is collected at real time by a camera and then analyzed and processed by using an image processing algorithm to transform positions the user points to the screen and gesture changes into logic coordinates and control orders of the computer on the screen; and then the processing results are transmitted to the computer through the wireless transmission module. The invention provides a natural, intuitive and simple human-computer interaction method, which can realize remote operation of computers.

Owner:SOUTH CHINA UNIV OF TECH

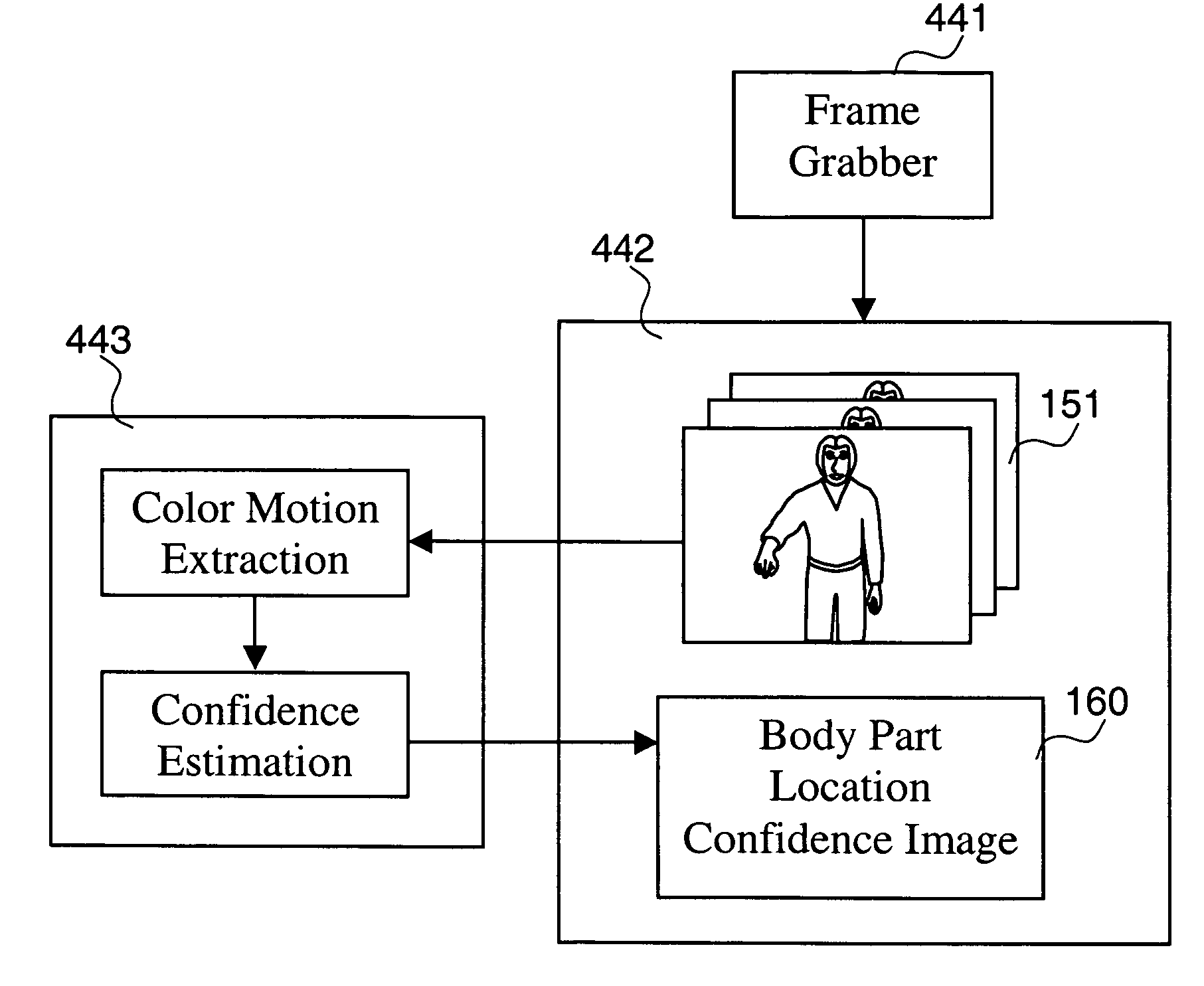

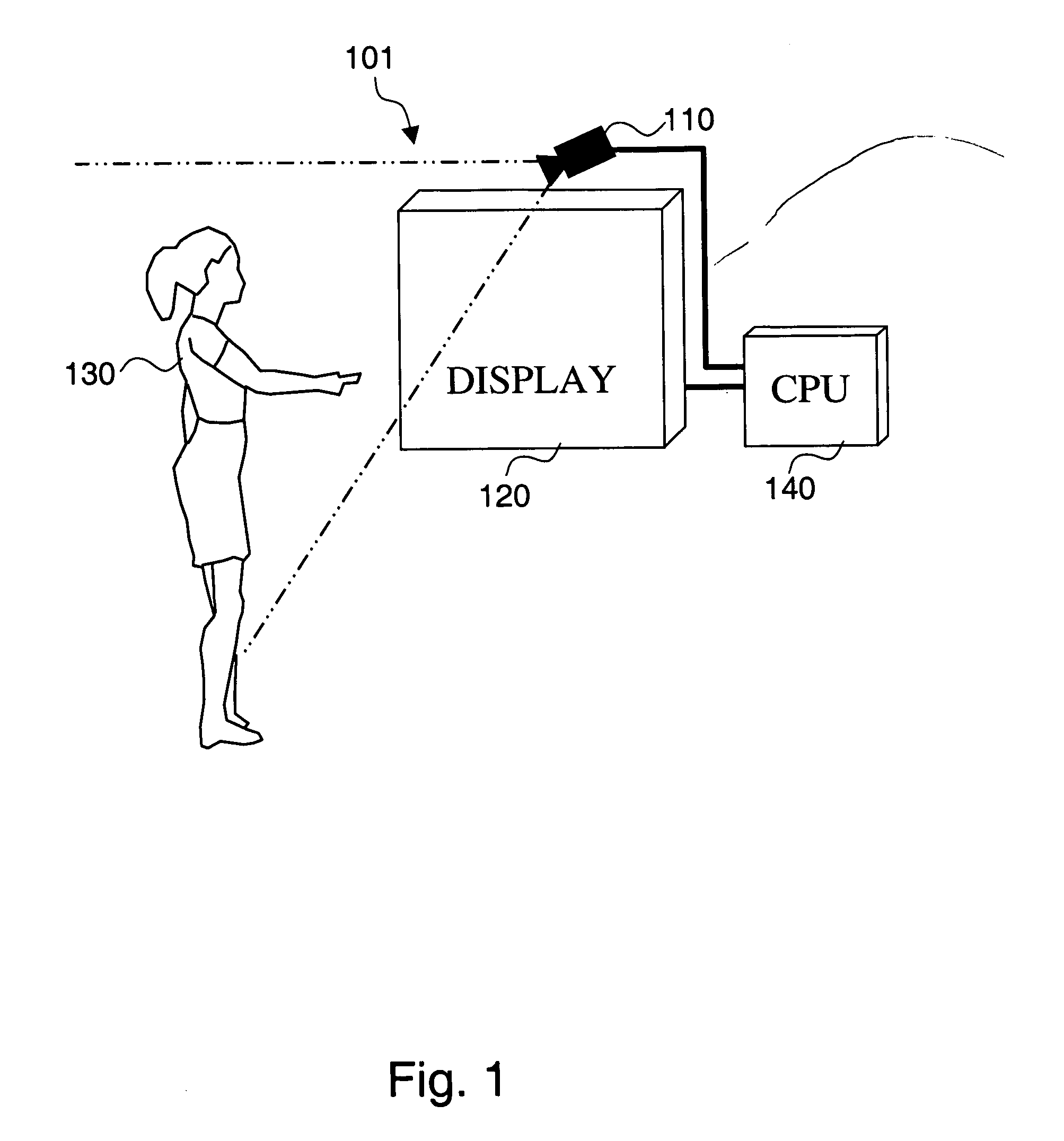

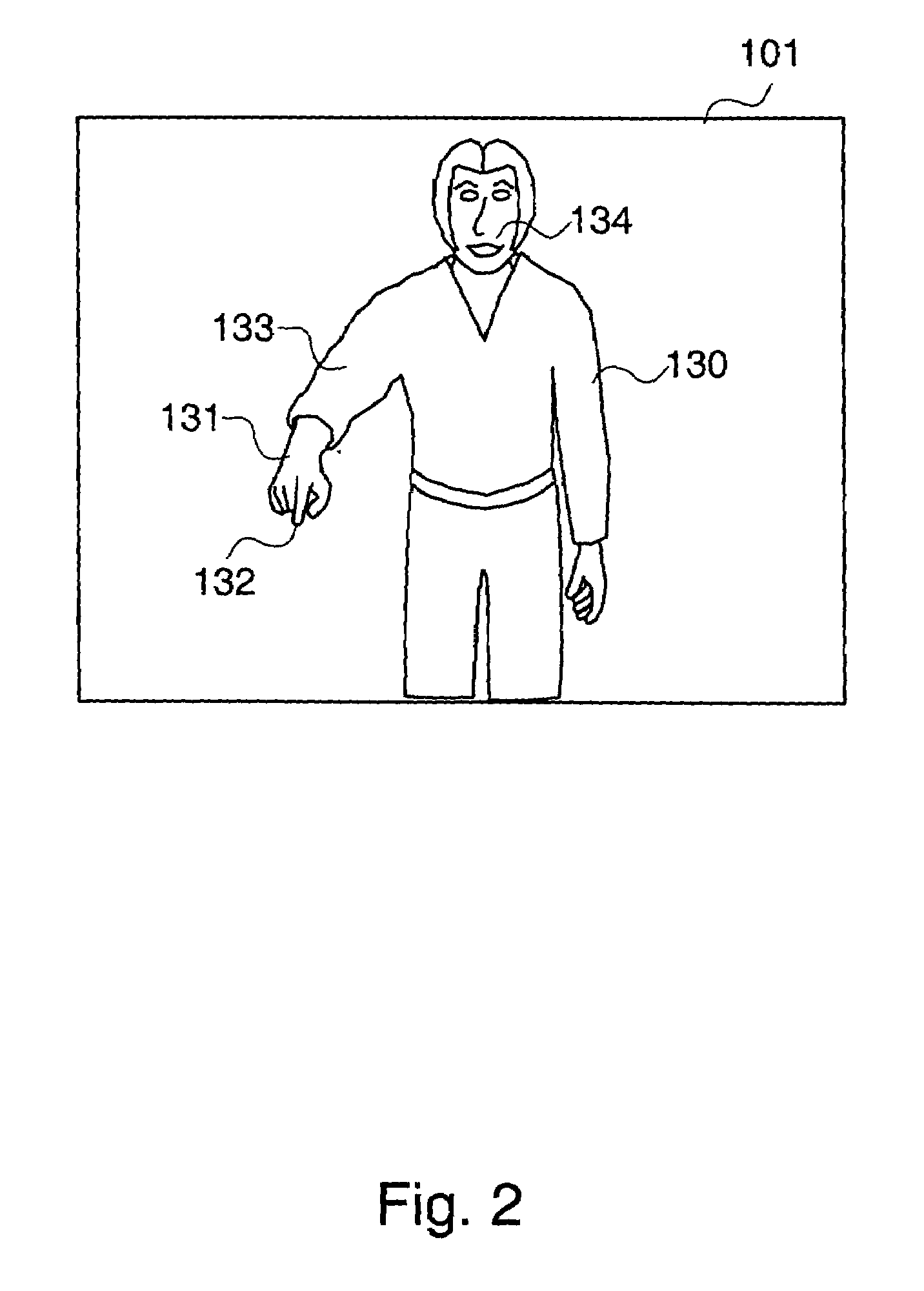

Method and system for detecting conscious hand movement patterns and computer-generated visual feedback for facilitating human-computer interaction

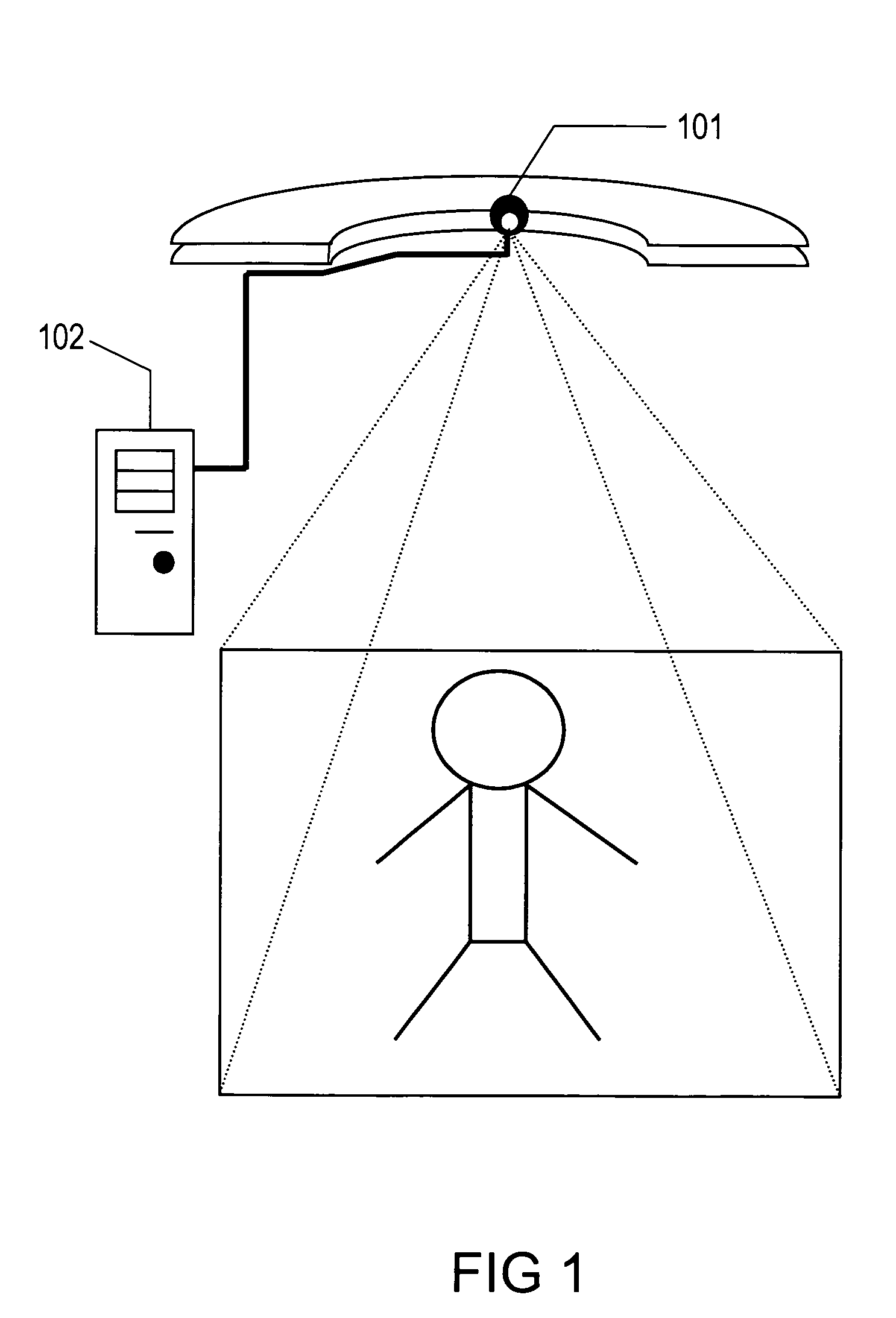

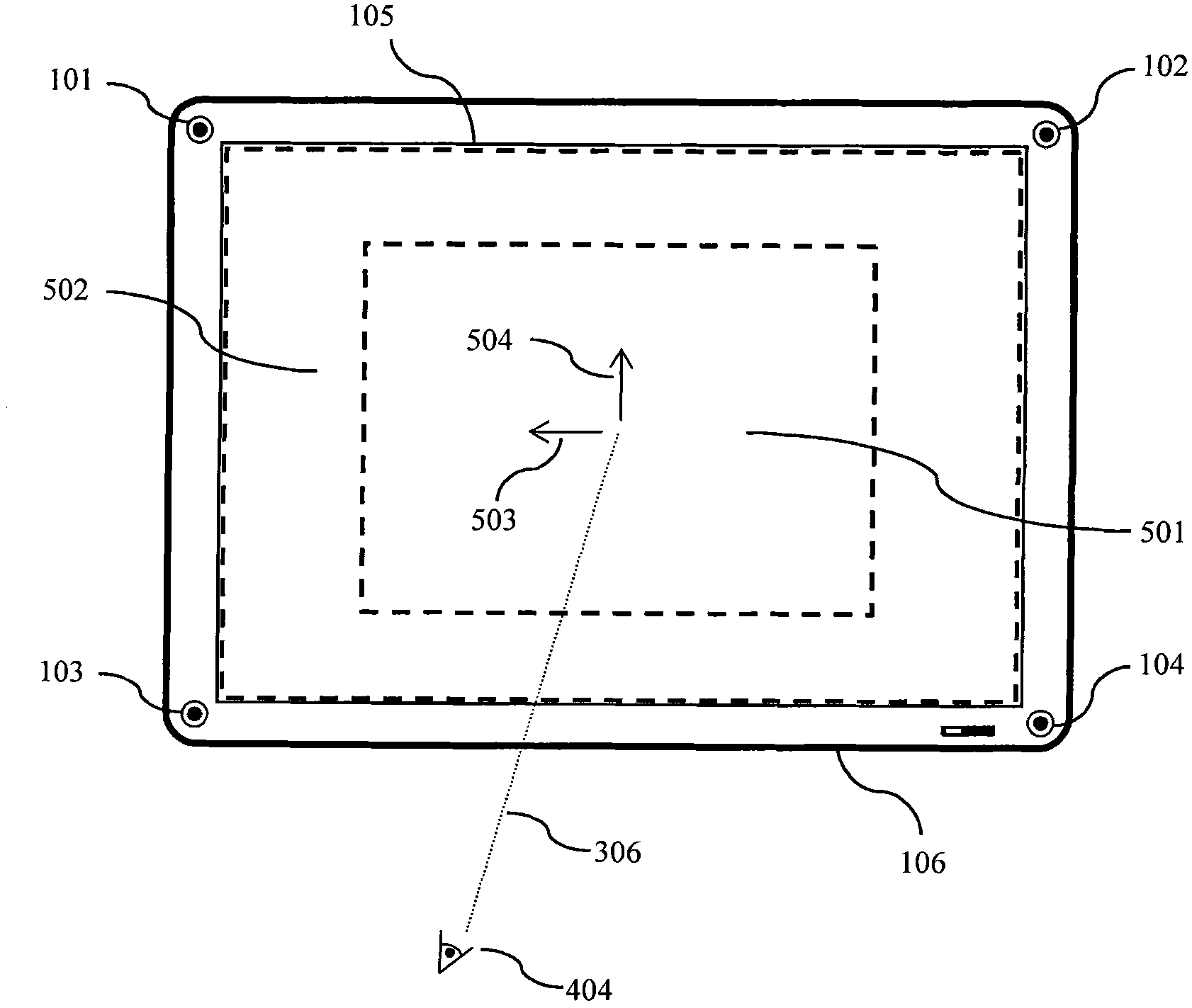

ActiveUS7274803B1Effective visual feedbackEfficient feedbackCharacter and pattern recognitionColor television detailsContact freePublic place

The present invention is a system and method for detecting and analyzing motion patterns of individuals present at a multimedia computer terminal from a stream of video frames generated by a video camera and the method of providing visual feedback of the extracted information to aid the interaction process between a user and the system. The method allows multiple people to be present in front of the computer terminal and yet allow one active user to make selections on the computer display. Thus the invention can be used as method for contact-free human-computer interaction in a public place, where the computer terminal can be positioned in a variety of configurations including behind a transparent glass window or at a height or location where the user cannot touch the terminal physically.

Owner:F POSZAT HU

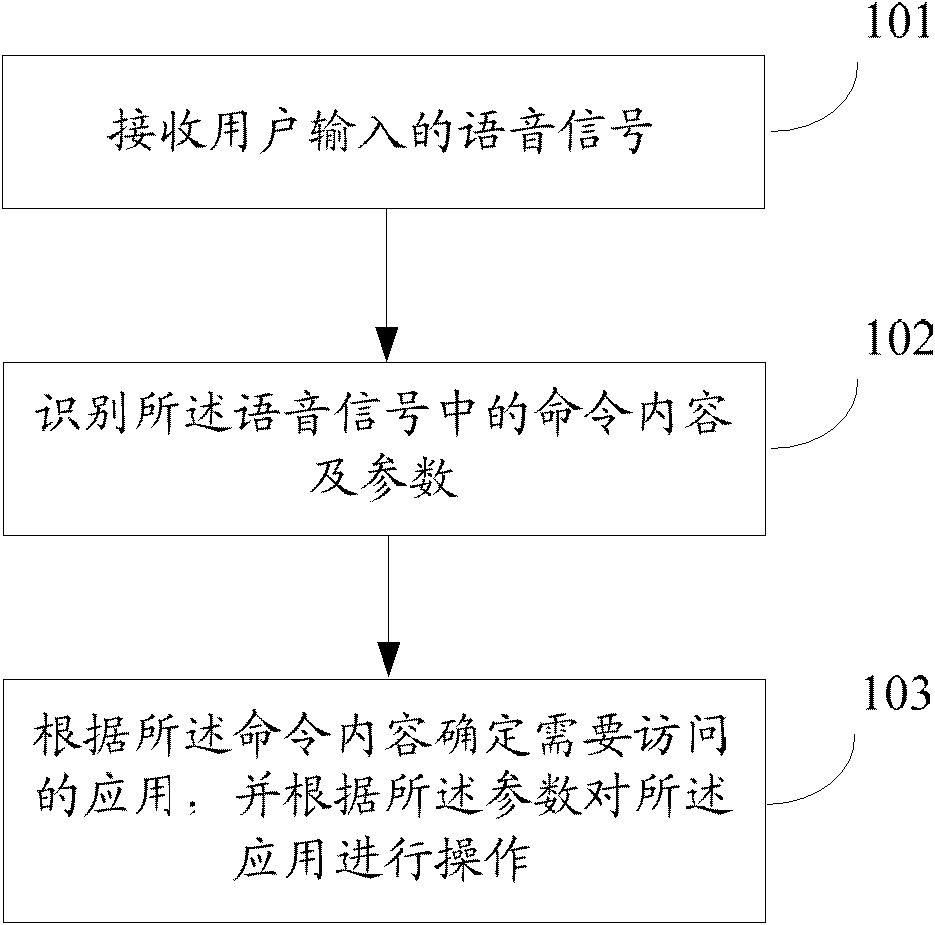

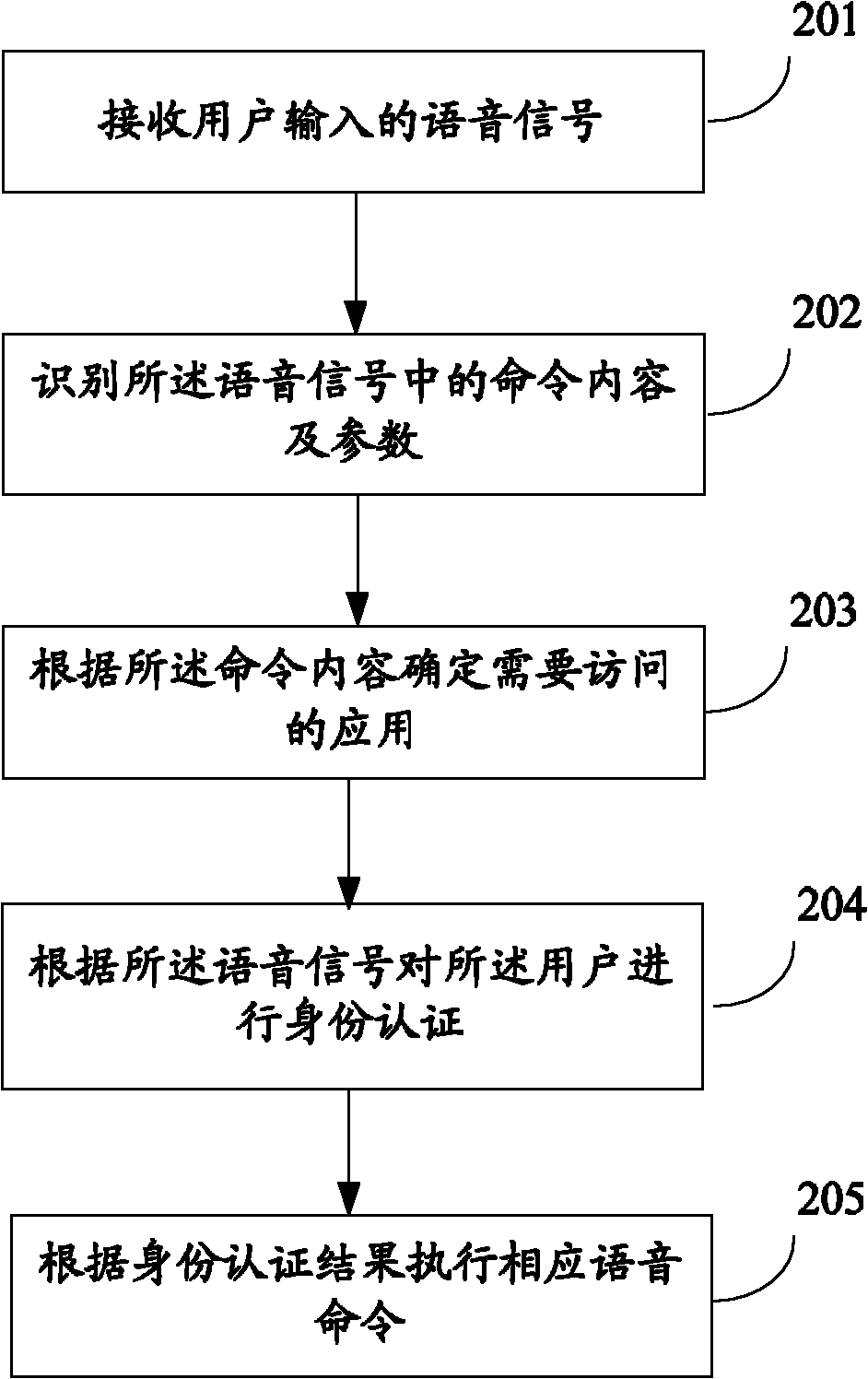

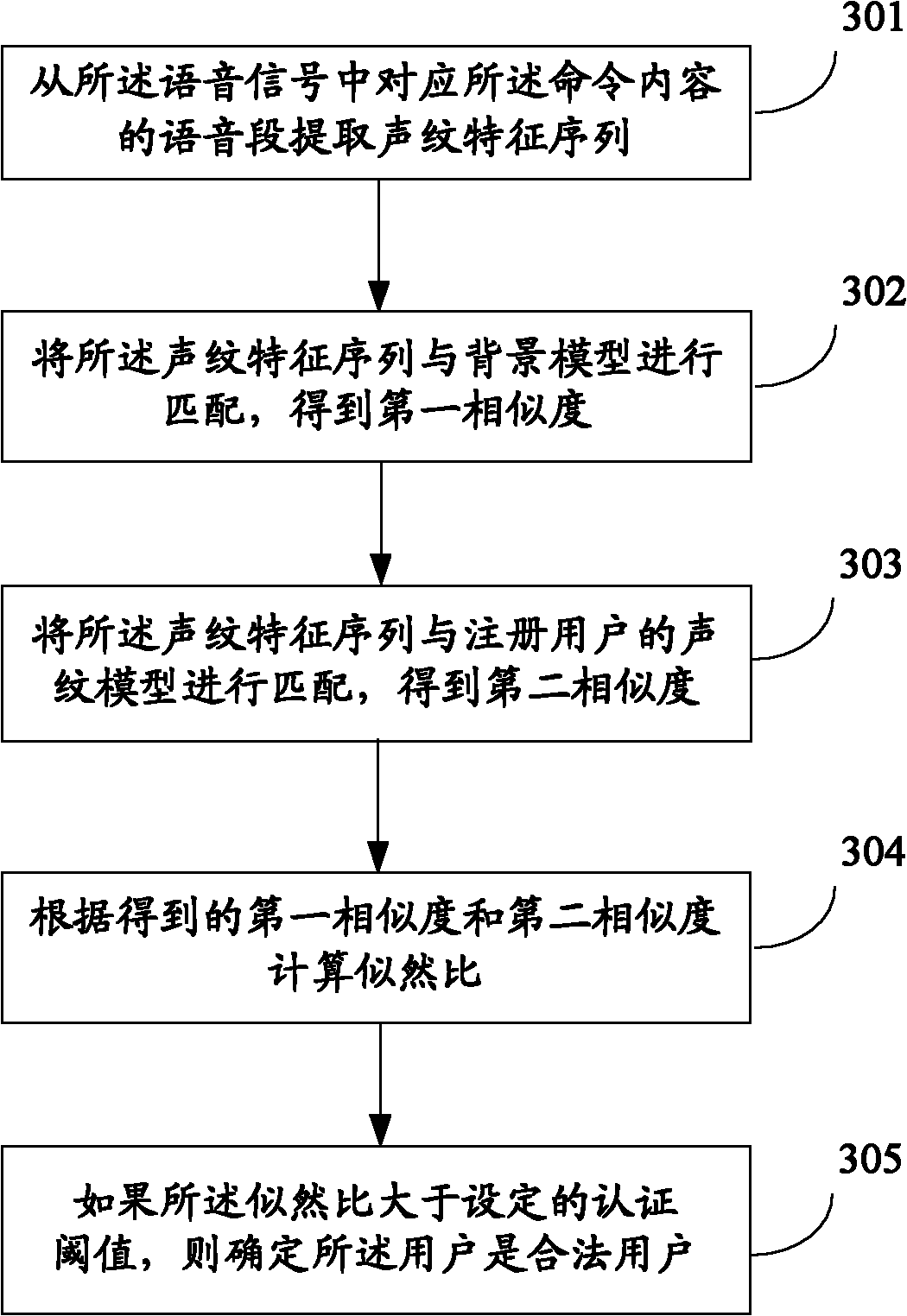

Personal assistant application access method and system

InactiveCN102510426ARealize the function of personal virtual assistantEfficient shortcut command orientationSpeech analysisSubstation equipmentAccess methodUser input

The invention relates to the technical field of application access and discloses a personal assistant application access method and system. The method comprises the following steps: receiving a voice signal input by a user; identifying command content and parameters in the voice signal; according to the command content, determining application which needs to access; and according to the parameters, operating the application. By utilizing the personal assistant application access method and system disclosed by the invention, the human-computer interaction efficiency can be improved.

Owner:IFLYTEK CO LTD

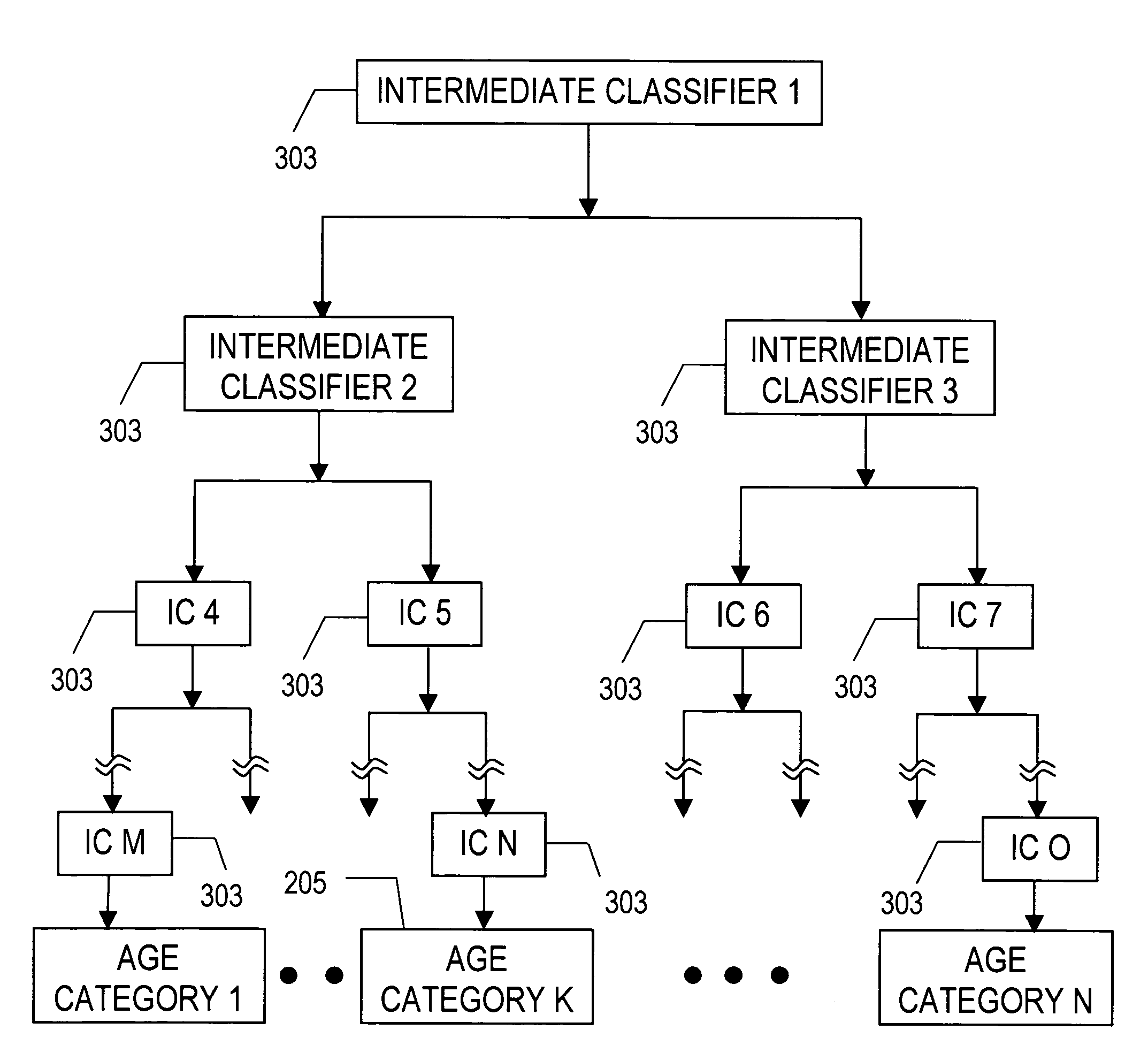

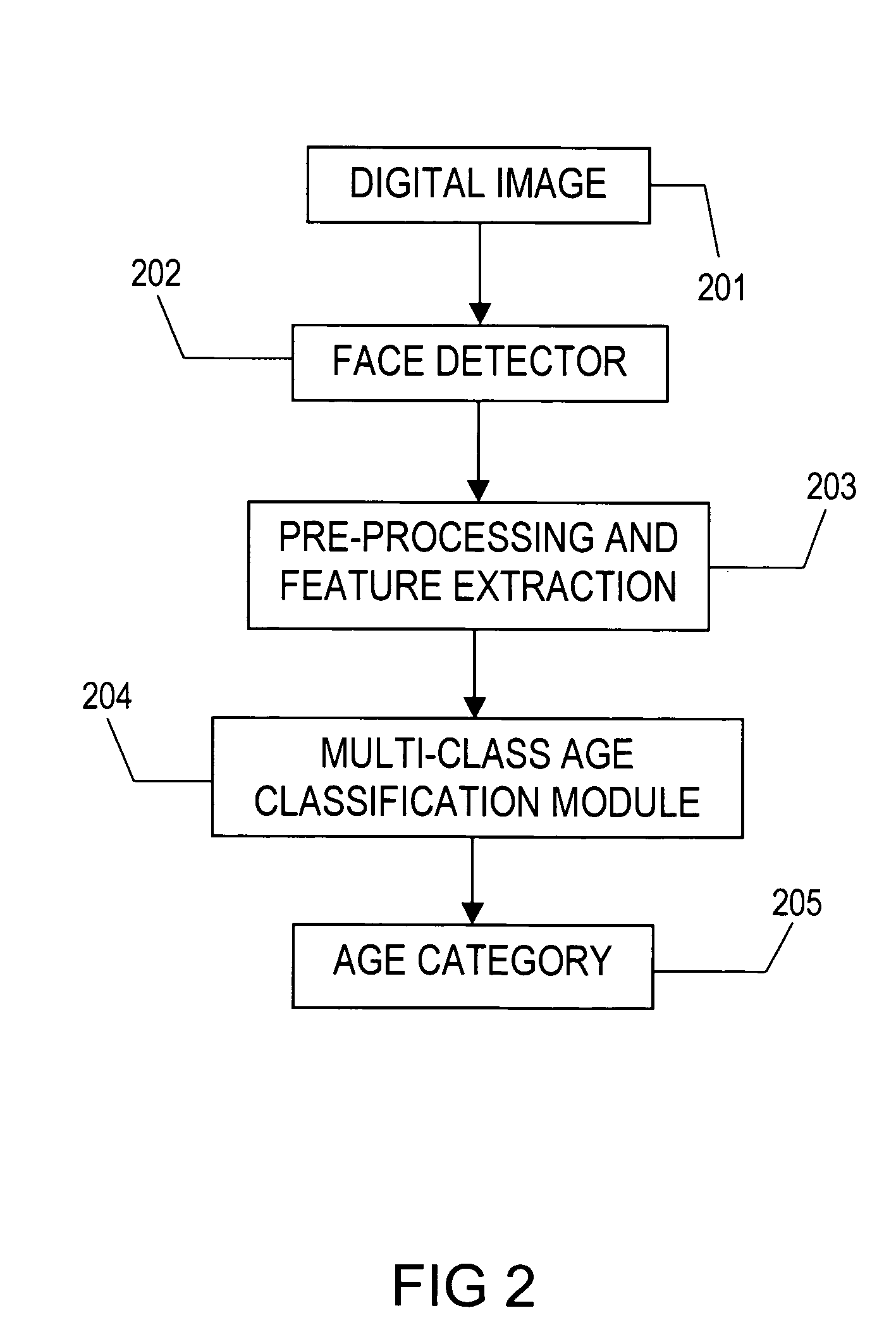

Classification of humans into multiple age categories from digital images

The present invention includes a method and system for automatically extracting the multi-class age category information of a person from digital images. The system detects the face of the person(s) in an image, extracts features from the face(s), and then classifies into one of the multiple age categories. Using appearance information from the entire face gives better results as compared to currently known techniques. Moreover, the described technique can be used to extract age category information in more robust manner than currently known methods, in environments with a high degree of variability in illumination, pose and presence of occlusion. Besides use as an automated data collection system wherein given the necessary facial information as the data, the age category of the person is determined automatically, the method could also be used for targeting certain age-groups in advertisements, surveillance, human computer interaction, security enhancements and immersive computer games.

Owner:VIDEOMINING CORP

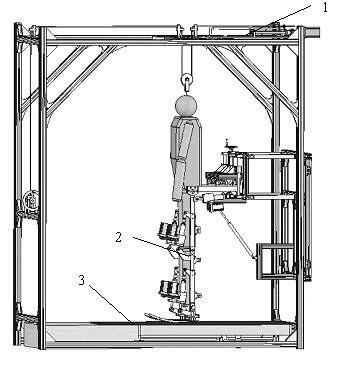

Motion control method of lower limb rehabilitative robot

InactiveCN102058464ARealize tracking controlEnhanced awareness of initiativeChiropractic devicesMovement coordination devicesEngineeringActive participation

The invention relates to a motion control method of a lower limb rehabilitative robot. In the method, aiming at different rehabilitation stages of a patient, two working modes of passive training and active training are carried out: under the mode of passive training, the patient is driven by controlling the robot to finish specific motions or motion according to a right physiological gait track; abnormal motions of the patient are completely restrained; and the patient passively follows the robot to do walking rehabilitation training; under the mode of active training, limited abnormal motions of the patient are restrained by the robot; through a real-time detection on joint driving forces generated when the patient acts on the robot in the motion process, human-computer interaction moment is extracted by utilizing an inverse dynamic model to judge the active motion intention of lower limbs of the patient; and the interaction moment is converted into correction value of gait track by utilizing an impedance controller to directly correct or generate the gait training track the patient expects through an adaptive controller, therefore, the purpose that the robot can provide auxiliary force and resistant force for the rehabilitation training can be indirectly realized. By means of the motion control method of the lower limb rehabilitative robot, rehabilitation training motions suitable for different rehabilitation stages can be provided for a dysbasia patient, thereby enhancing active participation degree of the rehabilitation training of the patient, building confidence of the rehabilitation and positivity of the motion, and then enhancing effect of the rehabilitation training.

Owner:SHANGHAI UNIV

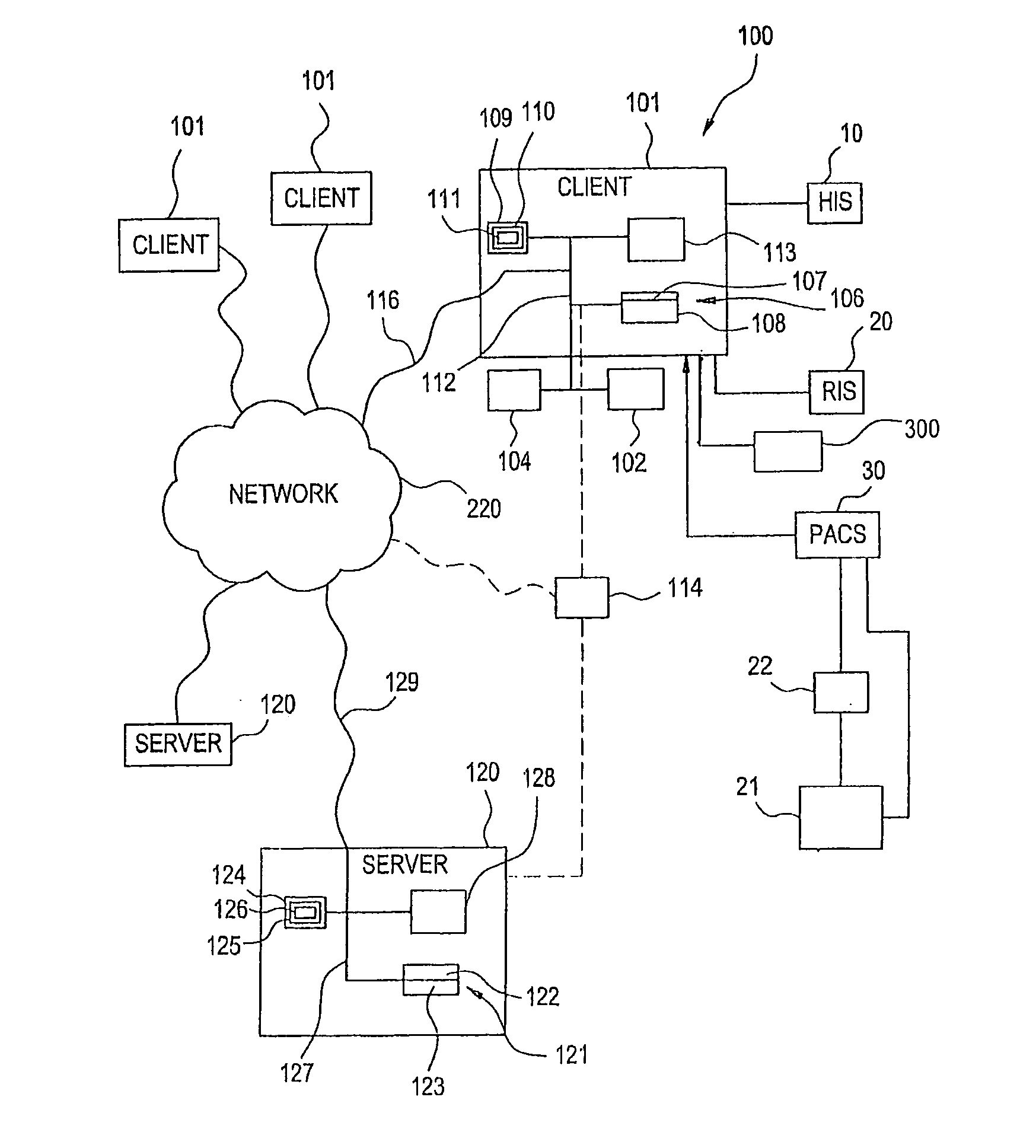

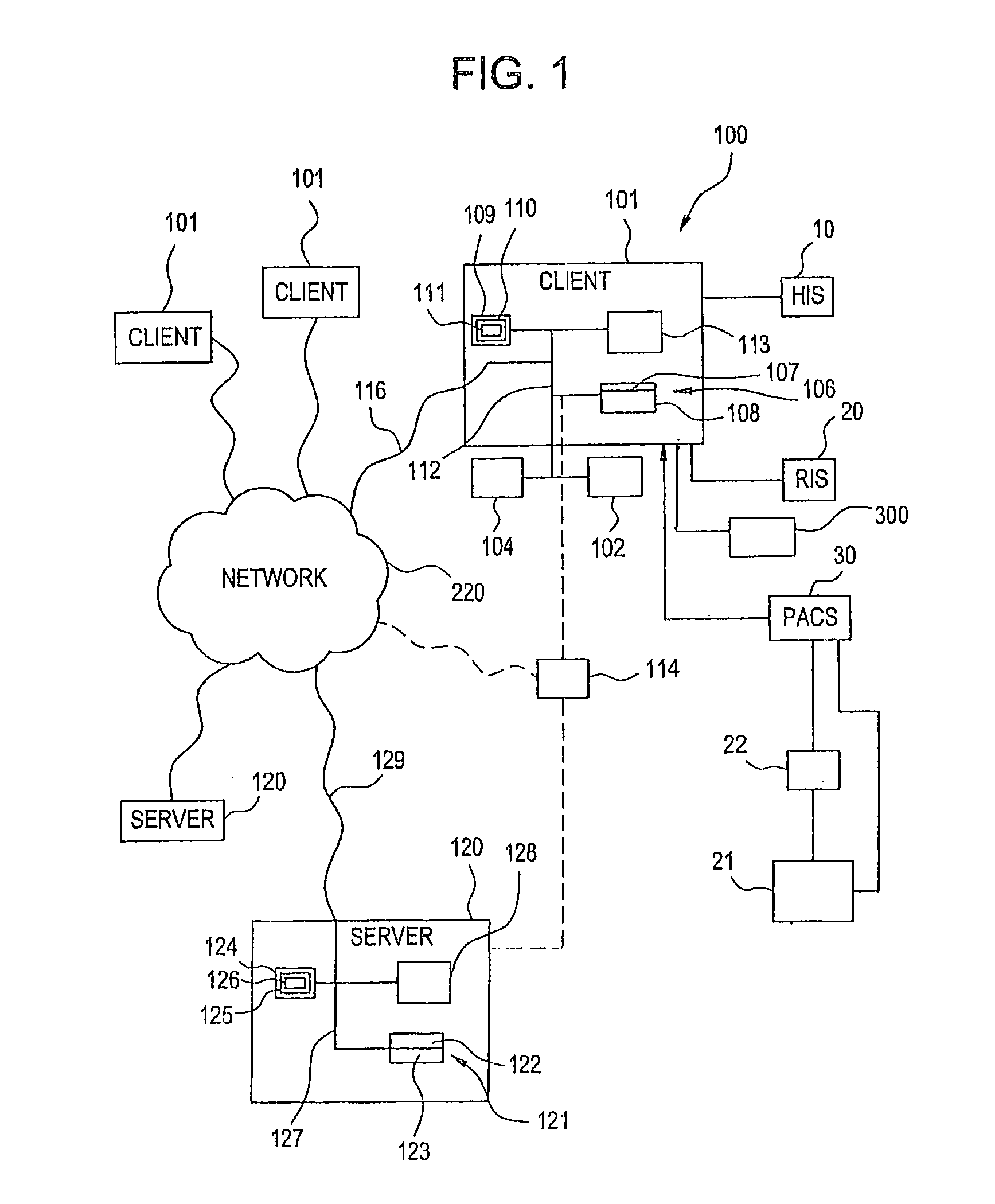

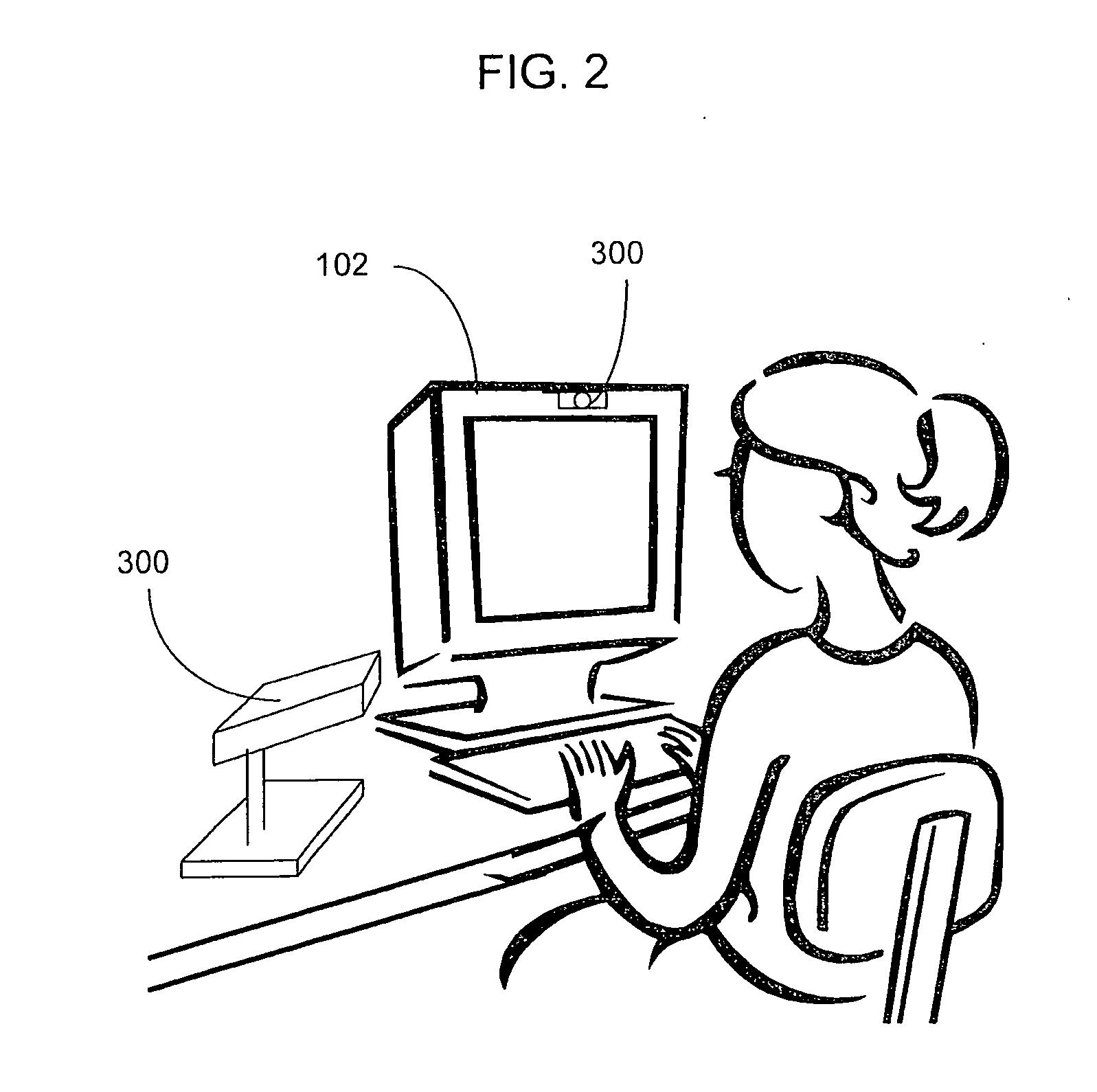

Visually directed human-computer interaction for medical applications

InactiveUS20110270123A1Faster and intuitive human-computer inputFaster and more intuitive human-computer inputDiagnostic recording/measuringSensorsGuidelineApplication software

The present invention relates to a method and apparatus of utilizing an eye detection apparatus in a medical application, which includes calibrating the eye detection apparatus to a user; performing a predetermined set of visual and cognitive steps using the eye detection apparatus; determining a visual profile of a workflow of the user; creating a user-specific database to create an automated visual display protocol of the workflow; storing eye-tracking commands for individual user navigation and computer interactions; storing context-specific medical application eye-tracking commands, in a database; performing the medical application using the eye-tracking commands; and storing eye-tracking data and results of an analysis of data from performance of the medical application, in the database. The method includes performing an analysis of the database for determining best practice guidelines based on clinical outcome measures.

Owner:REINER BRUCE

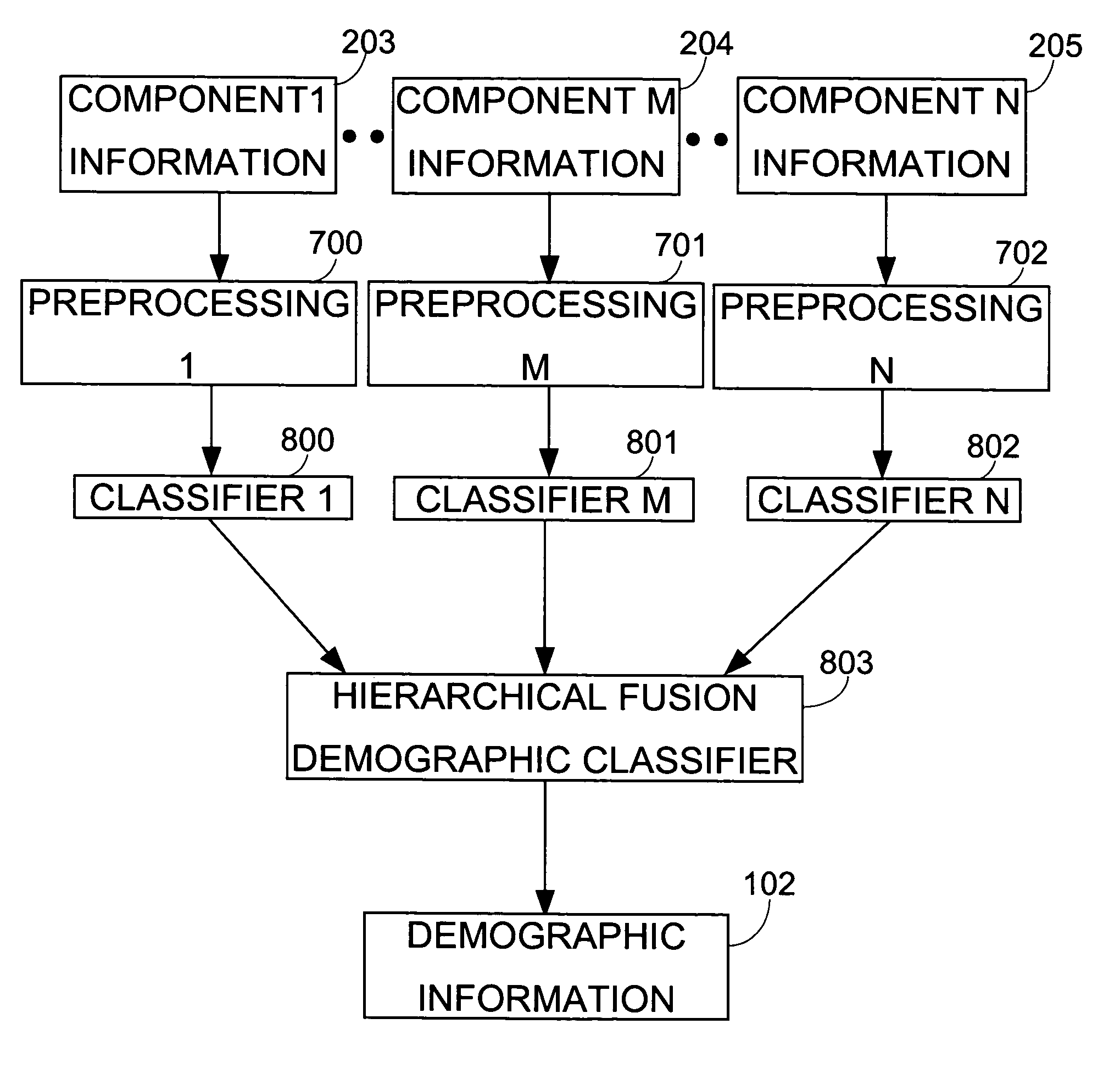

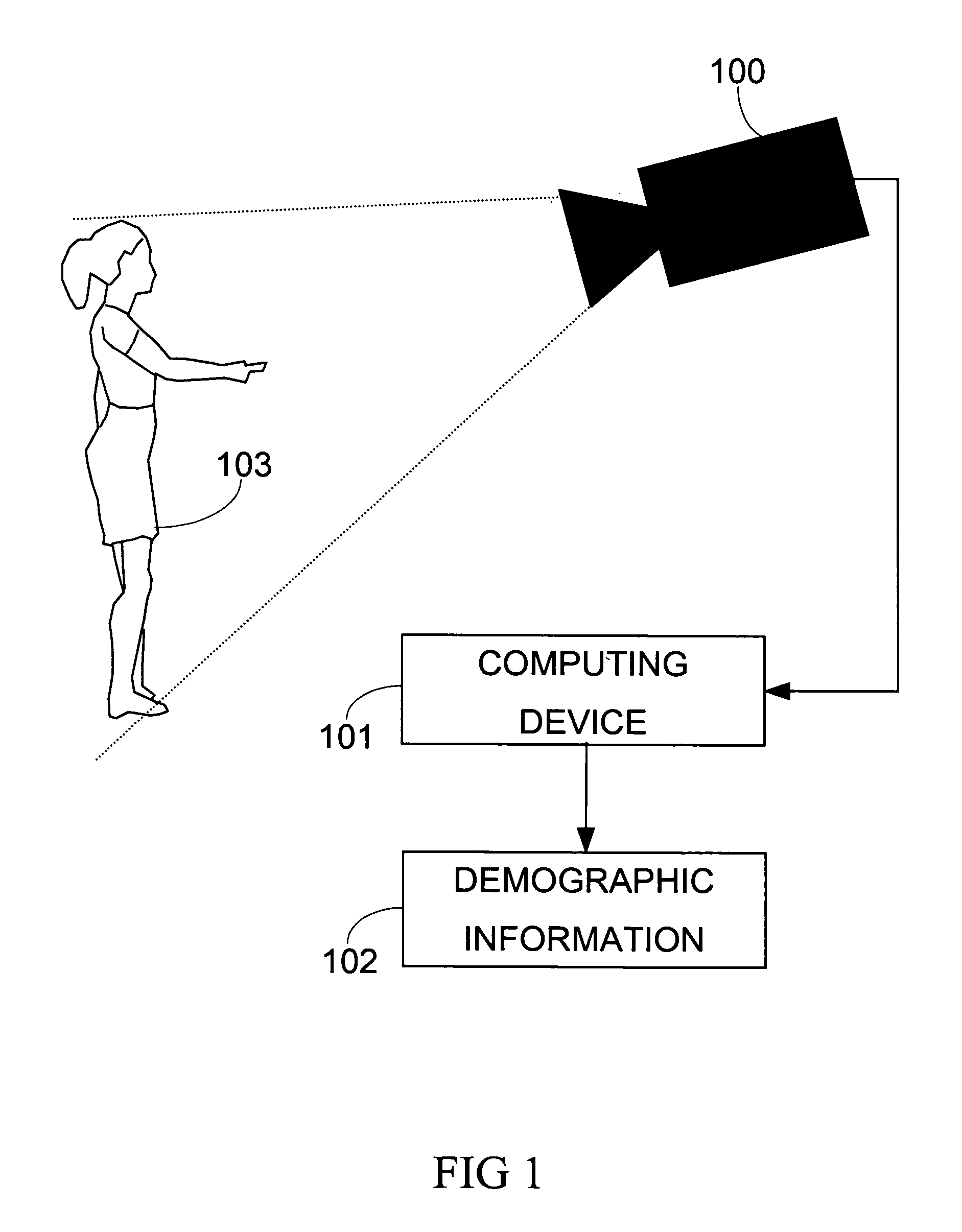

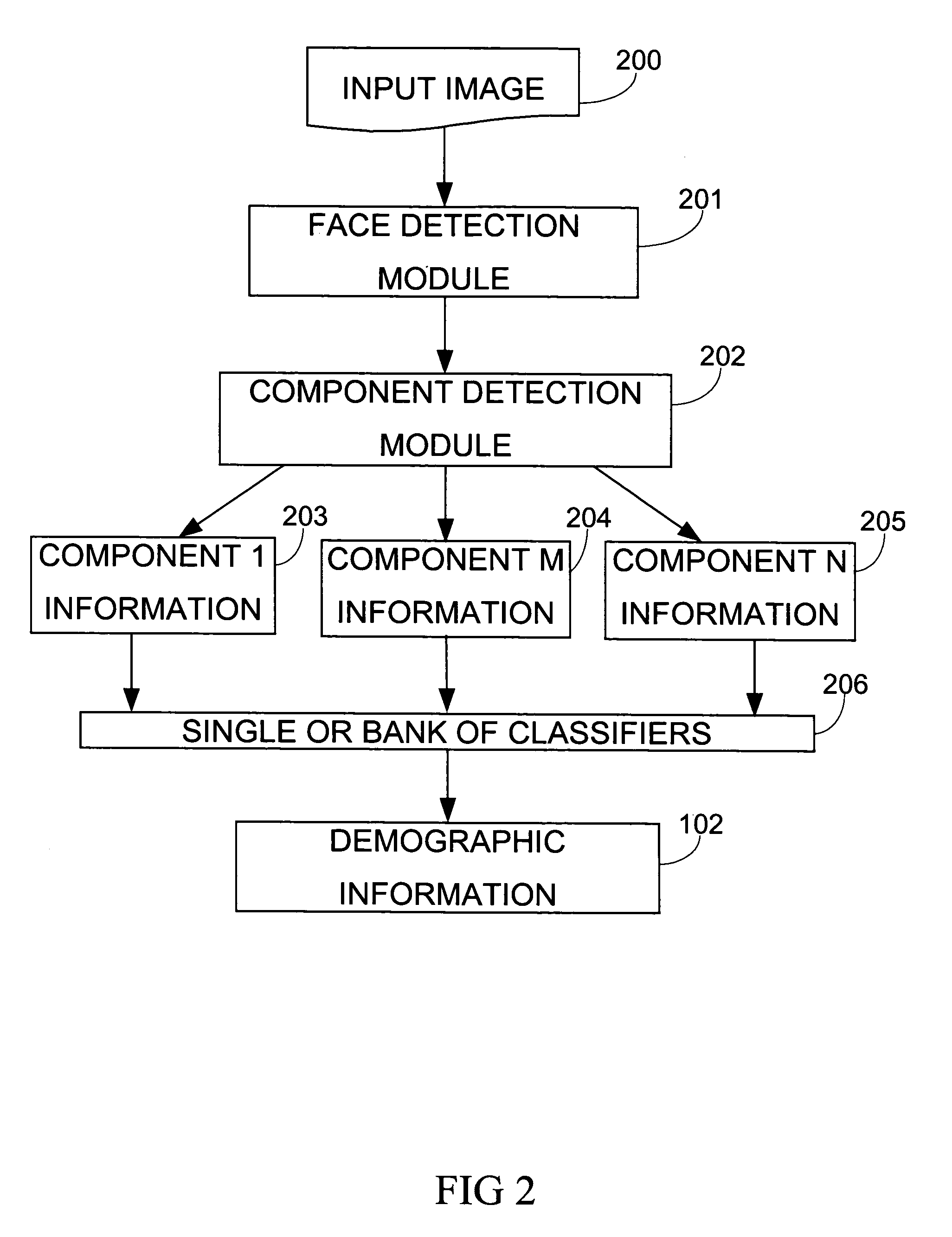

Demographic classification using image components

The present invention includes a system and method for automatically extracting the demographic information from images. The system detects the face in an image, locates different components, extracts component features, and then classifies the components to identify the age, gender, or ethnicity of the person(s) in the image. Using components for demographic classification gives better results as compared to currently known techniques. Moreover, the described system and technique can be used to extract demographic information in more robust manner than currently known methods, in environments where high degree of variability in size, shape, color, texture, pose, and occlusion exists. This invention also performs classifier fusion using Data Level fusion and Multi-level classification for fusing results of various component demographic classifiers. Besides use as an automated data collection system wherein given the necessary facial information as the data, the demographic category of the person is determined automatically, the system could also be used for targeting of the advertisements, surveillance, human computer interaction, security enhancements, immersive computer games and improving user interfaces based on demographic information.

Owner:VIDEOMINING CORP

Human-computer interaction device and method adopting eye tracking in video monitoring

ActiveCN101866215AReduce the impactEnhanced interactionInput/output for user-computer interactionTelevision system detailsVideo monitoringHuman–machine interface

The invention belongs to the technical field of video monitoring and in particular relates to a human-computer interaction device and a human-computer interaction method adopting human-eye tracking in the video monitoring. The device comprises a non-invasive facial eye image video acquisition unit, a monitoring screen, an eye tracking image processing module and a human-computer interaction interface control module, wherein the monitoring screen is provided with infrared reference light sources around; and the eye tracking image processing module separates out binocular sub-images of a left eye and a right eye from a captured facial image, identifies the two sub-images respectively and estimates the position of a human eye staring position corresponding to the monitoring screen. The invention also provides an efficient human-computer interaction way according to eye tracking characteristics. The unified human-computer interaction way disclosed by the invention can be used for selecting a function menu by using eyes, switching monitoring video contents, regulating the focus shooting vision angle of a remote monitoring camera and the like to improve the efficiency of operating videomonitoring equipment and a video monitoring system.

Owner:FUDAN UNIV

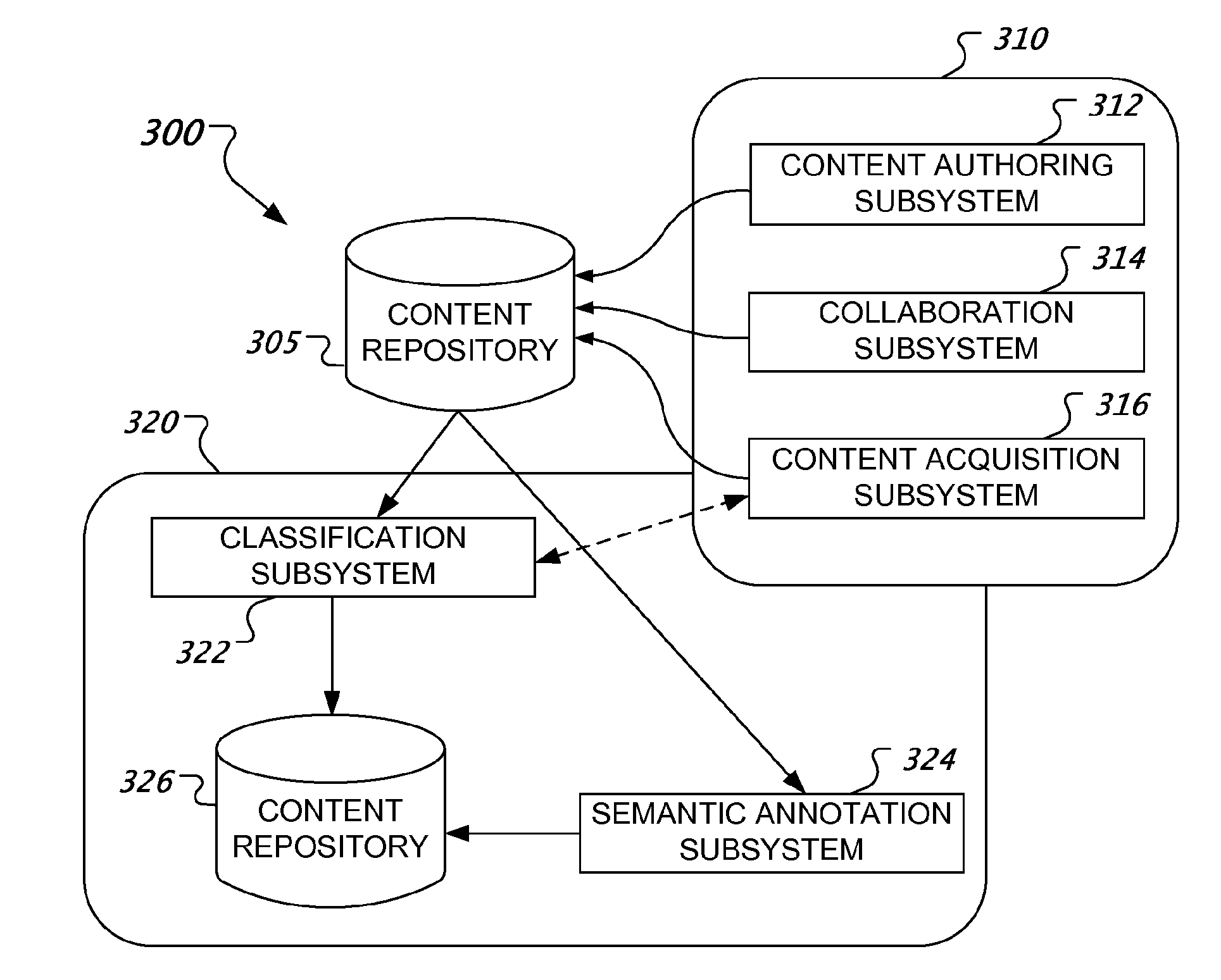

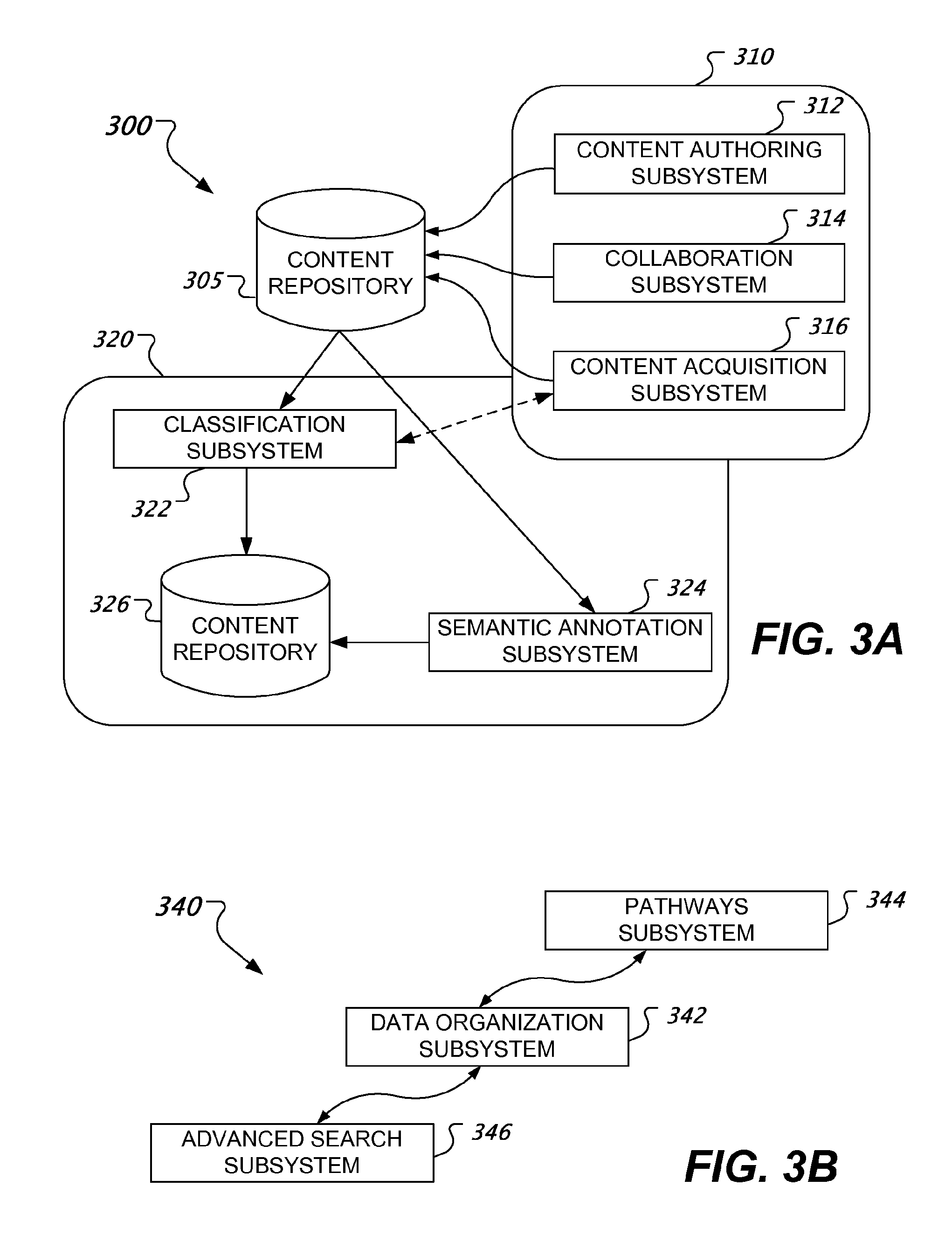

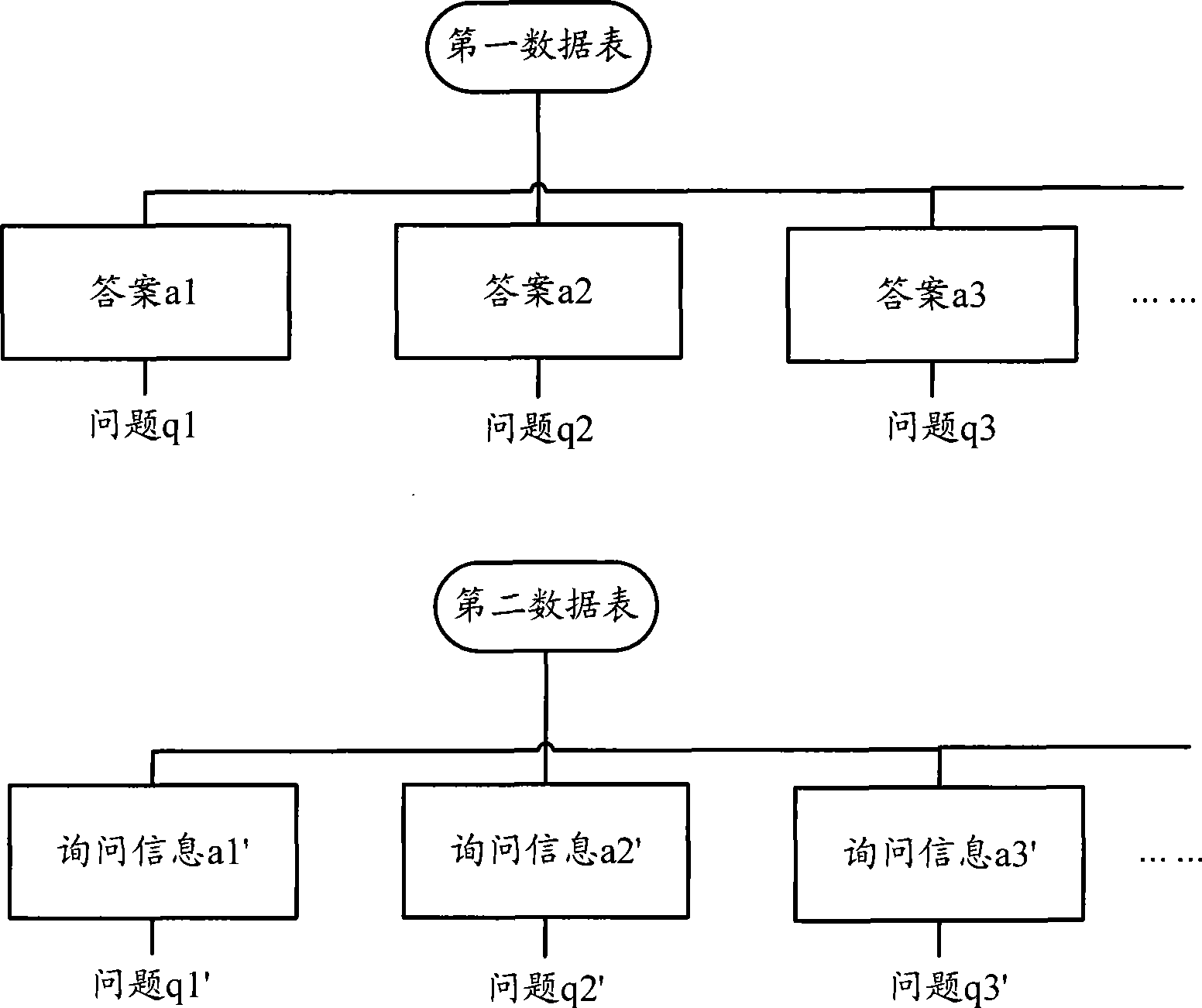

Adaptive Knowledge Platform

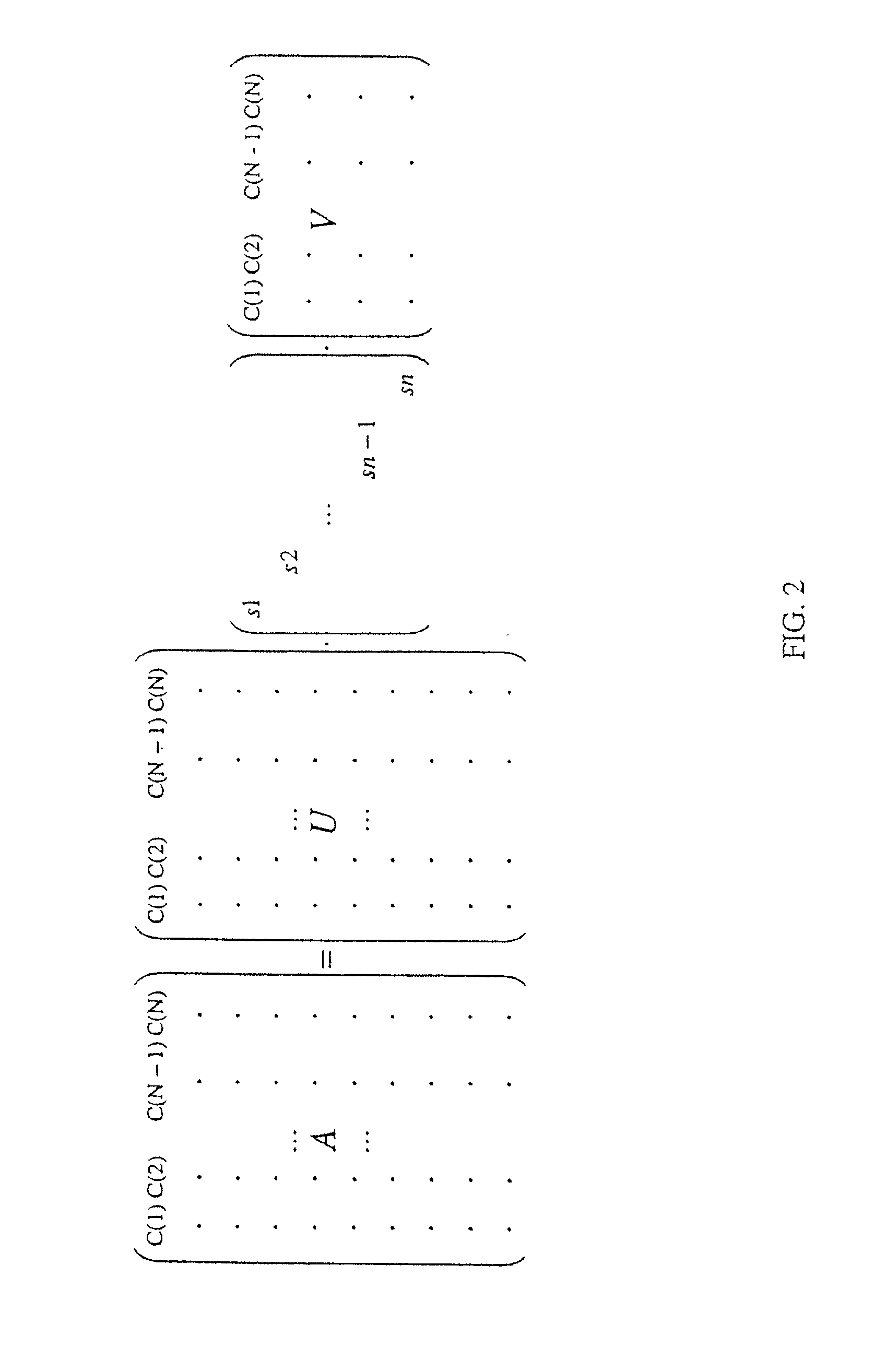

InactiveUS20110264649A1Easy to organizeImprove filtering effectData processing applicationsDigital data processing detailsData setMultiple perspective

Methods, systems, and apparatus, including medium-encoded computer program products, for providing an adaptive knowledge platform. In one or more aspects, a system can include a knowledge management component to acquire, classify and disseminate information of a dataset; a human-computer interaction component to visualize multiple perspectives of the dataset and to model user interactions with the multiple perspectives; and an adaptivity component to modify one or more of the multiple perspectives of the dataset based on a user-interaction model.

Owner:ALEXANDRIA INVESTMENT RES & TECH

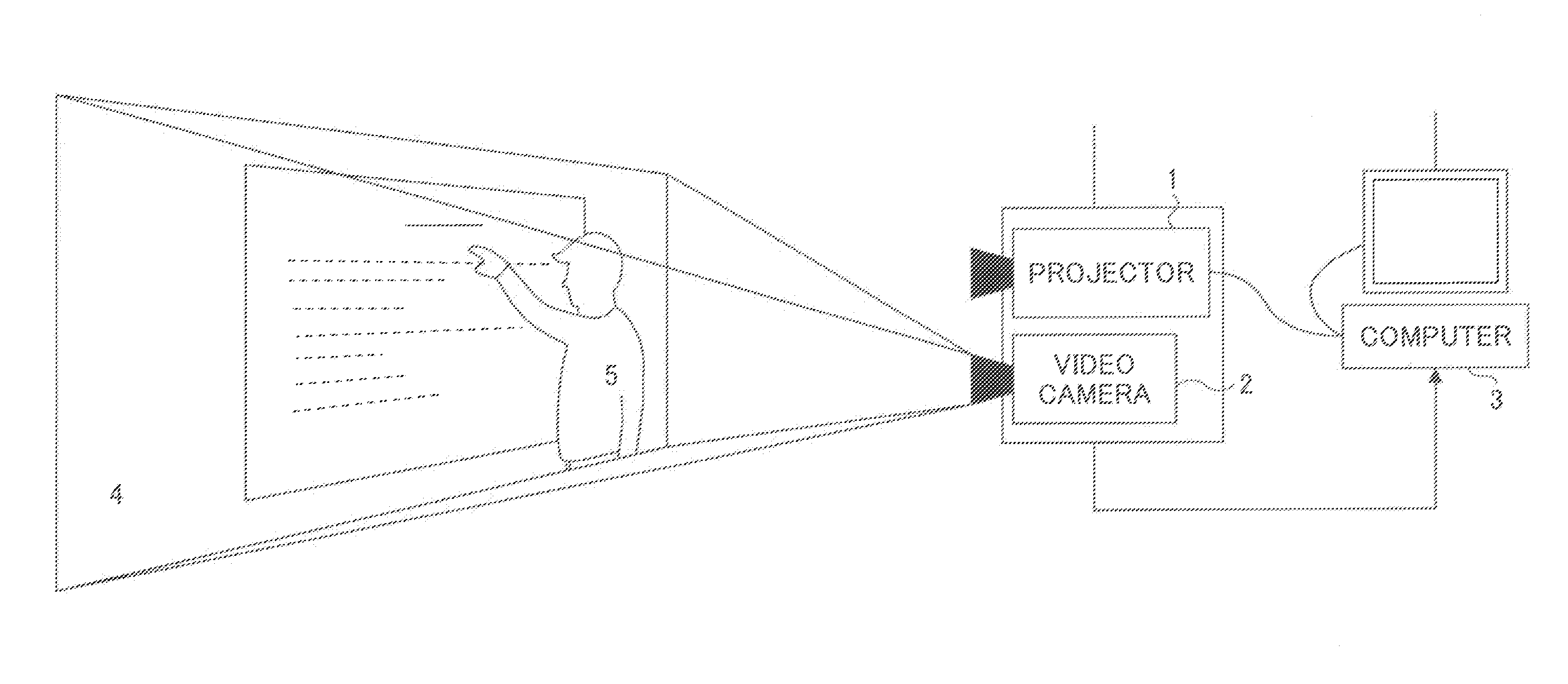

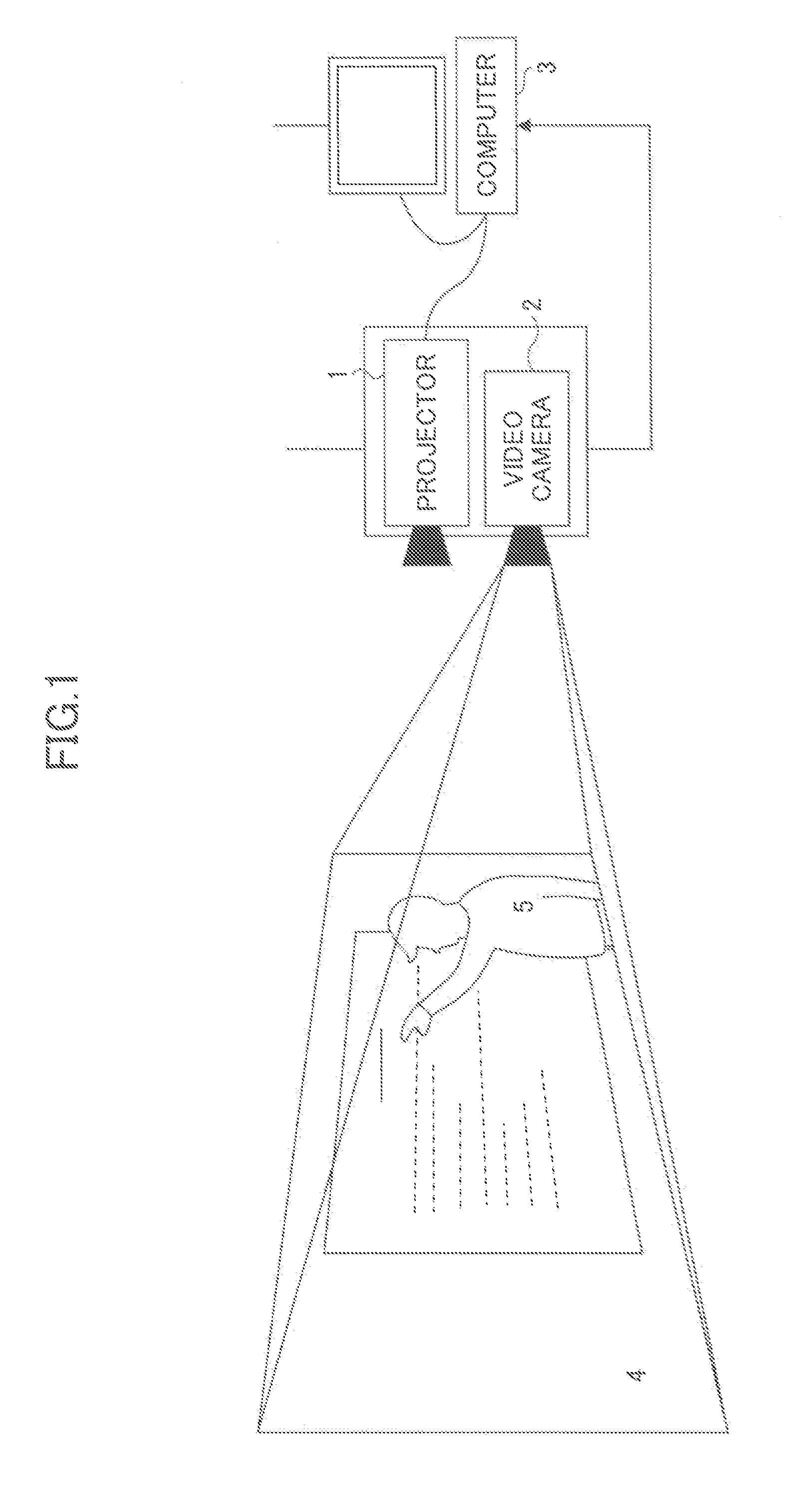

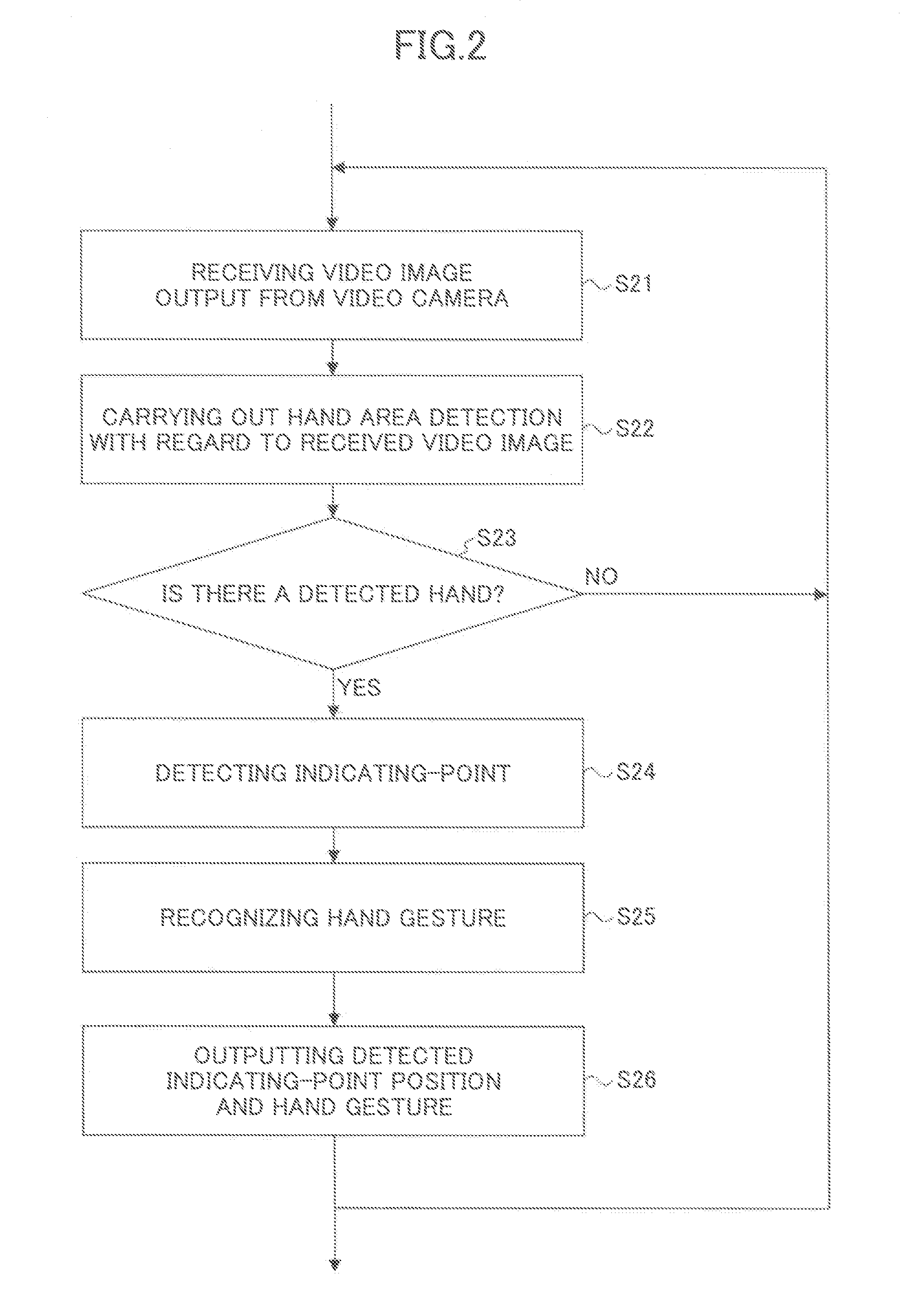

Hand and indicating-point positioning method and hand gesture determining method used in human-computer interaction system

InactiveUS20120062736A1Rapid positioningCharacter and pattern recognitionColor television detailsInteraction systemsPattern recognition

Disclosed are a hand positioning method and a human-computer interaction system. The method comprises a step of continuously capturing a current image so as to obtain a sequence of video images; a step of extracting a foreground image from each of the captured video images, and then carrying out binary processing so as to obtain a binary foreground image; a step of obtaining a vertex set of a minimum convex hull of the binary foreground image, and then creating areas of concern serving as candidate hand areas; and a step of extracting hand imaging features from the respective created areas of concern, and then determining a hand area from the candidate hand areas by carrying out pattern recognition based on the extracted hand imaging features.

Owner:RICOH KK

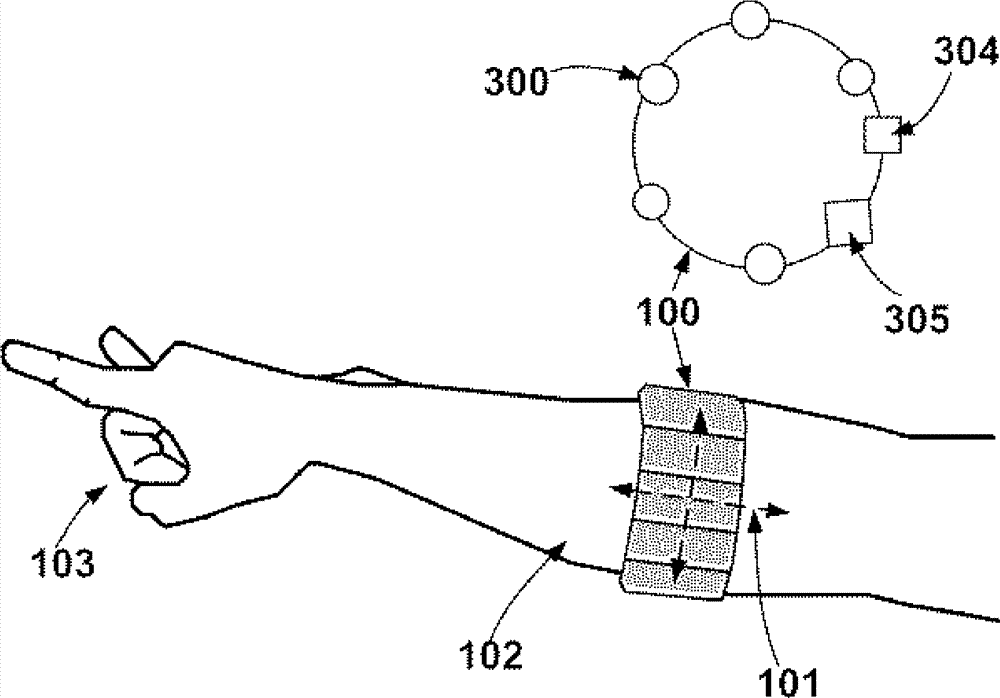

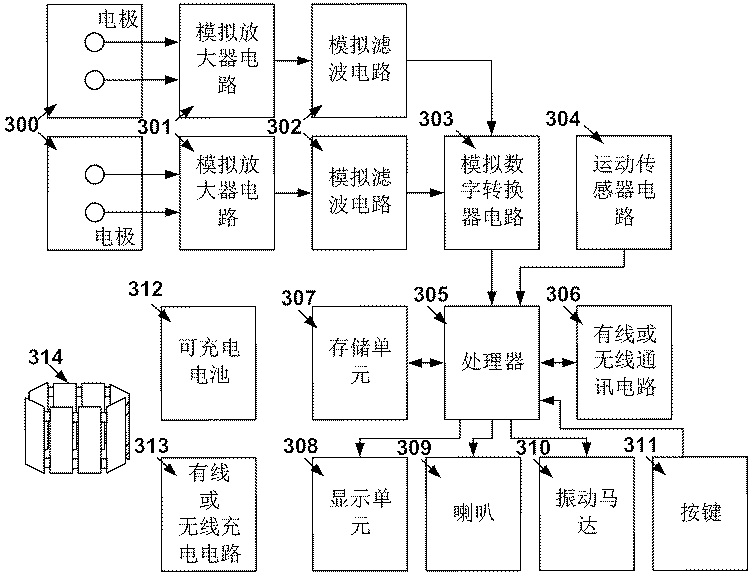

Gesture recognition device based on arm muscle current detection and motion sensor

InactiveCN103777752AInput/output for user-computer interactionGraph readingCurrent sensorMachine instruction

The invention discloses a gesture recognition device based on arm muscle current signal detection and a motion sensor, and the gesture recognition device serving as a machine instruction input device can be used for any electronic system needing man-machine interaction. The device comprises a hand ring or an arm ring, wherein according to the shape, the hand ring or the arm ring can be worn on the wrist or the front arm, biological currents generated in the motion process of the arm muscles are extracted through one or more muscle skin current sensors tightly attached to the skin, the currents pass through an amplifier circuit, a filter circuit and an analog-digital conversion circuit, and characteristic parameters of gestures are extracted through real-time digital signal algorithm processing, and therefore the gestures are recognized. The recognized gestures are mapped to be all kinds of control instructions allowed to be configured, and the instructions are transmitted to a controlled mainframe in a wireless or wired mode through Bluetooth or others. The gestures are judged through the arm muscle currents and the motion sensor, and a bran-new man-machine interaction instruction input mode is achieved.

Owner:上海威璞电子科技有限公司

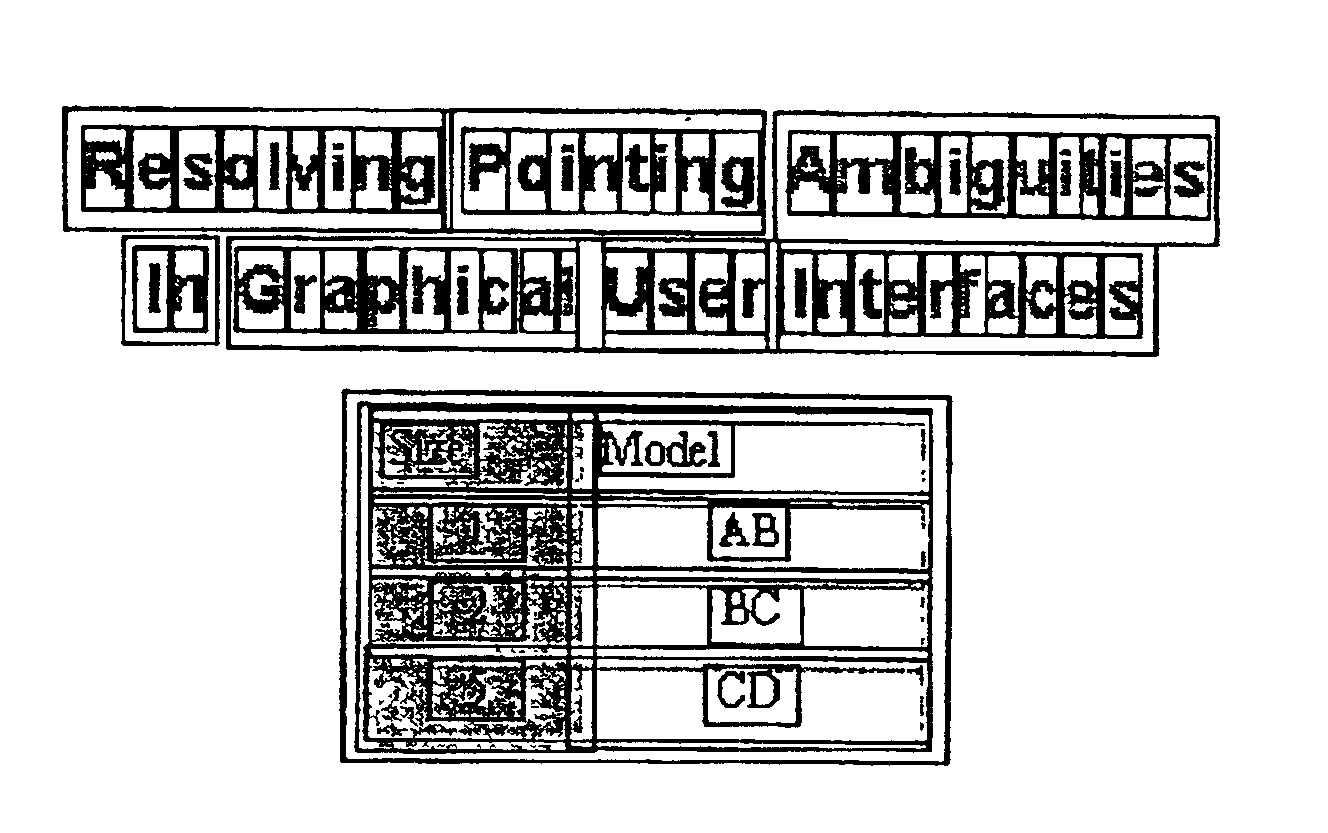

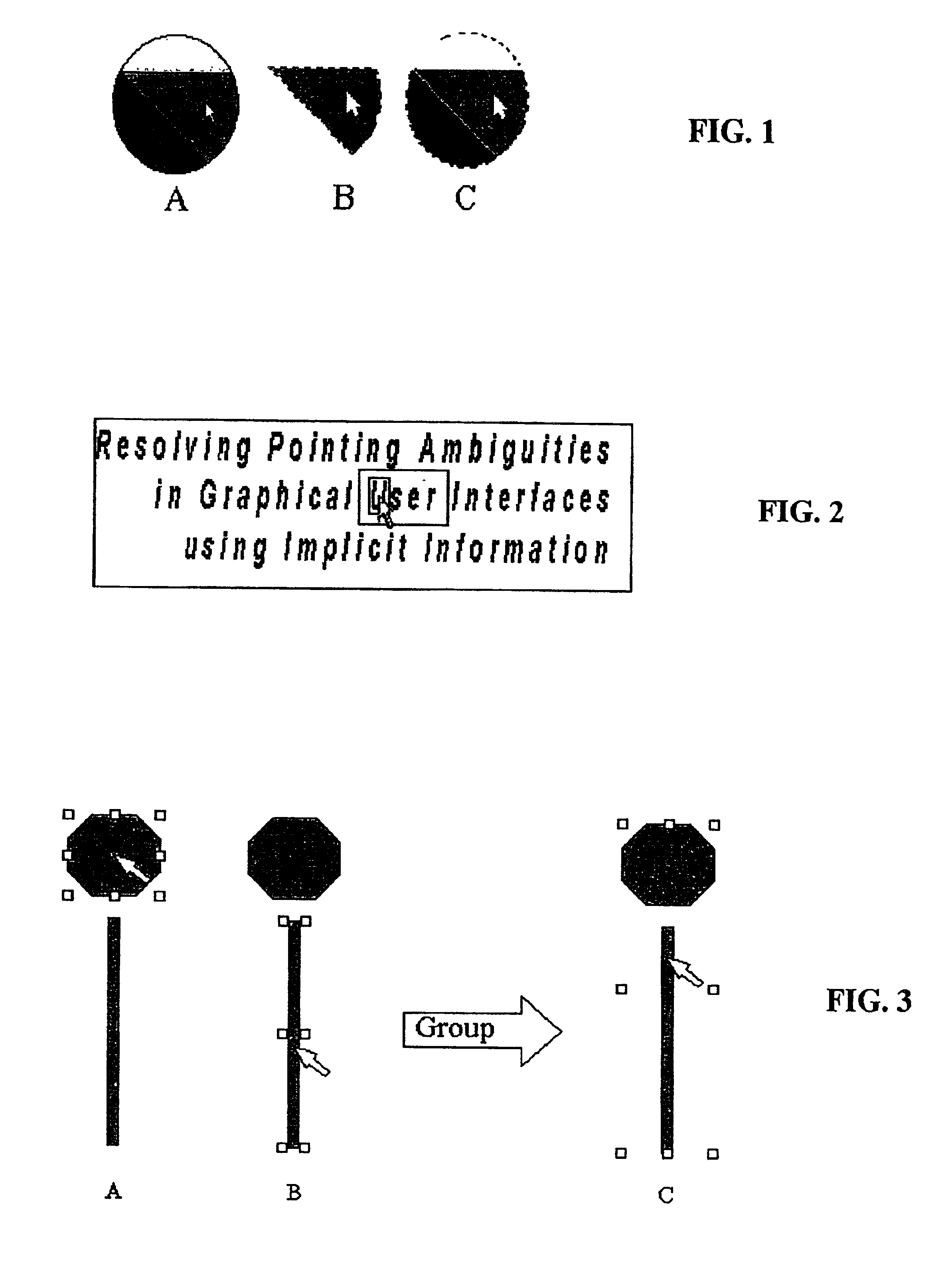

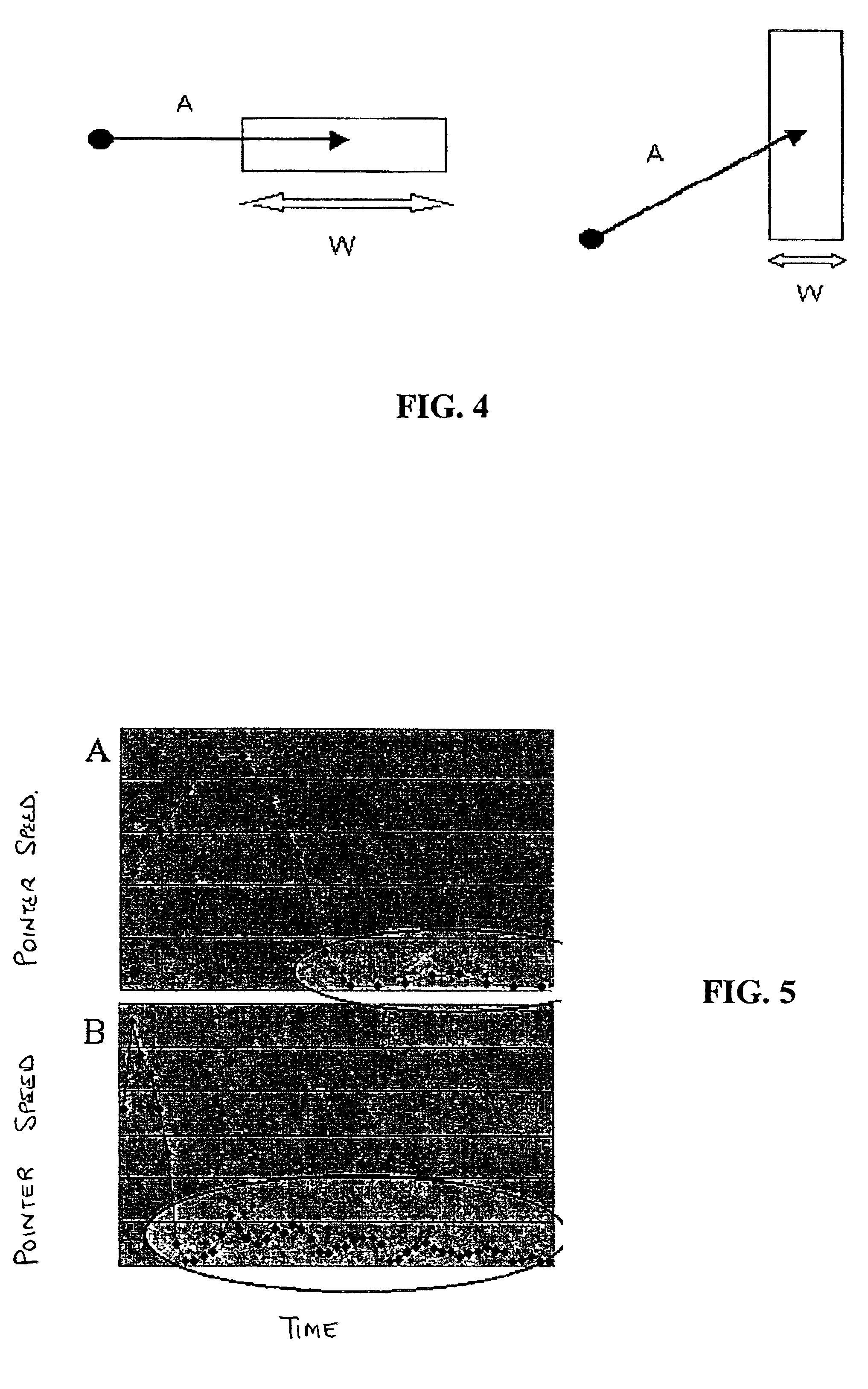

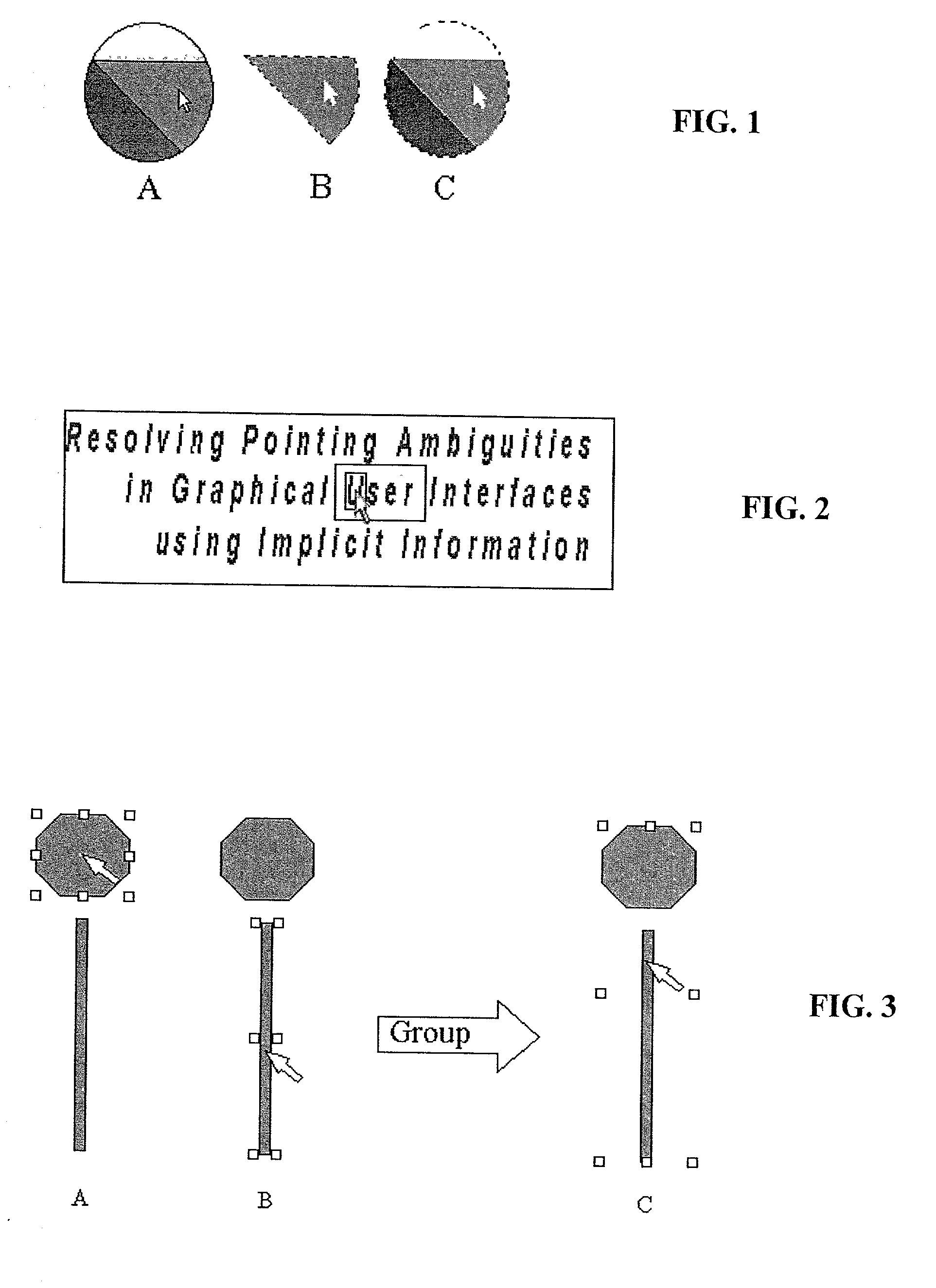

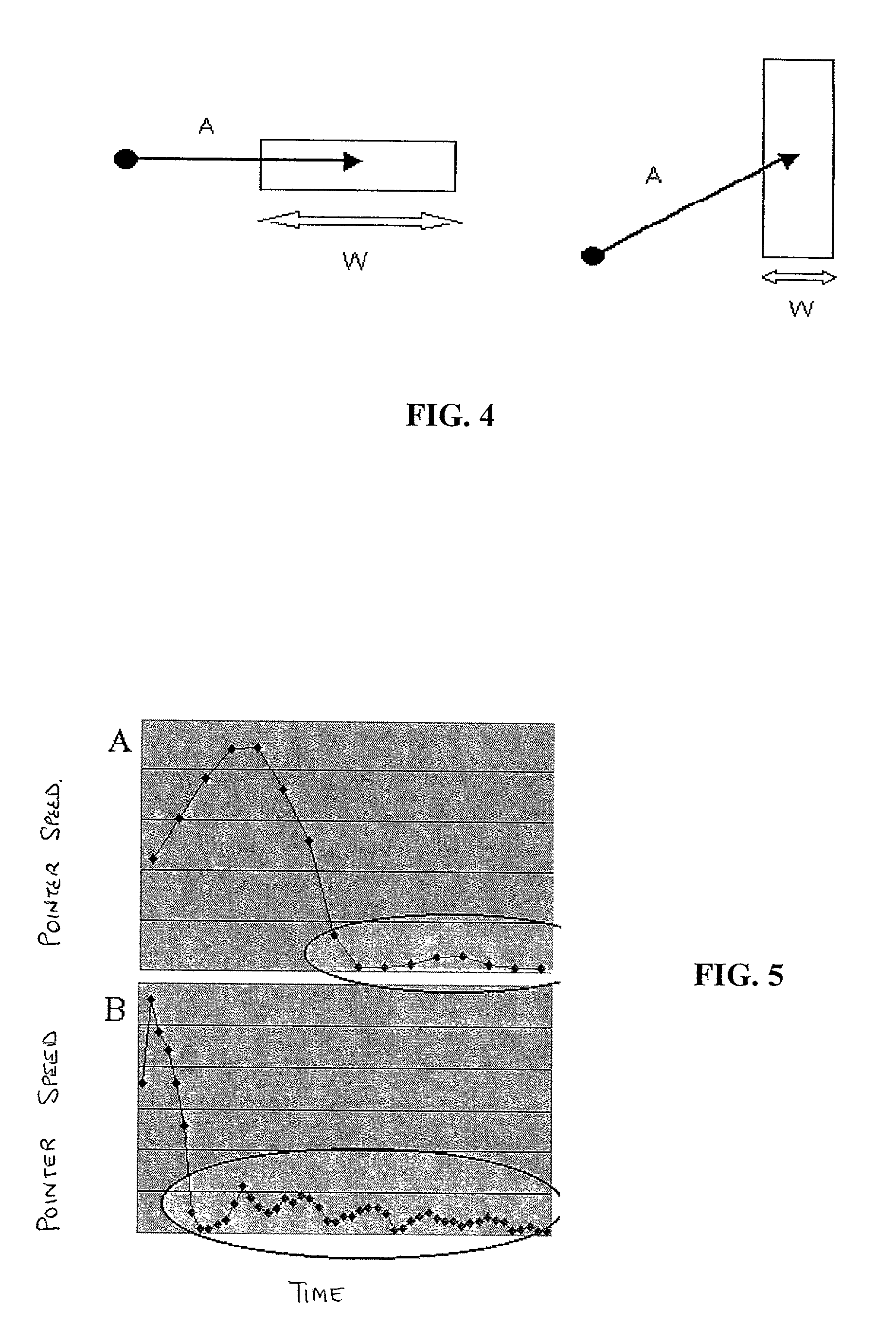

Method and system for implicitly resolving pointing ambiguities in human-computer interaction (HCI)

InactiveUS6907581B2Simple design and implementationEasy accessInput/output processes for data processingObject basedAmbiguity

Owner:RAMOT AT TEL AVIV UNIV LTD

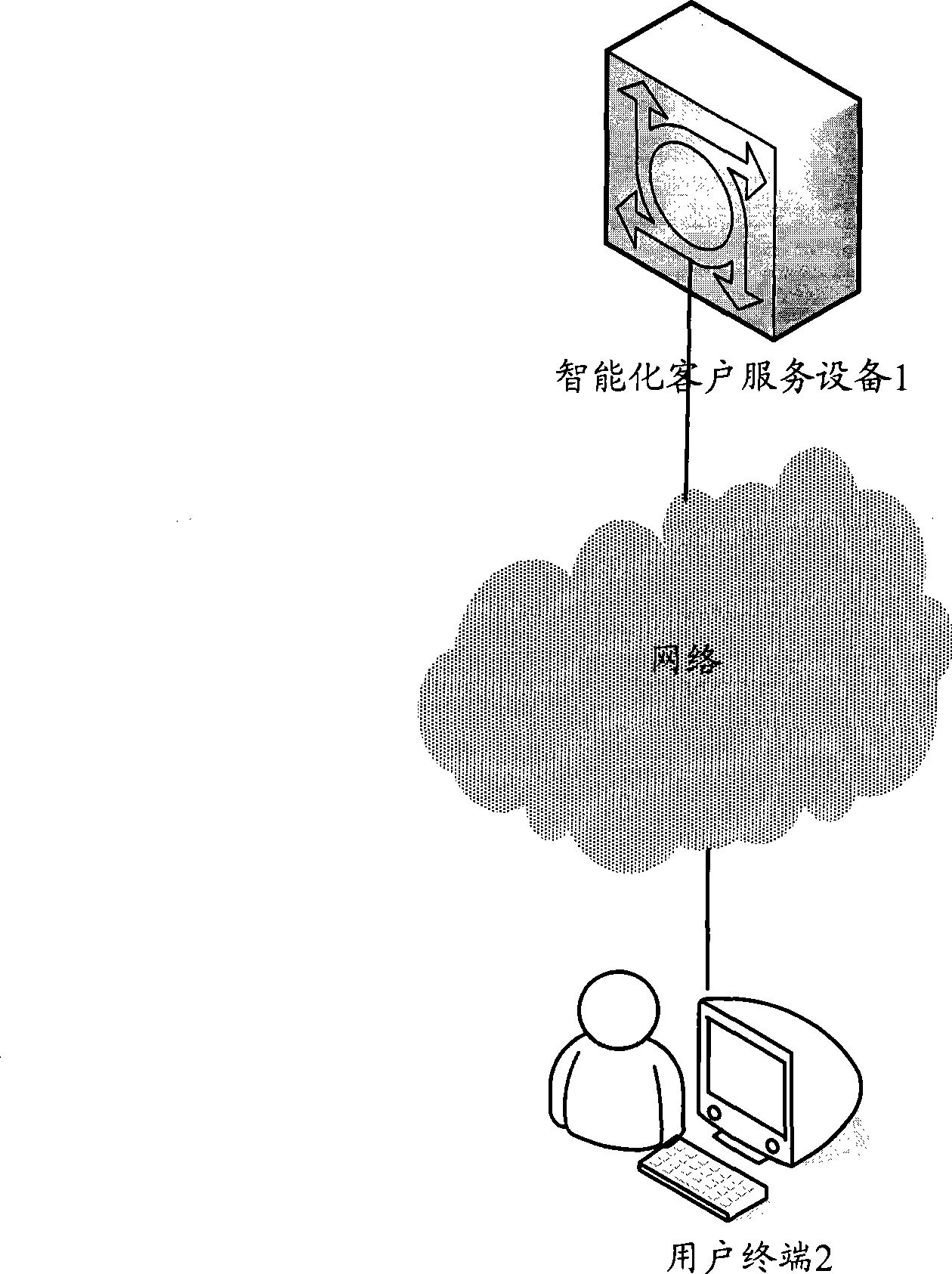

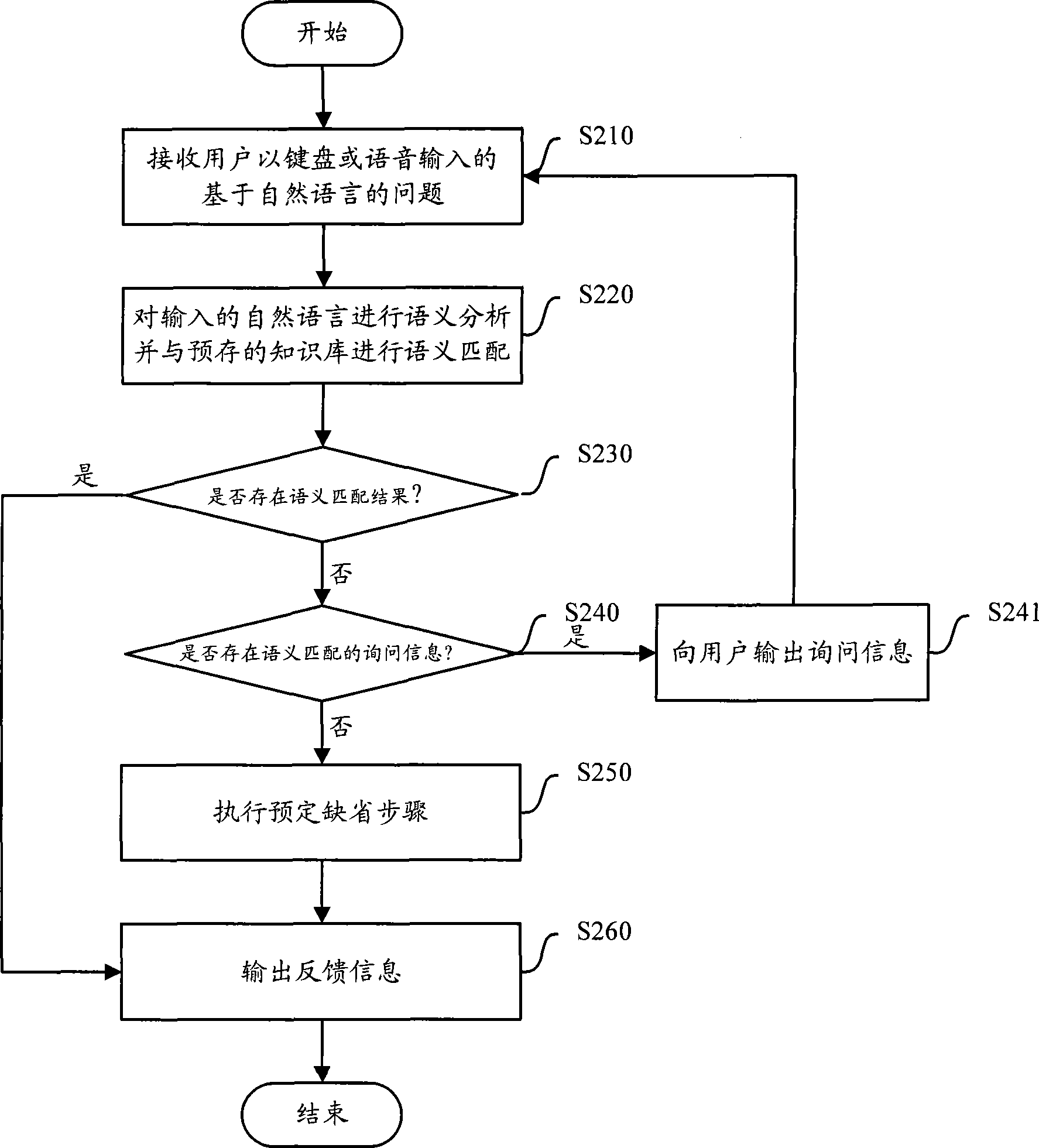

Method and equipment for implementing automatic customer service through human-machine interaction technology

ActiveCN101431573AReduce reinvestmentLow running costSpecial service for subscribersSupervisory/monitoring/testing arrangementsHuman–robot interactionHuman system interaction

The invention provides a method realizing automatic client service using human-computer interaction technique, and an equipment realizing the method. The method includes: receiving problem imported with nature language from the user, converting the nature language into information identified by the computer using human-computer interaction technique; outputting feedback information according withthe information identified for the computer. In addition, the invention also provides the equipment realizing the method.

Owner:SHANGHAI XIAOI ROBOT TECH CO LTD

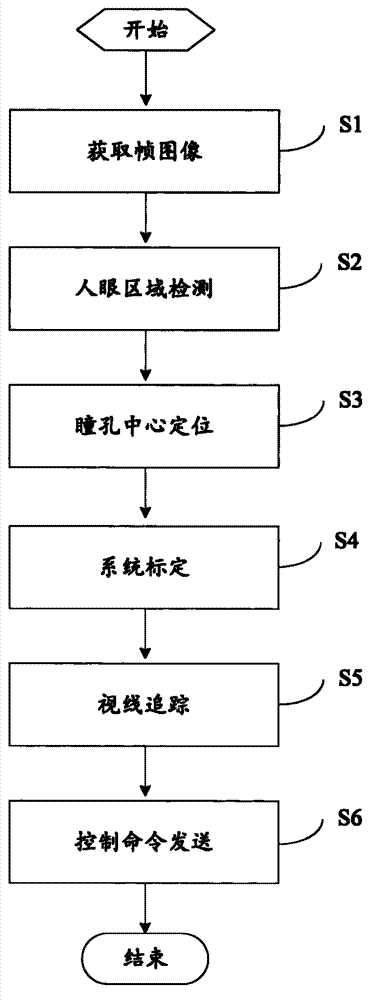

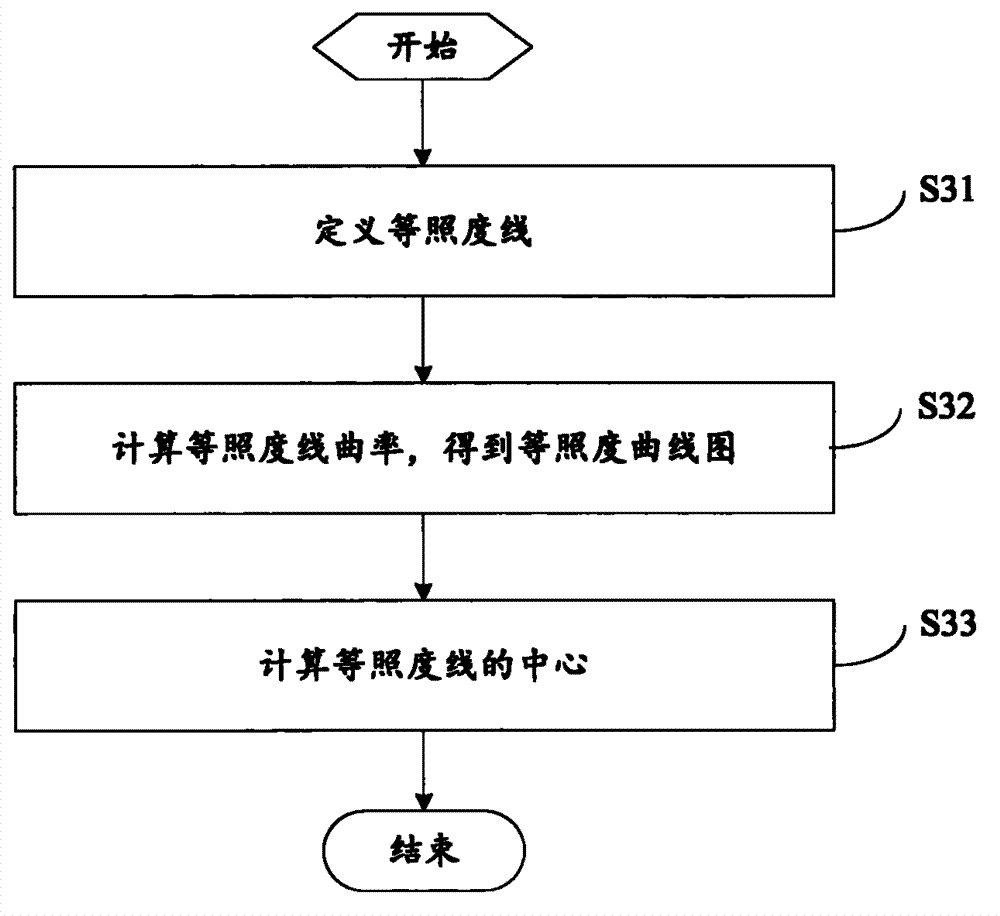

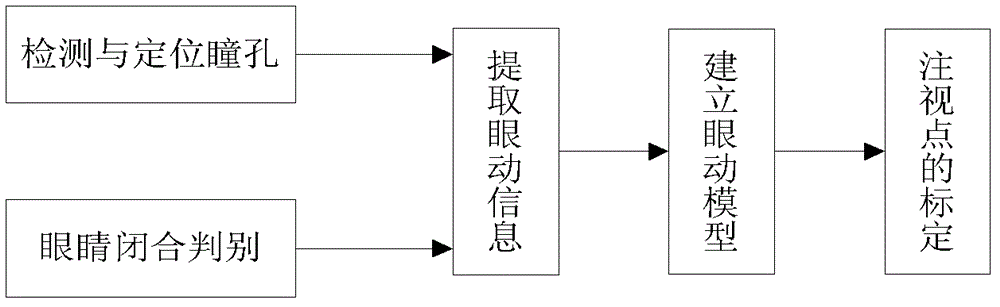

Man-machine interaction method and system based on sight judgment

ActiveCN102830797ARealize the operationEasy to operateInput/output for user-computer interactionGraph readingEye closurePupil

The invention relates to the technical field of man-machine interaction and provides a man-machine interaction method based on sight judgment, to realize the operation on an electronic device by a user. The method comprises the following steps of: obtaining a facial image through a camera, carrying out human eye area detection on the image, and positioning a pupil center according to the detected human eye area; calculating a corresponding relationship between an image coordinate and an electronic device screen coordinate system; tracking the position of the pupil center, and calculating a view point coordinate of the human eye on an electronic device screen according to the corresponding relationship; and detecting an eye blinking action or an eye closure action, and issuing corresponding control orders to the electronic device according to the detected eye blinking action or the eye closure action. The invention further provides a man-machine interaction system based on sight judgment. With the adoption of the man-machine interaction method, the stable sight focus judgment on the electronic device is realized through the camera, and control orders are issued through eye blinking or eye closure, so that the operation on the electronic device by the user becomes simple and convenient.

Owner:SHENZHEN INST OF ADVANCED TECH

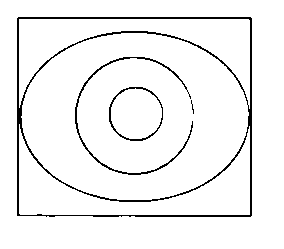

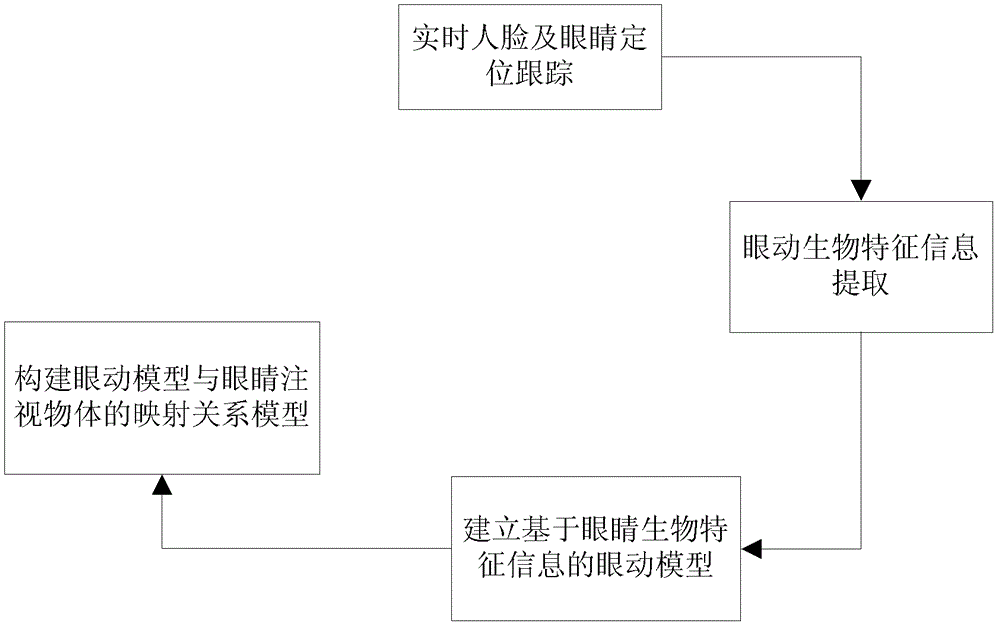

Non-contact free space eye-gaze tracking method suitable for man-machine interaction

ActiveCN102749991AAccurate detectionPrecise positioningInput/output for user-computer interactionCharacter and pattern recognitionJet aeroplaneAviation

The invention provides a non-contact free space eye-gaze tracking method suitable for man-machine interaction. The method comprises the steps of positioning and tracking a face and eyes in real time, extracting eye movement biological characteristic information, building an eye movement model based on the eye movement biological characteristic information, building a mapping relational model of the eye movement model and an eye-gazed object and the like. The non-contact free space eye-gaze tracking method suitable for the man-machine interaction relates to multiple crossed fields of image processing, computer vision, pattern recognition and the like and has wide application prospect in the fields of new-generation man-machine interaction, disabled people assisting, aerospace relating field, sports, automobile and airplane driving, virtual reality, games and the like. In addition, the method has great practical significance for improvement of the life and self-care level of disabled people, building of a harmonious society and improvement of independent innovative capability in the national high-and-new technical fields of the man-machine interaction, unmanned driving and the like.

Owner:广东百泰科技有限公司

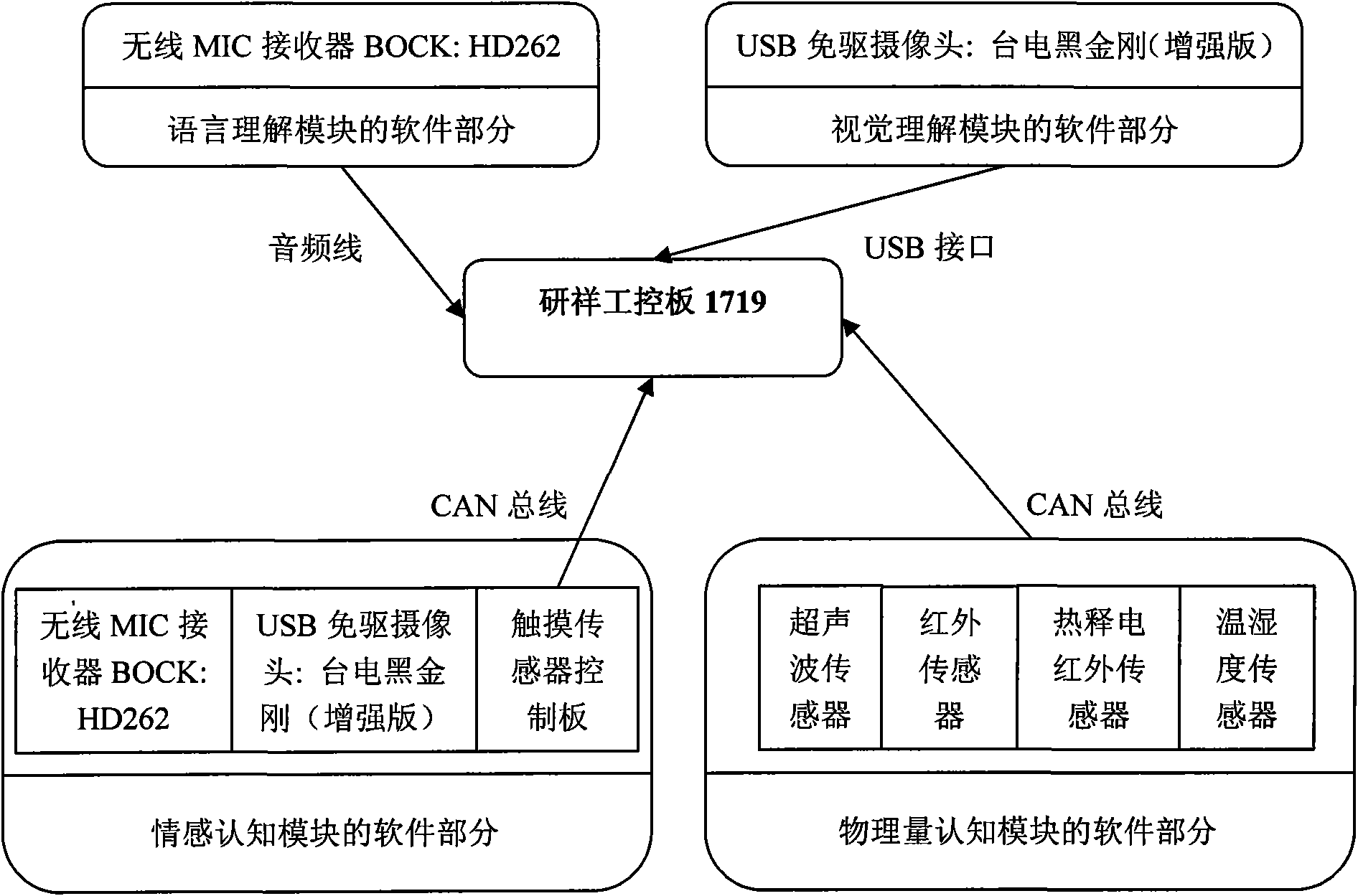

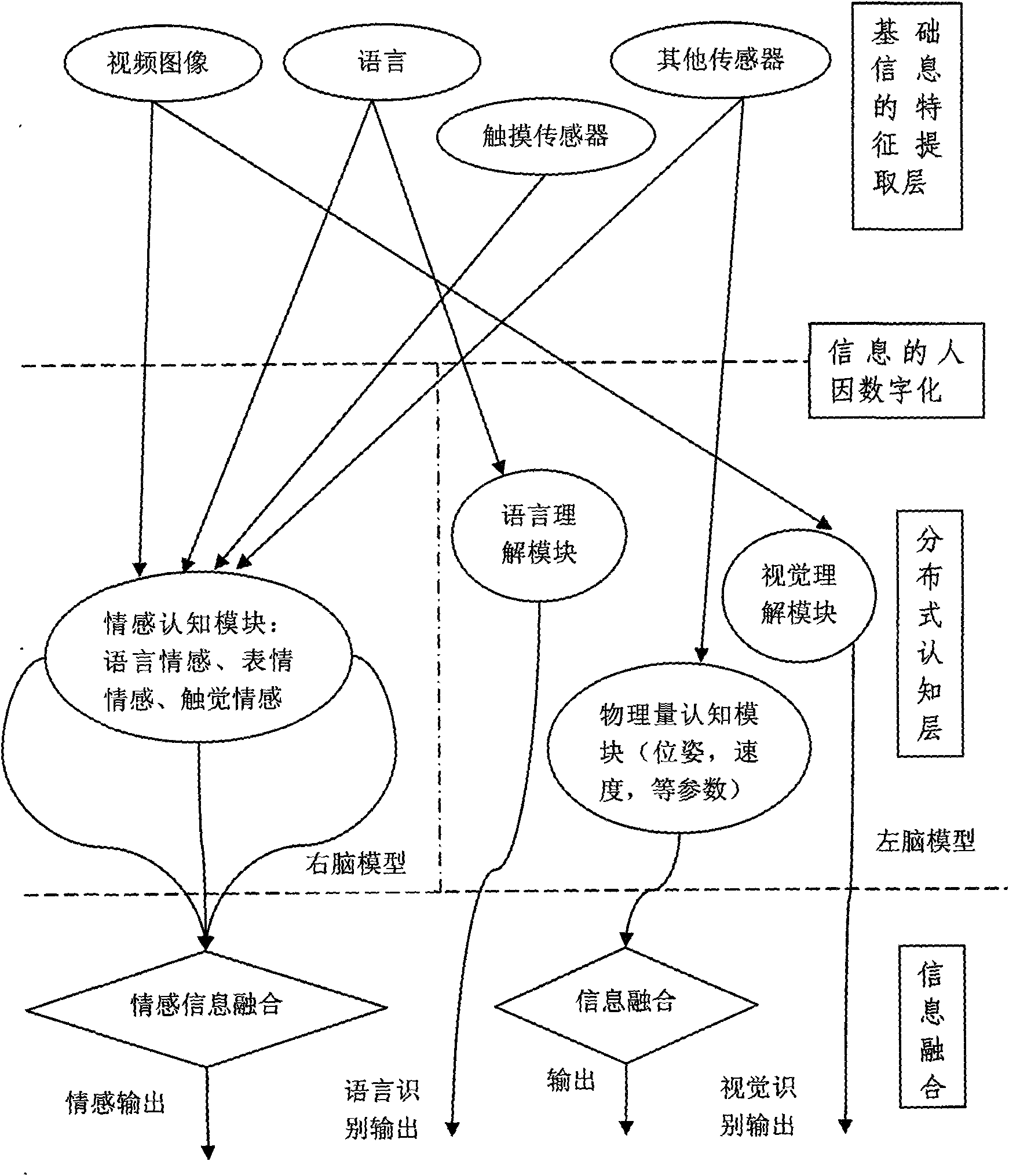

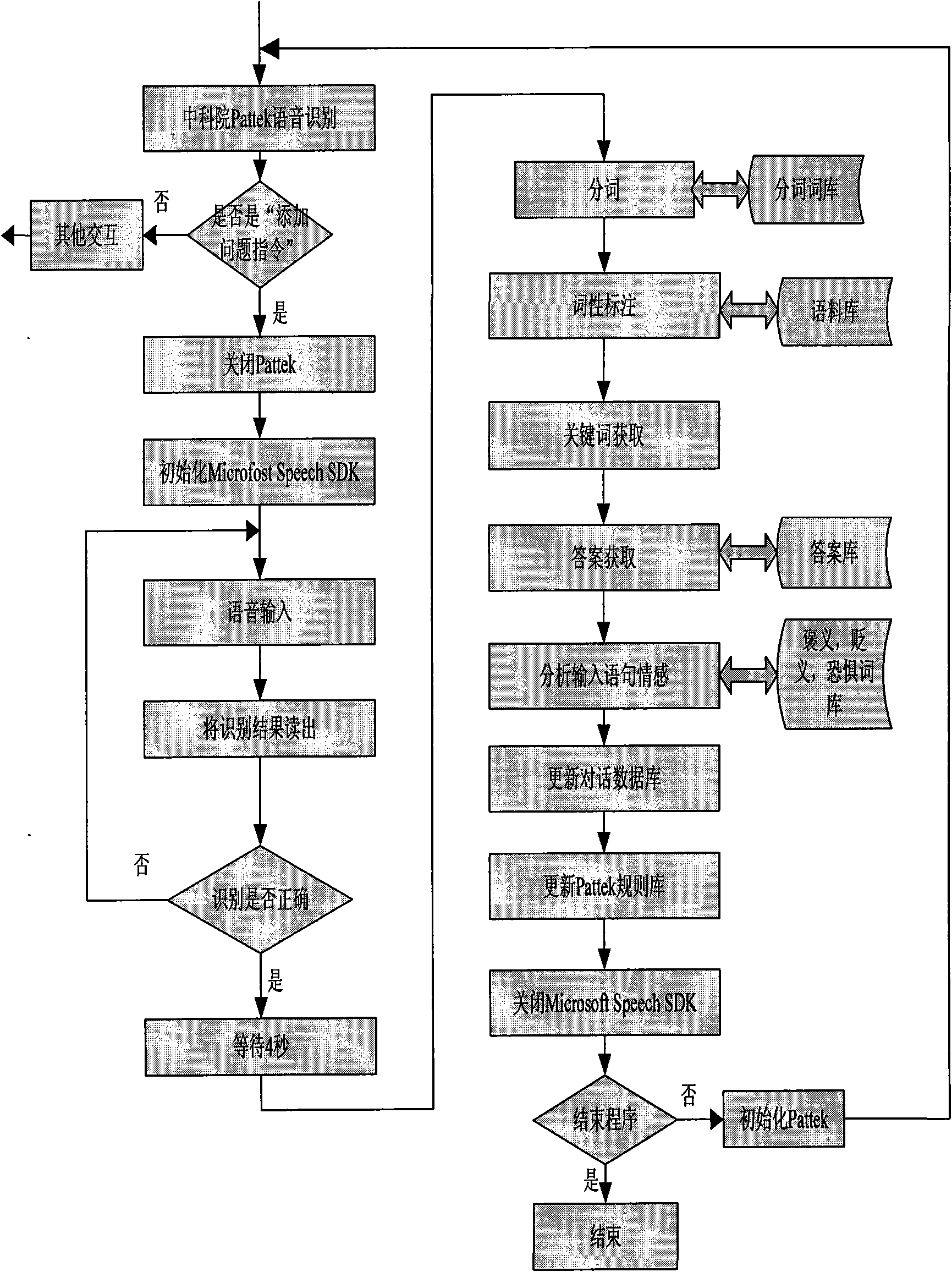

Distributed cognitive technology for intelligent emotional robot

InactiveCN101604204AInput/output for user-computer interactionCharacter and pattern recognitionHuman behaviorLanguage understanding

The invention provides distributed cognitive technology for an intelligent emotional robot, which can be applied in the field of multi-channel human-computer interaction in service robots, household robots, and the like. In a human-computer interaction process, the multi-channel cognition for the environment and people is distributed so that the interaction is more harmonious and natural. The distributed cognitive technology comprises four parts, namely 1) a language comprehension module which endows a robot with an ability of understanding human language after the steps of word division, word gender labeling, key word acquisition, and the like; 2) a vision comprehension module which comprises related vision functions such as face detection, feature extraction, feature identification, human behavior comprehension, and the like; 3) an emotion cognition module which extracts related information in language, expression and touch, analyzes user emotion contained in the information, synthesizes a comparatively accurate emotion state, and makes the intelligent emotional robot cognize the current emotion of a user; and 4) a physical quantity cognition module which makes the robot understand the environment and self state as the basis of self adjustment.

Owner:UNIV OF SCI & TECH BEIJING

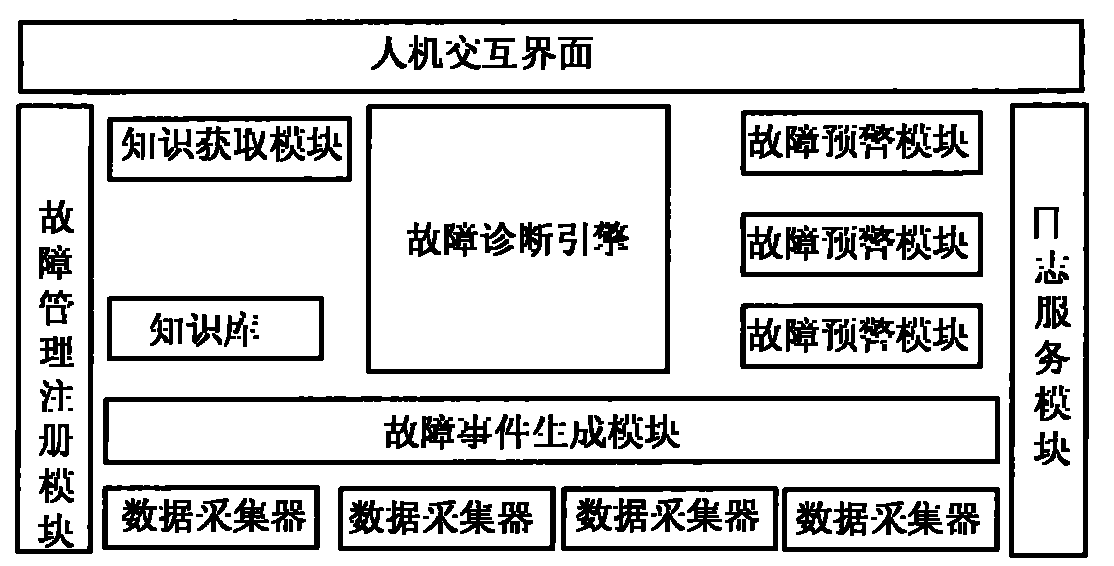

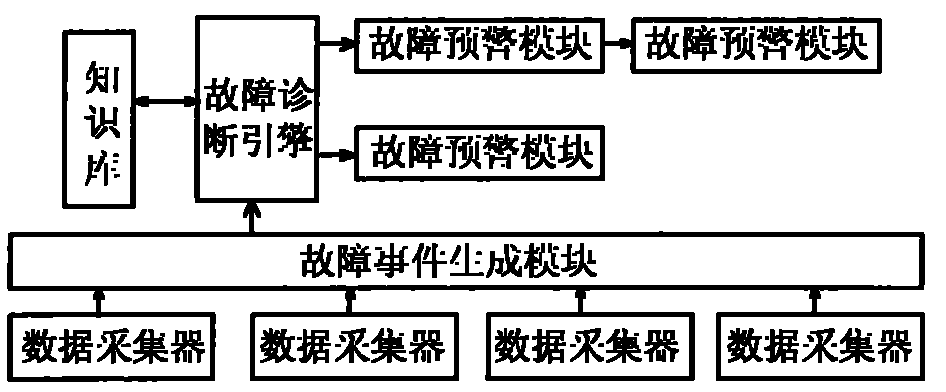

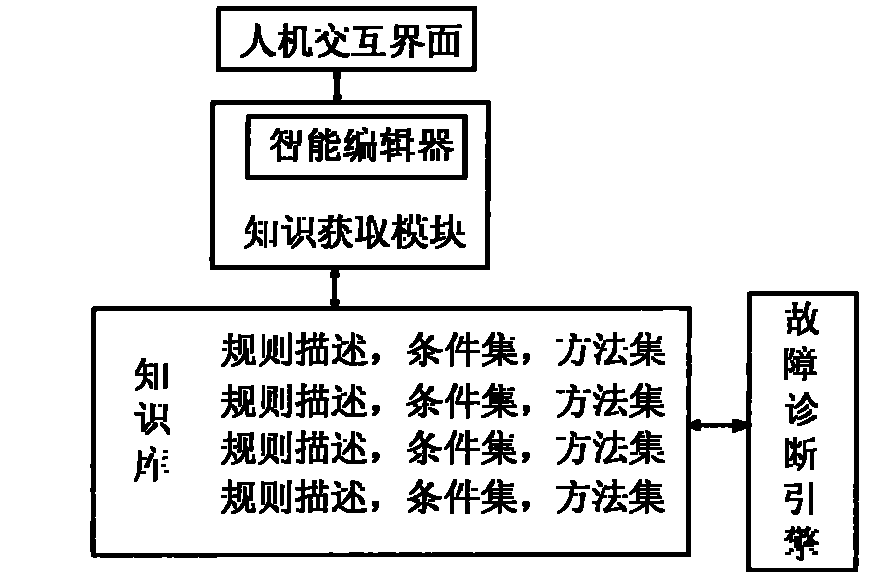

Computer fault management system based on expert system method

ActiveCN101833497AWell structured designMake full use of resourcesHardware monitoringSystems managementData acquisition

The invention provides a computer fault management system based on an expert system method, which comprises a data acquisition unit (1), a fault event generation module (2), a fault diagnosis engine (3), a knowledge base (4), a knowledge acquisition module (5), a fault isolation module (6), a fault recovery module (7), a fault early-warning module (8), a log service module (9), a fault management registration module (10) and a human-computer interaction interface (11); and a system administrator monitors and manages the data acquisition unit (1), the fault event generation module (2), the fault diagnosis engine (3), the knowledge base (4), the fault isolation module (5), the fault recovery module (6), the fault early-warning module (7) and the log service module (8) through the human-computer interaction interface (11), and accesses an intelligent editor provided by the knowledge acquisition module (5) through the human-computer interaction interface (11).

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

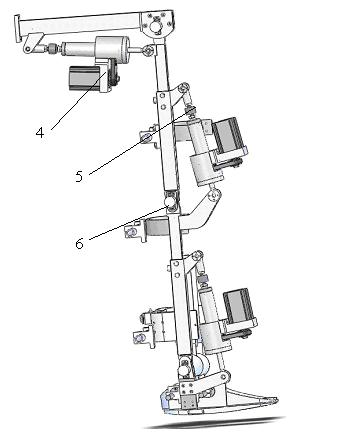

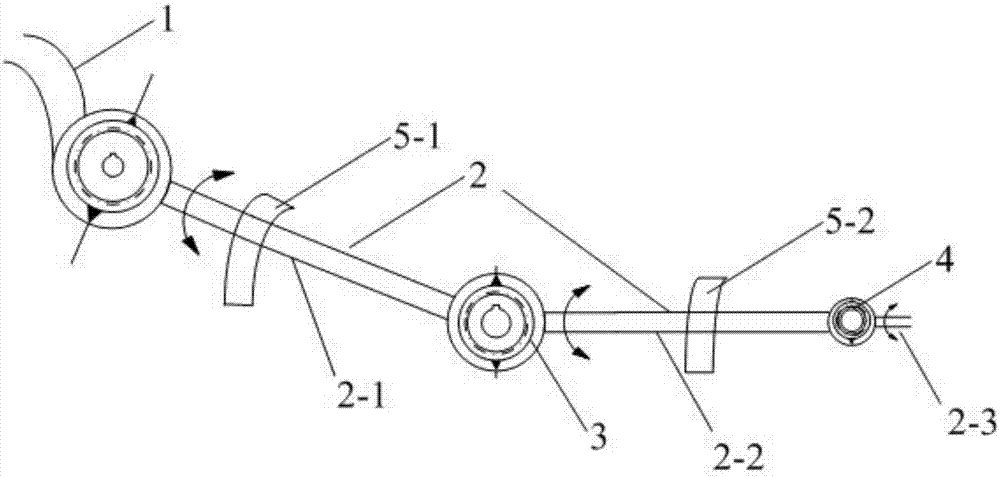

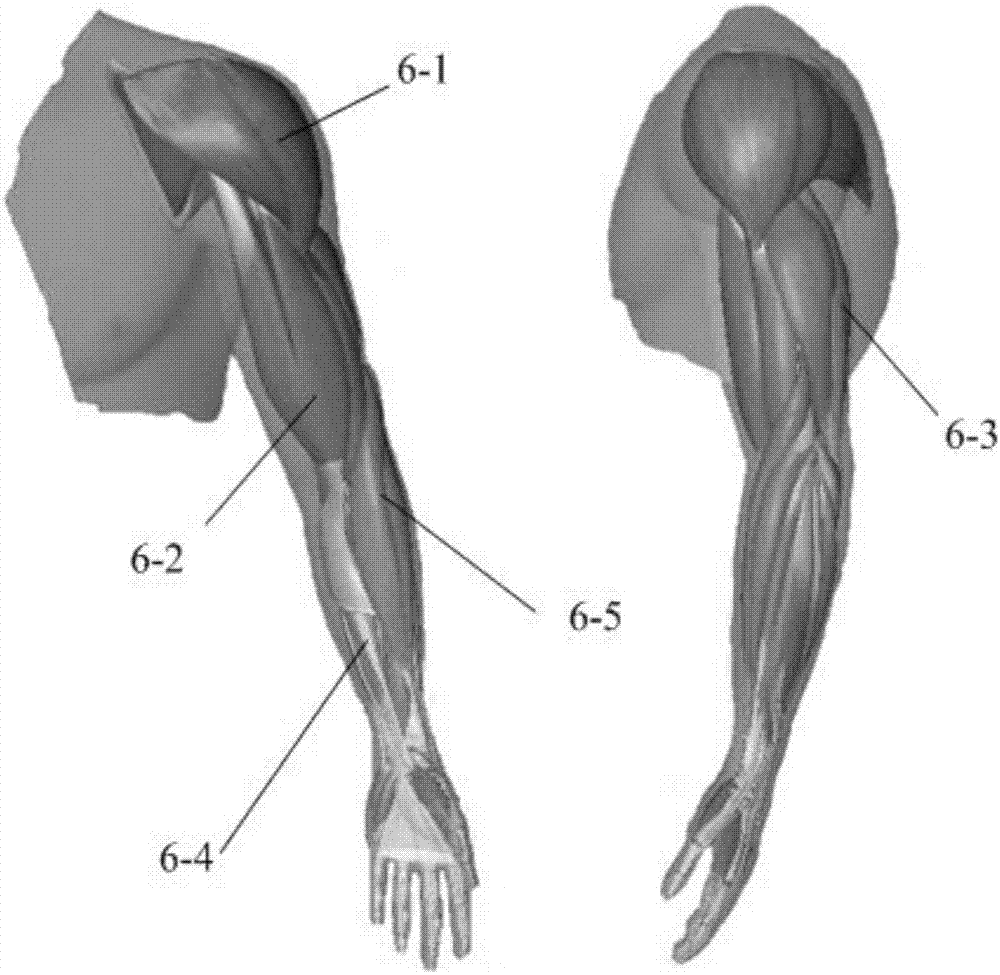

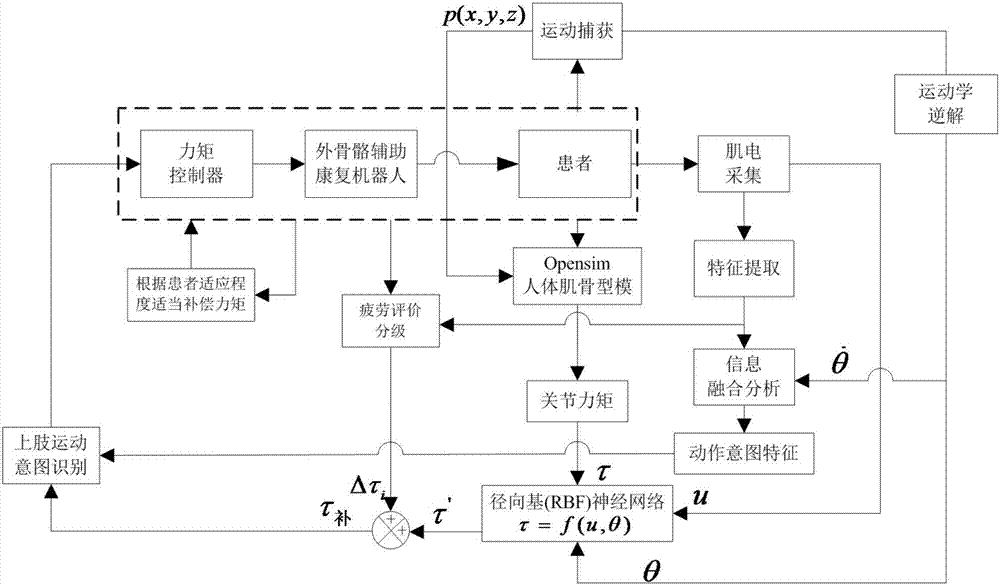

Upper limb exoskeleton rehabilitation robot control method based on radial basis neural network

ActiveCN107397649ARealize interactive intelligent rehabilitationImprove rehabilitation effectChiropractic devicesDiagnostic recording/measuringUpper limb muscleNetwork model

The invention discloses an upper limb exoskeleton rehabilitation robot control method based on a radial basis neural network. The method includes the steps that a human body upper limb musculoskeletal model is established; upper limb muscle myoelectric signals and upper limb movement data are collected, the movement data are imported into the upper limb musculoskeletal model, upper limb joint torque is obtained, the radial basis neural network is established and a neural network model is given out; the patient movement intention is recognized, the joint angular speed is subjected to fusion analysis, the result is used for recognizing the training object joint stretching state, and the limb movement intention is determined; and myoelectric signals and joint angles in affected side rehabilitation training are collected in real time, affected side joint torque is obtained through the neural network, joint torque needing to be compensated by an exoskeleton mechanical arm is calculated, myoelectric signal fatigue characteristics are analyzed, the compensation torque magnitude can be adjusted by classifying the degree of fatigue, and a torque controller can be controlled to achieve the effect that an upper limb rehabilitation robot assists patients in rehabilitation training by combining the movement intention. By means of the upper limb exoskeleton rehabilitation robot control method, the rehabilitation training process is made to be more suitable for the patients, man-machine interaction is strengthened, and the rehabilitation effect is improved.

Owner:YANSHAN UNIV

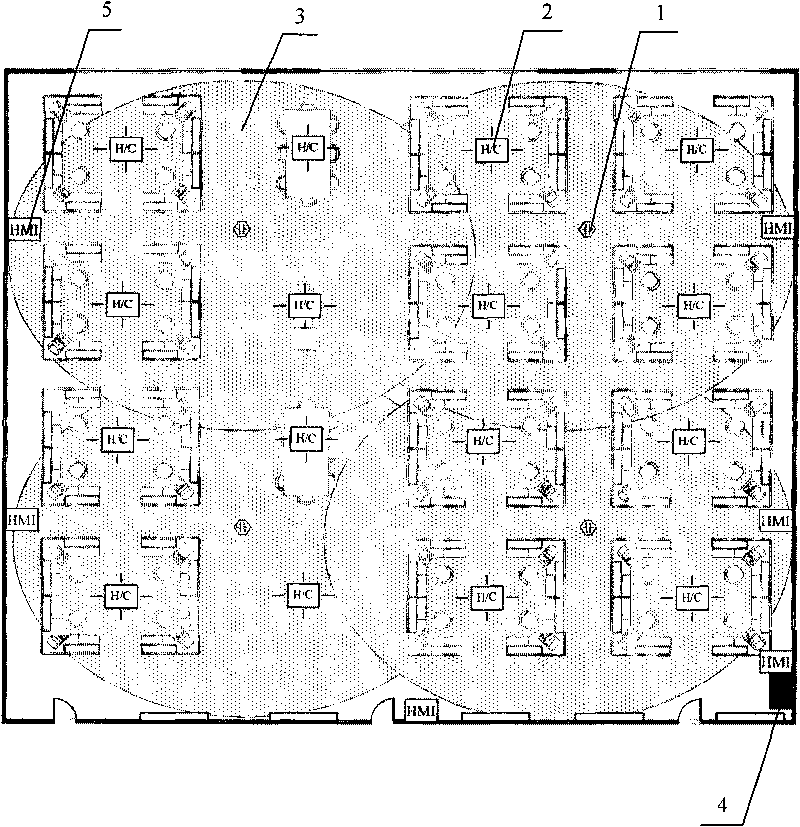

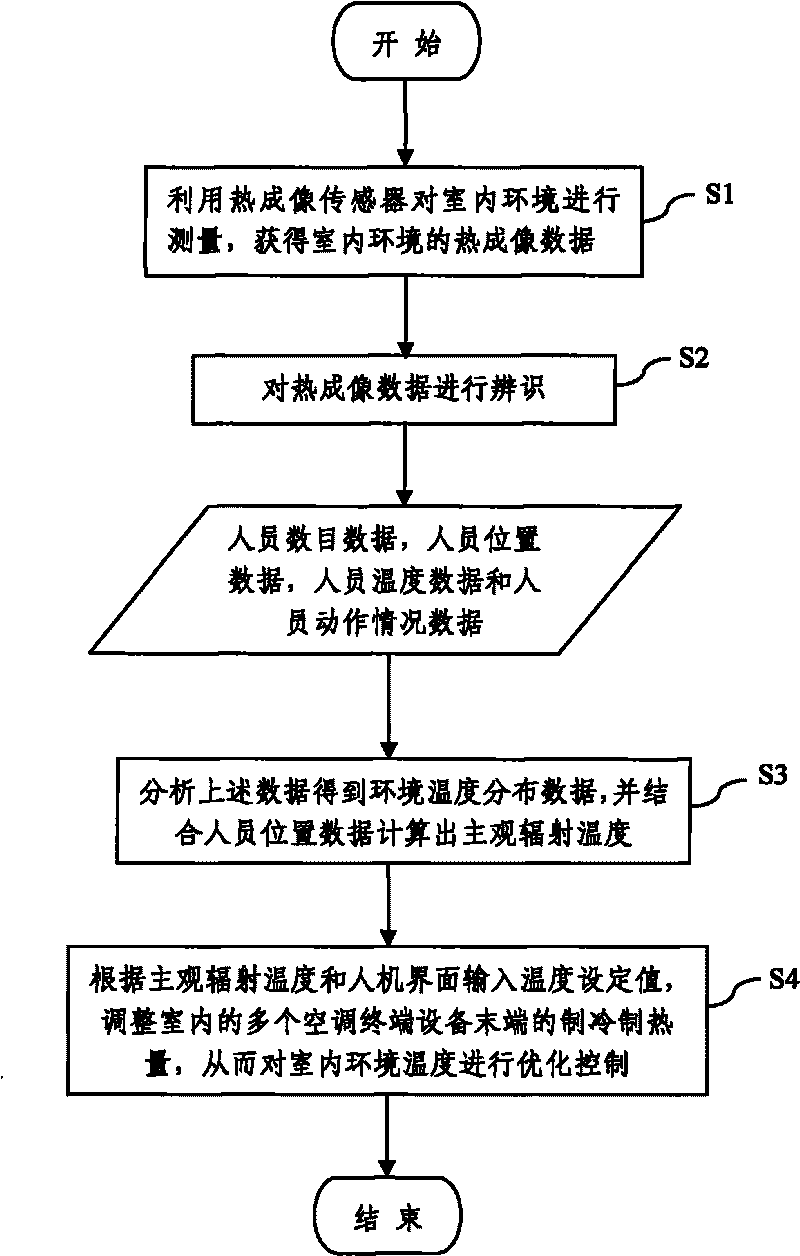

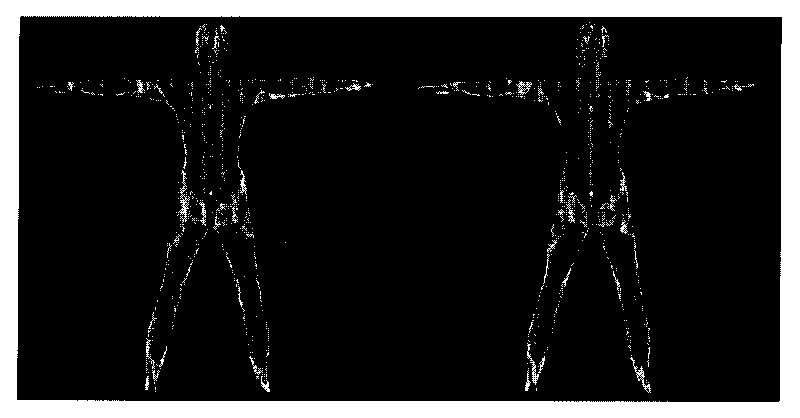

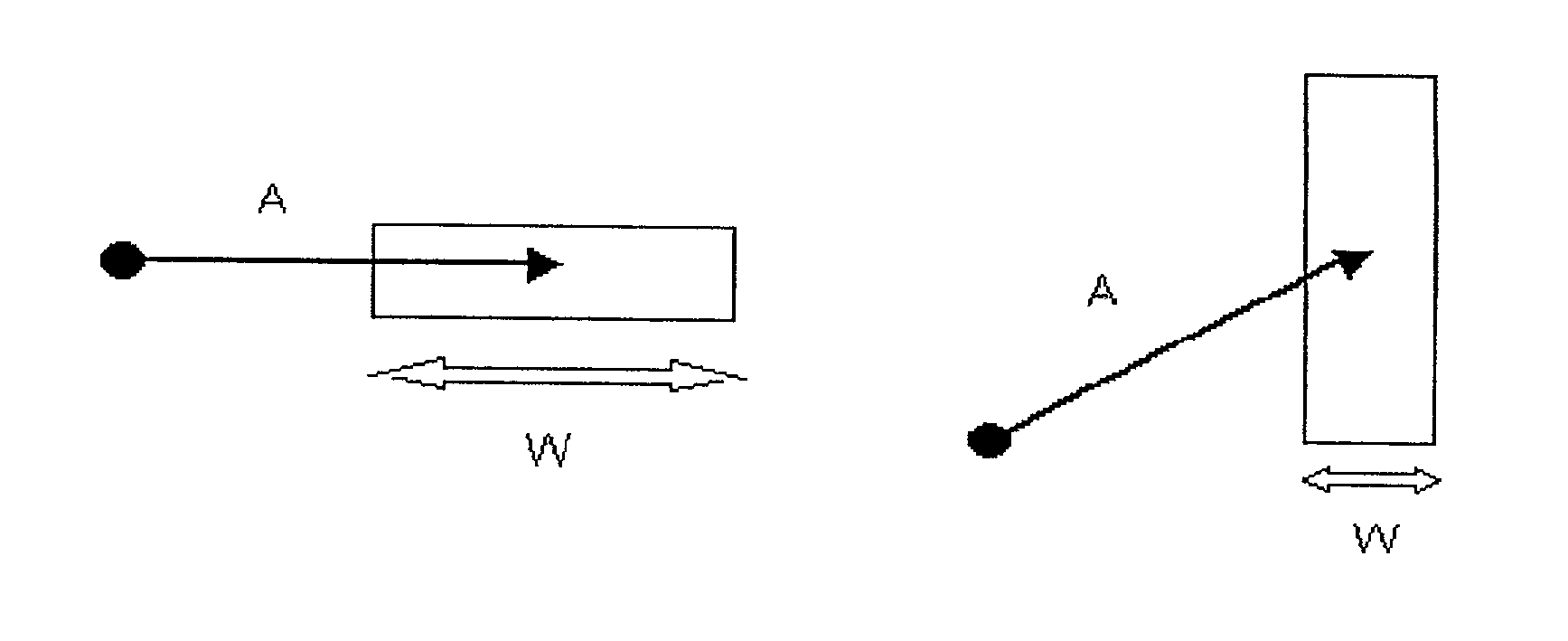

System and method for intelligently controlling indoor environment based on thermal imaging technology

InactiveCN101737907AImprove comfortImprove work efficiencySpace heating and ventilation safety systemsLighting and heating apparatusAutomatic controlInteraction interface

The invention discloses a system for intelligently controlling an indoor environment based on thermal imaging technology, which comprises a thermal imaging sensor, a human-computer interaction interface device and an area controller, wherein the thermal imaging sensor acquires thermal imaging data of the indoor environment and identifies and analyzes the thermal imaging data so as to calculate a subjective radiation temperature; and the area controller adjusts the refrigerating and heating capacities at the tail end of an indoor air conditioner according to the subjective radiation temperature and the temperature set by the human-computer interaction interface device so as to perform optimized control on the indoor environment to achieve a temperature comfortable for indoor personnel. The system and a method for intelligently controlling the indoor environment based on the thermal imaging technology can adjust the temperature of the indoor environment, improve the personnel comfort level and work efficiency, reduce the energy consumption and improve the management level of an automatic control system of a building under the condition of acquiring comprehensive environment and personnel information.

Owner:于震

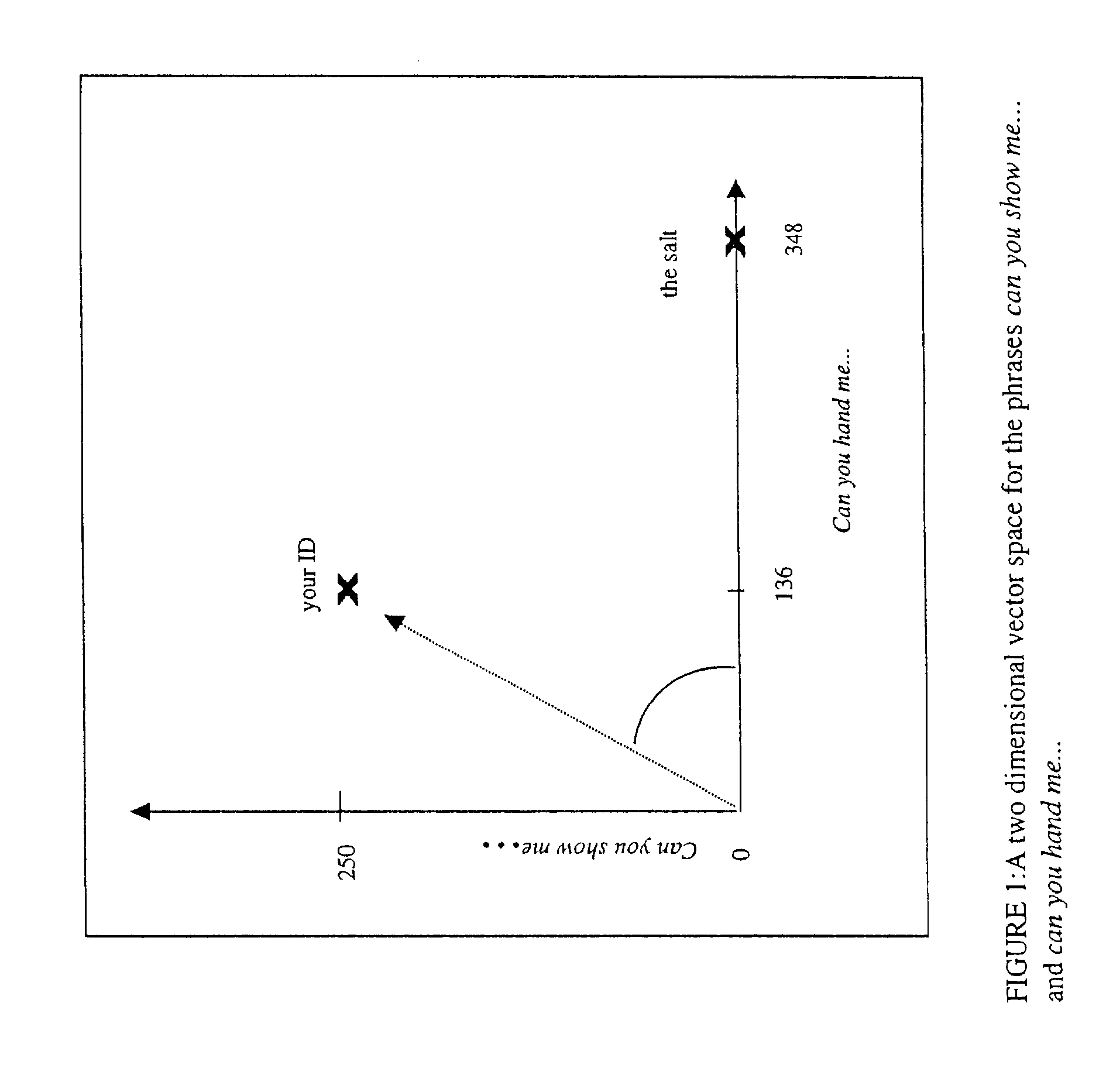

Method and system for implicitly resolving pointing ambiguities in human-computer interaction (HCI)

InactiveUS20030016252A1Accurate understandingStatic indicating devicesInput/output processes for data processingObject basedAmbiguity

A method and system for implicitly resolving pointing ambiguities in human-computer interaction by implicitly analyzing user movements of a pointer toward a user targeted object located in an ambiguous multiple object domain and predicting the user targeted object, using different categories of heuristic (statically and / or dynamically learned) measures, such as (i) implicit user pointing gesture measures, (ii) application context, and (iii) number of computer suggestions of each predicted user targeted object. Featured are user pointing gesture measures of (1) speed-accuracy tradeoff, referred to as total movement time (TMT), and, amount of fine tuning (AFT) or tail-length (TL), and, (2) exact pointer position. A particular application context heuristic measure used is referred to as containment hierarchy. The invention is widely applicable to resolving a variety of different types of pointing ambiguities such as composite object types of pointing ambiguities, involving different types of pointing devices, and which are widely applicable to essentially any type of software and / or hardware methodology involving using a pointer, such as in computer aided design (CAD), object based graphical editing, and text editing.

Owner:RAMOT AT TEL AVIV UNIV LTD

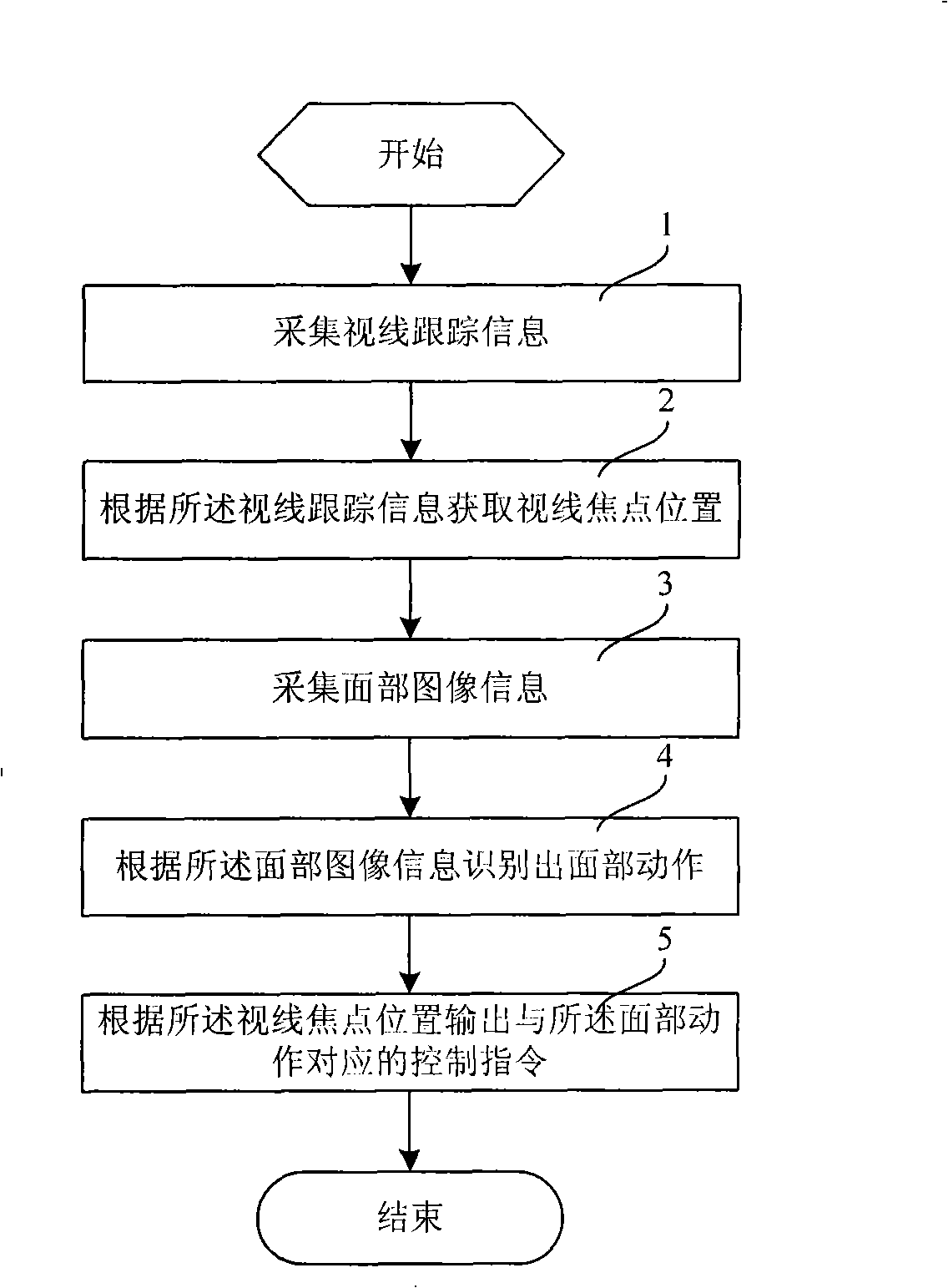

Eye tracking human-machine interaction method and apparatus

InactiveCN101311882AAccurate outputSimple protocol for interactionInput/output for user-computer interactionCharacter and pattern recognitionComputer graphics (images)Man machine

The invention relates to a view line tracking man-machine interactive method, including: view line tracking information is collected and a view line focus position is obtained according to the view line tracking information; facial image information is collected and facial action is recognized according to the facial image information; a control command corresponding to the facial action is output according to the view line focus position. the invention also relates to a view line tracking man-machine interactive device which comprises a view line tracing processing unit which is used for the view line tracking operation, a facial action recognition unit for collecting the facial image information and recognizing the facial action according to the facial image information and a control command output unit which is respectively connected with the view line tracking processing unit and the facial action recognition unit and is used for outputting the control command corresponding to the facial action according to the view line focus position. the embodiment of the invention provides a non-contact view line tracking man-machine interactive method and a device with interaction protocols.

Owner:HUAWEI TECH CO LTD

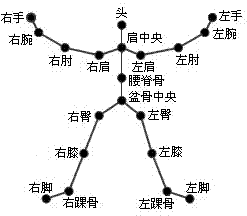

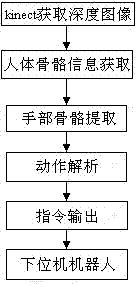

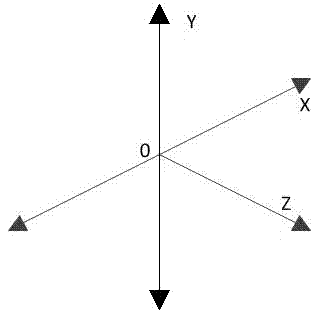

Man-computer interaction method for intelligent human skeleton tracking control robot on basis of kinect

InactiveCN103399637AInteractive natureInput/output for user-computer interactionGraph readingHuman bodyDepth of field

The invention provides a man-computer interaction method for an intelligent human skeleton tracking control robot on the basis of kinect. The man-computer interaction method includes the steps of detecting actions of an operator through a 3D depth sensor, obtaining data frames, converting the data frames into an image, splitting objects and background environments similar to a human body in the image, obtaining depth-of-field data, extracting human skeleton information, identifying different positions of the human body, building a 3D coordinate of joints of the human body, identifying rotation information of skeleton joints of the two hands of the human body, identifying which hand of the human body is triggered according to catching of changes of angles of the different skeleton joints, analyzing different action characteristics of the operator, using corresponding characters as control instructions which are sent to a robot of a lower computer, receiving and processing the characters through an AVR single-chip microcomputer master controller, controlling the robot of the lower computer to carry out corresponding actions, and achieving man-computer interaction of the intelligent human skeleton tracking control robot on the basis of the kinect. According to the method, restraints of traditional external equipment on man-computer interaction are eliminated, and natural man-computer interaction is achieved.

Owner:NORTHWEST NORMAL UNIVERSITY

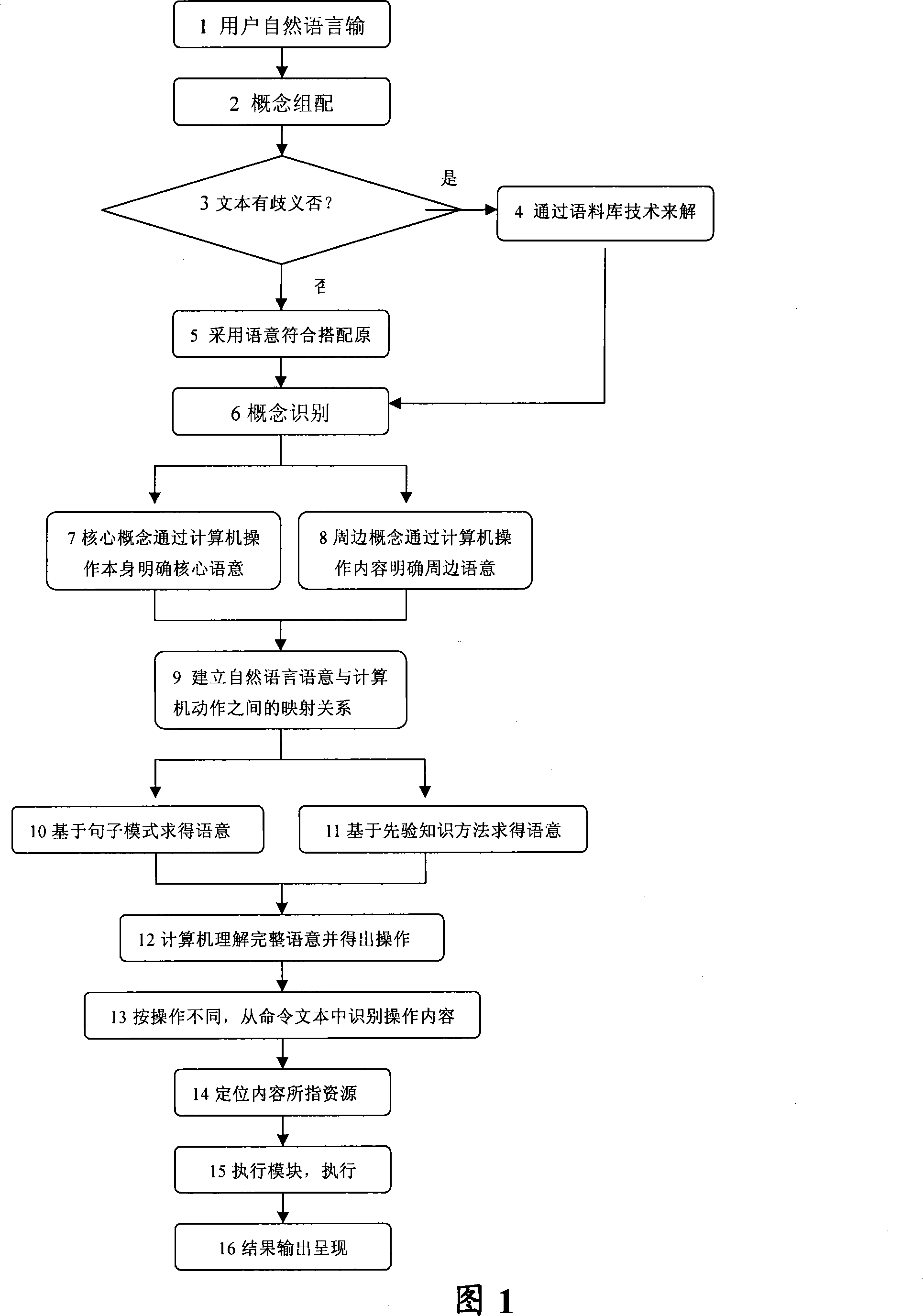

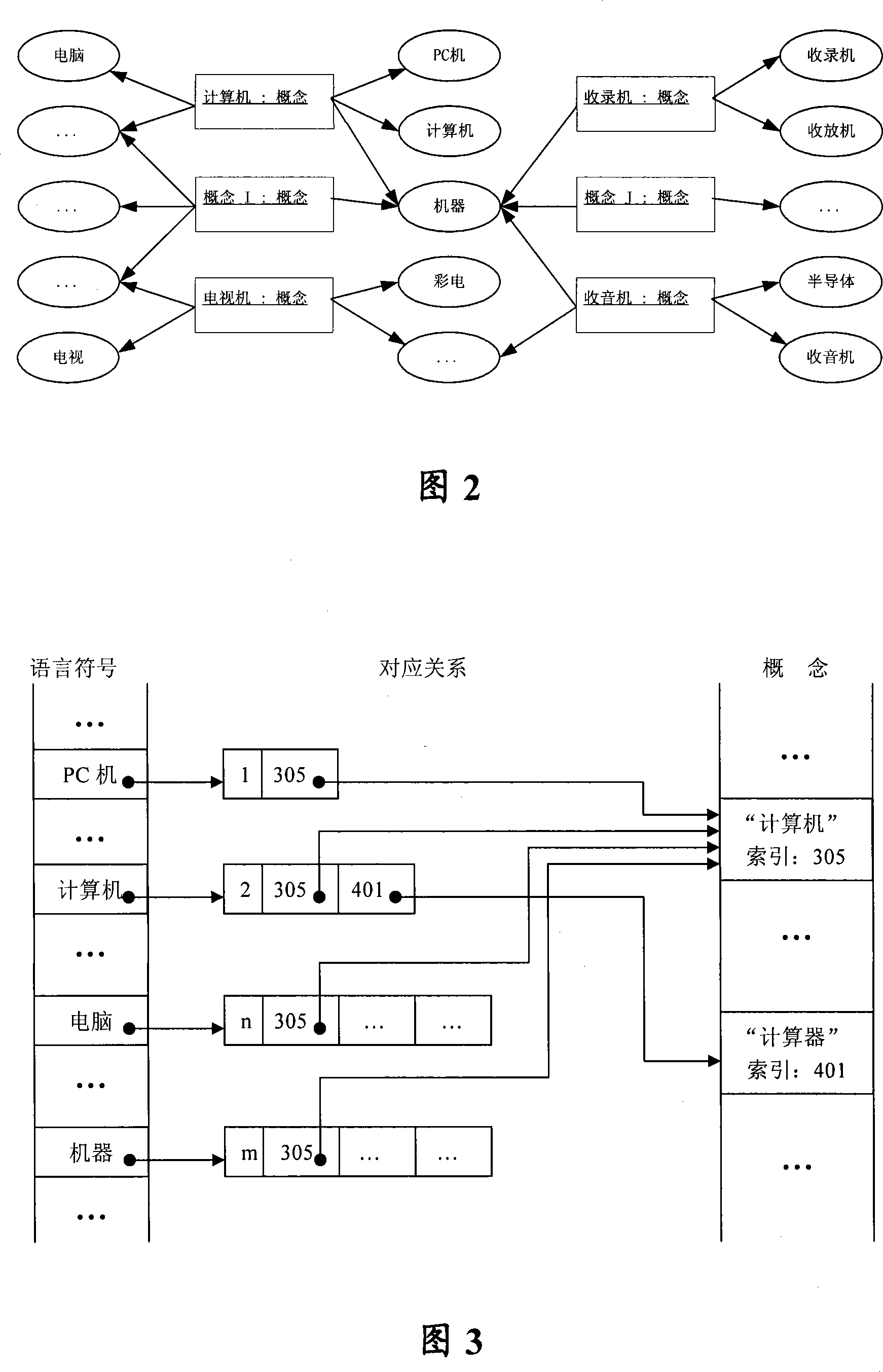

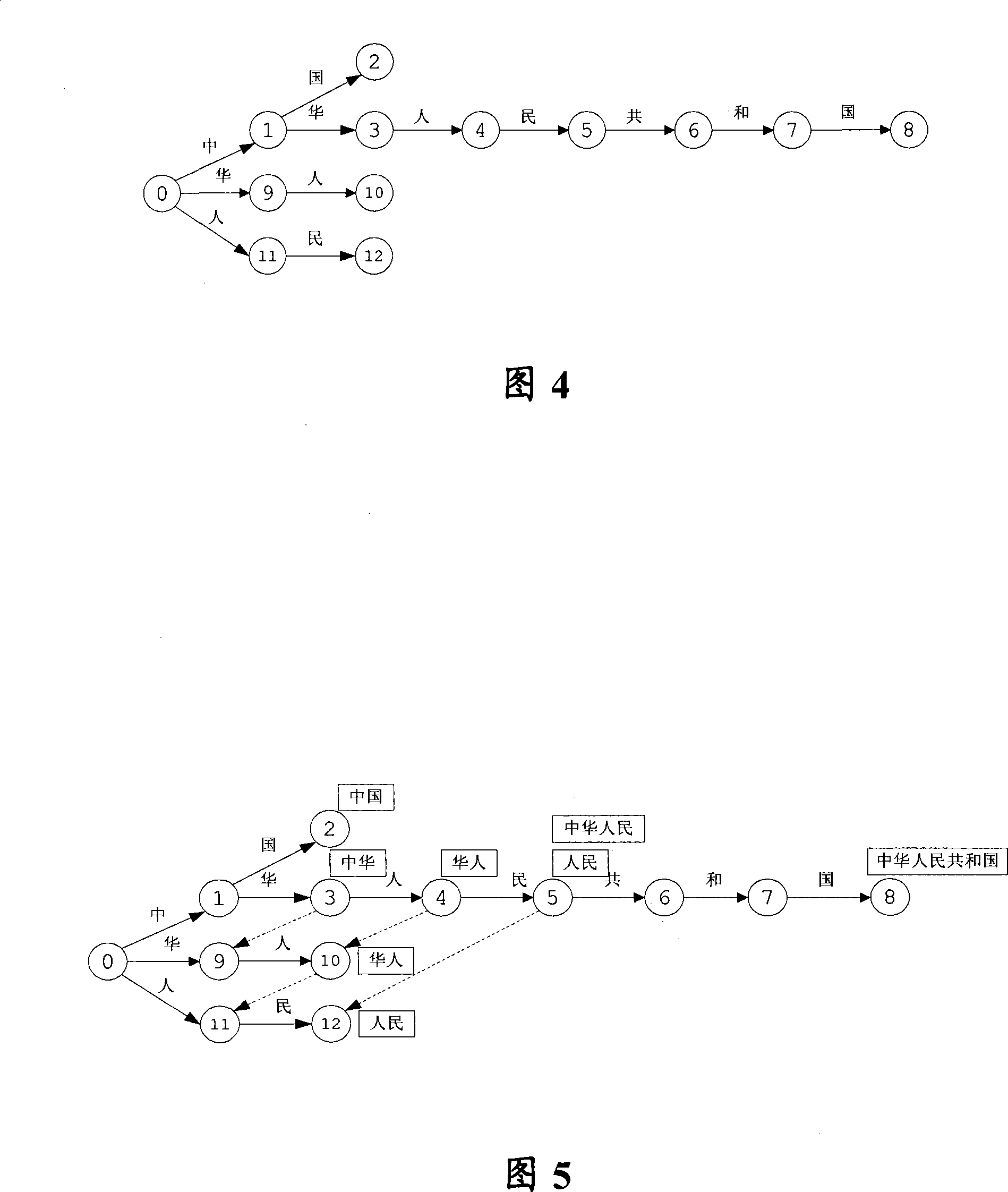

Free-running speech comprehend method and man-machine interactive intelligent system

InactiveCN101178705AEasy to understandCorrect answerSpeech recognitionSpecial data processing applicationsNatural language understandingAmbiguity

The invention discloses natural language understanding method, comprising the steps that: a natural language is matched with a conceptual language symbol after receiving the natural language input by the customer, and then a conception is associated with the conceptual language symbol; a conception which is most suitable to the current language content is selected by being compared with the preset conception dictionary, and then whether the conception is ambiguous is judged; and if the answer is YES, the conception is obtained by a language data base, entering the next step; and if the answer is NO, the conception is obtained based on the principle of language content matched, entering to the next step; a core conception and a sub conception are obtained by a conception reorganization, wherein, the core language meaning of the core conception is defined by an operation of the computer while the sub language meaning of the sub conception is defined by the operation content of the computer; and the complete language meaning is obtained by combining the core language meaning with the sub language meaning. The invention also provides a human-computer interaction intelligent system based on the method provided by the invention. The invention recognizes the natural sound input by the customer more accurately, thereby providing the customer with more intelligent and perfect services.

Owner:CHINA TELECOM CORP LTD

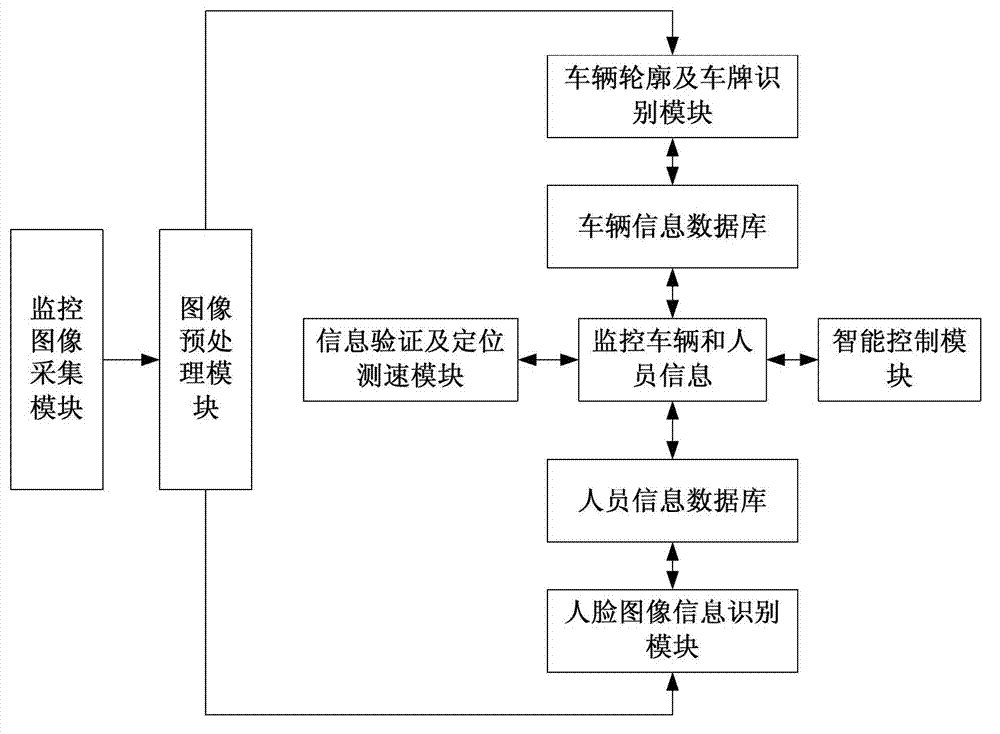

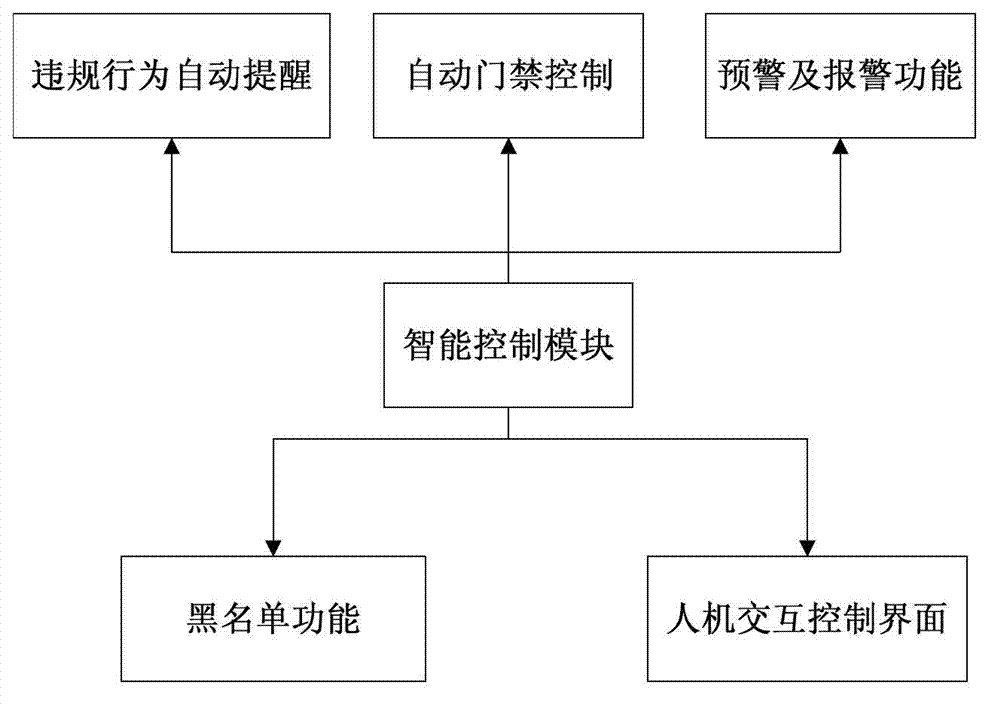

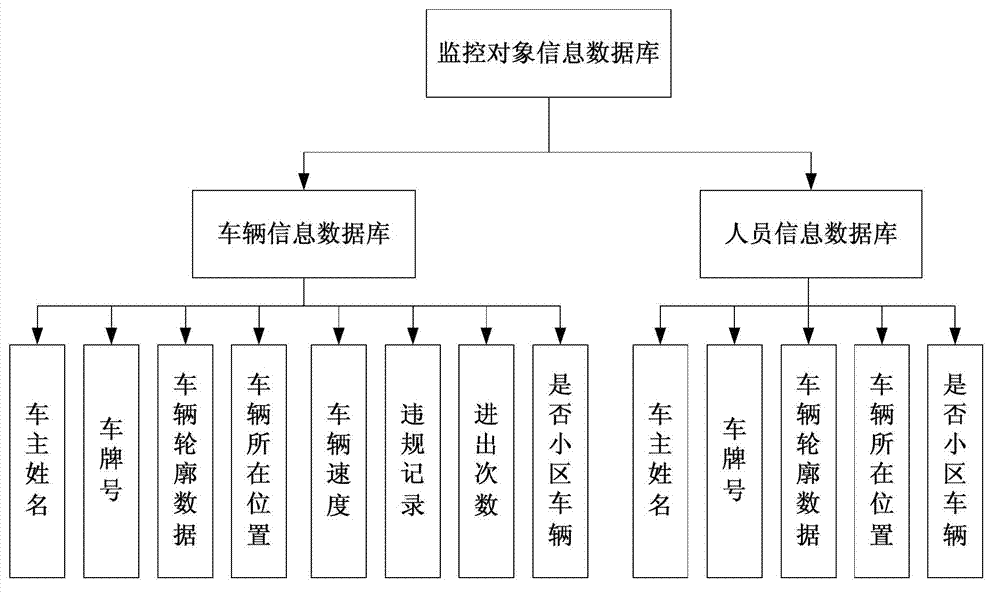

Intelligent system for monitoring vehicles and people in community

InactiveCN103093528ARealize fully automatic intelligent monitoringReal-time managementRoad vehicles traffic controlIndividual entry/exit registersIntelligent managementComputer vision

The invention discloses an intelligent system for monitoring vehicles and people in a community. The intelligent system comprises a monitor image collection module, a monitor image pre-processing module, a vehicle contour and license plate image information recognition module, a vehicle information database, a face image information recognition module, a people information database, an image information identification verification and positioning speed-measurement module and an intelligent management module. The monitor image collection module collects image information of the vehicles and the people, the monitor image pre-processing module pre-processes the image information, the vehicle contour and license plate image information recognition module processes, extracts and recognizes vehicle image information, and the face image information recognition module extracts and recognizes obtained people face information; and the image information identification verification and positioning speed-measurement module performs identification verification an positioning testing, and an intelligent control module is used for human-machine interactive interface operation and intelligent processing. The intelligent system monitors the vehicles and the people in the community and performs entrance guard management, thereby guaranteeing the trip safety of the people in the community.

Owner:SUN YAT SEN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com