Patents

Literature

628 results about "Gaze directions" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Geometry of gaze direction. Gaze direction is the vector positioned along the visual axis, pointing from the fovea of the looker through the center of the pupil to the gazed-at spot; binocular looking involves two such vectors, but for simplicity I will disregard here the fact their directions are somewhat different.

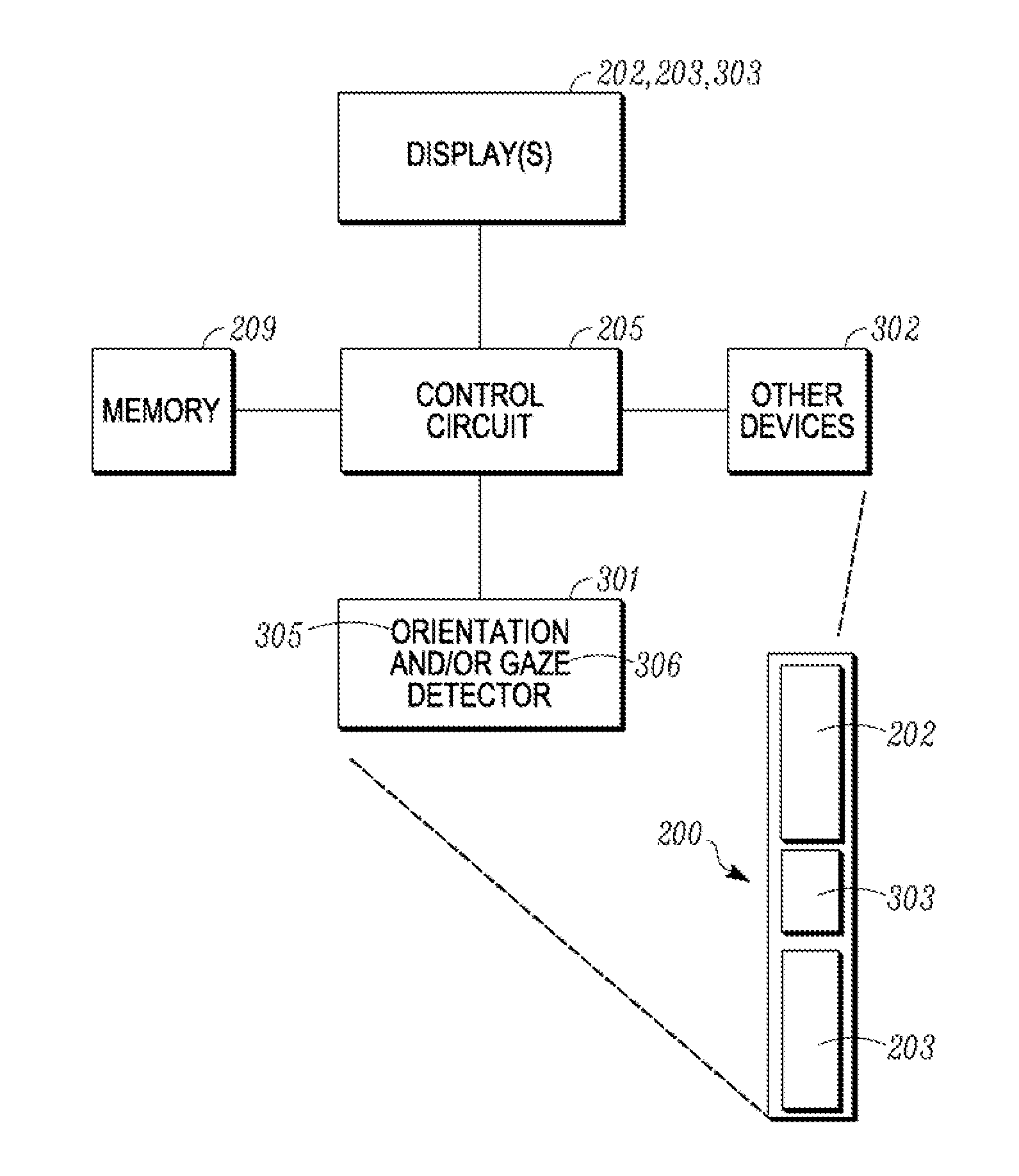

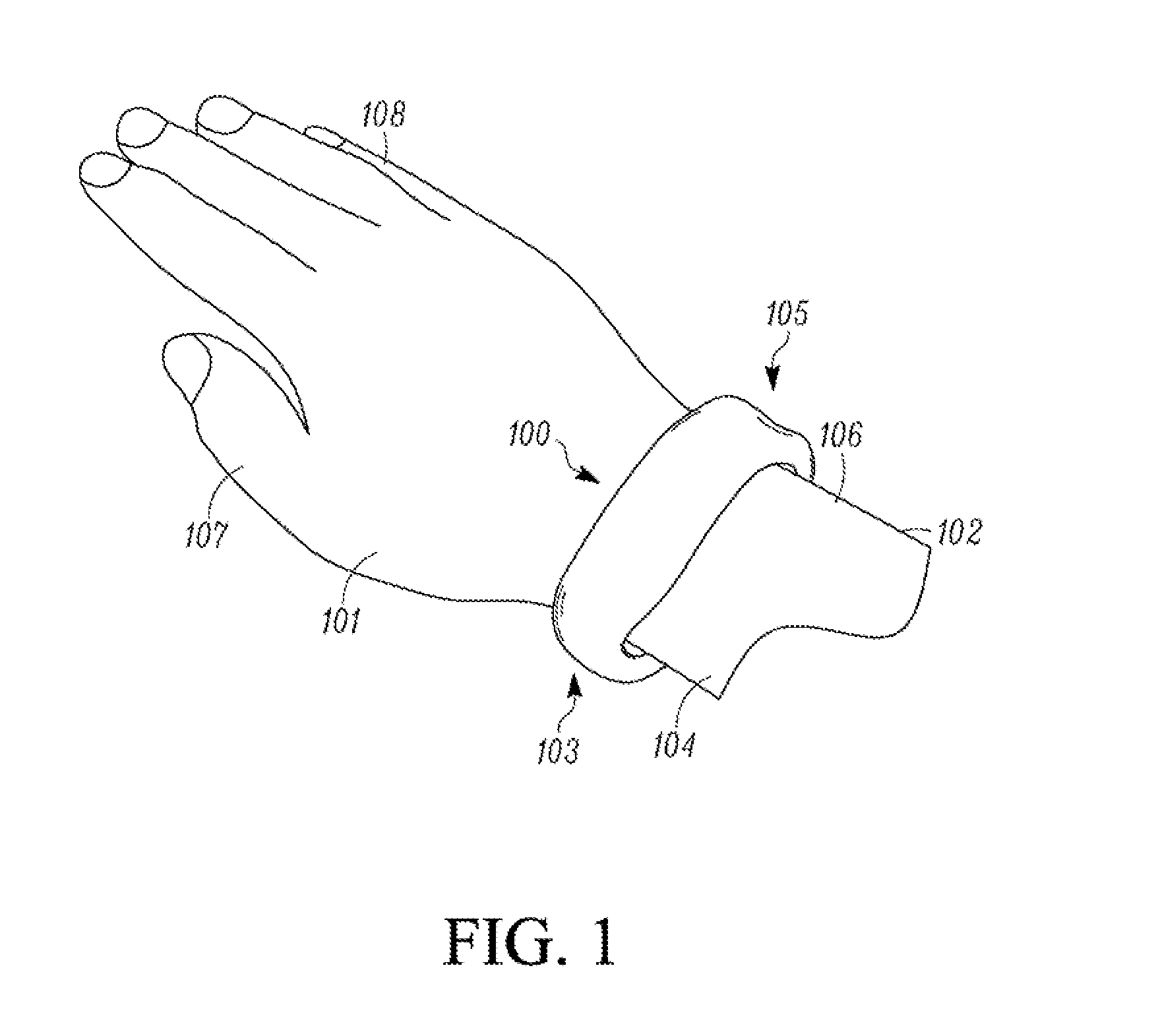

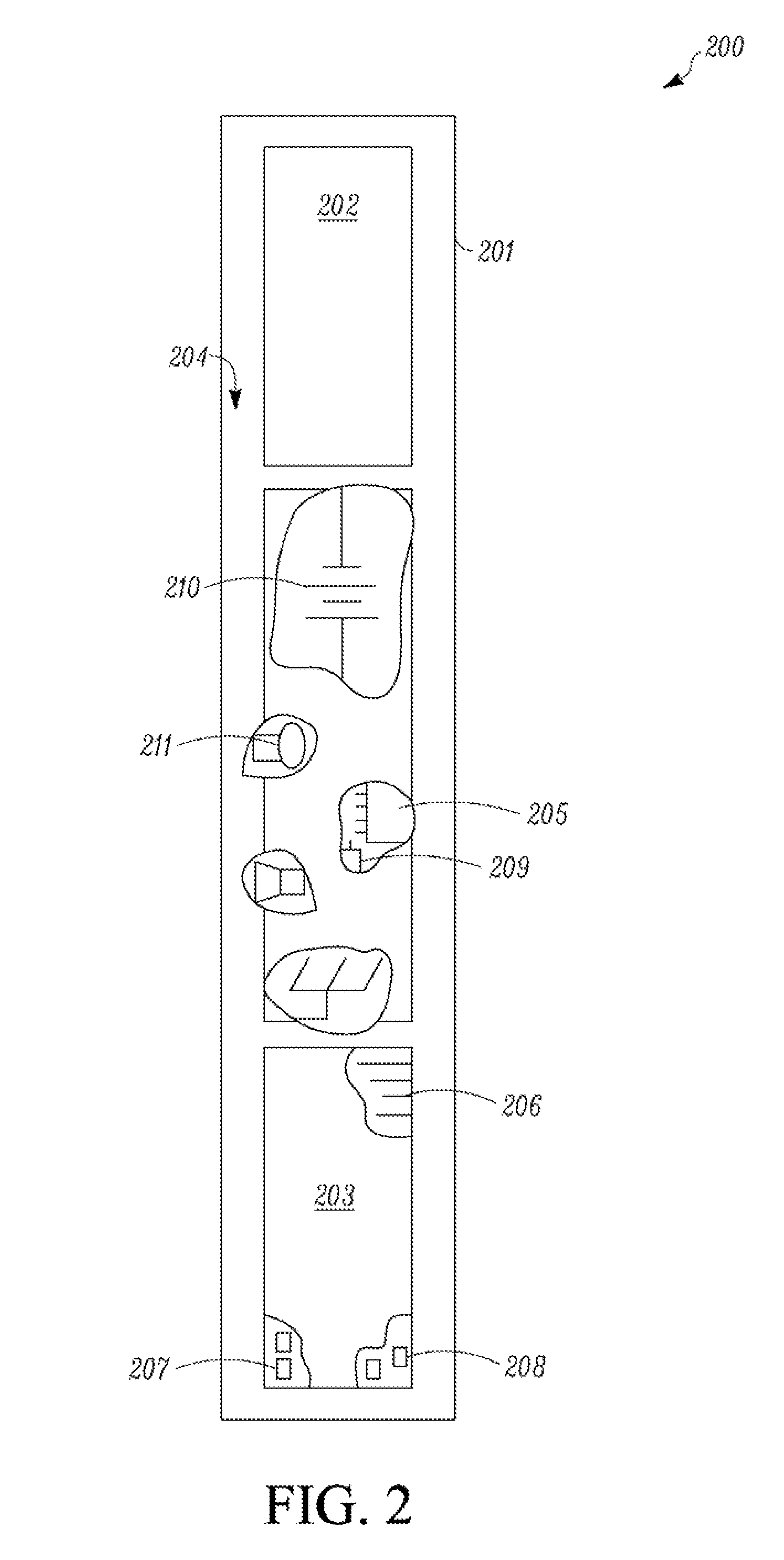

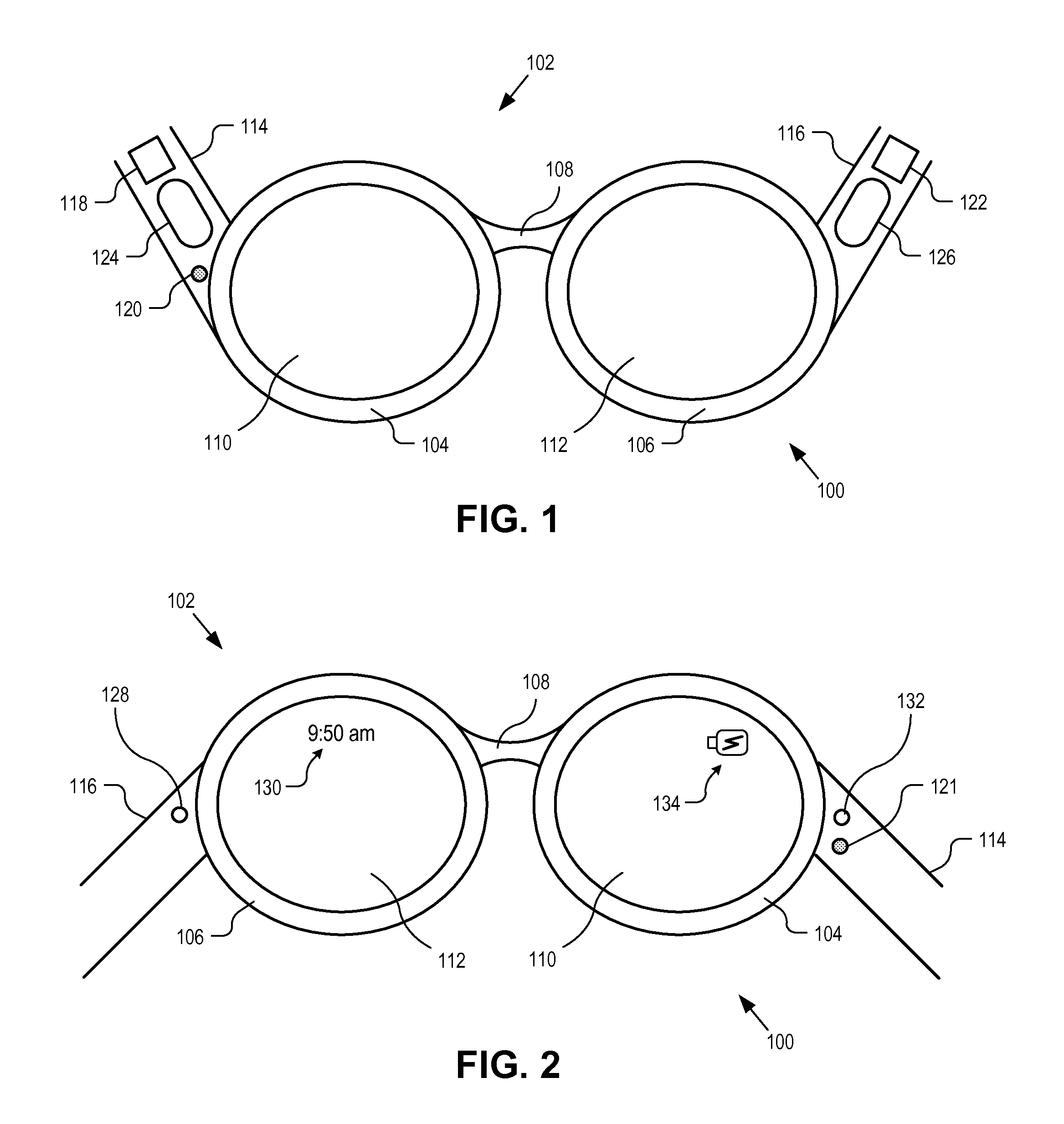

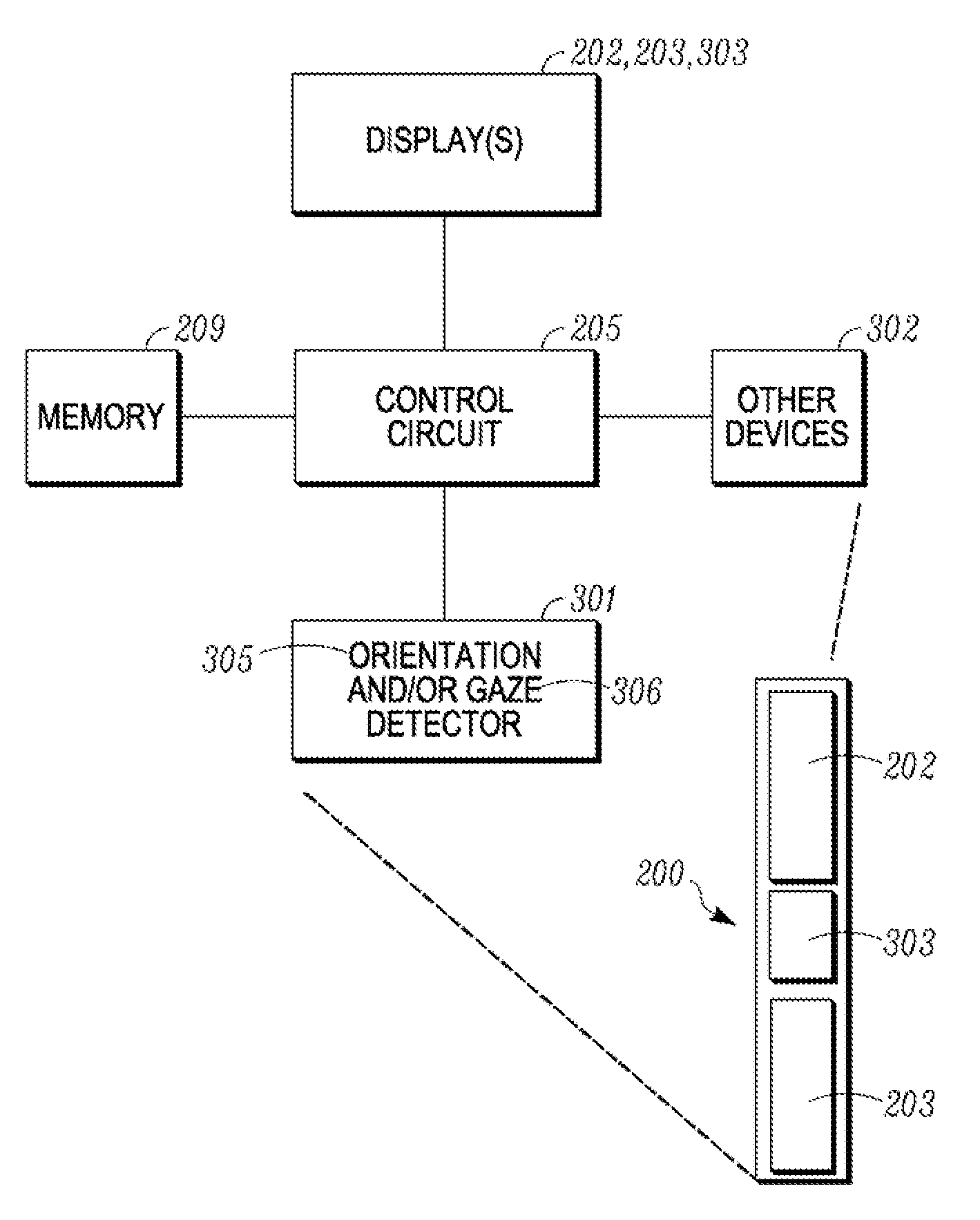

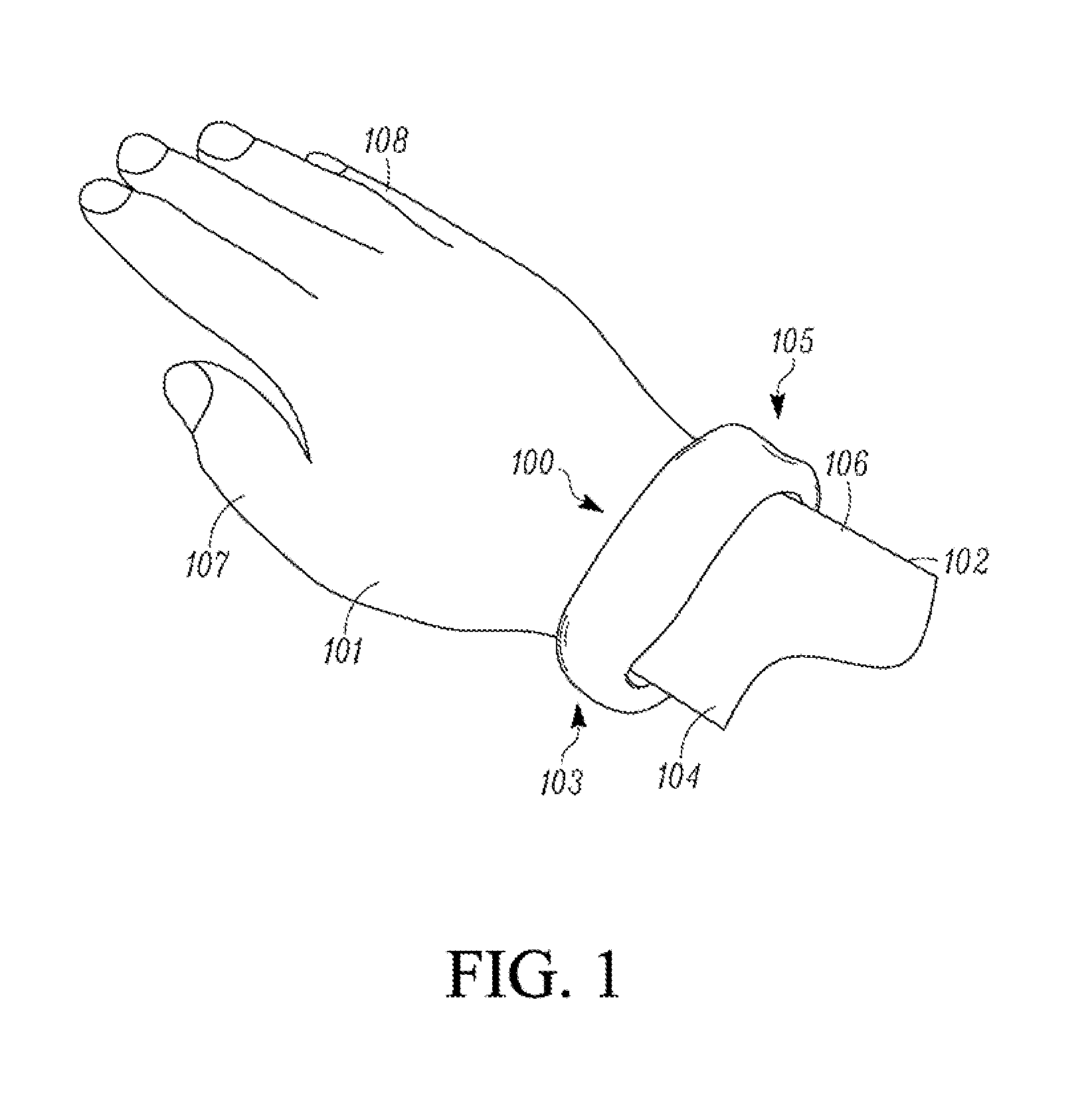

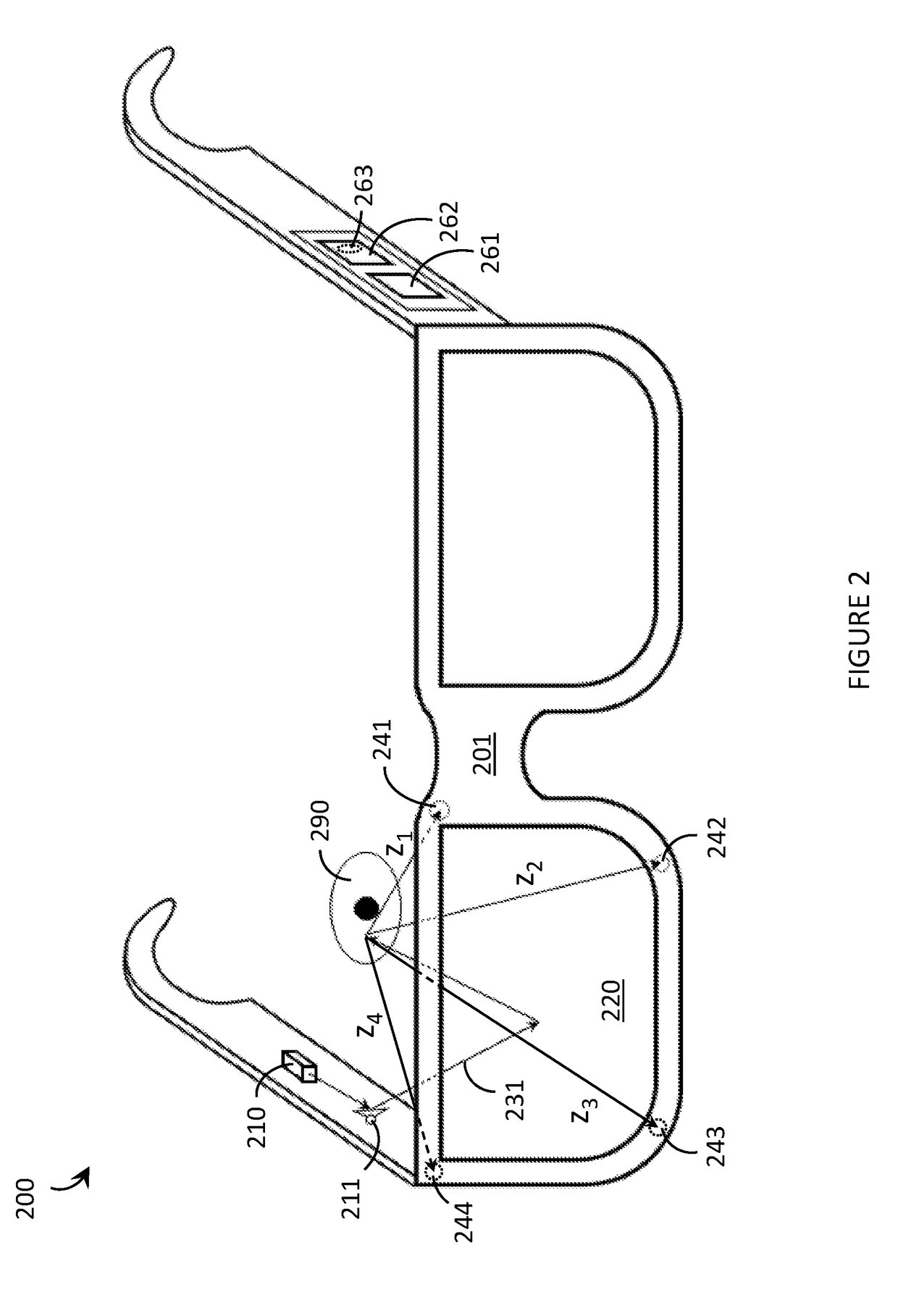

Wearable display device, corresponding systems, and method for presenting output on the same

ActiveUS20130222270A1Power managementDevices with multiple display unitsDisplay deviceHuman–computer interaction

An electronic device can include detectors for altering the presentation of data on one or more displays. In a wearable electronic device, a flexible housing can be configured to enfold about an appendage of a user, such as a user's wrist. A display can disposed along a major face of the flexible housing. A control circuit can be operable with the display. A gaze detector can be included to detect a gaze direction, and optionally a gaze cone. An orientation detector can be configured to detect an orientation of the electronic device relative to the user. The control circuit can alter a presentation of data on the display in response to a detected gaze direction, in response to detected orientation of the wearable electronic device relative to the user, in response to touch or gesture input, or combinations thereof. Secondary displays can be hingedly coupled to the electronic device.

Owner:GOOGLE TECH HLDG LLC

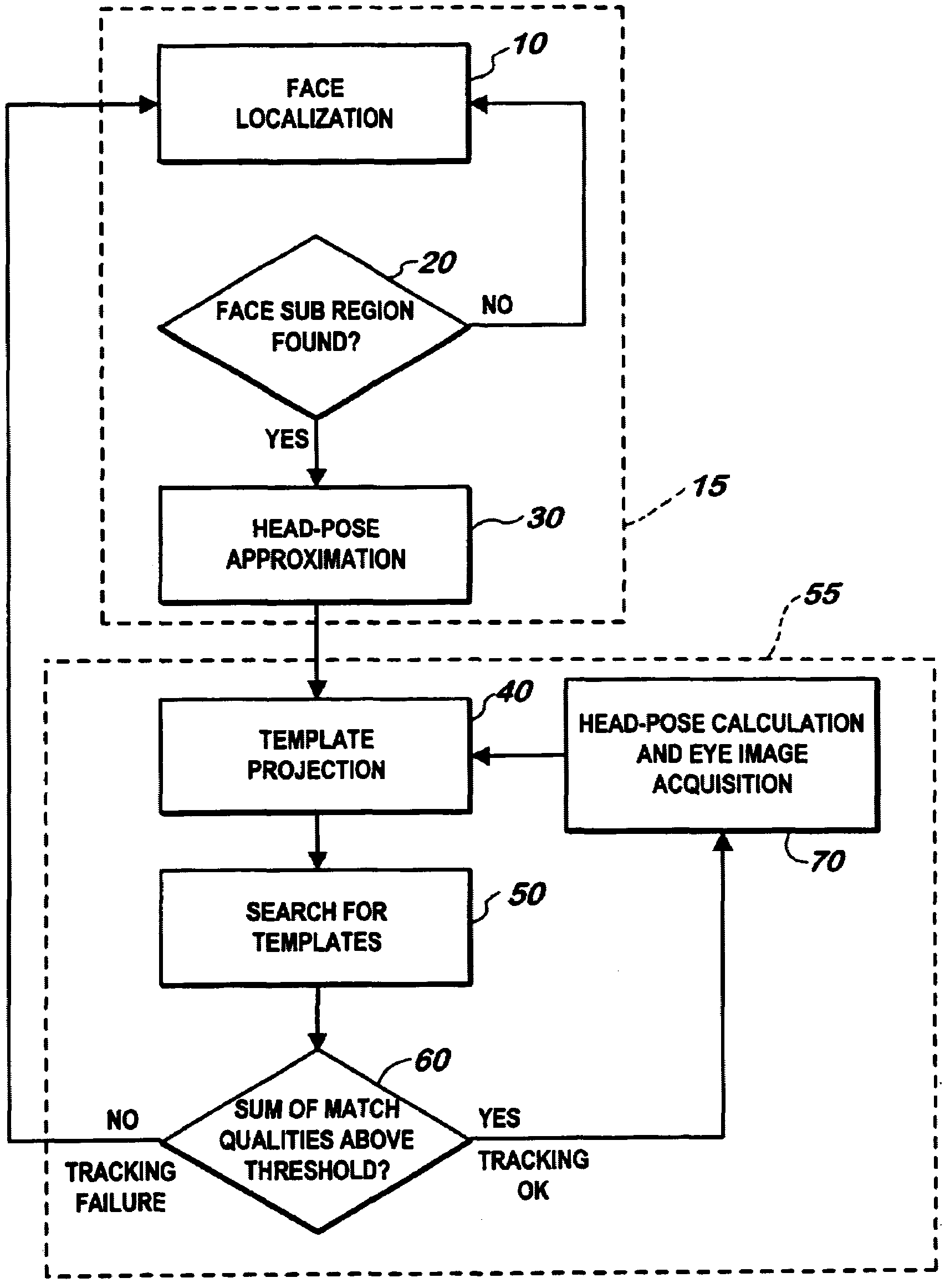

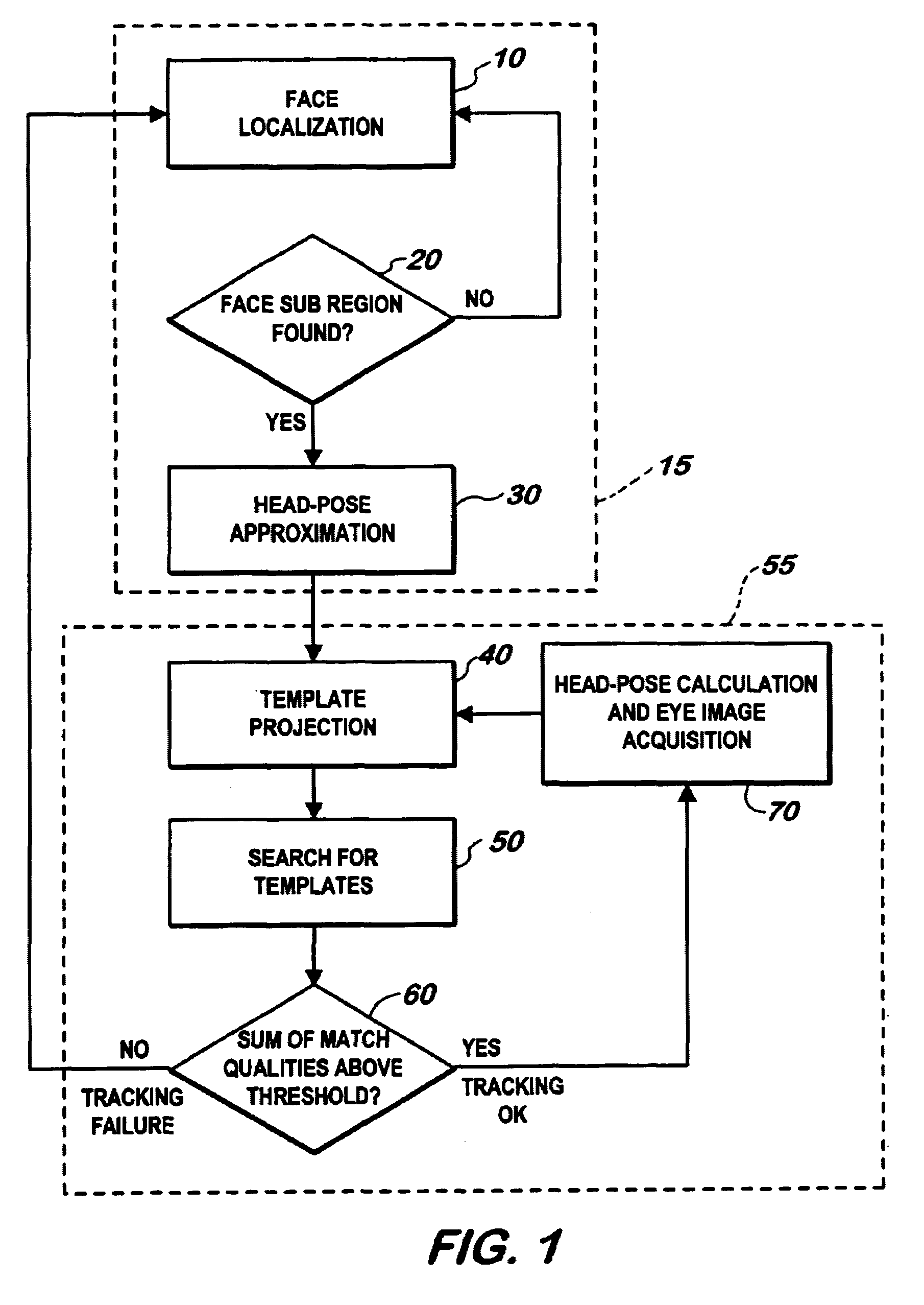

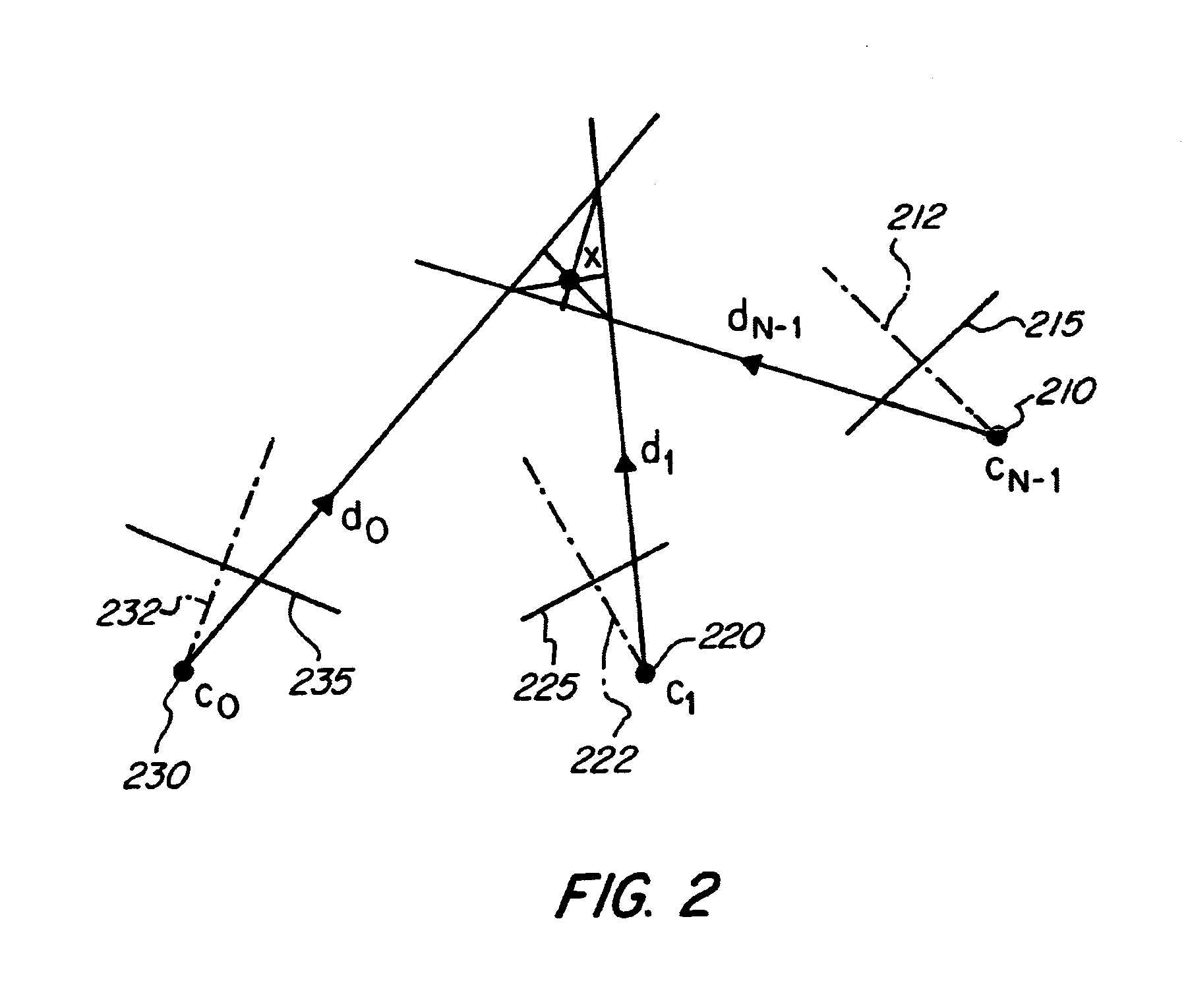

Facial image processing system

InactiveUS7043056B2Image analysisCharacter and pattern recognitionImaging processingPostural orientation

A method of determining an eye gaze direction of an observer is disclosed comprising the steps of: (a) capturing at least one image of the observer and determining a head pose angle of the observer; (b) utilizing the head pose angle to locate an expected eye position of the observer, and (c) analyzing the expected eye position to locate at least one eye of the observer and observing the location of the eye to determine the gaze direction.

Owner:NXP BV +1

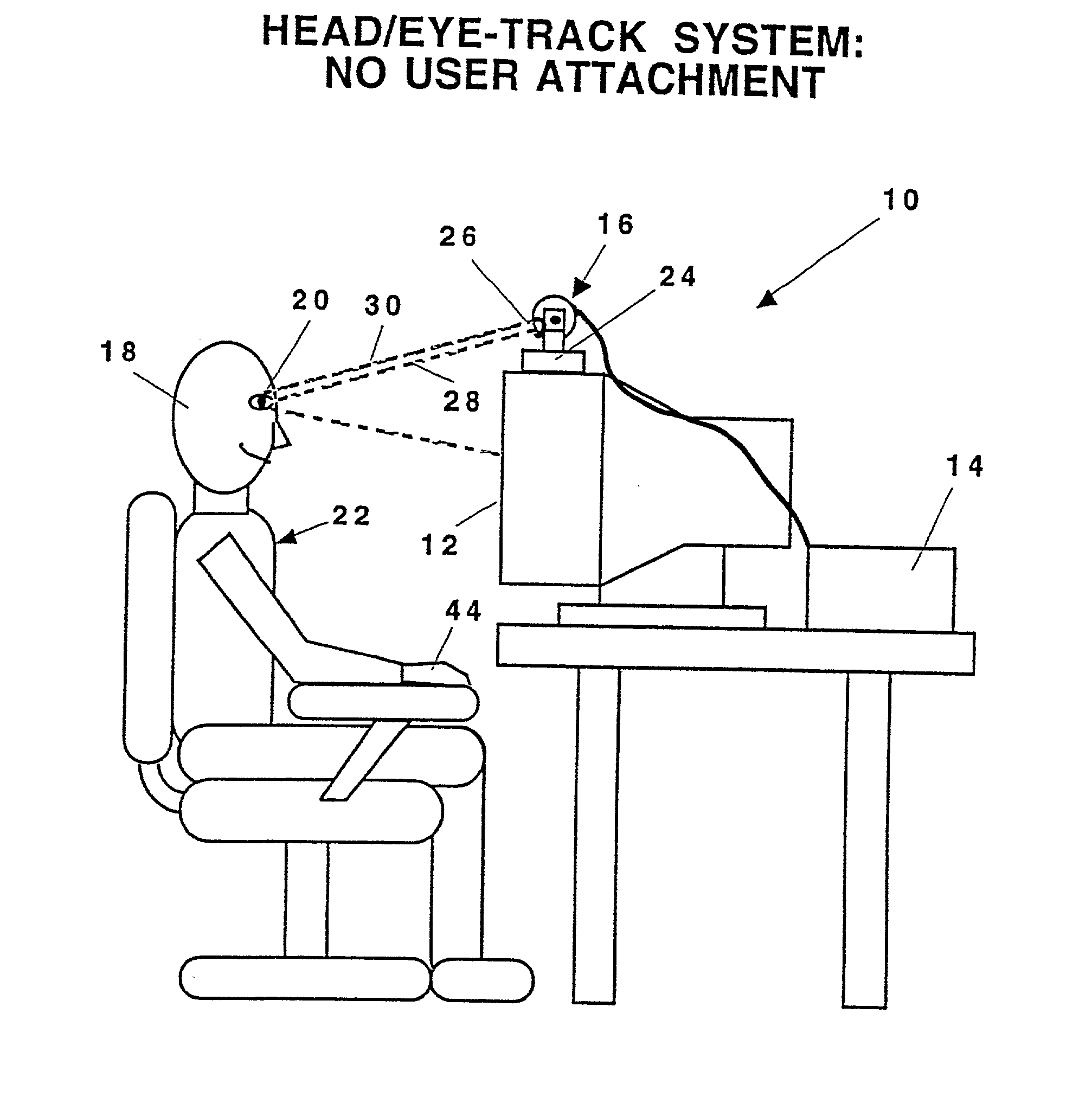

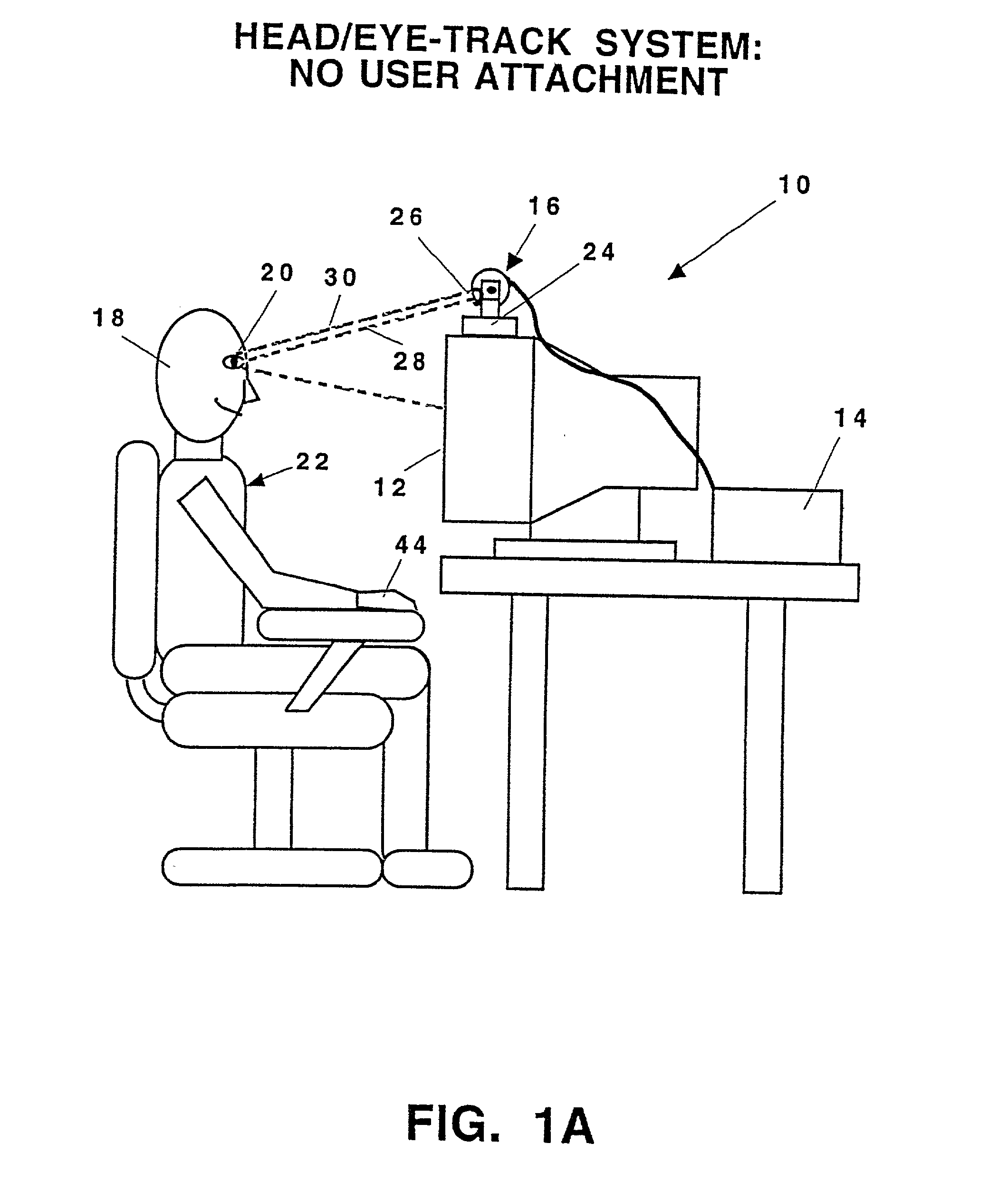

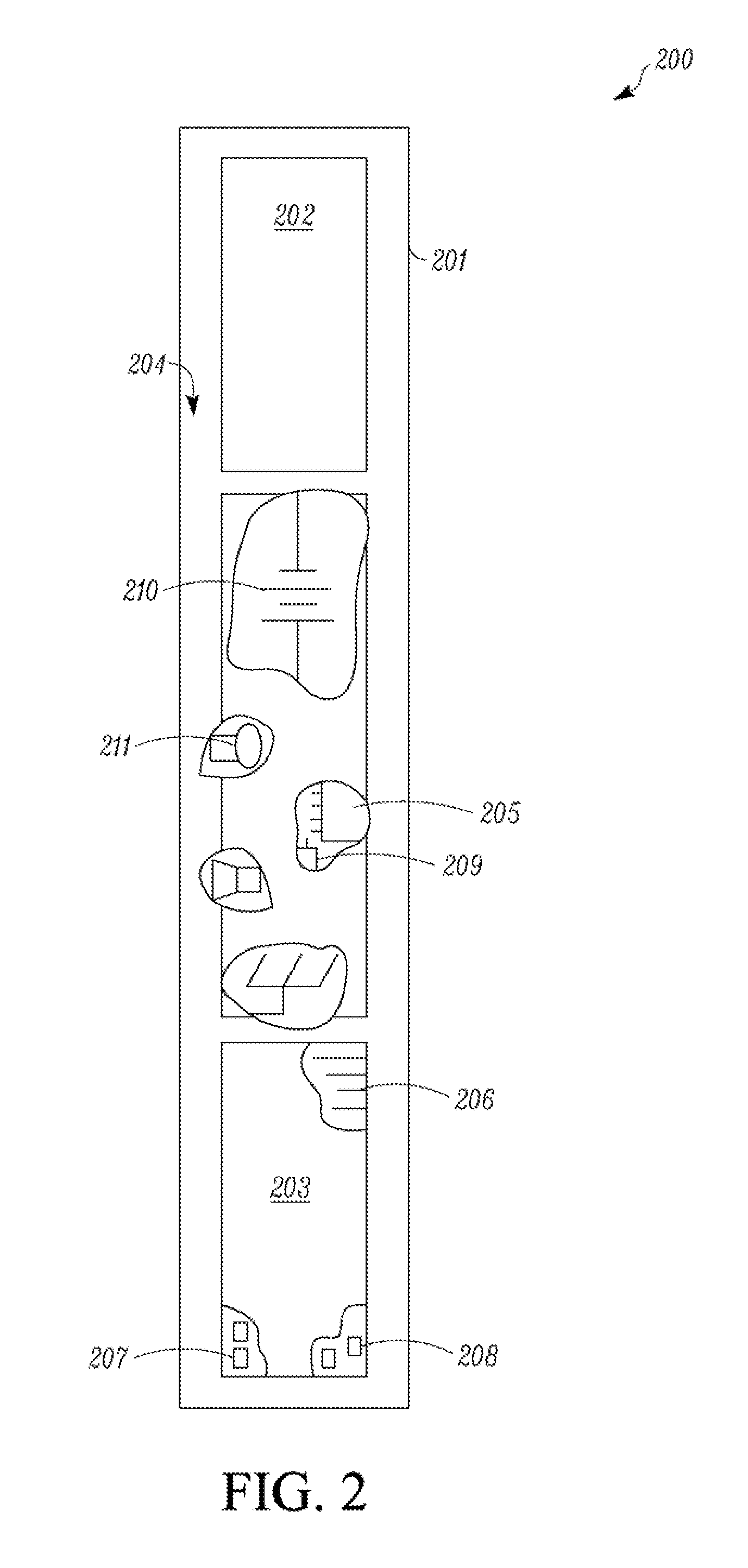

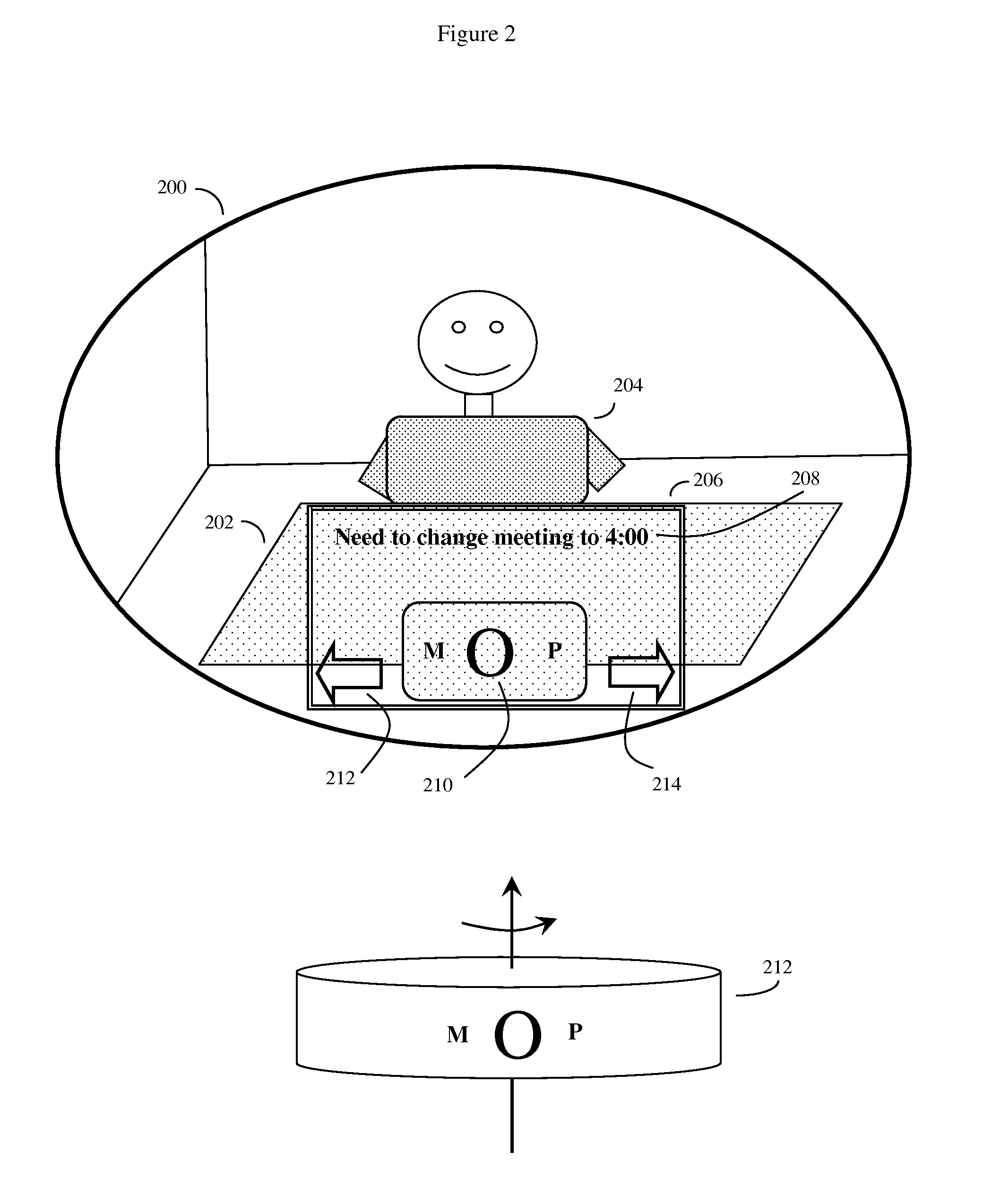

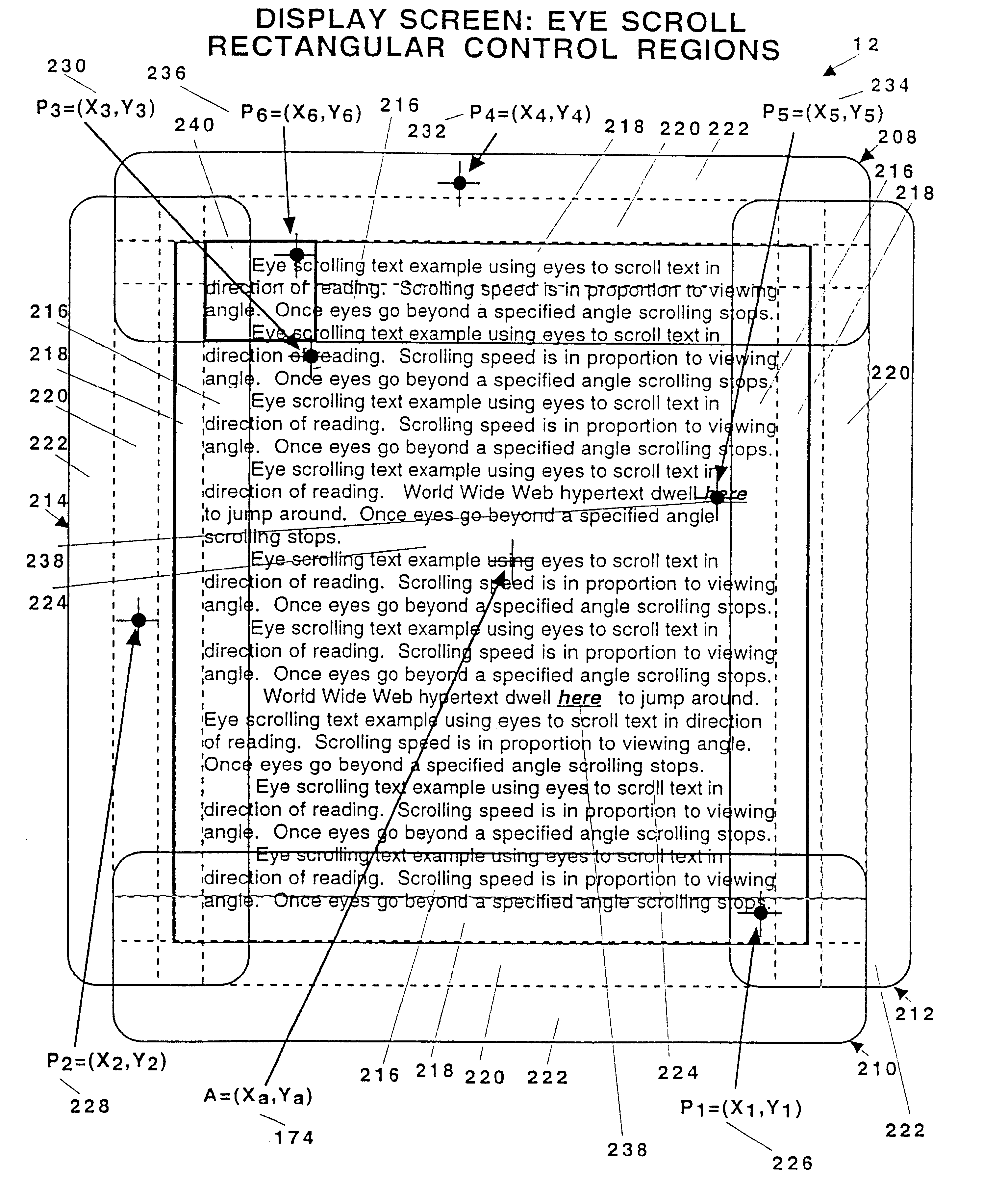

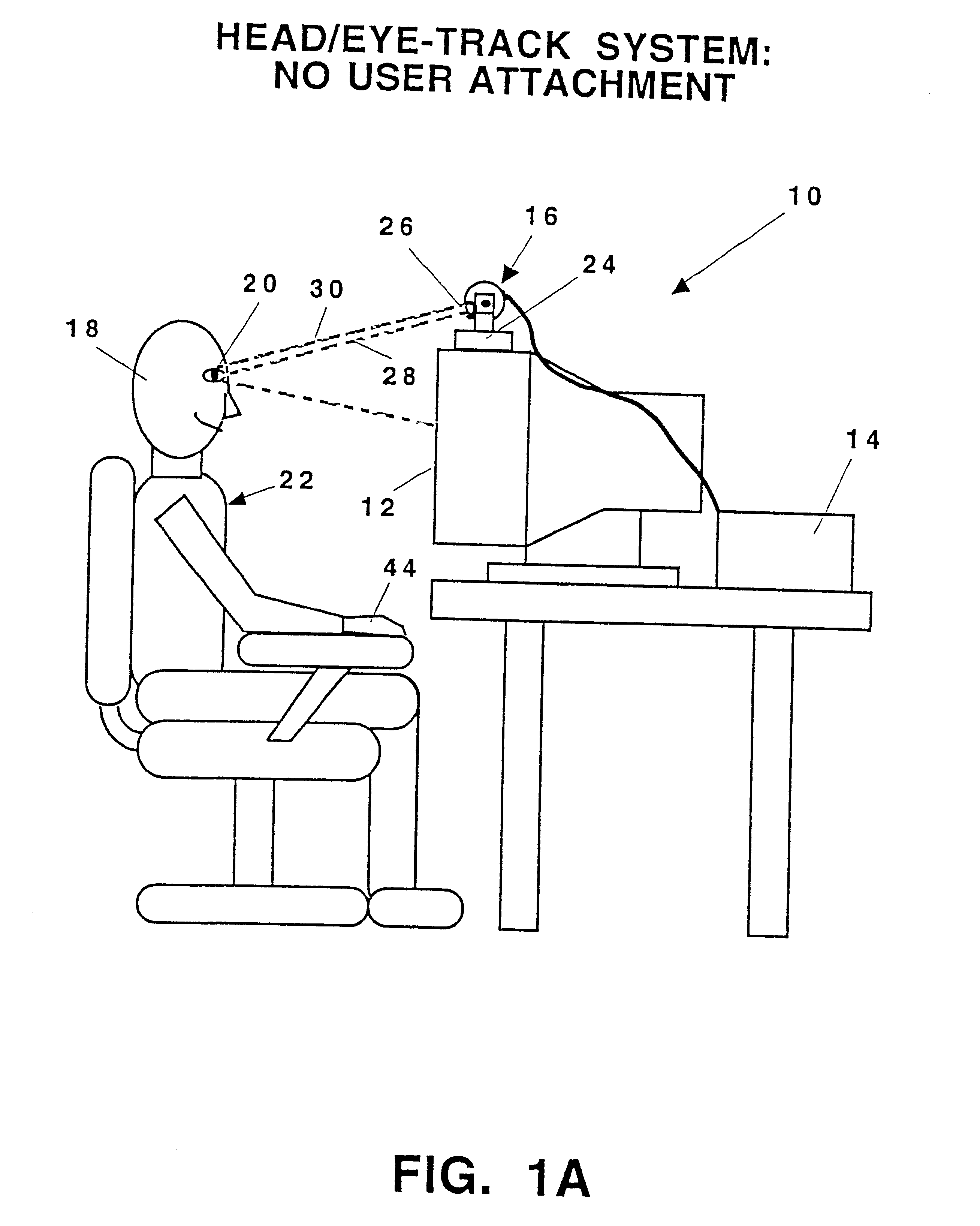

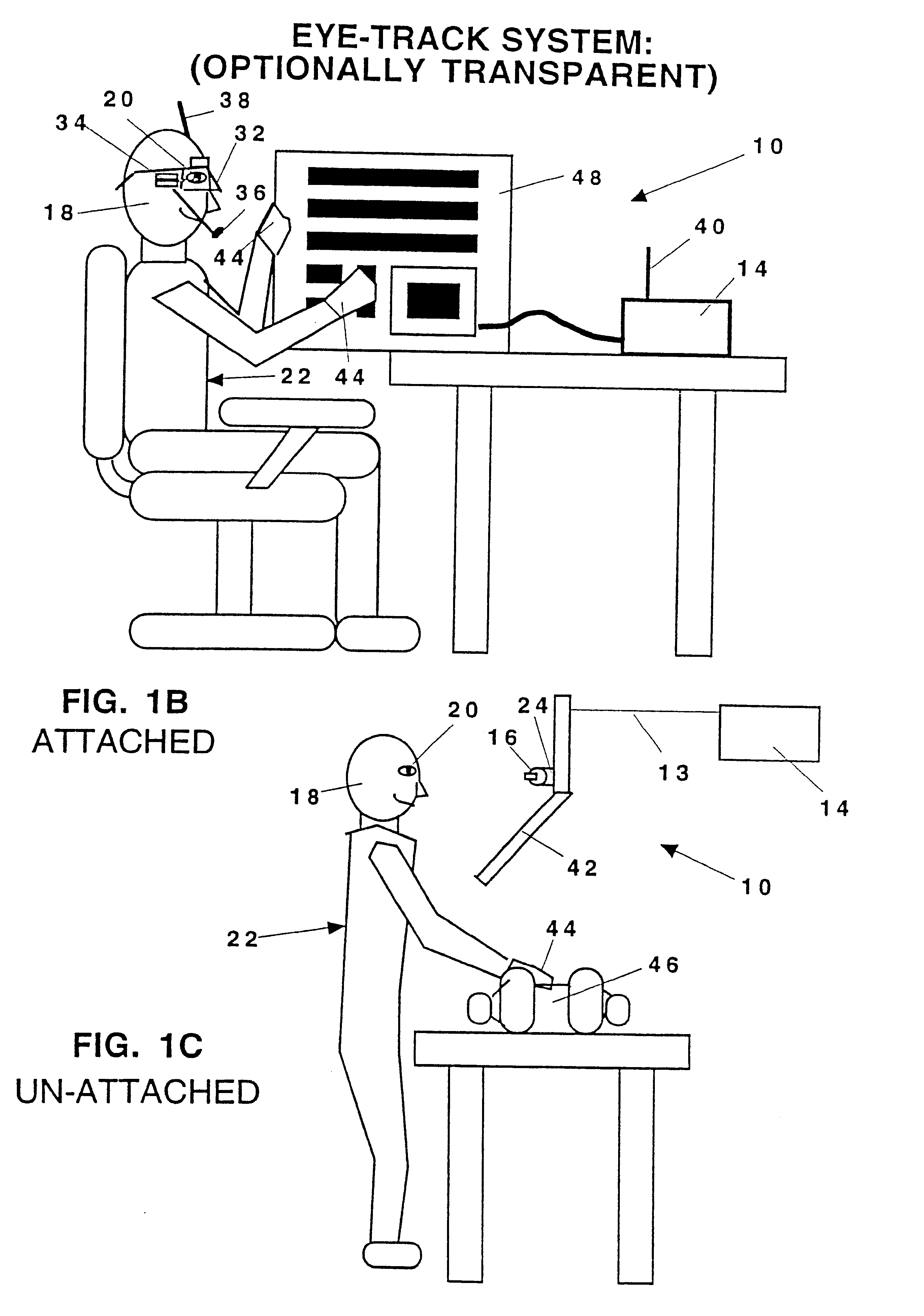

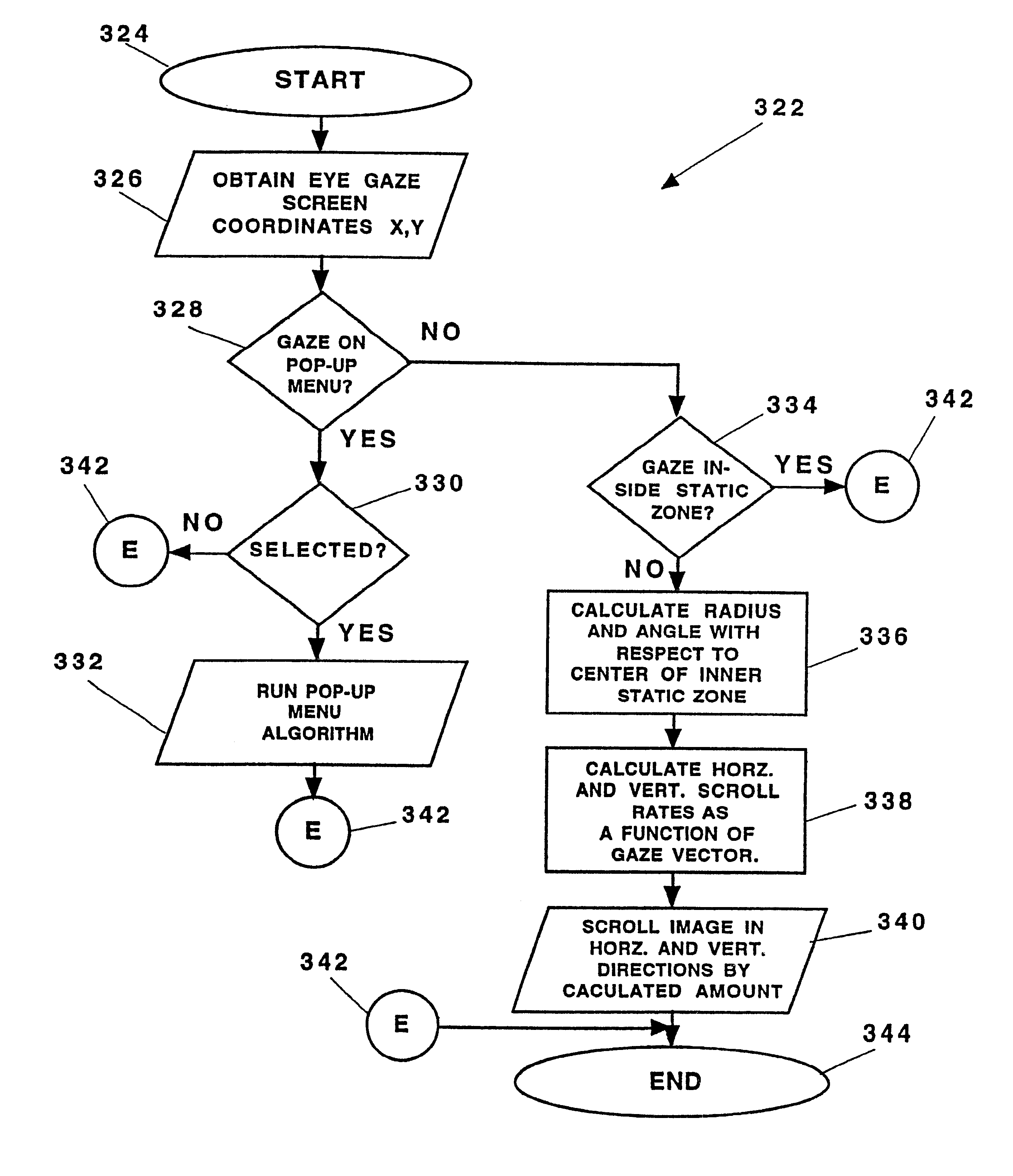

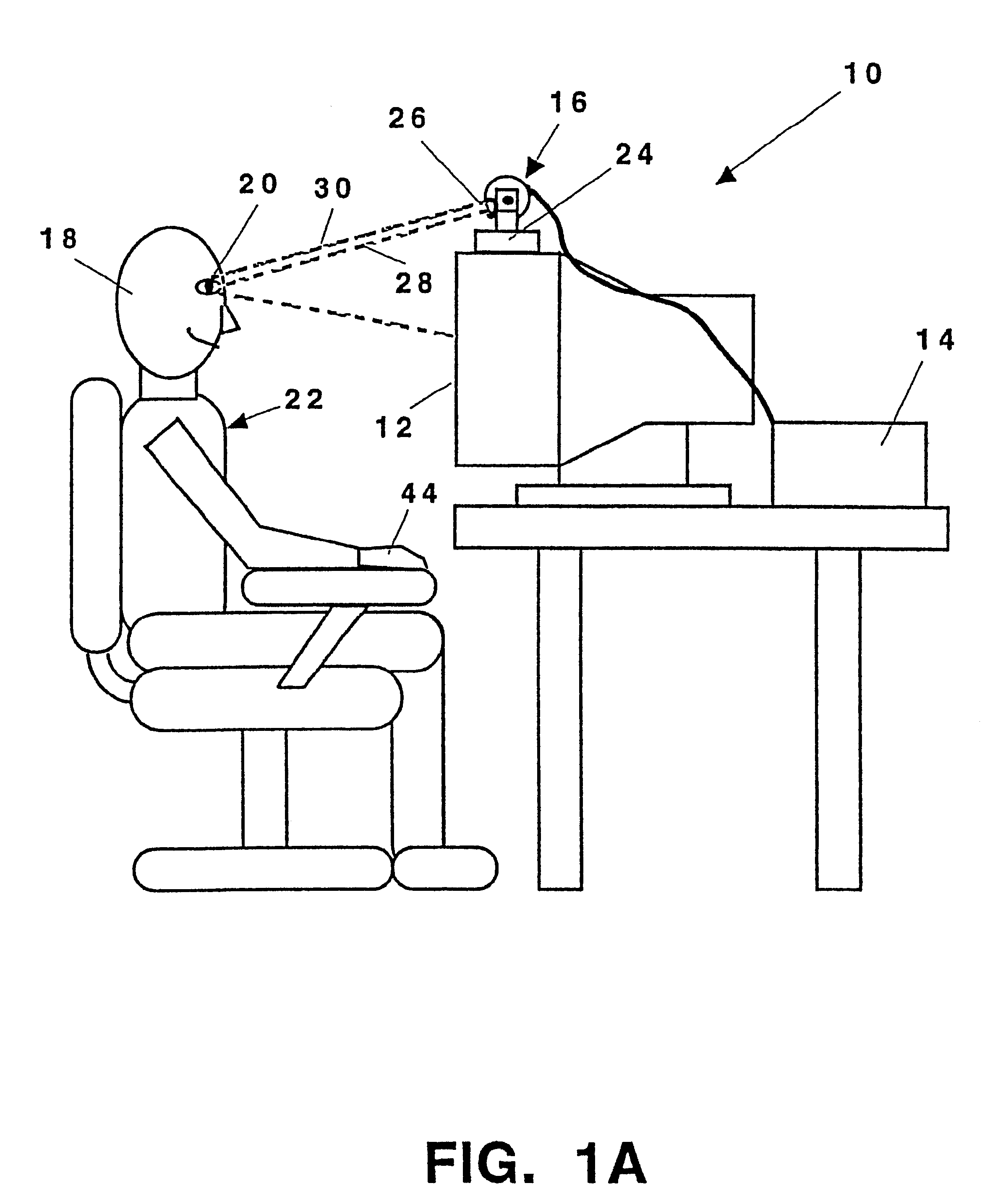

System and methods for controlling automatic scrolling of information on a display or screen

InactiveUS20020105482A1Input/output for user-computer interactionCathode-ray tube indicatorsDisplay deviceScrolling

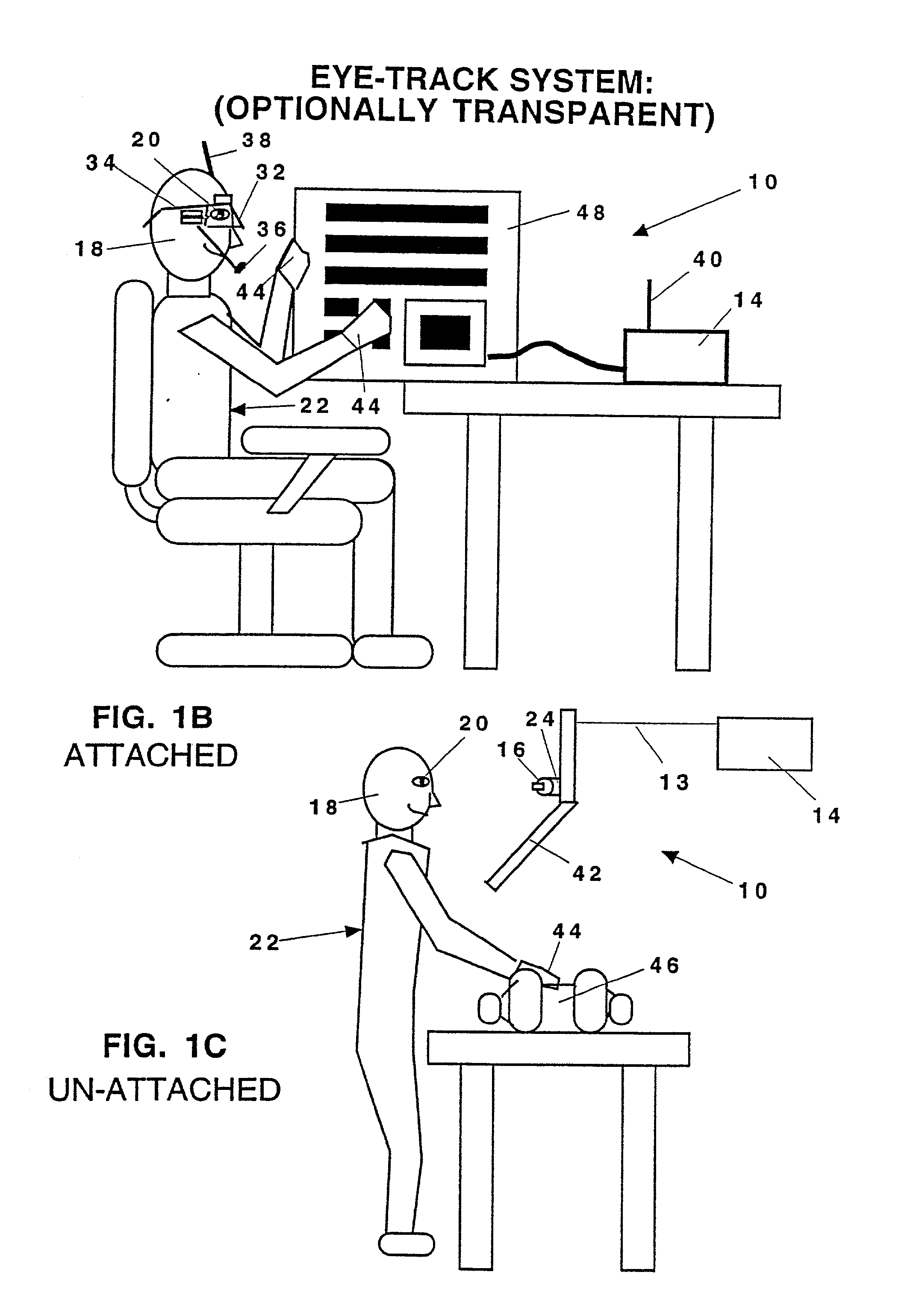

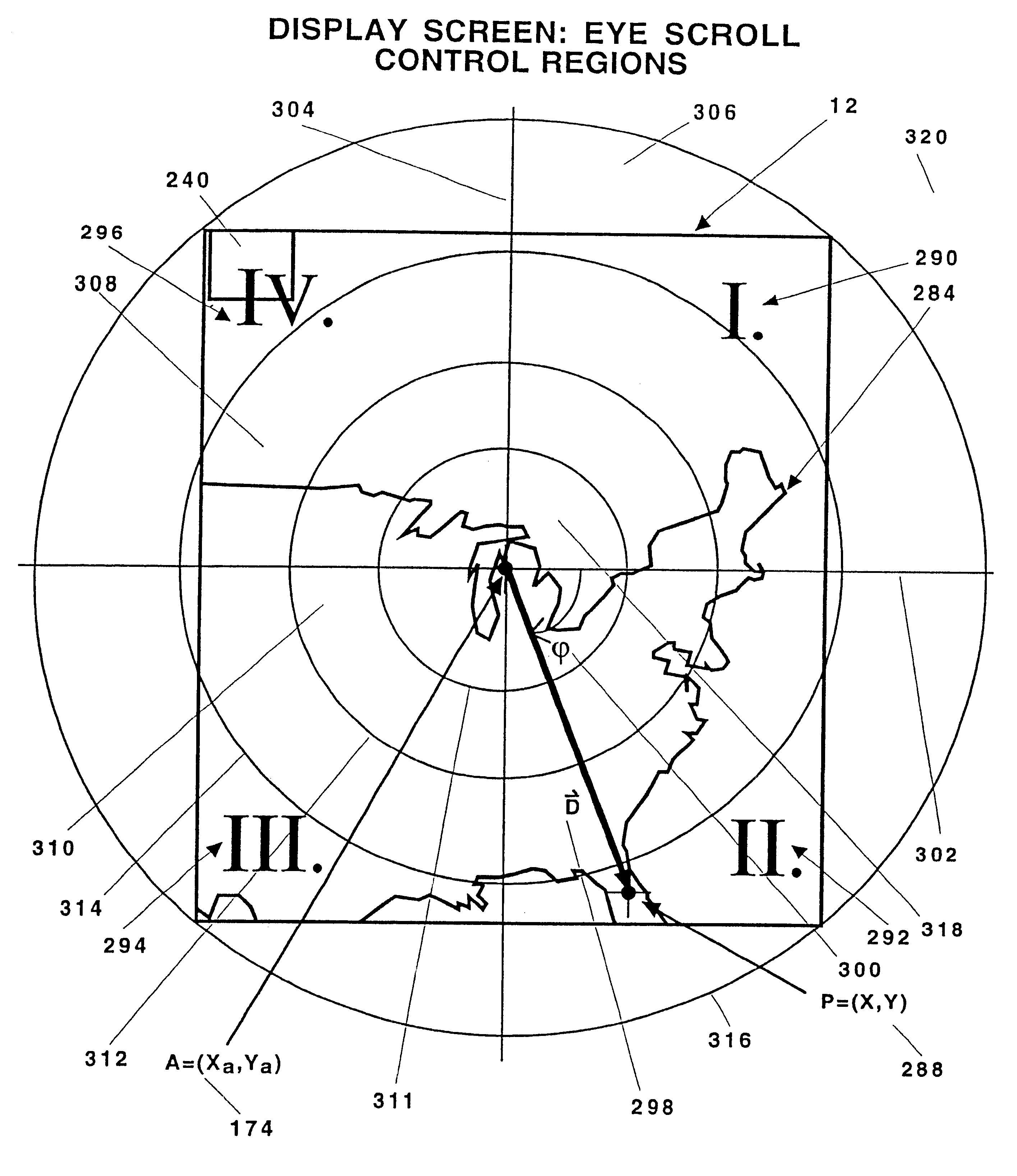

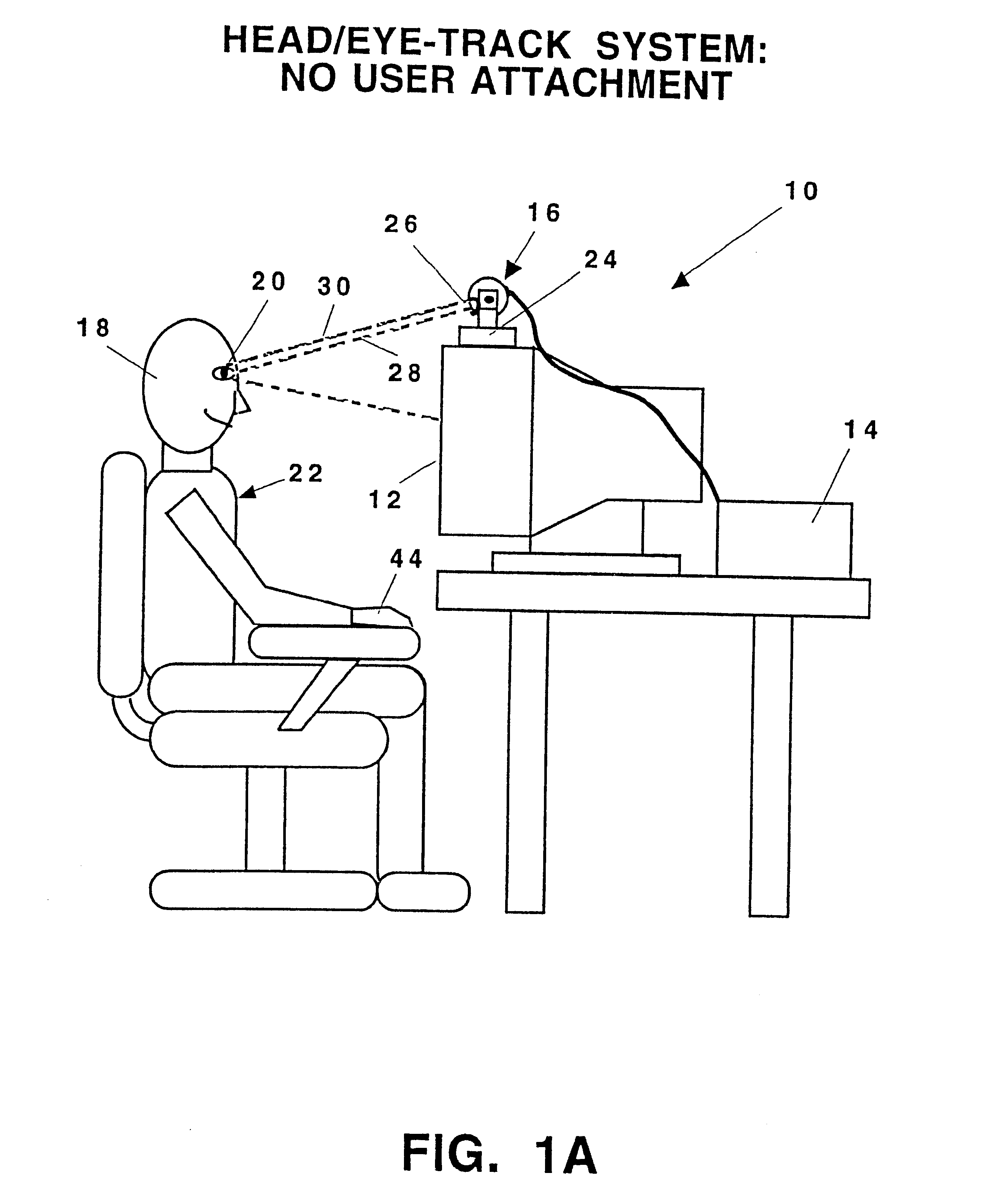

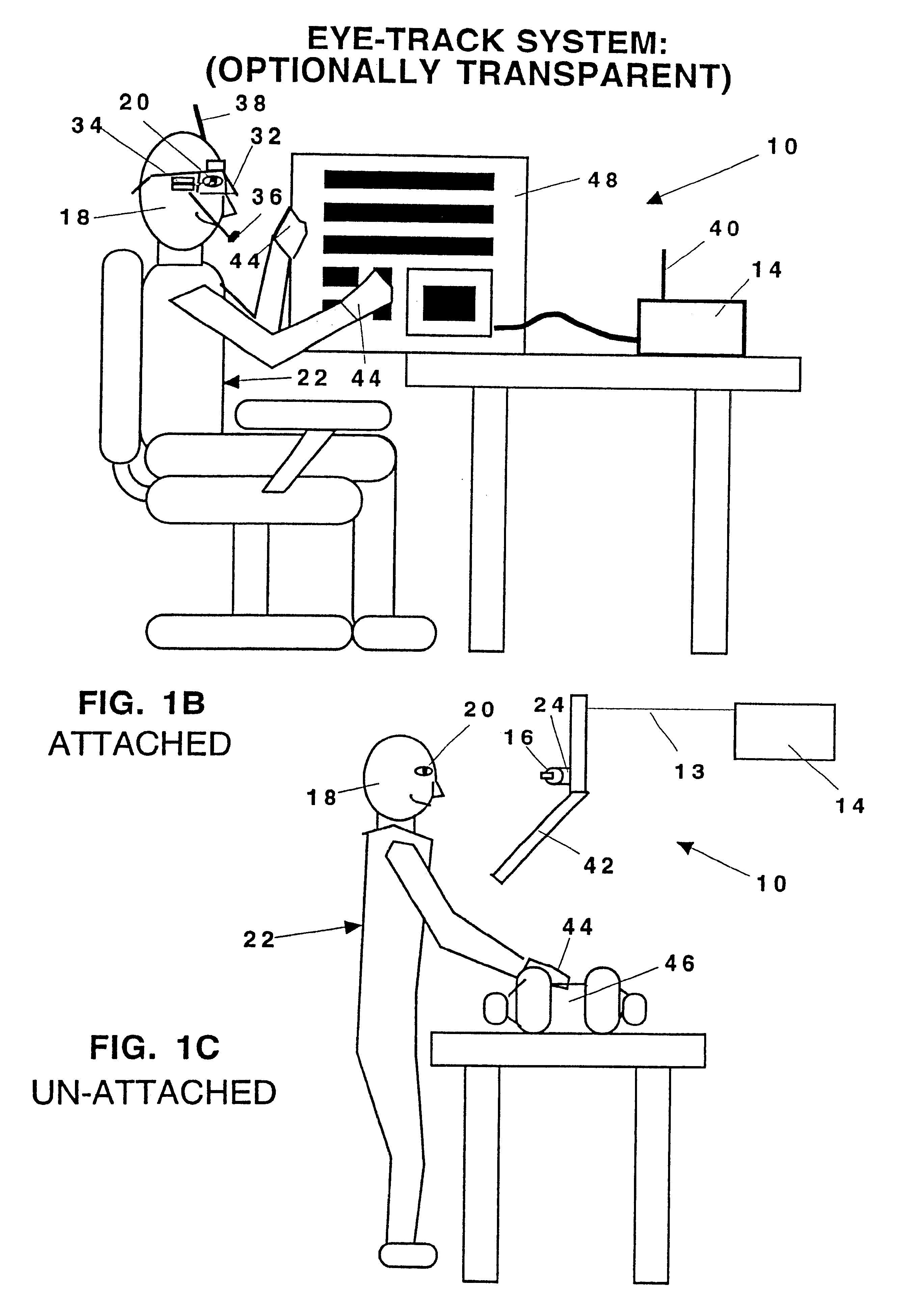

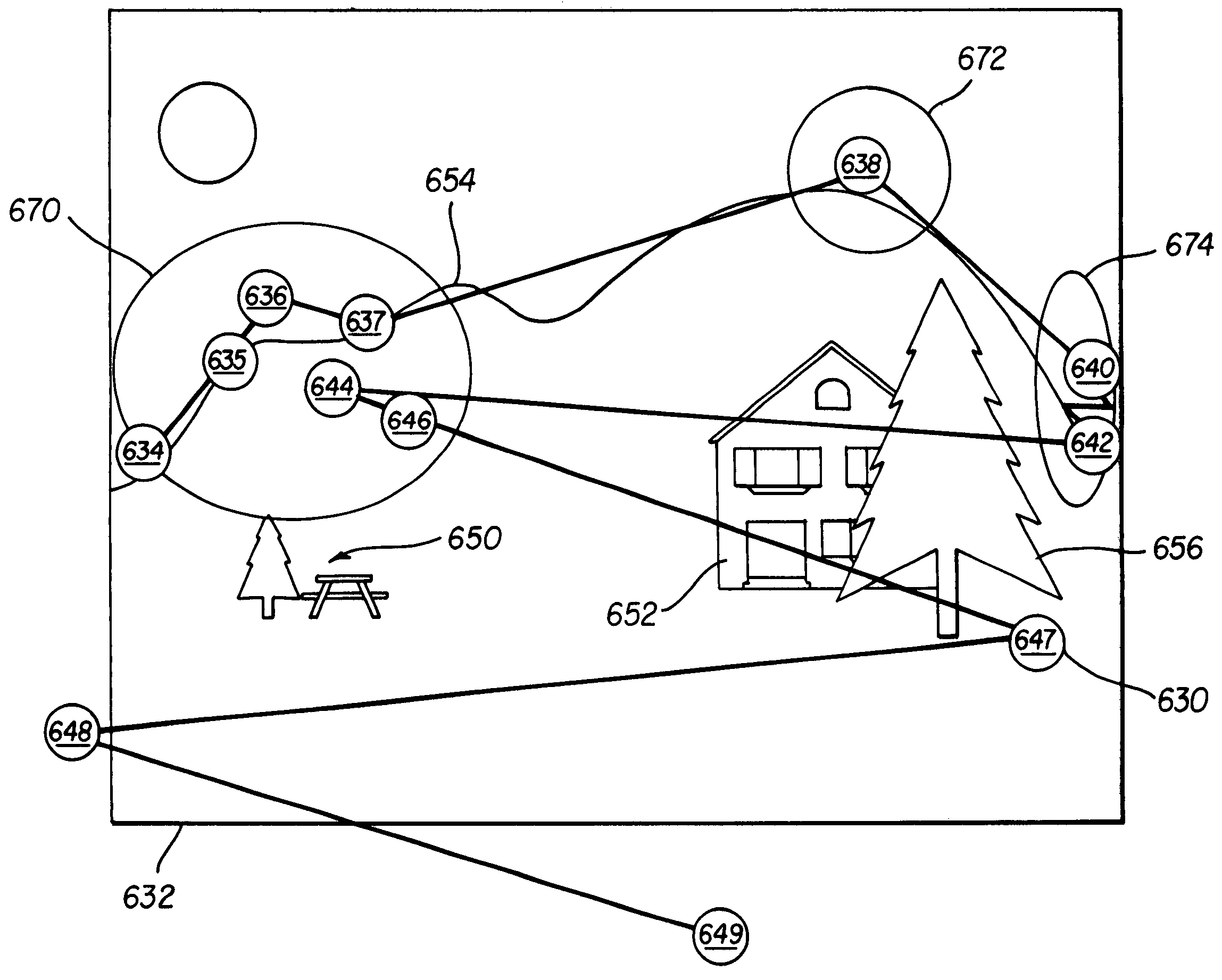

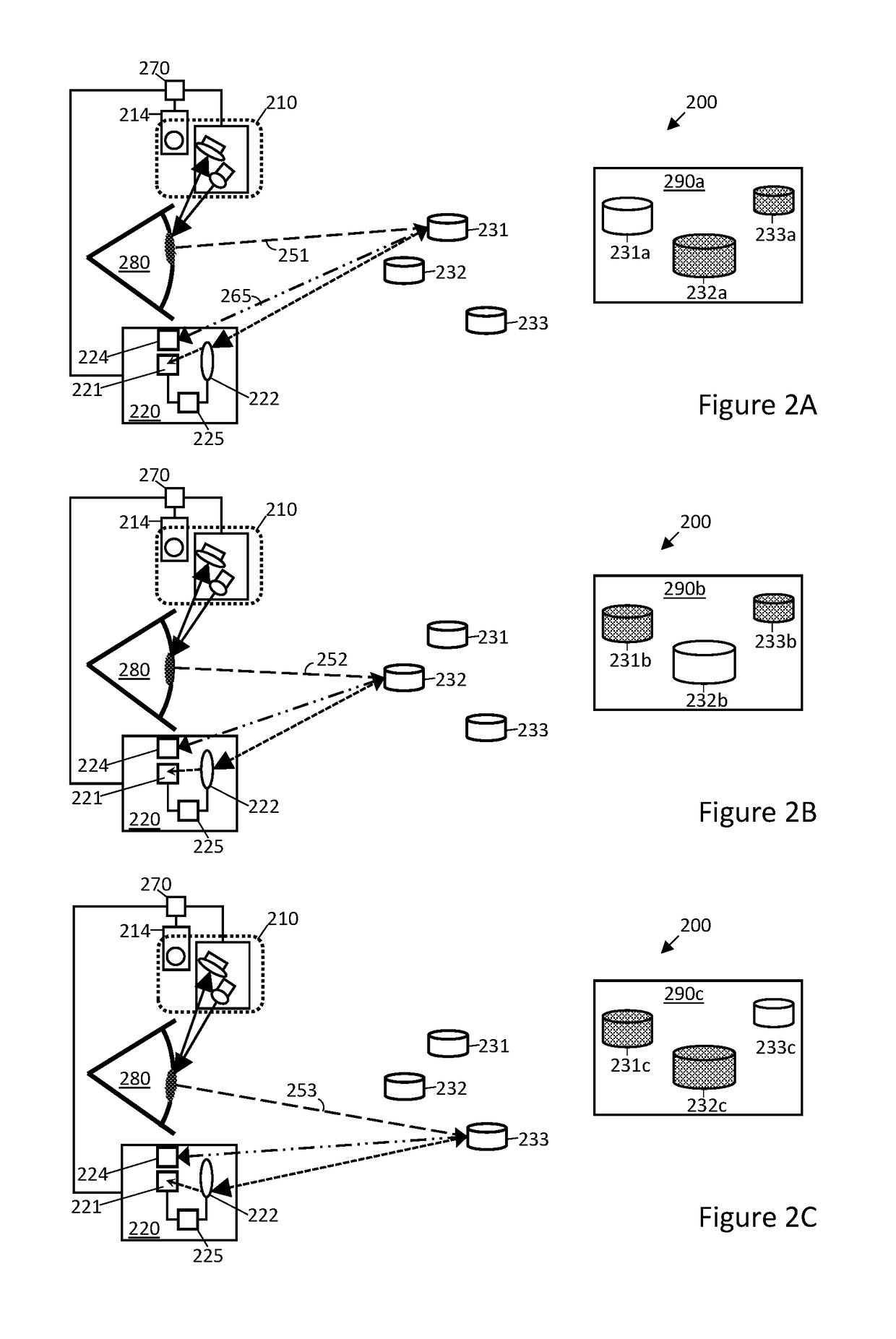

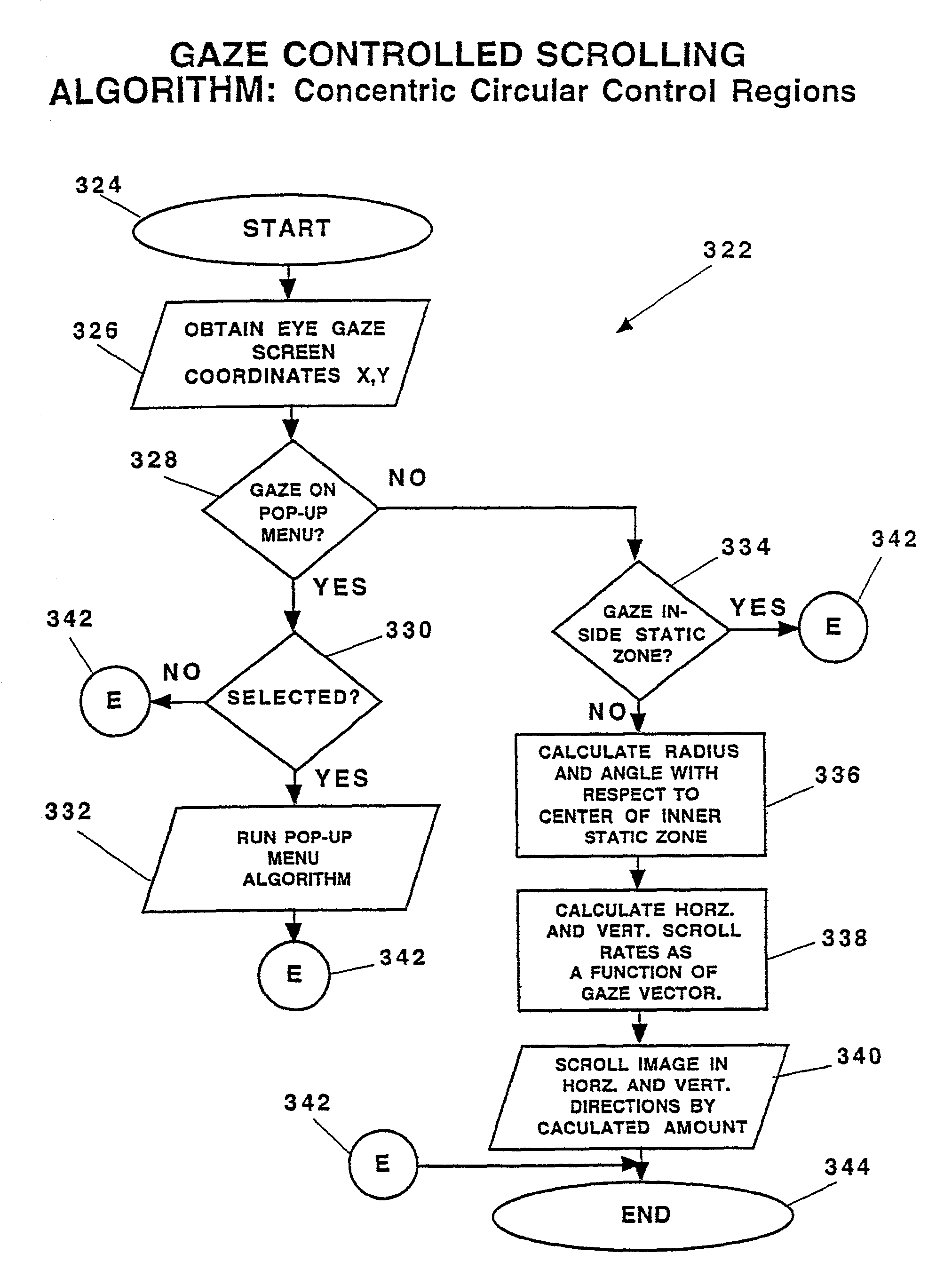

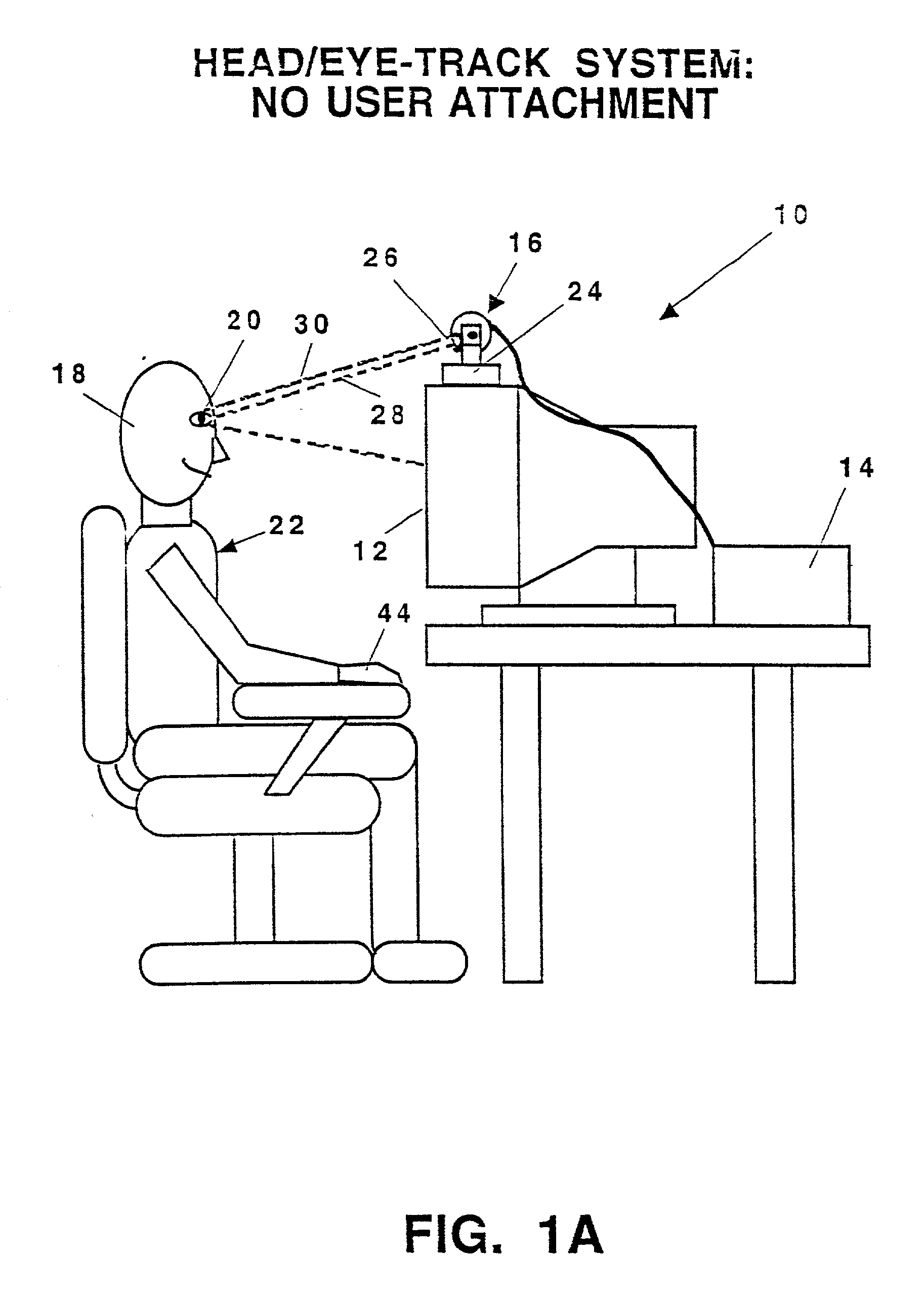

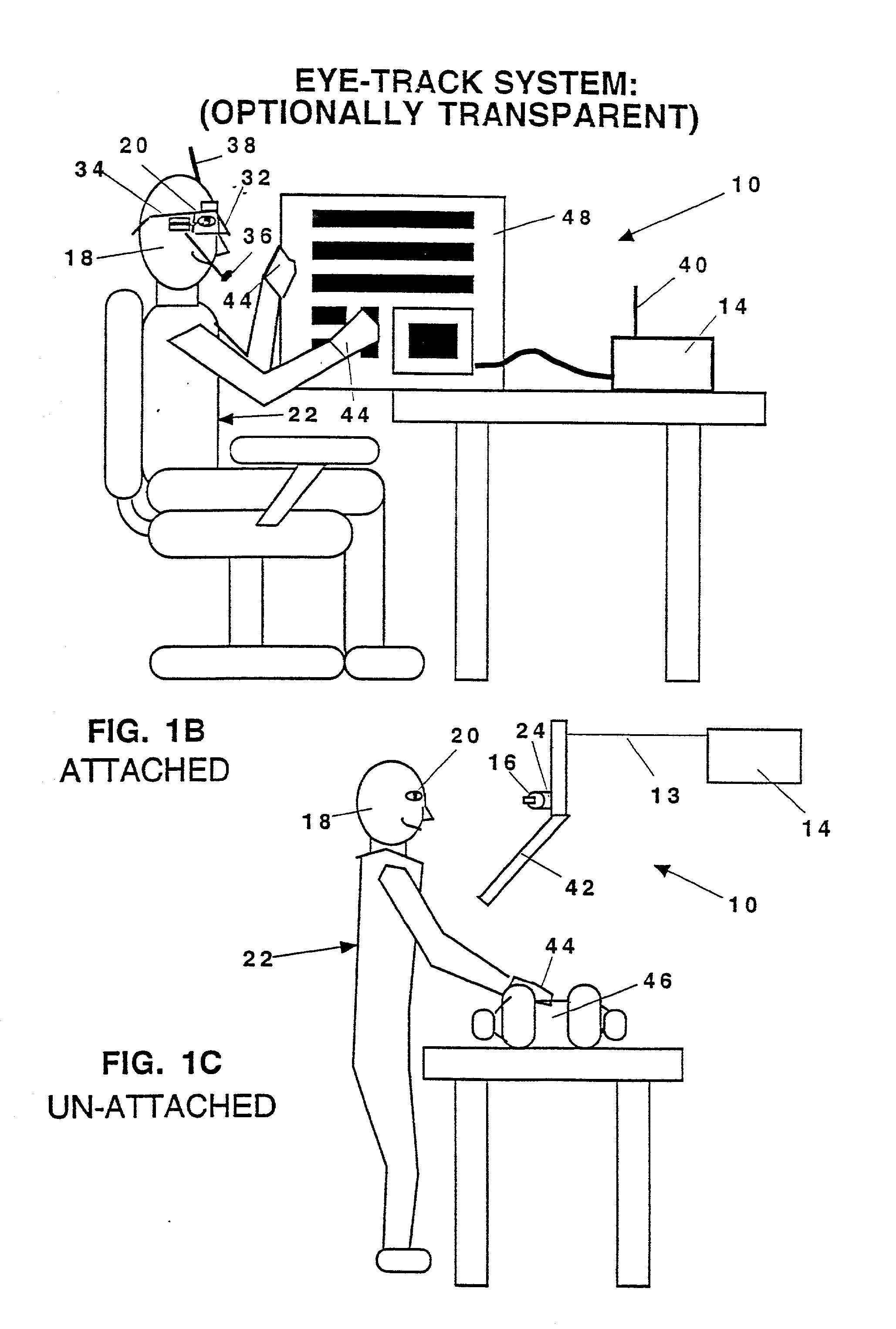

A system 10 for controlling the automatic scrolling of information on a computer display or screen 12 is disclosed. The system 10 generally includes a computer display or screen12, a computer system 14, gimbaled sensor system 16 for following and tracking the position and movement of the user's head 18 and user's eye 20, and a scroll activating interface algorithm using a neural network to find screen gaze coordinates implemented by the computer system 14 so that corresponding scrolling function is performed based upon the screen gaze coordinates of the user's eye 20 relative to a certain activation area(s) on the display or screen 12. The gimbaled sensor system 16 contains a gimbaled platform 24 mounted at the top of the display or screen 12. The gimbaled sensor system 16 tracks the user's 22 head 18 and eye 20, allows the user to be free from any attachments while the gimbaled sensor system 16 is eye tracking, and still allows the user to freely move his or her head when the system 10 is in use. A method of controlling automatic scrolling of information on a display or screen 12 by a user 22 is also disclosed. The method generally includes the steps of finding a screen gaze coordinate 146 on the display or screen 12 of the user 22, determining whether the screen gaze coordinate 146 is within at least one activated control region, and activating scrolling to provide a desired display of information when the gaze direction is within at least one activated control region. In one embodiment, the control regions are defined as upper control region 208, lower region 210, right region 212, and left region 214 for controlling the scrolling respectively in the downward, upward, leftward, and rightward directions. In another embodiment, the control regions are defined by concentric rings 306, 308, and 310 for maintaining the stationary position of the information or controlling the scrolling of the information towards the center of the display or screen 12.

Owner:LEMELSON JEROME H +1

System and methods for controlling automatic scrolling of information on a display or screen

InactiveUS6351273B1Input/output for user-computer interactionSpeech analysisAutomatic controlDisplay device

A system for controlling the automatic scrolling of information on a computer display. The system includes a computer display, a computer gimbaled sensor for tracking the position of the user's head and user's eye, and a scroll activating interface algorithm using a neural network to find screen gaze coordinates implemented by the computer. A scrolling function is performed based upon the screen gaze coordinates of the user's eye relative t activation area(s) on the display. The gimbaled sensor system contains a platform mounted at the top of the display. The gimbaled sensor system tracks the user's head and eye allowing the user to be free from attachments while the gimbaled sensor system is tracking, still allowing the user to freely move his head. A method of controlling automatic scrolling of information on a display includes the steps of finding a screen gaze coordinate on the display of the user determining whether the screen gaze coordinate is within at least one activated control region, and activating scrolling to provide a desired display of information when the gaze direction is within at least one activated control region. In one embodiment, the control regions are defined as upper control region, lower region, right region and left region for controlling the scrolling respectively in downward, upward, leftward and rightward directions. In another embodiment, control regions are defined by concentric rings for maintaining the stationary position of the information or controlling the scrolling of the information towards the center of the display or screen.

Owner:LEMELSON JEROME H +1

Real time eye tracking for human computer interaction

ActiveUS8885882B1Improve interactivityMore intelligent behaviorImage enhancementImage analysisOptical axisGaze directions

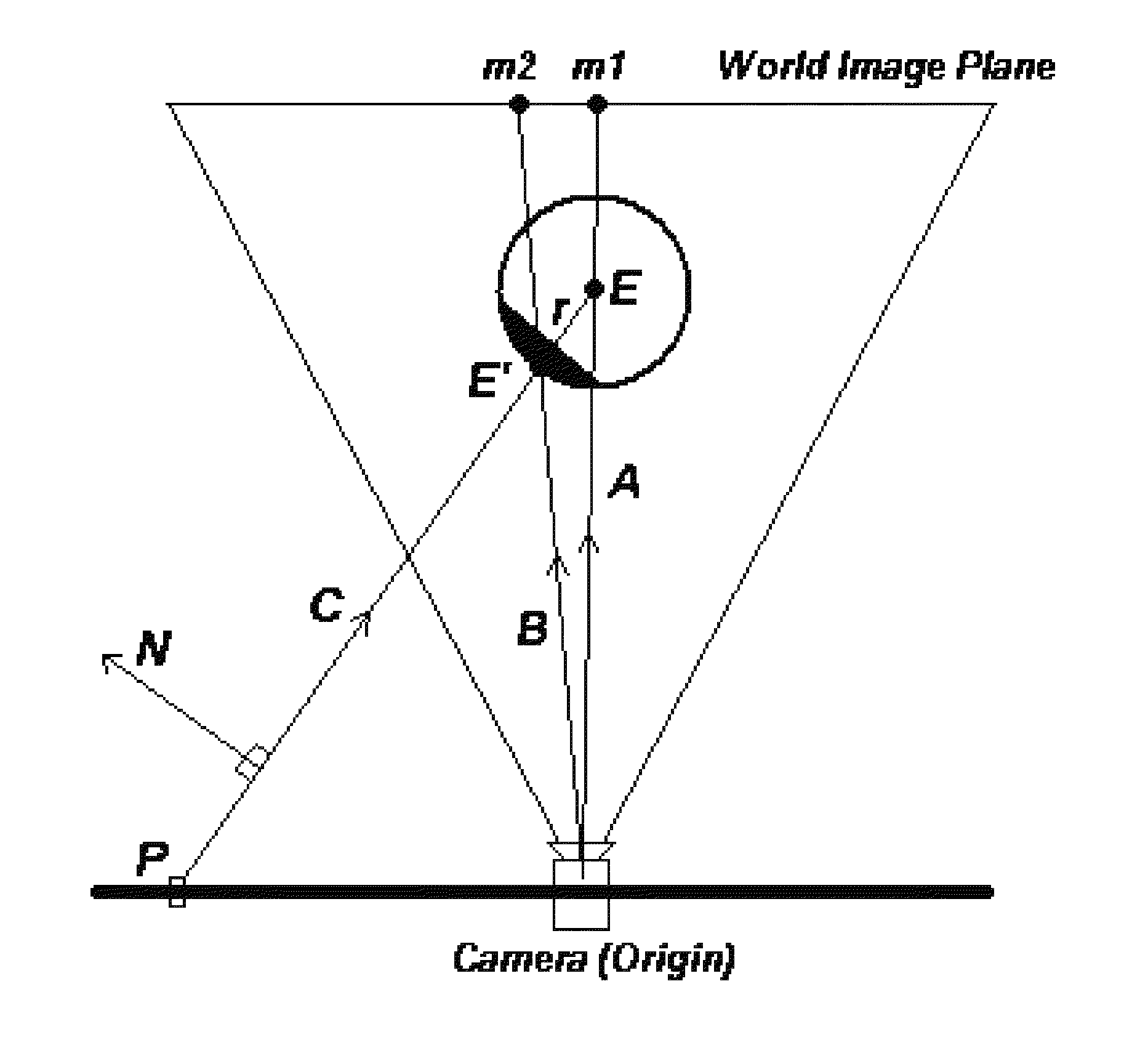

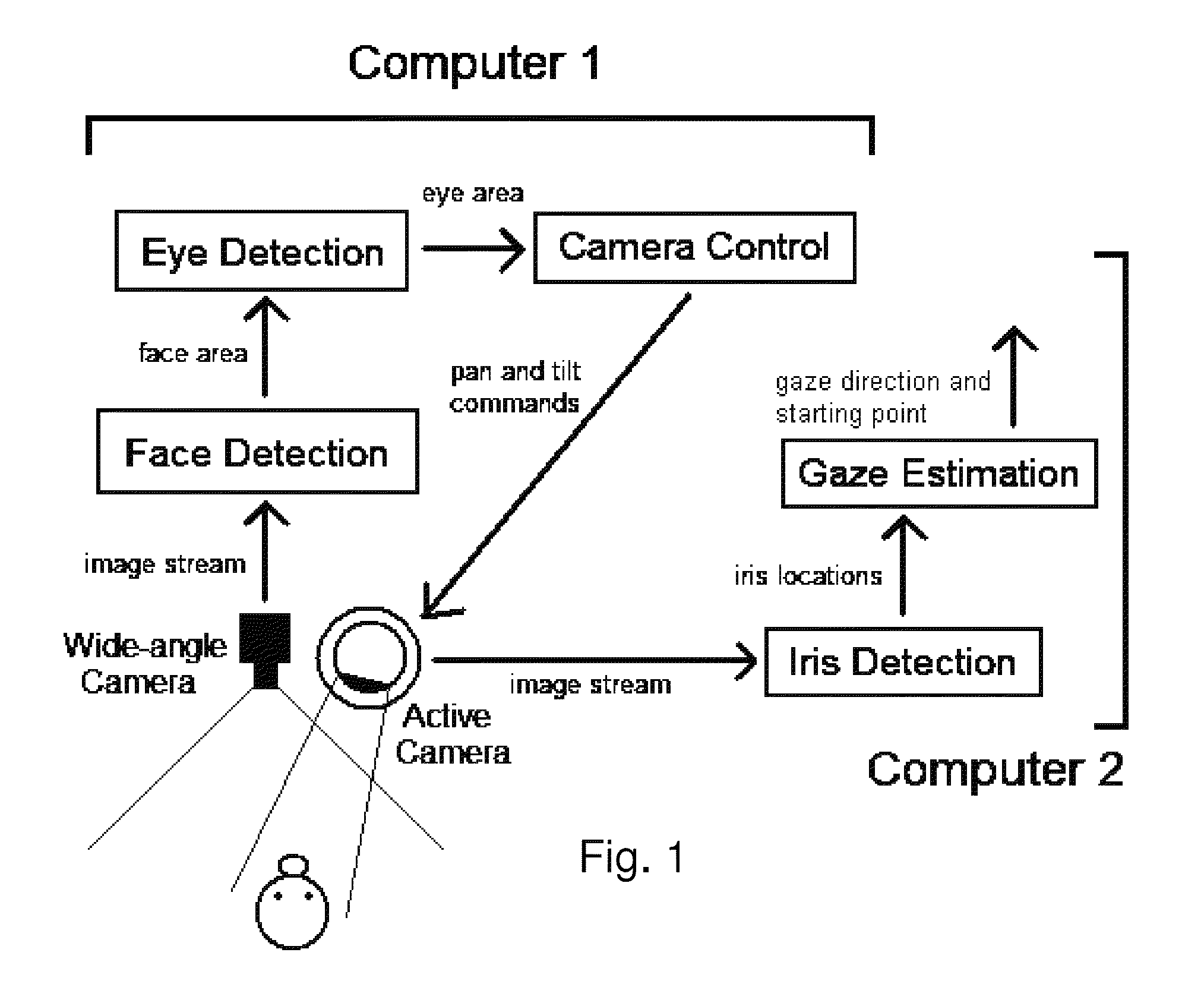

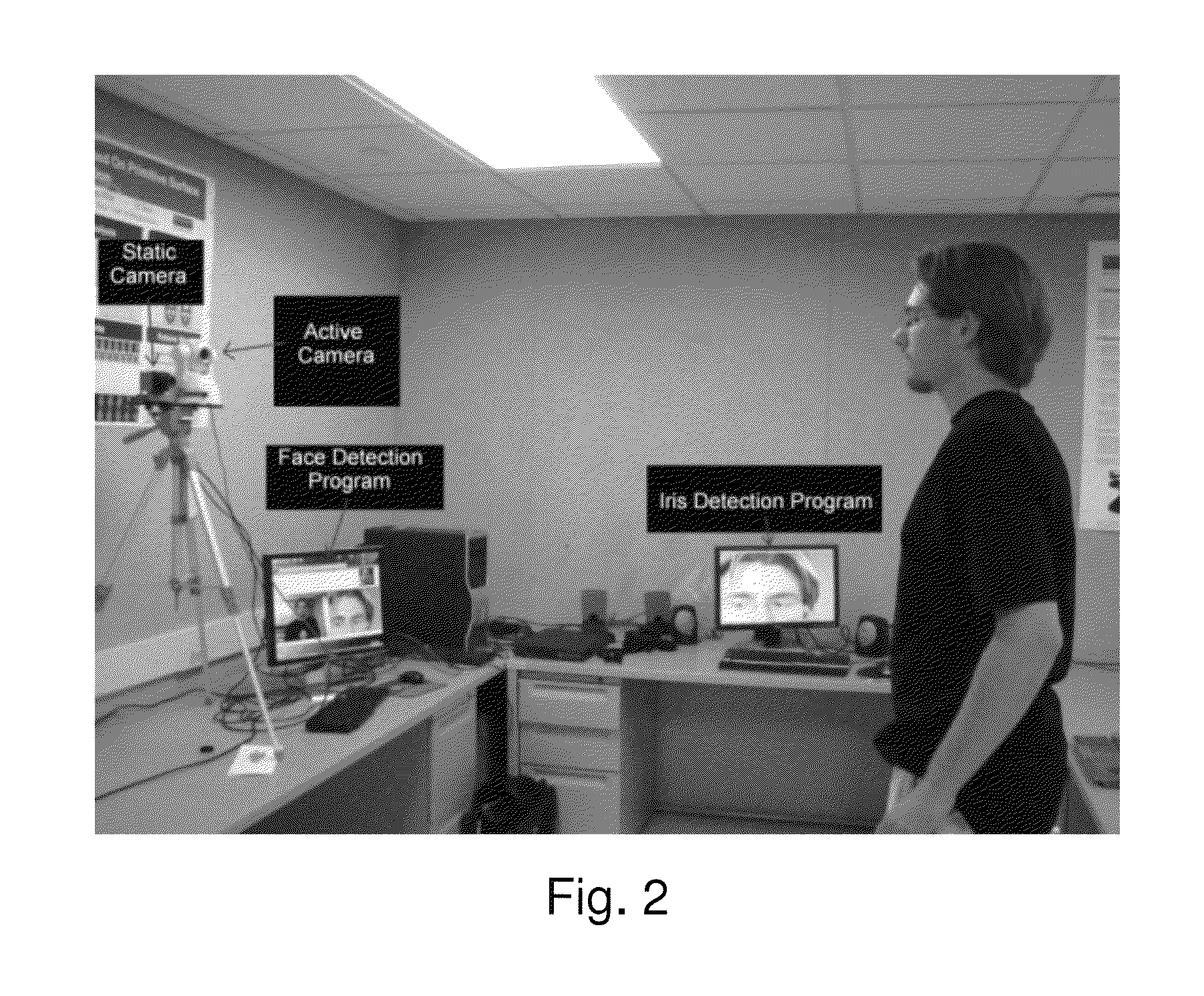

A gaze direction determining system and method is provided. A two-camera system may detect the face from a fixed, wide-angle camera, estimates a rough location for the eye region using an eye detector based on topographic features, and directs another active pan-tilt-zoom camera to focus in on this eye region. A eye gaze estimation approach employs point-of-regard (PoG) tracking on a large viewing screen. To allow for greater head pose freedom, a calibration approach is provided to find the 3D eyeball location, eyeball radius, and fovea position. Both the iris center and iris contour points are mapped to the eyeball sphere (creating a 3D iris disk) to get the optical axis; then the fovea rotated accordingly and the final, visual axis gaze direction computed.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

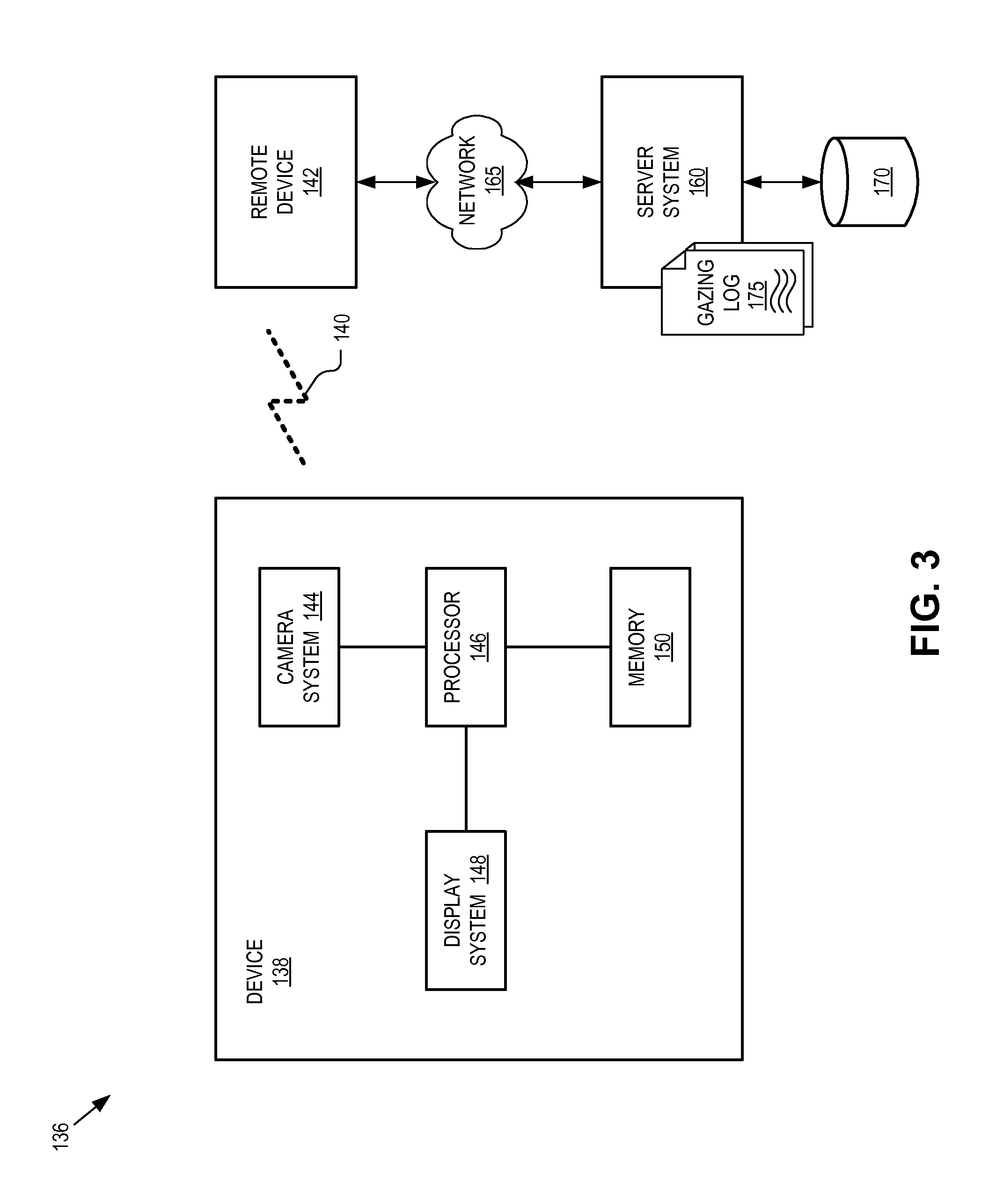

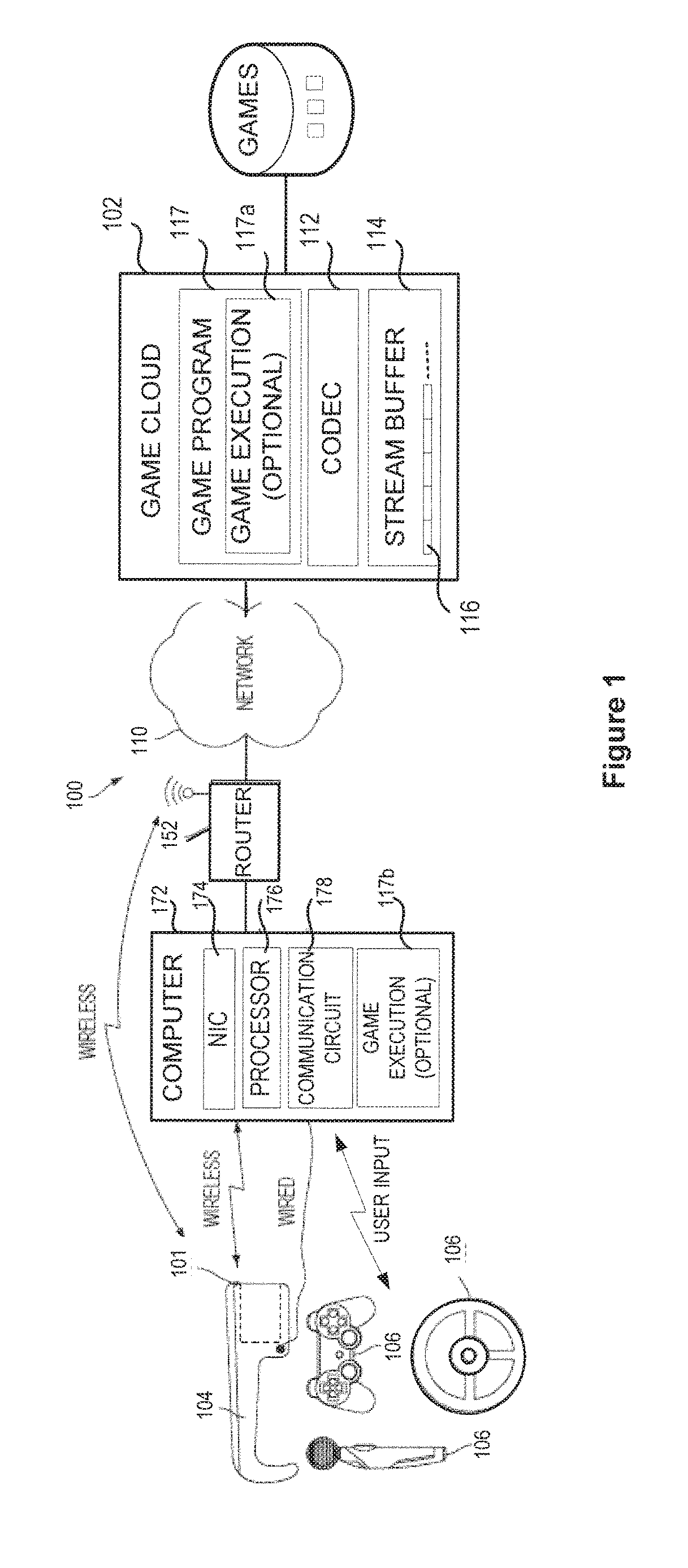

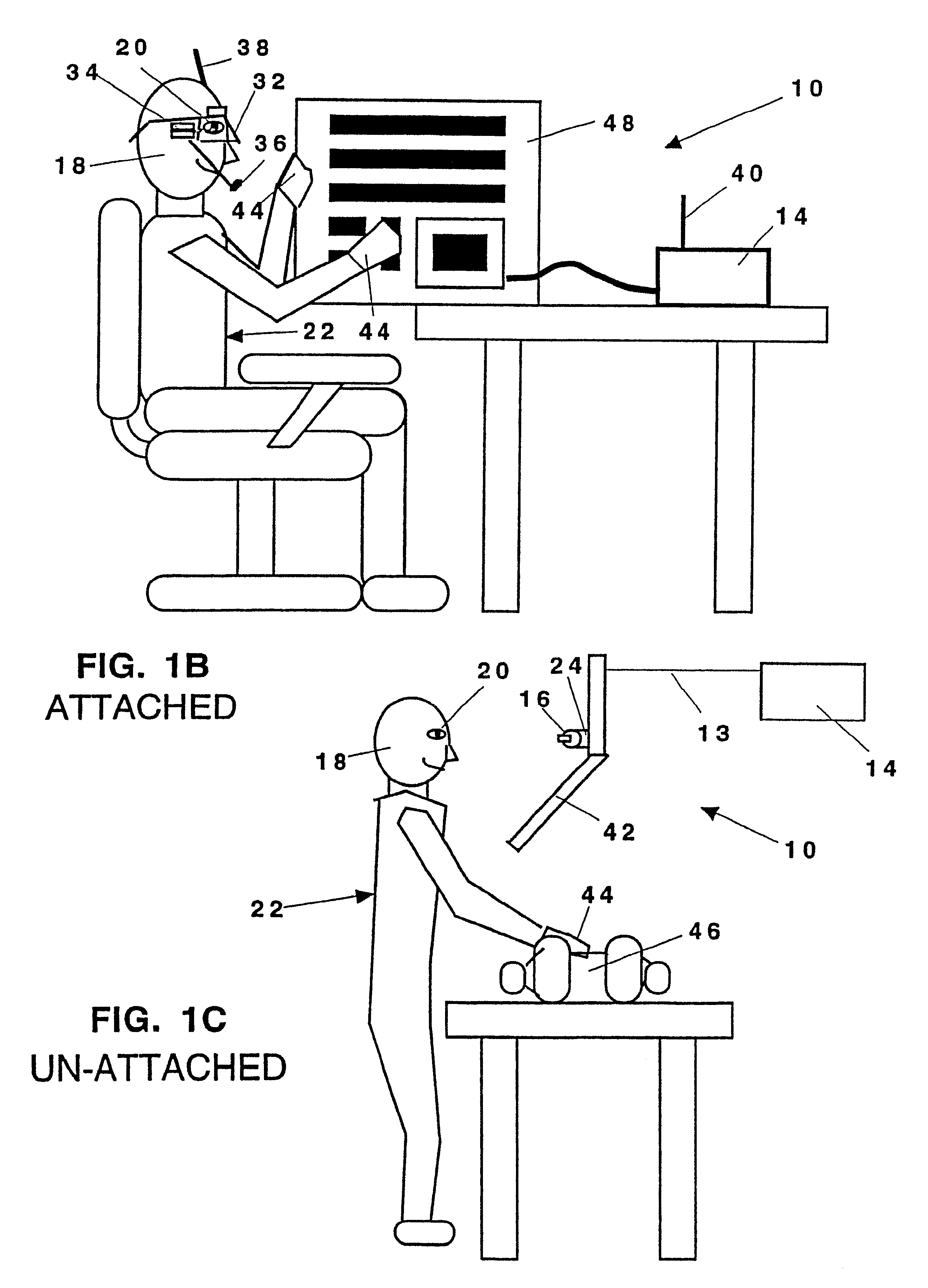

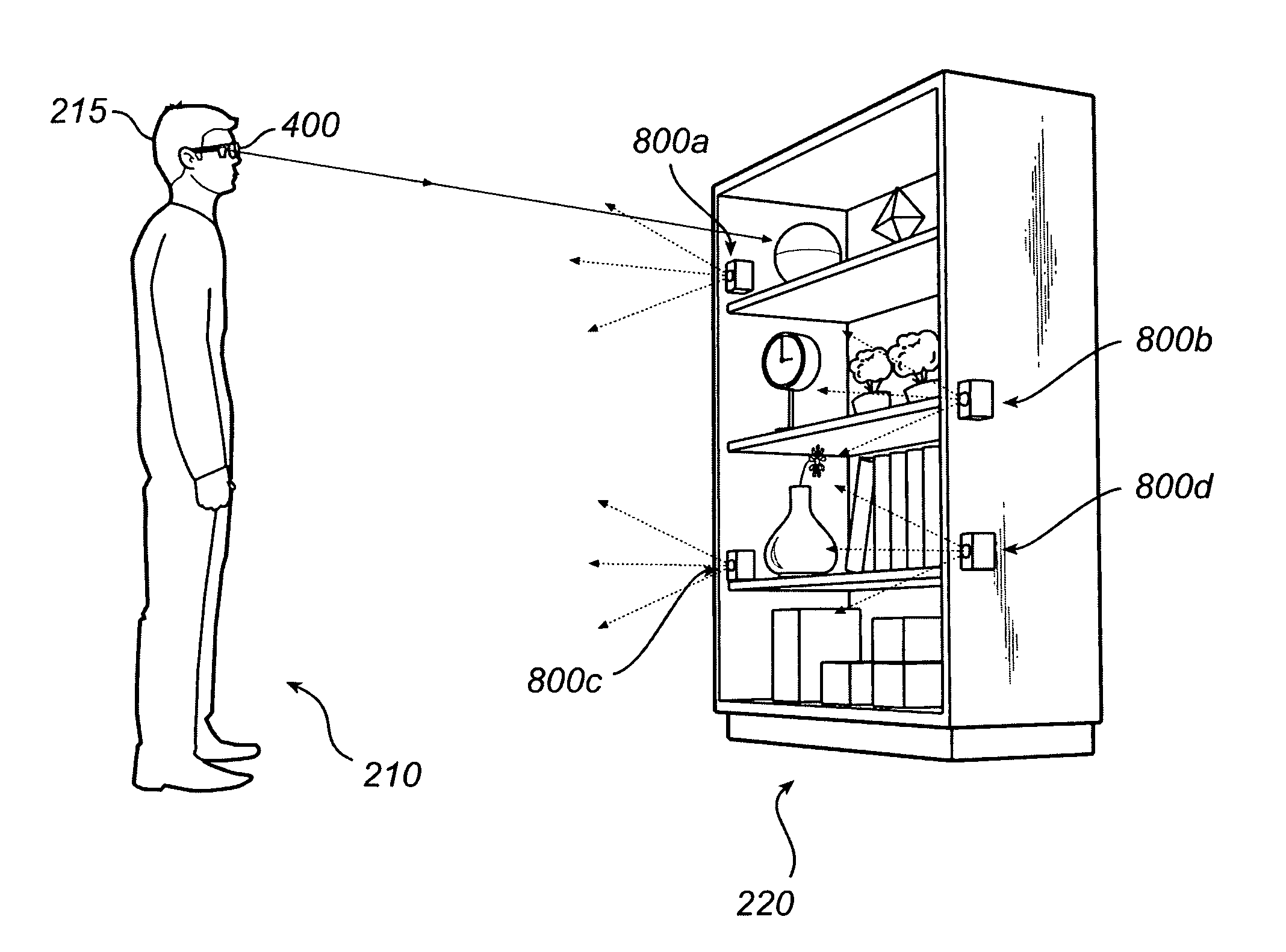

Gaze tracking system

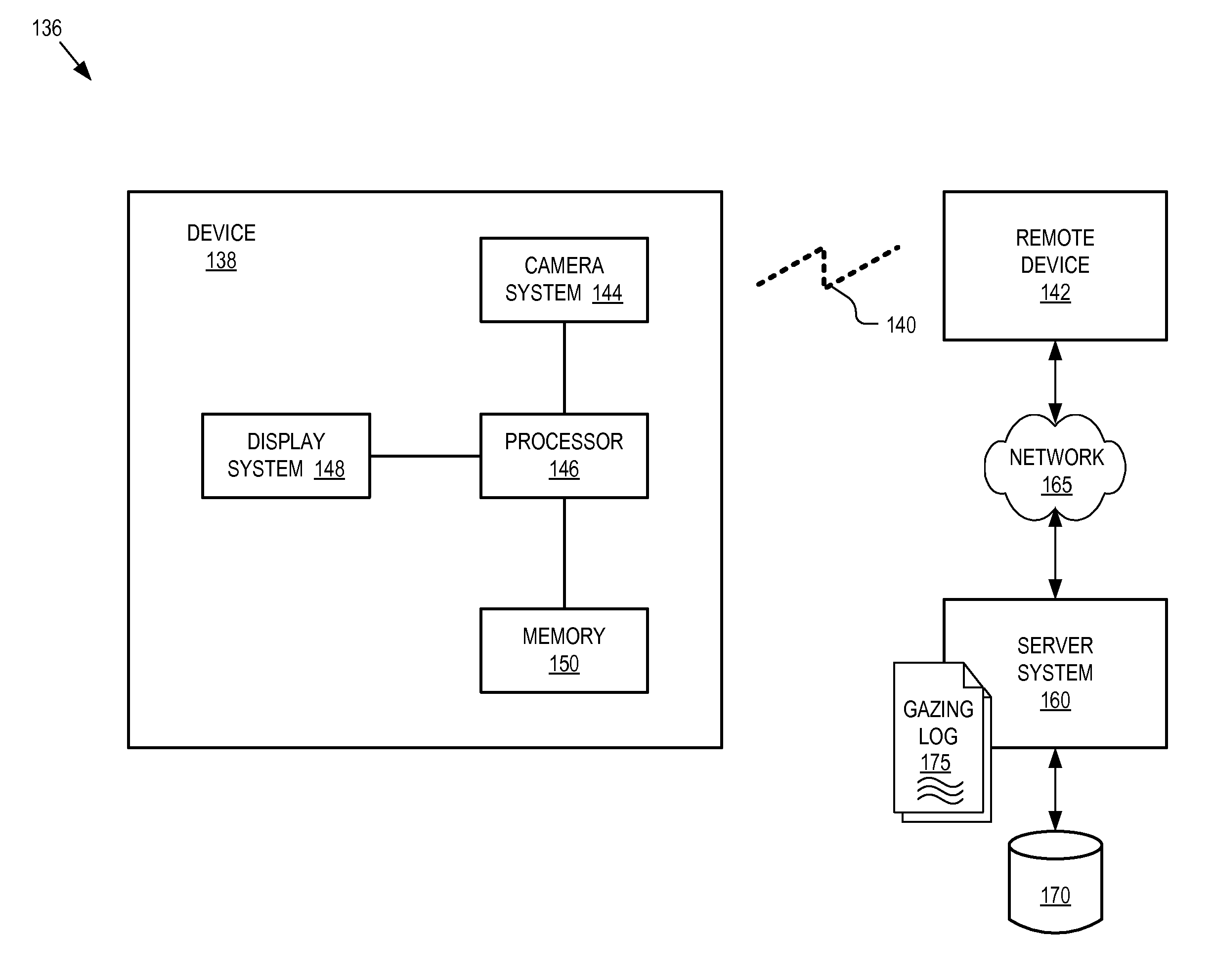

A gaze tracking technique is implemented with a head mounted gaze tracking device that communicates with a server. The server receives scene images from the head mounted gaze tracking device which captures external scenes viewed by a user wearing the head mounted device. The server also receives gaze direction information from the head mounted gaze tracking device. The gaze direction information indicates where in the external scenes the user was gazing when viewing the external scenes. An image recognition algorithm is executed on the scene images to identify items within the external scenes viewed by the user. A gazing log tracking the identified items viewed by the user is generated.

Owner:GOOGLE LLC

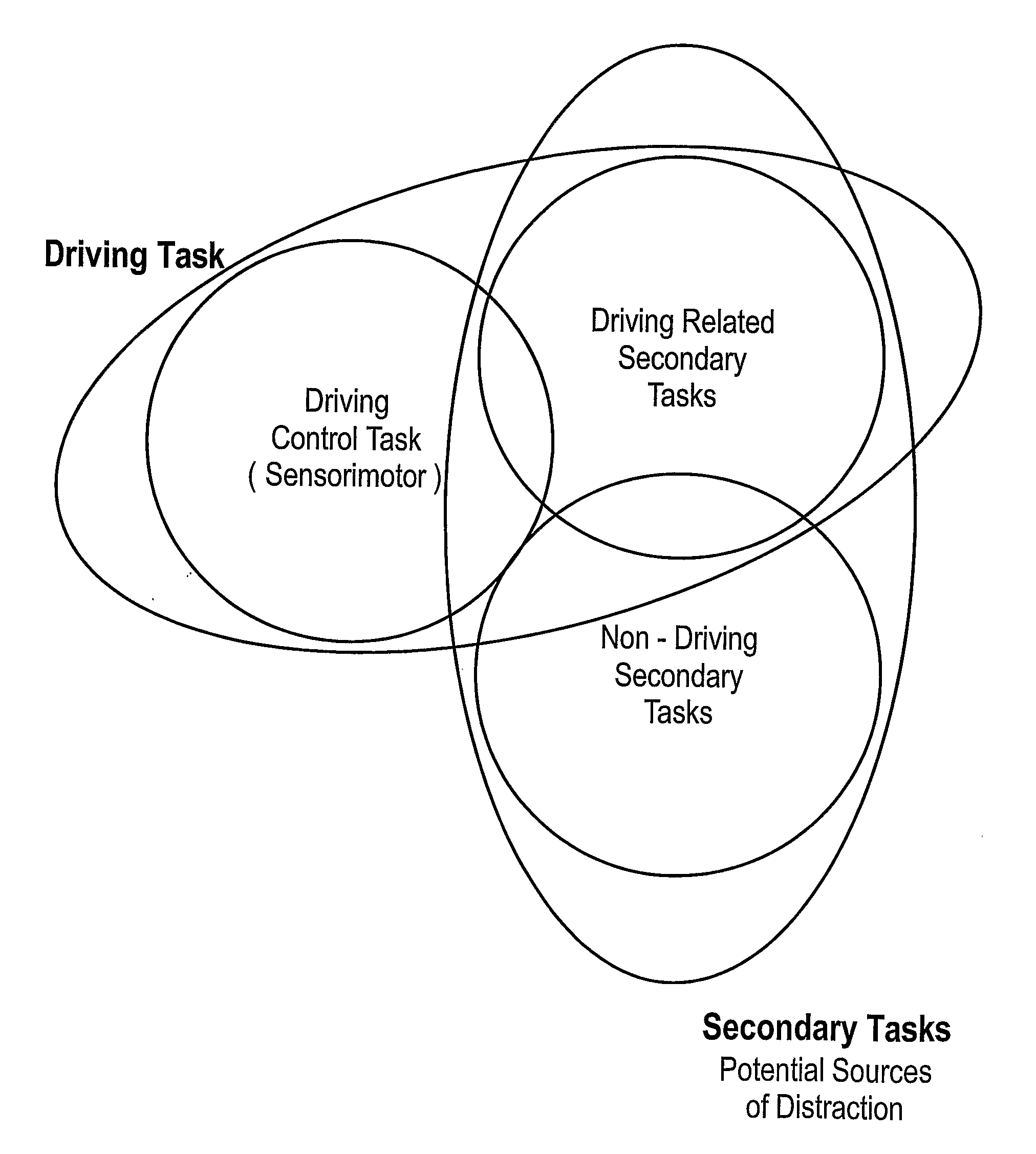

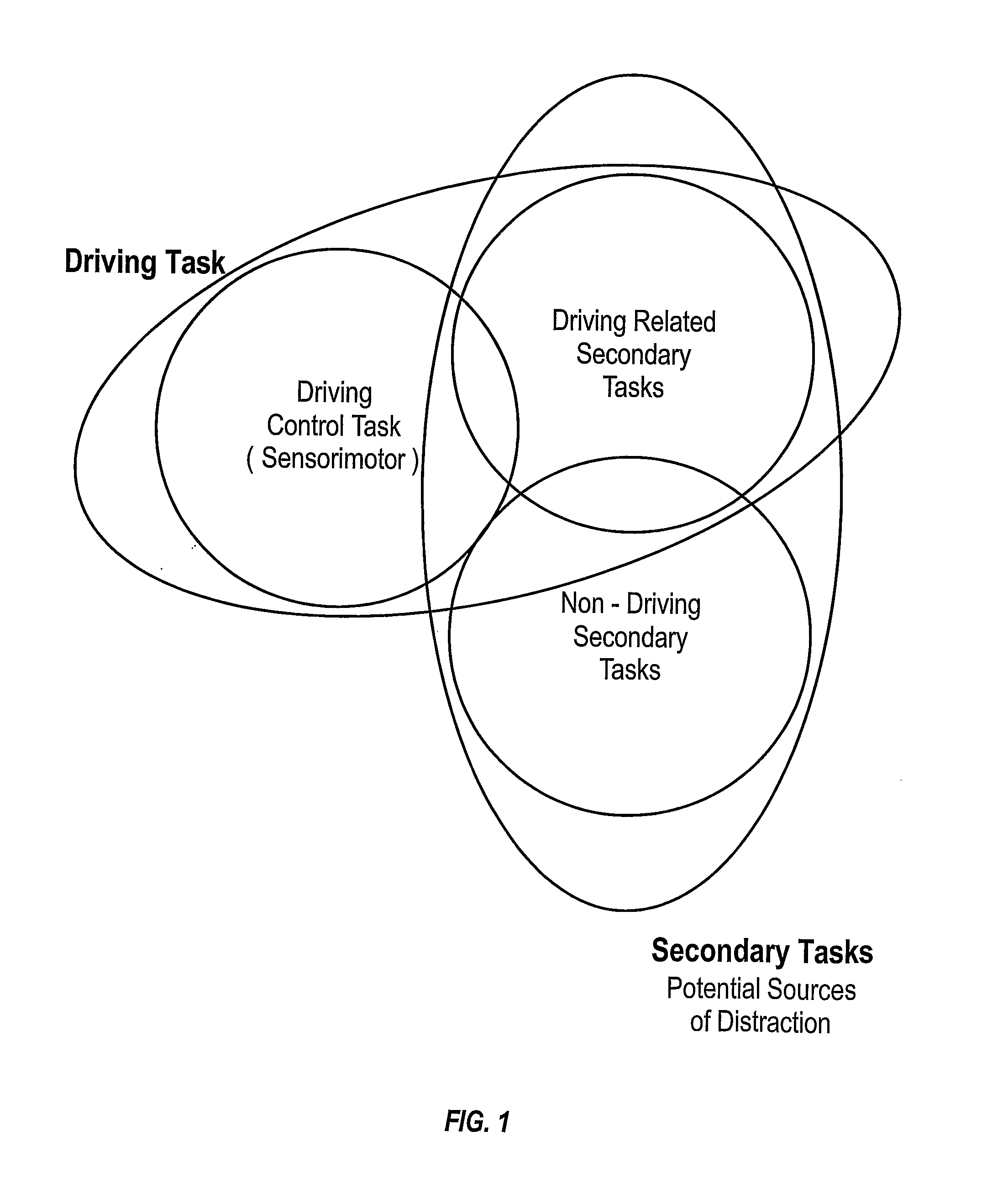

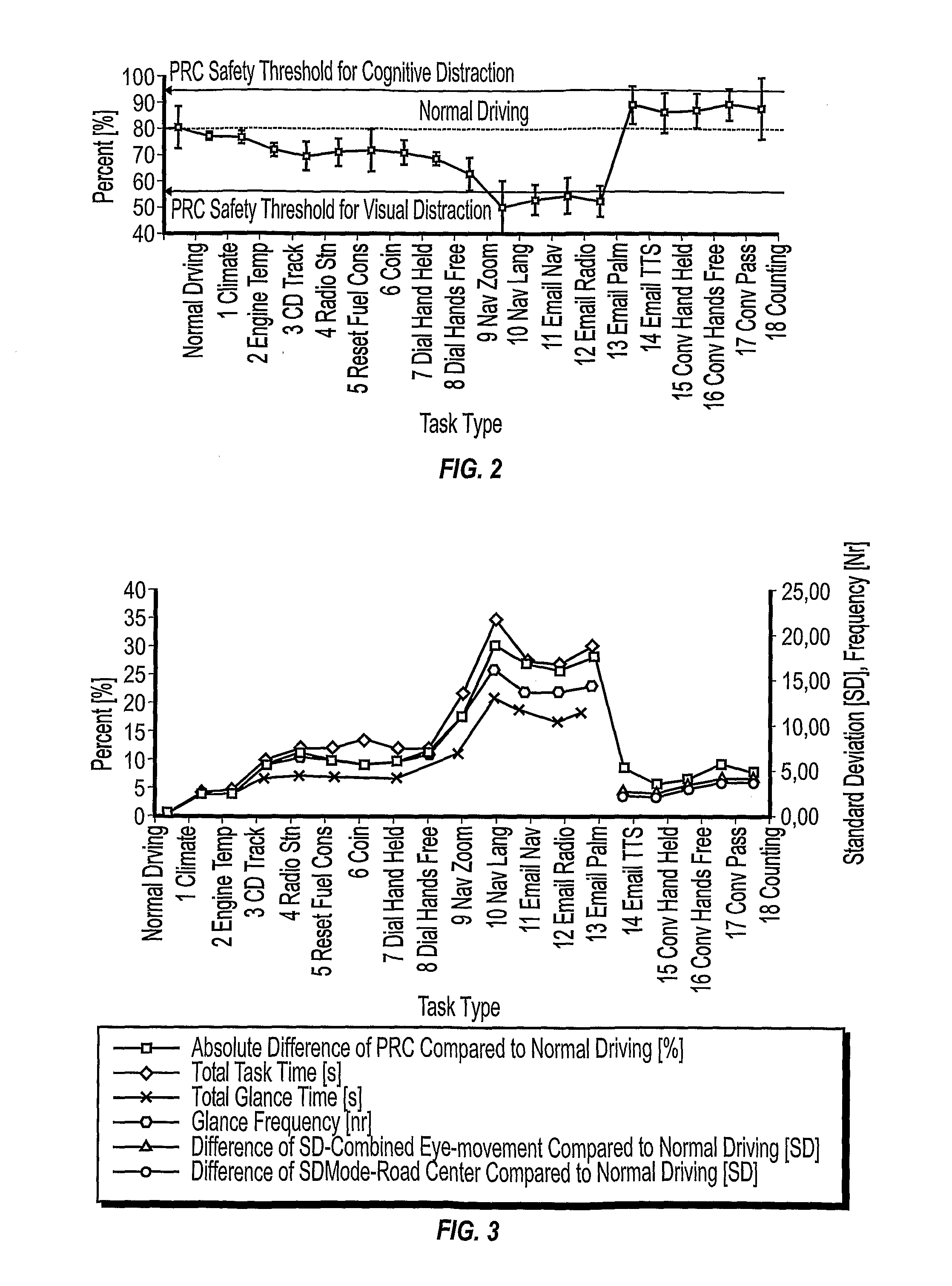

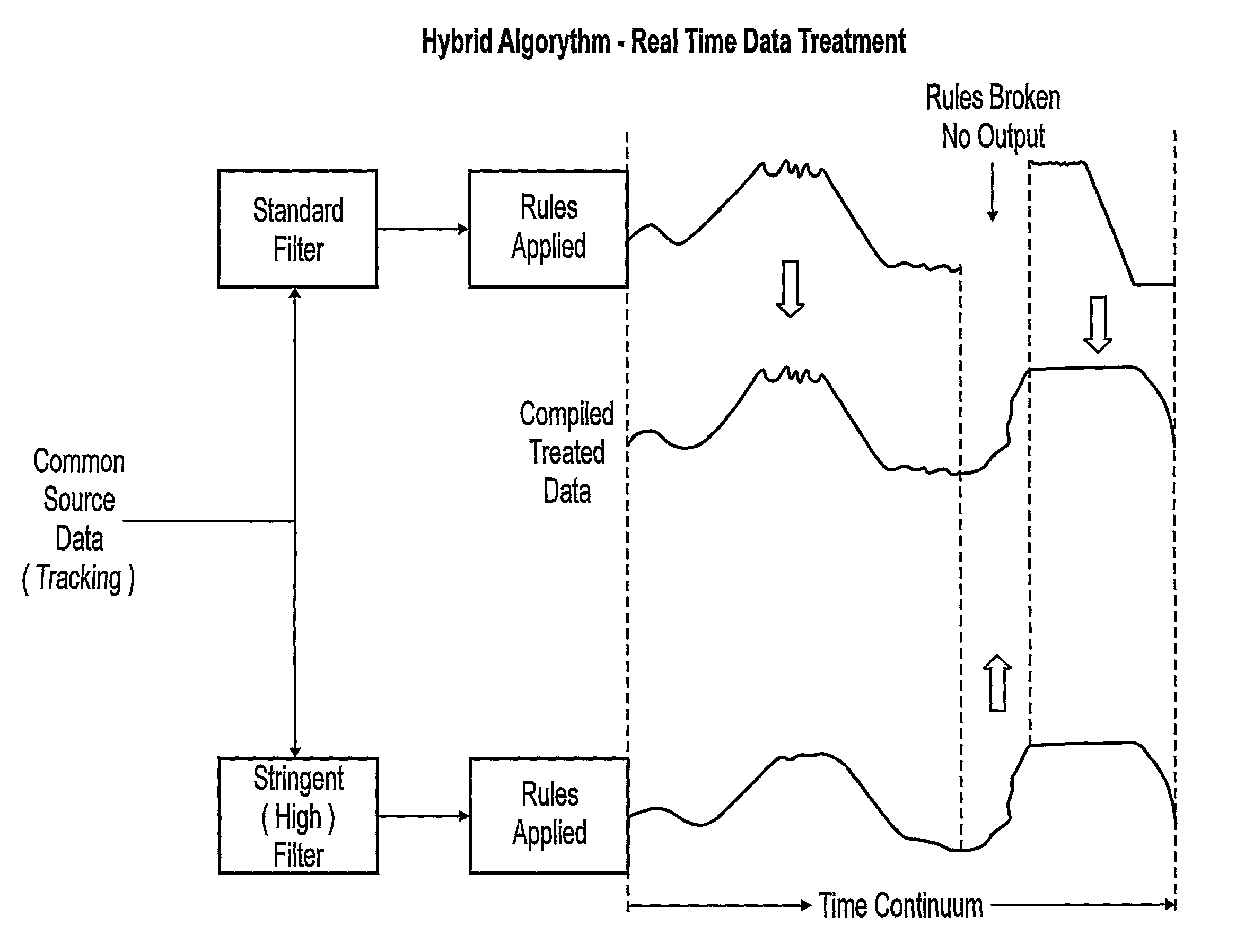

Method and apparatus for determining and analyzing a location of visual interest

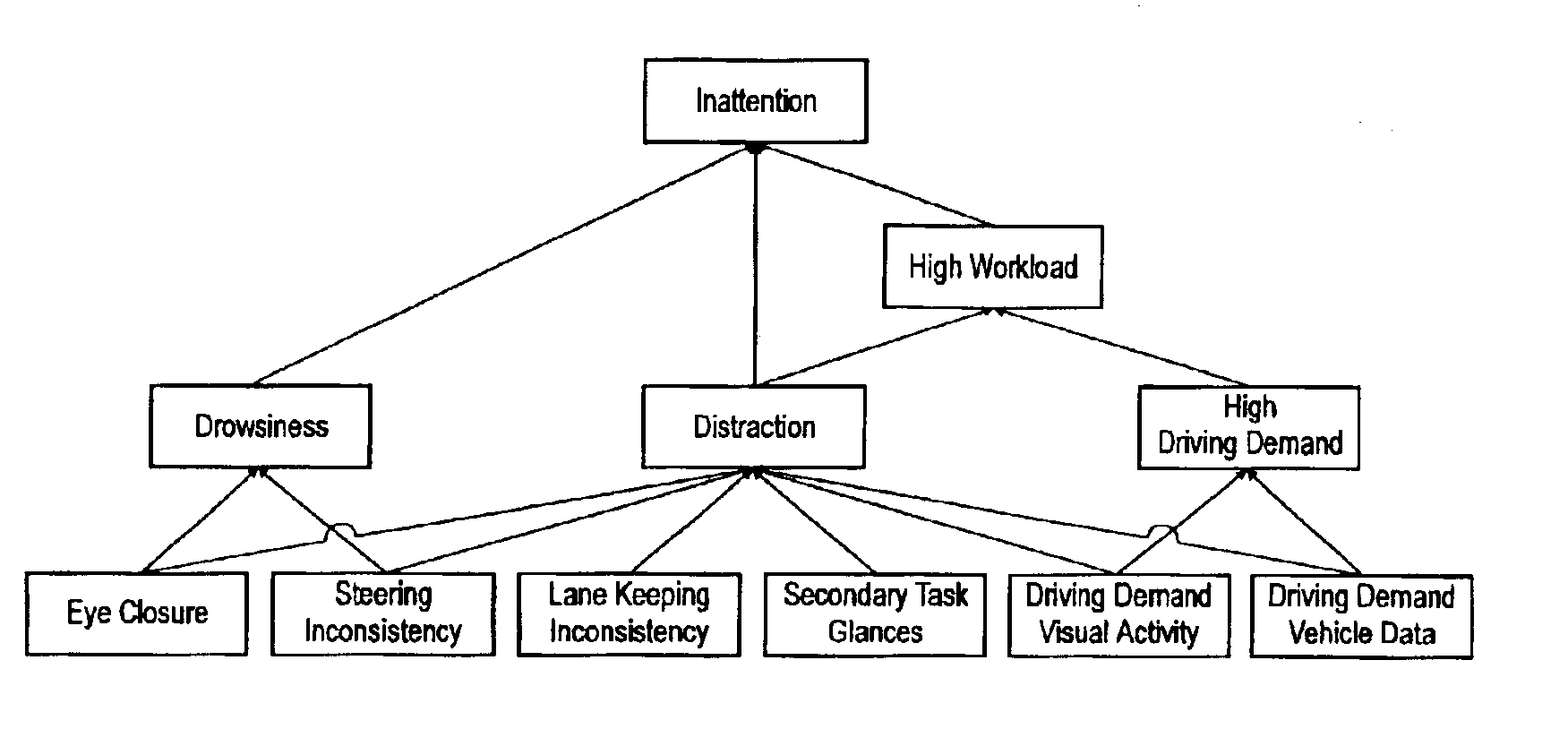

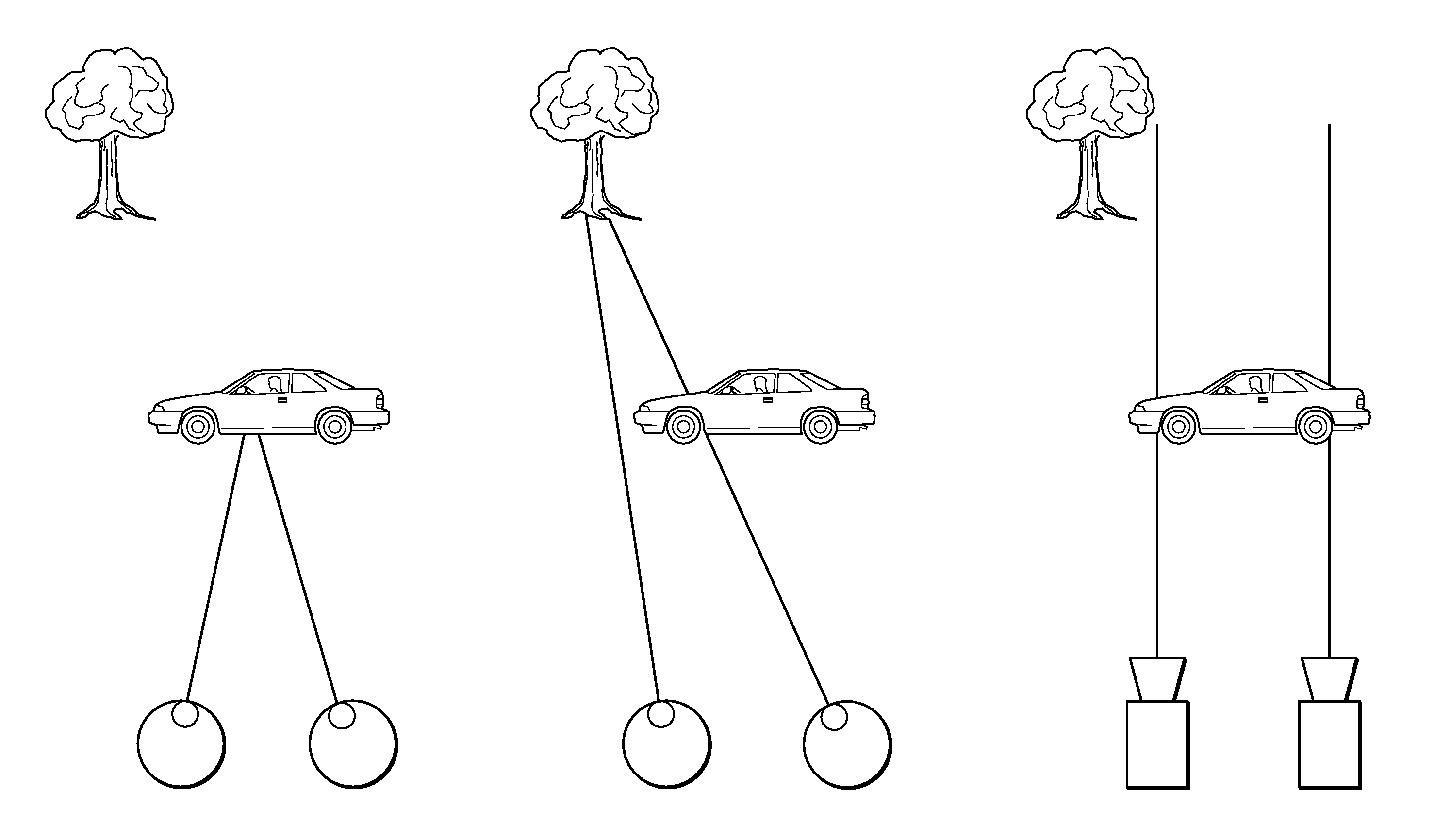

InactiveUS20100033333A1Effect of occurrence is minimizedMinimize impactElectric devicesAcquiring/recognising eyesPattern recognitionDriver/operator

A method of analyzing data based on the physiological orientation of a driver is provided. Data is descriptive of a driver's gaze-direction is processing and criteria defining a location of driver interest is determined. Based on the determined criteria, gaze-direction instances are classified as either on-location or off-location. The classified instances can then be used for further analysis, generally relating to times of elevated driver workload and not driver drowsiness. The classified instances are transformed into one of two binary values (e.g., 1 and 0) representative of whether the respective classified instance is on or off location. The uses of a binary value makes processing and analysis of the data faster and more efficient. Furthermore, classification of at least some of the off-location gaze direction instances can be inferred from the failure to meet the determined criteria for being classified as an on-location driver gaze direction instance.

Owner:VOLVO LASTVAGNAR AB

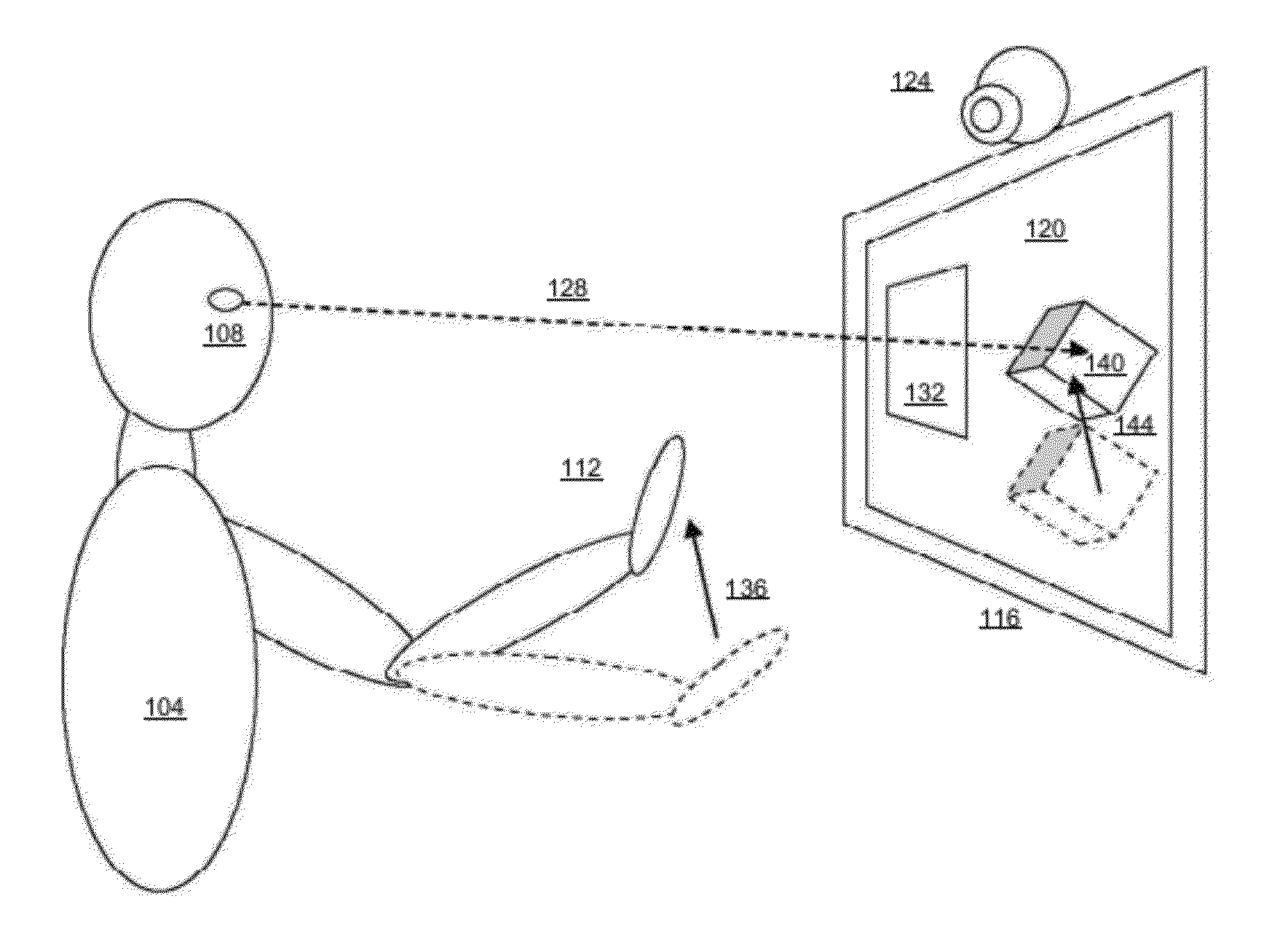

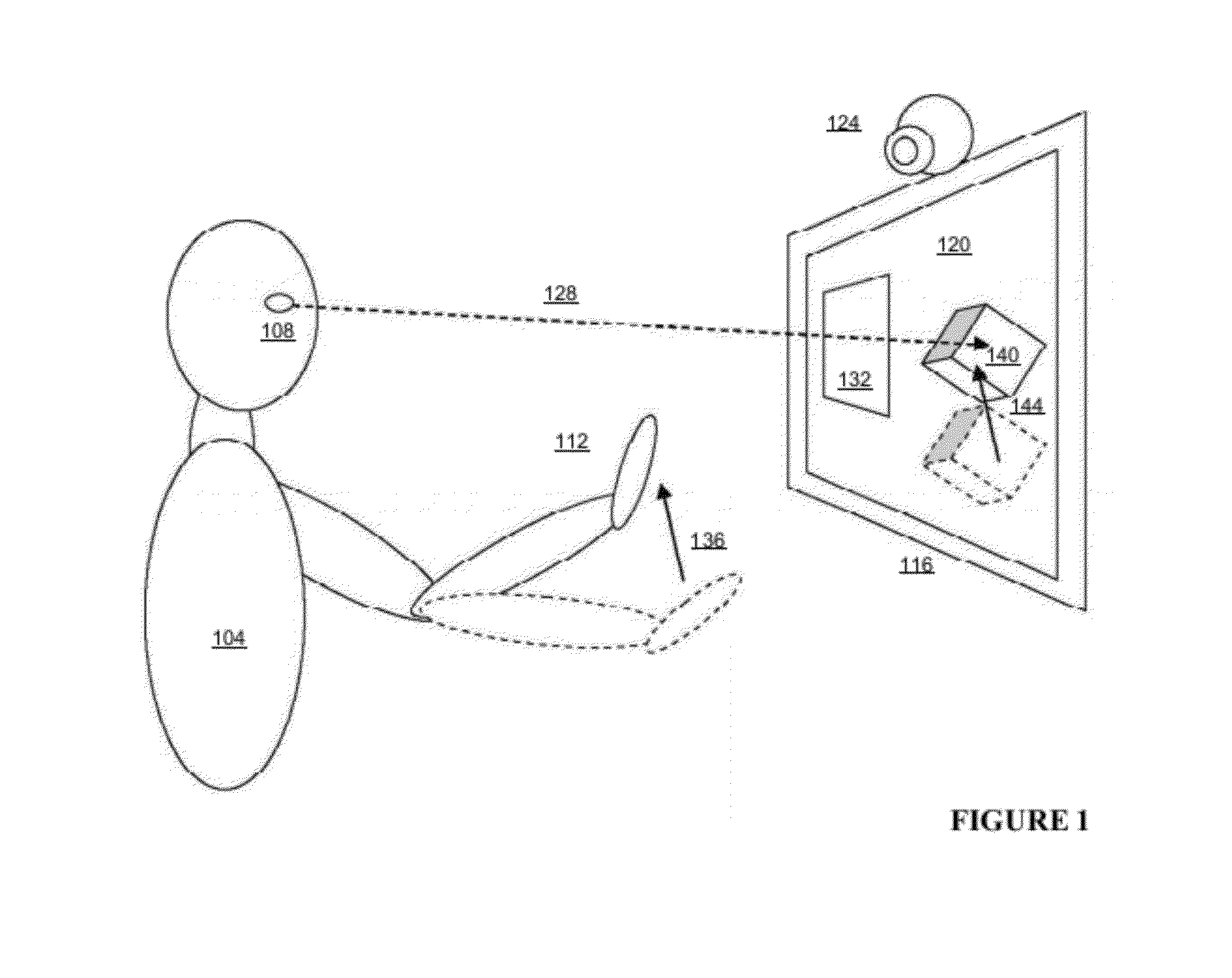

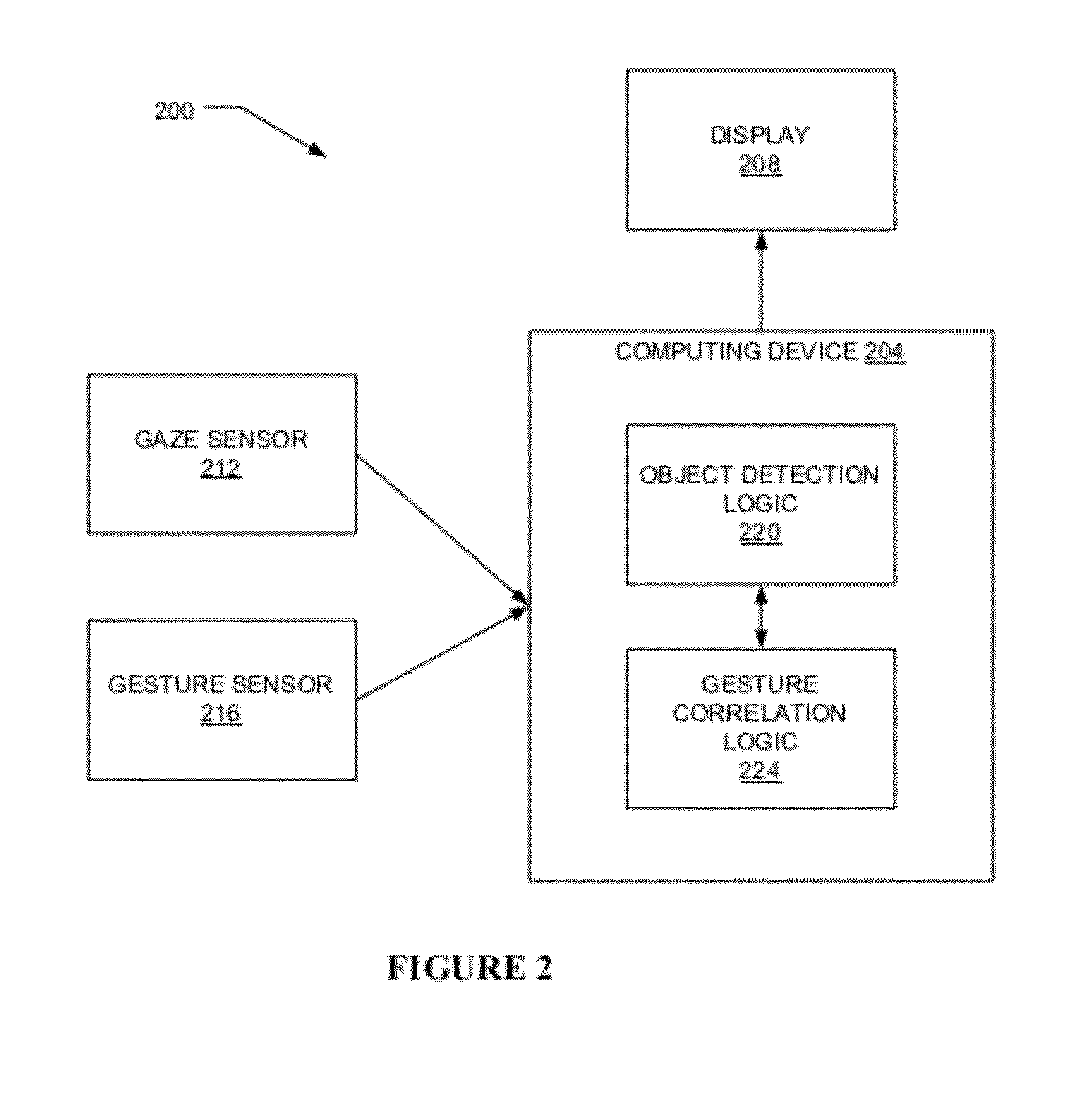

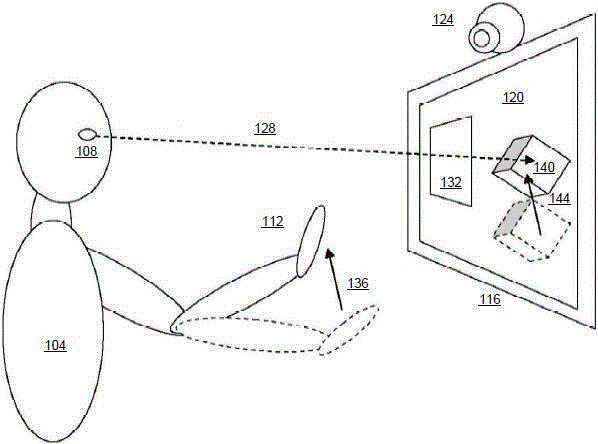

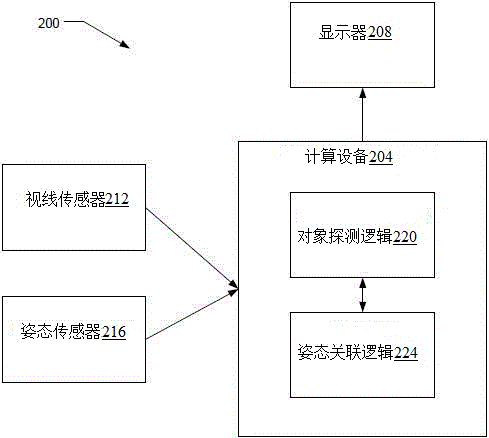

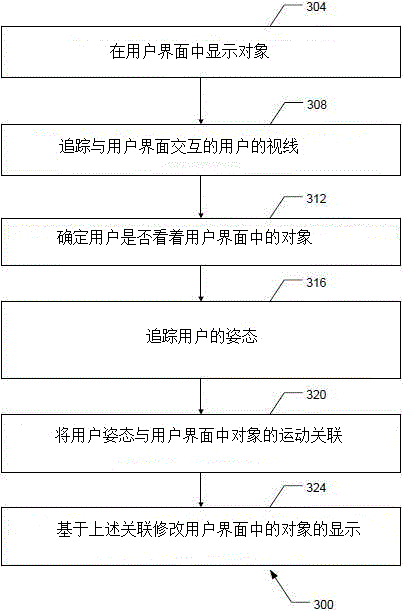

Systems and methods for providing feedback by tracking user gaze and gestures

InactiveUS20120257035A1Color television detailsClosed circuit television systemsUser inputGaze directions

User interface technology that provides feedback to the user based on the user's gaze and a secondary user input, such as a hand gesture, is provided. A camera-based tracking system may track the gaze direction of a user to detect which object displayed in the user interface is being viewed. The tracking system also recognizes hand or other body gestures to control the action or motion of that object, using, for example, a separate camera and / or sensor. The user interface is then updated based on the tracked gaze and gesture data to provide the feedback.

Owner:SONY COMPUTER ENTERTAINMENT INC

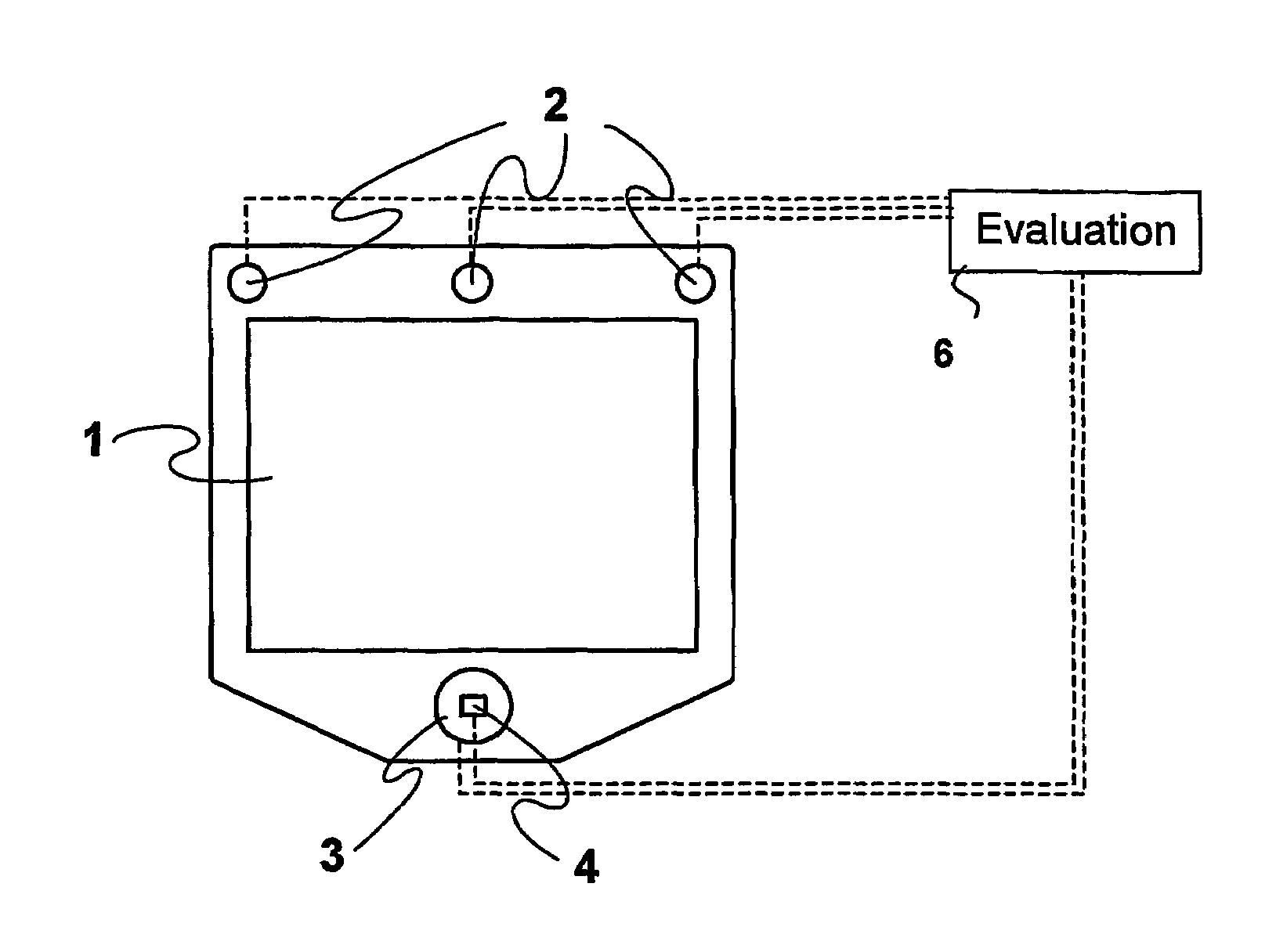

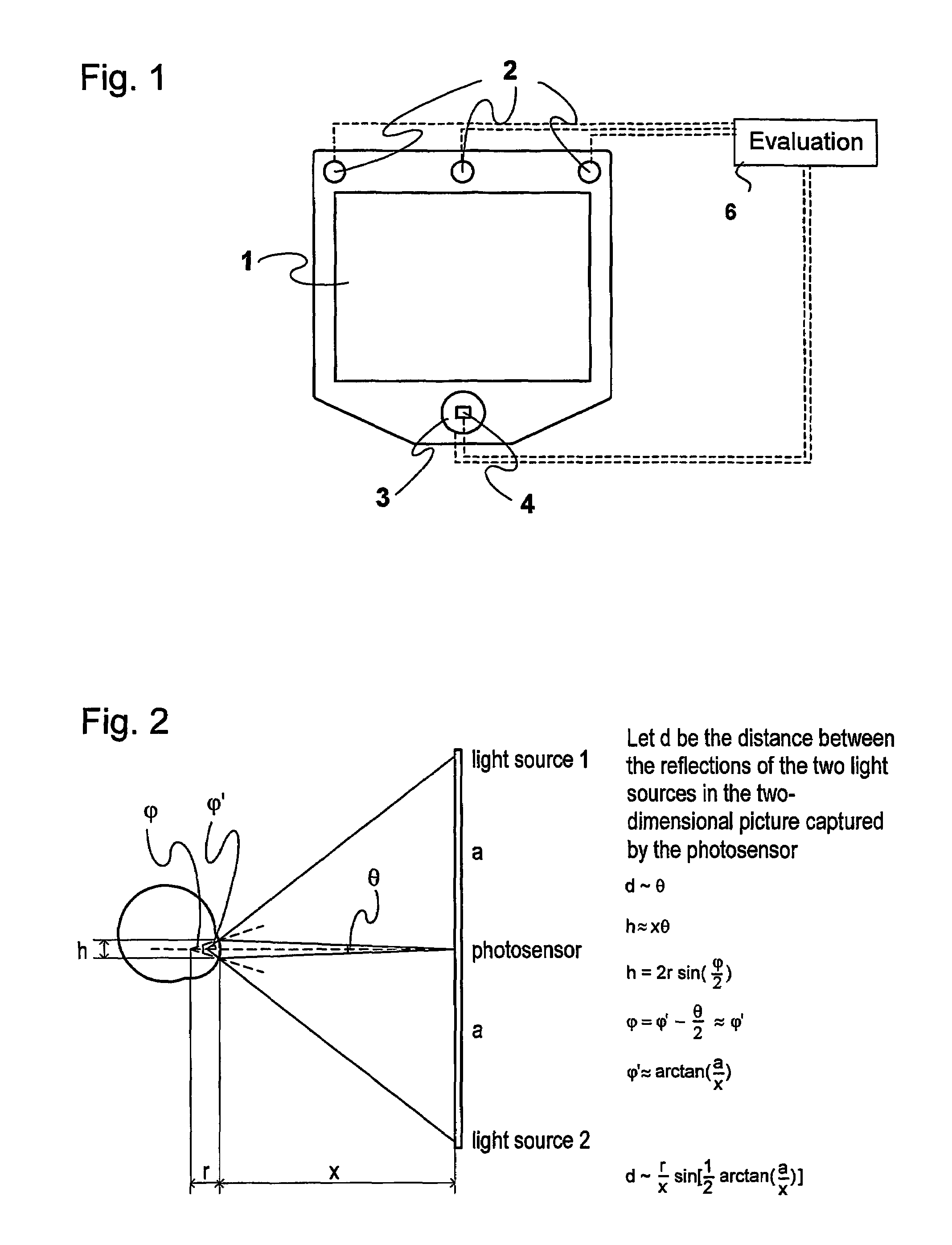

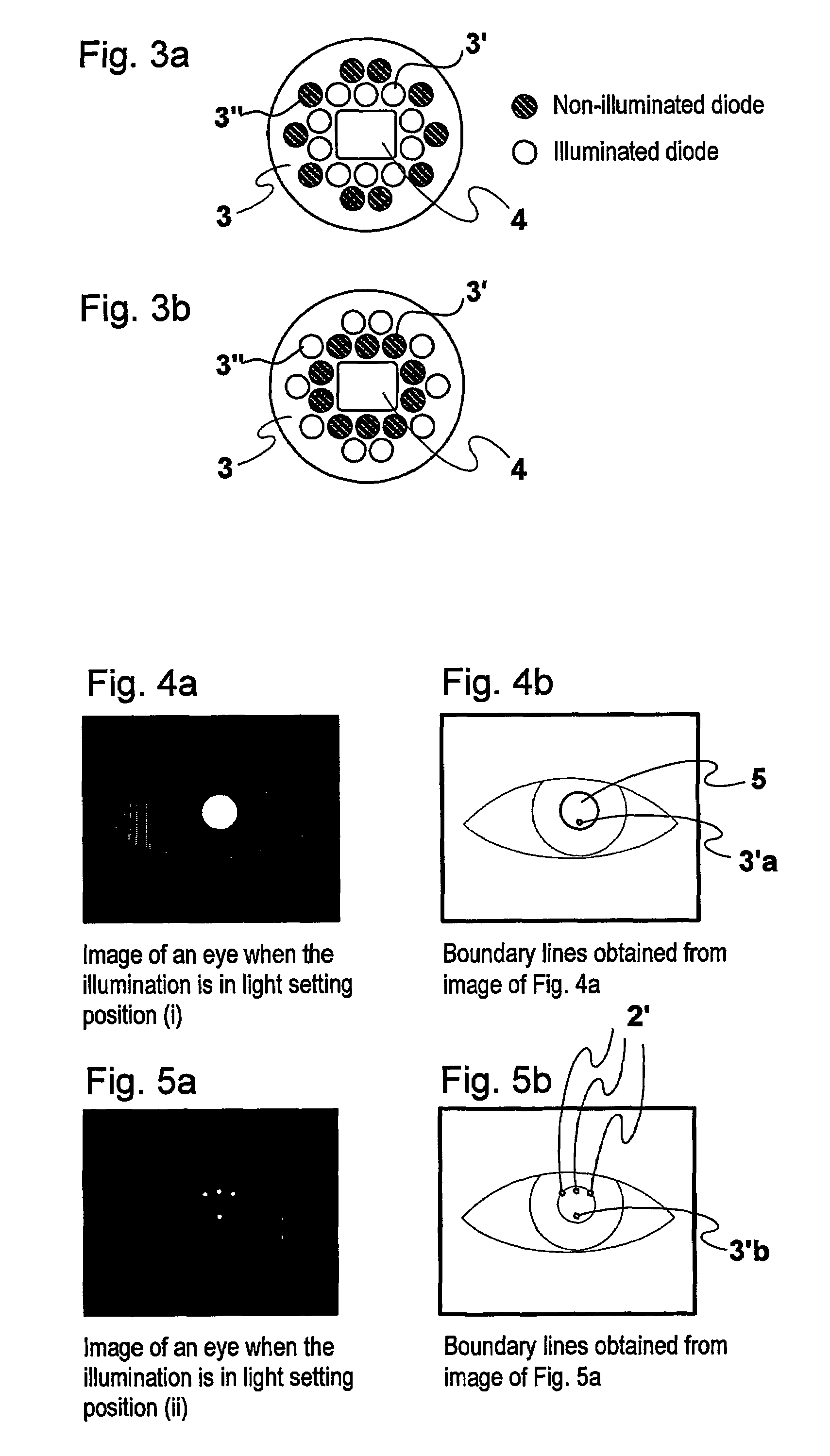

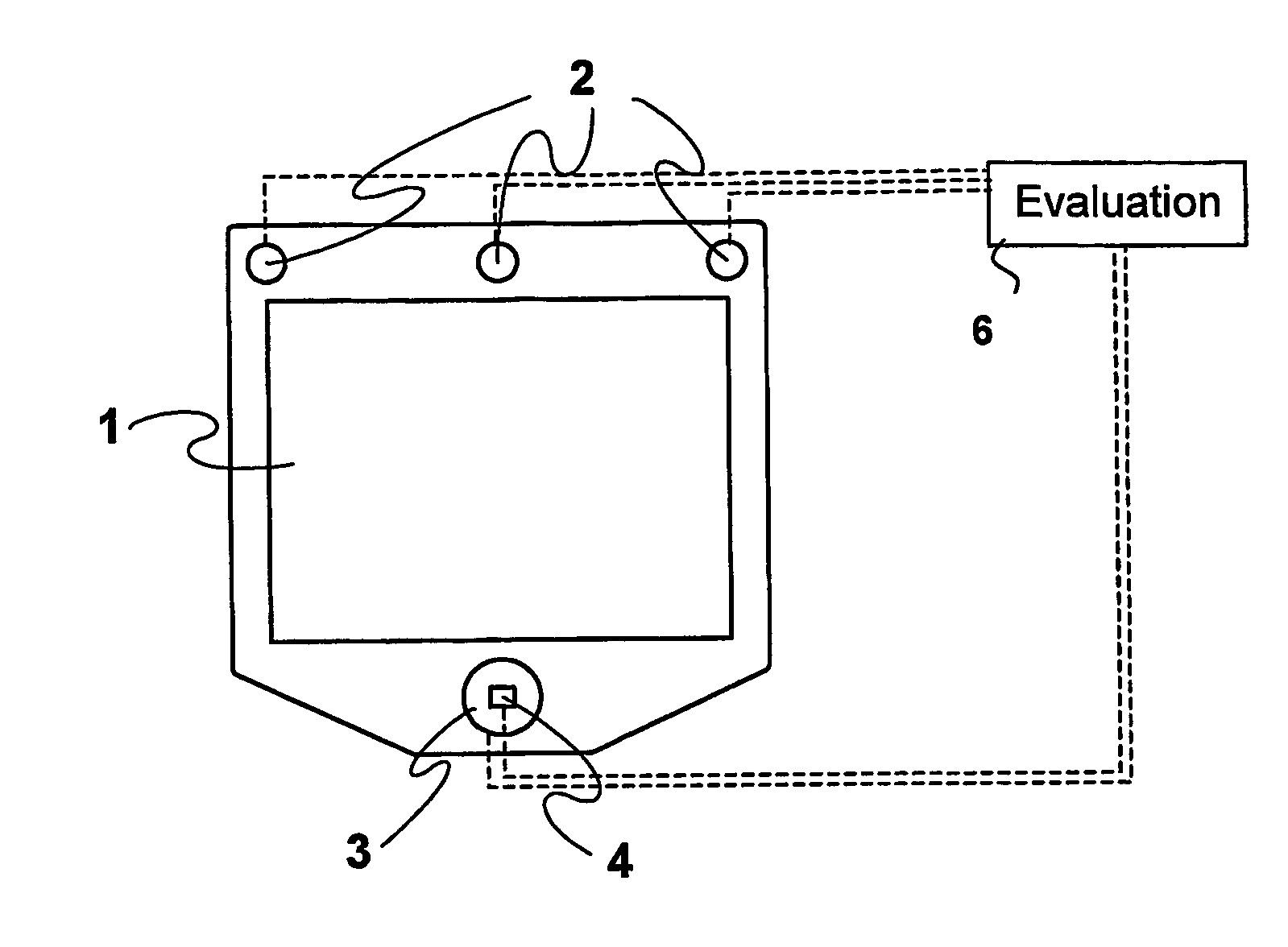

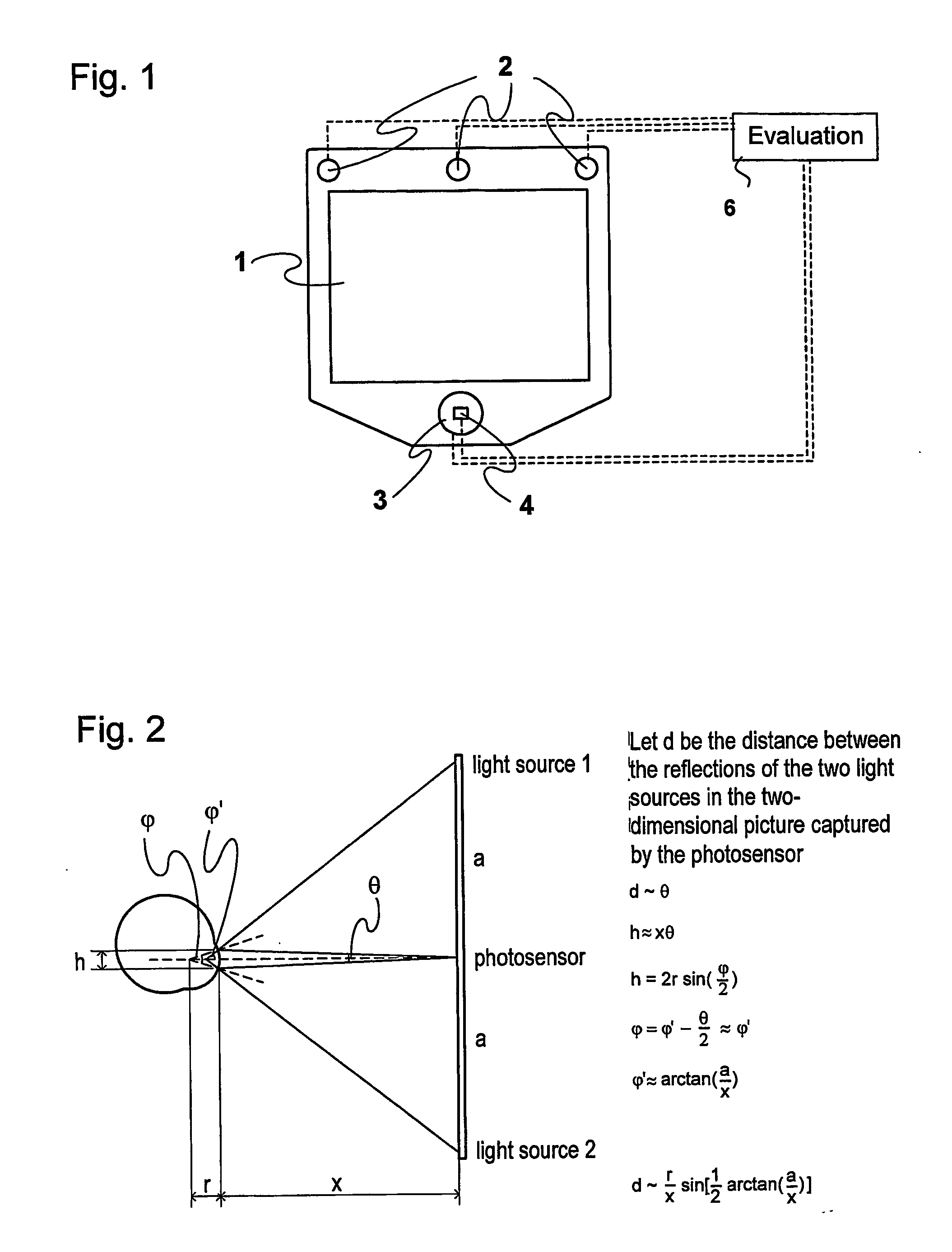

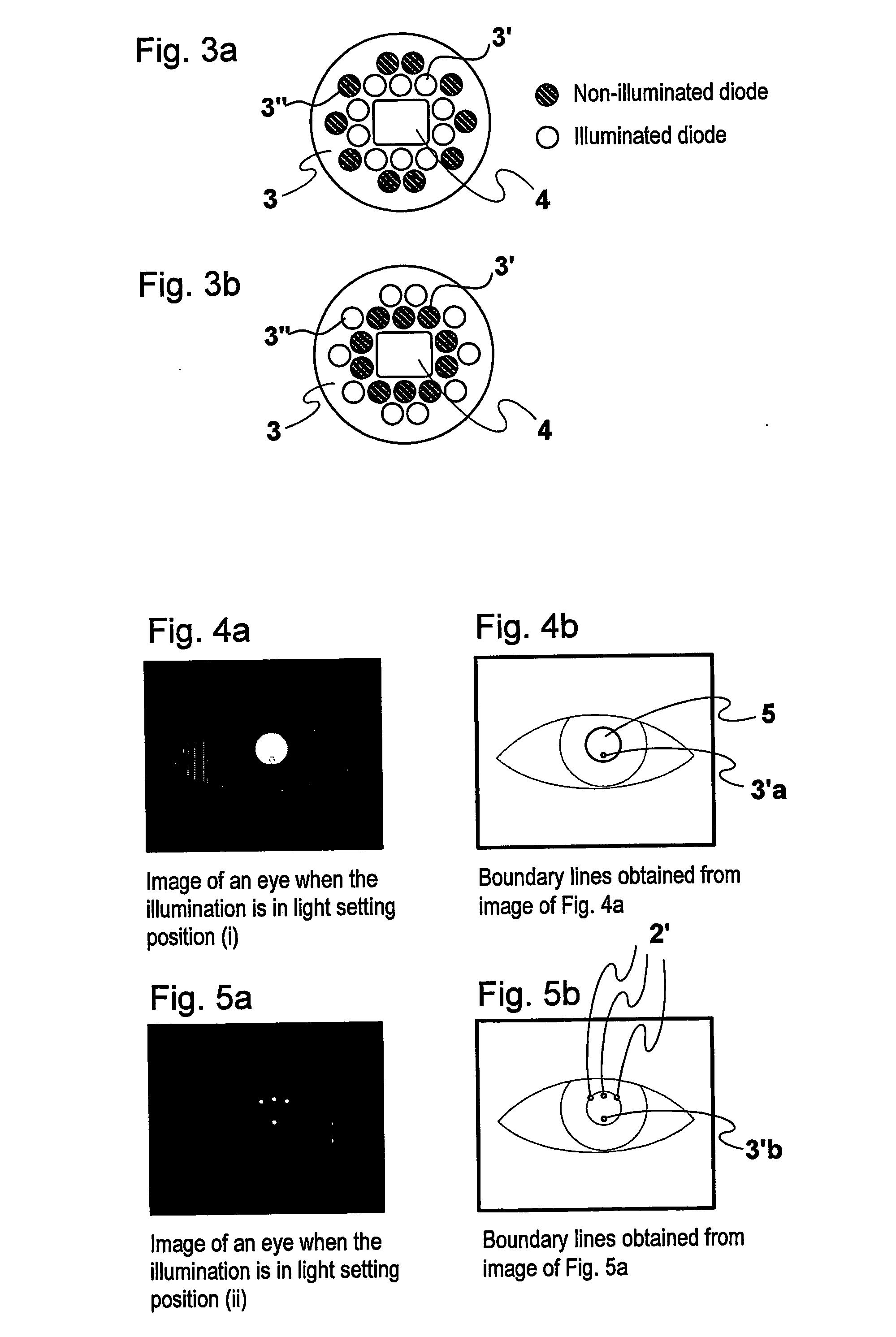

Method and installation for detecting and following an eye and the gaze direction thereof

When detecting the position and gaze direction of eyes, a photo sensor (1) and light sources (2, 3) placed around a display (1) and a calculation and control unit (6) are used. One of the light sources is placed around the sensor and includes inner and outer elements (3′; 3″). When only the inner elements are illuminated, a strong bright eye effect in a captured image is obtained, this resulting in a simple detection of the pupils and thereby a safe determination of gaze direction. When only the outer elements and the outer light sources (2) are illuminated, a determination of the distance of the eye from the photo sensor is made. After it has been possible to determine the pupils in an image, in the following captured images only those areas around the pupils are evaluated where the images of the eyes are located. Which one of the eyes that is the left eye and the right eye can be determined by following the images of the eyes and evaluating the positions thereof in successively captured images.

Owner:TOBII TECH AB

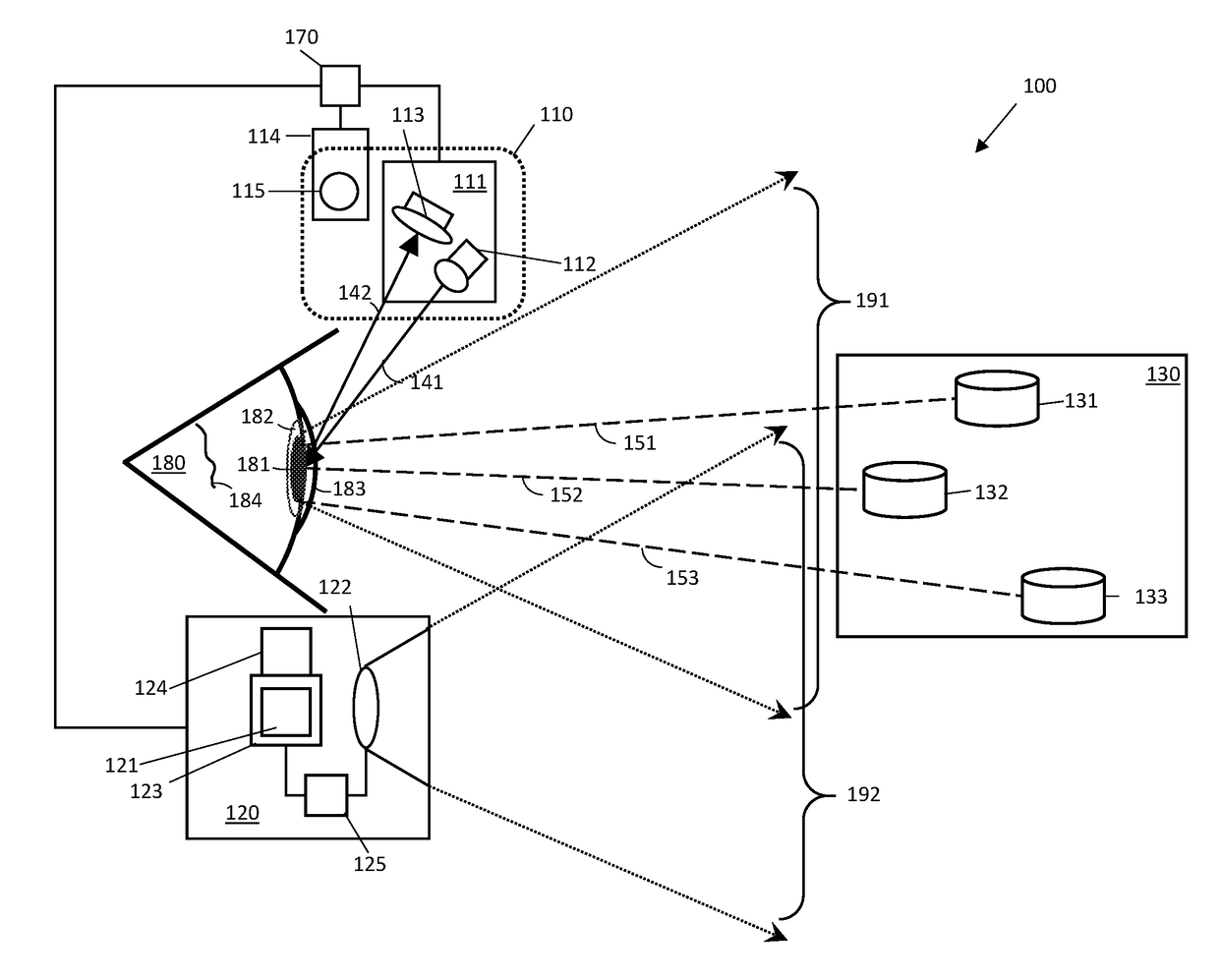

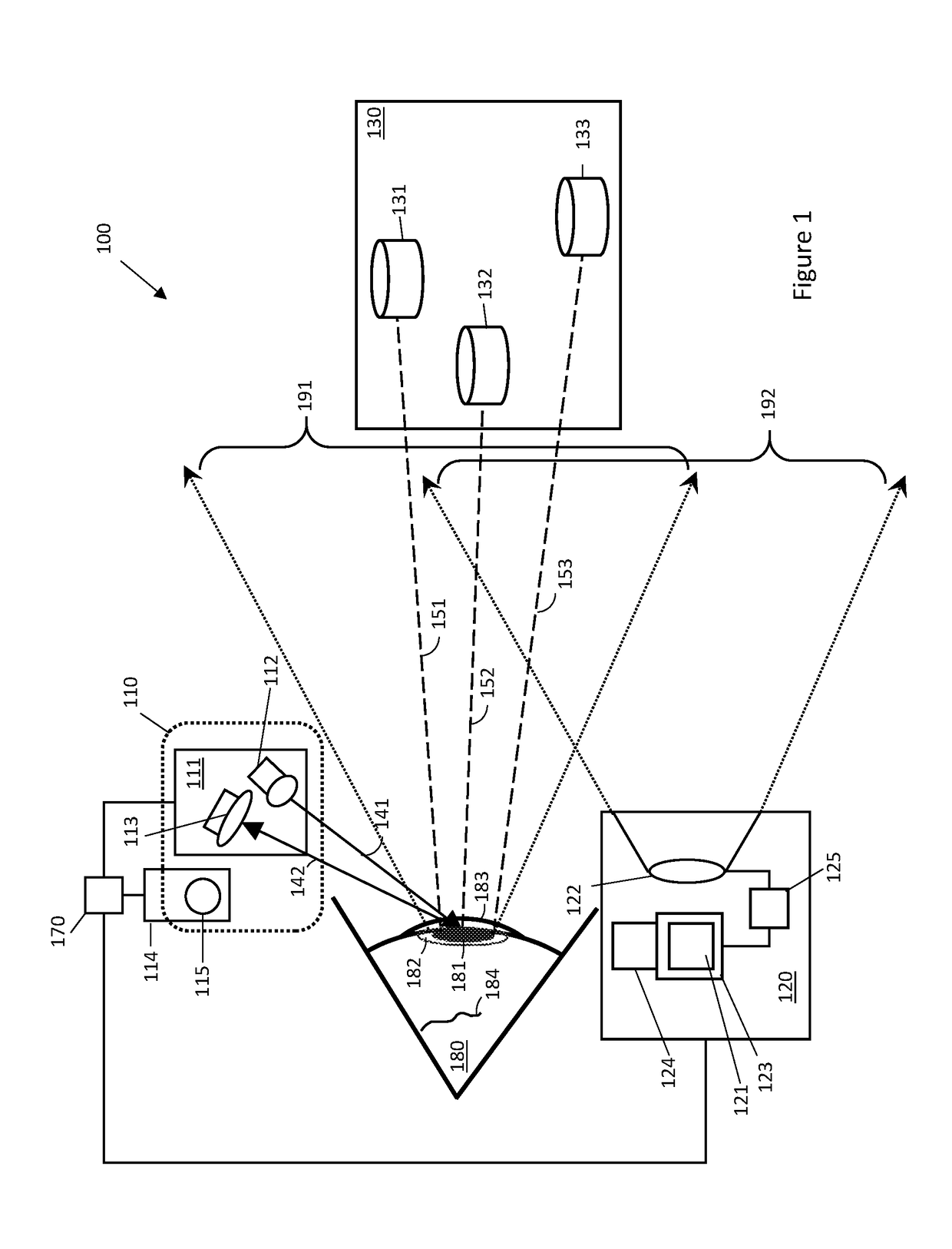

Methods and Apparatuses for Operating a Display in an Electronic Device

An electronic device can include detectors for altering the presentation of data on one or more displays. In a wearable electronic device, a flexible housing can be configured to enfold about an appendage of a user, such as a user's wrist. A display can disposed along a major face of the flexible housing. A control circuit can be operable with the display. A gaze detector can be included to detect a gaze direction, and optionally a gaze cone. An orientation detector can be configured to detect an orientation of the electronic device relative to the user. The control circuit can alter a presentation of data on the display in response to a detected gaze direction, in response to detected orientation of the wearable electronic device relative to the user, in response to touch or gesture input, or combinations thereof. Secondary displays can be hingedly coupled to the electronic device.

Owner:GOOGLE TECH HLDG LLC

Method and apparatus for determining and analyzing a location of visual interest

InactiveUS8487775B2Efficient analysisEasy to handleElectric devicesAcquiring/recognising eyesDriver/operatorWorkload

A method of analyzing data based on the physiological orientation of a driver is provided. Data is descriptive of a driver's gaze-direction is processing and criteria defining a location of driver interest is determined. Based on the determined criteria, gaze-direction instances are classified as either on-location or off-location. The classified instances can then be used for further analysis, generally relating to times of elevated driver workload and not driver drowsiness. The classified instances are transformed into one of two binary values (e.g., 1 and 0) representative of whether the respective classified instance is on or off location. The uses of a binary value makes processing and analysis of the data faster and more efficient. Furthermore, classification of at least some of the off-location gaze direction instances can be inferred from the failure to meet the determined criteria for being classified as an on-location driver gaze direction instance.

Owner:VOLVO LASTVAGNAR AB

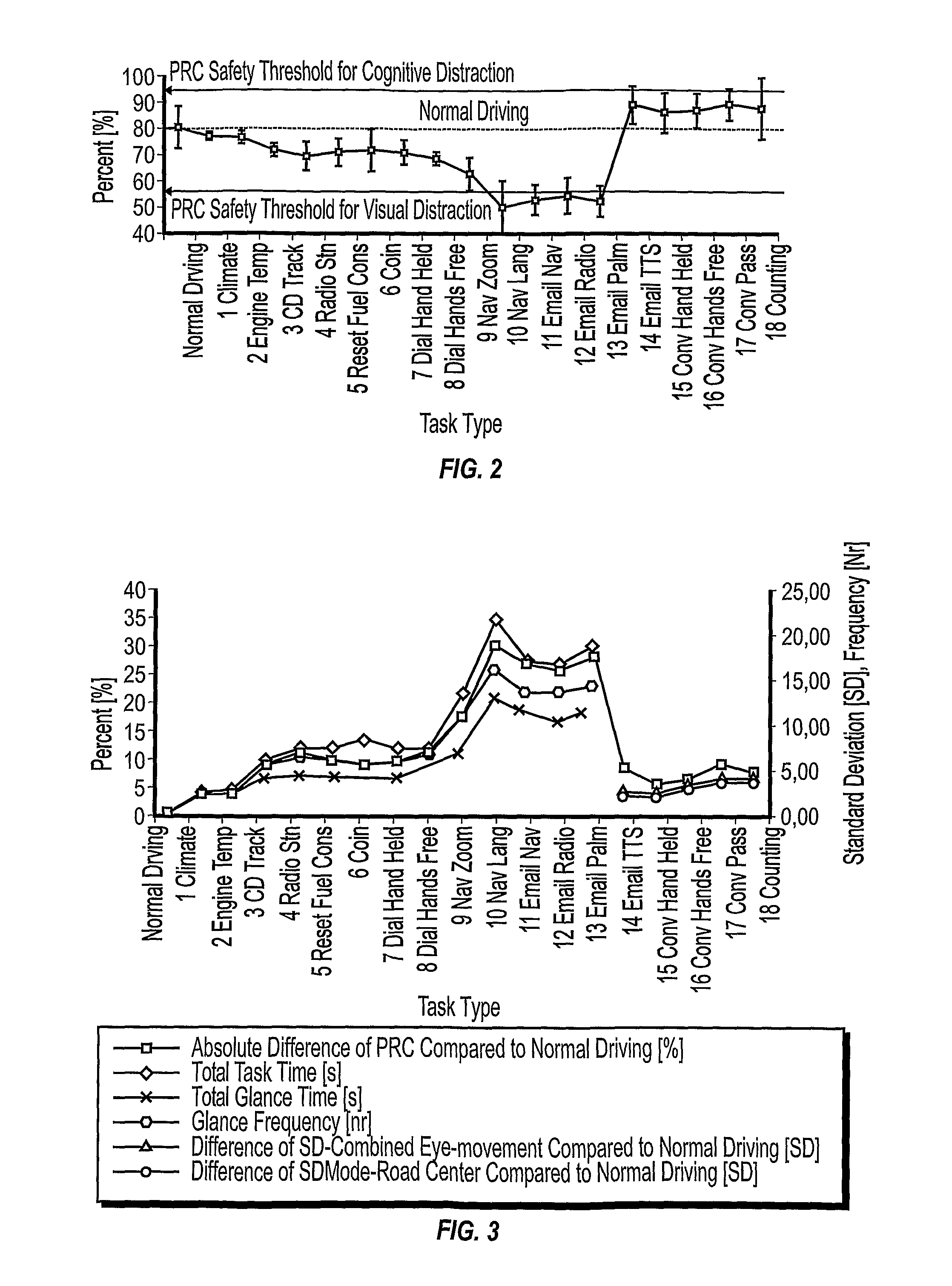

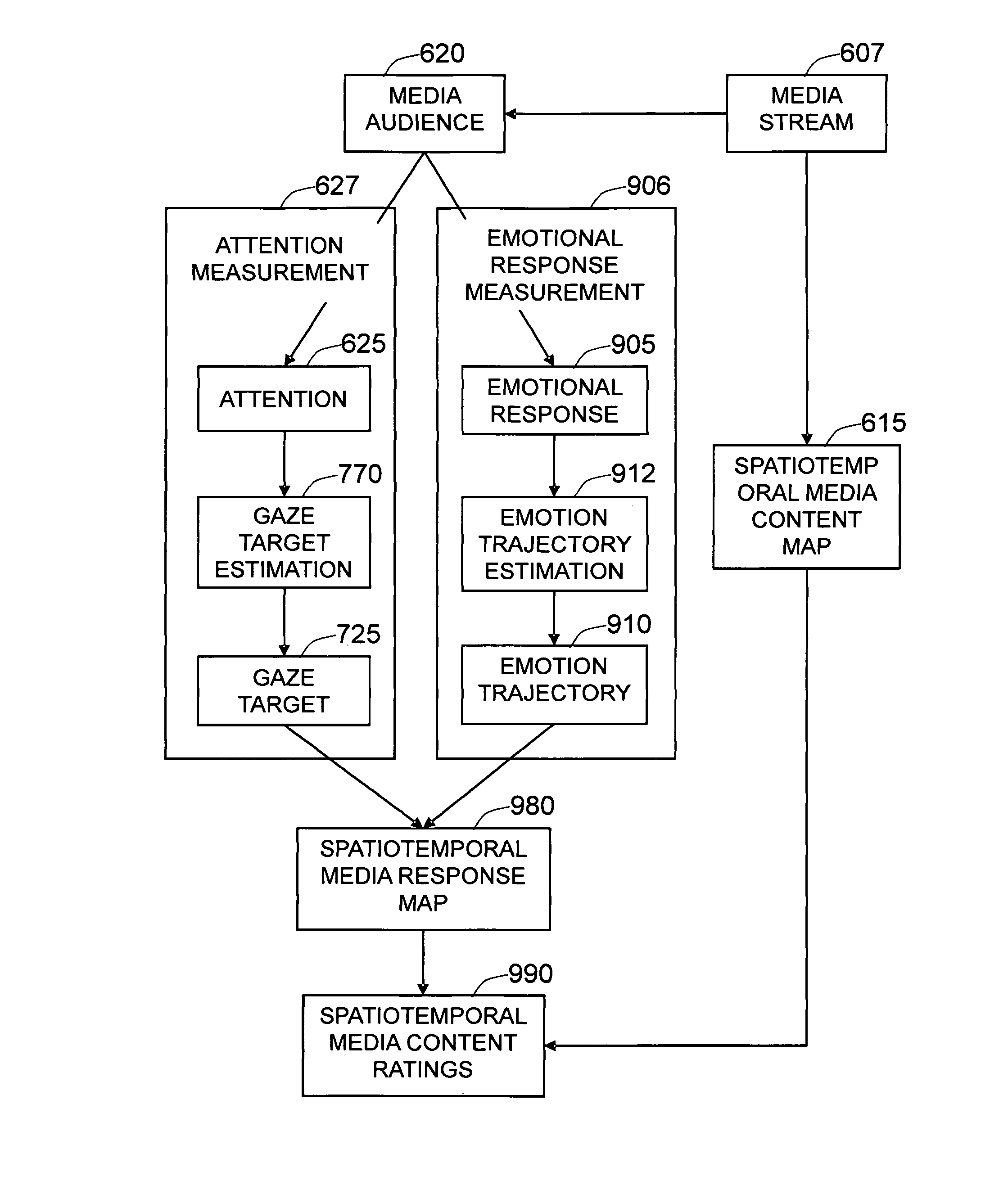

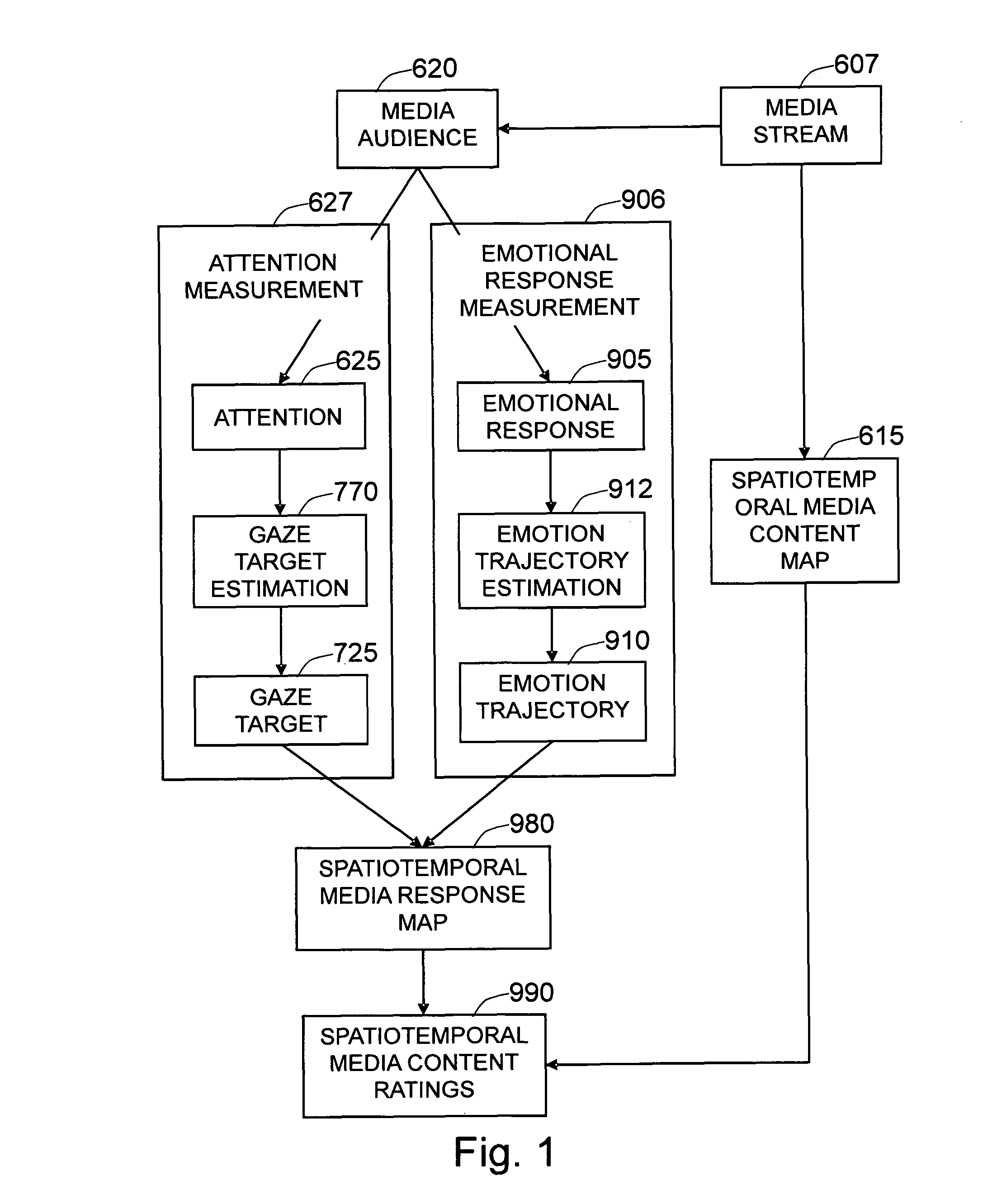

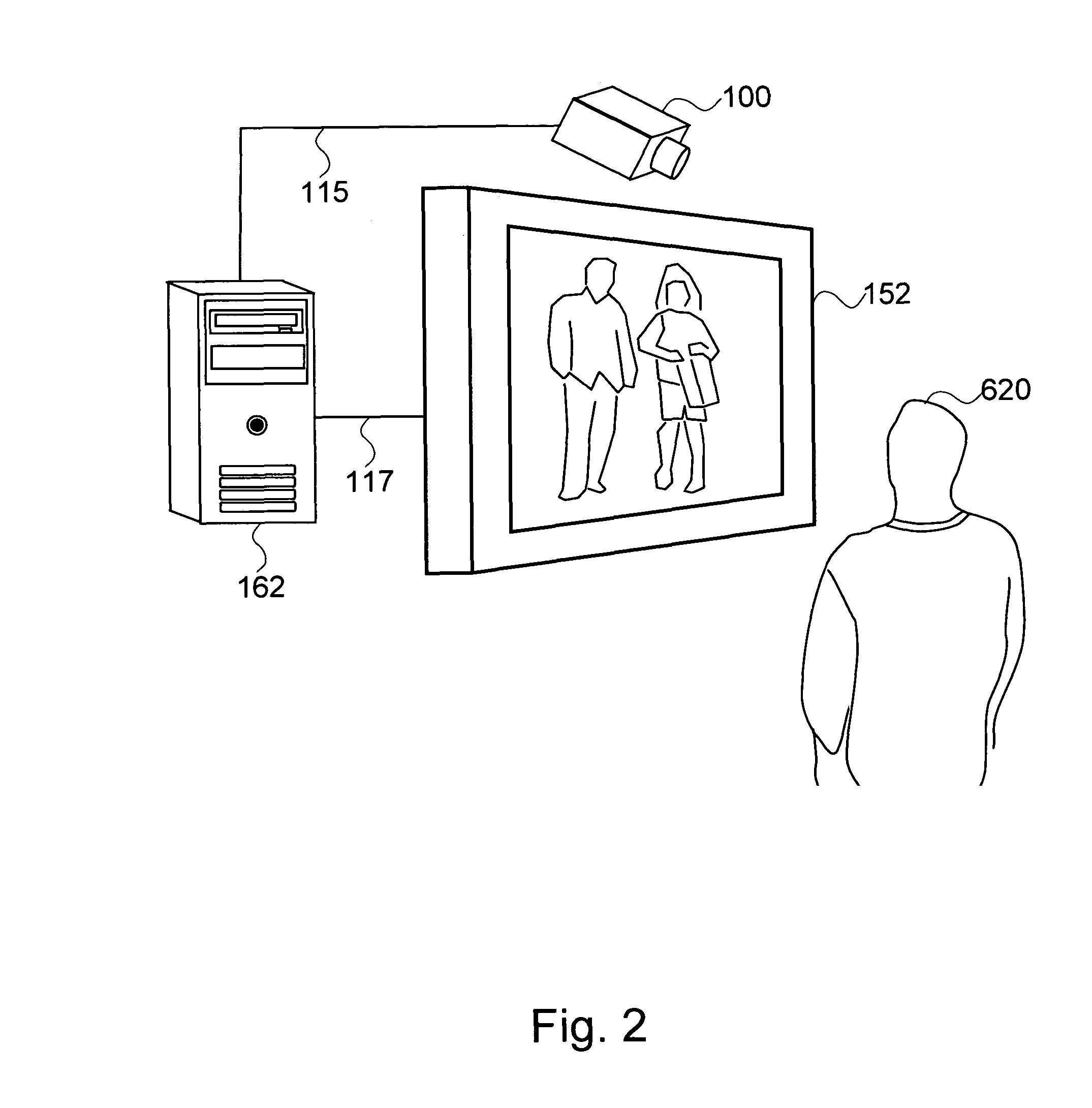

Method and system for measuring emotional and attentional response to dynamic digital media content

The present invention is a method and system to provide an automatic measurement of people's responses to dynamic digital media, based on changes in their facial expressions and attention to specific content. First, the method detects and tracks faces from the audience. It then localizes each of the faces and facial features to extract emotion-sensitive features of the face by applying emotion-sensitive feature filters, to determine the facial muscle actions of the face based on the extracted emotion-sensitive features. The changes in facial muscle actions are then converted to the changes in affective state, called an emotion trajectory. On the other hand, the method also estimates eye gaze based on extracted eye images and three-dimensional facial pose of the face based on localized facial images. The gaze direction of the person, is estimated based on the estimated eye gaze and the three-dimensional facial pose of the person. The gaze target on the media display is then estimated based on the estimated gaze direction and the position of the person. Finally, the response of the person to the dynamic digital media content is determined by analyzing the emotion trajectory in relation to the time and screen positions of the specific digital media sub-content that the person is watching.

Owner:MOTOROLA SOLUTIONS INC

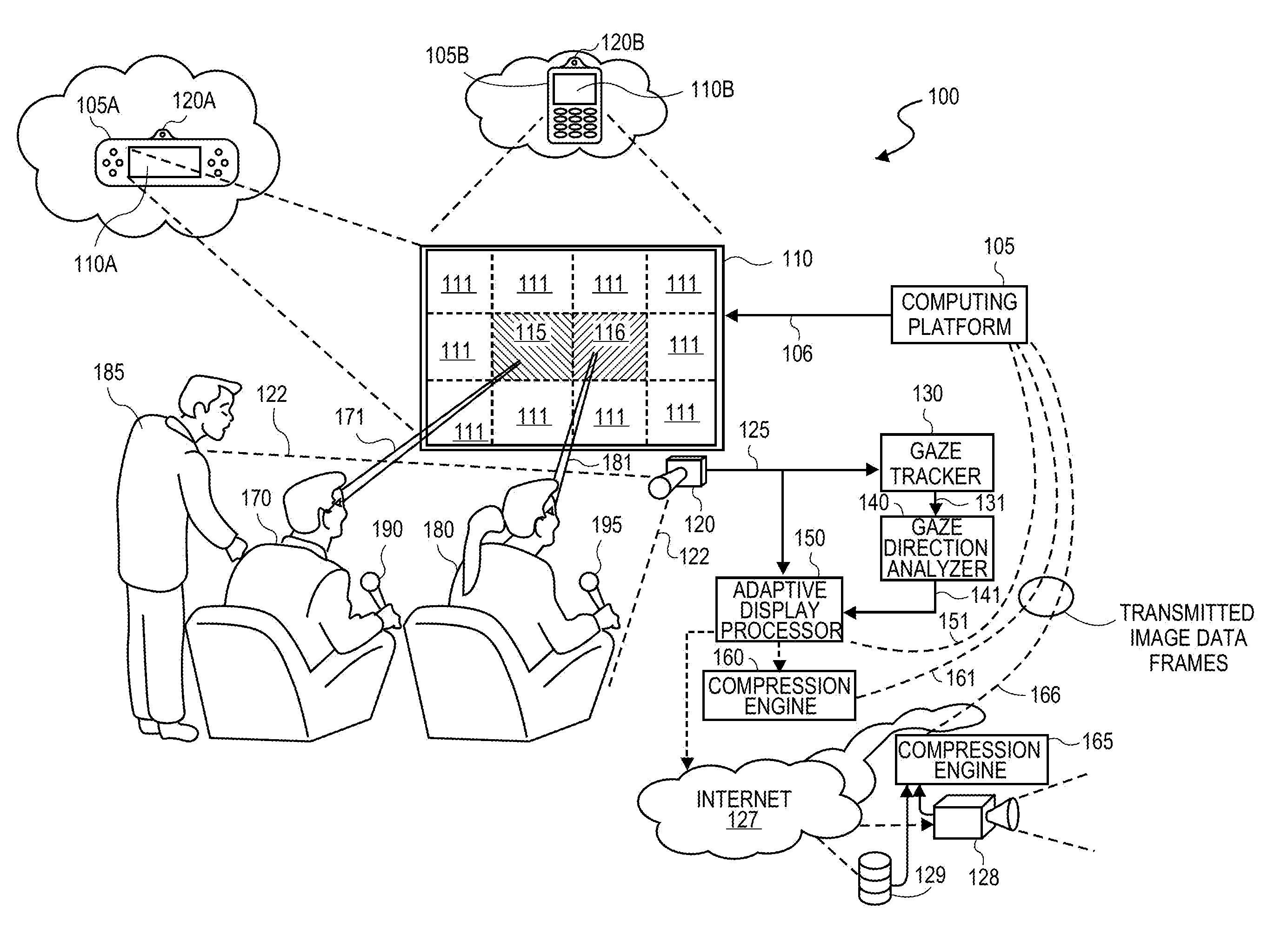

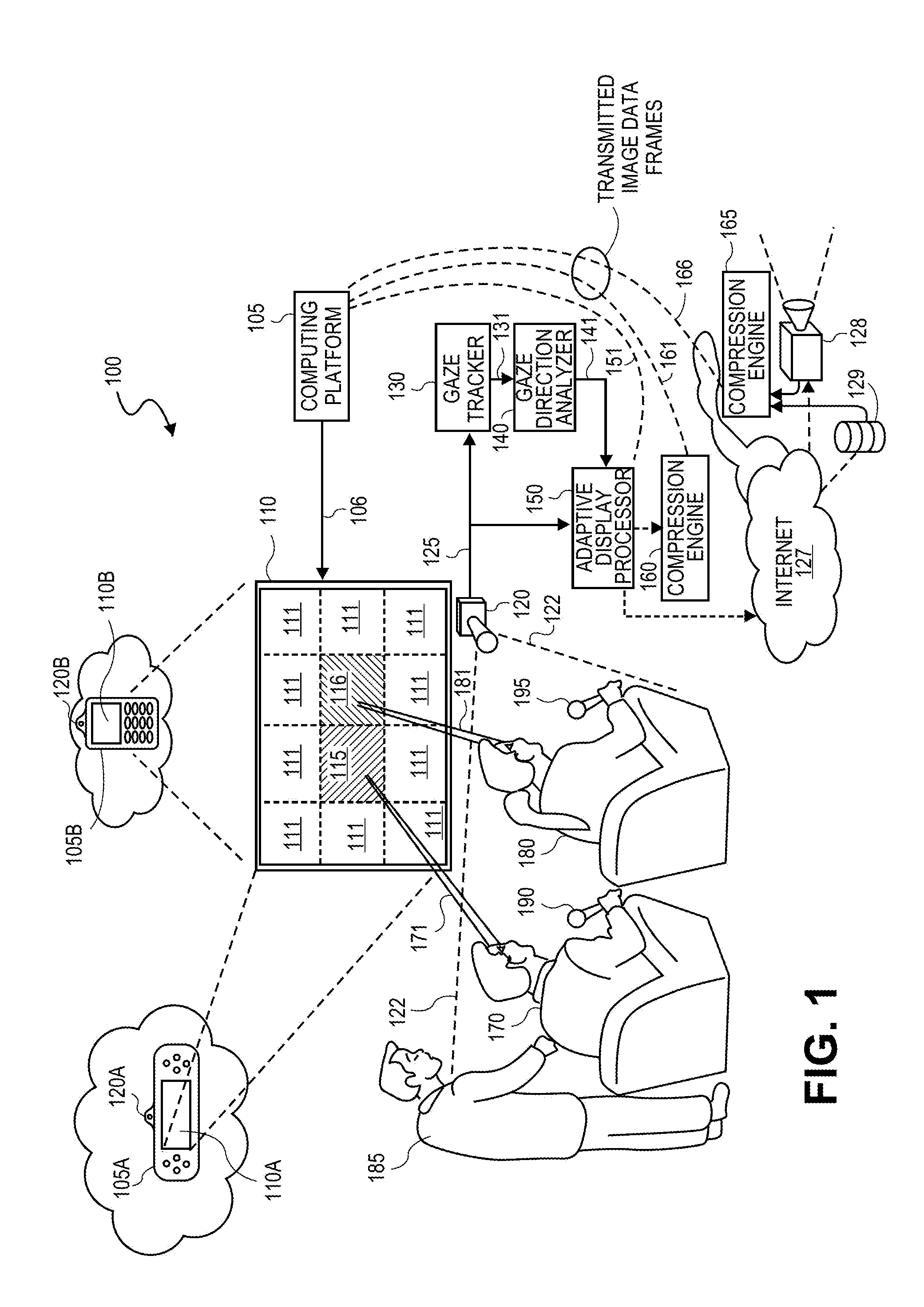

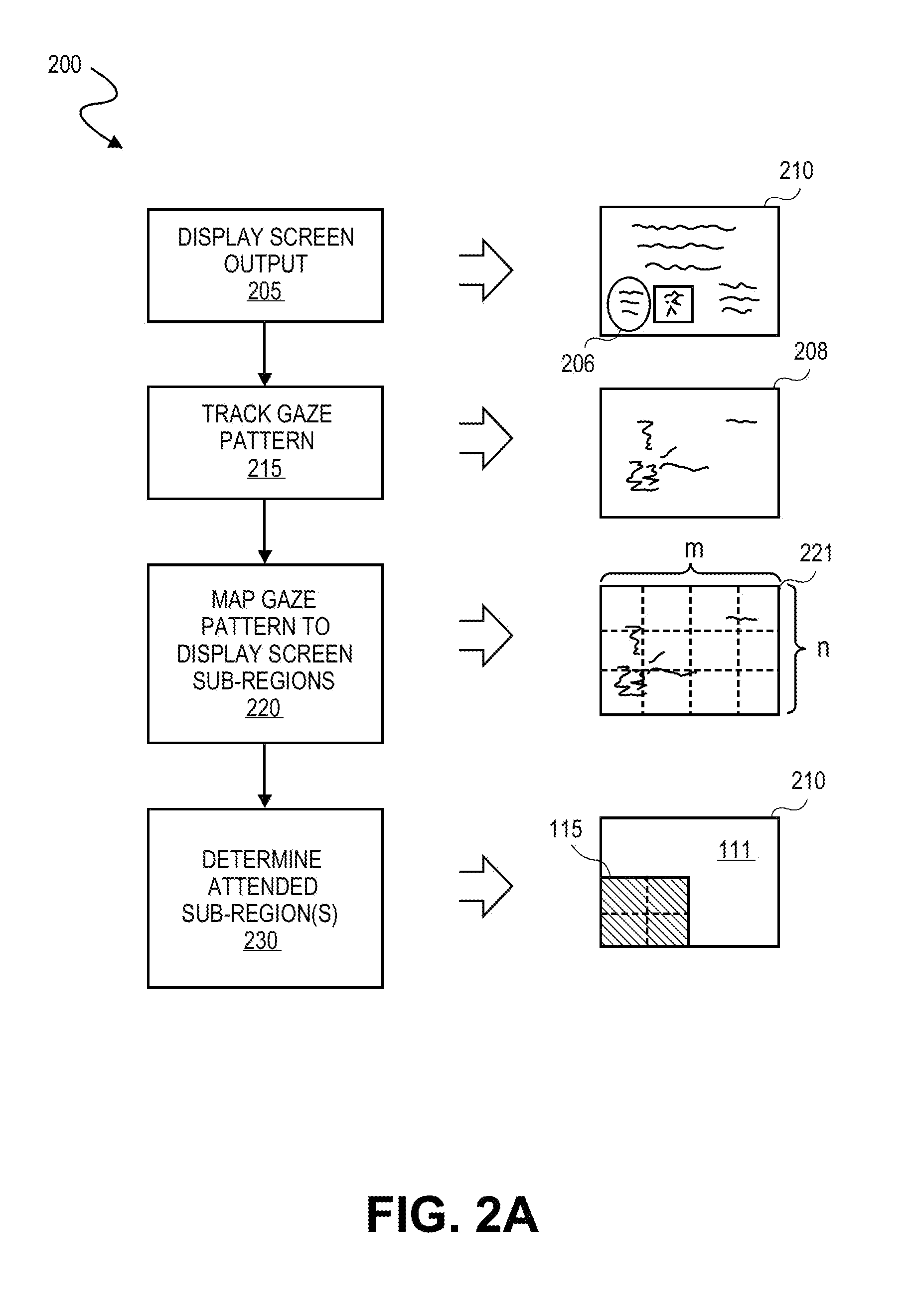

Adaptive displays using gaze tracking

ActiveUS20120146891A1Cathode-ray tube indicatorsDigital video signal modificationGaze directionsHuman–computer interaction

Methods and systems for adapting a display screen output based on a display user's attention. Gaze direction tracking is employed to determine a sub-region of a display screen area to which a user is attending. Display of the attended sub-region is modified relative to the remainder of the display screen, for example, by changing the quantity of data representing an object displayed within the attended sub-region relative to an object displayed in an unattended sub-region of the display screen.

Owner:SONY COMPUTER ENTERTAINMENT INC

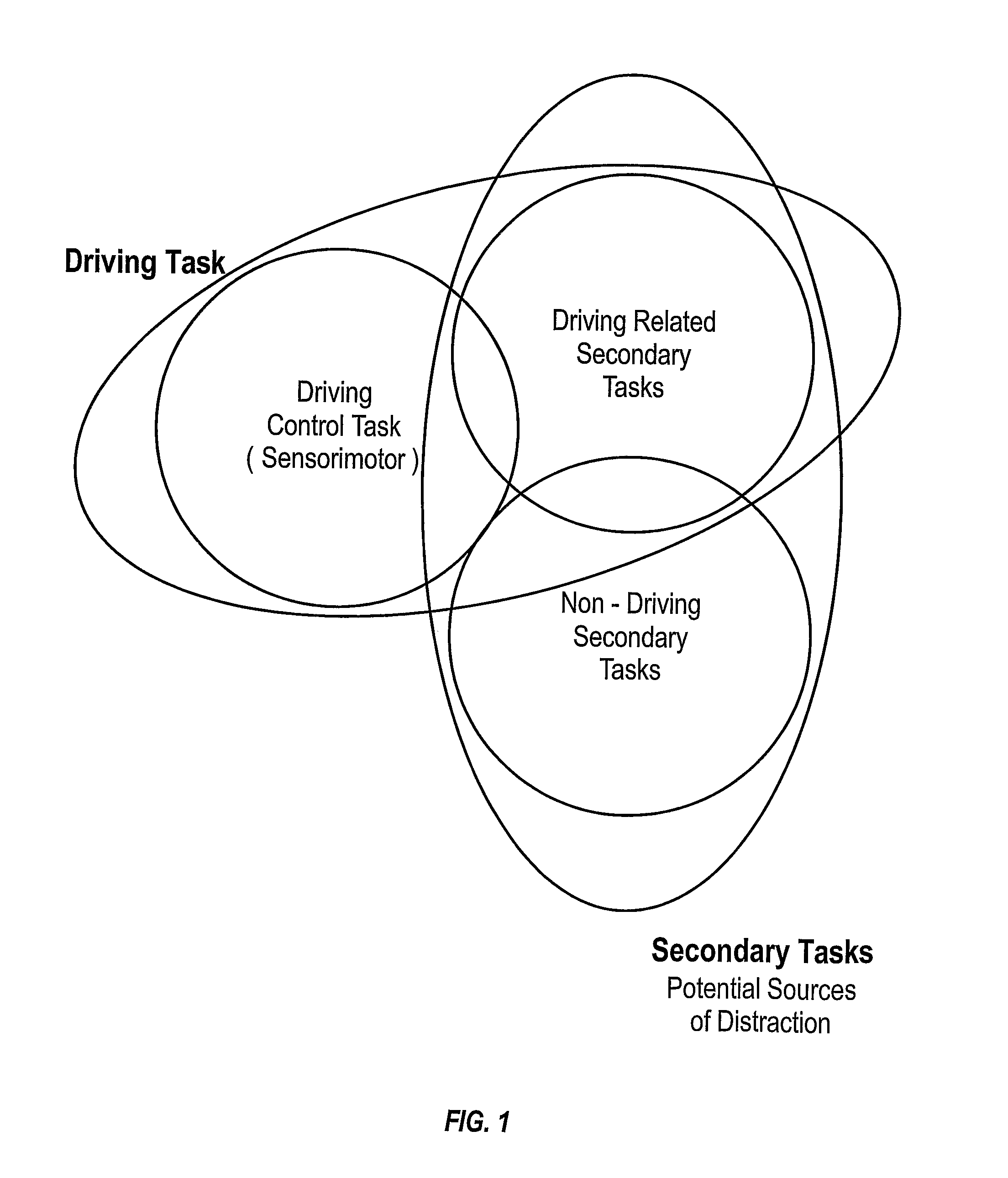

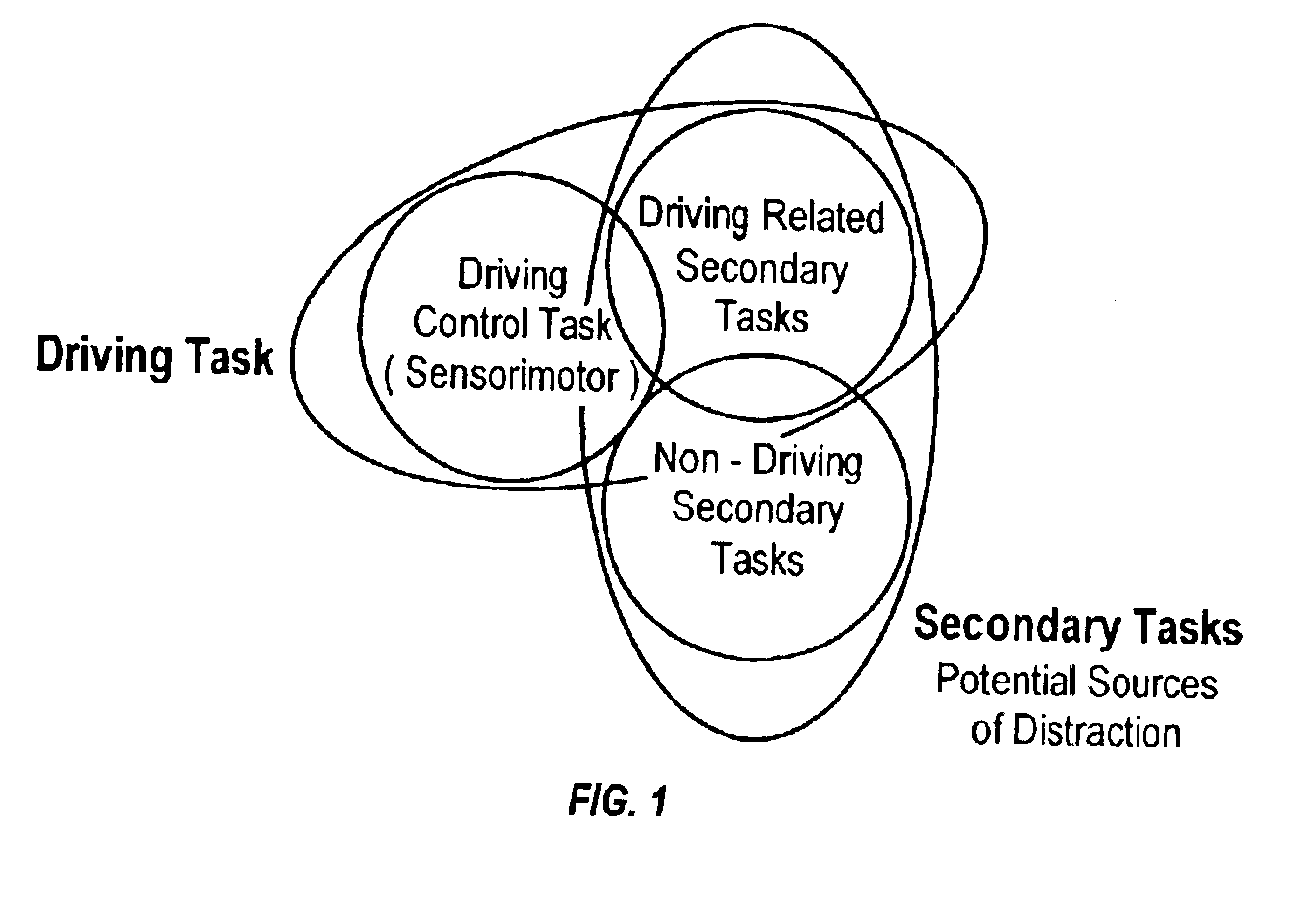

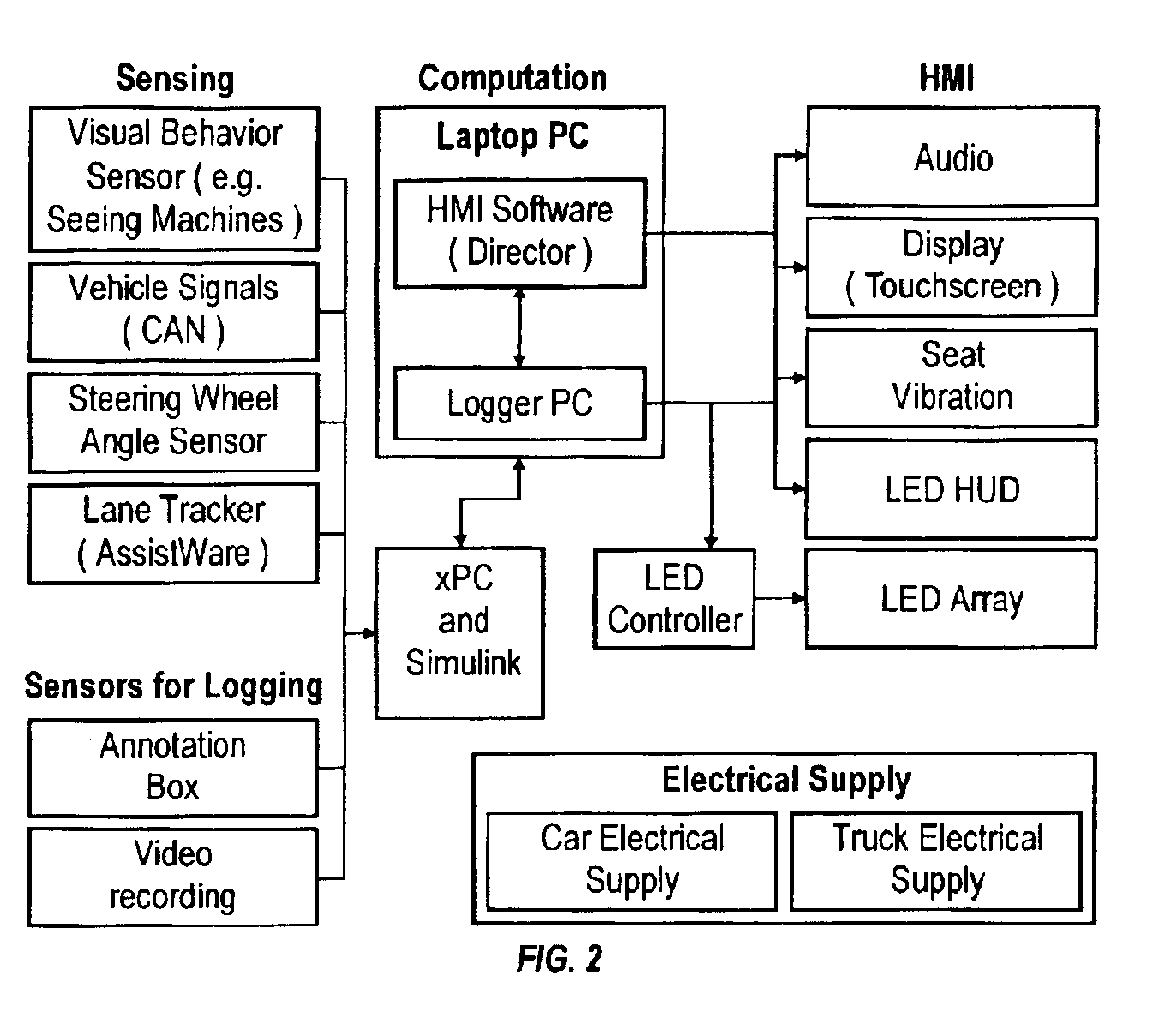

System and method for monitoring and managing driver attention loads

InactiveUS6974414B2Improve securityIncrease workloadCharacter and pattern recognitionDiagnostic recording/measuringHead movementsDriver/operator

System and method for monitoring the physiological behavior of a driver that includes measuring a physiological variable of a driver, assessing a driver's behavioral parameter on the basis of at least said measured physiological variable, and informing the driver of the assessed driver's behavioral parameter. The measurement of the physiological variable can include measuring a driver's eye movement, measuring a driver's eye-gaze direction, measuring a driver's eye-closure amount, measuring a driver's blinking movement, measuring a driver's head movement, measuring a driver's head position, measuring a driver's head orientation, measuring driver's movable facial features, and measuring a driver's facial temperature image.

Owner:VOLVO TECH

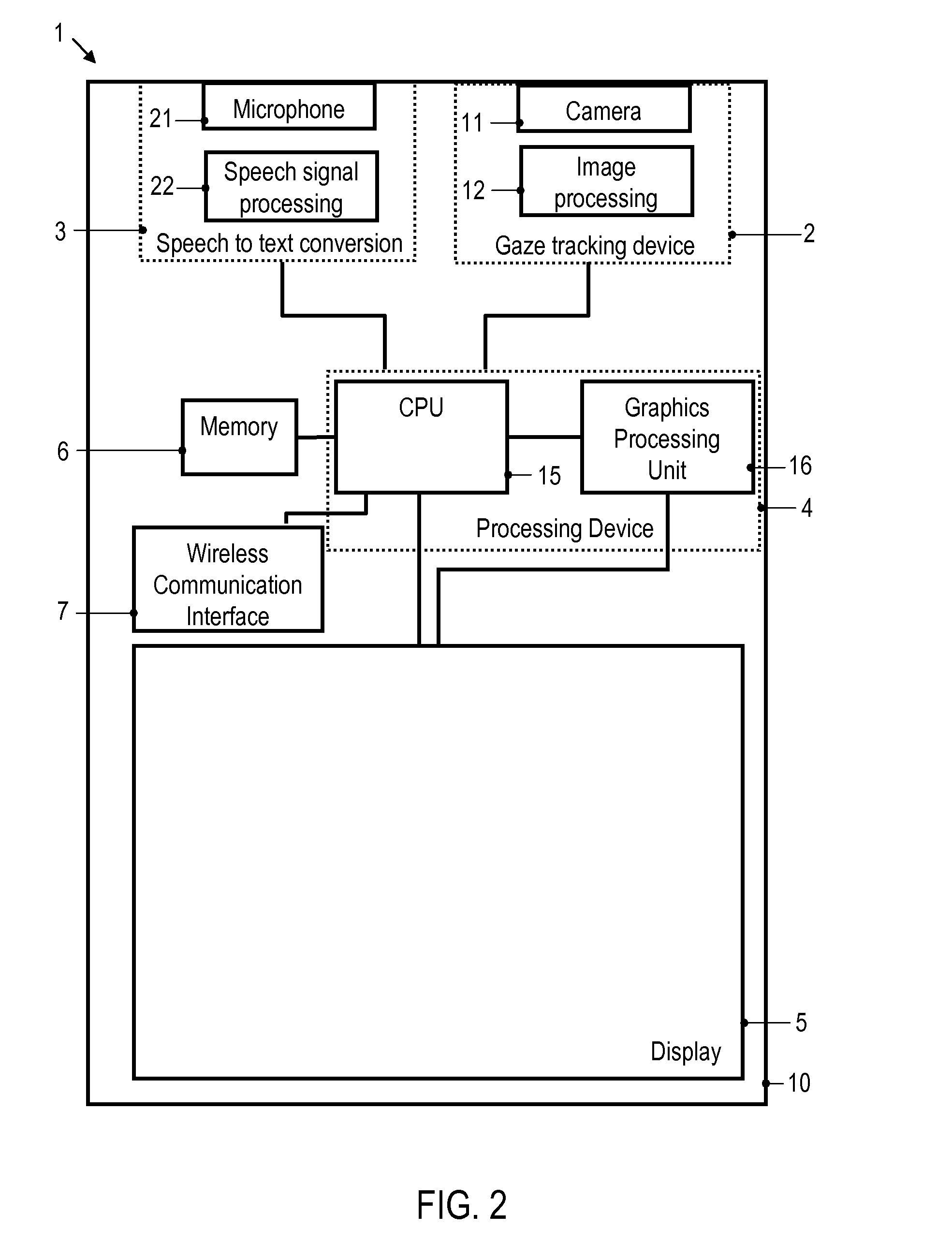

Portable Electronic Equipment and Method of Operating a User Interface

InactiveUS20150364140A1Easy to editInput/output for user-computer interactionNatural language data processingText editingDisplay device

A portable electronic equipment comprises a speech to text conversion module configured to generate a text by performing a speech to text conversion. A gaze tracking device is configured to track an eye gaze direction of a user on a display on which the text is displayed. The portable electronic equipment is configured to selectively activate a text editing function based on the tracked eye gaze direction.

Owner:SONY MOBILE COMM INC

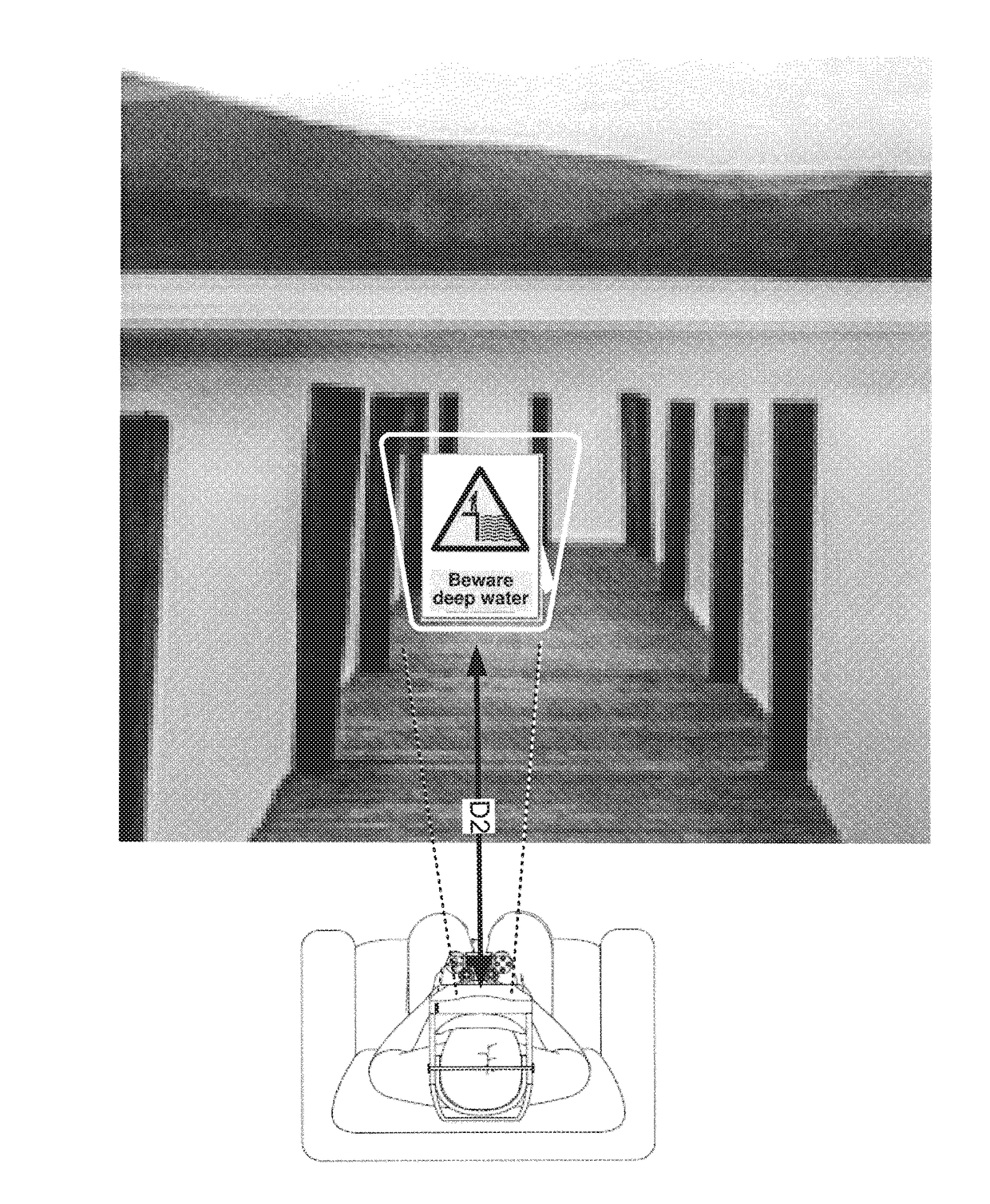

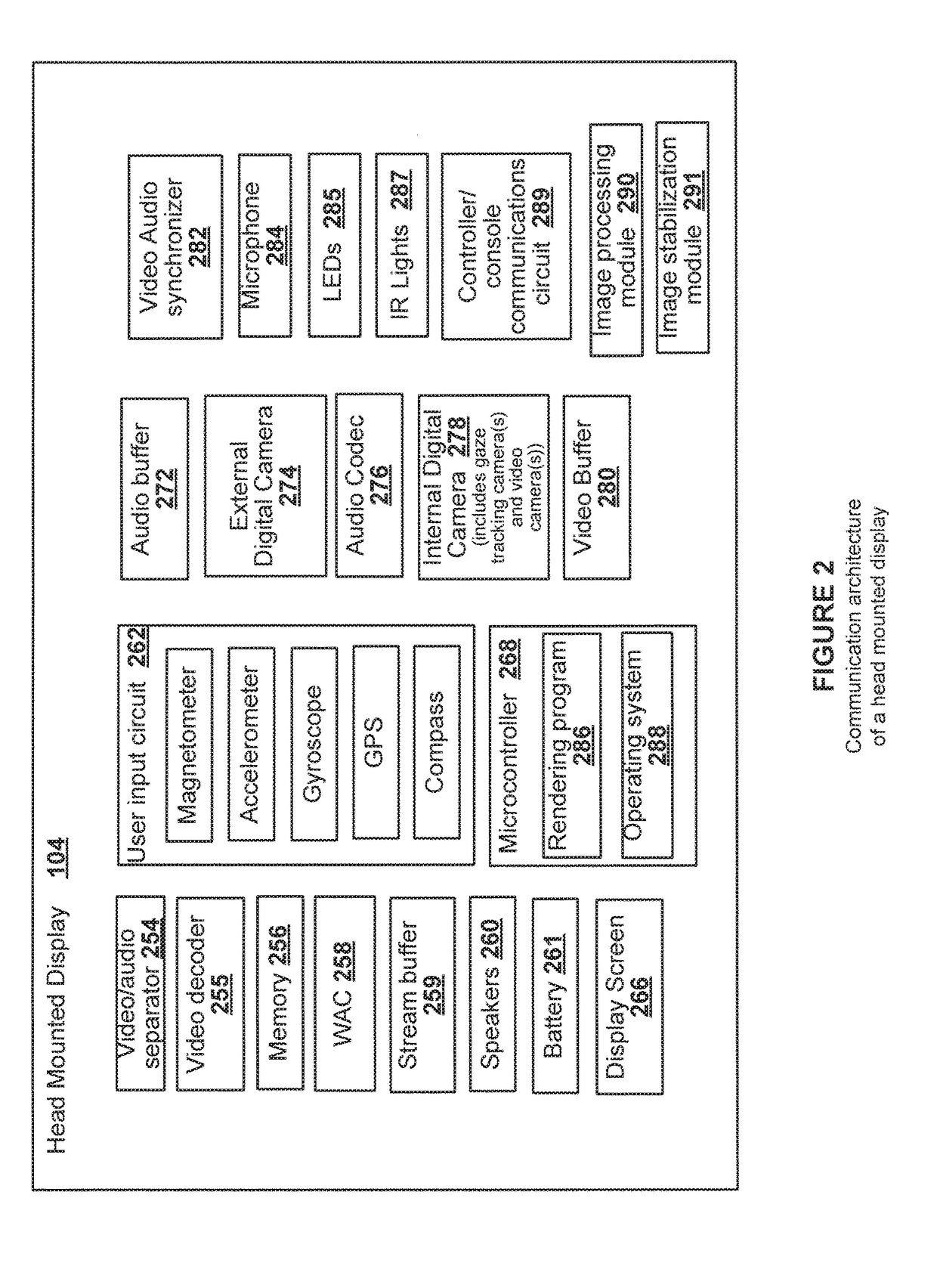

HMD Transitions for Focusing on Specific Content in Virtual-Reality Environments

ActiveUS20170358141A1Improve viewing effectSatisfying immersive experienceInput/output for user-computer interactionTelevision system detailsCamera lensDisplay device

Methods and systems for presenting an object on to a screen of a head mounted display (HMD) include receiving an image of a real-world environment in proximity of a user wearing the HMD. The image is received from one or more forward facing cameras of the HMD and processed for rendering on a screen of the HMD by a processor within the HMD. A gaze direction of the user wearing the HMD, is detected using one or more gaze detecting cameras of the HMD that are directed toward one or each eye of the user. Images captured by the forward facing cameras are analyzed to identify an object captured in the real-world environment that is in line with the gaze direction of the user, wherein the image of the object is rendered at a first virtual distance that causes the object to appear out-of-focus when presented to the user. A signal is generated to adjust a zoom factor for lens of the one or more forward facing cameras so as to cause the object to be brought into focus. The adjustment of the zoom factor causes the image of the object to be presented on the screen of the HMD at a second virtual distance that allows the object to be discernible by the user.

Owner:SONY COMPUTER ENTERTAINMENT INC

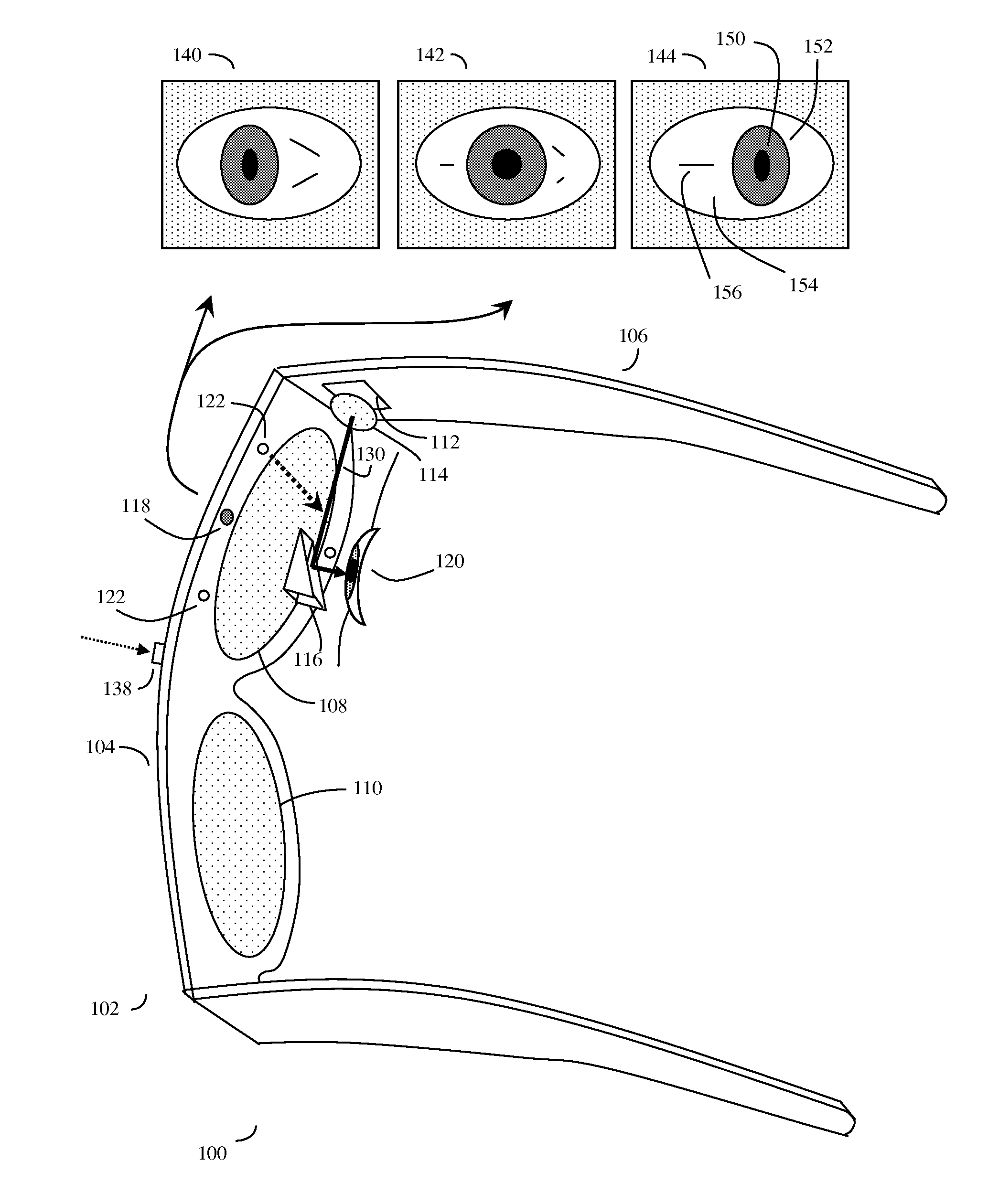

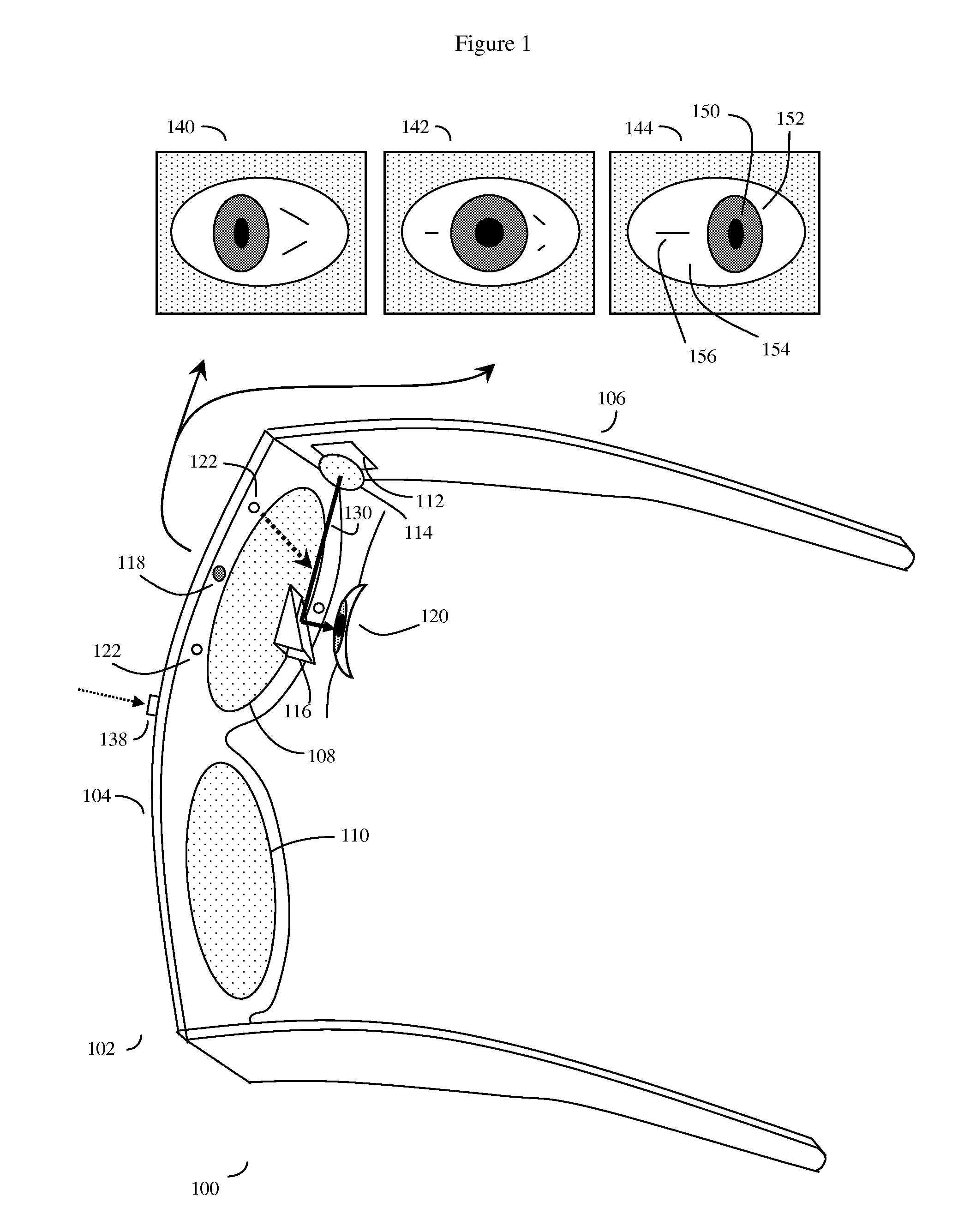

Eye gaze user interface and method

ActiveUS20120019662A1Cathode-ray tube indicatorsClosed circuit television systemsGaze directionsVisual perception

A software controlled user interface and method for an eye gaze controlled device, designed to accommodate angular accuracy versus time averaging tradeoffs for eye gaze direction sensors. The method can scale between displaying a small to large number of different eye gaze target symbols at any given time, yet still transmit a large array of different symbols to outside devices with minimal user training. At least part of the method may be implemented by way of a virtual window onto the surface of a virtual cylinder, with eye gaze sensitive symbols that can be rotated by eye gaze thus bringing various groups of symbols into view, and then selected by continual gazing. Specific examples of use of this interface and method on an eyeglasses-like head-mountable, vision-controlled, device are disclosed, along with various operation examples including sending and receiving text messages, control of robotic devices and control of remote vehicles.

Owner:SNAP INC

Method and installation for detecting and following an eye and the gaze direction thereof

ActiveUS20060238707A1Effective installationAcquiring/recognising eyesEye diagnosticsDisplay devicePupil

When detecting the position and gaze direction of eyes, a photo sensor (1) and light sources (2, 3) placed around a display (1) and a calculation and control unit (6) are used. One of the light sources is placed around the sensor and includes inner and outer elements (3′; 3″). When only the inner elements are illuminated, a strong bright eye effect in a captured image is obtained, this resulting in a simple detection of the pupils and thereby a safe determination of gaze direction. When only the outer elements and the outer light sources (2) are illuminated, a determination of the distance of the eye from the photo sensor is made. After it has been possible to determine the pupils in an image, in the following captured images only those areas around the pupils are evaluated where the images of the eyes are located. Which one of the eyes that is the left eye and the right eye can be determined by following the images of the eyes and evaluating the positions thereof in successively captured images.

Owner:TOBII TECH AB

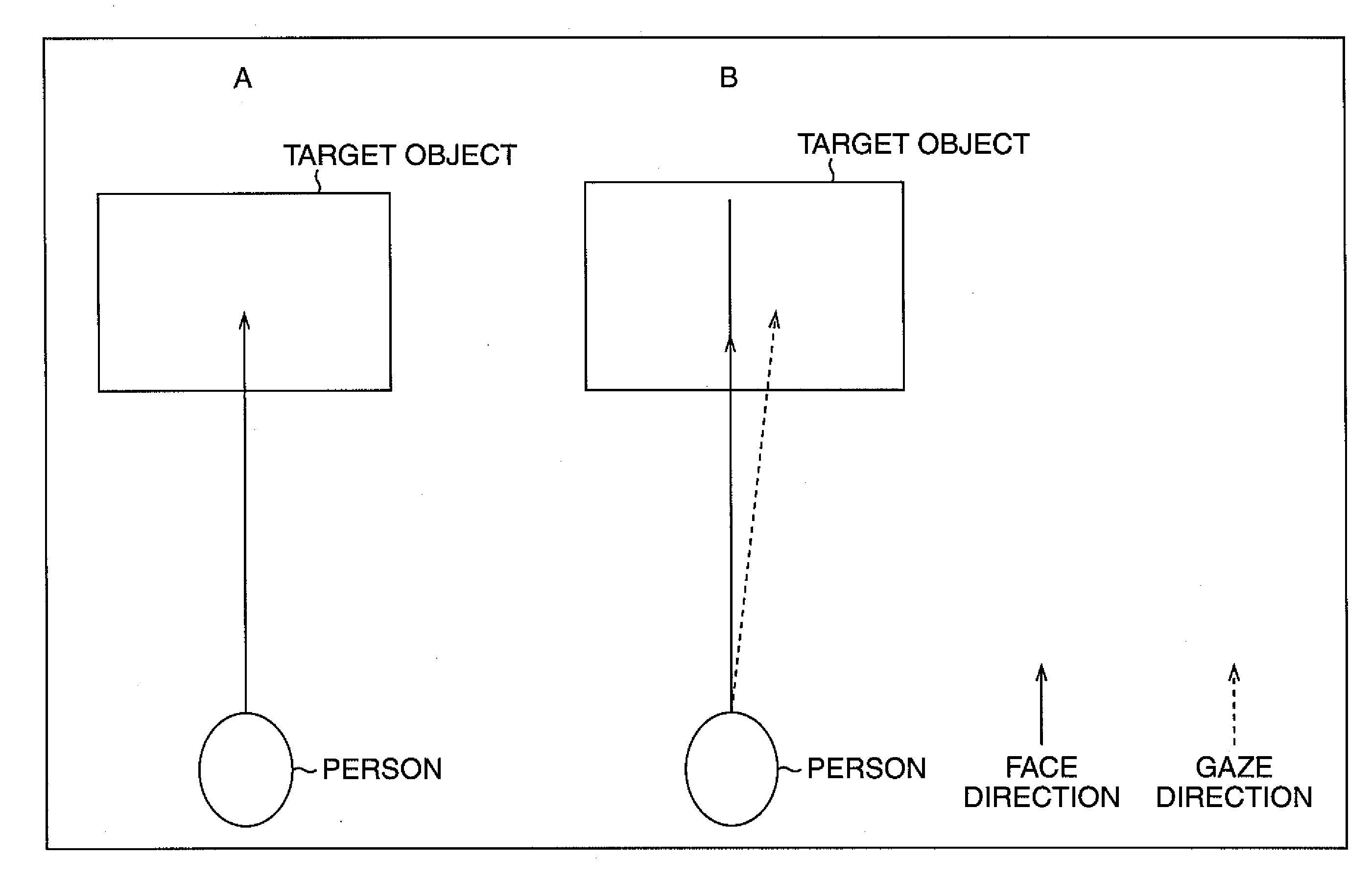

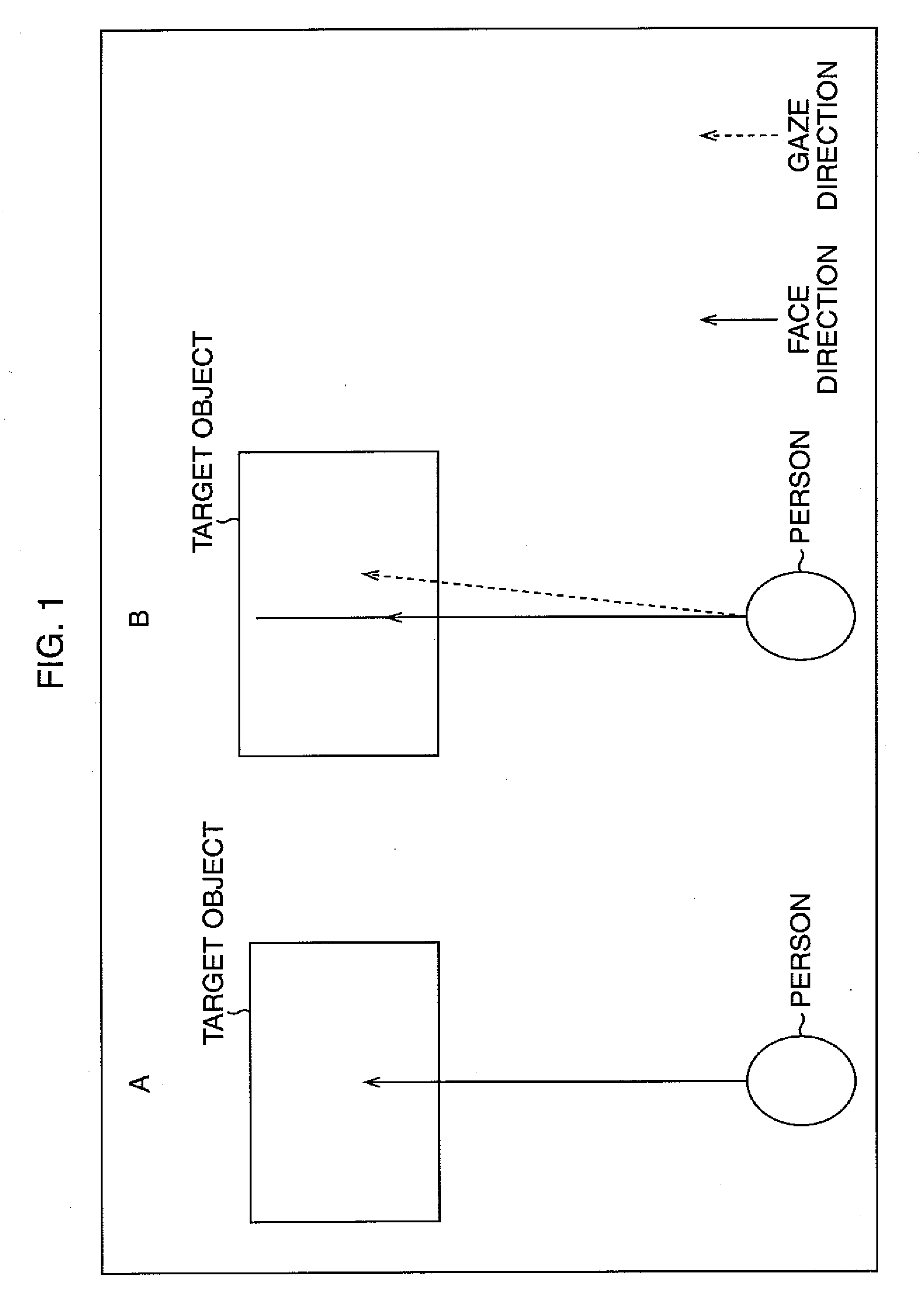

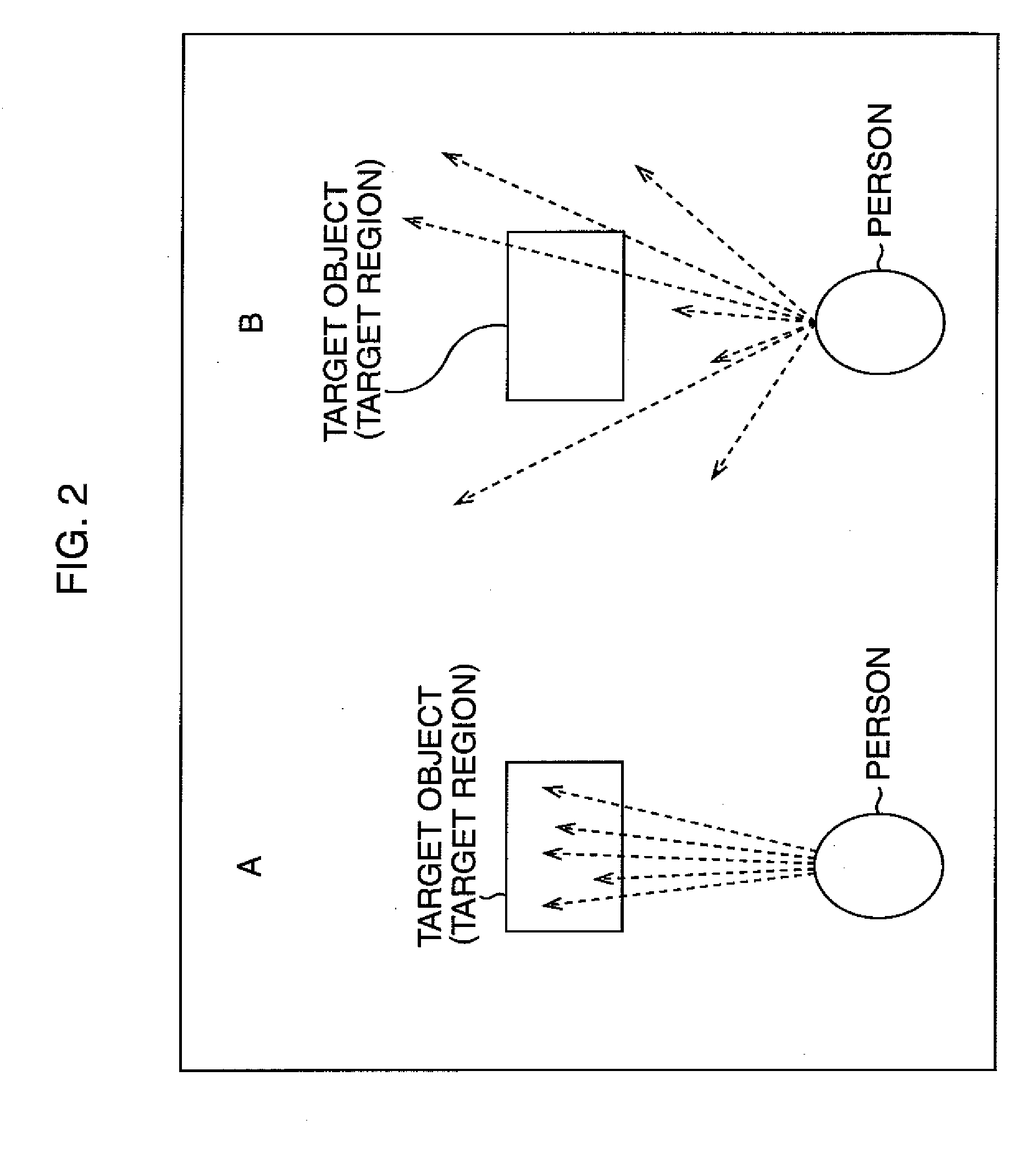

Monitoring device, monitoring method, control device, control method, and program

ActiveUS20090022368A1Process be performScene recognitionInput/output processes for data processingGaze directionsFace orientation

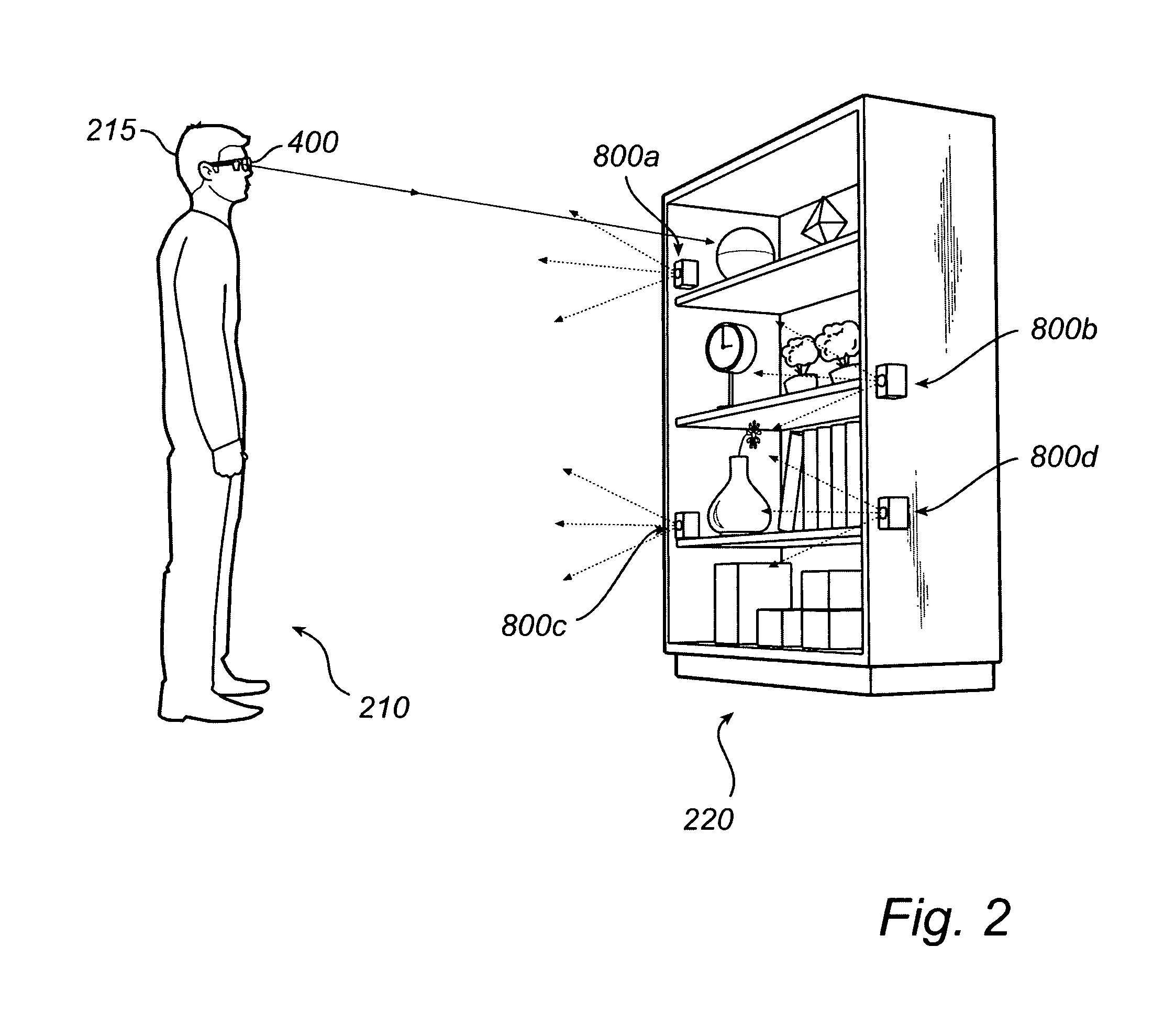

The present invention relates to a monitoring device, monitoring method, control device, control method, and program that use information on a face direction or gaze direction of a person to cause a device to perform processing in accordance with a movement or status of the person. A target detector 251 detects a target object. A face direction / gaze direction detector 252 detects a face direction and gaze direction of a person, determines a movement or status of the person on the basis of the relationship between the detected target object and the face direction or gaze direction of the person, and notifies face direction / gaze direction information using portions 253-1 to 253-3 of a result of the determination. The face direction / gaze direction information using portions 253-1 to 253-3 cause the device to execute predetermined processing on the basis of the result of the determination. The present invention can be applied to, for example, an on-vehicle system.

Owner:ORMON CORP

System and methods for controlling automatic scrolling of information on a display or screen

InactiveUS6603491B2Input/output for user-computer interactionCathode-ray tube indicatorsDisplay deviceBiological activation

A system for controlling the automatic scrolling of information on a computer display or screen is disclosed. The system includes a gimbaled sensor system for following the position of the user's head and user's eye, and scroll activating interface algorithm to find screen gaze coordinates implemented so the scrolling function is performed based upon the screen gaze coordinates of the user's eye relative to a certain activation area(s) on the display or screen. A method of controlling automatic scrolling of information on a display or screen by a user is also disclosed. The method generally includes the acts of finding a screen gaze coordinate on the display or screen of the user, determining whether the screen gaze coordinate is within at least one activated control region, and activating scrolling to provide a desired display of information when the gaze direction is within at least one activated control region.

Owner:LEMELSON JEROME H +1

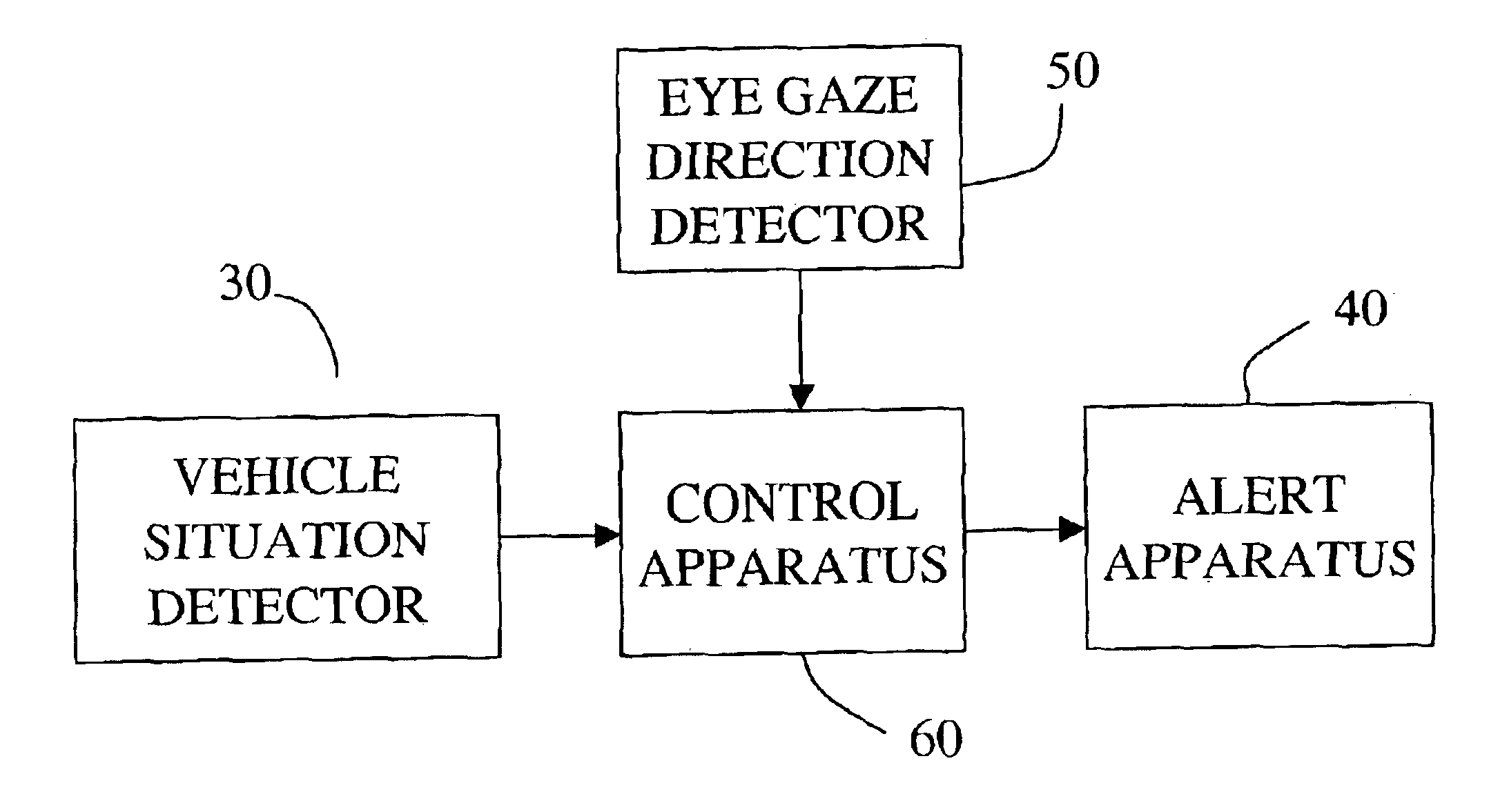

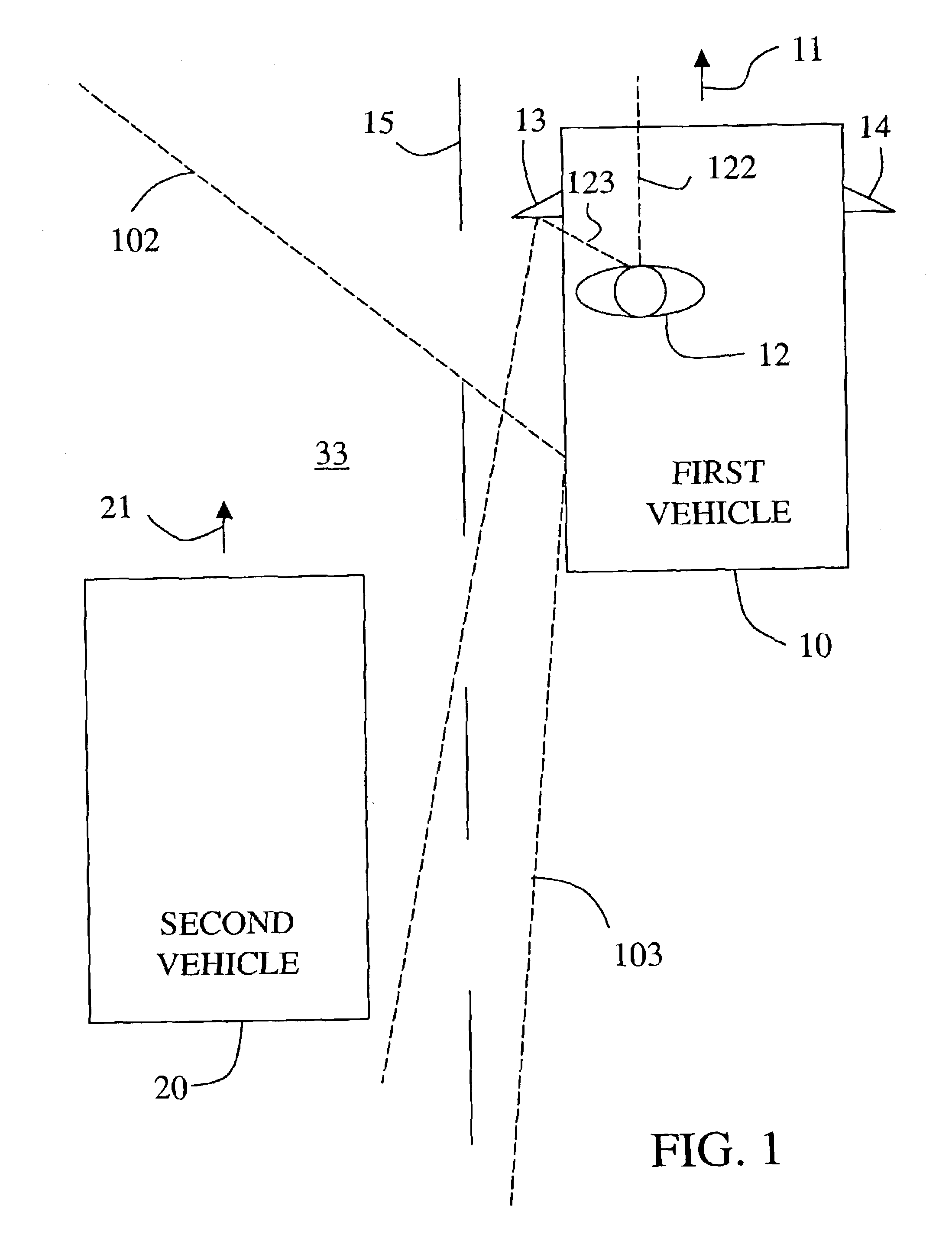

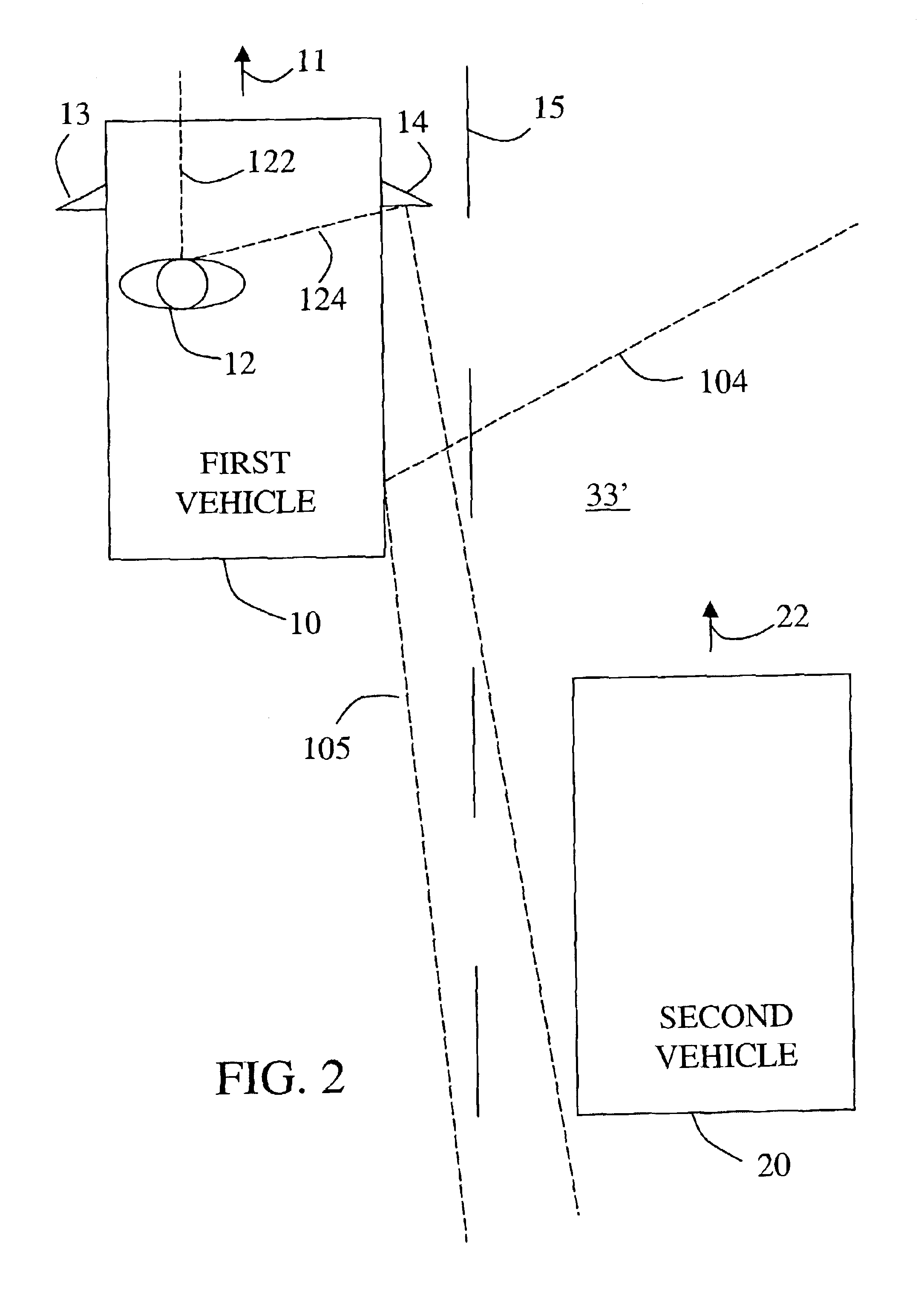

Vehicle situation alert system with eye gaze controlled alert signal generation

InactiveUS6859144B2Quick responseReduce the possibilityAnti-collision systemsCharacter and pattern recognitionHigh probabilityData storing

A system responds to detection of a vehicle situation by comparing a sensed eye gaze direction of the vehicle operator with data stored in memory. The stored data defines a first predetermined vehicle operator eye gaze direction indicating a high probability of operator desire that an alert signal be given and a second predetermined vehicle operator eye gaze direction indicating a low probability of operator desire that an alert signal be given. On the basis of the comparison, a first or second alert action is selected and an alert apparatus controlled accordingly. For example, the alternative alert actions may include (1) generating an alert signal versus not generating the alert signal, (2) generating an alert signal in a first manner versus generating an alert signal in a second manner, or (3) selecting a first value versus selecting a second value for a parameter in a mathematical control algorithm to determine when or whether to generate an alert signal.

Owner:APTIV TECH LTD

System and methods for controlling automatic scrolling of information on a display screen

InactiveUS6421064B1Input/output for user-computer interactionCathode-ray tube indicatorsComputerized systemBiological activation

A system for controlling the automatic scrolling of information includes a screen, a computer system, gimbaled sensor system for following and tracking the position and movement of the user's head and user's eye, and a scroll activating interface algorithm using a neural network to find screen gaze coordinates implemented by the computer system so that scrolling function is performance based upon the screen gaze coordinates of the user's eye relative to a certain activation area on the screen. A method of controlling scrolling includes the acts of finding a screen gaze coordinates on the screen, determining whether the screen gaze coordinate is within at least one activated control region, and activating scrolling to provide a display of information when the gaze direction is within at least one activated control region.

Owner:LEMELSON JEROME H +1

Detection of gaze point assisted by optical reference signal

ActiveUS20110279666A1Extension of timeReduce energy consumptionAcquiring/recognising eyesColor television detailsUses eyeglassesEyewear

A gaze-point detection system includes at least one infrared (IR) signal source to be placed in a test scene as a reference point, a pair of eye glasses to be worn by a person, and a data processing and storage unit for calculating a gaze point of the person wearing the pair of eye glasses. The pair of eye glasses includes an image sensor, an eye-tracking unit and a camera. The image sensor detects IR signals from the at least one IR signal source and generates an IR signal source tracking signal. The eye-tracking unit determines adapted to determine the gaze direction of the person and generates an eye-tracking signal, and the camera acquires a test scene picture. The data processing and storage unit communicates with the pair of eye glasses and calculates the gaze point relative to the test scene picture.

Owner:TOBII TECH AB

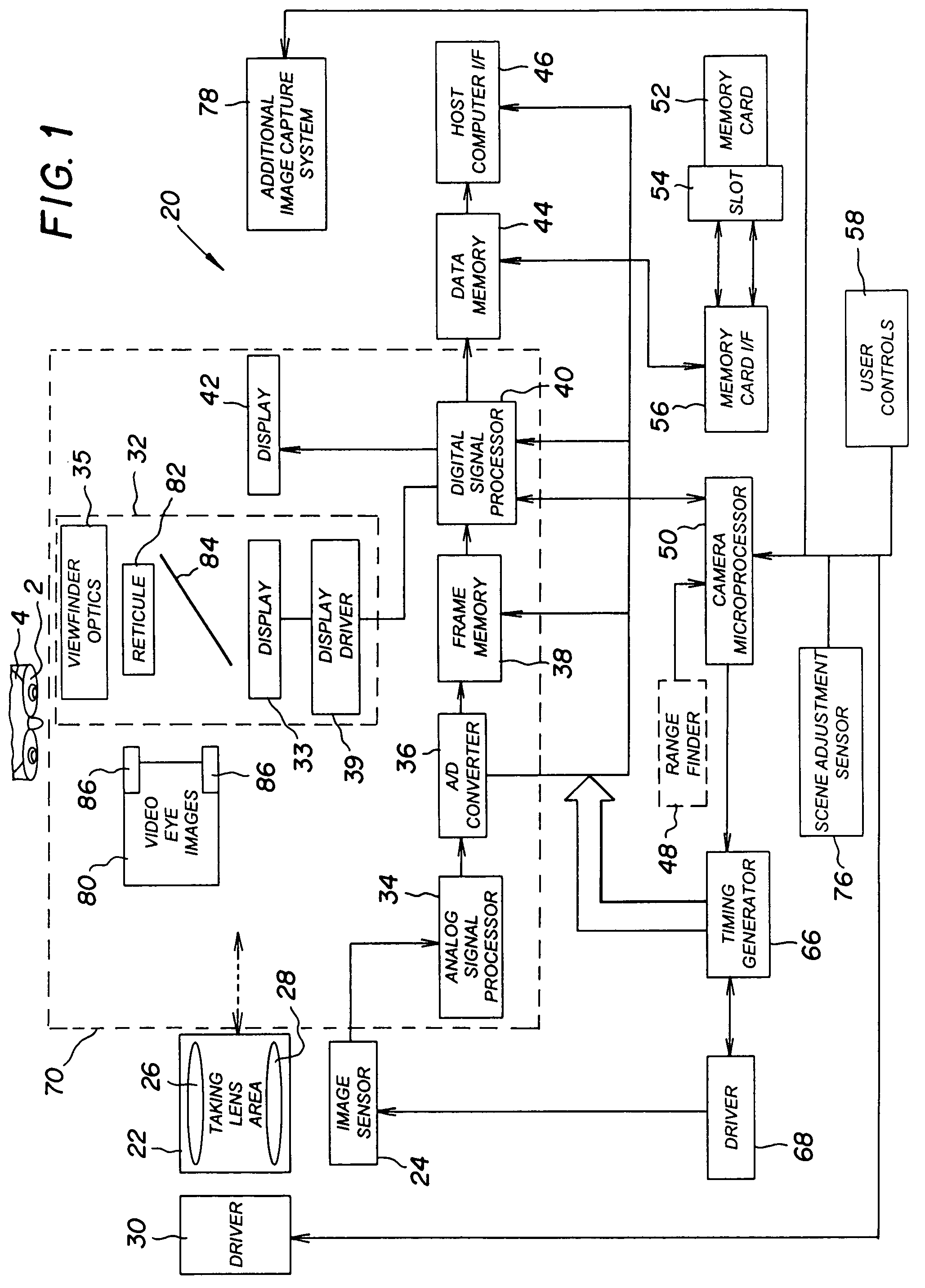

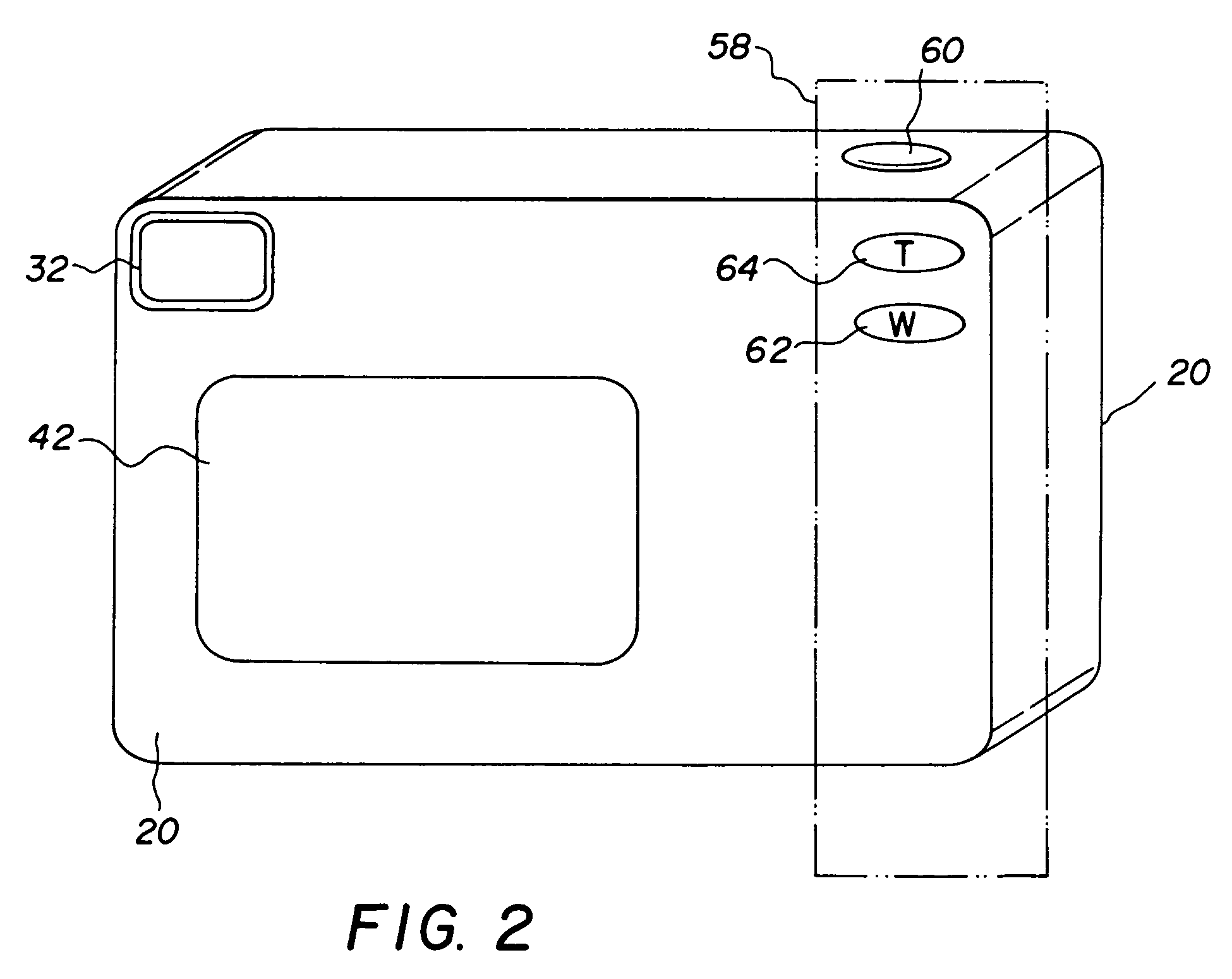

Camera system with eye monitoring

InactiveUS7206022B2Efficient and naturalistic methodImprove image searchingTelevision system detailsCharacter and pattern recognitionMonitoring systemGaze directions

In one aspect of the invention a camera system is provided having an image capture system adapted to capture an image of a scene during an image capture sequence and an eye monitoring system adapted to determine eye information including a direction of the gaze of an eye of a user of the camera system. A controller is adapted to store the determined eye information including information characterizing eye gaze direction during the image capture sequence and to associate the stored eye information with the scene image.

Owner:MONUMENT PEAK VENTURES LLC

Image capture systems, devices, and methods that autofocus based on eye-tracking

Image capture systems, devices, and methods that automatically focus on objects in the user's field of view based on where the user is looking / gazing are described. The image capture system includes an eye tracker subsystem in communication with an autofocus camera to facilitate effortless and precise focusing of the autofocus camera on objects of interest to the user. The autofocus camera automatically focuses on what the user is looking at based on gaze direction determined by the eye tracker subsystem and one or more focus property(ies) of the object, such as its physical distance or light characteristics such as contrast and / or phase. The image capture system is particularly well-suited for use in a wearable heads-up display to capture focused images of objects in the user's field of view with minimal intervention from the user.

Owner:GOOGLE LLC

System and methods for controlling automatic scrolling of information on a display or screen

InactiveUS20030020755A1Input/output for user-computer interactionCathode-ray tube indicatorsDisplay deviceScrolling

Owner:LEMELSON JEROME H +1

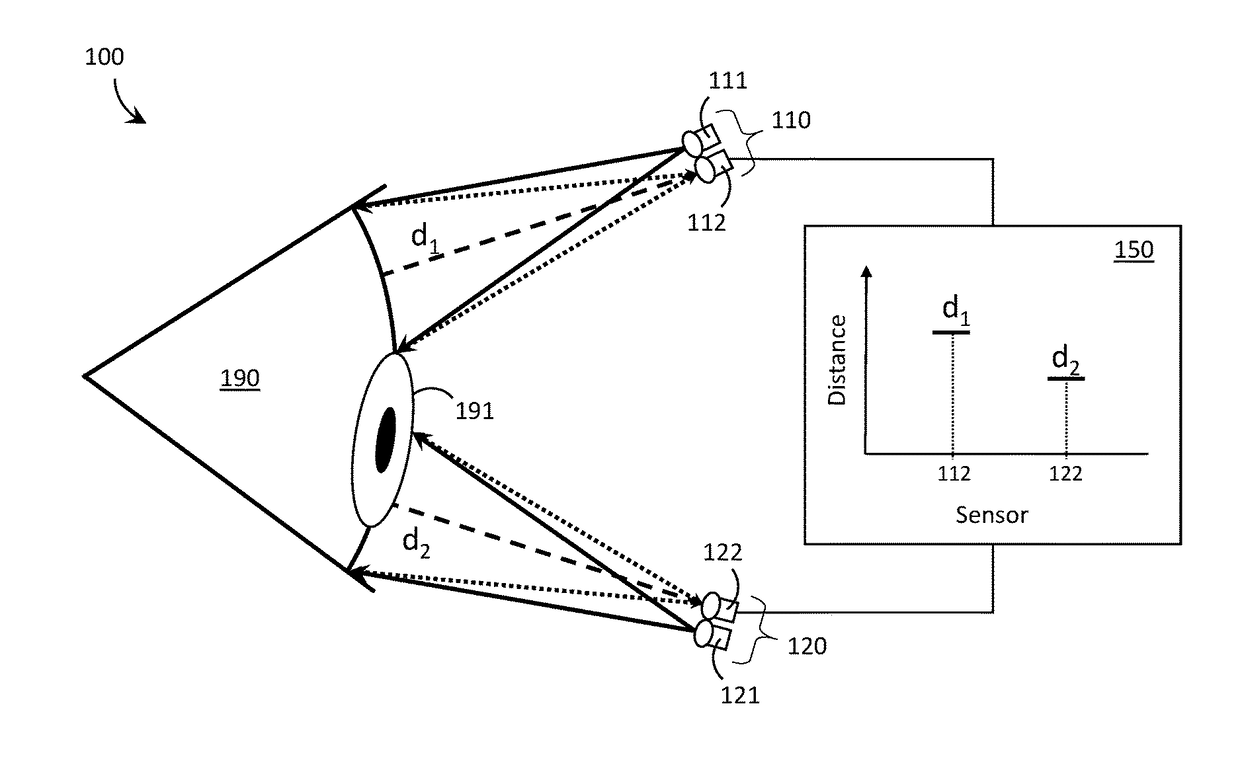

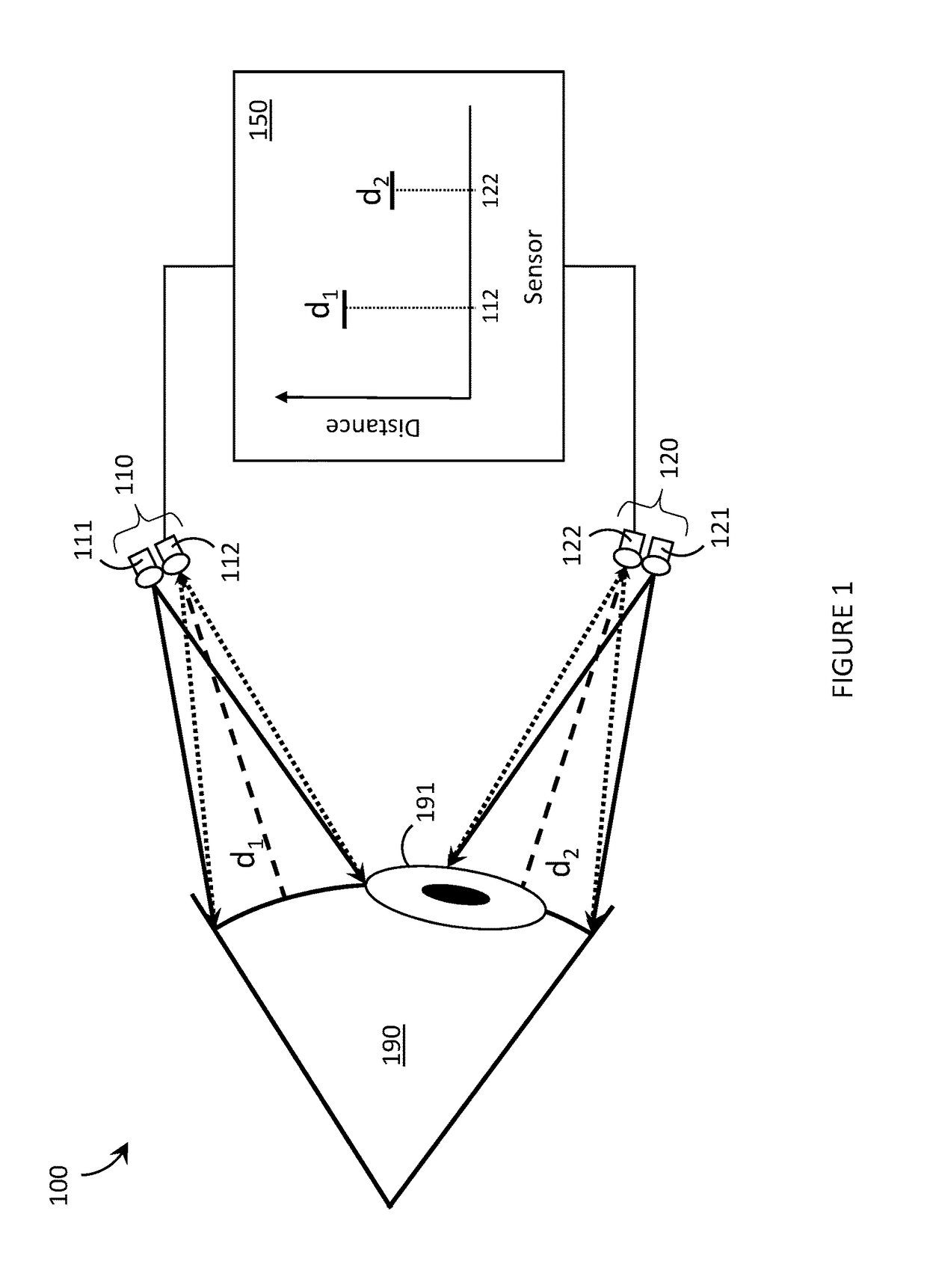

Systems, devices, and methods for proximity-based eye tracking

ActiveUS20170205876A1Input/output for user-computer interactionDetails for portable computersHead-up displayProximity sensor

Systems, devices, and methods for proximity-based eye tracking are described. A proximity sensor positioned near the eye monitors the distance to the eye, which varies depending on the position of the corneal bulge. The corneal bulge protrudes outward from the surface of the eye and so, all other things being equal, a static proximity sensor detects a shorter distance to the eye when the cornea is directed towards the proximity sensor and a longer distance to the eye when the cornea is directed away from the proximity sensor. Optical proximity sensors that operate with infrared light are used as a non-limiting example of proximity sensors. Multiple proximity sensors may be used and processed simultaneously in order to provide a more accurate / precise determination of the gaze direction of the user. Implementations in which proximity-based eye trackers are incorporated into wearable heads-up displays are described.

Owner:GOOGLE LLC

Systems and methods for providing feedback by tracking user gaze and gestures

InactiveCN102749990AInput/output for user-computer interactionGraph readingUser inputGaze directions

User interface technology that provides feedback to the user based on the user's gaze and a secondary user input, such as a hand gesture, is provided. A camera-based tracking system may track the gaze direction of a user to detect which object displayed in the user interface is being viewed. The tracking system also recognizes hand or other body gestures to control the action or motion of that object, using, for example, a separate camera and / or sensor. The user interface is then updated based on the tracked gaze and gesture data to provide the feedback.

Owner:SONY COMPUTER ENTERTAINMENT INC

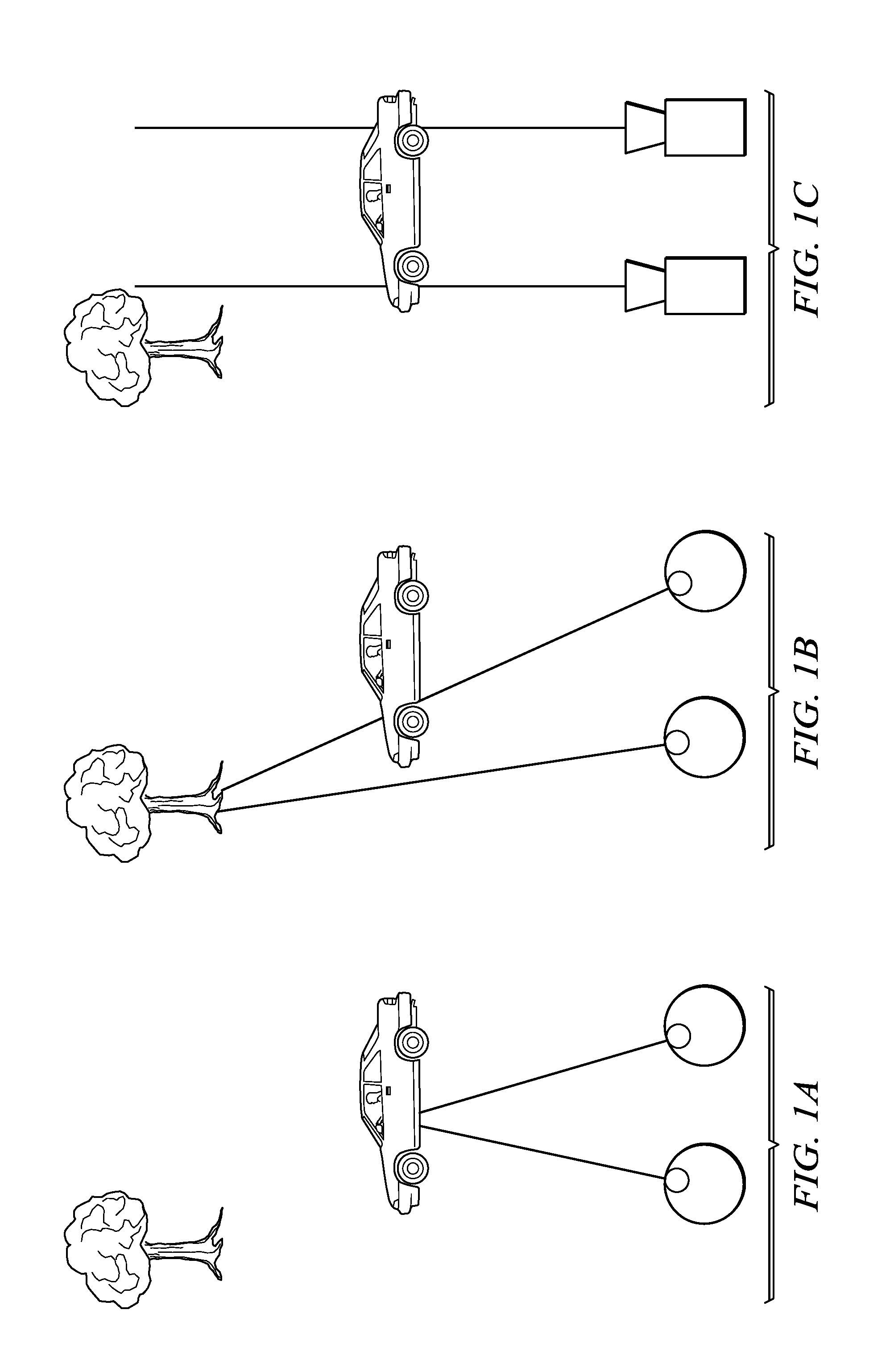

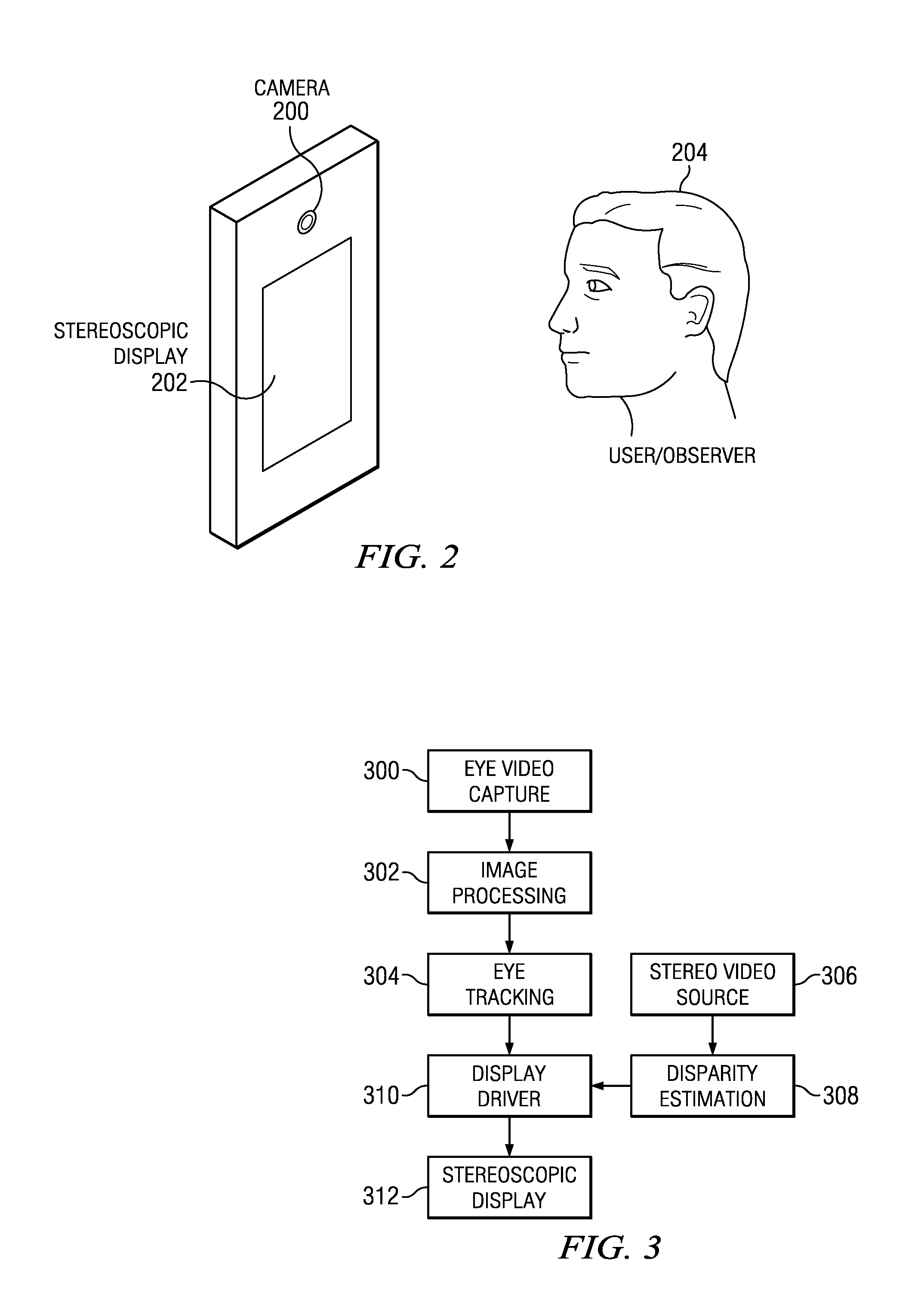

Stereoscopic Viewing Comfort Through Gaze Estimation

A method of improving stereo video viewing comfort is provided that includes capturing a video sequence of eyes of an observer viewing a stereo video sequence on a stereoscopic display, estimating gaze direction of the eyes from the video sequence, and manipulating stereo images in the stereo video sequence based on the estimated gaze direction, whereby viewing comfort of the observer is improved.

Owner:TEXAS INSTR INC

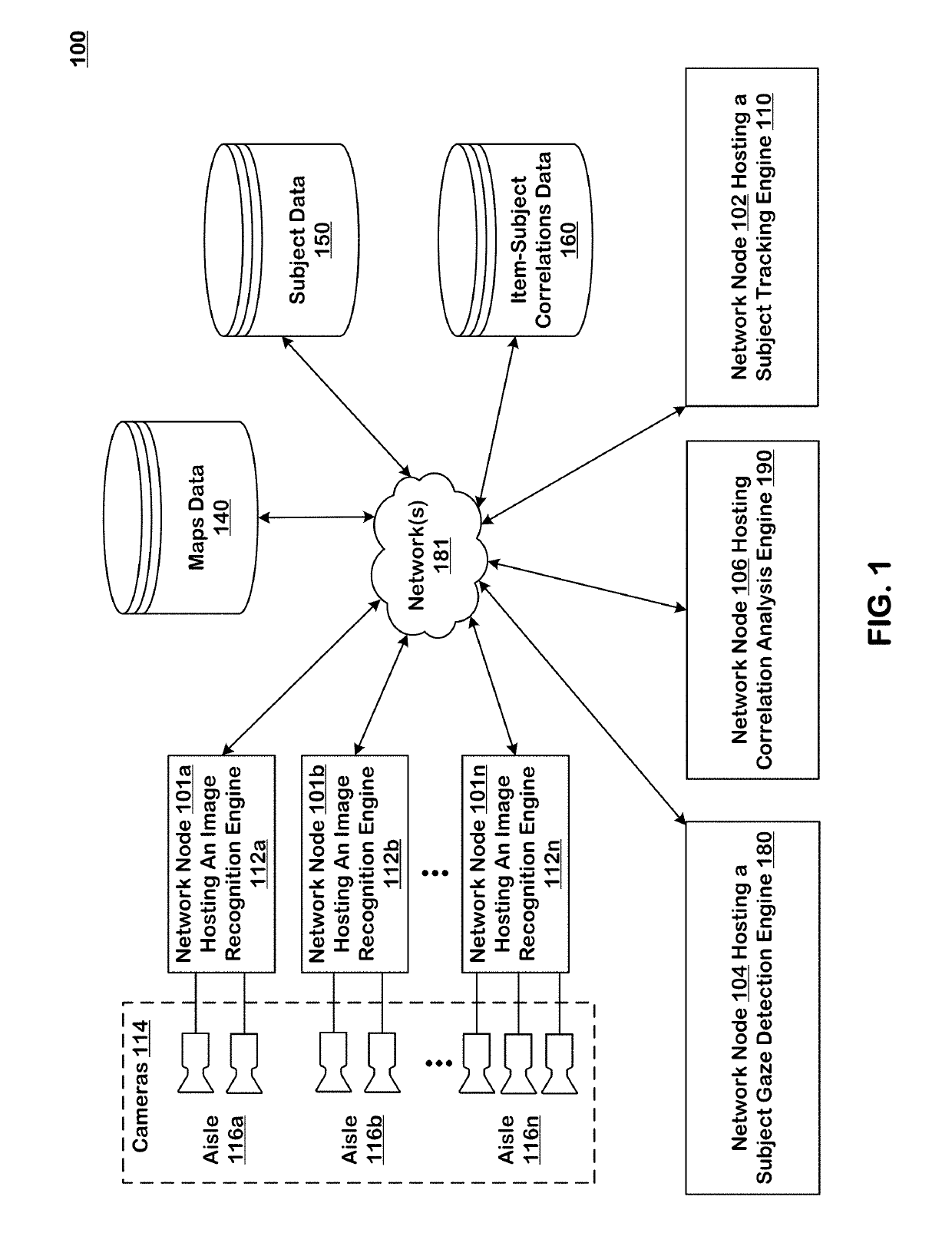

Directional impression analysis using deep learning

ActiveUS20190244386A1Improve reliabilityImage enhancementImage analysisGaze directionsSystem identification

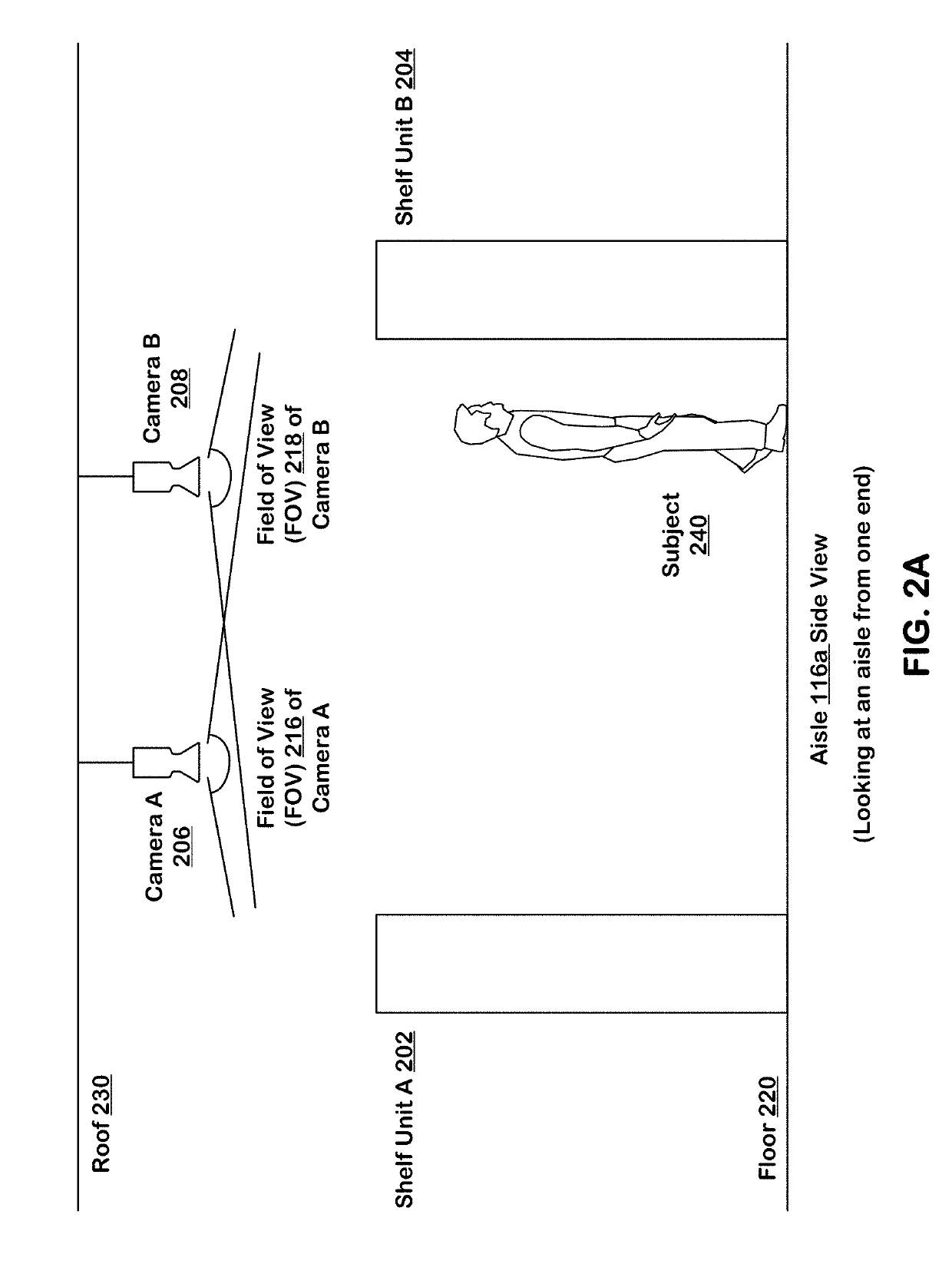

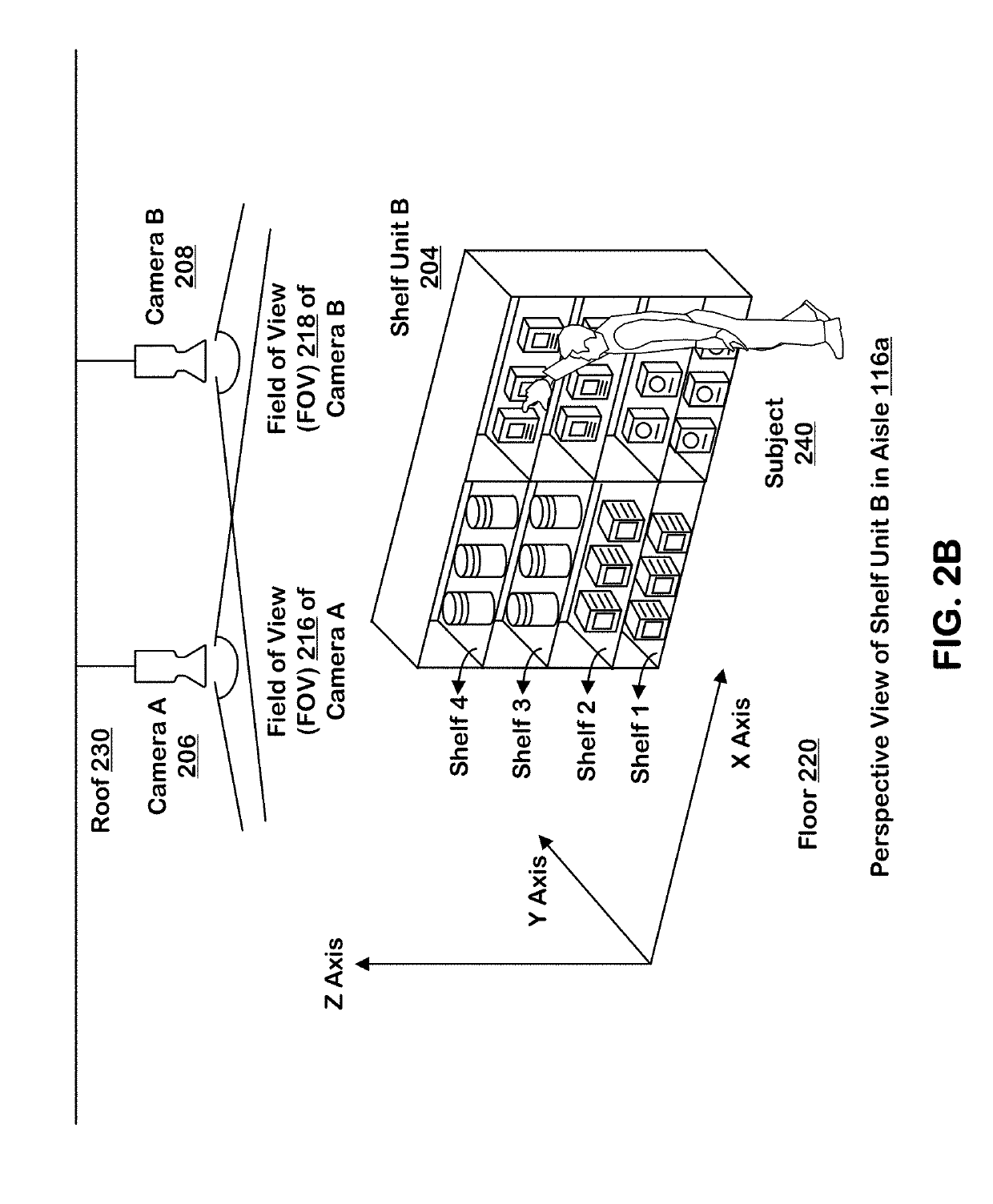

Systems and techniques are provided for detecting gaze direction of subjects in an area of real space. The system receives a plurality of sequences of frames of corresponding fields of view in the real space. The system uses sequences of frames in a plurality of sequences of frames to identify locations of an identified subject and gaze directions of the subject in the area of real space over time. The system includes logic having access to a database identifying locations of items in the area of real space. The system identifies items in the area of real space matching the identified gaze directions of the identified subject.

Owner:STANDARD COGNITION CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com