Patents

Literature

3772 results about "Recognition algorithm" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The Text Recognition Algorithm Independent Evaluation (TRAIT) is being conducted to assess the capability of text detection and recognition algorithms to correctly detect and recognize text appearing in unconstrained imagery.

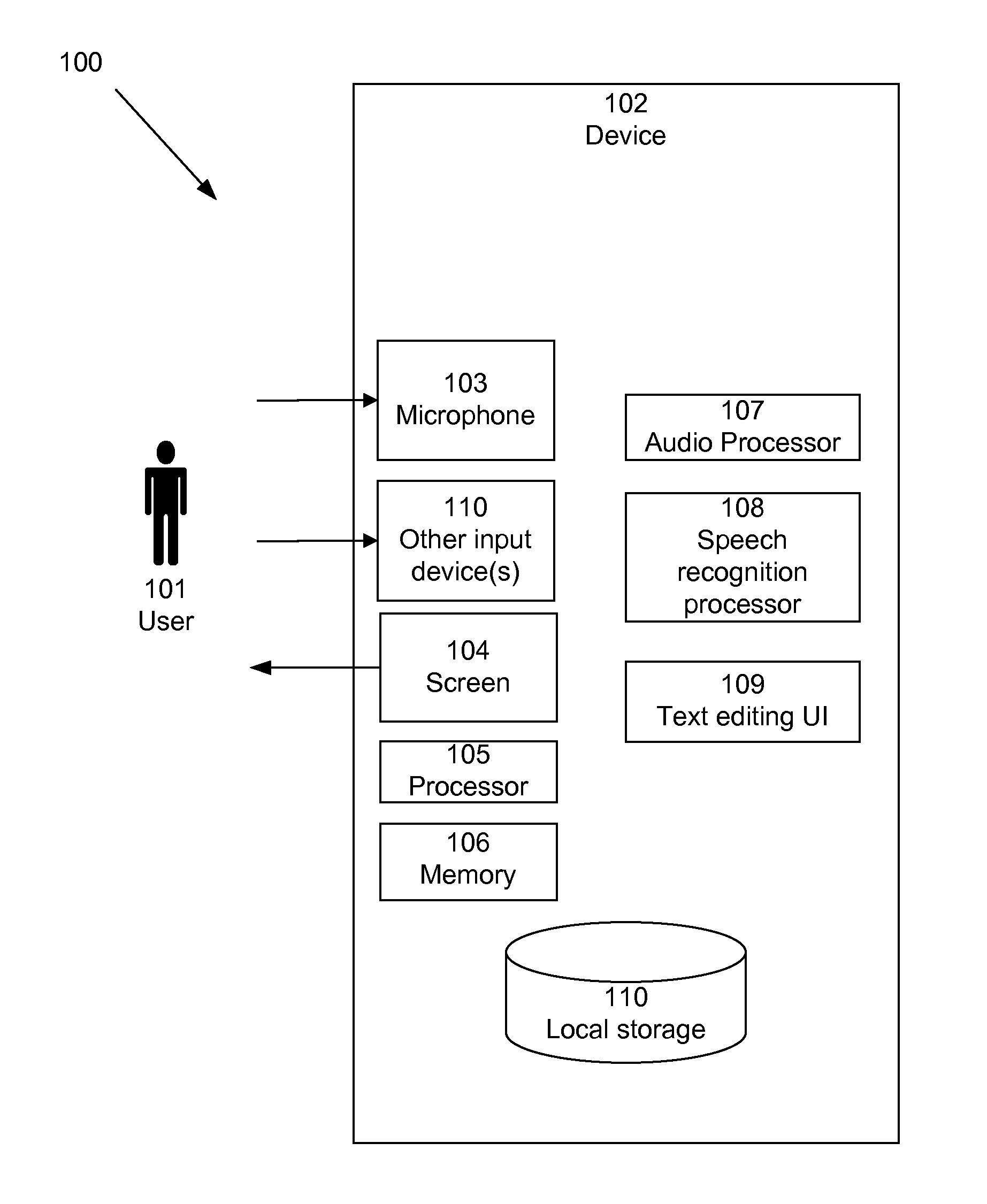

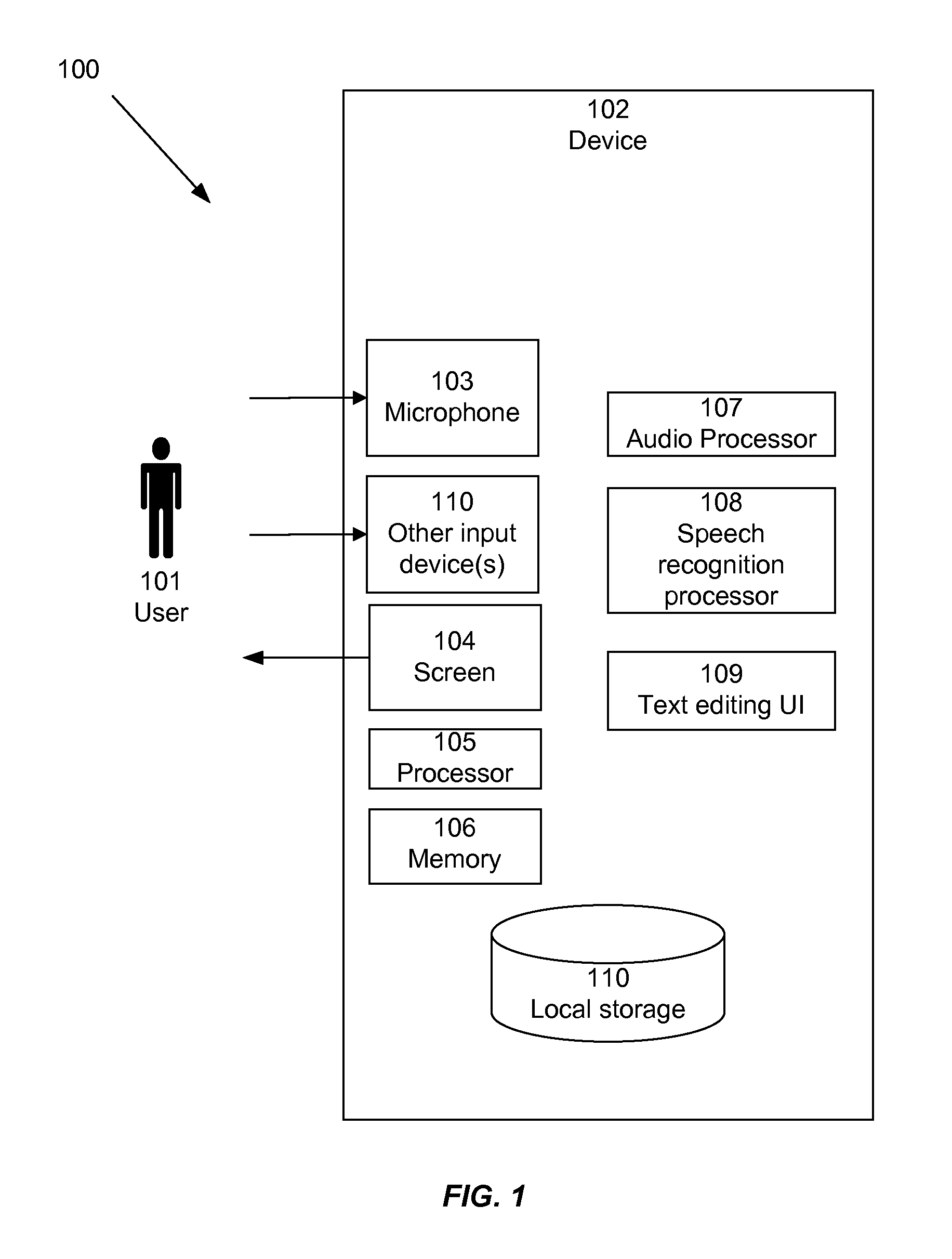

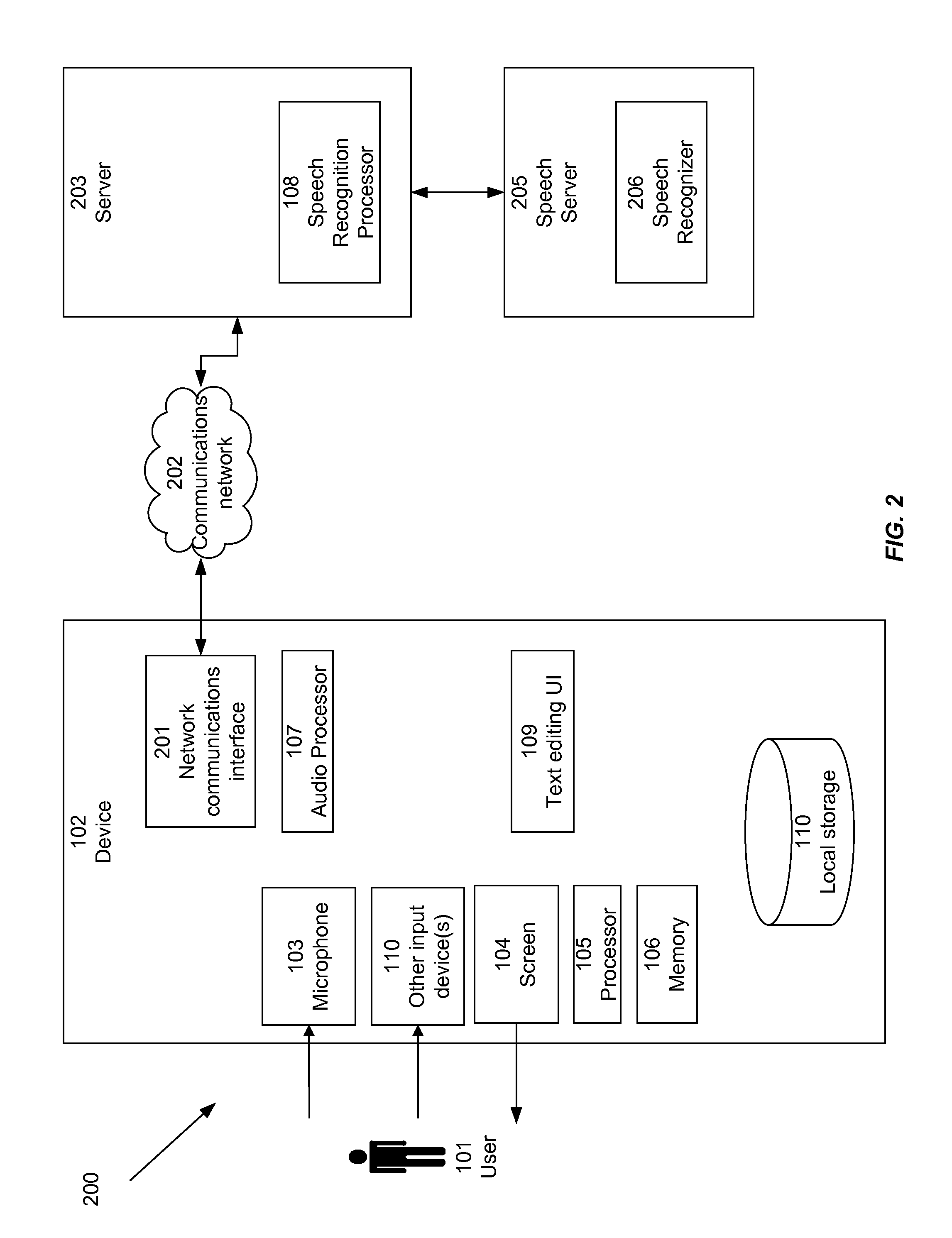

Consolidating Speech Recognition Results

InactiveUS20130073286A1Redundant elements are minimized or eliminatedChoose simpleSpeech recognitionSound input/outputRecognition algorithmSpeech identification

Candidate interpretations resulting from application of speech recognition algorithms to spoken input are presented in a consolidated manner that reduces redundancy. A list of candidate interpretations is generated, and each candidate interpretation is subdivided into time-based portions, forming a grid. Those time-based portions that duplicate portions from other candidate interpretations are removed from the grid. A user interface is provided that presents the user with an opportunity to select among the candidate interpretations; the user interface is configured to present these alternatives without duplicate elements.

Owner:APPLE INC

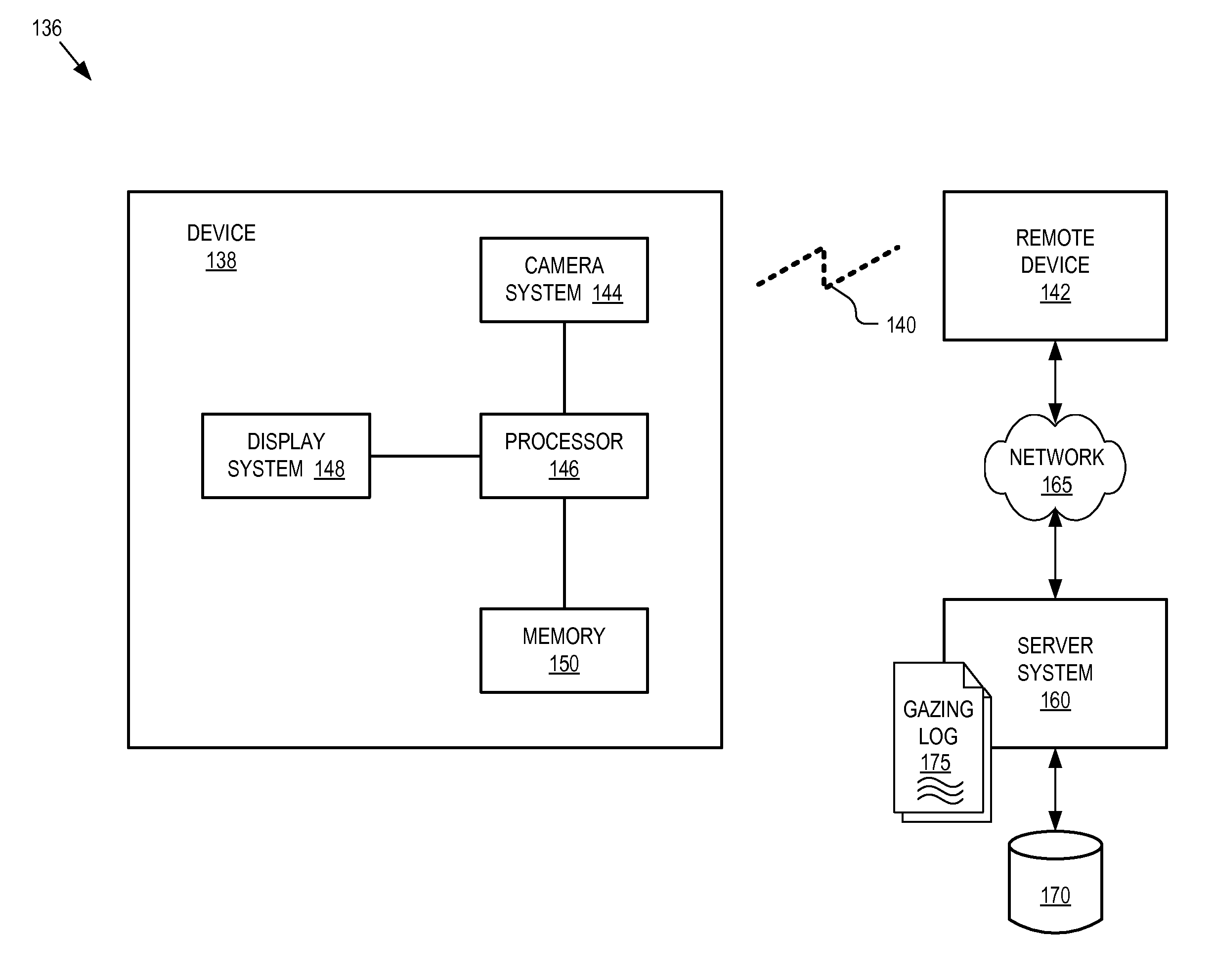

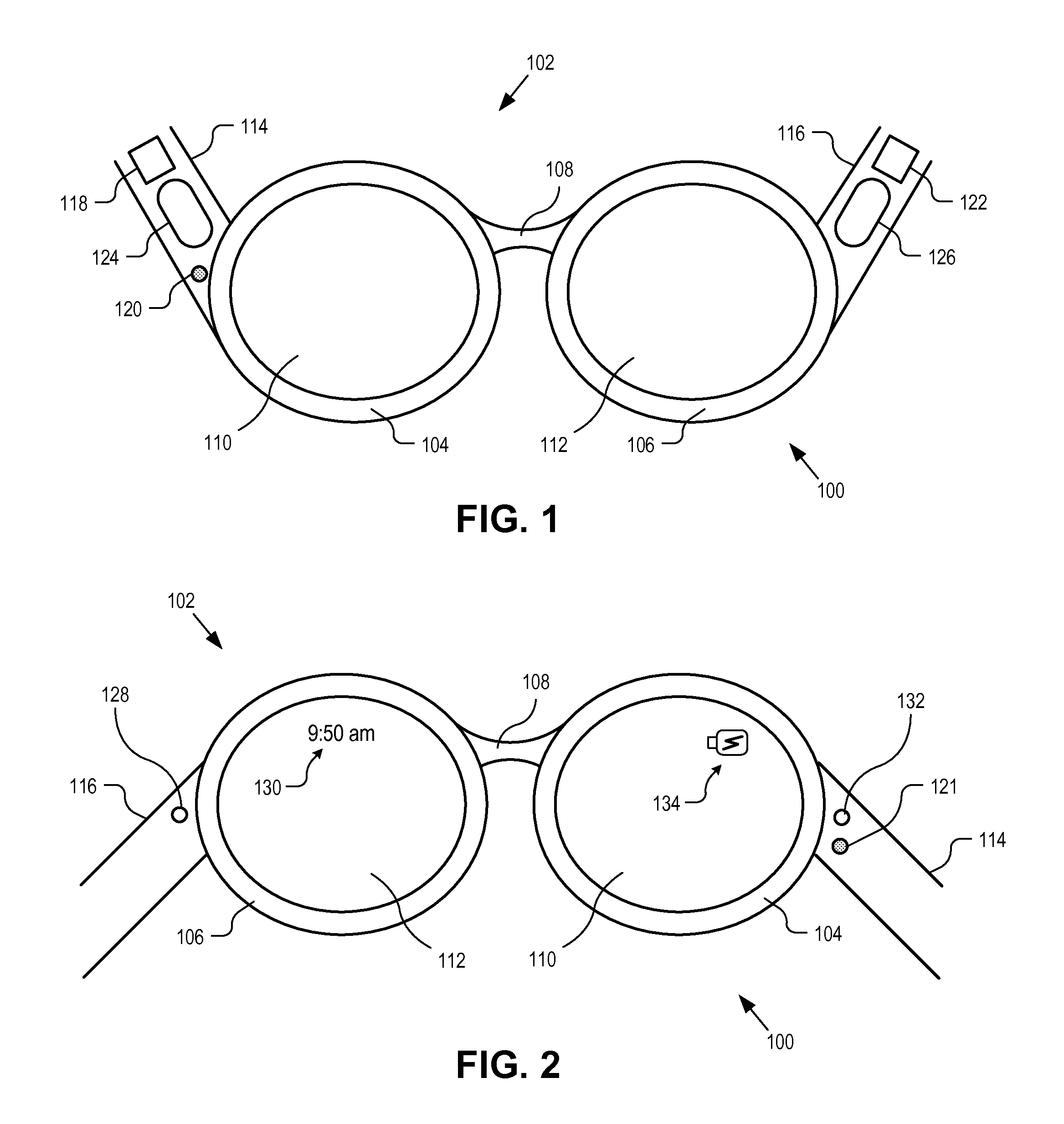

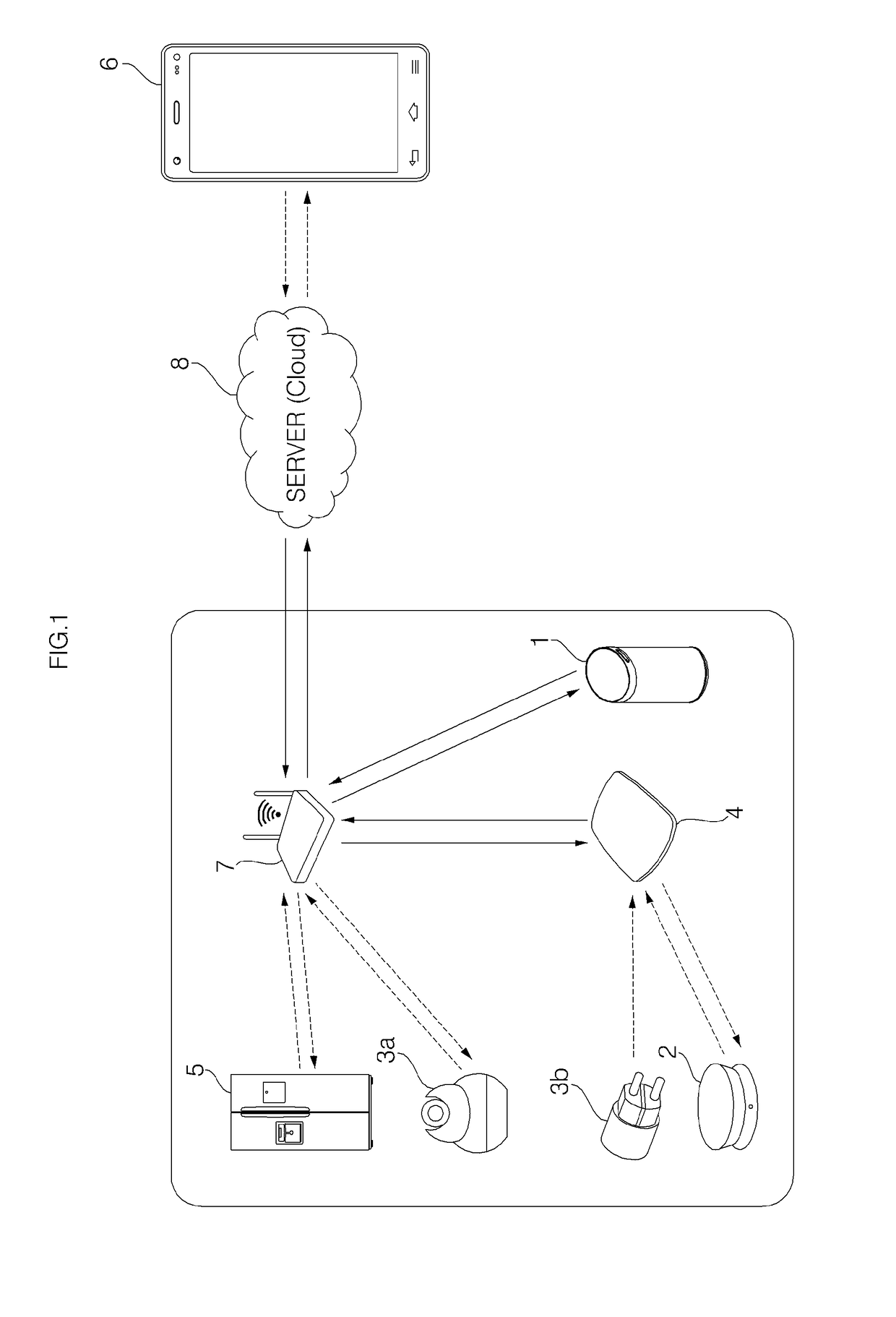

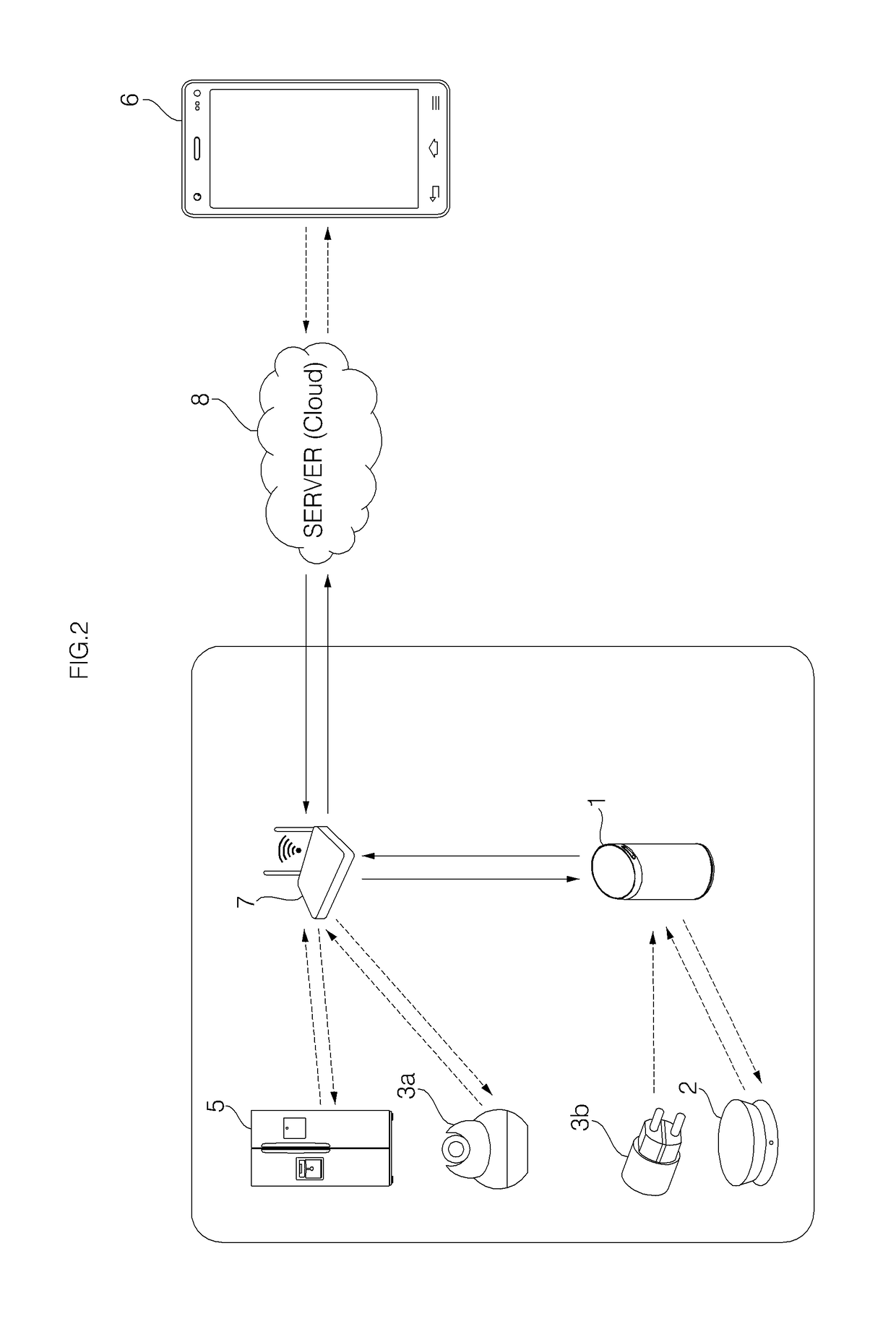

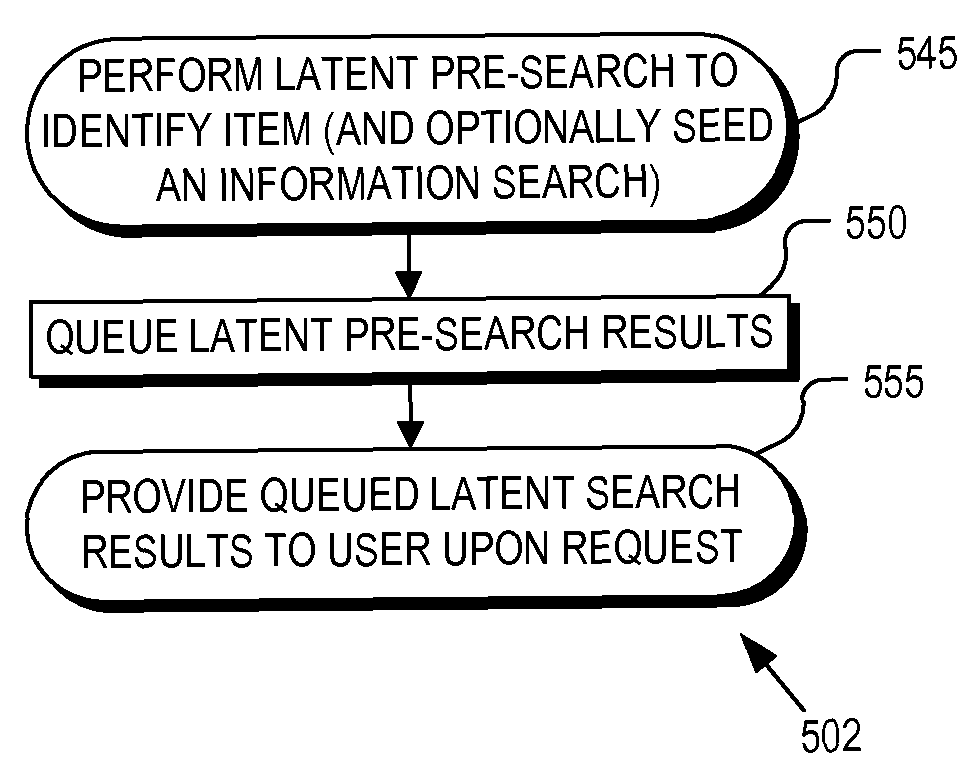

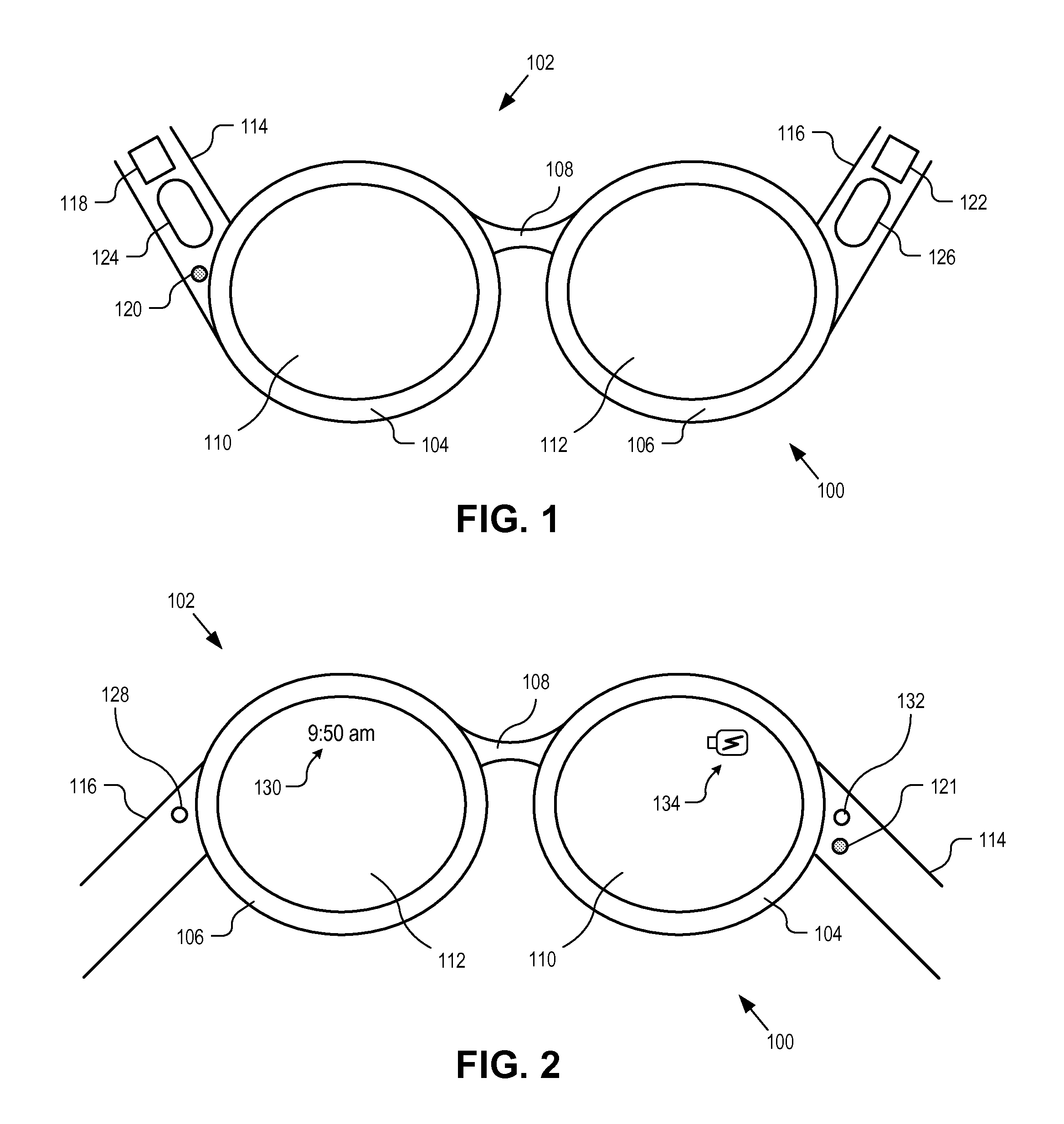

Gaze tracking system

A gaze tracking technique is implemented with a head mounted gaze tracking device that communicates with a server. The server receives scene images from the head mounted gaze tracking device which captures external scenes viewed by a user wearing the head mounted device. The server also receives gaze direction information from the head mounted gaze tracking device. The gaze direction information indicates where in the external scenes the user was gazing when viewing the external scenes. An image recognition algorithm is executed on the scene images to identify items within the external scenes viewed by the user. A gazing log tracking the identified items viewed by the user is generated.

Owner:GOOGLE LLC

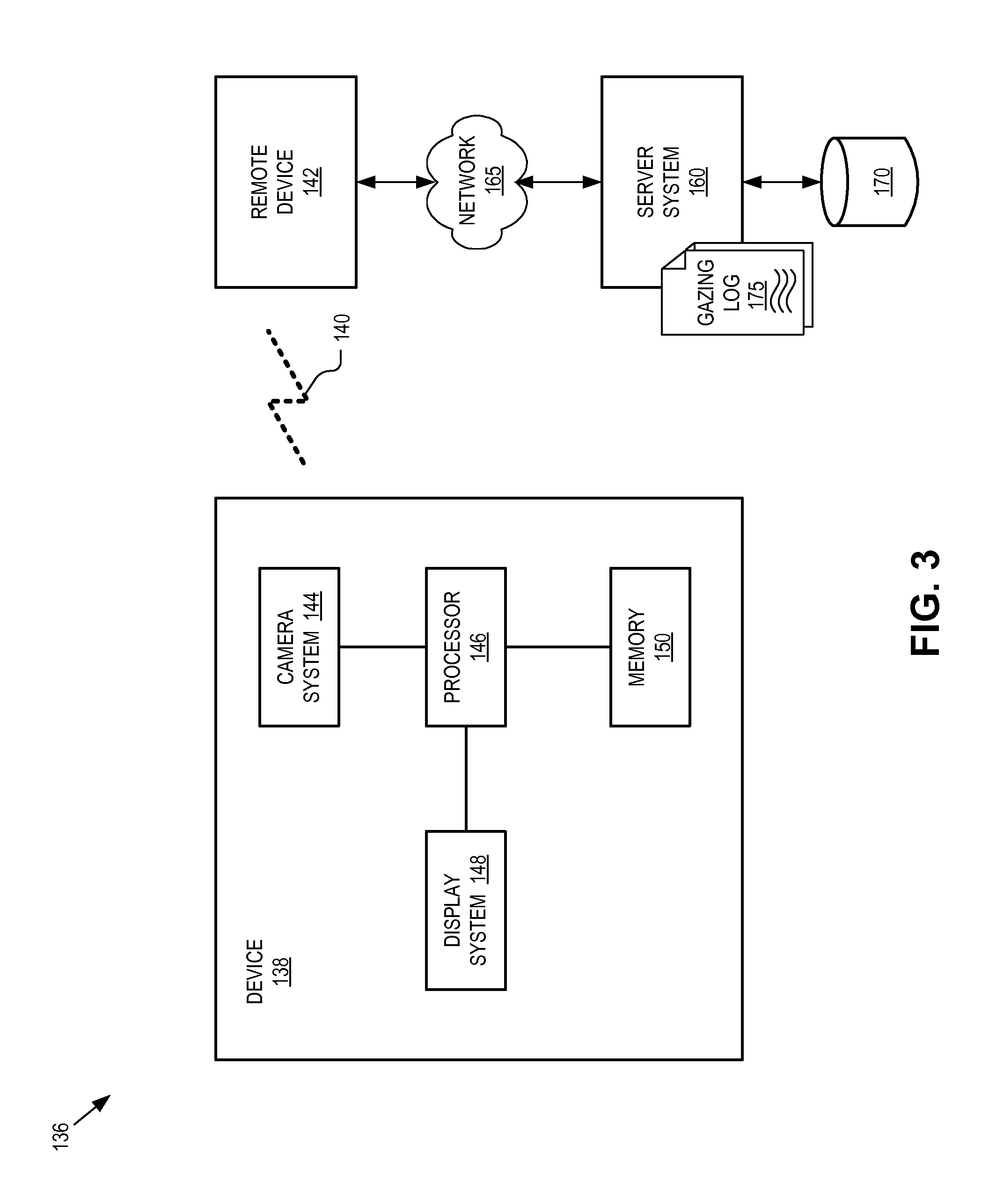

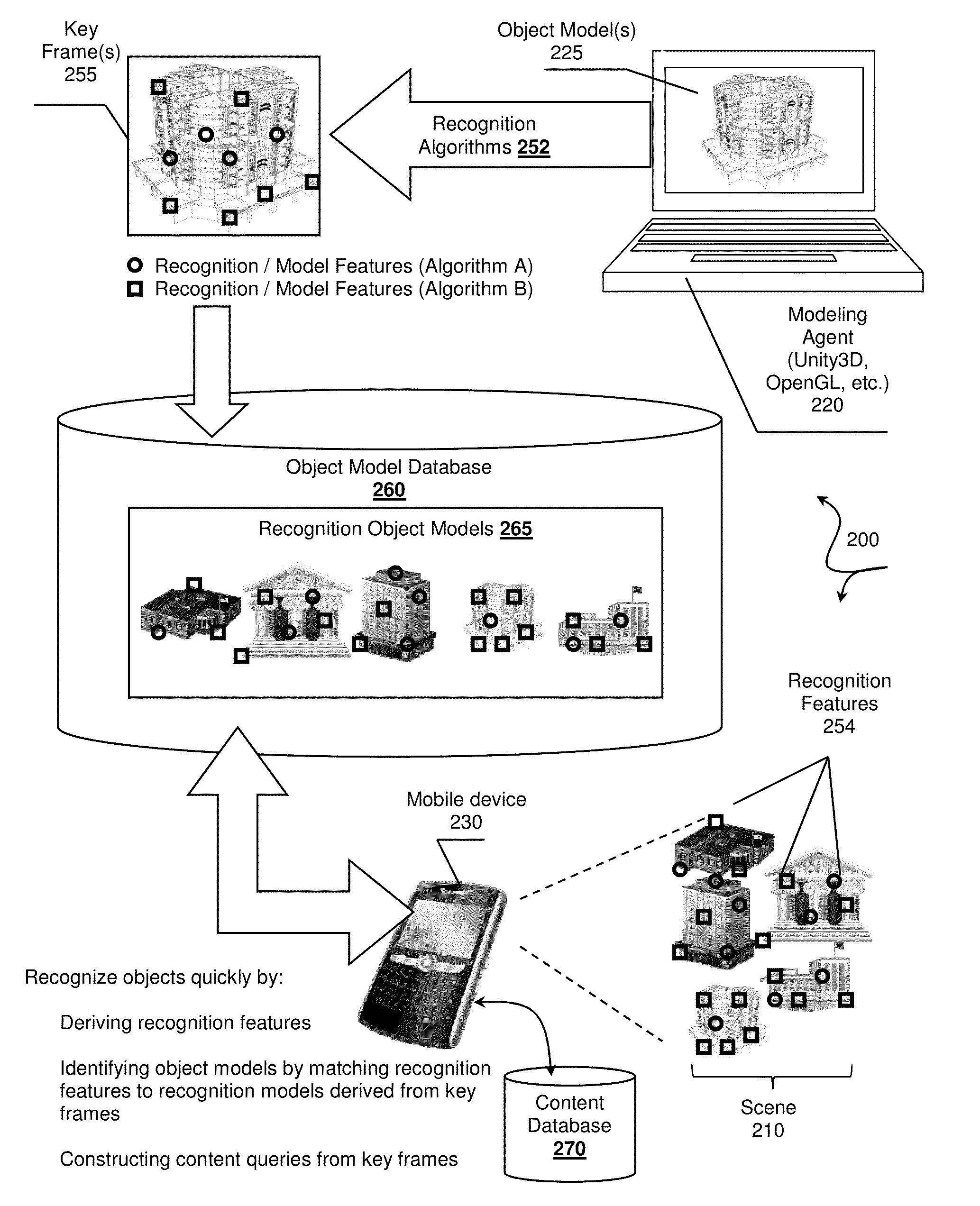

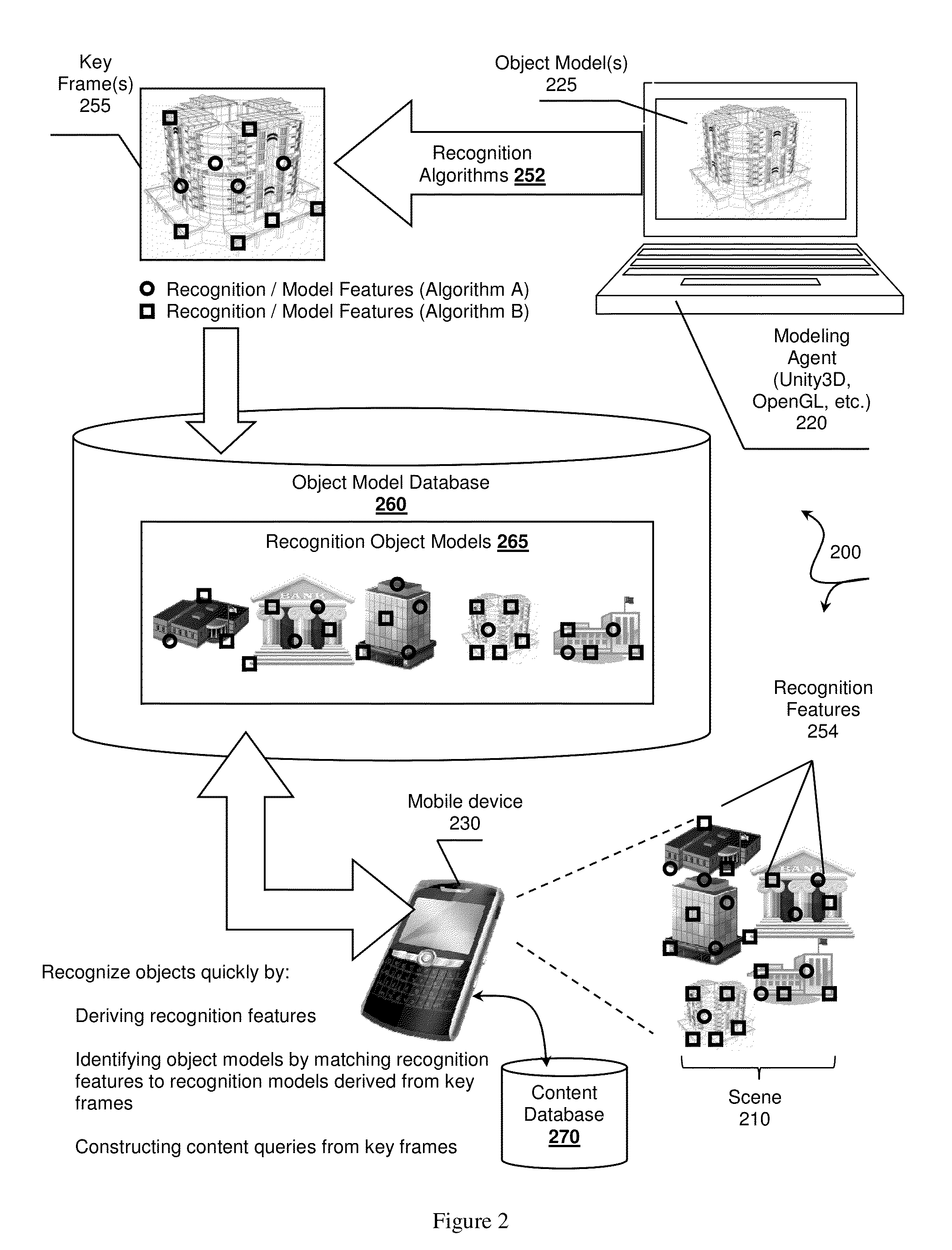

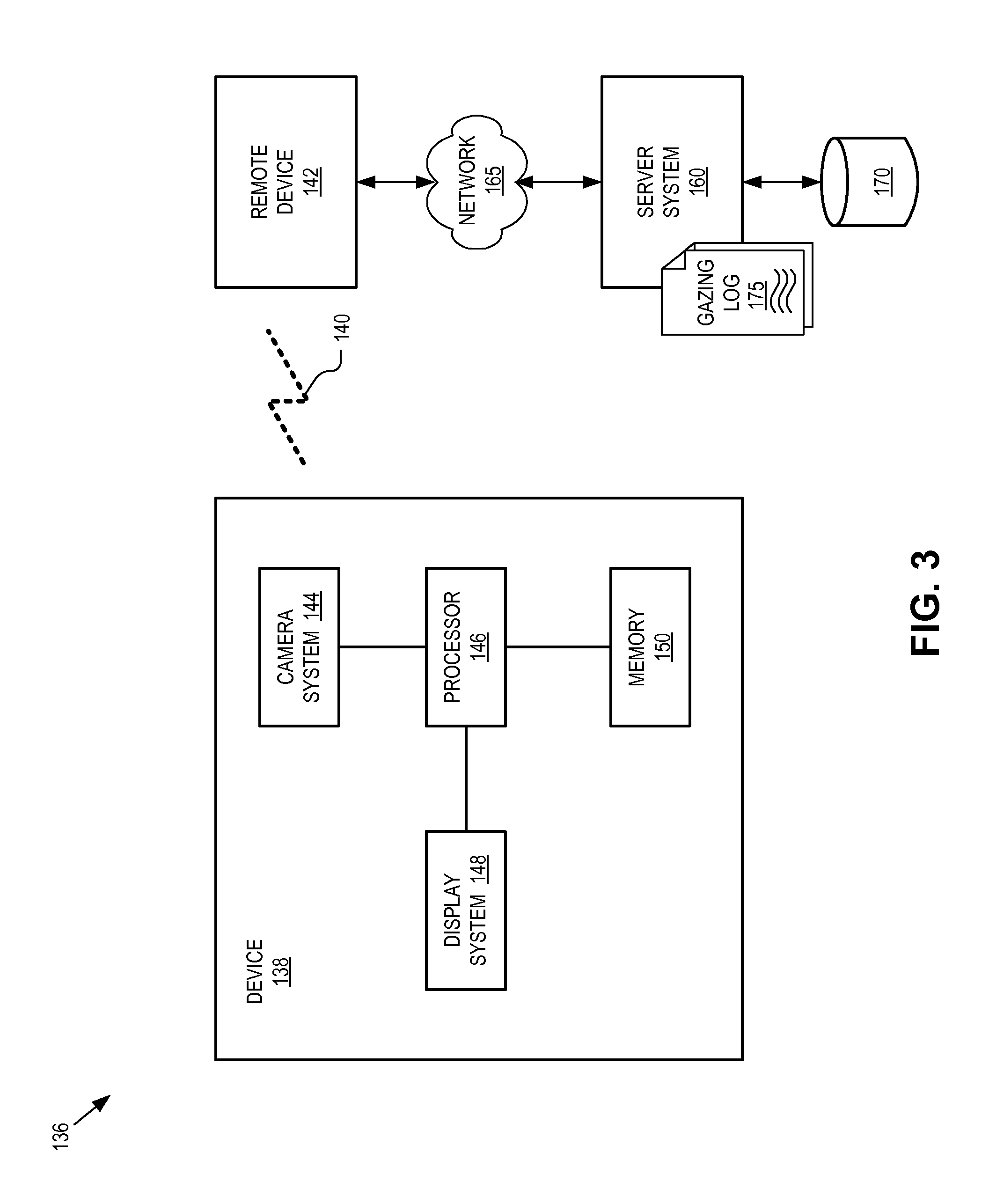

Fast recognition algorithm processing, systems and methods

ActiveUS20150023602A1Image enhancementDigital data information retrievalRecognition algorithmObject identifier

Systems and methods of quickly recognizing or differentiating many objects are presented. Contemplated systems include an object model database storing recognition models associated with known modeled objects. The object identifiers can be indexed in the object model database based on recognition features derived from key frames of the modeled object. Such objects are recognized by a recognition engine at a later time. The recognition engine can construct a recognition strategy based on a current context where the recognition strategy includes rules for executing one or more recognition algorithms on a digital representation of a scene. The recognition engine can recognize an object from the object model database, and then attempt to identify key frame bundles that are contextually relevant, which can then be used to track the object or to query a content database for content information.

Owner:NANTMOBILE +1

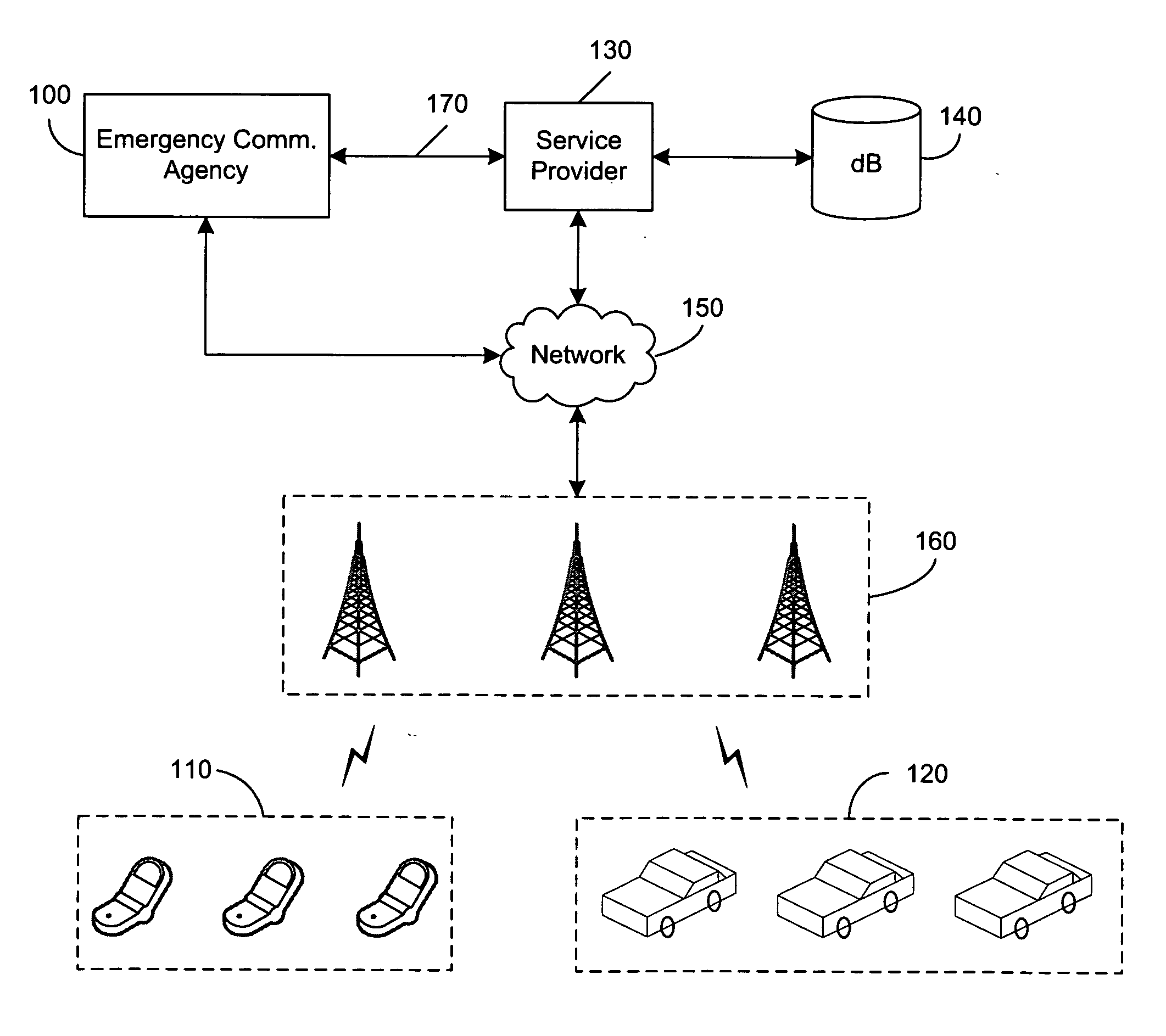

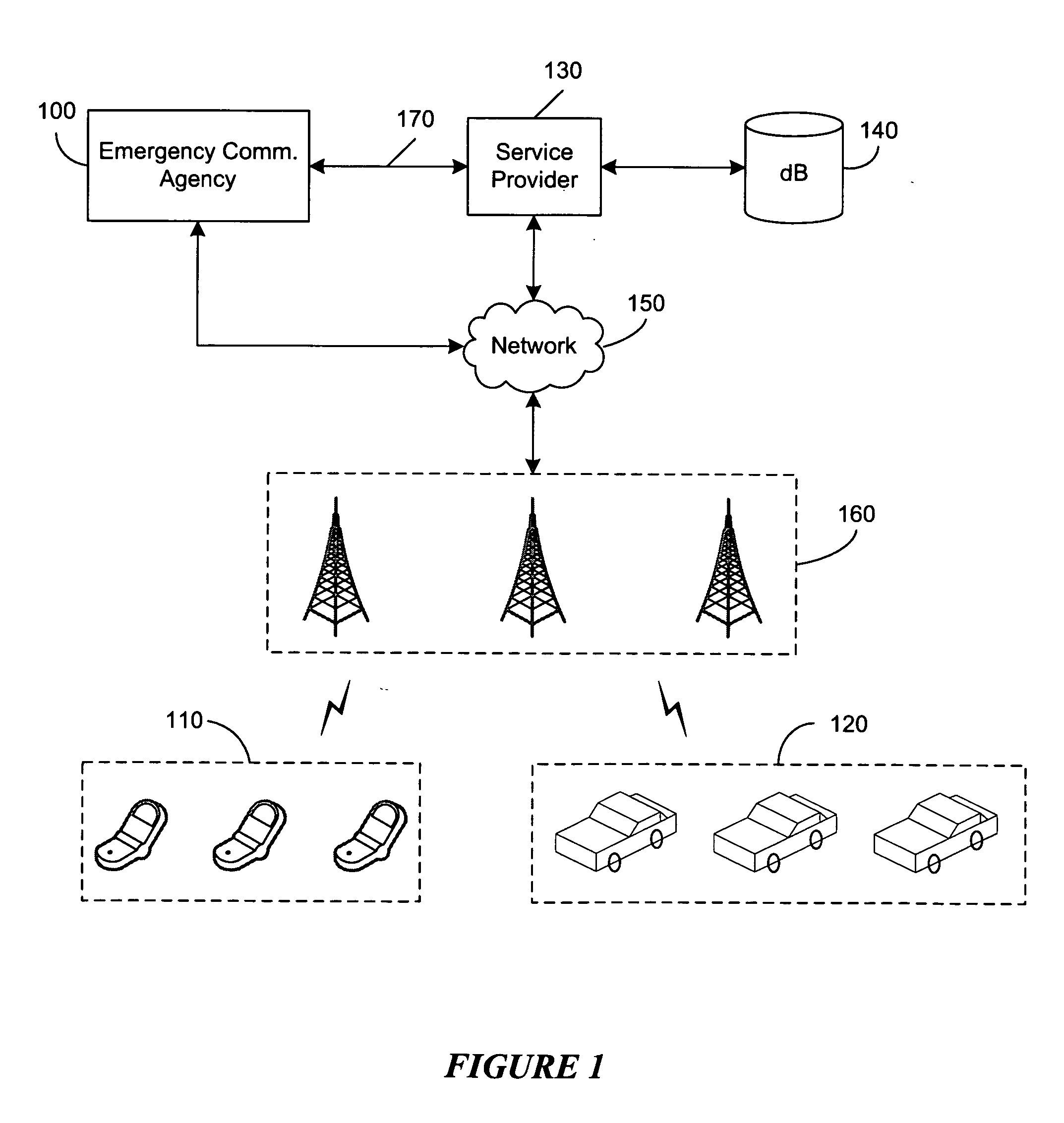

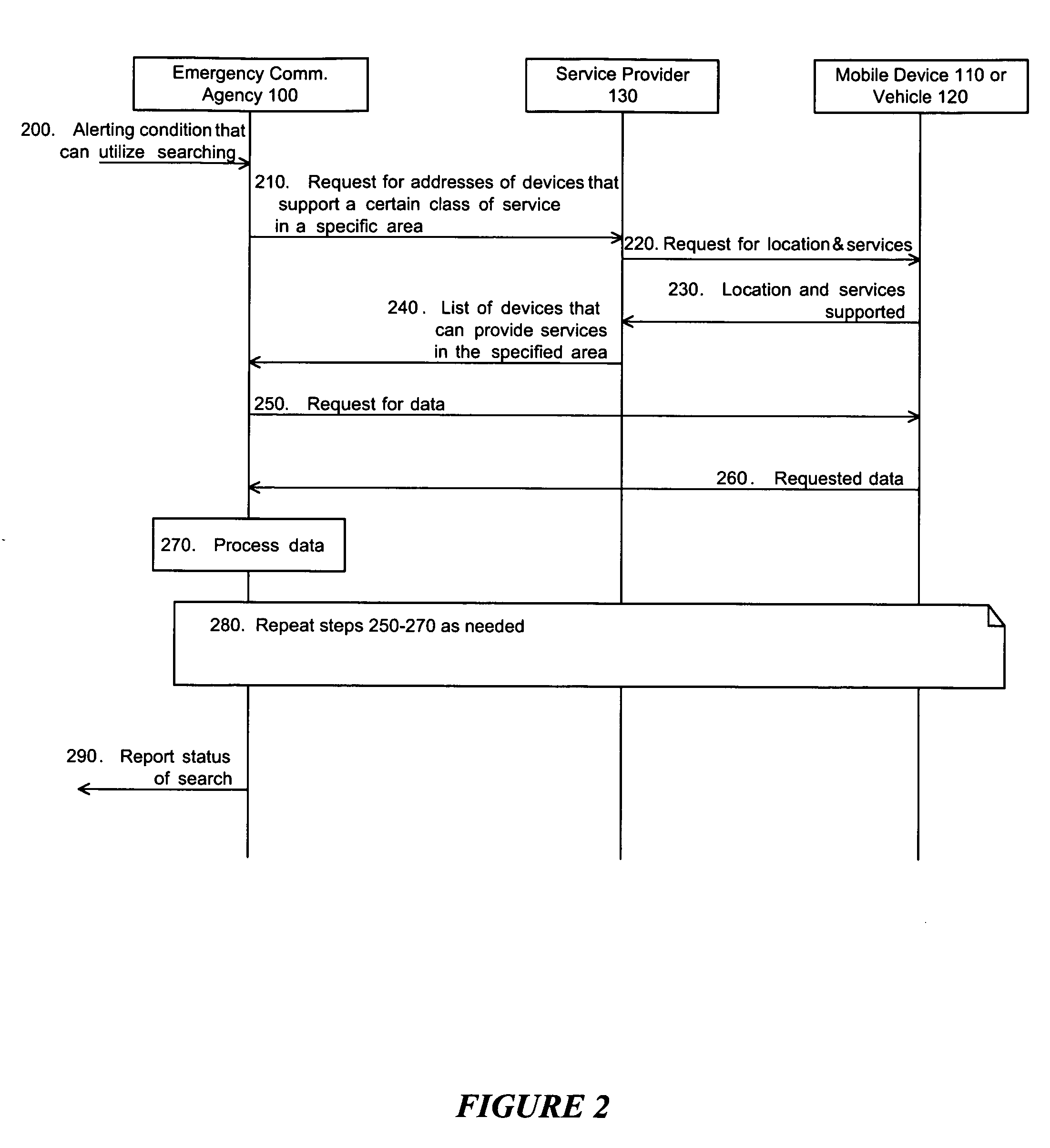

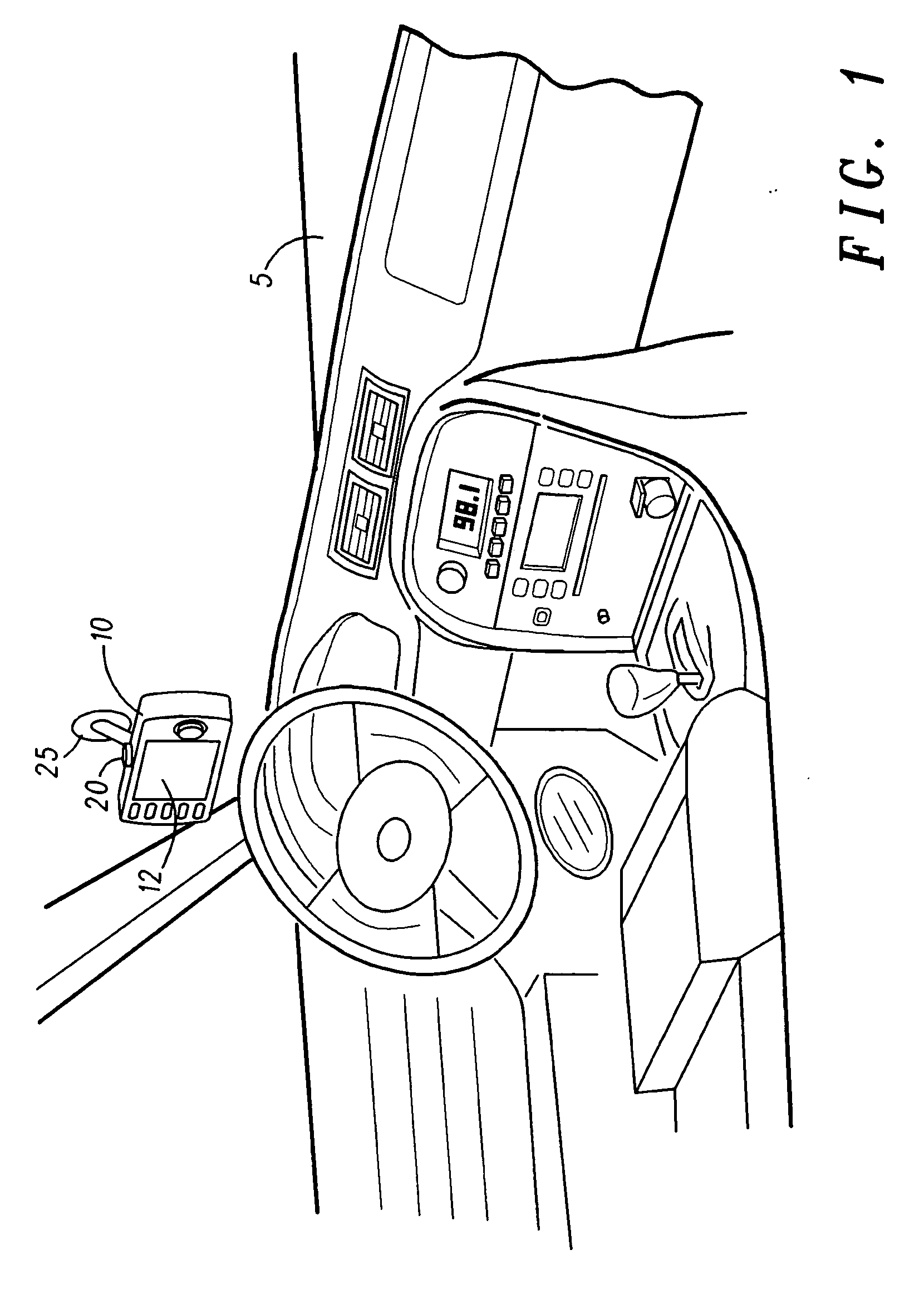

Emergency communications for the mobile environment

InactiveUS20070139182A1Beneficial and mannerEmergency connection handlingConnection managementInformation processingTelematics

Systems and methods for two-way, interactive communication regarding emergency notifications and responses for mobile environments are disclosed. In accordance with one embodiment of the present invention, a specific geographic area is designated for selective emergency communications. The emergency communications may comprise text, audio, video, and other types of data. The emergency notification is sent to users' mobile communications devices such as in-vehicle telematics units, cellular phones, personal digital assistants (PDAs), laptops, etc. that are currently located in the designated area. The sender of the emergency message or the users' service provider(s) may remotely control cameras and microphones associated with the users' mobile communications devices. For example, a rear camera ordinarily used when driving in reverse may be used to capture images and video that may assist authorities in searching for a suspect. The users' vehicles may send photographs or video streams of nearby individuals, cars and license plates, along with real-time location information, in response to the emergency notification. Image recognition algorithms may be used to analyze license plates, vehicles, and faces captured by the users' cameras and determine whether they match a suspect's description. Advantageously, the present invention utilizes dormant resources in a highly beneficial and time-saving manner that increases public safety and national security.

Owner:MOTOROLA INC

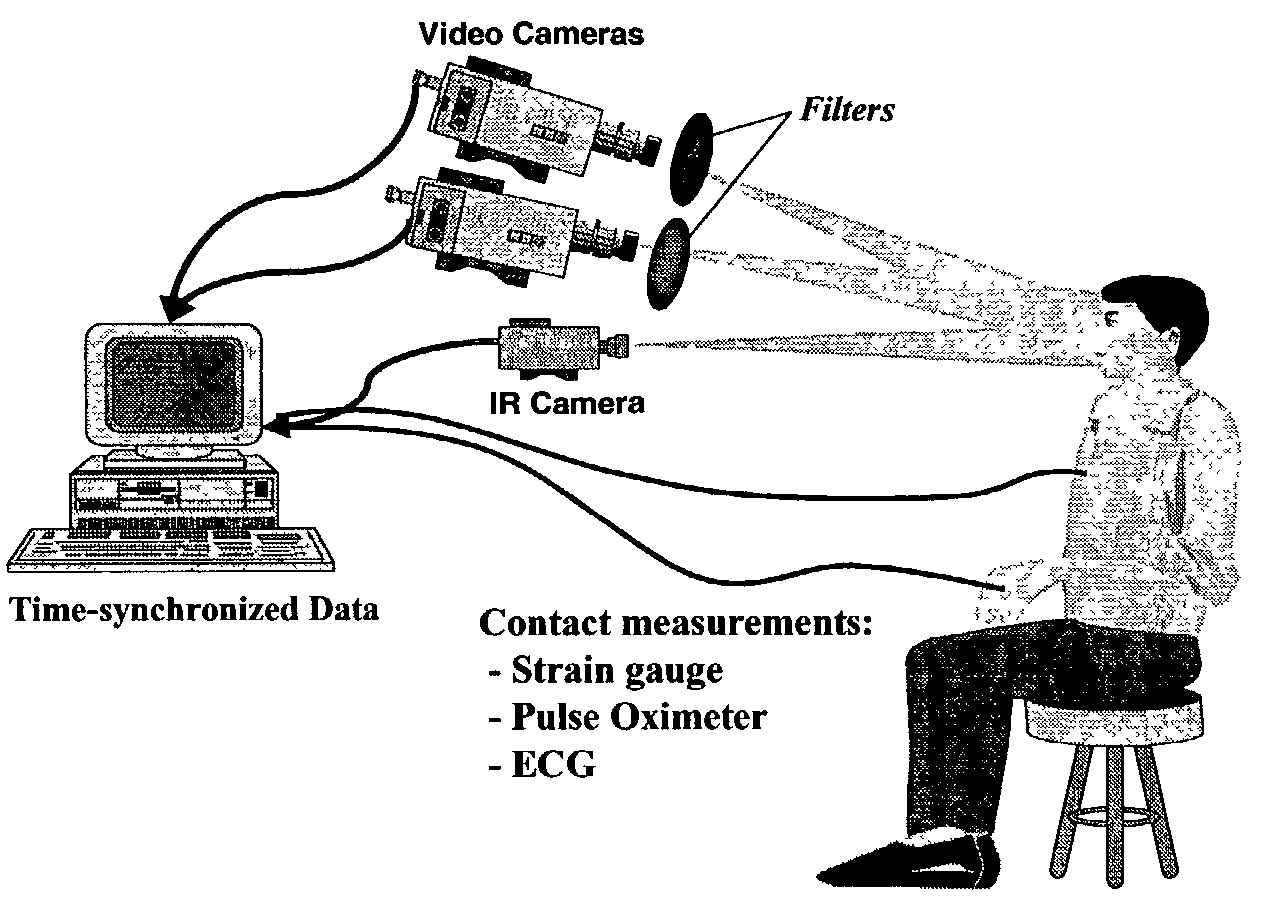

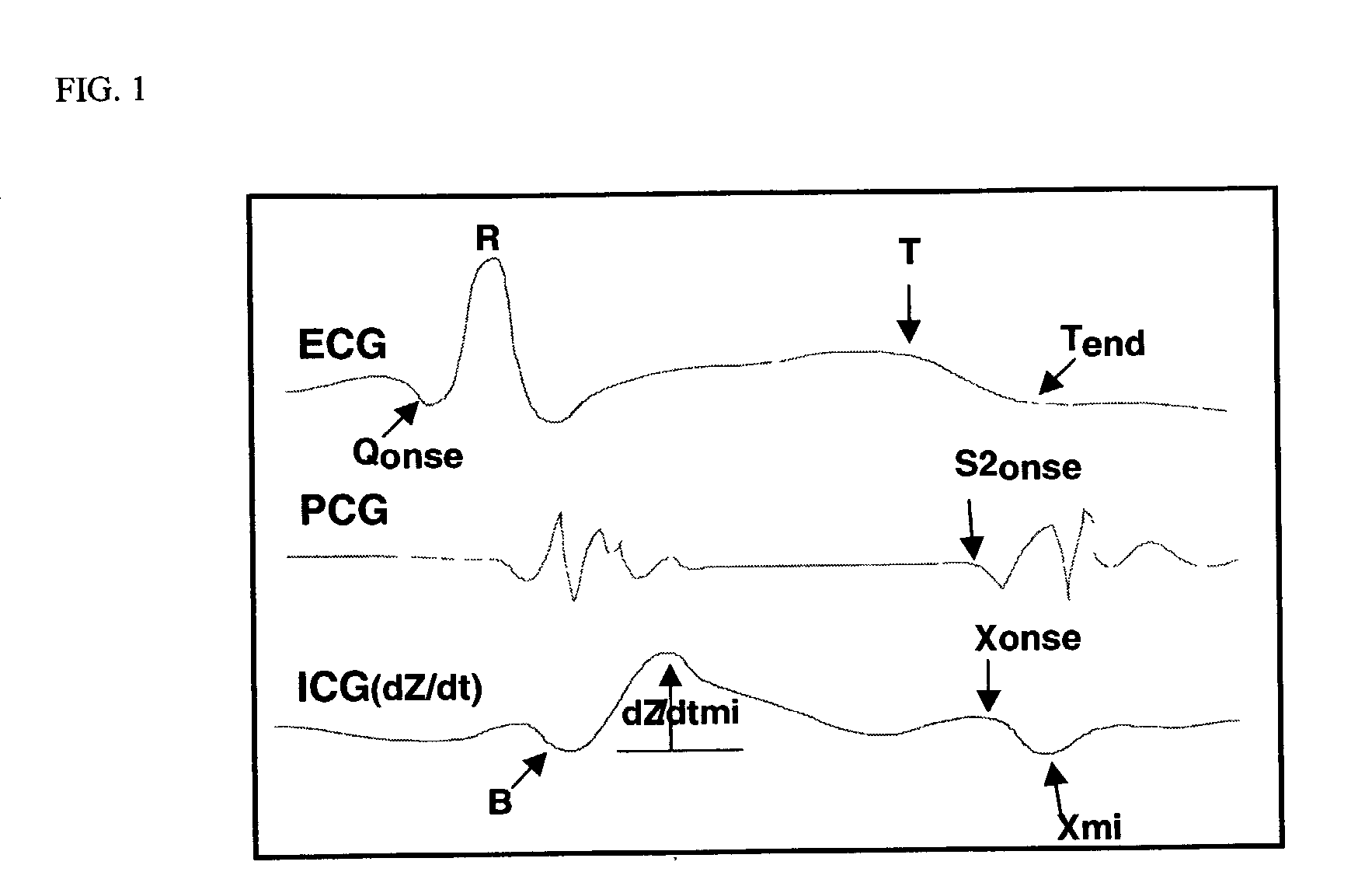

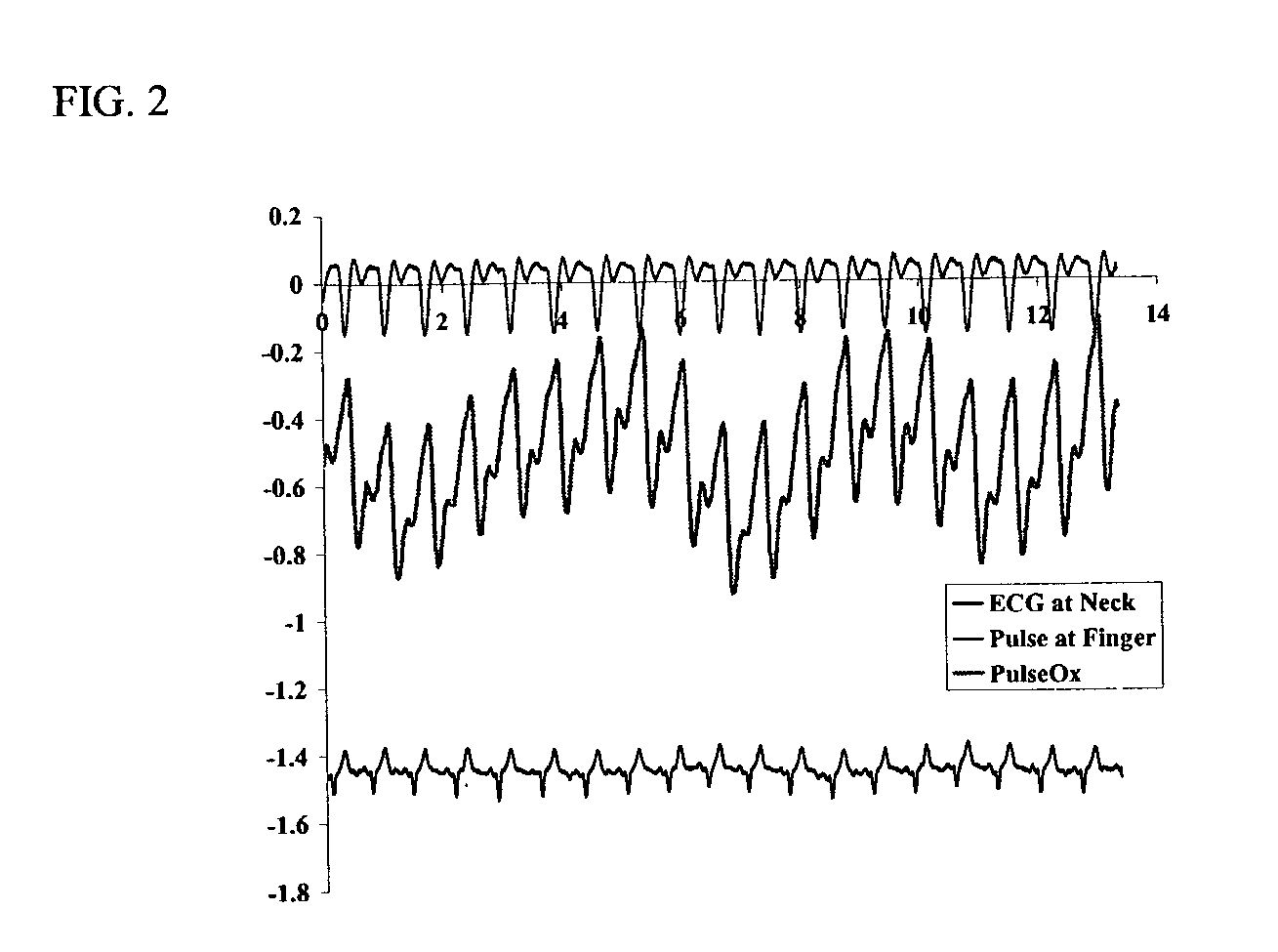

Identification by analysis of physiometric variation

Methods involving extraction of information from the inherent variability of physiometrics, including data on cardiovascular and pulmonary functions such as heart rate variability, characteristics of ECG traces, pulse, oxygenation of subcutaneous blood, respiration rate, temperature or CO2 content of exhaled air, heart sounds, and body resonance, can be used to identify individual subjects, particularly humans. Biometric data for use in the methods can be obtained either from contact sensors or at a distance. The methods can be performed alone or can be fused with previous identification algorithms.

Owner:LEIDOS

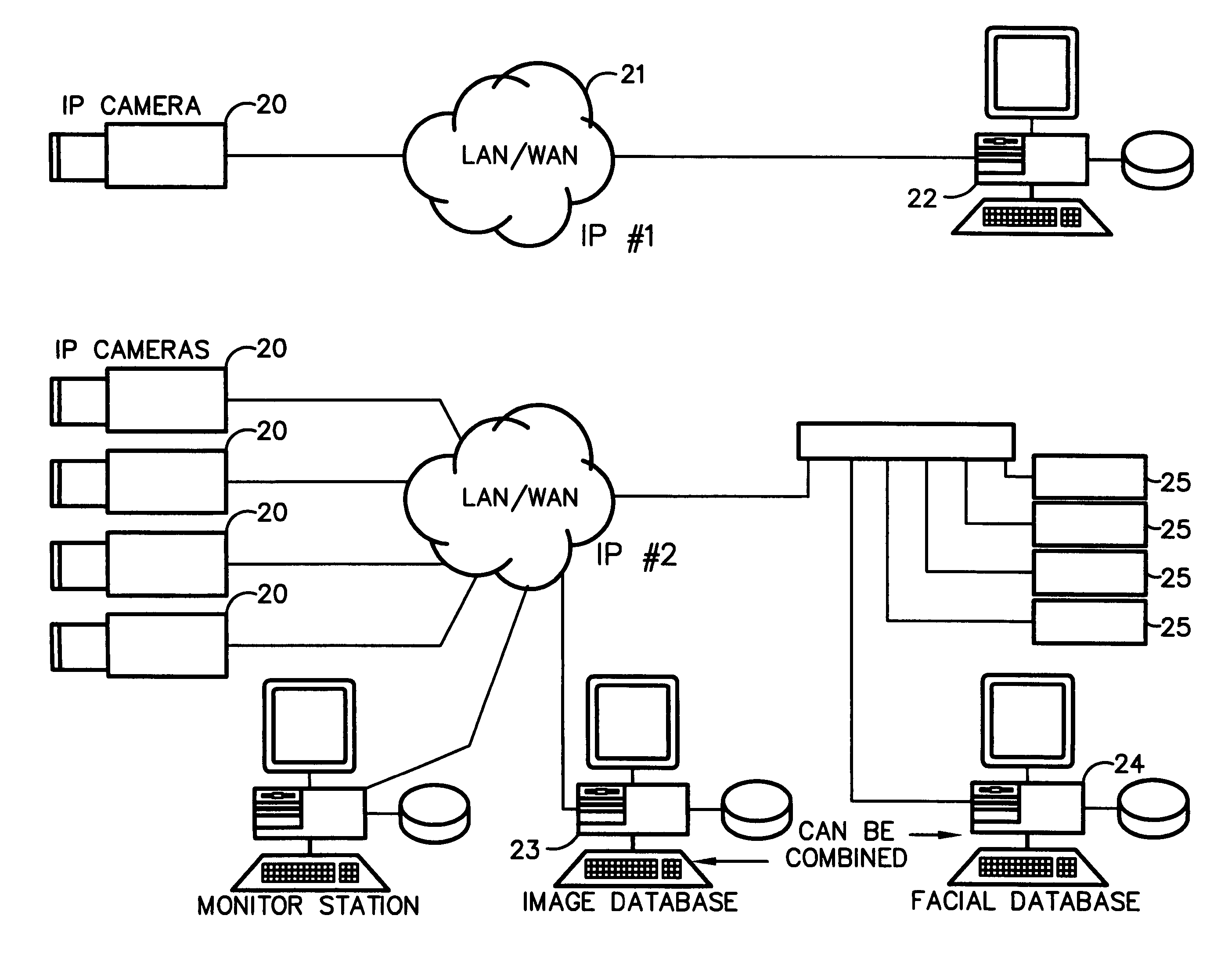

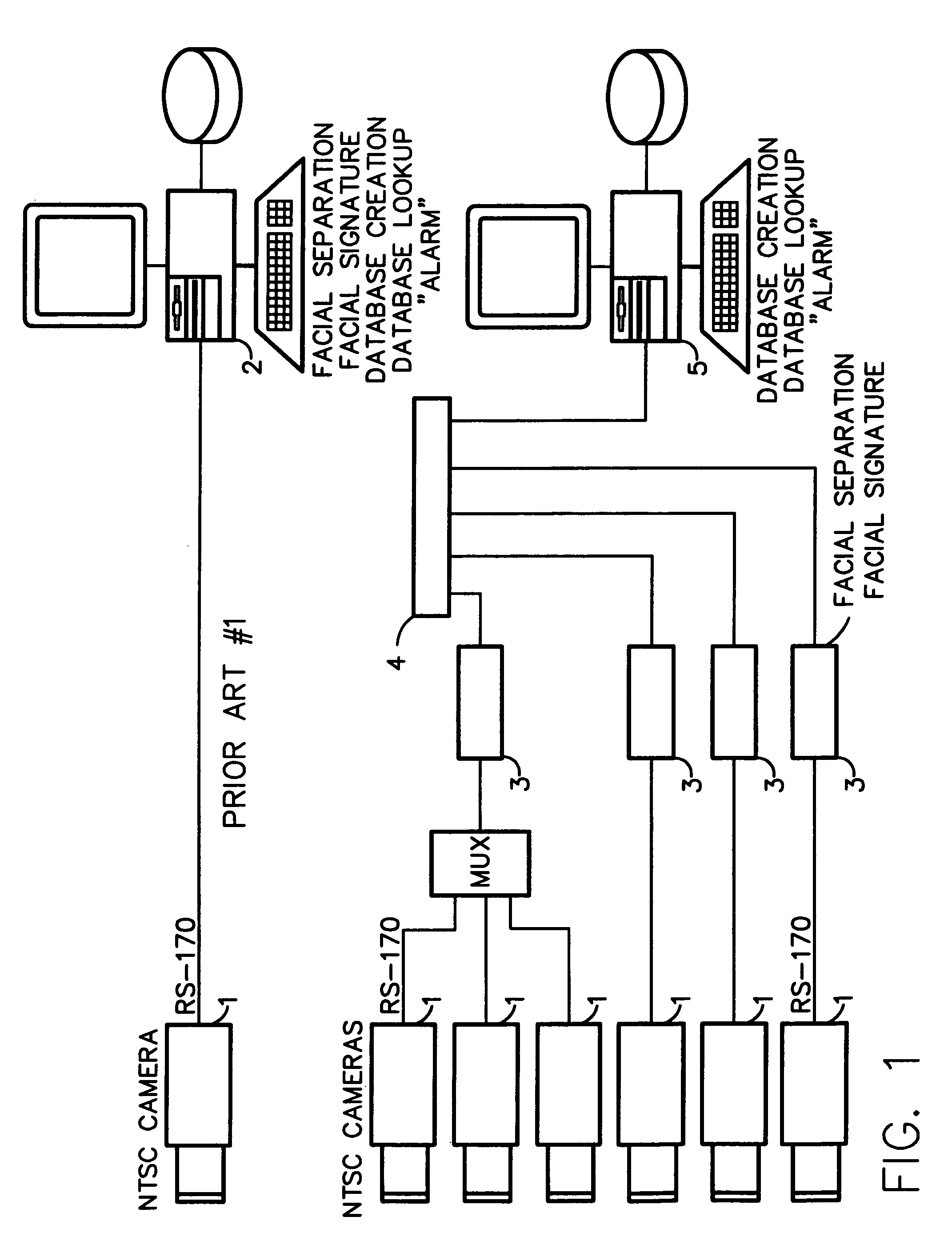

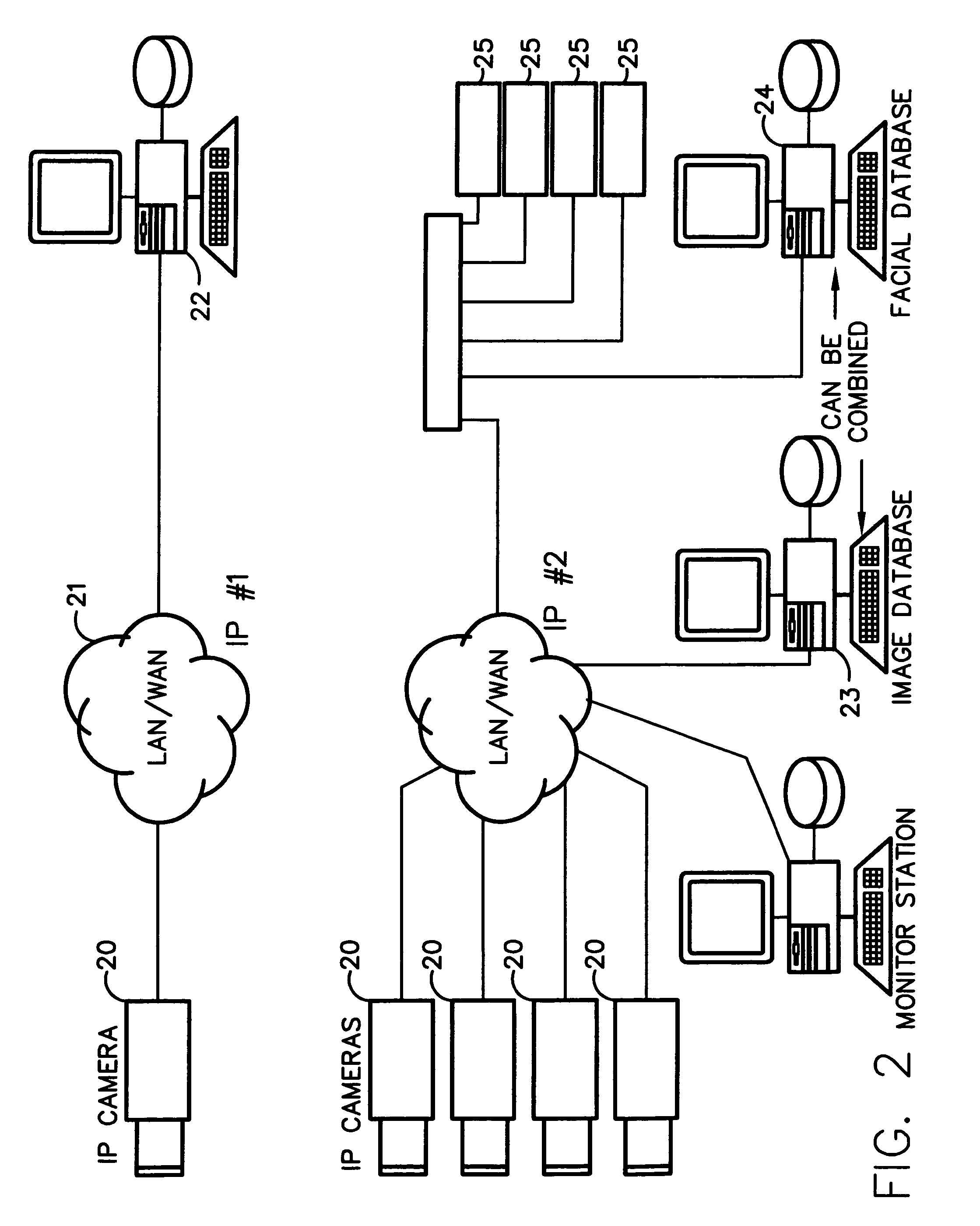

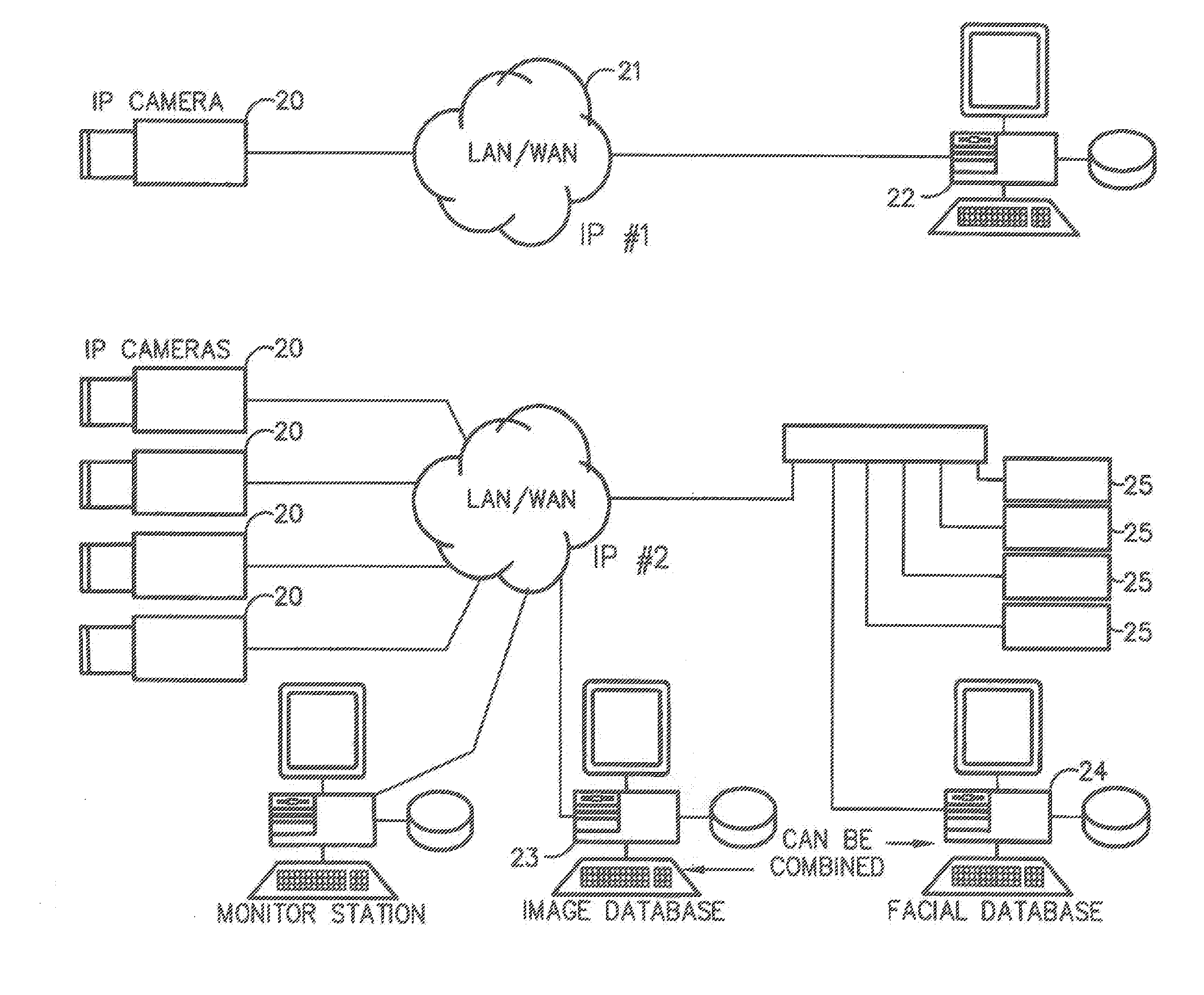

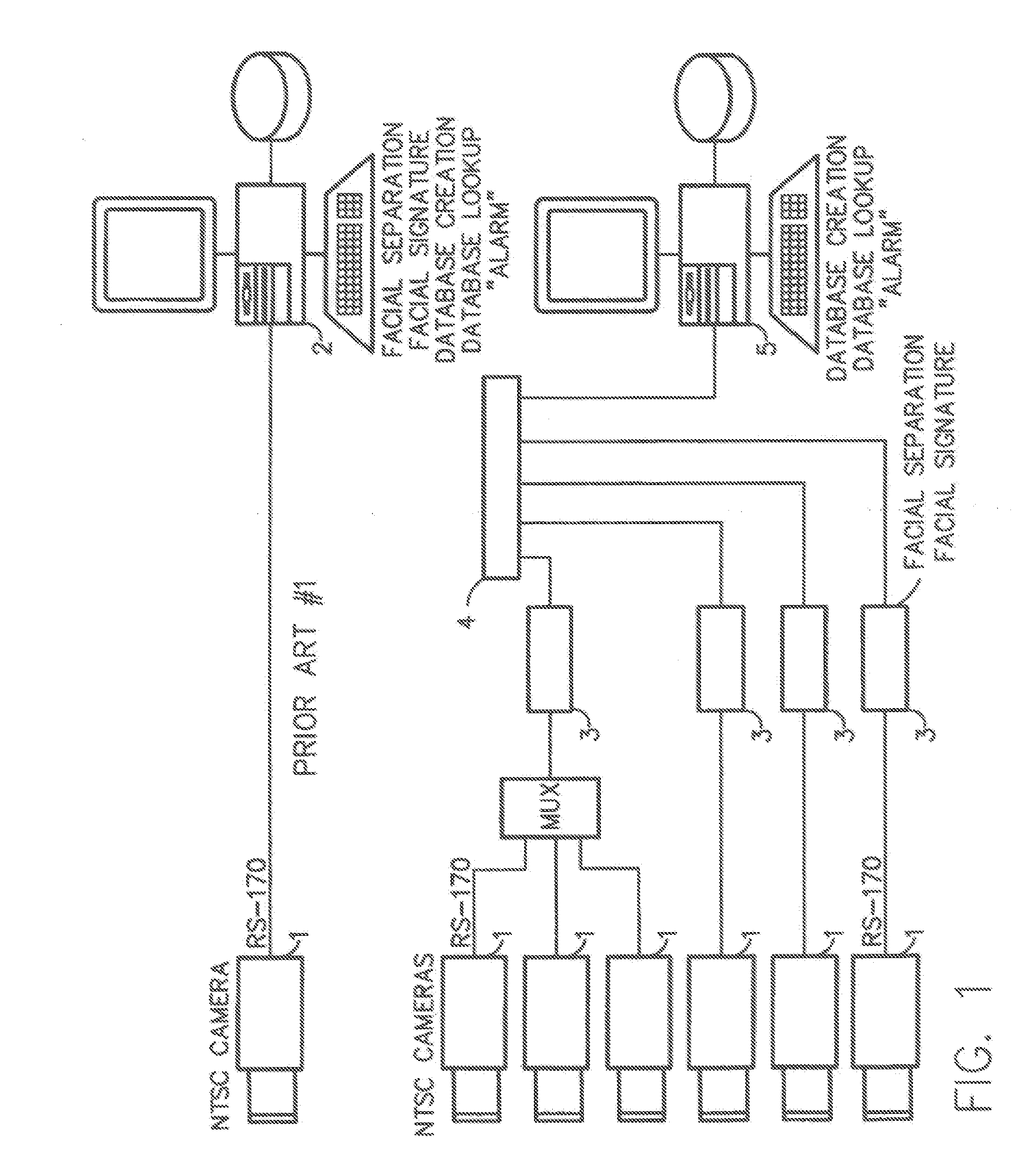

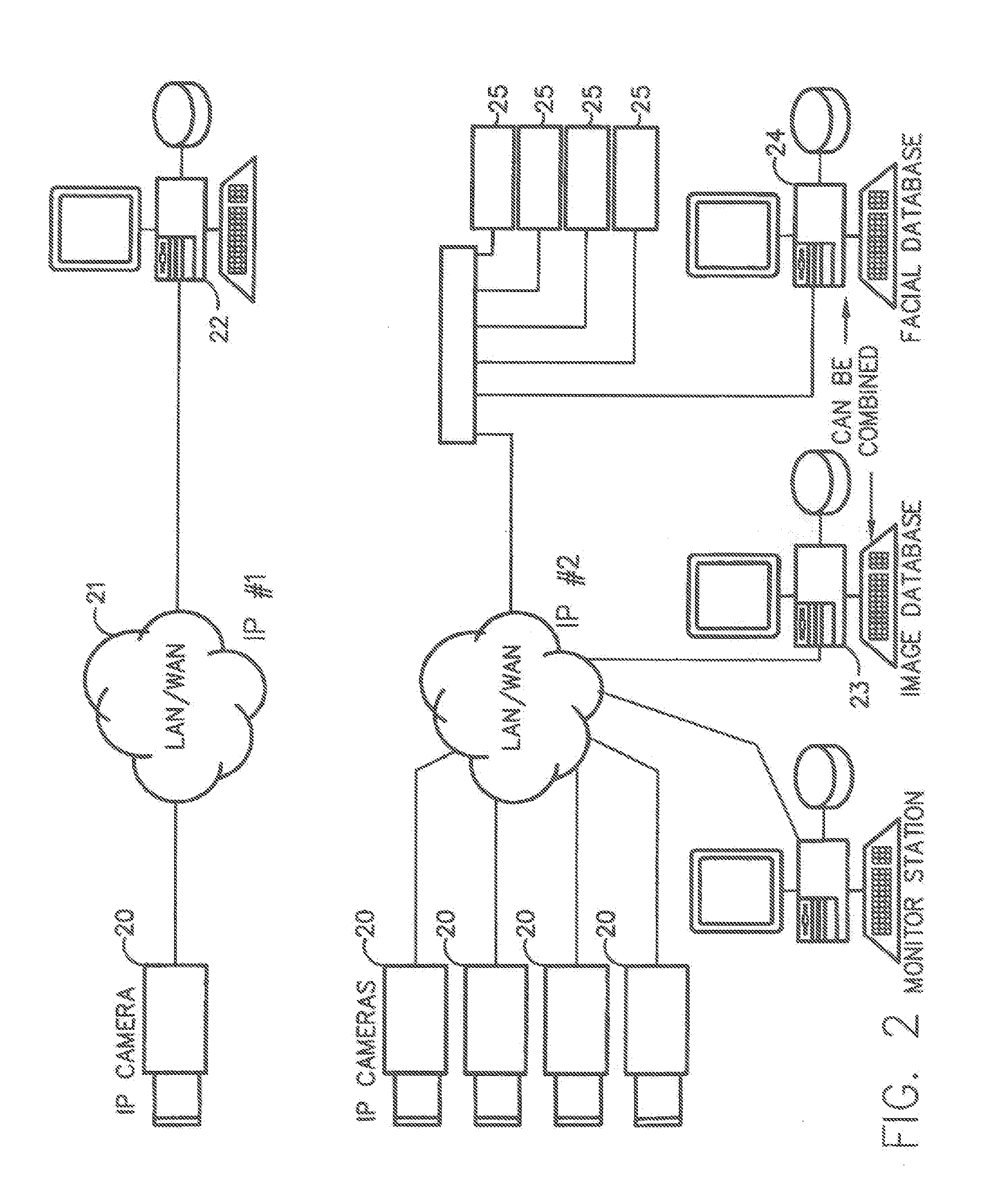

Method for incorporating facial recognition technology in a multimedia surveillance system

InactiveUS7634662B2Function increaseImprove economyChecking time patrolsCharacter and pattern recognitionSurveillance cameraRecognition algorithm

Embodiment provide a surveillance camera adapted to be connected to an internet protocol network, the surveillance camera including at least one facial processor, at least one facial recognition algorithm embodied in suitable media, at least one facial recognition algorithm executable with digital format image data by at least one facial processor detecting faces, execution of at least one facial recognition algorithm producing unique facial image data, execution of at least one facial separation algorithm producing facial separation data, at least one facial processor in communication with at least one facial signature database to obtain reference data, execution of at least one facial signature algorithm comparing facial separation data and reference data to identify correlations, at least one compression algorithm producing compressed image data, and a network stack configured to transmit to the network unique facial image data for each detected face and compressed image data.

Owner:PR NEWSWIRE

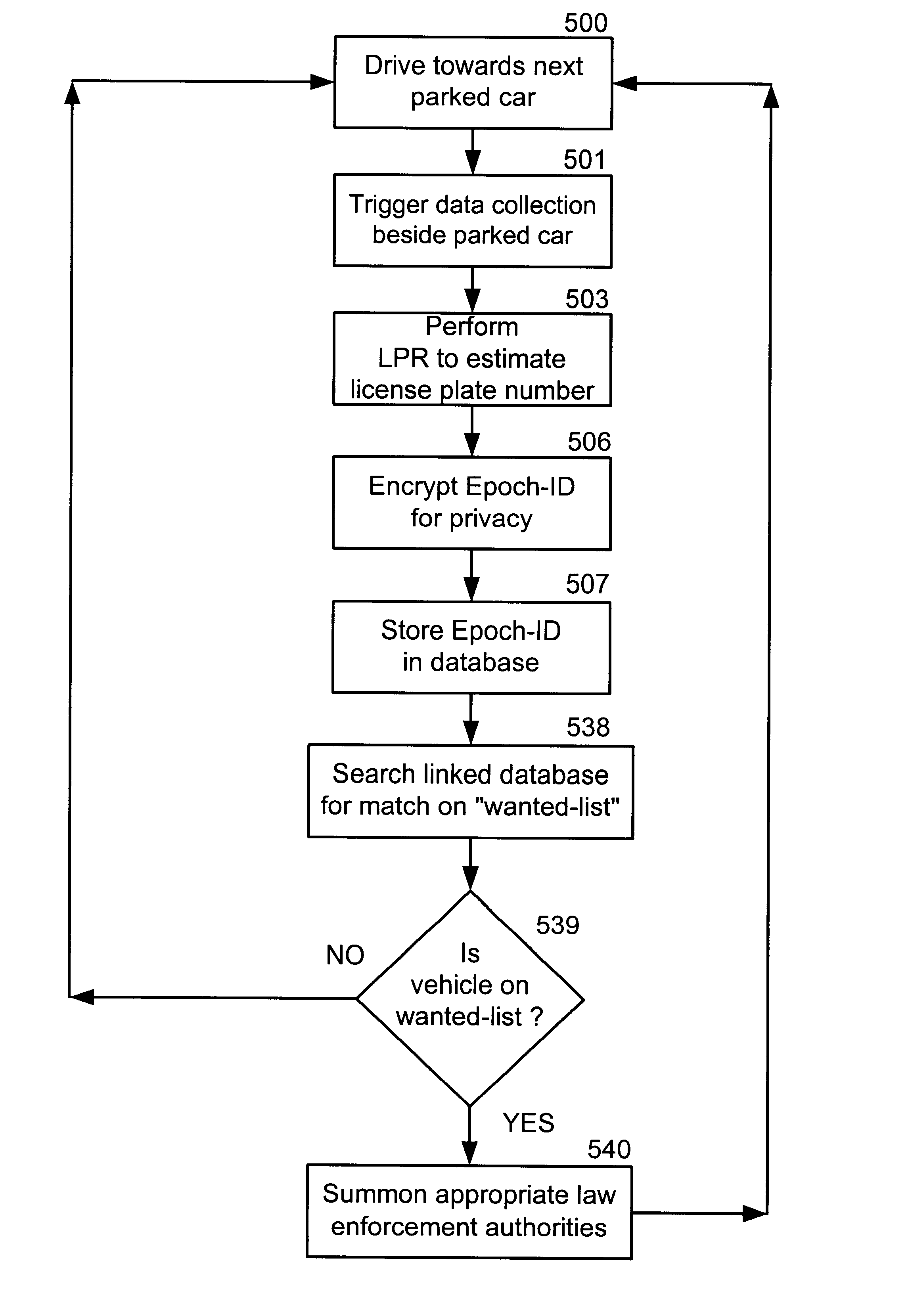

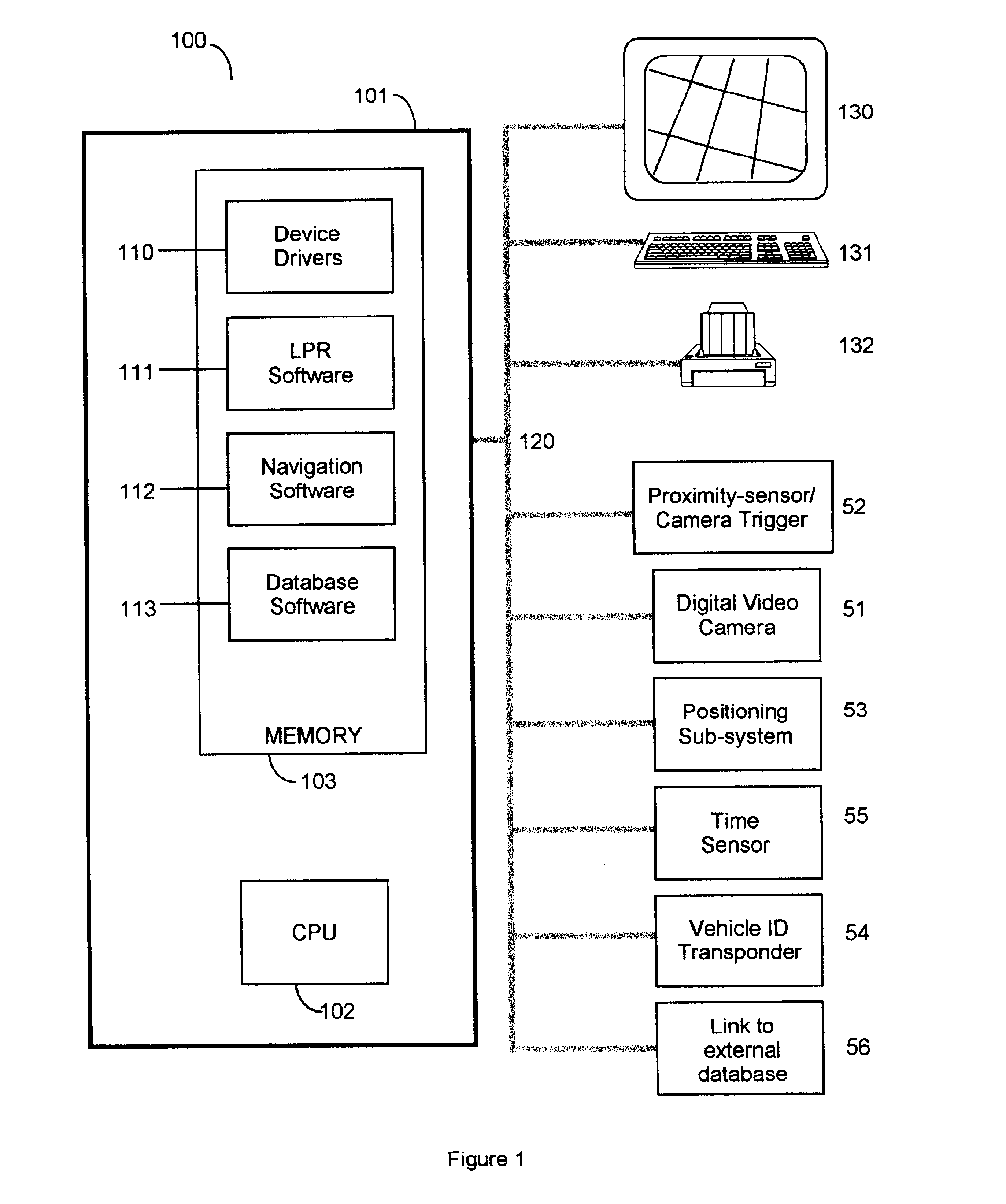

Parking regulation enforcement system

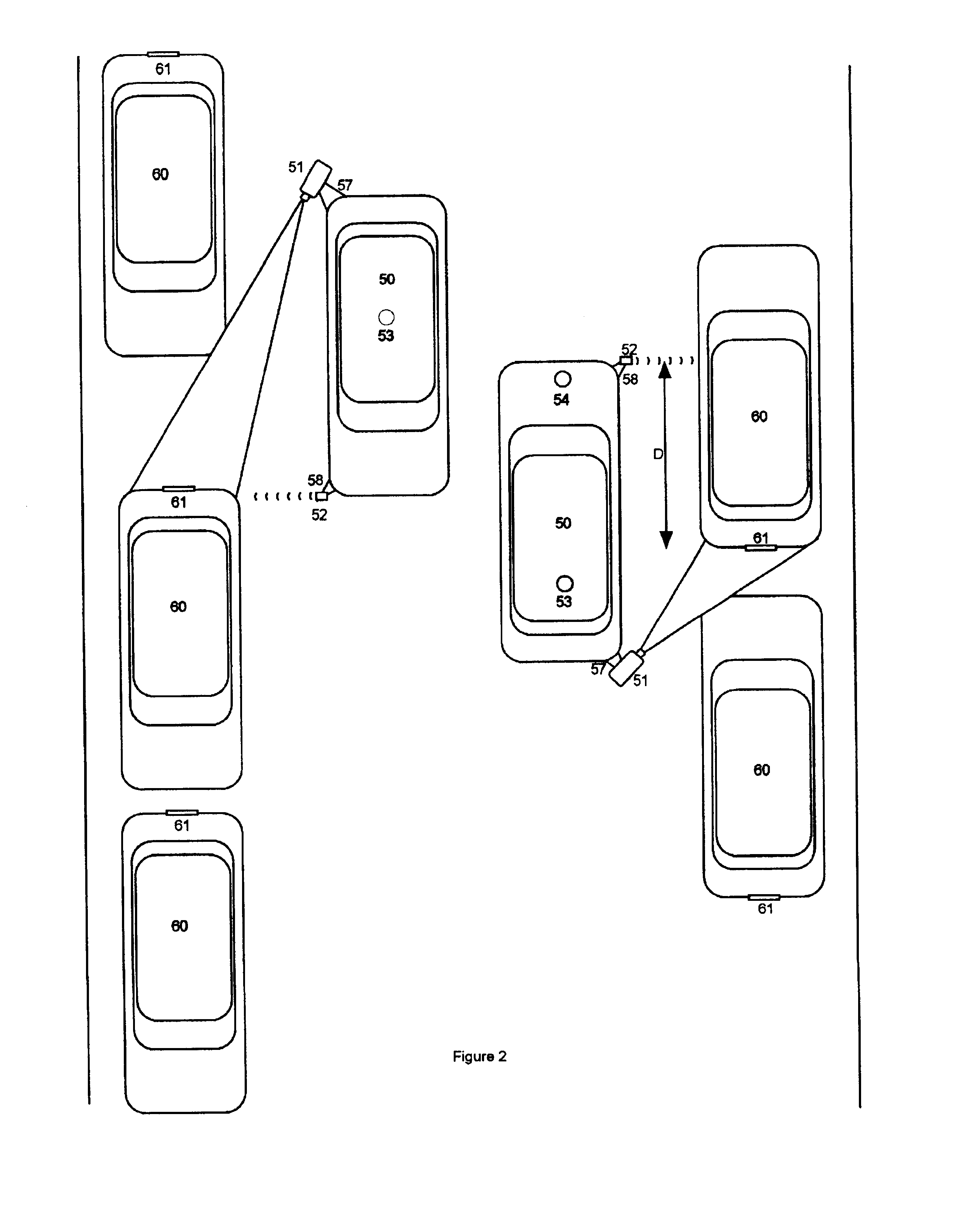

A video camera mounted on a parking enforcement patrol vehicle and connected to a computer near the operator. The system is driven along a patrol route where parked vehicles are governed by a posted time limit. The system enforces the local parking regulation by automatically determining whether or not each parked car has been parked longer than the posted time limit. Violations are detected by applying a License Plate Recognition algorithm to the images. Each license plate number is time-tagged, geo-referenced and entered into a local database. When the patrol vehicle re-traces the patrol route after the posted parking time limit has expired, the database is searched to flag vehicles that were observed at the same location during the previous circuit and therefore in violation of the parking regulations. When the system detects a parking violation, it prints a parking citation that the operator affixes to the offending parked vehicle.

Owner:AUTO VU TECH INC

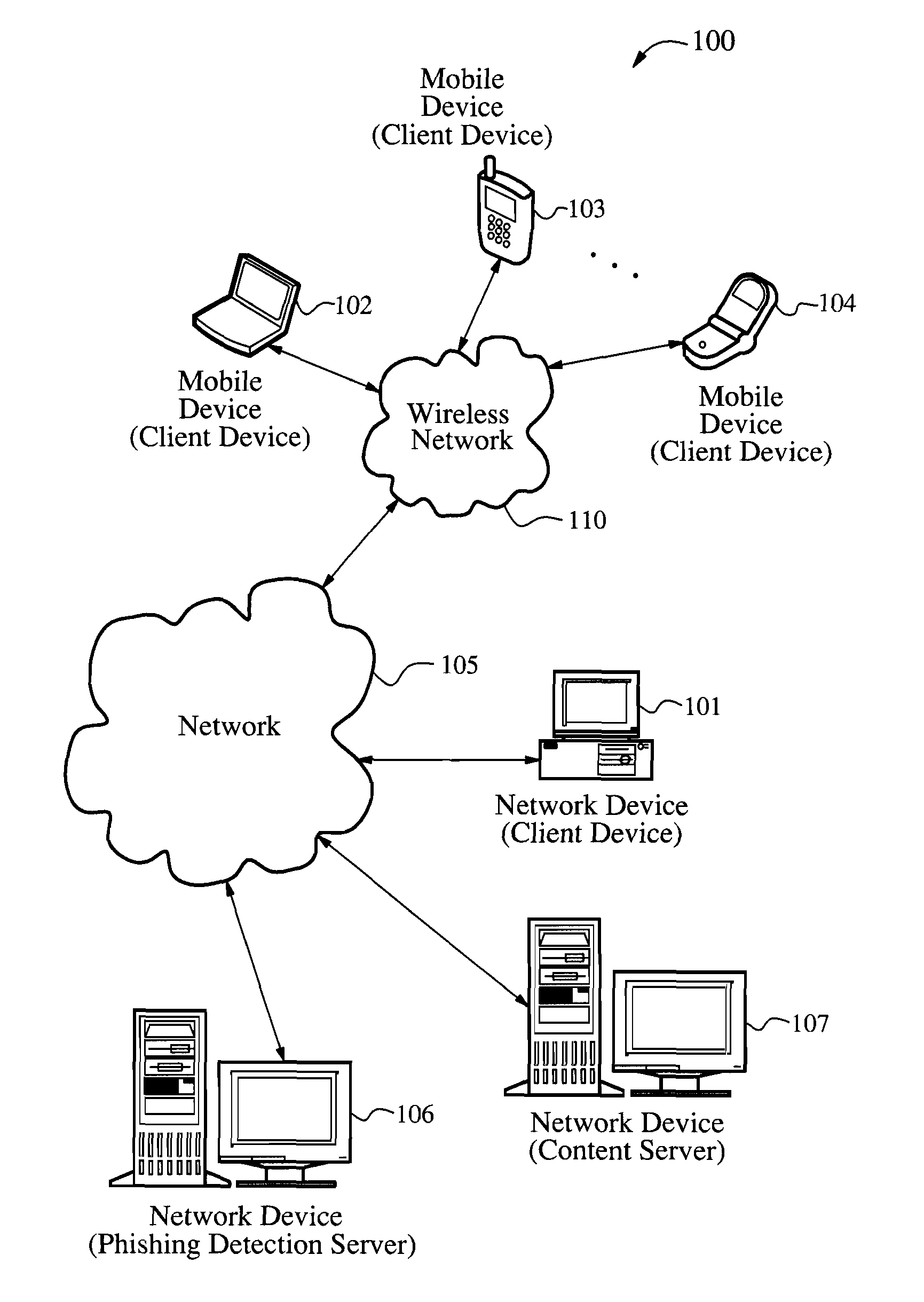

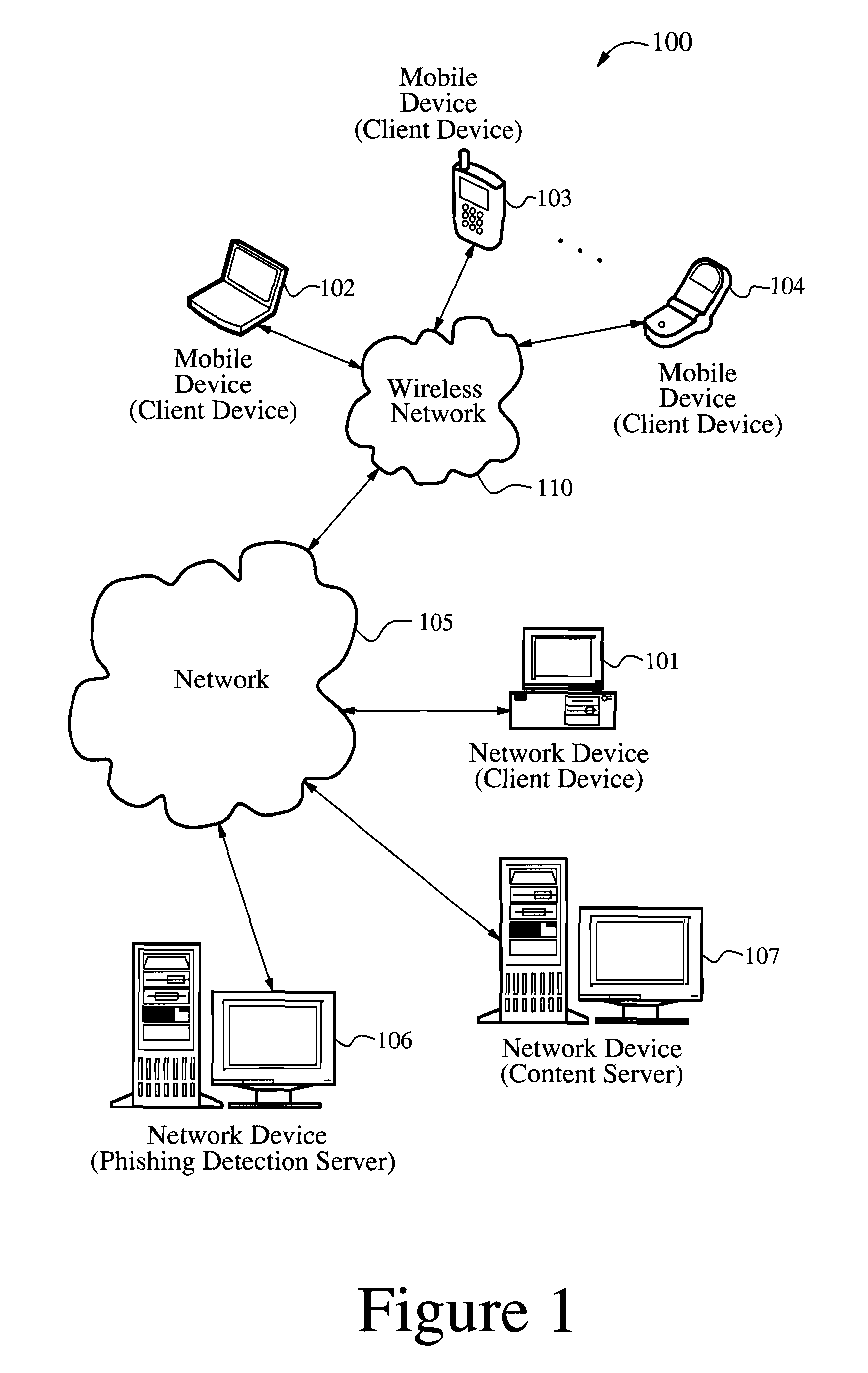

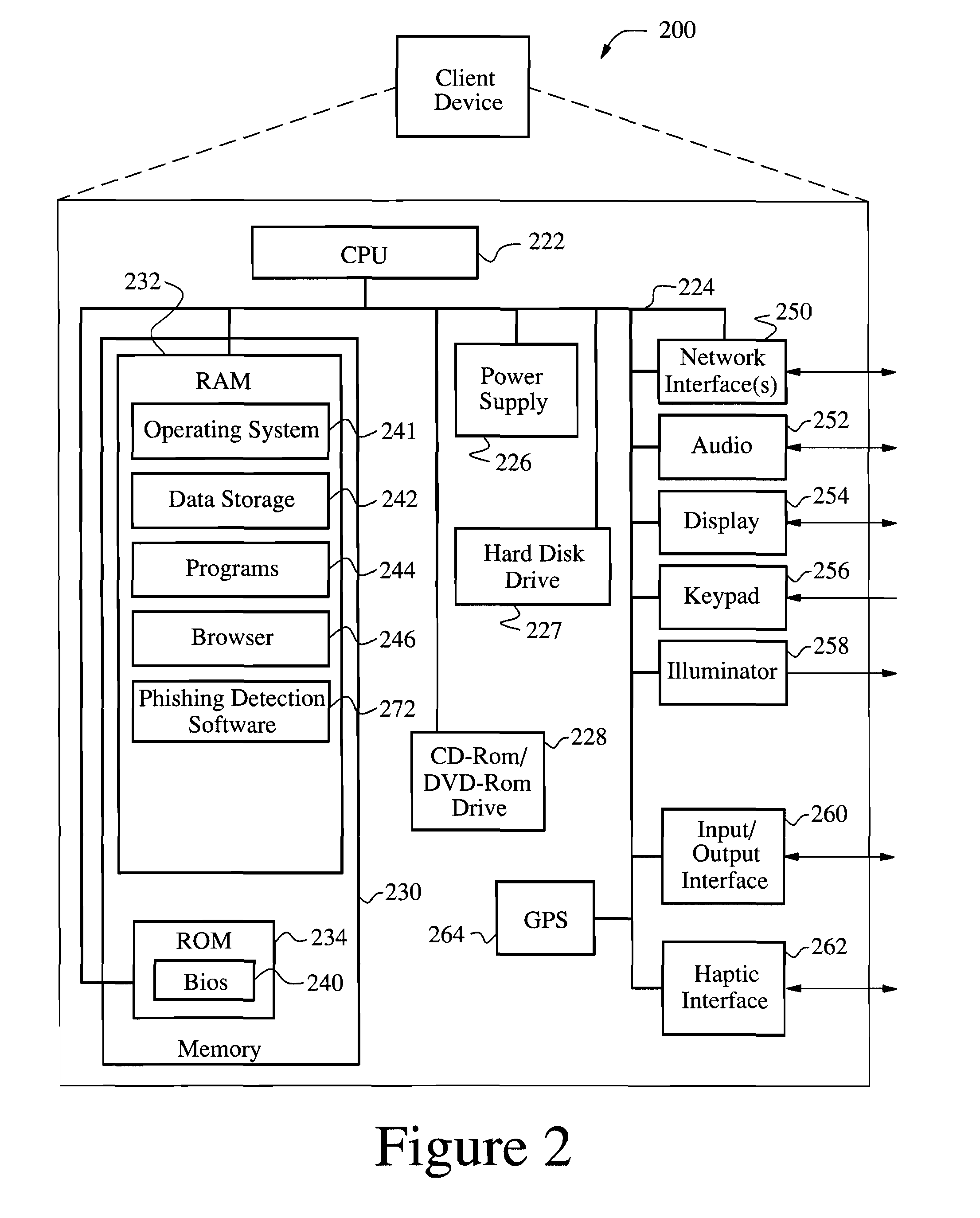

Anti-phishing agent

A phishing detection agent is provided. In one embodiment, a user's browser includes a plug-in application or agent that may capture a visual record of a webpage and, with a cached copy of known, authentic websites provided to it via periodic updates, perform a series of image comparison functions to determine if the suspected website is attempting to deceive the user. The phishing detection agent is capable of performing an image recognition algorithm, such as logo recognition algorithm, optical character recognition, an image similarity algorithm, or combination of two or more of the above. If the suspected webpage corresponds to one of the authentic web pages, but the domain name of the suspected web page does not match the domain name of one of the authentic web pages, the suspected web page is flagged as a phishing web site.

Owner:OATH INC

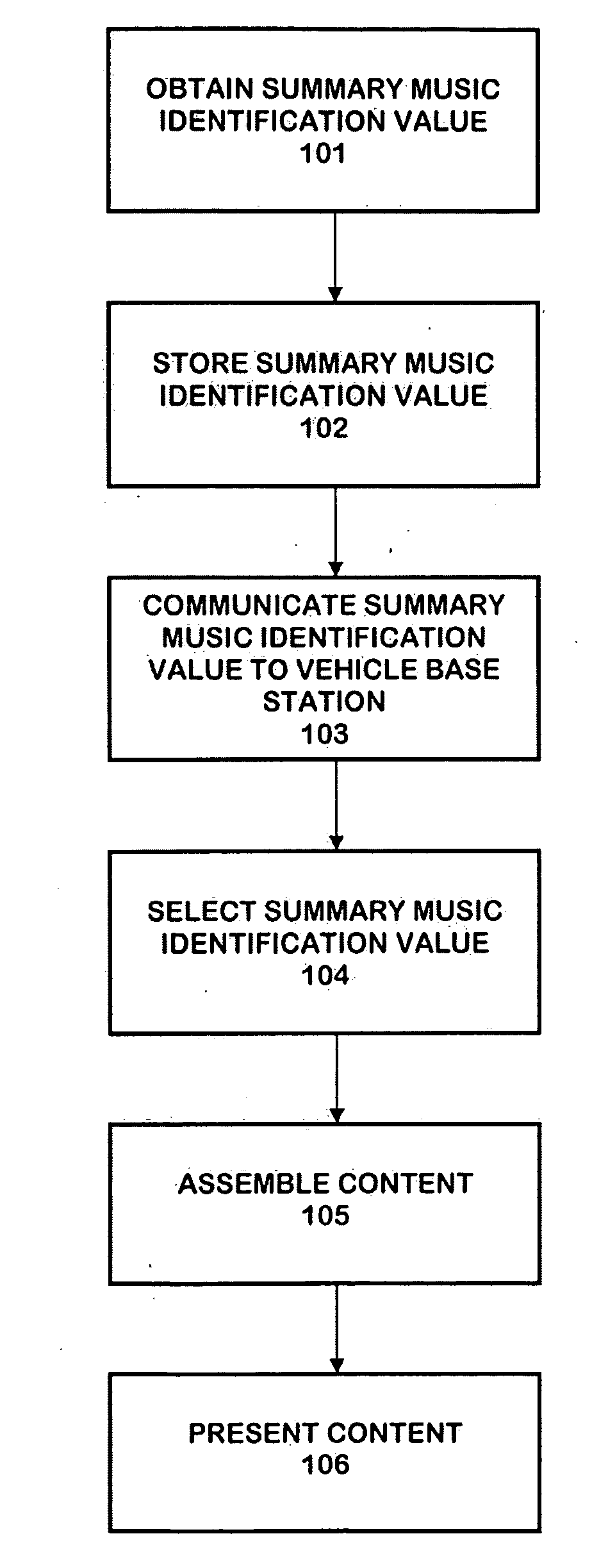

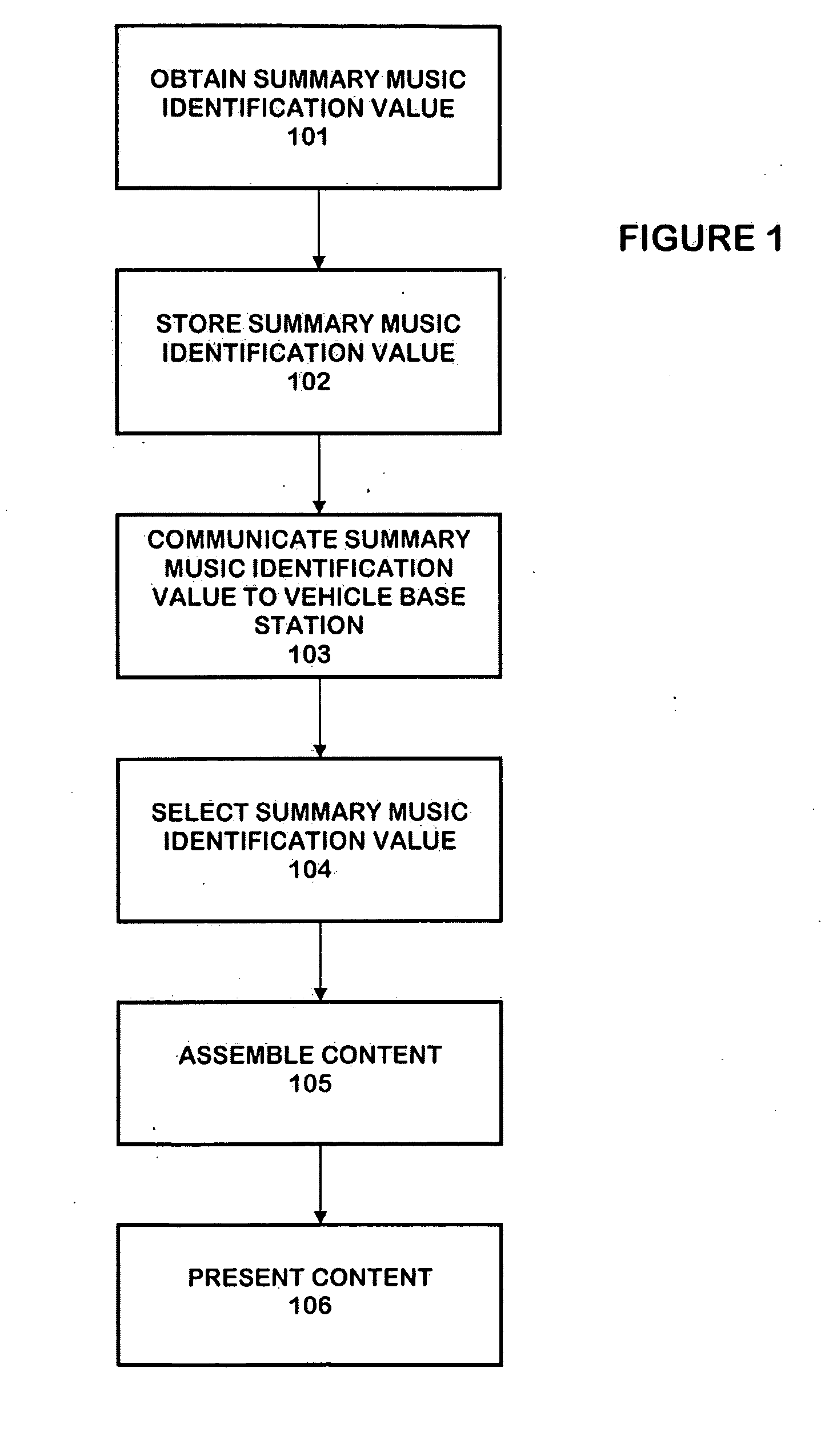

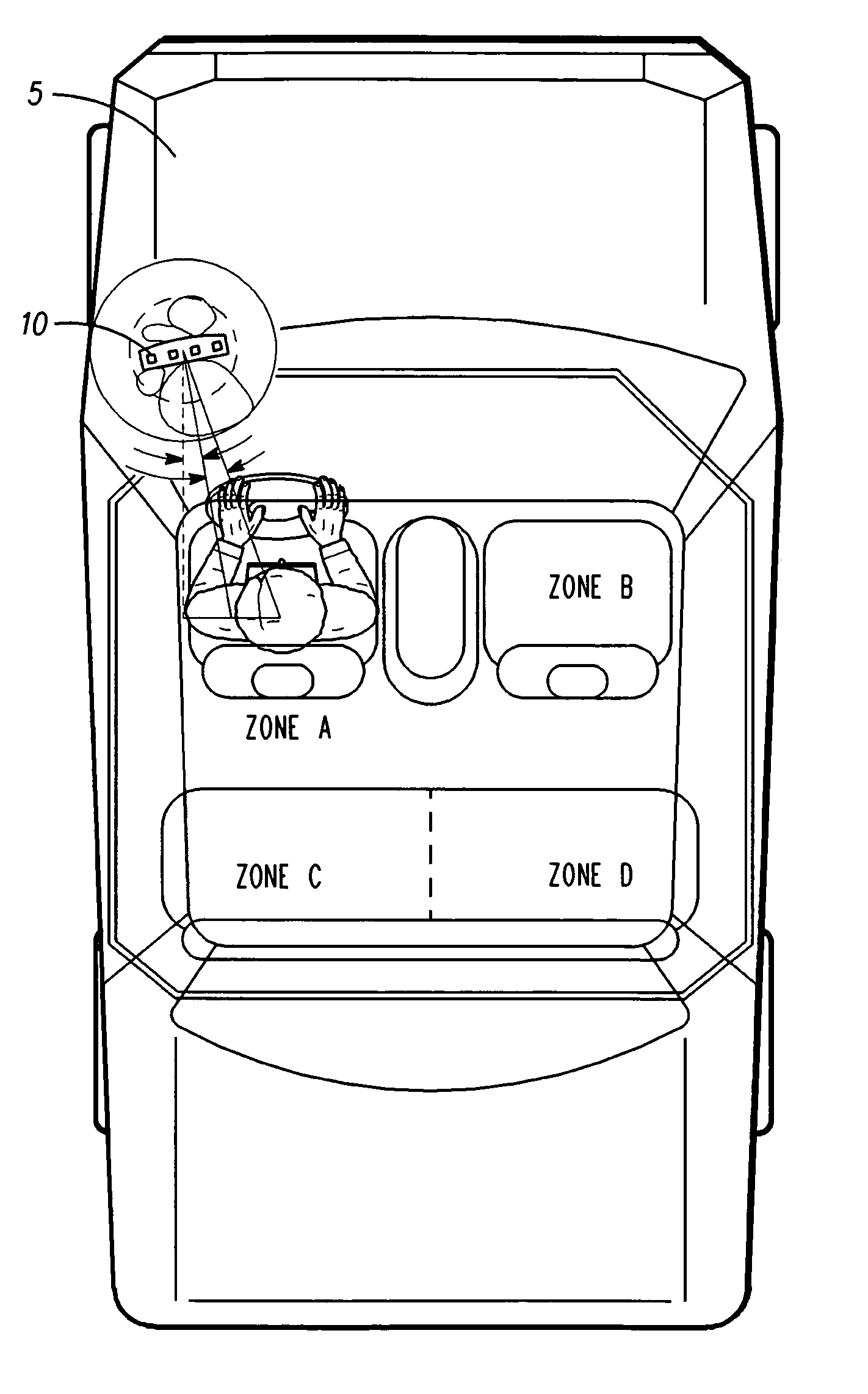

Vehicle infotainment system with virtual personalization settings

InactiveUS20090164473A1Reduce distractionsScalable functionalityElectrophonic musical instrumentsElectronic editing digitised analogue information signalsPersonalizationDistraction

The personalized content system of the system combines a summary music identification value creation and identification algorithm that represents a mathematical summary music identification of a song, an audio file, or other relational music criteria and data (e.g. title, artist, genre, style, beats per minute, etc.) or any combination thereof. The derived value represents the musical taste or style attributes of a song or style. The analysis and generation of the summary music identification value is intended to take place while outside the vehicle where attention can be paid and vehicle distraction and safety is not an issue. A user can generate a single summary music identification value or can generate multiple summary music identification values for different criteria. In other cases, summary music identification values can be defined for genre, but with a much more personal touch possible than with fixed playlist radio stations. In another embodiment, a user can obtain and use someone else's summary music identification value (e.g. a celebrity, artist, friend, etc.) and use it with the system.

Owner:HARMAN INT IND INC +1

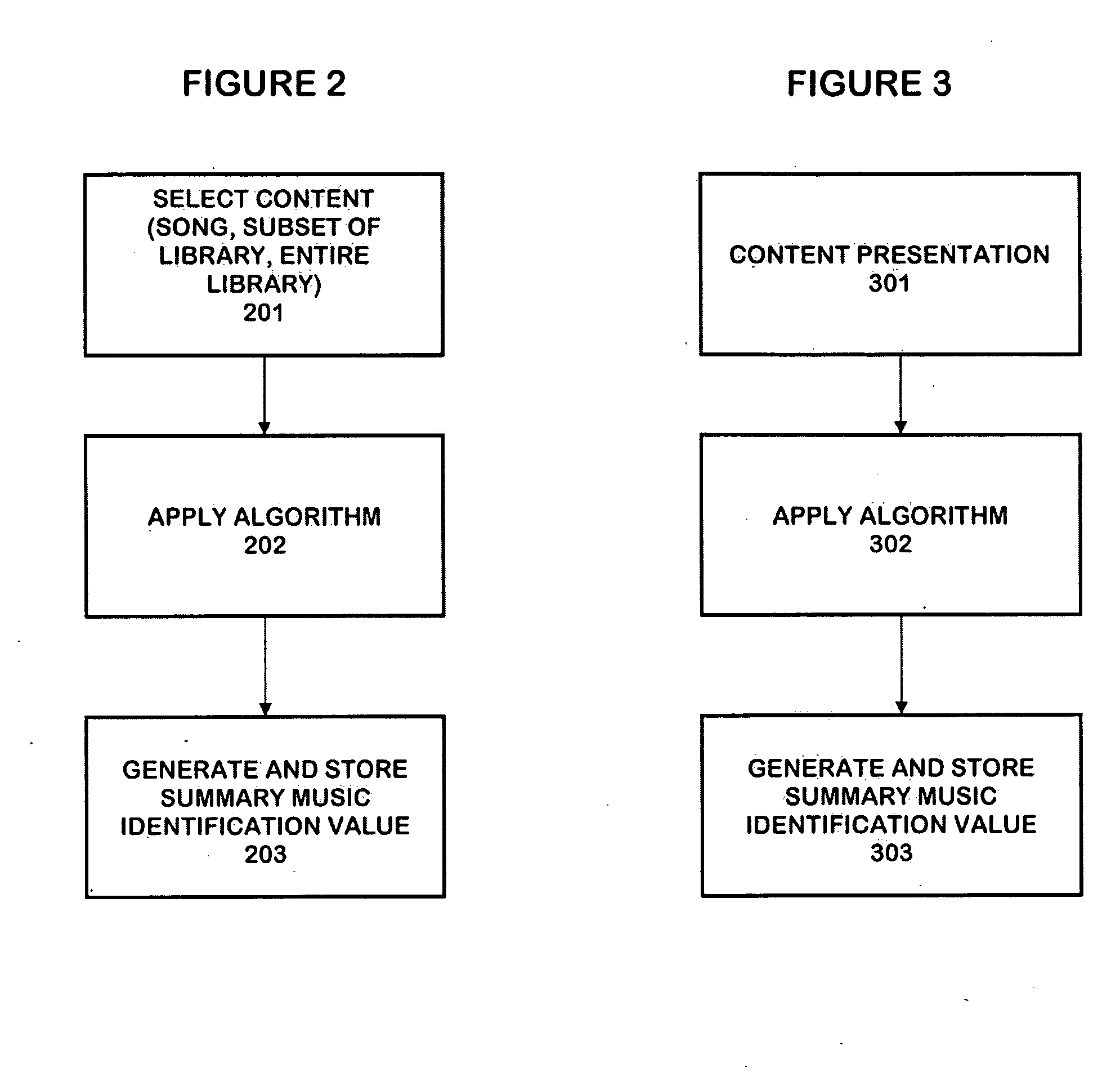

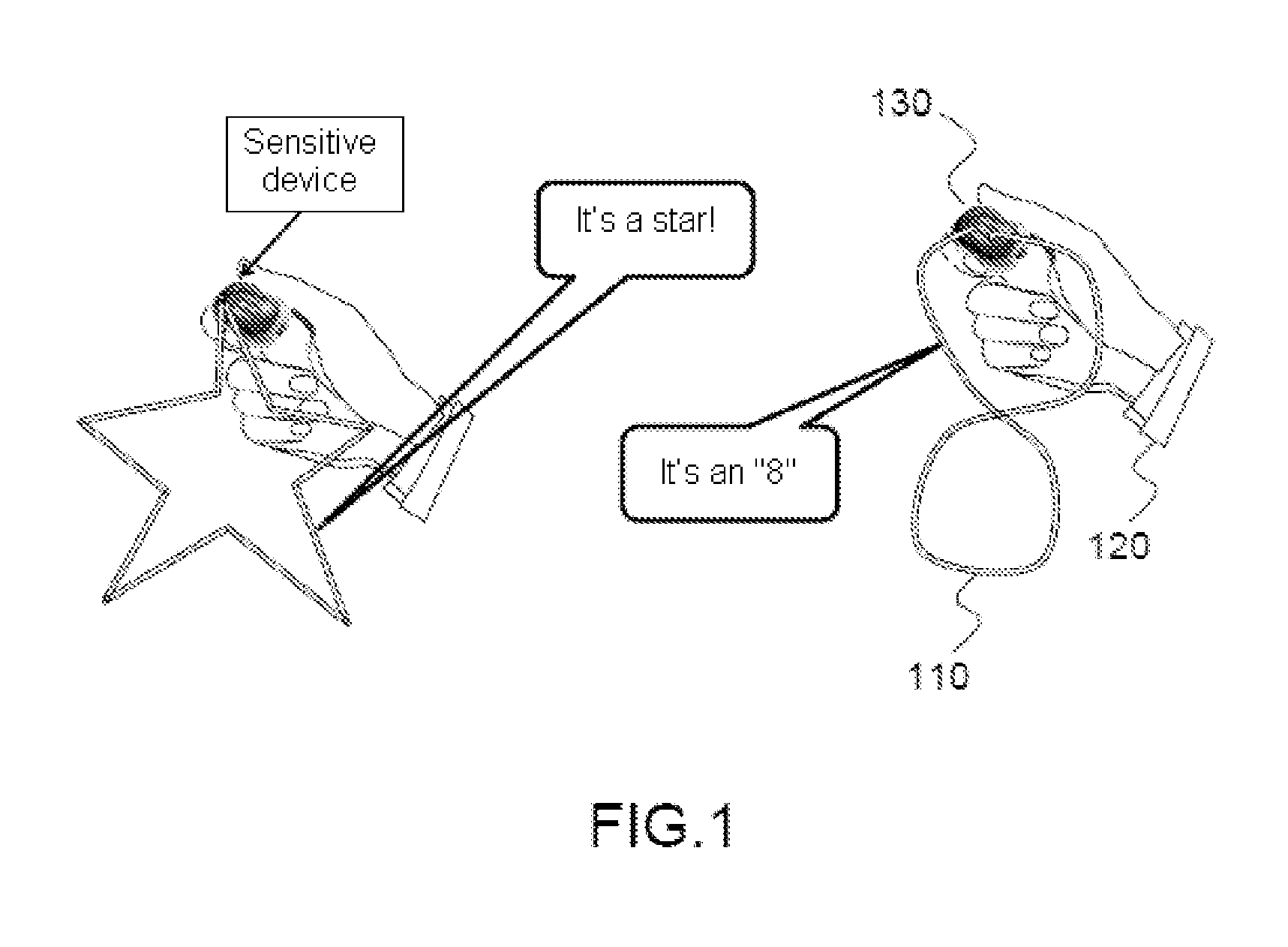

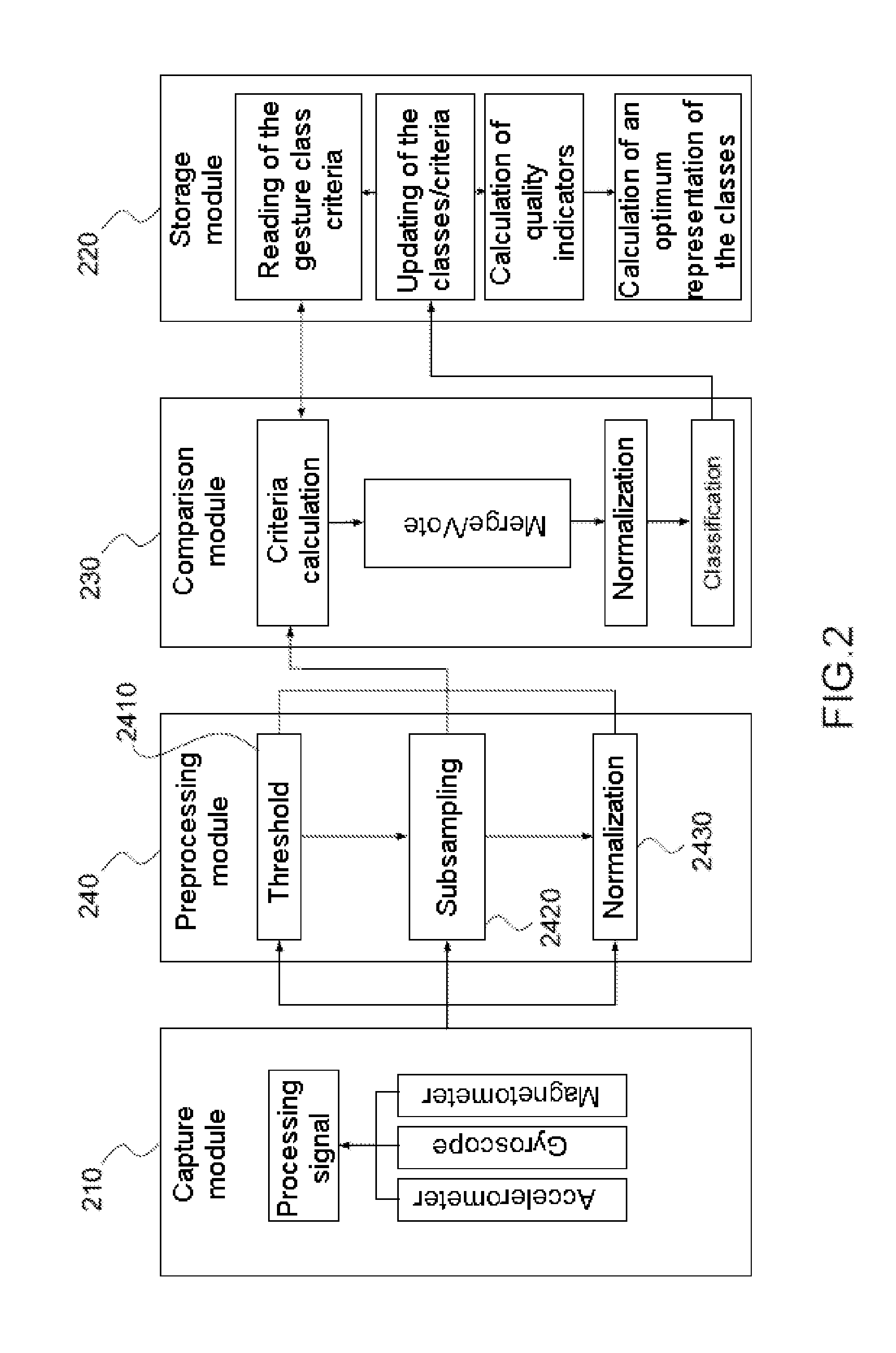

System and method for recognizing gestures

InactiveUS20120323521A1Reduce processing timeHigh degreeDigital computer detailsAcceleration measurementElectronic systemsRecognition algorithm

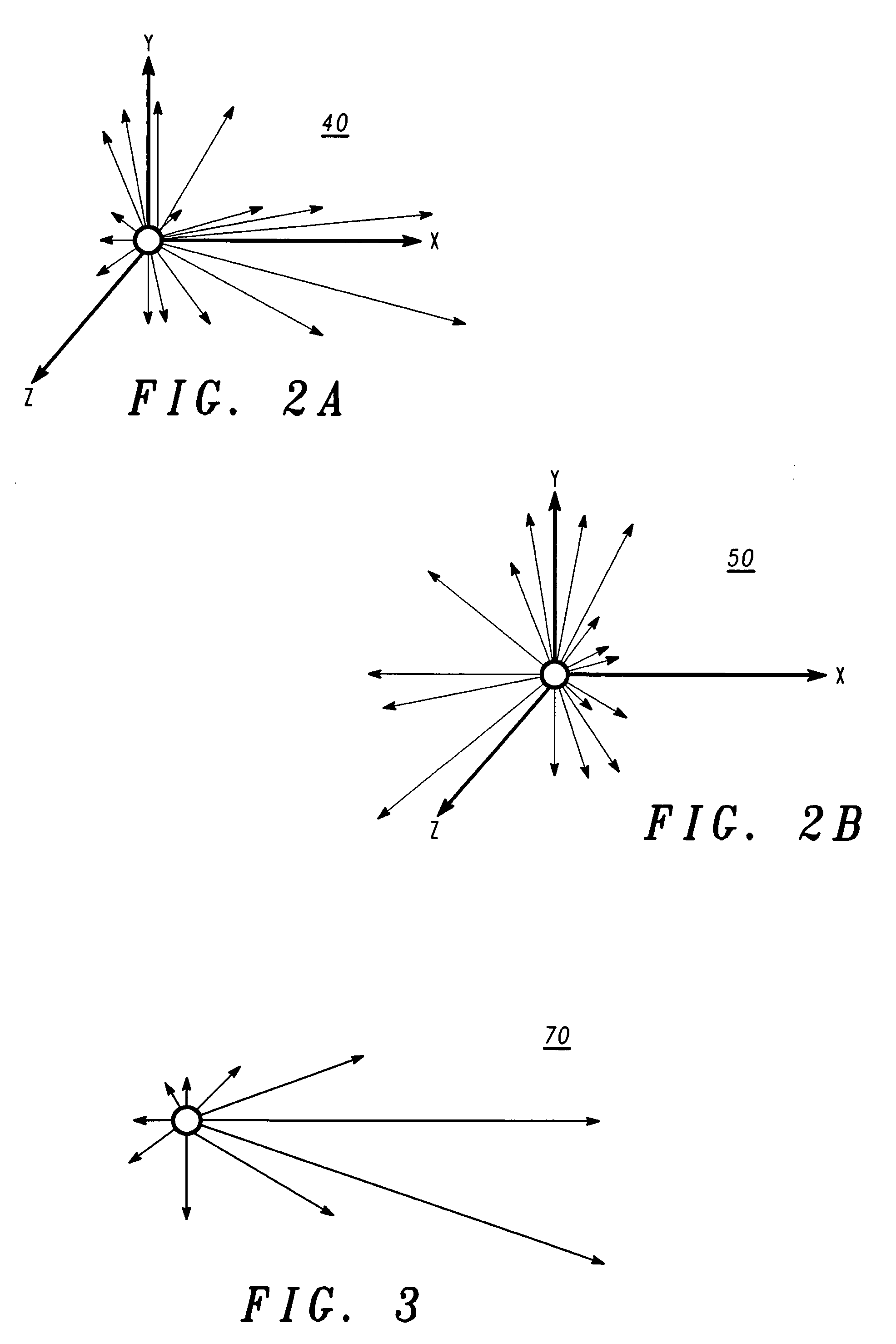

Systems and methods for recognizing the gestures of an entity, notably a human being, and, optionally, for controlling an electrical or electronic system or apparatus, are discussed. The system uses sensors that measure signals, preferentially representative of inertial data about the movements of said entity, and implements a process for enriching a dictionary of said gestures to be recognized and a recognition algorithm, for recognition among the classes of gestures in said dictionary. The algorithm implemented is preferably of the dynamic time warping type. The system carries out preprocessing operations, such as the elimination of signals captured during periods of inactivity of the entity, subsampling of the signals, and normalization of the measurements by reduction, and preferentially uses, to classify the gestures, specific distance calculation modes and modes for merging or voting between the various measurements by the sensors.

Owner:MOVEA +1

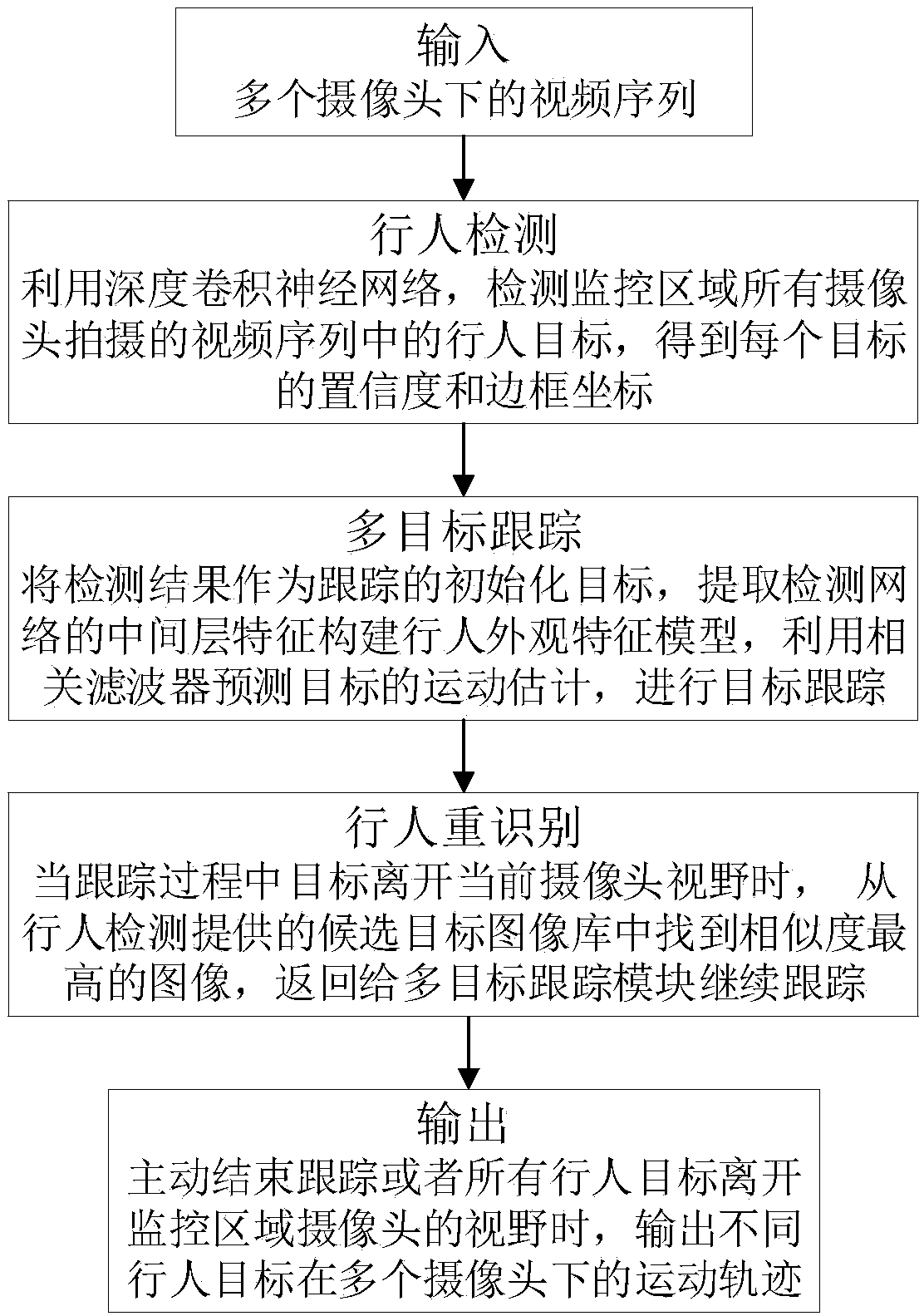

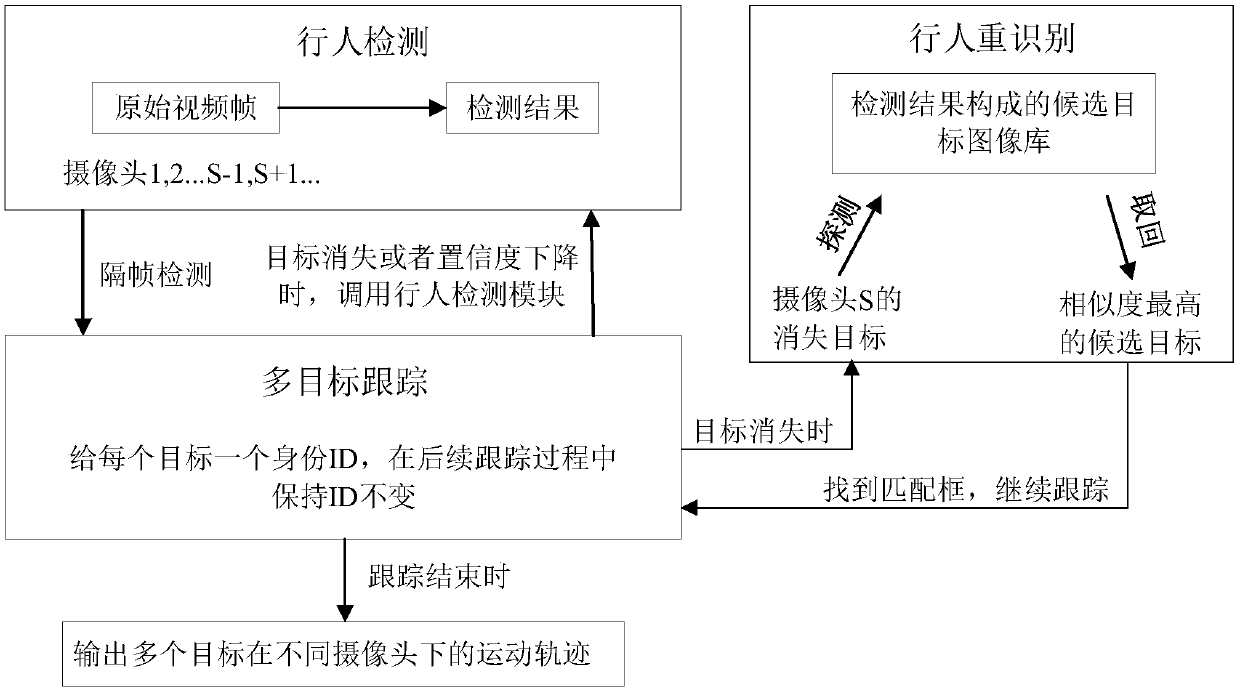

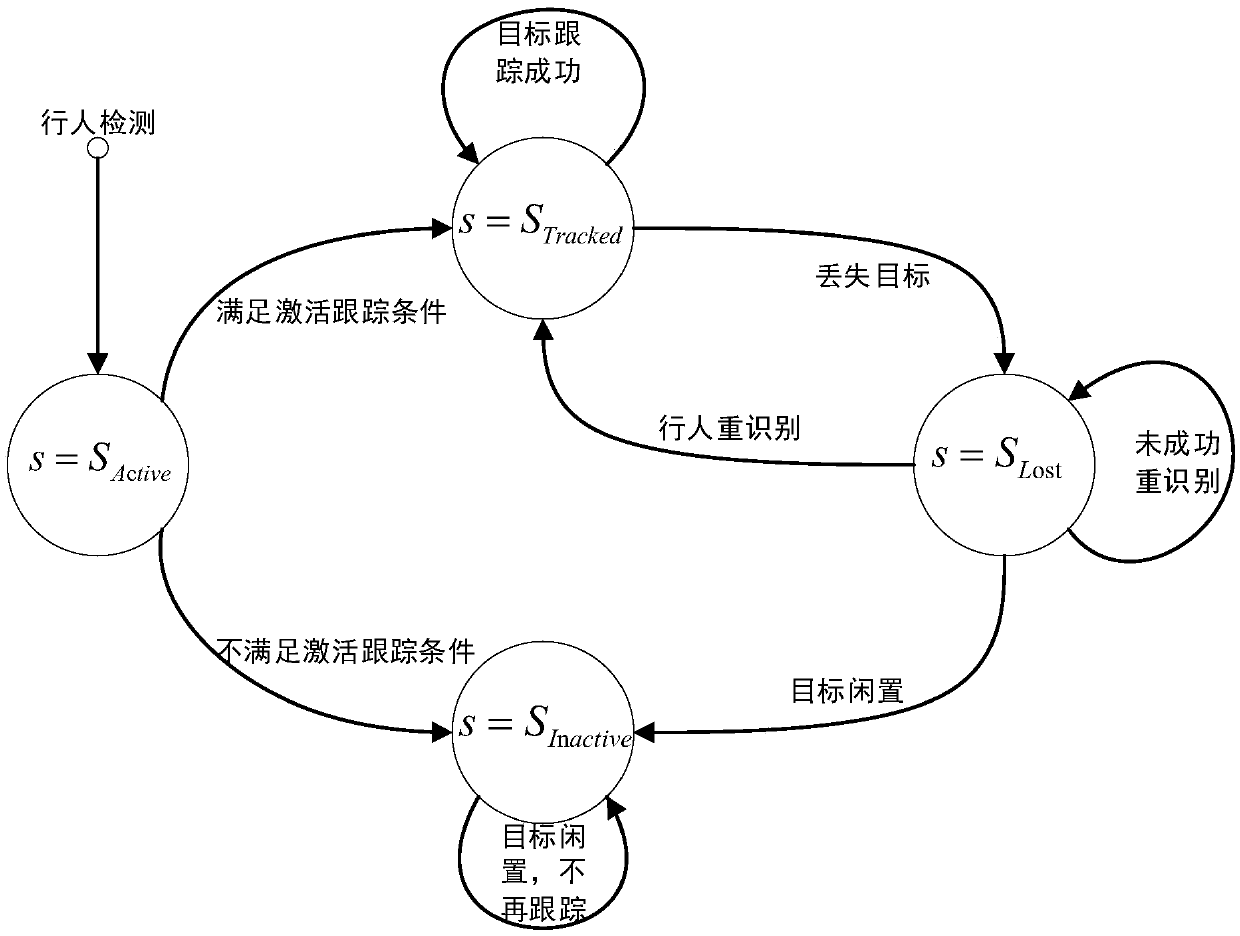

Cross-camera pedestrian detection tracking method based on depth learning

ActiveCN108875588AOvercome occlusionOvercome lighting changesCharacter and pattern recognitionNeural architecturesMulti target trackingRecognition algorithm

The invention discloses a cross-camera pedestrian detection tracking method based on depth learning, which comprises the steps of: by training a pedestrian detection network, carrying out pedestrian detection on an input monitoring video sequence; initializing tracking targets by a target box obtained by pedestrian detection, extracting shallow layer features and deep layer features of a region corresponding to a candidate box in the pedestrian detection network, and implementing tracking; when the targets disappear, carrying out pedestrian re-identification which comprises the process of: after target disappearance information is obtained, finding images with the highest matching degrees with the disappearing targets from candidate images obtained by the pedestrian detection network and continuously tracking; and when tracking is ended, outputting motion tracks of the pedestrian targets under multiple cameras. The features extracted by the method can overcome influence of illuminationvariations and viewing angle variations; moreover, for both the tracking and pedestrian re-identification parts, the features are extracted from the pedestrian detection network; pedestrian detection, multi-target tracking and pedestrian re-identification are organically fused; and accurate cross-camera pedestrian detection and tracking in a large-range scene are implemented.

Owner:WUHAN UNIV

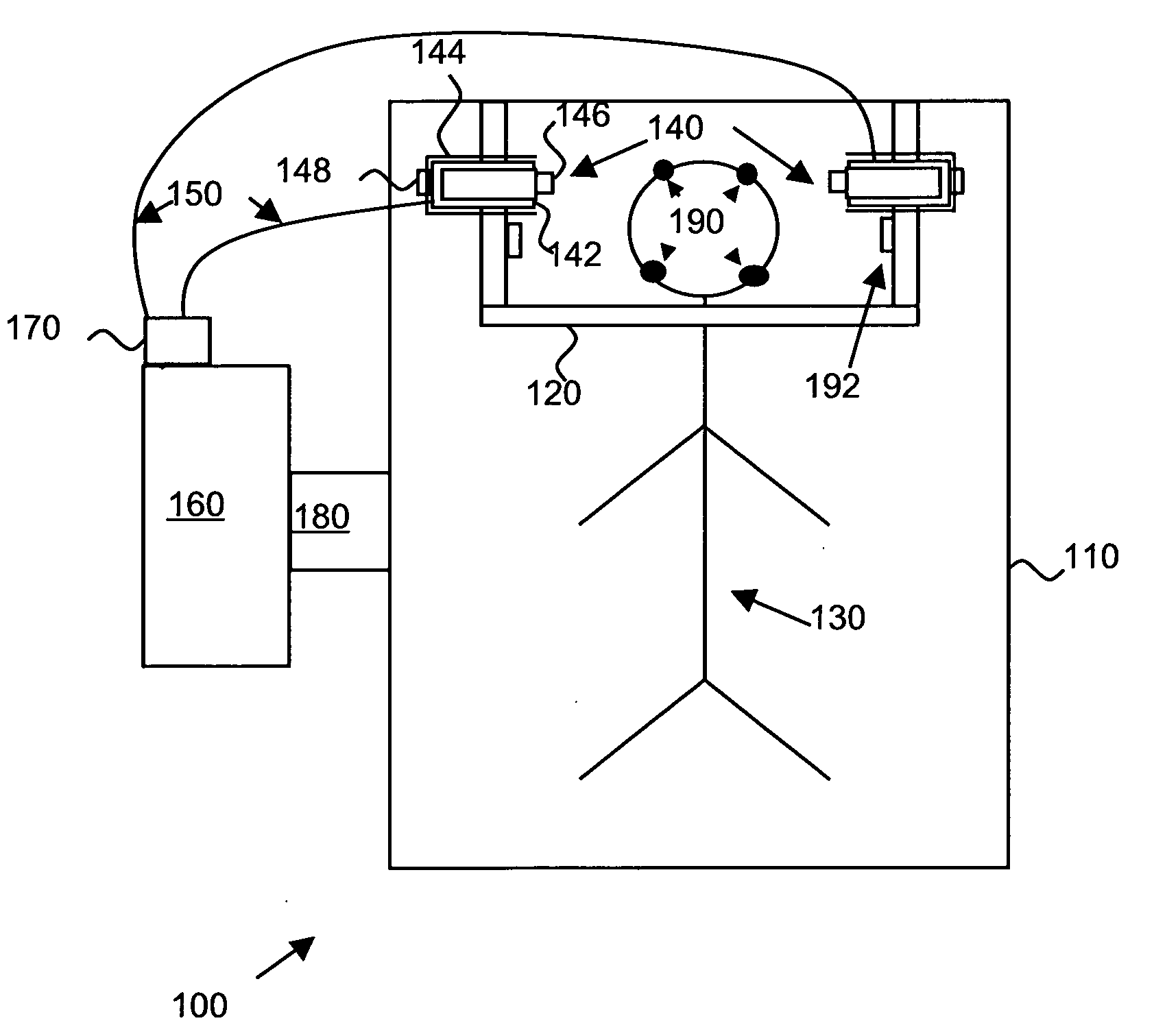

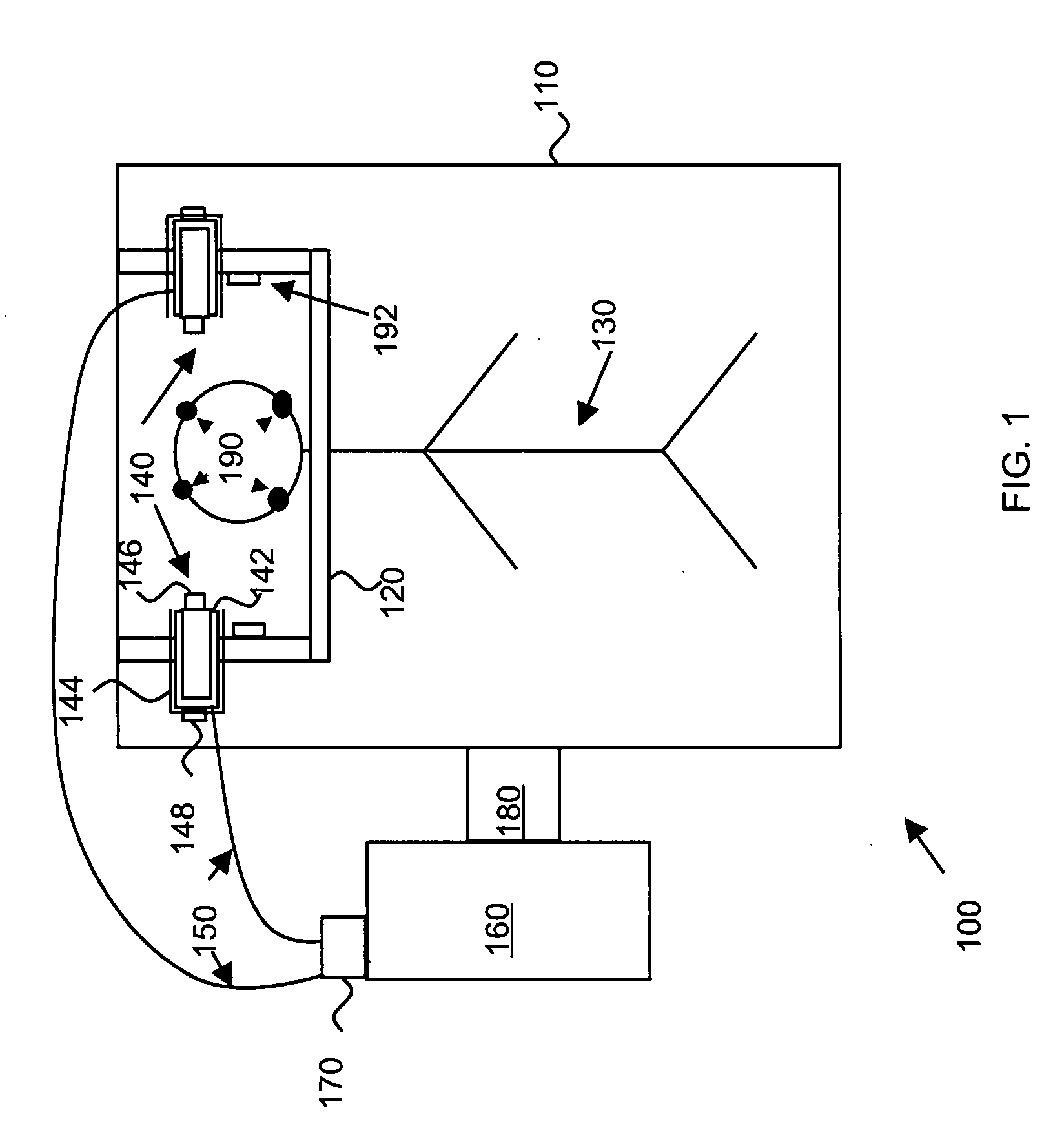

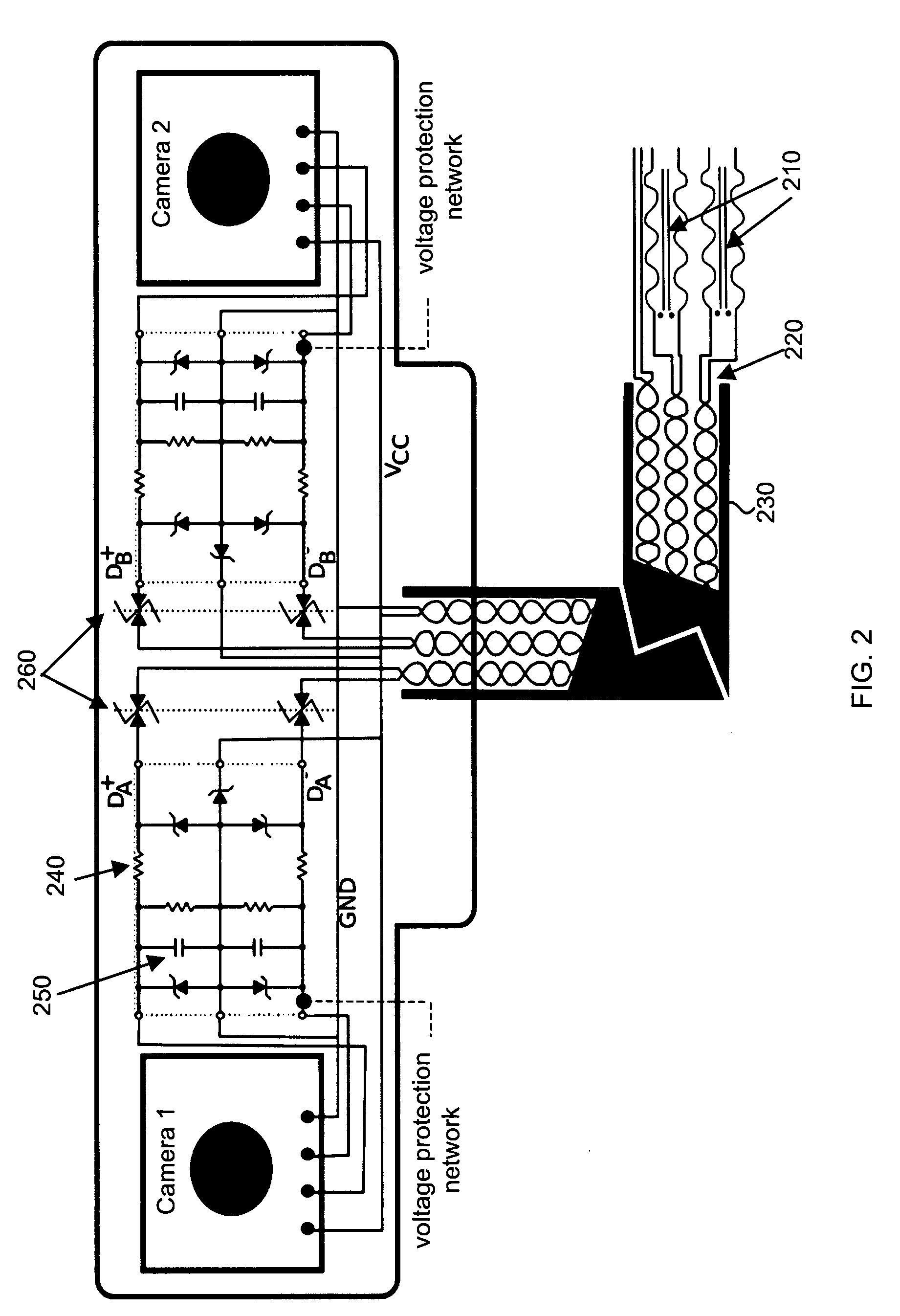

Apparatus and method for real-time motion-compensated magnetic resonance imaging

InactiveUS20090209846A1Magnetic measurementsCharacter and pattern recognitionResonanceReal Time Kinematic

The present invention provides an apparatus and method for real-time motion compensated magnetic resonance imaging (MRI) of a human or animal. The apparatus includes one or more magnetic-resonance compatible cameras mounted on a coil of the MRI device, a calculation and storage device, and an interface operably connected to the MRI device and the calculation and storage device. The apparatus may also include a set of magnetic resonance compatible markers, where the markers are positioned on the human or animal. Alternatively, the apparatus may use a facial recognition algorithm to identify features of the human or animal. For the present invention, the frame of reference is defined by the animal or human being imaged, instead of the typical magnetic resonance coordinate system. Based on continuous positional information, the apparatus controls the magnetic resonance scanner so that it follows the human or animal's motion.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV

Method for Incorporating Facial Recognition Technology in a Multimedia Surveillance System

InactiveUS20100111377A1Function increaseImprove economyChecking time patrolsChecking apparatusMonitoring systemRecognition algorithm

Embodiments provide a surveillance system having at least one camera adapted to produce an IP signal, the at least one camera having an image collection device configured for collecting image data, the at least one camera having at least one facial processor configured to execute with digital format image data at least one facial recognition algorithm, execution of the at least one facial recognition algorithm with the digital format image data detecting faces when present in the digital format image data, execution of the at least onefacial recognition algorithm providing for each detected face at least one set of unique facial image data.

Owner:MONROE DAVID A

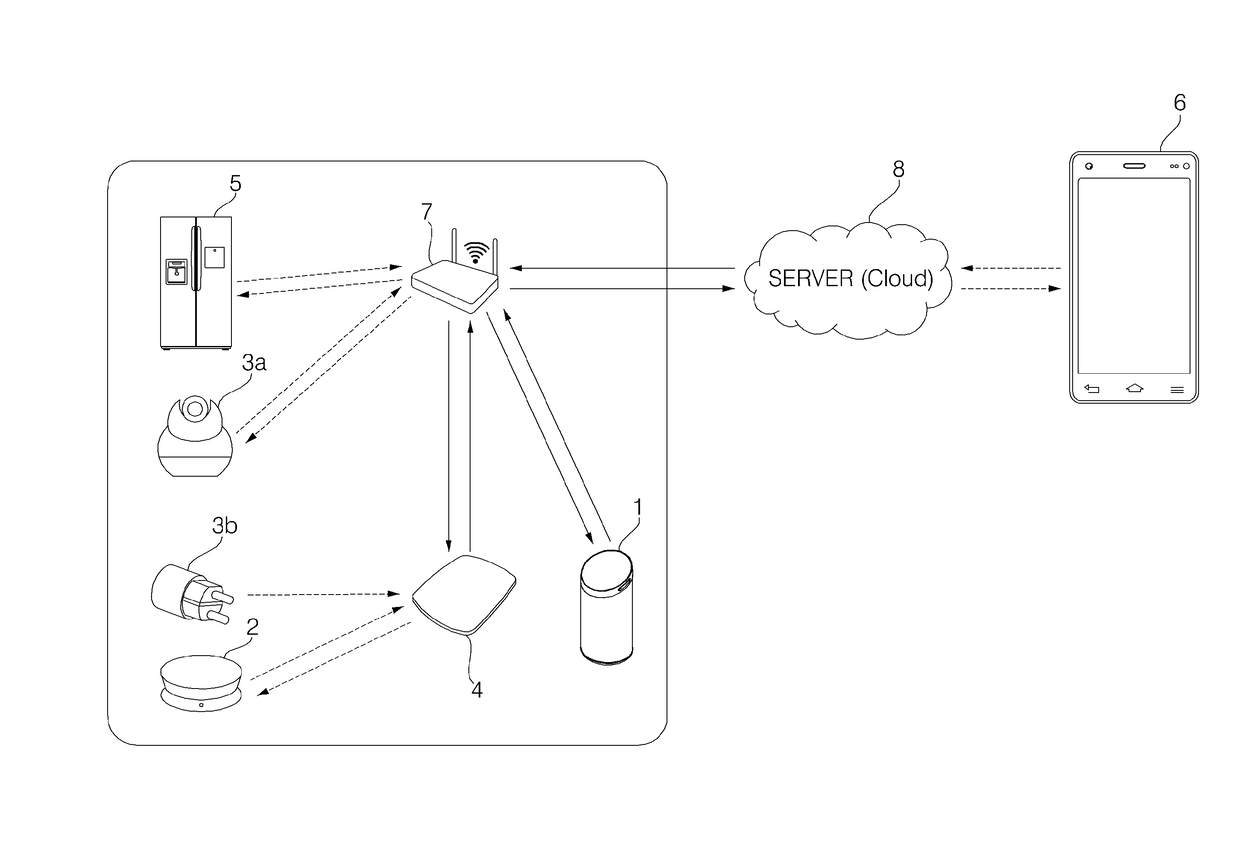

Voice recognition apparatus and voice recognition system

ActiveUS20180308470A1Improve featuresSoftware maintainance/managementSound input/outputRecognition algorithmIdentification device

Disclosed is a voice recognition apparatus including: a microphone configured to receive a voice command; a memory configured to store a first voice recognition algorithm; a communication module configured to transmit the voice command to a server system and receive first voice recognition algorithm-related update data from the server system; and a controller configured to perform control to update the first voice recognition algorithm, which is stored in the memory, based on the first voice recognition algorithm-related update data. Accordingly, the voice recognition apparatus is able to provide a voice recognition algorithm fitting to a user's characteristics.

Owner:LG ELECTRONICS INC

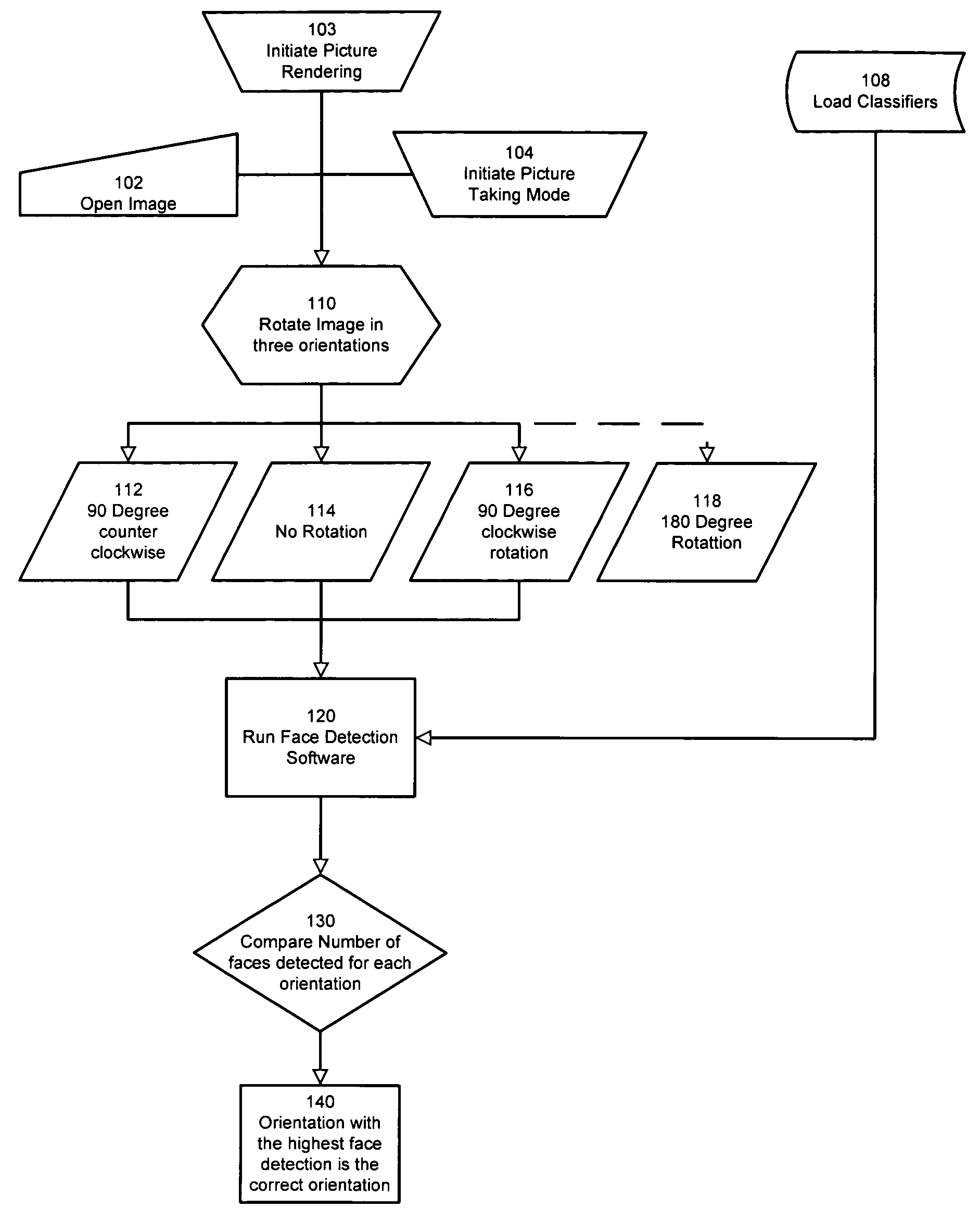

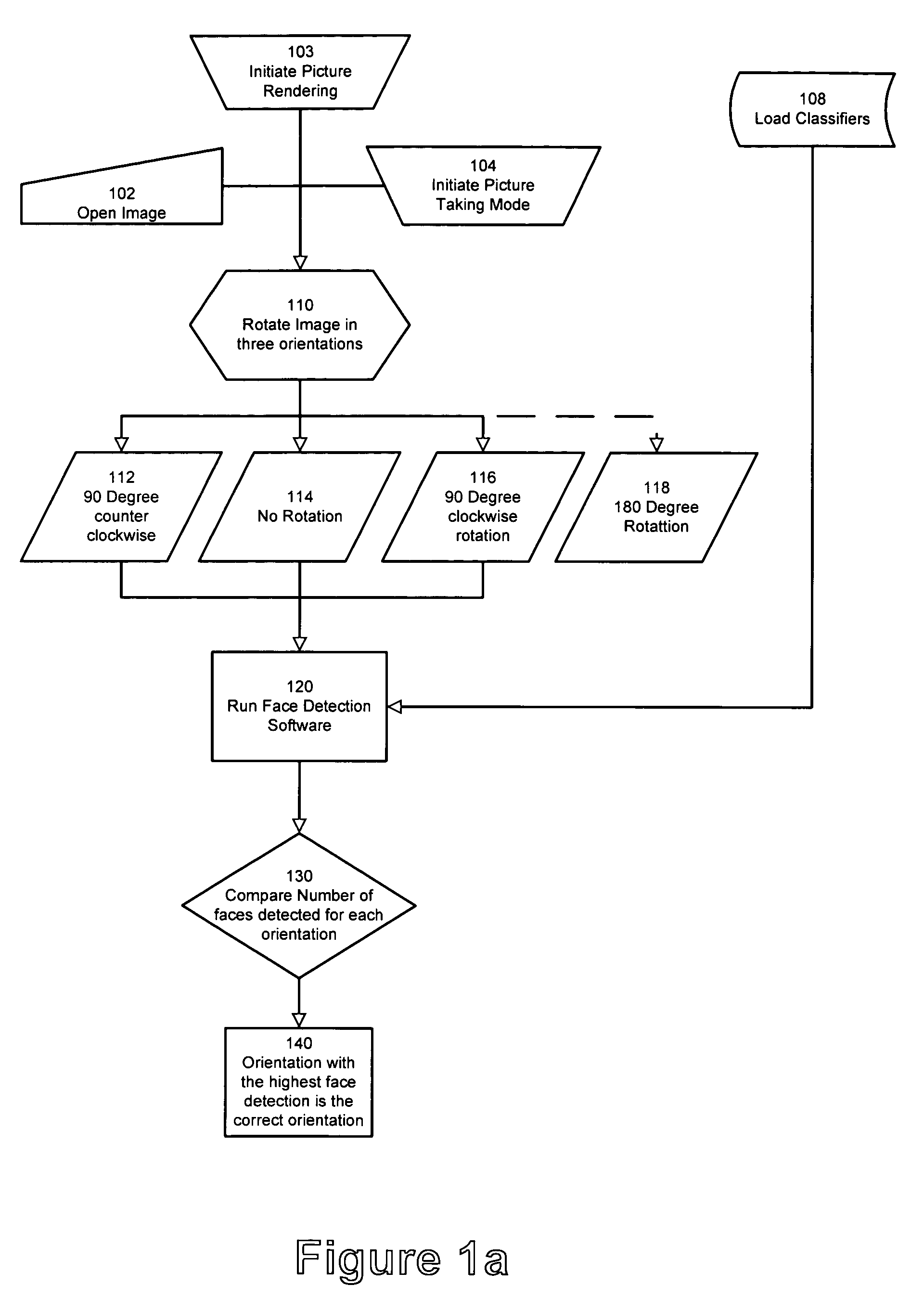

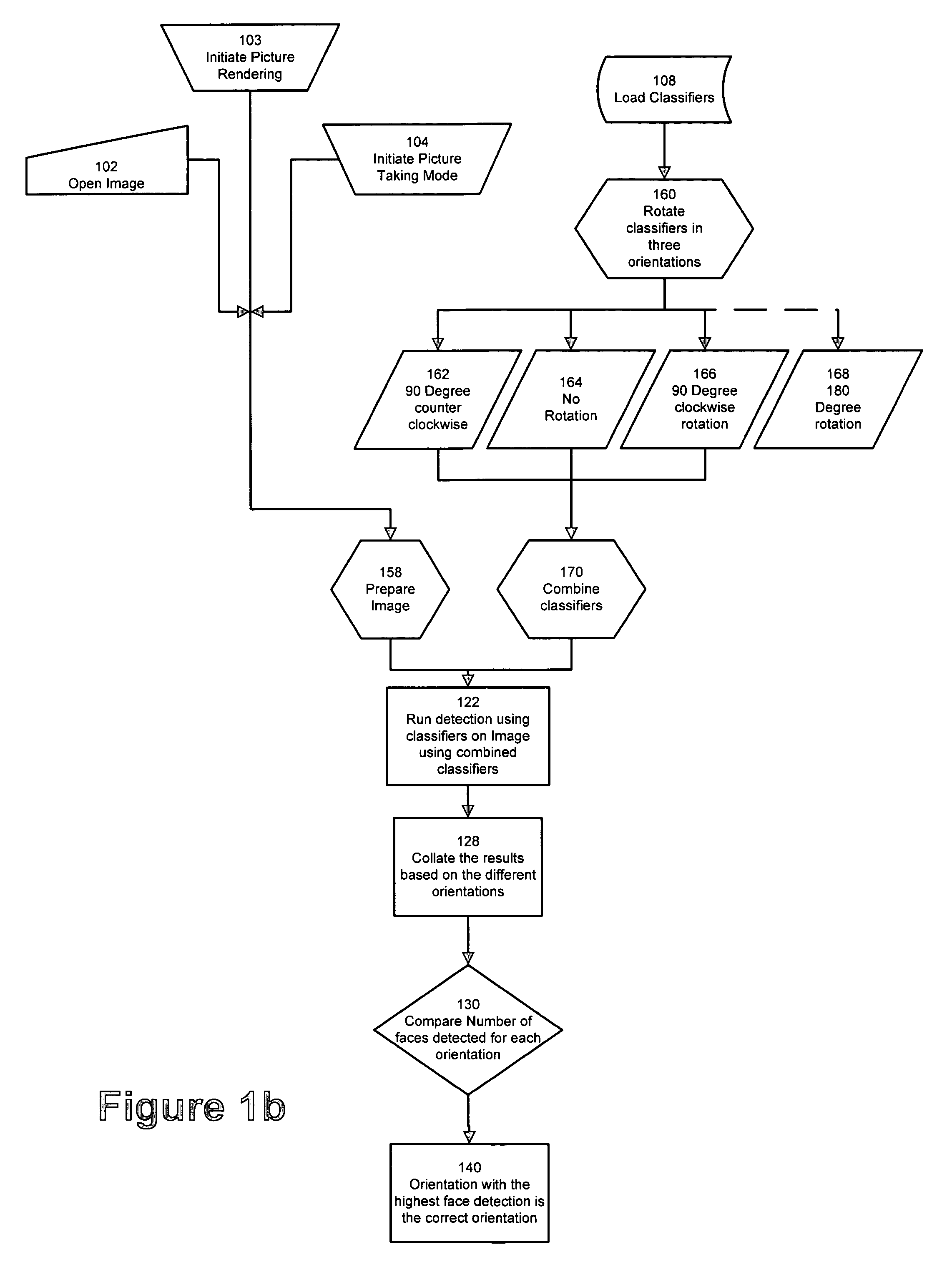

Detecting orientation of digital images using face detection information

A method of automatically establishing the correct orientation of an image using facial information. This method is based on the exploitation of the inherent property of image recognition algorithms in general and face detection in particular, where the recognition is based on criteria that is highly orientation sensitive. By applying a detection algorithm to images in various orientations, or alternatively by rotating the classifiers, and comparing the number of successful faces that are detected in each orientation, one may conclude as to the most likely correct orientation. Such method can be implemented as an automated method or a semi automatic method to guide users in viewing, capturing or printing of images.

Owner:FOTONATION VISION

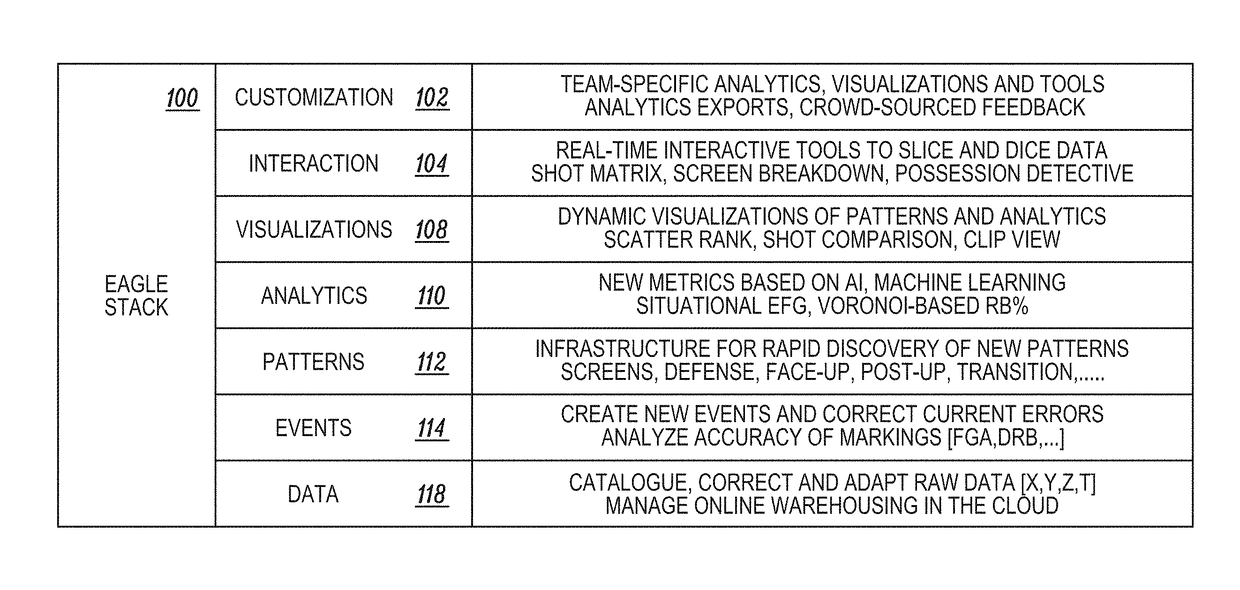

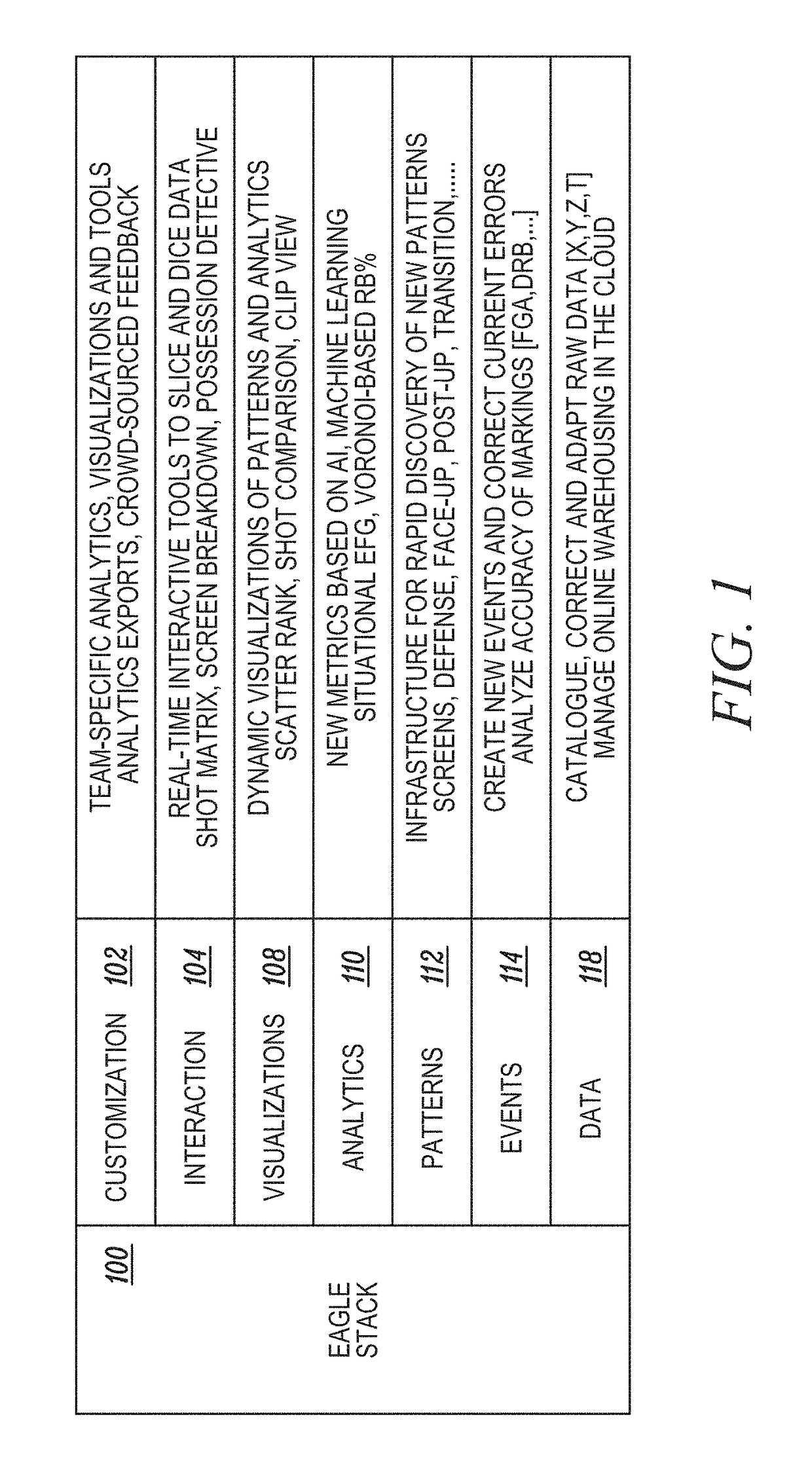

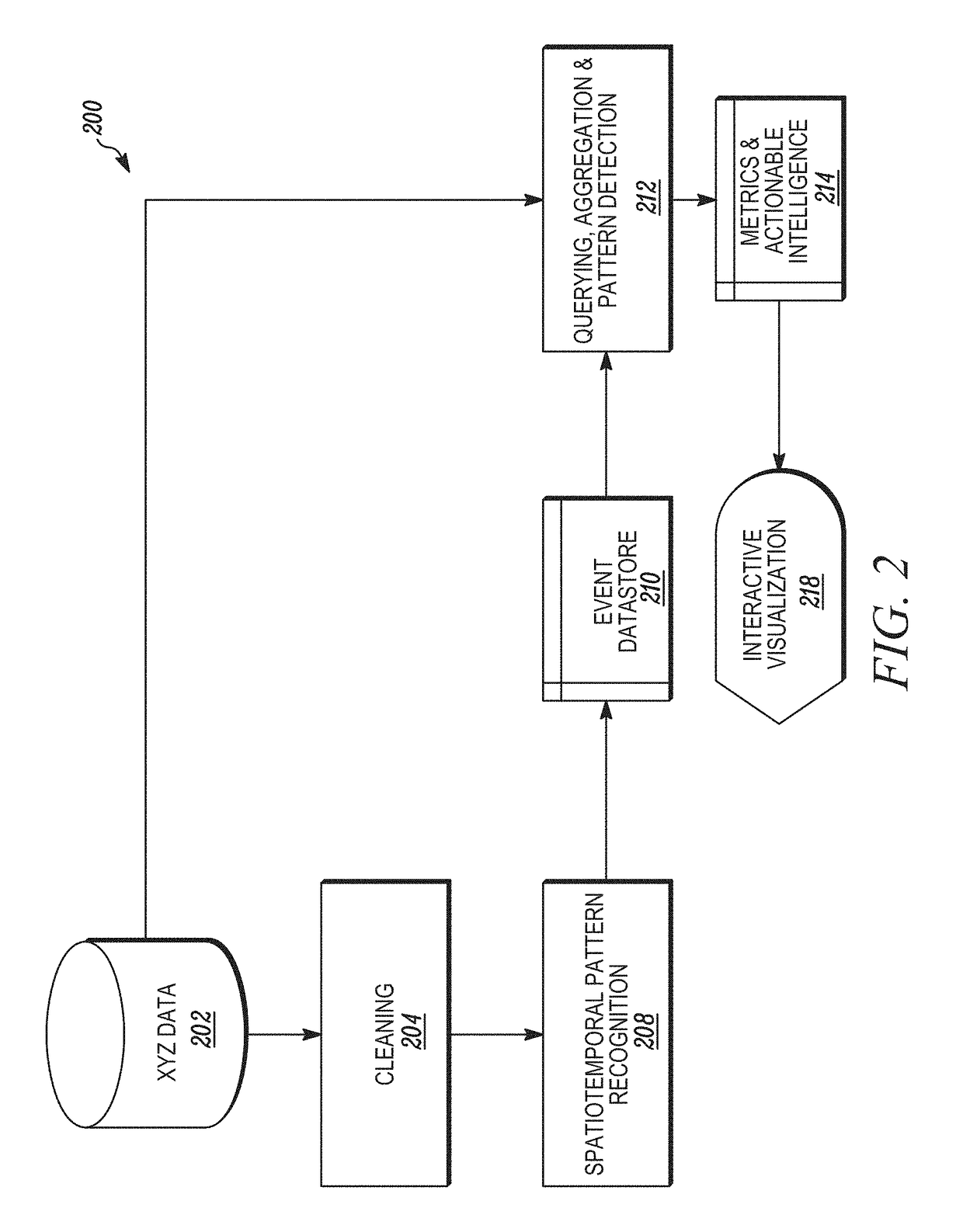

Methods and systems of spatiotemporal pattern recognition for video content development

ActiveUS20170238055A1Facilitate decision-makingIncrease entertainmentImage enhancementTelevision system detailsEvent typeRecognition algorithm

Providing enhanced video content includes processing at least one video feed through at least one spatiotemporal pattern recognition algorithm that uses machine learning to develop an understanding of a plurality of events and to determine at least one event type for each of the plurality of events. The event type includes an entry in a relationship library detailing a relationship between two visible features. Extracting and indexing a plurality of video cuts from the video feed is performed based on the at least one event type determined by the understanding that corresponds to an event in the plurality of events detectable in the video cuts. Lastly, automatically and under computer control, an enhanced video content data structure is generated using the extracted plurality of video cuts based on the indexing of the extracted plurality of video cuts.

Owner:GENIUS SPORTS SS LLC

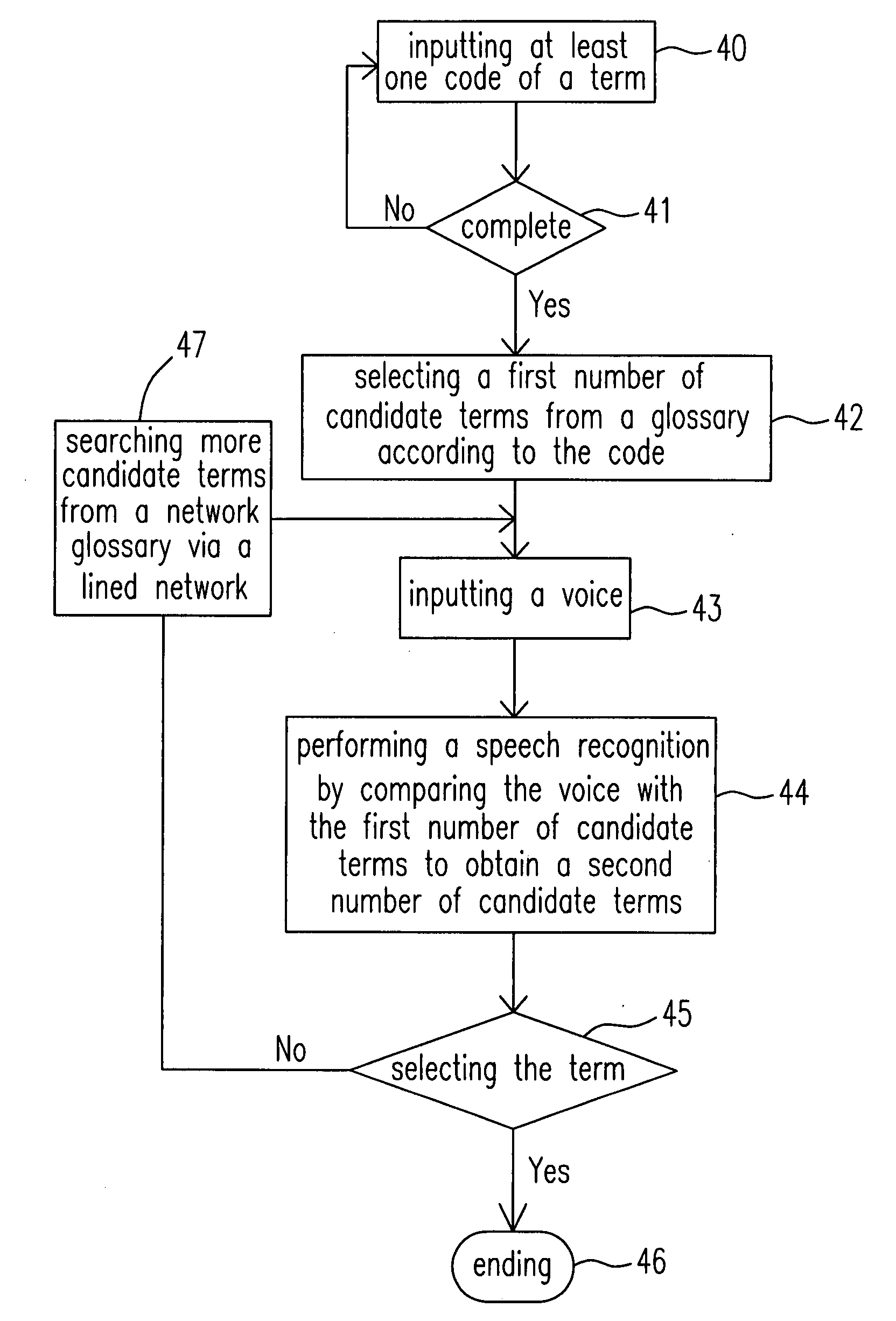

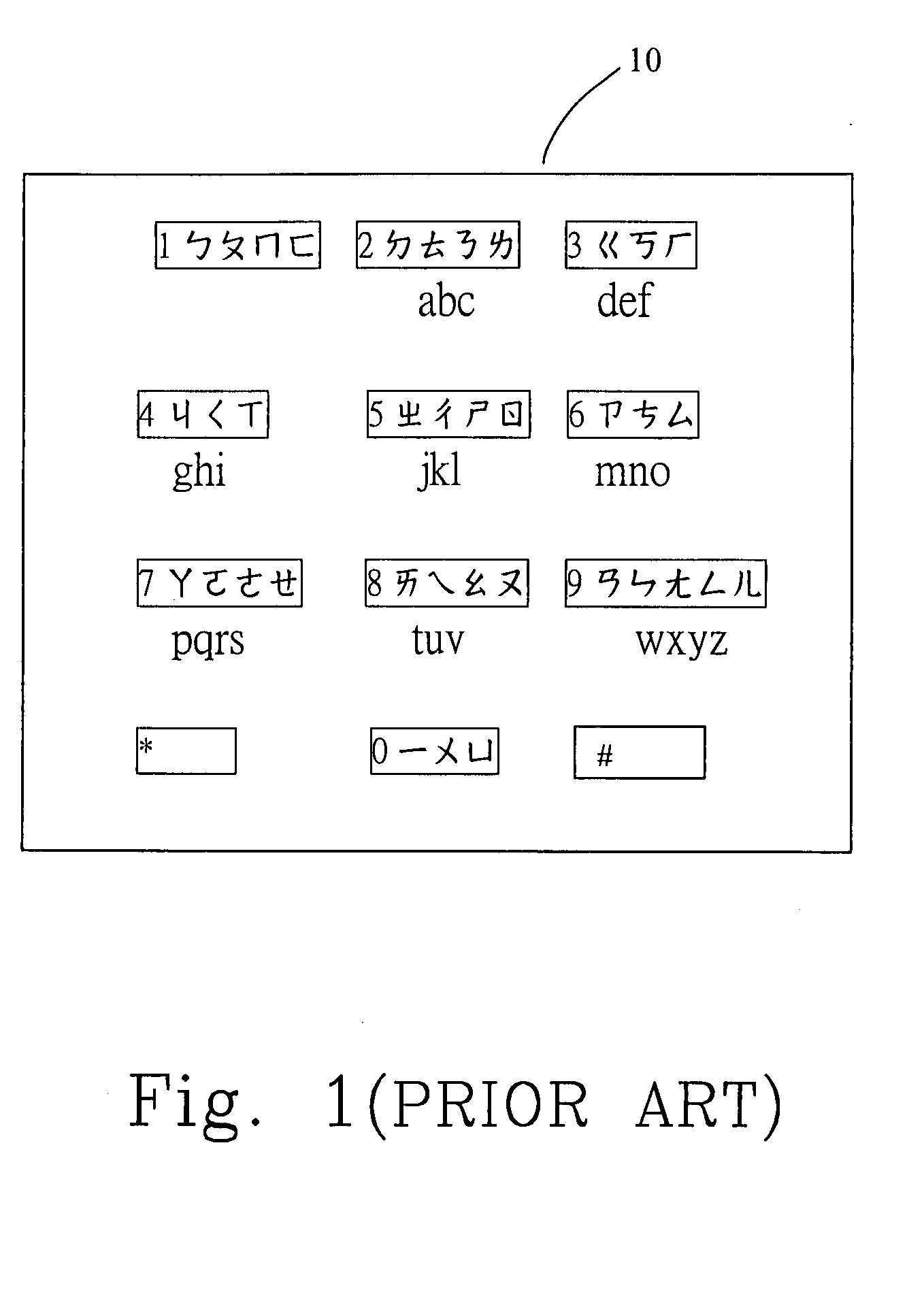

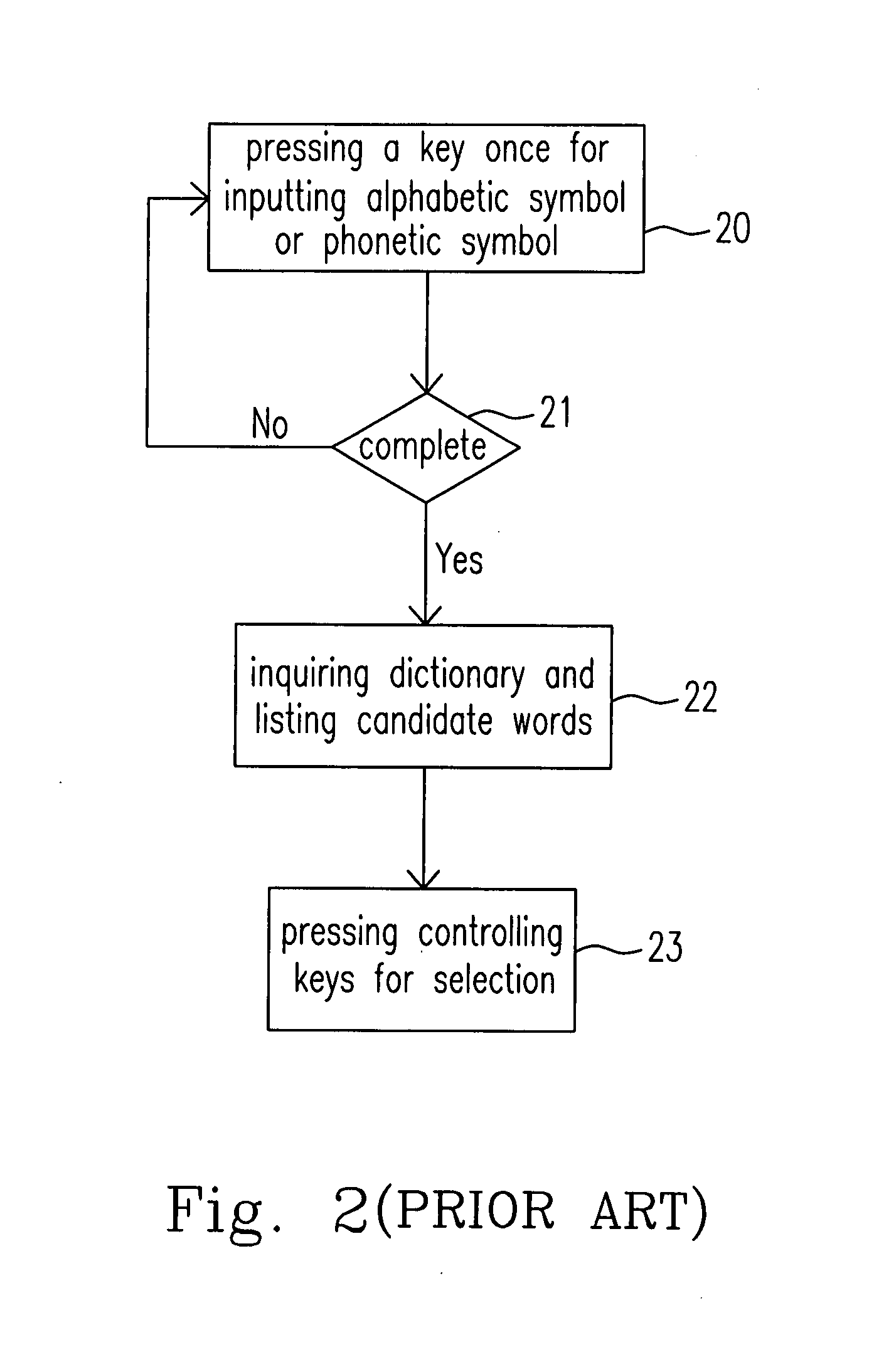

Input system for mobile search and method therefor

InactiveUS20080281582A1Decrease keying numberThe process is convenient and fastDigital data processing detailsNatural language data processingMobile searchProcess module

An input system for mobile search and a method therefor are provided. The input system includes an input module receiving a code input for a specific term and a voice input corresponding thereto, a database including a glossary and an acoustic model, wherein the glossary includes a plurality of terms and a sequence list, and each of the terms has a search weight based on an order of the sequence list, a process module selecting a first number of candidate terms from the glossary according to the code input by using an input algorithm and obtaining a second number of candidate terms by using a speech recognition algorithm to compare the voice input with the first number of candidate terms via the acoustic model, wherein the second number of candidate terms are listed in a particular order based on their respective search weights, and an output module showing the second number of candidate terms in the particular order for selecting the specific term therefrom.

Owner:DELTA ELECTRONICS INC

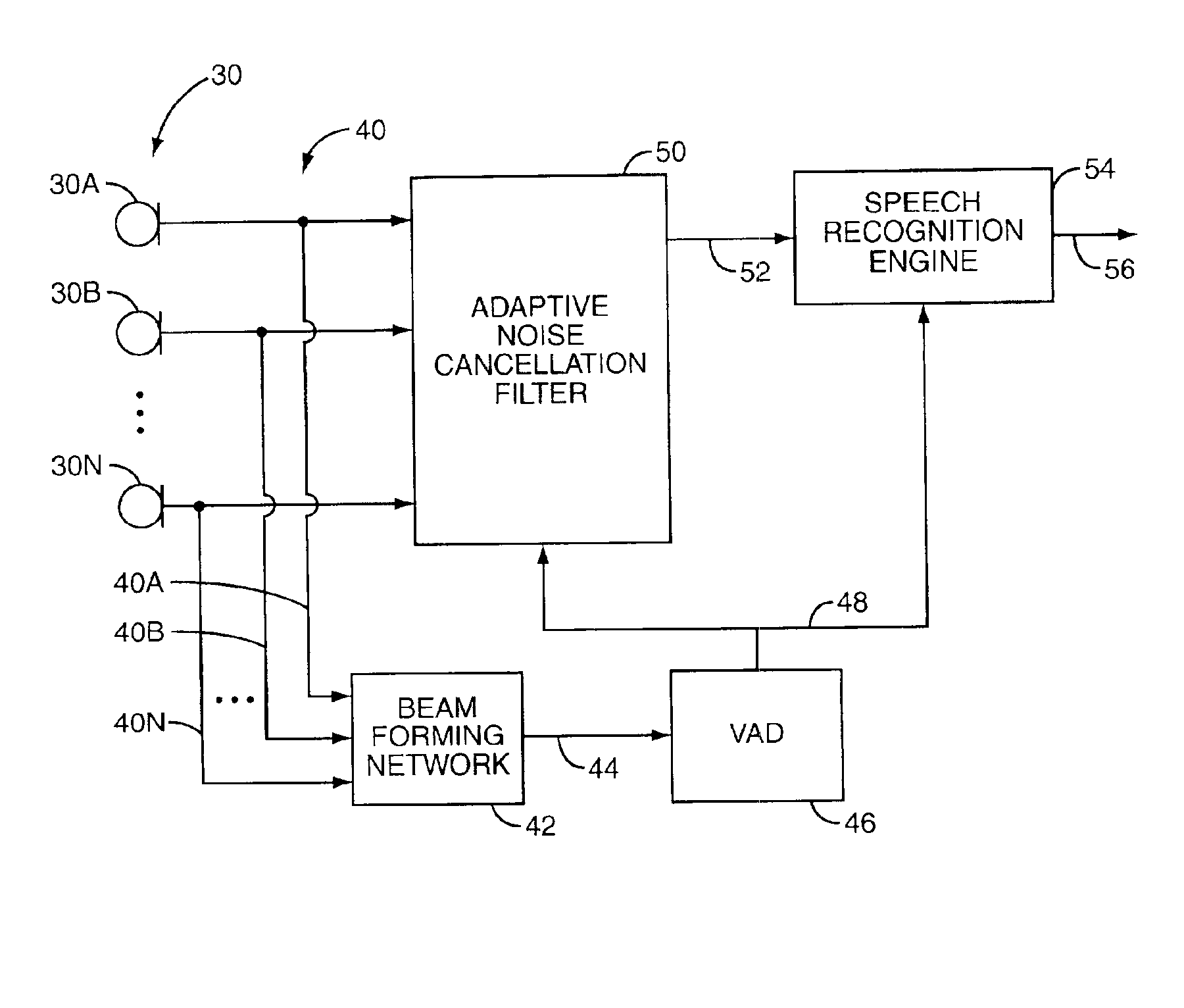

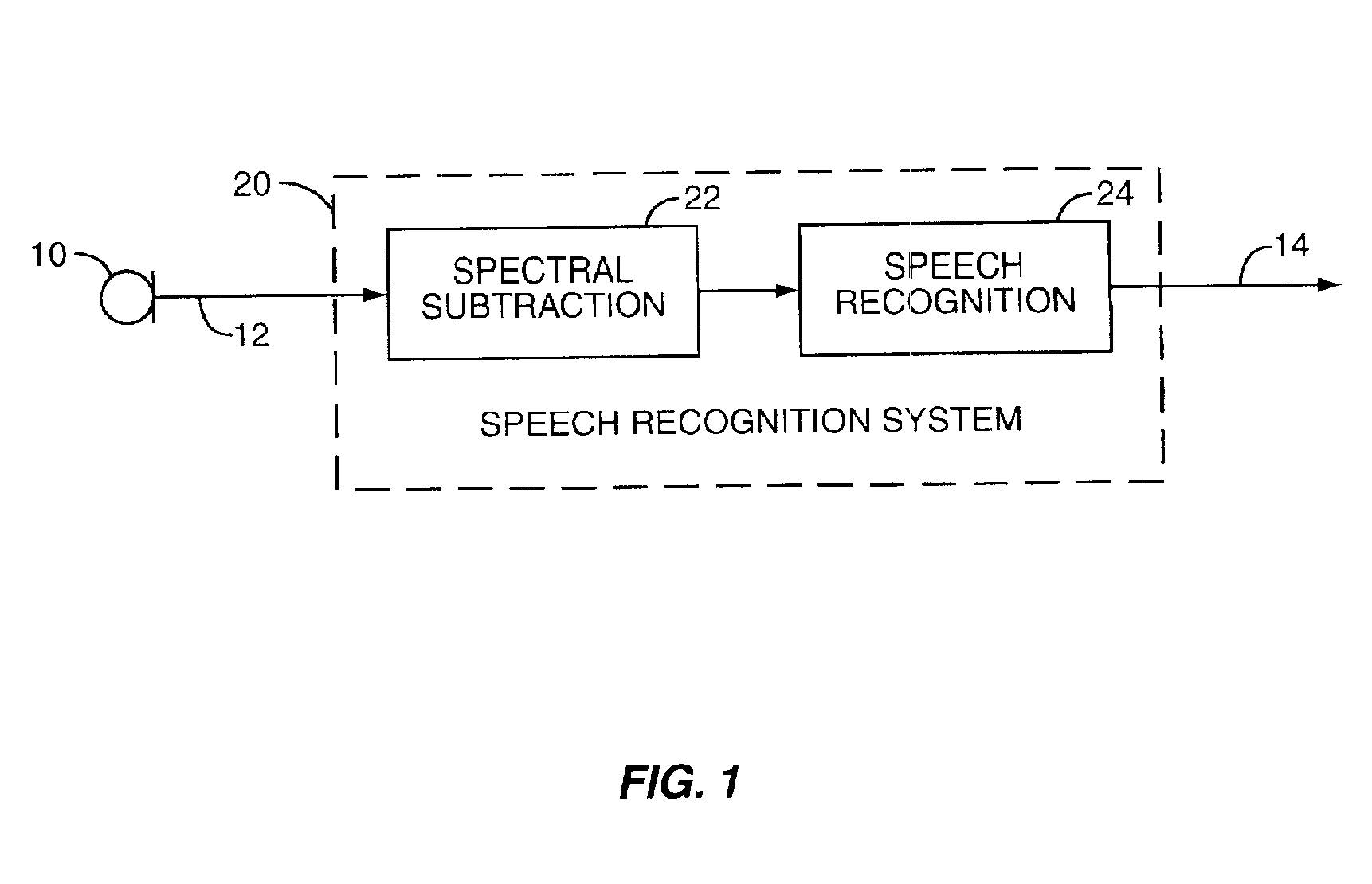

Speech recognition using microphone antenna array

A system and method of audio processing provides enhanced speech recognition. Audio input is received at a plurality of microphones. The multi-channel audio signal from the microphones may be processed by a beamforming network to generate a single-channel enhanced audio signal, on which voice activity is detected. Audio signals from the microphones are additionally processed by an adaptable noise cancellation filter having variable filter coefficients to generate a noise-suppressed audio signal. The variable filter coefficients are updated during periods of voice inactivity. A speech recognition engine may apply a speech recognition algorithm to the noise-suppressed audio signal and generate an appropriate output. The operation of the speech recognition engine and the adaptable noise cancellation filter may advantageously be controlled based on voice activity detected in the single-channel enhanced audio signal from the beamforming network.

Owner:HIGHBRIDGE PRINCIPAL STRATEGIES LLC AS COLLATERAL AGENT

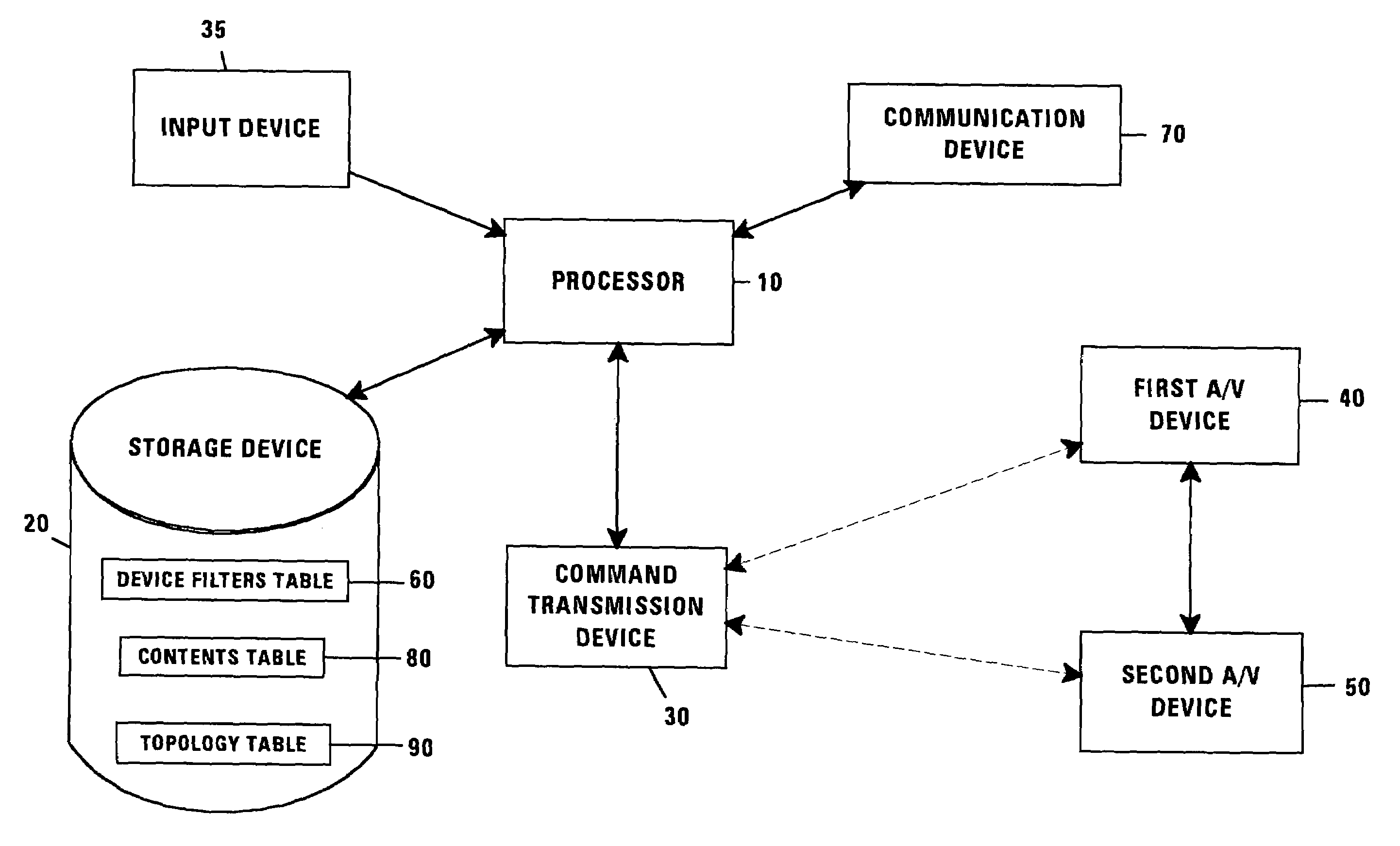

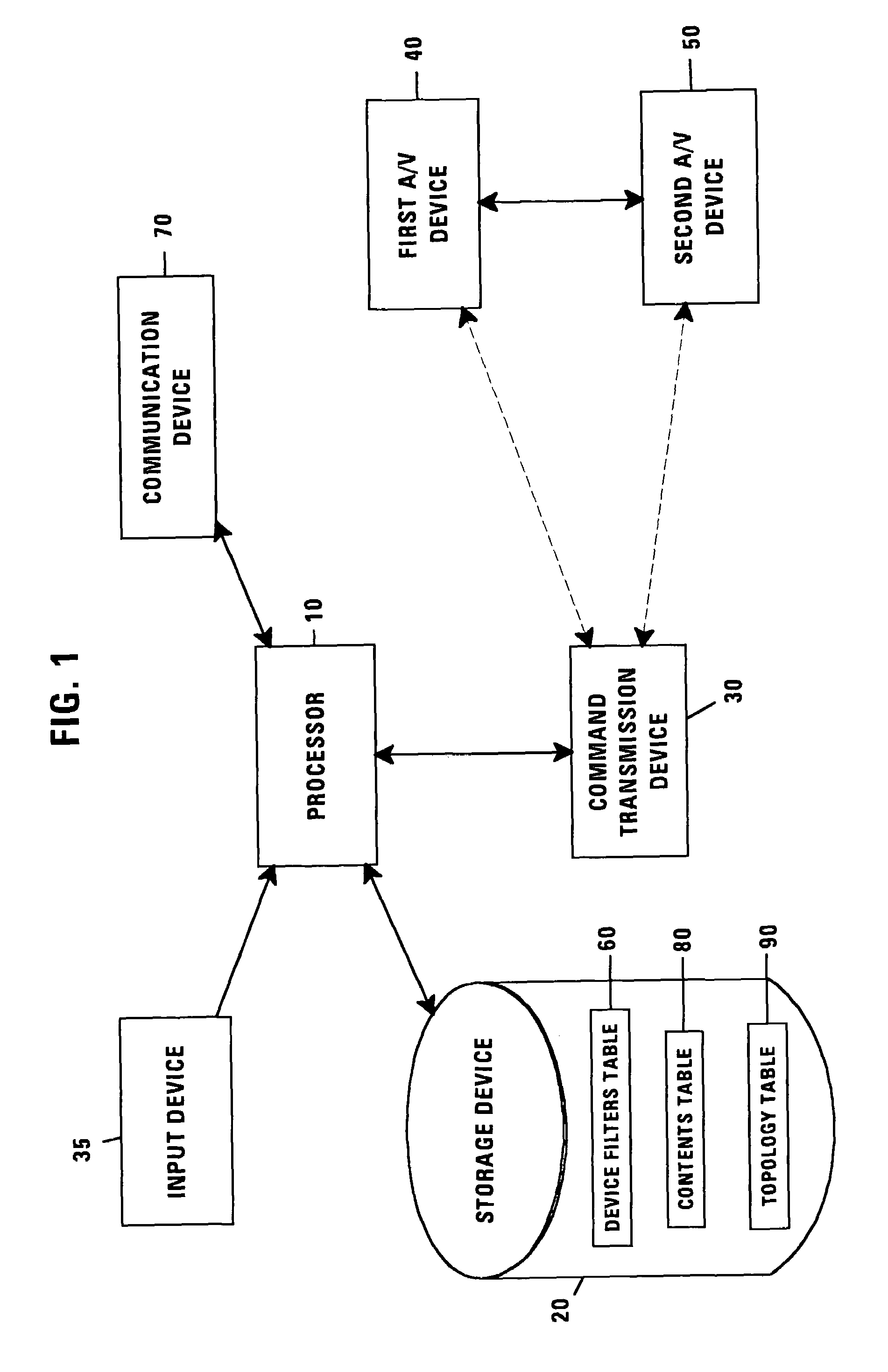

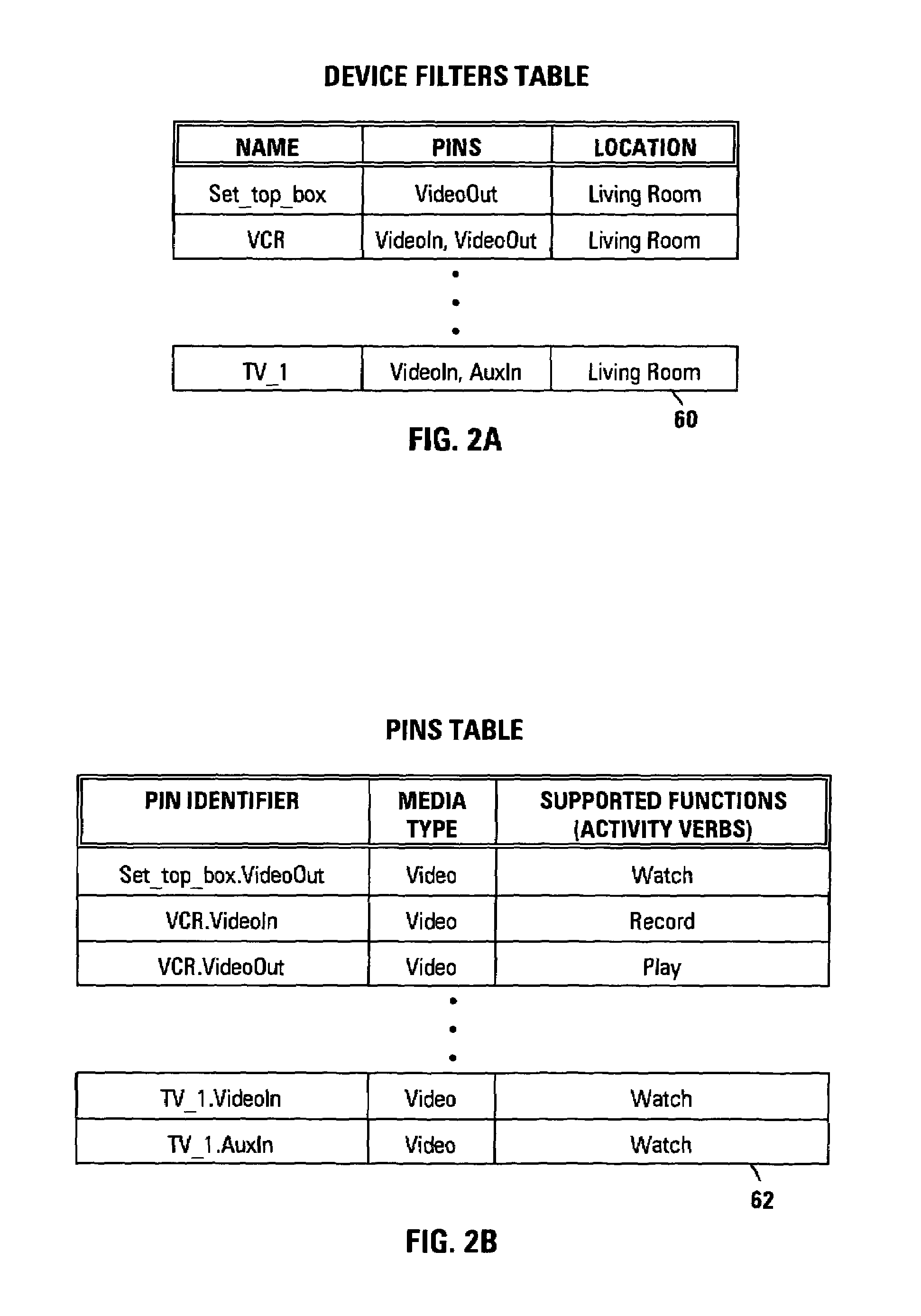

System and method for integrating and controlling audio/video devices

A processor integrating and controlling at least two A / V devices by constructing a control model, referred to as a filter graph, of the at least two A / V devices as a function of a physical connection topology of the at least two A / V devices and a desired content to be rendered by one of the at least two A / V devices. The filter graph may be constructed as a function of at least two device filters corresponding to the at least two A / V devices, in which the device filters include certain characteristics of the at least two A / V device. These characteristics may include the input or output pins for each device, the media type that the A / V device may process, the type of functions that the device may serve, etc. The desired content may be received as a user input which is entered via a keyboard, mouse or other comparable input devices. In addition, the user input may be entered as a voice command, which may be parsed by the processor using conventional speech recognition algorithms or natural language processing to extract the necessary information. Once the filter graph is constructed, the processor may control the at least two A / V devices via the filter graph by invoking predetermined operations on the filter graph resulting in the appropriate commands being sent to the at least two A / V devices, thereby results in the rendering of the desired content.

Owner:INTEL CORP

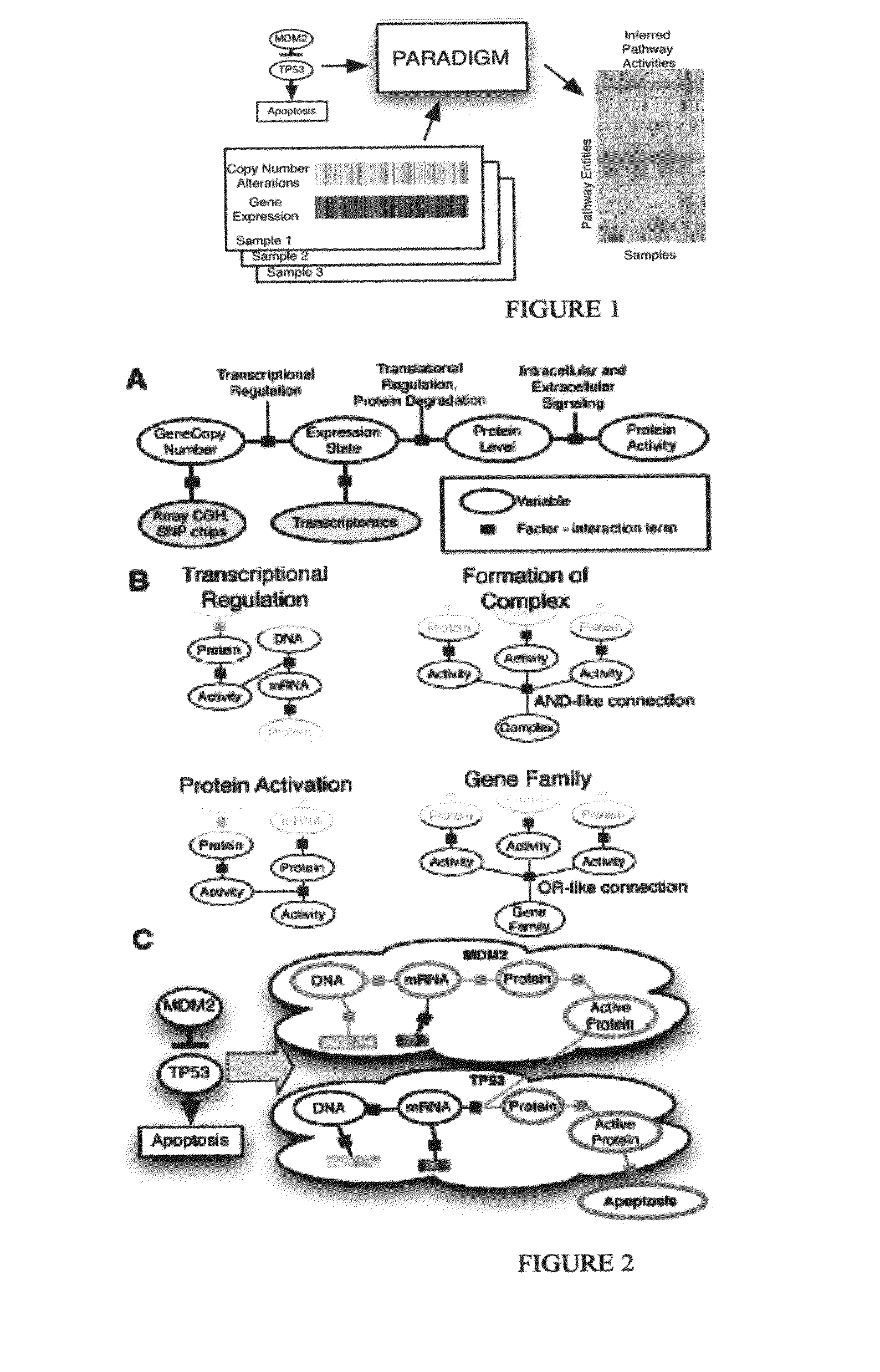

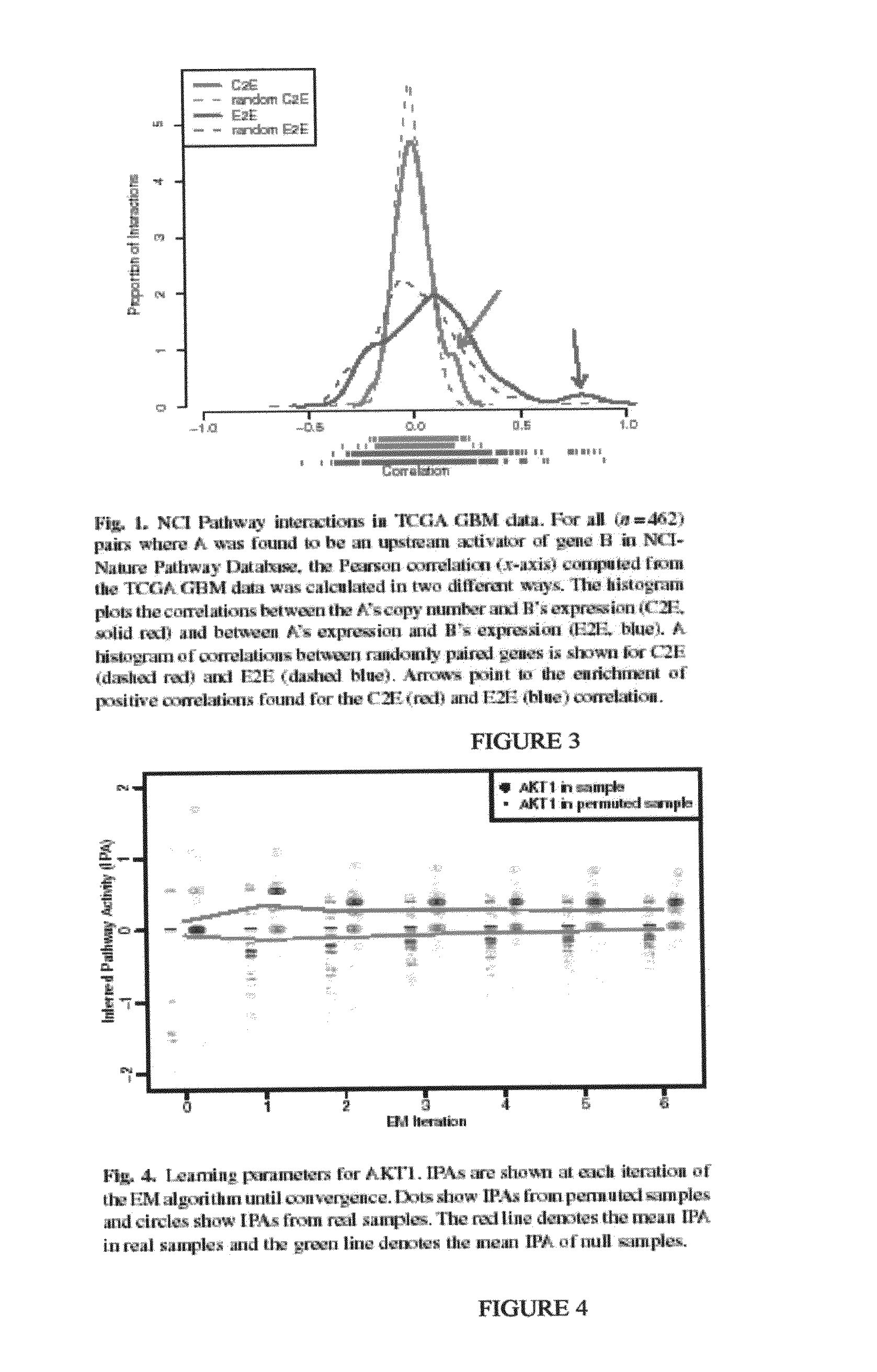

Pathway recognition algorithm using data integration on genomic models (PARADIGM)

ActiveUS20120041683A1Divergent outcomeConducive to survivalData visualisationDigital computer detailsRegimenMedicine

The present invention relates to methods for evaluating the probability that a patient's diagnosis may be treated with a particular clinical regimen or therapy.

Owner:RGT UNIV OF CALIFORNIA

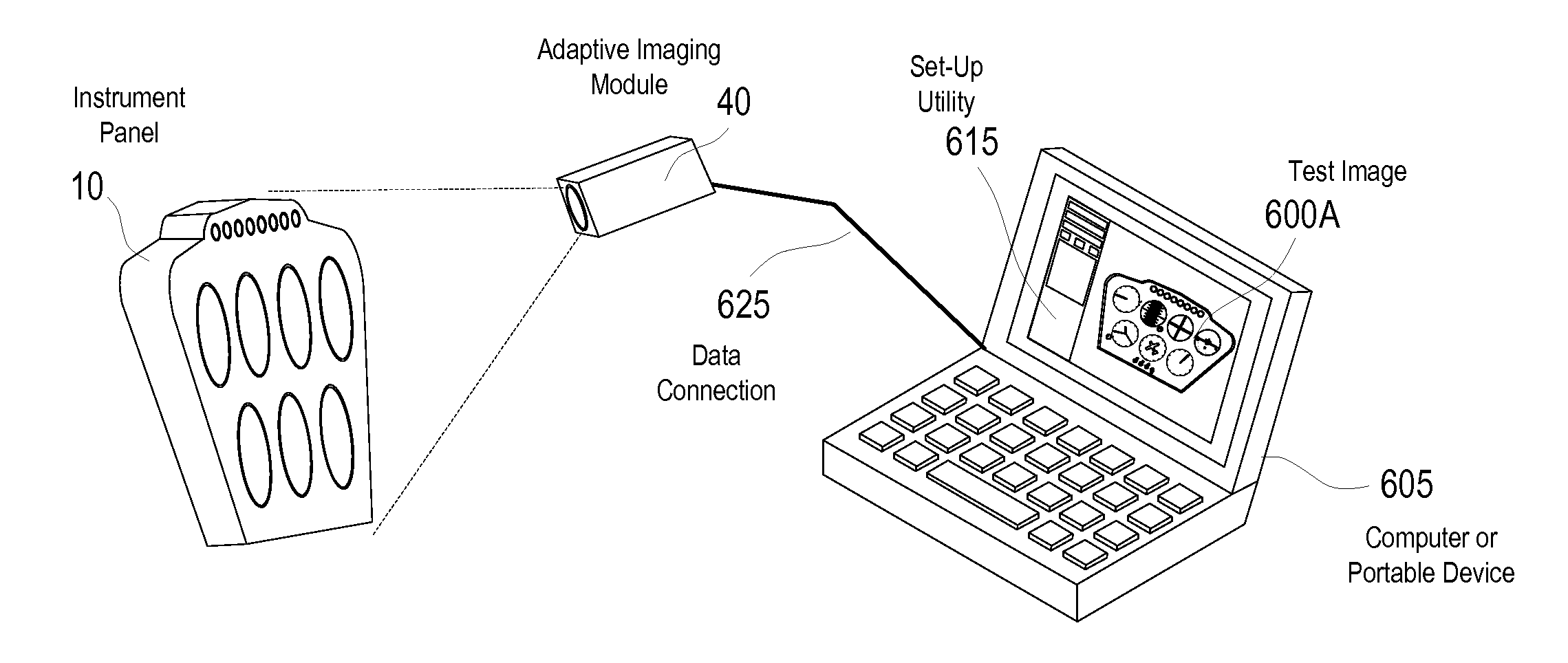

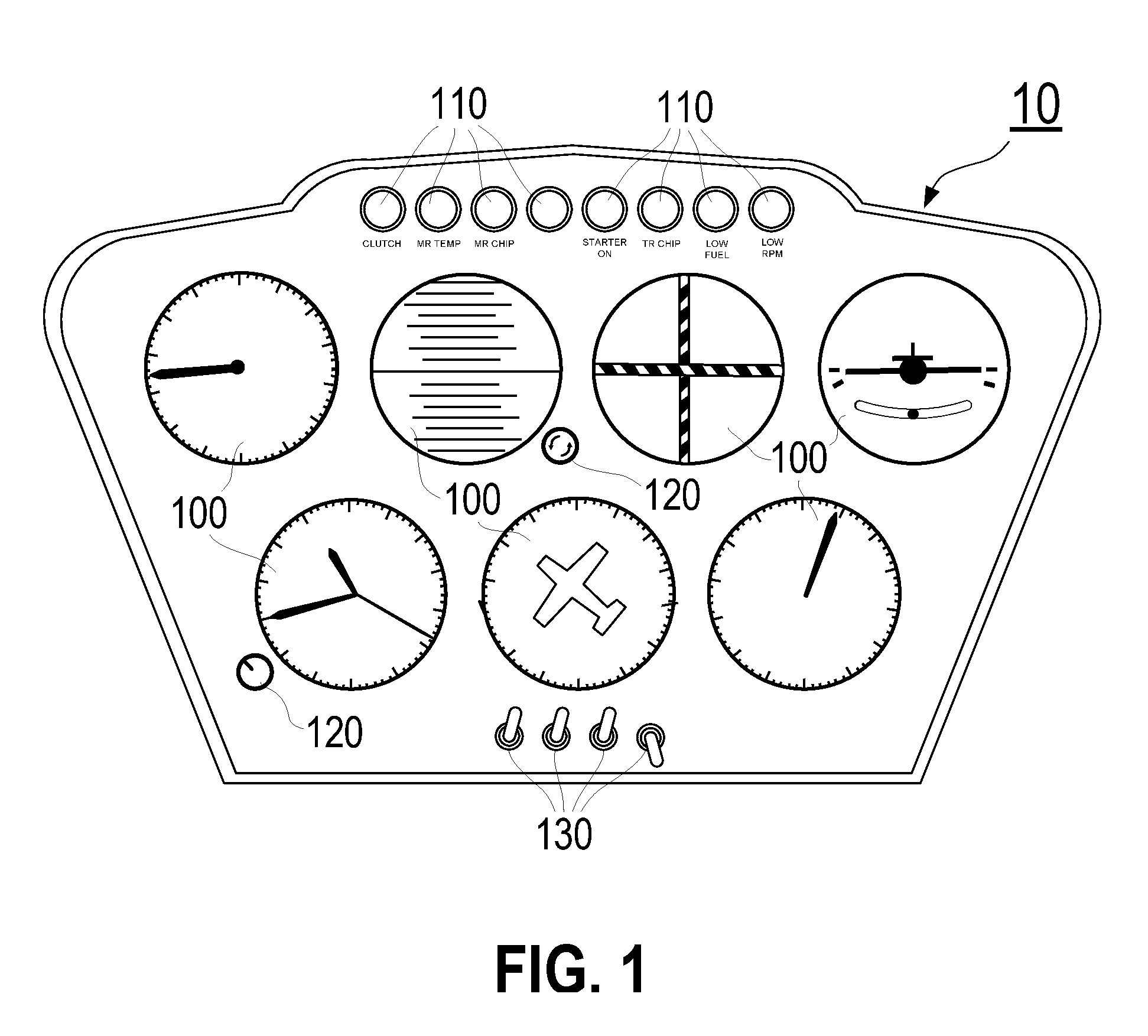

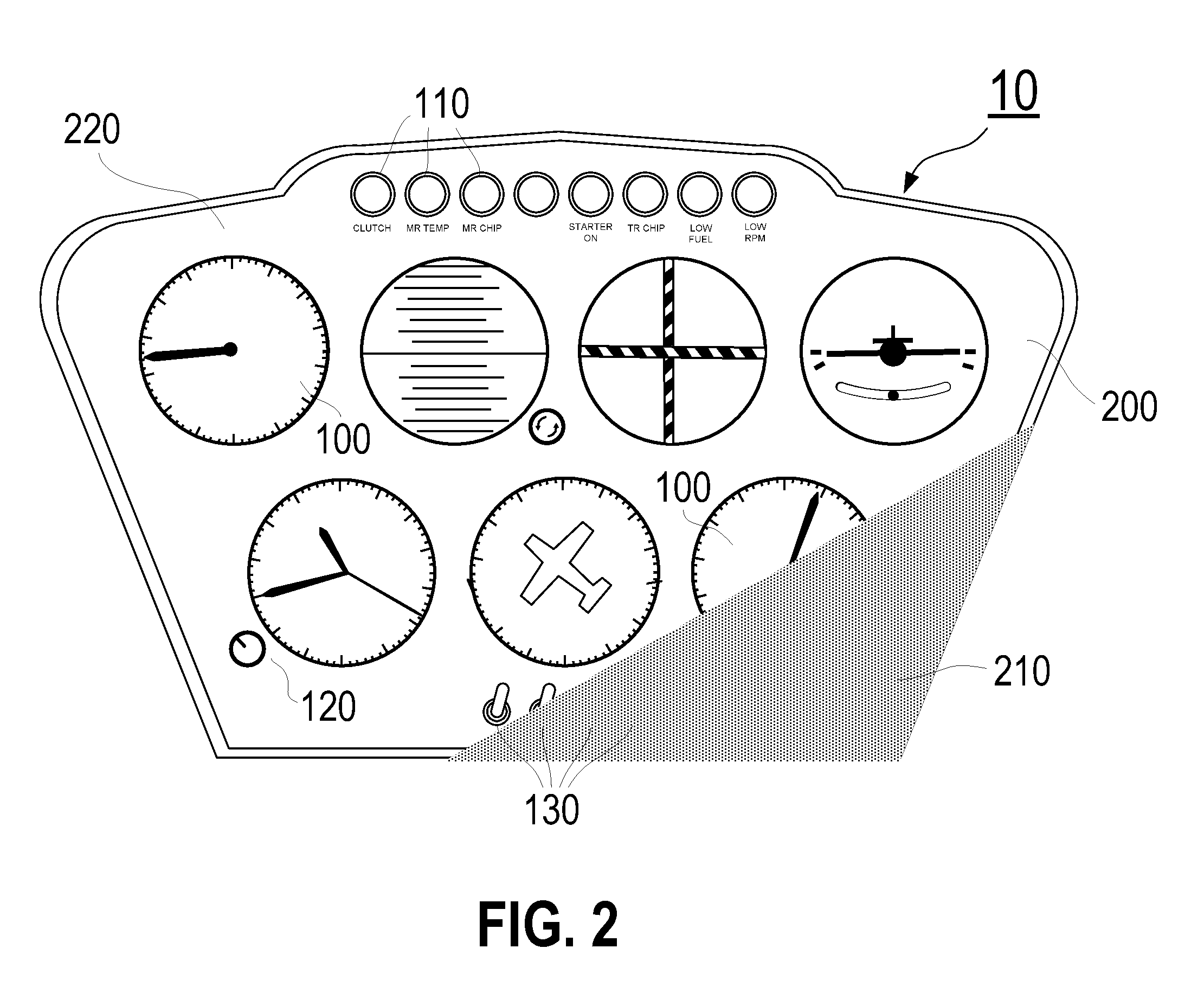

Optical image monitoring system and method for vehicles

ActiveUS8319666B2Registering/indicating working of vehiclesRoad vehicles traffic controlRecognition algorithmMonitoring system

A system and method of acquiring information from an image of a vehicle in real time wherein at least one imaging device with advanced light metering capabilities is placed aboard a vehicle, a computer processor means is provided to control the imaging device and the advanced light metering capabilities, the advanced light metering capabilities are used to capture an image of at least a portion of the vehicle, and image recognition algorithms are used to identify the current state or position of the corresponding portion of the vehicle.

Owner:APPAREO SYST

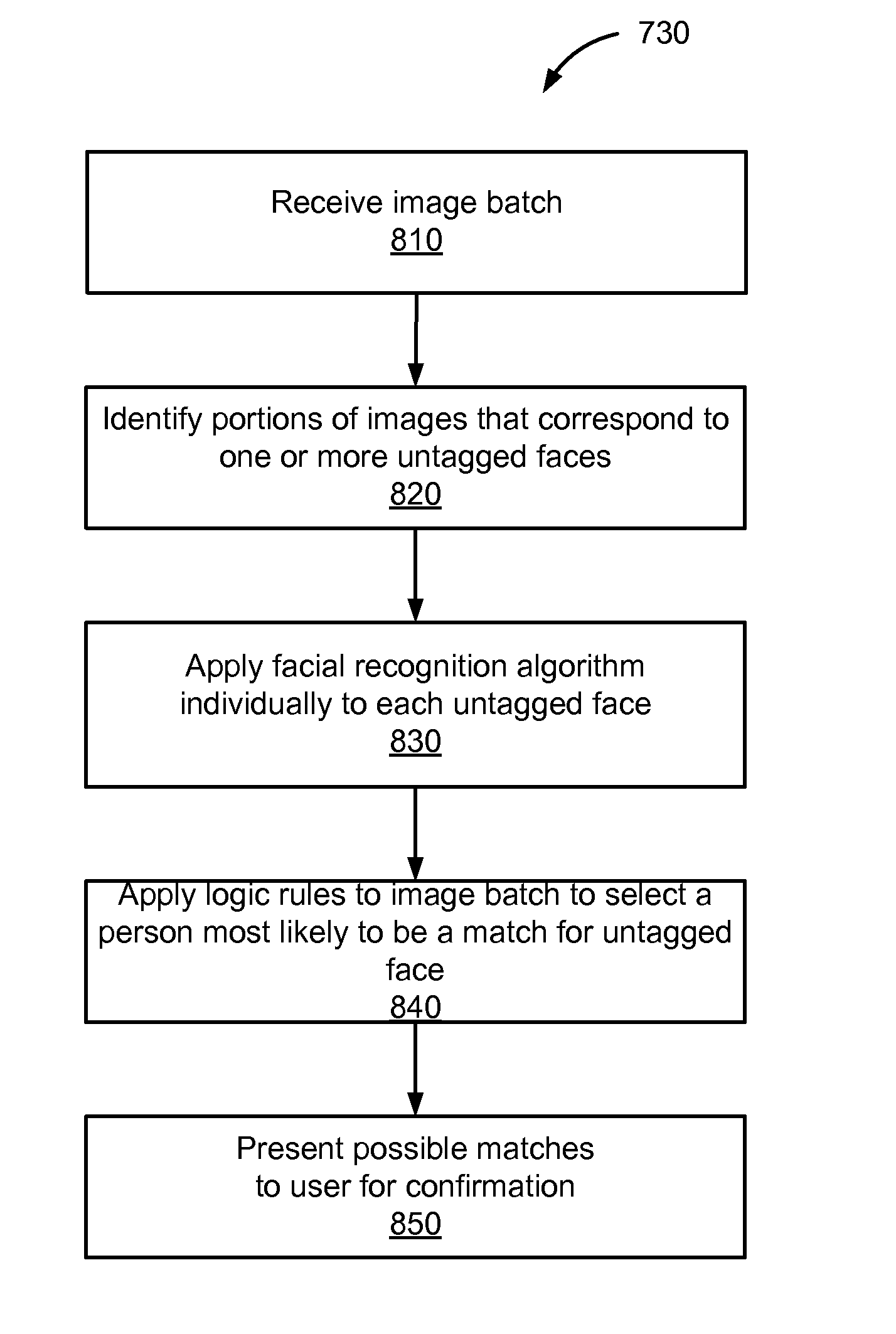

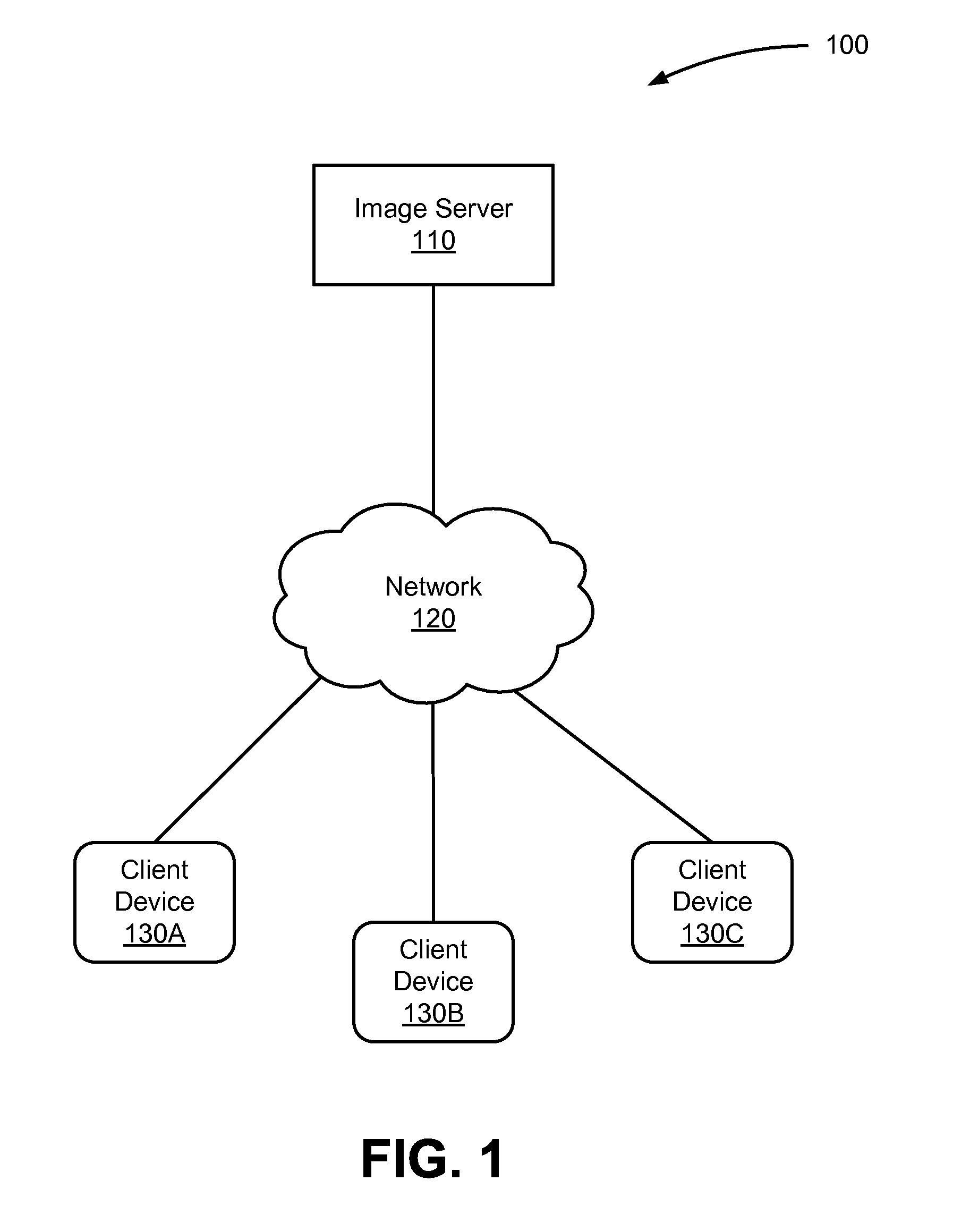

Assisted photo-tagging with facial recognition models

ActiveUS8861804B1Reduce the possibilityTelevision system detailsDatabase updatingRecognition algorithmFacial recognition system

Embodiments of the invention perform assisted tagging of images, including tagging of people, locations, and activities depicted in those images. A batch of images is received comprising images of faces, including at least some faces that have not yet been tagged. A facial recognition algorithm is applied to the faces to determine matching data comprising possible tags for each untagged face. A logic engine applies logic rules to reduce the likelihood that certain matches are correct. The most likely match from among the possible matches is selected for suggestion to the user for verification. Once verified, the metadata of the image indicating the recognized people within the image is updated.

Owner:SHUTTERFLY LLC

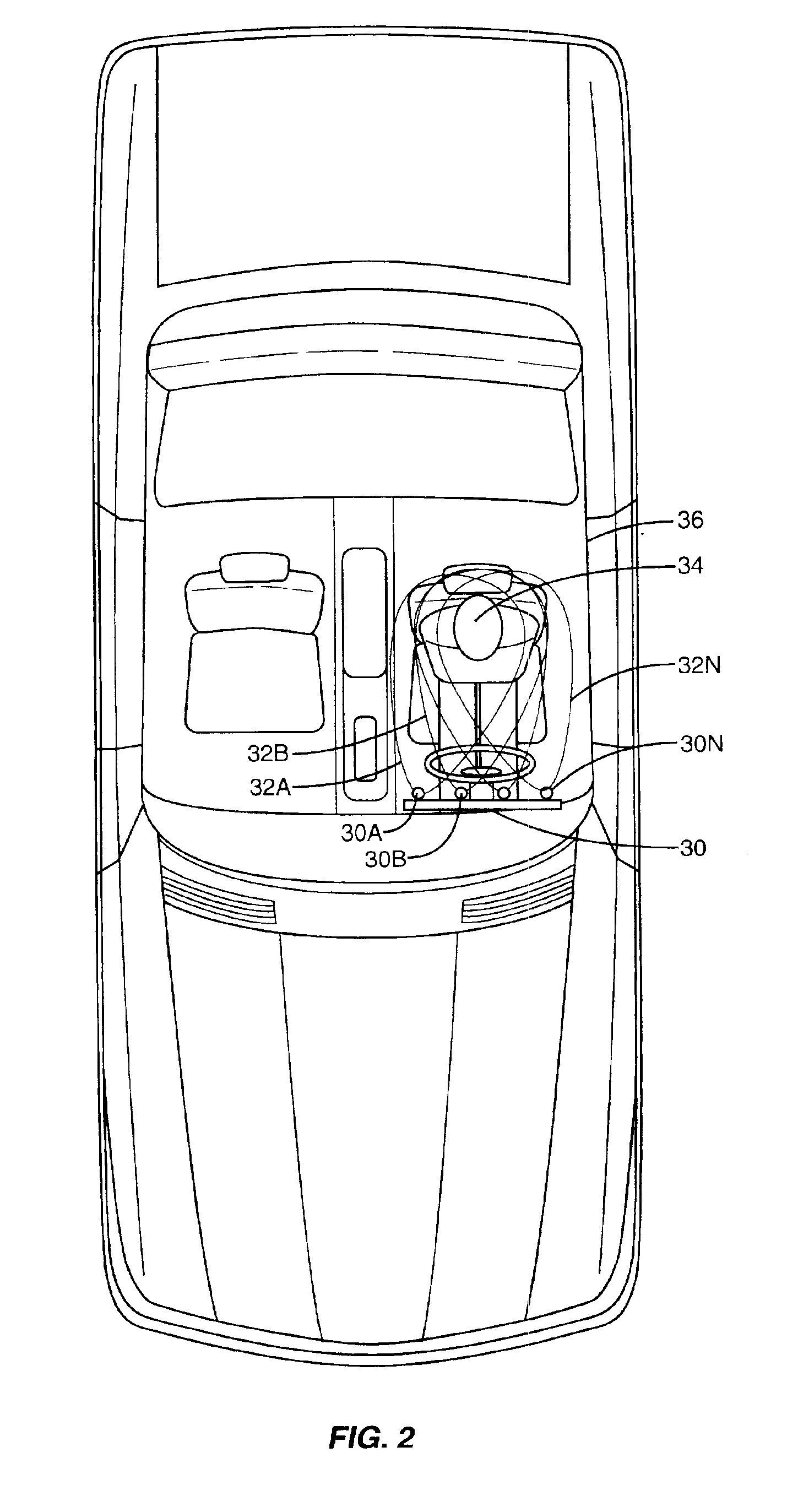

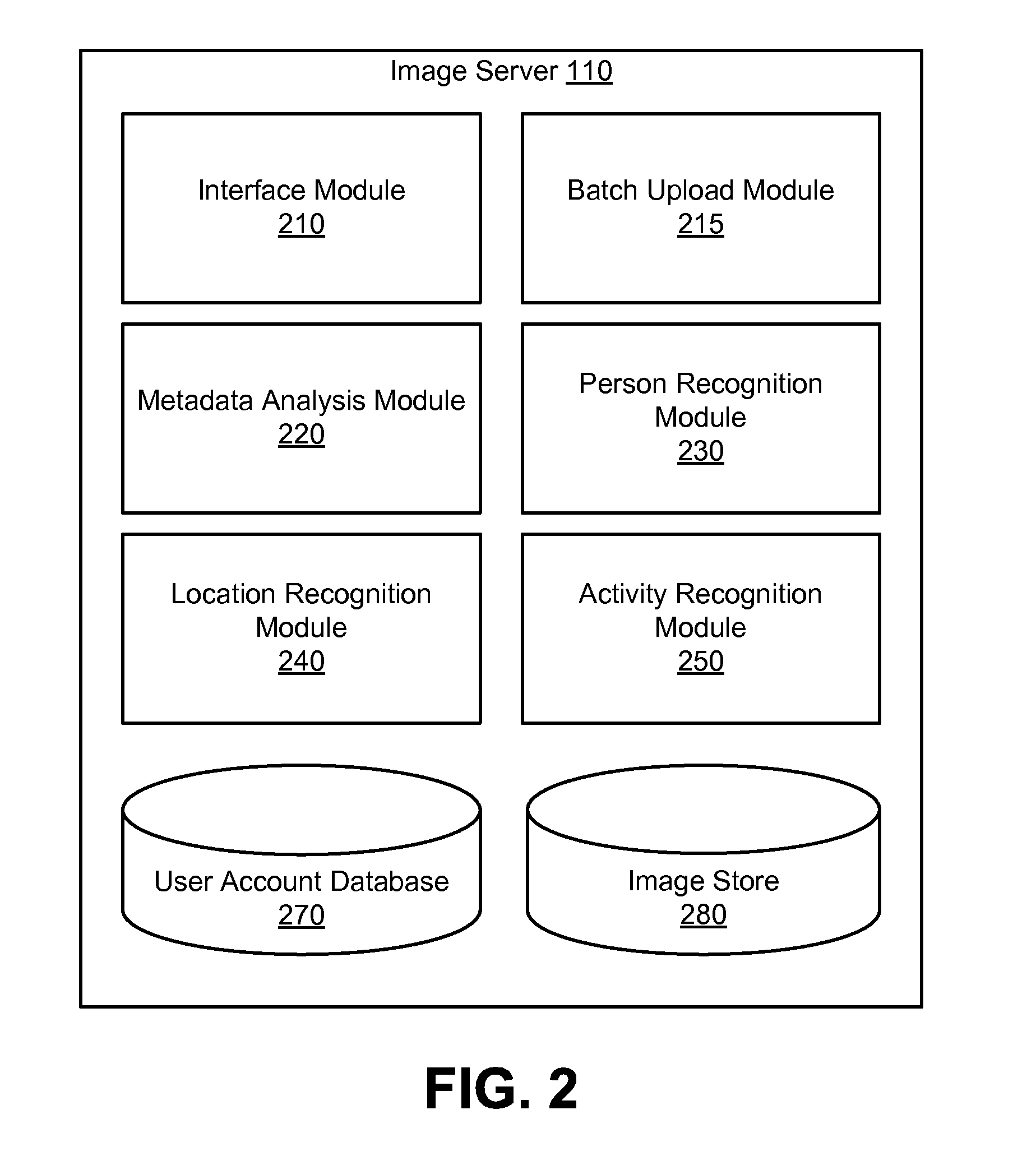

Method and device for determining a location and orientation of a device in a vehicle

InactiveUS20070120697A1Dashboard fitting arrangementsInstrument arrangements/adaptationsCamera lensRecognition algorithm

Systems, methods, and devices for accurately determining the location and orientation of adevice in a vehicle are disclosed. The ability of a device to automatically determine its location in a vehicle by using images from a camera or distance measurements is provided. The images or distance measurements are compared to previously stored data, and an image recognition algorithm or pattern recognition algorithm is used to determine the closest match and the location of the device. The camera is also configured to rotate itself such that the camera lens is substantially aligned with the user interface of the device. Upon orienting itself in this manner, the camera is configured to capture an image which allows the device to determine which occupants of the vehicle are able to access the device. In other embodiments, the orientation of the device can be determined by determining the offset angle between a reference line and the user interface of the device. The occupants having access to the device can be determined with reference to a look-up table of angles and vehicle areas. The operation of the device can advantageously be modified based upon its location and which occupants are able to access the device.

Owner:MOTOROLA INC

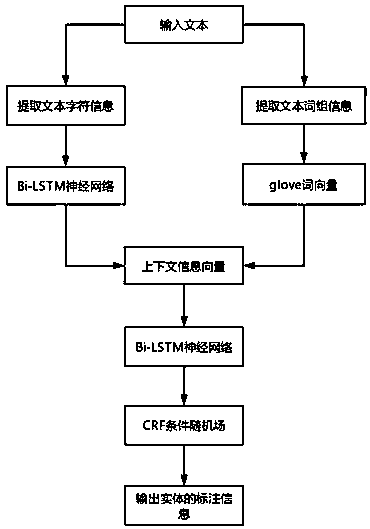

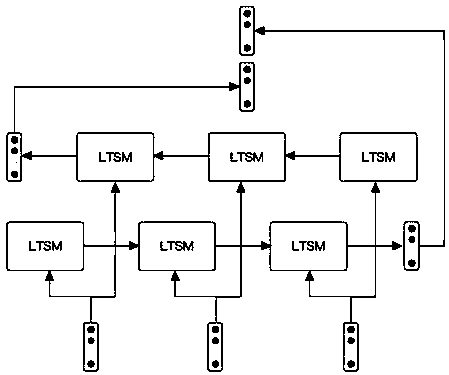

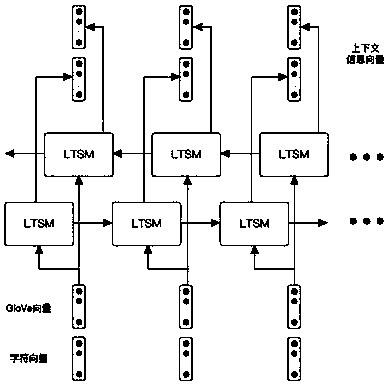

Named entities recognition method based on bidirectional LSTM and CRF

InactiveCN107644014AEasy to handleImprove training efficiencySpecial data processing applicationsNeural learning methodsConditional random fieldNamed-entity recognition

The invention discloses a named entities recognition method based on bidirectional LSTM and CRF. The named entities recognition method based on the bidirectional LSTM and CRF is improved and optimizedbased on the traditional named entities recognition algorithm in the prior art. The named entities recognition method based on the bidirectional LSTM and CRF comprises the following steps: (1) preprocessing a text, extracting phrase information and character information of the text; (2) coding the text character information by means of the bidirectional LSTM neural network to convert the text character information into character vectors; (3) using the glove model to code the text phrase information into word vectors; (4) combining the character vectors and the word vectors into a context information vector and putting the context information vector into the bidirectional LSTM neural network; and (5) decoding the output of the bidirectional LSTM with a linear chain condition random field to obtain a text annotation entity. The invention uses a deep neural network to extract text features and decodes the textual features with the condition random field, therefore, the text feature information can be effectively extracted and good effects can be achieved in the entity recognition tasks of different languages.

Owner:南京安链数据科技有限公司

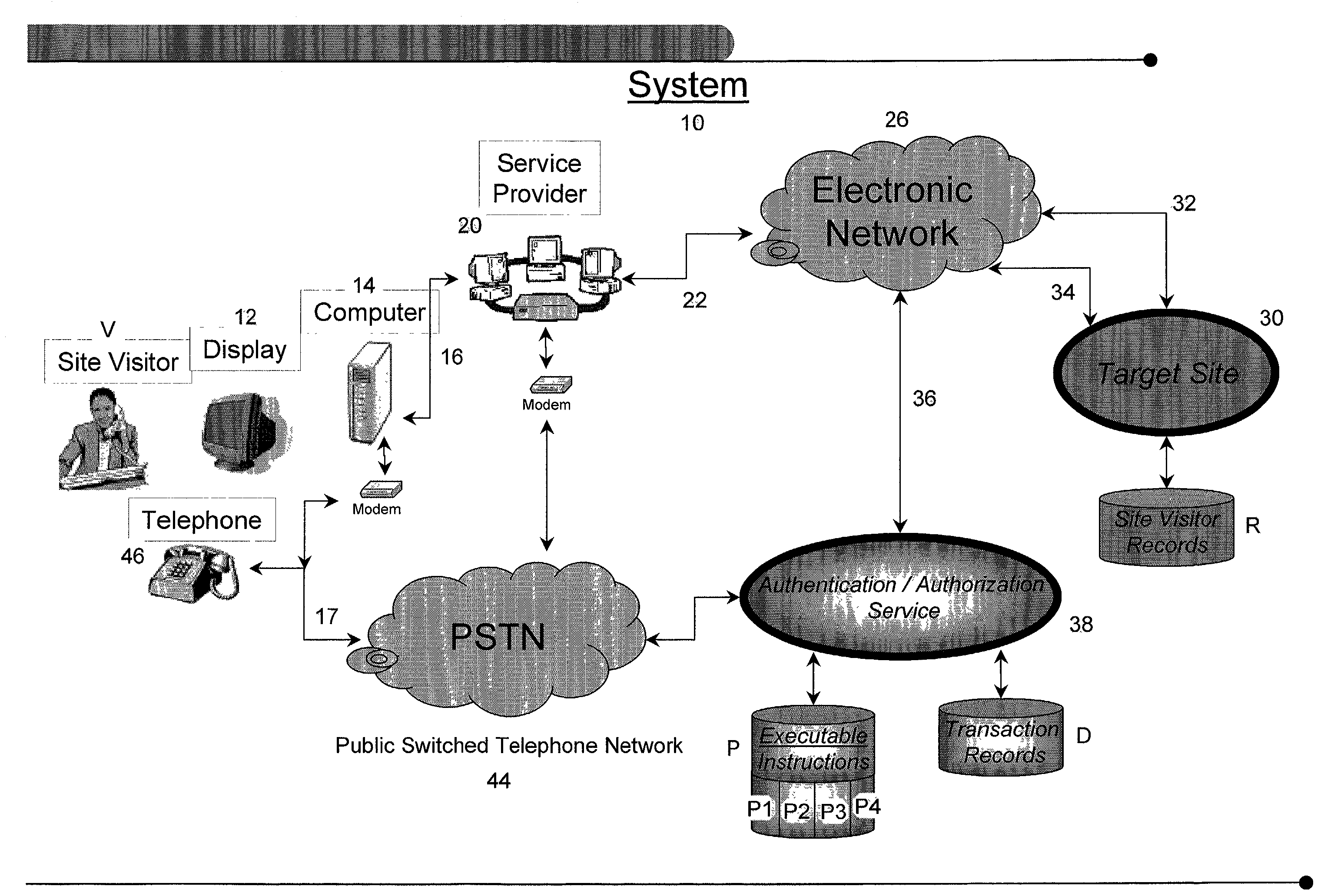

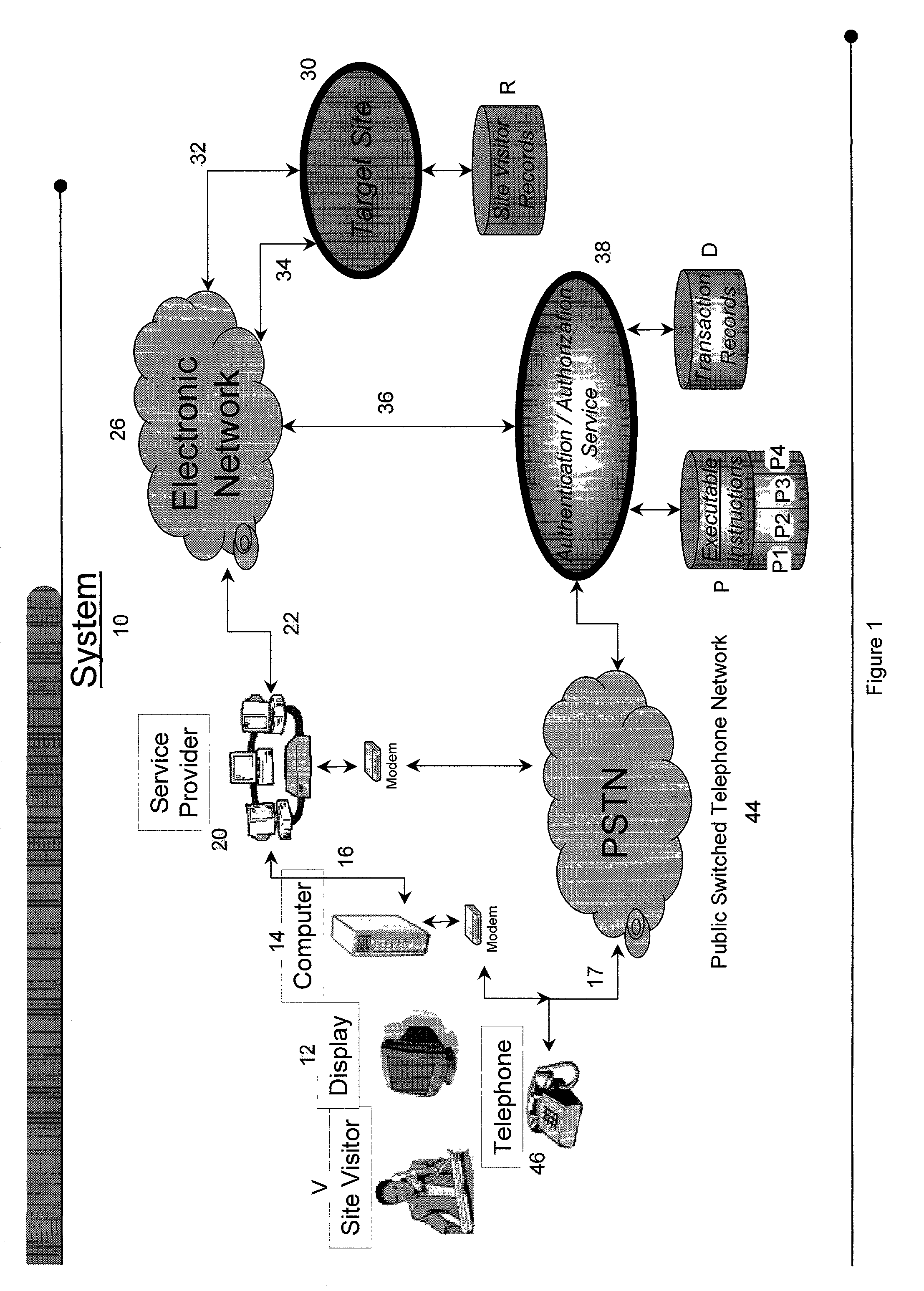

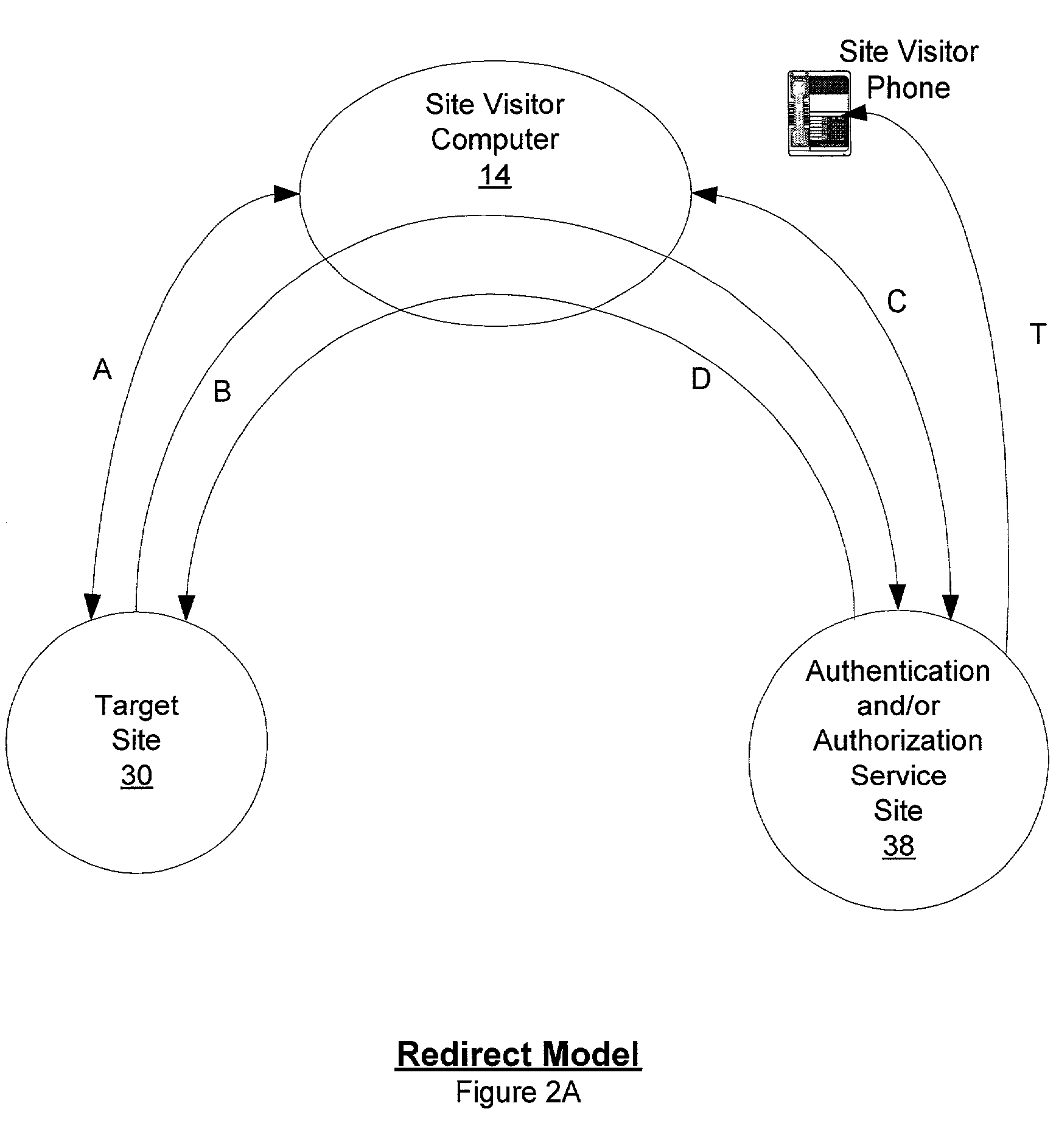

Use of public switched telephone network for authentication and authorization in on-line transactions

A system for authentication and / or authorization which incorporates two communication channels, and at least one of third-party data sources, geographic correlation algorithms, speech recognition algorithms, voice biometric comparison algorithms, and mechanisms to convert textual data into speech. A site visitor's identity can be verified using one or all of such features in combination with a visitors address on one of the channels.

Owner:PAYFONE

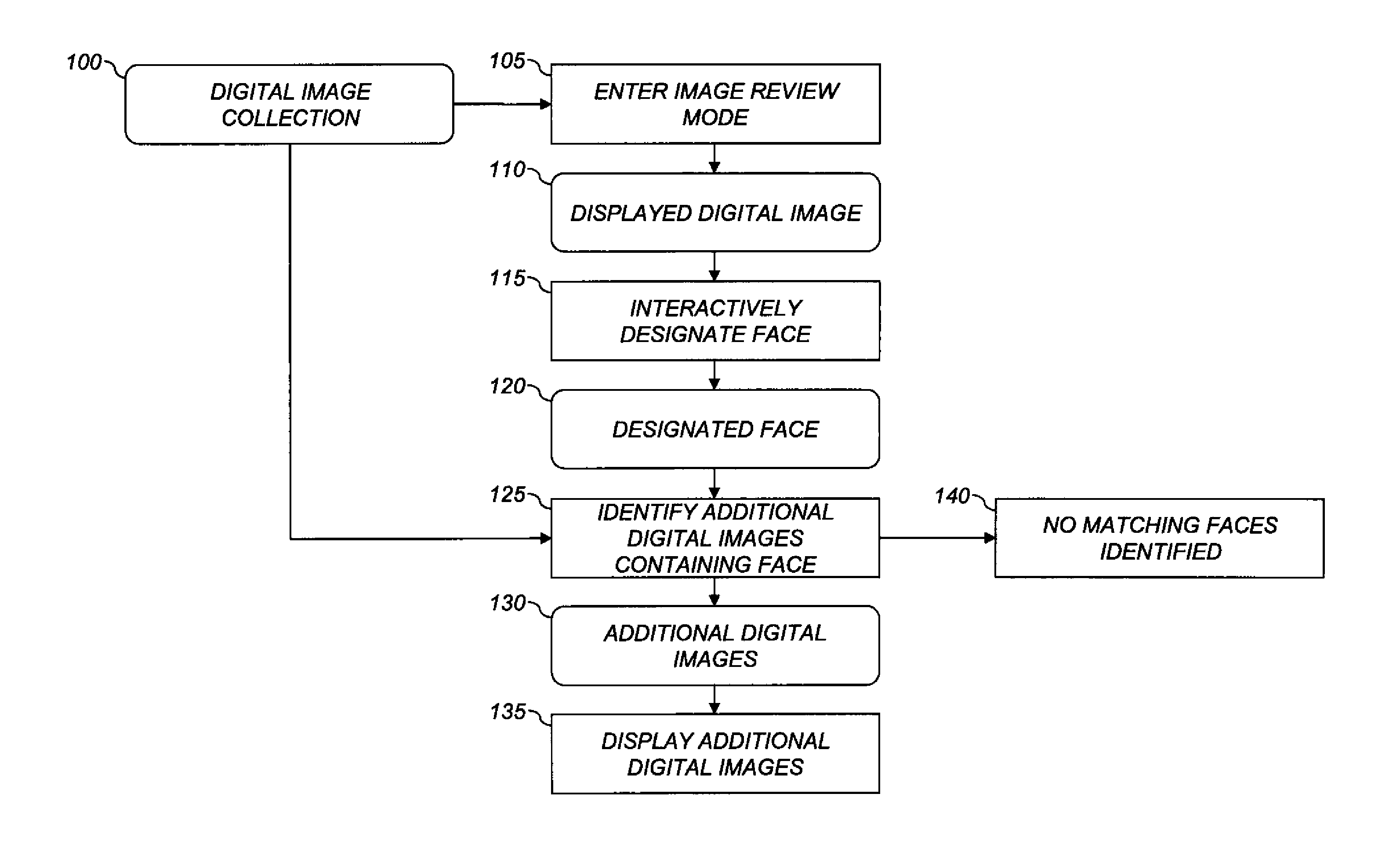

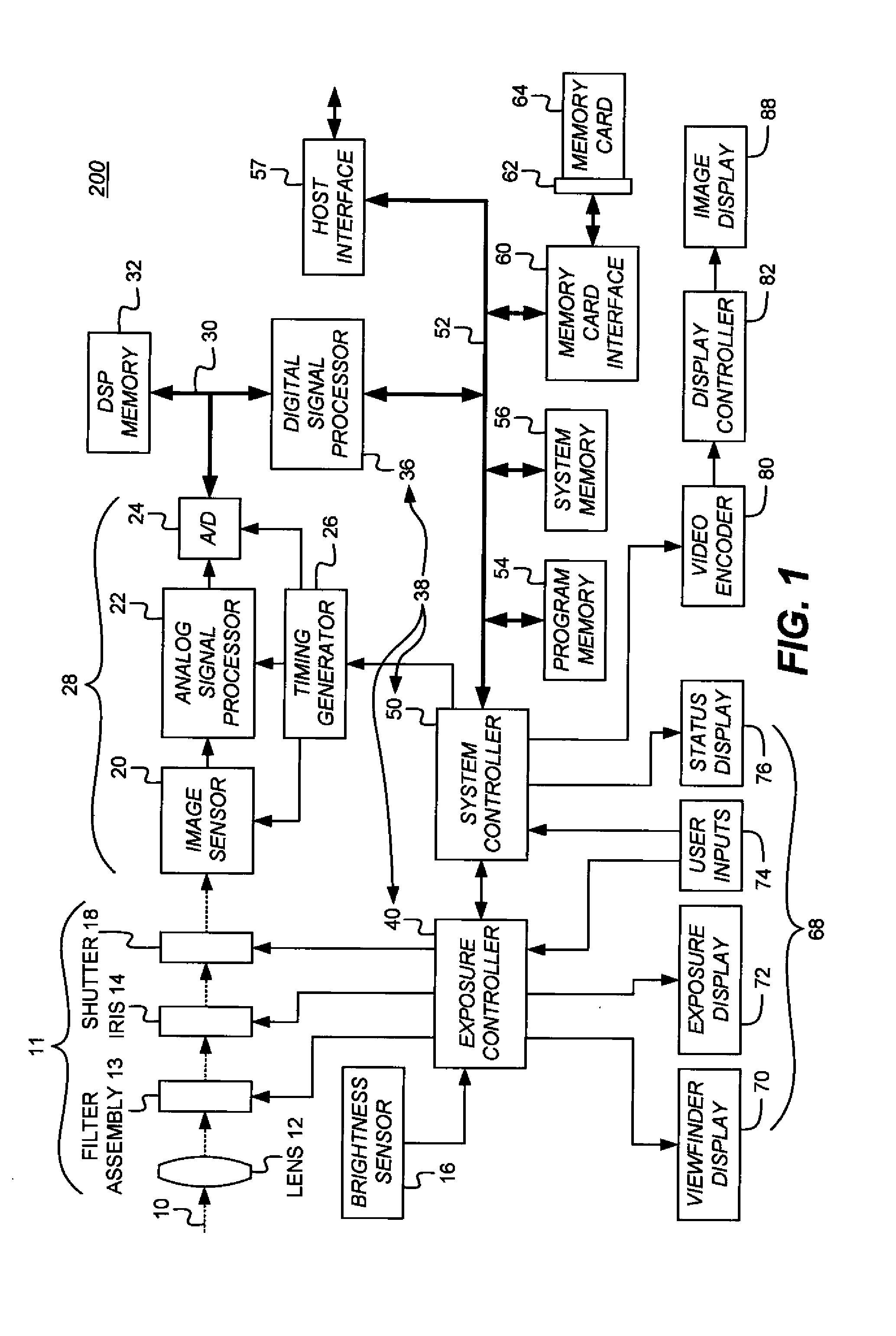

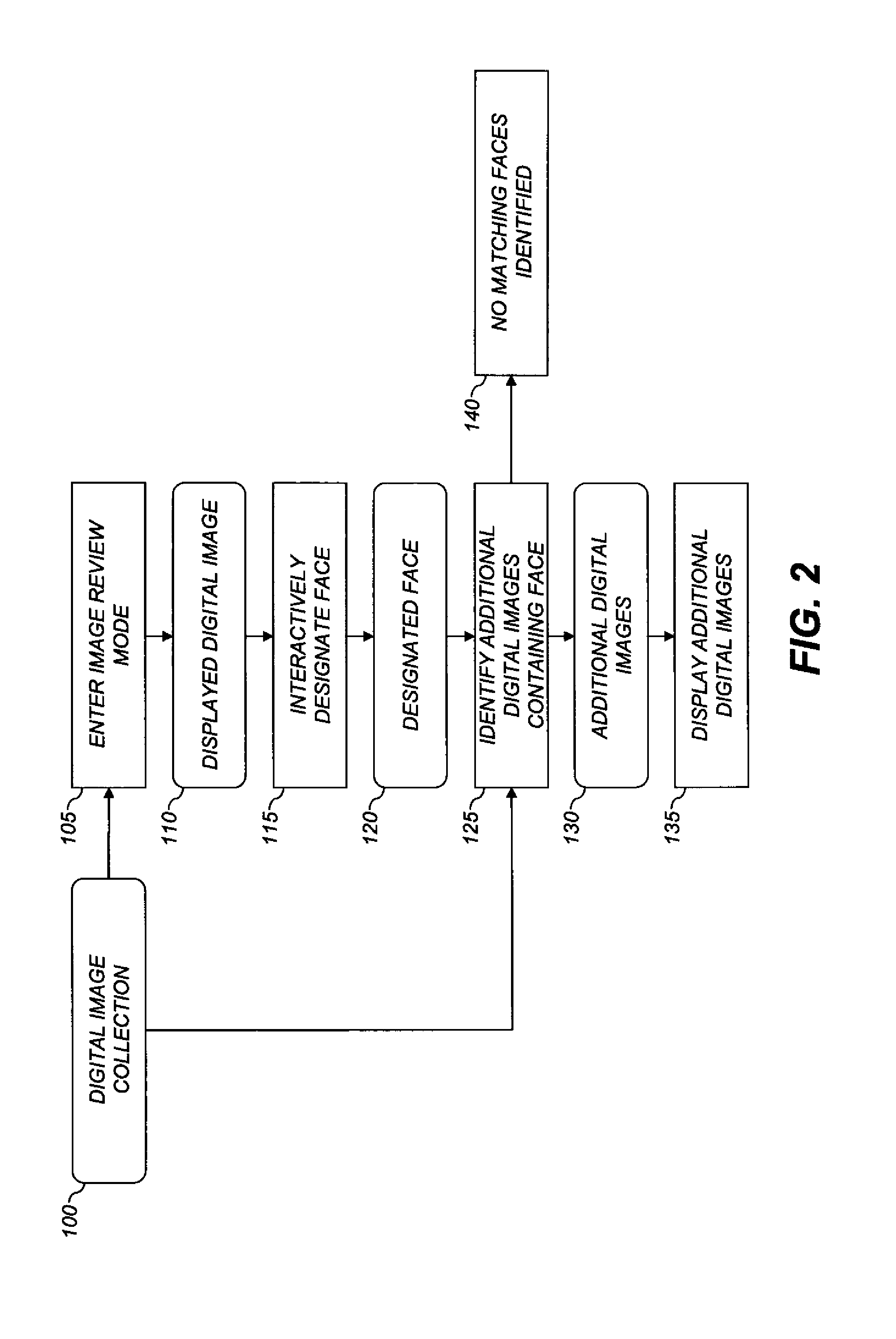

Searching digital image collections using face recognition

InactiveUS20110243397A1Facilitates efficient searchingImprove organizationTelevision system detailsDigital data information retrievalRecognition algorithmDigital image

A method for searching a collection of digital images on a display screen, comprising: entering an image review mode and displaying on the display screen a first digital image from the collection of digital images; designating a face contained in the first digital image by using an interactive user interface to indicate a region of the displayed first digital image containing the face; using a processor to execute an automatic face recognition algorithm to identify one or more additional digital images from the collection of digital images that contain the designated face; and displaying the identified one or more additional digital images on the display screen.

Owner:EASTMAN KODAK CO

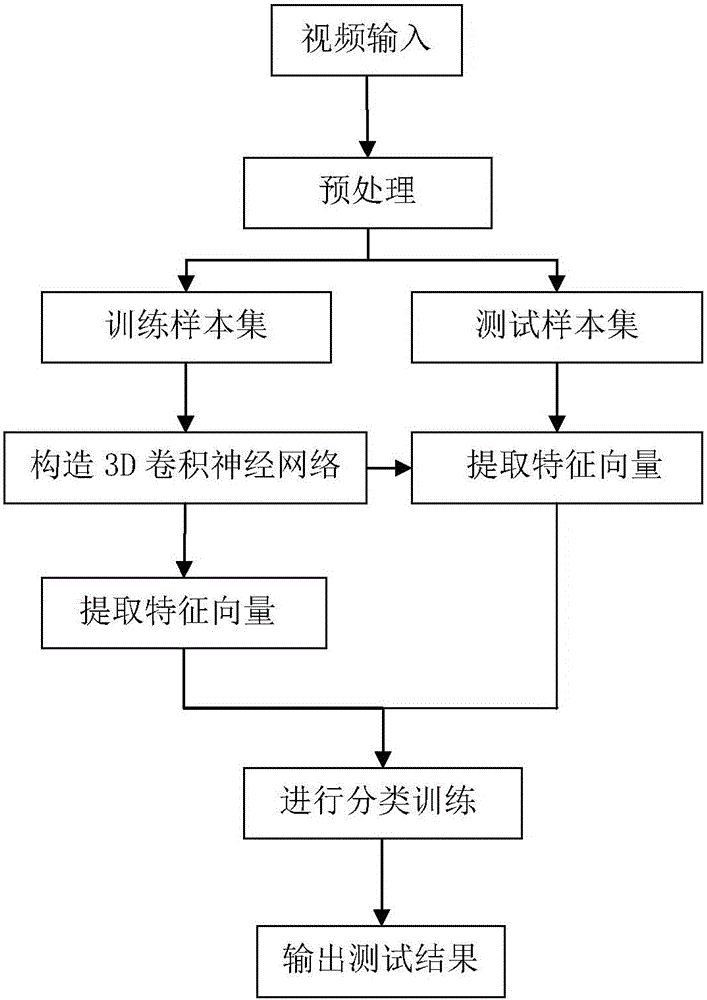

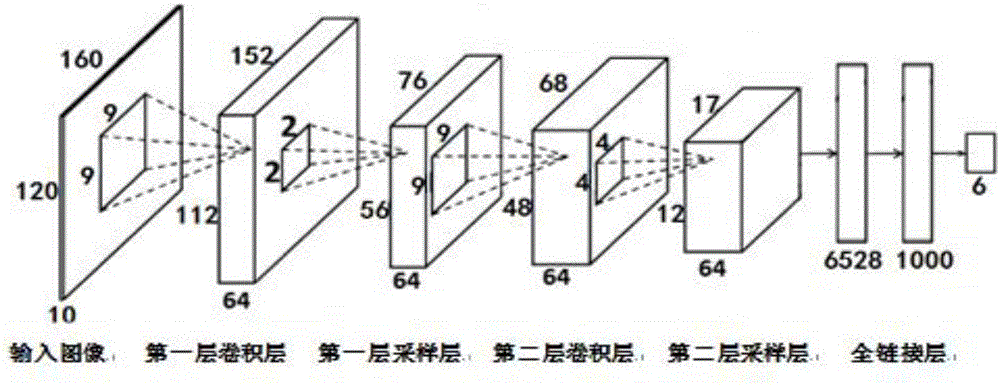

3D (three-dimensional) convolutional neural network based human body behavior recognition method

InactiveCN105160310AThe extracted features are highly representativeFast extractionCharacter and pattern recognitionHuman bodyFeature vector

The present invention discloses a 3D (three-dimensional) convolutional neural network based human body behavior recognition method, which is mainly used for solving the problem of recognition of a specific human body behavior in the fields of computer vision and pattern recognition. The implementation steps of the method are as follows: (1) carrying out video input; (2) carrying out preprocessing to obtain a training sample set and a test sample set; (3) constructing a 3D convolutional neural network; (4) extracting a feature vector; (5) performing classification training; and (6) outputting a test result. According to the 3D convolutional neural network based human body behavior recognition method disclosed by the present invention, human body detection and movement estimation are implemented by using an optical flow method, and a moving object can be detected without knowing any information of a scenario. The method has more significant performance when an input of a network is a multi-dimensional image, and enables an image to be directly used as the input of the network, so that a complex feature extraction and data reconstruction process in a conventional recognition algorithm is avoided, and recognition of a human body behavior is more accurate.

Owner:XIDIAN UNIV

Gaze tracking system

A gaze tracking technique is implemented with a head mounted gaze tracking device that communicates with a server. The server receives scene images from the head mounted gaze tracking device which captures external scenes viewed by a user wearing the head mounted device. The server also receives gaze direction information from the head mounted gaze tracking device. The gaze direction information indicates where in the external scenes the user was gazing when viewing the external scenes. An image recognition algorithm is executed on the scene images to identify items within the external scenes viewed by the user. A gazing log tracking the identified items viewed by the user is generated.

Owner:GOOGLE LLC

Computation of a recognizability score (quality predictor) for image retrieval

ActiveUS20090067726A1Data processing applicationsCharacter and pattern recognitionImage queryRecognition algorithm

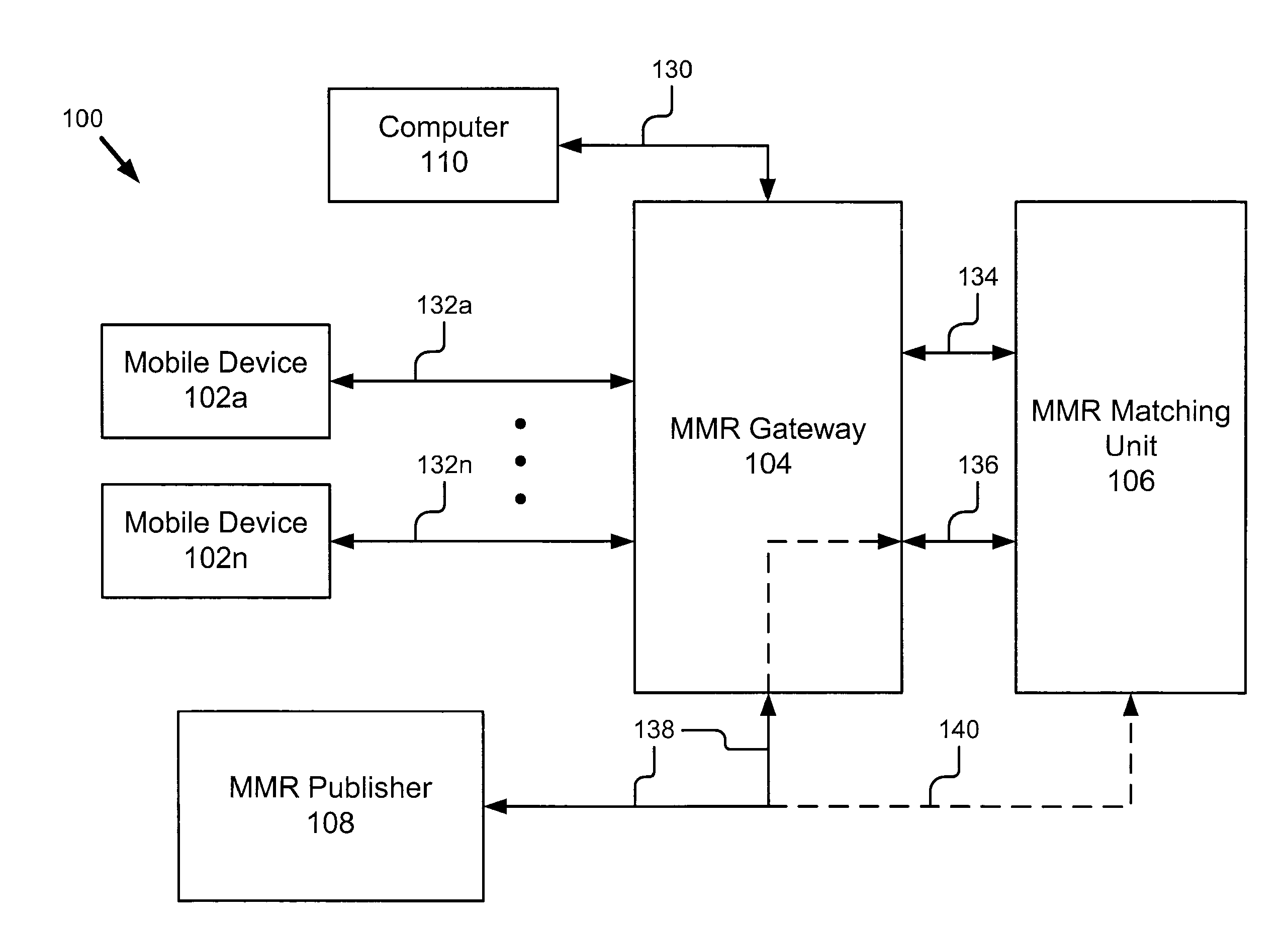

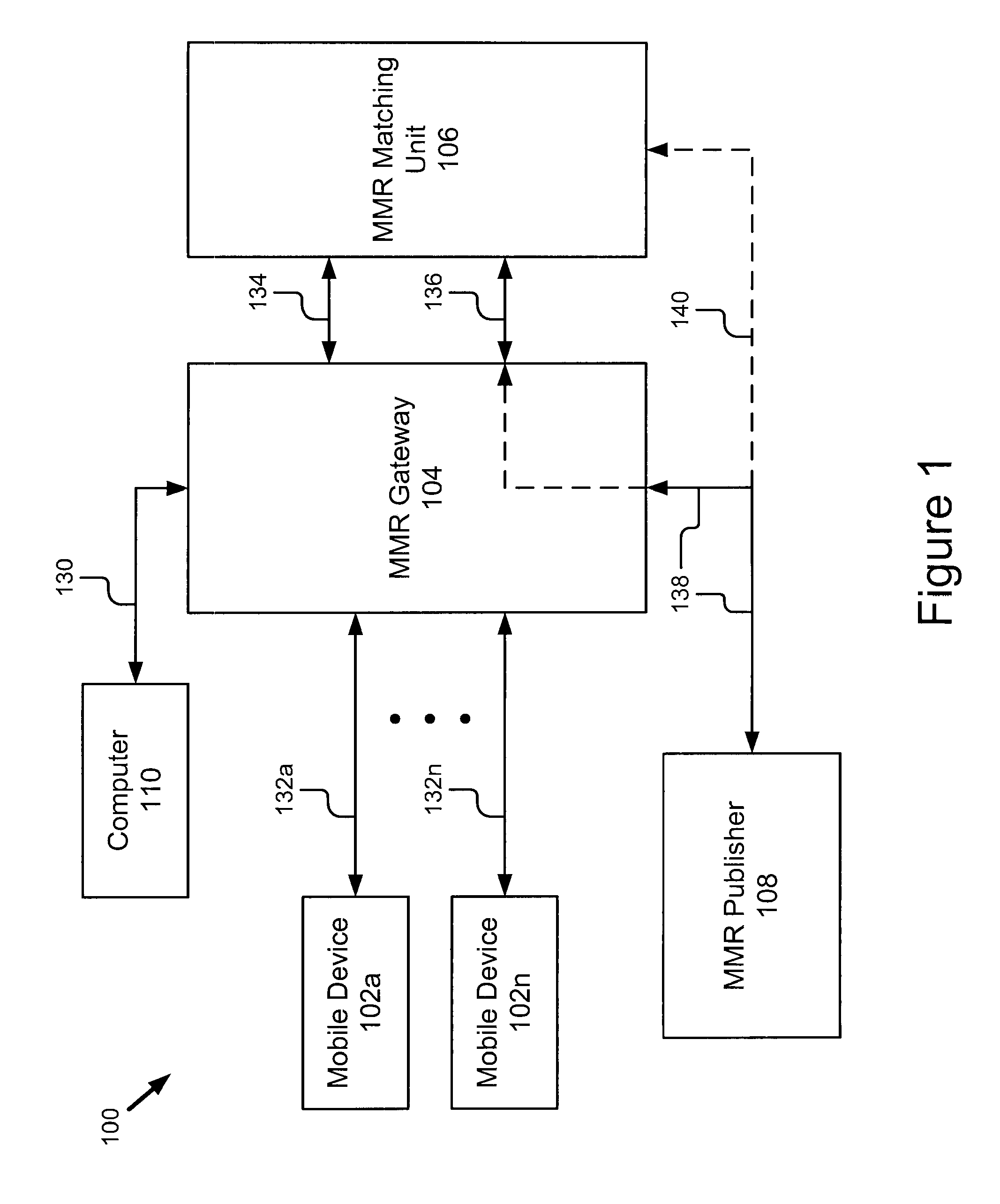

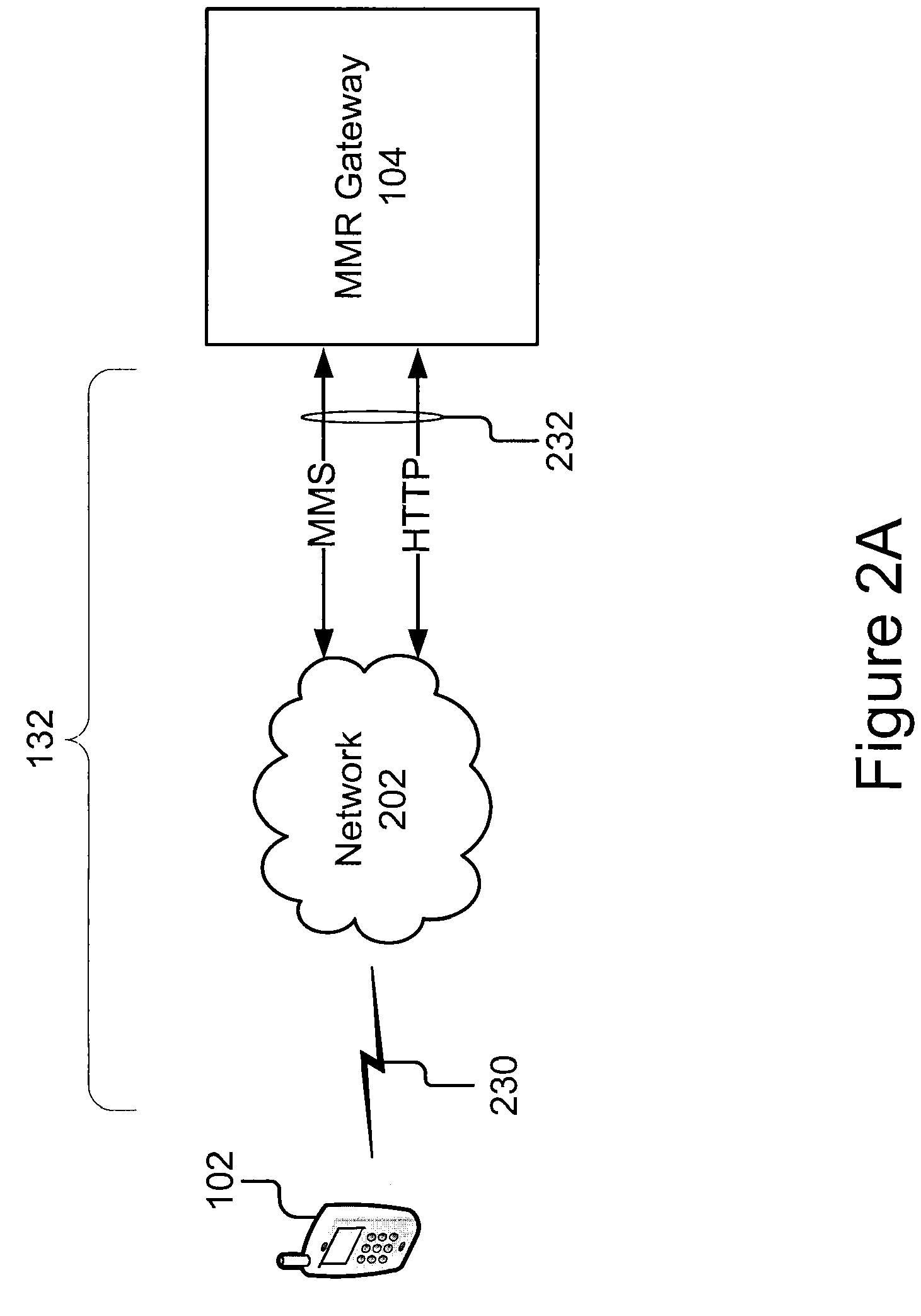

A MMR system for newspaper publishing comprises a plurality of mobile devices, an MMR gateway, an MMR matching unit and an MMR publisher. The MMR matching unit receives an image query from the MMR gateway and sends it to one or more of the recognition units to identify a result including a document, the page and the location on the page. The MMR system also includes a quality predictor as a plug-in installed on the mobile device to filter images before they are included as part of a retrieval request or as part of the MMR matching unit. The quality predictor comprises an input for receiving recognition algorithm information, a vector calculator, a score generator and a scoring module. The quality predictor receives as inputs an image query, context information and device parameters, and generates an outputs a recognizability score. The present invention also includes a method for generating robustness features.

Owner:RICOH KK

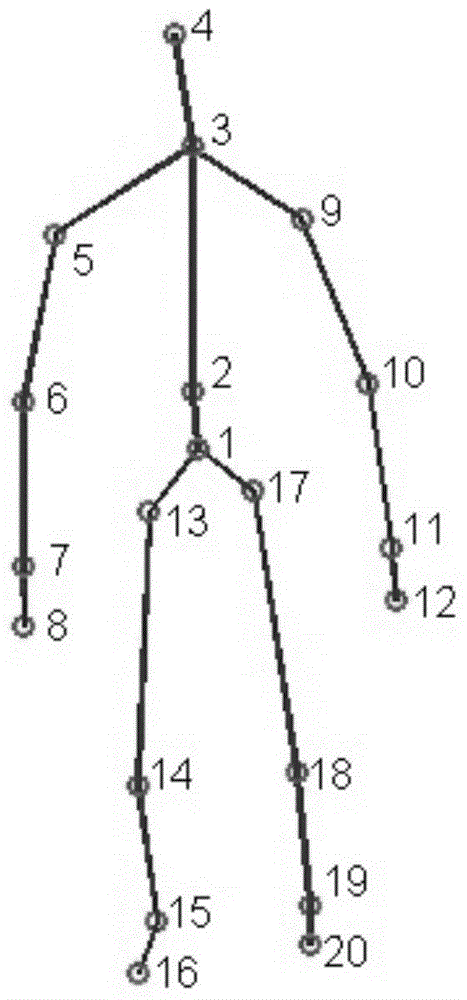

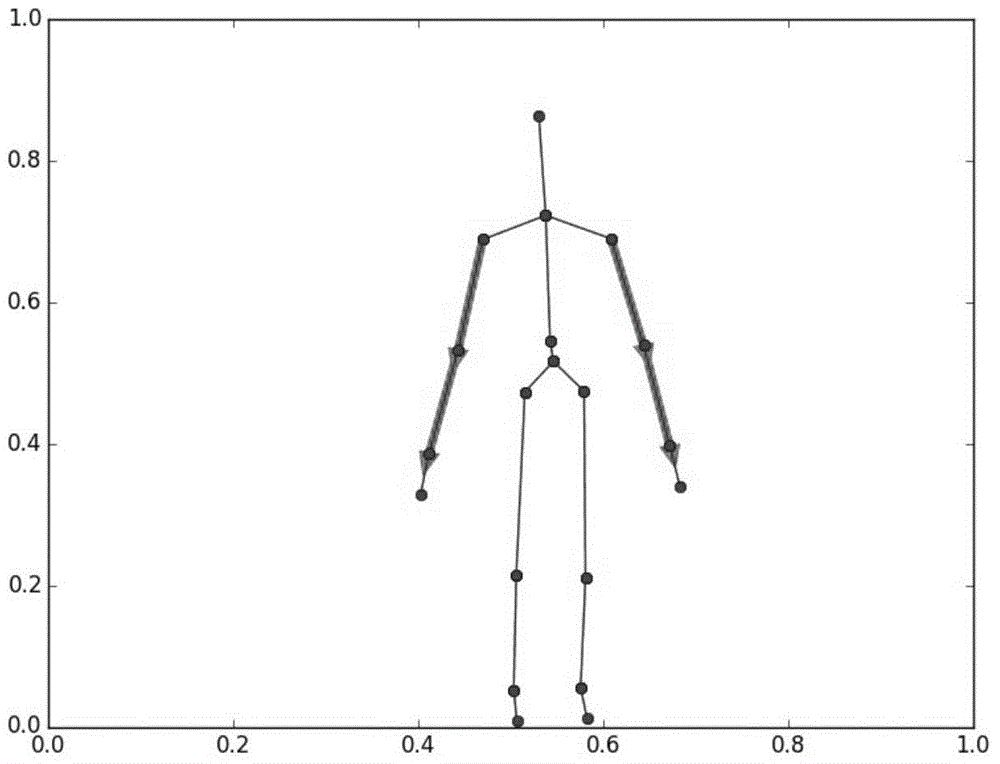

Parallelized human body behavior identification method

InactiveCN104899561AIncrease training rateReduce generationCharacter and pattern recognitionExtensibilityHuman body

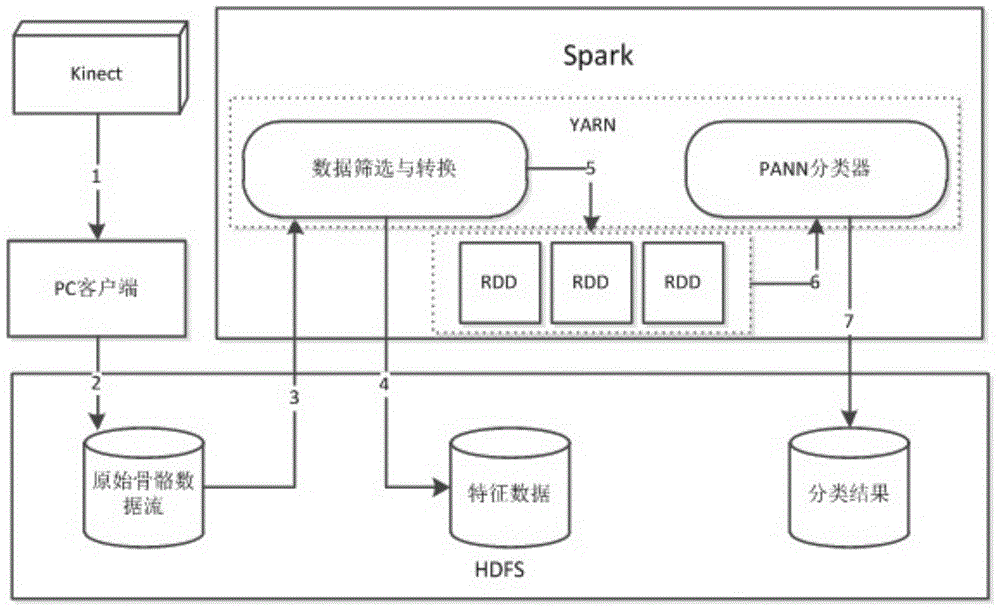

The present invention discloses a parallelized human body behavior identification method. According to the method, skeleton data of Kinect is used as input; a distributed behavior identification algorithm is implemented based on a Spark computing framework; and a complete parallel identifying process is formed. Acquisition of the skeleton data of a human body is based on scene depth acquisition capacity of Kinect and the data is preprocessed to ensure invariability of displacement and scale of characteristics; and a human body structural vector, joint included angle information and skeleton weight bias are respectively selected for static behavior characteristics and a dynamic behavior searching algorithm for a structural similarity is provided. On the identification algorithm, a neural network algorithm is parallelized on Spark; a quasi-newton method L-BFGS is adopted to optimize a network weight updating process; and the training speed is obviously increased. According to an identification platform, a Hadoop distributed file system HDFS is used as a behavior data storage layer; Spark is applied to a universal resource manager YARN; the parallel neural network algorithm is used as an upper application; and the integral system architecture has excellent extendibility.

Owner:SOUTH CHINA UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com