3D (three-dimensional) convolutional neural network based human body behavior recognition method

A technology of convolutional neural network and recognition method, applied in the field of human behavior recognition based on 3D convolutional neural network, to achieve the effect of high representativeness of extracted features, fast extraction speed and strong anti-interference

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

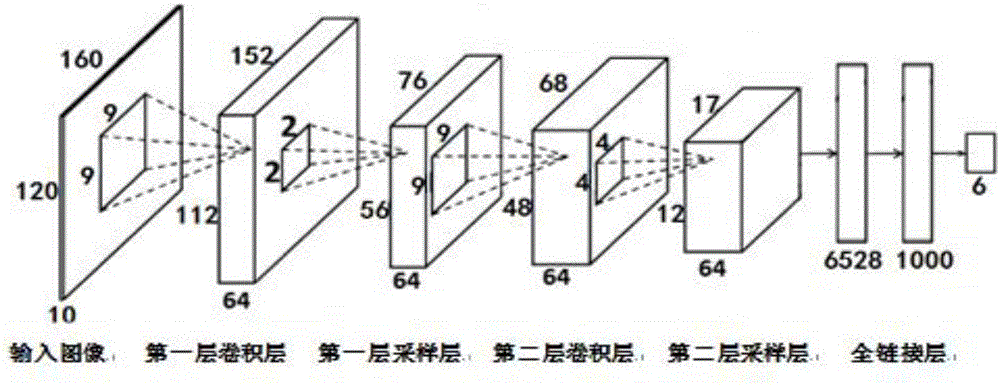

[0044] The specific implementation manners of the present invention will be further described in detail below in conjunction with the accompanying drawings.

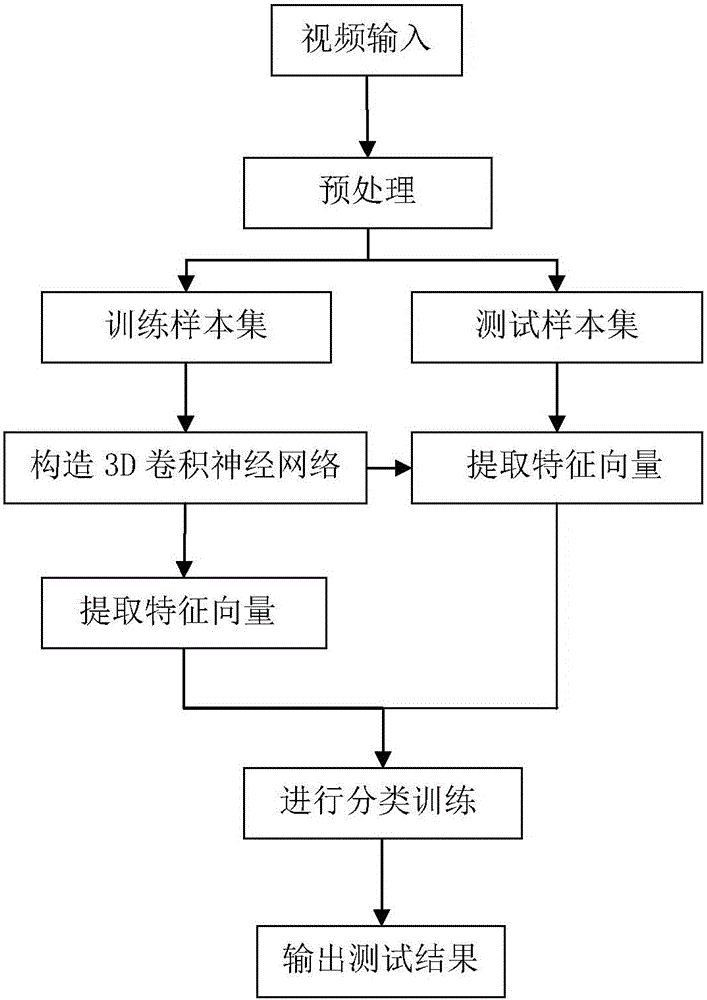

[0045] combined with figure 1 The concrete steps of the present invention are described as follows:

[0046] Step 1, video input.

[0047] Input the video images in the six video files of walking, jogging, running, boxing, handwaving, and handclapping in the KTH dataset into the computer, read the video images frame by frame, and obtain image information.

[0048] Step 2, preprocessing.

[0049] In the first step, the images with obvious human behavior characteristics are screened from the image information, and the screened images are saved. Observe the image information of each human behavior, and manually delete blank images without human body and images with less than two-thirds of the body's limbs.

[0050] In the second step, the size of the screened image is unified into 120×160 pixels to obtain an image of the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com