Patents

Literature

4627 results about "Speech identification" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Speech ID helps protect the authenticity and accuracy of speech by helping the hearing aid to prioritize conversation in difficult listening situations. This feature works to isolate the unique frequencies associated with various letters and words and helps maintain the crispness of conversation. Acuity Directionality.

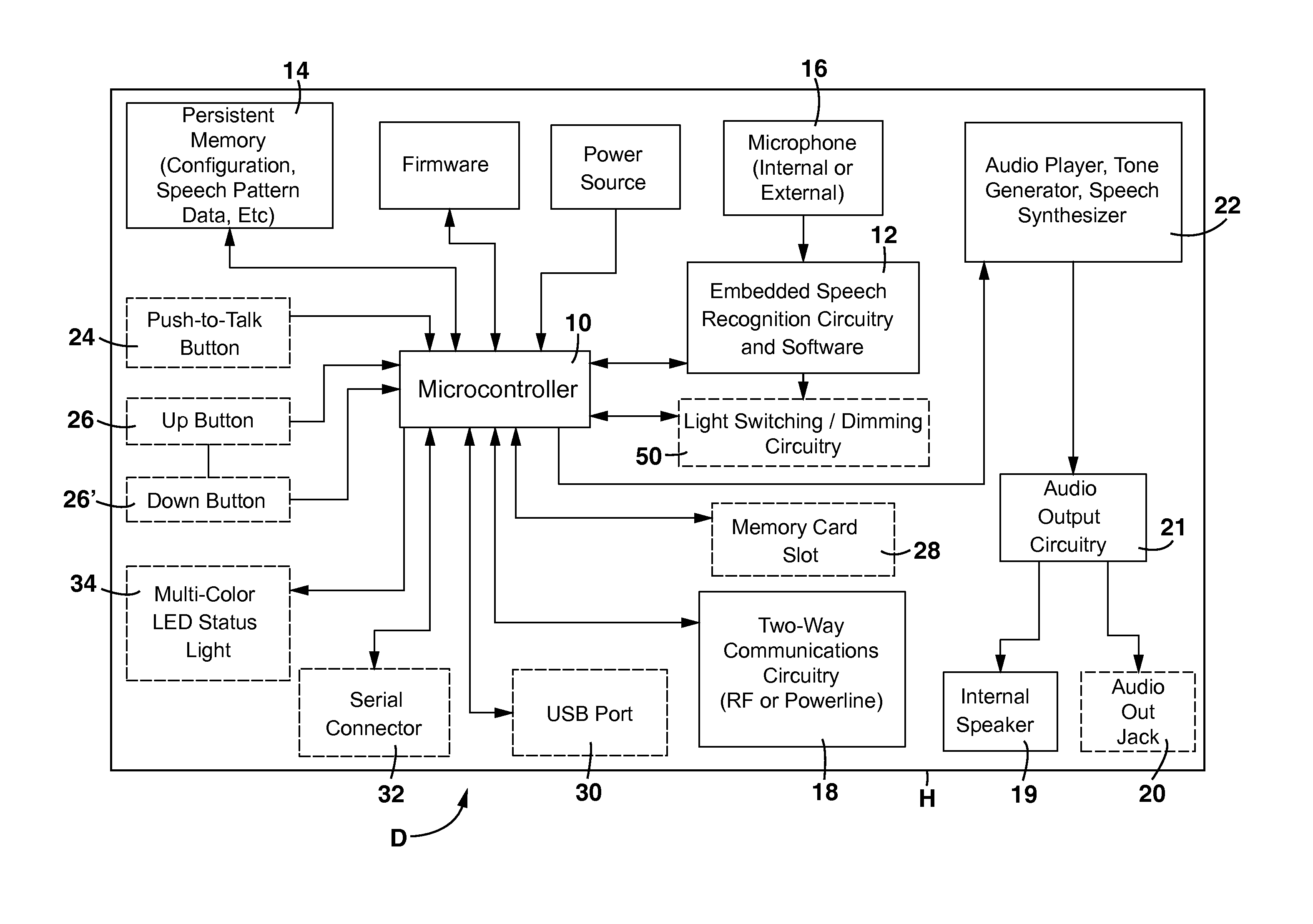

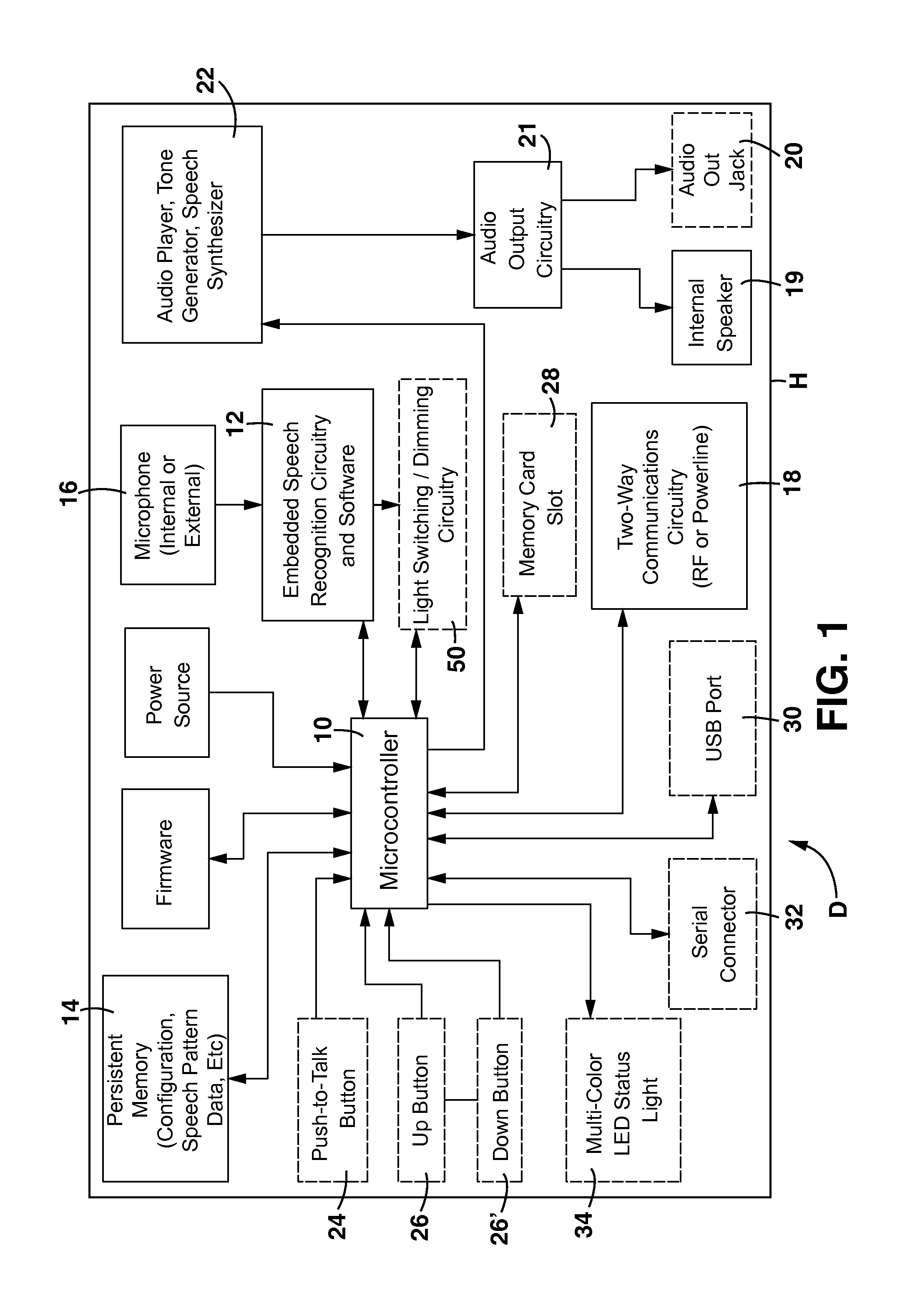

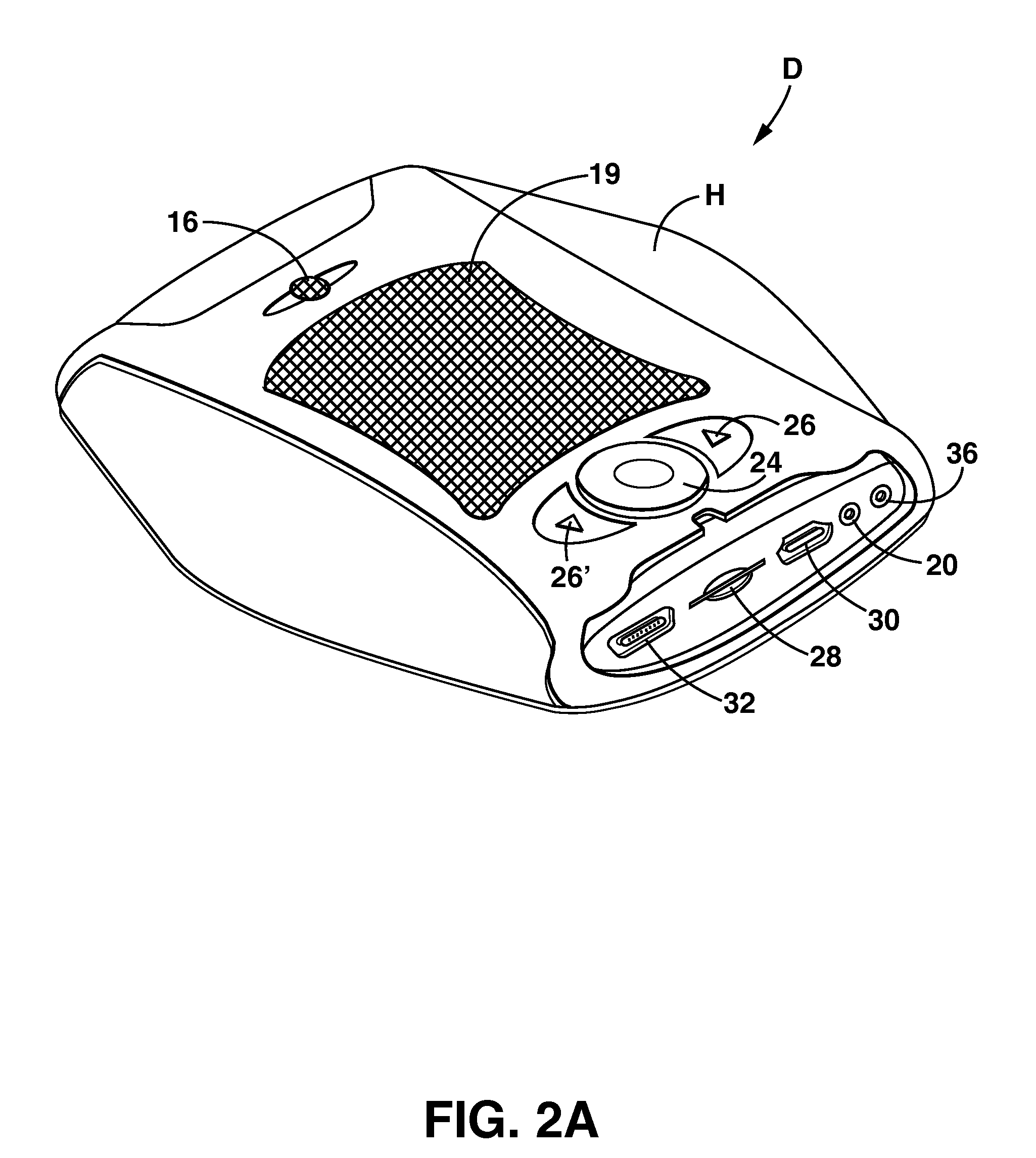

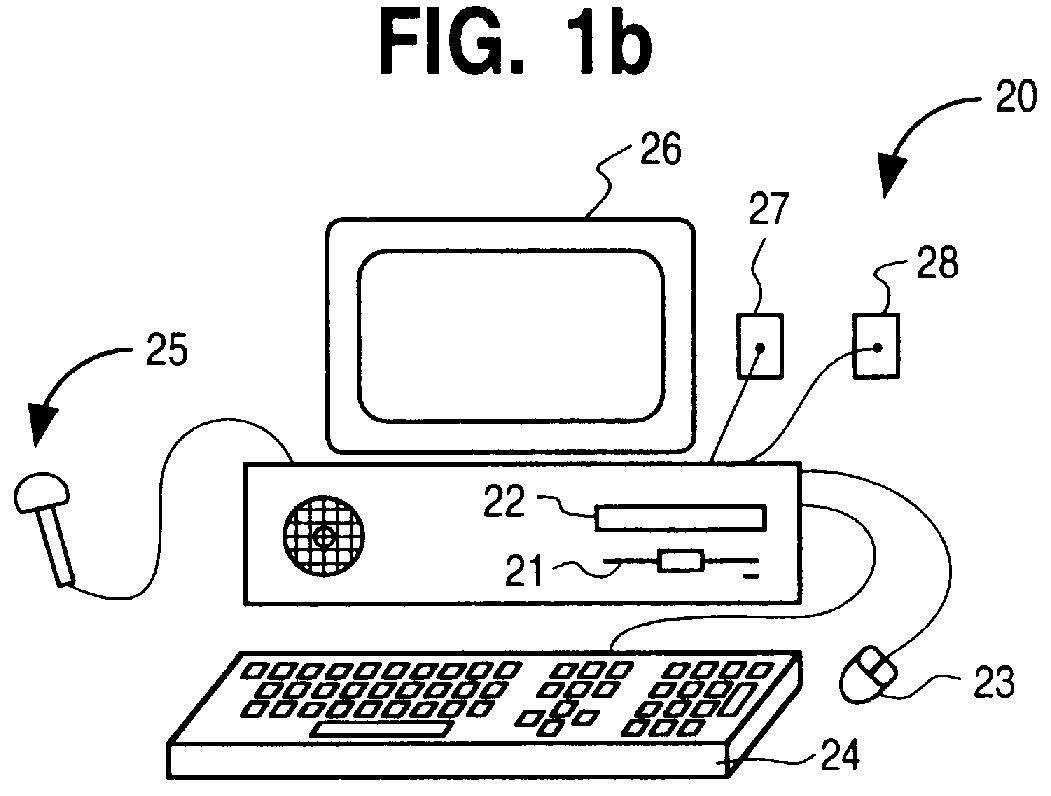

Interactive speech recognition device and system for hands-free building control

A self-contained wireless interactive speech recognition control device and system that integrates with automated systems and appliances to provide totally hands-free speech control capabilities for a given space. Preferably, each device comprises a programmable microcontroller having embedded speech recognition and audio output capabilities, a microphone, a speaker and a wireless communication system through which a plurality of devices can communicate with each other and with one or more system controllers or automated mechanisms. The device may be enclosed in a stand-alone housing or within a standard electrical wall box. Several devices may be installed in close proximity to one another to ensure hands-free coverage throughout the space. When two or more devices are triggered simultaneously by the same speech command, real time coordination ensures that only one device will respond to the command.

Owner:ROSENBERGER THEODORE ALFRED

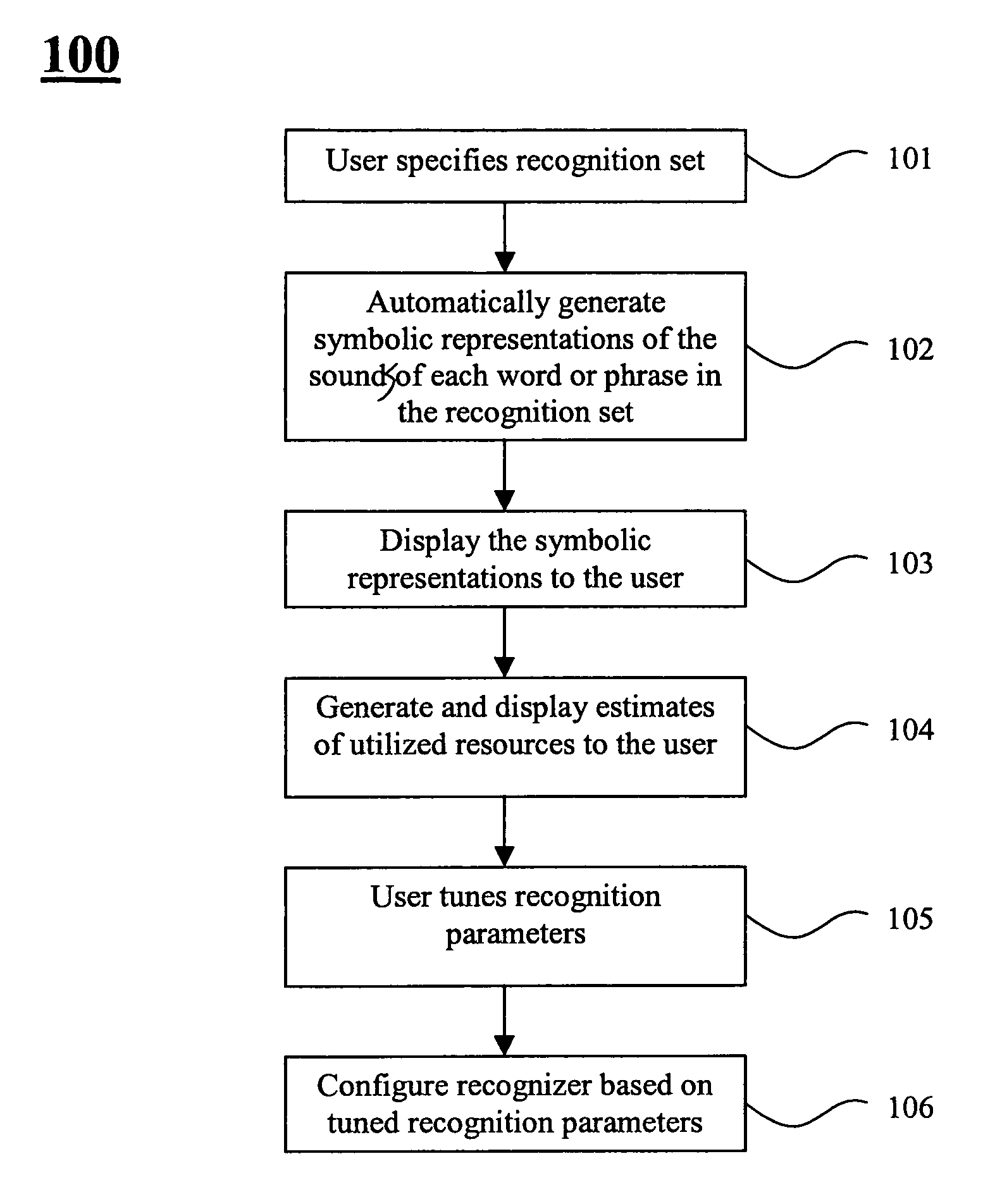

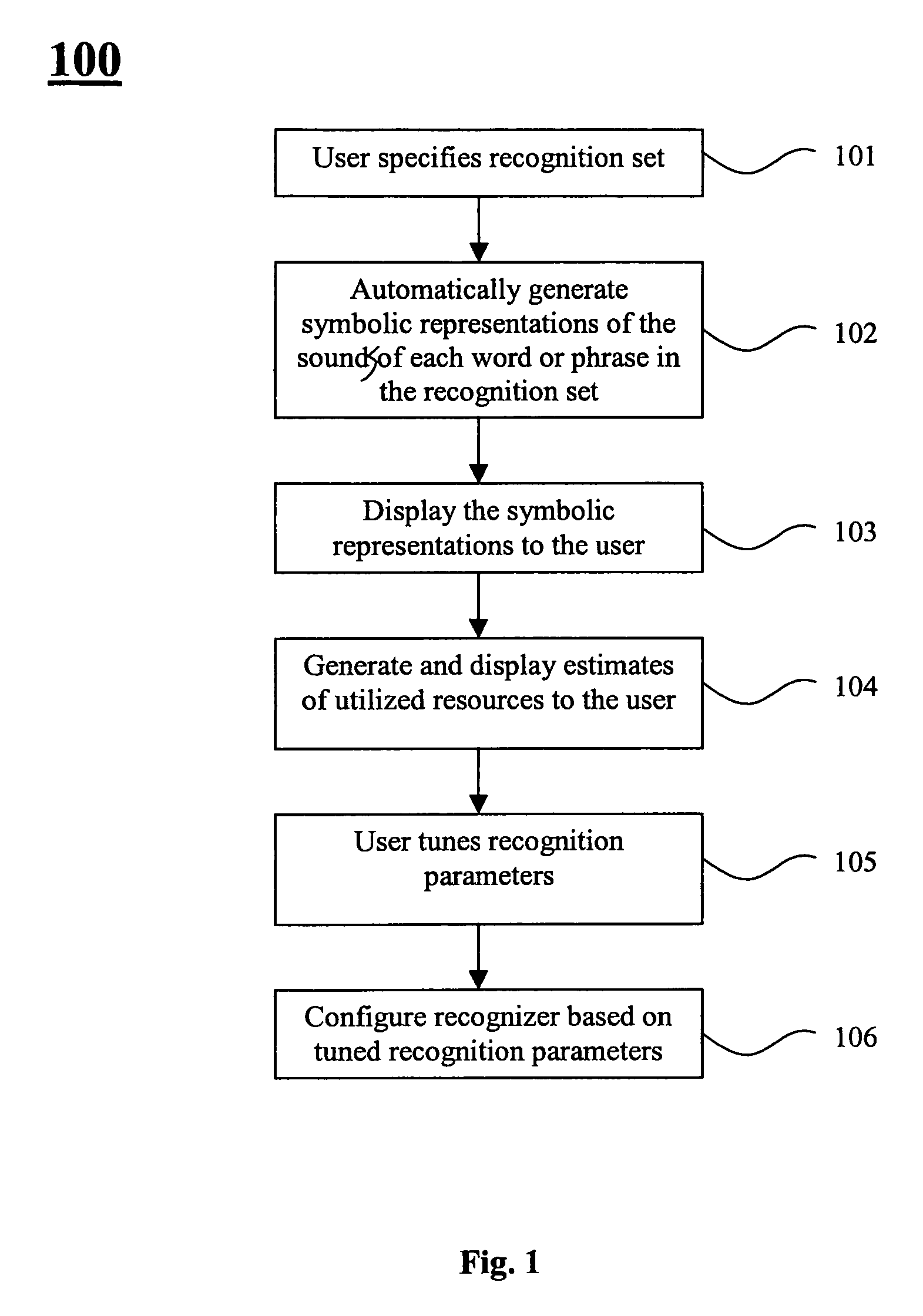

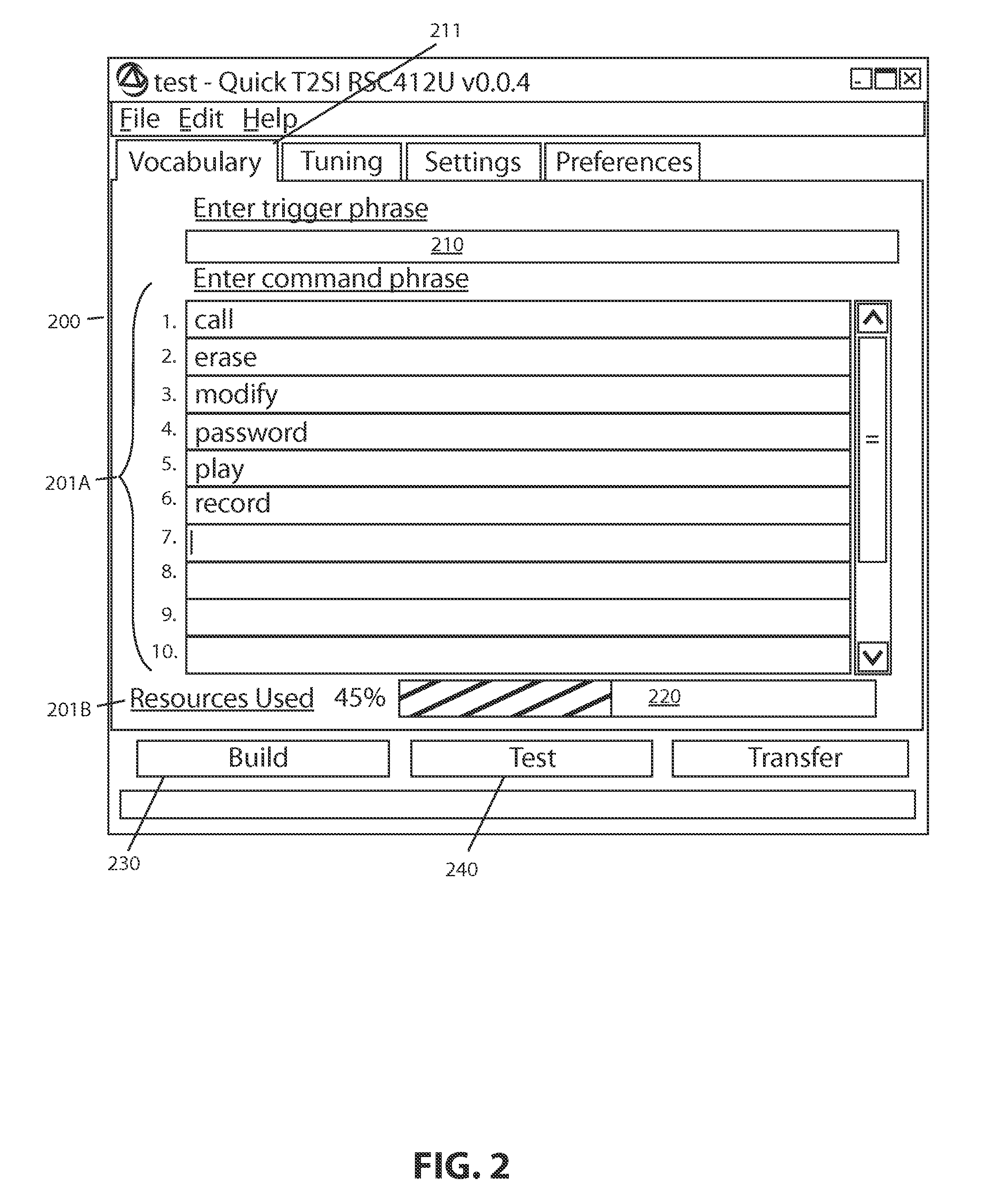

Method and apparatus of specifying and performing speech recognition operations

ActiveUS7720683B1Efficient executionShorten cycle timeSpeech recognitionSpeech identificationSpeech sound

A speech recognition technique is described that has the dual benefits of not requiring collection of recordings for training while using computational resources that are cost-compatible with consumer electronic products. Methods are described for improving the recognition accuracy of a recognizer by developer interaction with a design tool that iterates the recognition data during development of a recognition set of utterances and that allows controlling and minimizing the computational resources required to implement the recognizer in hardware.

Owner:SENSORY

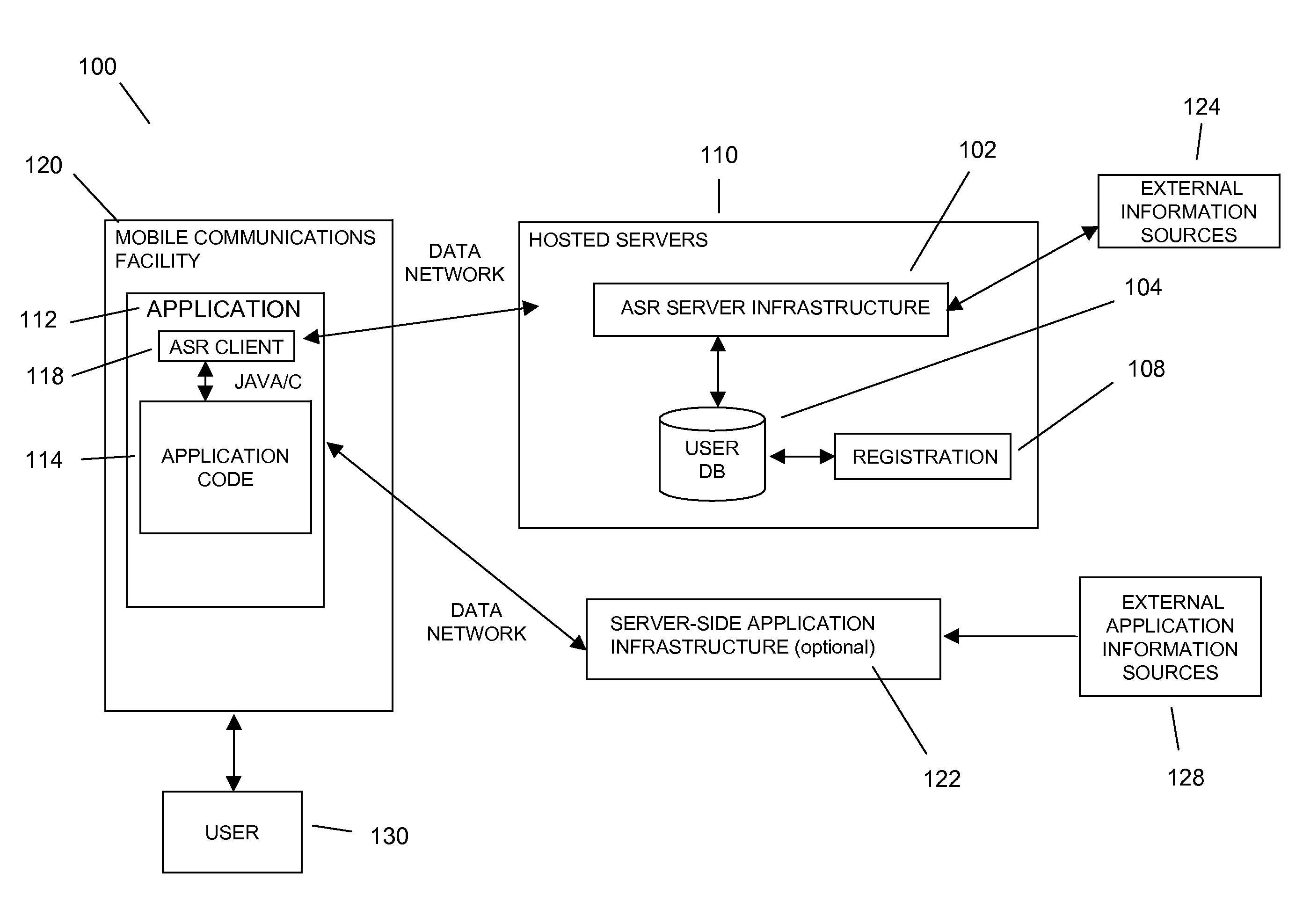

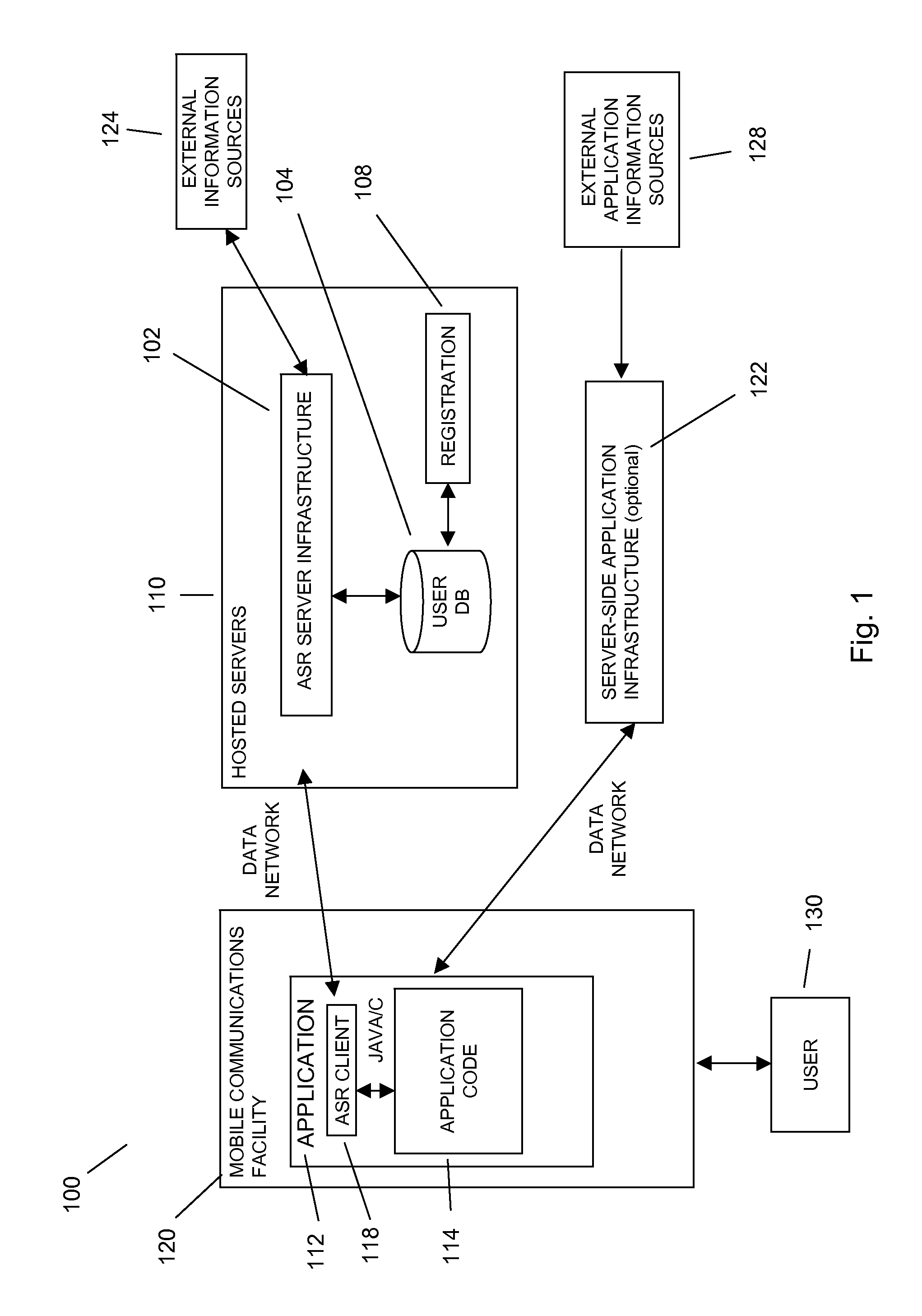

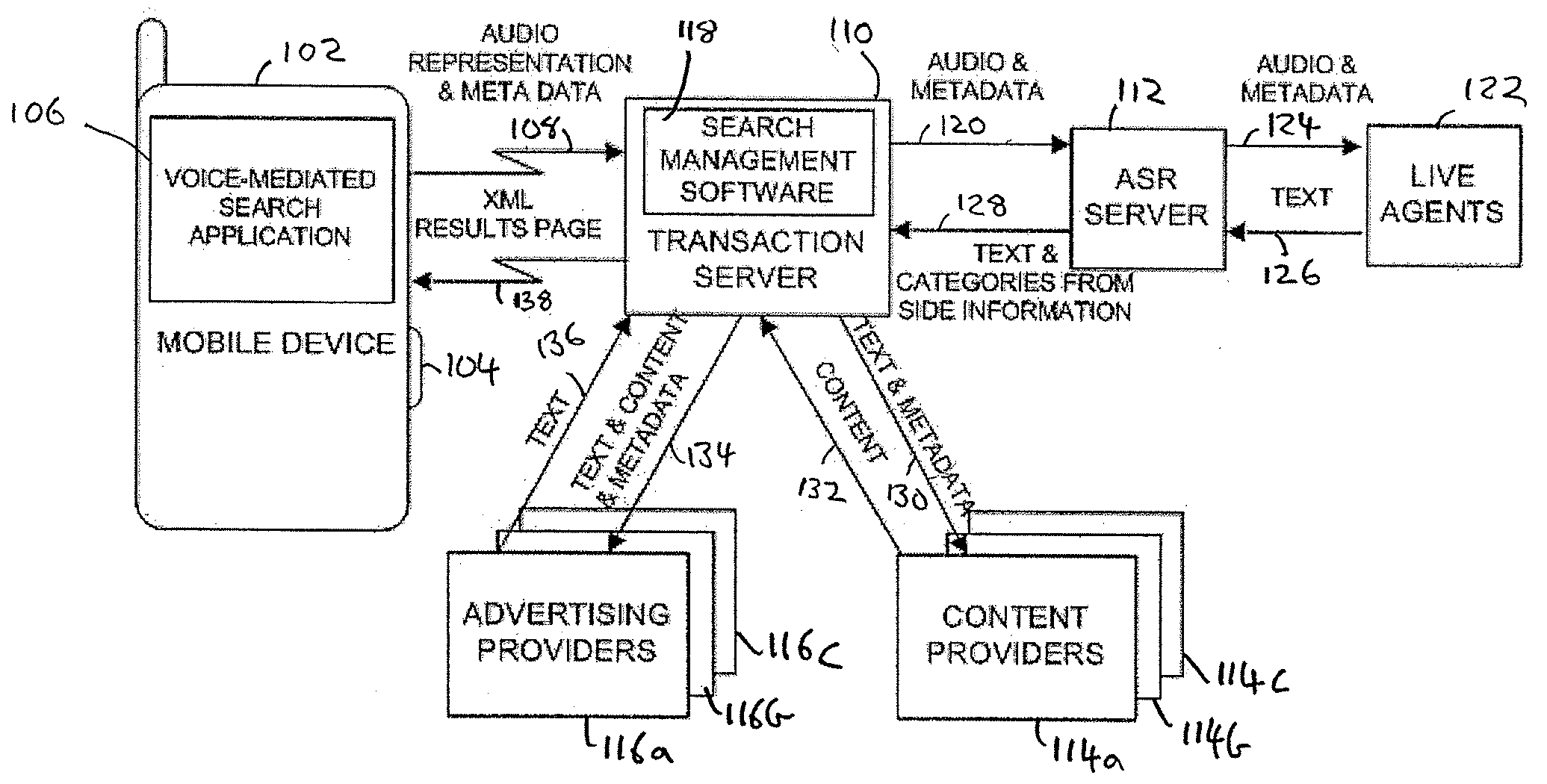

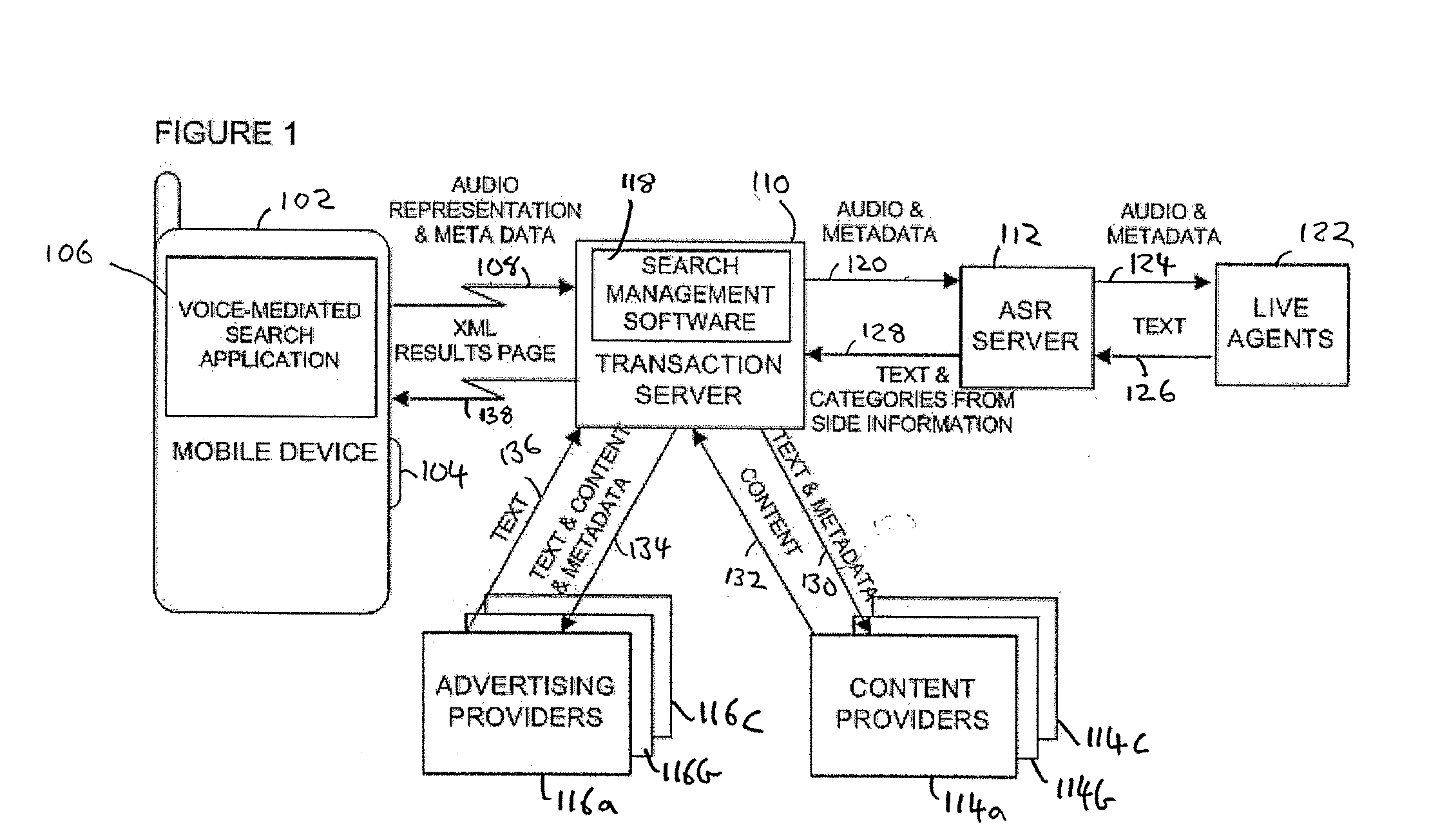

Command and control utilizing ancillary information in a mobile voice-to-speech application

In embodiments of the present invention improved capabilities are described for controlling a mobile communication facility utilizing ancillary information comprising accepting speech presented by a user using a resident capture facility on the mobile communication facility while the user engages an interface that enables a command mode for the mobile communications facility; processing the speech using a resident speech recognition facility to recognize command elements and content elements; transmitting at least a portion of the speech through a wireless communication facility to a remote speech recognition facility; transmitting information from the mobile communication facility to the remote speech recognition facility, wherein the information includes information about a command recognizable by the resident speech recognition facility and at least one of language, location, display type, model, identifier, network provider, and phone number associated with the mobile communication facility; generating speech-to-text results utilizing the remote speech recognition facility based at least in part on the speech and on the information related to the mobile communication facility; and transmitting the text results for use on the mobile communications facility.

Owner:VLINGO CORP

Consolidating Speech Recognition Results

InactiveUS20130073286A1Redundant elements are minimized or eliminatedChoose simpleSpeech recognitionSound input/outputRecognition algorithmSpeech identification

Candidate interpretations resulting from application of speech recognition algorithms to spoken input are presented in a consolidated manner that reduces redundancy. A list of candidate interpretations is generated, and each candidate interpretation is subdivided into time-based portions, forming a grid. Those time-based portions that duplicate portions from other candidate interpretations are removed from the grid. A user interface is provided that presents the user with an opportunity to select among the candidate interpretations; the user interface is configured to present these alternatives without duplicate elements.

Owner:APPLE INC

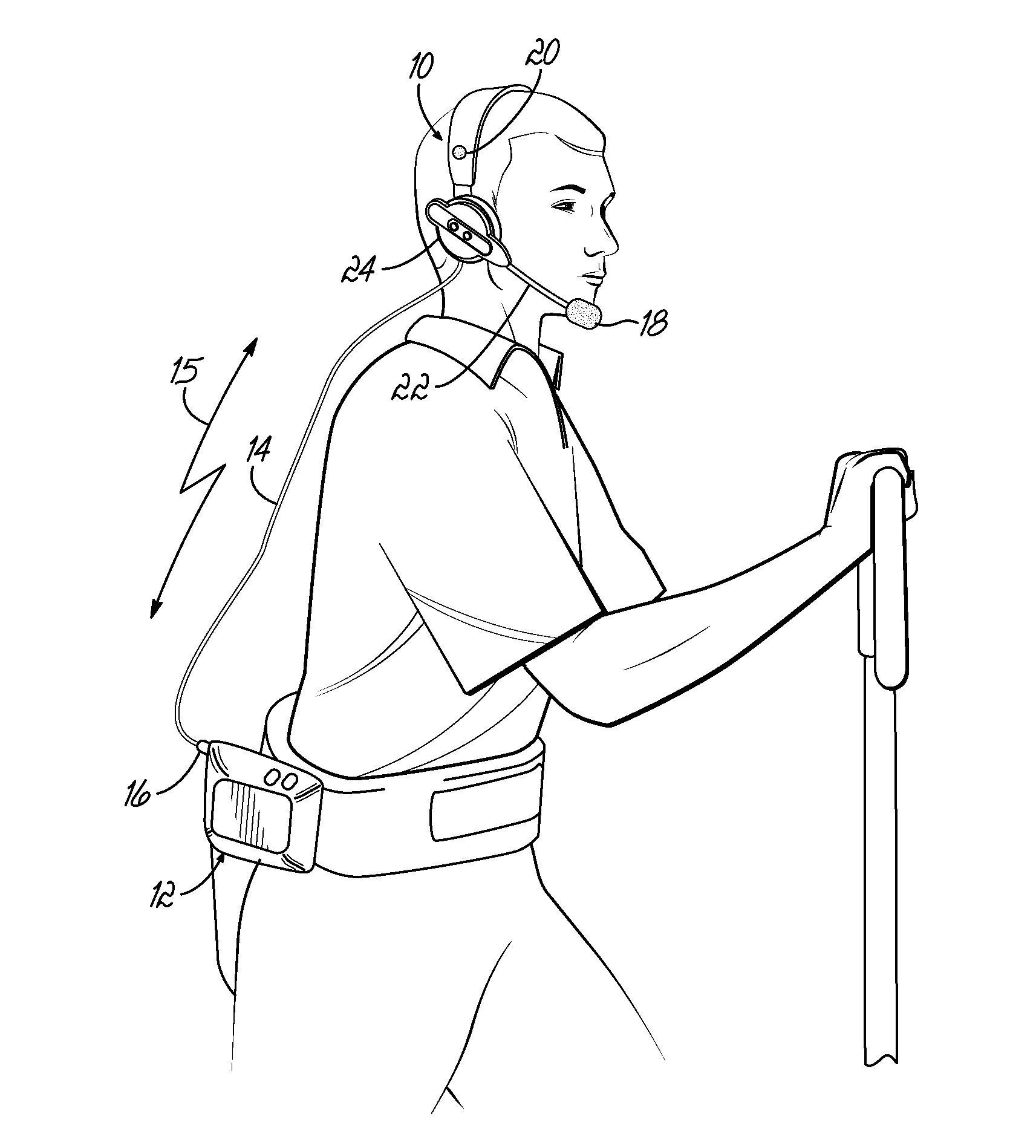

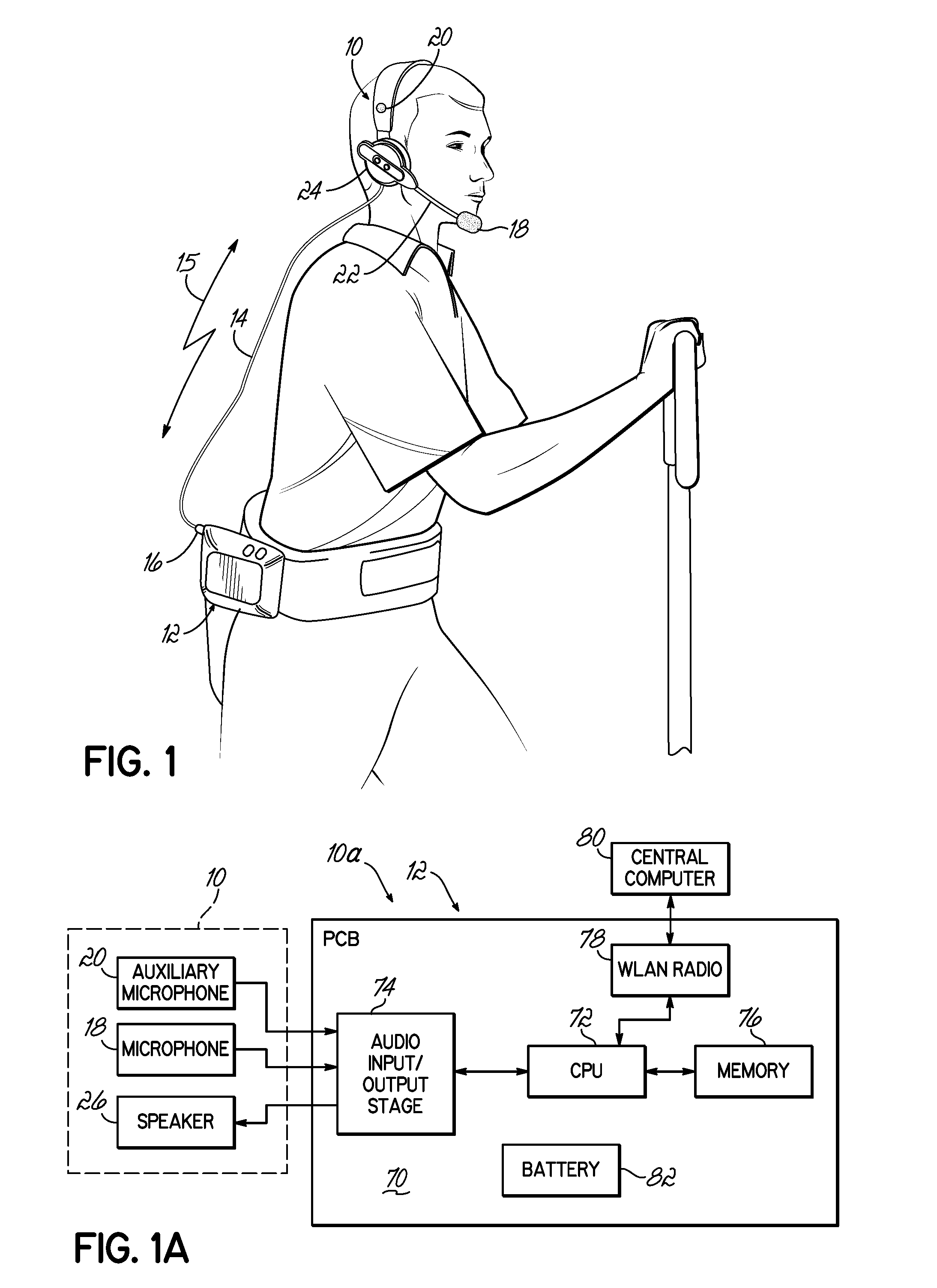

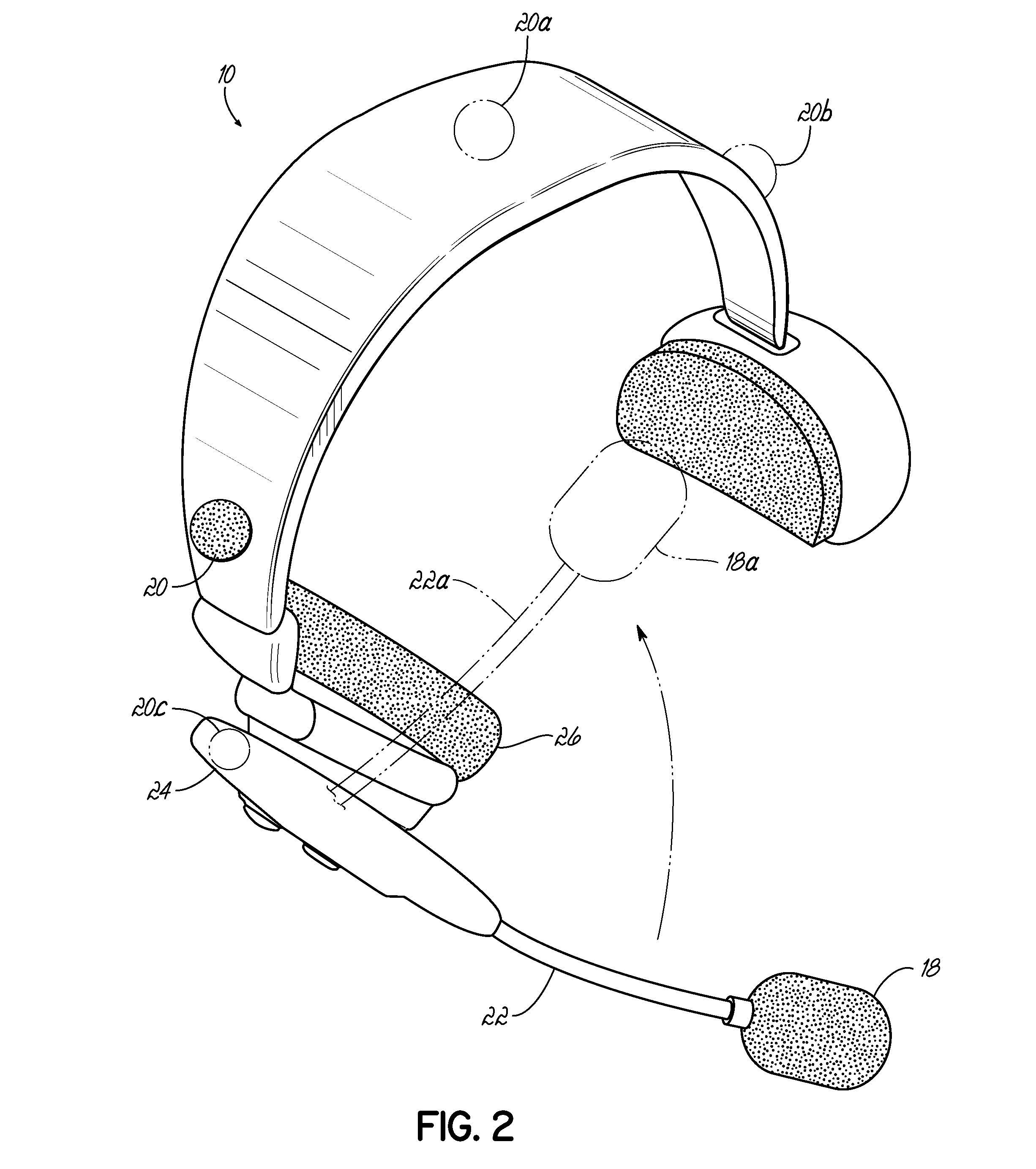

System and method for improving speech recognition accuracy in a work environment

Apparatus and method that improves speech recognition accuracy, by monitoring the position of a user's headset-mounted speech microphone, and prompting the user to reconfigure the speech microphone's orientation if required. A microprocessor or other application specific integrated circuit provides a mechanism for comparing the relative transit times between a user's voice, a primary speech microphone, and a secondary compliance microphone. The difference in transit times may be used to determine if the speech microphone is placed in an appropriate proximity to the user's mouth. If required, the user is automatically prompted to reposition the speech microphone.

Owner:VOCOLLECT

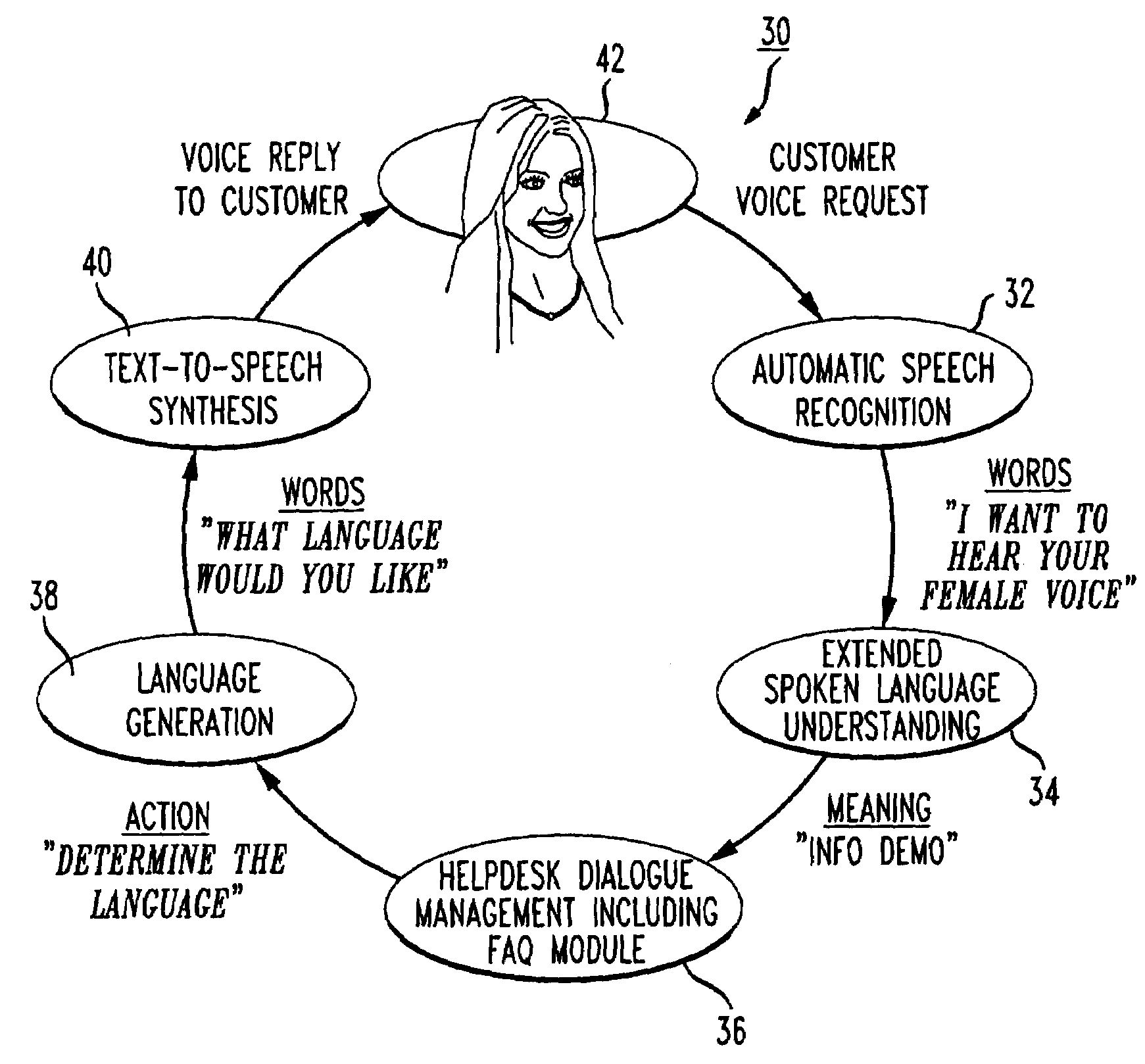

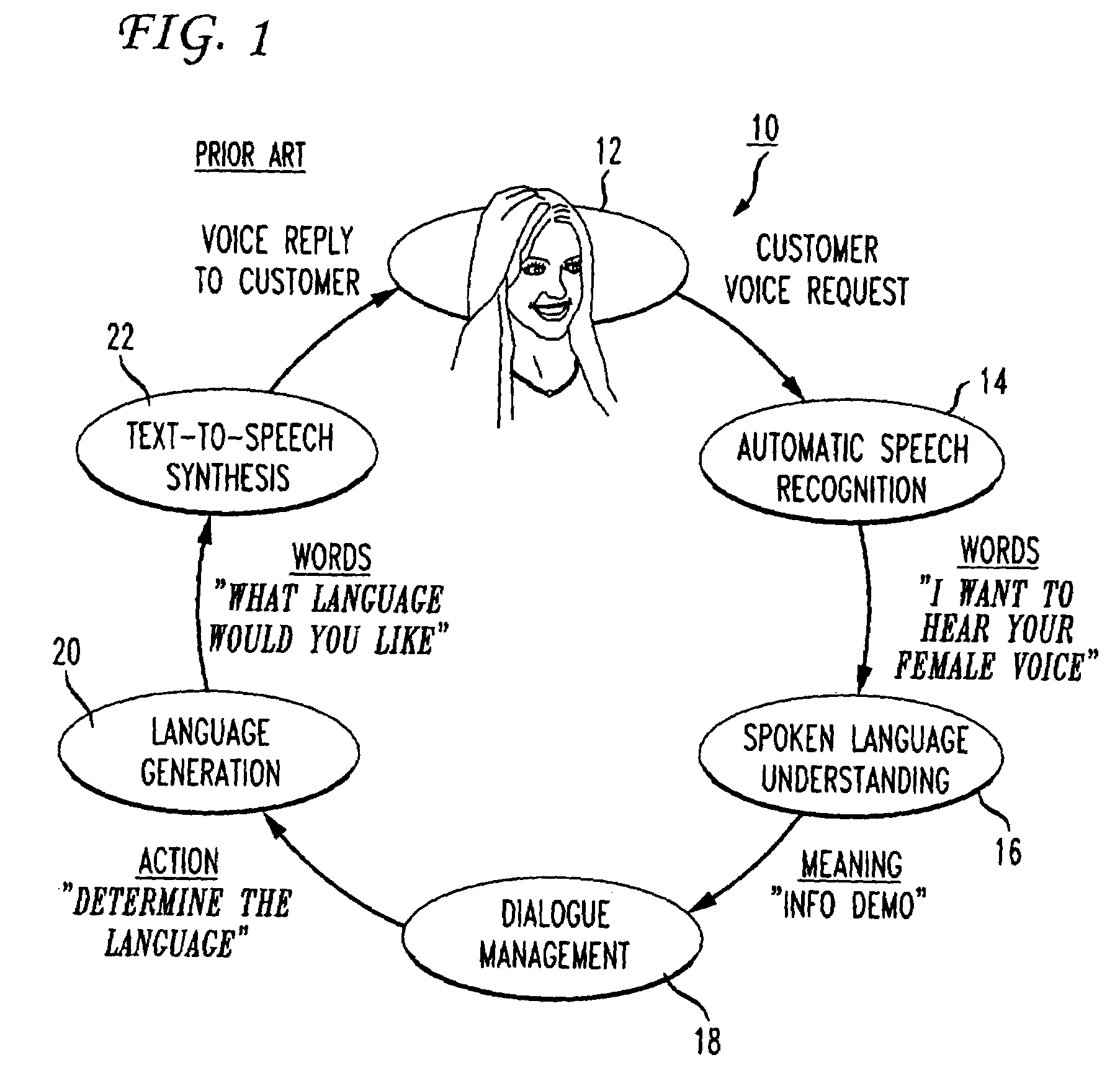

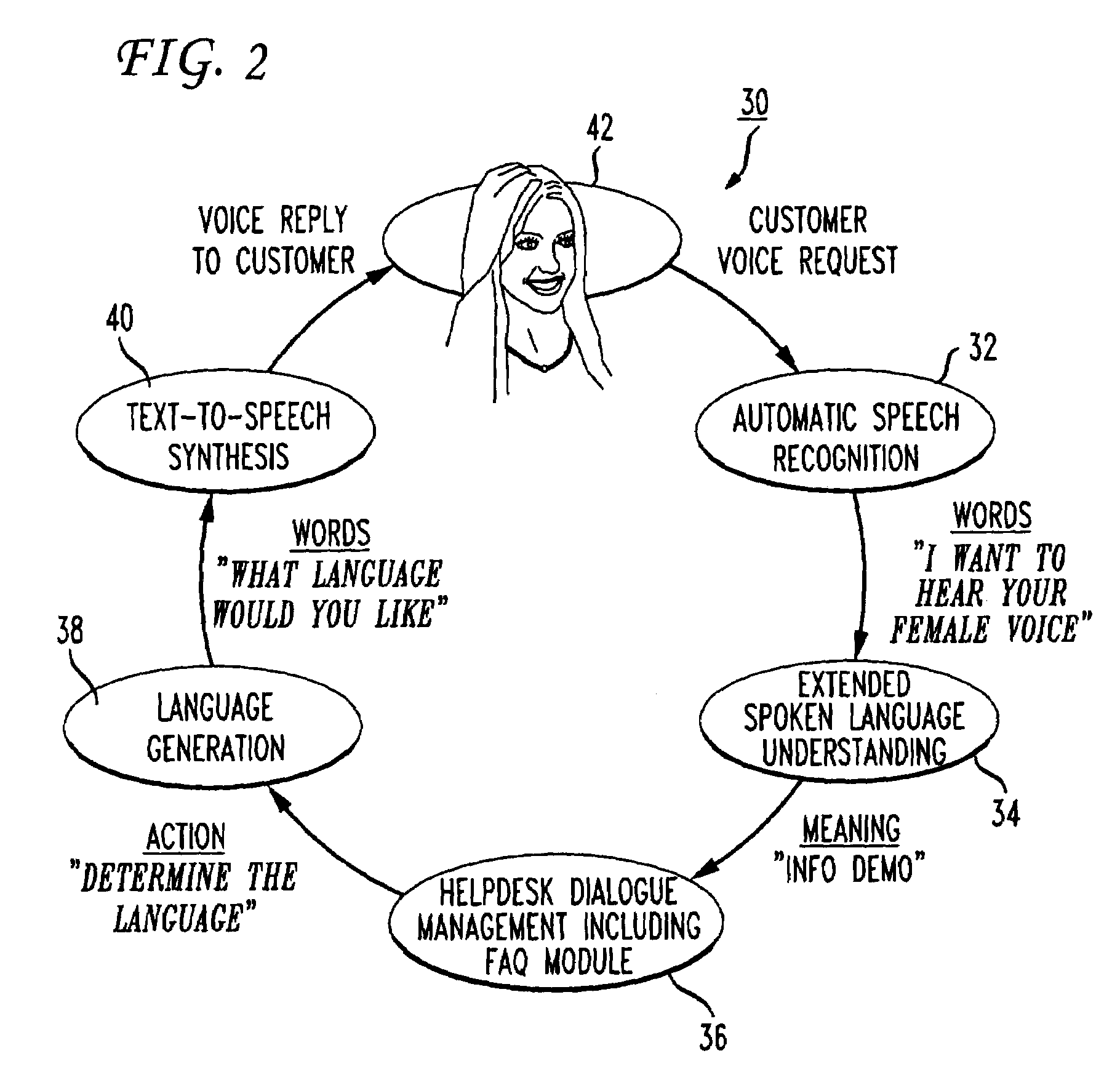

System for handling frequently asked questions in a natural language dialog service

InactiveUS7197460B1Improve customer relationshipEfficient mannerSpeech recognitionInput/output processes for data processingSpoken languageDialog management

A voice-enabled help desk service is disclosed. The service comprises an automatic speech recognition module for recognizing speech from a user, a spoken language understanding module for understanding the output from the automatic speech recognition module, a dialog management module for generating a response to speech from the user, a natural voices text-to-speech synthesis module for synthesizing speech to generate the response to the user, and a frequently asked questions module. The frequently asked questions module handles frequently asked questions from the user by changing voices and providing predetermined prompts to answer the frequently asked question.

Owner:NUANCE COMM INC

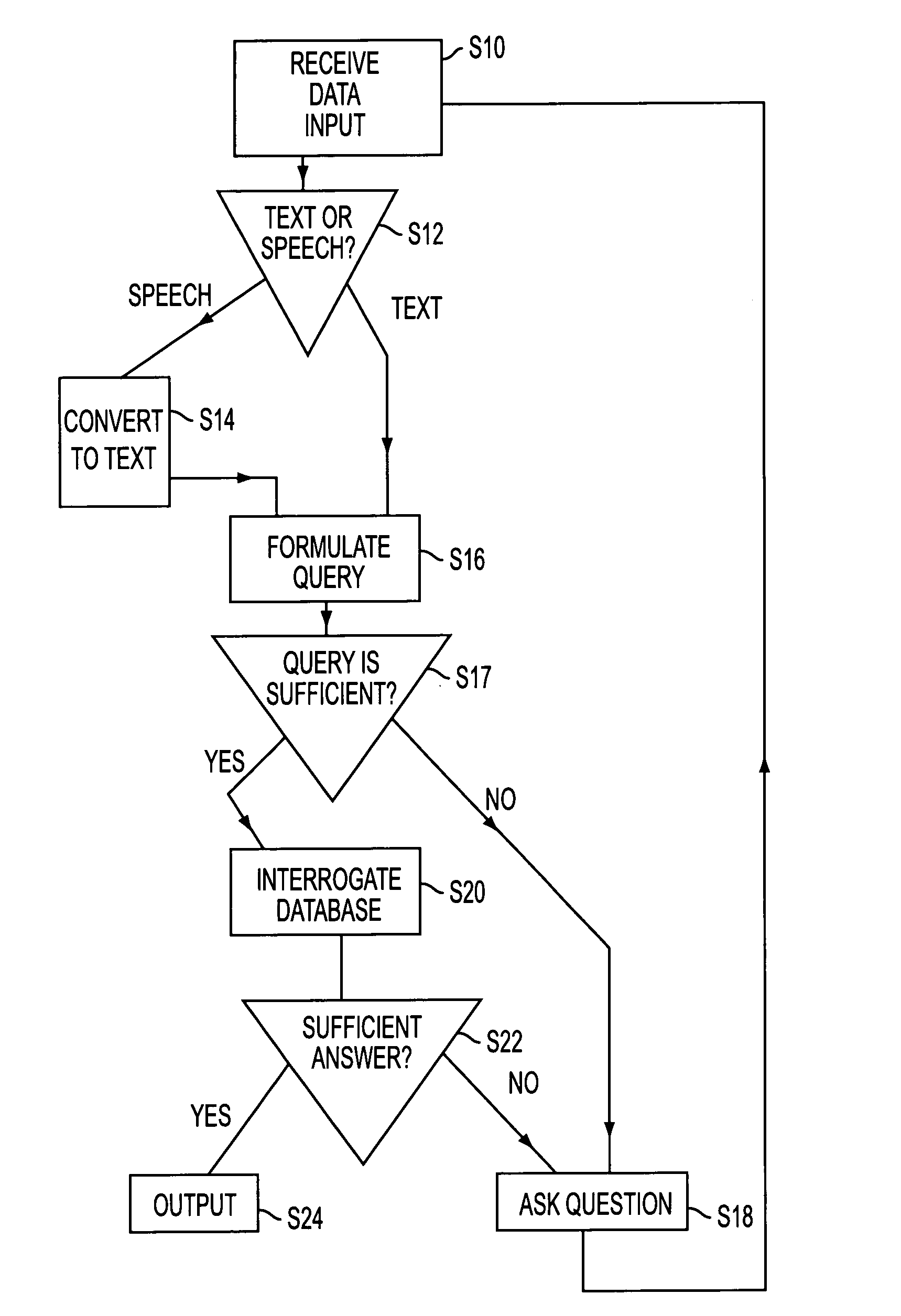

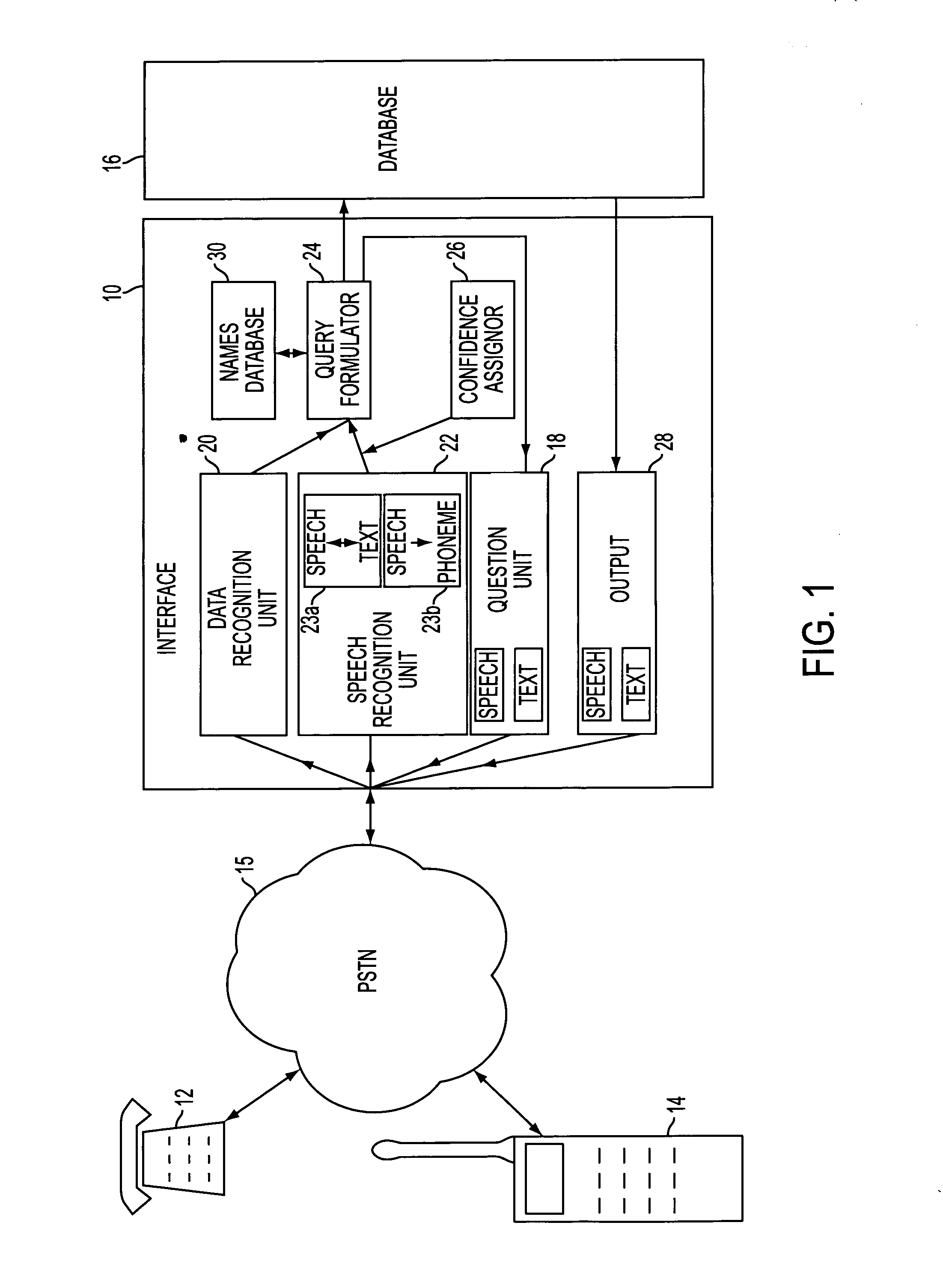

Automated database assistance using a telephone for a speech based or text based multimedia communication mode

InactiveUS6996531B2Digital data information retrievalAutomatic call-answering/message-recording/conversation-recordingAutomated databaseSpeech identification

An interface for remote human input for reading a database, the interface including an automatic voice question unit for eliciting speech input, a speech recognition unit for recognizing human speech input, and a data recognition unit for recognizing remote data input. The interface is associated with a database to search the database using the recognized input. A typical application is as an automated directory enquiry service.

Owner:AMAZON TECH INC

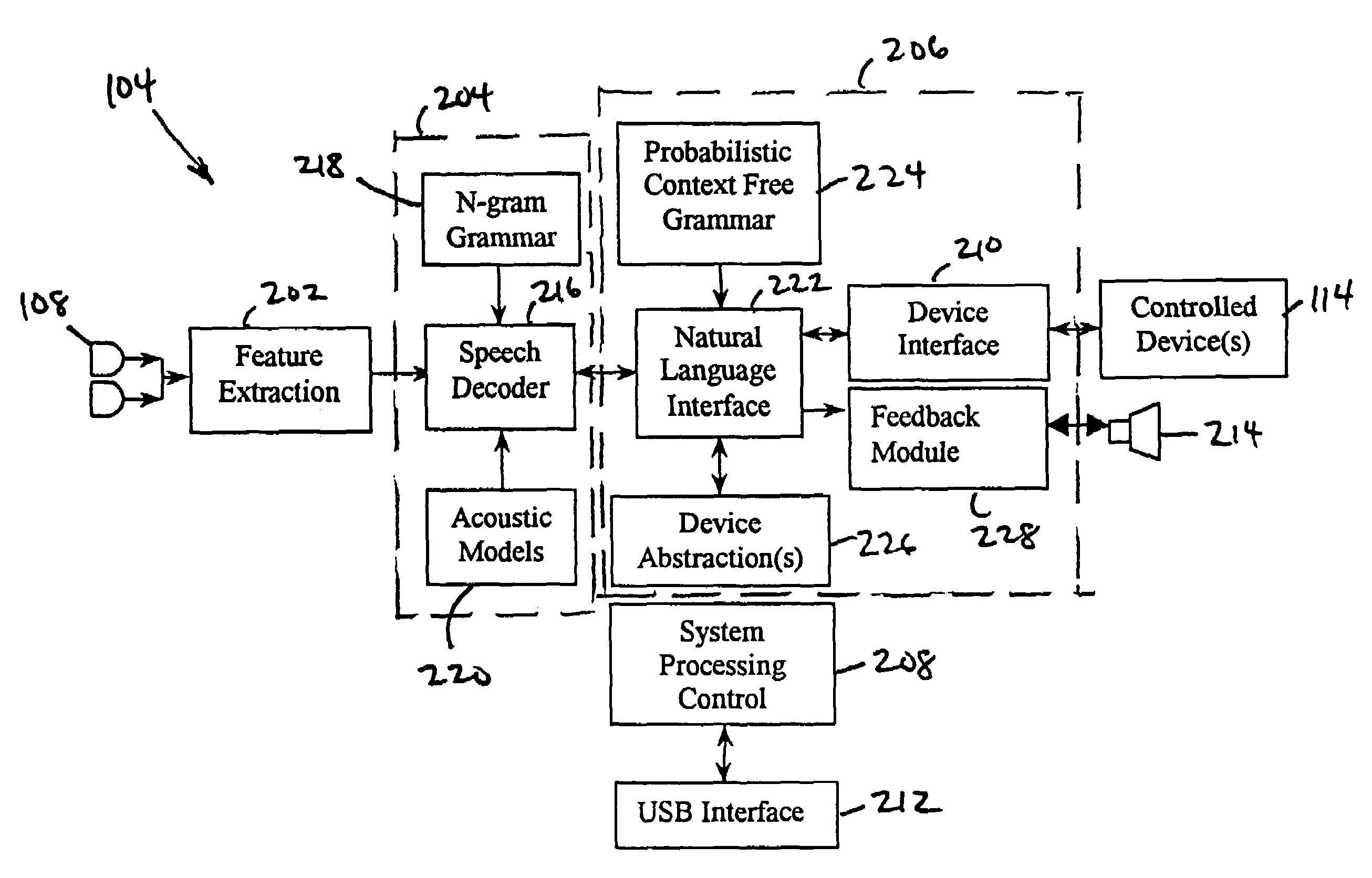

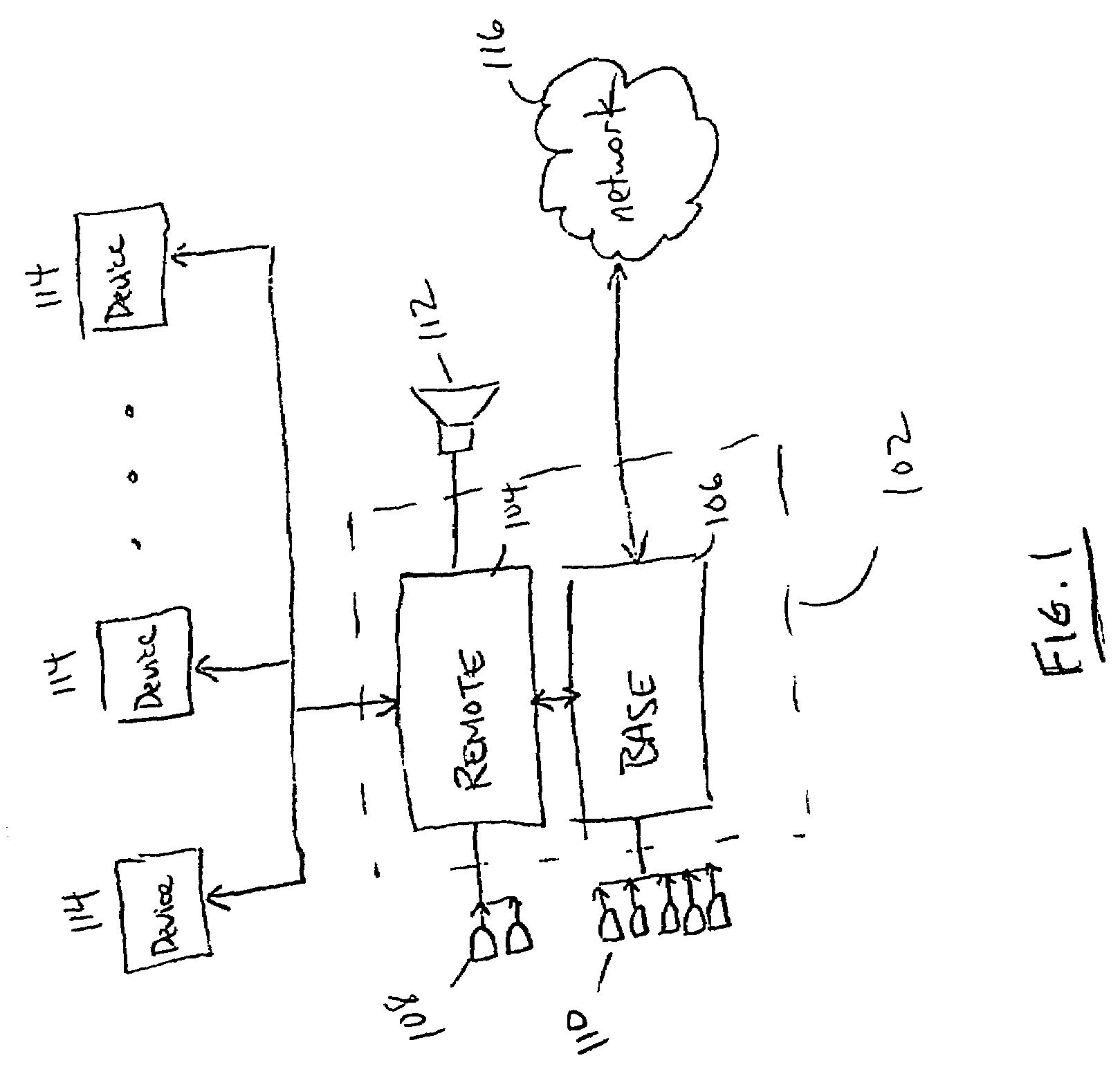

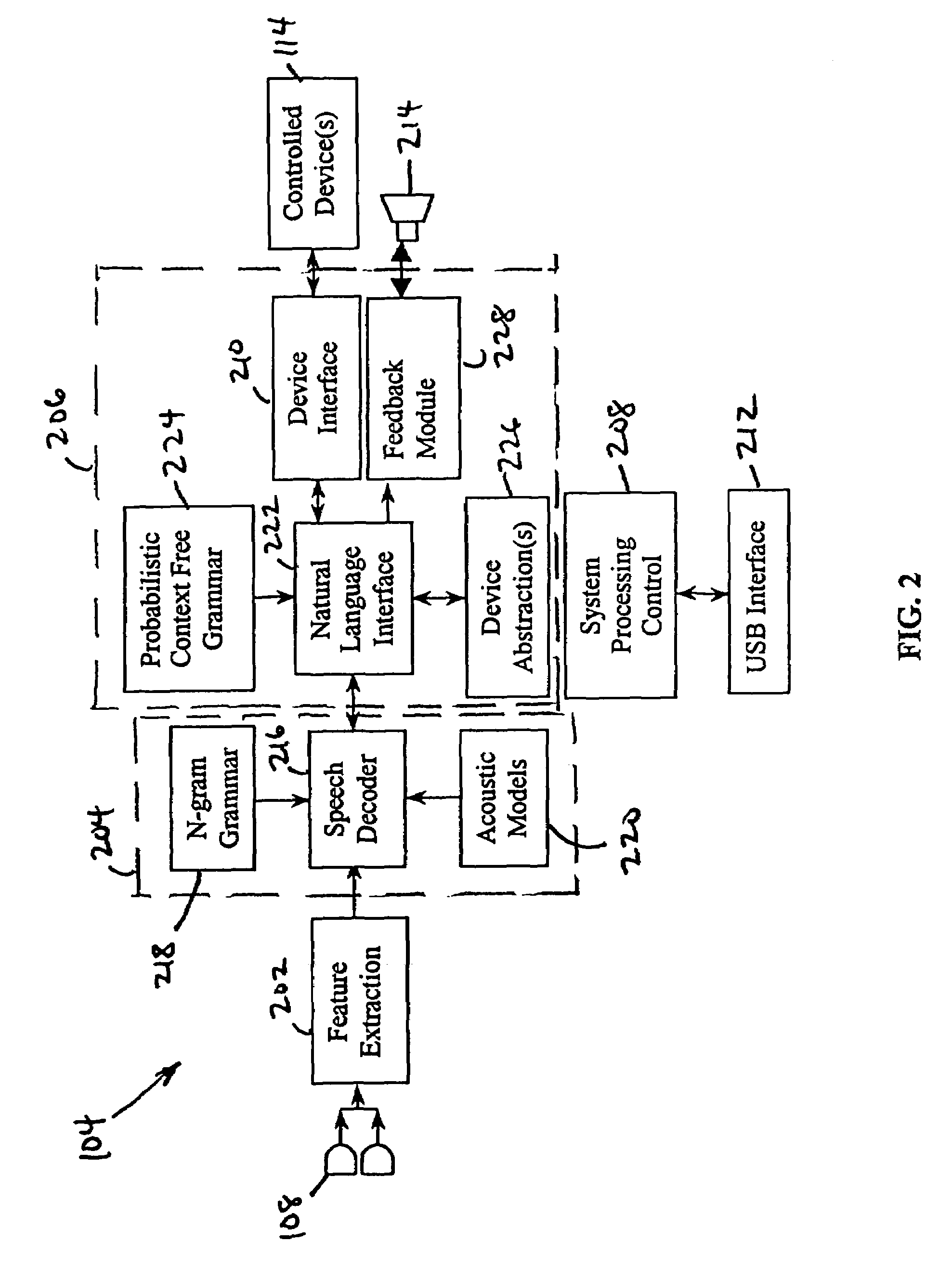

Natural language interface control system

InactiveUS7447635B1Speech recognitionSpecial data processing applicationsFeature extractionHide markov model

A natural language interface control system for operating a plurality of devices consists of a first microphone array, a feature extraction module coupled to the first microphone array, and a speech recognition module coupled to the feature extraction module, wherein the speech recognition module utilizes hidden Markov models. The system also comprises a natural language interface module coupled to the speech recognition module and a device interface coupled to the natural language interface module, wherein the natural language interface module is for operating a plurality of devices coupled to the device interface based upon non-prompted, open-ended natural language requests from a user.

Owner:SONY CORP +1

Performing actions identified in recognized speech

InactiveUS6839669B1Easy to handleEliminate processingSpeech recognitionSpeech identificationUtterance

A computer is used to perform recorded actions. The computer receives recorded spoken utterances of actions. The computer then performs speech recognition on the recorded spoken utterances to generate texts of the actions. The computer then parses the texts to determine properties of the actions. After parsing the texts, permits the user to indicate that the user has reviewed one or more actions. The computer then automatically carries out the actions indicated as having been reviewed by the user.

Owner:NUANCE COMM INC

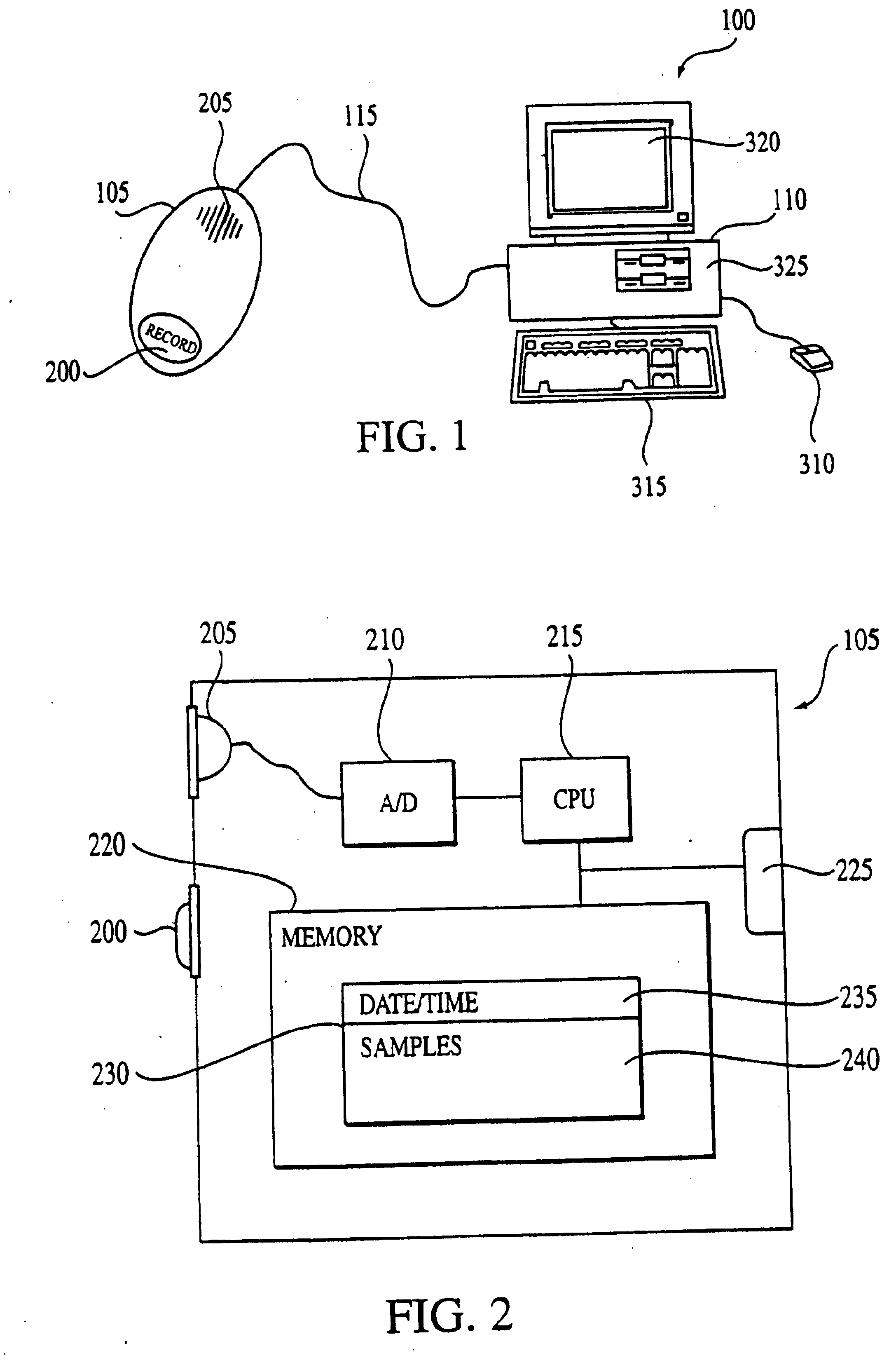

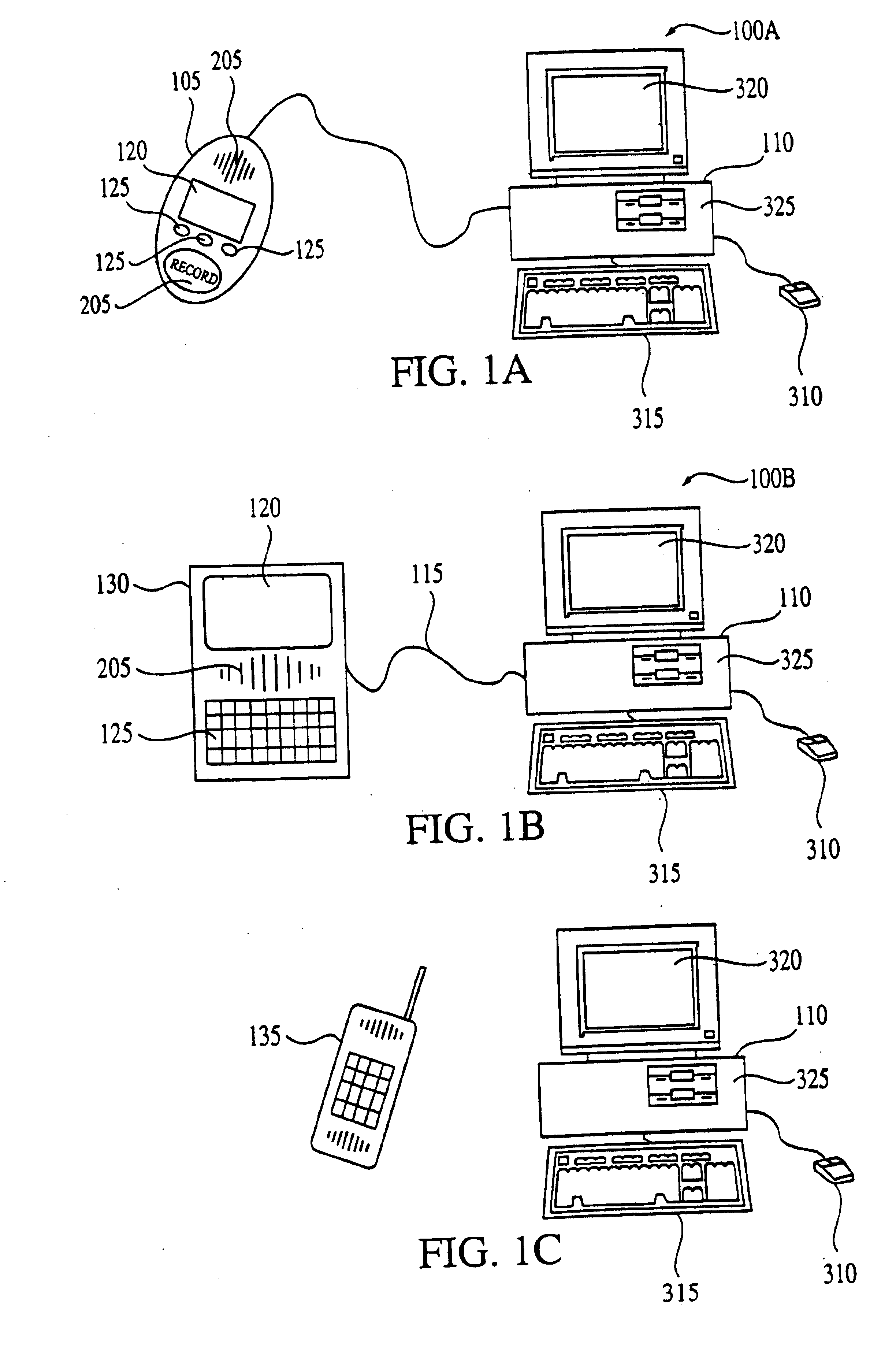

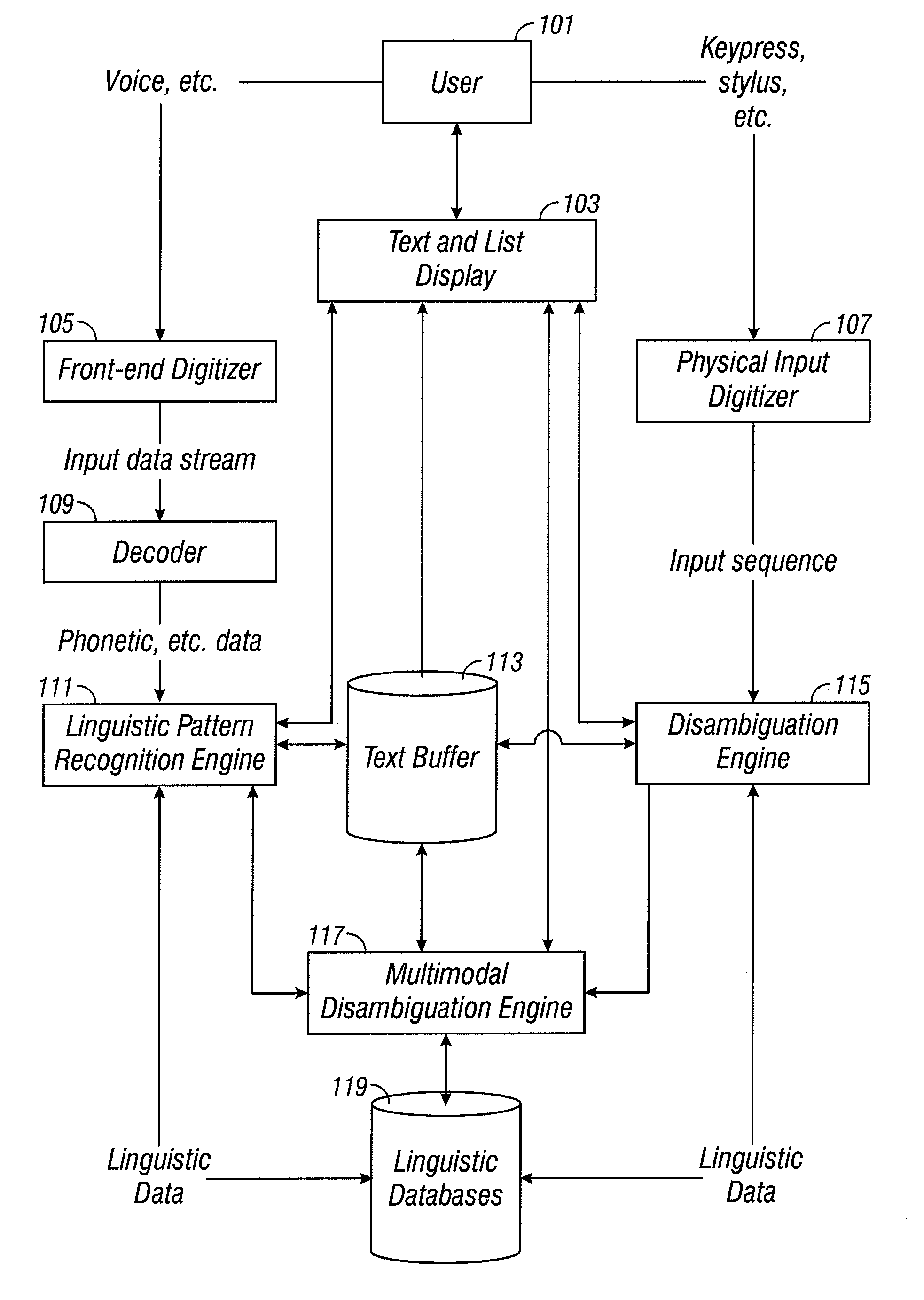

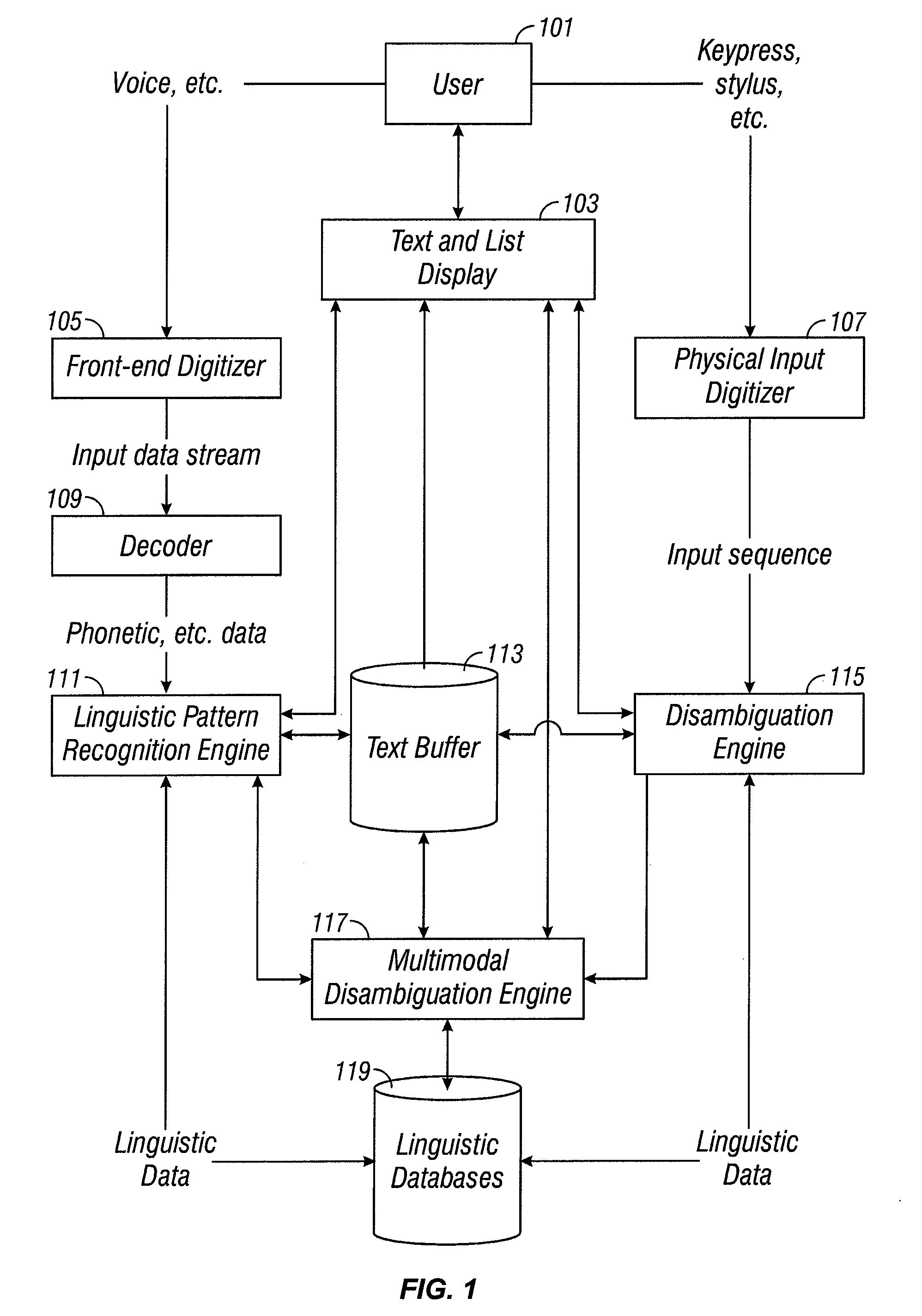

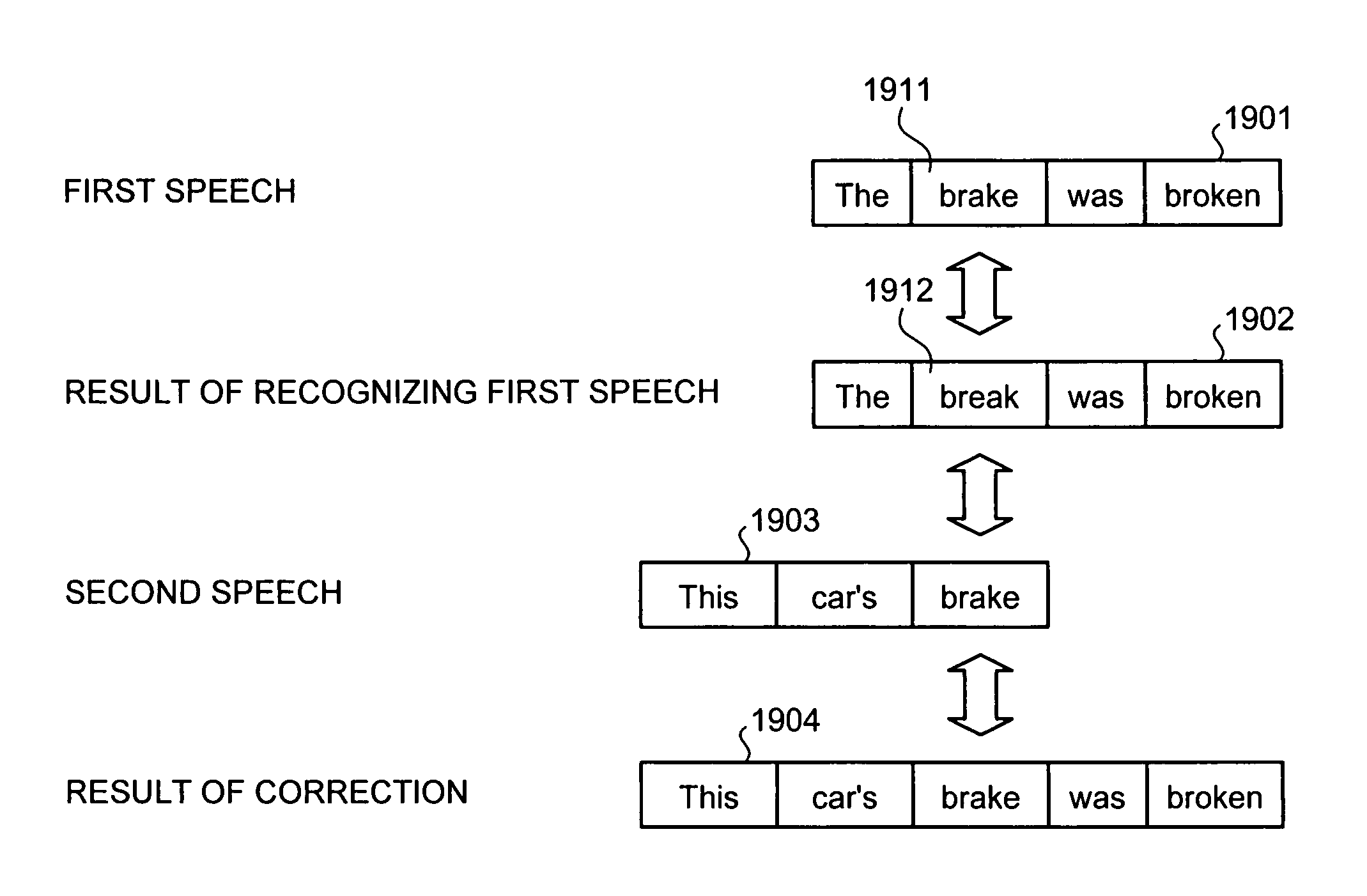

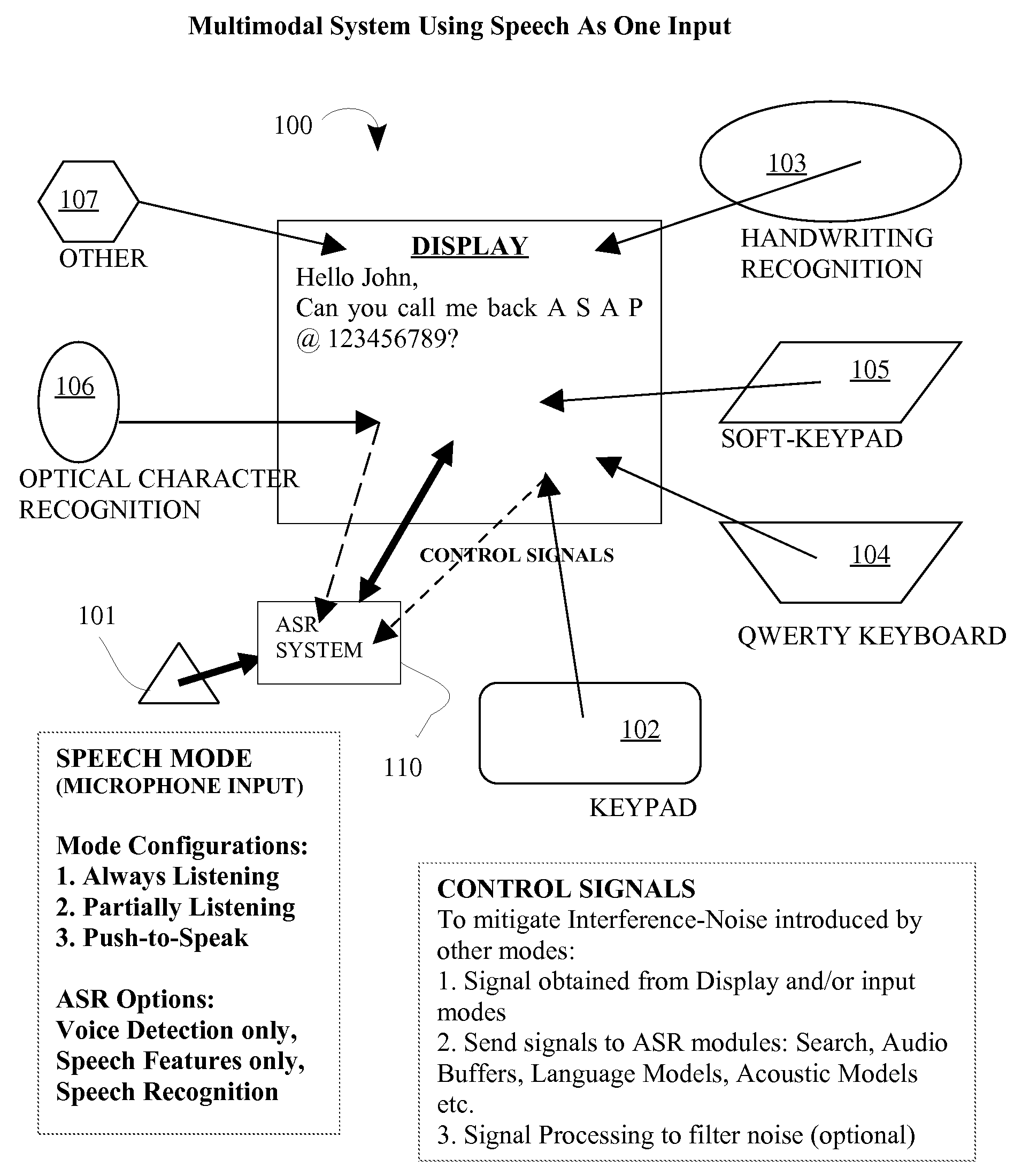

Multimodal disambiguation of speech recognition

InactiveUS8095364B2Resolves the recognition errors efficiently and accuratelySpeech recognitionEnvironmental noiseAmbiguity

The present invention provides a speech recognition system combined with one or more alternate input modalities to ensure efficient and accurate text input. The speech recognition system achieves less than perfect accuracy due to limited processing power, environmental noise, and / or natural variations in speaking style. The alternate input modalities use disambiguation or recognition engines to compensate for reduced keyboards, sloppy input, and / or natural variations in writing style. The ambiguity remaining in the speech recognition process is mostly orthogonal to the ambiguity inherent in the alternate input modality, such that the combination of the two modalities resolves the recognition errors efficiently and accurately. The invention is especially well suited for mobile devices with limited space for keyboards or touch-screen input.

Owner:CERENCE OPERATING CO

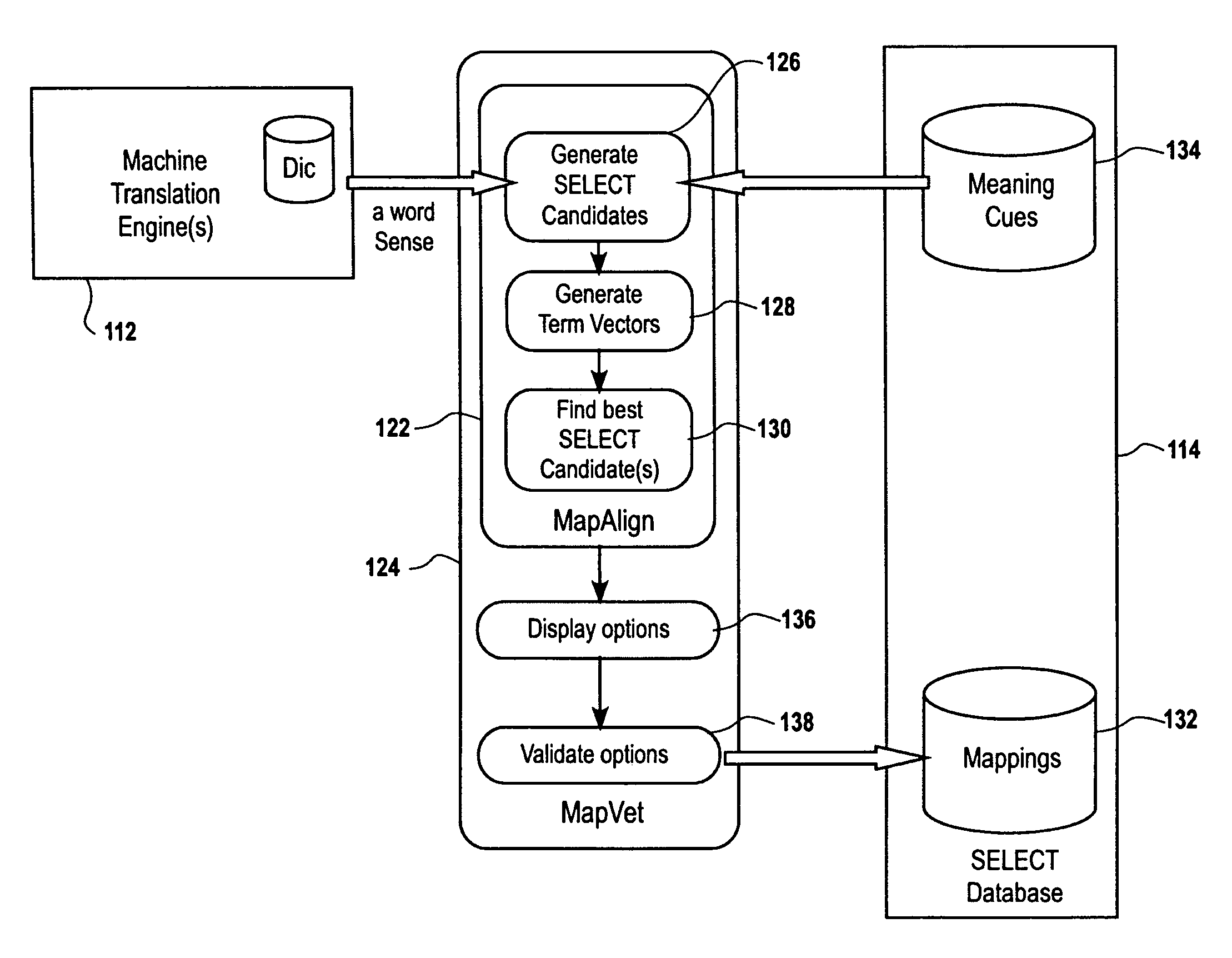

Speech-enabled language translation system and method enabling interactive user supervision of translation and speech recognition accuracy

ActiveUS7539619B1Natural language translationSpeech recognitionSpeech to speech translationAmbiguity

A system and method for a highly interactive style of speech-to-speech translation is provided. The interactive procedures enable a user to recognize, and if necessary correct, errors in both speech recognition and translation, thus providing robust translation output than would otherwise be possible. The interactive techniques for monitoring and correcting word ambiguity errors during automatic translation, search, or other natural language processing tasks depend upon the correlation of Meaning Cues and their alignment with, or mapping into, the word senses of third party lexical resources, such as those of a machine translation or search lexicon. This correlation and mapping can be carried out through the creation and use of a database of Meaning Cues, i.e., SELECT. Embodiments described above permit the intelligent building and application of this database, which can be viewed as an interlingua, or language-neutral set of meaning symbols, applicable for many purposes. Innovative techniques for interactive correction of server-based speech recognition are also described.

Owner:ZAMA INNOVATIONS LLC

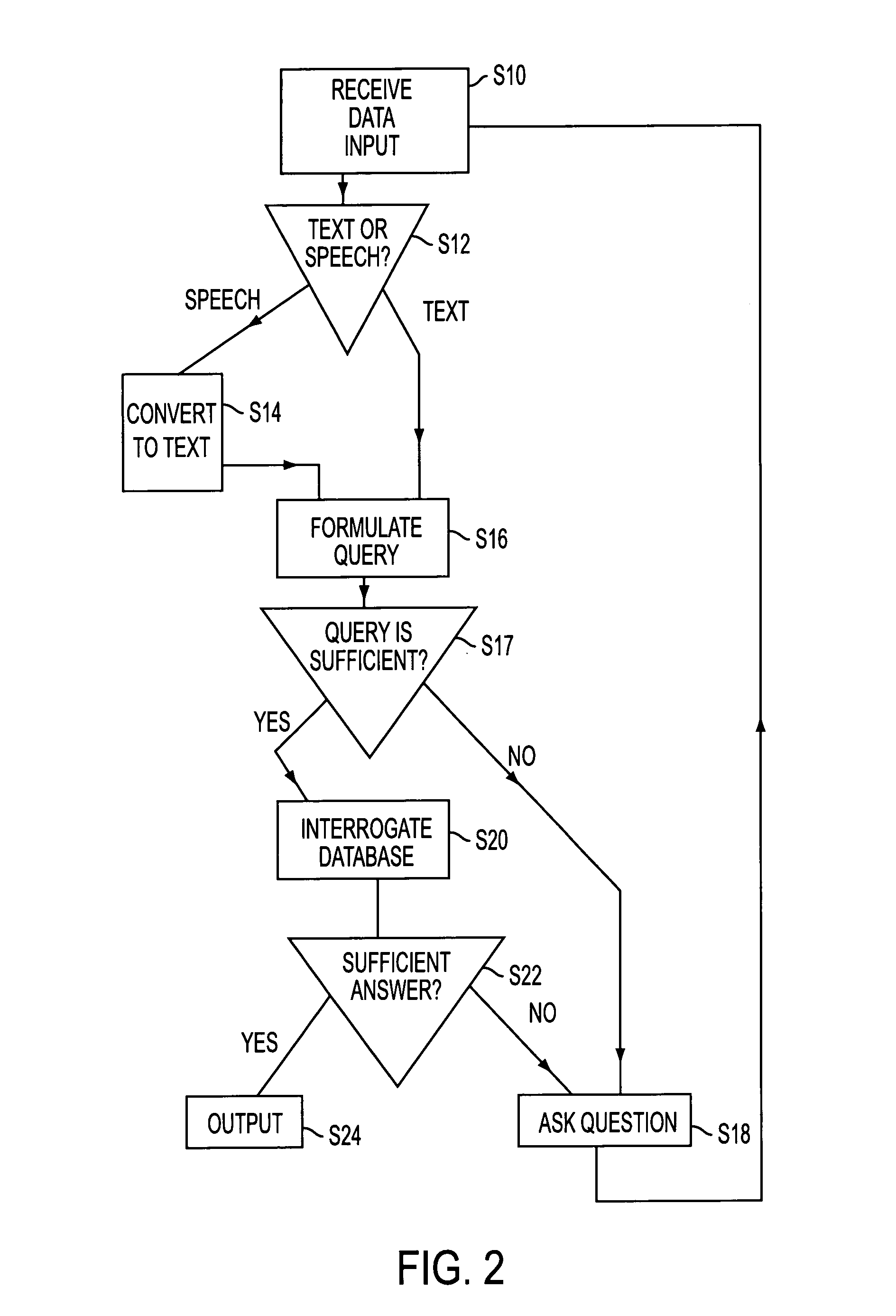

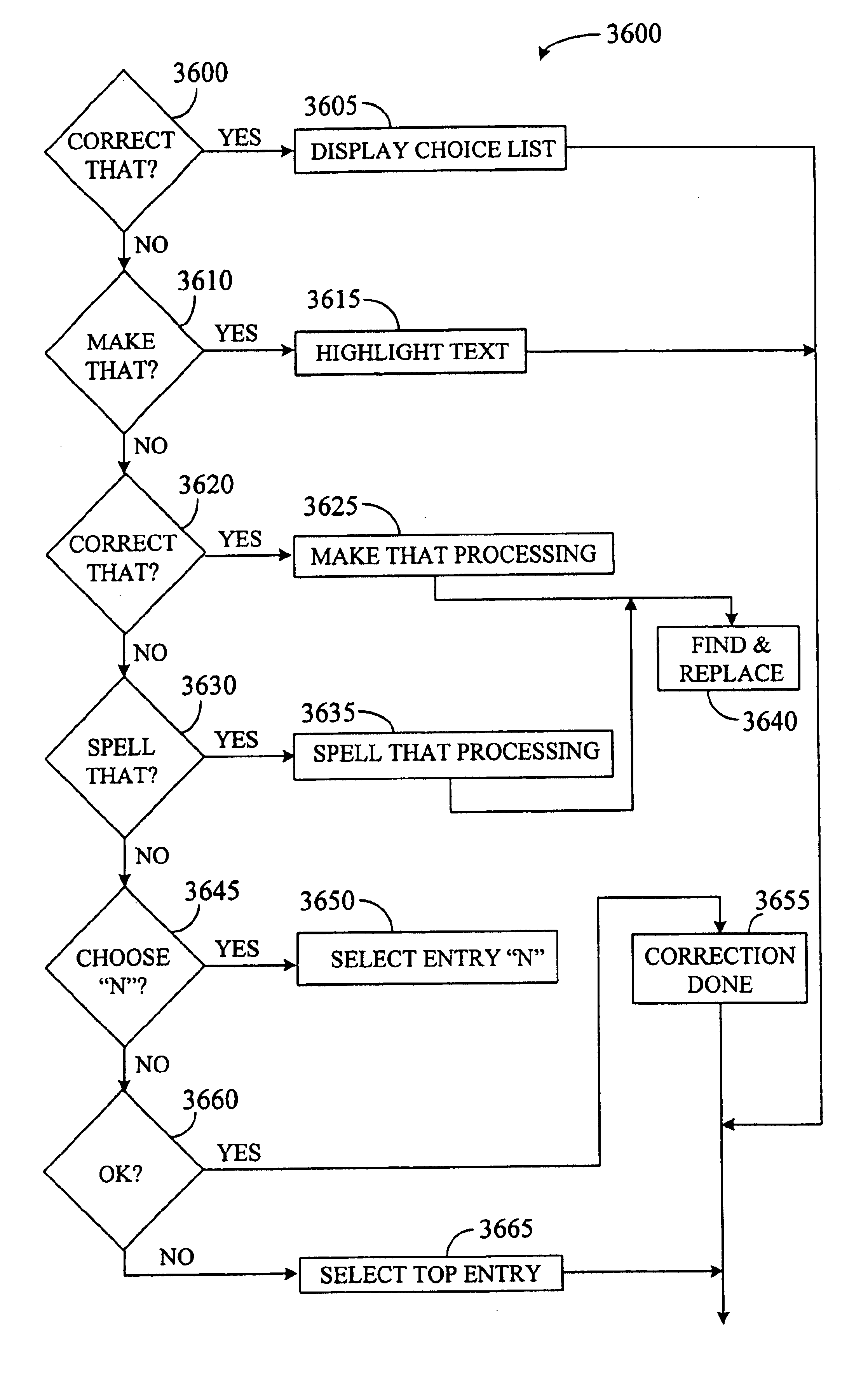

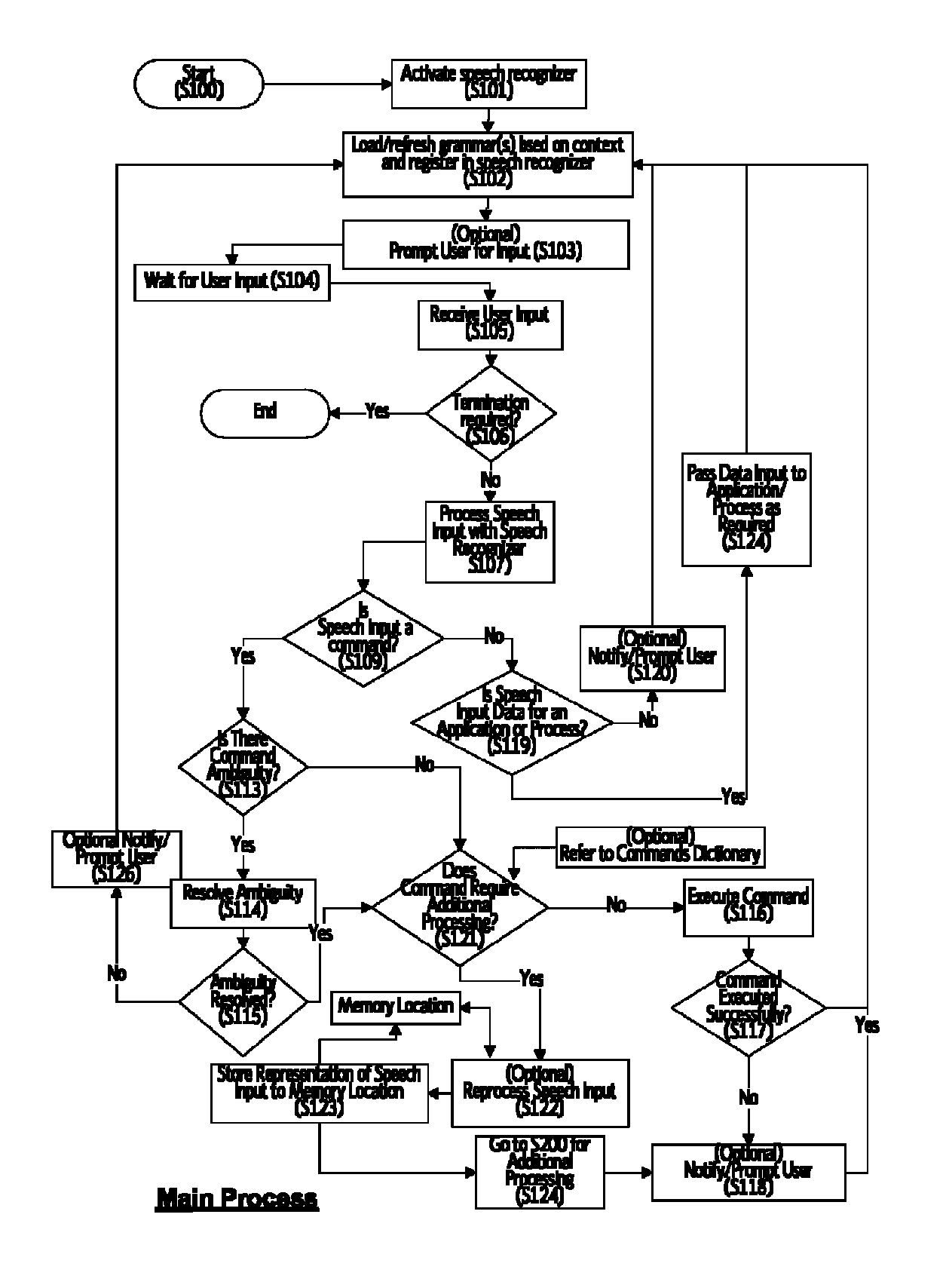

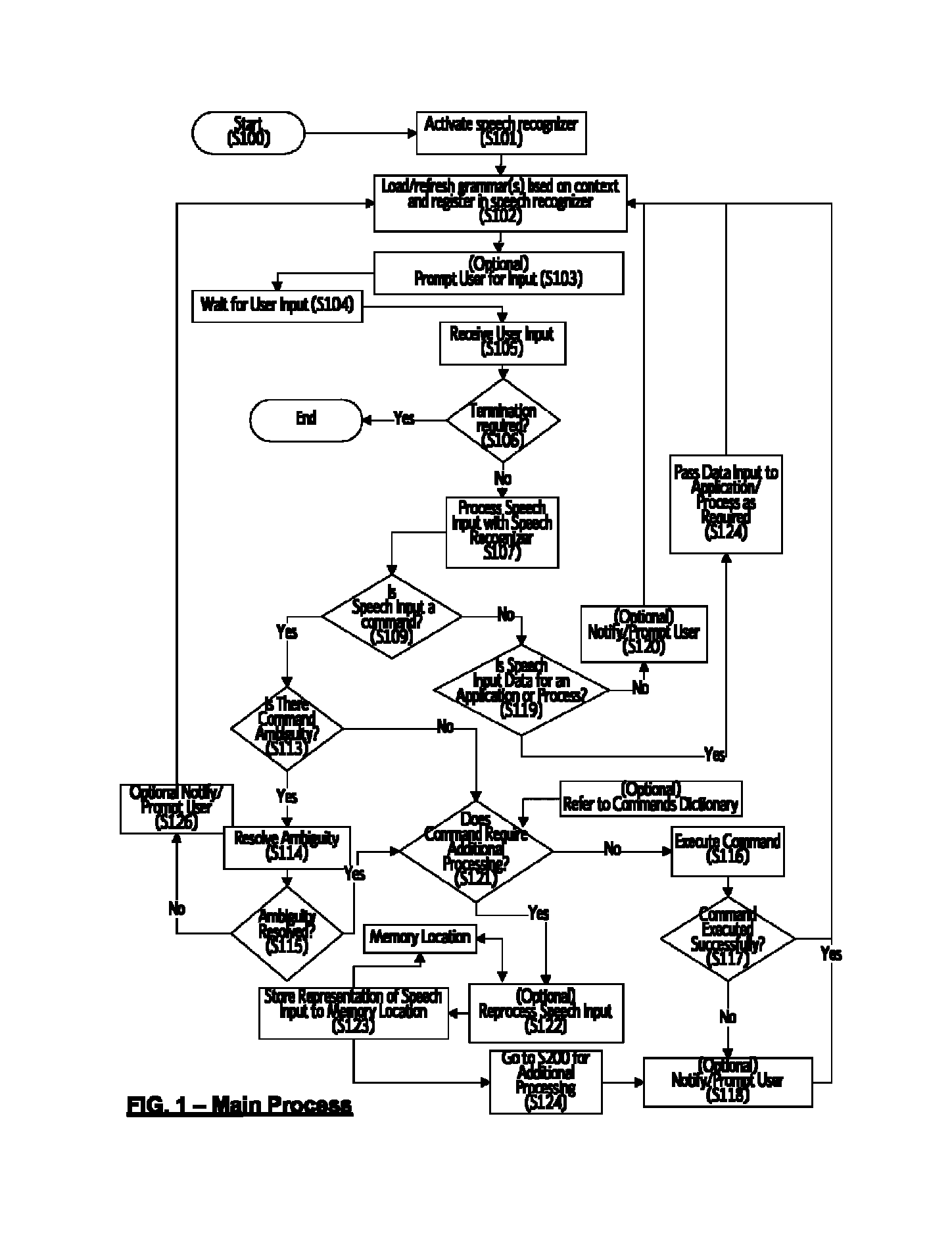

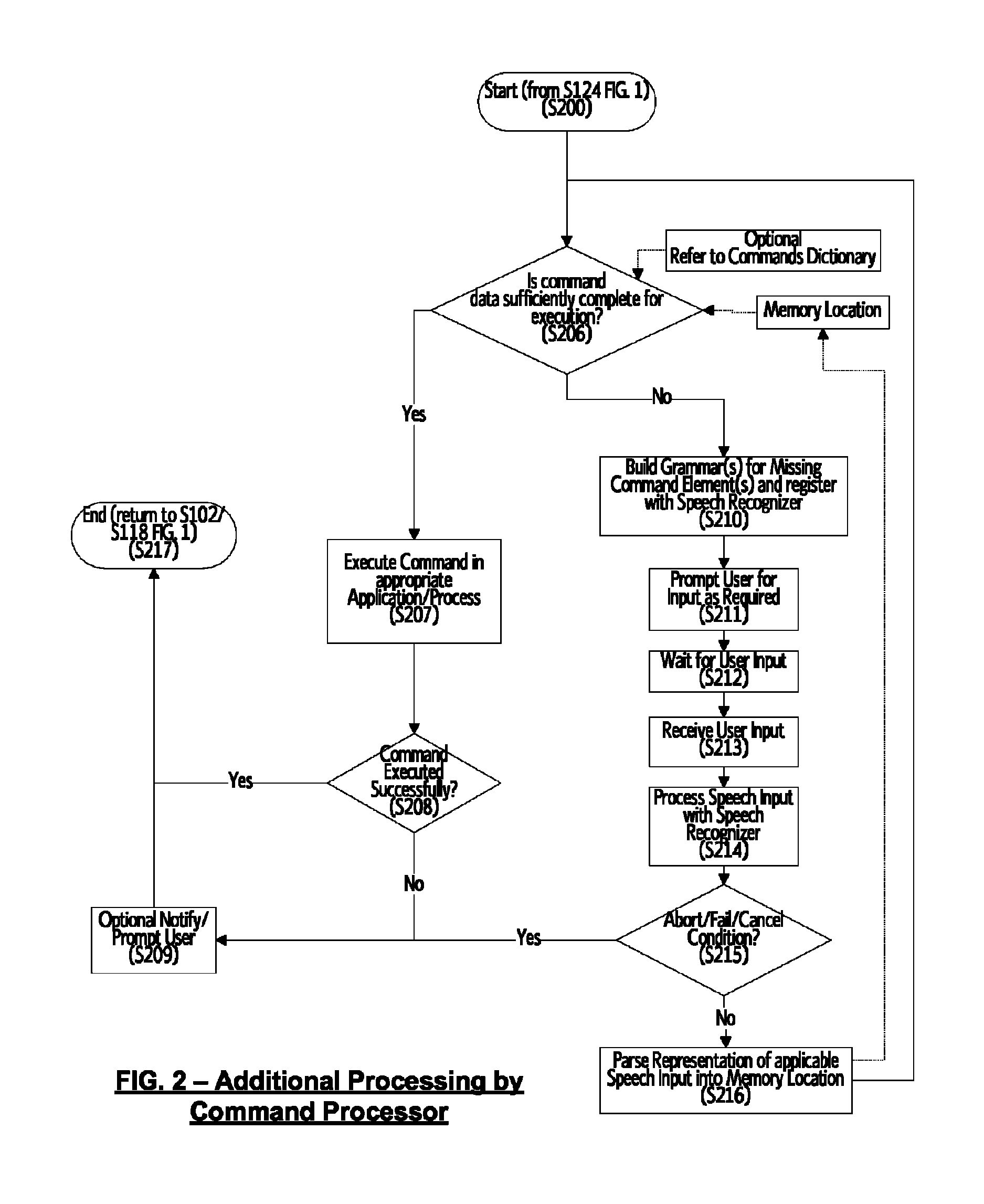

Method for processing the output of a speech recognizer

ActiveUS8219407B1Improve integrityReduce ambiguityEngine fuctionsBlade accessoriesUser inputSpeech identification

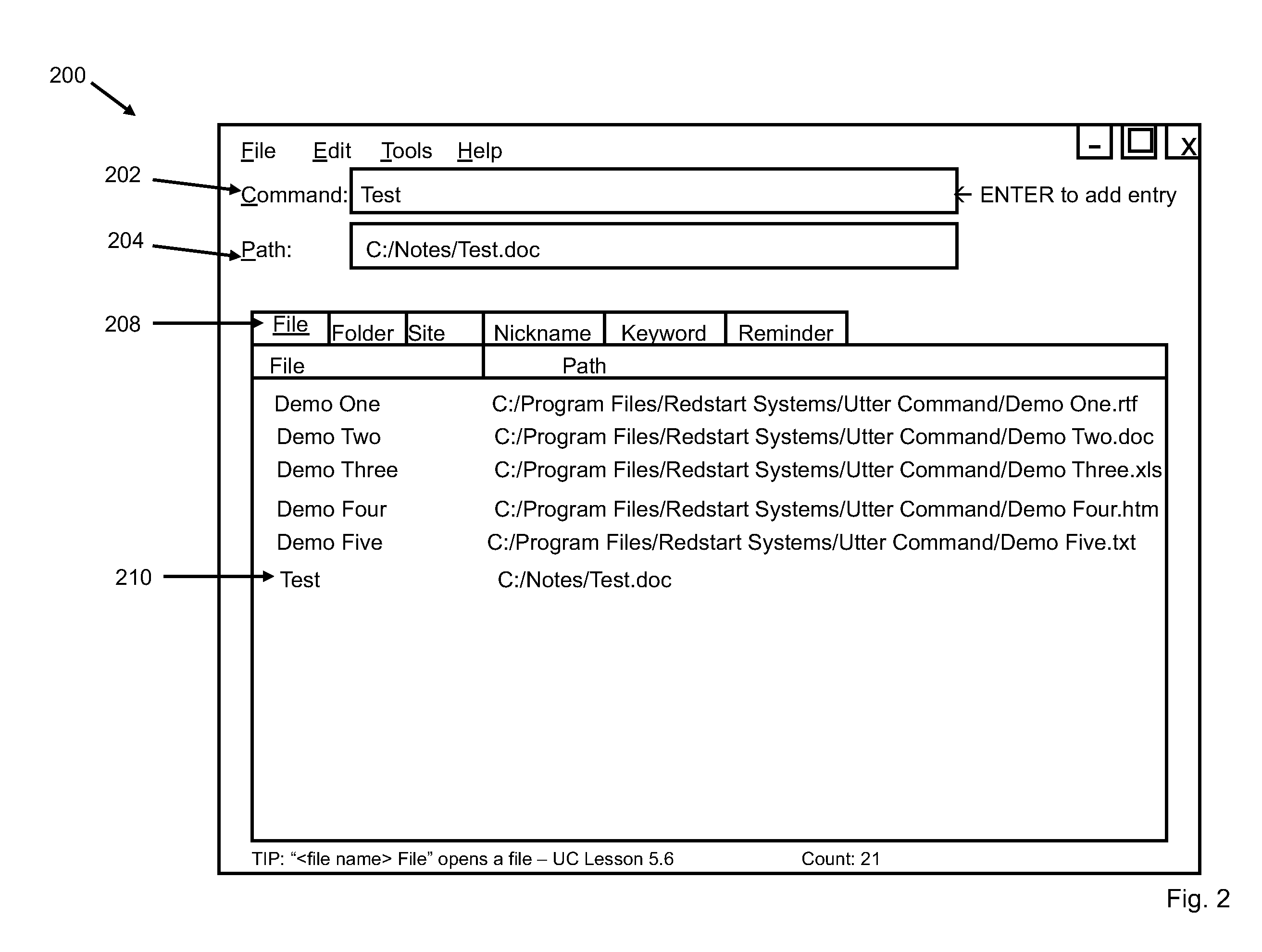

A system and method for processing speech input comprising a speech recognizer and a logical command processor which facilitates additional processing of speech input beyond the speech recognizer level. A speech recognizer receives input from a user, and when a command is identified in the speech input, if the command meets conditions that require additional processing, a representation of the speech input s stored for subsequent processing. A logical command processor performs additional processing of command input by analyzing the command and its elements, determining which elements are required for successful processing the command and which elements are present and lacking. The user is prompted to supply missing information, and subsequent user input is added to the command structure until the command input is aborted or the command structure reaches sufficient completeness to enable execution of the command. Thereby, speech input of complex commands in natural language in a system running a plurality of applications and processes is made possible.

Owner:GREAT NORTHERN RES

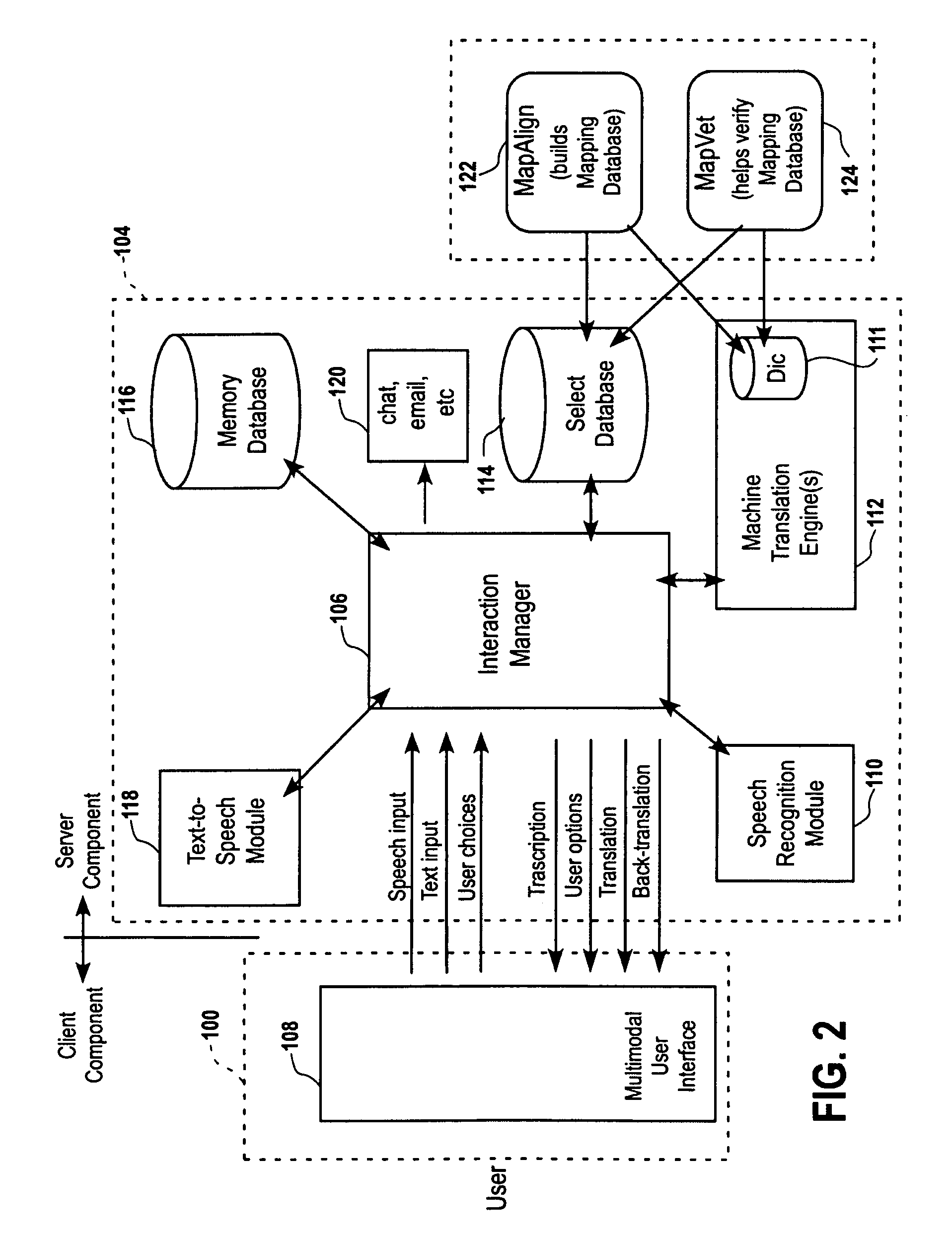

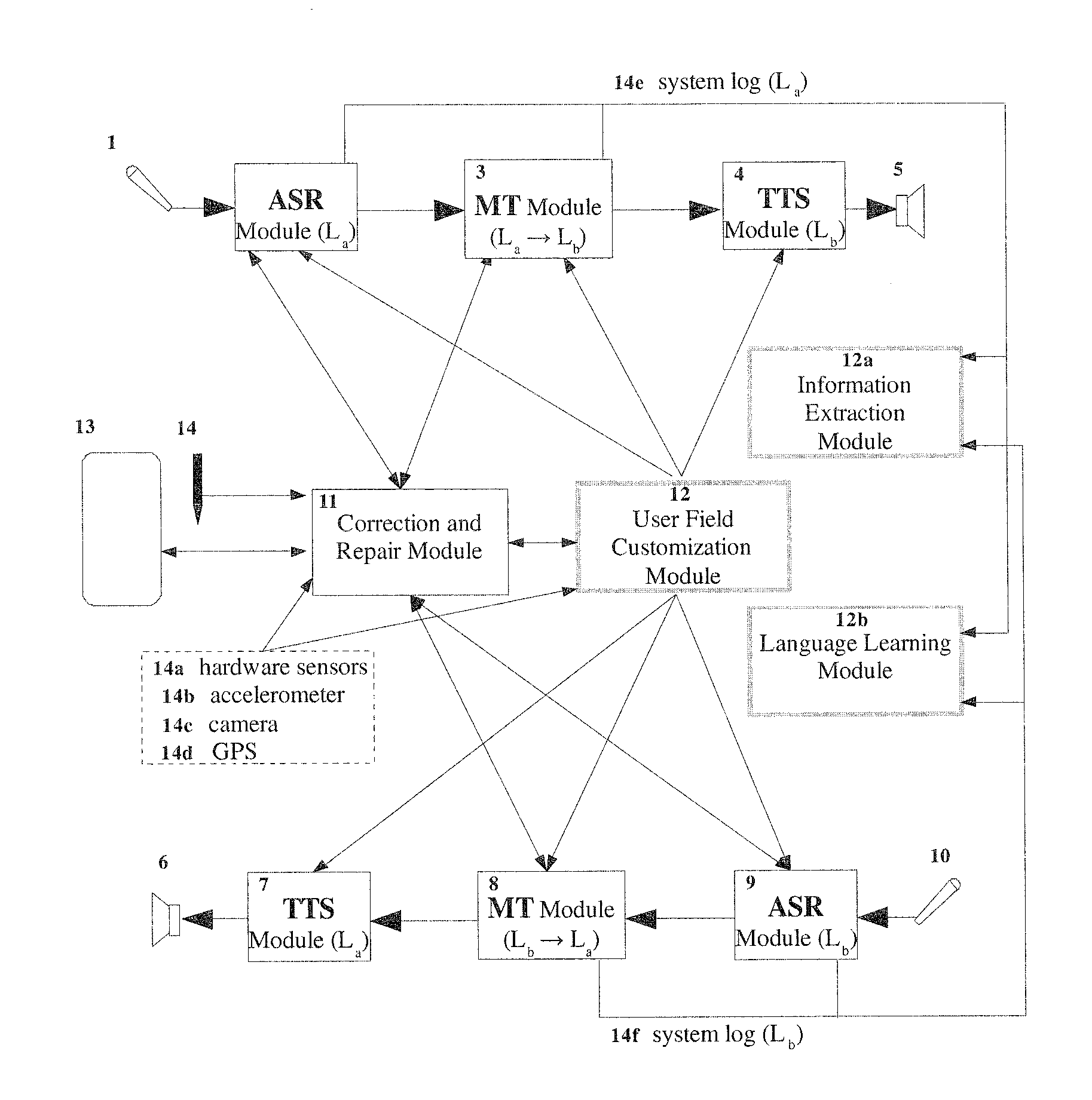

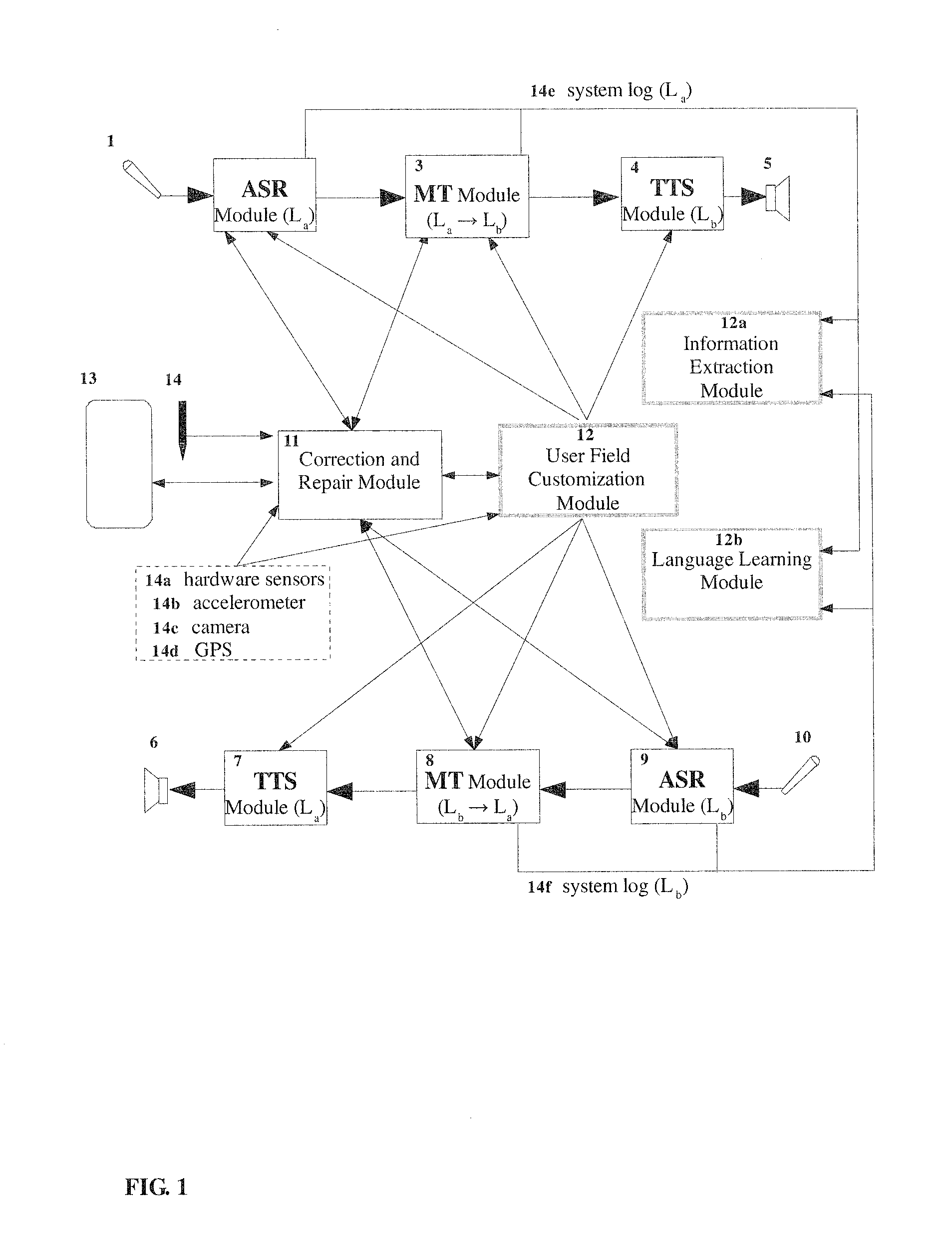

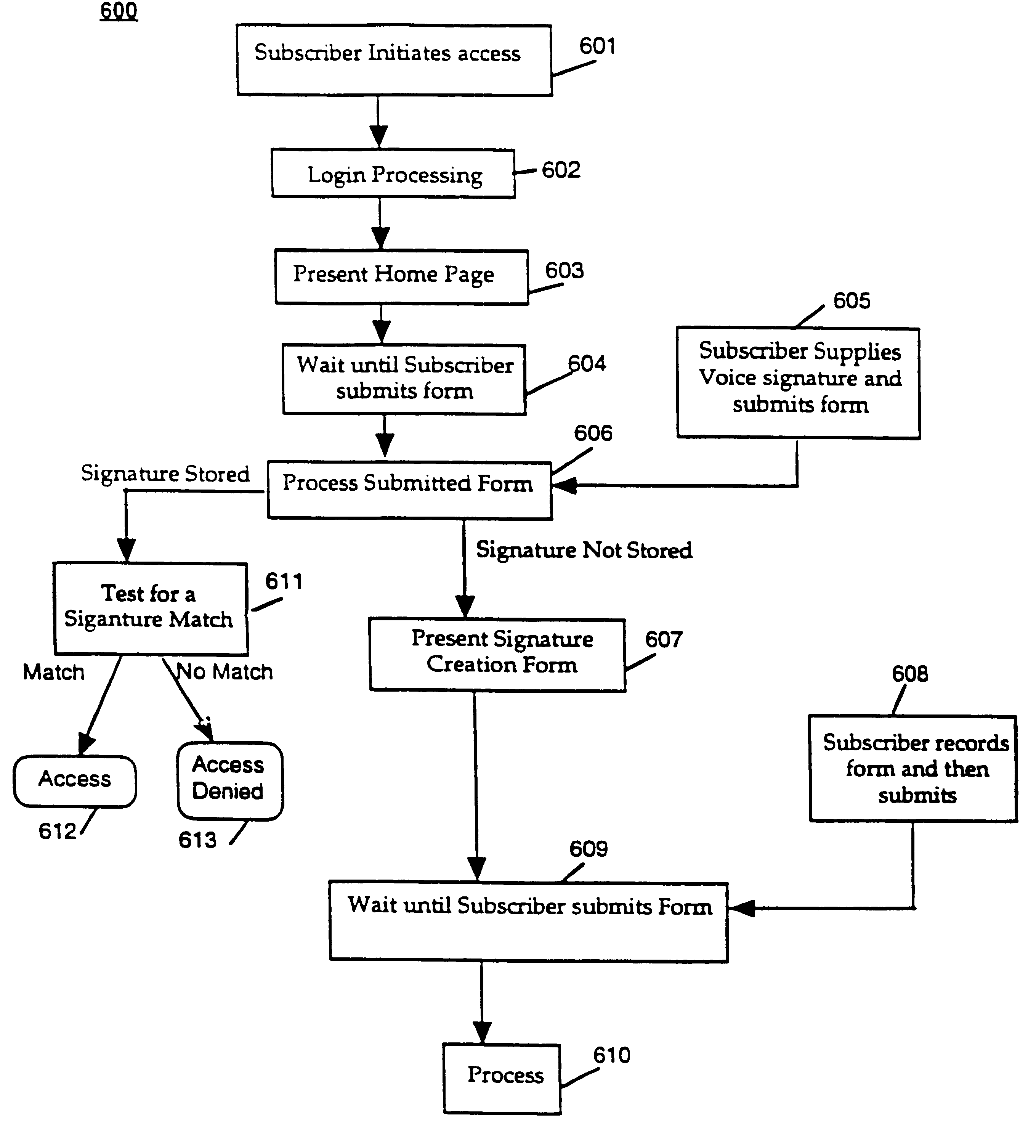

Enhanced speech-to-speech translation system and methods

ActiveUS20110307241A1Improve usabilityNatural language translationSpeech recognitionThird partySpeech to speech translation

A speech translation system and methods for cross-lingual communication that enable users to improve and modify content and usage of the system and easily abort or reset translation. The system includes a speech recognition module configured for accepting an utterance, a machine translation module, an interface configured to communicate the utterance and proposed translation, a correction module and an abort action unit that removes any hypotheses or partial hypotheses and terminates translation. The system also includes modules for storing favorites, changing language mode, automatically identifying language, providing language drills, viewing third party information relevant to conversation, among other things.

Owner:META PLATFORMS INC

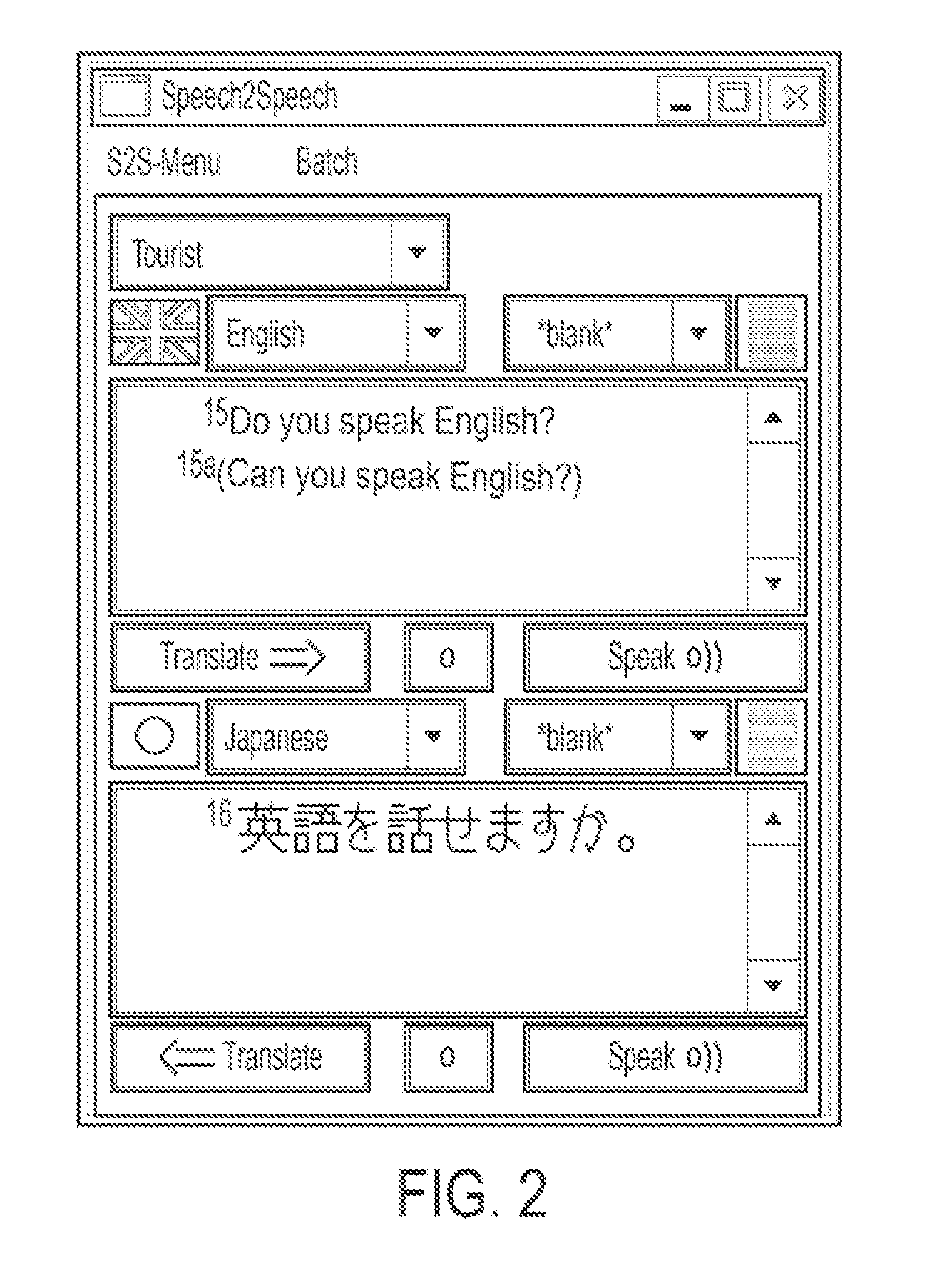

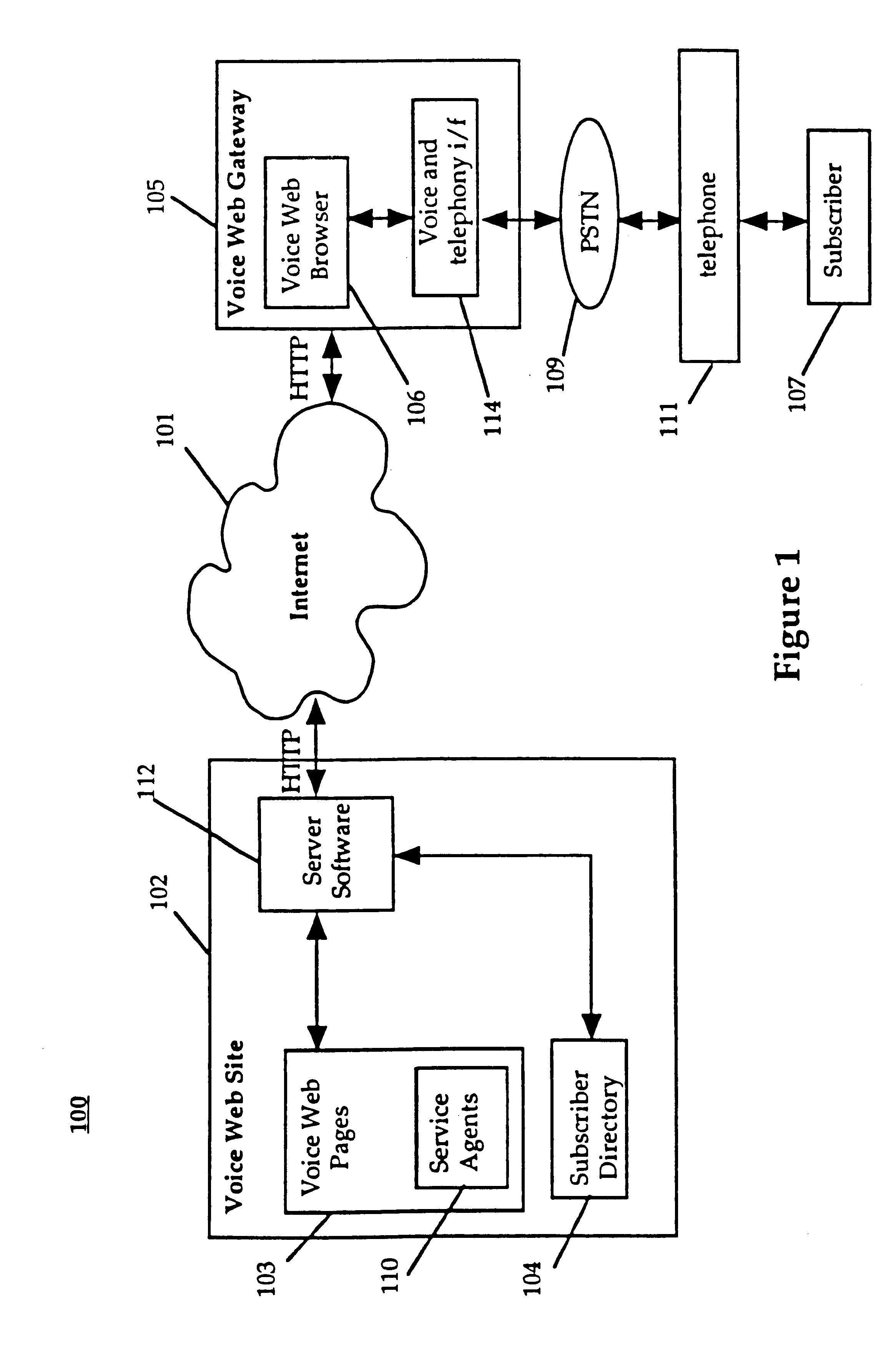

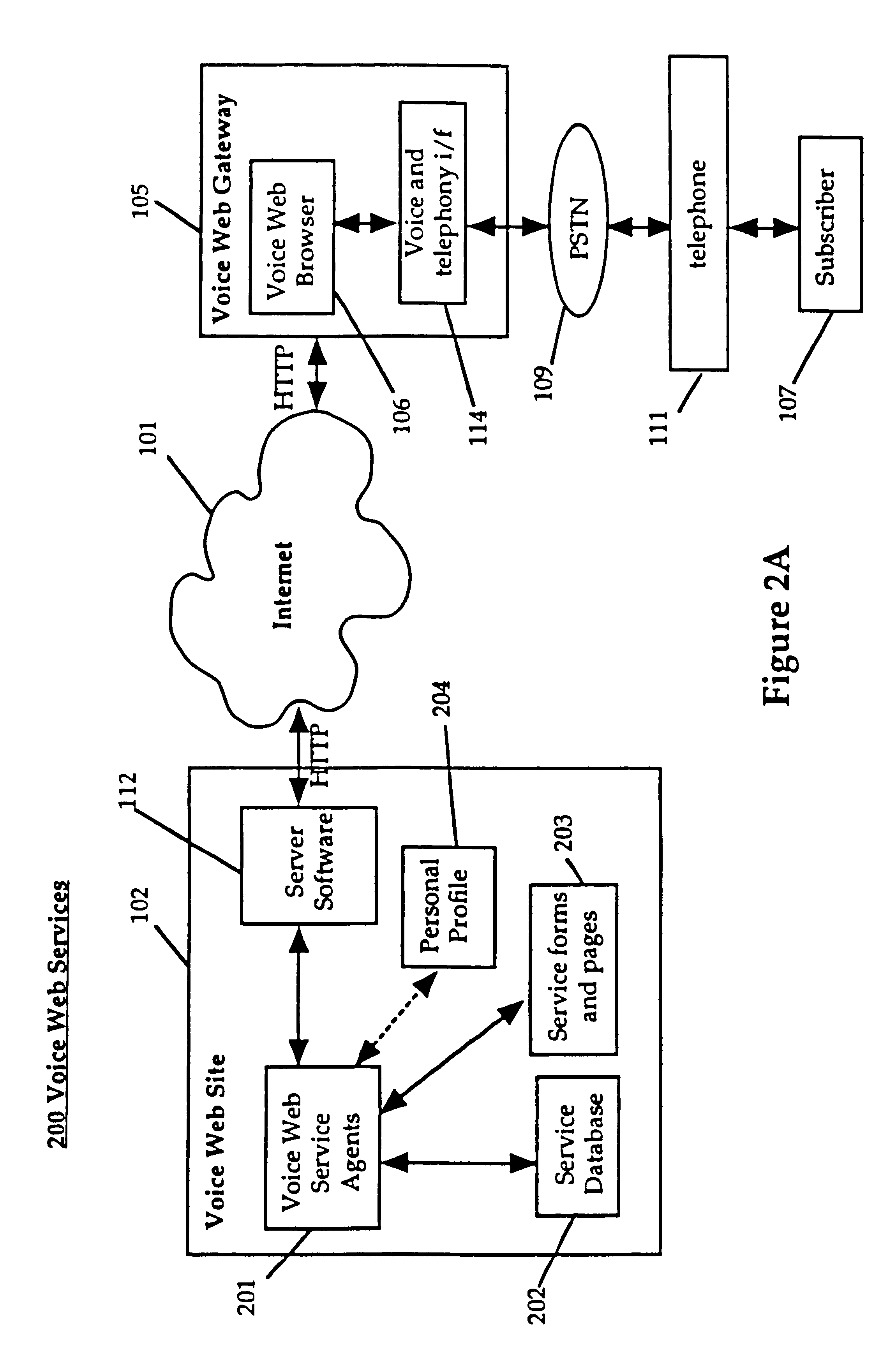

System and method for providing and using universally accessible voice and speech data files

InactiveUS6400806B1Automatic call-answering/message-recording/conversation-recordingAutomatic exchangesSpeech trainingHyperlink

A system and method provides universal access to voice-based documents containing information formatted using MIME and HTML standards using customized extensions for voice information access and navigation. These voice documents are linked using HTML hyper-links that are accessible to subscribers using voice commands, touch-tone inputs and other selection means. These voice documents and components in them are addressable using HTML anchors embedding HTML universal resource locators (URLs) rendering them universally accessible over the Internet. This collection of connected documents forms a voice web. The voice web includes subscriber-specific documents including speech training files for speaker dependent speech recognition, voice print files for authenticating the identity of a user and personal preference and attribute files for customizing other aspects of the system in accordance with a specific subscriber.

Owner:NUANCE COMM INC

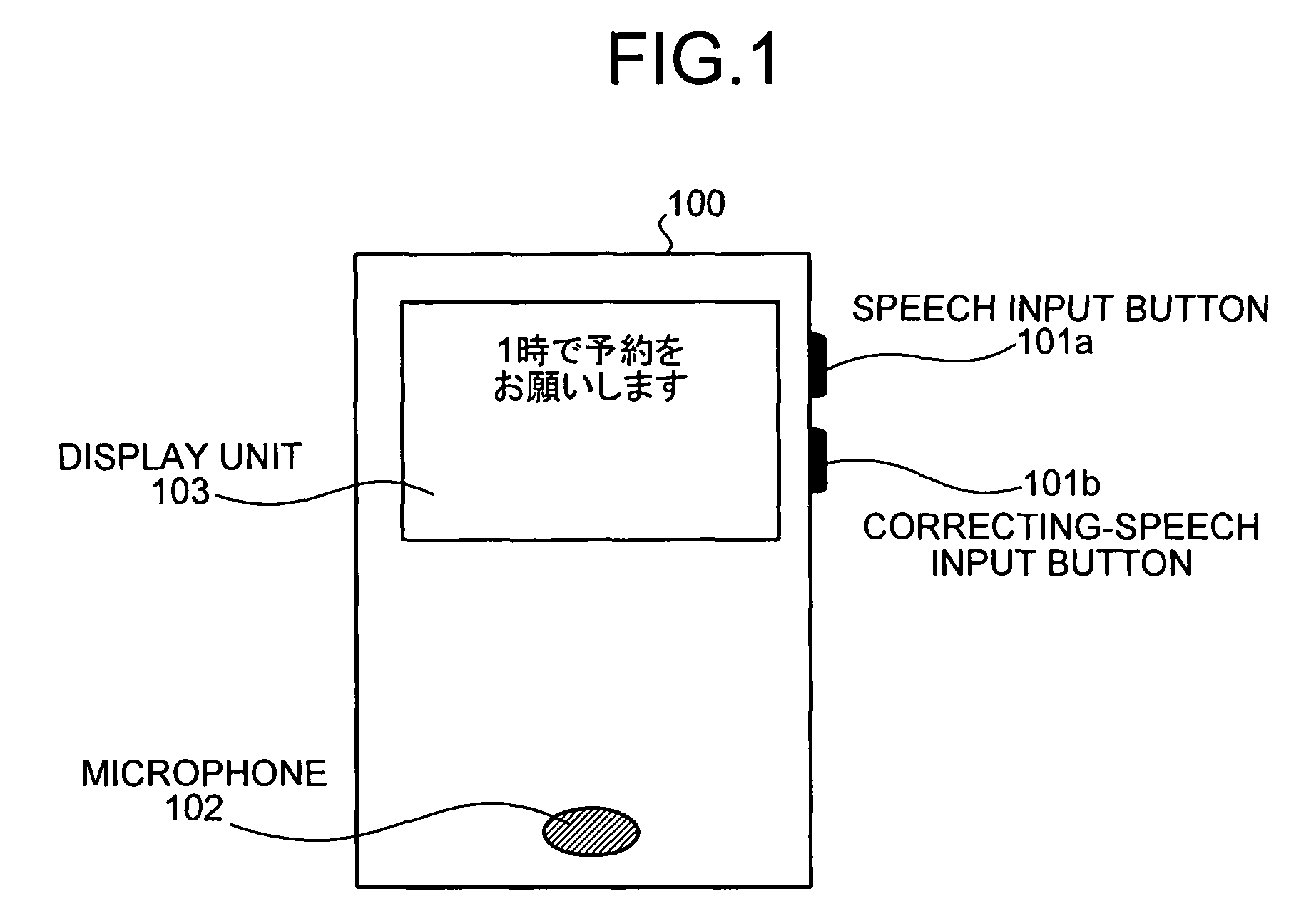

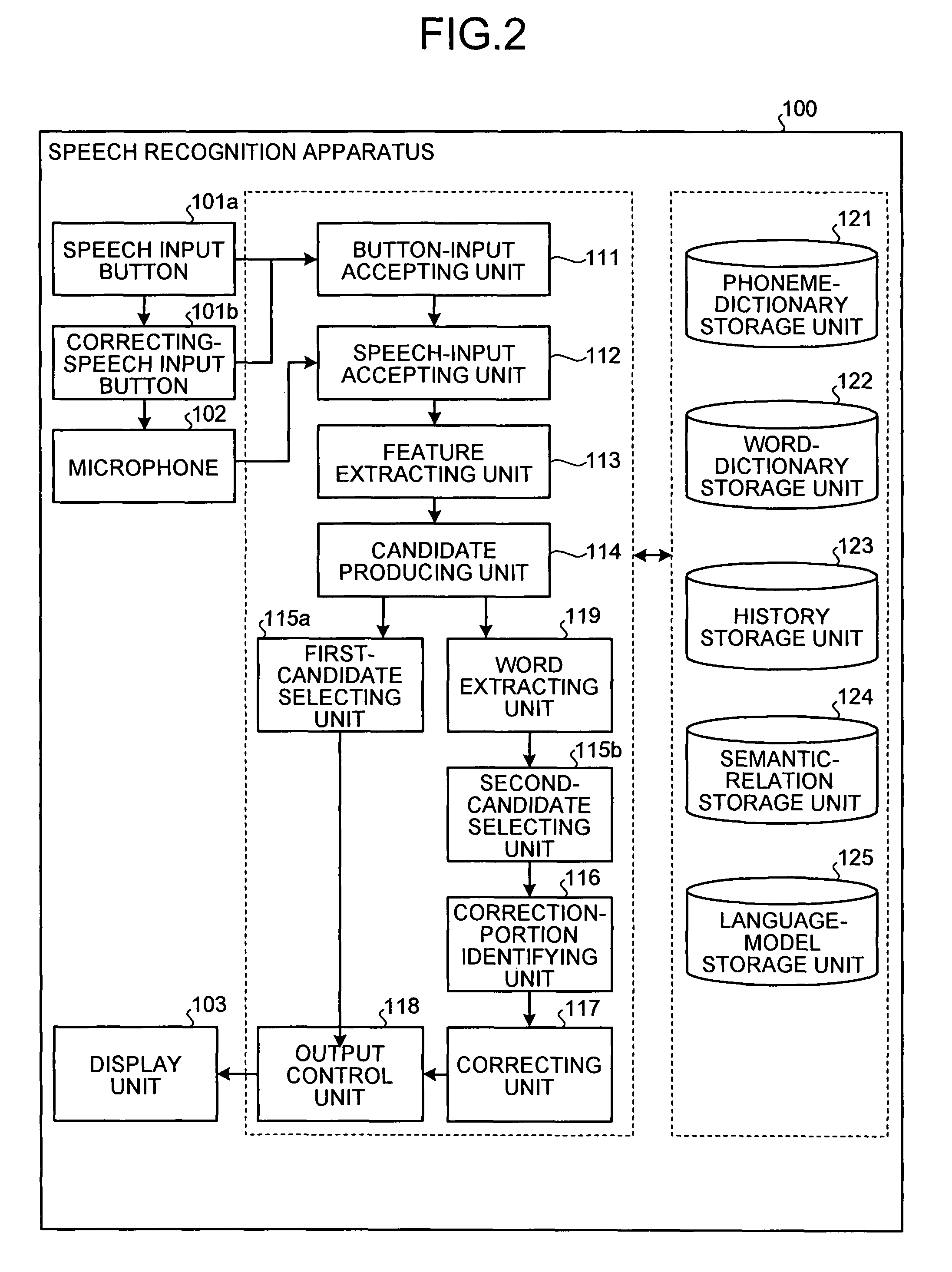

Apparatus, method and computer program product for recognizing speech

InactiveUS7974844B2Speech recognitionSpecial data processing applicationsSpeech identificationSpeech sound

Owner:TOSHIBA DIGITAL SOLUTIONS CORP

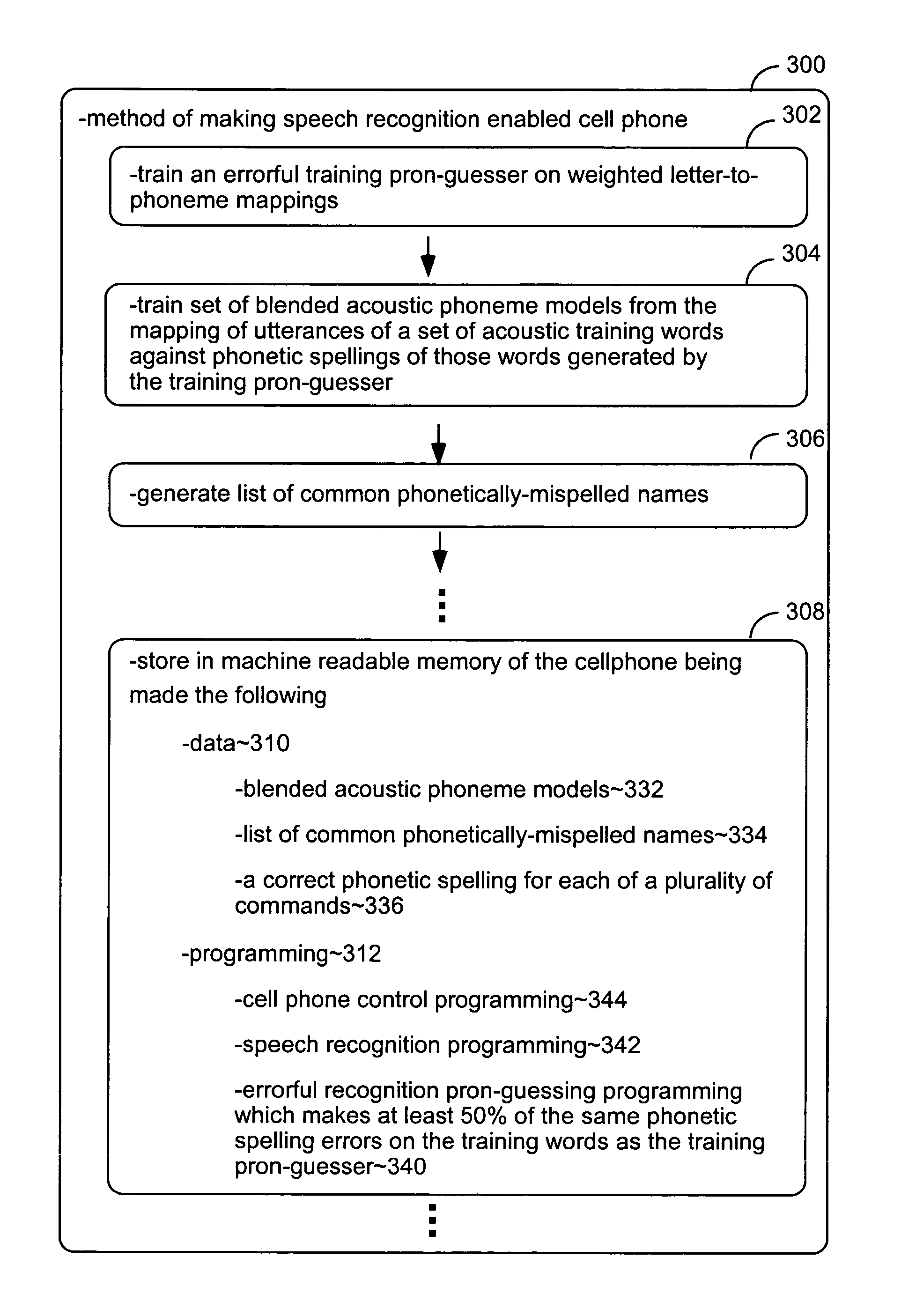

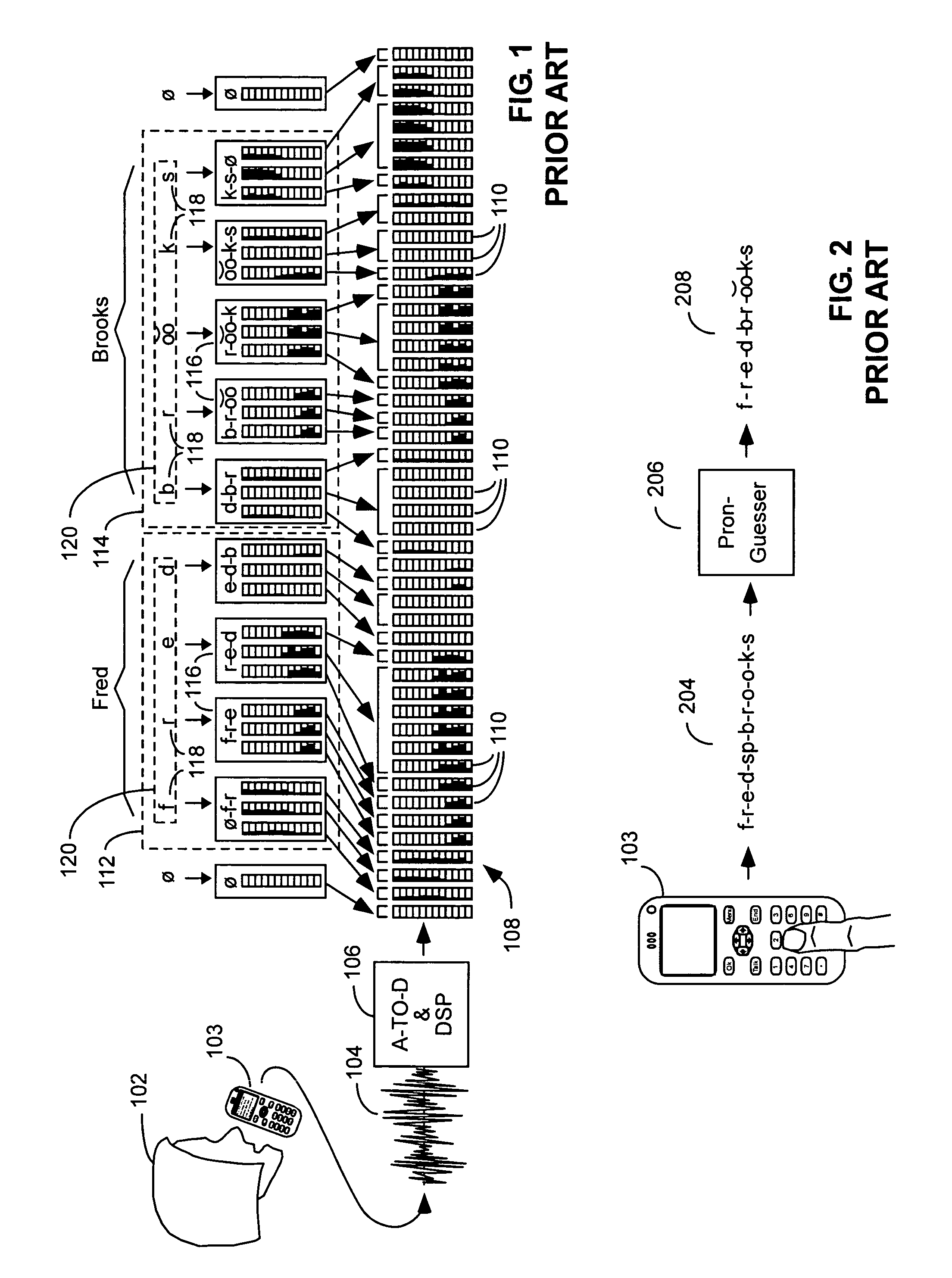

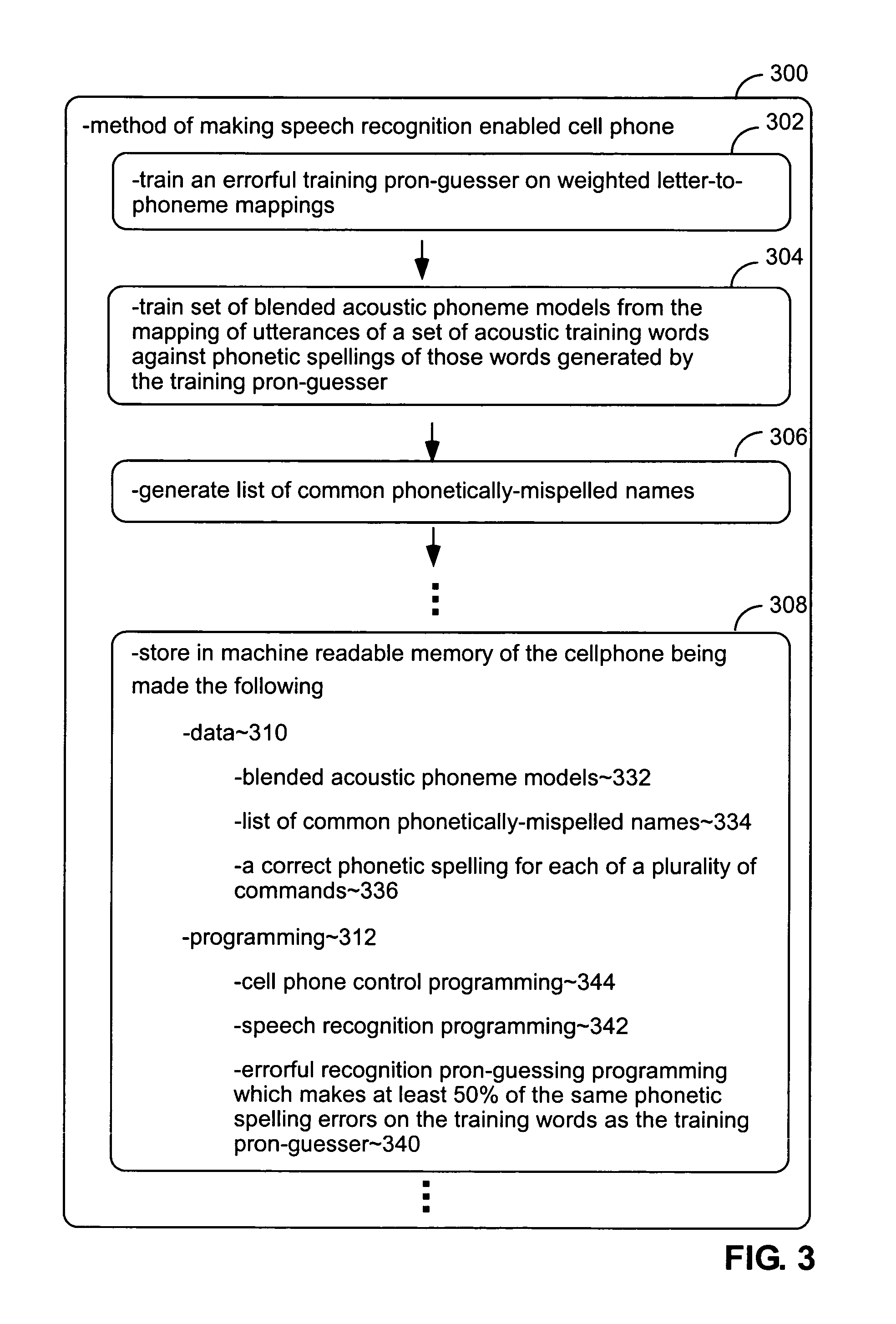

Training and using pronunciation guessers in speech recognition

InactiveUS7467087B1Reduce frequencyReliable phonetic spellingSpeech recognitionSpeech synthesisWord modelSpeech identification

The error rate of a pronunciation guesser that guesses the phonetic spelling of words used in speech recognition is improved by causing its training to weigh letter-to-phoneme mappings used as data in such training as a function of the frequency of the words in which such mappings occur. Preferably the ratio of the weight to word frequency increases as word frequencies decreases. Acoustic phoneme models for use in speech recognition with phonetic spellings generated by a pronunciation guesser that makes errors are trained against word models whose phonetic spellings have been generated by a pronunciation guesser that makes similar errors. As a result, the acoustic models represent blends of phoneme sounds that reflect the spelling errors made by the pronunciation guessers. Speech recognition enabled systems are made by storing in them both a pronunciation guesser and a corresponding set of such blended acoustic models.

Owner:CERENCE OPERATING CO

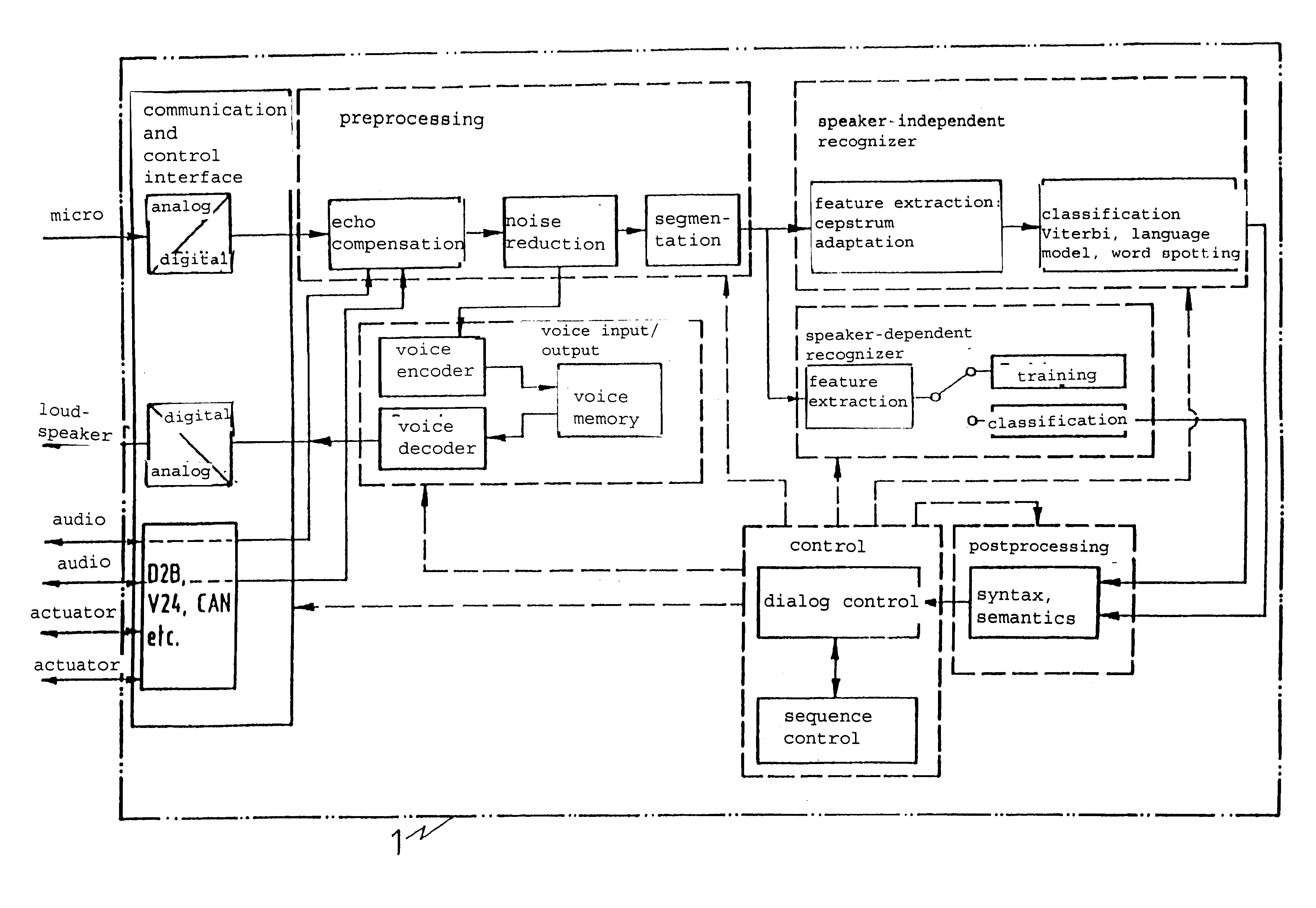

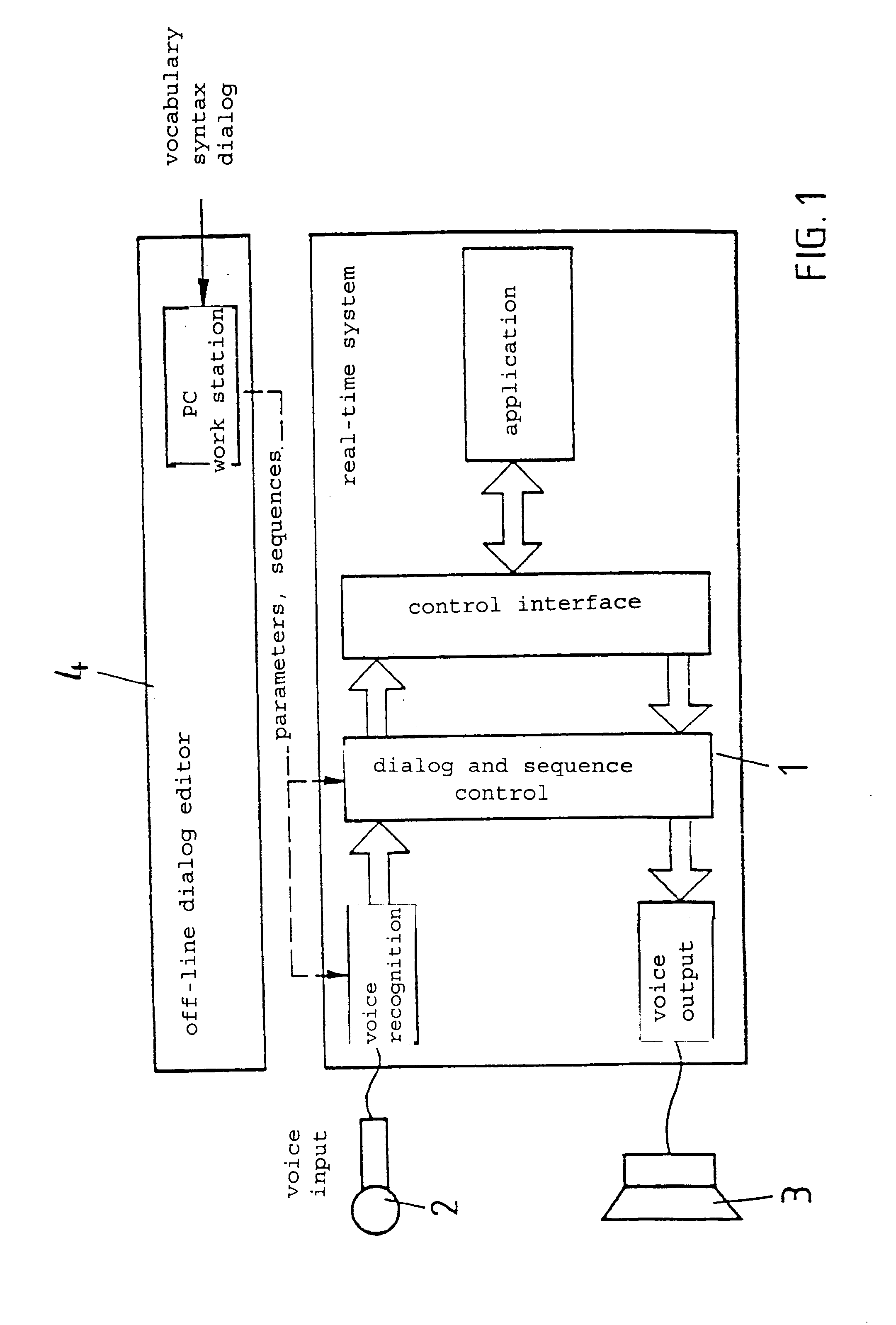

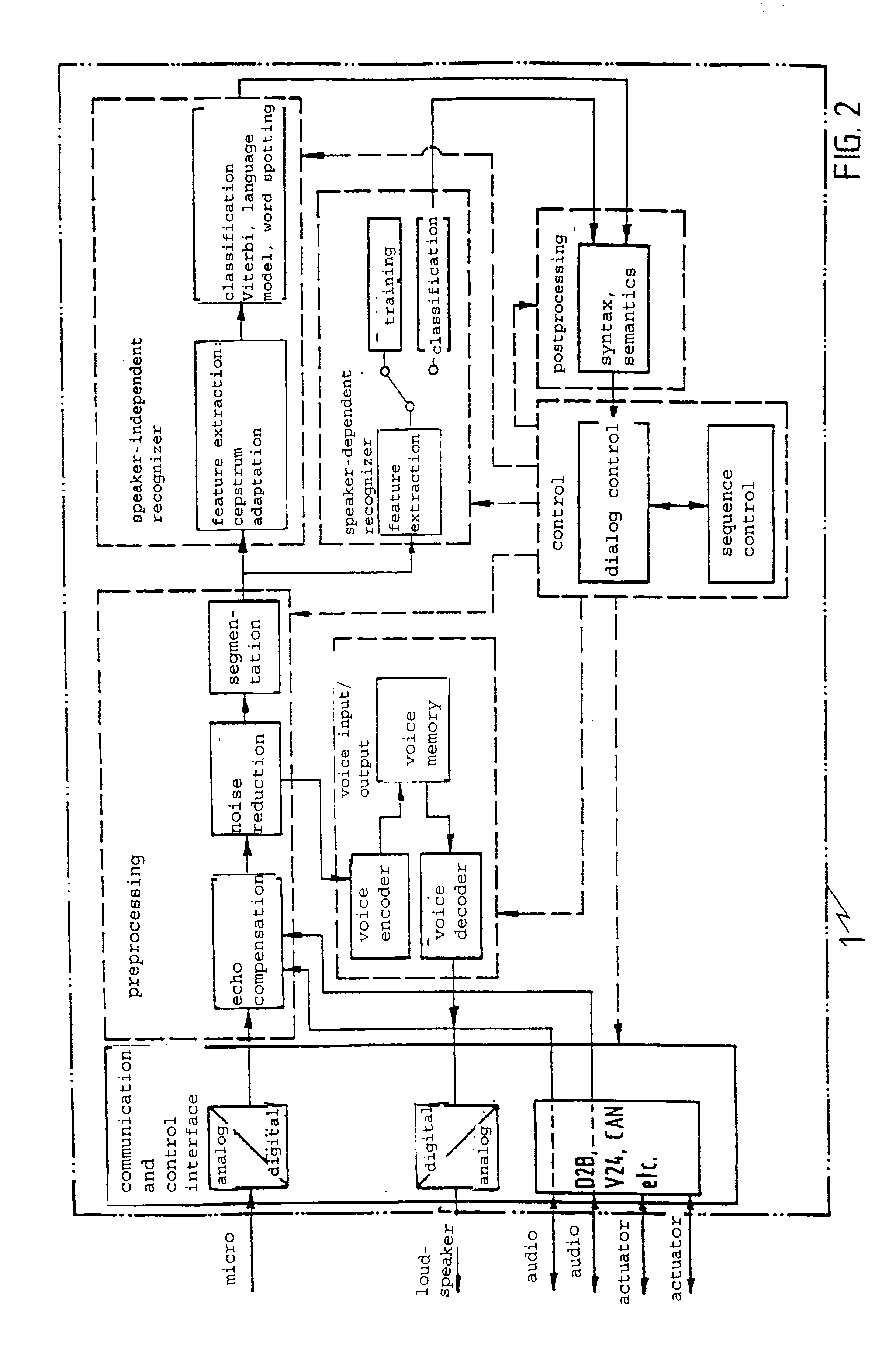

Process for automatic control of one or more devices by voice commands or by real-time voice dialog and apparatus for carrying out this process

InactiveUS6839670B1Reduce spendingSpeech recognitionElectric/fluid circuitAutomatic controlSpeech identification

A speech dialog system wherein a process for automatic control of devices by speech dialog is used applying methods of speech input, speech signal processing and speech recognition, syntatical-grammatical postediting as well as dialog, executive sequencing and interface control, and which is characterized in that syntax and command structures are set during real-time dialog operation; preprocessing, recognition and dialog control are designed for operation in a noise-encumbered environment; no user training is required for recognition of general commands; training of individual users is necessary for recognition of special commands; the input of commands is done in linked form, the number of words used to form a command for speech input being variable; a real-time processing and execution of the speech dialog is established; and the speech input and output is done in the hands-free mode.

Owner:NUANCE COMM INC

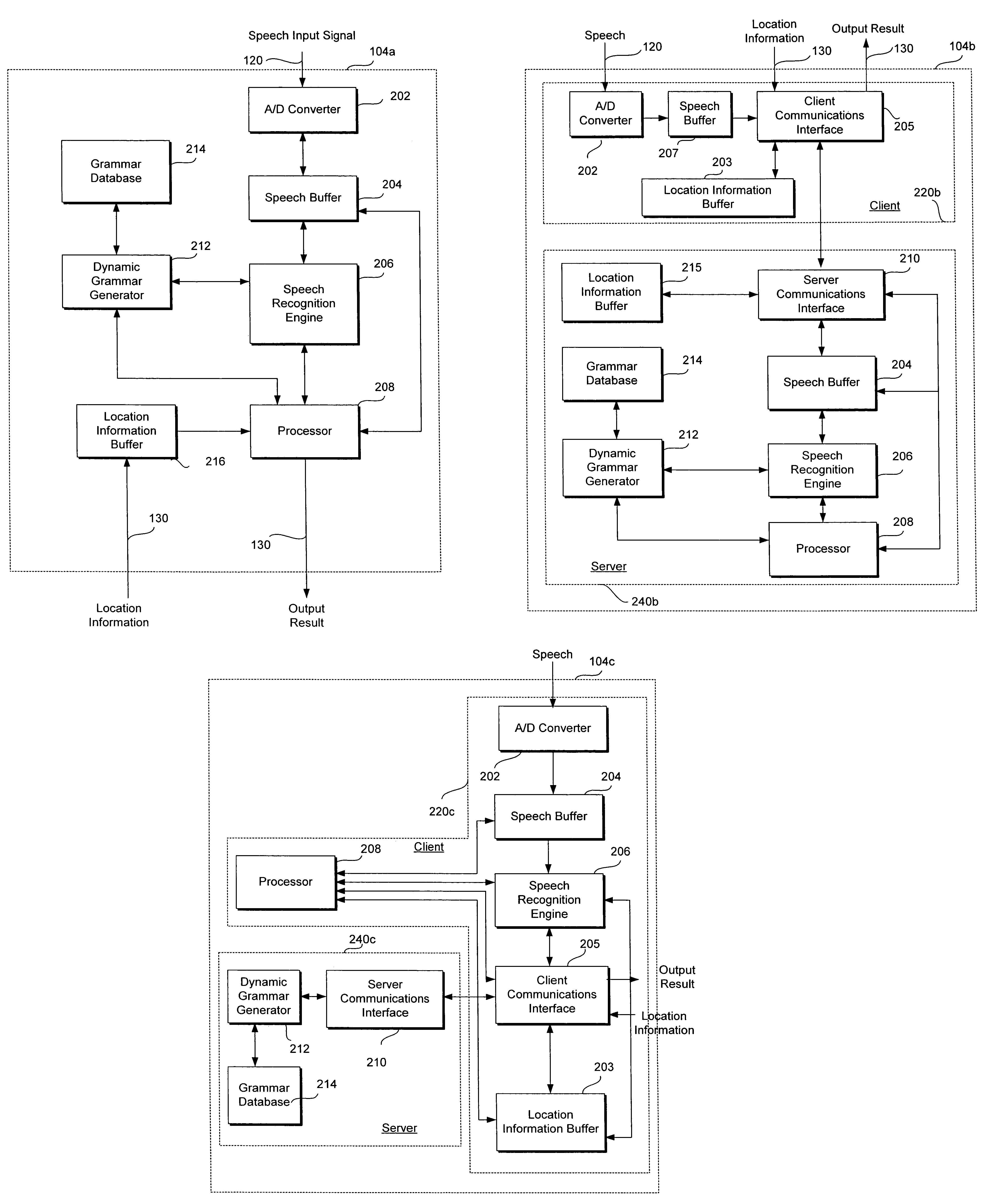

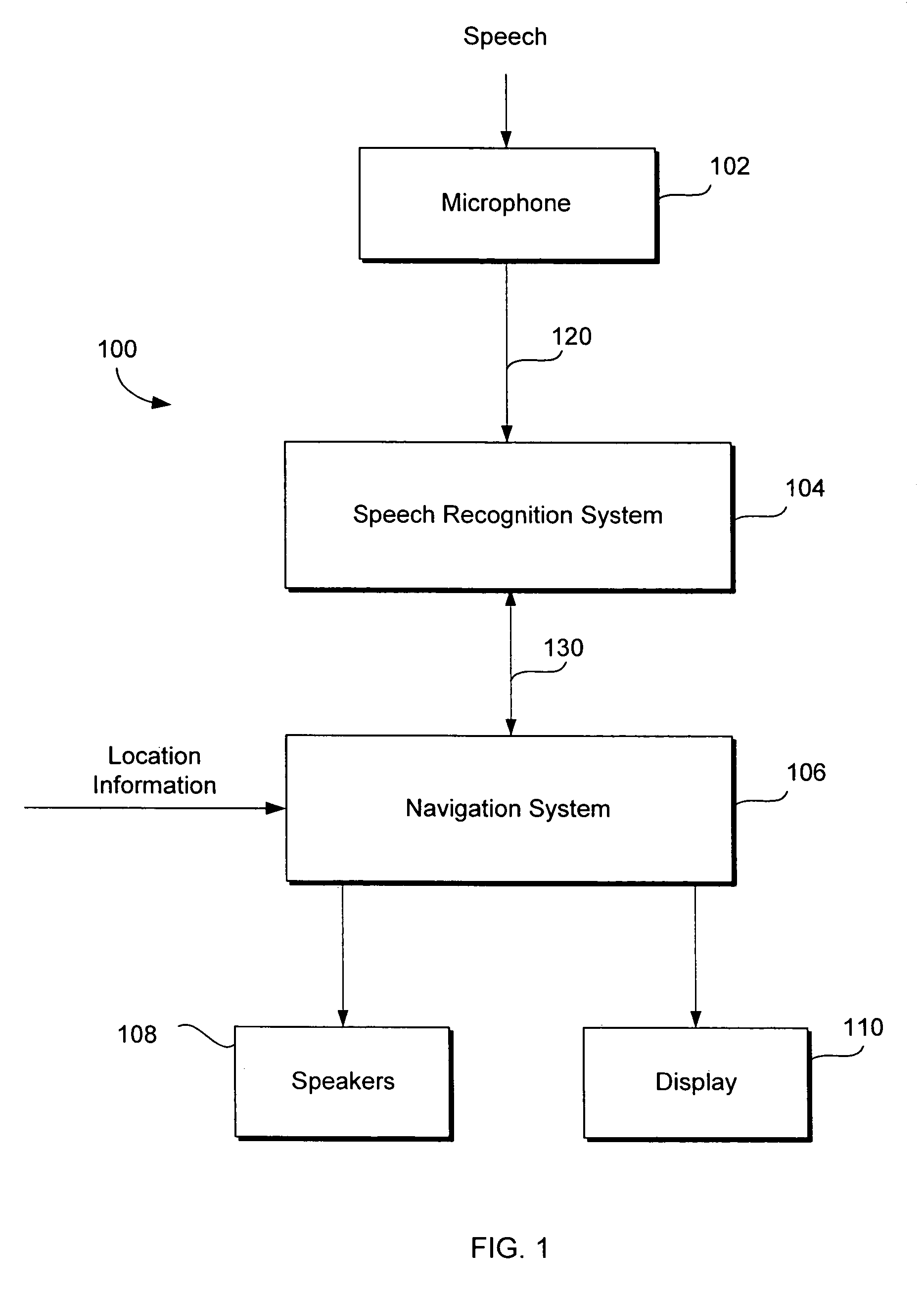

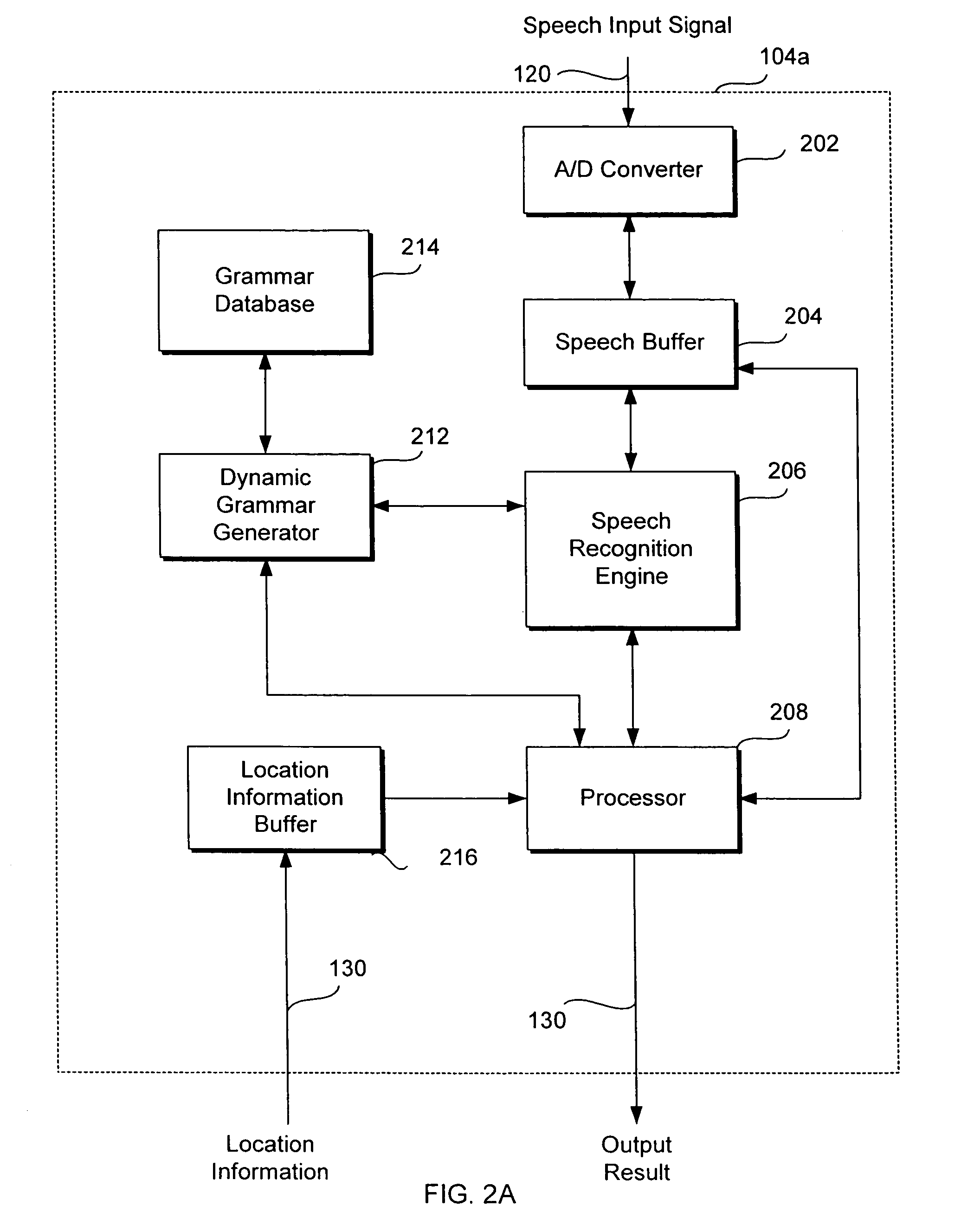

Method and system for speech recognition using grammar weighted based upon location information

InactiveUS7328155B2Overcome inaccurate recognitionThe recognition result is accurateSpeech recognitionSpeech identificationNavigation system

A speech recognition method and system for use in a vehicle navigation system utilize grammar weighted based upon geographical information regarding the locations corresponding to the tokens in the grammars and / or the location of the vehicle for which the vehicle navigation system is used, in order to enhance the performance of speech recognition. The geographical information includes the distances between the vehicle location and the locations corresponding to the tokens, as well as the size, population, and popularity of the locations corresponding to the tokens.

Owner:TOYOTA INFOTECHNOLOGY CENT CO LTD

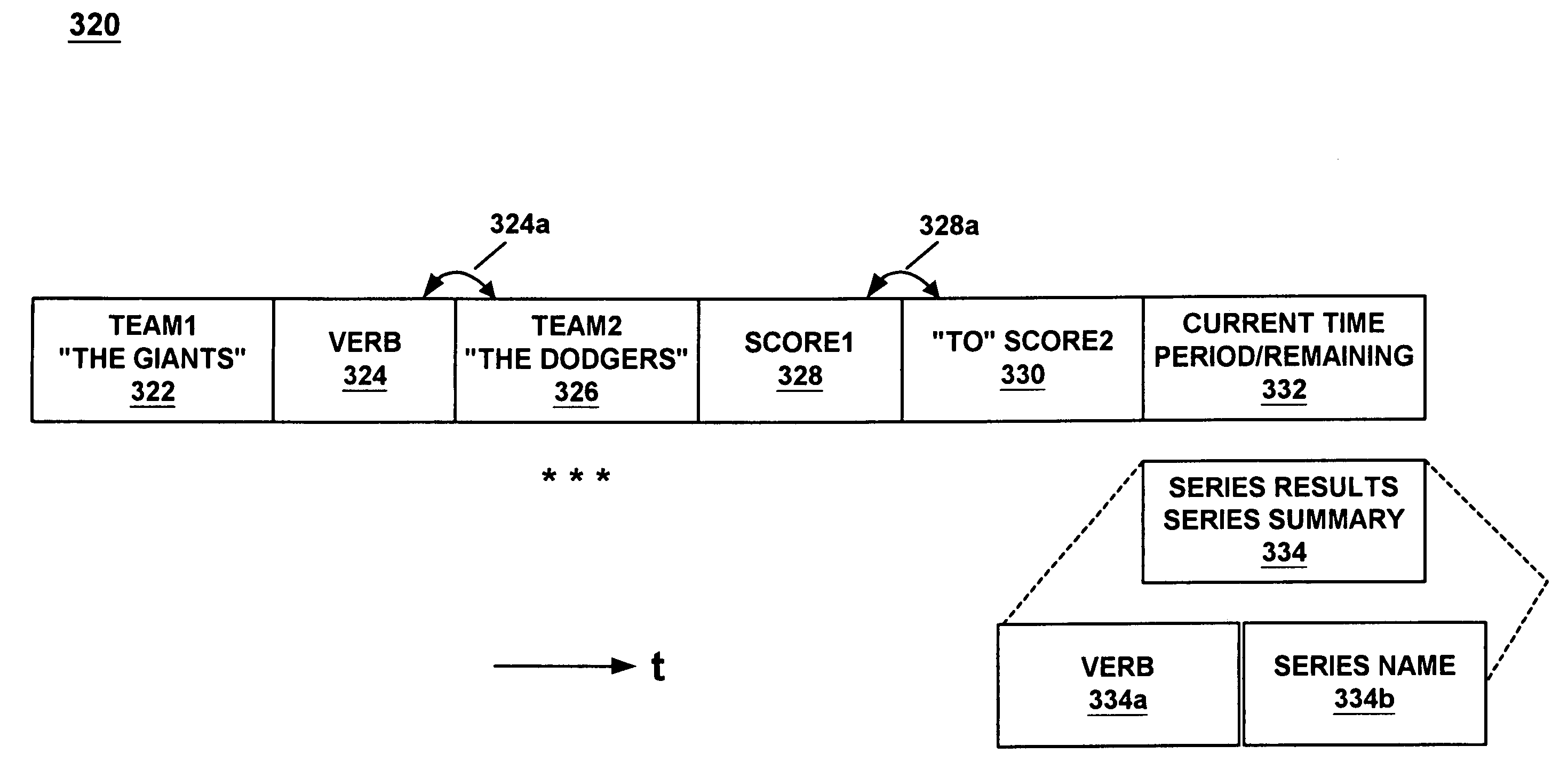

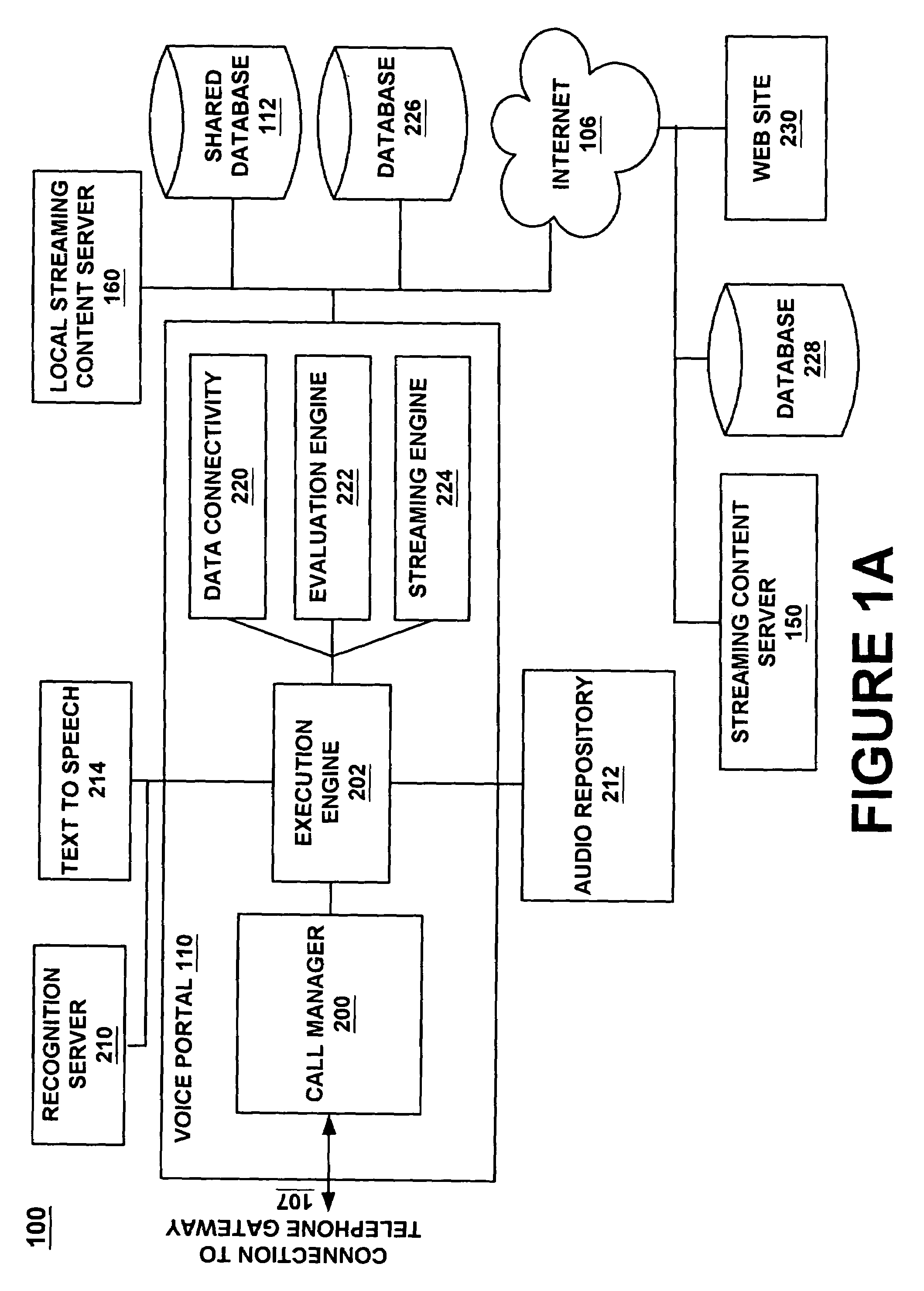

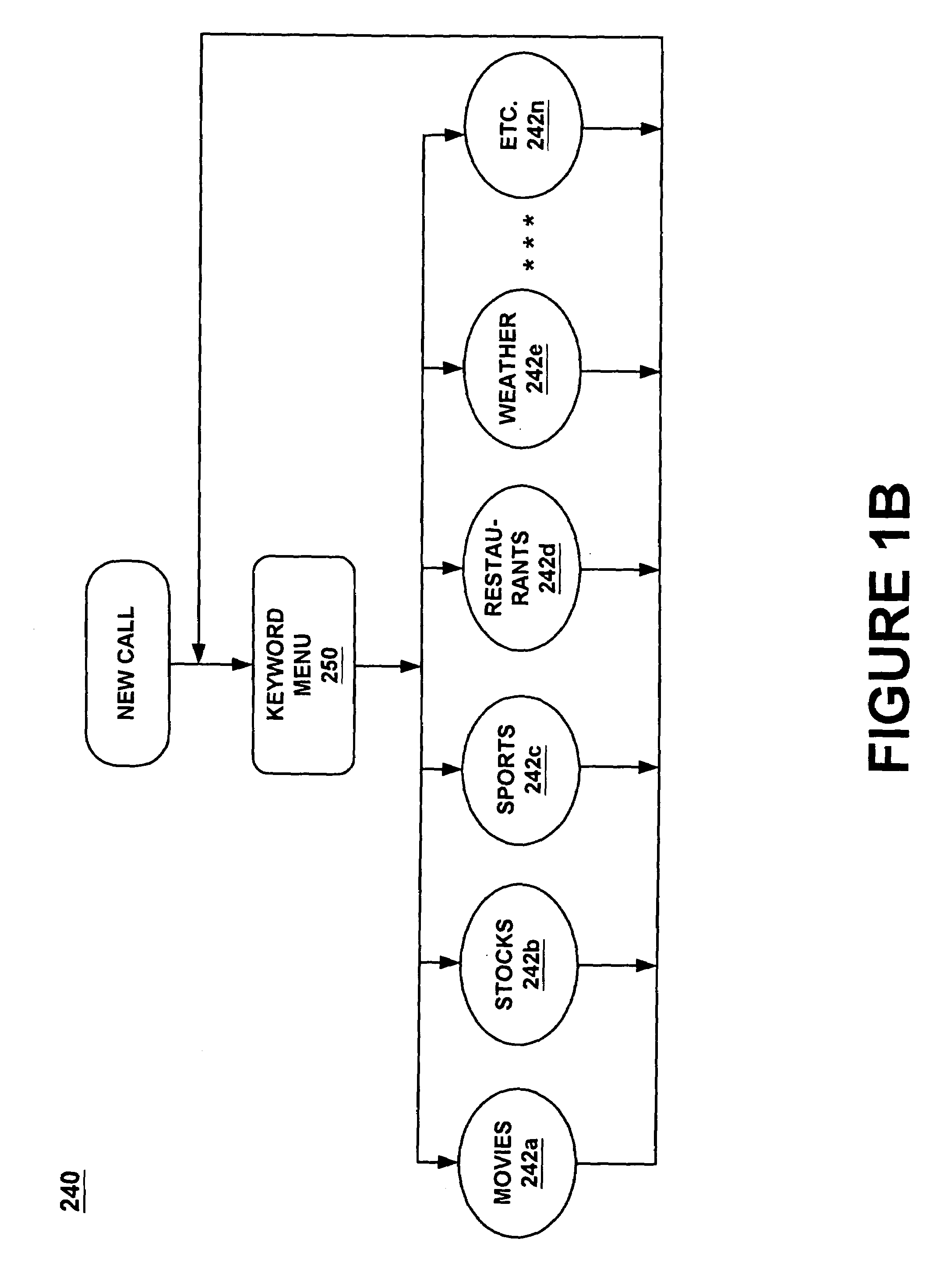

Providing services for an information processing system using an audio interface

InactiveUS7308408B1Structured and efficient and effectiveEffectivelyAutomatic exchangesSpeech recognitionInformation processingSpeech identification

A method and system for providing efficient menu services for an information processing system that uses a telephone or other form of audio user interface. In one embodiment, the menu services provide effective support for novice users by providing a full listing of available keywords and rotating house advertisements which inform novice users of potential features and information. For experienced users, cues are rendered so that at any time the user can say a desired keyword to invoke the corresponding application. The menu is flat to facilitate its usage. Full keyword listings are rendered after the user is given a brief cue to say a keyword. Service messages rotate words and word prosody. When listening to receive information from the user, after the user has been cued, soft background music or other audible signals are rendered to inform the user that a response may now be spoken to the service. Other embodiments determine default cities, on which to report information, based on characteristics of the caller or based on cities that were previously selected by the caller. Other embodiments provide speech concatenation processes that have co-articulation and real-time subject-matter-based word selection which generate human sounding speech. Other embodiments reduce the occurrences of falsely triggered barge-ins during content delivery by only allowing interruption for certain special words. Other embodiments offer special services and modes for calls having voice recognition trouble. The special services are entered after predetermined criterion have been met by the call. Other embodiments provide special mechanisms for automatically recovering the address of a caller.

Owner:MICROSOFT TECH LICENSING LLC

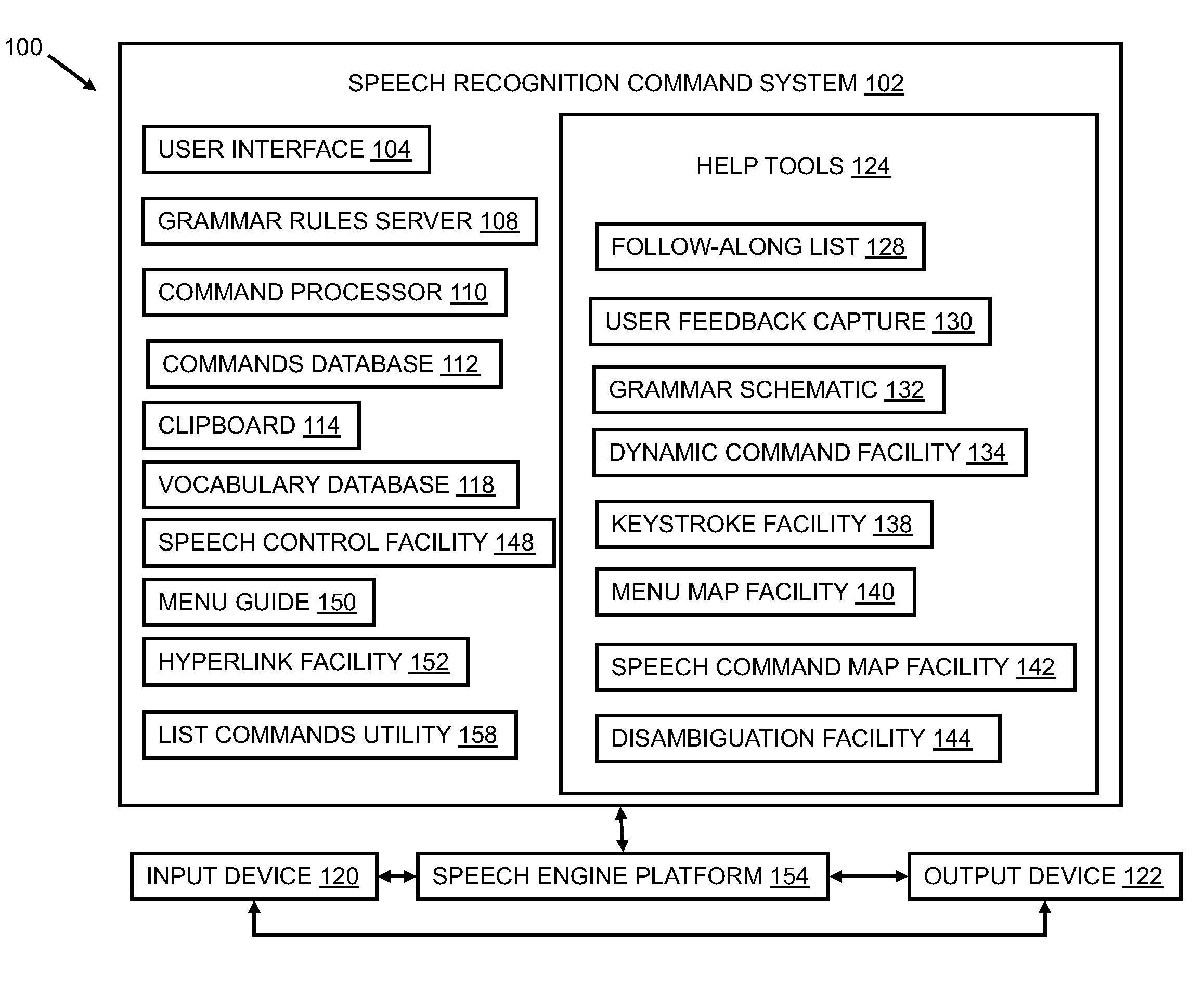

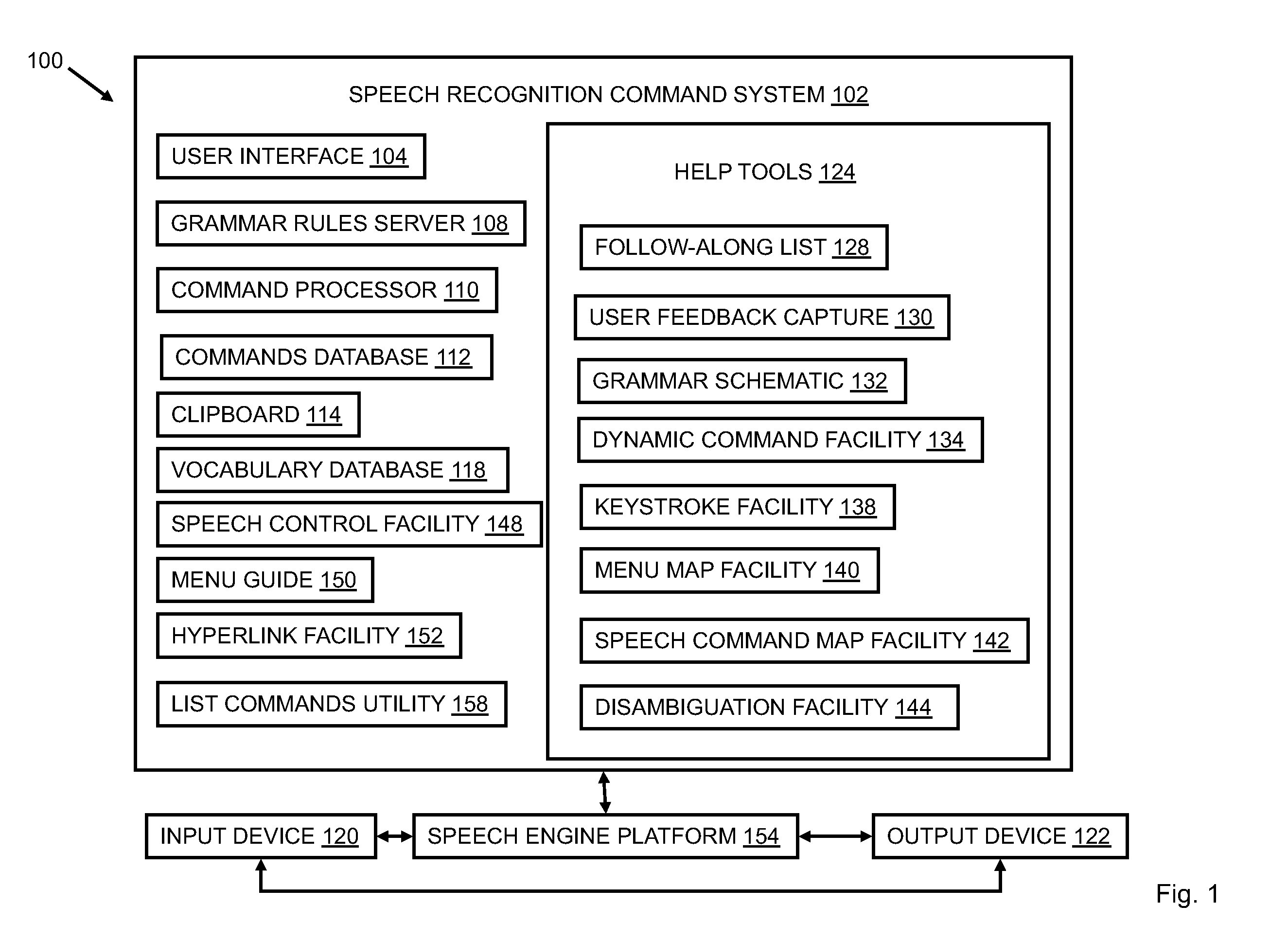

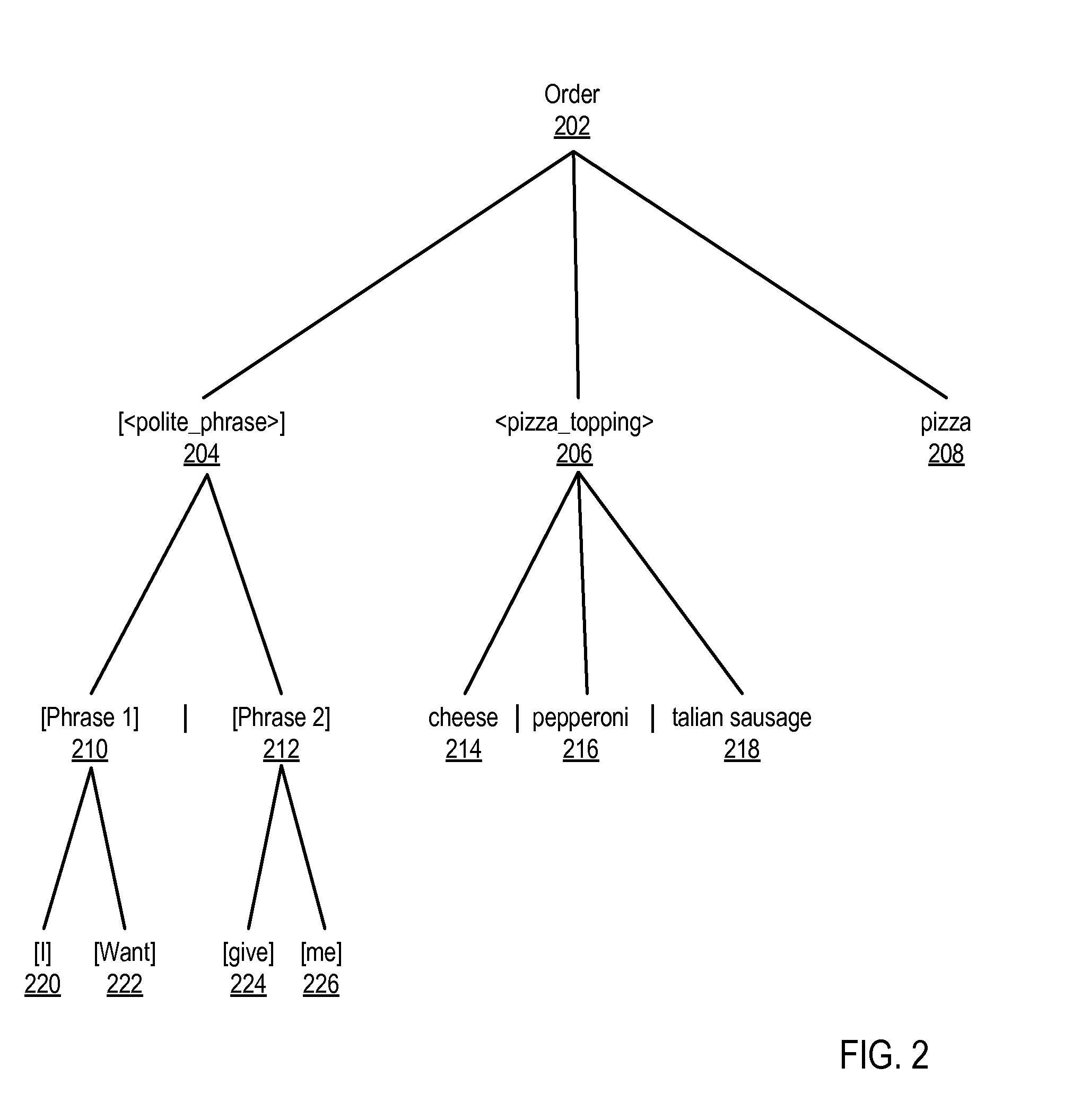

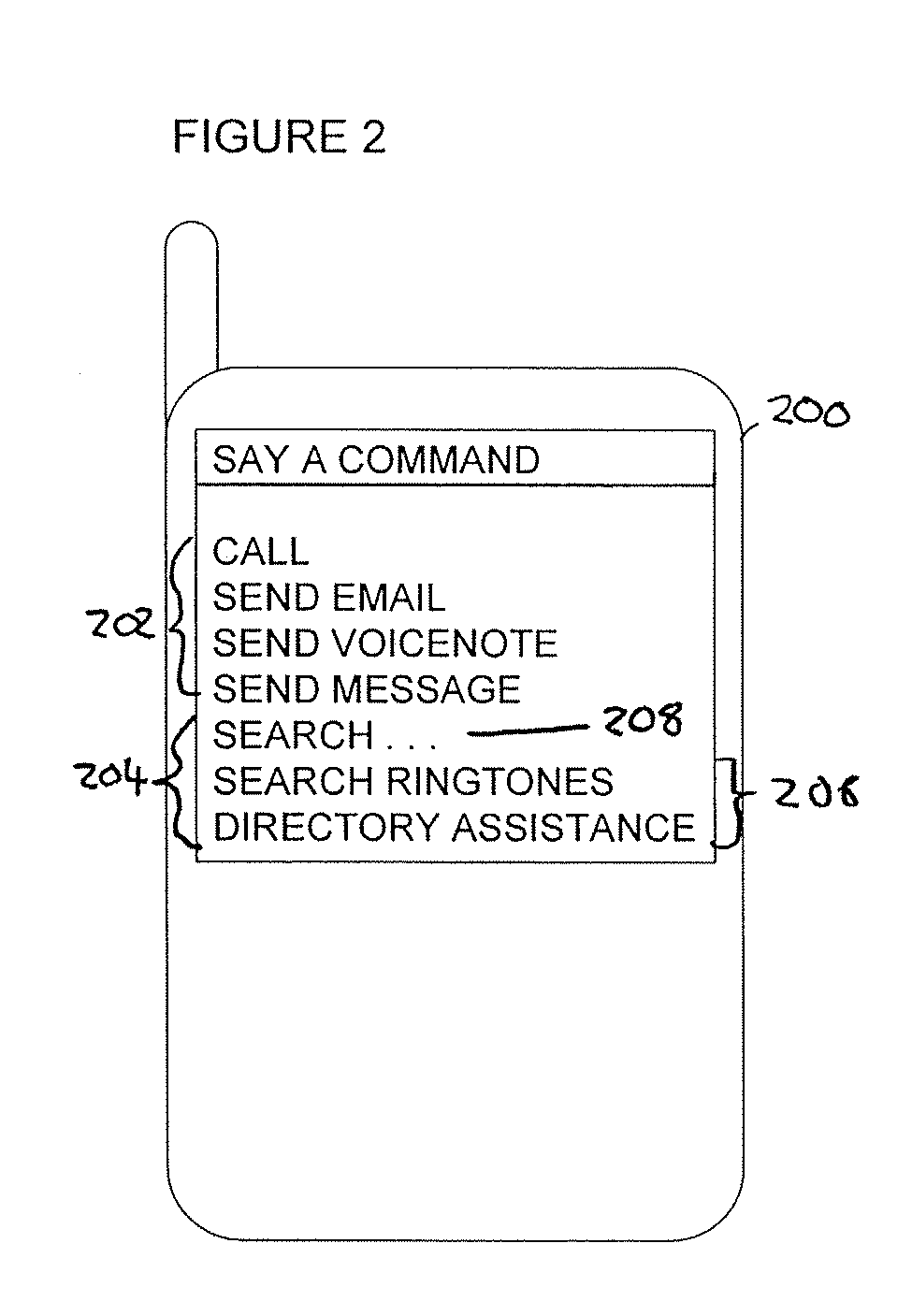

System and method of a list commands utility for a speech recognition command system

InactiveUS20100169098A1Facilitates a phrase modeEasy to installSpeech recognitionSpecial data processing applicationsCommand and controlCommand system

In embodiments of the present invention, a system and computer-implemented method for enabling a user to interact with a mobile device using a voice command may include the steps of defining a structured grammar for generating a global voice command, defining a global voice command of the structured grammar, wherein the global voice command enables access to an object of the mobile device using a single command, and mapping at least one function of the object to the global voice command, wherein upon receiving voice input from the user of the mobile device, the object recognizes the global voice command and controls the function.

Owner:PATCH KIMBERLY C

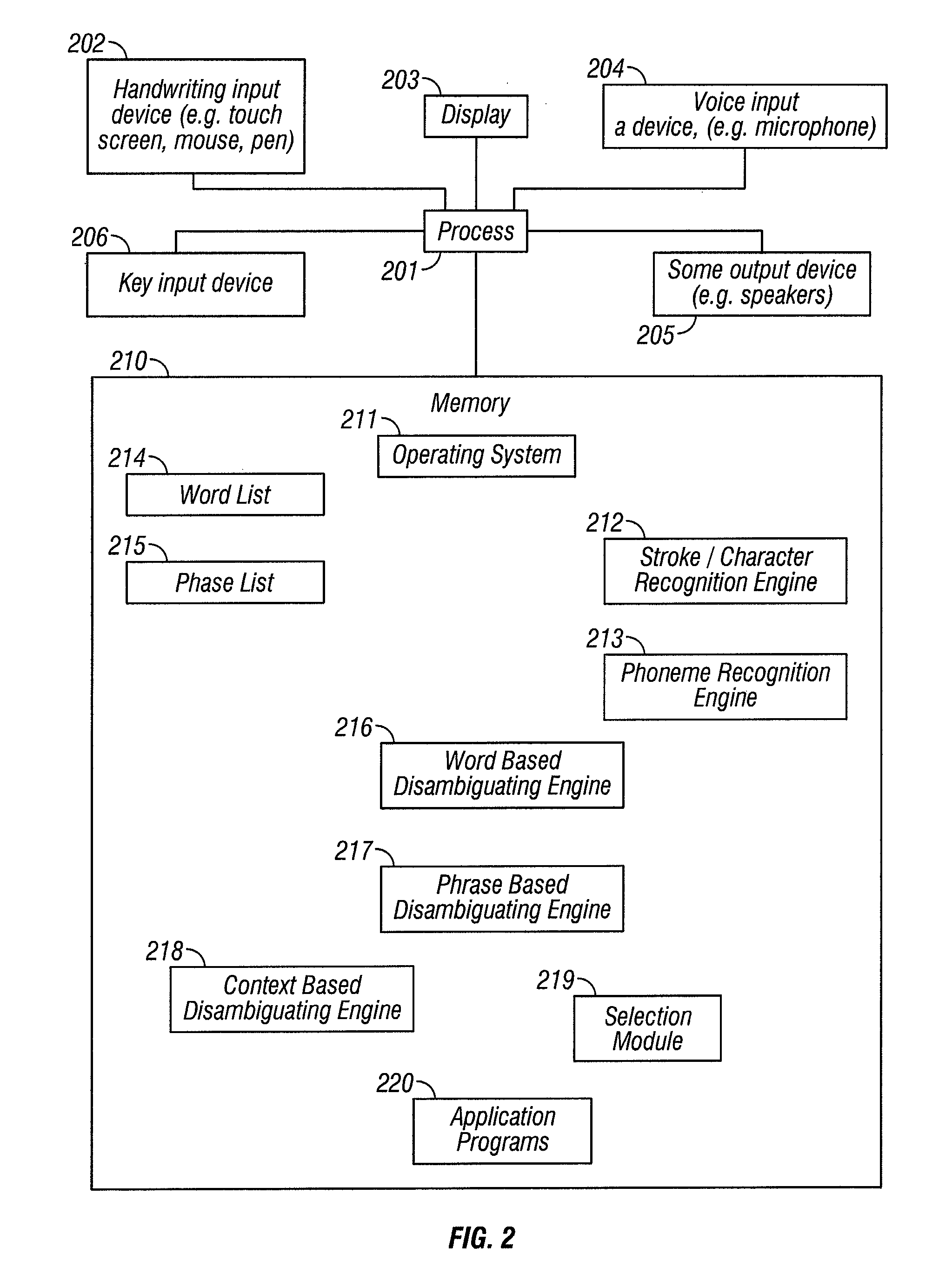

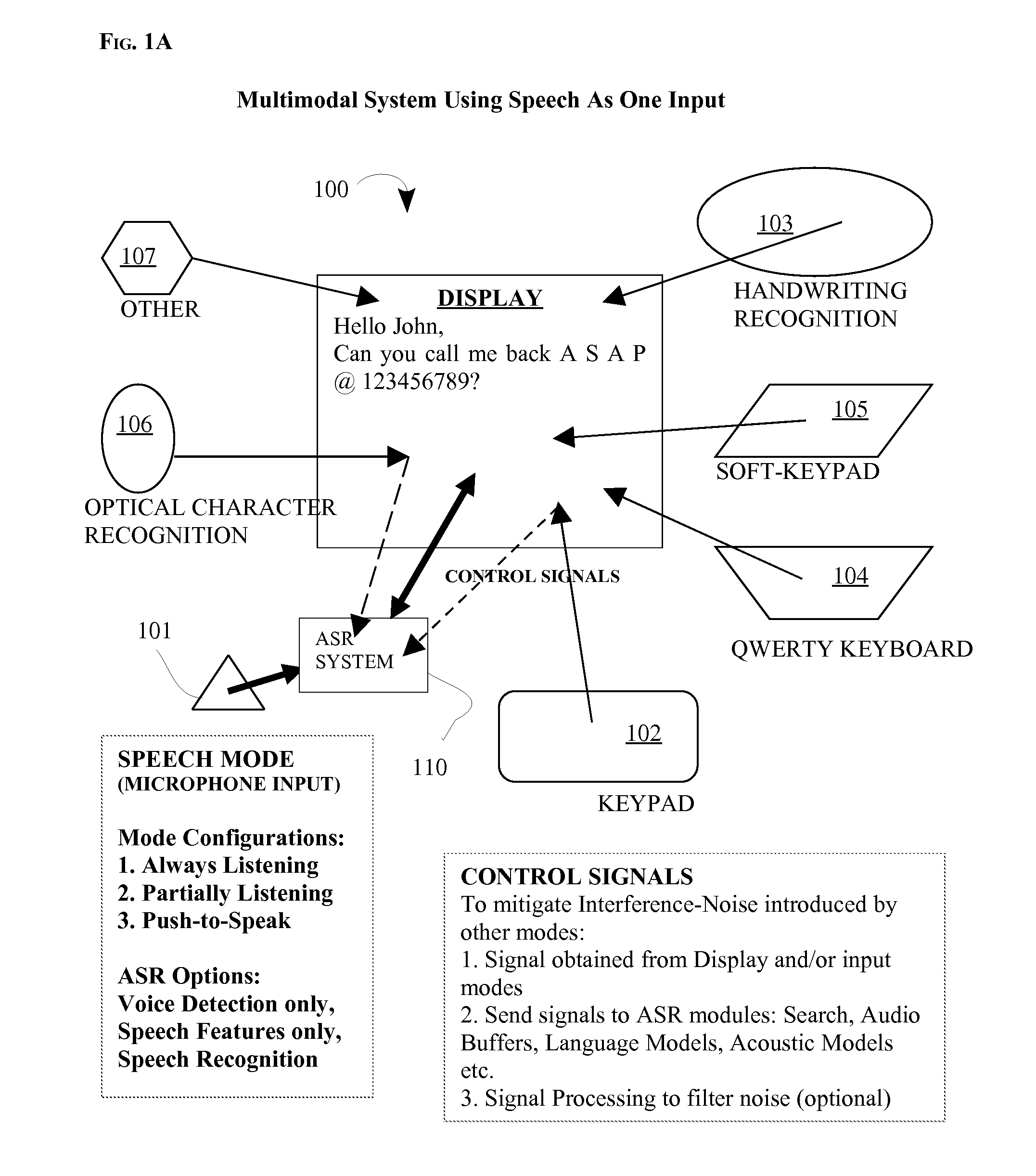

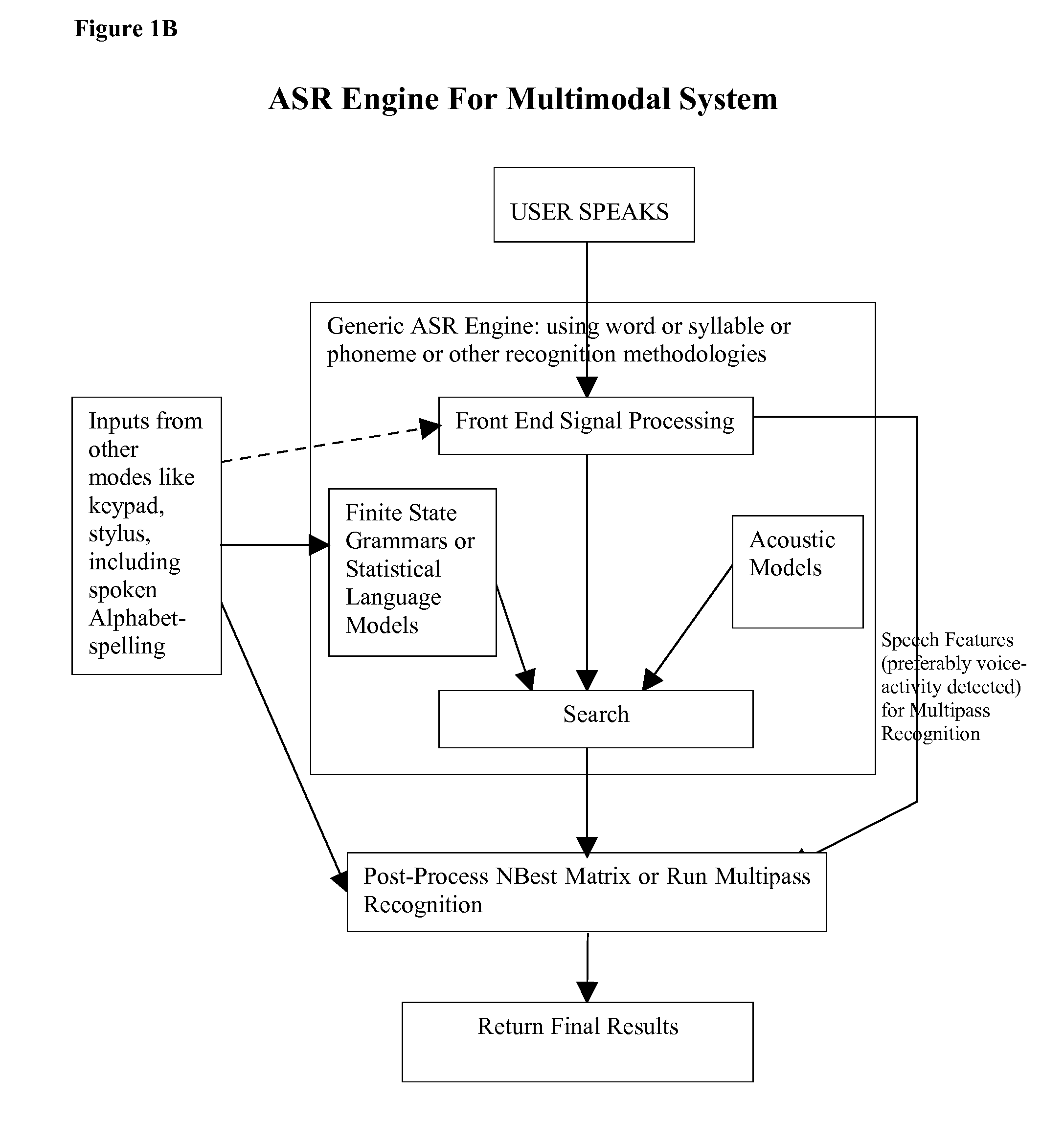

Multimodal speech recognition system

ActiveUS20080133228A1Increase speedImprove easeSpeech recognitionSpeech identificationSystem configuration

The disclosure describes an overall system / method for text-input using a multimodal interface with speech recognition. Specifically, pluralities of modes interact with the main speech mode to provide the speech-recognition system with partial knowledge of the text corresponding to the spoken utterance forming the input to the speech recognition system. The knowledge from other modes is used to dynamically change the ASR system's active vocabulary thereby significantly increasing recognition accuracy and significantly reducing processing requirements. Additionally, the speech recognition system is configured using three different system configurations (always listening, partially listening, and push-to-speak) and for each one of those three different user-interfaces are proposed (speak-and-type, type-and-speak, and speak-while-typing). Finally, the overall user-interface of the proposed system is designed such that it enhances existing standard text-input methods; thereby minimizing the behavior change for mobile users.

Owner:RAO ASHWIN P

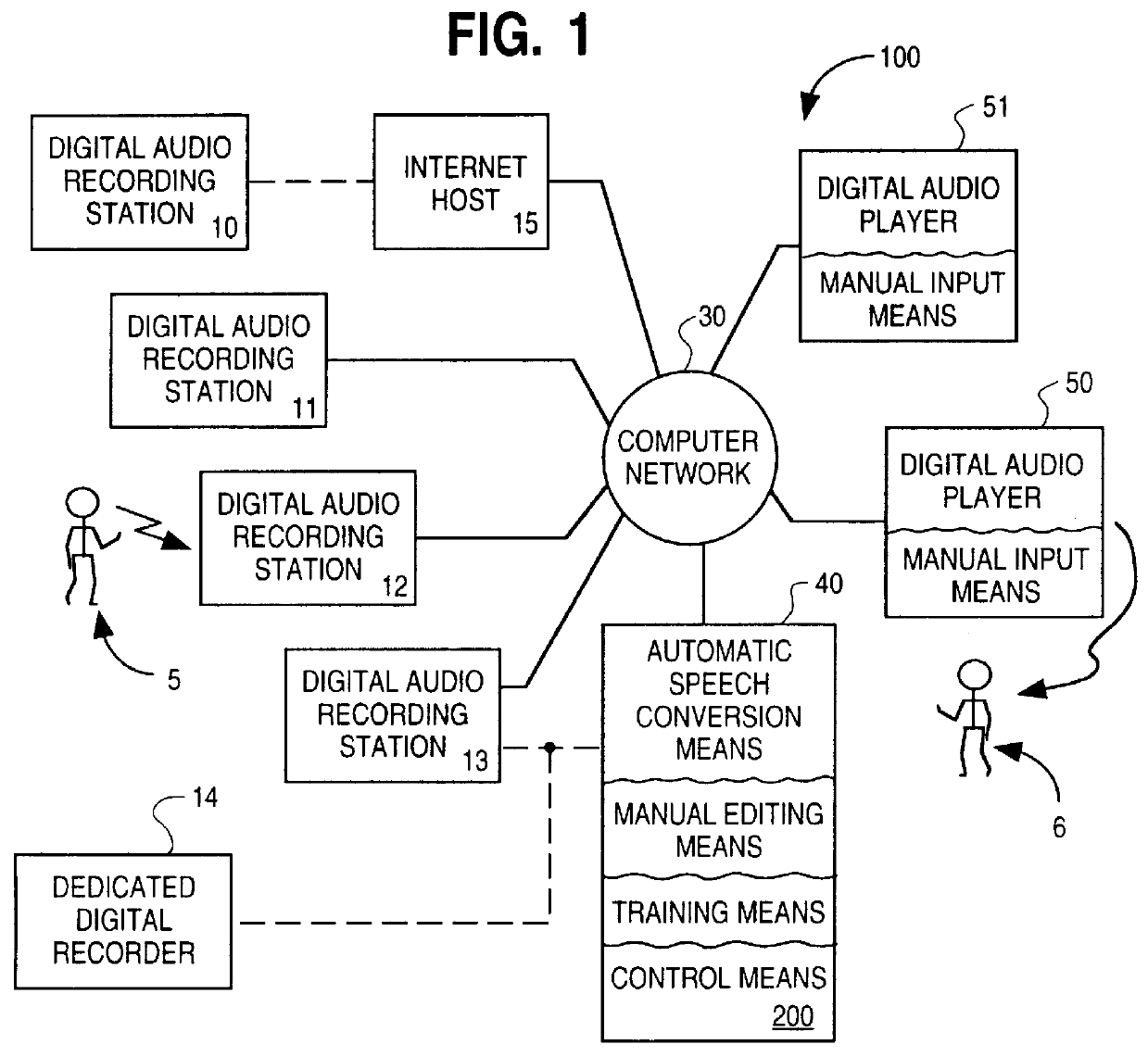

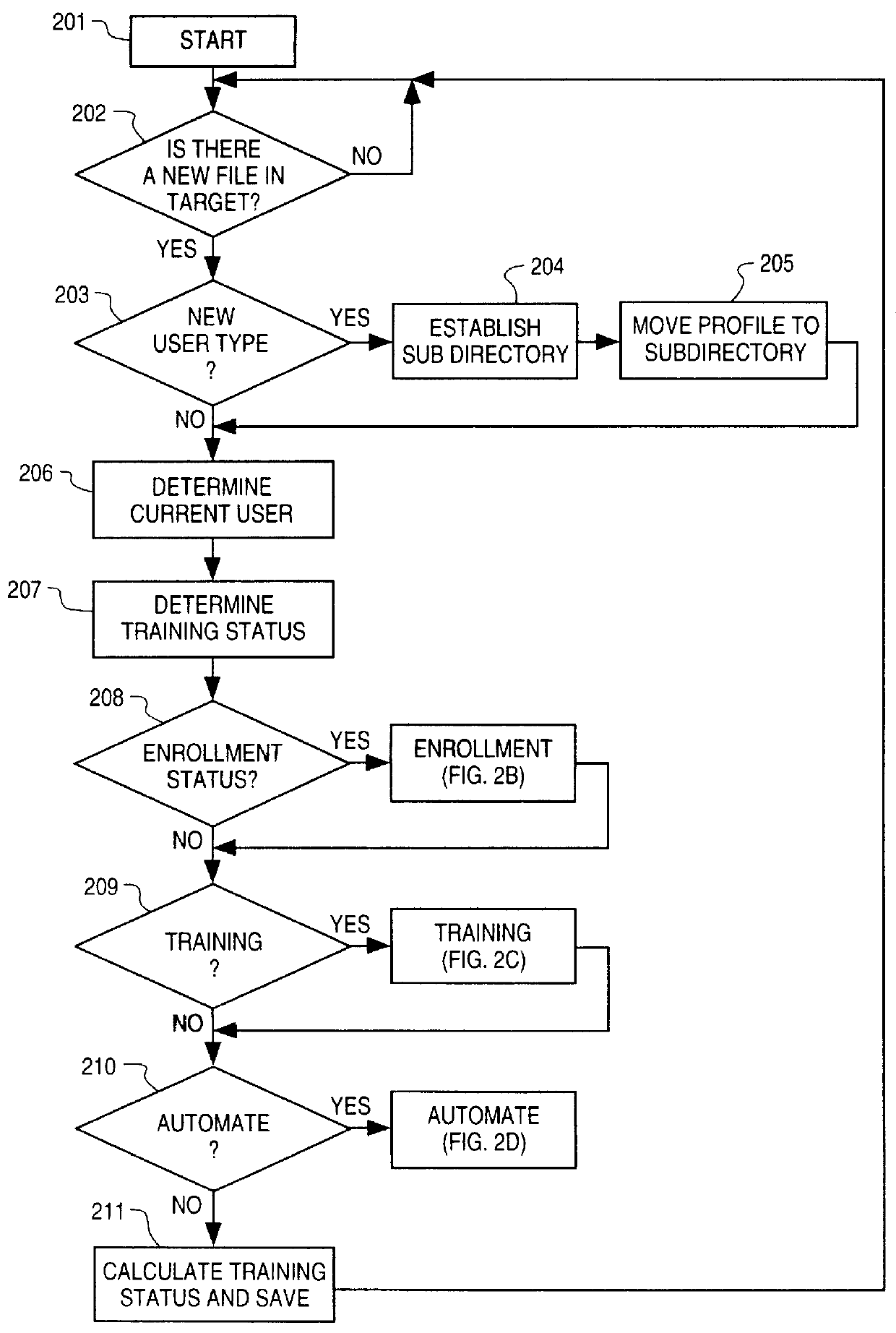

System and method for automating transcription services

InactiveUS6122614AMinimize the numberSimple meansSpeech recognitionSpeech synthesisAcoustic modelSpeech identification

A system for substantially automating transcription services for multiple voice users including a manual transcription station, a speech recognition program and a routing program. The system establishes a profile for each of the voice users containing a training status which is selected from the group of enrollment, training, automated and stop automation. When the system receives a voice dictation file from a current voice user based on the training status the system routes the voice dictation file to a manual transcription station and the speech recognition program. A human transcriptionist creates transcribed files for each received voice dictation files. The speech recognition program automatically creates a written text for each received voice dictation file if the training status of the current user is training or automated. A verbatim file is manually established if the training status of the current user is enrollment or training and the speech recognition program is trained with an acoustic model for the current user using the verbatim file and the voice dictation file if the training status of the current user is enrollment or training. The transcribed file is returned to the current user if the training status of the current user is enrollment or training or the written text is returned if the training status of the current user is automated. An apparatus and method is also disclosed for simplifying the manual establishment of the verbatim file. A method for substantially automating transcription services is also disclosed.

Owner:CUSTOM SPEECH USA +1

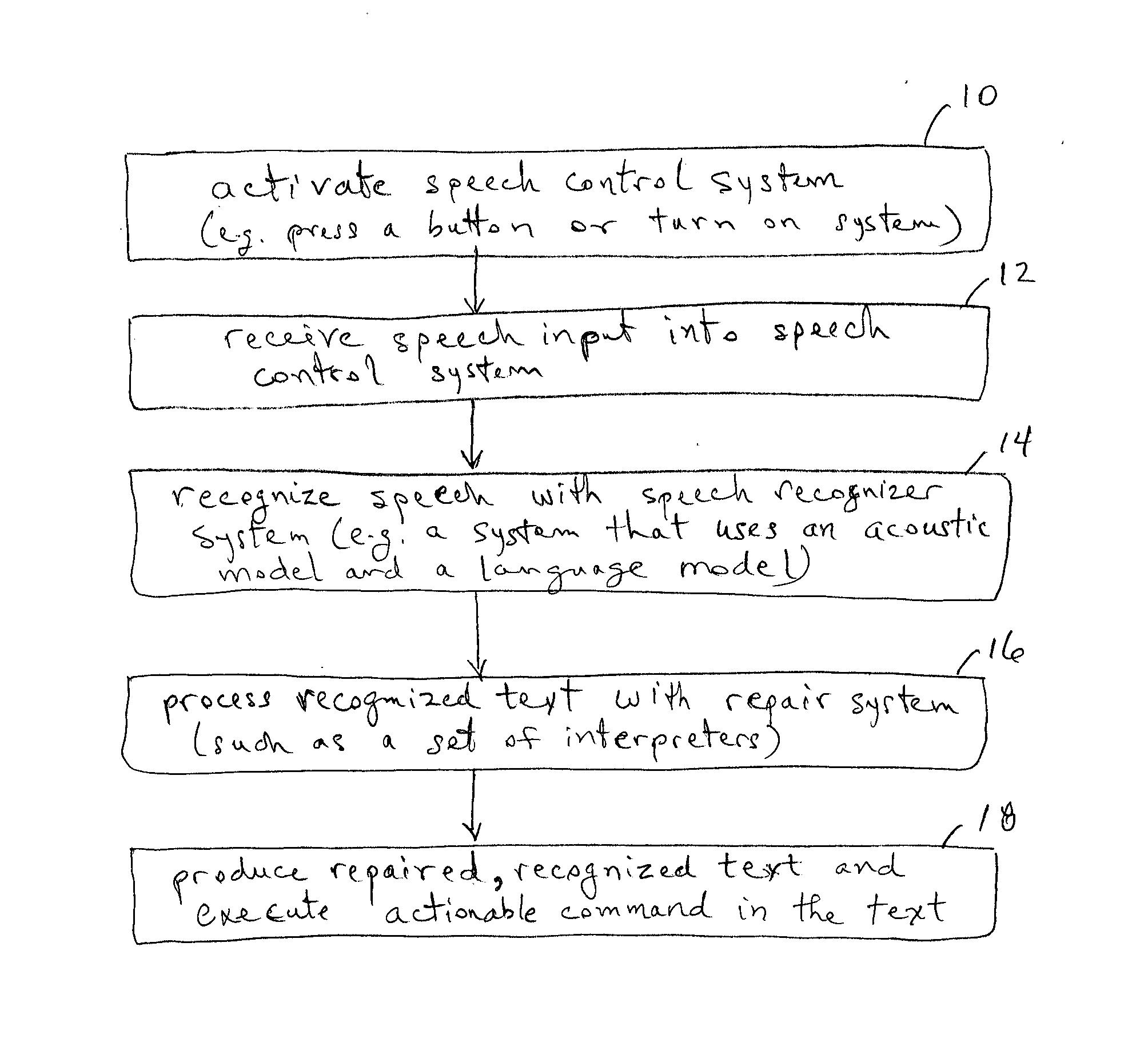

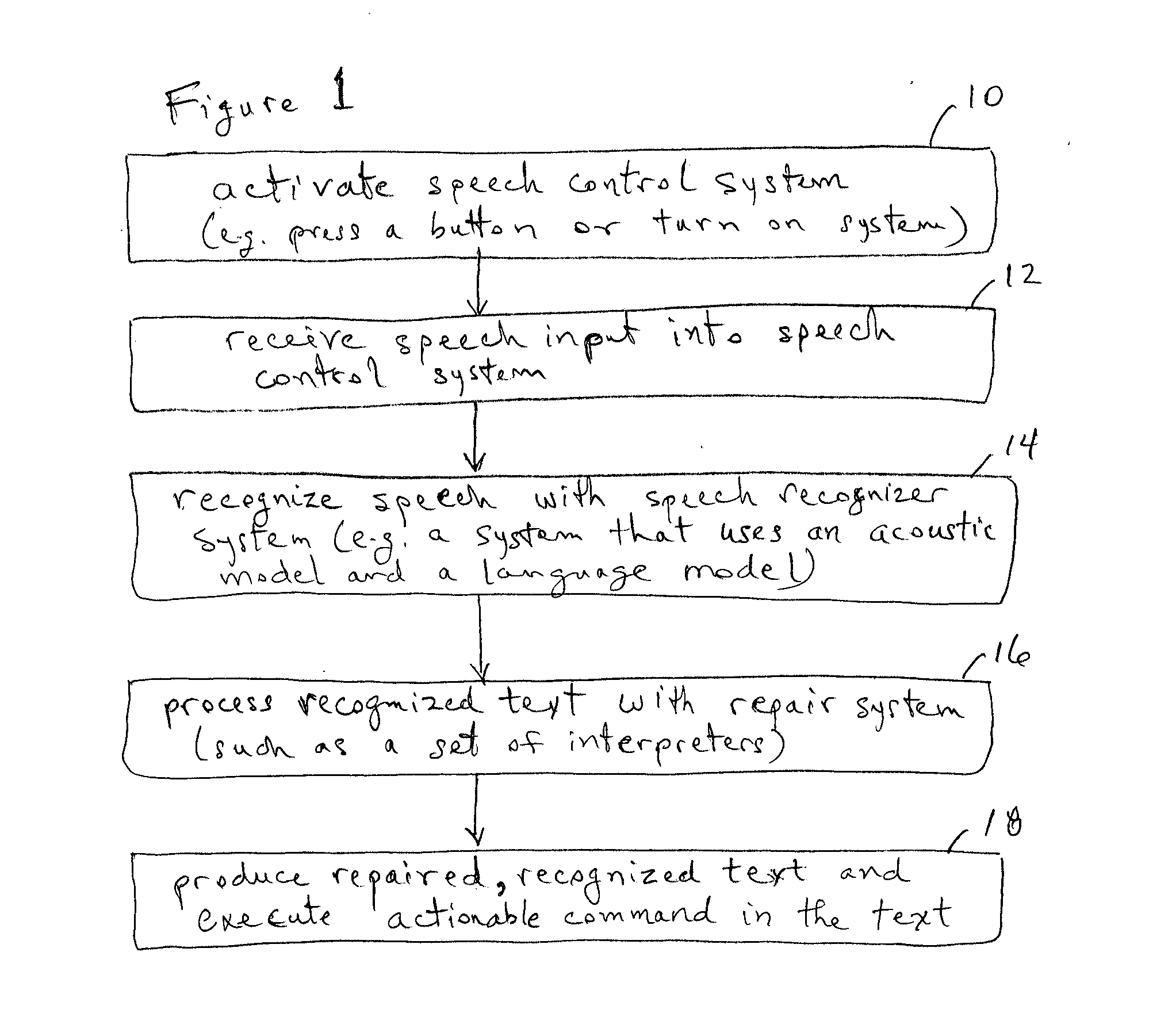

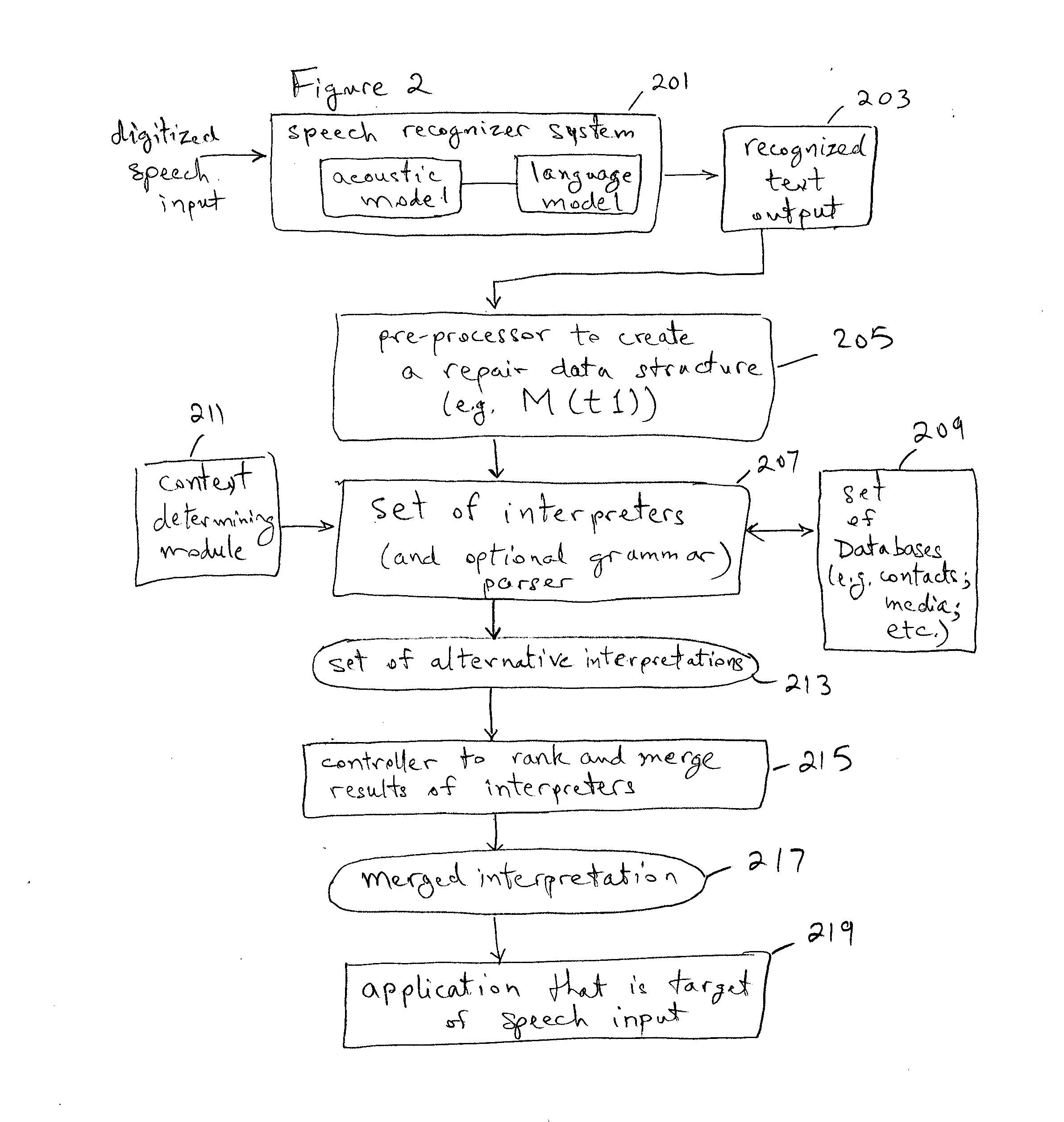

Speech recognition repair using contextual information

A speech control system that can recognize a spoken command and associated words (such as “call mom at home”) and can cause a selected application (such as a telephone dialer) to execute the command to cause a data processing system, such as a smartphone, to perform an operation based on the command (such as look up mom's phone number at home and dial it to establish a telephone call). The speech control system can use a set of interpreters to repair recognized text from a speech recognition system, and results from the set can be merged into a final repaired transcription which is provided to the selected application.

Owner:APPLE INC

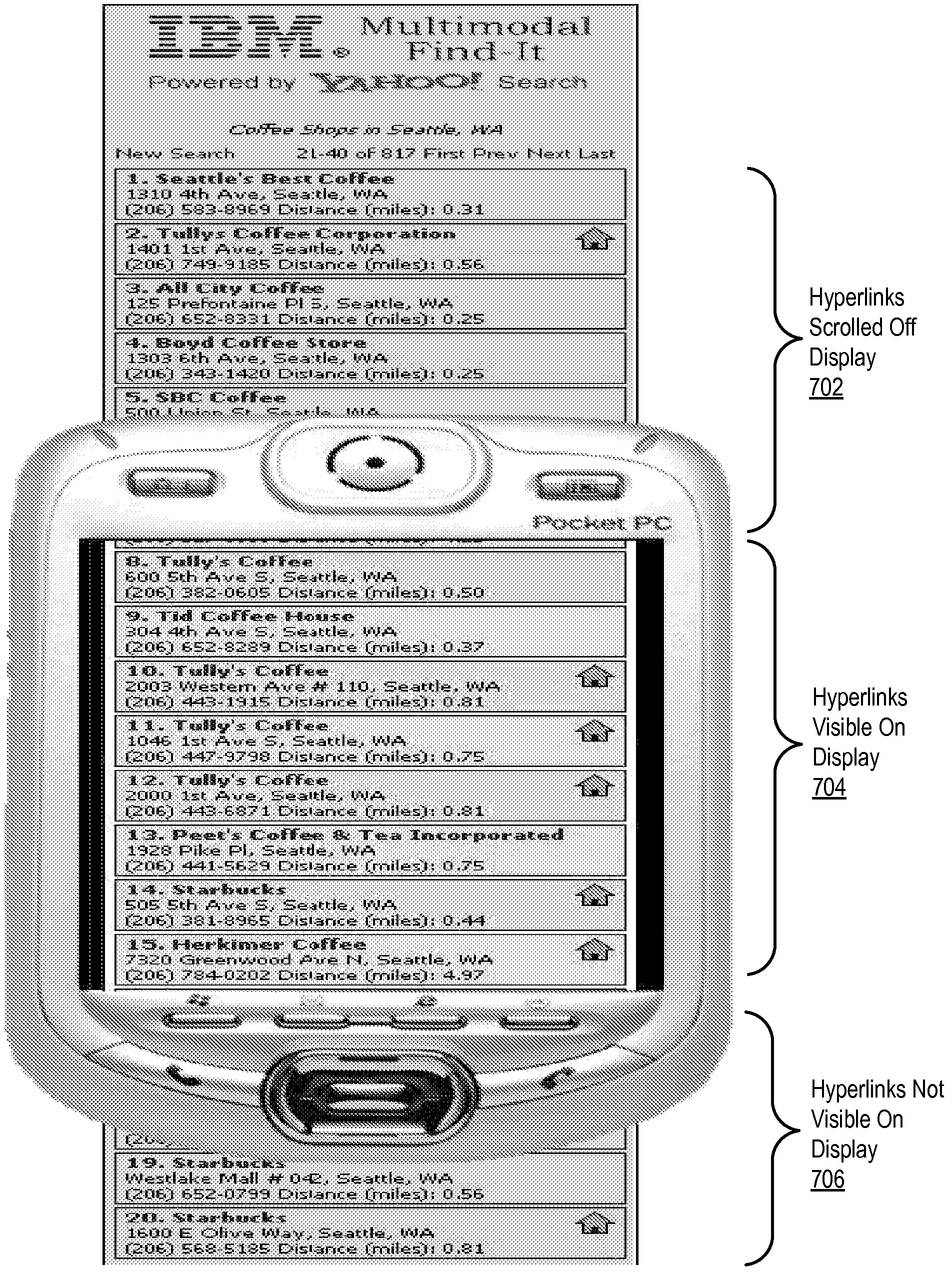

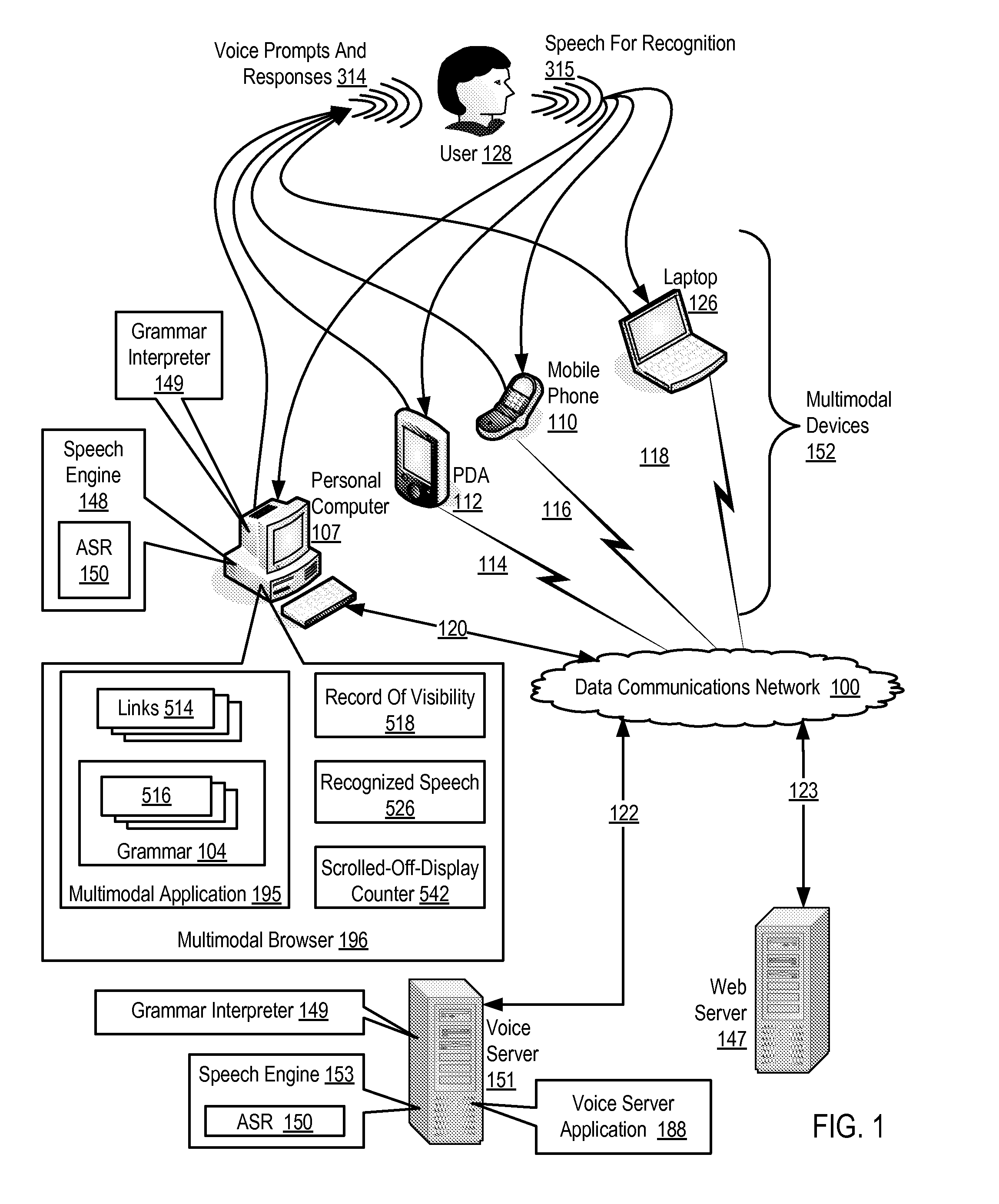

Disambiguating a speech recognition grammar in a multimodal application

Disambiguating a speech recognition grammar in a multimodal application, the multimodal application including voice activated hyperlinks, the voice activated hyperlinks voice enabled by a speech recognition grammar characterized by ambiguous terminal grammar elements, including maintaining by the multimodal browser a record of visibility of each voice activated hyperlink, the record of visibility including current visibility and past visibility on a display of the multimodal device of each voice activated hyperlink, the record of visibility further including an ordinal indication, for each voice activated hyperlink scrolled off display, of the sequence in which each such voice activated hyperlink was scrolled off display; recognizing by the multimodal browser speech from a user matching an ambiguous terminal element of the speech recognition grammar; selecting by the multimodal browser a voice activated hyperlink for activation, the selecting carried out in dependence upon the recognized speech and the record of visibility.

Owner:MICROSOFT TECH LICENSING LLC

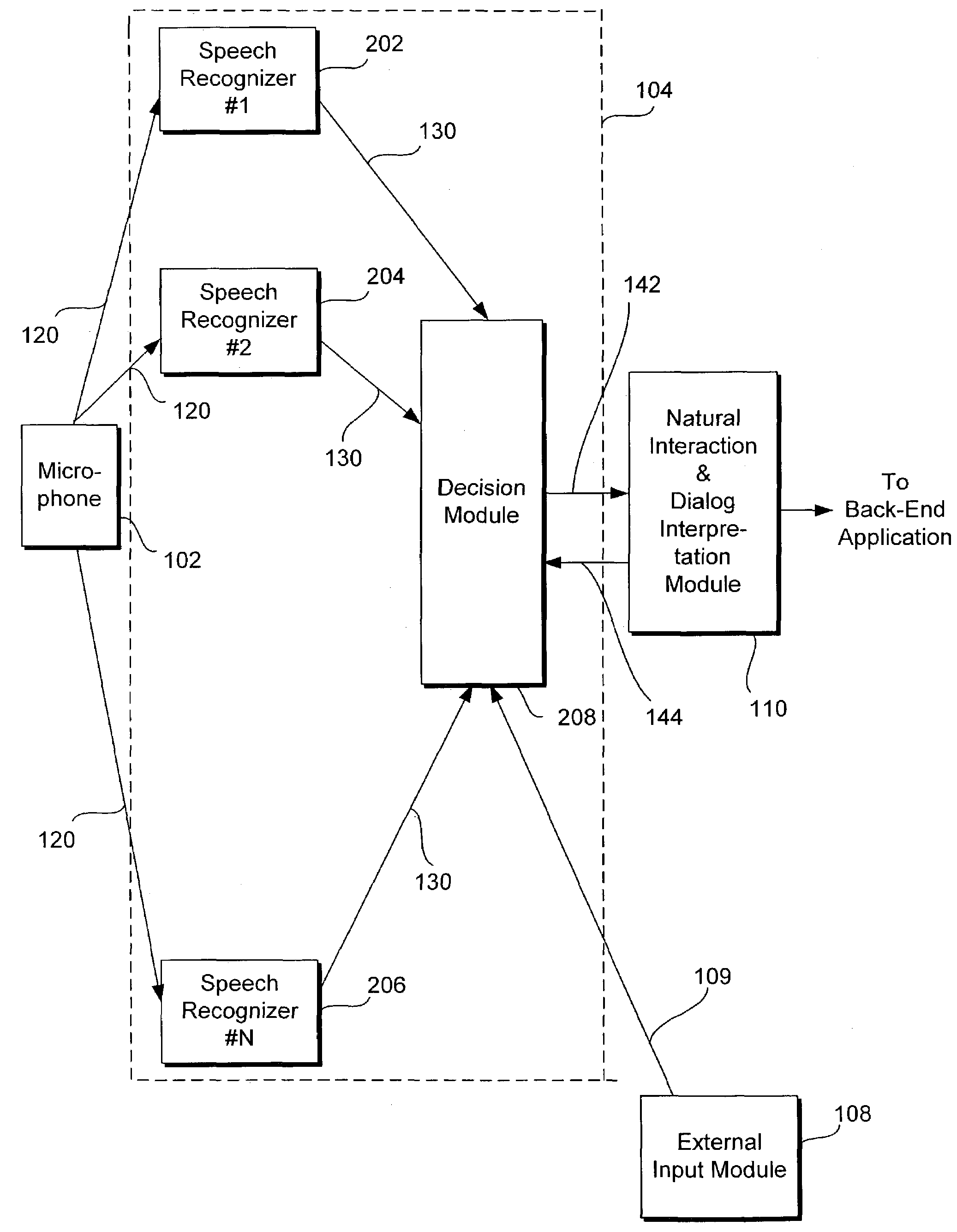

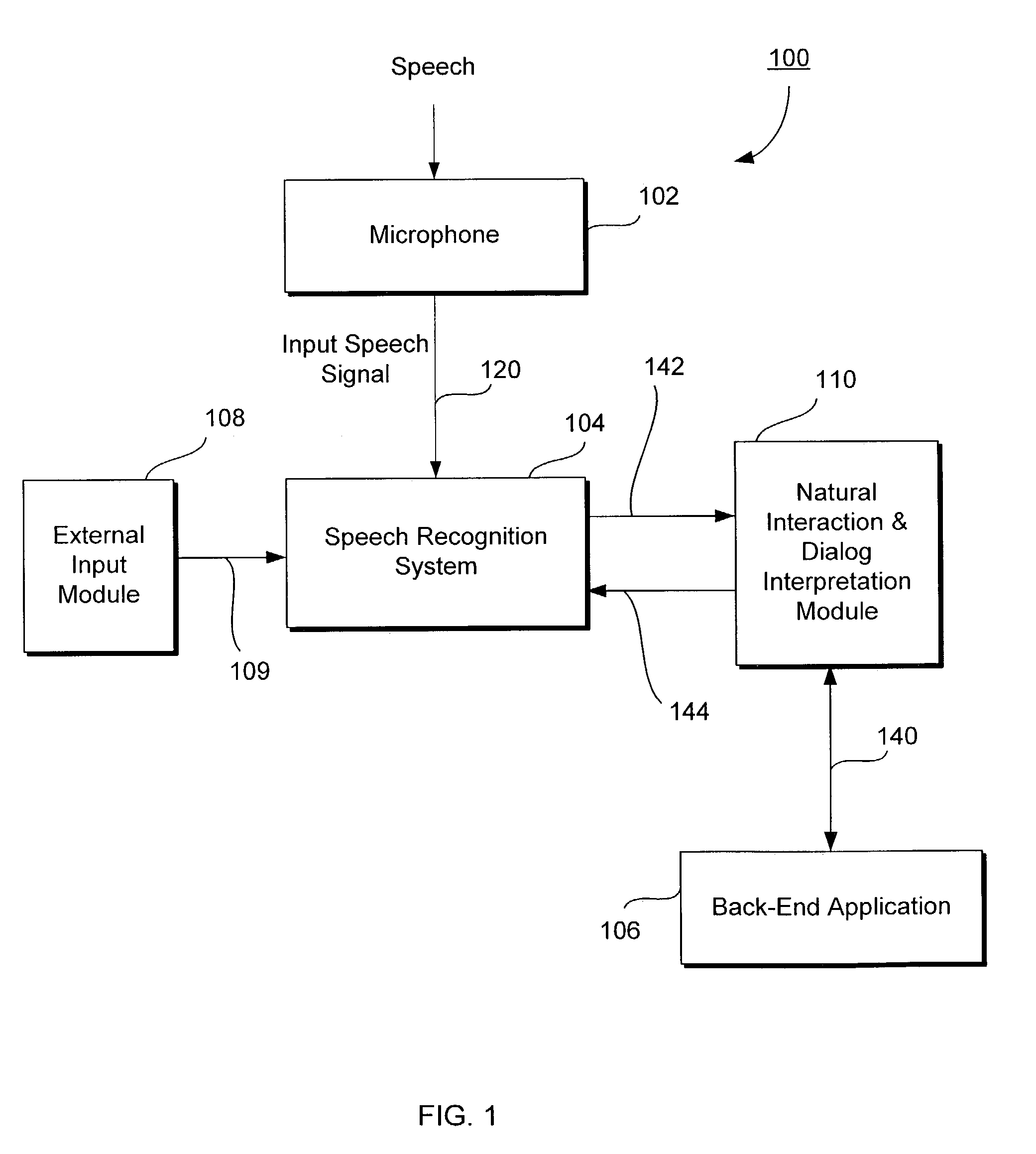

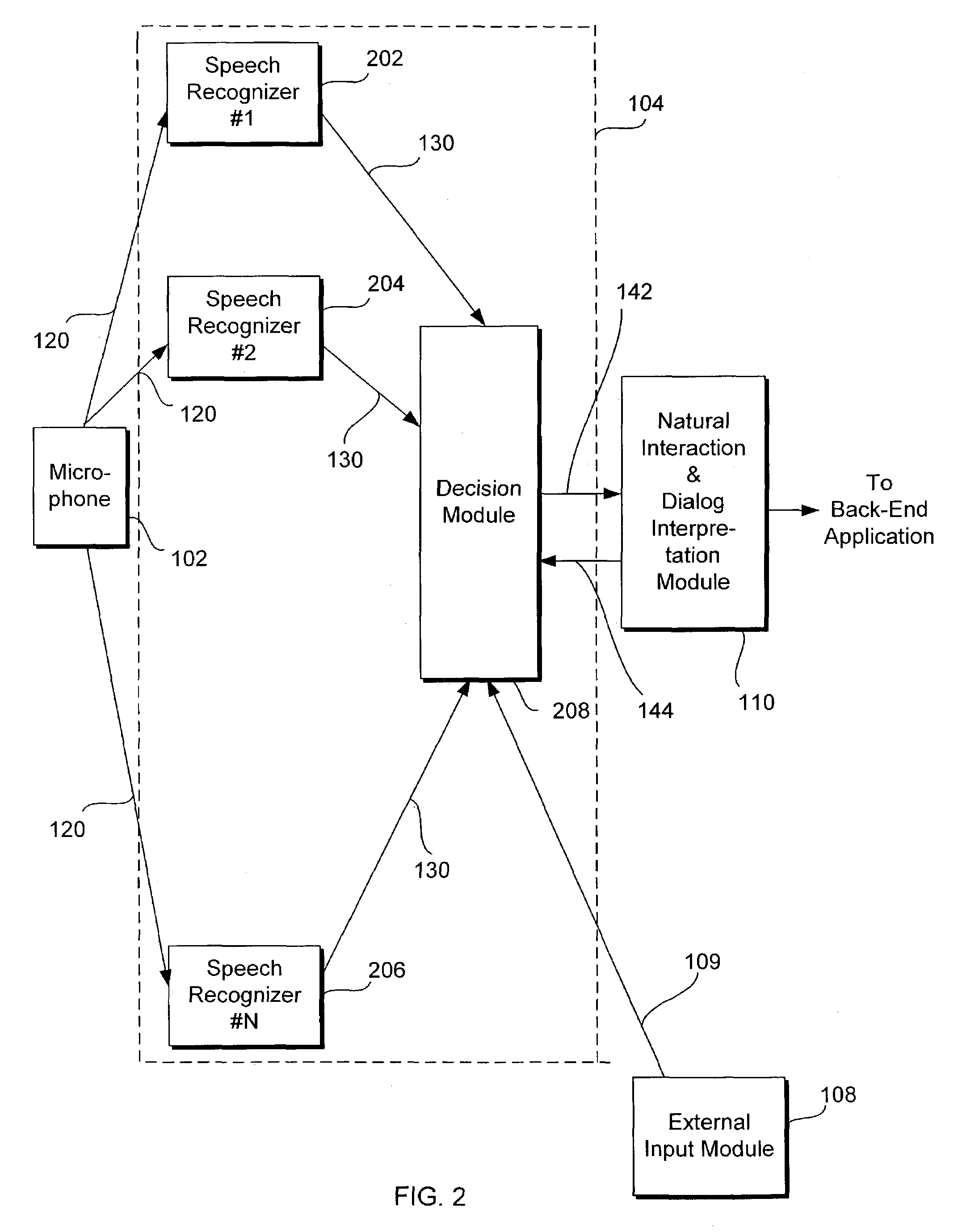

Speech recognition system having multiple speech recognizers

InactiveUS7228275B1Reduce intensityExcessive signalingSpeech recognitionSpeech identificationDecision taking

A speech recognition system recognizes an input speech signal by using a first speech recognizer and a second speech recognizer each coupled to a decision module. Each of the first and second speech recognizers outputs first and second recognized speech texts and first and second associated confidence scores, respectively, and the decision module selects either the first or the second speech text depending upon which of the first or second confidence score is higher. The decision module may also adjust the first and second confidence scores to generate first and second adjusted confidence scores, respectively, and select either the first or second speech text depending upon which of the first or second adjusted confidence scores is higher. The first and second confidence scores may be adjusted based upon the location of a speaker, the identity or accent of the speaker, the context of the speech, and the like.

Owner:TOYOTA INFOTECHNOLOGY CENT CO LTD +1

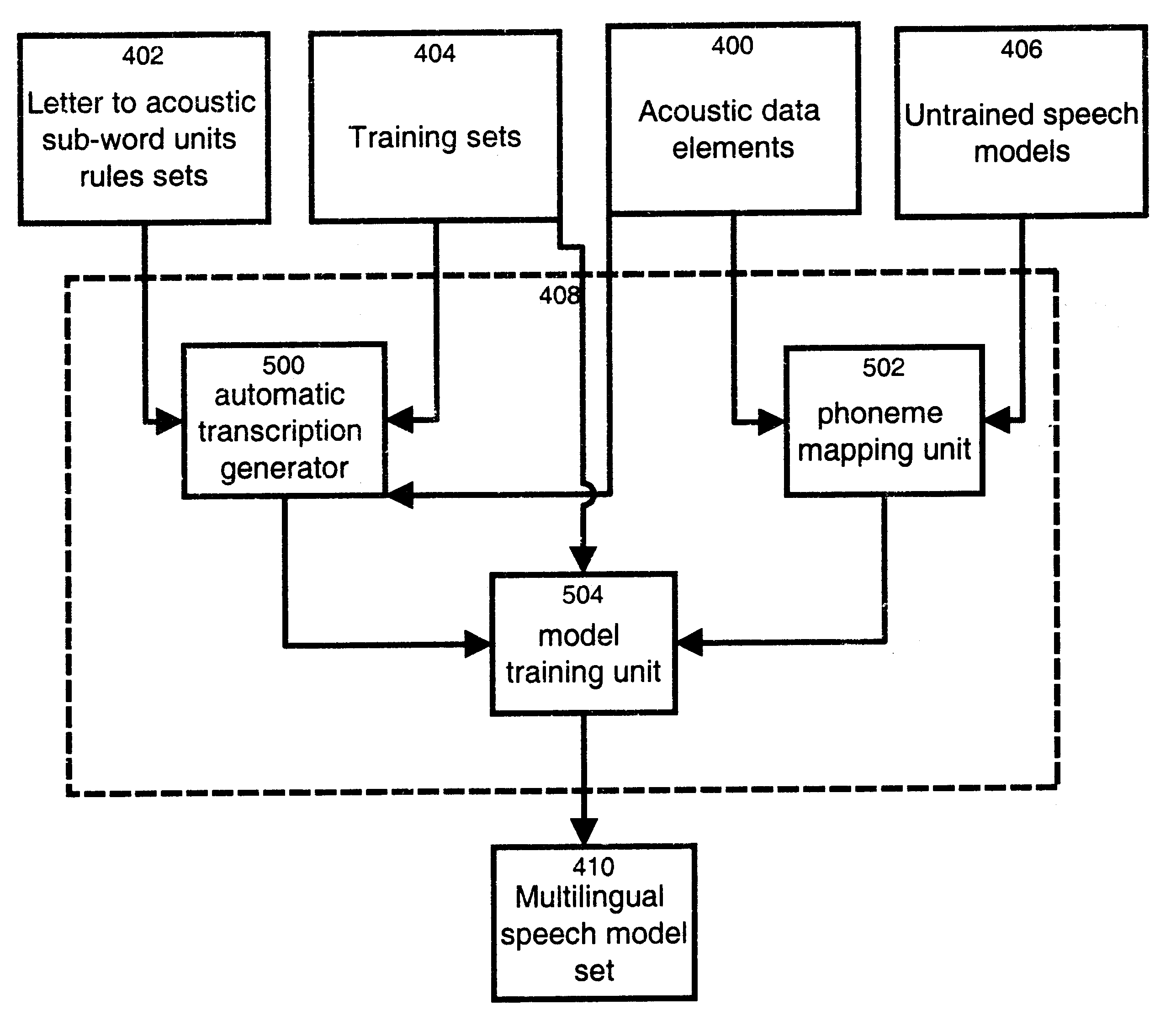

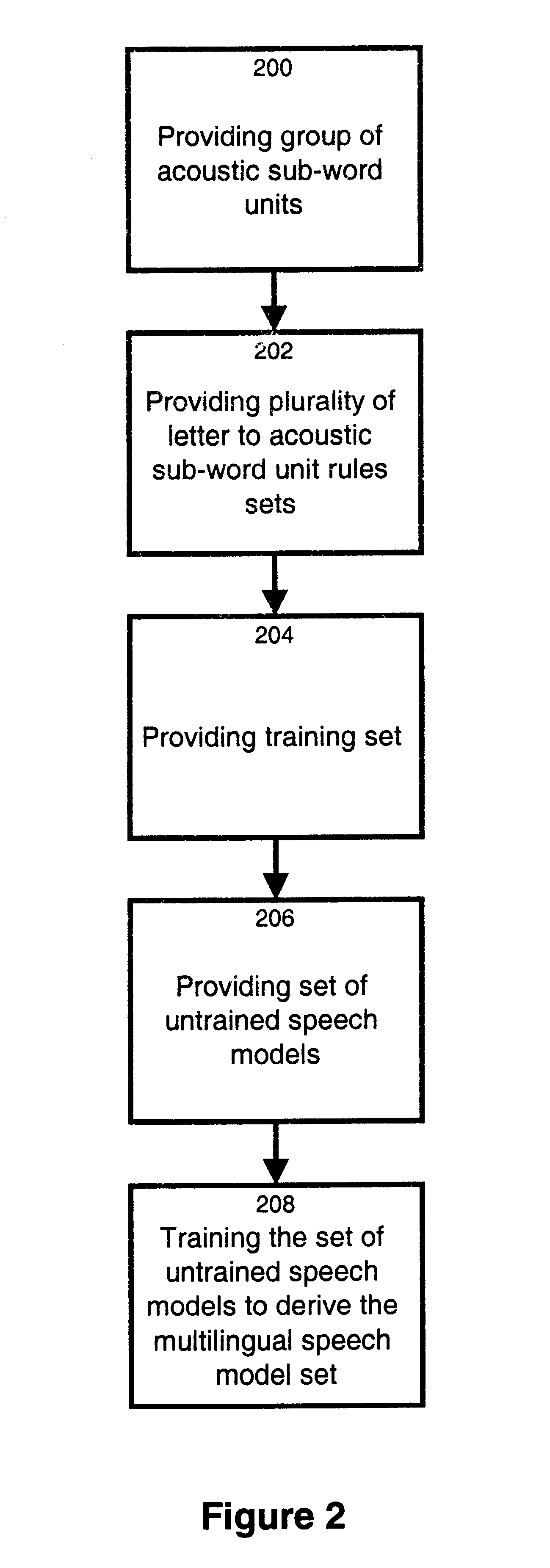

Method and apparatus for training a multilingual speech model set

InactiveUS6912499B1Reduce development costsShorten development timeSpeech recognitionSpecial data processing applicationsMore languageSpeech identification

The invention relates to a method and apparatus for training a multilingual speech model set. The multilingual speech model set generated is suitable for use by a speech recognition system for recognizing spoken utterances for at least two different languages. The invention allows using a single speech recognition unit with a single speech model set to perform speech recognition on utterances from two or more languages. The method and apparatus make use of a group of a group of acoustic sub-word units comprised of a first subgroup of acoustic sub-word units associated to a first language and a second subgroup of acoustic sub-word units associated to a second language where the first subgroup and the second subgroup share at least one common acoustic sub-word unit. The method and apparatus also make use of a plurality of letter to acoustic sub-word unit rules sets, each letter to acoustic sub-word unit rules set being associated to a different language. A set of untrained speech models is trained on the basis of a training set comprising speech tokens and their associated labels in combination with the group of acoustic sub-word units and the plurality of letter to acoustic sub-word unit rules sets. The invention also provides a computer readable storage medium comprising a program element for implementing the method for training a multilingual speech model set.

Owner:RPX CLEARINGHOUSE

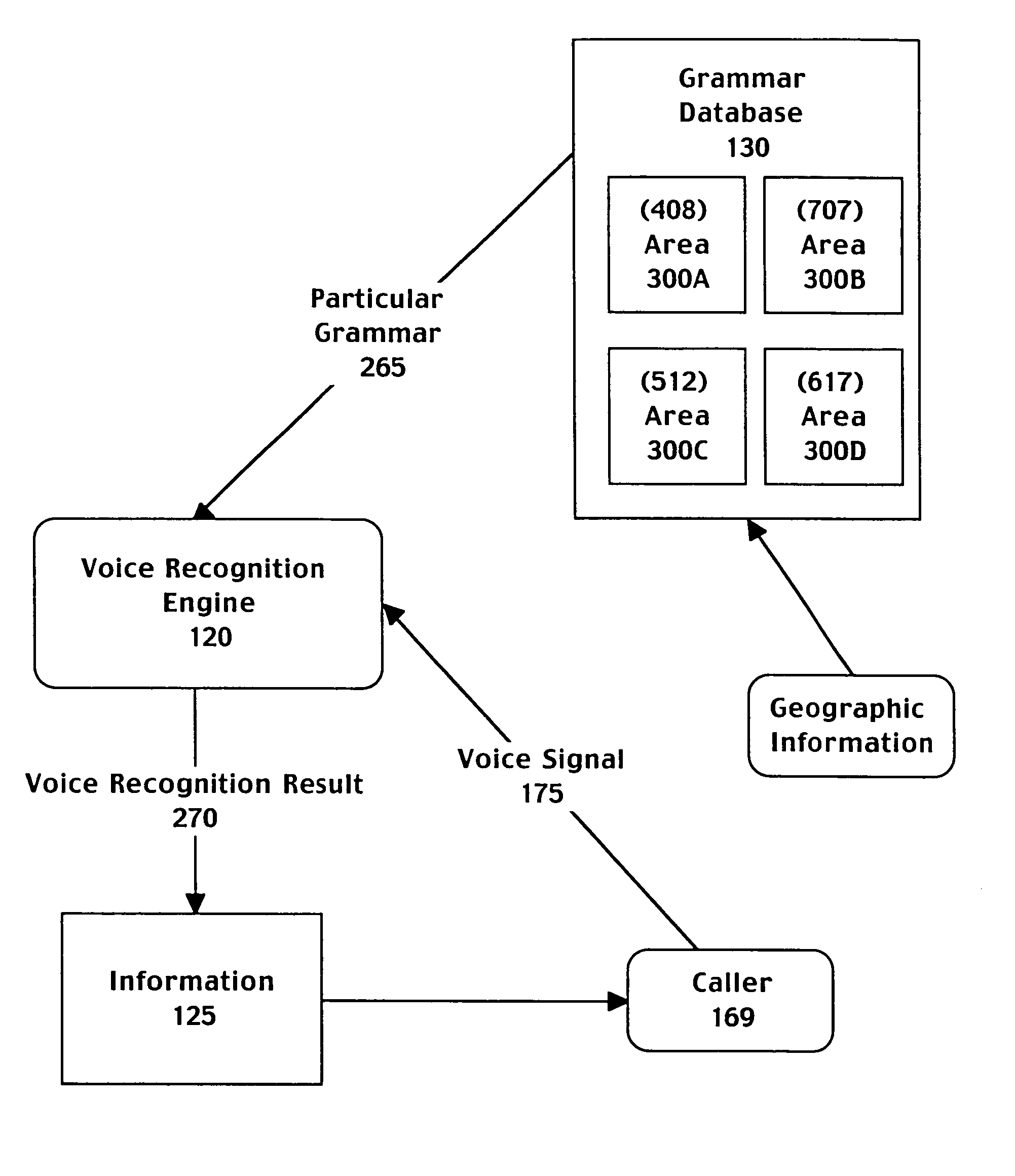

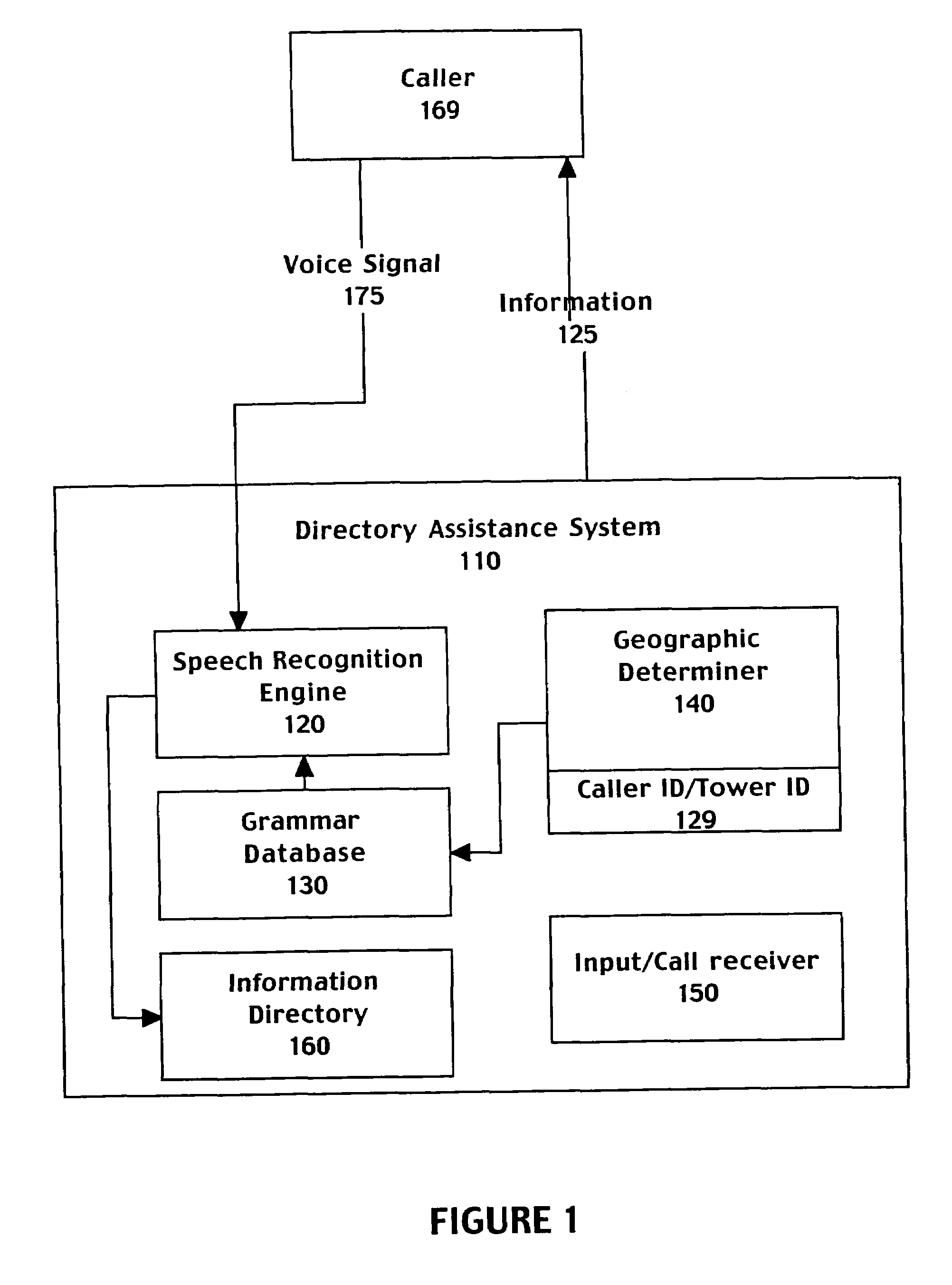

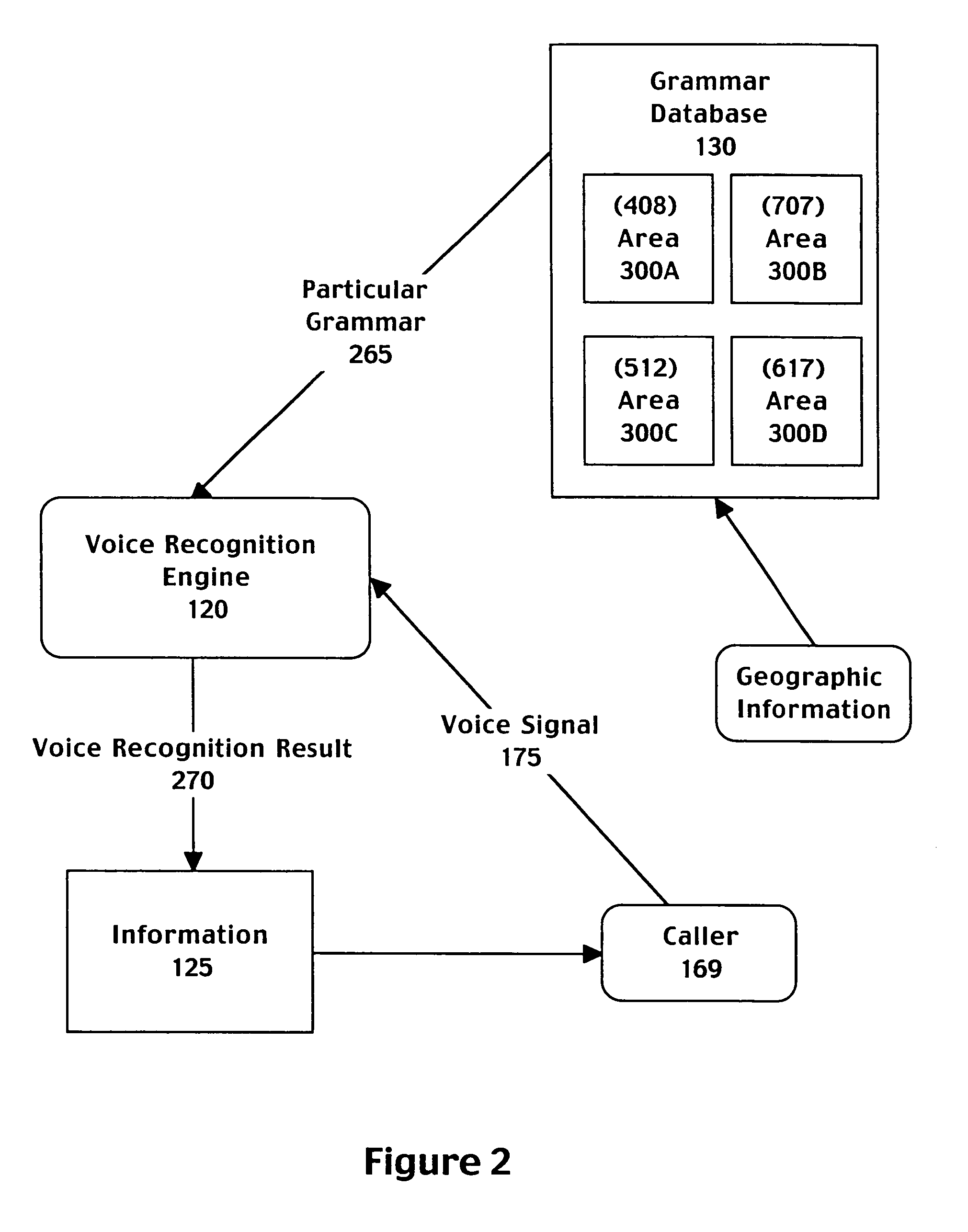

Method and system for selecting grammars based on geographic information associated with a caller

A computer implemented method for automatically processing a data request comprising accessing a voice signal from a caller, determining geographic information associated with the caller from telephone network information associated with a call made by the caller, and retrieving a speech recognition grammar customized to the geographic information associated with the caller. The method further includes recognizing the voice signal by matching the voice signal to an entry of the speech recognition grammar and providing a directory listing to the caller based on the entry of the speech recognition grammar. The selected grammar may be customized to bias speech recognition to more frequently recognize cities local to the geographic information.

Owner:MICROSOFT TECH LICENSING LLC

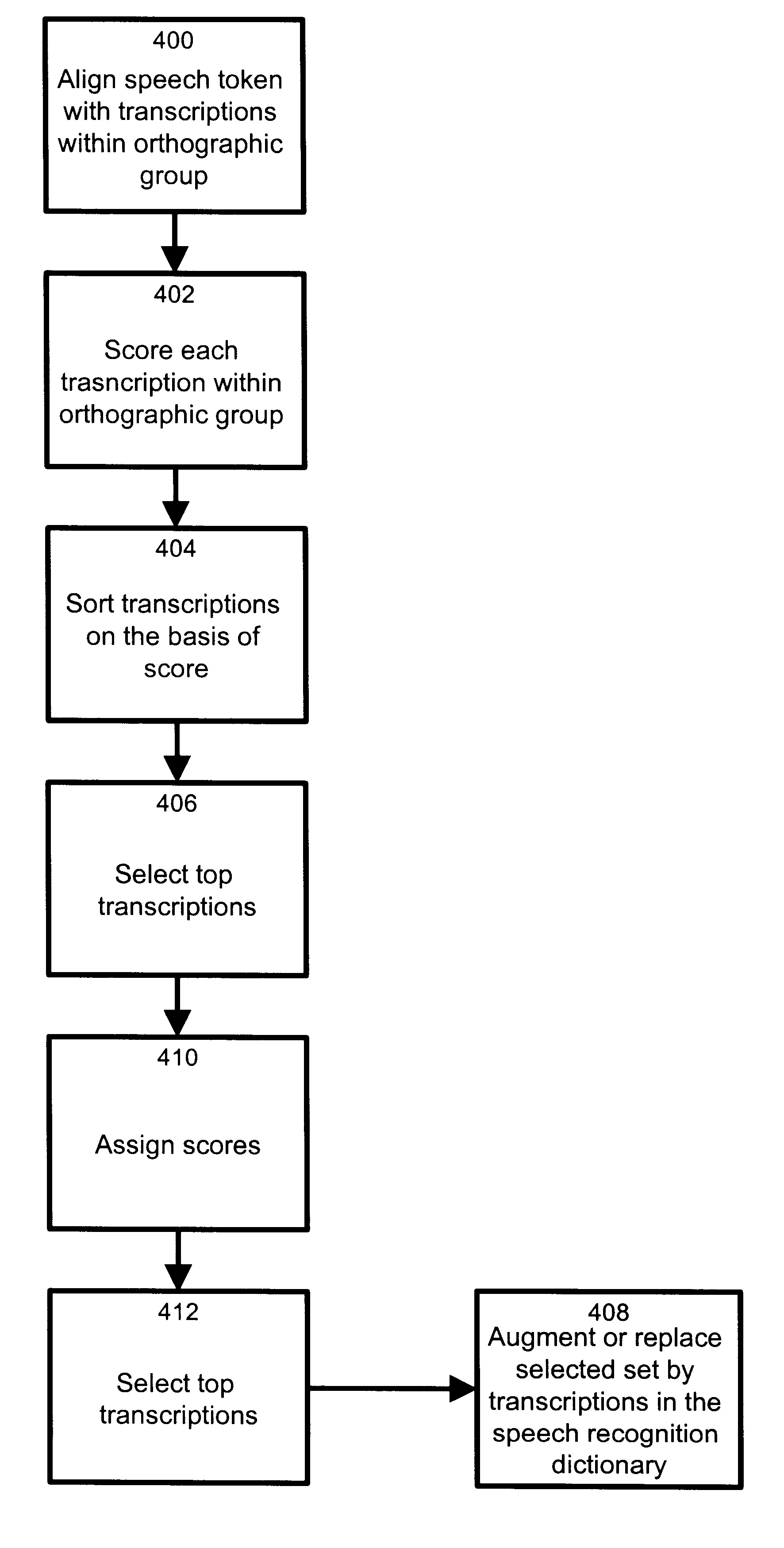

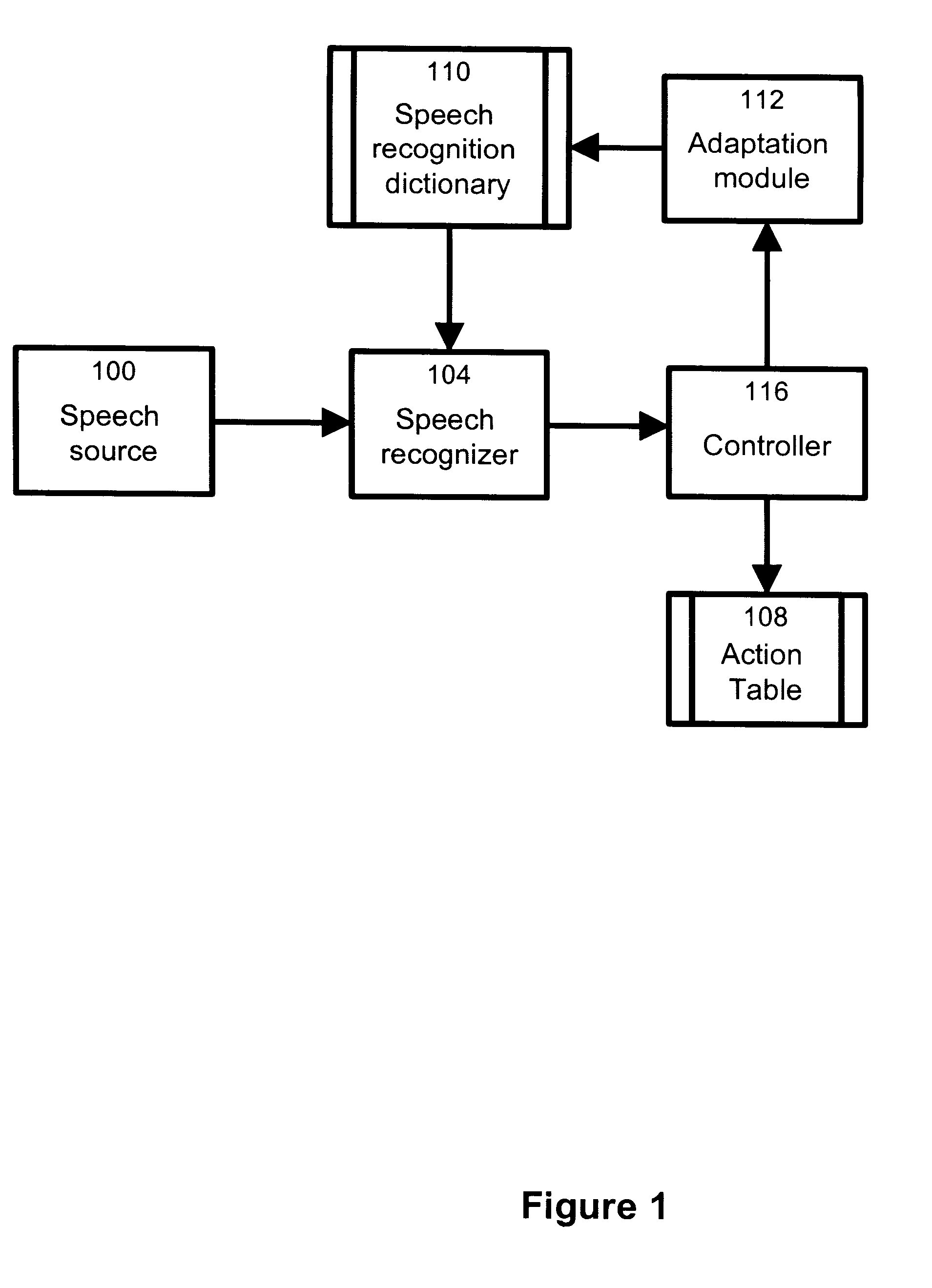

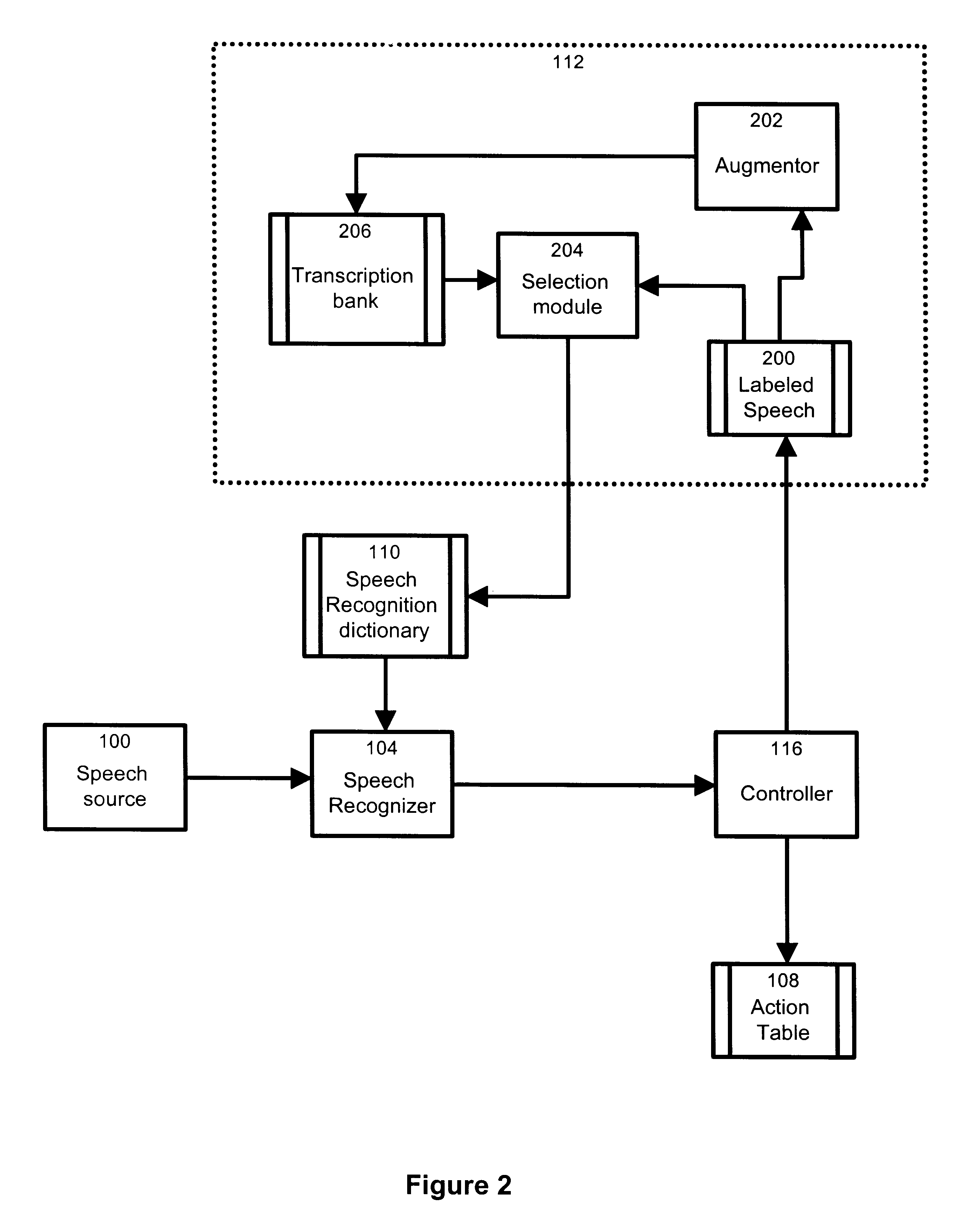

Method and apparatus for providing unsupervised adaptation of phonetic transcriptions in a speech recognition dictionary

An adaptive speech recognition system is provided including an input for receiving a signal derived from a spoken utterance indicative of a certain vocabulary item, a speech recognition dictionary, a speech recognition unit and an adaptation module. The speech recognition dictionary has a plurality of vocabulary items each being associated to a respective dictionary transcription group. The speech recognition unit is in an operative relationship with the speech recognition dictionary and selects a certain vocabulary item from the speech recognition dictionary as being a likely match to the signal received at the input. The results of the speech recognition process are provided to the adaptation module. The adaptation module includes a transcriptions bank having a plurality of orthographic groups, each including a plurality of transcriptions associated with a common vocabulary item. A transcription selector module in the adaptation module retrieves a given orthographic group from the transcriptions bank on a basis of the vocabulary item recognized by the speech recognition unit. The transcription selector module processes the given orthographic group on the basis of the signal received at the input to select a certain transcription from the transcriptions bank. The adaptation module then modifies a dictionary transcription group corresponding to the vocabulary item selected as being a likely match to the signal received at the input on the basis of the selected certain transcription.

Owner:AVAYA INC

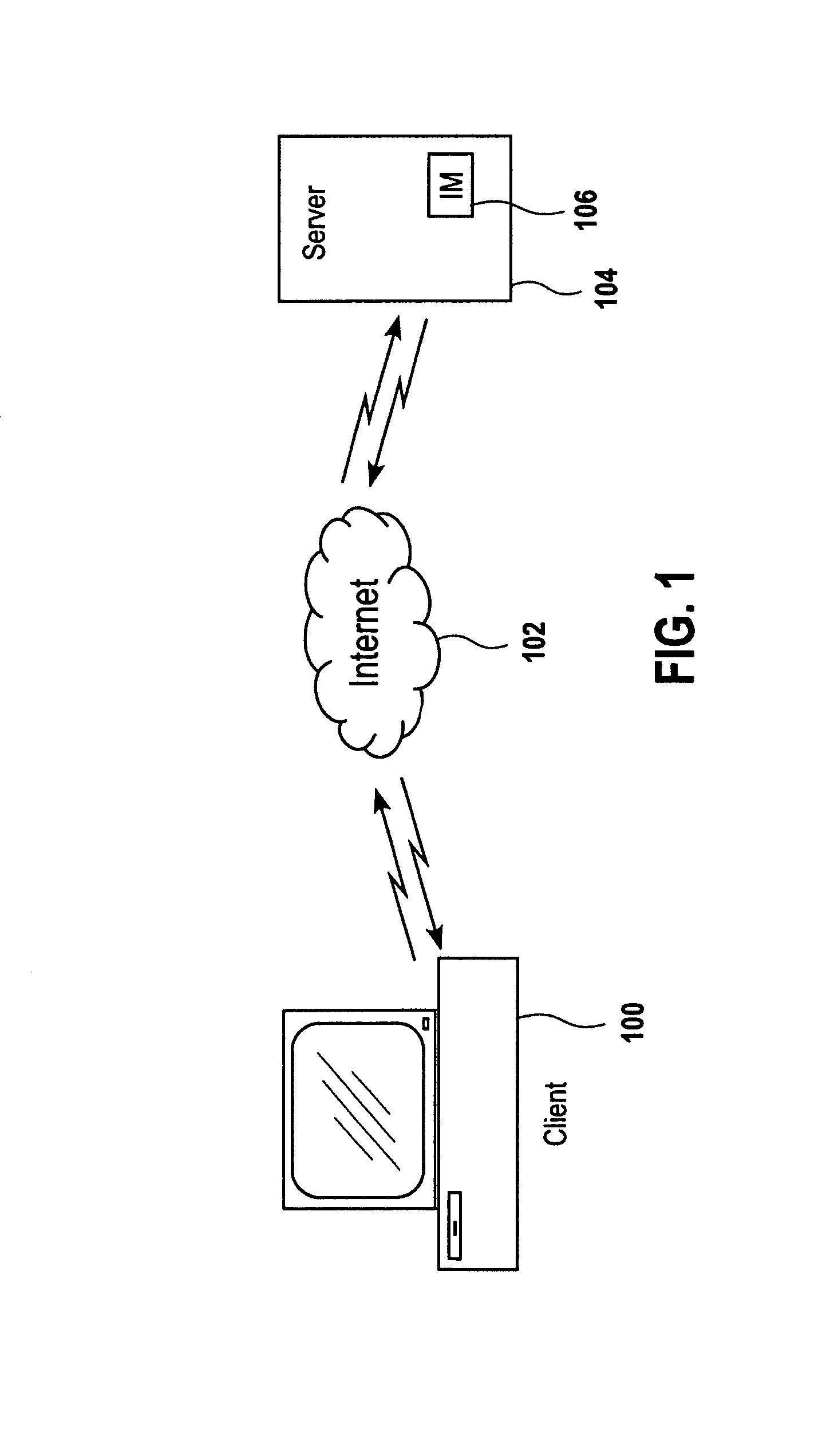

Local storage and use of search results for voice-enabled mobile communications devices

InactiveUS20080154612A1Improve abilitiesImprove responsivenessDevices with voice recognitionSubstation equipmentWireless dataSpeech identification

A method implemented on a mobile device that includes speech recognition functionality involves: receiving an utterance from a user of the mobile device, the utterance including a spoken search request; recognizing that the utterance includes a spoken search request; sending a representation of the spoken search request to a remote server over a wireless data connection; receiving search results over the wireless data connection that are responsive to the search request; storing the results on the mobile device; receiving a subsequent search request; performing a subsequent search responsive to the subsequent search request to generate subsequent search results, the subsequent search including searching the stored search results; and presenting the subsequent results on the mobile device. The method also involves indexing the stored results according to the user's search request, enhancing the device's ability to recognize frequently requested searches, and pre-loading the device with results corresponding to certain frequently requested searches.

Owner:CERENCE OPERATING CO

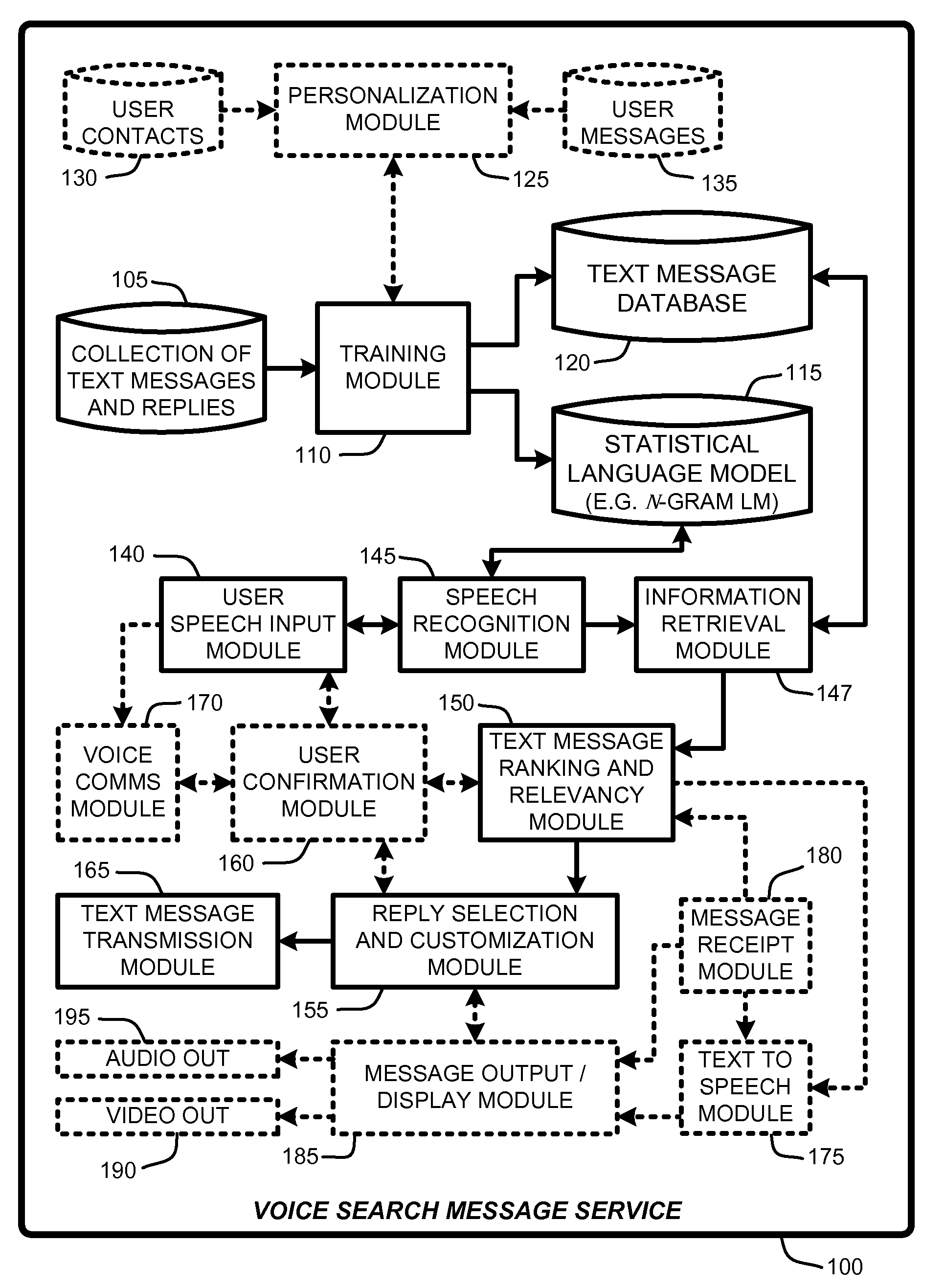

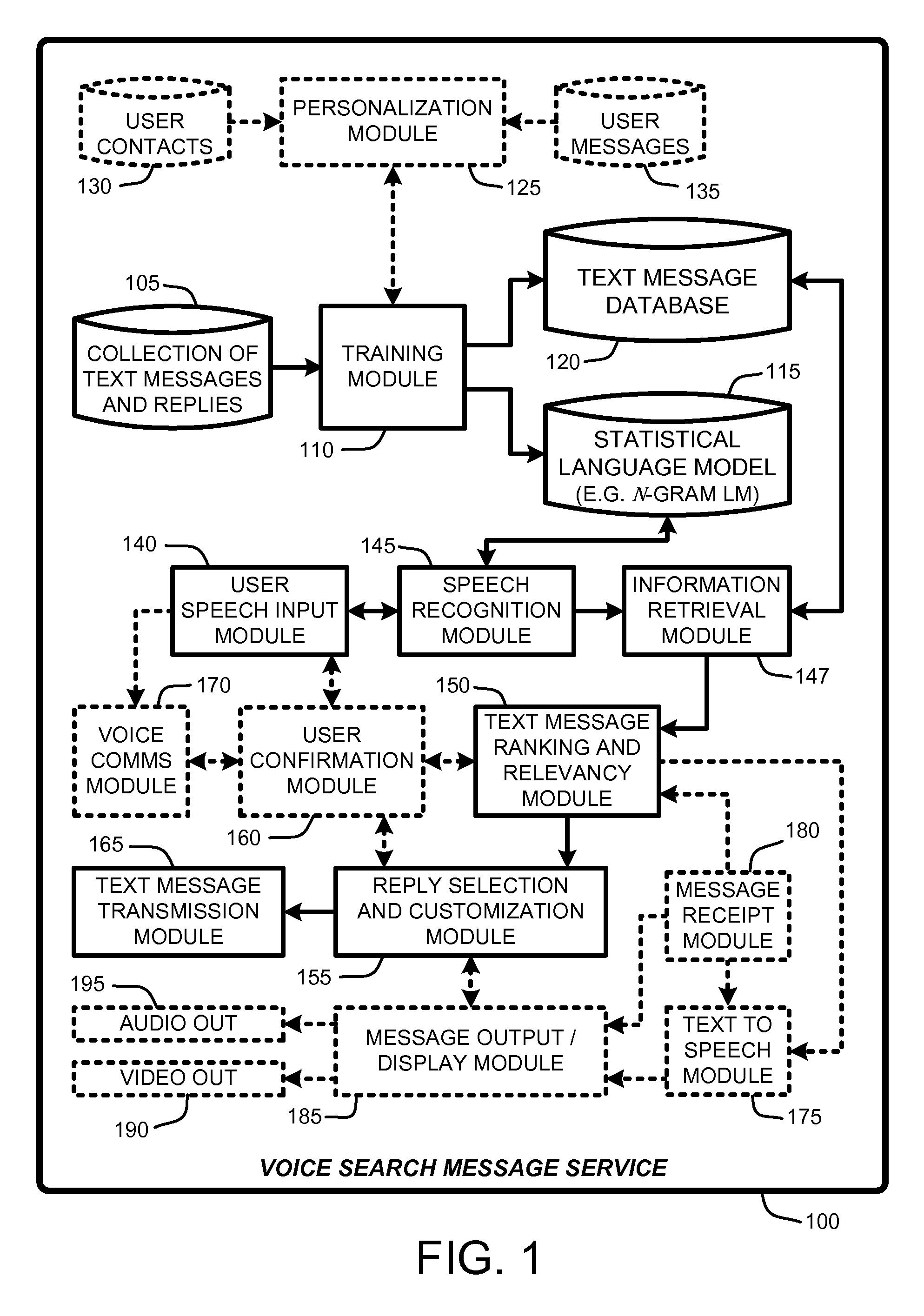

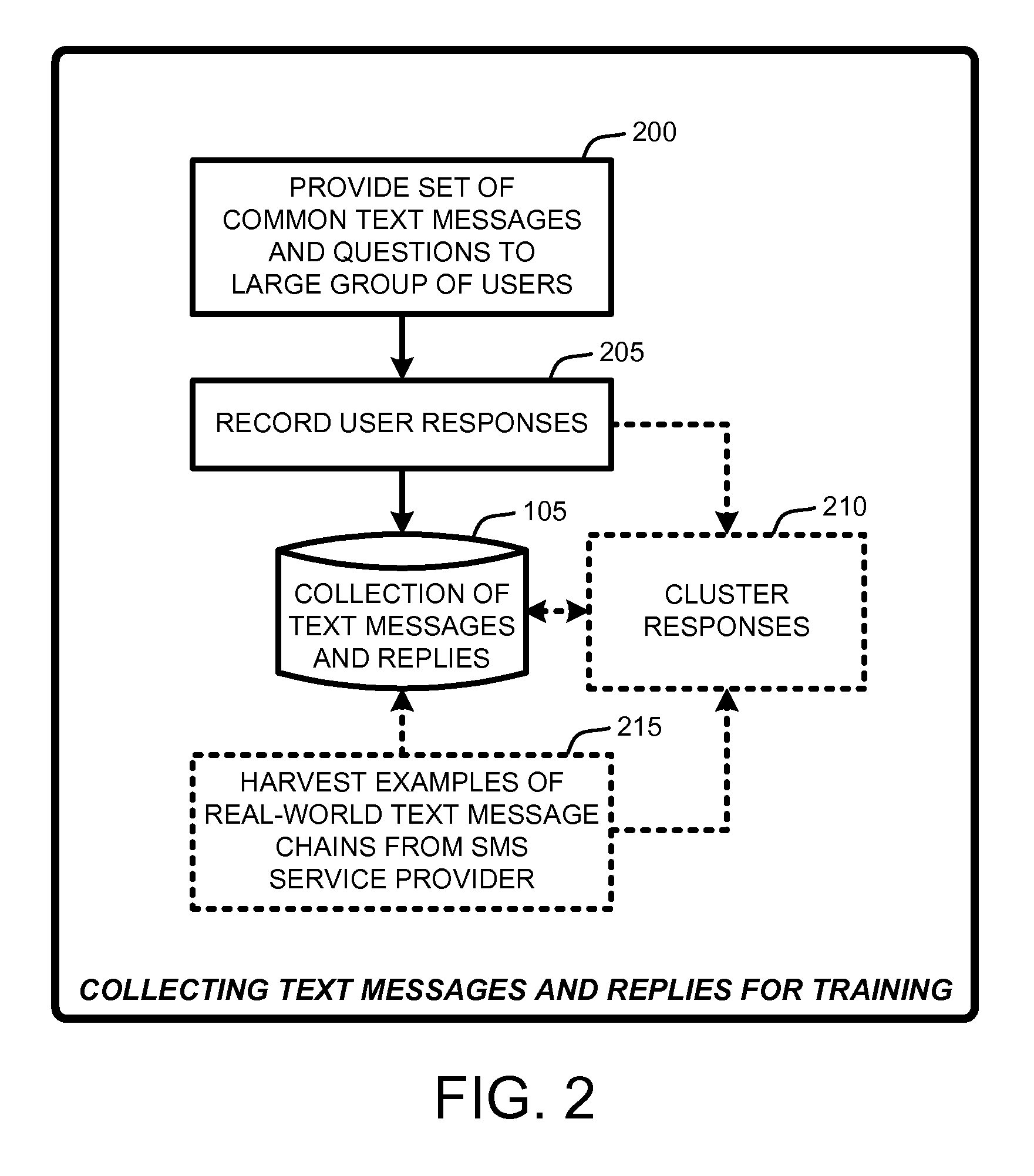

Replying to text messages via automated voice search techniques

Owner:MICROSOFT TECH LICENSING LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com