Patents

Literature

2189 results about "Utterance" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In spoken language analysis, an utterance is the smallest unit of speech. It is a continuous piece of speech beginning and ending with a clear pause. In the case of oral languages, it is generally, but not always, bounded by silence. Utterances do not exist in written language, however, only their representations do. They can be represented and delineated in written language in many ways.

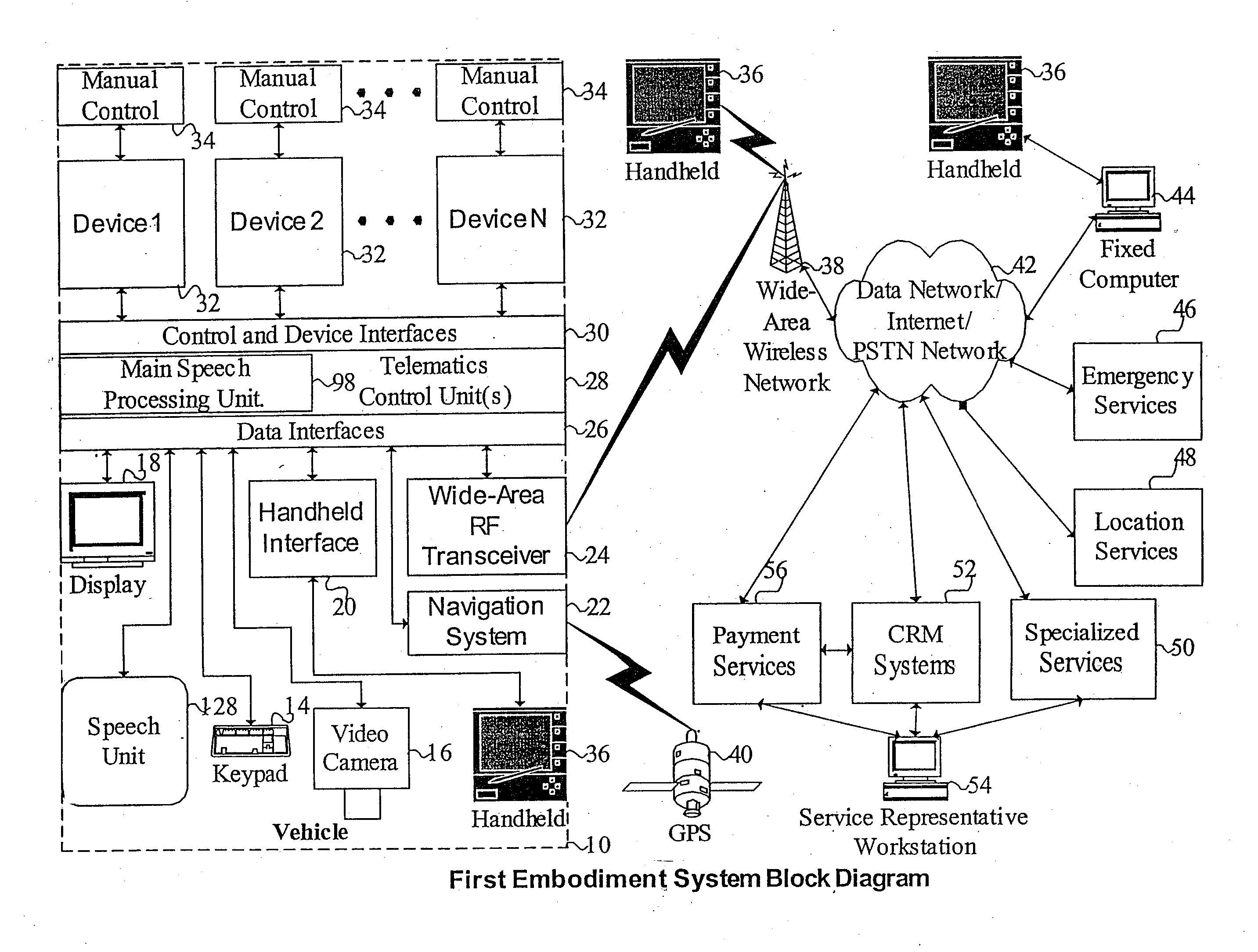

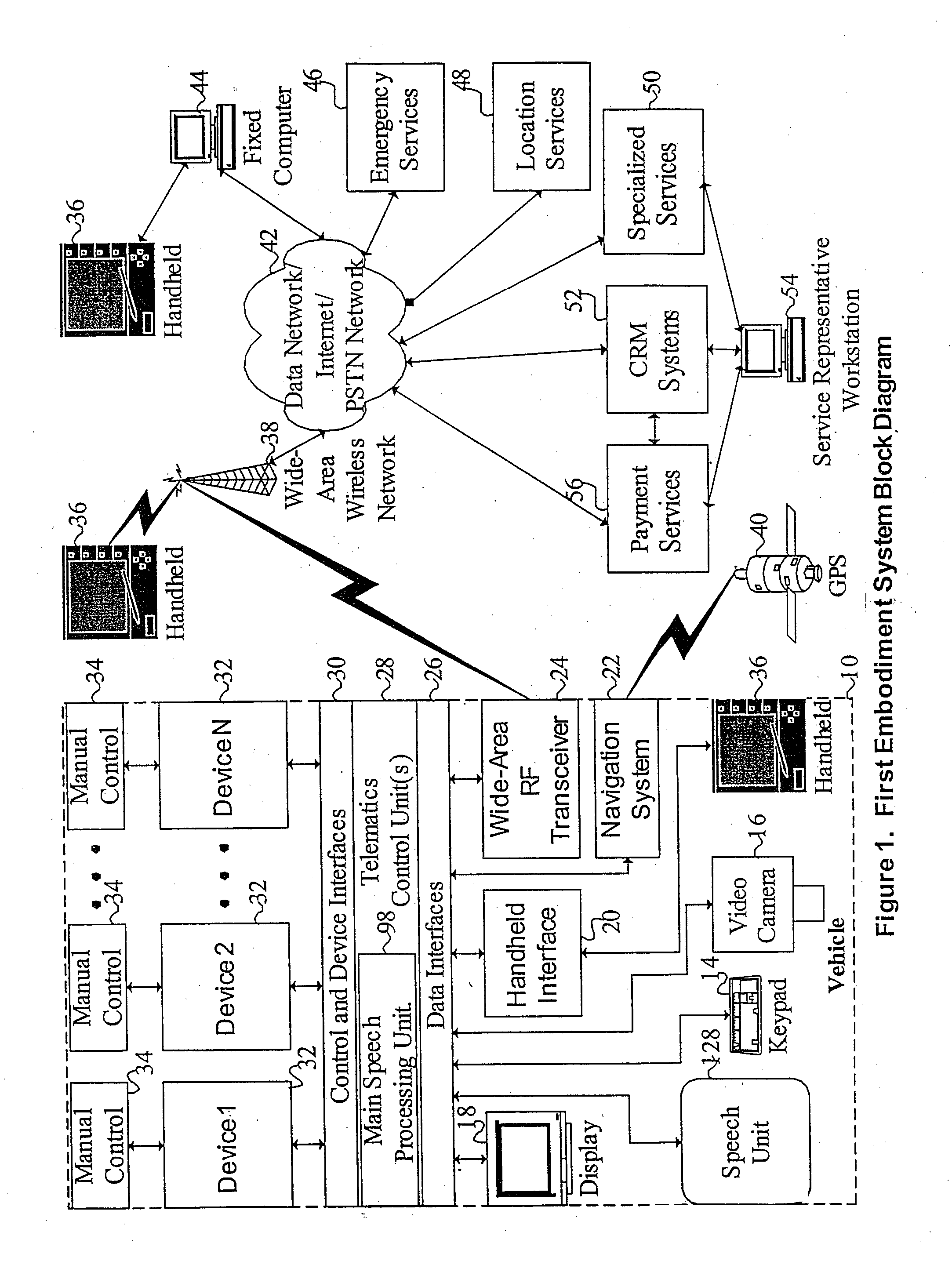

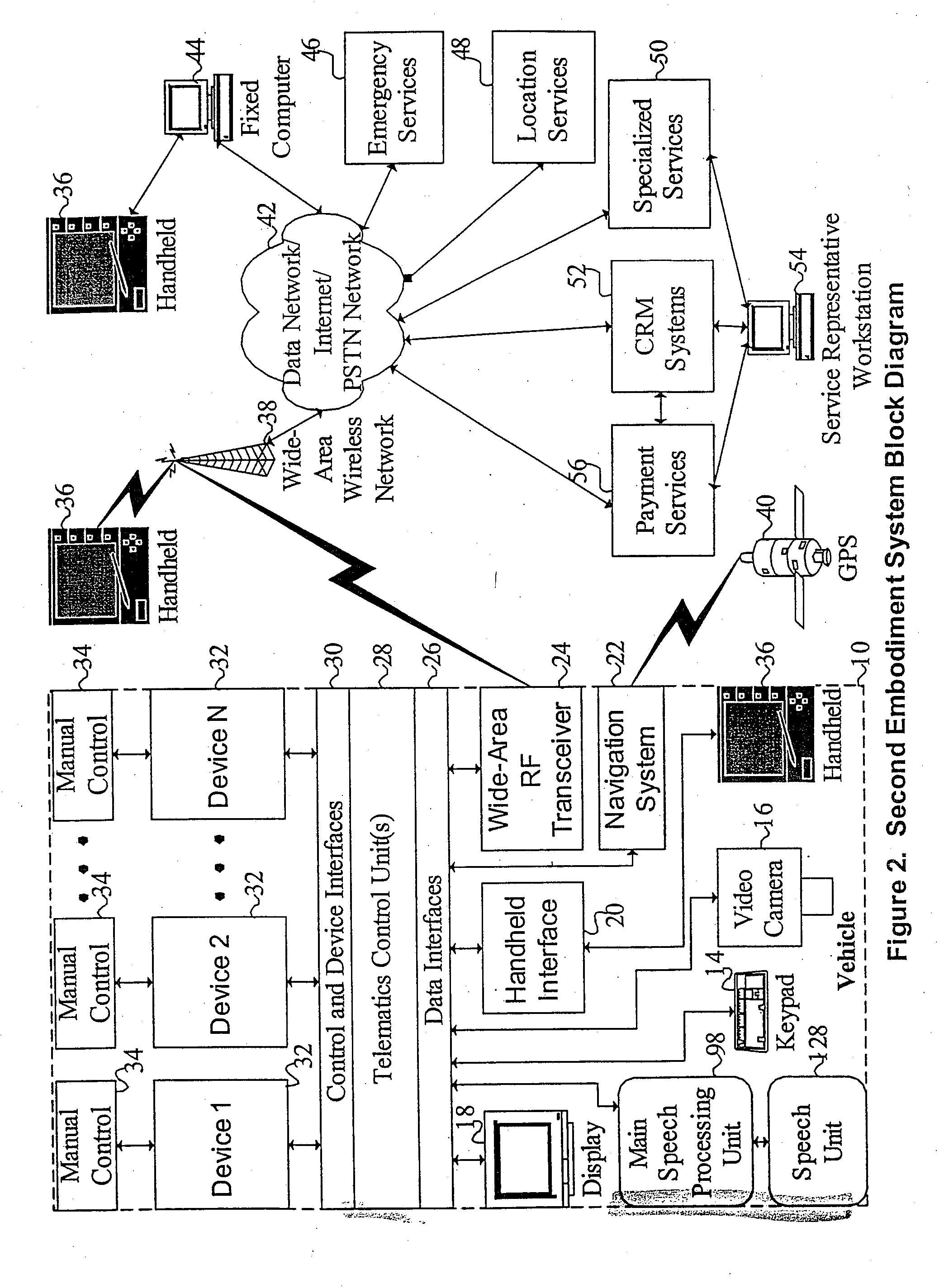

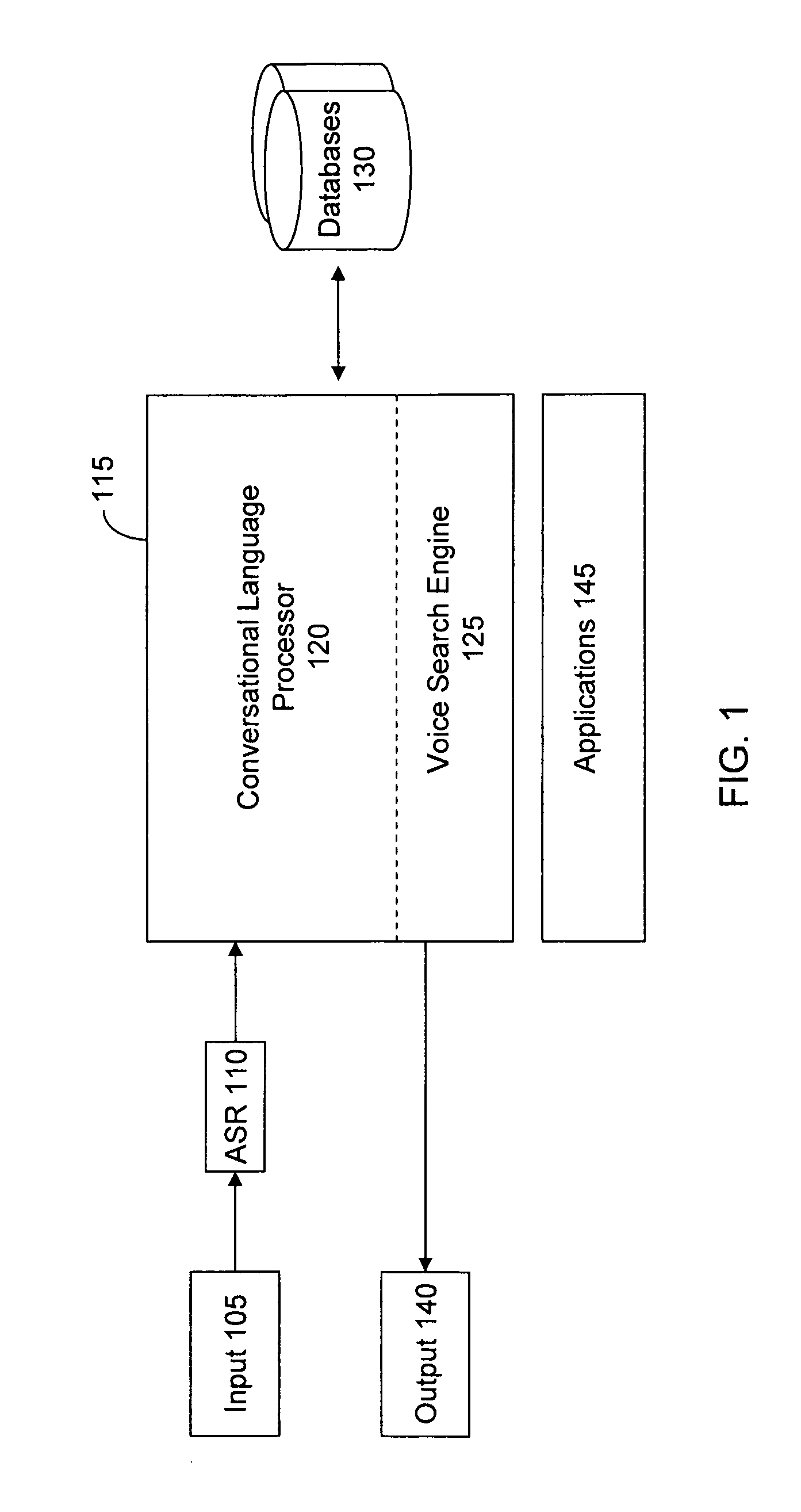

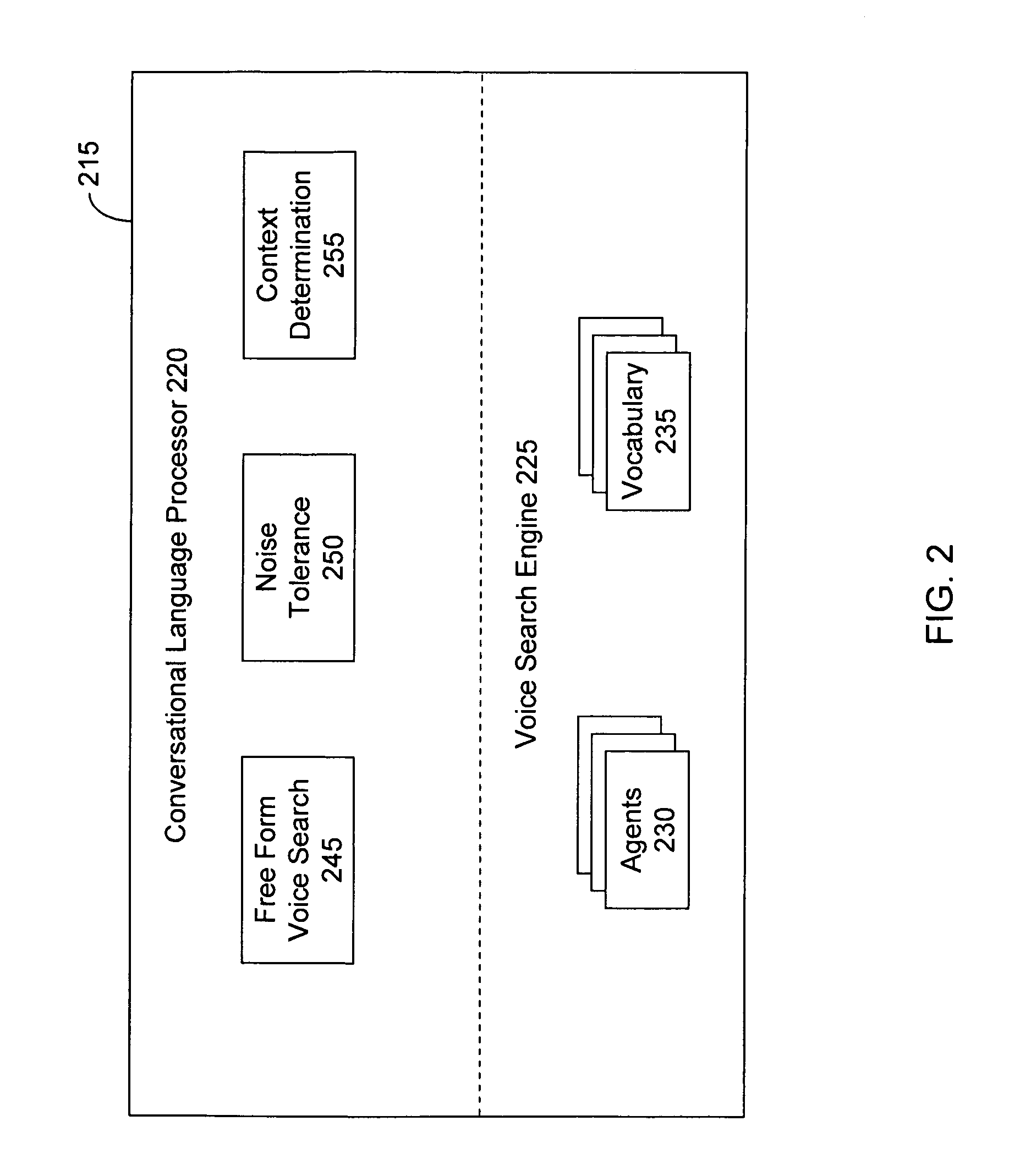

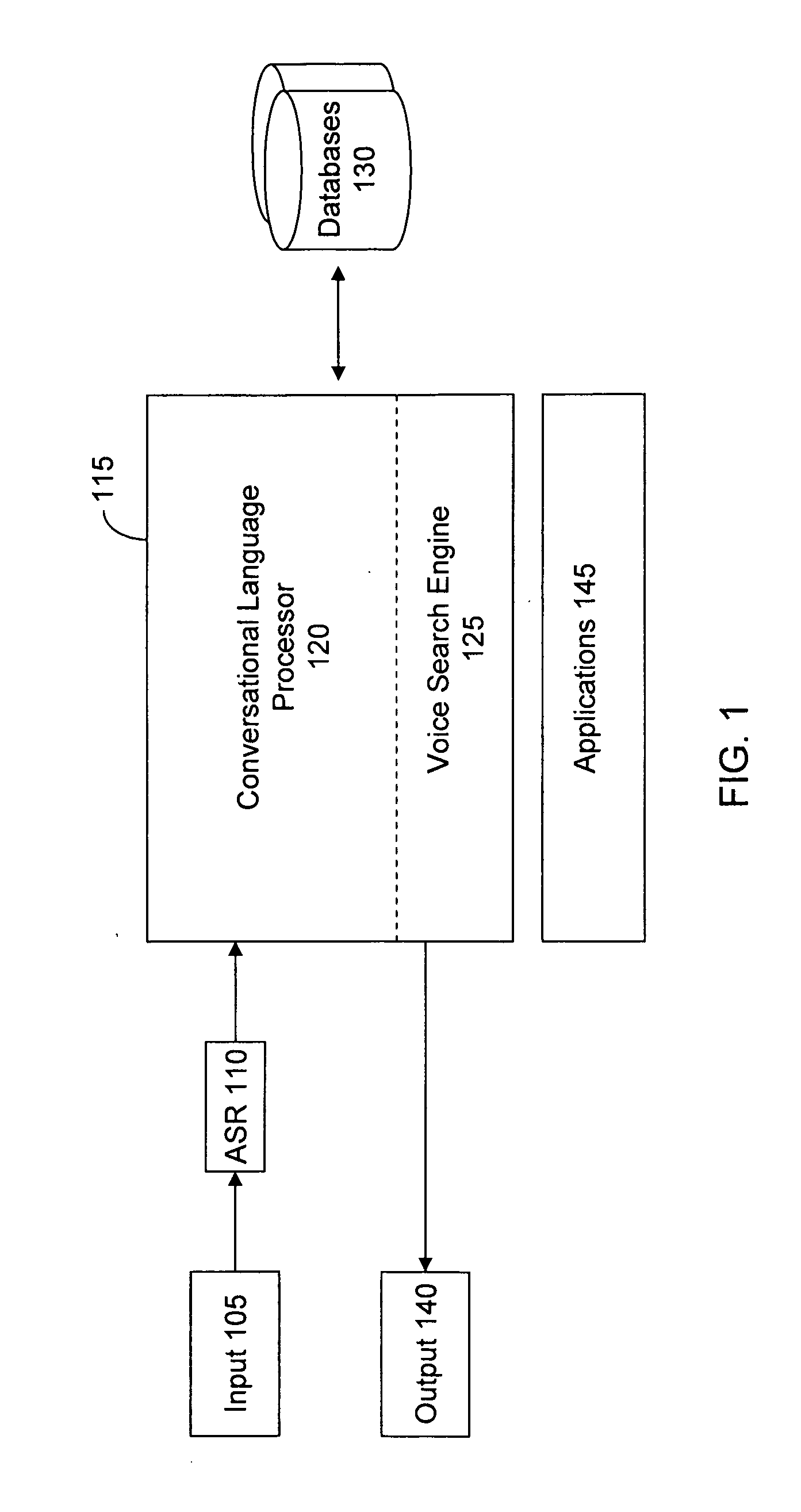

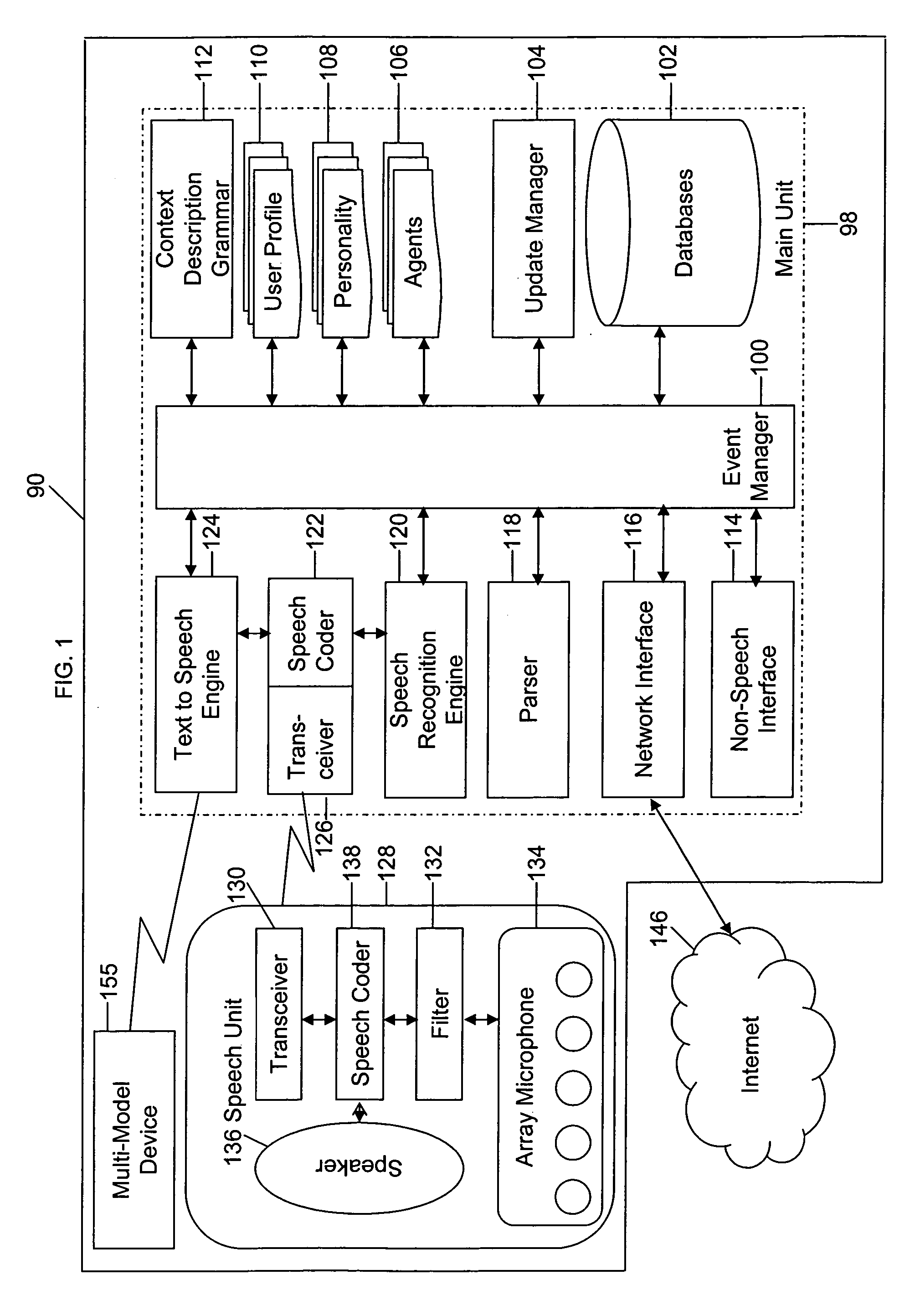

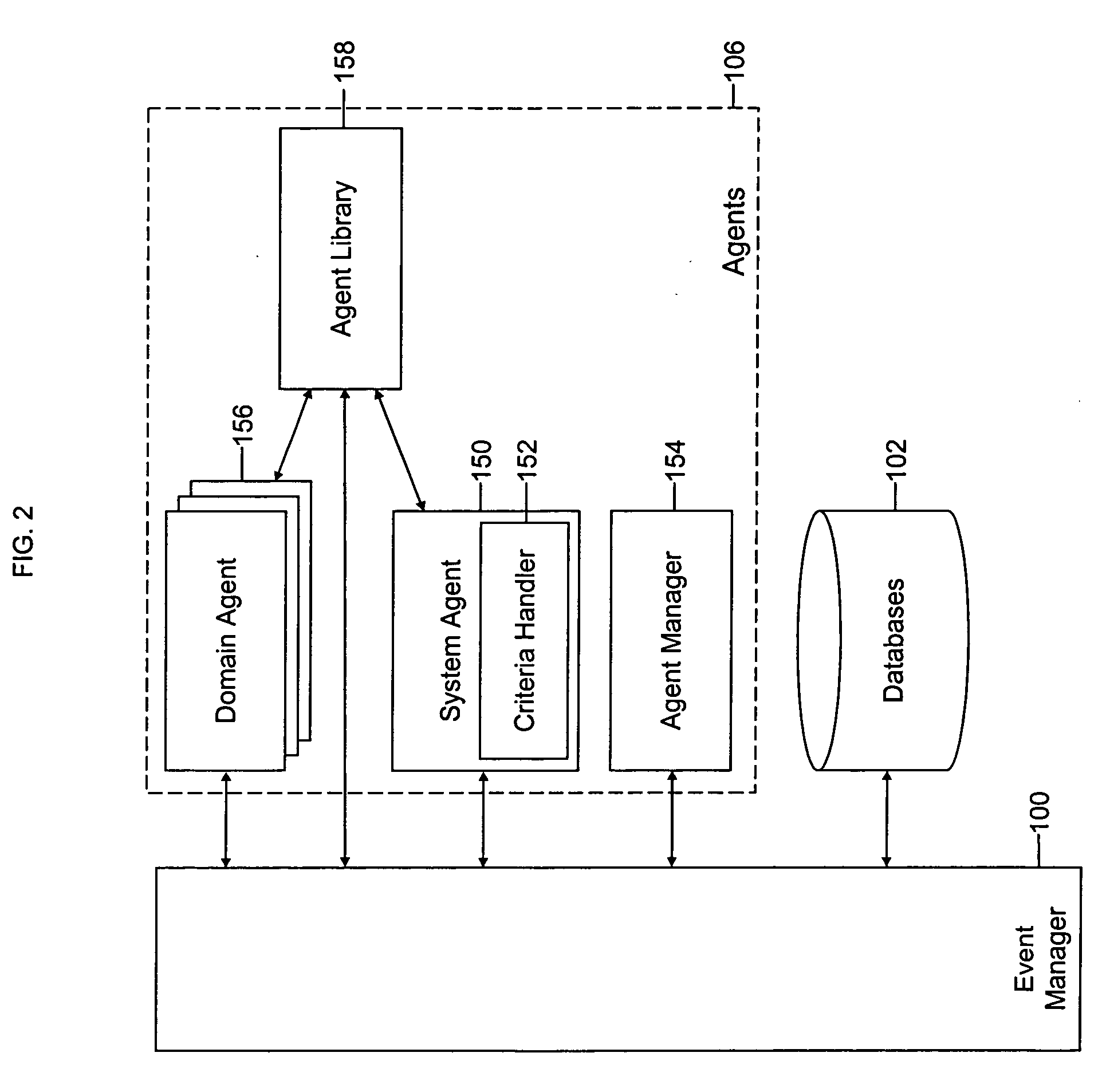

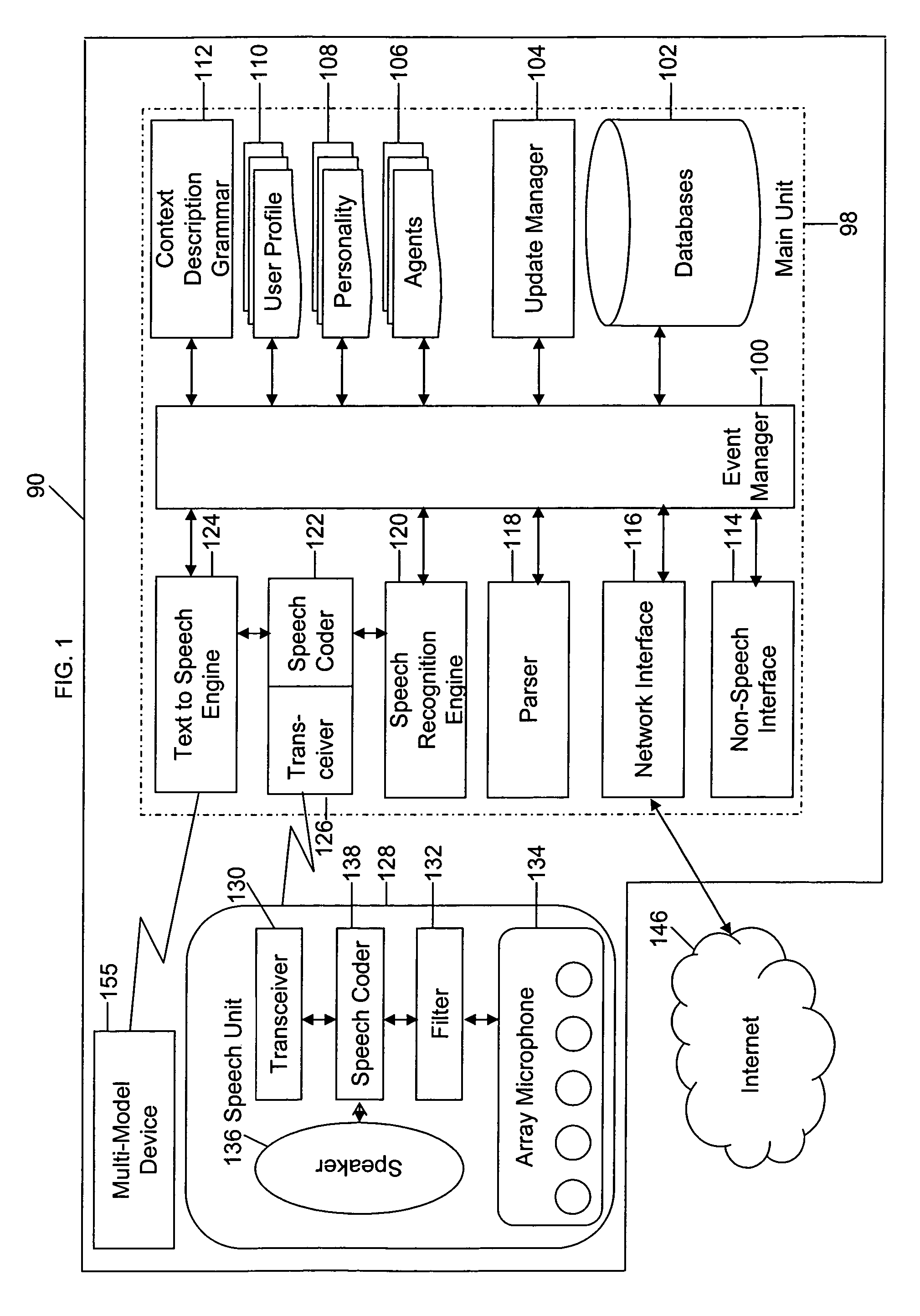

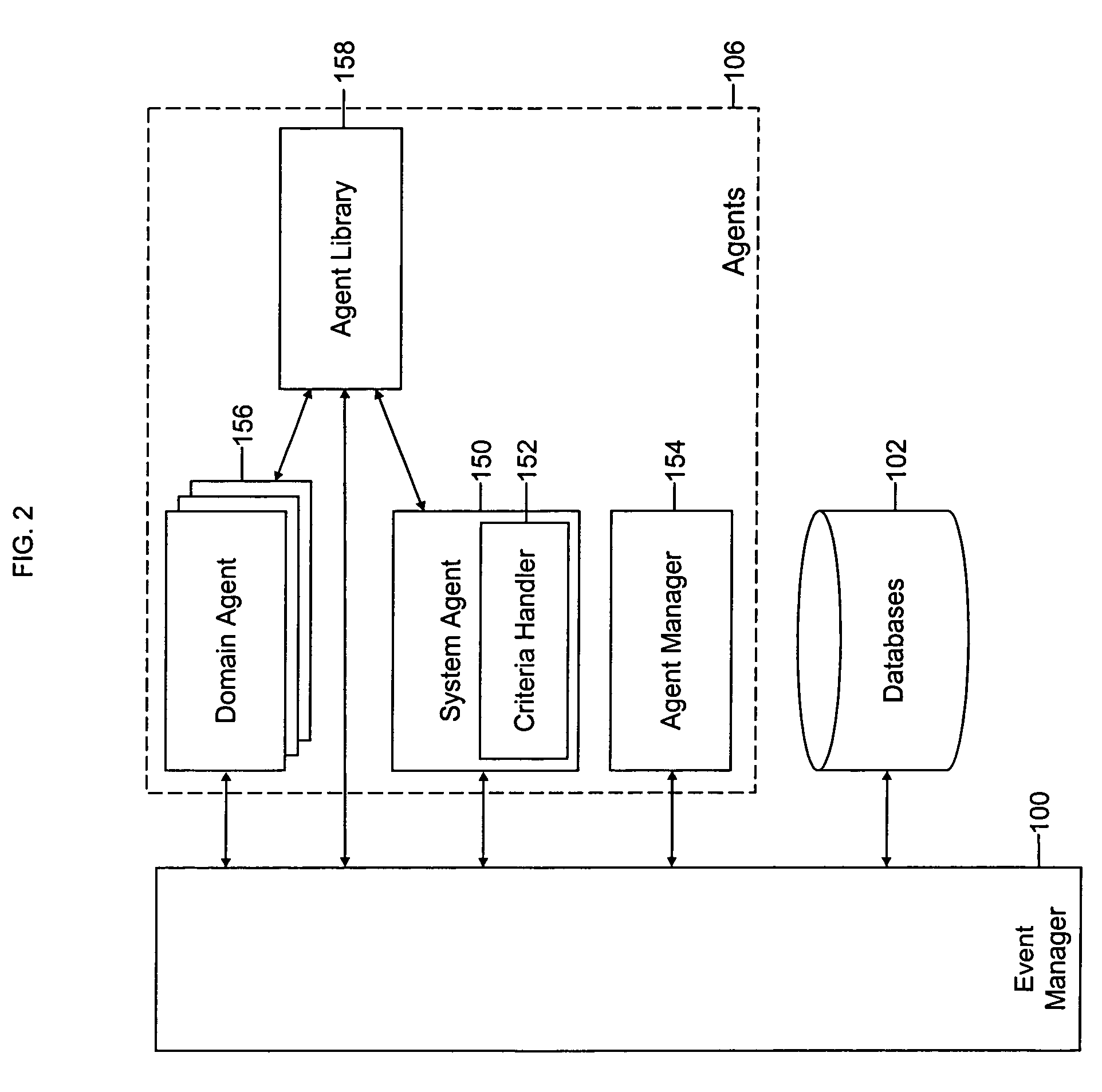

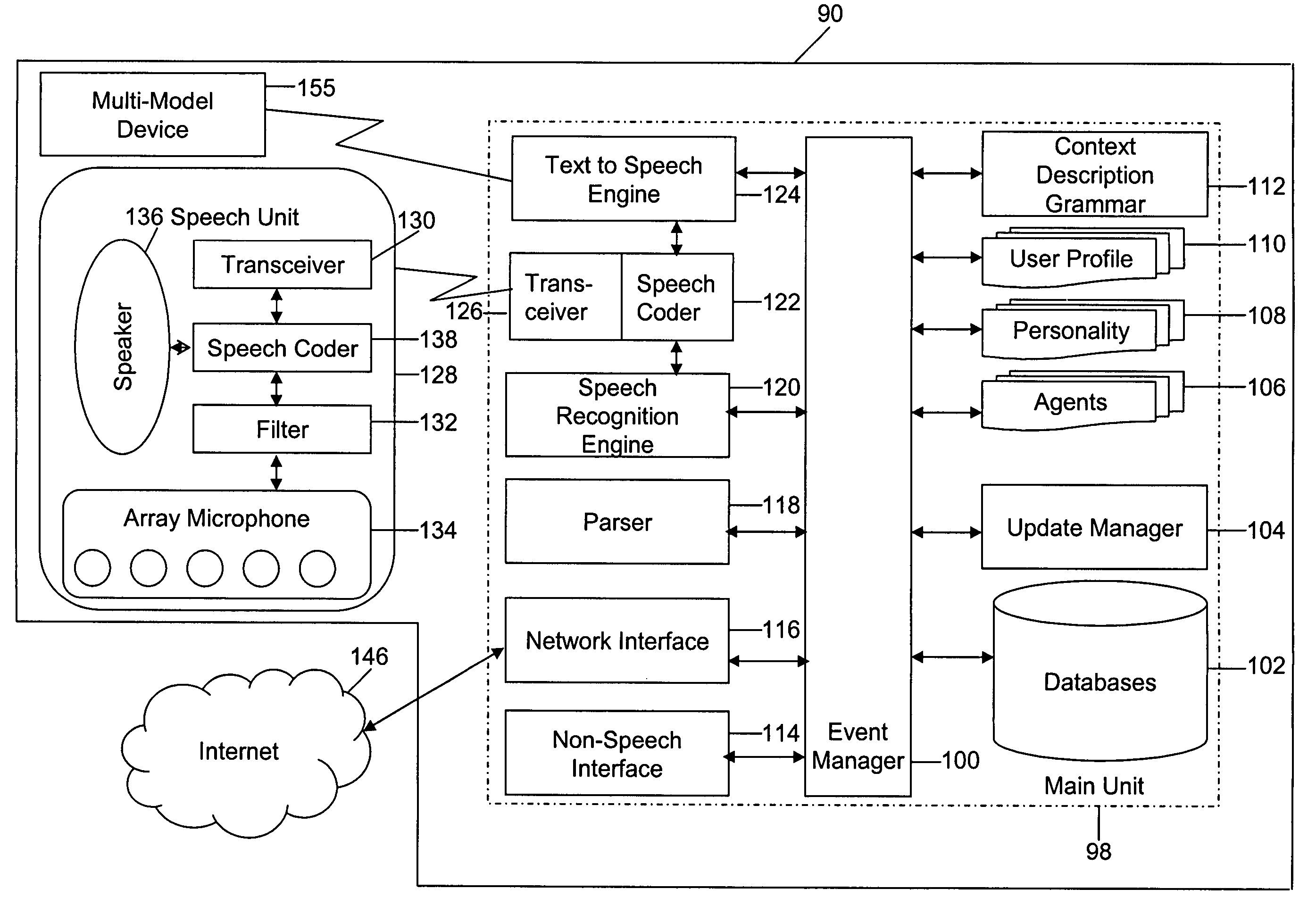

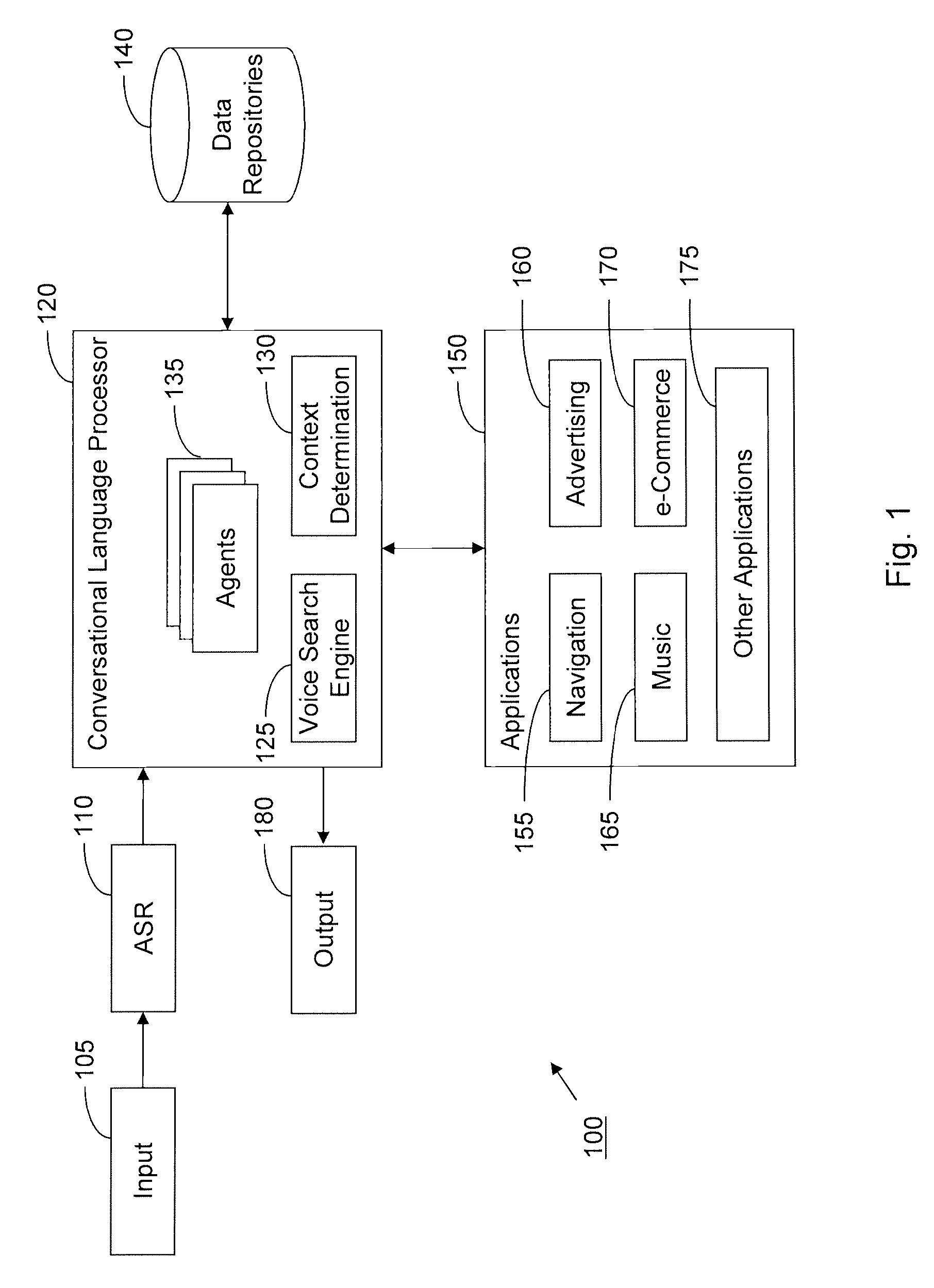

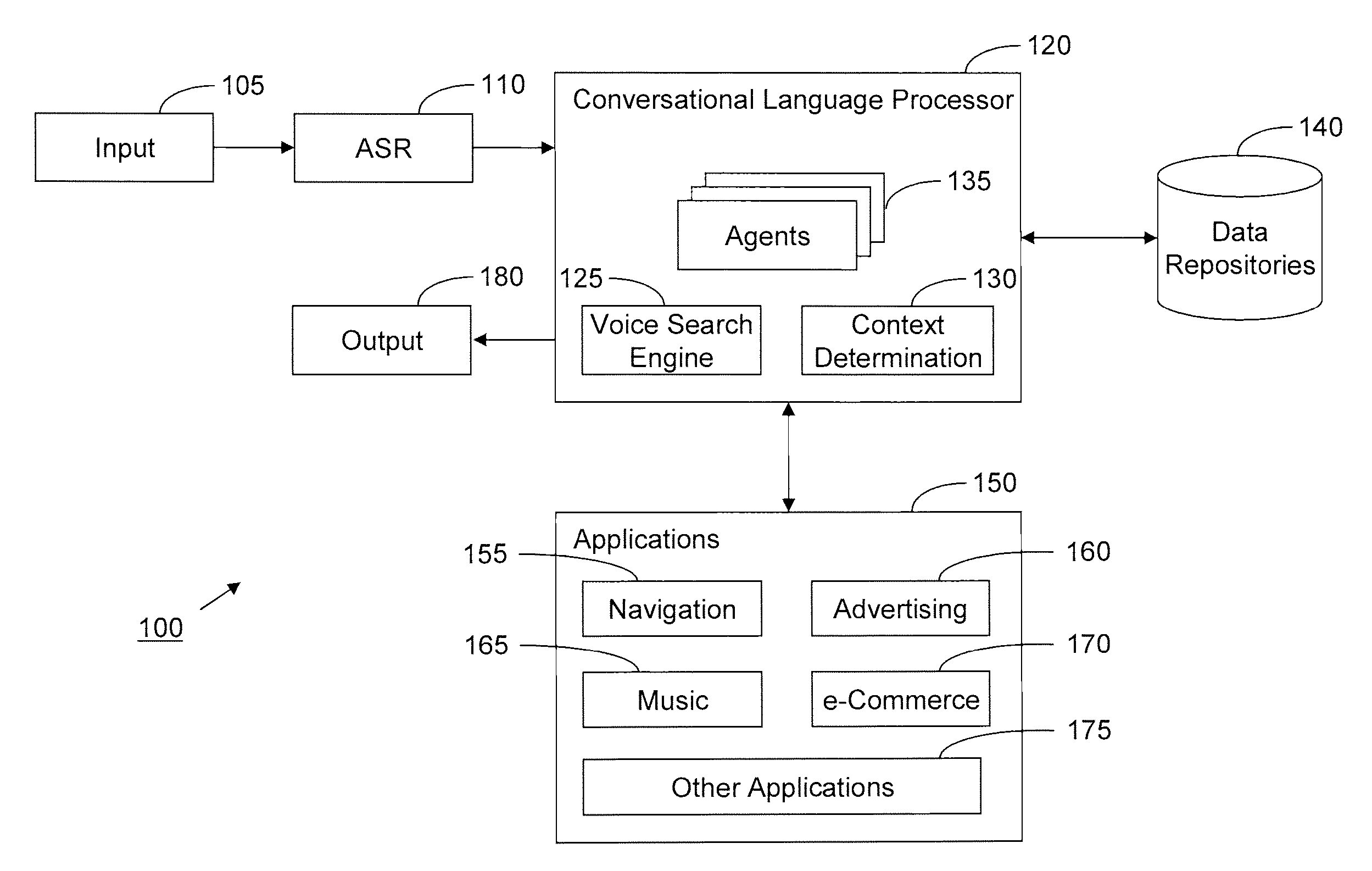

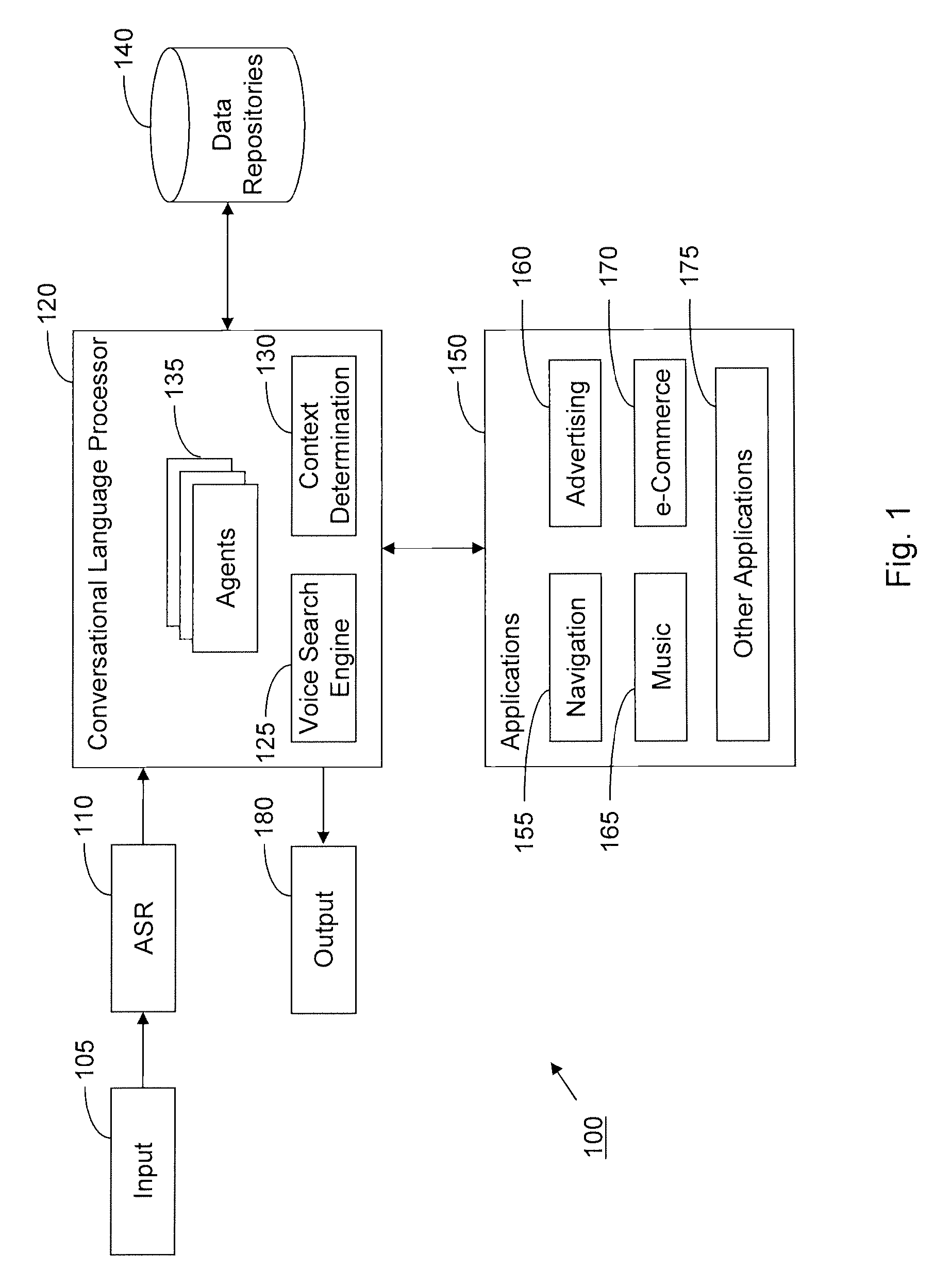

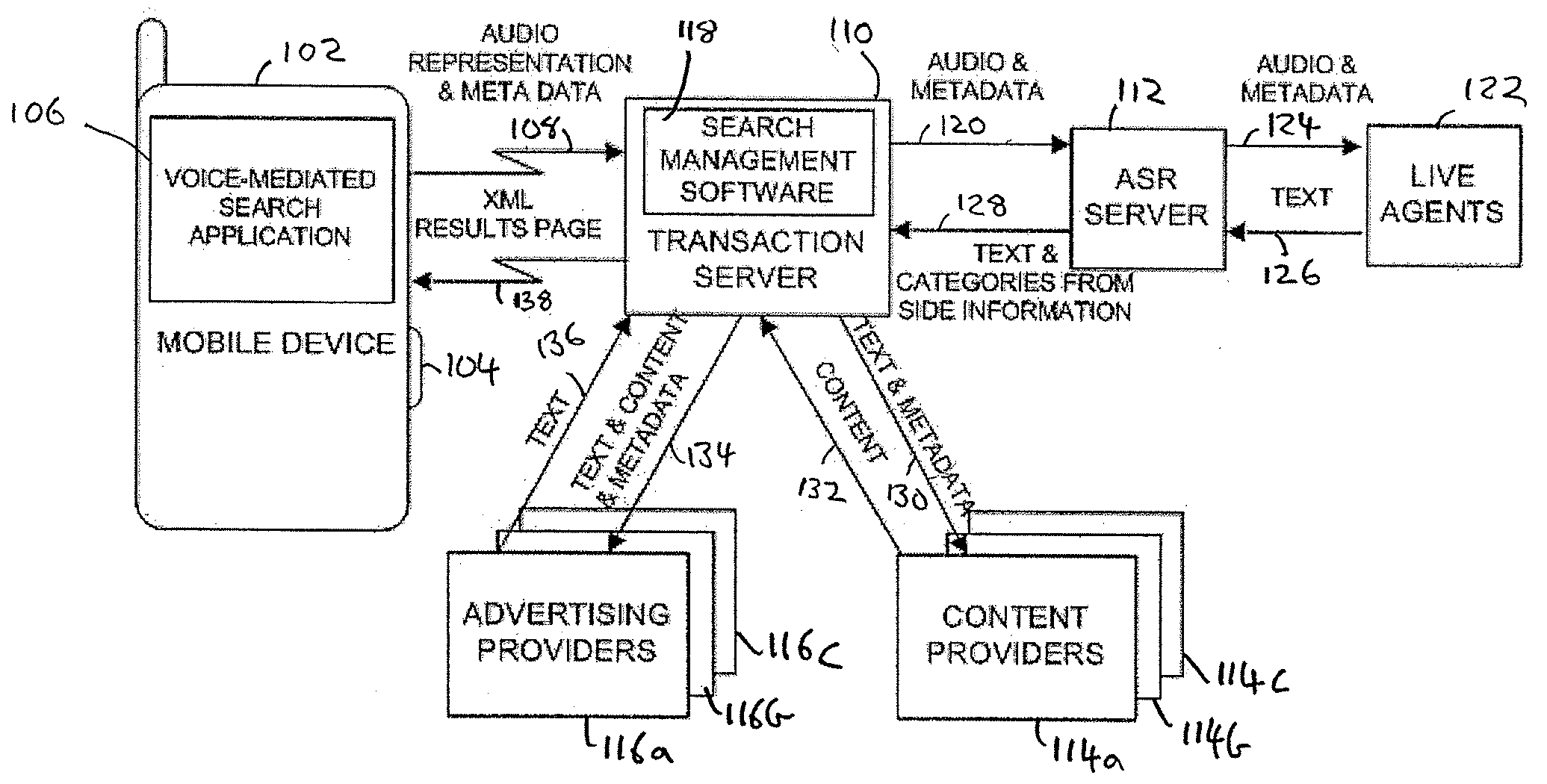

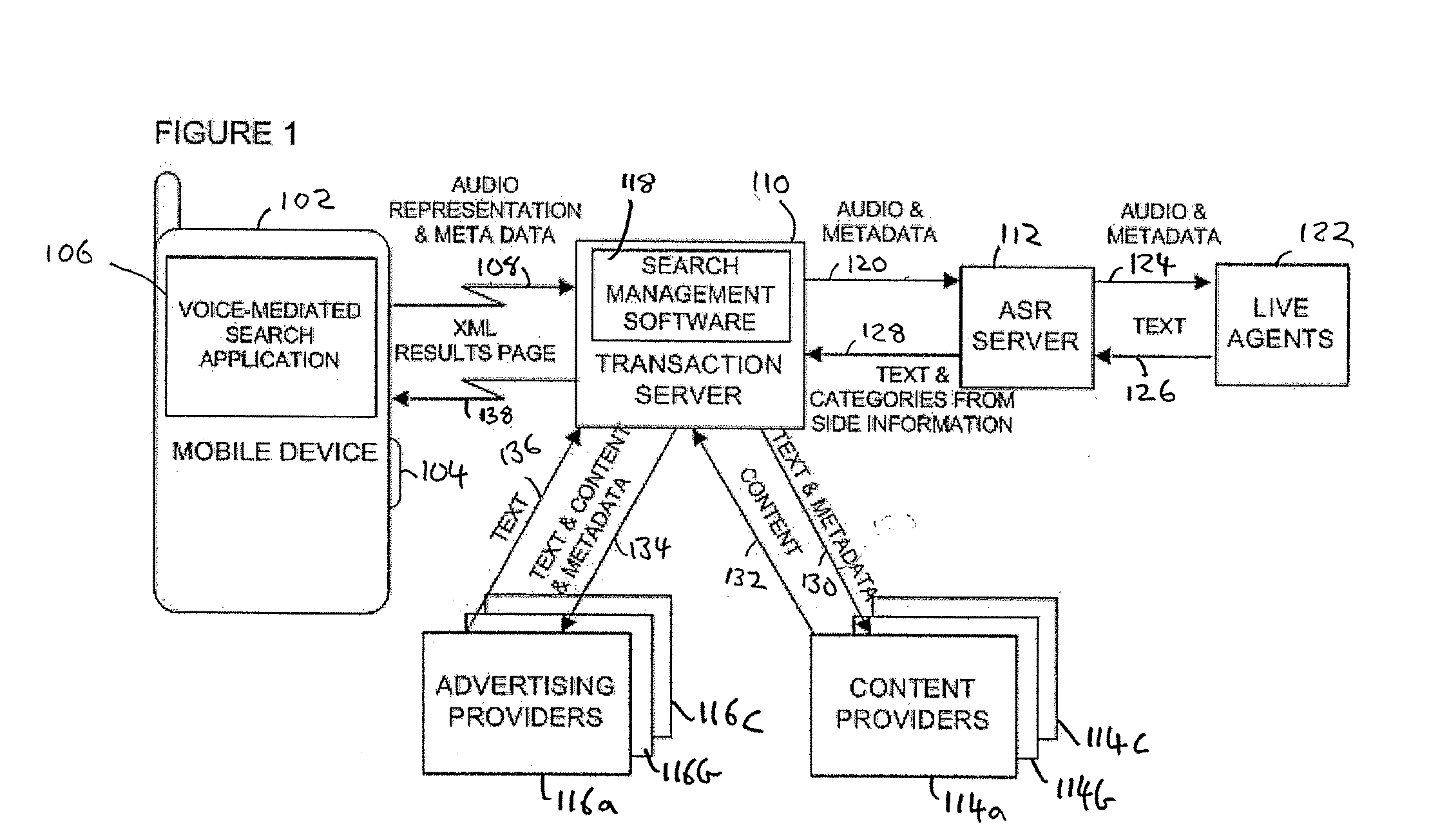

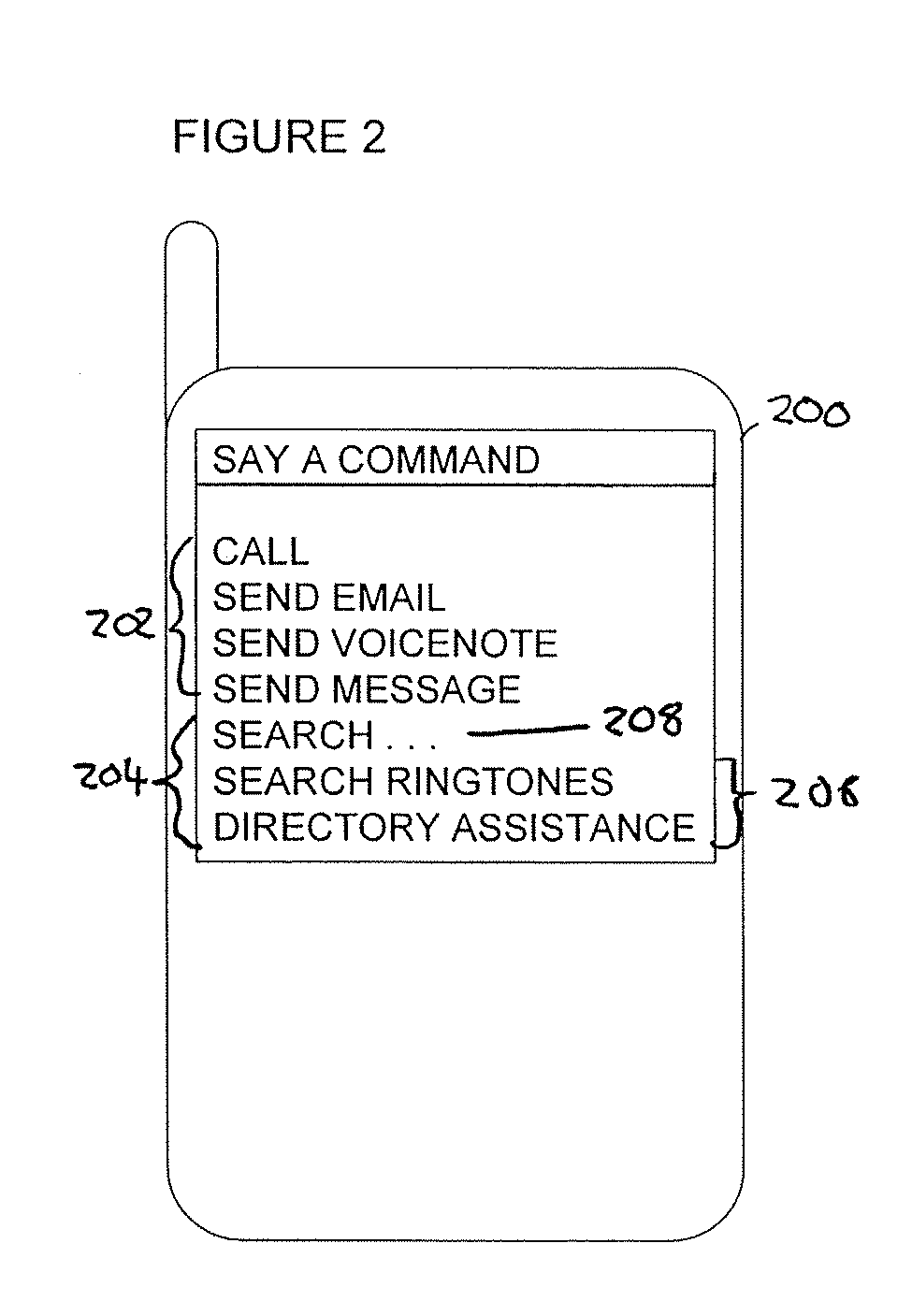

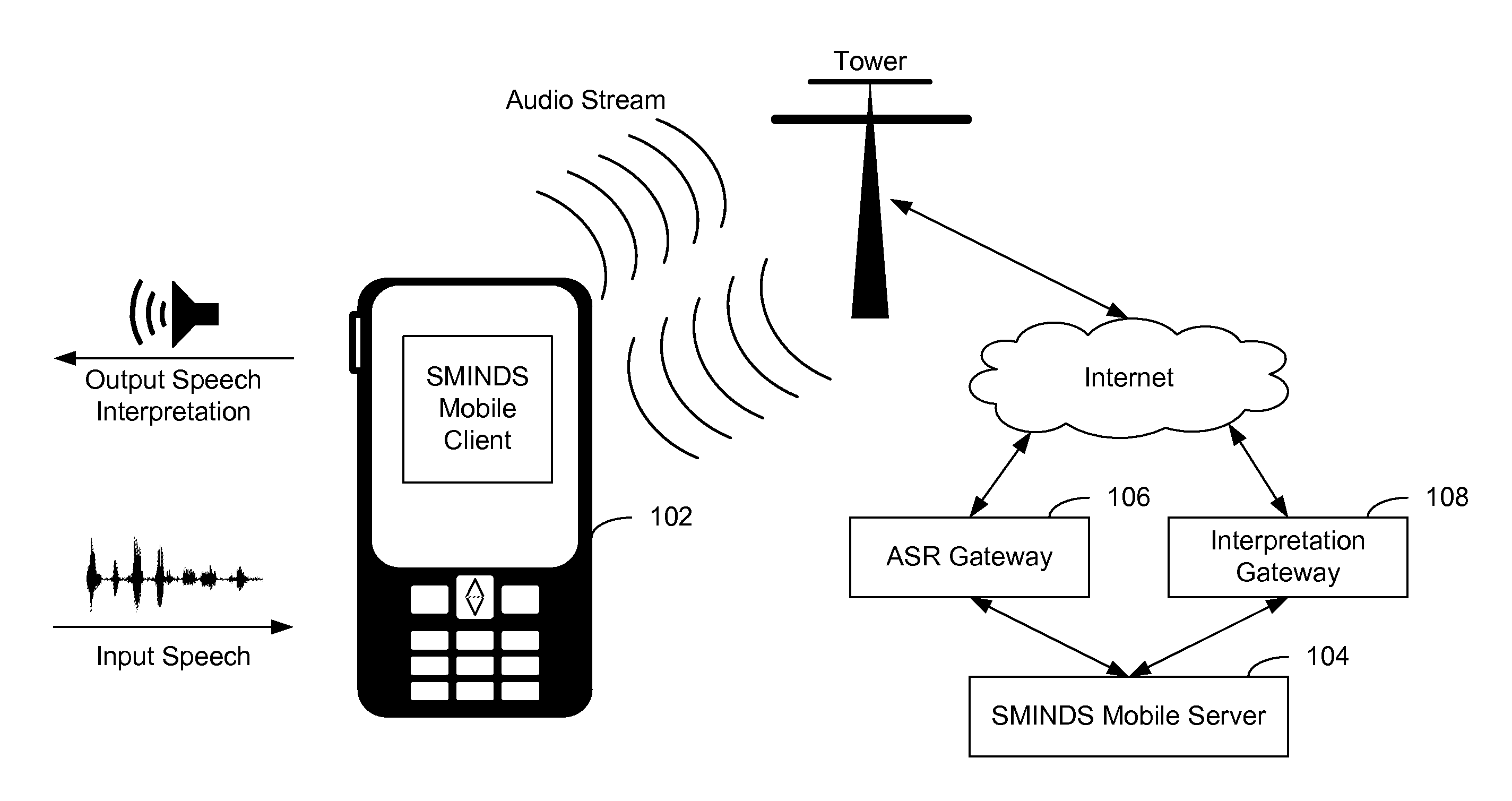

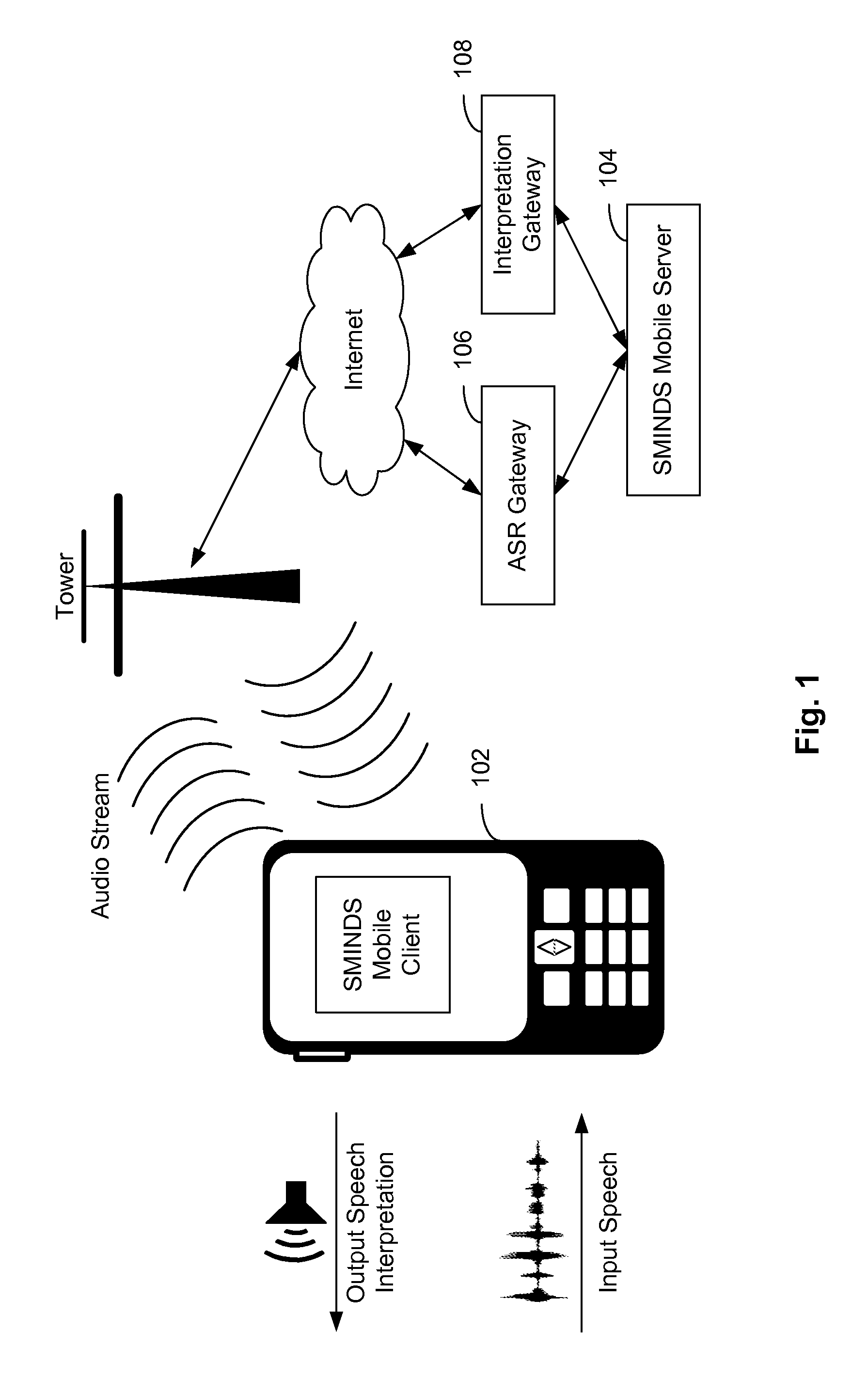

Mobile systems and methods for responding to natural language speech utterance

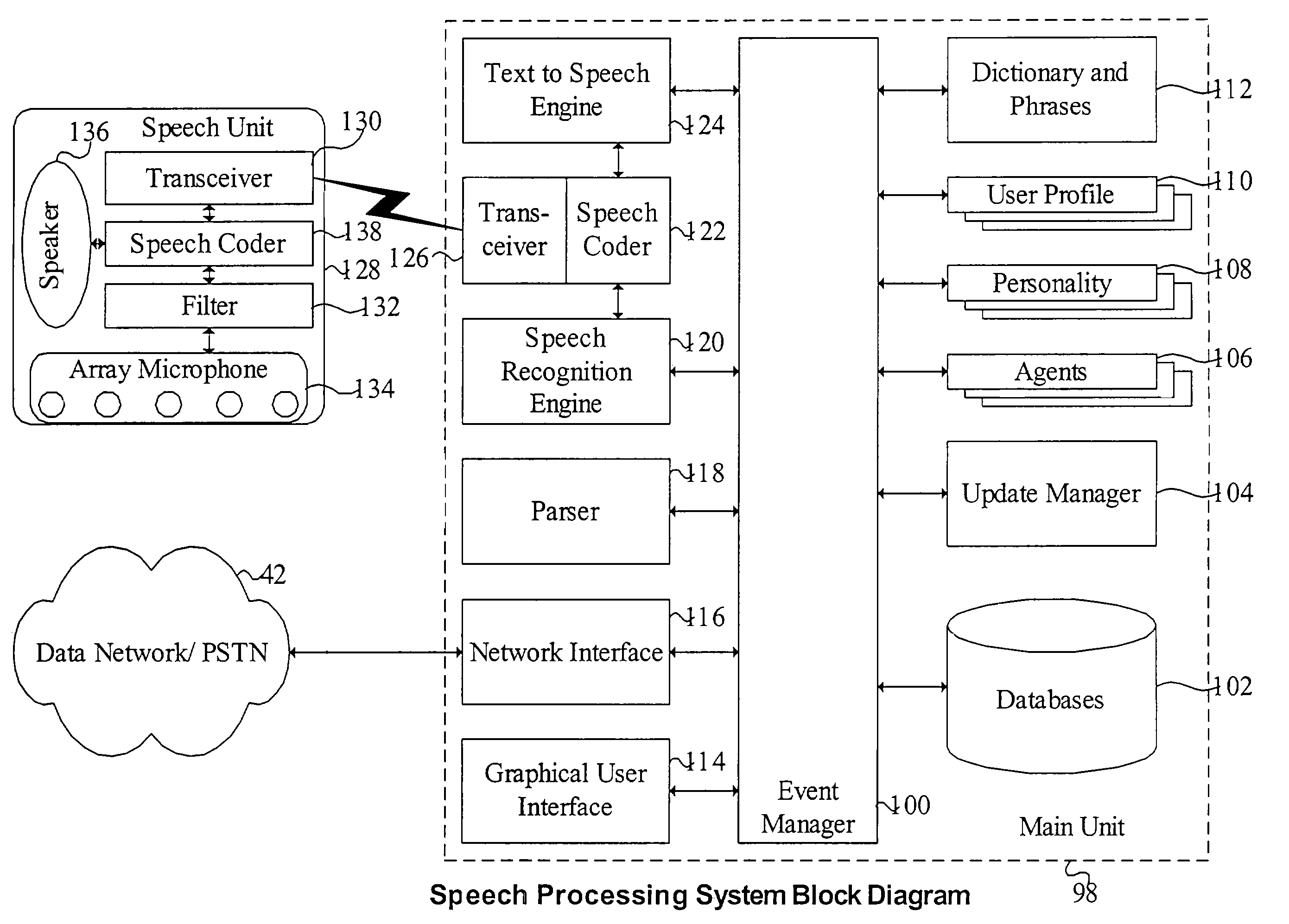

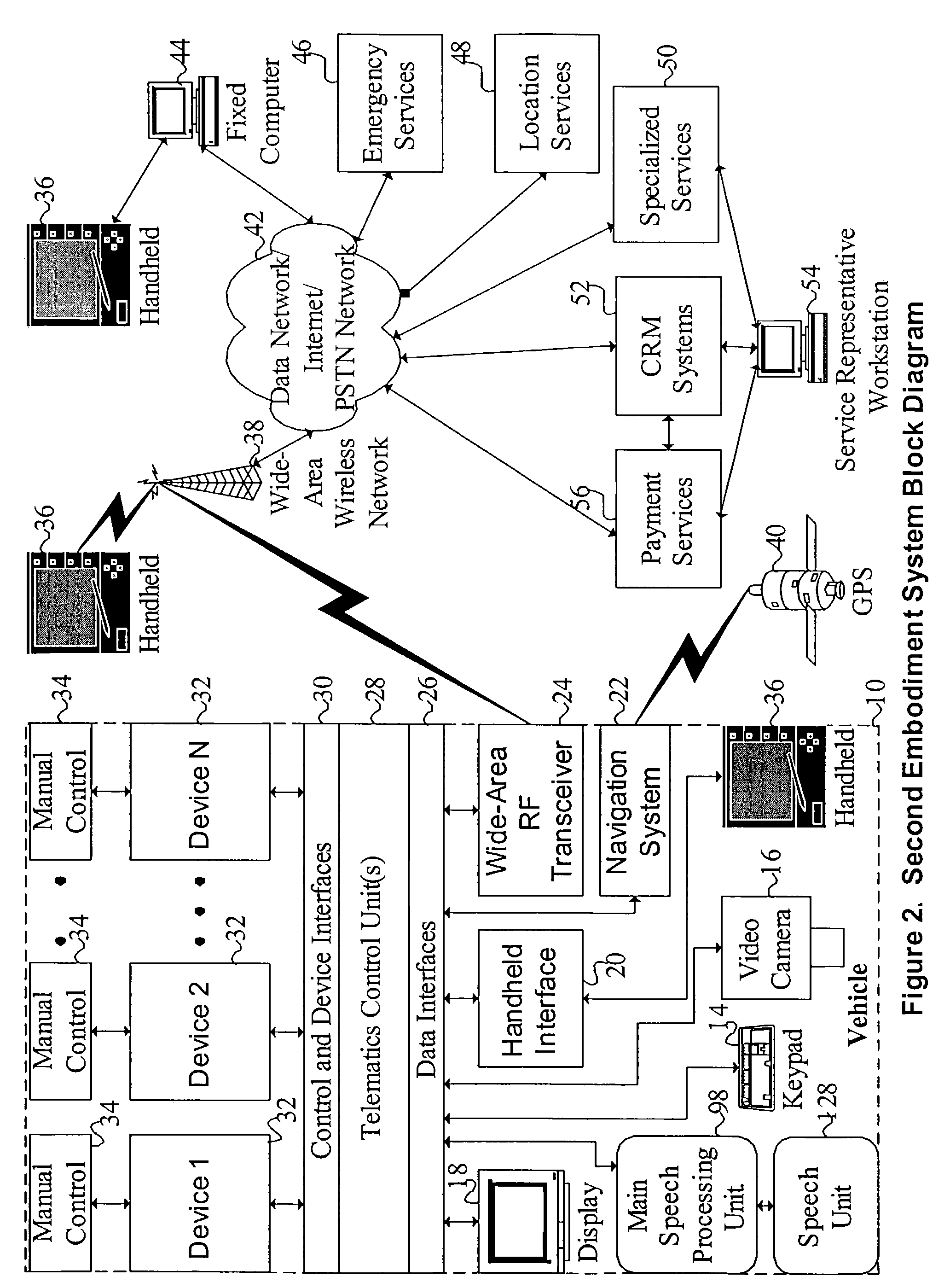

ActiveUS7693720B2Promotes feeling of naturalOvercome deficienciesDigital data information retrievalSpeech recognitionRemote systemTelematics

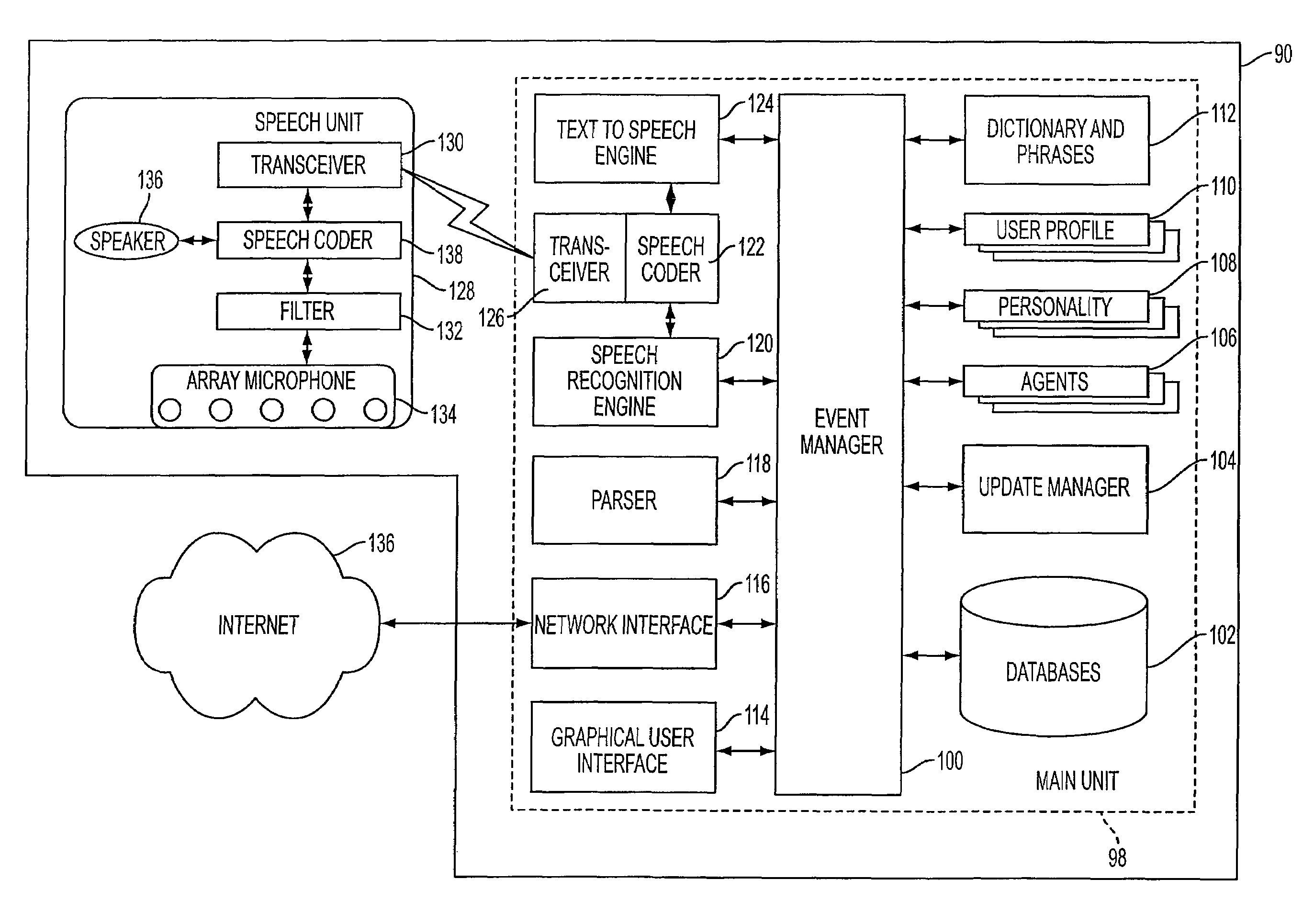

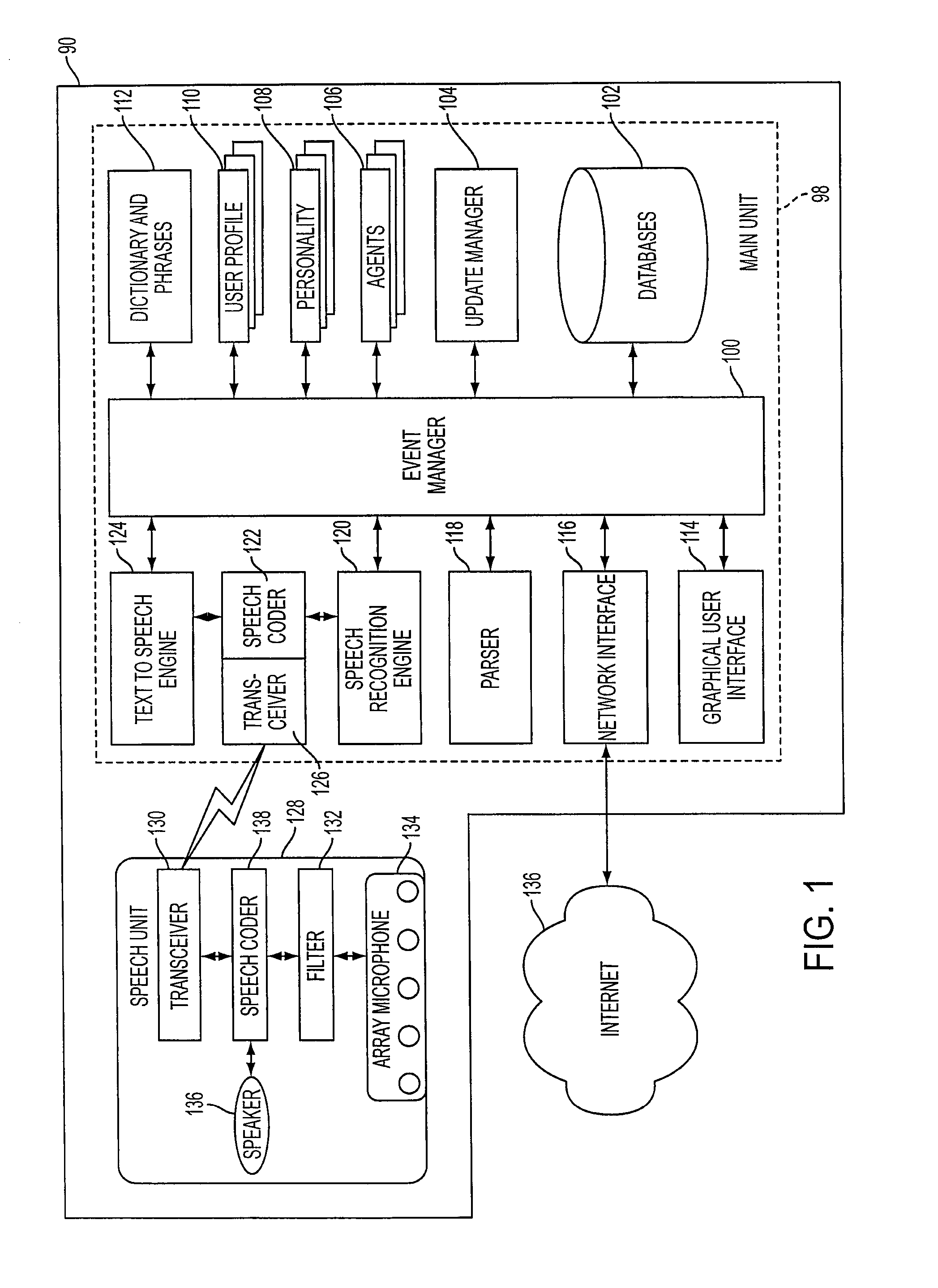

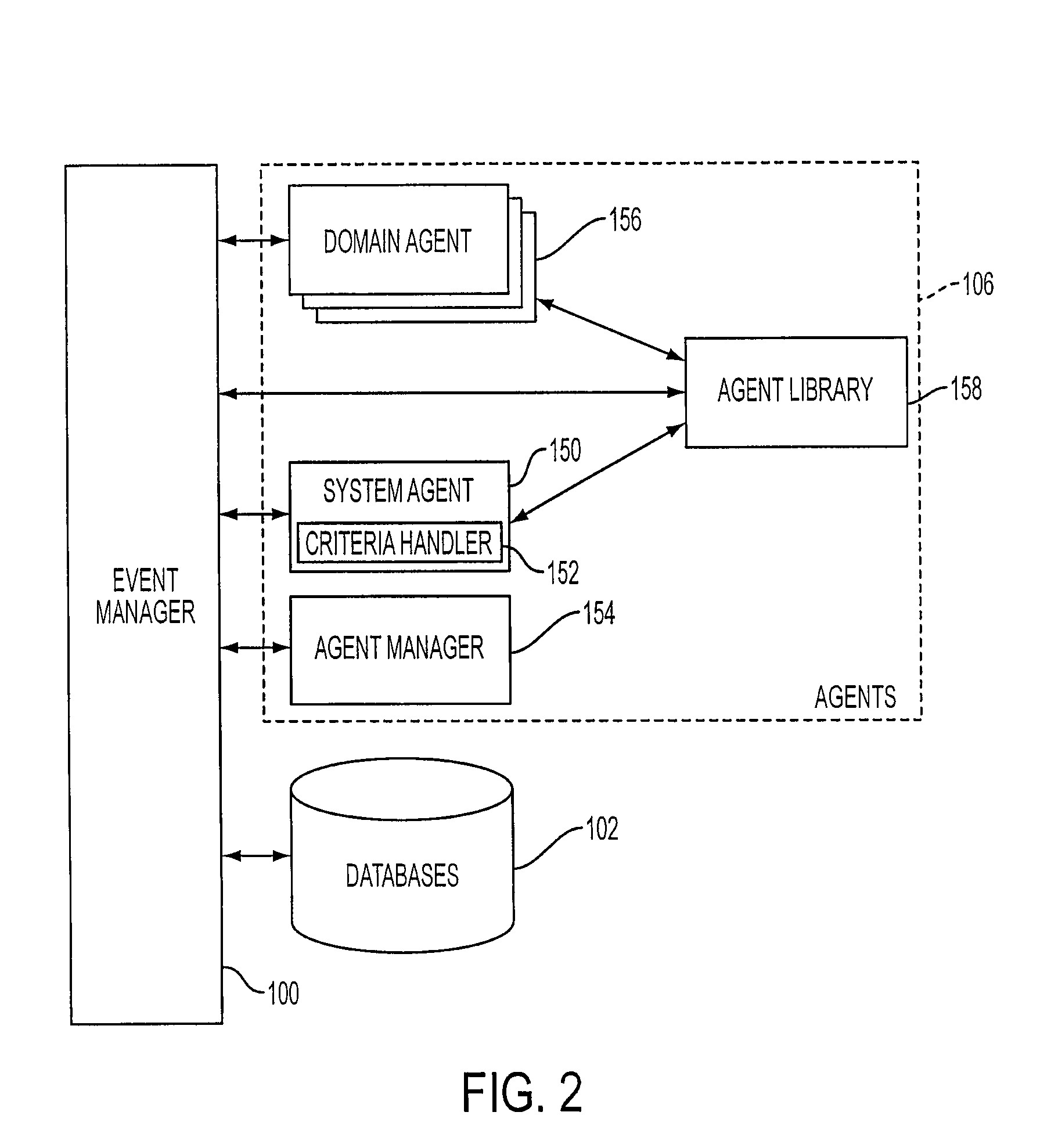

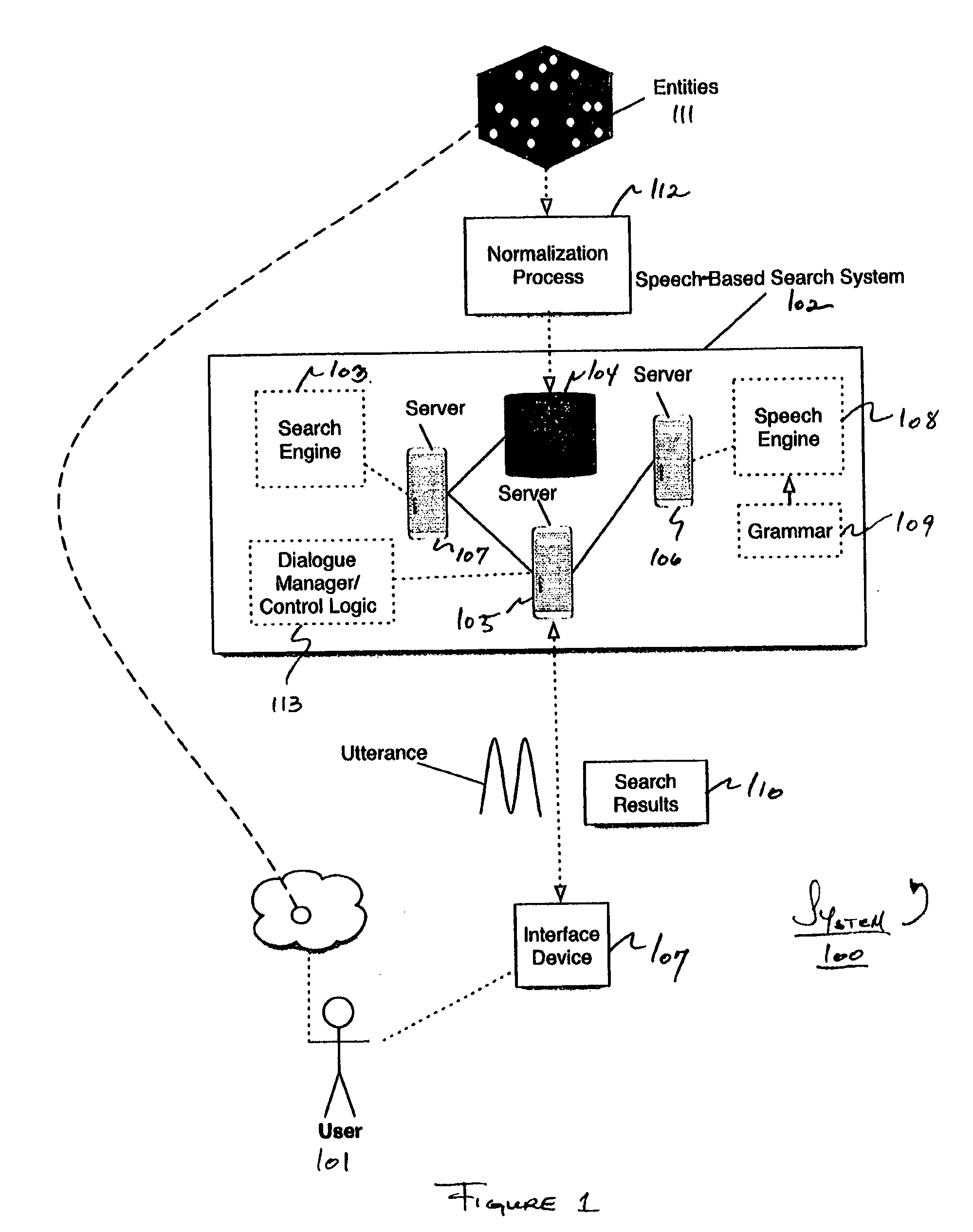

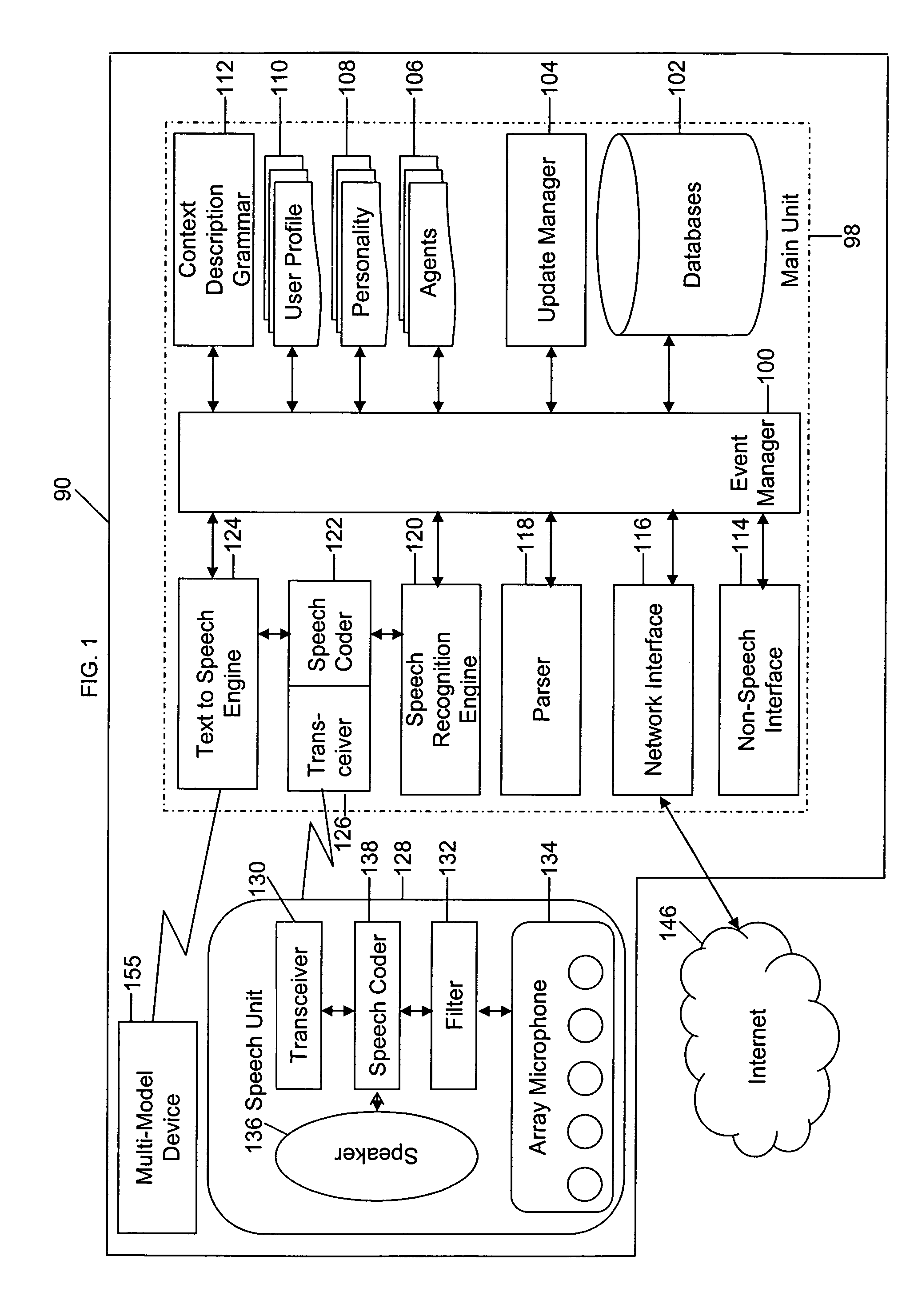

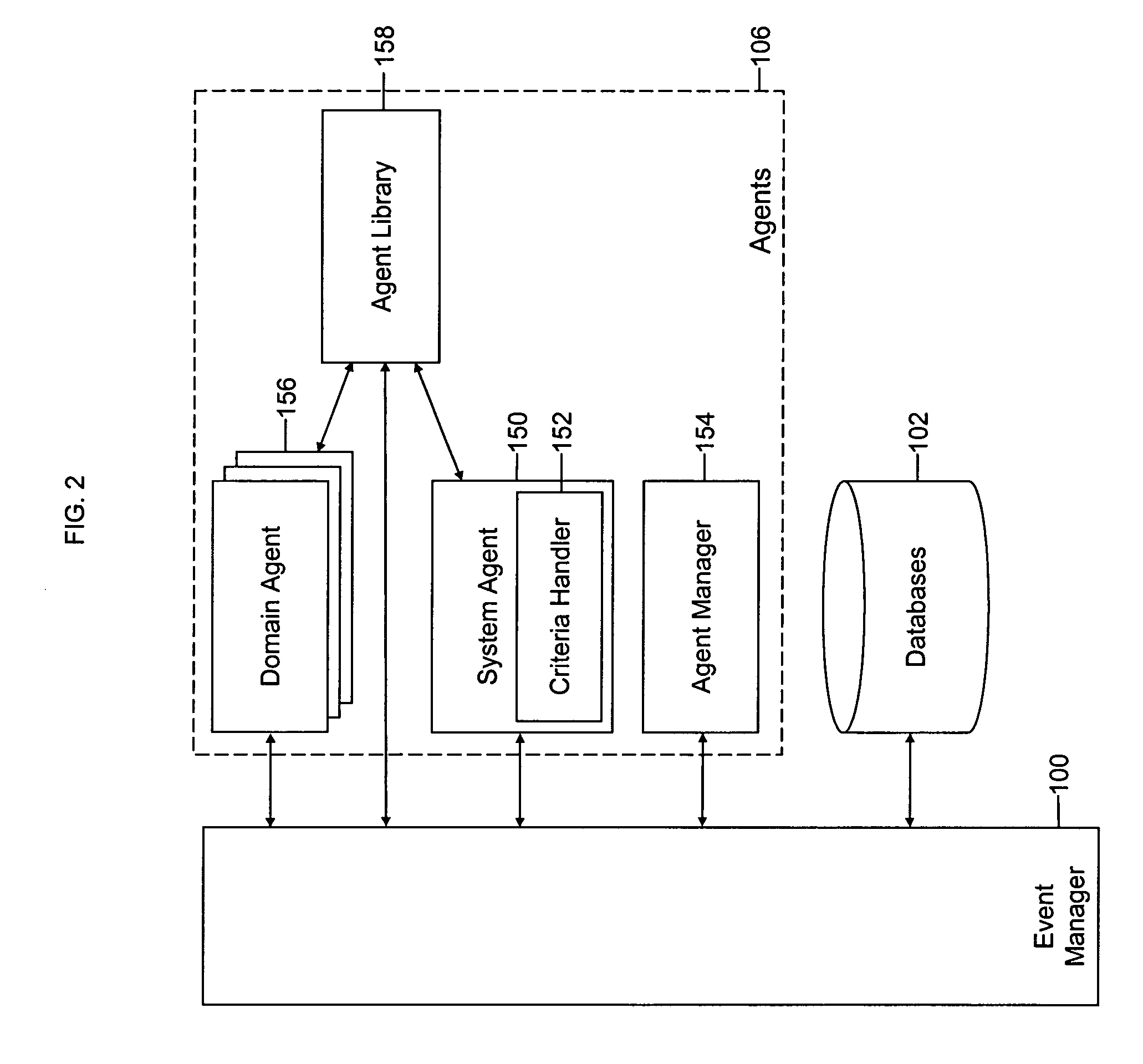

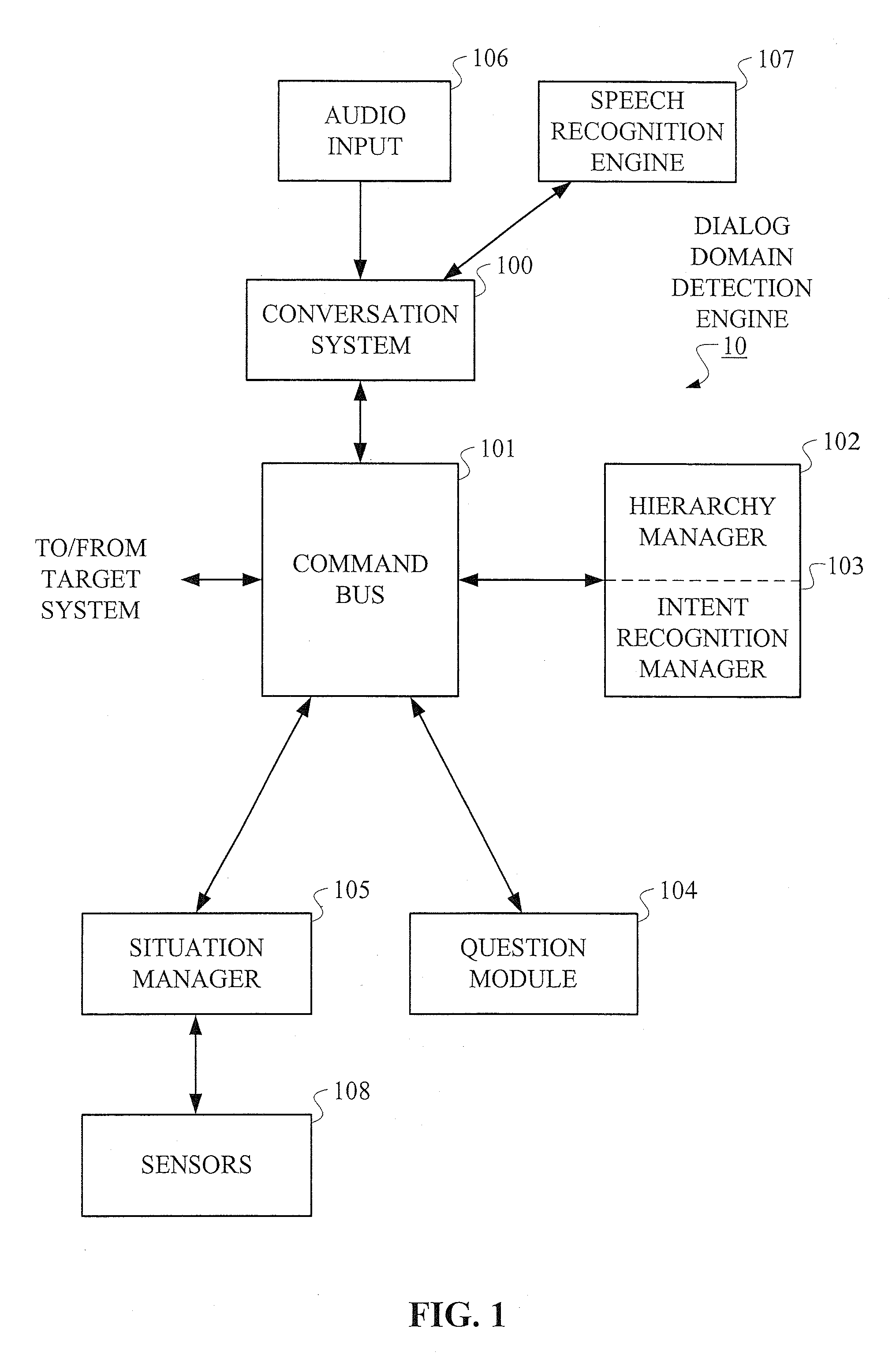

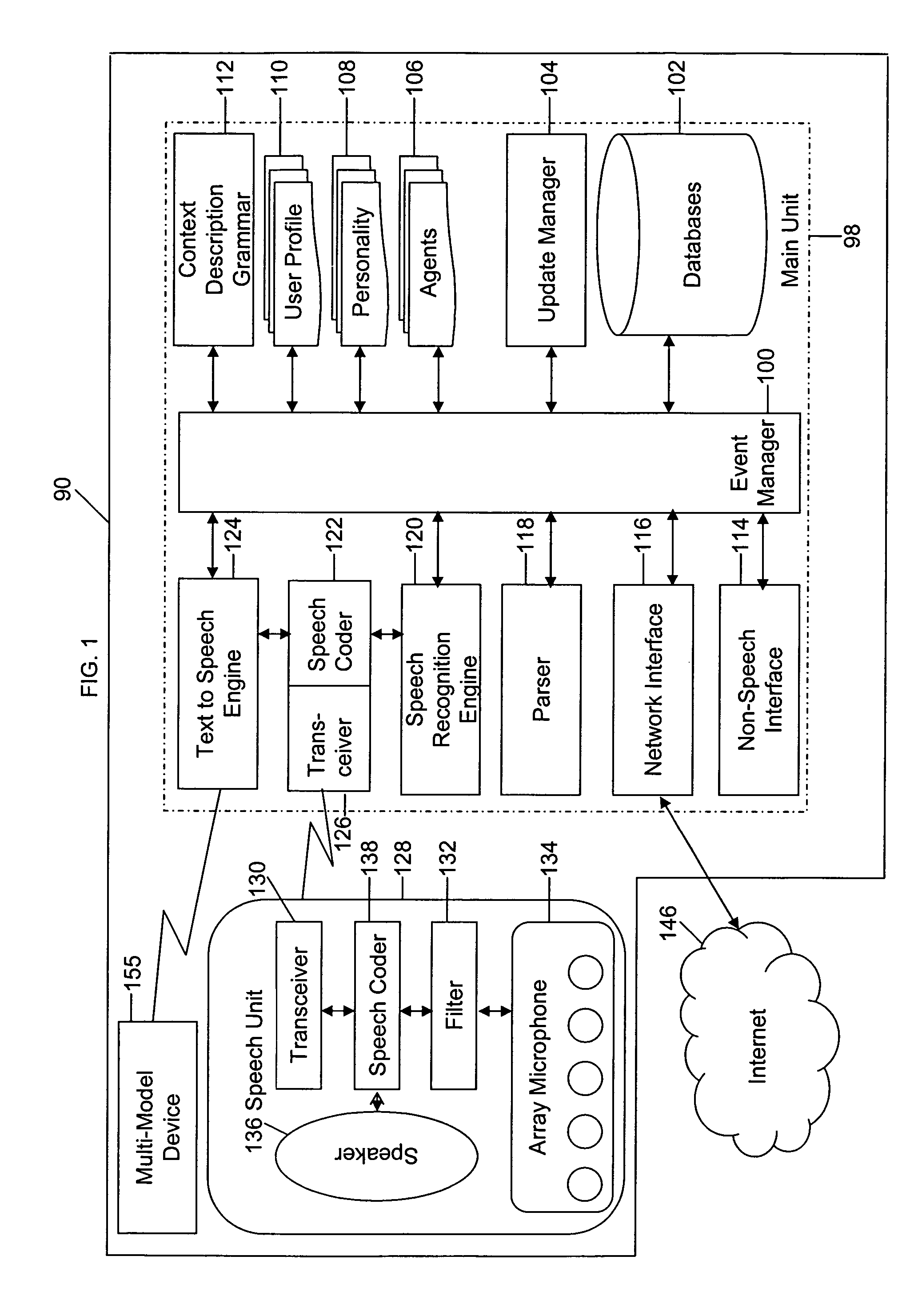

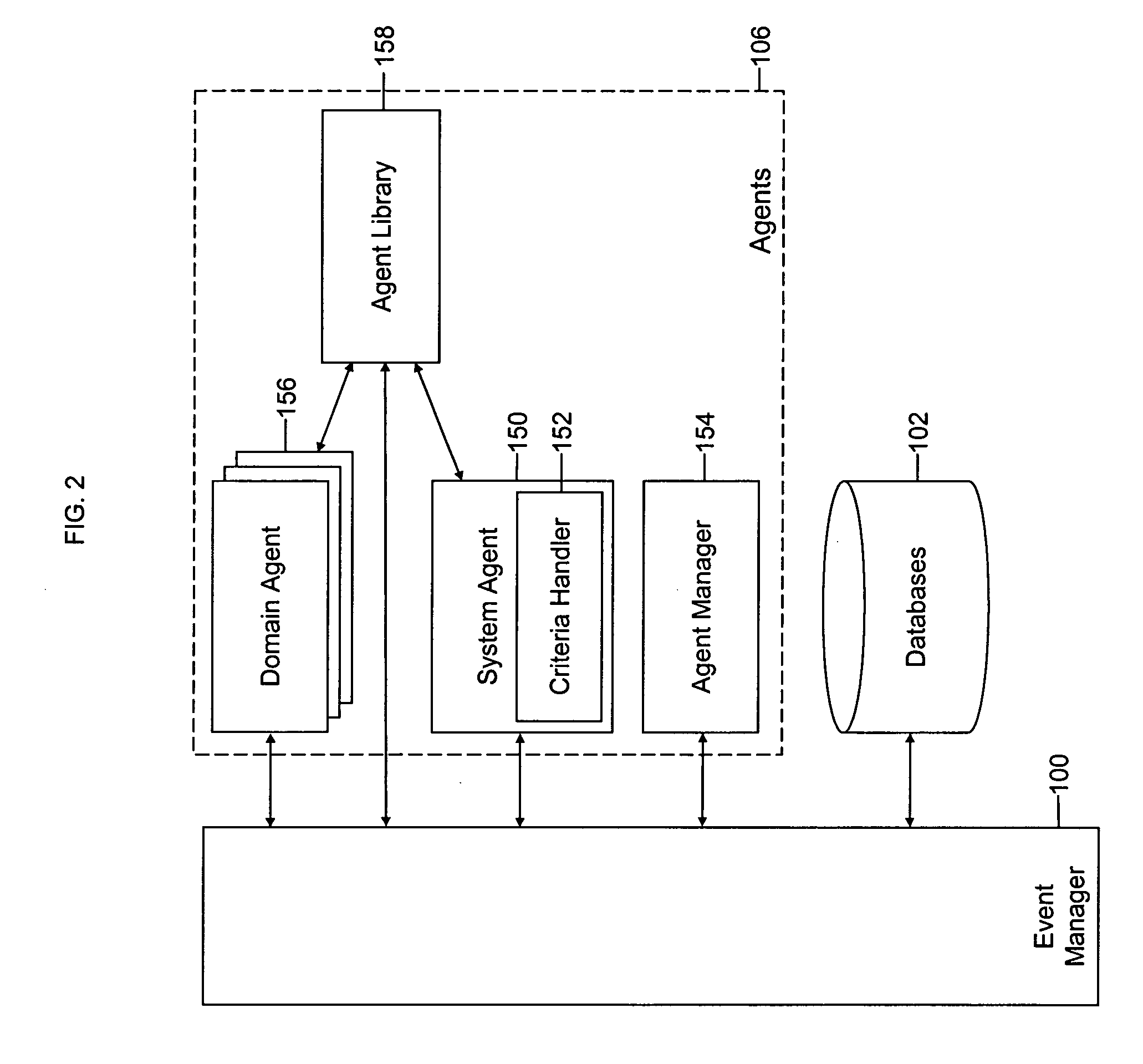

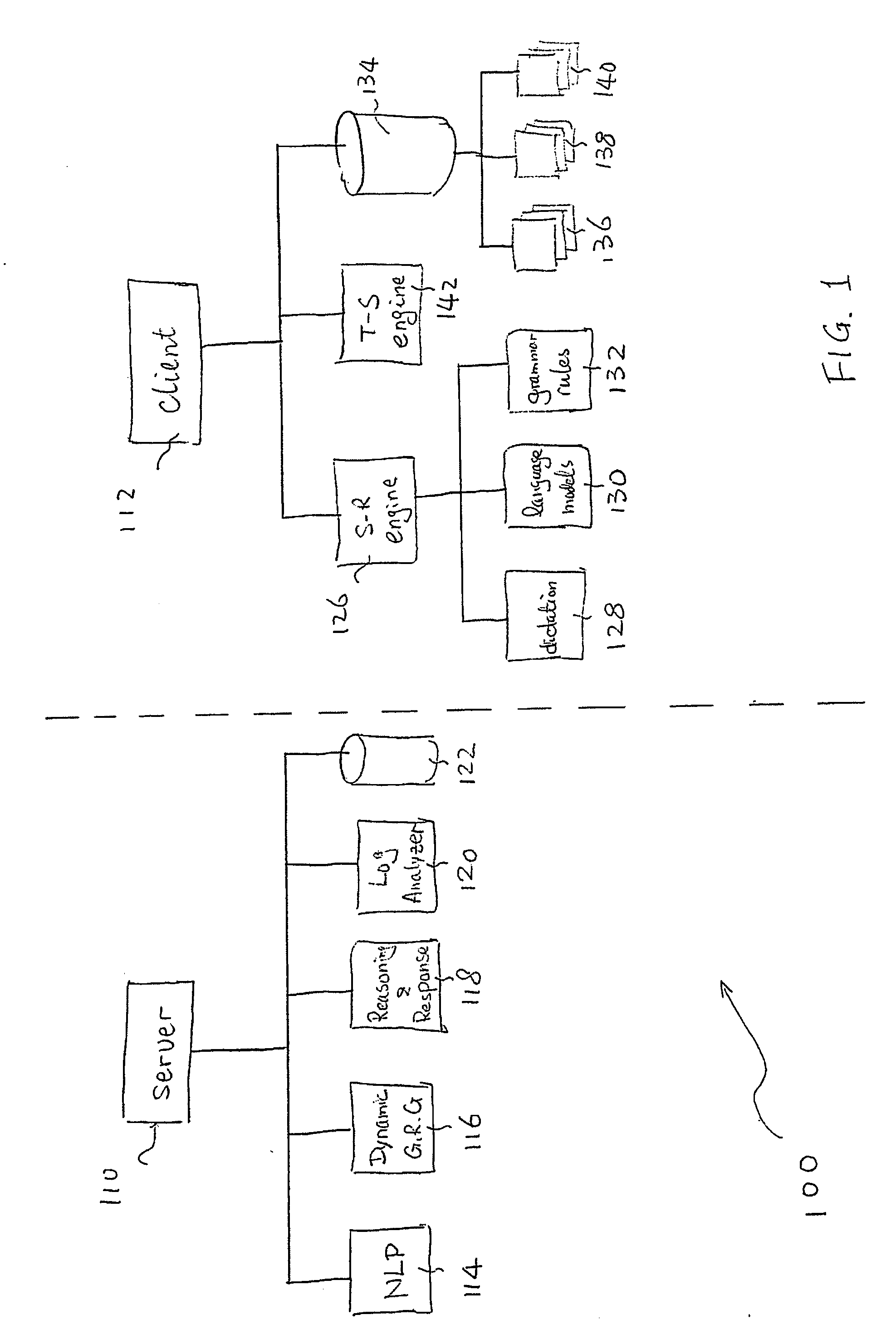

Mobile systems and methods that overcomes the deficiencies of prior art speech-based interfaces for telematics applications through the use of a complete speech-based information query, retrieval, presentation and local or remote command environment. This environment makes significant use of context, prior information, domain knowledge, and user specific profile data to achieve a natural environment for one or more users making queries or commands in multiple domains. Through this integrated approach, a complete speech-based natural language query and response environment can be created. The invention creates, stores and uses extensive personal profile information for each user, thereby improving the reliability of determining the context and presenting the expected results for a particular question or command. The invention may organize domain specific behavior and information into agents, that are distributable or updateable over a wide area network. The invention can be used in dynamic environments such as those of mobile vehicles to control and communicate with both vehicle systems and remote systems and devices.

Owner:DIALECT LLC

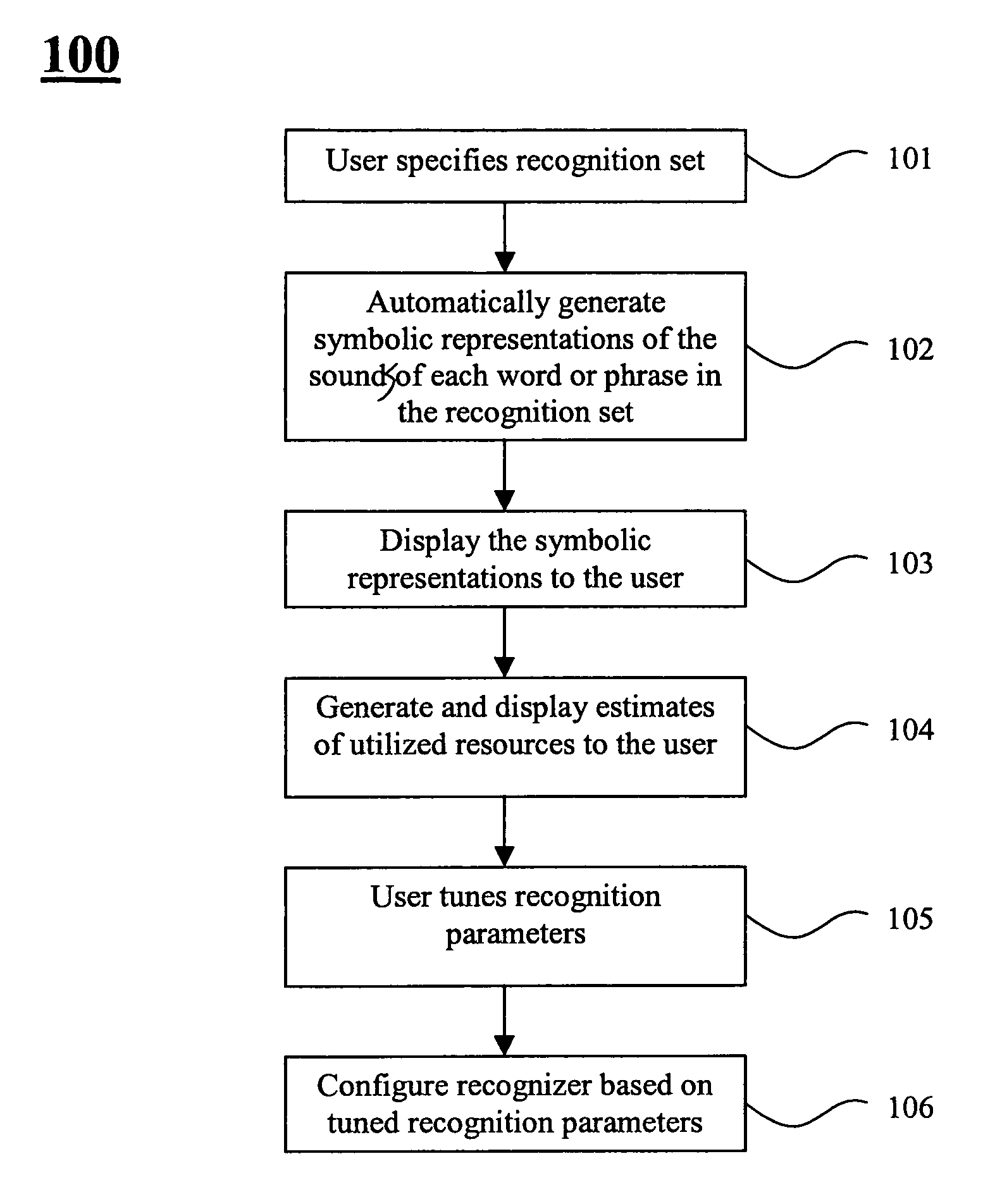

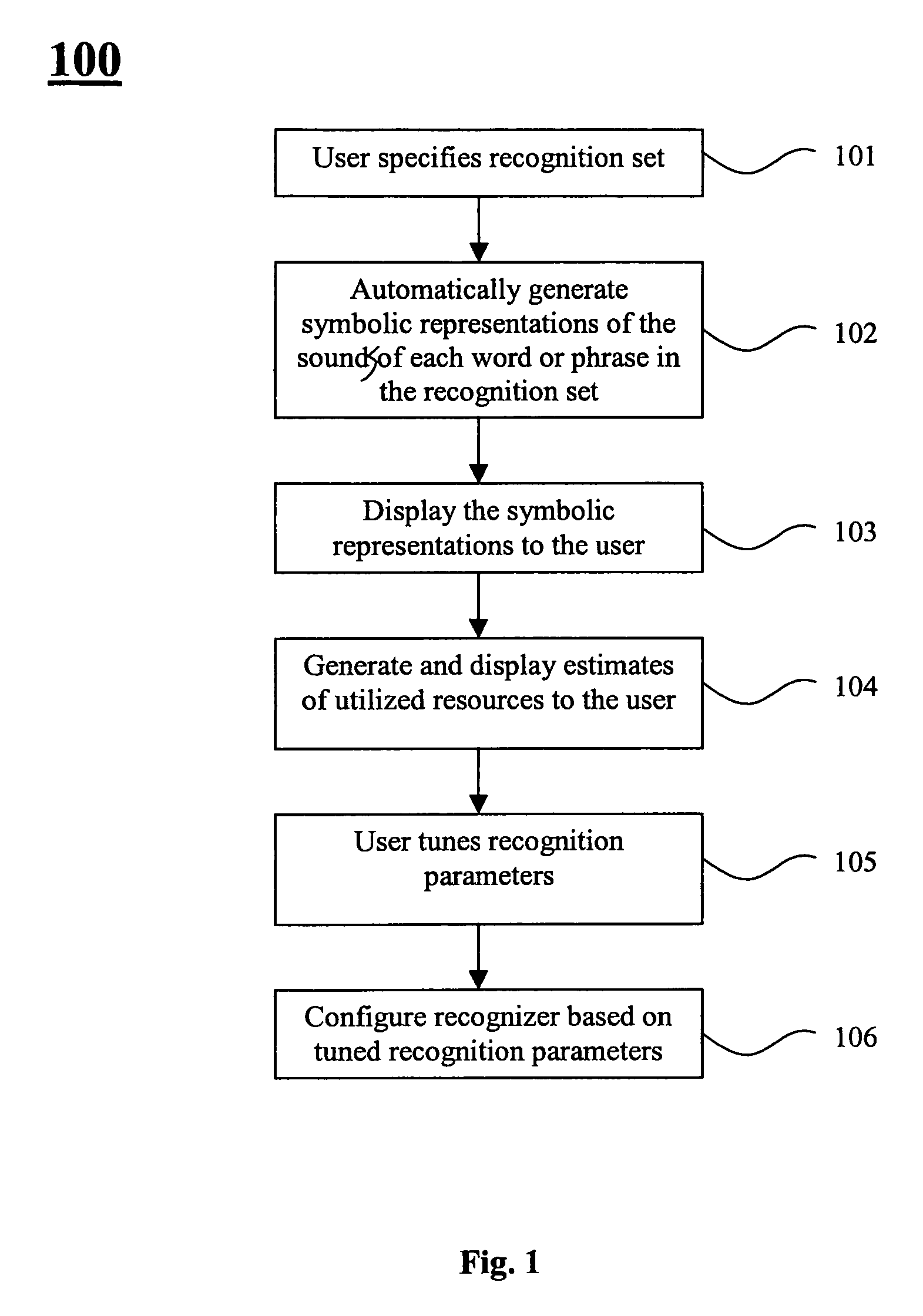

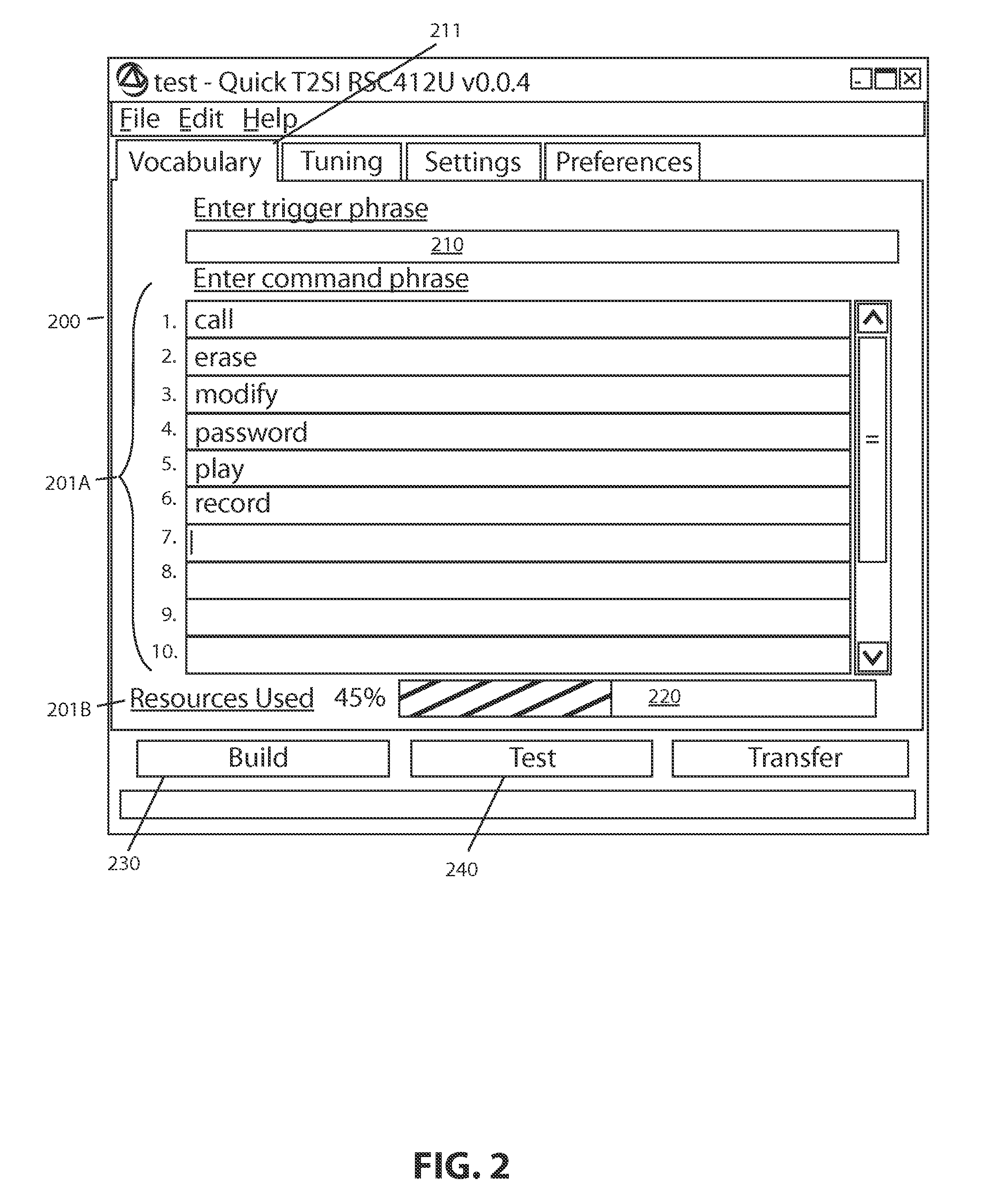

Method and apparatus of specifying and performing speech recognition operations

ActiveUS7720683B1Efficient executionShorten cycle timeSpeech recognitionSpeech identificationSpeech sound

A speech recognition technique is described that has the dual benefits of not requiring collection of recordings for training while using computational resources that are cost-compatible with consumer electronic products. Methods are described for improving the recognition accuracy of a recognizer by developer interaction with a design tool that iterates the recognition data during development of a recognition set of utterances and that allows controlling and minimizing the computational resources required to implement the recognizer in hardware.

Owner:SENSORY

Mobile systems and methods for responding to natural language speech utterance

ActiveUS20100145700A1Promotes feeling of naturalOvercome deficienciesVehicle testingInstruments for road network navigationInformation processingRemote system

Owner:DIALECT LLC

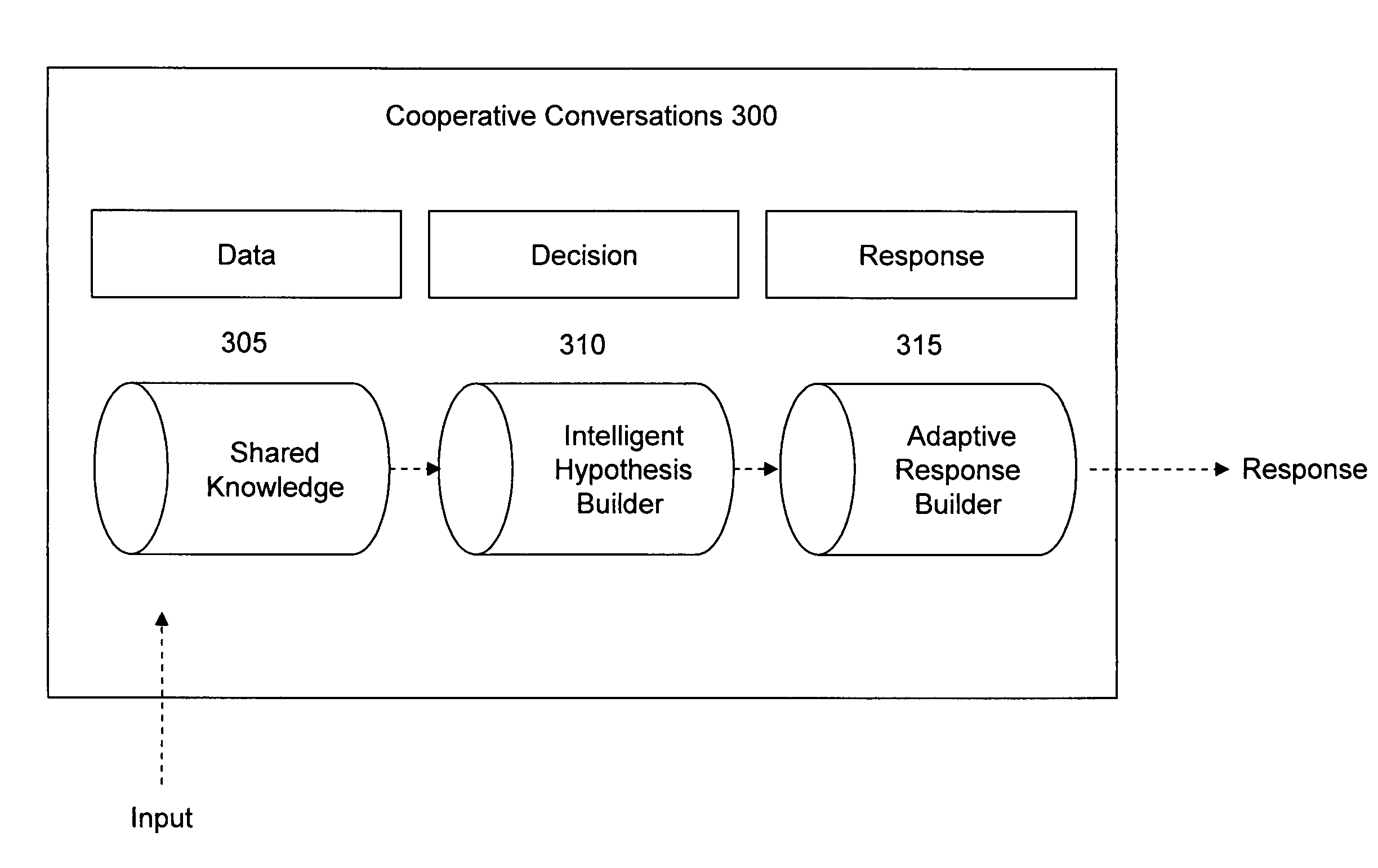

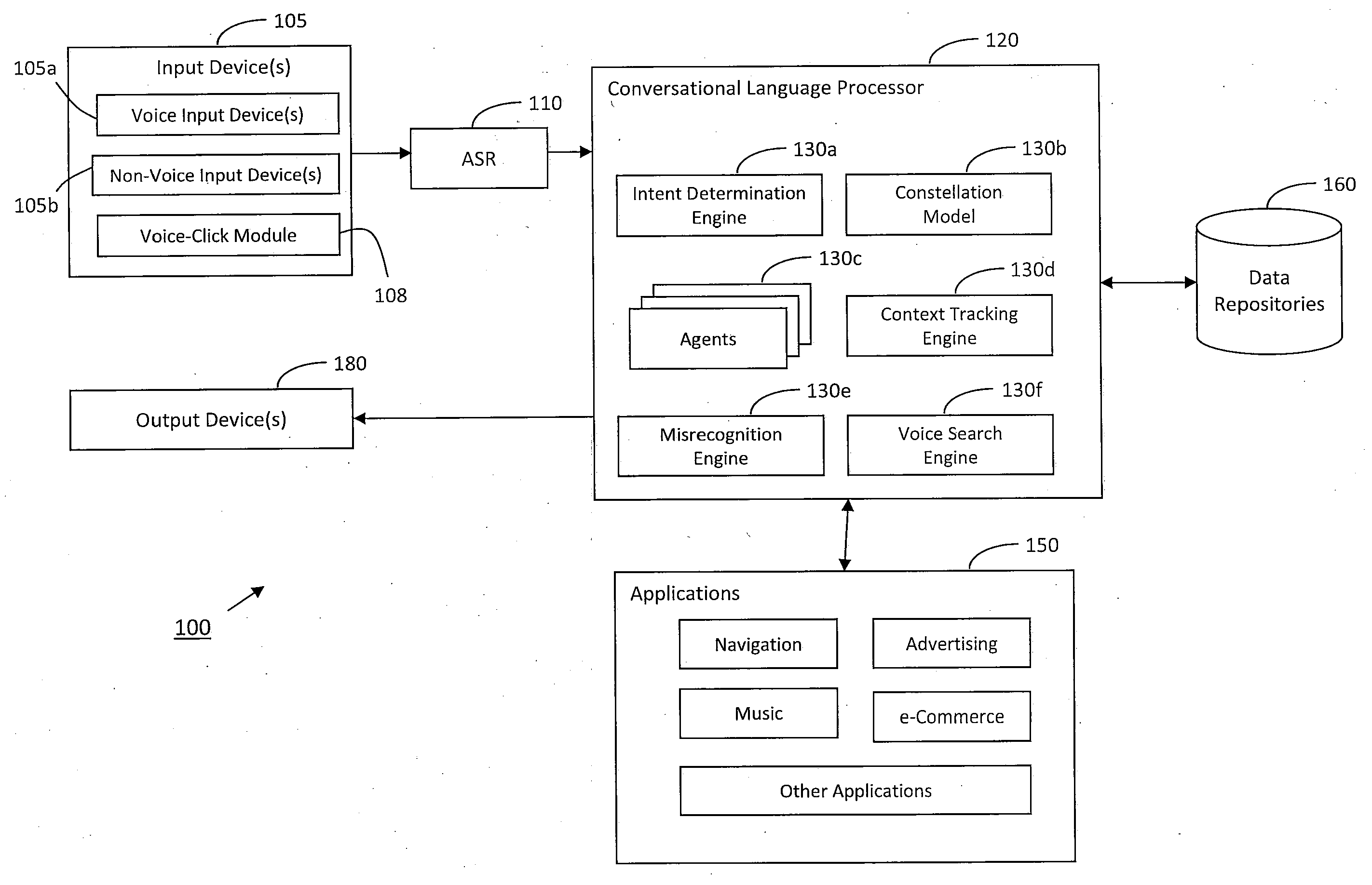

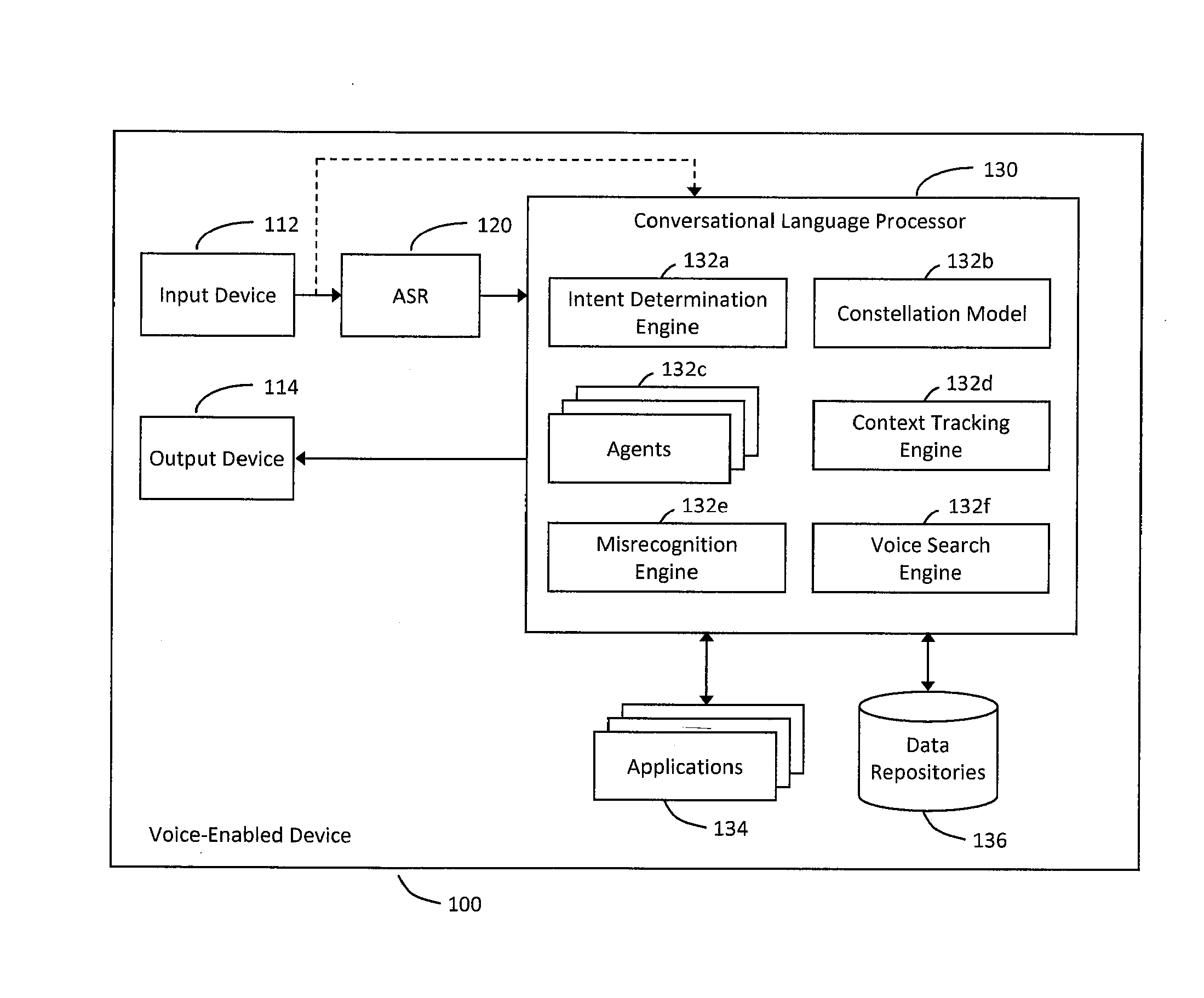

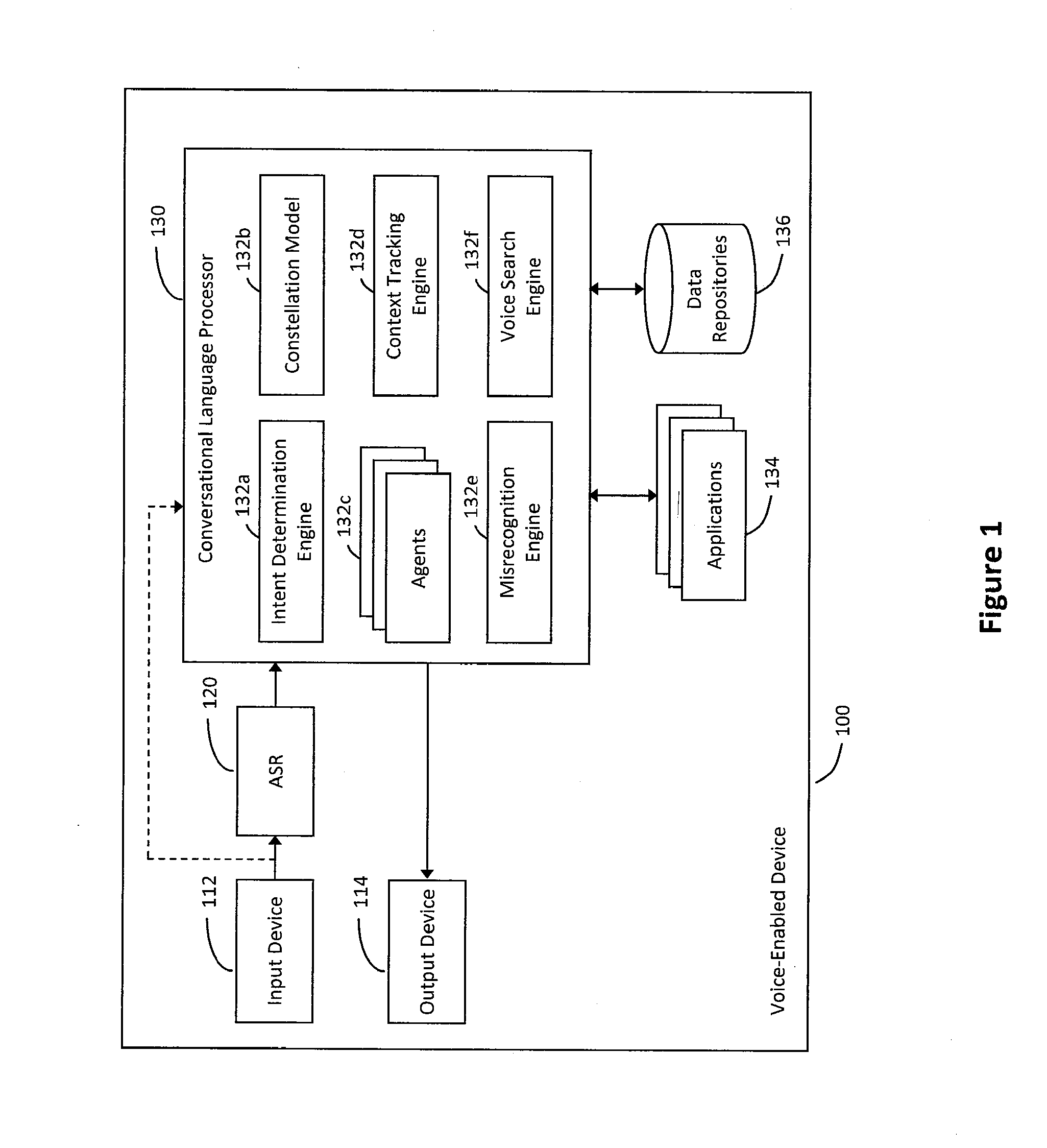

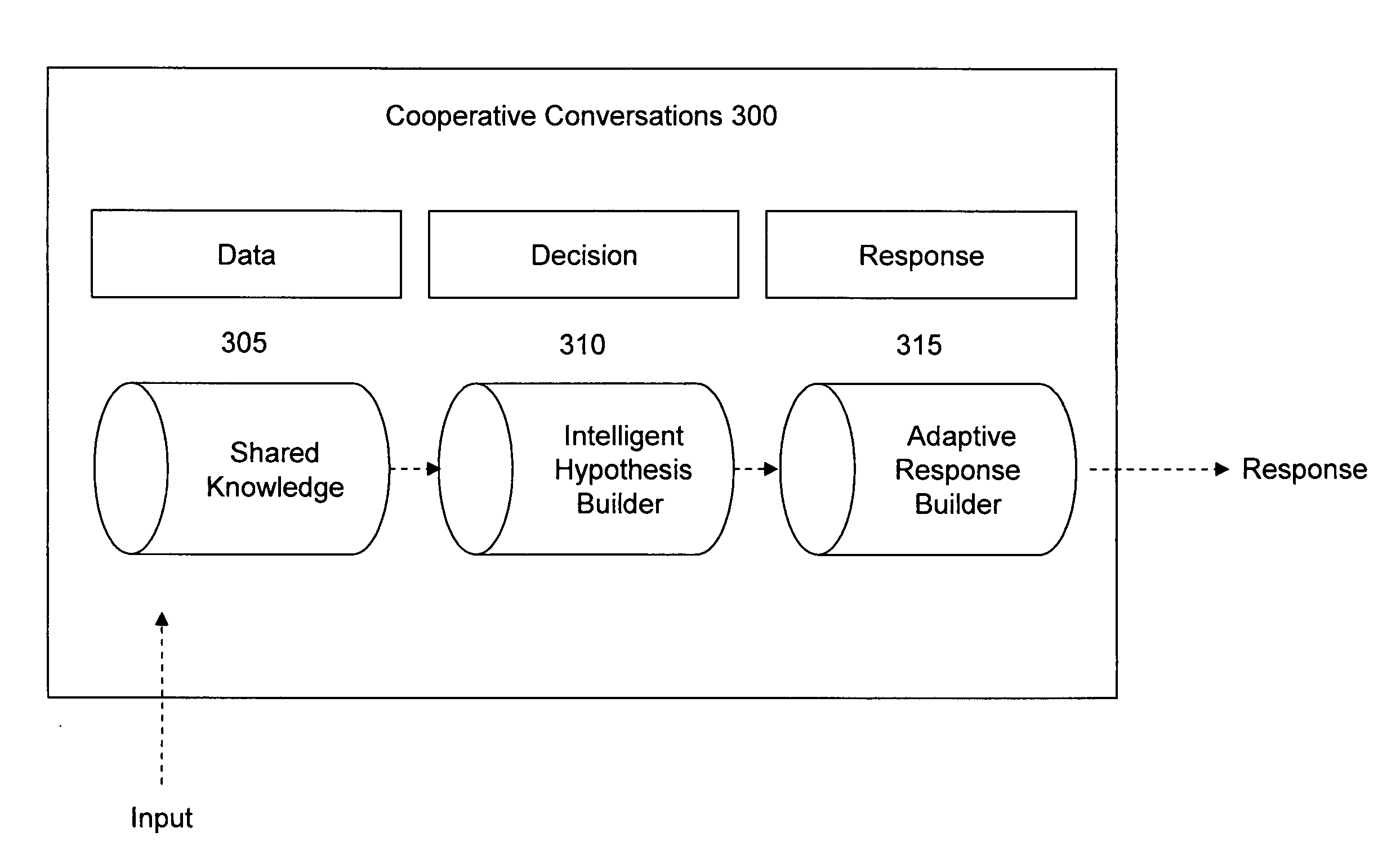

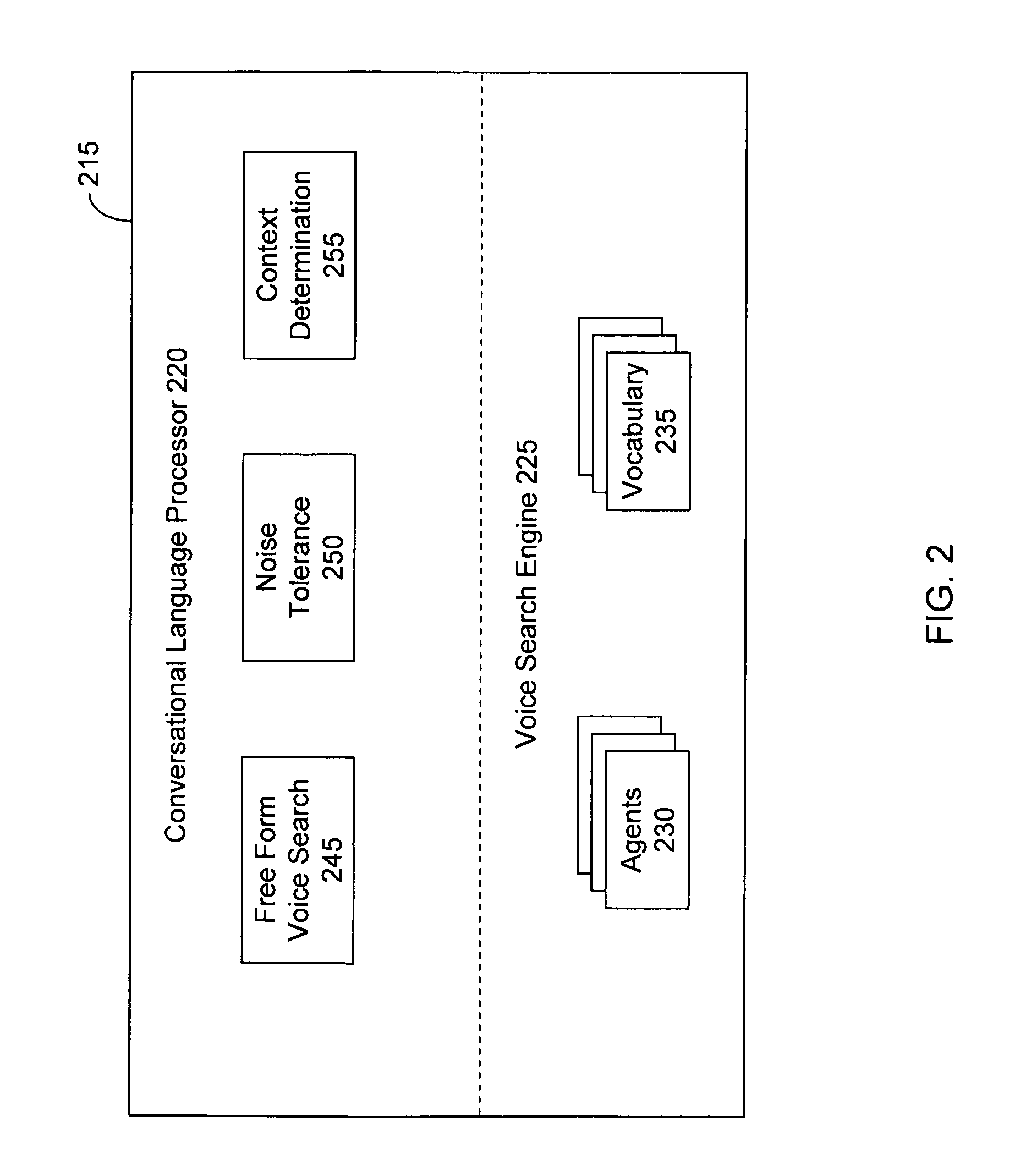

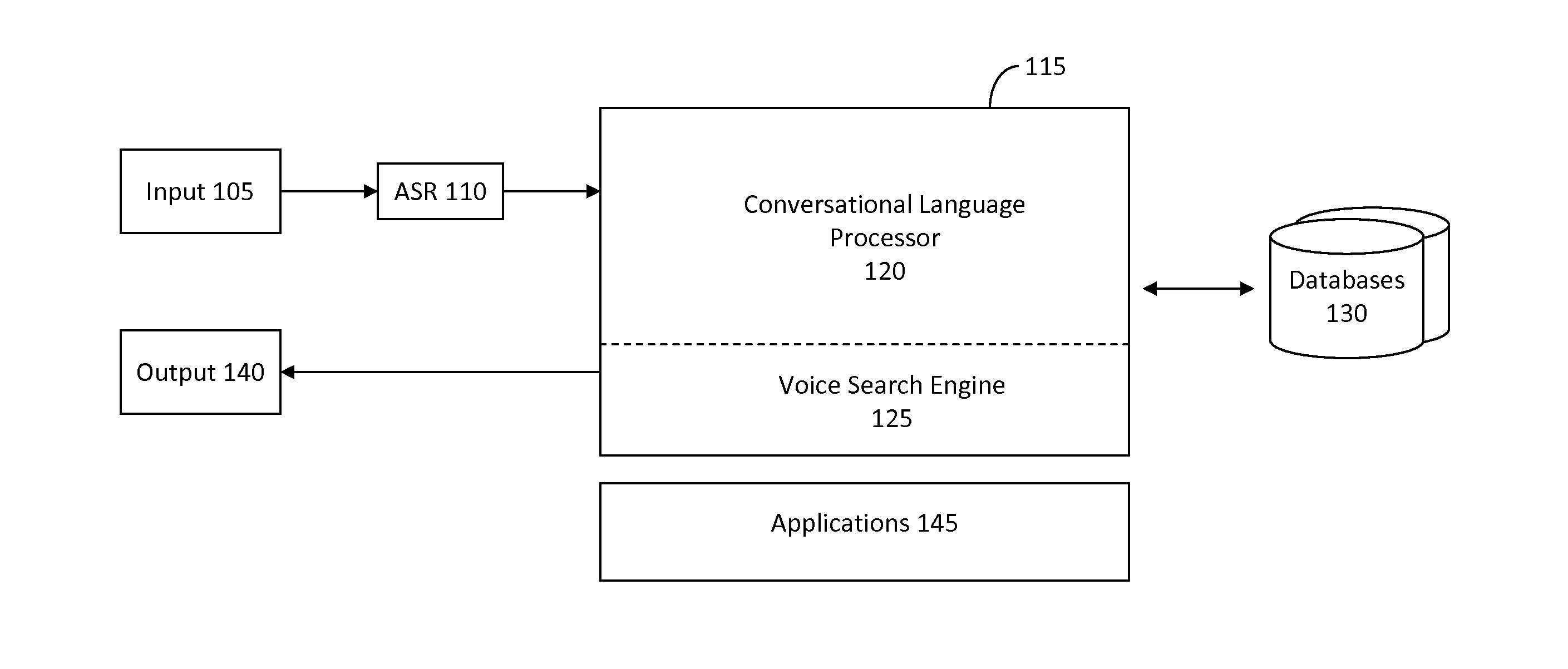

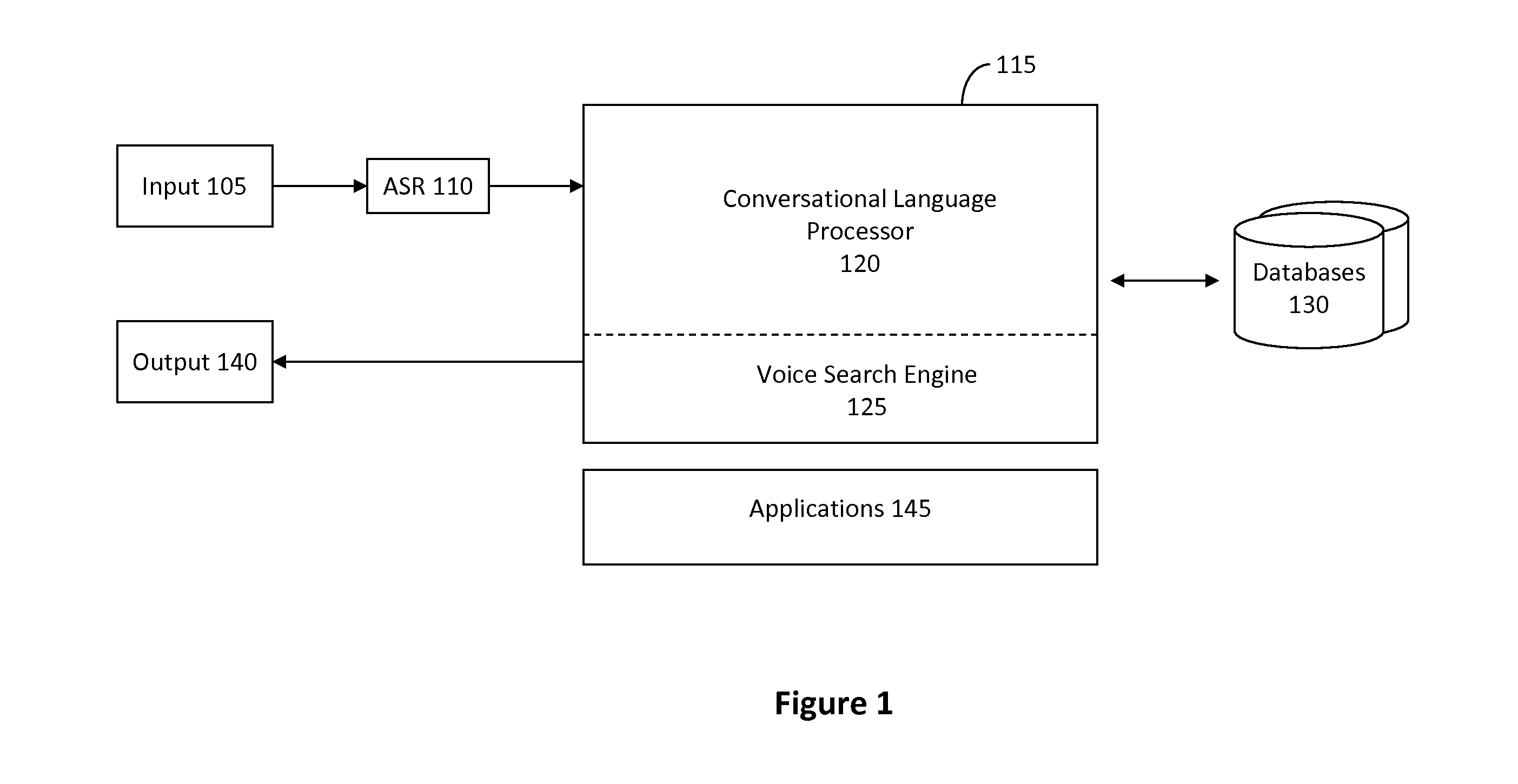

System and method for a cooperative conversational voice user interface

A cooperative conversational voice user interface is provided. The cooperative conversational voice user interface may build upon short-term and long-term shared knowledge to generate one or more explicit and / or implicit hypotheses about an intent of a user utterance. The hypotheses may be ranked based on varying degrees of certainty, and an adaptive response may be generated for the user. Responses may be worded based on the degrees of certainty and to frame an appropriate domain for a subsequent utterance. In one implementation, misrecognitions may be tolerated, and conversational course may be corrected based on subsequent utterances and / or responses.

Owner:VB ASSETS LLC

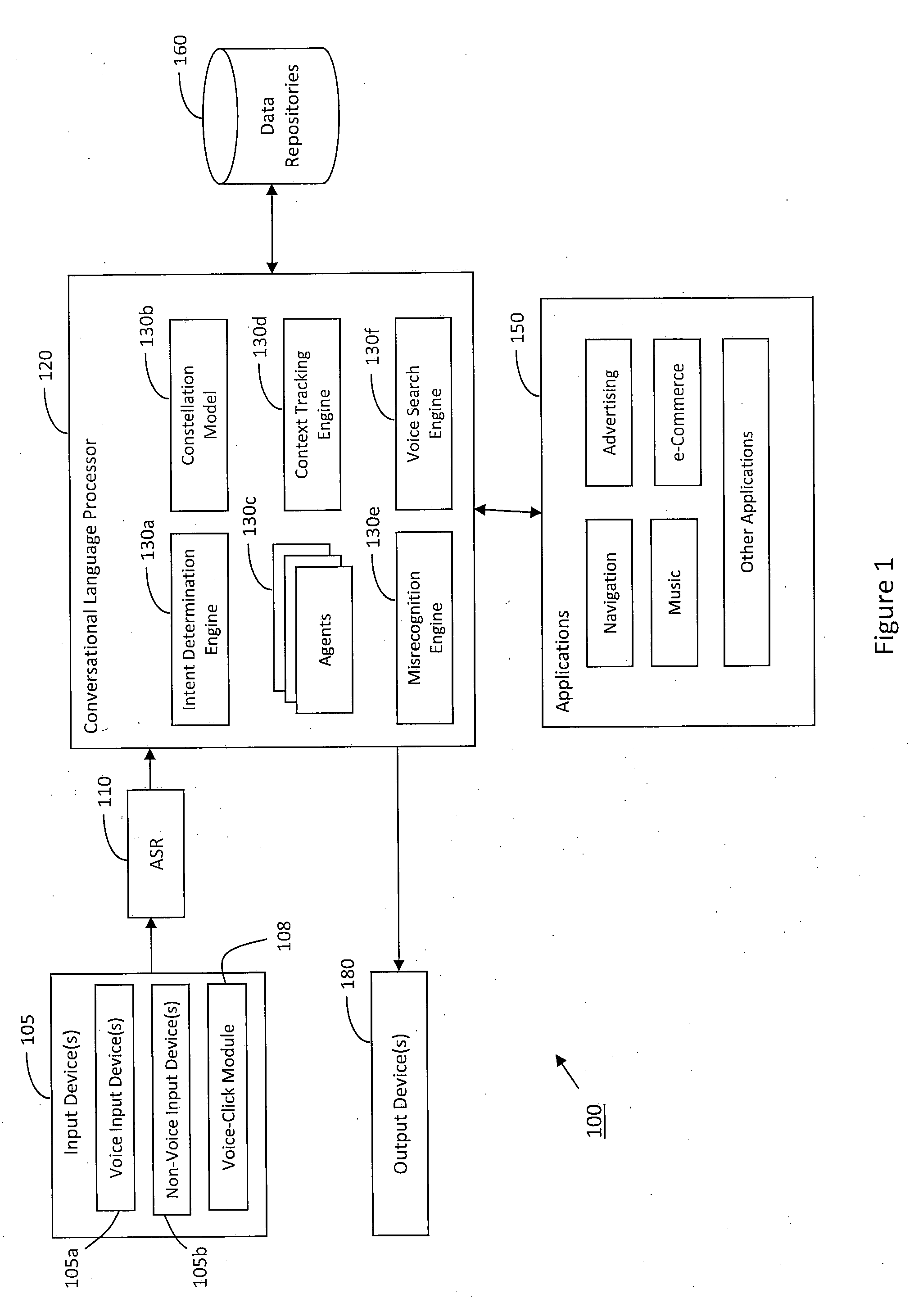

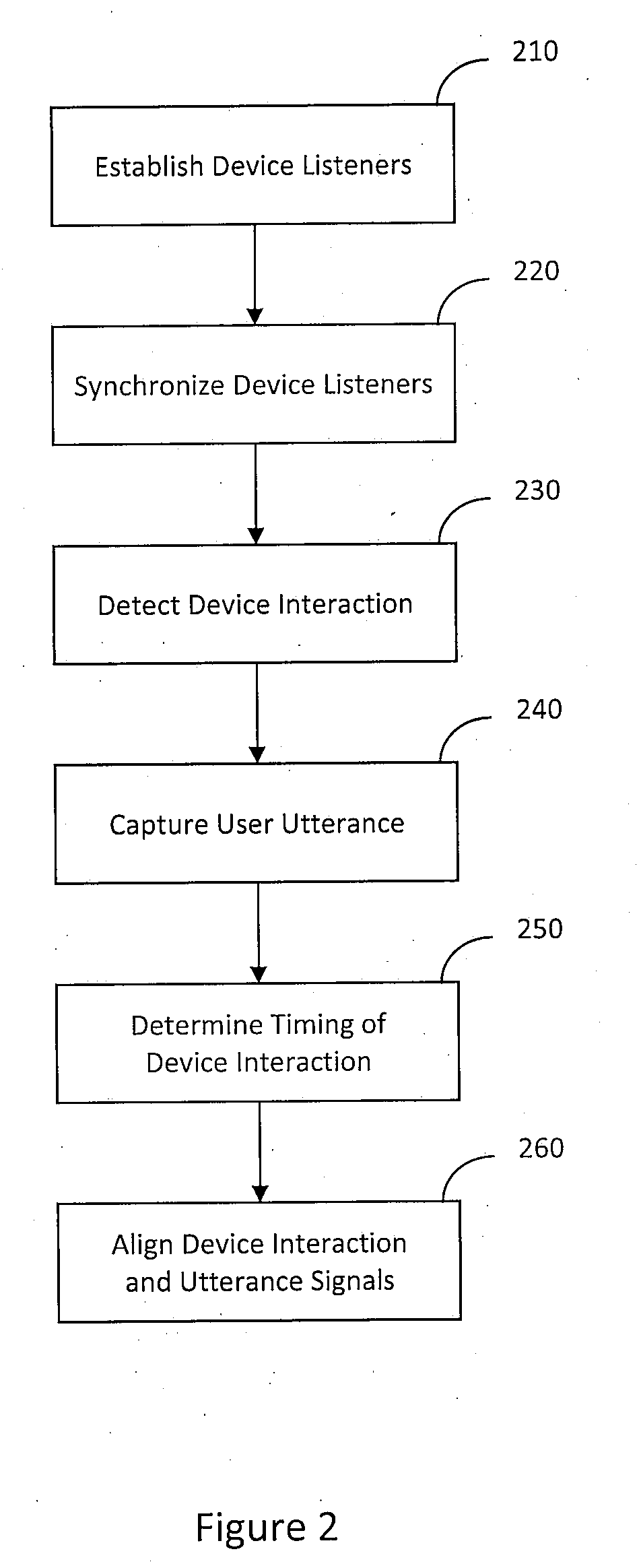

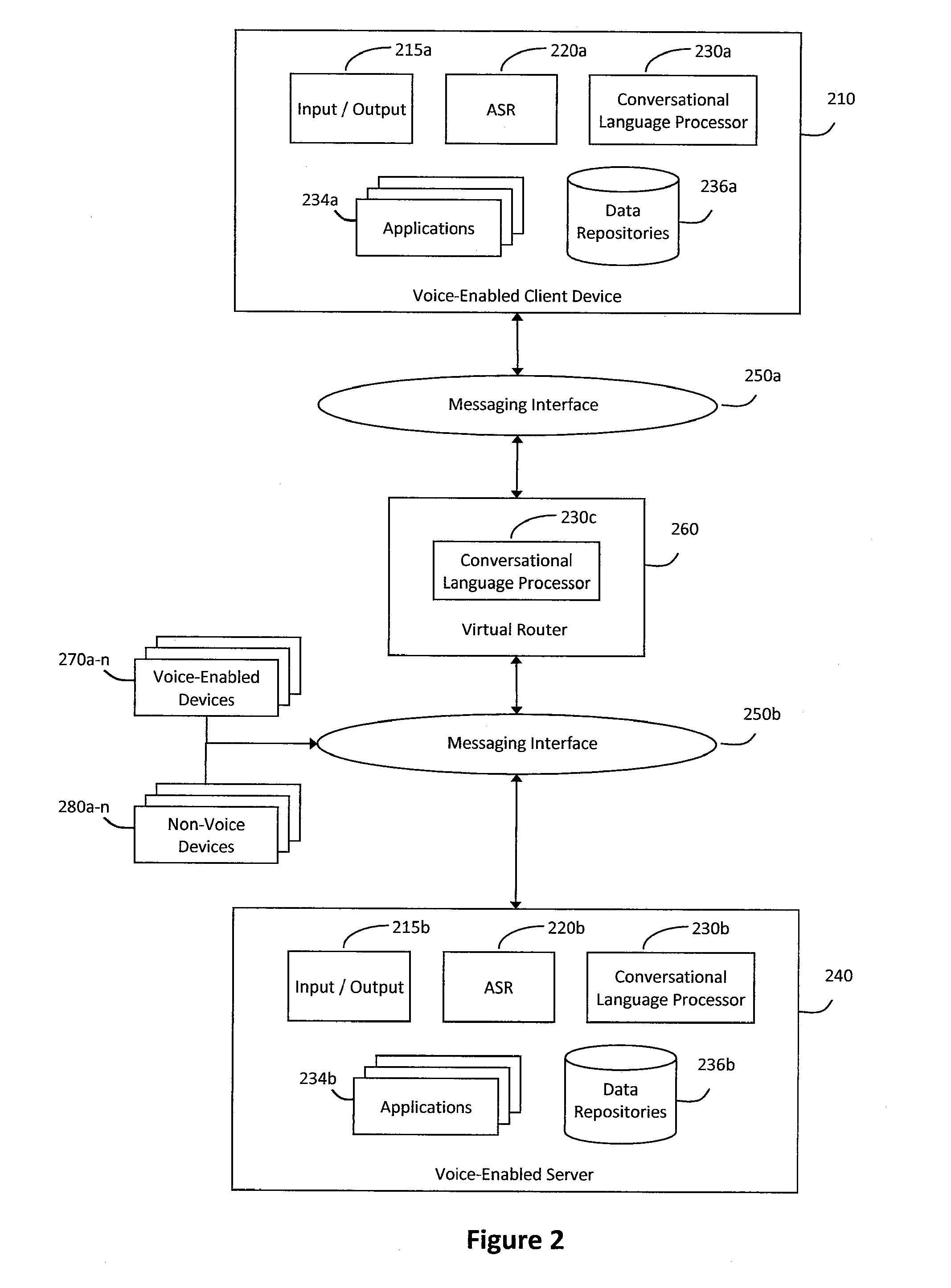

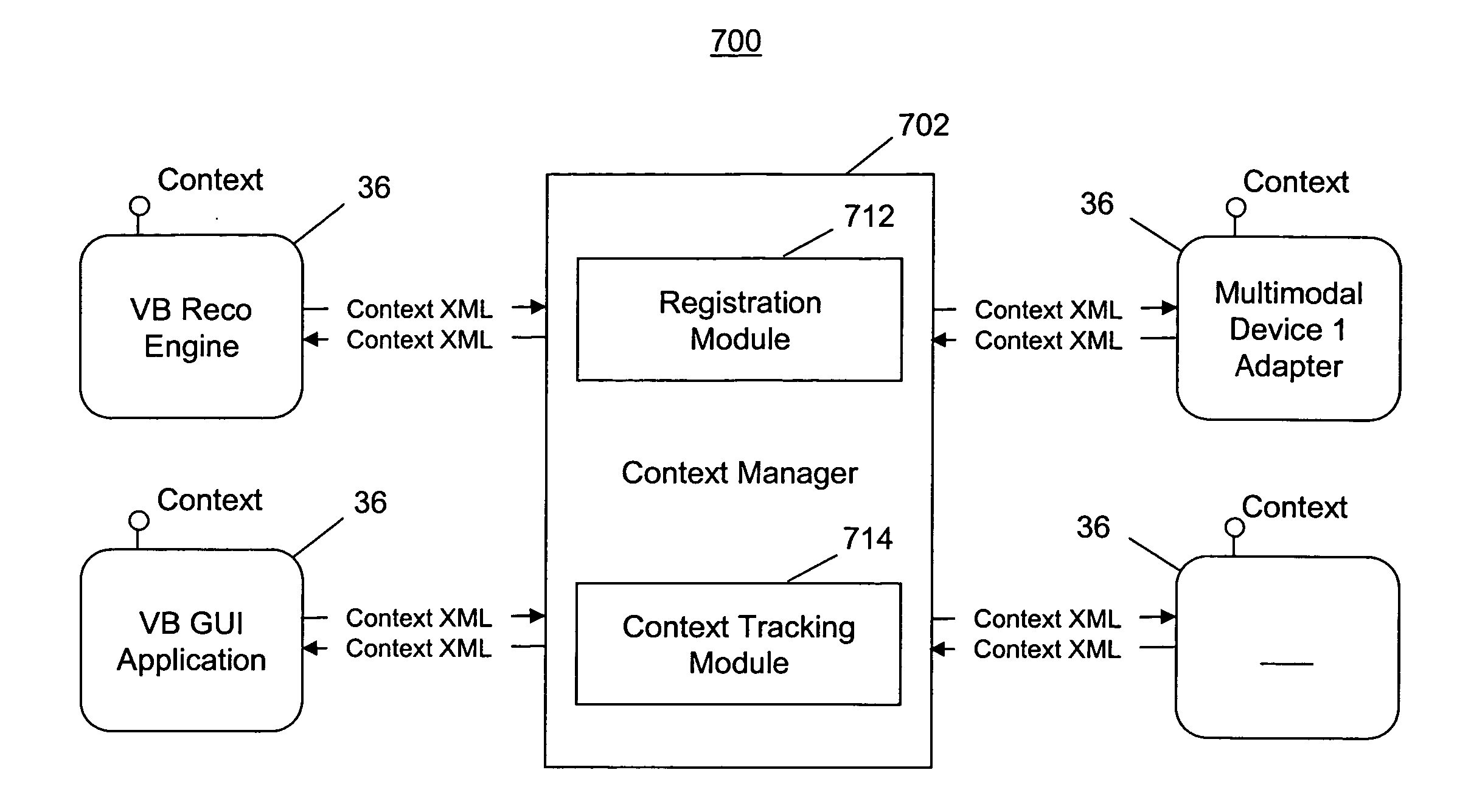

System and method for processing multi-modal device interactions in a natural language voice services environment

A system and method for processing multi-modal device interactions in a natural language voice services environment may be provided. In particular, one or more multi-modal device interactions may be received in a natural language voice services environment that includes one or more electronic devices. The multi-modal device interactions may include a non-voice interaction with at least one of the electronic devices or an application associated therewith, and may further include a natural language utterance relating to the non-voice interaction. Context relating to the non-voice interaction and the natural language utterance may be extracted and combined to determine an intent of the multi-modal device interaction, and a request may then be routed to one or more of the electronic devices based on the determined intent of the multi-modal device interaction.

Owner:ORACLE INT CORP

System and method for providing a natural language content dedication service

ActiveUS20110112921A1Less chargeNatural language translationSpeech recognitionSpeech soundWorld Wide Web

The system and method described herein may provide a natural language content dedication service in a voice services environment. In particular, providing the natural language content dedication service may generally include detecting multi-modal device interactions that include requests to dedicate content, identifying the content requested for dedication from natural language utterances included in the multi-modal device interactions, processing transactions for the content requested for dedication, processing natural language to customize the content for recipients of the dedications, and delivering the customized content to the recipients of the dedications.

Owner:VB ASSETS LLC

Systems and methods for responding to natural language speech utterance

ActiveUS7398209B2Promotes feeling of naturalOvercome deficienciesData processing applicationsNatural language data processingPrior informationDependability

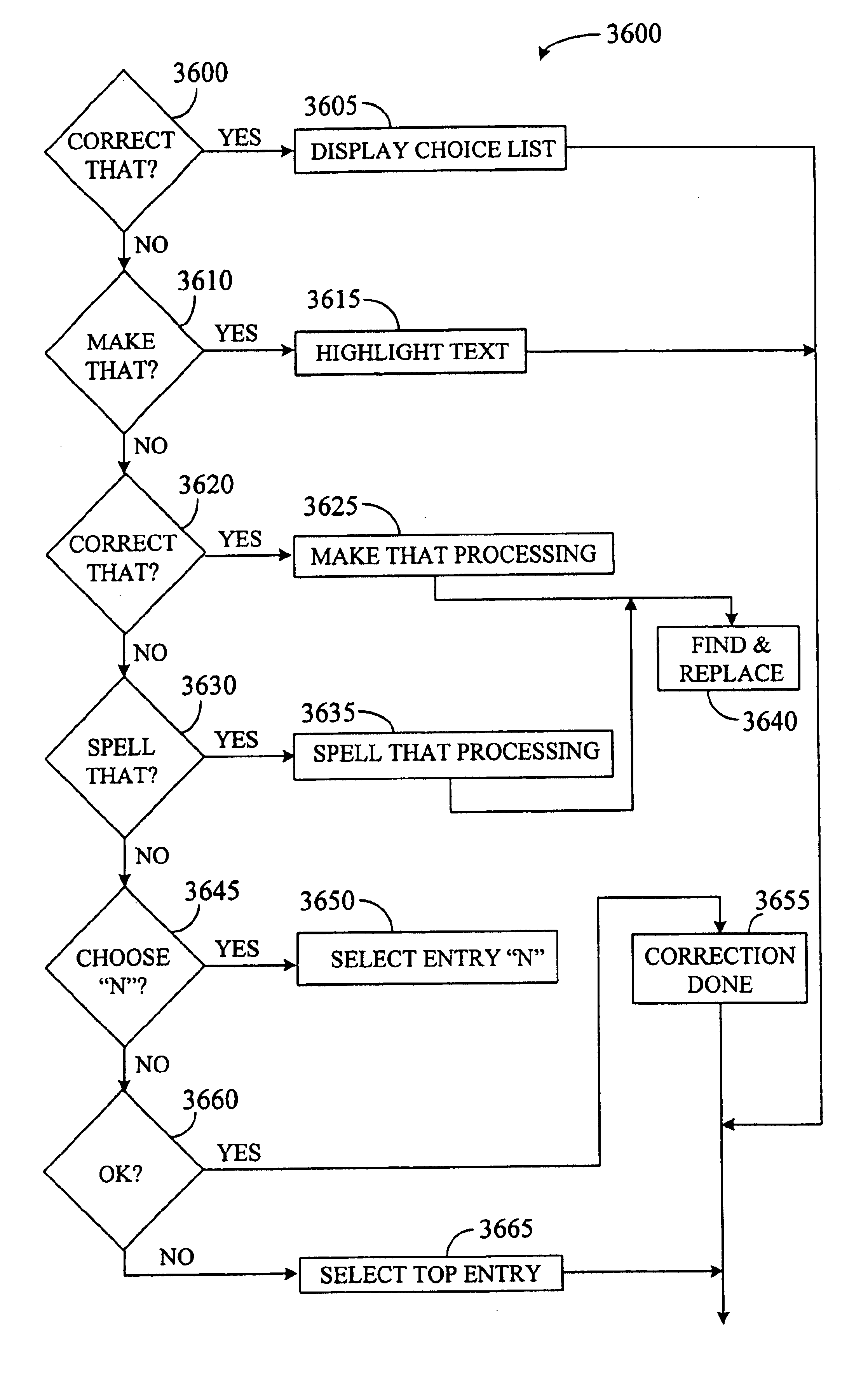

Systems and methods for receiving natural language queries and / or commands and execute the queries and / or commands. The systems and methods overcomes the deficiencies of prior art speech query and response systems through the application of a complete speech-based information query, retrieval, presentation and command environment. This environment makes significant use of context, prior information, domain knowledge, and user specific profile data to achieve a natural environment for one or more users making queries or commands in multiple domains. Through this integrated approach, a complete speech-based natural language query and response environment can be created. The systems and methods creates, stores and uses extensive personal profile information for each user, thereby improving the reliability of determining the context and presenting the expected results for a particular question or command.

Owner:DIALECT LLC

System and method for a cooperative conversational voice user interface

A cooperative conversational voice user interface is provided. The cooperative conversational voice user interface may build upon short-term and long-term shared knowledge to generate one or more explicit and / or implicit hypotheses about an intent of a user utterance. The hypotheses may be ranked based on varying degrees of certainty, and an adaptive response may be generated for the user. Responses may be worded based on the degrees of certainty and to frame an appropriate domain for a subsequent utterance. In one implementation, misrecognitions may be tolerated, and conversational course may be corrected based on subsequent utterances and / or responses.

Owner:VB ASSETS LLC

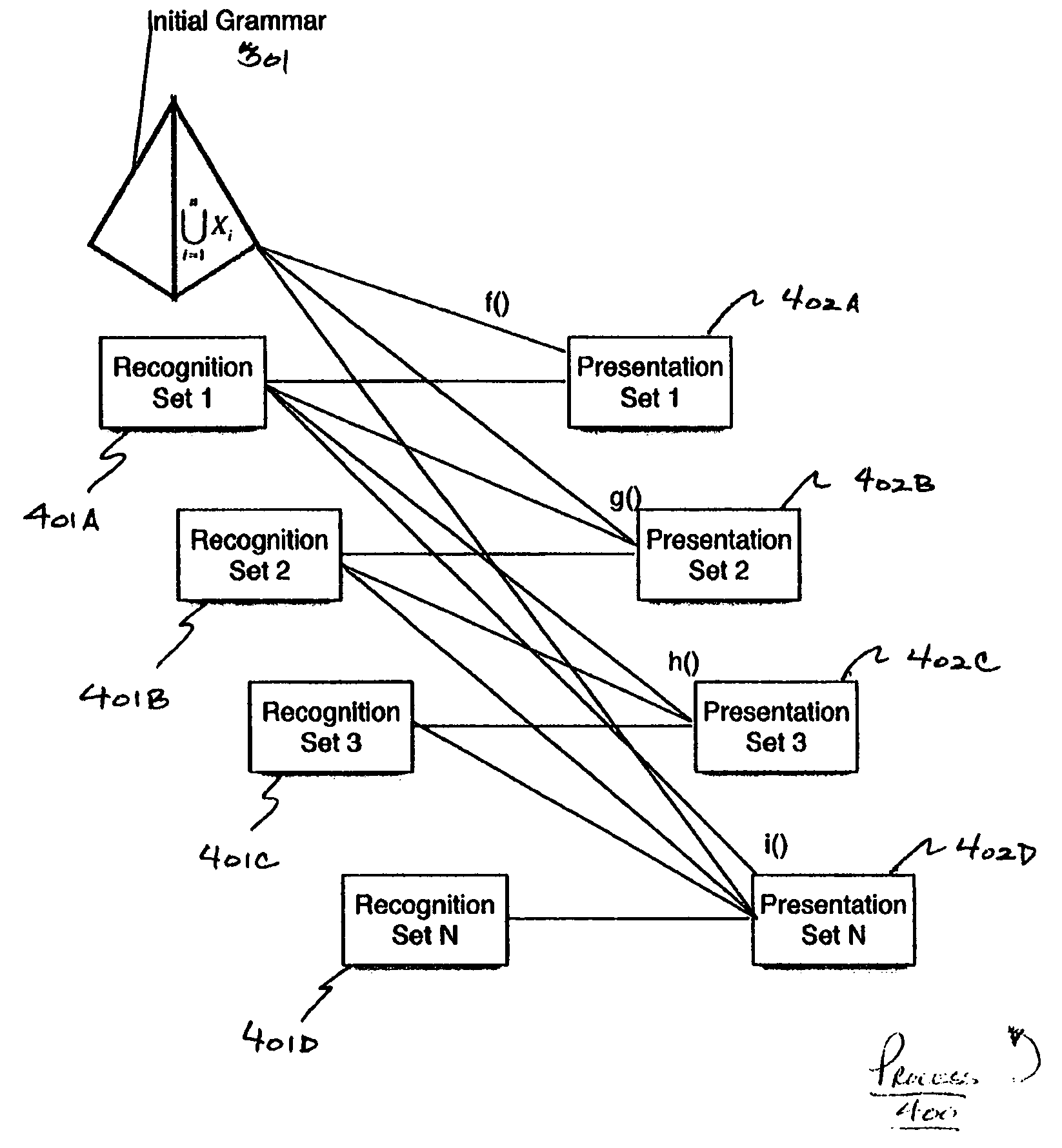

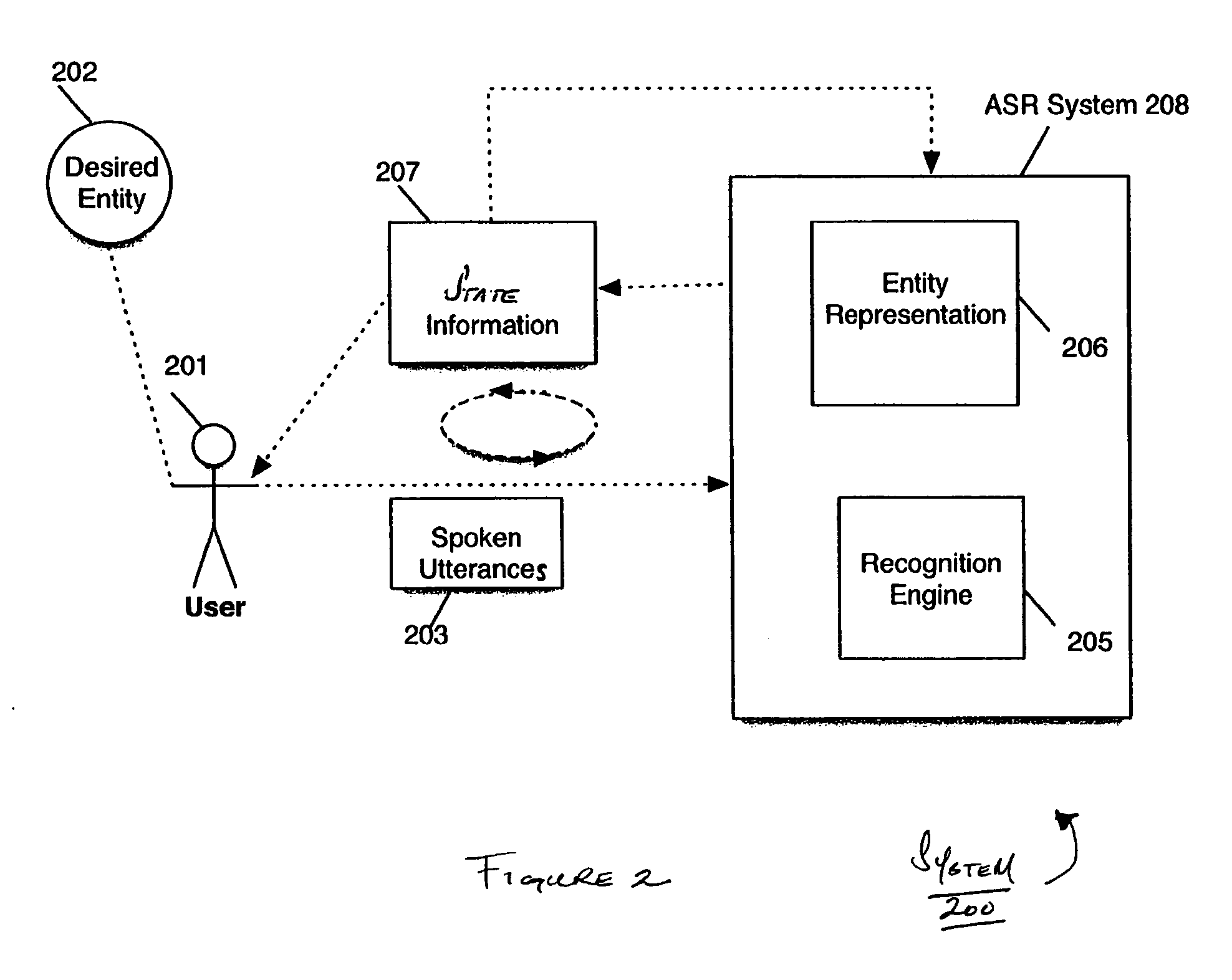

User interaction with voice information services

InactiveUS20060143007A1Reduce inputThe result is accurateSpeech recognitionAutomatic speechAncillary service

An iterative process is provided for interacting with a voice information service. Such a service may permit, for example, a user to search one or more databases and may provide one or more search results to the user. Such a service may be suitable, for example, for searching for a desired entity or object within the database(s) using speech as an input and navigational tool. Applications of such a service may include, for instance, speech-enabled searching services such as a directory assistance service or any other service or application involving a search of information. In one example implementation, an automatic speech recognition (ASR) system is provided that performs a speech recognition and database search in an iterative fashion. With each iteration, feedback may be provided to the user presenting potentially relevant results. In one specific ASR system, a user desiring to locate information relating to a particular entity or object provides an utterance to the ASR. Upon receiving the utterance, the ASR determines a recognition set of potentially relevant search results related to the utterance and presents to the user recognition set information in an interface of the ASR. The recognition set information includes, for instance, reference information stored internally at the ASR for a plurality of potentially relevant recognition results. The recognition set information may be used as input to the ASR providing a feedback mechanism. In one example implementation, the recognition set information may be used to determine a restricted grammar for performing a further recognition.

Owner:MICROSOFT TECH LICENSING LLC

System and method for a cooperative conversational voice user interface

Owner:VB ASSETS LLC

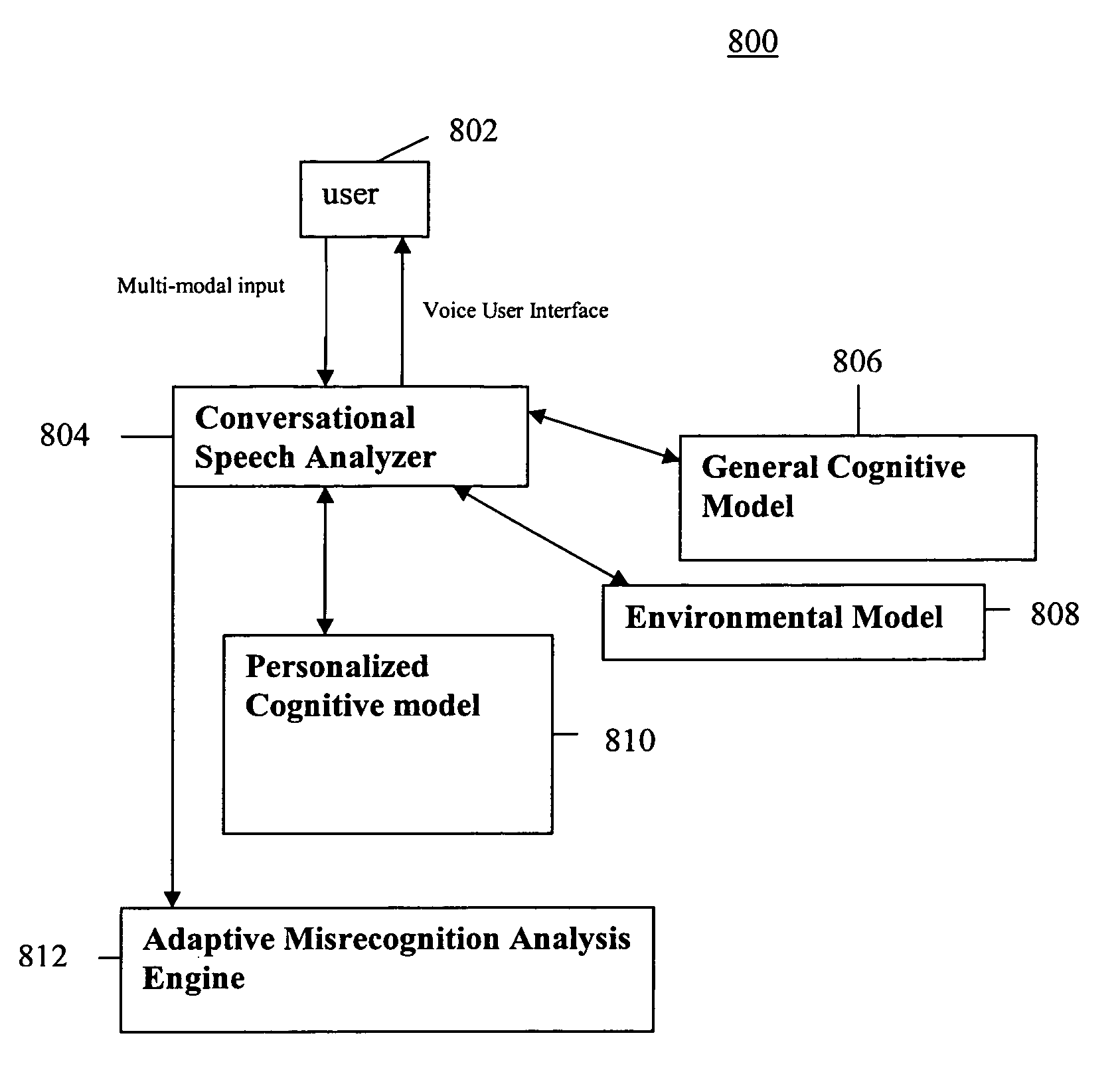

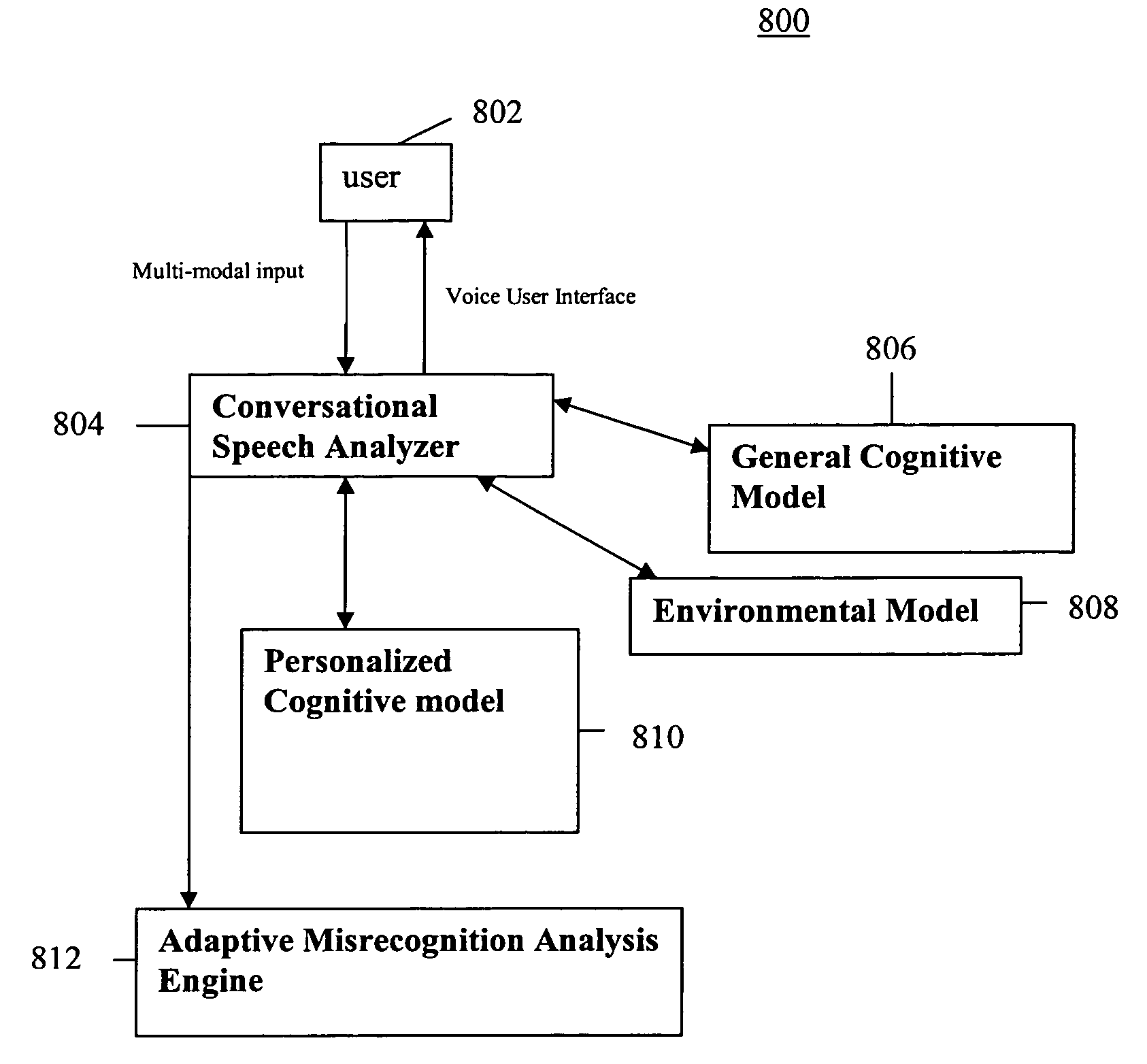

System and method of supporting adaptive misrecognition in conversational speech

ActiveUS20070038436A1Improve maximizationHigh bandwidthNatural language data processingSpeech recognitionPersonalizationSpoken language

A system and method are provided for receiving speech and / or non-speech communications of natural language questions and / or commands and executing the questions and / or commands. The invention provides a conversational human-machine interface that includes a conversational speech analyzer, a general cognitive model, an environmental model, and a personalized cognitive model to determine context, domain knowledge, and invoke prior information to interpret a spoken utterance or a received non-spoken message. The system and method creates, stores and uses extensive personal profile information for each user, thereby improving the reliability of determining the context of the speech or non-speech communication and presenting the expected results for a particular question or command.

Owner:DIALECT LLC

System and method of supporting adaptive misrecognition in conversational speech

ActiveUS7620549B2Promotes feeling of naturalSignificant to useNatural language data processingSpeech recognitionPersonalizationHuman–machine interface

A system and method are provided for receiving speech and / or non-speech communications of natural language questions and / or commands and executing the questions and / or commands. The invention provides a conversational human-machine interface that includes a conversational speech analyzer, a general cognitive model, an environmental model, and a personalized cognitive model to determine context, domain knowledge, and invoke prior information to interpret a spoken utterance or a received non-spoken message. The system and method creates, stores and uses extensive personal profile information for each user, thereby improving the reliability of determining the context of the speech or non-speech communication and presenting the expected results for a particular question or command.

Owner:DIALECT LLC

Systems and methods for responding to natural language speech utterance

ActiveUS7640160B2Promotes feeling of naturalSignificant to useDigital data information retrievalSemantic analysisPrior informationSpeech sound

Systems and methods are provided for receiving speech and non-speech communications of natural language questions and / or commands, transcribing the speech and non-speech communications to textual messages, and executing the questions and / or commands. The invention applies context, prior information, domain knowledge, and user specific profile data to achieve a natural environment for one or more users presenting questions or commands across multiple domains. The systems and methods creates, stores and uses extensive personal profile information for each user, thereby improving the reliability of determining the context of the speech and non-speech communications and presenting the expected results for a particular question or command.

Owner:DIALECT LLC

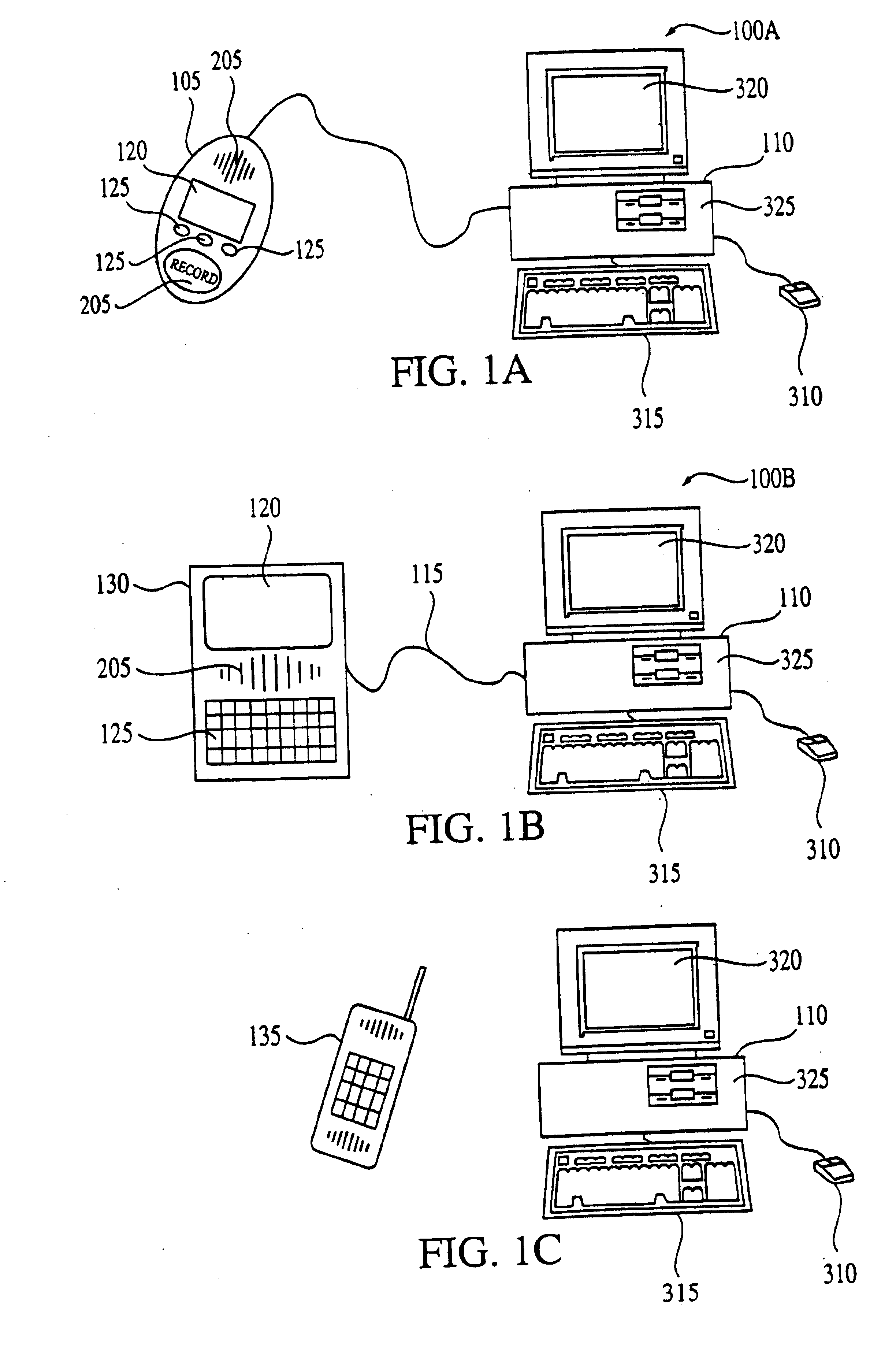

Performing actions identified in recognized speech

InactiveUS6839669B1Easy to handleEliminate processingSpeech recognitionSpeech identificationUtterance

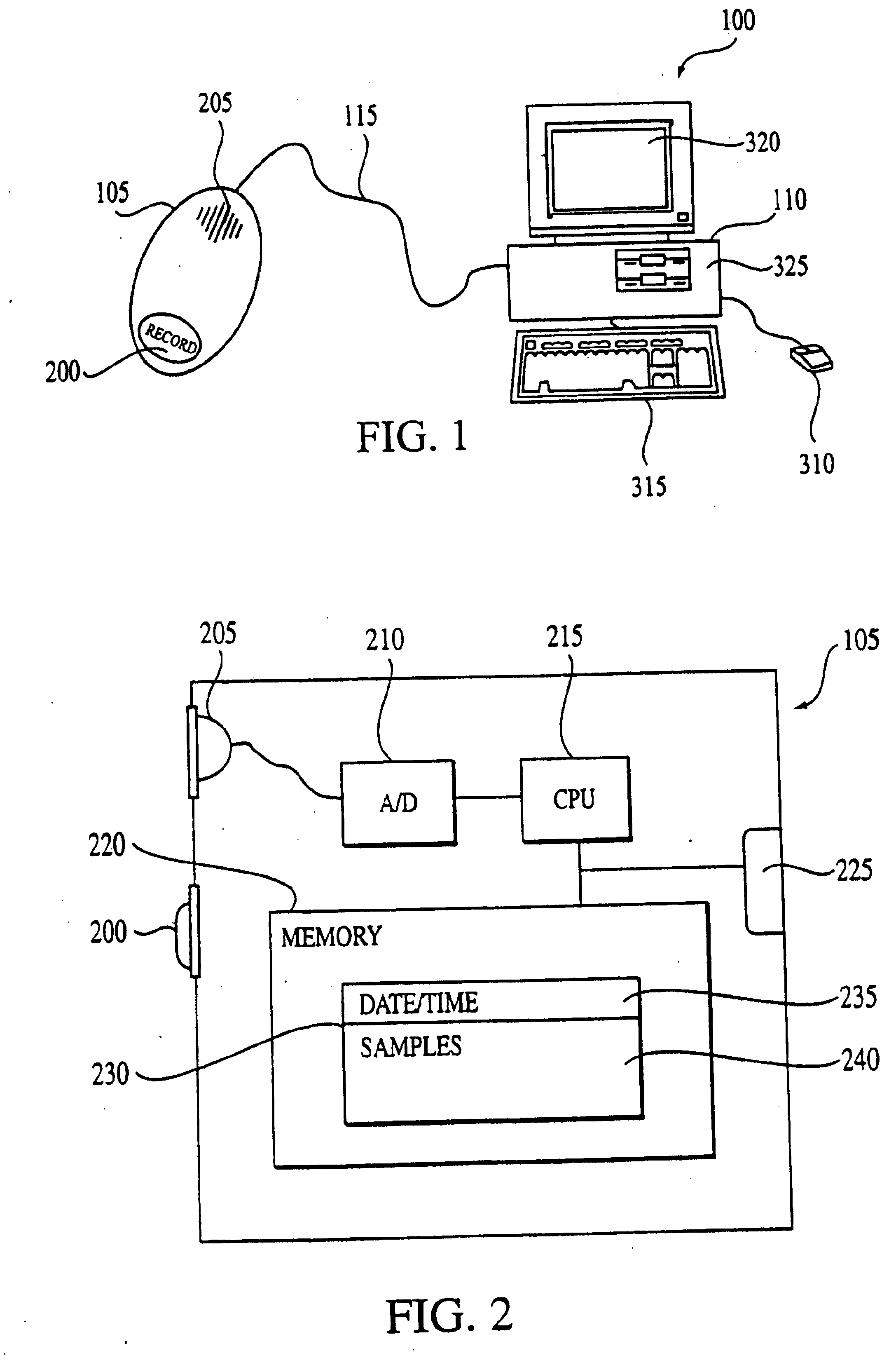

A computer is used to perform recorded actions. The computer receives recorded spoken utterances of actions. The computer then performs speech recognition on the recorded spoken utterances to generate texts of the actions. The computer then parses the texts to determine properties of the actions. After parsing the texts, permits the user to indicate that the user has reviewed one or more actions. The computer then automatically carries out the actions indicated as having been reviewed by the user.

Owner:NUANCE COMM INC

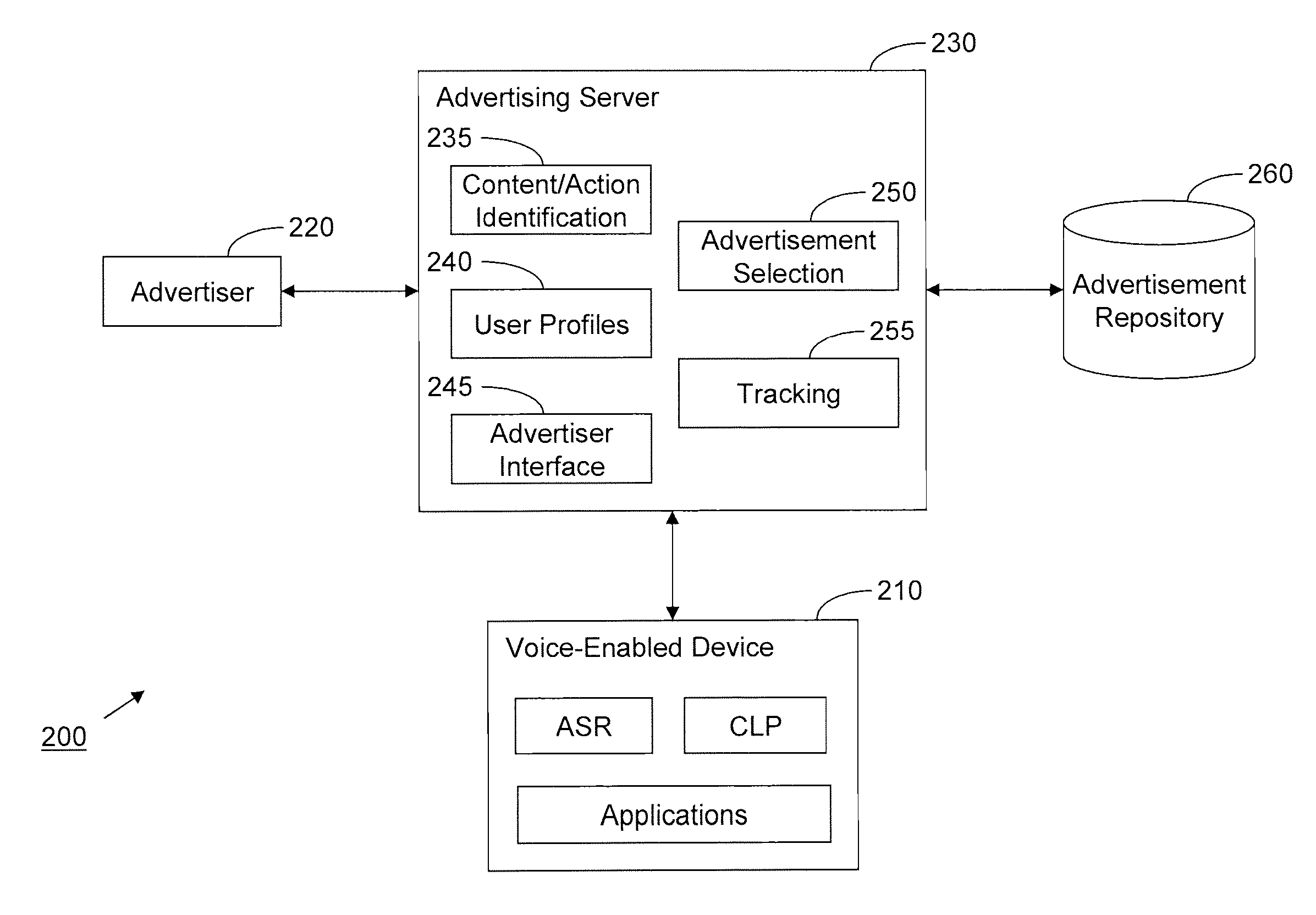

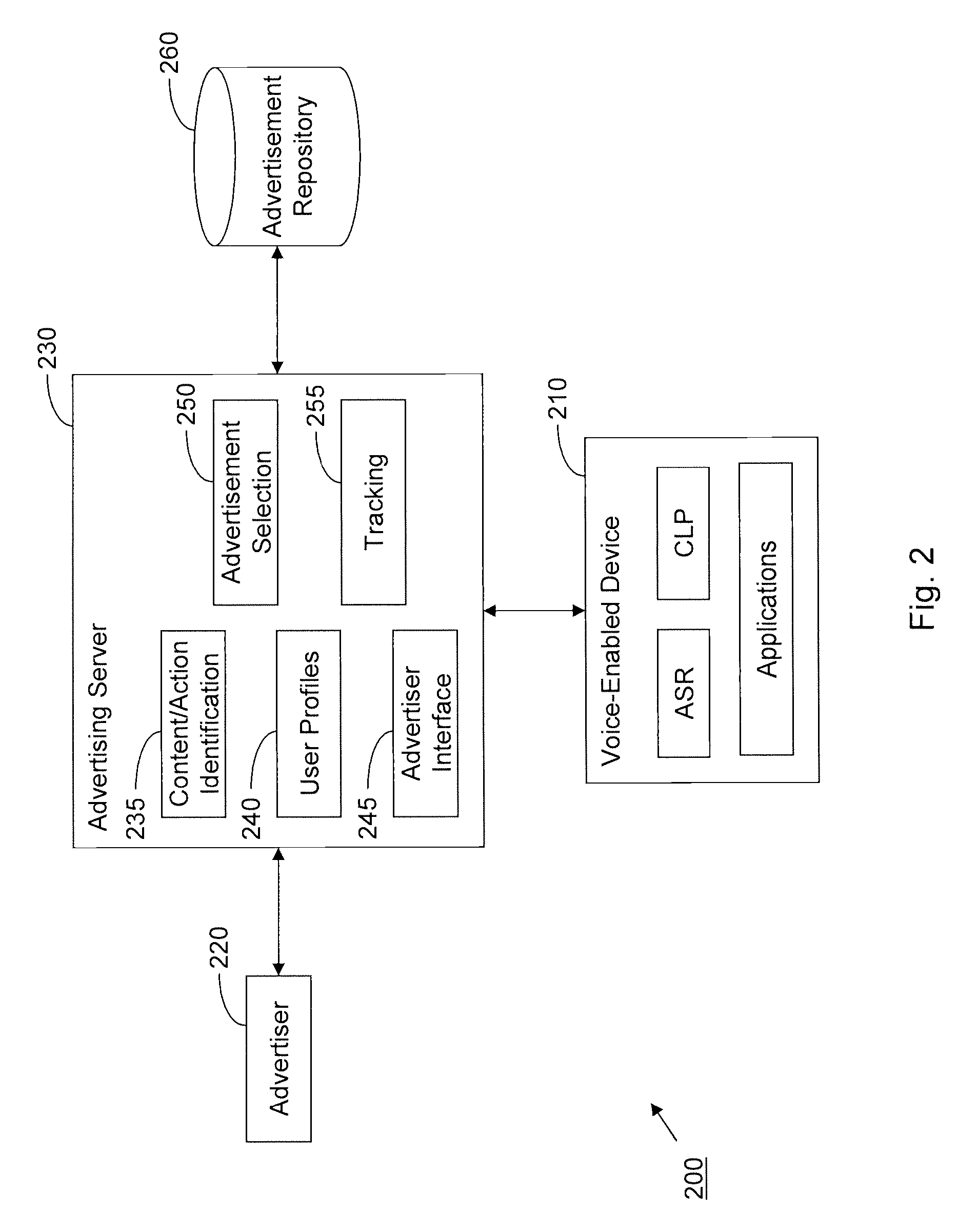

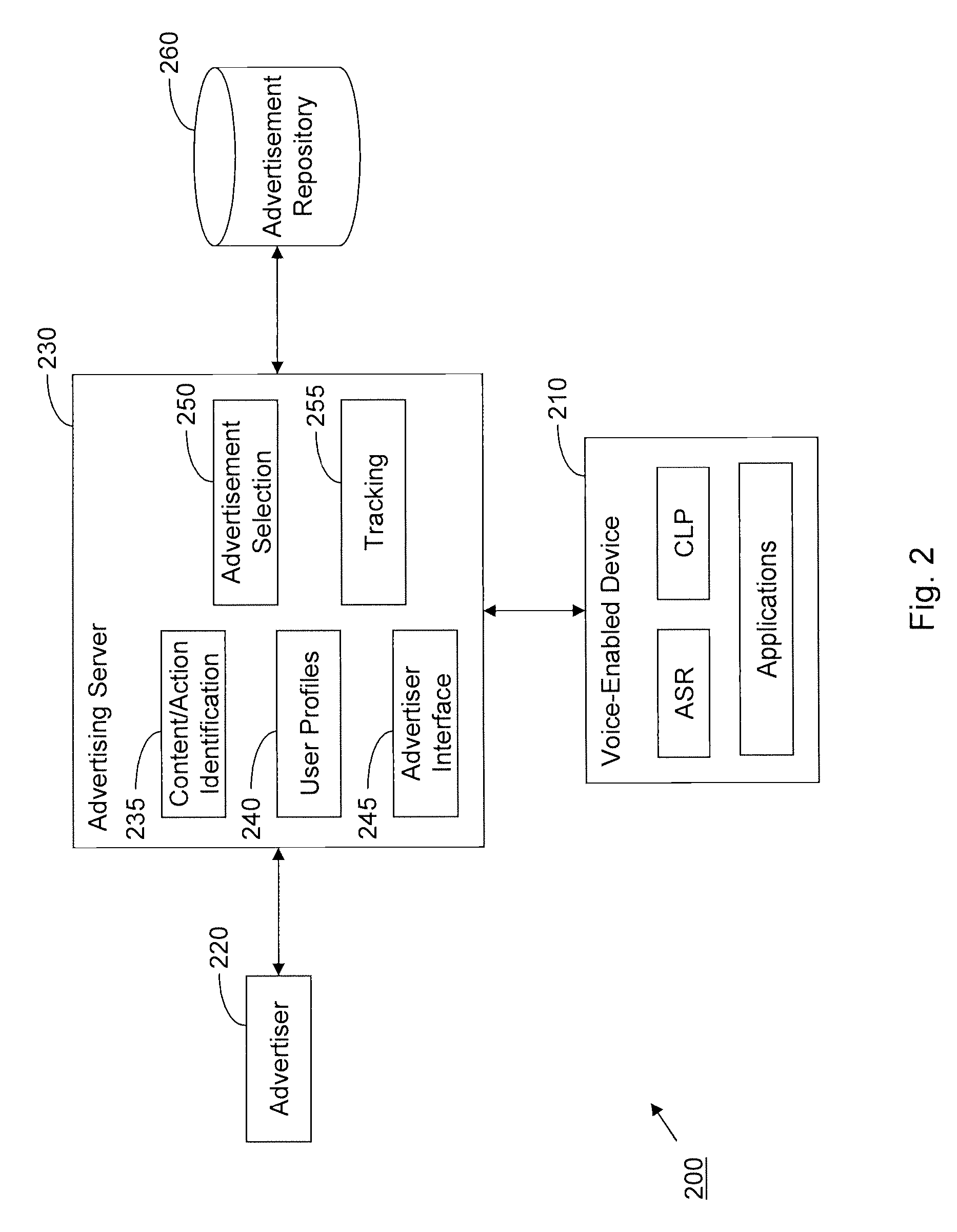

System and method for selecting and presenting advertisements based on natural language processing of voice-based input

A system and method for selecting and presenting advertisements based on natural language processing of voice-based inputs is provided. A user utterance may be received at an input device, and a conversational, natural language processor may identify a request from the utterance. At least one advertisement may be selected and presented to the user based on the identified request. The advertisement may be presented as a natural language response, thereby creating a conversational feel to the presentation of advertisements. The request and the user's subsequent interaction with the advertisement may be tracked to build user statistical profiles, thus enhancing subsequent selection and presentation of advertisements.

Owner:VB ASSETS LLC

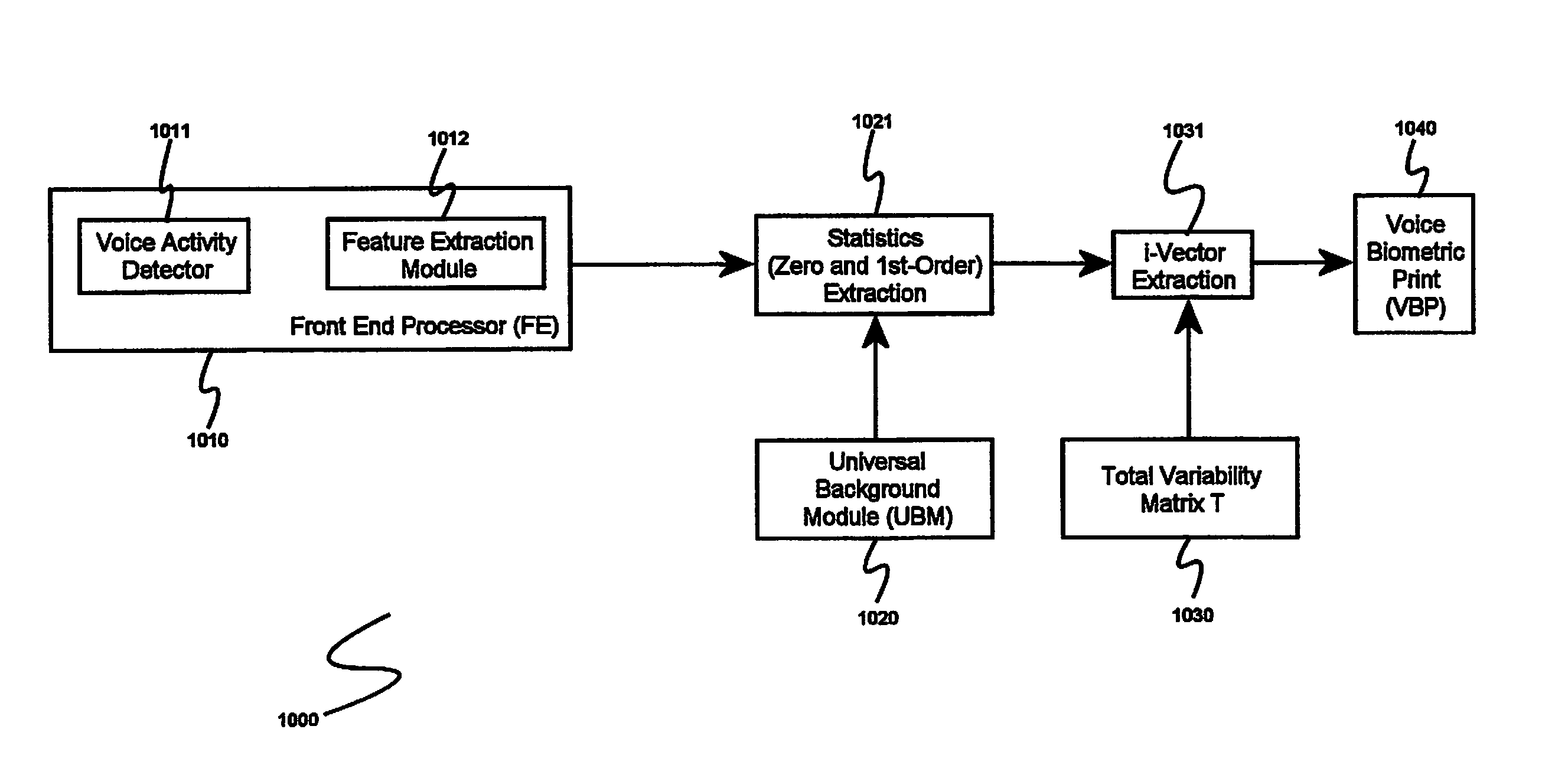

System and method for speaker recognition on mobile devices

InactiveUS20130225128A1Reduce storageReduce processUnauthorised/fraudulent call preventionEavesdropping prevention circuitsSpeaker recognition systemMobile device

A speaker recognition system for authenticating a mobile device user includes an enrollment and learning software module, a voice biometric authentication software module, and a secure software application. Upon request by a user of the mobile device, the enrollment and learning software module displays text prompts to the user, receives speech utterances from the user, and produces a voice biometric print. The enrollment and training software module determines when a voice biometric print has met at least a quality threshold before storing it on the mobile device. The secure software application prompts a user requiring authentication to repeat an utterance based at least on an attribute of a selected voice biometric print, receives a corresponding utterance, requests the voice biometric authentication software module to verify the identity of the second user using the utterance, and, if the user is authenticated, imports the voice biometric print.

Owner:CIRRUS LOGIC INC

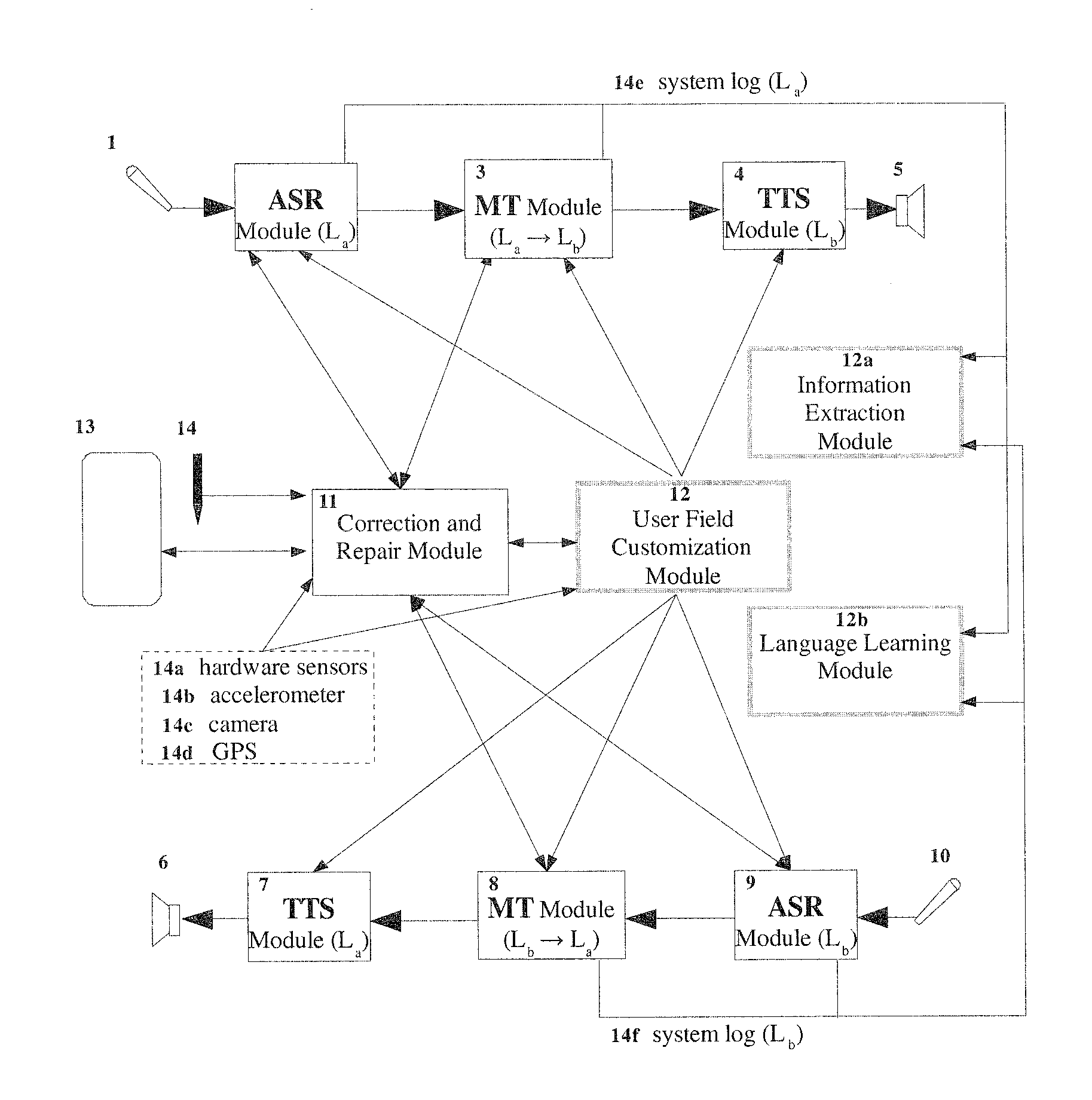

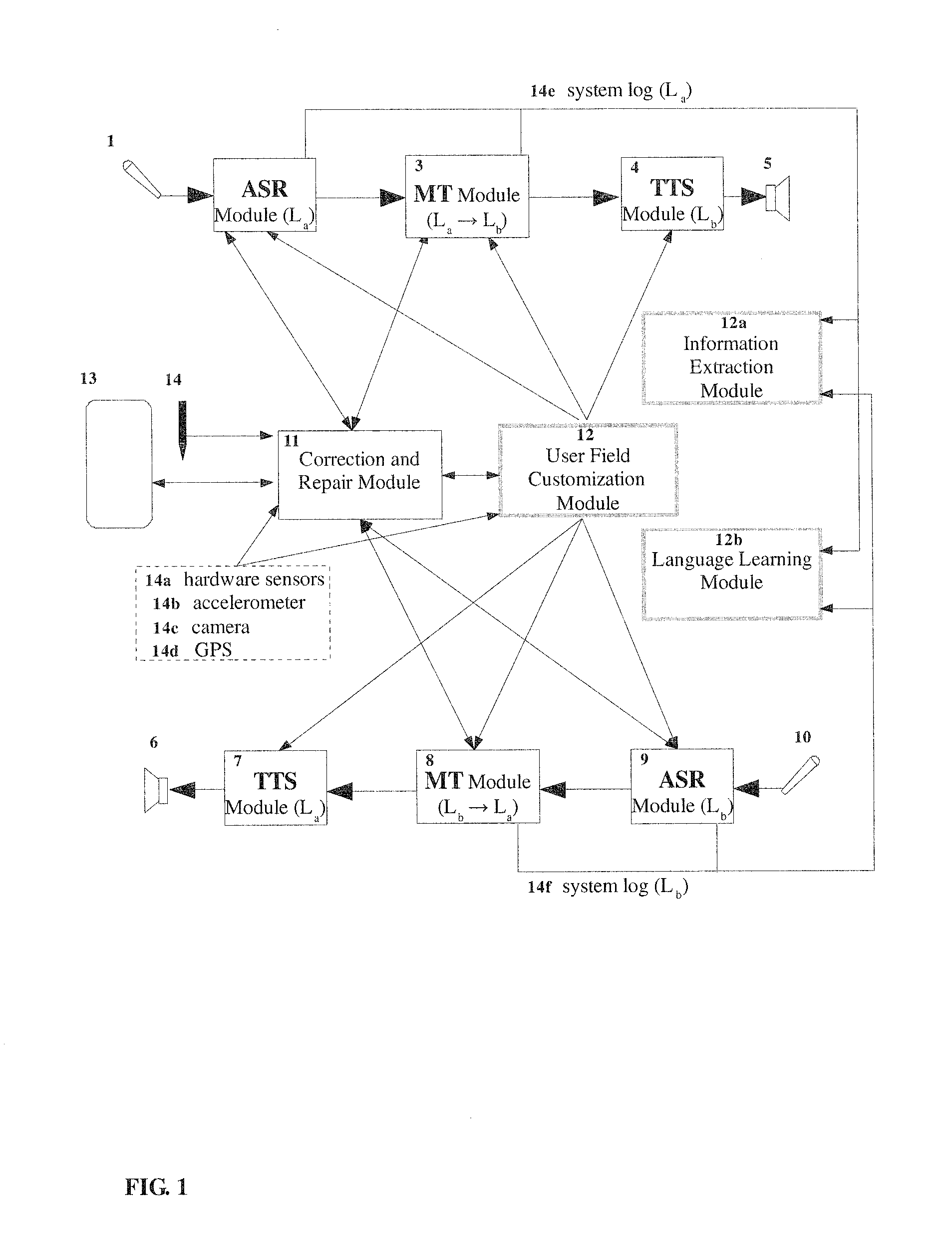

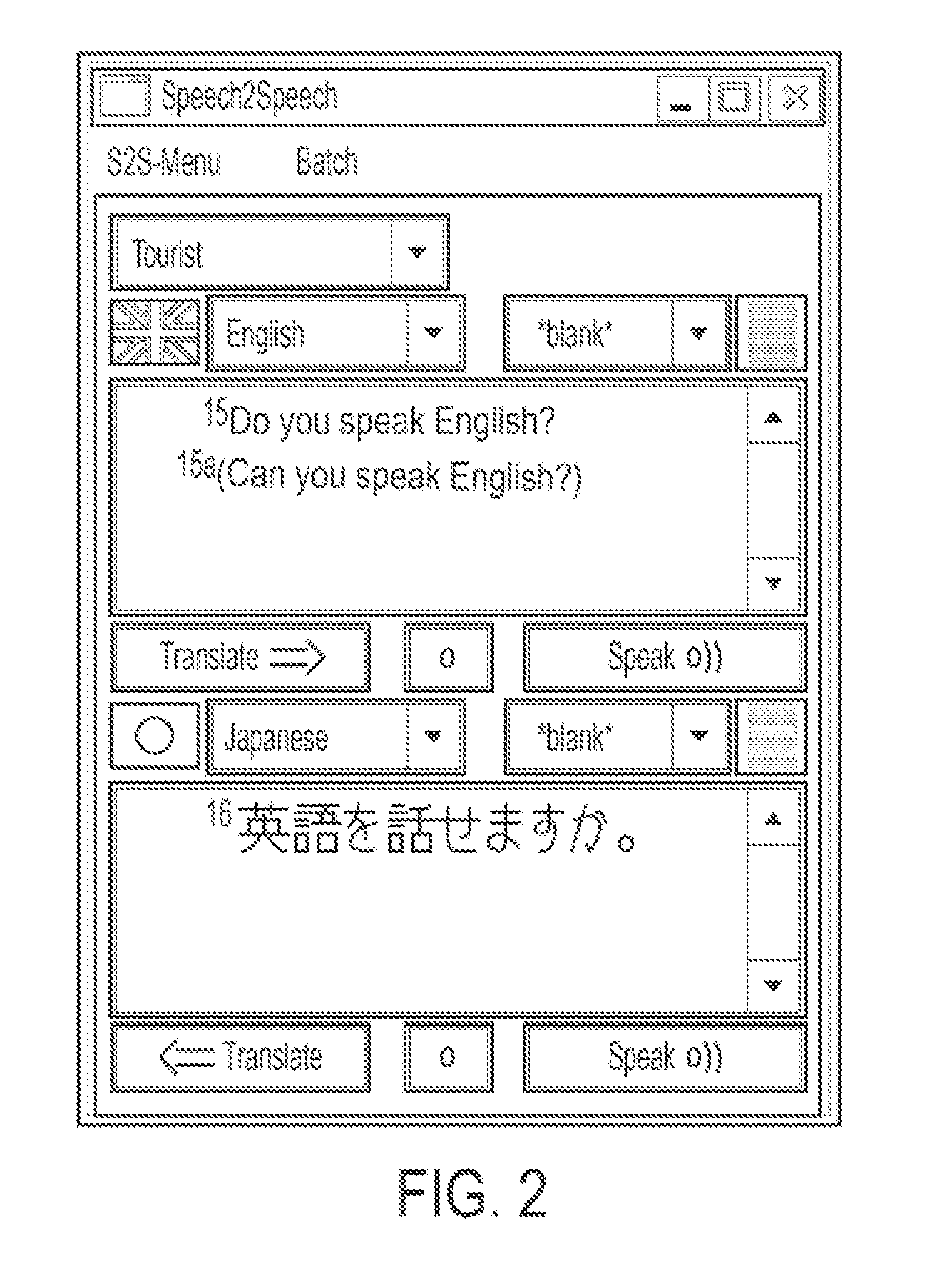

Enhanced speech-to-speech translation system and methods

ActiveUS20110307241A1Improve usabilityNatural language translationSpeech recognitionThird partySpeech to speech translation

A speech translation system and methods for cross-lingual communication that enable users to improve and modify content and usage of the system and easily abort or reset translation. The system includes a speech recognition module configured for accepting an utterance, a machine translation module, an interface configured to communicate the utterance and proposed translation, a correction module and an abort action unit that removes any hypotheses or partial hypotheses and terminates translation. The system also includes modules for storing favorites, changing language mode, automatically identifying language, providing language drills, viewing third party information relevant to conversation, among other things.

Owner:META PLATFORMS INC

System and method for selecting and presenting advertisements based on natural language processing of voice-based input

A system and method for selecting and presenting advertisements based on natural language processing of voice-based inputs is provided. A user utterance may be received at an input device, and a conversational, natural language processor may identify a request from the utterance. At least one advertisement may be selected and presented to the user based on the identified request. The advertisement may be presented as a natural language response, thereby creating a conversational feel to the presentation of advertisements. The request and the user's subsequent interaction with the advertisement may be tracked to build user statistical profiles, thus enhancing subsequent selection and presentation of advertisements.

Owner:VB ASSETS LLC

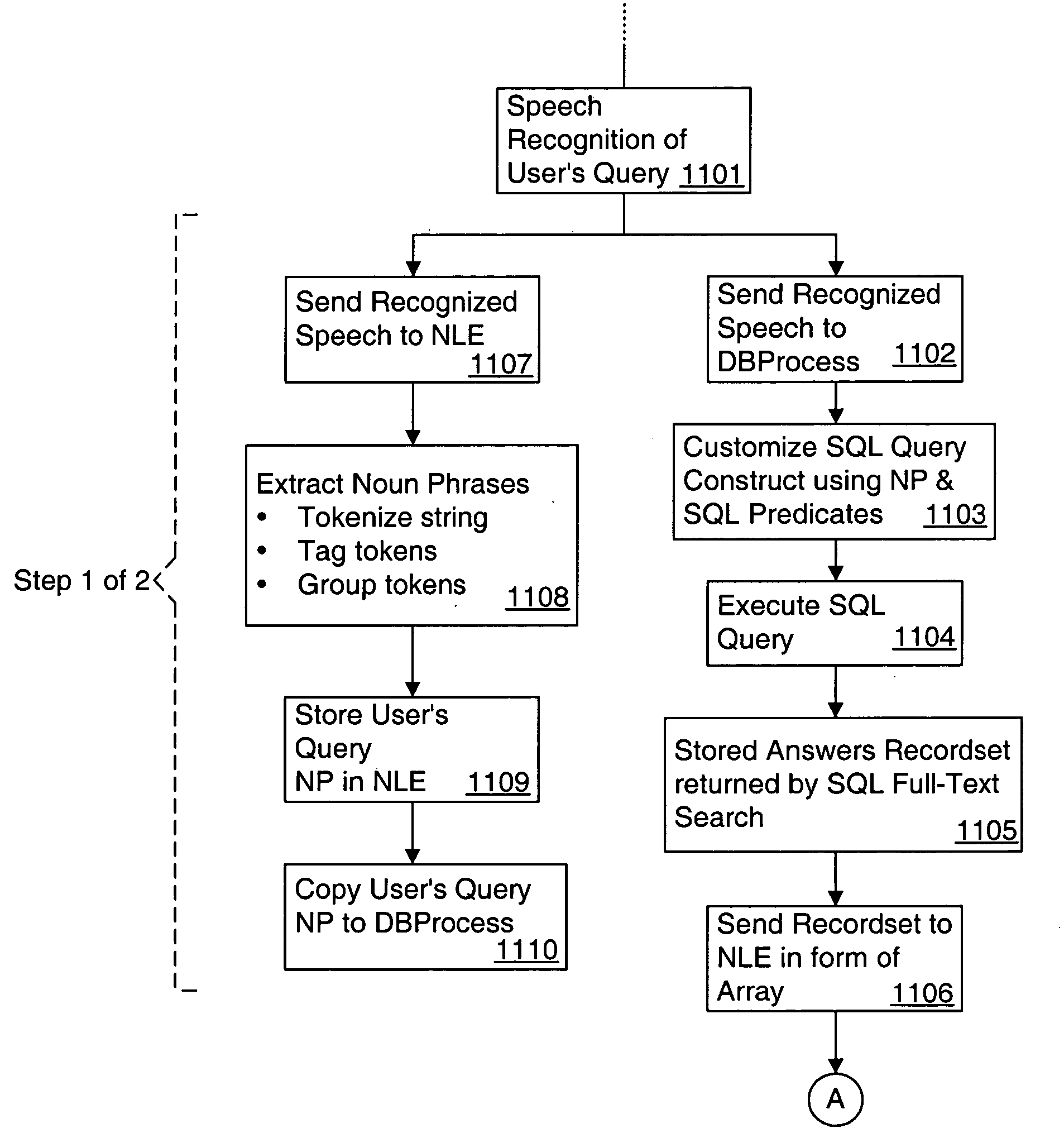

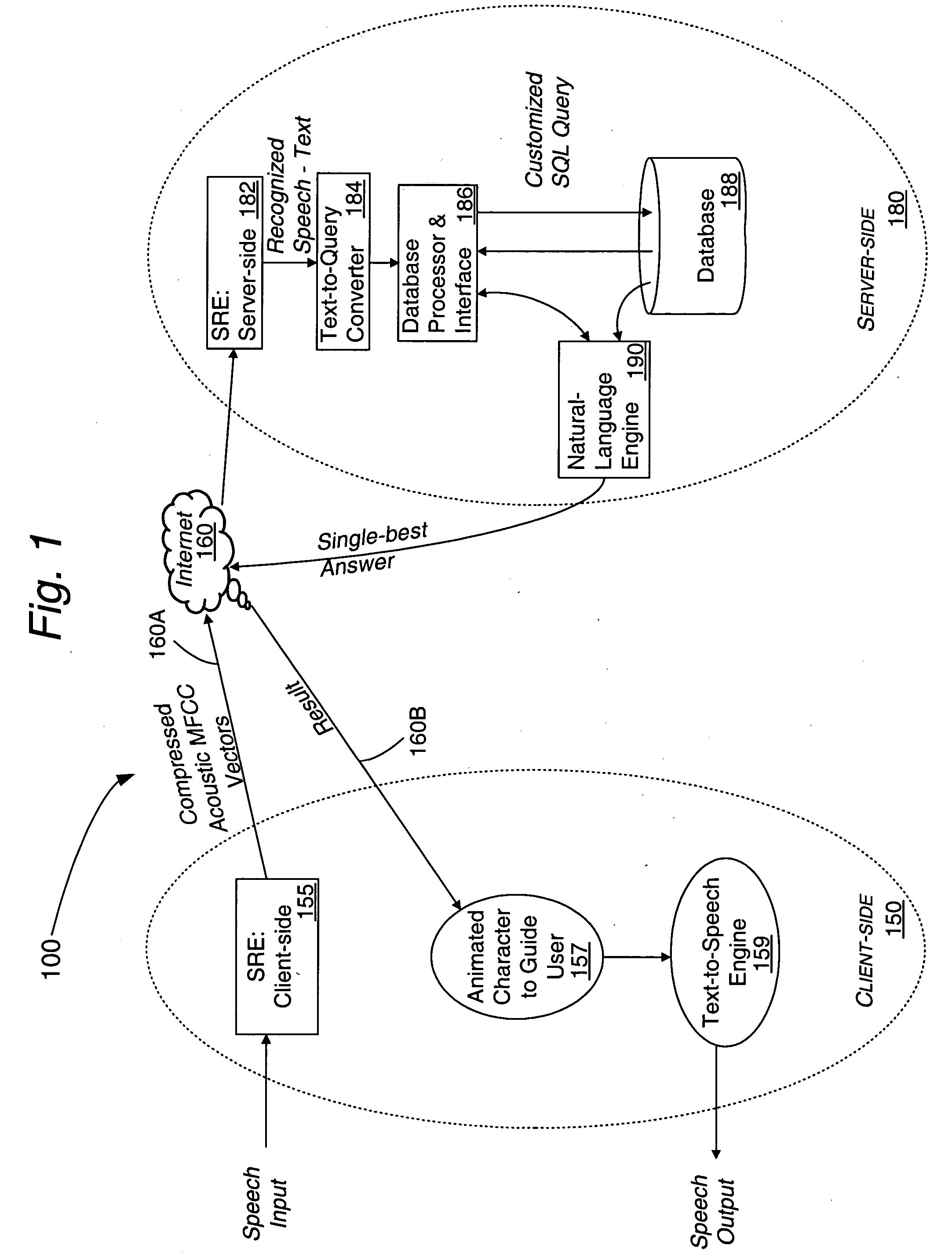

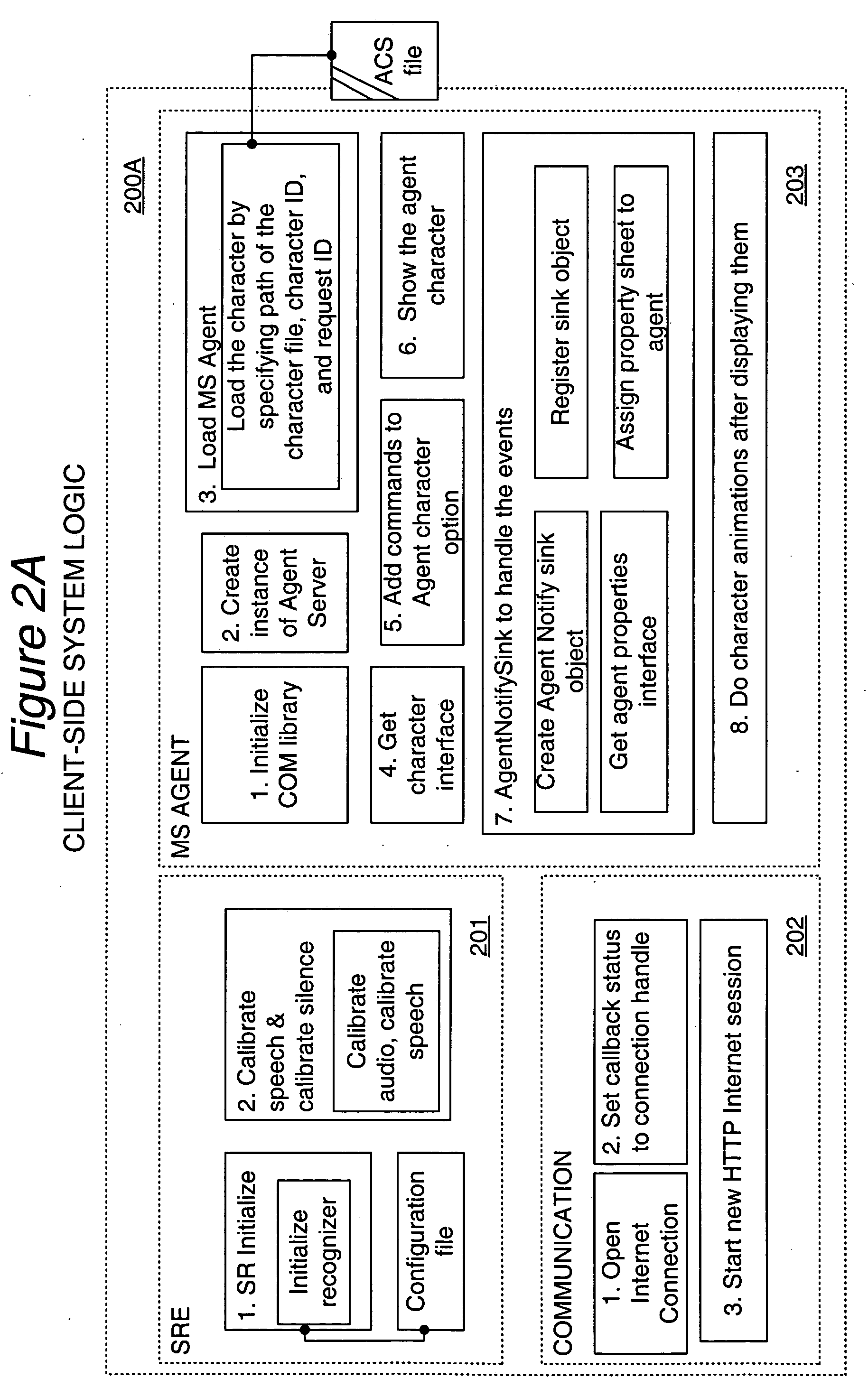

Distributed real time speech recognition system

InactiveUS20050080625A1Facilitates query recognitionAccurate best responseNatural language translationData processing applicationsFull text searchTime system

A real-time system incorporating speech recognition and linguistic processing for recognizing a spoken query by a user and distributed between client and server, is disclosed. The system accepts user's queries in the form of speech at the client where minimal processing extracts a sufficient number of acoustic speech vectors representing the utterance. These vectors are sent via a communications channel to the server where additional acoustic vectors are derived. Using Hidden Markov Models (HMMs), and appropriate grammars and dictionaries conditioned by the selections made by the user, the speech representing the user's query is fully decoded into text (or some other suitable form) at the server. This text corresponding to the user's query is then simultaneously sent to a natural language engine and a database processor where optimized SQL statements are constructed for a full-text search from a database for a recordset of several stored questions that best matches the user's query. Further processing in the natural language engine narrows the search to a single stored question. The answer corresponding to this single stored question is next retrieved from the file path and sent to the client in compressed form. At the client, the answer to the user's query is articulated to the user using a text-to-speech engine in his or her native natural language. The system requires no training and can operate in several natural languages.

Owner:NUANCE COMM INC

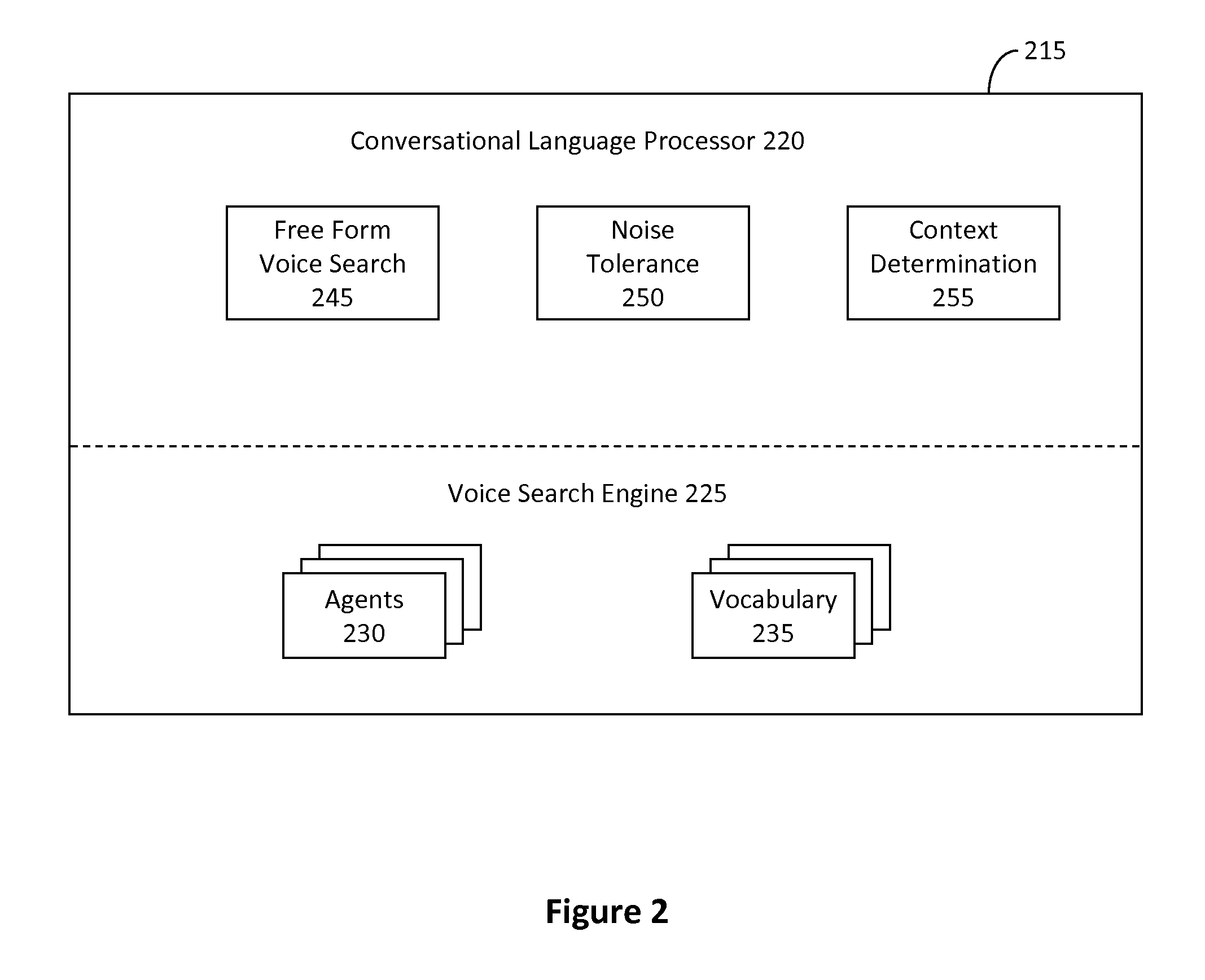

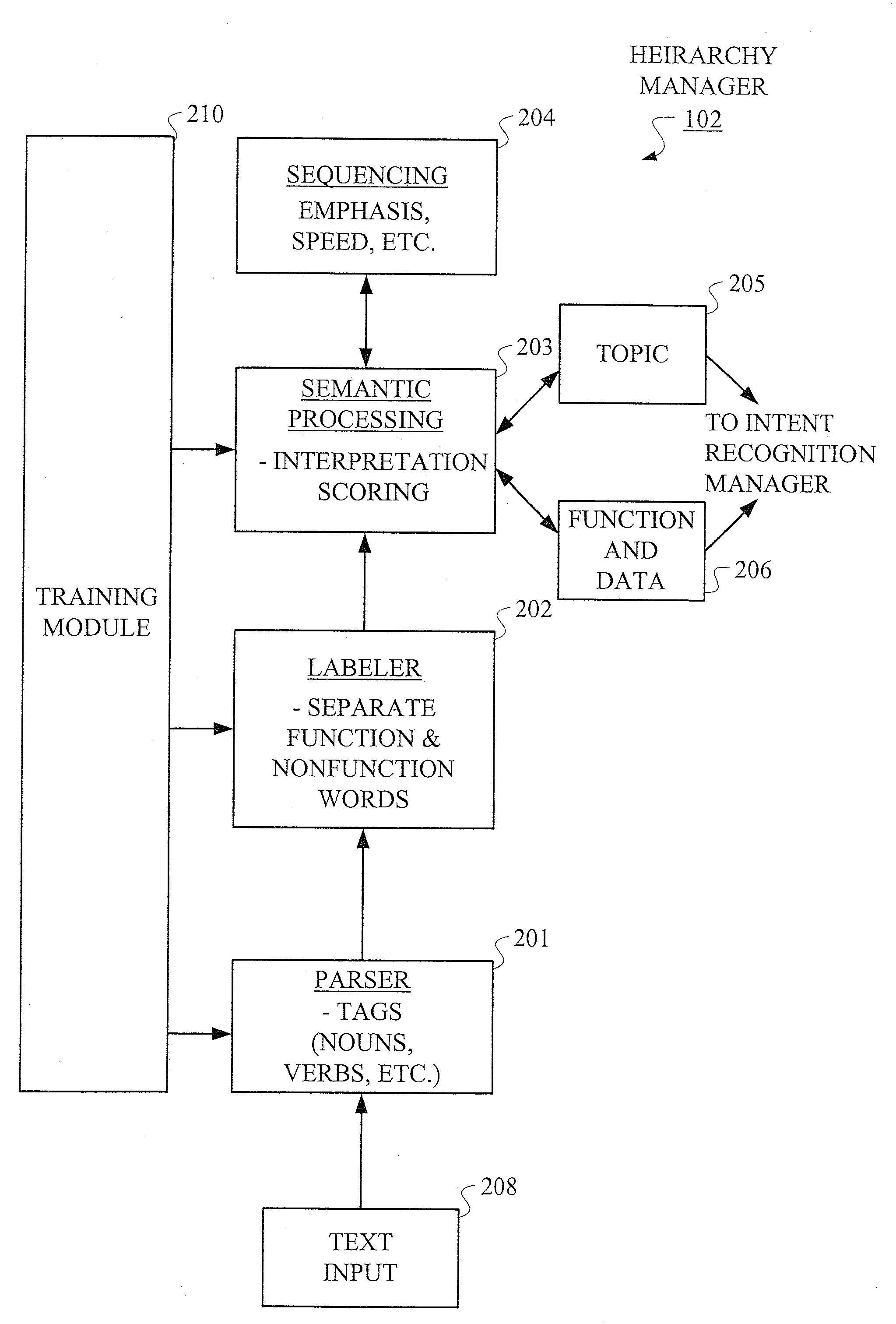

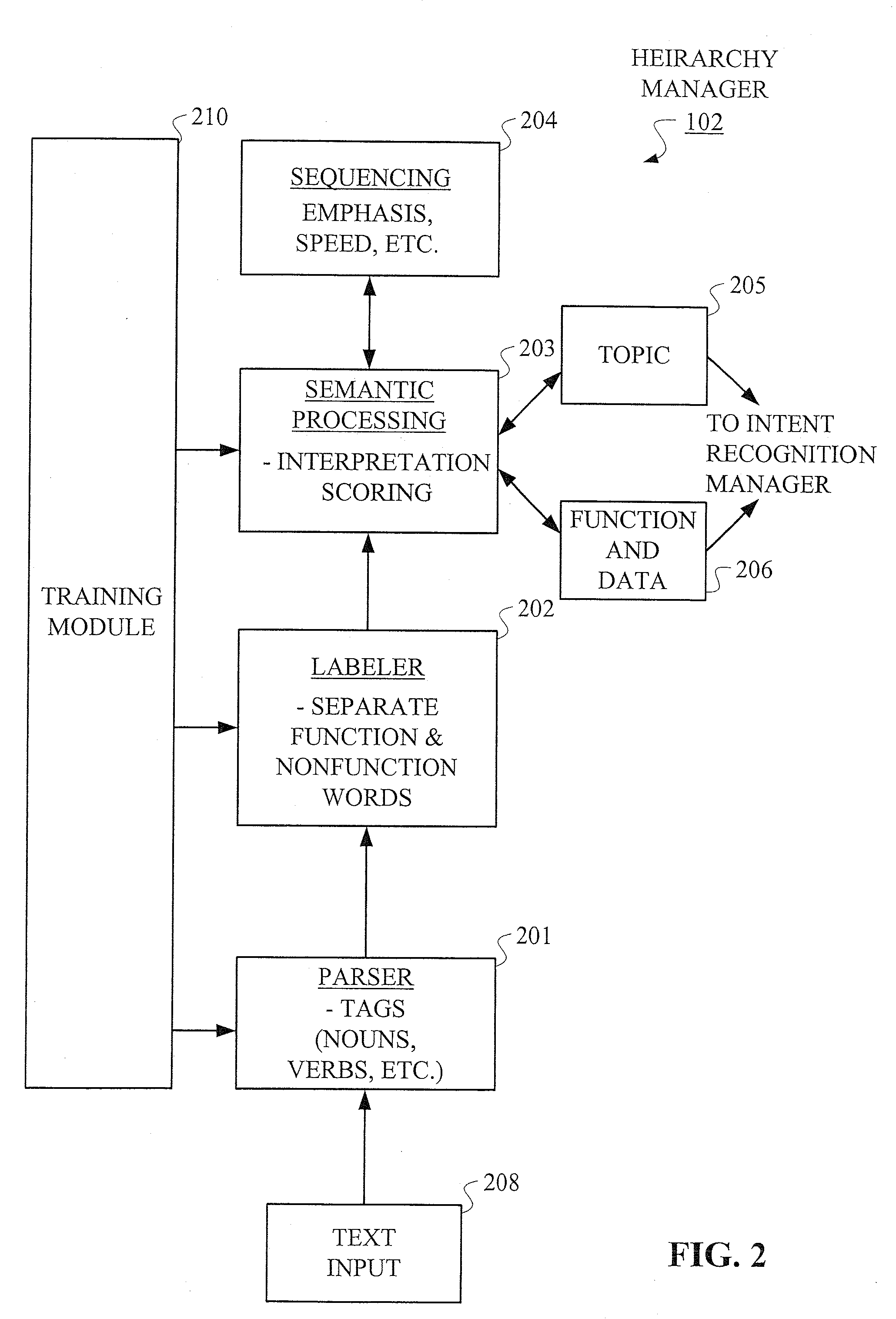

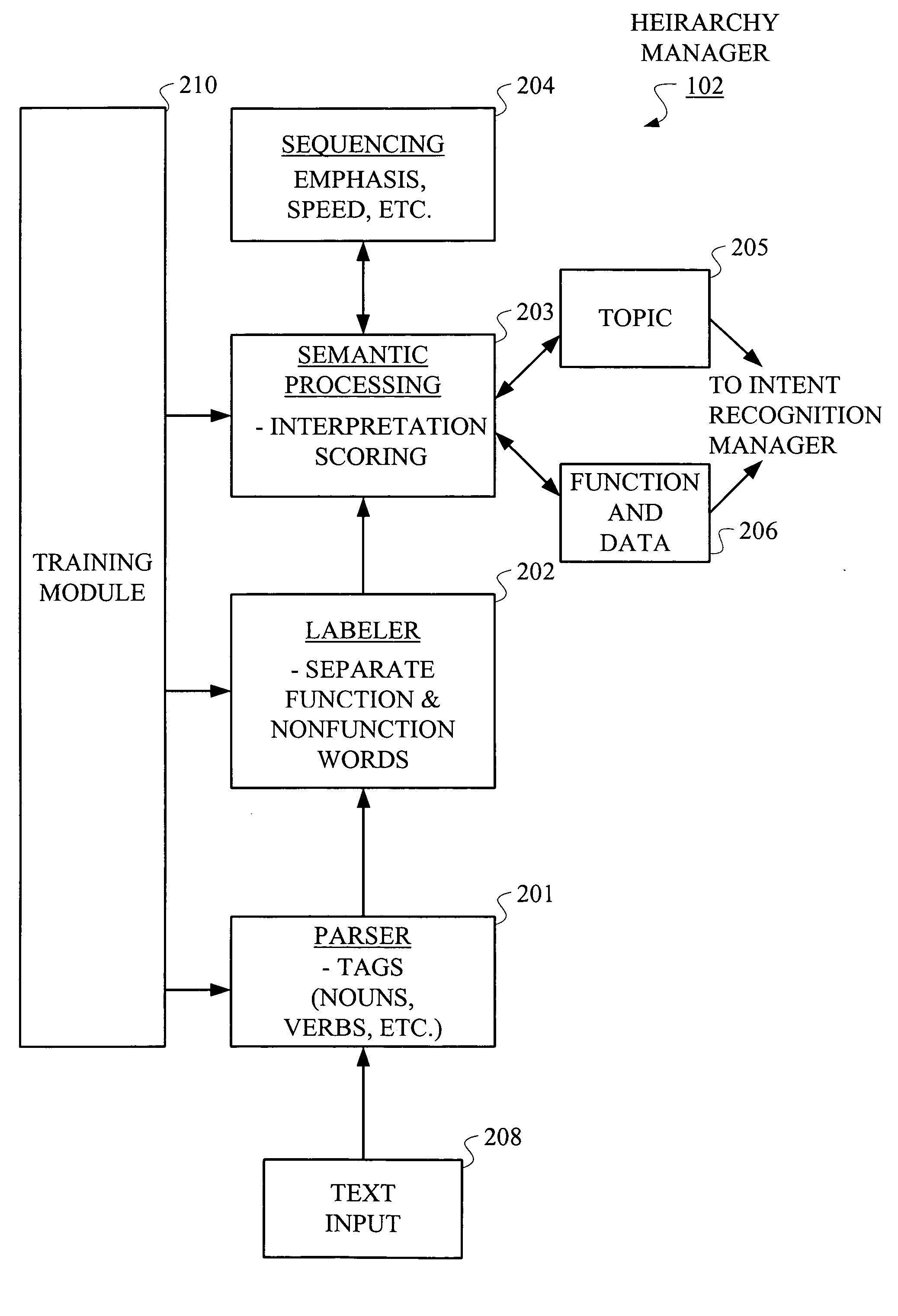

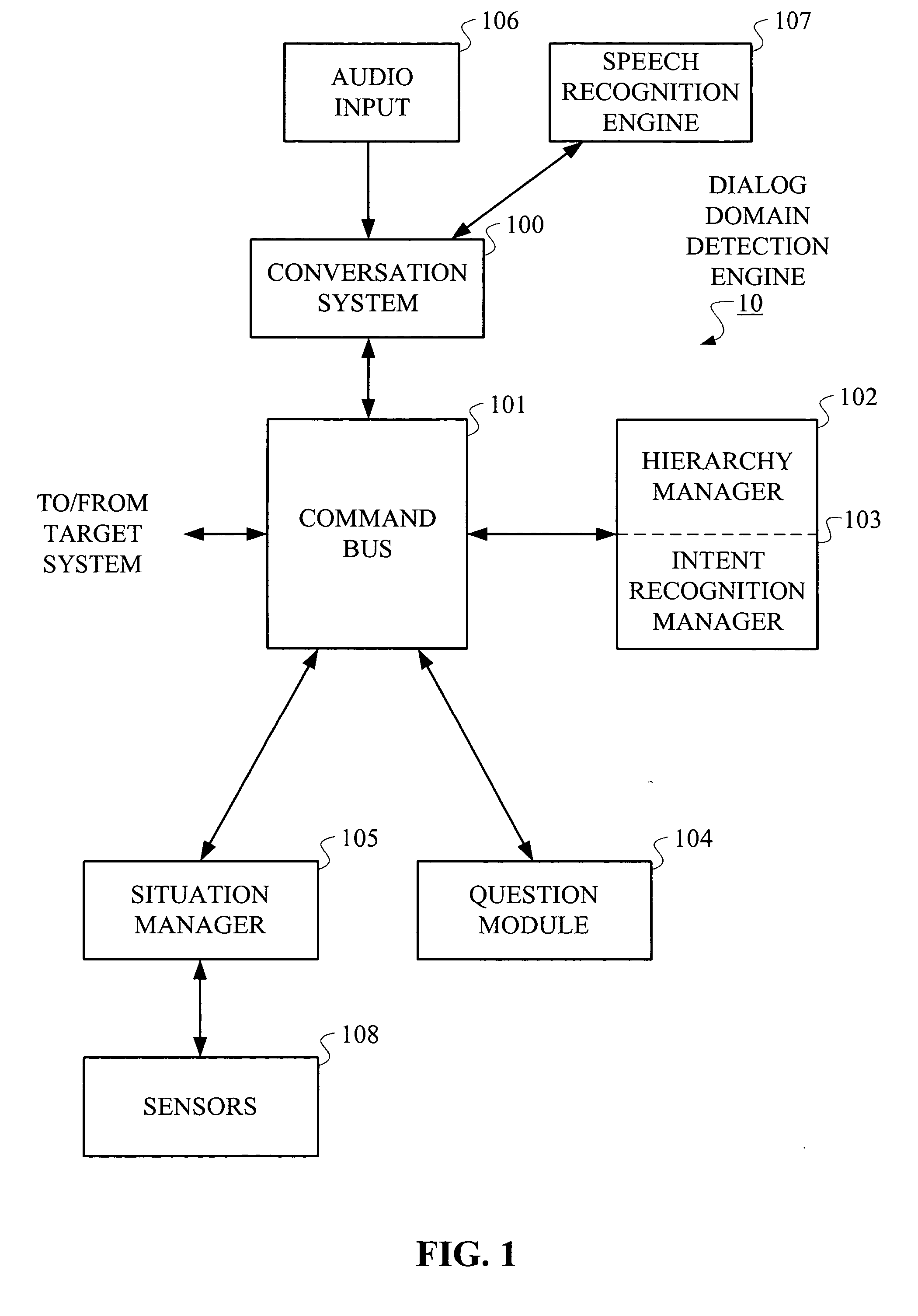

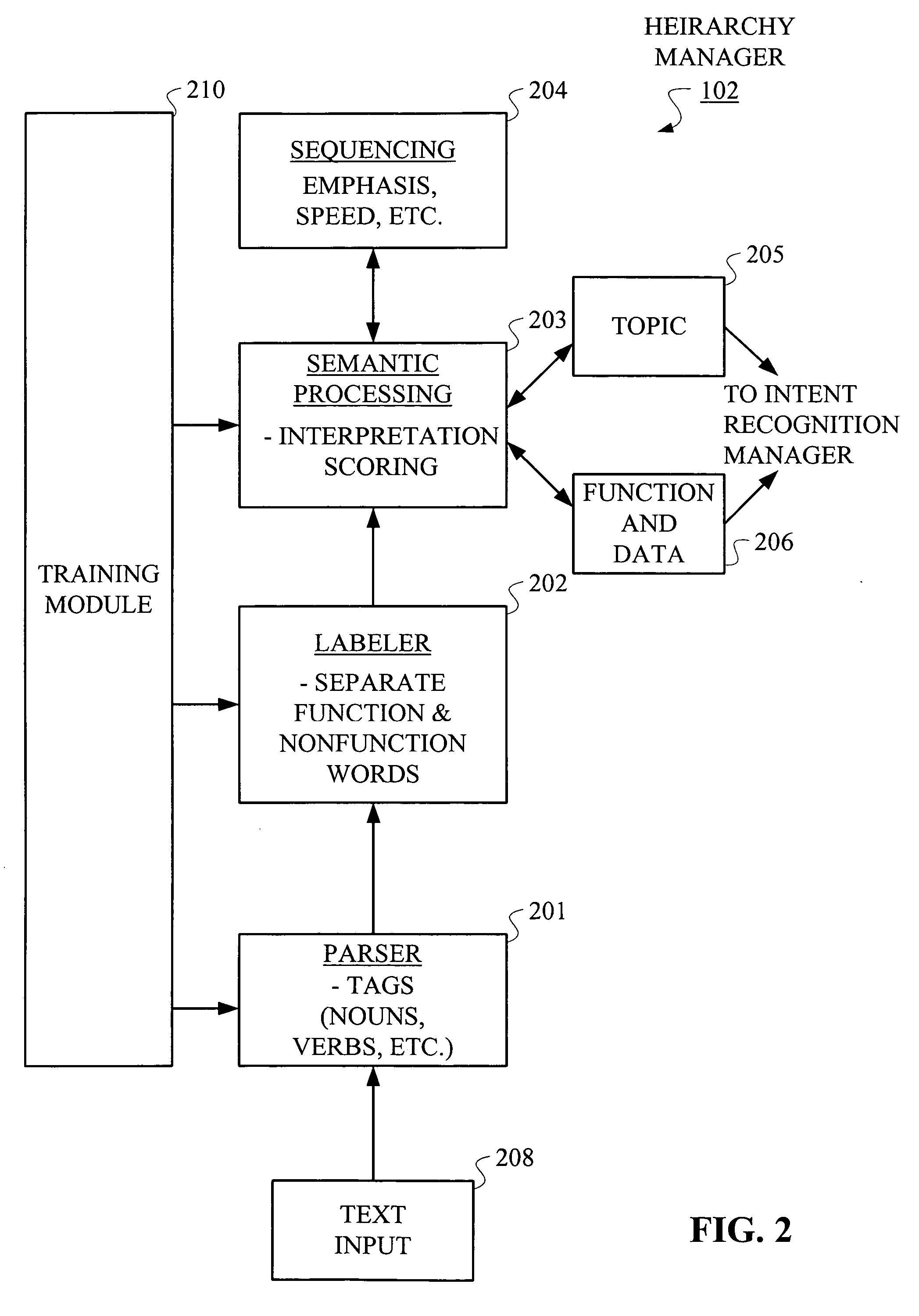

Hierarchical Methods and Apparatus for Extracting User Intent from Spoken Utterances

Improved techniques are disclosed for permitting a user to employ more human-based grammar (i.e., free form or conversational input) while addressing a target system via a voice system. For example, a technique for determining intent associated with a spoken utterance of a user comprises the following steps / operations. Decoded speech uttered by the user is obtained. An intent is then extracted from the decoded speech uttered by the user. The intent is extracted in an iterative manner such that a first class is determined after a first iteration and a sub-class of the first class is determined after a second iteration. The first class and the sub-class of the first class are hierarchically indicative of the intent of the user, e.g., a target and data that may be associated with the target. The multi-stage intent extraction approach may have more than two iterations. By way of example only, the user intent extracting step may further determine a sub-class of the sub-class of the first class after a third iteration, such that the first class, the sub-class of the first class, and the sub-class of the sub-class of the first class are hierarchically indicative of the intent of the user.

Owner:NUANCE COMM INC

Systems and methods for responding to natural language speech utterance

ActiveUS20070033005A1Improve maximizationHigh bandwidthDigital data information retrievalSemantic analysisPrior informationVoice communication

Owner:DIALECT LLC

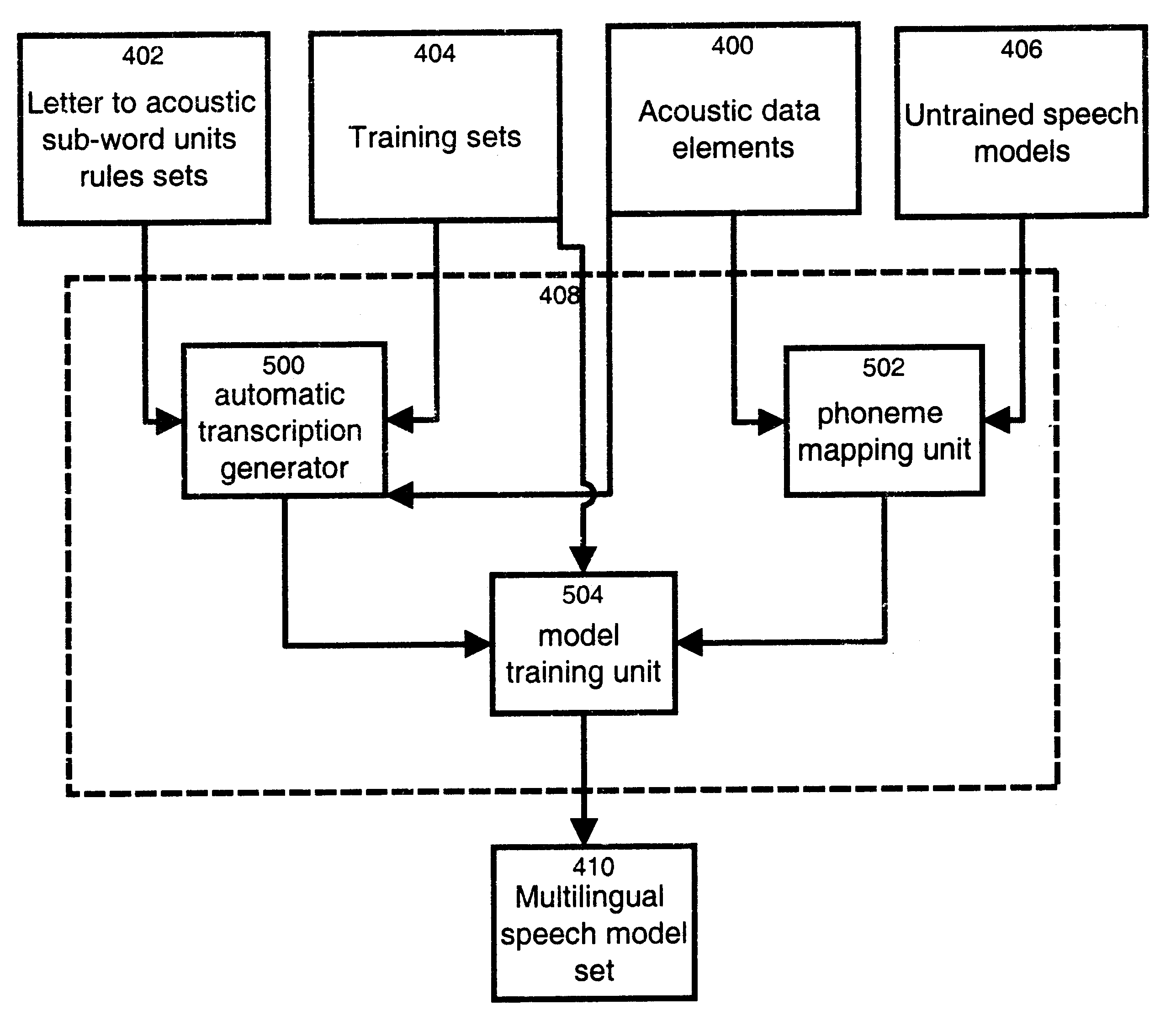

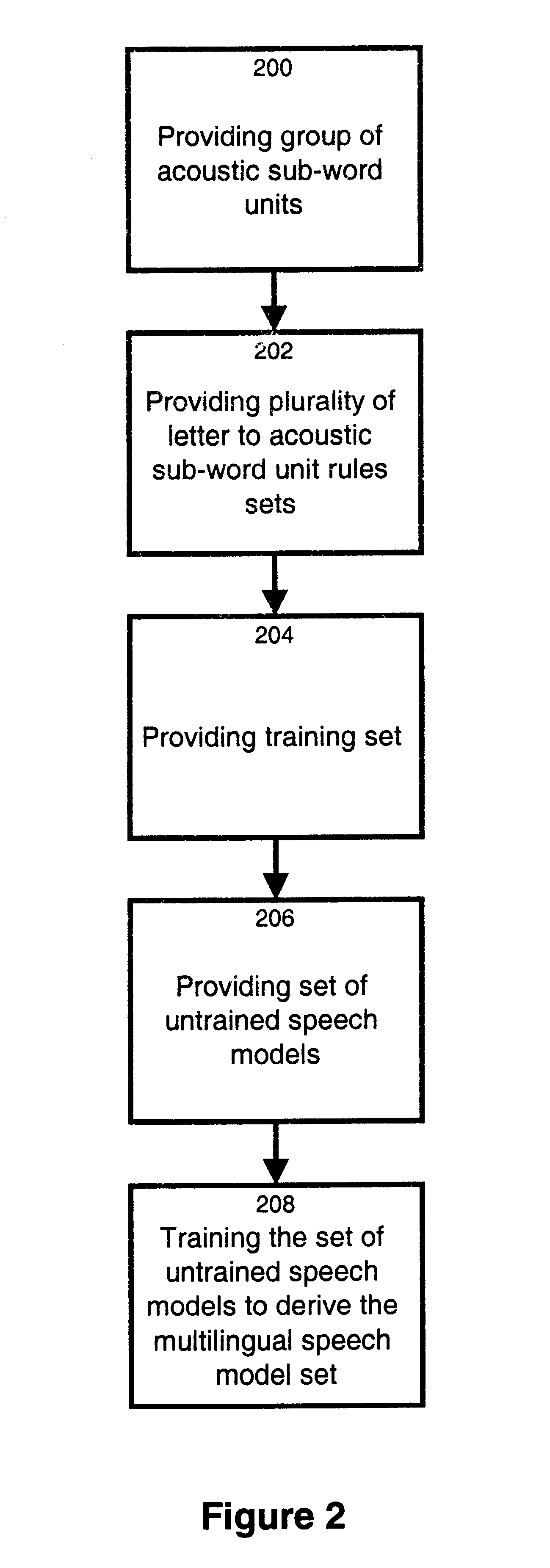

Method and apparatus for training a multilingual speech model set

InactiveUS6912499B1Reduce development costsShorten development timeSpeech recognitionSpecial data processing applicationsMore languageSpeech identification

The invention relates to a method and apparatus for training a multilingual speech model set. The multilingual speech model set generated is suitable for use by a speech recognition system for recognizing spoken utterances for at least two different languages. The invention allows using a single speech recognition unit with a single speech model set to perform speech recognition on utterances from two or more languages. The method and apparatus make use of a group of a group of acoustic sub-word units comprised of a first subgroup of acoustic sub-word units associated to a first language and a second subgroup of acoustic sub-word units associated to a second language where the first subgroup and the second subgroup share at least one common acoustic sub-word unit. The method and apparatus also make use of a plurality of letter to acoustic sub-word unit rules sets, each letter to acoustic sub-word unit rules set being associated to a different language. A set of untrained speech models is trained on the basis of a training set comprising speech tokens and their associated labels in combination with the group of acoustic sub-word units and the plurality of letter to acoustic sub-word unit rules sets. The invention also provides a computer readable storage medium comprising a program element for implementing the method for training a multilingual speech model set.

Owner:RPX CLEARINGHOUSE

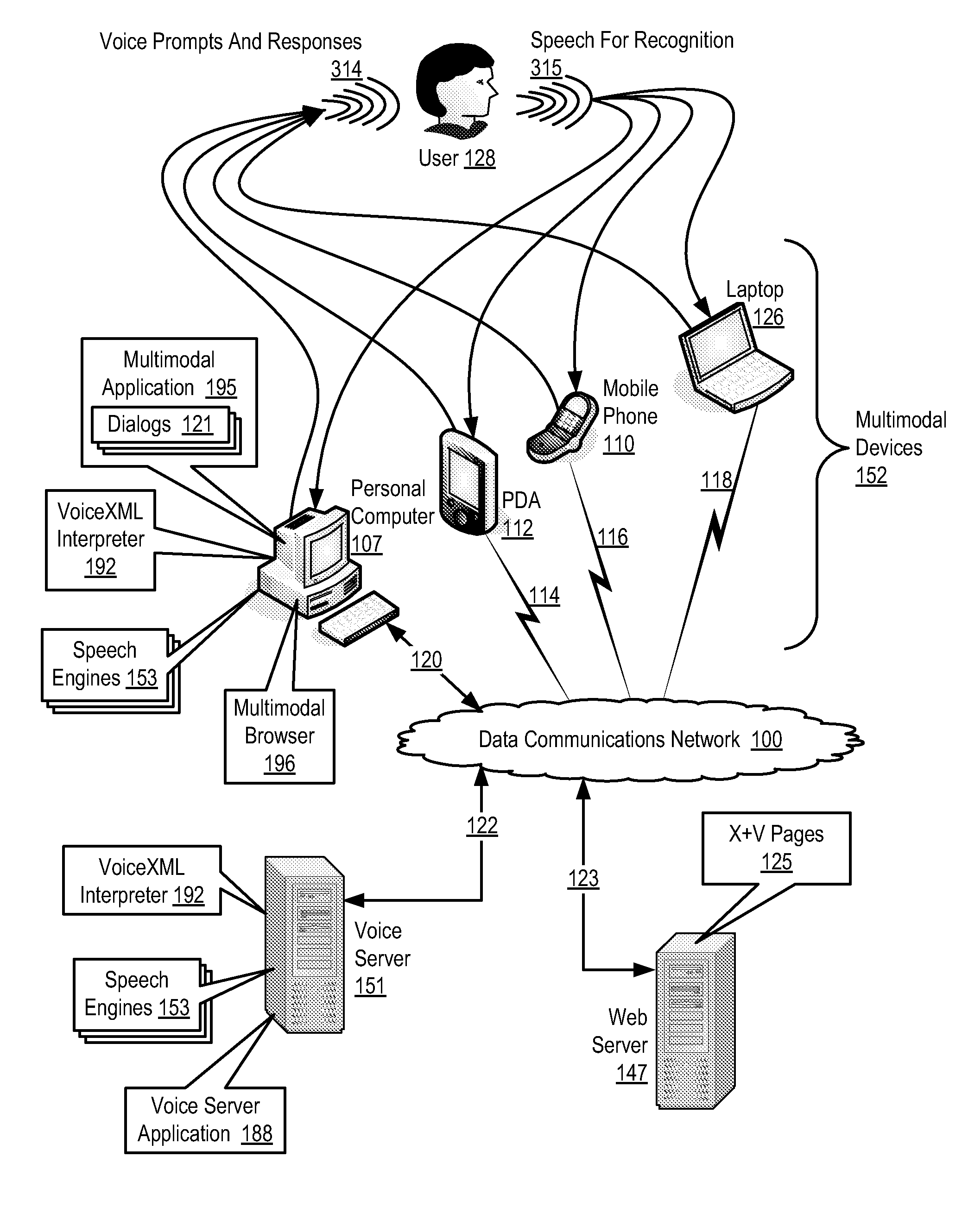

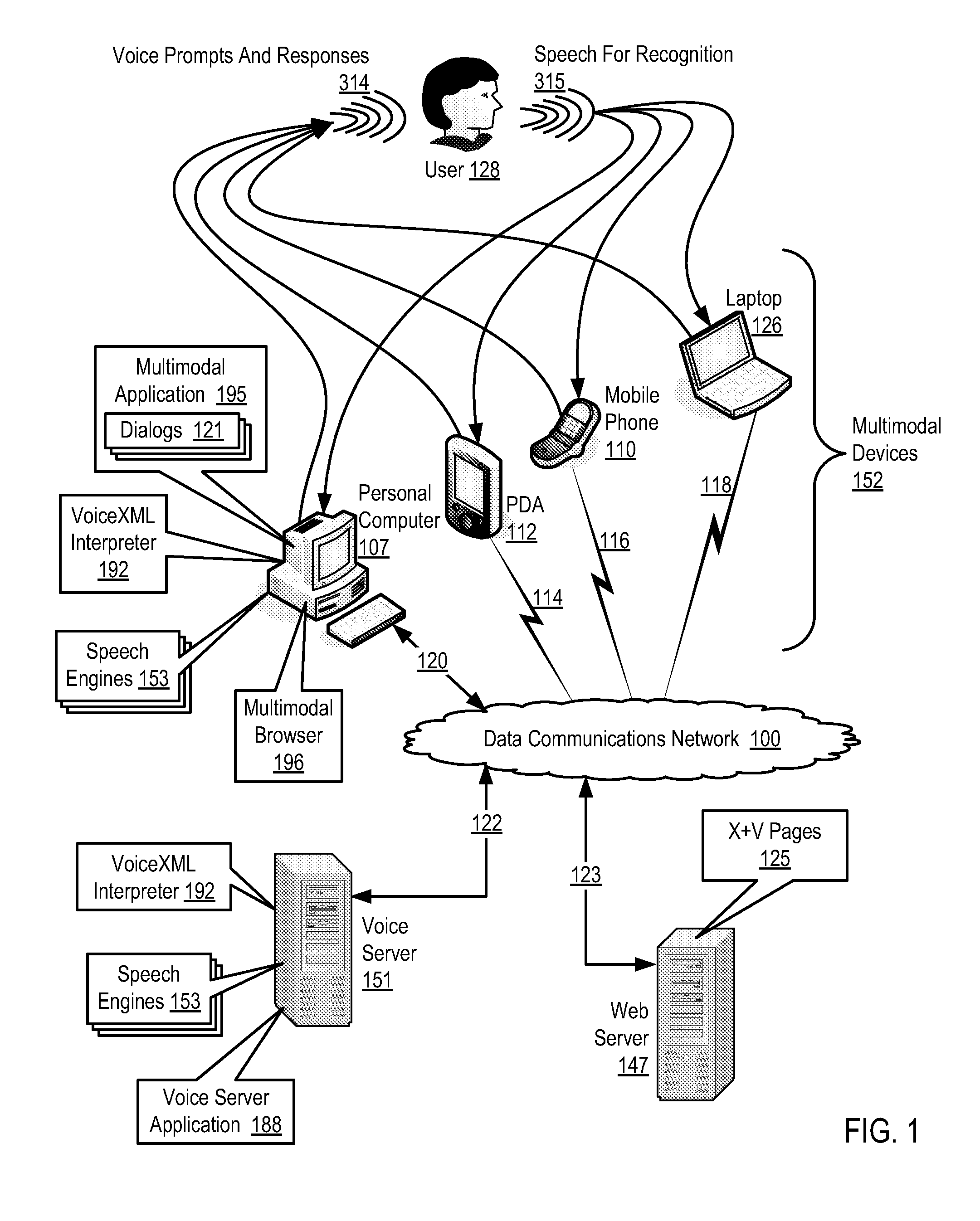

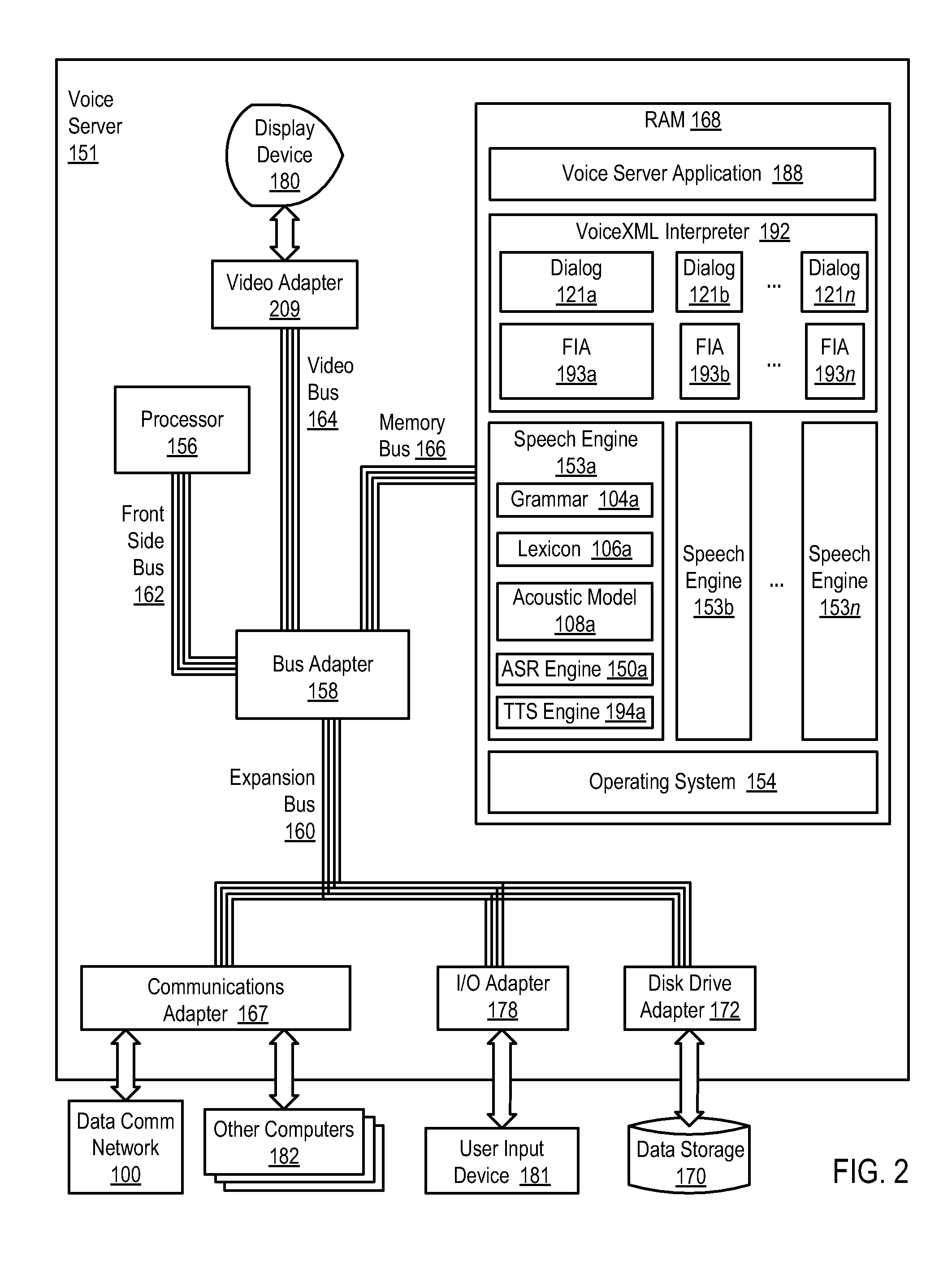

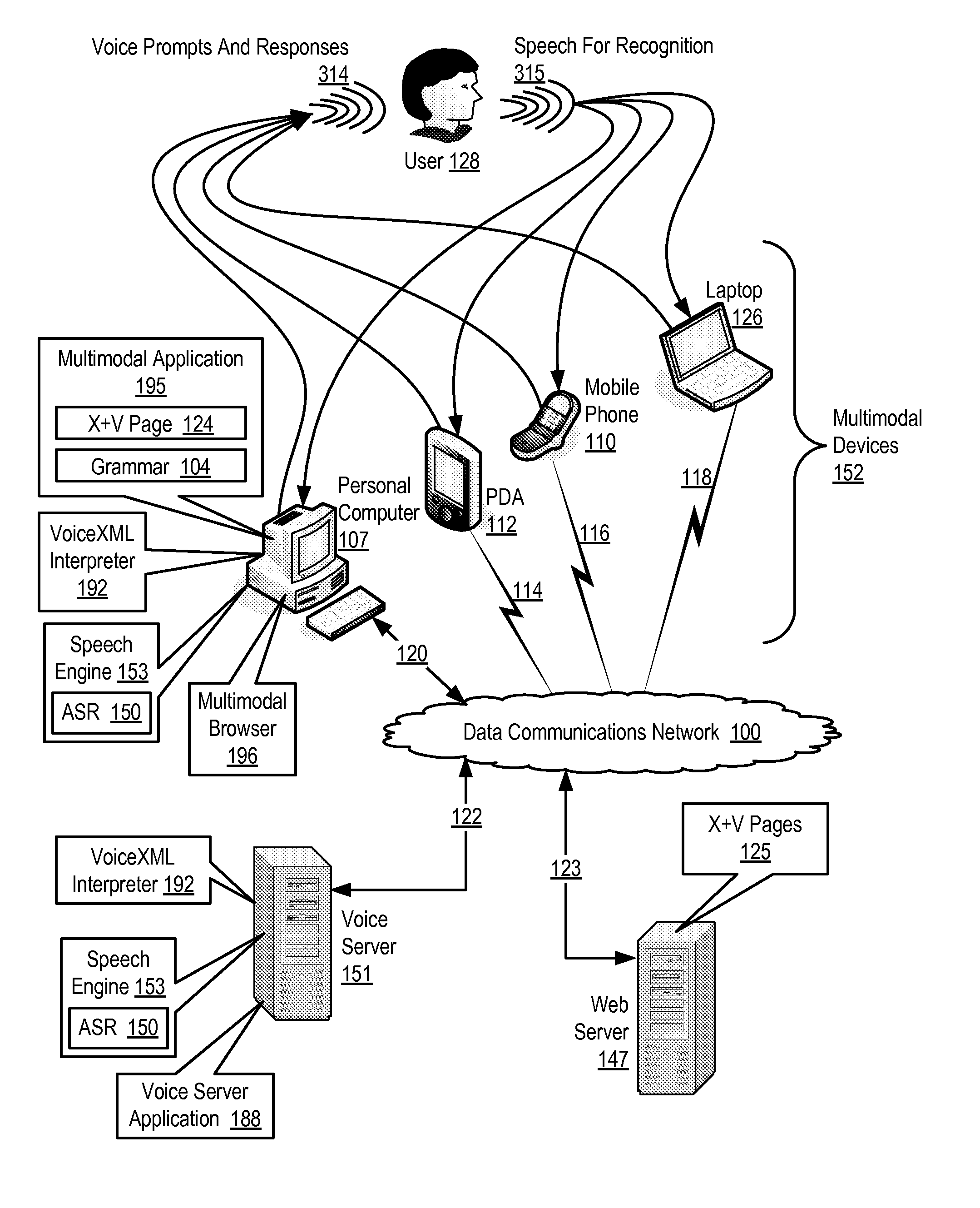

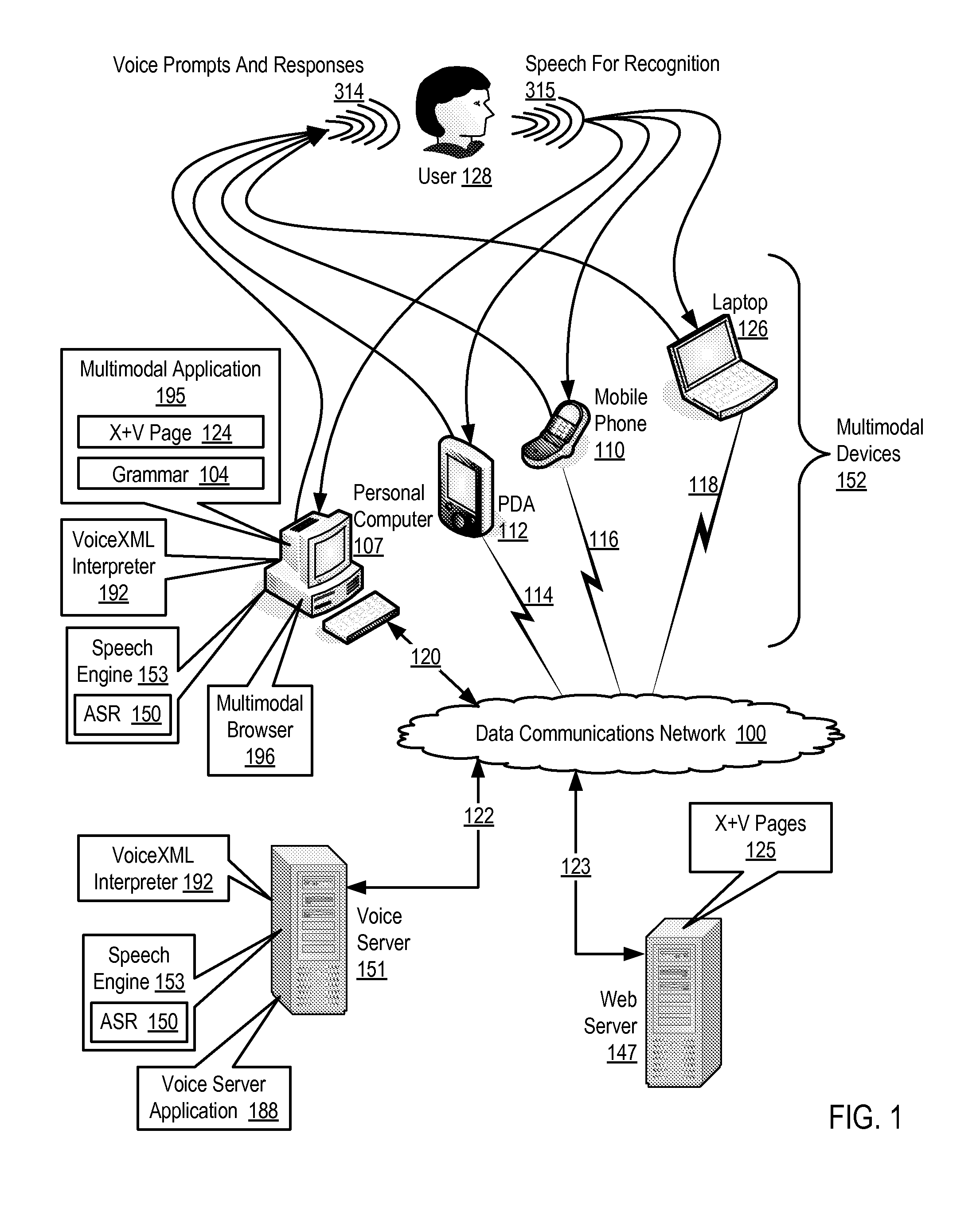

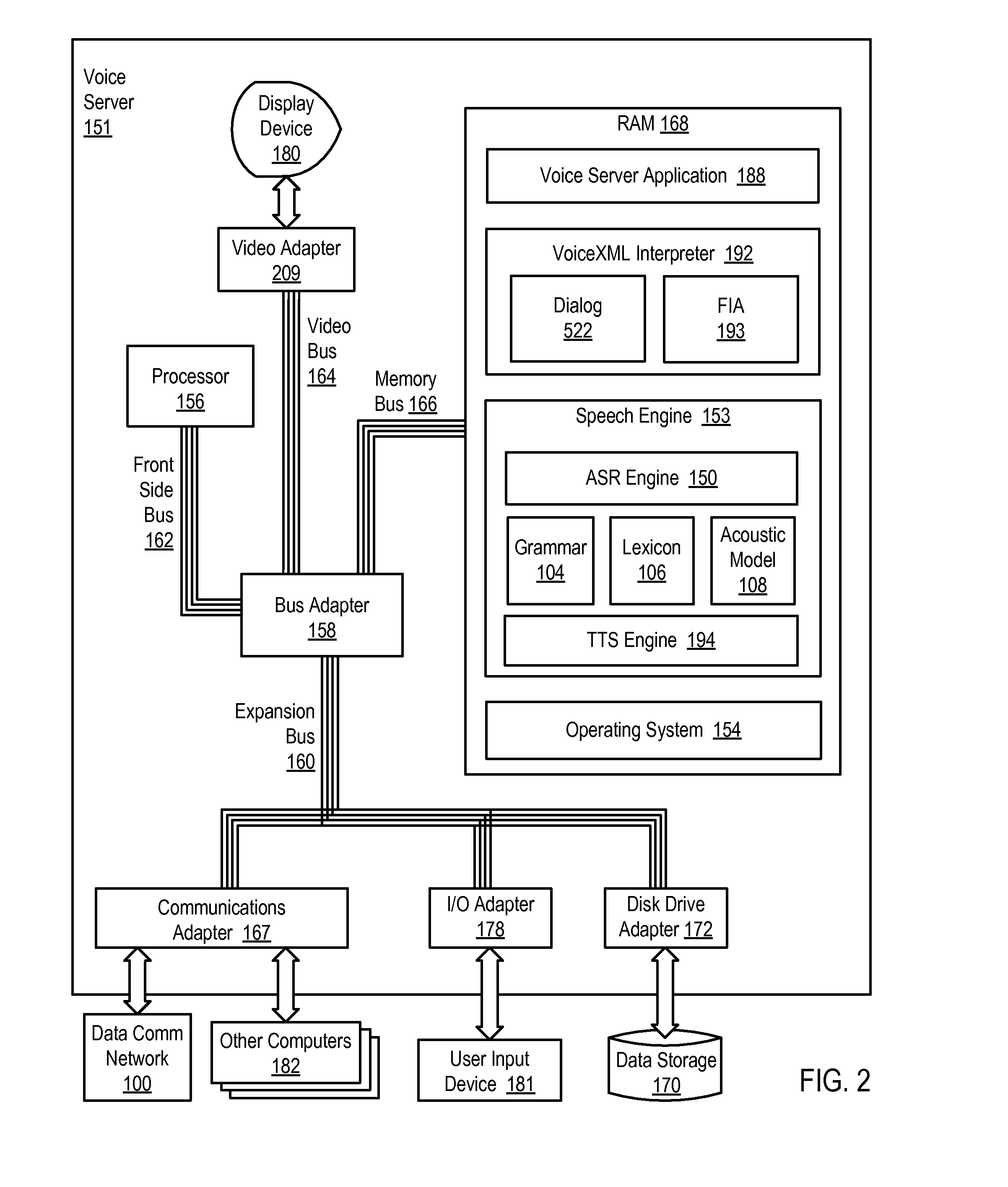

Supporting Multi-Lingual User Interaction With A Multimodal Application

Methods, apparatus, and products are disclosed for supporting multi-lingual user interaction with a multimodal application, the application including a plurality of VoiceXML dialogs, each dialog characterized by a particular language, supporting multi-lingual user interaction implemented with a plurality of speech engines, each speech engine having a grammar and characterized by a language corresponding to one of the dialogs, with the application operating on a multimodal device supporting multiple modes of interaction including a voice mode and one or more non-voice modes, the application operatively coupled to the speech engines through a VoiceXML interpreter, the VoiceXML interpreter: receiving a voice utterance from a user; determining in parallel, using the speech engines, recognition results for each dialog in dependence upon the voice utterance and the grammar for each speech engine; administering the recognition results for the dialogs; and selecting a language for user interaction in dependence upon the administered recognition results.

Owner:NUANCE COMM INC

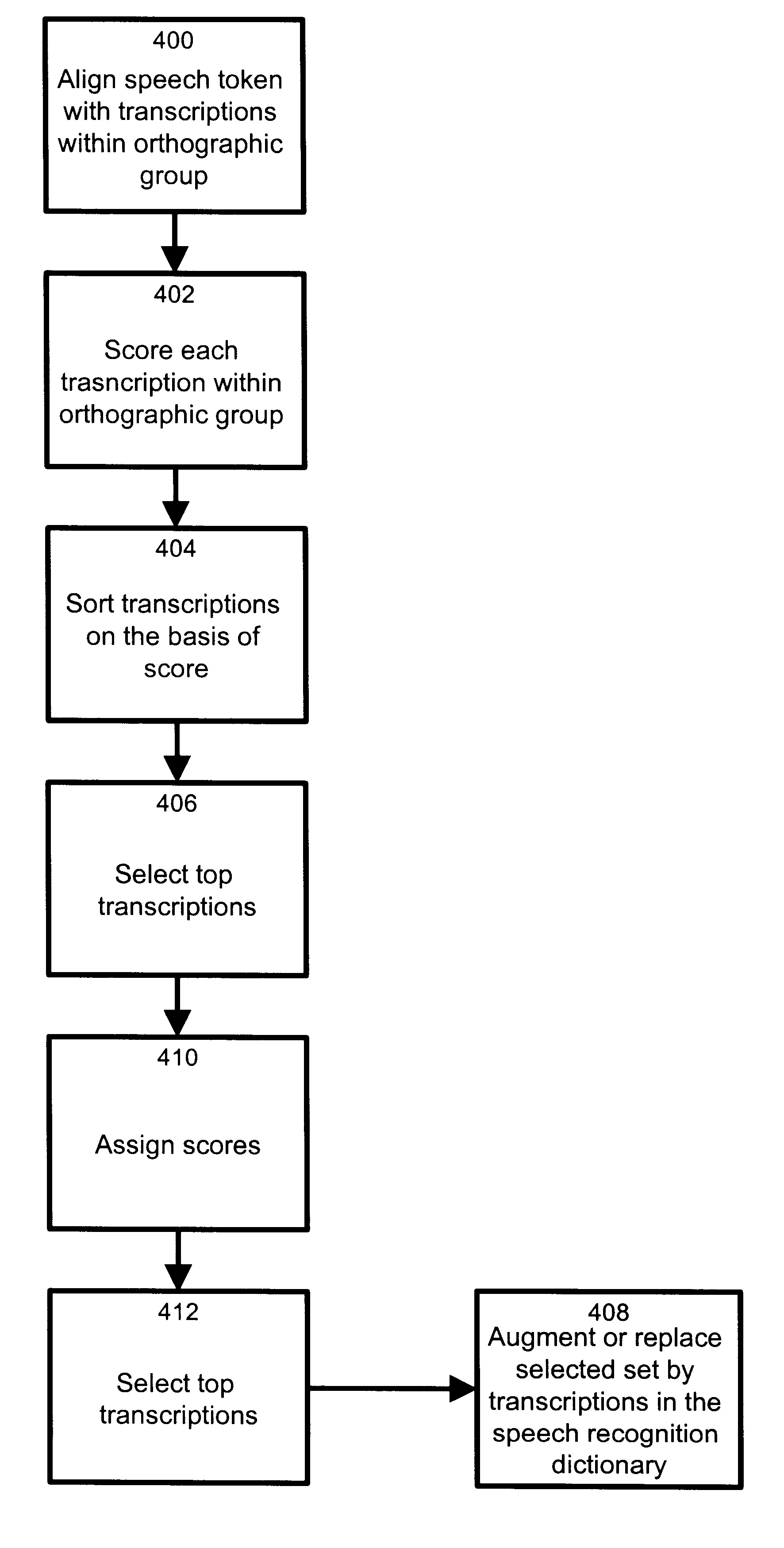

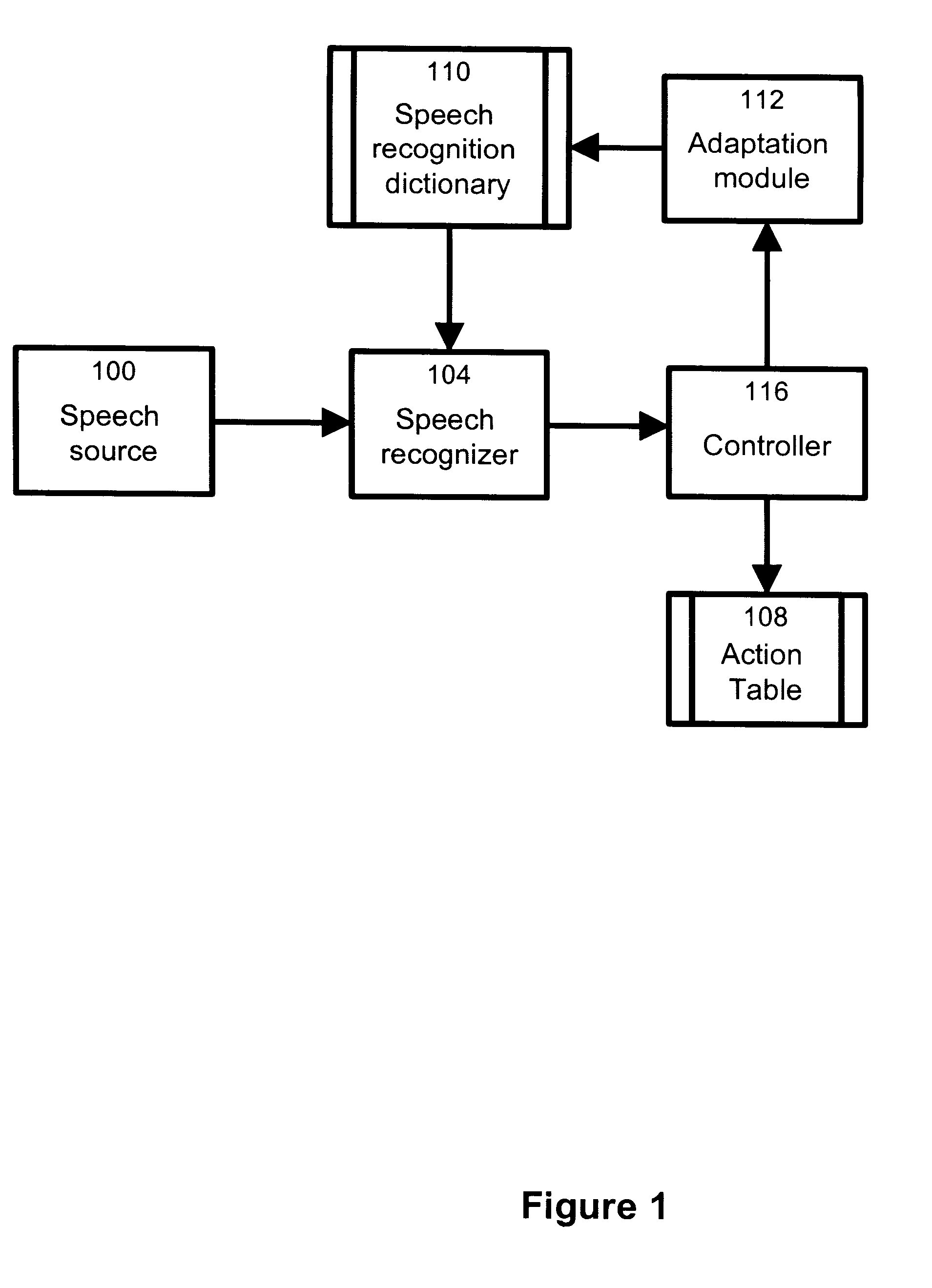

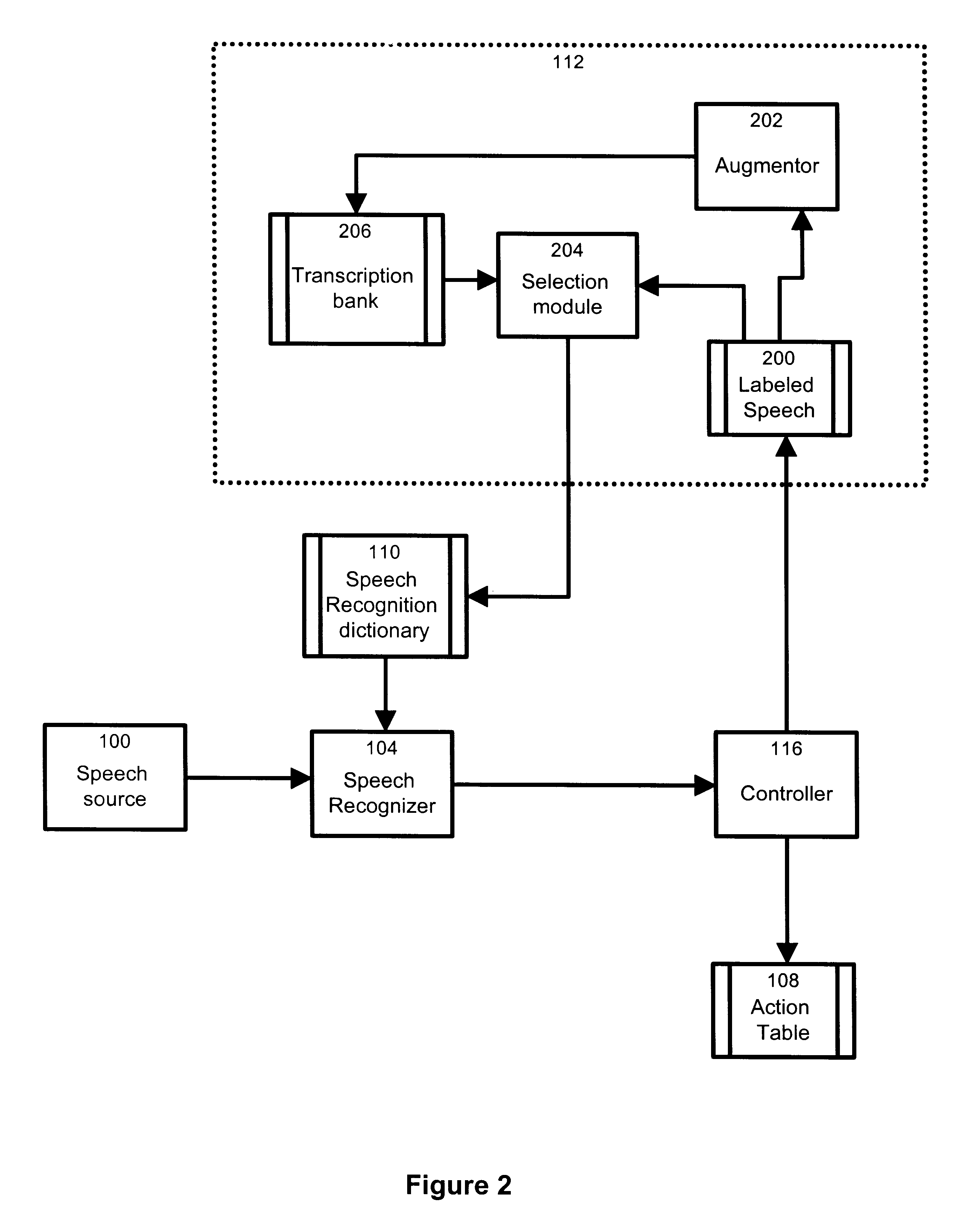

Method and apparatus for providing unsupervised adaptation of phonetic transcriptions in a speech recognition dictionary

An adaptive speech recognition system is provided including an input for receiving a signal derived from a spoken utterance indicative of a certain vocabulary item, a speech recognition dictionary, a speech recognition unit and an adaptation module. The speech recognition dictionary has a plurality of vocabulary items each being associated to a respective dictionary transcription group. The speech recognition unit is in an operative relationship with the speech recognition dictionary and selects a certain vocabulary item from the speech recognition dictionary as being a likely match to the signal received at the input. The results of the speech recognition process are provided to the adaptation module. The adaptation module includes a transcriptions bank having a plurality of orthographic groups, each including a plurality of transcriptions associated with a common vocabulary item. A transcription selector module in the adaptation module retrieves a given orthographic group from the transcriptions bank on a basis of the vocabulary item recognized by the speech recognition unit. The transcription selector module processes the given orthographic group on the basis of the signal received at the input to select a certain transcription from the transcriptions bank. The adaptation module then modifies a dictionary transcription group corresponding to the vocabulary item selected as being a likely match to the signal received at the input on the basis of the selected certain transcription.

Owner:AVAYA INC

Local storage and use of search results for voice-enabled mobile communications devices

InactiveUS20080154612A1Improve abilitiesImprove responsivenessDevices with voice recognitionSubstation equipmentWireless dataSpeech identification

A method implemented on a mobile device that includes speech recognition functionality involves: receiving an utterance from a user of the mobile device, the utterance including a spoken search request; recognizing that the utterance includes a spoken search request; sending a representation of the spoken search request to a remote server over a wireless data connection; receiving search results over the wireless data connection that are responsive to the search request; storing the results on the mobile device; receiving a subsequent search request; performing a subsequent search responsive to the subsequent search request to generate subsequent search results, the subsequent search including searching the stored search results; and presenting the subsequent results on the mobile device. The method also involves indexing the stored results according to the user's search request, enhancing the device's ability to recognize frequently requested searches, and pre-loading the device with results corresponding to certain frequently requested searches.

Owner:CERENCE OPERATING CO

Ordering Recognition Results Produced By An Automatic Speech Recognition Engine For A Multimodal Application

Ordering recognition results produced by an automatic speech recognition (‘ASR’) engine for a multimodal application implemented with a grammar of the multimodal application in the ASR engine, with the multimodal application operating in a multimodal browser on a multimodal device supporting multiple modes of interaction including a voice mode and one or more non-voice modes, the multimodal application operatively coupled to the ASR engine through a VoiceXML interpreter, includes: receiving, in the VoiceXML interpreter from the multimodal application, a voice utterance; determining, by the VoiceXML interpreter using the ASR engine, a plurality of recognition results in dependence upon the voice utterance and the grammar; determining, by the VoiceXML interpreter according to semantic interpretation scripts of the grammar, a weight for each recognition result; and sorting, by the VoiceXML interpreter, the plurality of recognition results in dependence upon the weight for each recognition result.

Owner:NUANCE COMM INC

Hierarchical methods and apparatus for extracting user intent from spoken utterances

Improved techniques are disclosed for permitting a user to employ more human-based grammar (i.e., free form or conversational input) while addressing a target system via a voice system. For example, a technique for determining intent associated with a spoken utterance of a user comprises the following steps / operations. Decoded speech uttered by the user is obtained. An intent is then extracted from the decoded speech uttered by the user. The intent is extracted in an iterative manner such that a first class is determined after a first iteration and a sub-class of the first class is determined after a second iteration. The first class and the sub-class of the first class are hierarchically indicative of the intent of the user, e.g., a target and data that may be associated with the target. The multi-stage intent extraction approach may have more than two iterations. By way of example only, the user intent extracting step may further determine a sub-class of the sub-class of the first class after a third iteration, such that the first class, the sub-class of the first class, and the sub-class of the sub-class of the first class are hierarchically indicative of the intent of the user.

Owner:NUANCE COMM INC

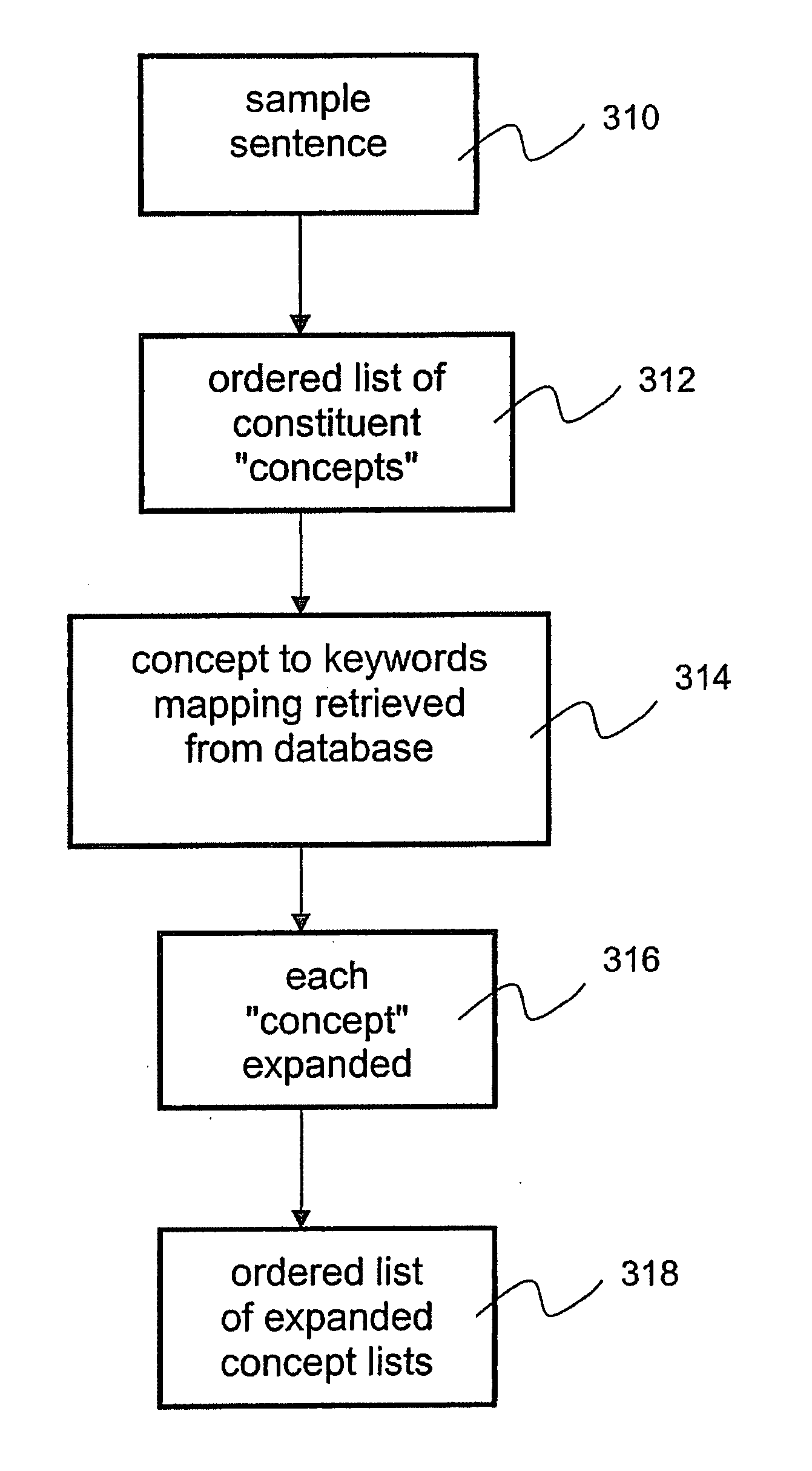

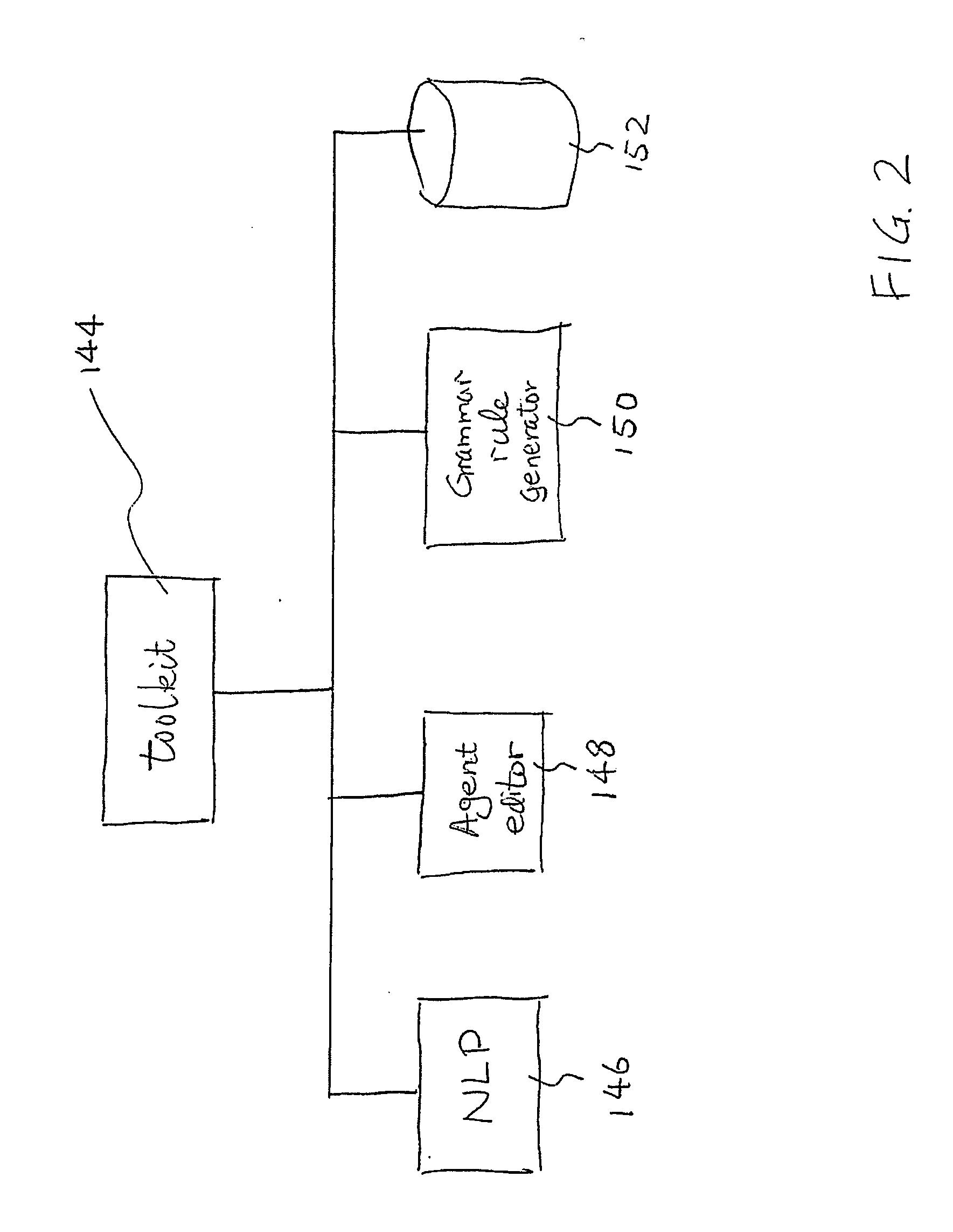

System and methods for improving accuracy of speech recognition

The invention provides a system and method for improving speech recognition. A computer software system is provided for implementing the system and method. A user of the computer software system may speak to the system directly and the system may respond, in spoken language, with an appropriate response. Grammar rules may be generated automatically from sample utterances when implementing the system for a particular application. Dynamic grammar rules may also be generated during interaction between the user and the system. In addition to arranging searching order of grammar files based on a predetermined hierarchy, a dynamically generated searching order based on history of contexts of a single conversation may be provided for further improved speech recognition. Dialogue between the system and the user of the system may be recorded and extracted for use by a speech recognition engine to refine or create language models so that accuracy of speech recognition relevant to a particular knowledge area may be improved.

Owner:INAGO CORP

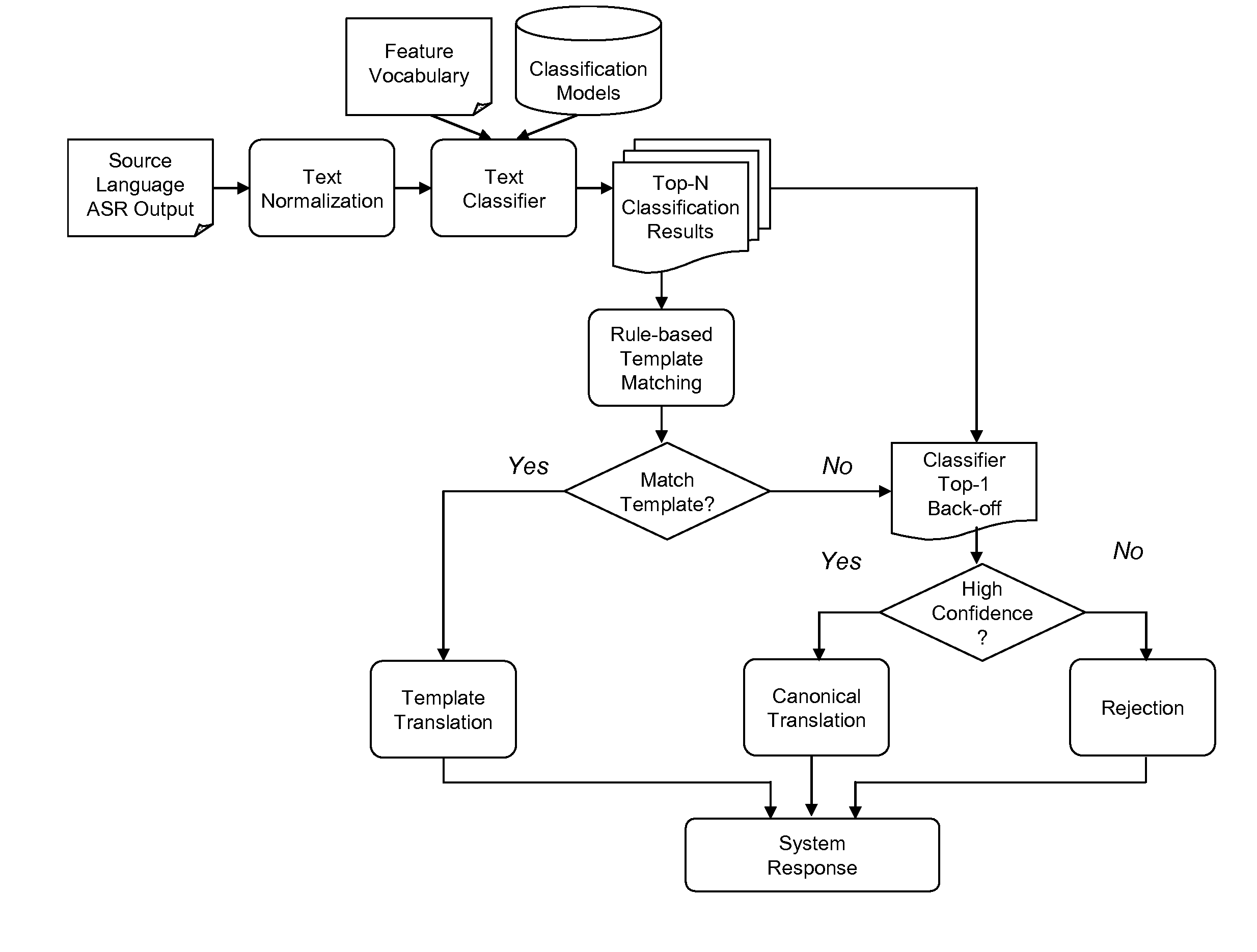

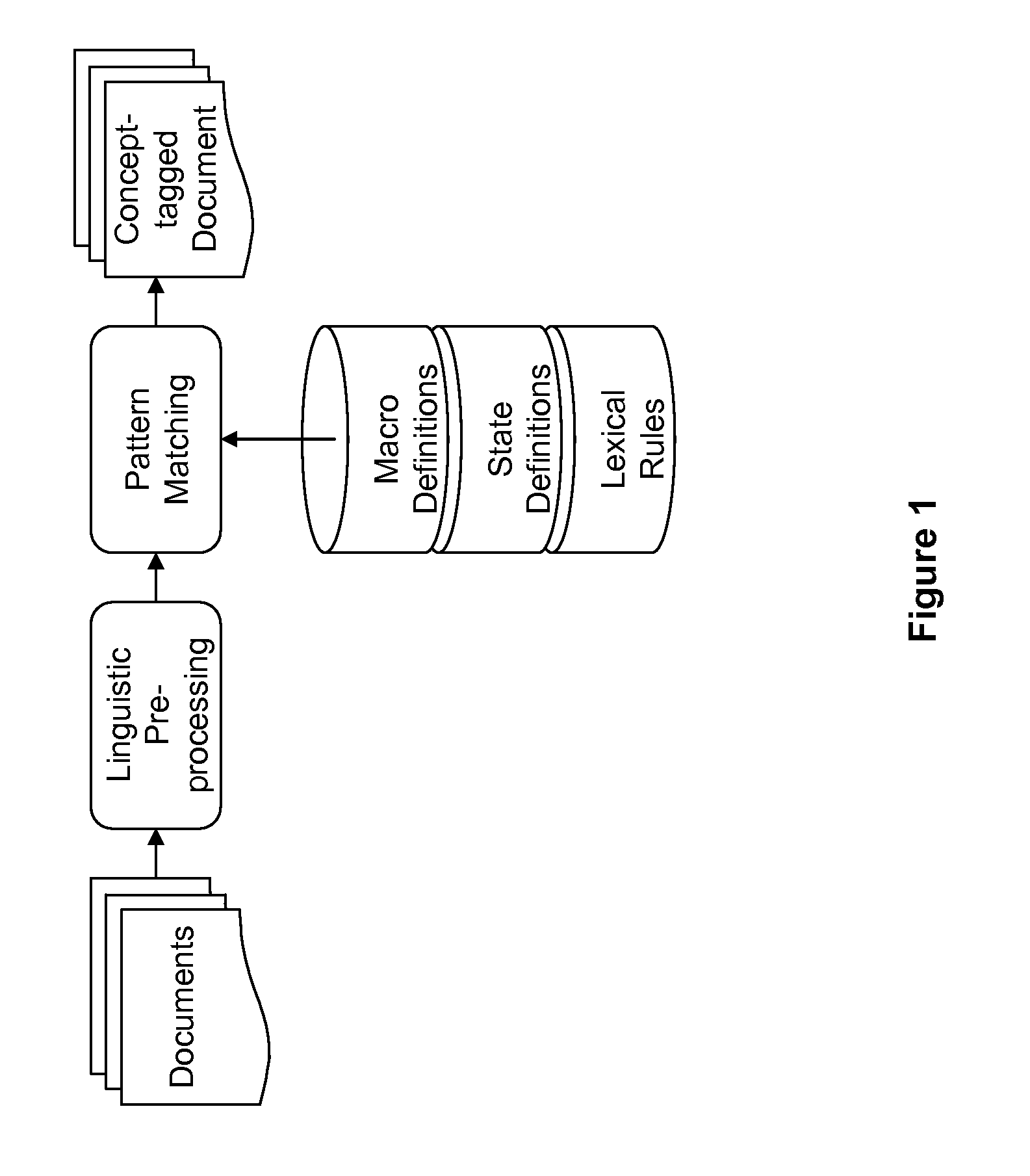

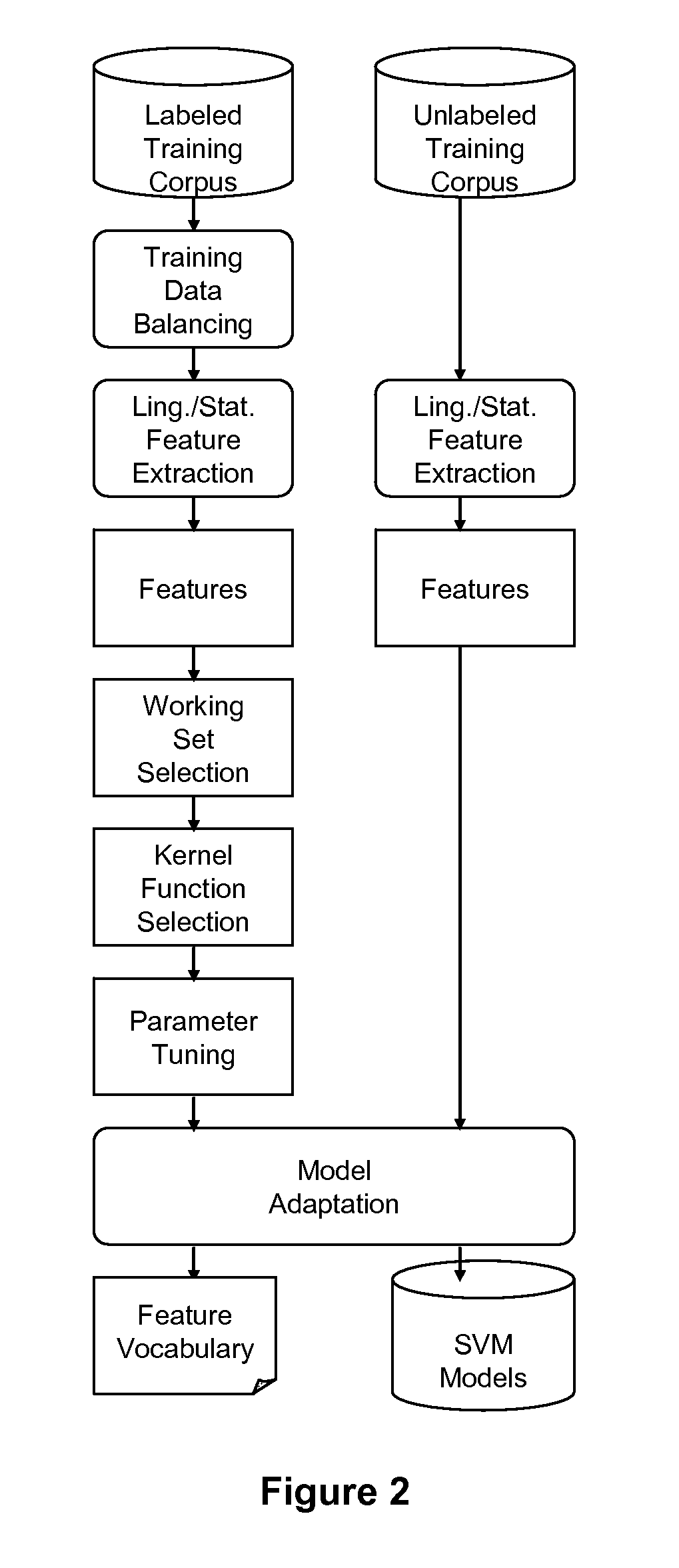

Robust information extraction from utterances

ActiveUS8583416B2Increase cross-entropyHigh precisionSpeech recognitionSpecial data processing applicationsFeature extractionText categorization

The performance of traditional speech recognition systems (as applied to information extraction or translation) decreases significantly with, larger domain size, scarce training data as well as under noisy environmental conditions. This invention mitigates these problems through the introduction of a novel predictive feature extraction method which combines linguistic and statistical information for representation of information embedded in a noisy source language. The predictive features are combined with text classifiers to map the noisy text to one of the semantically or functionally similar groups. The features used by the classifier can be syntactic, semantic, and statistical.

Owner:NANT HLDG IP LLC

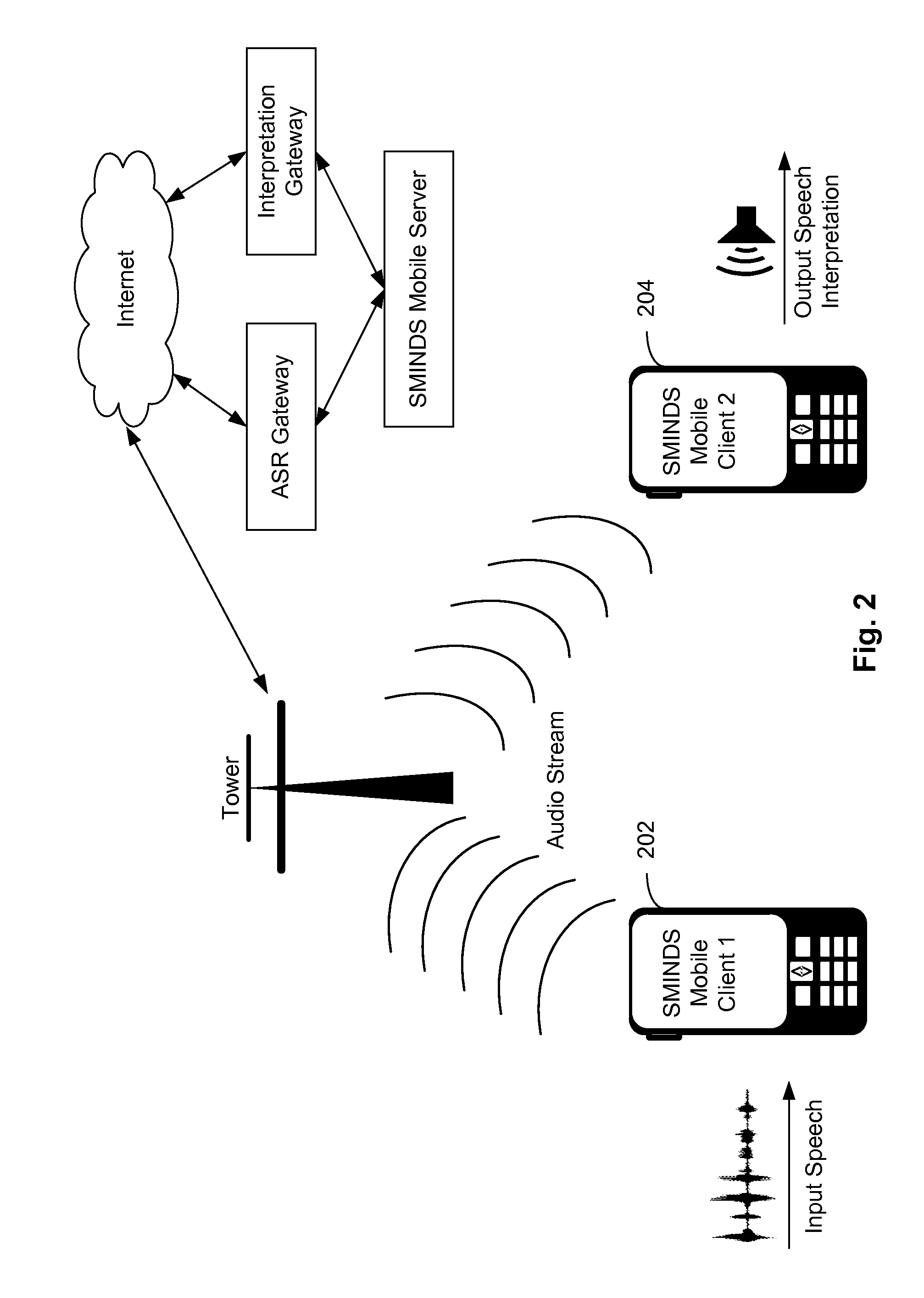

Mobile Speech-to-Speech Interpretation System

Interpretation from a first language to a second language via one or more communication devices is performed through a communication network (e.g. phone network or the internet) using a server for performing recognition and interpretation tasks, comprising the steps of: receiving an input speech utterance in a first language on a first mobile communication device; conditioning said input speech utterance; first transmitting said conditioned input speech utterance to a server; recognizing said first transmitted speech utterance to generate one or more recognition results; interpreting said recognition results to generate one or more interpretation results in an interlingua; mapping the interlingua to a second language in a first selected format; second transmitting said interpretation results in the first selected format to a second mobile communication device; and presenting said interpretation results in a second selected format on said second communication device.

Owner:NANT HLDG IP LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com