Patents

Literature

872 results about "Automatic speech" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Formulaic language (previously known as automatic speech or embolalia) is a linguistic term for verbal expressions that are fixed in form, often non-literal in meaning with attitudinal nuances, and closely related to communicative-pragmatic context. Along with idioms, expletives and proverbs, formulaic language includes pause fillers (e.g., "Like", "Er" or "Uhm") and conversational speech formulas (e.g., "You've got to be kidding," "Excuse me?" or "Hang on a minute").

User interaction with voice information services

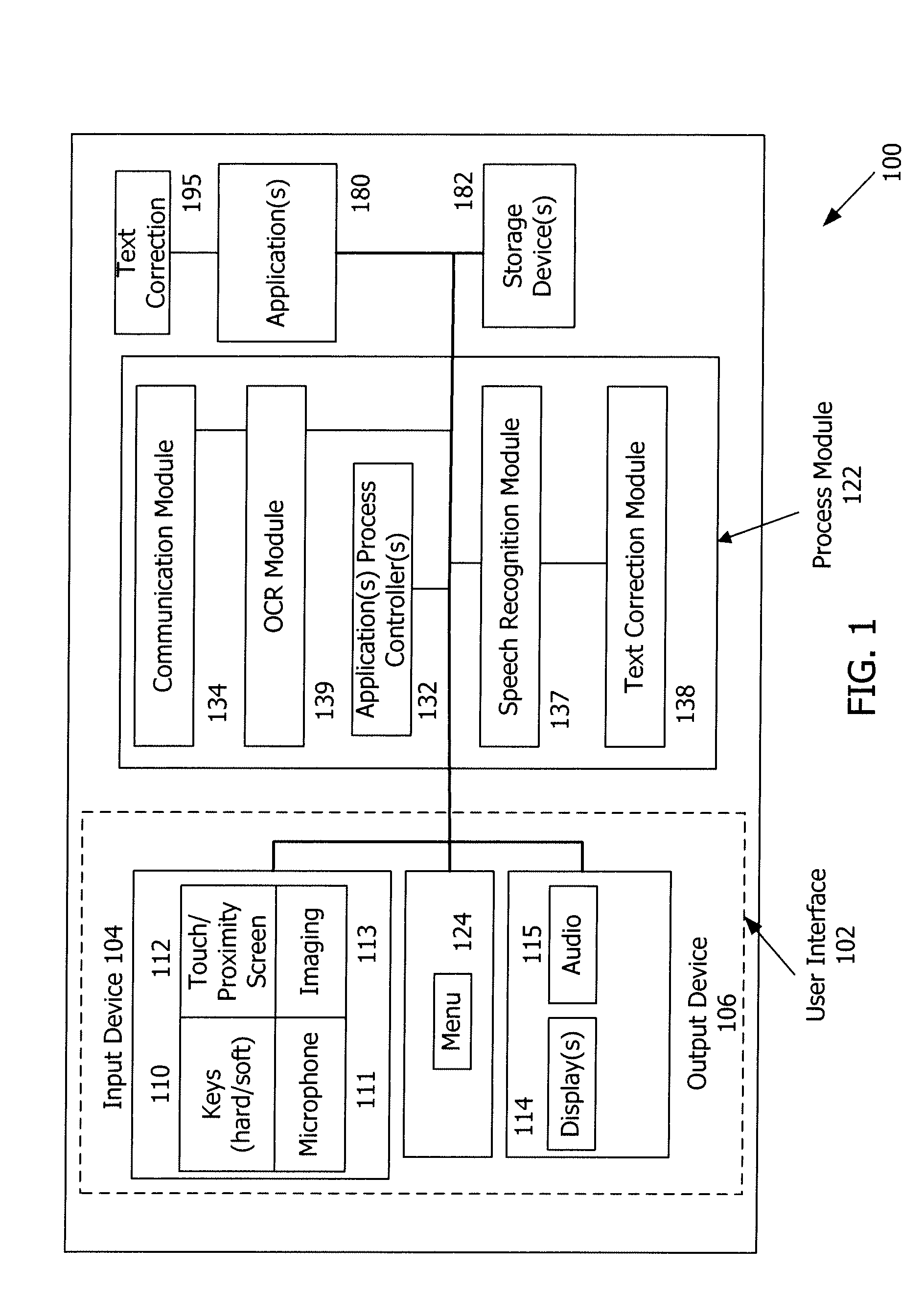

InactiveUS20060143007A1Reduce inputThe result is accurateSpeech recognitionAutomatic speechAncillary service

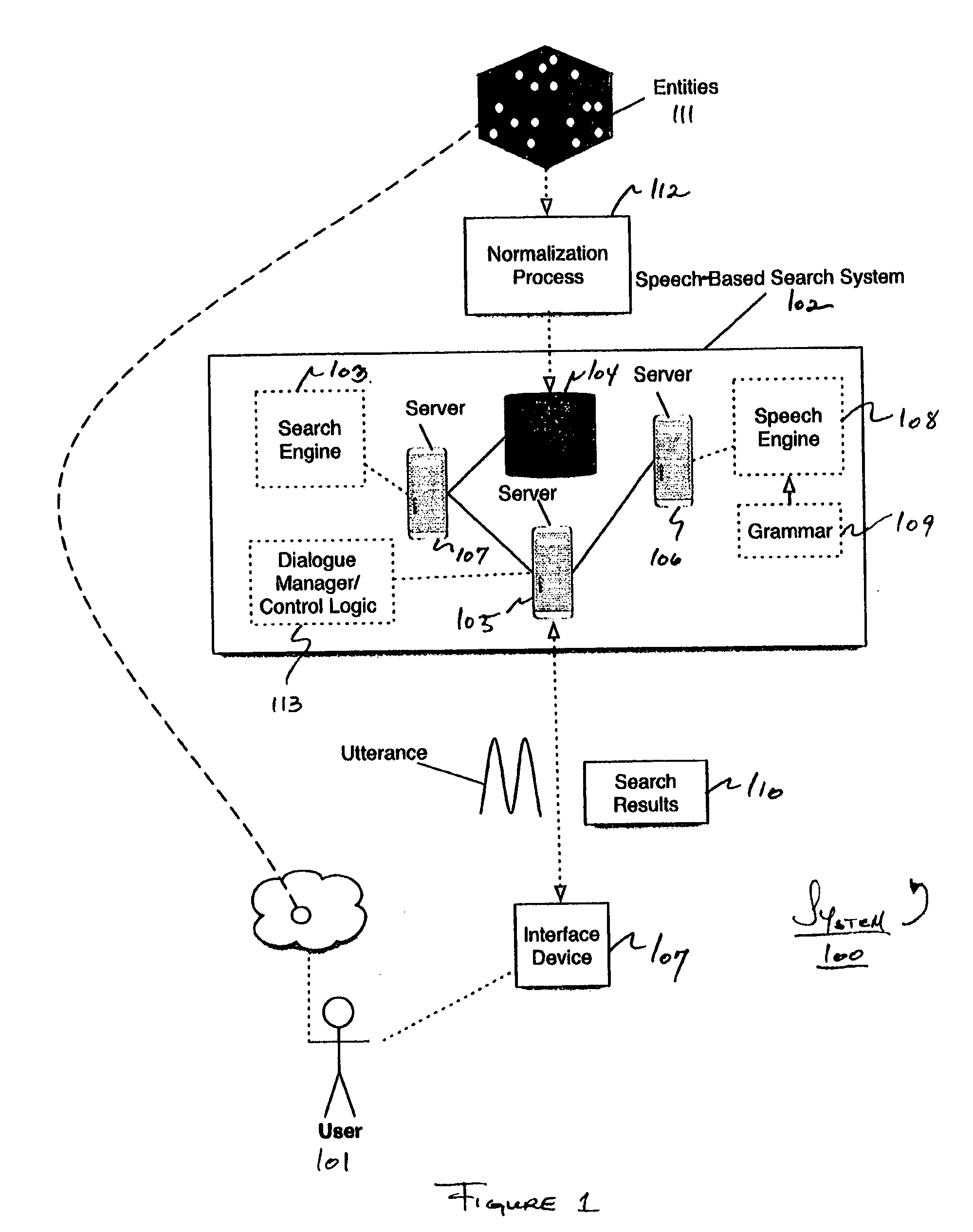

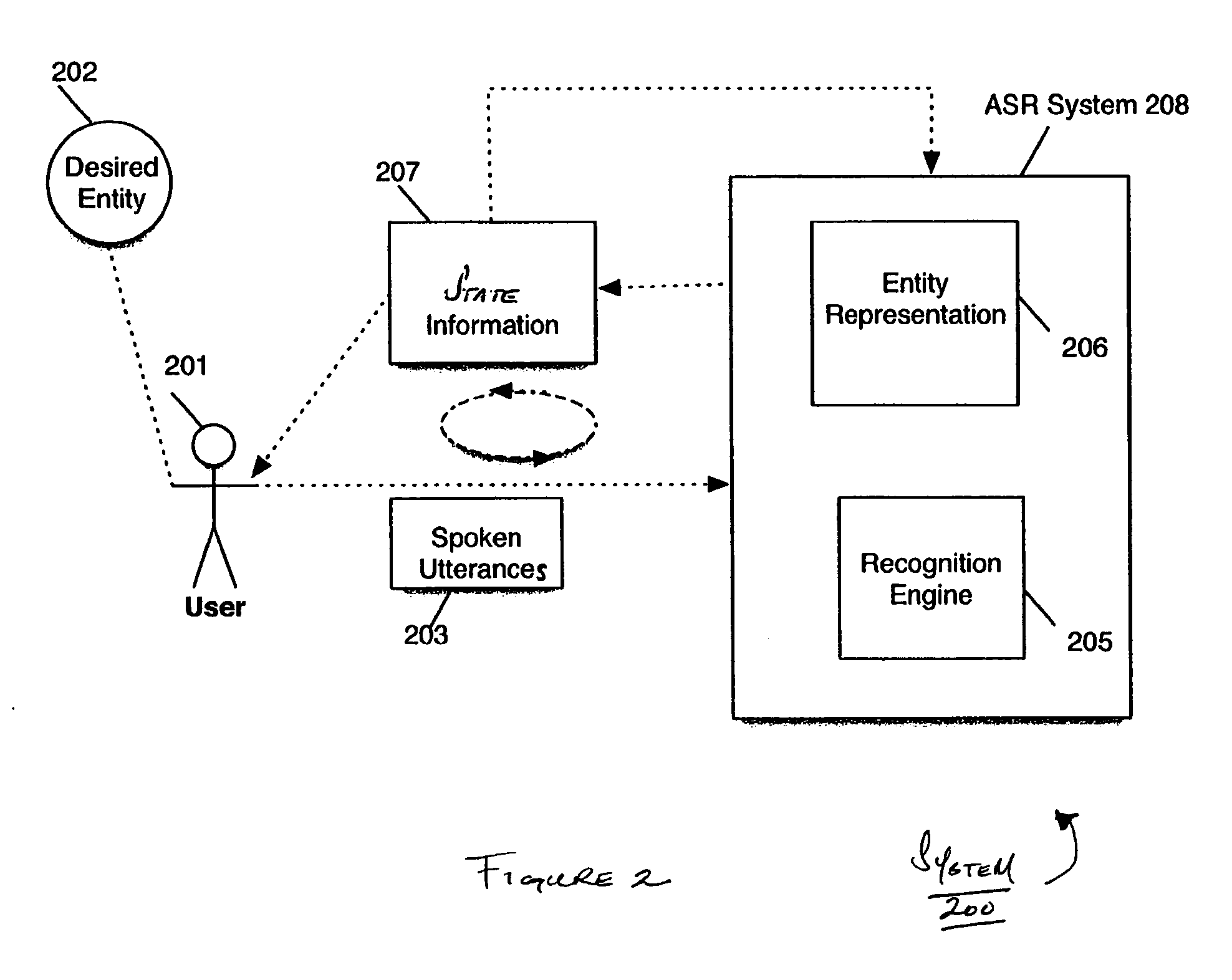

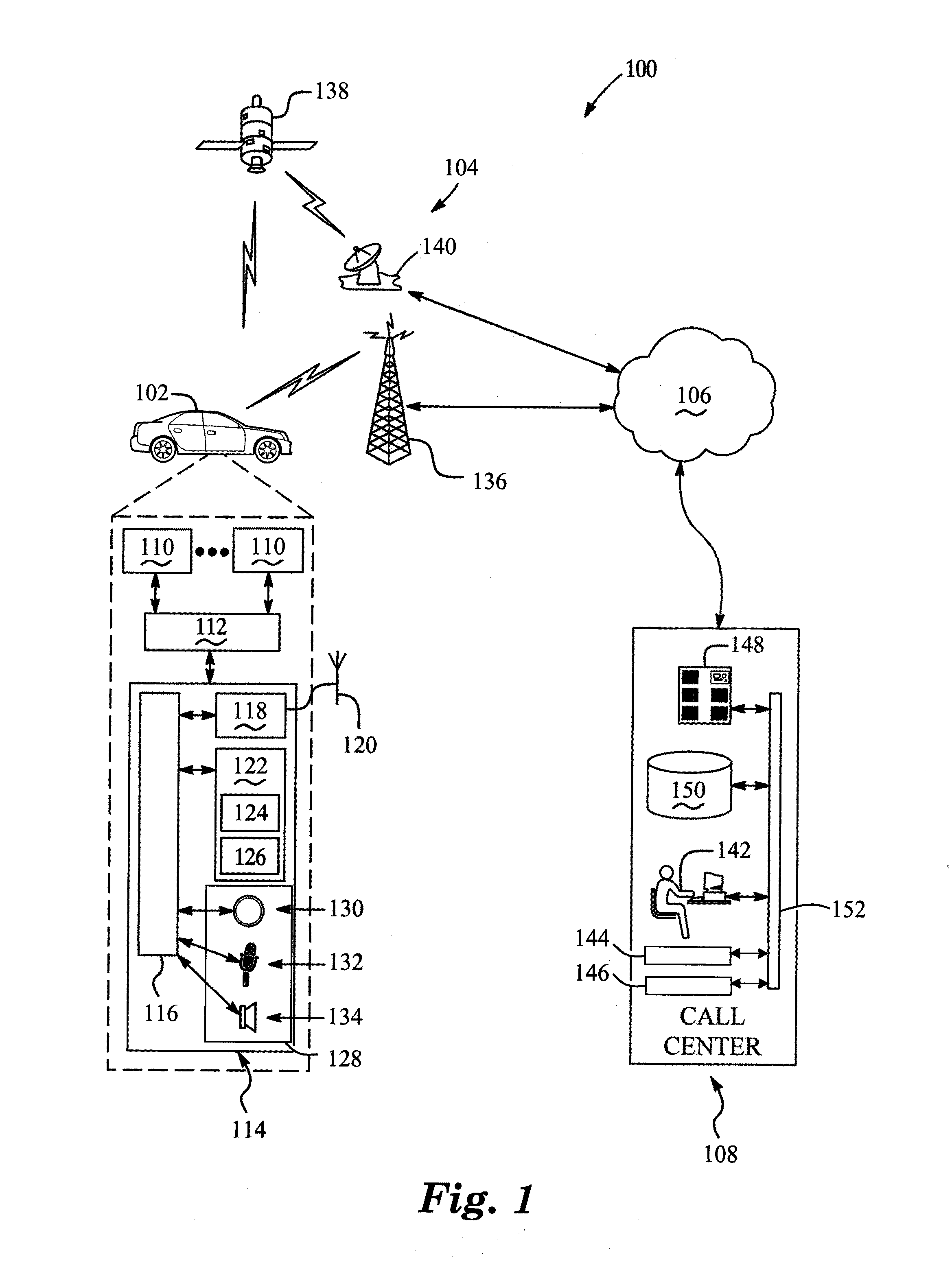

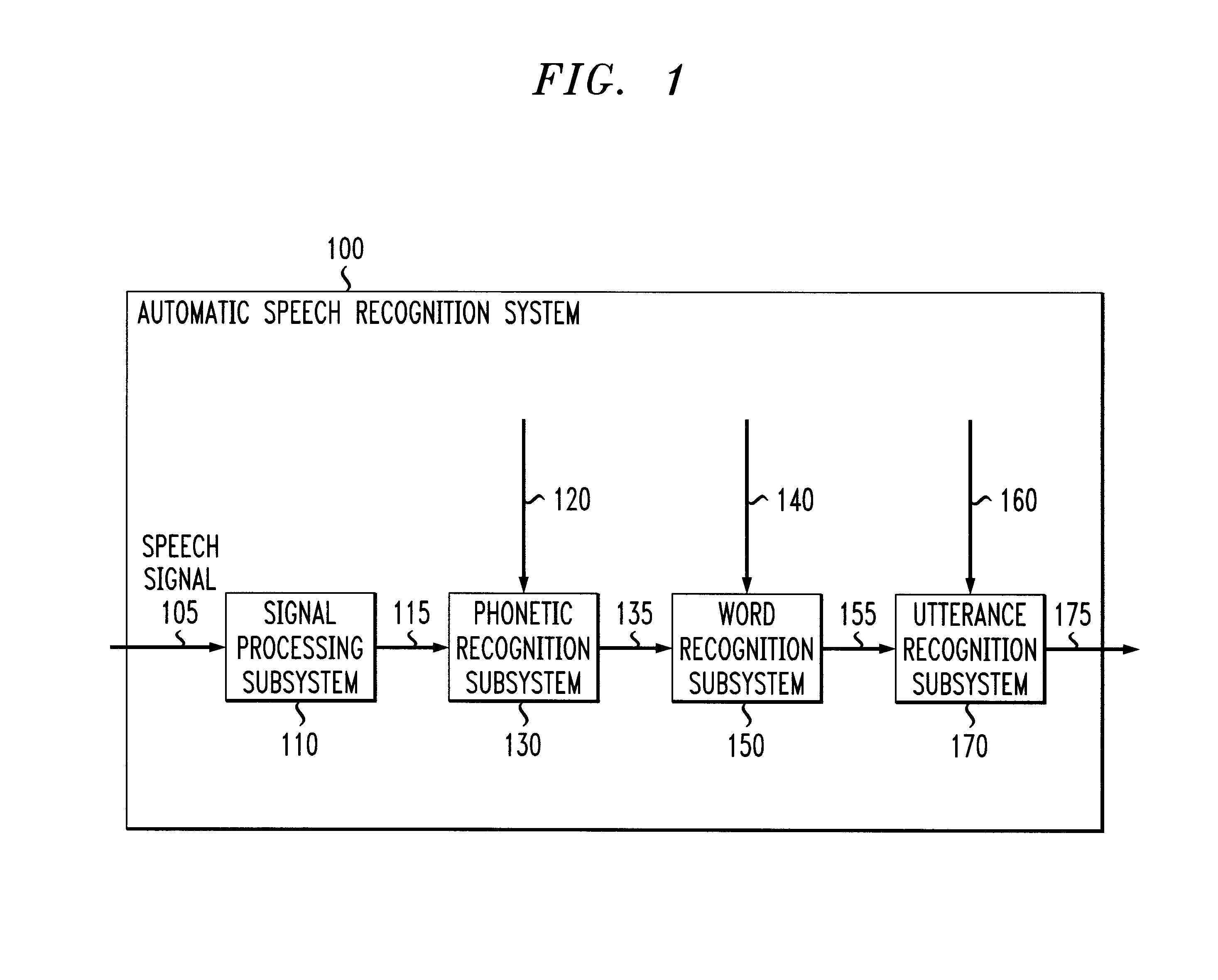

An iterative process is provided for interacting with a voice information service. Such a service may permit, for example, a user to search one or more databases and may provide one or more search results to the user. Such a service may be suitable, for example, for searching for a desired entity or object within the database(s) using speech as an input and navigational tool. Applications of such a service may include, for instance, speech-enabled searching services such as a directory assistance service or any other service or application involving a search of information. In one example implementation, an automatic speech recognition (ASR) system is provided that performs a speech recognition and database search in an iterative fashion. With each iteration, feedback may be provided to the user presenting potentially relevant results. In one specific ASR system, a user desiring to locate information relating to a particular entity or object provides an utterance to the ASR. Upon receiving the utterance, the ASR determines a recognition set of potentially relevant search results related to the utterance and presents to the user recognition set information in an interface of the ASR. The recognition set information includes, for instance, reference information stored internally at the ASR for a plurality of potentially relevant recognition results. The recognition set information may be used as input to the ASR providing a feedback mechanism. In one example implementation, the recognition set information may be used to determine a restricted grammar for performing a further recognition.

Owner:MICROSOFT TECH LICENSING LLC

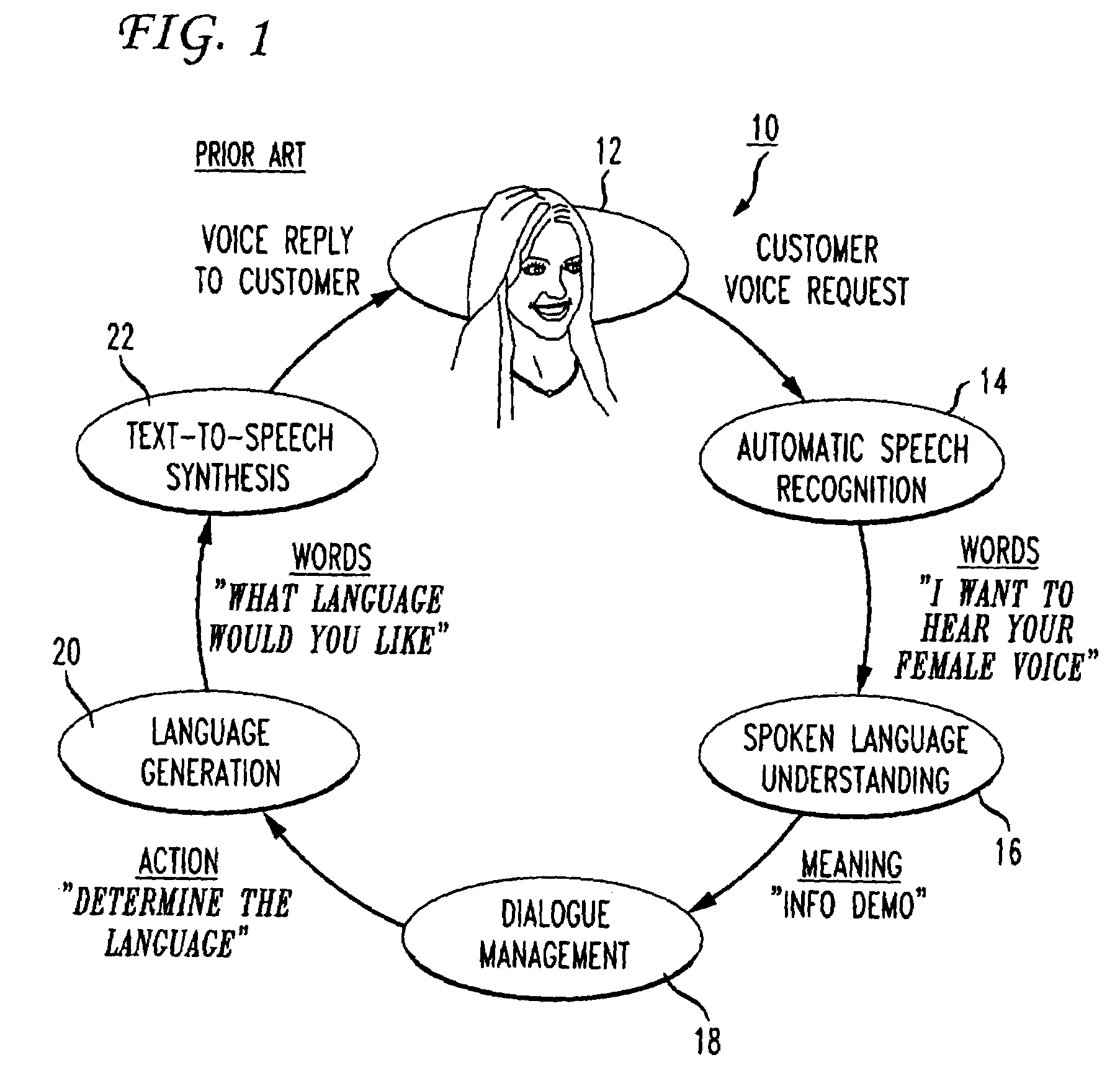

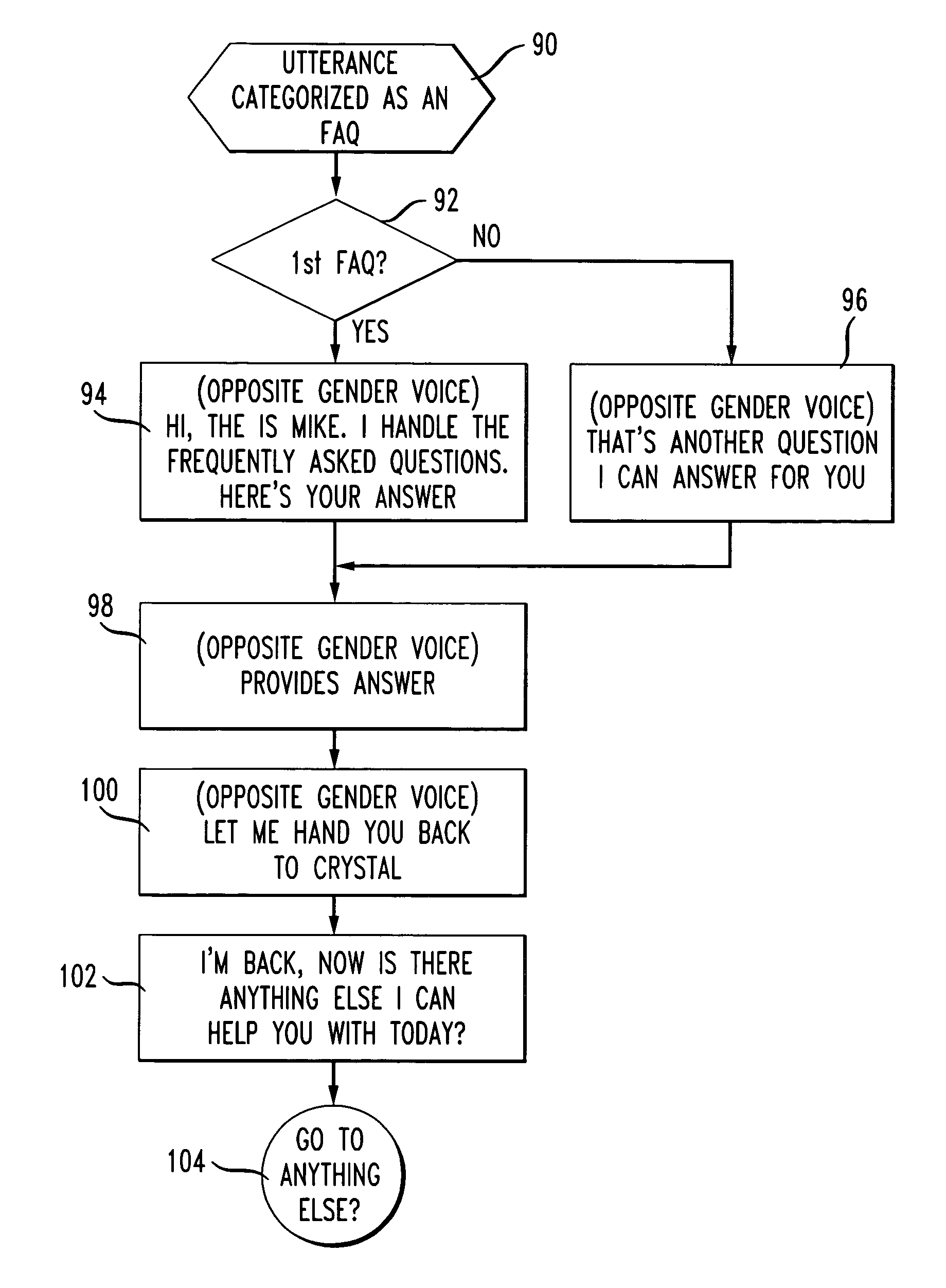

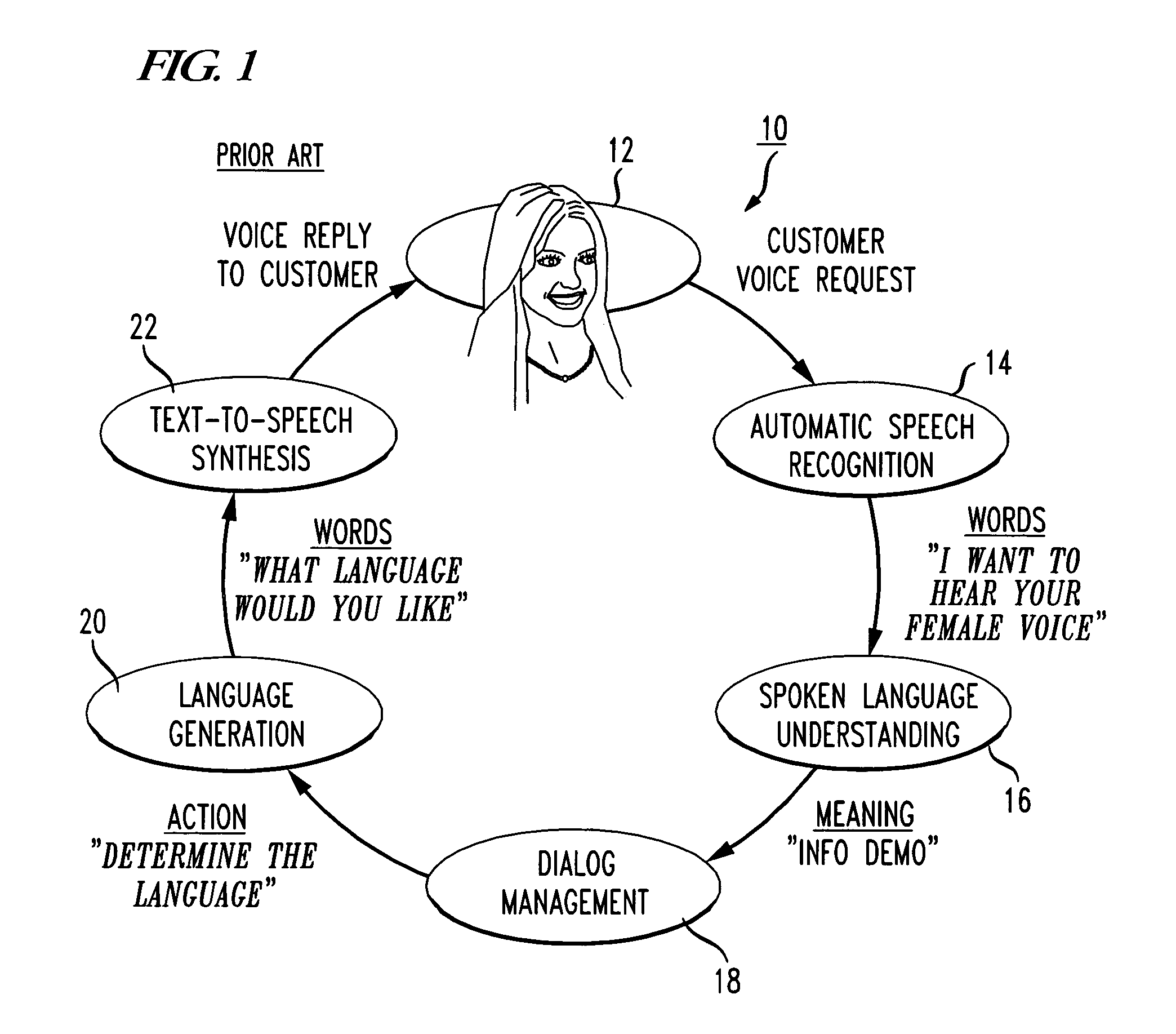

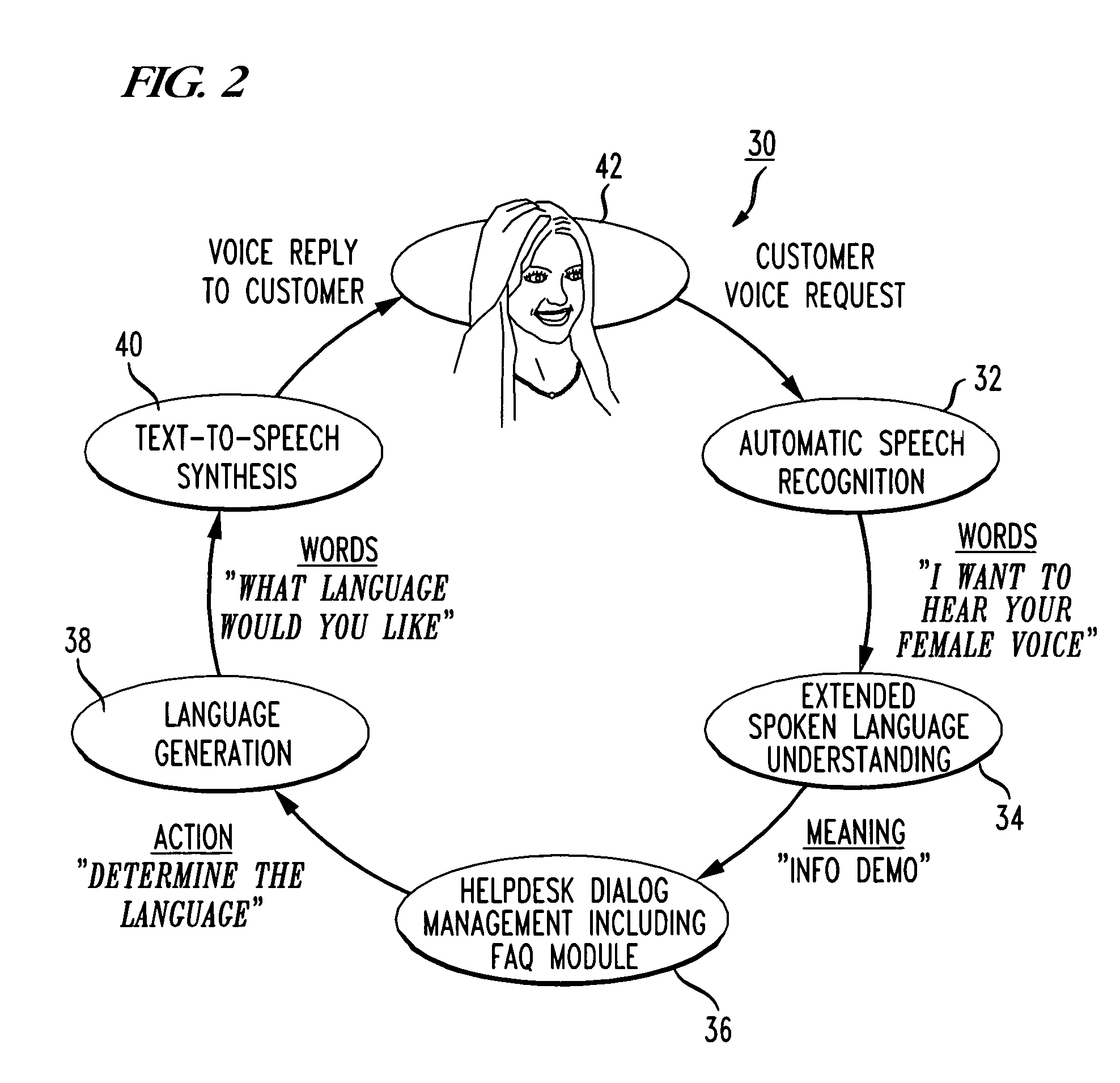

System for handling frequently asked questions in a natural language dialog service

InactiveUS7197460B1Improve customer relationshipEfficient mannerSpeech recognitionInput/output processes for data processingSpoken languageDialog management

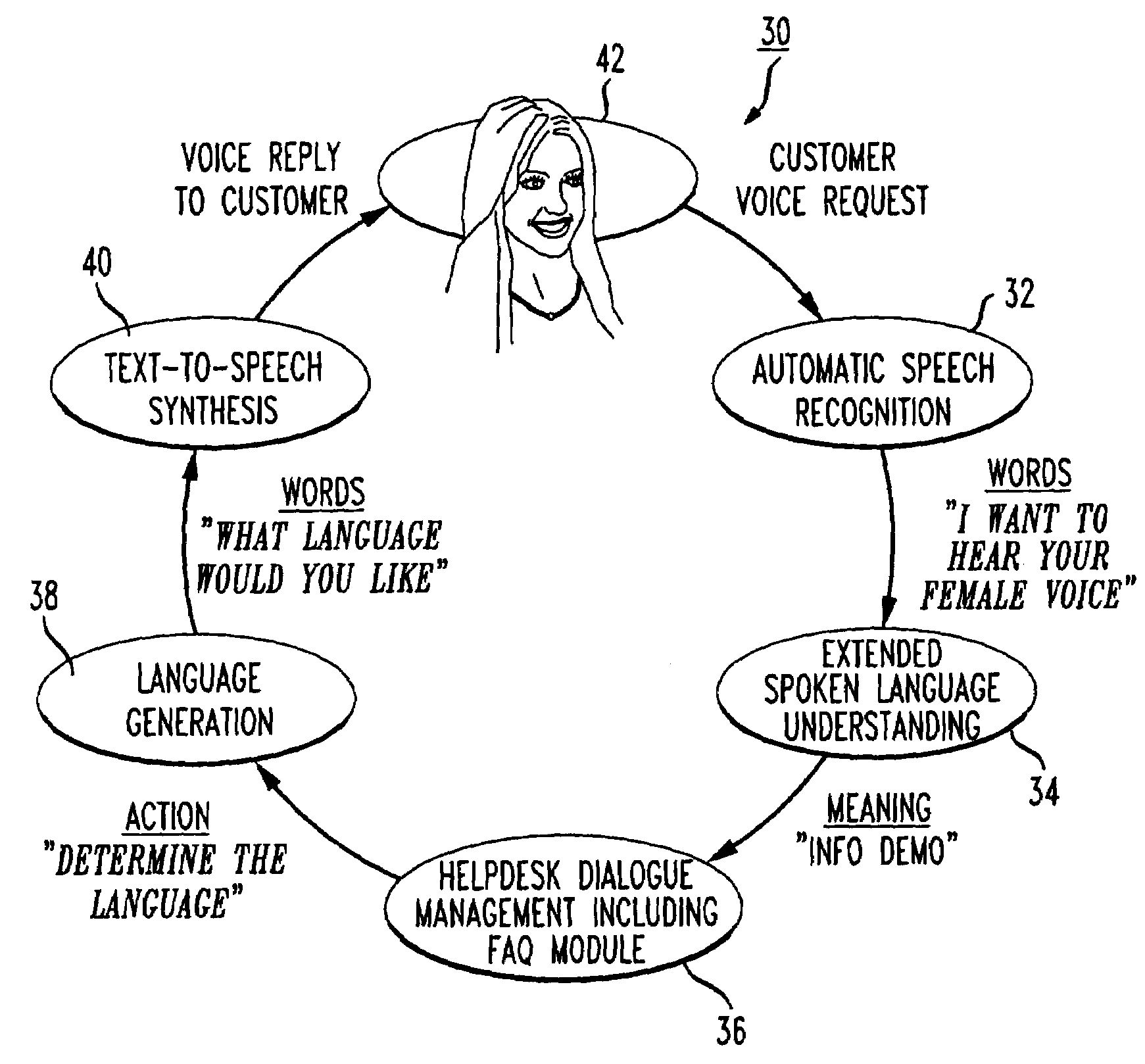

A voice-enabled help desk service is disclosed. The service comprises an automatic speech recognition module for recognizing speech from a user, a spoken language understanding module for understanding the output from the automatic speech recognition module, a dialog management module for generating a response to speech from the user, a natural voices text-to-speech synthesis module for synthesizing speech to generate the response to the user, and a frequently asked questions module. The frequently asked questions module handles frequently asked questions from the user by changing voices and providing predetermined prompts to answer the frequently asked question.

Owner:NUANCE COMM INC

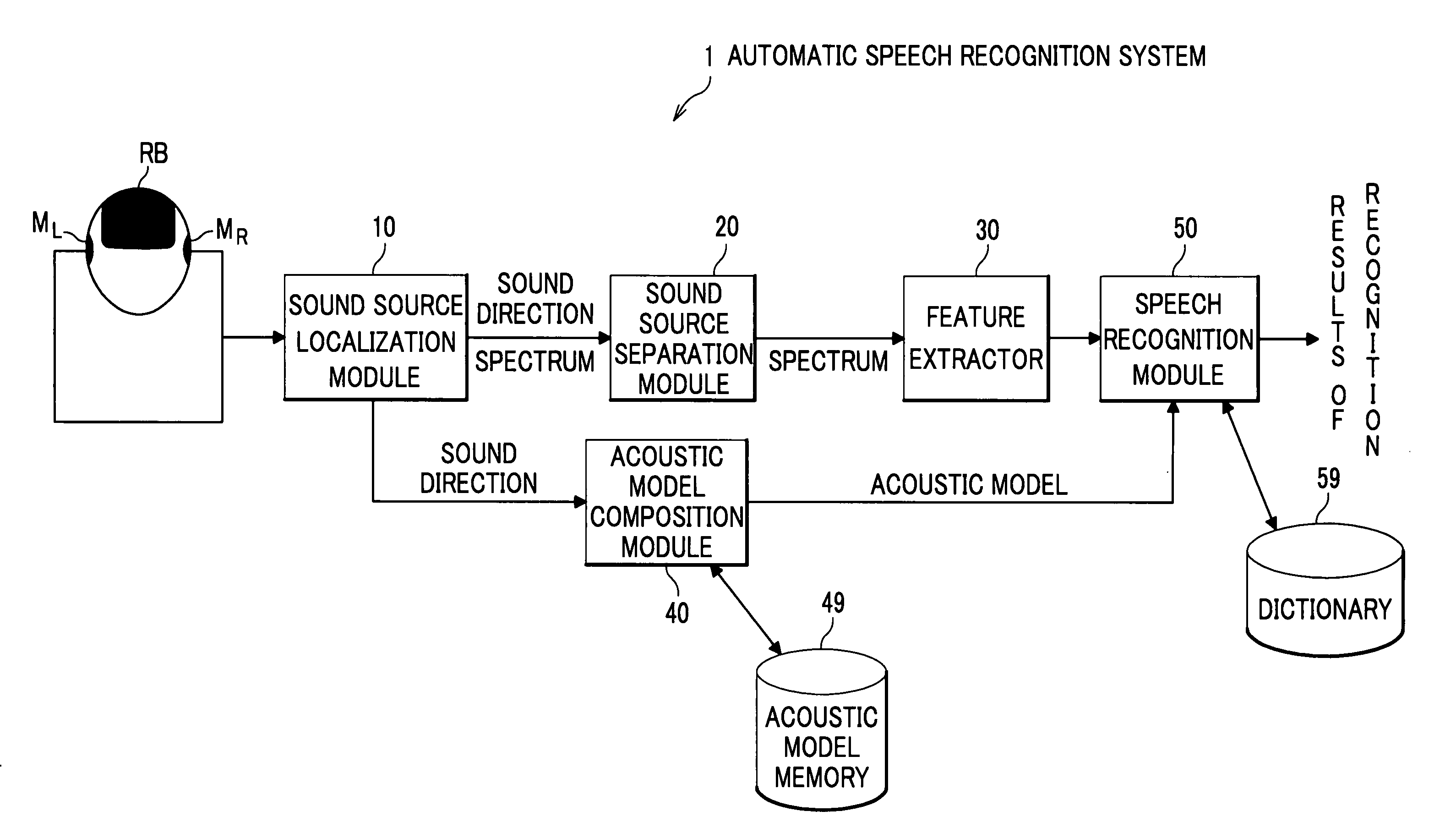

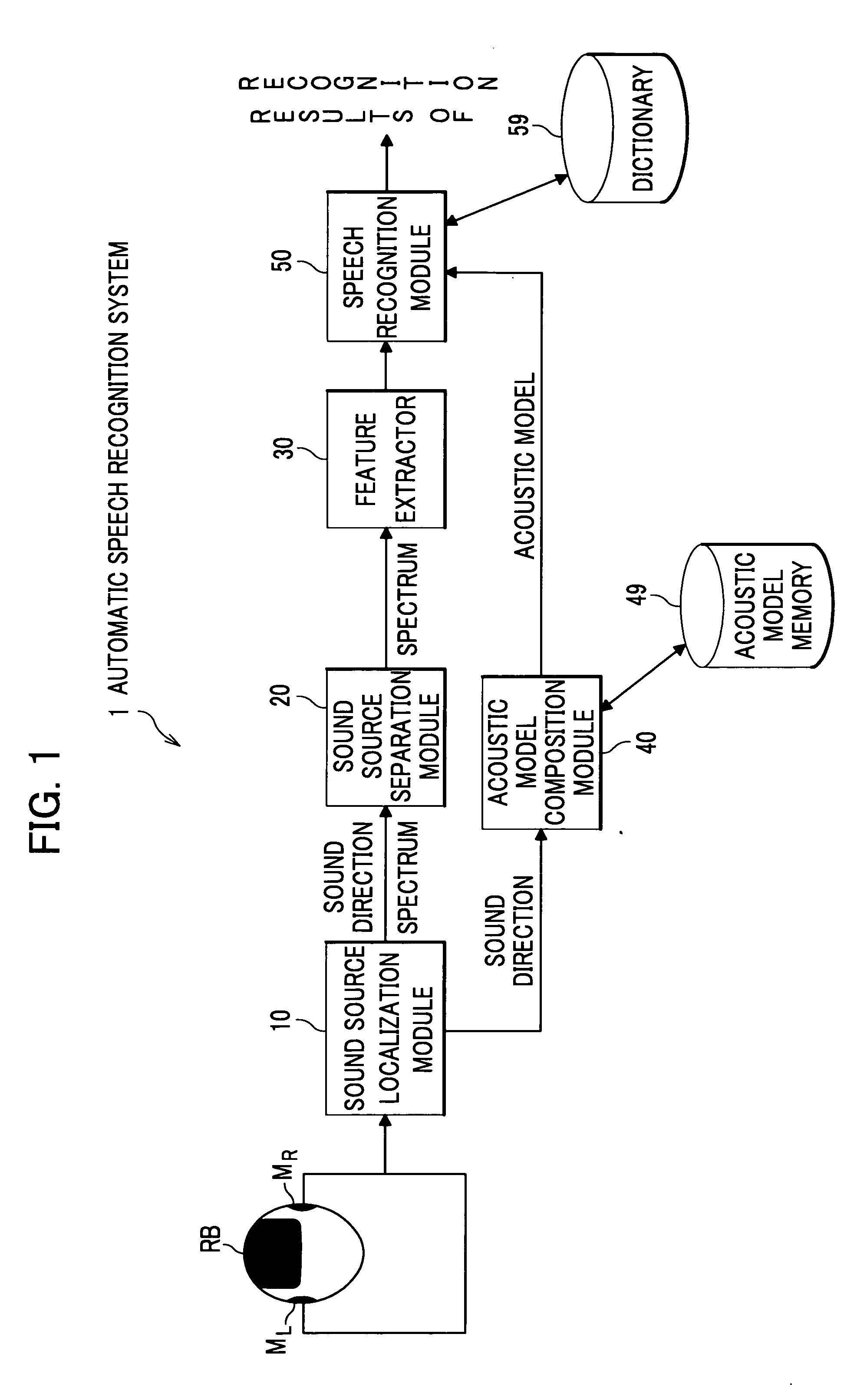

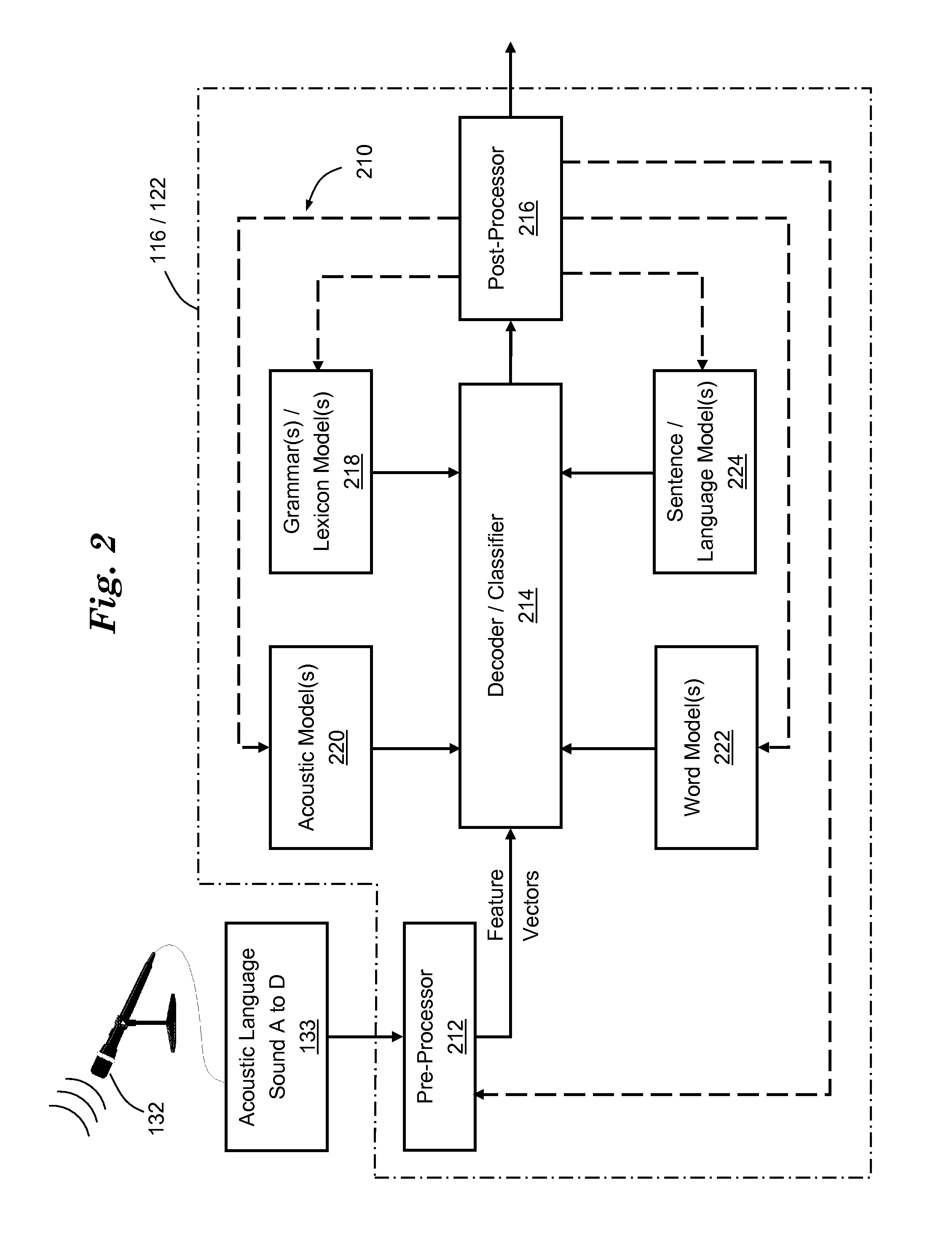

Automatic Speech Recognition System

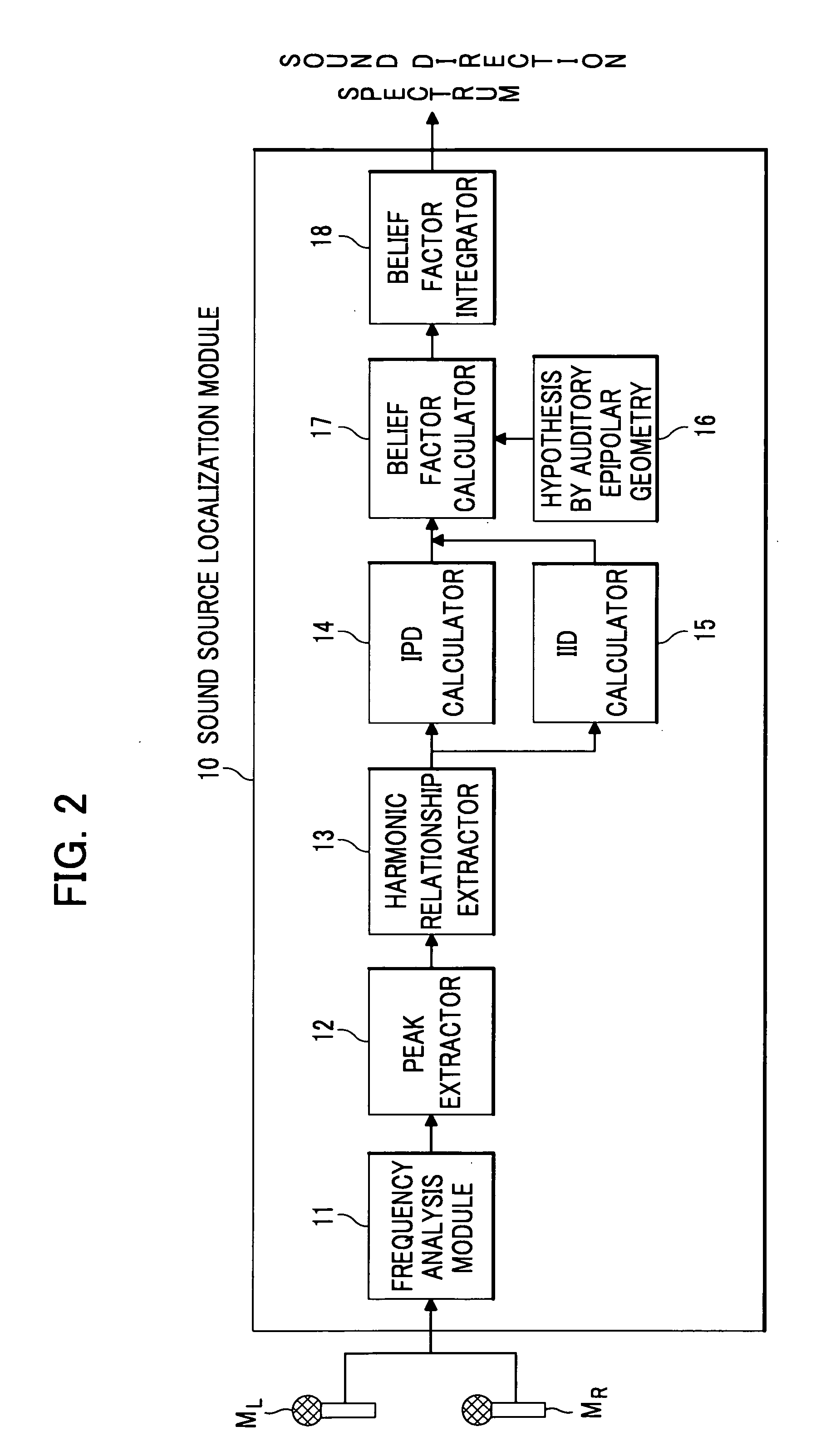

InactiveUS20090018828A1Improve speech recognition rateImprove accuracySpeech recognitionSound source separationFeature extraction

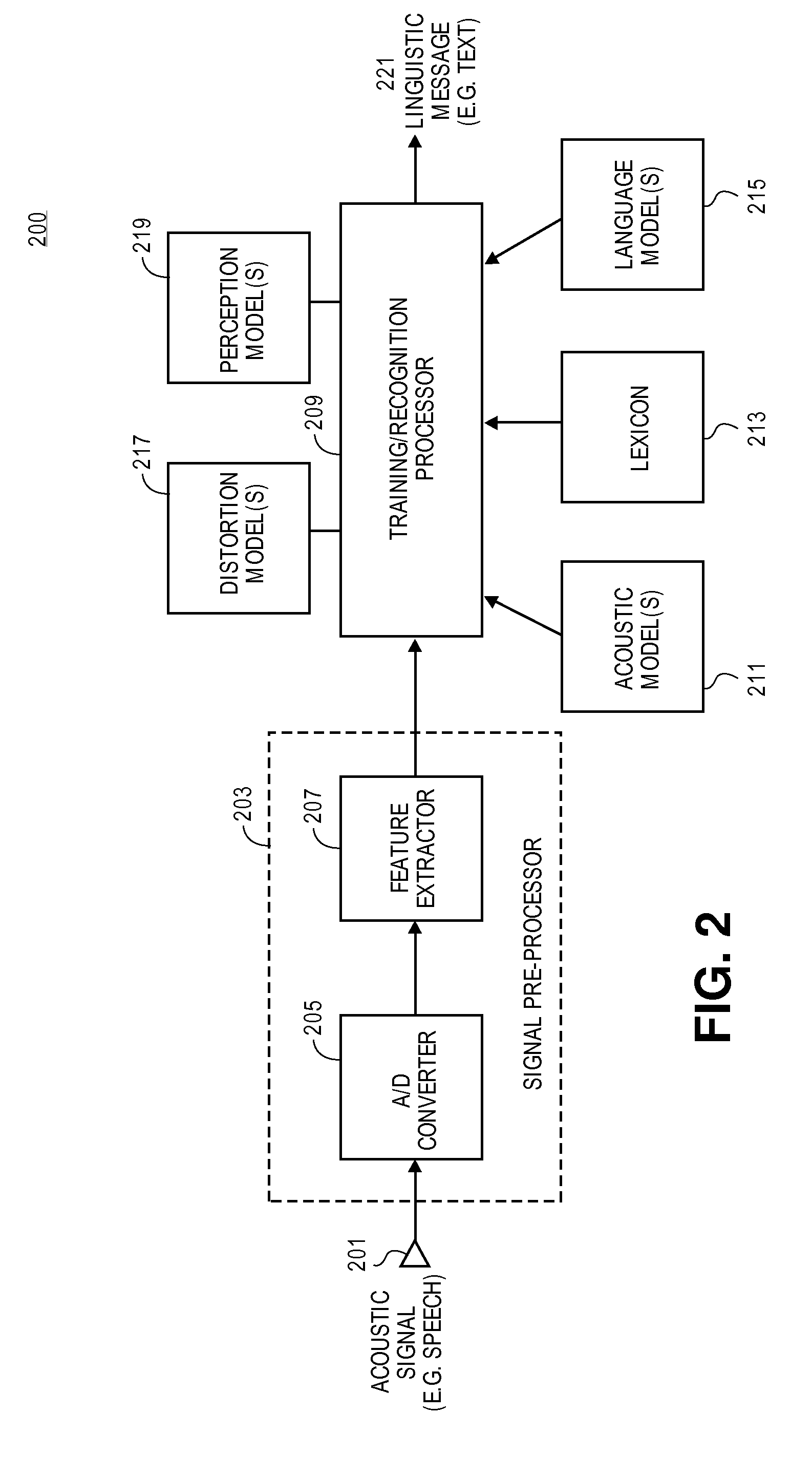

An automatic speech recognition system includes: a sound source localization module for localizing a sound direction of a speaker based on the acoustic signals detected by the plurality of microphones; a sound source separation module for separating a speech signal of the speaker from the acoustic signals according to the sound direction; an acoustic model memory which stores direction-dependent acoustic models that are adjusted to a plurality of directions at intervals; an acoustic model composition module which composes an acoustic model adjusted to the sound direction, which is localized by the sound source localization module, based on the direction-dependent acoustic models, the acoustic model composition module storing the acoustic model in the acoustic model memory; and a speech recognition module which recognizes the features extracted by a feature extractor as character information using the acoustic model composed by the acoustic model composition module.

Owner:HONDA MOTOR CO LTD

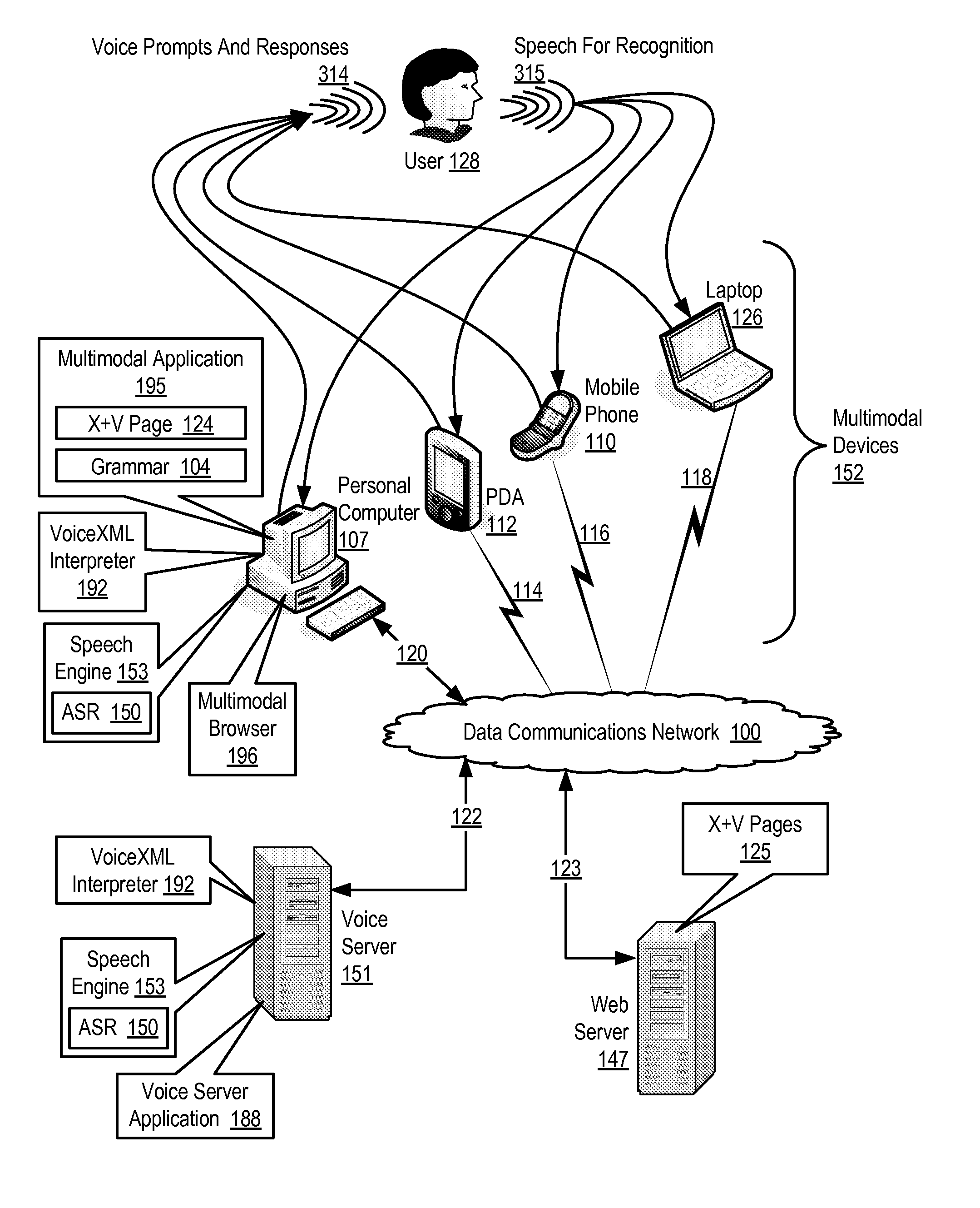

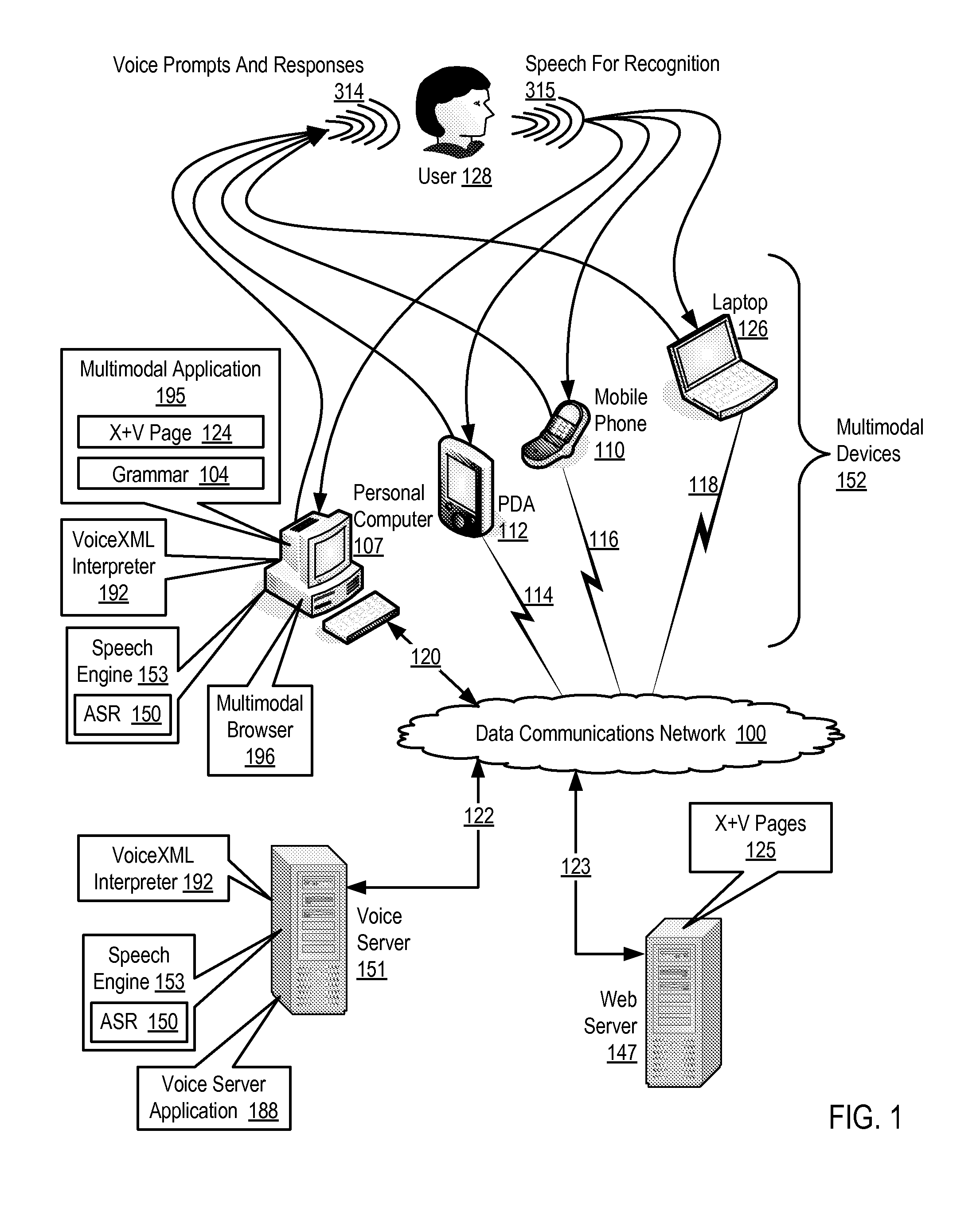

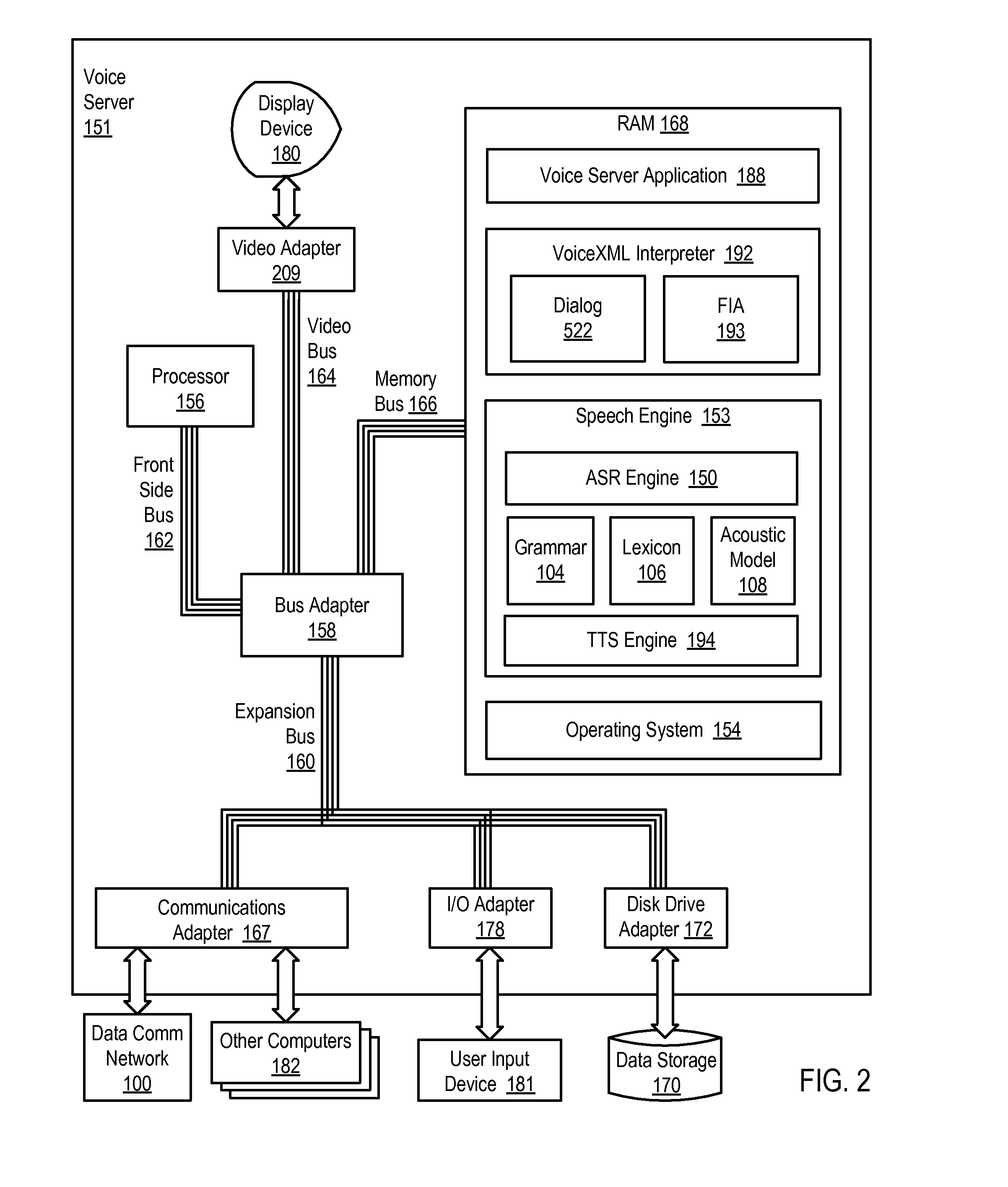

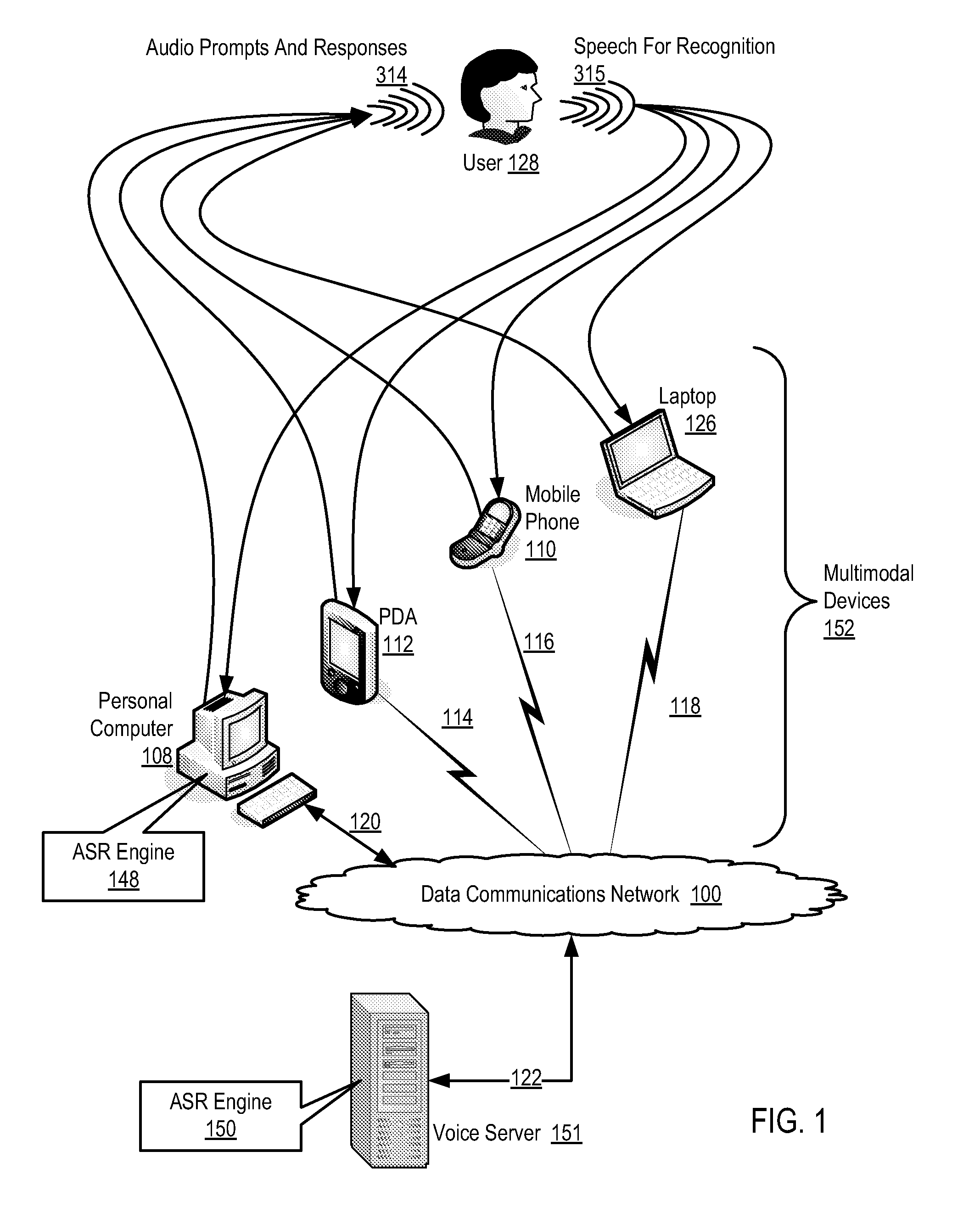

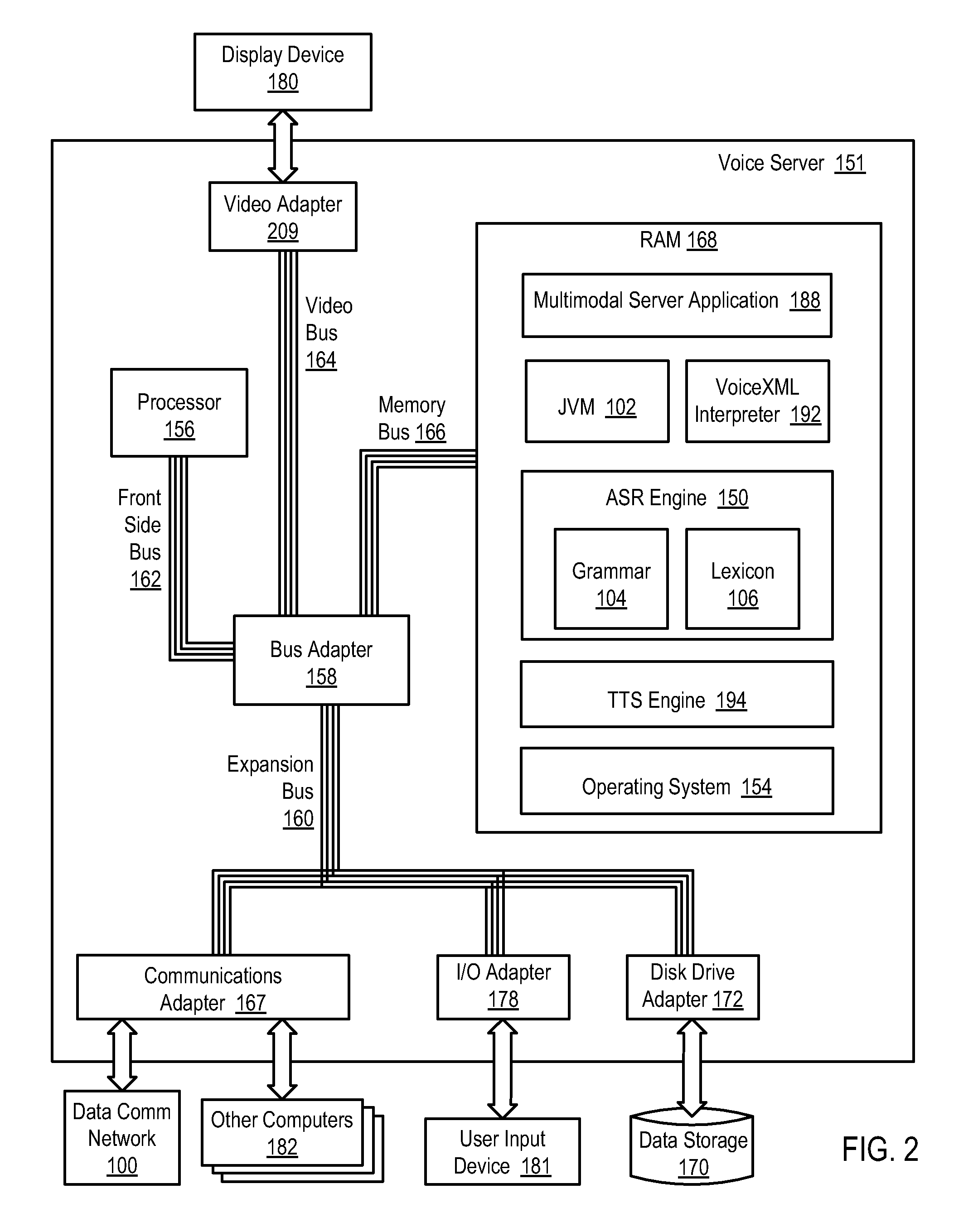

Ordering Recognition Results Produced By An Automatic Speech Recognition Engine For A Multimodal Application

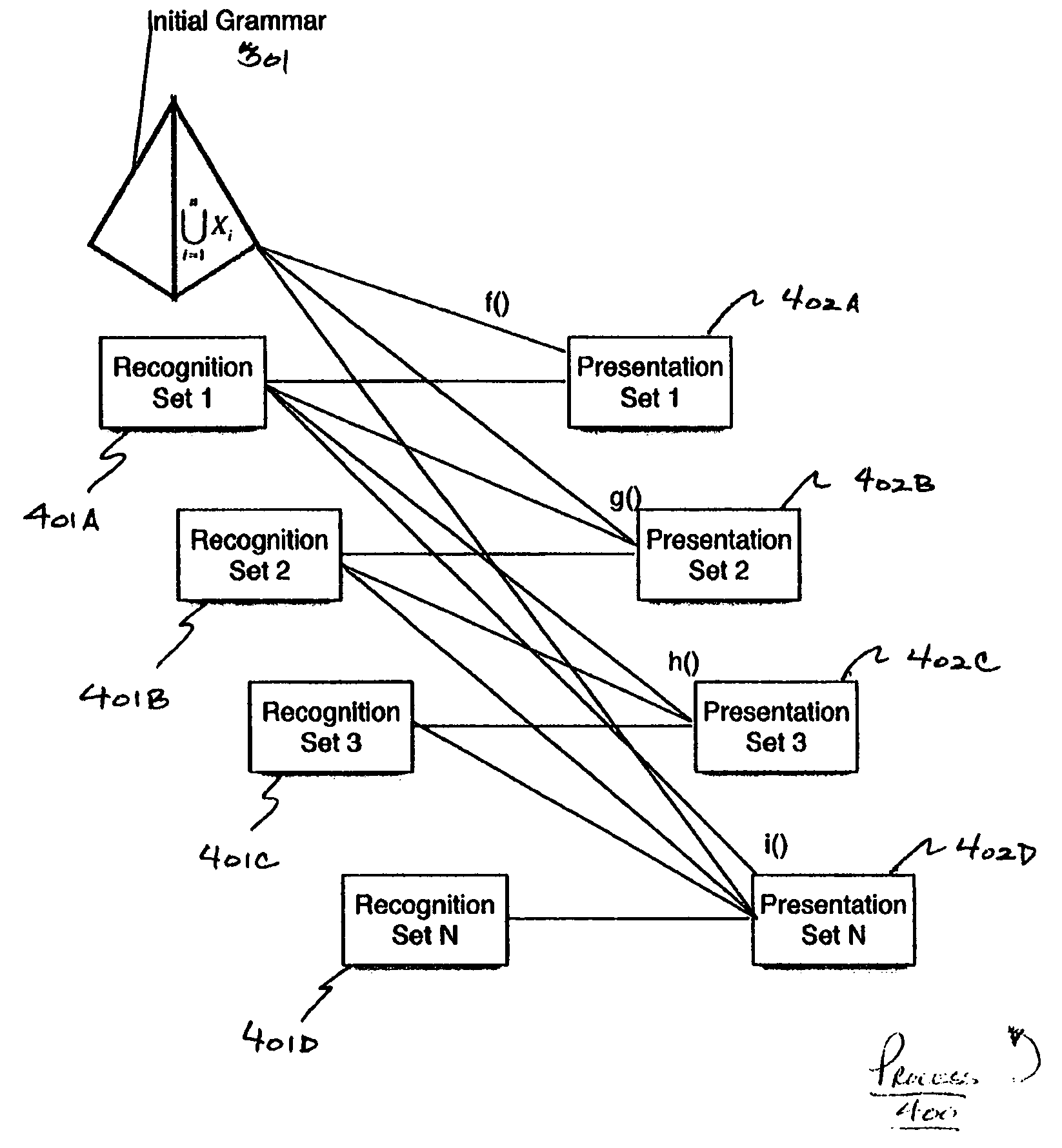

Ordering recognition results produced by an automatic speech recognition (‘ASR’) engine for a multimodal application implemented with a grammar of the multimodal application in the ASR engine, with the multimodal application operating in a multimodal browser on a multimodal device supporting multiple modes of interaction including a voice mode and one or more non-voice modes, the multimodal application operatively coupled to the ASR engine through a VoiceXML interpreter, includes: receiving, in the VoiceXML interpreter from the multimodal application, a voice utterance; determining, by the VoiceXML interpreter using the ASR engine, a plurality of recognition results in dependence upon the voice utterance and the grammar; determining, by the VoiceXML interpreter according to semantic interpretation scripts of the grammar, a weight for each recognition result; and sorting, by the VoiceXML interpreter, the plurality of recognition results in dependence upon the weight for each recognition result.

Owner:NUANCE COMM INC

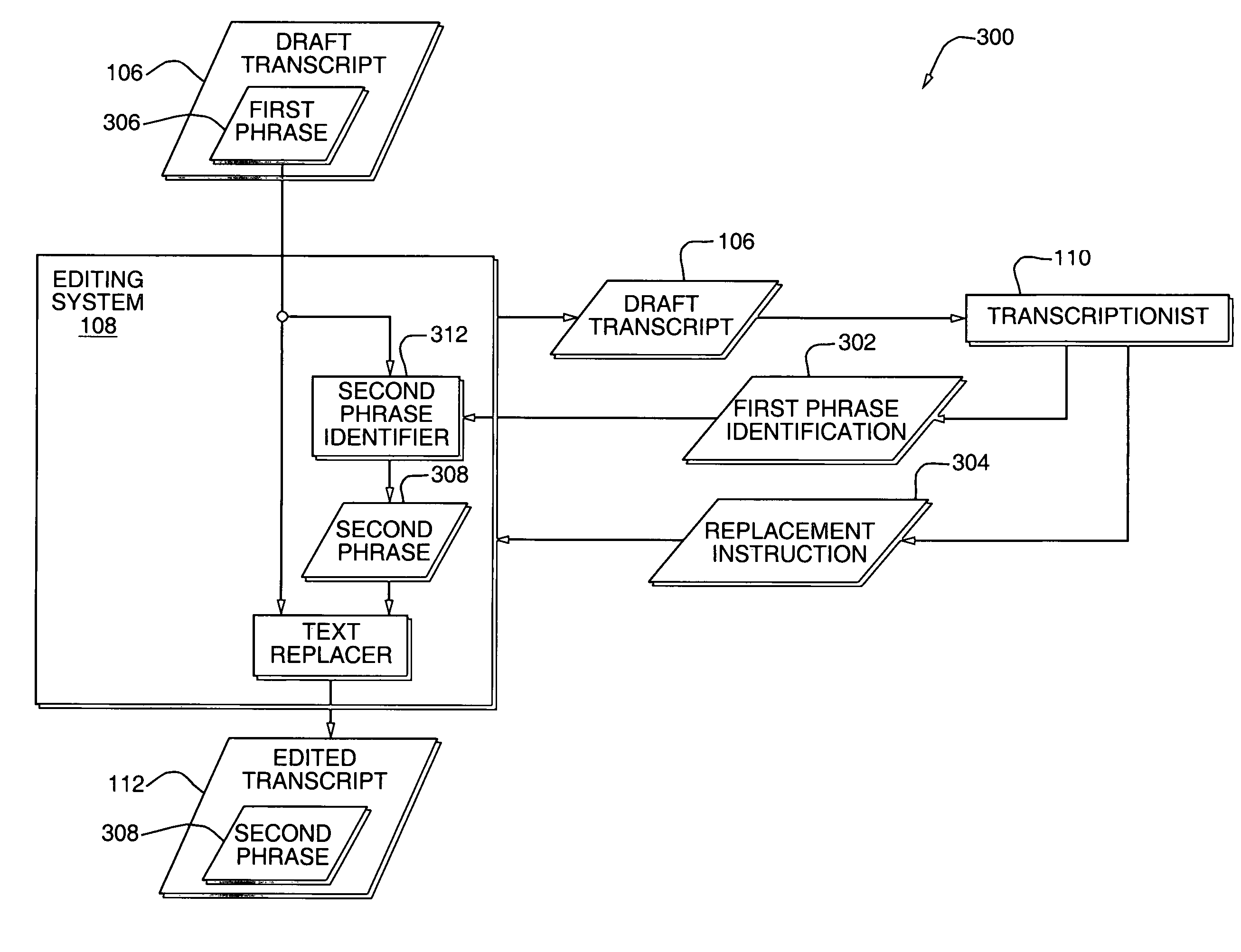

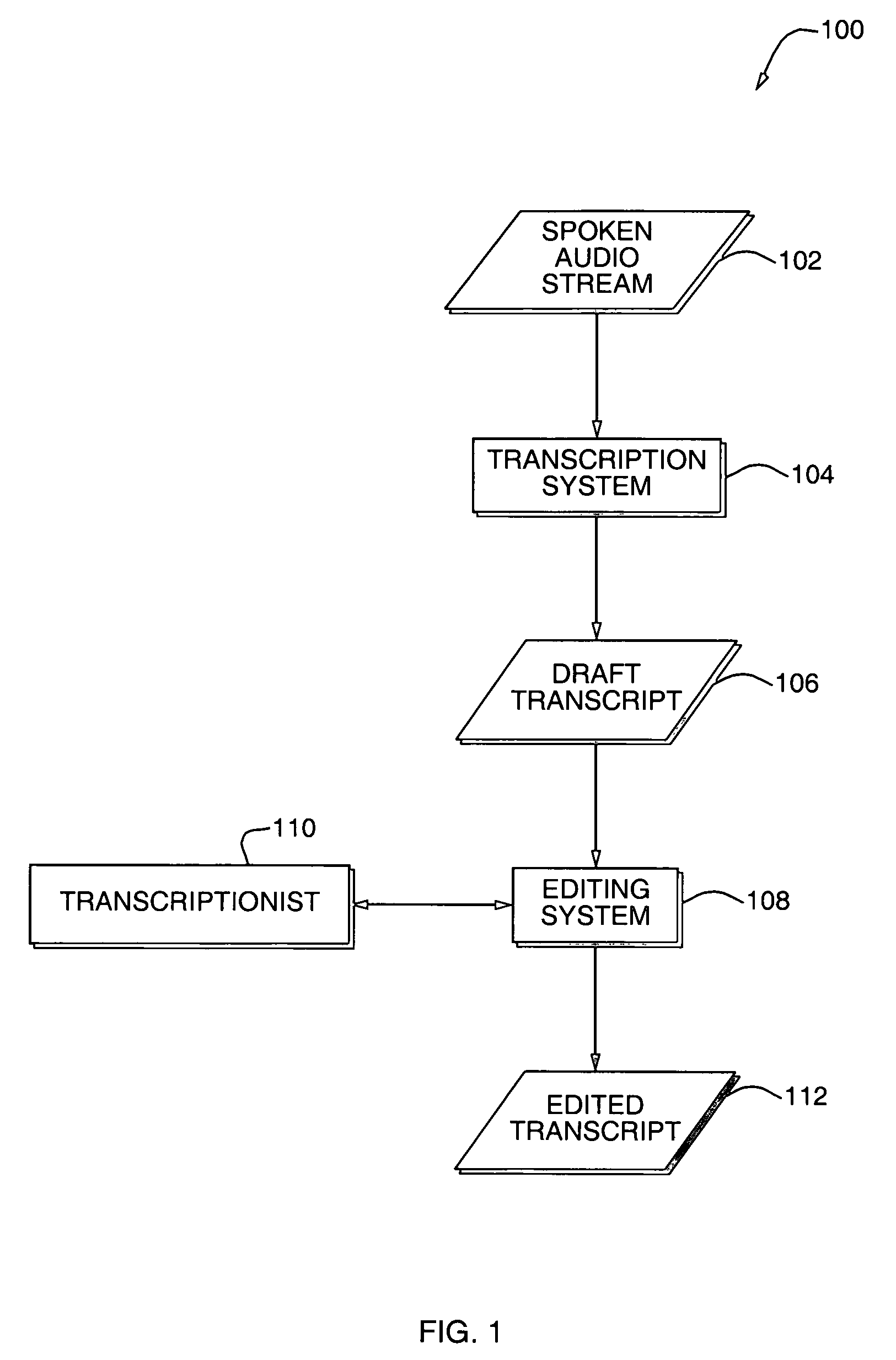

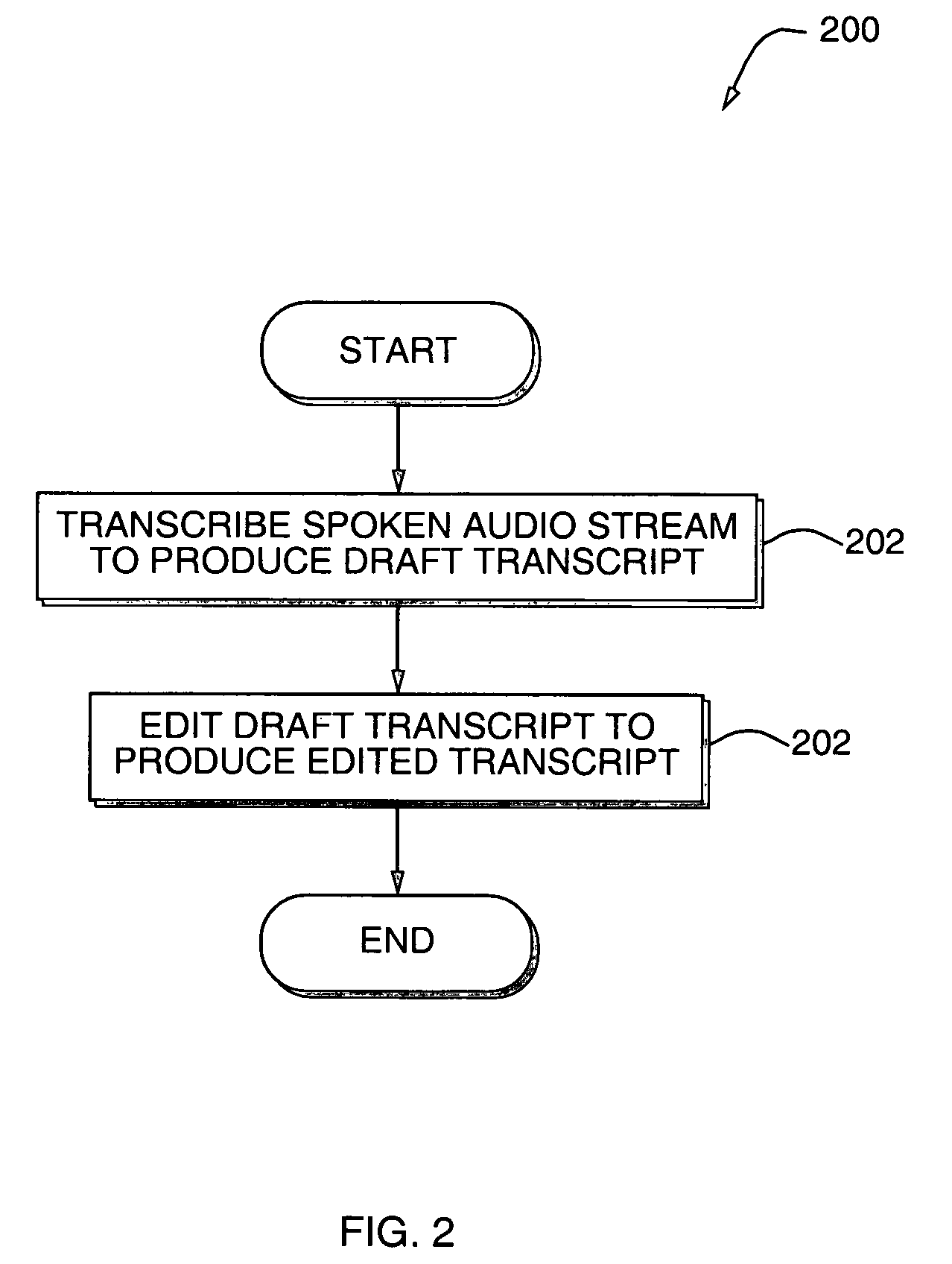

Replacing text representing a concept with an alternate written form of the concept

A system enables a transcriptionist to replace a first written form (such as an abbreviation) of a concept with a second written form (such as an expanded form) of the same concept. For example, the system may display to the transcriptionist a draft document produced from speech by an automatic speech recognizer. If the transcriptionist recognizes a first written form of a concept that should be replaced with a second written form of the same concept, the transcriptionist may provide the system with a replacement command. In response, the system may identify the second written form of the concept and replace the first written form with the second written form in the draft document.

Owner:3M HEALTH INFORMATION SYST INC

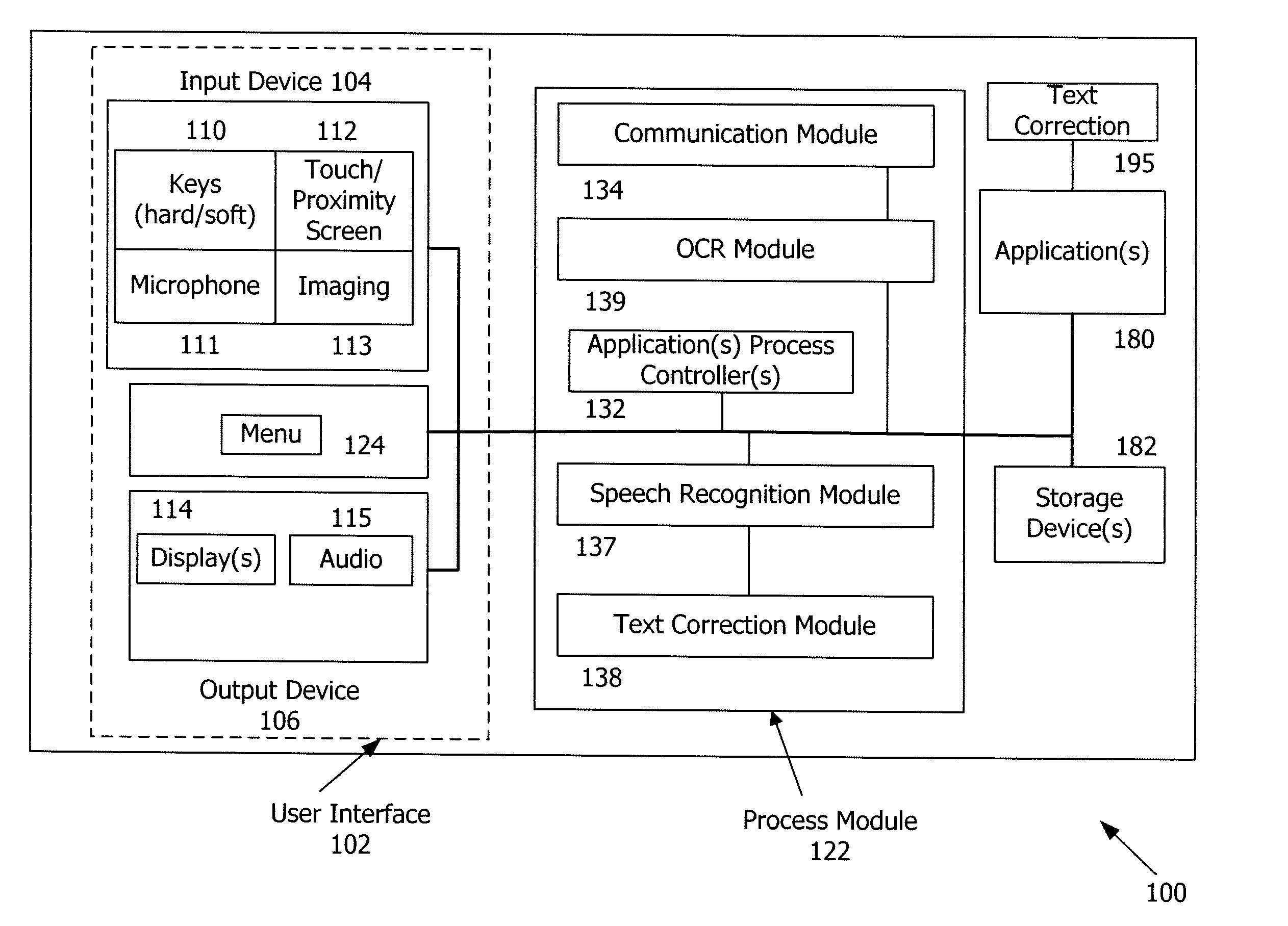

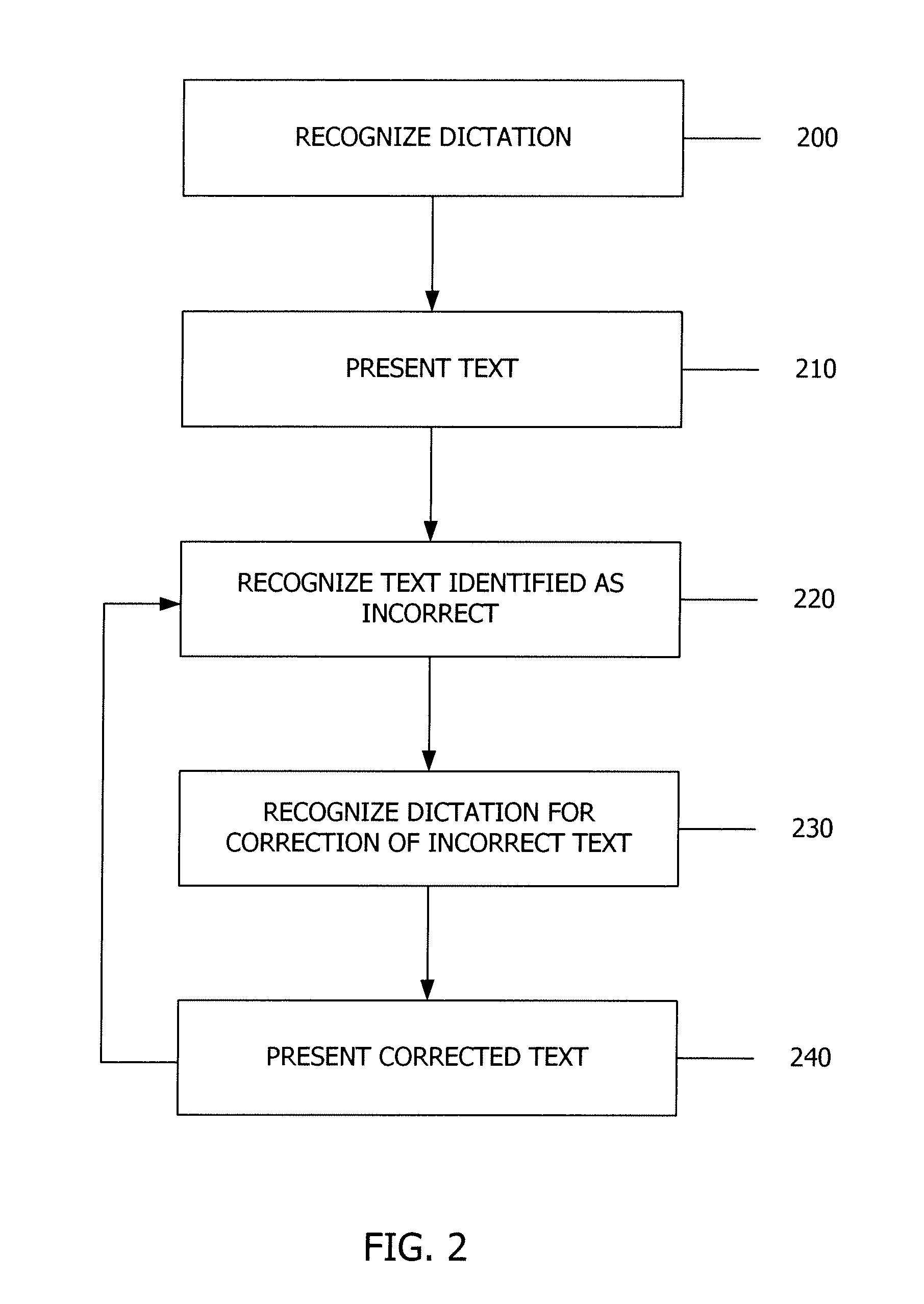

Multiword text correction

A method including detecting a selection of a plurality of erroneous words in text presented on a display of a device, in an automatic speech recognition system, receiving sequentially dictated corrections for the selected erroneous words in a single, continuous operation where each dictated correction corresponds to at least one of the selected erroneous words, and replacing the plurality of erroneous words with one or more corresponding words of the dictated corrections where each erroneous word is matched with the one or more corresponding words of the dictated corrections in an order the erroneous words appear according to a reading direction of the text.

Owner:NOKIA CORP

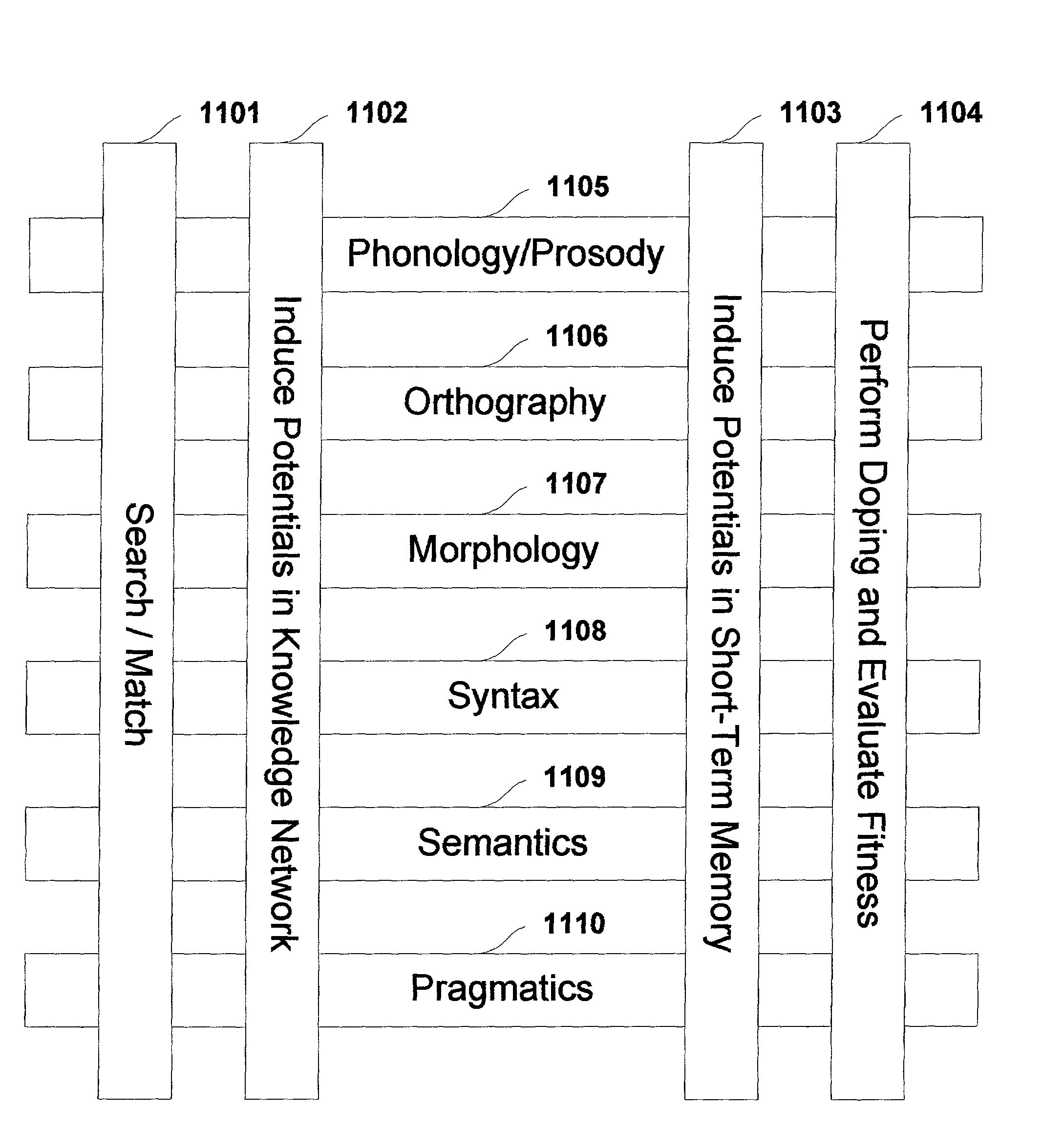

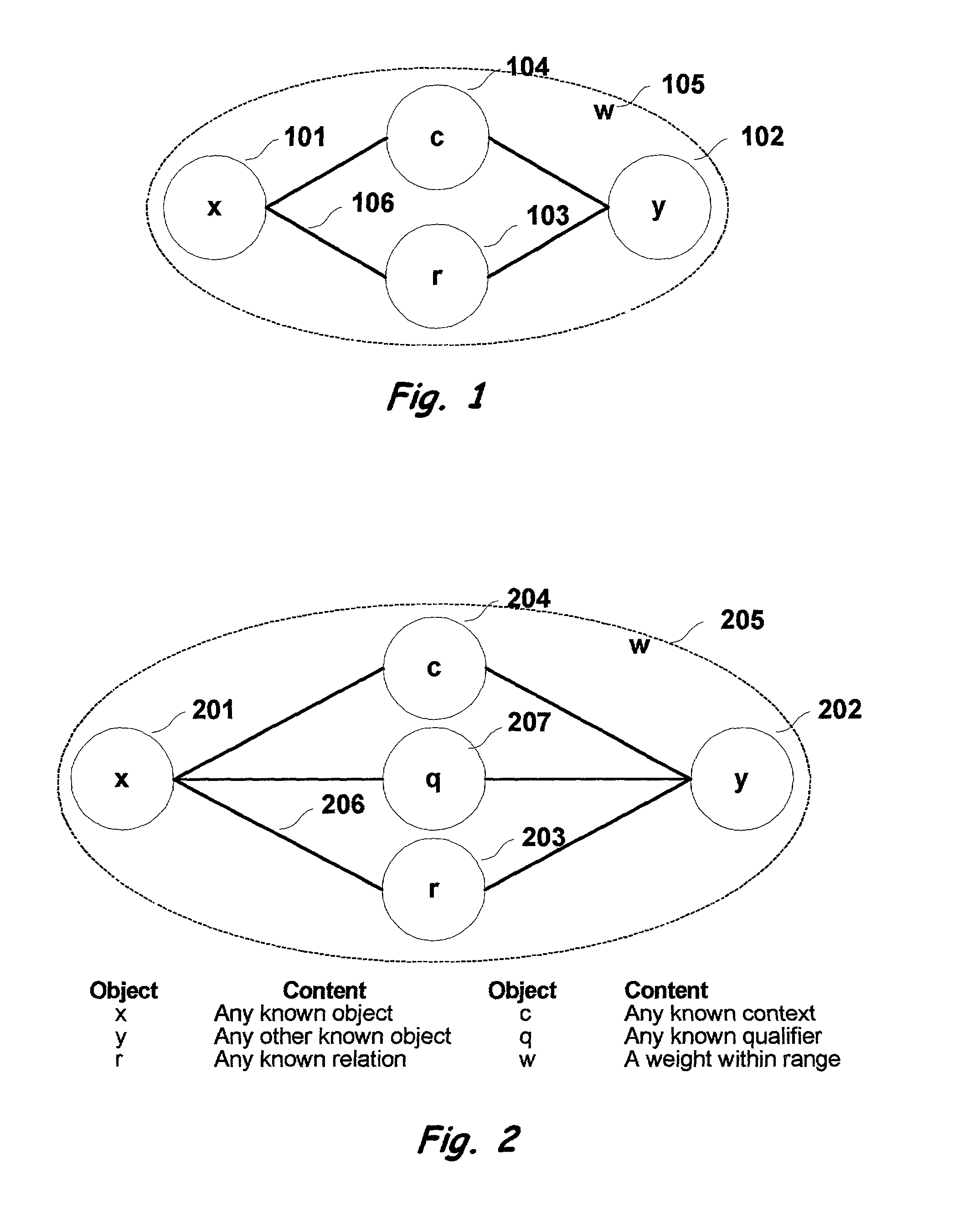

Multi-dimensional method and apparatus for automated language interpretation

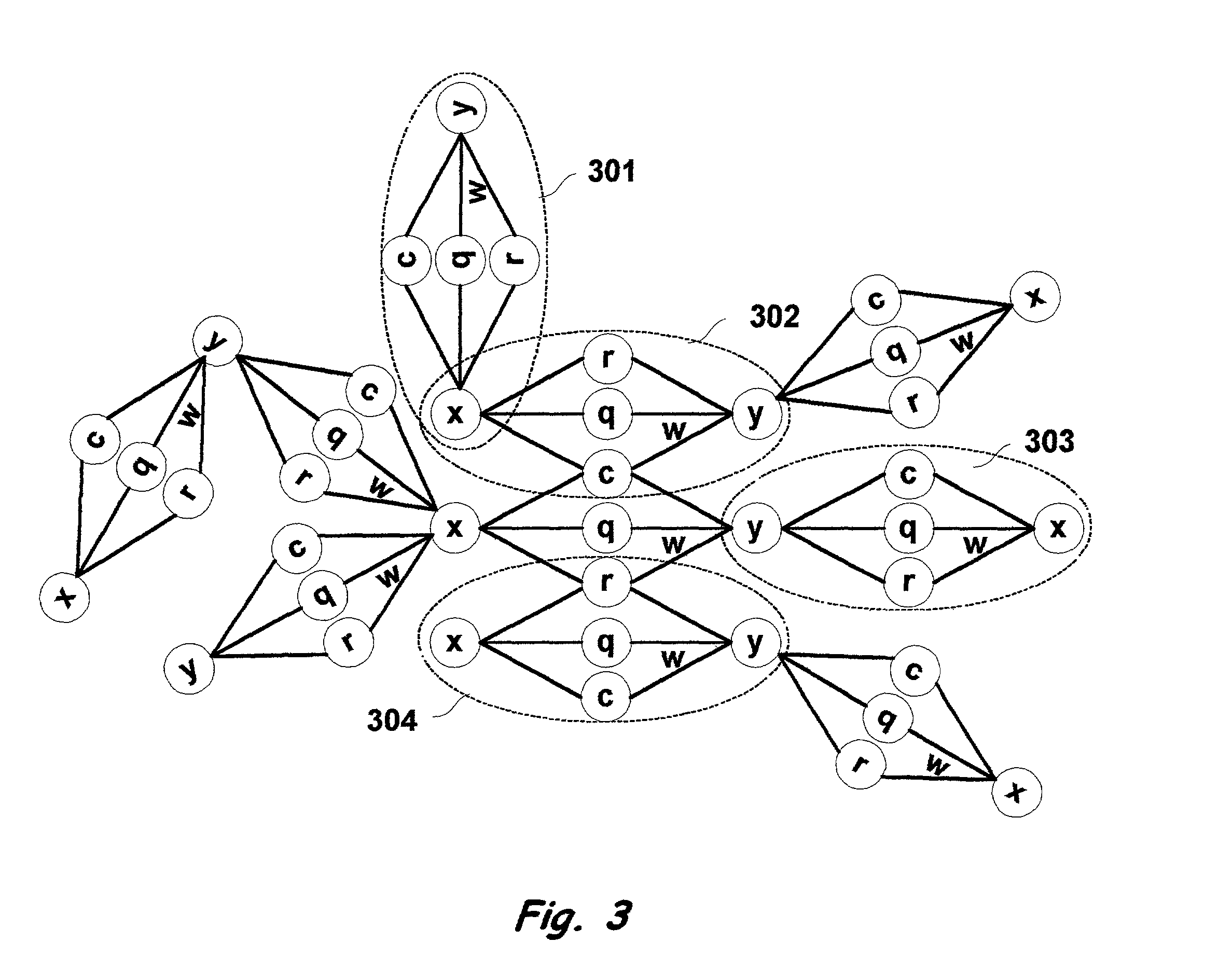

A method and apparatus for natural language interpretation are described. The invention includes a schema and apparatus for storing, in digital, analog, or other machine-readable format, a network of propositions formed of a plurality of text and / or non-text objects, and the steps of retrieving a string of input text, and locating all associated propositions in the network for each word in the input string. Embodiments of the invention also include optimization steps for locating said propositions, and specialized structures for storing them in a ready access storage area simulating human short-term memory. The schema and steps may also include structures and processes for obtaining and adjusting the weights of said propositions to determine posterior probabilities representing the intended meaning. Embodiments of the invention also include an apparatus designed to apply an automated interpretation algorithm to automated voice response systems and portable knowledge appliance devices.

Owner:KNOWLEDGENETICA CORP

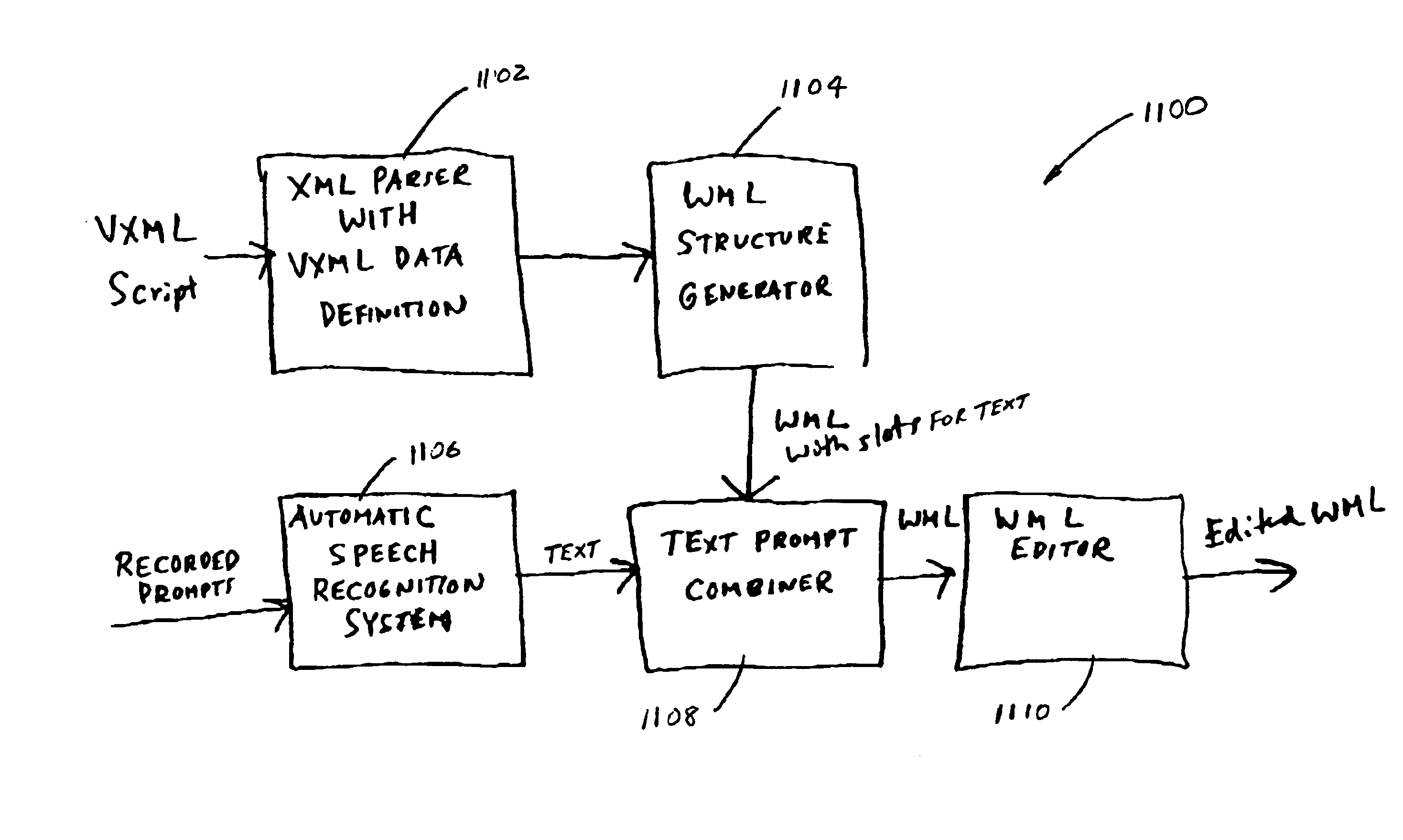

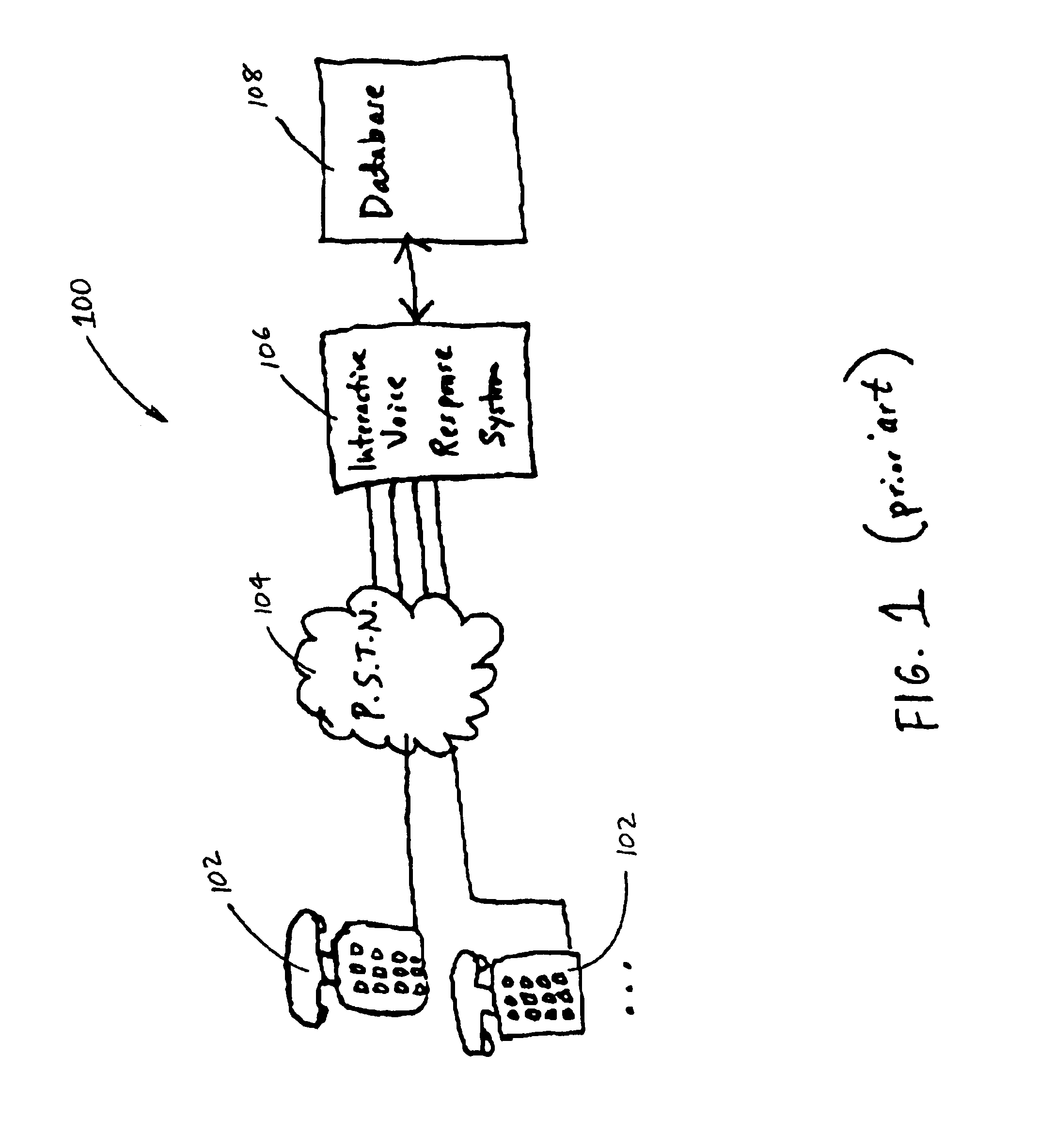

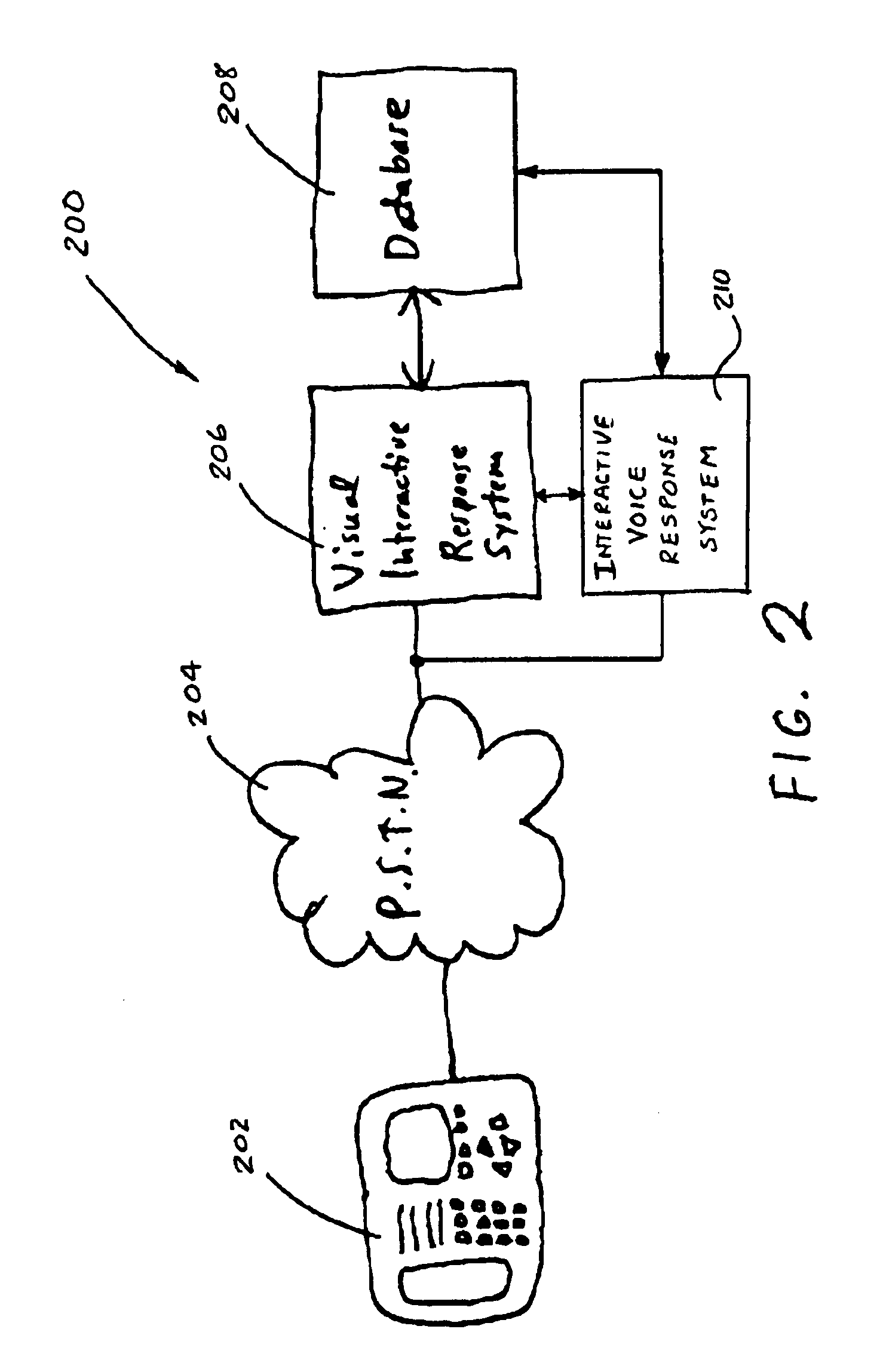

Visual interactive response system and method translated from interactive voice response for telephone utility

InactiveUS6920425B1Interconnection arrangementsAutomatic exchangesInteractive voice response systemWireless Markup Language

A system, method, and computer readable medium storing a software program for translating a script for an interactive voice response system to a script for a visual interactive response system. The visual interactive response system executes the translated visual-based script when a user using a display telephone calls the visual interactive response system. The visual interactive response system then transmits a visual menu to the display telephone to allow the user to select a desired response, which is subsequently sent back to the visual interactive response system for processing. The voice-based script may be defined in voice extensible markup language and the visual-based script may be defined in wireless markup language, hypertext markup language, or handheld device markup language. The translation system and program includes a parser for extracting command structures from the voice-based script, a visual-based structure generator for generating corresponding command structure for the visual-based script, a text prompt combiner for incorporating text translated from voice prompts into command structure generated by the structure generator, an automatic speech recognition routine for automatically converting voice prompts into translated text, and an editor for editing said visual-based script.

Owner:RPX CLEARINGHOUSE

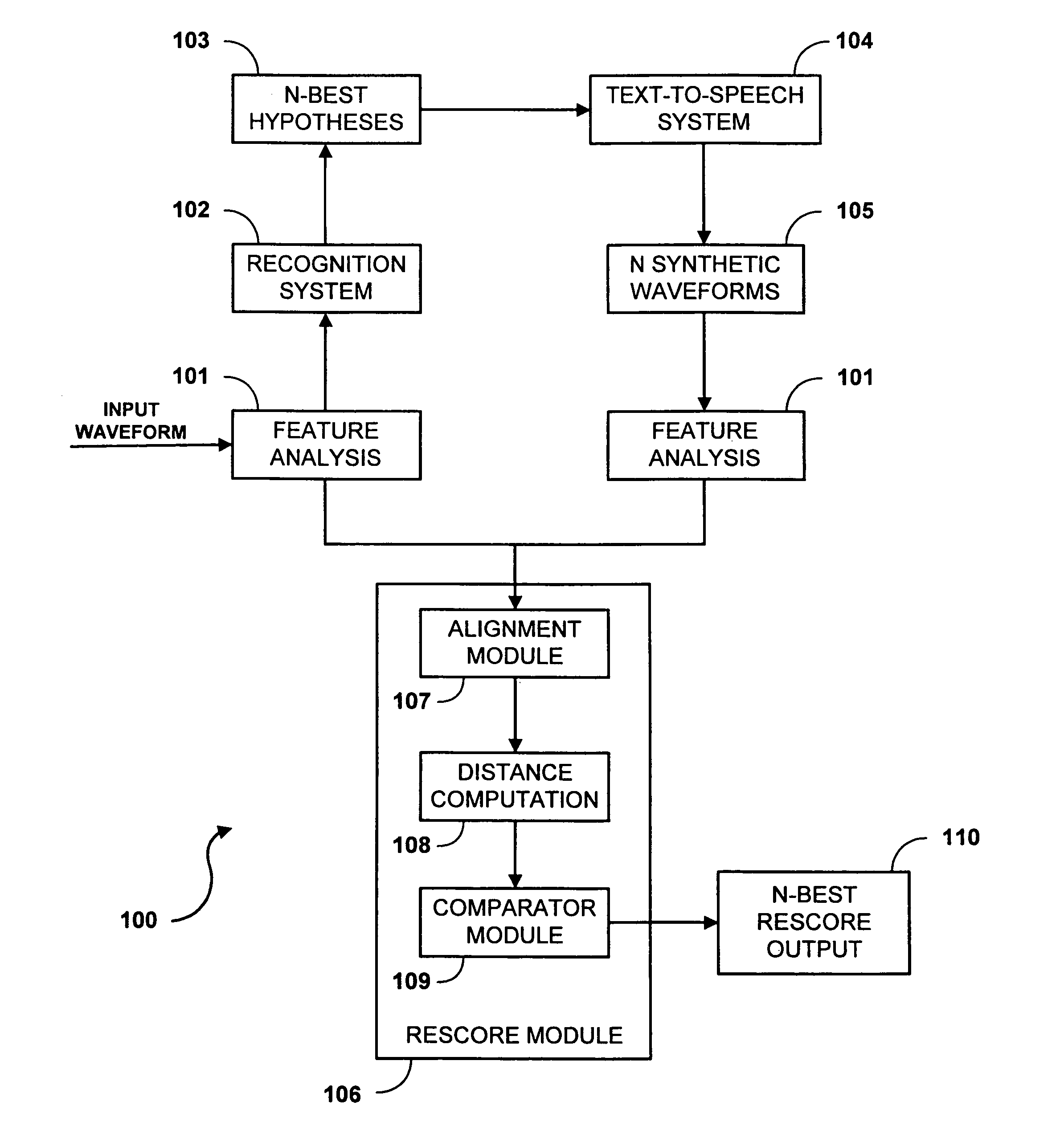

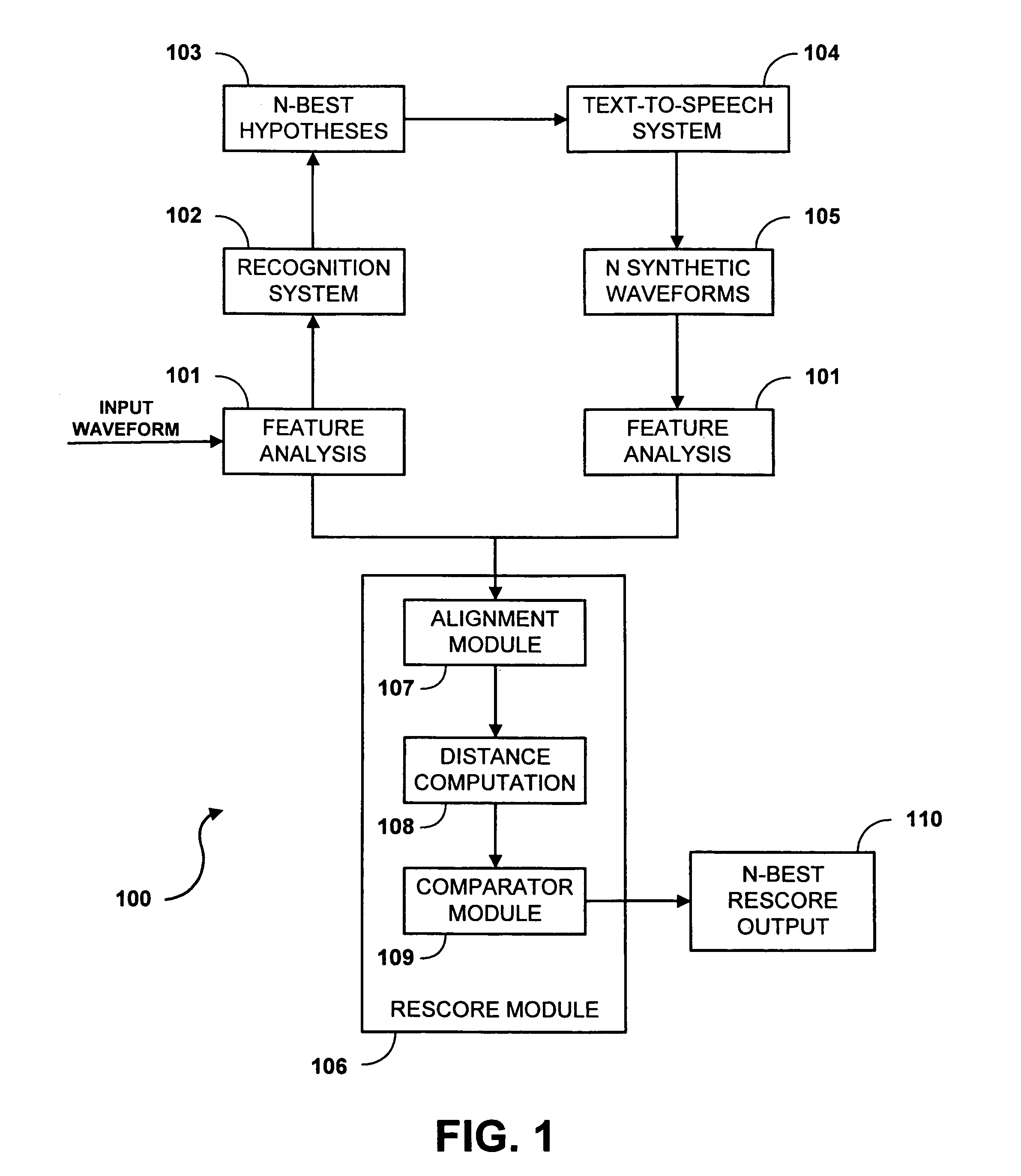

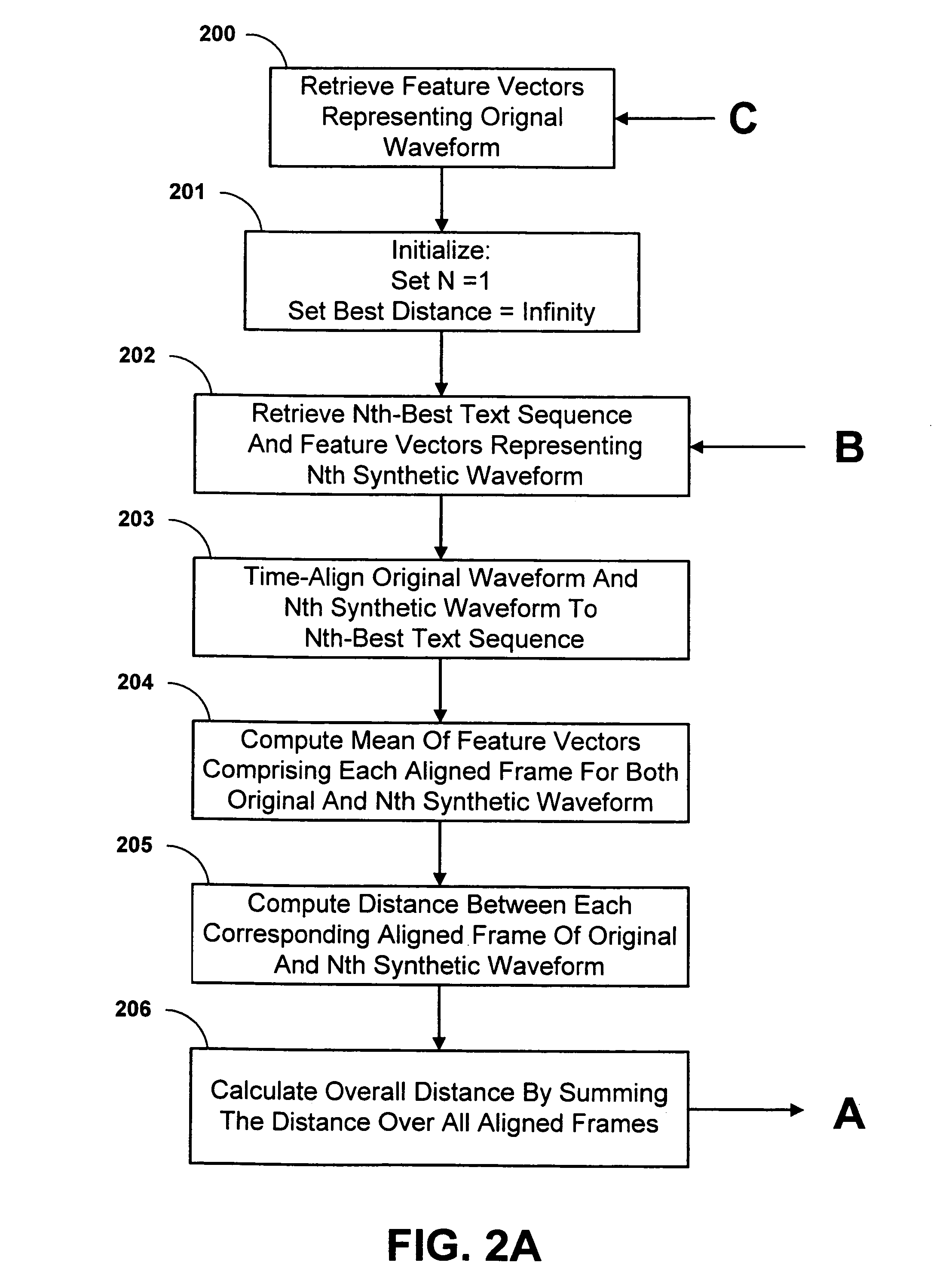

System and method for rescoring N-best hypotheses of an automatic speech recognition system

A system and method for rescoring the N-best hypotheses from an automatic speech recognition system by comparing an original speech waveform to synthetic speech waveforms that are generated for each text sequence of the N-best hypotheses. A distance is calculated from the original speech waveform to each of the synthesized waveforms, and the text associated with the synthesized waveform that is determined to be closest to the original waveform is selected as the final hypothesis. The original waveform and each synthesized waveform are aligned to a corresponding text sequence on a phoneme level. The mean of the feature vectors which align to each phoneme is computed for the original waveform as well as for each of the synthesized hypotheses. The distance of a synthesized hypothesis to the original speech signal is then computed as the sum over all phonemes in the hypothesis of the Euclidean distance between the means of the feature vectors of the frames aligning to that phoneme for the original and the synthesized signals. The text of the hypothesis which is closest under the above metric to the original waveform is chosen as the final system output.

Owner:IBM CORP

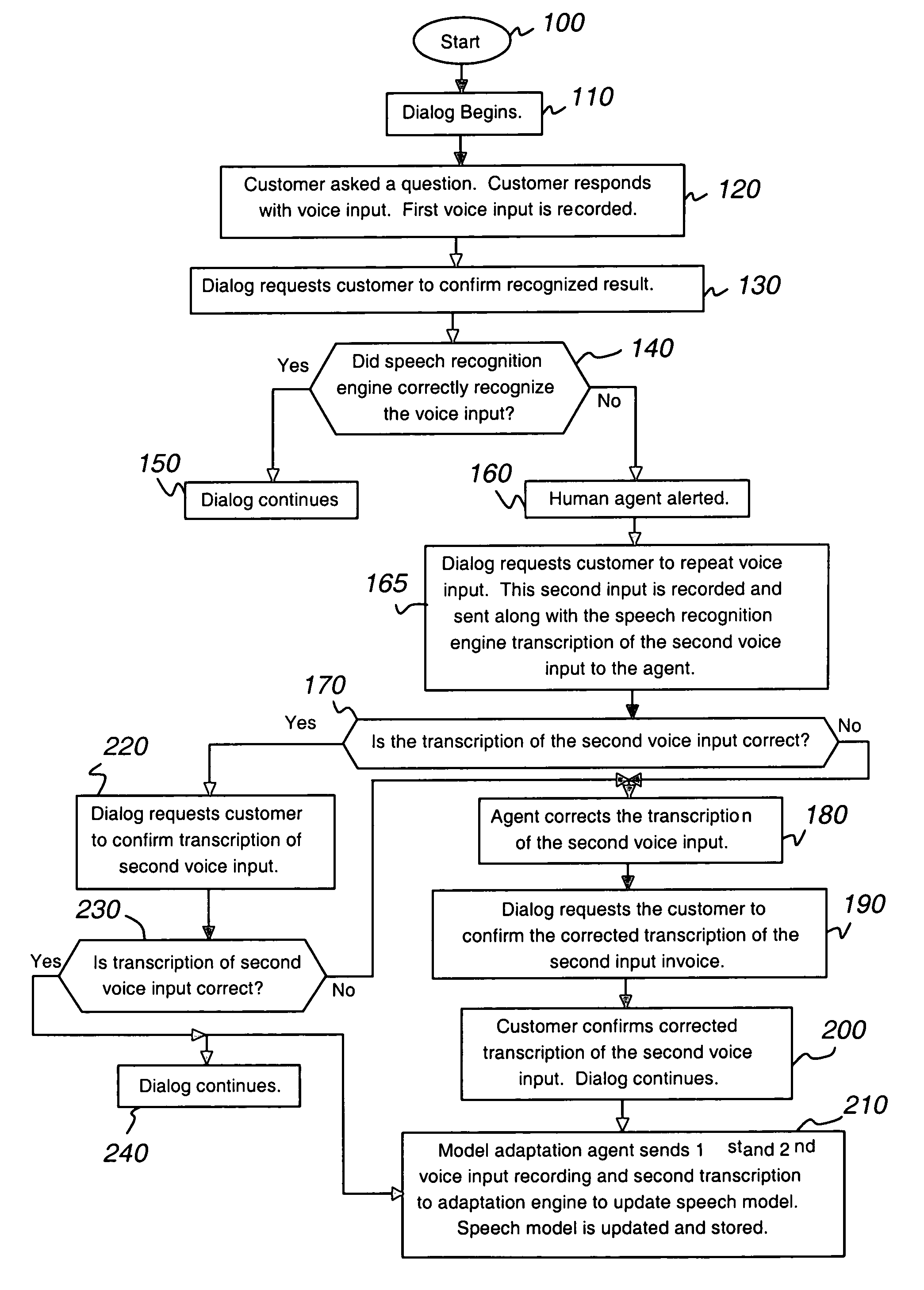

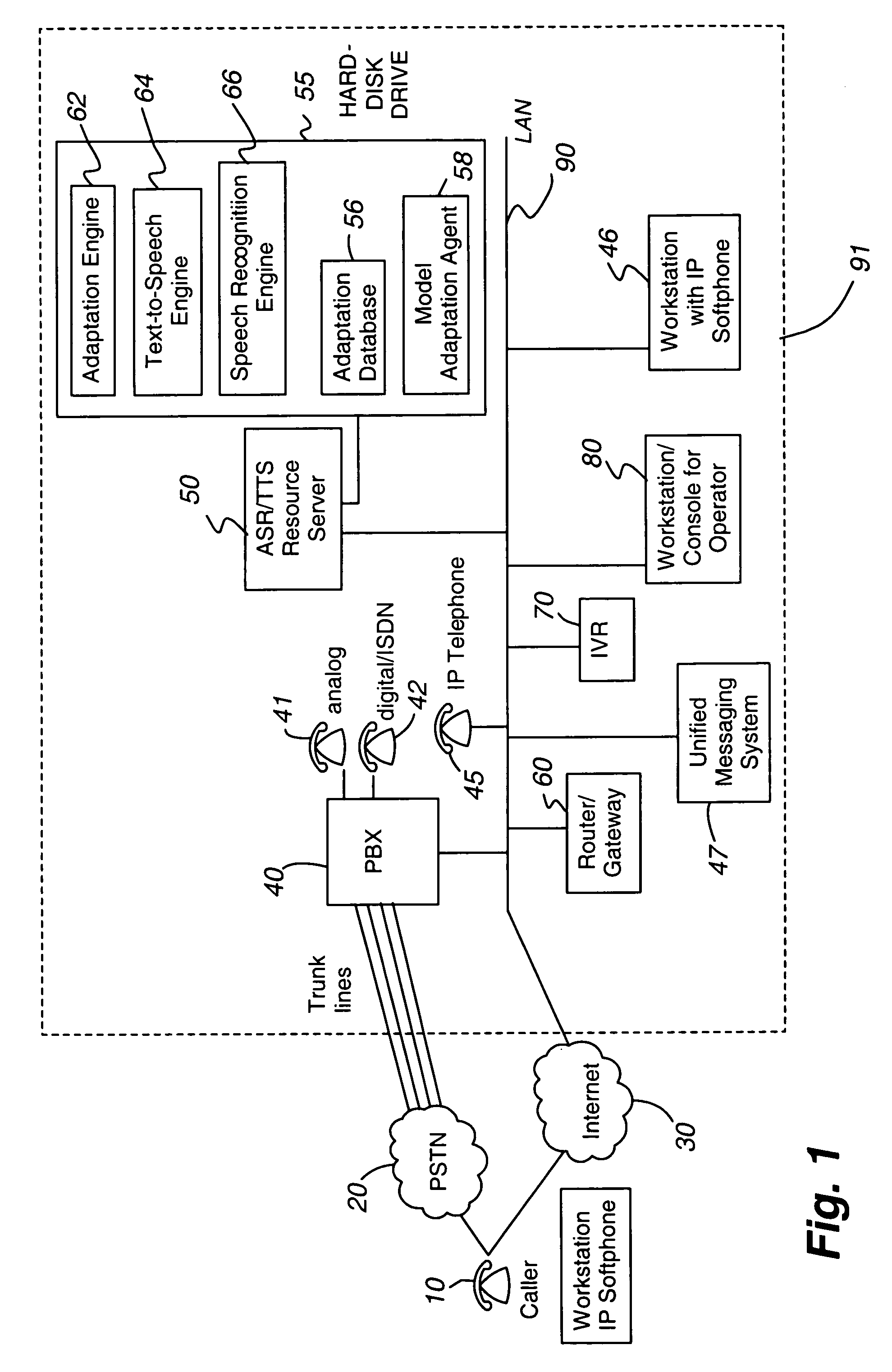

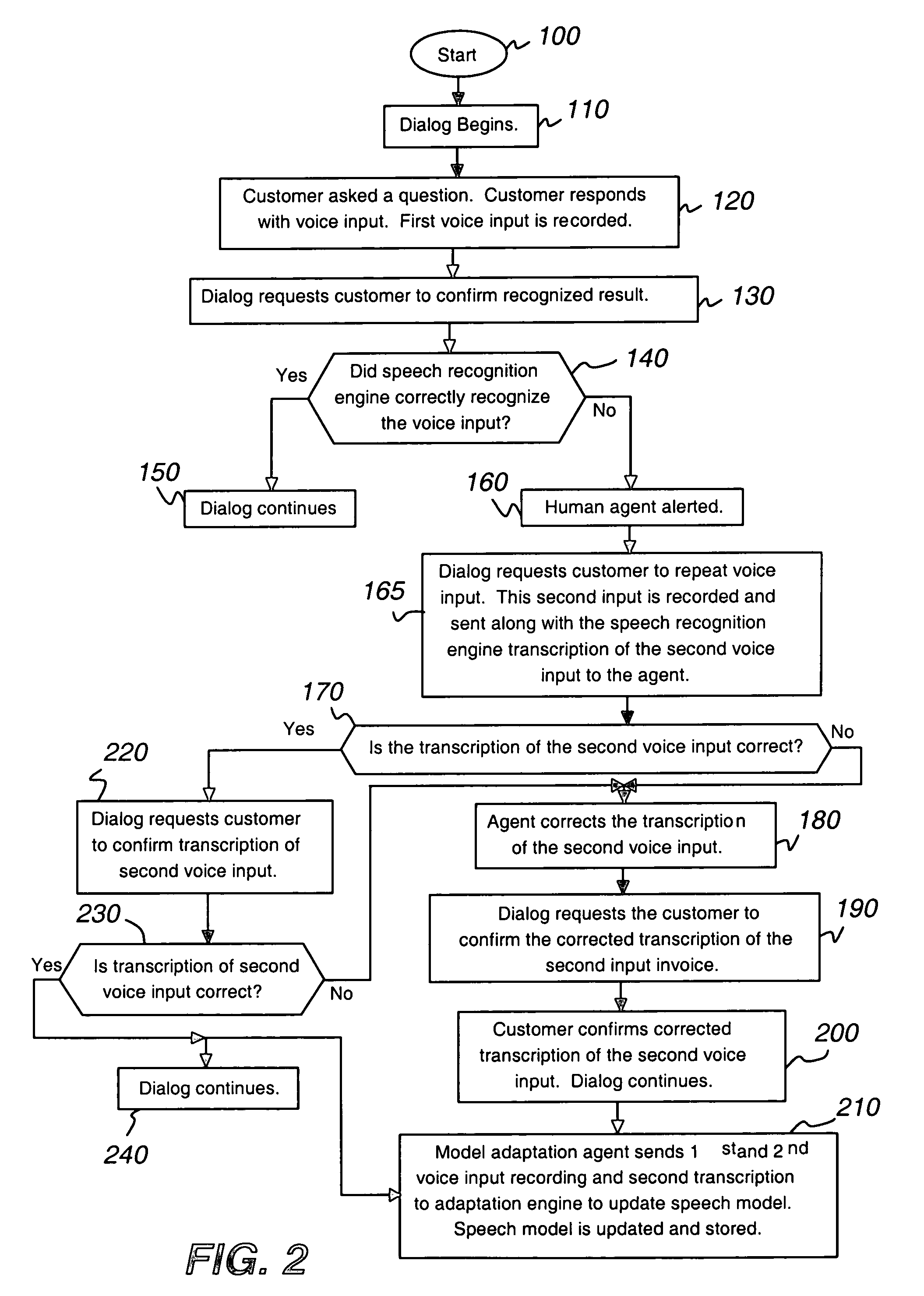

Transparent monitoring and intervention to improve automatic adaptation of speech models

ActiveUS7660715B1Improve speech recognition accuracyQuality improvementSpeech recognitionFrustrationMostly True

A system and method to improve the automatic adaptation of one or more speech models in automatic speech recognition systems. After a dialog begins, for example, the dialog asks the customer to provide spoken input and it is recorded. If the speech recognizer determines it may not have correctly transcribed the verbal response, i.e., voice input, the invention uses monitoring and if necessary, intervention to guarantee that the next transcription of the verbal response is correct. The dialog asks the customer to repeat his verbal response, which is recorded and a transcription of the input is sent to a human monitor, i.e., agent or operator. If the transcription of the spoken input is correct, the human does not intervene and the transcription remains unmodified. If the transcription of the verbal response is incorrect, the human intervenes and the transcription of the misrecognized word is corrected. In both cases, the dialog asks the customer to confirm the unmodified and corrected transcription. If the customer confirms the unmodified or newly corrected transcription, the dialog continues and the customer does not hang up in frustration because most times only one misrecognition occurred. Finally, the invention uses the first and second customer recording of the misrecognized word or utterance along with the corrected or unmodified transcription to automatically adapt one or more speech models, which improves the performance of the speech recognition system.

Owner:AVAYA INC

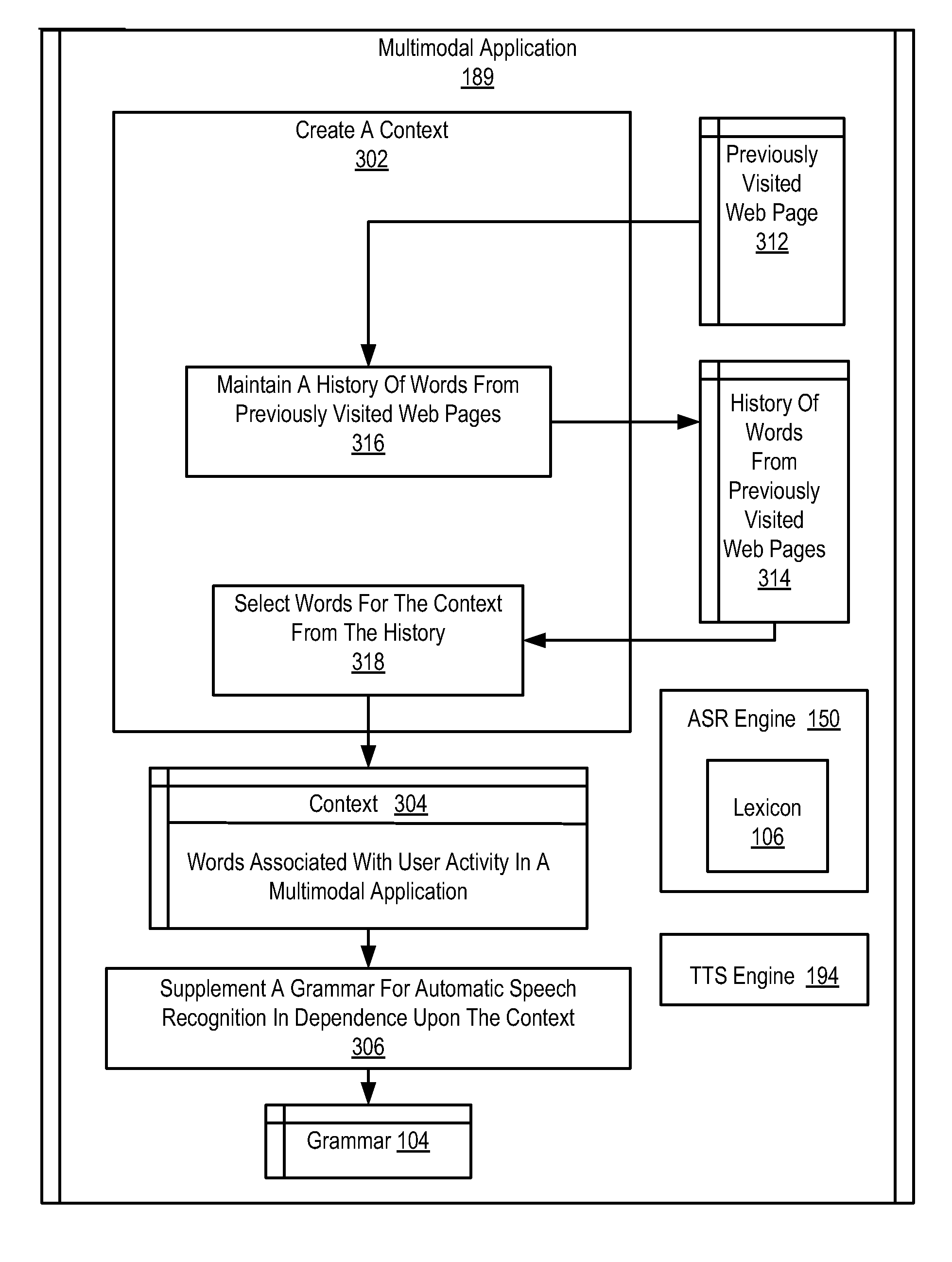

Context-based grammars for automated speech recognition

Methods, apparatus, and computer program products for providing a context-based grammar for automatic speech recognition, including creating by a multimodal application a context, the context comprising words associated with user activity in the multimodal application, and supplementing by the multimodal application a grammar for automatic speech recognition in dependence upon the context.

Owner:MICROSOFT TECH LICENSING LLC

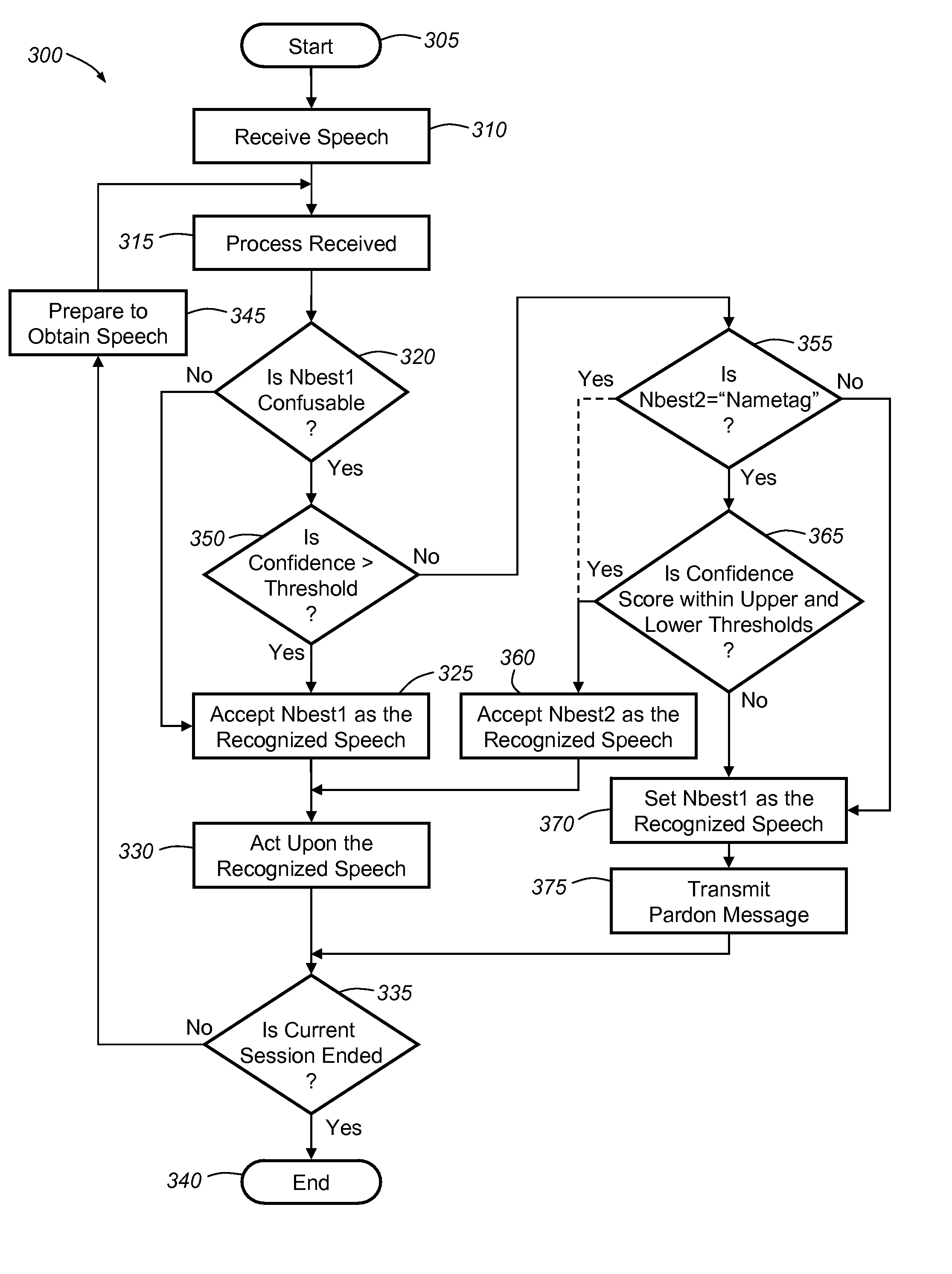

Correcting substitution errors during automatic speech recognition

A speech recognition method includes the steps of receiving input speech containing vocabulary, processing the input speech with a grammar to obtain N-best hypotheses and associated parameter values, and determining whether a first-best hypothesis of the N-best hypotheses is confusable with any vocabulary within the grammar. The first-best hypothesis is accepted as recognized speech corresponding to the received input speech if the first-best hypothesis is not determined to be confusable with any vocabulary within the grammar. Where the first-best hypothesis is determined to be confusable, at least one parameter value of the first-best hypothesis can be compared to at least one threshold value. The first-best hypothesis can be accepted as recognized speech corresponding to the received input speech, if the parameter value of the first-best hypothesis is greater than the threshold value.

Owner:GENERA MOTORS LLC

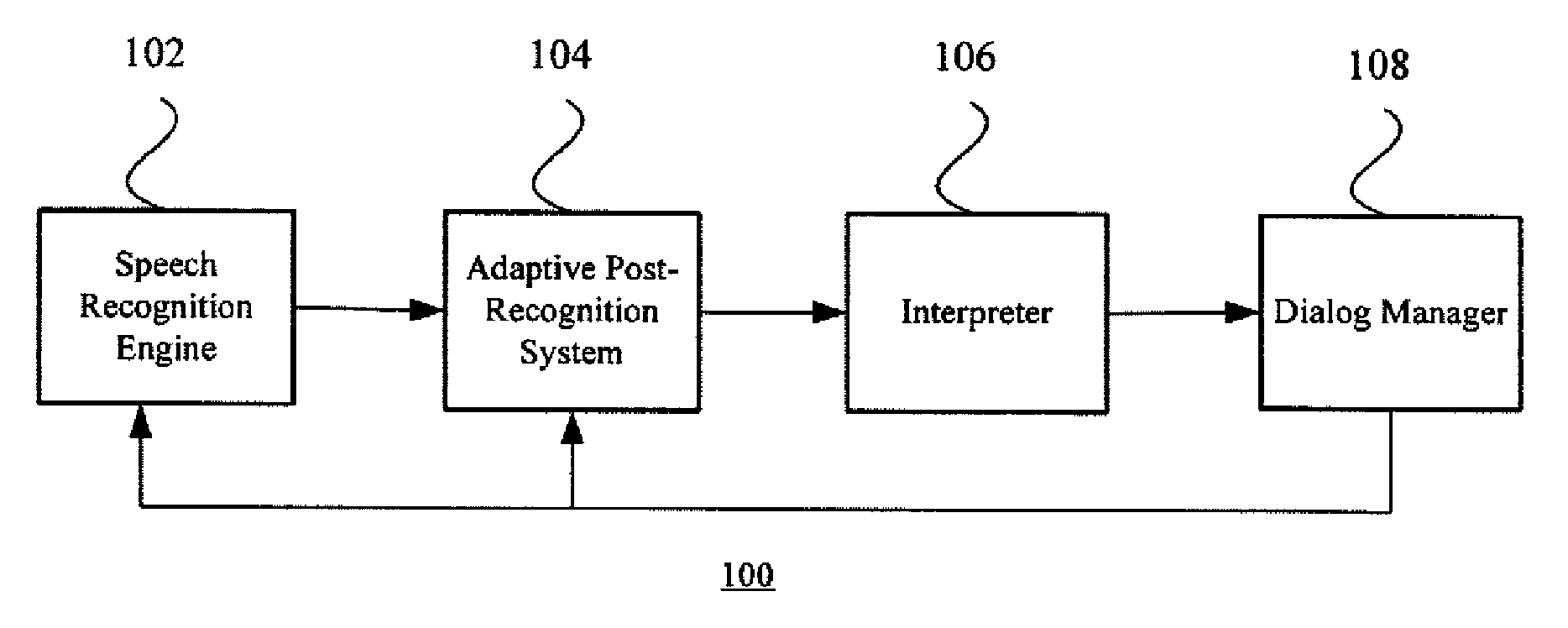

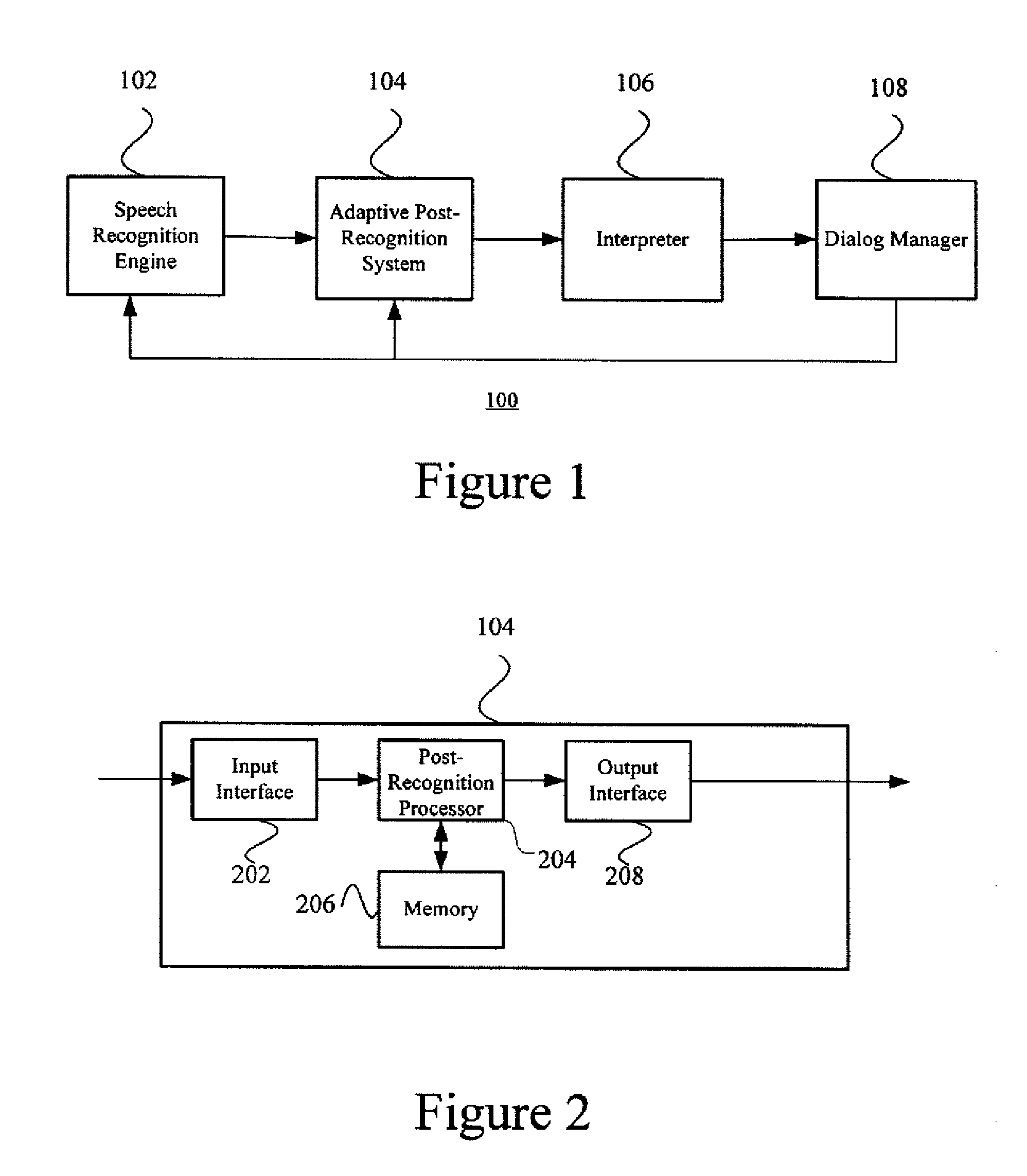

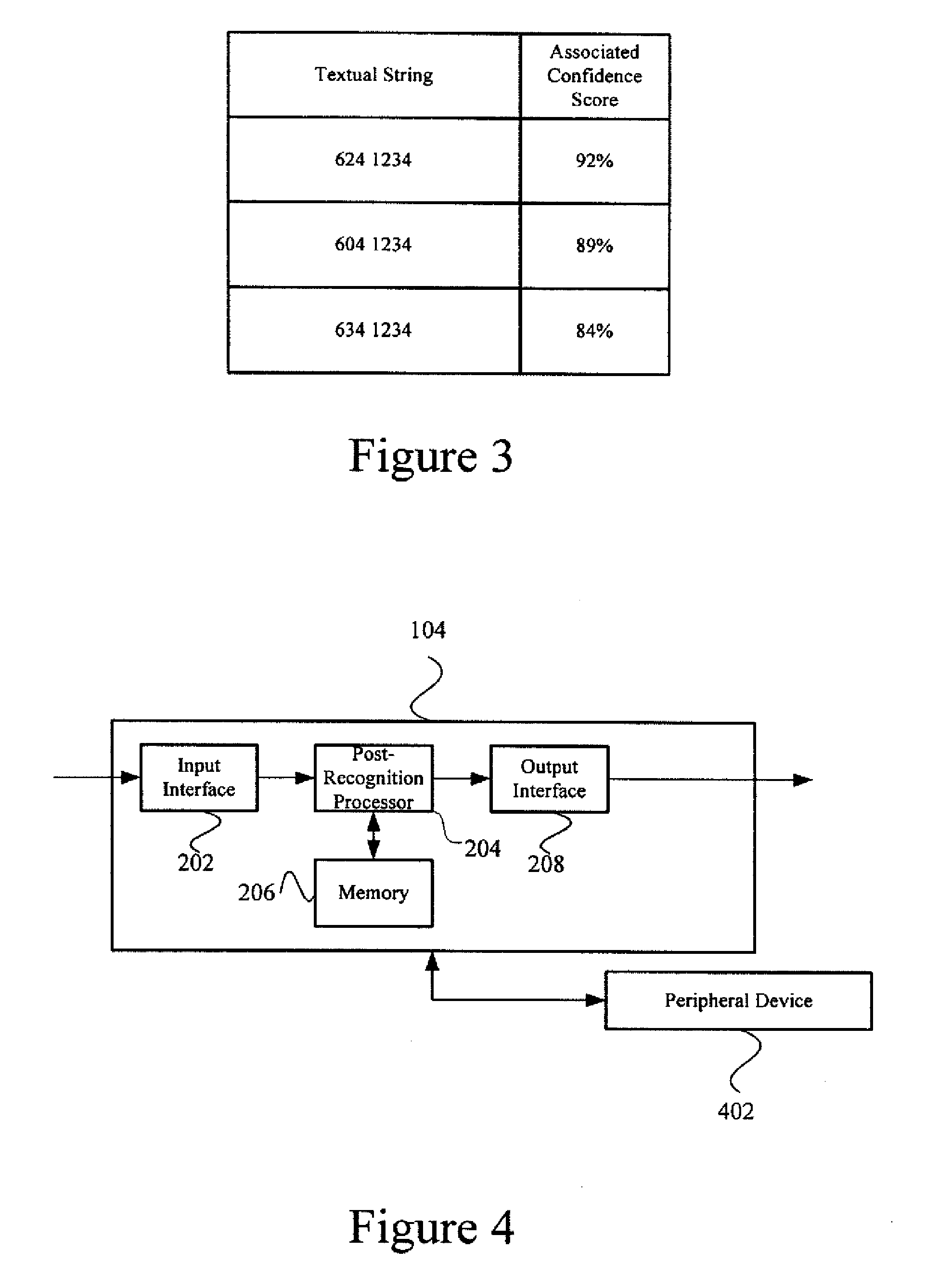

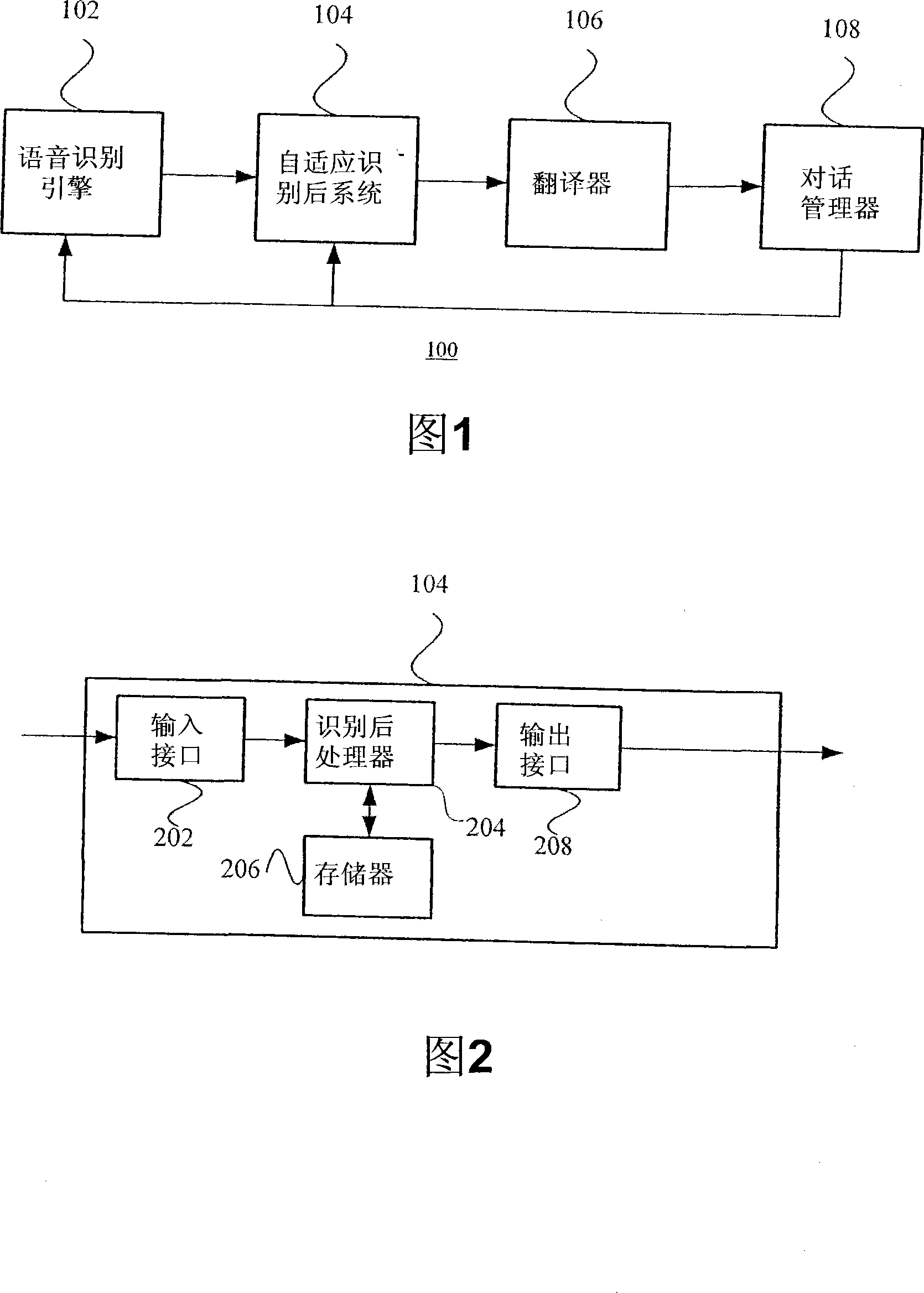

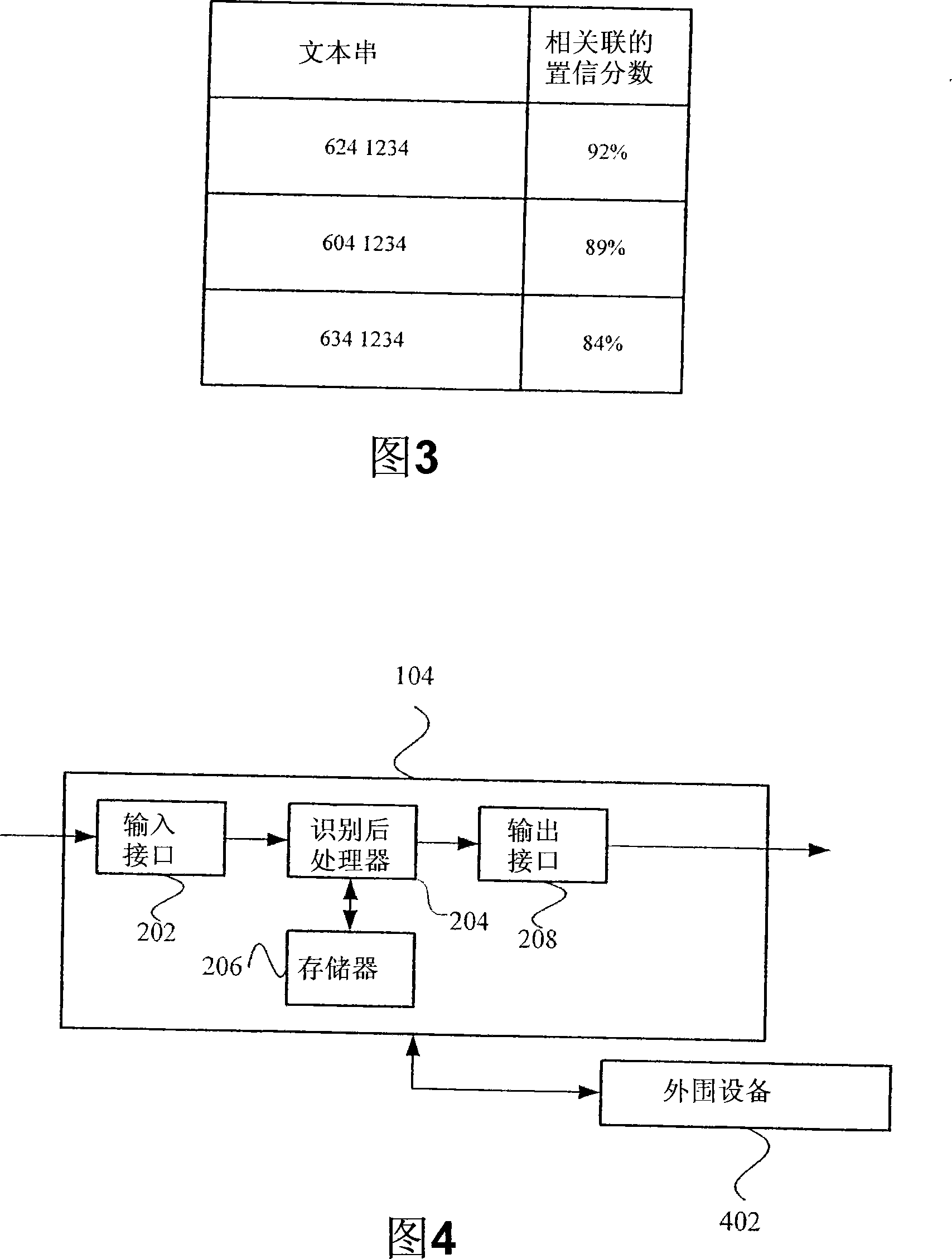

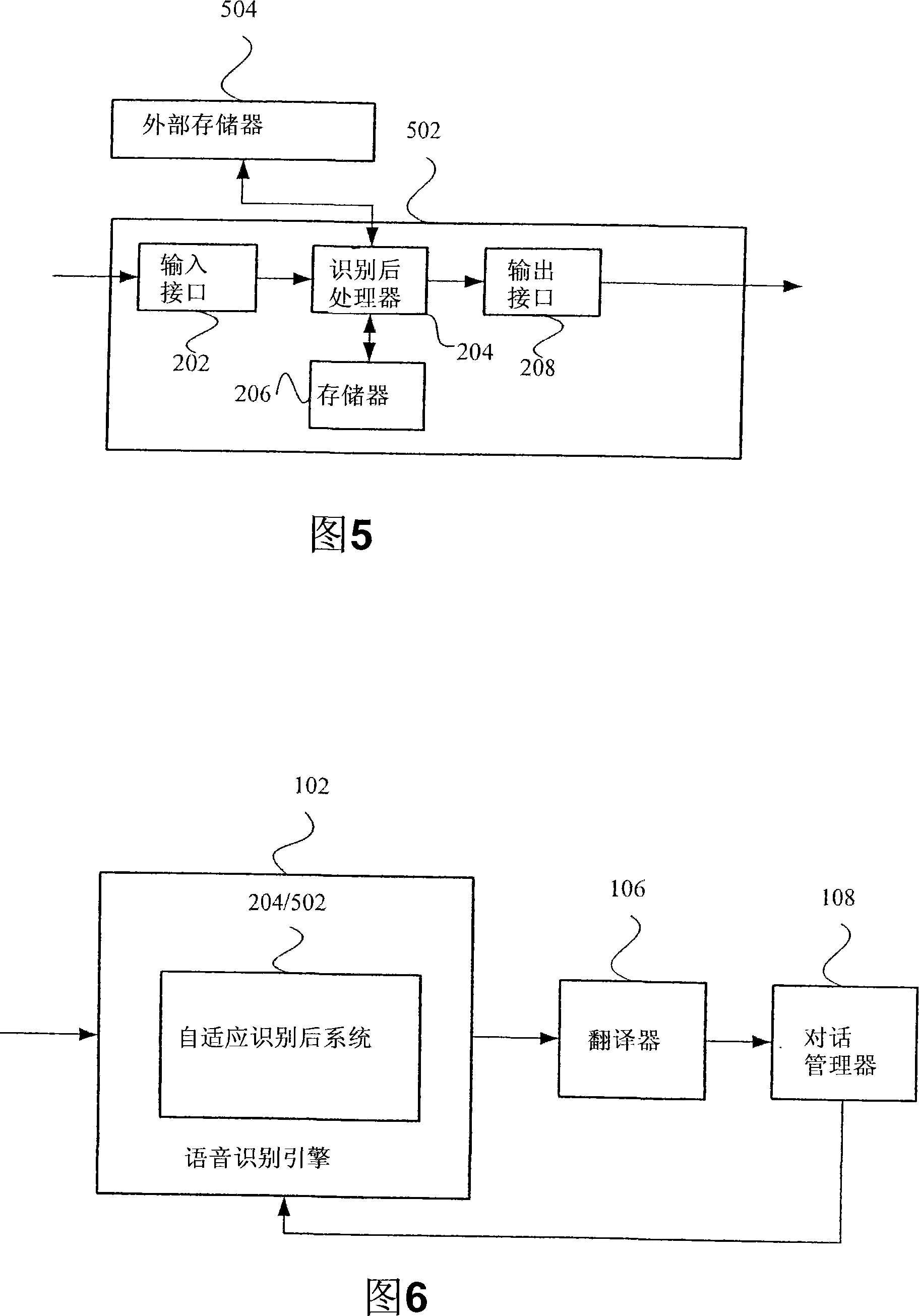

Adaptive context for automatic speech recognition systems

A system improves speech recognition includes an interface linked to a speech recognition engine. A post-recognition processor coupled to the interface compares recognized speech data generated by the speech recognition engine to contextual information retained in a memory, generates a modified recognized speech data, and transmits the modified recognized speech data to a parsing component.

Owner:NUANCE COMM INC +1

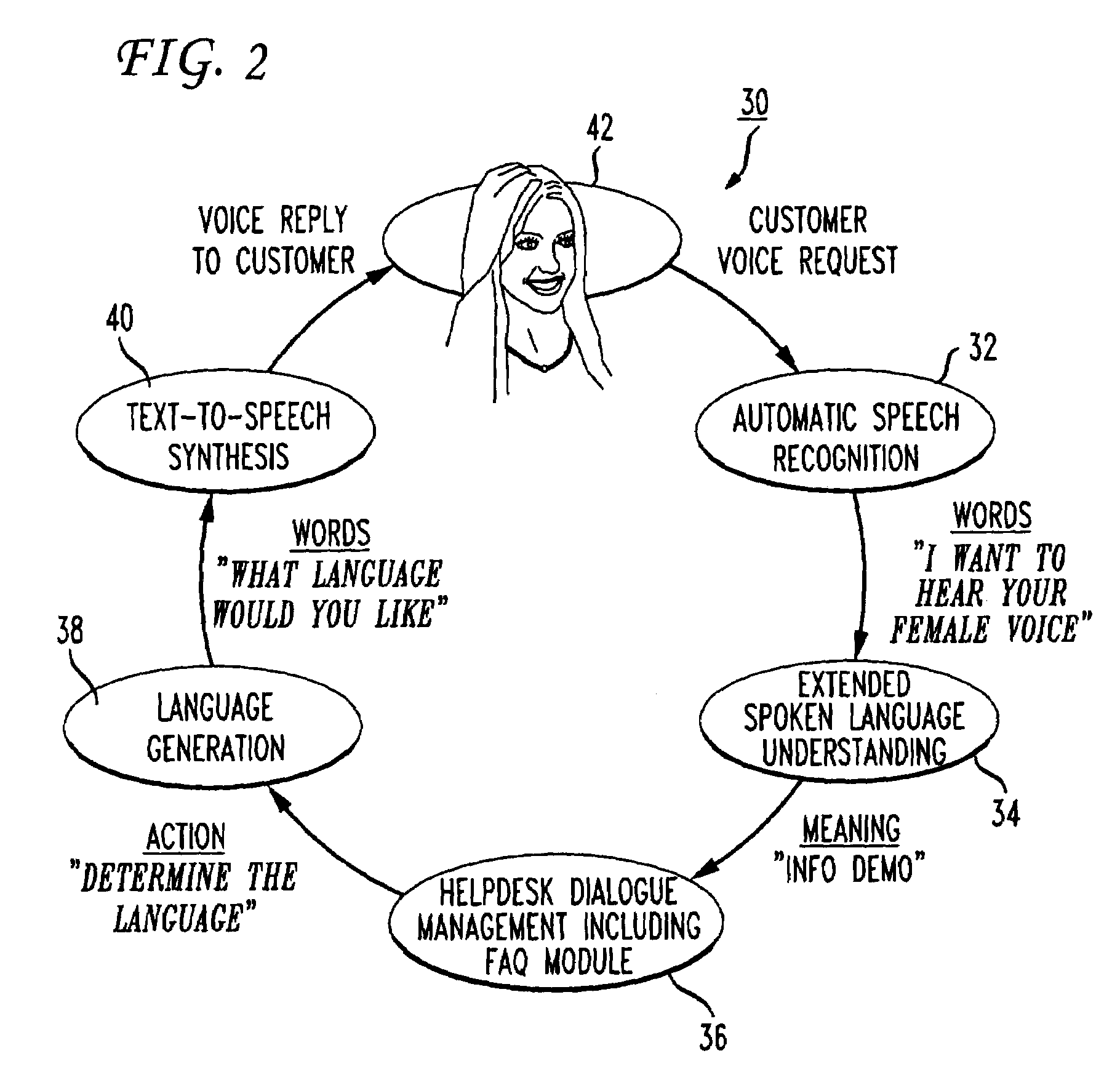

Voice-enabled dialog system

ActiveUS7869998B1Improve customer relationshipEfficient mannerSpeech recognitionSpecial data processing applicationsSpoken languageDialog management

A voice-enabled help desk service is disclosed. The service comprises an automatic speech recognition module for recognizing speech from a user, a spoken language understanding module for understanding the output from the automatic speech recognition module, a dialog management module for generating a response to speech from the user, a natural voices text-to-speech synthesis module for synthesizing speech to generate the response to the user, and a frequently asked questions module. The frequently asked questions module handles frequently asked questions from the user by changing voices and providing predetermined prompts to answer the frequently asked question.

Owner:NUANCE COMM INC

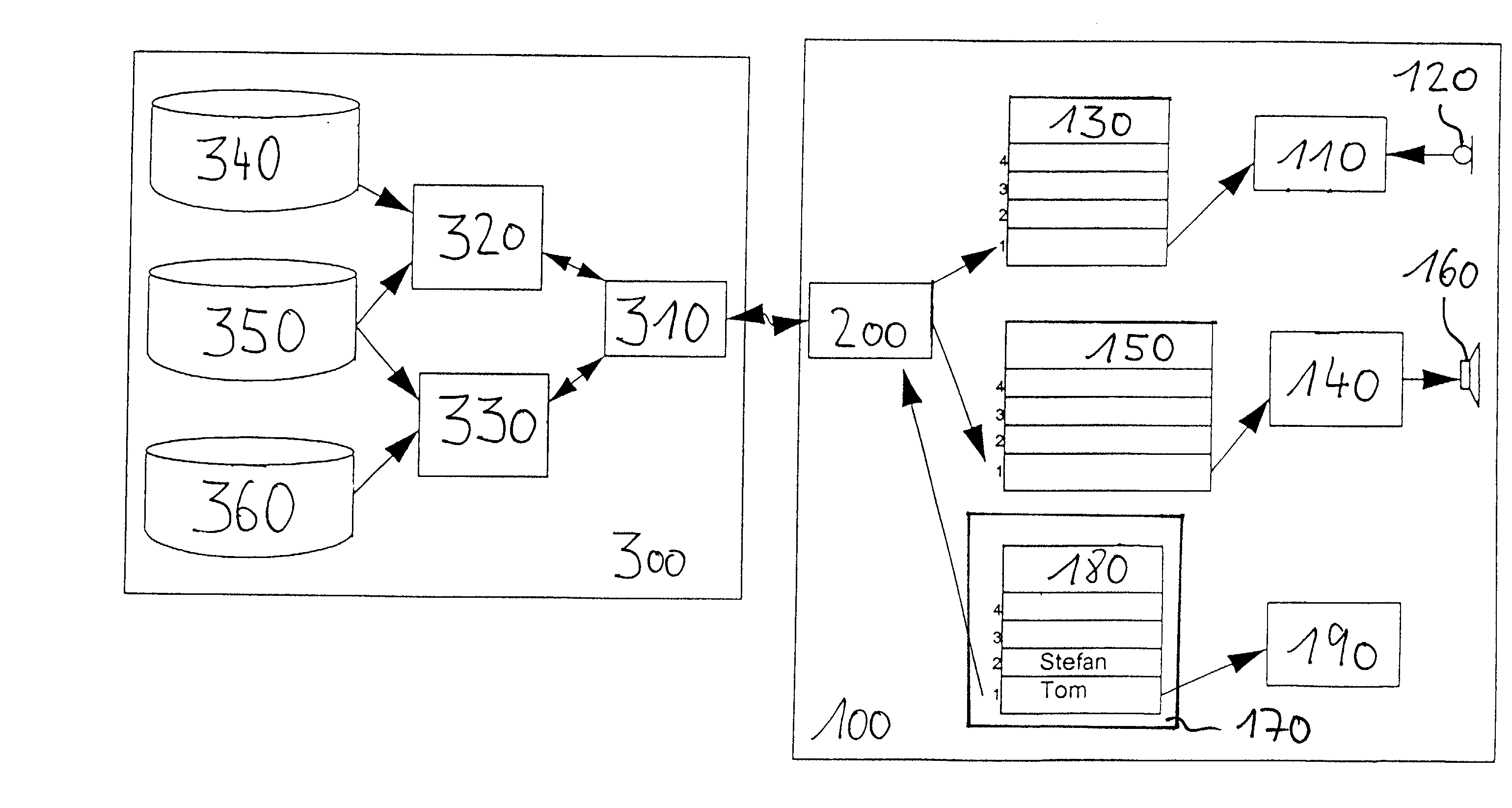

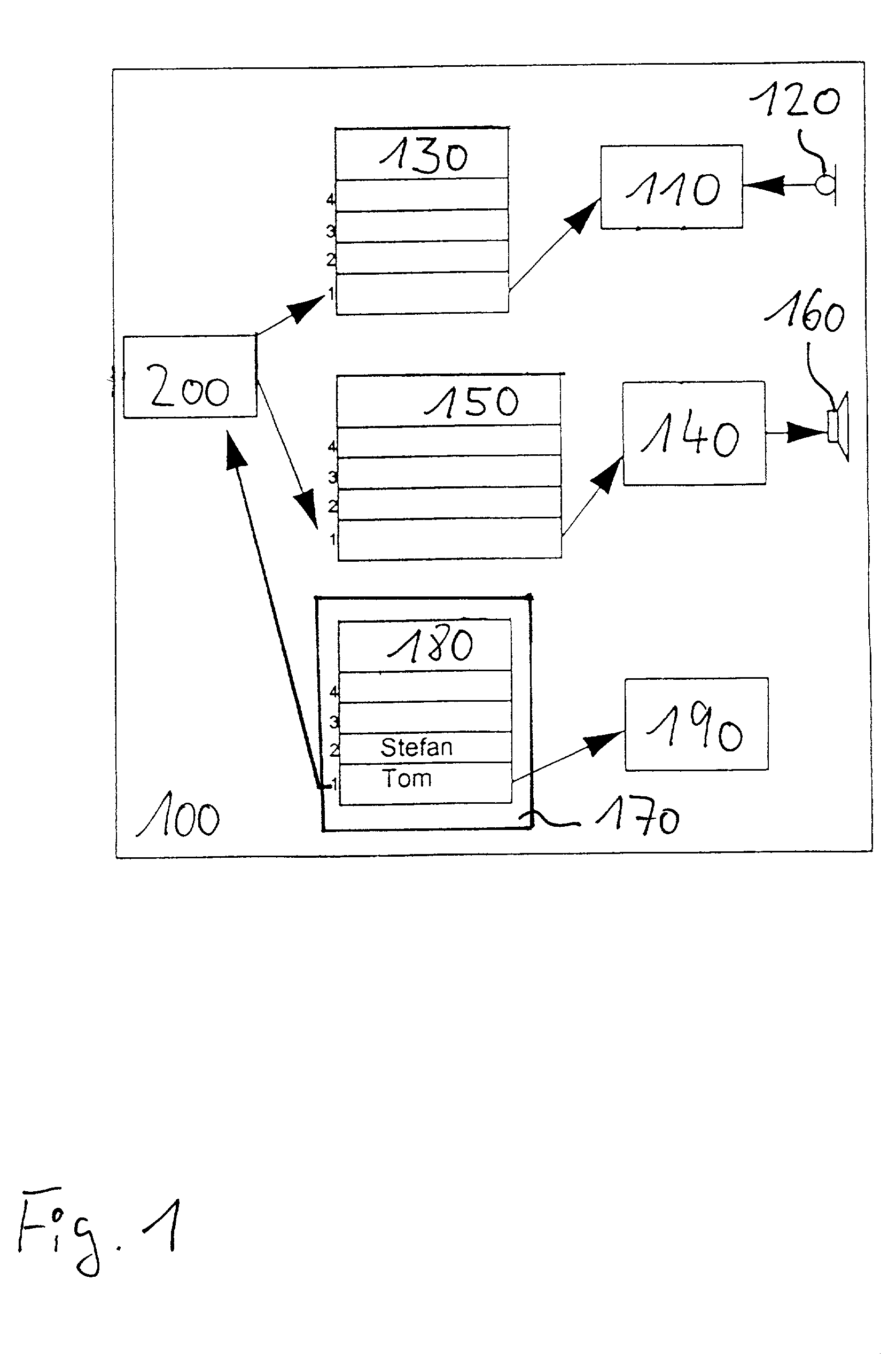

Mobile terminal controllable by spoken utterances

InactiveUS20020091511A1Reduce complexityLow costSubstation equipmentSpeech recognitionAcoustic modelAutomatic speech

A mobile terminal (100) which is controllable by spoken utterances like proper names or command words is described. The mobile terminal (100) comprises an interface (200) for receiving from a network server (300) acoustic models for automatic speech recognition and an automatic speech recognizer (110) for recognizing the spoken utterances based on the received acoustic models. The invention further relates to a network server (300) for mobile terminals (100) which are controllable by spoken utterances and to a method for obtaining acoustic models for a mobile terminal (100) controllable by spoken utterances.

Owner:TELEFON AB LM ERICSSON (PUBL)

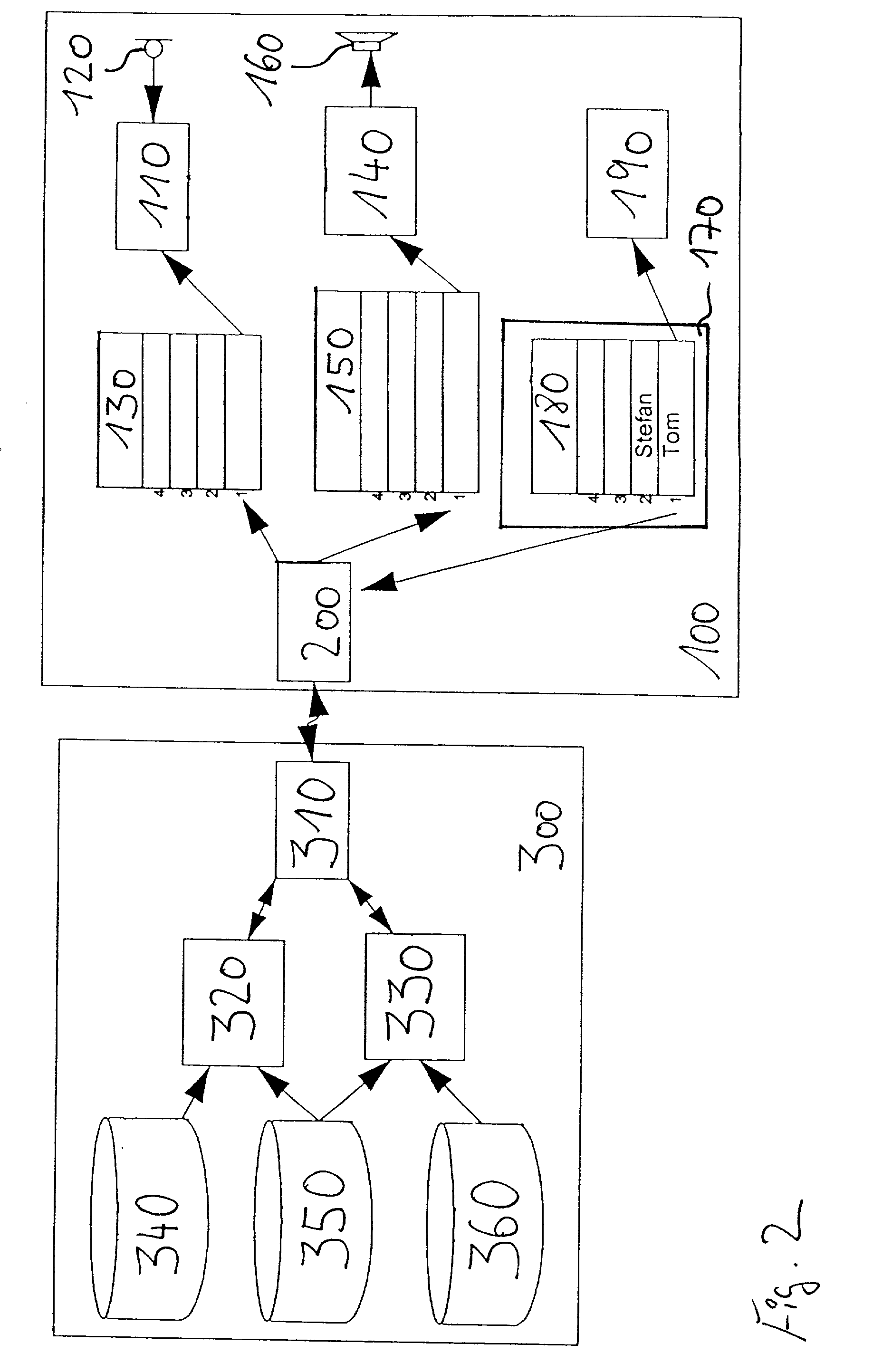

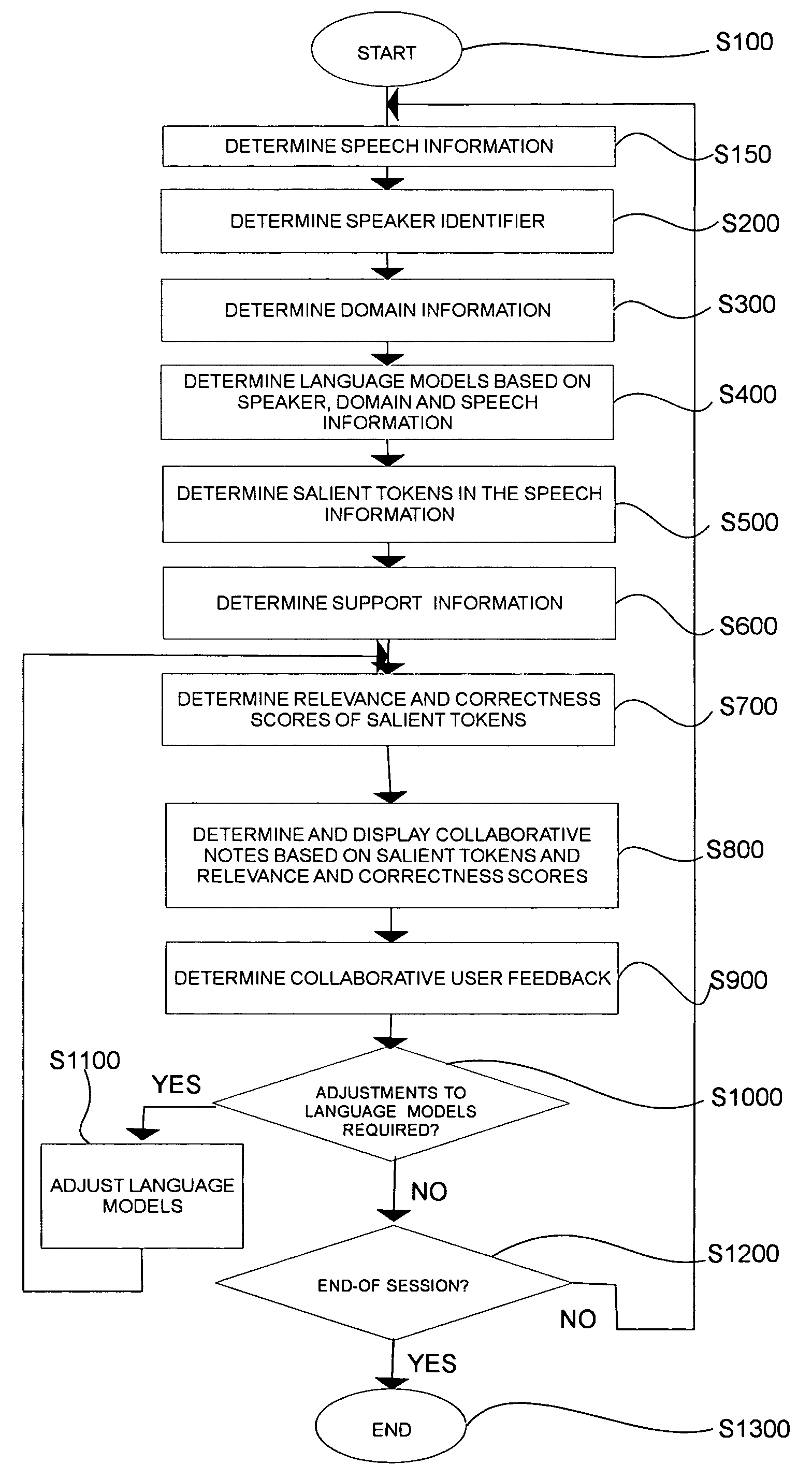

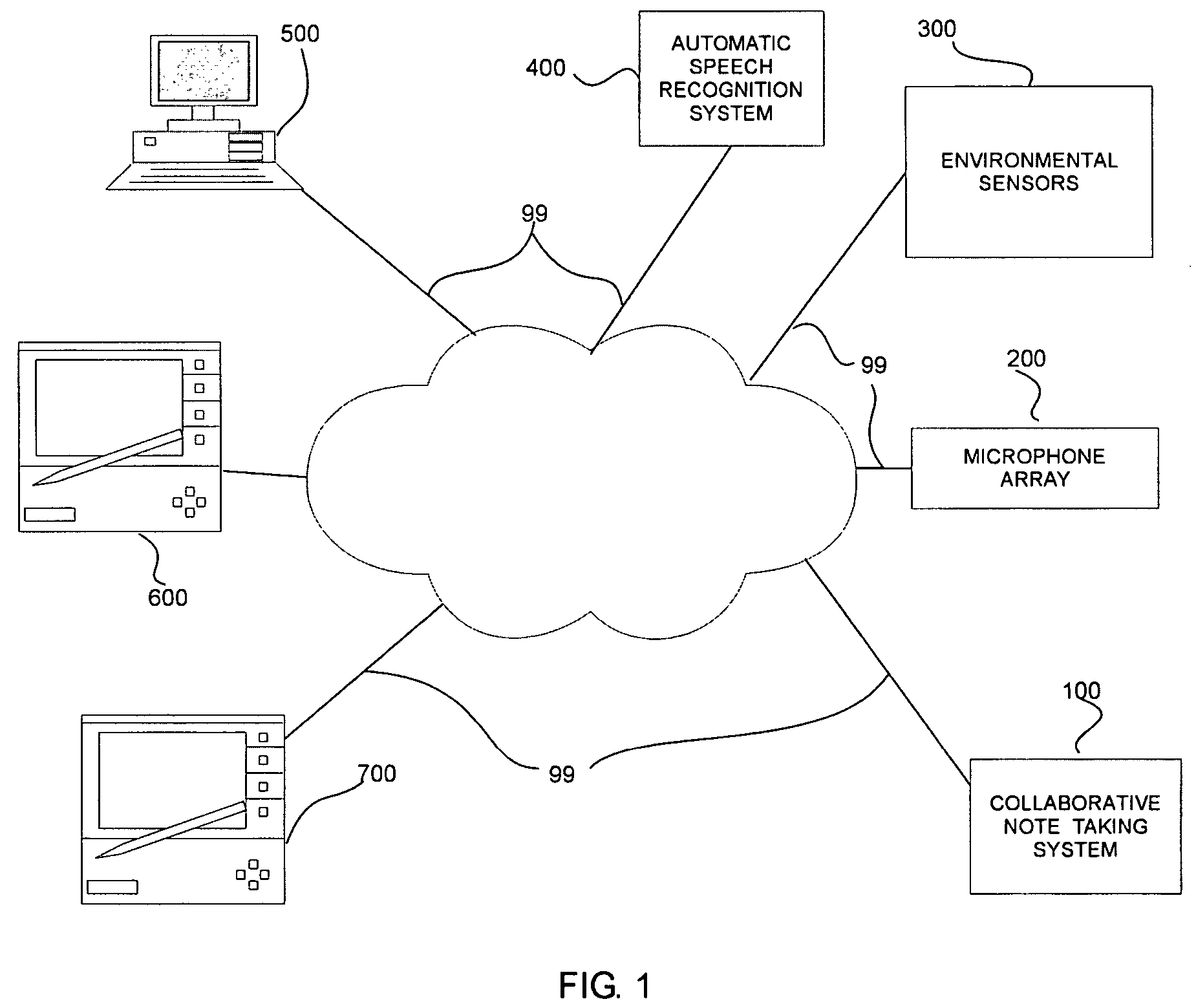

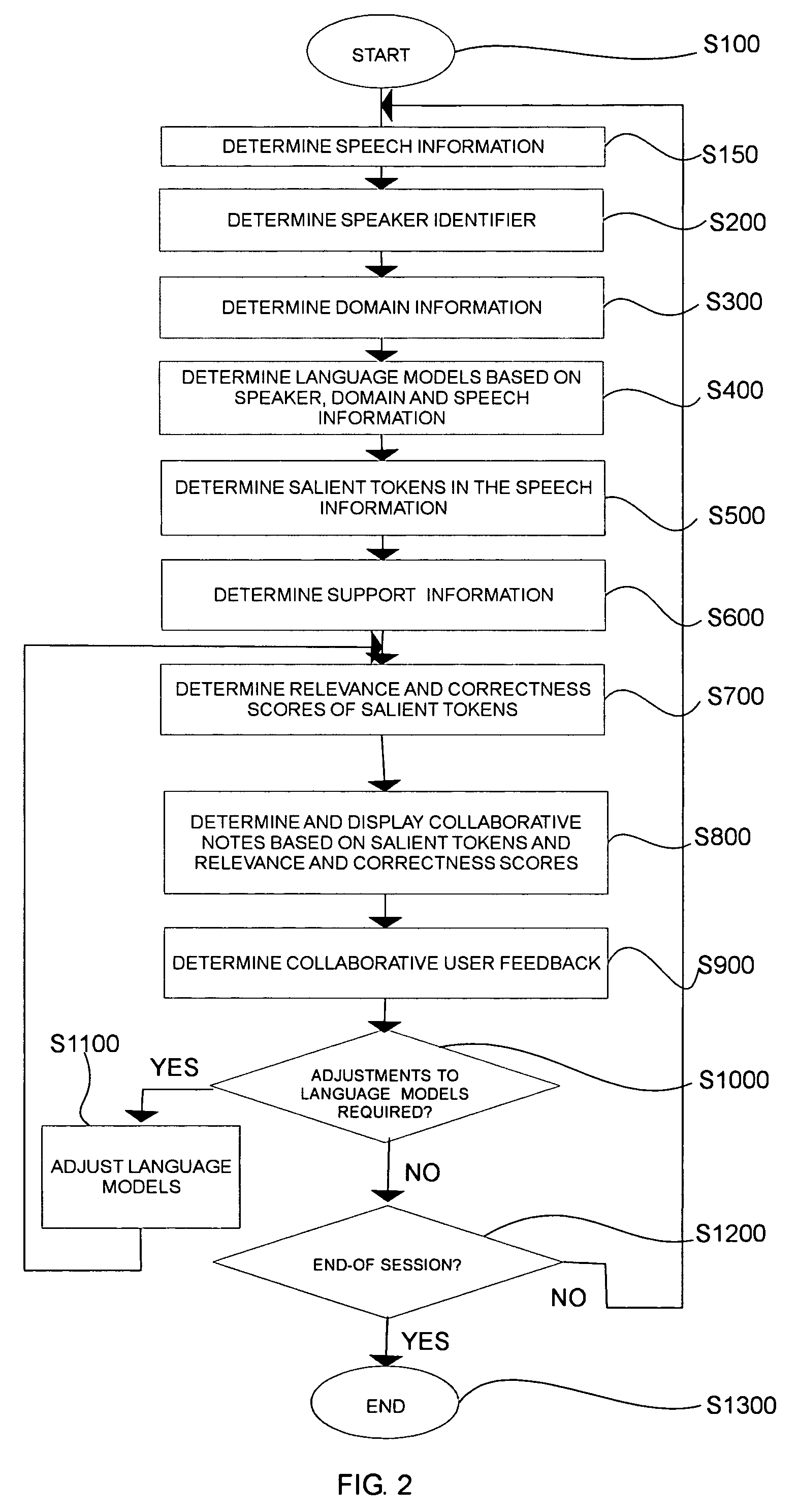

Systems and methods for collaborative note-taking

InactiveUS7542971B2Digital data processing detailsNatural language data processingHandwritingFunction word

Owner:FUJIFILM BUSINESS INNOVATION CORP

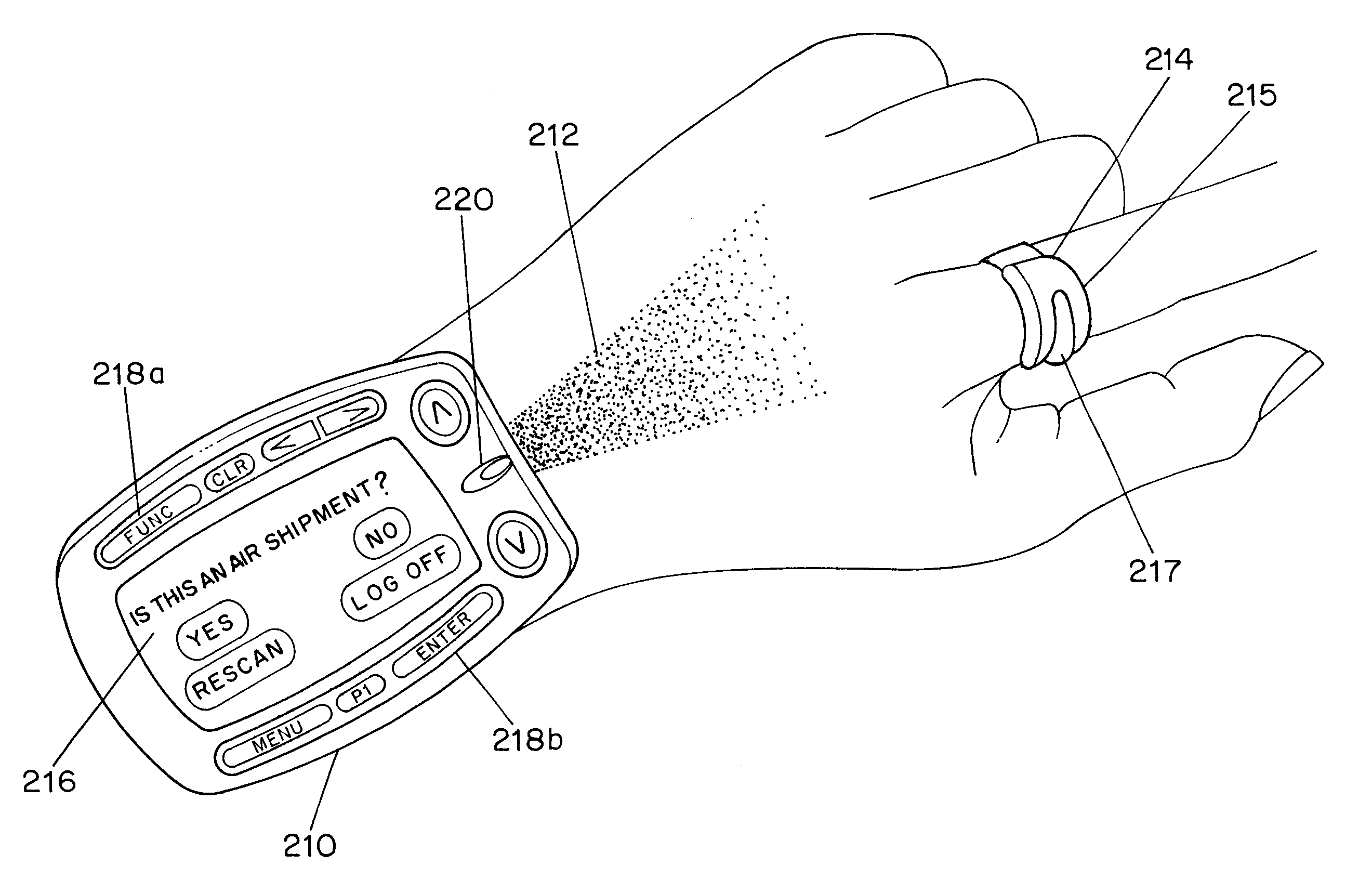

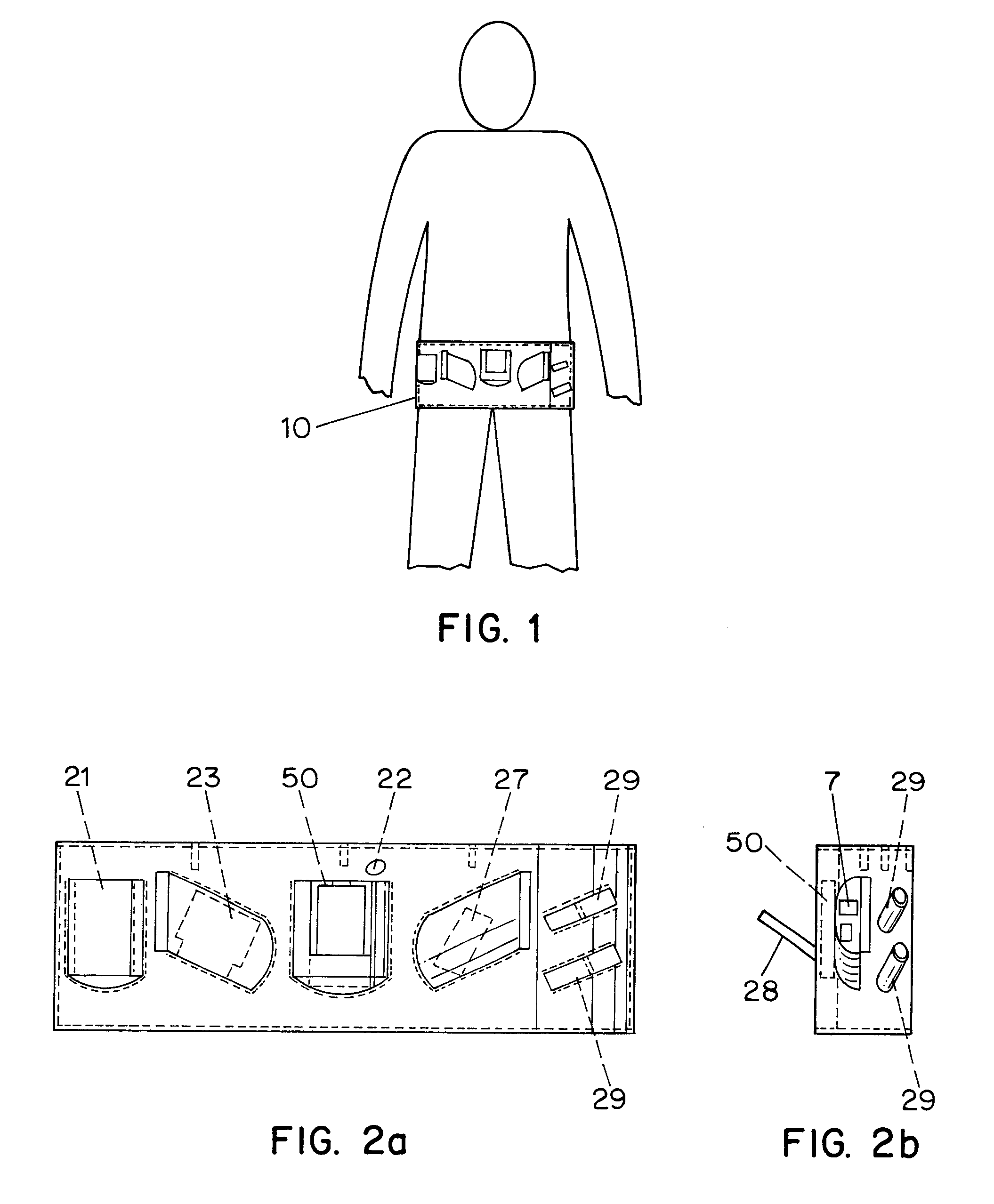

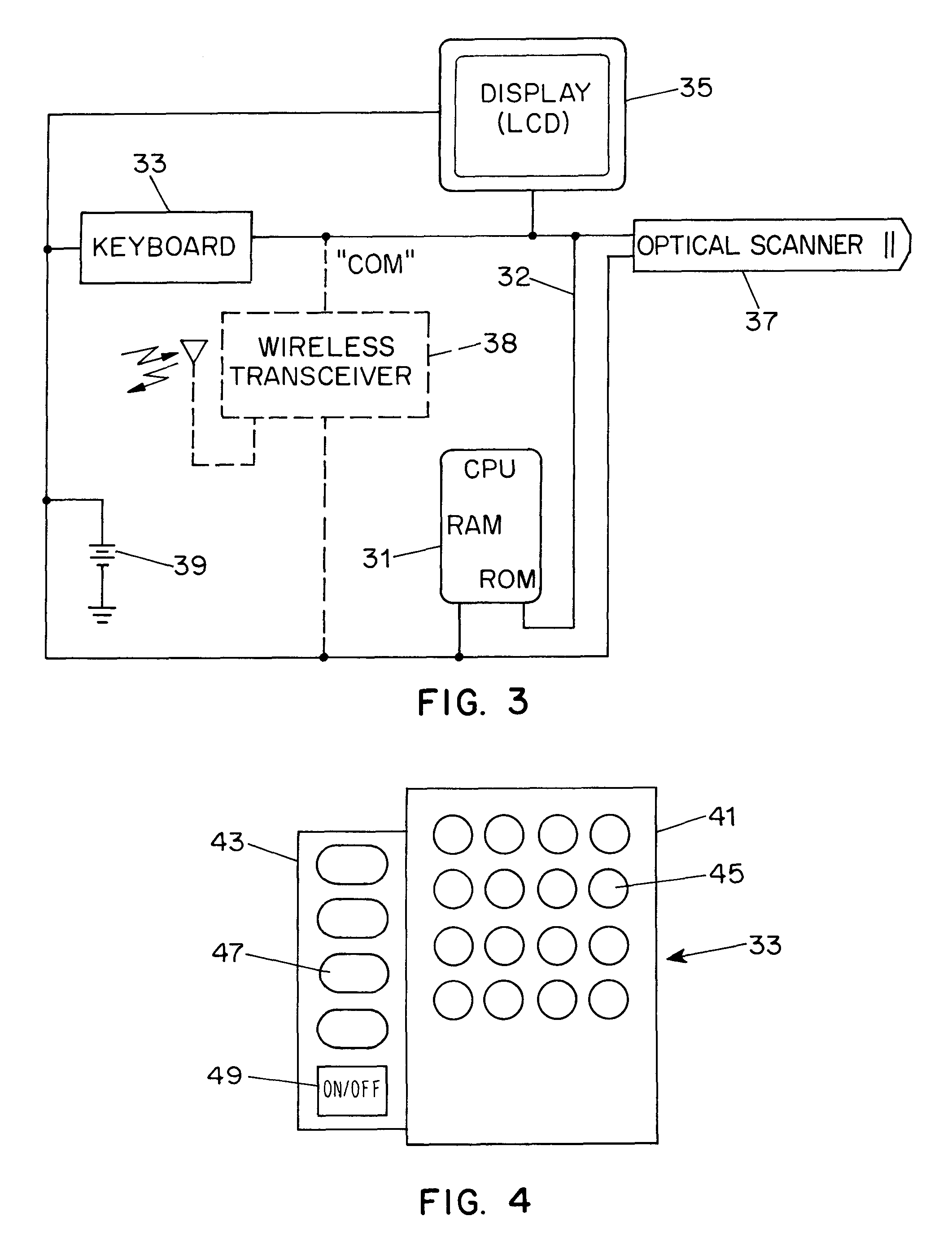

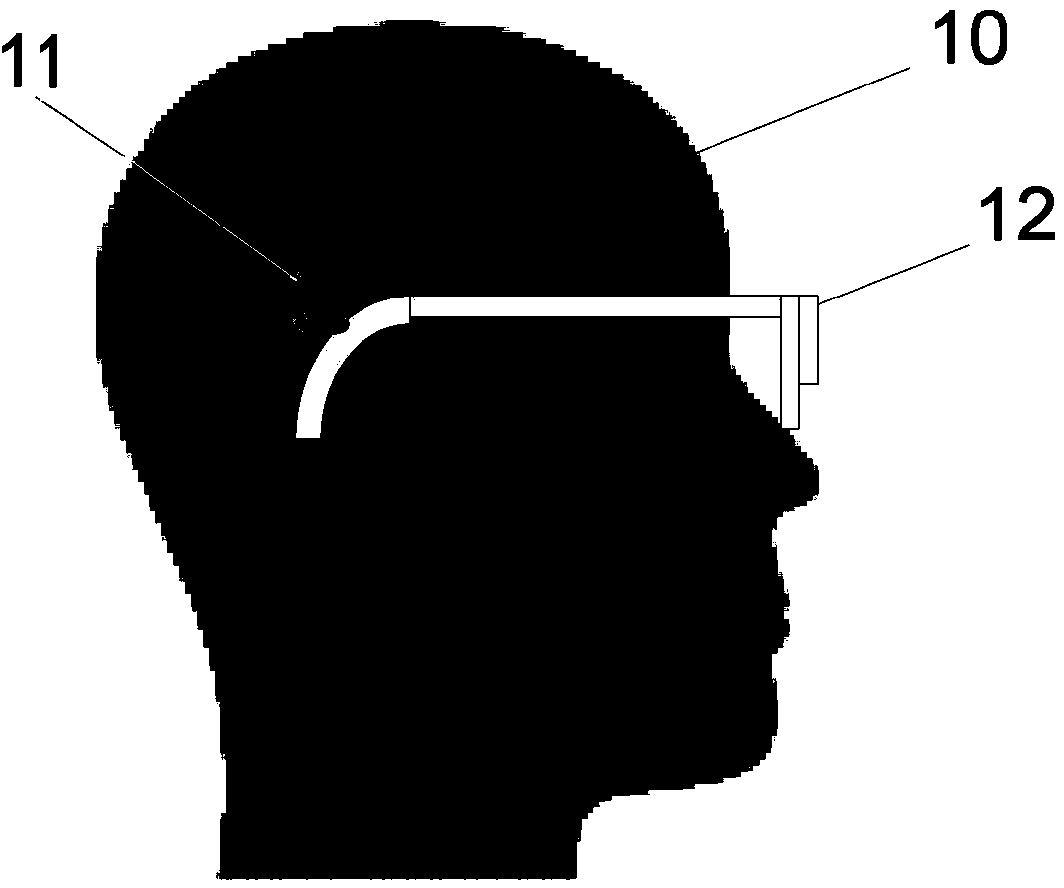

Wearable communication system

InactiveUS6853293B2Function increaseEnhance ergonomic usefulnessProgramme controlElectric signal transmission systemsEyepiecePager

A portable data input or computer system includes an input / output device such as a keyboard and a display, another data input device such as an optical bar code scanner, and a data processor module. To scan bar code type indicia, the operator points the scanner at the bar code and triggers the scanner to read the indicia. All the system components are distributed on an operator's body and together form a personal area system (PAS). Components may include a scanner or imager, a wrist unit, a headpiece including an eyepiece display, speaker and a microphone. Components within a particular PAS communicate with each other over a personal area network (PAN). Individual PASs may be combined into a network of PASs called a PAS cluster. PASs in a particular PAS cluster can communicate with each other over another wireless communication channel. Individual PAS can gain access to a Local Area Network (LAN) and / or a Wide Area Network (WAN) via an access point. Individual PASs can use devices, such as servers and PCs situated either on the LAN or the WAN to retrieve and exchange information. Individual PAS components can provide automatic speech and image recognition. PAS components may also act a telephone, a pager, or any other communication device having access to a LAN or a WAN. Transmission of digitized voice and / or video data can be achieved over an Internet link.

Owner:SYMBOL TECH INC

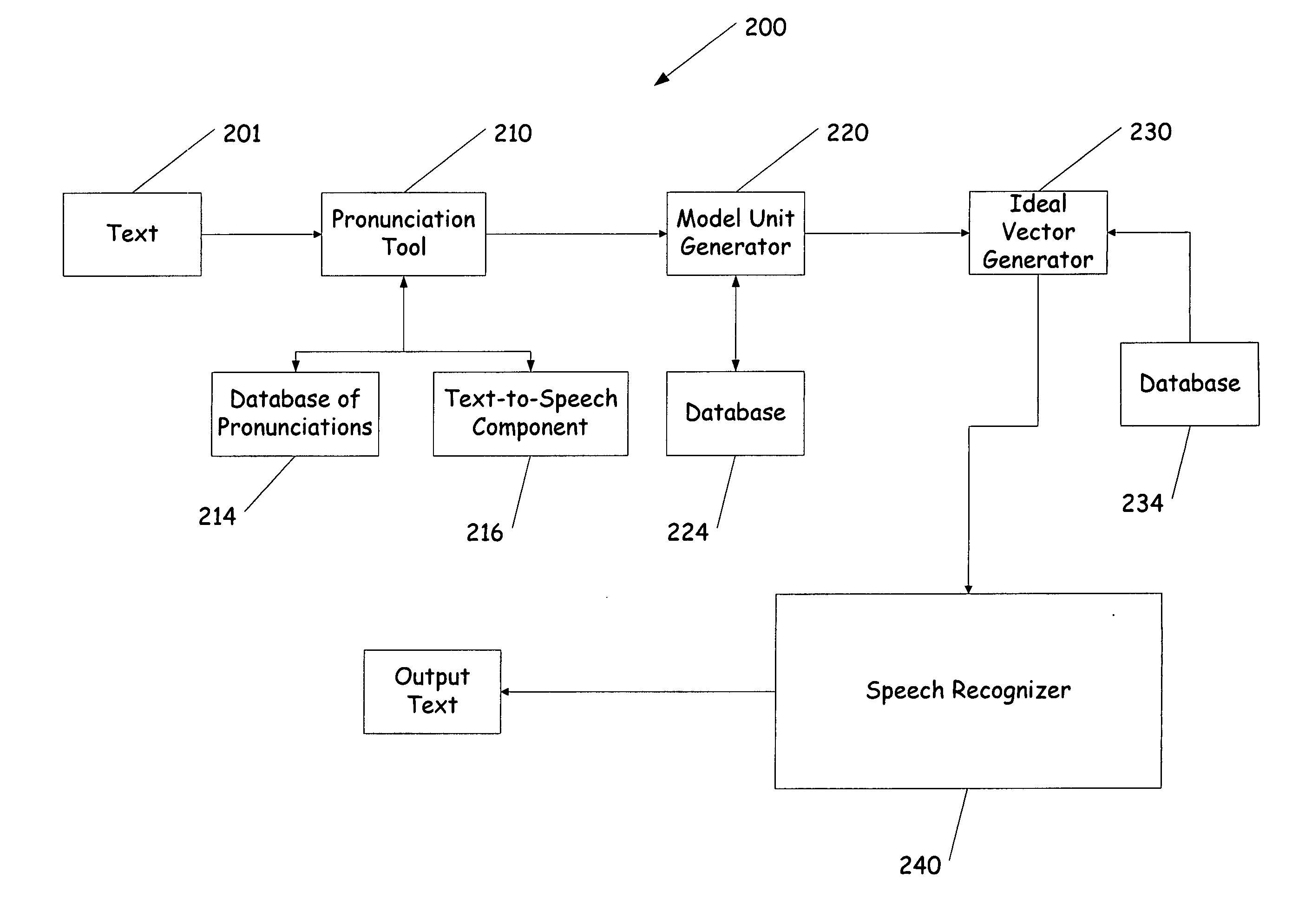

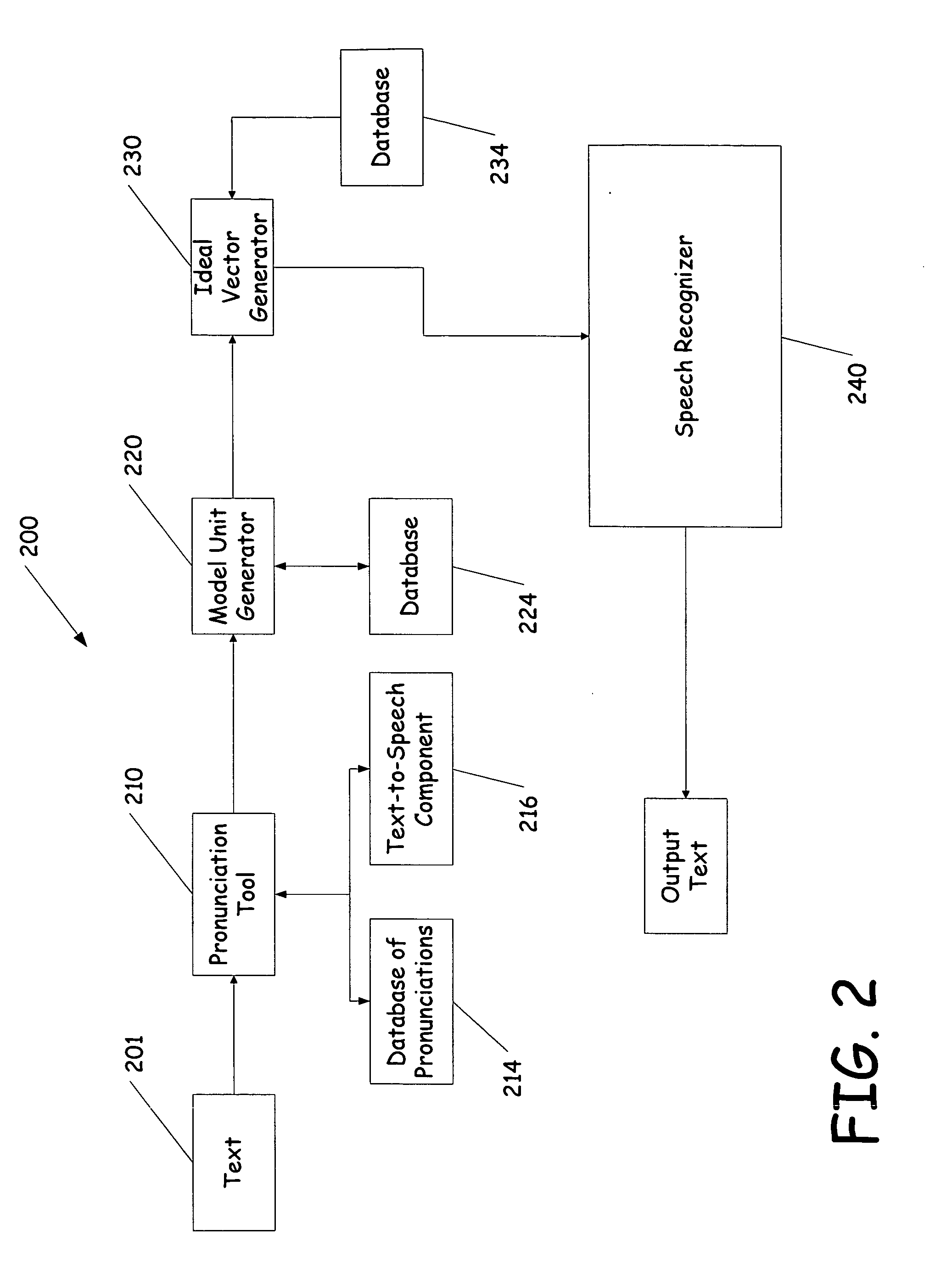

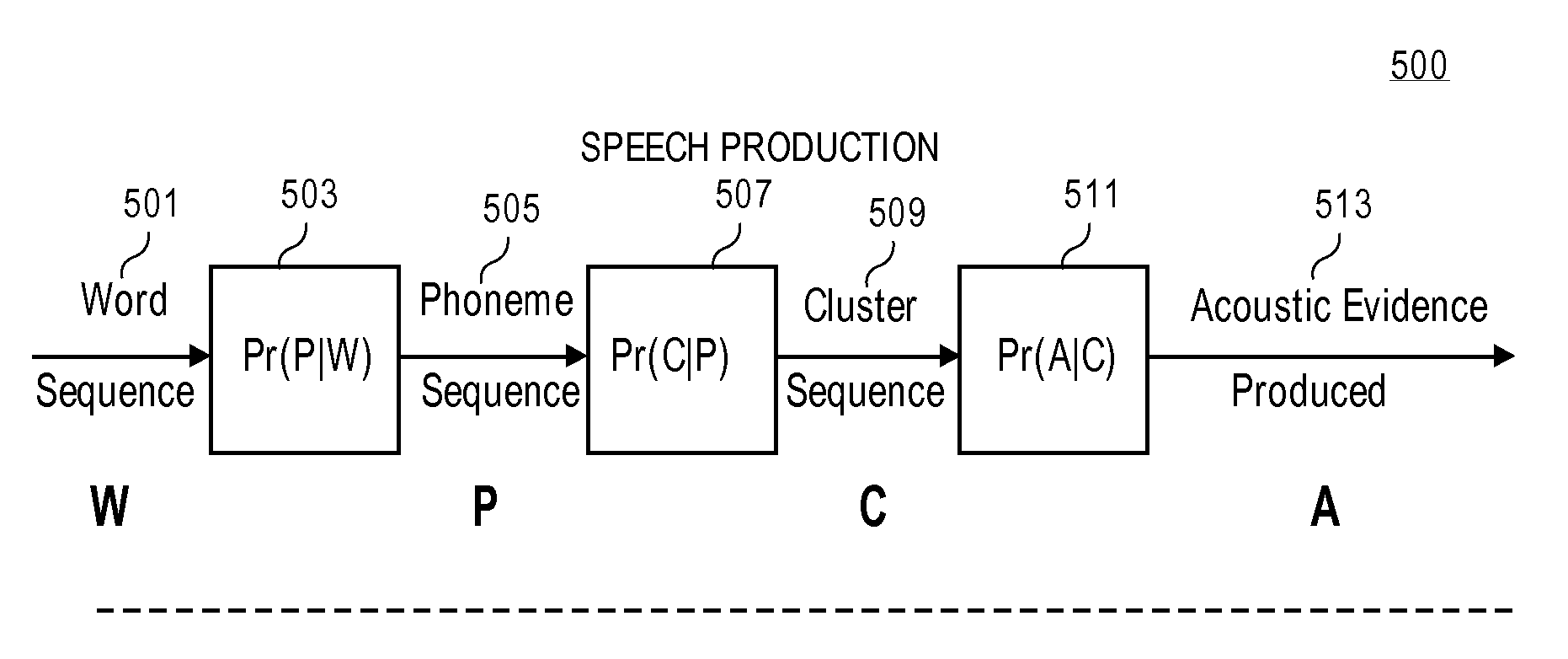

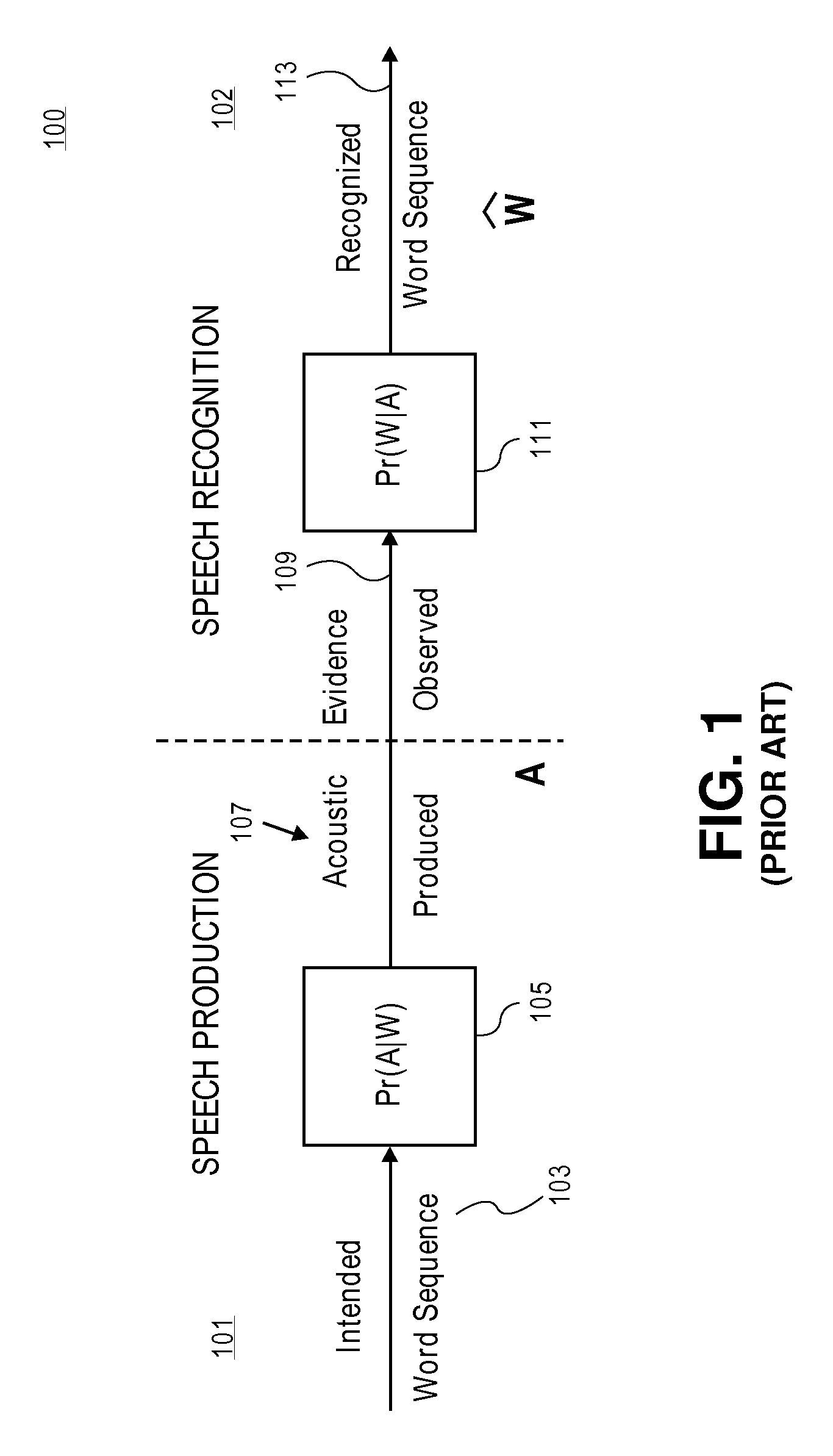

Testing and tuning of automatic speech recognition systems using synthetic inputs generated from its acoustic models

InactiveUS20060085187A1Errors in predictingAvoids acoustic mismatchesSpeech recognitionSpeech synthesisFeature vectorModel selection

A system and method of testing and tuning a speech recognition system by providing pronunciations to the speech recognizer. First a text document is provided to the system and converted into a sequence of phonemes representative of the words in the text. The phonemes are then converted to model units, such as Hidden Markov Models. From the models a probability is obtained for each model or state, and feature vectors are determined. The feature vector matching the most probable vector for each state is selected for each model. These ideal feature vectors are provided to the speech recognizer, and processed. The end result is compared with the original text, and modifications to the system can be made based on the output text.

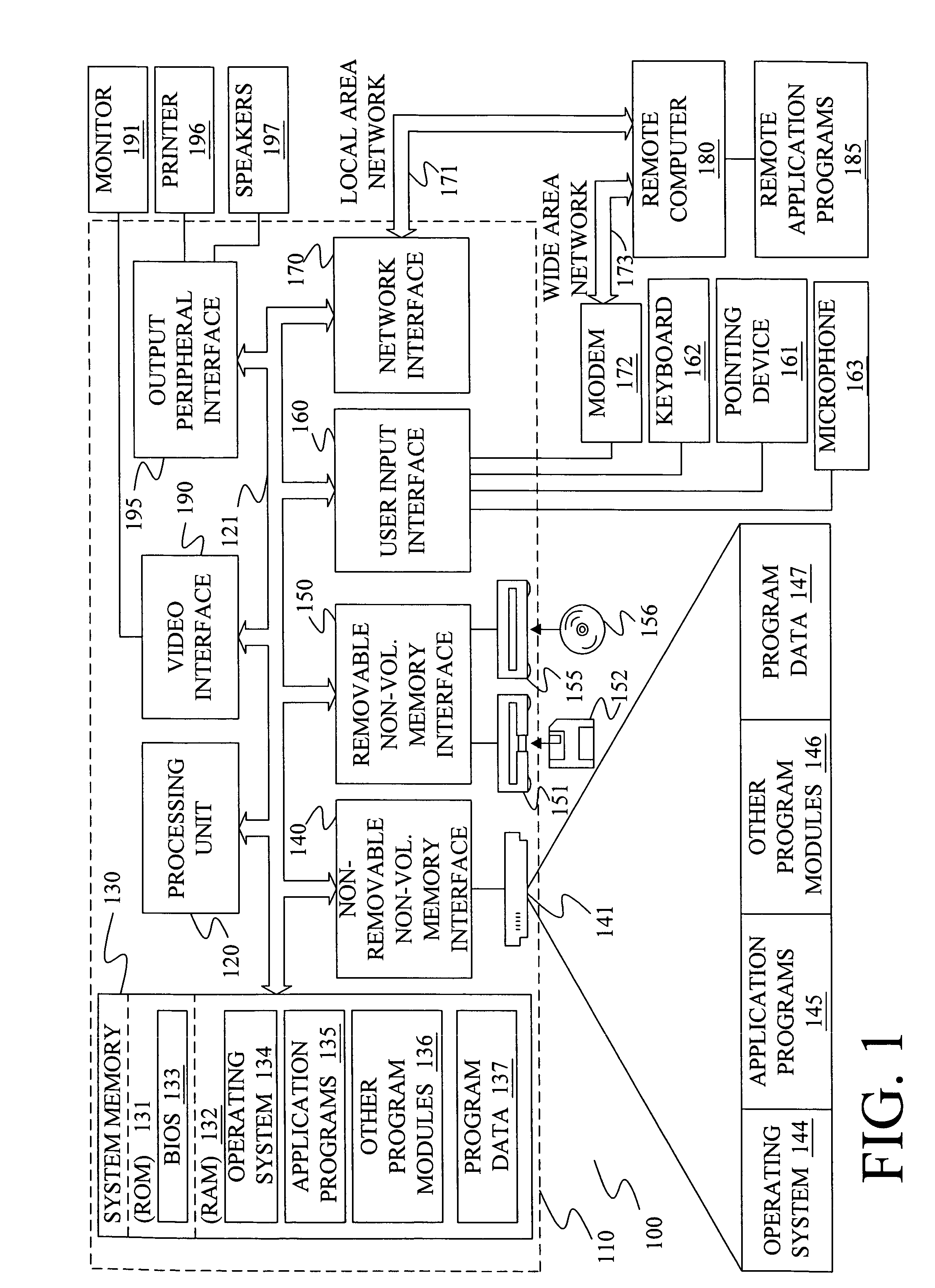

Owner:MICROSOFT TECH LICENSING LLC

Methods and apparatuses for automatic speech recognition

ActiveUS20110004475A1Reduce the amount requiredSpeech recognitionModel parametersContinuous parameter

Exemplary embodiments of methods and apparatuses for automatic speech recognition are described. First model parameters associated with a first representation of an input signal are generated. The first representation of the input signal is a discrete parameter representation. Second model parameters associated with a second representation of the input signal are generated. The second representation of the input signal includes a continuous parameter representation of residuals of the input signal. The first representation of the input signal includes discrete parameters representing first portions of the input signal. The second representation includes discrete parameters representing second portions of the input signal that are smaller than the first portions. Third model parameters are generated to couple the first representation of the input signal with the second representation of the input signal. The first representation and the second representation of the input signal are mapped into a vector space.

Owner:APPLE INC

Adaptive context for automatic speech recognition systems

Owner:QNX SOFTWARE SYST (WAVEMAKERS) INC (CA) +1

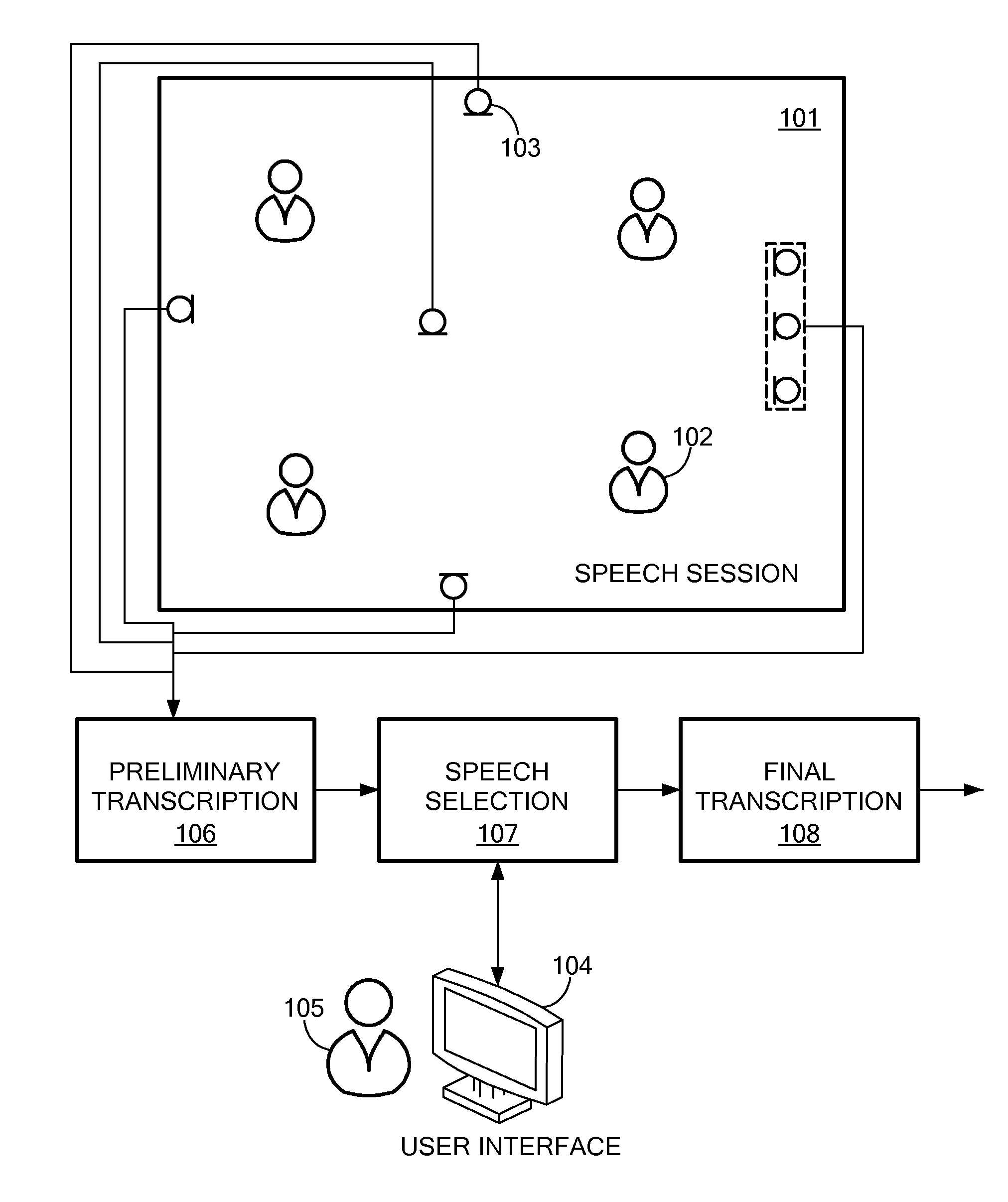

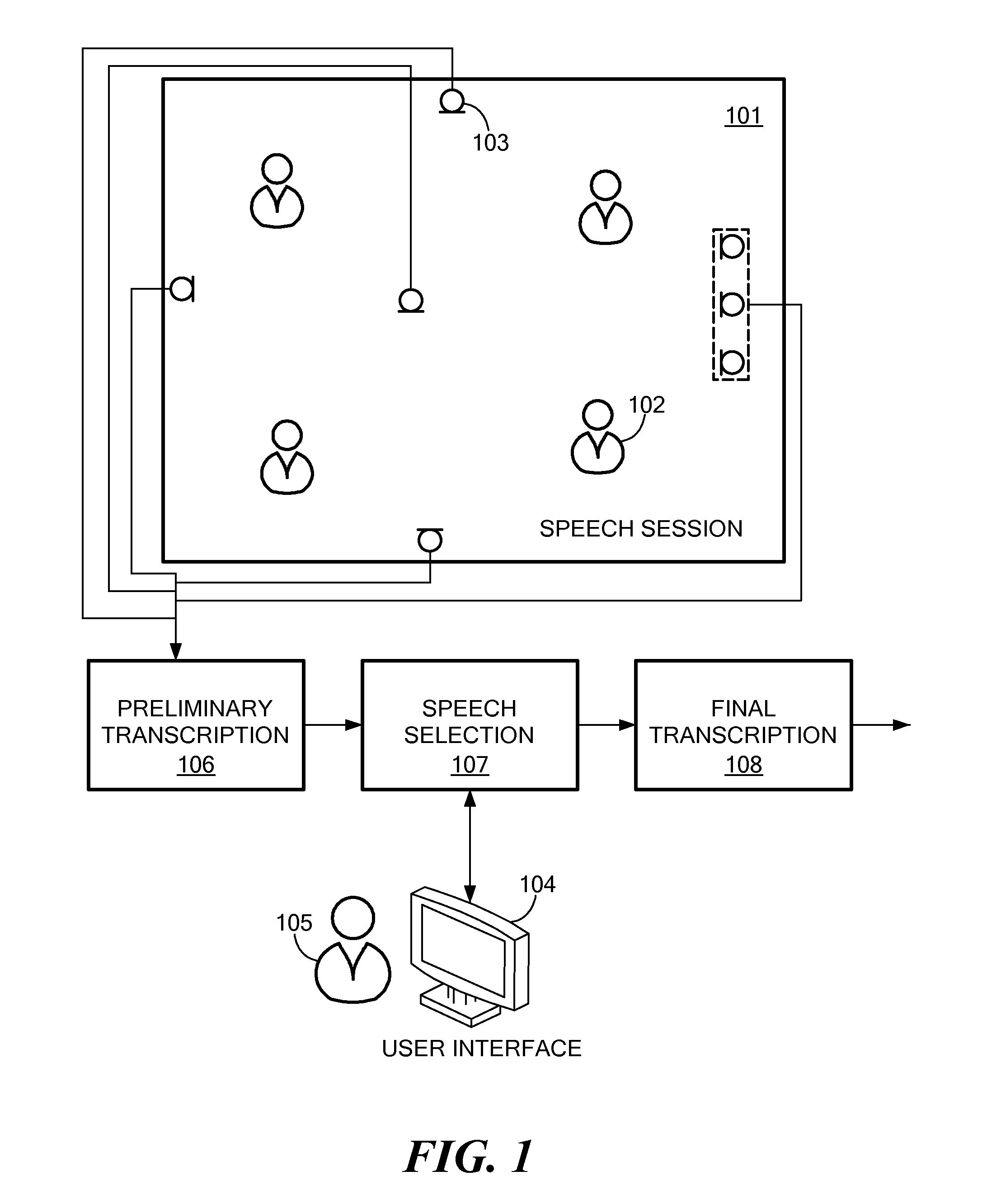

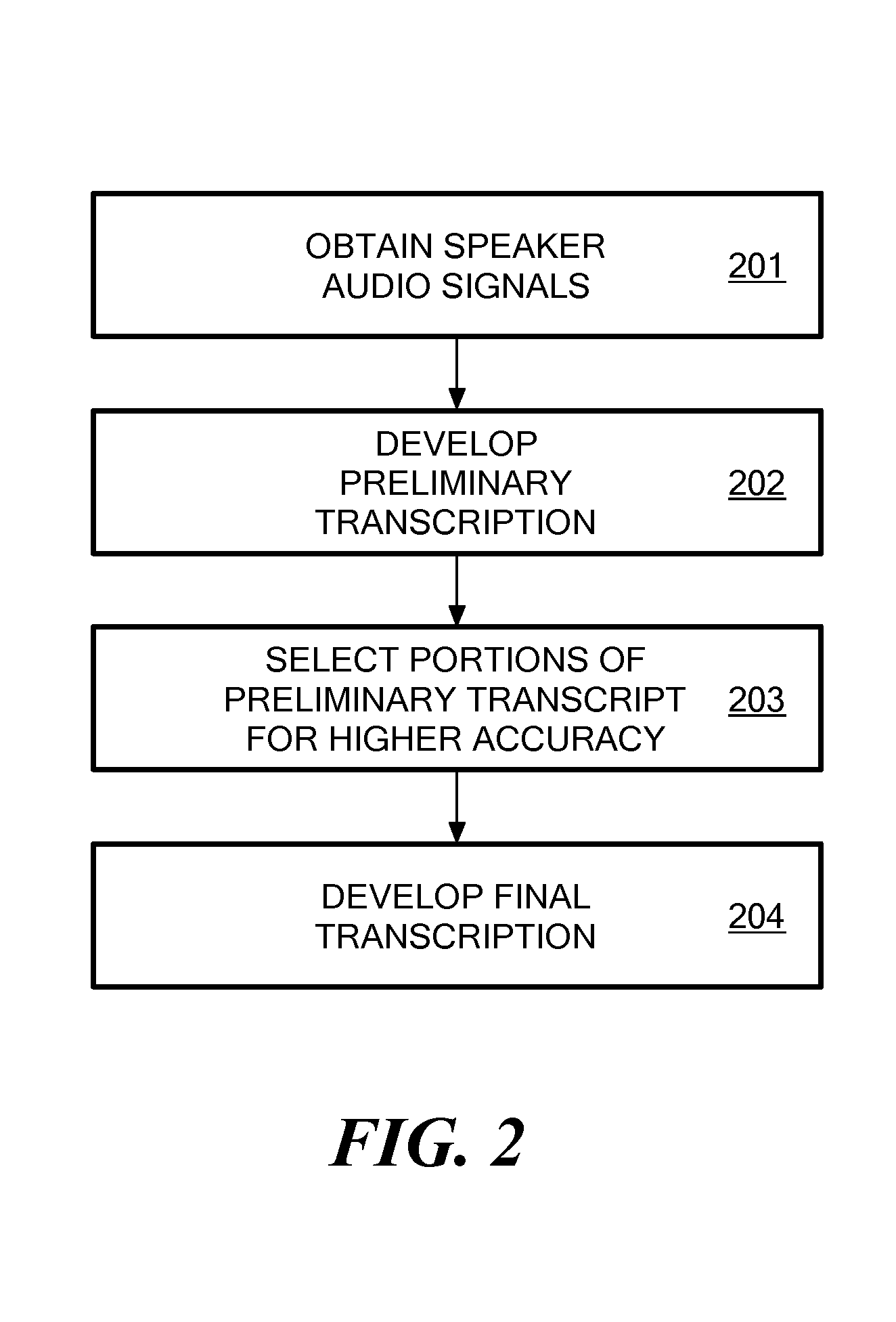

Combining Re-Speaking, Partial Agent Transcription and ASR for Improved Accuracy / Human Guided ASR

A speech transcription system is described for producing a representative transcription text from one or more different audio signals representing one or more different speakers participating in a speech session. A preliminary transcription module develops a preliminary transcription of the speech session using automatic speech recognition having a preliminary recognition accuracy performance. A speech selection module enables user selection of one or more portions of the preliminary transcription to receive higher accuracy transcription processing. A final transcription module is responsive to the user selection for developing a final transcription output for the speech session having a final recognition accuracy performance for the selected one or more portions which is higher than the preliminary recognition accuracy performance.

Owner:NUANCE COMM INC

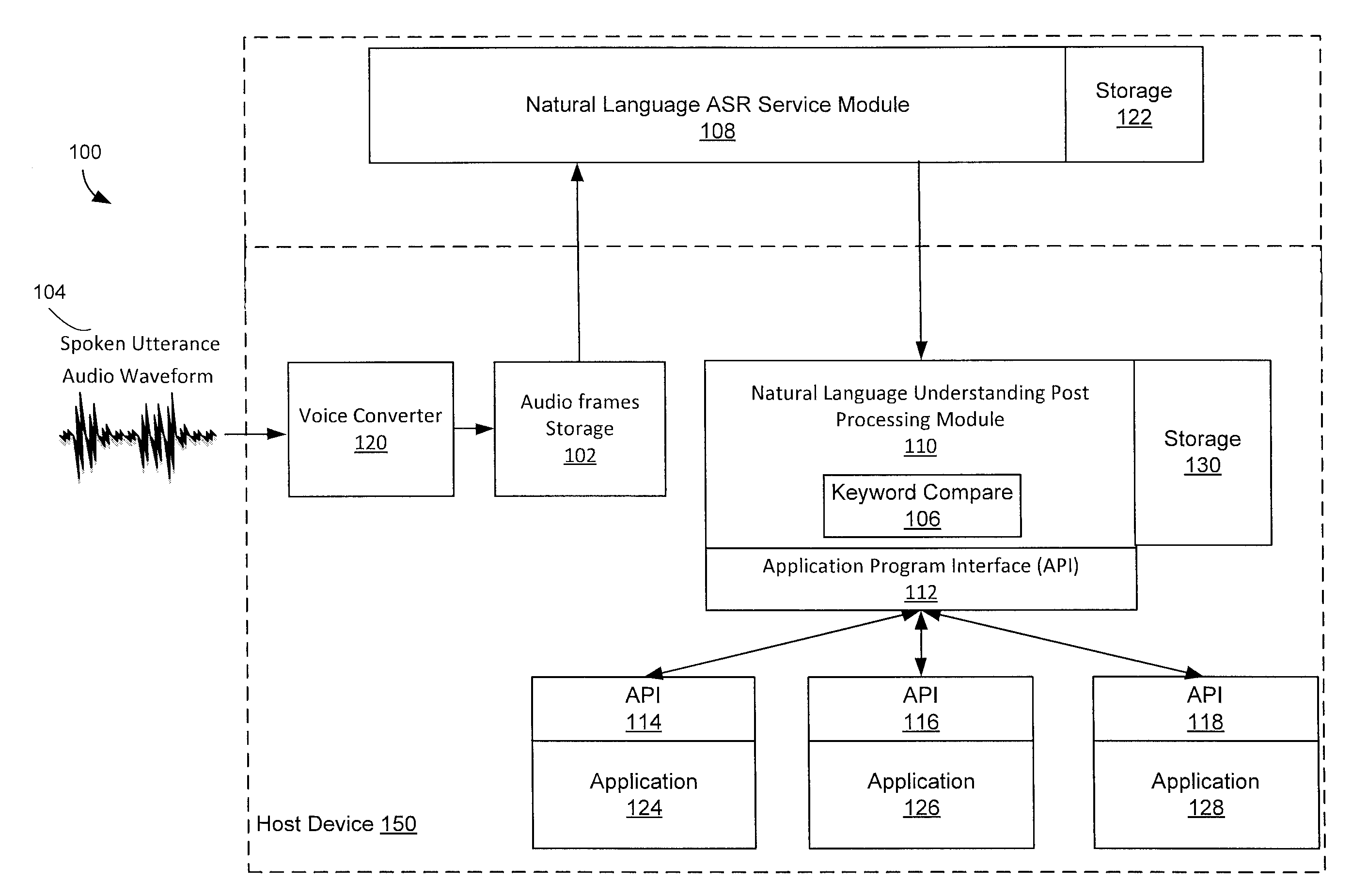

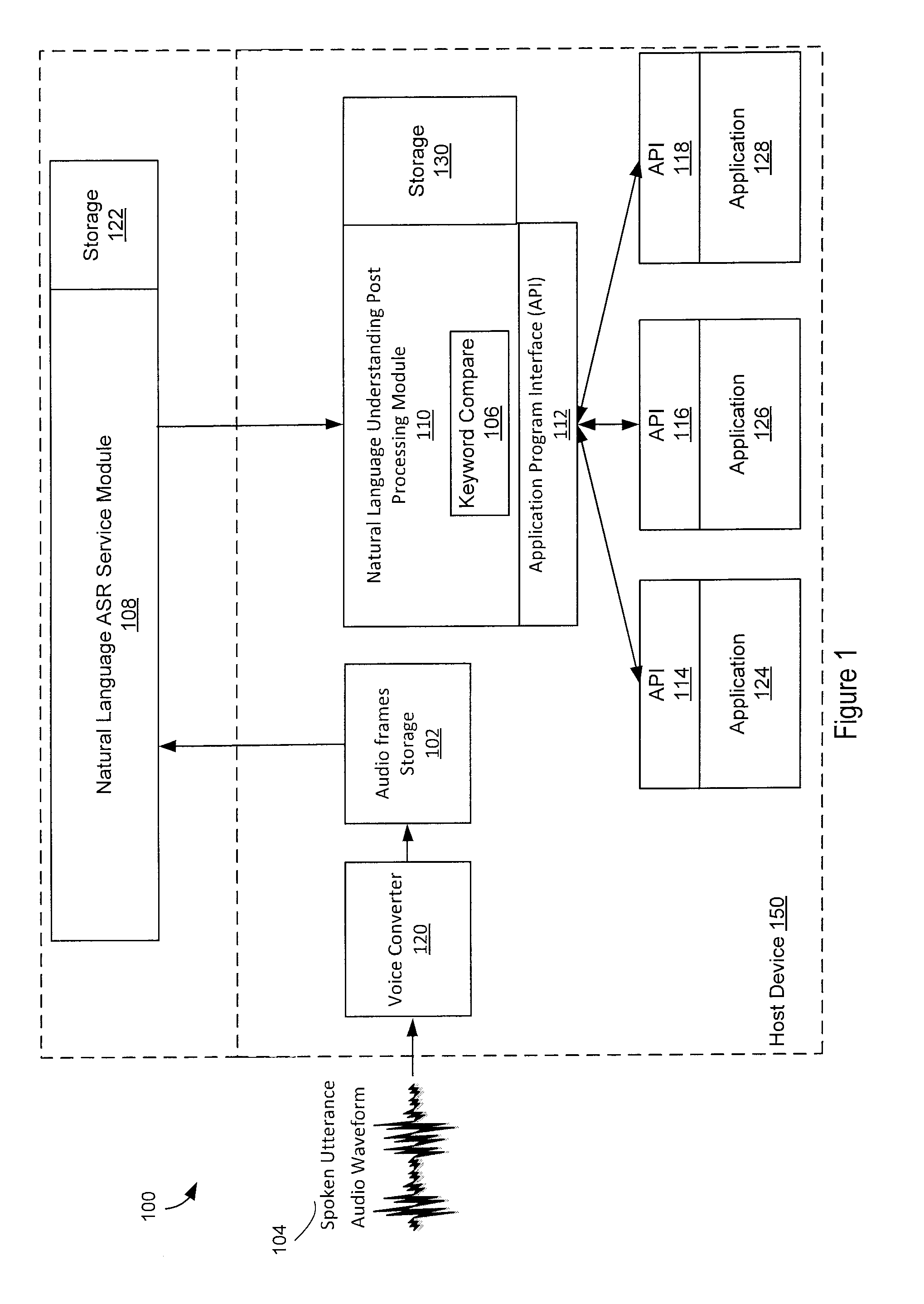

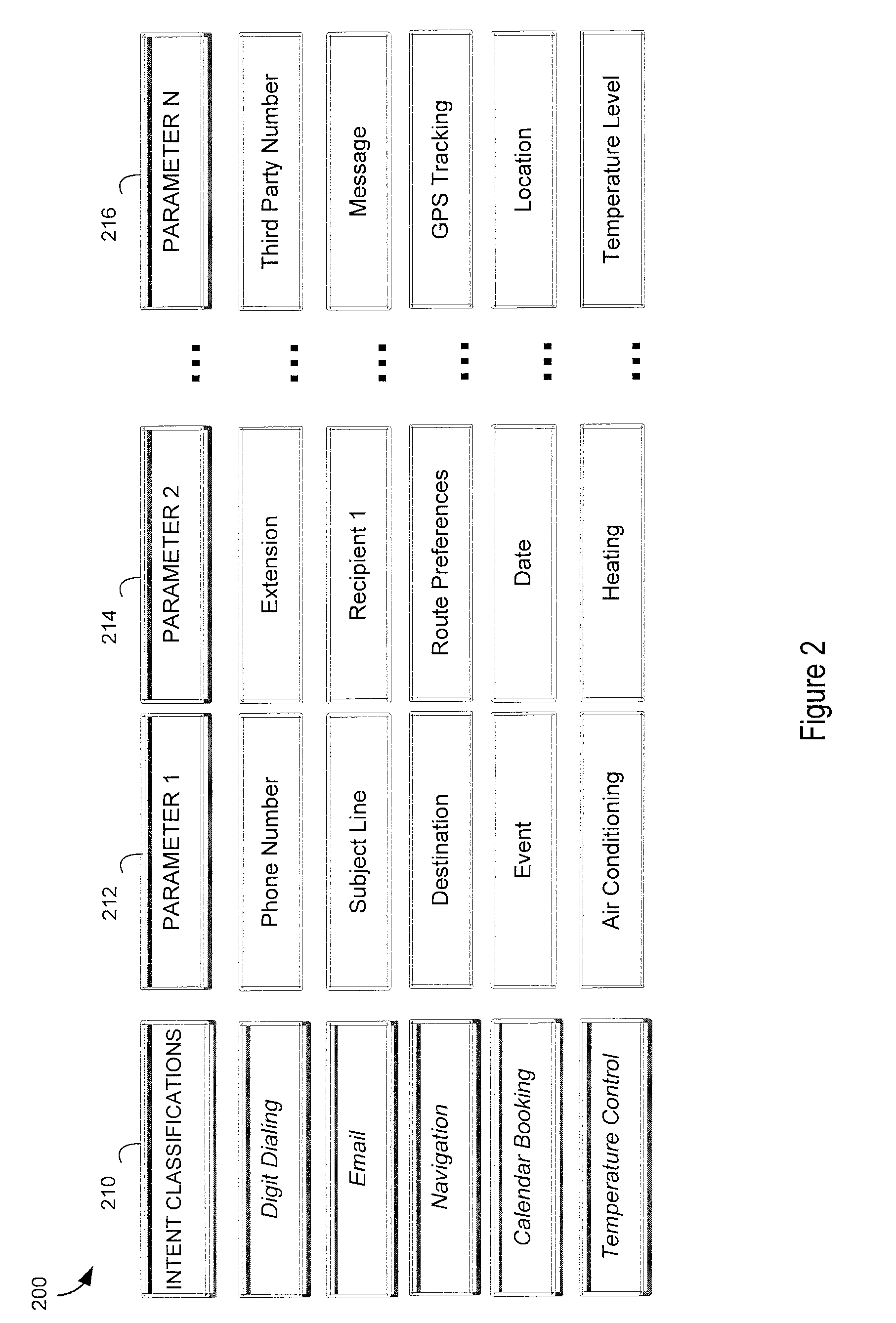

Natural language understanding automatic speech recognition post processing

In an automatic speech recognition post processing system, speech recognition results are received from an automatic speech recognition service. The speech recognition results may include transcribed speech, an intent classification and / or extracted fields of intent parameters. The speech recognition results are post processed for use in a specified context. All or a portion of the speech recognition results are compared to keywords that are sensitive to the specified context. The post processed speech recognition results are provided to an appropriate application which is operable to utilize the context sensitive product of post processing.

Owner:ONTARIO INC

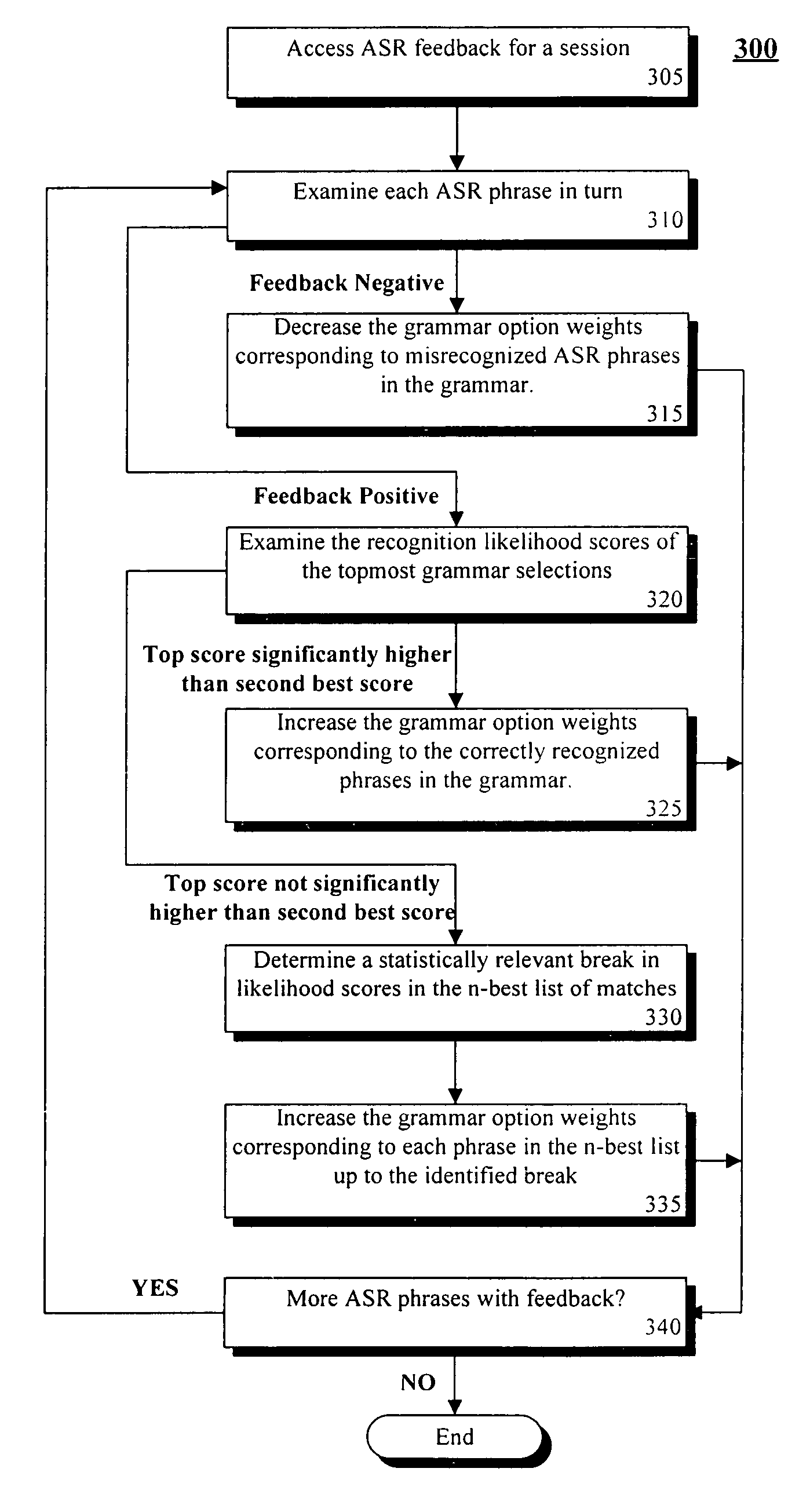

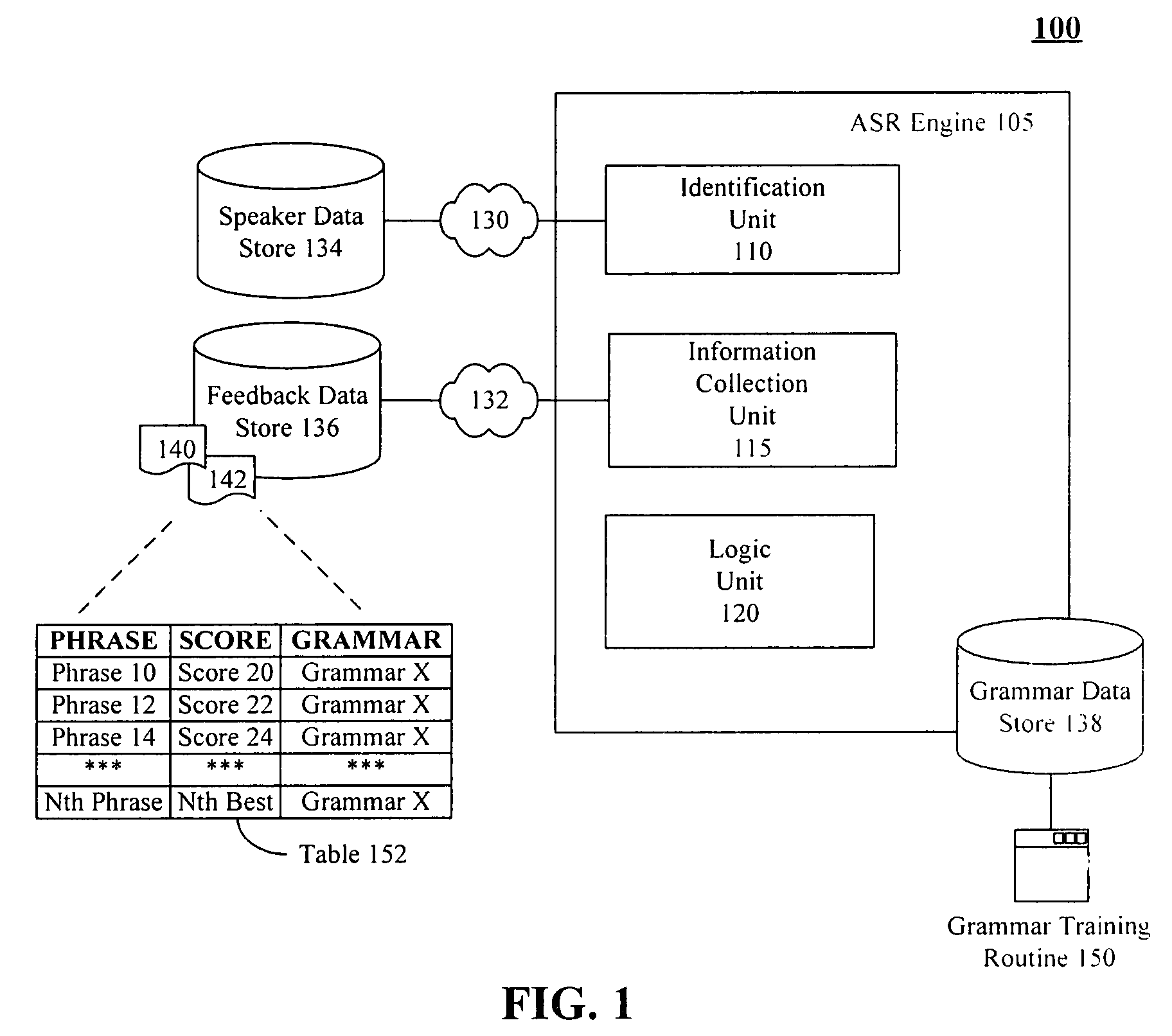

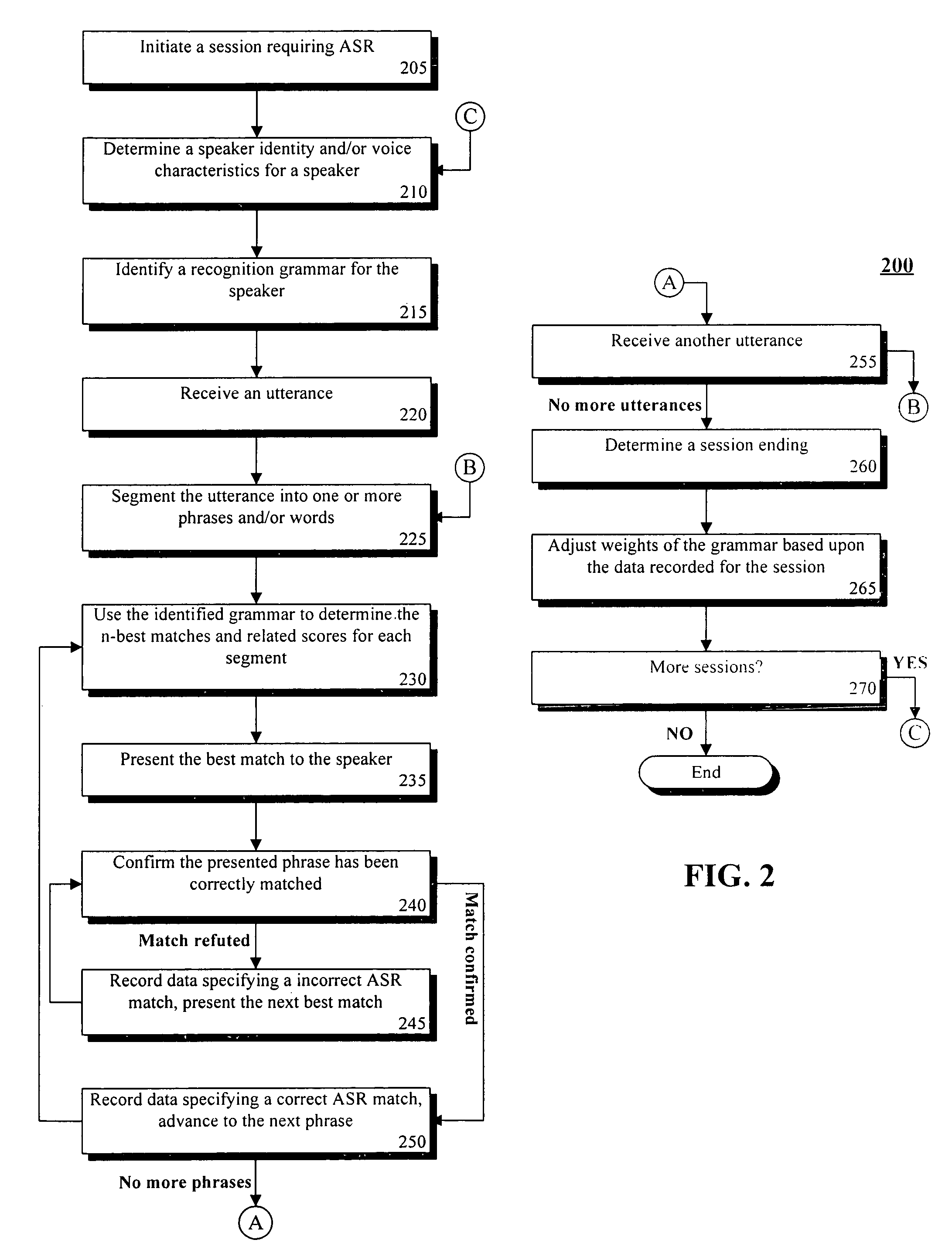

Training speaker-dependent, phrase-based speech grammars using an unsupervised automated technique

InactiveUS7778830B2Improve accuracyLower performance requirementsSpeech recognitionSpecial data processing applicationsAutomatic speechSpeech sound

The present invention can include a method for tuning grammar option weights of a phrase-based, automatic speech recognition (ASR) grammar, where the grammar option weights affect which entries within the grammar are matched to spoken utterances. The tuning can occur in an unsupervised fashion, meaning no special training session or manual transcription of data from an ASR session is needed. The method can include the step of selecting a phrase-based grammar to use in a communication session with a user wherein different phrase-based grammars can be selected for different users. Feedback of ASR phrase processing operations can be recorded during the communication session. Each ASR phrase processing operation can match a spoken utterance against at least one entry within the selected phrase-based grammar. At least one of the grammar option weights can be automatically adjusted based upon the feedback to improve accuracy of the phrase-based grammar.

Owner:NUANCE COMM INC

Low power activation of voice activated device

In a mobile device, a bone conduction or vibration sensor is used to detect the user's speech and the resulting output is used as the source for a low power Voice Trigger (VT) circuit that can activate the Automatic Speech Recognition (ASR) of the host device. This invention is applicable to mobile devices such as wearable computers with head mounted display, mobile phones and wireless headsets and headphones which use speech recognition for the entering of input commands and control. The speech sensor can be a bone conduction microphone used to detect sound vibrations in the skull, or a vibration sensor, used to detect sound pressure vibrations from the user's speech. This VT circuit can be independent of any audio components of the host device and can therefore be designed to consume ultra-low power. Hence, this VT circuit can be active when the host device is in a sleeping state and can be used to wake the host device on detection of speech from the user. This VT circuit will be resistant to outside noise and react solely to the user's voice.

Owner:DSP GROUP

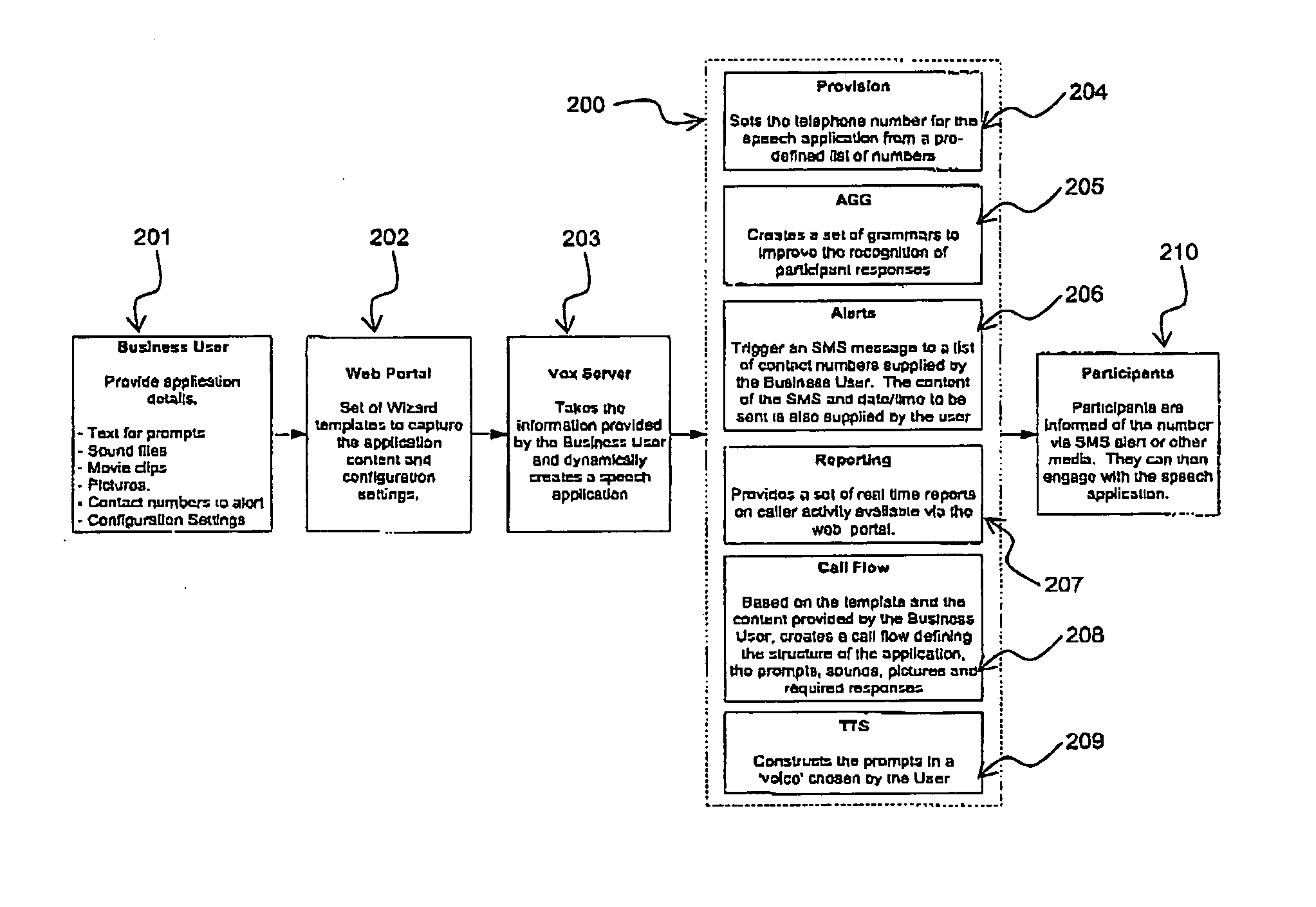

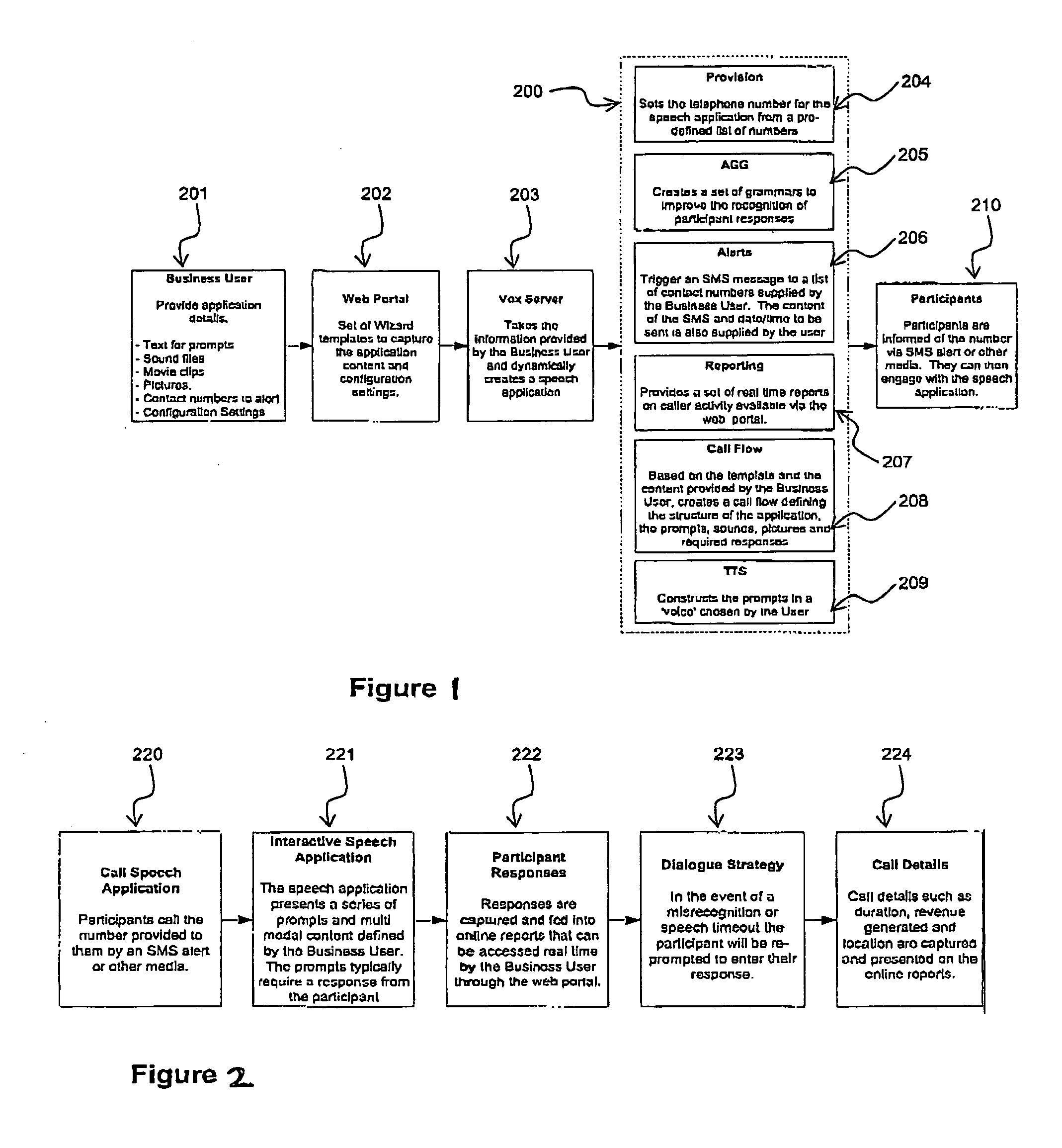

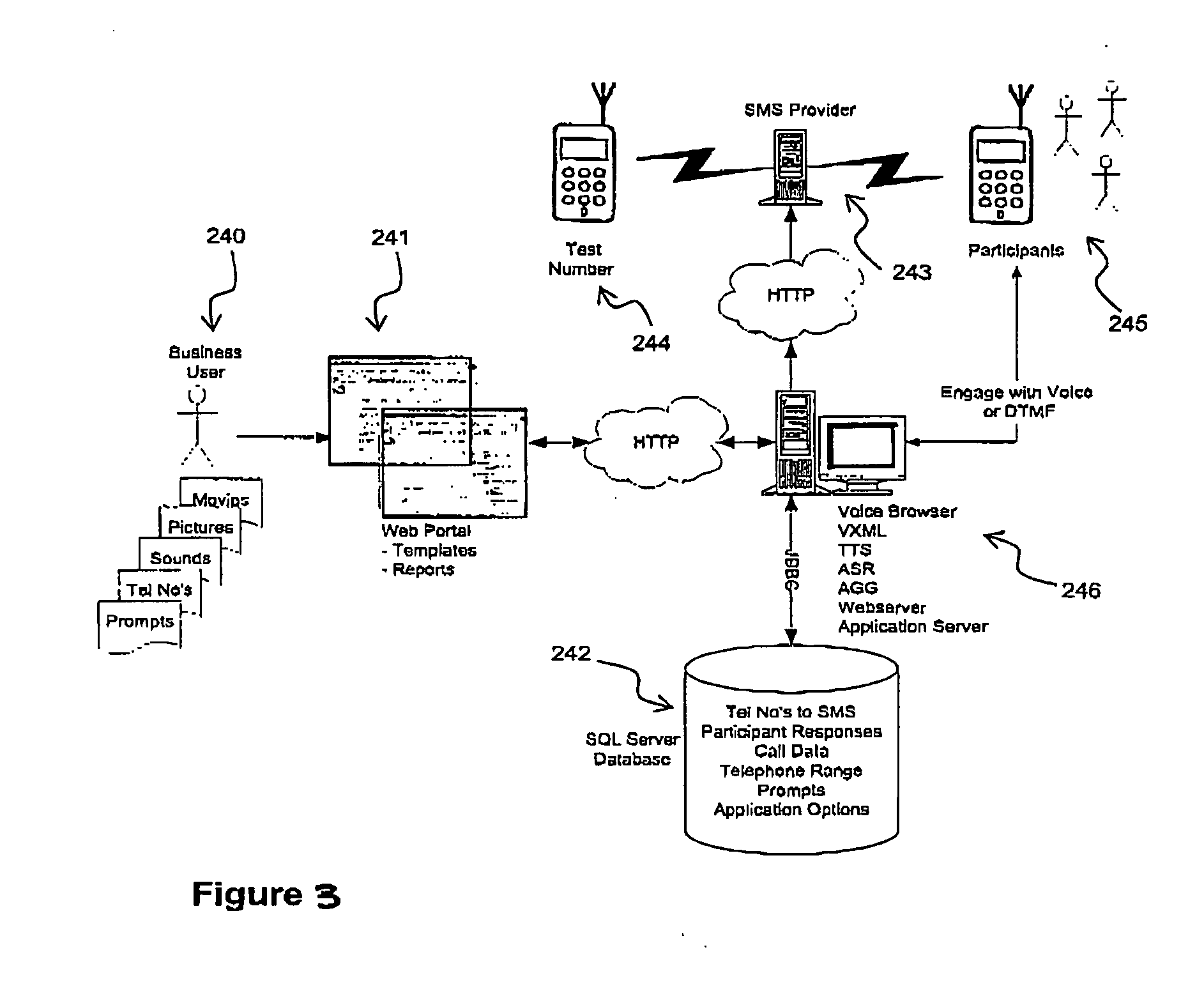

Automated speech-enabled application creation method and apparatus

InactiveUS20050125232A1Easy to useConstrain complexitySpeech recognitionMarketingSpeech soundEmbedded system

A system for creating and hosting speech-enabled applications having a speech interface that can be customised by a user is disclosed. The system comprises a customisation module that manages the components, e.g. templates, needed to enable the user to create a speech-enabled application. The customisation module allows a non-expert user rapidly to design and deploy complex speech interfaces. Additionally, the system can automatically manage the deployment of the speech-enabled applications once they have been created by the user, without the need for any further intervention by the user or use of the user's own computer processing resources.

Owner:VOX GENERATION LTD

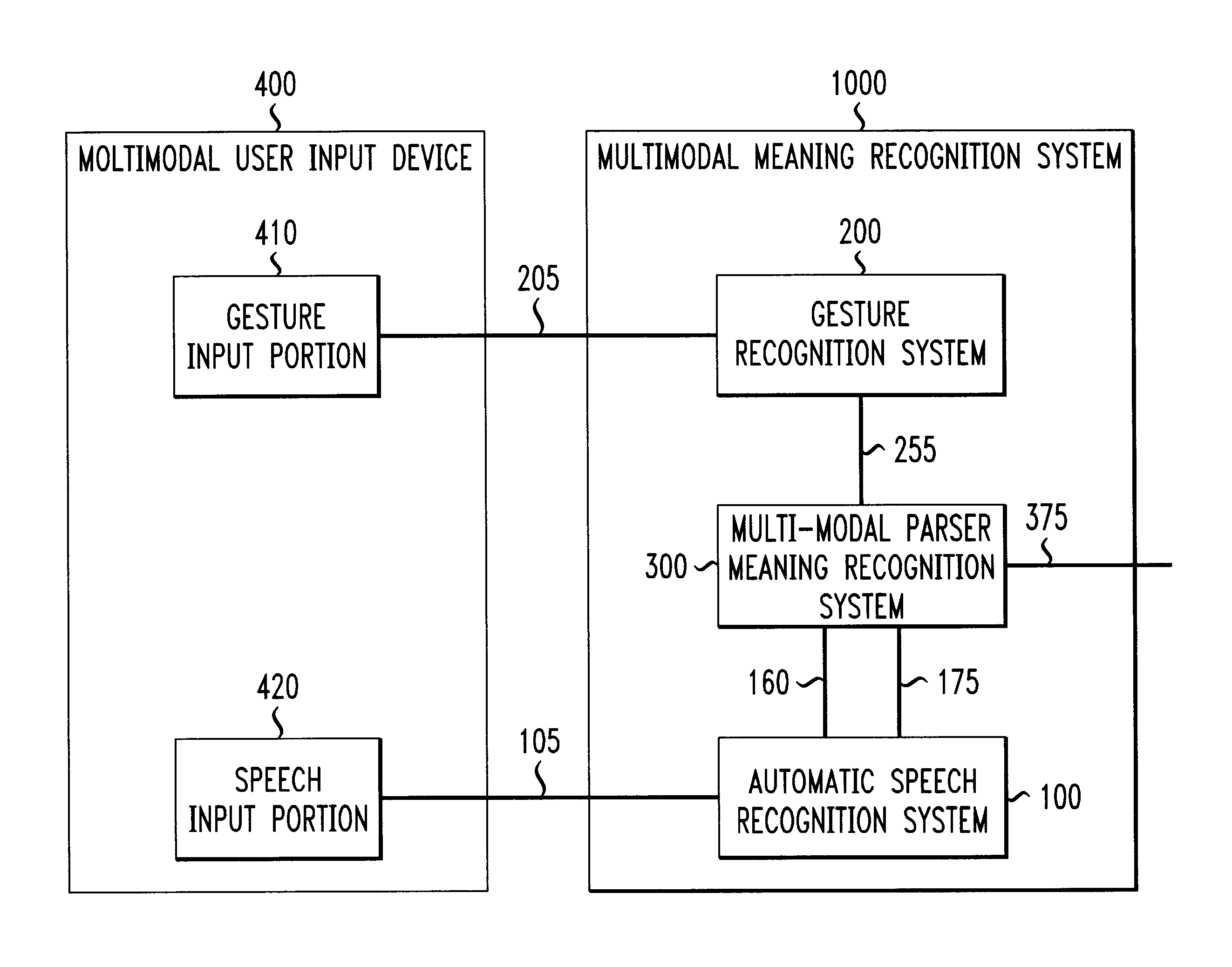

Systems and methods for extracting meaning from multimodal inputs using finite-state devices

InactiveUS6868383B1Computational complexity is reducedCharacter and pattern recognitionSpeech recognitionSpeech inputAutomatic speech

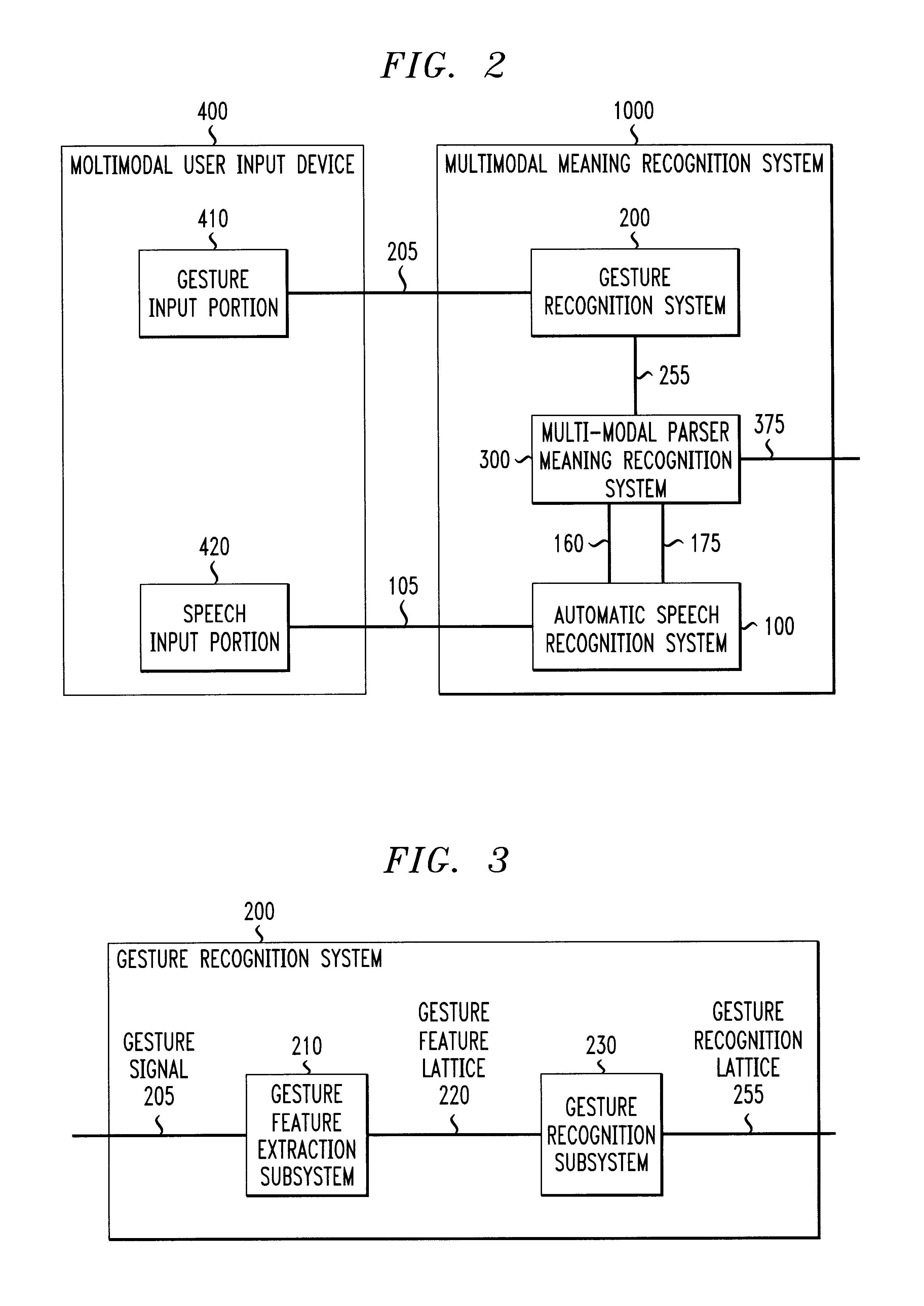

Multimodal utterances contain a number of different modes. These modes can include speech, gestures, and pen, haptic, and gaze inputs, and the like. This invention use recognition results from one or more of these modes to provide compensation to the recognition process of one or more other ones of these modes. In various exemplary embodiments, a multimodal recognition system inputs one or more recognition lattices from one or more of these modes, and generates one or more models to be used by one or more mode recognizers to recognize the one or more other modes. In one exemplary embodiment, a gesture recognizer inputs a gesture input and outputs a gesture recognition lattice to a multimodal parser. The multimodal parser generates a language model and outputs it to an automatic speech recognition system, which uses the received language model to recognize the speech input that corresponds to the recognized gesture input.

Owner:INTERACTIONS LLC (US)

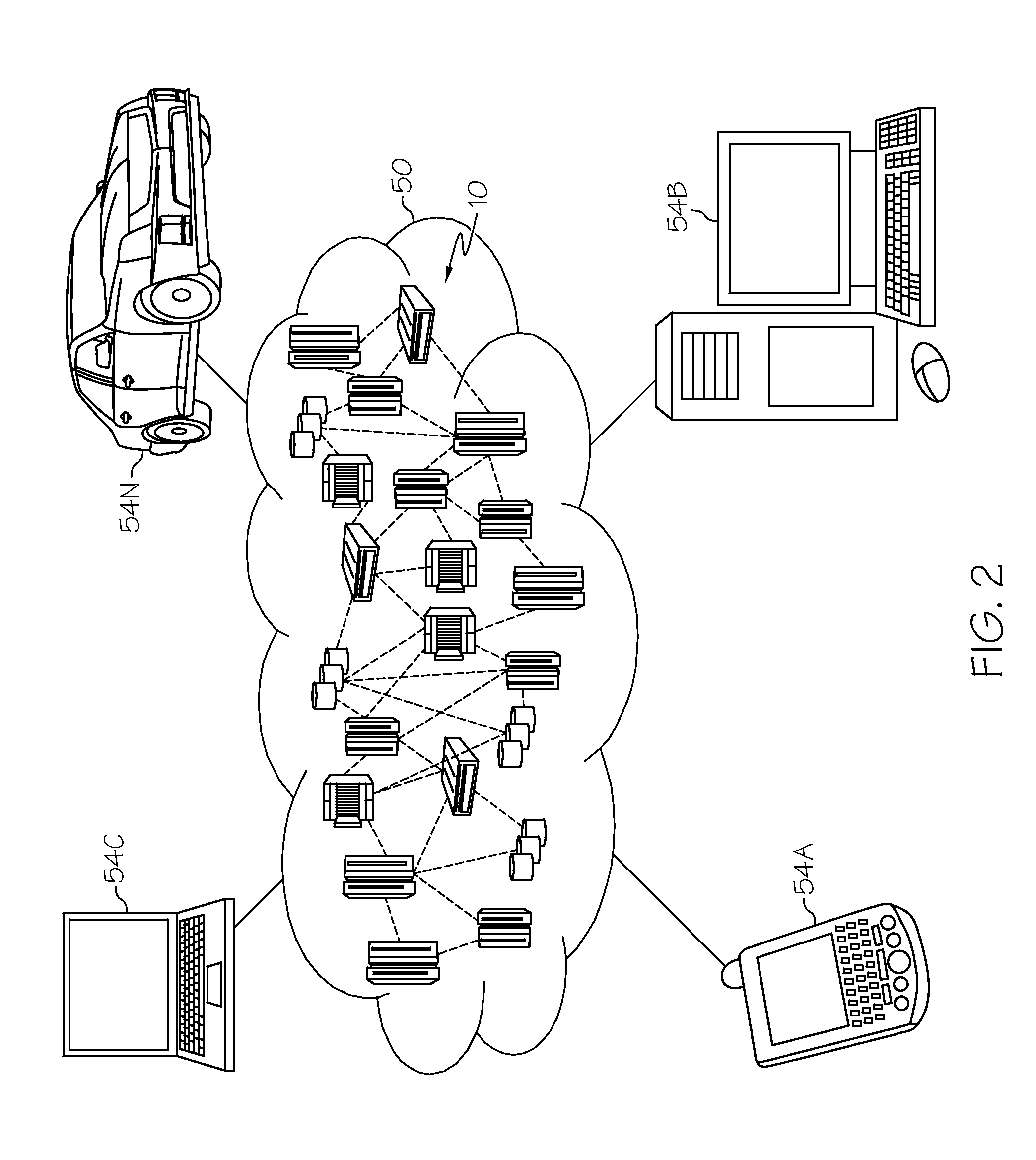

System, method and program product for providing automatic speech recognition (ASR) in a shared resource environment

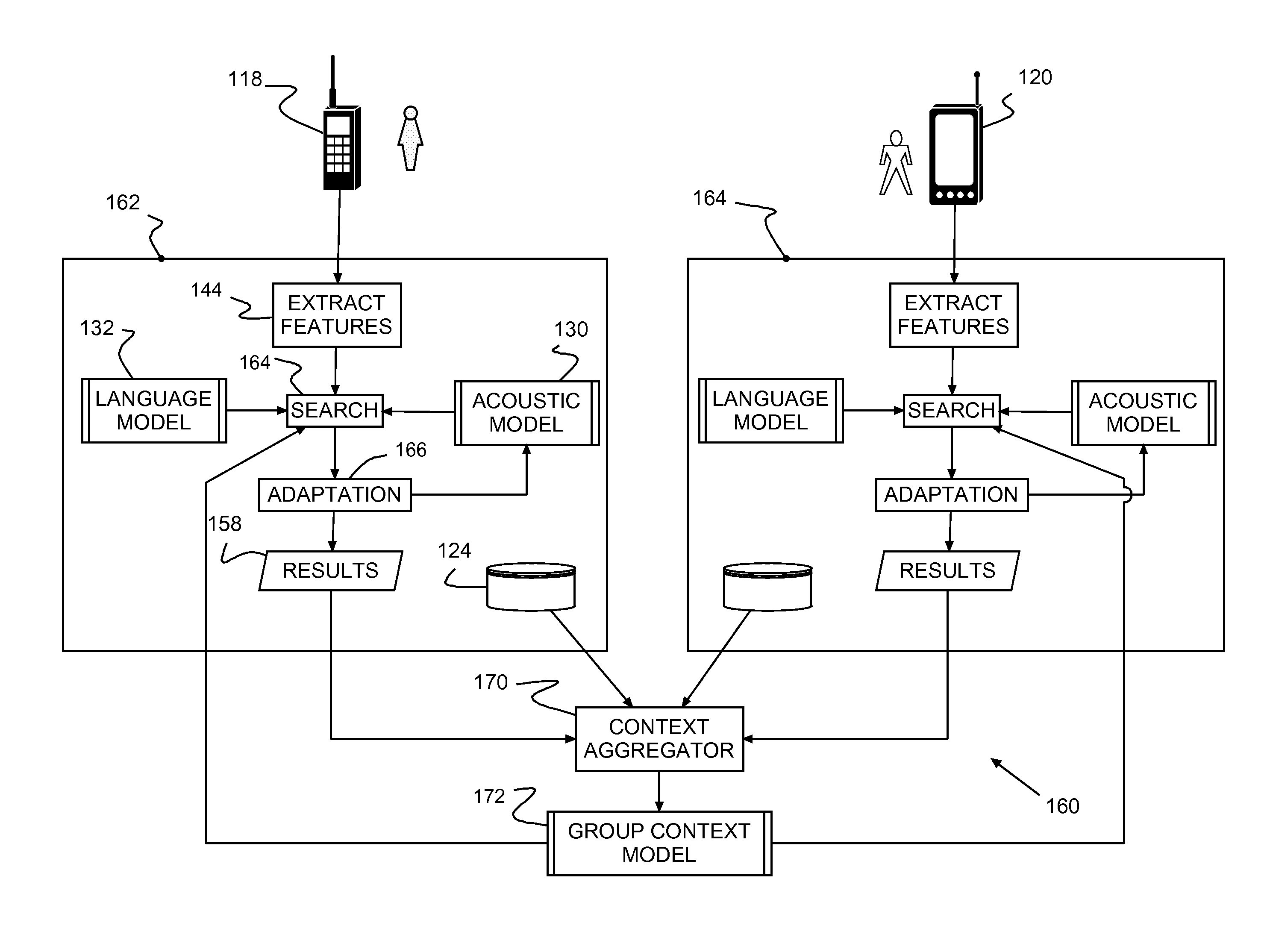

InactiveUS9043208B2Improve speech recognition performanceLeveling precisionSpeech recognitionContext modelAmbiguity

A speech recognition system, method of recognizing speech and a computer program product therefor. A client device identified with a context for an associated user selectively streams audio to a provider computer, e.g., a cloud computer. Speech recognition receives streaming audio, maps utterances to specific textual candidates and determines a likelihood of a correct match for each mapped textual candidate. A context model selectively winnows candidate to resolve recognition ambiguity according to context whenever multiple textual candidates are recognized as potential matches for the same mapped utterance. Matches are used to update the context model, which may be used for multiple users in the same context.

Owner:INT BUSINESS MASCH CORP

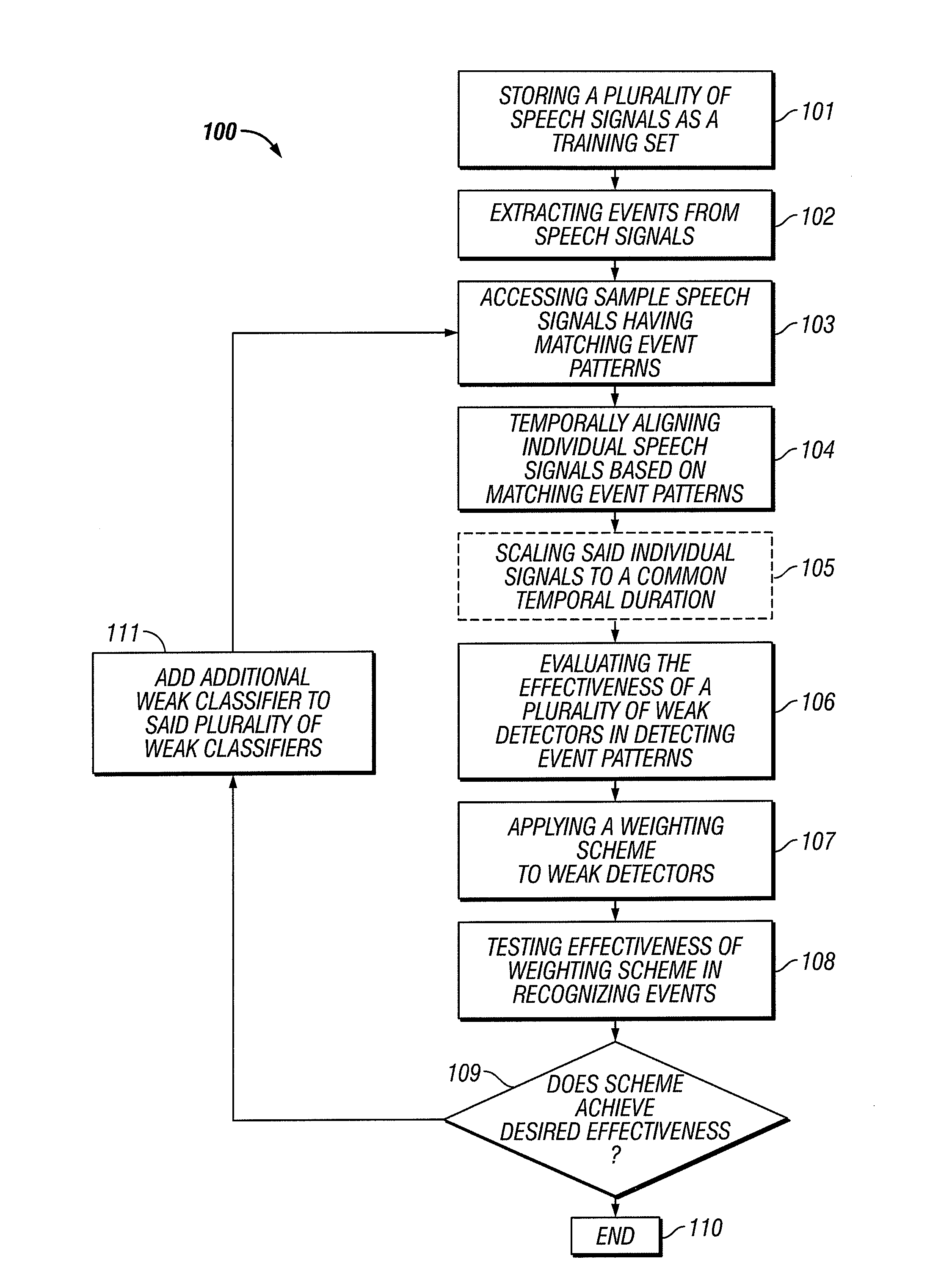

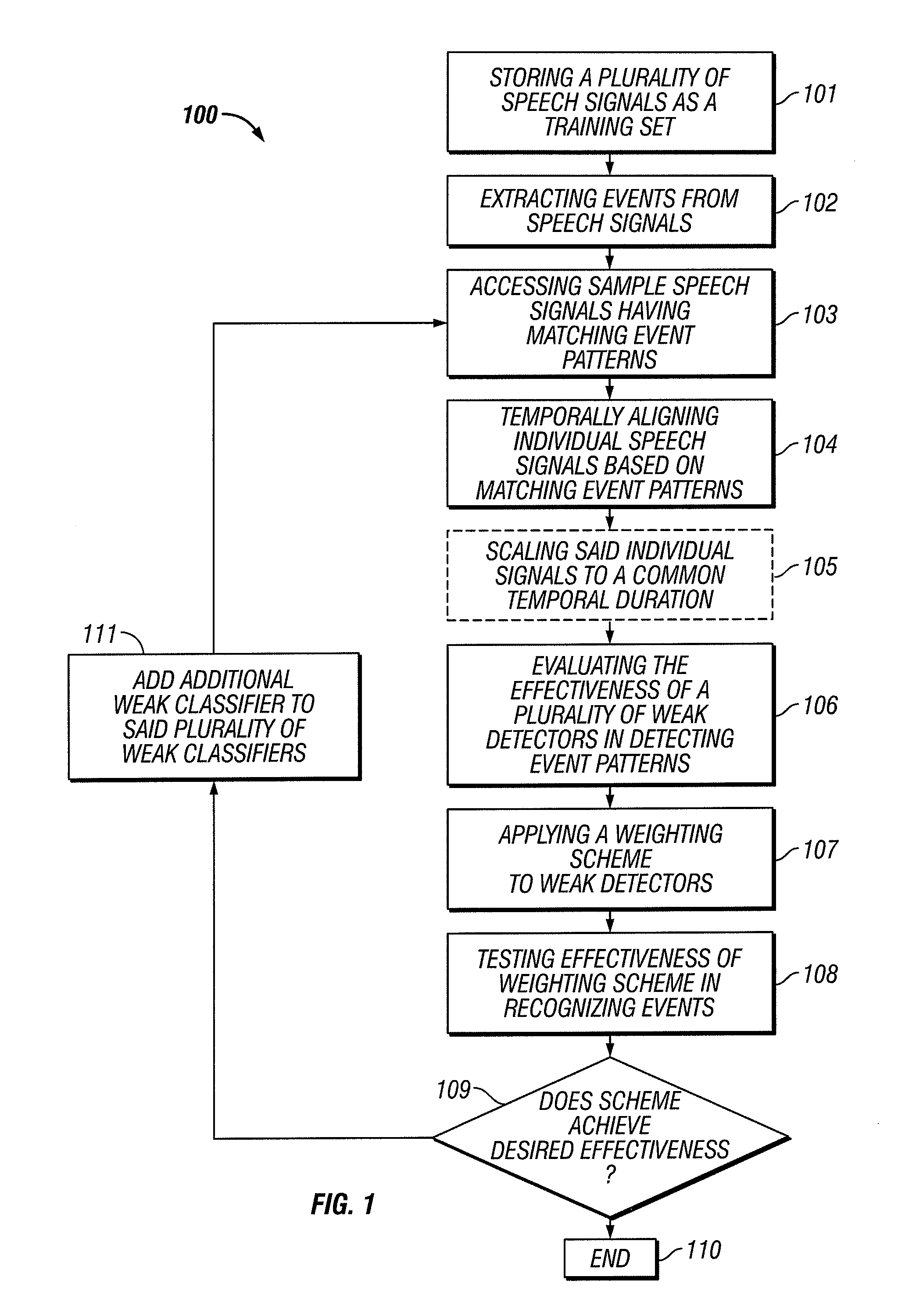

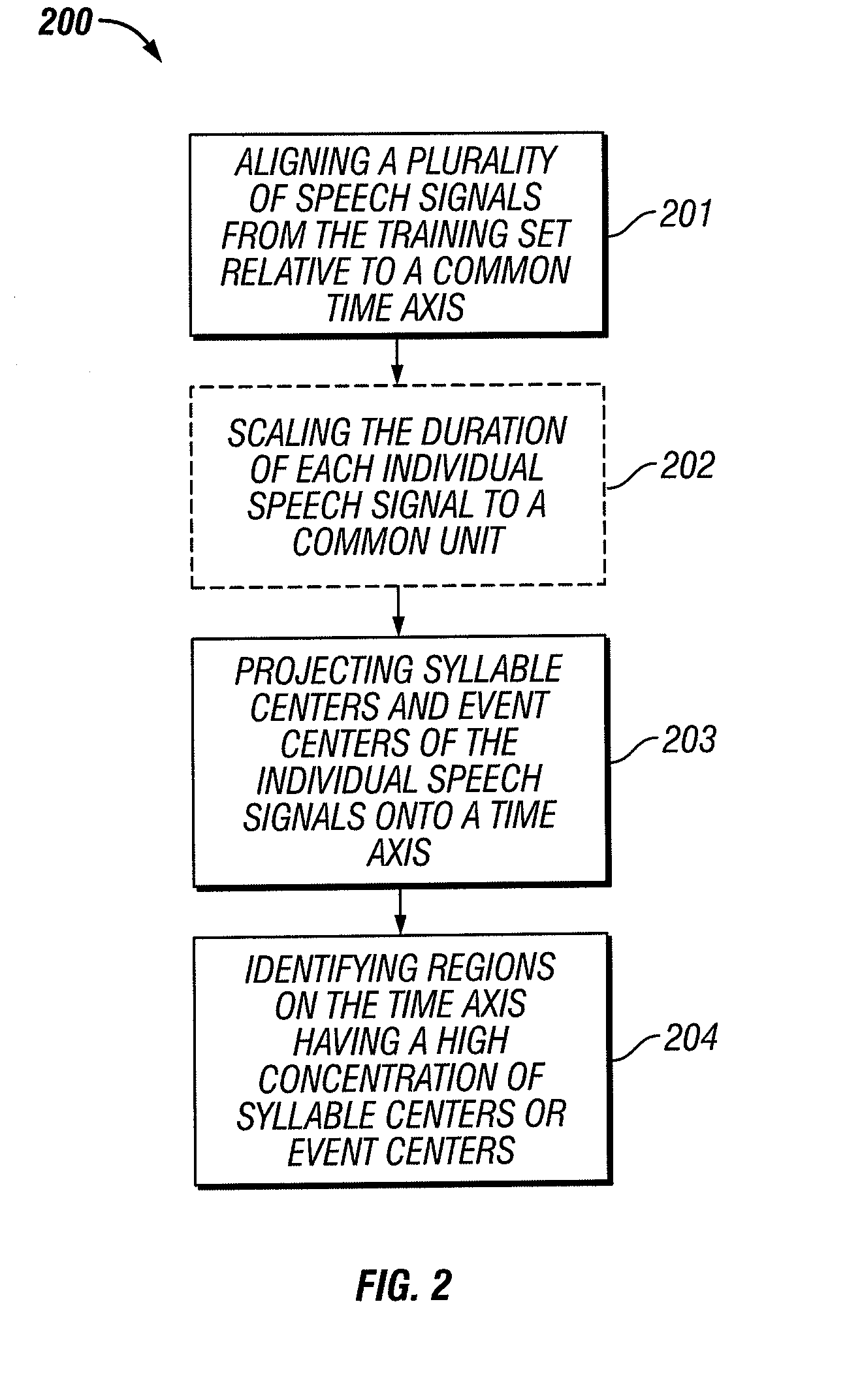

System and method for automatic speech to text conversion

ActiveUS20100121638A1Easy to identifyReduce complexitySpeech recognitionText streamSpeech identification

Speech recognition is performed in near-real-time and improved by exploiting events and event sequences, employing machine learning techniques including boosted classifiers, ensembles, detectors and cascades and using perceptual clusters. Speech recognition is also improved using tandem processing. An automatic punctuator injects punctuation into recognized text streams.

Owner:SCTI HLDG INC

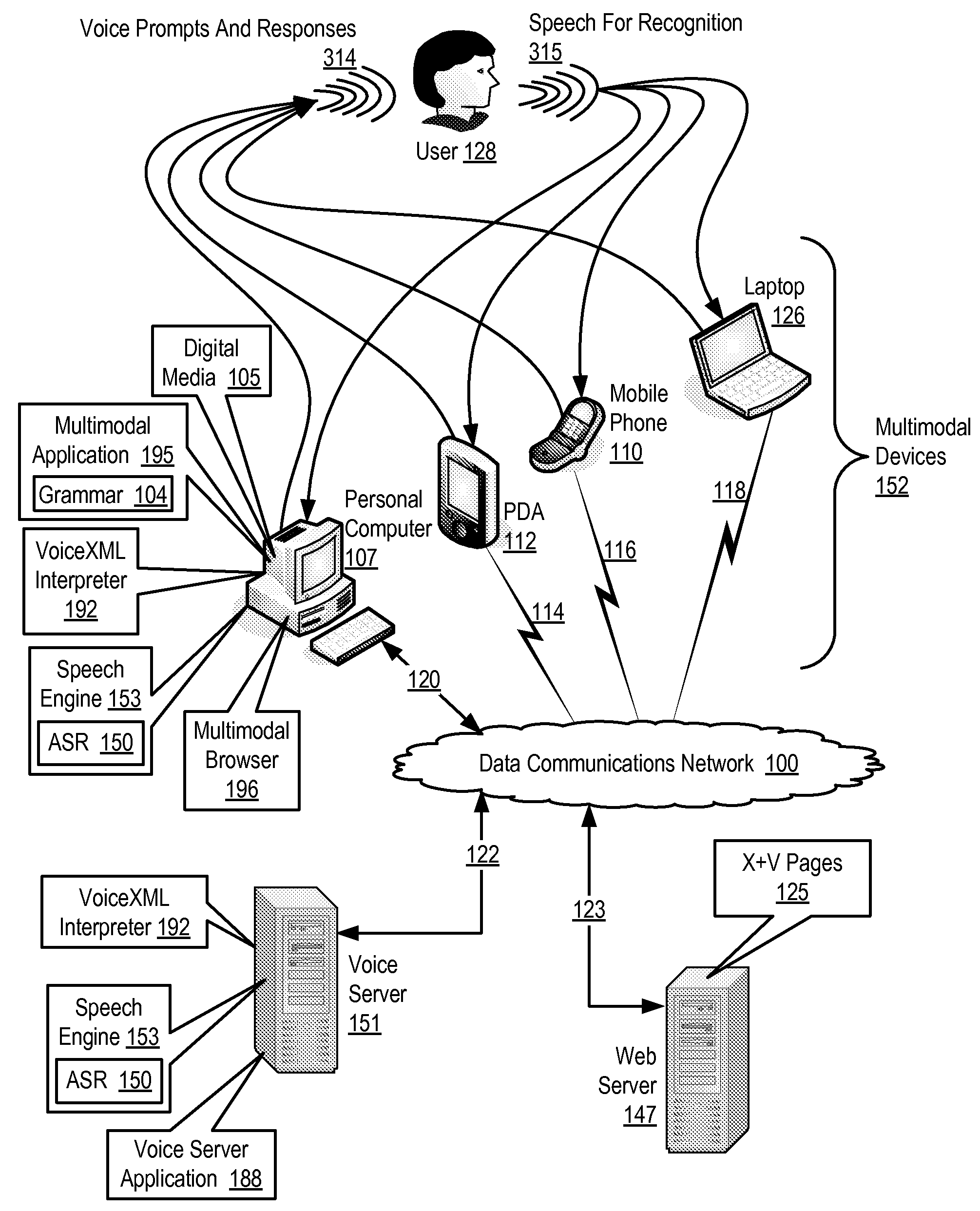

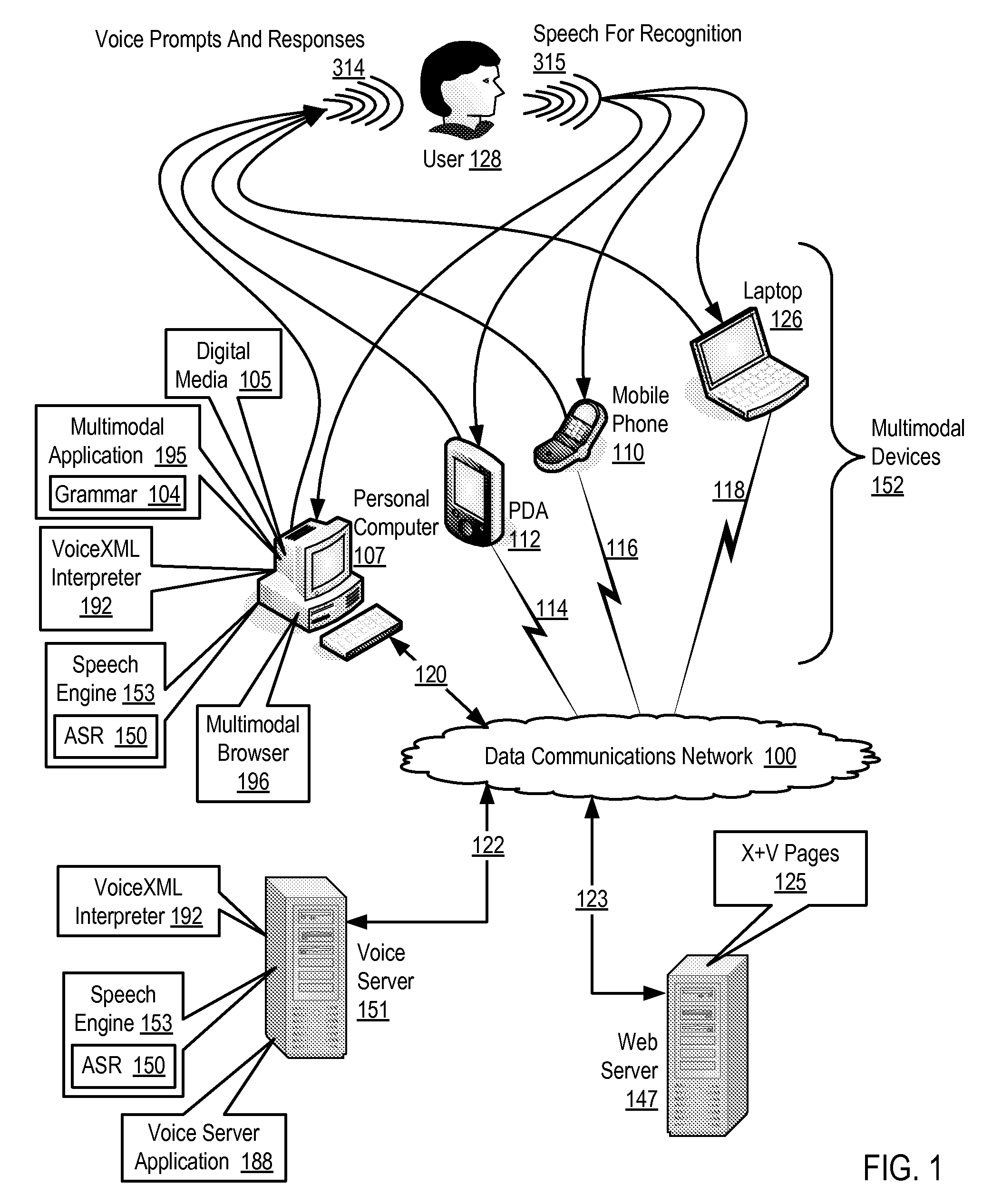

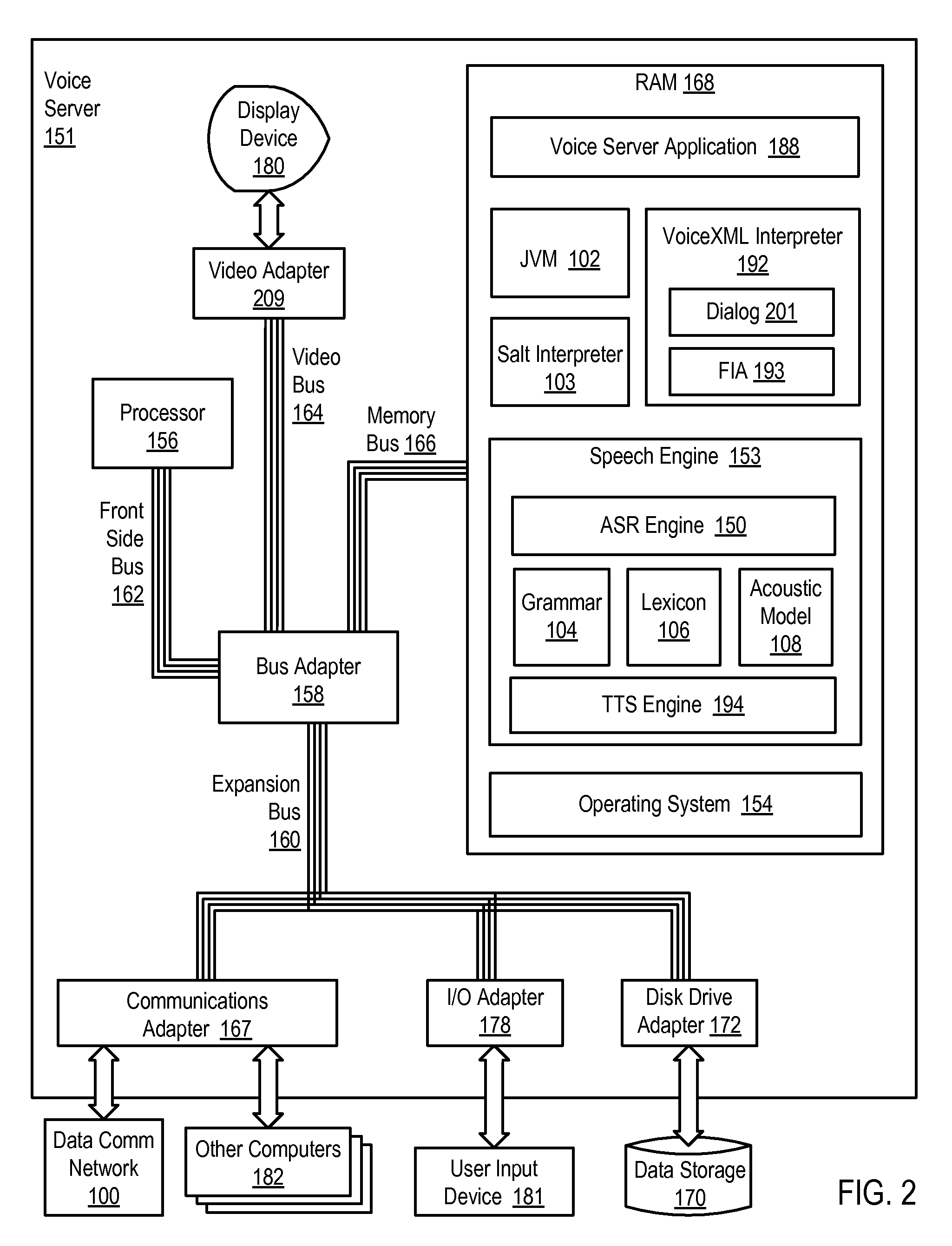

Presenting Supplemental Content For Digital Media Using A Multimodal Application

Presenting supplemental content for digital media using a multimodal application, implemented with a grammar of the multimodal application in an automatic speech recognition (‘ASR’) engine, with the multimodal application operating on a multimodal device supporting multiple modes of interaction including a voice mode and one or more non-voice modes, the multimodal application operatively coupled to the ASR engine, includes: rendering, by the multimodal application, a portion of the digital media; receiving, by the multimodal application, a voice utterance from a user; determining, by the multimodal application using the ASR engine, a recognition result in dependence upon the voice utterance and the grammar; identifying, by the multimodal application, supplemental content for the rendered portion of the digital media in dependence upon the recognition result; and rendering, by the multimodal application, the supplemental content.

Owner:NUANCE COMM INC

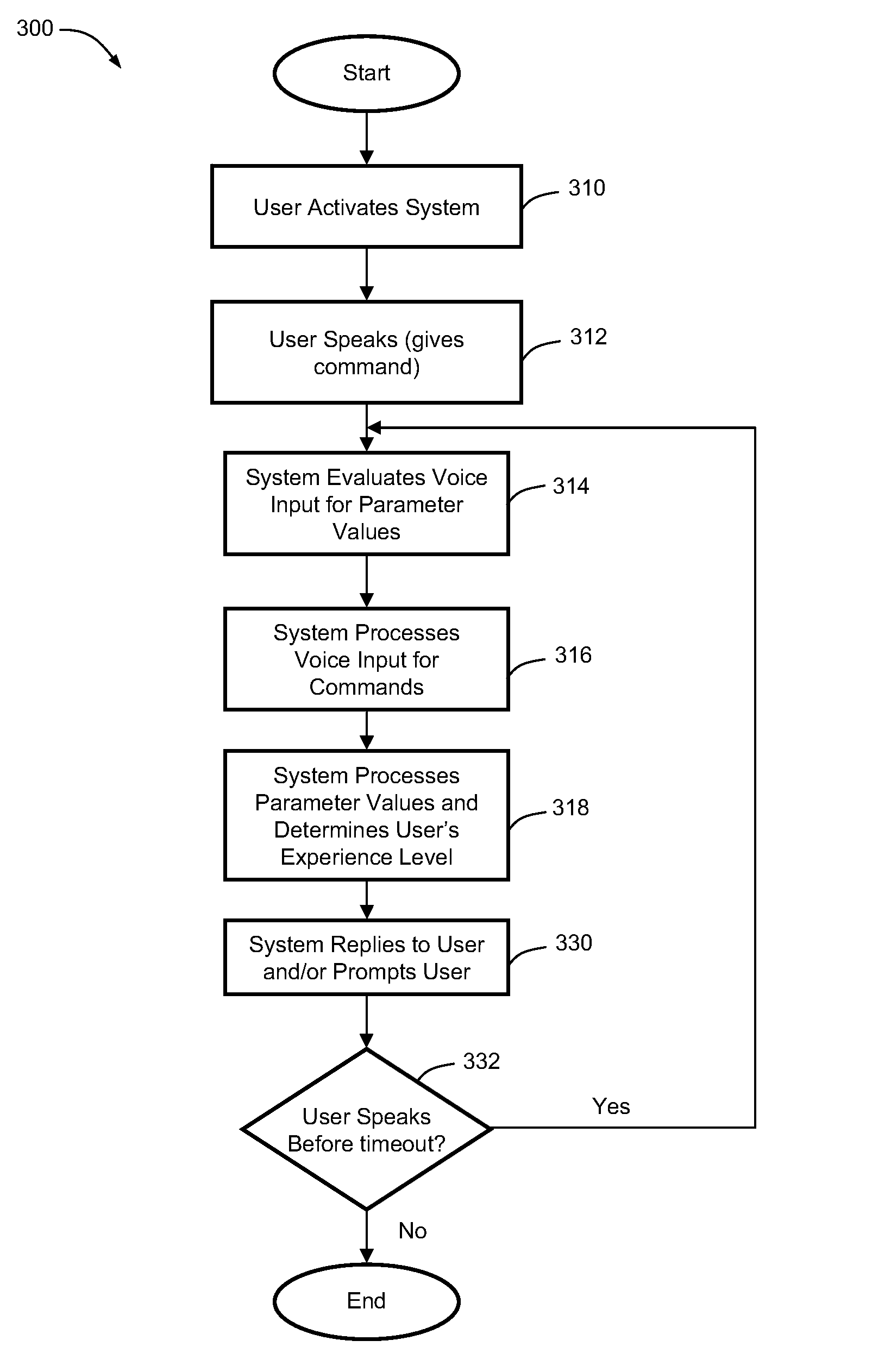

Automatically adapting user guidance in automated speech recognition

A speech recognition method includes receiving input speech from a user, processing the input speech to obtain at least one parameter value, and determining an experience level of the user using the parameter value(s). The method can also include prompting the user based upon the determined experience level of the user to assist the user in delivering speech commands.

Owner:GENERA MOTORS LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com