Patents

Literature

2699 results about "Speech processing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

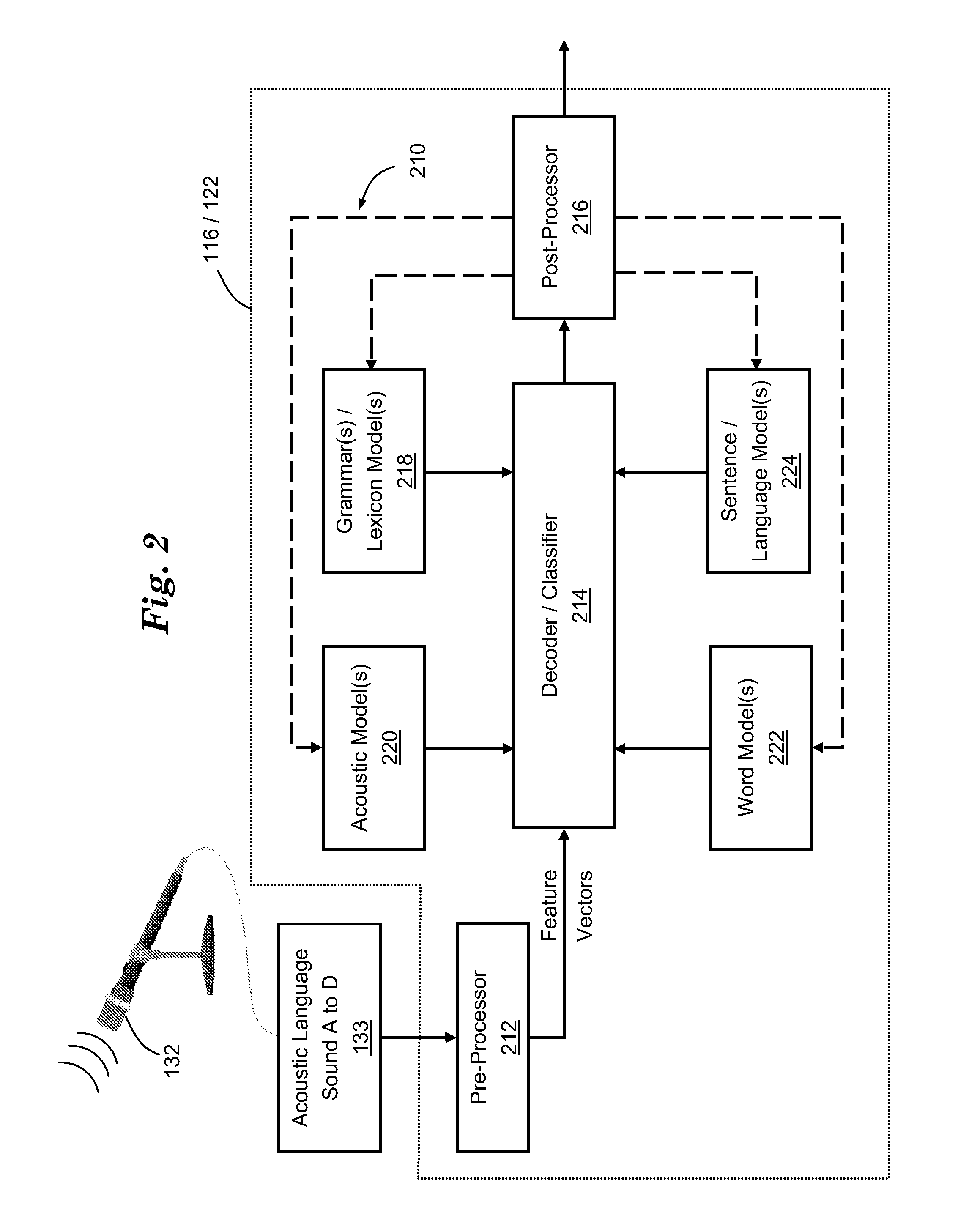

Speech processing is the study of speech signals and the processing methods of signals. The signals are usually processed in a digital representation, so speech processing can be regarded as a special case of digital signal processing, applied to speech signals. Aspects of speech processing includes the acquisition, manipulation, storage, transfer and output of speech signals. The input is called speech recognition and the output is called speech synthesis.

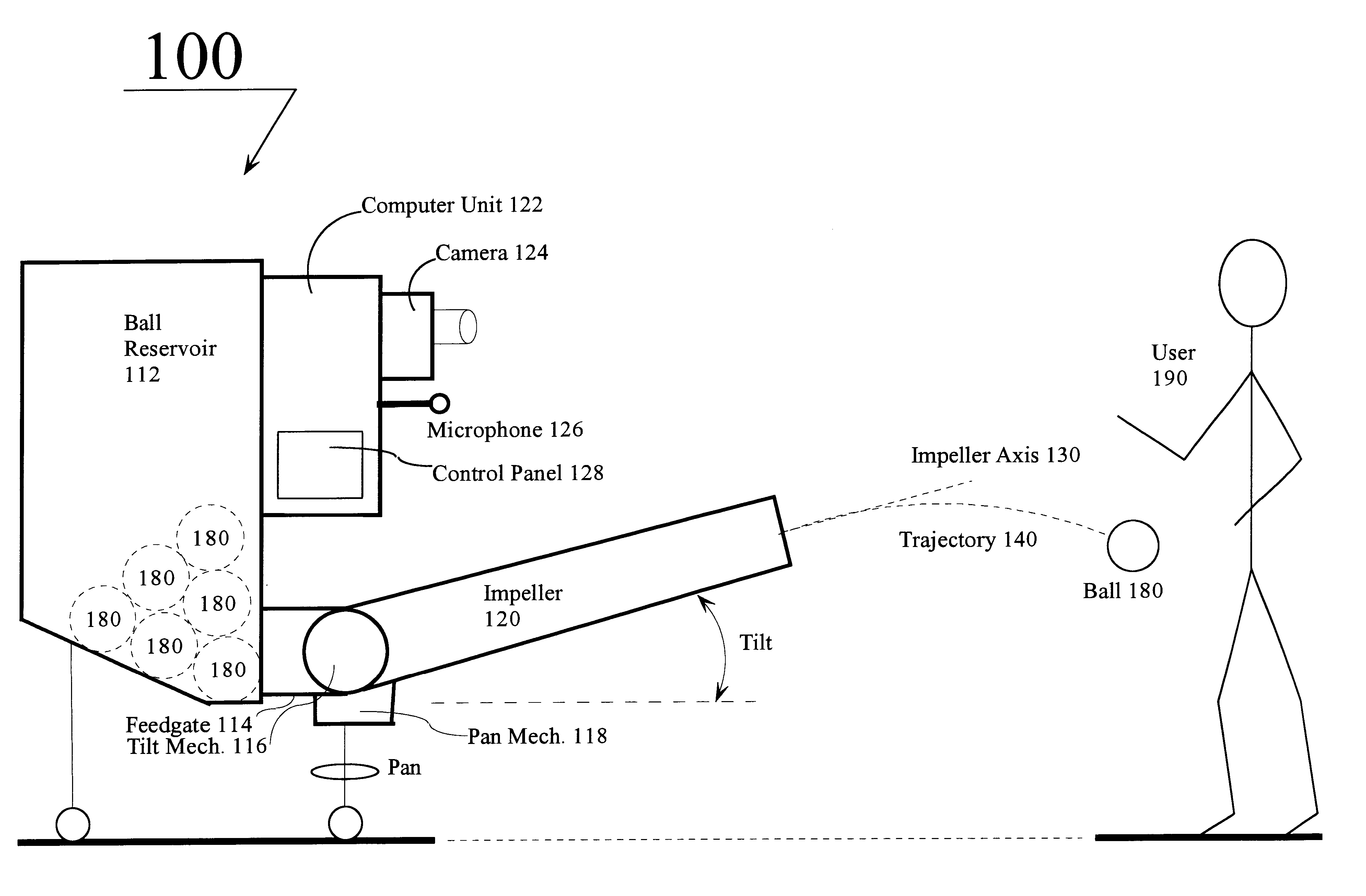

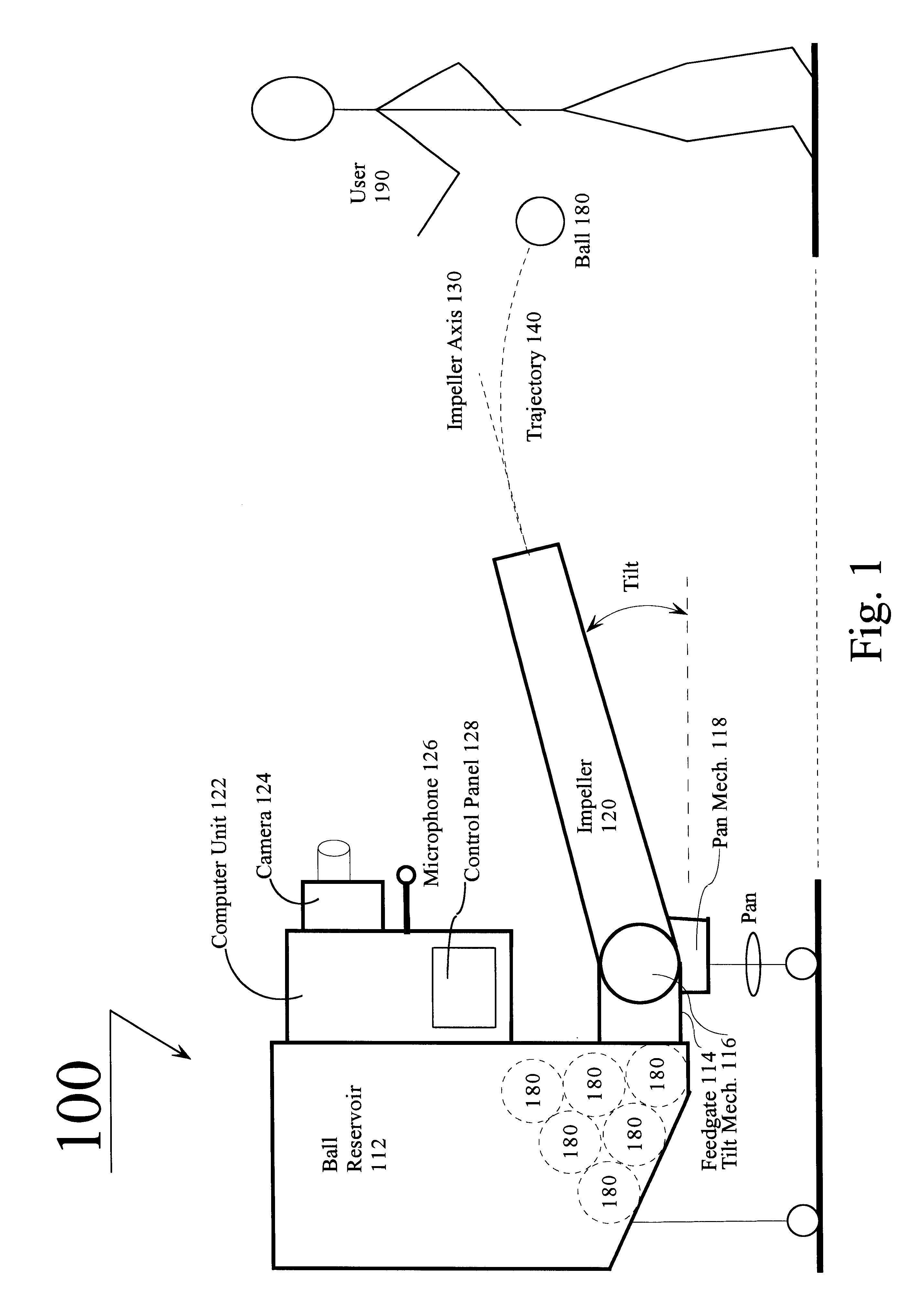

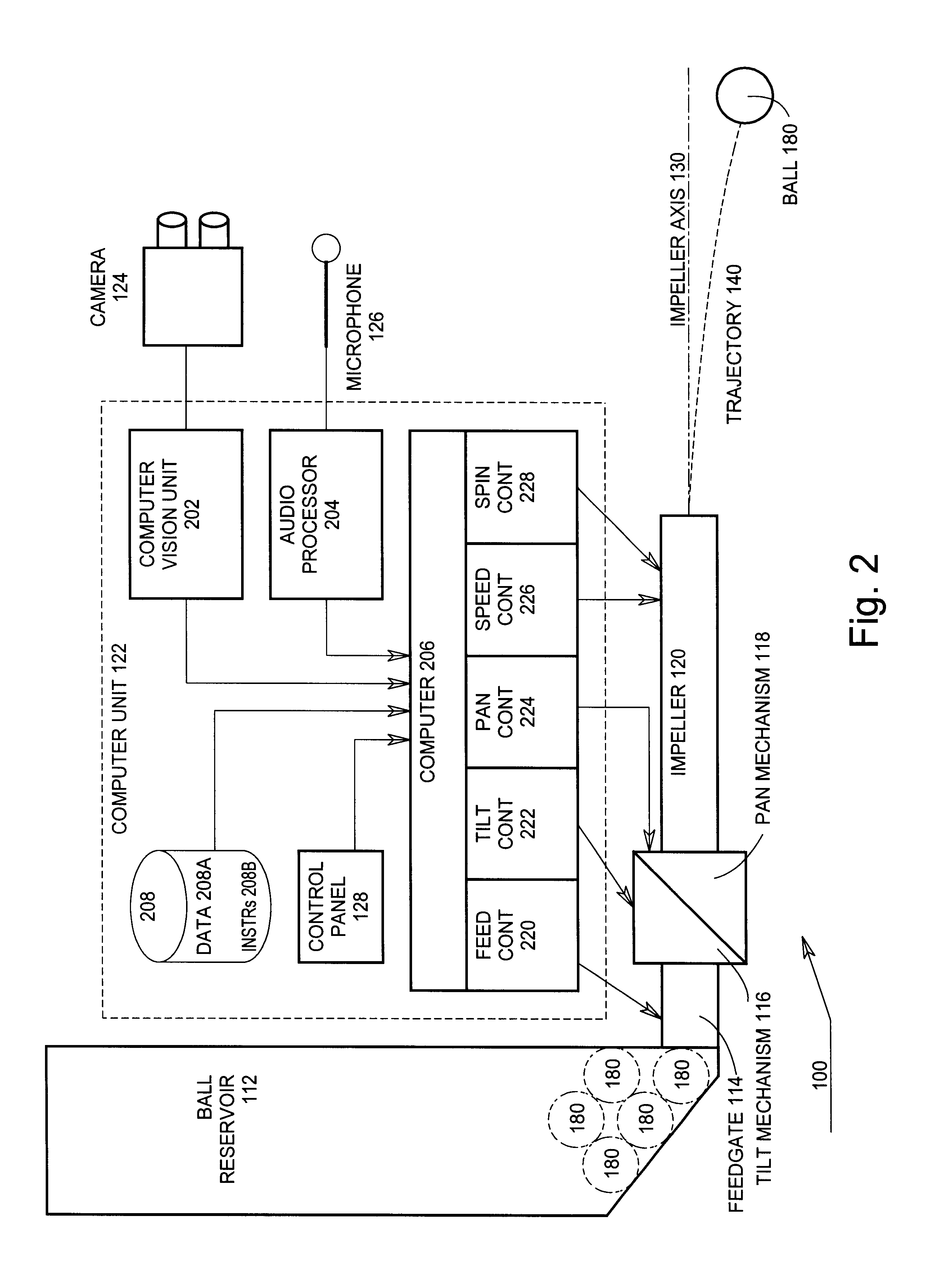

Ball throwing assistant

Owner:KONINK PHILIPS ELECTRONICS NV

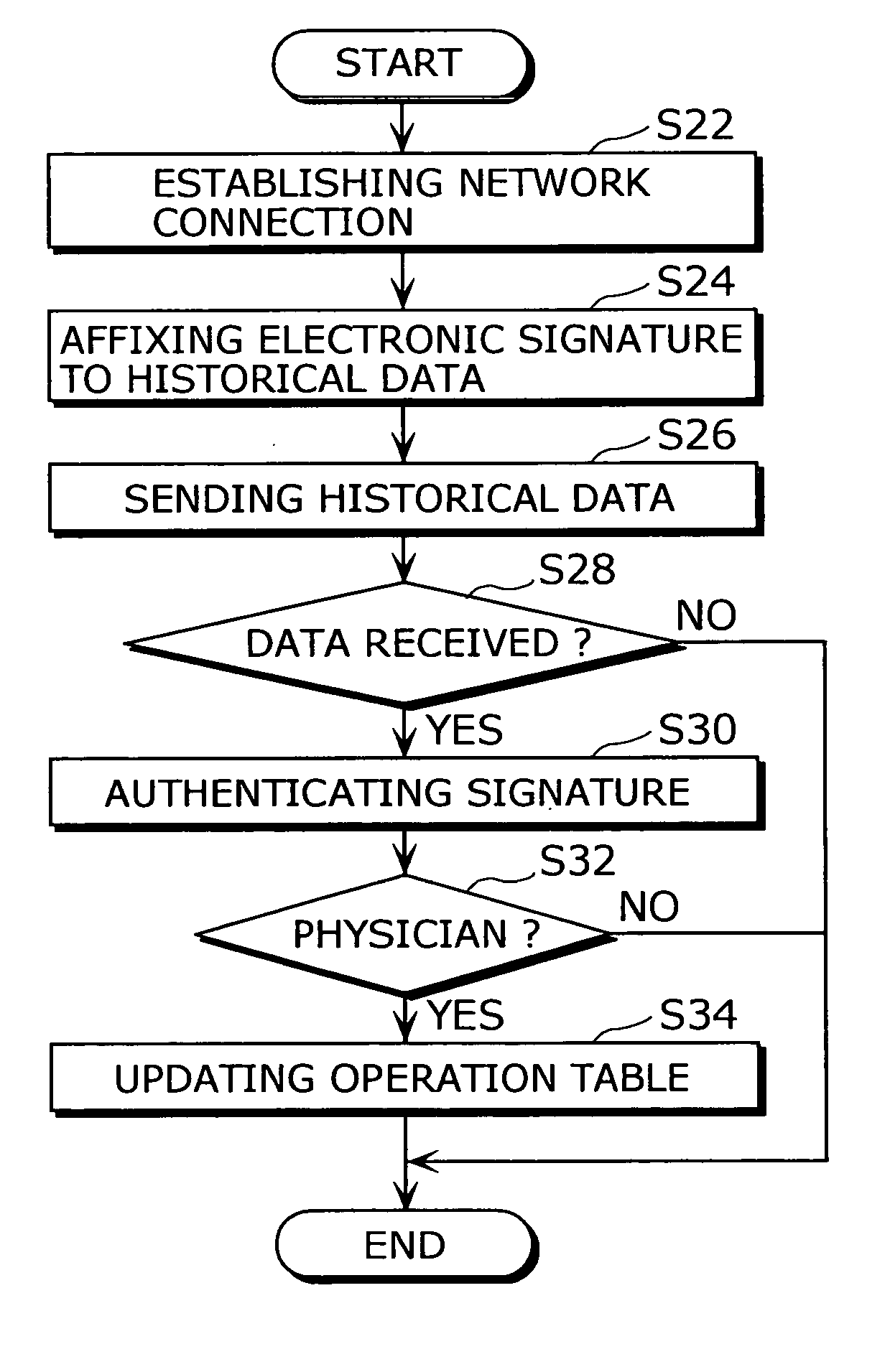

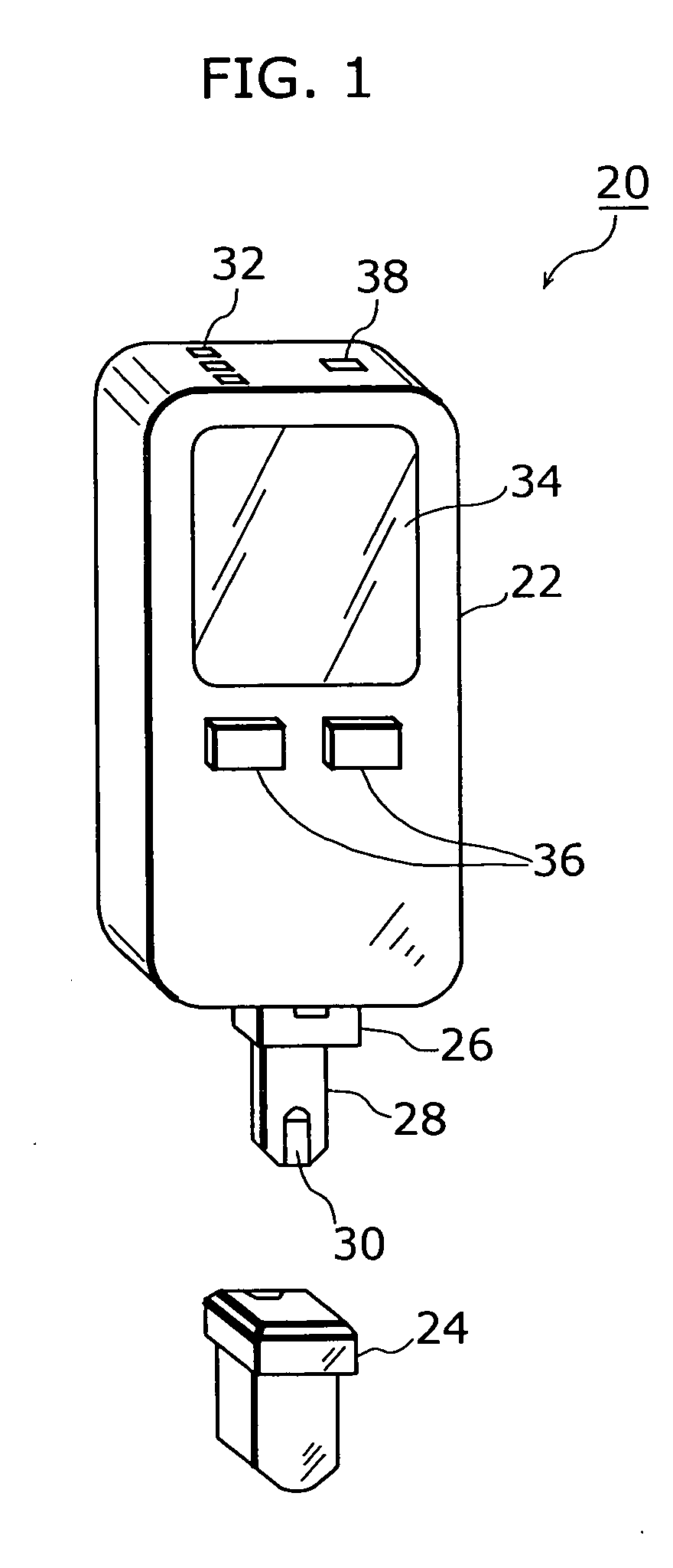

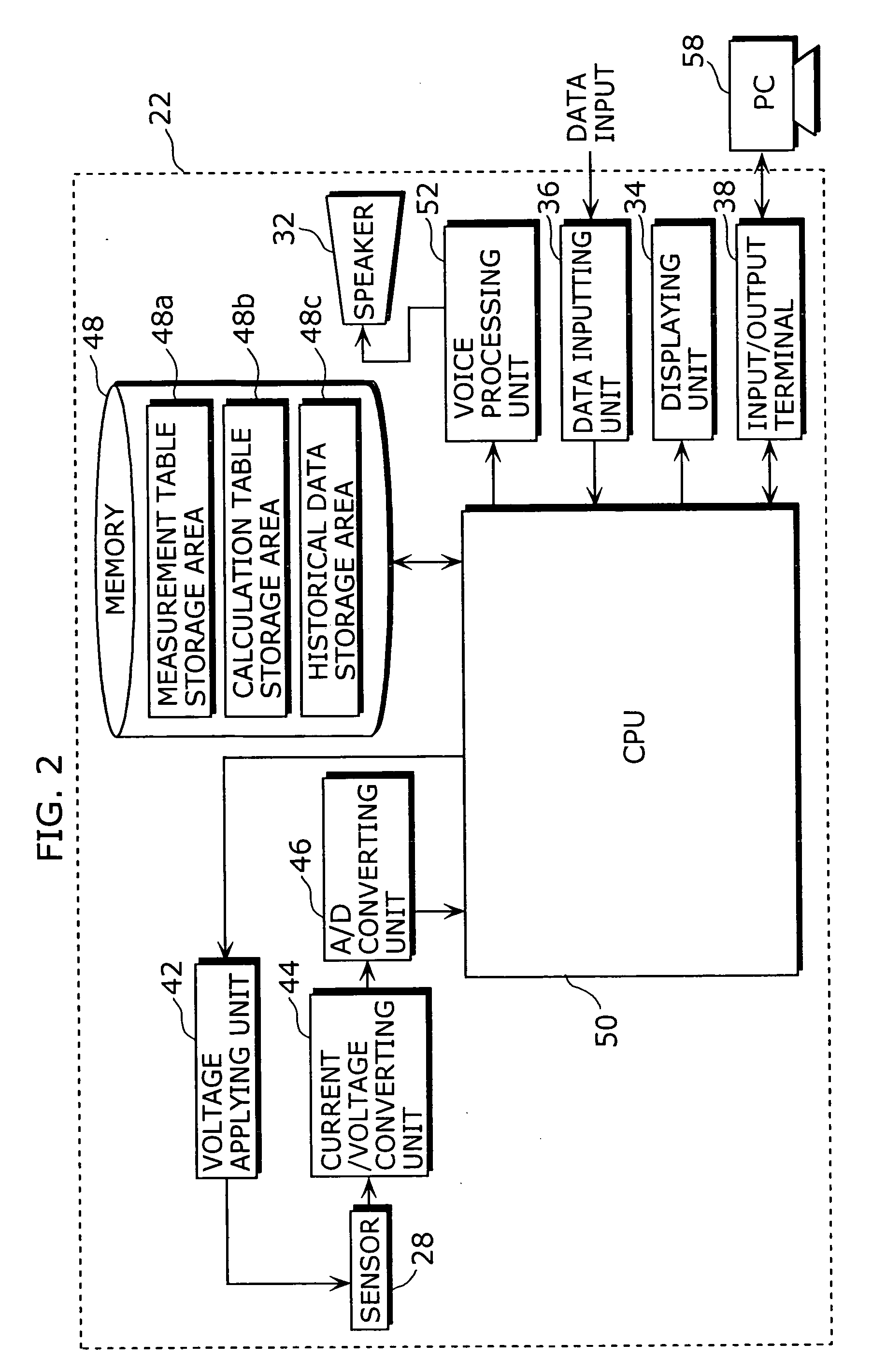

Dosage determination supporting device, injector, and health management supporting system

InactiveUS20050177398A1Improve reliabilityImprove portabilityData processing applicationsInfusion syringesSupporting systemMedicine

A dosage determination supporting apparatus, which is able to precisely determine a dosage in accordance with the health condition of a user, is provided with: a sensor (28) for measuring the blood sugar level obtained from the blood of the user; a memory (48) for storing an operation table showing a correspondence between the blood sugar level and the amount of insulin; a CPU (50) for calculating the amount of insulin corresponding to the blood sugar level, with reference to the operation table stored in the memory (48); a displaying unit (34) for displaying the amount of insulin; and a voice processing unit (52) for performing the voice processing on the amount of insulin and outputting the voice through a speaker (32).

Owner:PANASONIC CORP

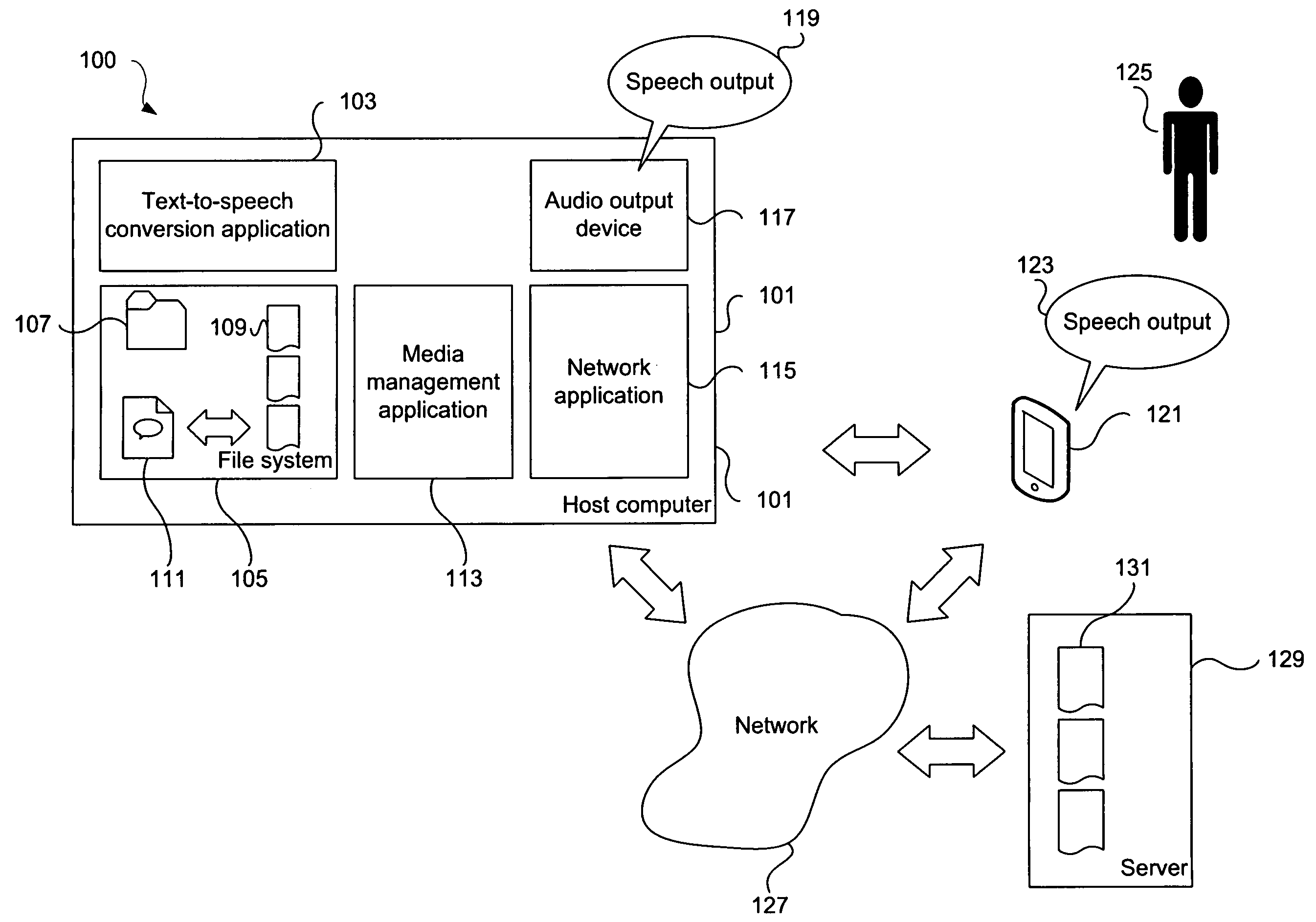

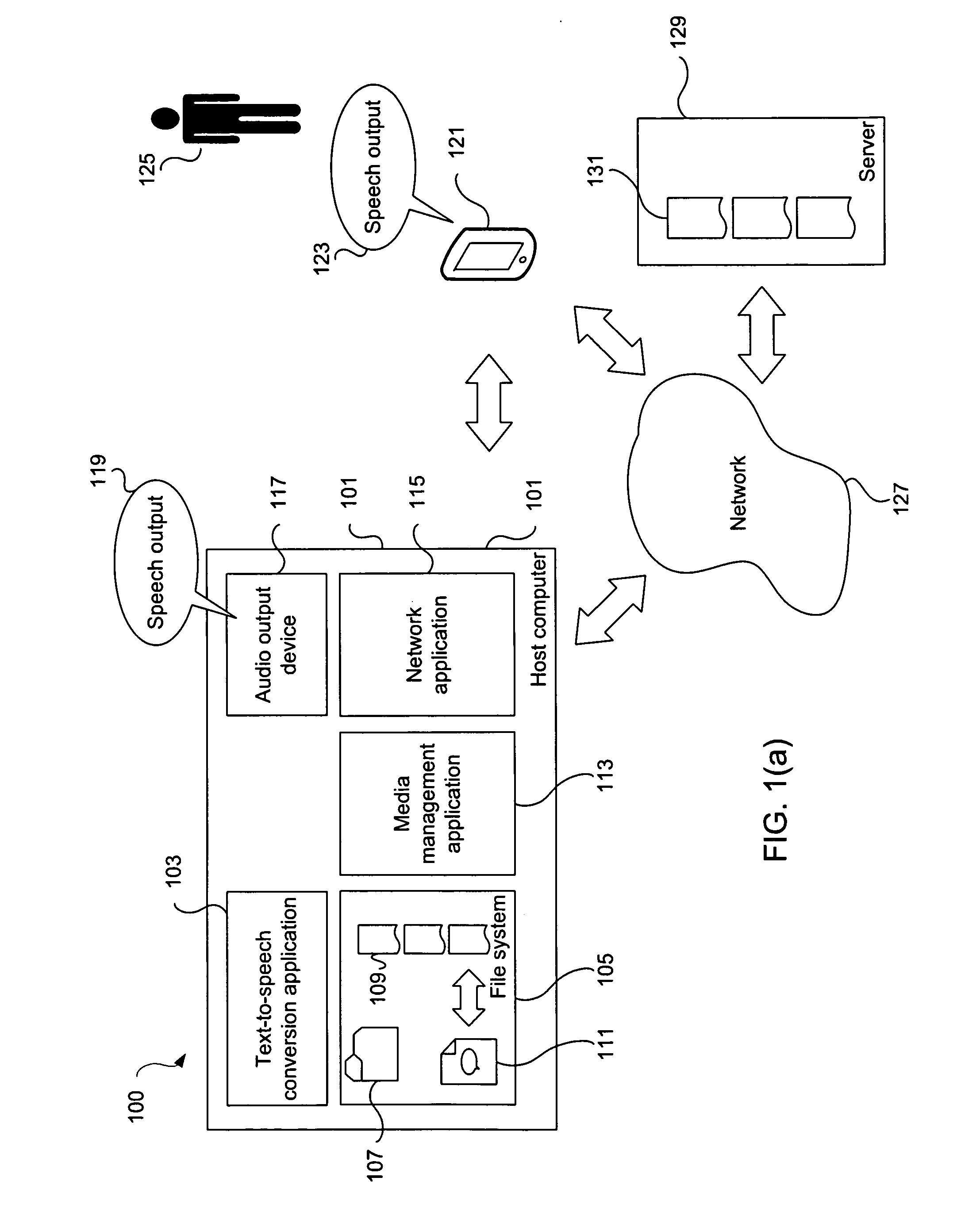

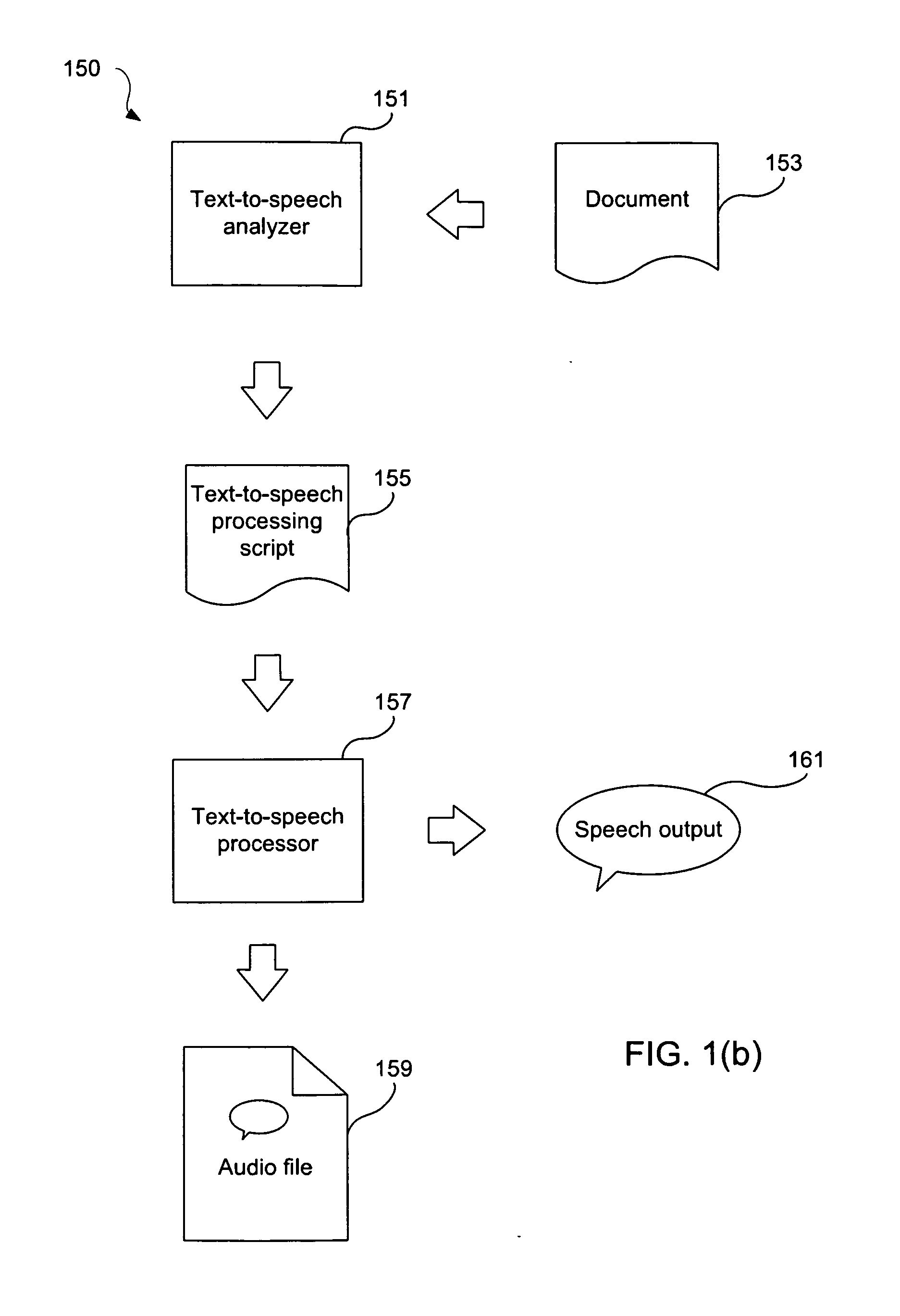

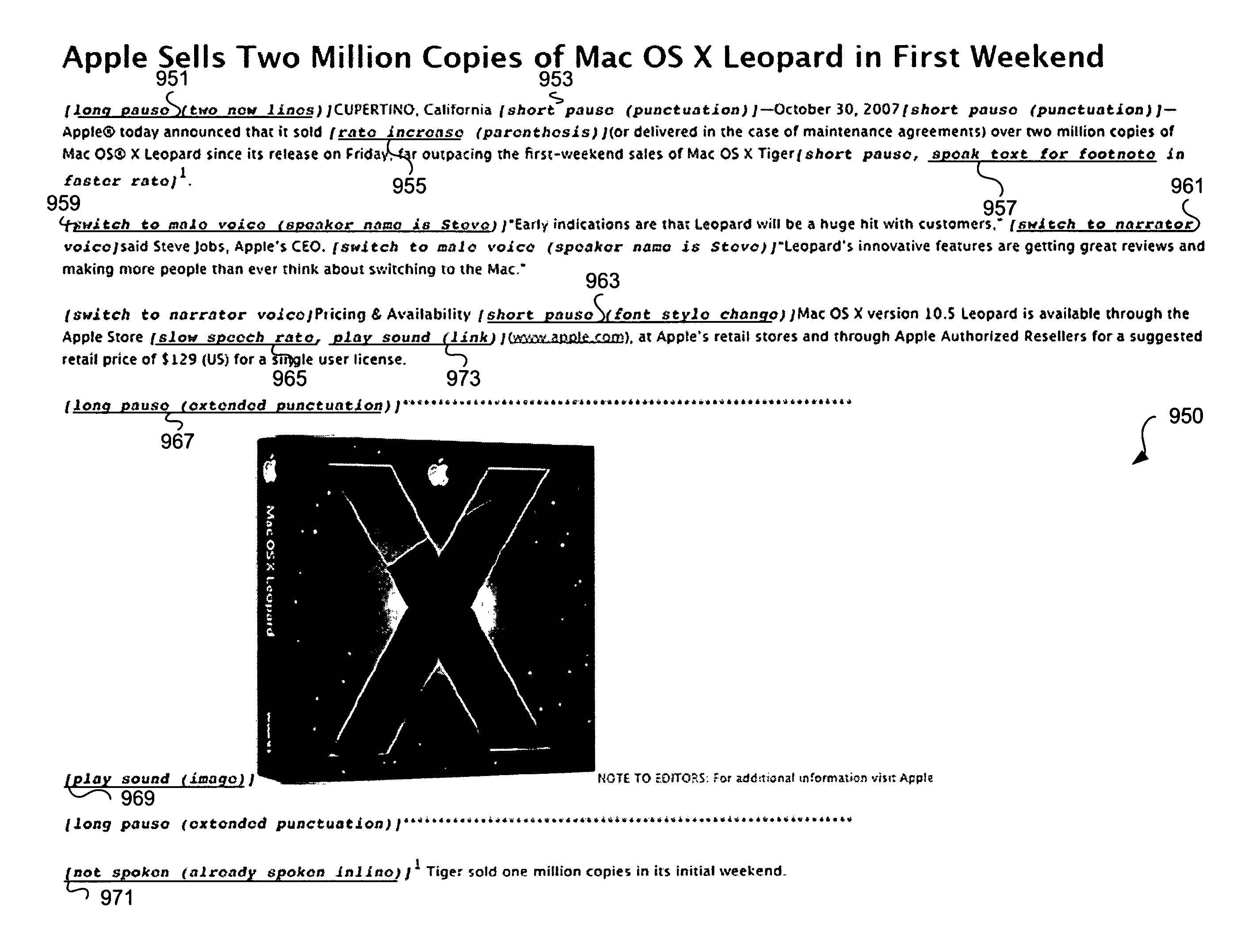

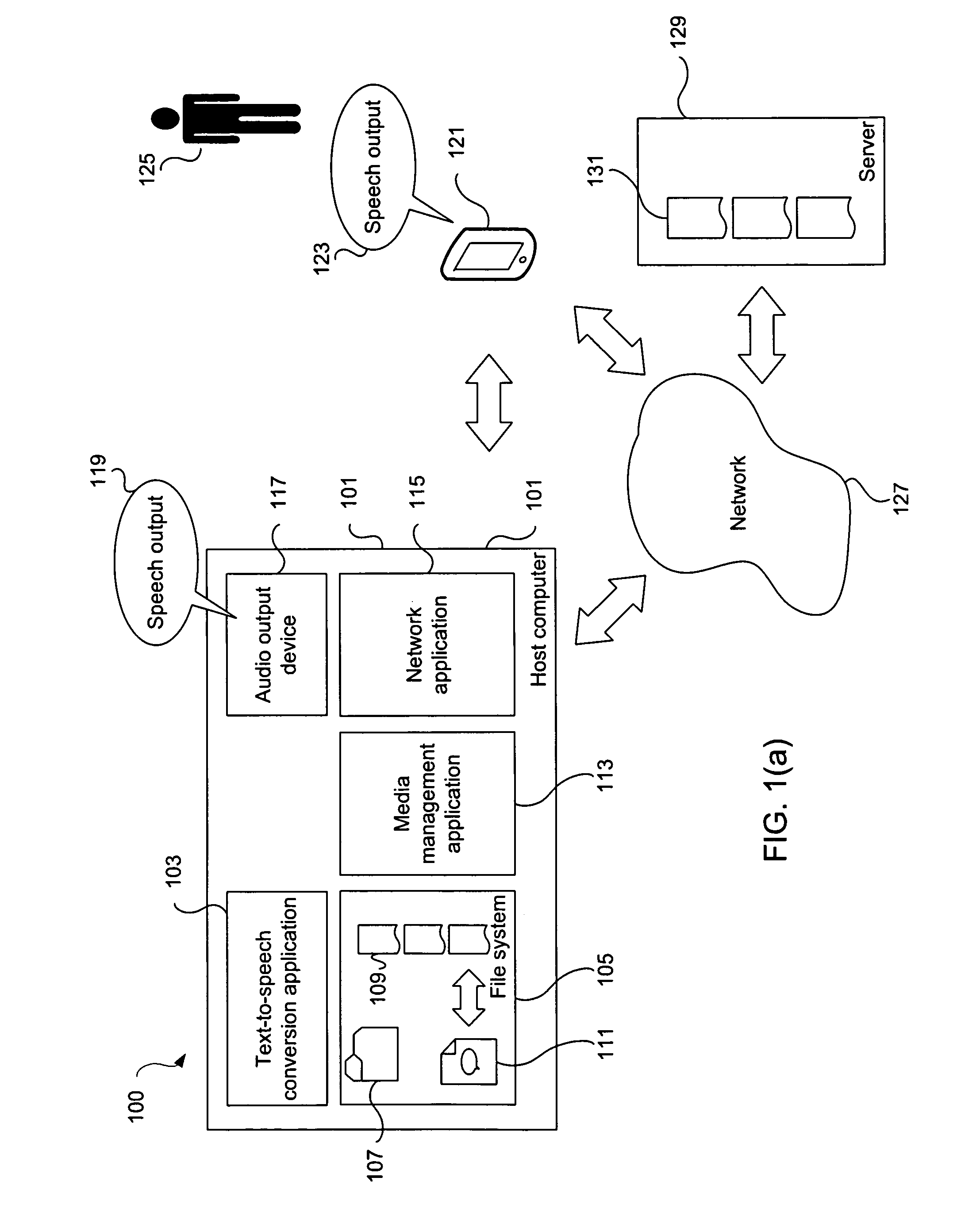

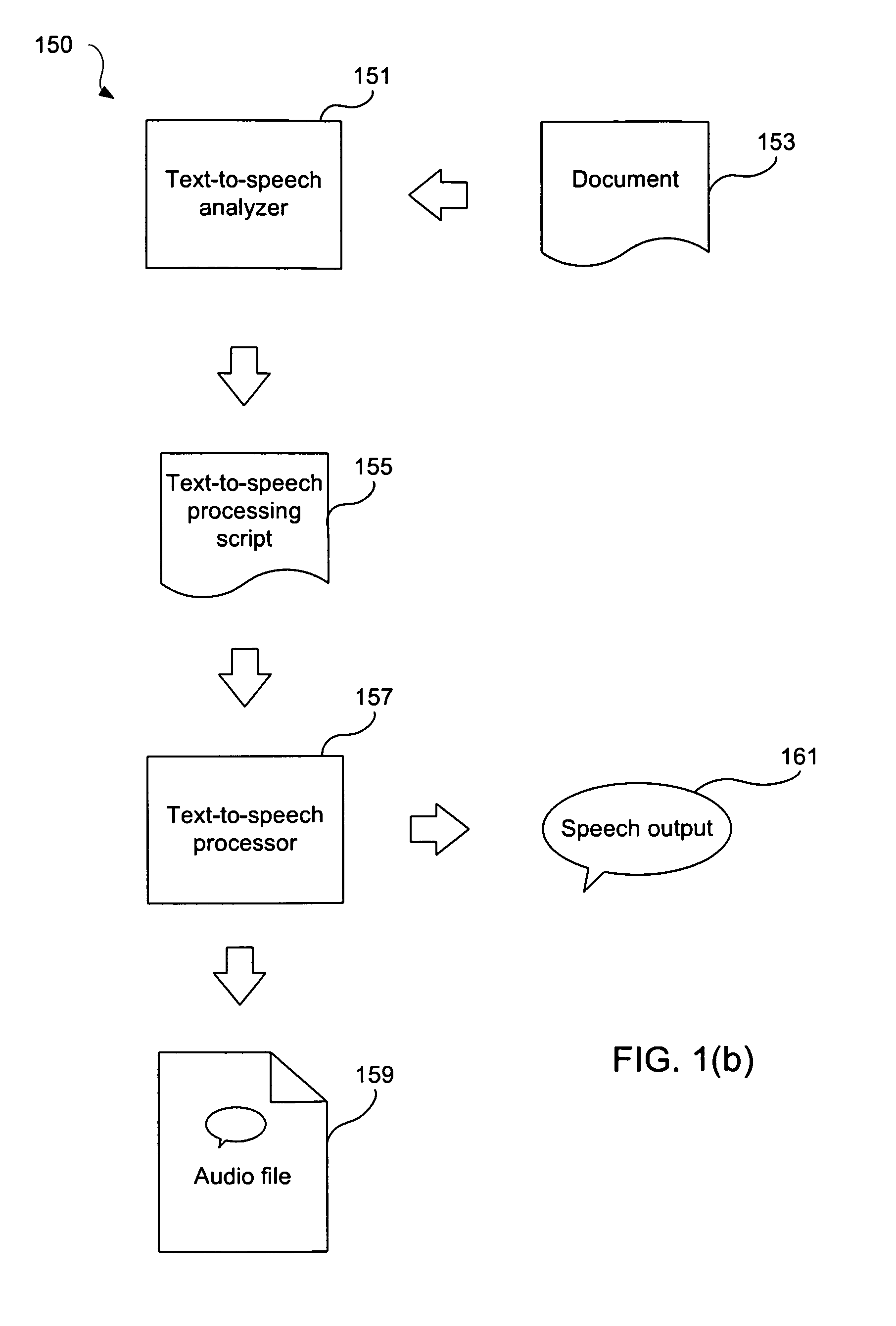

Intelligent Text-to-Speech Conversion

ActiveUS20090254345A1Improved text-to-speech processingSpeed up the processNatural language data processingSpeech synthesisElectronic documentDocumentation

Techniques for improved text-to-speech processing are disclosed. The improved text-to-speech processing can convert text from an electronic document into an audio output that includes speech associated with the text as well as audio contextual cues. One aspect provides audio contextual cues to the listener when outputting speech (spoken text) pertaining to a document. The audio contextual cues can be based on an analysis of a document prior to a text-to-speech conversion. Another aspect can produce an audio summary for a file. The audio summary for a document can thereafter be presented to a user so that the user can hear a summary of the document without having to process the document to produce its spoken text via text-to-speech conversion.

Owner:APPLE INC

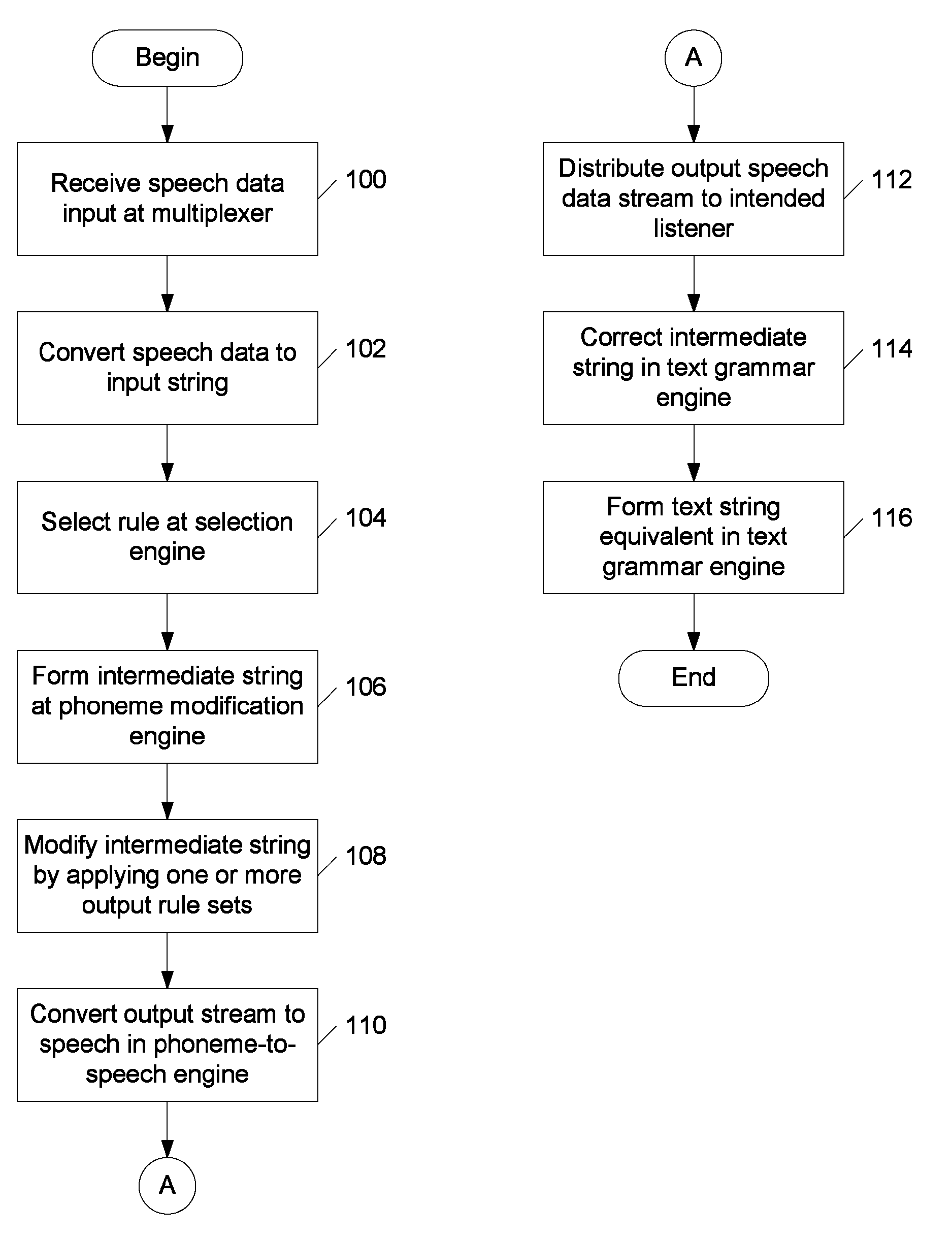

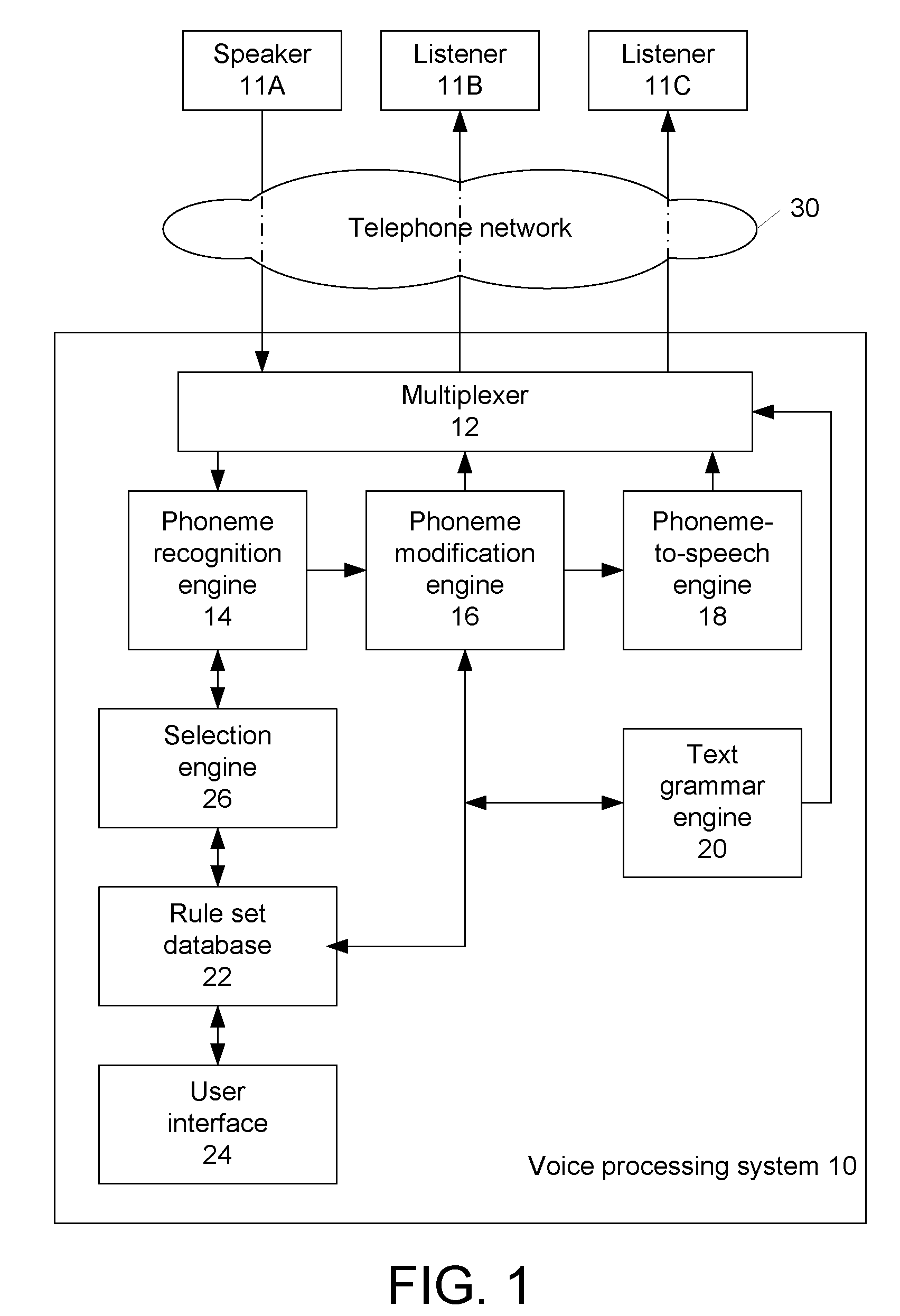

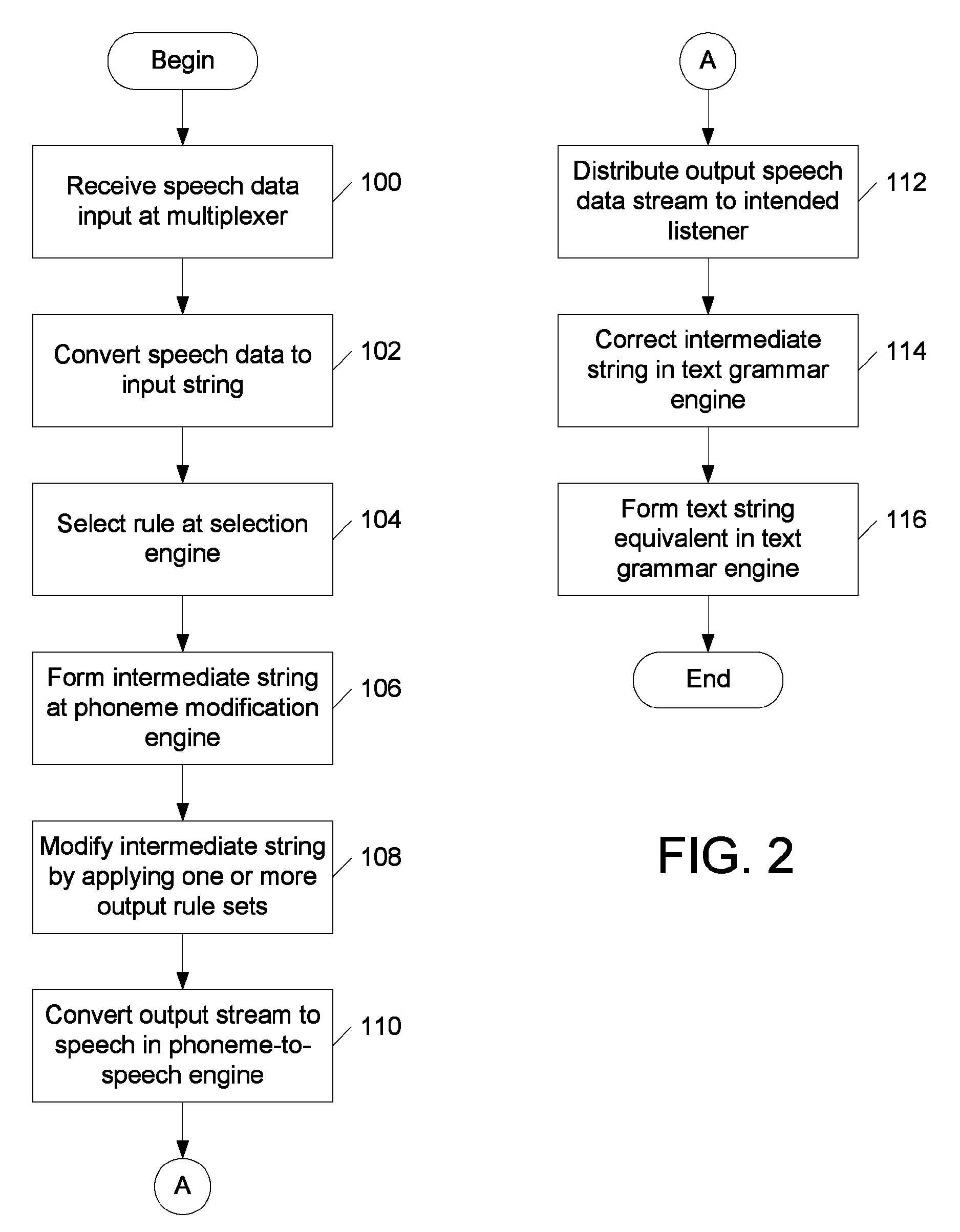

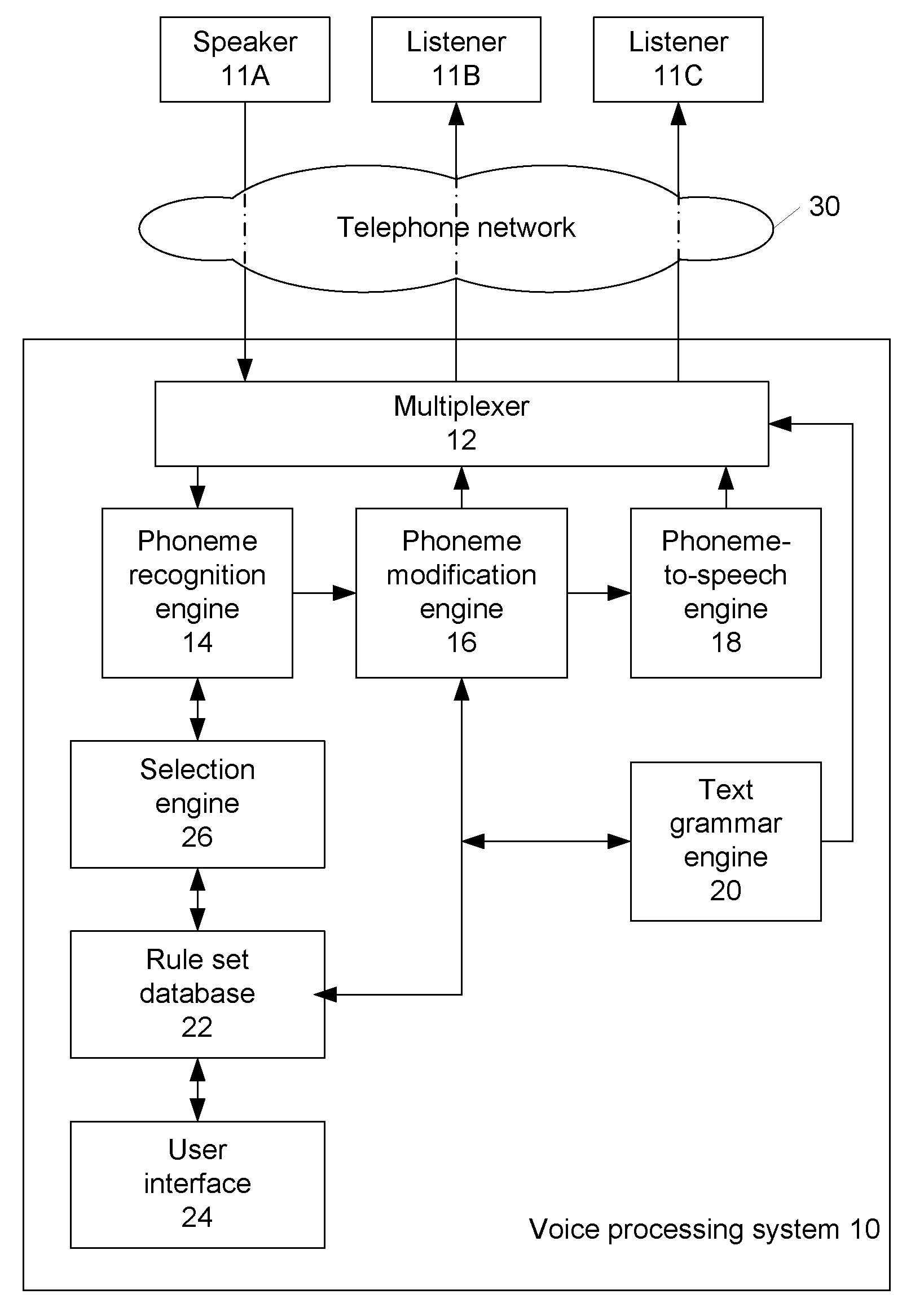

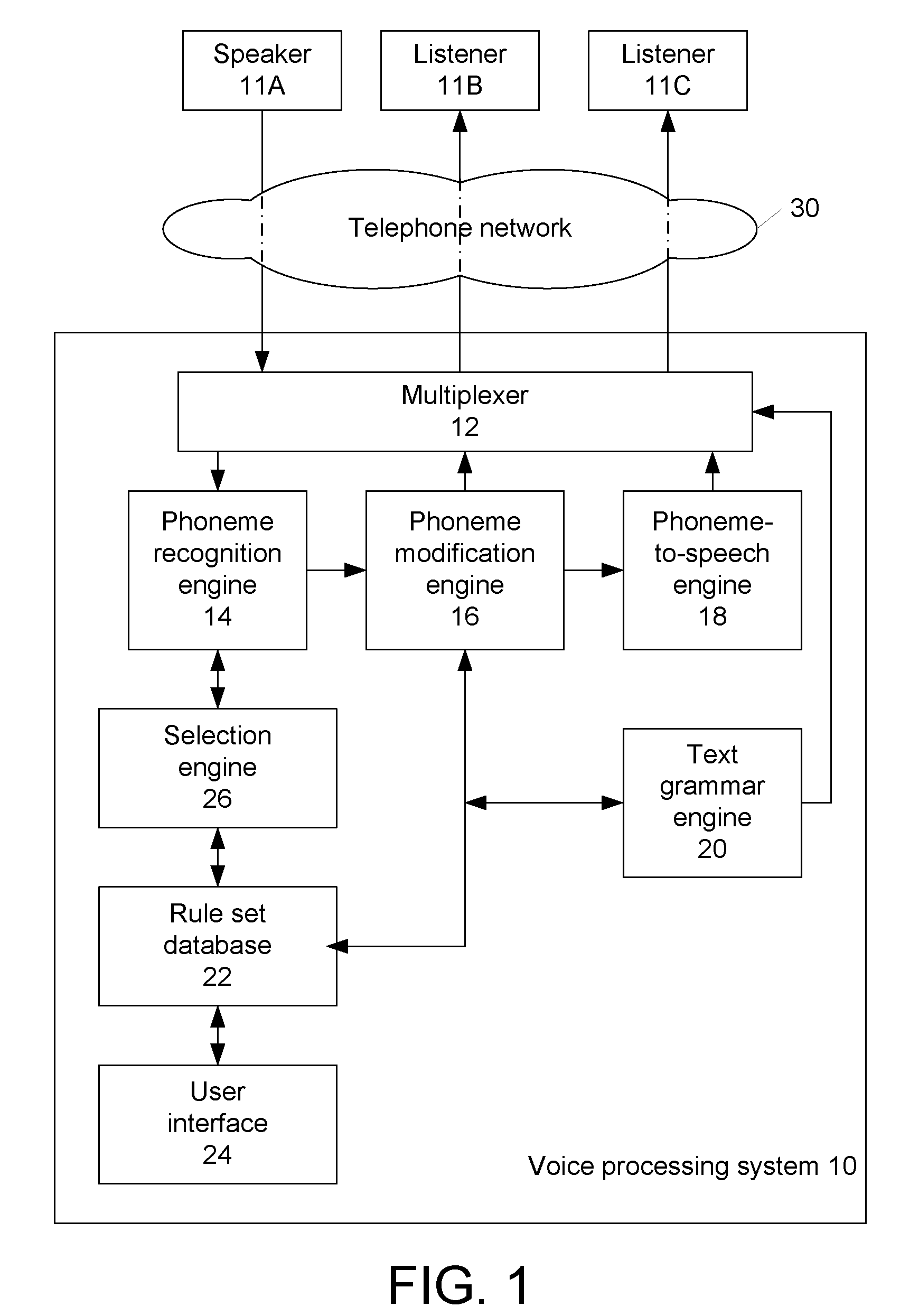

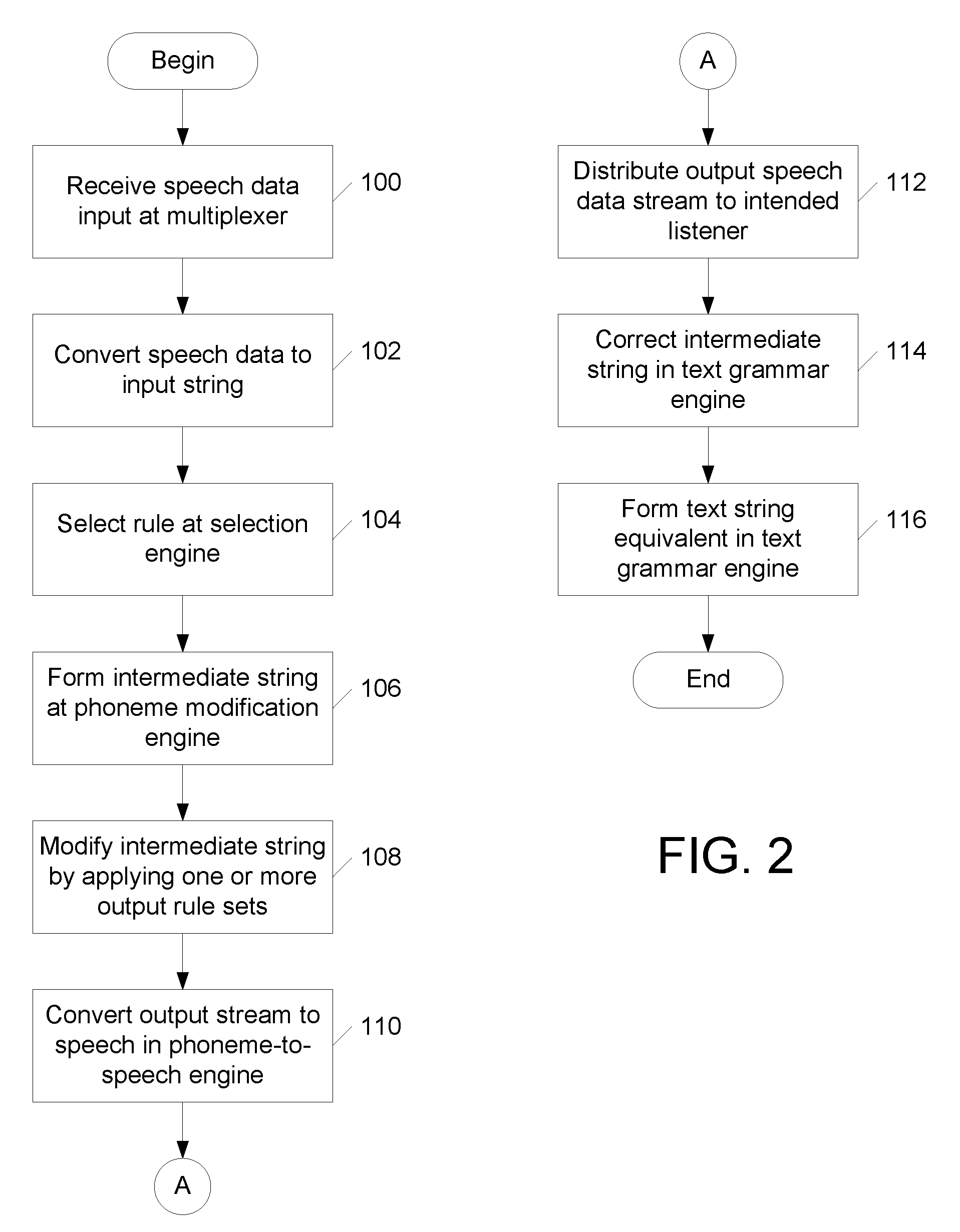

Phonetic decoding and concatentive speech synthesis

A speech processing system includes a multiplexer that receives speech data input as part of a conversation turn in a conversation session between two or more users where one user is a speaker and each of the other users is a listener in each conversation turn. A speech recognizing engine converts the speech data to an input string of acoustic data while a speech modifier forms an output string based on the input string by changing an item of acoustic data according to a rule. The system also includes a phoneme speech engine for converting the first output string of acoustic data including modified and unmodified data to speech data for output via the multiplexer to listeners during the conversation turn.

Owner:CERENCE OPERATING CO

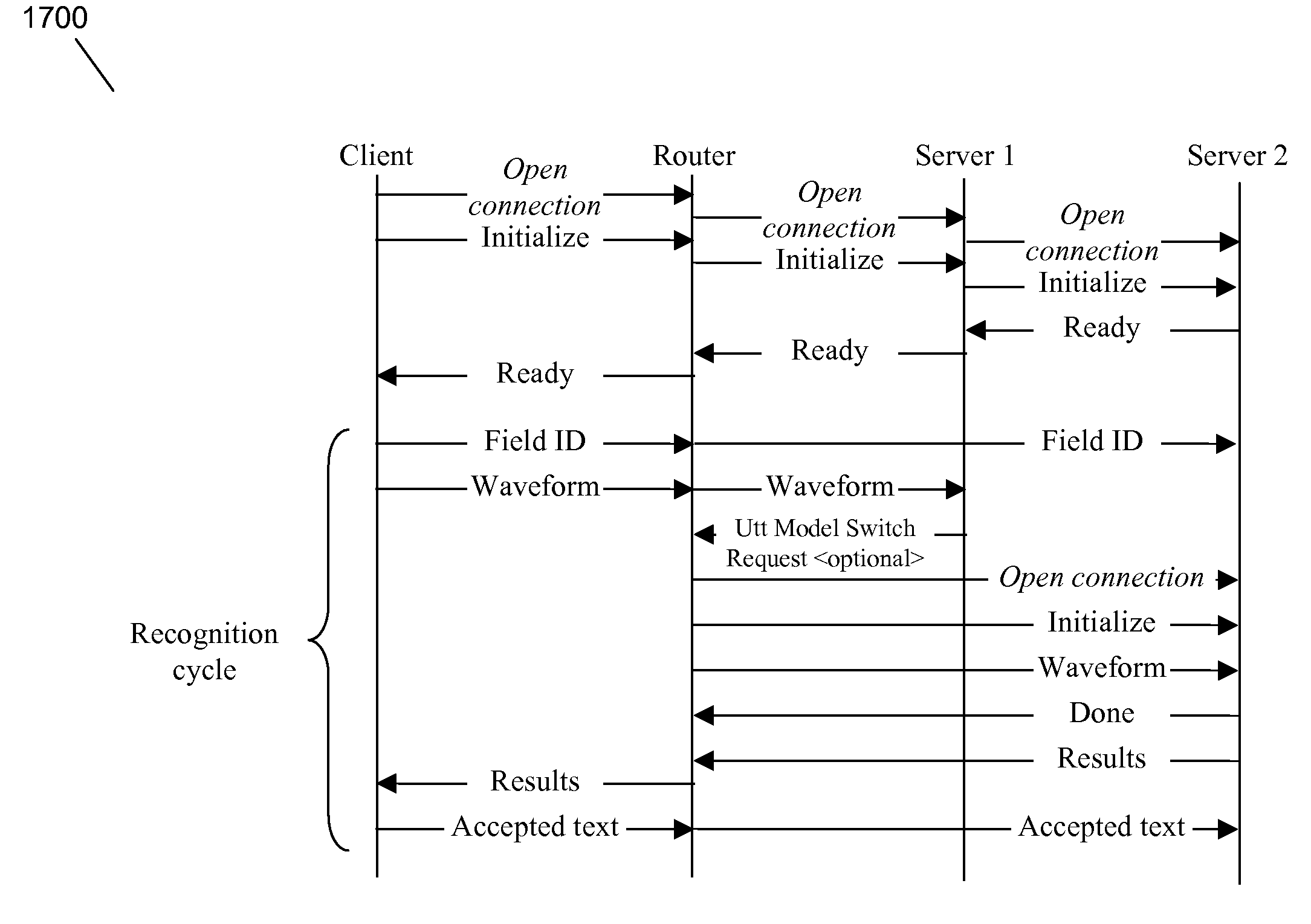

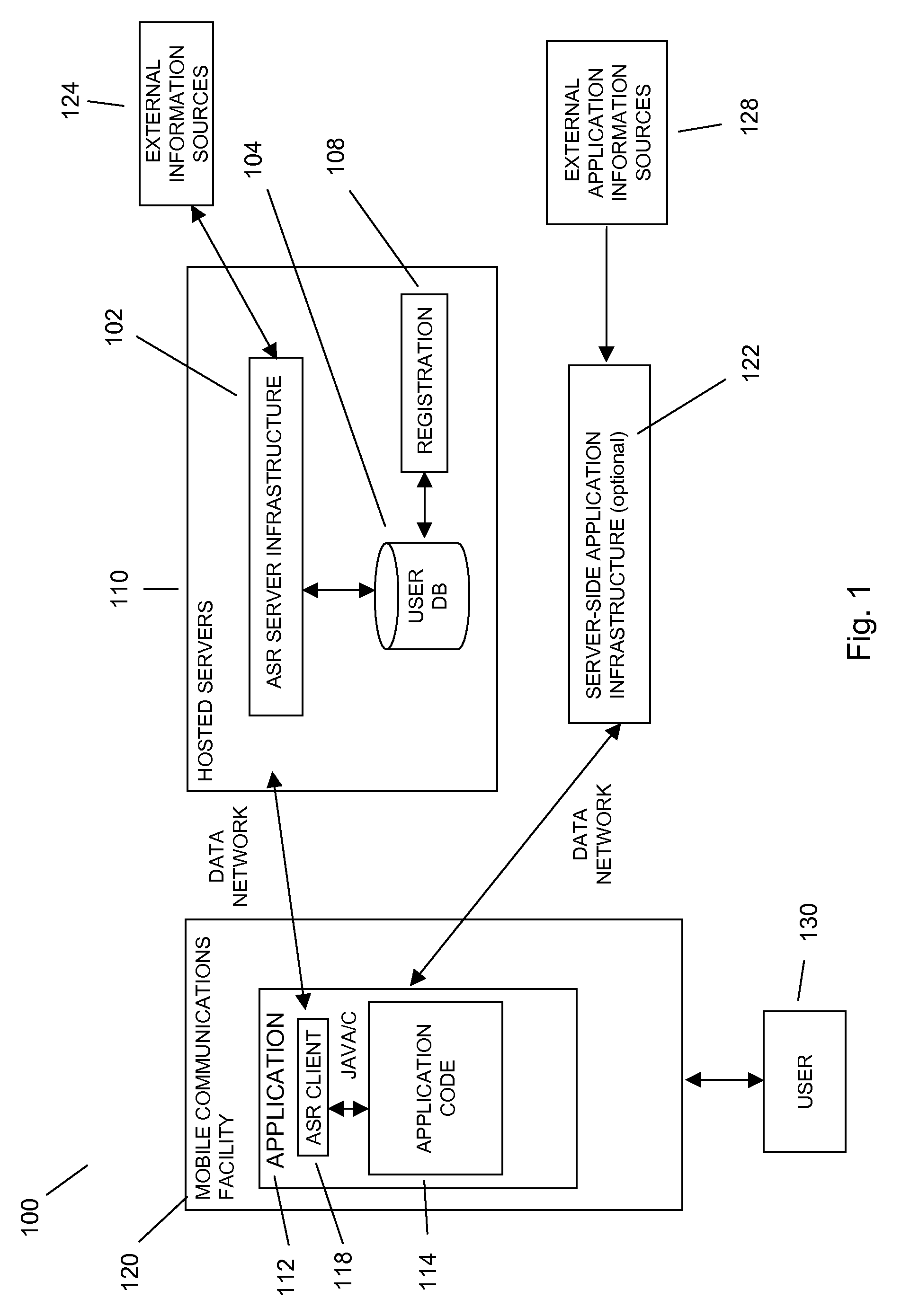

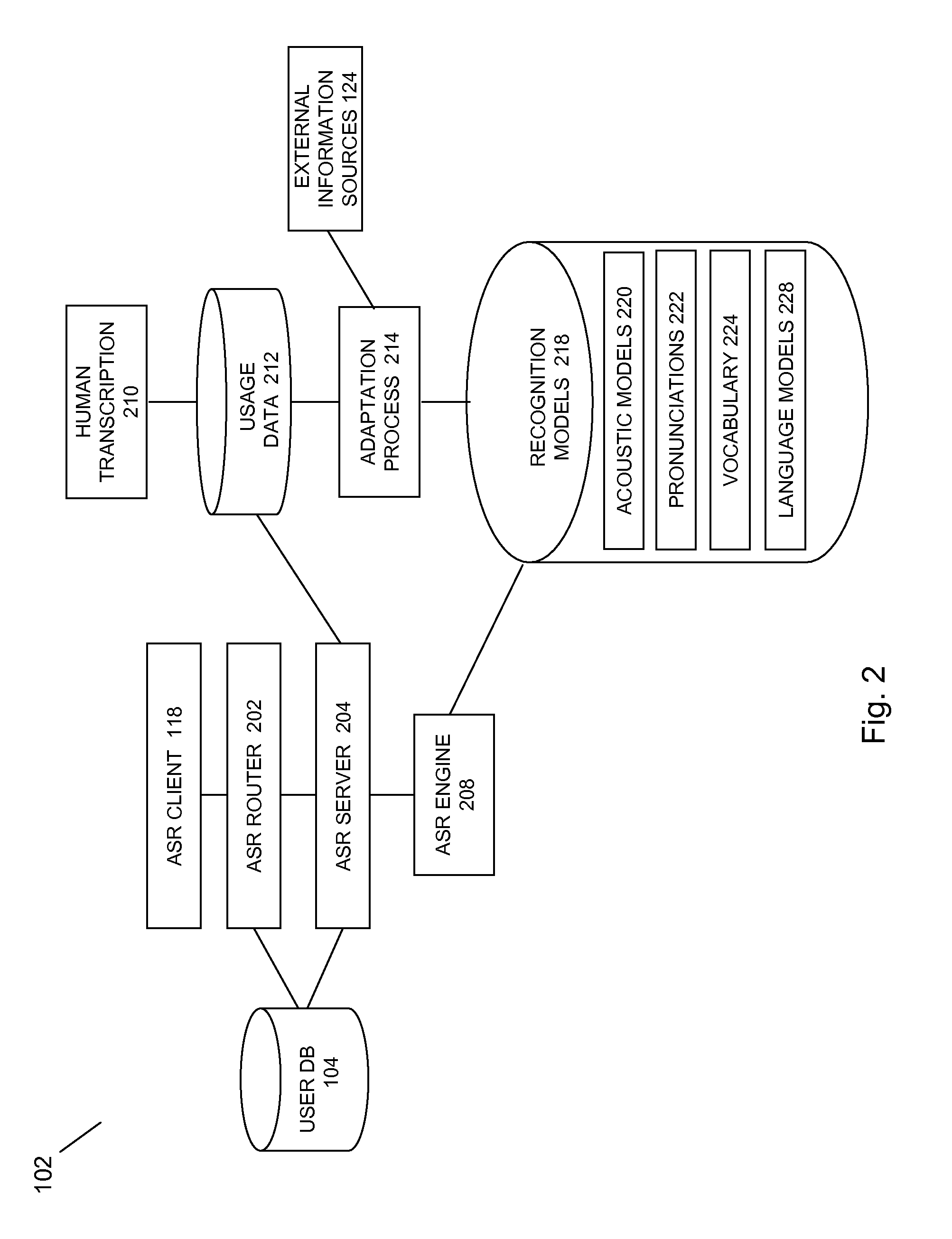

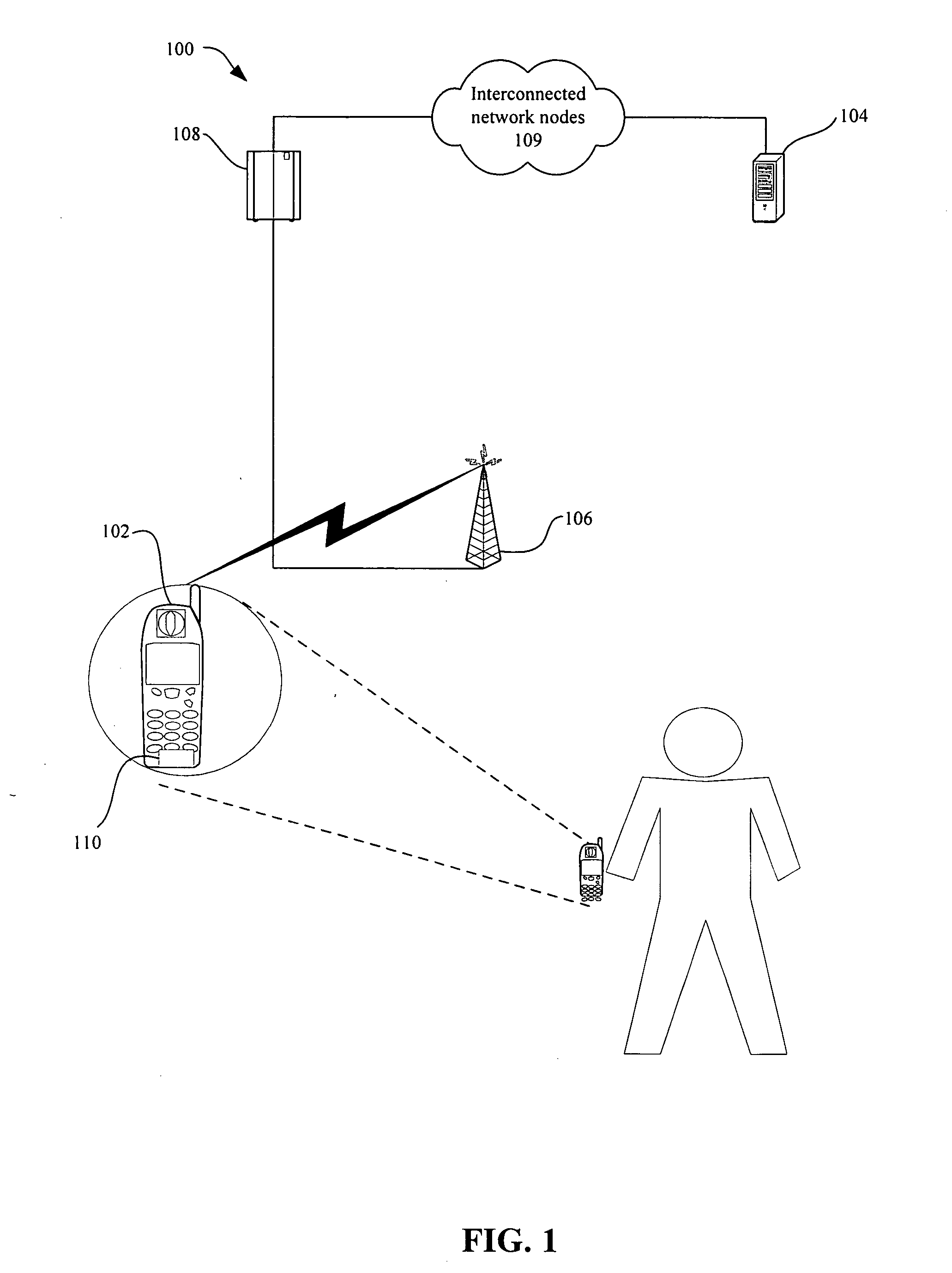

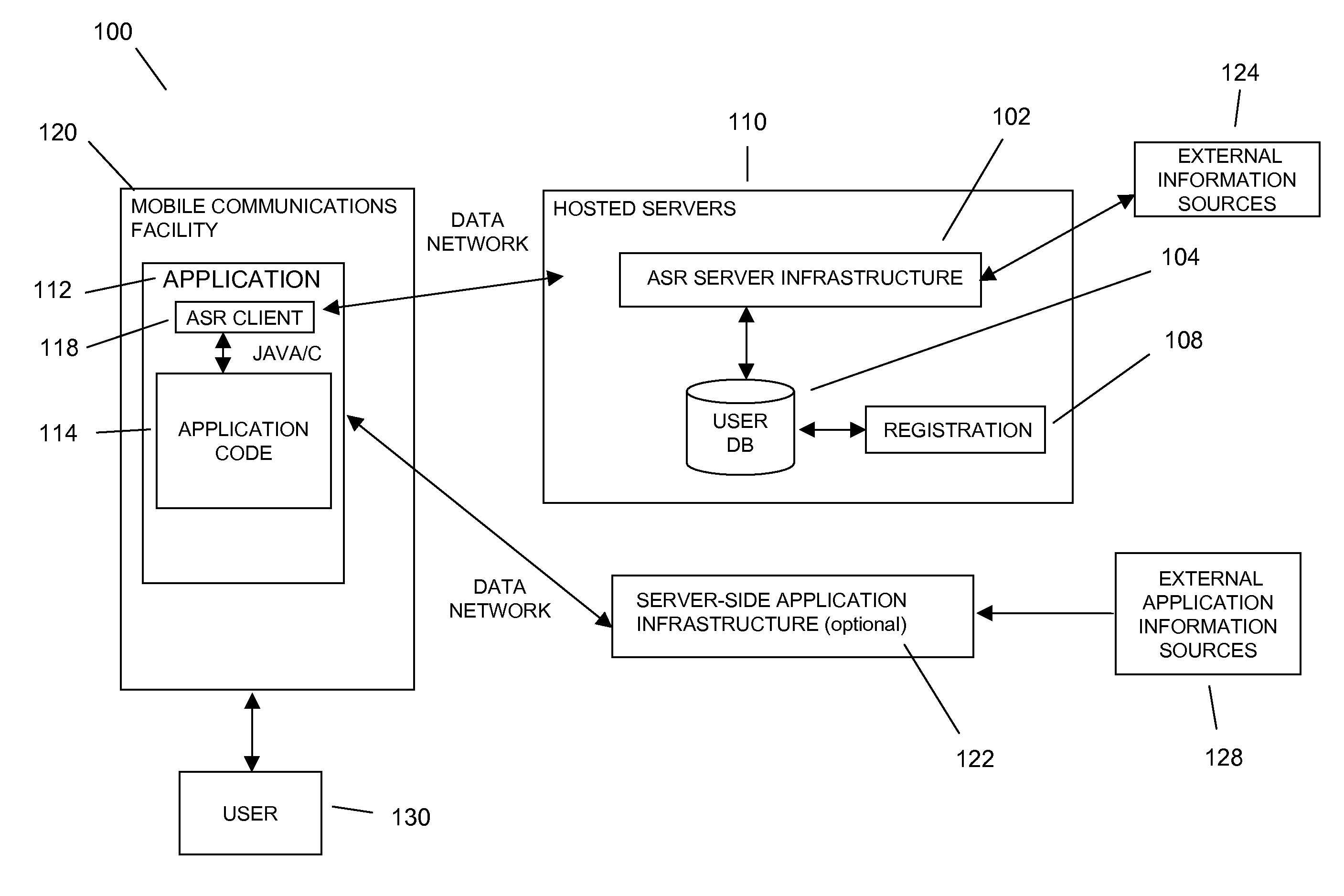

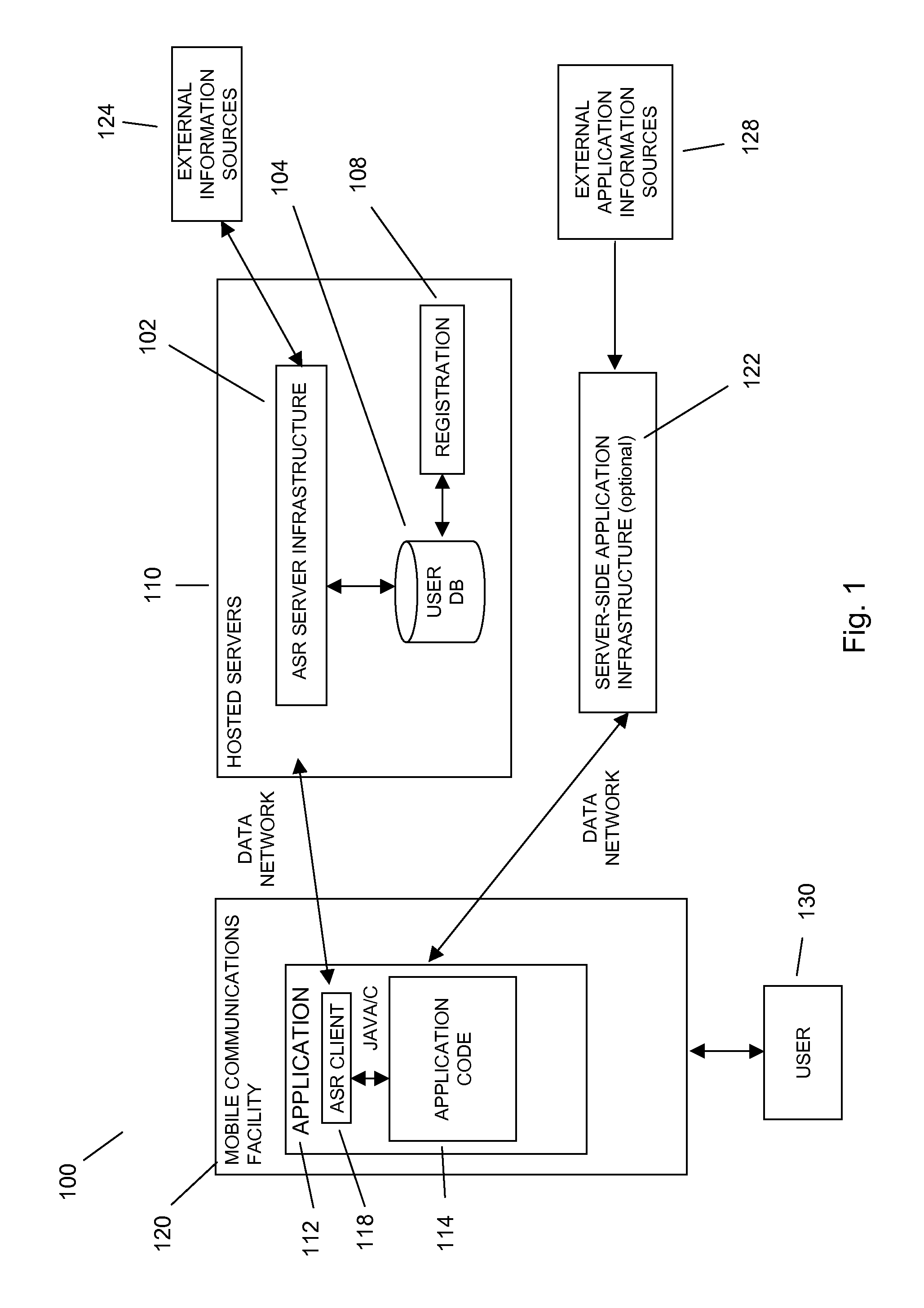

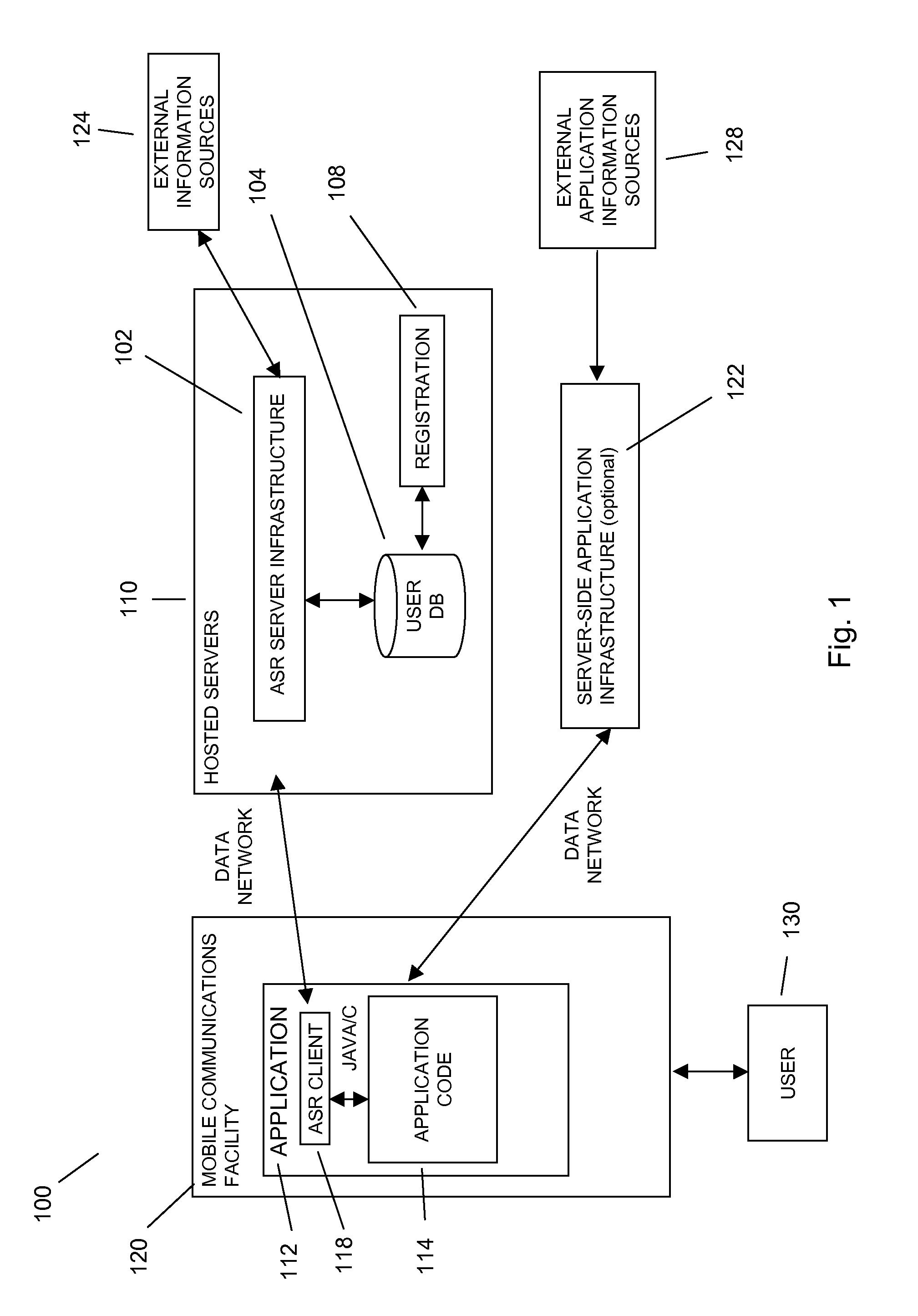

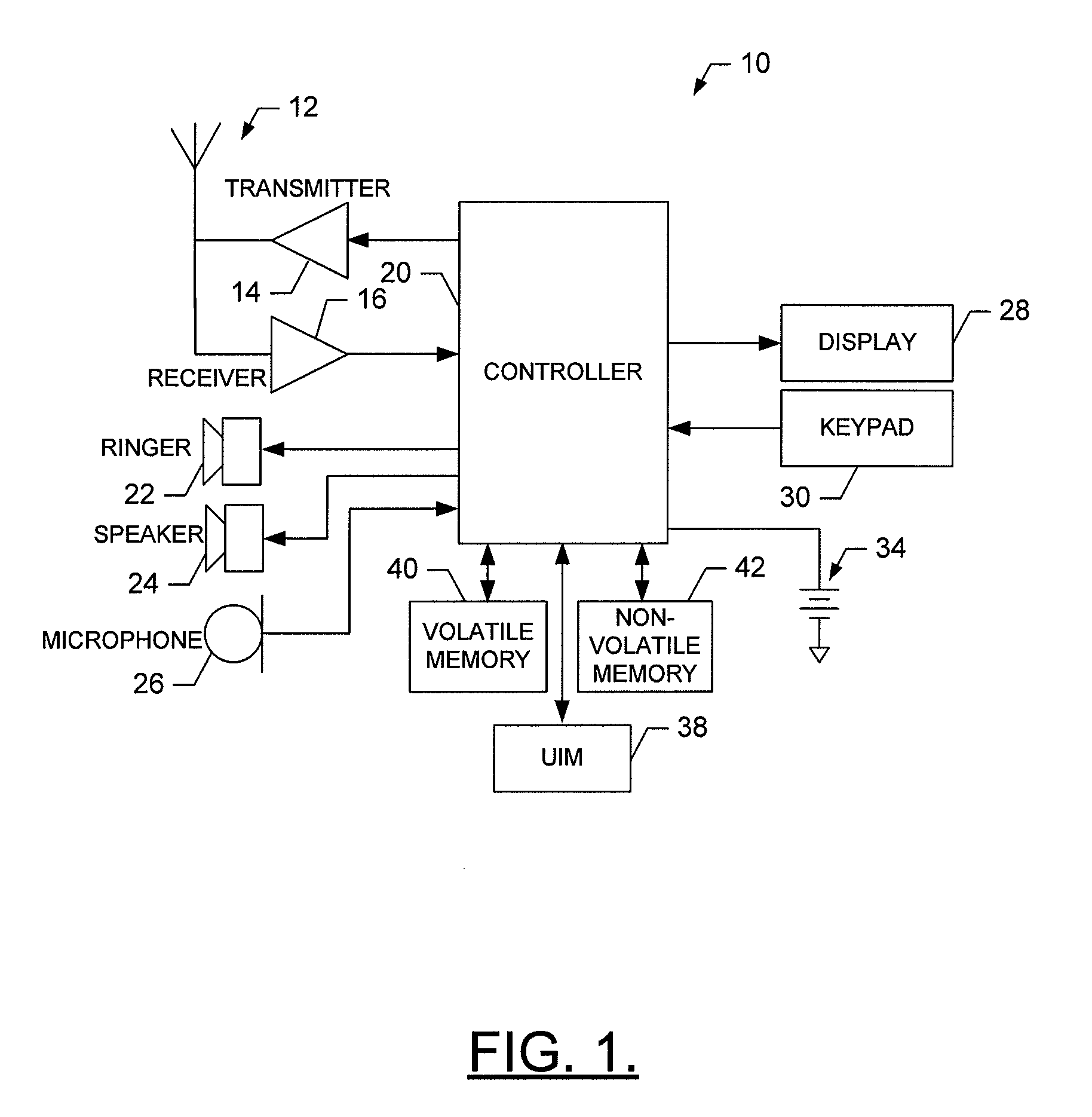

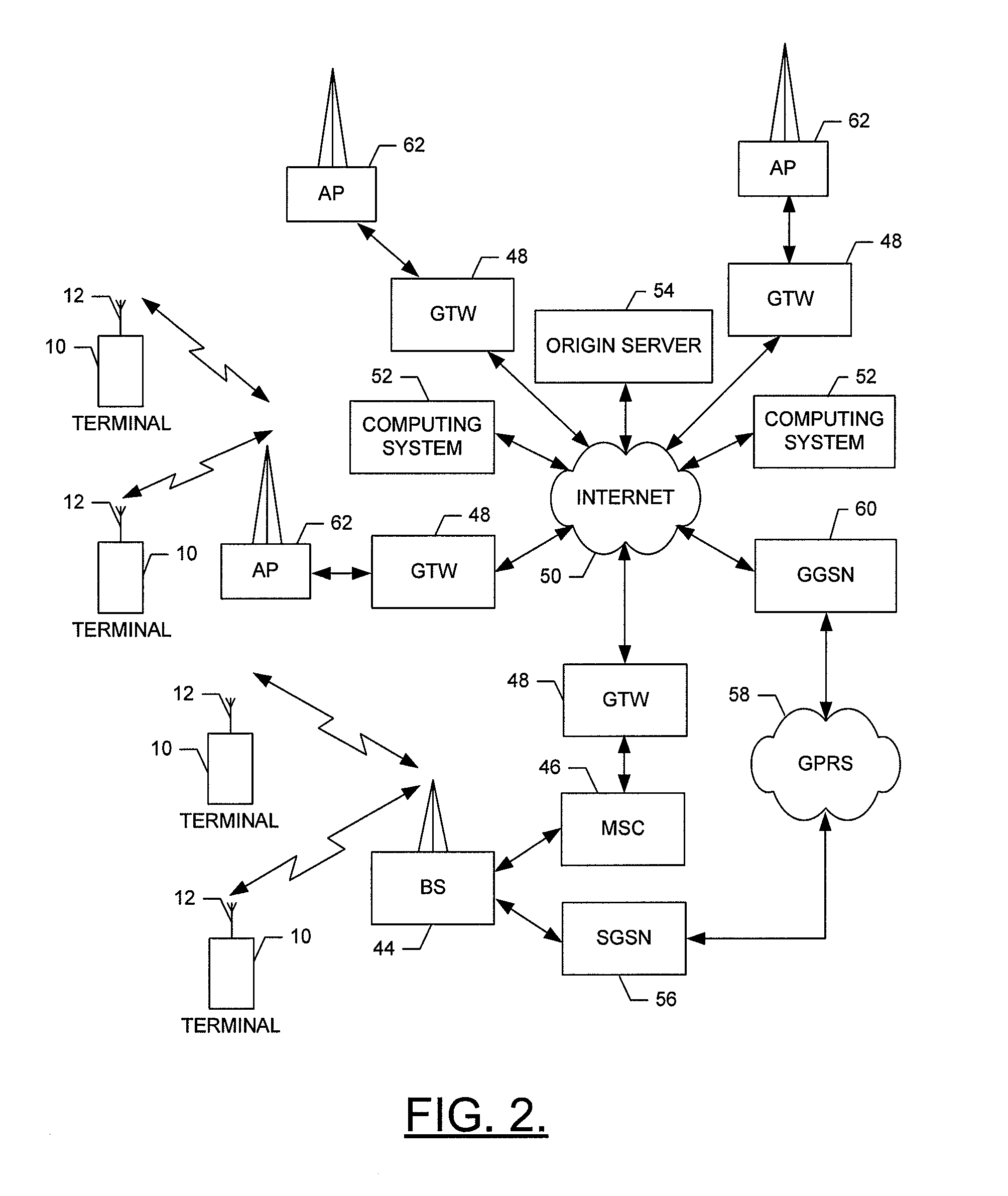

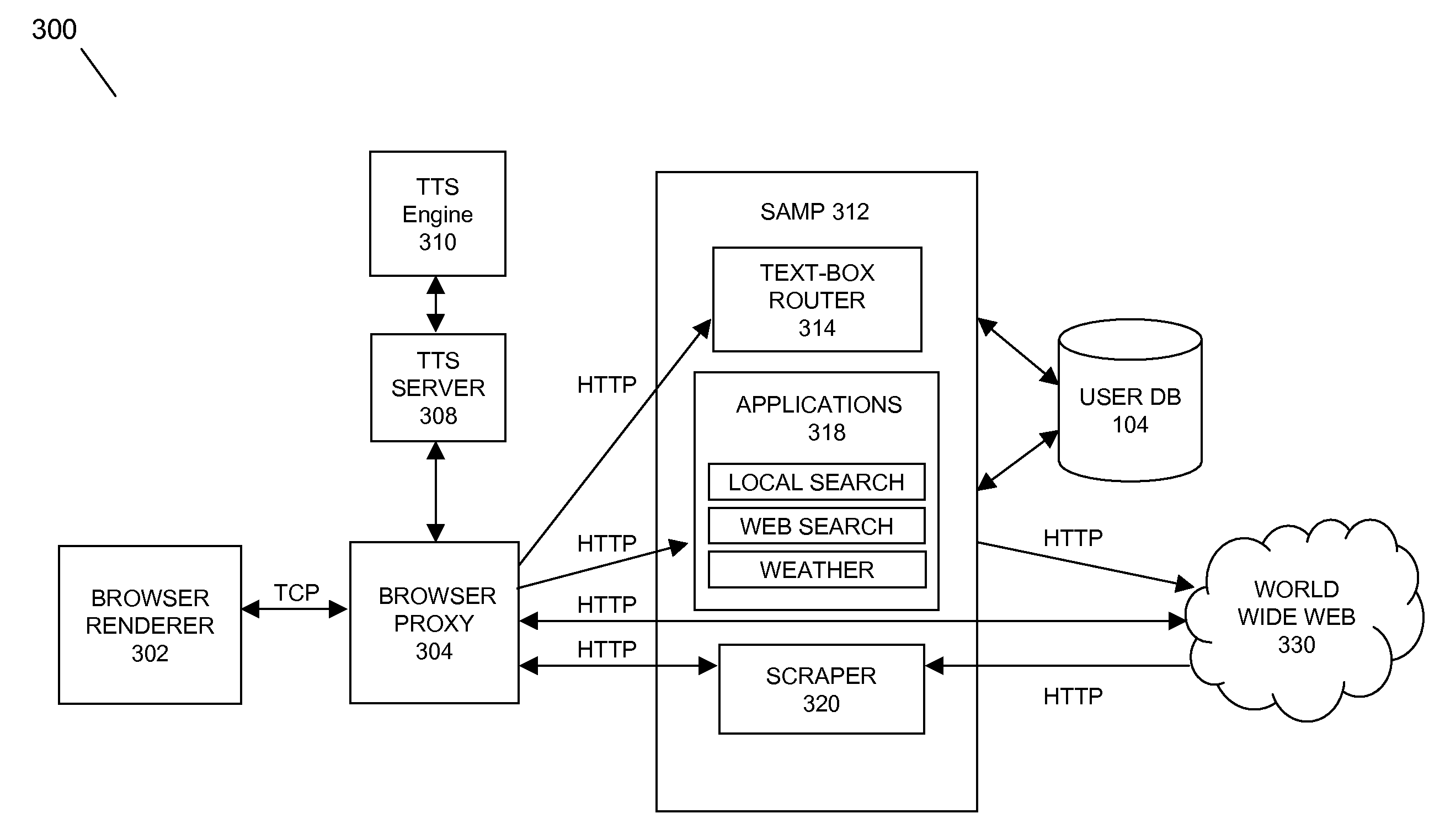

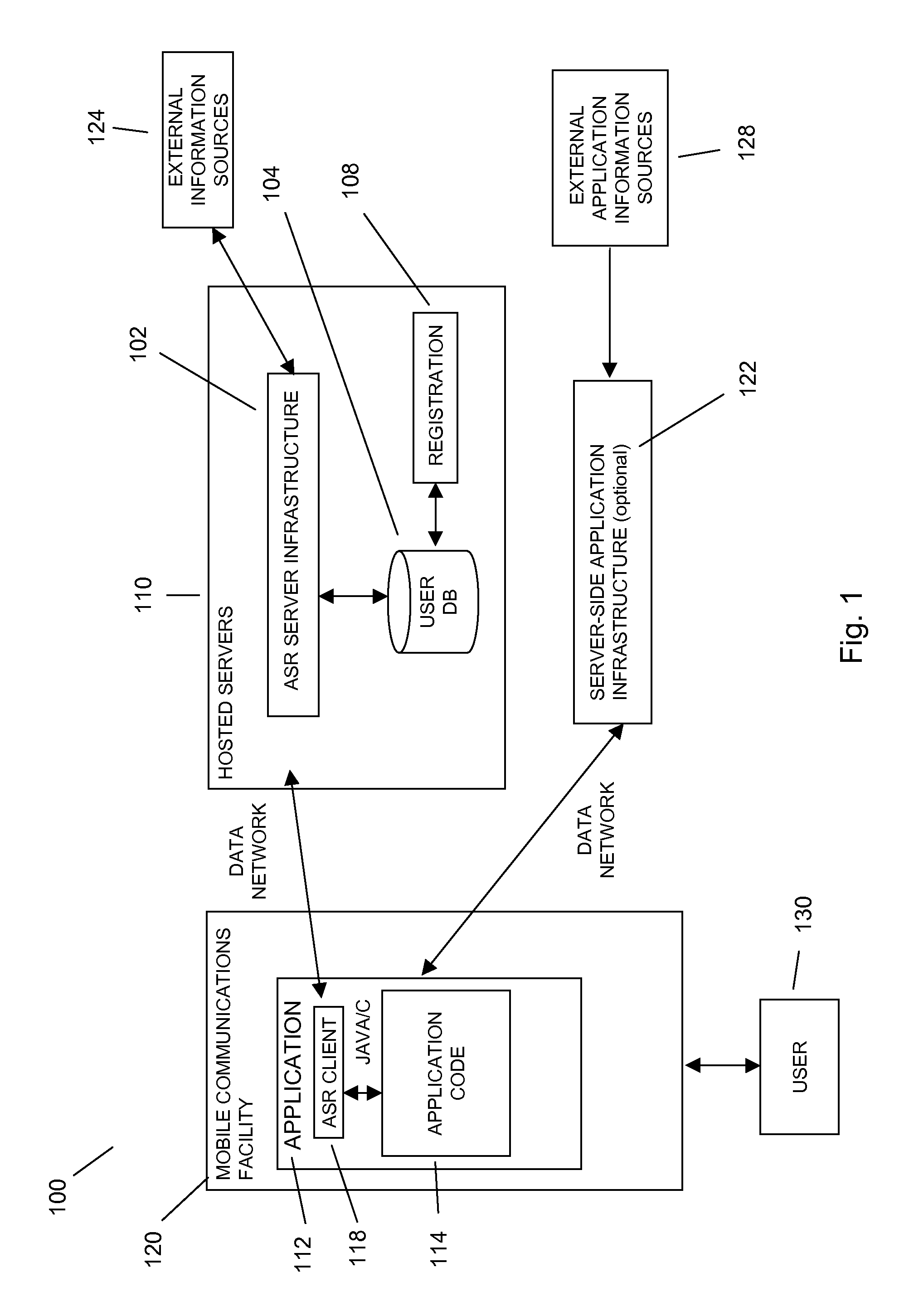

Application text entry in a mobile environment using a speech processing facility

In embodiments of the present invention improved capabilities are described for a mobile environment speech processing facility. The present invention may provide for the entering of text into a software application resident on a mobile communication facility, where recorded speech may be presented by the user using the mobile communications facility's resident capture facility. Transmission of the recording may be provided through a wireless communication facility to a speech recognition facility, and may be accompanied by information related to the software application. Results may be generated utilizing the speech recognition facility that may be independent of structured grammar, and may be based at least in part on the information relating to the software application and the recording. The results may then be transmitted to the mobile communications facility, where they may be loaded into the software application.

Owner:NUANCE COMM INC +1

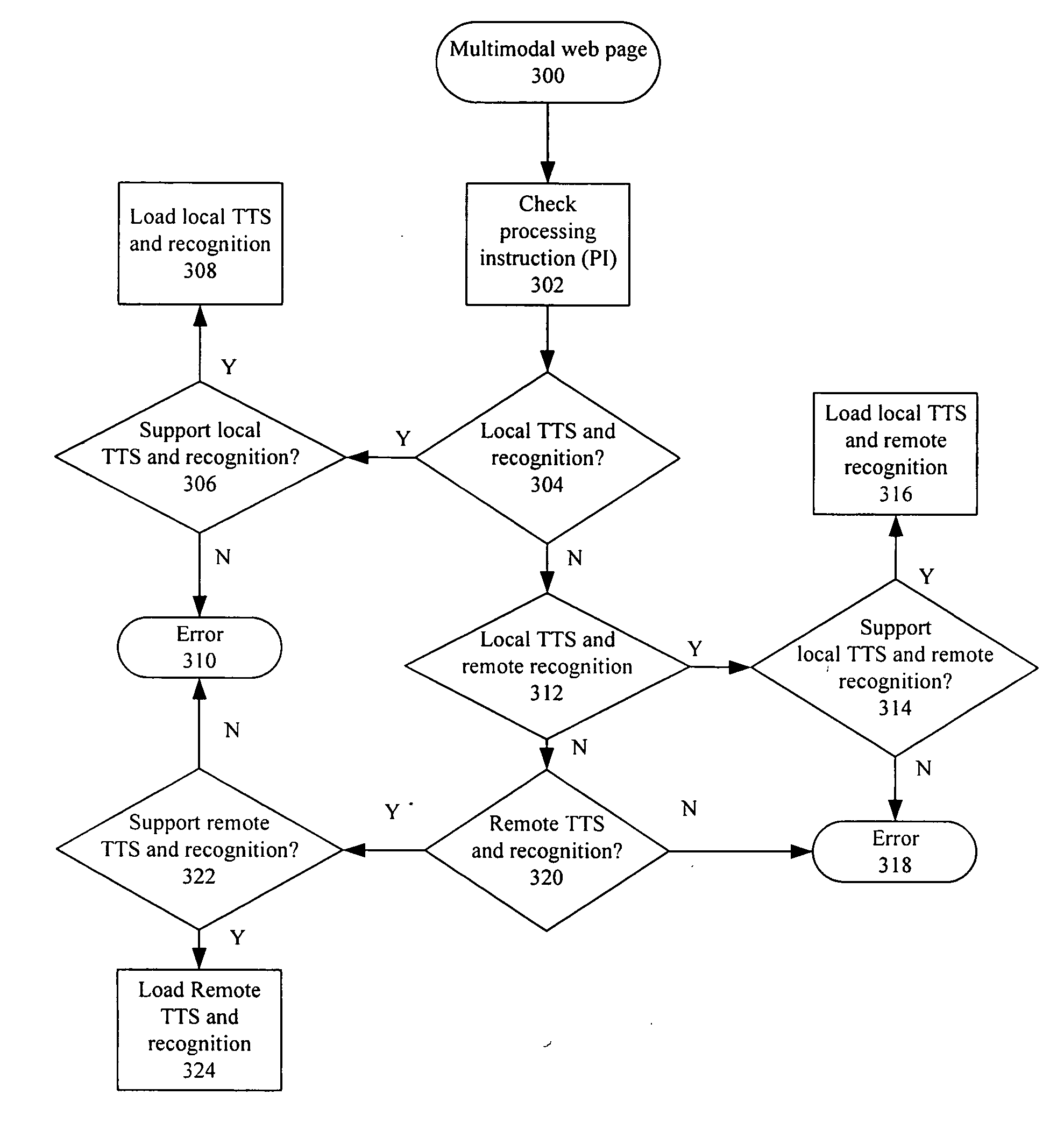

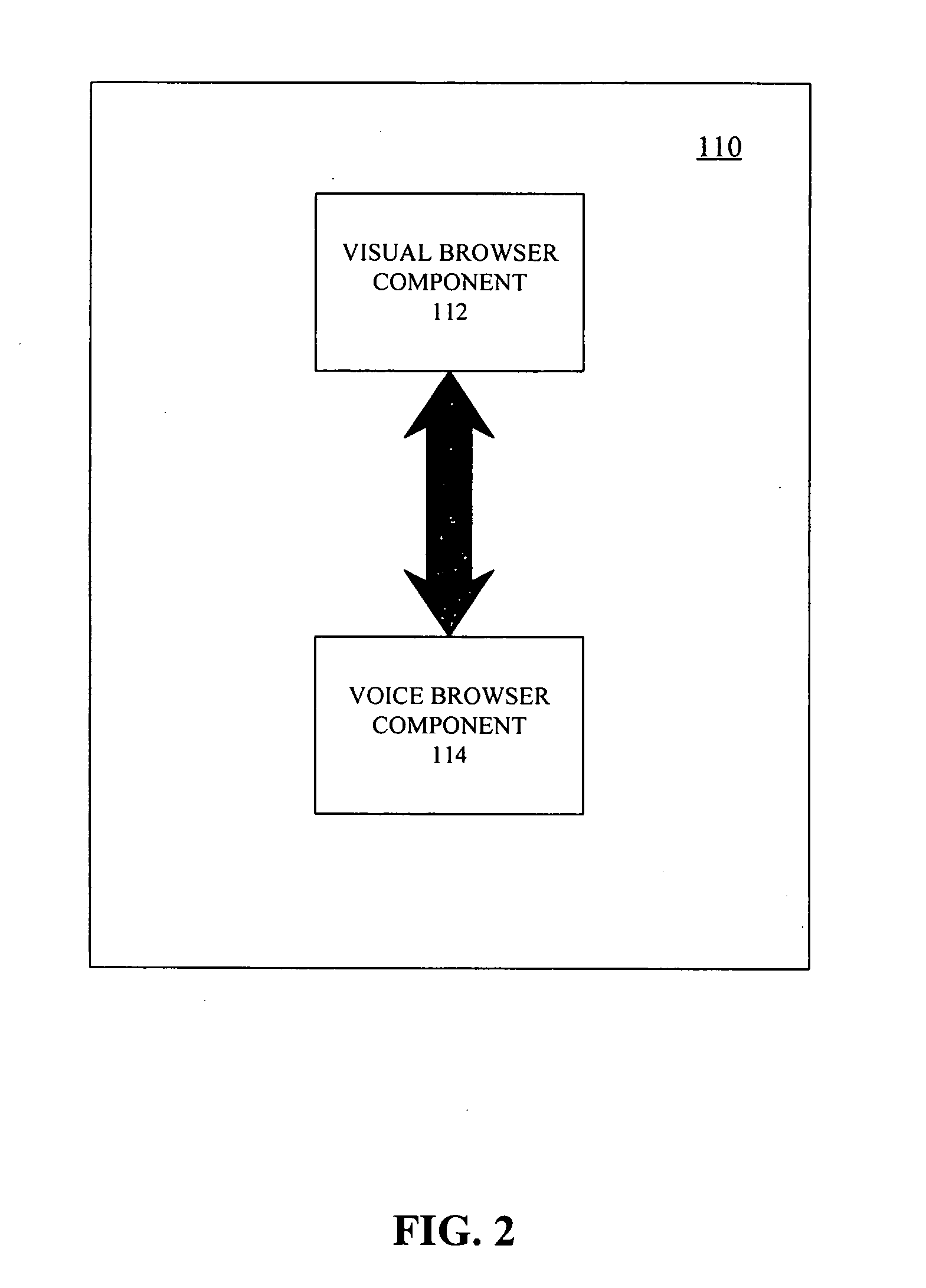

Dynamic switching between local and remote speech rendering

ActiveUS20060122836A1Devices with voice recognitionSubstation equipmentProcessing InstructionEnd system

A multimodal browser for rendering a multimodal document on an end system defining a host can include a visual browser component for rendering visual content, if any, of the multimodal document, and a voice browser component for rendering voice-based content, if any, of the multimodal document. The voice browser component can determine which of a plurality of speech processing configuration is used by the host in rendering the voice-based content. The determination can be based upon the resources of the host running the application. The determination also can be based upon a processing instruction contained in the application.

Owner:NUANCE COMM INC

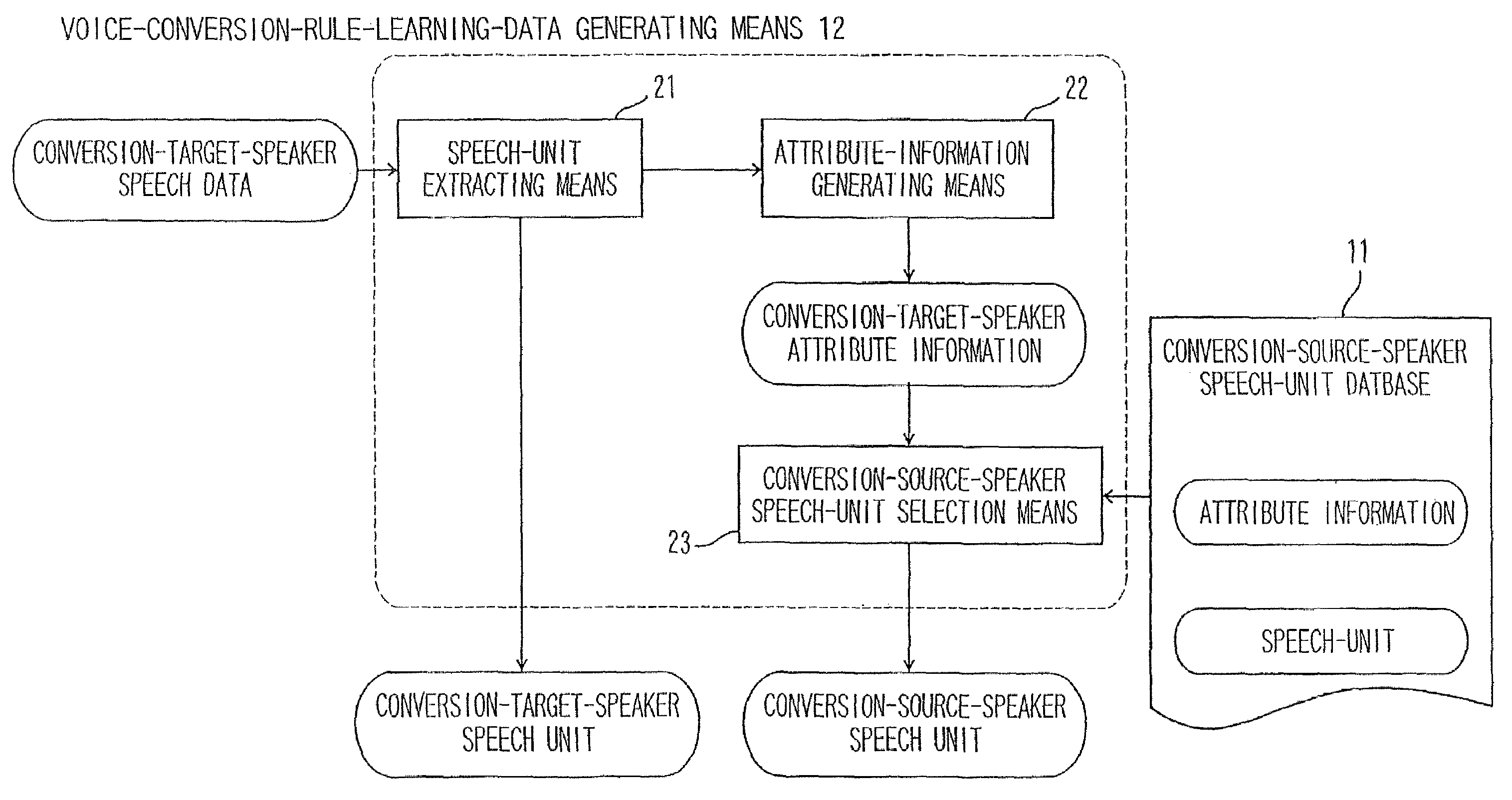

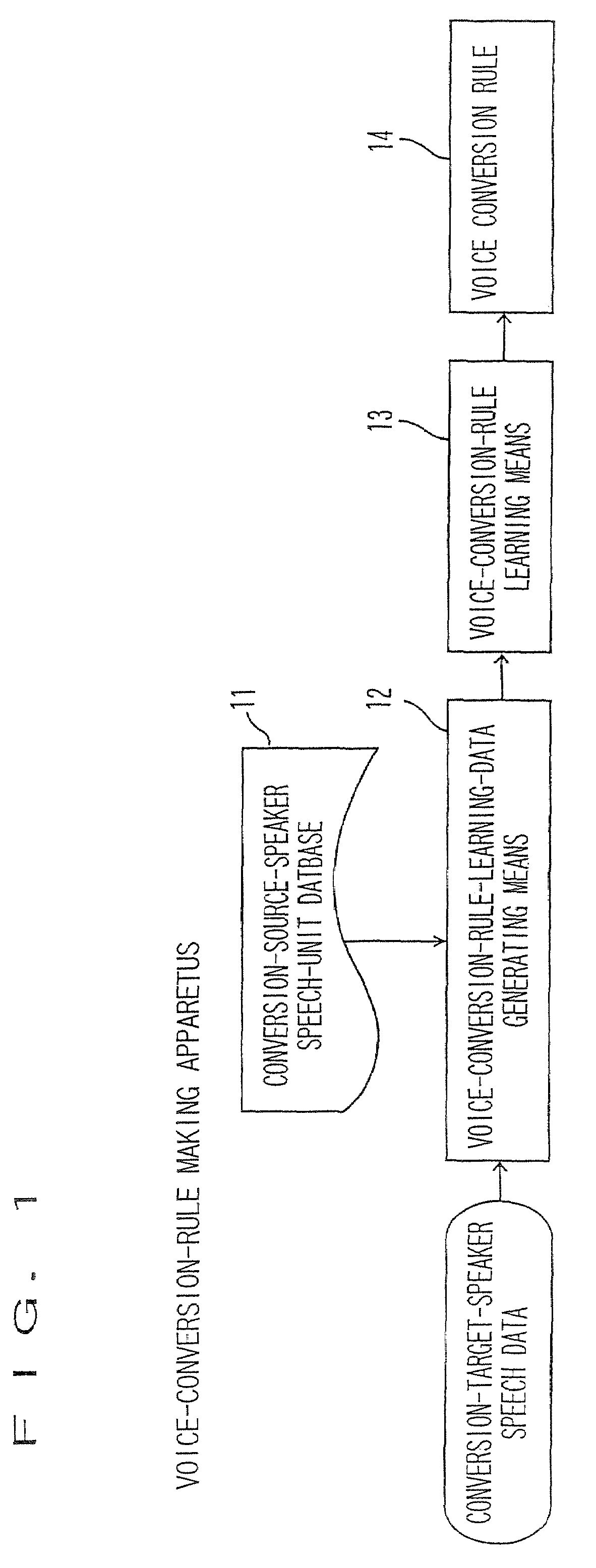

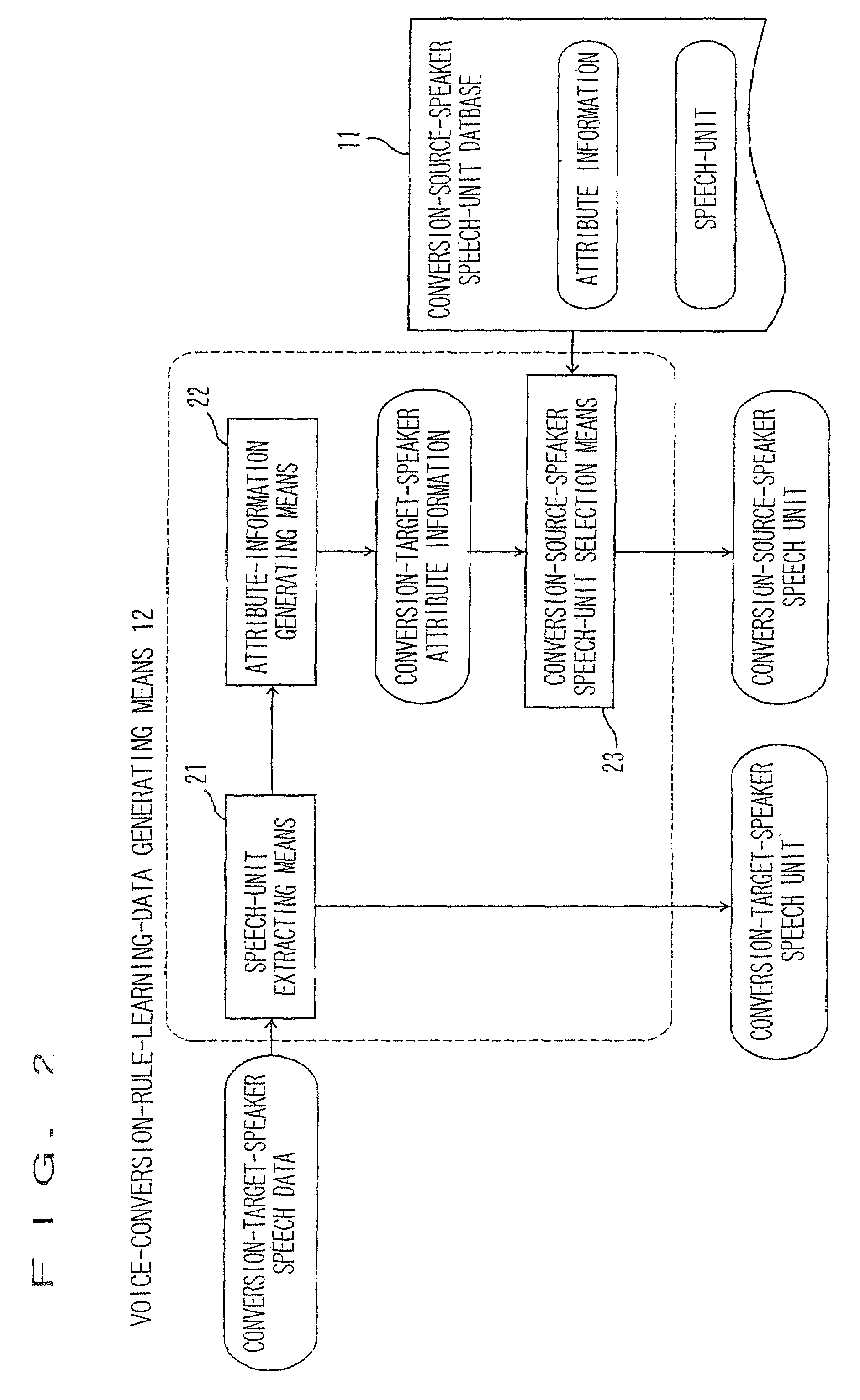

Apparatus and method for voice conversion using attribute information

ActiveUS7580839B2Automatic call-answering/message-recording/conversation-recordingSpeech recognitionLearning dataLoudspeaker

A speech processing apparatus according to an embodiment of the invention includes a conversion-source-speaker speech-unit database; a voice-conversion-rule-learning-data generating means; and a voice-conversion-rule learning means, with which it makes voice conversion rules. The voice-conversion-rule-learning-data generating means includes a conversion-target-speaker speech-unit extracting means; an attribute-information generating means; a conversion-source-speaker speech-unit database; and a conversion-source-speaker speech-unit selection means. The conversion-source-speaker speech-unit selection means selects conversion-source-speaker speech units corresponding to conversion-target-speaker speech units based on the mismatch between the attribute information of the conversion-target-speaker speech units and that of the conversion-source-speaker speech units, whereby the voice conversion rules are made from the selected pair of the conversion-target-speaker speech units and the conversion-source-speaker speech units.

Owner:TOSHIBA DIGITAL SOLUTIONS CORP

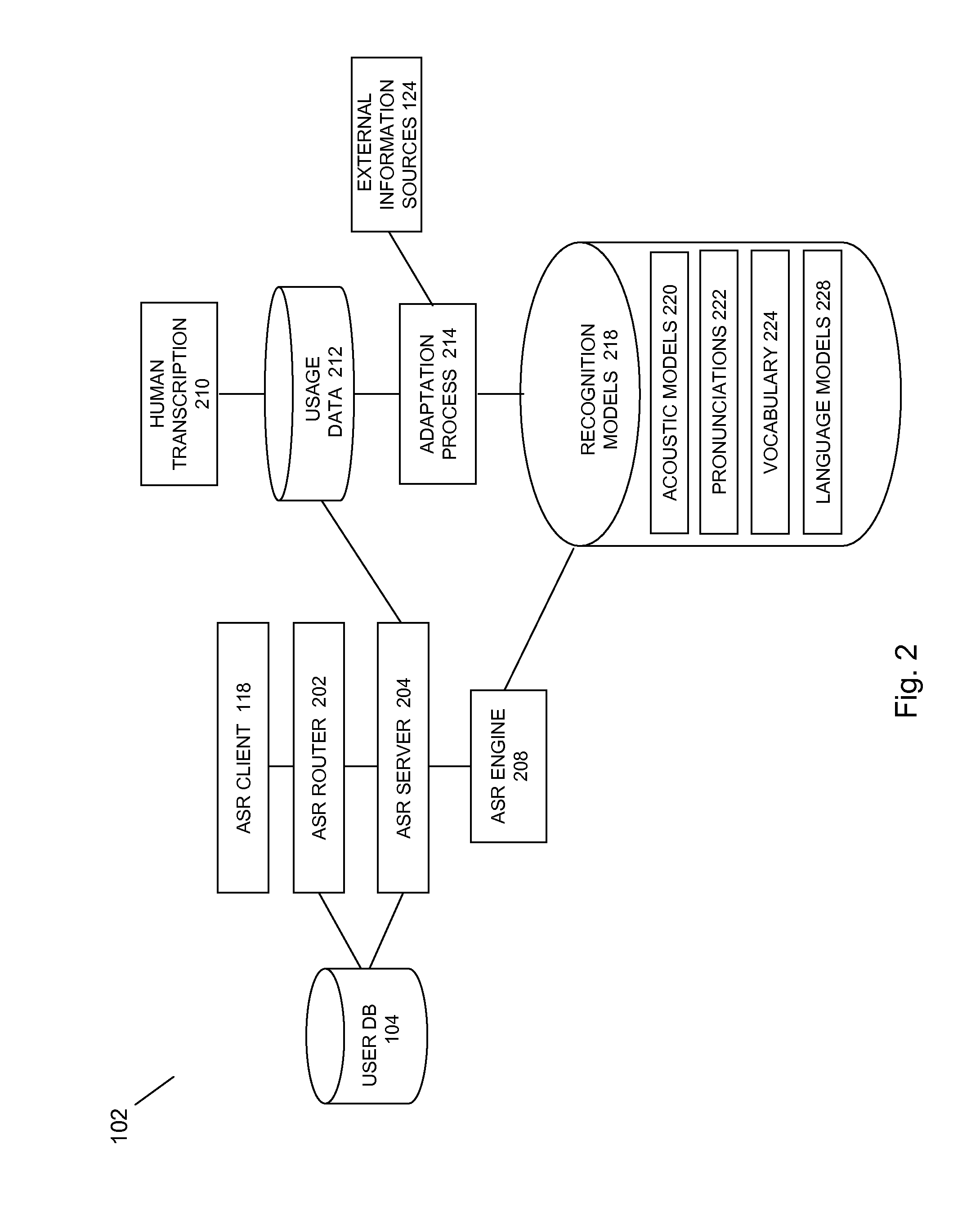

Mobile music environment speech processing facility

InactiveUS20080221880A1Devices with voice recognitionSubstation equipmentSpeech identificationMobile music

In embodiments of the present invention improved capabilities are described for a mobile environment speech processing facility. The present invention may provide for the entering of text into a music software application resident on a mobile communication facility, where speech may be recorded using the mobile communications facility's resident capture facility. Transmission of the recording may be provided through a wireless communication facility to a speech recognition facility. Results may be generated utilizing the speech recognition facility that may be independent of structured grammar, and may be based at least in part on the information relating to the recording. The results may then be transmitted to the mobile communications facility, where they may be loaded into the music software application. In embodiments, the user may be allowed to alter the results that are received from the speech recognition facility. In addition, the speech recognition facility may be adapted based on usage.

Owner:MOBEUS CORP +1

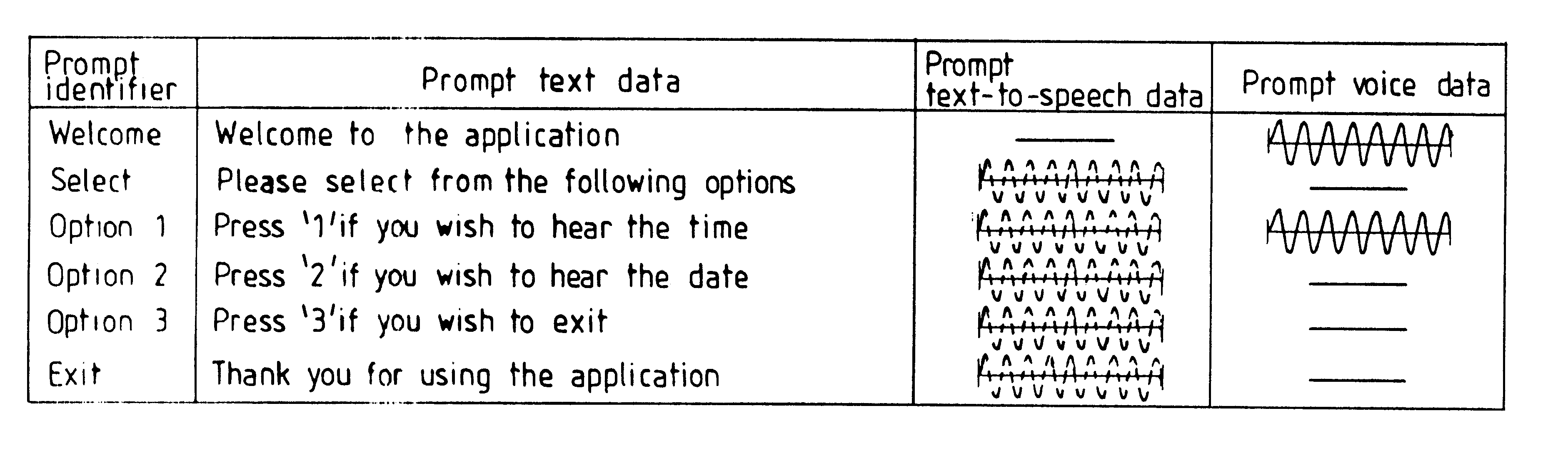

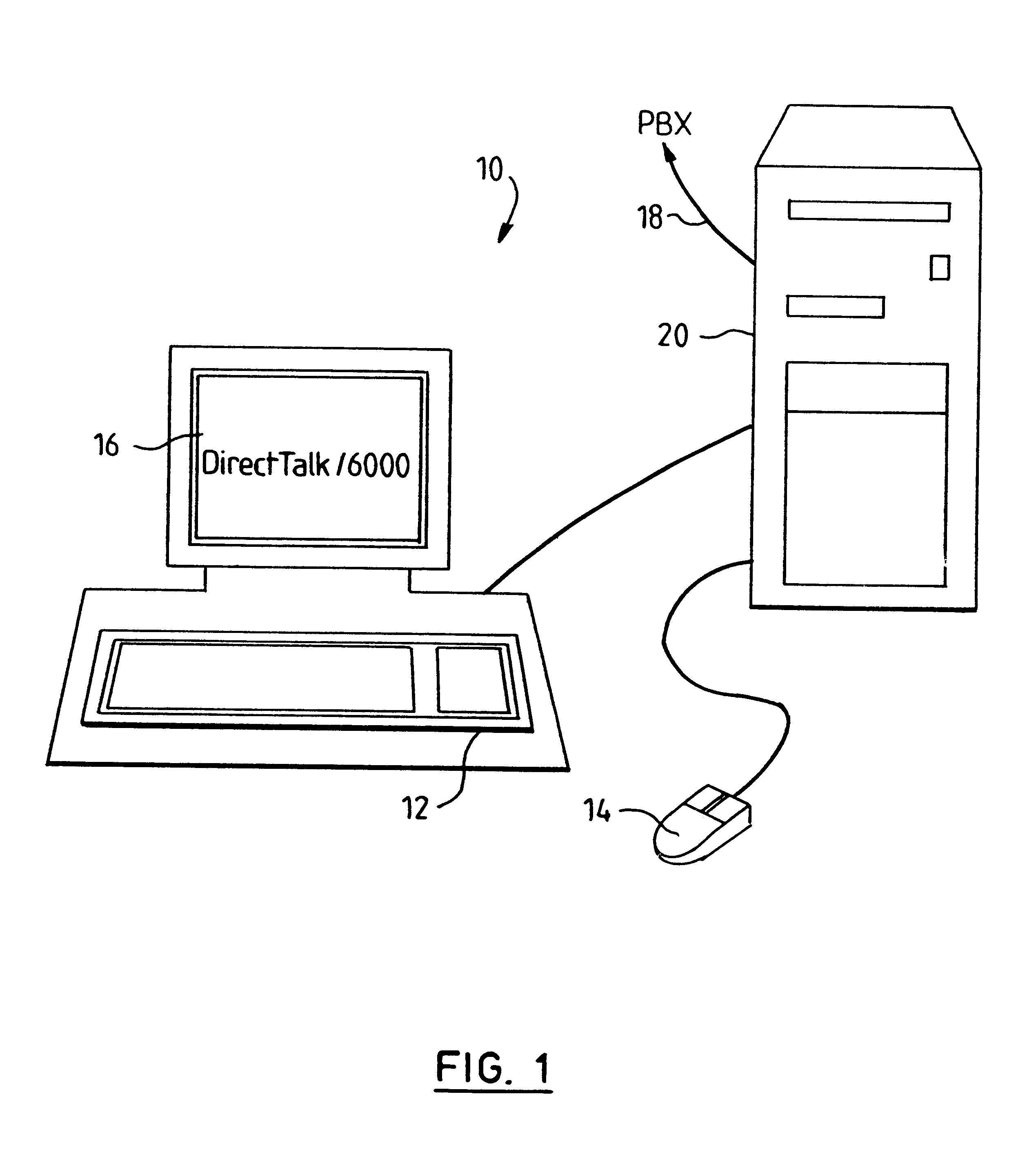

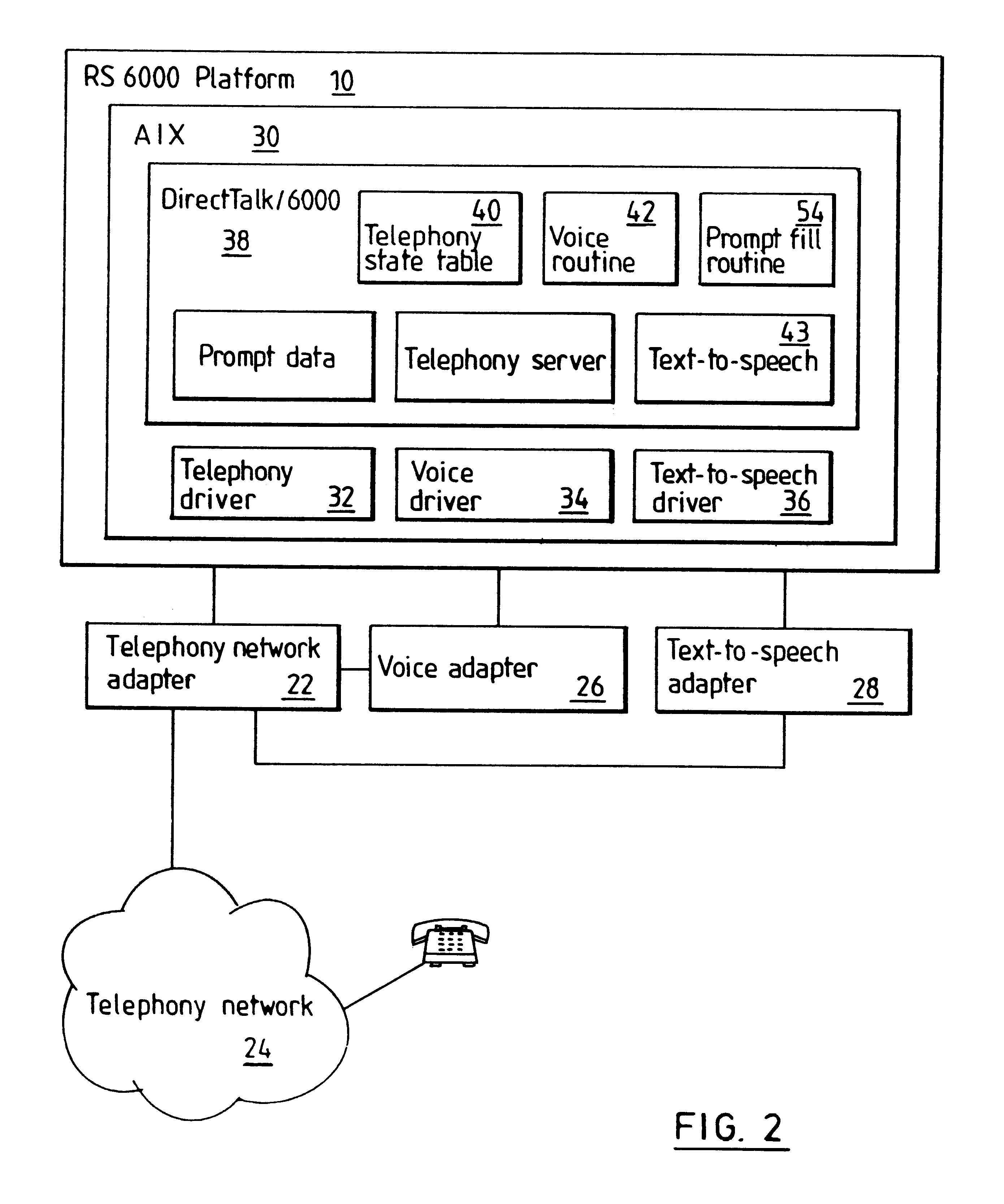

Developing voice response applications from pre-recorded voice and stored text-to-speech prompts

InactiveUS6345250B1Faster and more programmer friendlyFaster and more programmer friendly environmentInterconnection arrangementsAutomatic exchangesSpeech soundInteractive Voice Response Technology

An interactive voice response application on a computer telephony system includes a method of playing voice prompts from a mixed set of pre-recorded voice prompts and voice prompts synthesised from a text-to-speech process. The method comprises: reserving memory for a synthesised prompt and a pre-recorded prompt associated with a particular prompt identifier; on a play prompt request selecting the pre-recorded prompt if available and outputting through a voice output; otherwise selecting the synthesised prompt and playing the selected voice prompt through the voice output. If neither pre-recorded or synthesised data are available then text associated with the voice prompt is output through a text-to-speech output.

Owner:NUANCE COMM INC

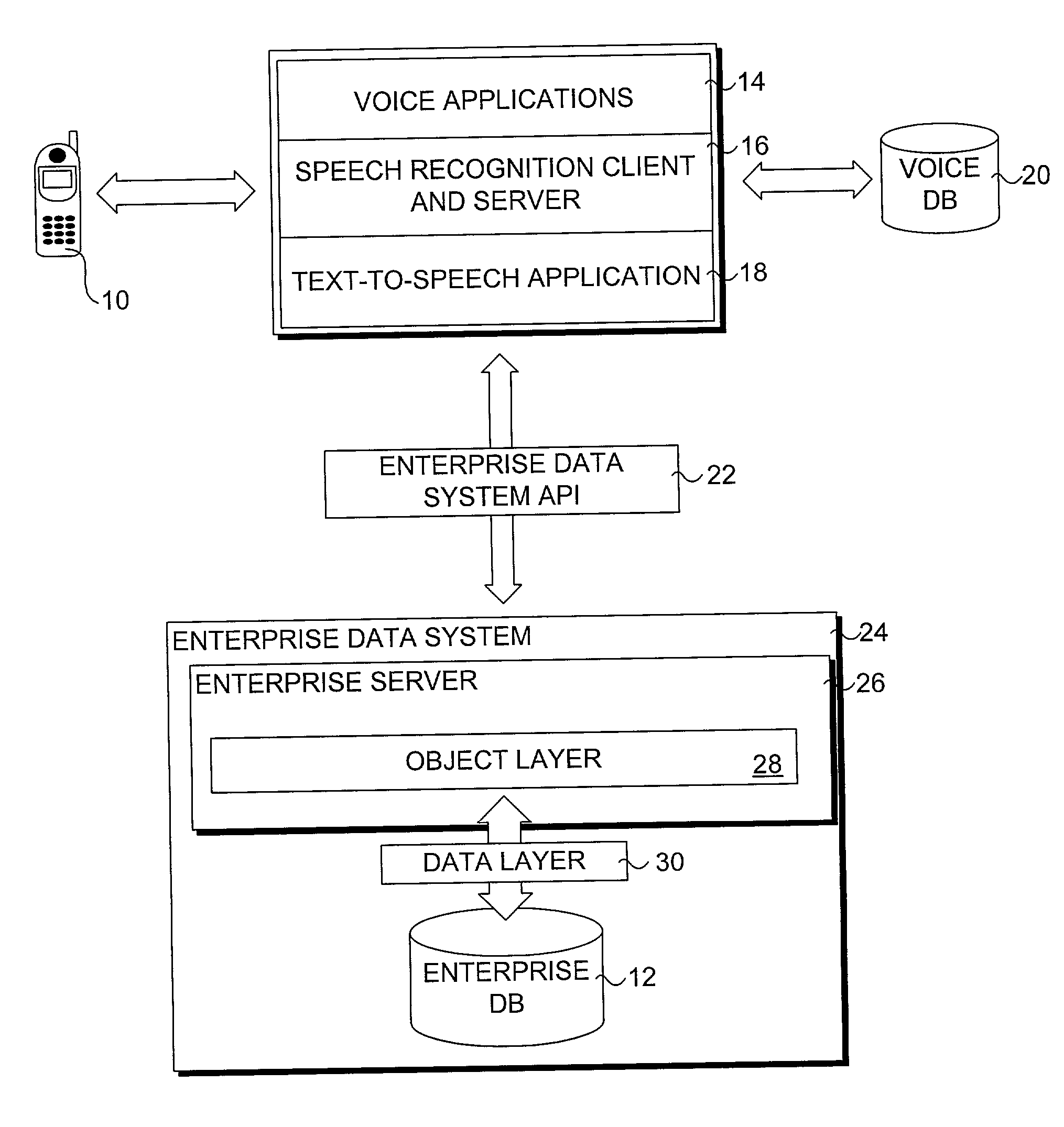

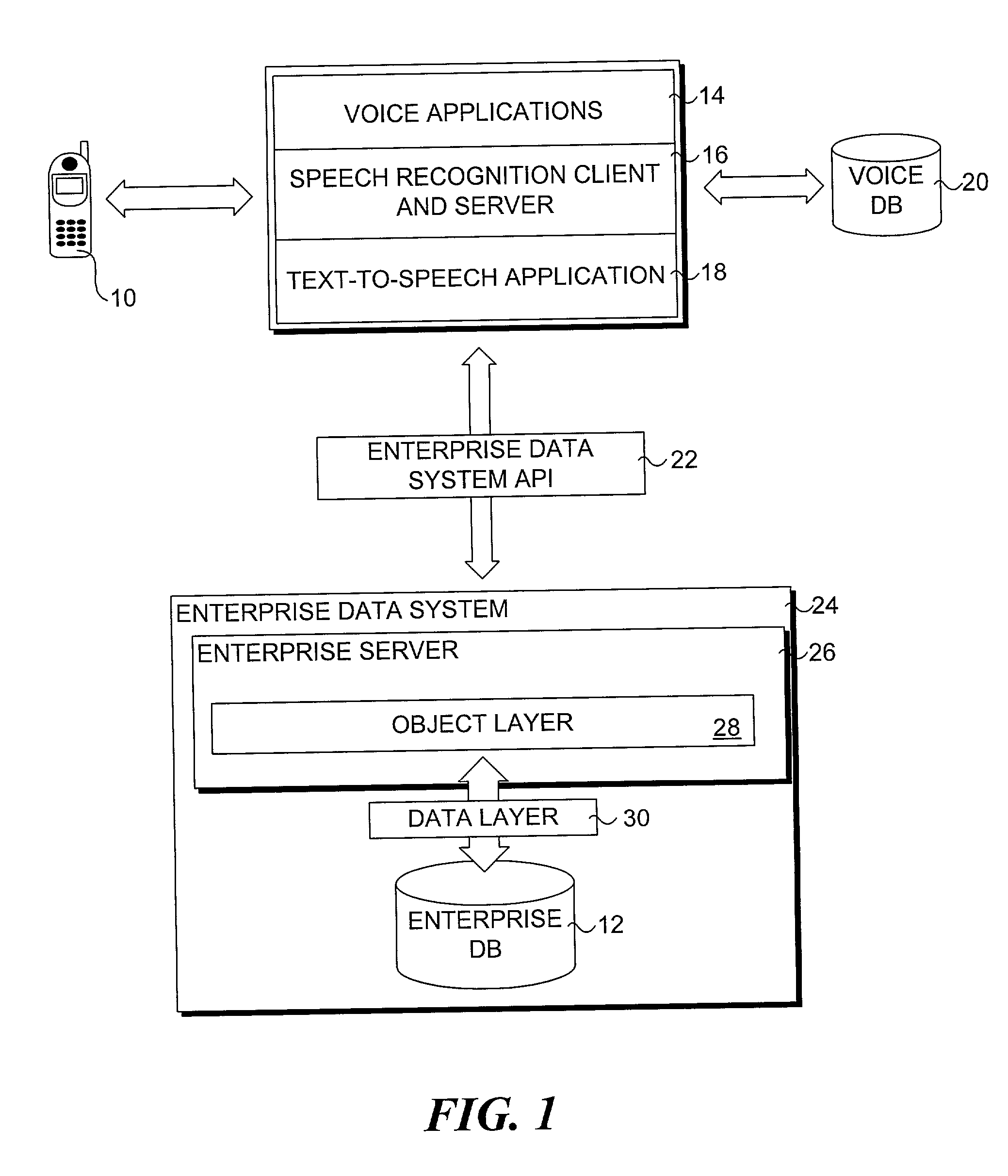

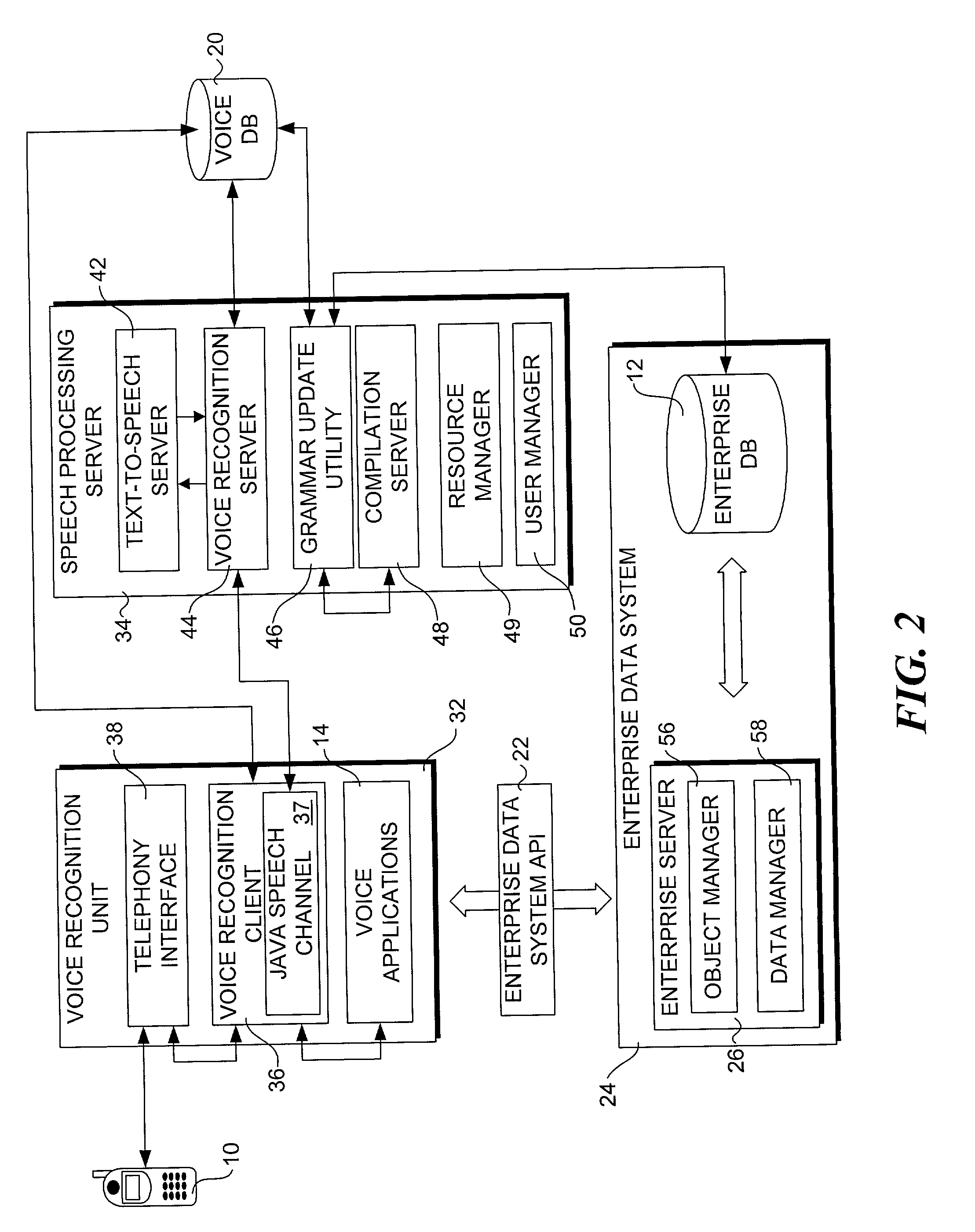

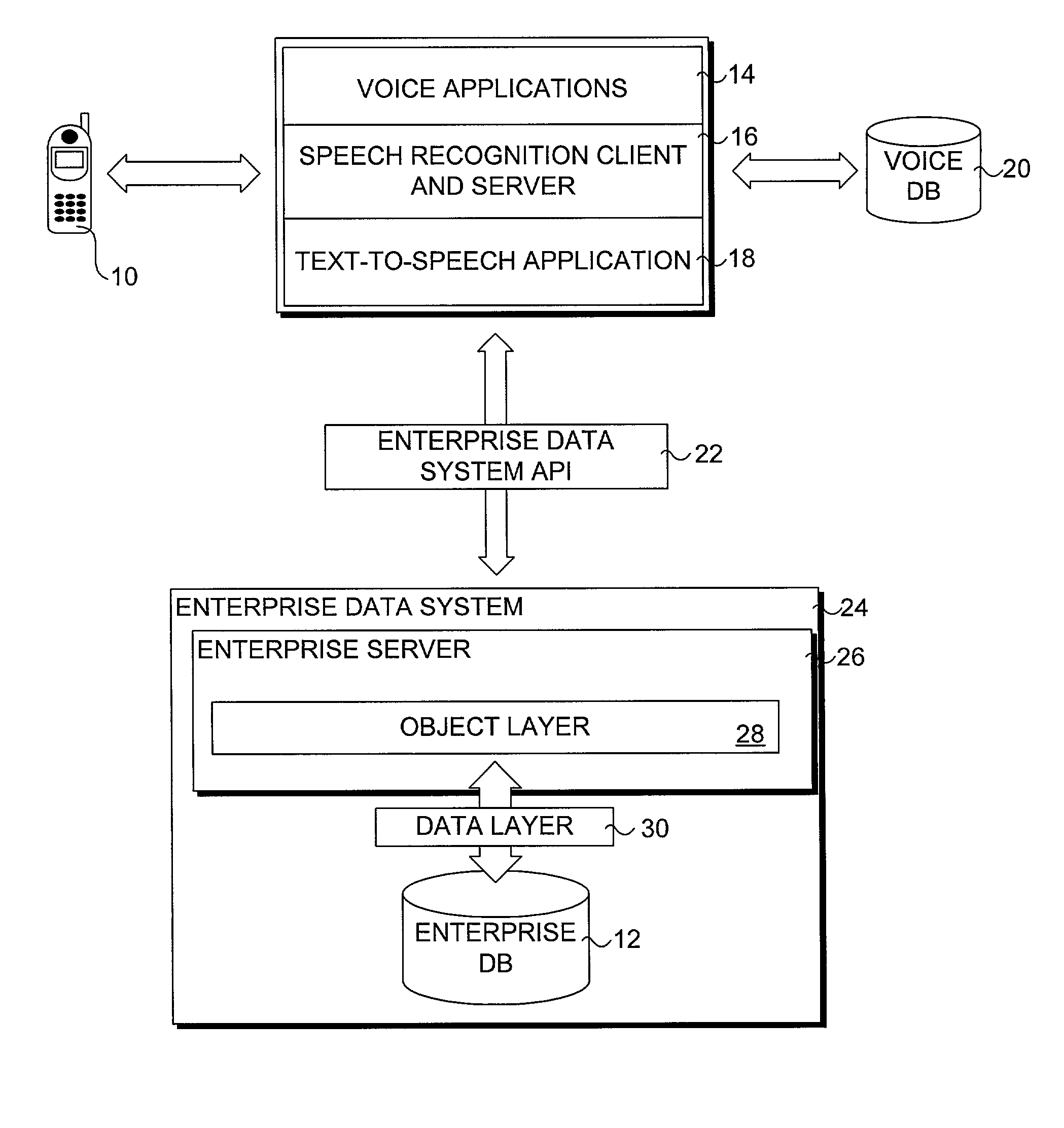

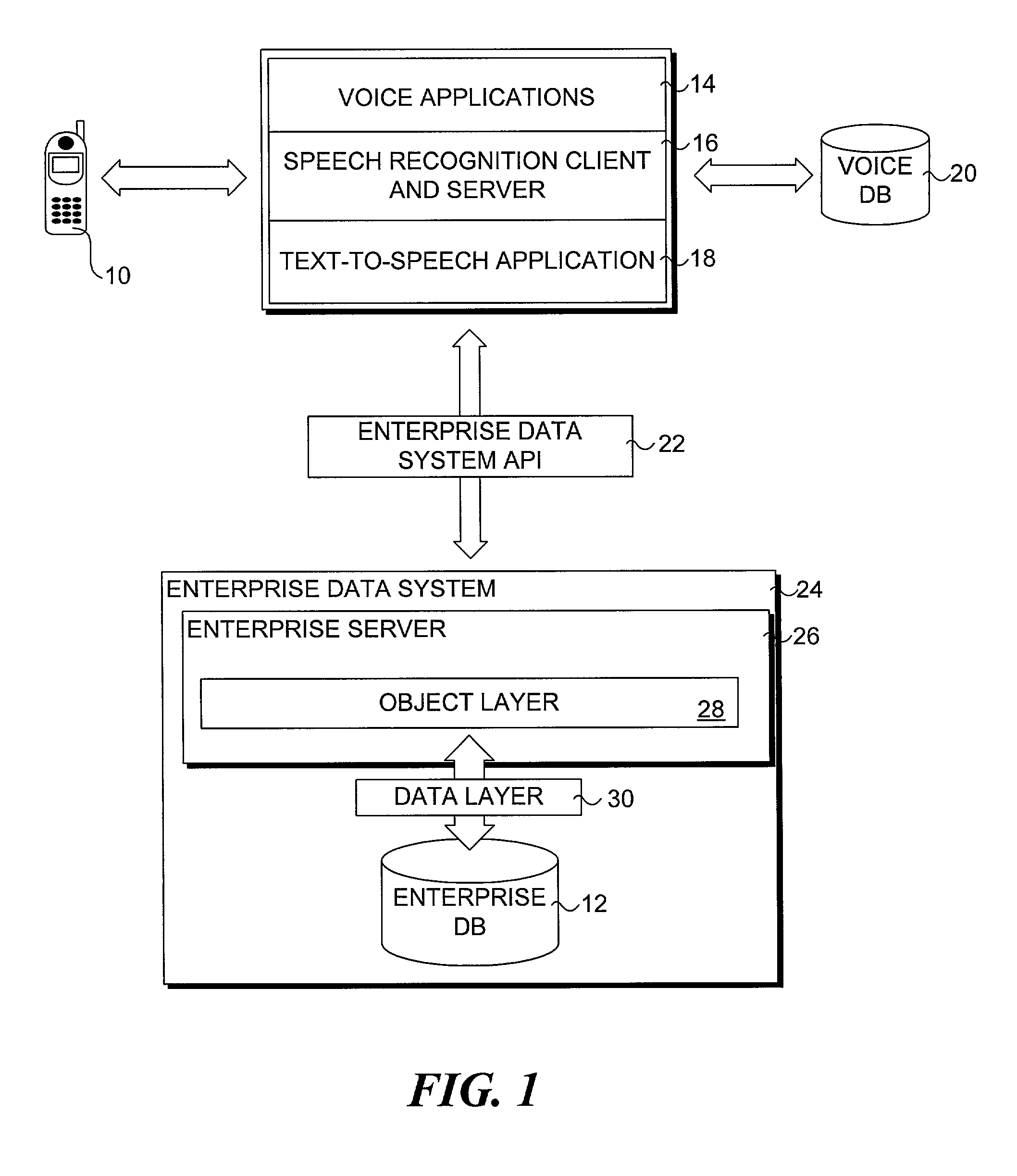

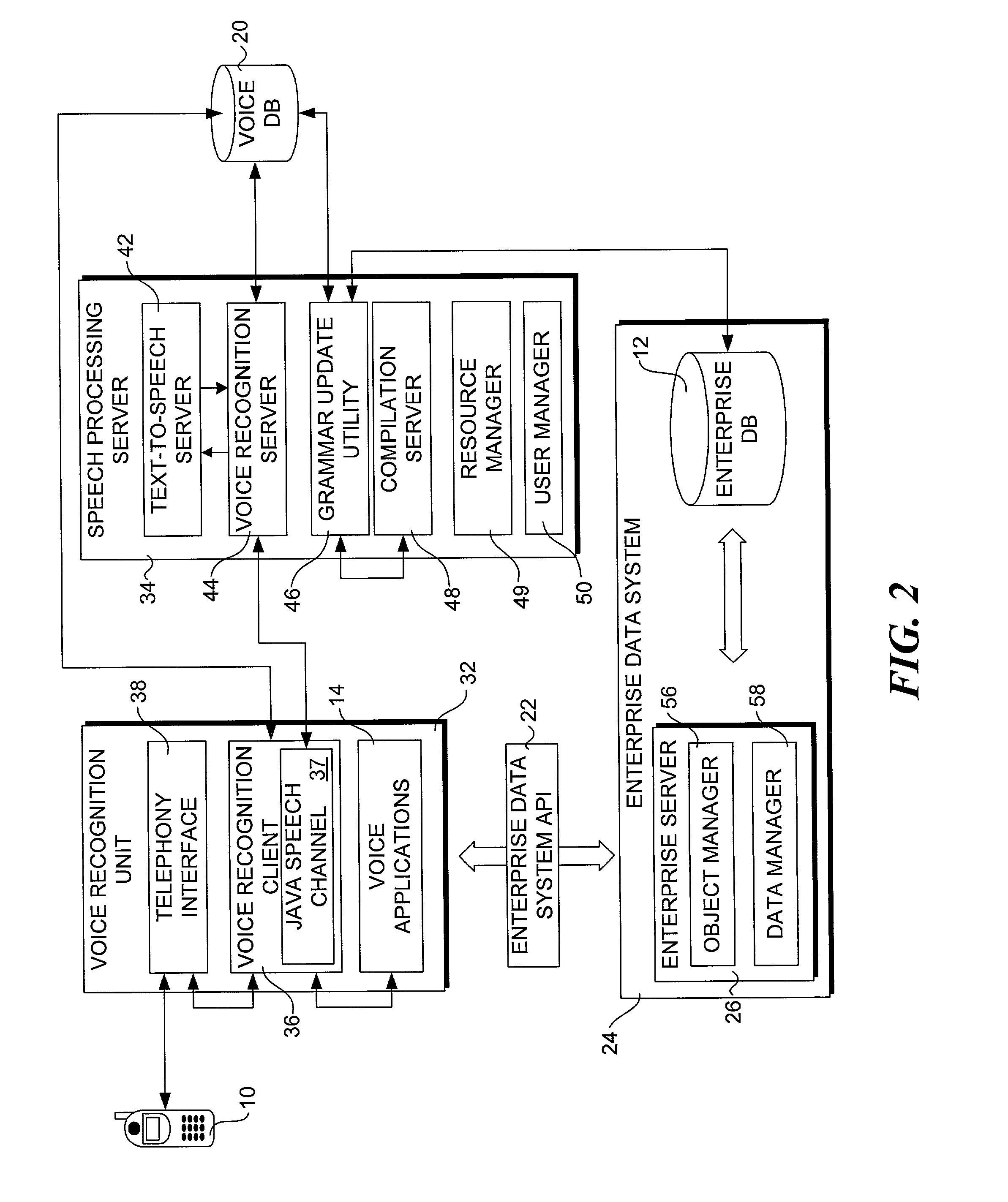

Method for accessing data via voice

InactiveUS20070198267A1Digital data information retrievalNatural language data processingData setSystem usage

A method for providing access to data via a voice interface. In one embodiment, the system includes a voice recognition unit and a speech processing server that work together to enable users to interact with the system using voice commands guided by navigation context sensitive voice prompts, and provide user-requested data in a verbalized format back to the users. Digitized voice waveform data are processed to determine the voice commands of the user. The system also uses a “grammar” that enables users to retrieve data using intuitive natural language speech queries. In response to such a query, a corresponding data query is generated by the system to retrieve one or more data sets corresponding to the query. The user is then enabled to browse the data that are returned through voice command navigation, wherein the system “reads” the data back to the user using text-to-speech (TTS) conversion and system prompts.

Owner:ORACLE INT CORP

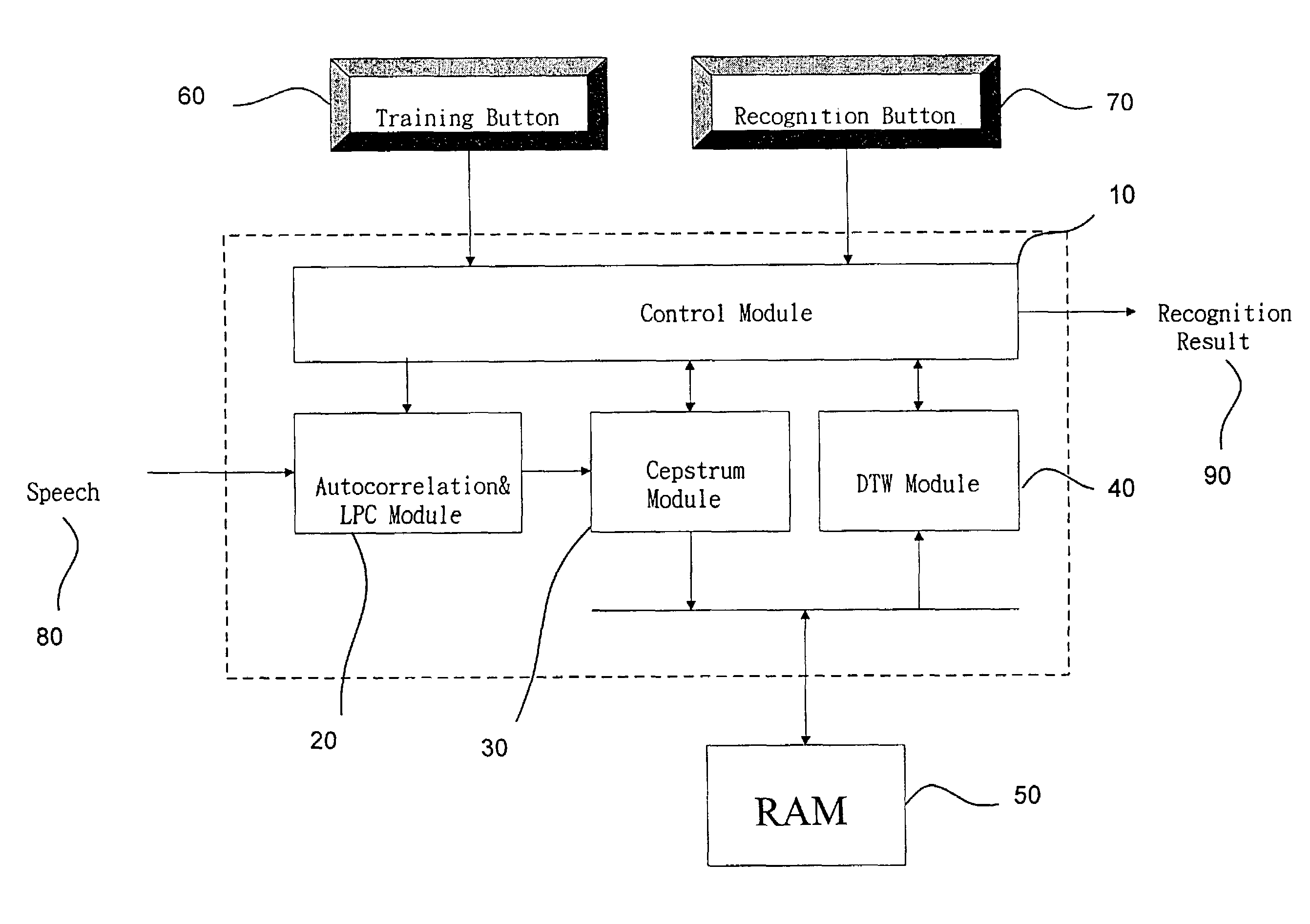

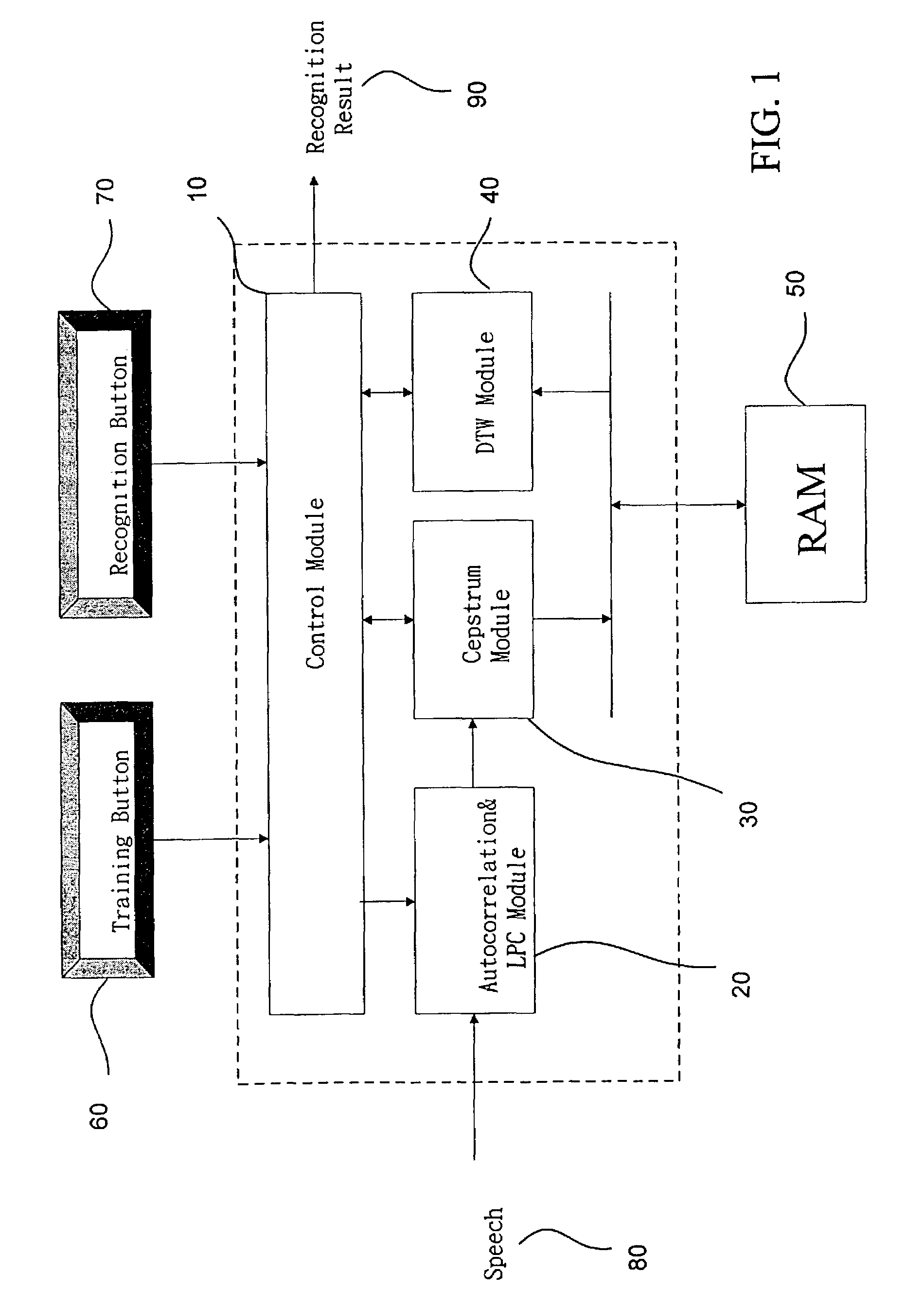

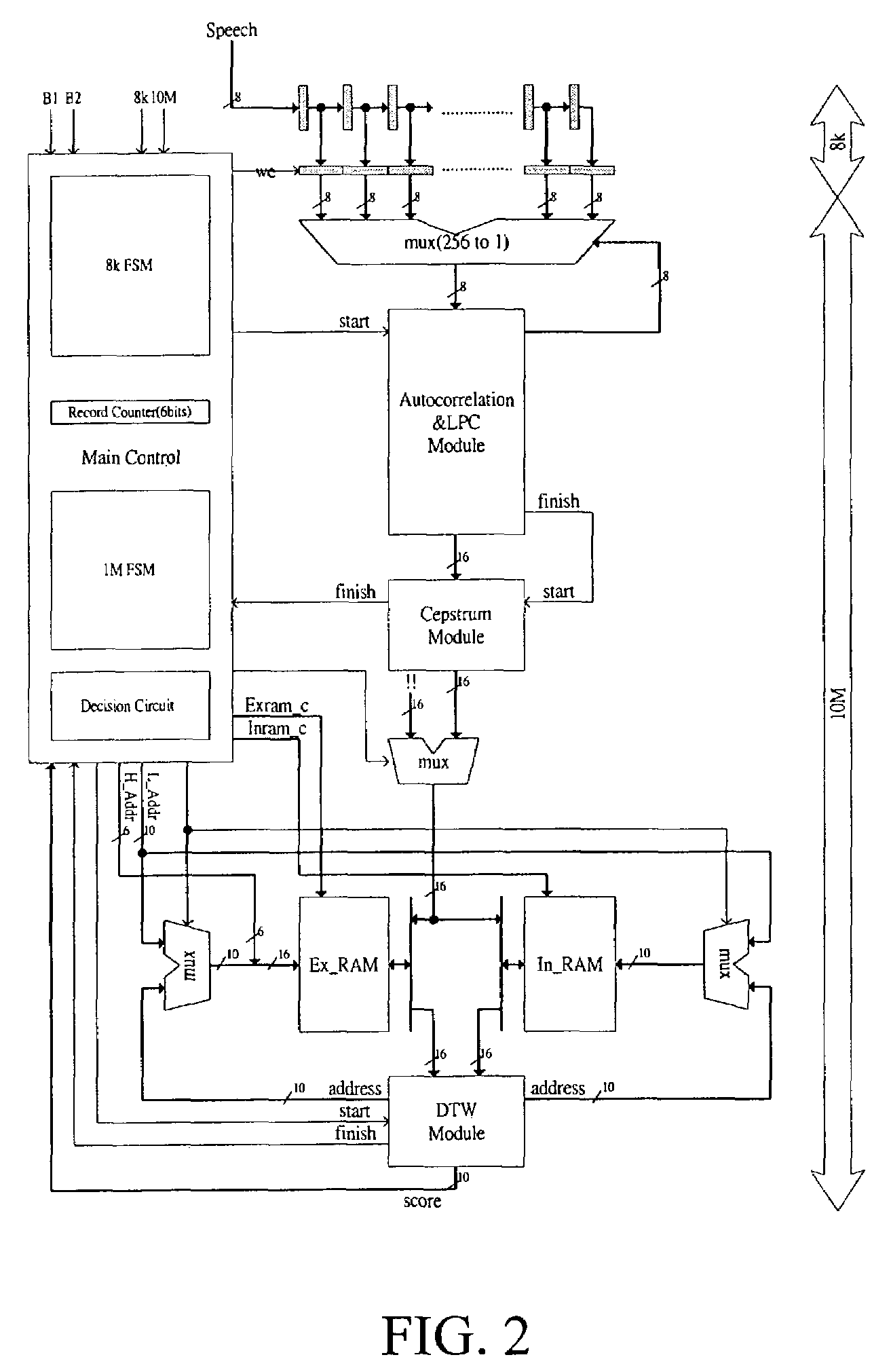

Speech recognition system

InactiveUS7266496B2Improve execution speedLow costSpeech recognitionLinearityLinear prediction coefficient

The present invention discloses a complete speech recognition system having a training button and a recognition button, and the whole system uses the application specific integrated circuit (ASIC) architecture for the design, and also uses the modular design to divide the speech processing into 4 modules: system control module, autocorrelation and linear predictive coefficient module, cepstrum module, and DTW recognition module. Each module forms an intellectual product (IP) component by itself. Each IP component can work with various products and application requirements for the design reuse to greatly shorten the time to market.

Owner:NAT CHENG KUNG UNIV

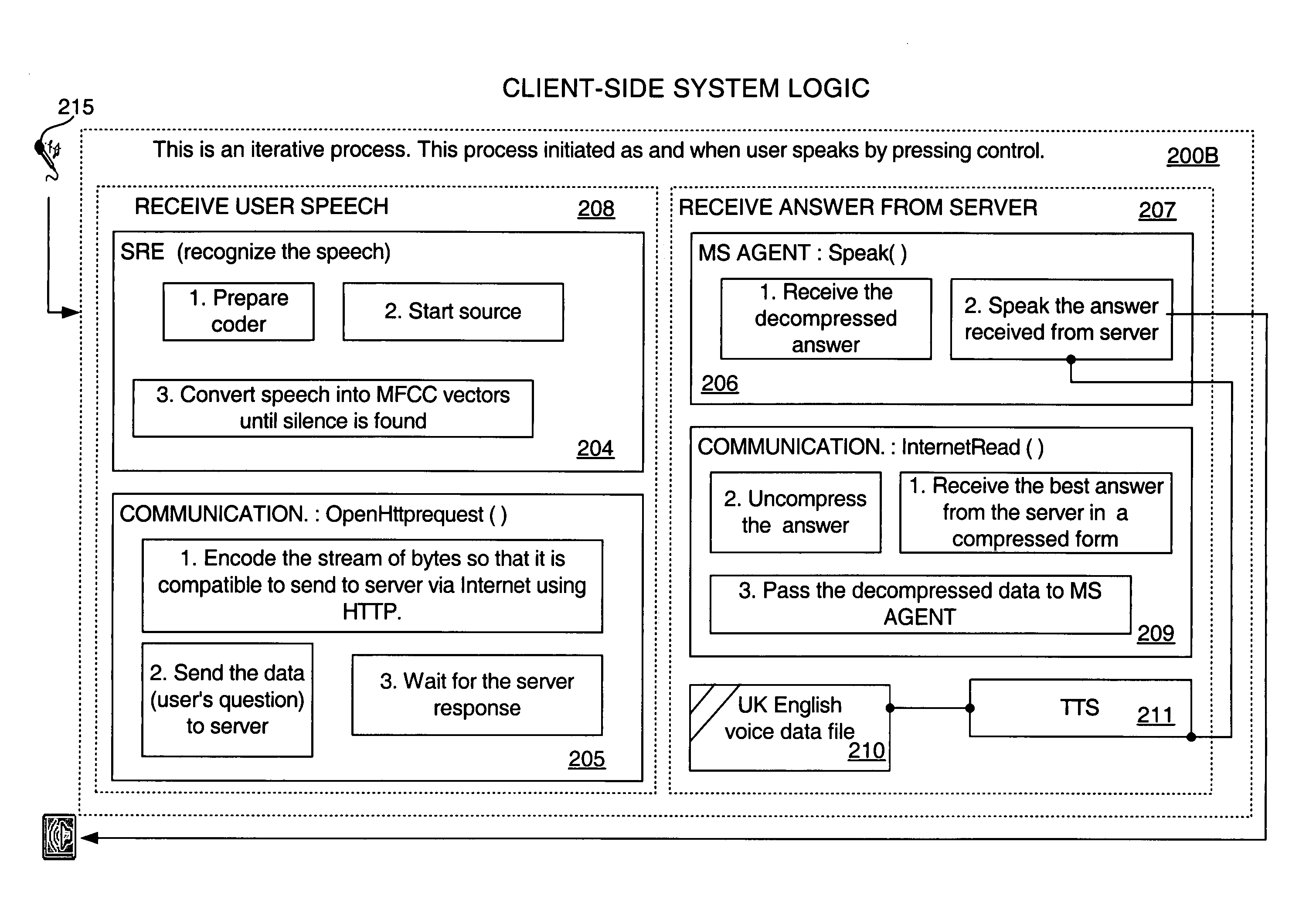

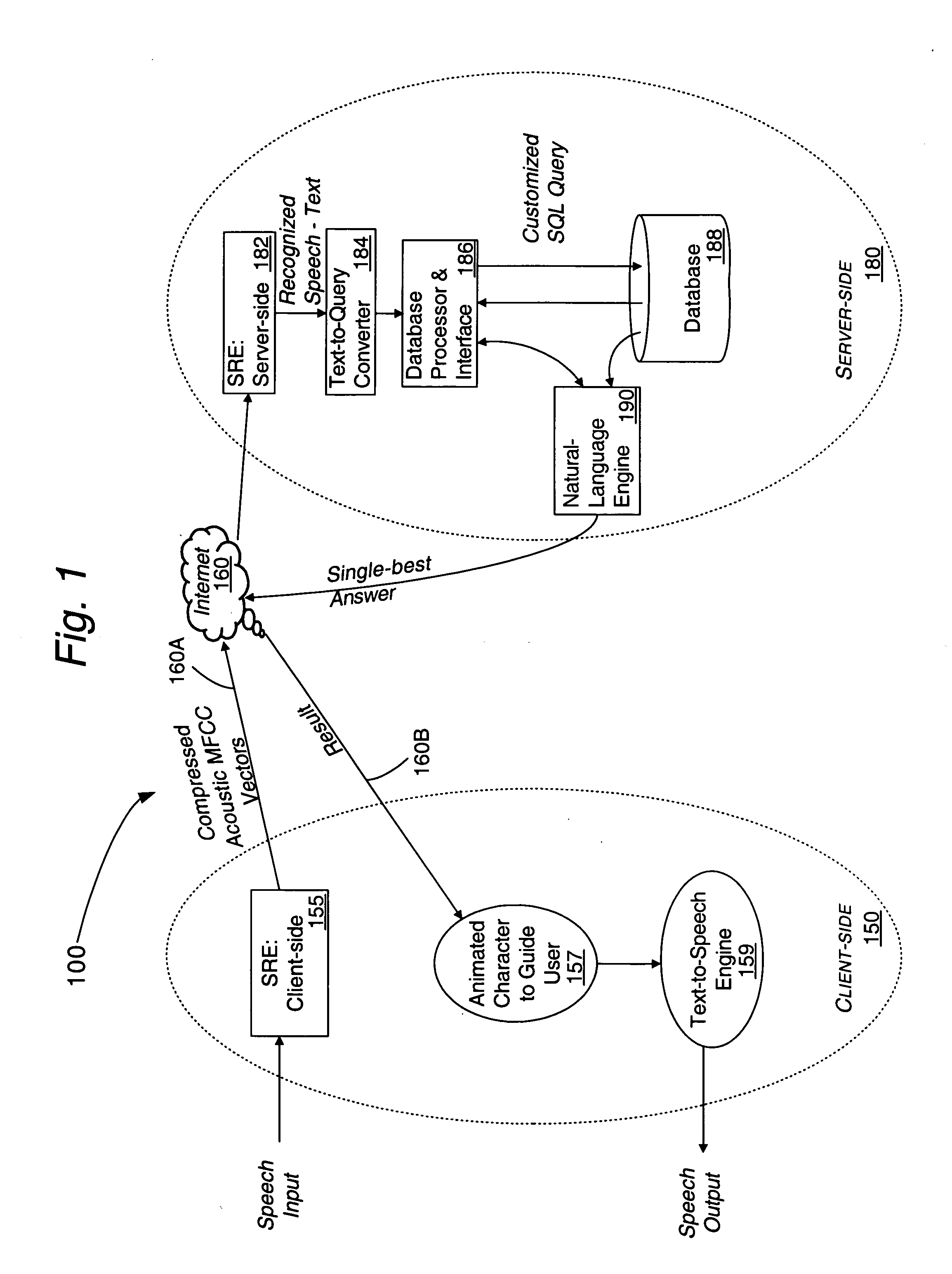

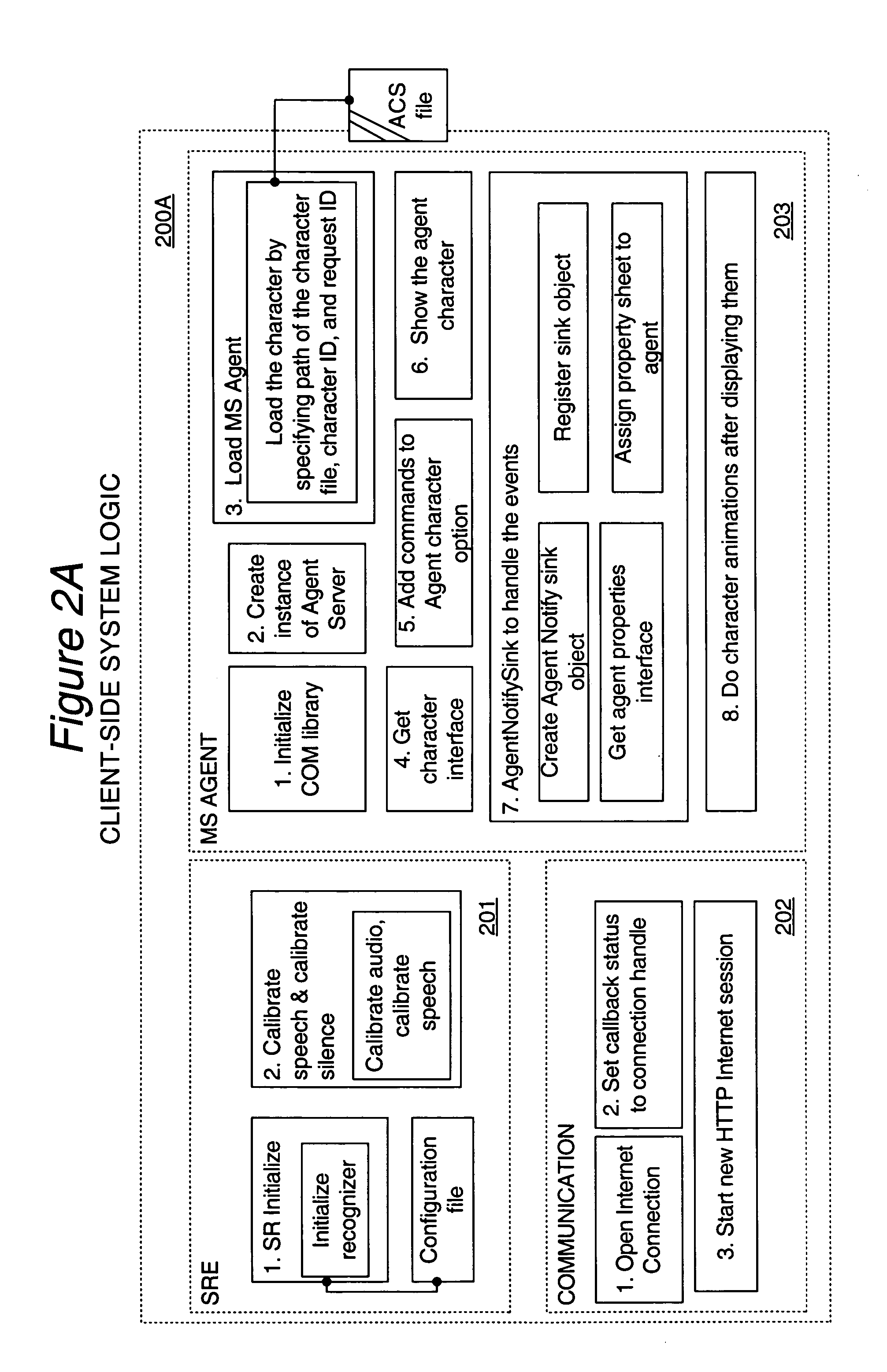

Partial speech processing device & method for use in distributed systems

InactiveUS20050086059A1Flexibly and optimally distributedImprove accuracyNatural language translationData processing applicationsTime responseClient-side

A client device incorporates partial speech recognition for recognizing a spoken query by a user. The full recognition process is distributed over a client / server architecture, so that the amount of partial recognition signal processing tasks can be allocated on a dynamic basis based on processing resources, channel conditions, etc. Partially processed speech data from the client device can be streamed to a server for a real-time response. Additional natural language processing operations can also be performed to implement sentence recognition functionality.

Owner:NUANCE COMM INC

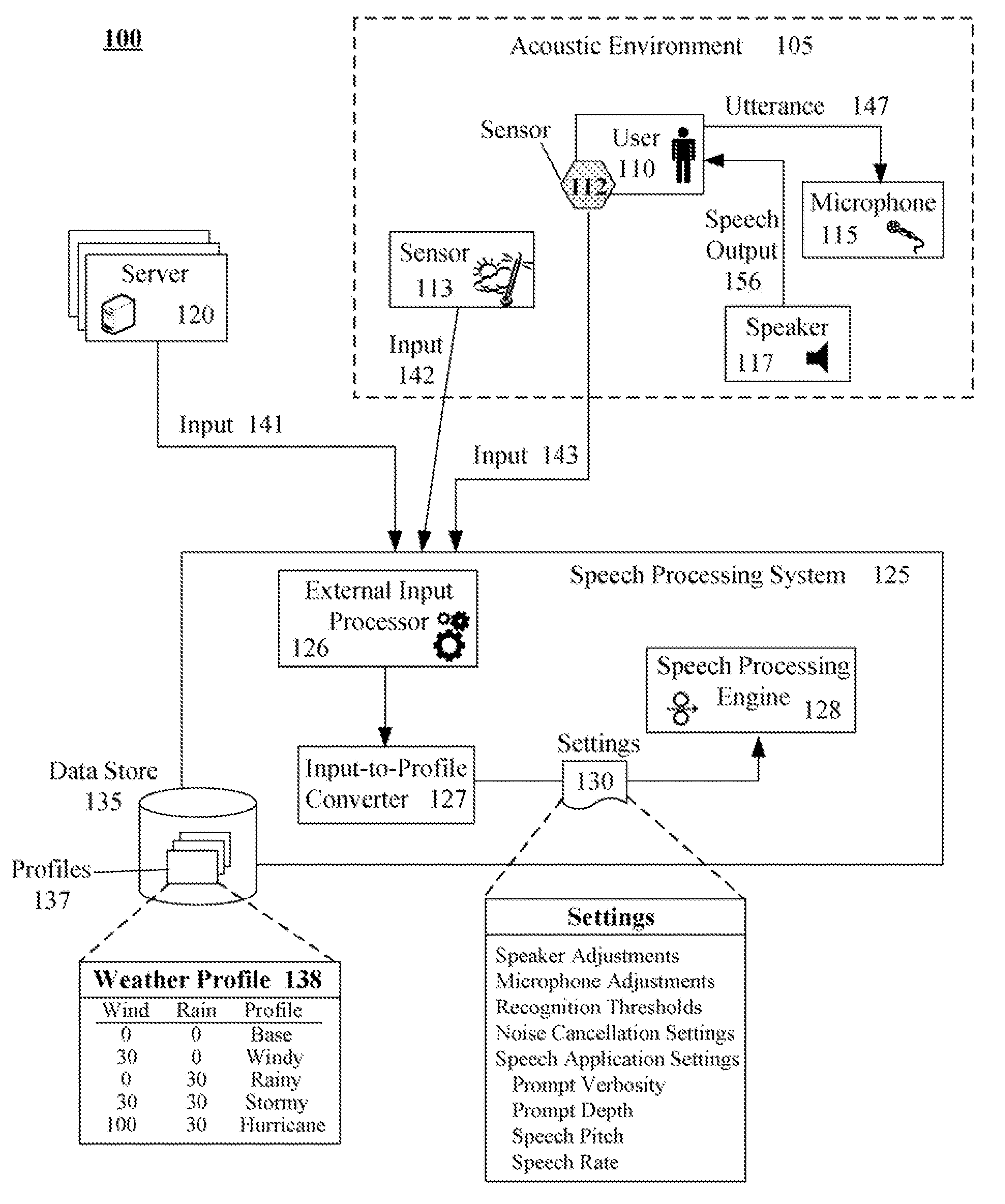

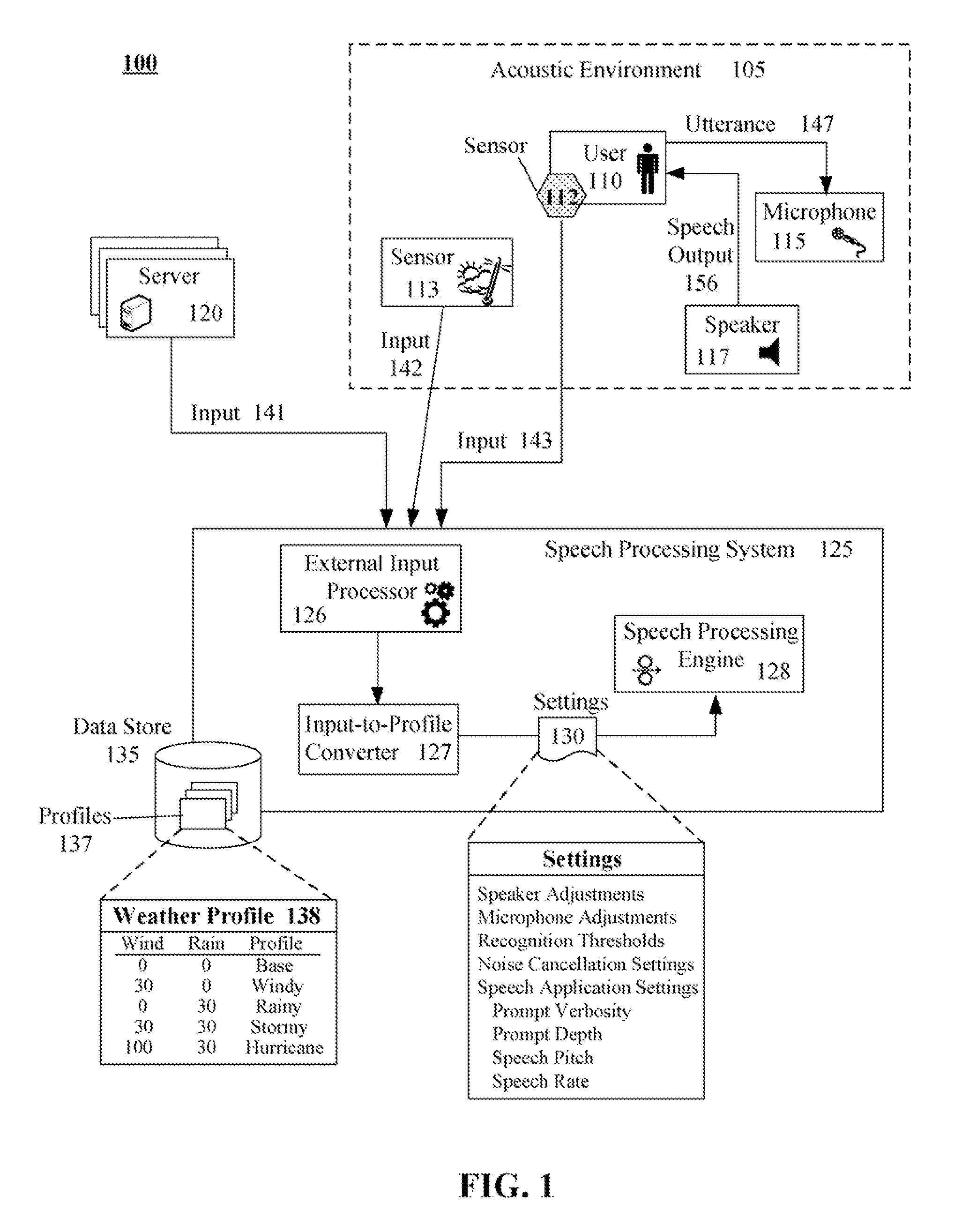

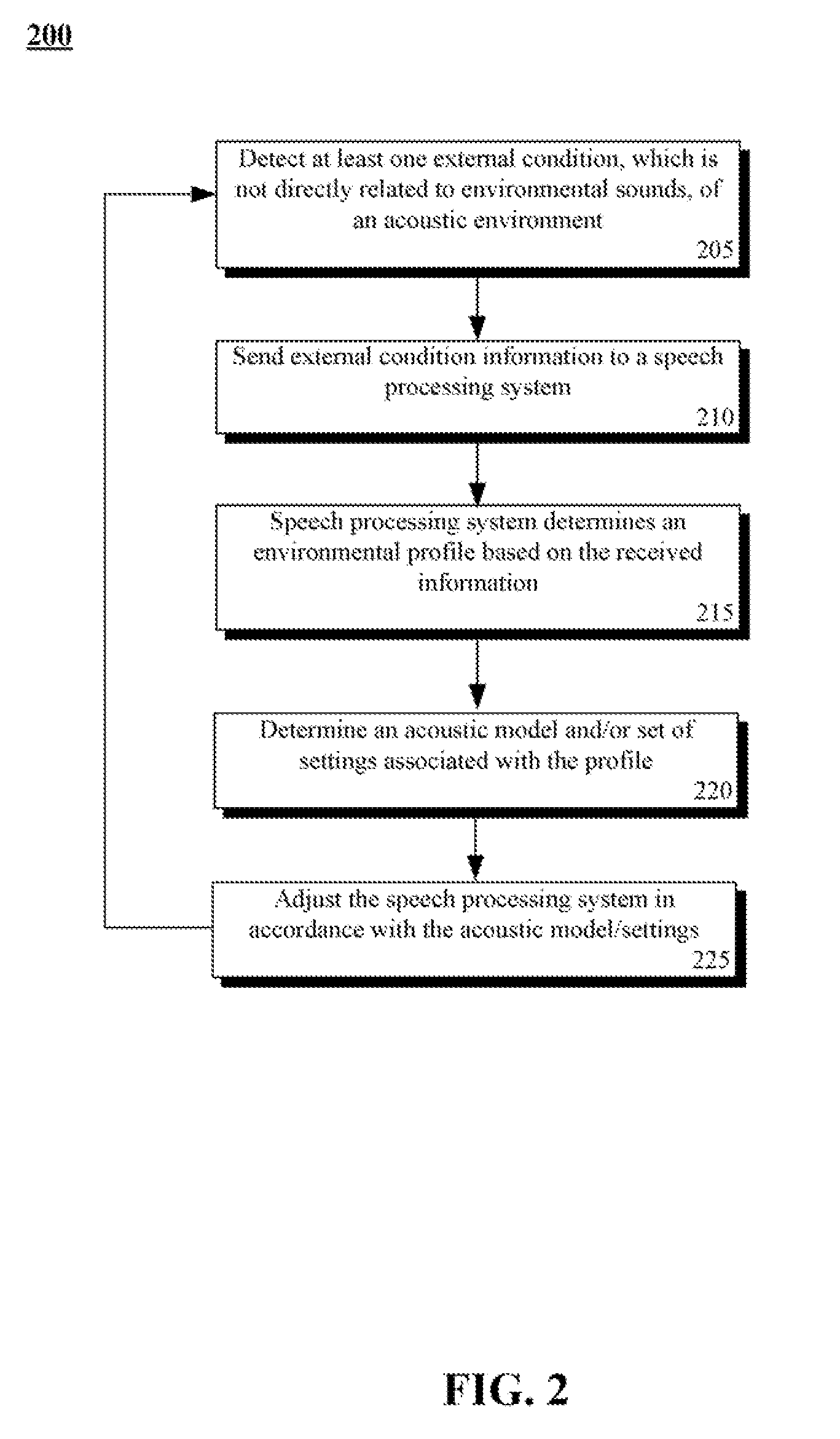

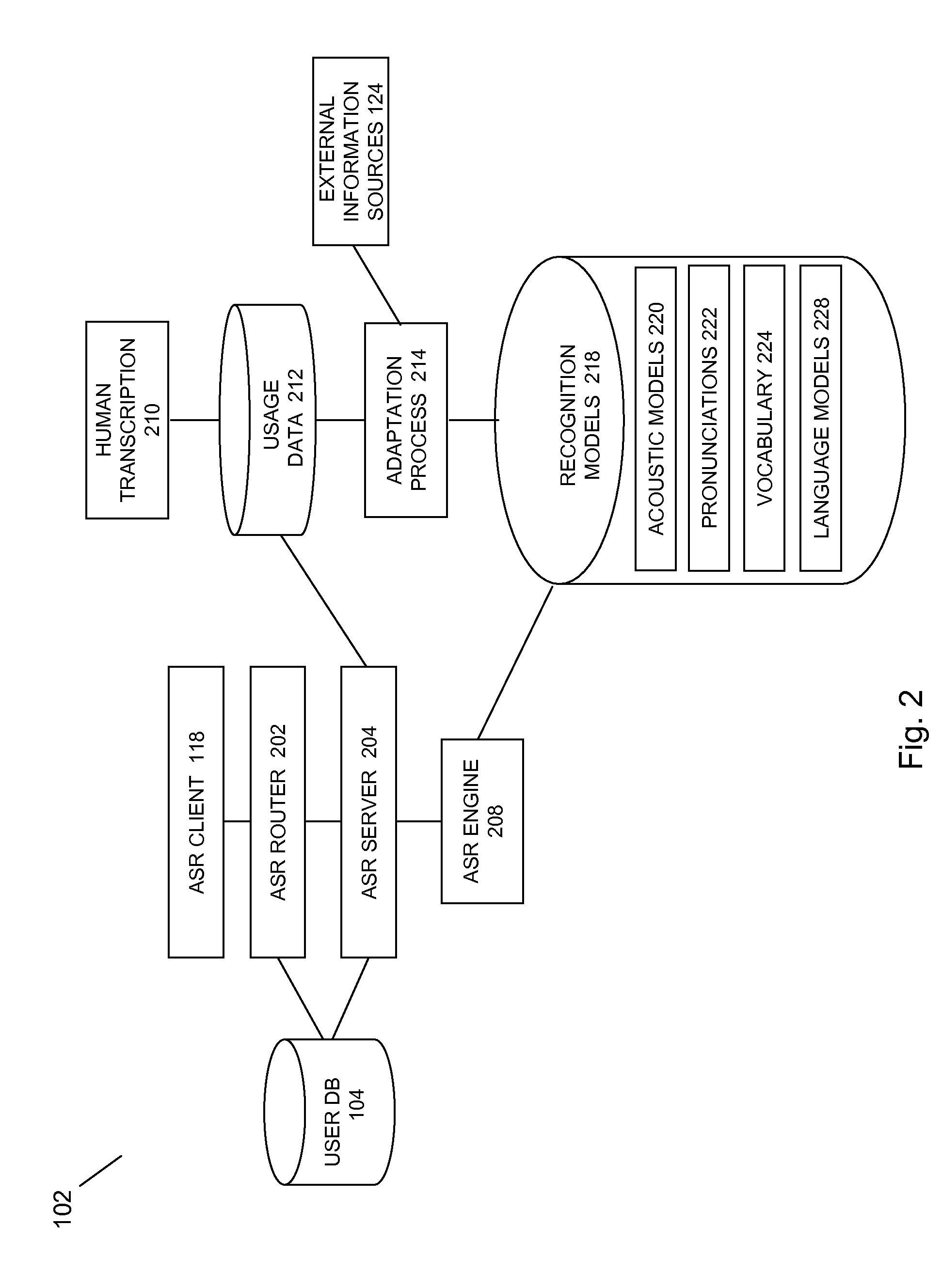

Adaptation of a speech processing system from external input that is not directly related to sounds in an operational acoustic environment

InactiveUS20080147411A1Improve system performanceImprove performanceSpeech recognitionFilter algorithmTransducer

A speech processing system that performs adaptations based upon non-sound external input, such as weather input. In the system, an acoustic environment can include a microphone and speaker. The microphone / speaker can receive / produce speech input / output to / from a speech processing system. An external input processor can receive non-sound input relating to the acoustic environment and to match the received input to a related profile. A setting adjustor can automatically adjust settings of the speech processing system based upon a profile based upon input processed by the external input processor. For example, the settings can include customized noise filtering algorithms, recognition confidence thresholds, output energy levels, and / or transducer gain settings.

Owner:NUANCE COMM INC

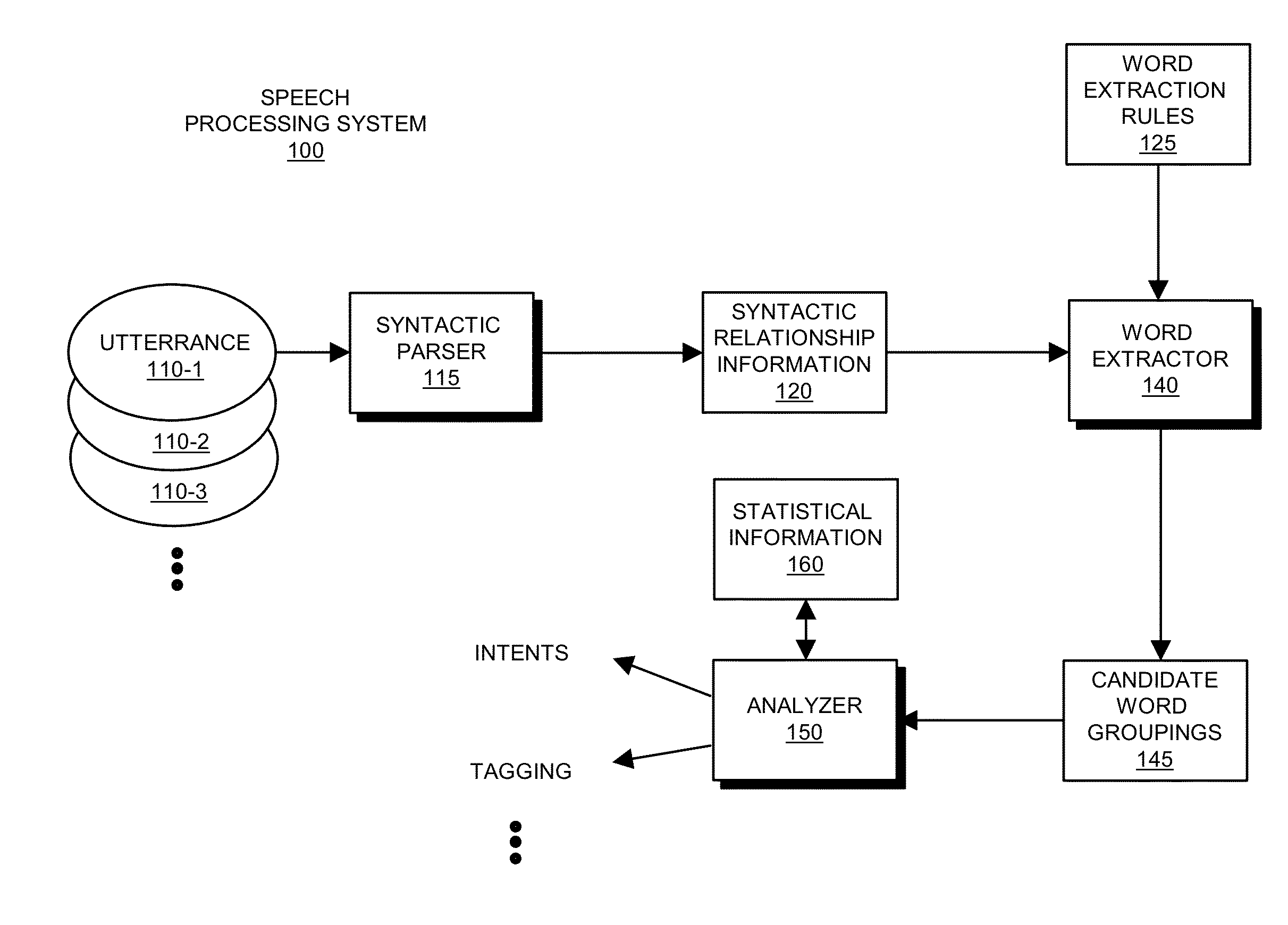

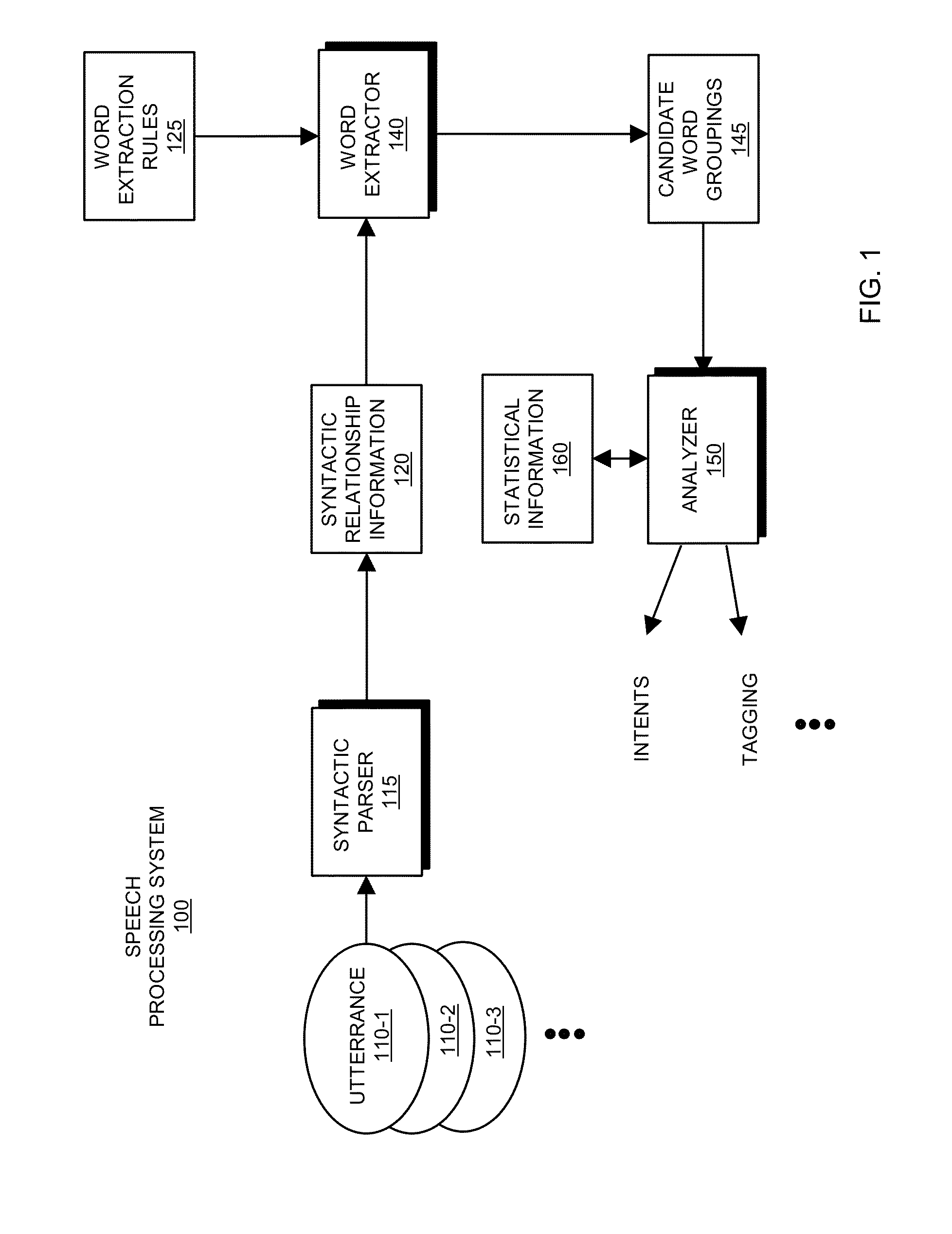

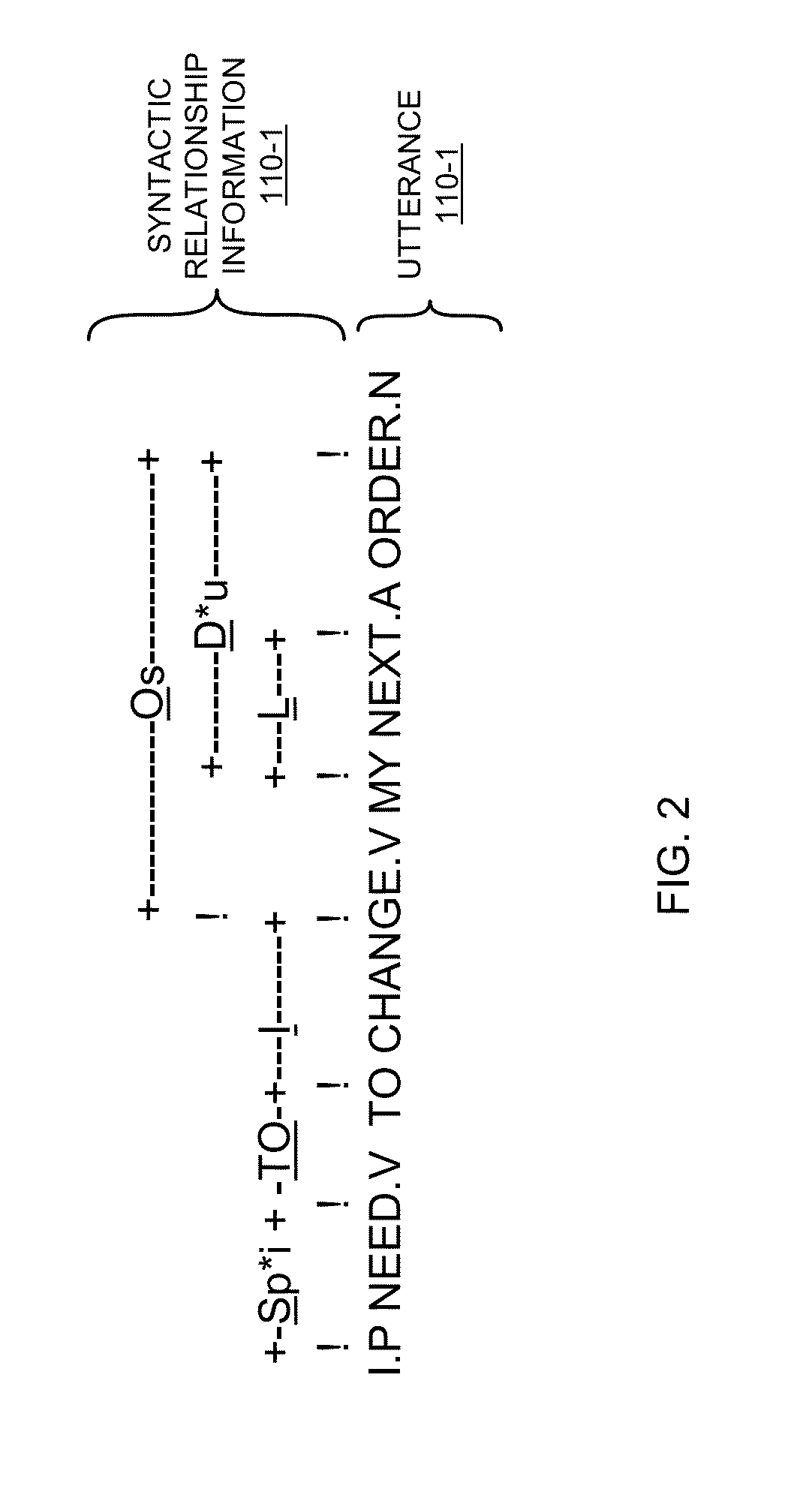

Intent mining via analysis of utterances

According to example configurations, a speech processing system can include a syntactic parser, a word extractor, word extraction rules, and an analyzer. The syntactic parser of the speech processing system parses the utterance to identify syntactic relationships amongst words in the utterance. The word extractor utilizes word extraction rules to identify groupings of related words in the utterance that most likely represent an intended meaning of the utterance. The analyzer in the speech processing system maps each set of the sets of words produced by the word extractor to a respective candidate intent value to produce a list of candidate intent values for the utterance. The analyzer is configured to select, from the list of candidate intent values (i.e., possible intended meanings) of the utterance, a particular candidate intent value as being representative of the intent (i.e., intended meaning) of the utterance.

Owner:NUANCE COMM INC

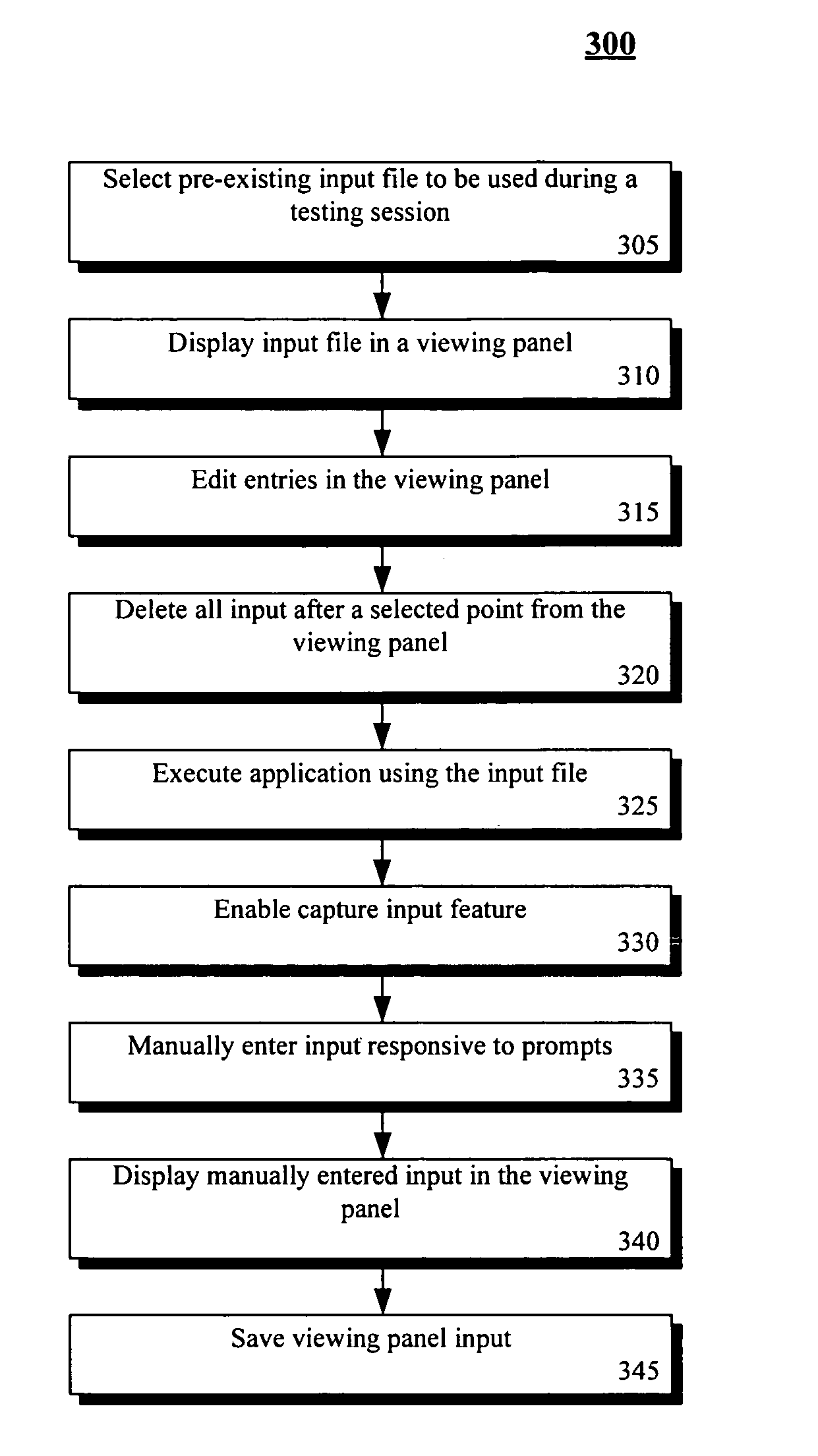

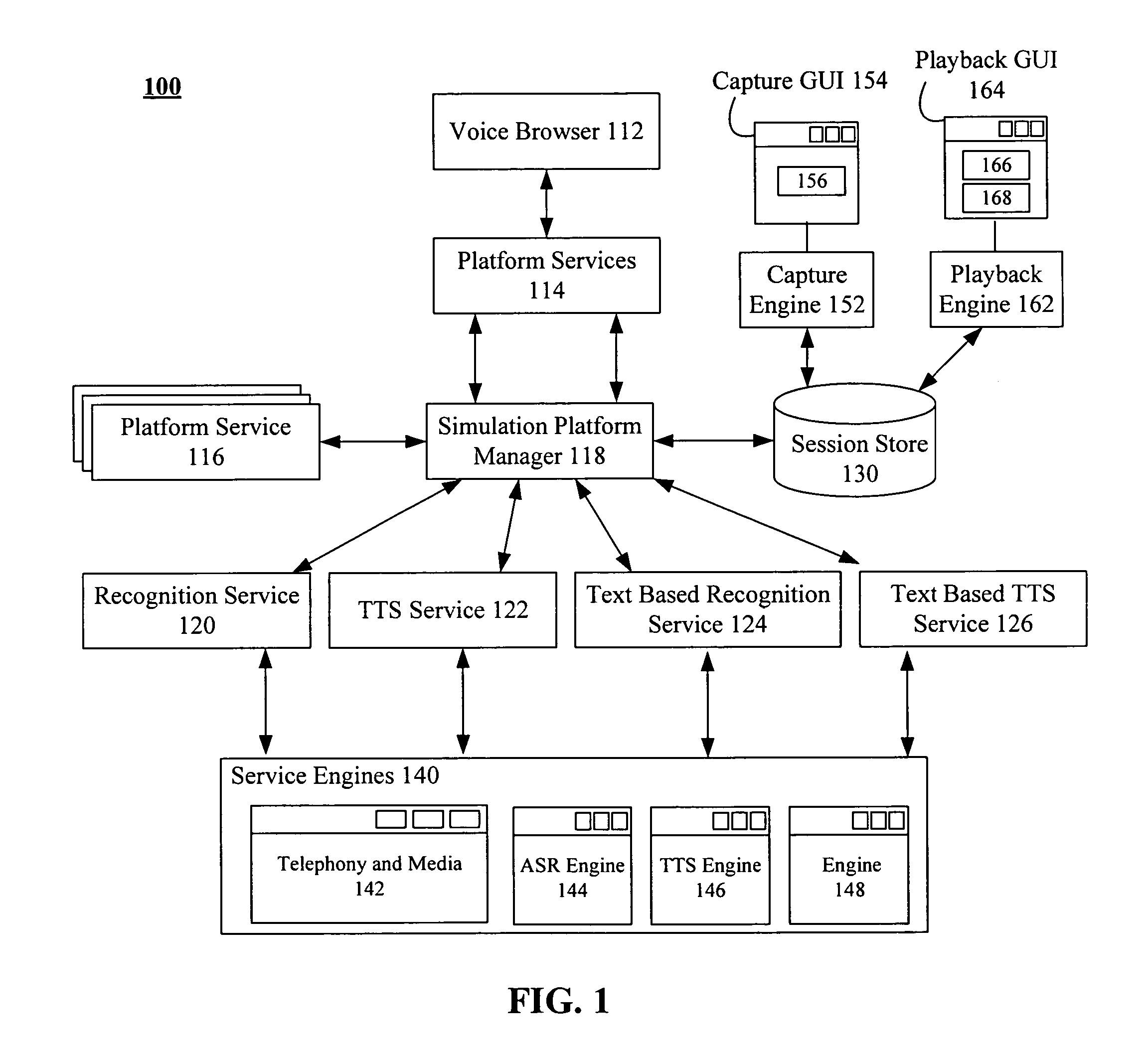

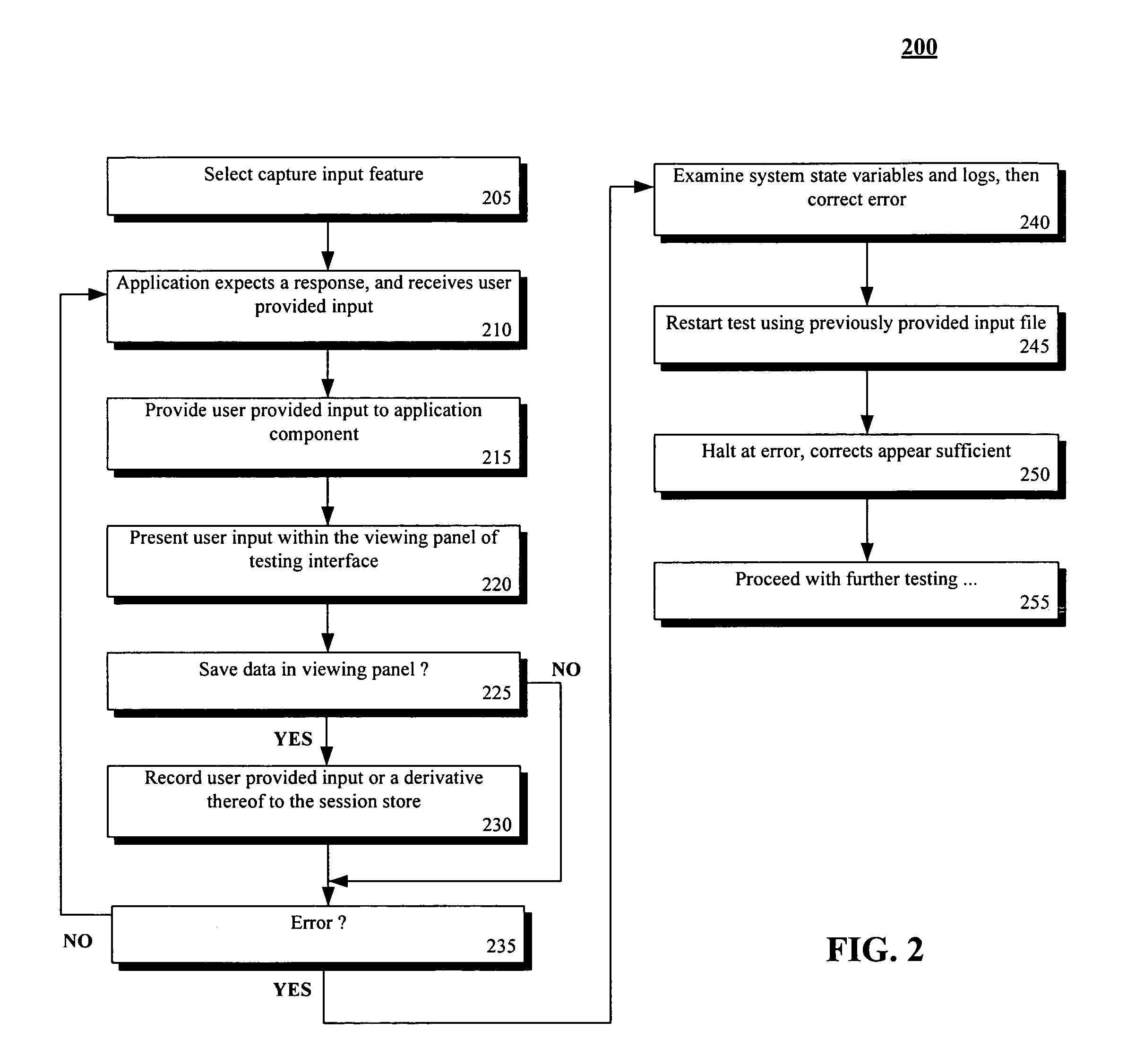

Automating input when testing voice-enabled applications

ActiveUS8260617B2Automatic call-answering/message-recording/conversation-recordingSpeech recognitionUser inputApplication software

A method for automating an input process during a testing of a voice-enabled application so that a tester is not required to manually respond to each prompt of the voice-enabled application. The method including the step of identifying a session input store that includes input data associated with voice-enabled application prompts and the step of executing the voice-enabled application. The voice-enabled application can prompt for user input that is to be used when performing a speech processing task. Input data from the session input store can be automatically extracted responsive to the prompting and used in place of manually entered input. The speech processing service can perform the speech processing task based upon the extracted data.

Owner:NUANCE COMM INC

Phonetic decoding and concatentive speech synthesis

A speech processing system includes a multiplexer that receives speech data input as part of a conversation turn in a conversation session between two or more users where one user is a speaker and each of the other users is a listener in each conversation turn. A speech recognizing engine converts the speech data to an input string of acoustic data while a speech modifier forms an output string based on the input string by changing an item of acoustic data according to a rule. The system also includes a phoneme speech engine for converting the first output string of acoustic data including modified and unmodified data to speech data for output via the multiplexer to listeners during the conversation turn.

Owner:CERENCE OPERATING CO

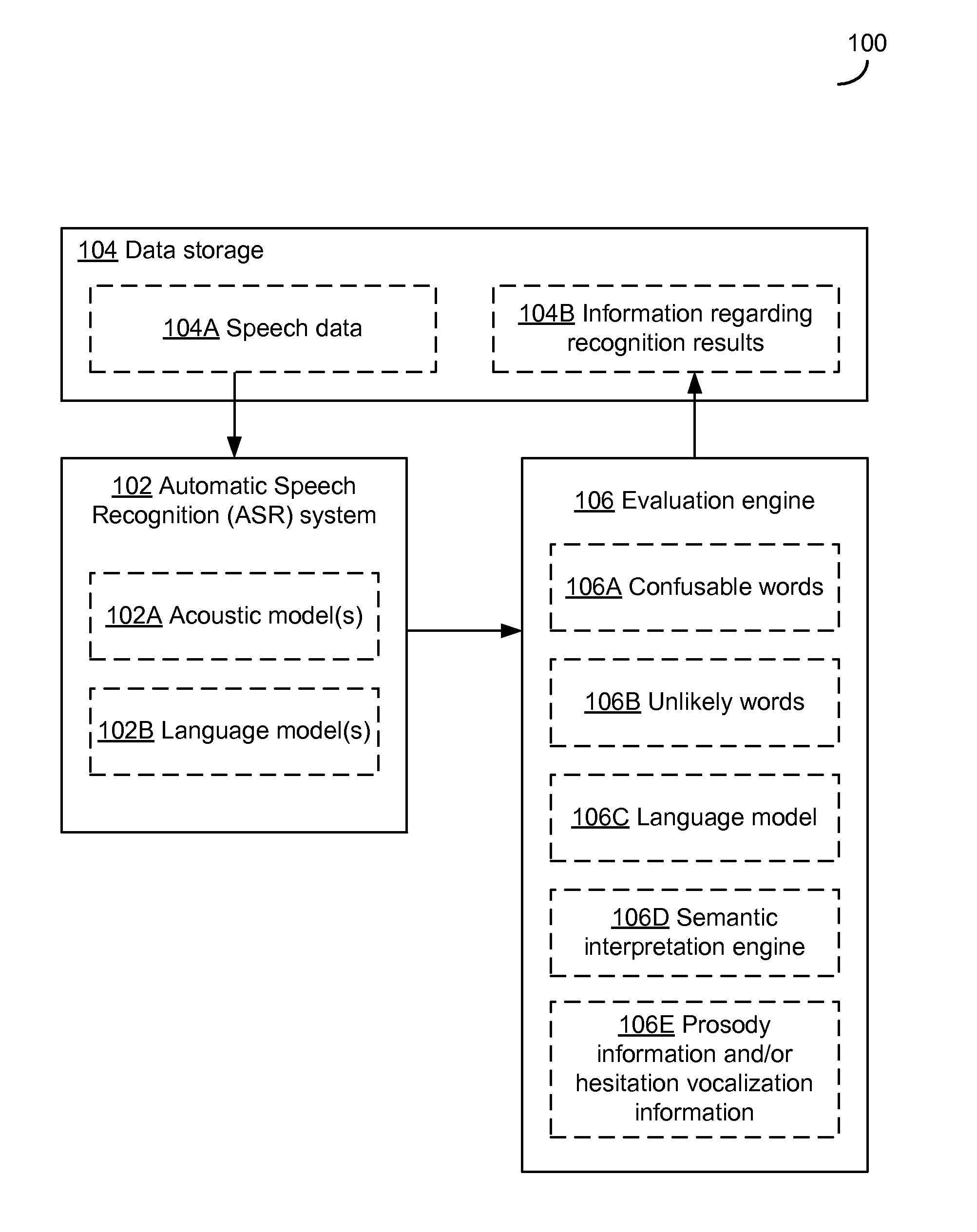

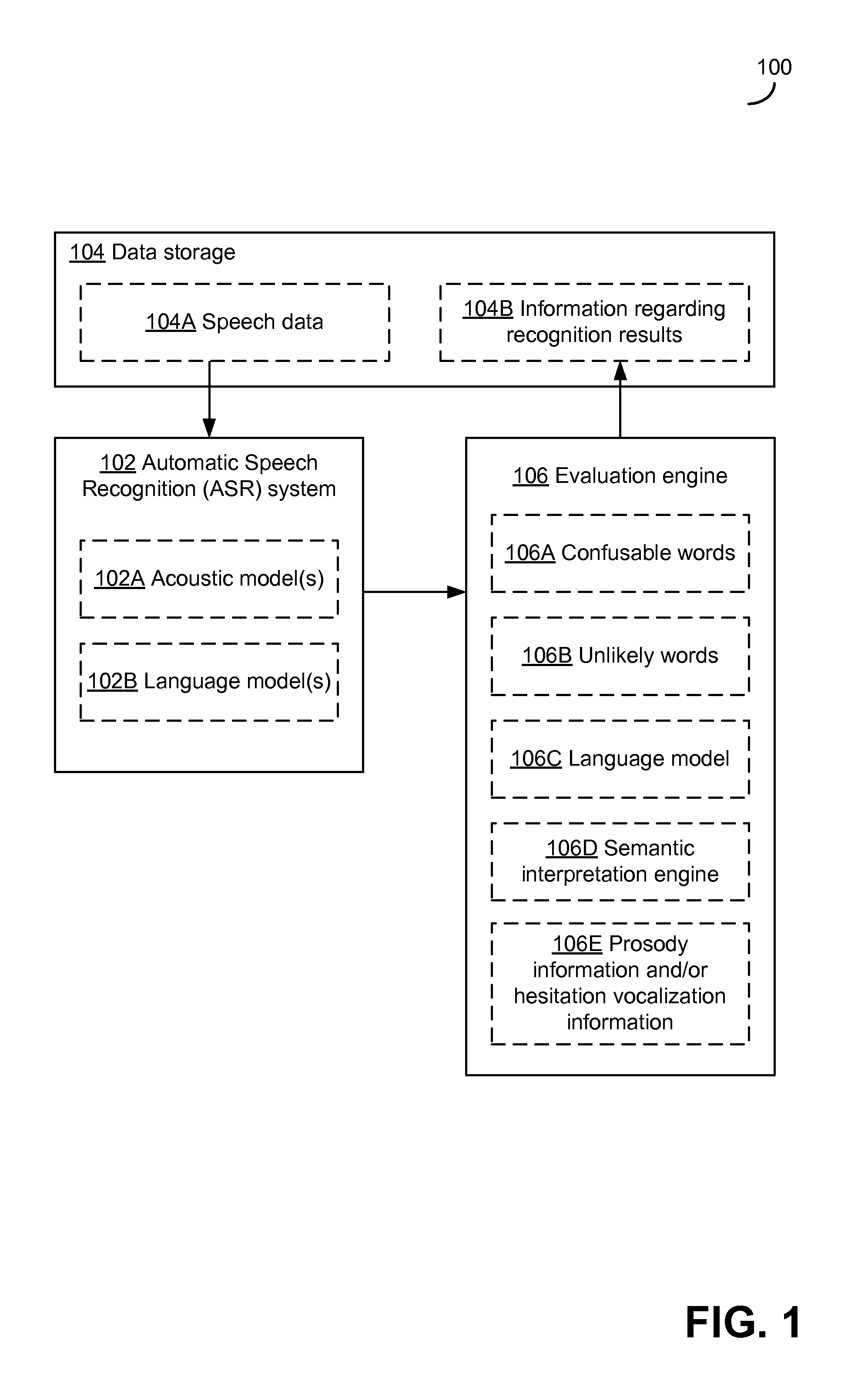

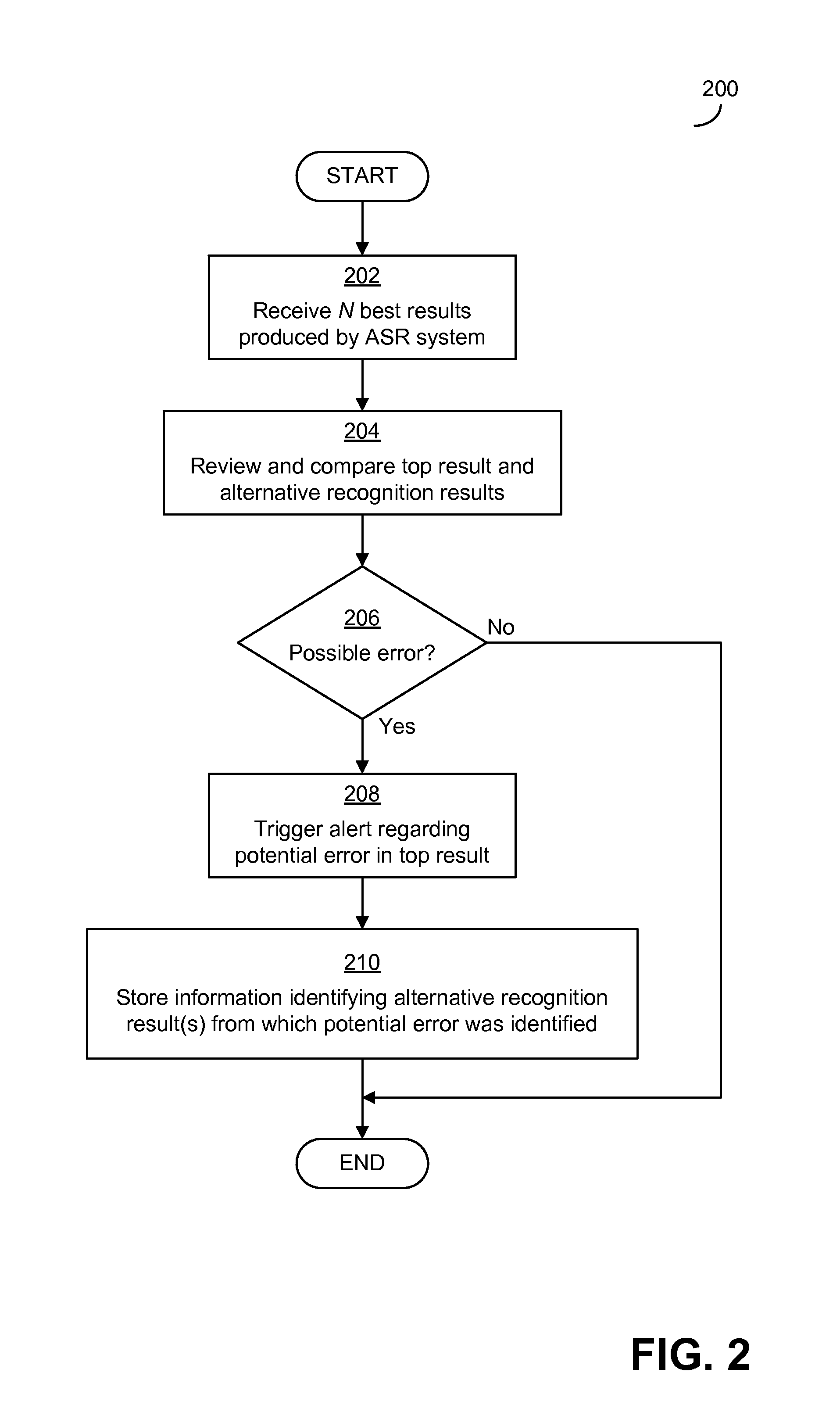

Detecting potential significant errors in speech recognition results

In some embodiments, the recognition results produced by a speech processing system (which may include a top recognition result and one or more alternative recognition results) based on an analysis of a speech input, are evaluated for indications of potential significant errors. In some embodiments, the recognition results may be evaluated to determine whether a meaning of any of the alternative recognition results differs from a meaning of the top recognition result in a manner that is significant for the domain. In some embodiments, one or more of the recognition results may be evaluated to determine whether the result(s) include one or more words or phrases that, when included in a result, would change a meaning of the result in a manner that would be significant for the domain.

Owner:NUANCE COMM INC

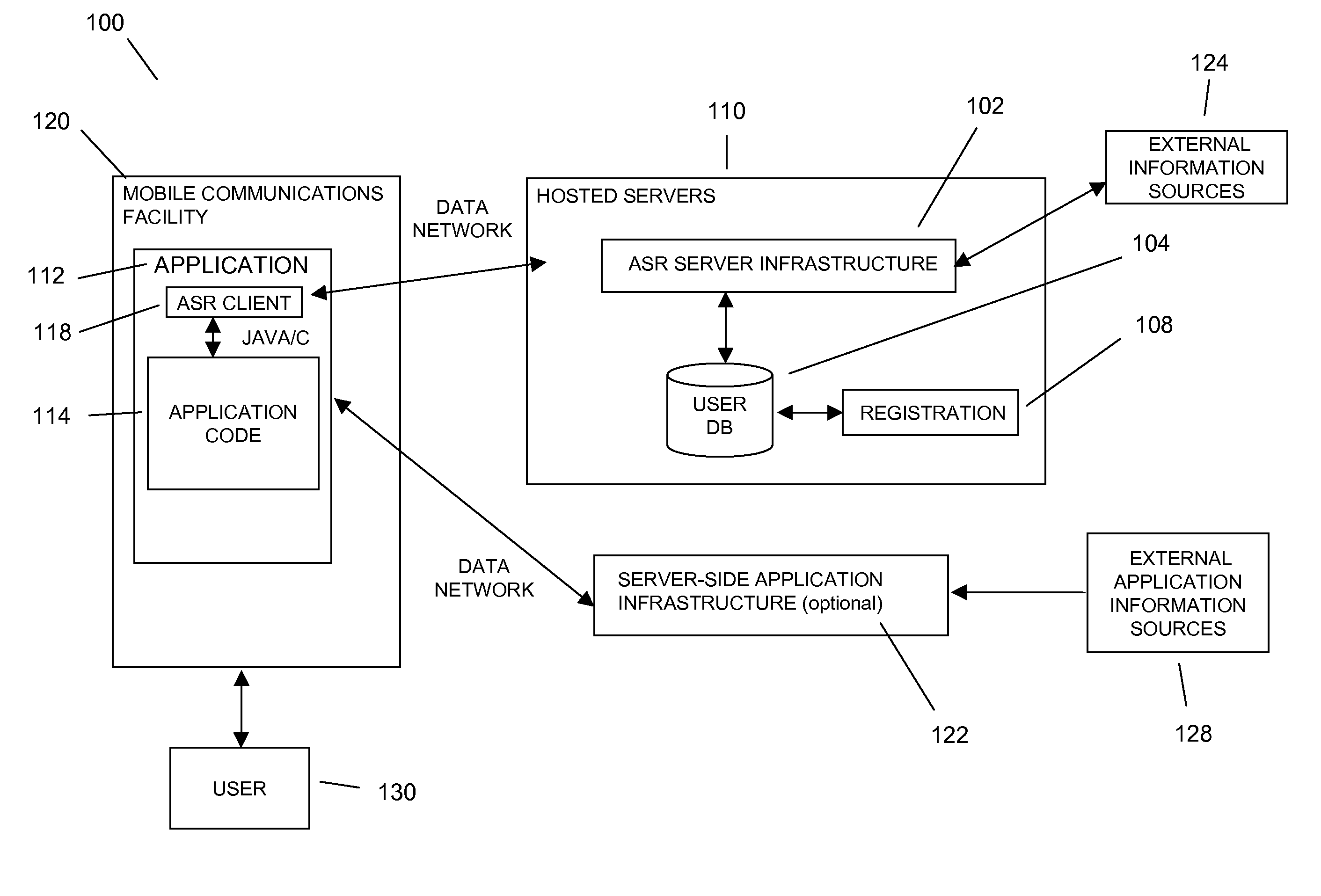

Mobile environment speech processing facility

In embodiments of the present invention improved capabilities are described for a mobile environment speech processing facility. The present invention may provide for the entering of text into a software application resident on a mobile communication facility, where recorded speech may be presented by the user using the mobile communications facility's resident capture facility. Transmission of the recording may be provided through a wireless communication facility to a speech recognition facility, and may be accompanied by information related to the software application. Results may be generated utilizing the speech recognition facility that may be independent of structured grammar, and may be based at least in part on the information relating to the software application and the recording. The results may then be transmitted to the mobile communications facility, where they may be loaded into the software application. In addition, the speech recognition facility may be adapted based on usage.

Owner:MOBEUS CORP +1

Intelligent text-to-speech conversion

ActiveUS8996376B2Natural language data processingDigital storageElectronic documentVoice transformation

Owner:APPLE INC

Method, Apparatus and Computer Program Product for Providing a Language Based Interactive Multimedia System

InactiveUS20080126093A1Improve rendering capabilitiesImprove efficiencySpeech recognitionSpeech synthesisProcessing elementSpeech sound

An apparatus for providing a language based interactive multimedia system includes a selection element, a comparison element and a processing element. The selection element may be configured to select a phoneme graph based on a type of speech processing associated with an input sequence of phonemes. The comparison element may be configured to compare the input sequence of phonemes to the selected phoneme graph. The processing element may be in communication with the comparison element and configured to process the input sequence of phonemes based on the comparison.

Owner:NOKIA CORP

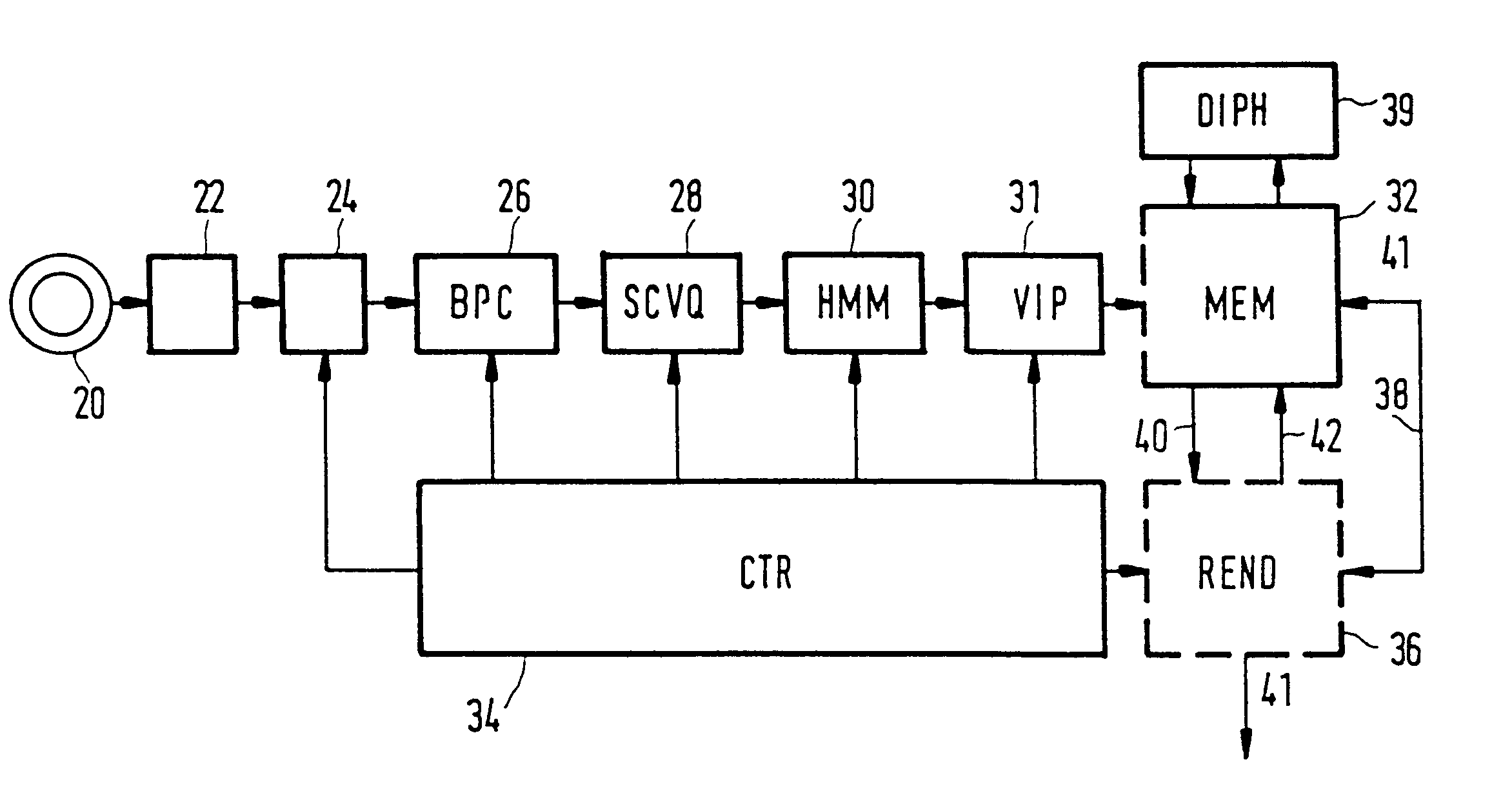

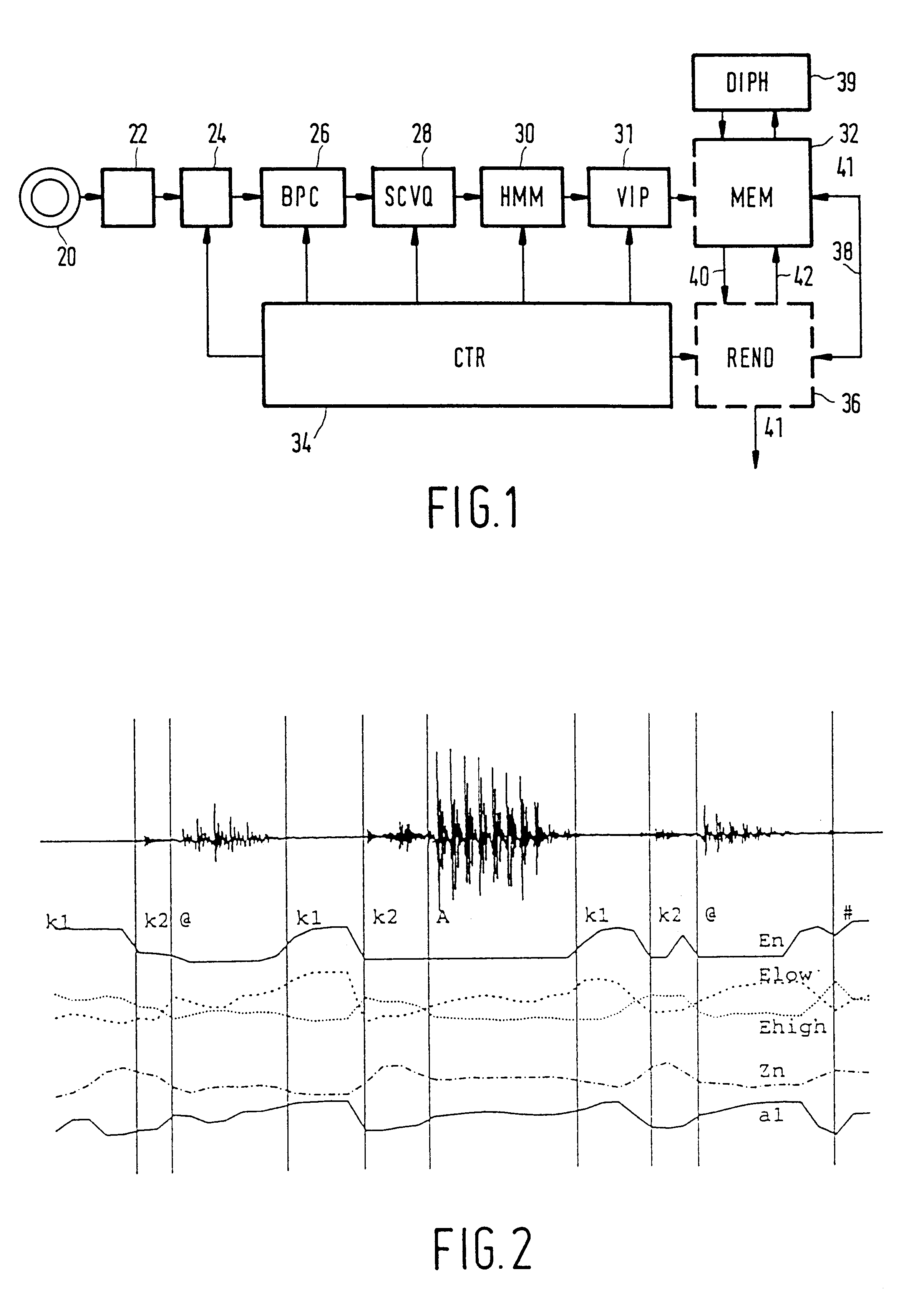

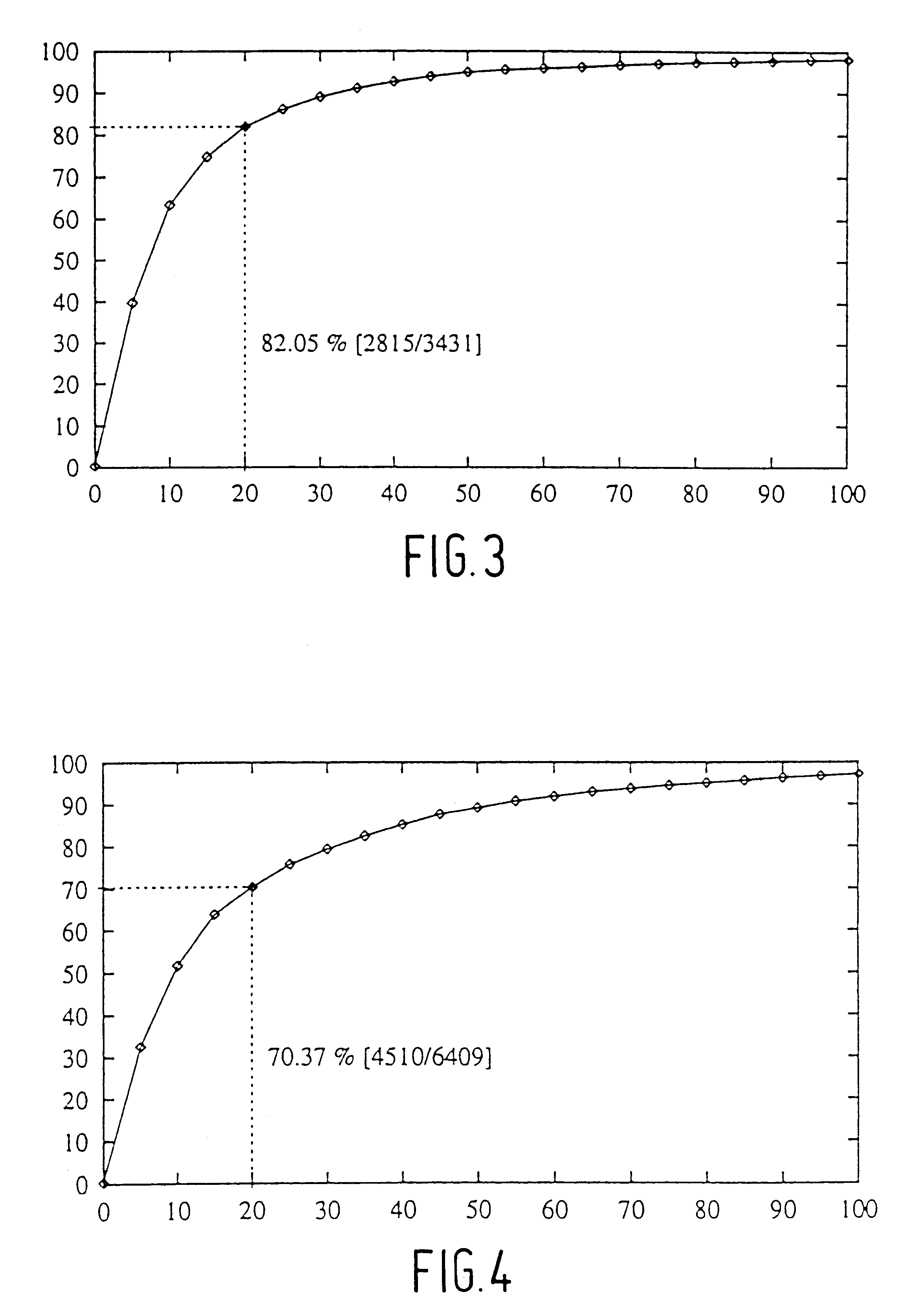

Method and apparatus for automatic speech segmentation into phoneme-like units for use in speech processing applications, and based on segmentation into broad phonetic classes, sequence-constrained vector quantization and hidden-markov-models

InactiveUS6208967B1Straightforward and inexpensiveDigital computer detailsBiological modelsAutomatic speech segmentationSpoken language

For machine segmenting of speech, first utterances from a database of known spoken words are classified and segmented into three broad phonetic classes (BPC) voiced, unvoiced, and silence. Next, using preliminary segmentation positions as anchor points, sequence-constrained vector quantization is used for further segmentation into phoneme-like units. Finally, exact tuning to the segmented phonemes is done through Hidden-Markov Modelling and after training a diphone set is composed for further usage.

Owner:U S PHILIPS CORP

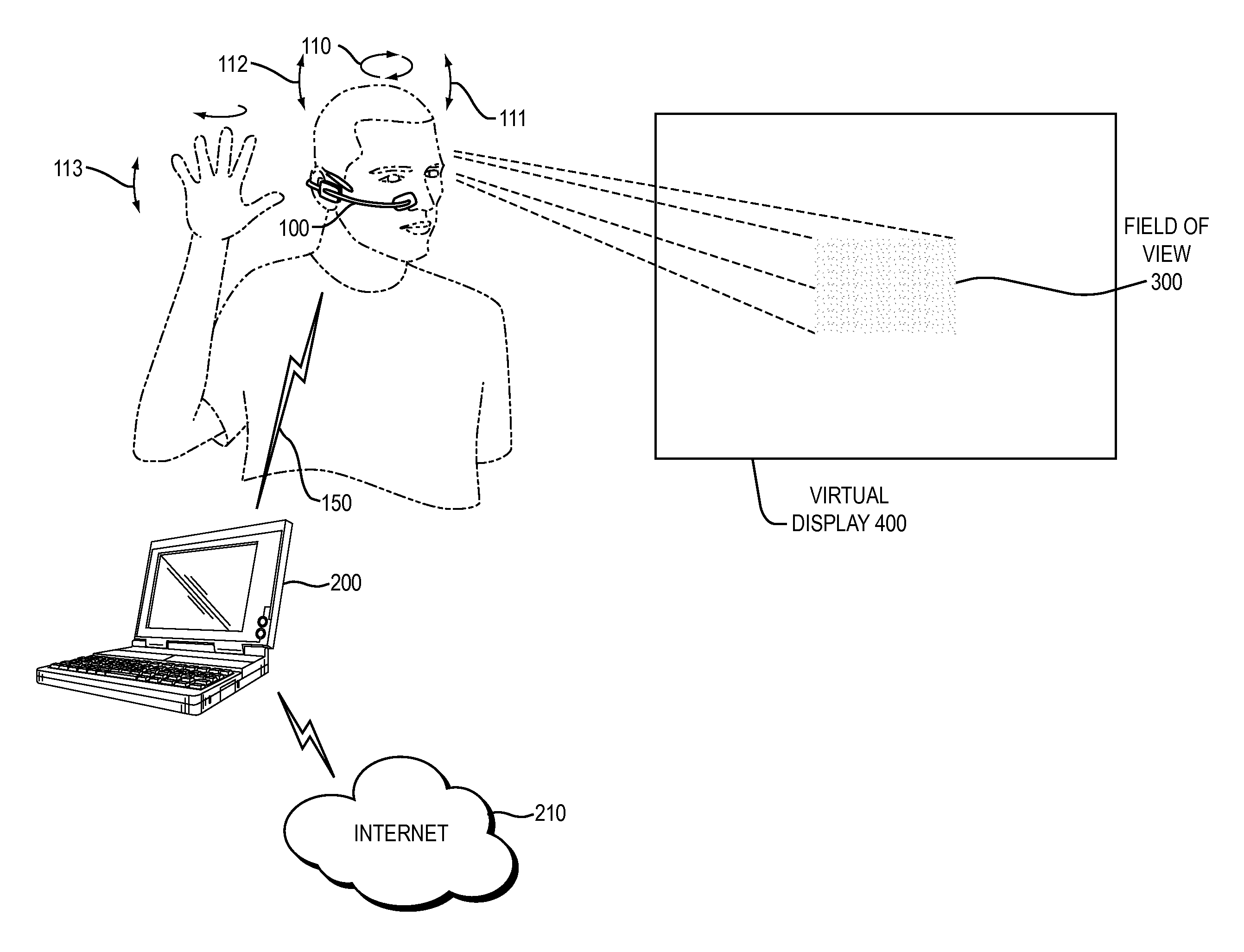

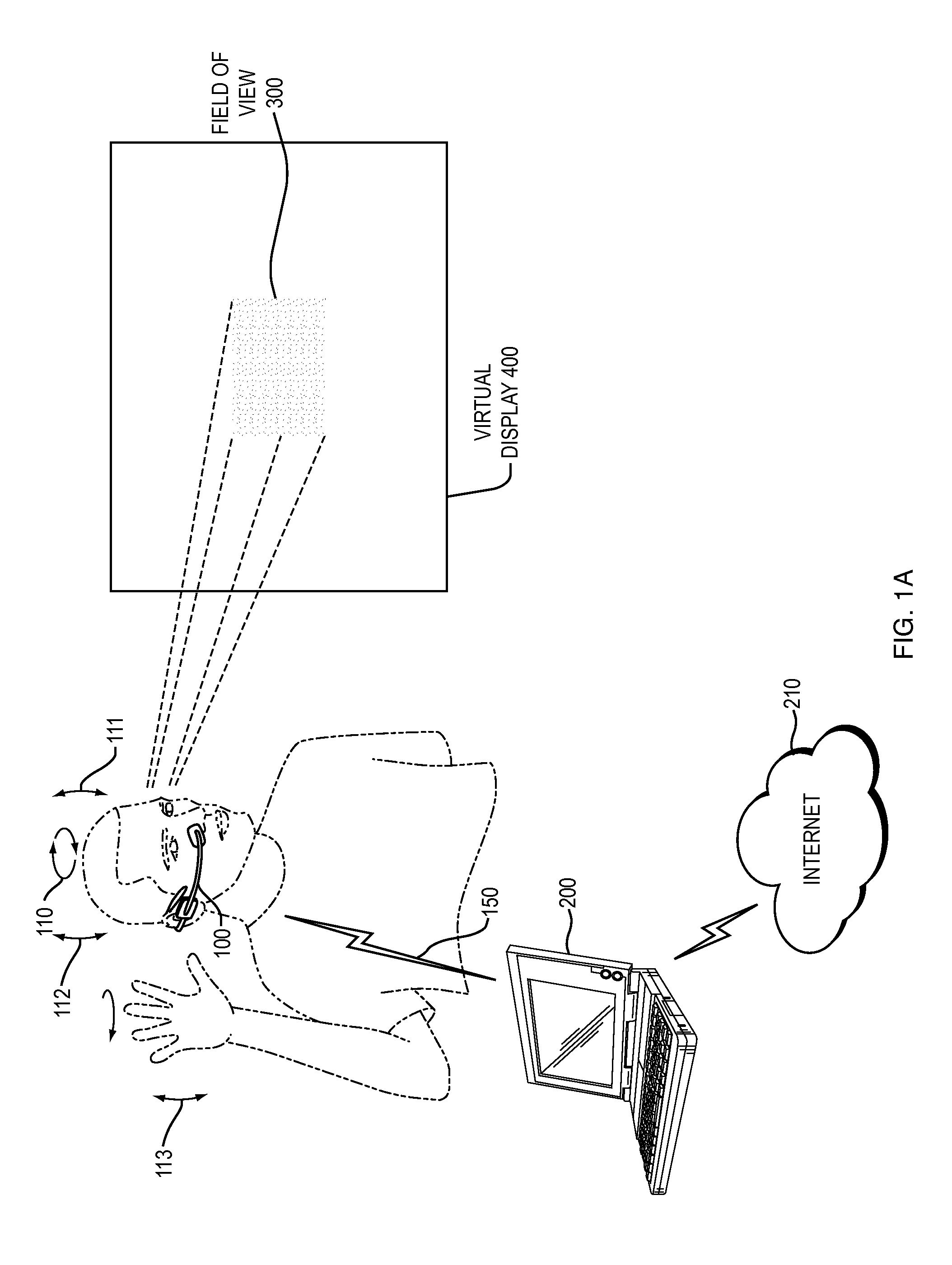

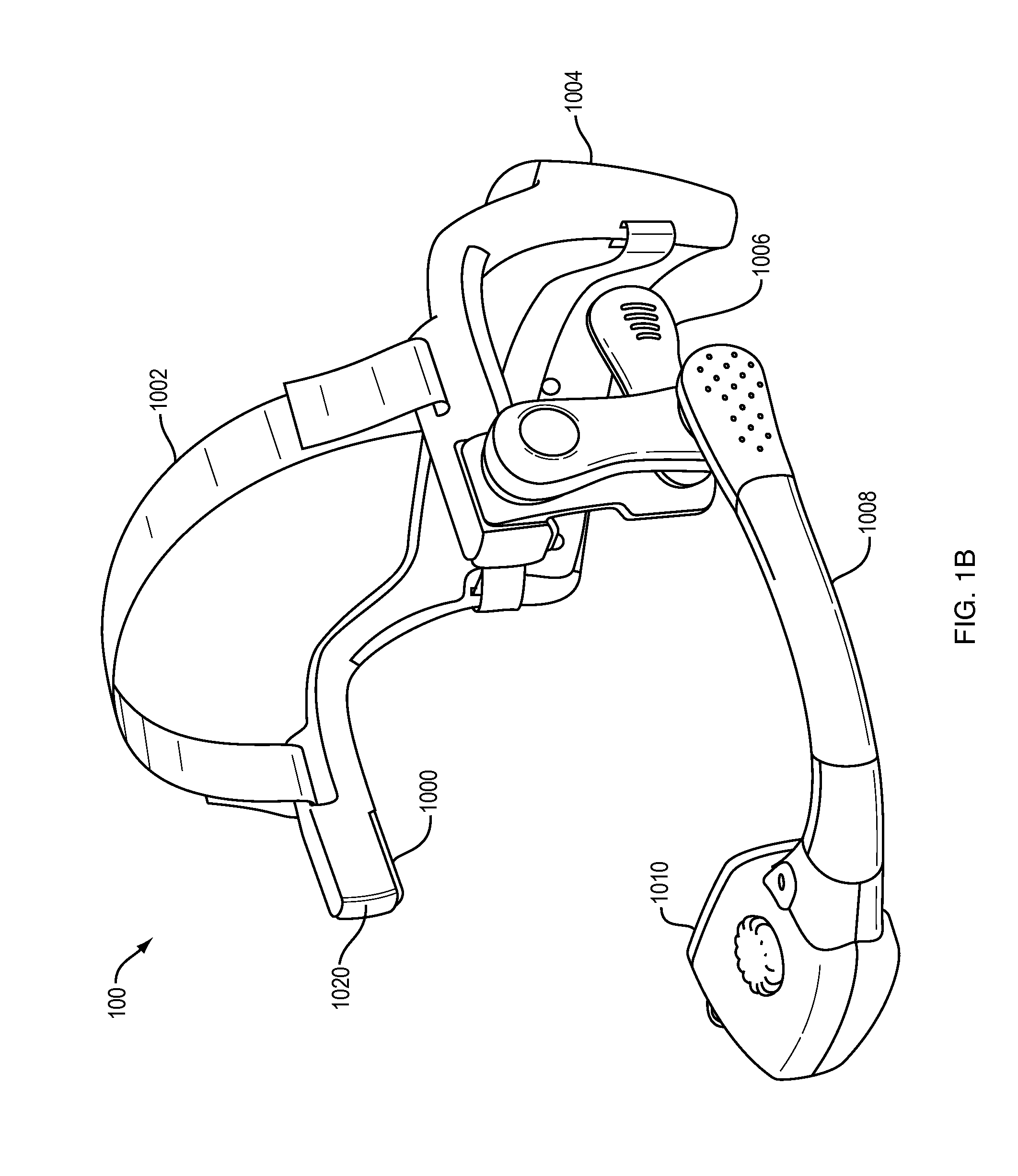

Text Editing With Gesture Control And Natural Speech

ActiveUS20150187355A1Small sizeNatural language data processingDetails for portable computersAddress bookText editing

Owner:KOPIN CORPORATION

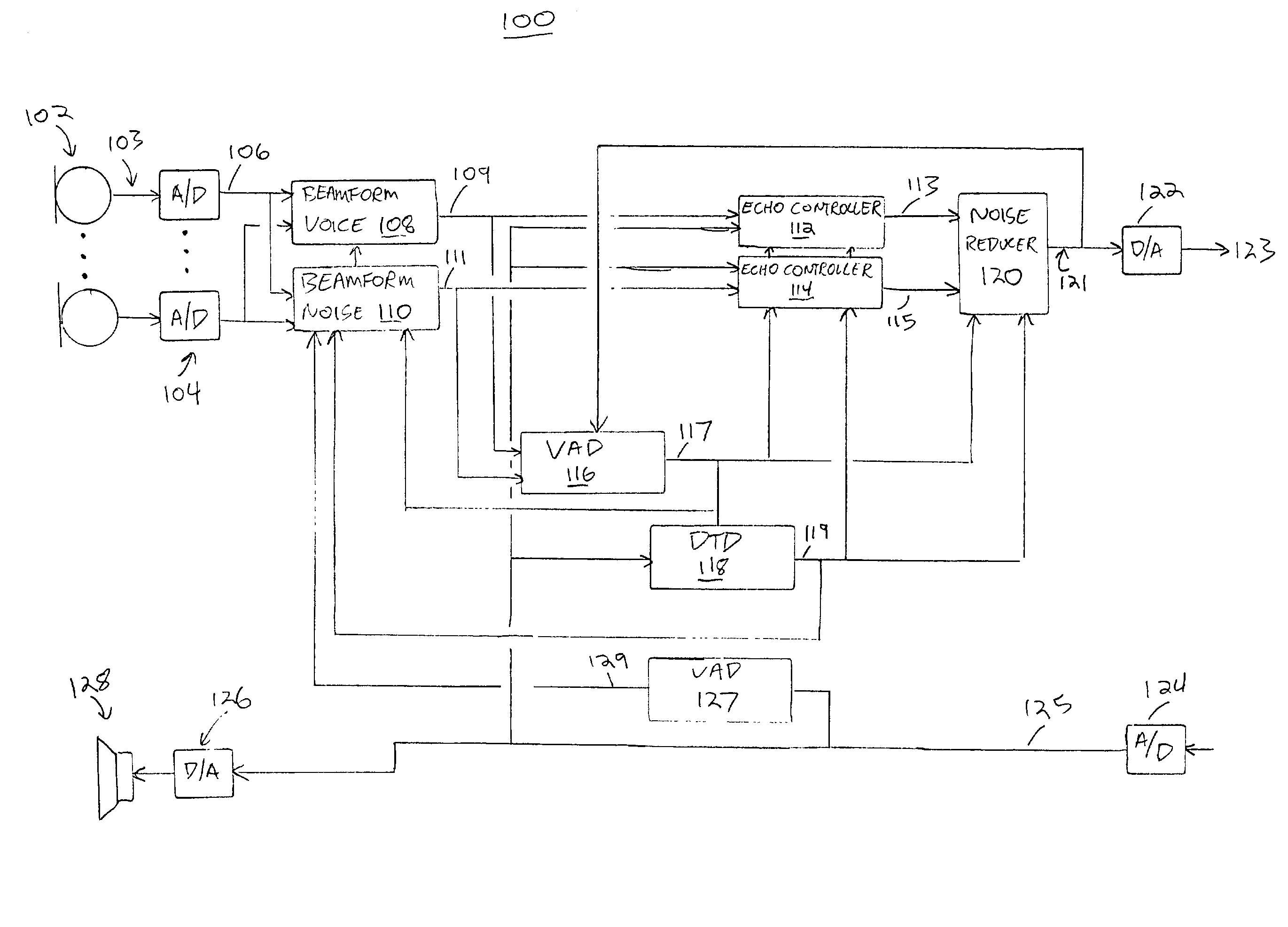

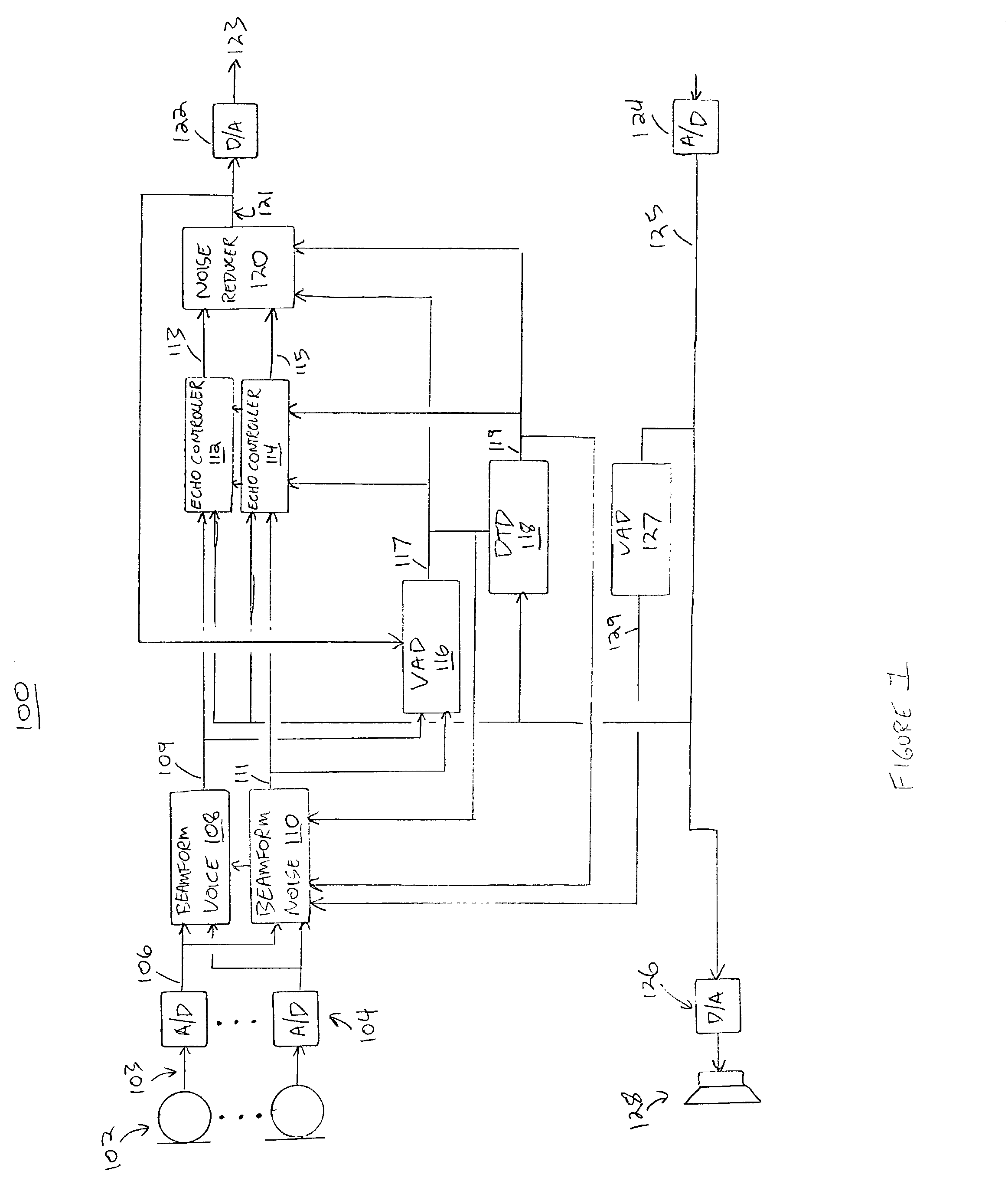

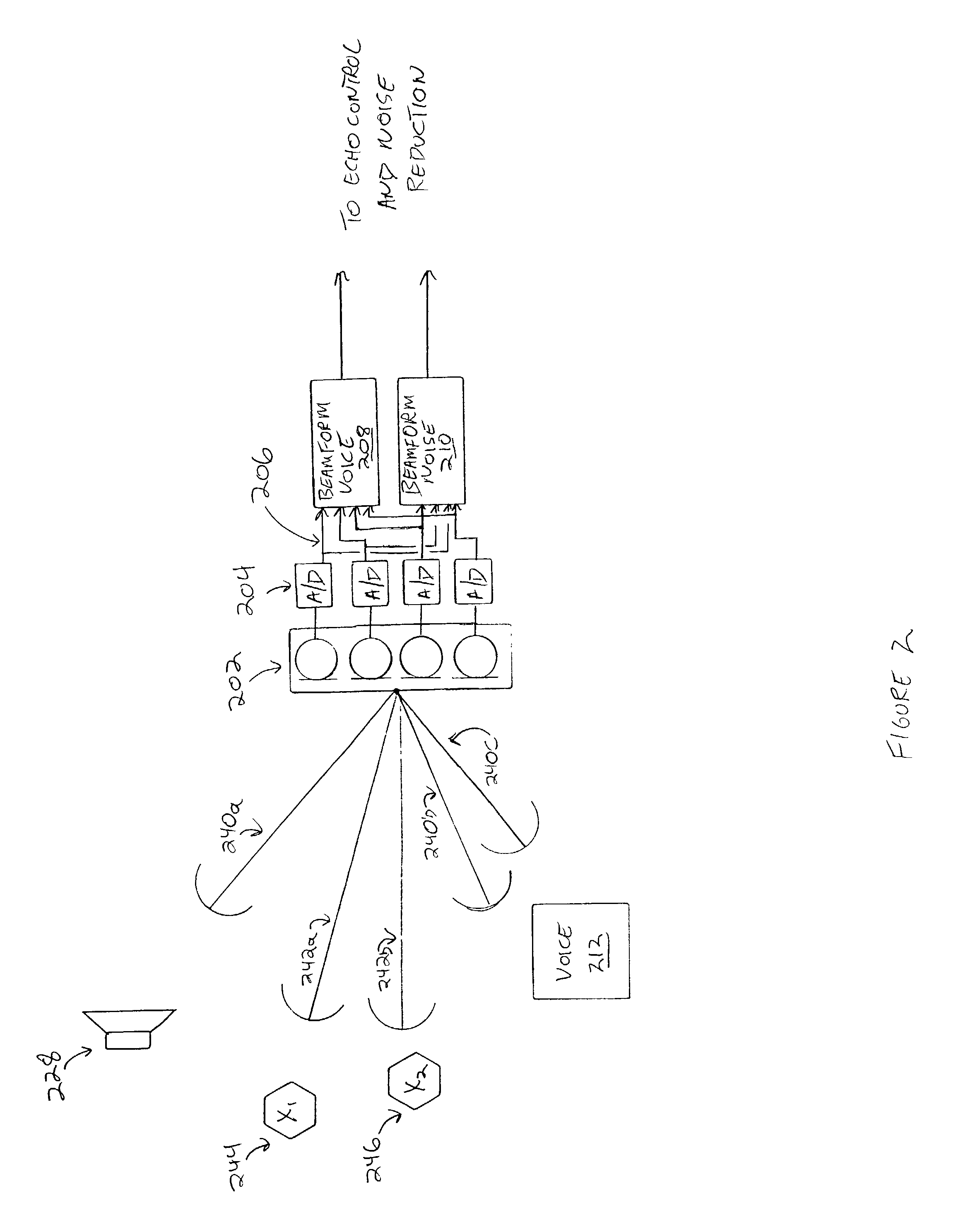

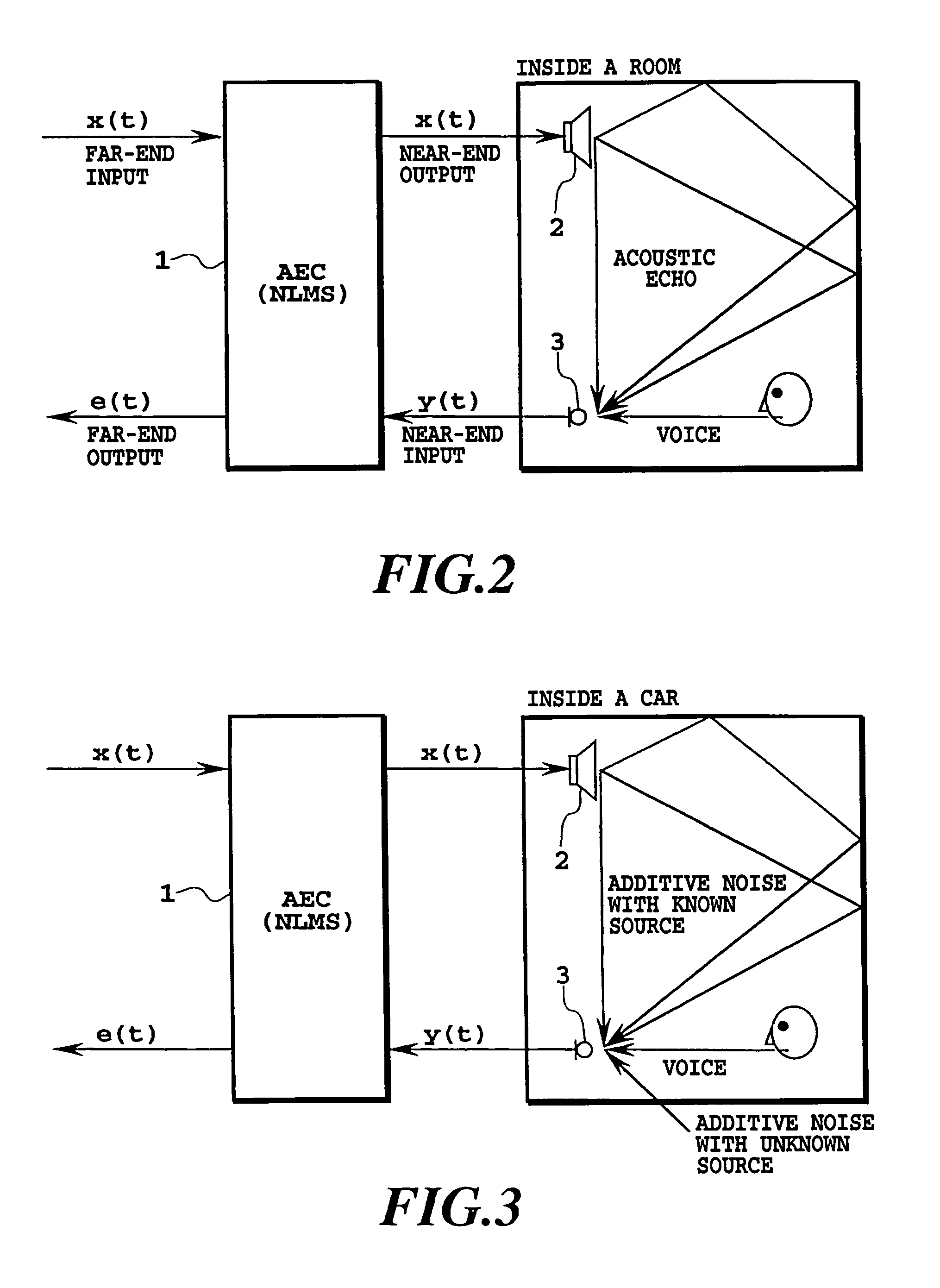

Method and apparatus for reducing echo and noise

InactiveUS7359504B1Reducing acoustic echoReduce background noiseTwo-way loud-speaking telephone systemsReducerComputer science

The present invention provides a solution to the needs described above through a method and apparatus for reducing echo and noise. The apparatus includes a microphone array for receiving and audio signal, the audio signal including a voice signal component and a noise signal component. The apparatus further includes a voice processing path having an input coupled to the microphone array and a noise processing path having an input coupled to the microphone array. The voice processing path is adapted to detect voice signals and the noise processing path is adapted to detect noise signals. A first echo controller is coupled to the voice processing path and a second echo controller is coupled to the noise processing path. A noise reducer is coupled to the output of the first echo controller and second echo controller.

Owner:PLANTRONICS

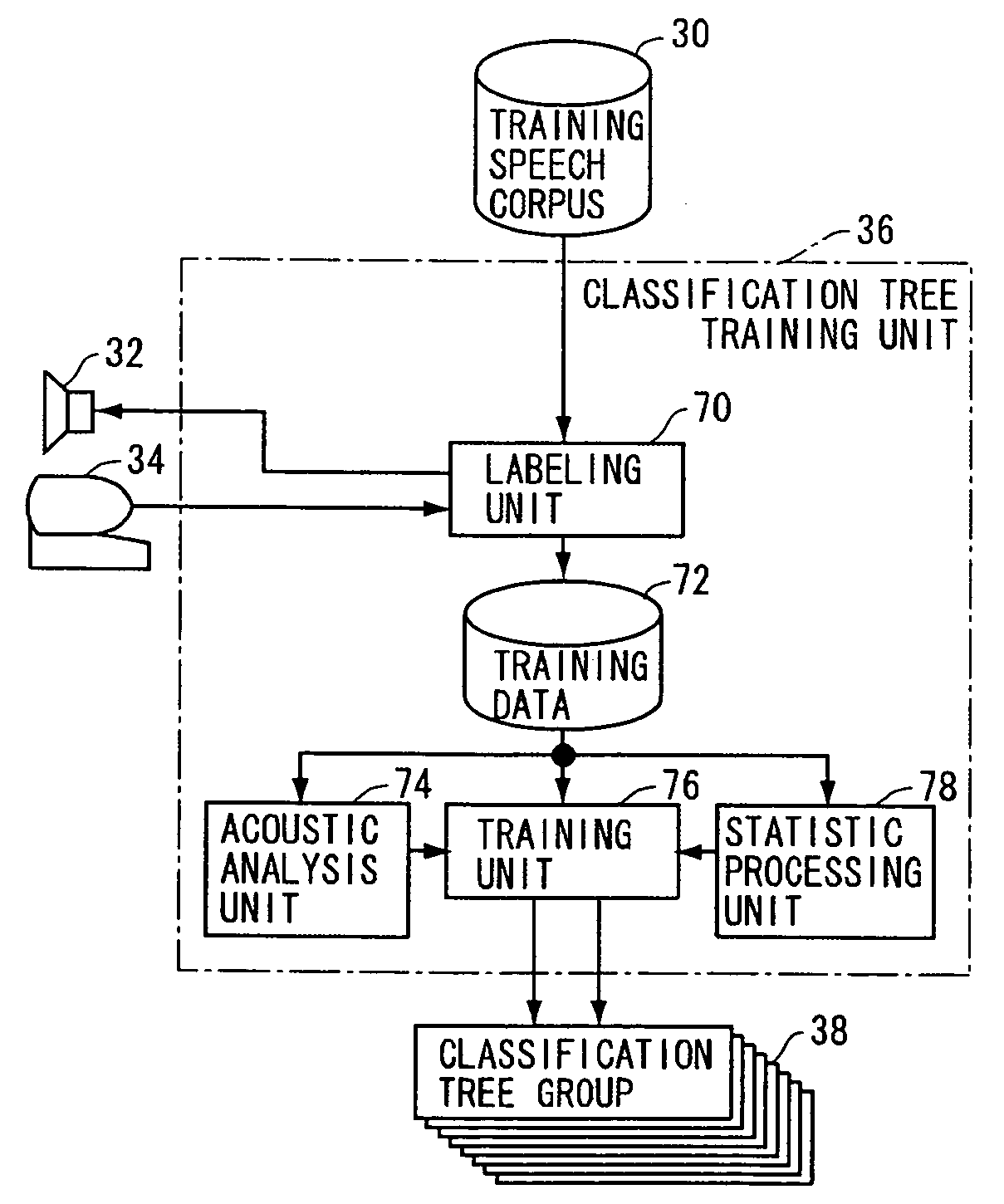

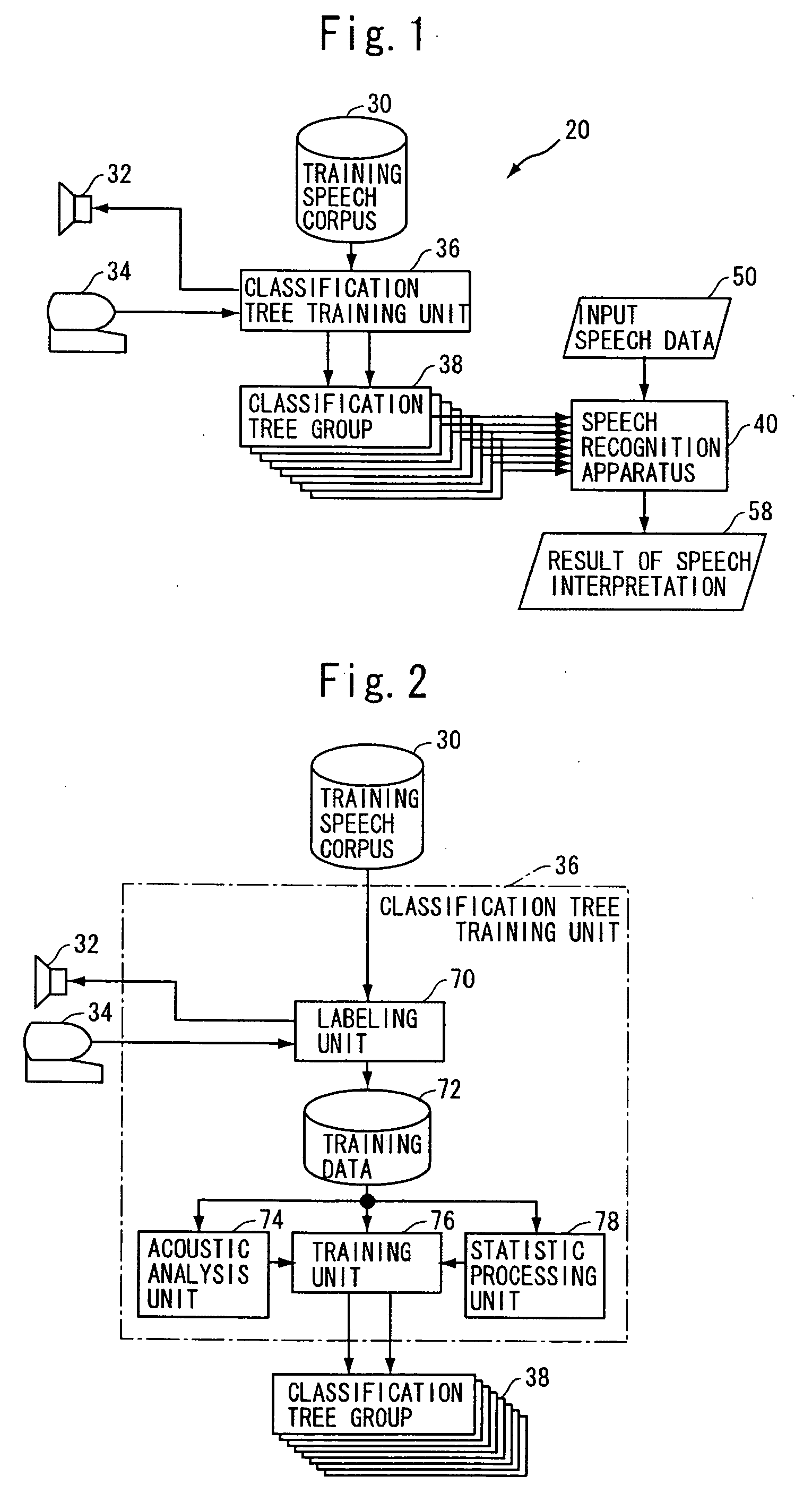

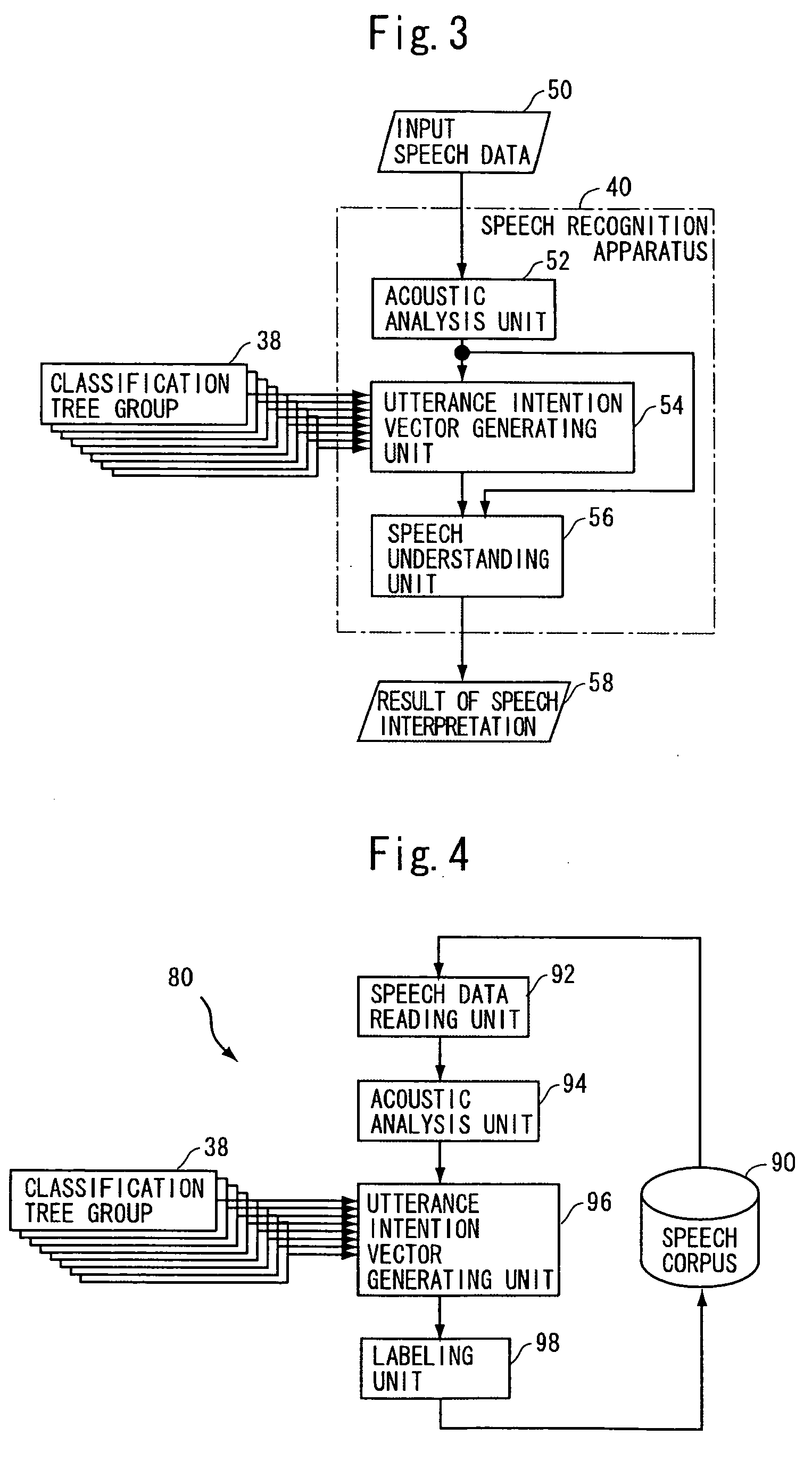

Apparatus and method for speech processing using paralinguistic information in vector form

InactiveUS20060080098A1Appropriately processedSuitable for processingSpeech recognitionSpeech synthesisSpeech corpusSpeech sound

A speech processing apparatus includes a statistics collecting module operable to collect, for each of a prescribed utterance units of a speech in a training speech corpus, a prescribed type of acoustic feature and statistic information on a plurality of paralinguistic information labels being selected by a plurality of listeners to a speech corresponding to the utterance unit; and a training apparatus trained by supervised machine training using said prescribed acoustic feature as input data and using the statistic information as answer data, to output probability of allocation of the label to a given acoustic feature, for each of said plurality of paralinguistic information labels, forming a paralinguistic information vector.

Owner:ATR ADVANCED TELECOMM RES INST INT

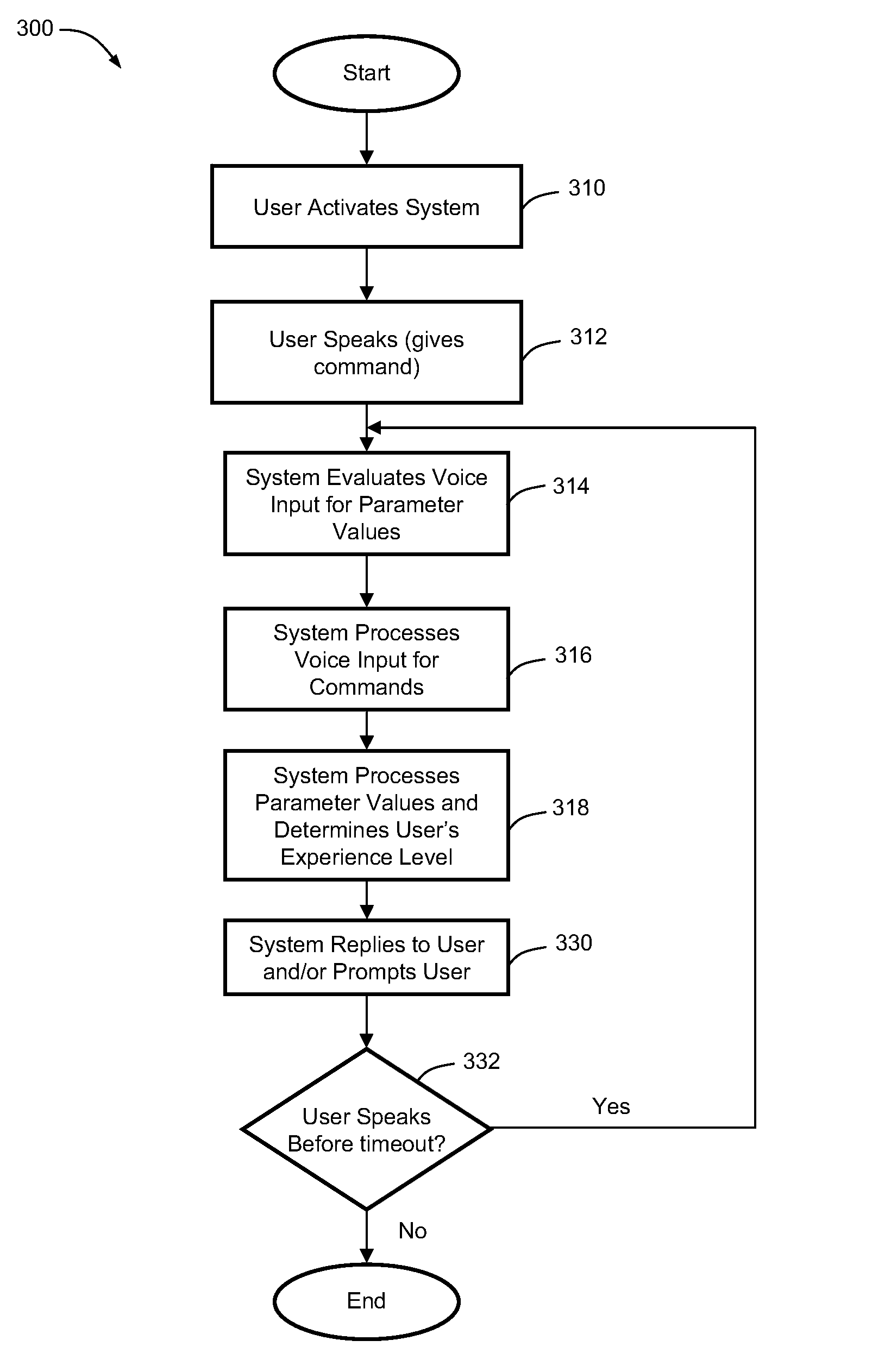

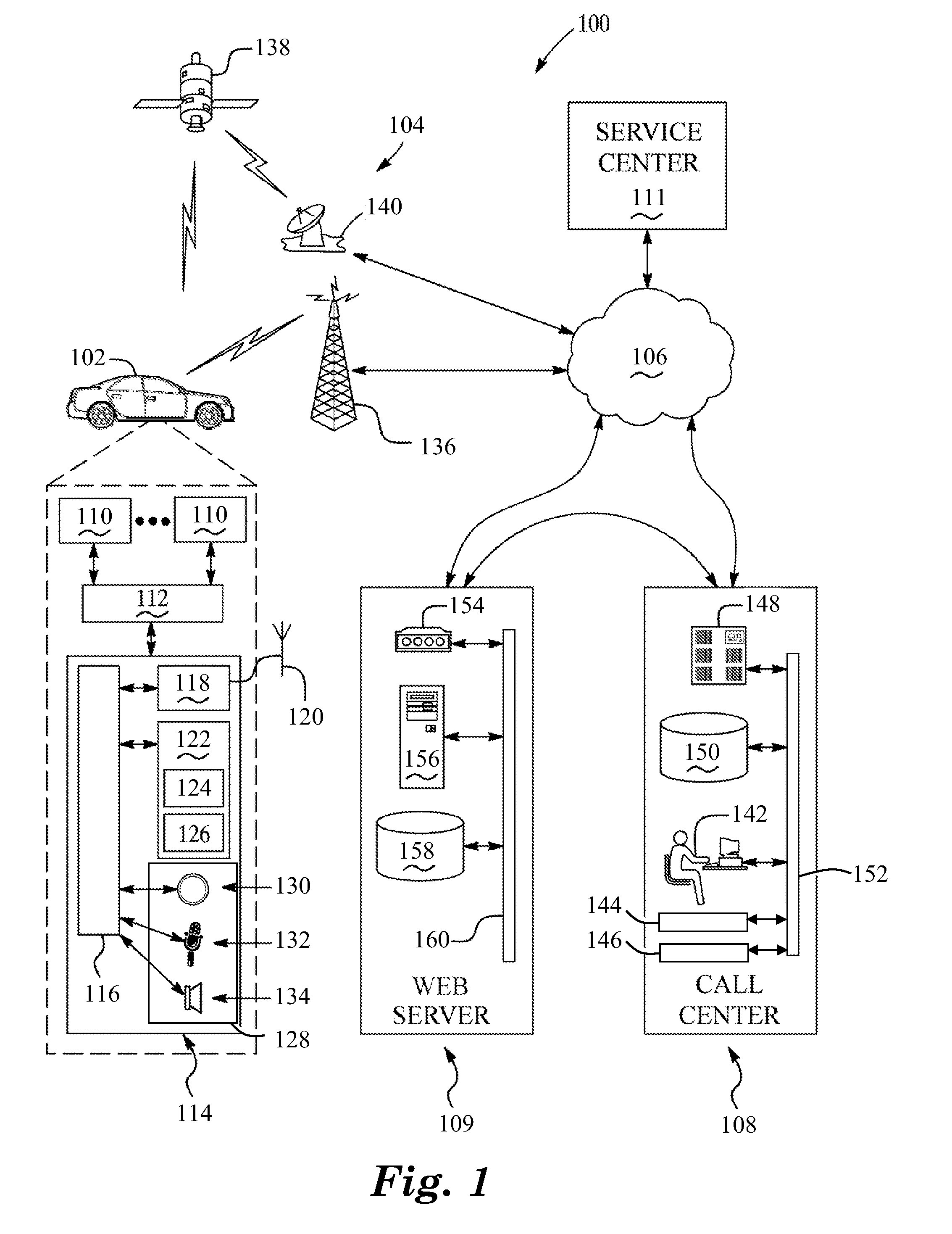

Automatically adapting user guidance in automated speech recognition

A speech recognition method includes receiving input speech from a user, processing the input speech to obtain at least one parameter value, and determining an experience level of the user using the parameter value(s). The method can also include prompting the user based upon the determined experience level of the user to assist the user in delivering speech commands.

Owner:GENERA MOTORS LLC

Mobile environment speech processing facility

In embodiments of the present invention improved capabilities are described for a mobile environment speech processing facility. The present invention may provide for the entering of text into a software application resident on a mobile communication facility, where recorded speech may be presented by the user using the mobile communications facility's resident capture facility. Transmission of the recording may be provided through a wireless communication facility to a speech recognition facility, and may be accompanied by information related to the software application. Results may be generated utilizing the speech recognition facility that may be independent of structured grammar, and may be based at least in part on the information relating to the software application and the recording. The results may then be transmitted to the mobile communications facility, where they may be loaded into the software application.

Owner:NUANCE COMM INC +1

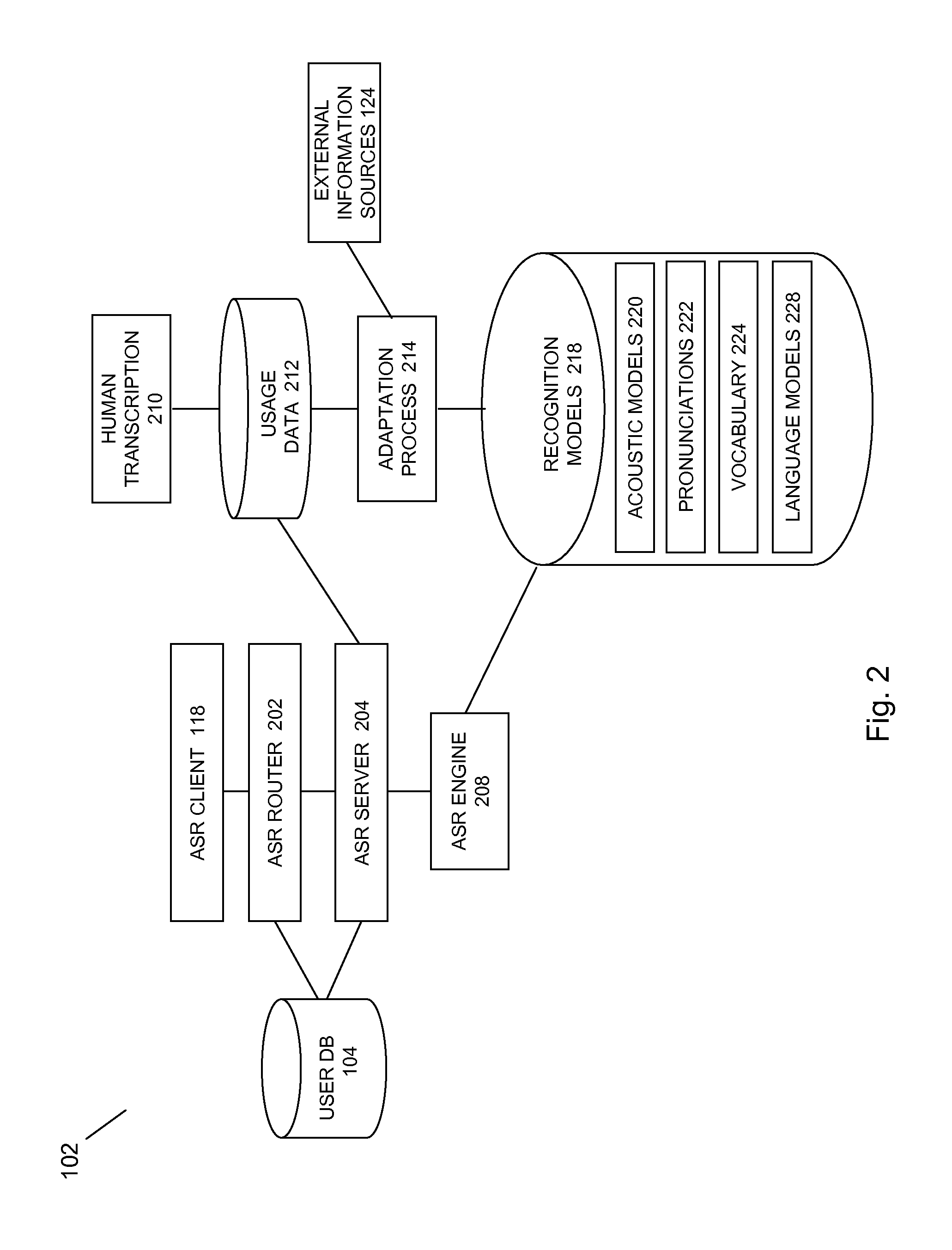

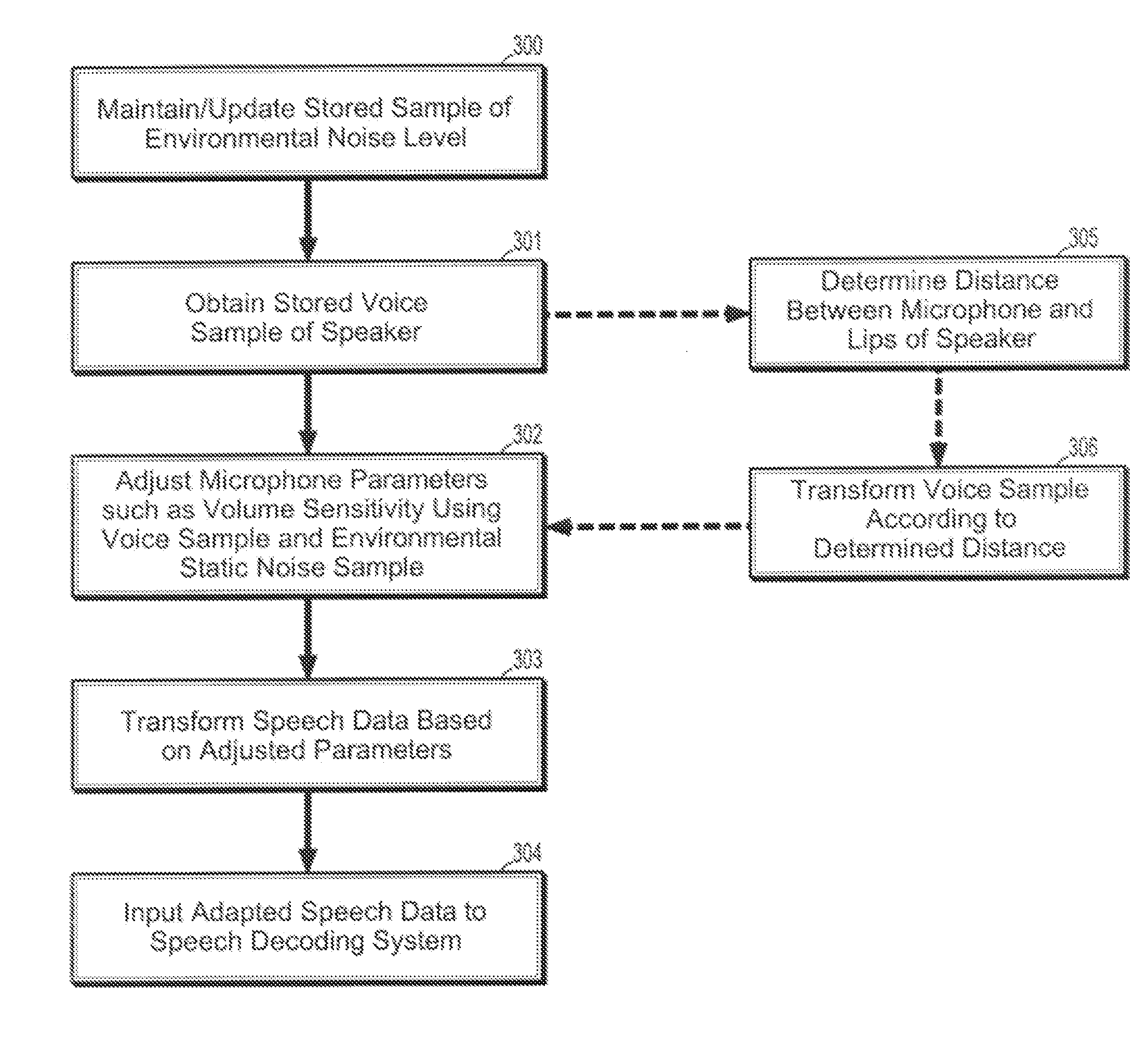

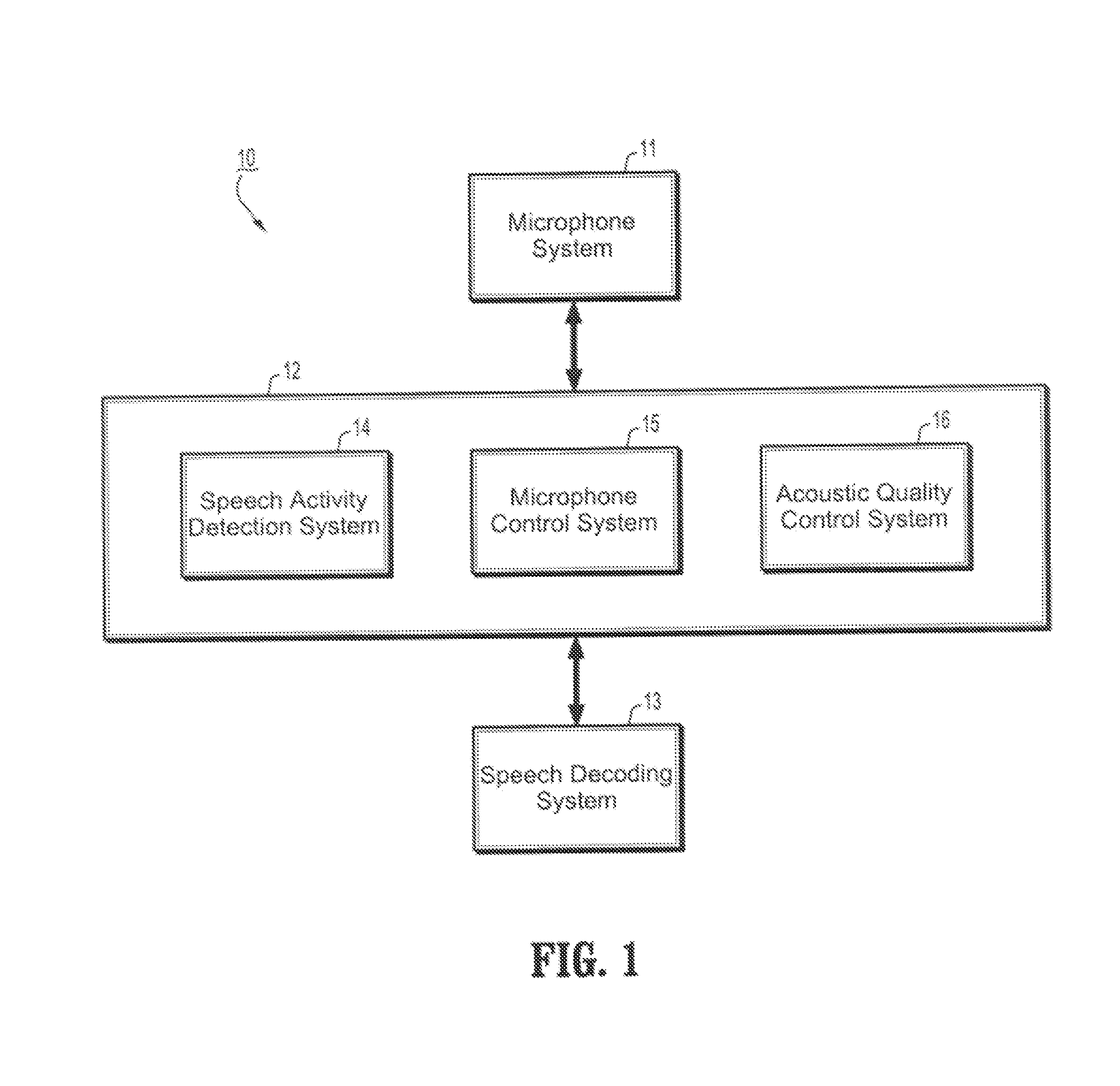

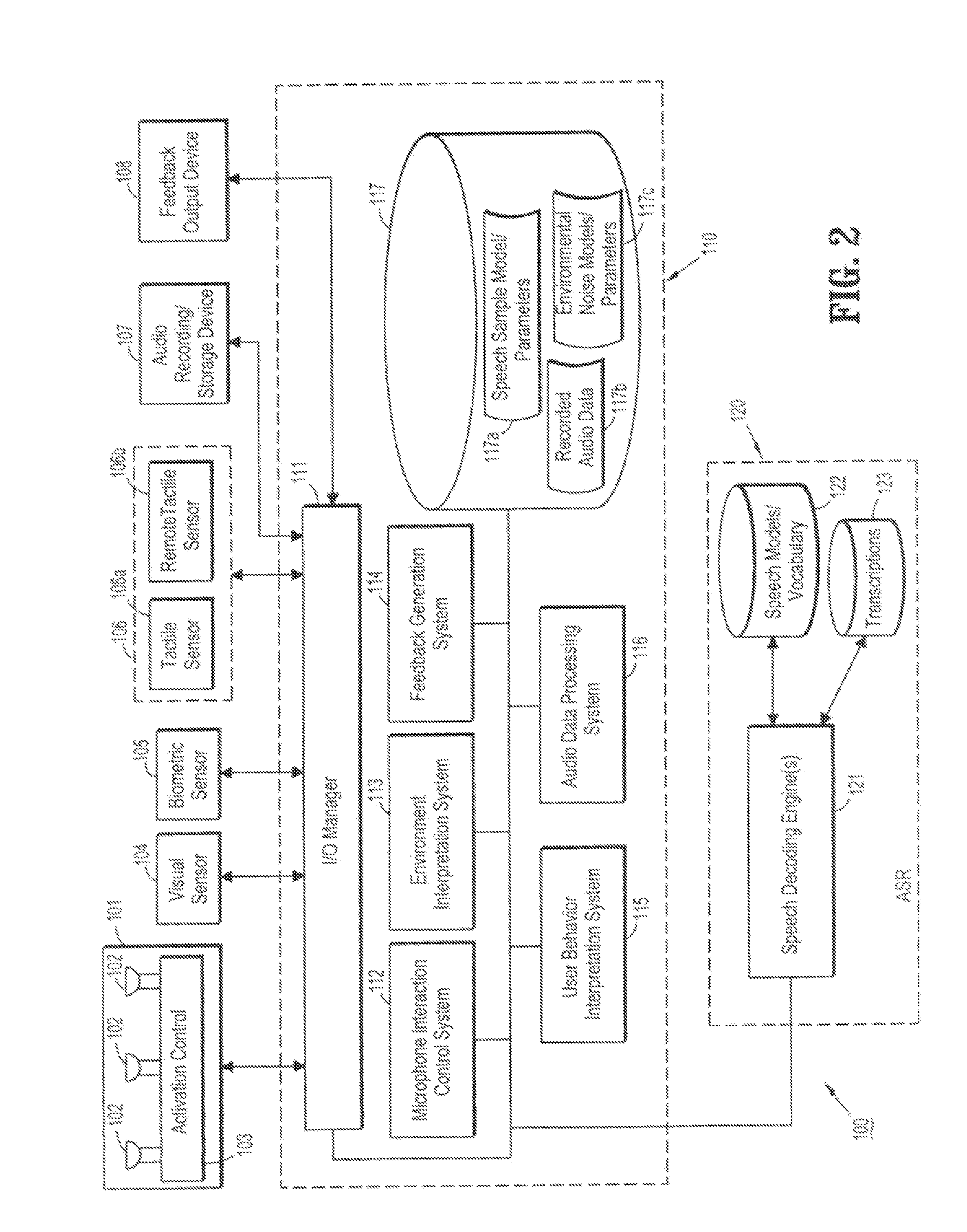

Systems and methods for intelligent control of microphones for speech recognition applications

InactiveUS20080167868A1Optimizes decoding accuracyGain controlSpeech recognitionSpeech identificationAudio frequency

Systems and methods for intelligent control of microphones in speech processing applications, which allows the capturing, recording and preprocessing of speech data in the captured audio in a way that optimizes speech decoding accuracy.

Owner:IBM CORP

System for accessing data via voice

ActiveUS7194069B1Automatic call-answering/message-recording/conversation-recordingSpecial service for subscribersData setData query

A system for providing access to data via a voice interface. In one embodiment, the system includes a voice recognition unit and a speech processing server that work together to enable users to interact with the system using voice commands guided by navigation context sensitive voice prompts, and provide user-requested data in a verbalized format back to the users. Digitized voice waveform data are processed to determine the voice commands of the user. The system also uses a “grammar” that enables users to retrieve data using intuitive natural language speech queries. In response to such a query, a corresponding data query is generated by the system to retrieve one or more data sets corresponding to the query. The user is then enabled to browse the data that are returned through voice command navigation, wherein the system “reads” the data back to the user using text-to-speech (TTS) conversion and system prompts.

Owner:ORACLE INT CORP

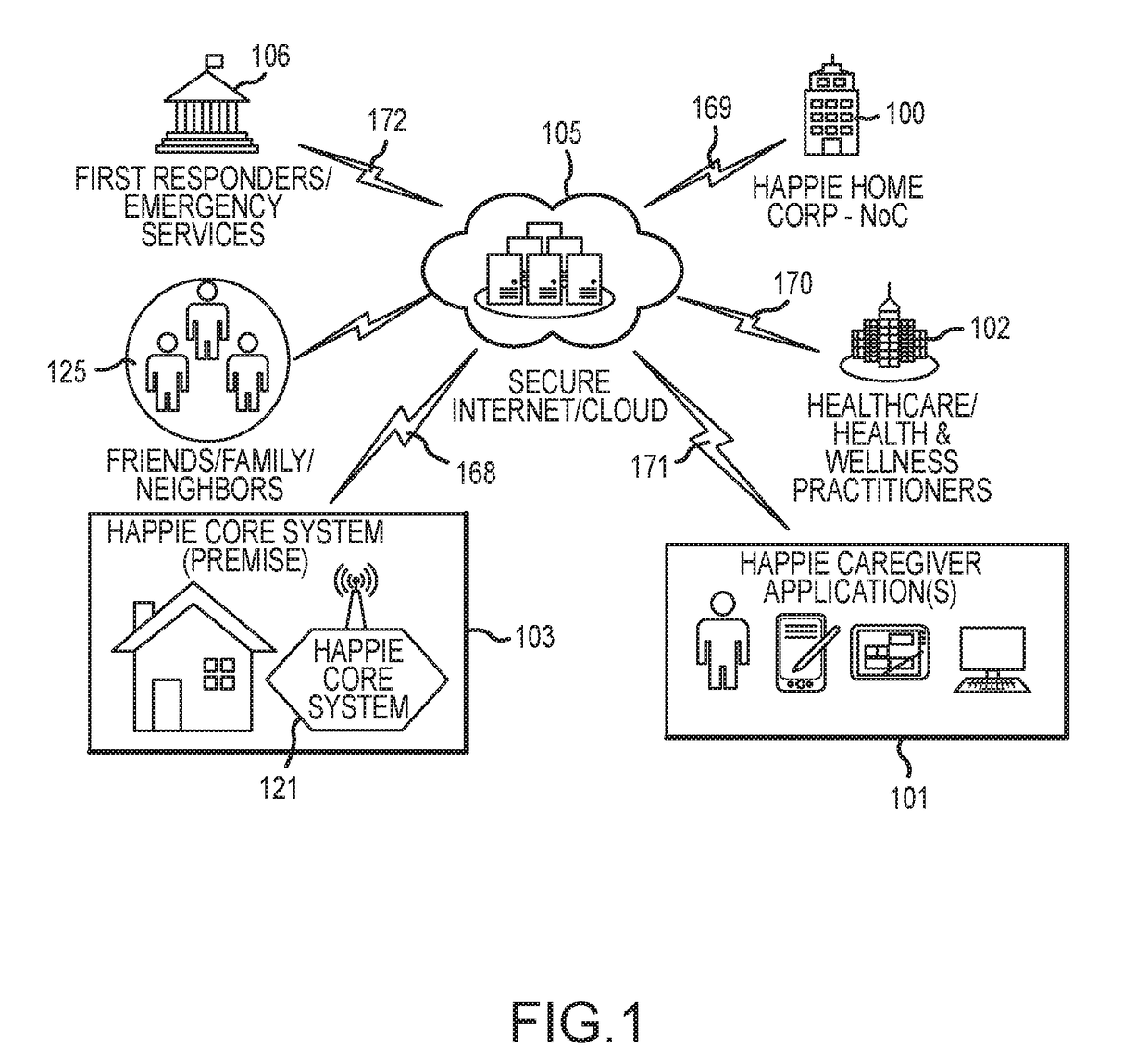

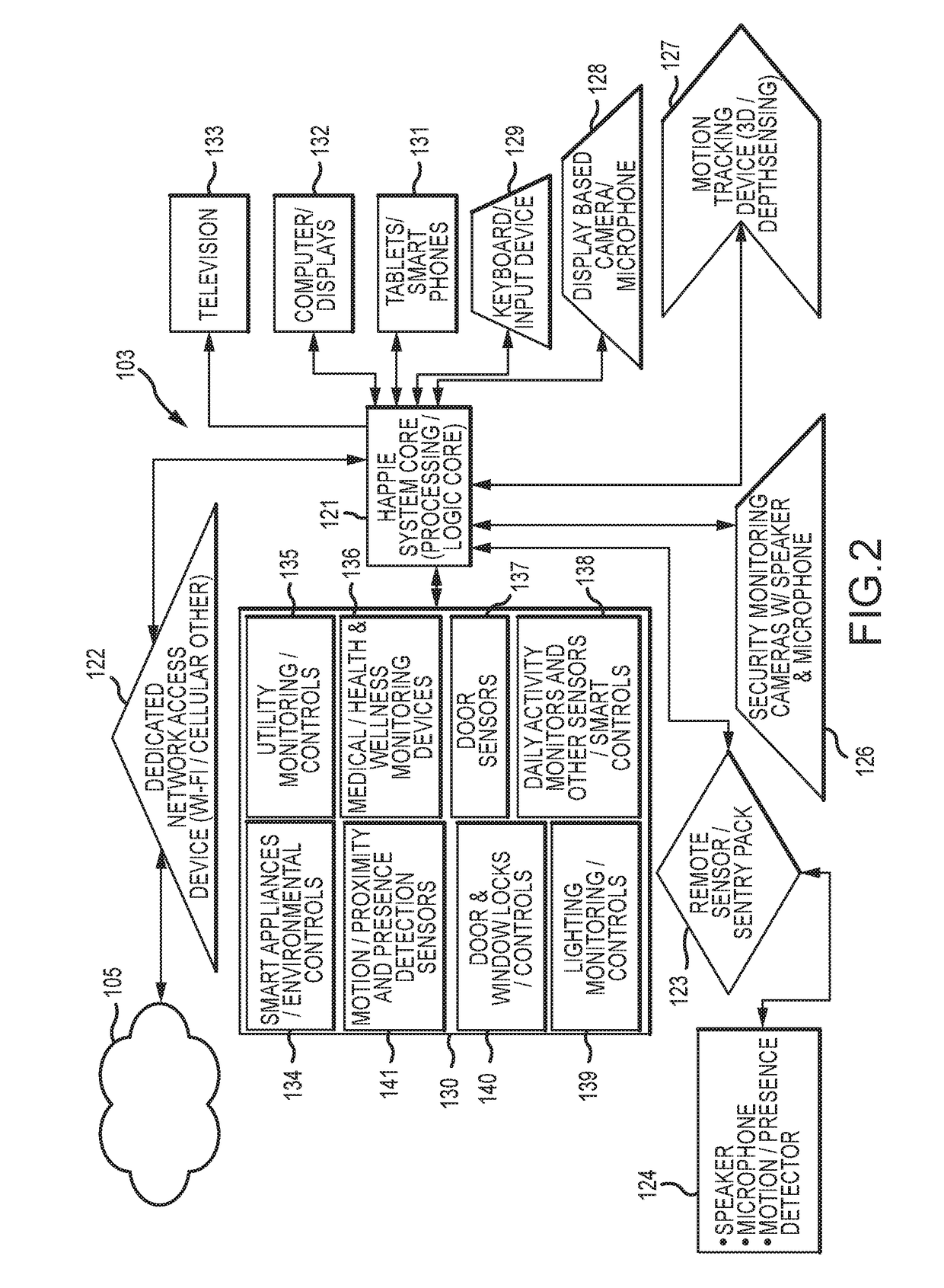

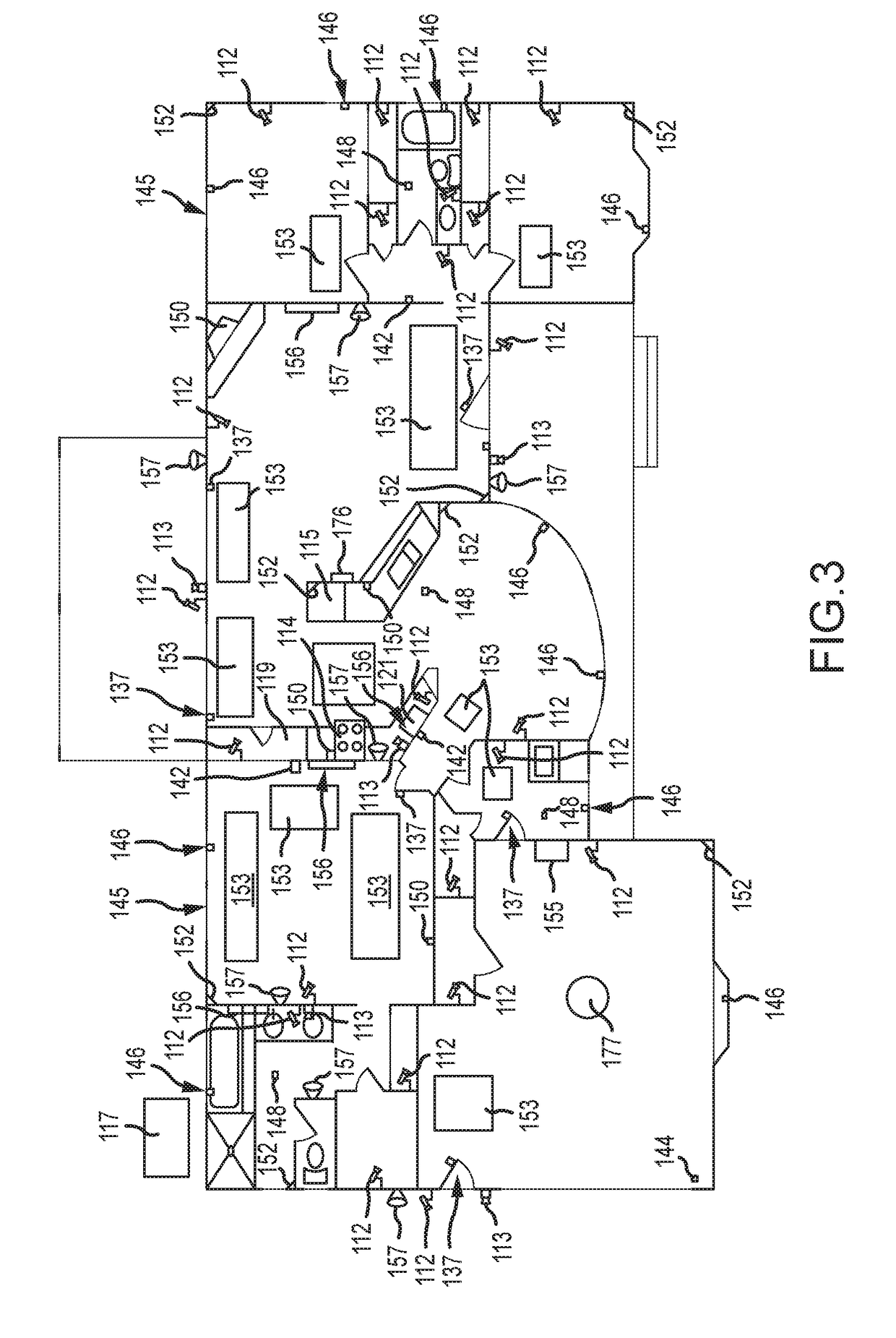

Happie home system

InactiveUS20180342329A1Reduce the burden onEfficiently provideMedical communicationData switching by path configurationDiseaseMotion detector

An improved home automation system is provided to facilitate senior care, as well as to facilitate care for individuals suffering from Alzheimer's disease or other dementias. A home control unit is provided that is connected to, and interfaces with, a combination of health equipment, smart home appliances, a smart medicine cabinet, a smart pantry, wearable sensors, motion detectors, video cameras, microphones, video monitors, speakers, smart thermostat, lighting, floor sensors, bed sensors, smoke detectors, glass breakage detectors, door sensors, and other perimeter sensors. A distributed computational architecture is provided having a CPU associated with each video camera and an associated proximate microphone and speaker, wherein speech detection and processing, and video processing, is performed by each such CPU in conjunction with its associated video camera, microphone, and speaker. Remote backup for such distributed speech processing is selectively provided by a remote server based upon confidence scopes generated by each such CPU. The distributed computational architecture is also utilized for video processing to facilitate peer-to-peer video conferencing communication using industry standard formats and to reduce latency and response times that would otherwise be encountered using remote servers.

Owner:HAPPIE HOME INC

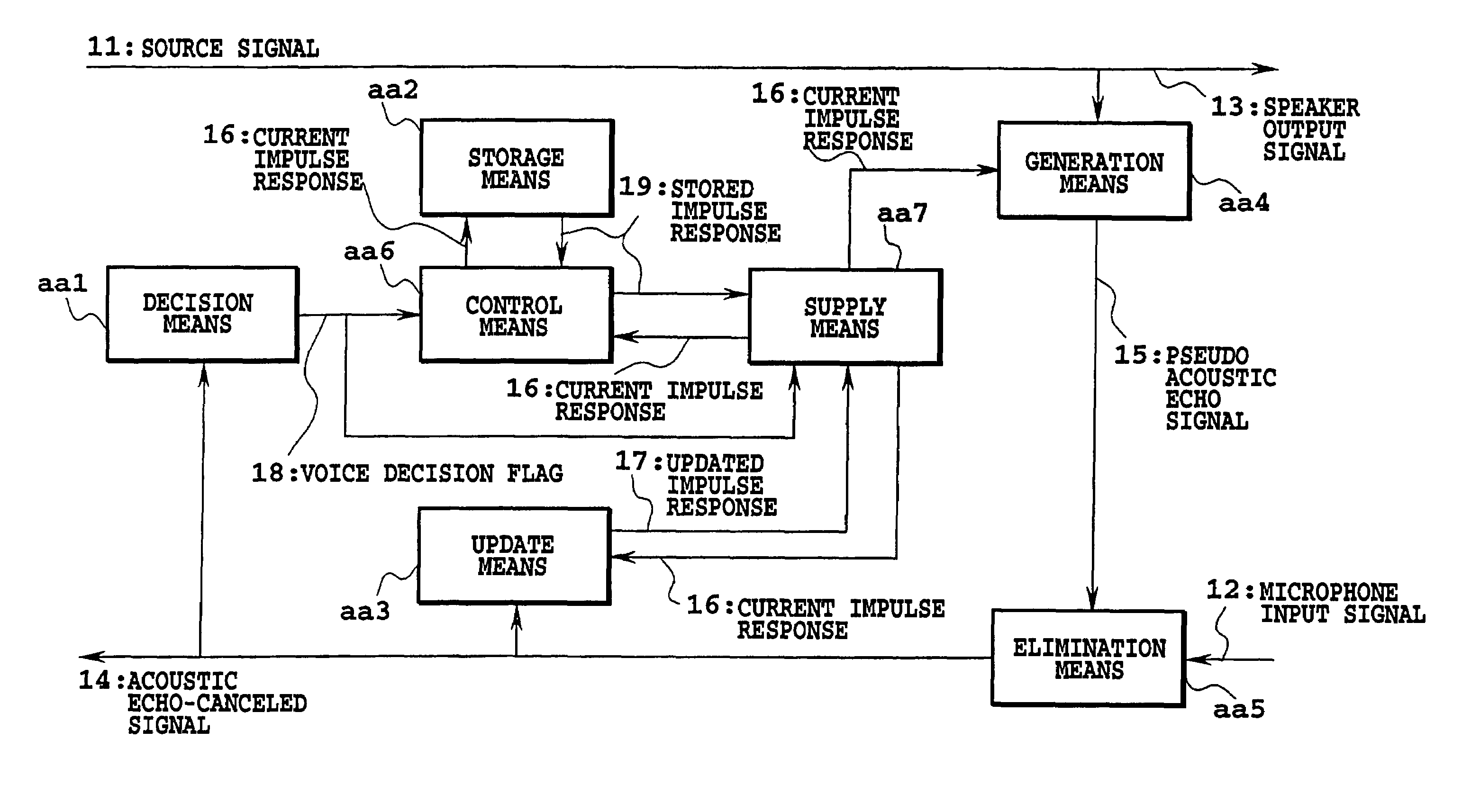

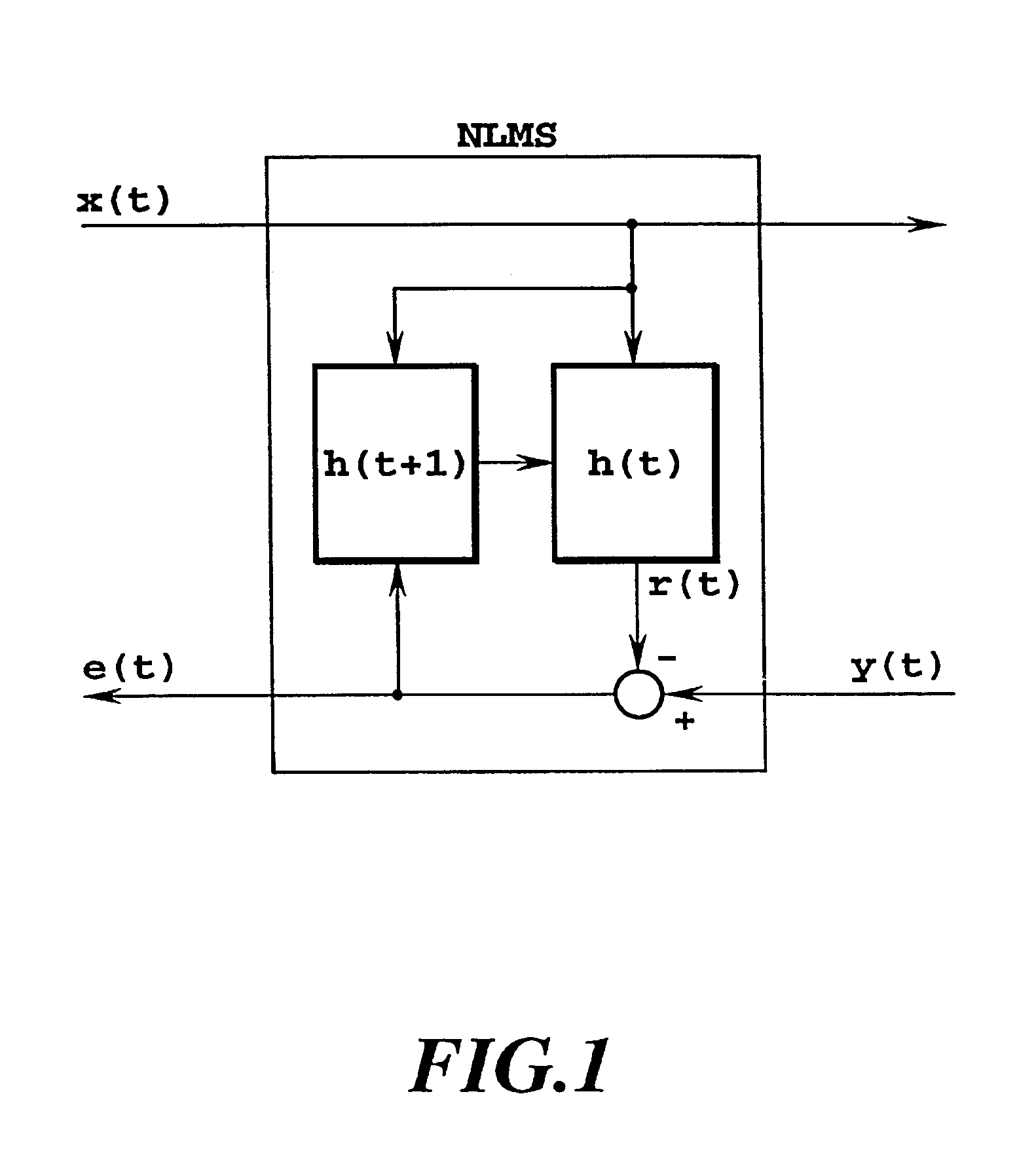

Speech processing method and apparatus for improving speech quality and speech recognition performance

InactiveUS7440891B1Remove additive noiseImprove abilitiesTwo-way loud-speaking telephone systemsDigital technique networkSpeech recognition performanceSpeech sound

A speech processing apparatus which, in the process of performing echo canceling by using a pseudo acoustic echo signal, continuously uses an impulse response used for the previous frame as an impulse response to generate the pseudo acoustic echo signal when a voice is contained in the microphone input signal, and which uses a newly updated impulse response when a voice is not contained in the microphone input signal.

Owner:ASAHI KASEI KK

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com