Patents

Literature

61 results about "Speech corpus" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A speech corpus (or spoken corpus) is a database of speech audio files and text transcriptions. In speech technology, speech corpora are used, among other things, to create acoustic models (which can then be used with a speech recognition engine). In linguistics, spoken corpora are used to do research into phonetic, conversation analysis, dialectology and other fields.

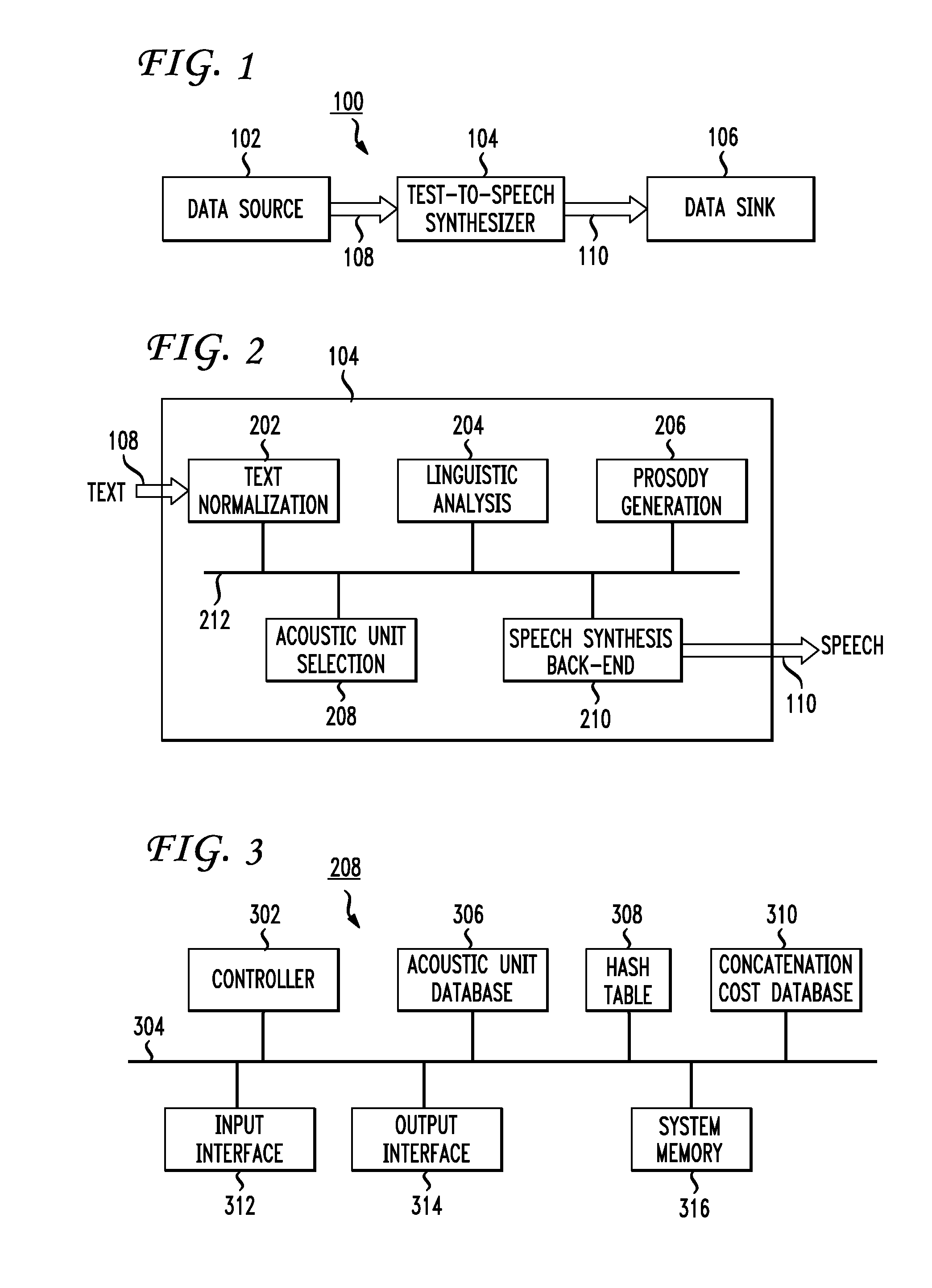

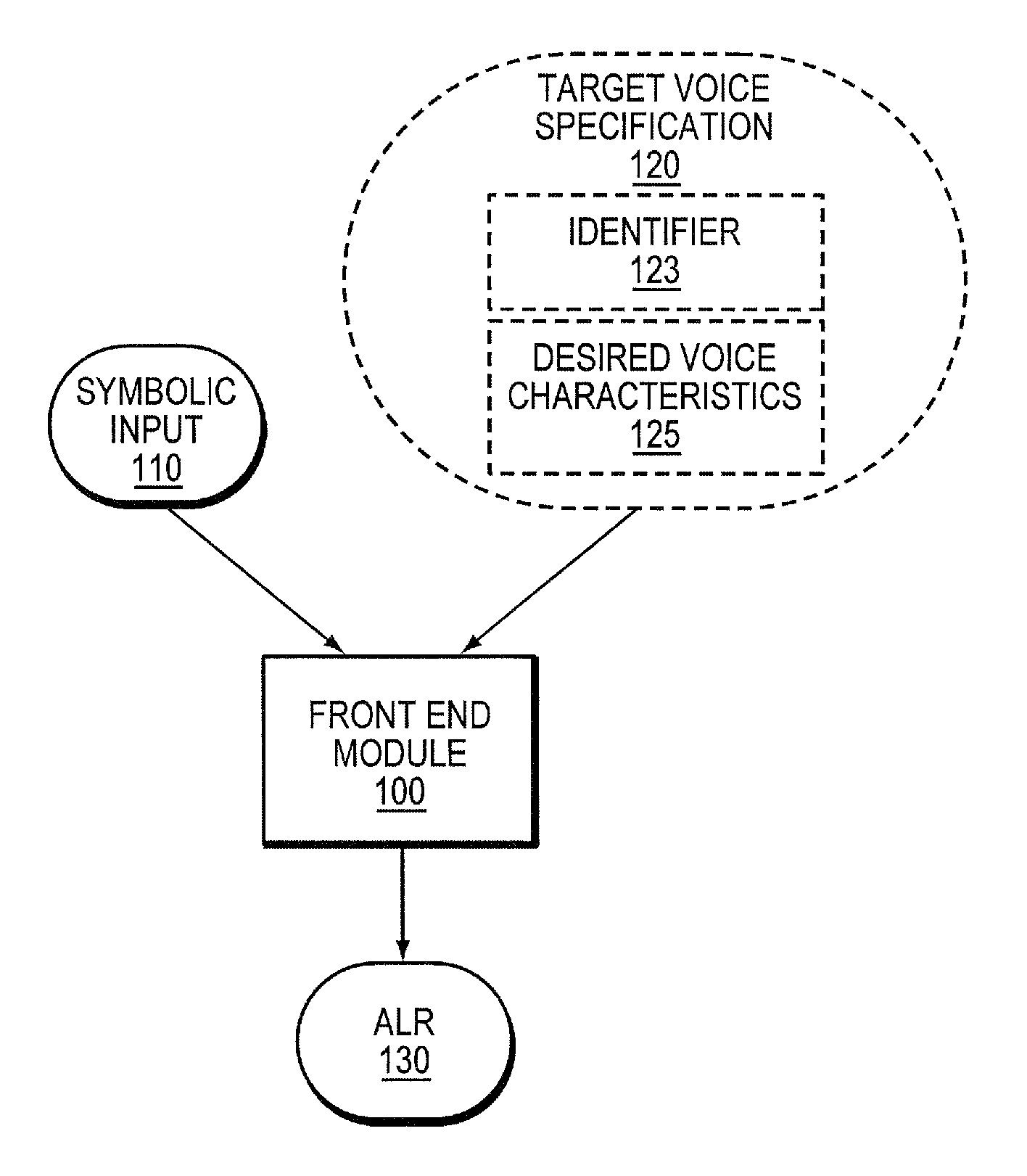

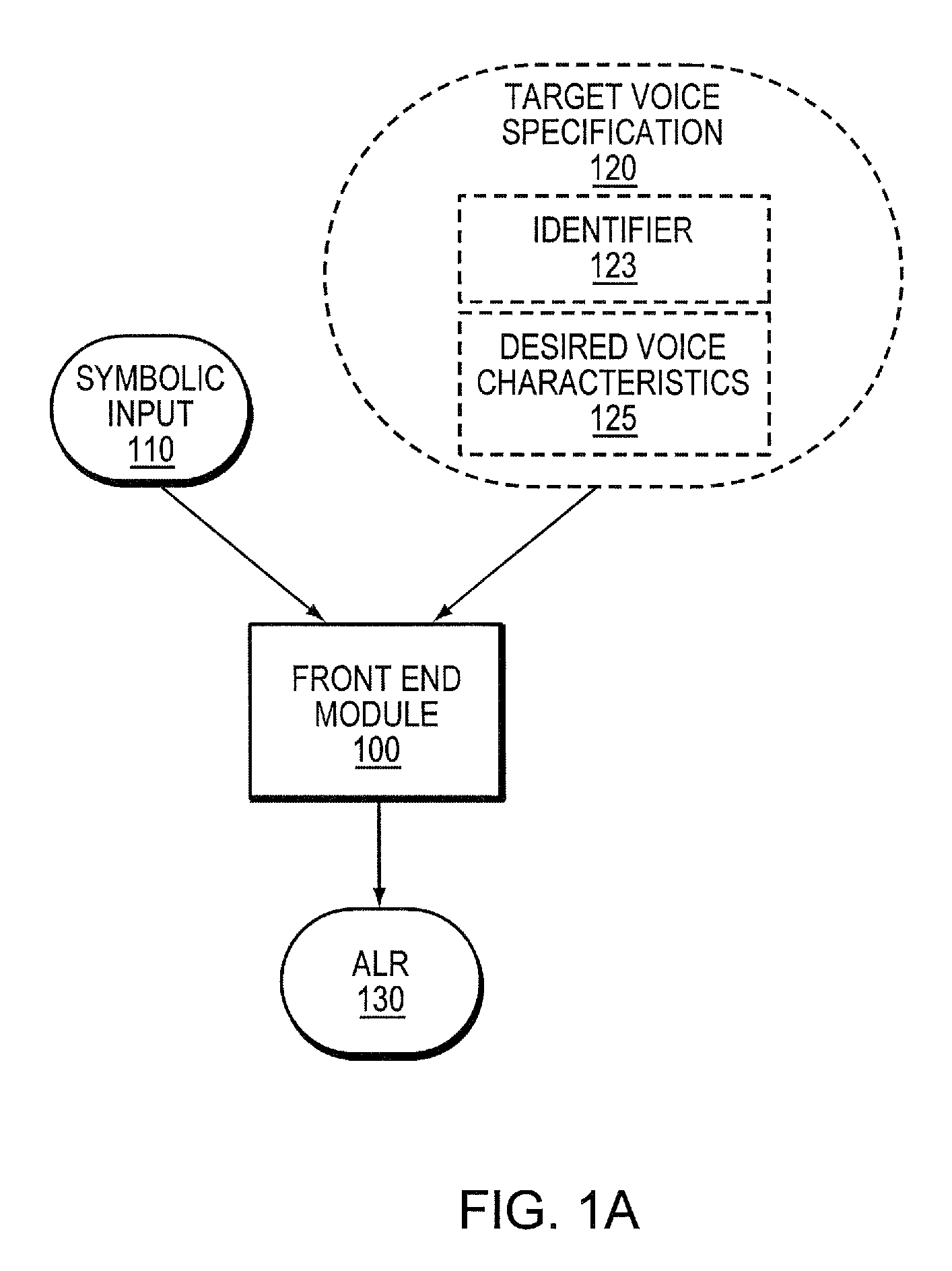

System and method for hybrid speech synthesis

ActiveUS20080270140A1Cost-efficientlySolve the real problemSpeech synthesisSpeech corpusSpeech sound

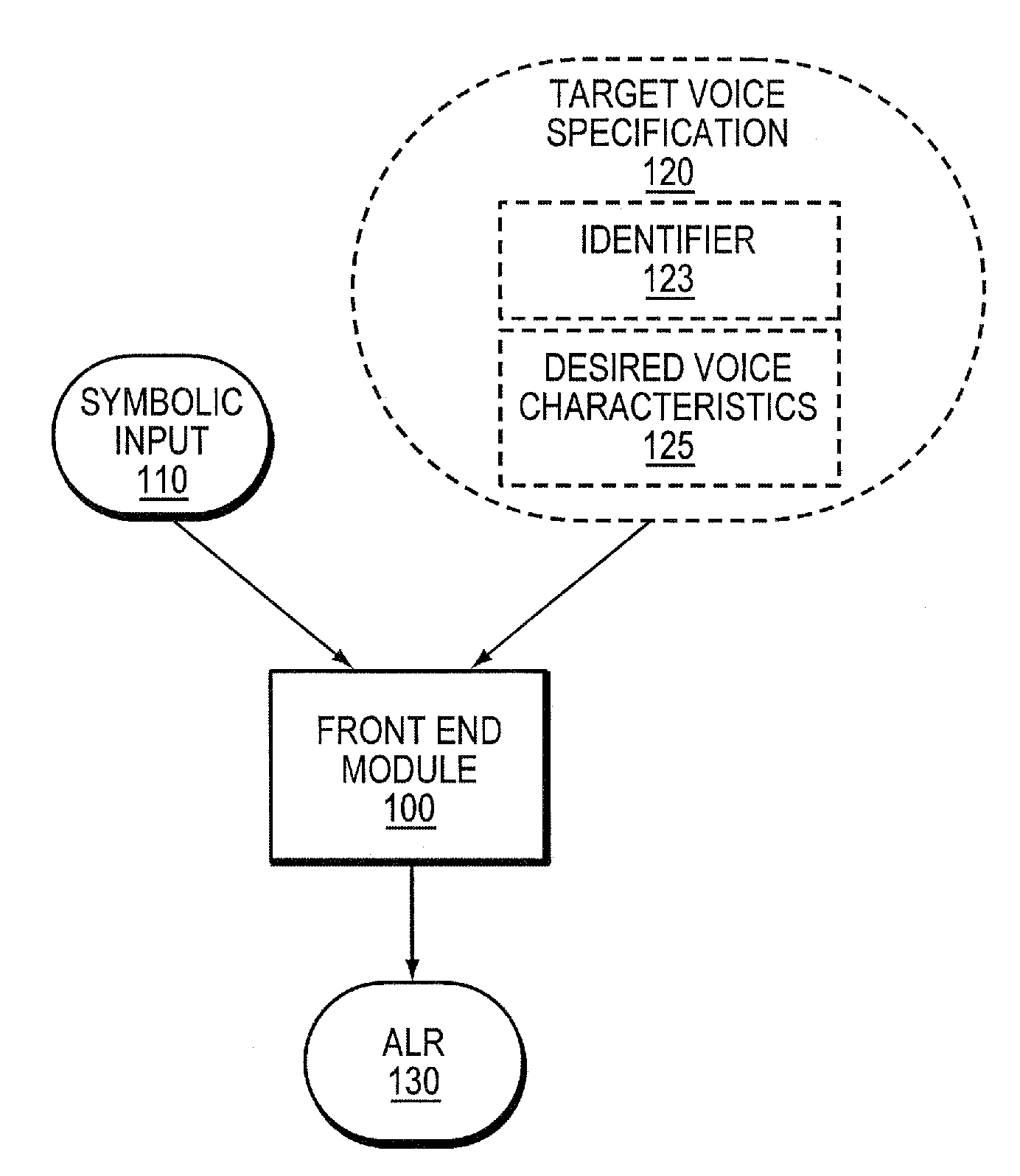

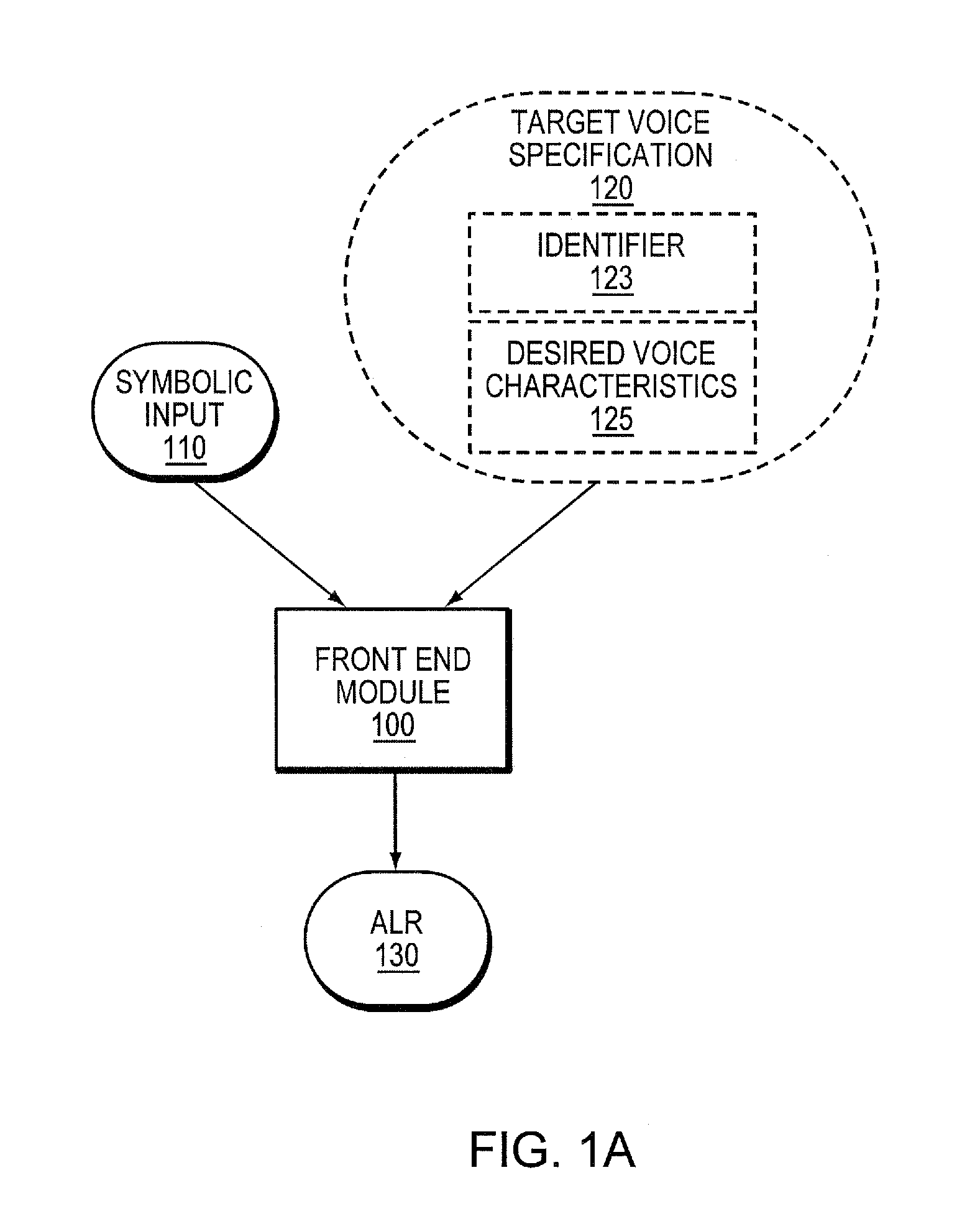

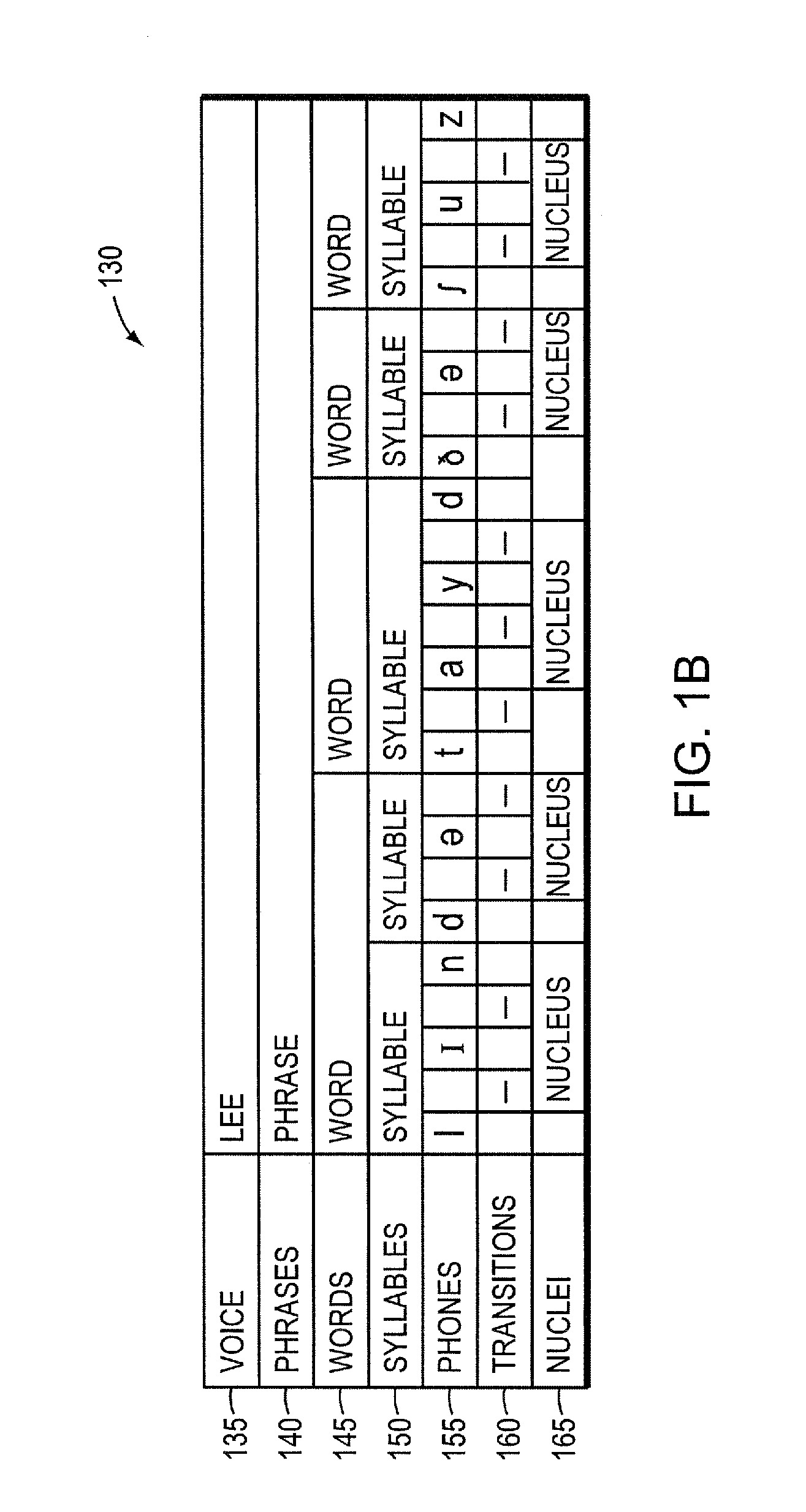

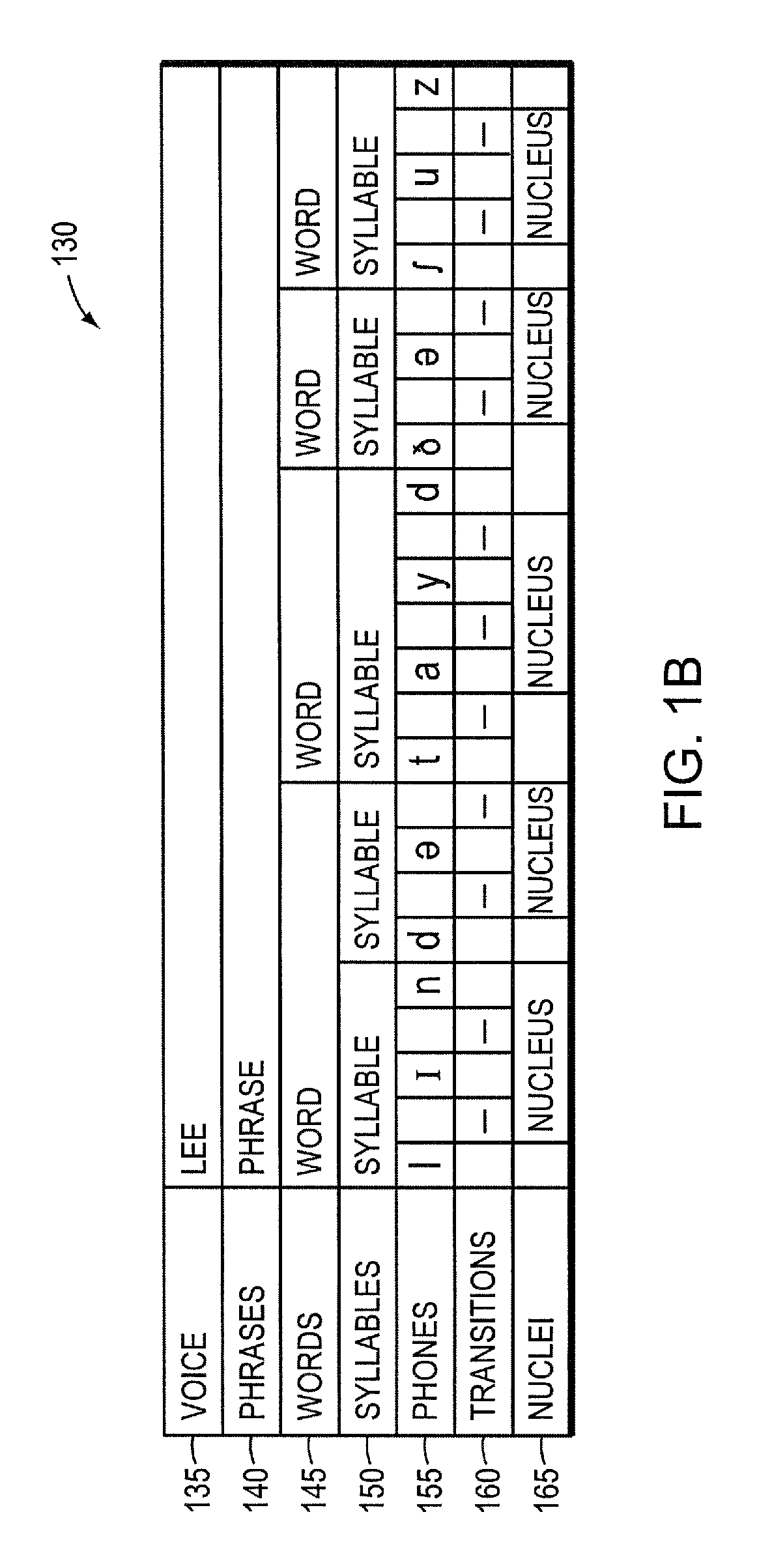

A speech synthesis system receives symbolic input describing an utterance to be synthesized. In one embodiment, different portions of the utterance are constructed from different sources, one of which is a speech corpus recorded from a human speaker whose voice is to be modeled. The other sources may include other human speech corpora or speech produced using Rule-Based Speech Synthesis (RBSS). At least some portions of the utterance may be constructed by modifying prototype speech units to produce adapted speech units that are contextually appropriate for the utterance. The system concatenates the adapted speech units with the other speech units to produce a speech waveform. In another embodiment, a speech unit of a speech corpus recorded from a human speaker lacks transitions at one or both of its edges. A transition is synthesized using RBSS and concatenated with the speech unit in producing a speech waveform for the utterance.

Owner:NOVASPEECH

Method and apparatus for speech synthesis without prosody modification

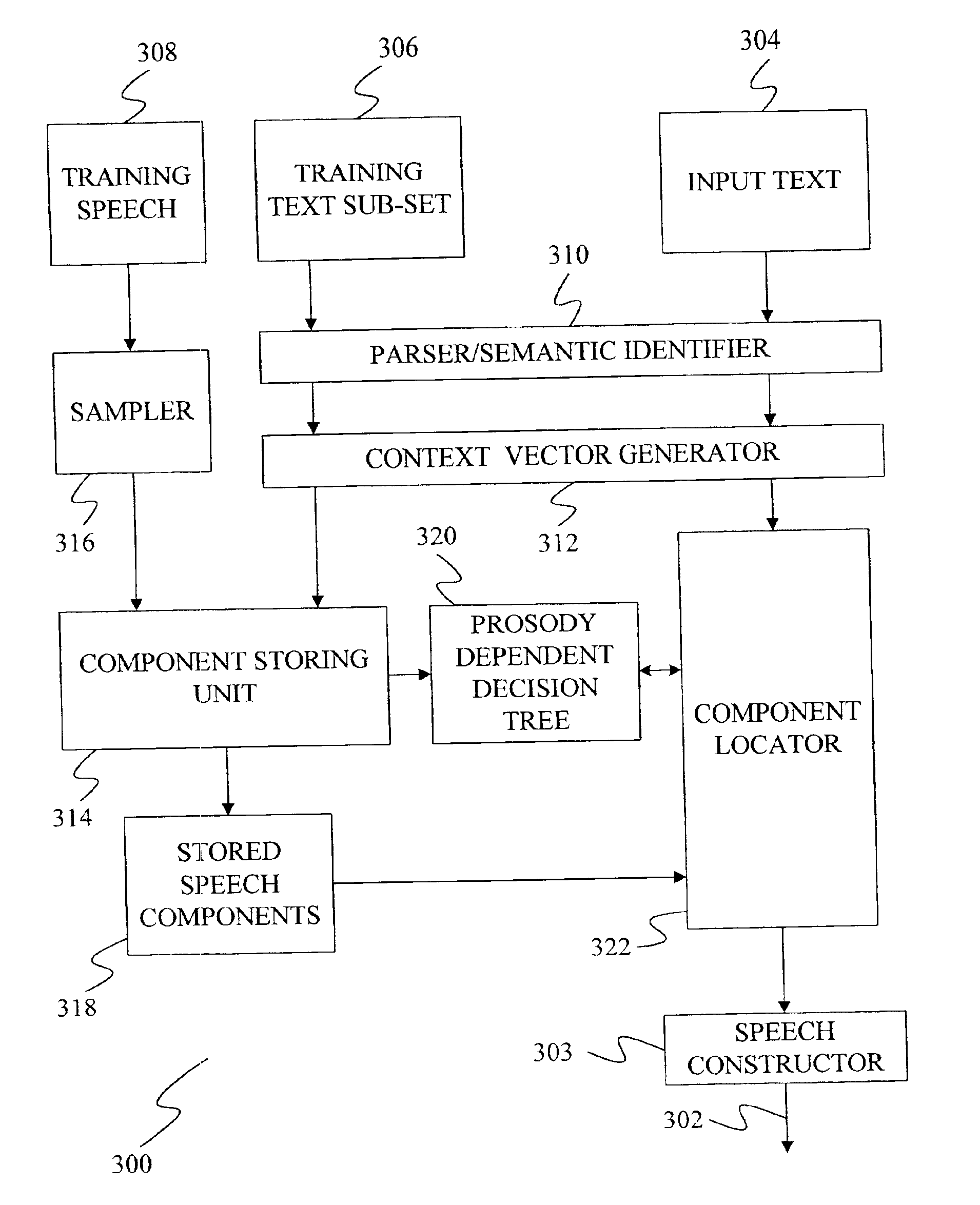

A speech synthesizer is provided that concatenates stored samples of speech units without modifying the prosody of the samples. The present invention is able to achieve a high level of naturalness in synthesized speech with a carefully designed training speech corpus by storing samples based on the prosodic and phonetic context in which they occur. In particular, some embodiments of the present invention limit the training text to those sentences that will produce the most frequent sets of prosodic contexts for each speech unit. Further embodiments of the present invention also provide a multi-tier selection mechanism for selecting a set of samples that will produce the most natural sounding speech.

Owner:MICROSOFT TECH LICENSING LLC

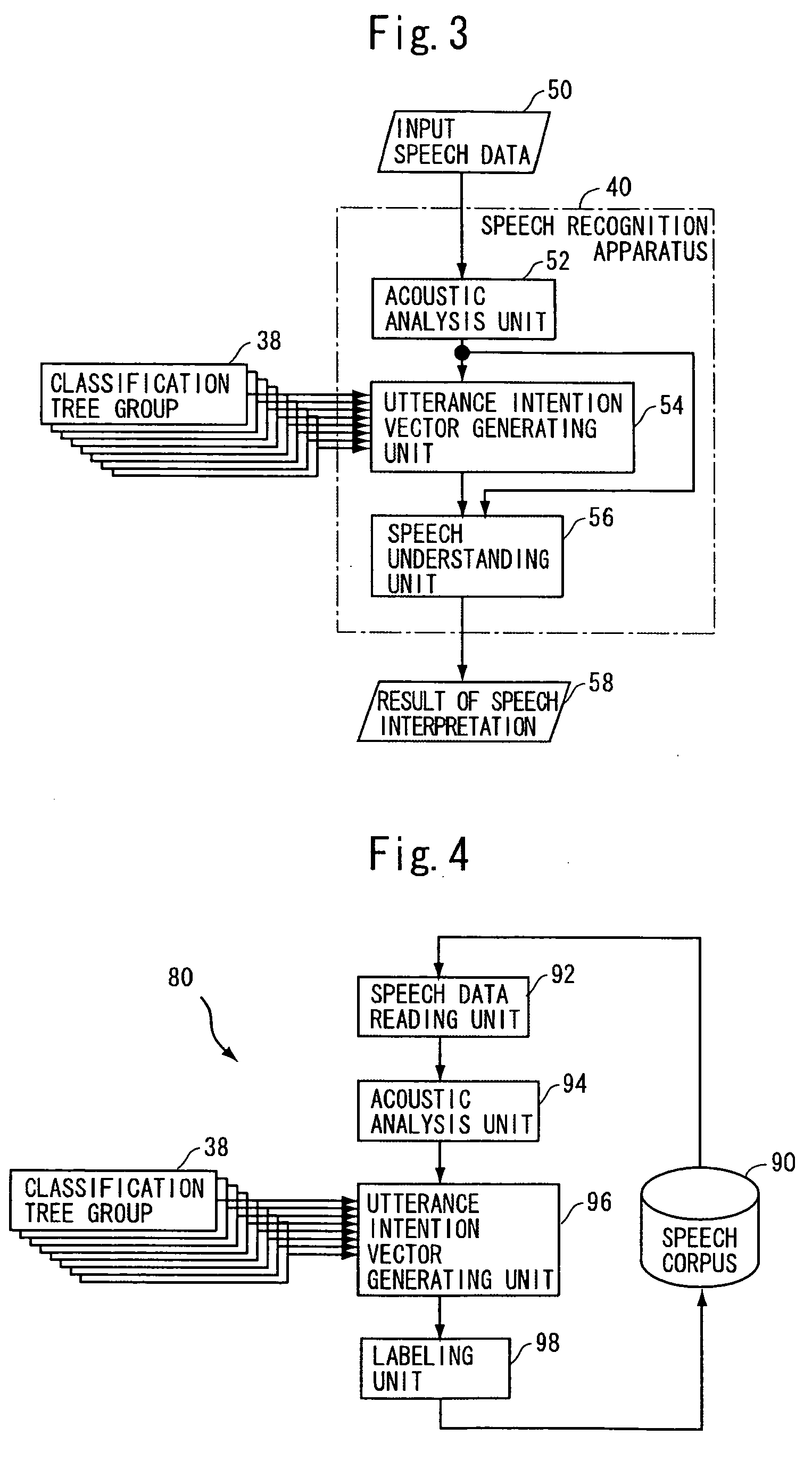

Apparatus and method for speech processing using paralinguistic information in vector form

InactiveUS20060080098A1Appropriately processedSuitable for processingSpeech recognitionSpeech synthesisSpeech corpusSpeech sound

A speech processing apparatus includes a statistics collecting module operable to collect, for each of a prescribed utterance units of a speech in a training speech corpus, a prescribed type of acoustic feature and statistic information on a plurality of paralinguistic information labels being selected by a plurality of listeners to a speech corresponding to the utterance unit; and a training apparatus trained by supervised machine training using said prescribed acoustic feature as input data and using the statistic information as answer data, to output probability of allocation of the label to a given acoustic feature, for each of said plurality of paralinguistic information labels, forming a paralinguistic information vector.

Owner:ATR ADVANCED TELECOMM RES INST INT

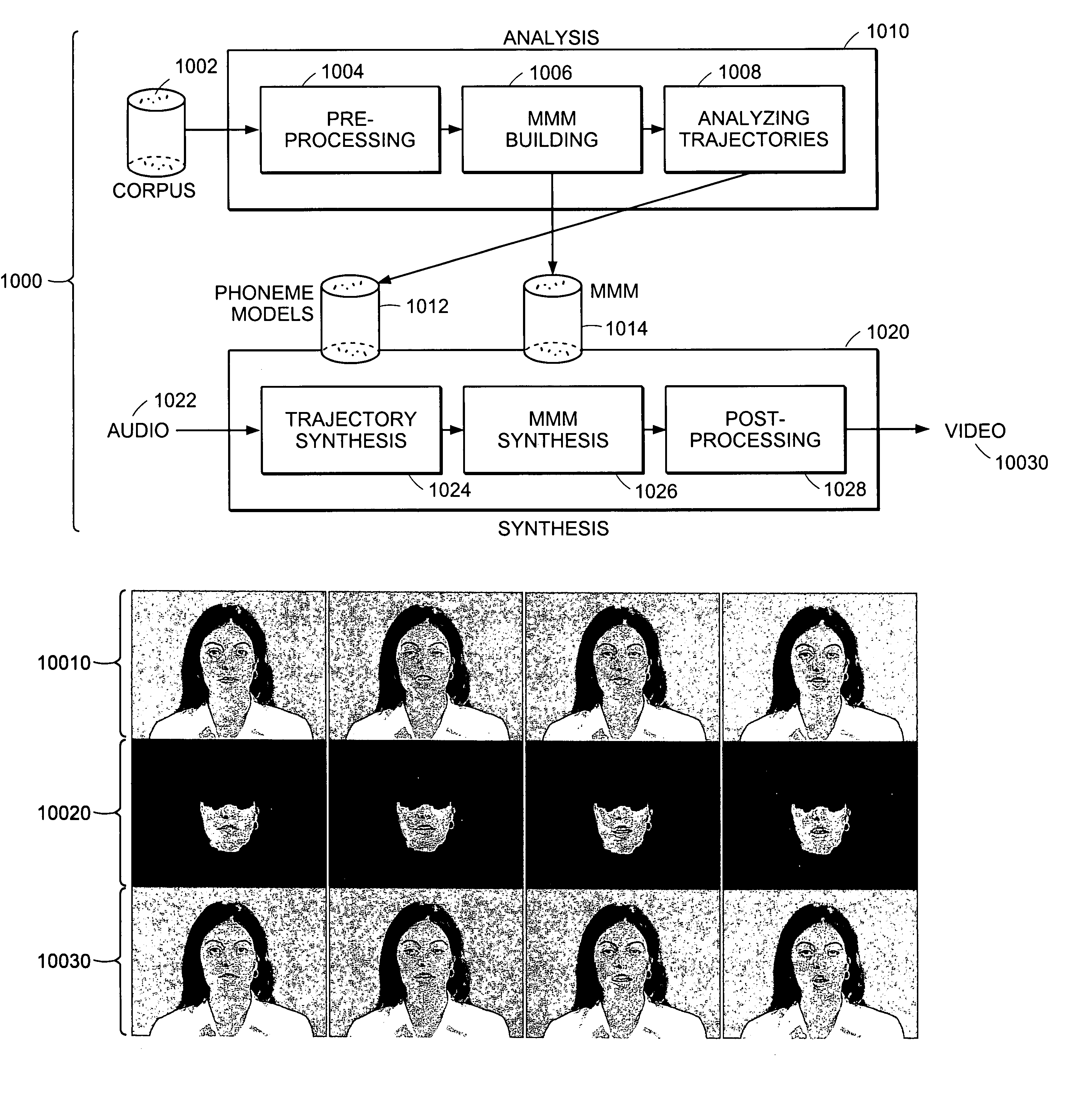

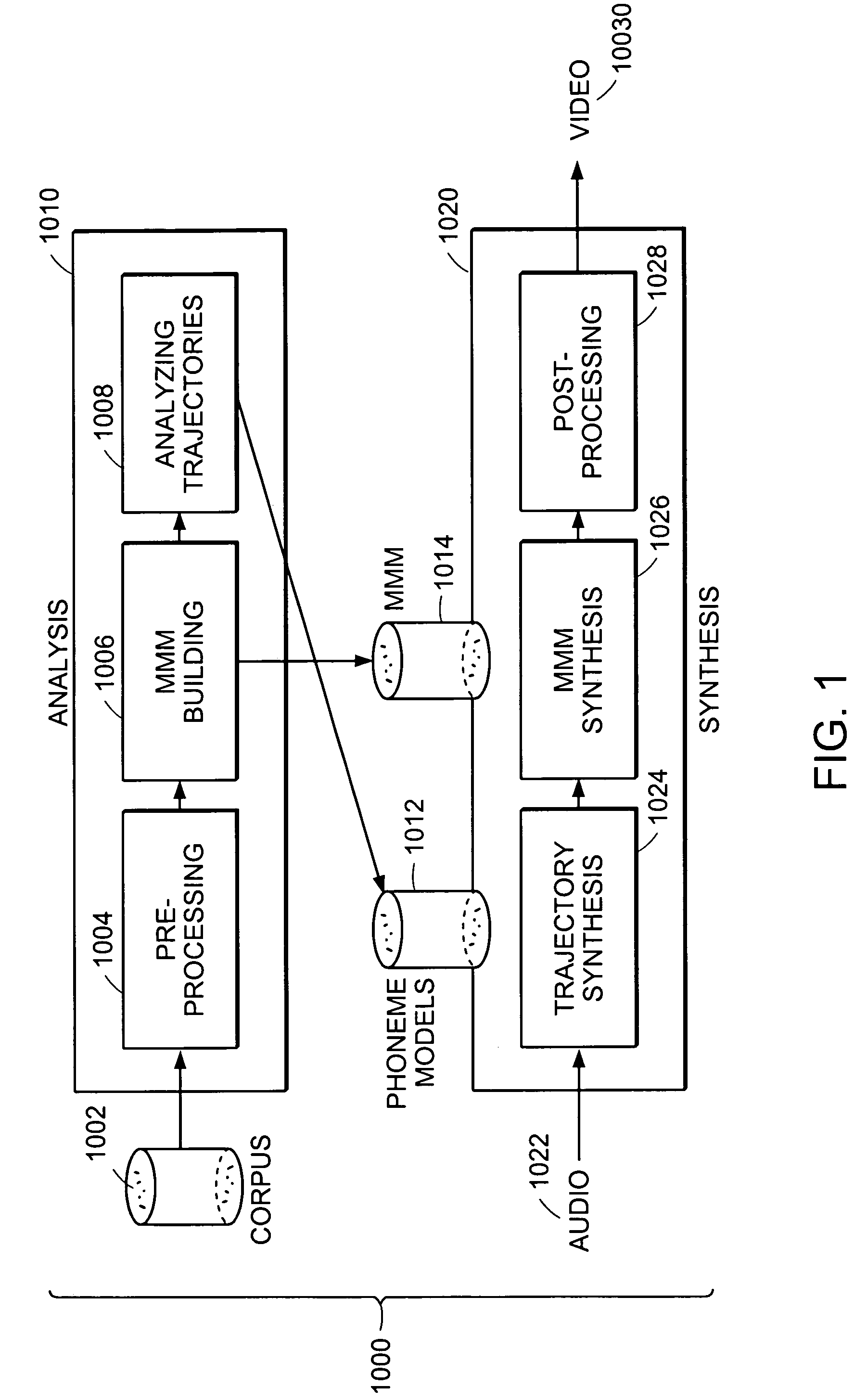

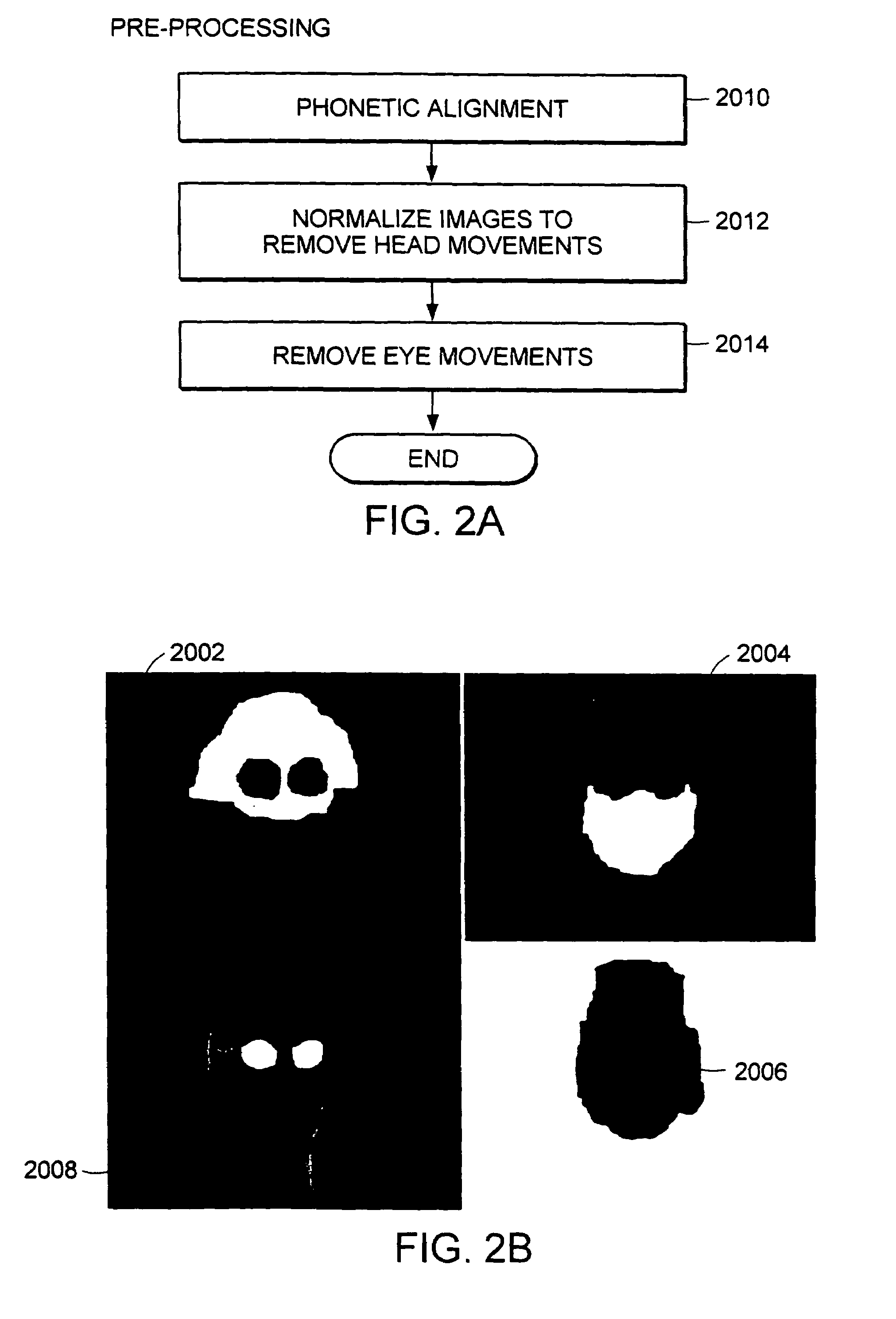

Trainable videorealistic speech animation

A method and apparatus for videorealistic, speech animation is disclosed. A human subject is recorded using a video camera as he / she utters a predetermined speech corpus. After processing the corpus automatically, a visual speech module is learned from the data that is capable of synthesizing the human subject's mouth uttering entirely novel utterances that were not recorded in the original video. The synthesized utterance is re-composited onto a background sequence which contains natural head and eye movement. The final output is videorealistic in the sense that it looks like a video camera recording of the subject. The two key components of this invention are 1) a multidimensional morphable model (MMM) to synthesize new, previously unseen mouth configurations from a small set of mouth image prototypes; and 2) a trajectory synthesis technique based on regularization, which is automatically trained from the recorded video corpus, and which is capable of synthesizing trajectories in MMM space corresponding to any desired utterance.

Owner:MASSACHUSETTS INST OF TECH

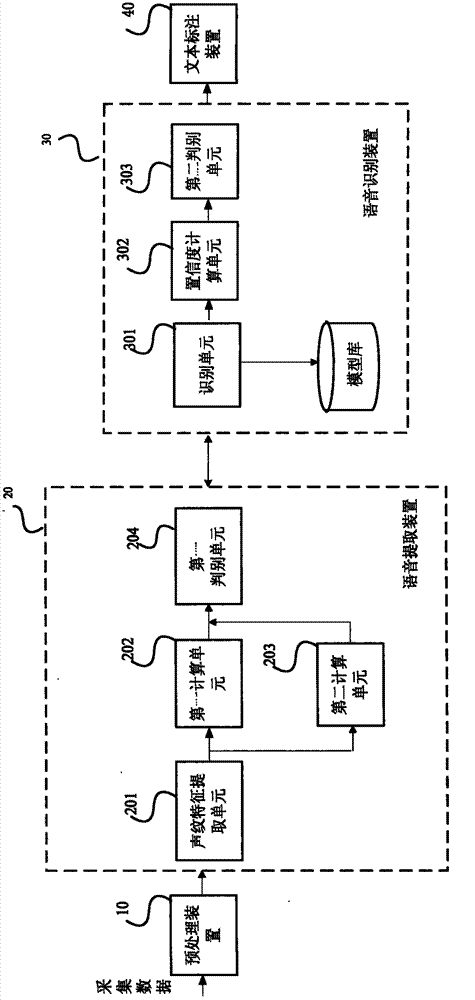

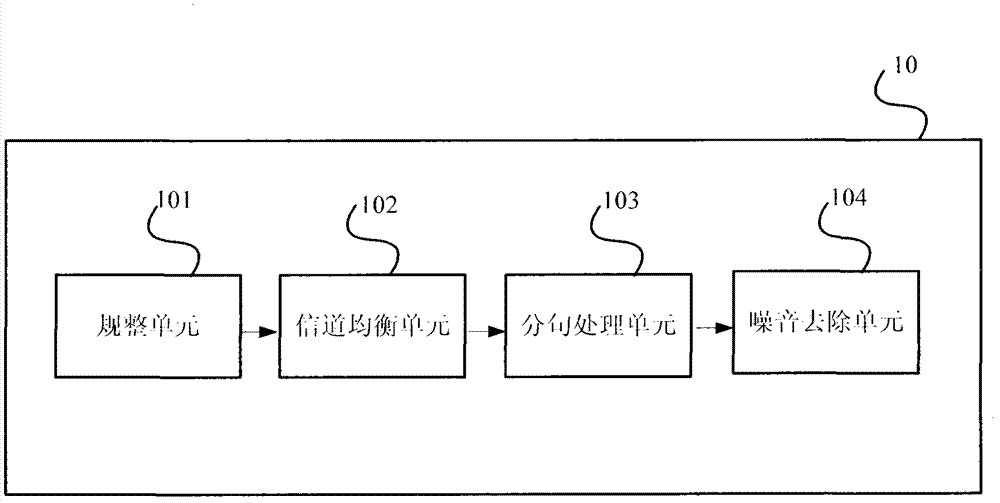

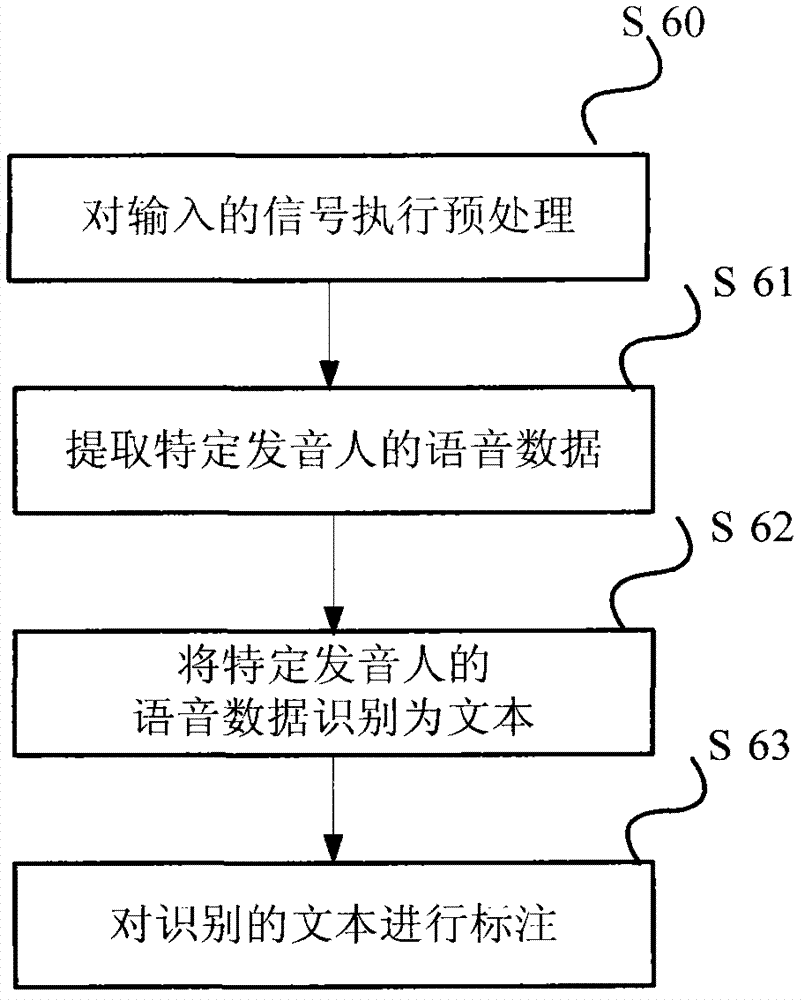

Speech corpus generating device and method, speech synthesizing system and method

ActiveCN102779508AShort build cycleEasy to updateSpecial data processing applicationsSpeech synthesisPersonalizationSpeech corpus

The invention provides a speech corpus generating device and method. The speech corpus generating device comprises a speech extracting device, a speech identifying device and a text marking device, wherein the speech extracting device is used for extracting speech data of a preset pronouncing person from collected data; the speech identifying device is used for identifying the speech data of the preset pronouncing person as a text; and the text marking device is used for marking the text. The invention also provides a speech synthesizing system and method. According to the speech corpus generating device and method provided by the invention, the speech corpus is generated by automatically collecting the data and automatically processing the data, so that a large amount of labor cost is saved. Besides, a construction period of the speech synthesizing system is shortened, the speech synthesizing system is conveniently updated and the personalized customization is realized.

Owner:IFLYTEK CO LTD

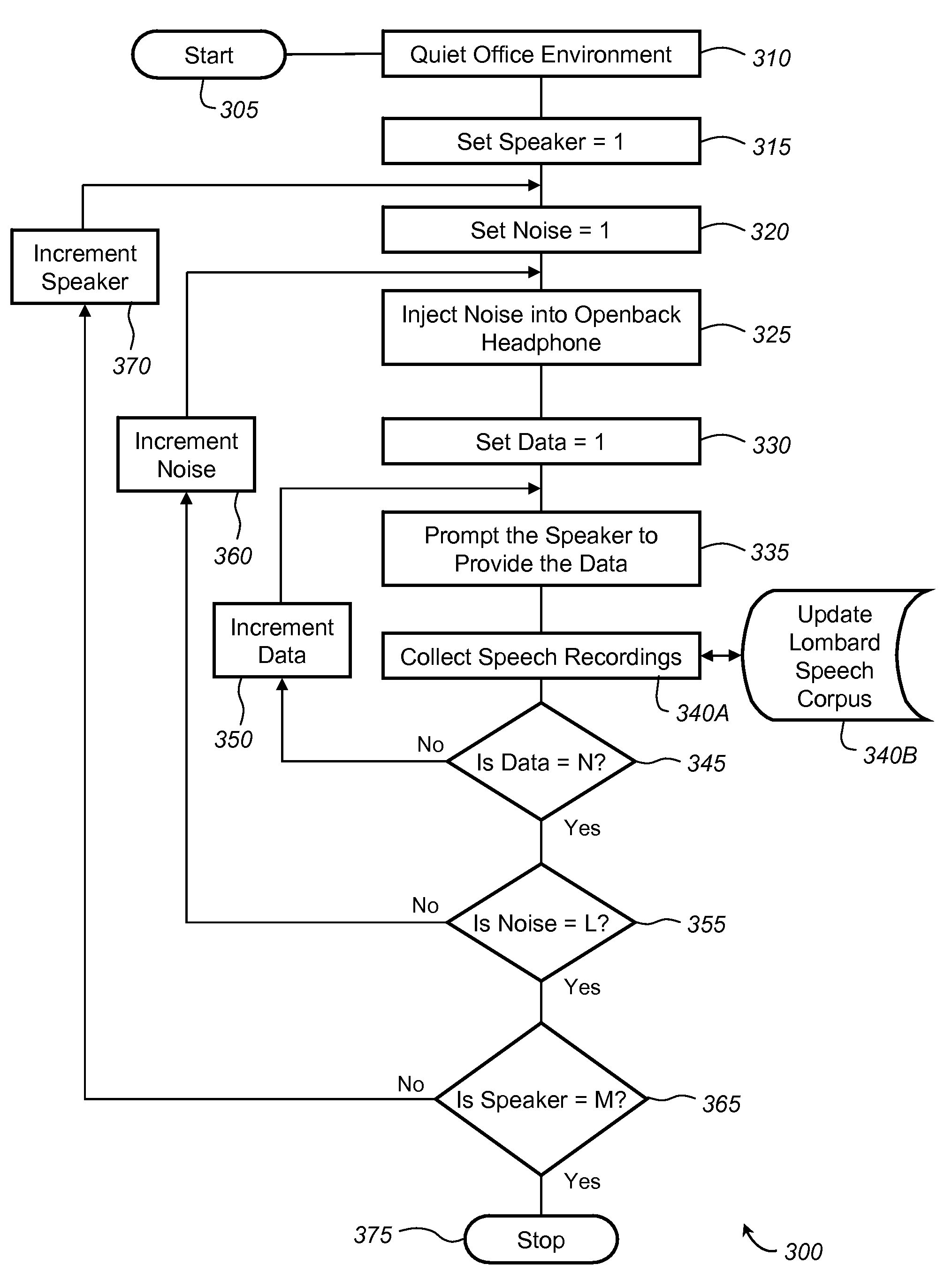

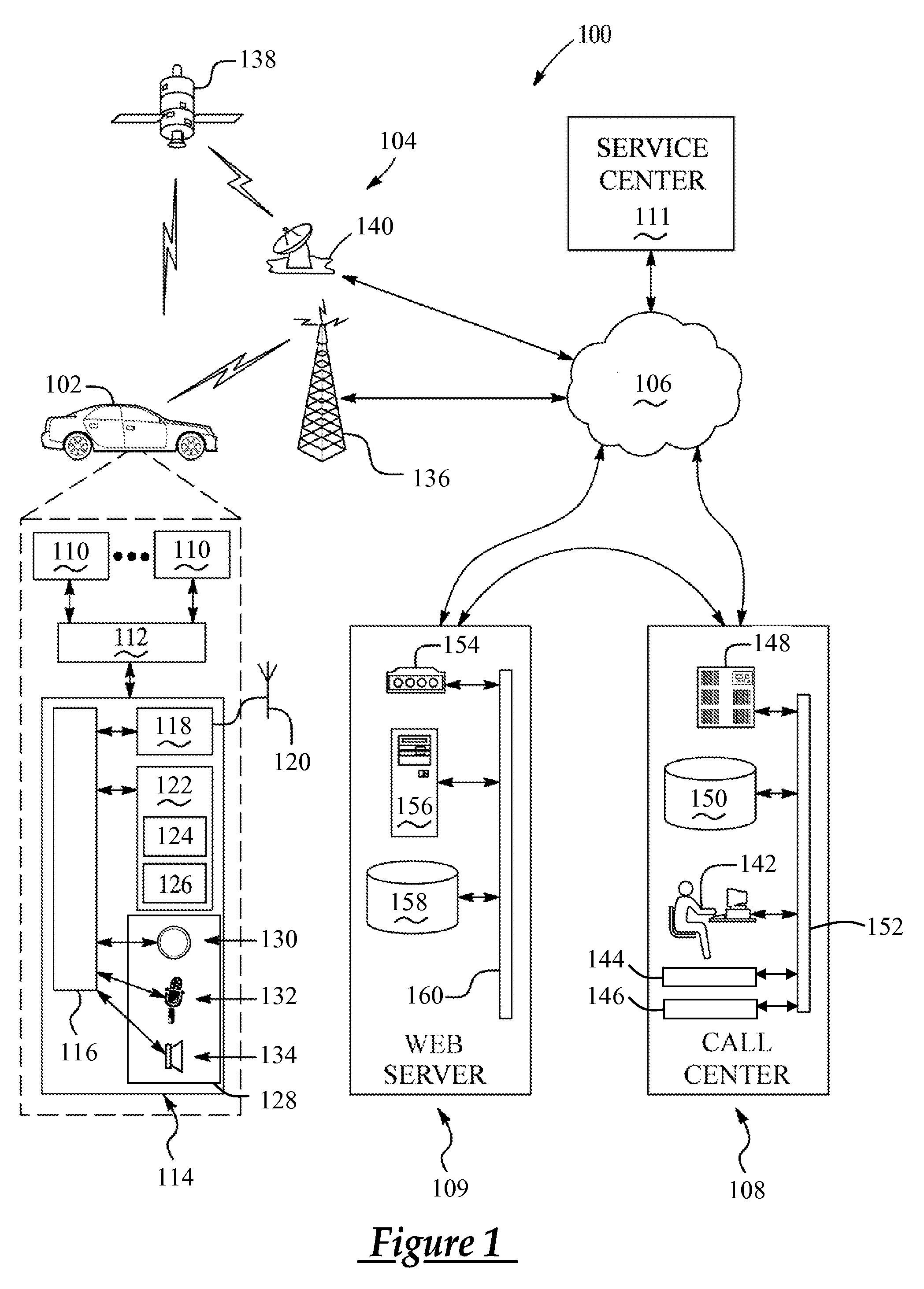

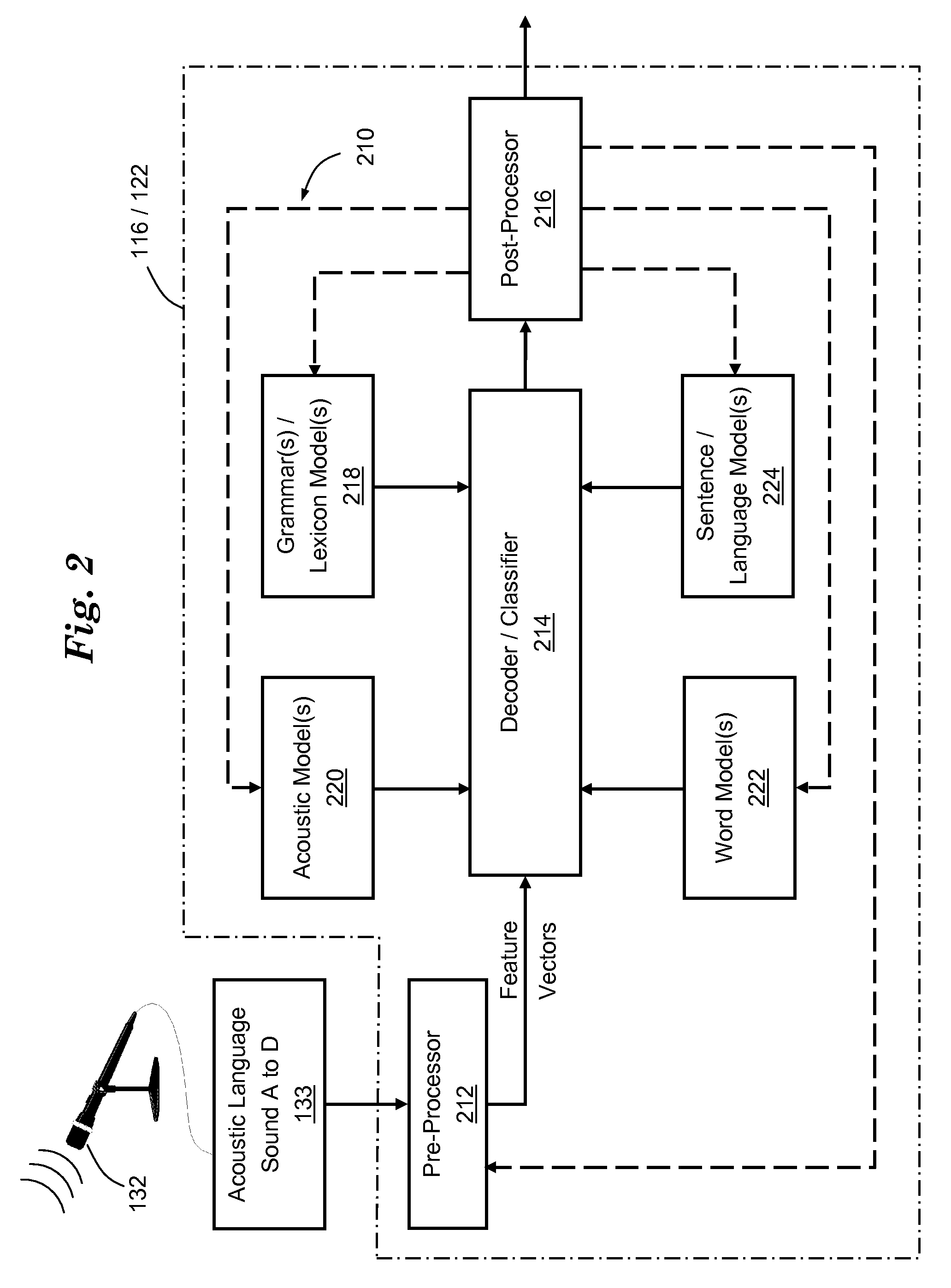

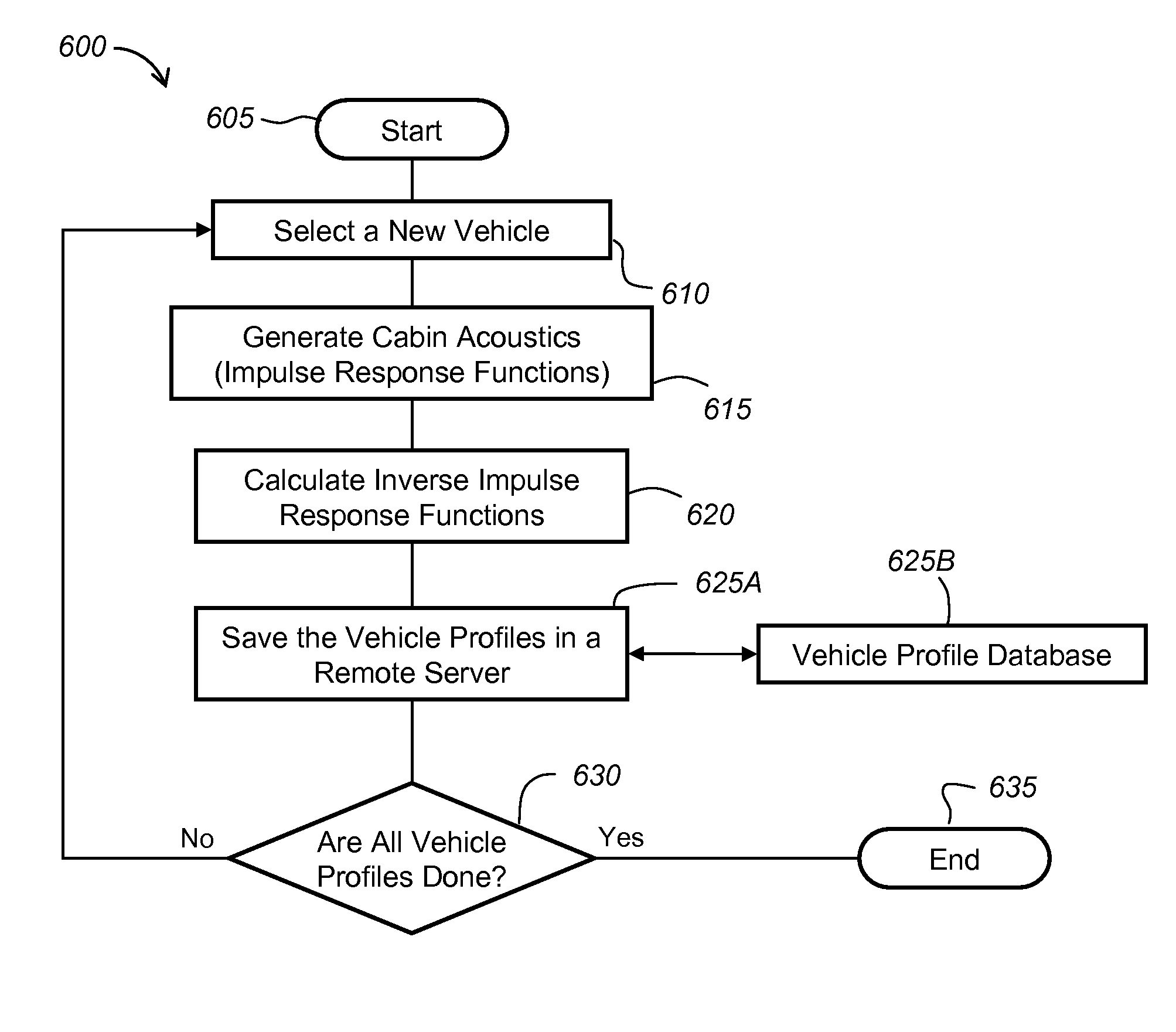

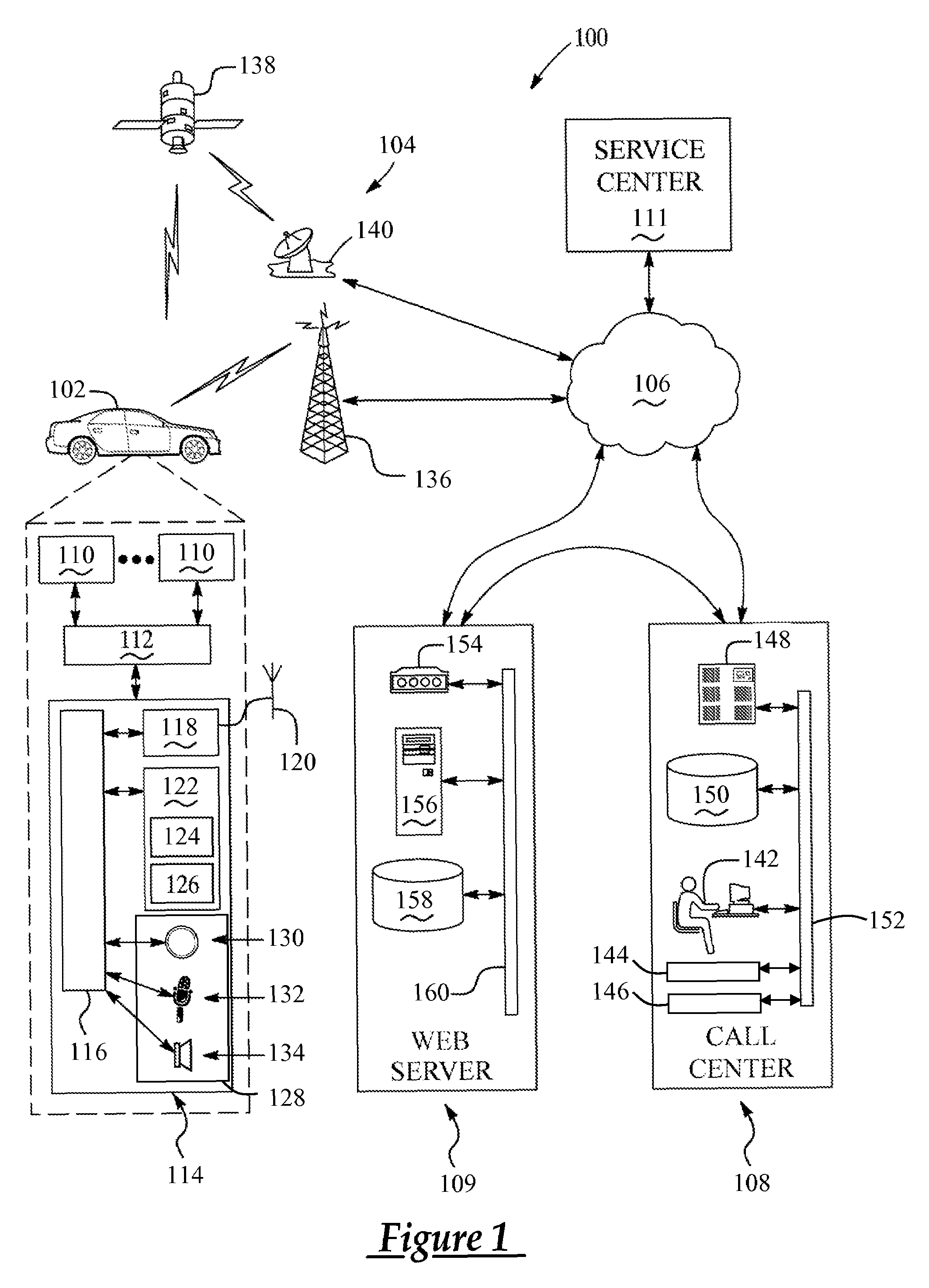

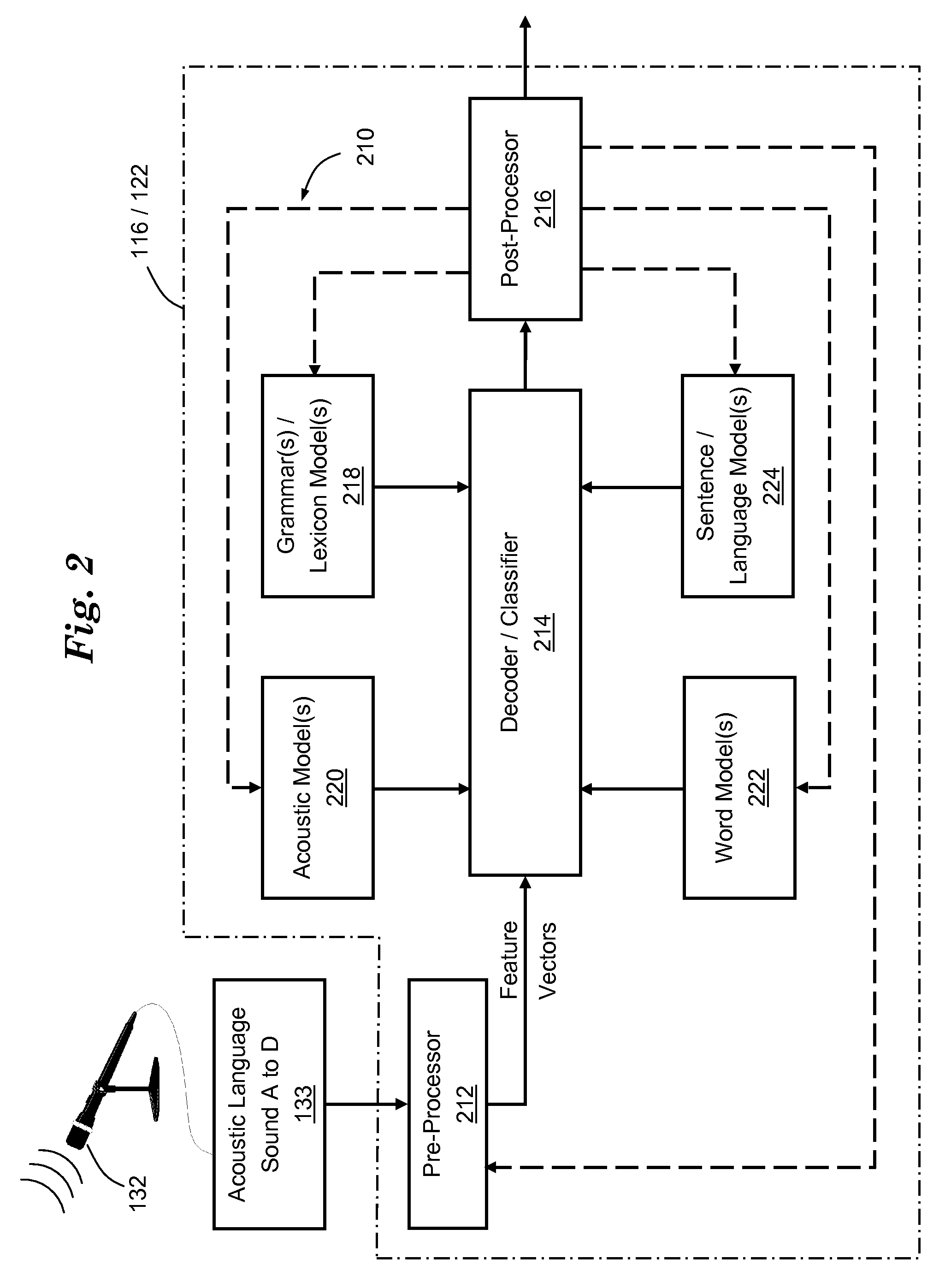

Automated speech recognition using normalized in-vehicle speech

A speech recognition method includes the steps of receiving speech in a vehicle, extracting acoustic data from the received speech, and applying a vehicle-specific inverse impulse response function to the extracted acoustic data to produce normalized acoustic data. The speech recognition method may also include one or more of the following steps: pre-processing the normalized acoustic data to extract acoustic feature vectors; decoding the normalized acoustic feature vectors using as input at least one of a plurality of global acoustic models built according to a plurality of Lombard levels of a Lombard speech corpus covering a plurality of vehicles; calculating the Lombard level of vehicle noise; and / or selecting the at least one of the plurality of global acoustic models that corresponds to the calculated Lombard level for application during the decoding step.

Owner:GENERA MOTORS LLC

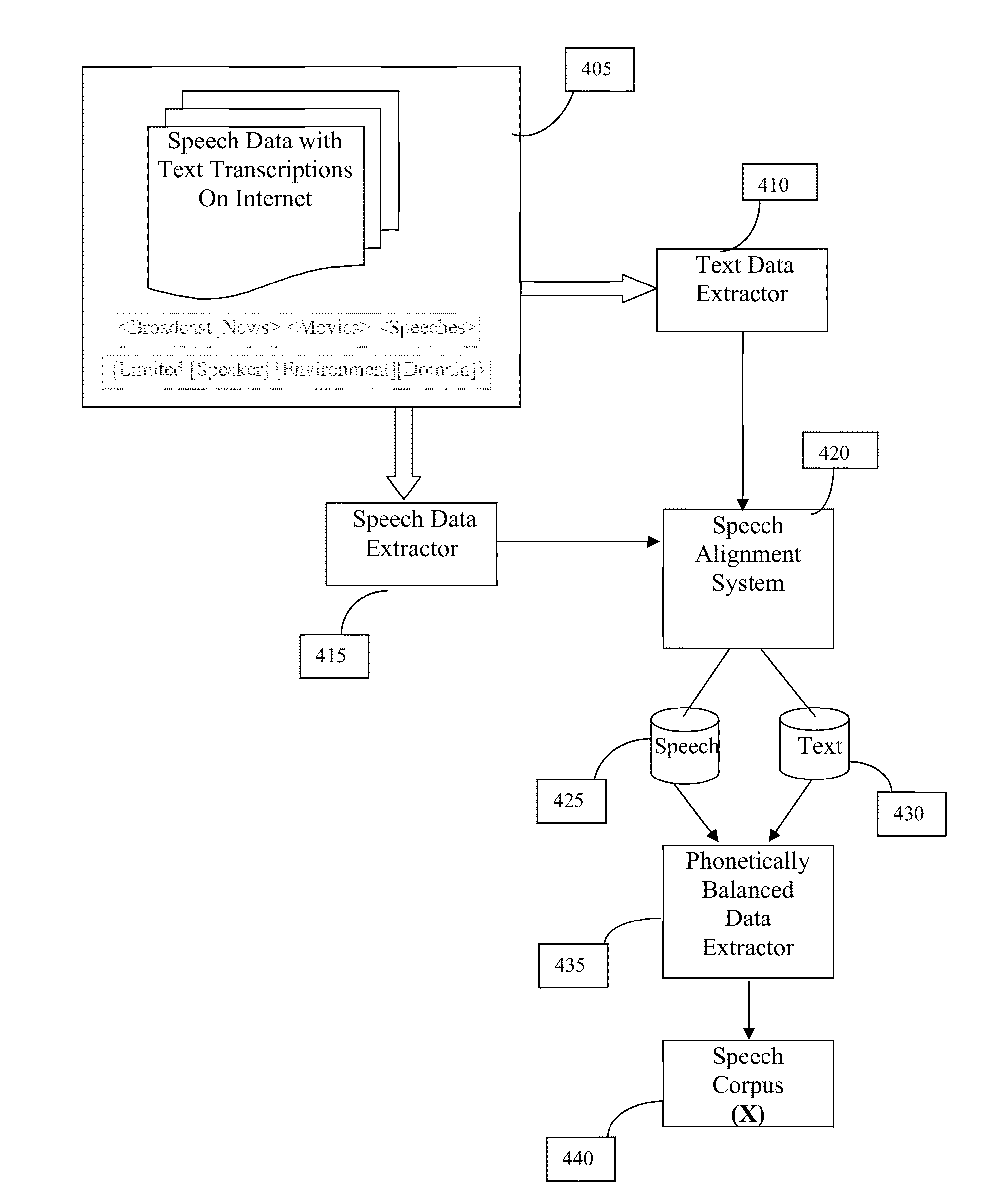

Frugal method and system for creating speech corpus

ActiveUS20130030810A1Minimization of effortMinimization of expenseSpeech recognitionWeb data navigationThe InternetSpeech corpus

The present invention provides a frugal method for extraction of speech data and associated transcription from plurality of web resources (internet) for speech corpus creation characterized by an automation of the speech corpus creation and cost reduction. An integration of existing speech corpus with extracted speech data and its transcription from the web resources to build an aggregated rich speech corpus that are effective and easy to adapt for generating acoustic and language models for (Automatic Speech Recognition) ASR systems.

Owner:TATA CONSULTANCY SERVICES LTD

Automated speech recognition using normalized in-vehicle speech

A speech recognition method includes the steps of receiving speech in a vehicle, extracting acoustic data from the received speech, and applying a vehicle-specific inverse impulse response function to the extracted acoustic data to produce normalized acoustic data. The speech recognition method may also include one or more of the following steps: pre-processing the normalized acoustic data to extract acoustic feature vectors; decoding the normalized acoustic feature vectors using as input at least one of a plurality of global acoustic models built according to a plurality of Lombard levels of a Lombard speech corpus covering a plurality of vehicles; calculating the Lombard level of vehicle noise; and / or selecting the at least one of the plurality of global acoustic models that corresponds to the calculated Lombard level for application during the decoding step.

Owner:GENERA MOTORS LLC

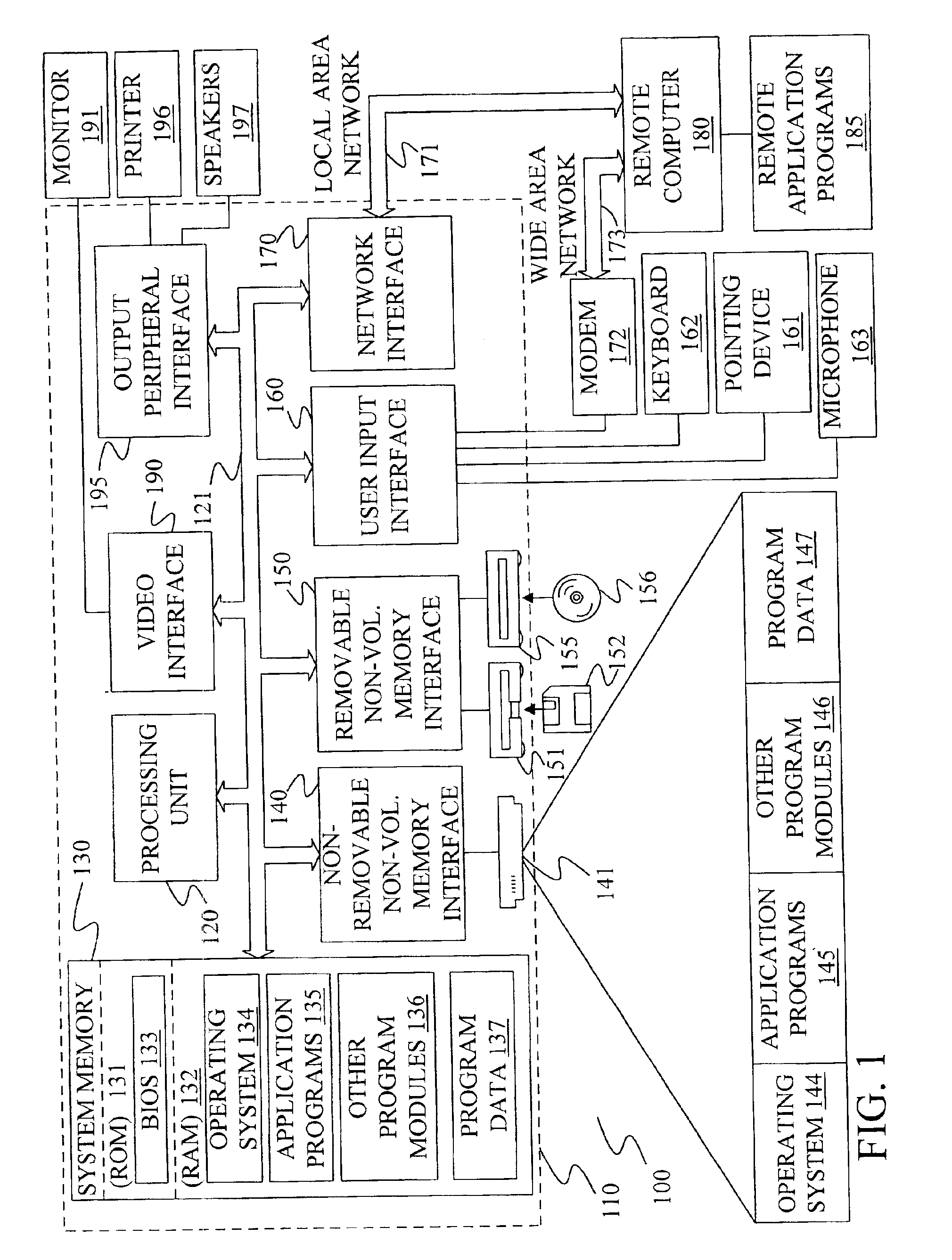

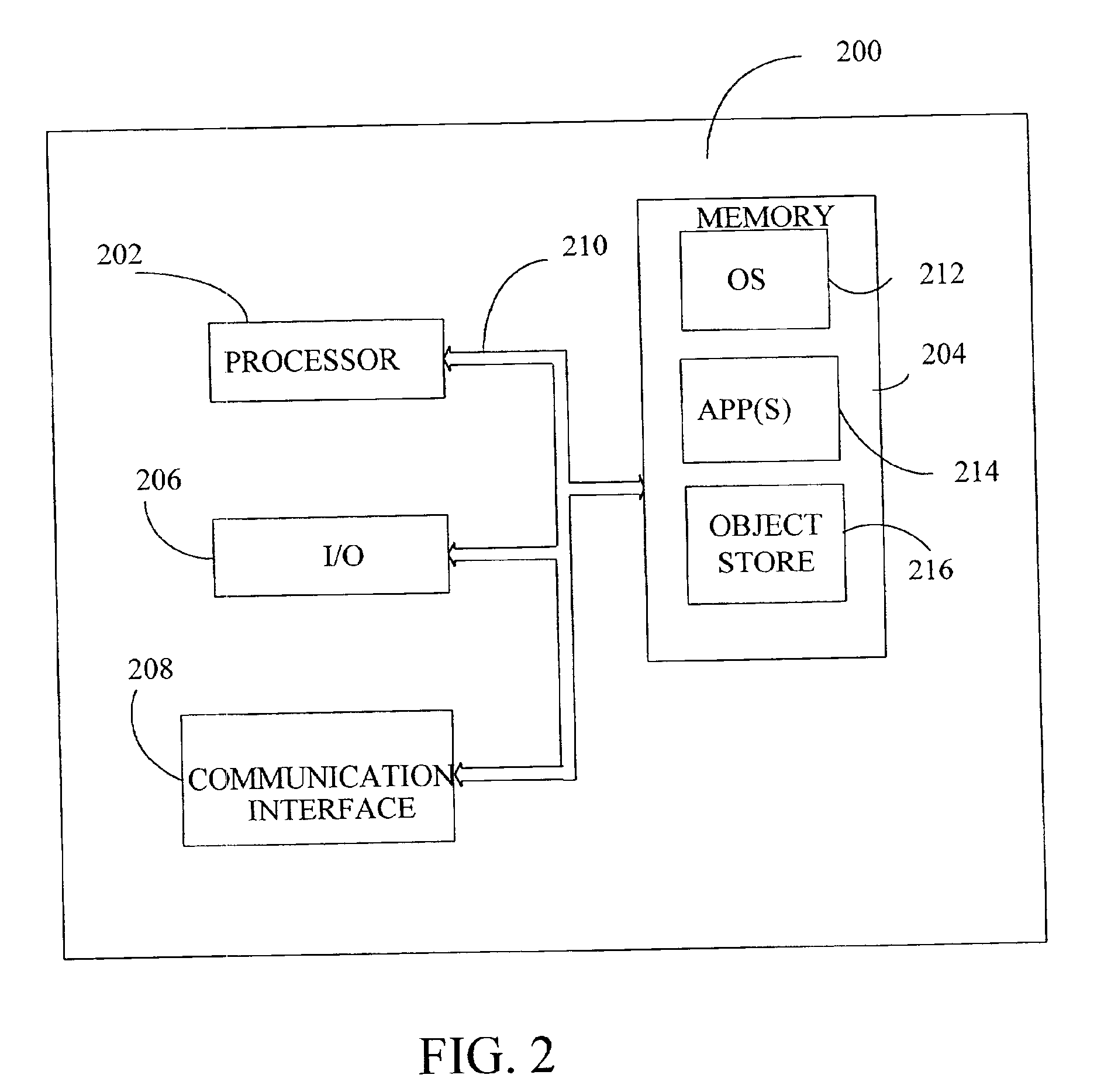

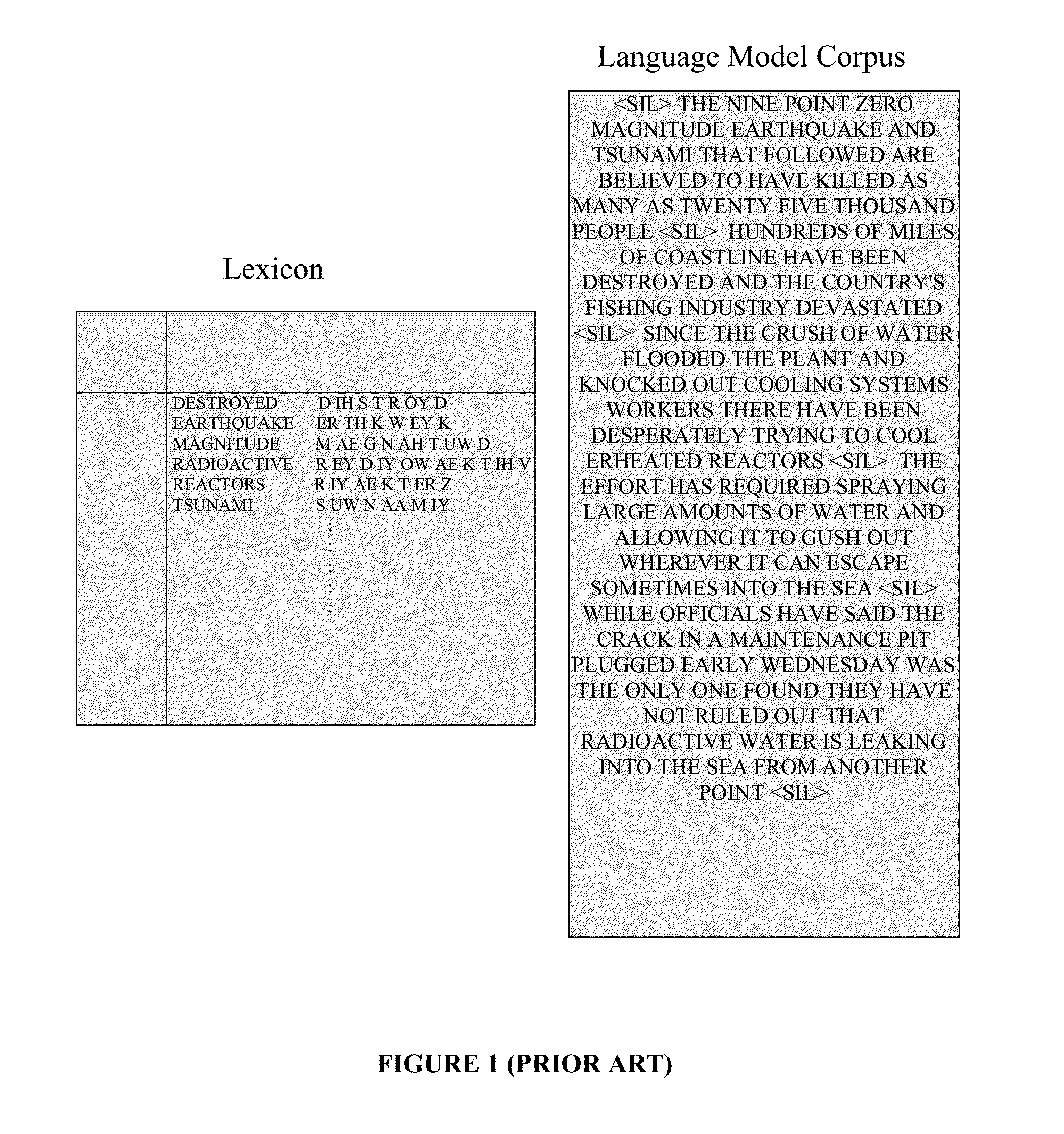

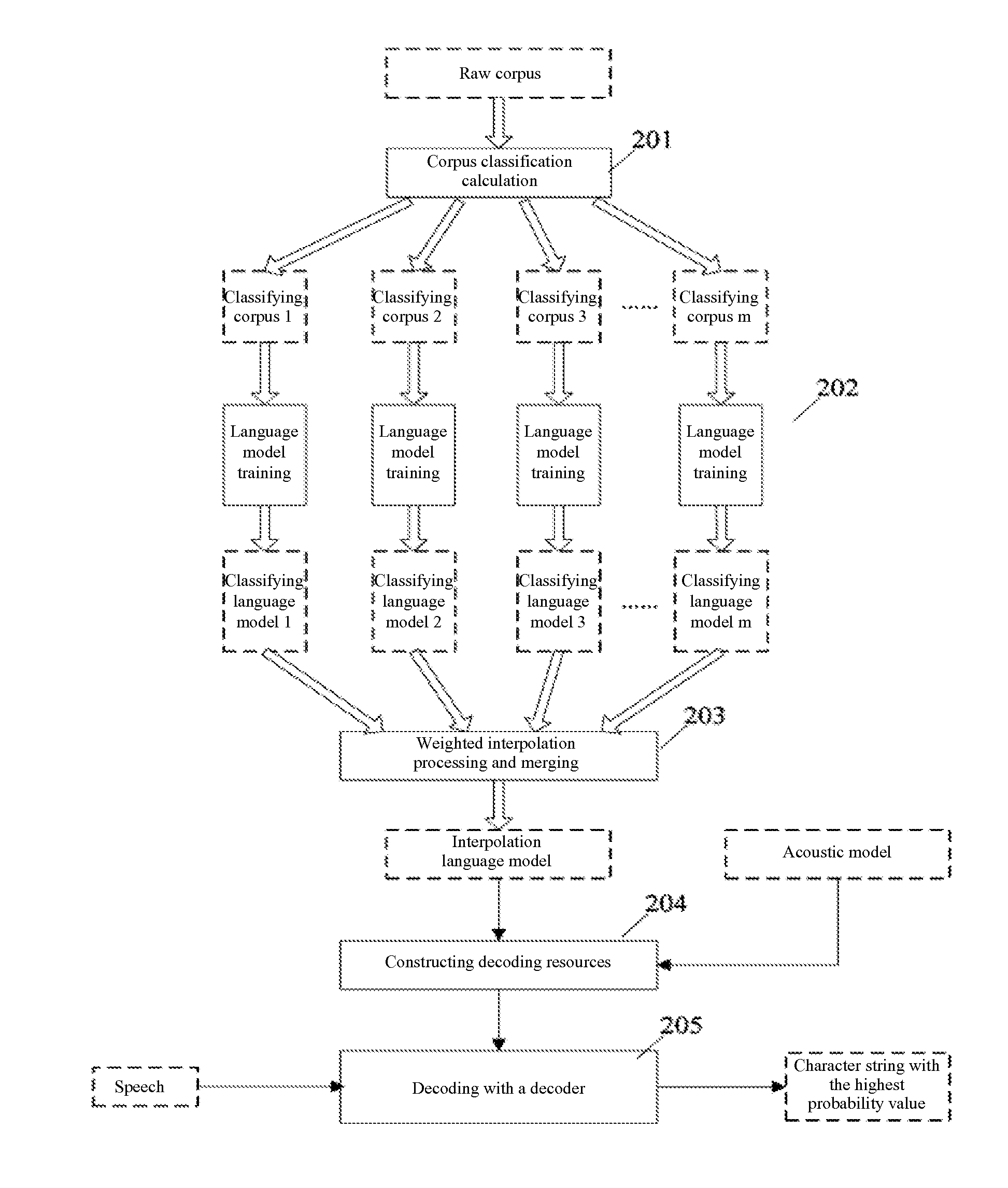

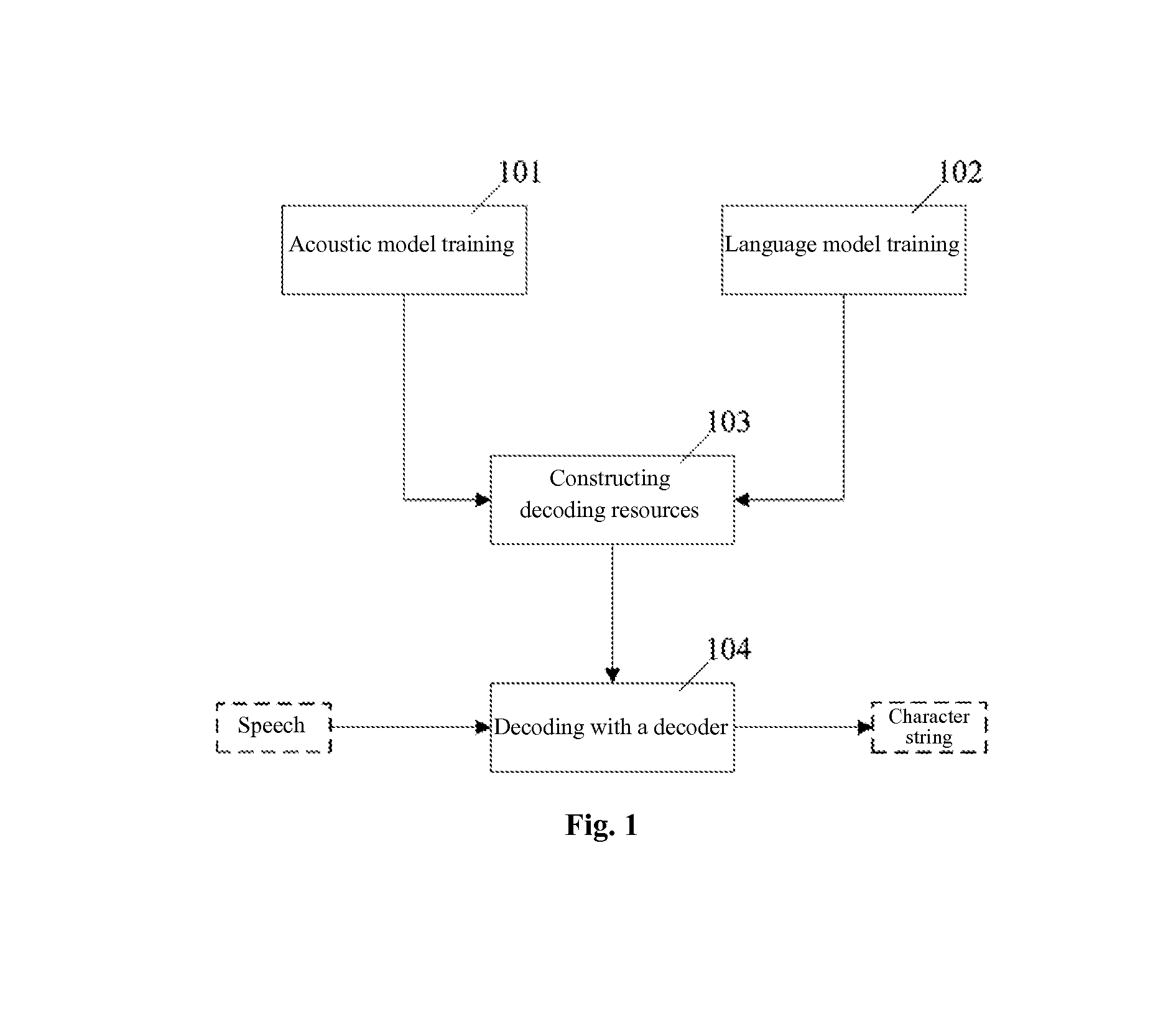

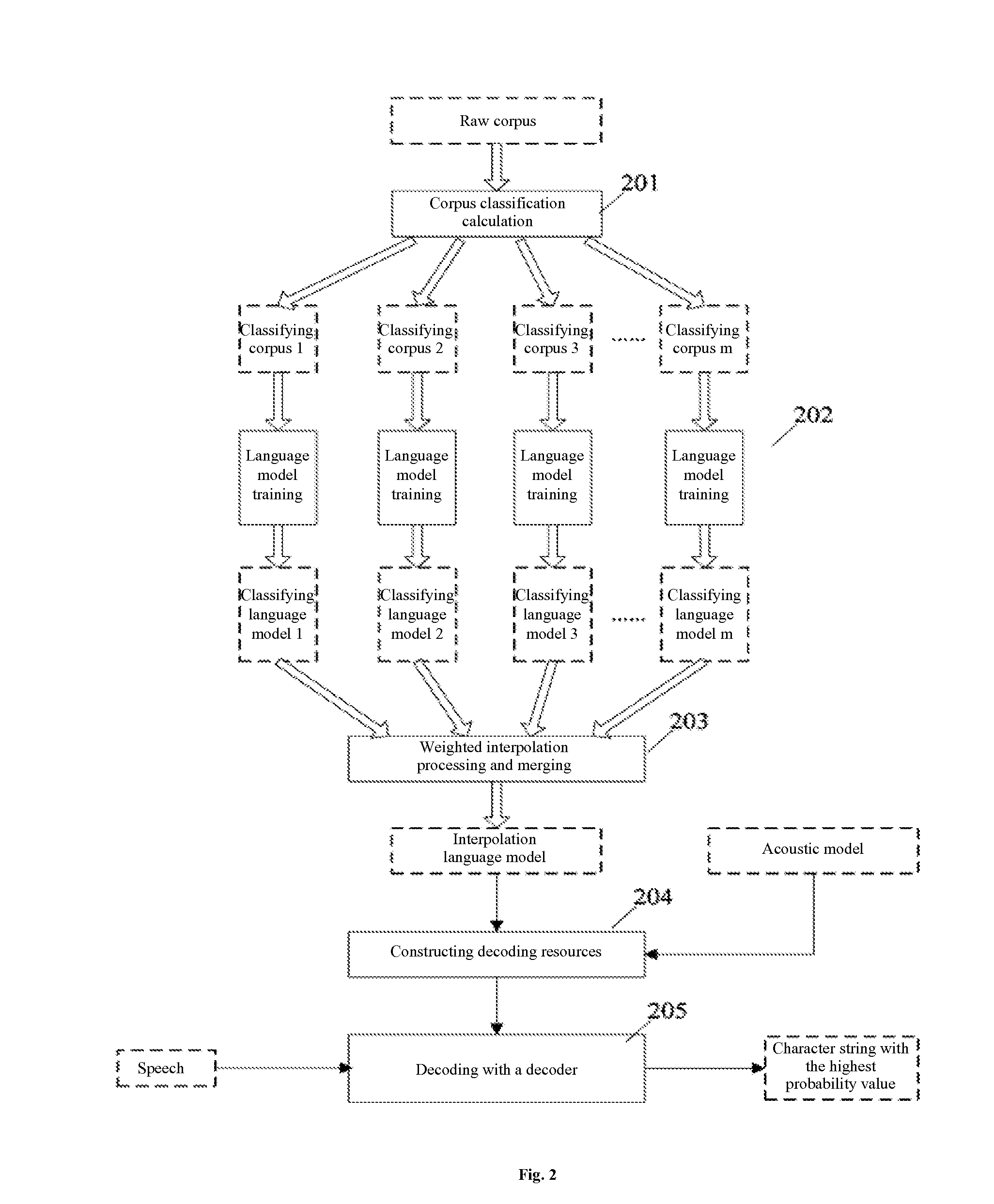

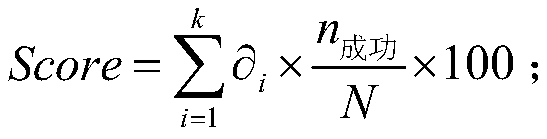

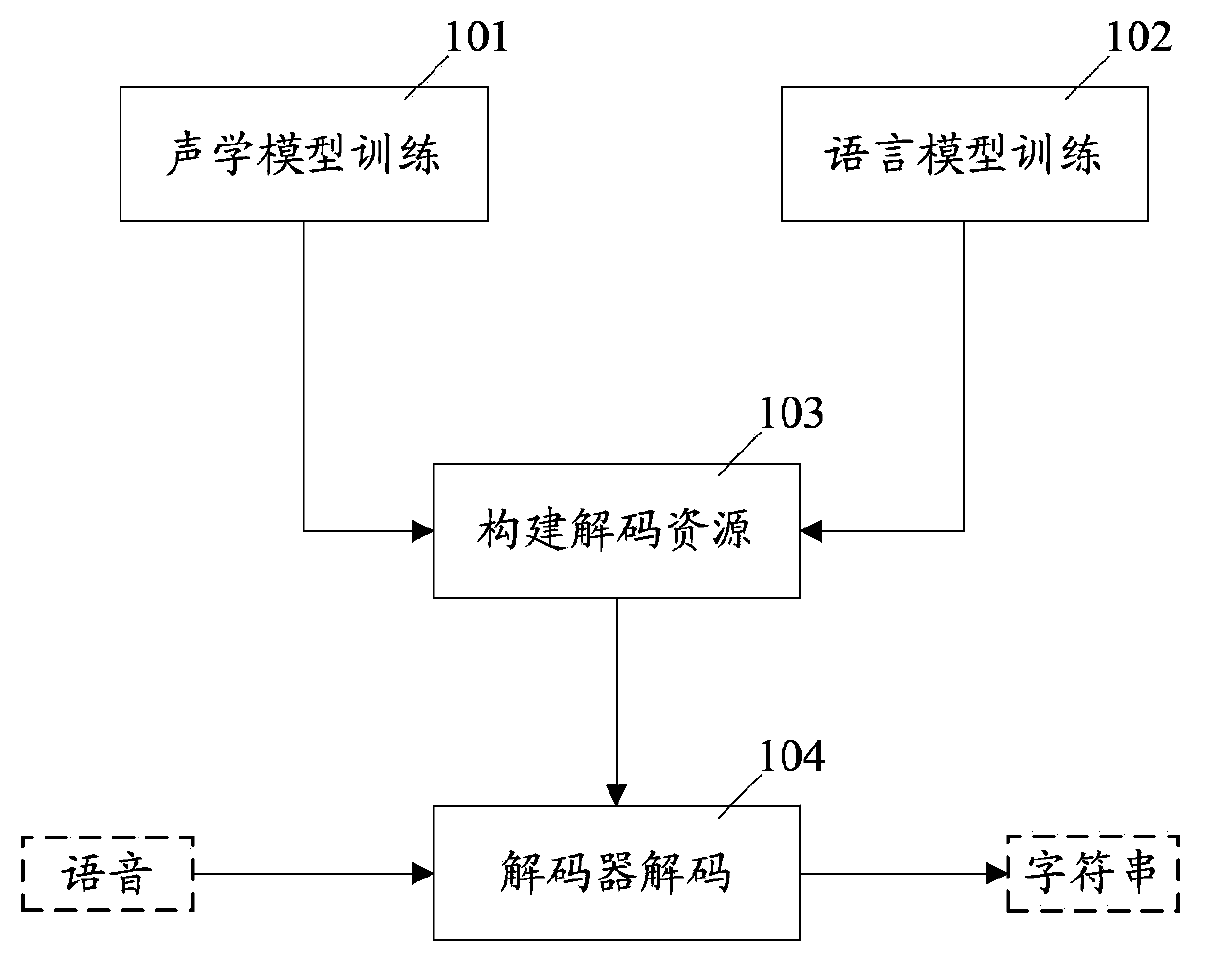

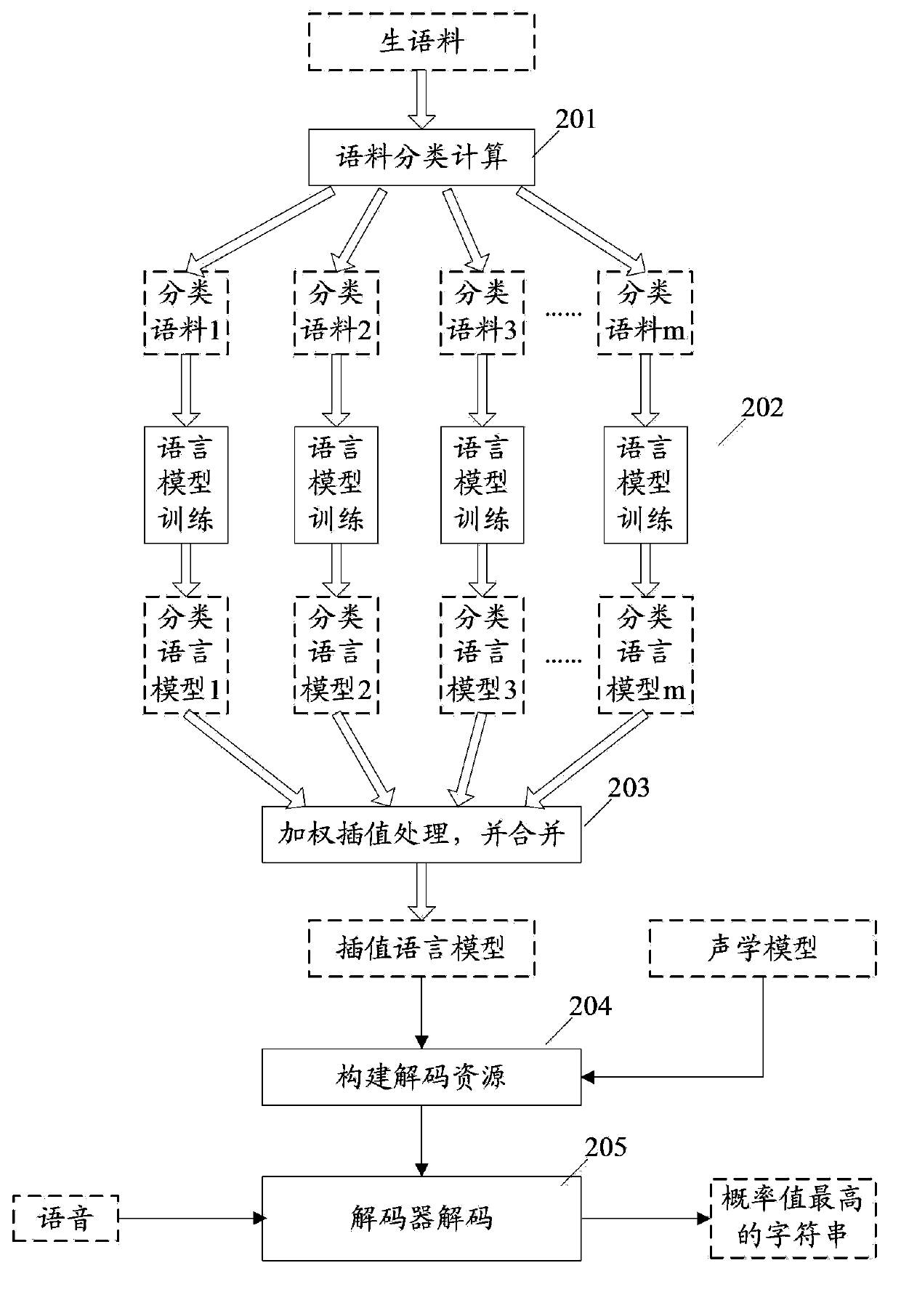

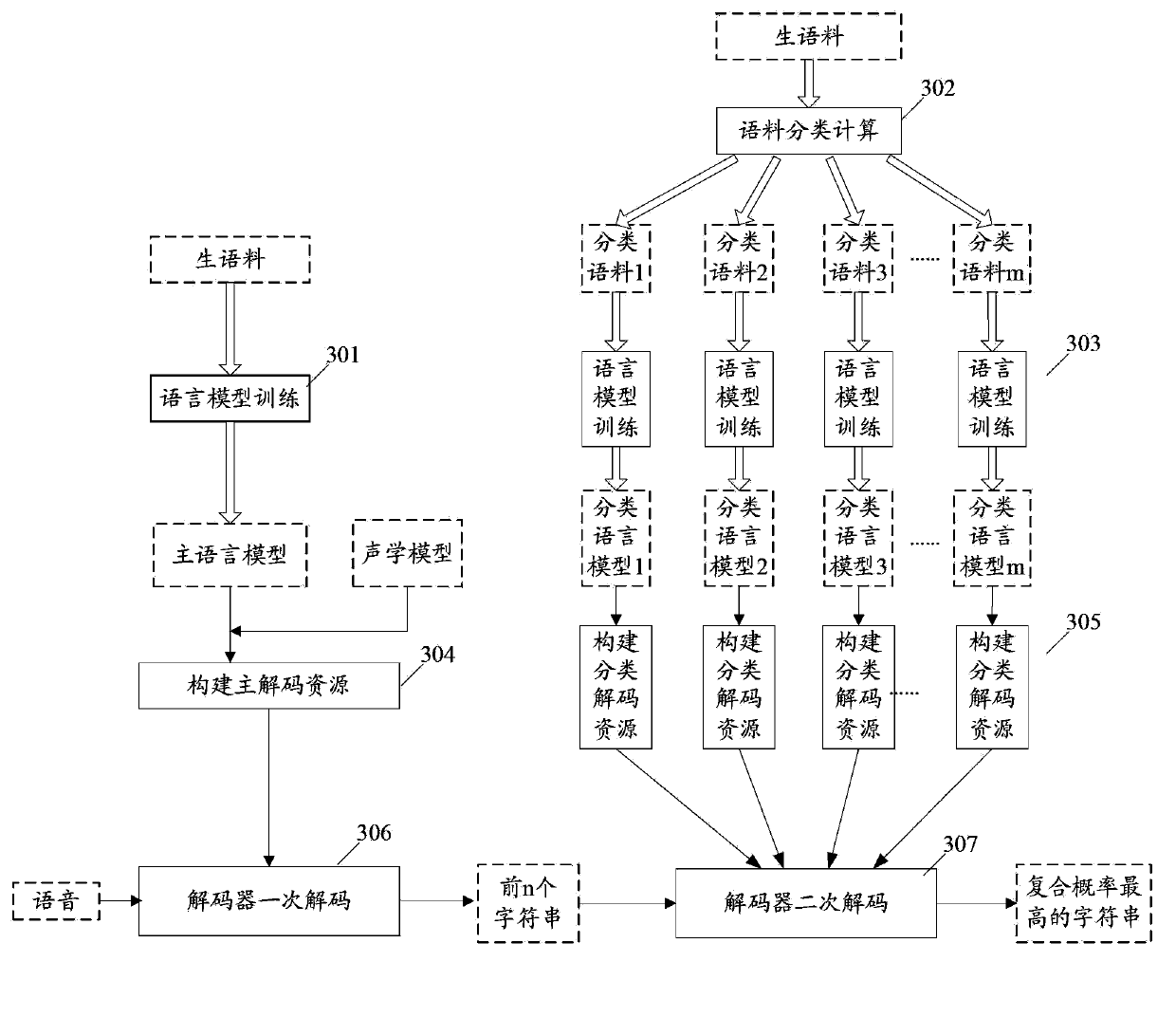

Method and system for automatic speech recognition

An automatic speech recognition method includes at a computer having one or more processors and memory for storing one or more programs to be executed by the processors, obtaining a plurality of speech corpus categories through classifying and calculating raw speech corpus; obtaining a plurality of classified language models that respectively correspond to the plurality of speech corpus categories through a language model training applied on each speech corpus category; obtaining an interpolation language model through implementing a weighted interpolation on each classified language model and merging the interpolated plurality of classified language models; constructing a decoding resource in accordance with an acoustic model and the interpolation language model; and decoding input speech using the decoding resource, and outputting a character string with a highest probability as a recognition result of the input speech.

Owner:TENCENT TECH (SHENZHEN) CO LTD

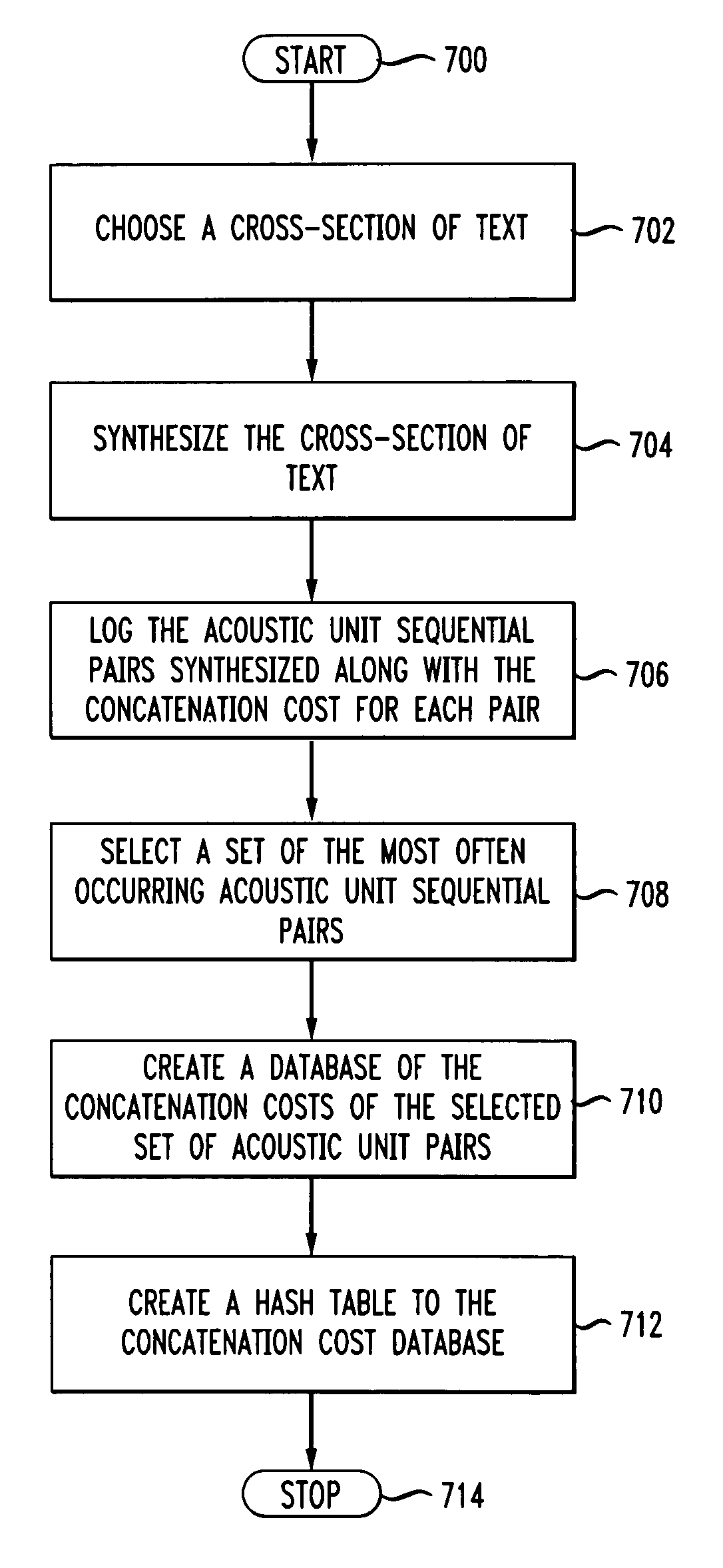

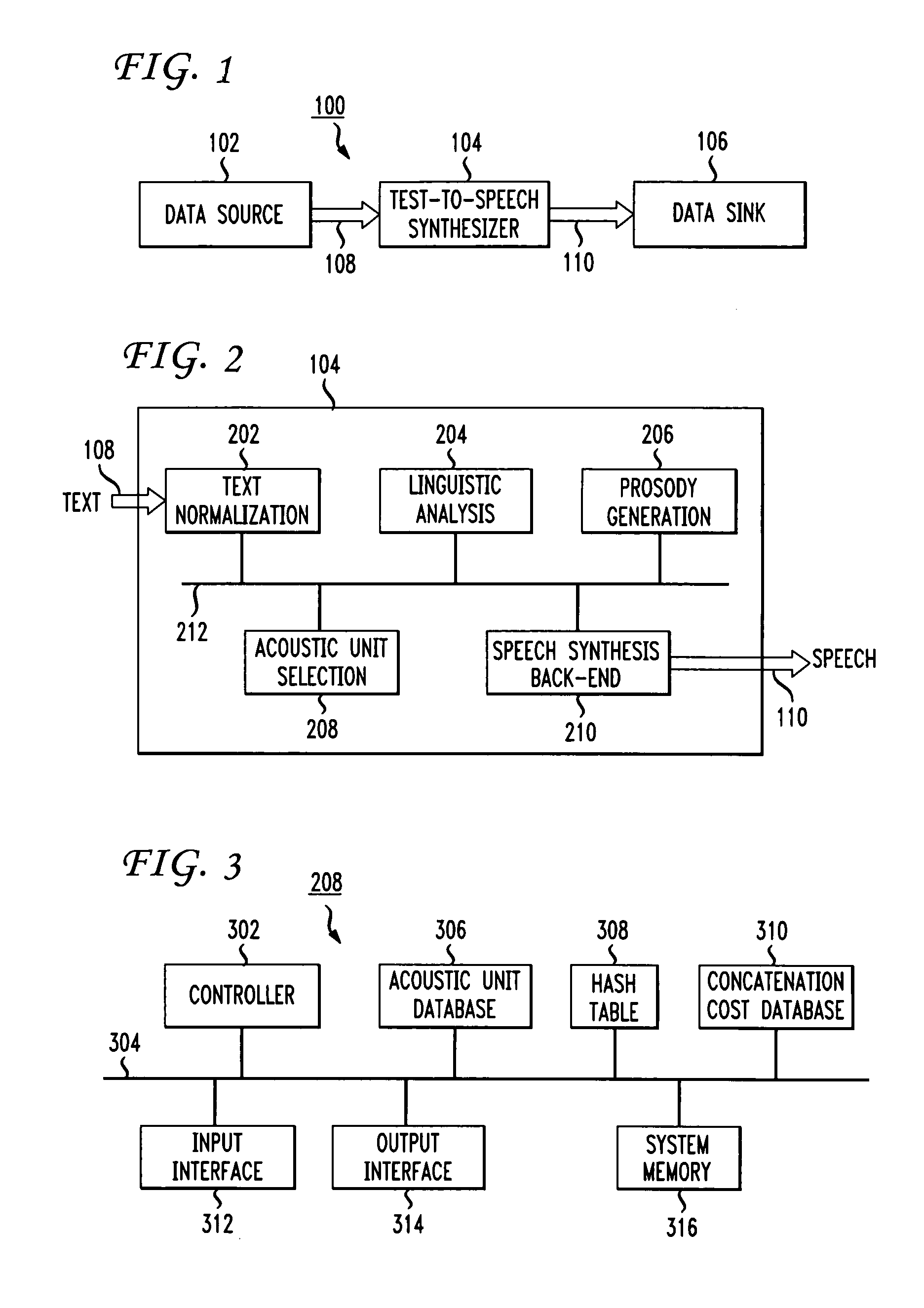

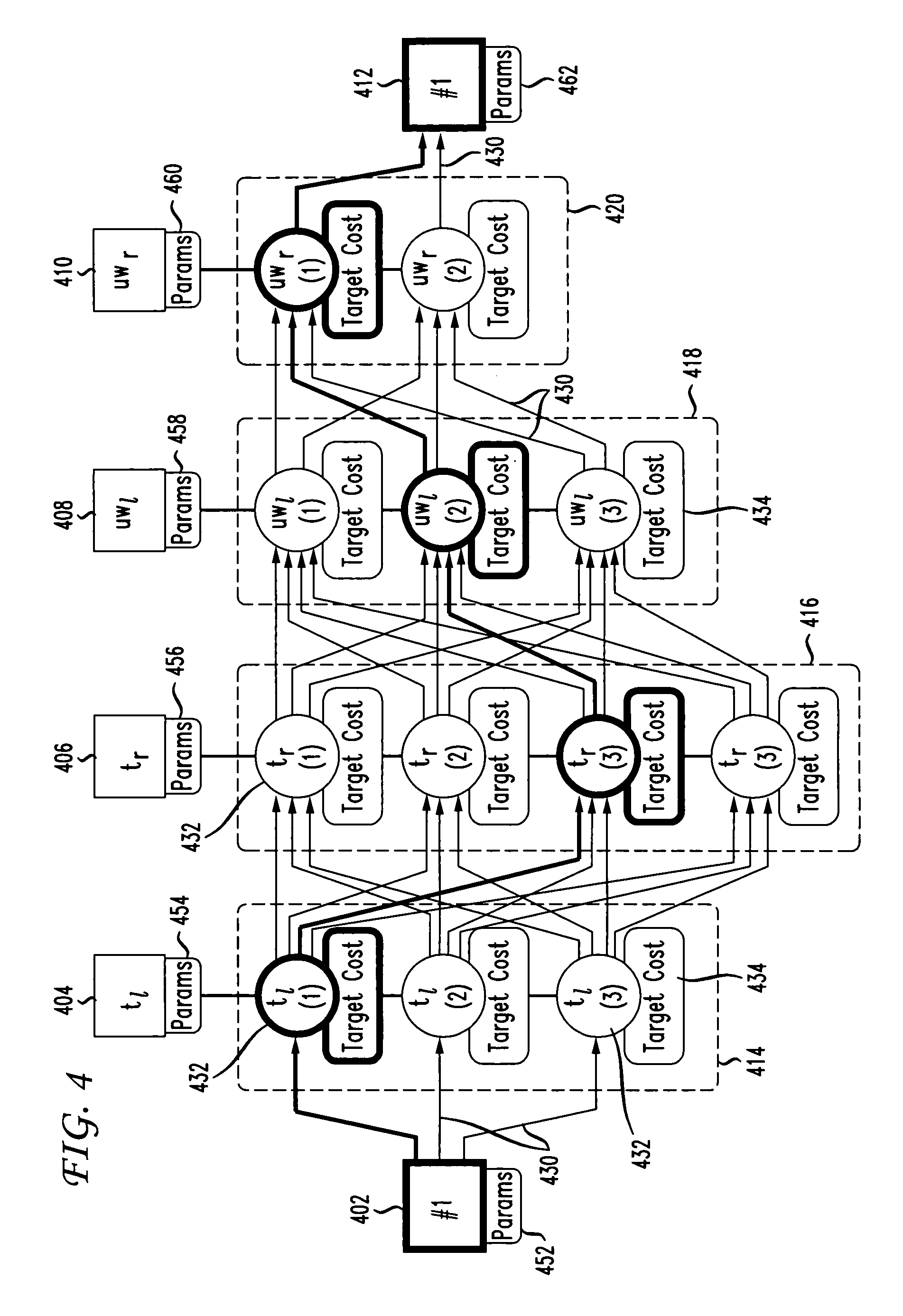

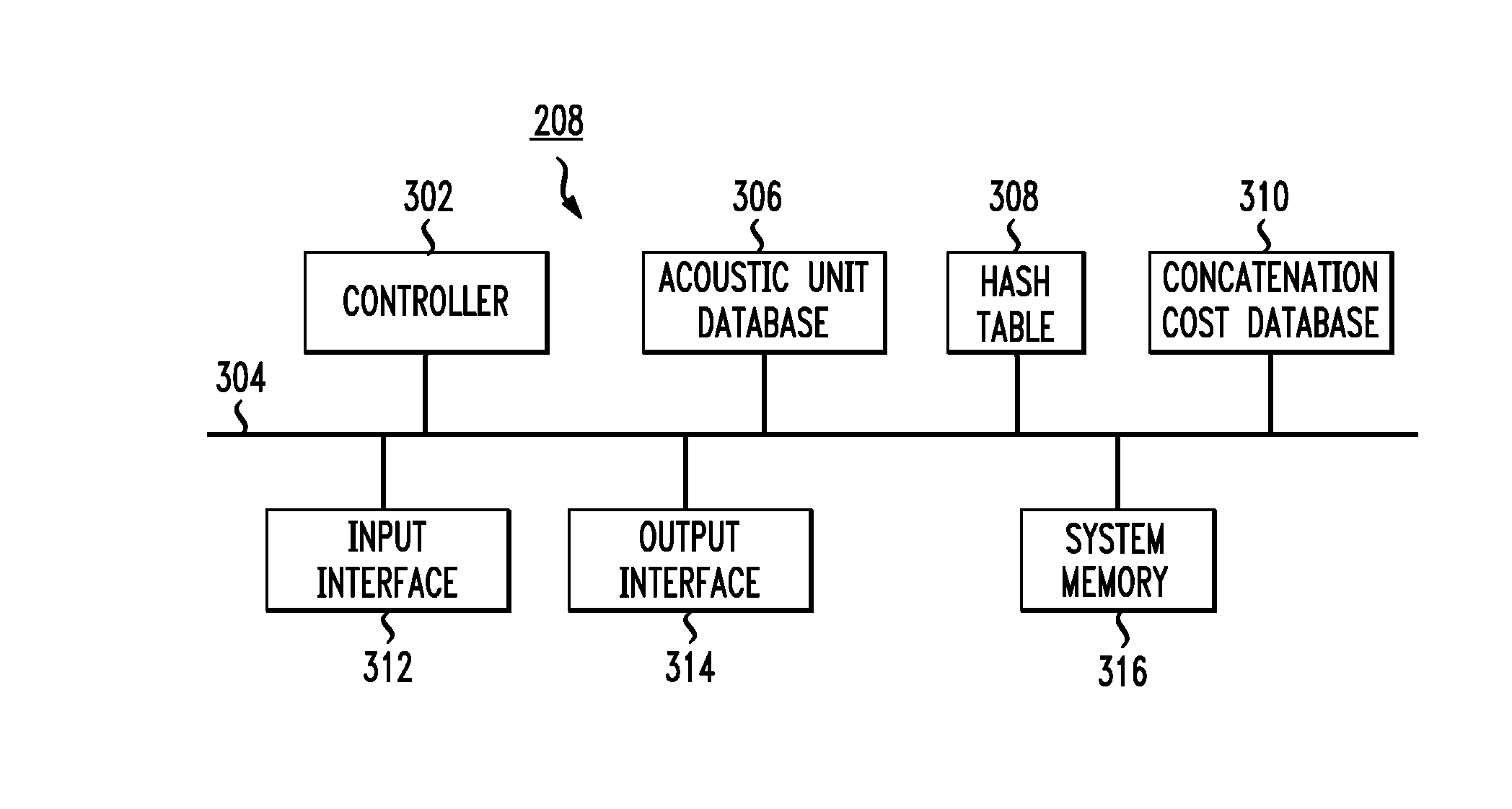

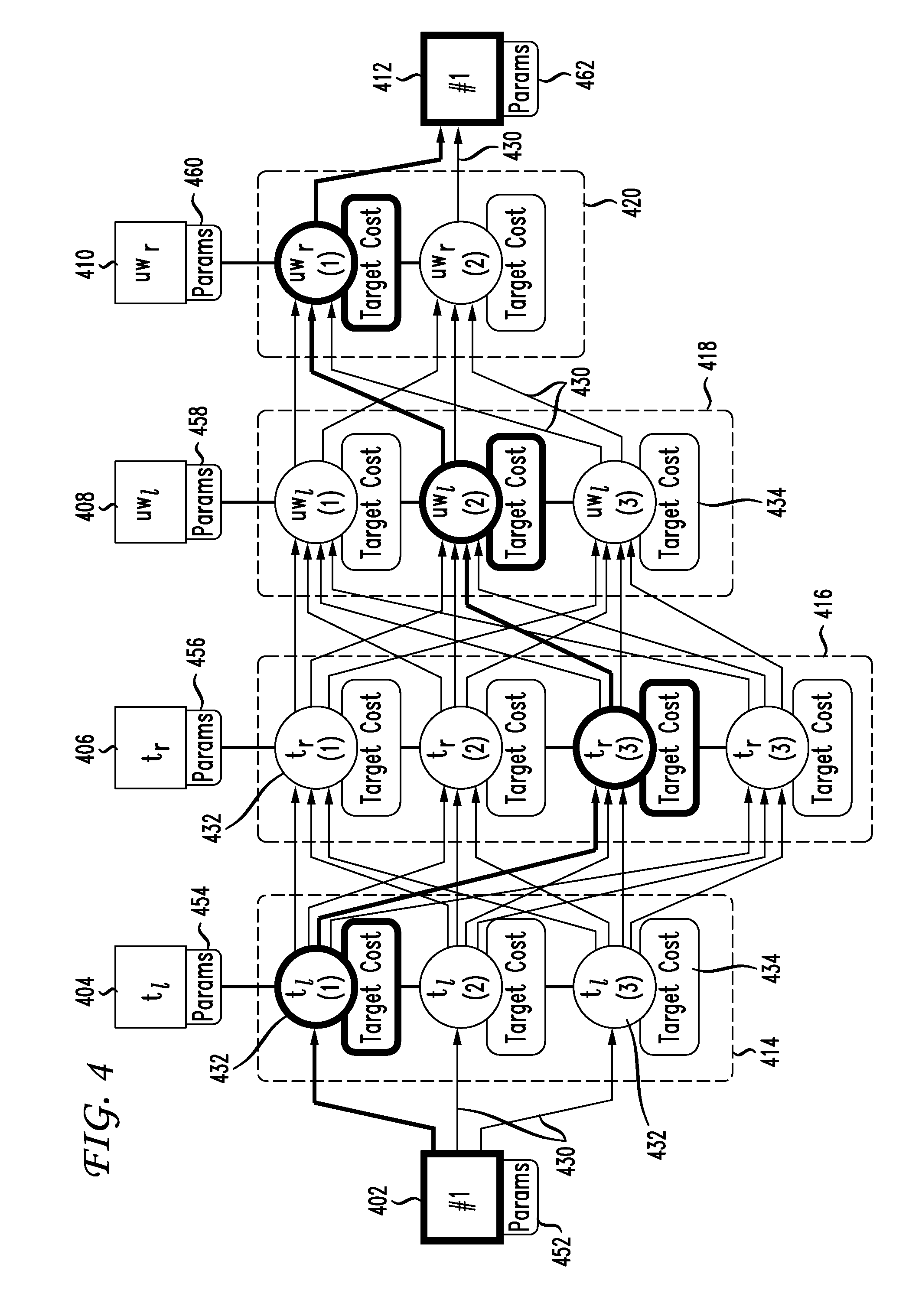

Methods and apparatus for rapid acoustic unit selection from a large speech corpus

A speech synthesis system can select recorded speech fragments, or acoustic units, from a very large database of acoustic units to produce artificial speech. The selected acoustic units are chosen to minimize a combination of target and concatenation costs for a given sentence. However, as concatenation costs, which are measures of the mismatch between sequential pairs of acoustic units, are expensive to compute, processing can be greatly reduced by pre-computing and caching the concatenation costs. Unfortunately, the number of possible sequential pairs of acoustic units makes such caching prohibitive. However, statistical experiments reveal that while about 85% of the acoustic units are typically used in common speech, less than 1% of the possible sequential pairs of acoustic units occur in practice. A method for constructing an efficient concatenation cost database is provided by synthesizing a large body of speech, identifying the acoustic unit sequential pairs generated and their respective concatenation costs, and storing those concatenation costs likely to occur. By constructing a concatenation cost database in this fashion, the processing power required at run-time is greatly reduced with negligible effect on speech quality.

Owner:CERENCE OPERATING CO

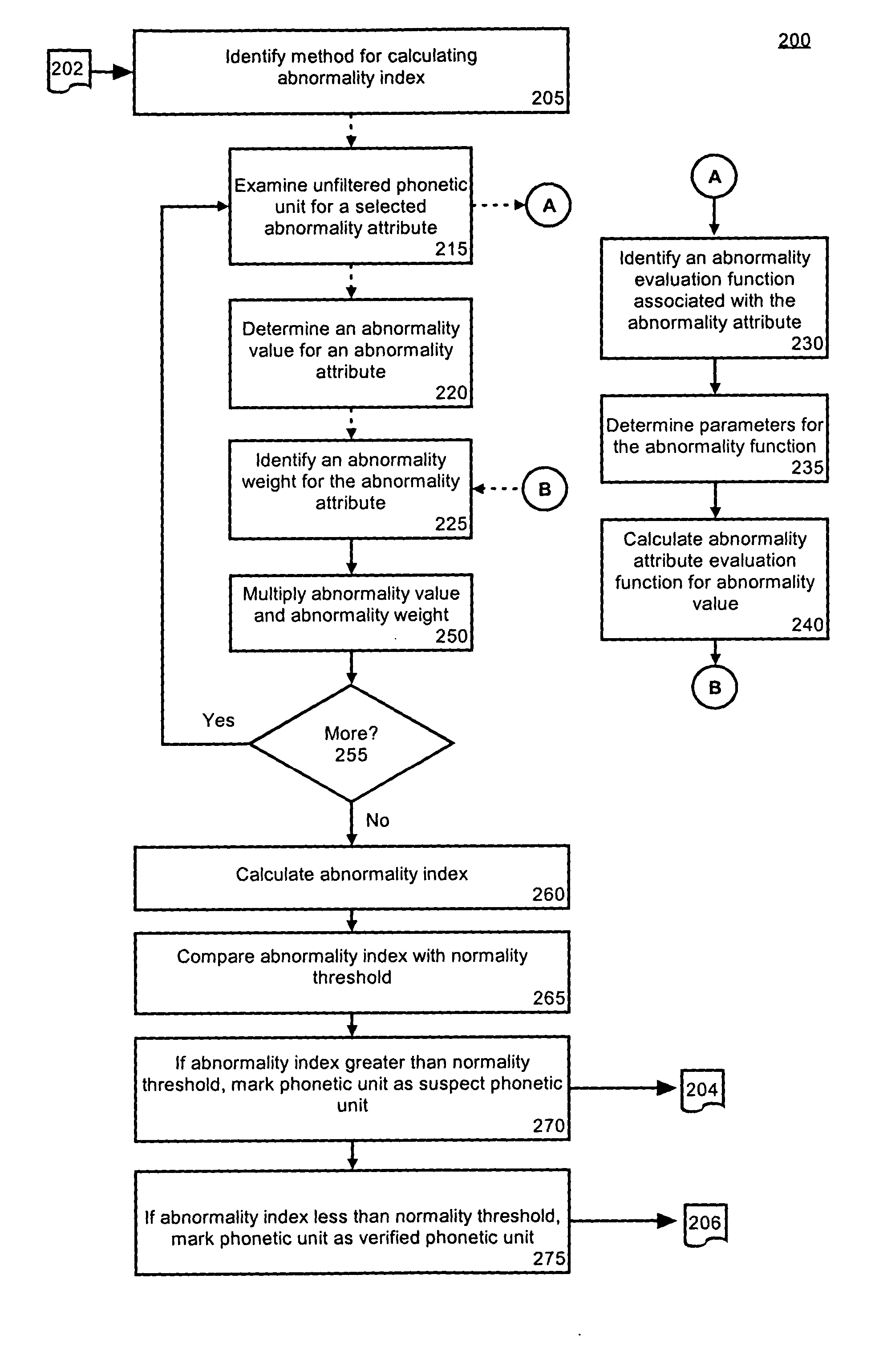

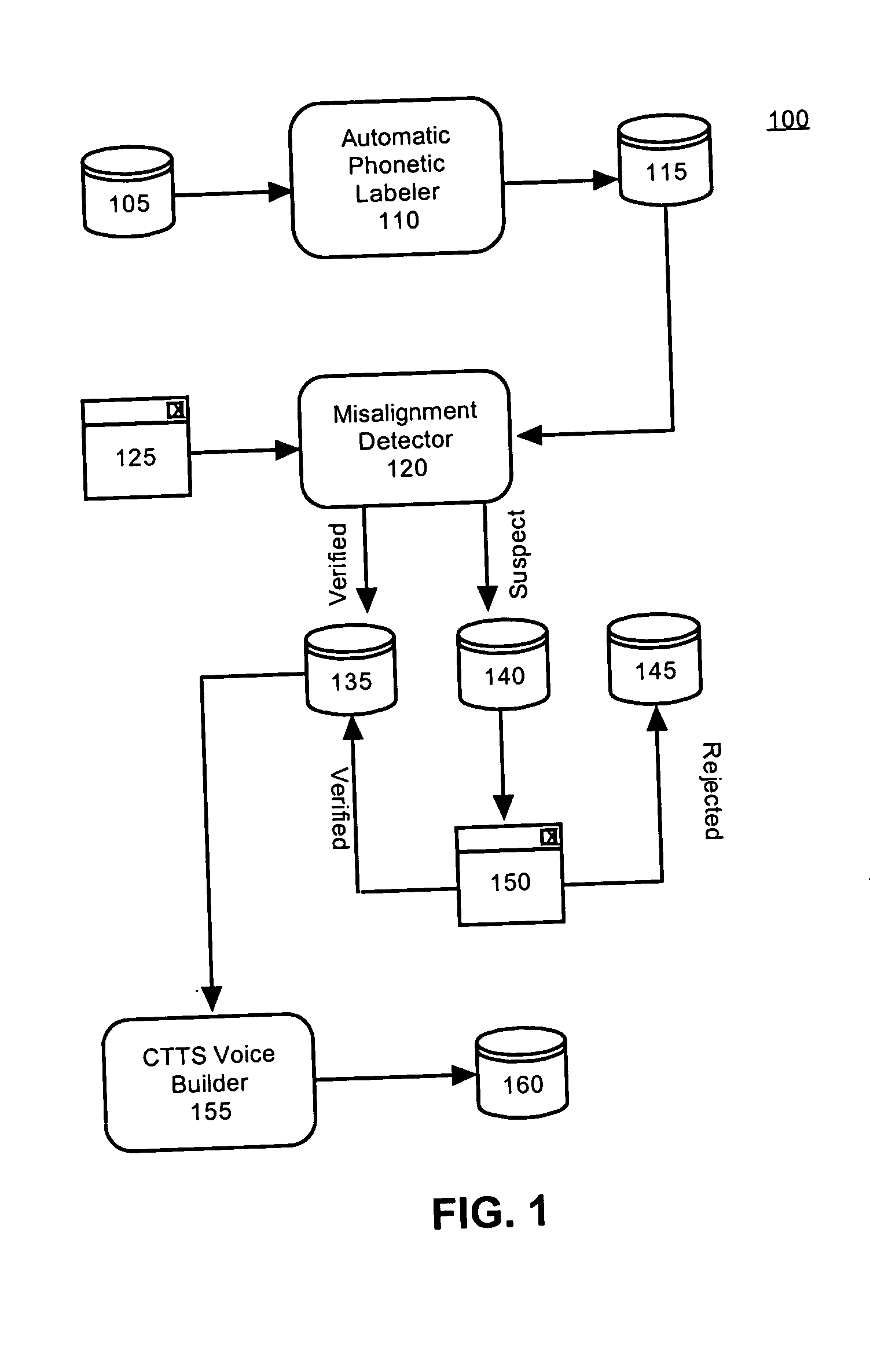

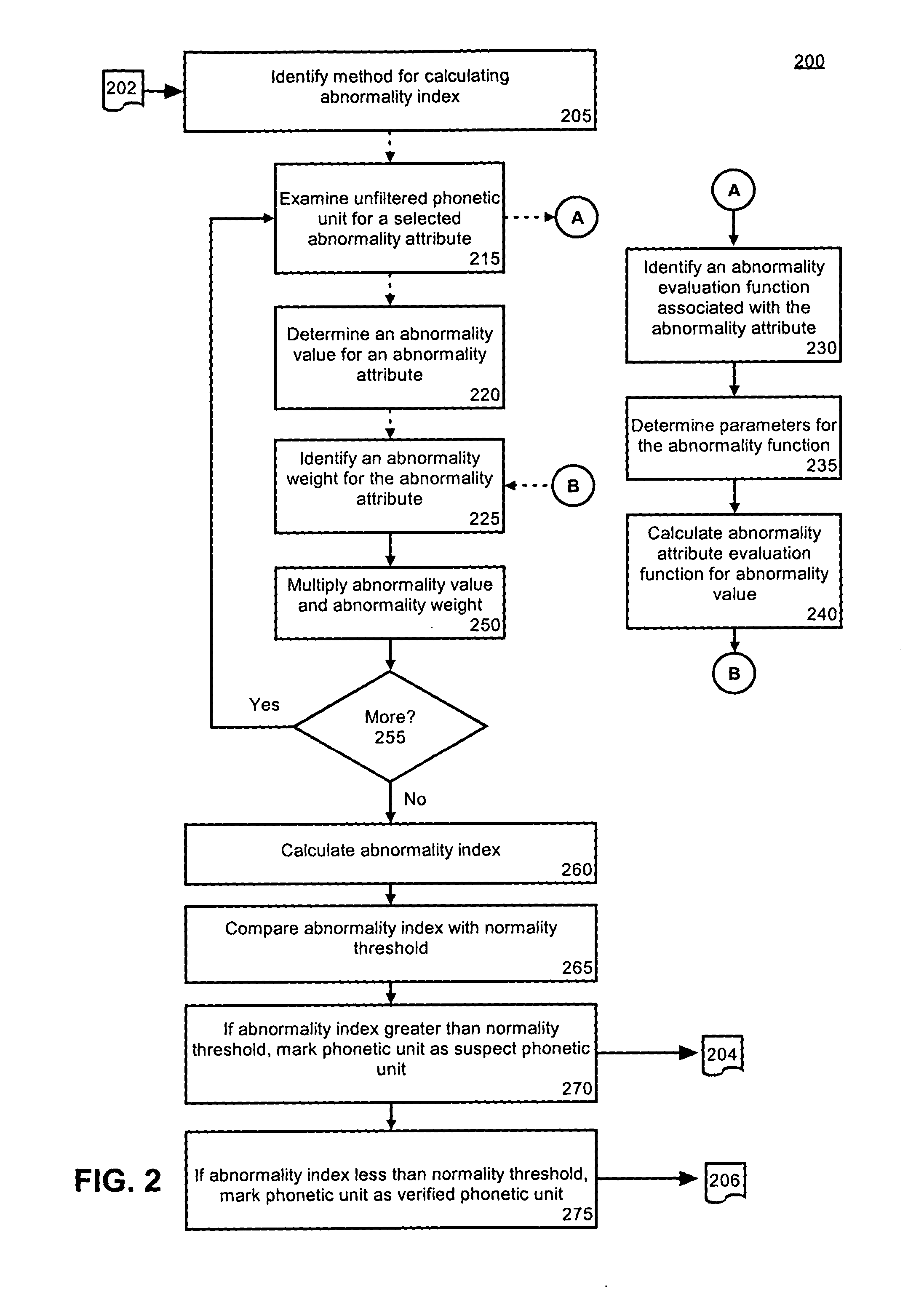

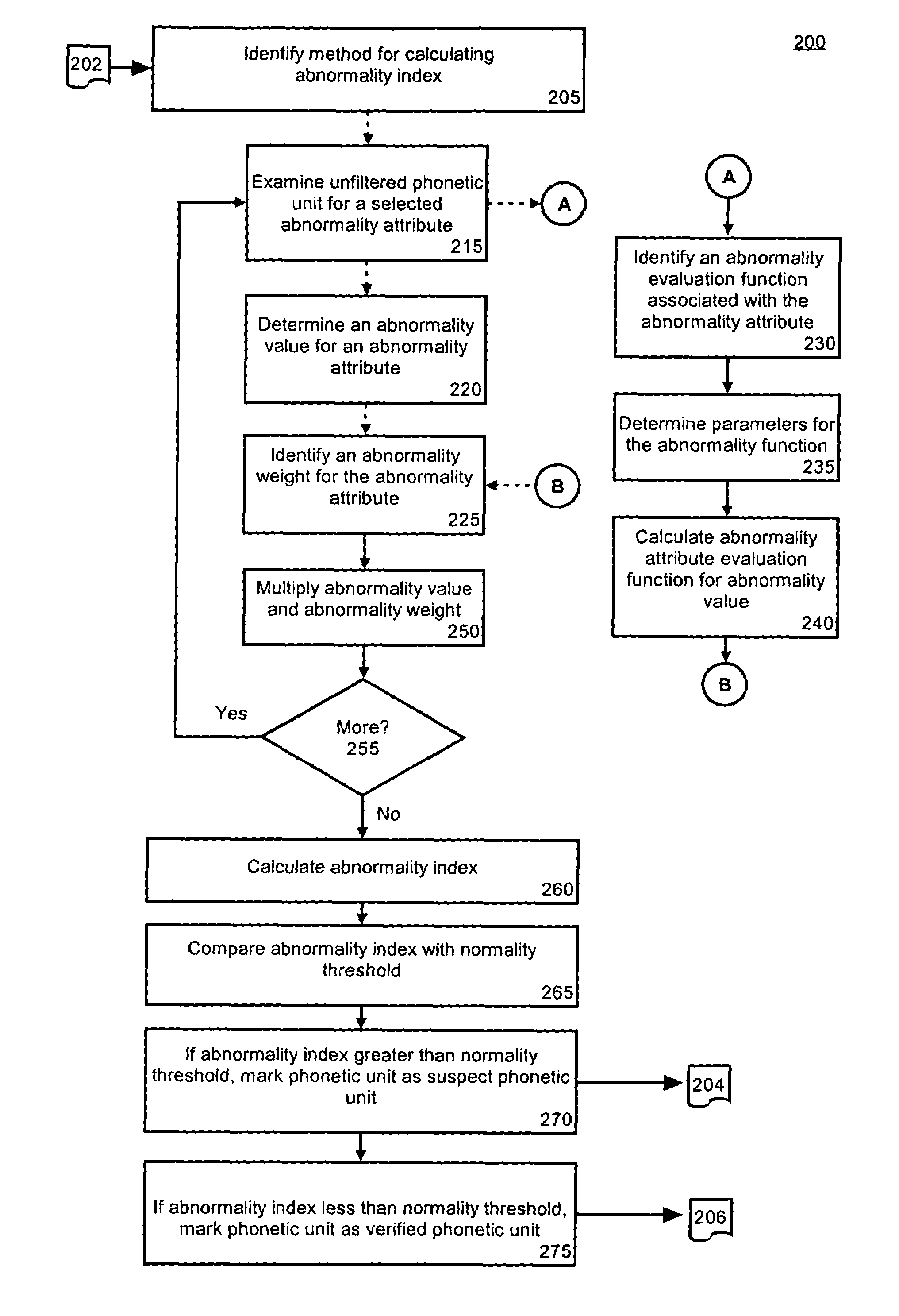

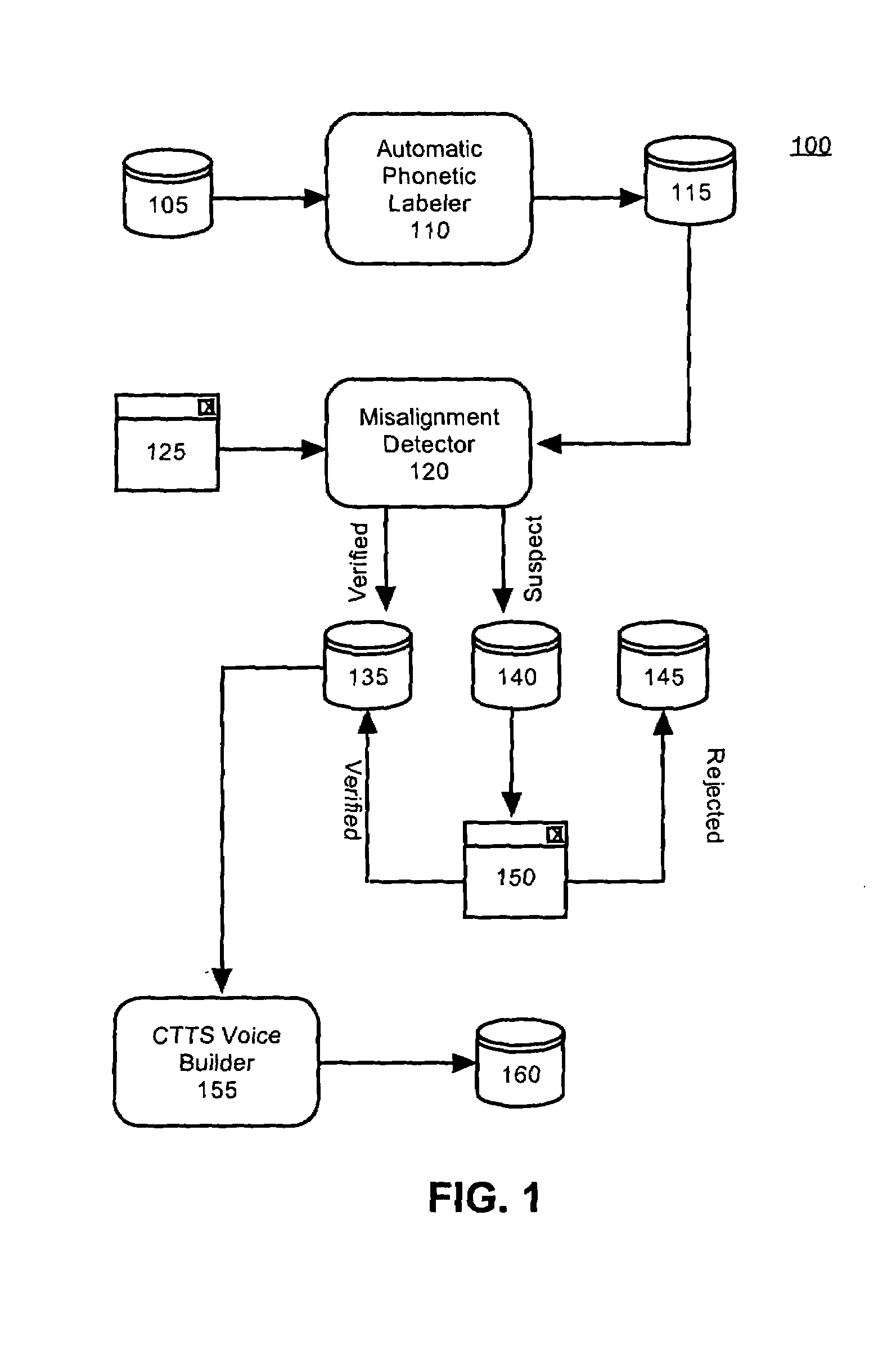

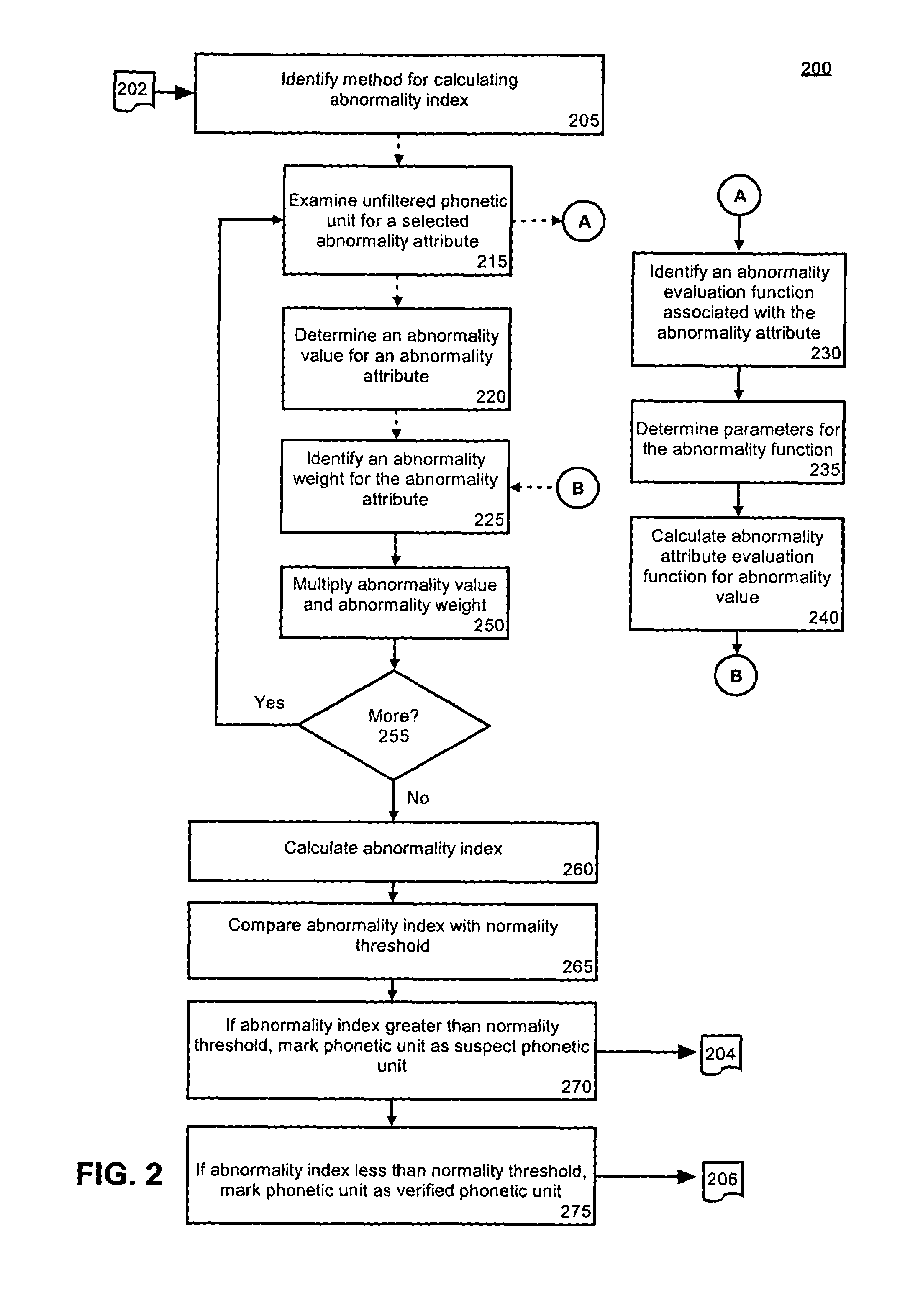

Method for detecting misaligned phonetic units for a concatenative text-to-speech voice

A method of filtering phonetic units to be used within a concatenative text-to-speech (CTTS) voice. Initially, a normality threshold can be established. At least one phonetic unit that has been automatically extracted from a speech corpus in order to construct the CTTS voice can be received. An abnormality index can be calculated for the phonetic unit. Then, the abnormality index can be compared to the established normality threshold. If the abnormality index exceeds the normality threshold, the phonetic unit can be marked as a suspect phonetic unit. If the abnormality index does not exceed the normality threshold, the phonetic unit can be marked as a verified phonetic unit. The concatenative text-to-speech voice can be built using the verified phonetic units.

Owner:CERENCE OPERATING CO

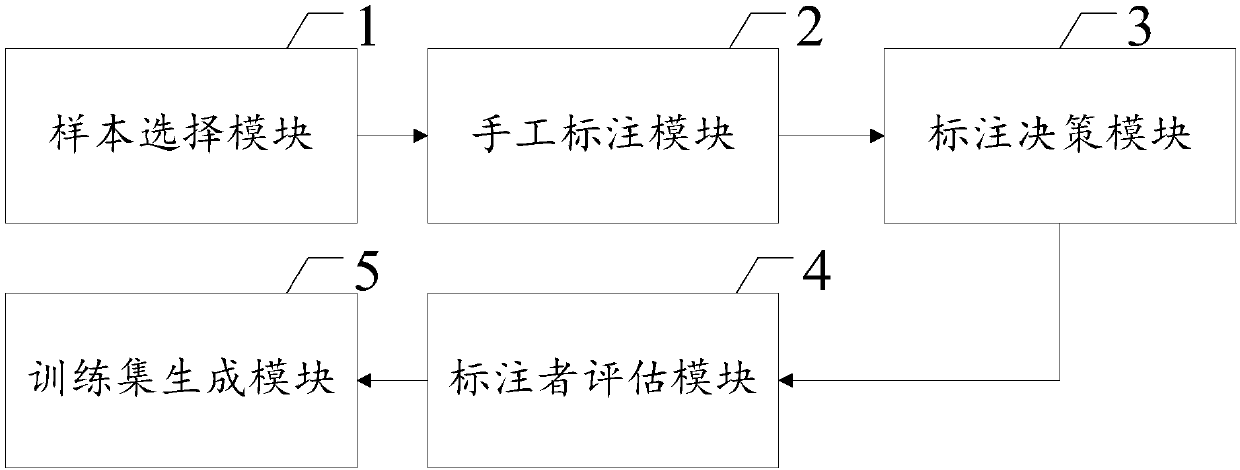

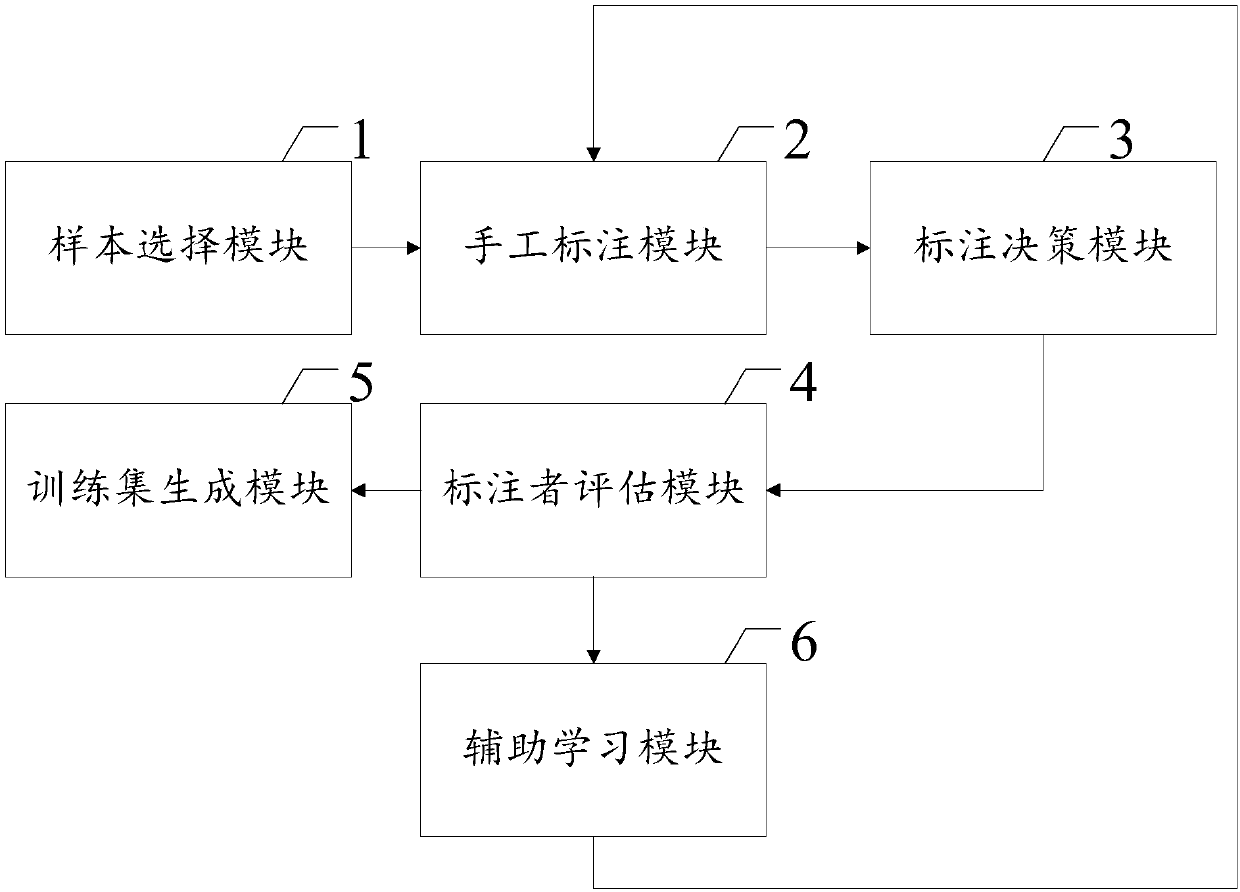

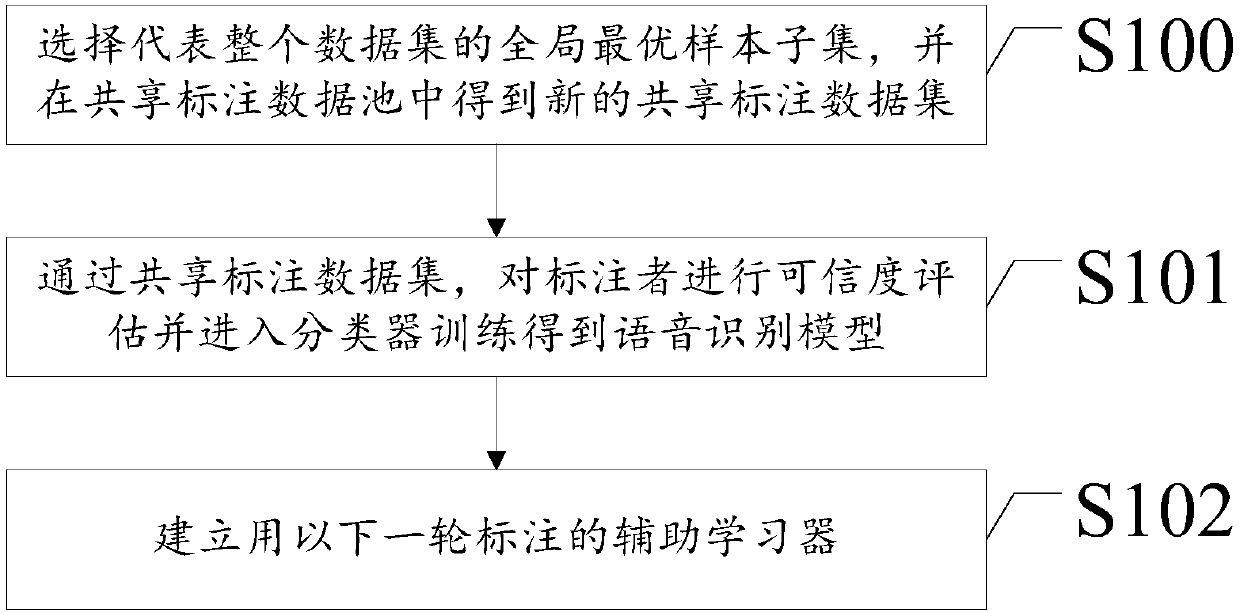

Tibetan speech corpus labeling method and system based on cooperative batch active learning

ActiveCN107808661ASufficient training dataTrusted training dataMathematical modelsCharacter and pattern recognitionAlgorithmSpeech corpus

The invention discloses a Tibetan speech corpus labeling method and a Tibetan speech corpus labeling system based on cooperative batch active learning. The system comprises a sample selection module,a manual labeling module, a labeling decision-making module, a labeling person evaluation module and a training set generation module. According to the method and the system, the construction of a sample evaluation function and the proving of the submodular function property of the sample evaluation function are solved through the adjacent optimal batch sample selection method, and the construction of a labeling decision function and the modeling of a labeling person evaluation model and a labeling person auxiliary learning model are solved through the labeling committee collaborative labelingmethod. In addition, the system disclosed by the invention can realize the functions inducing the optimal selection of a sample, the labeling and evaluation of a user, the sharing of labeling information and Tibetan speech knowledge, auxiliary learning of the labeling person and the like, so that the labeling quality of the Tibetan speech data is improved, and the construction of the speech corpus is accelerated.

Owner:MINZU UNIVERSITY OF CHINA

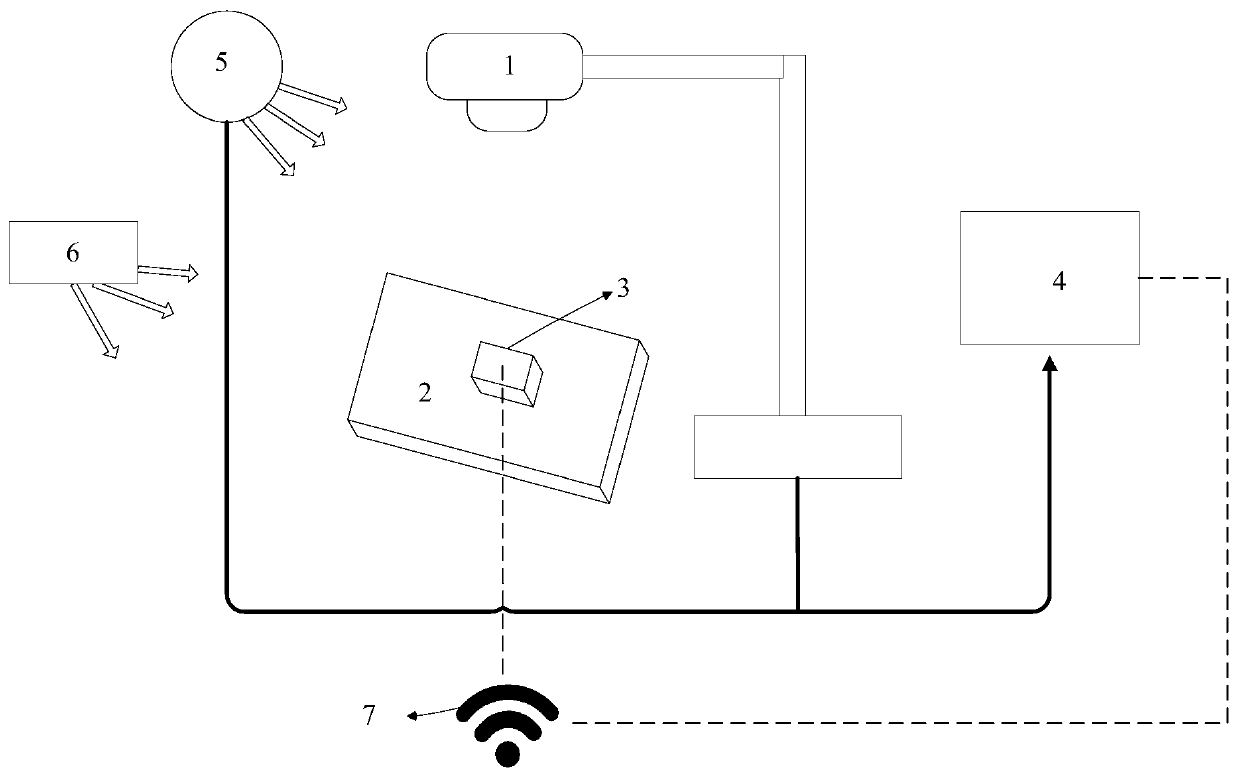

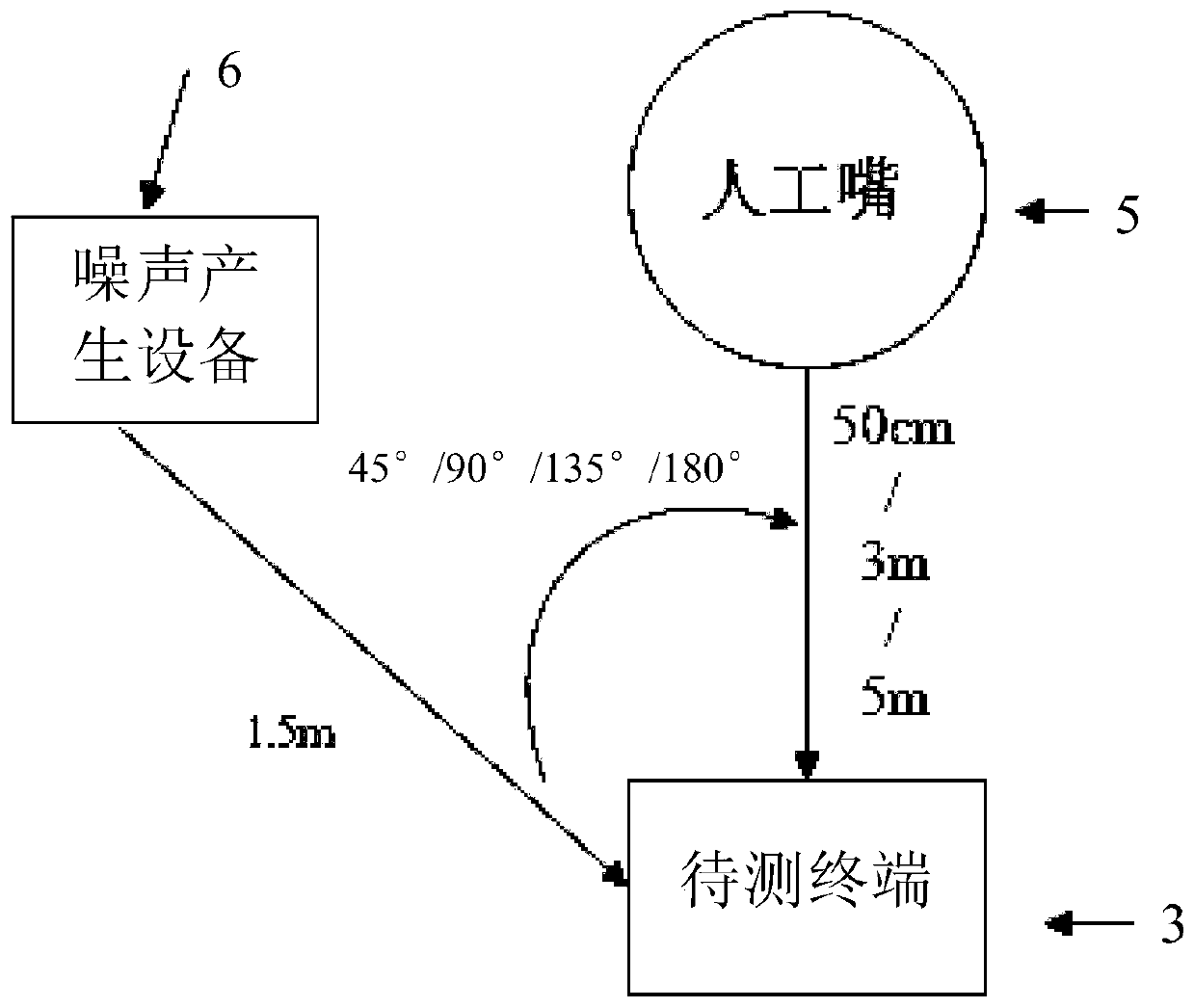

Speech recognition terminal evaluation system and method

InactiveCN110211567AReduce labor costsSpeech recognitionSpeech identificationSpeech recognition performance

The invention provides a speech recognition terminal evaluation system and method. The speech recognition terminal evaluation system comprises a speech playback device for outputting a test speech corpus, a terminal to be tested for recognizing the test speech corpus in different test environments including a noise test environment to obtain a recognition result, a noise generating device for generating noise required for the test, an image collecting device for performing image acquisition on the recognition result to obtain and transmit the speech recognition image to a control device and the control device which is used for converting a test text corpus into the test speech corpus through a speech synthesis method, performing image recognition on a speech device image based on a deep learning algorithm to obtain the recognition result, and comparing the recognition result with preset tagged data to obtain a comparison result used for indicating the speech recognition performance ofthe terminal to be tested. According to the scheme, automated test is adopted to support repetitive test, and the use of functional test based on deep learning algorithm comparison can reduce labor costs.

Owner:CHINA ACADEMY OF INFORMATION & COMM

Automatic voice recognizing method and system

ActiveCN103971675AProbability value increasedReduce the chance of driftSpeech recognitionHigh probabilitySpeech corpus

An automatic speech recognition method includes at a computer having one or more processors and a memory for storing one or more programs to be executed by the processors, obtaining a plurality of speech corpus categories through classifying and calculating raw speech corpus (801); obtaining a plurality of classified language models that respectively correspond to the plurality of speech corpus categories through language model training applied on each speech corpus category (802); obtaining an interpolation language model through implementing a weighted interpolation on each classified language model and merging the interpolated plurality of classified language models (803); constructing a decoding resource in accordance with an acoustic model and the interpolation language model (804); decoding input speech using the decoding resource, and outputting a character string with a highest probability as the recognition result of the input speech (805).

Owner:TENCENT TECH (SHENZHEN) CO LTD +1

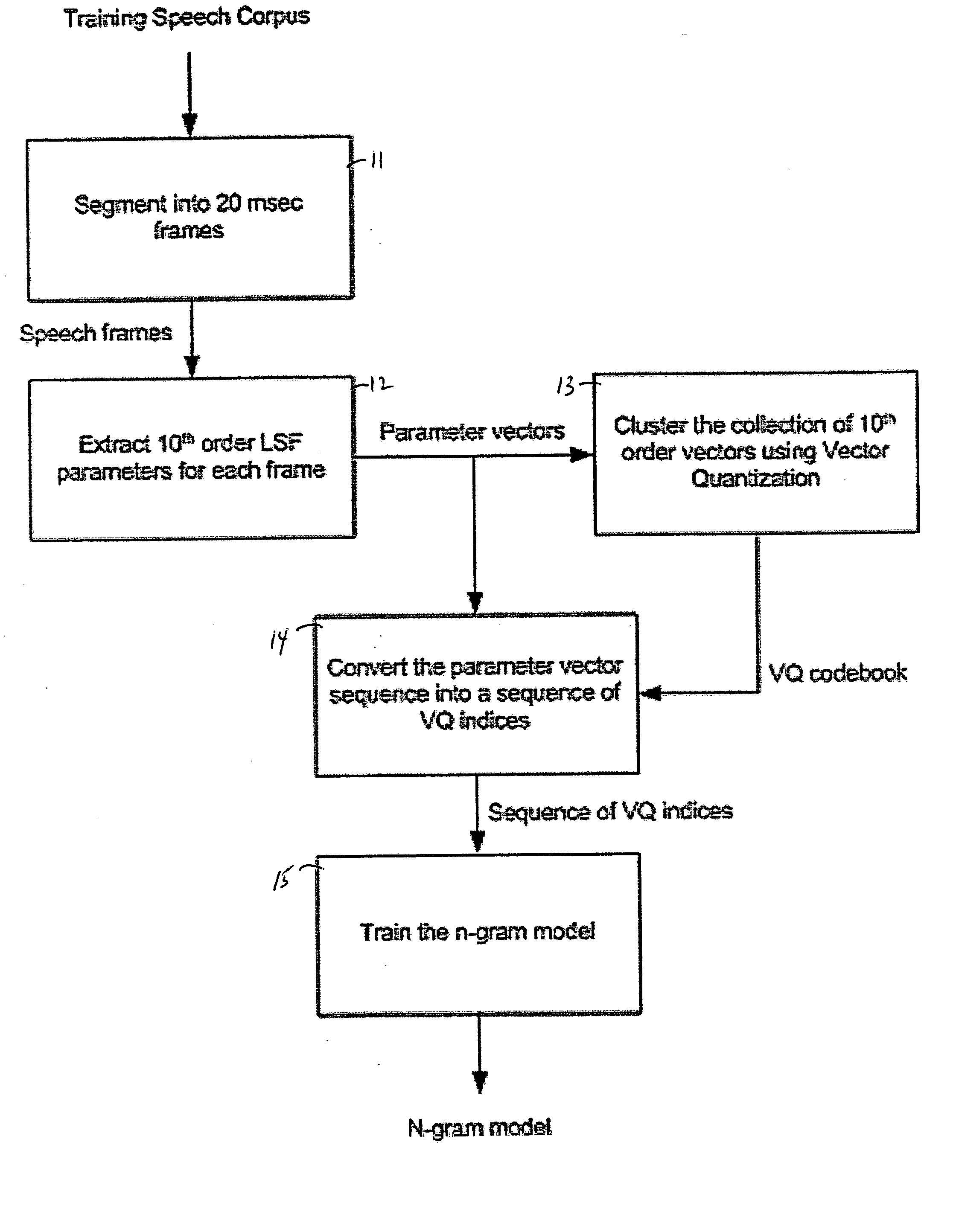

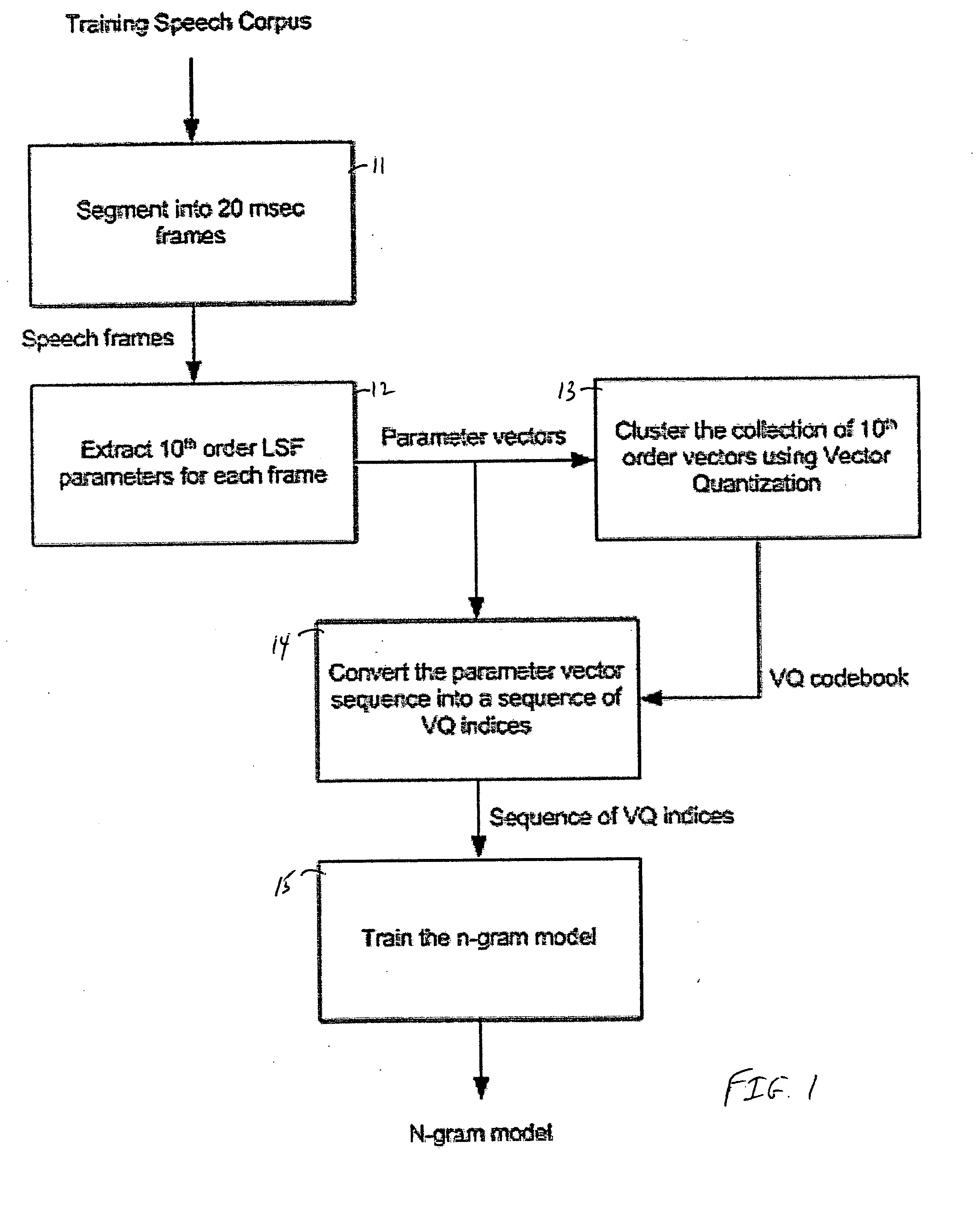

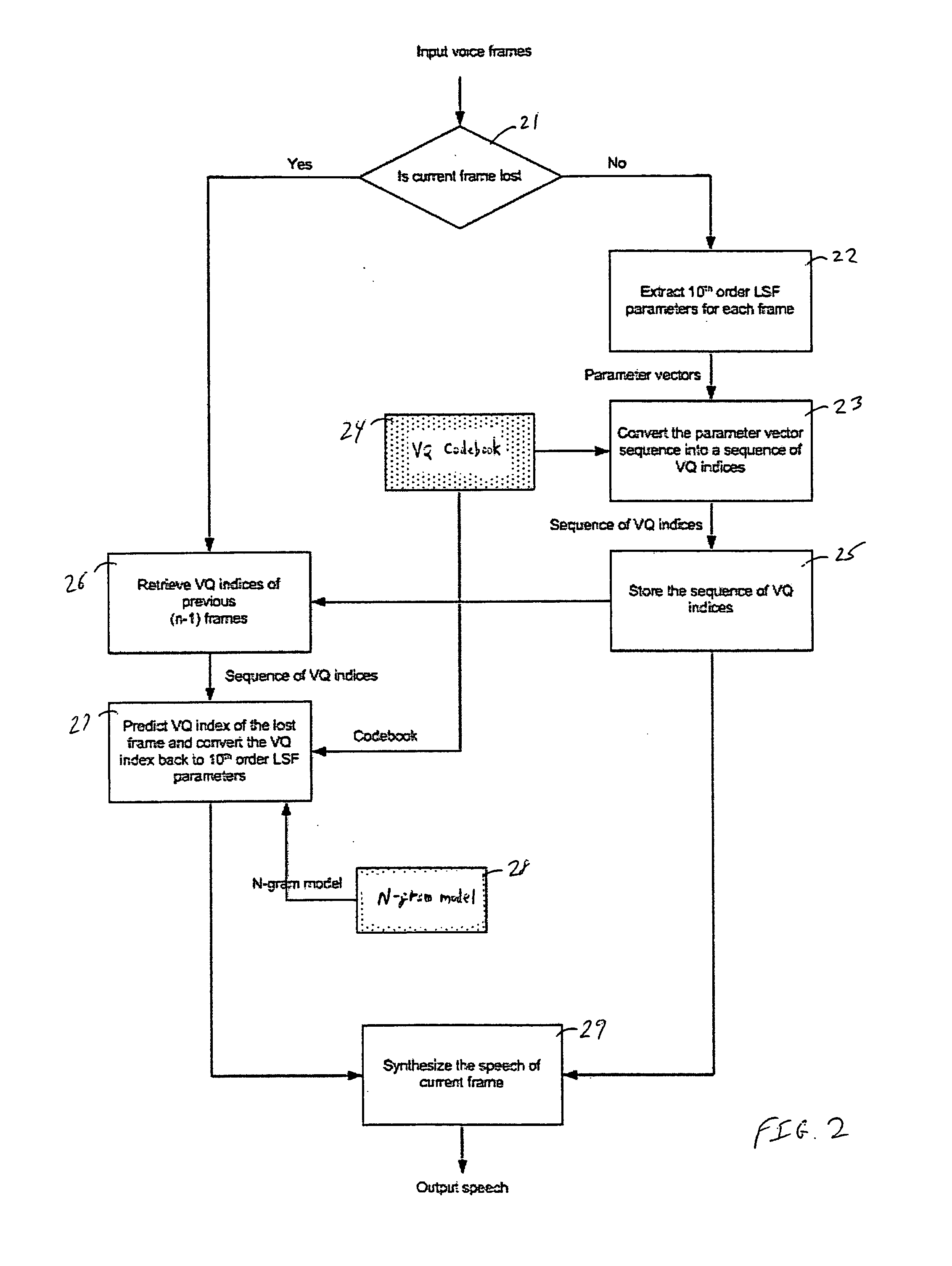

Packet loss concealment based on statistical n-gram predictive models for use in voice-over-IP speech transmission

InactiveUS20050276235A1Improved packet loss concealment (PLC) algorithmSpecial service provision for substationMultiplex system selection arrangementsStatistical correlationAlgorithm

A method for performing packet loss concealment of lost packets in Voice over IP (Internet Protocol) speech transmission. Statistical n-gram models are initially created with use of a training speech corpus, and then, packets lost during transmission are advantageously replaced based on these models. In particular, the existence of statistical patterns in successive voice over IP (VoIP) packets is advantageously exploited by first using conventional vector quantization (VQ) techniques to quantize the parameter data for each packet with use of a corresponding VQ index, and then determining statistical correlations between consecutive sequences of such VQ indices representative of the corresponding sequences of n packets. The statistic n-gram predictive models so created are then used to predict parameter data for use in representing lost data packets.

Owner:ALCATEL-LUCENT USA INC +1

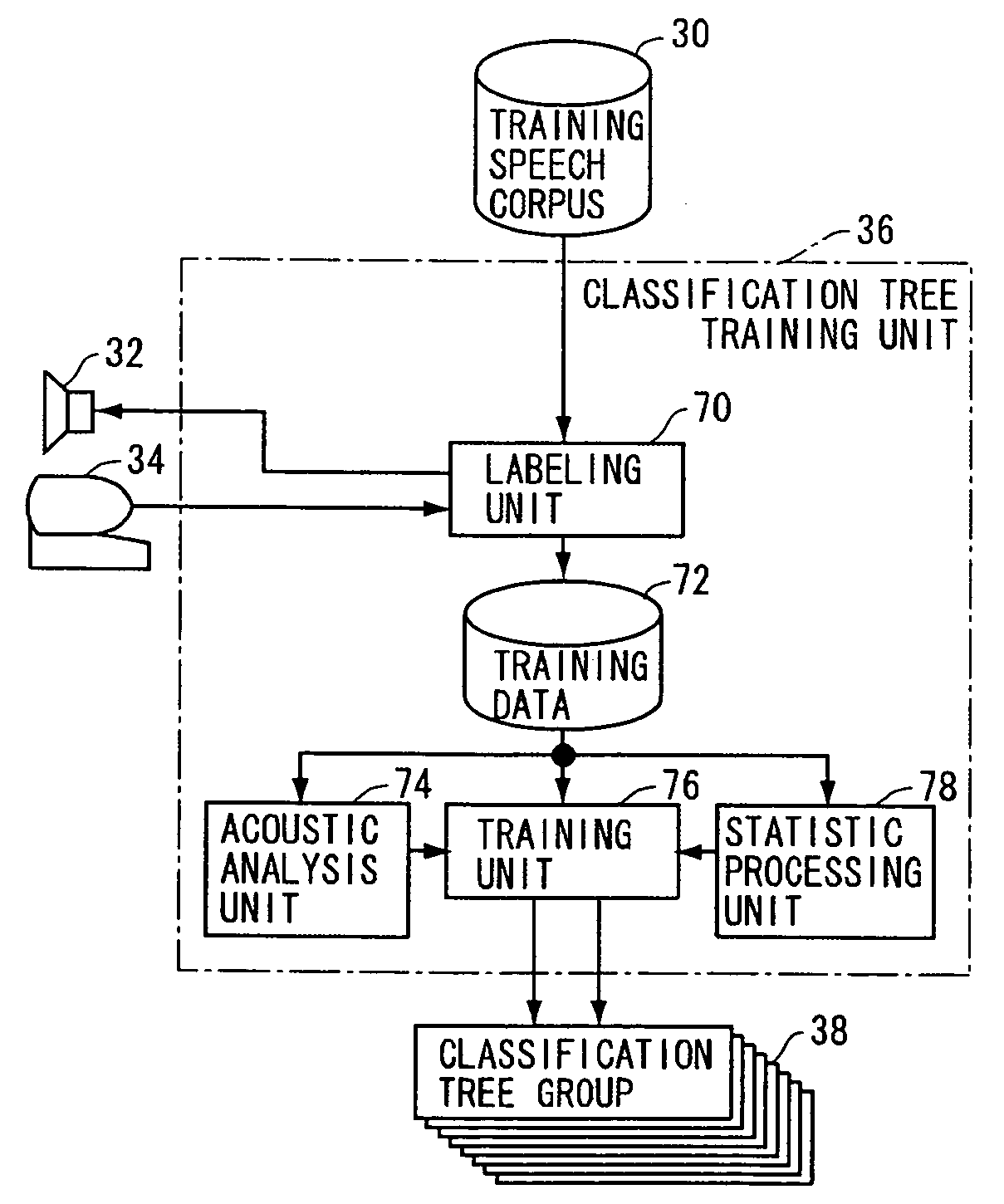

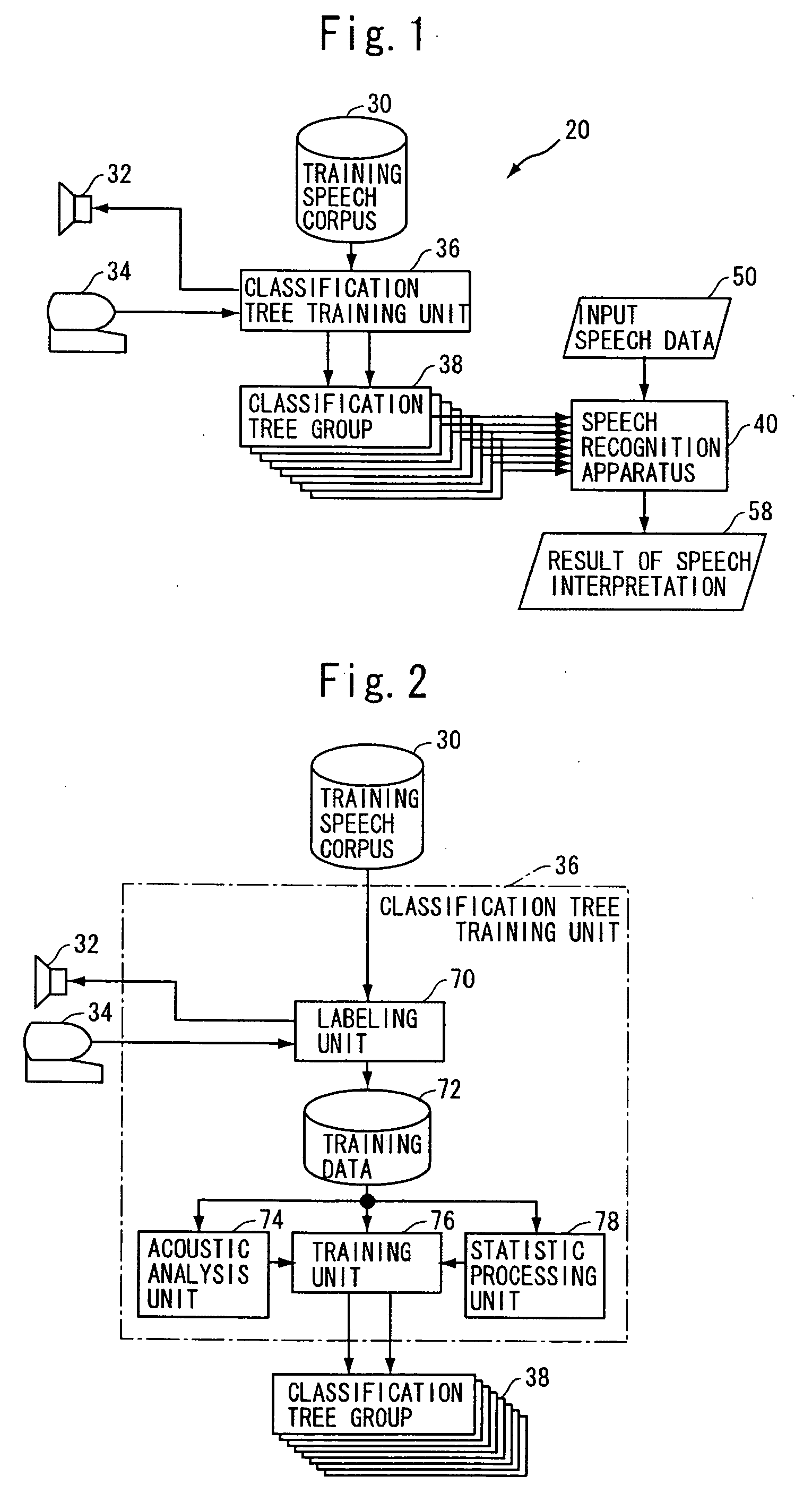

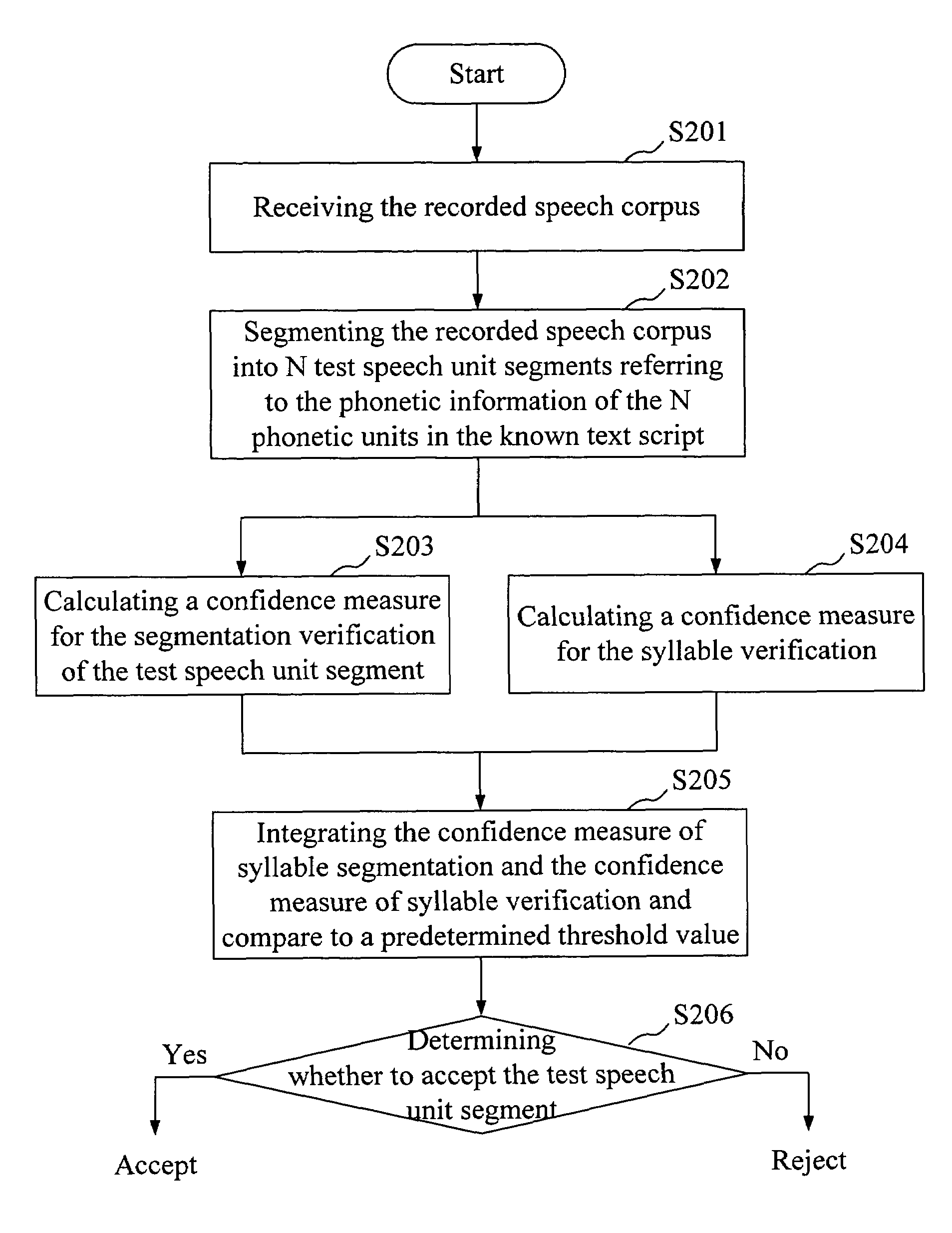

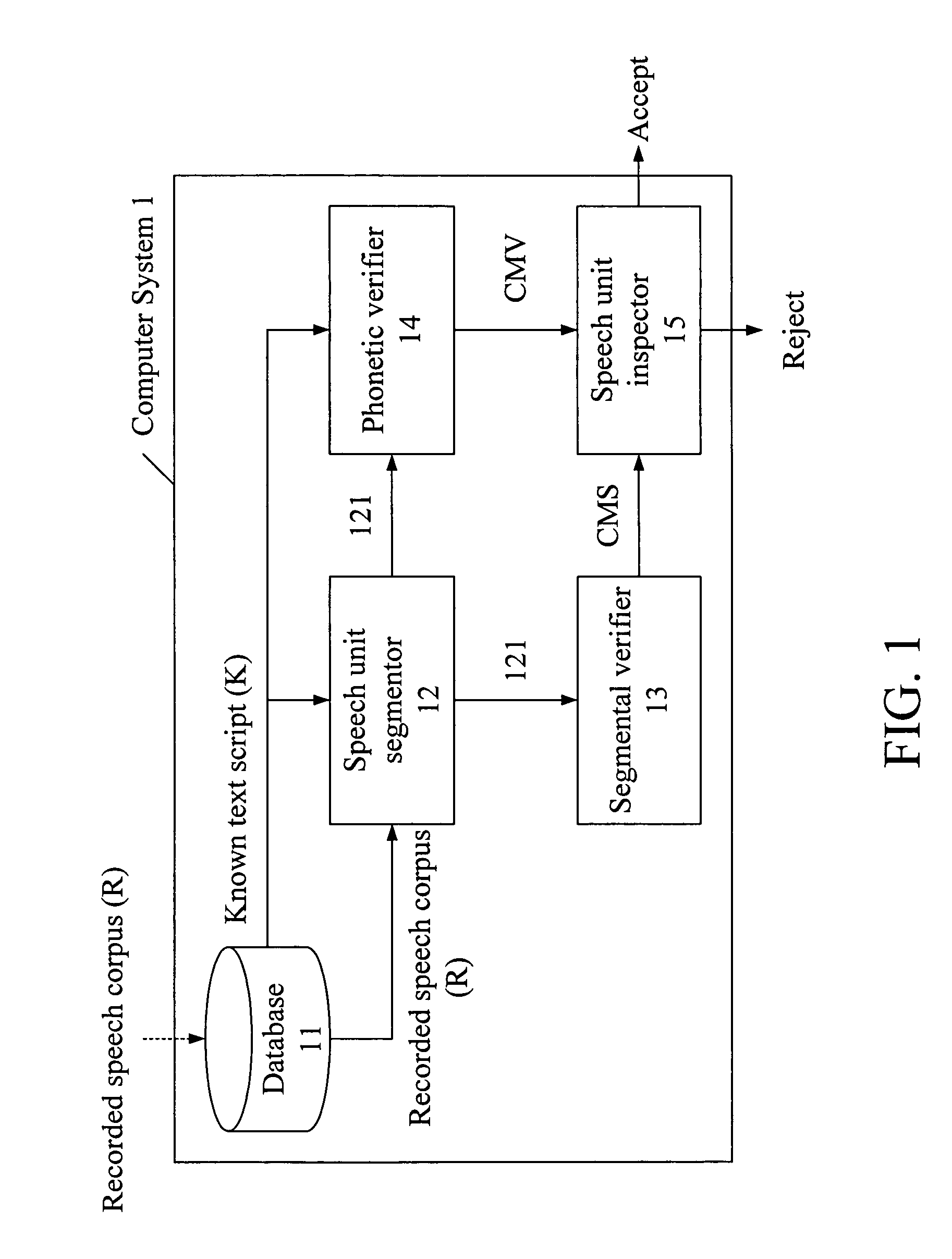

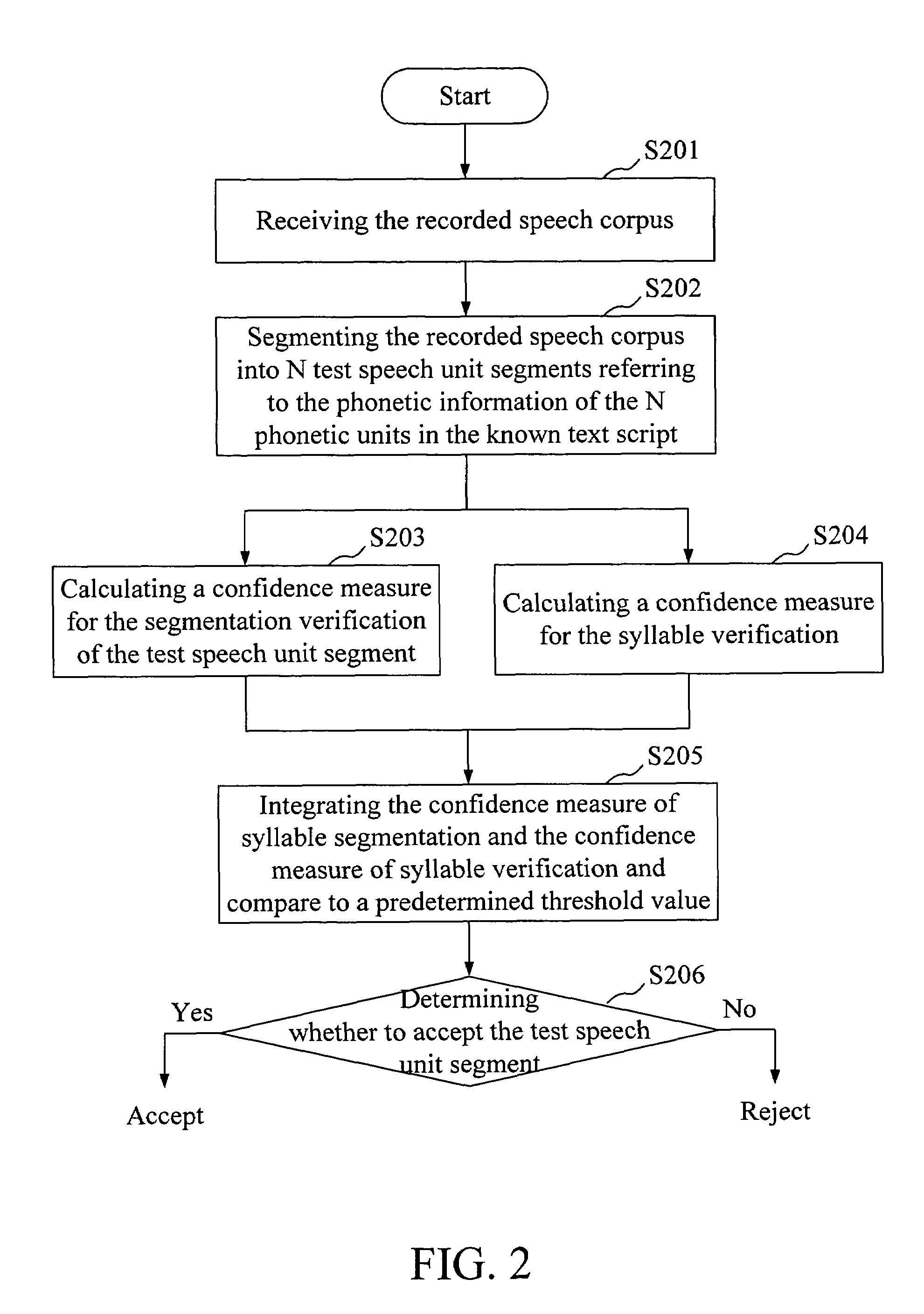

Automatic speech segmentation and verification using segment confidence measures

ActiveUS7472066B2Improve output qualityAutomatic collectionSpeech recognitionSpeech synthesisAutomatic speech segmentationSpeech corpus

An automatic speech segmentation and verification system and method is disclosed, which has a known text script and a recorded speech corpus corresponding to the known text script. A speech unit segmentor segments the recorded speech corpus into N test speech unit segments referring to the phonetic information of the known text script. Then, a segmental verifier is applied to obtain a confidence measure of syllable segmentation for verifying the correctness of the cutting points of test speech unit segments. A phonetic verifier obtains a confidence measure of syllable verification by using verification models for verifying whether the recorded speech corpus is correctly recorded. Finally, a speech unit inspector integrates the confidence measure of syllable segmentation and the confidence measure of syllable verification to determine whether the test speech unit segment is accepted or not.

Owner:IND TECH RES INST

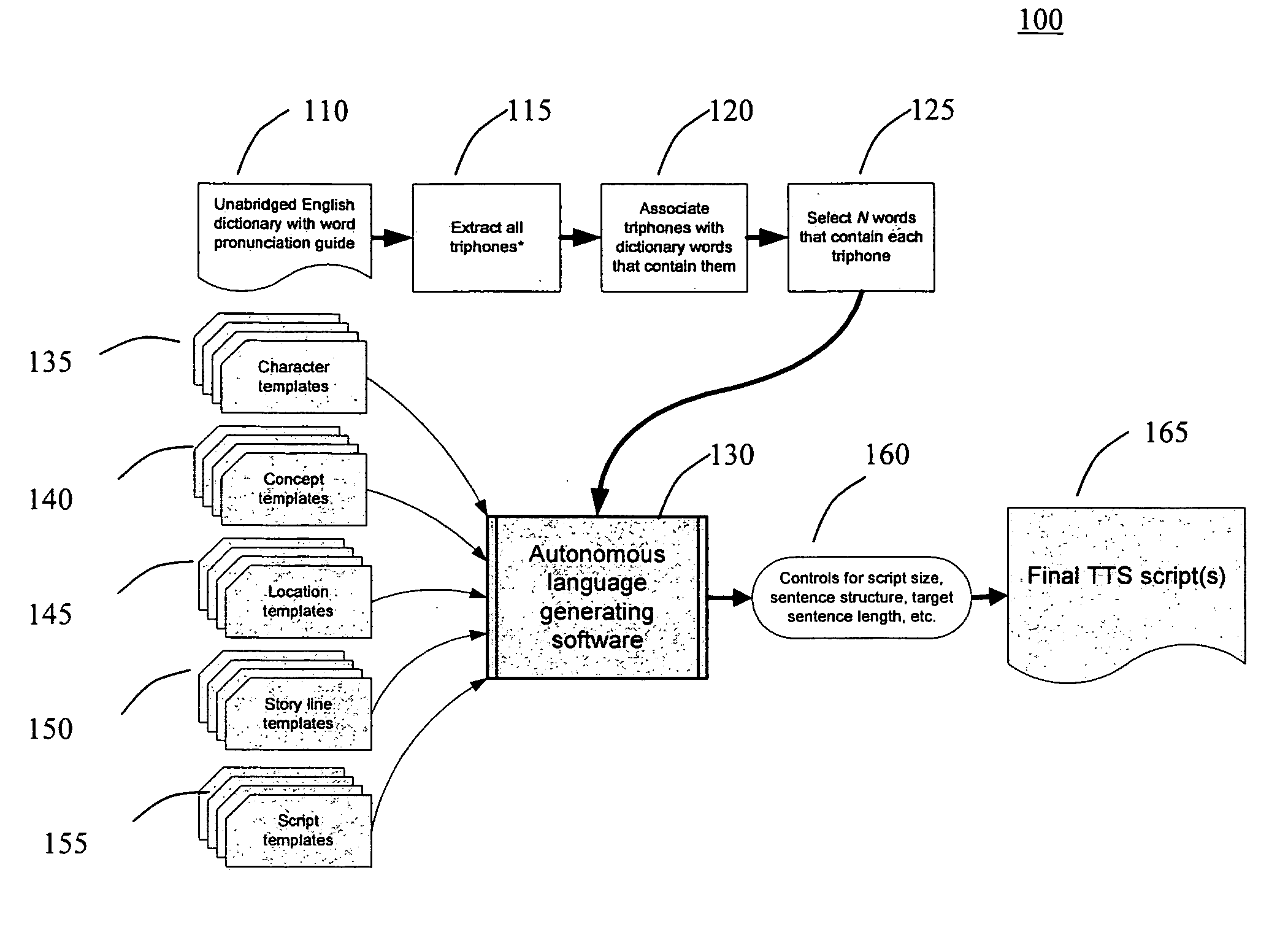

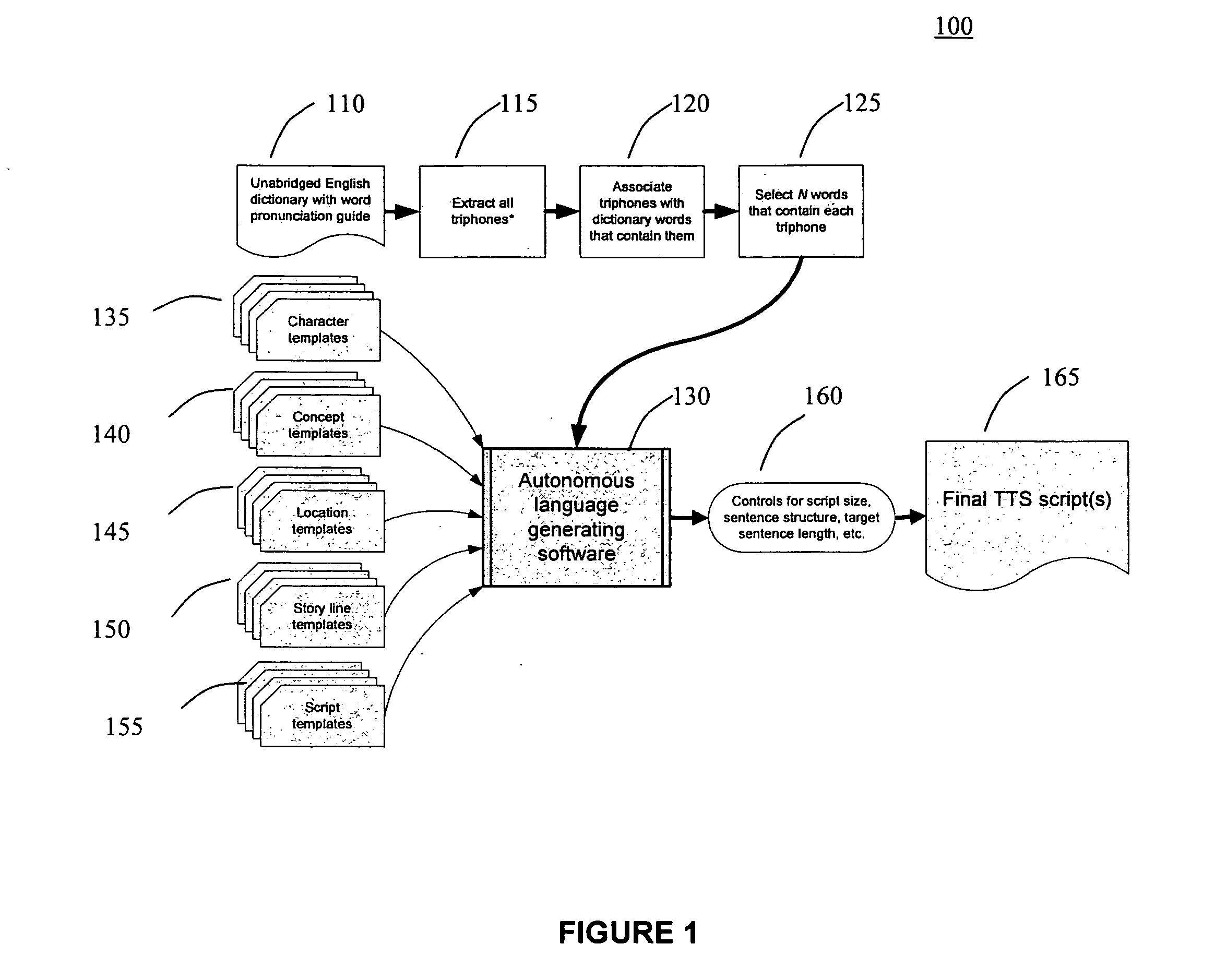

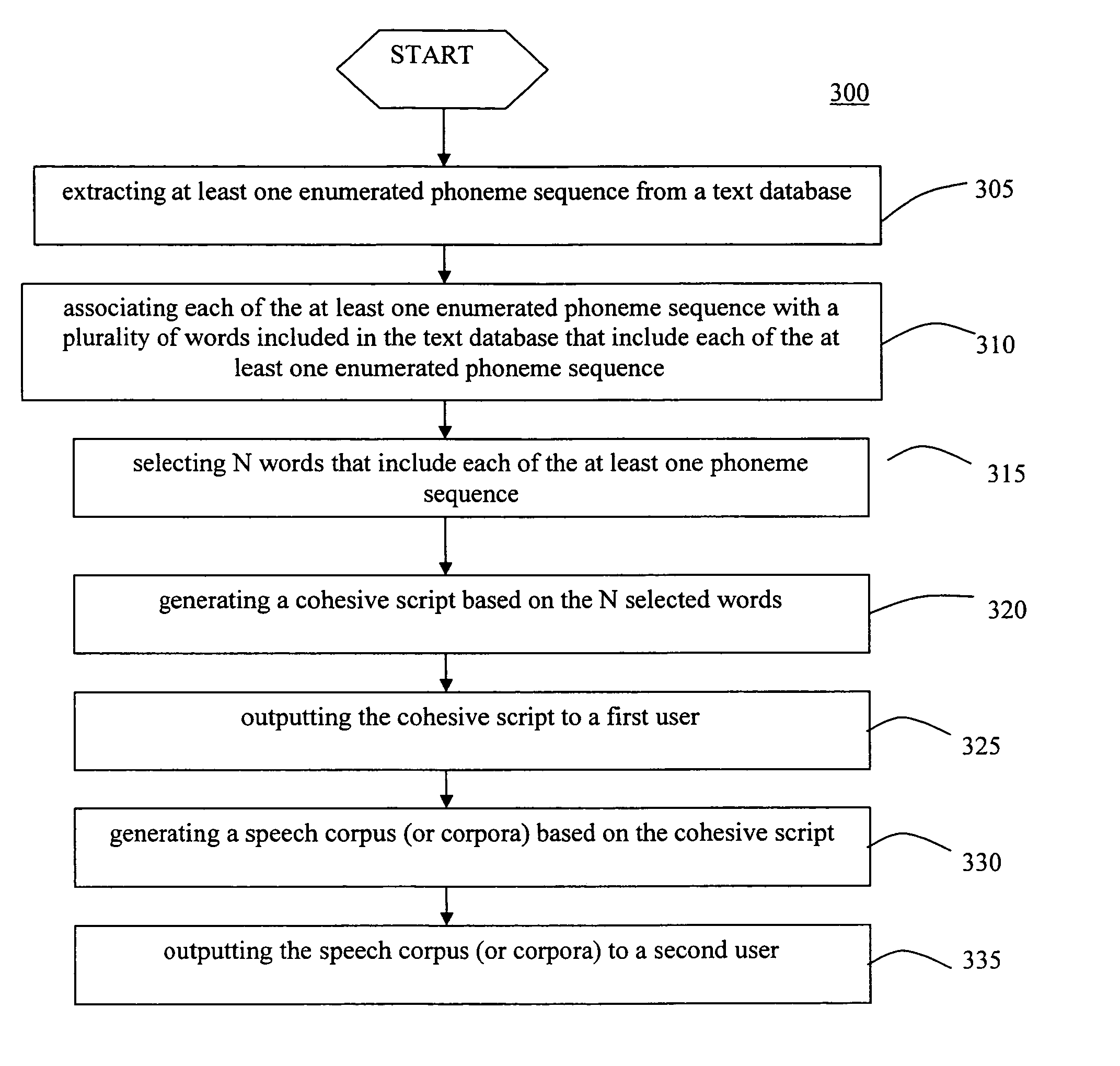

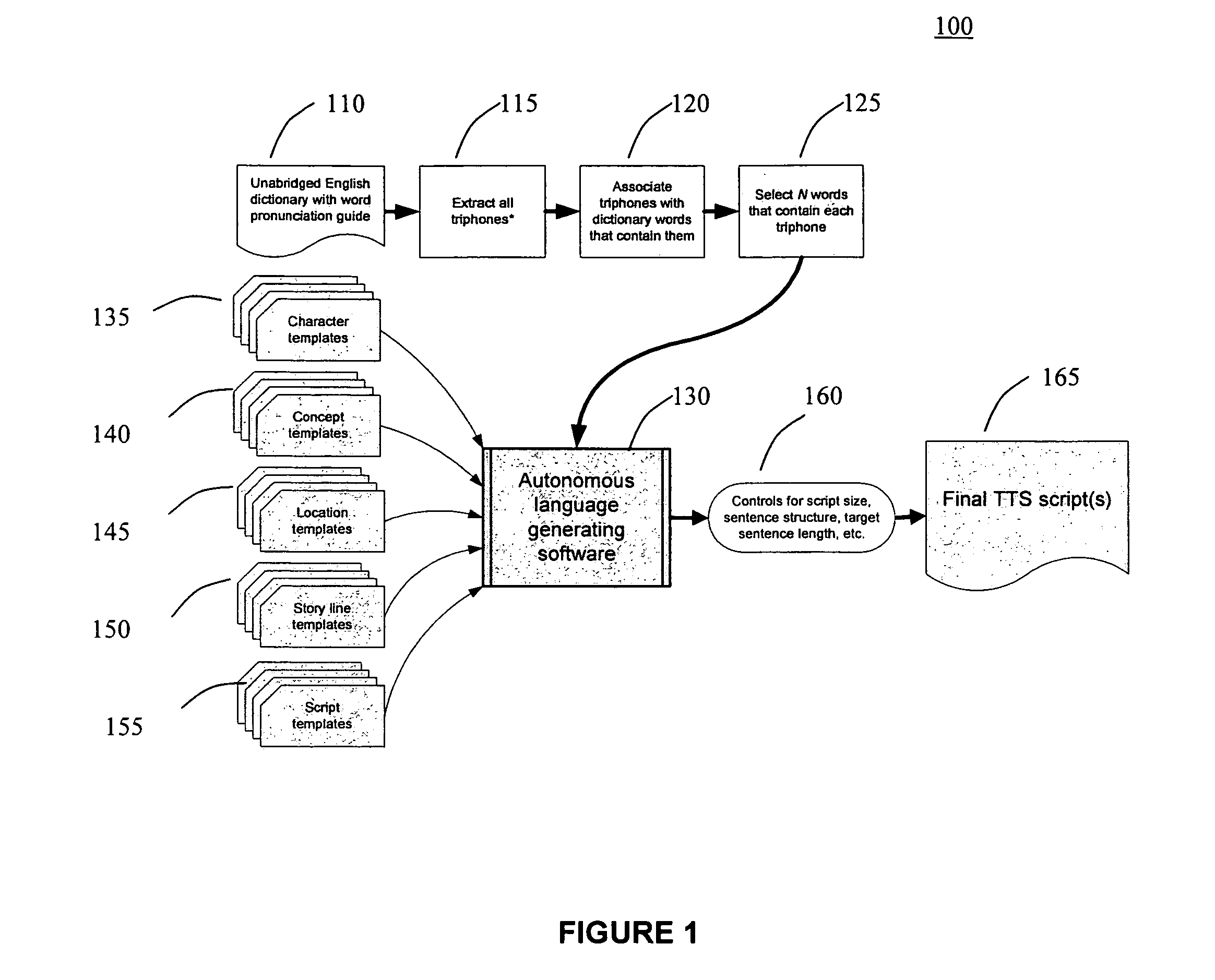

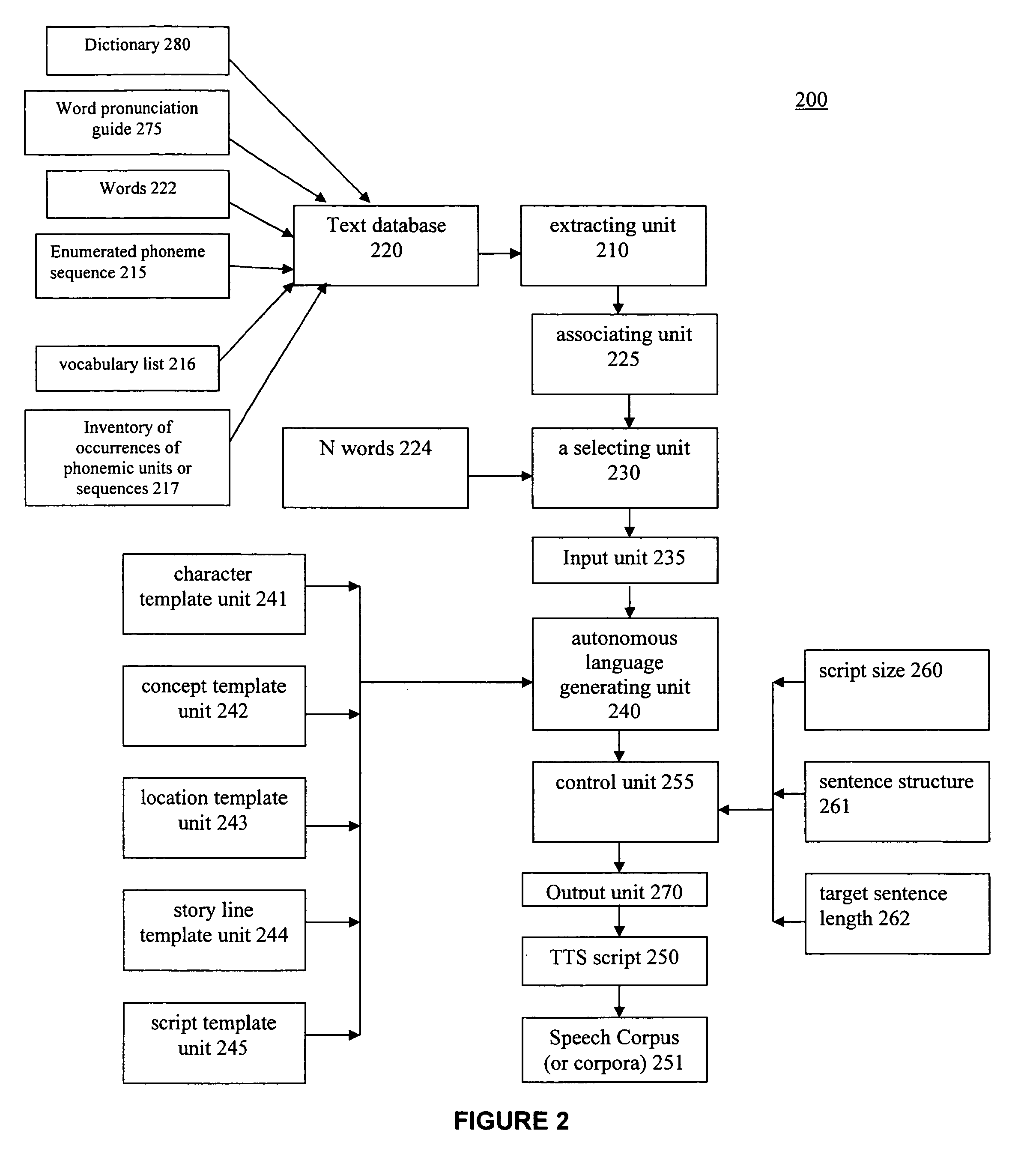

Autonomous system and method for creating readable scripts for concatenative text-to-speech synthesis (TTS) corpora

A method (and system) which autonomously generates a cohesive script from a text database for creating a speech corpus for concatenative text-to-speech, and more particularly, which generates cohesive scripts having fluency and natural prosody that can be used to generate compact text-to-speech recordings that cover a plurality of phonetic events.

Owner:CERENCE OPERATING CO

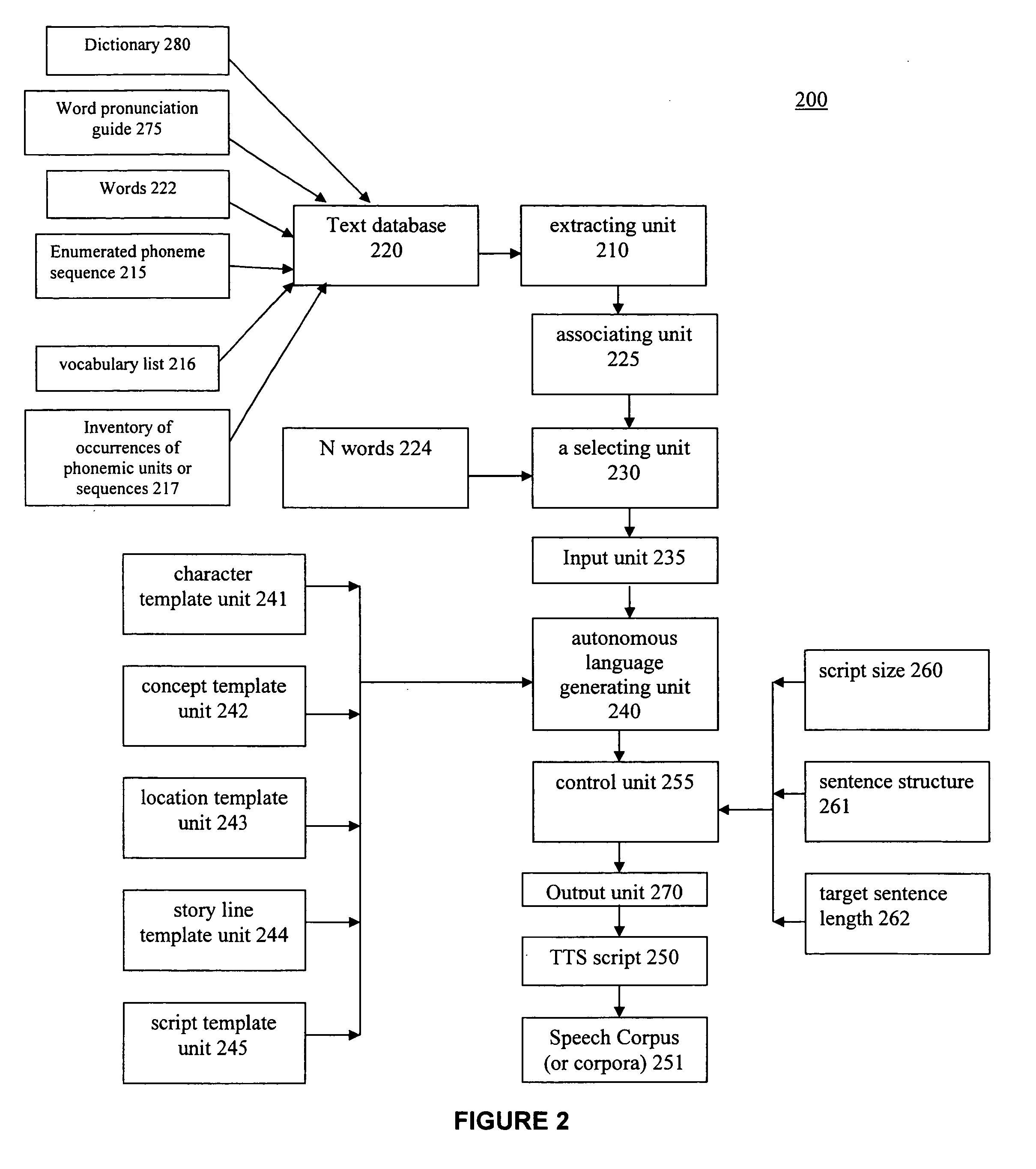

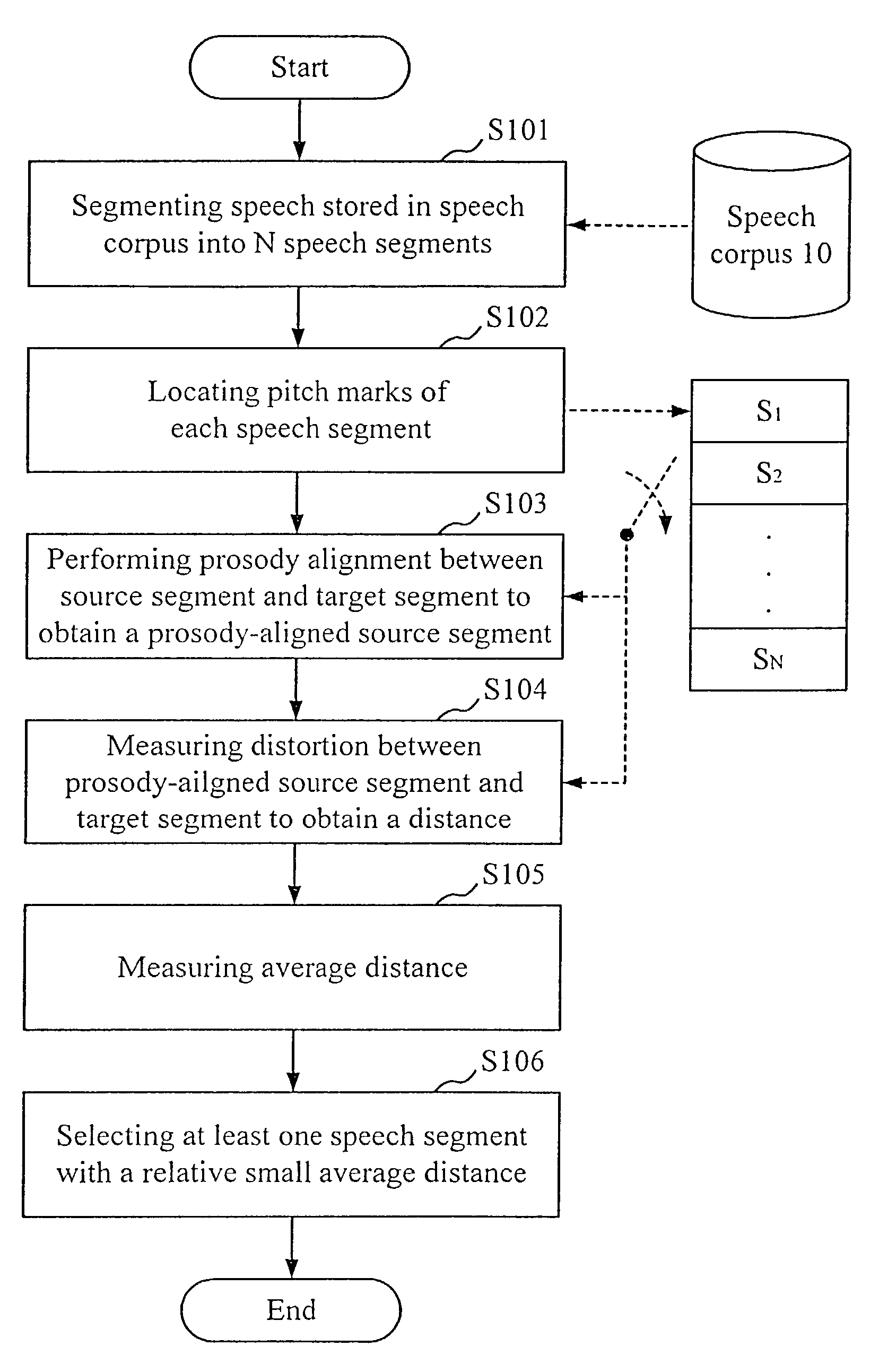

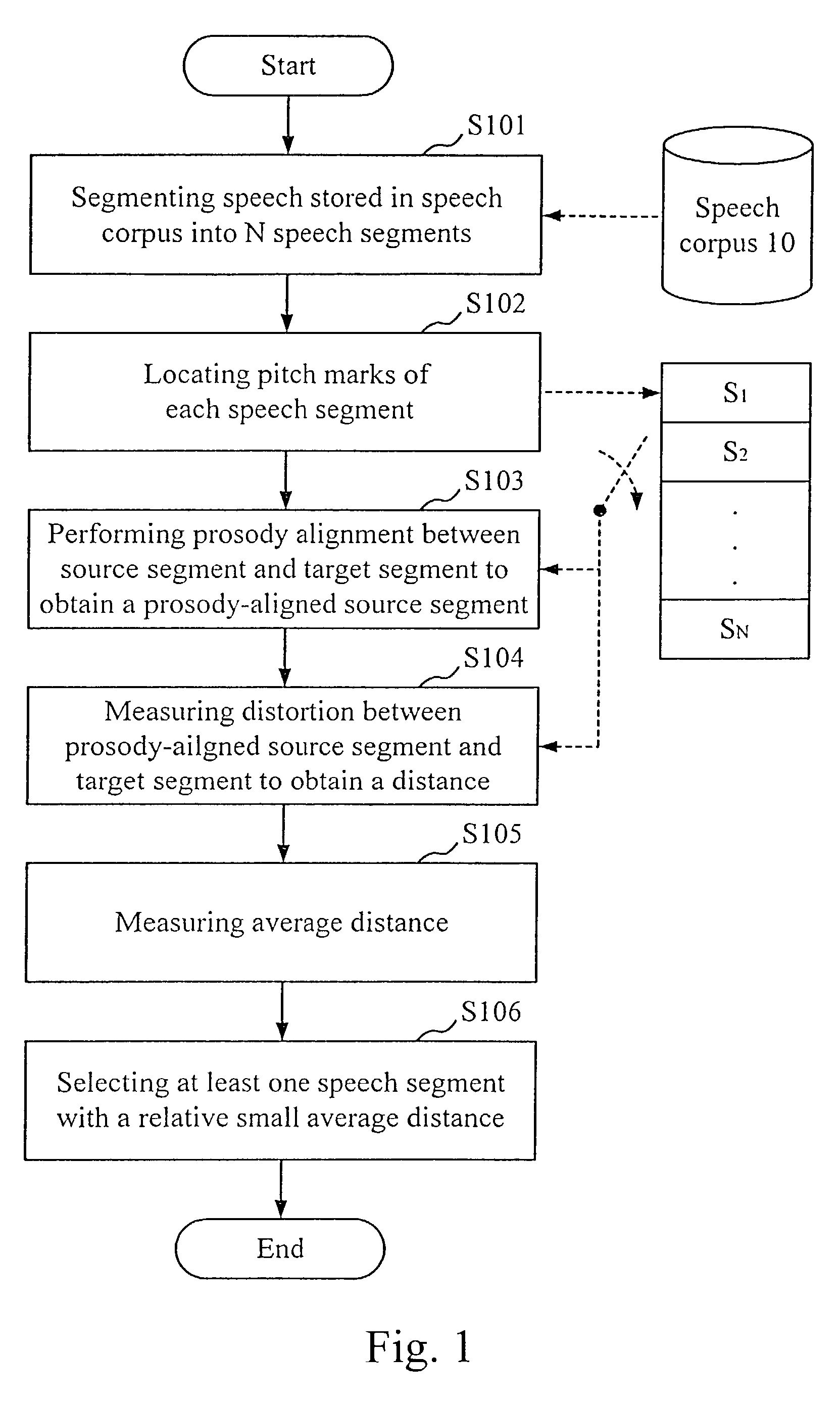

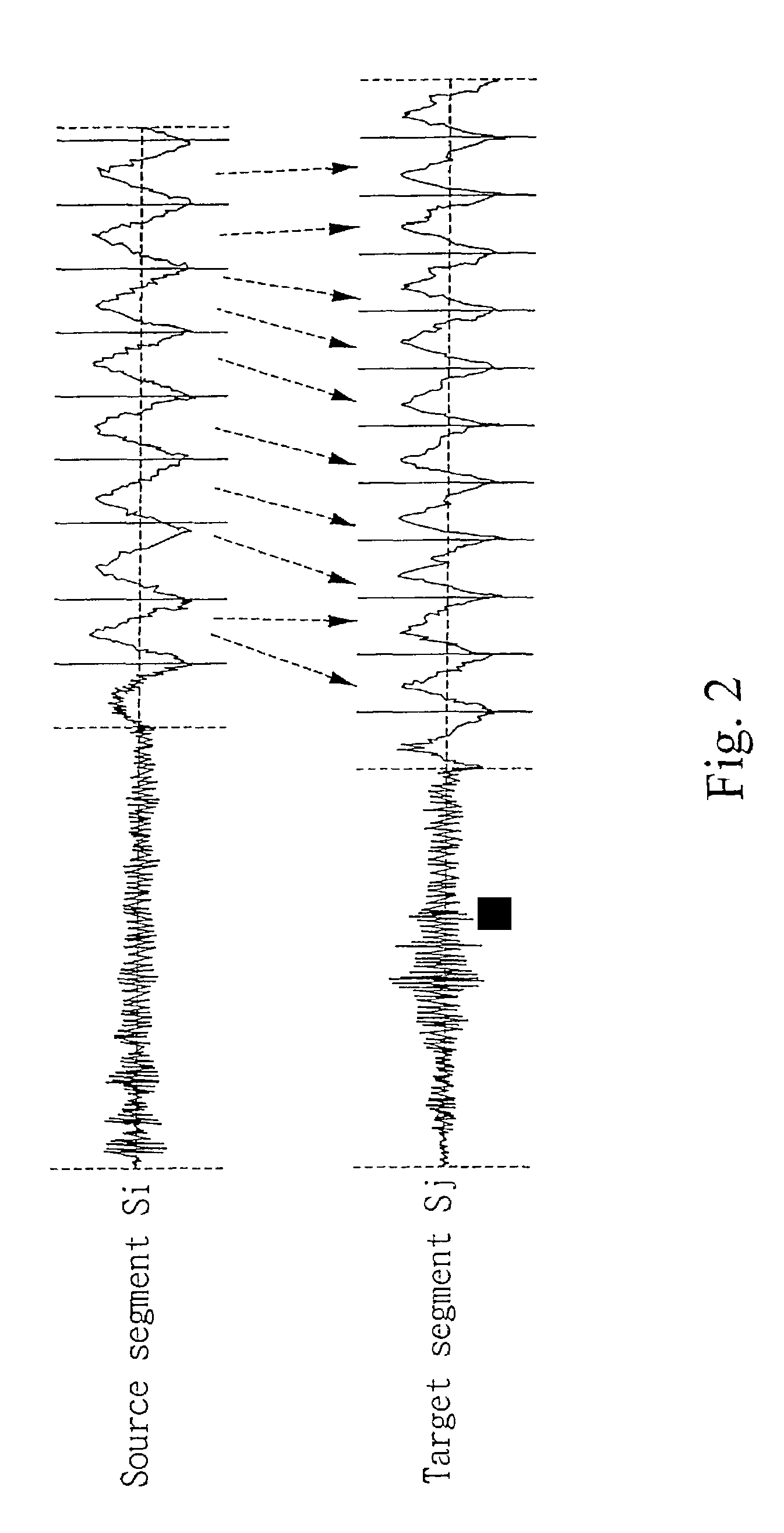

Method of speech segment selection for concatenative synthesis based on prosody-aligned distance measure

ActiveUS7315813B2Minimize total acoustic distortionMinimize distortionSpeech recognitionSpeech synthesisAutomatic segmentationSpeech corpus

A method of speech segment selection for concatenative synthesis based on prosody-aligned distance measure is disclosed. This method is based on comparison of speech segments segmented from a speech corpus, wherein speech segments are fully prosody-aligned to each other before distortion measure. With prosody alignment embedded in selection process, distortion resulting from possible prosody modification in synthesis could be taken into account objectively in selection phase. In order to carry out the purpose of the present invention, automatic segmentation, pitch marking and PSOLA method work together for prosody alignment. Two distortion measures, MFCC and PSQM are used for comparing two prosody-aligned segments of speech because of human perceptual consideration.

Owner:IND TECH RES INST

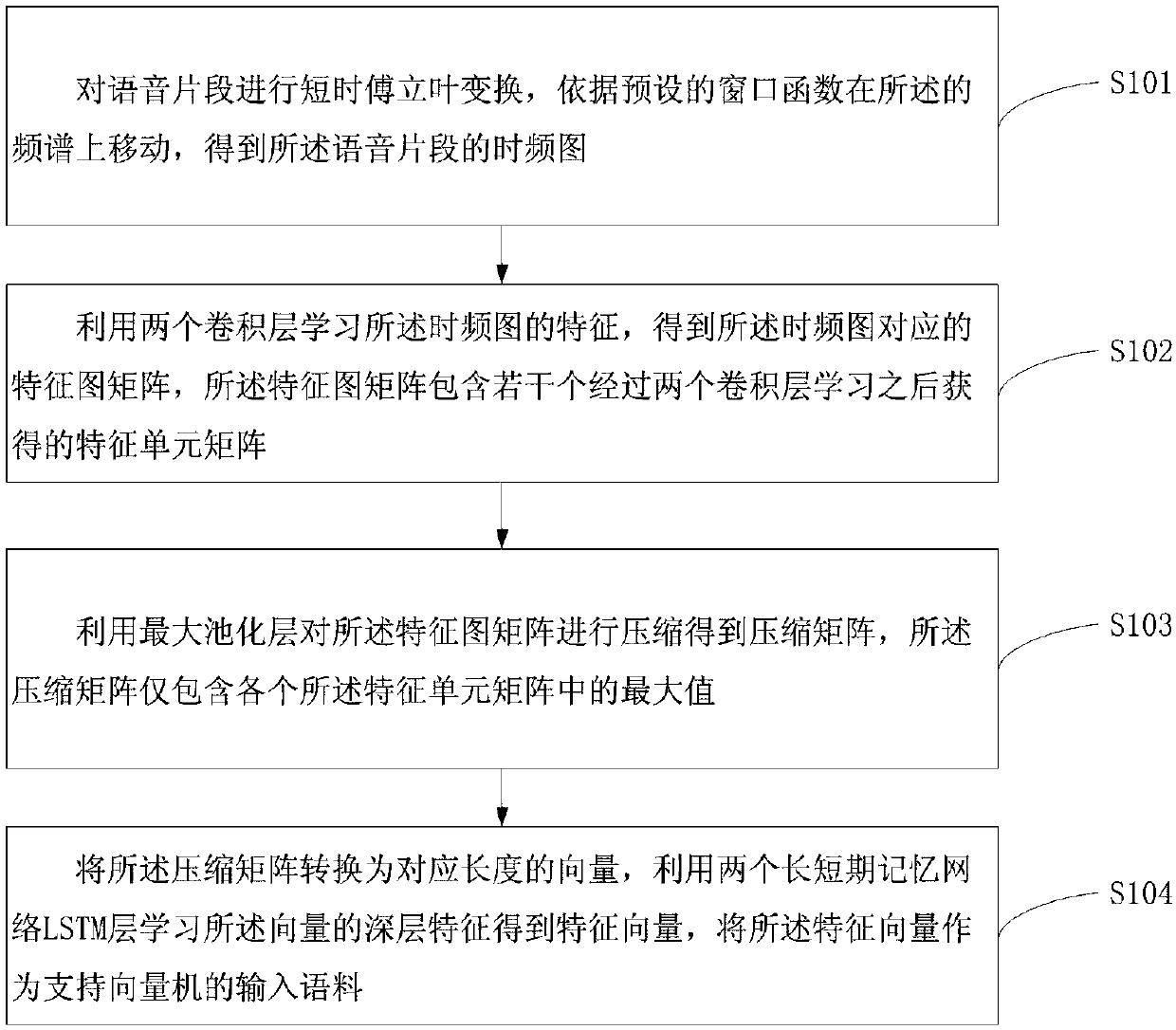

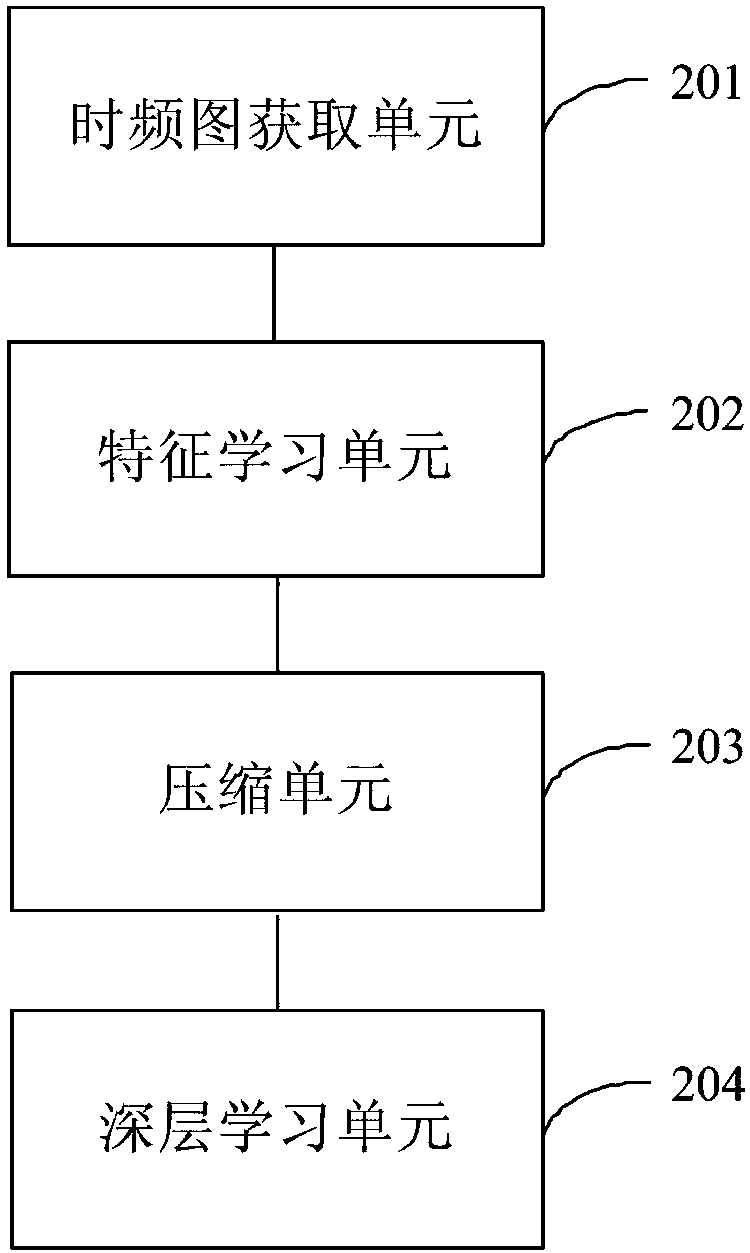

Interaction-oriented speech corpus processing method and device

The embodiments of the invention provide an interaction-oriented speech corpus processing method and device. The method includes the following steps: a speech fragment is converted into a time-frequency graph; the features of the time-frequency graph are learned by using two convolution layers to get a feature graph matrix; the feature graph matrix is compressed by using a maximum pooling layer, and the compressed matrix is converted into a vector; and the generation of the vector is learned by using two LSTM layers, and the learned feature vector is taken as the input corpus of SVM. Therefore, the number of effective corpus can be increased, the training of a speech emotion recognition model can be facilitated, and the recognition ability of the speech emotion recognition model can be improved.

Owner:HEFEI UNIV OF TECH +1

Method for detecting misaligned phonetic units for a concatenative text-to-speech voice

A method of filtering phonetic units to be used within a concatenative text-to-speech (CTTS) voice. Initially, a normality threshold can be established. At least one phonetic unit that has been automatically extracted from a speech corpus in order to construct the CTTS voice can be received. An abnormality index can be calculated for the phonetic unit. Then, the abnormality index can be compared to the established normality threshold. If the abnormality index exceeds the normality threshold, the phonetic unit can be marked as a suspect phonetic unit. If the abnormality index does not exceed the normality threshold, the phonetic unit can be marked as a verified phonetic unit. The concatenative text-to-speech voice can be built using the verified phonetic units.

Owner:CERENCE OPERATING CO

Methods and apparatus for rapid acoustic unit selection from a large speech corpus

Owner:CERENCE OPERATING CO

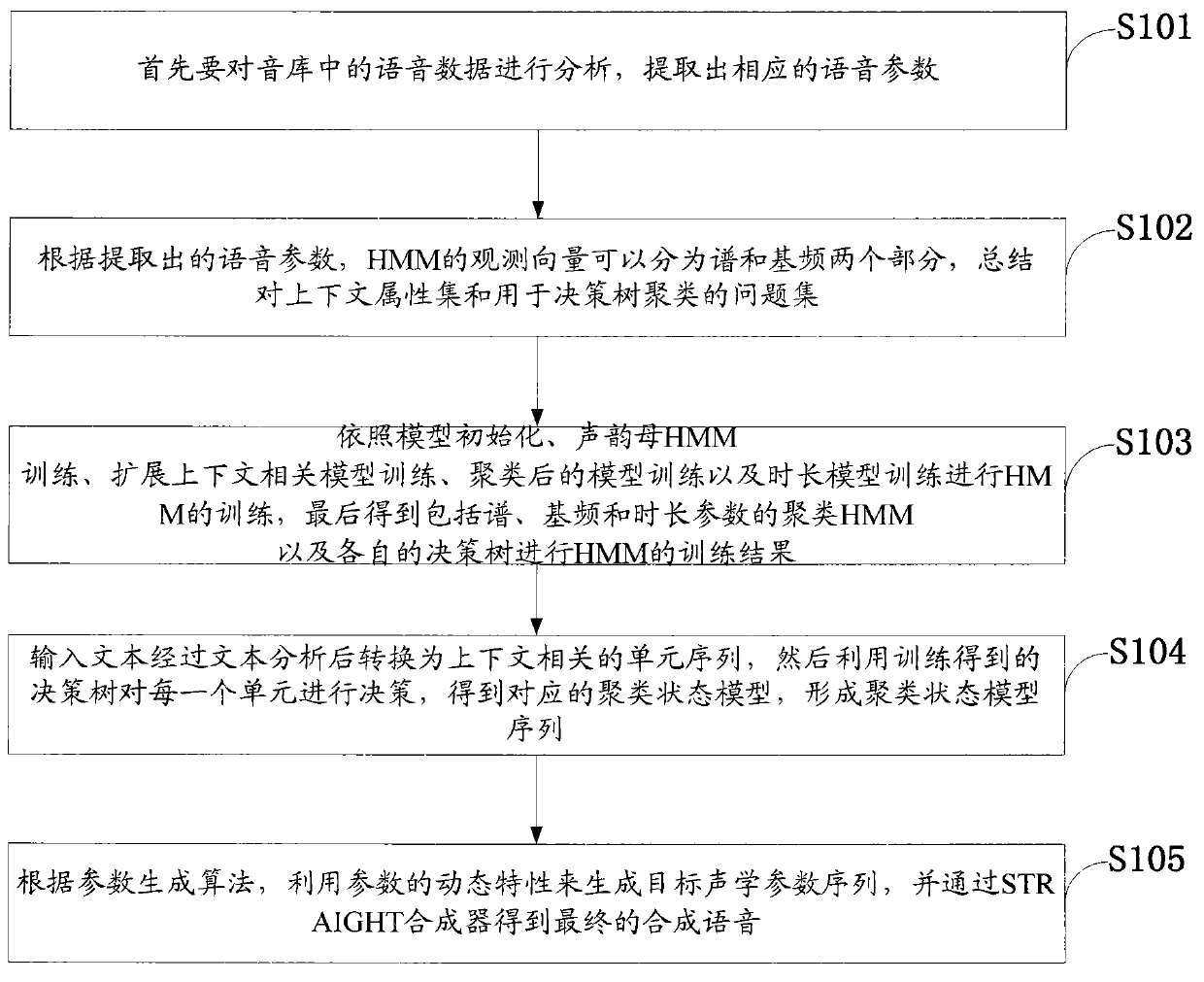

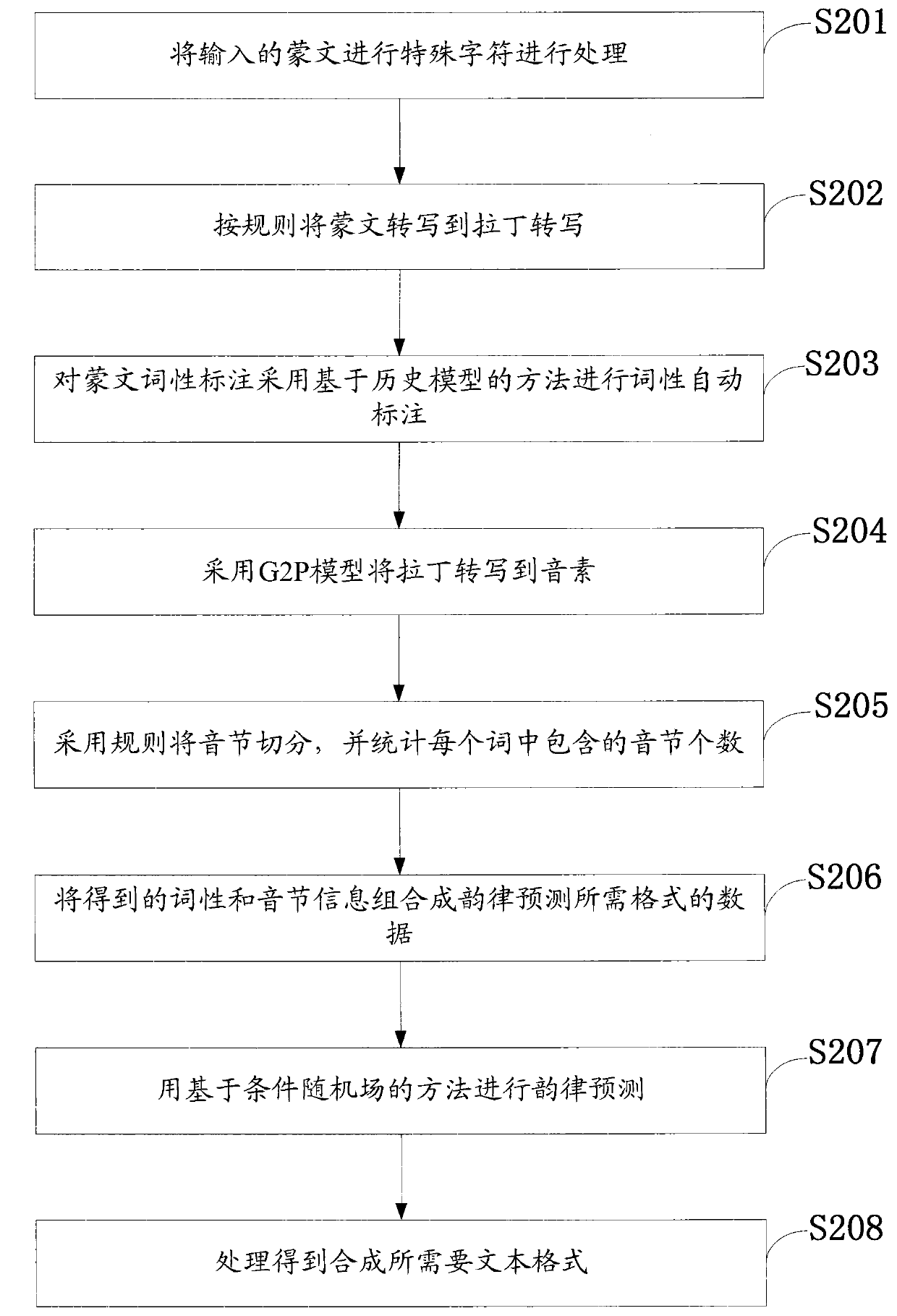

HMM-based method of Mongolian speech synthesis and front-end processing

The invention discloses an HMM-based method of Mongolian speech synthesis and front-end processing. The method comprises the following steps of analyzing speech data in a speech corpus; summarizing a context attribute set and a problem set for clustering decision-making trees; performing HMM training and acquiring HMM training results; acquiring corresponding clustering state models, and forming a clustering state model sequence; generating a target acoustic parameter sequence through the dynamic characteristics of parameters, and acquiring synthesis speech through a STRAIGHT synthesizer. The method of front-end processing comprises the following steps of performing special character processing on input Mongolian; transferring Mongolian to Latin; annotating the part of speech of Mongolian; transferring Latin to phonemes by adopting a G2P model; segmenting syllables by adopting rules; synthesizing data in the format required by rhythm forecasting; performing the rhythm forecasting; processing and acquiring a text format required by the synthesis. By the method, the duration and the pitch period parameter of the synthesis speech can be adjusted freely, the naturality and fluency of the synthesis speech are ensured, and the cost of the synthesis is reduced.

Owner:INNER MONGOLIA UNIVERSITY

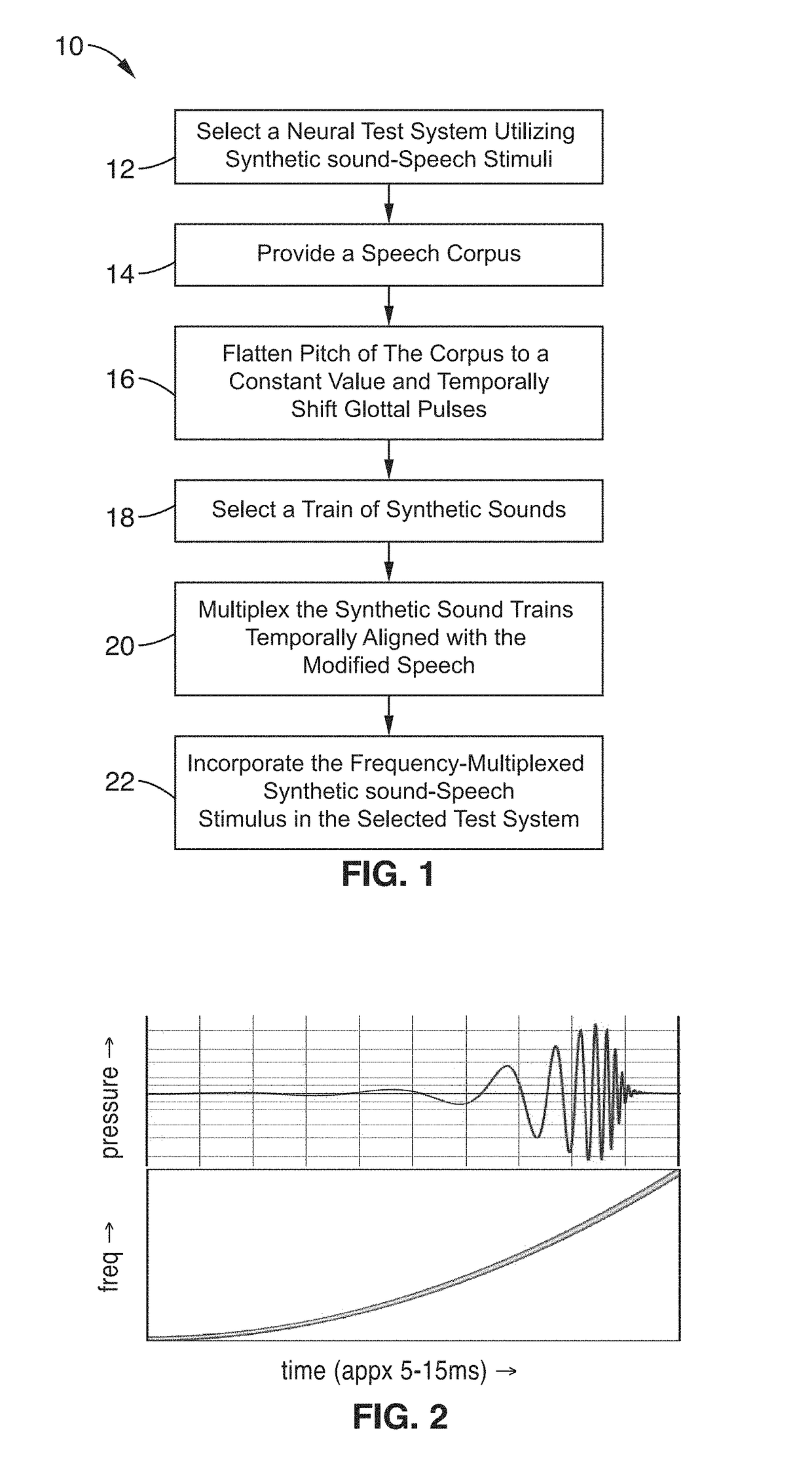

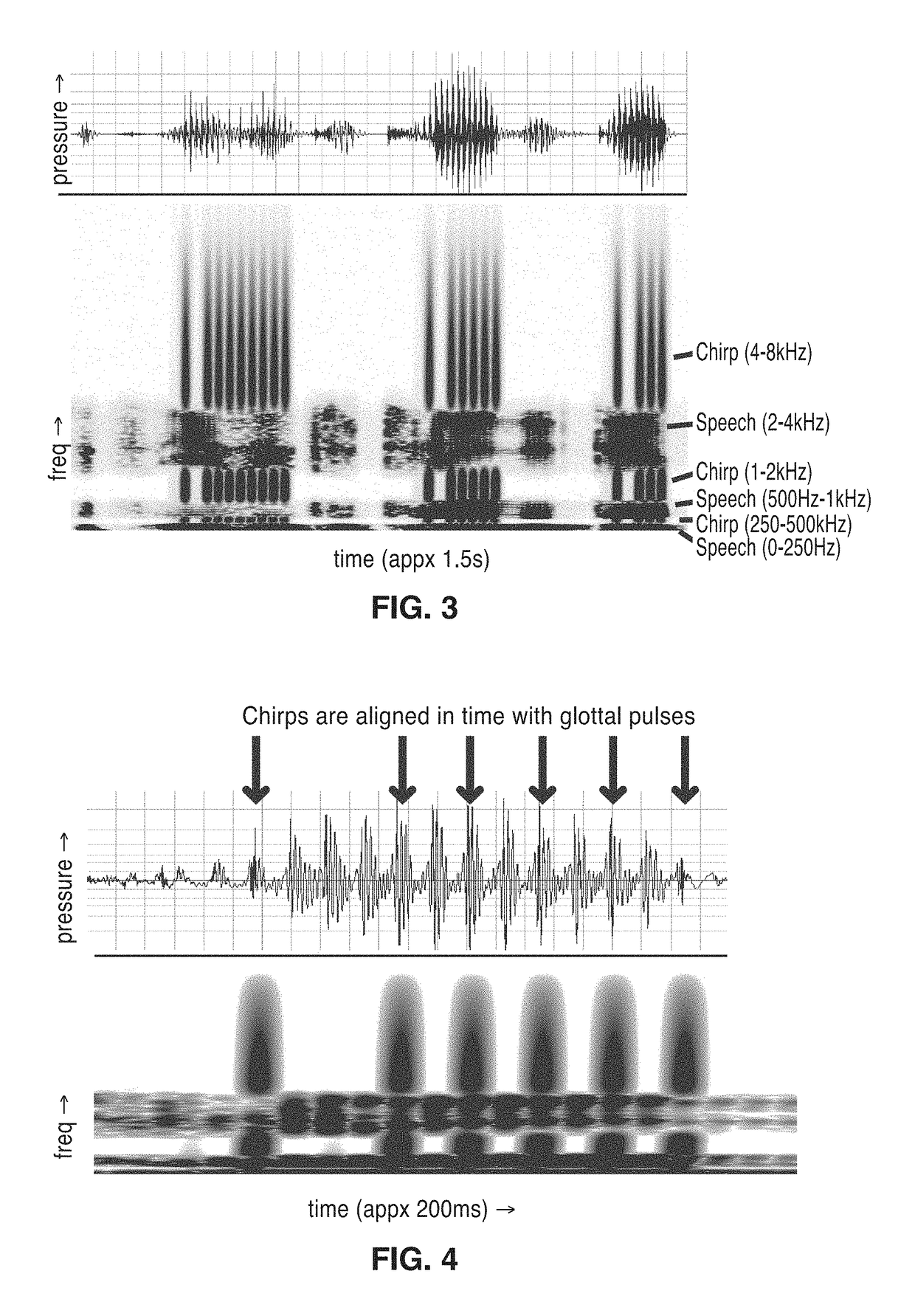

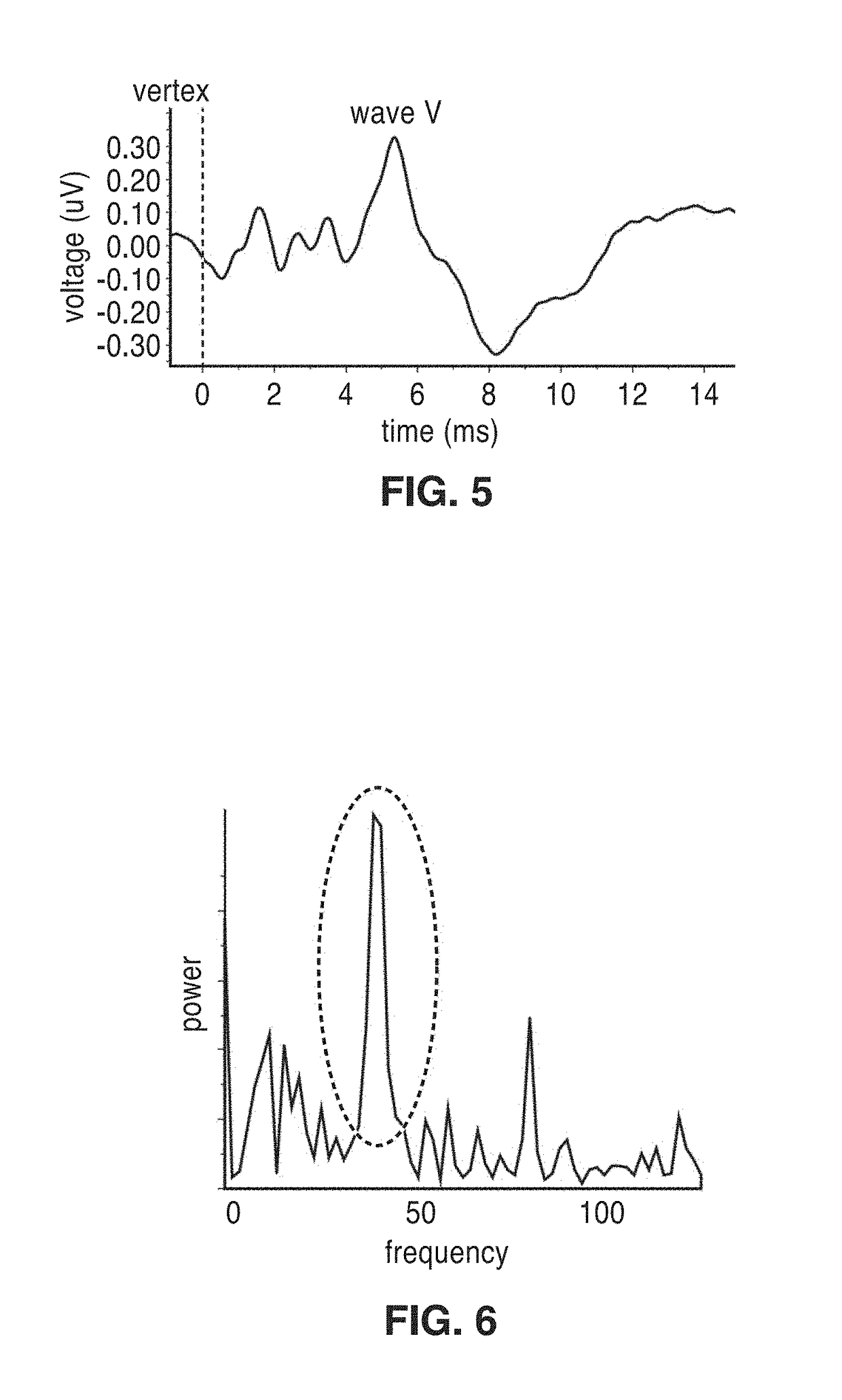

Frequency-multiplexed speech-sound stimuli for hierarchical neural characterization of speech processing

ActiveUS20170196519A1Accelerated programMaximize responseElectroencephalographySensorsMultiplexingAuditory system

A system and method for generating frequency-multiplexed synthetic sound-speech stimuli and for detecting and analyzing electrical brain activity of a subject in response to the stimuli. Frequency-multiplexing of speech copora and synthetic sounds helps the composite sound to blend into a single auditory object. The synthetic sounds are temporally aligned with the utterances of the speech corpus. Frequency multiplexing may include splitting the frequency axis into alternating bands of speech and synthetic sound to minimize the disruptive interaction between the speech and synthetic sounds along the basilar membrane and in their neural representations. The generated stimuli can be used with both traditional and advanced techniques to analyze electrical brain activity and provides a rapid, synoptic view into the functional health of the early auditory system, including how speech is processed at different levels and how these levels interact.

Owner:RGT UNIV OF CALIFORNIA

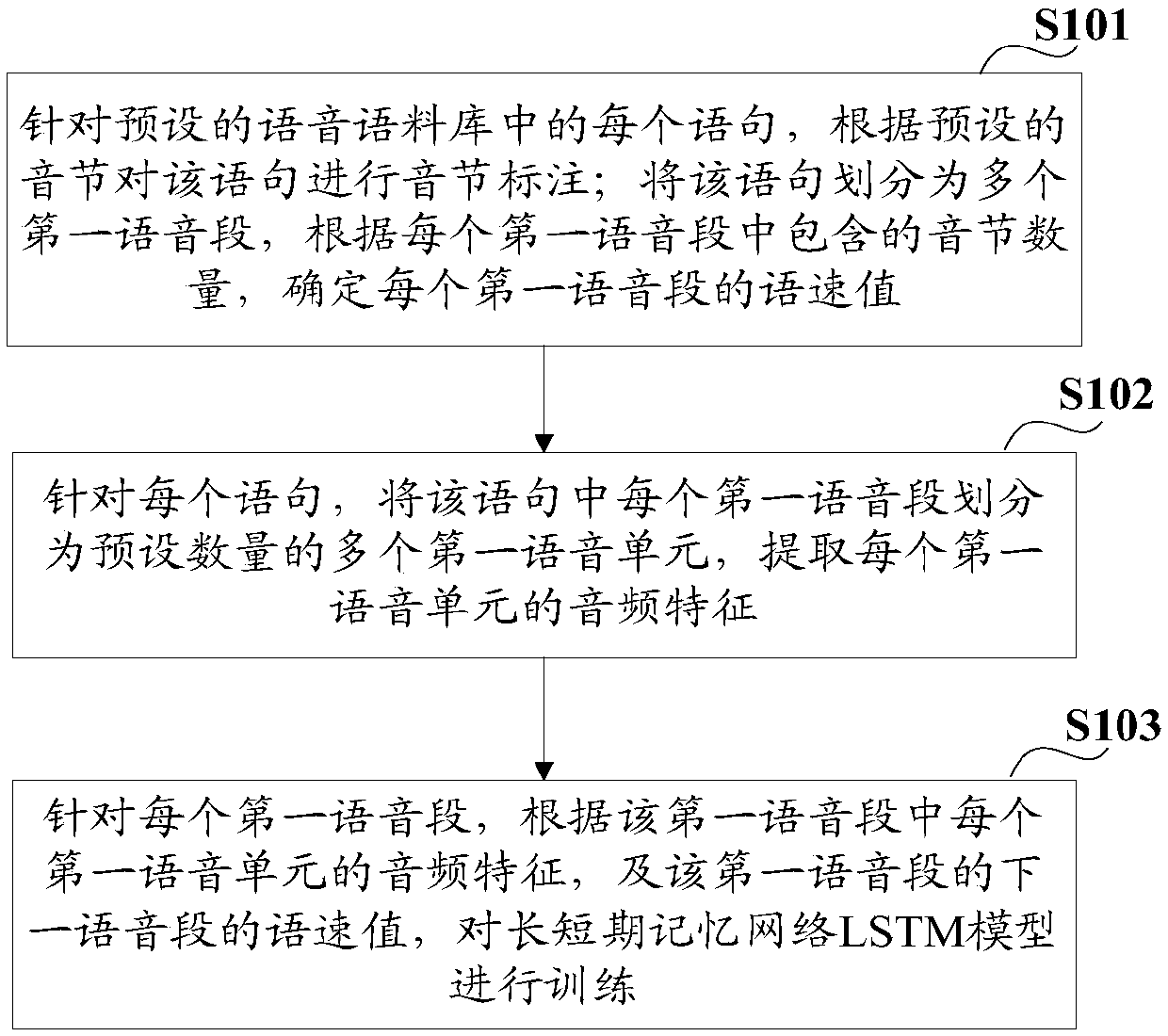

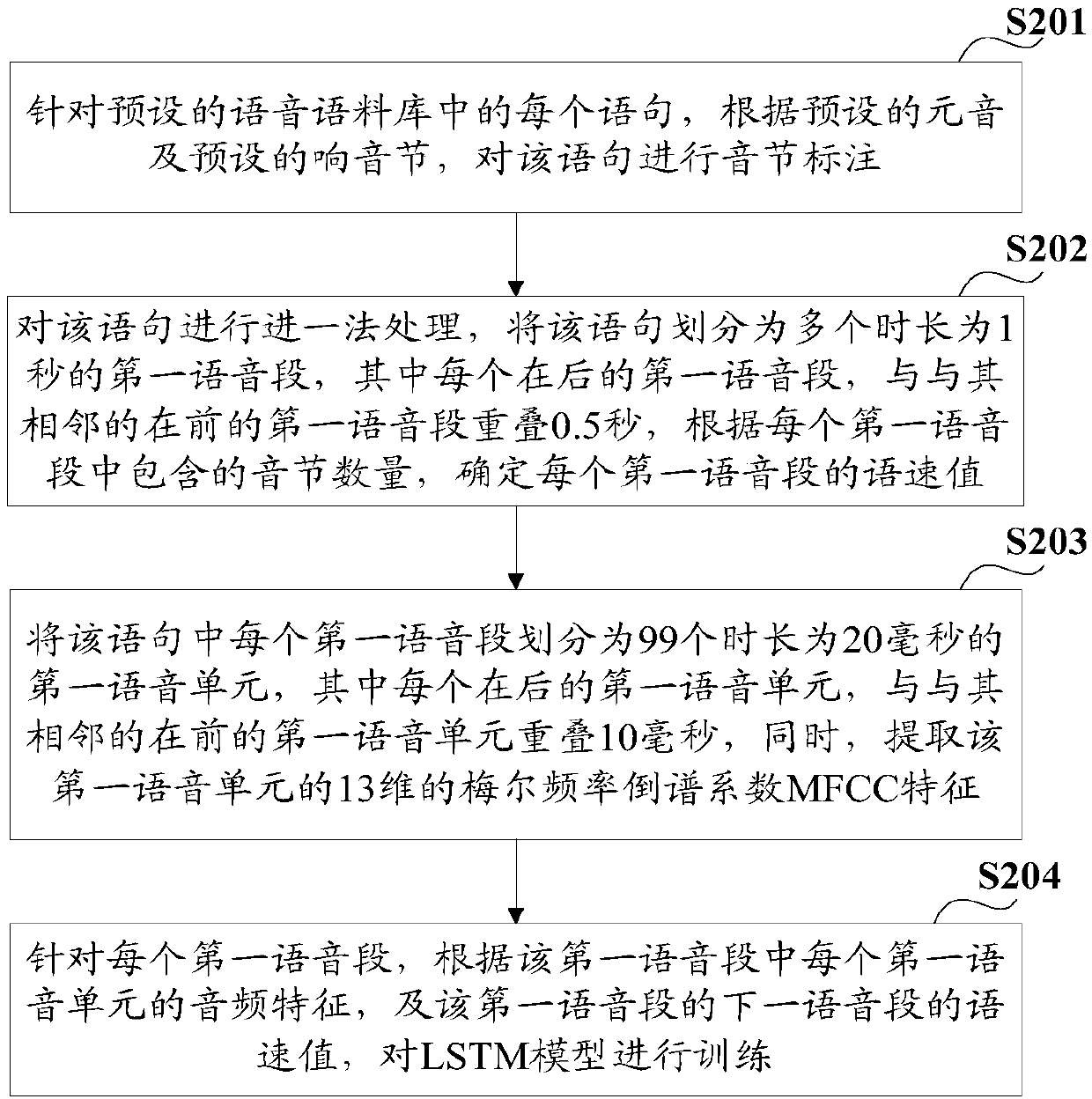

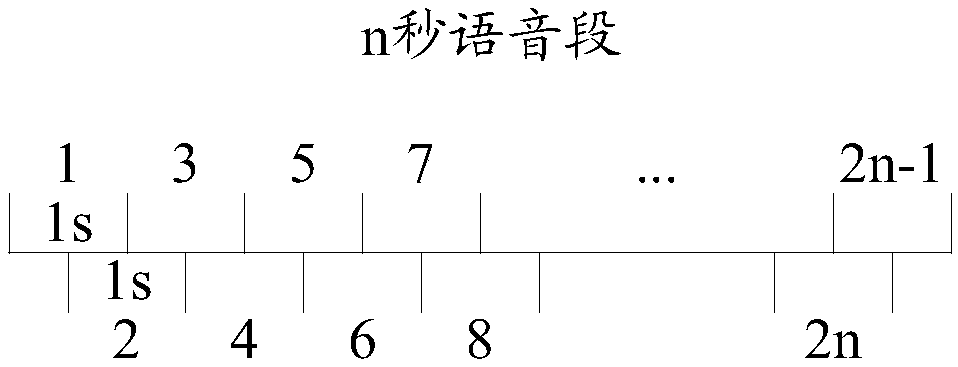

Speech speed estimation model training, speech speed estimation method, device, equipment and medium

The invention discloses speech speed estimation model training, a speech speed estimation method, device, equipment and a medium, and aims to solve the problems that an existing speech speed estimation method cannot predict the speech speed real value; the method comprises the following steps: marking syllables for each sentence in a preset speech corpus according to preset syllables; dividing thesentence into a plurality of the first speech segments, and determining the speech speed value of each the first speech segment according to the syllable number contained in each first speech segment; dividing each first speech segment into first speech units of a preset number, and extracting the audio features of each first speech unit; using the audio features of each first speech unit in thefirst speech segment and the speech speed value of the speech segment after the first speech segment to train an LSTM model. The method marks the syllables of the sentences in the speech corpus, and determines the real speech speed value, thus enabling the LSTM model to estimate the speech speed real value of a to-be-estimated sentence.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

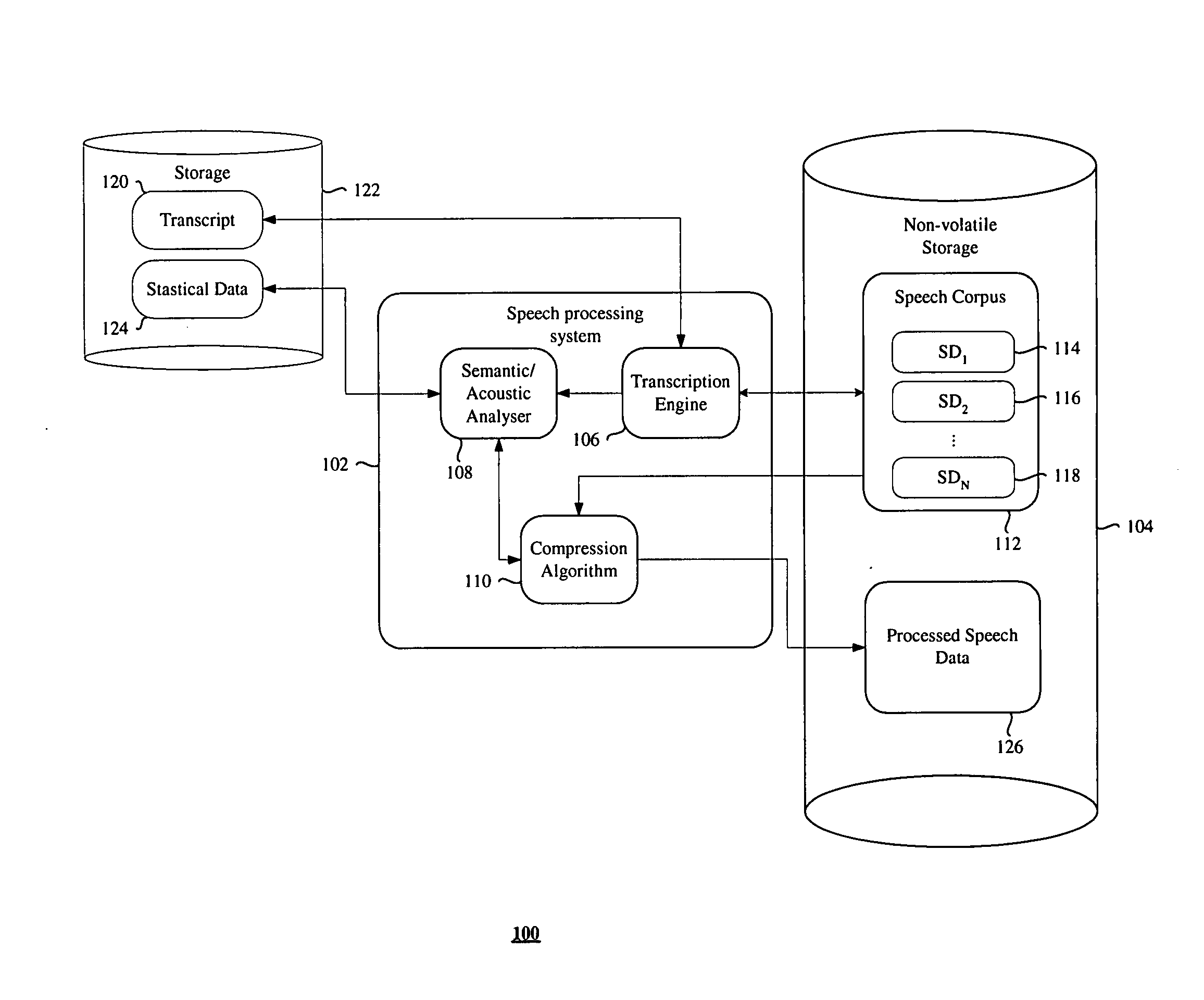

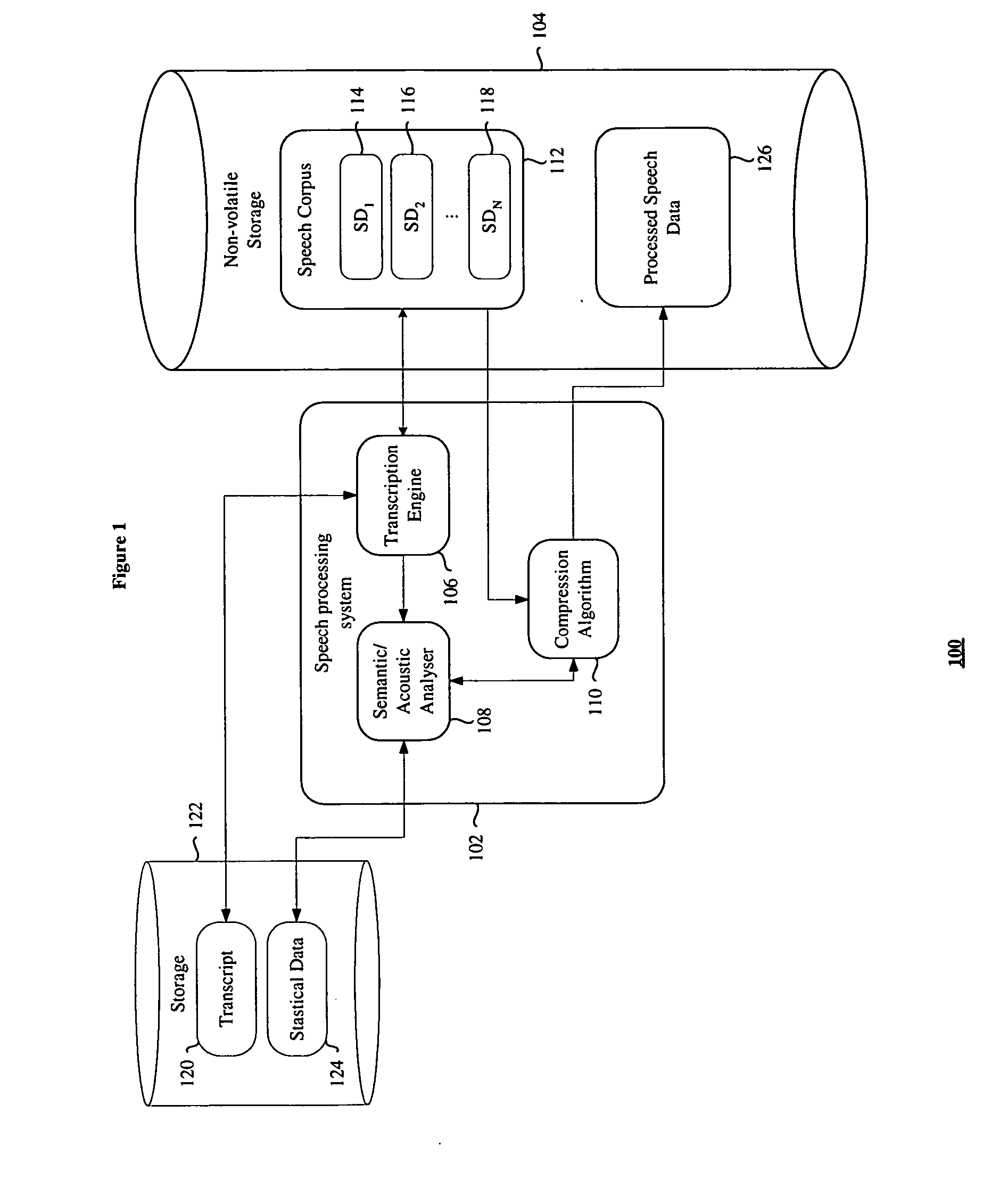

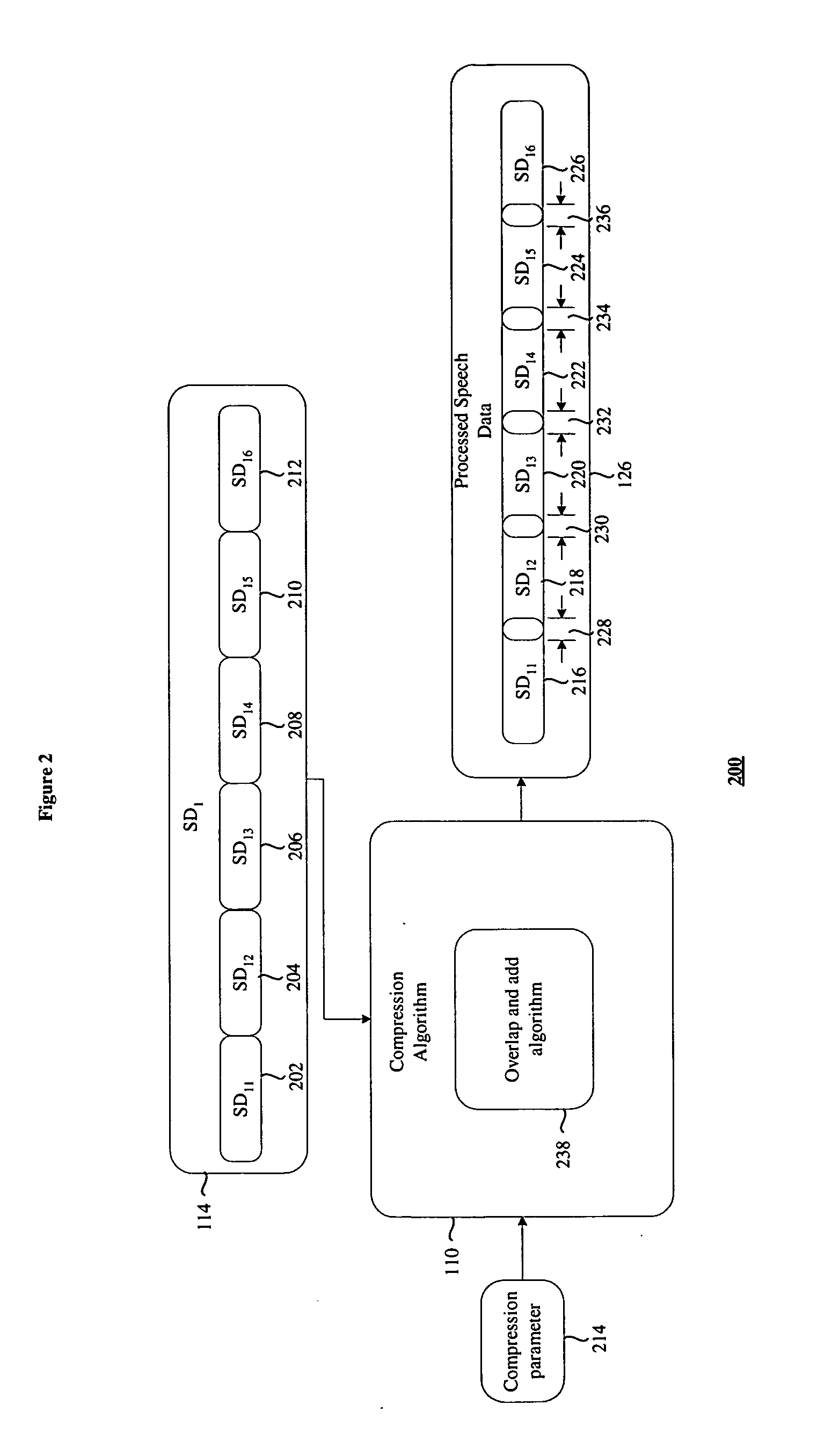

Speech processing system

InactiveUS20070219778A1Speech analysisSpecial data processing applicationsDatabase managerSpeech corpus

Embodiments of the present invention relate to a speech processing system comprising a data base manager to access a speech corpus comprising a plurality of sets of speech data; means for processing a selectable set of speech data to produce correlated redundancy data and means for creating a speech file comprising speech data according to the correlated redundancy data having a playback speed other than the normal playback speed of the selected speech data.

Owner:UNIV OF SHEFFIELD

System and method for hybrid speech synthesis

A speech synthesis system receives symbolic input describing an utterance to be synthesized. In one embodiment, different portions of the utterance are constructed from different sources, one of which is a speech corpus recorded from a human speaker whose voice is to be modeled. The other sources may include other human speech corpora or speech produced using Rule-Based Speech Synthesis (RBSS). At least some portions of the utterance may be constructed by modifying prototype speech units to produce adapted speech units that are contextually appropriate for the utterance. The system concatenates the adapted speech units with the other speech units to produce a speech waveform. In another embodiment, a speech unit of a speech corpus recorded from a human speaker lacks transitions at one or both of its edges. A transition is synthesized using RBSS and concatenated with the speech unit in producing a speech waveform for the utterance.

Owner:NOVASPEECH

Method and apparatus for speech synthesis without prosody modification

Owner:MICROSOFT TECH LICENSING LLC

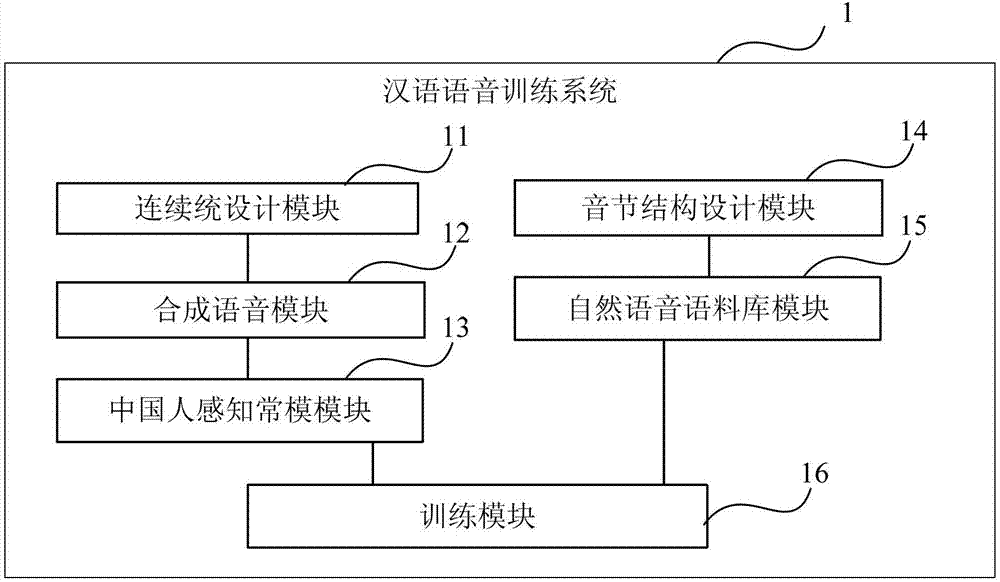

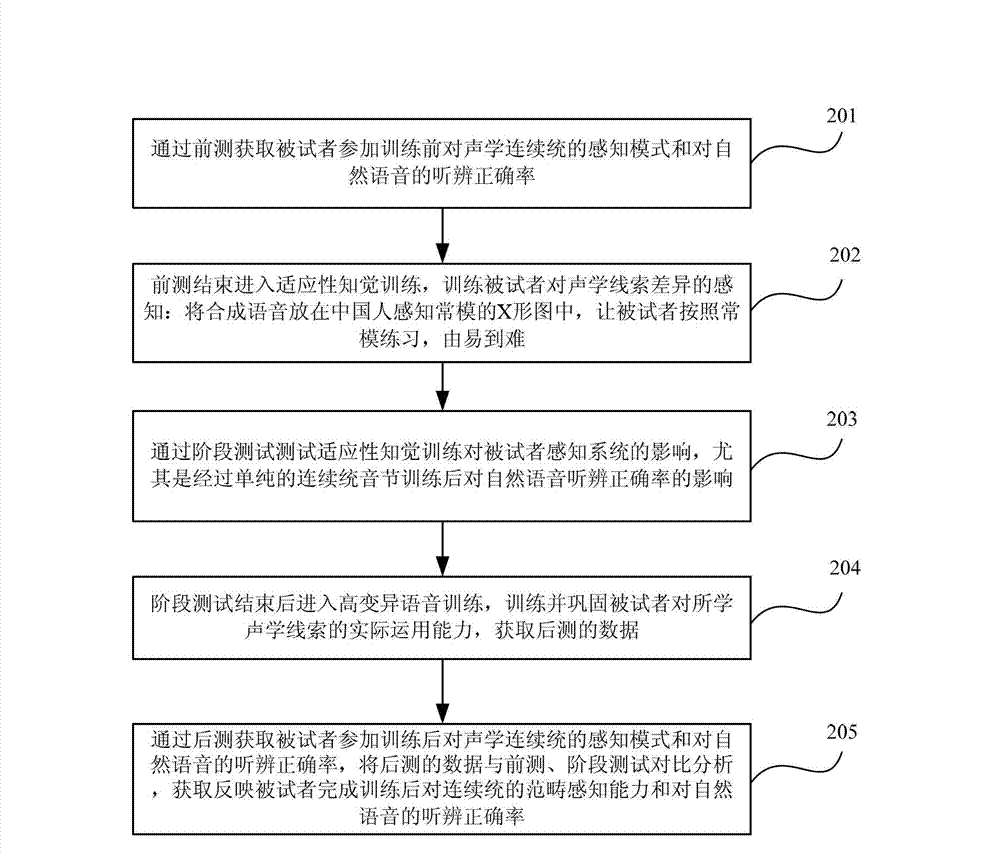

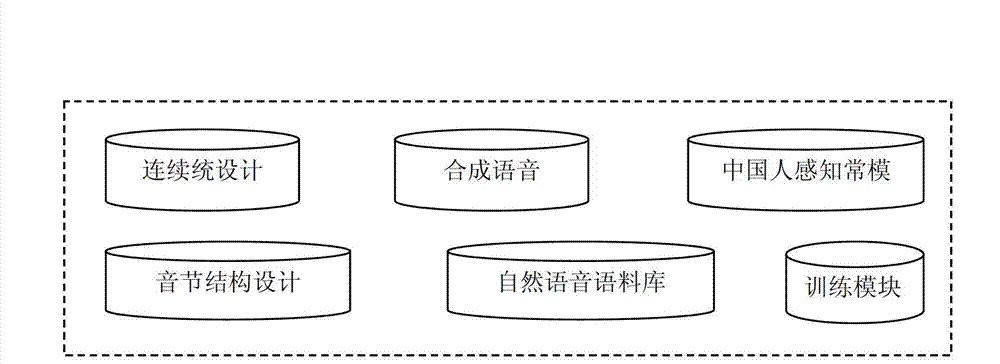

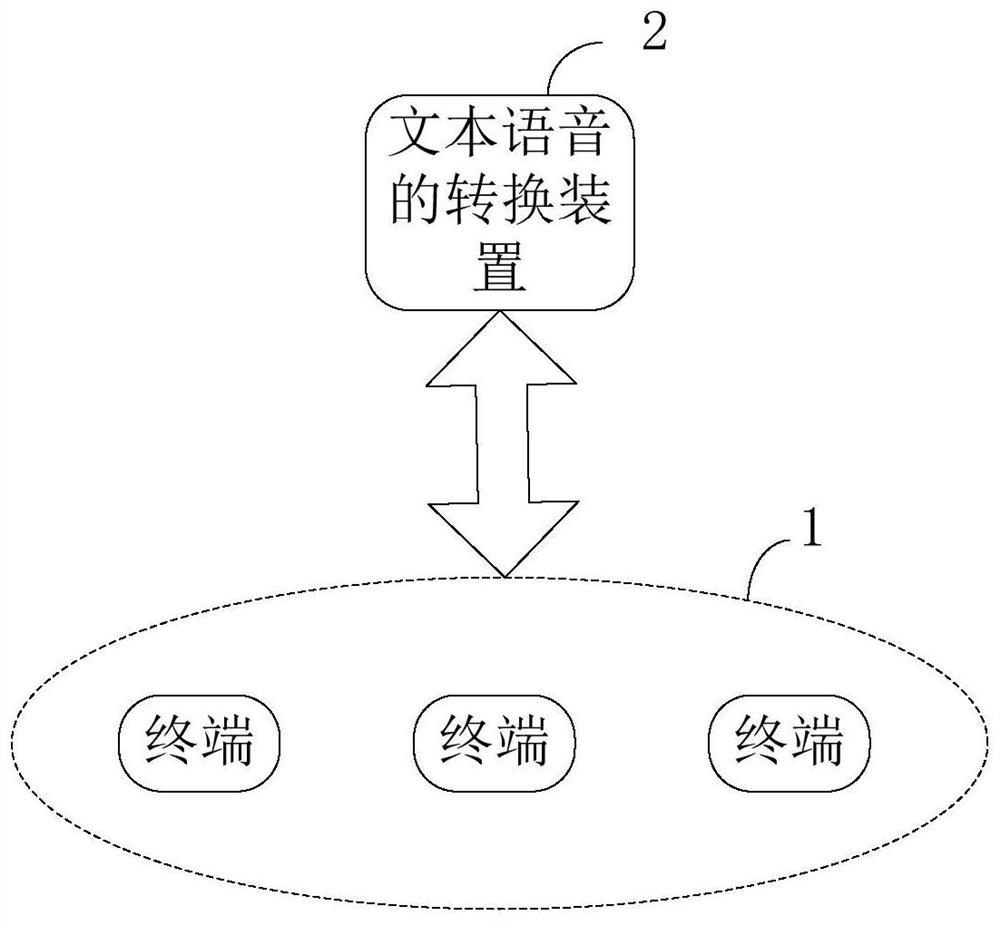

Chinese speech training system and Chinese speech training method

ActiveCN102968921AImprove listening accuracySpeech synthesisTeaching apparatusSpeech trainingSyllable

The invention provides a Chinese speech training system and a Chinese speech training method. The system comprises a continuum design module, a synthesis speech module, a Chinese perception norm module, a syllable structure design module, a natural speech corpus module and a training module, wherein the continuum design module obtains a targeted acoustics continuum designed by a user according to perception biased errors of a testee and the existing Chinese speech theory, the synthesis speech module converts the acoustics continuum designed by the continuum design module into synthesis speech, the Chinese perception norm module listens to and distinguishes the synthesis speech through the Chinese to obtain a Chinese perception norm of the acoustics continuum, the syllable structure design module obtains a training order designed for the syllable structure of the level of the testee by the user , the natural speech corpus module extracts needed natural speech corpus according to the training order designed by the syllable structure design module, and a training module is used for Chinese speech training of the testee by using the natural speech corpus and the Chinese perception norm. According to the system and the method, overseas students can master main distinctions between the Chinese speech within a short time and improve listening and distinguishing accuracy rapidly.

Owner:BEIJING LANGUAGE AND CULTURE UNIVERSITY

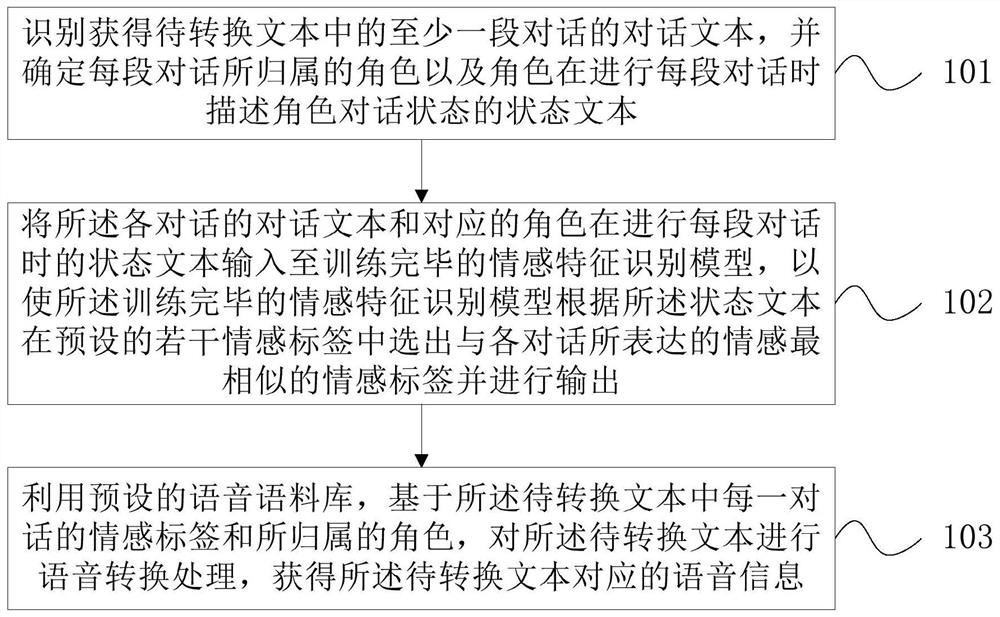

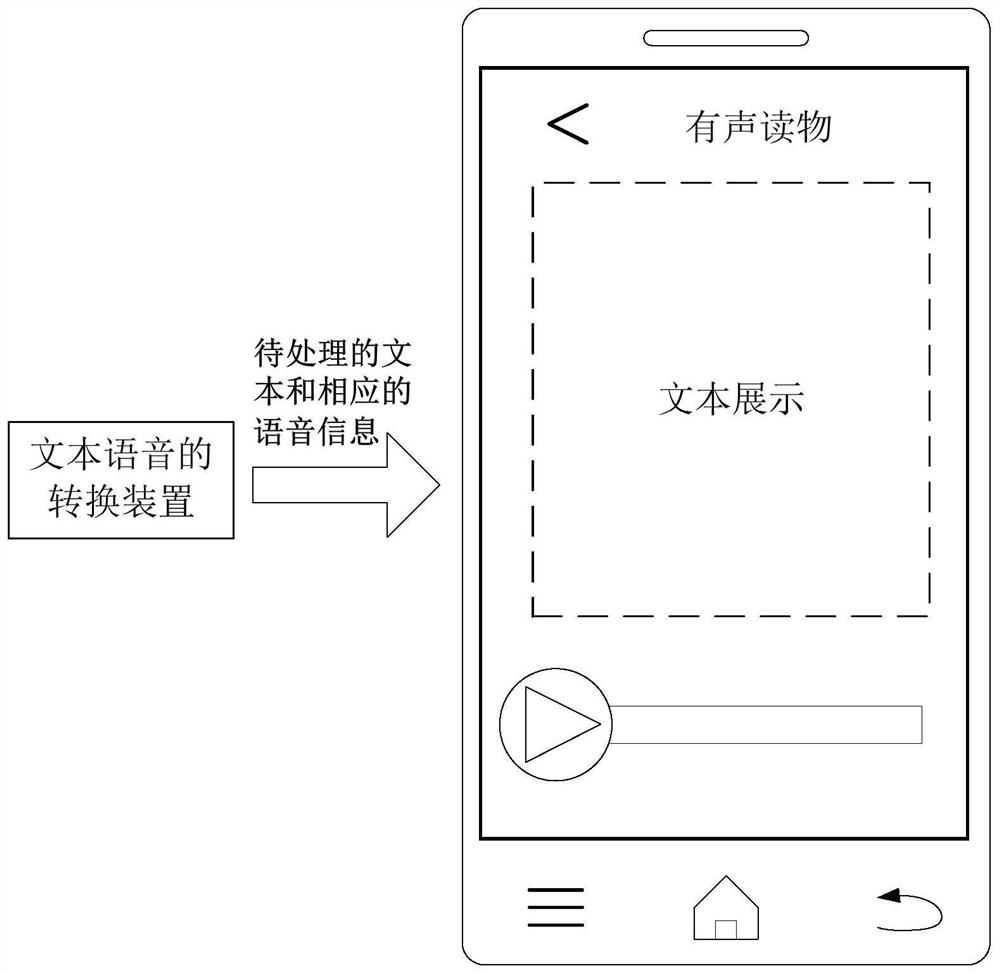

Text-to-speech conversion method, device, electronic equipment and storage medium

PendingCN112765971ARich tone of voiceExpressiveDigital data information retrievalNatural language data processingText entrySpeech corpus

According to a text-to-speech conversion method, a device, electronic equipment and a storage medium provided by the embodiment of the invention, the method comprises the steps of recognizing and obtaining the conversation text of at least one conversation in the to-be-converted text, and determining the role to which each conversation belongs and the state text for describing the conversation state of the role when the role performs each conversation; inputting the conversation text and the state text of each conversation into a trained emotion feature recognition model, so that the trained emotion feature recognition model selects an emotion tag most similar to the emotion expressed by each conversation from a plurality of preset emotion tags according to the state text and outputs the emotion tag; and performing voice conversion processing on the to-be-converted text by using a preset voice corpus based on the emotion label of each conversation in the to-be-converted text and the affiliated role to obtain the voice information, the voice information corresponding to the to-be-converted text obtained in the embodiment of the invention is rich in voice tone, and the user experience is improved. The emotional change of each task in the to-be-converted text can be reflected, and the expressive force is high.

Owner:BEIJING VOLCANO ENGINE TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com