Patents

Literature

303results about How to "Expressive" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

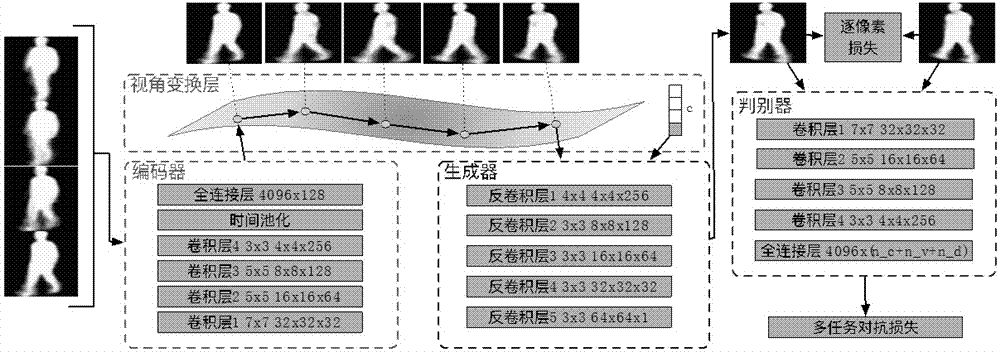

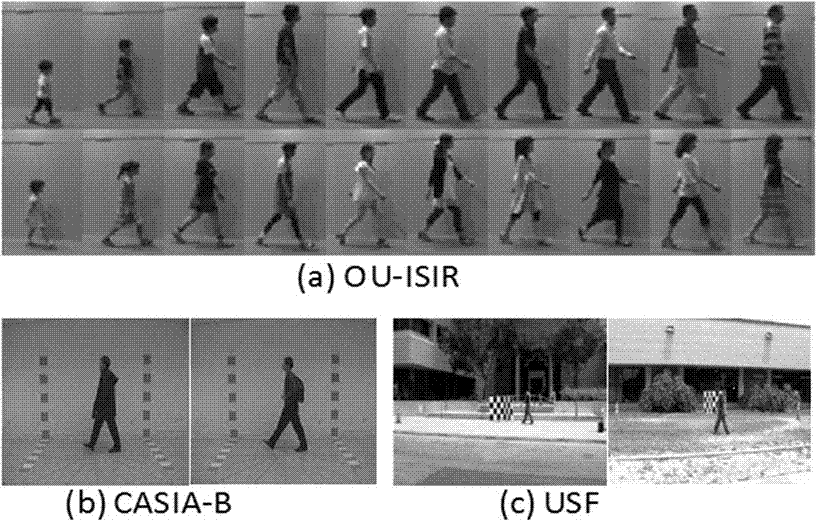

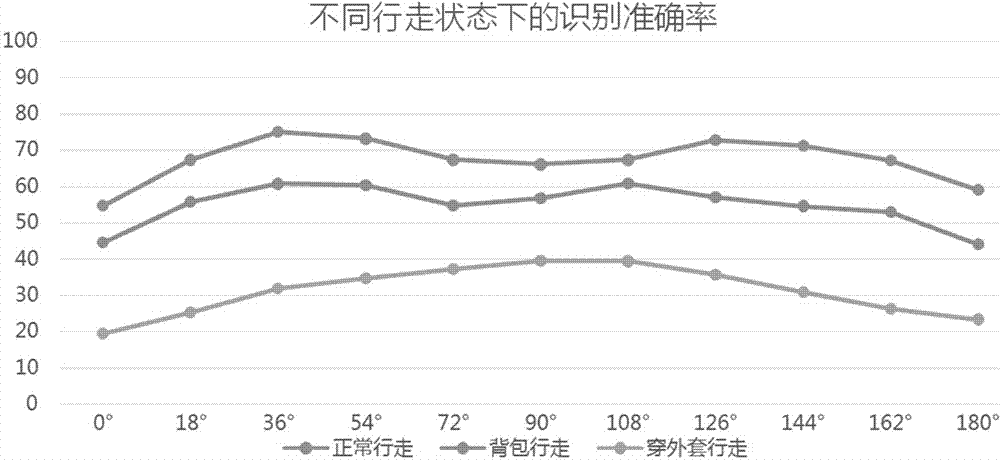

Cross-visual angle gait recognition method based on multitask generation confrontation network

ActiveCN107085716AReduce computational overheadExpressiveCharacter and pattern recognitionNeural architecturesPattern recognitionFrame sequence

The invention belongs to the field of computer vision and machine learning, and particularly relates to a cross-visual angle gait recognition method based on a multitask generation confrontation network. The objective of the invention is to solve a problem of reduced generalization performance of a model under big visual angle change of the gait recognition. The method comprises steps of firstly carrying out pretreatment on each frame of image for an original pedestrian video frame sequence, and extracting gait template features; carrying out gait hidden expression through neural network coding and carrying out angle transformation in a hidden space; generating a confrontation network through multiple tasks and constructing gait template features of other visual angles; and finally using the gait hidden expression to carry out recognition. Compared with methods based on classification or reconstruction, the method has quite strong interpretability and recognition performance can be improved.

Owner:FUDAN UNIV

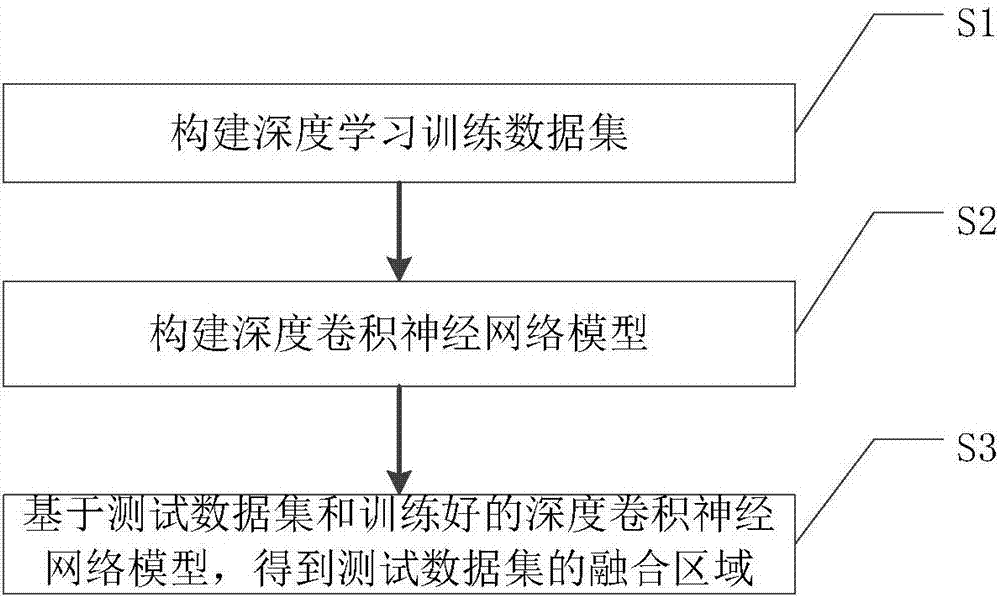

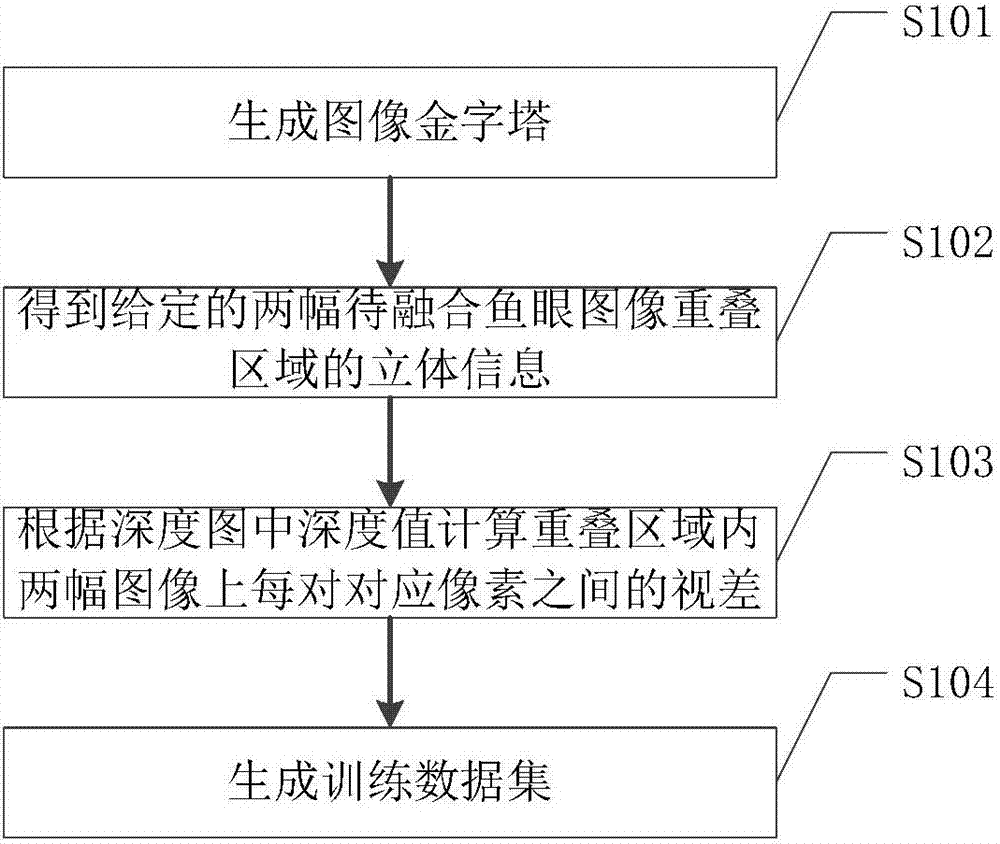

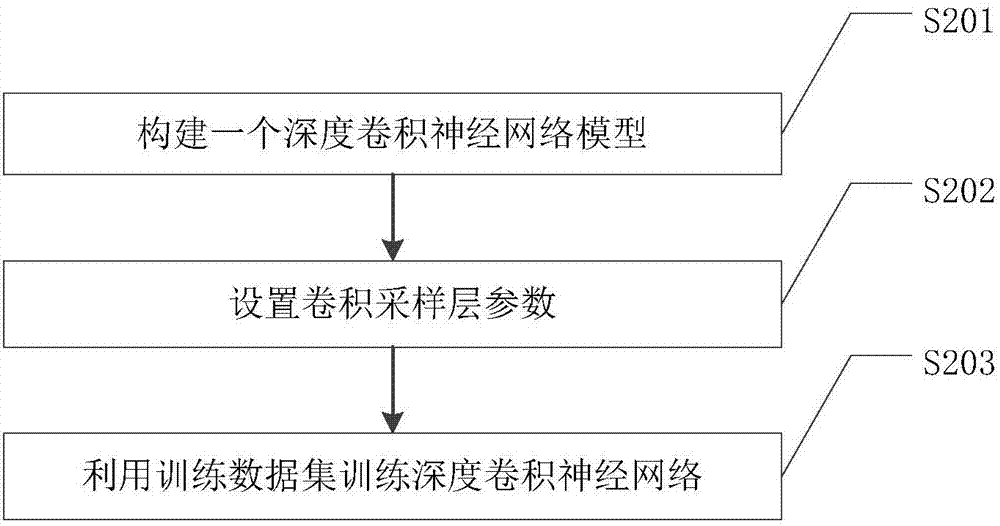

Panoramic image fusion method based on depth convolution neural network and depth information

InactiveCN106934765AReduce ghostingImplement automatic selectionImage enhancementImage analysisData setSemantic representation

The invention discloses a panoramic image fusion method based on a depth convolution neural network and depth information. The method comprises the steps of (S1) constructing a deep learning training data set, selecting overlap regions xe1 and xe2 of two fish eye images to be fused used for training and an ideal fusion area ye of a panoramic image formed after fusing the two fish eye images, and constructing a training set {xe1, xe2, ye} of the images to be fused and a panoramic image block pair, (S2) constructing a convolution neural network model, and (S3) obtaining a fusion area of a test data set based on a test data set and a trained depth convolution neural network model. According to the method, an image can be expressed more comprehensively and deeply, the image semantic representation in a plurality of abstract levels is realized, and the accuracy of image fusion is improved.

Owner:CHANGSHA PANODUX TECH CO LTD

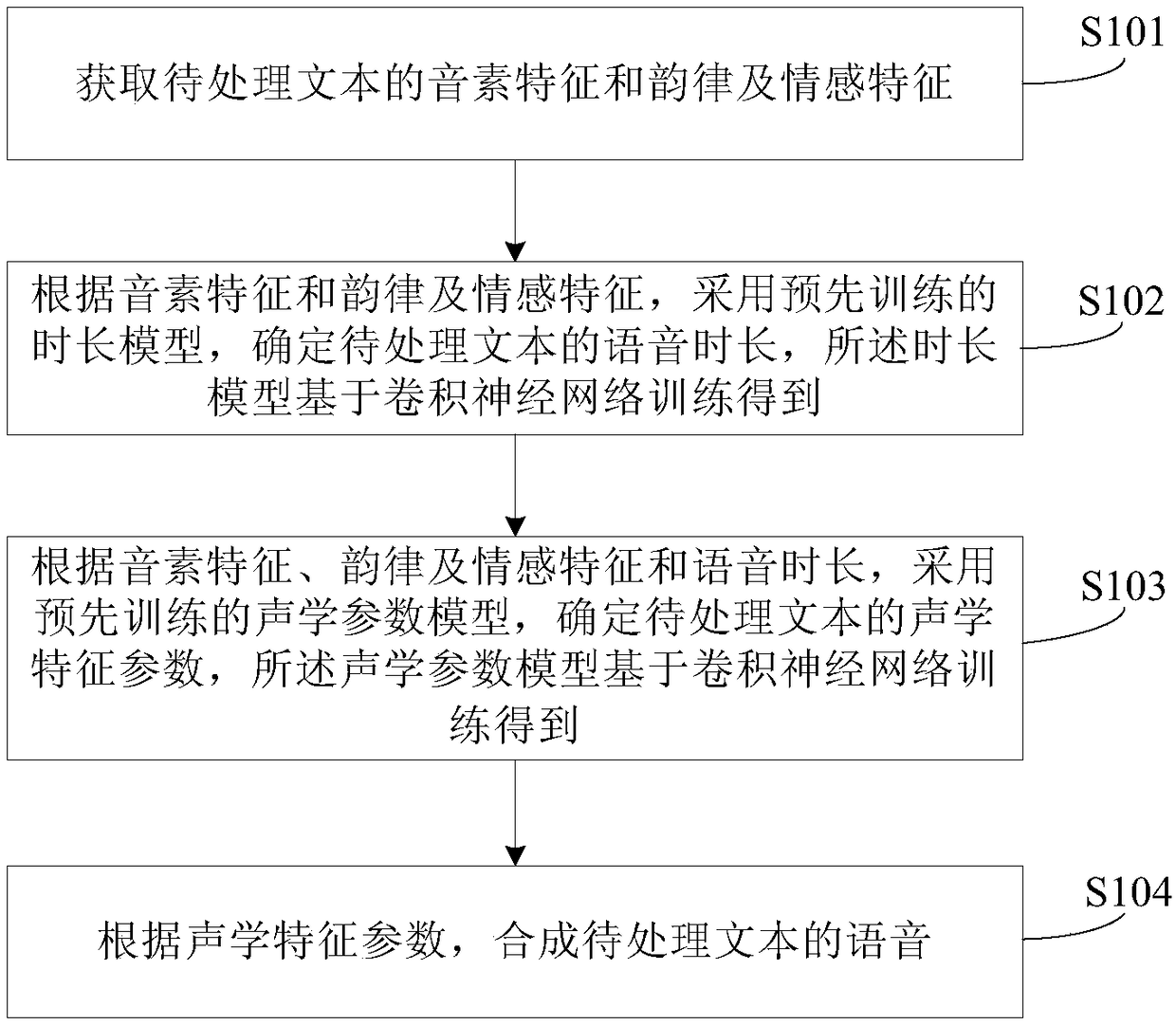

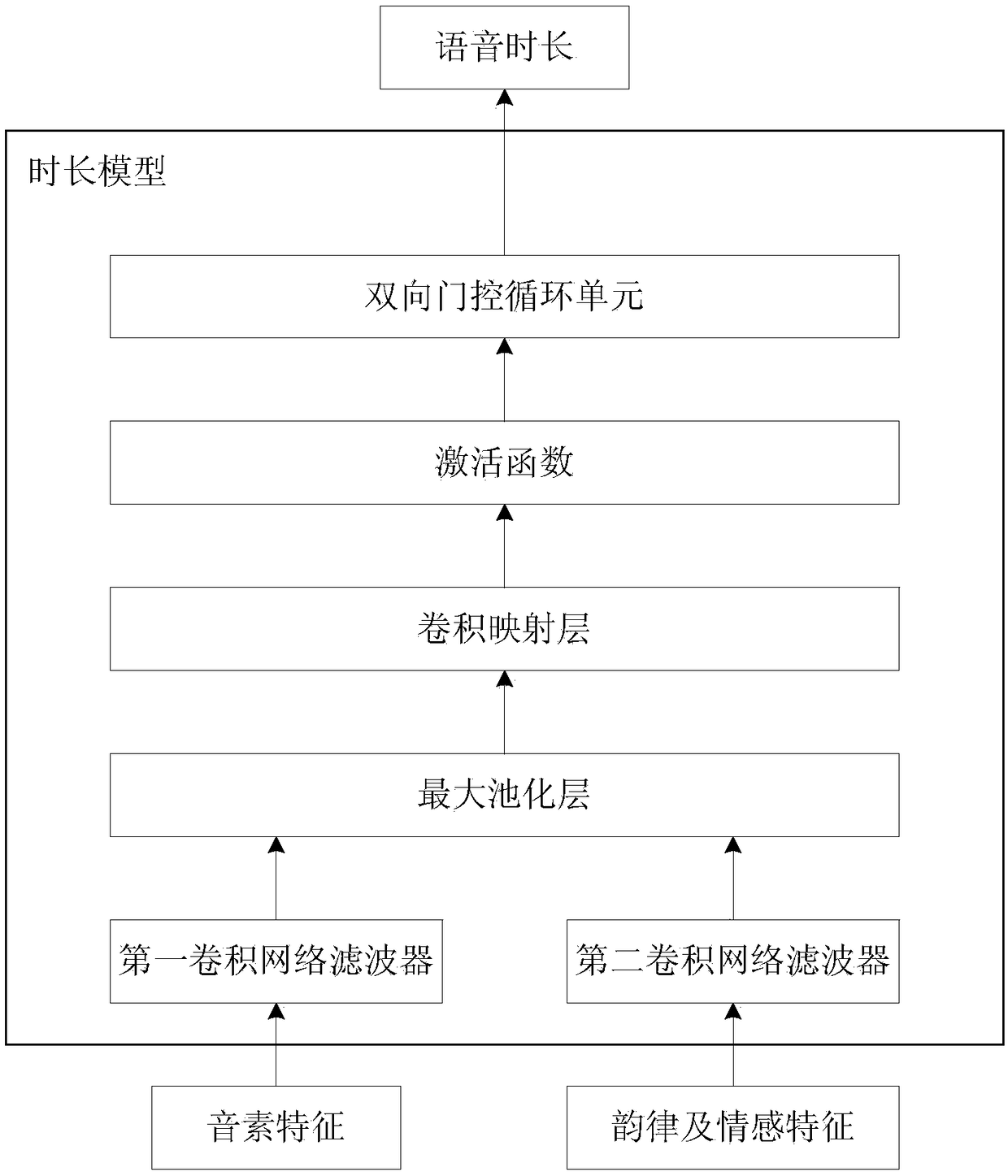

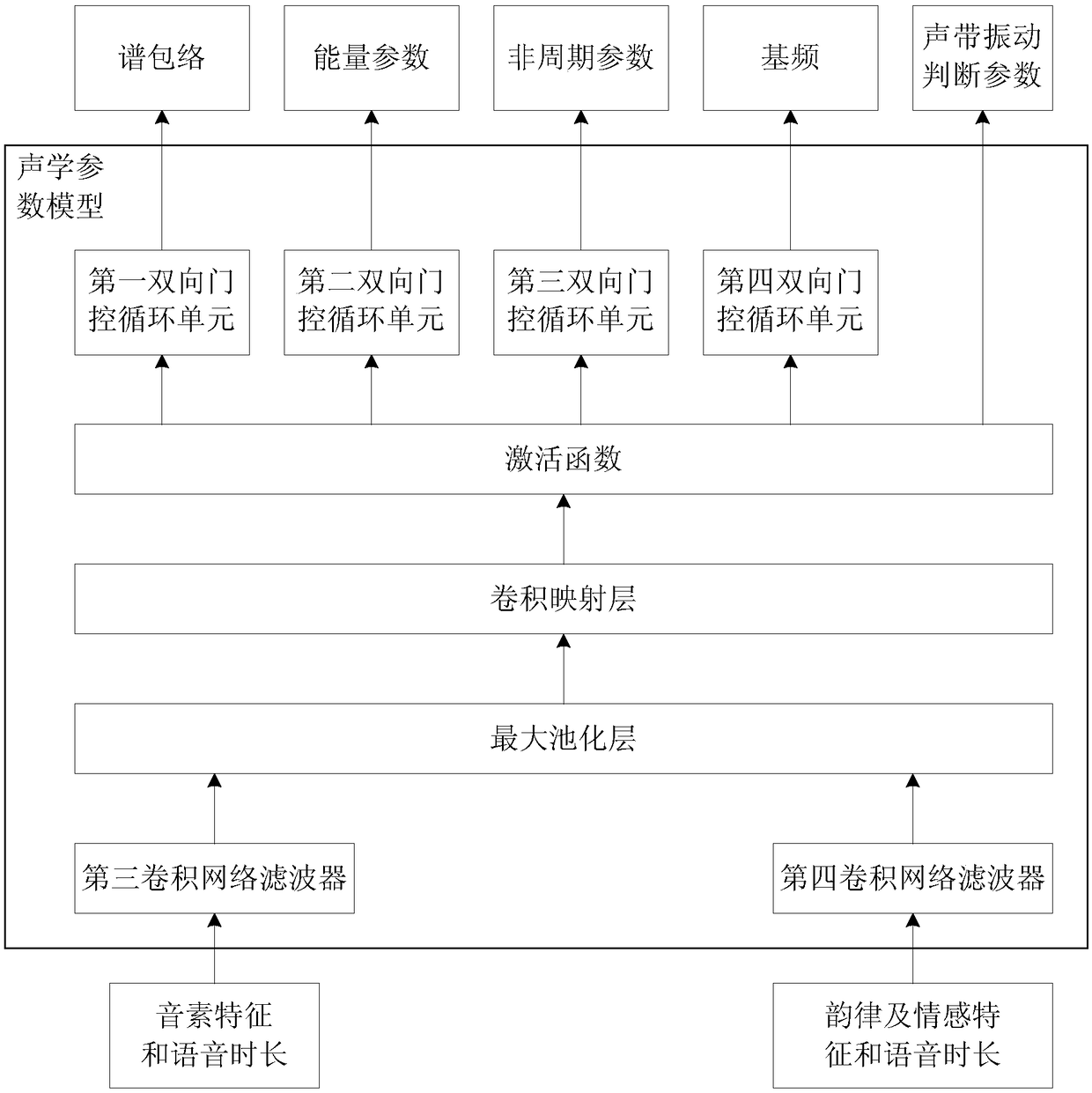

Voice synthesis method and device

The embodiment of the invention provides a voice synthesis method and device. The voice synthesis method includes the steps that phoneme characteristics and rhythm and affective characteristics of a to-be-processed text are obtained; according to the phoneme characteristics and the rhythm and affective characteristics, the voice duration of the to-be-processed text is determined through a pre-trained duration model, wherein the duration model is obtained based on convolutional neural network training; according to the phoneme characteristics, the rhythm and affective characteristics and the voice duration, acoustic characteristic parameters of the to-be-processed text are determined through a pre-trained acoustic parameter model, wherein the acoustic parameter model is obtained based on convolutional neural network training; according to the acoustic characteristic parameters, a voice of the to-be-processed text is synthesized. By means of the voice synthesis method in the embodiment,on the premise that the real-time requirement can be met, the synthesized voice which is higher in voice quality, better in emotion expressiveness and more natural and smoother can be provided.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

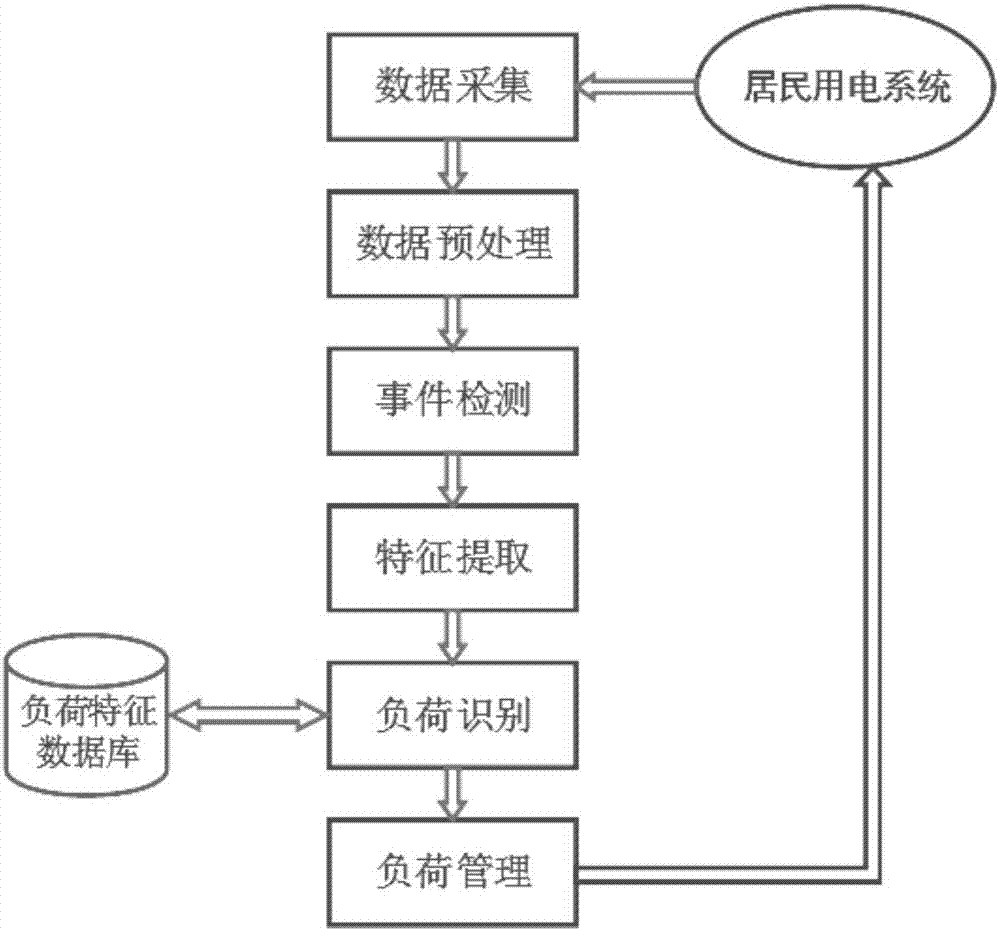

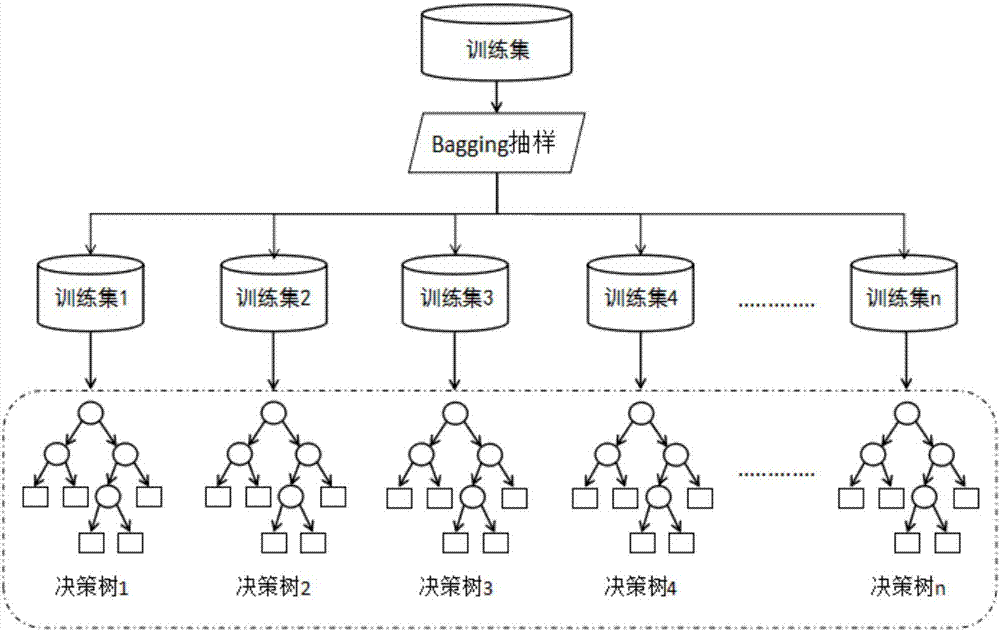

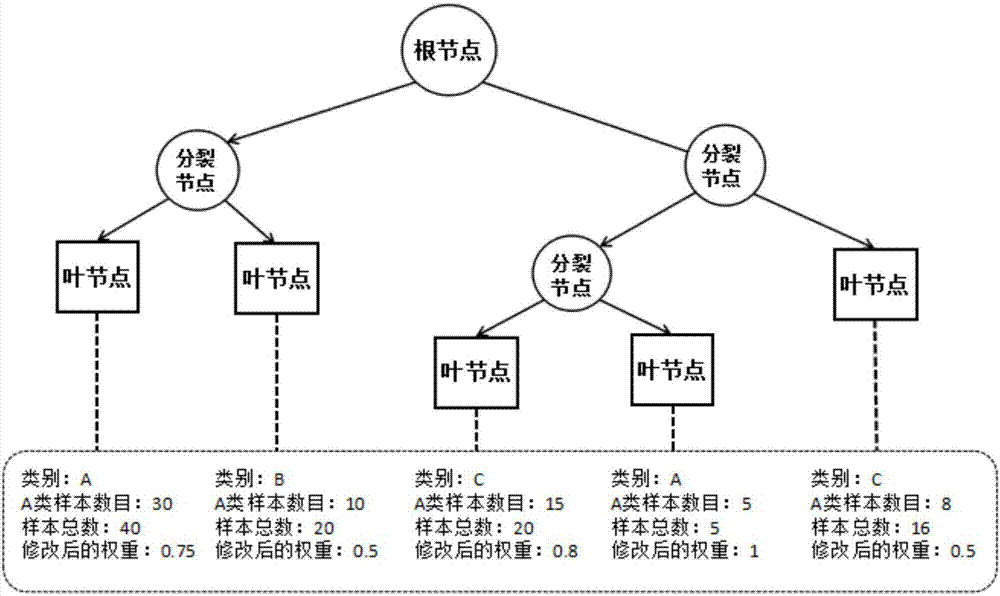

Random forest-based non-invasive home appliance identification method

InactiveCN107273920AGood anti-noise performanceSimplify the modeling processCharacter and pattern recognitionTime integral measurementNon invasiveVoltage

The invention discloses a random forest-based non-invasive home appliance identification method, which aims to monitor a family internal power utilization condition without invading an interior of a family user, and is high in identification speed and high in accuracy. According to the adopted technical scheme, the method comprises the steps of establishing a load feature database; taking load features stored in the feature database as an original training set, and generating N training sub-sets in the original training set; combining N generated decision trees into a random forest; finishing a training process of the random forest through optimization of weights of different decision tree leaf nodes; detecting an electric appliance switching event by utilizing a secondary detection algorithm to obtain starting and ending time of event occurrence, and separating out current and voltage signals of a switched electric appliance from a bus signal, thereby obtaining the load features from separated data; and finally inputting the load features as input parameters to the trained random forest, and finishing electric appliance identification through voting.

Owner:XI AN JIAOTONG UNIV

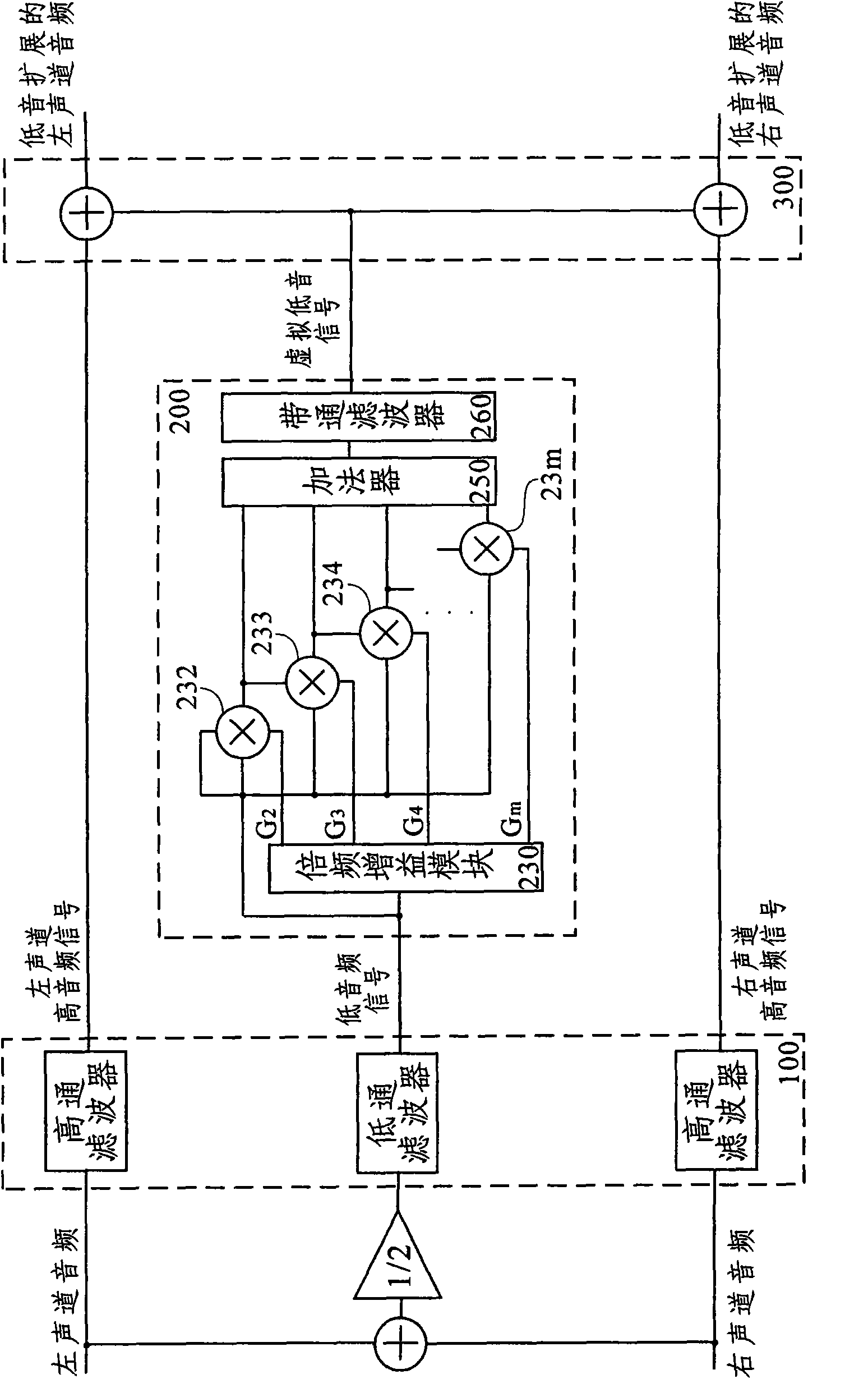

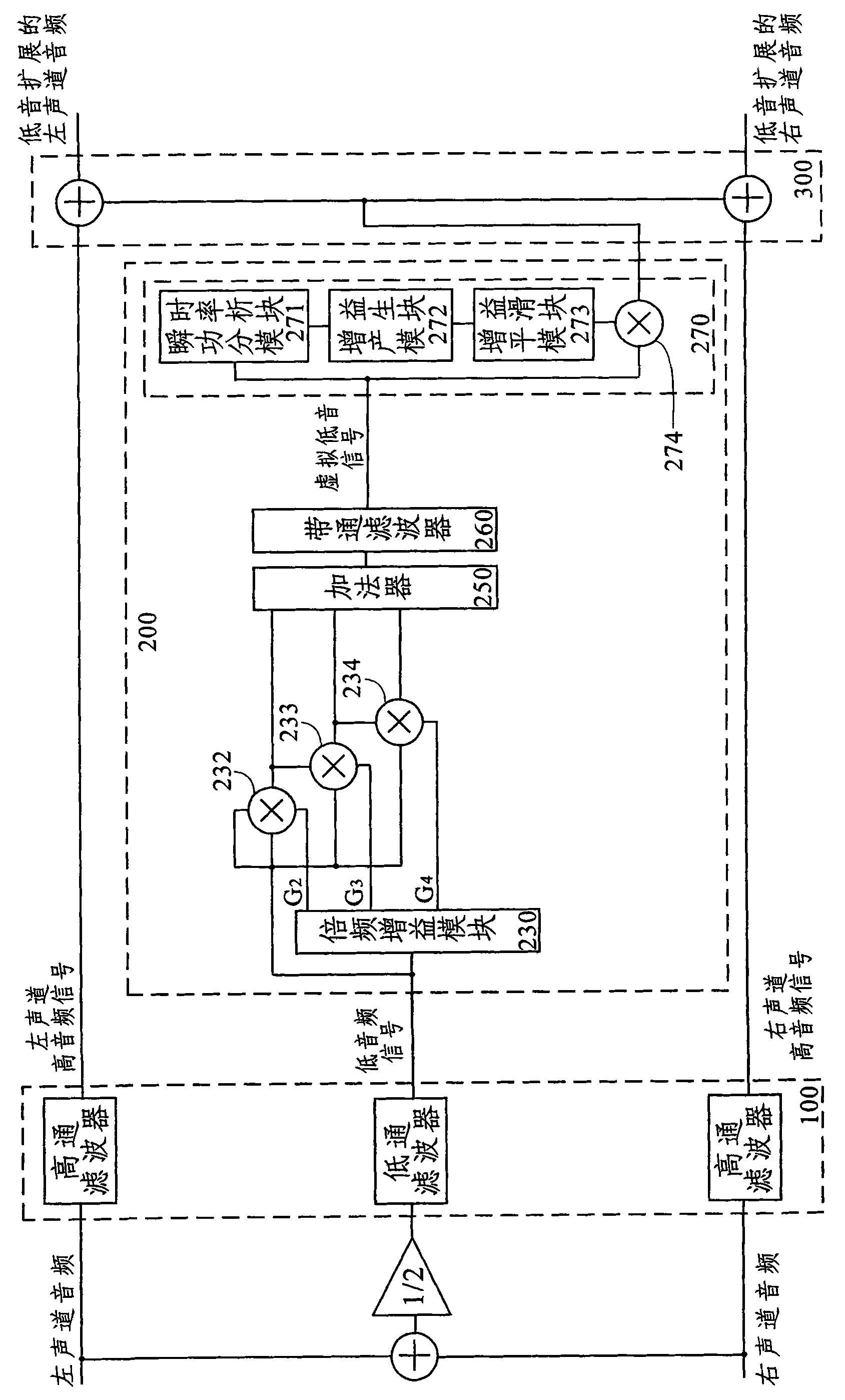

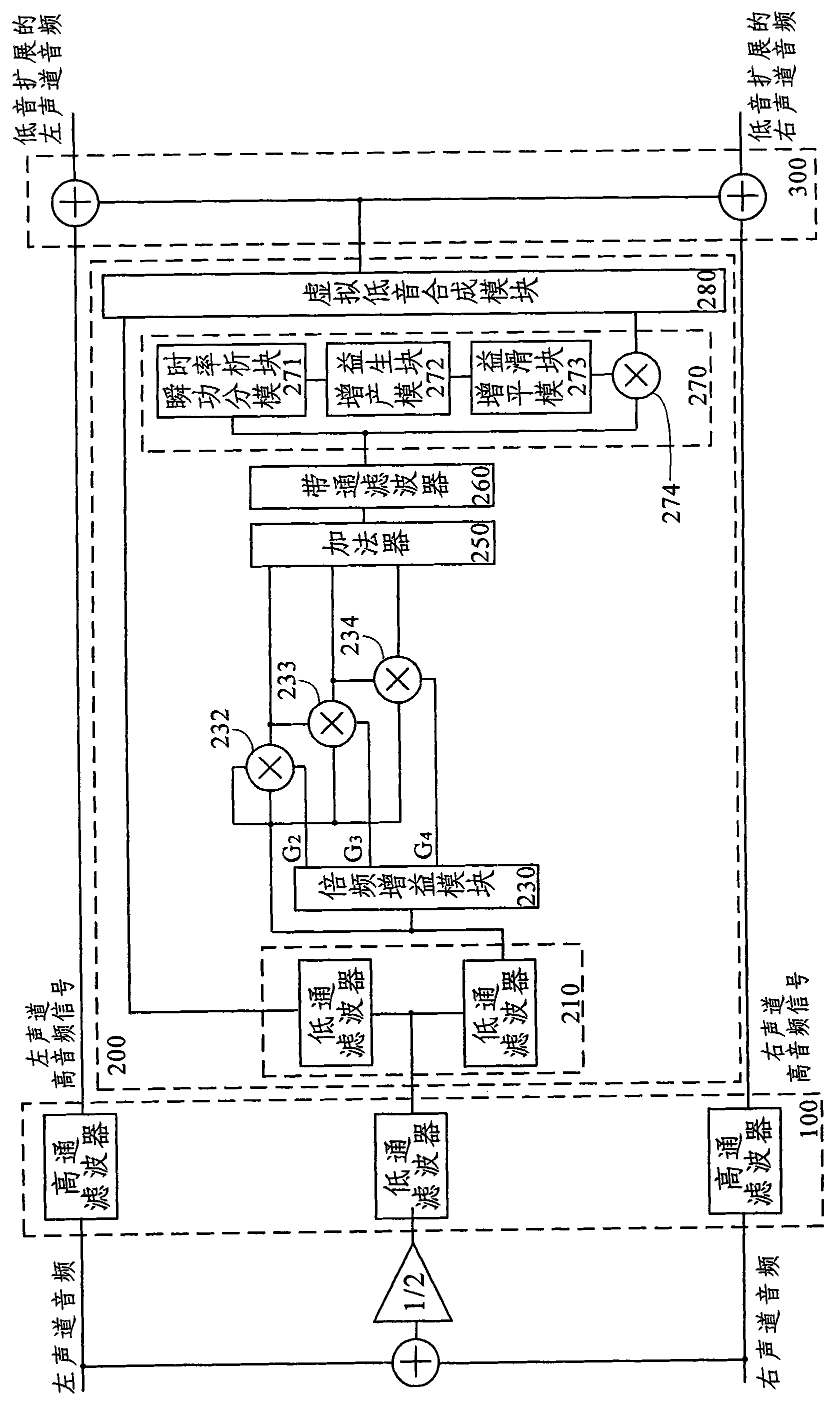

Method and device for restoring signal under speaker cut-off frequency to original sound

ActiveCN101964190AAvoid distortionWide frequency response rangeSpeech synthesisTransducer circuitsDynamic range compressionHarmonic

The invention relates to an audio signal processing method and an audio signal processing device for restoring a signal under speaker cut-off frequency to the original sound, which aim to extend the equivalent sound range of a small-sized speaker and enhance the expression of low sound. A virtual low-sound harmonic wave sequence is generated by a feedforward self-multiplication operation method and singly by the grain corresponding to each harmonic wave respectively, so that the proportion of the harmonic waves can be freely controlled and the tone can be controlled; the gain applied to the virtual low-sound signal is adjusted by a virtual low-sound signal sound-type control method and according to the impacting process and the releasing process to control the sound types of the virtual low-sound signal so as to control the hearing feeling of the final sound signal according to requirements to make the sound from 'knocking' more abundant; and the virtual low-sound signal processing effect is enhanced by combining techniques such as a low-sound dynamic range compression technique, a power conservation filter technique, a low-sound signal frequency redivision technique and the like, even if under high speaker cut-off frequency, the quality of the original low sound can still be restored by effectively restoring the virtual low-sound signal.

Owner:SHENZHEN FOCALTECH SYST

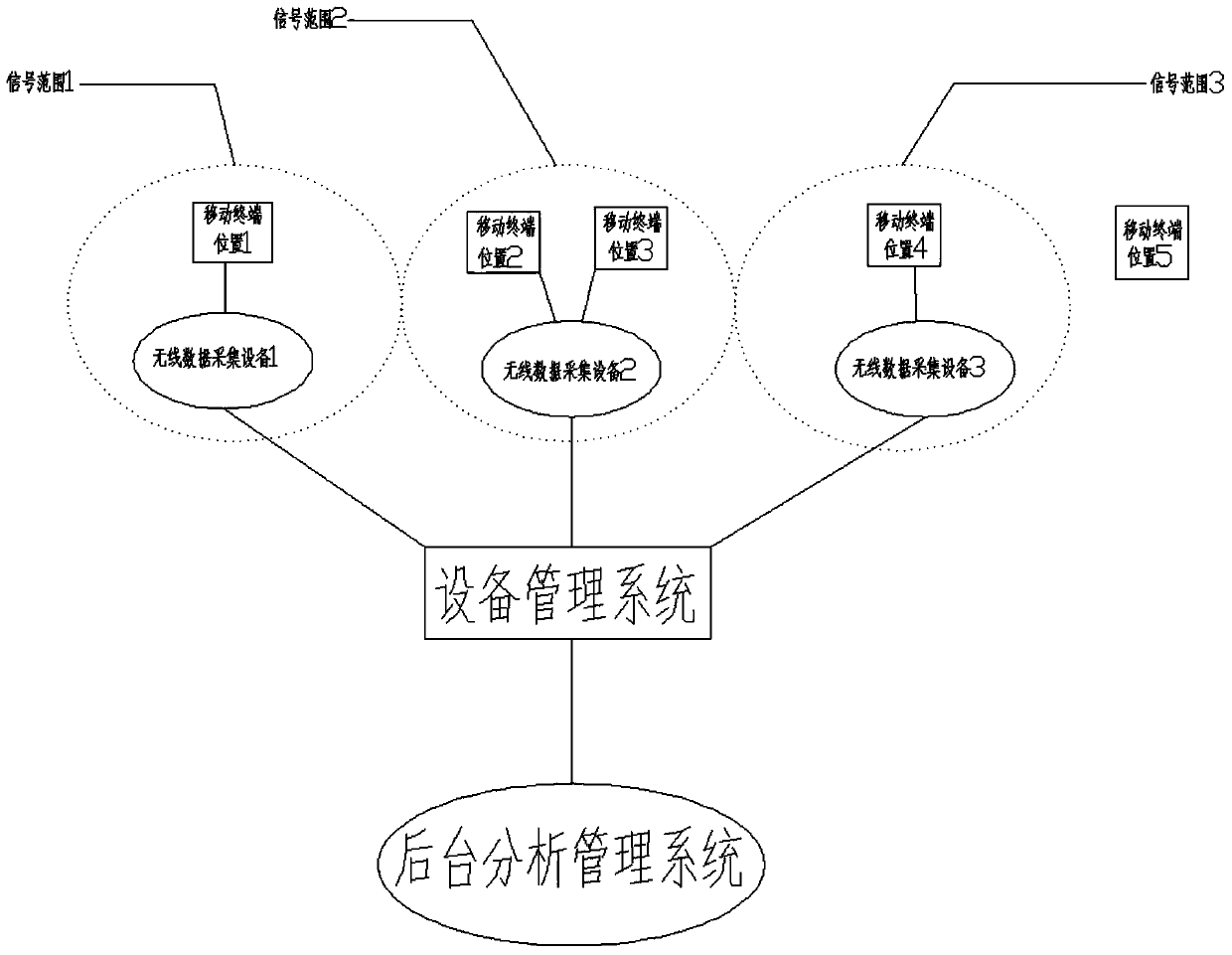

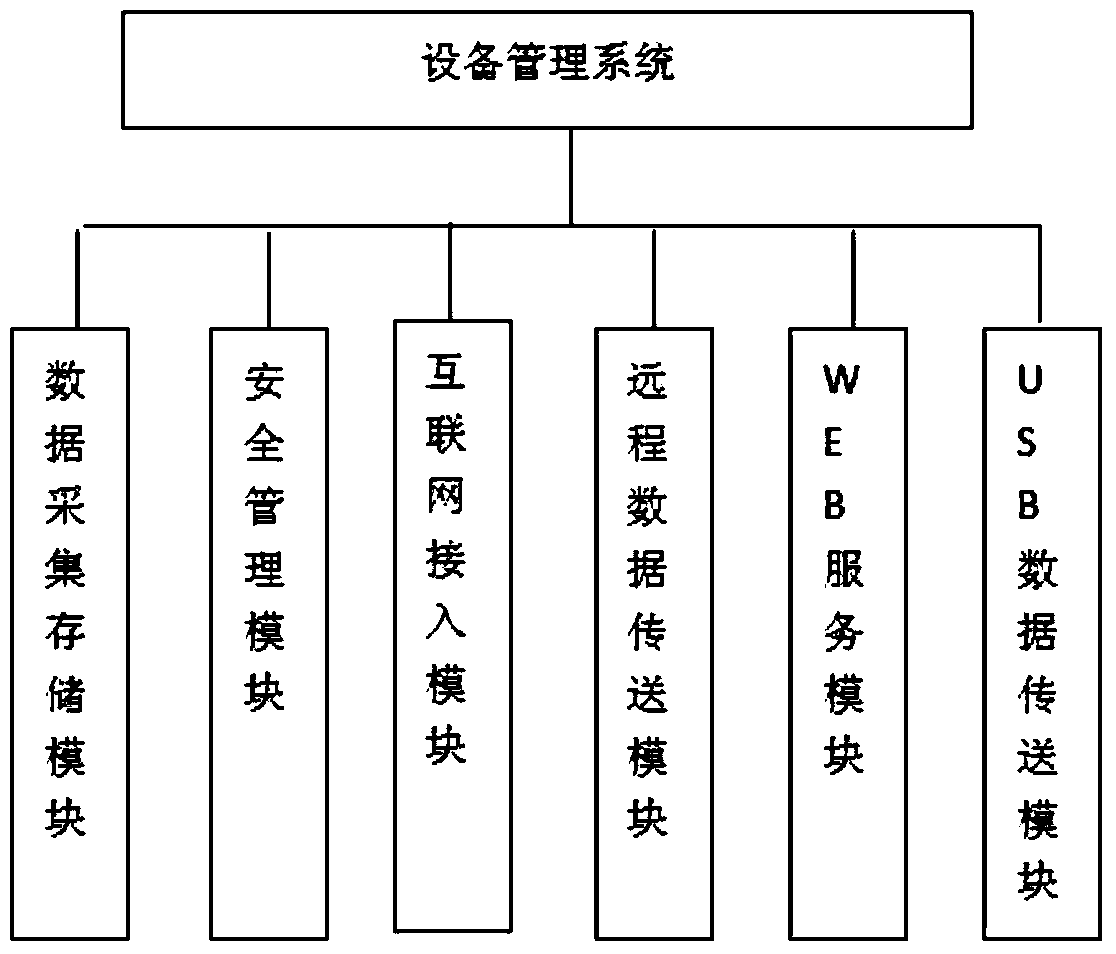

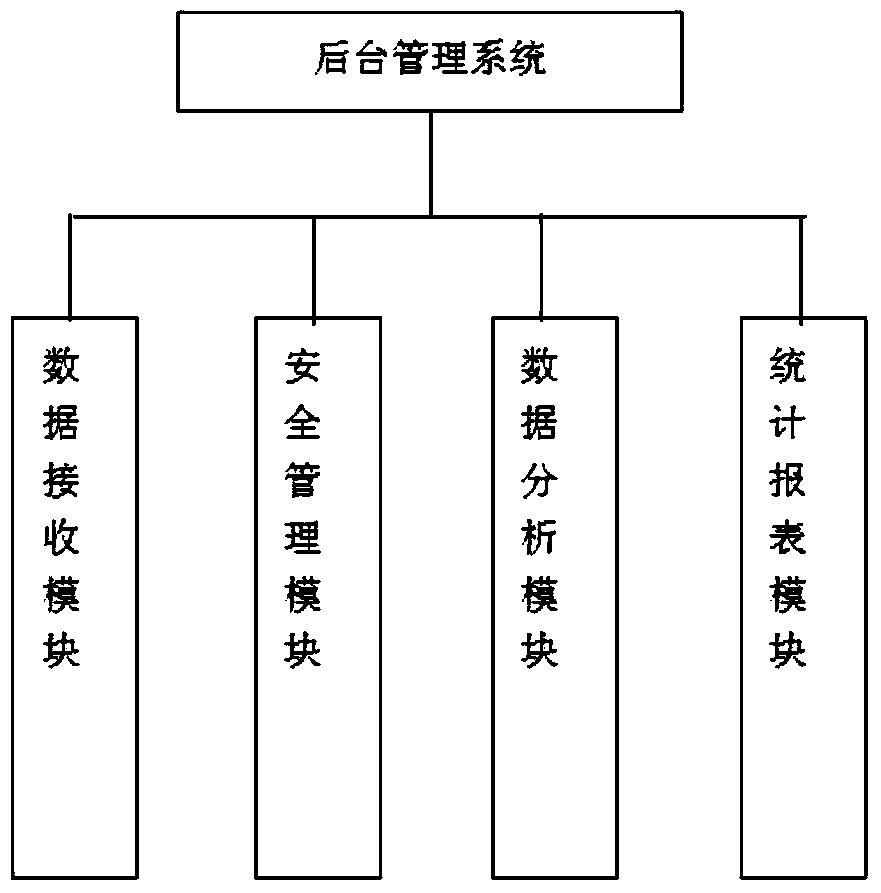

Mobile terminal motion trail analyzing system

The invention relates to a mobile terminal motion trail analyzing system. A mobile terminal with a wireless local area network function being opened can be used for sending probe request frames at regular time, mobile terminal acquisition equipment is arranged in different areas for acquiring probe request frame signals sent by the mobile terminal in each area, MAC addresses of the mobile terminals are obtained through analyzing the probe request frame signals, the mobile terminal acquisition equipment transmits the acquired data to a background management and analysis system, and the background management and analysis system obtains the motion trails of the mobile terminals through analyzing the MAC addresses, the acquisition time and the acquisition areas. The mobile terminal motion trail analyzing system can be used for carrying out statistics on the passenger flow volumes and passenger flow moving directions in the areas in public places, such as retail shop fronts and shopping malls, and the statistics is more accurate and more convenient compared with the traditional labor statistics and infrared ray statistics.

Owner:BEIJING WINCHANNEL SOFTWARE TECH

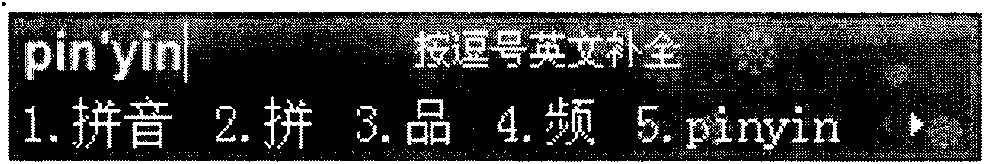

Method and device for realizing dynamic skin of input method

ActiveCN101866282AAdd animation effectsVisual and Mental EnrichmentSpecific program execution arrangementsPhysical therapyPhysical medicine and rehabilitation

The invention discloses a method for realizing dynamic skin of an input method. The method comprises the following steps of: obtaining the input behavior information of a user; adjusting the state of the skin of the input method according to the input behavior information; and showing the input behavior information by using the state of the skin of the input method. The invention also discloses a device for realizing the dynamic skin of the input method. The method and the device realize the dynamic skin of the input method, namely the skin shown to the user can be adjusted in real time instead of being kept unchanged or randomly switched among several ones; and moreover, such adjustment is performed according to the change of the input behavior of the user. The method and the device make full use of the input behavior information and realize dynamic change of the skin of the input method along with the input behavior of the user.

Owner:BEIJING SOGOU TECHNOLOGY DEVELOPMENT CO LTD

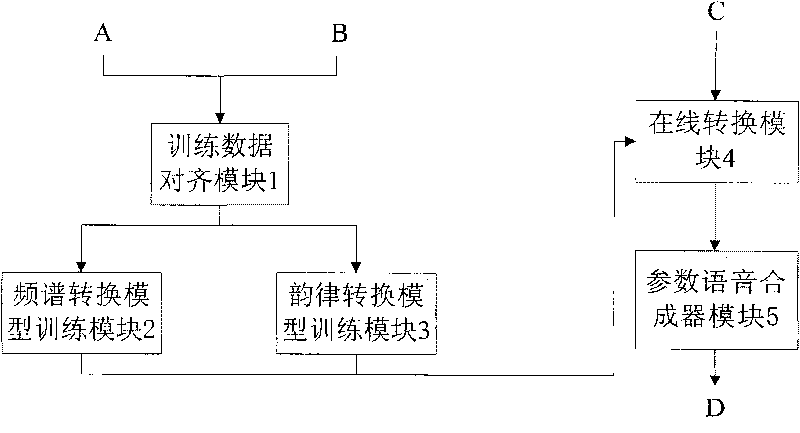

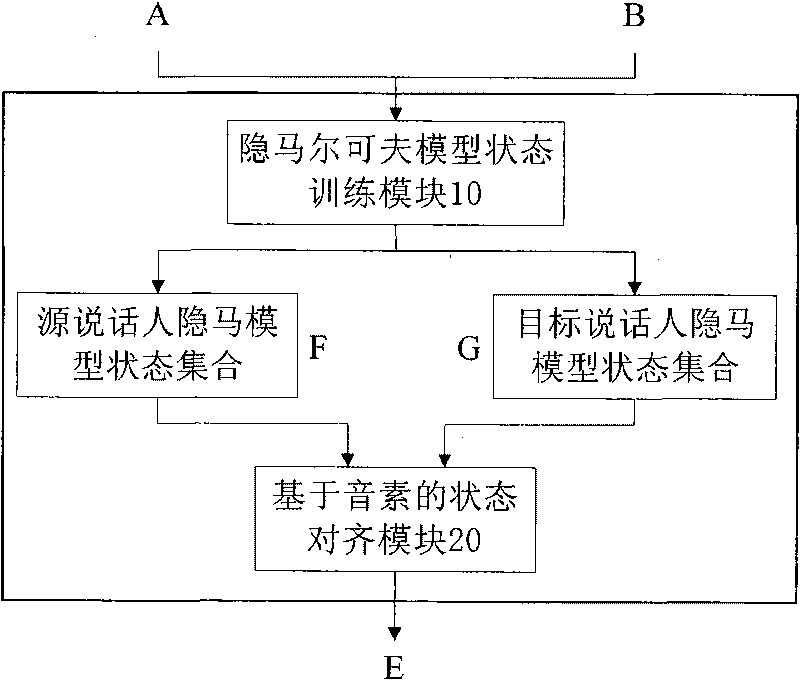

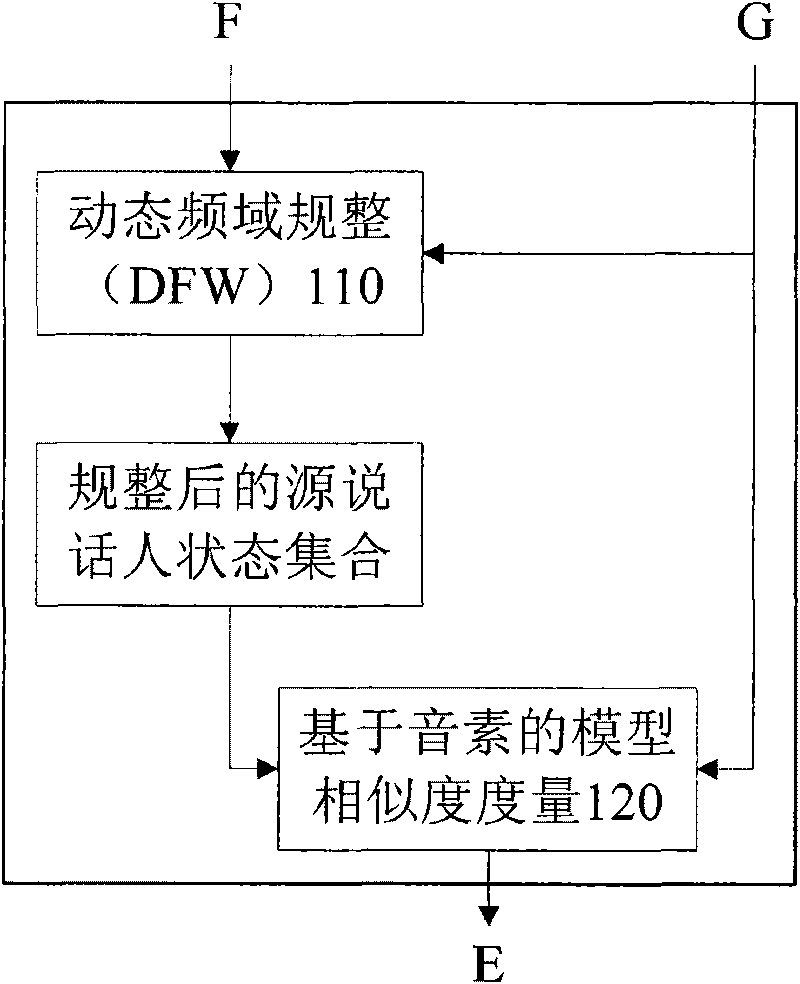

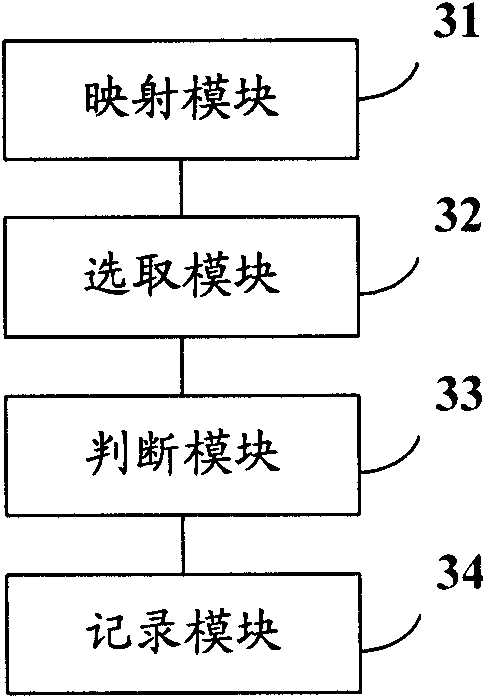

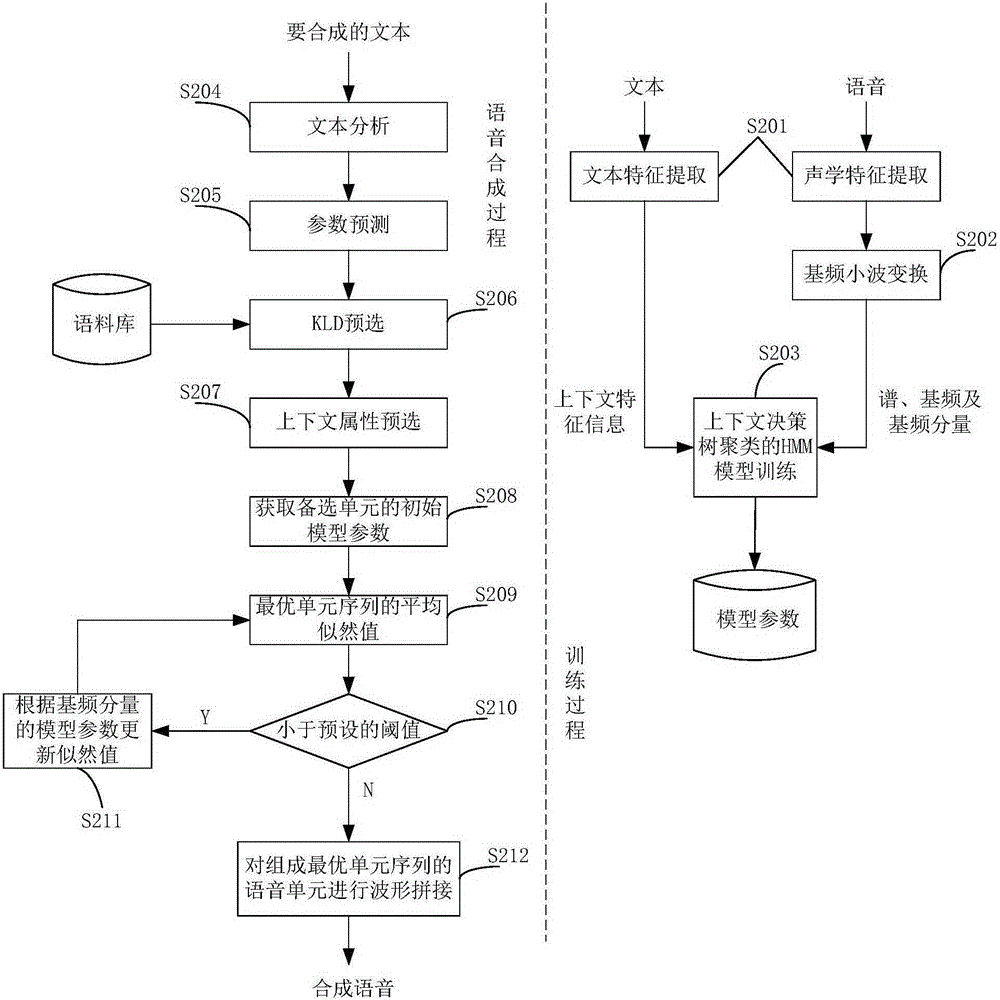

Text-independent speech conversion system based on HMM model state mapping

ActiveCN101751922AImprove accuracyRich conversion resultsSpeech recognitionSpeech synthesisFrequency spectrumVoice transformation

The invention discloses a text-independent speech conversion system based on HMM model state mapping, which is composed of a data alignment module, a spectrum conversion model generation module, a rhythm conversion model generation module, an online conversion module and a parameter voice synthesizer; wherein, the data alignment module receives the voice parameters of the source and target speakers, and aligns to the input data according to phoneme information to generate state-aligned data pairs; the spectrum conversion model generation module receives the aligned data pairs and establishes a voice spectrum parameter conversion module based on source and target speakers according to the data; the rhythm conversion model generation module receives the aligned data pairs and establishes a voice rhythm parameter conversion module based on source and target speakers according to the data; the online conversion module obtains the converted voice spectrum parameter and rhythm parameter according to the conversion modules generated by the spectrum conversion model generation module and the rhythm conversion model generation module, and voice data of the source speaker for conversion; the parameter voice synthesizer module receives the converted spectrum information and rhythm information from the online conversion module and outputs the converted voice result.

Owner:北京中科欧科科技有限公司

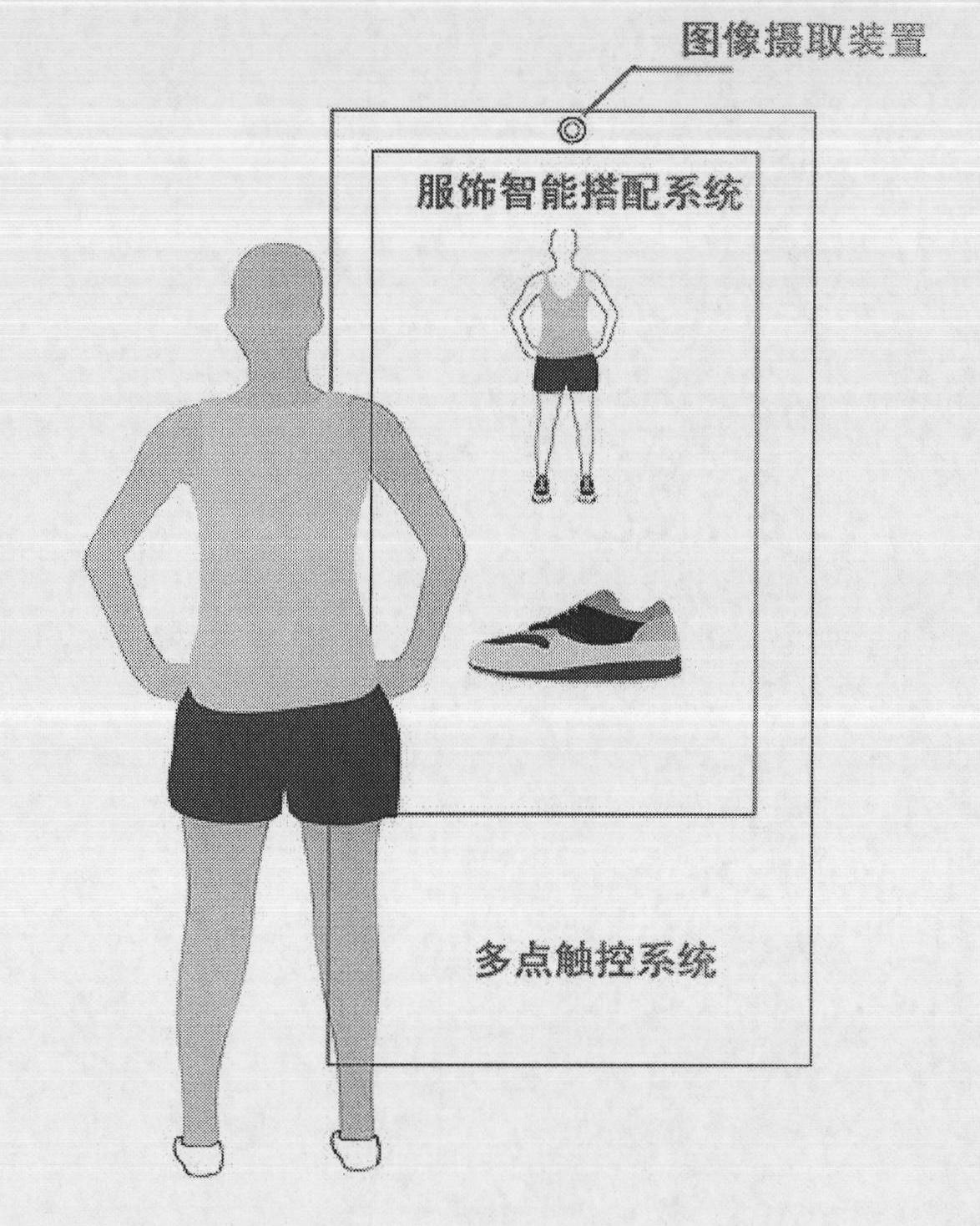

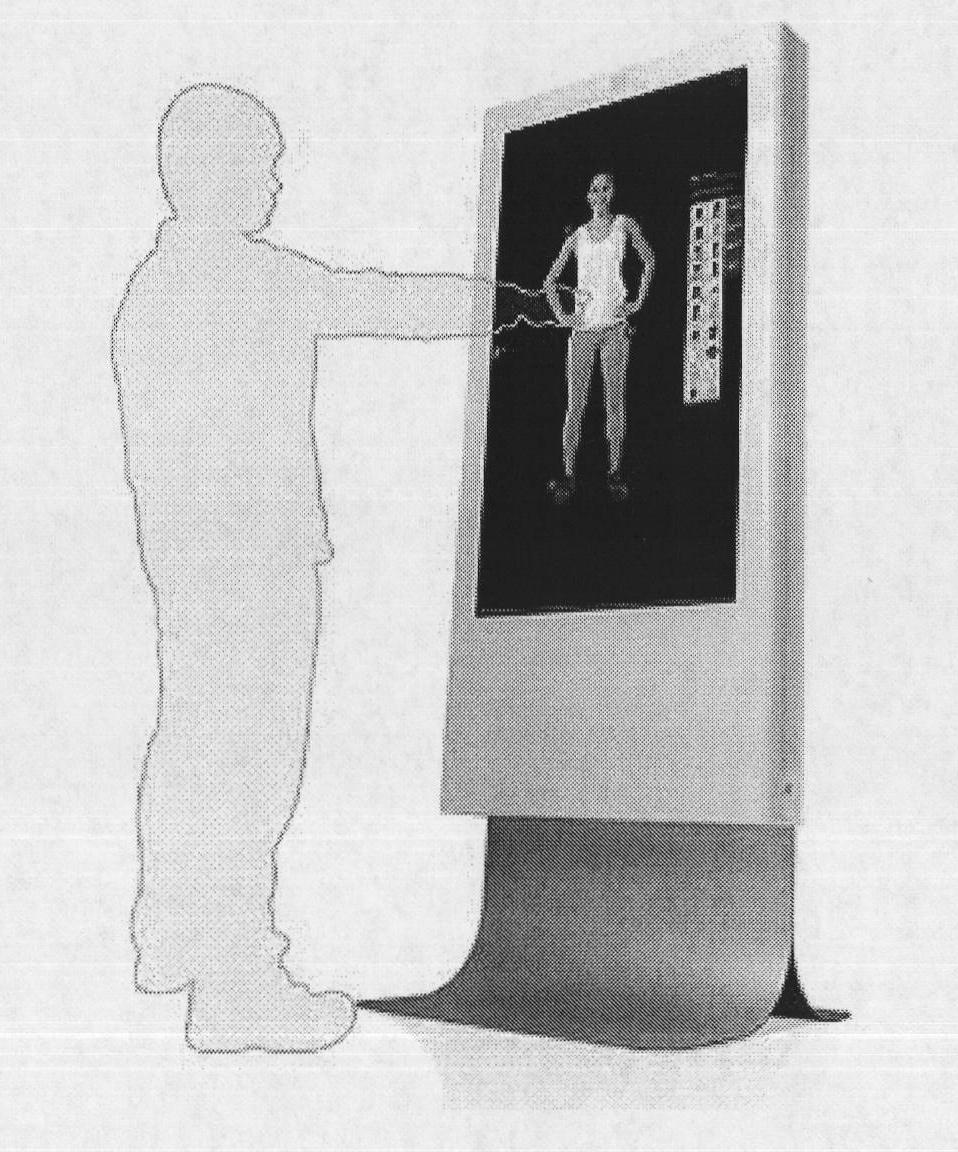

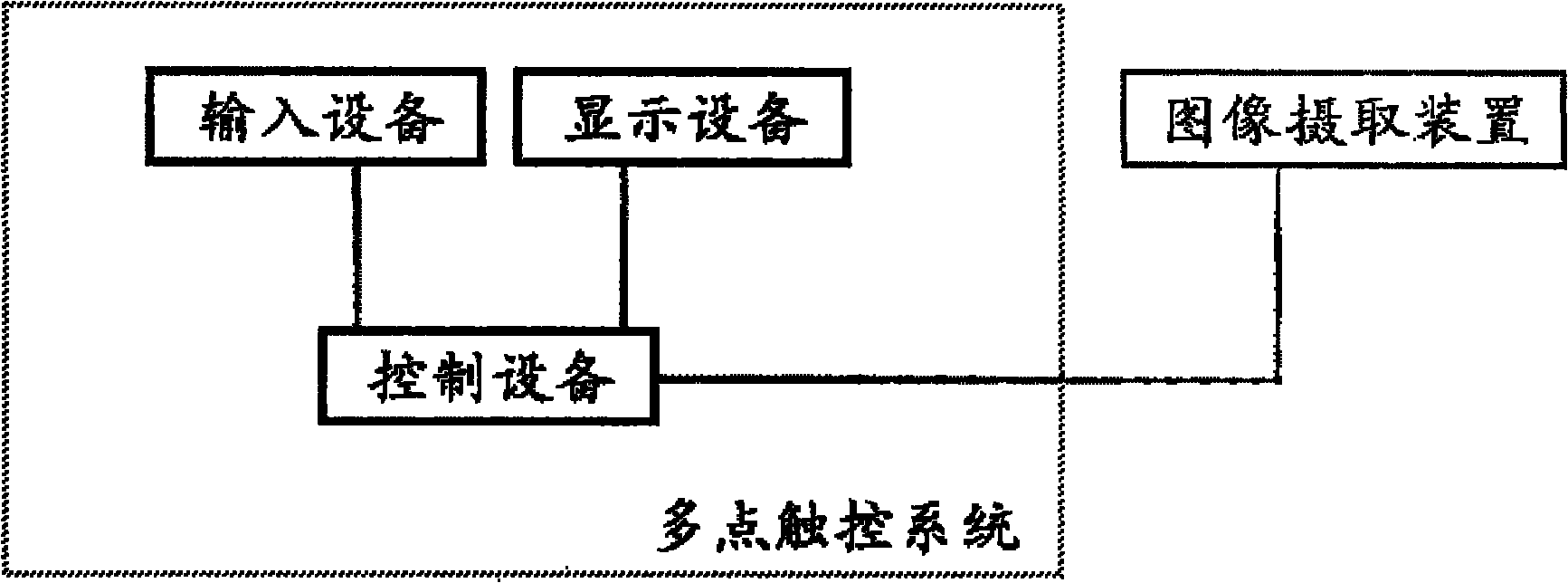

Intelligent clothing matching system and method aiming at sale terminals

The invention relates to intelligent clothing matching system and method aiming at sale terminals. The system comprises a multipoint touch control system (1), an image acquisition device (2) and an intelligent clothing matching system (3); a user applies a plurality of hand gestures to operate a user control interface of the multipoint touch control system, the multipoint touch control system detects a plurality of induction points generated on the same screen in the same time, transmits various information data generated by user control to the intelligent clothing matching system and has the functions of gesture identification, image identification, finger motion trail judgment and multipoint touch control; the image acquisition device (2) is used for acquiring user photographs and transmitting the photographs into the intelligent clothing matching system; the intelligent clothing matching system (3) is used for storing and analyzing the user photographs sent by the image acquisition device, invoking products which are suitable for the user from a product database and displaying on the user control interface, and the user realizes the virtual fitting of the products on a virtual model by finger operation. The invention simplifies and accelerates the product selection process of the user without long-time selection as well as fussy and repeated fitting process of the user.

Owner:翁荣森 +2

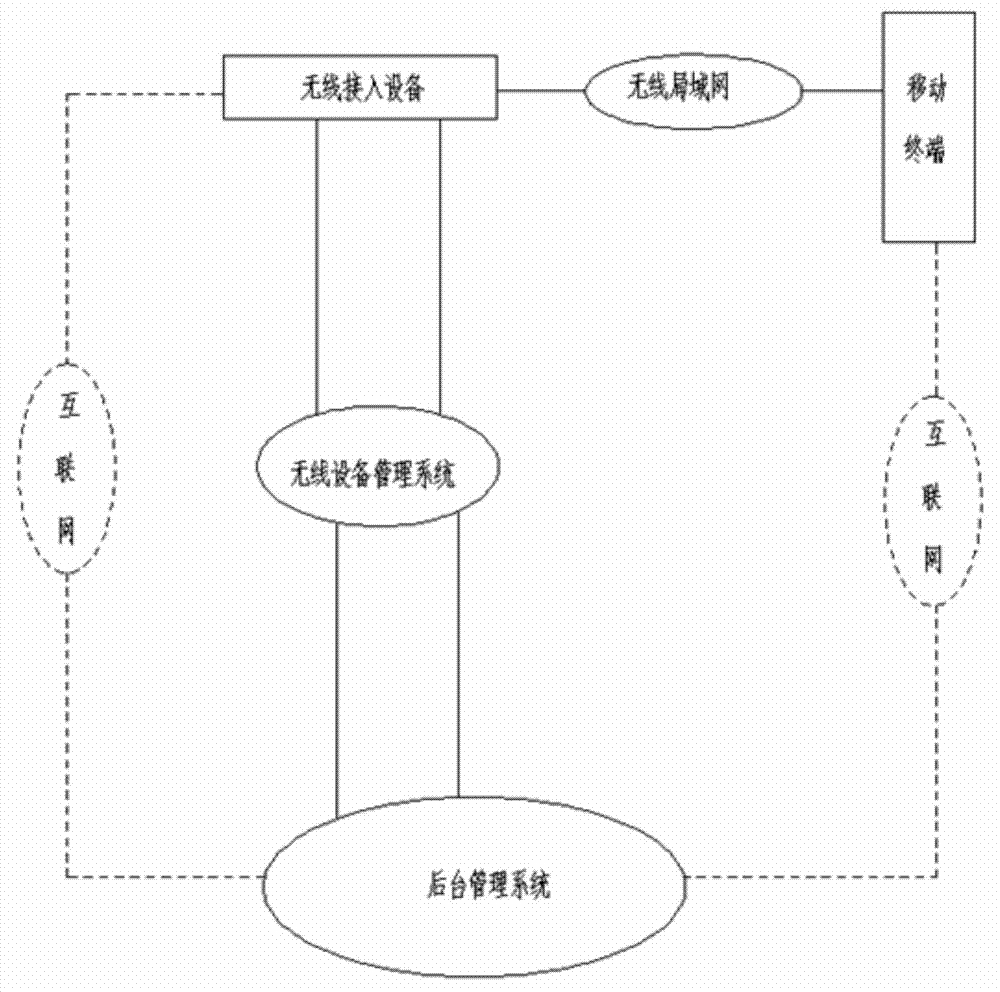

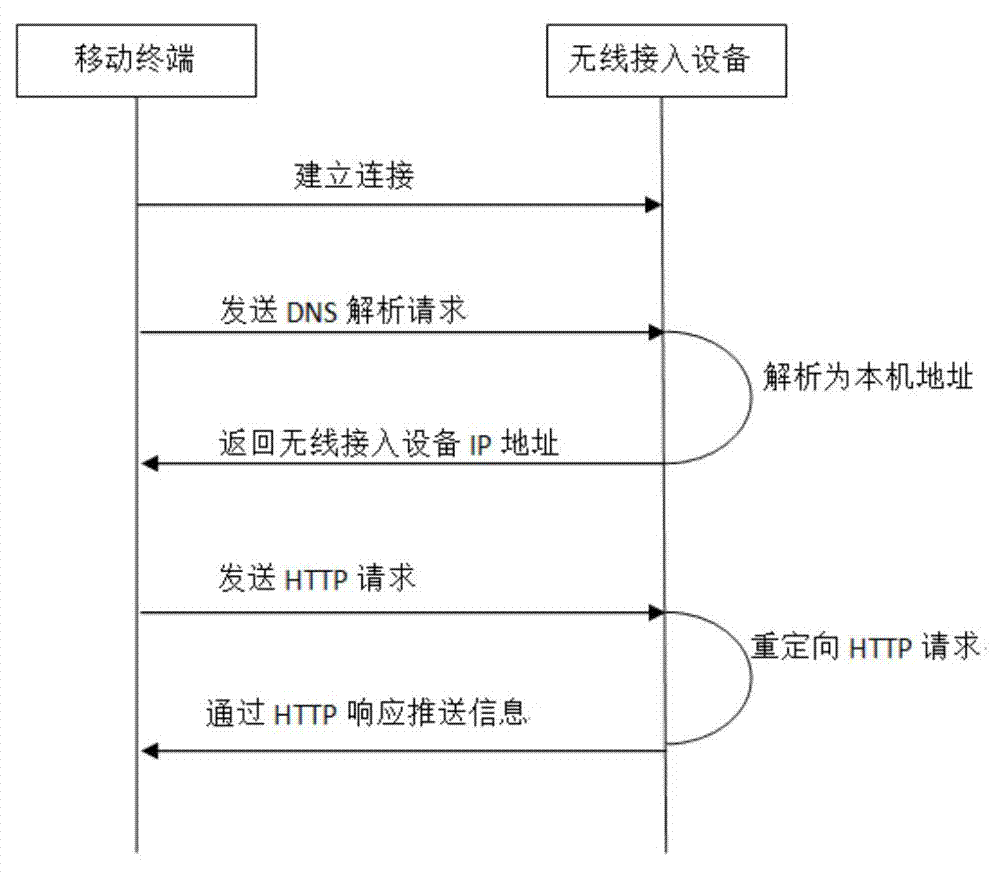

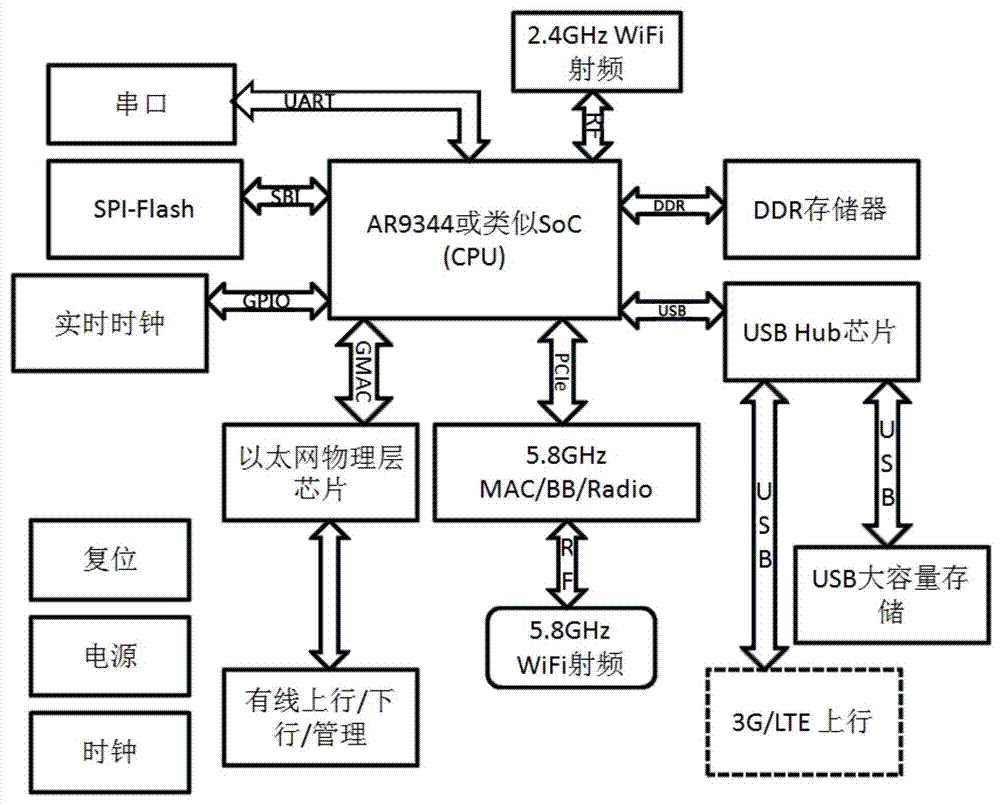

Information propaganda pushing method and pushing device thereof based on wireless network

InactiveCN103501481AEasy to operateCustomer service traffic charges and download speed limitationsLocation information based serviceTransmissionTelecommunicationsLocal area network

The invention relates to an information propaganda pushing method and pushing device thereof based on a wireless network. After a mobile terminal establishes a wireless local area network connection with a wireless access device, the mobile terminal sends an HTTP request for visiting the network to the wireless access device. The wireless access device records a mac address of the request and re-orientates the HTTP request into a network resource address, namely an URL, in the wireless access device. After the network resource address receives the request, built-in propaganda information and an application program in the wireless access device or propaganda information and an application program downloaded from a backstage management system through a wireless device management system are pushed to the mobile terminal in an HTTP response mode. After the application program pushed by the wireless access device is installed on the mobile terminal, the information is directly pushed by the backstage management system to the mobile terminal.

Owner:BEIJING WINCHANNEL SOFTWARE TECH

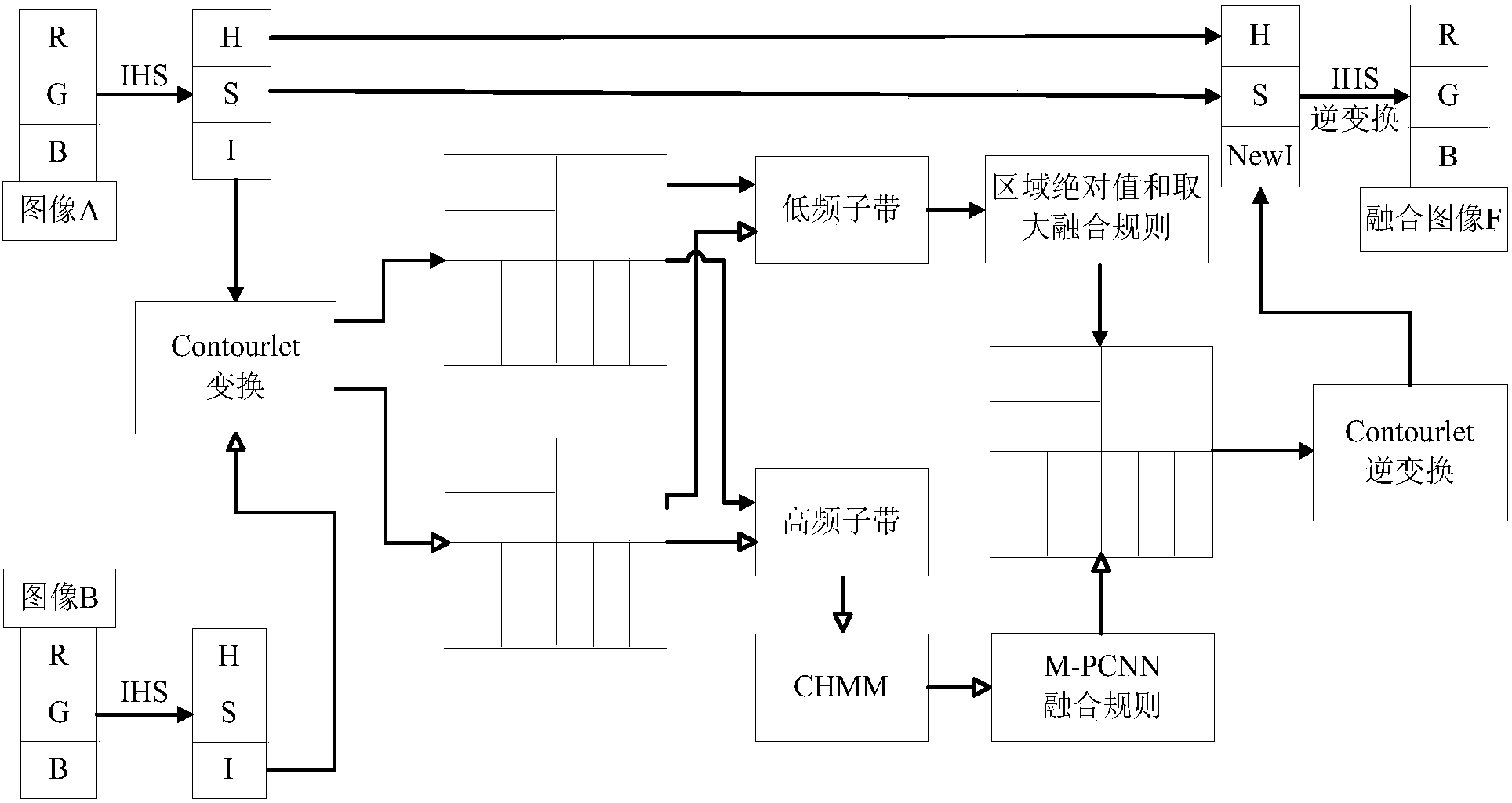

Contourlet domain multi-modal medical image fusion method based on statistical modeling

The invention discloses a Contourlet domain multi-modal medical image fusion method based on statistical modeling, mainly for solving the problems of difficulty in balancing spatial resolution and spectrum information during medical image fusion. The realization steps comprise: 1), performing IHS transformation on an image to be fused, and obtaining brightness, tone and saturation; 2), respectively executing Contourlet transformation on a brightness component, and estimating the CHMM parameters of a context hidden Markov model of a high frequency sub-band by use of an EM algorithm; 3), a low frequency sub-band employing a fusion rule of taking the maximum from area absolute value sums, and the high frequency sub-band designing a fusion rule based on a CHMM and an improved pulse coupling nerve network M-PCNN; 4), a high frequency coefficient and a low frequency coefficient after fusion executing Contourlet inverse transformation to reconstruct a new brightness component; and 5), obtaining a fusion image by use of IHS inverse transformation. The method provided by the invention can fully integrate the structure and function information of a medical image, effectively protects image details, improves the visual effect, and compared to a conventional fusion method, greatly improves the quality of a fusion image.

Owner:JIANGNAN UNIV

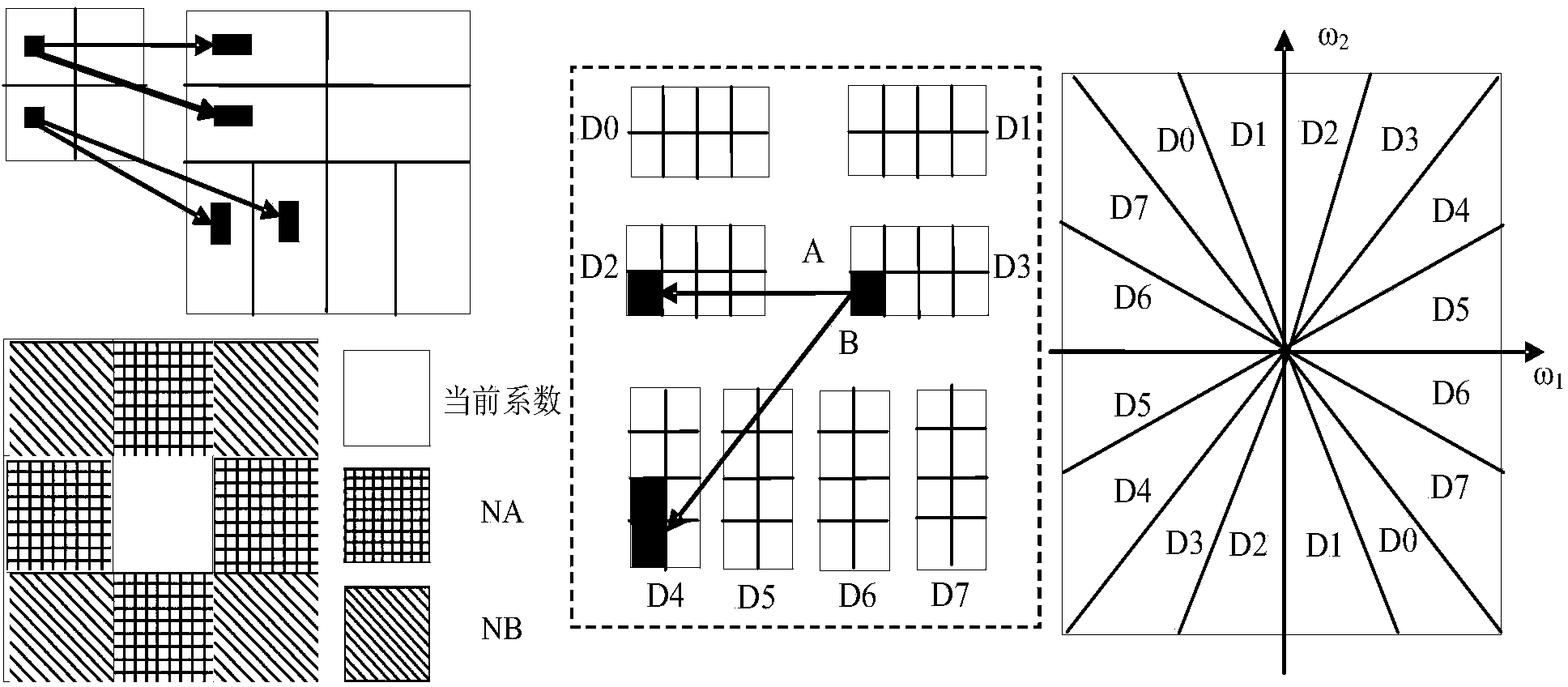

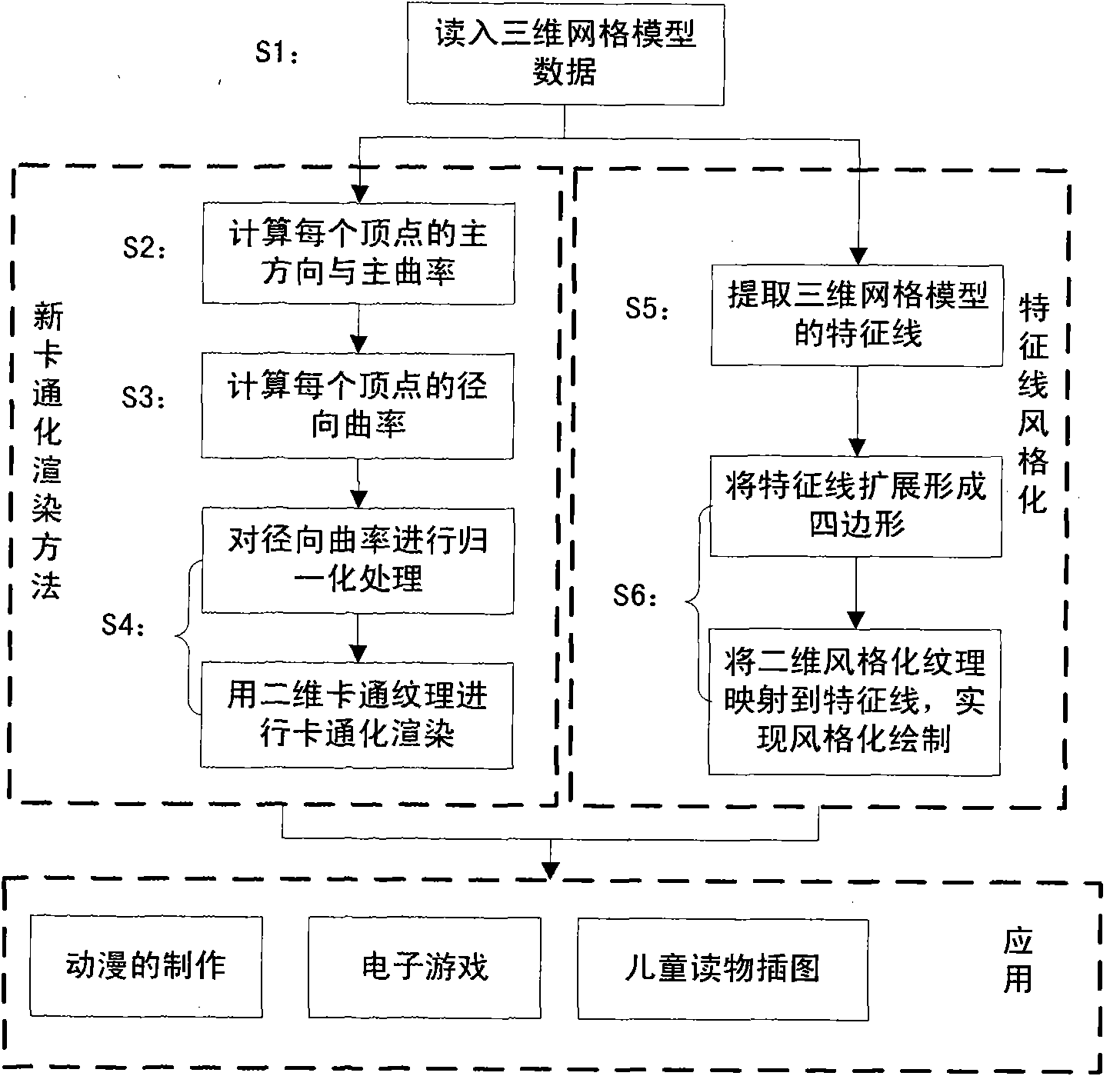

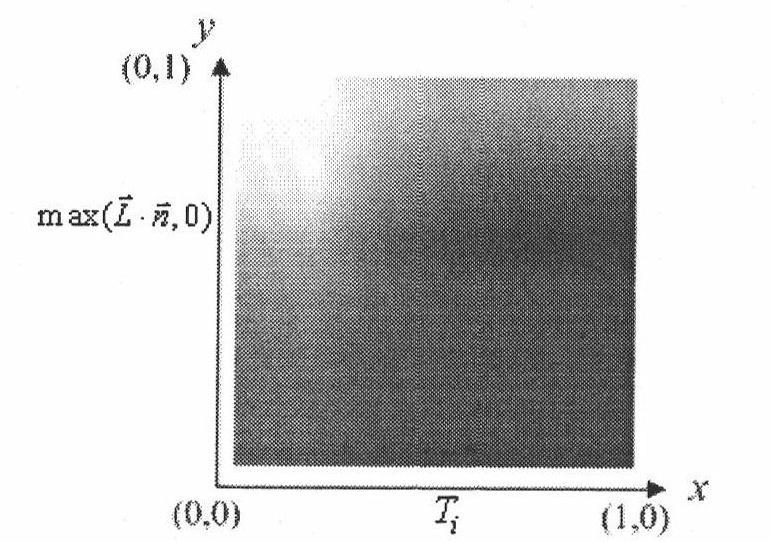

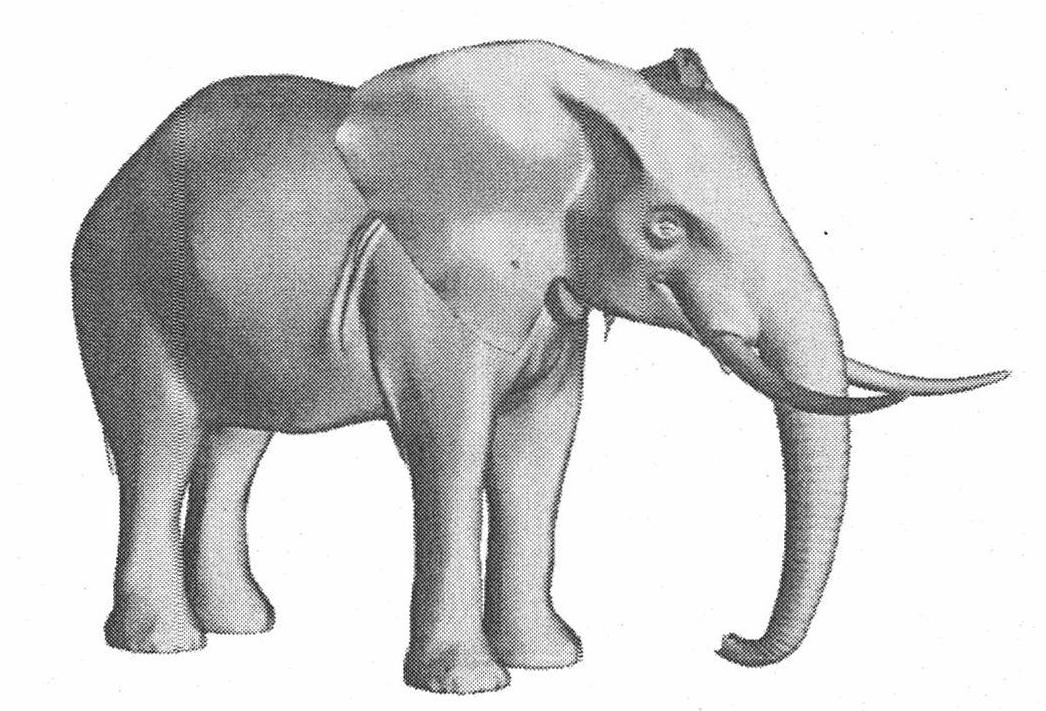

Non-photorealistic rendering method for three-dimensional network model with stylized typical lines

InactiveCN101984467AEnrich internal feature detailsGood drawing effect3D-image renderingPrincipal directionNetwork model

The invention relates to a non-photorealistic rendering method for a three-dimensional network model with stylized typical lines, which comprises the following main steps: 1) calculating the principal direction and the principal curvature of each vertex in a three-dimensional network model; 2) solving the radial curvature of each vertex in the three-dimensional network model under the current viewpoint position, and normalizing all the radial curvatures; 3) carrying out cartoon rendering on the three-dimensional network model by using two-dimensional cartoon textures based on the normalized radial curvatures; 4) extracting typical lines of the three-dimensional network model, and expanding the typical lines along the normal directions of the vertices of the three-dimensional network model to form a quadrangle; and 5) carrying out texture mapping on the typical lines of the three-dimensional network model by using two-dimensional stylized textures to generate the stylized rendering effect of the typical lines. Based on the above steps, the invention realizes the non-photorealistic rendering of the three-dimensional network model with stylized typical lines.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

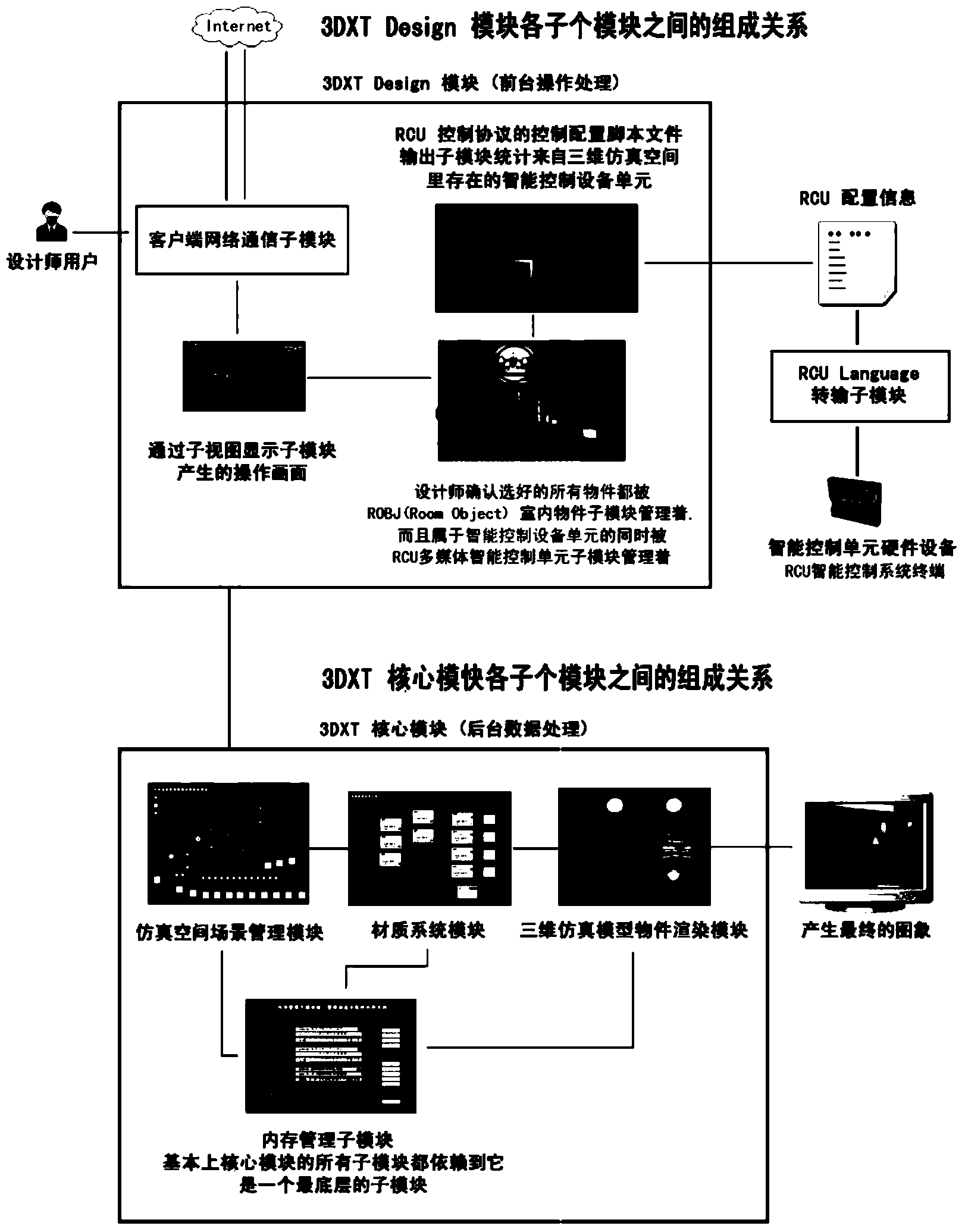

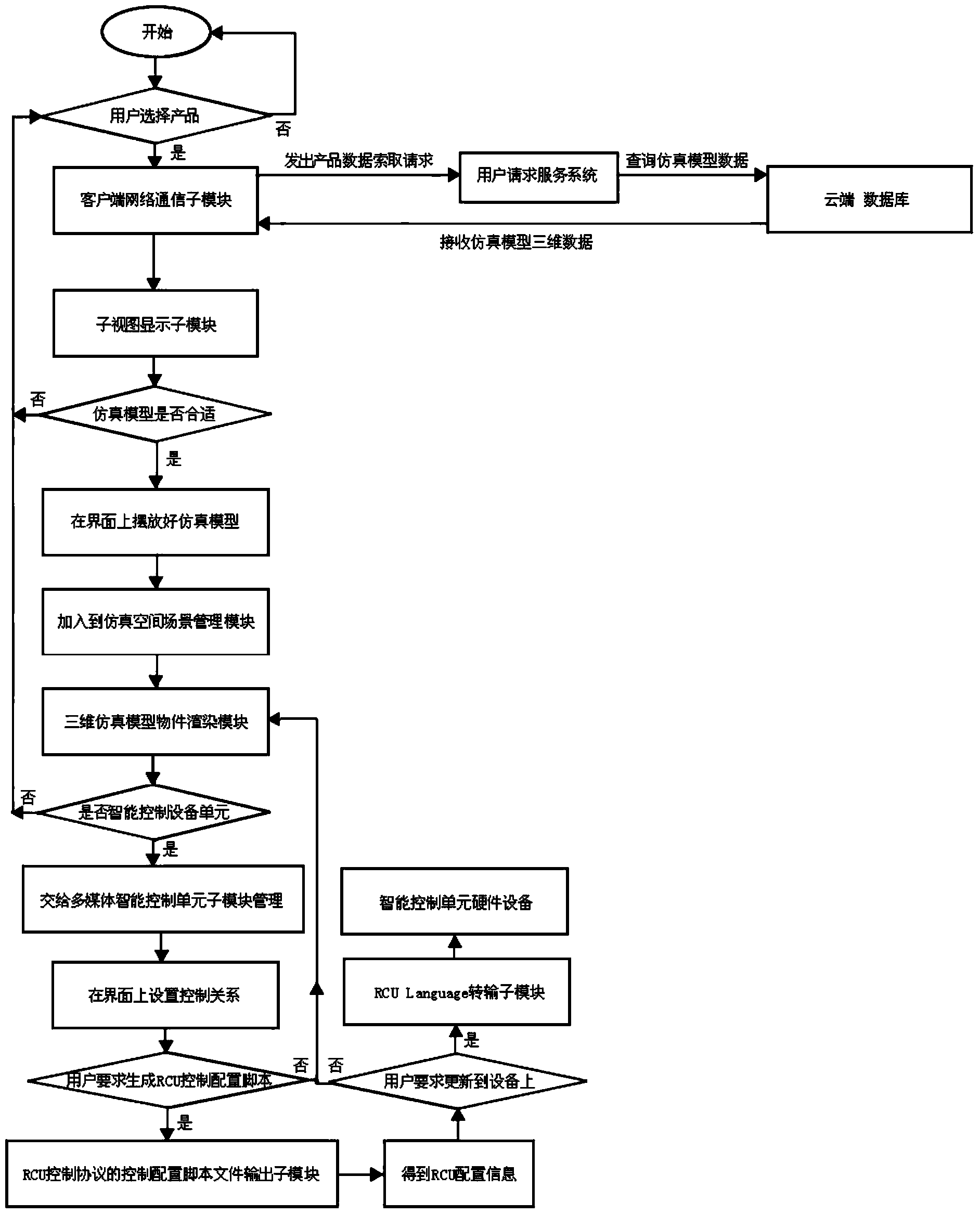

Intelligent device control and arrangement system and method applied to indoor design

InactiveCN103823949AImprove the display effectEasy to findSpecial data processing applicationsThree dimensional simulationControl system

The invention discloses an intelligent device control and arrangement system applied to indoor design. The intelligent device control and arrangement system comprises a database, a user demand service system and a real-time three-dimensional virtual simulation design control system which comprises a core module and a design application module. The design application module comprises a sub-view displaying sub-module used for displaying the appearance and detailed parameter data of a three-dimensional simulation product in a picture-in-picture mode, a mathematical logic abstract model used for defining furnishing articles in a guest room in an OOP mode, an indoor article sub-module for giving an operation processing method, a mathematical logic abstract model for defining an intelligent control unit in the OOP mode, an RCU multimedia intelligent control unit sub-module for giving an operation processing method, a control configuration script file output sub-module and an RCU Language transmission sub-module of an RCU control protocol. According to the system and method, the threshold requirement of user using is low, modification is convenient, and the third dimension is high.

Owner:黄健华

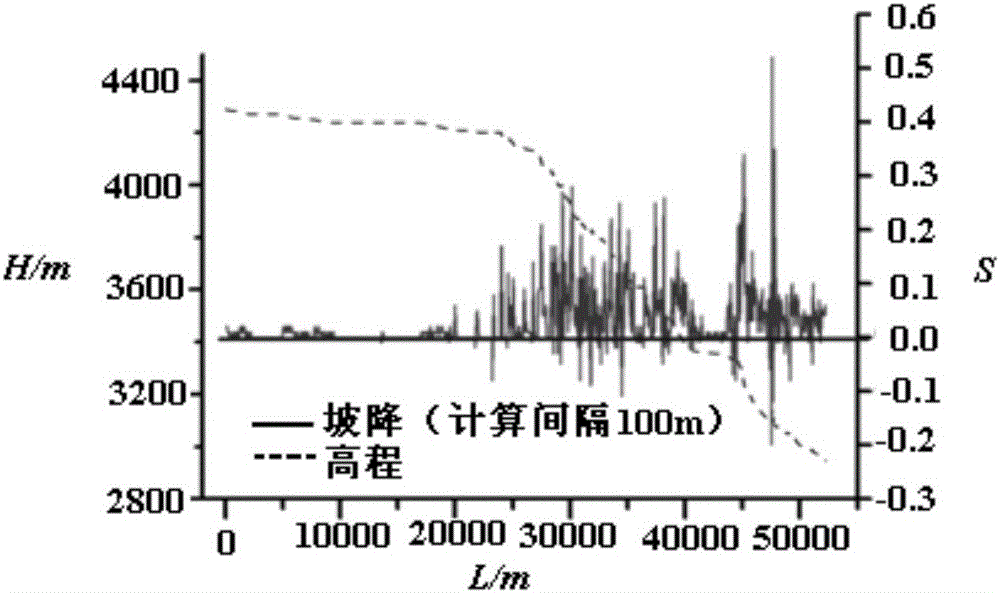

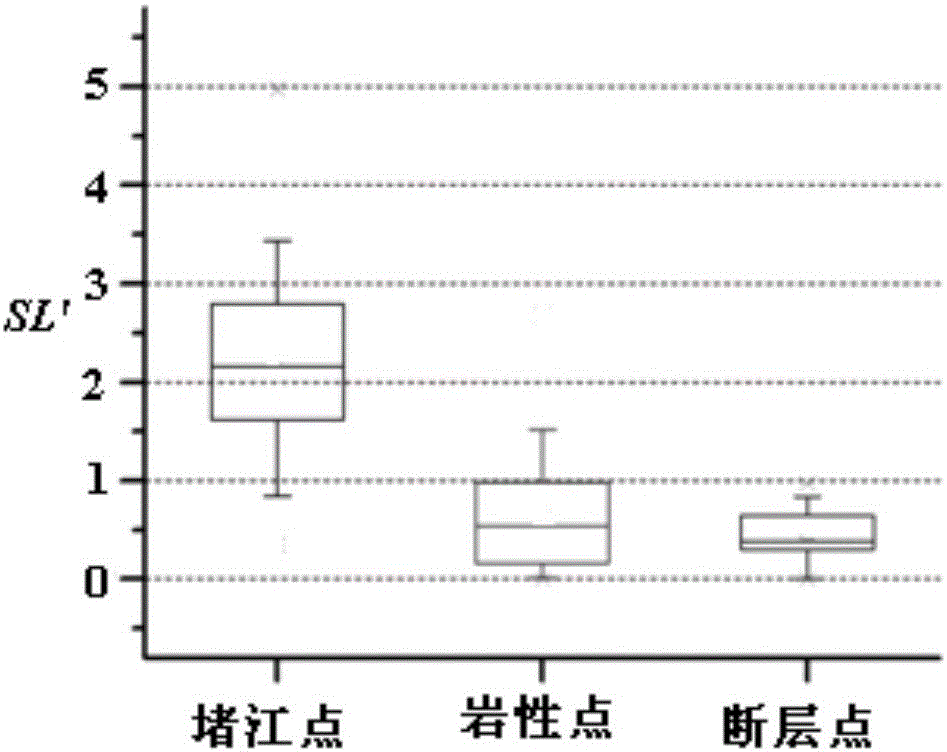

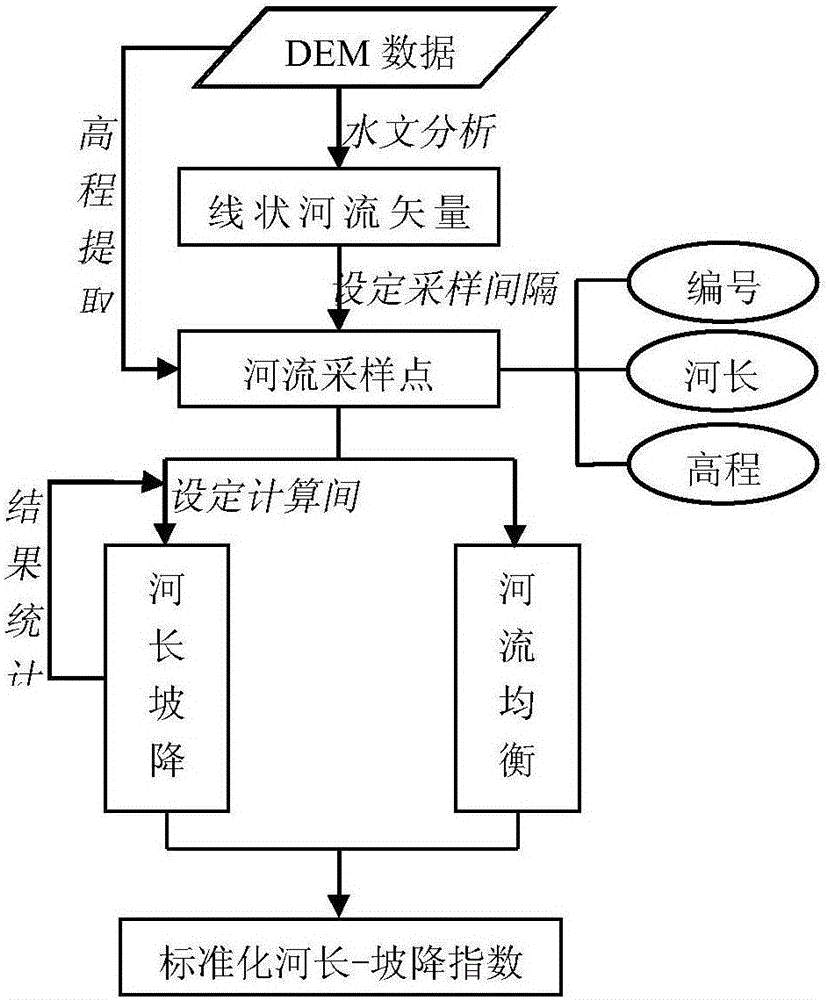

Automatically extracting method for river-valley morphological parameters based on DEM

InactiveCN105740464AGeometric meaning representation is clearEasy to analyzeImage enhancementImage analysisTerrainInterference factor

The invention discloses an automatically extracting method for the river-valley morphological parameters based on DEM, and relates to the field of digital geomorphology analysis. The automatically extracting method includes the steps that DEM data for analyzing a river-valley drainage basin is obtained; all rivers belonging to the river-valley drainage basin are obtained and numbered, and sampling points of a longitudinal section are taken at a certain sampling interval a; the morphological parameters of the longitudinal section of a river valley and the morphological parameters of the transverse section of the river valley are calculated. By means of the automatically extracting method, river-valley geomorphology and subsection difference can be represented in the microscopic view, and the whole terrain of the river valley can be analyzed; representation is comprehensive; the calculated parameters are small in interference factor and high in accuracy and operation efficiency.

Owner:CHINA AERO GEOPHYSICAL SURVEY & REMOTE SENSING CENT FOR LAND & RESOURCES

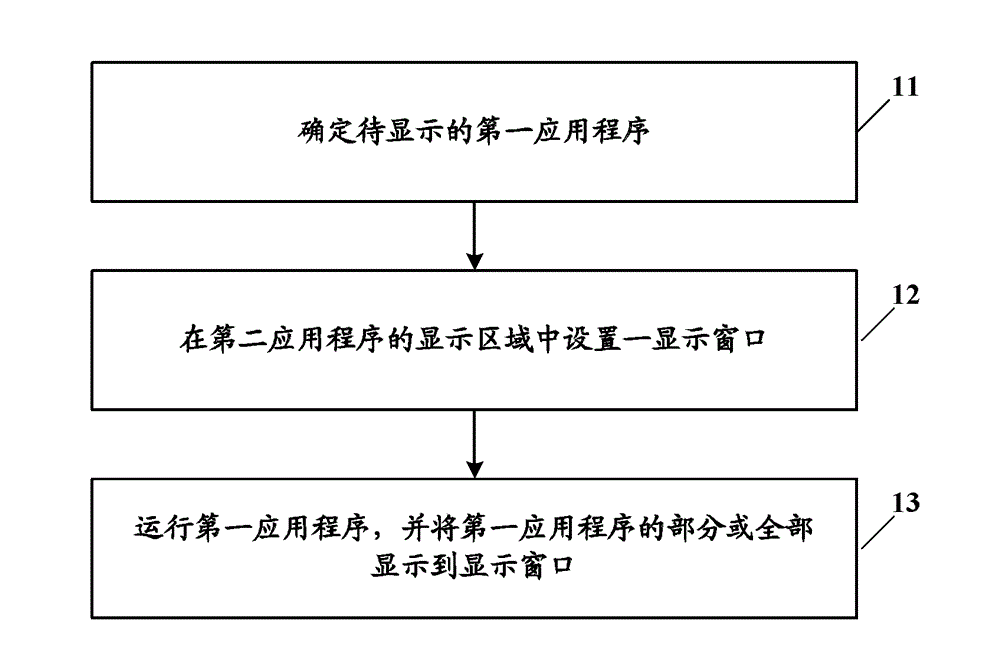

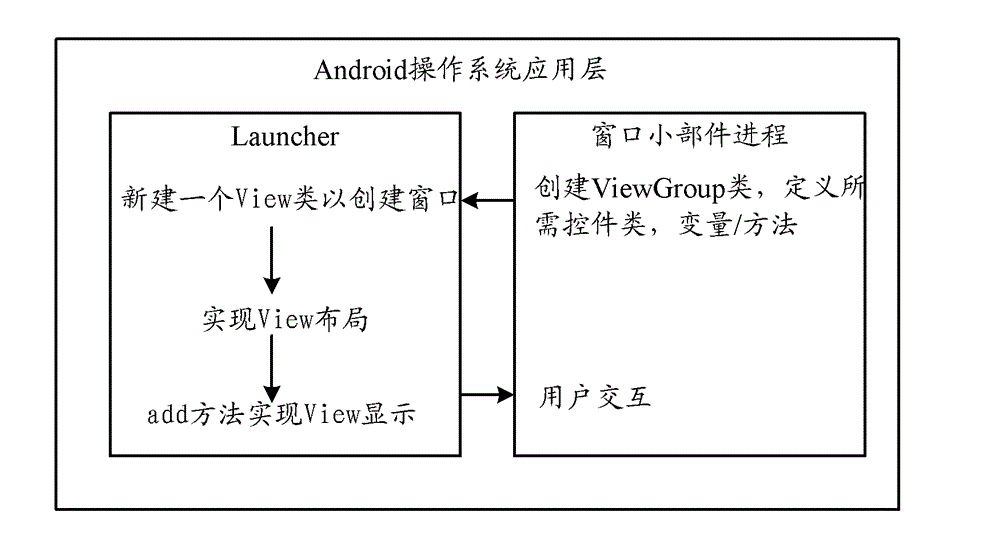

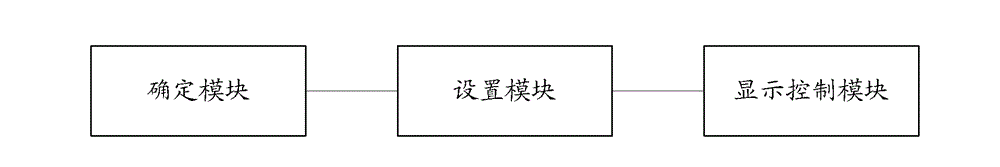

Display process method, display device and electronic equipment

InactiveCN103064735AIncrease flexibilityExpressiveProgram initiation/switchingDisplay processingDisplay device

The invention discloses a display process method, a display device and electronic equipment. The display process method is applied to the electronic equipment, a second application program is stored in the electronic equipment, and the second application program is provided with a display area when used. The display process method includes: assuring the to-be-display first application program, setting a display window in the display area of the second application program, operating the first application program and displaying part or the whole of the first application program on the display window. The display process method, the display device and the electronic equipment improve flexibility of displaying.

Owner:LENOVO (BEIJING) CO LTD

Water absorbent canvas coating and manufacture method thereof

The invention relates to a water absorbent canvas coating which is characterized in that the water absorbent canvas coating is manufactured by the following materials in percentage by weight: 30 to 33water, 18 to 23 polyacrylic emulsion, 8 to 13 polyvinyl alcohol, 12 to 15 titanium pigment, 10 to 12 talcum powder, 7 to 10 light calcium carbonate, 7 to 10 heavy calcium carbonate, 2 to 4 silica white, 0.3 to 0.4 dispersant, 0.2 to 0.3 anti-foaming agent, and 1 to 2 thickening agent; and the canvas provided with the coating is particularly applicable to acrylic painting, the coating which is identical with acrylic paints ensures that the paints is very firmly bound with the canvas, and the acrylic paints can be used for being adjusted to water color effect and poster color effect on the canvas according to the requirements, to ensure that the color tolerance of the acrylic paints is better and the expressive force is stronger.

Owner:WUXI PHOENIX ARTIST MATERIALS

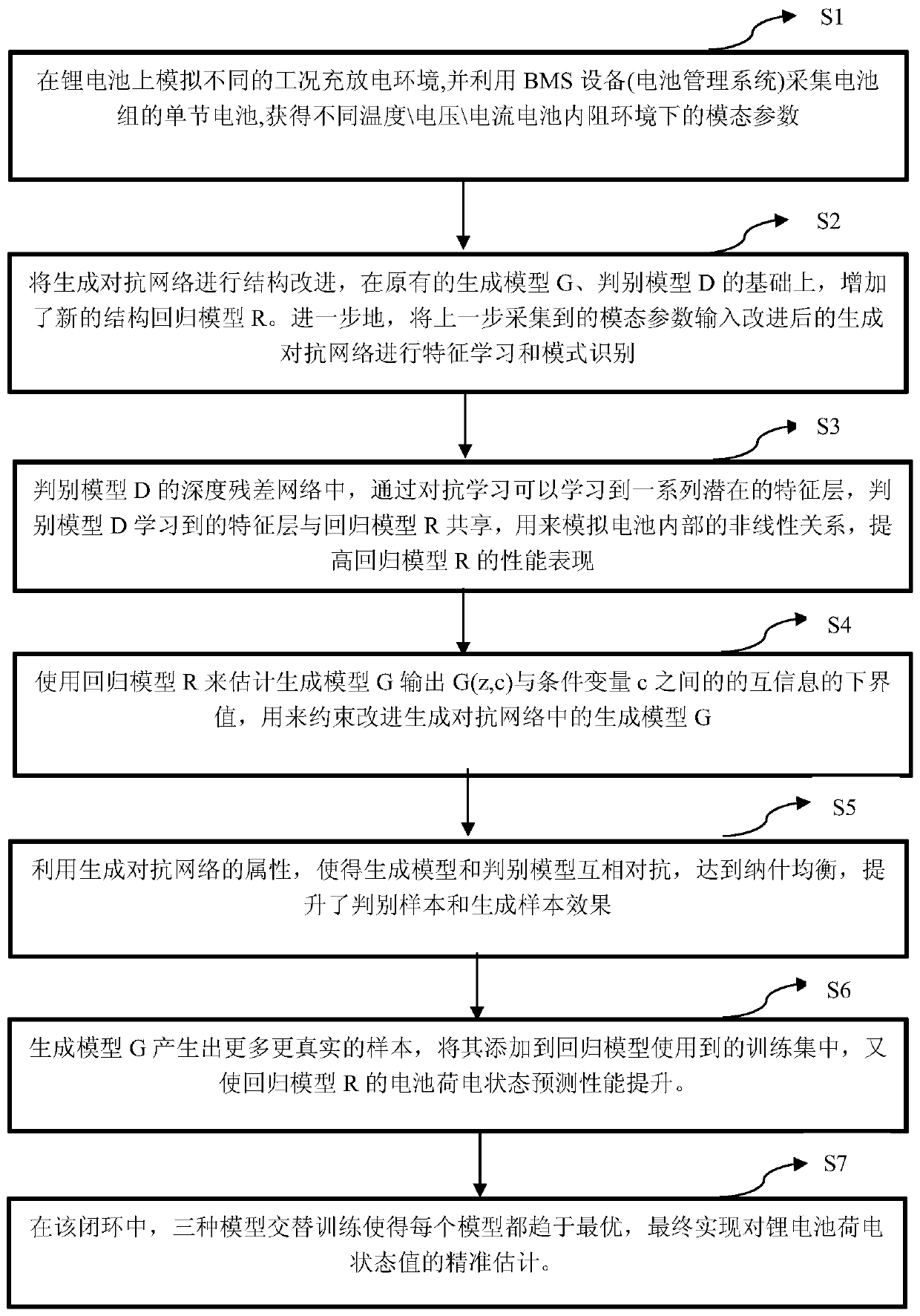

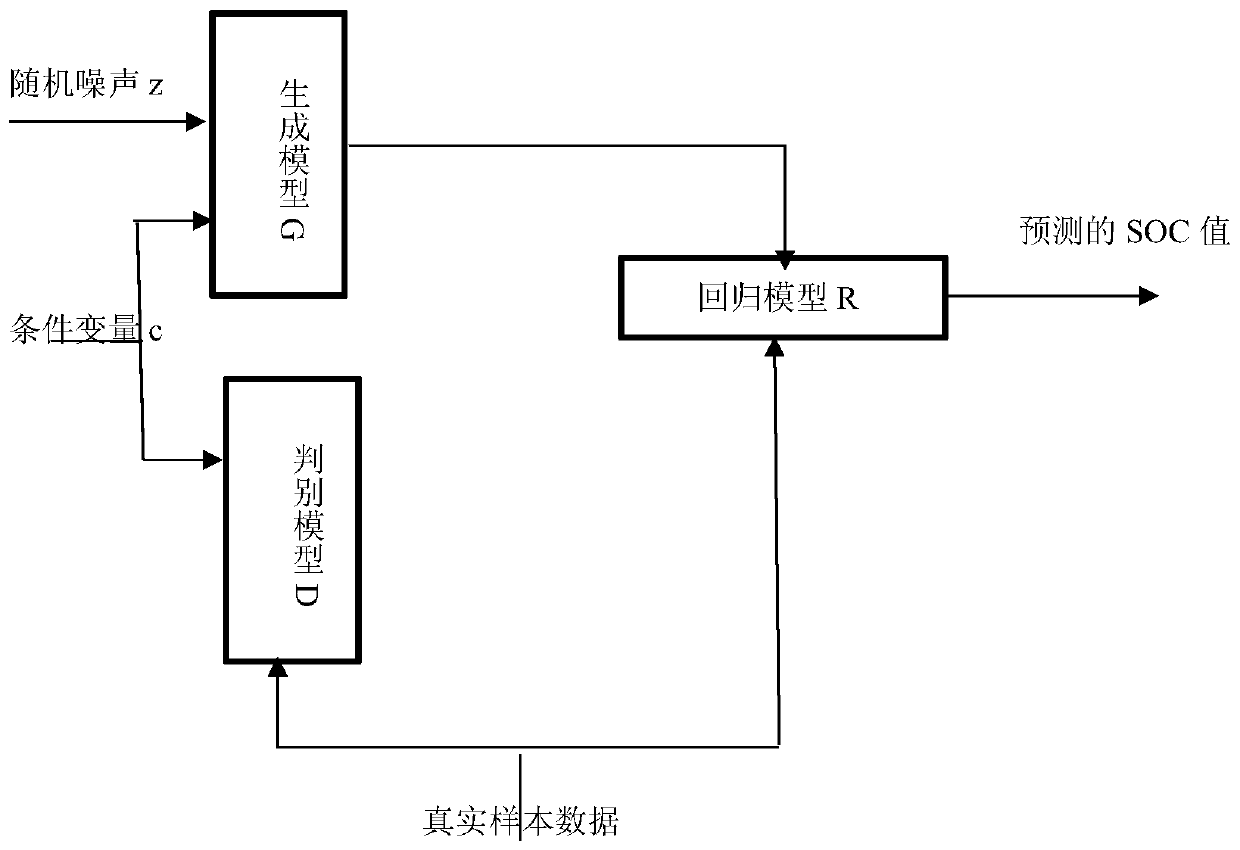

Lithium battery state-of-charge prediction method based on improved generative adversarial network

ActiveCN111007399ANash EquilibriumBoost discriminative samplesElectrical testingNeural architecturesAlgorithmGenerative adversarial network

The invention provides a lithium battery state-of-charge prediction method based on an improved generative adversarial network. The lithium battery state-of-charge prediction method comprises the following steps: collecting modal parameters of a lithium battery and a real state-of-charge SOC in a lithium battery sample; estimating a lower bound value of mutual information between the generation model G output G (z, c) and the condition variable c by using a regression model R; enabling the generation model G and a discrimination model D to confront each other to achieve Nash equilibrium; generating a sample by utilizing the generation model G, and adding the sample into a training set used by the regression model R for training; and alternately training the generation model G, the discrimination model D and the regression model R to enable each model to tend to converge. According to the invention, the training set conforming to original distribution is expanded by using the generationmodel; at the same time, in the improved generative adversarial network, two activation functions of a random correction linear unit RReLU and an exponential linear unit Exponential Units (ELU) are used to obtain stronger model expressive force, and the nonlinear characteristics of the lithium battery are better learned.

Owner:ZHEJIANG UNIV

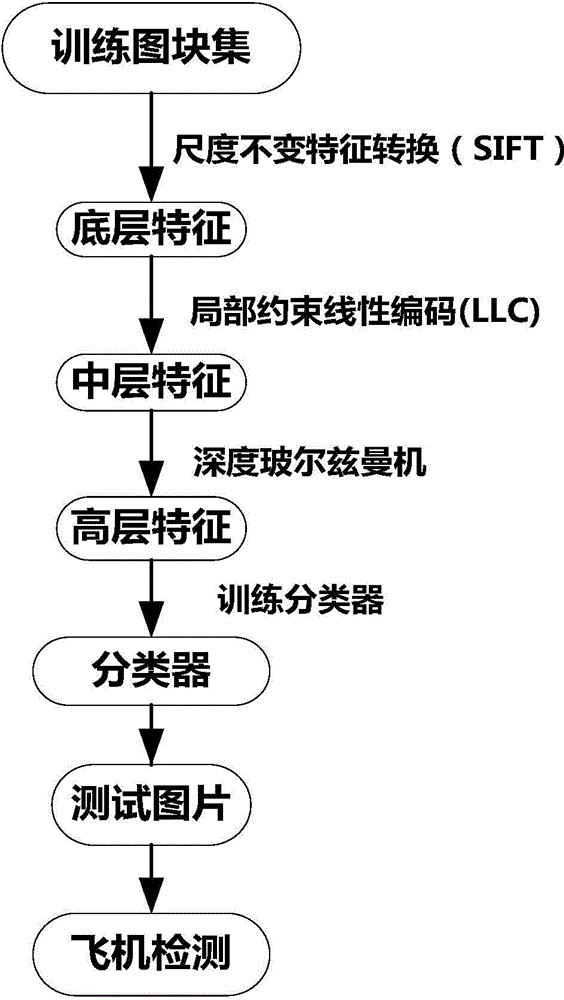

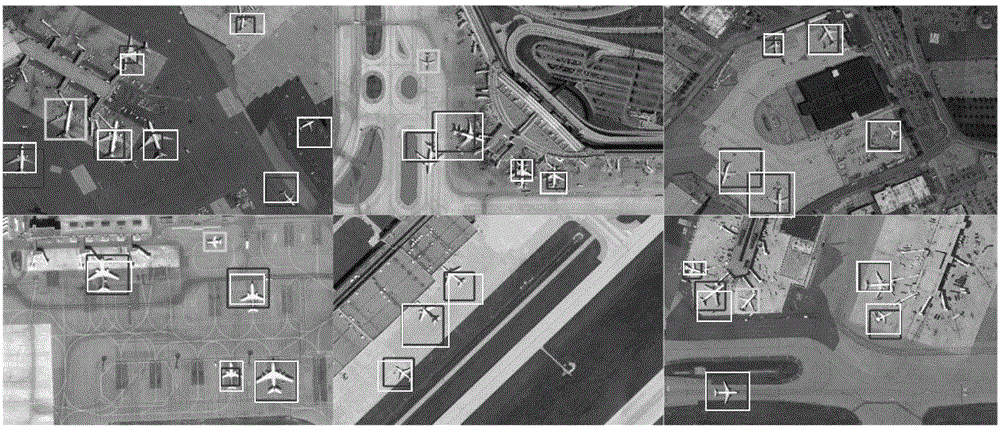

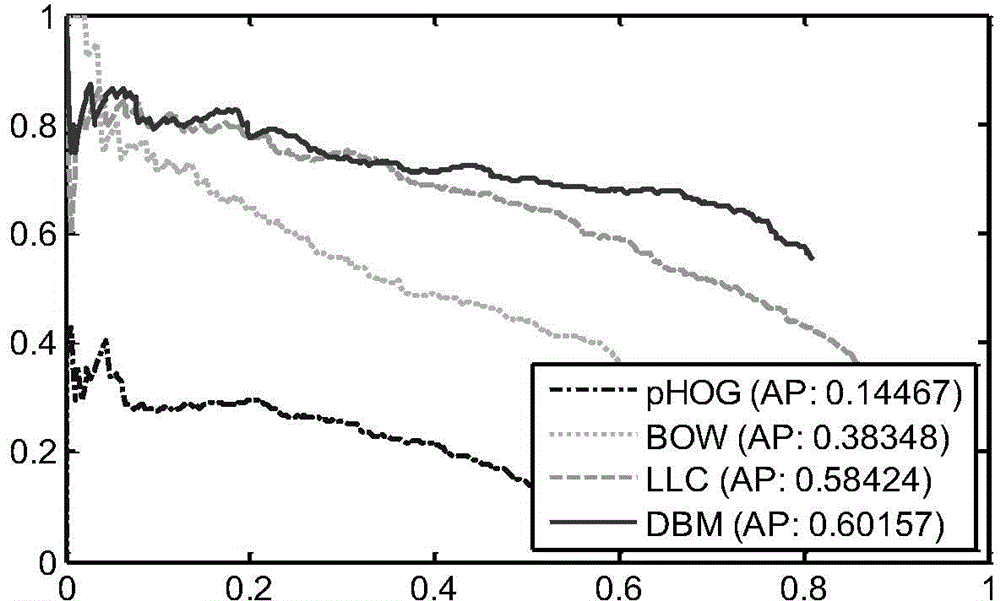

High-resolution remote sensing image airplane detecting method based on high-level feature extraction of depth boltzmann machine

InactiveCN104463248AEnhance expressive abilityExpressiveImage analysisKernel methodsJet aeroplaneScale-invariant feature transform

The invention relates to a high-resolution remote sensing image airplane detecting method based on high-level feature extraction of a depth boltzmann machine. The method comprises the steps that at first, a picture is divided into a plurality of segments, then scale-invariant feature transformation (SIFT) is utilized for extracting key points in the segments, the key points serve as low-level features of the segments, then a local restriction linear coding algorithm is utilized for coding the low-level features to obtain medium-level features, then the three-layer depth boltzmann machine is utilized for obtaining high-level features of the segments from the medium-level features, then the high-level features are utilized for training a support vector machine classifier, finally the classifier is used for detecting an airplane of the detected picture, and the airplane detection result high in accuracy and robustness can be obtained.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

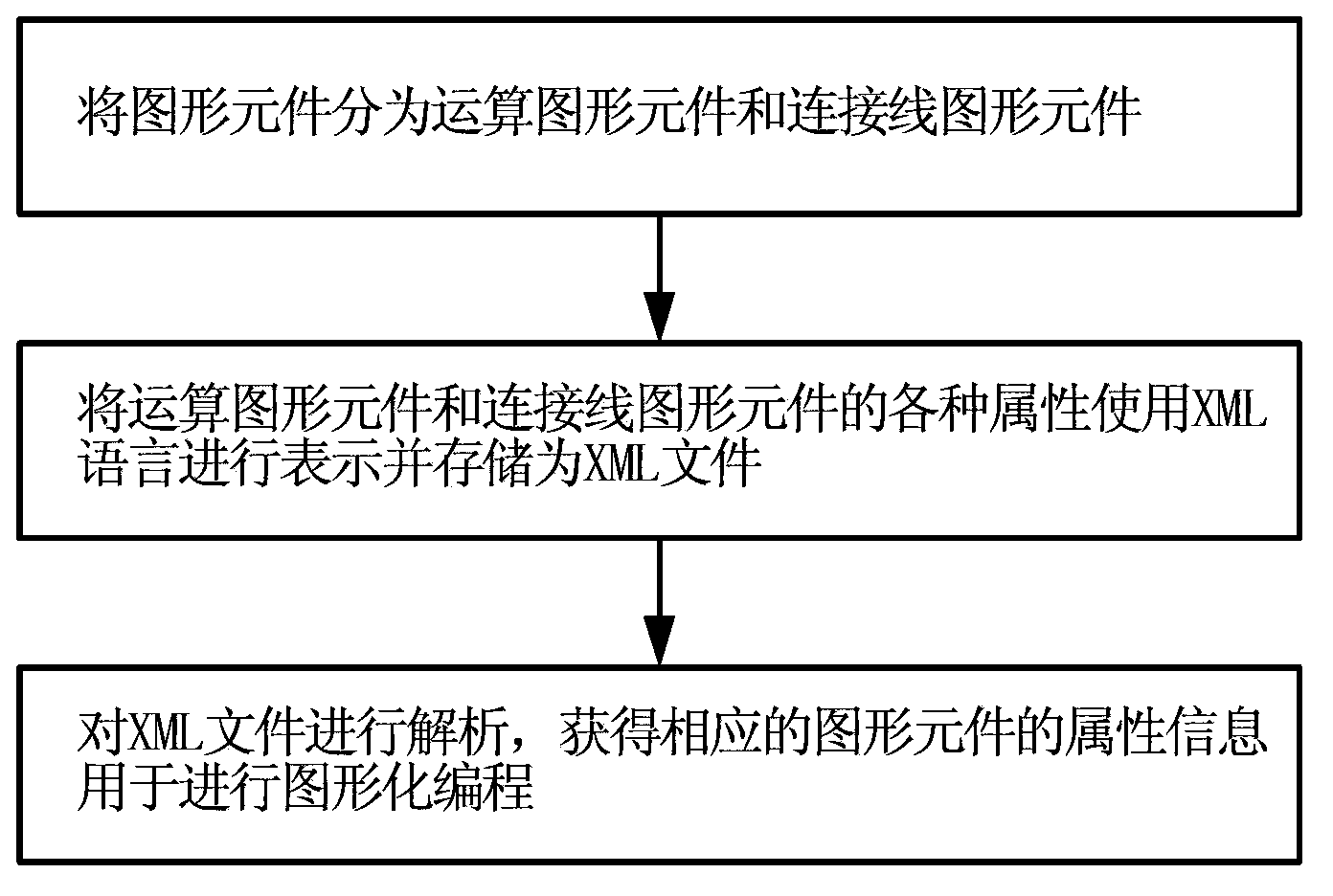

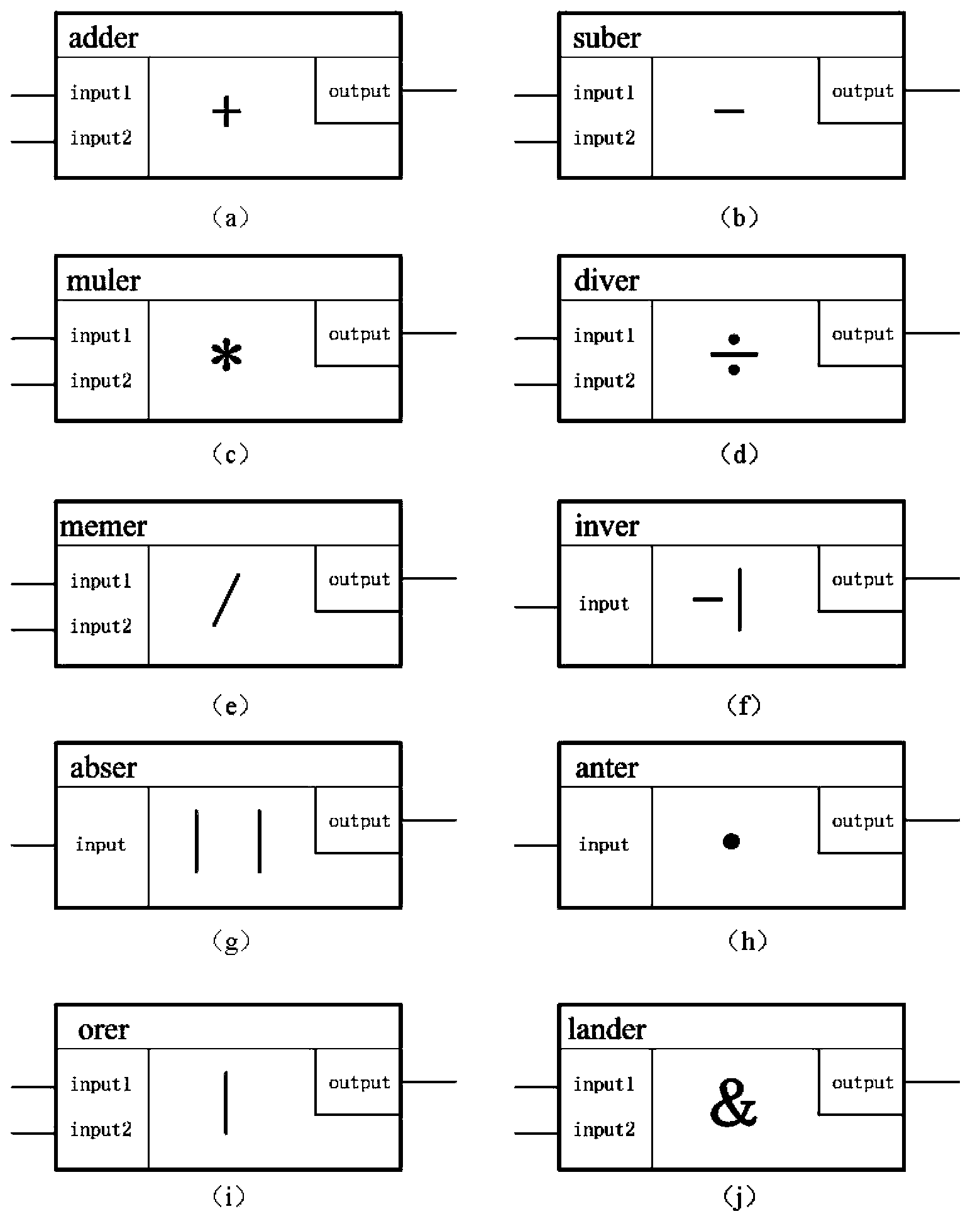

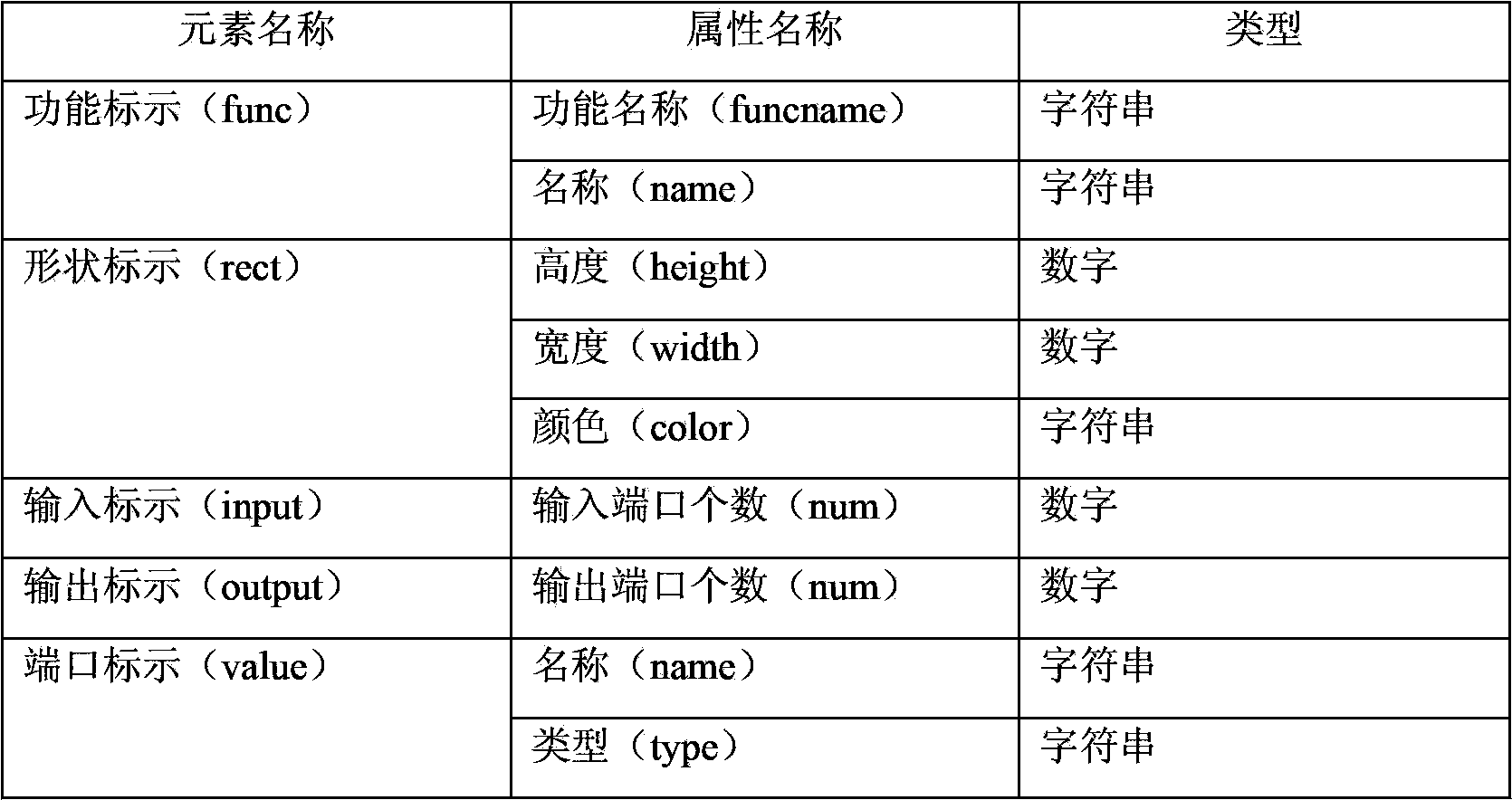

Graphical programming source file storage and analytic method

The invention provides a graphical programming source file storage and analytic method. The method includes the steps that graphic elements needed by graphical programming are divided into operation graphic elements and connecting line graphic elements; various attributes of the operation graphic elements and various attributes of the connecting line graphic elements are expressed by XMLs, and each type of the graphic elements is stored as an XML file; the XML files are analyzed, and attribute information of the corresponding graphic elements is obtained and used for graphical programming. The XML technology is used in graphical programming source file storage and conversion processes, interconversion between graphical programs and XML descriptions can be conveniently achieved, programming work is more efficient and convenient, and development cycles of products are shortened.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

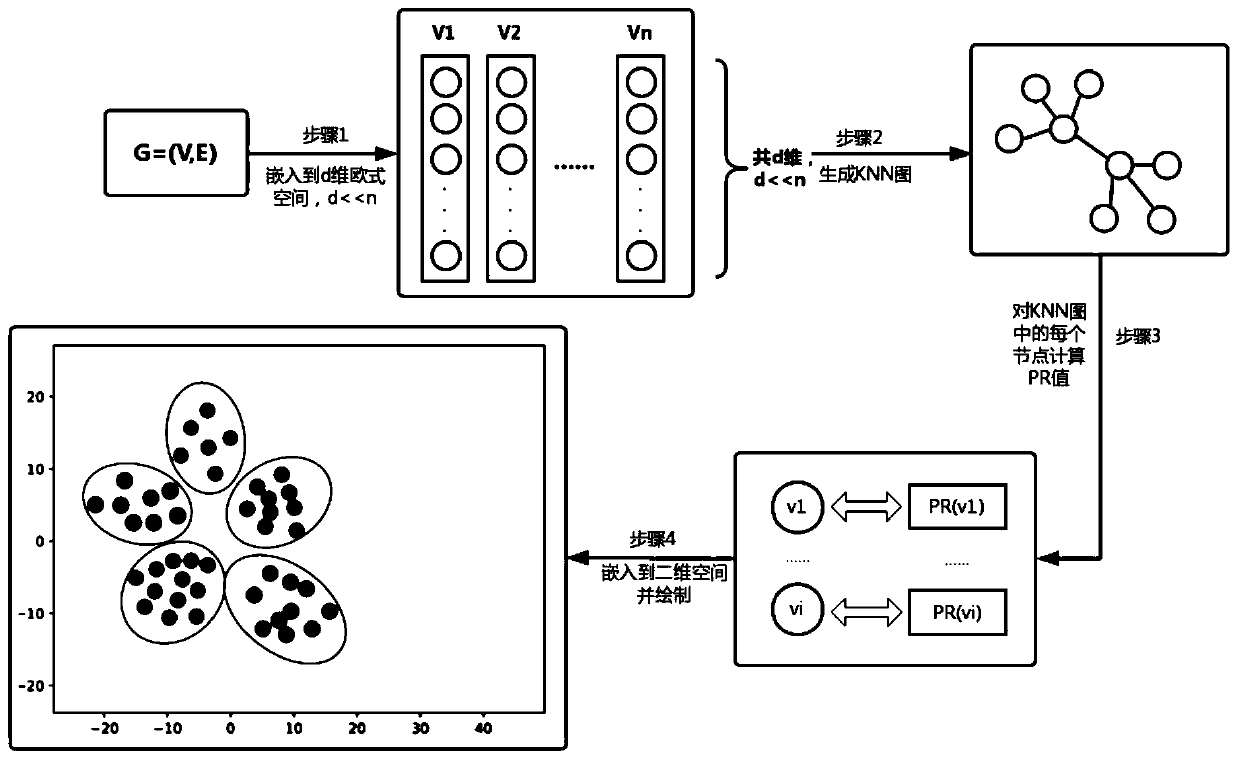

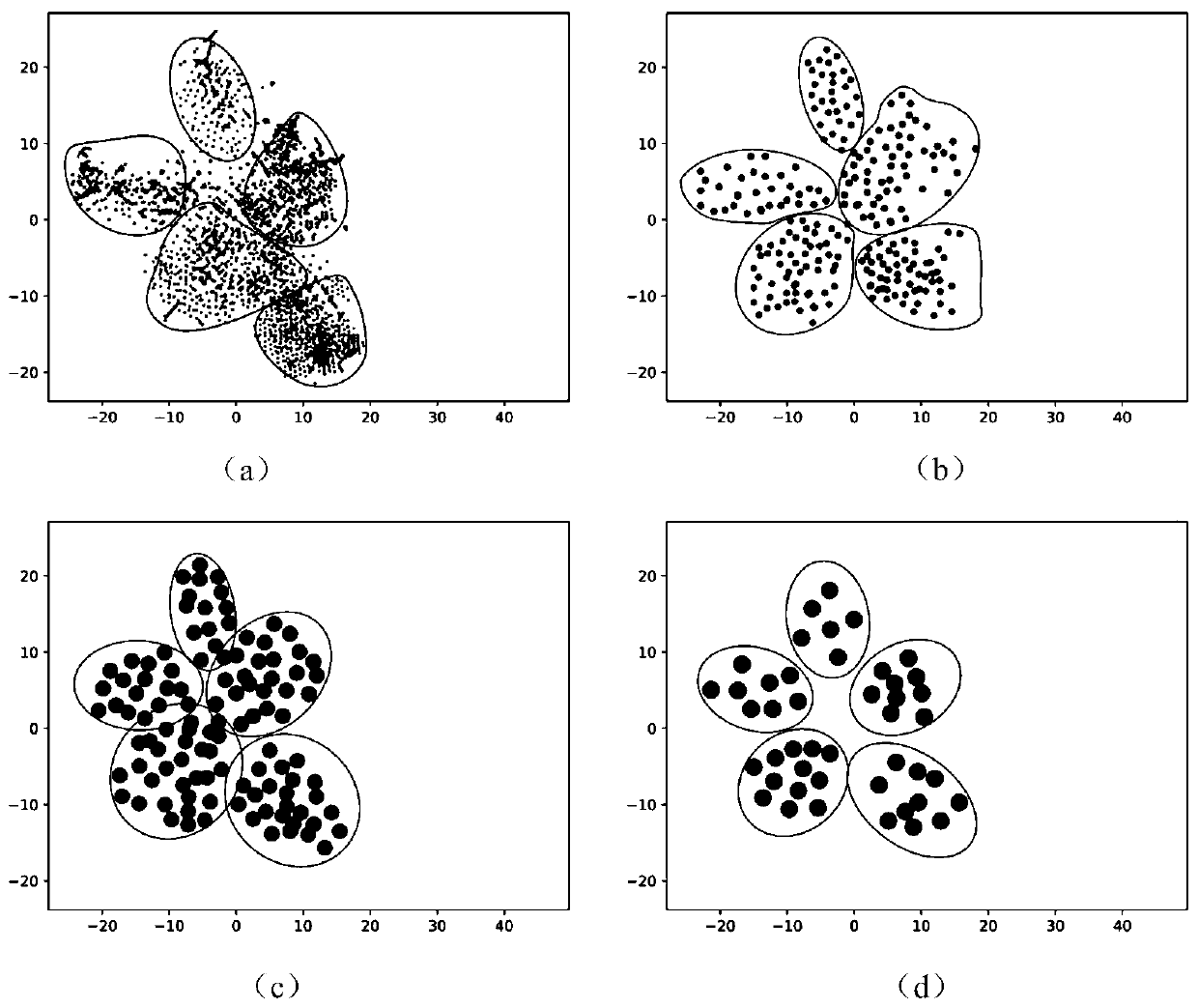

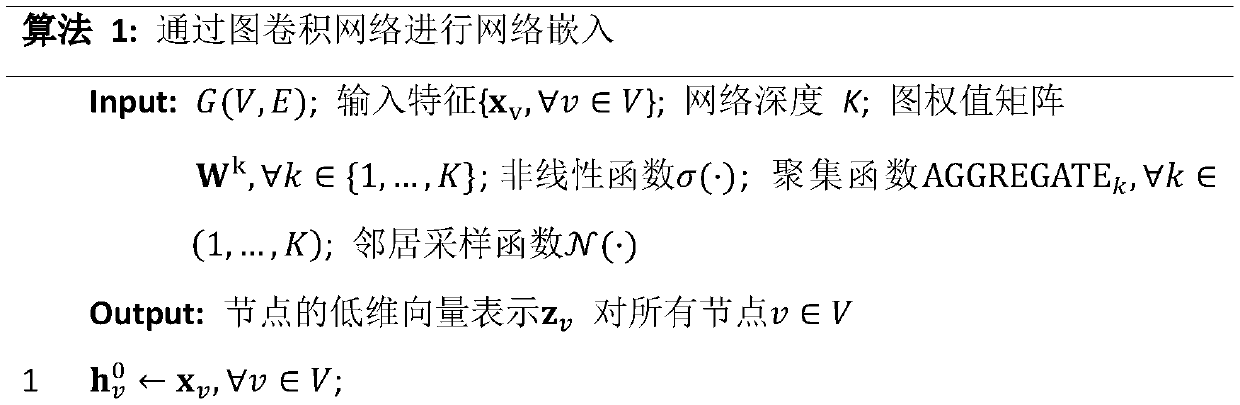

Graph visualization method based on graph convolution network

InactiveCN109753589AExpressiveOther databases indexingOther databases browsing/visualisationNODALGranularity

The invention discloses a graph visualization method based on a graph convolution network. The method comprises the following steps of: 1) for a network G = (V, E) in a target field, embedding nodes in the network G into a low-dimensional Euclidean space to obtain a low-dimensional embedding vector of the network G; wherein the low-dimensional embedded vector comprises feature information of nodesin a network G and topological structure information of the network G; wherein V is a node set, and E is an edge set; 2) constructing the low-dimensional embedded vector into a K neighbor graph, namely a KNN graph, and 3) drawing the KNN graph in a two-dimensional space based on a probability model. According to the method, the learned embedded vector retains the structure information and the feature information of the node at the same time, and the visualization result can be subjected to granularity adjustment.

Owner:INST OF INFORMATION ENG CAS

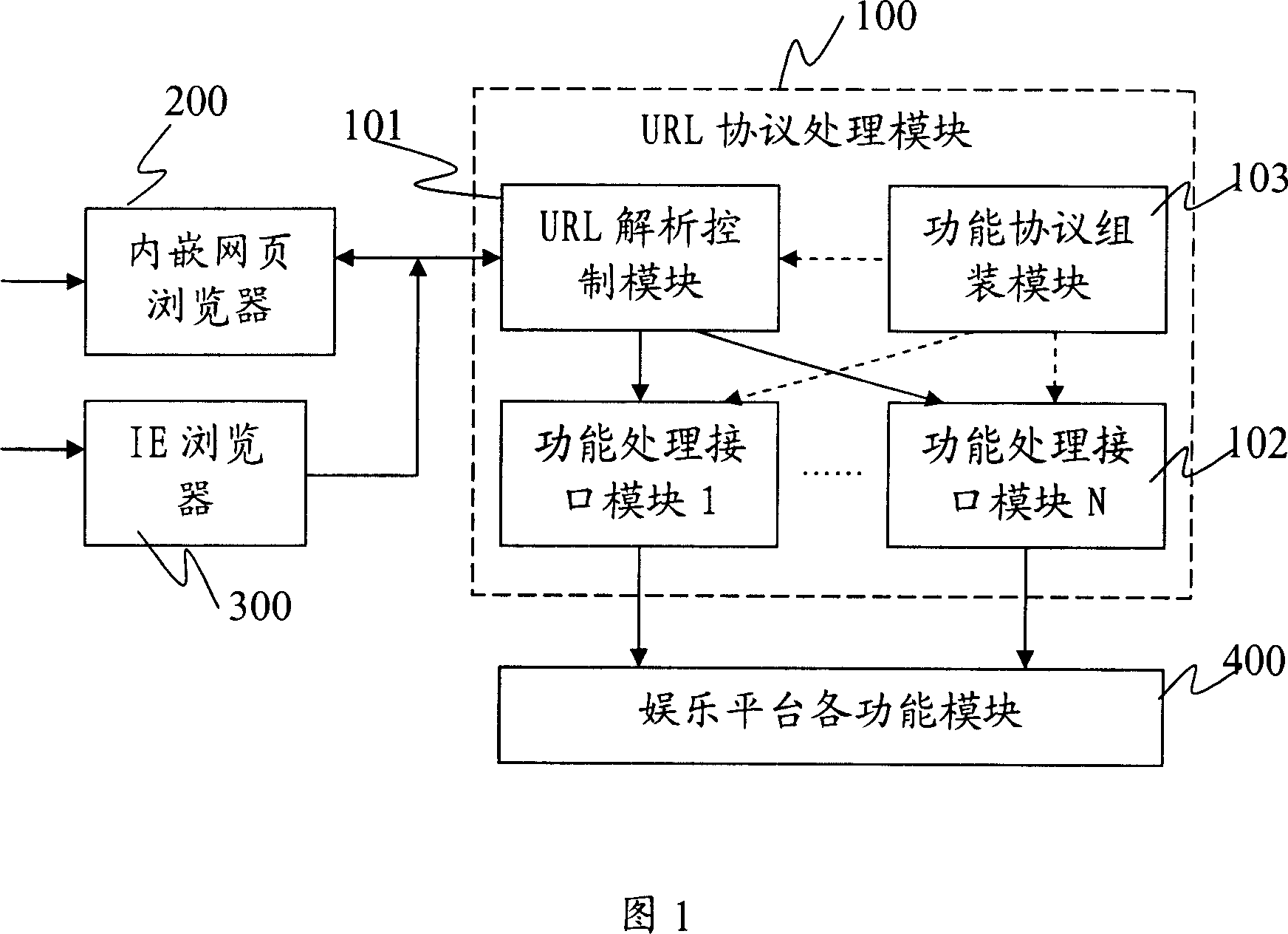

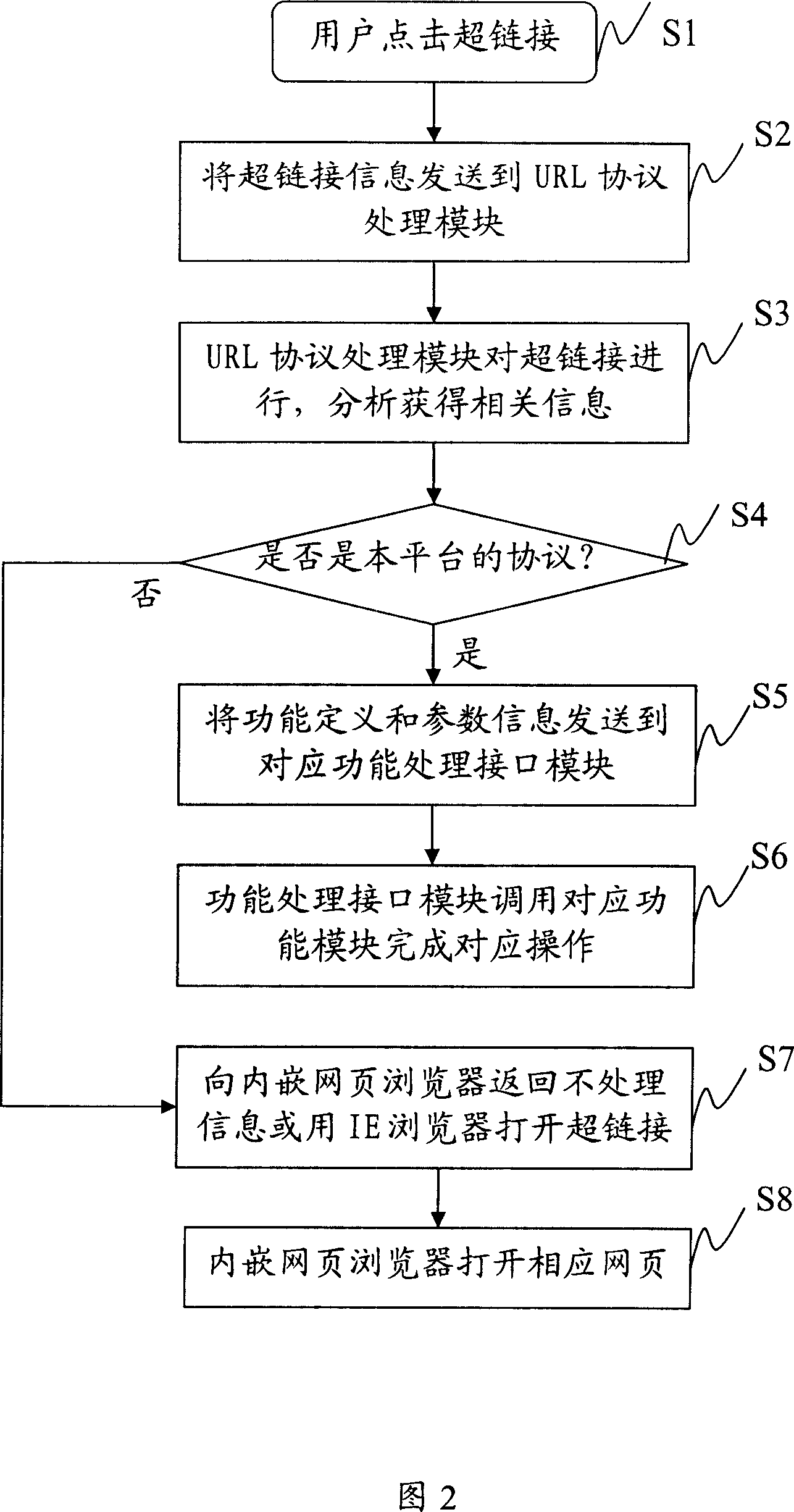

Method and system for controlling amusement platform through configuring hyperlink

ActiveCN101079047AIncrease contentExpressiveSpecial data processing applicationsHyperlinkFunction definition

The invention relates to a method and a system of controlling entertainment flat base by configuring hyperlink in the computer communication technology field, which comprises the following steps: A1 configuring uniform resource position mark URL; A2 regarding the URL as the hyperlink object, making hyperlink and showing the hyperlink on the entertainment flat base, wherein making URL protocol head corresponding the designation of the entertainment flat base; regarding function definition and parameter as the protocol content. The invention also discloses a system of controlling entertainment flat base by configuring hyperlink. The words and pictures with hyperlink in the invention can show on the any place of the entertainment flat base (dialog box, chat section or the like) and can send to the entertainment flat base on fixed time by the server, the user can come in the room, open the stage property shop and buy the stage property by hitting hyperlink. The invention simplifies the operation of using game flat base function for users, which improves the mutual environment between the users and entertainment flat base.

Owner:TENCENT TECH (SHENZHEN) CO LTD

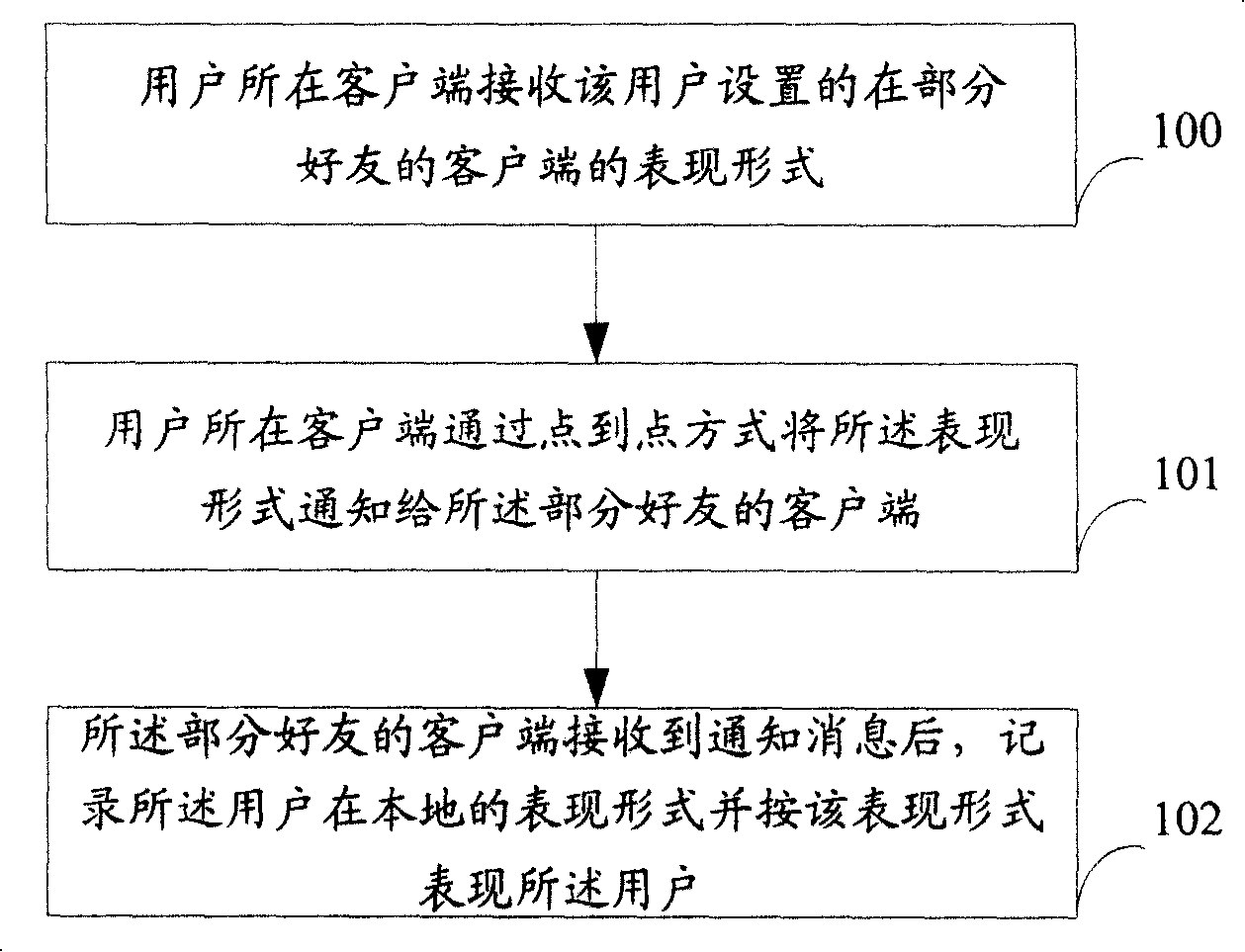

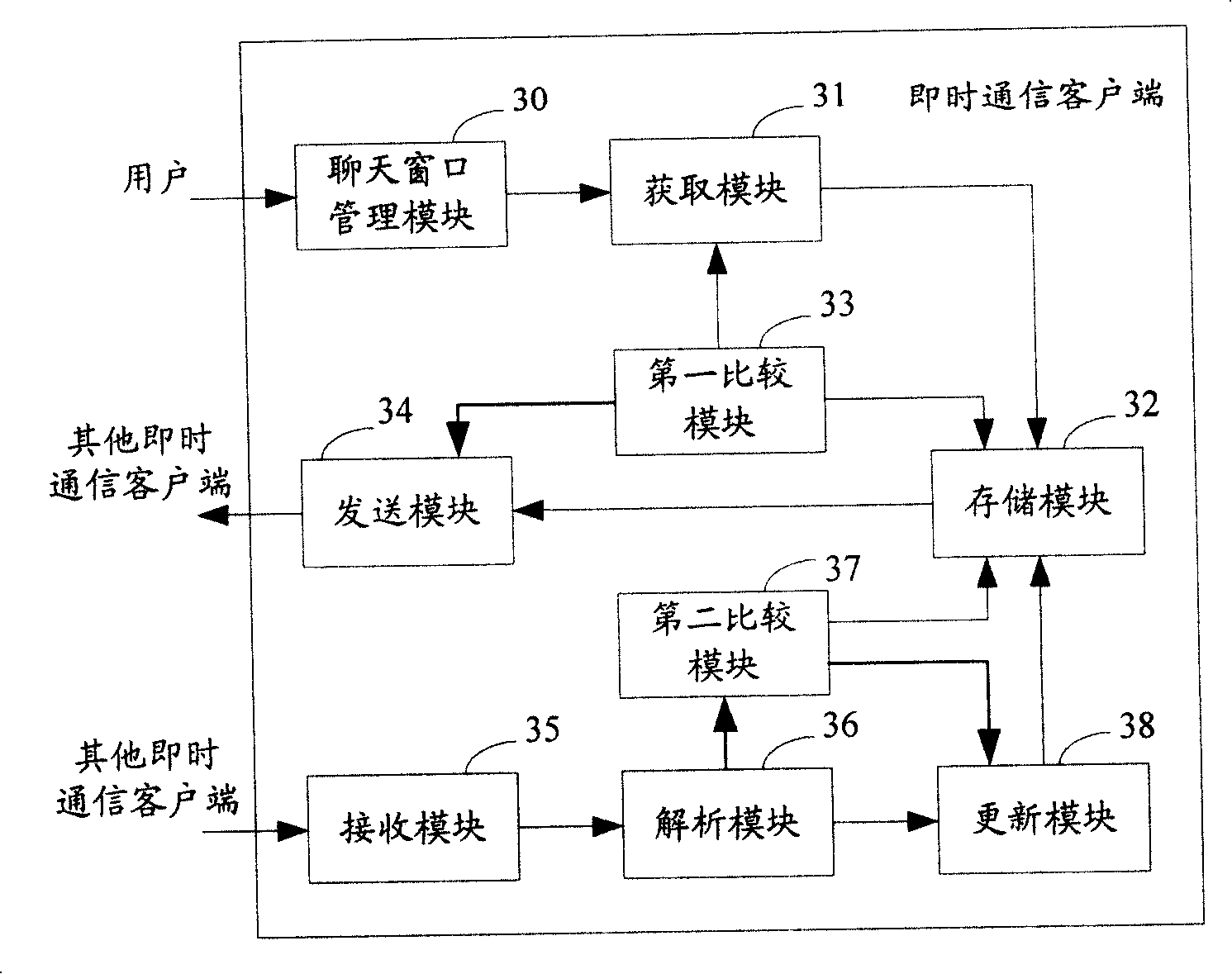

Method and system of self-defining user expression in instant communication

ActiveCN101179407AExpressiveMeet individual needsSpecial service provision for substationPersonalizationClient-side

The invention discloses a method and system for customizing user performance in instant messaging, which is used to solve the problem in the prior art that the user has a single form of expression for different friends in the process of instant messaging; the method includes: the client where the user is located according to The representation form set by the user on the clients of some of the friends is notified to the clients of the part of the friends in a point-to-point manner; after receiving the notification message, the clients of the part of the friends record the user’s local representation, and represent the user in that representation. The adoption of the present invention satisfies the needs of users to personalize different expression forms for different friends, and improves user experience.

Owner:TENCENT TECH (SHENZHEN) CO LTD

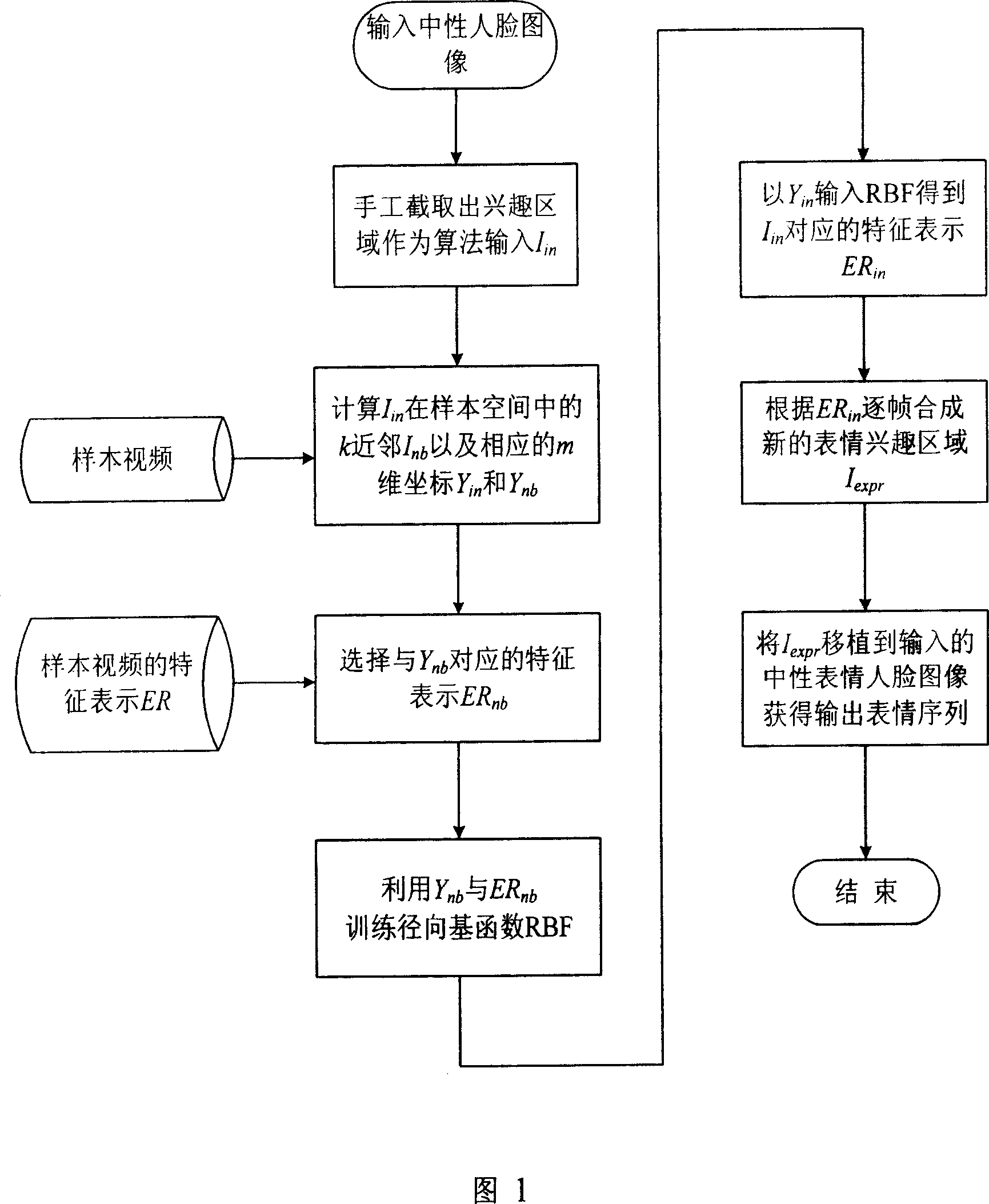

Video flow based people face expression fantasy method

InactiveCN1920880AEfficient synthesisIncrease credibilityImage enhancementGeometric image transformationPattern recognitionImaging technique

The invention relates to face pathetic image technique of video flow, which is based on several pathetic sequences of one input neutral face pathetic image, wherein said algorism comprises: (1) selecting face interested sub area from the input face image by hand; (2) calculating out the k neighbors and relative m-dimension character coordinates in the sample space; (3) using said coordinates and character to represent training radial basic function; (4) using the coordinate of input image as the input of radial basic function to obtain relative character representation, to compose the dynamic sequence of face interested sub region frame by frame; (5) planting composed dynamic sequence into input neutral face pathetic image to obtain the last pathetic effect. The invention can generate several dynamic pathetic sequences quickly based on one image, with wide applications.

Owner:ZHEJIANG UNIV

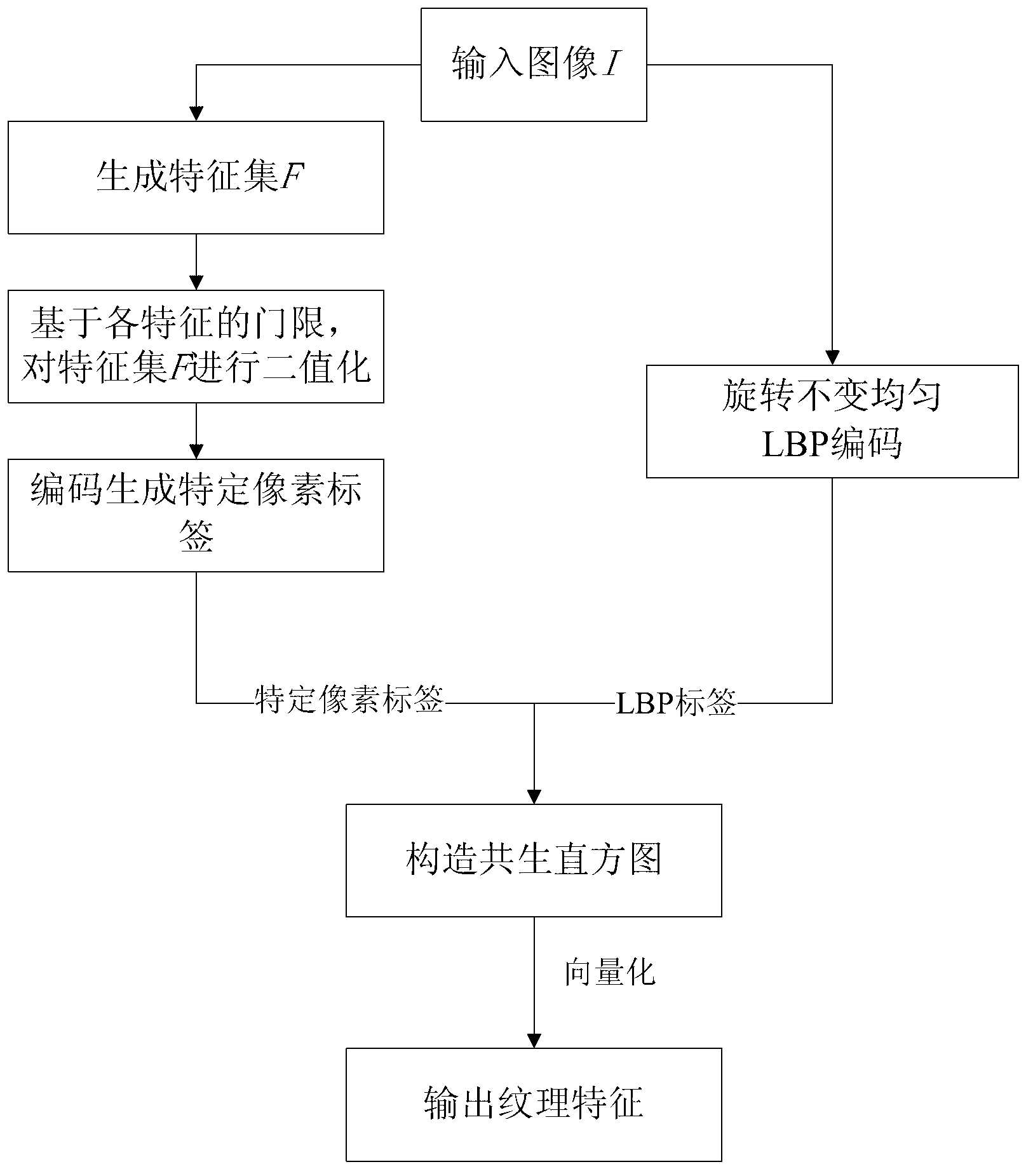

Method for extracting textural features of robust

ActiveCN103258202AExpressiveDiscriminatingImage analysisCharacter and pattern recognitionFeature setImaging processing

The invention discloses a method for extracting textural features of a robust, and belongs to the technical field of image processing. The method comprises the implementation steps: pre-processing an input image, generating a feature set F, carrying out binaryzation on the feature set F based on a threshold value of each feature, carrying out binary encoding and generating a particular pixel label, carrying out rotation invariant even local binary pattern (LBP) encoding on the input image, generating an LBP label of each pixel point, constructing a 2-D coexistence histogram by the particular pixel label and the LBP label of each pixel point, vectoring the coexistence histogram and then applying the coexistence histogram into textural expression. The method is applied so as to reduce binary quantitative loss in an existing LBP mode, at the same time maintain robustness of changes of illumination, rotation, scale and visual angles by the extraction features.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

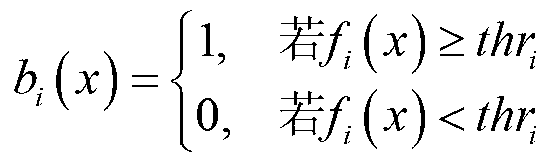

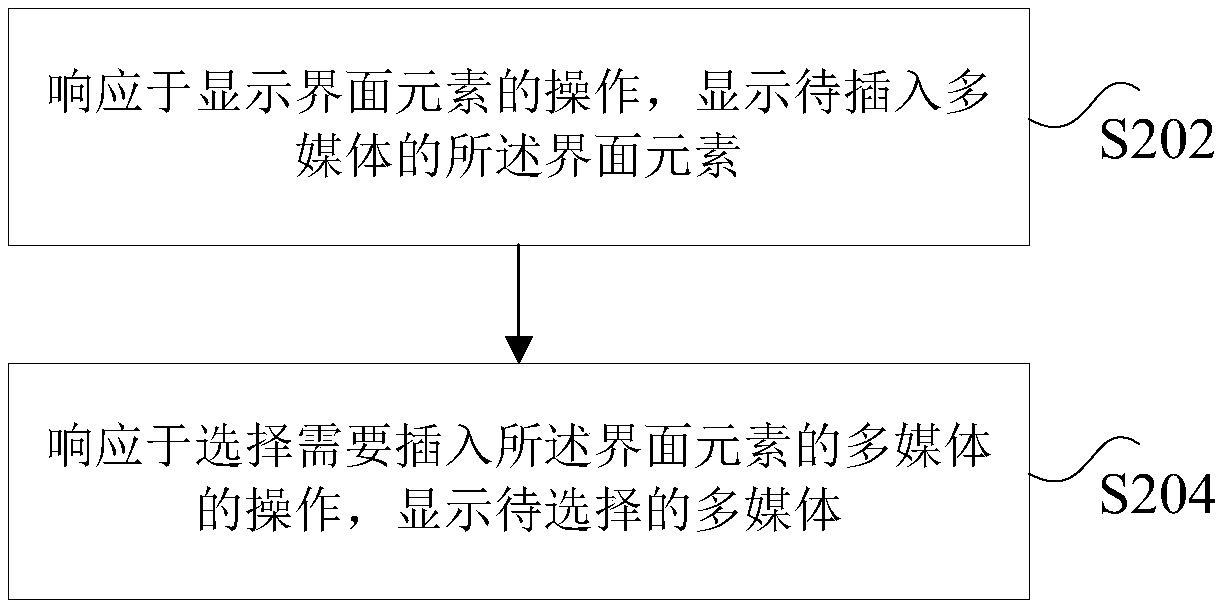

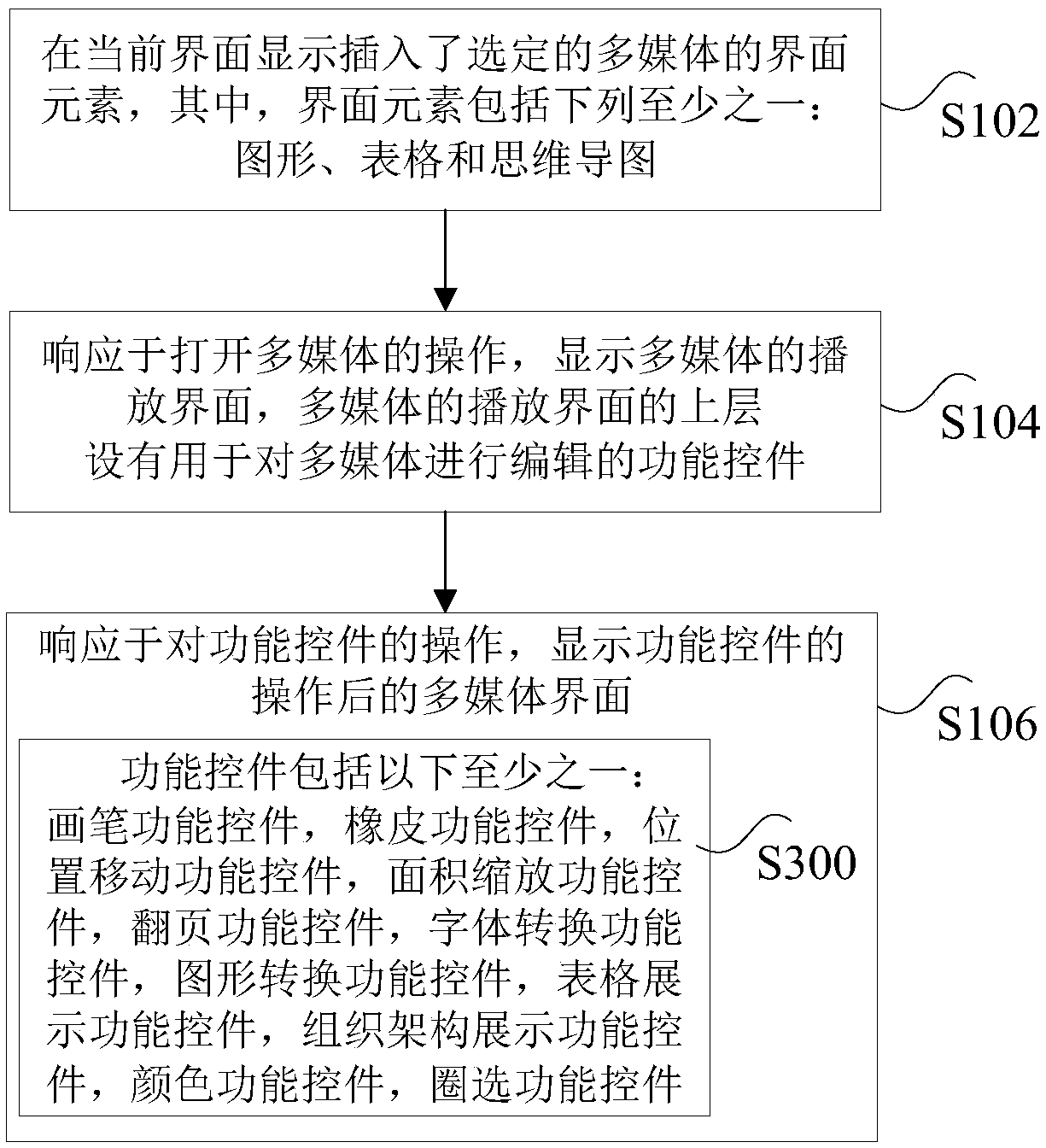

Interface element operation method and device of electronic whiteboard and interactive intelligent device

ActiveCN108958608AExpressiveImprove expressivenessNatural language data processingExecution for user interfacesGraphicsUser needs

The invention discloses an interface element operation method and device of an electronic whiteboard and an interactive intelligent device. The method comprises: displaying an interface element inserted with a selected multimedia on a current interface, wherein the interface element comprises at least one of the following: a graph, a table and a mind map; in response to an operation of opening themultimedia, displaying a playing interface of the multimedia, wherein a functional control for editing the multimedia is provided on an upper layer of the playing interface of the multimedia; in response to the operation of the function control, displaying a multimedia interface after the corresponding operation of the function control is performed. The invention solves the technical problem thatan interface element inserted with multimedia in the related art cannot be edited according to user requirements.

Owner:GUANGZHOU SHIYUAN ELECTRONICS CO LTD +1

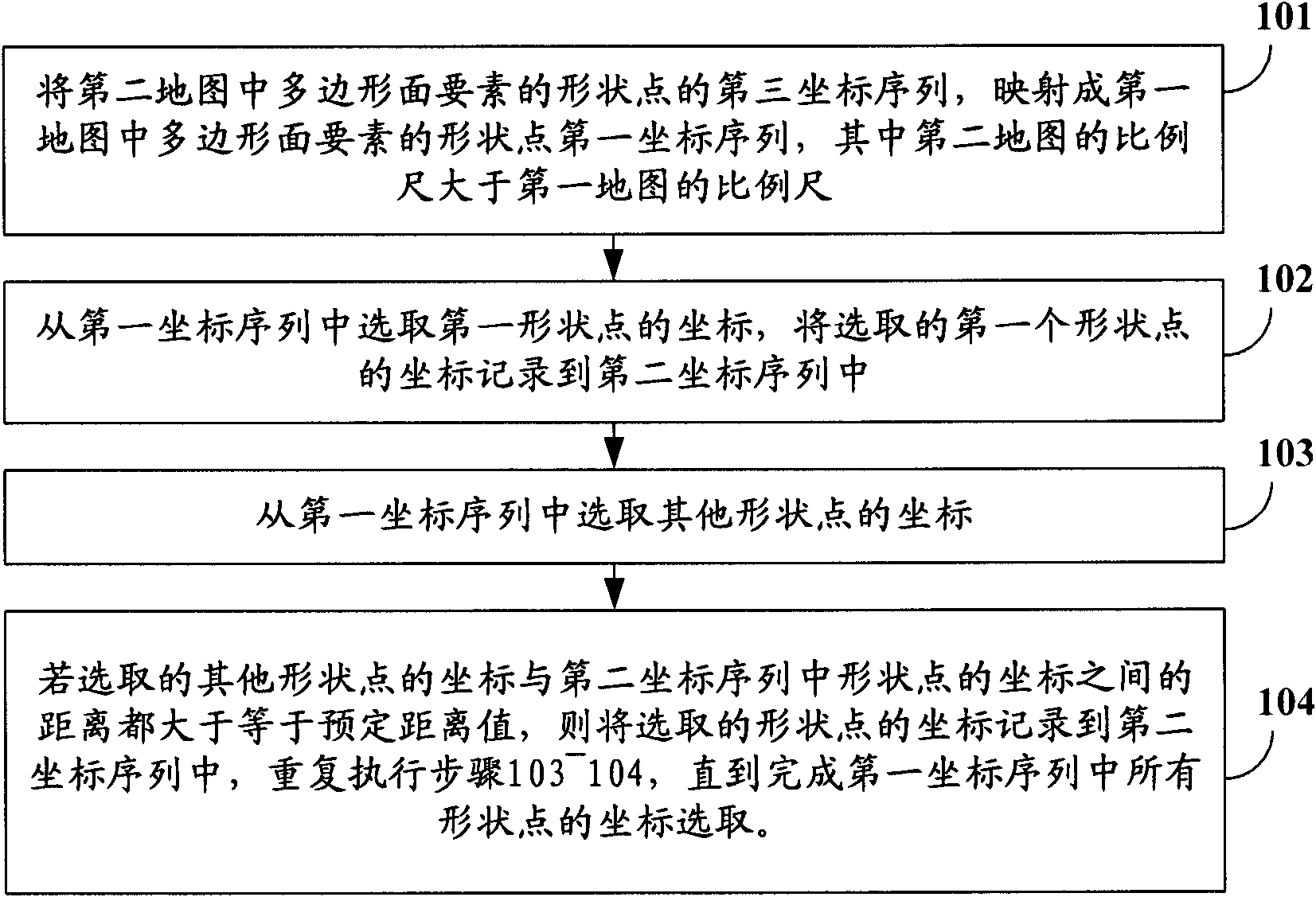

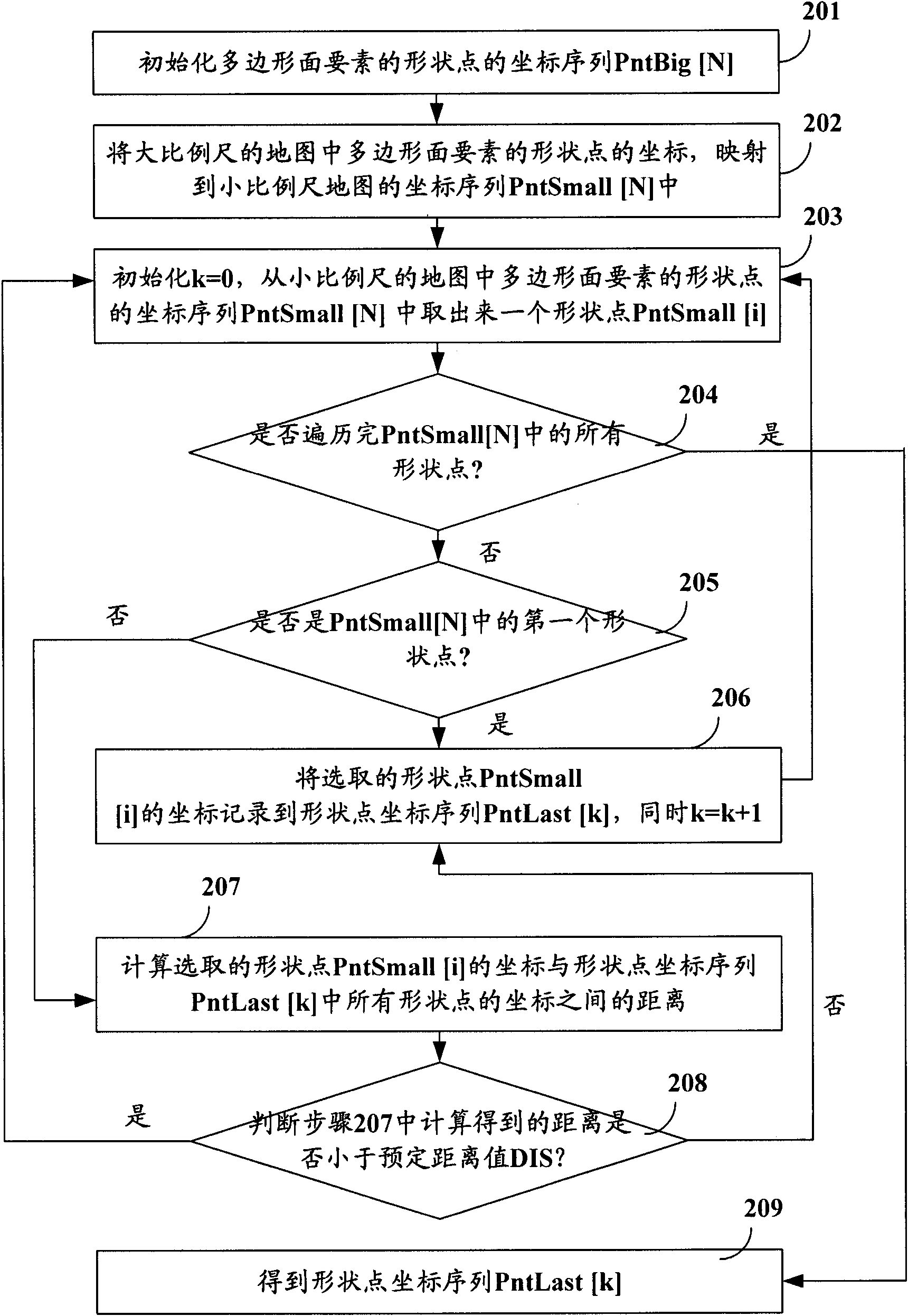

Method and device for automatically simplifying shape points of polygonal surface elements of electronic map

ActiveCN102314798AImprove work efficiencyExpressiveInstruments for road network navigationMaps/plans/chartsComputer visionElectronic map

The invention provides a method and a device for automatically simplifying shape points of polygonal surface elements of an electronic map and belongs to the technical field of digital mapping. The method comprises the following steps of: 1, selecting coordinates of the first shape point from a first coordinate sequence, and recording the selected coordinates of the first shape point into a second coordinate sequence; 2, selecting the coordinates of other shape points from the first coordinate sequence; 3, if distances between the selected coordinates of other shape points and the coordinatesof the shape points in the second coordinate sequence are greater than or equal to a preset distance value, recording the selected coordinates of the shape points into the second coordinate sequence;and repeatedly executing the steps 2 and 3 until the coordinates of all shape points in the first coordinate sequence are selected. By the invention, the self-intersection of the simplified polygonalsurface elements cannot be caused by the selected shape points of the polygonal surface elements, and the shape characteristics of the original polygonal surface elements are kept.

Owner:NAVINFO

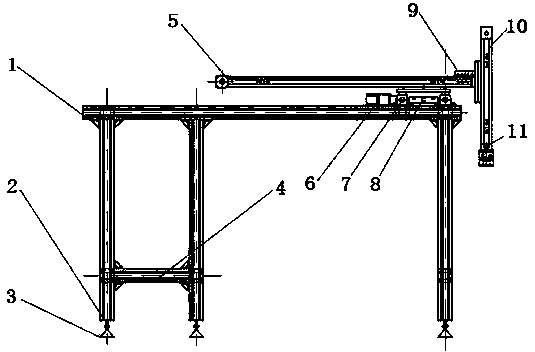

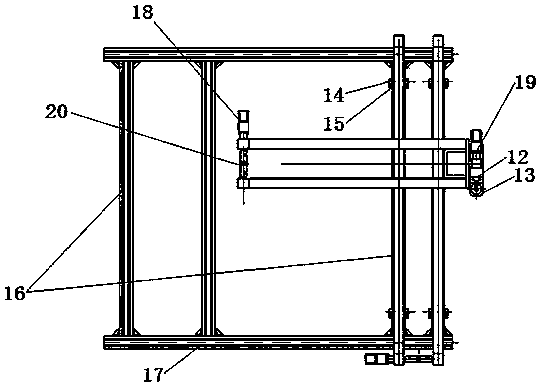

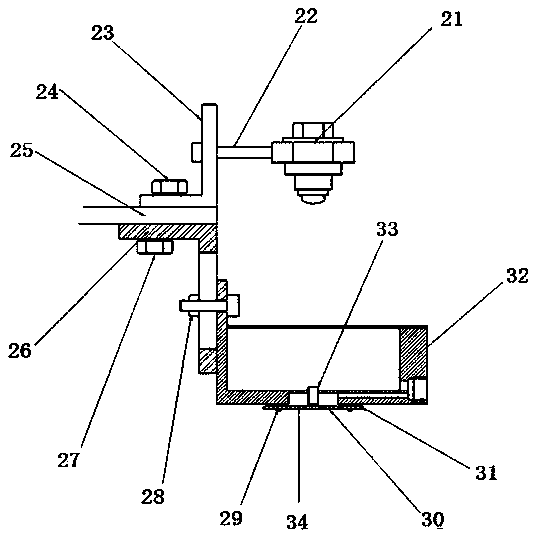

Automatic online mark spraying machine for steel plates

The invention discloses an automatic online mark spraying machine for steel plates. The automatic online mark spraying machine for steel plates comprises an equipment foundation rack, a three-dimensional coordinate positioning mechanism is installed on the equipment foundation rack and provided with an alternating current servo device connected with a computer, the computer controls the alternating current servo device to achieve three-dimensional movement positioning of the three-dimensional coordinate positioning mechanism, and the three-dimensional coordinate positioning mechanism is provided with a flow restraining device. Compared with the prior art, the automatic online mark spraying machine for steel plates can solve the problems that existing mark spraying equipment is huge and large in occupied space, specific temperature requirements are provided when the surfaces of steel plates are marked, the spray printing quality is poor, the existing mark spraying equipment is incompatible with existing enterprise equipment, the cost is high as the whole set of equipment needs to be imported, a spraying mechanism is not provided with a flow restraining device, and the environment of working sites is poor.

Owner:LIUZHOU IRON & STEEL

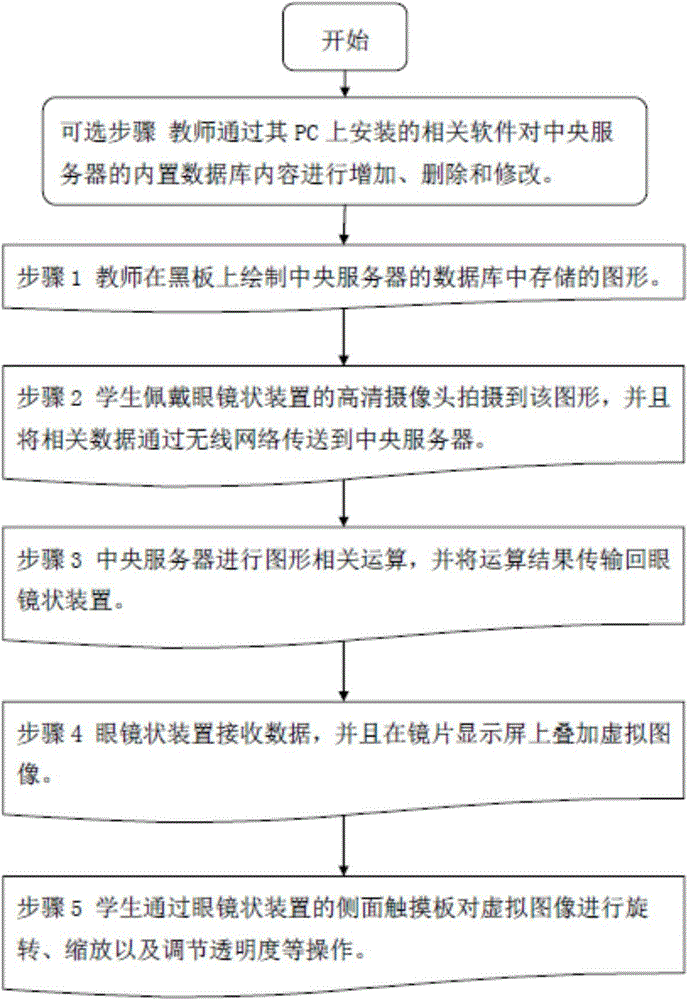

Augmented reality teaching system

InactiveCN104464414AStrong data transmission capabilityStrong computing powerElectrical appliancesOptical elementsGraphicsPlane figure

The invention discloses a teaching system based on the augmented reality technology, suitable for emerging education requirements. Under the help of a central processor in a classroom, students wearing spectacle-shaped devices perform full interaction with teaching contents and teachers, so as to get familiar with the teaching contents and cultivate interests. When a teacher draws certain specific figures on the blackboard, cameras of the spectacle-shaped devices worn by the students can send shot figures to a server; the server detects the figures and compares with a database. For example, the teacher draws plane figures of a dam on the blackboard, the spectacle-shaped devices send the figures to the server, the server returns data to the spectacle-shaped devices, and the spectacle-shaped devices superpose the figures on a display screen, so that the students can see a three-dimensional dam figure; through operating a right side touch tablet, the students can rotate and zoom the three-dimensional dam figure, and adjust the transparency of the superposed figure.

Owner:NANJING DAWU EDUCATION TECH

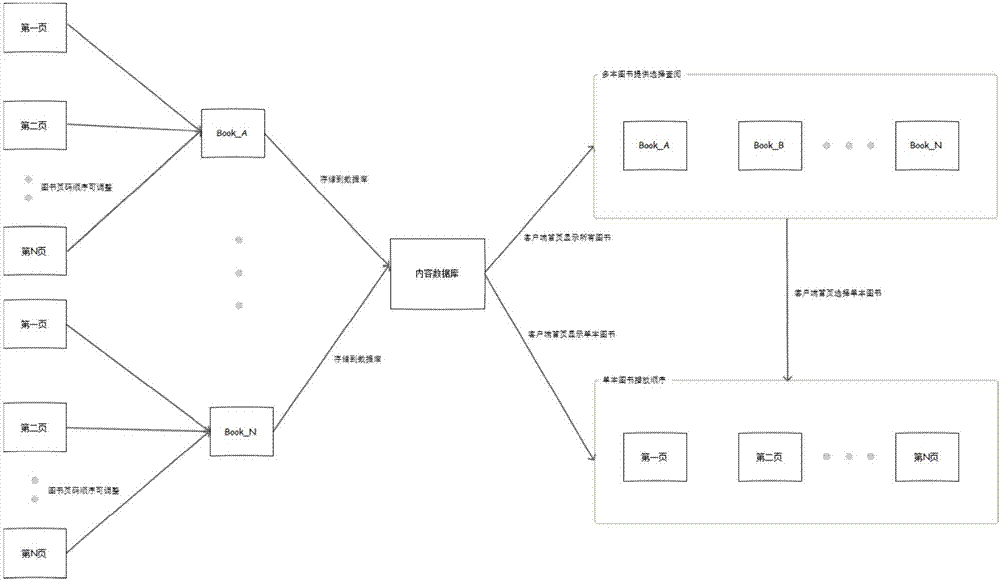

Book VR and AR experience interactive system

InactiveCN107193904AExpressiveAttract the desire to continue readingStill image data indexingMetadata still image retrievalDynamic storageData profiling

The invention discloses a book VR and AR experience interactive system. The system comprises a content database, an image recognition module, a dynamic storage module, a big data analysis module and a dynamic display module, wherein all books, all book pictures, a content input model, book data, VR and AR resource data and the like are stored in the content database; the image recognition module recognizes basic information and feature properties of the book pictures and performs image recognition on the book pictures; the dynamic storage module processes the book pictures according to the feature properties corresponding to the book pictures and stores the book data; the big data analysis module analyzes common categories of identical feature properties to form a general content input model; and the dynamic display module extracts identical book data and content data of the content input model for display. According to the system, the books are displayed in different modes such as VR and AR, the display modes are more diversified, and the user experience effect is better; paper media are digitalized, so that the interactivity and interestingness in the reading process are enhanced, and generalization and standardization of the book data are achieved.

Owner:JINHUA VRSEEN TECH

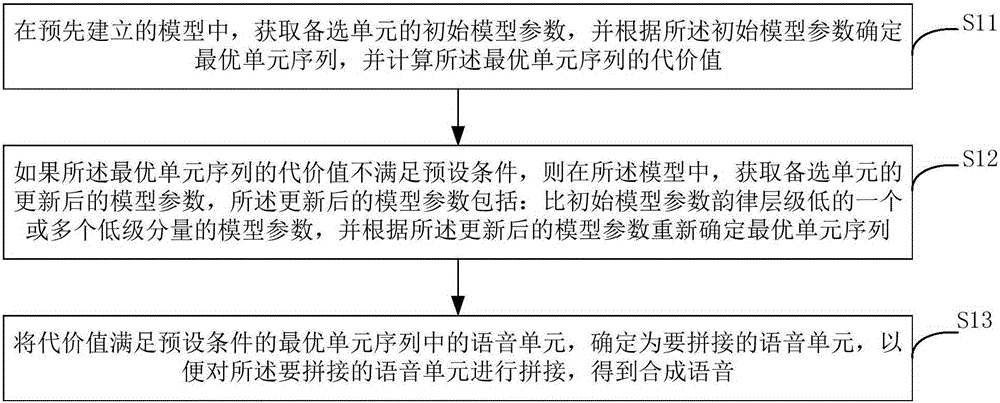

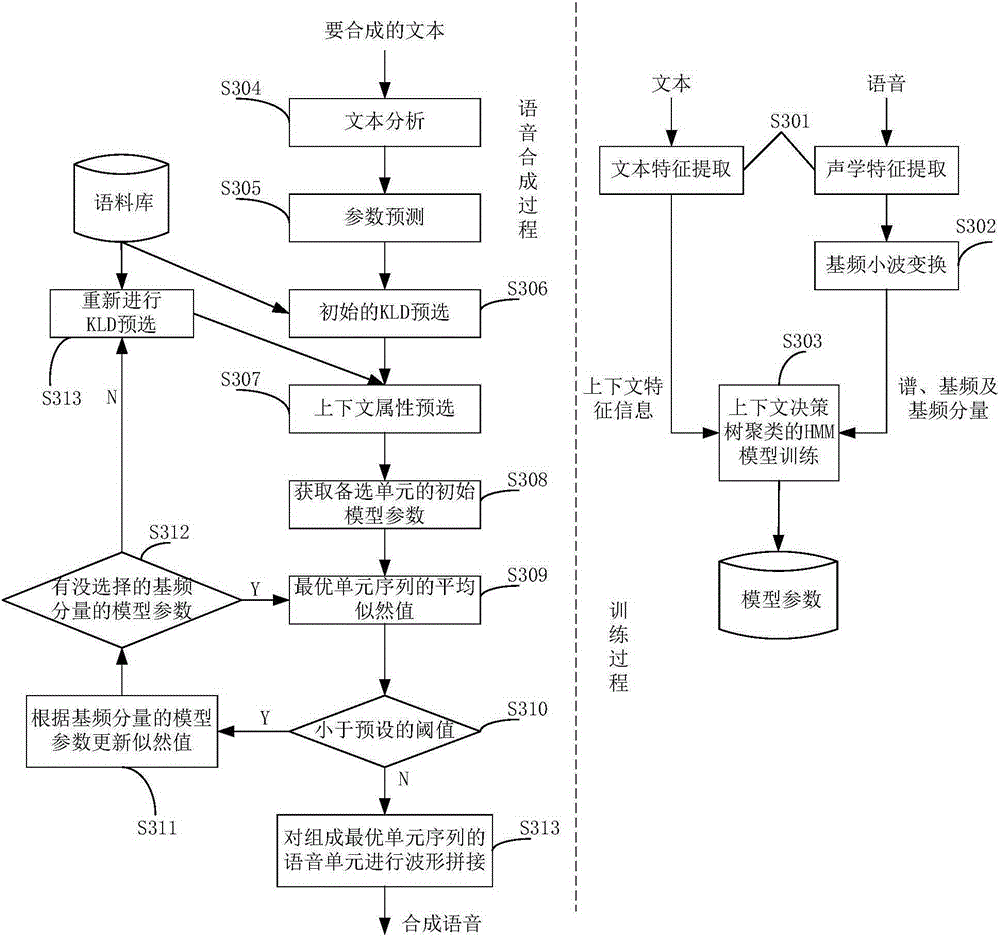

Speech synthesis method and device

The invention provides a speech synthesis method and device. The speech synthesis method comprises the steps that initial model parameters of alternative units are acquired in a pre-built model, an optimal unit sequence is determined according to the initial model parameters, and a cost value of the optimal unit sequence is calculated; if the cost value of the optimal unit sequence does not meet a preset condition, updated model parameters of the alternative units are acquired in the model, wherein the updated model parameters comprise model parameters of which the rhythm layers are one or multiple low-level component / components lower than those of the initial model parameters, and an optimal unit sequence is determined again according to the updated model parameters; speech units in the optimal unit sequence of which of the cost value meets the preset condition are determined as speech units to be spliced, so that the speech units to be spliced are conveniently spliced to obtain synthesized speech. According to the method, the accuracy of the selected speech units can be improved, and therefore the synthesized speech can be more natural and has the better expressive force.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com