Patents

Literature

451 results about "Scale-invariant feature transform" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

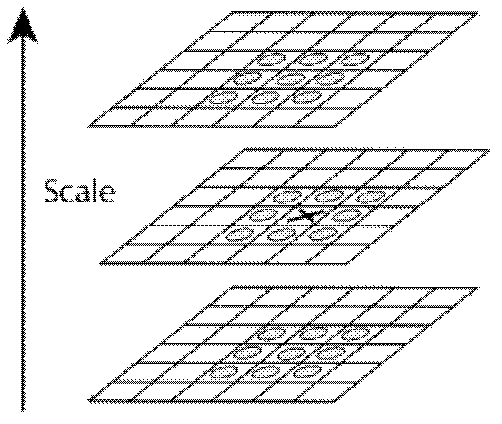

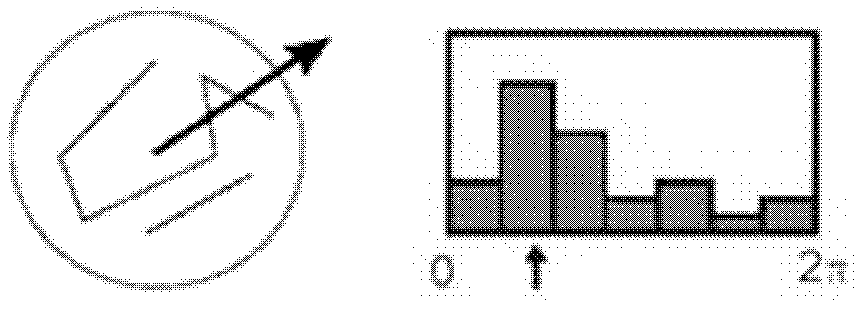

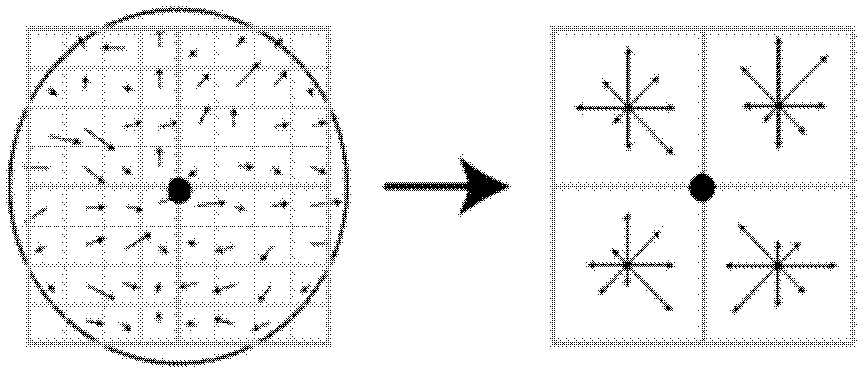

The scale-invariant feature transform (SIFT) is a feature detection algorithm in computer vision to detect and describe local features in images. It was patented in Canada by the University of British Columbia and published by David Lowe in 1999. Applications include object recognition, robotic mapping and navigation, image stitching, 3D modeling, gesture recognition, video tracking, individual identification of wildlife and match moving.

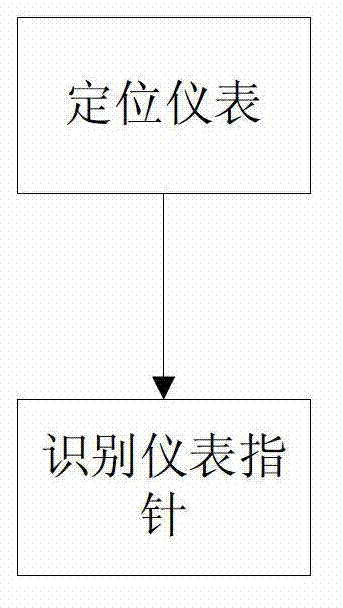

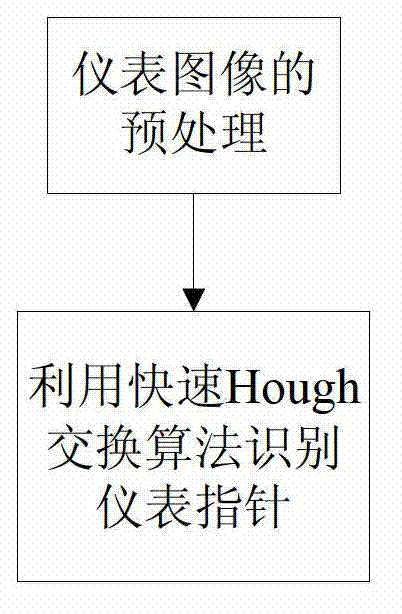

Improved multi-instrument reading identification method of transformer station inspection robot

InactiveCN103927507AImprove robustnessMeet the requirements of automatic detection and identification of readingsCharacter and pattern recognitionHough transformScale-invariant feature transform

The invention discloses an improved multi-instrument reading identification method of a transformer station inspection robot. In the method, first of all, for instrument equipment images of different types, equipment template processing is carried out, and position information of min scales and max scales of each instrument in a template database. For the instrument equipment images acquired in real time by the robot, a template graph of a corresponding piece of equipment is scheduled from a background service, by use of a scale invariant feature transform (SIFT) algorithm, an instrument dial plate area sub-image is extracted in an input image in a matching mode, afterwards, binary and instrument point backbone processing is performed on the dial plate sub-image, by use of rapid Hough transform, pointer lines are detected, noise interference is eliminated, accurate position and directional angel of a pointer are accurately positioned, and pointer reading is finished. Such an algorithm is subjected to an on-site test of some domestic 500 kv intelligent transformer station inspection robot, the integration recognition rate of various instruments exceeds 99%, the precision and robustness for instrument reading are high, and the requirement for on-site application of a transformer station is completely satisfied.

Owner:STATE GRID INTELLIGENCE TECH CO LTD

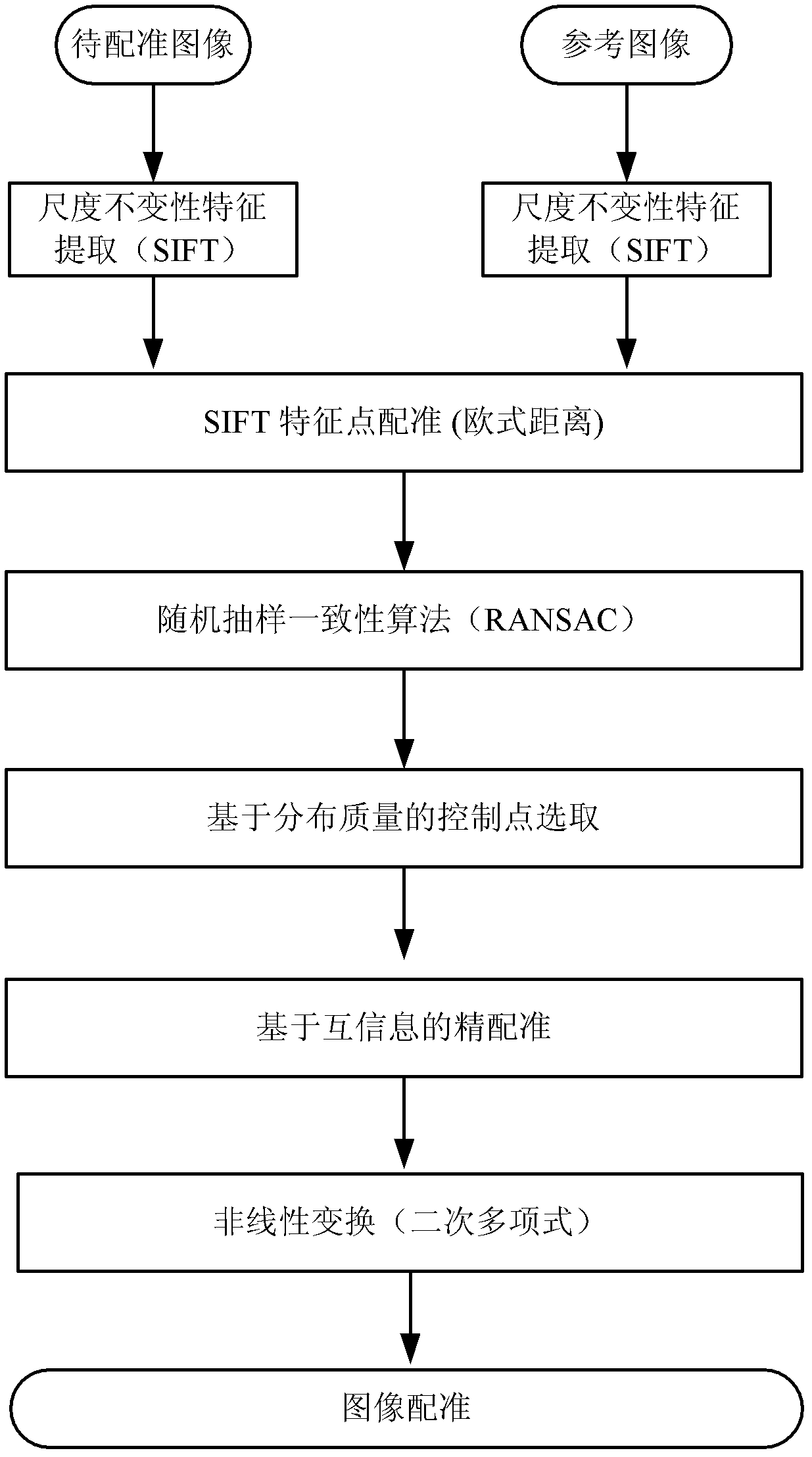

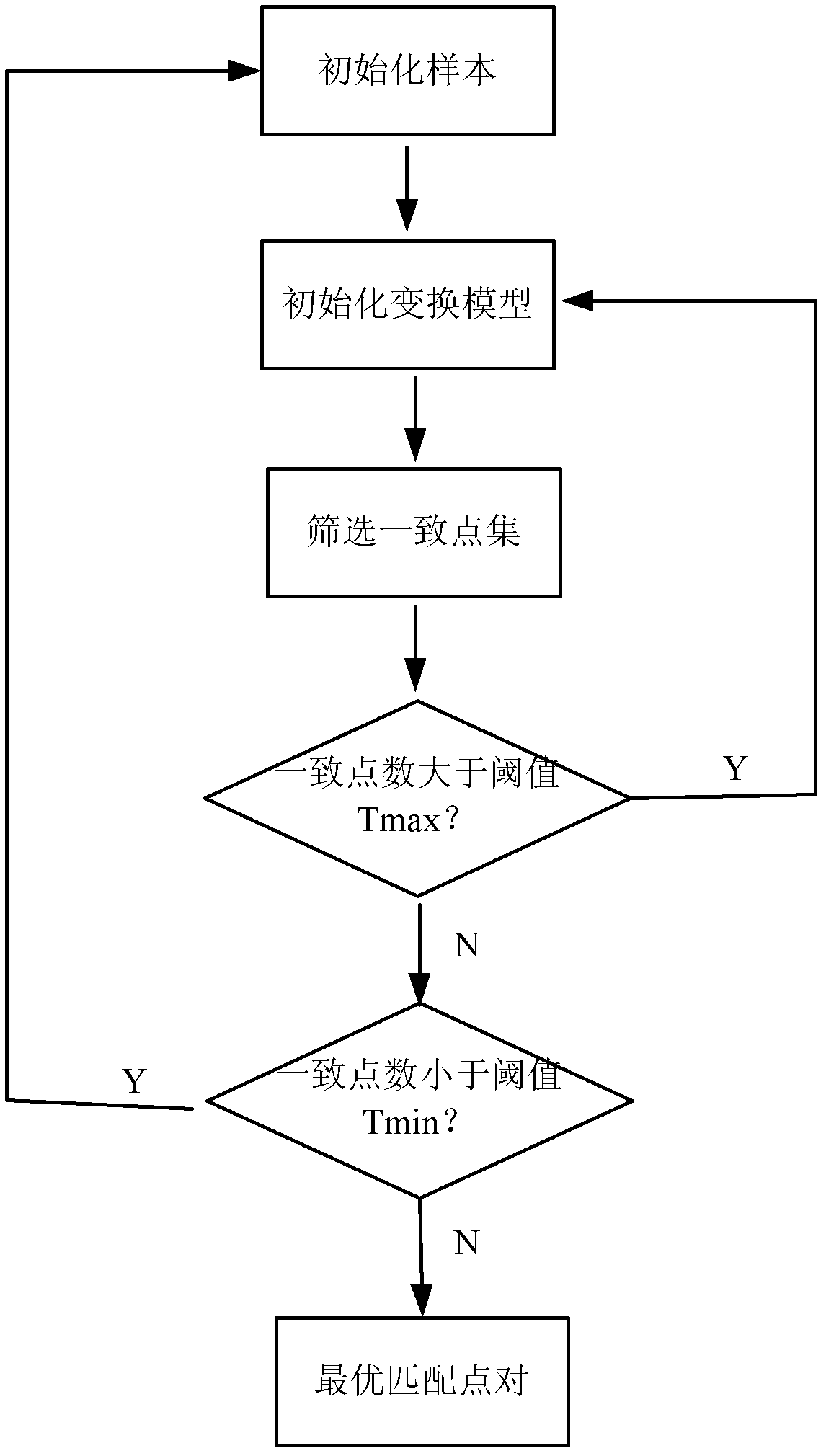

Remote sensing image registration method of multi-source sensor

ActiveCN103020945AQuick registrationPrecise registrationImage analysisWeight coefficientMutual information

The invention provides a remote sensing image registration method of a multi-source sensor, relating to an image processing technology. The remote sensing image registration method comprises the following steps of: respectively carrying out scale-invariant feature transform (SIFT) on a reference image and a registration image, extracting feature points, calculating the nearest Euclidean distances and the nearer Euclidean distances of the feature points in the image to be registered and the reference image, and screening an optimal matching point pair according to a ratio; rejecting error registration points through a random consistency sampling algorithm, and screening an original registration point pair; calculating distribution quality parameters of feature point pairs and selecting effective control point parts with uniform distribution according to a feature point weight coefficient; searching an optimal registration point in control points of the image to be registered according to a mutual information assimilation judging criteria, thus obtaining an optimal registration point pair of the control points; and acquiring a geometric deformation parameter of the image to be registered by polynomial parameter transformation, thus realizing the accurate registration of the image to be registered and the reference image. The remote sensing image registration method provided by the invention has the advantages of high calculation speed and high registration precision, and can meet the registration requirements of a multi-sensor, multi-temporal and multi-view remote sensing image.

Owner:济钢防务技术有限公司

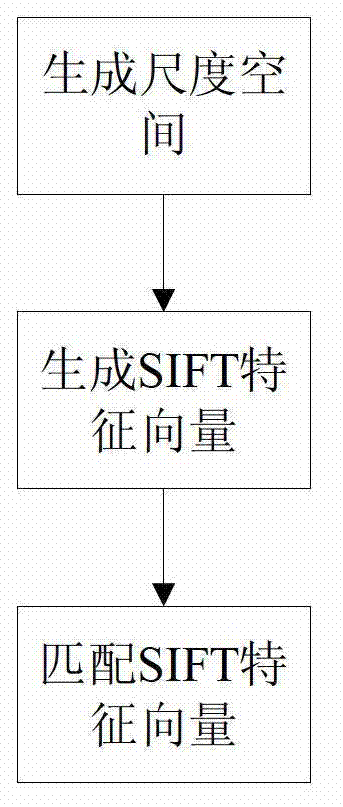

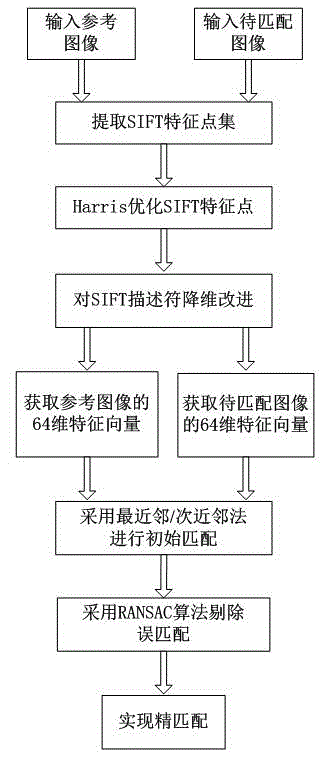

Efficient image matching method based on improved scale invariant feature transform (SIFT) algorithm

InactiveCN102722731AImprove real-time performanceReduce overheadCharacter and pattern recognitionFeature vectorScale-invariant feature transform

The invention discloses an efficient image matching method based on an improved scale invariant feature transform (SIFT) algorithm. The method comprises the following steps of: (1) extracting feature points of an input reference image and an image to be matched by using an SIFT operator; (2) by using a Harris operator, optimizing the feature points which are extracted by the SIFT operator, and screening representative angular points as final feature points; (3) performing dimensionality reduction on an SIFT feature descriptor, and acquiring 64-dimension feature vector descriptors of the reference image and the image to be matched; and (4) initially matching the reference image and the image to be matched by using a nearest neighbor / second choice neighbor (NN / SCN) algorithm, and eliminating error matching by using a random sample consensus (RANSAC) algorithm, so the images can be accurately matched. The method has the advantages that by selecting points which can well represent or reflect image characteristics for image matching, matching accuracy is ensured, and the real-time performance of SIFT matching is improved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

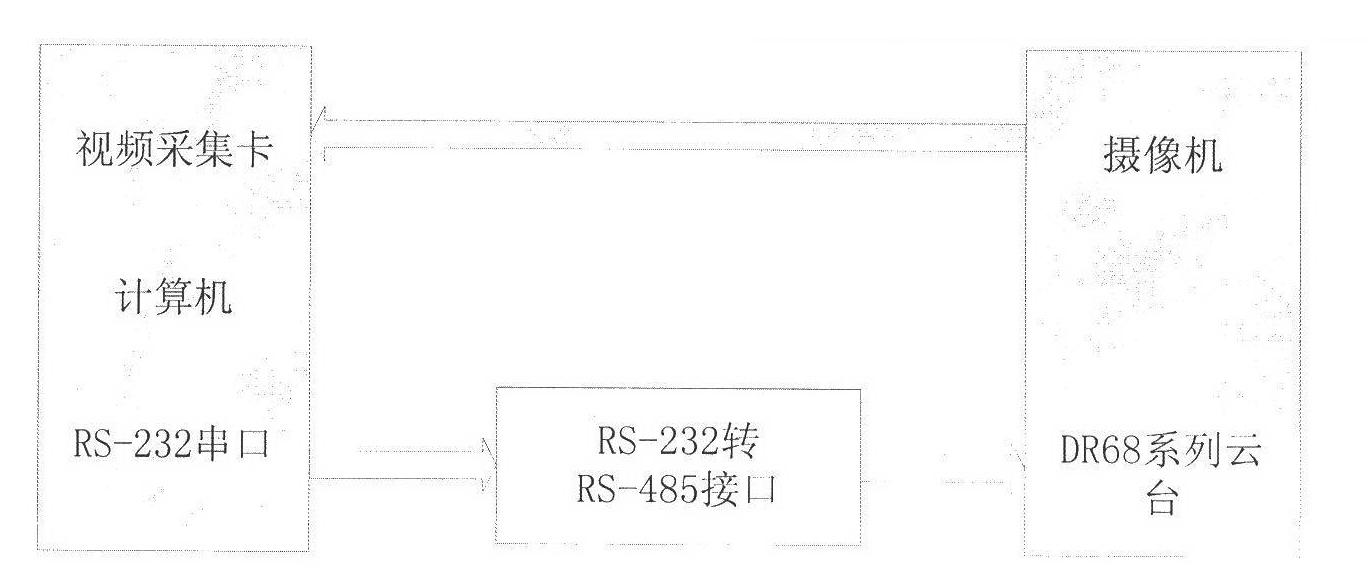

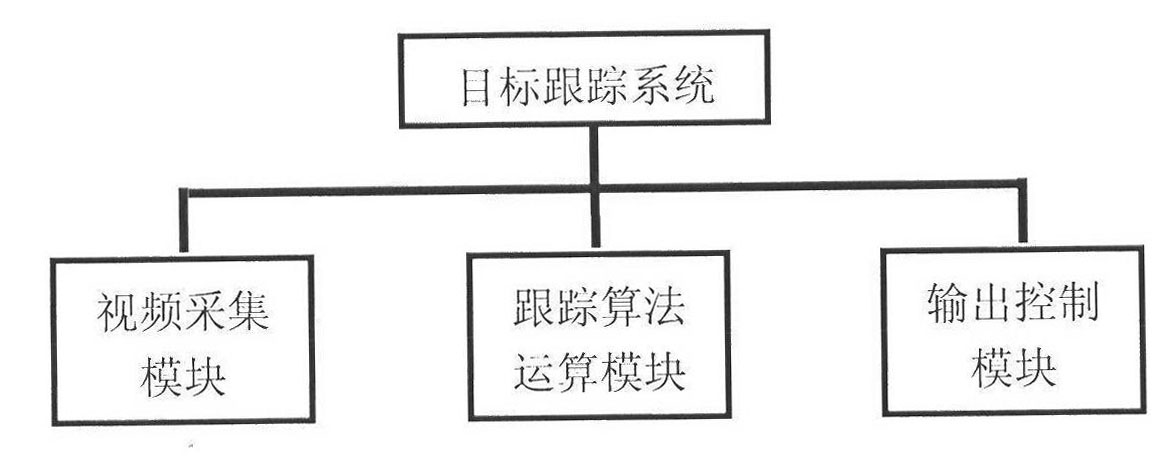

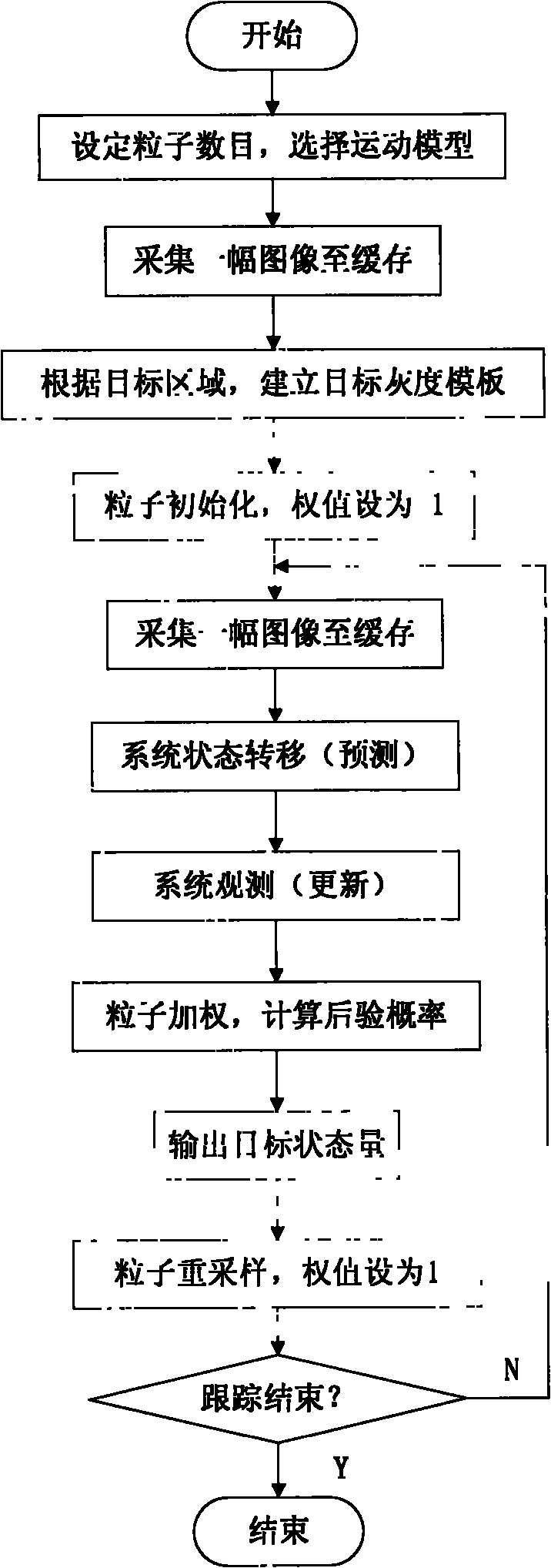

Automatic target tracking method and system by combining multi-characteristic matching and particle filtering

InactiveCN102184551ATelevision system detailsImage analysisScale-invariant feature transformComputer module

The invention relates to an automatic target tracking method and system by combining multi-characteristic matching and particle filtering. The target tracking system provided by the invention comprises a video acquisition module, a tracking algorithm computation module and an output control module, wherein the video acquisition module finishes the initialization of an acquisition card and the real-time acquisition of images; the tracking algorithm computation module comprises three tracking modes: the particle filtering tracking based on gray template region matching, the particle filtering tracking based on color probability distribution and the particle filtering tracking based on SIFT (scale invariant feature transform) characteristic matching, thereby realizing the target tracking of translation space and affine space; and the output control module utilizes the center of the target position obtained by tracking as a control command which is transmitted to a cloud deck, thereby realizing the motion of a camera along with a target object.

Owner:NORTHEASTERN UNIV

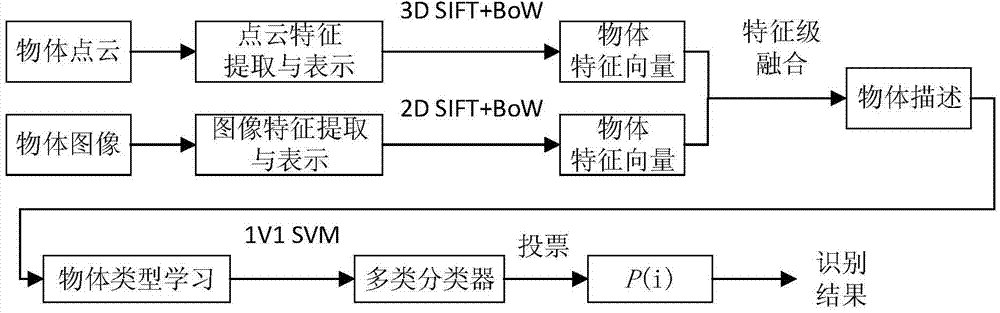

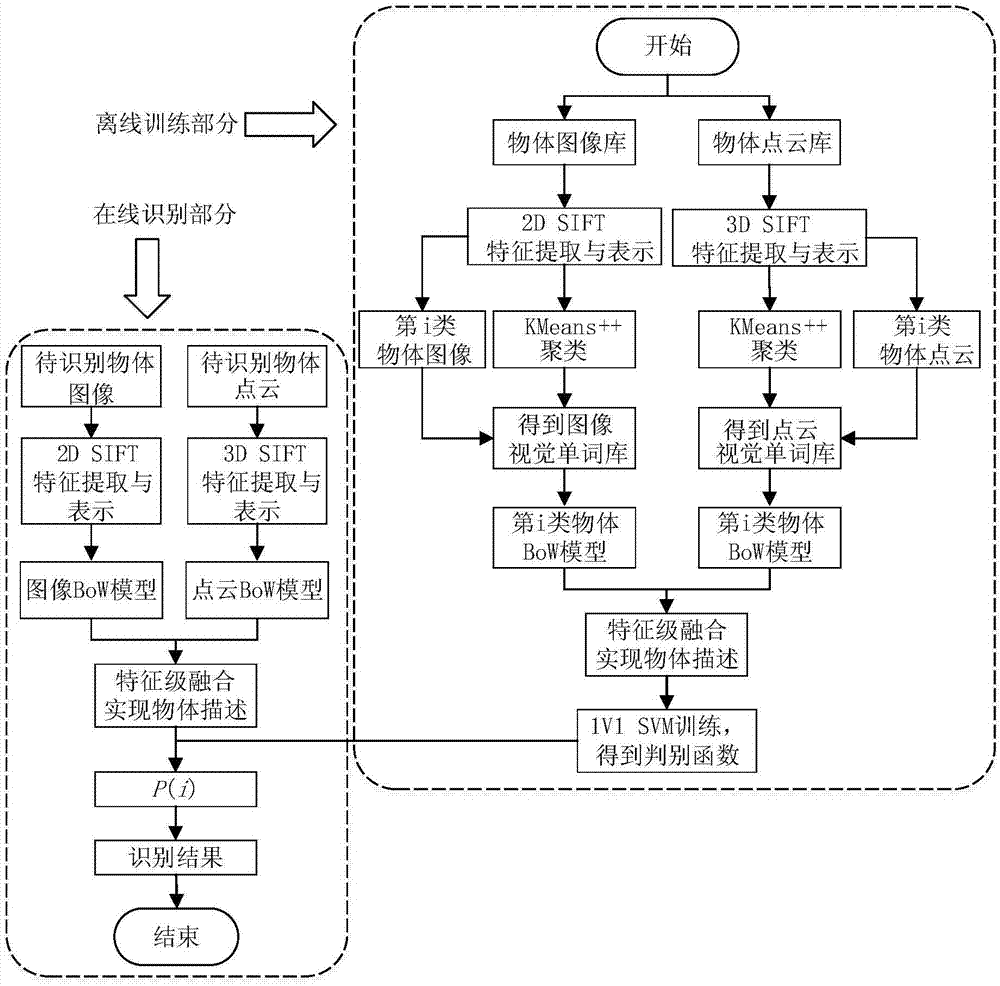

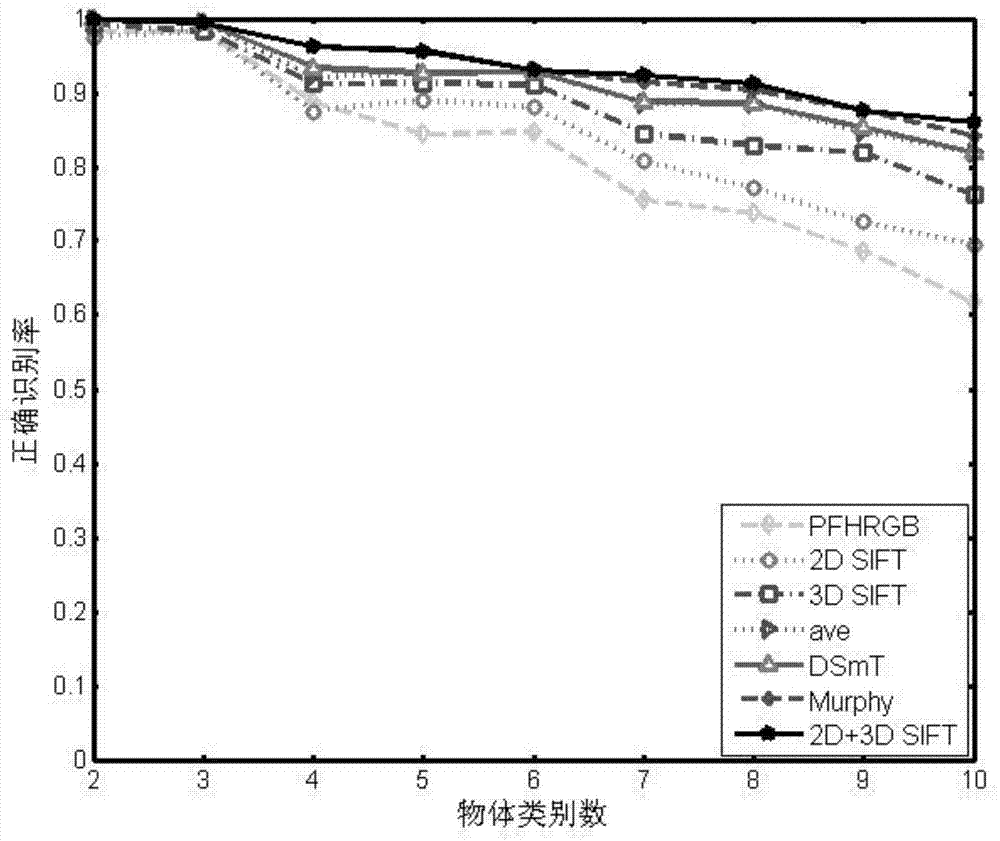

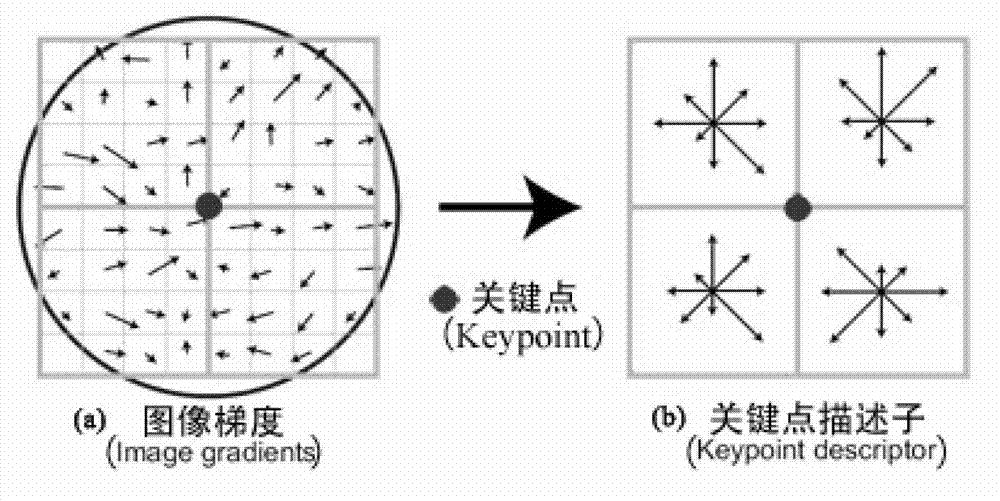

Ordinary object recognizing method based on 2D and 3D SIFT feature fusion

ActiveCN104715254ASolve the problem of low single feature recognition rateCharacter and pattern recognitionSupport vector machineFeature vector

The invention discloses an ordinary object recognizing method based on 2D and 3D SIFT feature fusion. The ordinary object recognizing method based on 2D and 3D SIFT feature fusion aims to increase the ordinary object recognizing accuracy. A 3D SIFT feather descriptor based on a point cloud model is provided based on Scale Invariant Feature Transform, SIFT (2D SIFT), and then the ordinary object recognizing method based on 2D and 3D SIFT feature fusion is provided. The ordinary object recognizing method based on 2D and 3D SIFT feature fusion comprises the following steps that 1, a two-dimension image and 2D and 3D feather descriptors of three-dimension point cloud of an object are extracted; 2, feather vectors of the object are obtained by means of a BoW (Bag of Words) model; 3, the two feature vectors are fused according to feature level fusion, so that description of the object is achieved; 4, classified recognition is achieved through a support vector machine (SVN) of a supervised classifier, and a final recognition result is given.

Owner:SOUTHEAST UNIV

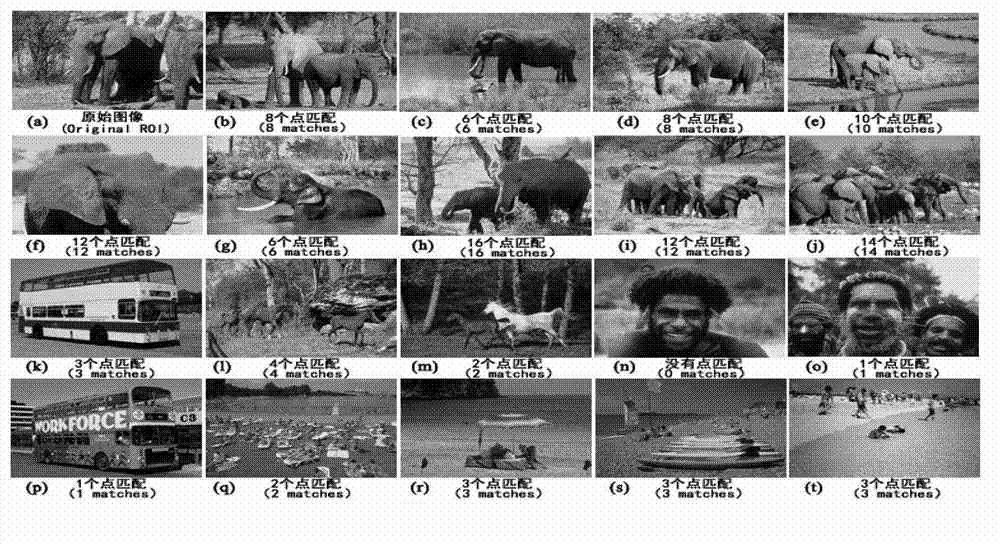

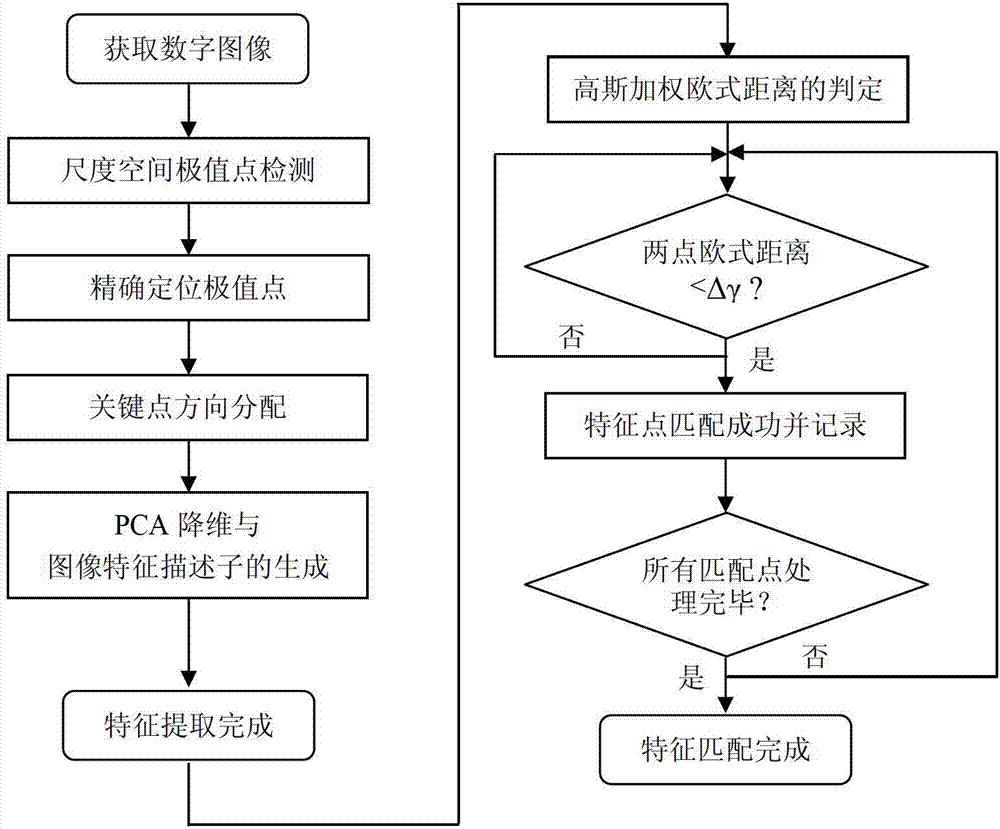

Feature extraction and matching method and device for digital image based on PCA (principal component analysis)

ActiveCN103077512AHigh precisionImprove matching speedImage analysisDigital videoPrincipal component analysis

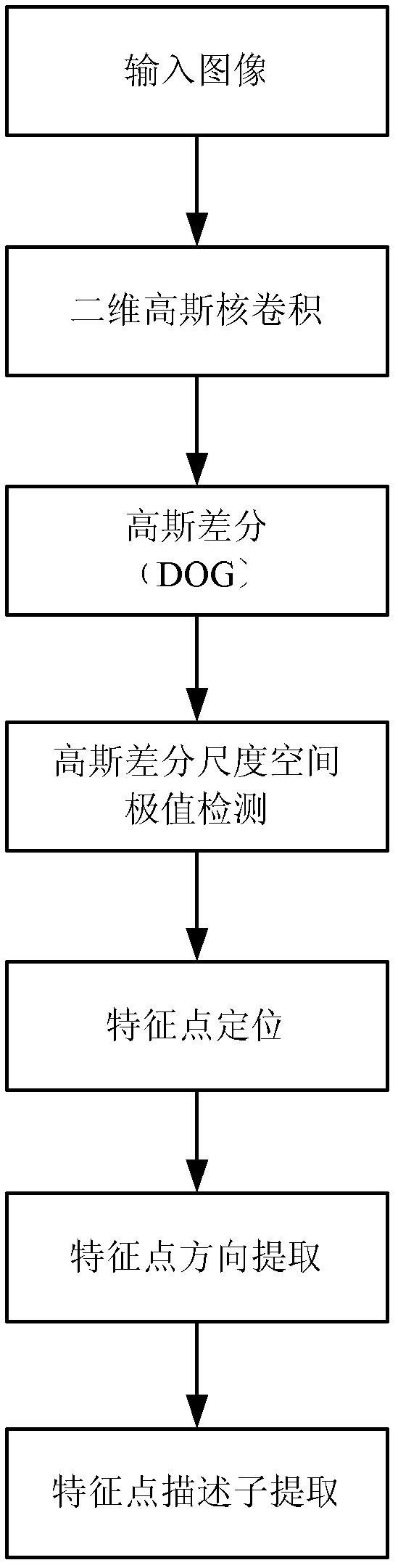

The invention provides a feature extraction and matching method and device for a digital image based on PCA (principal component analysis), belonging to the technical field of image analysis. The method comprises the following steps of: 1) detecting scale space extreme points; 2) locating the extreme points; 3) distributing directions of the extreme points; 4) reducing dimension of PCA and generating image feature descriptors; and 5) judging similarity measurement and feature matching. The device mainly comprises a numerical value preprocessing module, a feature point extraction module and a feature point matching module. Compared with the existing SIFI (Scale Invariant Feature Transform) feature extraction and matching algorithm, the feature extraction and matching method has higher accuracy and matching speed. The method and device provided by the invention can be directly applied to such machine vision fields as digital image retrieval based on contents, digital video retrieval based on contents, digital image fusion and super-resolution image reconstruction.

Owner:BEIJING UNIV OF TECH

Image retrieval method based on vocabulary tree level semantic model

InactiveCN103020111AImprove retrieval performanceThe search result is validSpecial data processing applicationsScale-invariant feature transformImage retrieval

The invention discloses an image retrieval method, which is realized on the basis of a vocabulary tree level semantic model. Firstly, the characteristics of SIFT (scale-invariant feature transform) comprising color information of an image are extracted to construct the characteristic vocabulary tree of an image library, and a visual sense vocabulary describing image visual sense information is generated. Secondly, the Bayesian decision theory is utilized to realize the mapping of the visual sense vocabulary into semantic subject information on the basis of the generated visual sense vocabulary, a level semantic model is further constructed, and the semantic image retrieval algorithm based on content is completed on the basis of the model. Thirdly, according to relevant feedback of a user during a retrieval process, a positive image expandable image retrieval library can be added, and the high-level semantic mapping can be revised at the same time. Experimental results show that the retrieval method is stable in performance, and the retrieval effect is obviously promoted along with the increasing of feedback times.

Owner:SUZHOU UNIV

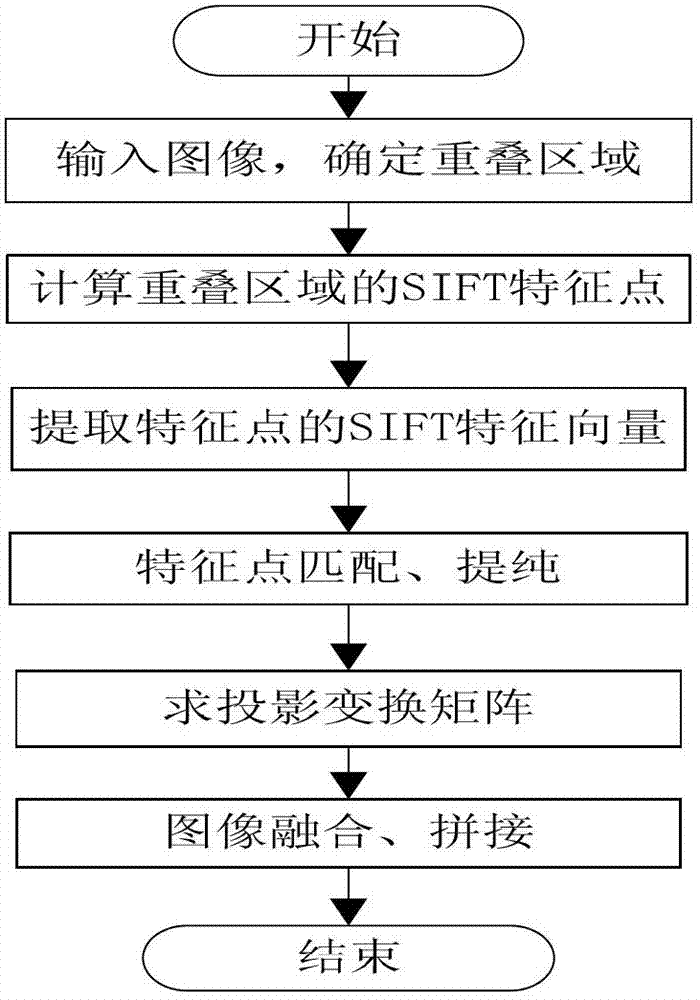

Image stitching method based on overlapping region scale-invariant feather transform (SIFT) feature points

InactiveCN102968777ASmall amount of calculationReduce in quantityImage enhancementGeometric image transformationFeature vectorScale-invariant feature transform

The invention discloses an image stitching method based on overlapping region scale-invariant feather transform (SIFT) feature points and belongs to the technical field of image processing. Aiming at the problems that the algorithm computation is large and subsequent matching error and computing redundancy are easily caused due to the non-overlapping region features because of extraction of the features of the whole image in the conventional image stitching algorithm based on features, the invention provides an image stitching method based on the overlapping region SIFT feature points. According to the method, only the feature points in the image overlapping region are extracted, the number of the feature points is reduced, and the algorithm computation is greatly reduced; and moreover, the feature points are represented by employing an improved SIFT feature vector extraction method, the computation during feature point matching is further reduced, and the mismatching rate is reduced. The invention also discloses an image stitching method with optical imaging difference, wherein the image stitching method comprises the following steps of: converting two images with optical imaging difference to be stitched to a cylindrical coordinate space by utilizing projection transformation, and stitching the images by using the image stitching method based on the overlapping region SIFT feature points.

Owner:HOHAI UNIV

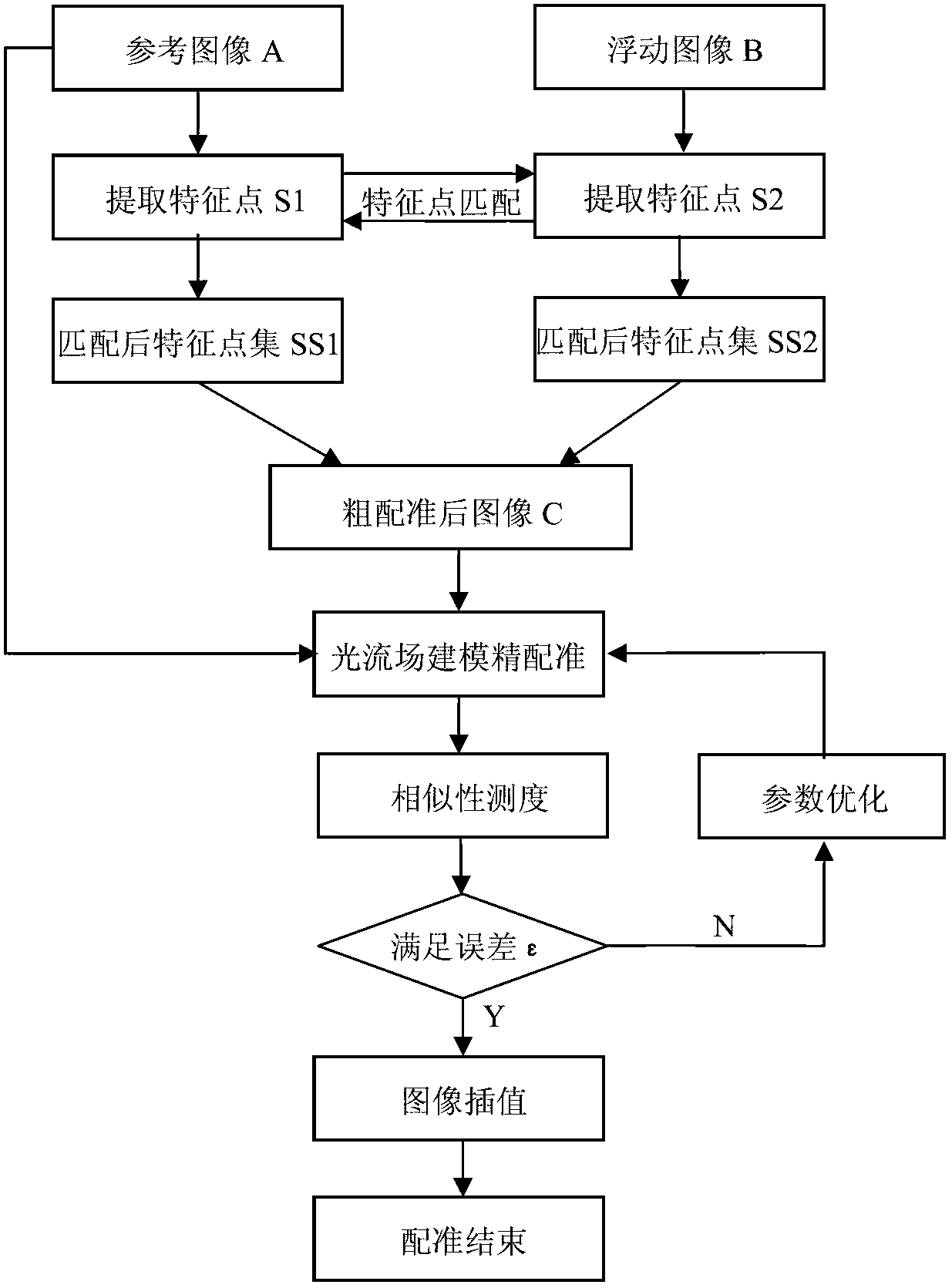

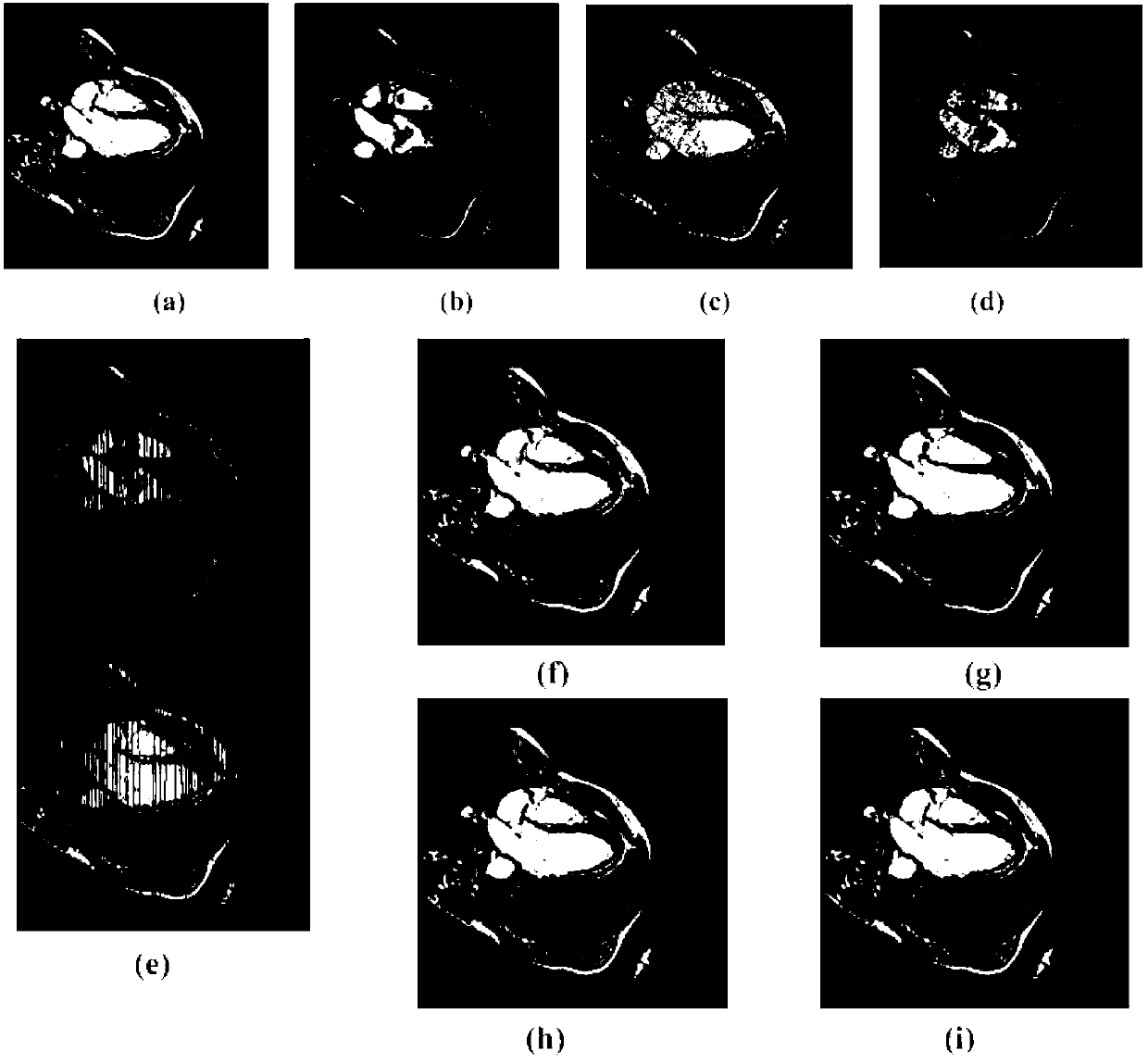

Non-rigid heart image grading and registering method based on optical flow field model

ActiveCN102722890AStrong anti-noise abilityImprove robustnessImage analysisGeometric image transformationImaging processingScale-invariant feature transform

The invention discloses a non-rigid heart image grading and registering method based on an optical flow field model, which belongs to the technical field of image processing. The method comprises the following steps of: obtaining an affine transformation coefficient through the scale invariant characteristic vectors of two images, and obtained a rough registration image through affine transformation; and obtaining bias transformation of the rough registration image by using an optical flow field method, and interpolating to obtain a fine registration image. In the non-rigid heart image grading and registering method, an SIFT (Scale Invariant Feature Transform) characteristic method and an optical flow field method are complementary to each other, the SIFT characteristic is used for making preparations for increasing the converging speed of the optical flow field method, and the registration result is more accurate through the optical flow field method; and the characteristic details of a heart image are better kept, higher anti-noising capability and robustness are achieved, and an accurate registration result is obtained. Due to the adopted difference value method, a linear difference value and a central difference are combined, and final registration is realized by adopting a multi-resolution strategy in the method simultaneously.

Owner:INNER MONGOLIA UNIV OF SCI & TECH

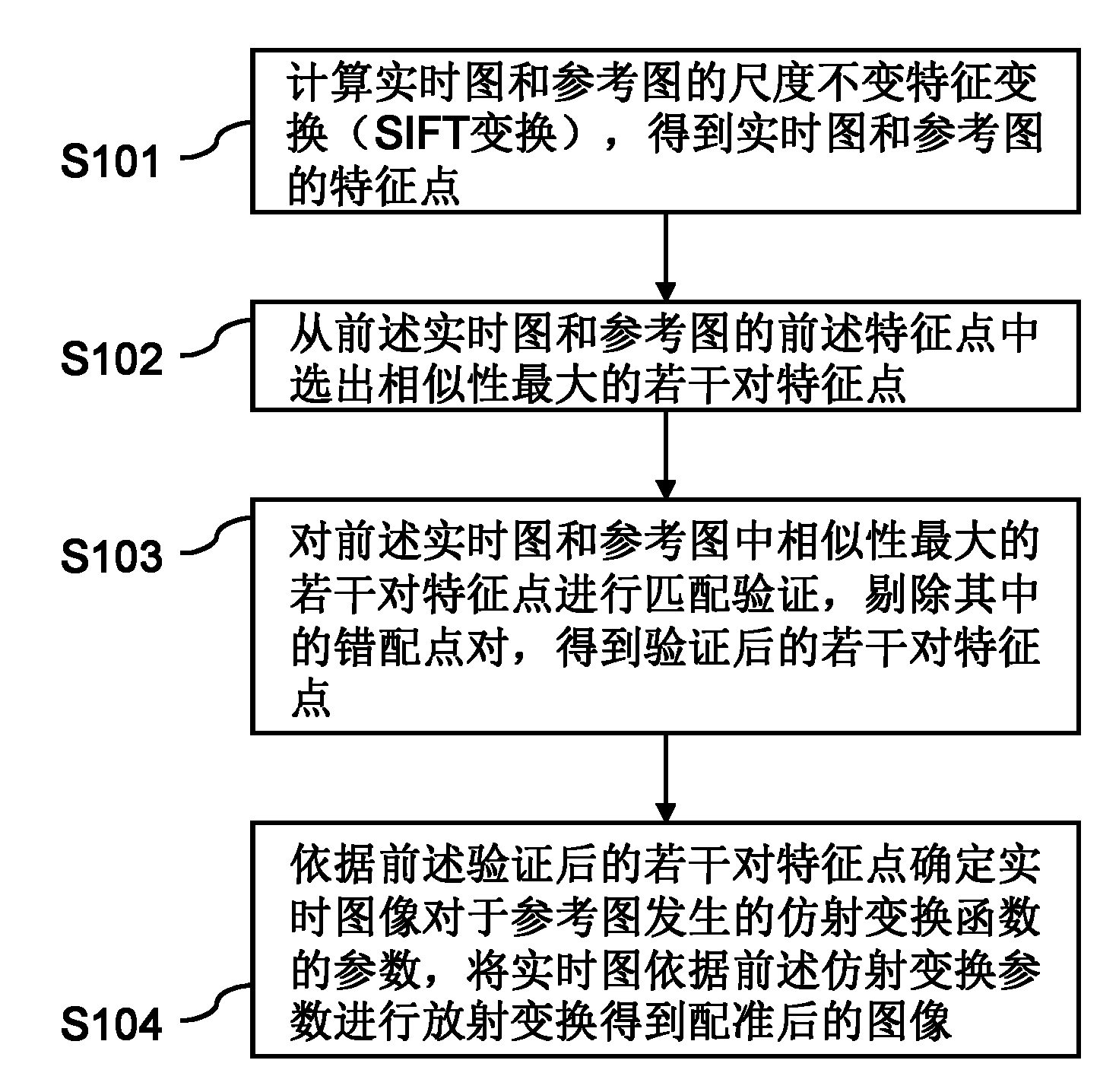

Image registration system and method thereof

InactiveCN102005047AImprove accuracyImprove registration accuracyImage analysisScale-invariant feature transformReference image

The invention provides an image registration system and a method thereof. The method comprises the following steps of: firstly calculating scale invariant feature transform (SIFT transform) of a real-time image and a reference image to obtain feature points of the real-time image and the reference image; selecting a plurality of pairs of feature points with maximum similarity from the feature points of the real-time image and the reference image; carrying out matching verification of the plurality of pairs of feature points with maximum similarity selected from the feature points of the real-time image and the reference image and rejecting a mismatching point pair therein to obtain a plurality of pairs of feature points which are verified; according to the plurality of pairs of feature points which are verified, determining the parameter of an affine transformation function of the real-time image relative to the reference image; and carrying out radioactive transform of the real-time image according to the affine transformation parameter to obtain the registered image. The system and the method are difficult to influence and high in registration accuracy.

Owner:江苏博悦物联网技术有限公司

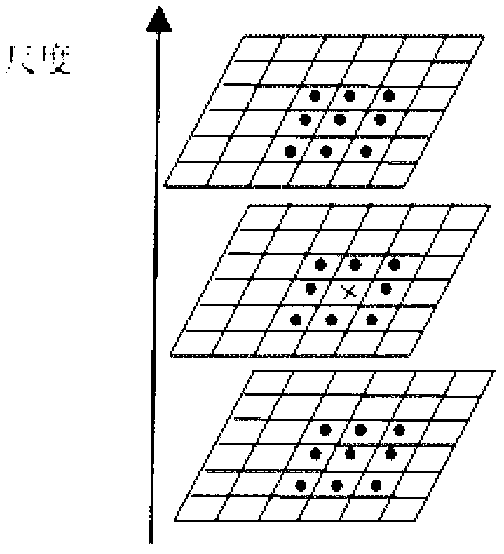

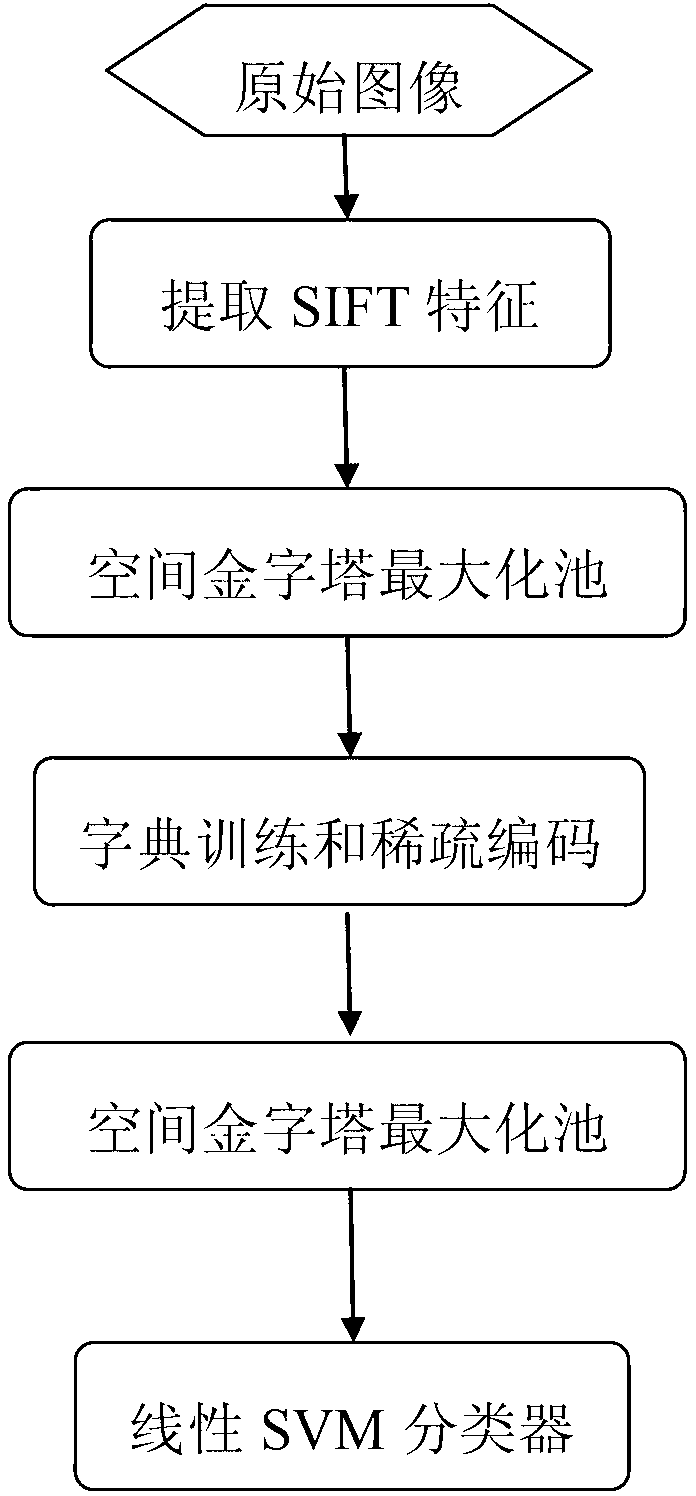

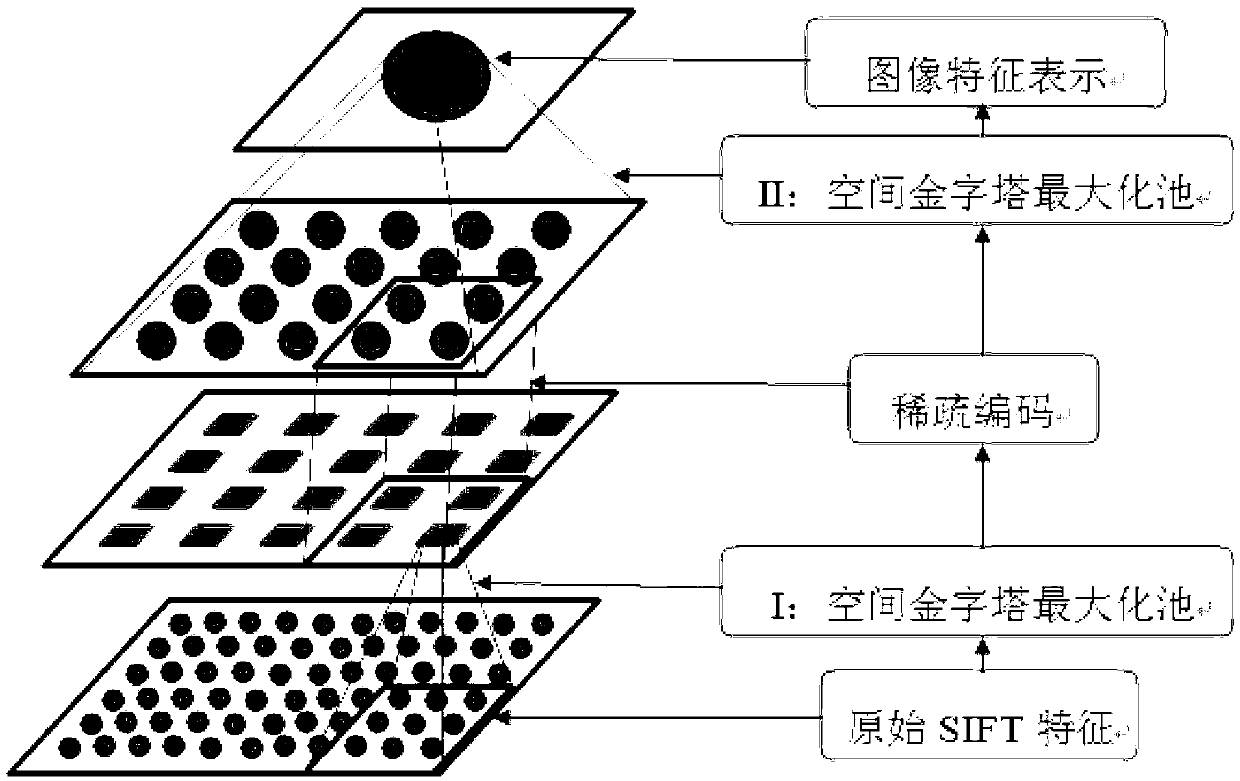

Image classification method based on hierarchical SIFT (scale-invariant feature transform) features and sparse coding

InactiveCN103020647AReduce the dimensionality of SIFT featuresHigh simulationCharacter and pattern recognitionSingular value decompositionData set

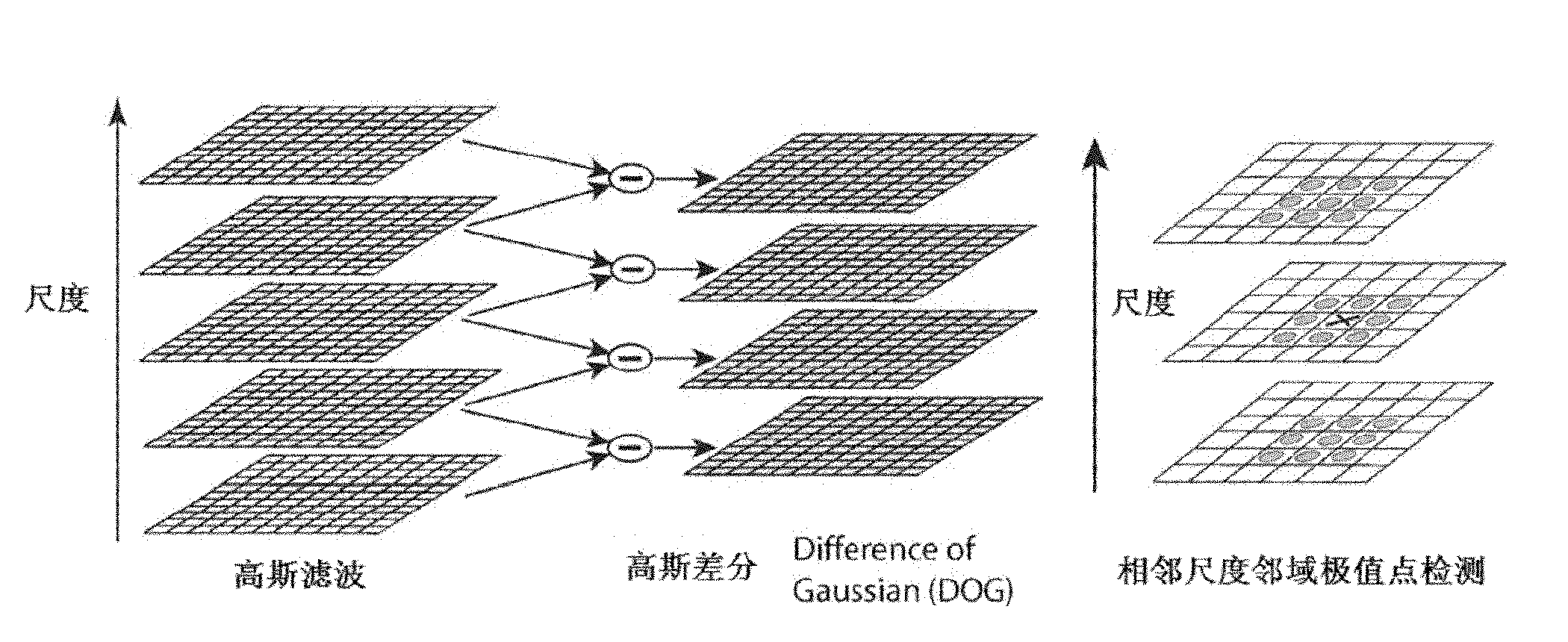

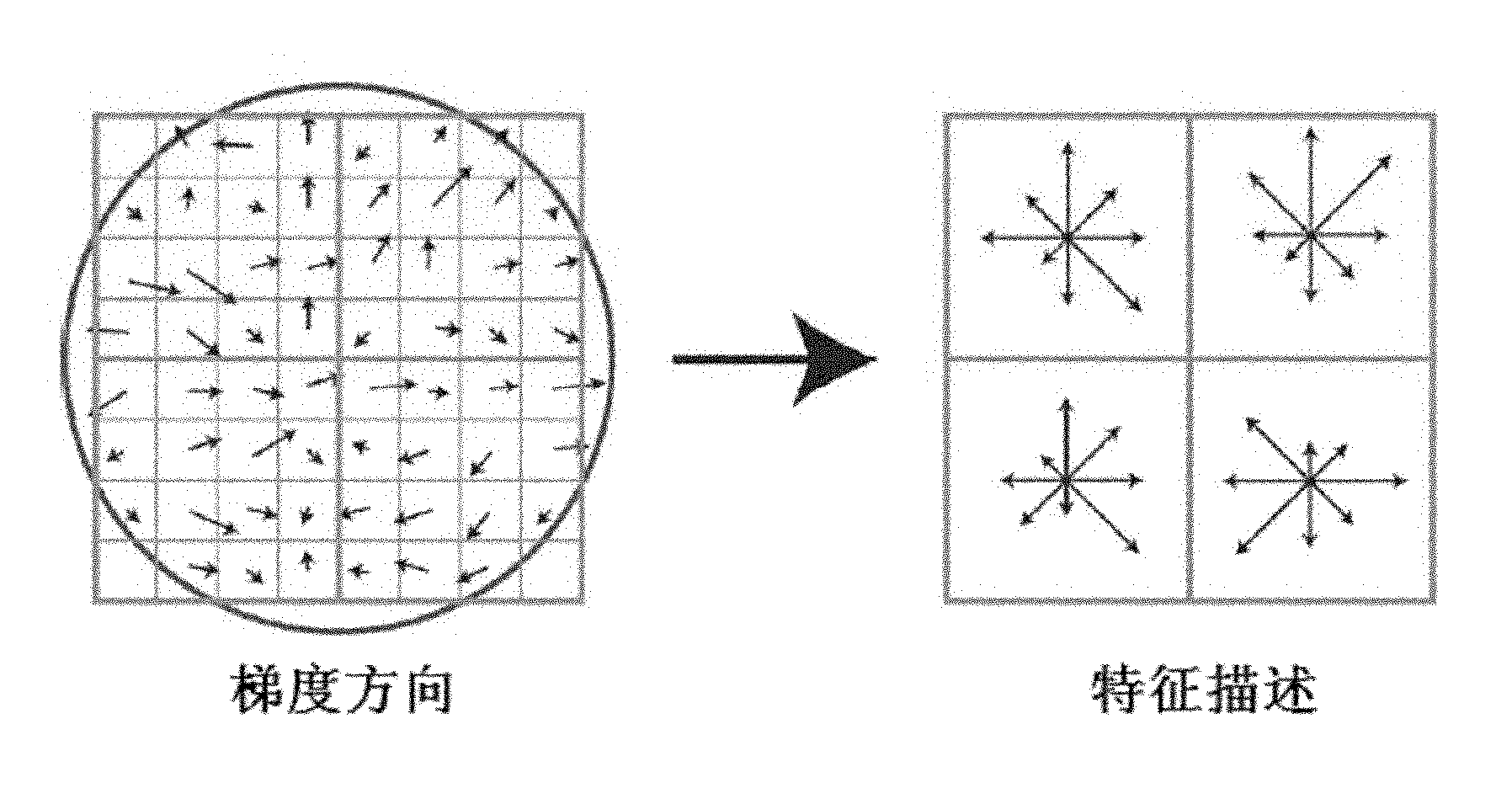

The invention discloses an image classification method based on hierarchical SIFT (scale-invariant feature transform) features and sparse coding. The method includes the implementation steps: (1) extracting 512-dimension scale unchanged SIFT features from each image in a data set according to 8-pixel step length and 32X32 pixel blocks; (2) applying a space maximization pool method to the SIFT features of each image block so that a 168-dimension vector y is obtained; (3) selecting several blocks from all 32X32 image blocks in the data set randomly and training a dictionary D by the aid of a K-singular value decomposition method; (4) as for the vectors y of all blocks in each image, performing sparse representation for the dictionary D; (5) applying the method in the step (2) for all sparse representations of each image so that feature representations of the whole image are obtained; and (6) inputting the feature representations of the images into a linear SVM (support vector machine) classifier so that classification results of the images are obtained. The image classification method has the advantages of capabilities of capturing local image structured information and removing image low-level feature redundancy and can be used for target identification.

Owner:XIDIAN UNIV

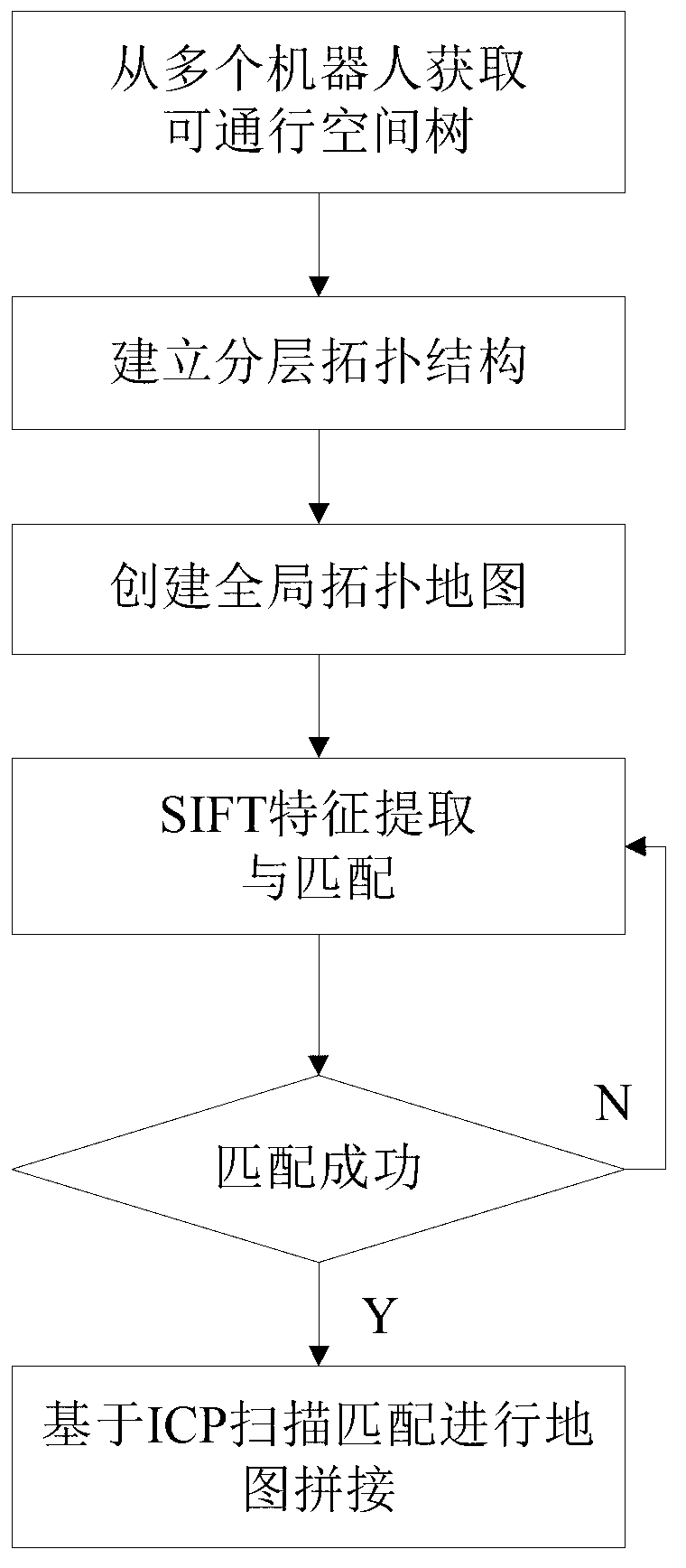

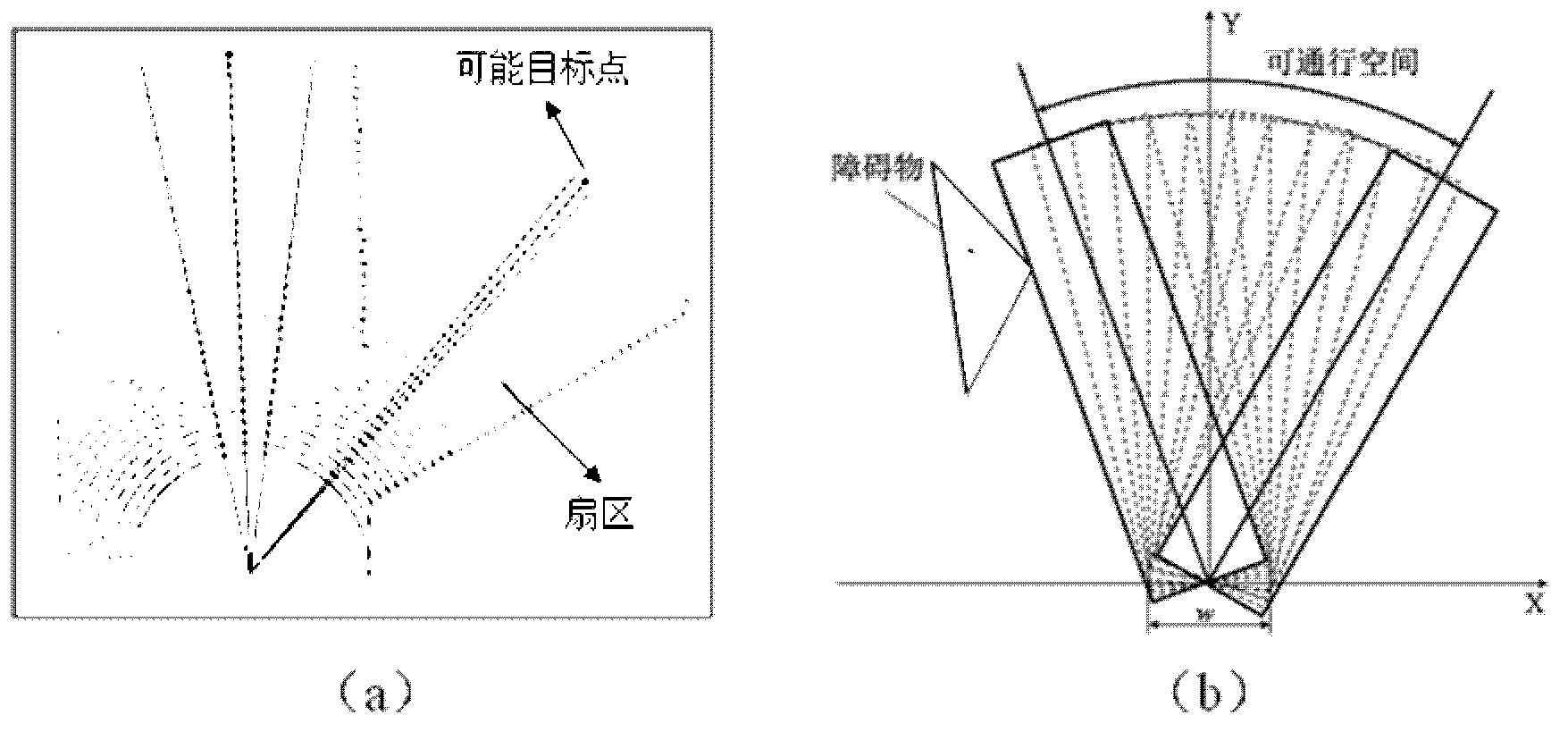

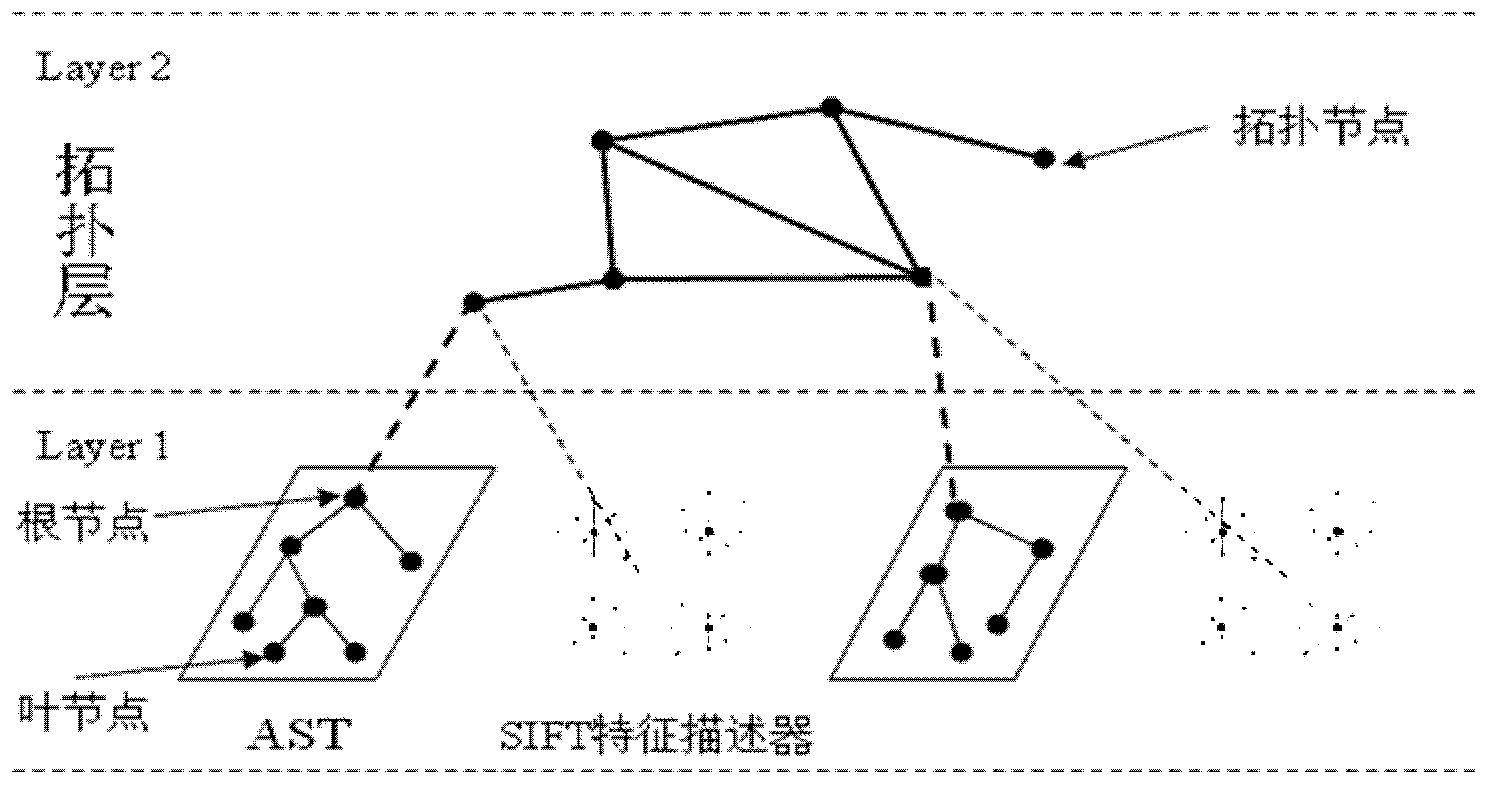

Layered topological structure based map splicing method for multi-robot system

InactiveCN103247040AImprove accuracySolve the problem of creating efficiencyImage enhancementScale-invariant feature transformMultirobot systems

The invention belongs to the field of intelligent movable robots, and discloses a layered topological structure based map splicing method for a multi-robot system in an unknown environment and solves the map splicing problem of the multi-robot system in case the position and posture are unknown. The method comprises the following steps: acquiring an accessible space tree, building a layered topological structure, creating a global topological map, extracting SIFT (Scale Invariant Feature Transform) features and performing feature matching, and performing map splicing based on ICP (Iterative Closest Point) scanning matching. According to the invention, under the condition that the relative positions and gestures of robots are unknown, a layered topological structure merging SIFT features is provided, the global topological map is created in an increment manner, and the map splicing of the multi-robot system under large scale unknown environment is realized according to the SIFT information among the nodes, in combination with a scanning matching method; and the splicing accuracy and real-time performance are effectively improved. Therefore, the method is suitable for the field of intelligent mobile robots related to map creation and map splicing.

Owner:BEIJING UNIV OF TECH

Pattern recognition method of substation switch based on infrared detection

ActiveCN102289676AImprove match stabilityImprove noise immunityCircuit arrangementsCharacter and pattern recognitionPattern recognitionSmart substation

The invention discloses a method for identifying the mode of a switch of a substation based on infrared detection. The method comprises the following steps of: 1, inputting an image to be identified, acquiring a switch area of the image by image registration, and sequentially graying, binarizing and thinning the switch area; 2, linearly detecting the image of the thinned switch area by using a Hough transformation algorithm; and 3, determining a detection result, and identifying the state of the switch by using a switch state determination condition. An H matrix between two images is obtainedby matching of the features of scale invariant feature transform (SIFT), the switch area in the image is found, and then the state of the switch is identified. Experiments show that: by the method, the problem of identification of a power switch can be effectively solved; an important significance is provided for automatic monitoring of the power equipment of an intelligent substation; the burdens on an inspector of the substation can be reduced; and detection efficiency is greatly improved.

Owner:STATE GRID INTELLIGENCE TECH CO LTD

Uncalibrated multi-viewpoint image correction method for parallel camera array

InactiveCN102065313AFreely adjust horizontal parallaxIncrease the use range of multi-look correctionImage analysisSteroscopic systemsParallaxScale-invariant feature transform

The invention relates to an uncalibrated multi-viewpoint image correction method for parallel camera array. The method comprises the steps of: at first, extracting a set of characteristic points in viewpoint images and determining matching point pairs of every two adjacent images; then introducing RANSAC (Random Sample Consensus) algorithm to enhance the matching precision of SIFT (Scale Invariant Feature Transform) characteristic points, and providing a blocking characteristic extraction method to take the fined positional information of the characteristic points as the input in the subsequent correction processes so as to calculate a correction matrix of uncalibrated stereoscopic image pairs; then projecting a plurality of non-coplanar correction planes onto the same common correction plane and calculating the horizontal distance between the adjacent viewpoints on the common correction plane; and finally, adjusting the positions of the viewpoints horizontally until parallaxes are uniform, namely completing the correction. The composite stereoscopic image after the multi-viewpoint uncalibrated correction of the invention has quite strong sense of width and breadth, prominently enhanced stereoscopic effect compared with the image before the correction, and can be applied to front-end signal processing of a great many of 3DTV application devices.

Owner:SHANGHAI UNIV

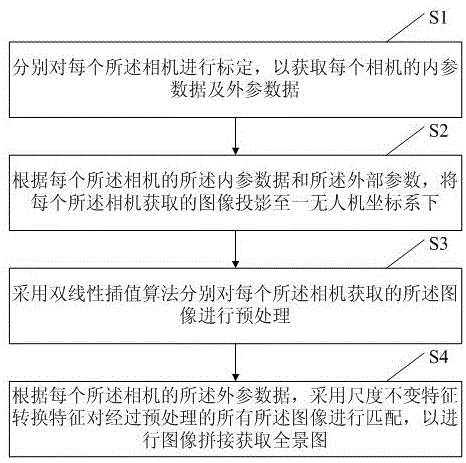

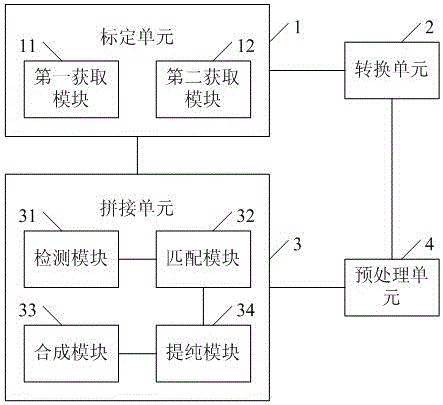

Panoramic image stitching method and system based on multiple cameras

InactiveCN106157304AReduce the number of flightsImprove experienceImage enhancementImage analysisVertical projectionScale-invariant feature transform

The invention discloses a method and system for stitching panoramas based on multiple cameras. The method for stitching panoramas based on multiple cameras is applied to unmanned aerial vehicles. The unmanned aerial vehicle includes a plurality of cameras for capturing different viewing angles, and Partial viewing angles of two adjacent cameras overlap, including the following steps: S1. Calibrate each camera separately to obtain the internal reference data and external reference data of each camera; S2. According to the internal reference data and external parameter data of each camera , project the image acquired by each camera to a UAV coordinate system, and the UAV coordinate system takes the vertical projection point of the UAV on the ground as the coordinate origin; S3. The images acquired by the camera are preprocessed; S4. According to the external parameter data of each camera, all the preprocessed images are matched by using the scale-invariant feature transformation feature to perform image stitching to obtain a panorama.

Owner:CHENGDU TOPPLUSVISION TECH CO LTD

Method for filling indoor light detection and ranging (LiDAR) missing data based on Kinect

InactiveCN102938142AIntegrity can be maintainedRealize collection workImage enhancementImage analysisMissing dataScale-invariant feature transform

The invention relates to a method for filling indoor LiDAR missing data based on Kinect. The method comprises extracting a key frame in a Kinect scanning process to obtain sparse scanned data; using a scale invariant feature transform (SIFT) algorithm to perform feature extraction on a RGB-D image collected by a Kinect device, and rejecting abnormal feature matching points through a random sample concensus (RANSAC) operator; merging the extracted features; extracting features of an LiDAR image, matching the features with the features of the Kinect device roughly to obtain a transfer matrix; using an improved iterative closest point (ICP) algorithm to achieve fine match of the LiDAR image with the RGB-D image of the Kinect; and performing the missing data fusion between an LiDAR model and the part scanned by the Kinect. The method for filling indoor LiDAR missing data based on Kinect has the advantages that the device cost is low, the acquisition process is flexible, scene depth and image information can be obtained, partial or missing data of indoor complex scenes can be acquired and filled rapidly.

Owner:WUHAN UNIV

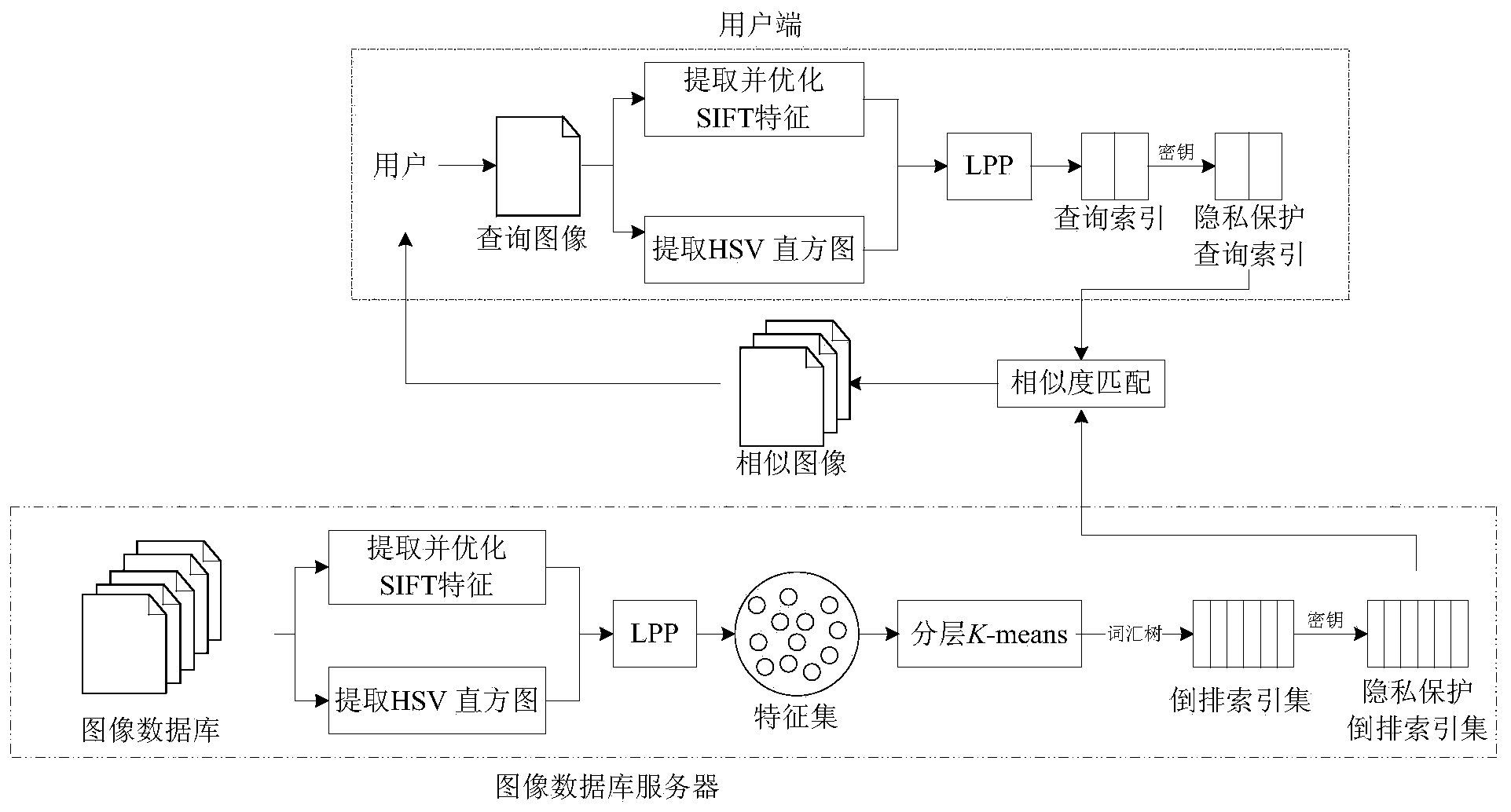

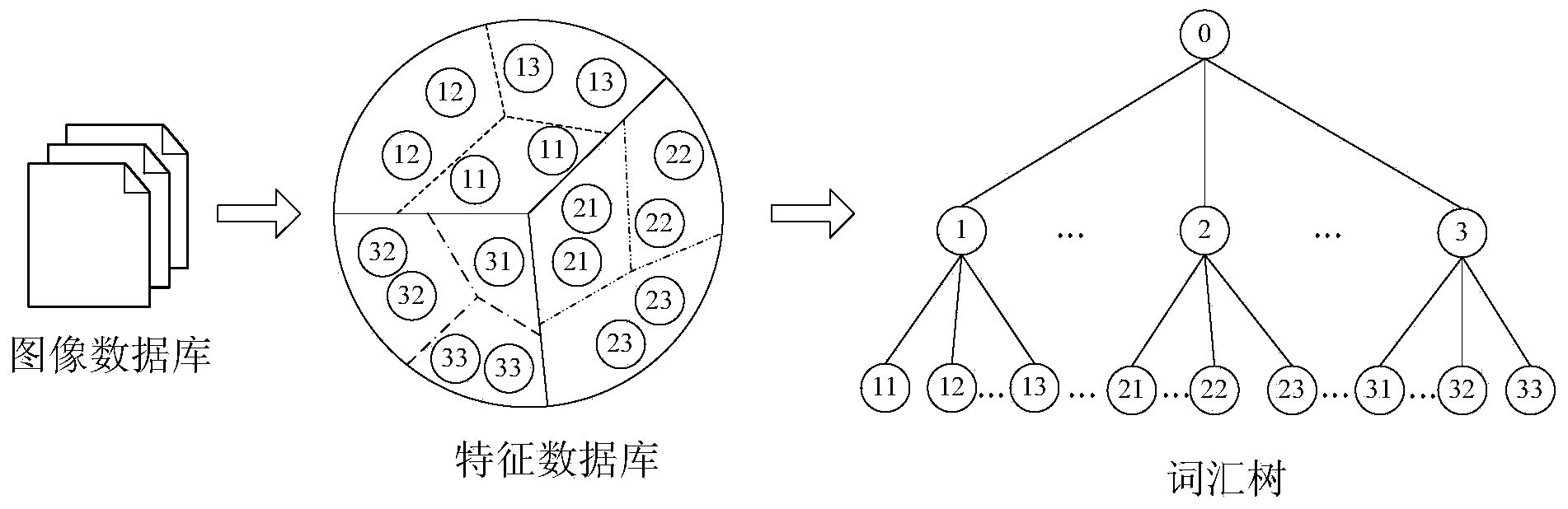

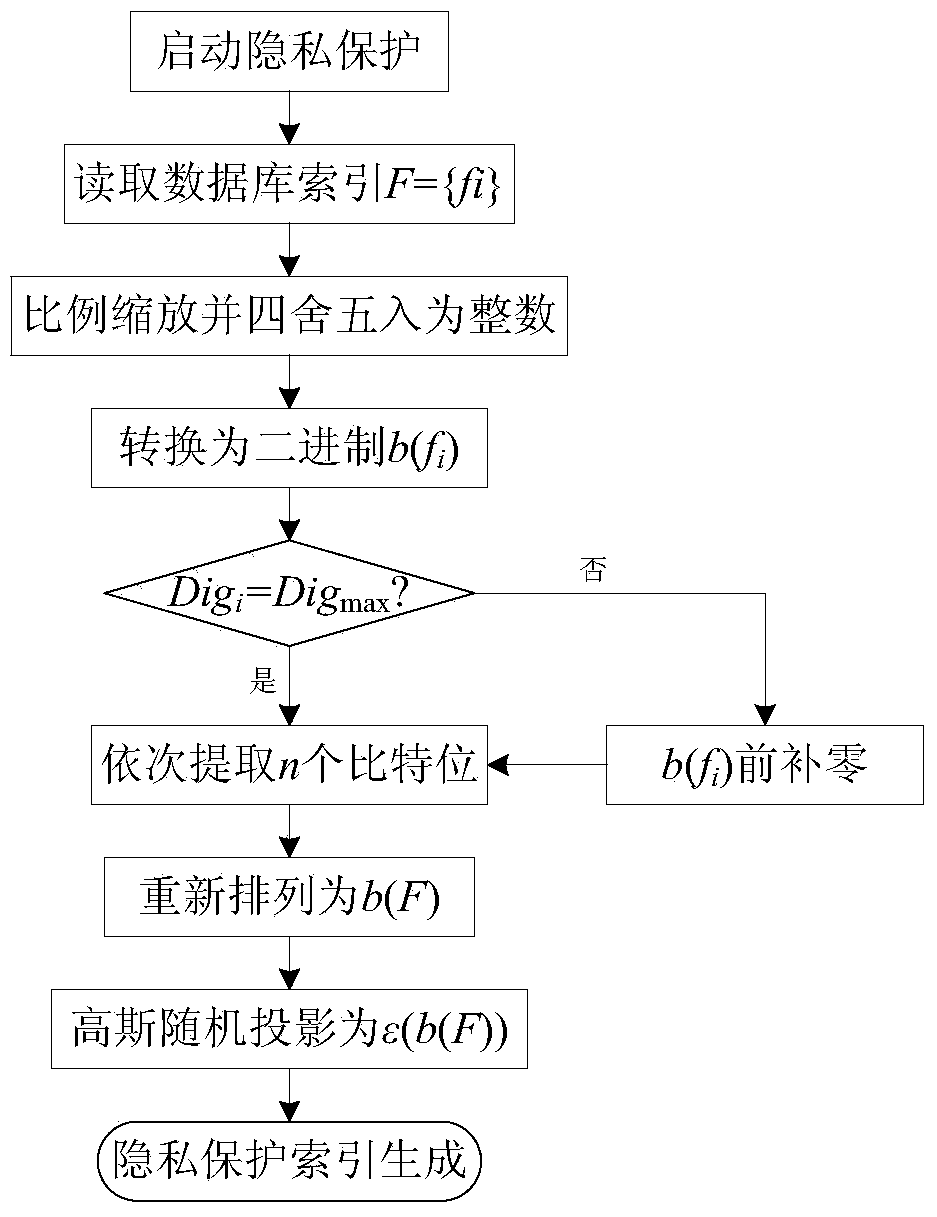

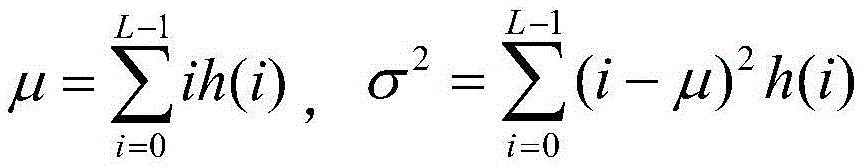

Privacy-protection index generation method for mass image retrieval

ActiveCN104008174AImprove performanceReduce the numberCharacter and pattern recognitionProgram/content distribution protectionFeature DimensionScale-invariant feature transform

The invention discloses a privacy-protection index generation method for mass image retrieval, relates to the privacy protection problem in mass image retrieval and involves with taking privacy protection into image retrieval. The method is used for establishing an image index with privacy protection, and therefore, the safety of the privacy information of a user can be protected while the retrieval performance is guaranteed. The method comprises the steps of firstly, extracting and optimizing SIFT (Scale Invariant Feature Transform) and HSV (Hue, Saturation and Value) color histogram, performing feature dimension reduction by use of a use of a manifold dimension reduction method of locality preserving projections, and constructing a vocabulary tree by using the dimension-reduced feature data. The vocabulary tree is used for constructing an inverted index structure; the method is capable of reducing the number of features, increasing the speed of plaintext domain image retrieval and also optimizing the performance of image retrieval. The method is characterized in that privacy protection is added on the basis of a plaintext domain retrieval framework and the inverted index is double encrypted by use of binary random codes and random projections, and therefore, the image index with privacy protection is realized.

Owner:数安信(北京)科技有限公司

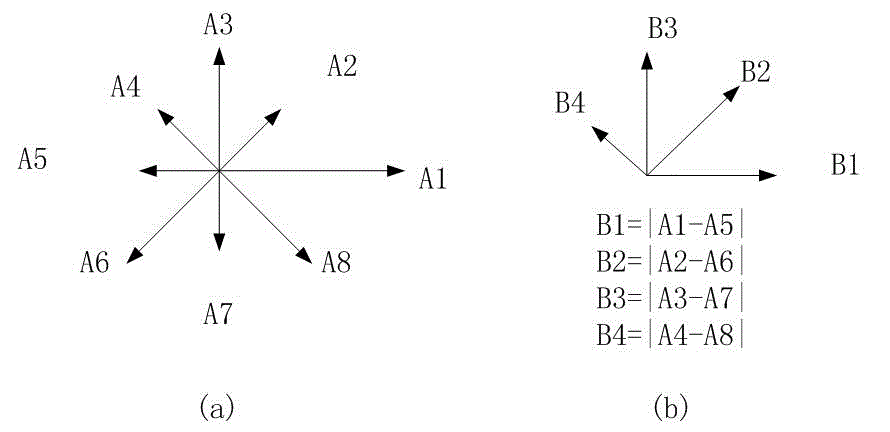

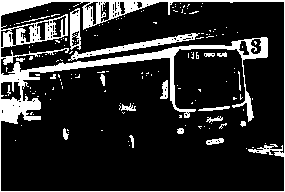

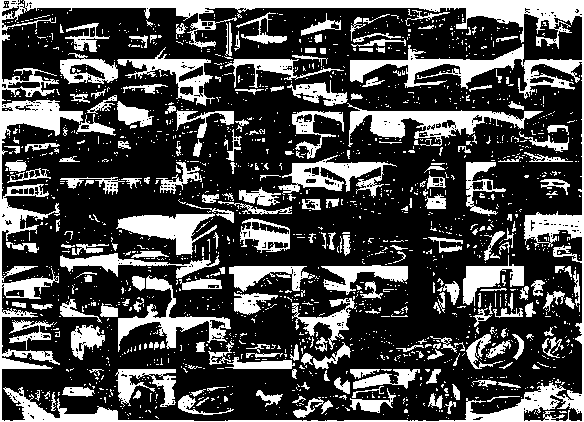

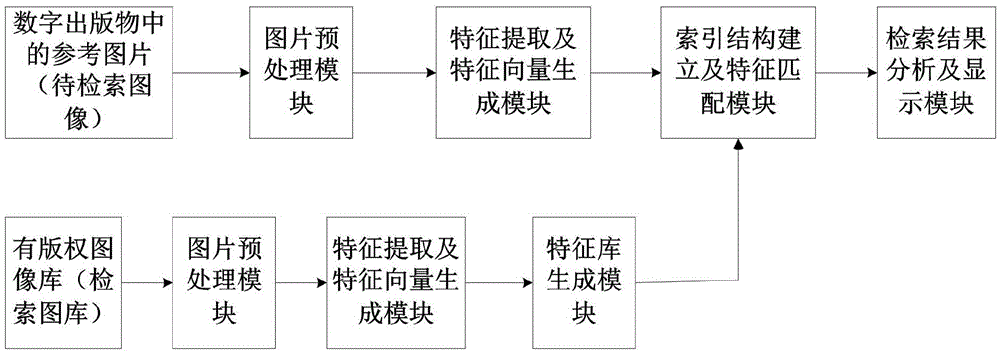

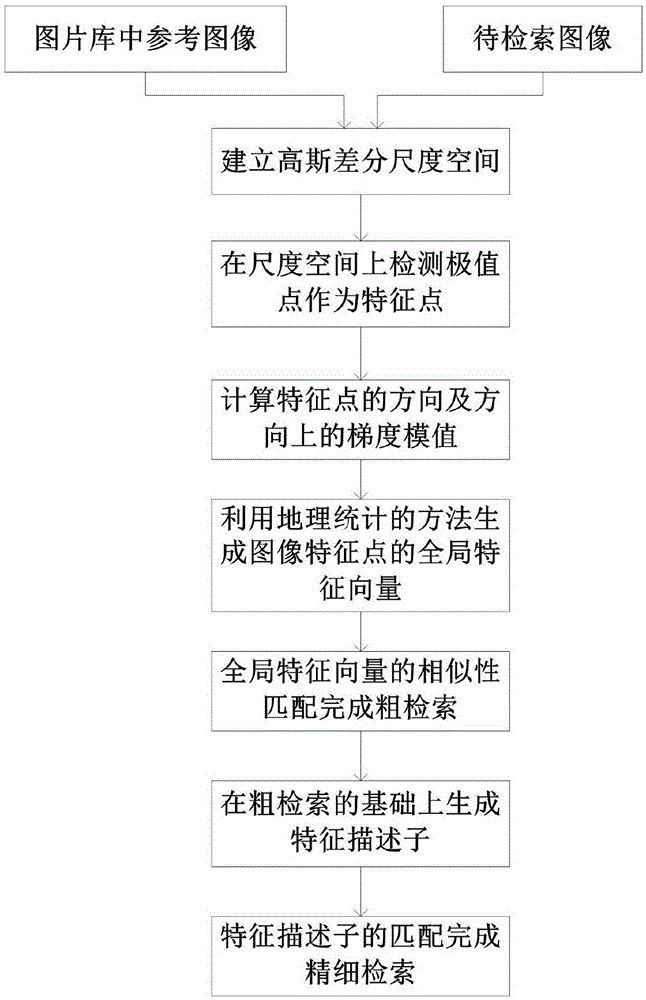

Efficient image retrieval method based on improved SIFT (scale invariant feature transform) feature

ActiveCN105550381AApplicable Image Infringement Review RequirementsSpecial data processing applicationsFeature vectorImaging processing

The invention discloses an efficient image retrieval method based on an improved SIFT (scale invariant feature transform) feature, relates to the field of image processing and computer vision and belongs to a content-based image retrieval method. The method comprises the steps of establishing a gauss difference scale space, taking scale space detection extreme points as feature points, calculating the direction of the feature points and gradient module values in the direction, generating a global feature vector of the image feature points through a geographical statistical method, conducting global feature vector similarity matching to finish rough retrieval, generating feature descriptors on the basis of rough retrieval, and conducting feature descriptor matching to finish accurate retrieval. The novel image retrieval method is superior to a traditional SIFT algorithm, and is more suitable for requirements of image infringement examination in digital prints than an existing retrieval algorithm.

Owner:BEIJING UNIV OF TECH

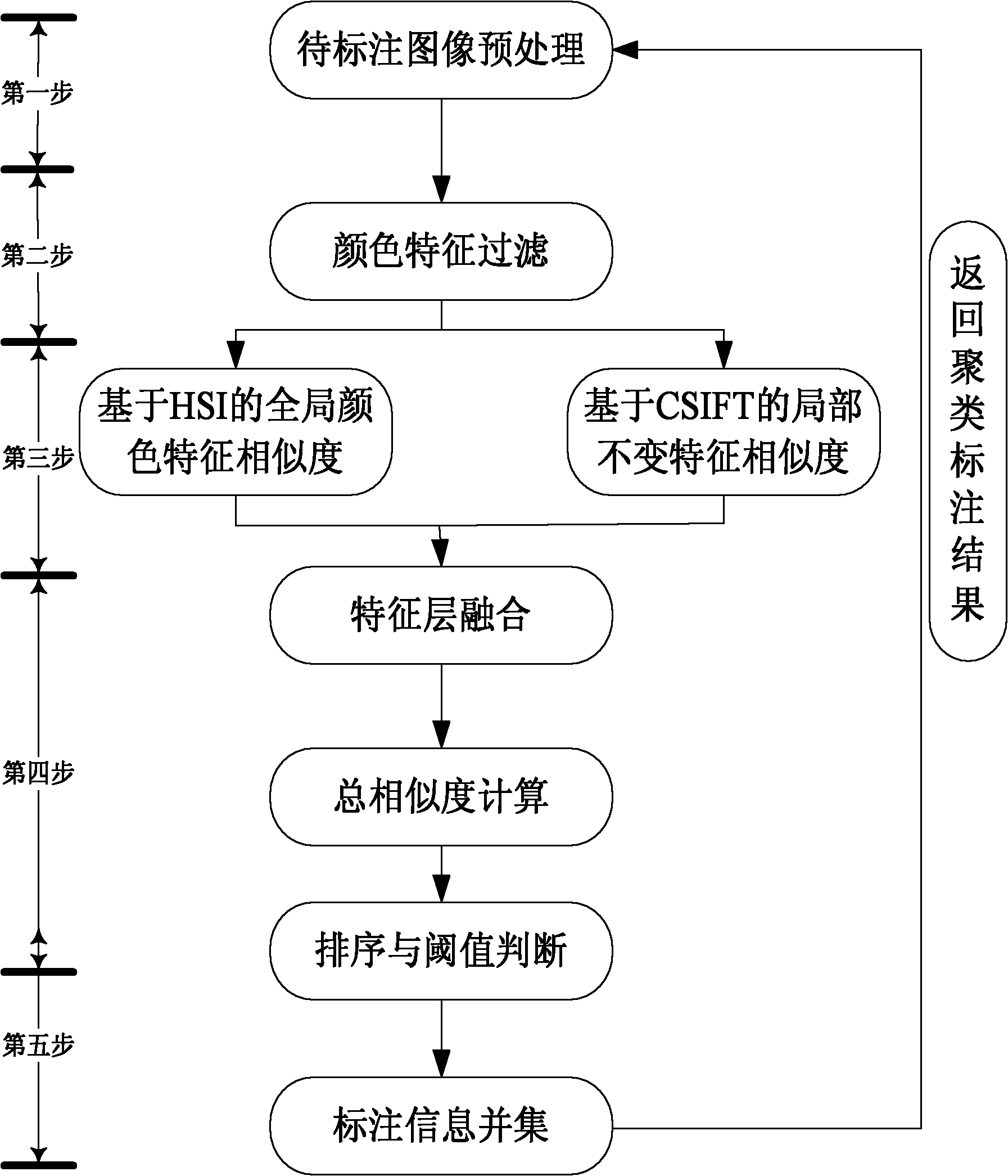

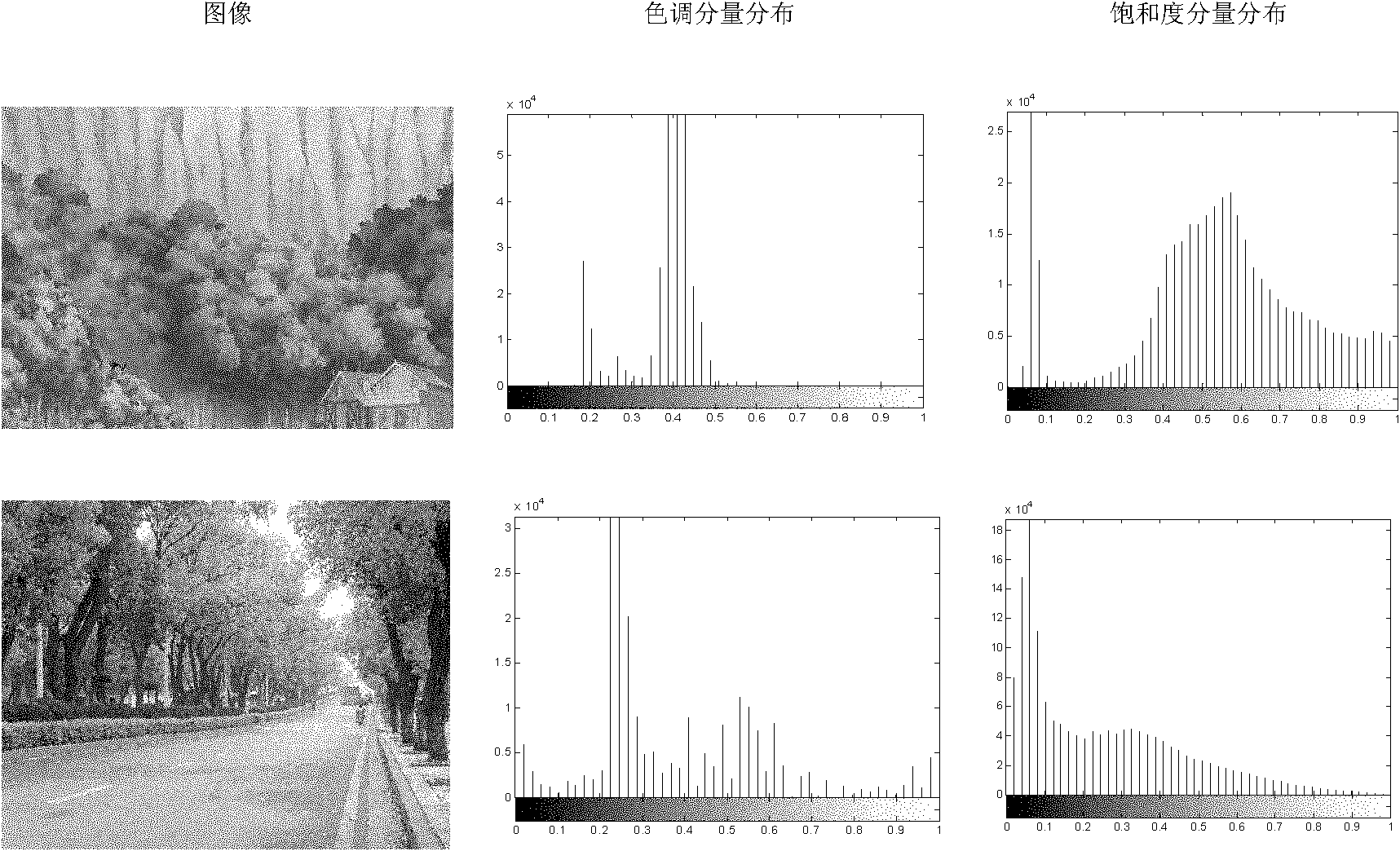

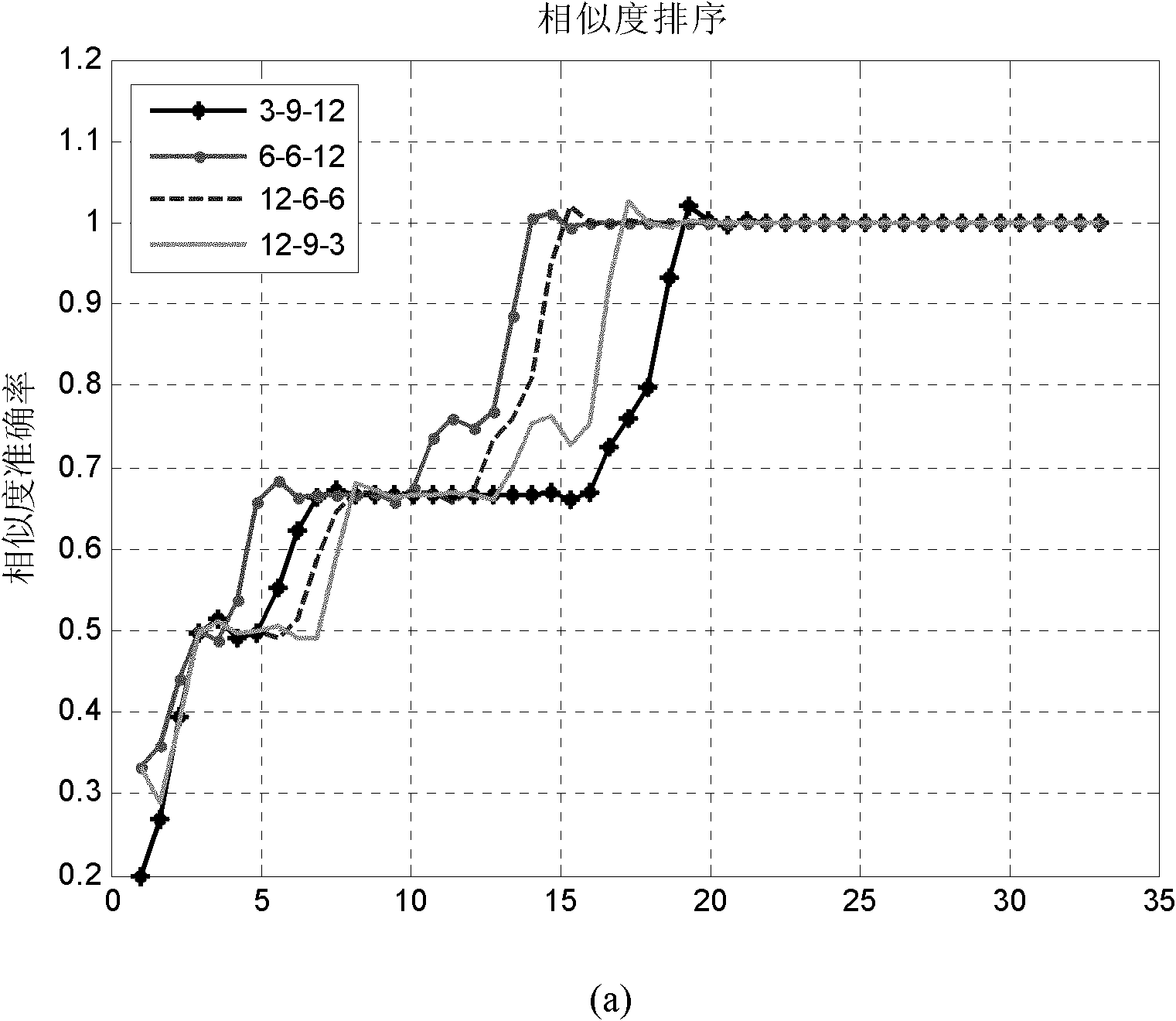

Method for automatically tagging animation scenes for matching through comprehensively utilizing overall color feature and local invariant features

InactiveCN102012939AHigh speedGood auxiliary effectImage analysisSpecial data processing applicationsPattern recognitionScale-invariant feature transform

The invention discloses a method for automatically tagging animation scenes for matching through comprehensively utilizing an overall color feature and local invariant features, which aims to improve the tagging accuracy and tagging speed of animation scenes through comprehensively utilizing overall color features and color-invariant-based local invariant features. The technical scheme is as follows: preprocessing a target image (namely, an image to be tagged), calculating an overall color similarity between the target image and images in an animation scene image library, and carrying out color feature filtering on the obtained result; after color feature filtering, extracting a matching image result and the colored scale invariant feature transform (CSIFT) feature of the target image, and calculating an overall color similarity and local color similarities between the matching image result and the CSIFT feature; fusing the overall color similarity and the local color similarities so as to obtain a final total similarity; and carrying out text processing and combination on the tagging information of the images in the matching result so as to obtain the final tagging information of the target image. By using the method provided by the invention, the matching accuracy and matching speed of an animation scene can be improved.

Owner:NAT UNIV OF DEFENSE TECH

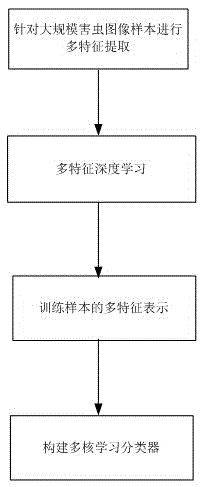

Agricultural pest image recognition method based on multi-feature deep learning technology

ActiveCN105488536AImprove accuracyImprove recognition rateCharacter and pattern recognitionScale-invariant feature transformHistogram of oriented gradients

The invention relates to an agricultural pest image recognition method based on a multi-feature deep learning technology. In comparison with the prior art, a defect of poor pest image recognition performance under the complex environment condition is solved. The method comprises the following steps of carrying out multi-feature extraction on large-scale pest image samples and extracting color features, texture features, shape features, scale-invariant feature conversion features and directional gradient histogram features of the large-scale pest image samples; carrying out multi-feature deep learning and respectively carrying out unsupervised dictionary training on different types of features to obtain sparse representation of the different types of features; carrying out multi-feature representation on training samples and constructing a multi-feature representation form-multi-feature sparse coding histogram for the pest image samples through combining different types of features; and constructing a multi-core learning classifier and constructing a multi-core classifier through learning a sparse coding histogram for positive and negative pest image samples to classify pest images. According to the method, the accuracy for pest recognition is improved.

Owner:HEFEI INSTITUTES OF PHYSICAL SCIENCE - CHINESE ACAD OF SCI

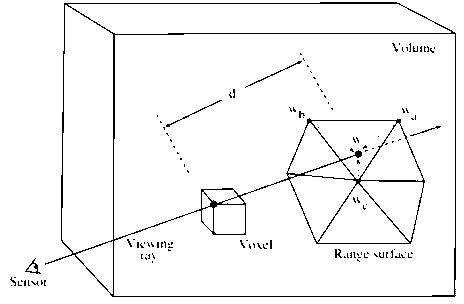

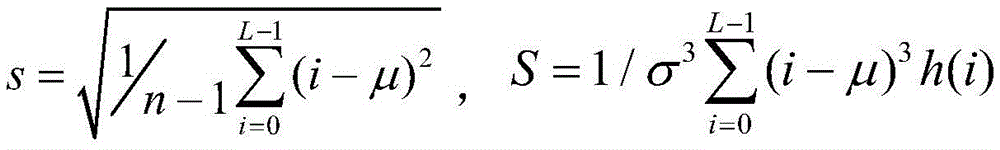

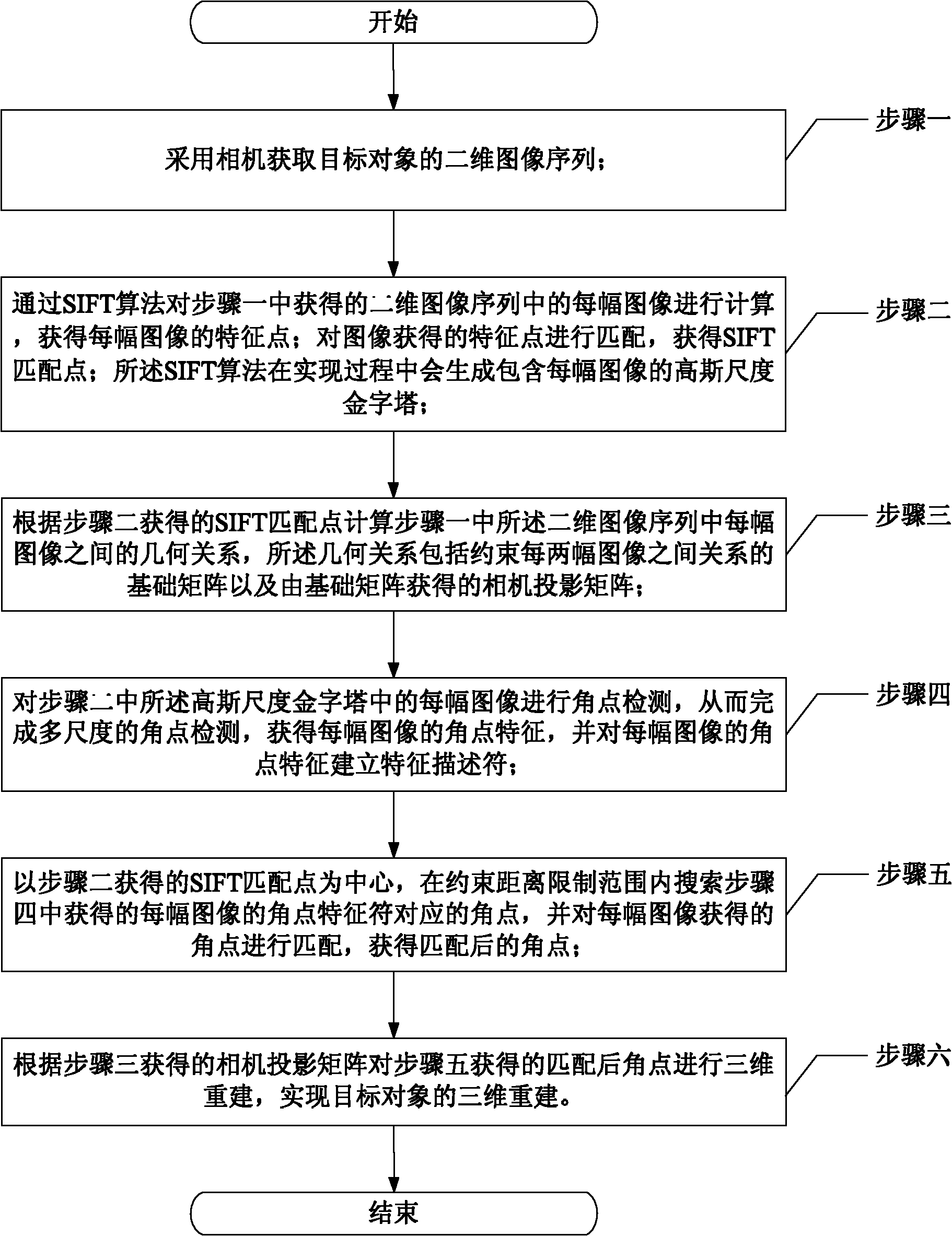

Two-dimensional image sequence based three-dimensional reconstruction method of target

InactiveCN102074015AAccurate descriptionEfficient 3D reconstructionImage analysisHat matrixScale-invariant feature transform

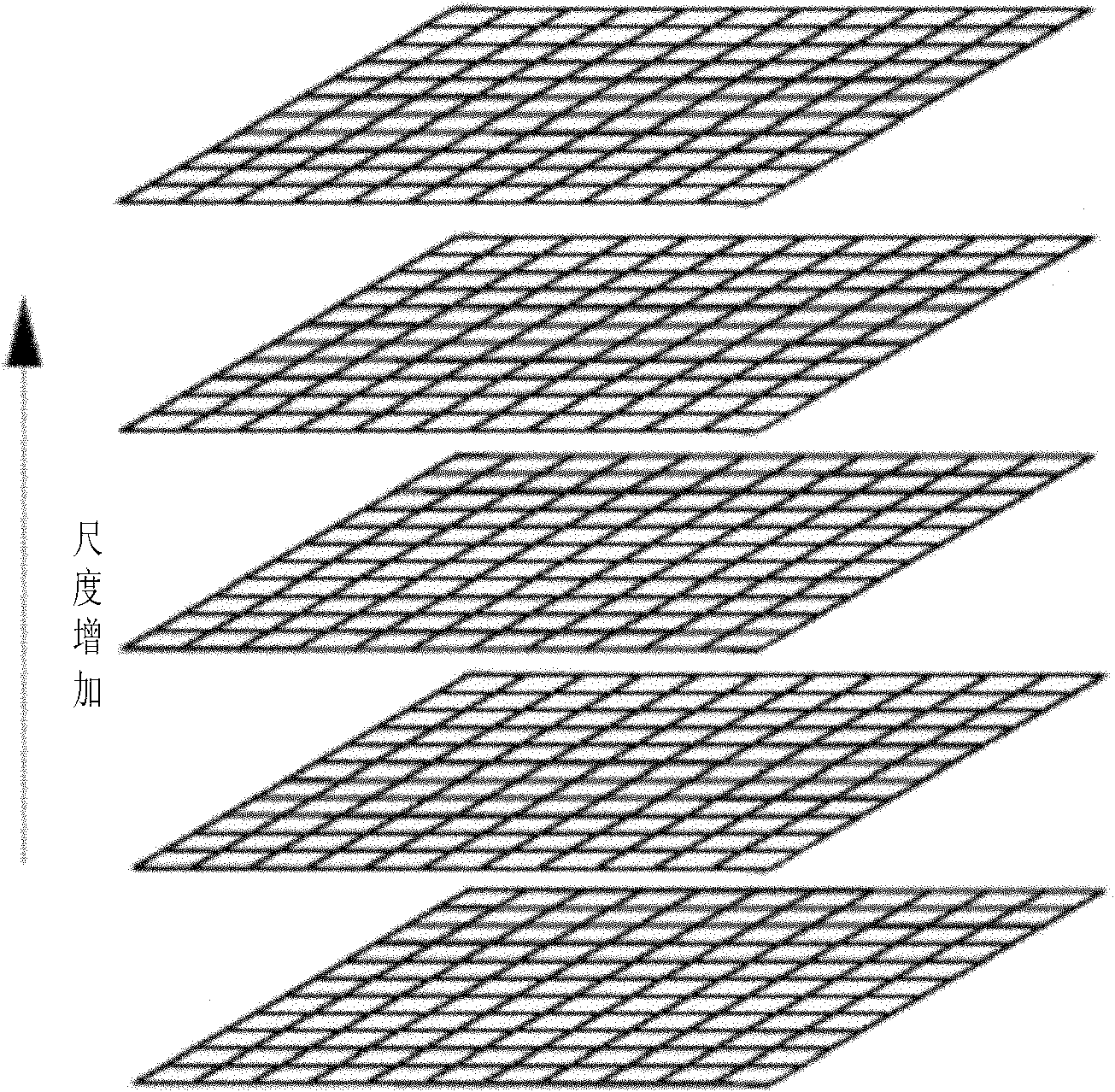

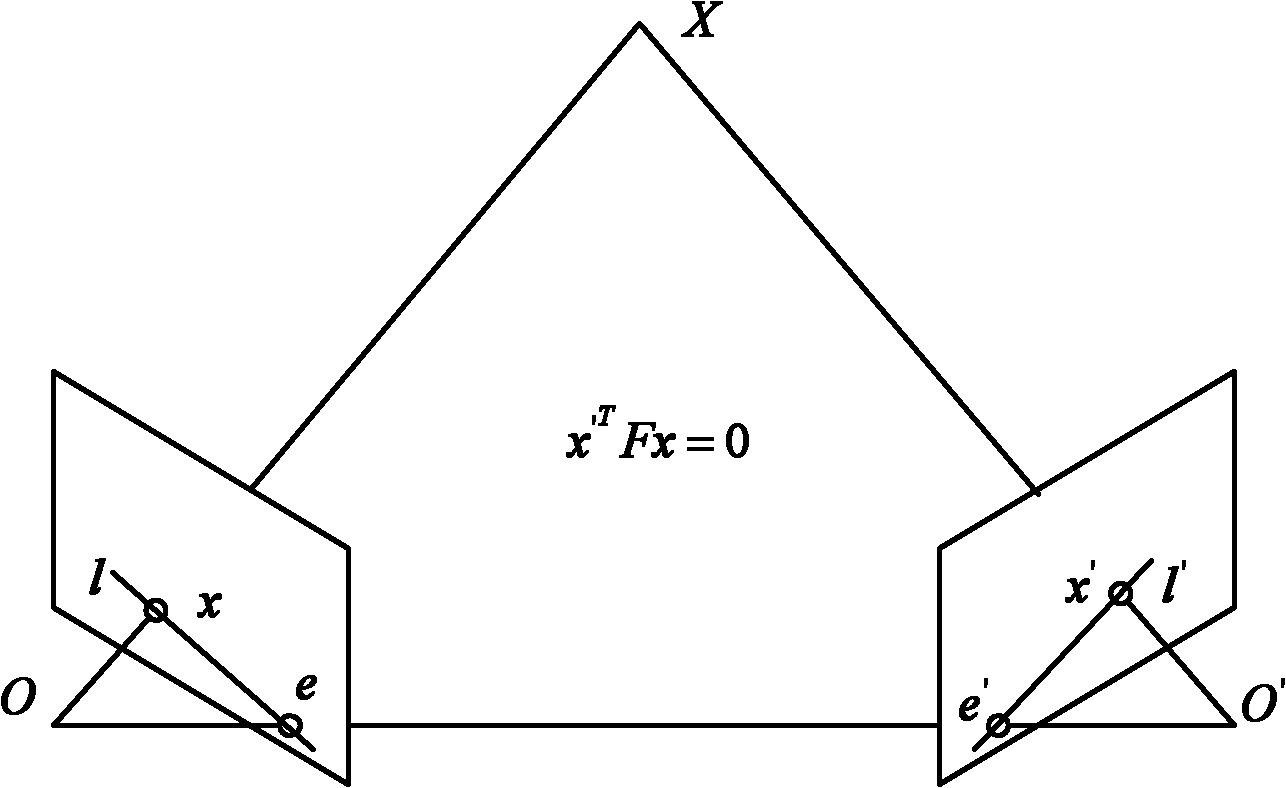

The invention discloses a two-dimensional image sequence based three-dimensional reconstruction method of a target, and relates to the three-dimensional reconstruction method of the target, which solves the problem that in the traditional image-based three-dimensional reconstruction method, the reconstruction precision is low due to more points needing to be reconstructed and large calculation quantity. The three-dimensional reconstruction method comprises the following steps of: using a camera to obtain a two-dimensional image sequence of the target, calculating and matching each image through a scale invariant feature transform (SIFT) algorithm, and calculating the geometric relationship between images; carrying out the corner detection of each image in a Gaussian scale pyramid generated in the realizing process of the SIFT algorithm, and obtaining the multi-scale corner features of the images; taking the obtained SIFT matching point as a center, searching a corner corresponding to each image in a limited range of a restrained distance, and matching the corners obtained by each image to obtain the matched corner; and realizing the three-dimensional reconstruction of the target by carrying out the three-dimensional reconstruction of the matched corner according to a projection matrix of a camera. The two-dimensional image sequence based three-dimensional reconstruction method is applied to the three-dimensional reconstruction of the target.

Owner:HARBIN INST OF TECH

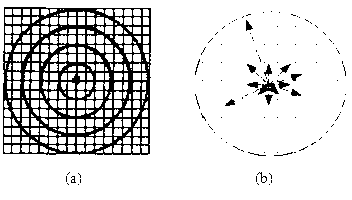

Improved scale invariant feature transform (SIFT) image feature matching algorithm

InactiveCN103136751AImprove execution efficiencyOvercoming the inability to fit in grayscaleImage analysisScale-invariant feature transformGray level

The invention discloses an improved scale invariant feature transform (SIFT) image feature matching algorithm. The algorithm comprises: step one, scale space extreme points are detected; step two, feature descriptor is generated; and step three, a K-d tree balanced binary tree is built, a nearest neighborhood feature point on the K-b tree is searched by BBF, a matched feature dot pair is judged by Euclidean distance, and secondary matching is conducted after Euclidean distance matching. According to the improved SIFT image feature matching algorithm, the descriptor of 128 dimensions is reduced to 48 dimensions, execution efficiency of the algorithm is improved by two-thirds and reaches the speed of speeded-up robust features (SURF) feature description subalgorithm based on integral, and the defects that the algorithm is not suitable for the gray level and the changing circumstances of the point view of the images are overcome.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

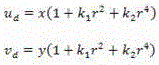

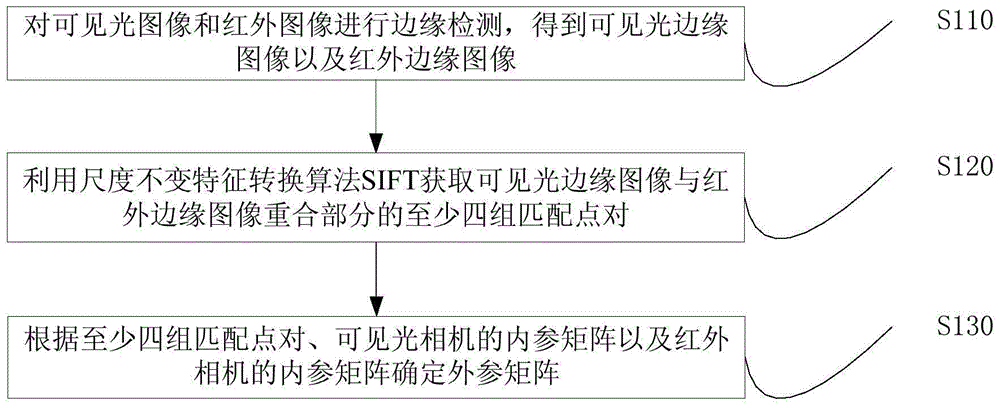

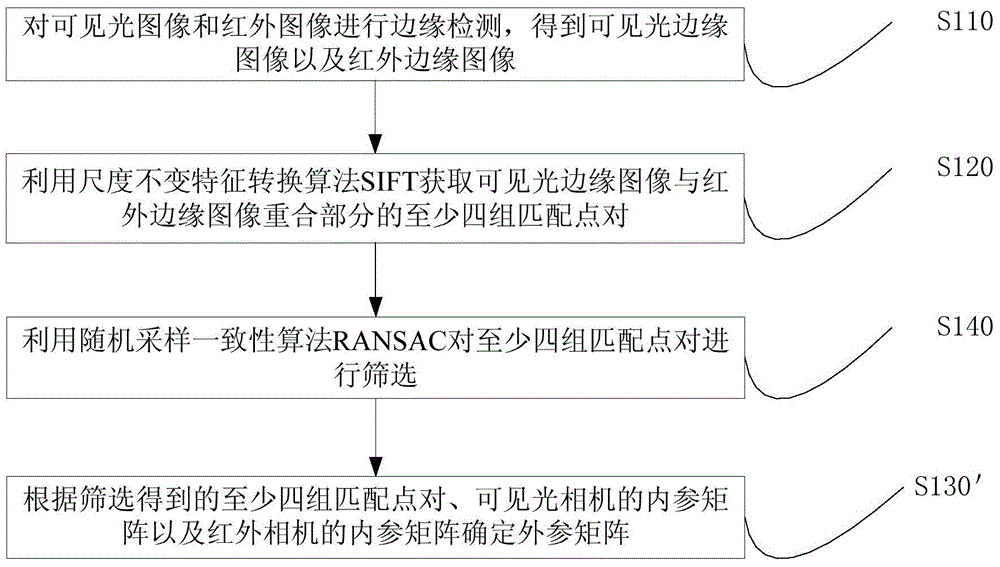

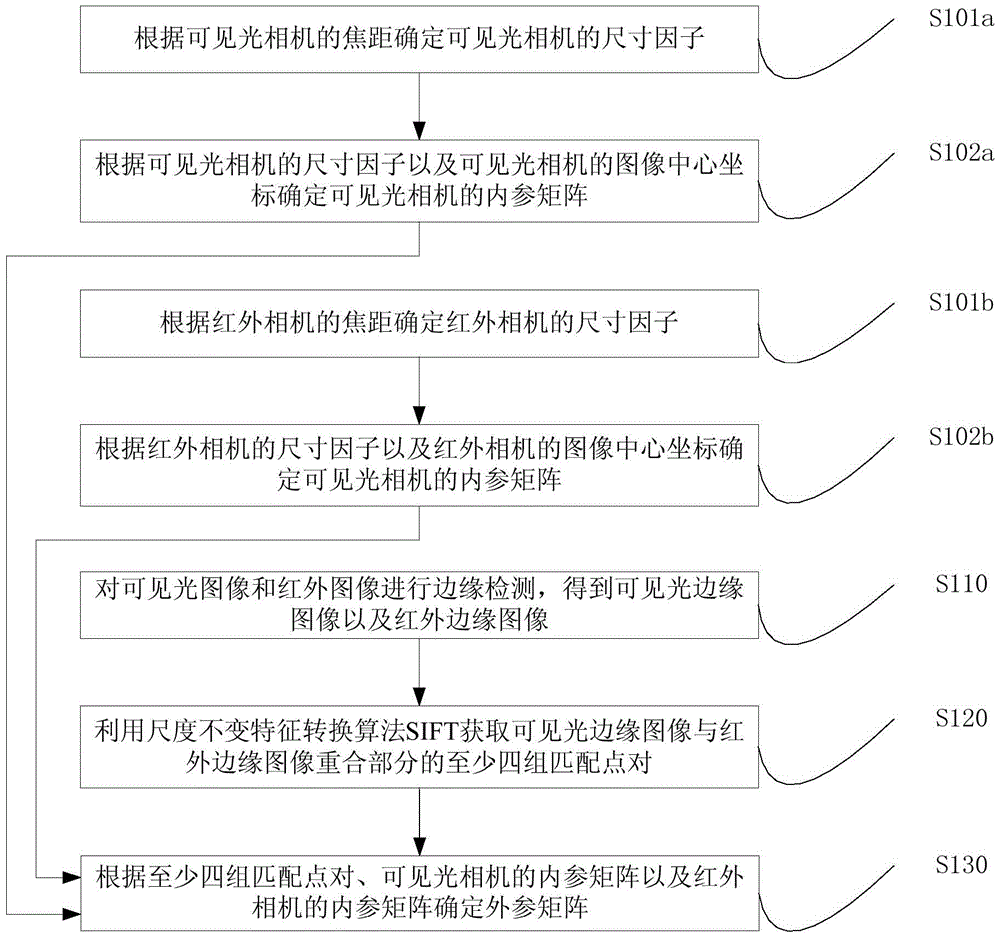

Method and device for jointly calibrating parameters of visible light camera and infrared camera

ActiveCN105701827AReduce calibration costsImprove calibration efficiencyImage enhancementImage analysisScale-invariant feature transformIntrinsics

The invention discloses a method and device for jointly calibrating the parameters of a visible light camera and an infrared camera. The method comprises steps of: detecting the edges of a visible light image and an infrared image to obtain a visible light edge image and an infrared edge image; acquiring at least four groups of matched point pairs of the visible light edge image and the infrared edge image by using a scale invariant feature transform (SIFT) algorithm; and determining an extrinsic parameter matrix according to the at least four groups of matched point pairs, the intrinsic parameter matrix of the visible light camera, and the intrinsic parameter matrix of the infrared camera. The method and the device may acquire the matched point pairs in the visible light edge image and the infrared edge image without a calibration board and determine the extrinsic parameter matrix without the calibration board so as to reduce the calibration cost of camera parameters. Further, when a focal length of the camera is changed, the extrinsic parameter matrix can be determined without camera position adjustment so that parameter calibration efficiency is increased.

Owner:CHINA FORESTRY STAR BEIJING TECH INFORMATION CO LTD

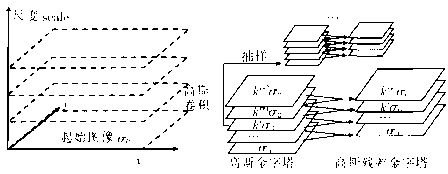

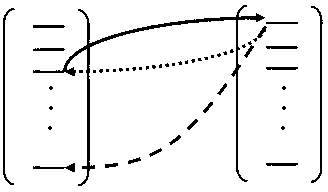

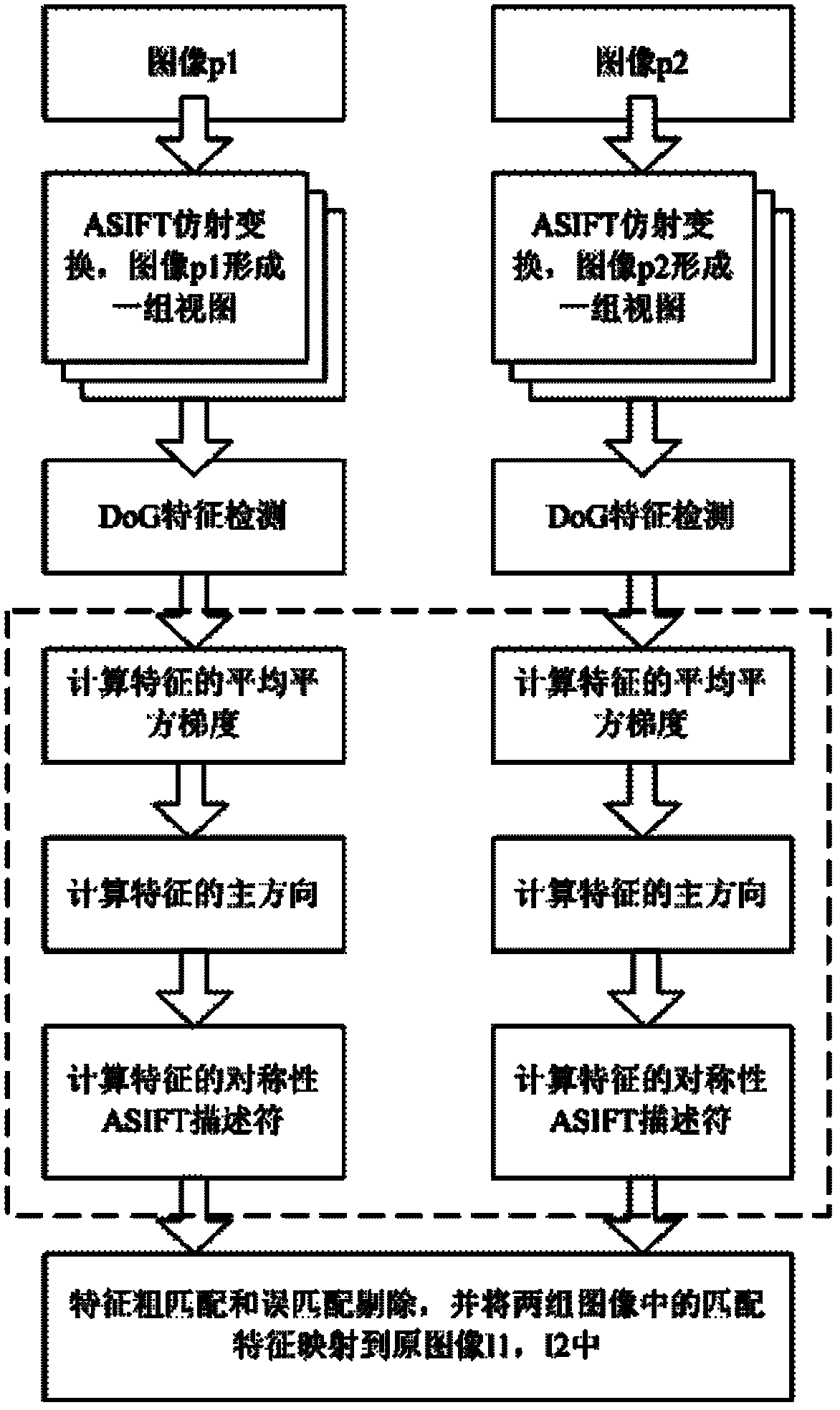

Multimodal image feature extraction and matching method based on ASIFT (affine scale invariant feature transform)

InactiveCN102231191AHas a completely affine invariant propertySolve the unsolvable affine invariance problemImage analysisCharacter and pattern recognitionFeature vectorScale-invariant feature transform

The invention discloses a multimodal feature extraction and matching method based on ASIFT (affine scale invariant feature transform), and the method is mainly used for realizing the point feature extraction and matching of the multimodal image which cannot be solved in the prior art. The method can be realized through the following steps: carrying out sampling on the ASIFT affine transformational model tilting value parameters and longitude parameters, thus obtaining two groups of views of two input images; adopting a difference of Gauss (DoG) feature detection method to detect the position and size of the feature point on the two groups of views; using an average squared-gradient method to set the principle directions of the features and setting the feature vector amplitude by a counting method; calculating the symmetric ASIFT descriptor of the features; and adopting a nearest neighborhood method to carry out coarse matching on the symmetric ASIFT descriptor, and using an optimized random sampling method to remove mis-matching features. In the invention, features can be extracted and matched in the images sensed by various sensors, and the method provided by the invention has the characteristic of invariability after complete affine, and can be applied to the fields of object recognition and tracking, image registration and the like.

Owner:XIDIAN UNIV

Image search method based on CGCI-SIFT (consistence index-scale invariant feature transform) partial feature

InactiveCN102945289ATransform validChunking scienceSpecial data processing applicationsScale-invariant feature transformAlgorithm

The invention discloses an image search method based on a CGCI-SIFT (consistence index-scale invariant feature transform) partial feature. The image search method is realized based on CGCI-SIFT and comprises the following steps of: starting from the strength and the distribution of the influence of a neighborhood domain pixel to a key point; establishing a periphery partial feature descriptor through gray level texture comparison strength information; and subsequently establishing a central partial descriptor by combining direction gradient information having relatively high central feature point description so as to form a final description, wherein the CGCI-SIFT utilizes the contrast property of a partial area and is combined with gradient information of the original SIFT algorithm, rather than that the SIFT in which the weight and the direction of the gradient are singly stored, so that the CGCI-SIFT has relatively comprehensive geometric and optical conversion invariance. Due to the utilization of the gray level texture comparison strength information, the CGCI-SIFT is simple in calculation, thereby being relatively efficient and relatively applicable to real-time application. Tests show that according to the search method, the performance is stable, the search time is short, and a remarkable improvement in the search effect can be realized.

Owner:SUZHOU SOUKE INFORMATION TECH

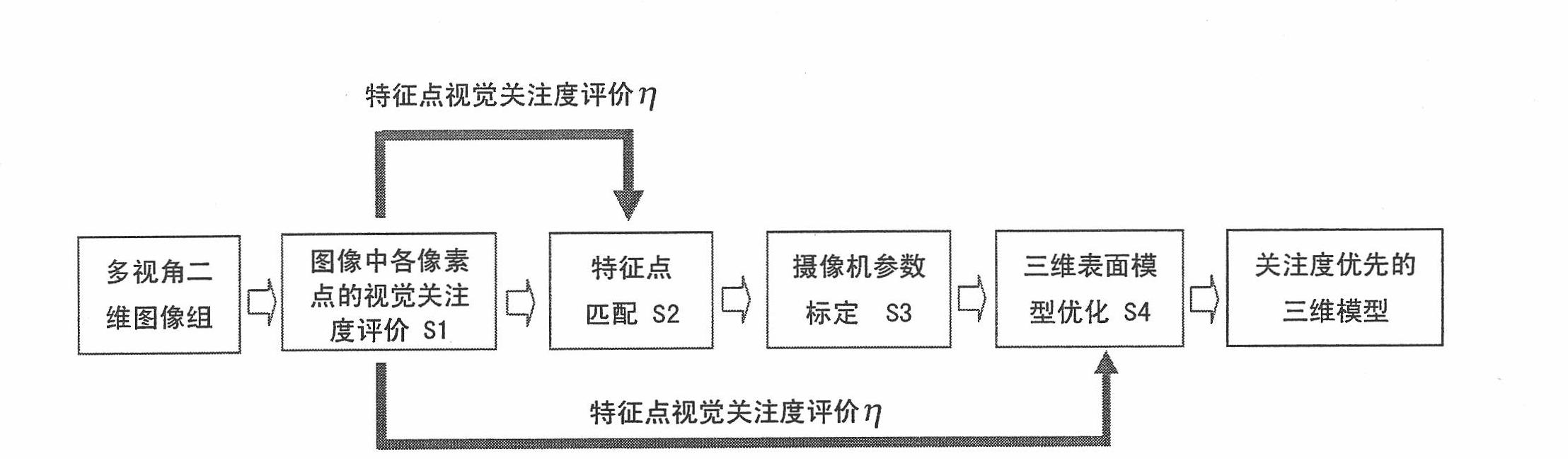

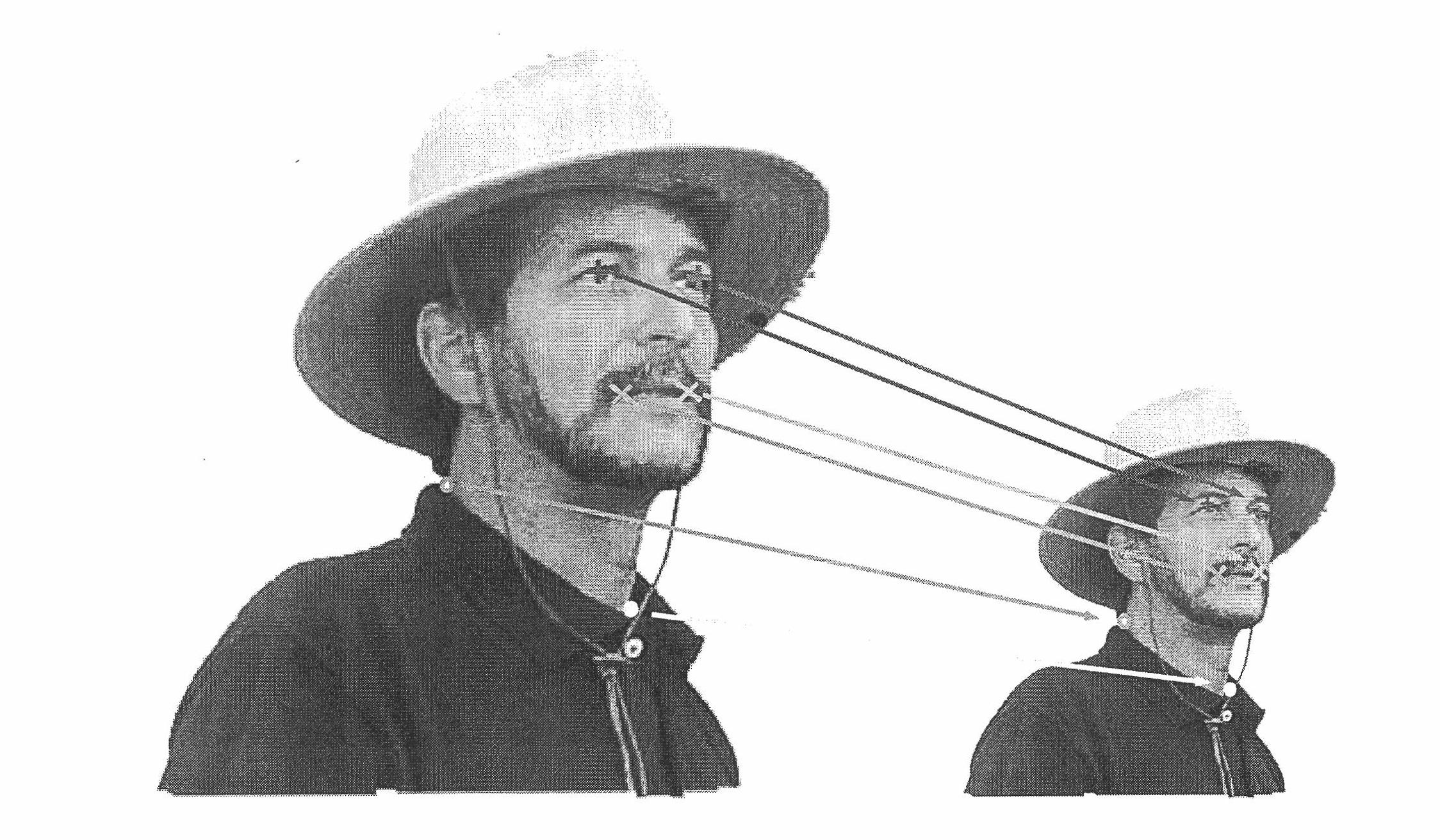

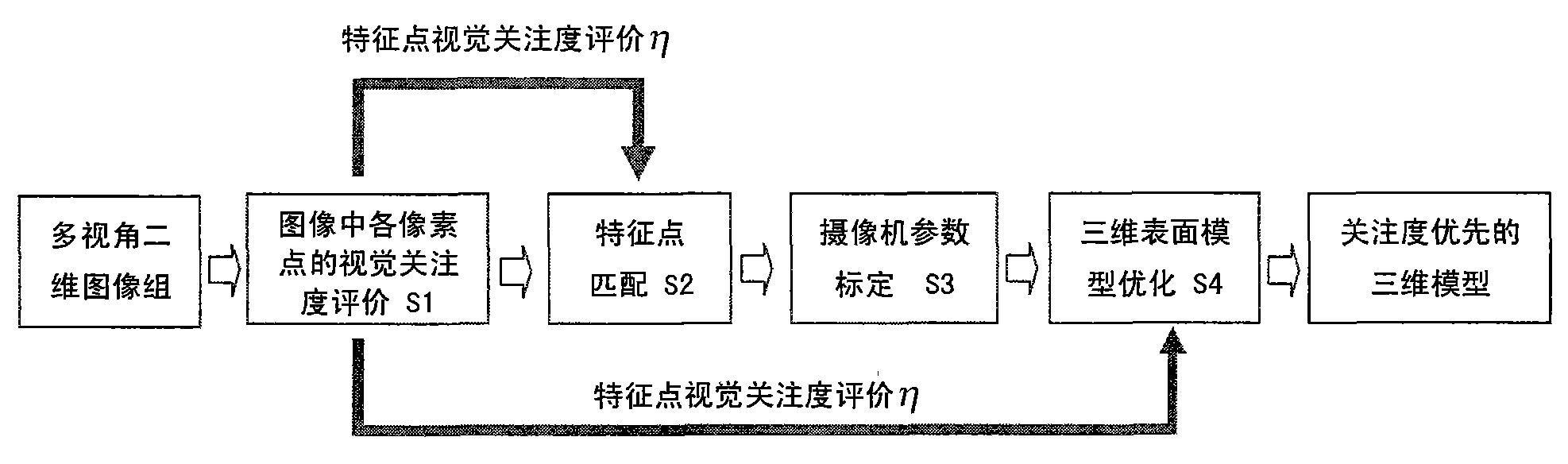

Three-dimensional scene reconstruction method of two-dimensional image group

InactiveCN101877143AReduce mistakesHigh precision3D-image rendering3D modellingScale-invariant feature transformCharacteristic space

The invention discloses a three-dimensional scene reconstruction method of a two-dimensional image group, and the method comprises the following steps: S1: inputting each image in the image group and calculating vision attention evaluation of each pixel; S2: extracting scale-invariant feature transform feature points on the input images of the image group, matching and selecting the feature points on any two images in the image group to obtain matched feature points, wherein the matching and selecting principles include feature space similarity of a feature point pair and the obtained vision attention corresponding to the obtained feature points; S3: evaluating camera parameters by utilizing the obtained matched feature points; and S4: solving an optimized three-dimensional scene model by using the selected matched feature point pairs, the attention evaluation corresponding to the feature points and the camera parameters obtained by the evaluation.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

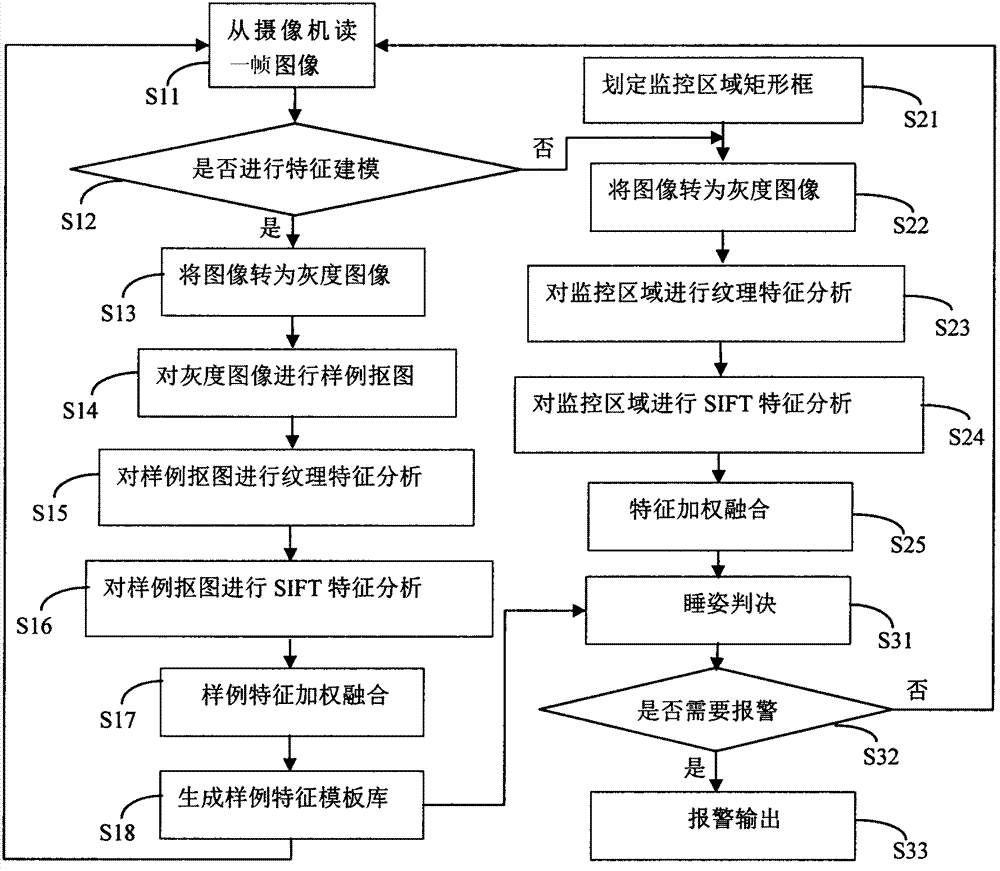

Intelligent identification method and device for baby sleep position

InactiveCN102789672ASolve coldSolve the problem of chokingCharacter and pattern recognitionAlarmsPhysical medicine and rehabilitationScale-invariant feature transform

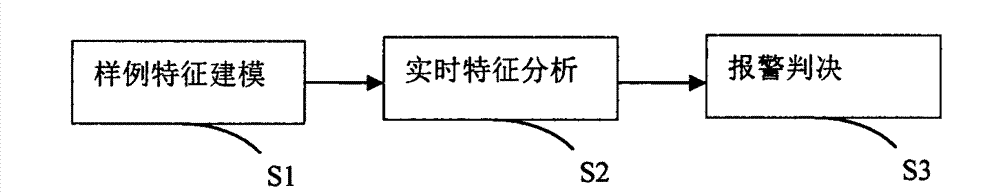

The invention discloses an intelligent identification method and device for a baby sleep position. A video analysis and mode identification method is adopted to identify the baby sleep position so as to timely discover important baby events like a baby kicks out a quilt, the face of the baby is covered by clothes, or the baby sleeps on the stomach. The method consists of three parts, namely sample feature modeling, real-time feature analysis and alarm judgment. In the sample feature modeling, textural features and SIFT (Scale Invariant Feature Transform) features of a sample image are analyzed, a sample feature template base is generated by means of feature fusion, furthermore, the features of a set monitoring area are analyzed while the real-time features are analyzed, the sleep position is identified in combination with the sample feature template base, the alarm type is judged, and then the alarm information is output. Due to the intelligent identification method and device, a guardian does not have to monitor the baby all the time by videos or observation on site, especially when the guardian sleeps soundly at night, the important baby events can be effectively and intelligently detected, identified and warned early and timely.

Owner:深圳市瑞工科技有限公司

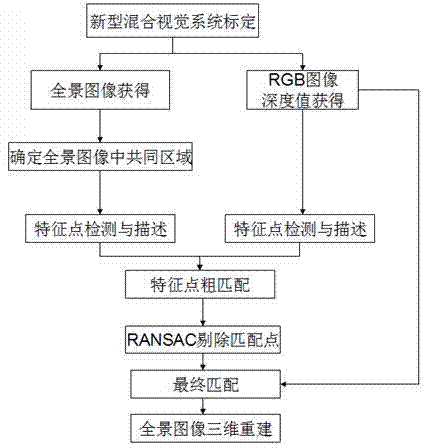

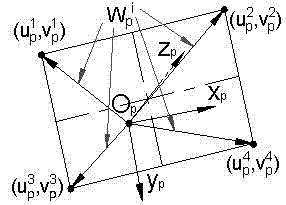

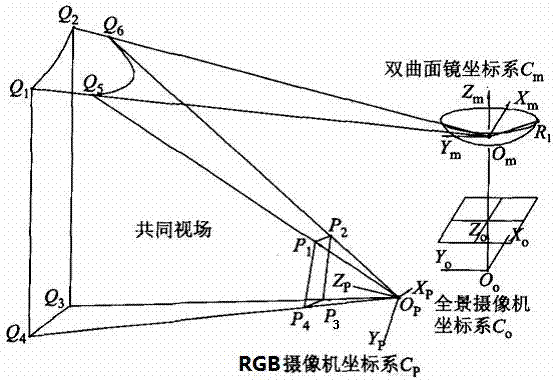

Three-dimensional reconstruction method of panoramic image in mixed vision system

ActiveCN103971378AImprove matching accuracyThe method is simple and effectiveImage analysisScale-invariant feature transformReconstruction method

The invention relates to a three-dimensional reconstruction method of a panoramic image in a mixed vision system. The mixed vision system comprises an RGB-D (Red Green Blue-Digital) camera and a panoramic camera. The method comprises the following steps: firstly, calibrating the mixed vision system, thereby obtaining internal and external parameters of the mixed vision system; secondly, determining a common field of view of the mixed vision system according to a space relation and the internal and external parameters of the mixed vision system; then obtaining characteristic matching points of the common field of view of the mixed vision system on the basis of SIFT (Scale Invariant Feature Transform)+RANSAC (Random Sample Consensus); and finally, on the basis of depth information of the RGB-D camera, carrying out three-dimensional reconstruction on the matching characteristic points in the panoramic camera. The three-dimensional reconstruction method has the advantages that three-dimensional information of characteristic points in the common field of view in the panoramic image in the mixed vision system can be obtained, and three-dimensional reconstruction of the panoramic image with low complexity and high quality can be realized.

Owner:福建旗山湖医疗科技有限公司

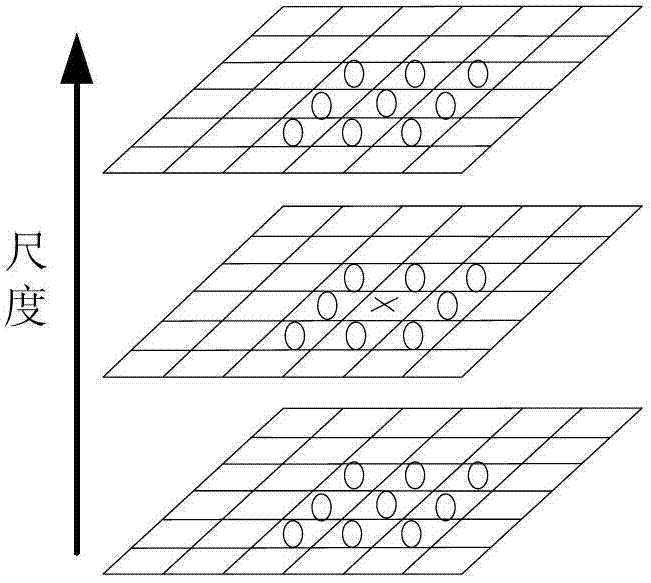

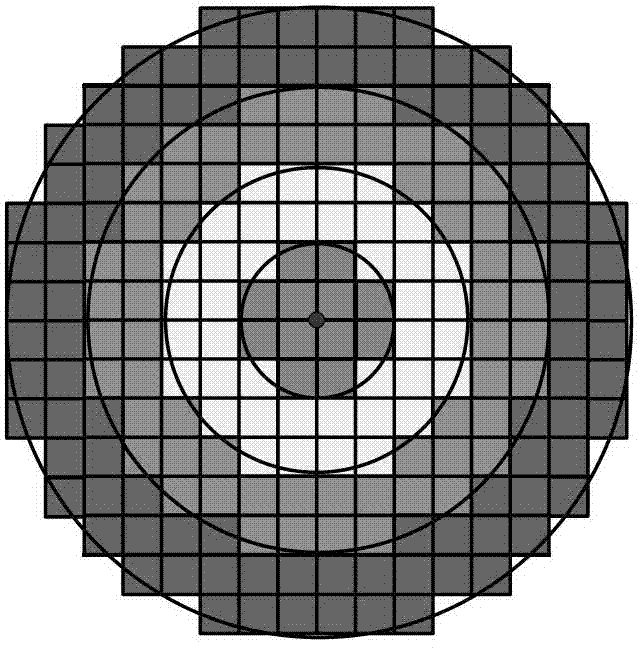

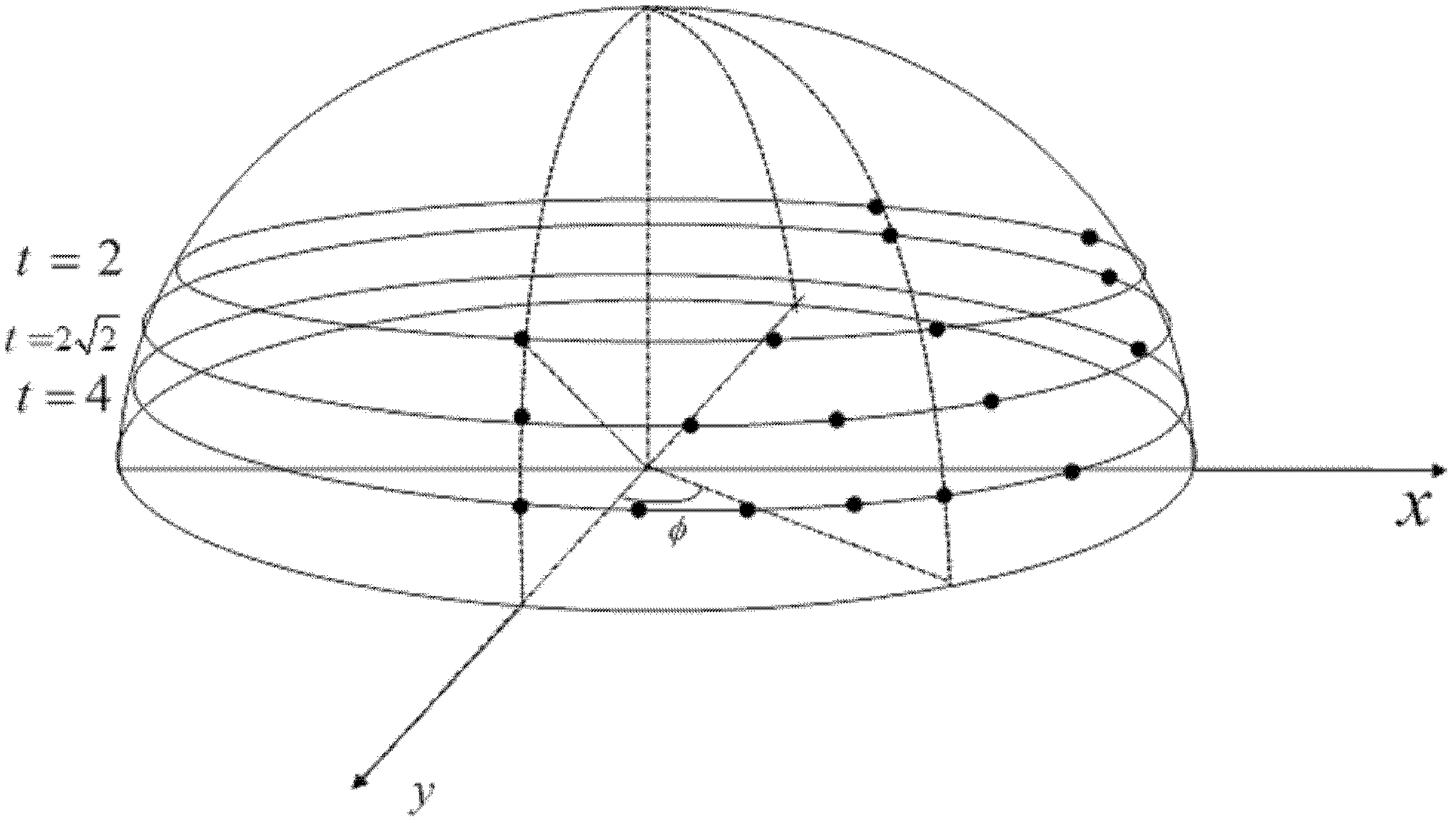

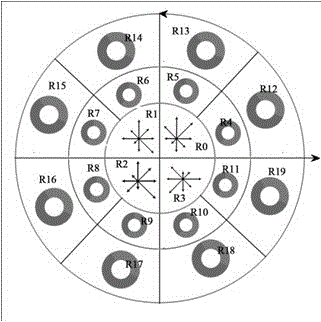

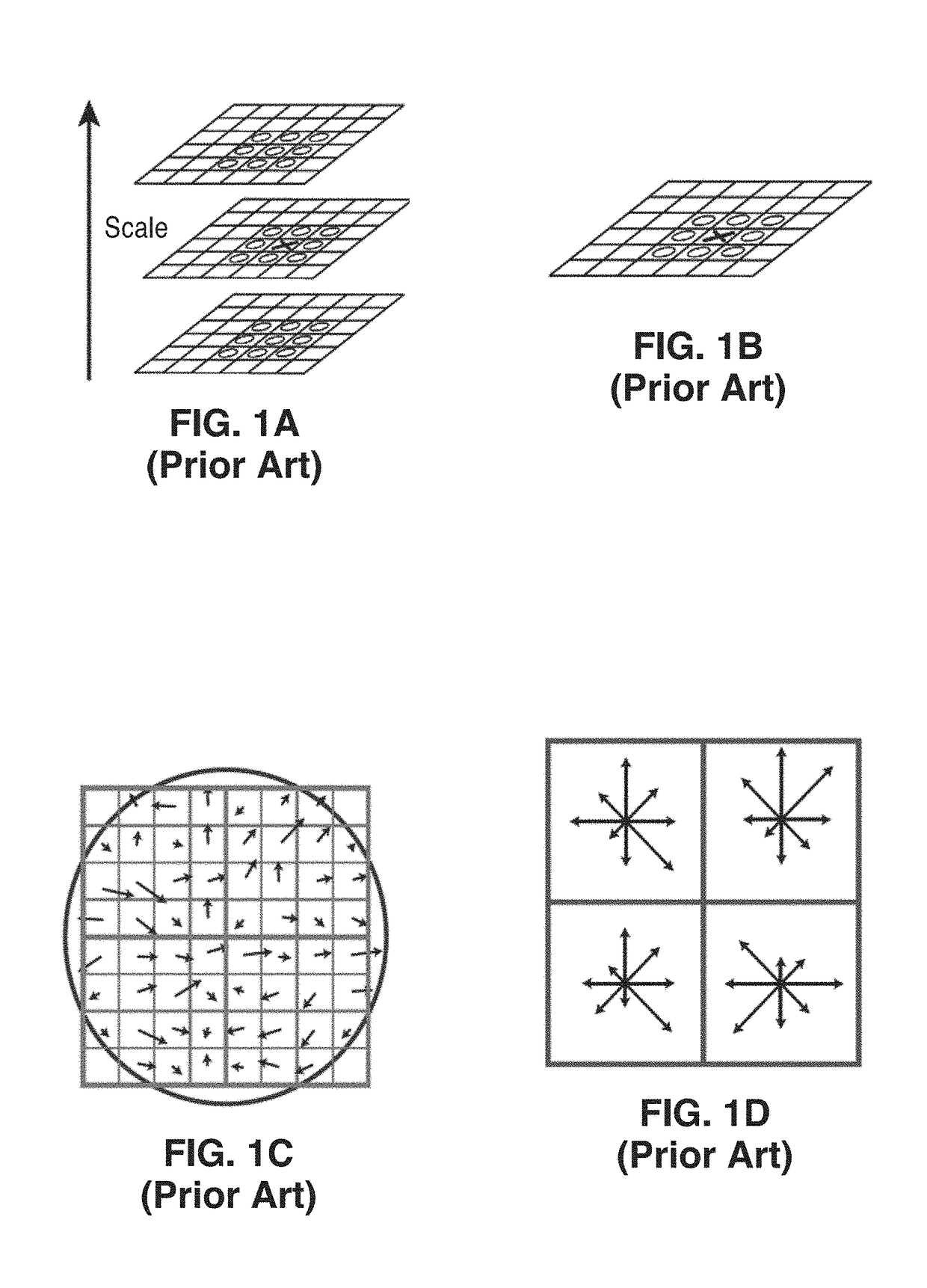

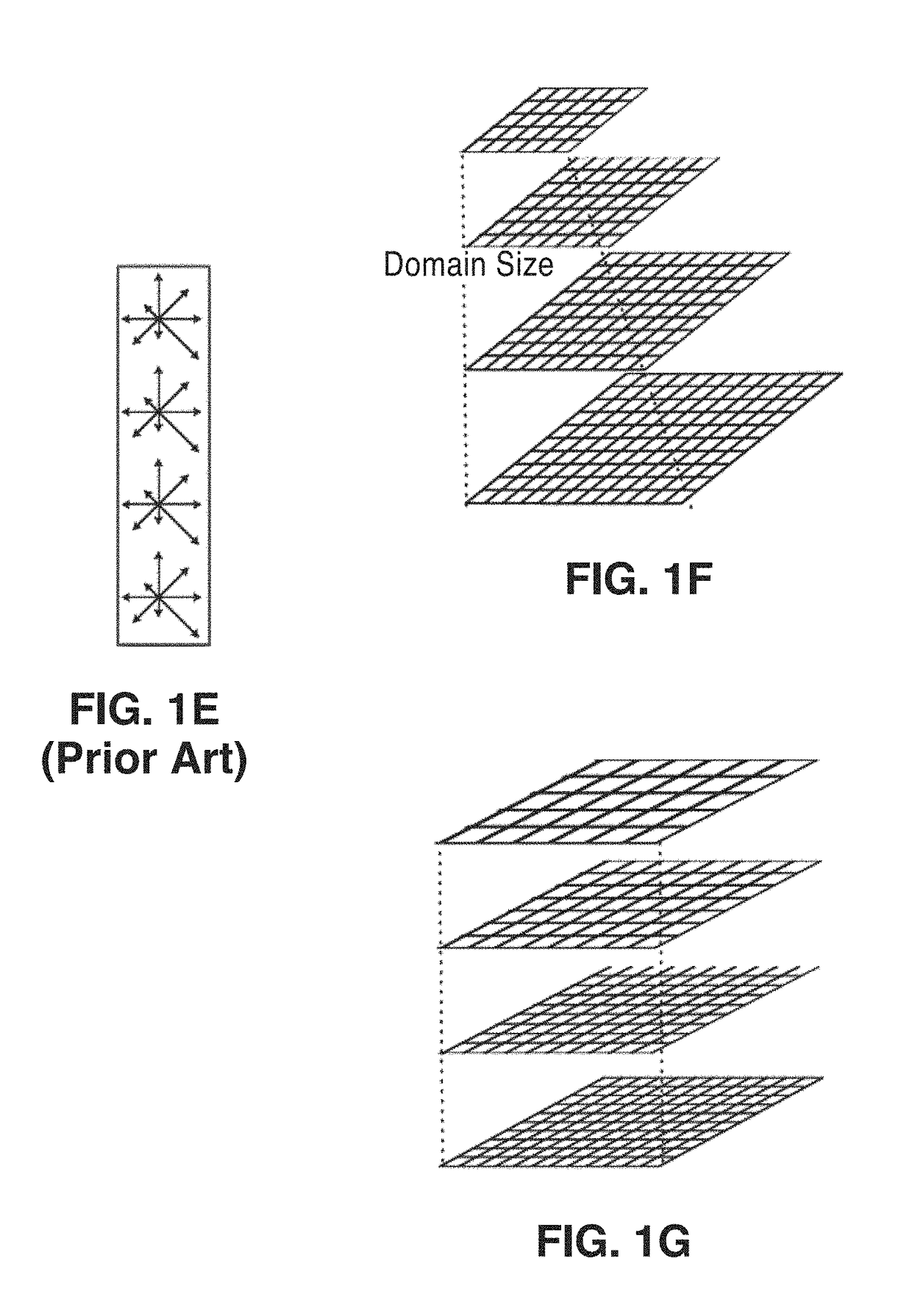

Dsp-sift: domain-size pooling for image descriptors for image matching and other applications

A variation of scale-invariant feature transform (SIFT) based on pooling gradient orientations across different domain sizes, in addition to spatial locations. The resulting descriptor is called DSP-SIFT, and it outperforms other methods in wide-baseline matching benchmarks, including those based on convolutional neural networks, despite having the same dimension of SIFT and requiring no training. Problems of local representation of imaging data are also addressed as computation of minimal sufficient statistics that are invariant to nuisance variability induced by viewpoint and illumination. A sampling-based and a point-estimate based approximation of such representations are described.

Owner:RGT UNIV OF CALIFORNIA

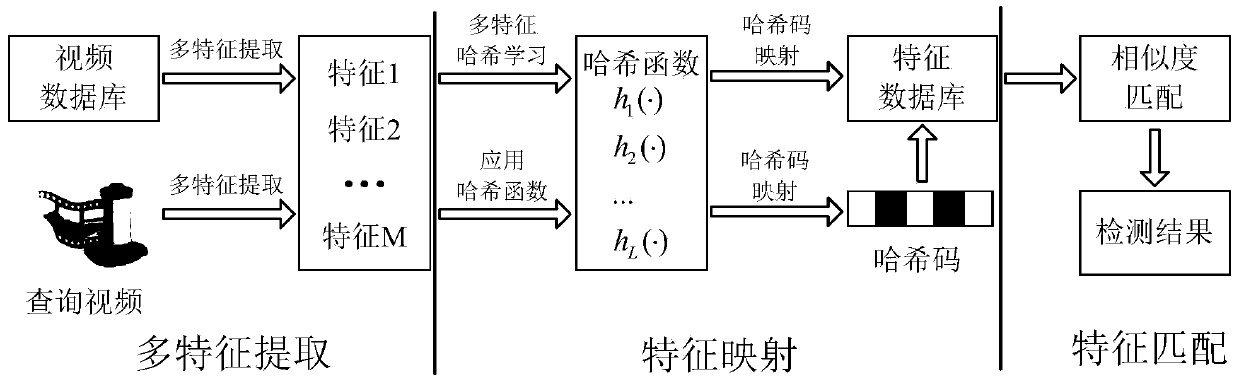

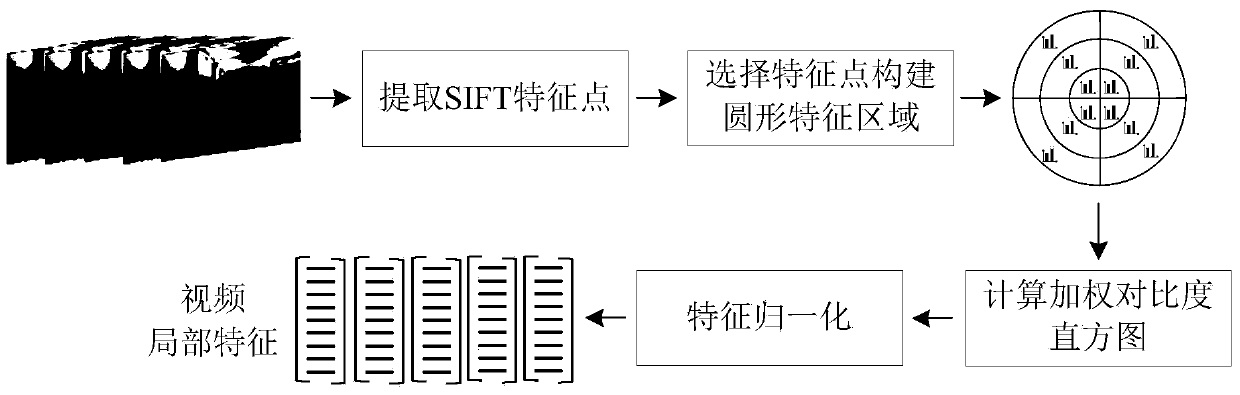

Video copy detection method based on multi-feature Hash

InactiveCN103744973AHigh precisionImprove robustnessCharacter and pattern recognitionSpecial data processing applicationsDigital videoHash function

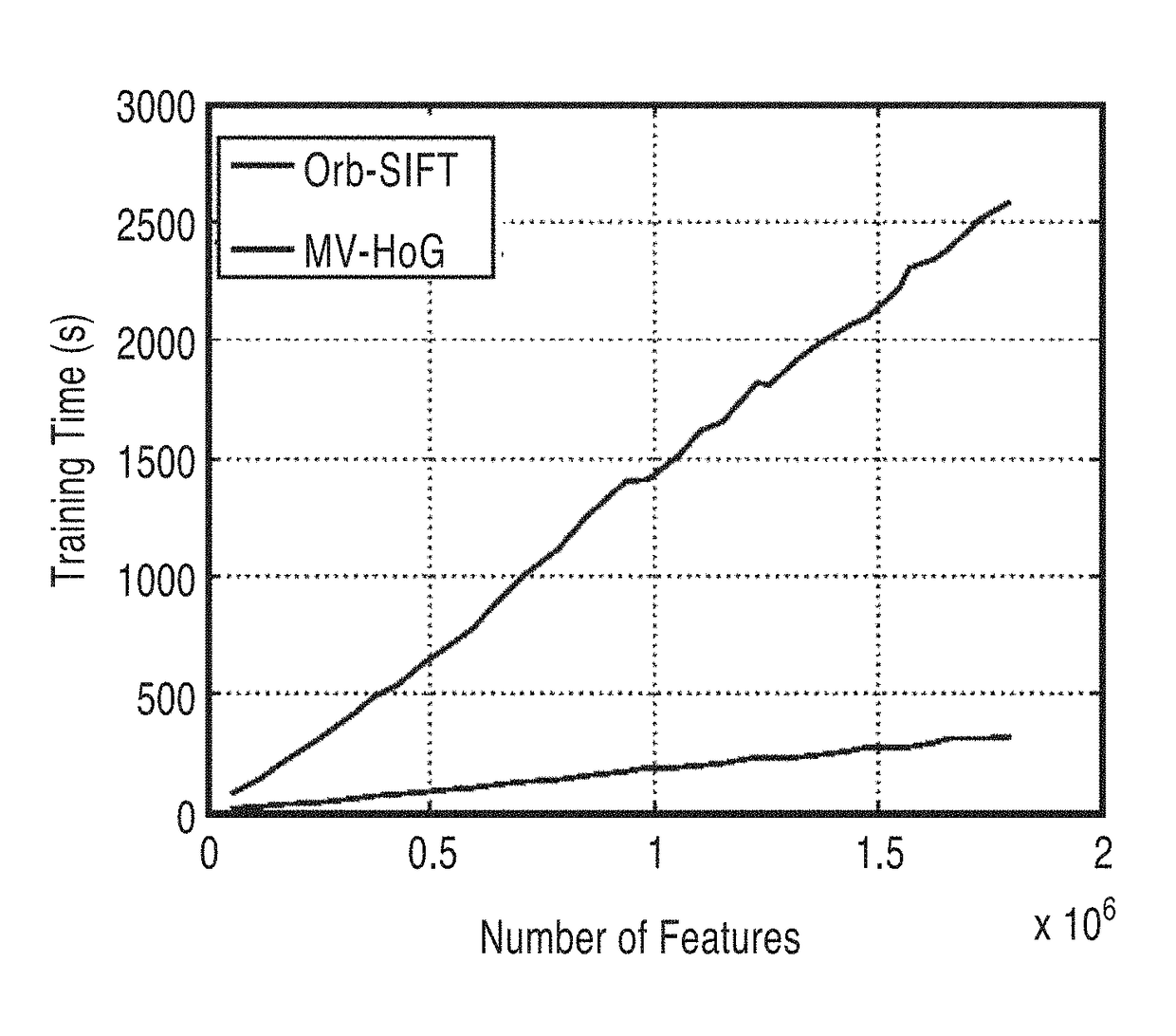

The invention discloses a video copy detection method based on multi-feature Hash, which mainly solves the problem that detection efficiency and detection accuracy cannot be effectively balanced in the exiting video copy detection algorithm. The video copy detection method based on multi-feature Hash comprises the following realization steps of: (1) extracting the pyramid histogram of oriented gradients (PHOG) of a key frame as the global feature of the key frame; (2) extracting a weighted contrast histogram based on scale invariant feature transform (SIFT) of the key frame as the local feature of the key frame; (3) establishing a target function by a similarity-preserving multi-feature Hash learning SPM2H algorithm, and obtaining L Hash functions by optimization solution; (4) mapping the key frame of a database video and the key frame of an inquired video into an L-dimensional Hash code by virtue of the L Hash functions; (5) judging whether the inquired video is the copied video or not through feature matching. The video copy detection method based on multi-feature Hash disclosed by the invention is good in robustness for multiple attacks, and capable of being used for copyright protection, copy control and data mining for digital videos on the Internet.

Owner:XIDIAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com