Patents

Literature

5906 results about "Identification rate" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Identification rate. Definition. The identification rate is "[t]he rate at which a biometric subject in a database is correctly identified.".

Method for personalized television voice wake-up by voiceprint and voice identification

InactiveCN104575504APersonalizedRealize TV voice wake-up functionSpeech recognitionSelective content distributionPersonalizationSpeech identification

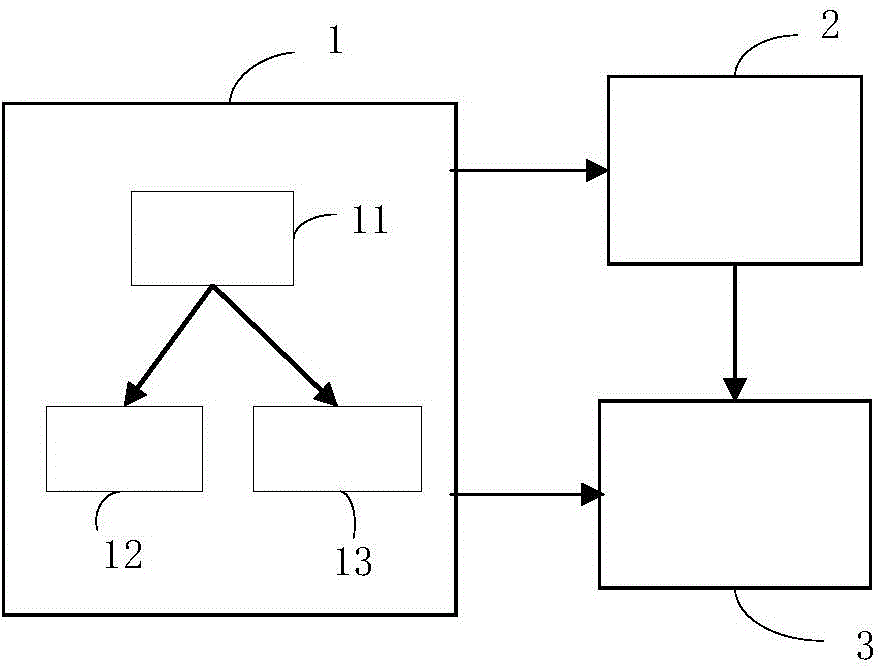

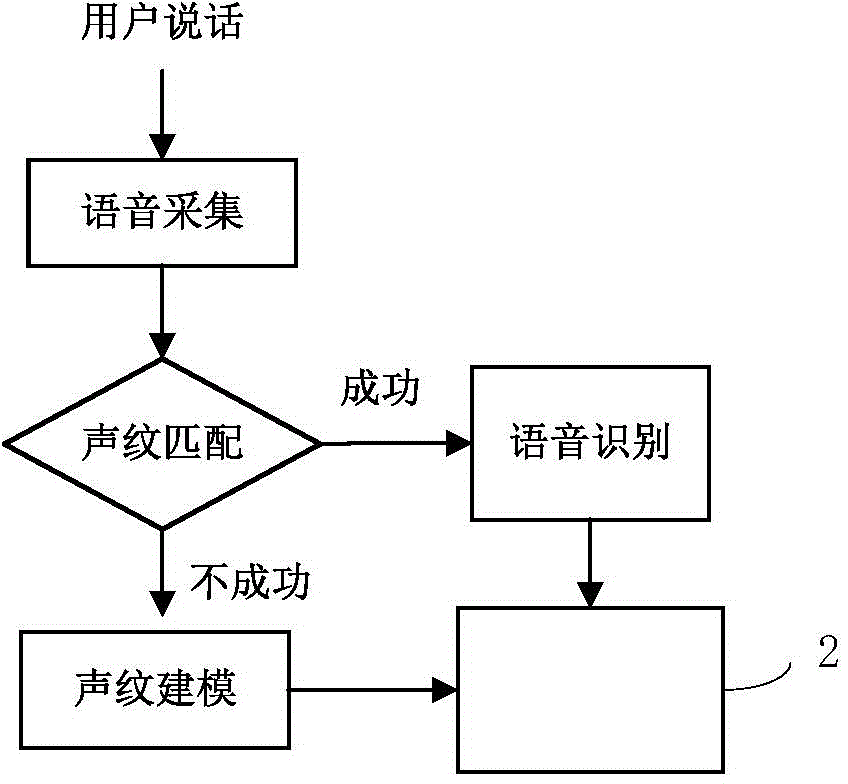

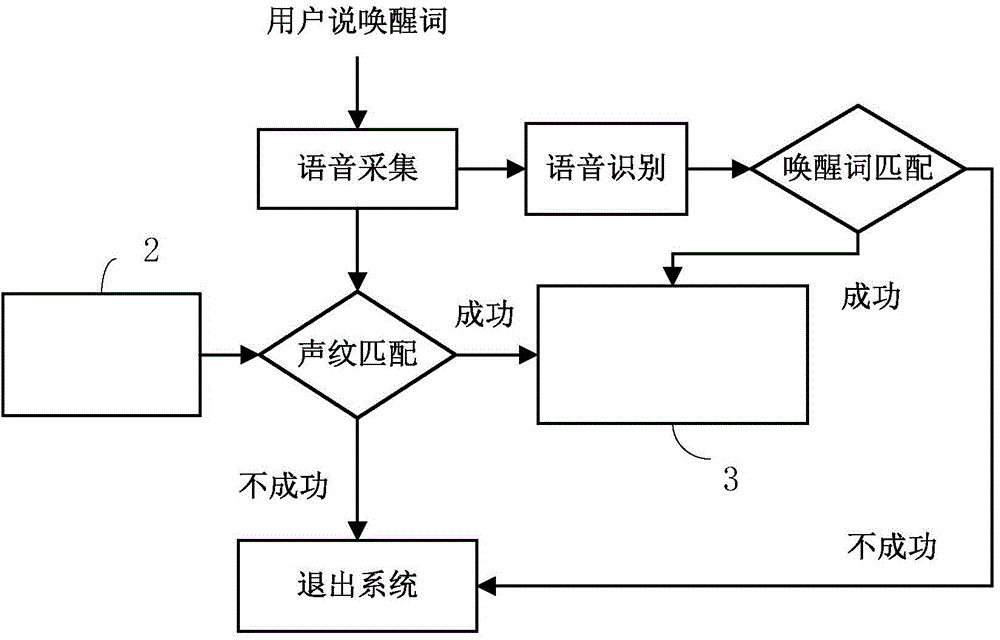

The invention discloses a method for personalized television voice wake-up by voiceprint and voice identification, particularly a method for performing identity confirmation on a television user through voiceprint identification and controlling a television to perform personalized voice wake-up through confirmed identity and a voice identification result of user voice, and relates to voiceprint identification and voice identification technologies. A composition system comprises a voice control system (1), an information storage unit (2) and a television main controller (3) which are connected through electric signals. The method has the characteristics of short training time, very high voiceprint and voice identification speed and high identification rate. Voiceprint and voice identification can be finished by only offline training and testing, identification results do not need to be sent to a cloud server, use is convenient, and the safety of family information is guaranteed. The method also can be applied to user-personalized automatic voice channel change of the television, can be transplanted to a common high-speed DSP (digital signal processor) or chip for operation, and can be widely applied to the related fields of smart homes.

Owner:SHANGHAI NORMAL UNIVERSITY

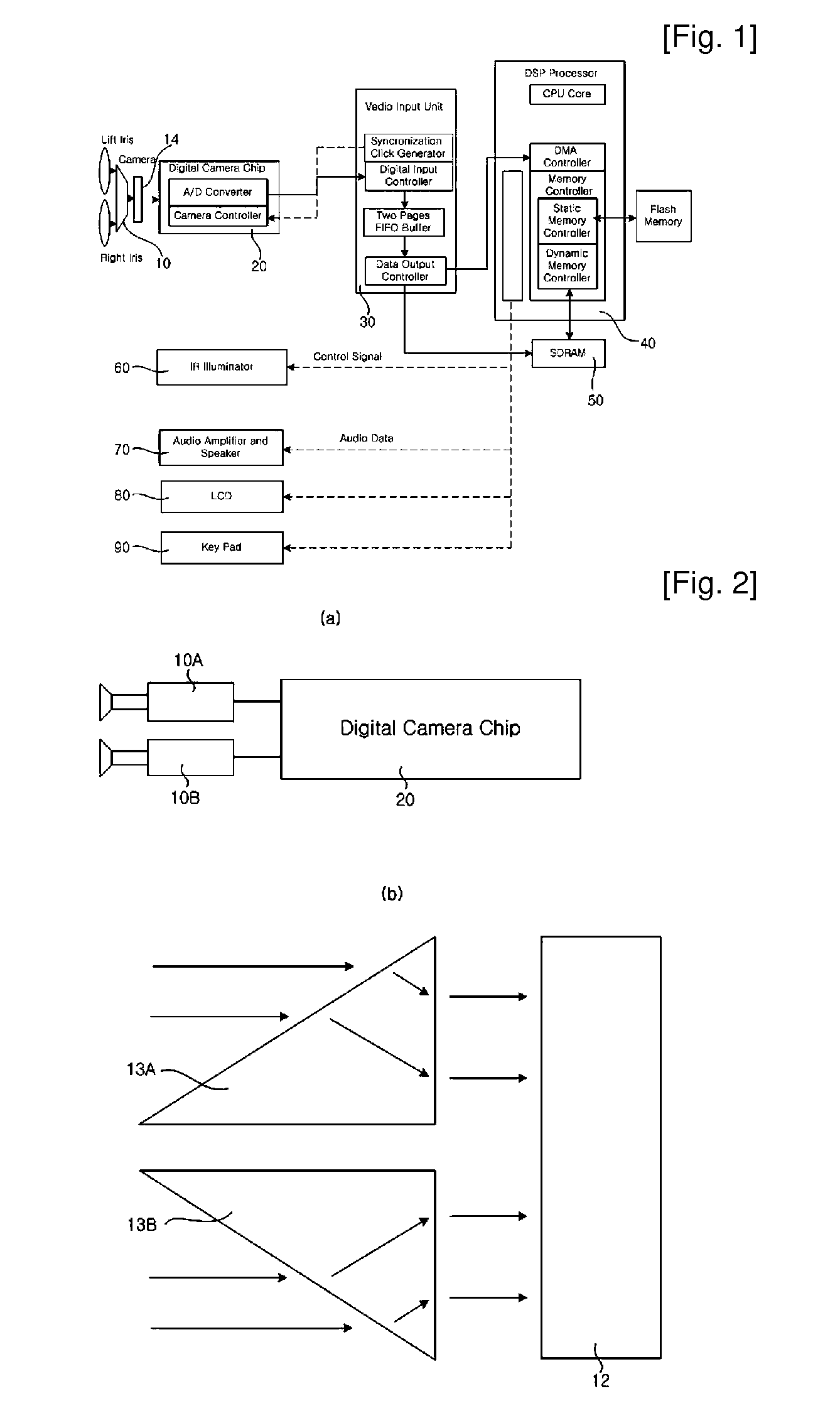

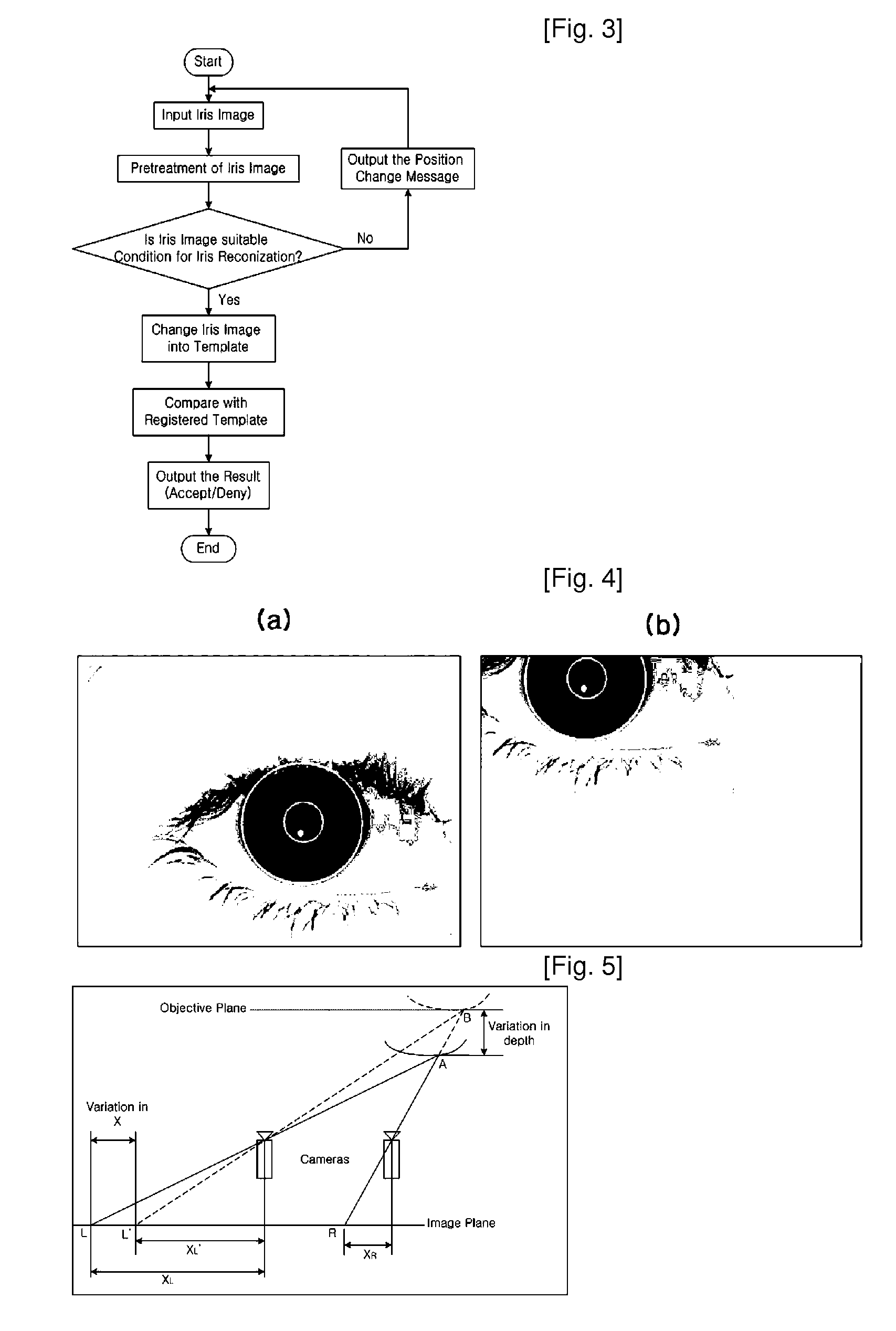

Iris Identification System and Method Using Mobile Device with Stereo Camera

InactiveUS20080292144A1Easy to getImprove recognition rateLighting and heating apparatusAcquiring/recognising eyesCamera lensStereo cameras

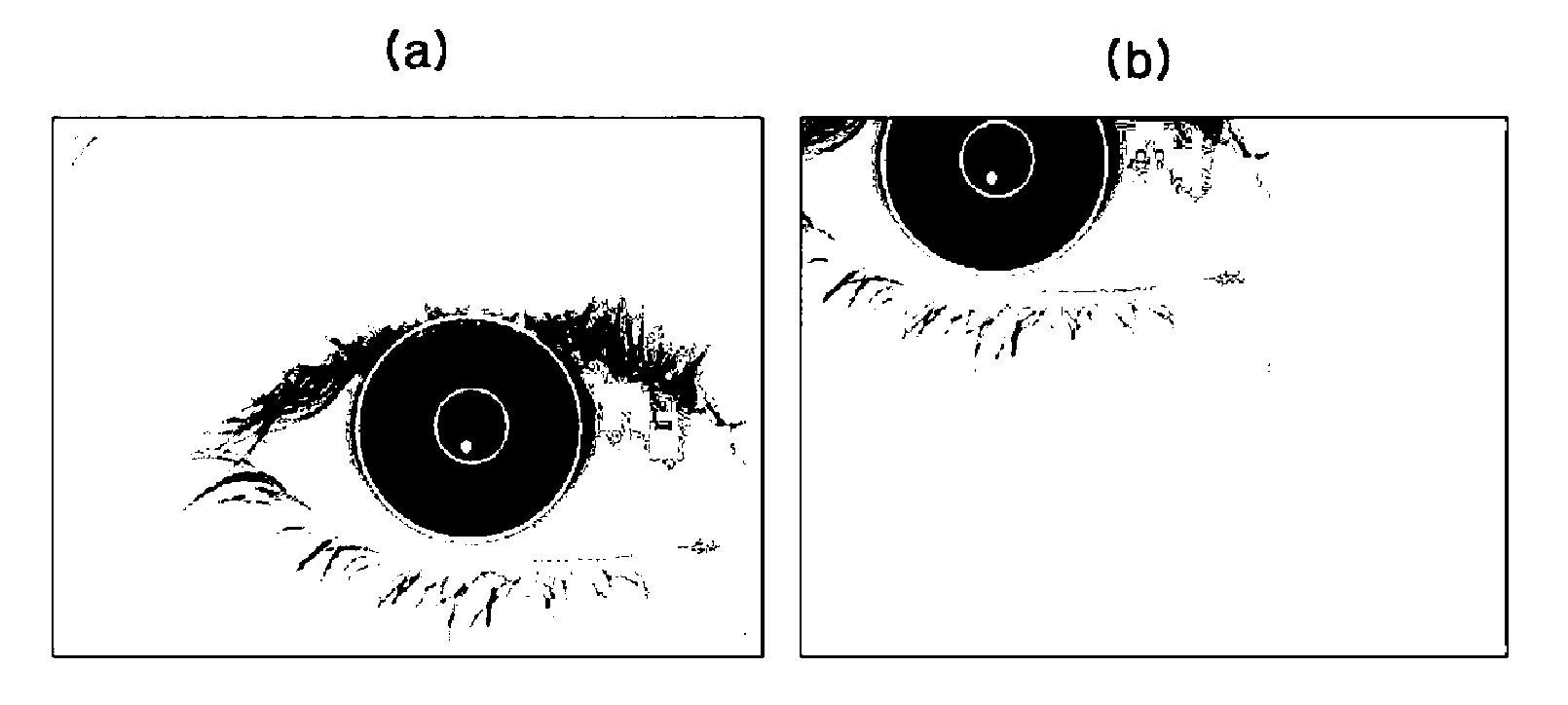

The present invention relates to a face recognition and / or iris recognition system and method using a mobile device equipped with a stereo camera, which acquire a stereo image of a user's face using at least two cameras or a method corresponding thereto and, even when the size of the stereo image is varied according to distance, correct the size of the stereo image. The stereo camera uses a single-focus lens with a long depth of focus to acquire a focused iris image over a wider range. When the user is not located at a position suitable for iris recognition, a message is sent to the user such that an iris image suitable for recognition is acquired. Furthermore, an iris image correction process according to distance is performed to prevent recognition rate from decreasing even when the size of the iris image is changed.

Owner:IRITECH

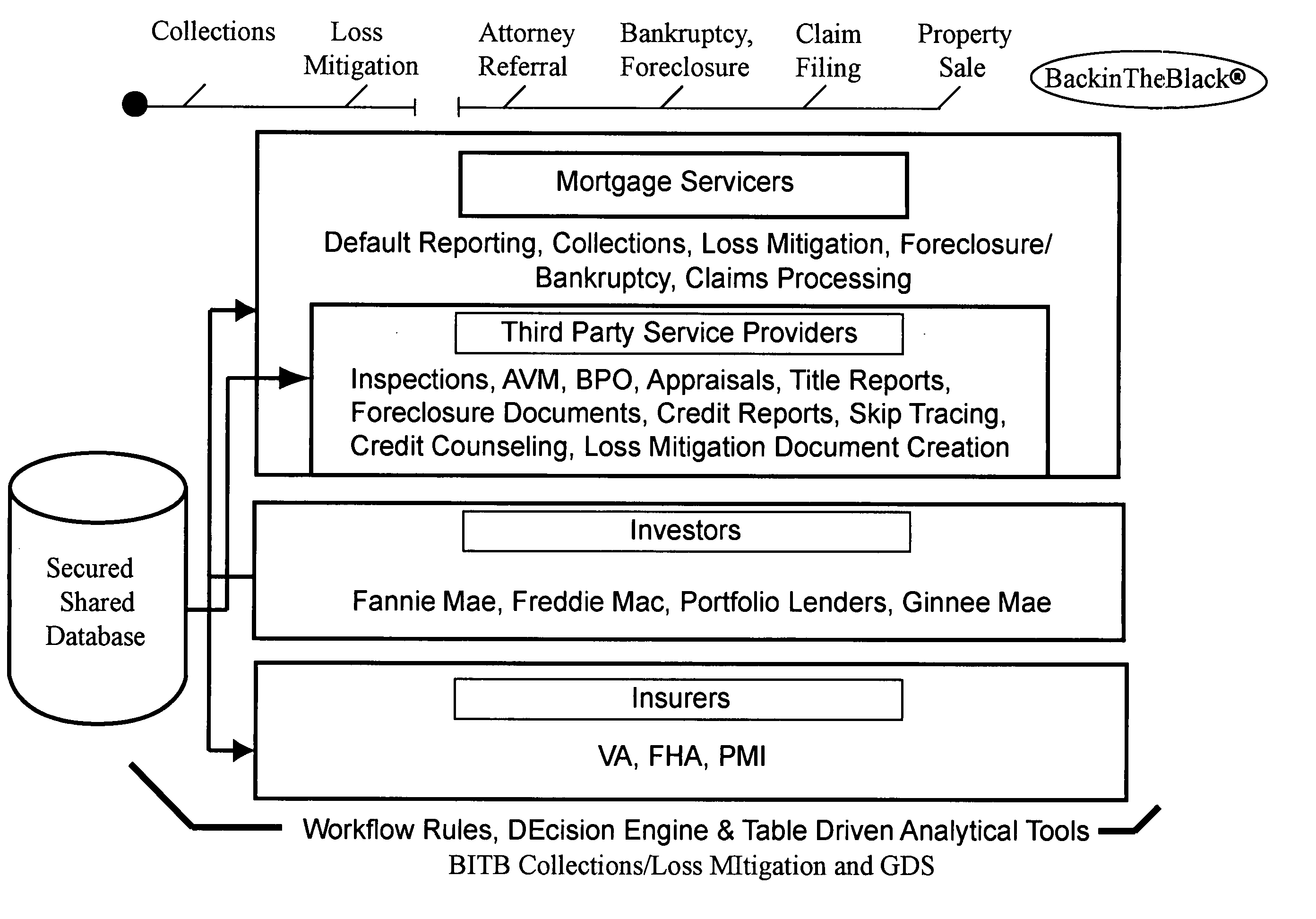

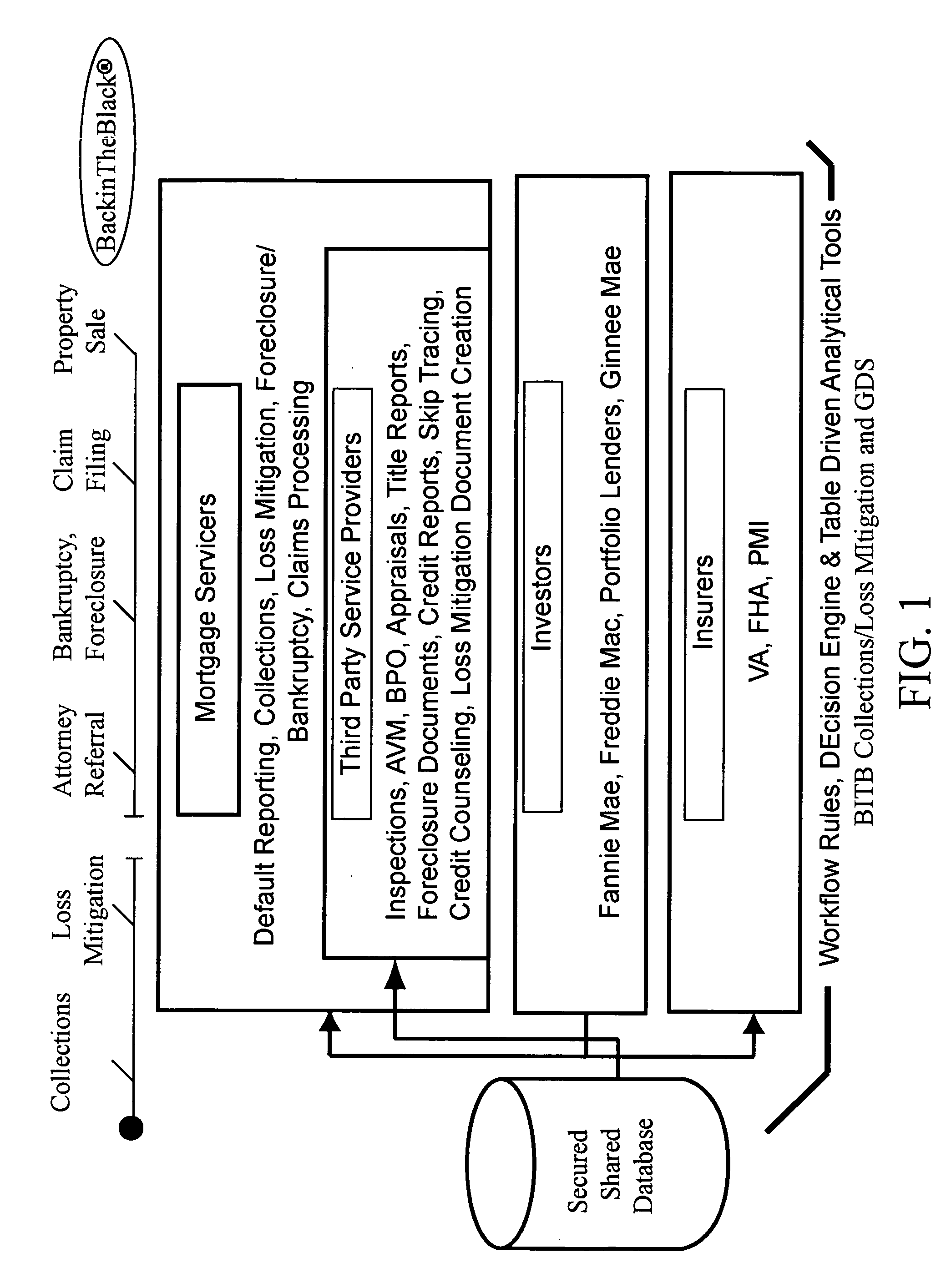

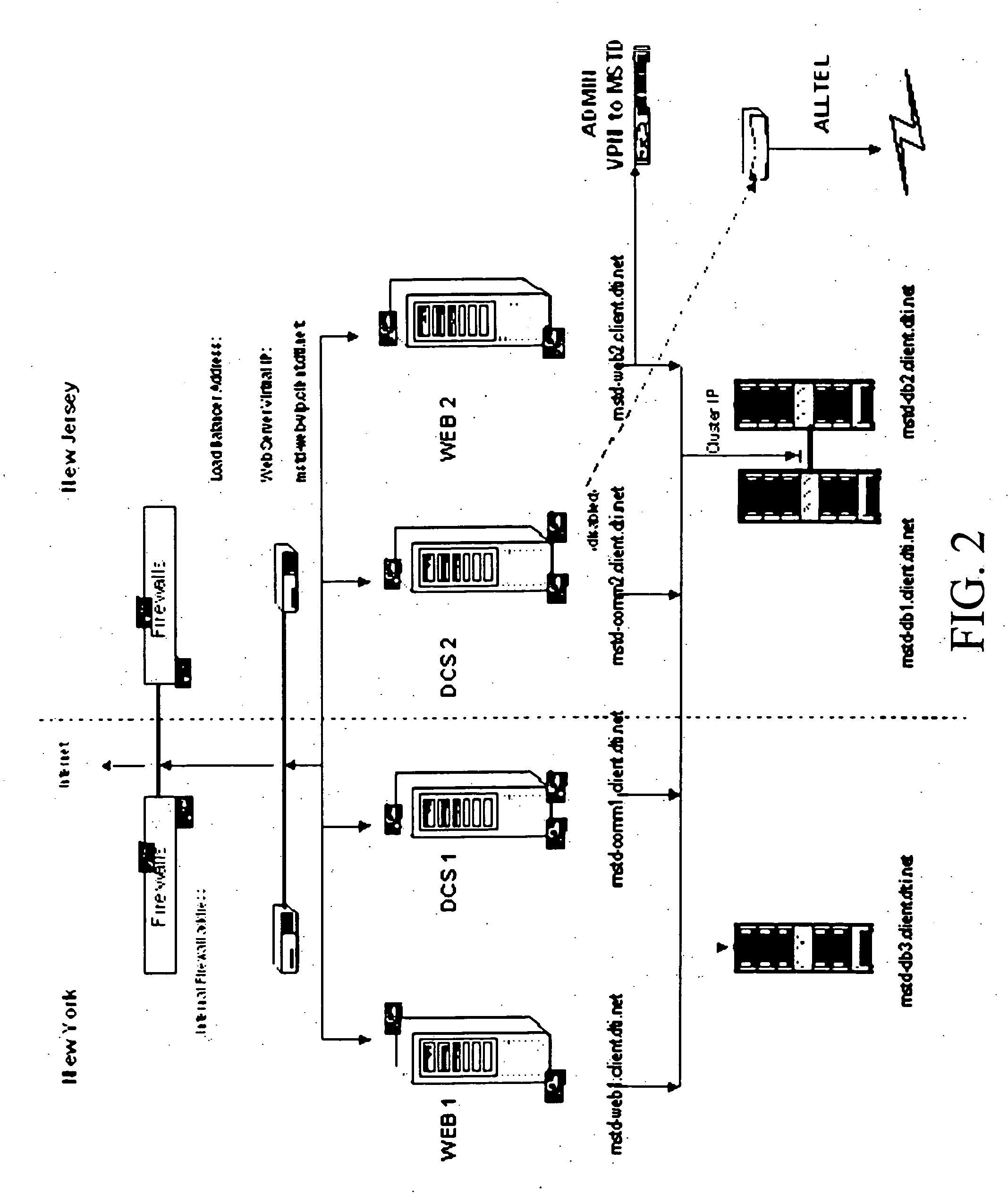

Software solution management of problem loans

InactiveUS20050278246A1Increasing availability of resourceFacilitate dialogueFinanceSpecial data processing applicationsProcess lossComputer science

An improved and automated ASP-delivered loan workout system, inclusive of communication architecture and software method that assists a user in improving the dialogue and counseling sessions between user, borrower, and other involved parties, and which increases loan servicer efficiency while decreasing the time and expense necessary to process loss mitigation cases-including identification of an appropriate financial workout solution, via the use of logical decision tree-based interviews-increases the rate of identification of viable loss mitigation options, and selects and manages the best loss mitigation solution, all in real-time, and within investor and lender requirements, within loan guarantor and insurer conditions, and in compliance with rapidly changing industry standards and government rules.

Owner:MSTD

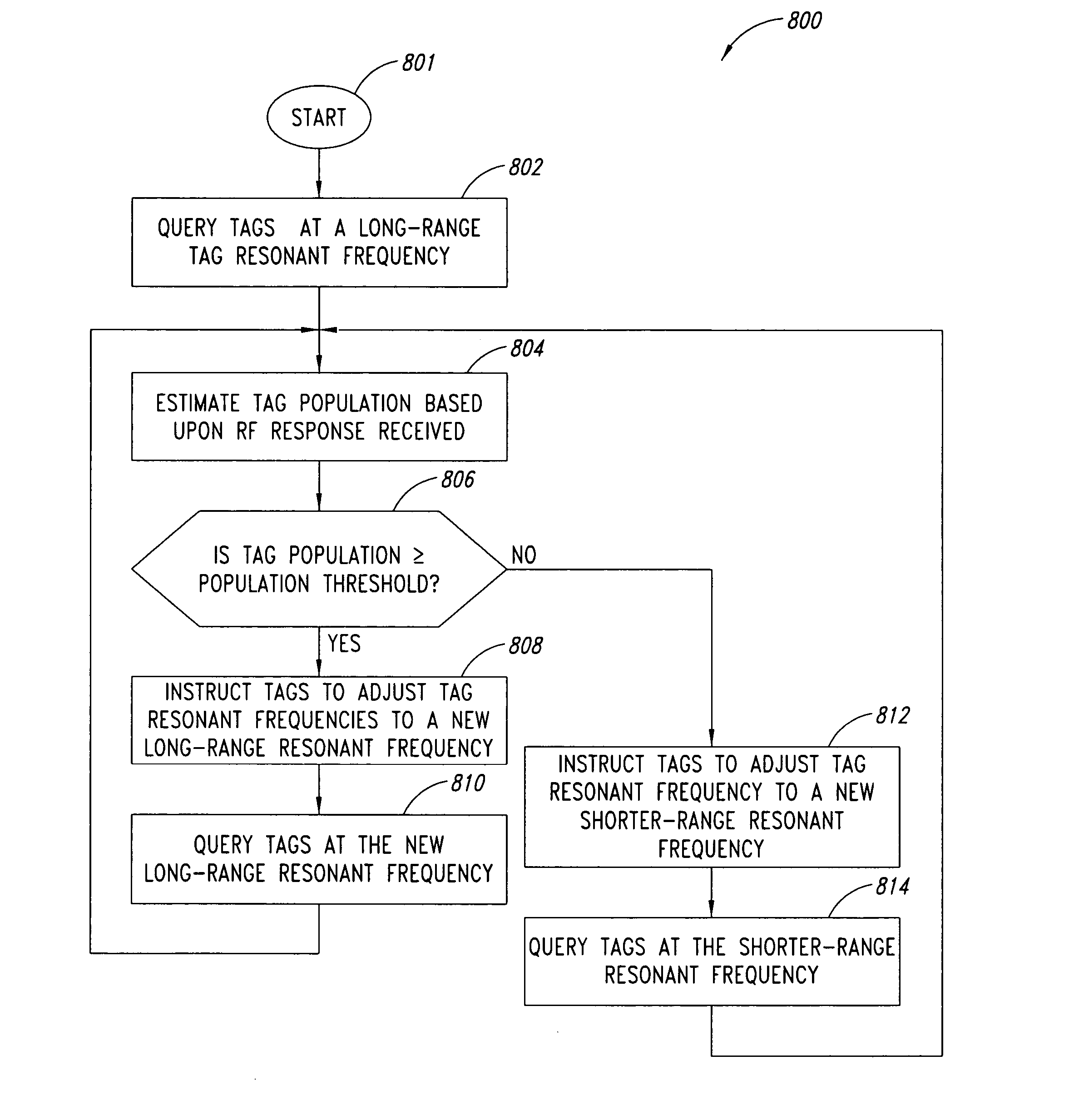

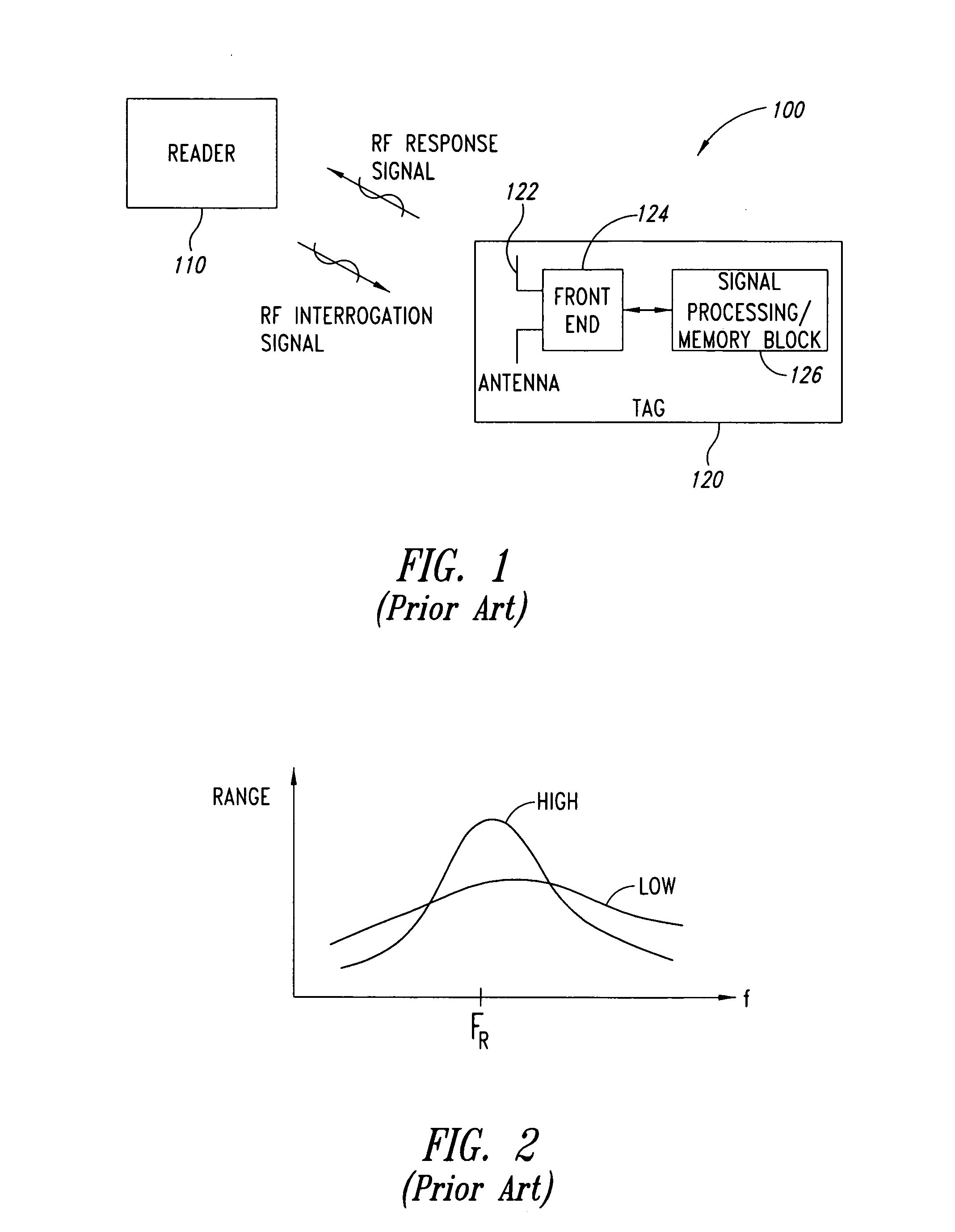

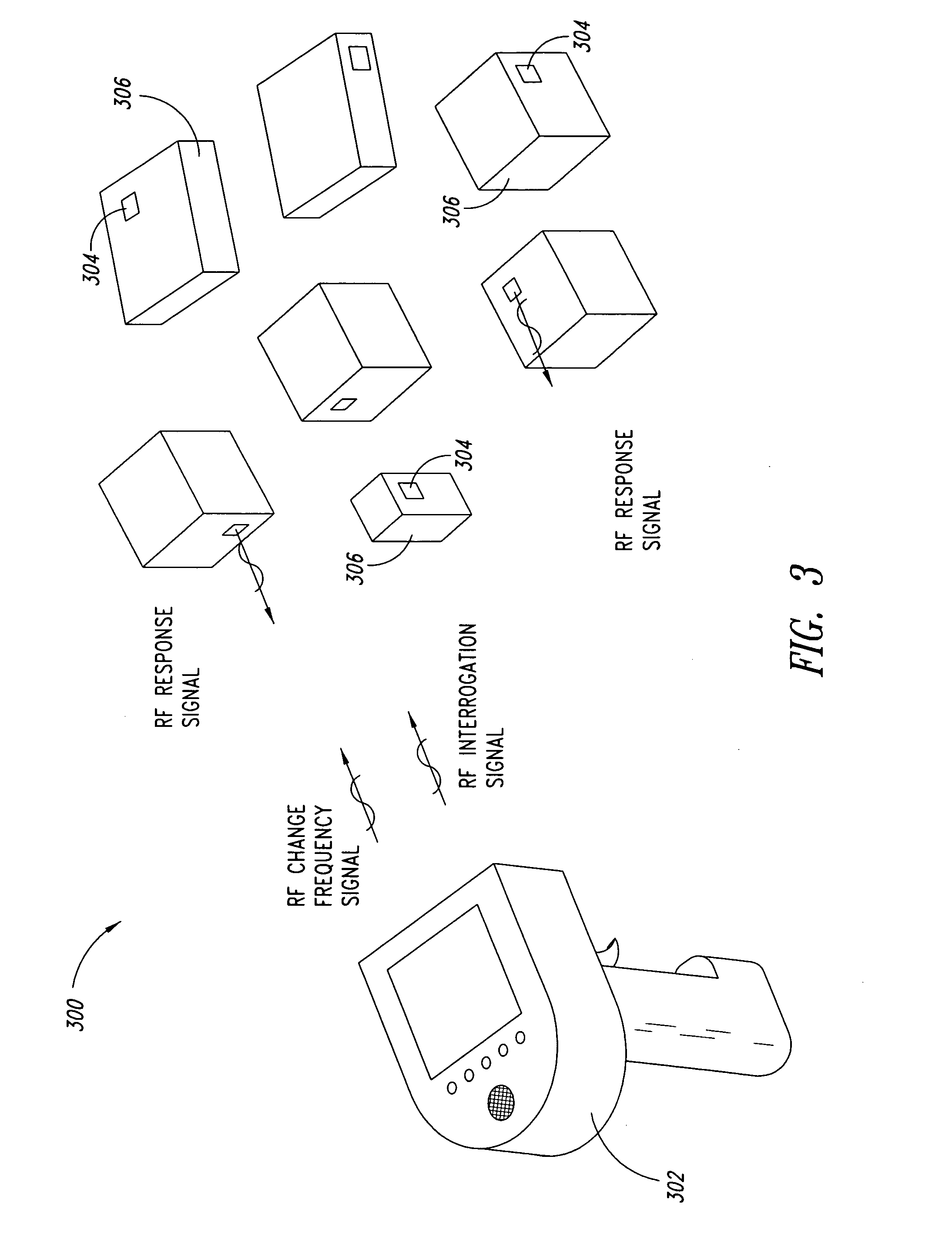

System and method of enhancing range in a radio frequency identification system

ActiveUS20070096881A1Enhance radio frequency identification tag identification rateElectric signal transmission systemsMemory record carrier reading problemsIdentification rateEngineering

A radio frequency identification (RFID) system includes a plurality of RFID tags each having adjustable tag resonant frequency, and an RFID reader configured to transmit interrogation and change frequency RF signals. The interrogation and change frequency RF signals are transmitted at the current tag resonant frequency. The plurality of RFID tags modify the tag resonant frequency to a new tag resonant frequency in response to the change frequency RF signals. RFID tag identification rates may be enhanced by interrogating the plurality of RFID tags at the tag resonant frequency for a dwell time, receiving response RF signals, determining a new tag resonant frequency, and transmitting a change frequency RF signal that causes the RFID tags to change the tag resonant frequency to the new tag resonant frequency. The new resonant frequency may be based on the number of received responses.

Owner:INTERMEC IP

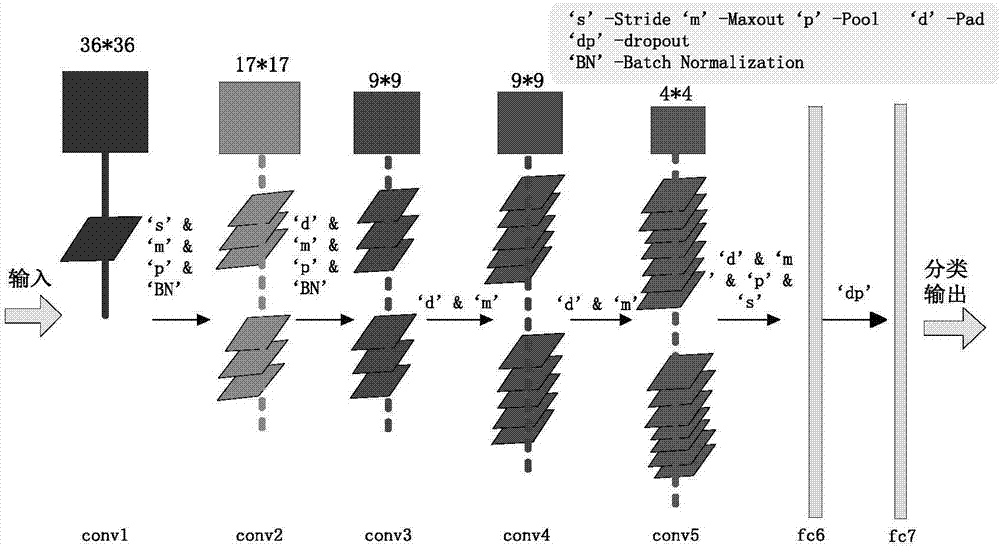

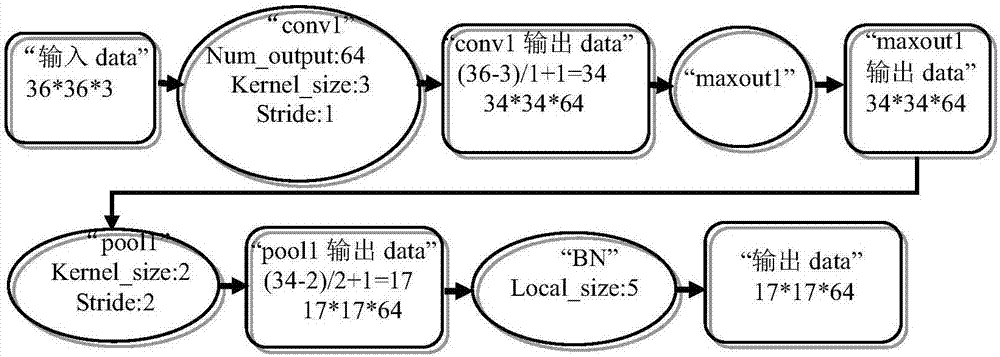

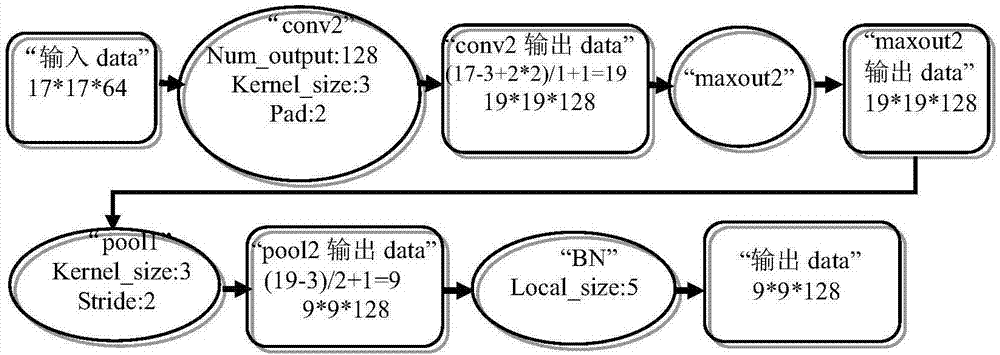

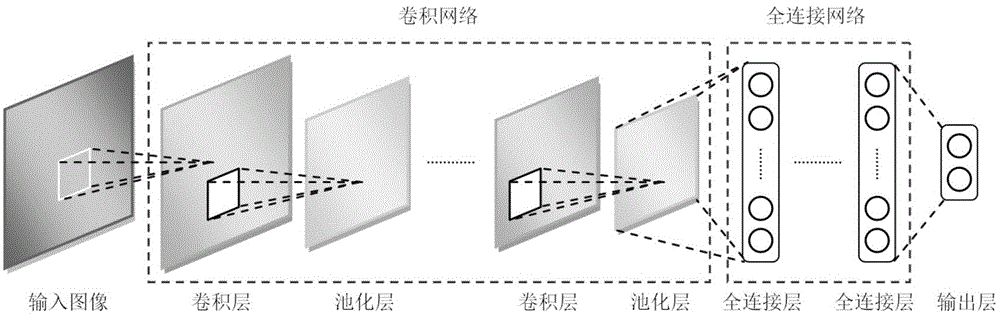

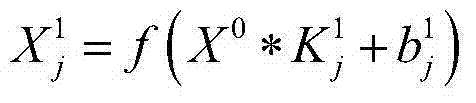

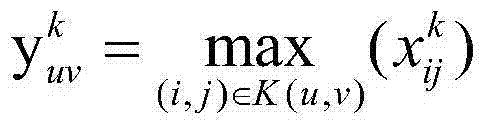

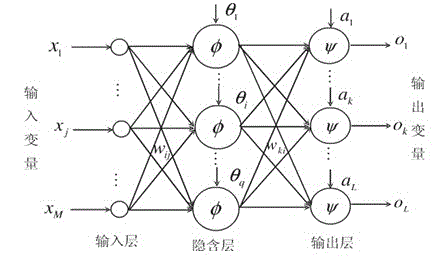

Image classification method based on convolution neural network

PendingCN107341518AImprove accuracyImprove recognition rateCharacter and pattern recognitionNeural learning methodsClassification methodsNetwork structure

The invention discloses an image classification method based on a convolution neural network. The method comprises the following steps: constructing a deep convolution neural network; improving the deep convolution neural network; training and testing the deep convolution neural network; and optimizing the network parameter. By using the image classification method disclosed by the invention, the improvement and the optimization are respectively performed on the network structure and multiple parameters of the convolution neural network, the recognition rate of the deep convolution neural network can be effectively improved, and the accuracy of the image classification is improved.

Owner:EAST CHINA UNIV OF TECH

Improved multi-instrument reading identification method of transformer station inspection robot

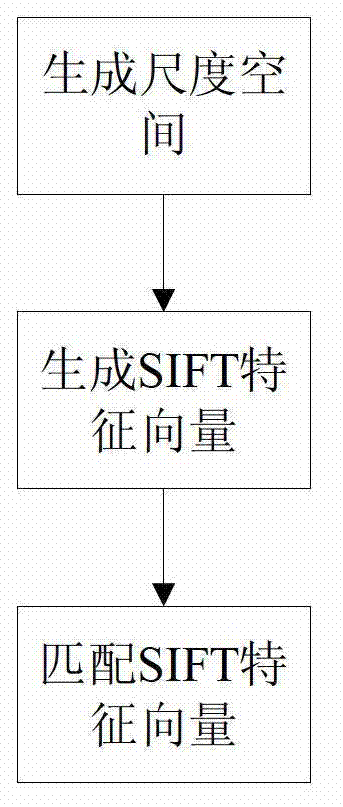

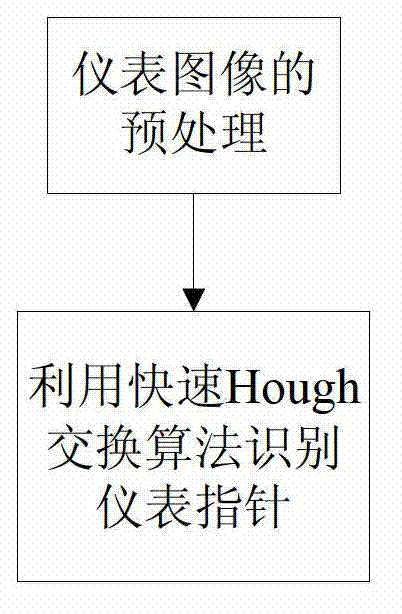

InactiveCN103927507AImprove robustnessMeet the requirements of automatic detection and identification of readingsCharacter and pattern recognitionHough transformScale-invariant feature transform

The invention discloses an improved multi-instrument reading identification method of a transformer station inspection robot. In the method, first of all, for instrument equipment images of different types, equipment template processing is carried out, and position information of min scales and max scales of each instrument in a template database. For the instrument equipment images acquired in real time by the robot, a template graph of a corresponding piece of equipment is scheduled from a background service, by use of a scale invariant feature transform (SIFT) algorithm, an instrument dial plate area sub-image is extracted in an input image in a matching mode, afterwards, binary and instrument point backbone processing is performed on the dial plate sub-image, by use of rapid Hough transform, pointer lines are detected, noise interference is eliminated, accurate position and directional angel of a pointer are accurately positioned, and pointer reading is finished. Such an algorithm is subjected to an on-site test of some domestic 500 kv intelligent transformer station inspection robot, the integration recognition rate of various instruments exceeds 99%, the precision and robustness for instrument reading are high, and the requirement for on-site application of a transformer station is completely satisfied.

Owner:STATE GRID INTELLIGENCE TECH CO LTD

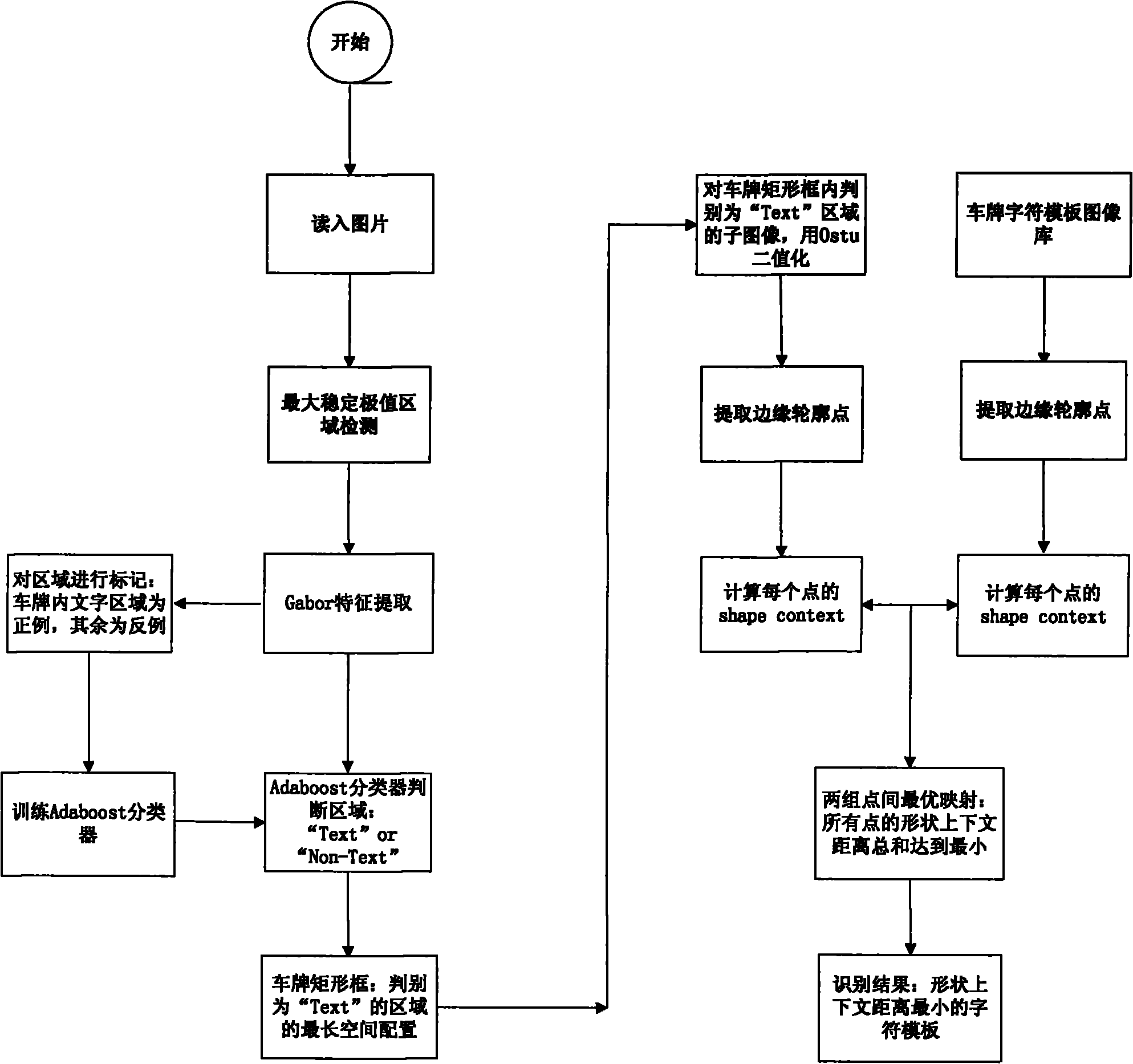

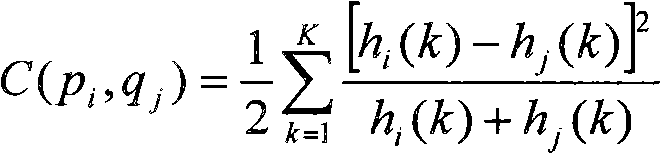

License plate detection and identification method based on maximum stable extremal region

InactiveCN101859382AThe description is validEffective texture featuresImage analysisCharacter and pattern recognitionTemplate matchingImaging processing

The invention belongs to the technical field of the pattern identification and image processing, and relates to a license plate detection and identification method based on the maximum stable extremal region, which comprises the following steps: extracting the maximum stable extremal region (MSER) to obtain text regions of candidate license plates; adopting one effective feature for description of each extremal region, classifying the extremal regions into 'text' regions and 'non-text' regions with a classifier obtained in prior training, and extracting a license plate from original images based on the characteristics of the structure of the license plate; and describing with the characteristics of the shape context, and completing the character identification through template matching. Since the maximum stable extremal region has the affine invariance, high stability and multi-scale features, and the regions are determined only according to the gray value and are not light-sensitive,the license plate detection and identification method with the maximum stable extremal region as the base is applicable to the complex background, and has the advantages of good stability and high identification rate.

Owner:FUDAN UNIV

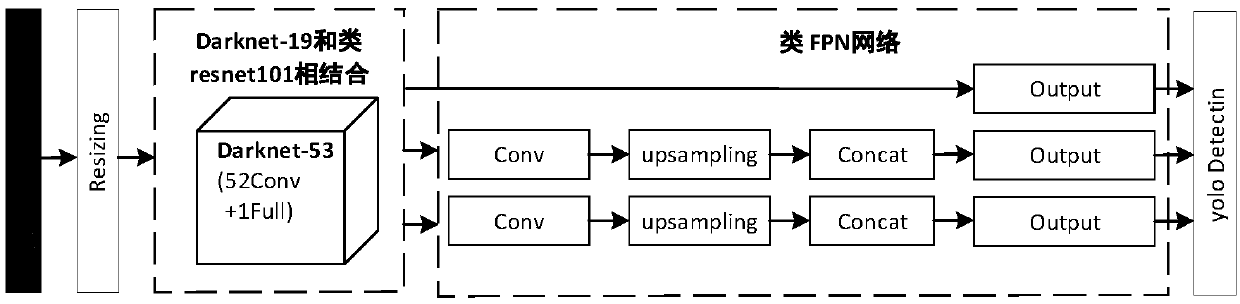

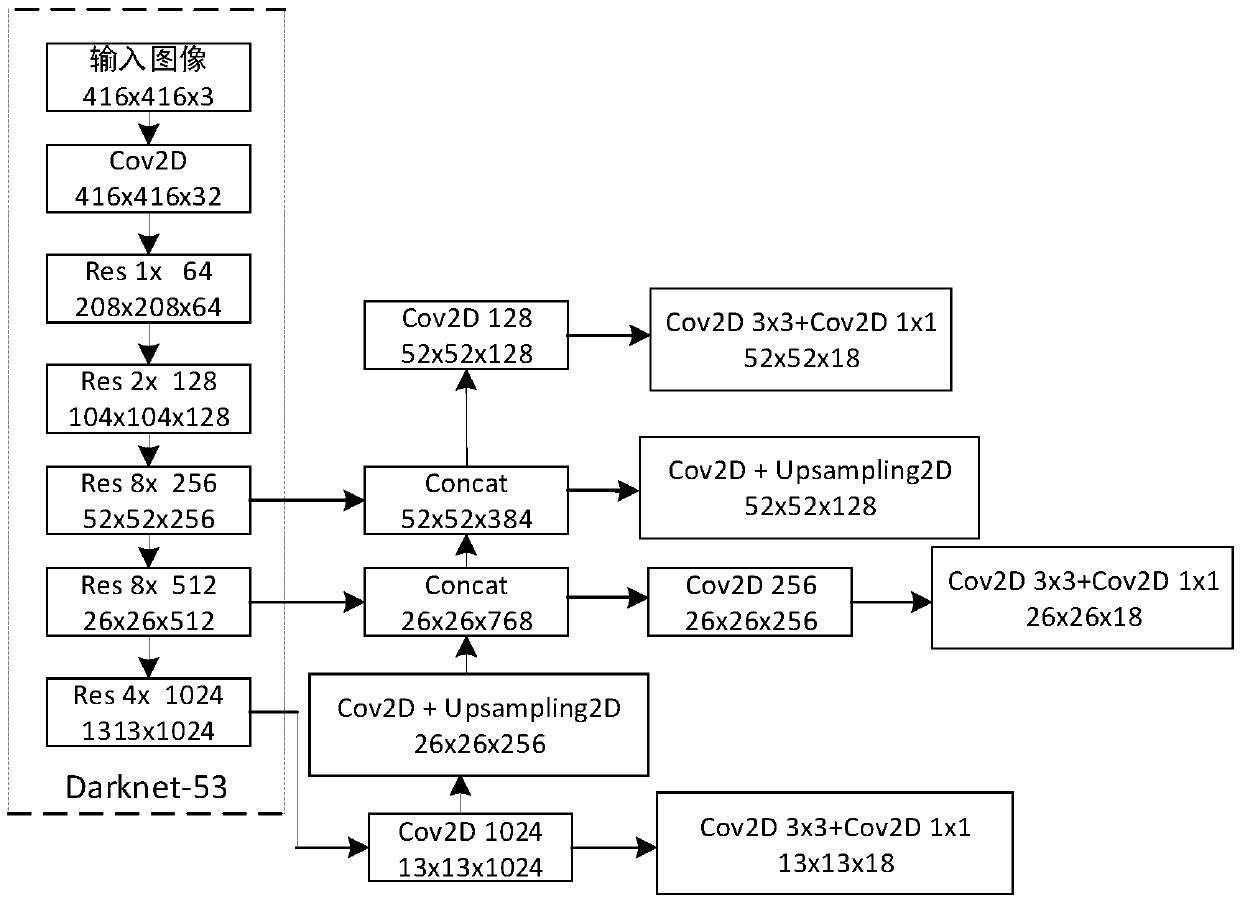

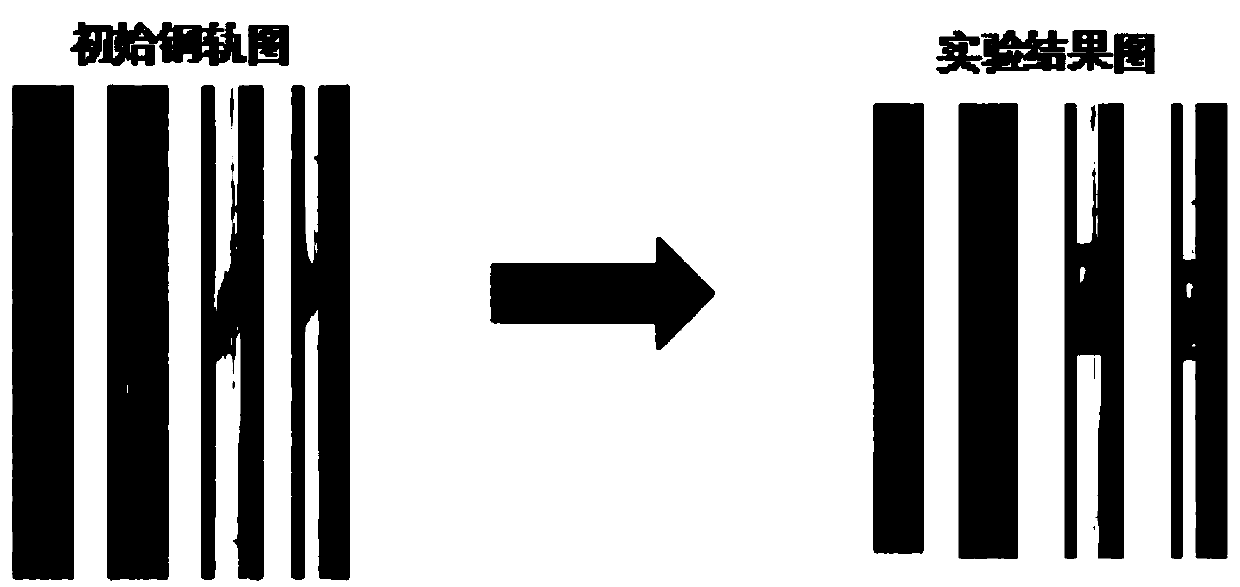

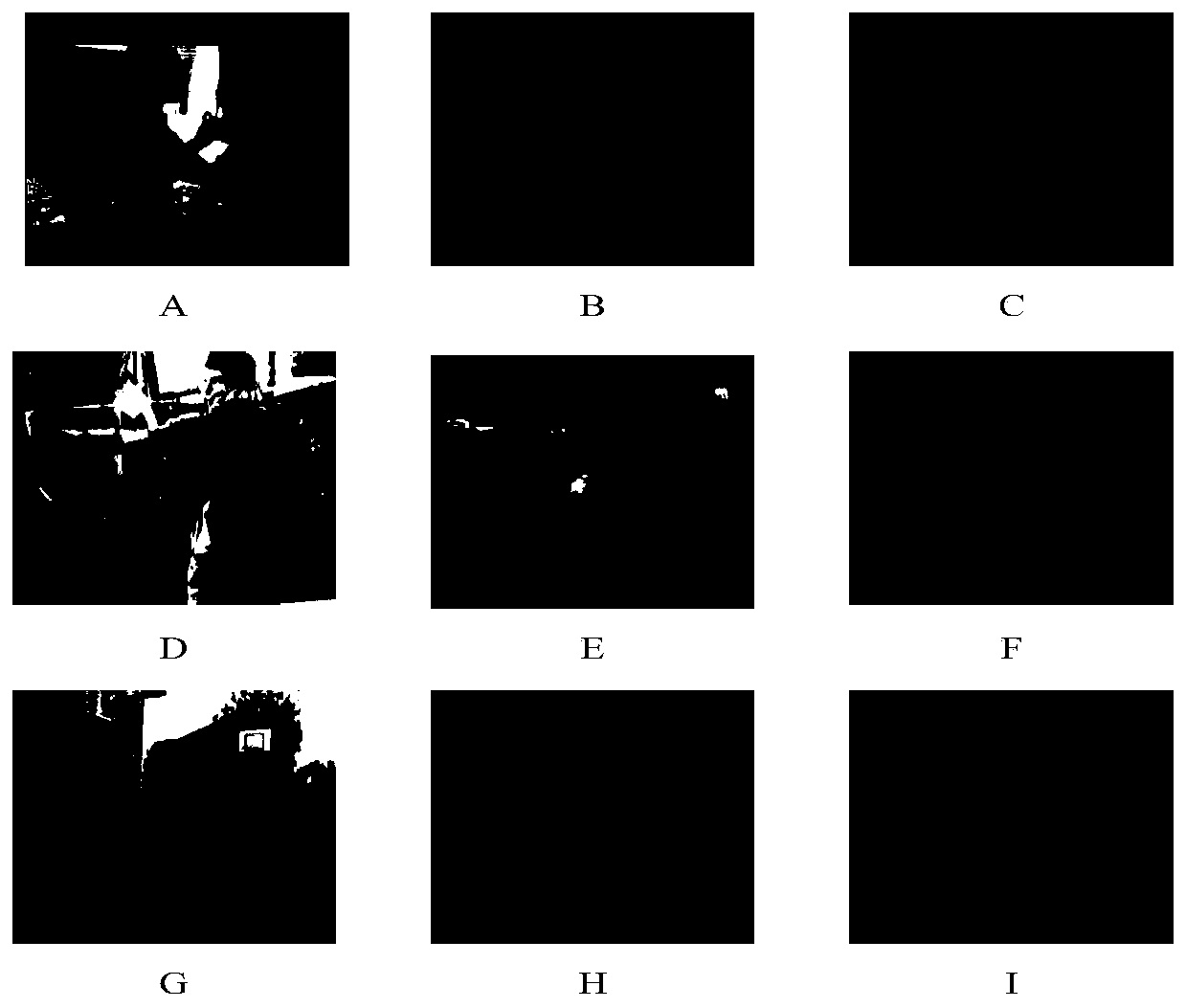

Rail surface defect detection method based on depth learning network

InactiveCN109064461AResolve overlapTargeting is accurateImage analysisOptically investigating flaws/contaminationImage extractionNetwork model

The invention belongs to the field of depth learning, and provides a rail surface defect detection method based on the depth learning network, aiming at solving various problems existing in the priorrail detection methods. The depth learning method first automatically resets the input rail image to 416*416, and then extracts and processes the image. Image extraction mainly by Darknet-53 model complete. The processing output is mainly accomplished by the FPN-like network model. Firstly, the rail image is divided into cells. According to the position of the defects in the cells, the width, height and coordinates of the center point of the defects are calculated by dimension clustering method, and the coordinates are normalized. At the same time, we use logistic regression to predict the fraction of boundary box object, use binary cross-entropy loss to predict the category contained in the boundary box, calculate the confidence level, and then process the convolution in the output, up-sampling, network feature fusion to get the prediction results. The invention can accurately identify defects and effectively improve the detection and identification rate of rail surface defects.

Owner:CHANGSHA UNIVERSITY OF SCIENCE AND TECHNOLOGY

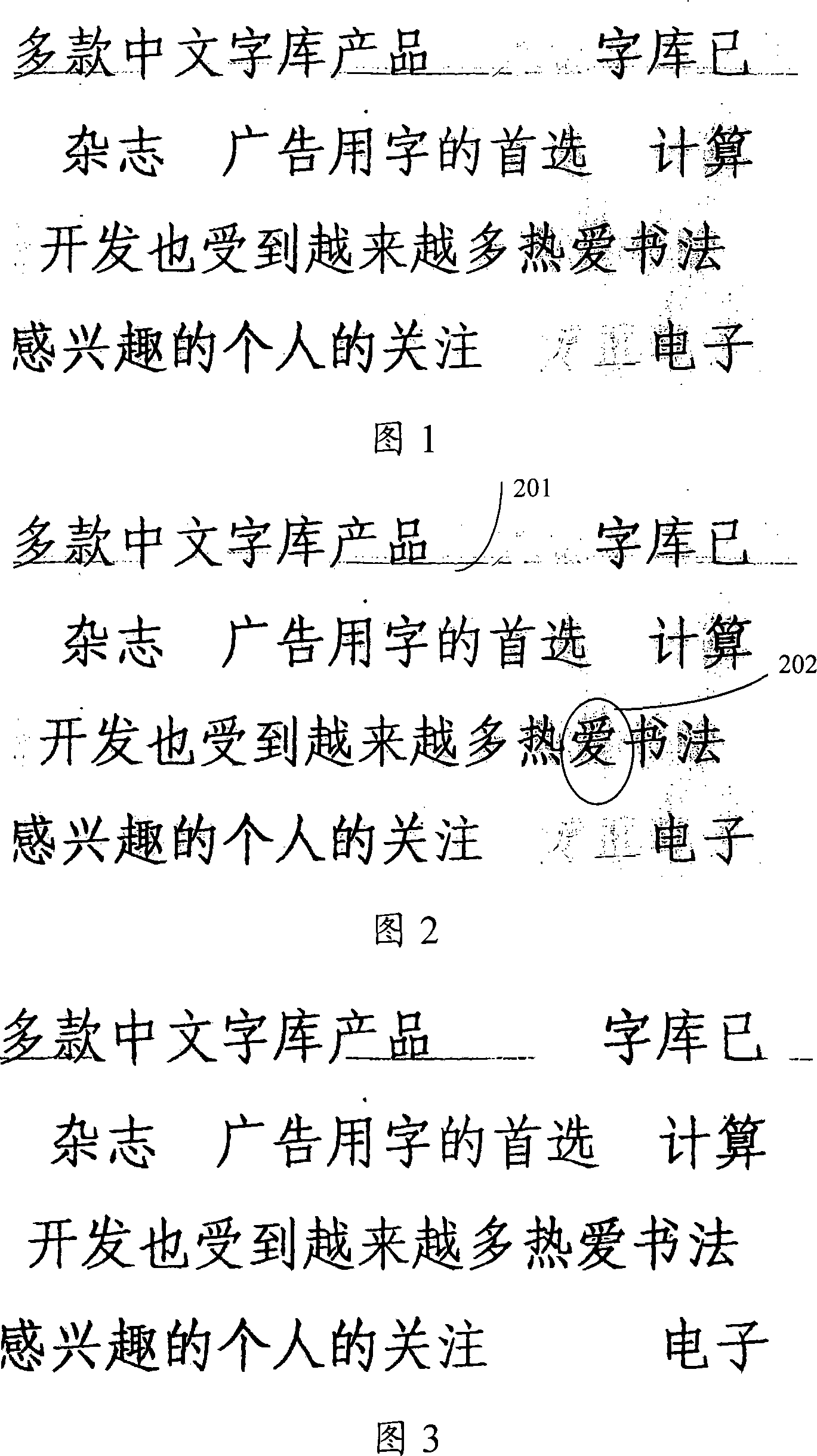

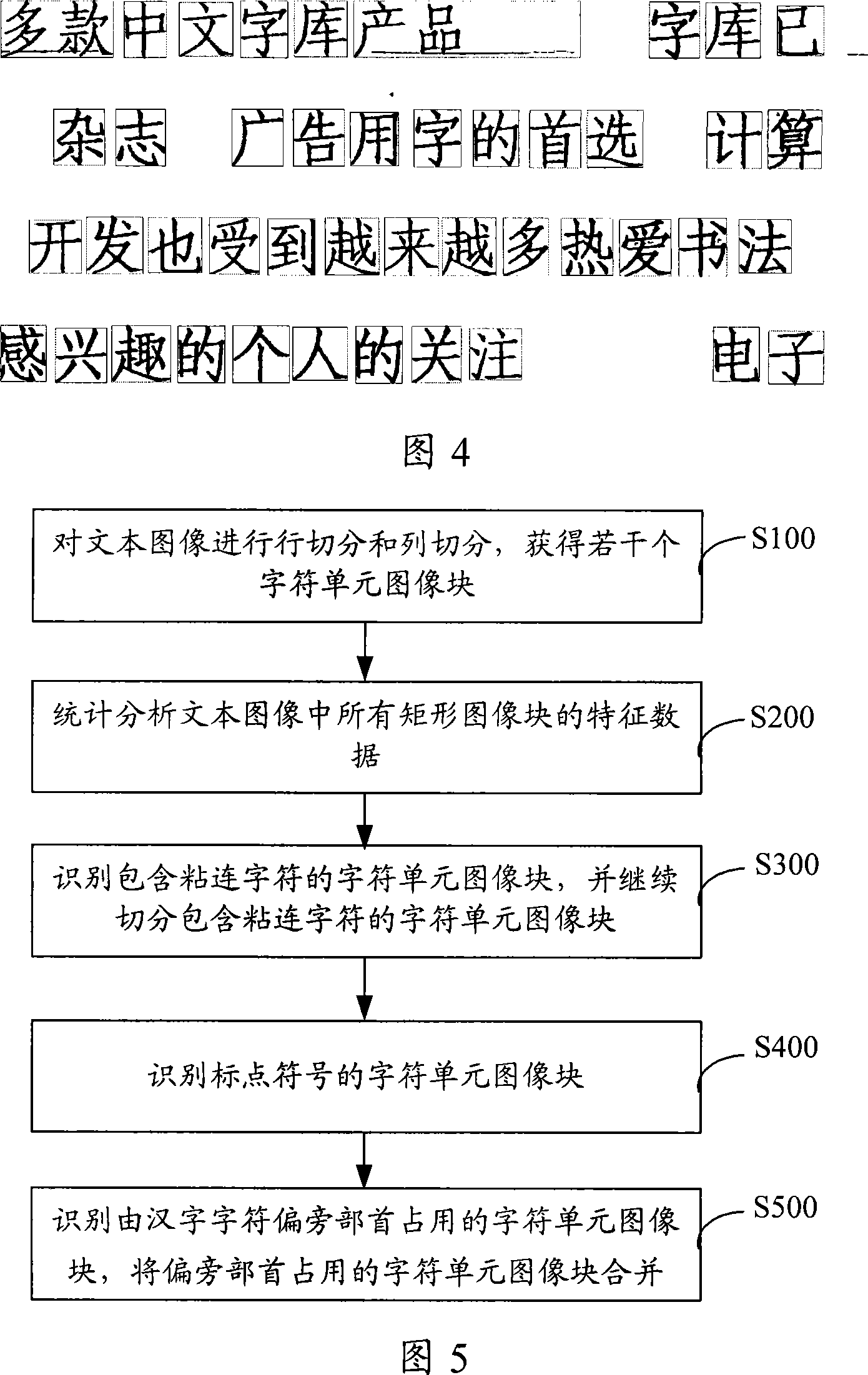

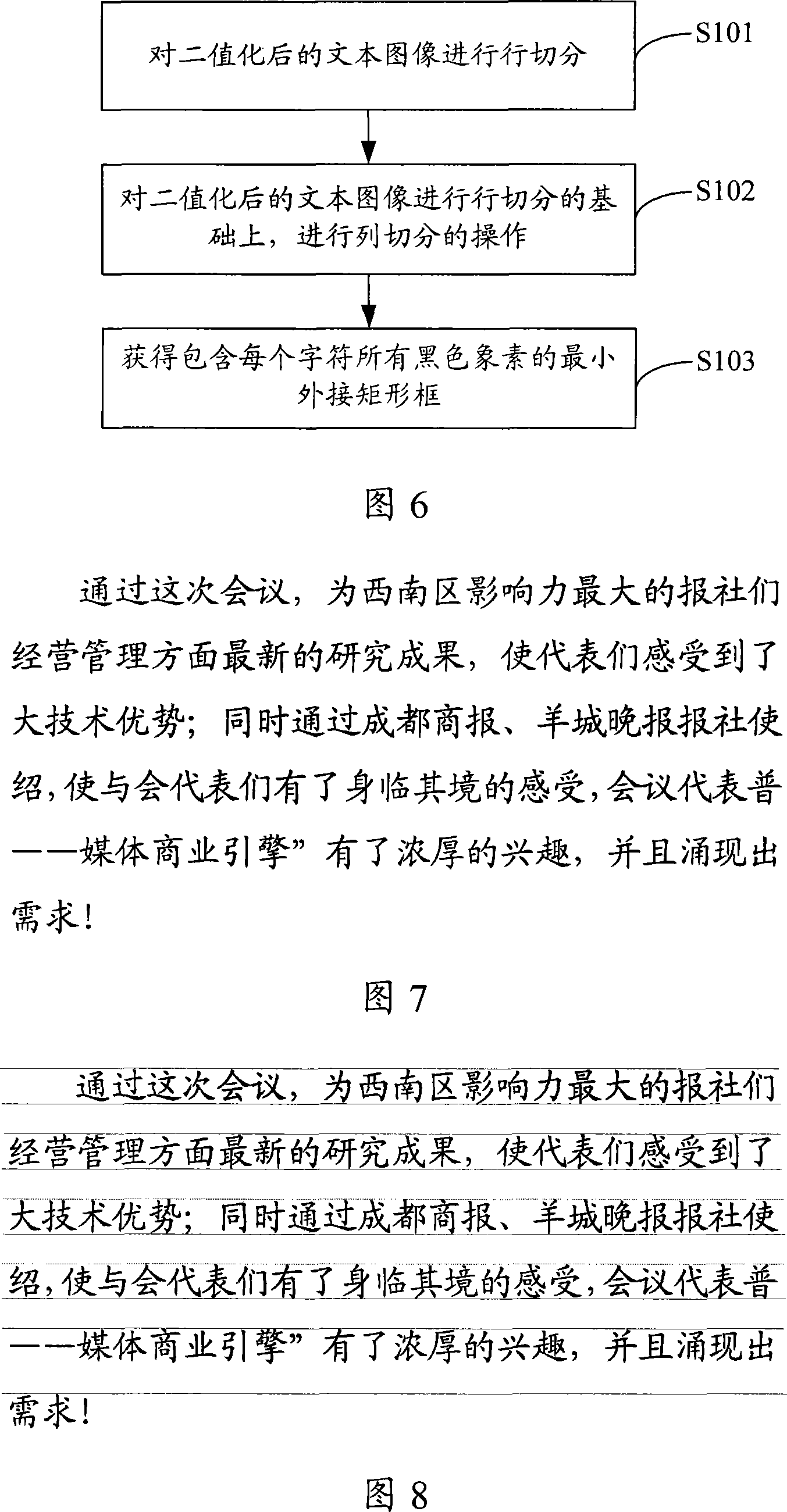

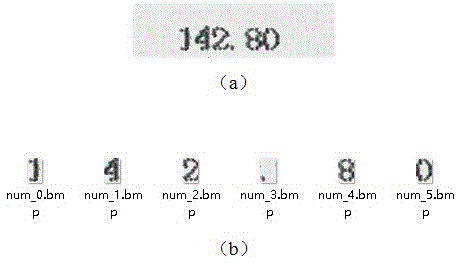

Method and apparatus for cutting character

ActiveCN101251892AImprove recognition rateCharacter and pattern recognitionChinese charactersEnglish characters

The invention discloses a character segmentation method and a character segmentation device, which can recognize character unit image blocks containing touching characters and character unit image blocks containing components and radicals, and assure the correctness of the character segmentation result. In the technical proposal of the invention, a plurality of character unit image blocks are obtained by making line segmentation and column segmentation to a text image, character unit image blocks containing touching characters are recognized and continue to be segmented, Chinese character unit image block areas and English character unit image block areas are recognized, character unit image blocks occupied by components and radicals of Chinese characters are recognized in the Chinese character unit image block areas, and character unit image blocks occupied by components and radicals of adjacent Chinese characters are merged into a character unit image block. The invention ensures that the character segmentation result does not depend too much on a character recognition feedback mechanism and further improves the recognition rate of the characters.

Owner:NEW FOUNDER HLDG DEV LLC +2

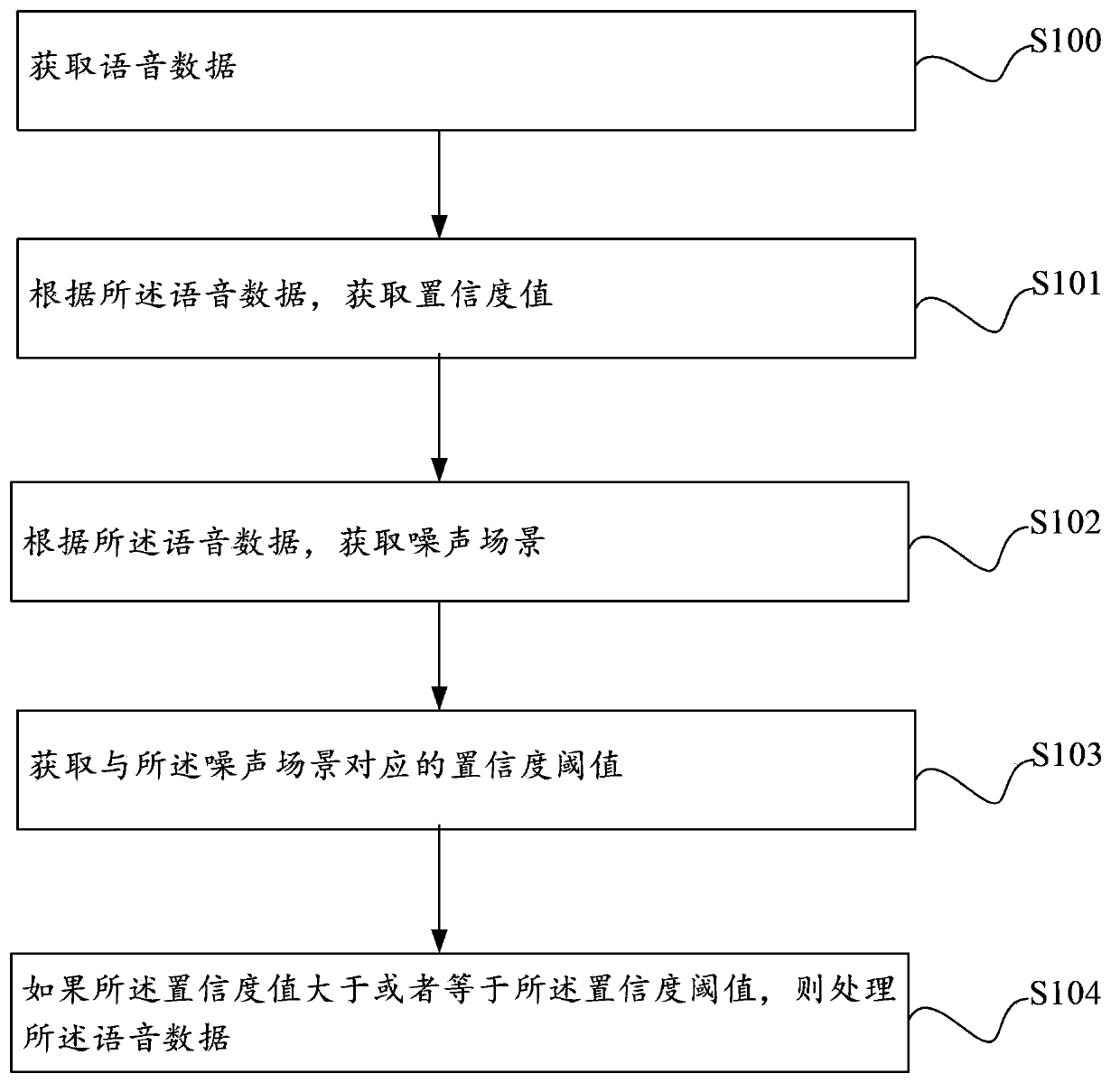

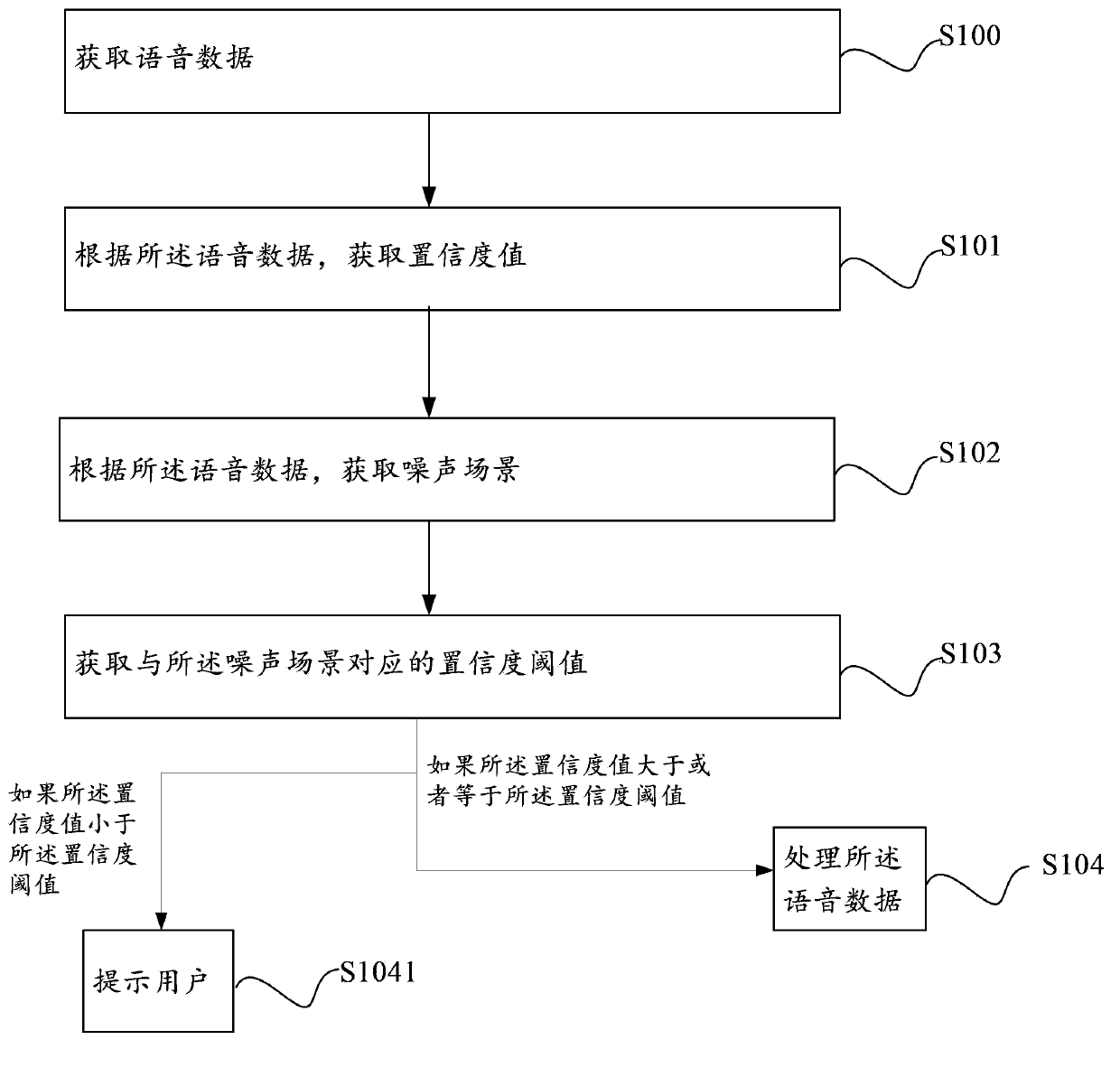

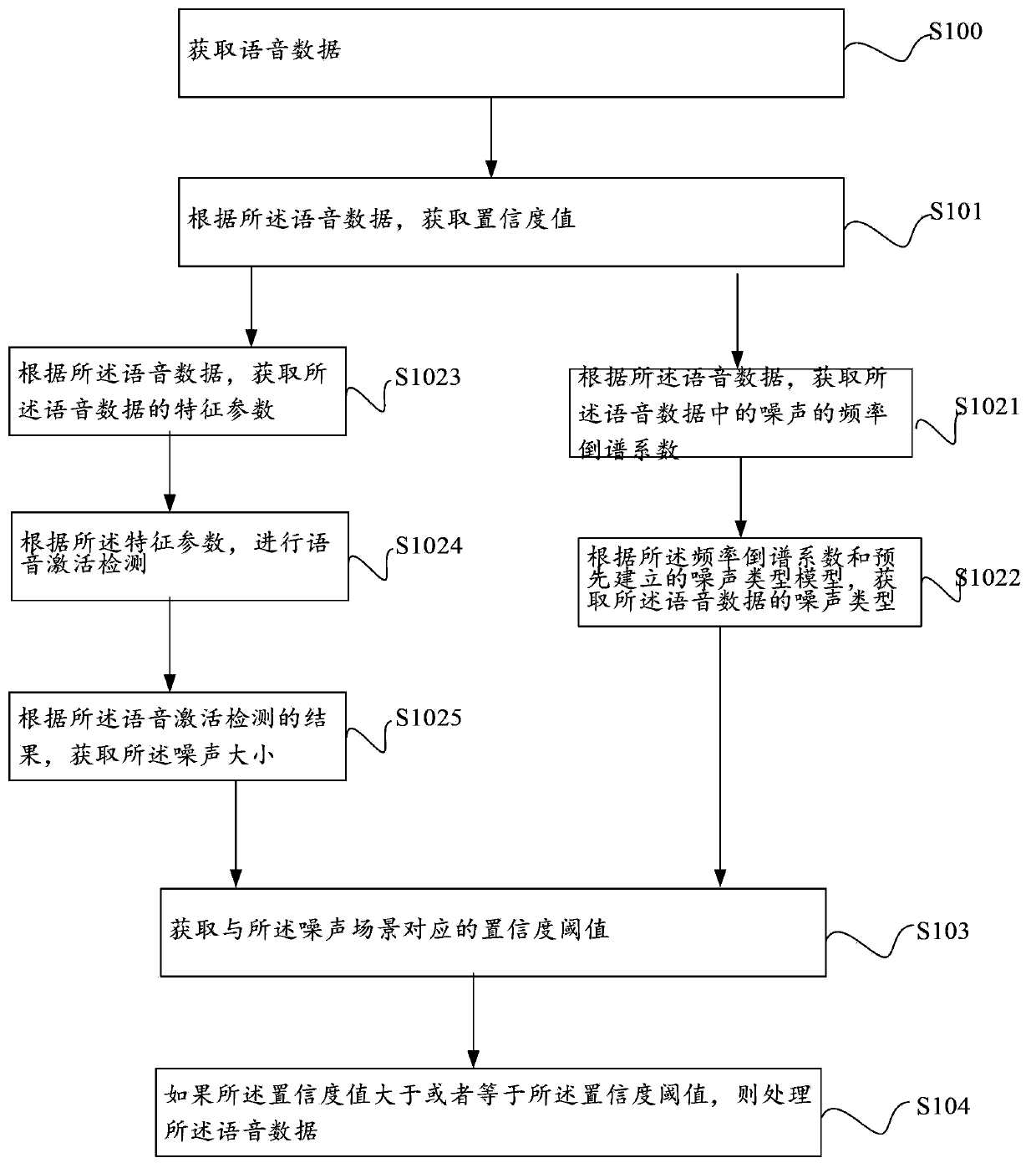

Method and device for recognizing voices

ActiveCN103971680AConfidence thresholds can be flexibly adjustedImprove speech recognition rateSpeech recognitionSpeech soundVoice data

Embodiments of the present invention provide a voice identification method, which includes: obtaining voice data; obtaining a confidence value according to the voice data; obtaining a noise scenario according to the voice data; obtaining a confidence threshold corresponding to the noise scenario; and if the confidence value is greater than or equal to the confidence threshold, processing the voice data. An apparatus is also provided. The method and apparatus that flexibly adjust the confidence threshold according to the noise scenario greatly improve a voice identification rate under a noise environment.

Owner:HUAWEI DEVICE CO LTD

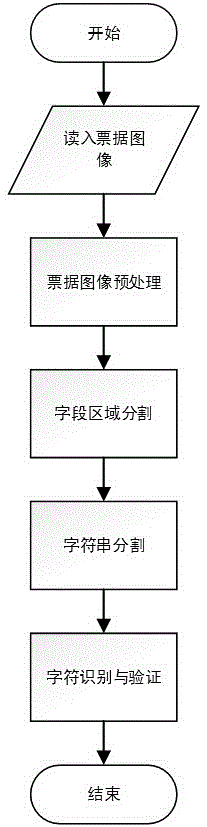

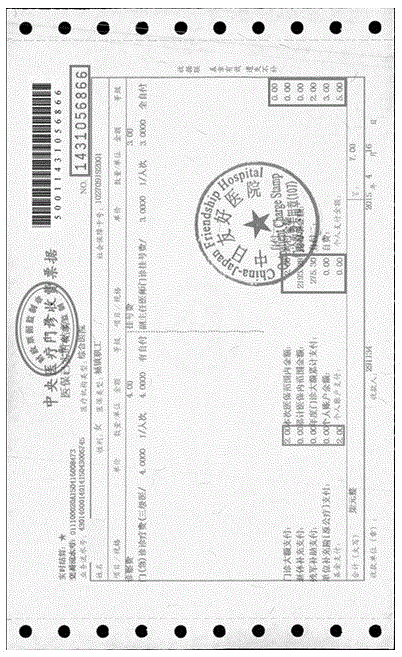

Automatic character extraction and recognition system and method for low-resolution medical bill image

ActiveCN105654072AAutomatic extractionEasy to identifyCharacter and pattern recognitionImage resolutionImaging quality

The invention discloses an automatic character extraction and recognition system and method for a low-resolution medical bill image. The system comprises an image preprocessing module, a field segmenting module, a single character segmenting module and a character recognizing module. The method comprises the steps of image preprocessing, field area recognizing, character string segmenting and character recognizing and verifying. The automatic character extraction and recognition system and method can be better suitable for automatic character extraction and recognition of the low-resolution medical bill image. The information can be fully utilized by performing layout analysis on a bill. For the image of which the image quality is low and the noise and the image resolution influence are very high, a character string is conveniently segmented into single characters through the semanteme of each field area, and then recognition on the image is converted into recognition on the single characters; for example, an invoice number composed of pure numbers can be recognized through a method special for processing an image only containing numbers, and when the invoice number is recognized, the recognizing range is limited within ten numbers from 0 to 9, and therefore the recognition rate can be greatly increased.

Owner:HARBIN INST OF TECH

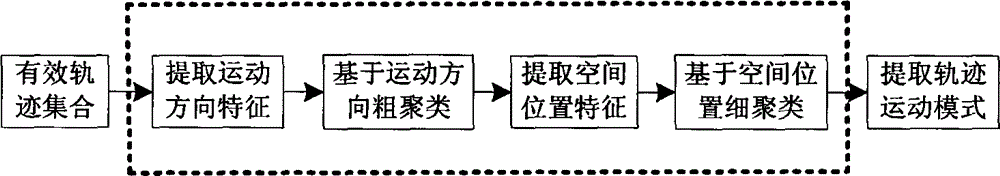

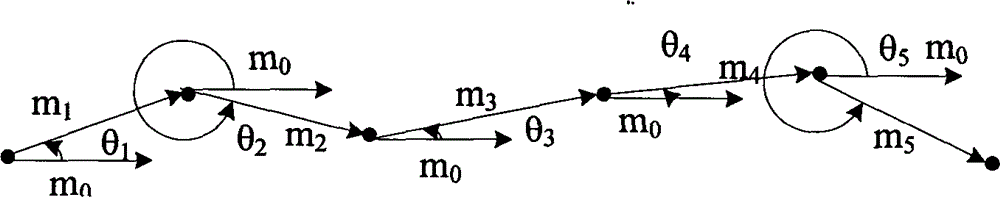

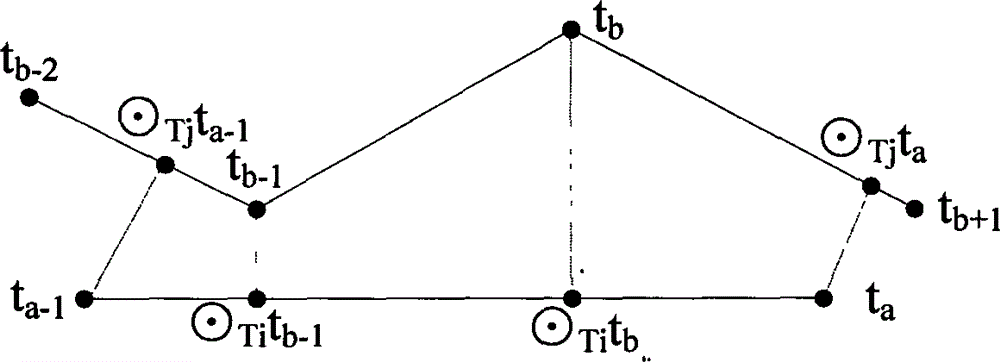

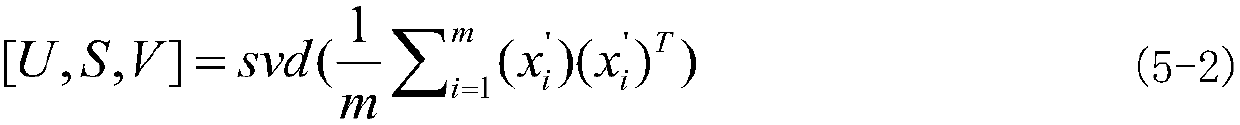

Learning and anomaly detection method based on multi-feature motion modes of vehicle traces

ActiveCN103605362APosition/course control in two dimensionsAnomaly detectionDimensionality reduction

The invention provides a method for learning and anomaly detection of trace modes by utilizing much feature information of a trace. Firstly, in the trace mode learning phase, similarities of motion directions and spatial positions between traces are considered at the same time, a typical trace motion mode is extracted by hierarchical agglomerative clustering, and is provided with high cluster accuracy; and the time efficiency is greatly improved through constructing a Laplacian matrix and reducing the dimensionality of the matrix. Then in the abnormity detection phase, a distribution area of scene starting points is learned through a GMM model, a moving window is used as a basic comparing element, differences of a trace to be detected and a typical trace in position and direction are measured by defining a position distance and a direction distance, and an on-line classifier based on the direction distance and the position distance is established. That the trace belongs to a starting point abnormity, a global abnormity or a local abnormity is determined online through a multi-feature abnormity detection algorithm; and due to the fact that starting point, direction and position feature differences are considered at the same time, and the global abnormity and the local child segment abnormity are considered, the learning and anomaly detection method based on multi-feature motion modes of the vehicle traces is higher in abnormity recognition rate when being compared to traditional methods.

Owner:海之蝶(天津)科技有限公司

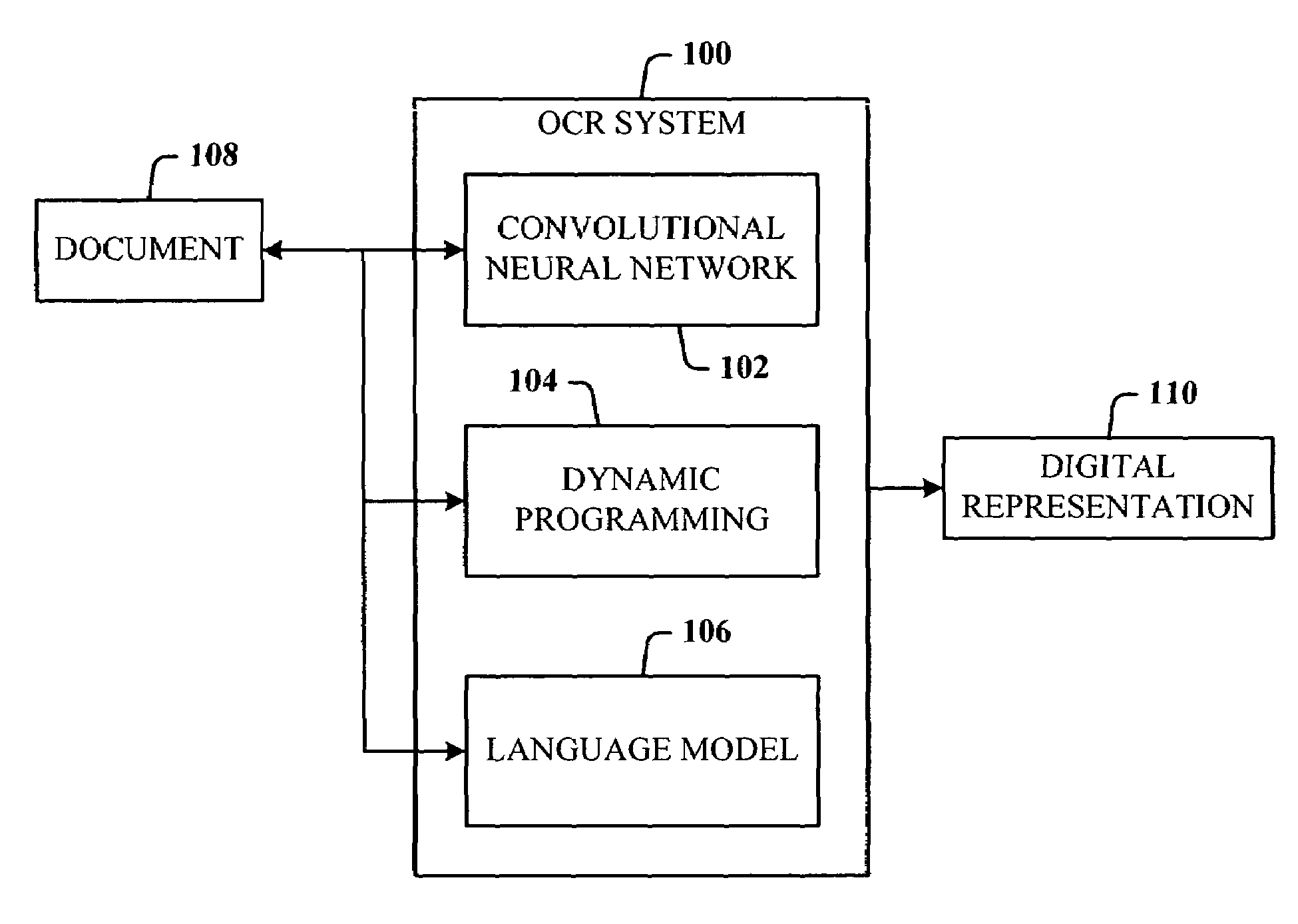

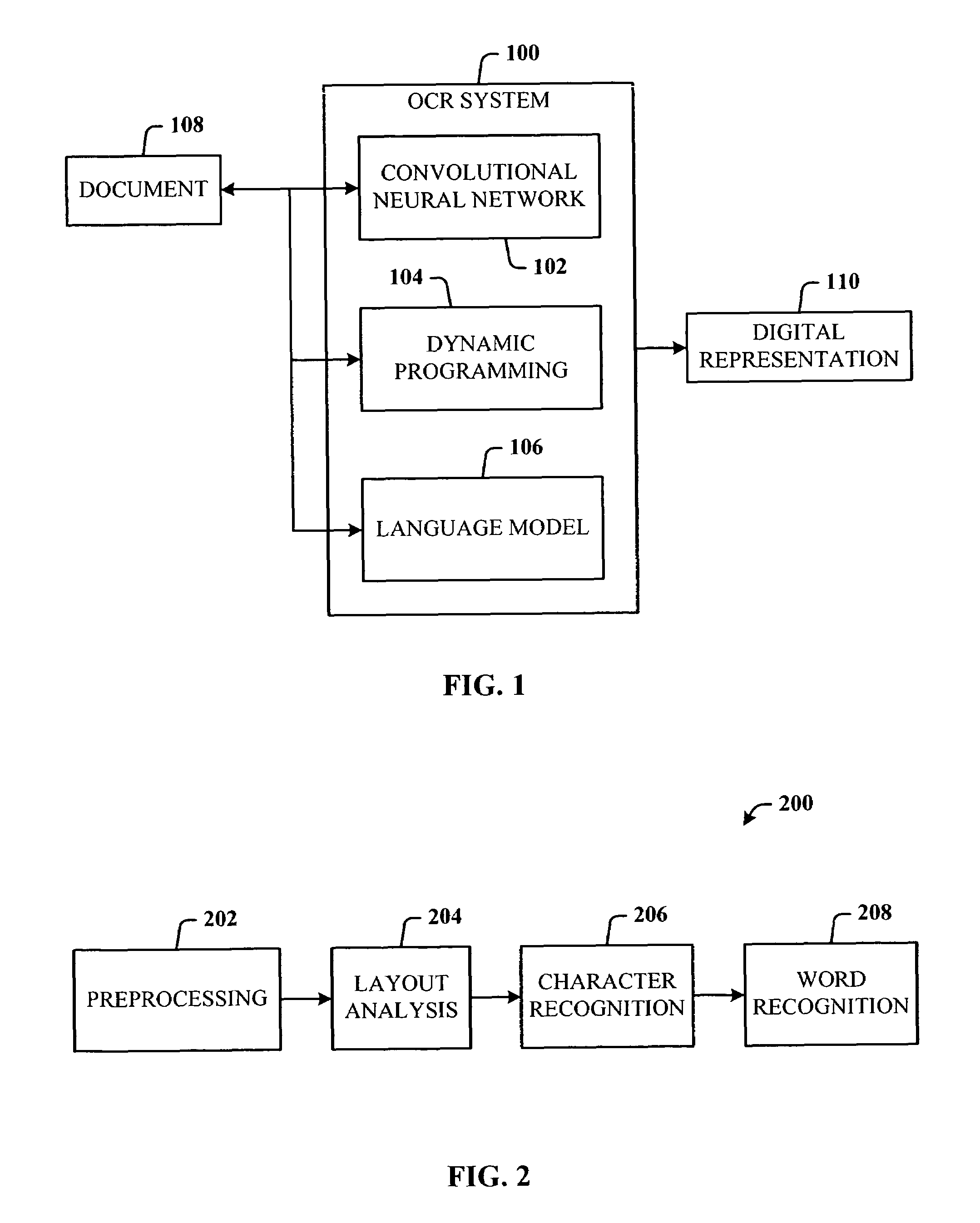

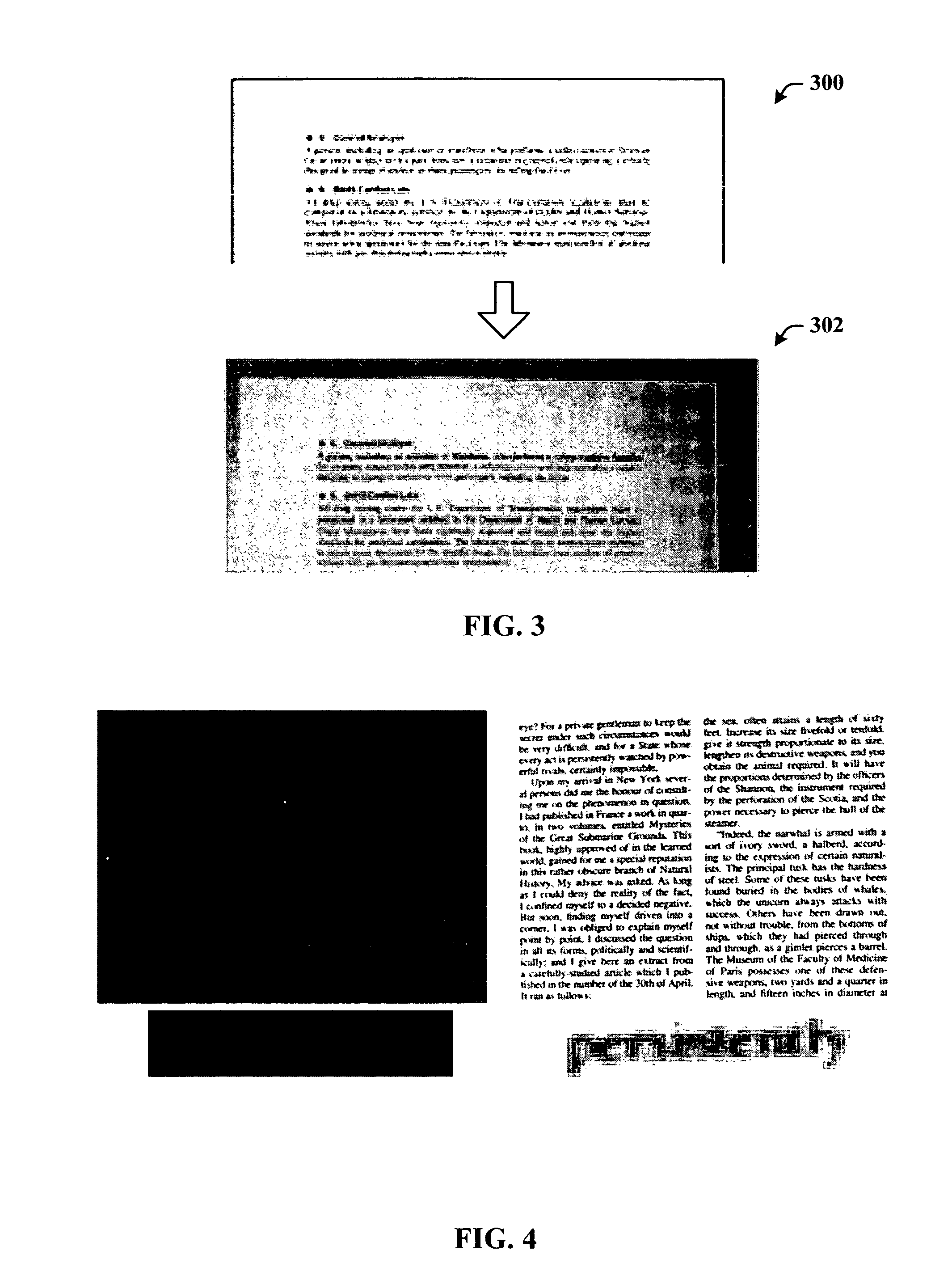

Low resolution OCR for camera acquired documents

ActiveUS7499588B2Low resolution OCRMaximum robustnessBiological modelsCharacter recognitionSingle processGrey level

Owner:MICROSOFT TECH LICENSING LLC

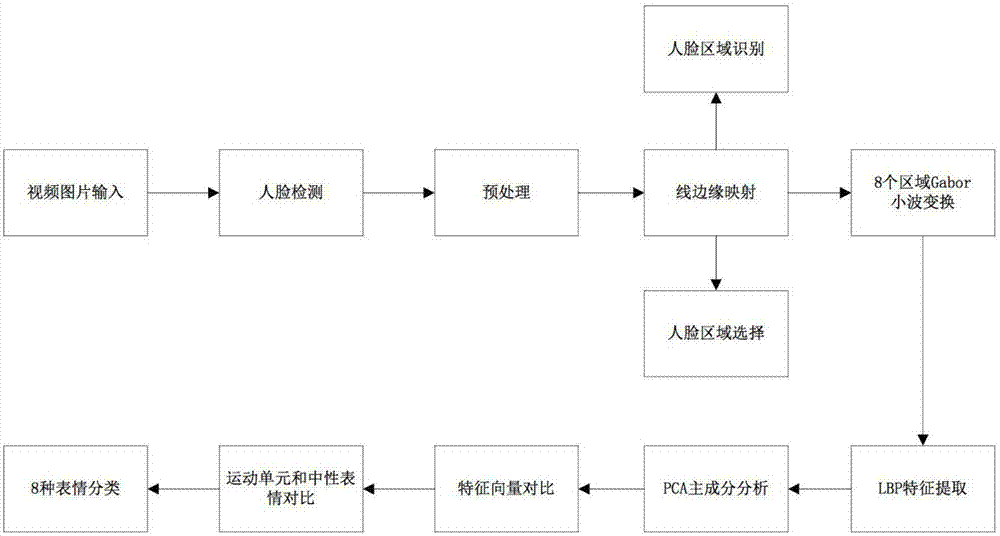

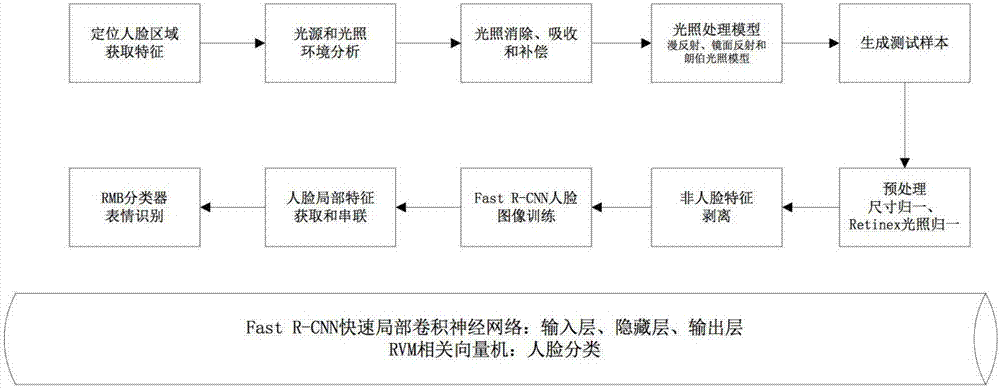

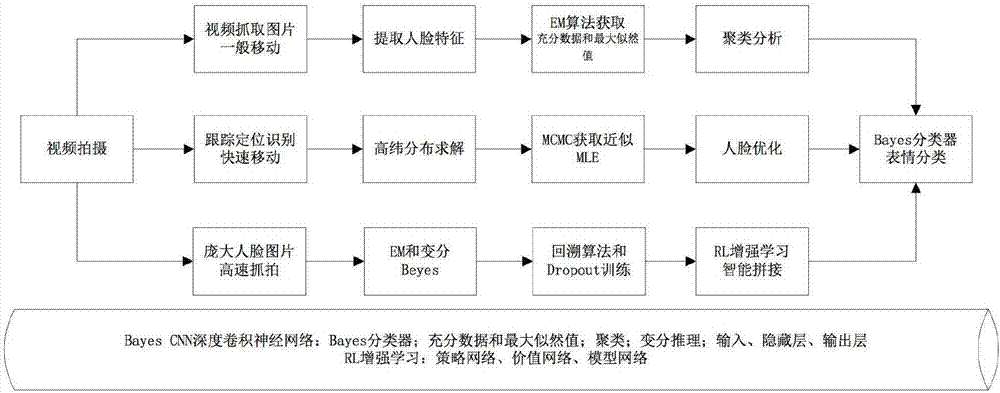

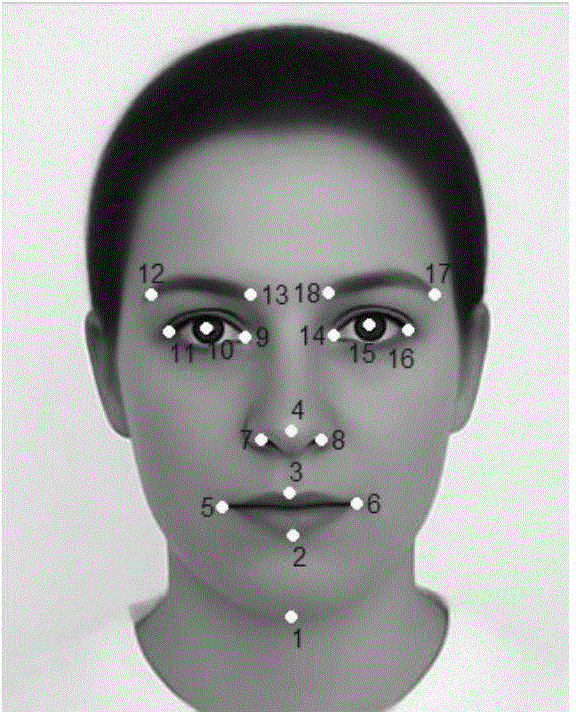

Human face emotion recognition method in complex environment

InactiveCN107423707AReal-time processingImprove accuracyImage enhancementImage analysisNerve networkFacial region

The invention discloses a human face emotion recognition method in a mobile embedded complex environment. In the method, a human face is divided into main areas of a forehead, eyebrows and eyes, cheeks, a noise, a month and a chain, and 68 feature points are further divided. In view of the above feature points, in order to realize the human face emotion recognition rate, the accuracy and the reliability in various environments, a human face and expression feature classification method is used in a normal condition, a Faster R-CNN face area convolution neural network-based method is used in conditions of light, reflection and shadow, a method of combining a Bayes Network, a Markoff chain and variational reasoning is used in complex conditions of motion, jitter, shaking, and movement, and a method of combining a deep convolution neural network, a super-resolution generative adversarial network (SRGANs), reinforcement learning, a backpropagation algorithm and a dropout algorithm is used in conditions of incomplete human face display, a multi-human face environment and noisy background. Thus, the human face expression recognition effects, the accuracy and the reliability can be promoted effectively.

Owner:深圳帕罗人工智能科技有限公司

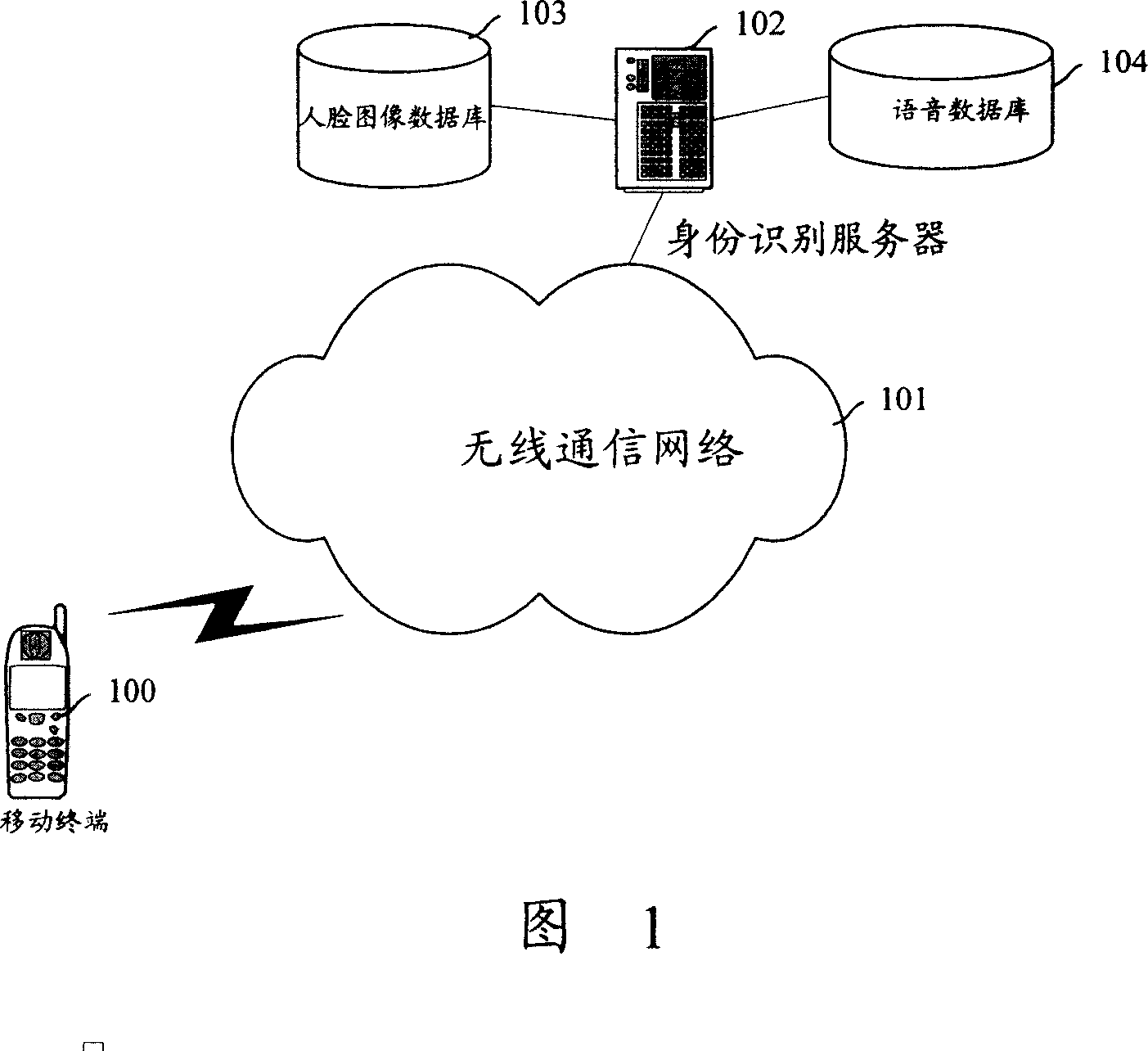

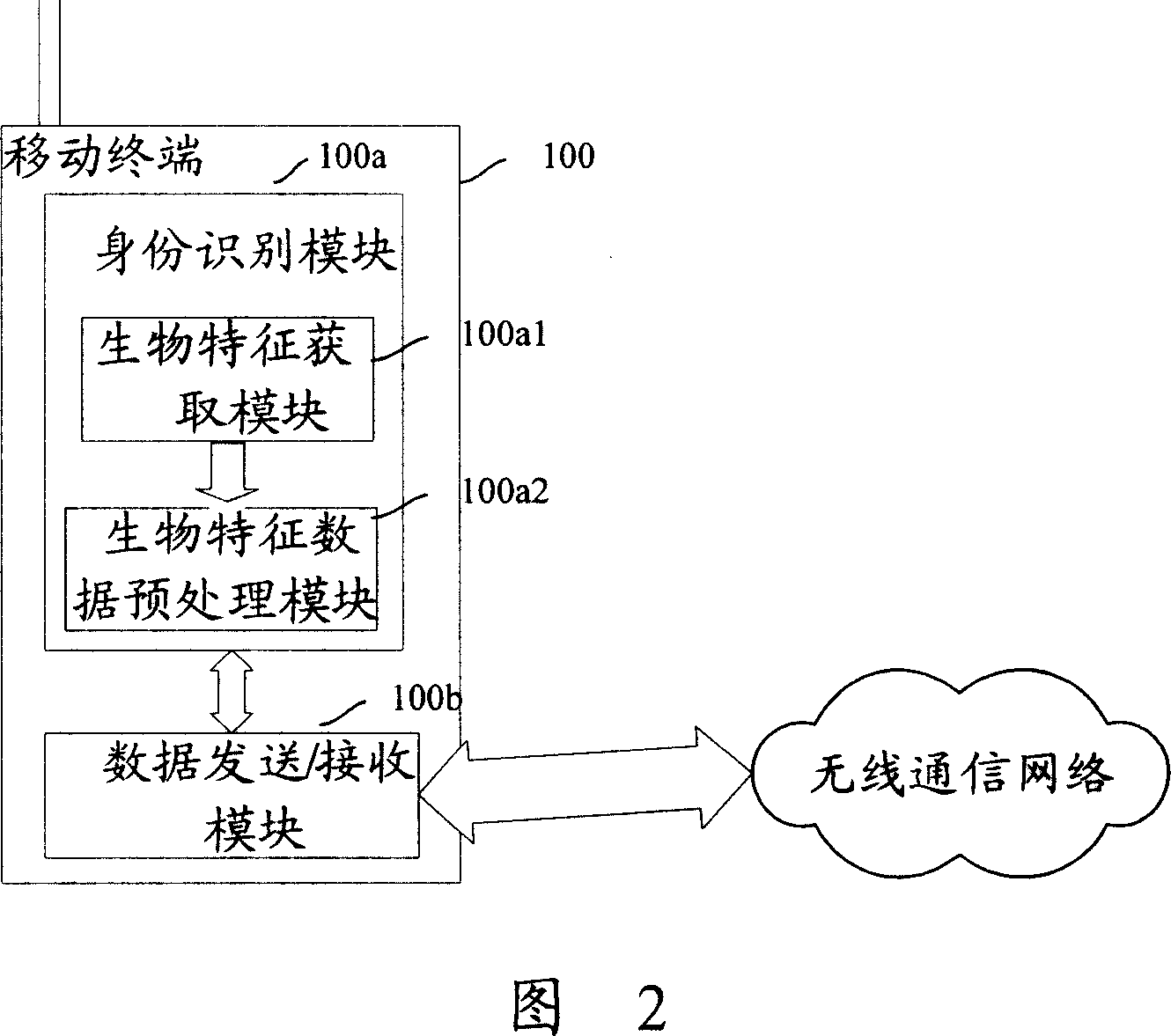

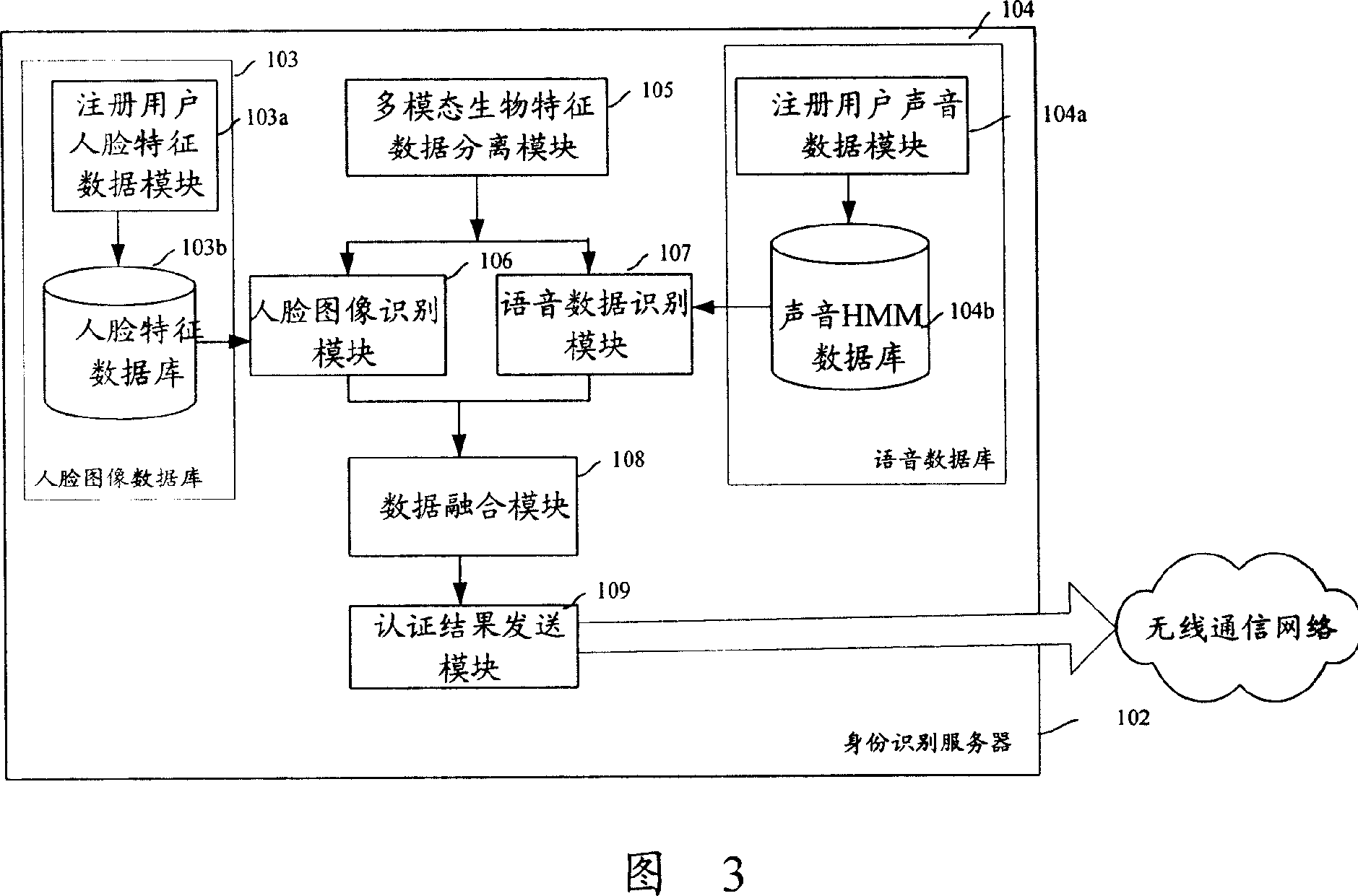

Long-distance identity-certifying system, terminal, servo and method

InactiveCN101075868AImprove recognition rateEasy to identifyUser identity/authority verificationComputer hardwareAuthentication server

The system comprises: a terminal with the human-face image detecting function and the voice data acquiring function, a communication network and a personal identification server. Wherein, the terminal with the human-face image detecting function and the voice data acquiring function embeds the acquired voice data into the detected human-face image, and sends the human-face image embedded with the voice data to the personal identification server; the identification authentication server separates the voice data from the human-face image, and respectively authenticate the human-face image and the voice data, and then combines them; the authentication result is sent to the terminal.

Owner:GLOBAL INNOVATION AGGREGATORS LLC

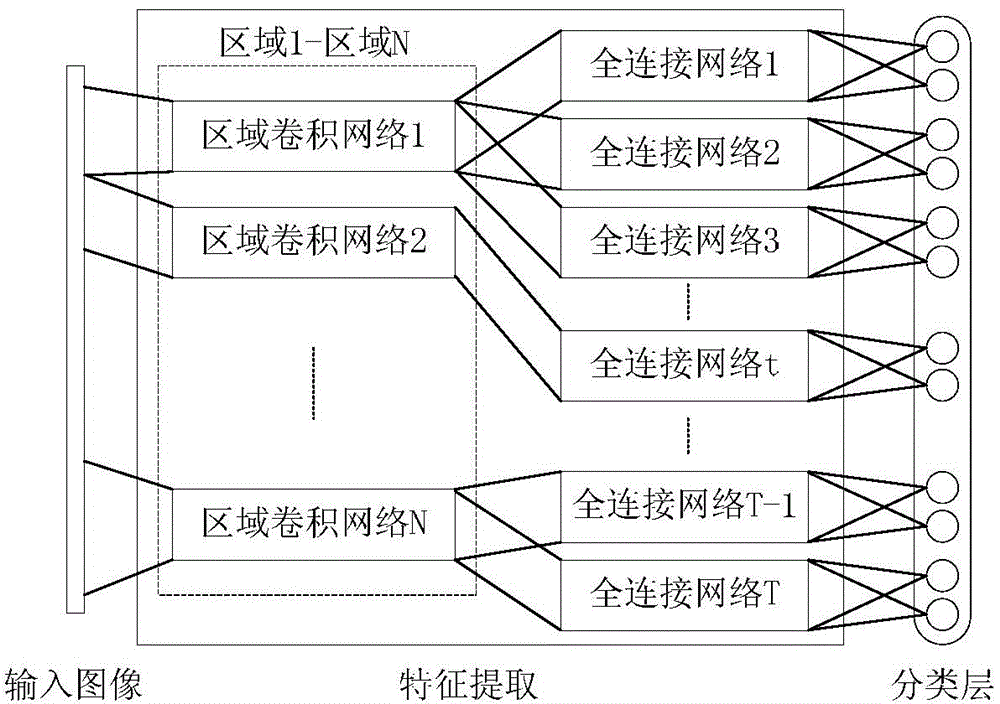

Face feature extraction method based on face feature point shape drive depth model

ActiveCN104463172AAvoid mismatchEnhanced description abilityCharacter and pattern recognitionFeature extractionConvolution

The invention relates to a face feature extraction method based on a face feature point shape drive depth model. The method comprises the steps that the face feature point shape drive depth model is set up, N depth convolution neural networks are utilized for extracting features of N face regions divided according to the positions of face feature points to obtain the discrimination feature and the attributive feature of each region, and then all the discrimination features and the attributive features are fused to obtain features higher in descriptive ability. According to the face feature extraction method based on the face feature point shape drive depth model, the problem of robustness under change conditions of illumination, angles, expressions, shielding and the like can be well solved, and the recognition rate of face recognition under these conditions is increased.

Owner:CHONGQING ZHONGKE YUNCONG TECH CO LTD

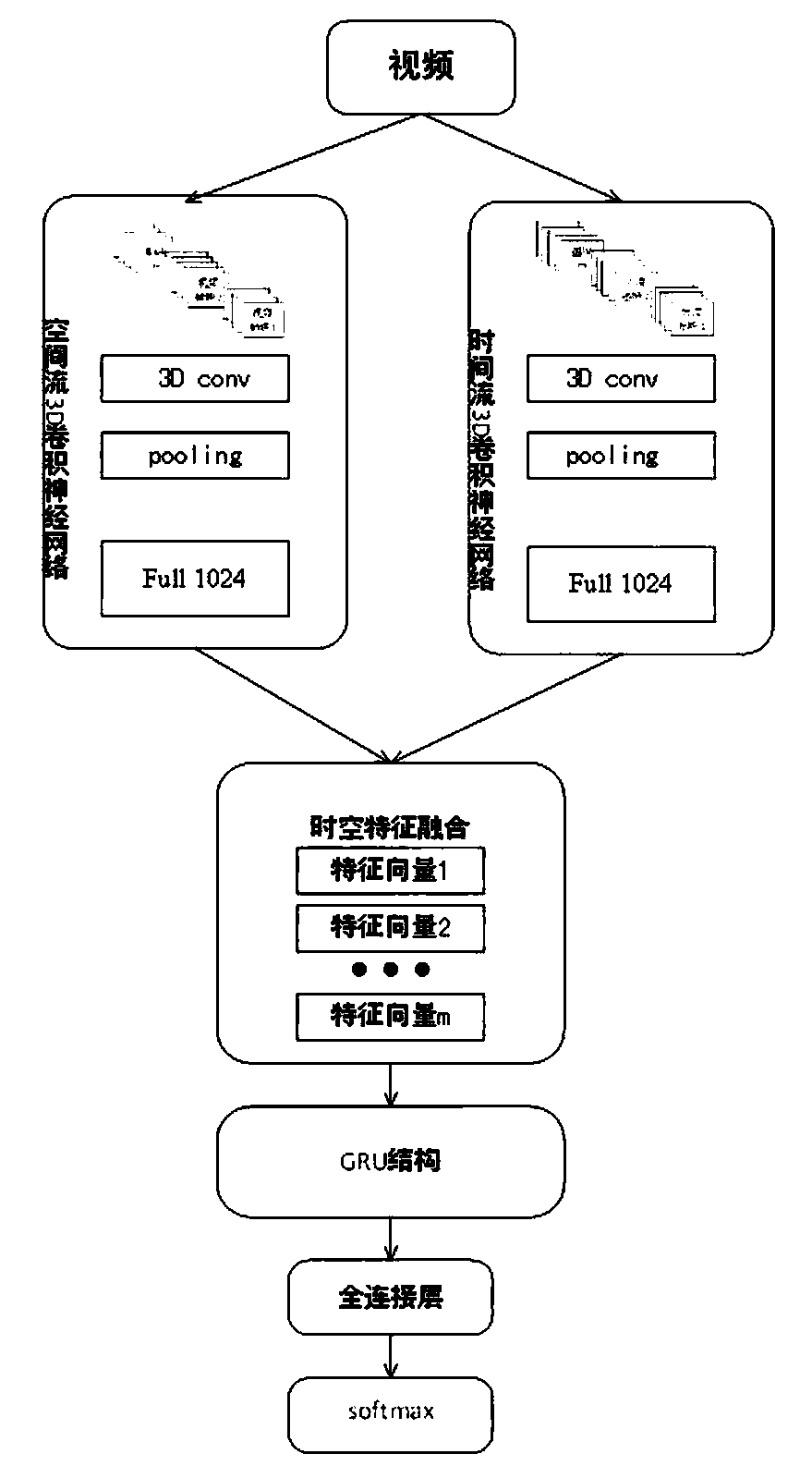

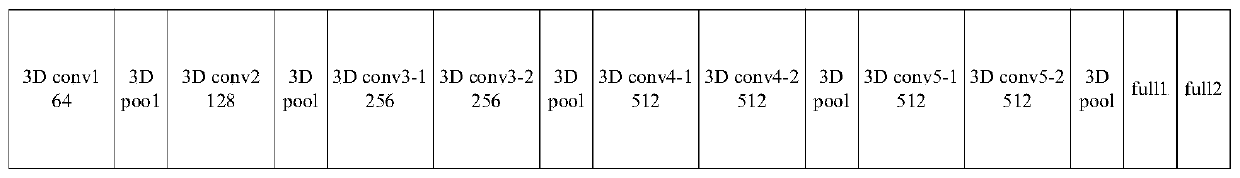

Behavior recognition technical method based on deep learning

PendingCN110188637AGood feature expression abilityEasy to useCharacter and pattern recognitionNeural architecturesVideo monitoringNetwork model

The invention discloses a behavior recognition technical method based on deep learning, which solves a problem that the intelligentization of a video monitoring system in the prior art needs to be improved. The method comprises the following steps of constructing a deeper space-time double-flow CNN-GRU neural network model by adopting a mode of combining a double-flow convolutional neural networkand a GRU network; extracting the time domain and space domain features of the video; extracting the long-time serialization characteristics of the spatial-temporal characteristic sequence according to the capability of the GRU network for memorizing information, and carrying out the behavior recognition of a video through employing a softmax classifier; proposing a new related entropy-based lossfunction; and with the help of a method for processing the mass information by referring to a human brain visual nerve attention mechanism, introducing an attention mechanism before a space-time double-flow CNN-GRU neural network model performs space-time feature fusion. The accuracy of the model provided by the technology is 61.5%, and compared with an algorithm based on a double-flow convolutional neural network, the recognition rate is improved to a certain extent.

Owner:XIDIAN UNIV

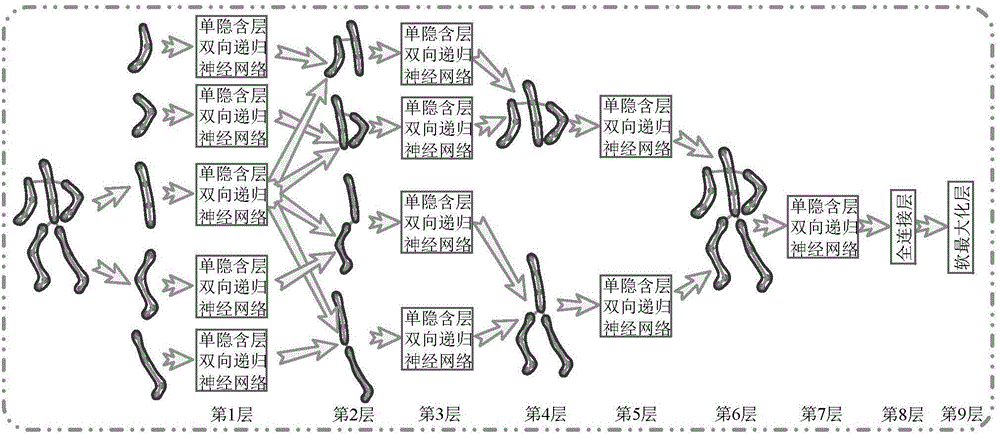

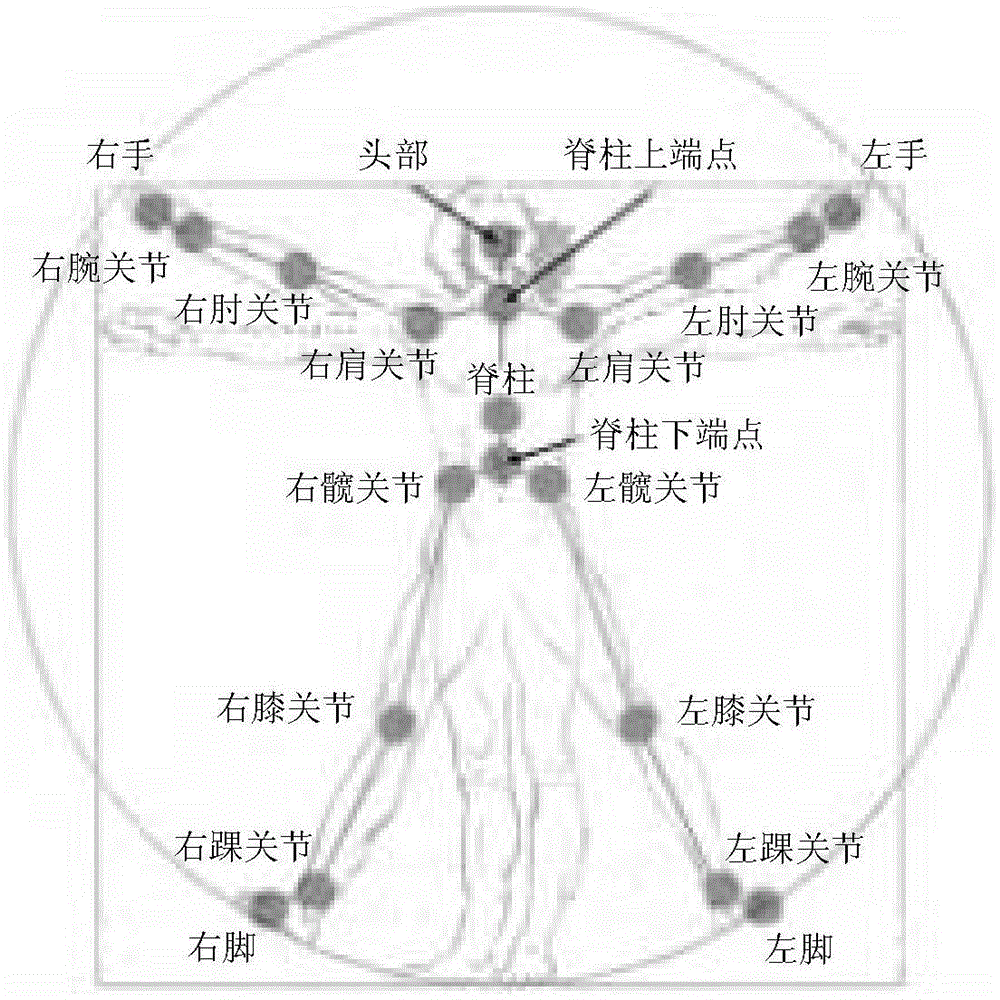

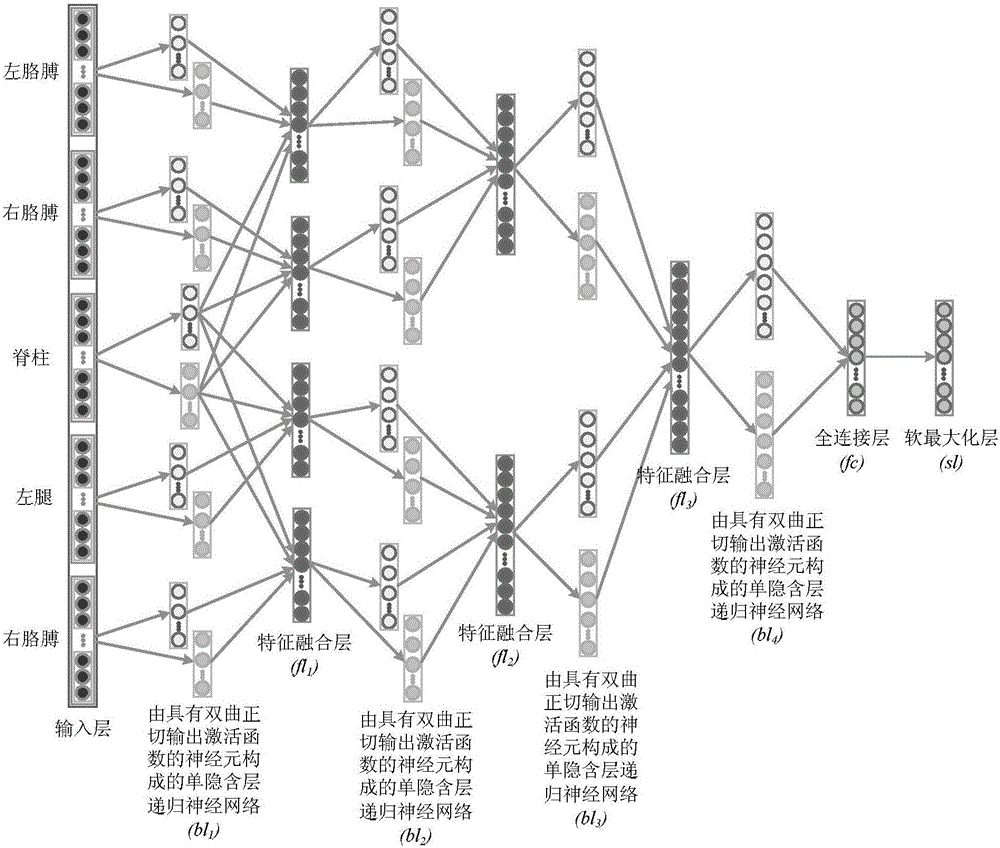

Behavior identification method based on recurrent neural network and human skeleton movement sequences

ActiveCN104615983AEasy accessHigh precision recognition rateCharacter and pattern recognitionNeural architecturesHuman bodyVideo monitoring

The invention discloses a behavior identification method based on a recurrent neural network and human skeleton movement sequences. The method comprises the following steps of normalizing node coordinates of extracted human skeleton posture sequences to eliminate influence of absolute space positions, where a human body is located, on an identification process; filtering the skeleton node coordinates through a simple smoothing filter to improve the signal to noise ratio; sending the smoothed data into the hierarchic bidirectional recurrent neural network for deep characteristic extraction and identification. Meanwhile, the invention provides a hierarchic unidirectional recurrent neural network model for coping with practical real-time online analysis requirements. The behavior identification method based on the recurrent neural network and the human skeleton movement sequences has the advantages of designing an end-to-end analyzing mode according to the structural characteristics and the motion relativity of human body, achieving high-precision identification and meanwhile avoiding complex computation, thereby being applicable to practical application. The behavior identification method based on the recurrent neural network and the human skeleton movement sequence is significant to the fields of intelligent video monitoring based on the depth camera technology, intelligent traffic management, smart city and the like.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

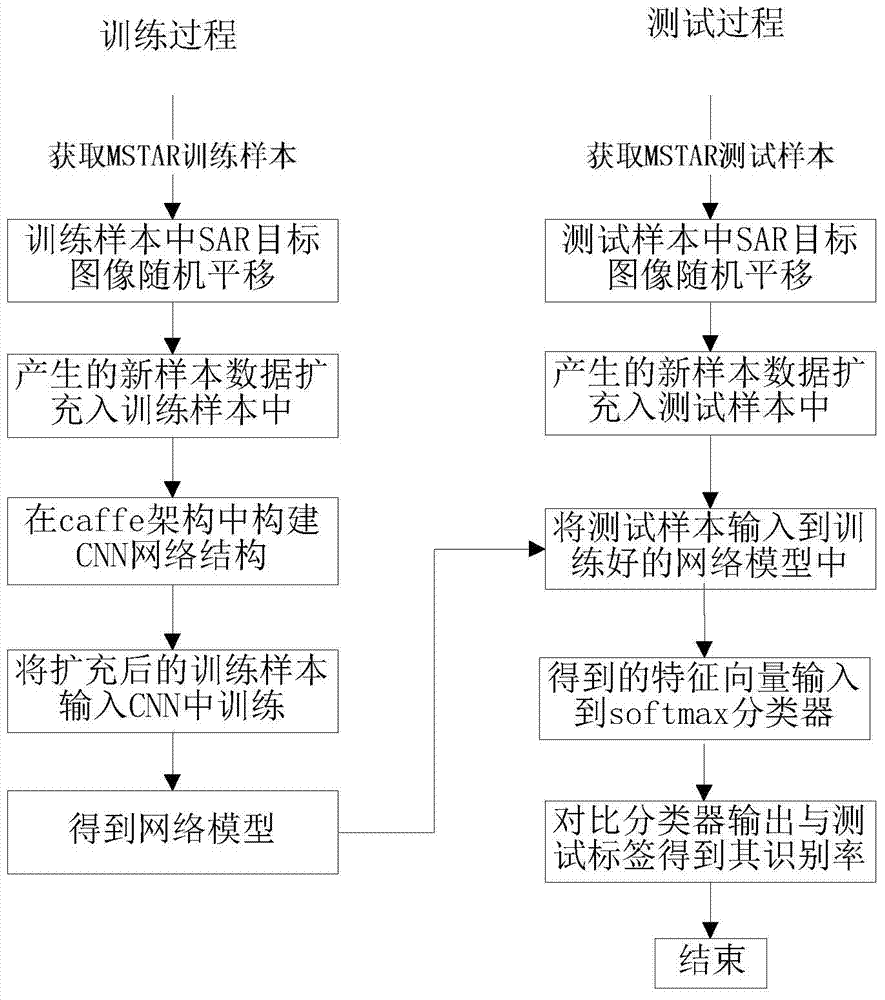

SAR target identification method based on CNN

ActiveCN104732243AImprove recognition rateComprehensive feature extractionCharacter and pattern recognitionNeural learning methodsNetwork modelSample image

The invention discloses an SAR target identification method based on s CNN. The achieving steps are that 1. a target to be identified in each training image is subjected to multi-time random translation transformation, new samples are obtained, and the new samples are marked with labels of original images and are put into training samples in an expansion mode; 2. a convolutional neural network (CNN) structure is established in a caffe framework; 3. the training samples obtained after expansion are input into the CNN for training, and a trained network model is obtained; 4. a testing sample is subjected to multi-time translation expanding, and the testing sample obtained after expanding is obtained; and 5. the testing sample obtained after expanding is input into the trained CNN network model for testing, and the recognition rate is obtained. A target to be identified at any position of a sample image has the high recognition rate and stable performance, and the problem that according to an existing SAR target recognition method, influence from the position of the target to be recognized in the sample images is large is solved.

Owner:XIDIAN UNIV

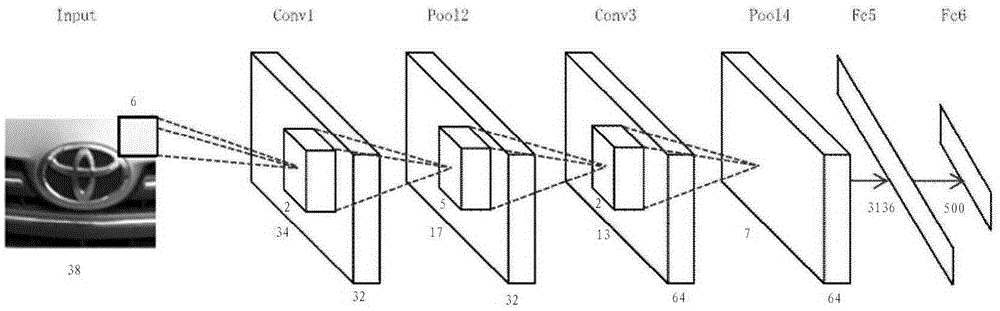

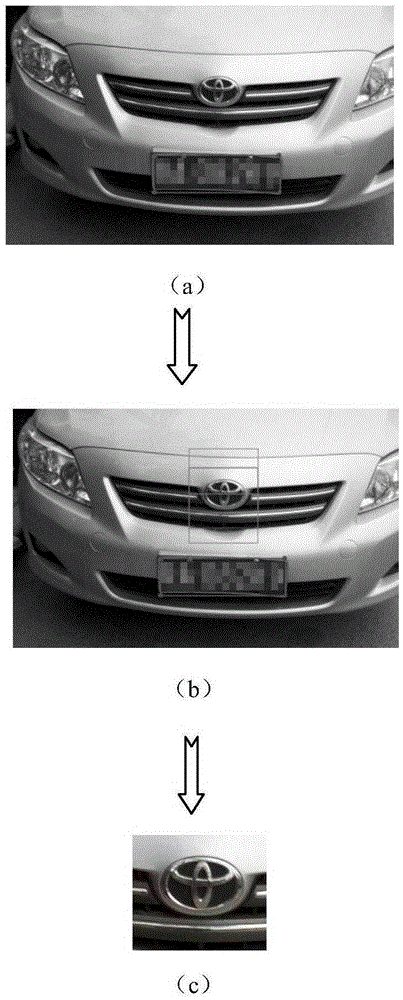

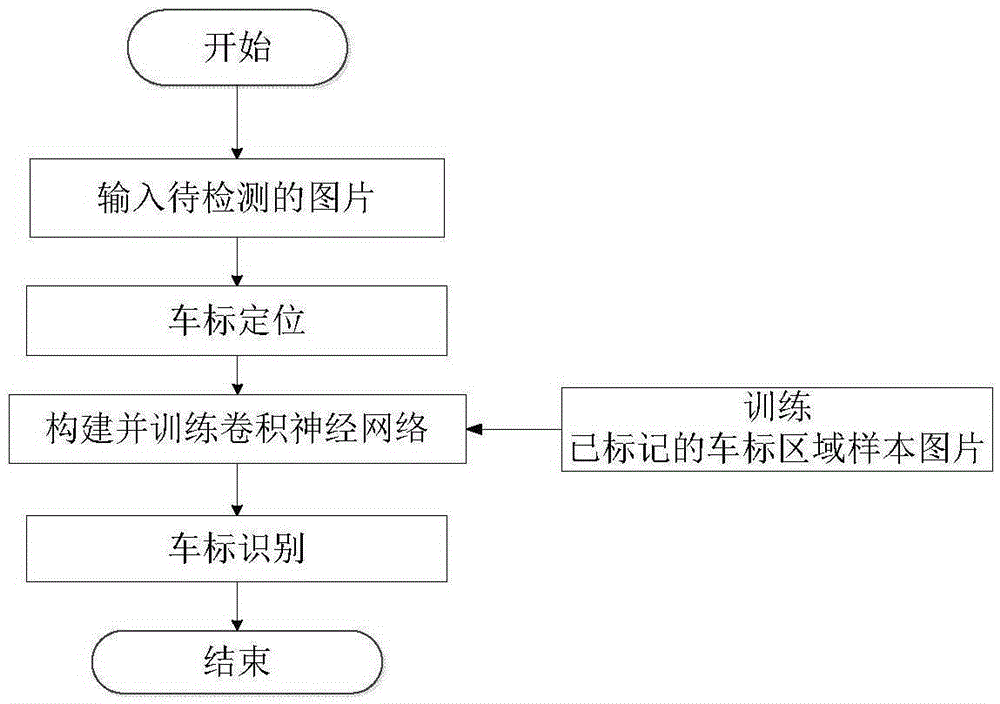

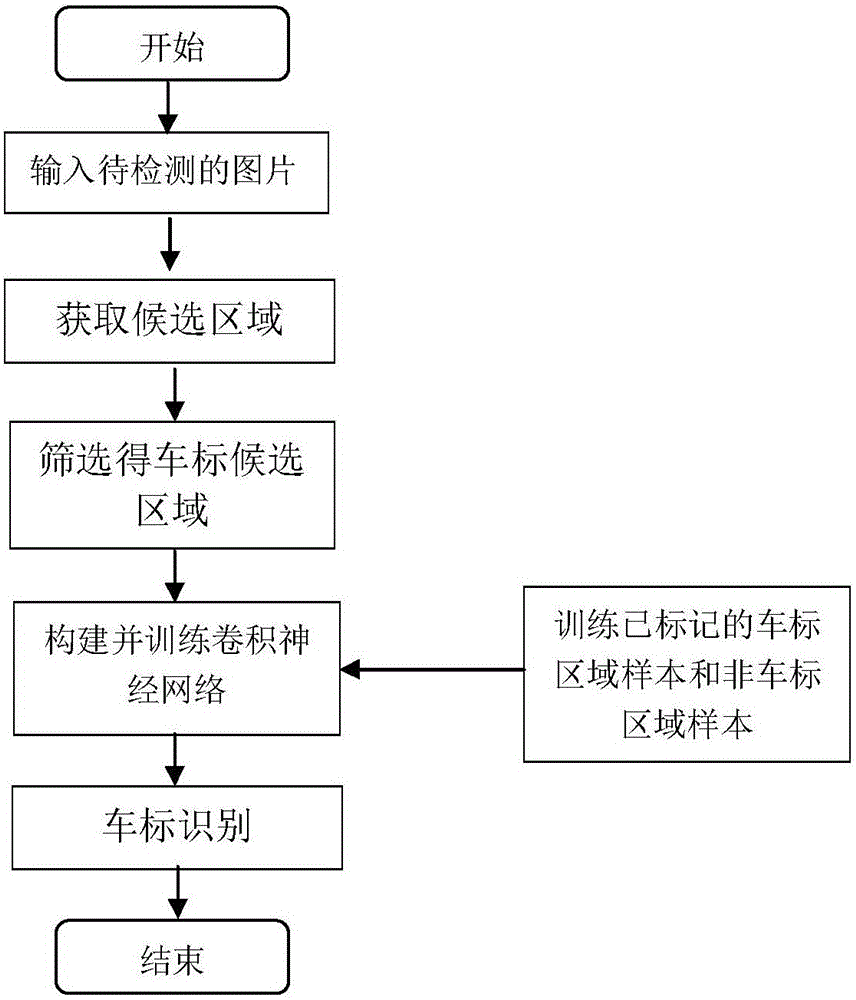

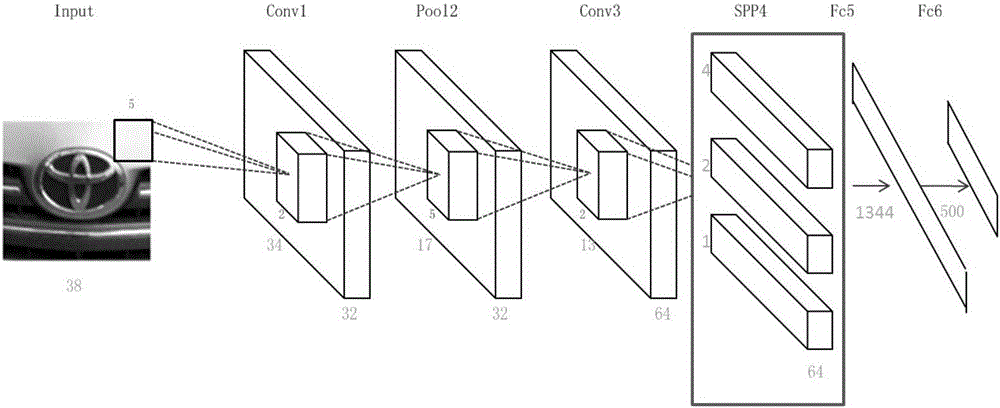

Convolutional neural network based vehicle logo identification method

InactiveCN105354568AAccurate extractionImprove robustnessCharacter and pattern recognitionNeural learning methodsAlgorithmTraffic intersection

The invention provides a convolutional neural network based vehicle logo identification method. The method is specifically implemented by the following steps of: (1) inputting a to-be-detected picture shot by a high-resolution camera device in a traffic intersection; (2) positioning a vehicle logo; (3) constructing and training a convolutional neural network; and (4) identifying the vehicle logo. With the adoption of the convolutional neural network (CNN) based vehicle logo identification method, the shortcomings of complicated extraction feature operator, poor timeliness and complicated model in the prior art can be effectively overcome, and the calculation amount is effectively reduced; and features of CNN self-learning have higher robustness on environmental change, so that the identification rate of the vehicle logo is increased.

Owner:XIDIAN UNIV

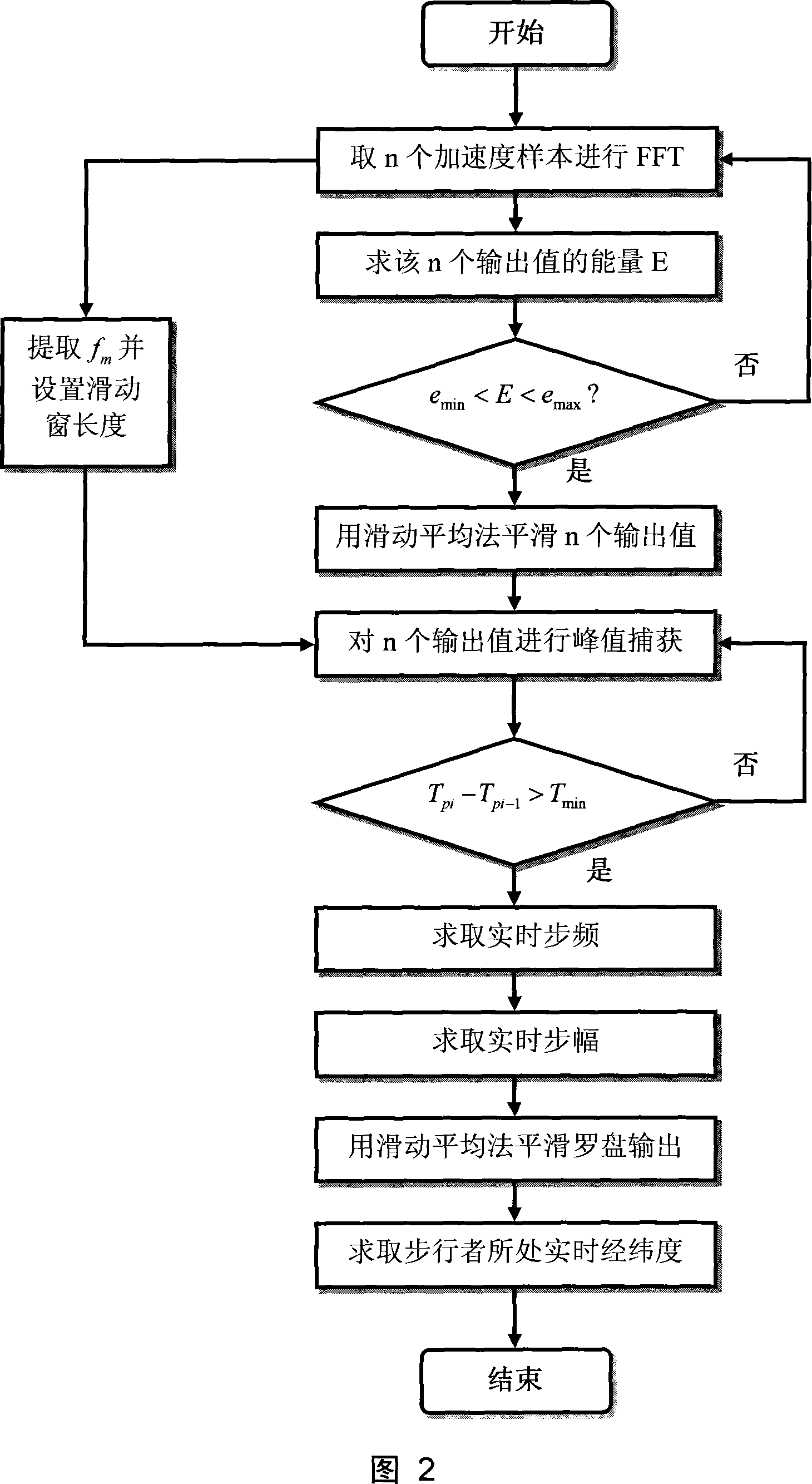

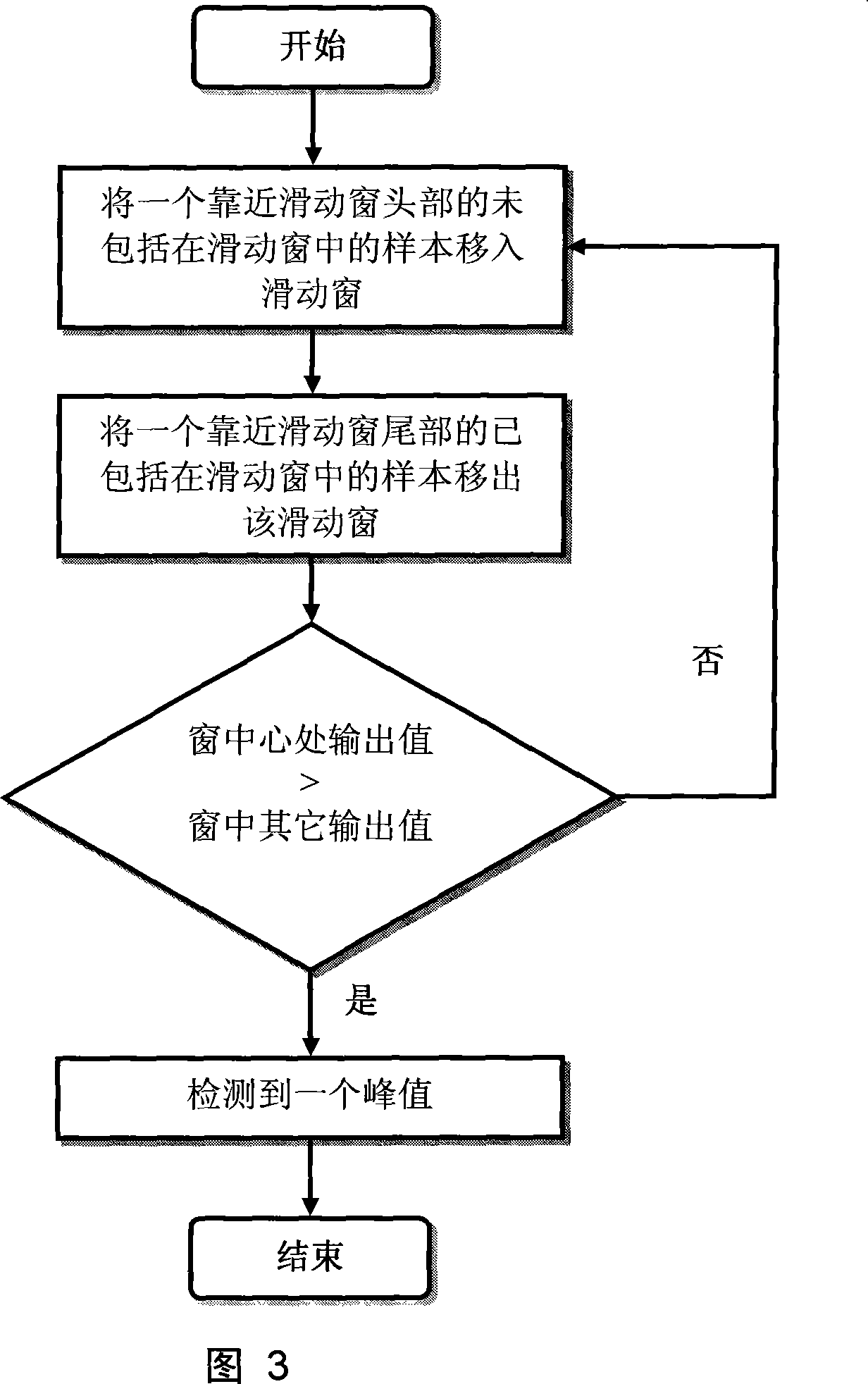

Method for locating walker

InactiveCN101226061ALow costEasy to implementNavigation by speed/acceleration measurementsCompassesWalking distanceLongitude

The invention relates to a positioning method suitable for walkers, belonging to the field of navigation, guidance and control areas, which comprises three parts: identify the motion state of the walker; calculate the real-time stride of the walker; work out the real-time latitude and longitude of the location of the walker by combining with course angle. The change of the location of the walker is mainly realized by the motion of two legs; walking makes body vibrate regularly; measure and analyze the features of the vibration; adopt the peak capture method aiming at the acceleration sampling to extract the real-time stride frequency of the walker; utilize the relation between the stride frequency and stride to work out the real-time stride of the walker; the real-time stride as the walking distance of the walker between the adjacent output value is combined with the course angle output by an electromagnet compass; and work out the real-time latitude and longitude of the location of the walker by utilizing the Earth model. Before estimating the stride, make a judgment that whether n acceleration output values represent the walking motion of the walker; the judgment is based on the energy of sampling acceleration. The positioning method suitable for walkers has the advantages that the positioning method can judge with high accuracy whether the walker is walking, efficiently improving the location accuracy of the walker.

Owner:SHANGHAI JIAO TONG UNIV

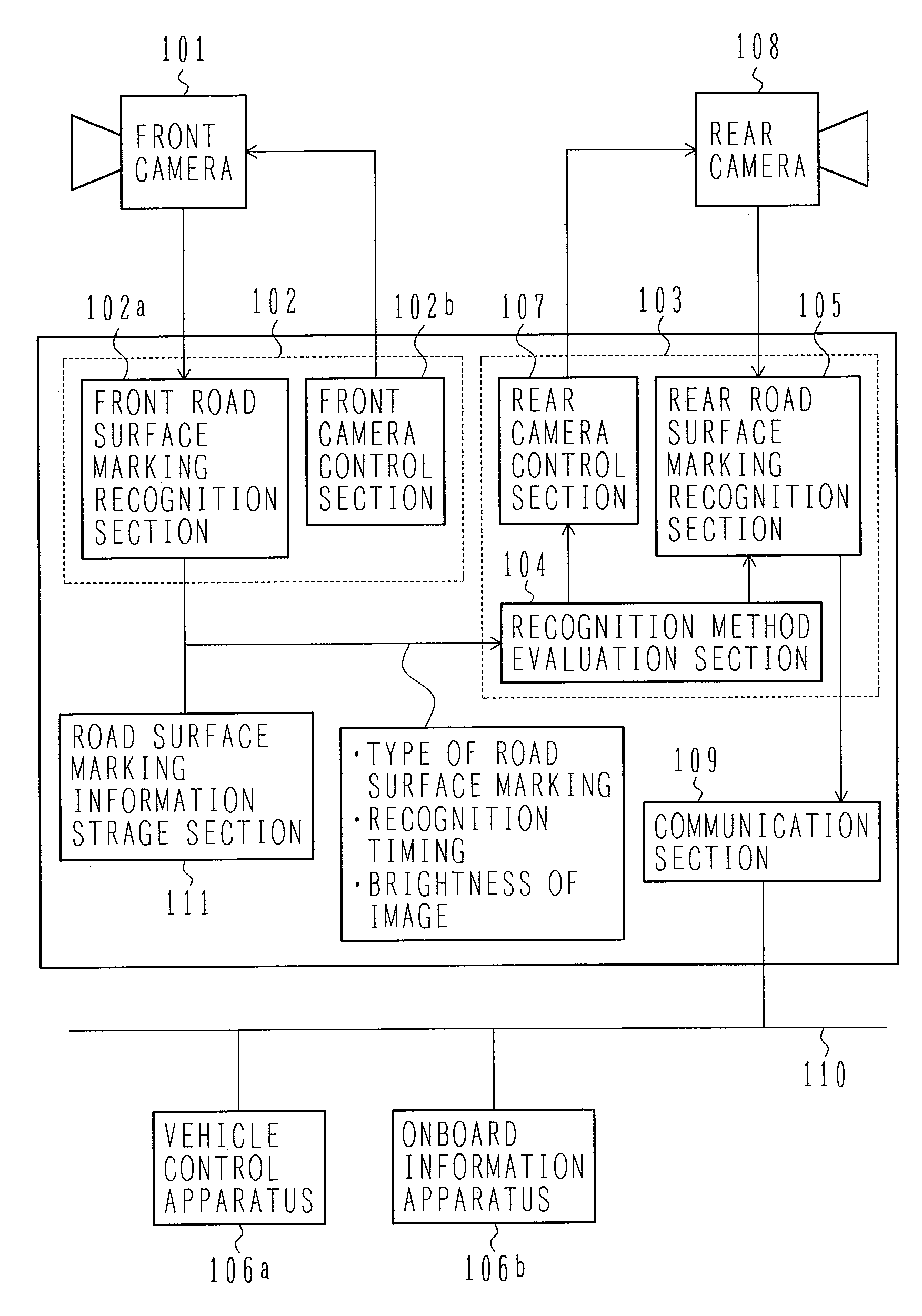

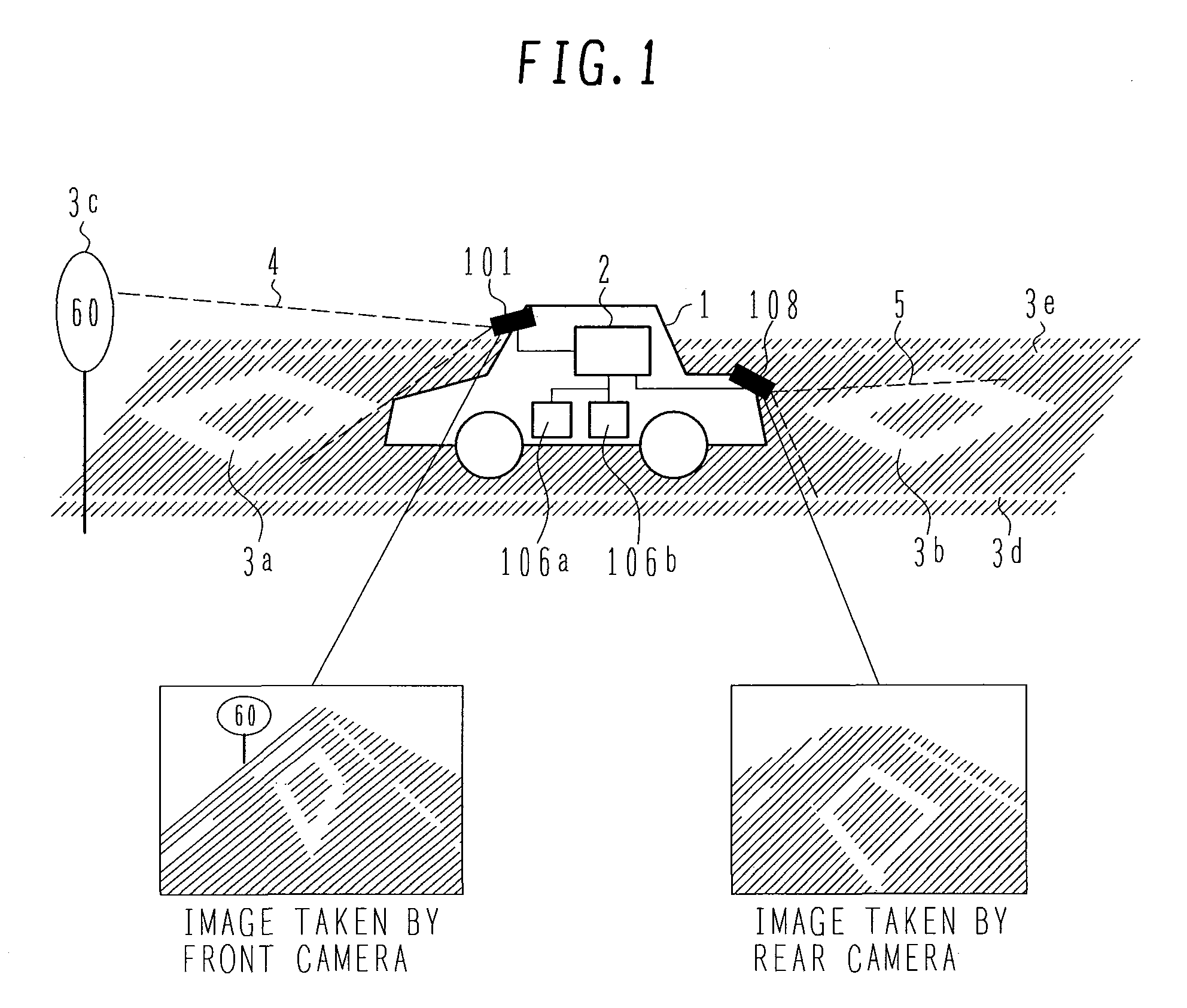

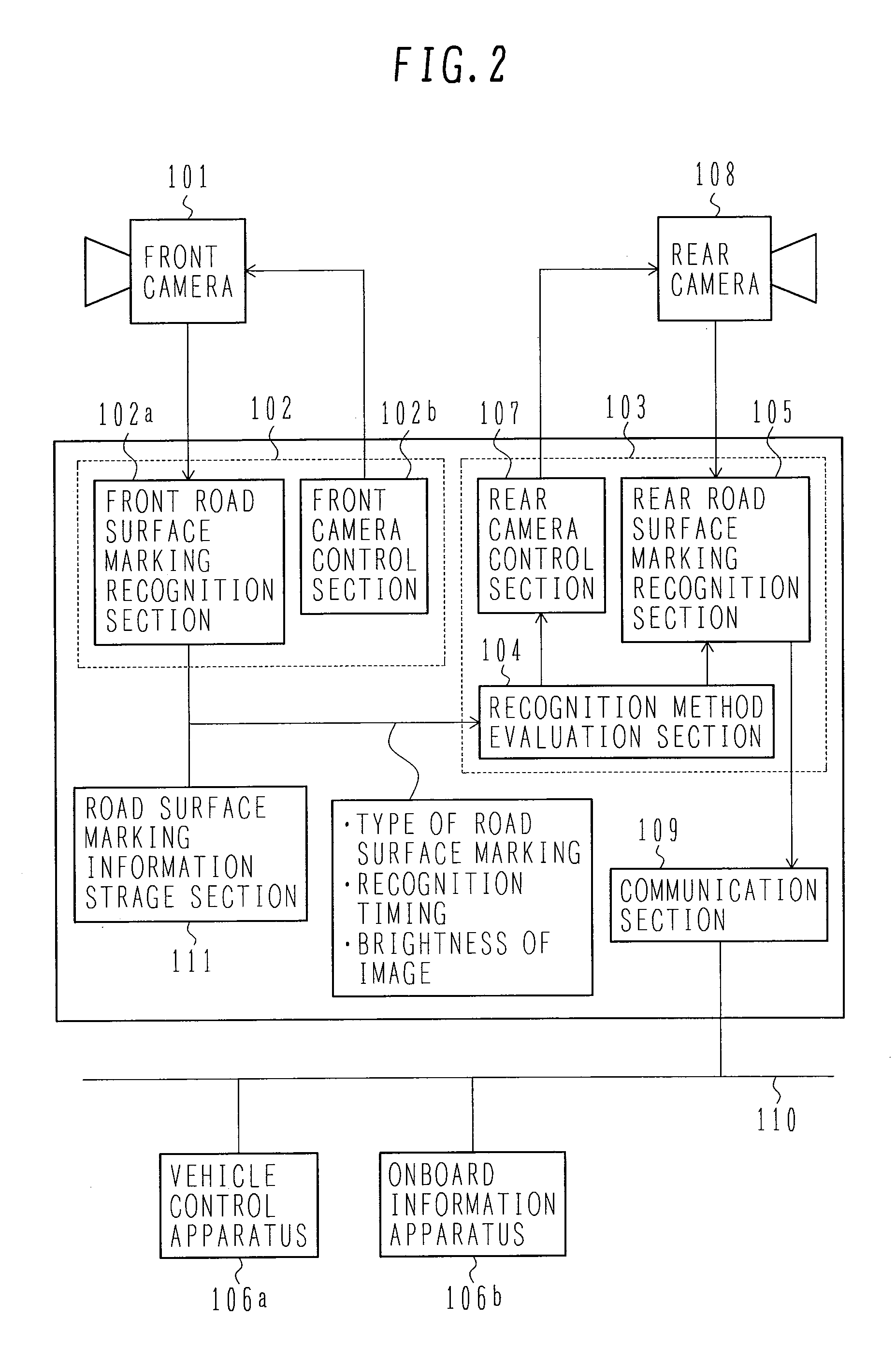

Apparatus and System for Recognizing Environment Surrounding Vehicle

InactiveUS20080013789A1Accurate recognition of objectEasy to adjustRoad vehicles traffic controlCharacter and pattern recognitionEngineeringRoad surface

In conventional systems using an onboard camera disposed rearward of a vehicle for recognizing an object surrounding the vehicle, the object is recognized by the camera disposed rearward of the vehicle. In the image recognized by the camera, a road surface marking taken by the camera appears at a lower end of a screen of the image, which makes it difficult to predict a specific position in the screen from which the road surface marking appears. Further, an angle of depression of the camera is large, and it is a short period of time to acquire the object. Therefore, it is difficult to improve a recognition rate and to reduce false recognition. Results of recognition (type, position, angle, recognition time) made by a camera disposed forward of the vehicle, are used to predict a specific timing and a specific position of a field of view of a camera disposed rearward of the vehicle, at which the object appears. Parameters of recognition logic of the rearwardly disposed camera and processing timing are then optimally adjusted. Further, luminance information of the image from the forwardly disposed camera is used to predict possible changes to be made in luminance of the field of view of the rearwardly disposed camera. Gain and exposure time of the rearwardly disposed camera are then adjusted.

Owner:HITACHI LTD

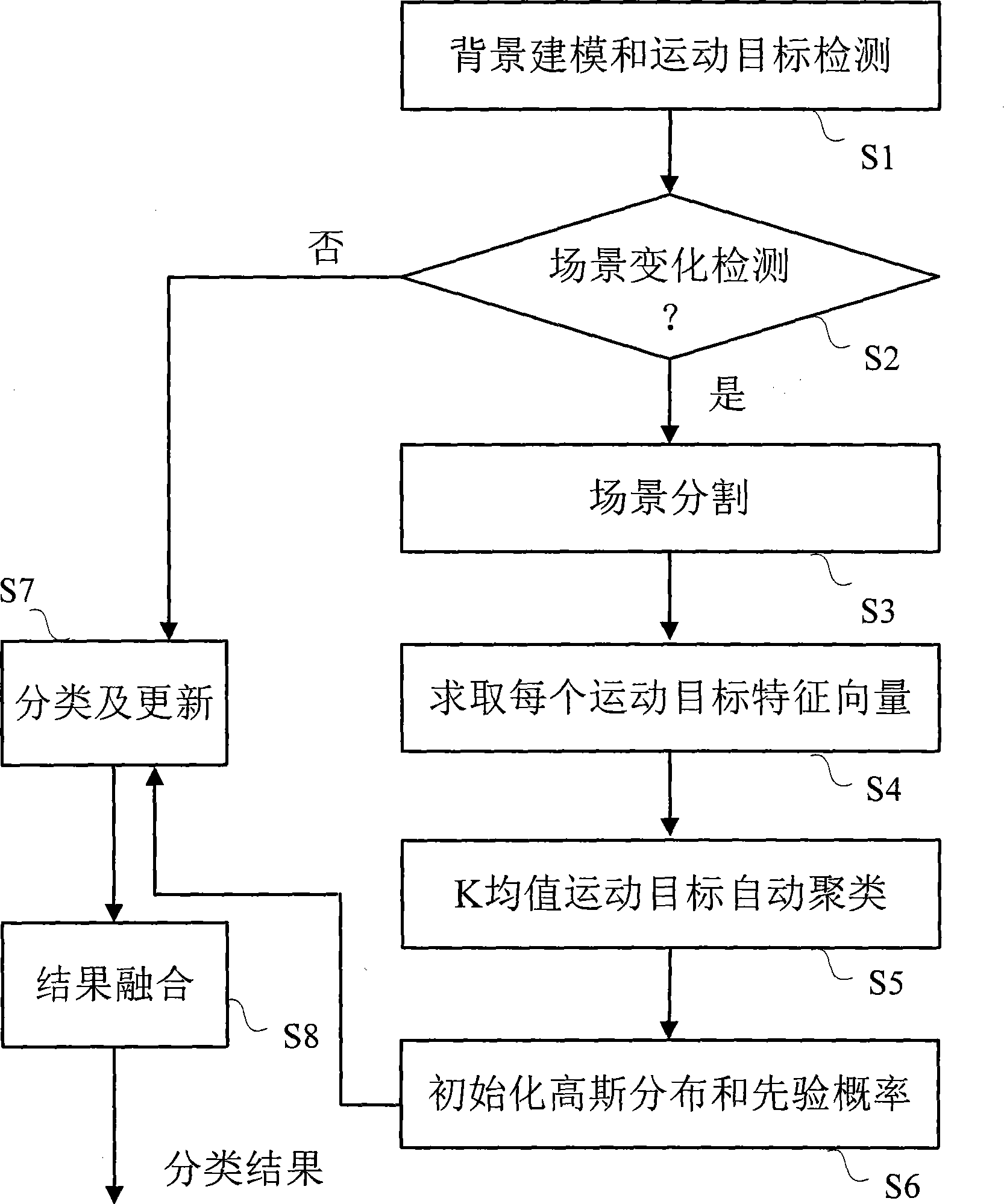

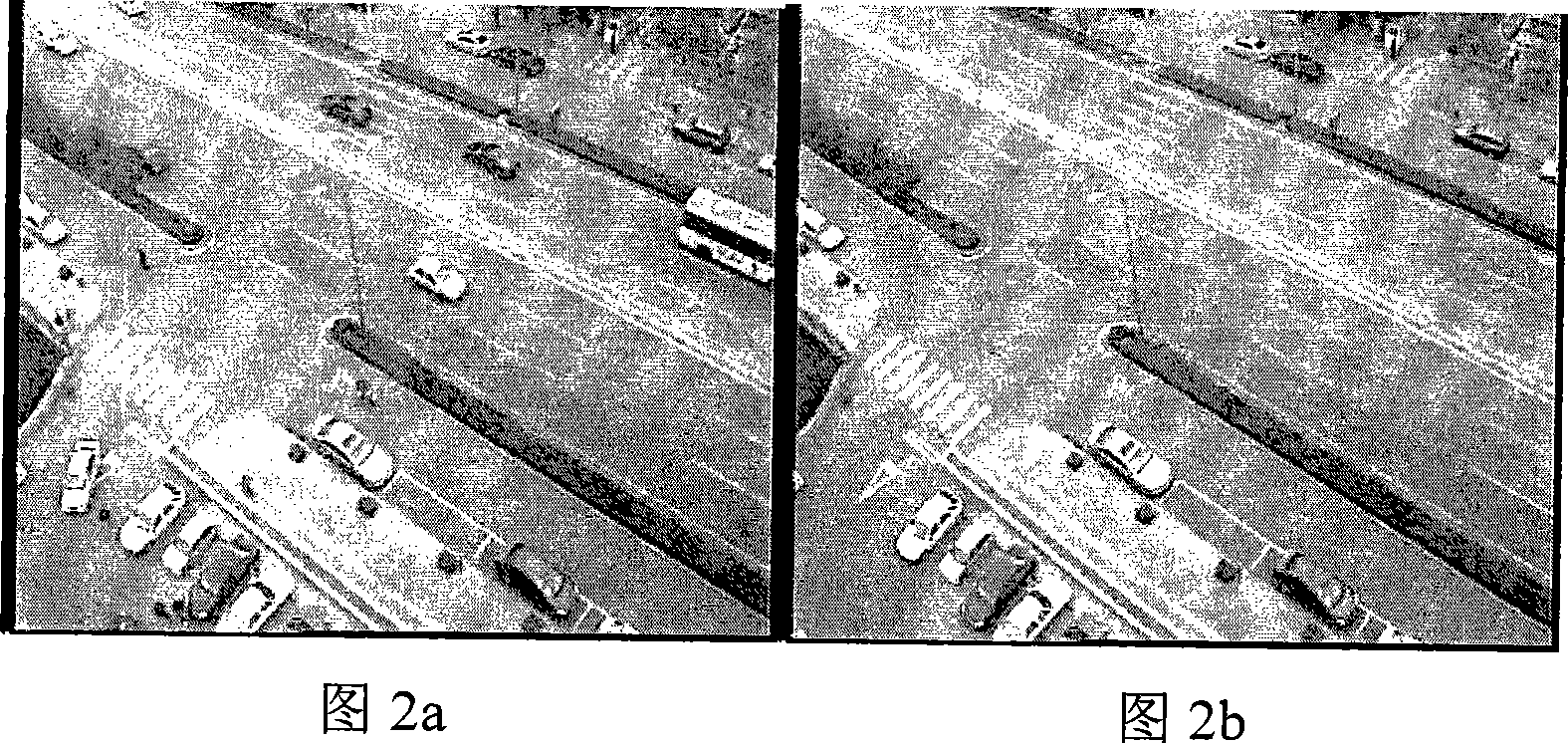

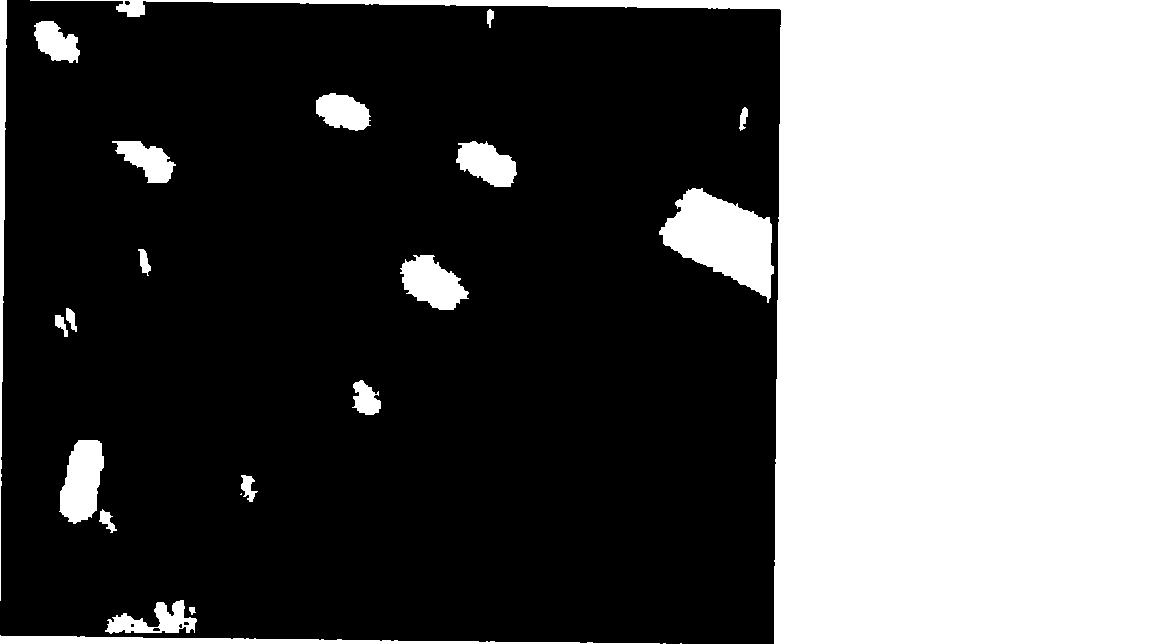

Moving target classification method based on on-line study

InactiveCN101389004AAutomatic judgmentAlgorithms are efficientImage analysisClosed circuit television systemsClassification methodsImage sequence

The invention relates to a method which automatically classifies motion targets learning online, models an image sequence background and detects the motion targets, scene variation, coverage viewing angle and partitioning scene, extracts and clusters characteristic vectors, and marks region classes; the number of the motion targets in a sub-region and certain threshold value initialize Gaussian distribution and prior probability to accomplish initialization of a classifier in accordance with the characteristic vectors of all the motion target regions that pass through the sub-region; the motion targets in the sub-region are classified and parameters of the classifier are online iterated and optimized; classification results in the process of tracking the motion targets are synthesized to output the classification result of the motion result that learns online. The invention is used for detection of abnormalities in monitor scenes, establishing rules for various class targets, enhancing security of monitor system, identifying objects in the monitor scenes, lessening complexity of identification algorithm, improving rate of identification, and for semantized comprehension for the monitor scenes, identifying classes of the motion target and aiding to comprehension for behavior events occurring in the scenes.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

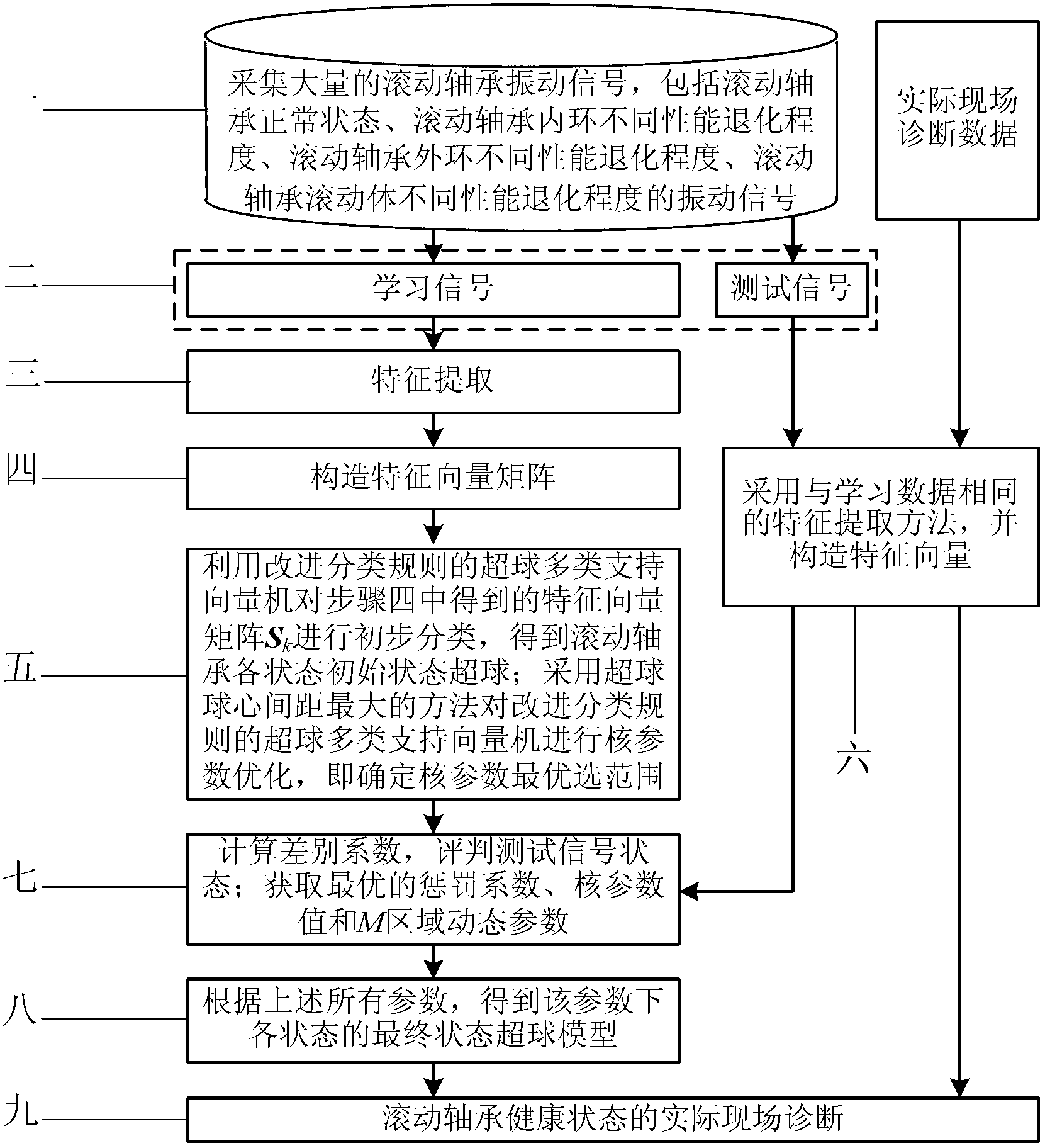

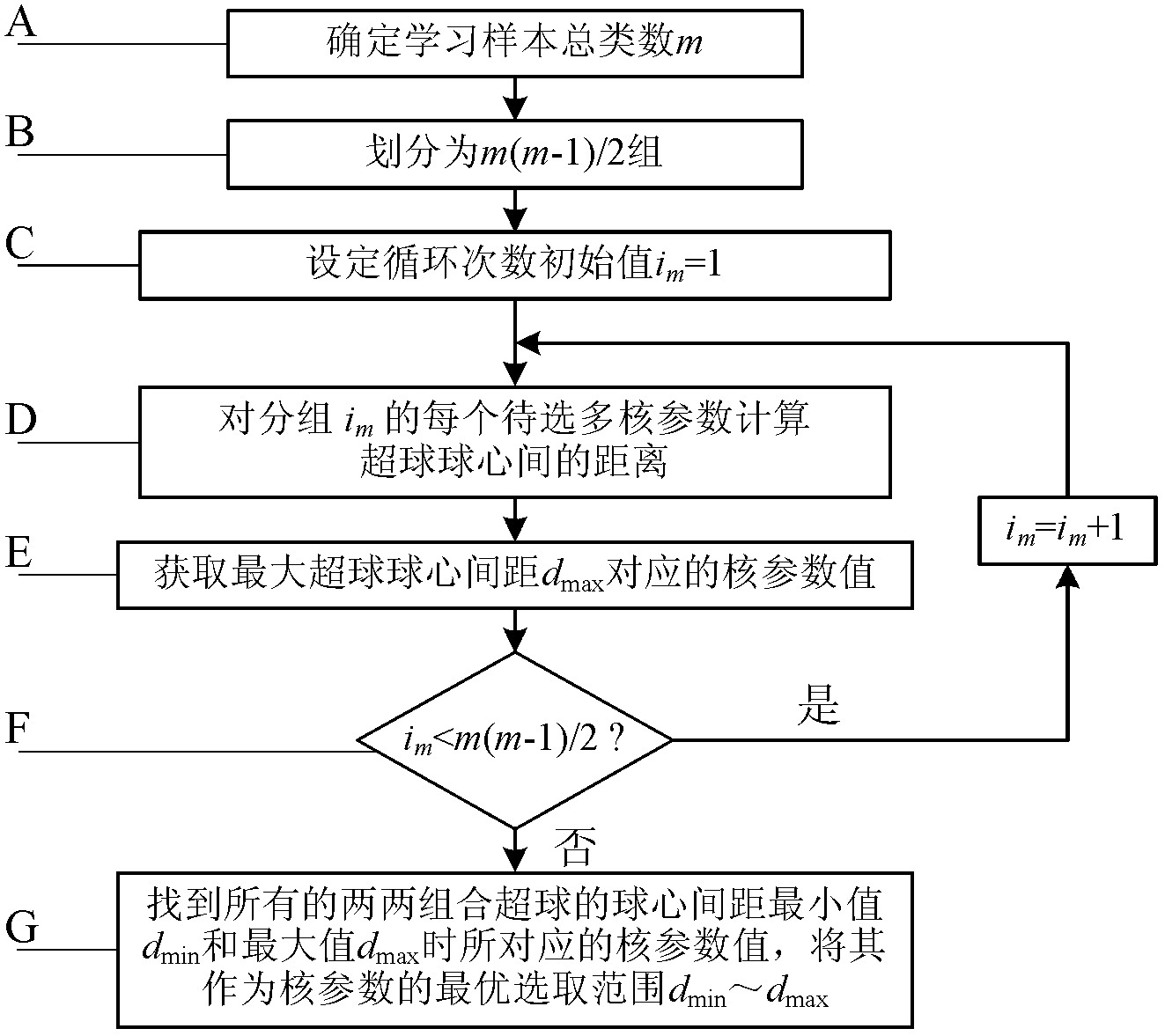

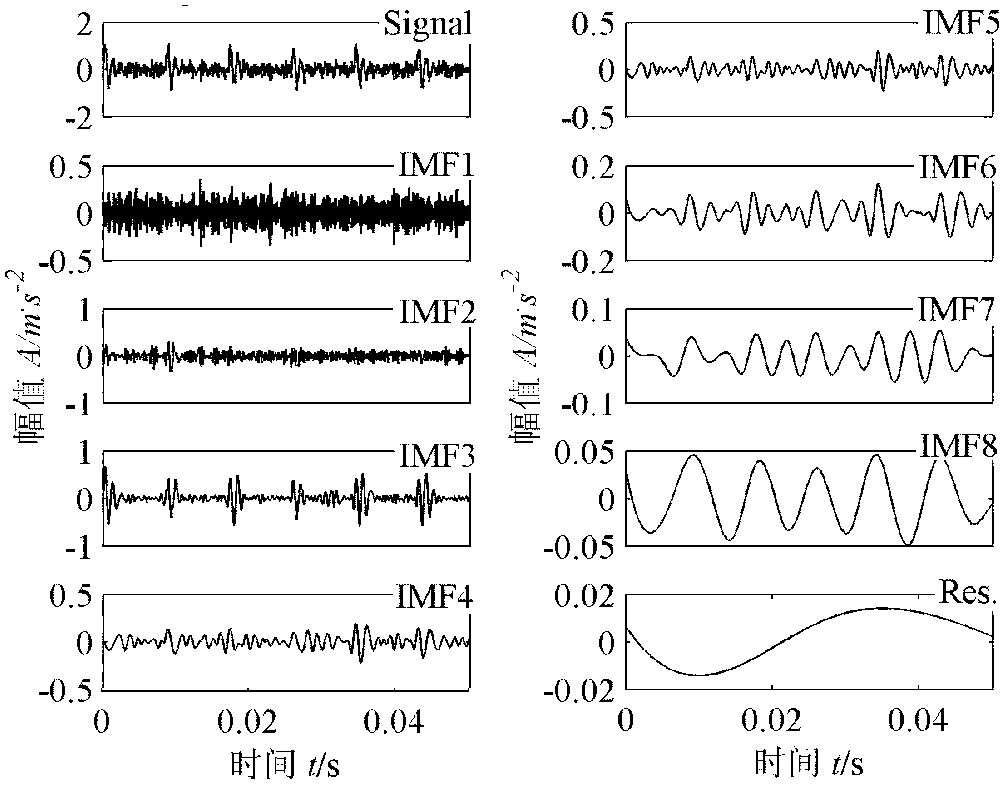

Diagnosis method for fault position and performance degradation degree of rolling bearing

InactiveCN102854015AOptimization determination methodReduce consumptionMachine bearings testingSingular value decompositionSupport vector machine

The invention discloses a diagnosis method for the fault position and the performance degradation degree of a rolling bearing, belonging to the technical field of fault diagnosis for bearings, and solving the problems of low accuracy of diagnosis for fault position and performance degradation degree, and high time consumption of training existing in an intelligent diagnosis method for a rolling bearing in the prior art. A white noise criterion is added in the disclosed integrated empirical mode decomposition method, so that artificial determination for decomposition parameters can be avoided, and the decomposition efficiency can be increased; and via the disclosed nuclear parameter optimization method based on a hypersphere centre distance, the small and effective search region of nuclear parameters in a multi-classification condition can be determined, so that training time is reduced, and the final state hypersphere model of a classifier is given. The intelligent diagnosis method based on parameter-optimized integrated empirical mode decomposition and singular value decomposition, and combined with a nuclear parameter-optimized hypersphere multi-class support vector machine based on the hypersphere centre distance is higher in identification rate compared with the existing diagnosis method. The diagnosis method disclosed by the invention is mainly applied to intelligent diagnosis on the fault position and the performance degradation degree of the rolling bearing.

Owner:HARBIN UNIV OF SCI & TECH

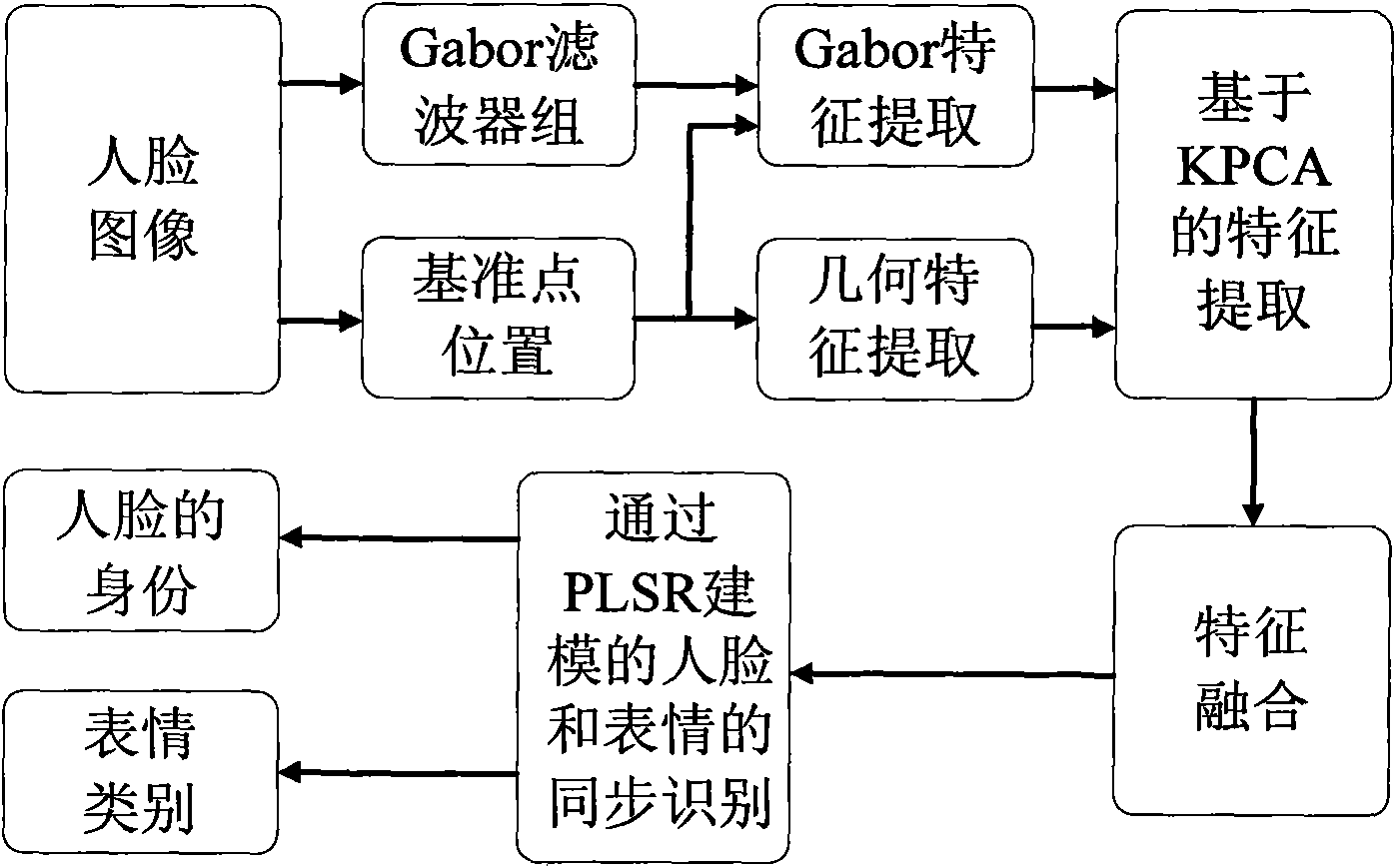

Method for synchronously recognizing identities and expressions of human faces

InactiveCN101620669AFull support for simultaneous recognitionGood recognition characteristicsCharacter and pattern recognitionIdentification rateFacial characteristic

The invention proposes a method for synchronously recognizing identities and expressions of human faces. The method comprises the steps of extracting facial features of each human-face image, defining corresponding semantic features for each image and adopting a feature fusion method of kernel principal component analysis (PCCA) for the facial features so as to enable input image features to have better recognition properties. On the basis, a model of the relation between the facial features and the semantic features is established by use of a partial least-squares regression (PLSR) method, and expression-identity recognition is performed on to-be-recognized human-face images by use of the model. Experiments show that the method proposed by the invention not only can synchronously recognize human faces and expressions, but also can improve the recognition rate of human-face expression recognition.

Owner:南京宇音力新电子科技有限公司

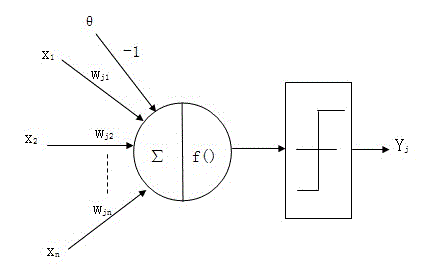

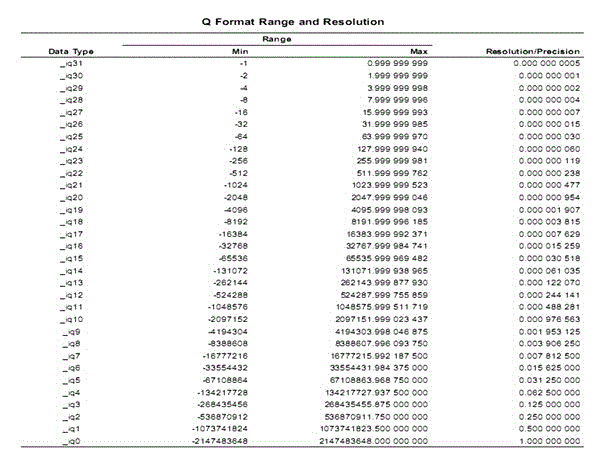

Neural network optimization method based on floating number operation inline function library

InactiveCN102981854AImprove recognition efficiencyImprove efficiencyBiological neural network modelsSpecific program execution arrangementsNerve networkNetwork awareness

Provided is a neural network optimization method based on a floating number operation inline function library, wherein the model of neural unit is Y=1 / (1+exp(-Sigma wi*xi)). The value range of i is 1 to n, and n is the number of neural units. The floating-point operations inline function is structured in a dikaryon chip, namely, function library IQ math Library. In the neural network optimization method based on a floating number operation inline function library, except that the step _IQ(x[i]) is carried out in circulation, the rest steps are all carried out outside the circulation body, and the efficiency of the execution of all the steps as a whole is greatly improved compared with a floating point arithmetic. The neural network optimization method based on a floating number operation inline function library optimizes the transplant of back propagation (BP) neural network on transcranial magnetic stimulation (TMS) 3206464T, and the accuracy of a result is decided through a beginning decimal calibration. On the premise of guaranteeing the accuracy of the result does not influence the recognition rate, BP network awareness efficiency is greatly improved.

Owner:天津市天祥世联网络科技有限公司

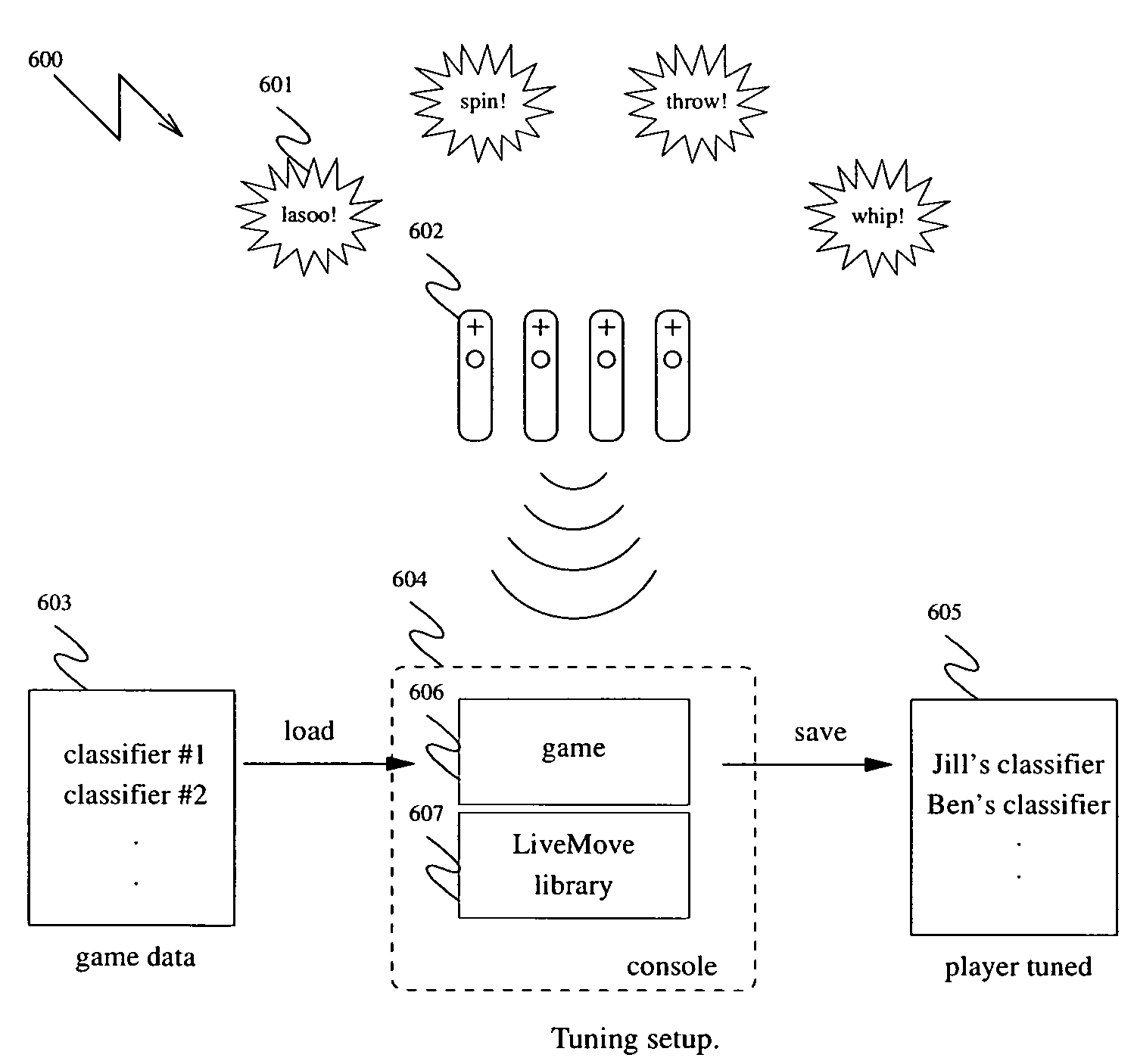

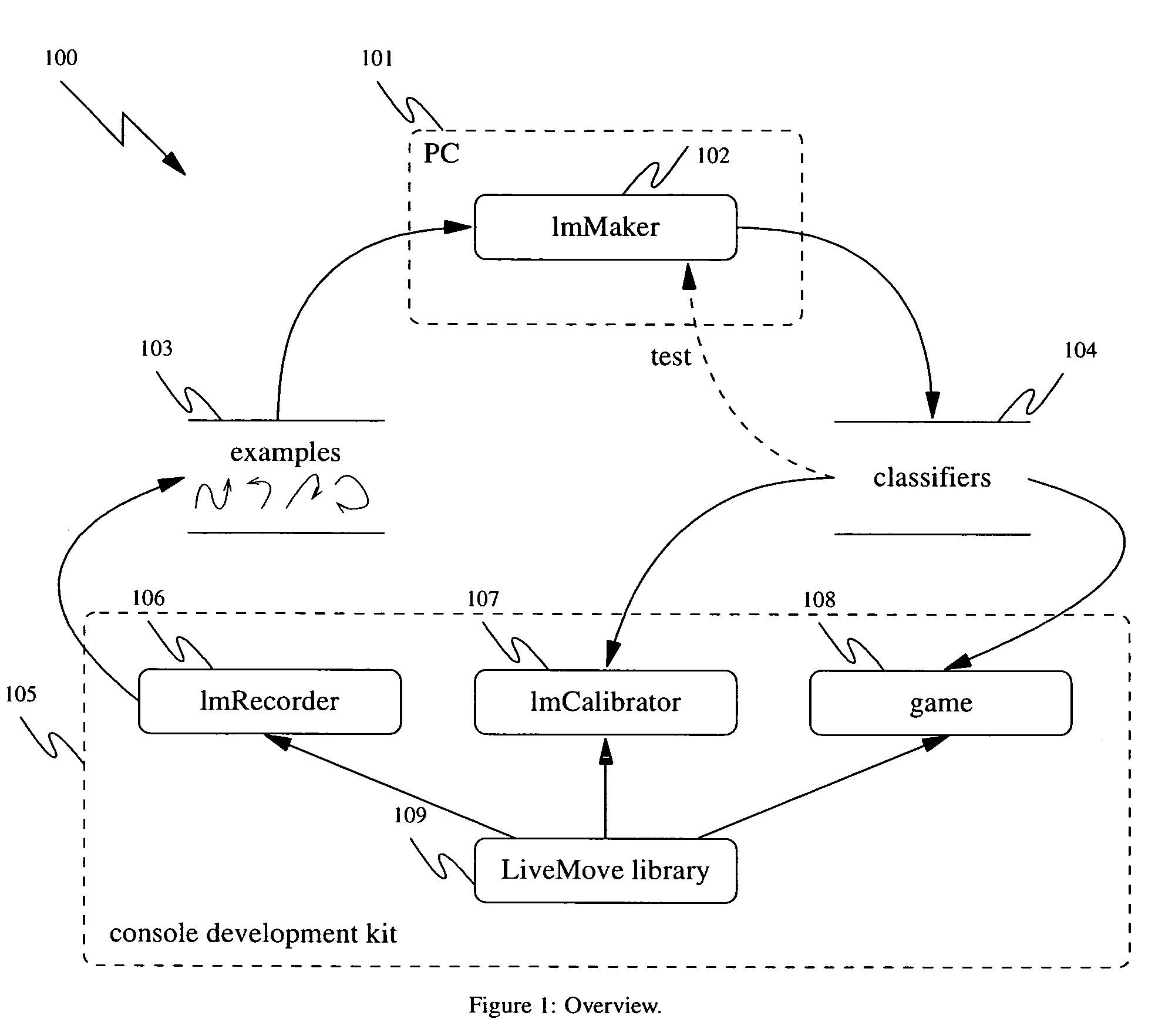

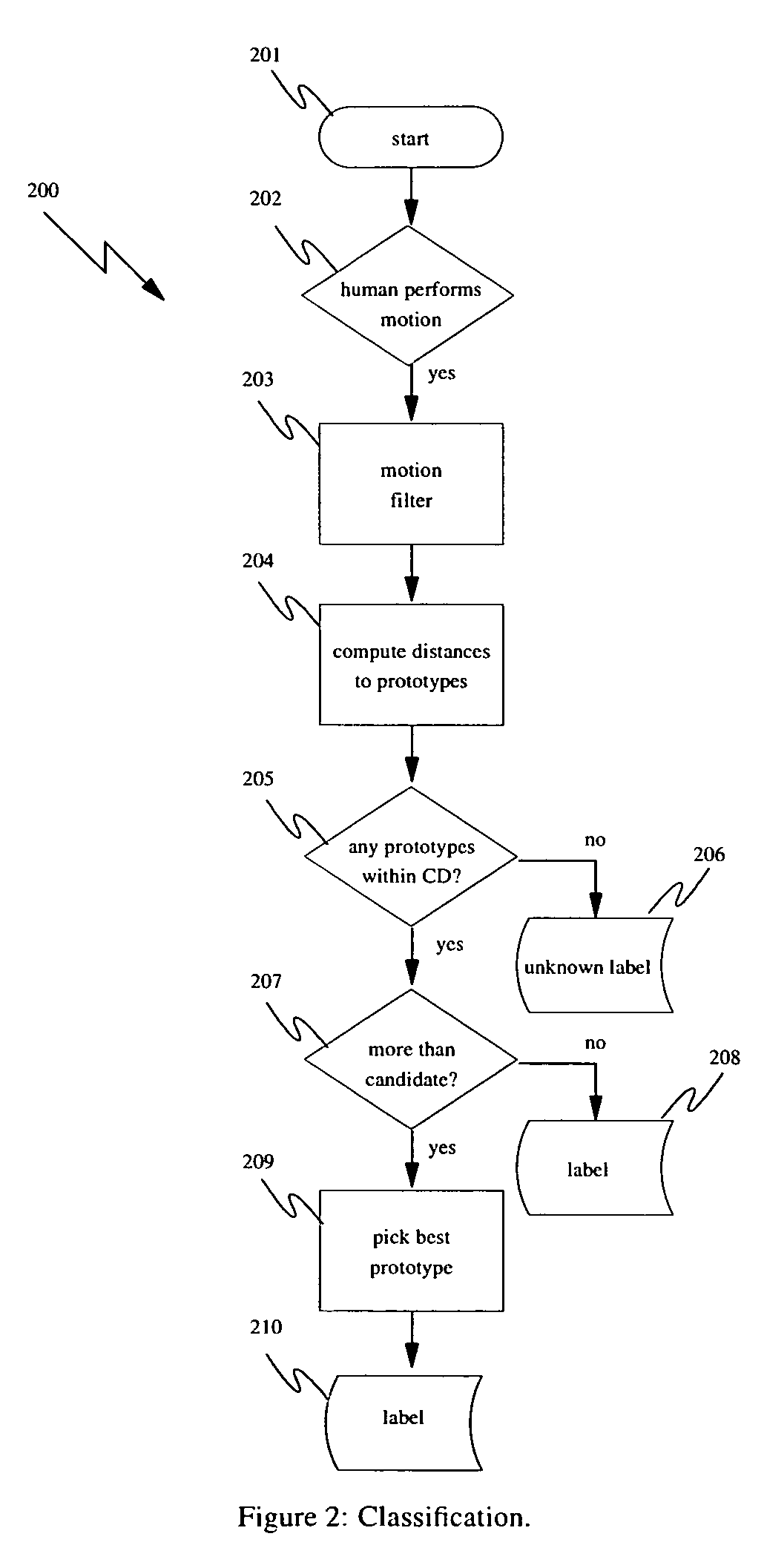

Generating motion recognizers for arbitrary motions for video games and tuning the motion recognizers to the end user

ActiveUS7702608B1Character and pattern recognitionKnowledge representationMotion recognitionComputer science

Generating motion recognizers from example motions, without substantial programming, without limitation to any fixed set of well-known gestures, and without limitation to motions that occur substantially in a plane, or are substantially predefined in scope. From example motions for each class of motion to be recognized, a system automatically generates motion recognizers using machine learning techniques. Those motion recognizers can be incorporated into an end-user application, with the effect that when a user of the application supplies a motion, those motion recognizers will recognize the motion as an example of one of the known classes of motion. Motion recognizers can be incorporated into an end-user application; tuned to improve recognition rates for subsequent motions to allow end-users to add new example motions.

Owner:YEN WEI

Human body movement recognition method based on convolutional neural network feature coding

ActiveCN107169415ASmall amount of calculationImprove accuracyCharacter and pattern recognitionHuman bodyFeature coding

The invention provides a human body movement recognition method based on convolutional neural network feature coding and mainly aims to solve the problems of complicated calculation and low accuracy in the prior art. According to the implementation scheme, TV-L1 is utilized to obtain a video light steam graph; convolutional neural network coding, local feature accumulation coding, dimension-reducing whitening processing and VLAD vector processing are sequentially performed in a video space direction and a light stream movement direction, and space direction VLAD vectors and movement direction VLAD vectors are acquired; and information in the video space direction and information in the light steam movement direction are merged to obtain human body movement classification data, and then classification processing is performed. According to the method, convolutional features are subjected to local feature accumulation coding, so that the recognition rate is increased when complicated background data is processed, and the calculated amount is reduced; the features acquired by fusing video VLAD vectors and light stream VLAD vectors has higher robustness to environmental changes, and the method can be used for performing detection and recognition on human body movement in a monitoring video in areas such as a community, a shopping mall and a privacy occasion.

Owner:XIDIAN UNIV

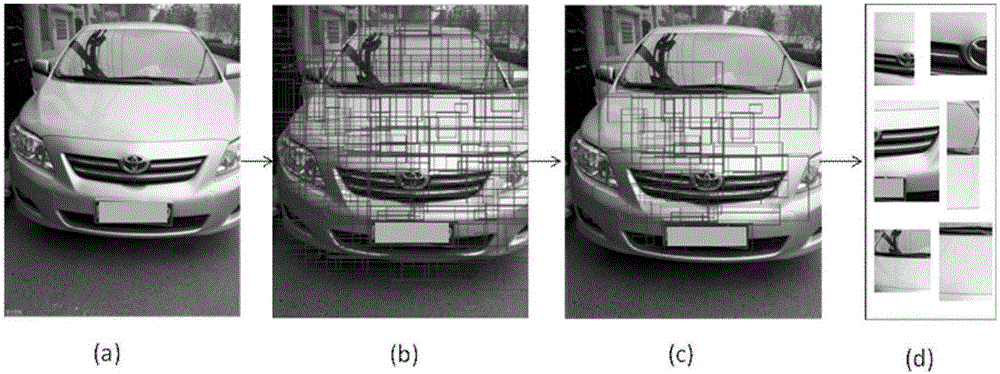

Selective search and convolutional neural network based vehicle logo recognition method

InactiveCN105868774AAccurate extractionSimple processCharacter and pattern recognitionParking spaceHigh definition

The invention proposes a selective search and convolutional neural network based vehicle logo recognition method, and mainly solves the problems of complicated calculation and poor timeliness in the prior art. According to the implementation scheme, the method comprises the steps of 1) inputting a to-be-detected picture shot by a high-definition shooting device in a traffic intersection; 2) carrying out selective search for the to-be-detected picture to obtain candidate regions; 3) screening the candidate regions to obtain vehicle logo candidate regions; and 4) constructing and training a convolutional neural network (CNN) and inputting the logo candidate regions into the trained CNN for testing to obtain a vehicle logo recognition result. According to the method, the calculation amount is effectively reduced, the vehicle logo candidate regions can be quickly obtained, a self-learning characteristic of the CNN has higher robustness for environmental change, and the vehicle logo recognition rate is increased; and the method can be used for quick detection of freeway entrances and parking spaces to vehicles.

Owner:XIDIAN UNIV

Moving target detecting method based on differential fusion and image edge information

InactiveCN102184552AReliable detectionExcellent recognitionImage enhancementImage analysisBackground imageVideo image

The invention relates to a moving target detecting method based on differential fusion and image edge information. The method comprises the following steps of: extracting a video image for preprocessing, and extracting the edge of the image to acquire continuous edge images; performing inter-frame differential operation on the continuous edge images respectively in a unit 8 data format, and performing background differential operation on the image in an intermediate frame; and fusing the detecting results of two differences to primarily extract a moving pedestrian target. In a background difference, a background automatic extracting method is improved, and a continuous-edge-image-based background image extracting and updating method is provided. A moving target area in the image is extracted by using an adaptive-background-model-based dynamic threshold value, and morphologic filtering and connectivity detection are performed, so that the foreground target of a moving pedestrian is acquired. Under the complex condition of sudden light variation, a moving target can be accurately and reliably detected, and the method is superior to the three traditional methods in the two aspects ofan identification rate and an error detection rate.

Owner:UNIV OF SHANGHAI FOR SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com