Patents

Literature

474 results about "False recognition" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

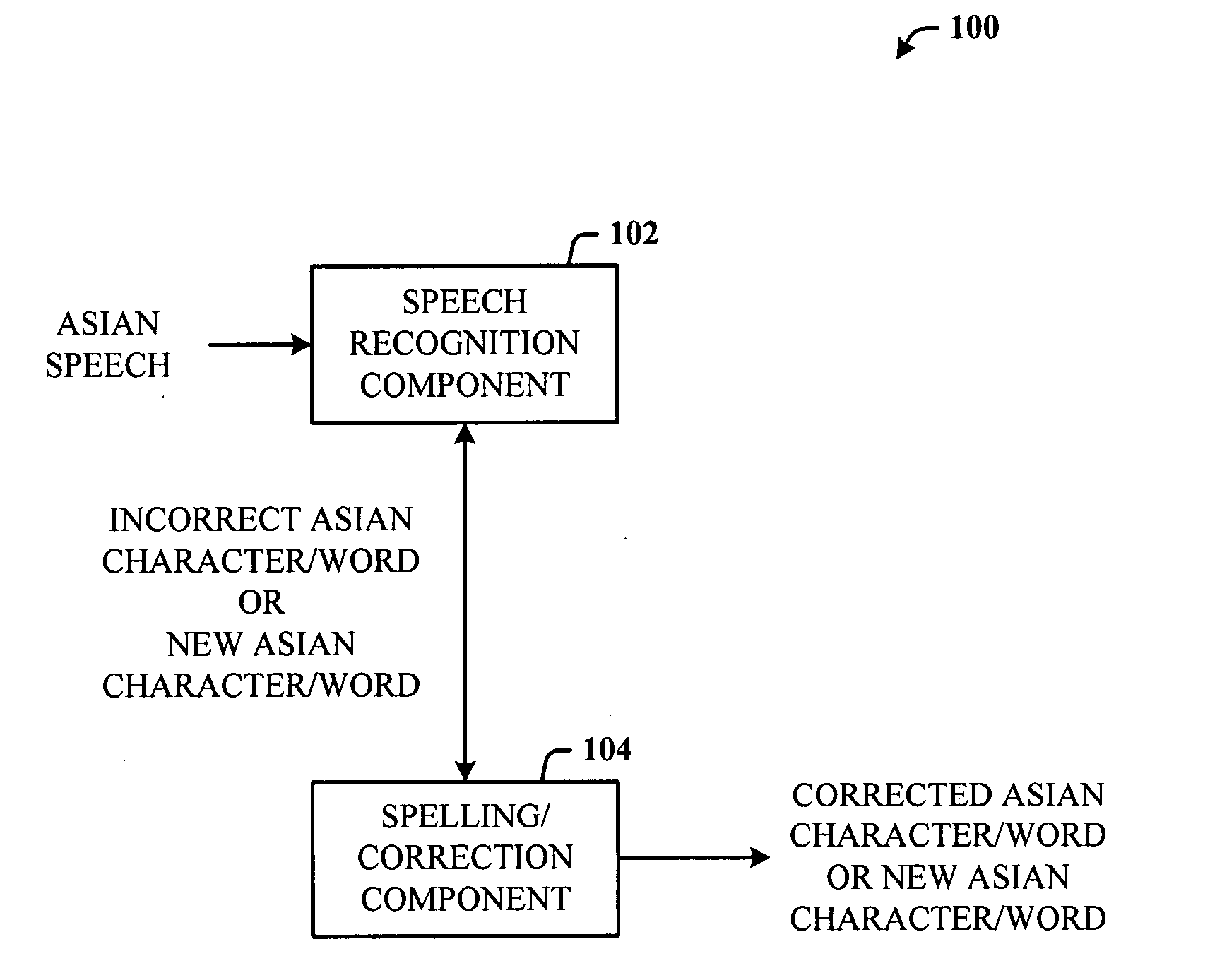

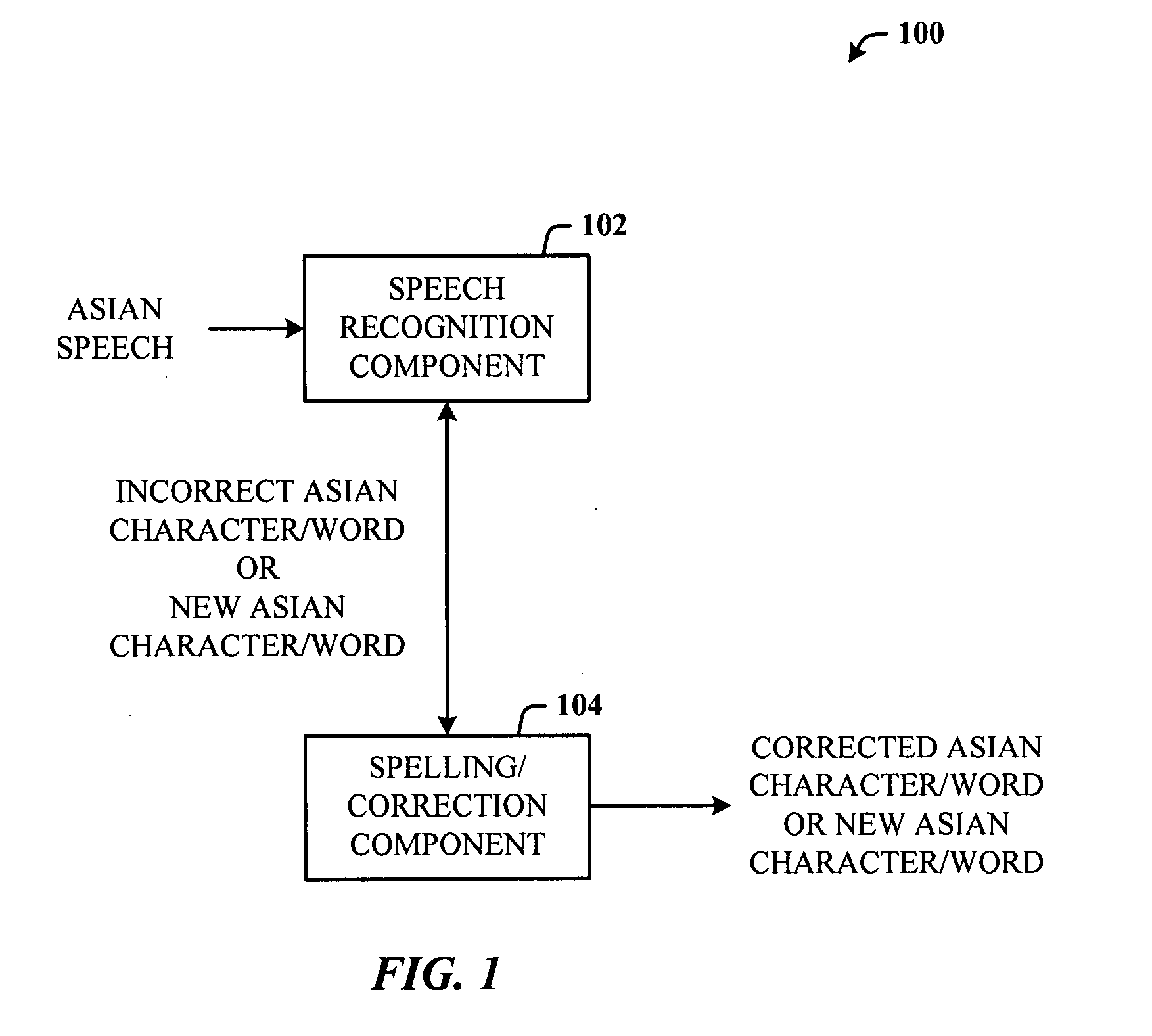

Recognition architecture for generating Asian characters

ActiveUS20080270118A1Easy to determineEasy inputNatural language data processingSpeech recognitionSpeech inputLanguage speech

Architecture for correcting incorrect recognition results in an Asian language speech recognition system. A spelling mode can be launched in response to receiving speech input, the spelling mode for correcting incorrect spelling of the recognition results or generating new words. Correction can be obtained using speech and / or manual selection and entry. The architecture facilitates correction in a single pass, rather than multiples times as in conventional systems. Words corrected using the spelling mode are corrected as a unit and treated as a word. The spelling mode applies to languages of at least the Asian continent, such as Simplified Chinese, Traditional Chinese, and / or other Asian languages such as Japanese.

Owner:MICROSOFT TECH LICENSING LLC

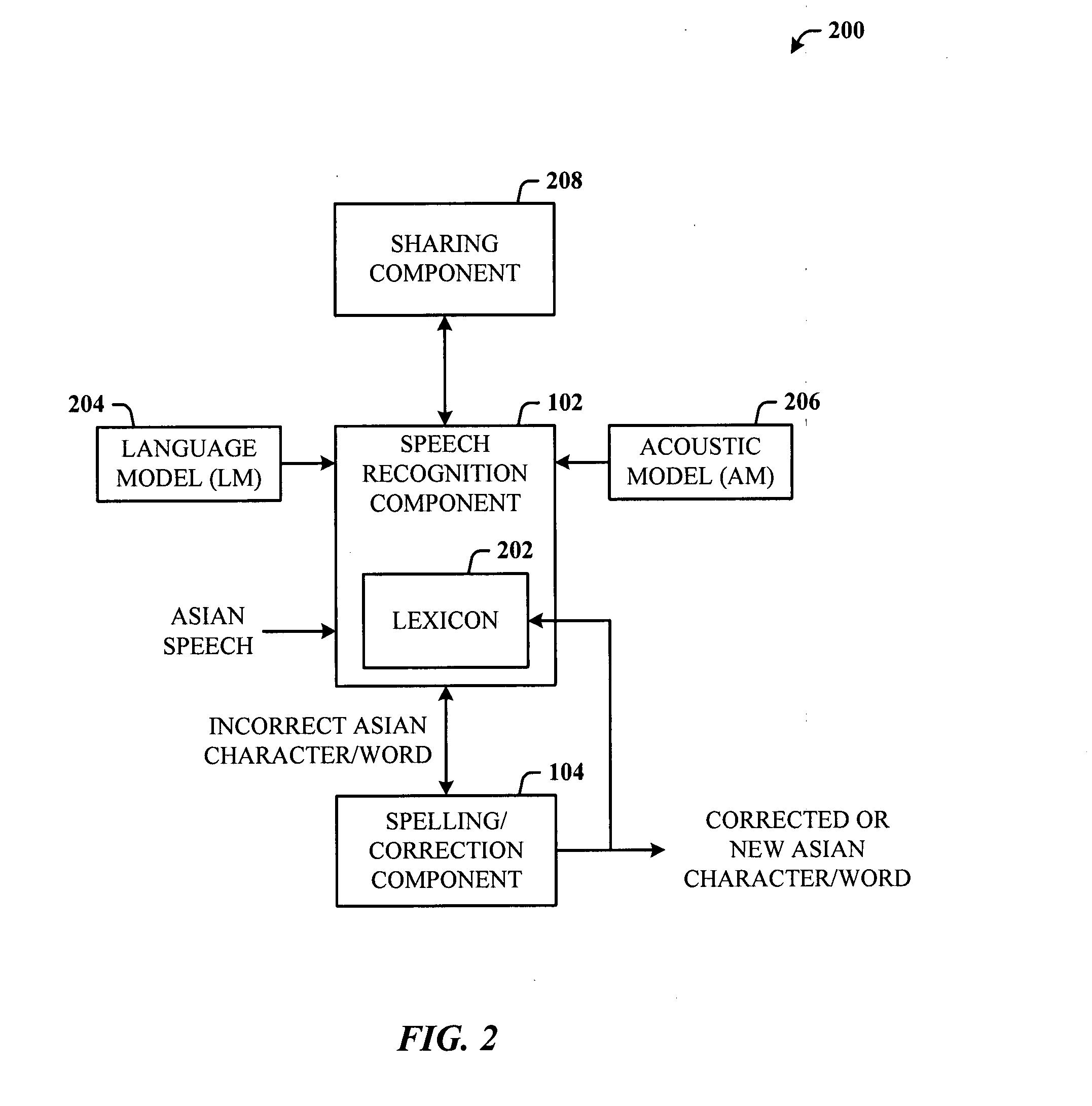

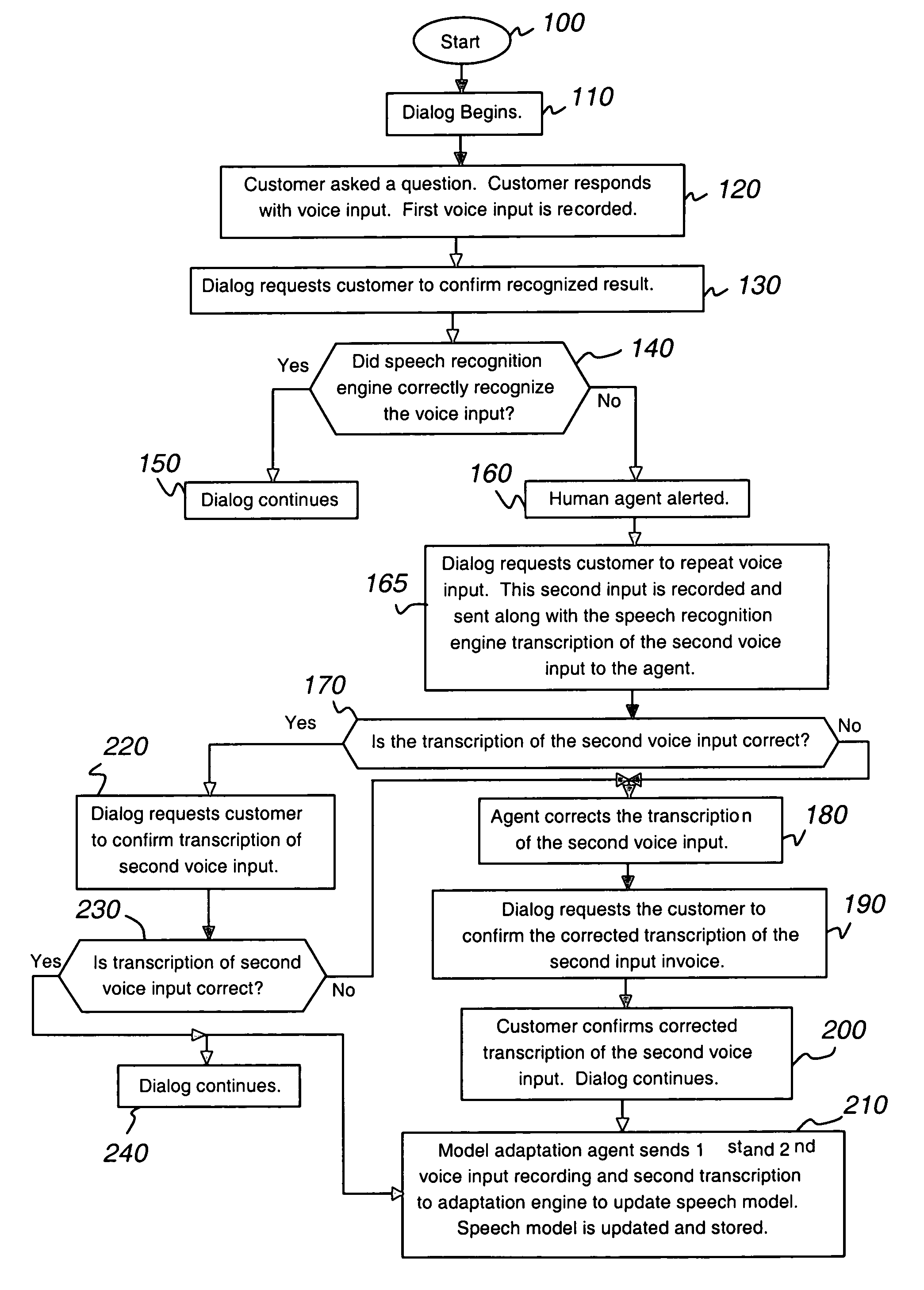

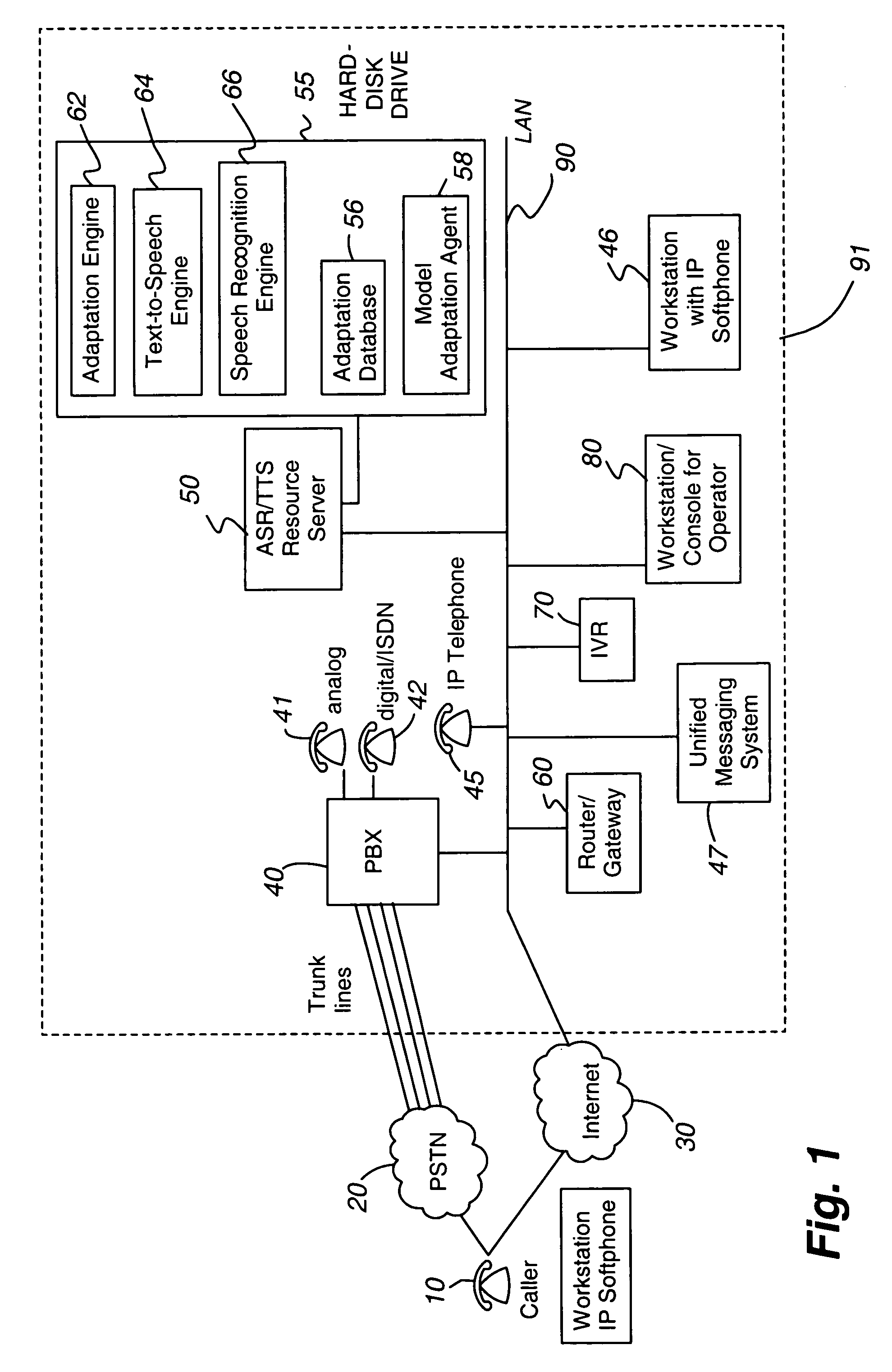

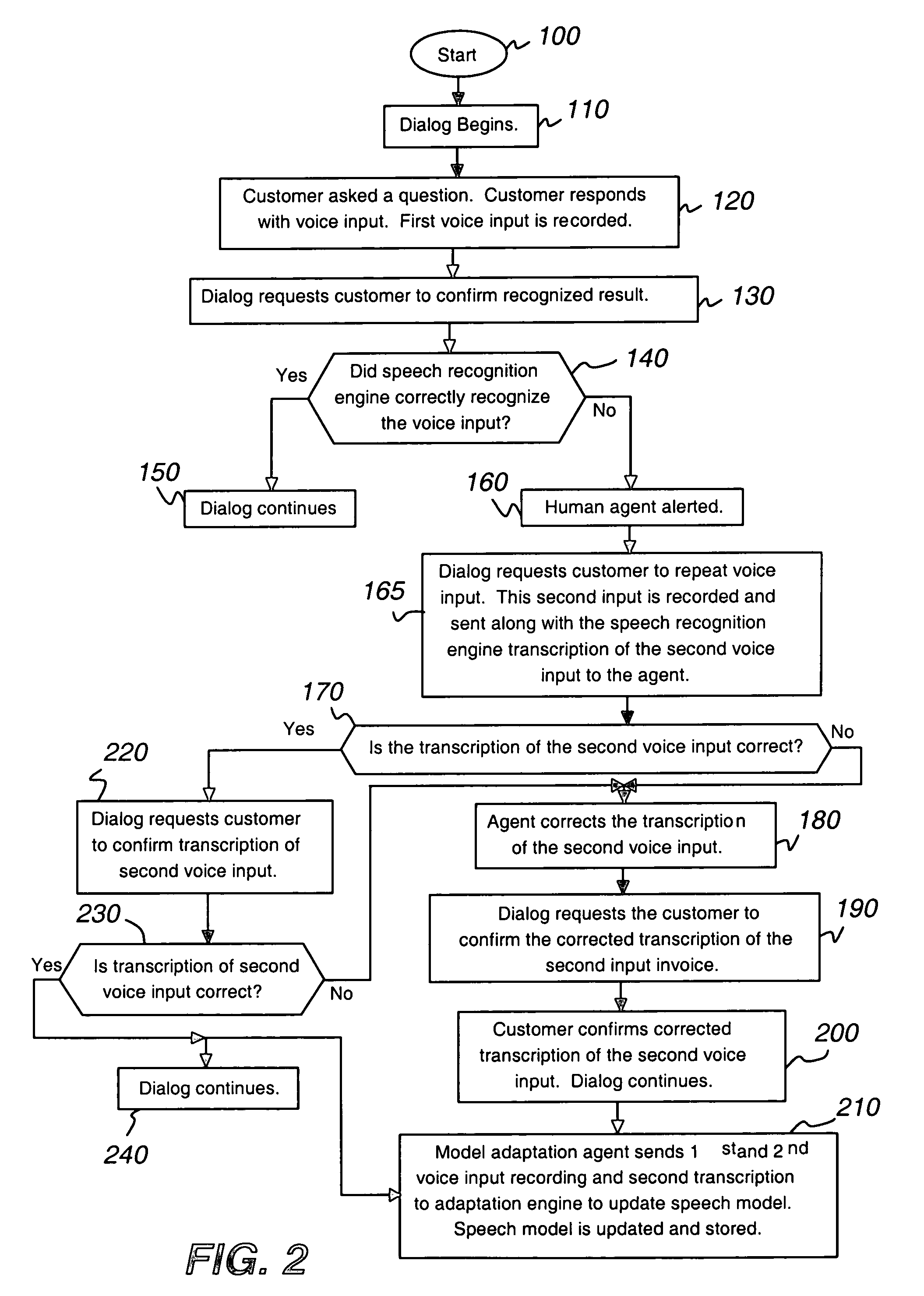

Transparent monitoring and intervention to improve automatic adaptation of speech models

ActiveUS7660715B1Improve speech recognition accuracyQuality improvementSpeech recognitionFrustrationMostly True

A system and method to improve the automatic adaptation of one or more speech models in automatic speech recognition systems. After a dialog begins, for example, the dialog asks the customer to provide spoken input and it is recorded. If the speech recognizer determines it may not have correctly transcribed the verbal response, i.e., voice input, the invention uses monitoring and if necessary, intervention to guarantee that the next transcription of the verbal response is correct. The dialog asks the customer to repeat his verbal response, which is recorded and a transcription of the input is sent to a human monitor, i.e., agent or operator. If the transcription of the spoken input is correct, the human does not intervene and the transcription remains unmodified. If the transcription of the verbal response is incorrect, the human intervenes and the transcription of the misrecognized word is corrected. In both cases, the dialog asks the customer to confirm the unmodified and corrected transcription. If the customer confirms the unmodified or newly corrected transcription, the dialog continues and the customer does not hang up in frustration because most times only one misrecognition occurred. Finally, the invention uses the first and second customer recording of the misrecognized word or utterance along with the corrected or unmodified transcription to automatically adapt one or more speech models, which improves the performance of the speech recognition system.

Owner:AVAYA INC

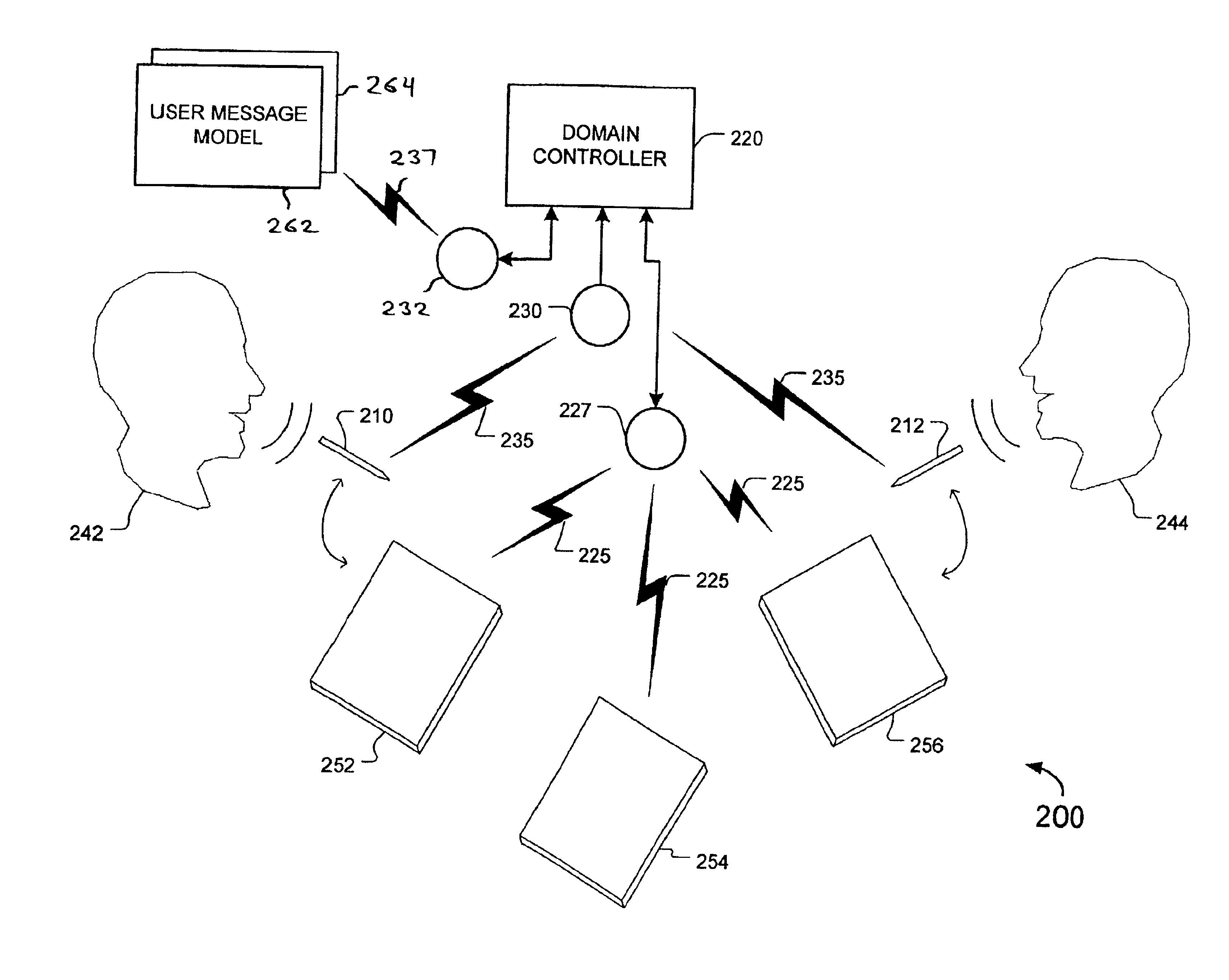

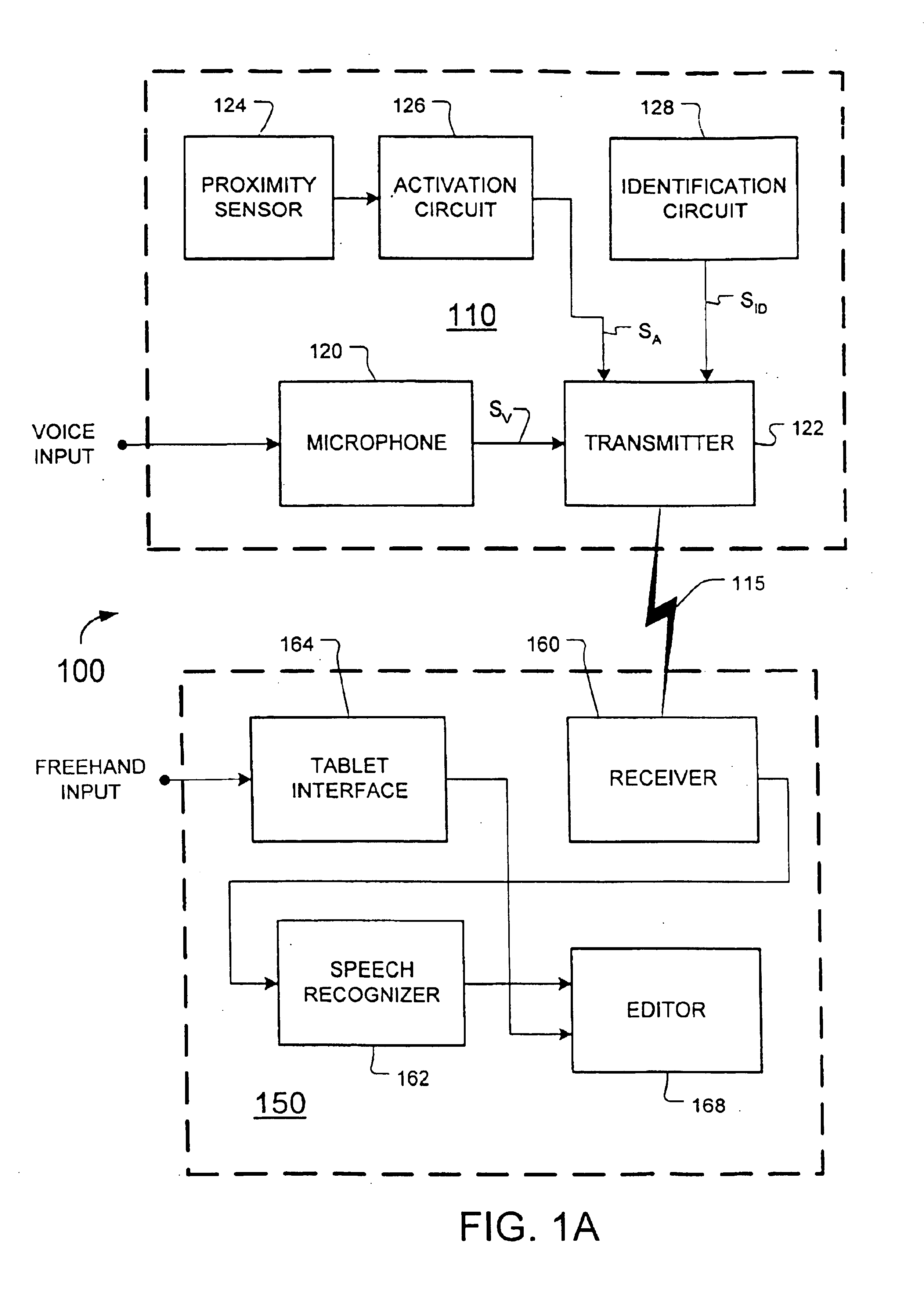

Message recognition using shared language model

InactiveUS6904405B2Speech recognitionInput/output processes for data processingHandwritingAcoustic model

Certain disclosed methods and systems perform multiple different types of message recognition using a shared language model. Message recognition of a first type is performed responsive to a first type of message input (e.g., speech), to provide text data in accordance with both the shared language model and a first model specific to the first type of message recognition (e.g., an acoustic model). Message recognition of a second type is performed responsive to a second type of message input (e.g., handwriting), to provide text data in accordance with both the shared language model and a second model specific to the second type of message recognition (e.g., a model that determines basic units of handwriting conveyed by freehand input). Accuracy of both such message recognizers can be improved by user correction of misrecognition by either one of them. Numerous other methods and systems are also disclosed.

Owner:BUFFALO PATENTS LLC

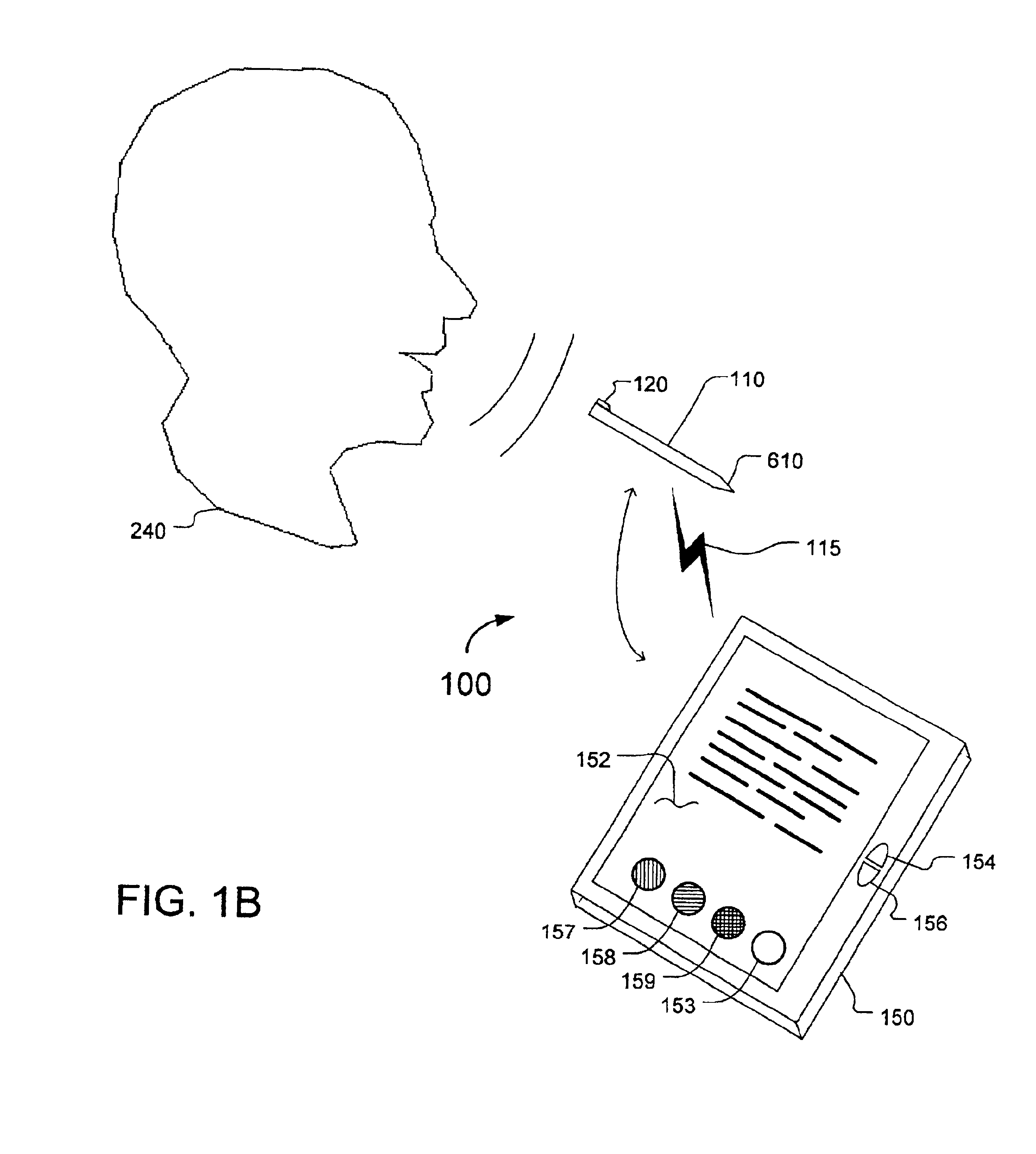

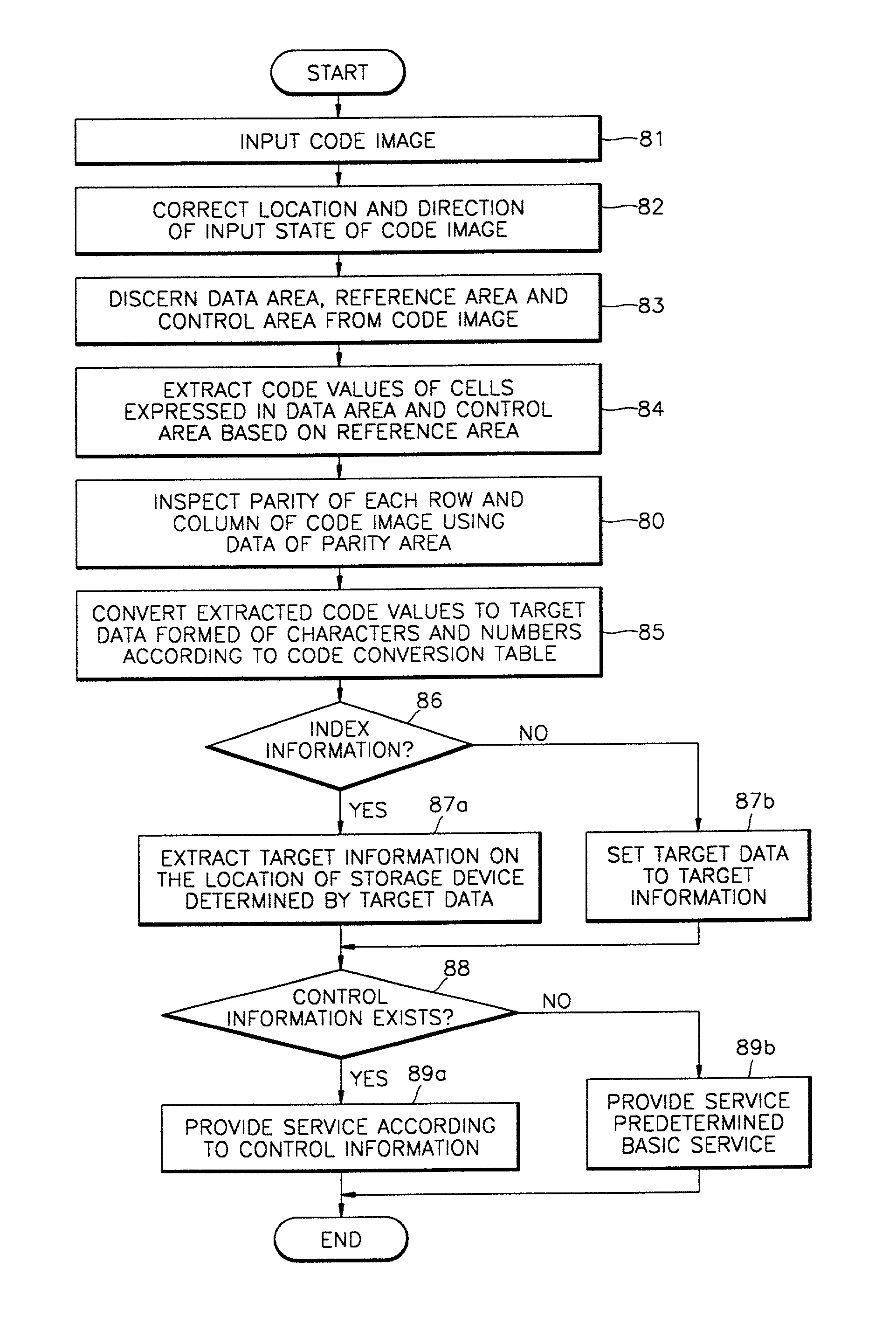

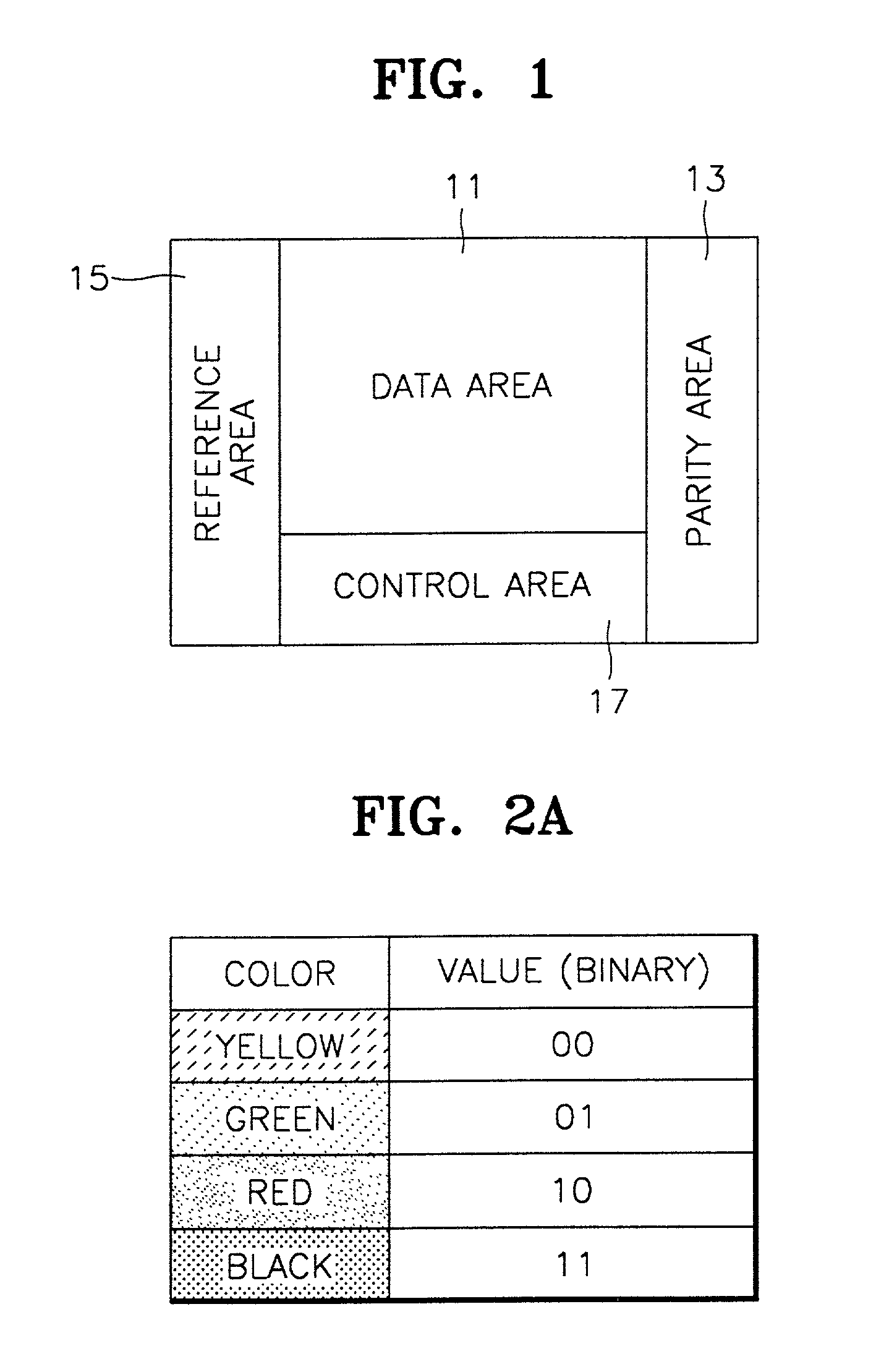

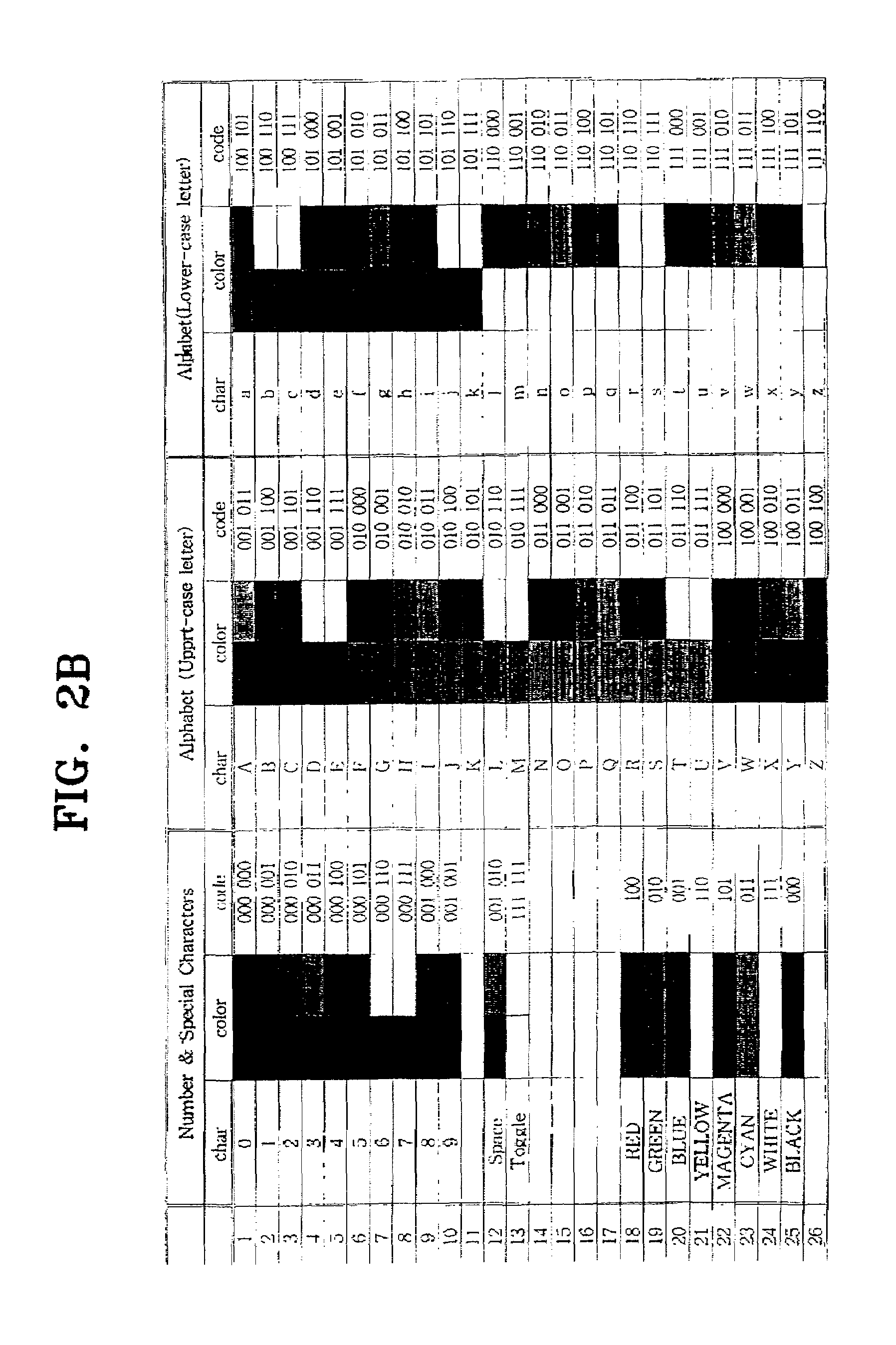

Machine readable code image and method of encoding and decoding the same

InactiveUS7020327B2Co-operative working arrangementsCharacter and pattern recognitionComputer graphics (images)Coding decoding

A machine readable code, a code encoding method and device and a code decoding method and device are provided. This machine readable code includes a data area made up of at least one data cell, in which different colors or shades are encoded and expressed depending on the content of the information, and a parity area made up of at least one parity cell, the parity area provided to determine whether colors or shades expressed in the data cells have been properly expressed depending on the content of the information. Since this code image includes a parity area for parity inspection, mal-recognition of colors due to differences between input devices such as cameras or between the environments such as light can be easily detected and corrected.

Owner:COLORZIP MEDIA

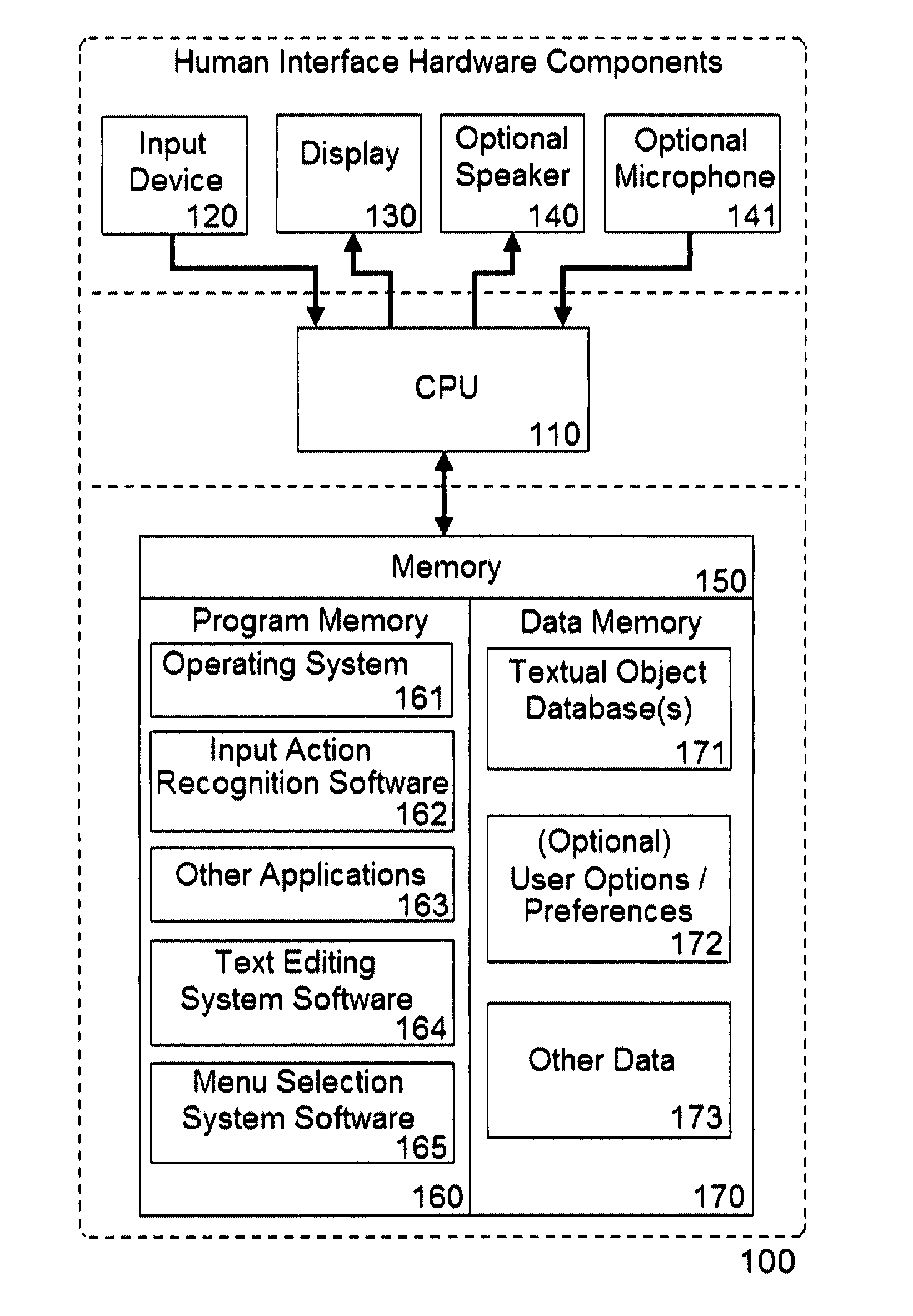

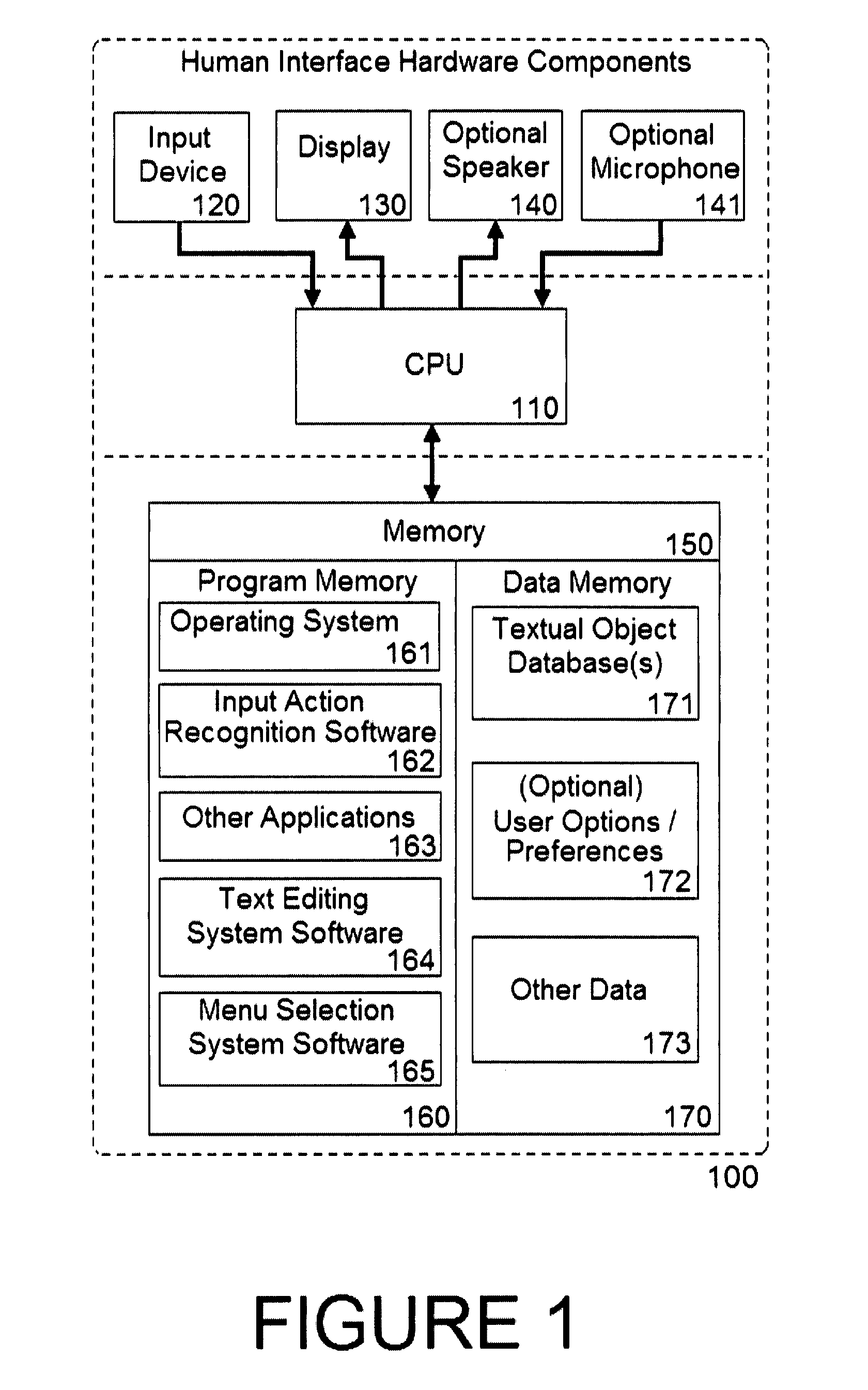

System and method for a user interface for text editing and menu selection

ActiveUS7542029B2Reduce speedImprove efficiencyInput/output for user-computer interaction2D-image generationGraphicsText editing

Methods and system to enable a user of an input action recognition text input system to edit any incorrectly recognized text without re-locating the text insertion position to the location of the text to be corrected. The System also automatically maintains correct spacing between textual objects when a textual object is replaced with an object for which automatic spacing is generated in a different manner. The System also enables the graphical presentation of menu choices in a manner that facilitates faster and easier selection of a desired choice by performing a selection gesture requiring less precision than directly contacting the sub-region of the menu associated with the desired choice.

Owner:CERENCE OPERATING CO

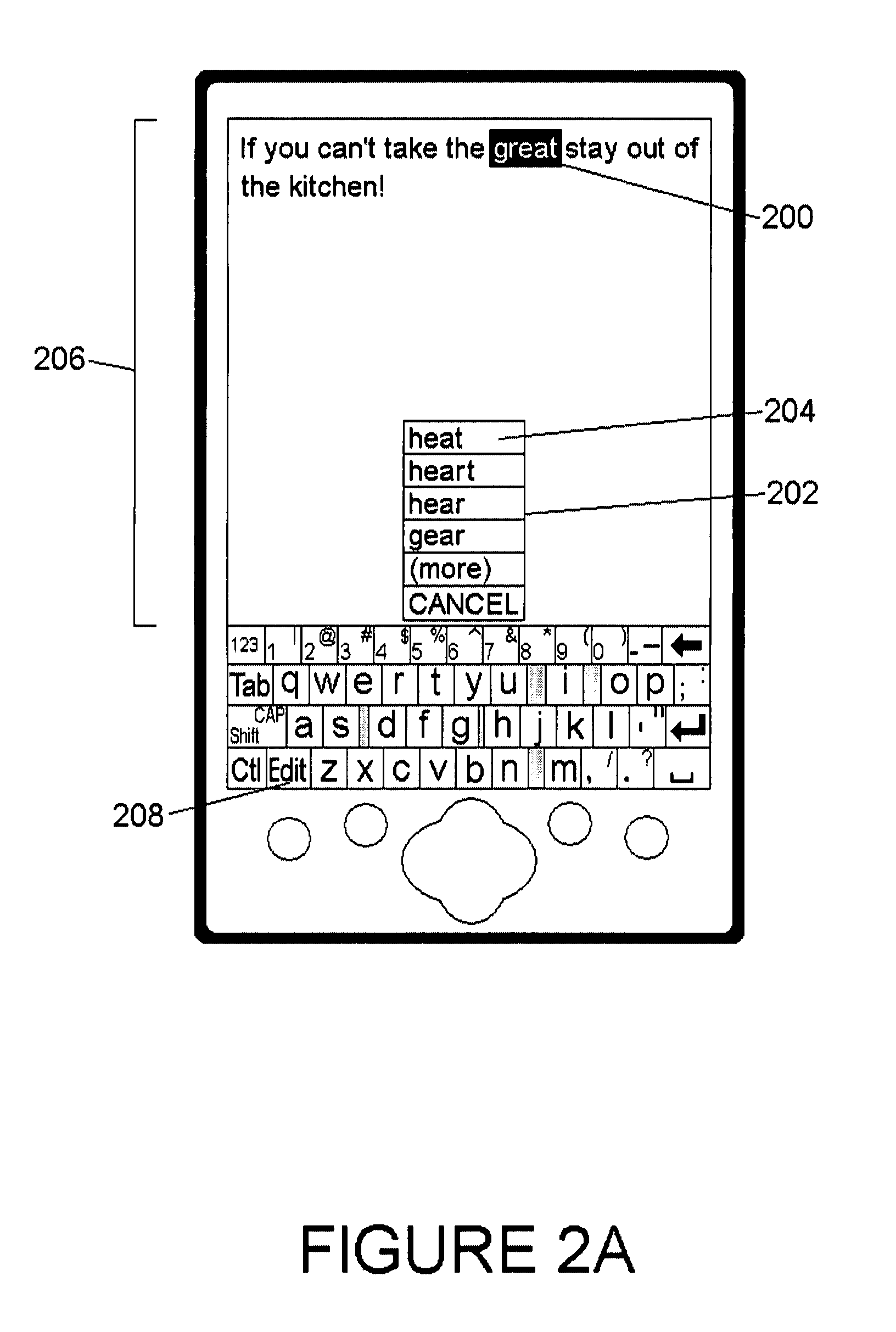

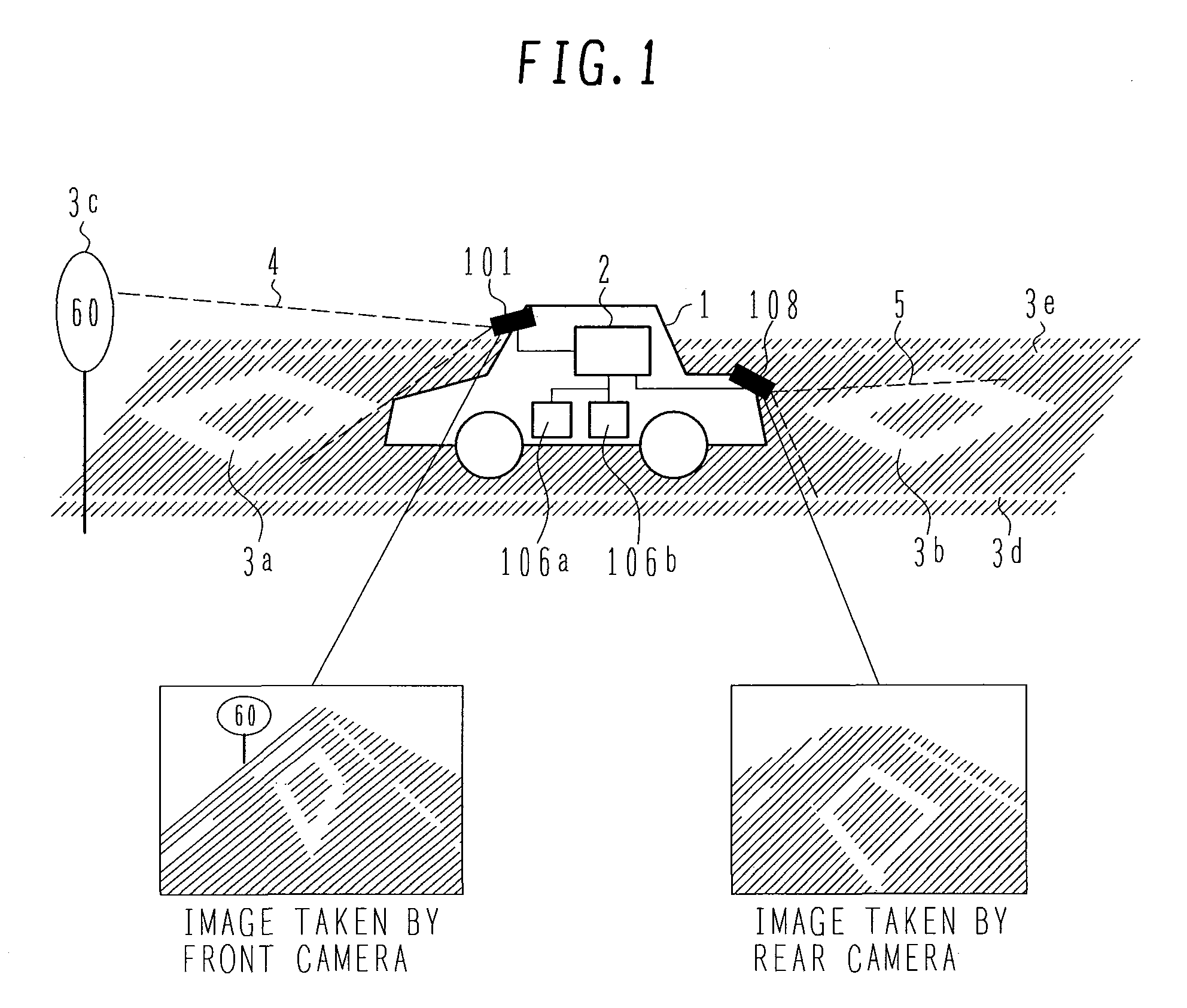

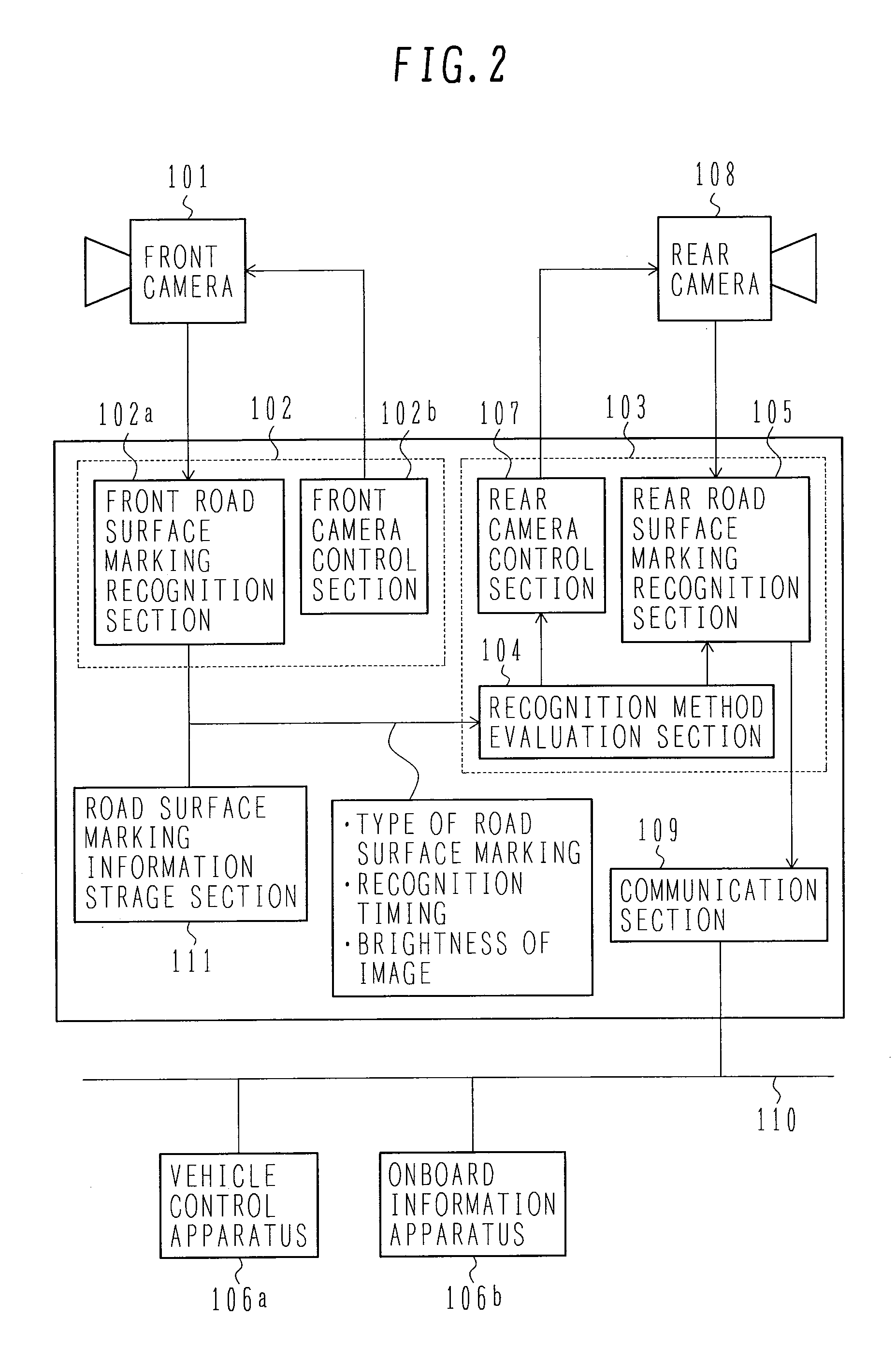

Apparatus and System for Recognizing Environment Surrounding Vehicle

InactiveUS20080013789A1Accurate recognition of objectEasy to adjustRoad vehicles traffic controlCharacter and pattern recognitionEngineeringRoad surface

In conventional systems using an onboard camera disposed rearward of a vehicle for recognizing an object surrounding the vehicle, the object is recognized by the camera disposed rearward of the vehicle. In the image recognized by the camera, a road surface marking taken by the camera appears at a lower end of a screen of the image, which makes it difficult to predict a specific position in the screen from which the road surface marking appears. Further, an angle of depression of the camera is large, and it is a short period of time to acquire the object. Therefore, it is difficult to improve a recognition rate and to reduce false recognition. Results of recognition (type, position, angle, recognition time) made by a camera disposed forward of the vehicle, are used to predict a specific timing and a specific position of a field of view of a camera disposed rearward of the vehicle, at which the object appears. Parameters of recognition logic of the rearwardly disposed camera and processing timing are then optimally adjusted. Further, luminance information of the image from the forwardly disposed camera is used to predict possible changes to be made in luminance of the field of view of the rearwardly disposed camera. Gain and exposure time of the rearwardly disposed camera are then adjusted.

Owner:HITACHI LTD

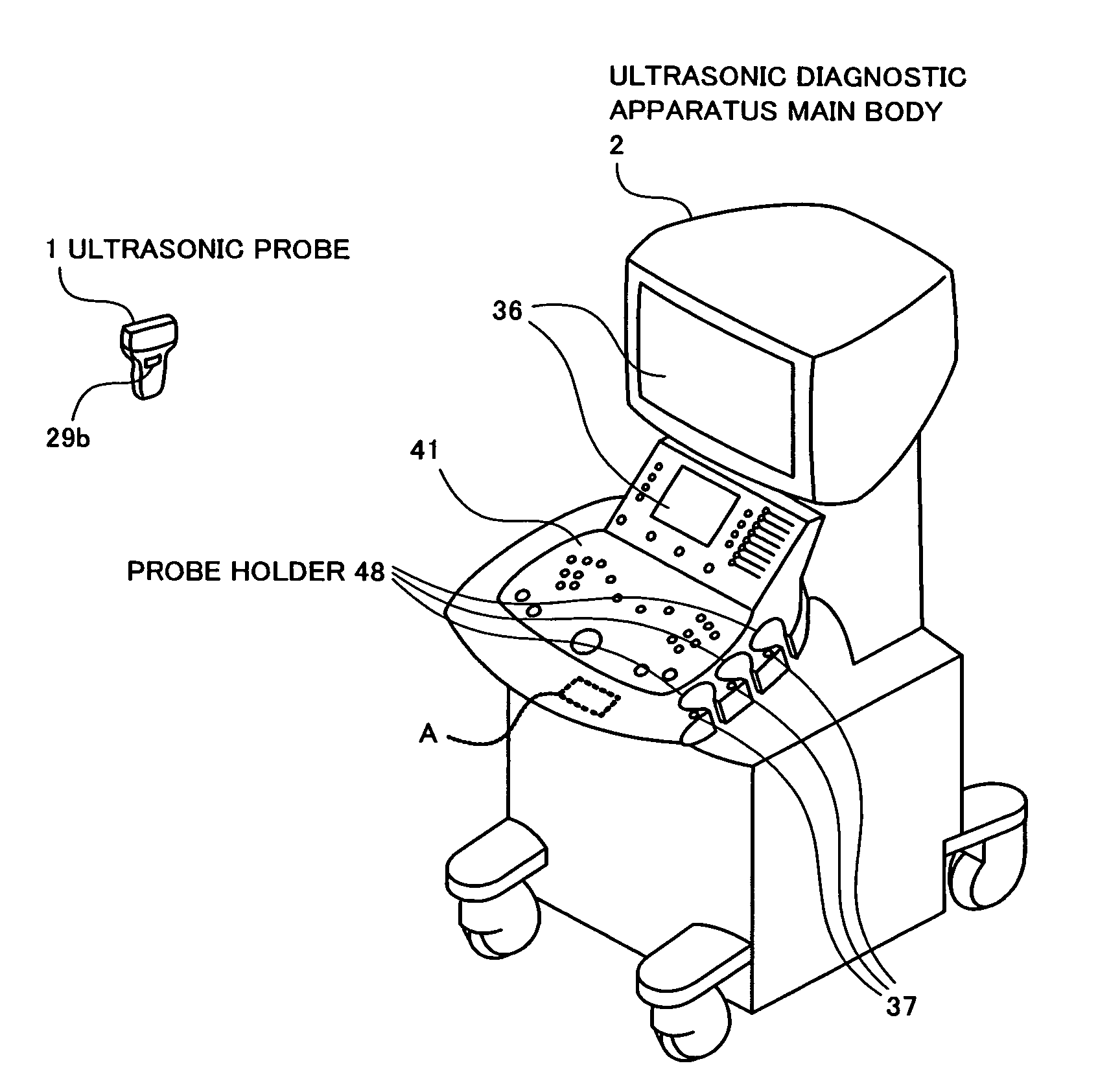

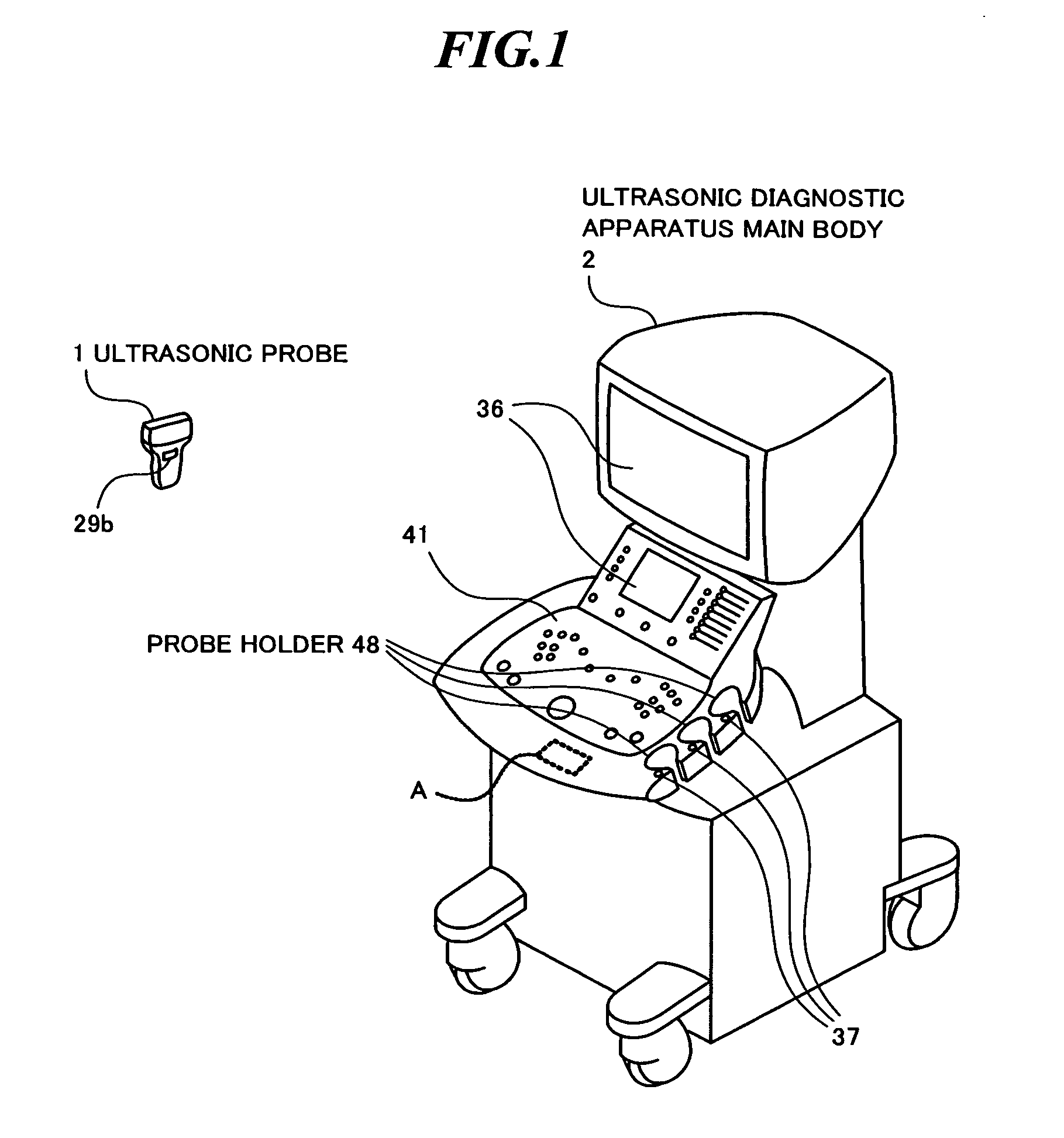

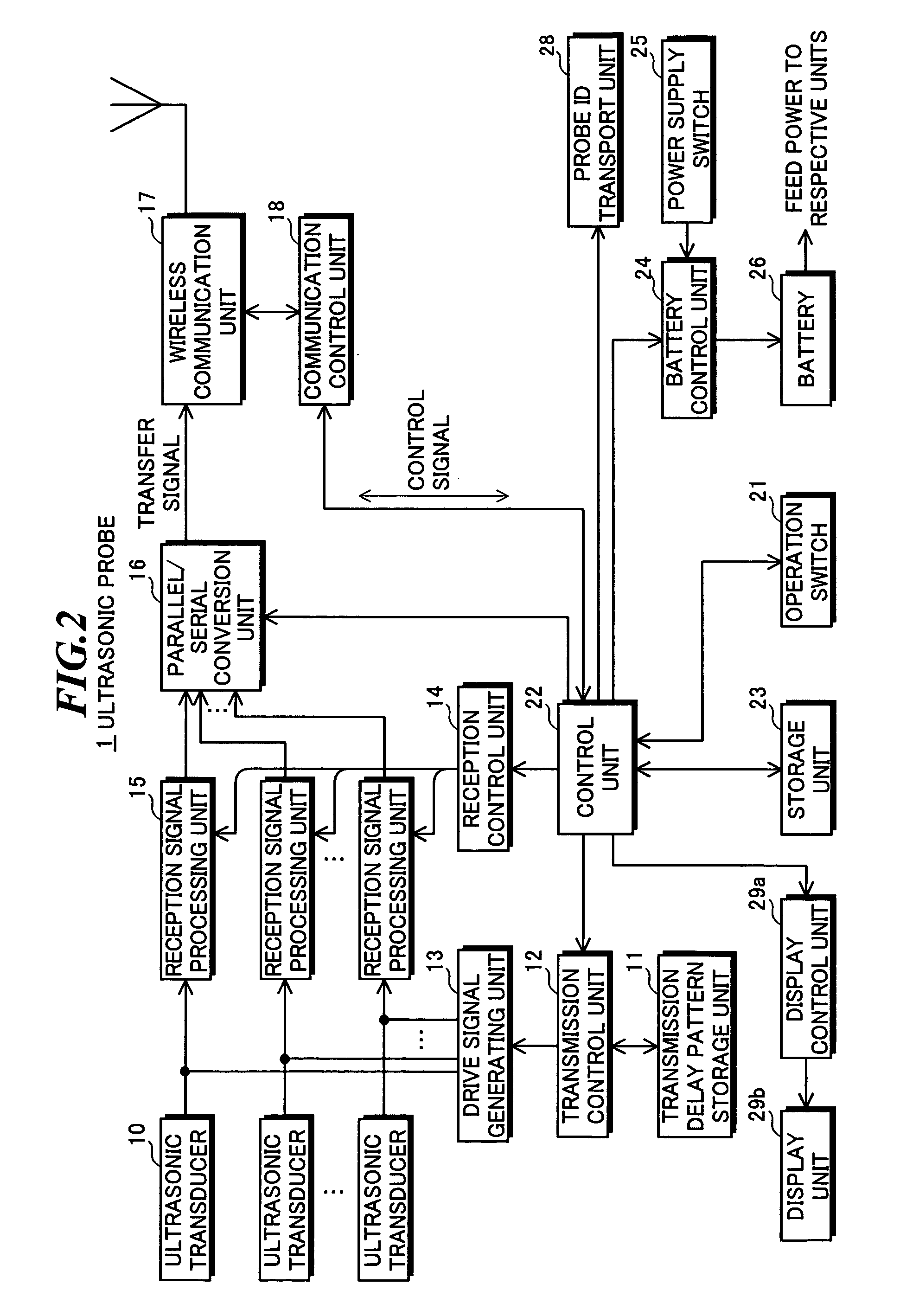

Ultrasonic diagnostic apparatus and ultrasonic probe

ActiveUS20100191121A1Wireless connectionReduce misidentificationDiagnostic recording/measuringInfrasonic diagnosticsCommunication unitFalse recognition

When a transfer signal according to ultrasonic echoes is wirelessly transmitted from an ultrasonic probe to an ultrasonic diagnostic apparatus main body, the main body and the probe are reliably connected without false recognition. An ultrasonic diagnostic apparatus includes an ultrasonic probe and an ultrasonic diagnostic apparatus main body, and the ultrasonic probe includes a probe ID transport unit having a transport distance shorter than that of a first wireless communication unit for transporting a probe ID for identification of itself in contact or noncontact to an outside, the ultrasonic diagnostic apparatus main body includes a probe ID acquiring unit for acquiring the probe ID transported from the probe ID transport unit, and a second wireless communication unit receives the transfer signal from the ultrasonic probe having the probe ID acquired by the probe ID acquiring unit.

Owner:FUJIFILM CORP

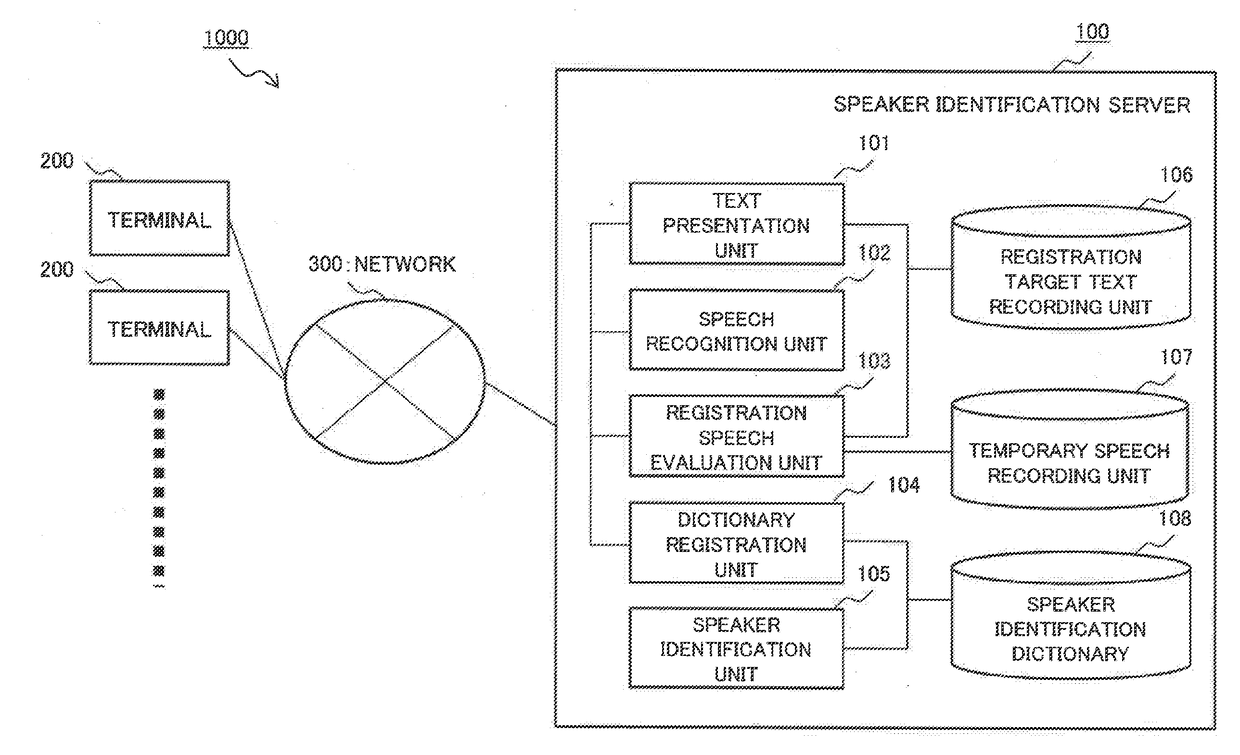

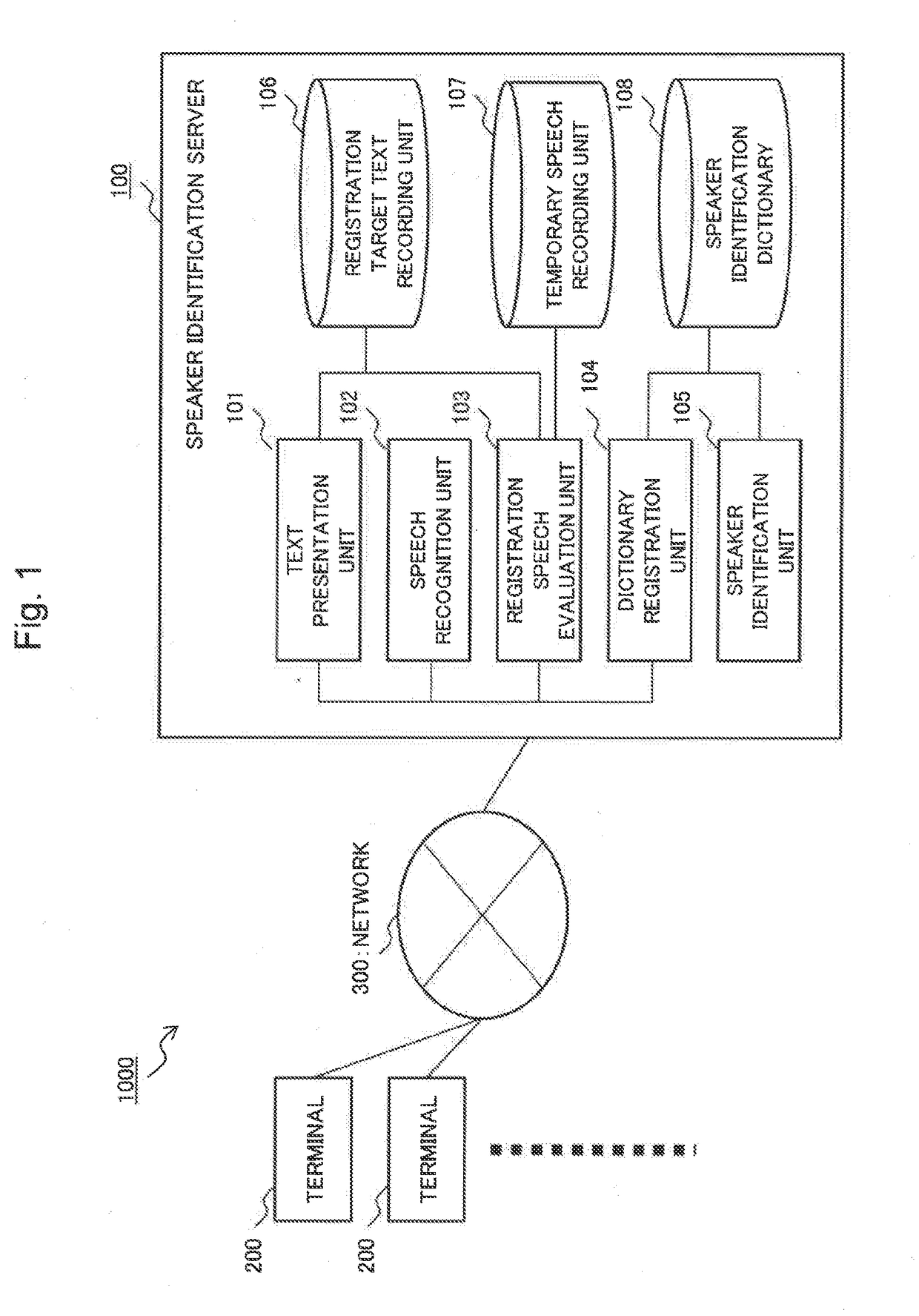

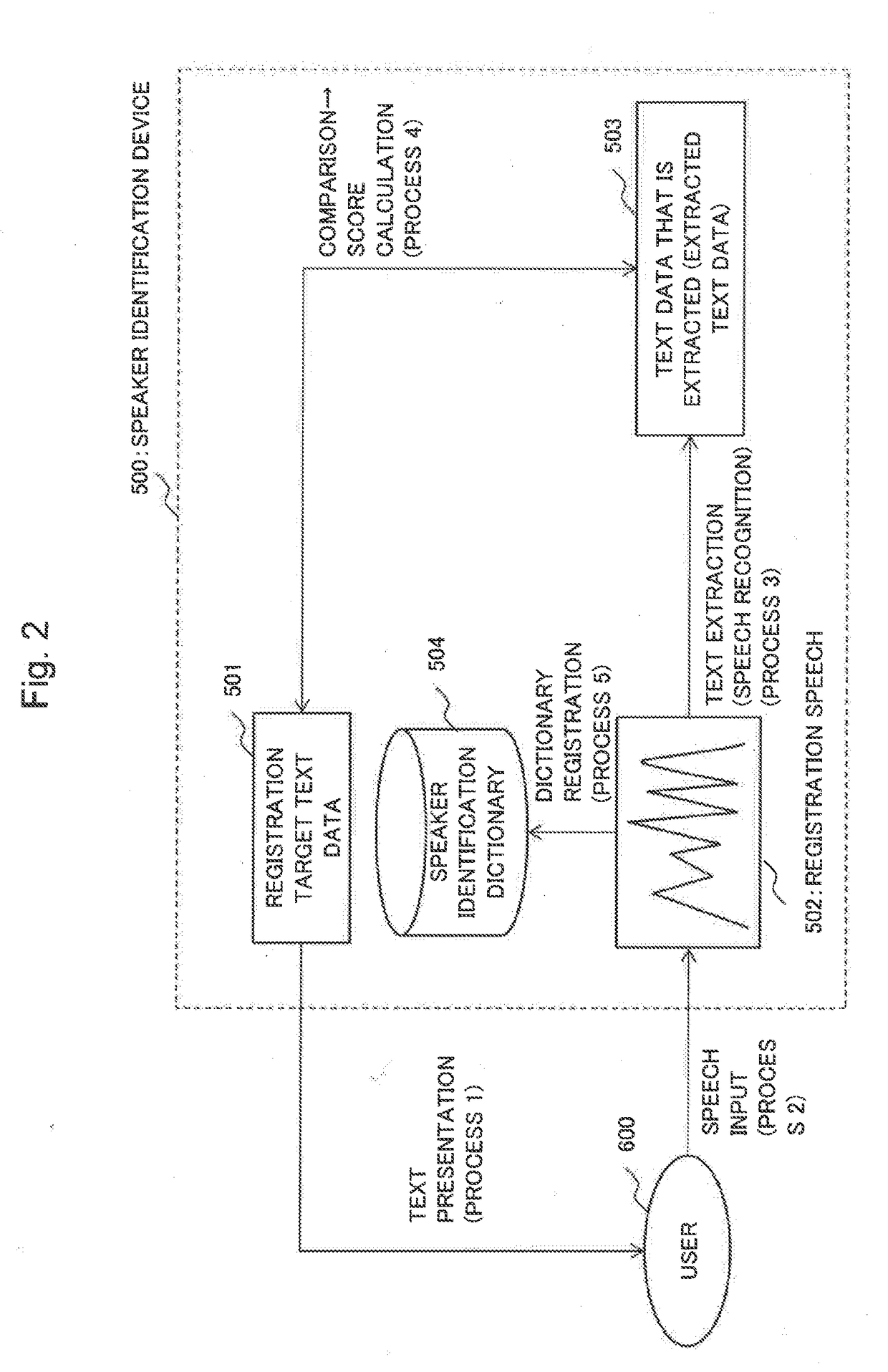

Speaker identification device and method for registering features of registered speech for identifying speaker

InactiveUS20170323644A1Stable and accurate identificationSuppress errorSpeech analysisIdentification deviceSpeech input

[Problem] To suppress an erroneous identification resulting from registration speech, and identify the speaker stably and precisely.[Solving means] The speech recognition unit 102 extracts the text data corresponding to the registration speech, as the extraction text data. The registration speech is a speech input by a registration speaker reading aloud registration target text data that is preliminarily set text data. The registration speech evaluation unit 103 calculates a score representing a similarity degree between the extracted text data and the registration target text data (registration speech score) for each registration speaker. The dictionary registration unit 104 registers the feature value of the registration speech in the speaker identification dictionary 108 for registering the feature value of the registration speech for each registration speaker, according to the evaluation result by the registration speech evaluation unit 103.

Owner:NEC CORP

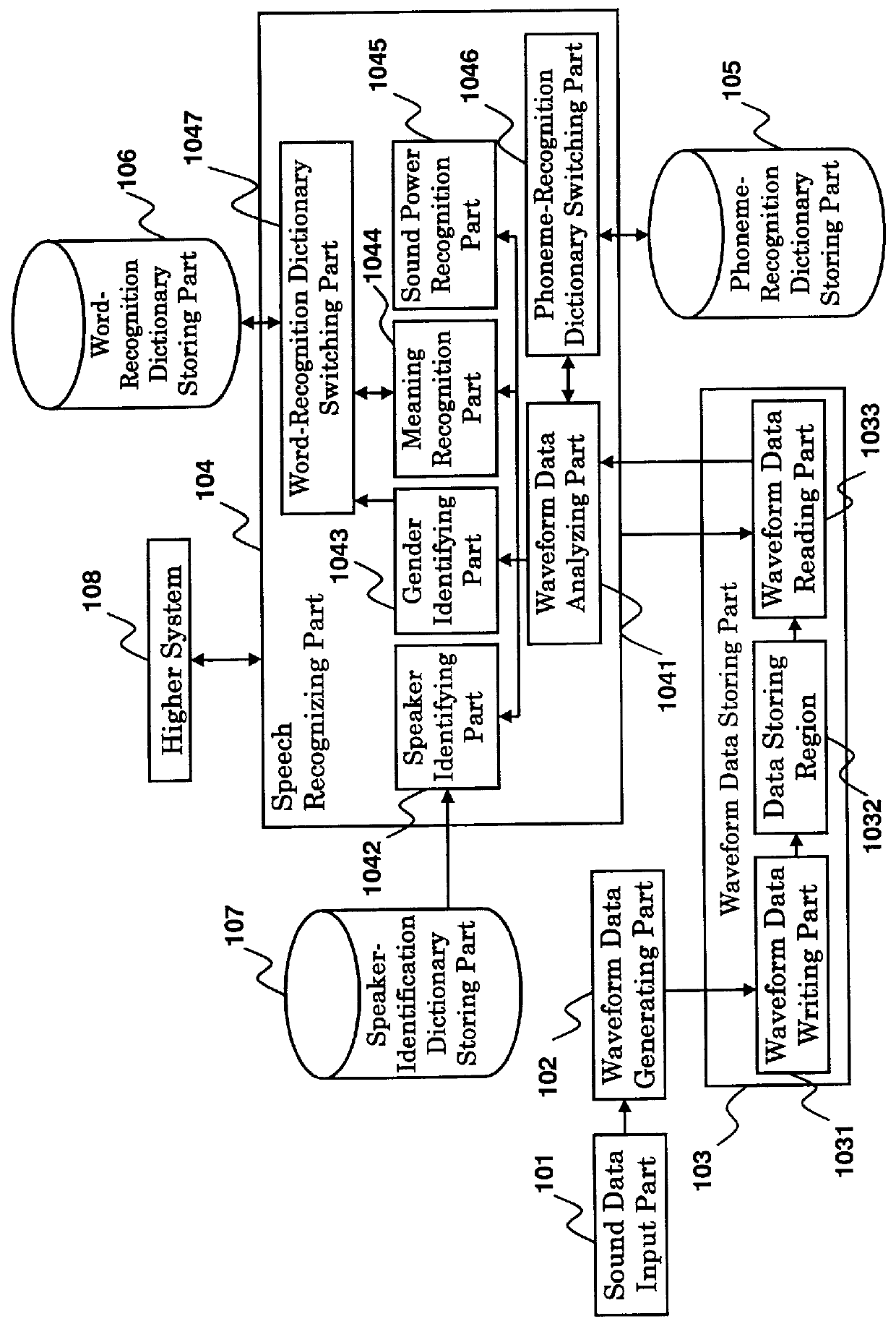

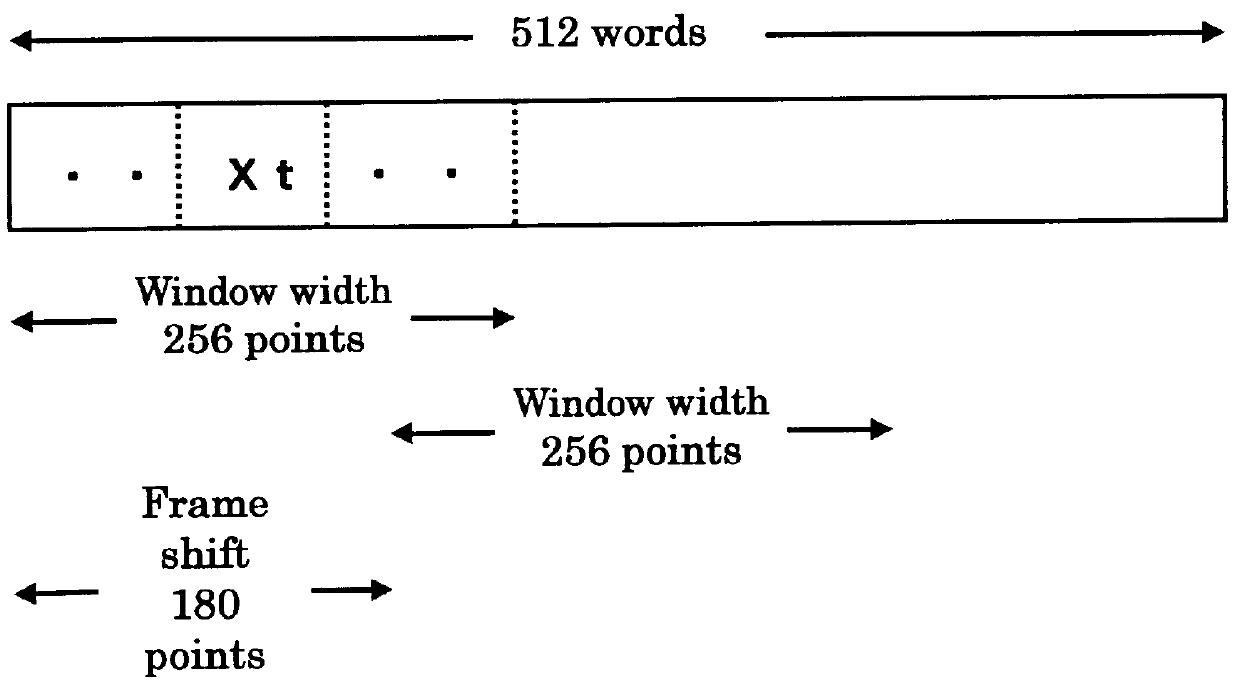

Speech recognizer using speaker categorization for automatic reevaluation of previously-recognized speech data

A speech recognizer includes storing means for storing speech data, and reevaluating means for reevaluating the speech data stored in the storing means in response to a request from a data processor utilizing the results of speaker categorization (the results of categorization with speaker categorization means such as a gender-dependent speech model or speaker identification). Thus, the present invention makes it possible to correct the speech data that has been wrongly recognized before.

Owner:FUJITSU LTD

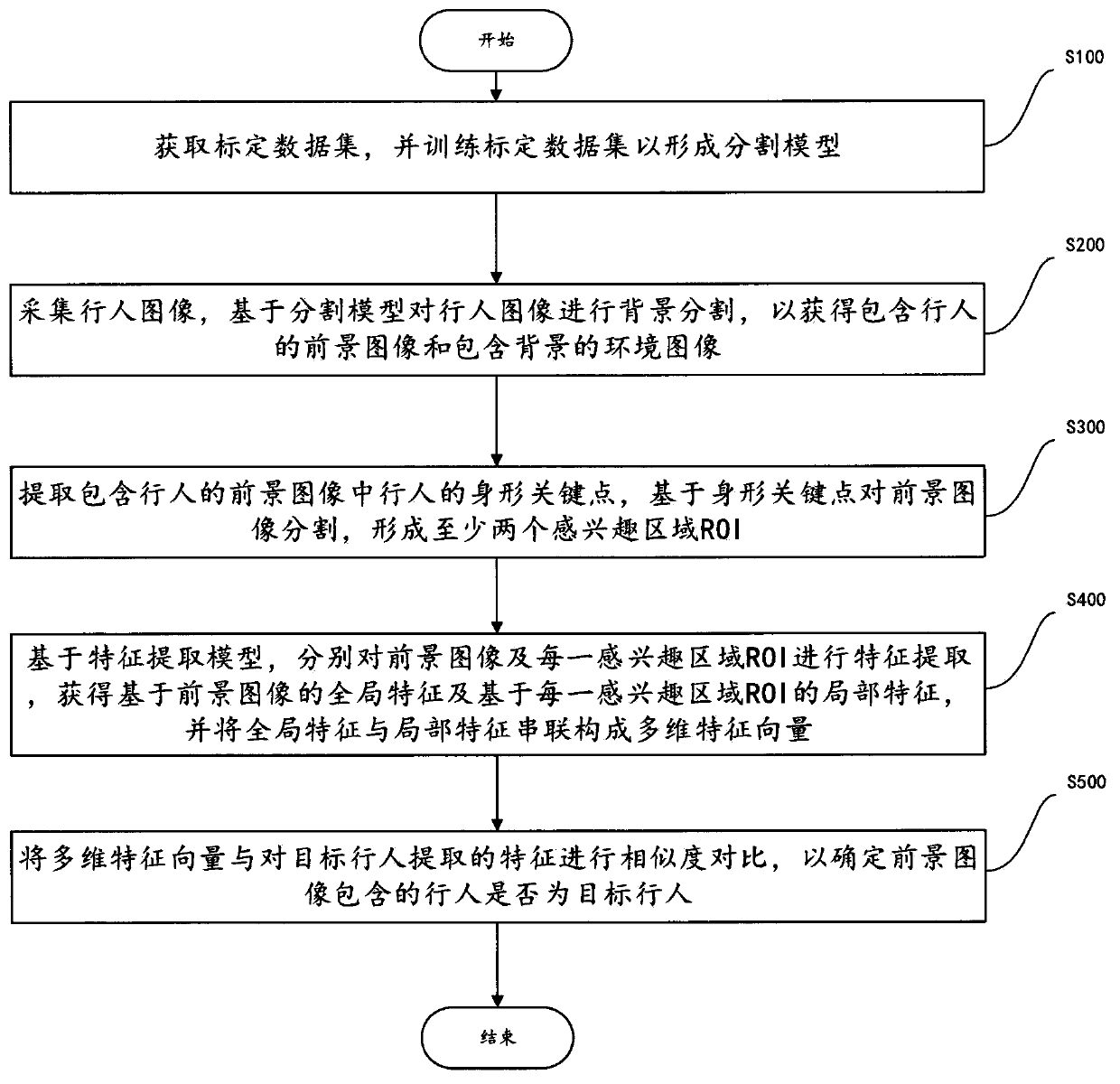

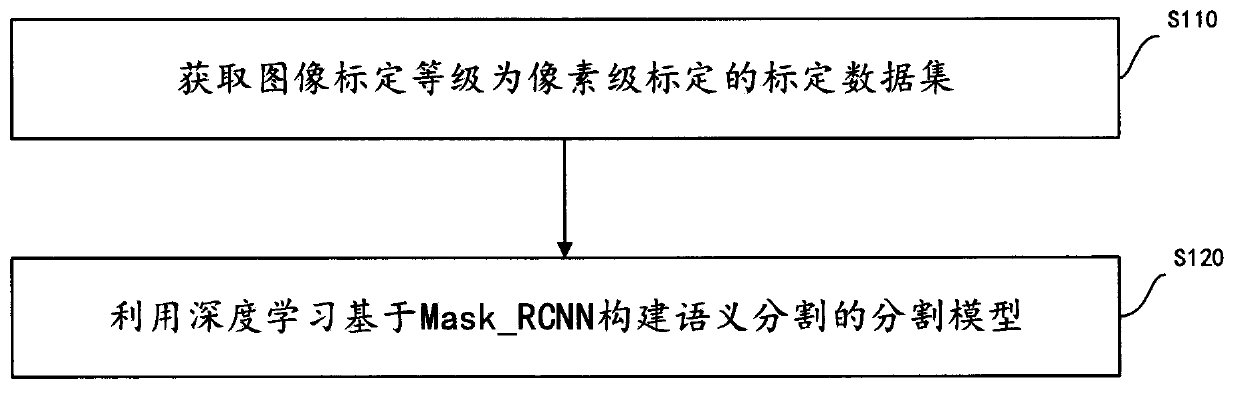

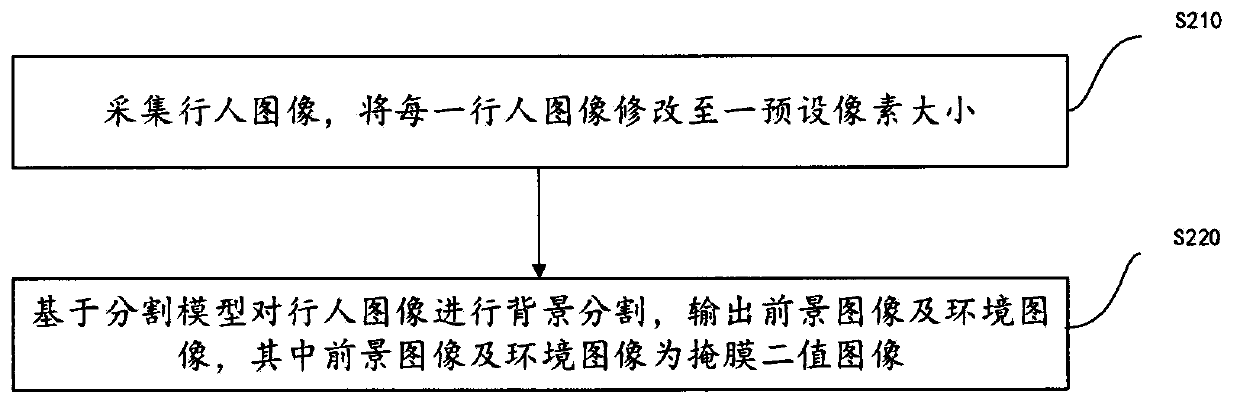

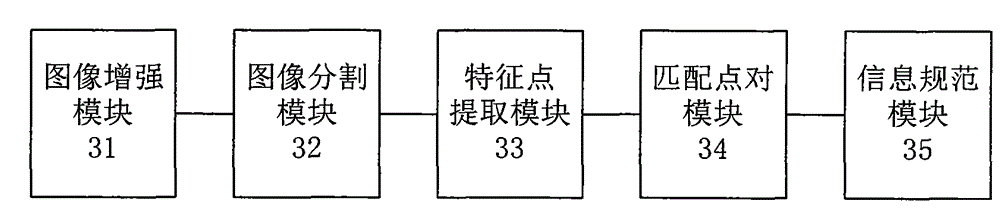

Pedestrian re-identification method and system and computer readable storage medium

InactiveCN109934177ARemove redundant featuresImprove accuracyCharacter and pattern recognitionNeural architecturesFeature vectorData set

The invention provides a pedestrian re-identification method and system, a computer readable storage medium. The pedestrian re-identification method comprises the following steps: obtaining a calibration data set, and training the calibration data set to form a segmentation model; acquiring a pedestrian image, and segmenting the background of the pedestrian image to obtain a foreground image and an environment image; extracting body-shaped key points of pedestrians in the foreground image containing the pedestrians, and segmenting the foreground image based on the body-shaped key points to form an ROI; extracting features of the foreground image and the ROI of the region of interest based on a feature extraction model to obtain global features and weighted features, and connecting the global features and the weighted features in series to form a multi-dimensional feature vector; and performing similarity comparison on the multi-dimensional feature vector and features extracted from thetarget pedestrian to determine whether the pedestrian is the target pedestrian. By removing background images of pedestrians captured under different cameras, redundant features during feature extraction are eliminated, recognition results of pedestrian re-recognition are only based on pure features, and the occurrence of false recognition is reduced.

Owner:艾特城信息科技有限公司

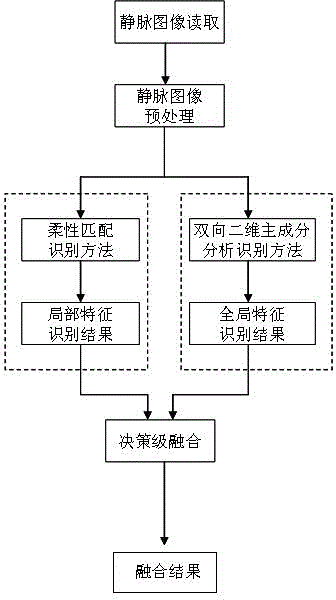

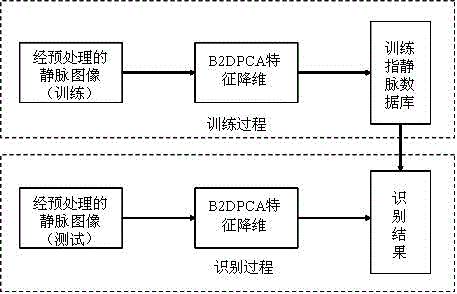

Finger vein recognition method fusing local features and global features

InactiveCN103336945AIdentification helpsOvercome limitationsCharacter and pattern recognitionVeinFinger vein recognition

The invention discloses a finger vein recognition method fusing local features and global features. At present, a number of vein recognition methods adopt the local features of a vein image, so that the recognition precision of the vein recognition methods is greatly affected by the quality of the image; the phenomena of rejection and false recognition are liable to appear. The finger vein recognition method provided by the invention comprises the following steps: firstly, performing pretreatment operations such as finger area extraction of a read-in finger vein image, binarization and the like; then, according to the point set of extracted detail features, realizing the matching of the local features within a certain angle and a certain radius by virtue of a flexible matching-based local feature recognition module; using a global feature recognition module for vein image recognition to realize the matching of the global features as the global feature recognition module is used for analyzing bidirectional two-dimensional principal components and can better display a two-dimensional image data set on the whole; finally, designing weights according to the correct recognition rates of the two recognition methods, performing decision-level fusion to the results of two classifiers, and taking the fused result as a final recognition result. The method is applied to finger vein recognition.

Owner:HEILONGJIANG UNIV

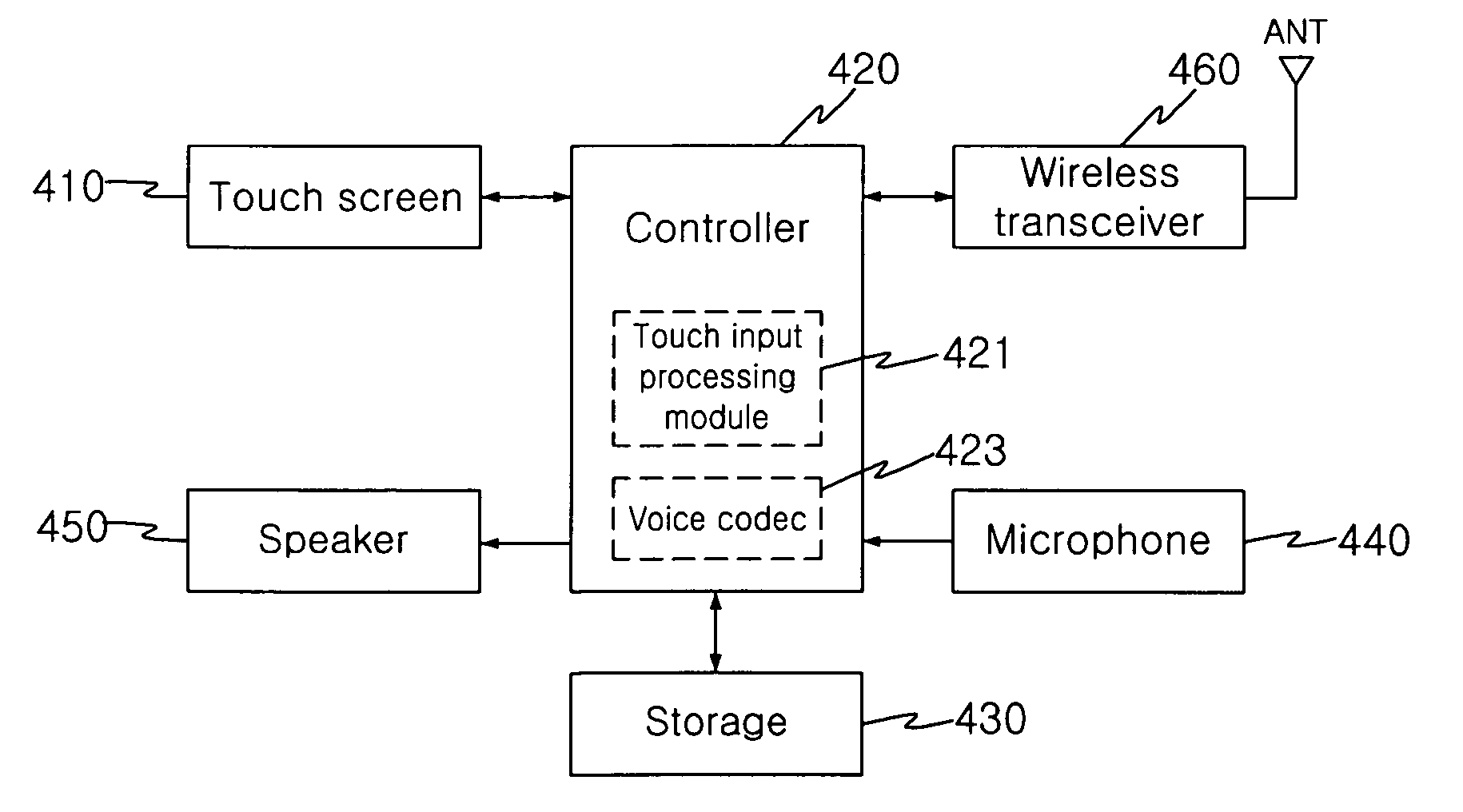

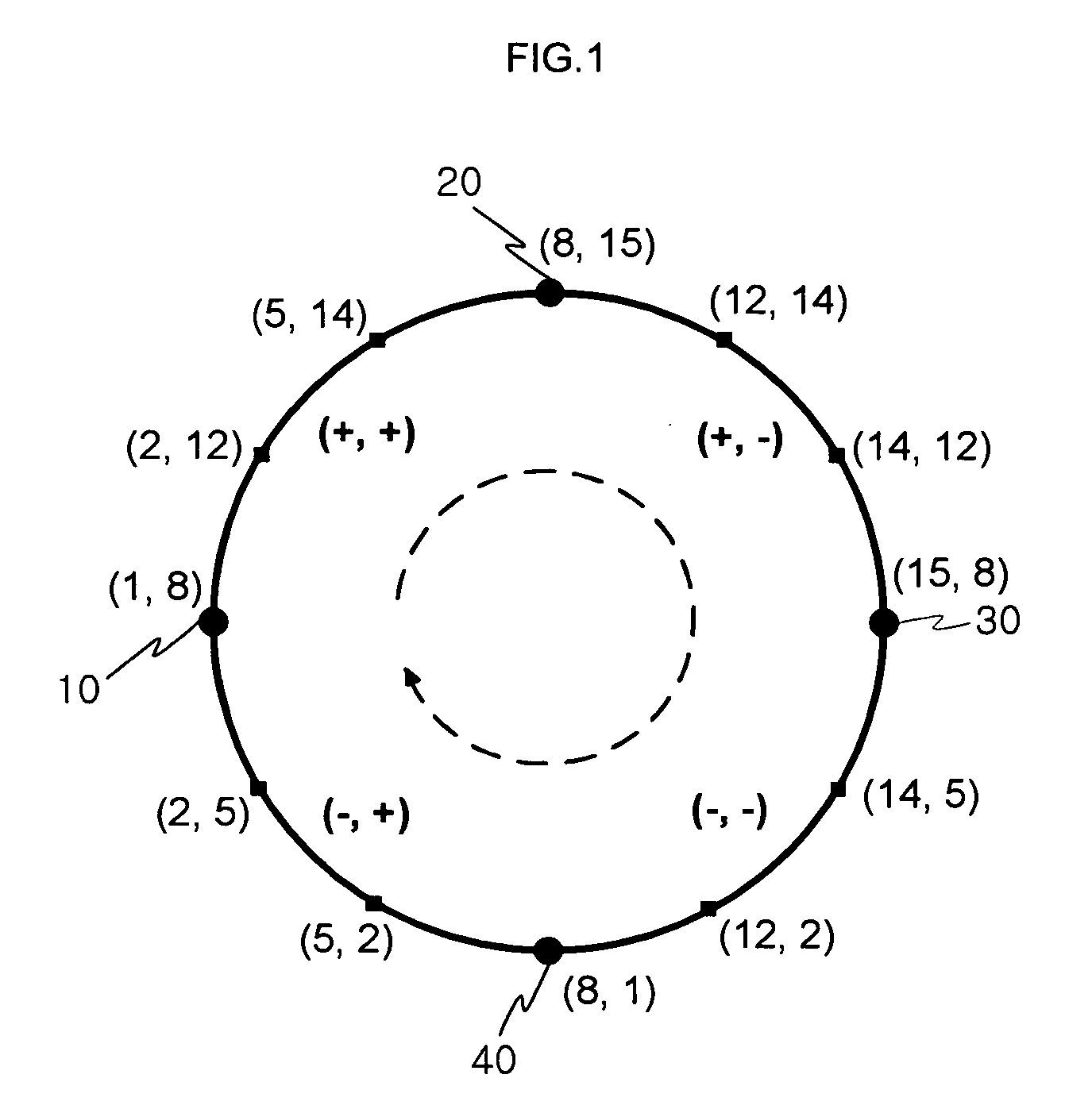

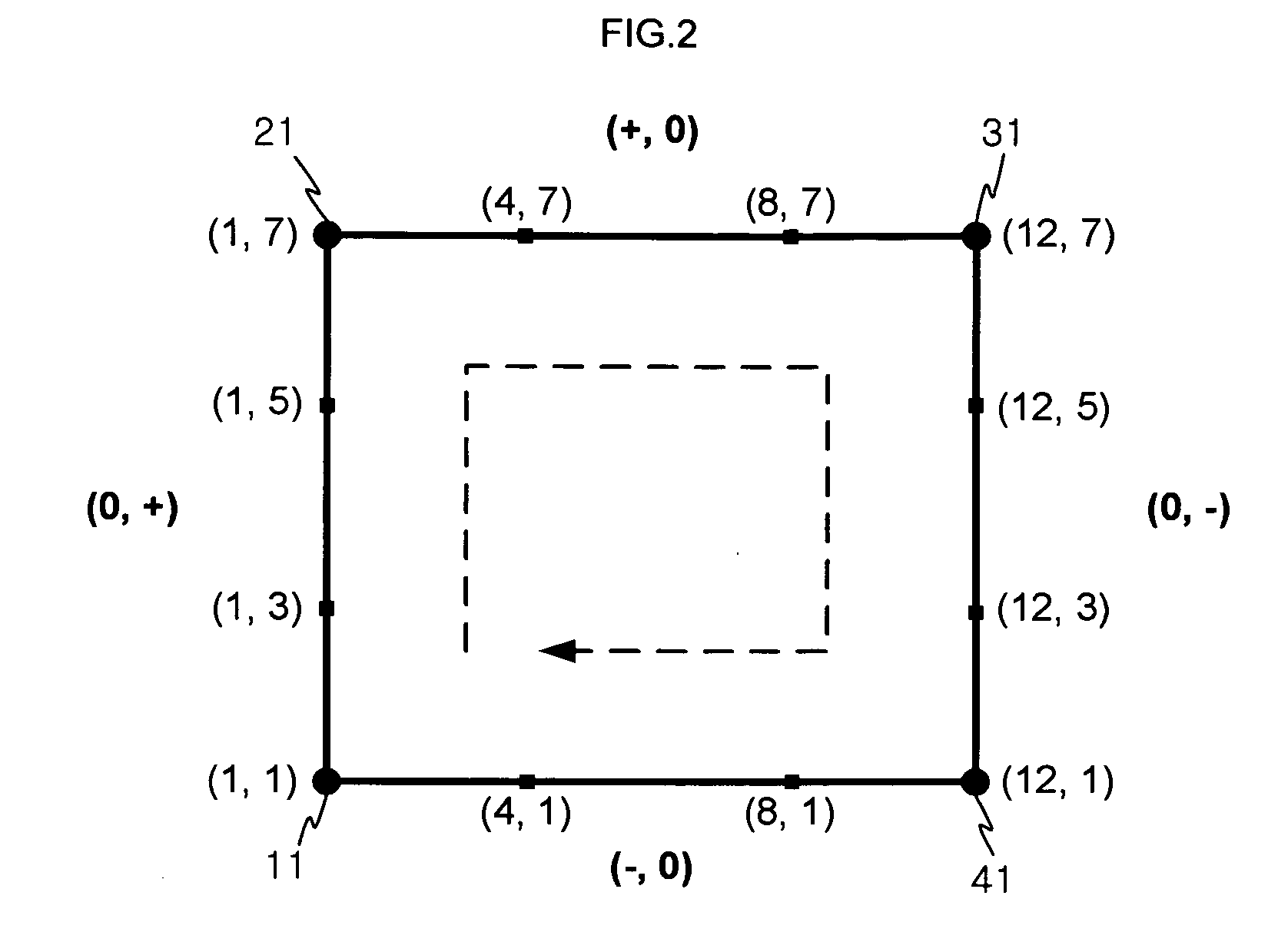

Touch input recognition methods and apparatuses

InactiveUS20090289905A1Improve accuracyReduce processing loadInput/output processes for data processingComputer visionFalse recognition

Touch input recognition methods and apparatuses can increase the accuracy of the recognition of touch input from user and reduce processing load in recognizing the touch input. When at least one coordinate value corresponding to a touch trace is received at a given time interval, an inflection point based on the received at least one coordinate value is determined. When a predetermined coordinate value of the at least one coordinate value is a coordinate value of the inflection point, a symbol value is set based on an increment / decrement feature of at least one coordinate value provided before the predetermined coordinate value. Finally, an event corresponding to the set symbol value is retrieved. Thus, the event corresponding to a touch input from a user is retrieved and executed based on an increment / decrement feature of a coordinate value, thereby reducing a processing load and a false recognition rate.

Owner:KT TECH

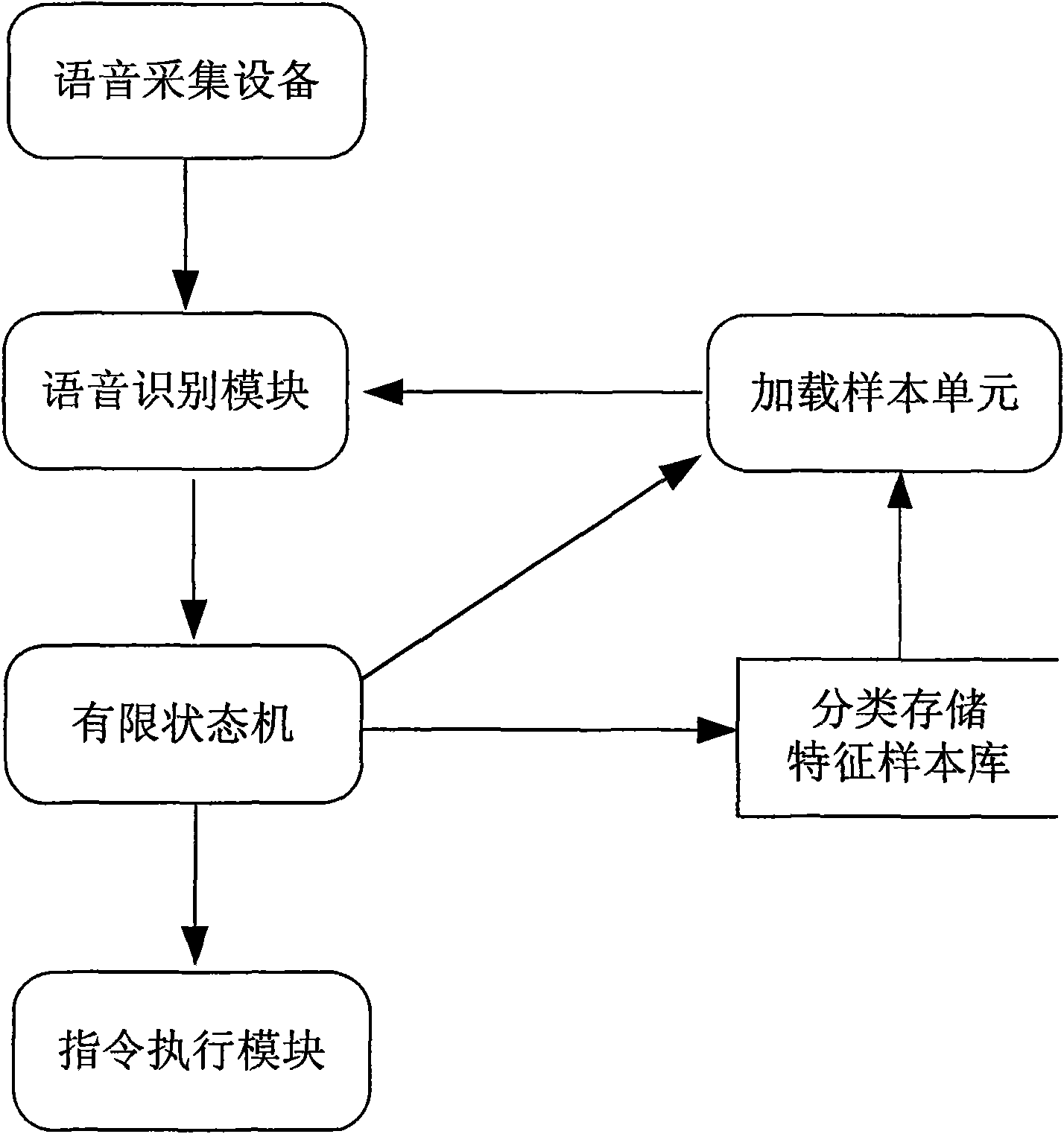

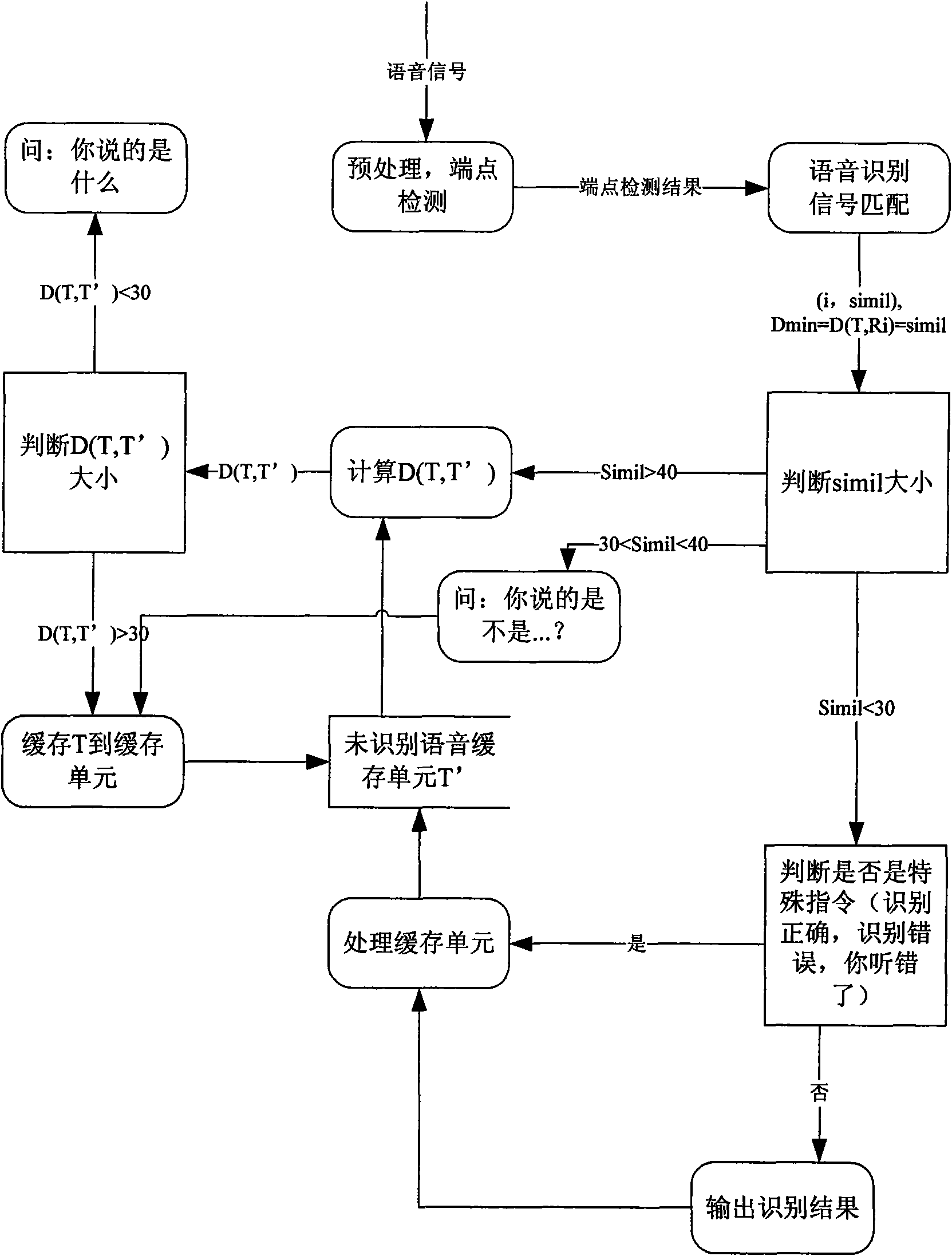

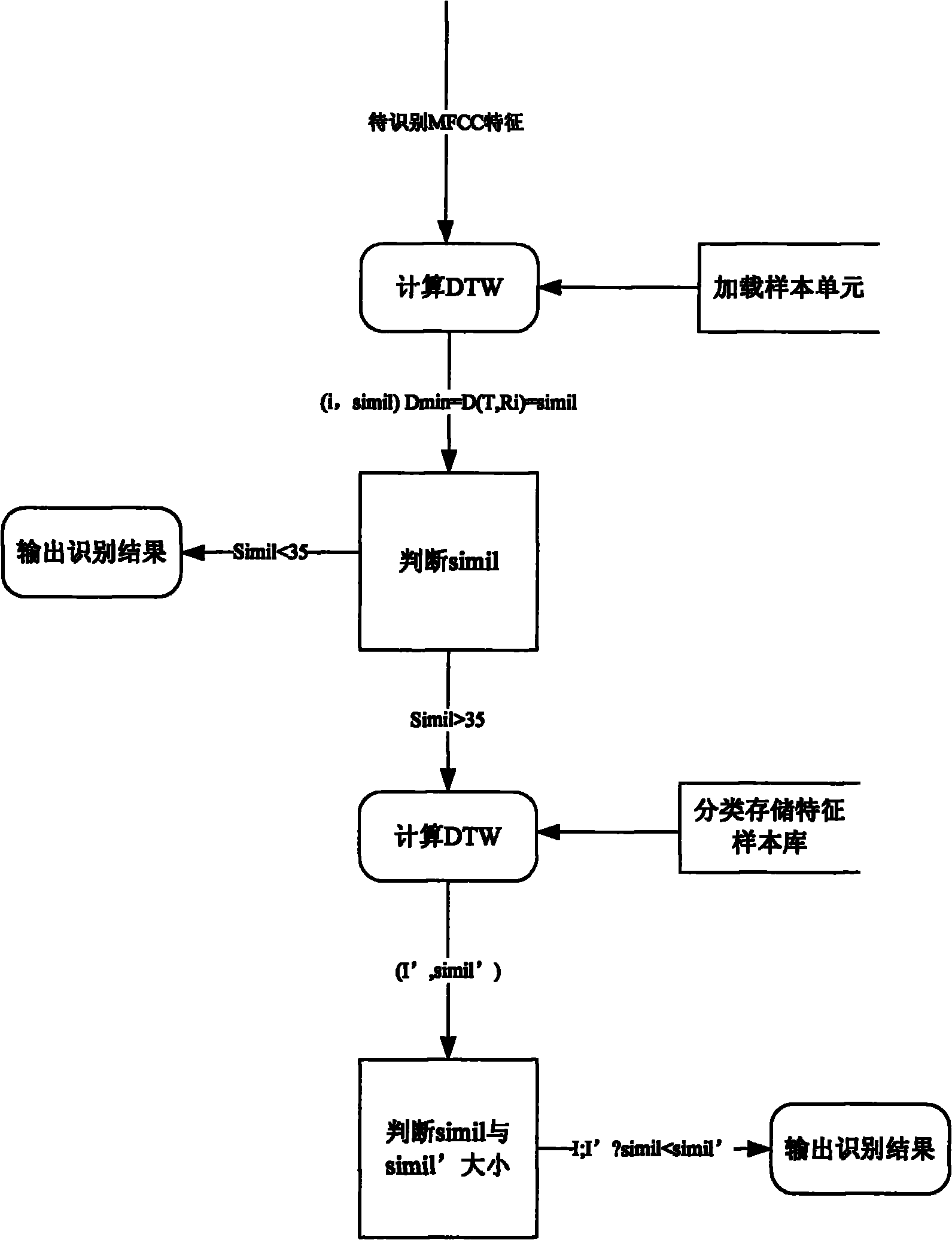

Extensible audio recognition method based on man-machine interaction

InactiveCN101923857AReduced ability to recognizeReduce overheadSpeech recognitionFinite-state machineSpeech identification

The invention belongs to the technical field of audio processing, and relates to an extensible audio recognition system based on man-machine interaction and a method thereof. The extensible audio recognition system comprises an audio acquisition device, a voice recognition module, a loading sample unit, a finite-state machine, a classification storage characteristic sample database and an instruction execution module. The audio recognition method is based on high recognition rate of isolate word speed recognition to a speaker dependent, and enables the system to store voice segments which can not be recognized into the sample database in an online learning mode after a process of man-machine interaction through the assistance of a user on the premise of fully training the user, and in addition, the cost to recognition is reduced through divided module storage and loading. The core algorithm of the invention is based on voice signals, is not limited to languages of speakers, and can support the recognition of mixed languages (for example, Chinese and English and the like). The method has lower false recognition rate and no recognition rate, and improves the reliability and adaptability of the system through dialogue interaction and online increment training.

Owner:FUDAN UNIV

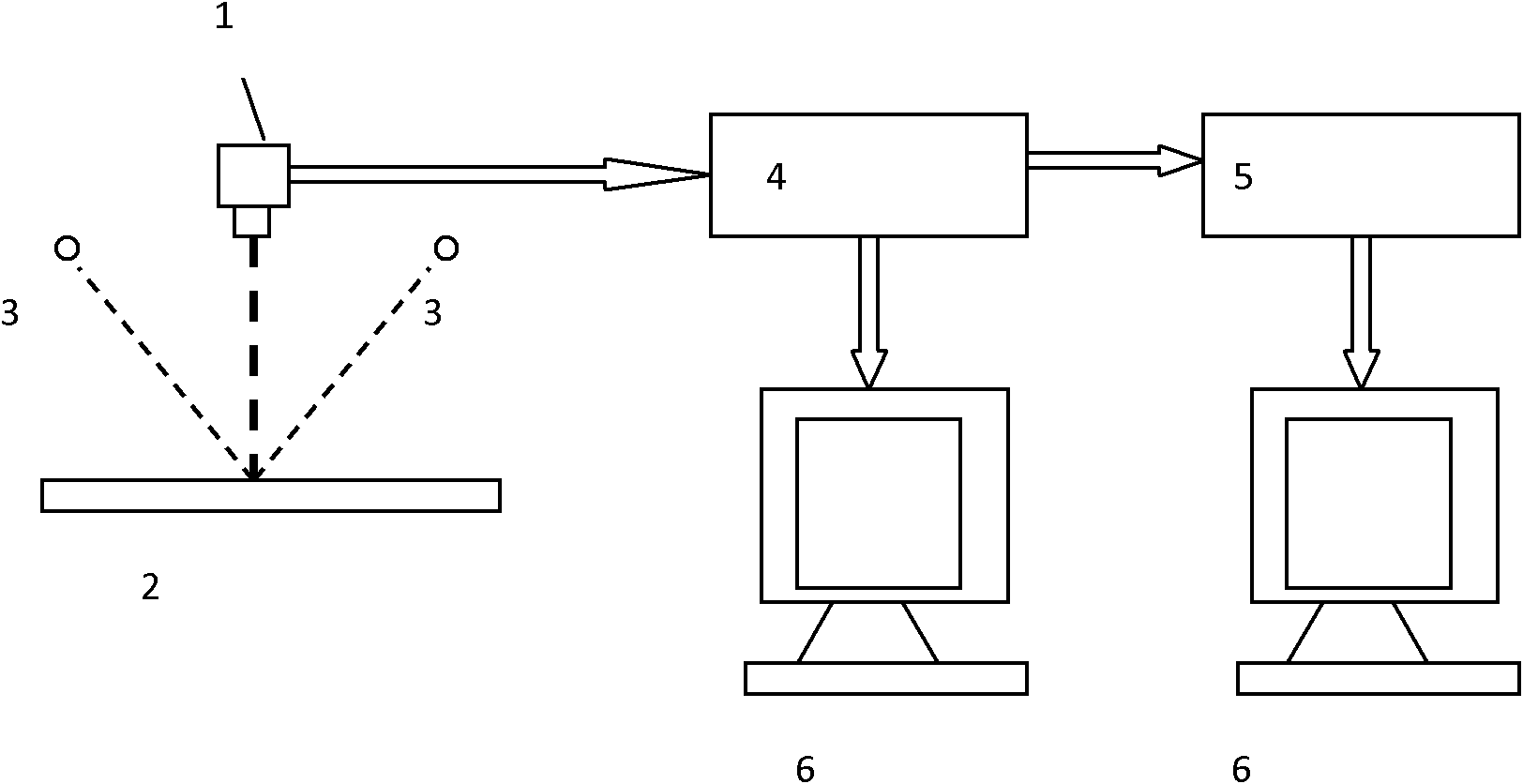

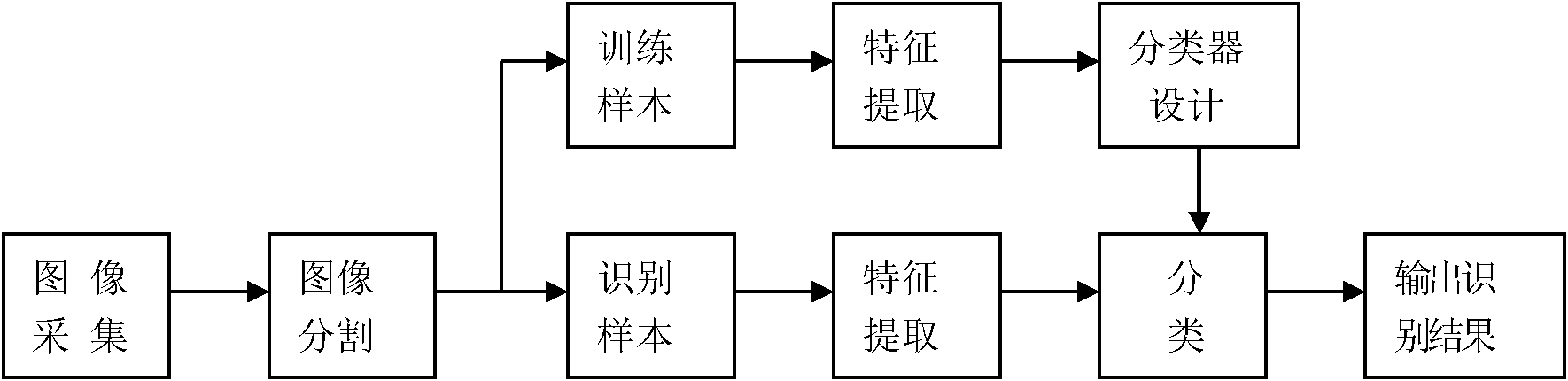

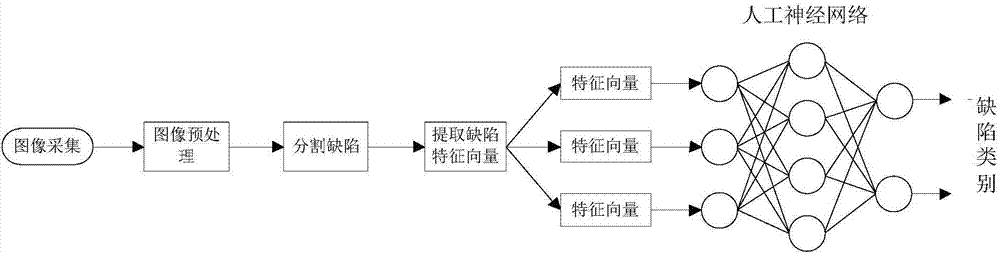

System and method for recognizing different defect types in paper defect visual detection

InactiveCN102095731ATake full advantage of data processing capabilitiesEasy to useCharacter and pattern recognitionOptically investigating flaws/contaminationImaging processingFalse recognition

The invention relates to a system and a method for recognizing different defect types in paper defect visual detection. The system and the method have high recognition rate and high speed, and can recognize different defect types in paper defect visual detection with the average recognition rate of 97.5% and false recognition rate of 2.5%, thereby substantially achieving exhaustive detection and recognition of defective images. The time for entire defect recognition process is less than 10 ms, and can substantially meet the time requirement for paper real-time detection under the actual production conditions. The system comprises an image pickup device matching with the paper sheet to be detected, and a light source matching with the paper sheet to be detected, wherein the image pickup device is connected with an image capture card; the image capture card transmits the obtained image to a computer and a DSP (digital signal processor) image processing unit; and the DSP image processing unit is connected with the computer.

Owner:QILU UNIV OF TECH

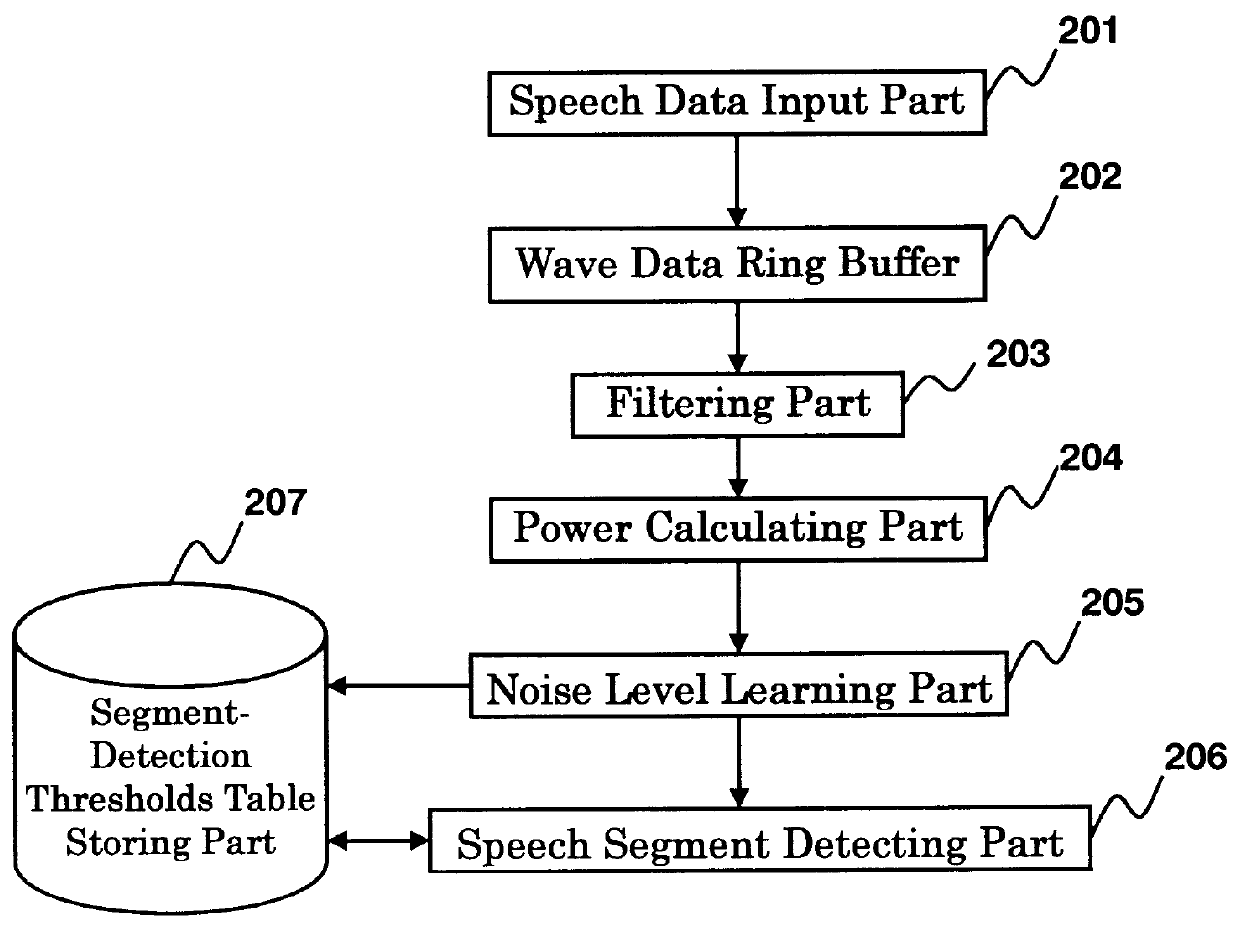

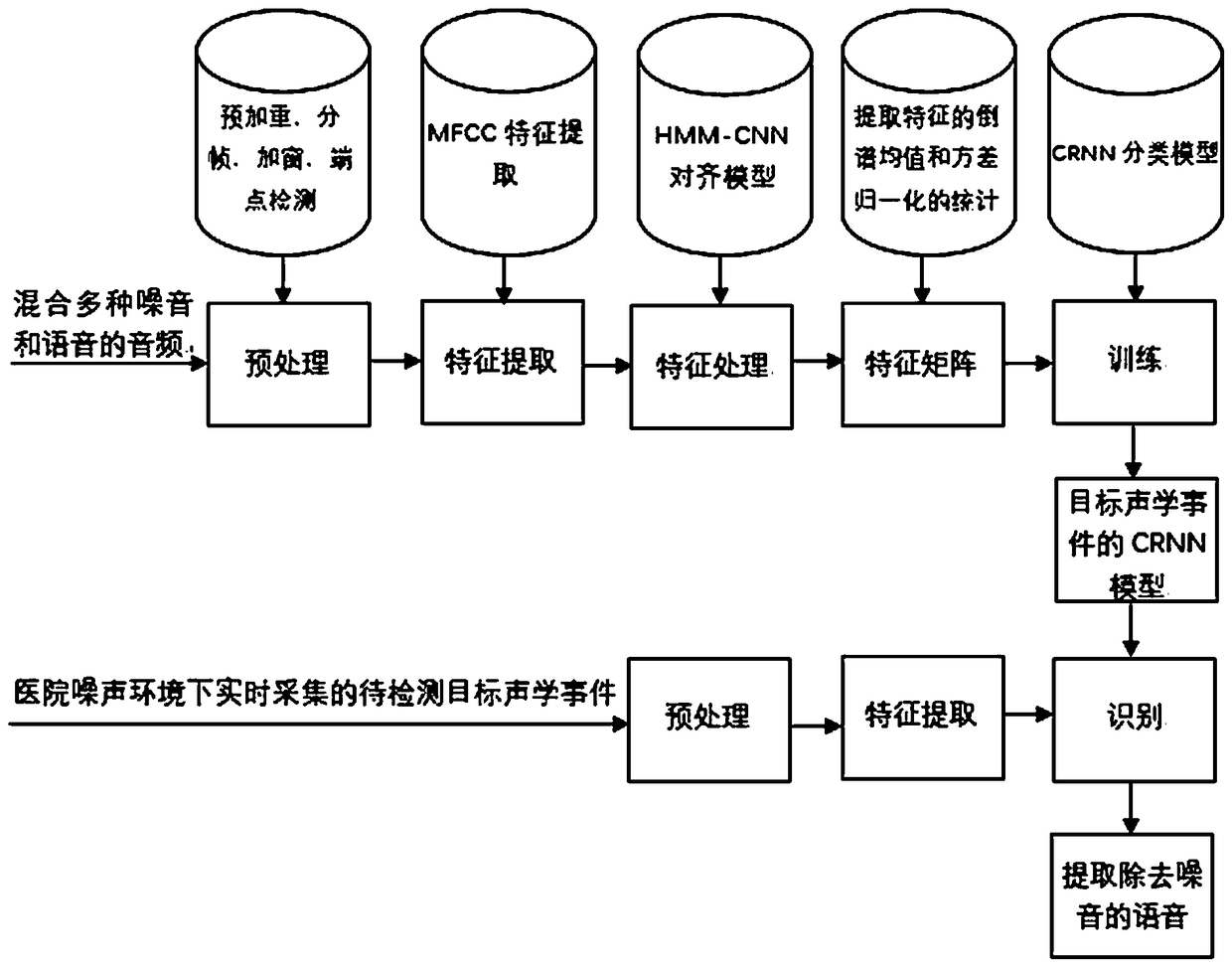

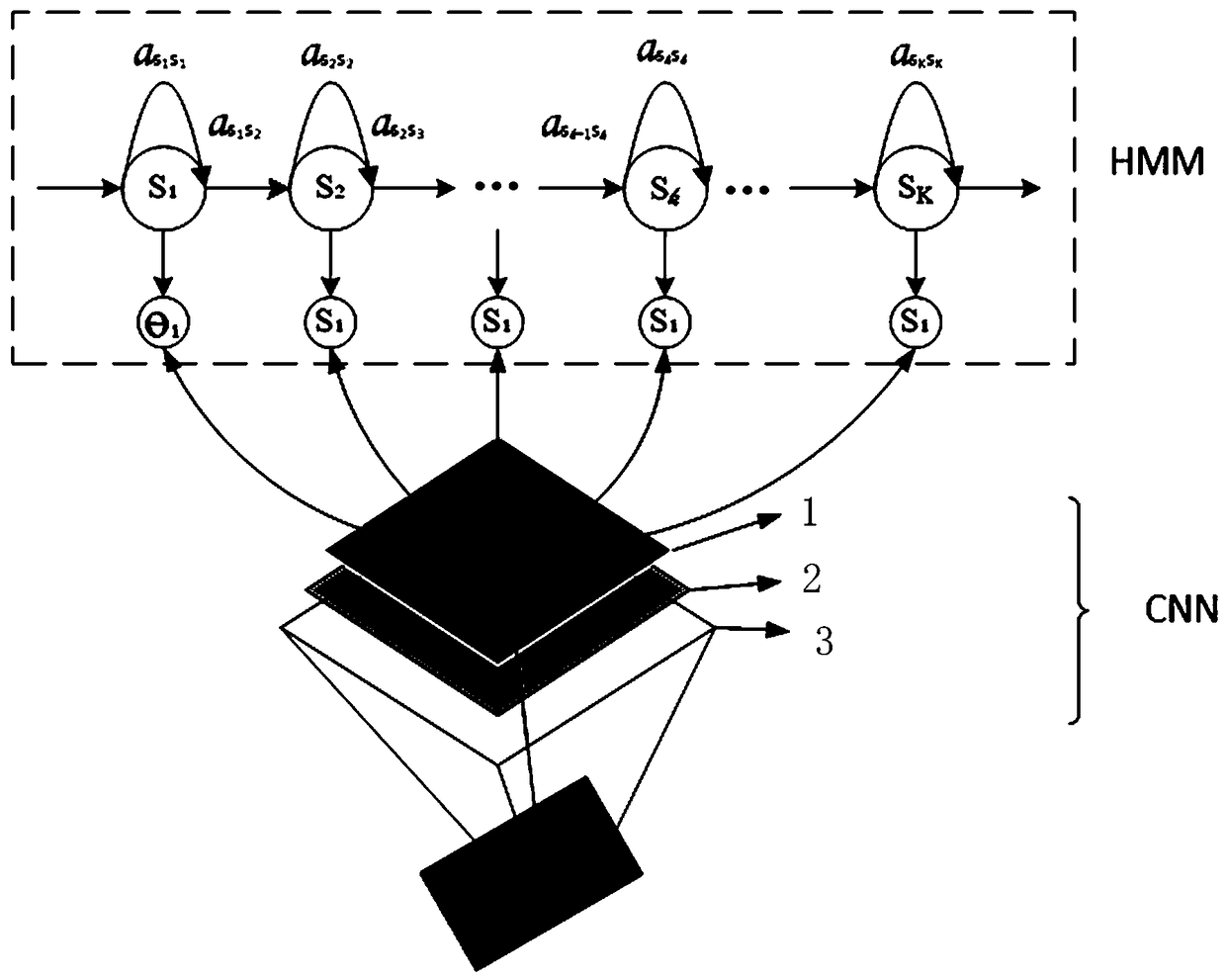

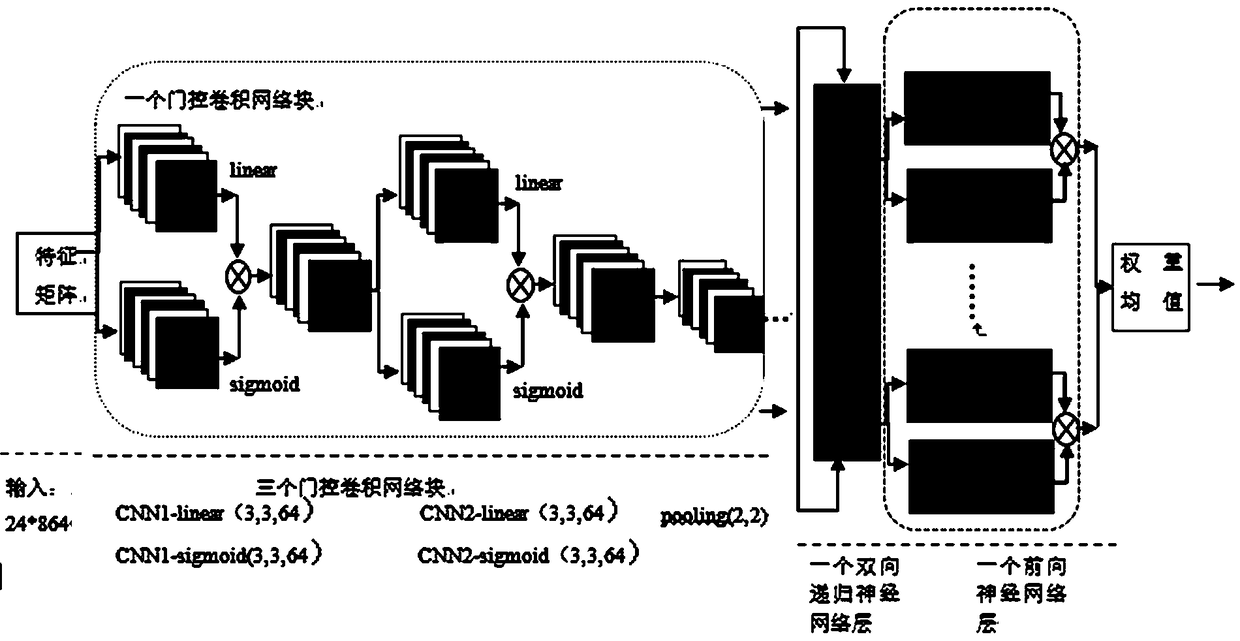

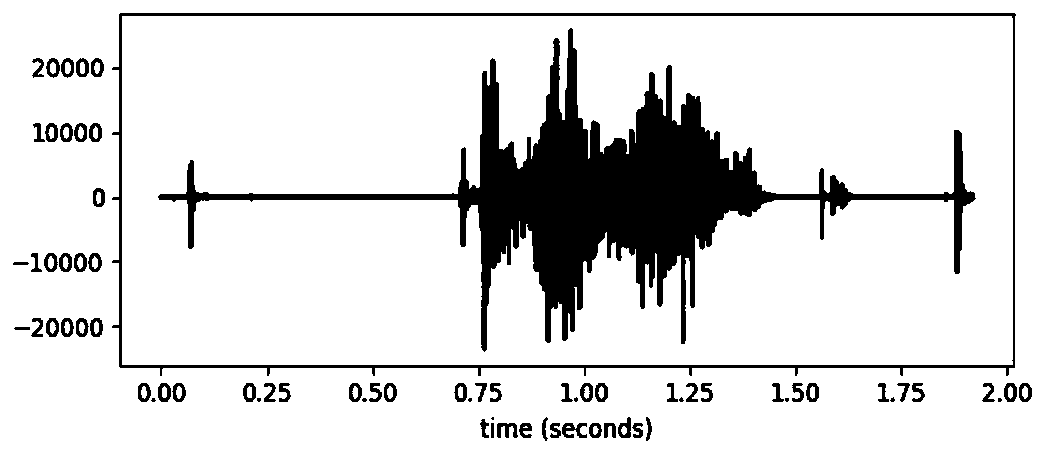

Acoustic event detecting method under hospital noise environment

InactiveCN108648748AImprove recognition rateAchieve robustnessSpeech recognitionMedical recordFalse recognition

The invention relates to an acoustic event detecting method, in particular to an acoustic event detecting method under the hospital noise environment. According to the acoustic event detecting methodunder the hospital noise environment, voice is accurately recognized to be text, the recognition rate of an electronic medical record input through the voice is increased, and the false recognition rate is decreased. The acoustic event detecting method comprises the following steps that 1, feature intercepting is conducted on an audio signal of each acoustic event, and audio clips of the audio signals are marked correspondingly; 2, an MFCC feature coefficient of each target acoustic event in audio is extracted; 3, voice phonemes are aligned; 4, feature matrixes of the voice are generated; 5, aCRNN model is established for each target acoustic event; 6, the audio signals of the to-be-detected target acoustic events collected in real time under the hospital noise environment are pre-processed, and then MFCC feature extraction is conducted; 7, the category of the to-be-detected target acoustic events is obtained; and 8, the audio clips irrelevant to the target acoustic events are filed out.

Owner:SHENYANG POLYTECHNIC UNIV

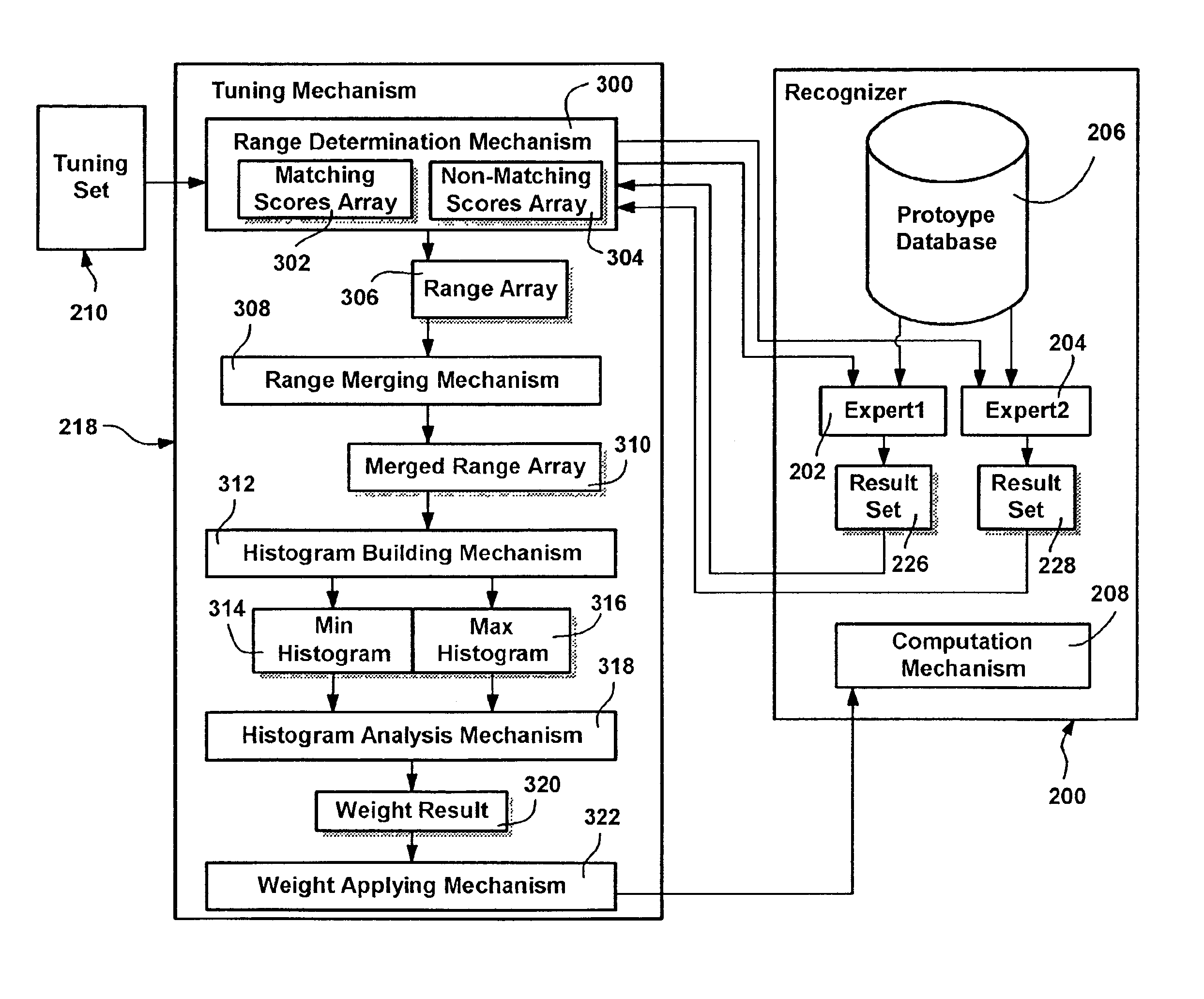

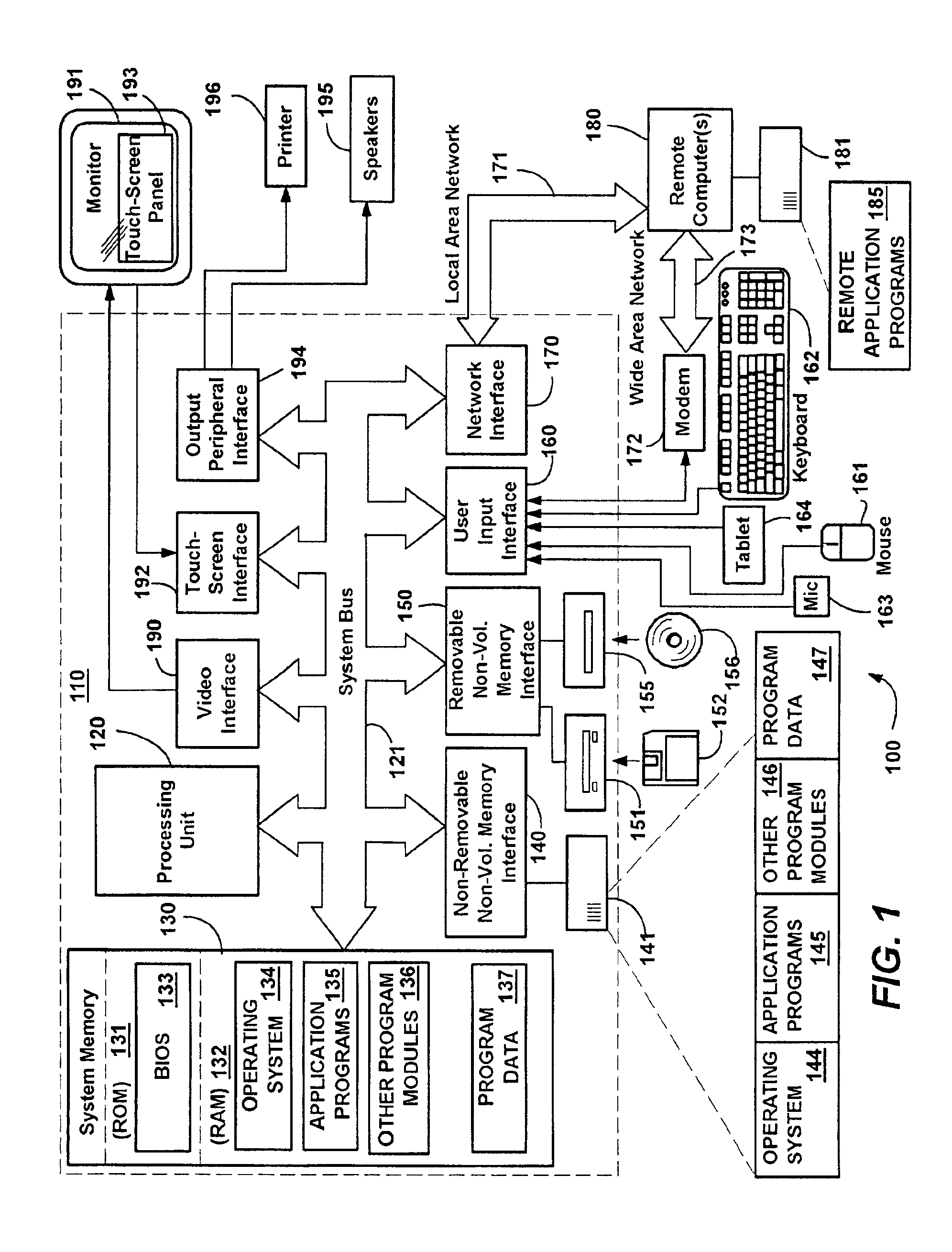

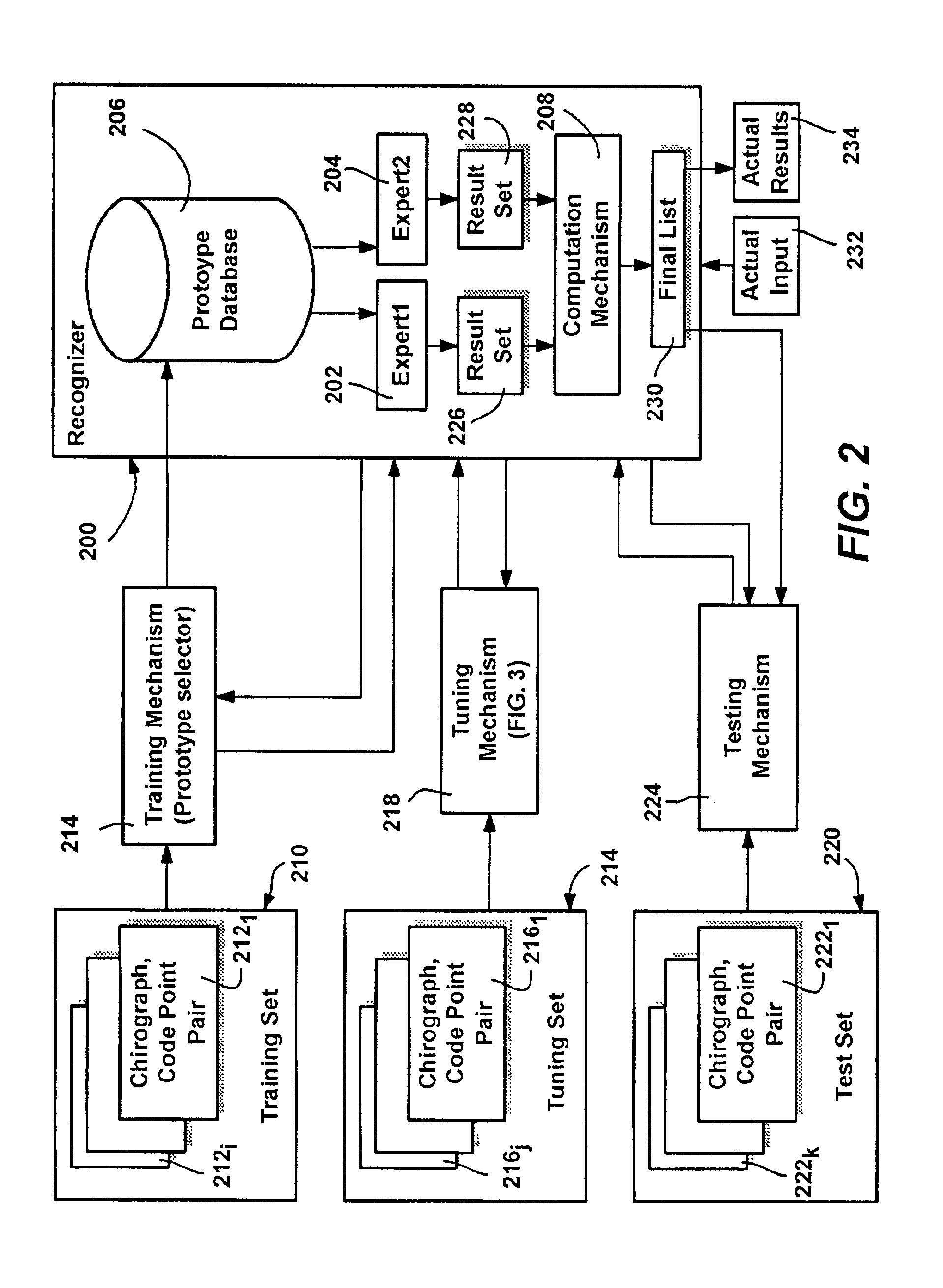

Efficient method and system for determining parameters in computerized recognition

ActiveUS6879718B2Increase valueDigital computer detailsCharacter and pattern recognitionFalse recognitionMultiple experts

In computerized recognition having multiple experts, a method and system is described that obtains an optimum value for an expert tuning parameter in a single pass over sample tuning data. Each tuning sample is applied to two experts, resulting in scores from which ranges of parameters that correct incorrect recognition errors without changing correct results for that sample are determined. To determine the range data for a given sample, the experts return scores for each prototype in a database, the scores separated into matching and non-matching scores. The matching and non-matching scores from each expert are compared, providing upper and lower bounds defining ranges. Maxima and minima histograms track upper and lower bound range data, respectively. An analysis of the histograms based on the full set of tuning samples provides the optimum value. For tuning multiple parameters, each parameter may be optimized by this method in isolation, and then iterated.

Owner:MICROSOFT TECH LICENSING LLC

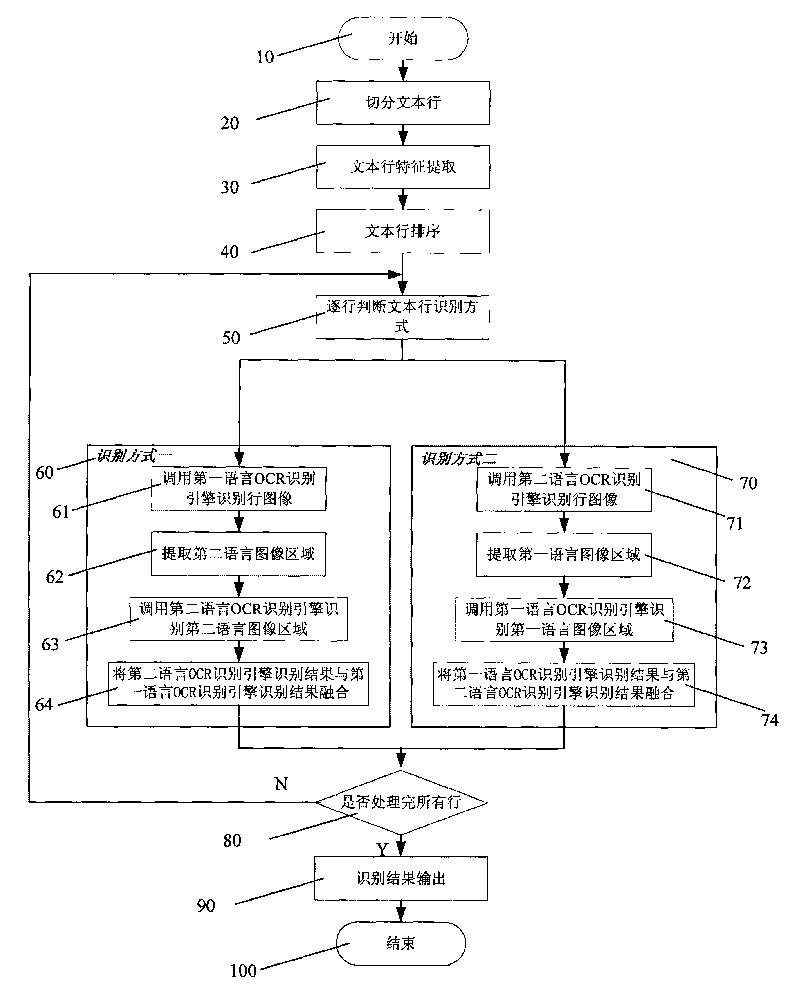

Quick text recognition method

ActiveCN101751567AImprove matchReduce false recognition rateCharacter and pattern recognitionText recognitionFalse recognition

The invention discloses a quick text recognition method, belonging to the OCR technical field. In the method, an OCR recognition engine is used for recognizing the mixing character images of two languages; firstly, a text is segregated into text lines; next, the text lines are arrayed according to the number of the characters of a first language or a second language included in each text line; then a Chinese OCR recognition engine is used for recognition to extract an English doubt region, and an English OCR recognition engine is used for recognition. If the current-line recognition result is an English line, the OCR recognition strategy at the next line is that: firstly, the English OCR recognition engine is used for recognition to extract a Chinese doubt region; then, the Chinese OCR recognition engine is used for recognition; and finally, the recognition results are mixed. The method improves the recognition speed, reduces the character misrecognition rate and provides the high-efficient version for an embedded device.

Owner:HANVON CORP

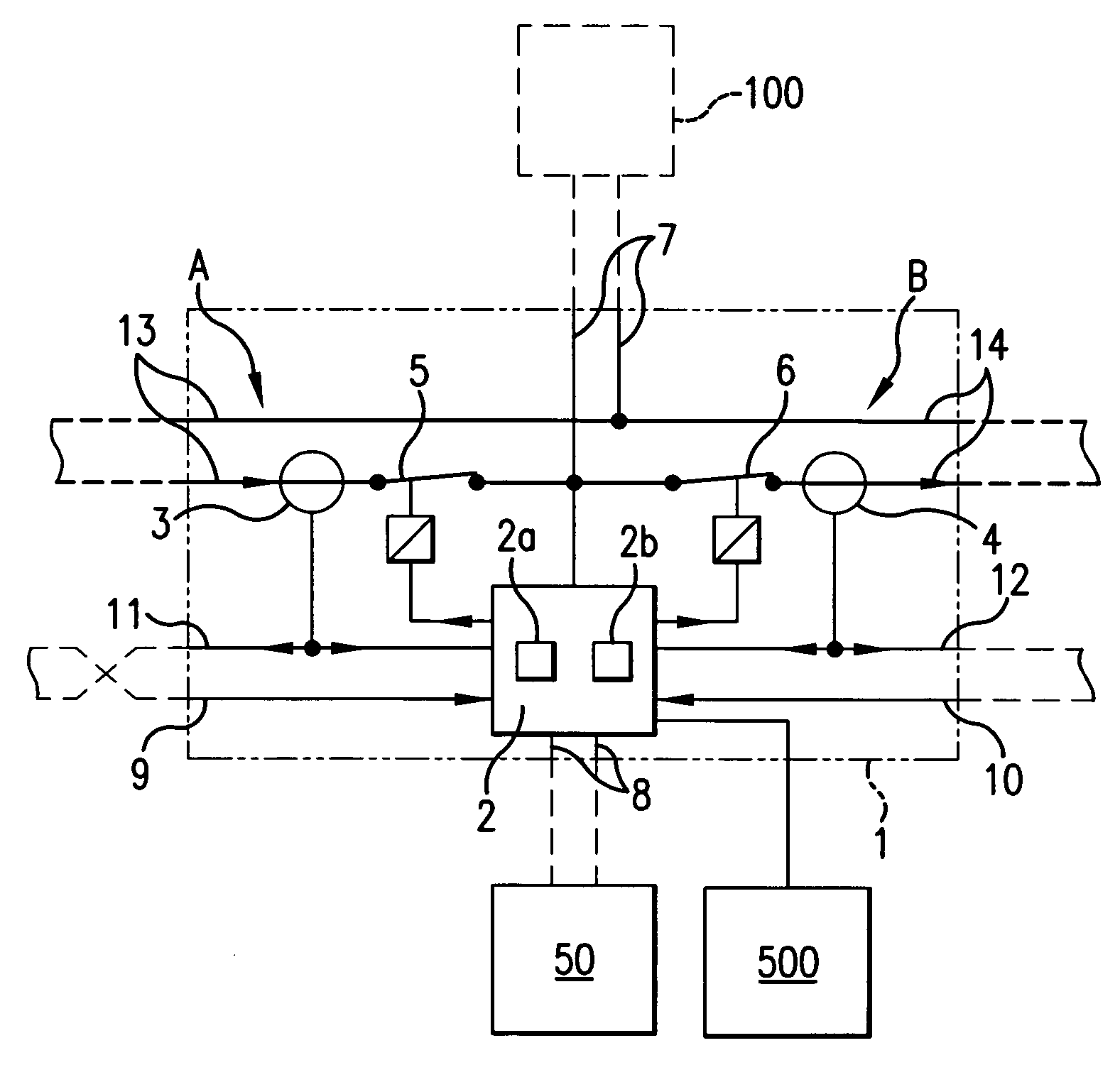

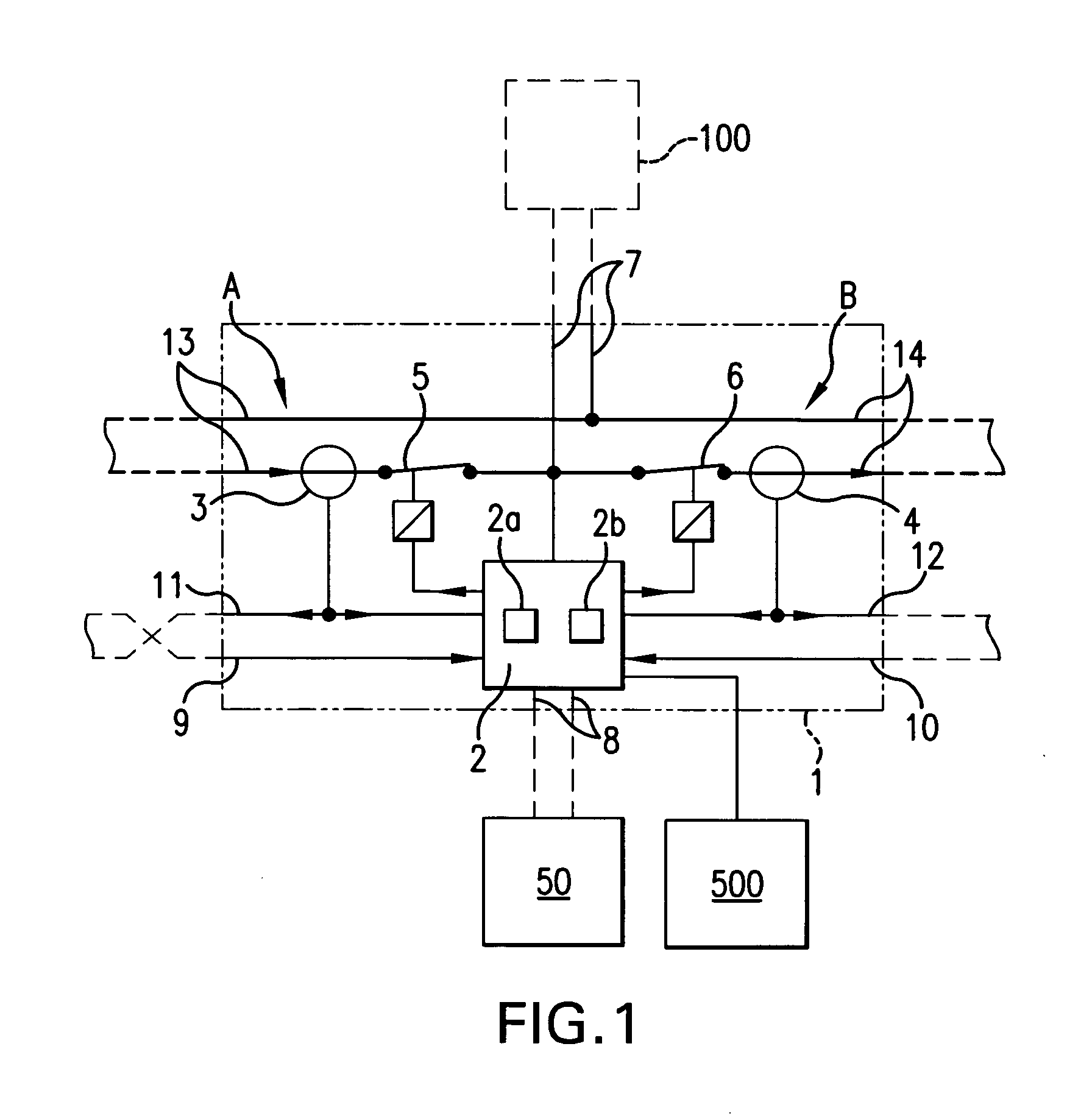

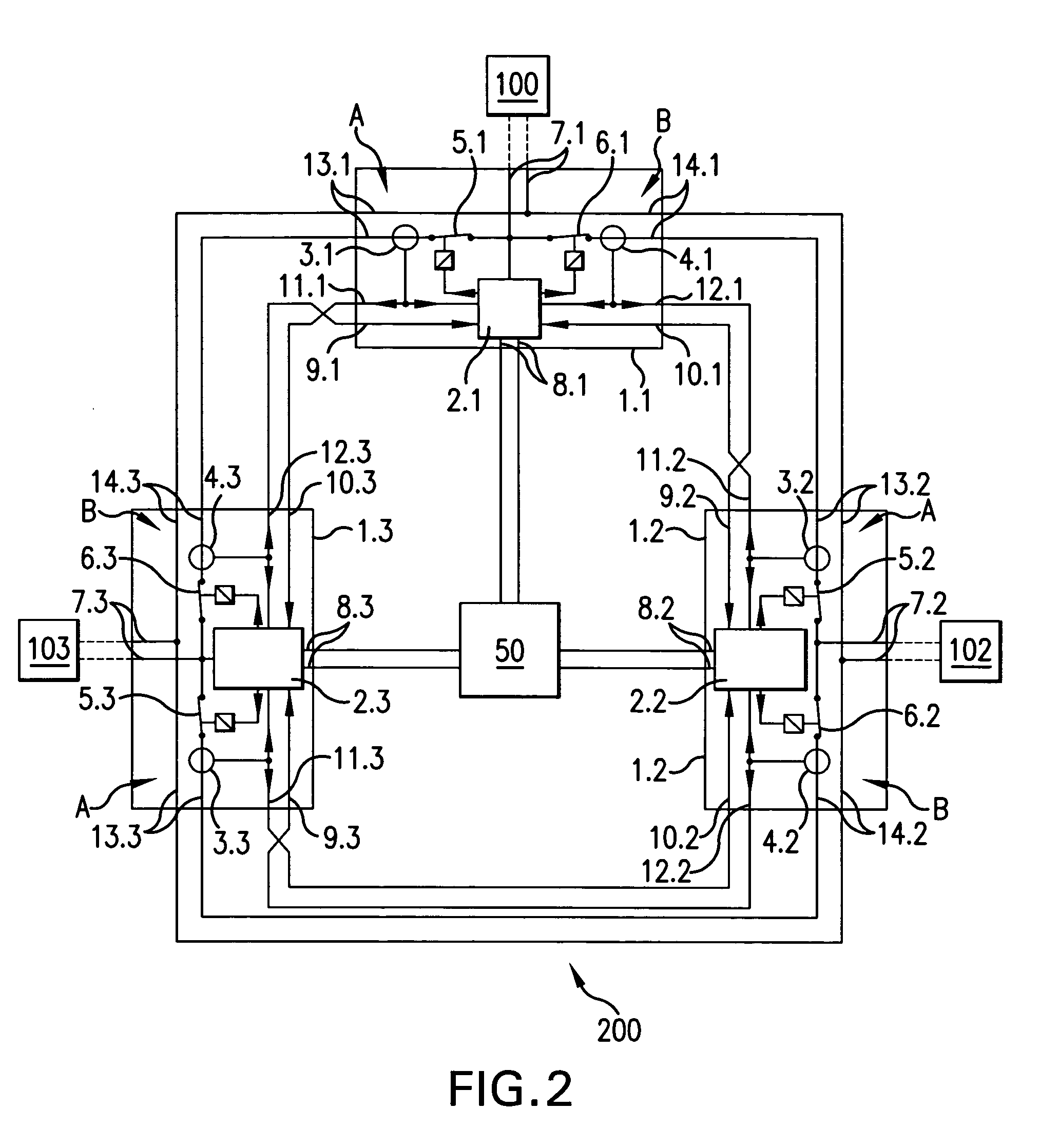

Error recognition for power ring

InactiveUS20050001431A1Long delayMaintain stable transmissionElectric devicesEmergency protective circuit arrangementsData connectionRing device

A power ring device that includes: a ring conductor; a plurality of controllers, each controller being connected to the ring conductor and includes a first control unit; a plurality of paired pick ups, wherein each pair connects either an electrical consumer device or an electrical power supply to the ring conductor and to one of the plurality of controllers, wherein a first pair of pick ups of a first controller is connected to a first power supply; and a plurality of current sensor signal lines connecting each control unit to form a data connection; wherein each controller has a right and left side, a right sided and left sided switch elements; and a right sided and left sided current sensors connected so that a first current value measured by the left sided current sensor of the first controller is transmitted as data to the first control unit of a second controller.

Owner:RHEINMETALL LANDSYST

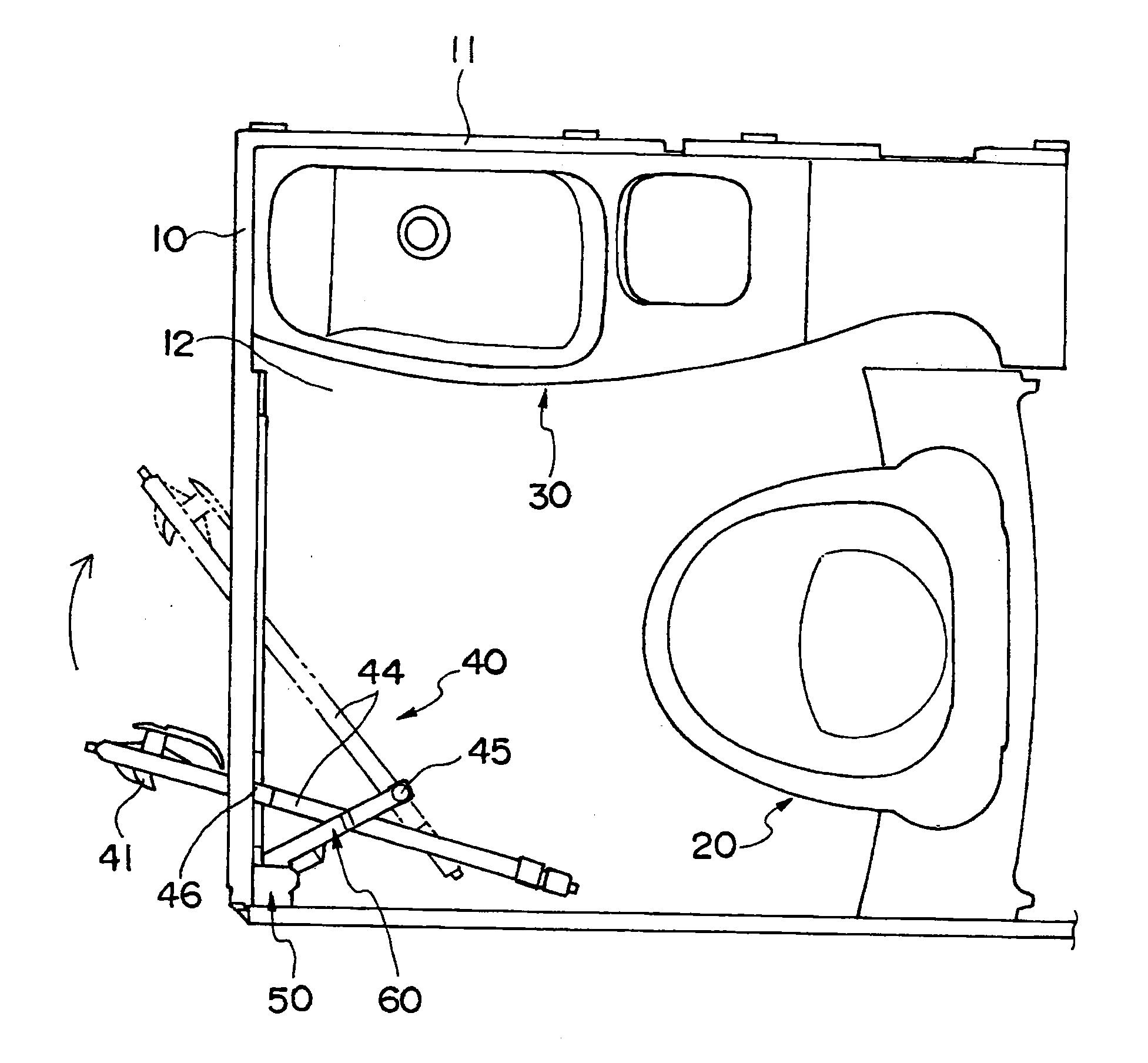

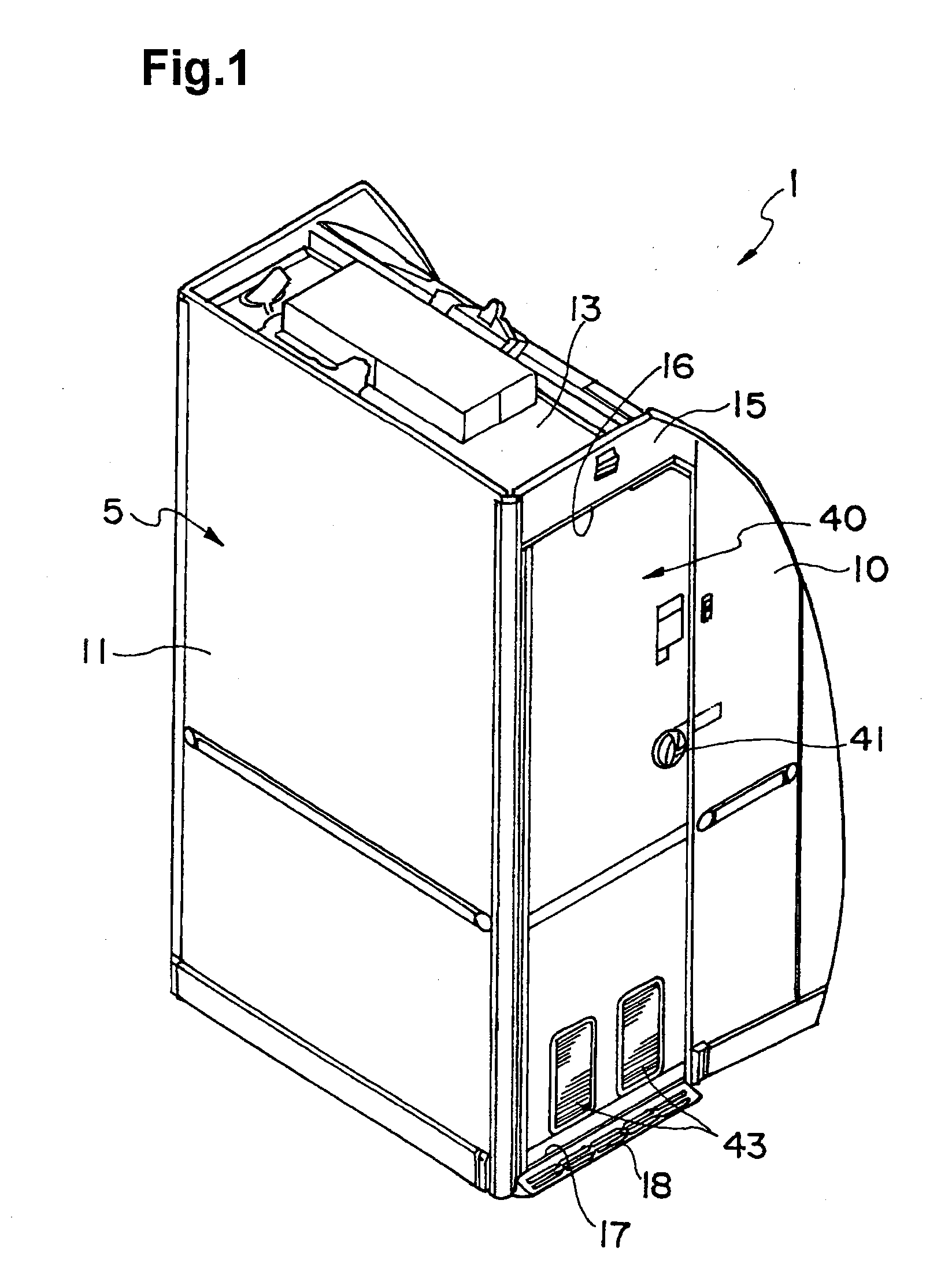

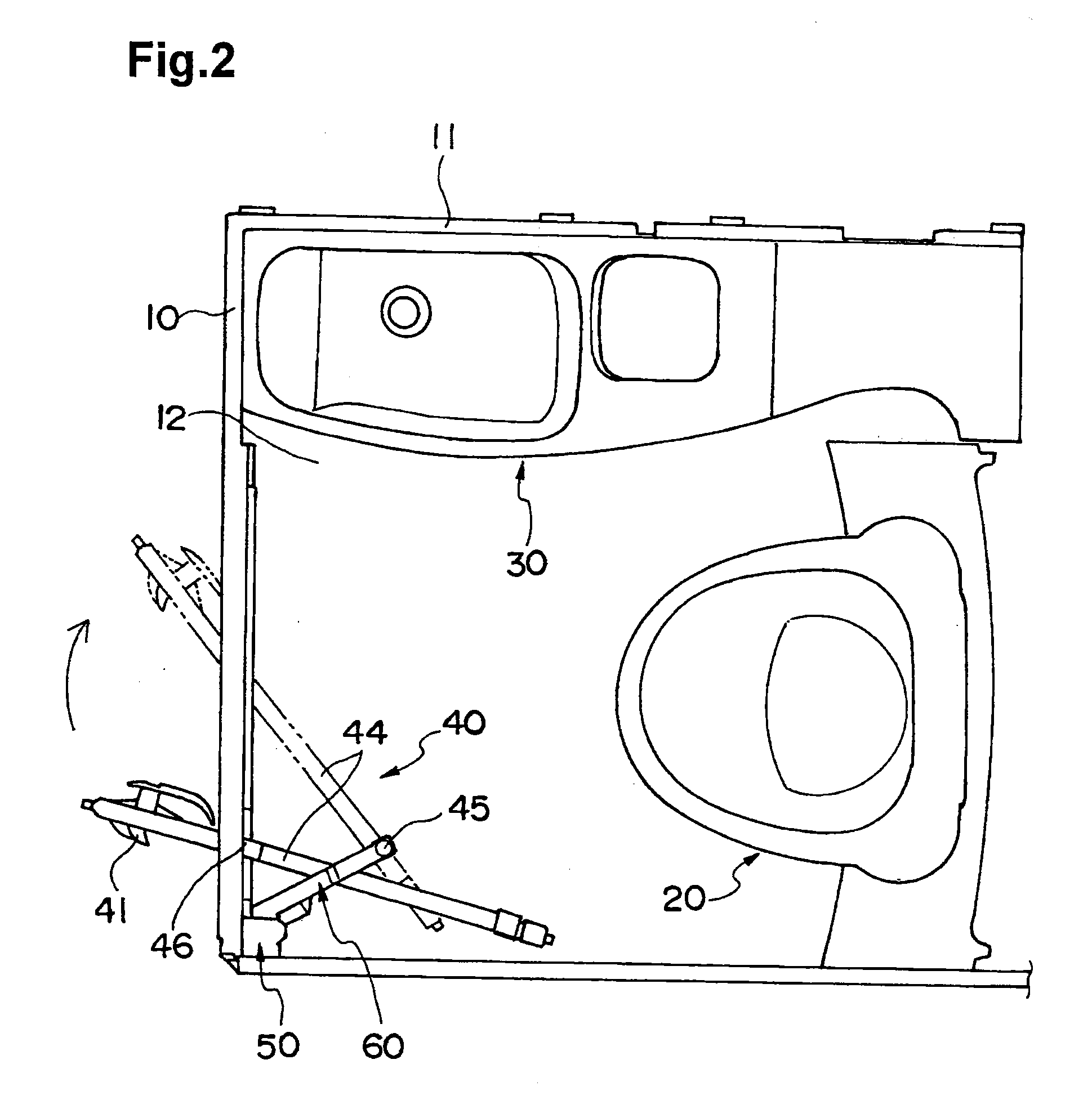

Door structure of aircraft restroom

ActiveUS20100237193A1Reducing turning speed of turnedReduce noise levelWeight reductionAircraft doorsEngineeringJamb

There is provided a space-saving and compact swing type door structure of an aircraft restroom, in which a door is automatically closed and which is easy to use for a passenger using the restroom. The swing type door (40) is slidably hung at its upper end edge (44) from a rail disposed at an upper portion of a door frame by a guide structure including a slide pin (46) and is turnably connected in a predetermined position different from the hung position to the door frame by a linkage (60). Swinging operation in opening the door (40) deforms a spring provided in an automatic closing device (50). When a hand is released from the door (40), the door (40) is constantly biased in a closing direction by spring resilience through the linkage (60) and automatically returns to a closed position. Therefore, the restroom becomes easy to use, an aisle in front of the restroom can be used in an emergency, and it is possible to avoid contingencies caused by false recognition of an escape hatch.

Owner:JAMCO

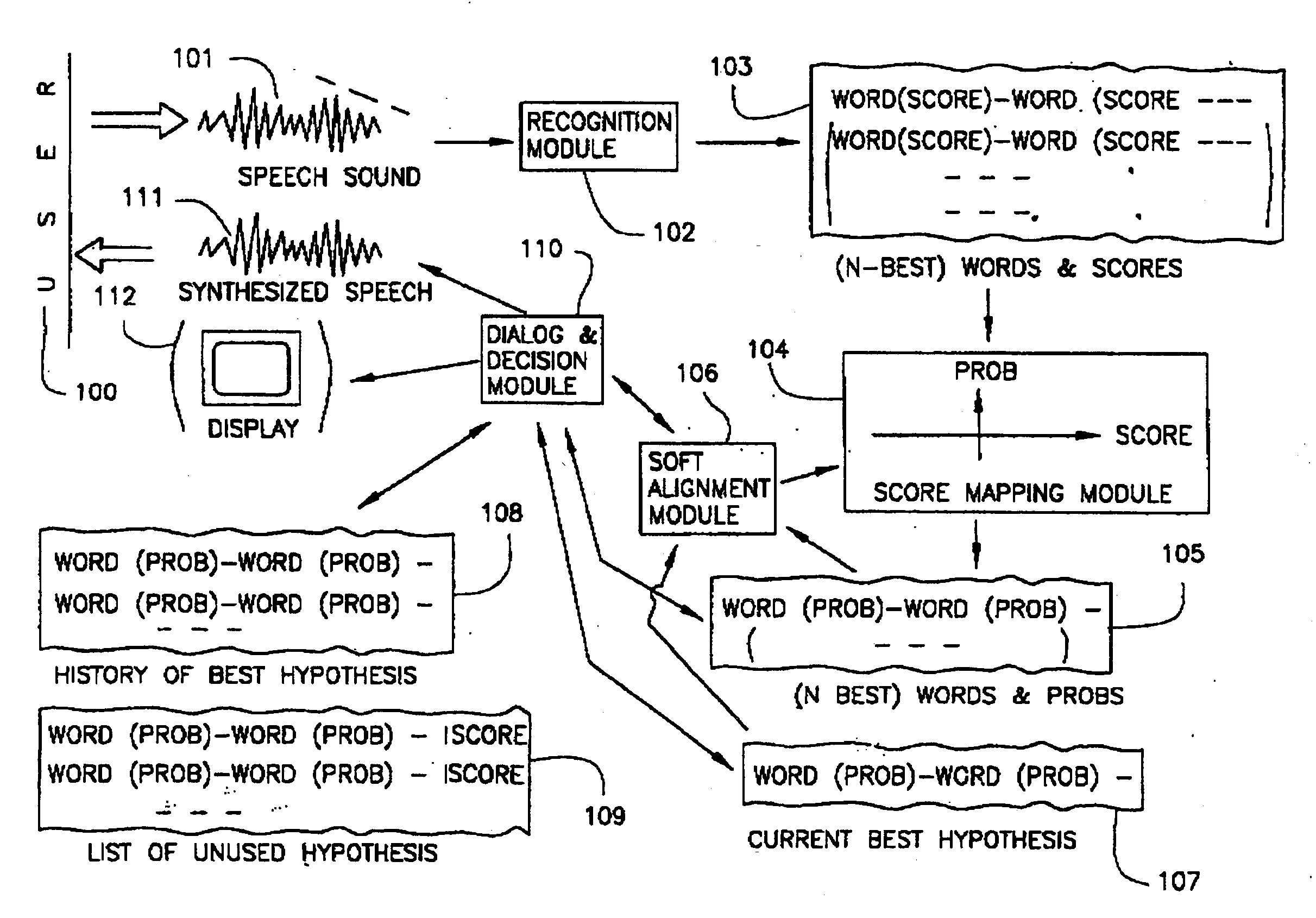

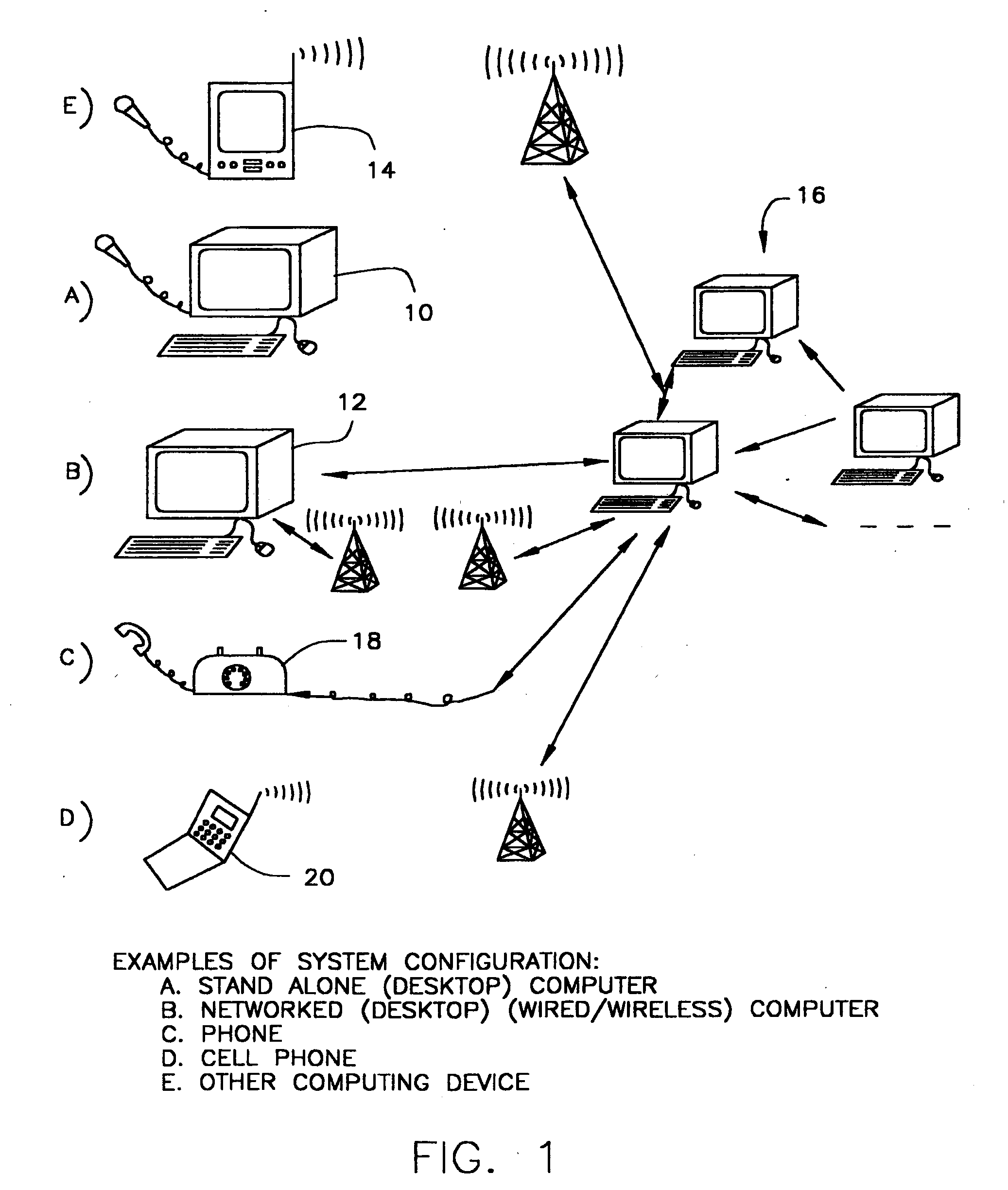

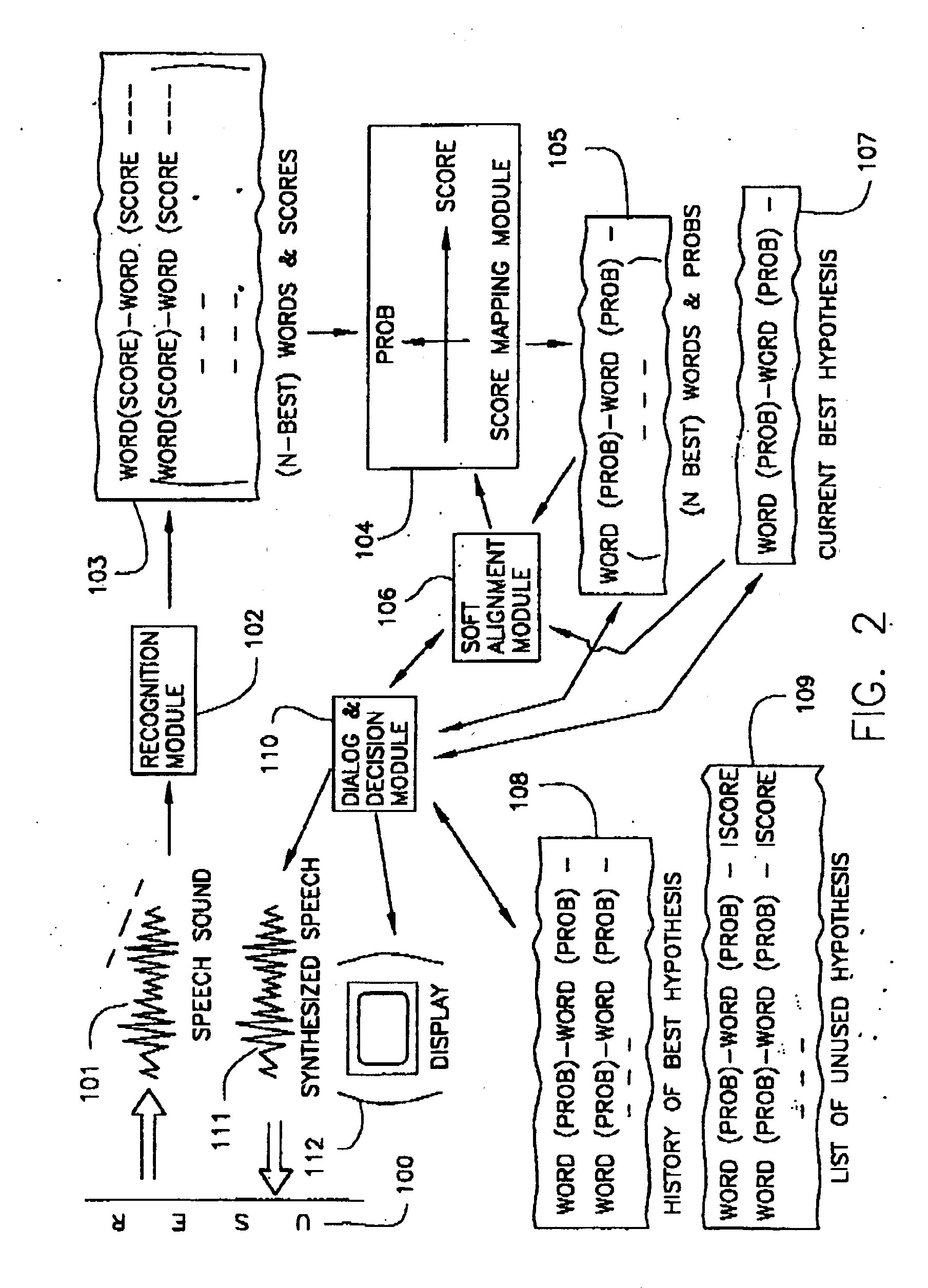

Natural error handling in speech recognition

A user interface, and associated techniques, that permit a fast and efficient way of correcting speech recognition errors, or of diminishing their impact. The user may correct mistakes in a natural way, essentially by repeating the information that was incorrectly recognized previously. Such a mechanism closely approximates what human-to-human dialogue would be in similar circumstances. Such a system fully takes advantage of all the information provided by the user, and on its own estimates the quality of the recognition in order to determine the correct sequence of words in the fewest number of steps.

Owner:NUANCE COMM INC

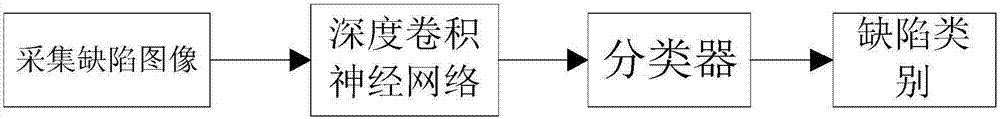

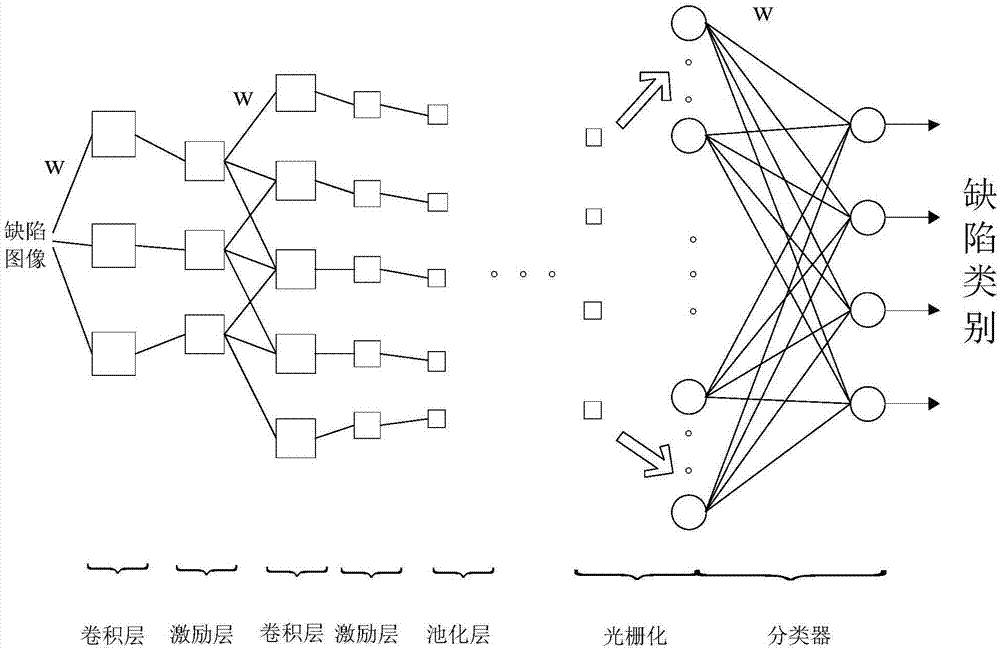

Hardware defect classification recognition method based on depth convolution neural network

InactiveCN107481231AReduce training timeImprove recognition accuracyImage enhancementImage analysisImaging processingFalse recognition

The invention discloses a hardware defect classification recognition method based on a depth convolution neural network, and the method comprises the steps: building a network: constructing the depth convolution neural network; training the network: classifying collected images into two classes: a training set and a test set, wherein the training set comprises 70% of the collected images, and the test set comprises 30% of the collected images; recognizing defects: inputting a hardware image in the test set into the trained sample, inspecting the output result, contrasting a recognition result and the label of the image, and carrying out the statistics of the correct recognition rate and wrong recognition rate. The method employs the depth convolution neural network, saves a complex image processing algorithm, achieves the extraction of more abstract features of defects through the adding of a network depth, is stronger in distinguishability between different defect classes, and is higher in recognition rate.

Owner:GUANGDONG UNIV OF TECH

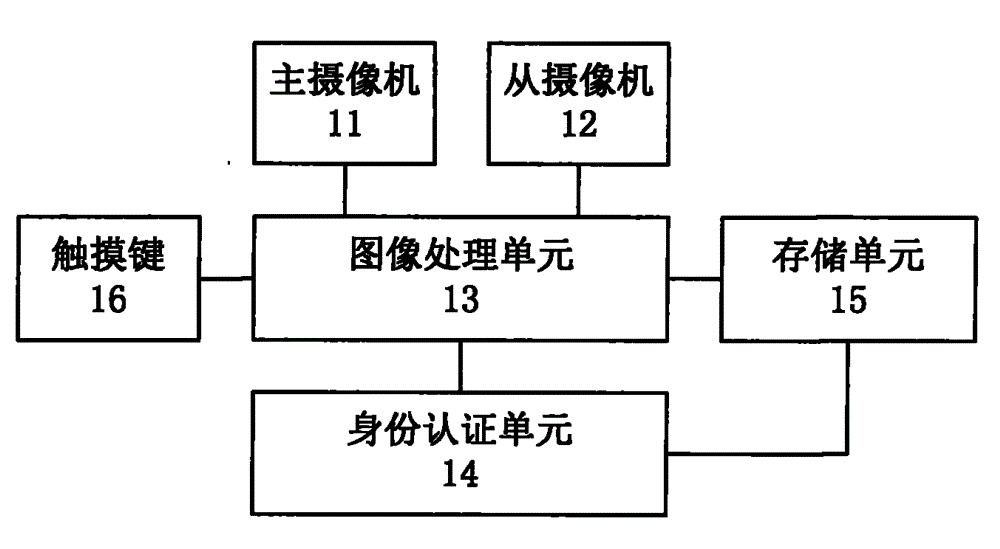

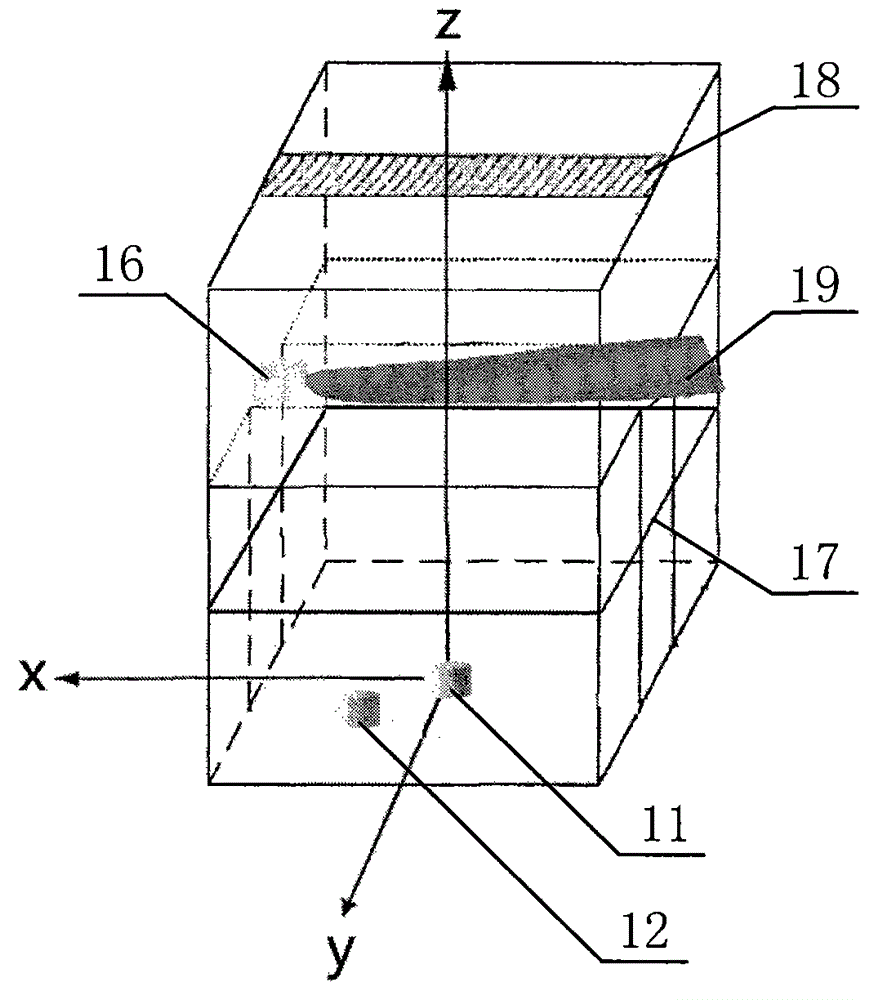

Identity recognition device and method based finger veins

ActiveCN104933389AAchieve authenticationImprove accuracyCharacter and pattern recognitionDigital data authenticationVeinImaging processing

The invention discloses an identify recognition device and method based finger veins, and the device comprises a main camera, a slave camera, an image processing unit, an identity authentication unit, a storage unit, a touch key, a glass filter, and an ultraviolet surface light source, wherein the main and slave cameras are used for respectively transmitting obtained main and slave finger vein images to the image processing unit. The image processing unit is used for extracting three-dimensional characteristic information of finger veins from the main and slave finger vein image signals, and carrying out normalizing, thereby obtaining the reference characteristic information of the finger veins. The identity authentication unit is used for comparing the obtained reference characteristic information of to-be-recognized finger veins with characteristic template information, and completing the identity recognition and authentication of the finger veins. Through the extraction and normalization of the three-dimensional characteristic information of the finger veins, the device and method effectively solve a problem of wrong recognition caused by the change of a finger posture, and remarkably improve the recognition accuracy and safety level.

Owner:北京细推科技有限公司

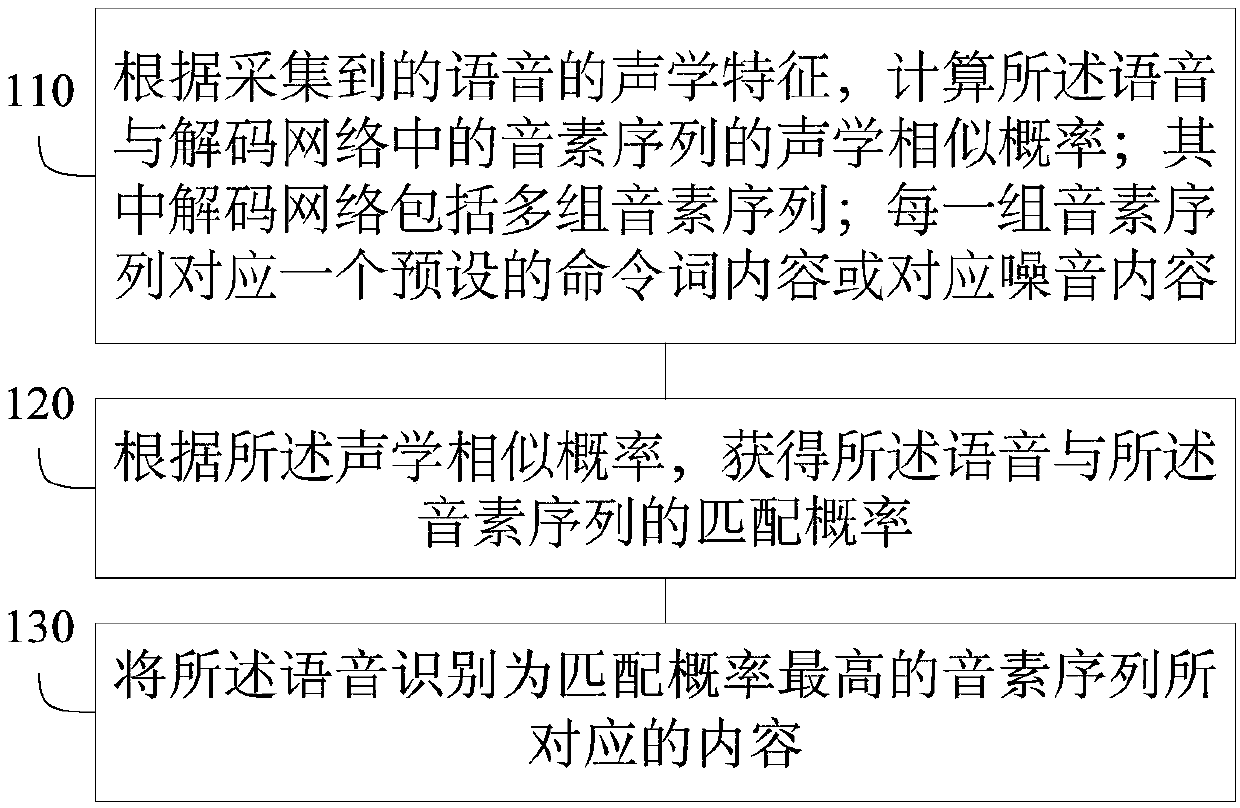

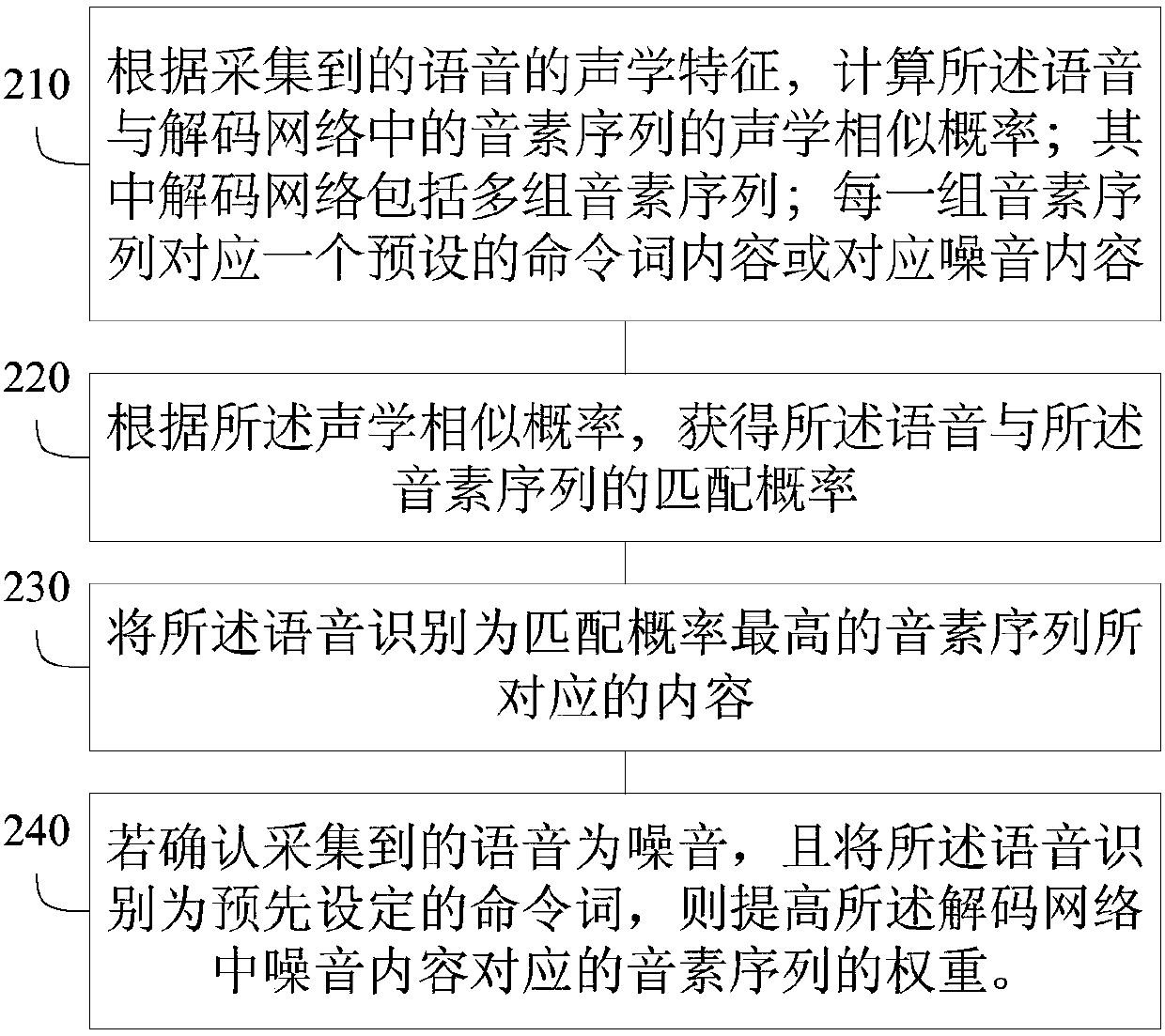

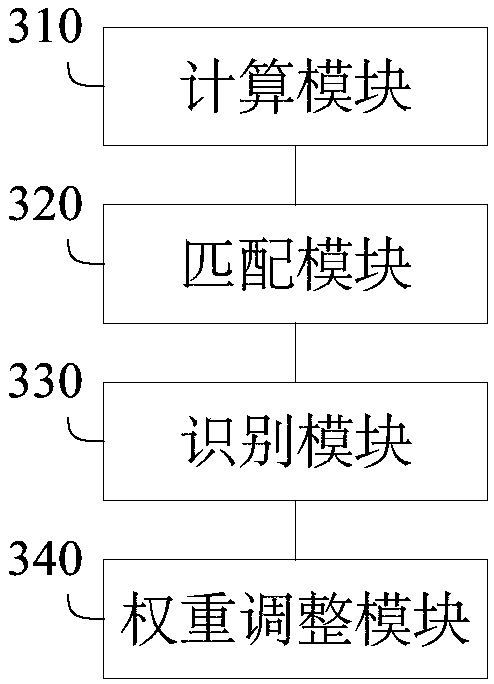

Speech recognition method and apparatus, terminal, and computer readable storage medium

ActiveCN107644638AReduce false recognition rateSolve the problem of high false recognition rateSpeech recognitionComputer terminalFalse recognition

The invention discloses a speech recognition method. The method comprises: according to collected acoustic speech features, acoustic similarity probabilities of the speech with phoneme sequences in adecoding network is calculated, wherein the decoding network includes a plurality of phoneme sequence groups and each phoneme sequence group corresponds to one preset command word content or a noise content; on the basis of the acoustic similarity probabilities, matching probabilities between the speech and the phoneme sequences are obtained; and the speech is recognized as a content correspondingto the phoneme sequence with the highest matching probability. Correspondingly, the invention also discloses a speech recognition apparatus, a terminal, and a computer readable storage medium. Therefore, a phenomenon that the noise is recognized as a command word is avoided; and calculation of a confidence level after speech recognition is not needed, so that the false recognition rate is reduced.

Owner:北京如布科技有限公司

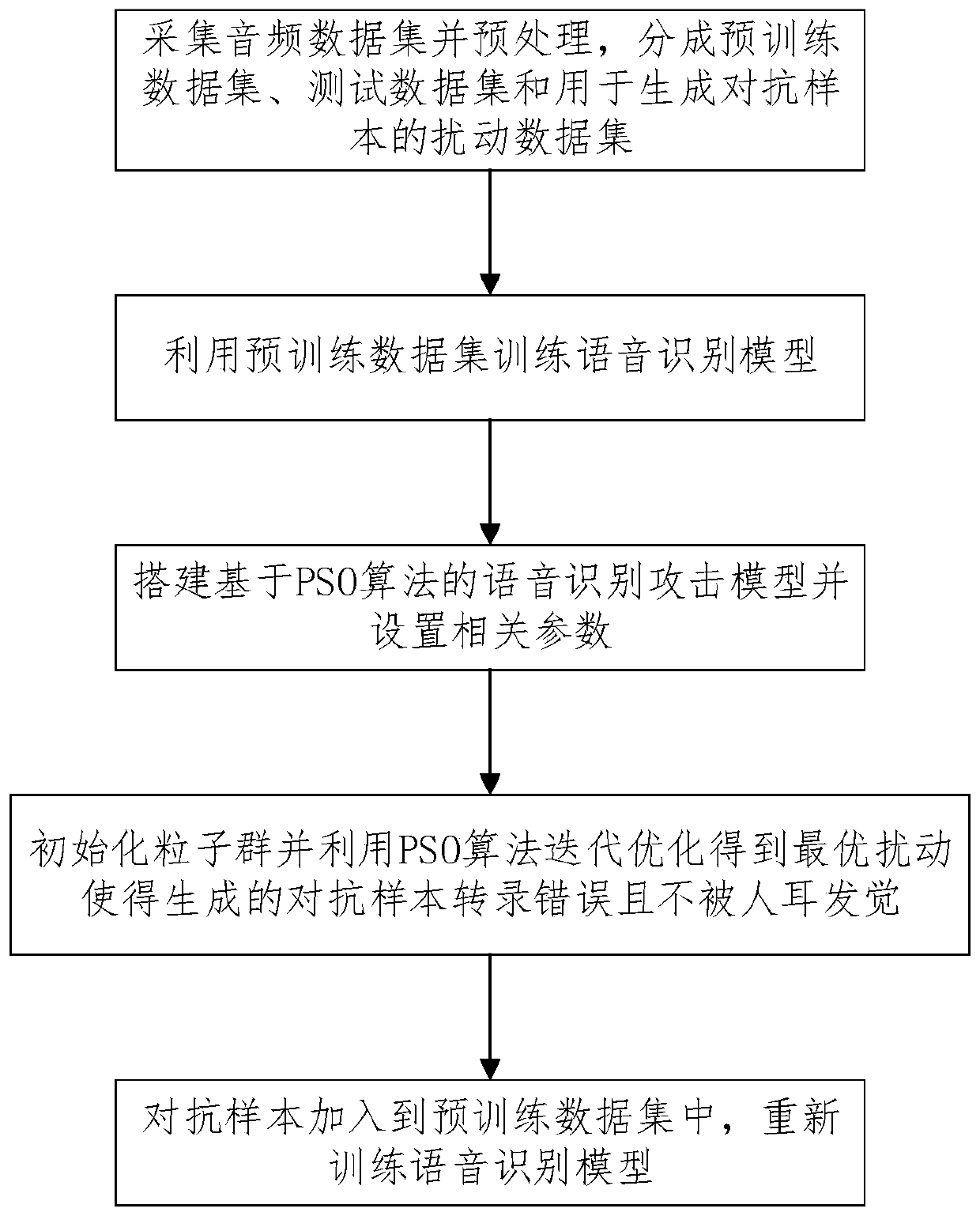

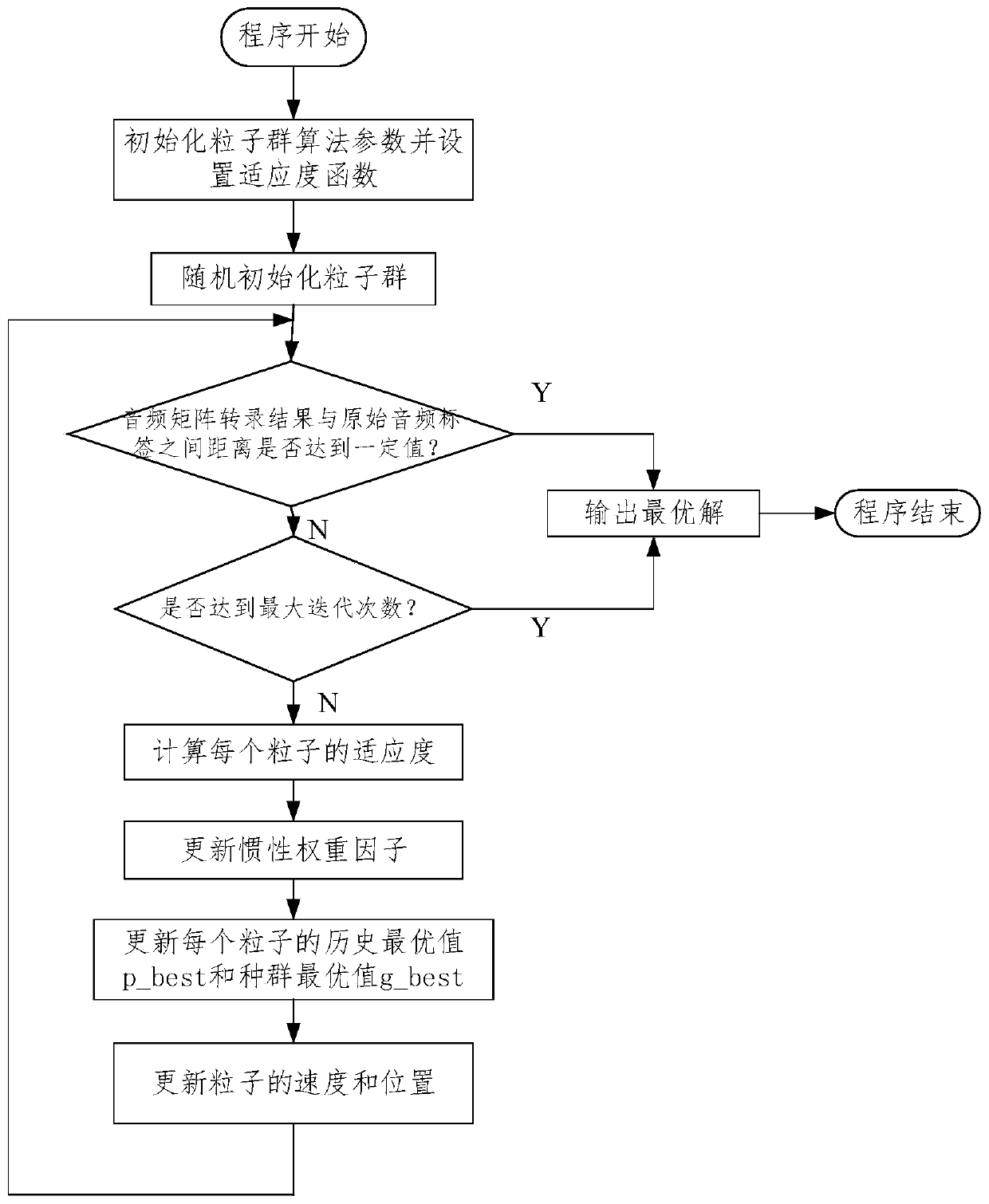

Speech recognition attack defense method based on PSO algorithm

ActiveCN110767216AAbility to defend against adversarial samplesImprove securitySpeech recognitionCryptographic attack countermeasuresPattern recognitionData set

The invention discloses a speech recognition attack defense method based on a PSO algorithm. The method comprises the following steps of (1) preparing an original audio data set, and dividing the original audio data set into a pre-training data set, a test data set and a disturbance data set used for generating an adversarial sample; (2) training a speech recognition model, wherein the speech recognition model is built, related parameters of the model are initialized, the speech recognition model is trained by using the pre-training data set, and the test data set is used to test the recognition accuracy of the model; (3) attacking the speech recognition model, wherein an attack method based on the PSO algorithm is built, a fitness function and related parameters of the PSO algorithm are set, and an optimal adversarial sample generated by the attack method can be recognized by mistake and is not recognized by the human ear; and (4) performing adversarial training on the speech recognition model, wherein the adversarial sample generated in the step (3) is added into the pre-training data set, and the speech recognition model is re-trained, so that the speech recognition model has the capability of defending attack of the adversarial sample and the safety and stability of the model are improved.

Owner:ZHEJIANG UNIV OF TECH

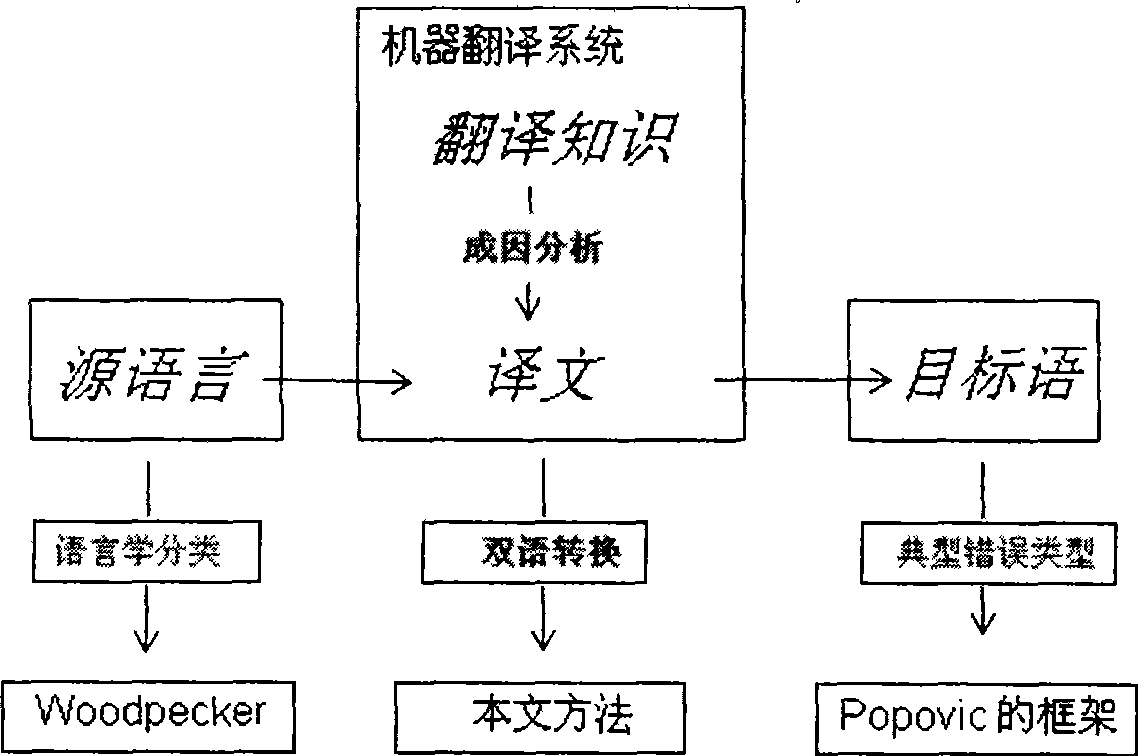

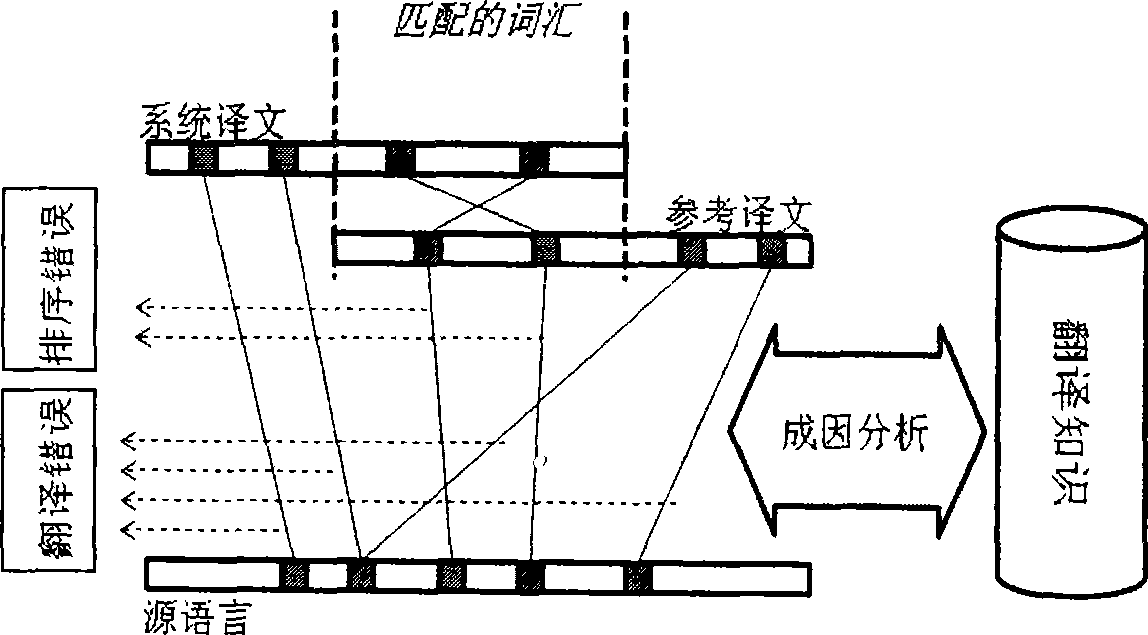

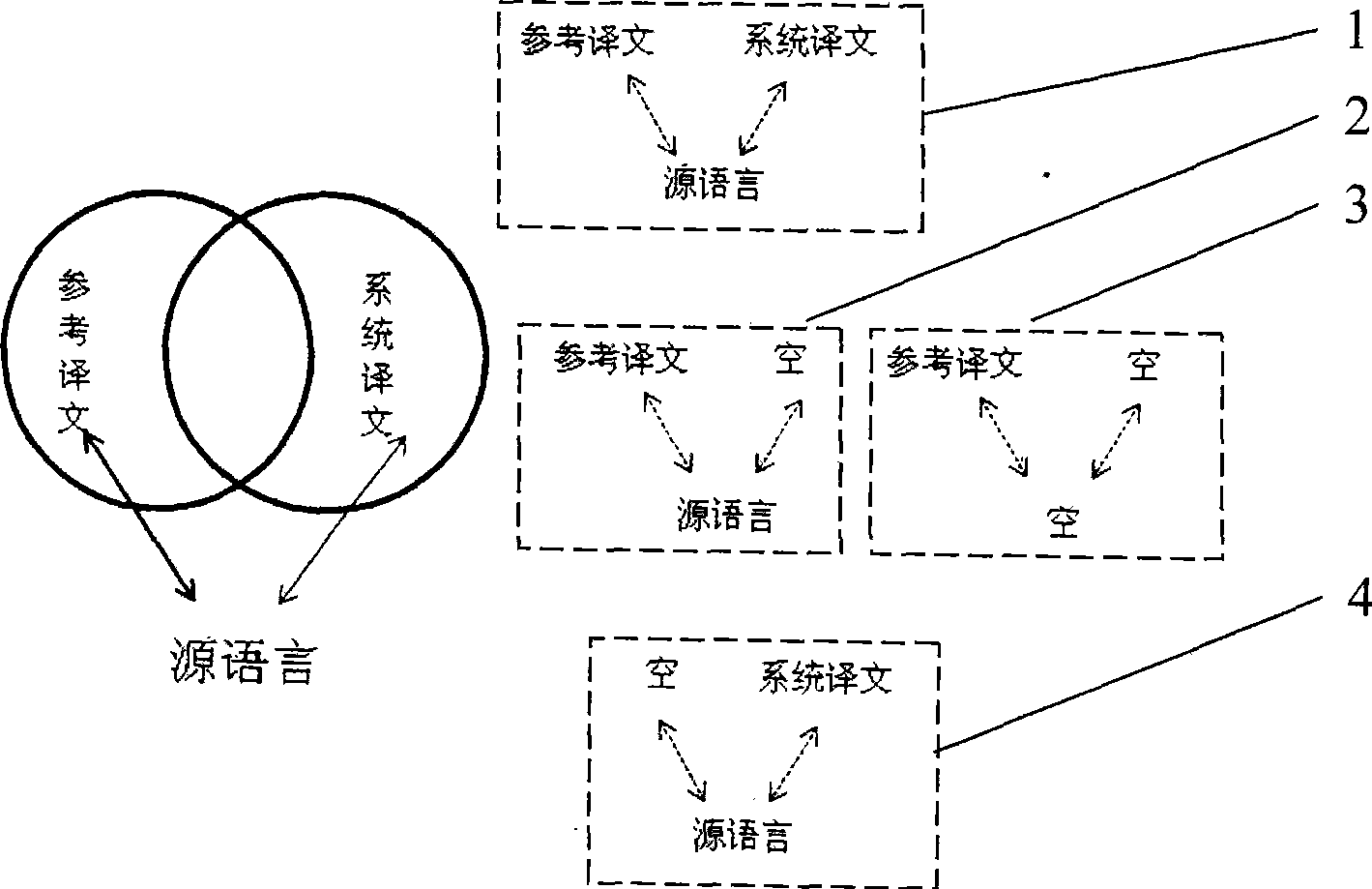

Automatic diagnosis and evaluation method for machine translation

The invention relates to an automatic diagnosis and evaluation method for machine translation, belonging to a machine translation evaluation technology and solving the problems that an evaluation method of the prior translation system can only test the processing capability of the translation system to a special monolingual phenomenon and can not obtain the defects of the translation system. The method comprises the following steps: firstly, matching words of a reference translation text and a systemic translation text and finding possible source language words for each object language word by utilizing translation knowledge; then, identifying errors and adopting the relation between source language and object language to judge a bilingual type of each error; and further utilizing the relation between the bilingual characteristics and the translation knowledge to judge the reasons of the errors. The automatic diagnosis and evaluation method for machine translation represents the bilingual errors by relative words in source language sentences, the reference translation text and the systemic translation text, induces the linguistics characteristics of the words in the diagnosis process and can more directly help developers to find and solve the inherent defects of the translation system.

Owner:HARBIN INST OF TECH

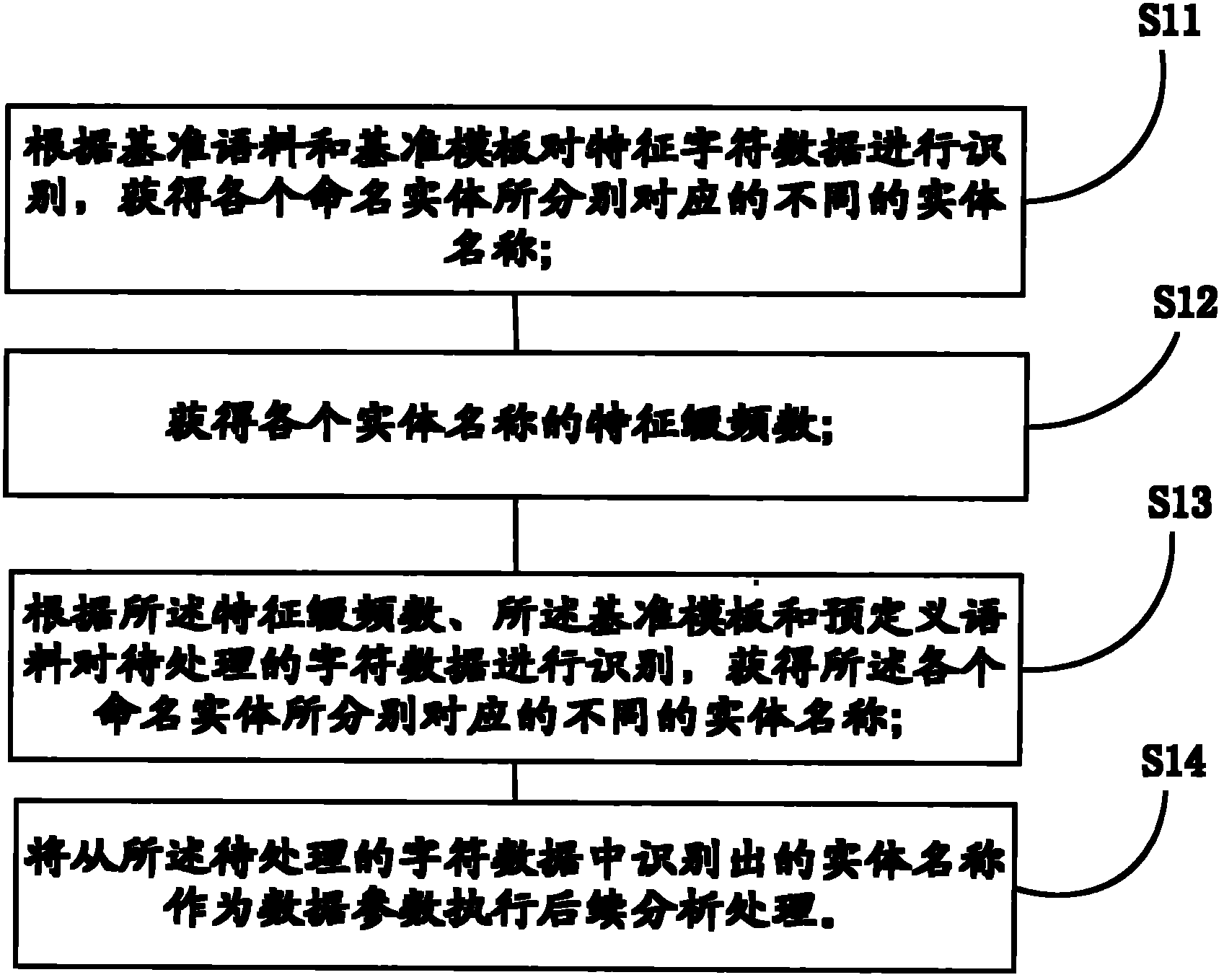

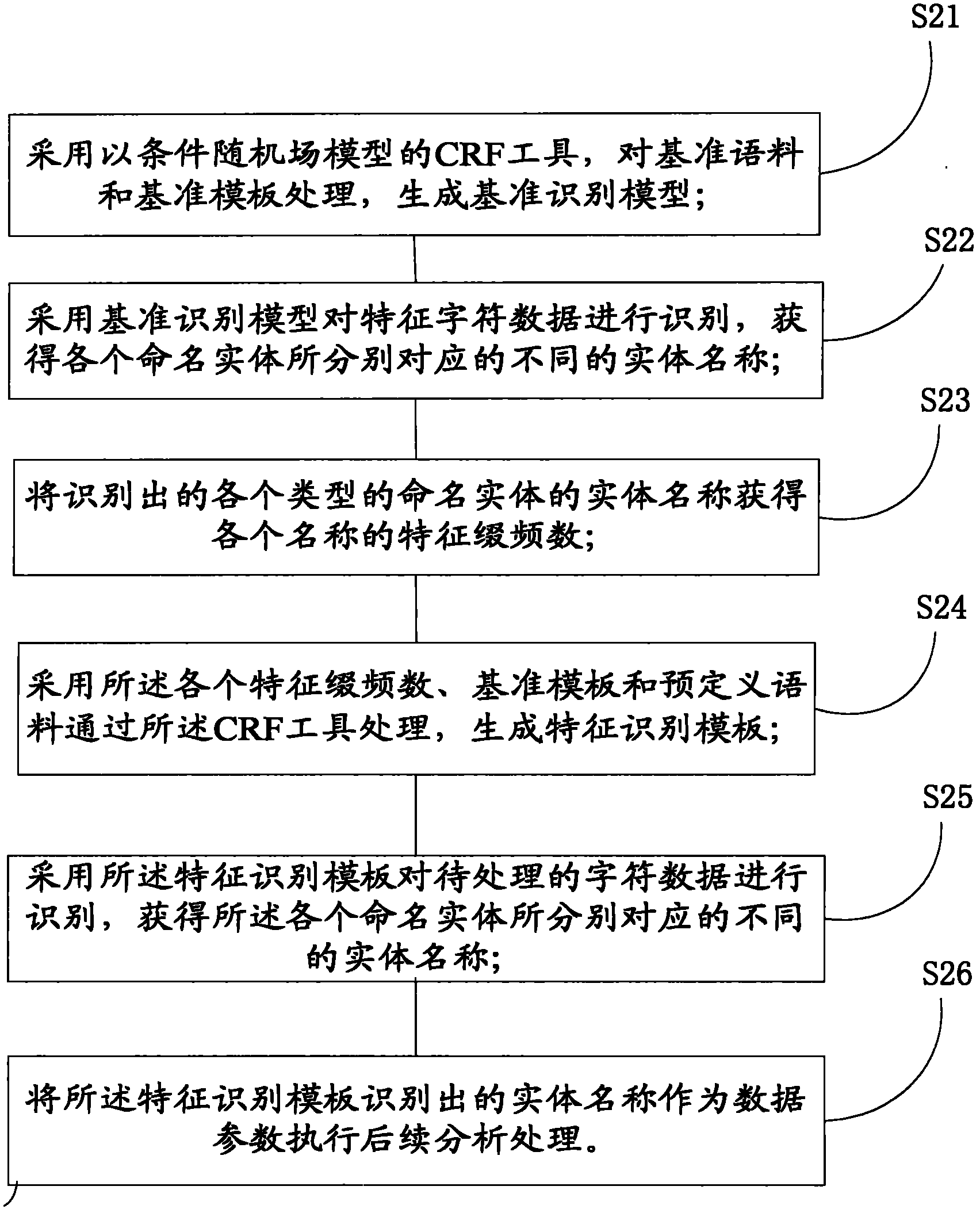

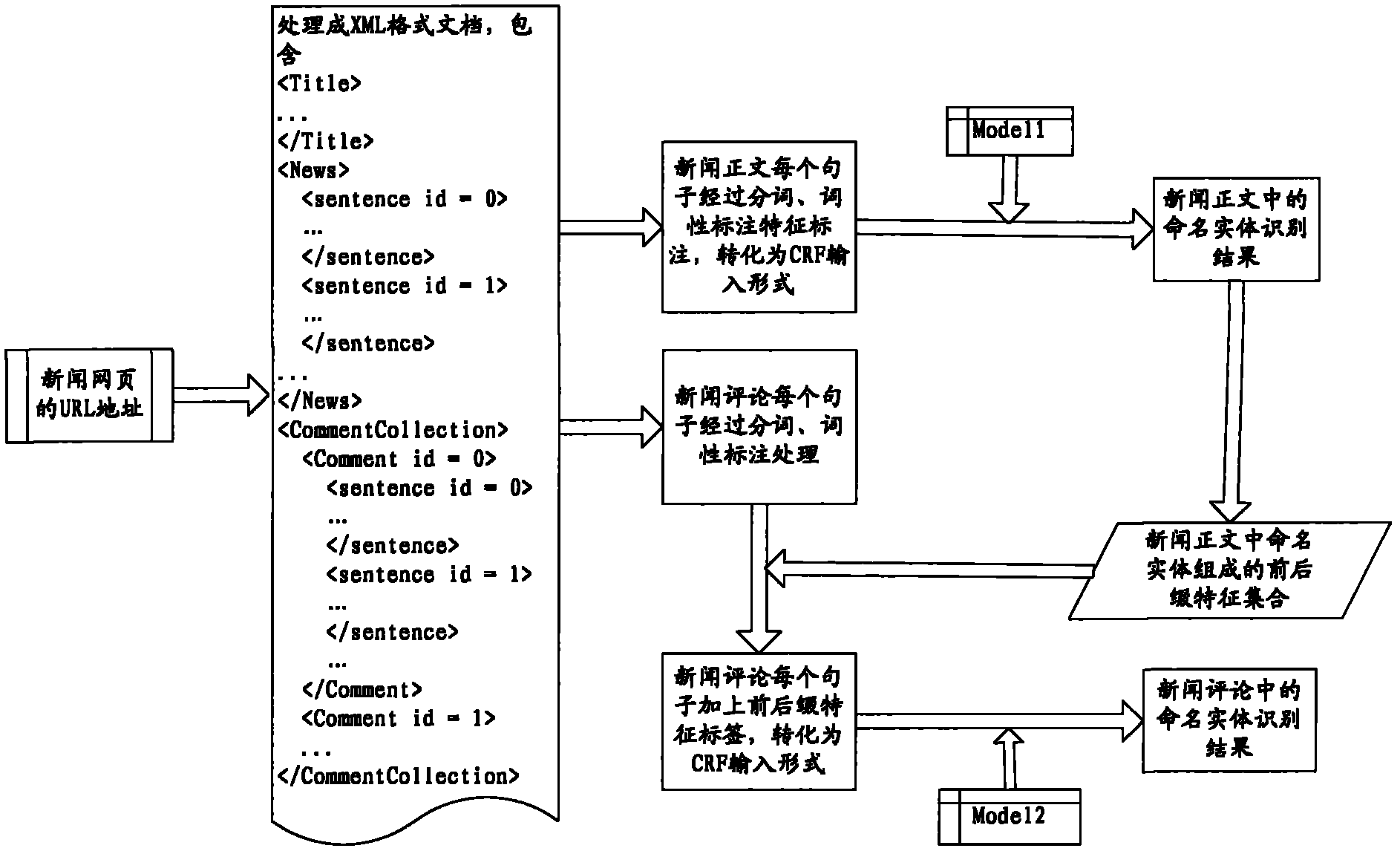

Character data recognition and processing method and device

InactiveCN102103594AImprove recognition accuracyOvercome the problem of large recognition errors of predefined character dataSpecial data processing applicationsAffixFalse recognition

The invention provides a character data recognition and processing method and a character data recognition and processing device. The method provided by the invention comprises the following steps of: recognizing featured character data according to reference linguistic data and a reference template, and obtaining different entity names corresponding to each named entity respectively; obtaining the feature affix frequency of each entity name; recognizing character data to be processed according to the feature affix frequency, the reference template and predefined linguistic data to obtain the different entity names corresponding to each named entity respectively; and performing subsequent analysis processing by taking the entity names recognized from the character data to be processed as data parameters. Feature affixes form a recognition feature column, so the method and the system solve the problem of relatively greater predefined character data recognition errors in post-retrieval and translation, improve the recognition accuracy of the named entities and avoid freely or insufficiently normally expressed named entities not being recognized or being recognized by error.

Owner:PEKING UNIV +3

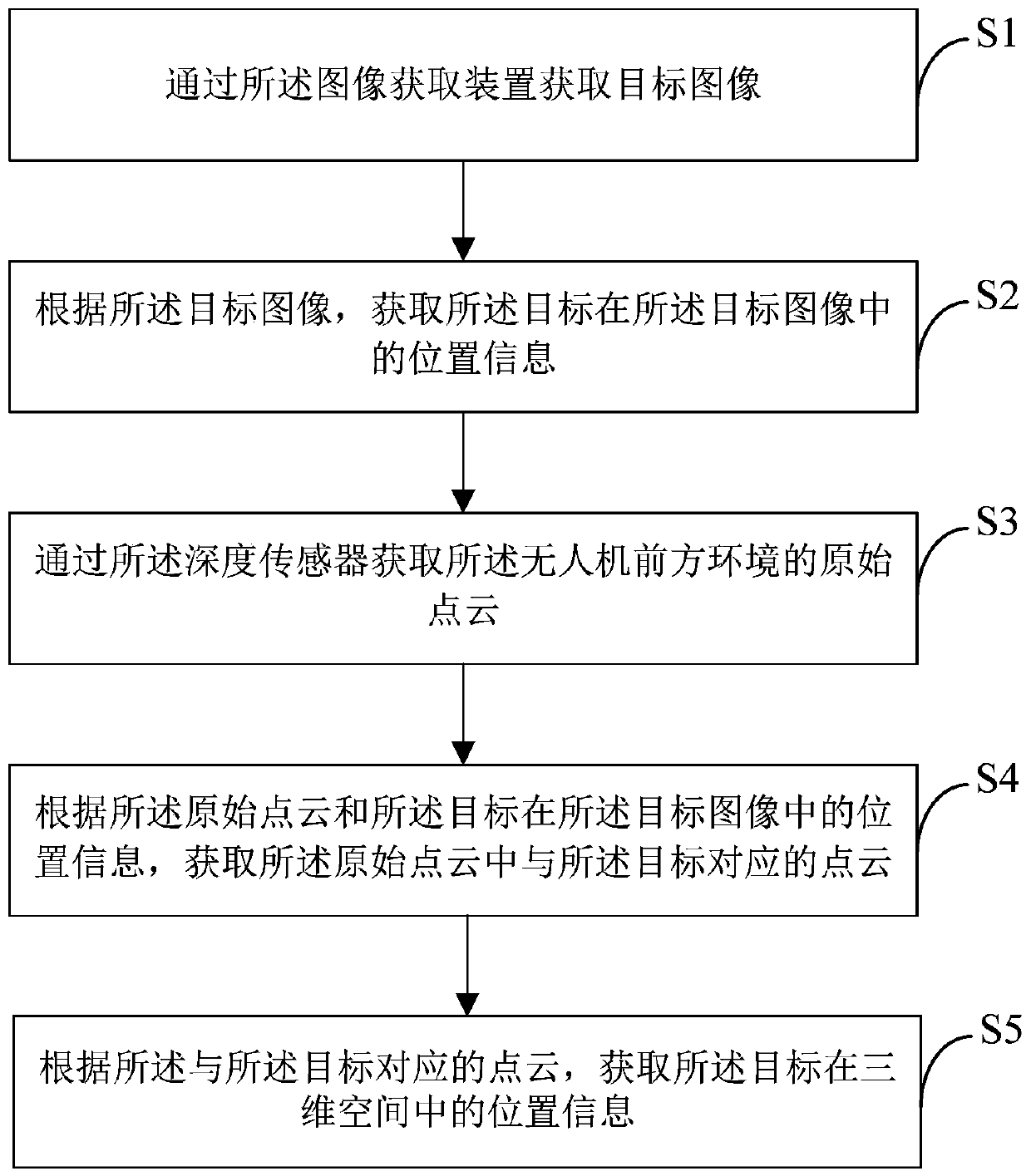

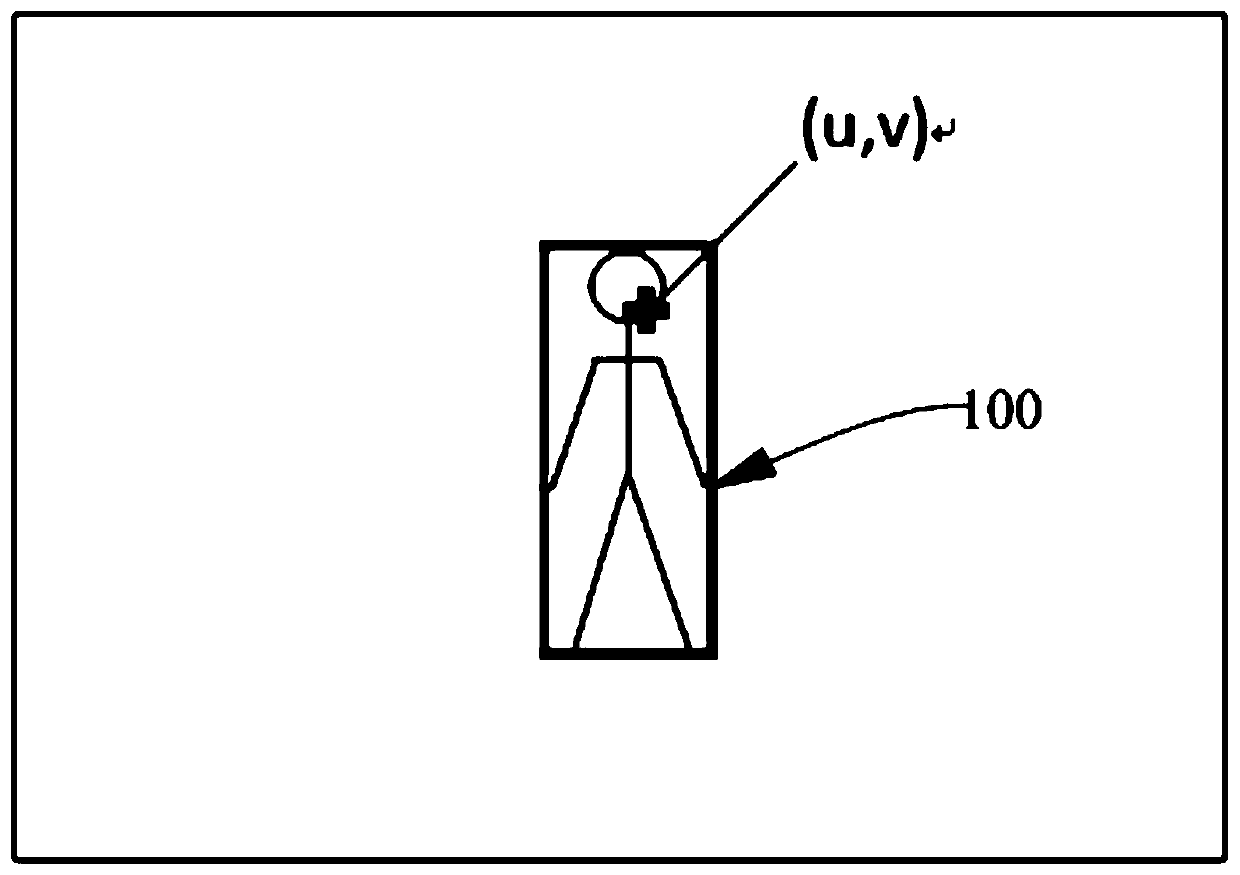

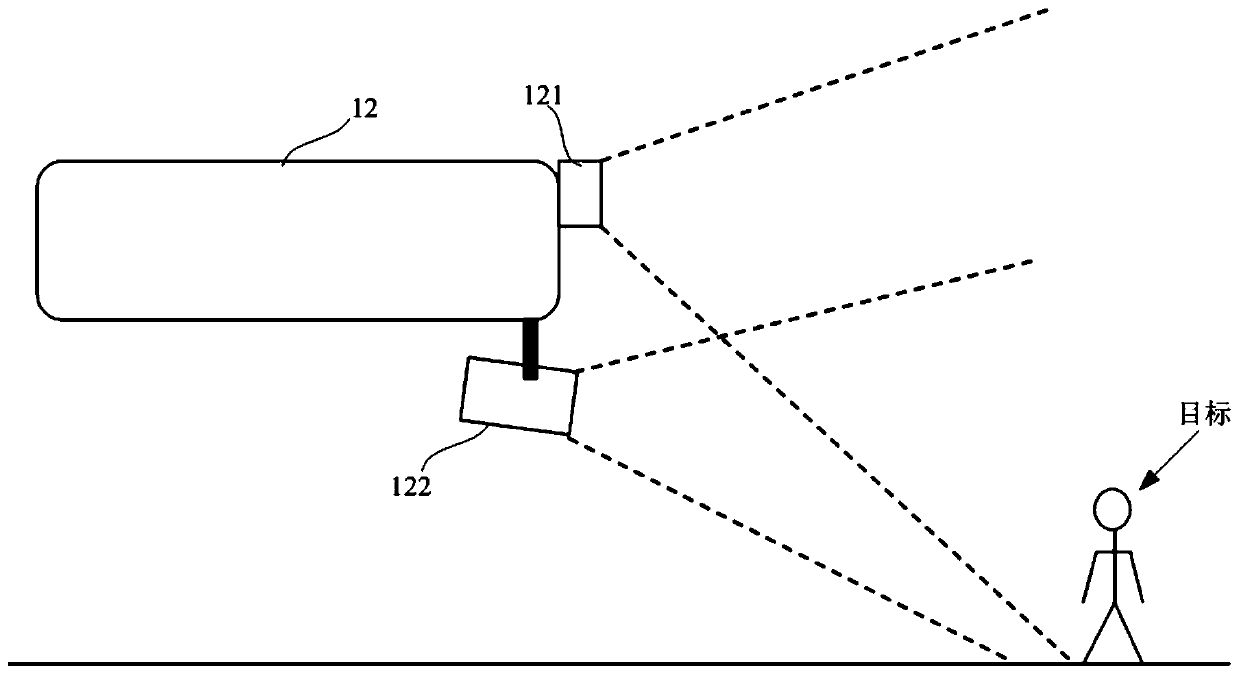

Target positioning method and device and unmanned aerial vehicle

PendingCN109767452AImprove stabilityHigh precisionImage enhancementImage analysisPoint cloudThree-dimensional space

The invention relates to a target positioning method and device and an unmanned aerial vehicle. The method comprises the steps of acquiring a target image through an image acquisition device; acquiring position information of the target in the target image according to the target image; obtaining an original point cloud of an environment in front of the unmanned aerial vehicle through a depth sensor; obtaining a point cloud corresponding to the target in the original point cloud according to the position information of the original point cloud and the target in the target image; and acquiringposition information of the target in a three-dimensional space according to the point cloud corresponding to the target. According to the invention, the depth information of the target is obtained bydetermining the position information of the three-dimensional space of the target; a motion estimation model with higher stability and precision can also be provided for target tracking, the probability of false recognition and tracking loss is reduced, more accurate visualization of three-dimensional path planning and real-time three-dimensional reconstruction can be achieved, and meanwhile a three-dimensional map with a target object can be used for obstacle avoidance.

Owner:SHENZHEN AUTEL INTELLIGENT AVIATION TECH CO LTD

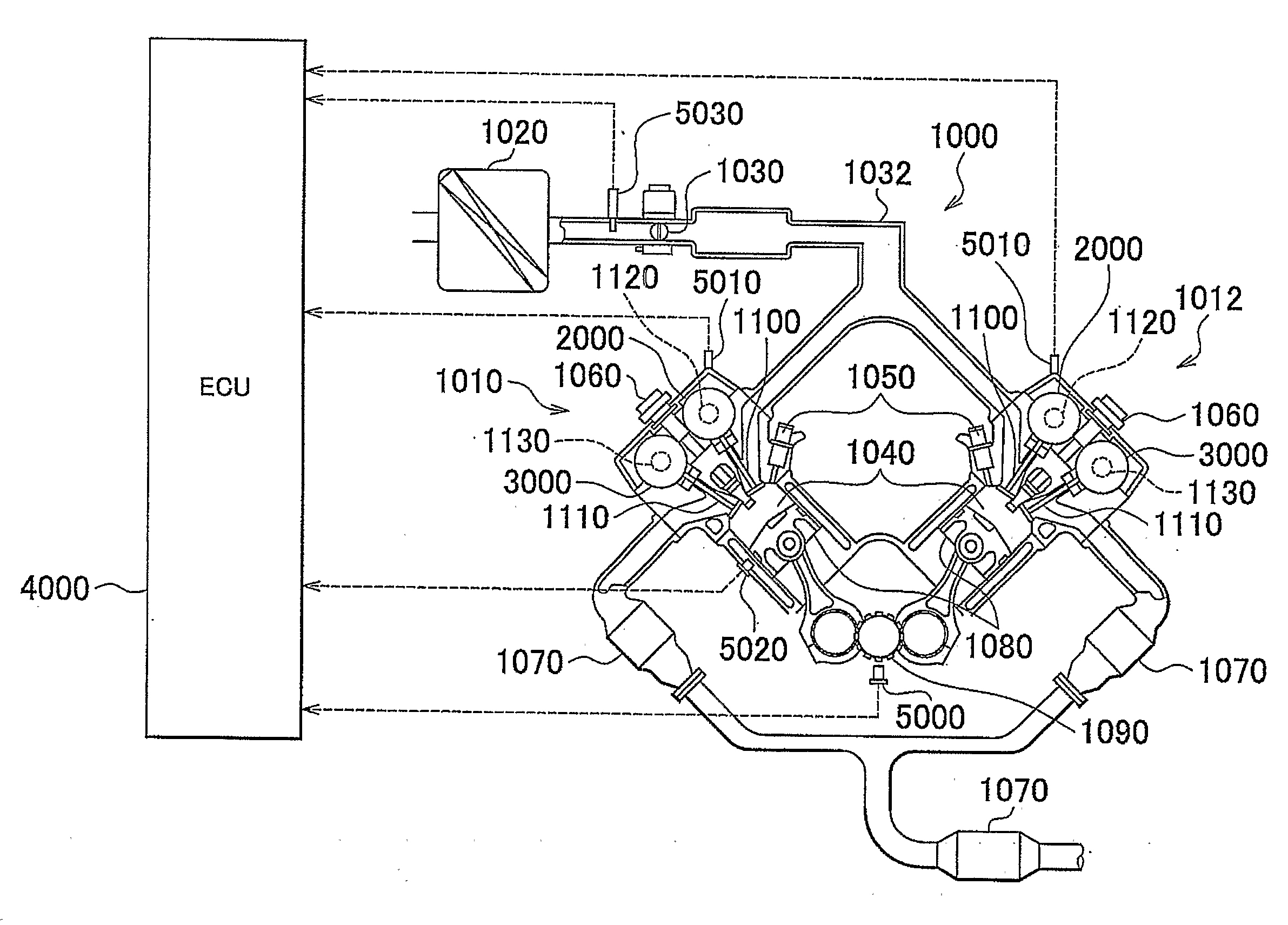

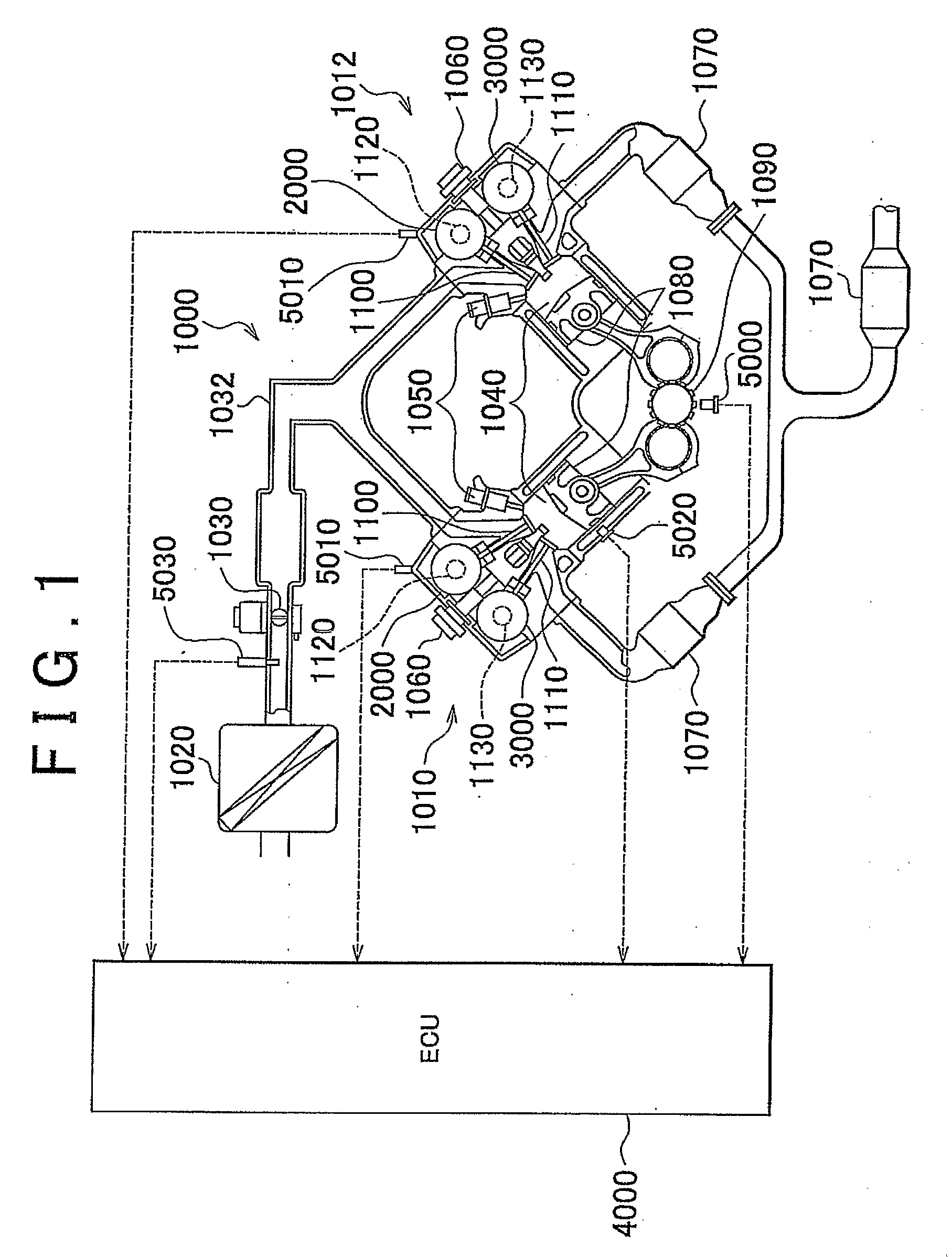

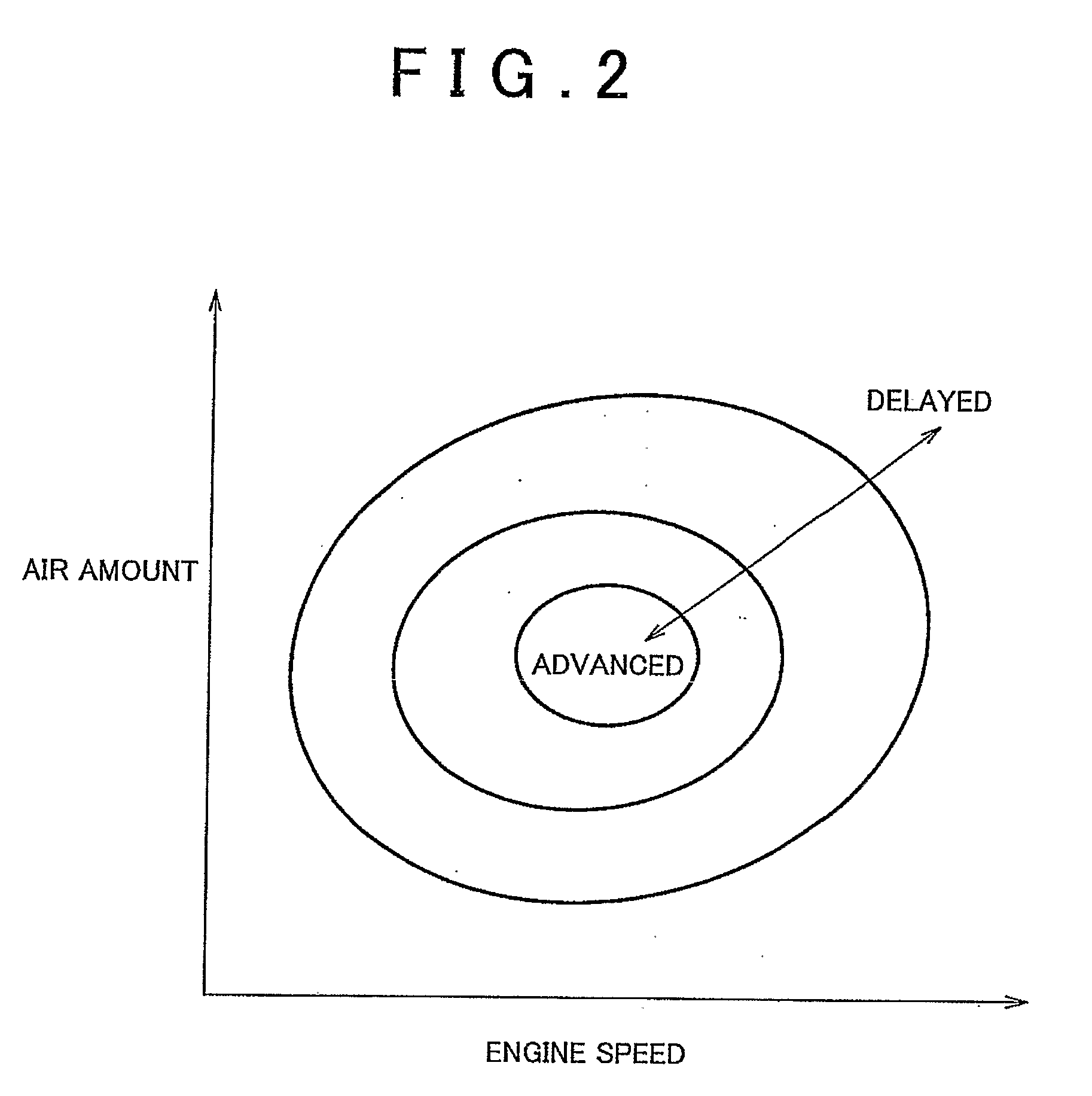

Variable valve timing system

InactiveUS20090101094A1Stable executionMinimization is negatively influencedElectrical controlInternal combustion piston enginesVariable valve timingActuator

An ECU transmits a pulsed operation command signal, indicating operation commands for an electric motor used as a VVT actuator, to an electric-motor EDU. The electric-motor EDU recognizes the combination of the direction in which the actuator should be operated (actuator operation direction) and the control mode based on the duty ratio of the operation command signal, and the rotational speed command value based on the frequency of the operation command signal. The electric-motor EDU controls the electric motor according to the control commands. The duty ratio indicating the combination is set such that even if the duty ratio is falsely recognized, a false recognition concerning the actuator operation direction is prevented, such false recognition causing the valve phase to change in an undesirable direction, and even if the actuator operation direction is falsely recognized, the rate of change in the phase is restricted.

Owner:TOYOTA JIDOSHA KK

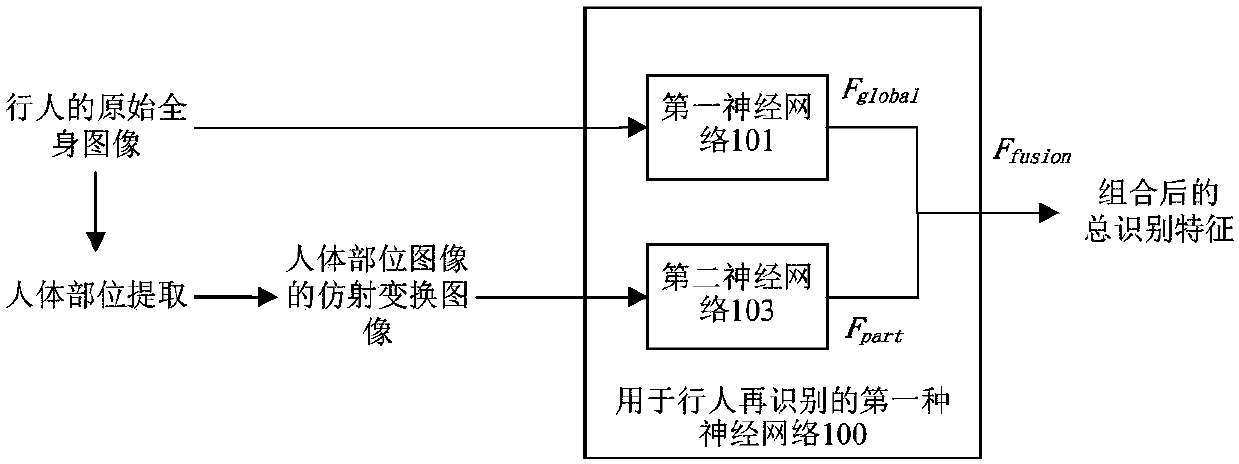

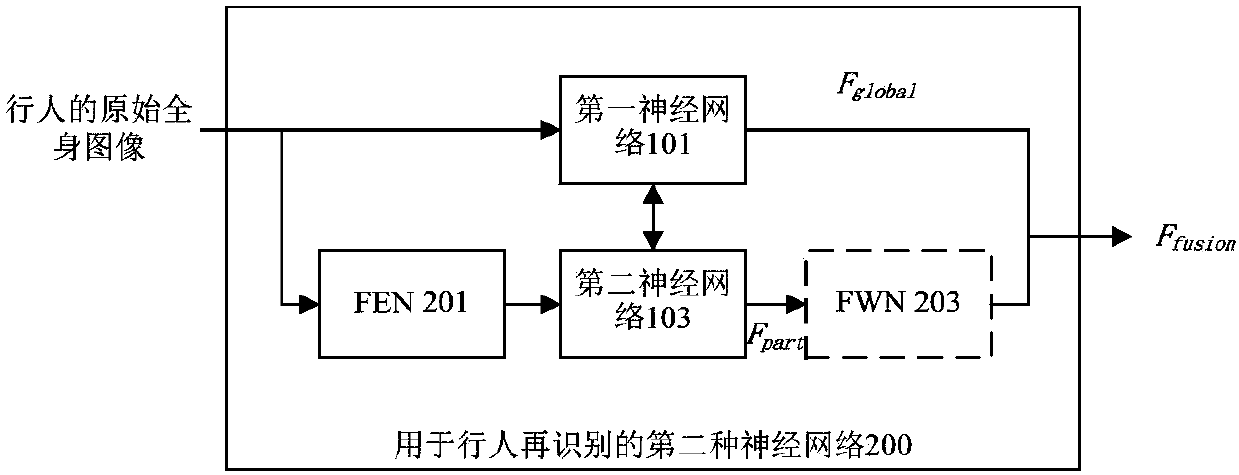

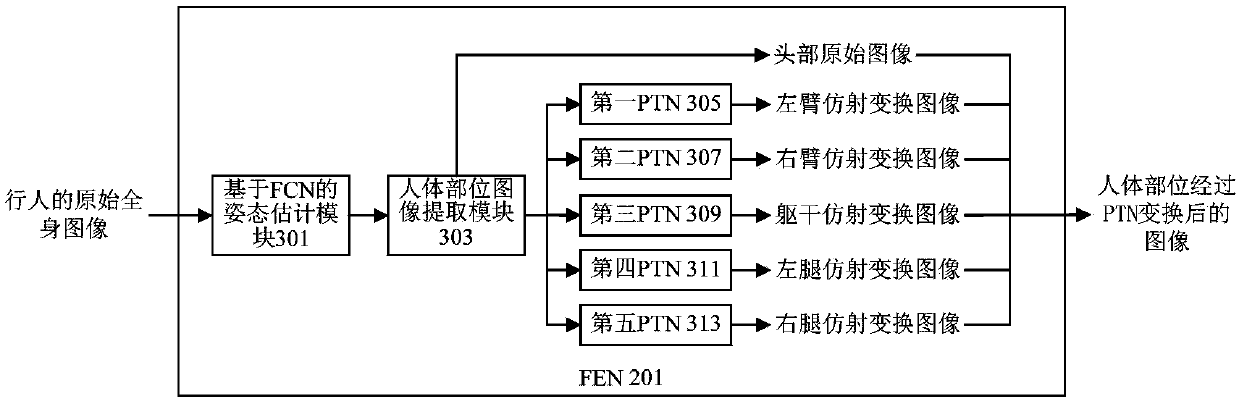

Neural network for pedestrian re recognition and pedestrian re-recognition algorithm based on deep learning

ActiveCN107729805ARobust Pedestrian Feature Matching CapabilityImprove the correct recognition rateGeometric image transformationCharacter and pattern recognitionWhole bodyRecognition algorithm

The invention discloses a neural network for pedestrian re recognition and a pedestrian re-recognition algorithm based on deep learning. The neural network comprises a first neural network that uses an original full-body image of a pedestrian as a first input and outputs a first identification feature and a second neural network that uses an affine transformation image of a body part image extracted from the original full-body image of the pedestrian as a second input and outputs a second identification feature. The body parts at least include a head, a trunk, and arms and legs; and the firstidentification feature and the second identification feature are combined into an overall identification feature. Therefore, the pedestrian feature matching capability with the high robustness is realized; and thus the correct recognition rate is improved and / or the false recognition rate is reduced.

Owner:PEKING UNIV

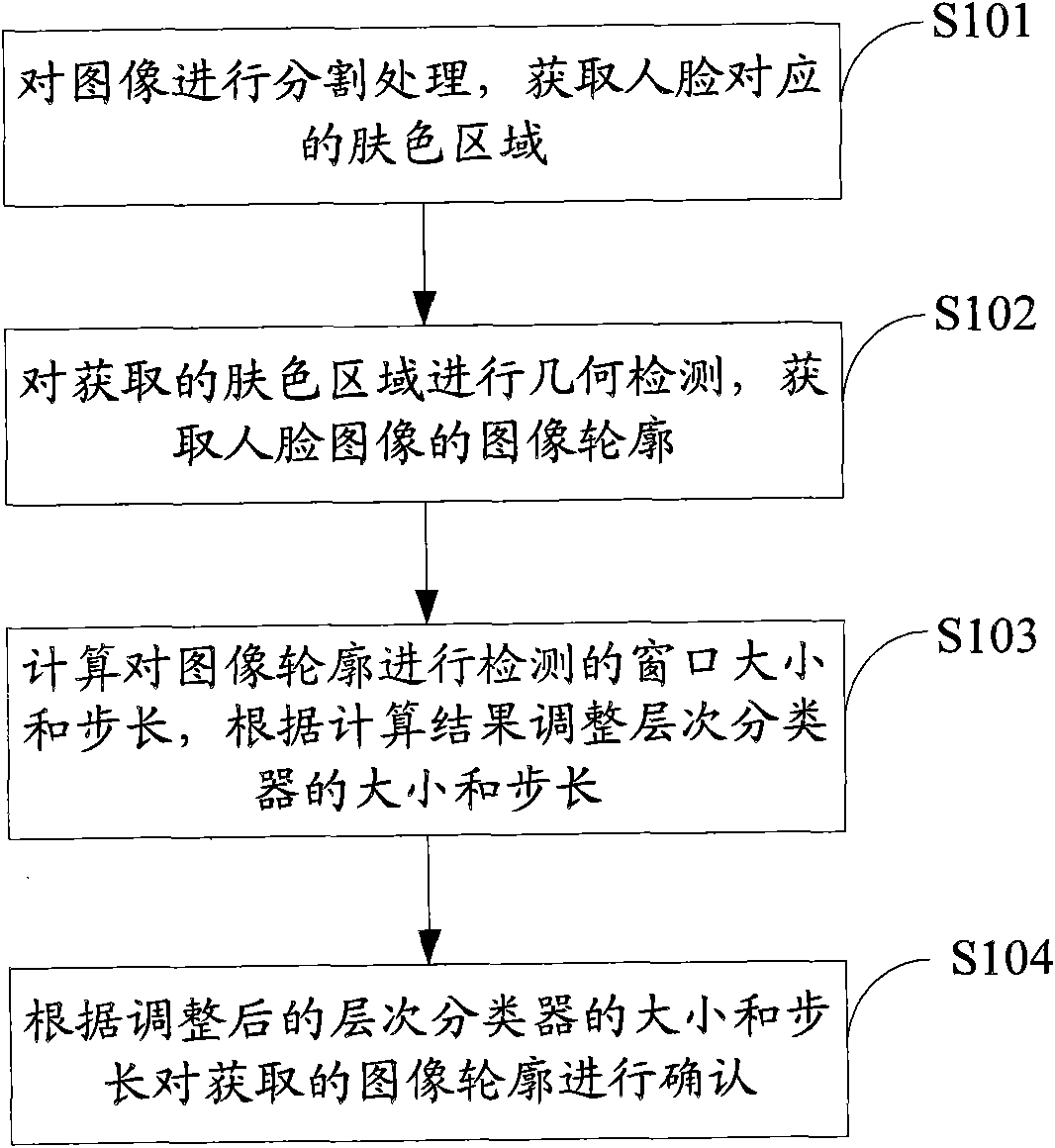

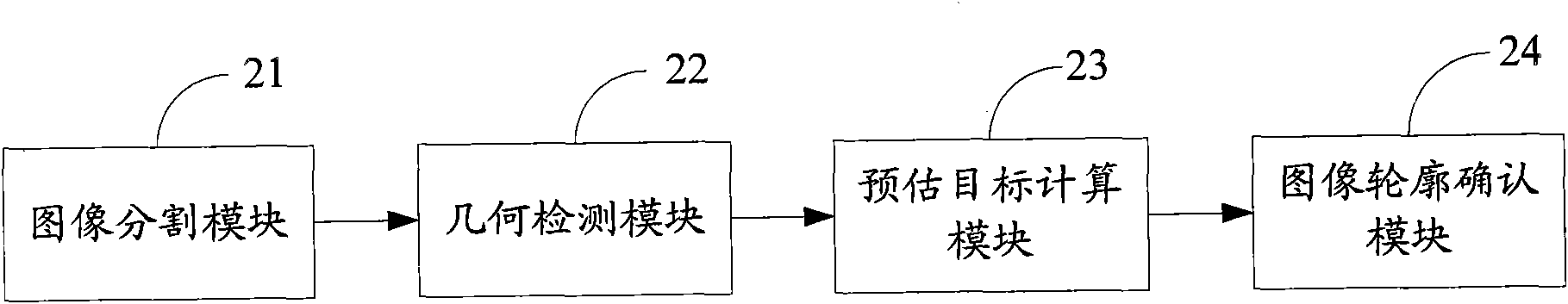

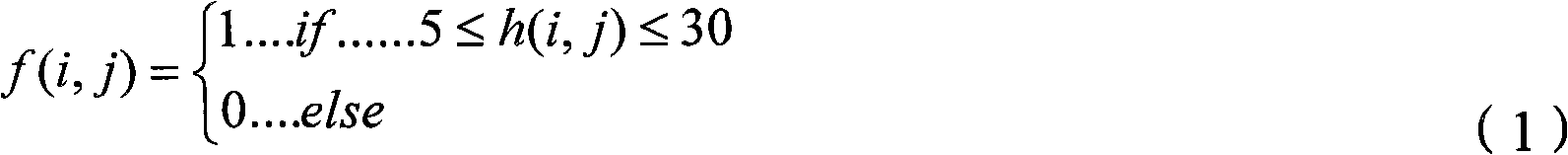

Mobile terminal as well as human face detection method and device thereof

InactiveCN101923637AThe detection process is fastImprove detection accuracyCharacter and pattern recognitionPattern recognitionFace detection

The invention belongs to the technical field of image processing and discloses a mobile terminal as well as human face detection method and device thereof. The method comprises the following steps of: partitioning a human face image to acquire the corresponding skin-color areas of a human face; carrying out geometrical detection to the acquired skin-color areas to acquire an image contour of the human face image; calculating the size and the step length of a window for detecting the image contour and adjusting the size and the step length of a level classifier according to a calculating result; and confirming the acquired image contour according to the adjusted size and step length of the level classifier. The human face detection method provided in the invention can be conveniently used together with a hand-held terminal, and not only reduces the searching time, but also reduces the false recognition rate and is beneficial to the generalization of the hand-held terminal.

Owner:SHENZHEN KONKA TELECOMM TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com