Patents

Literature

3002 results about "Text recognition" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Handwritten word recognition based on geometric decomposition

A method of recognizing a handwritten word of cursive script comprising providing a template of previously classified words, and optically reading a handwritten word so as to form an image representation thereof comprising a bit map of pixels. The external pixel contour of the bit map is extracted and the vertical peak and minima pixel extrema on upper and lower zones respectively of this external contour are detected. Feature vectors of the vertical peak and minima pixel extrema are determined and compared to the template so as to generate a match between the handwritten word and a previously classified word. A method for classifying an image representation of a handwritten word of cursive script is also provided. Also provided is an apparatus for recognizing a handwritten word of cursive script. This apparatus comprises a template of previously classified words, a reader for optically reading a handwritten word, and a controller being linked thereto for generating a match between the handwritten word and the previously classified words. Two algorithms are provided for respectively correcting the skew and slant of a word image.

Owner:IMDS SOFTWARE

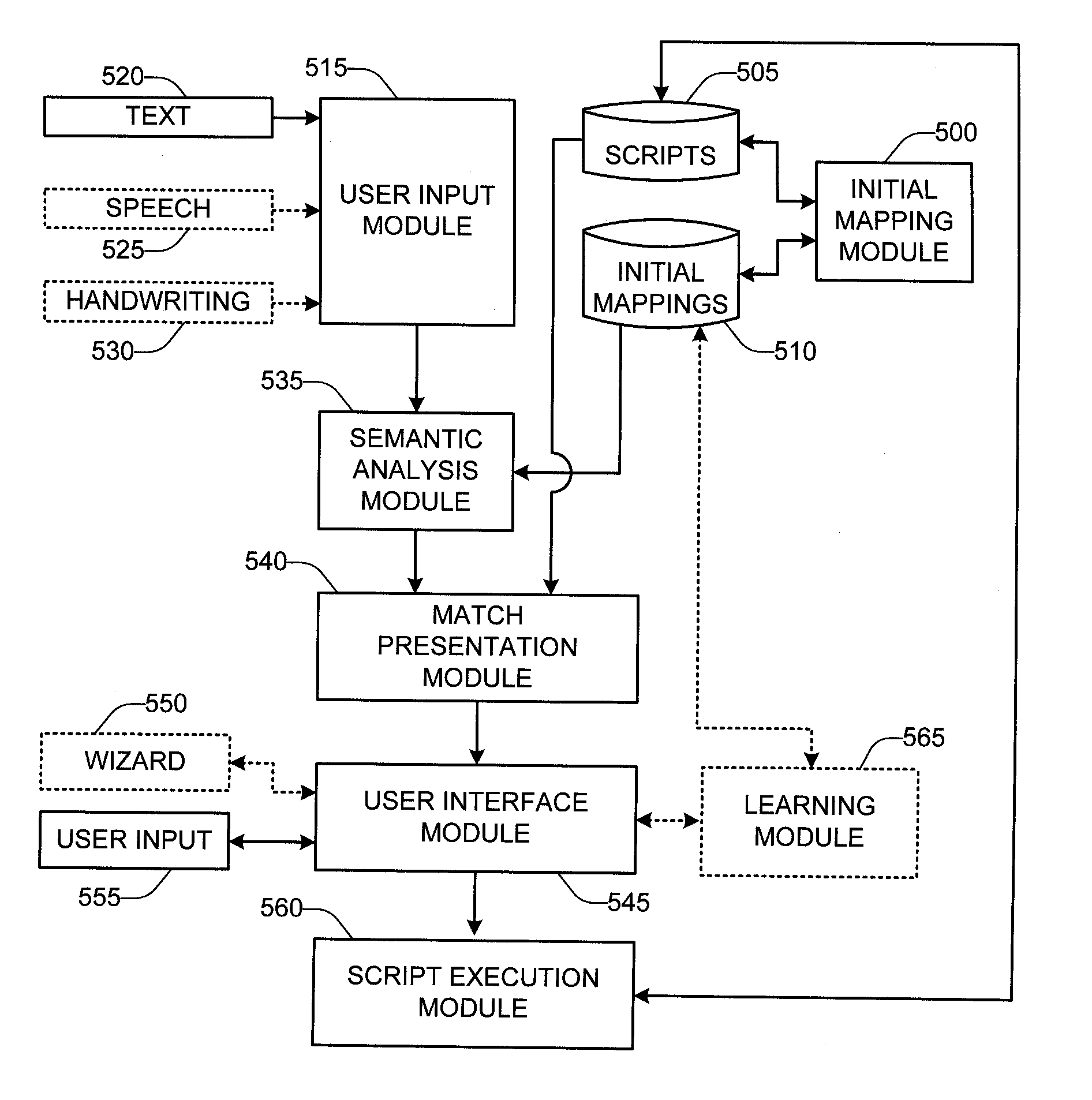

Natural language interface for driving adaptive scenarios

A “Natural Language Script Interface” (NLSI), provides an interface and query system for automatically interpreting natural language inputs to select, execute, and / or otherwise present one or more scripts or other code to the user for further user interaction. In other words, the NLSI manages a pool of scripts or code, available from one or more local and / or remote sources, as a function of the user's natural language inputs. The NLSI operates either as a standalone application, or as a component integrated into existing applications. Natural language inputs may be received from a variety of sources, and include, for example, computer-based text or voice input, handwriting or text recognition, or any other human or machine-readable input from one or more local or remote sources. In various embodiments, machine learning techniques are used to improve script selection and processing as a function of observed user interaction with selected scripts.

Owner:MICROSOFT TECH LICENSING LLC

On-Screen Guideline-Based Selective Text Recognition

ActiveUS20110123115A1Television system detailsColor television detailsText recognitionStream capture

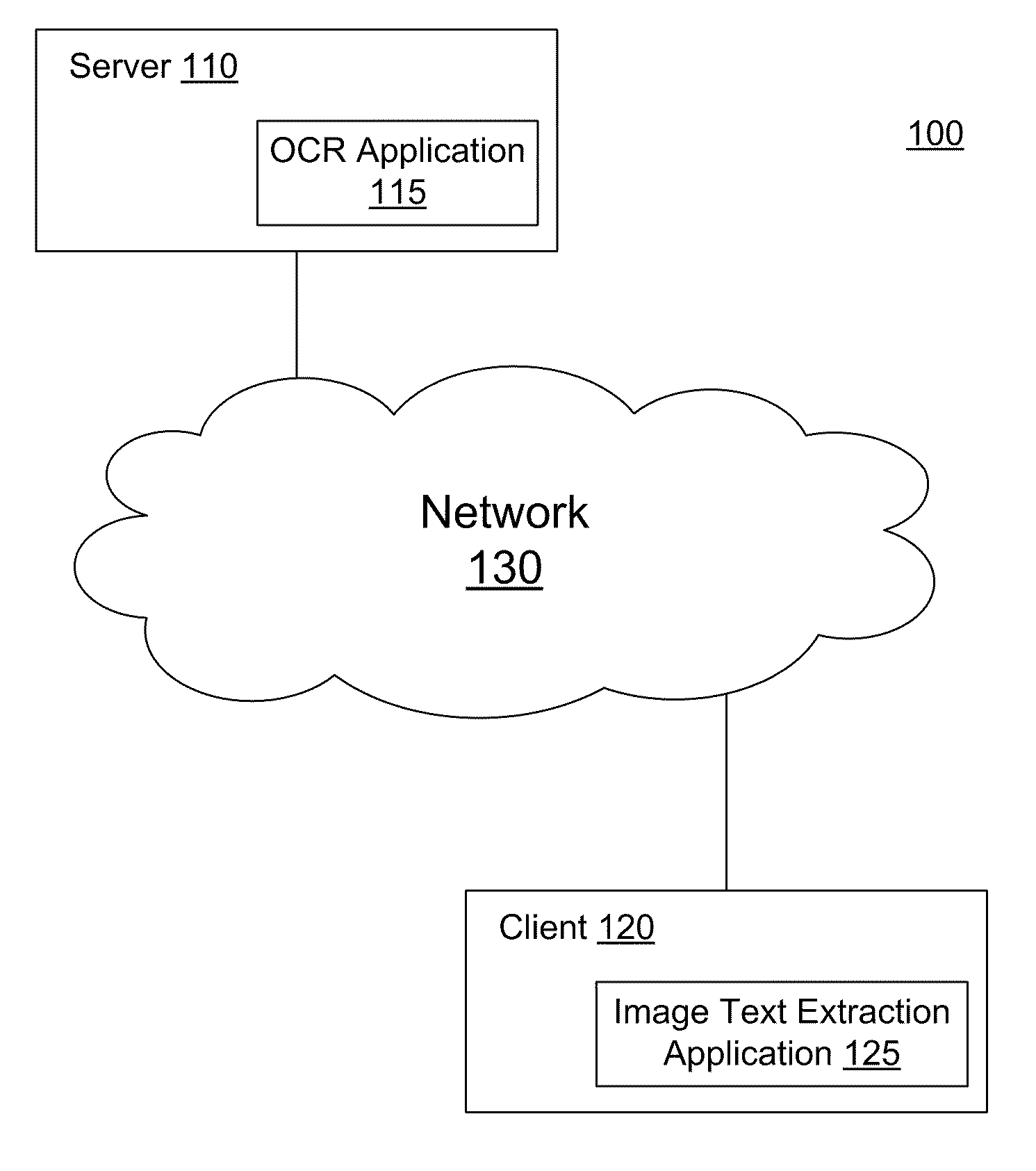

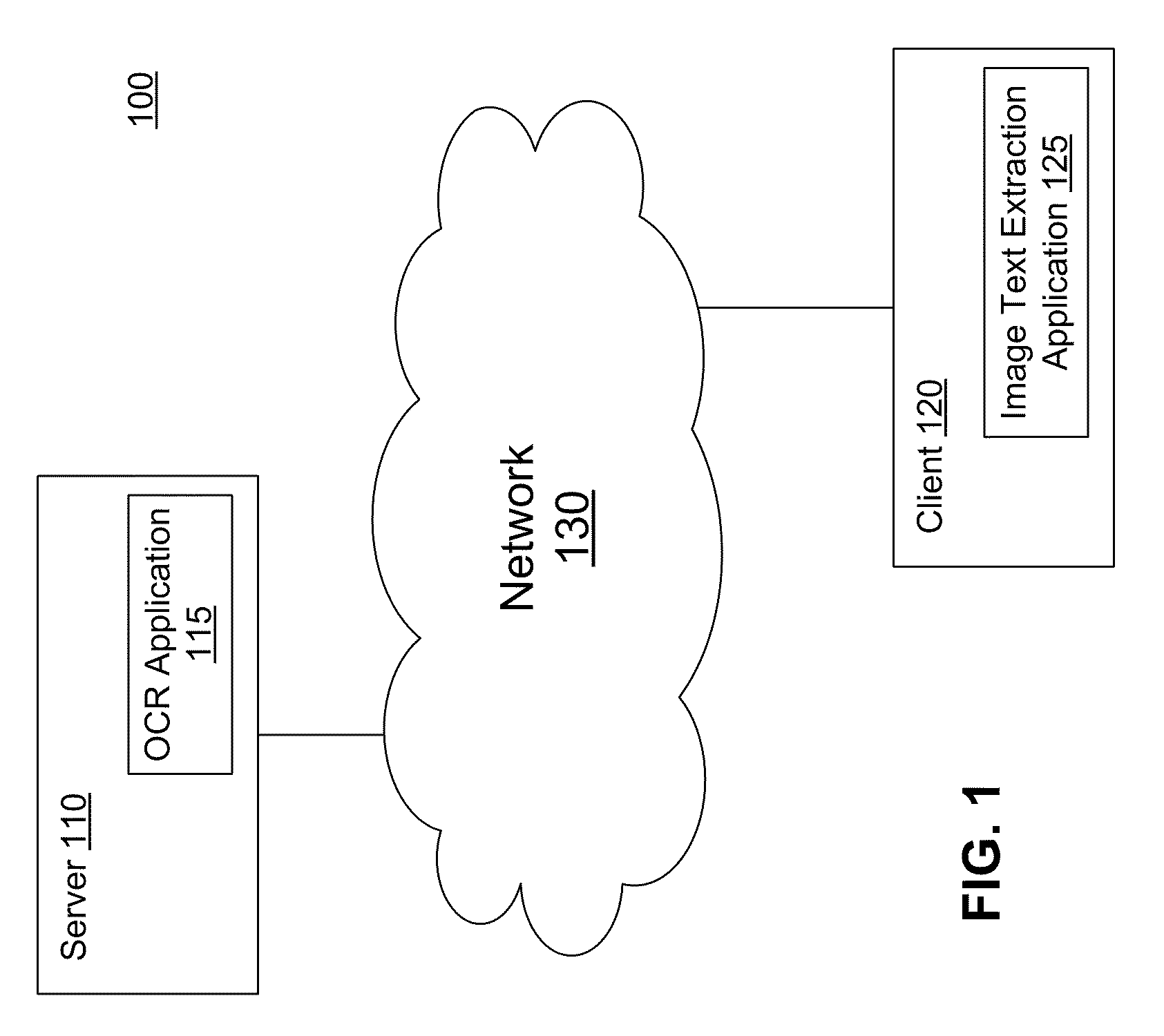

A live video stream captured by an on-device camera is displayed on a screen with an overlaid guideline. Video frames of the live video stream are analyzed for a video frame with acceptable quality. A text region is identified in the video frame approximate to the on-screen guideline and cropped from the video frame. The cropped image is transmitted to an optical character recognition (OCR) engine, which processes the cropped image and generates text in an editable symbolic form (the OCR'ed text). A confidence score is determined for the OCR'ed text and compared with a threshold value. If the confidence score exceeds the threshold value, the OCR'ed text is outputted.

Owner:GOOGLE LLC

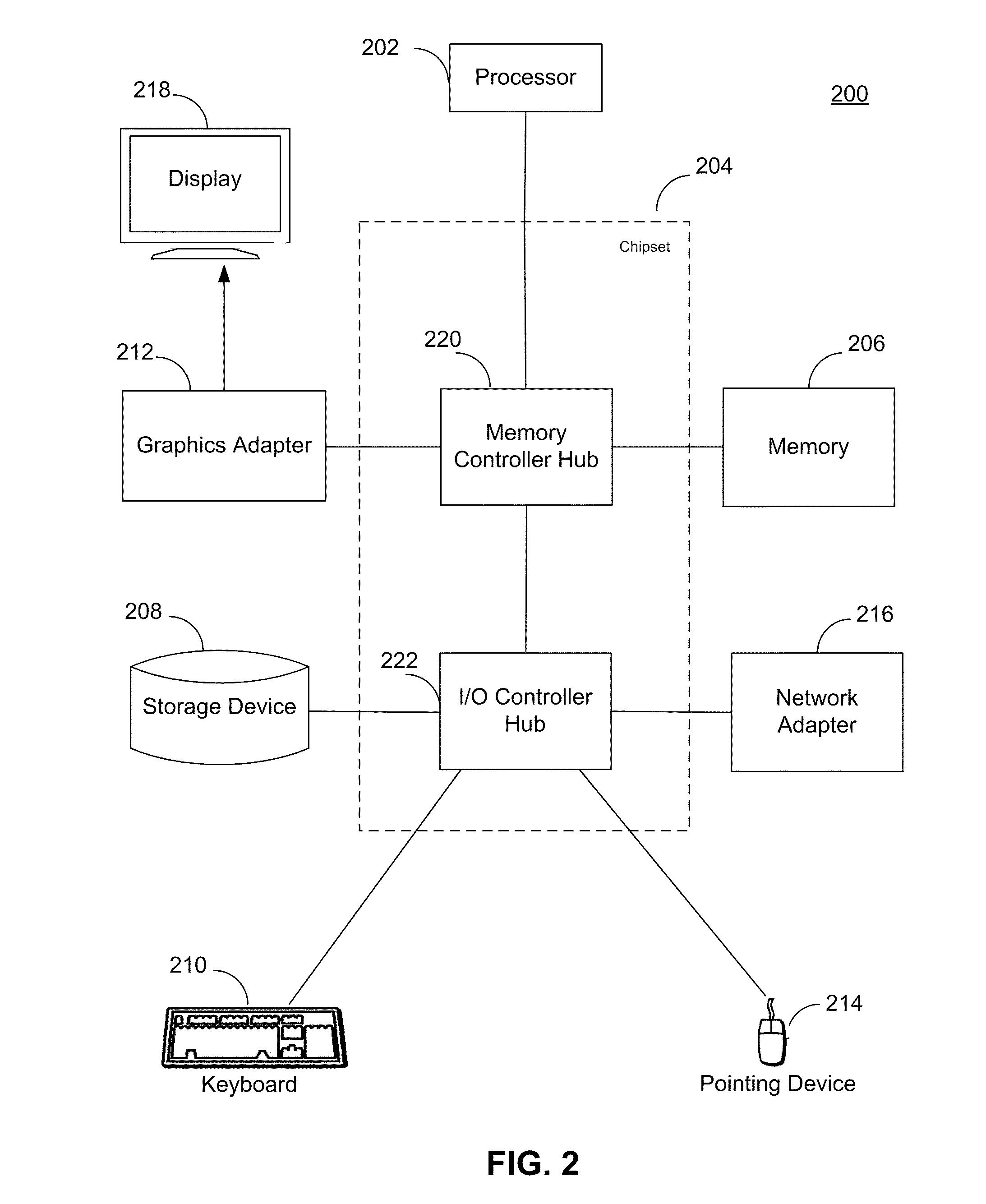

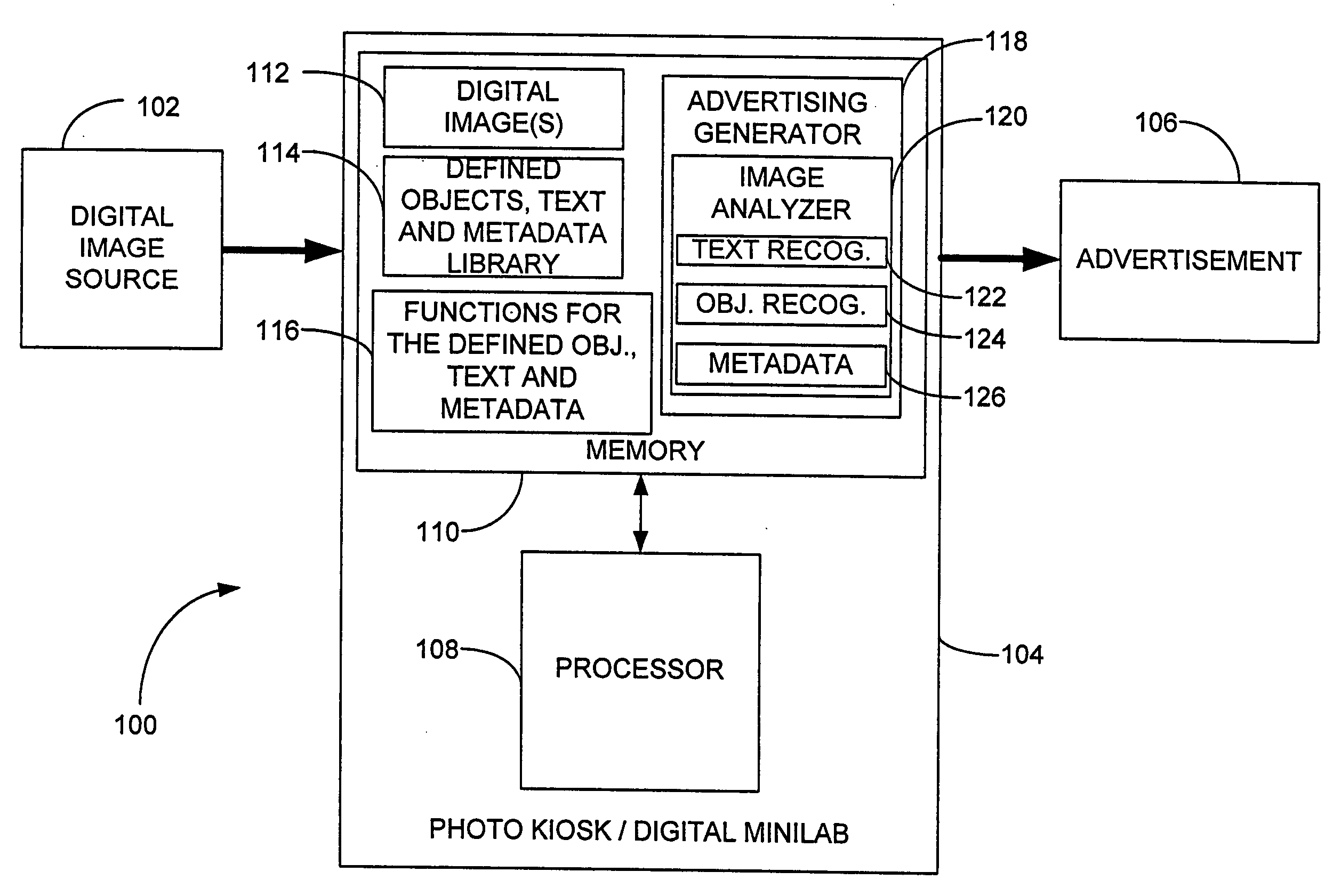

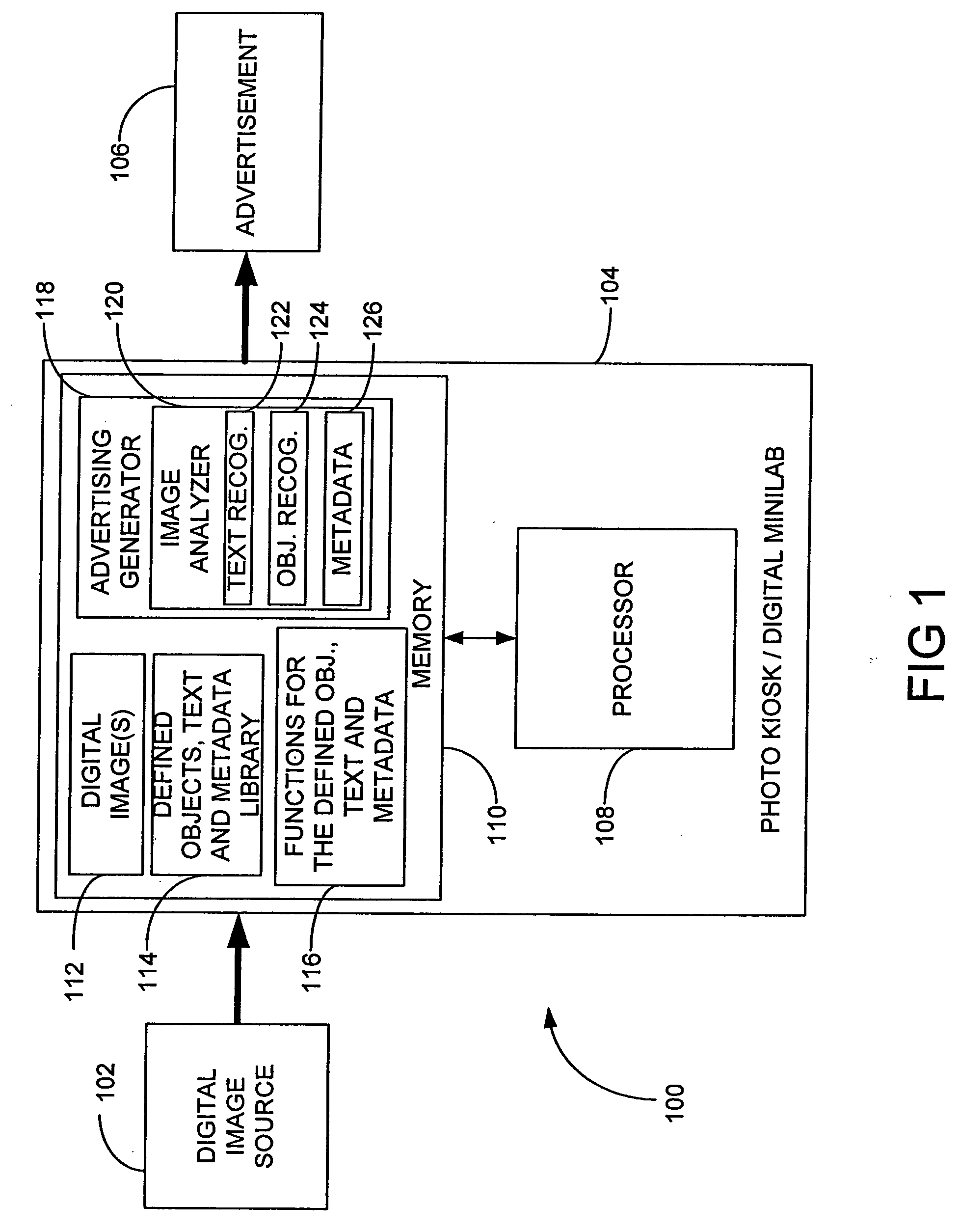

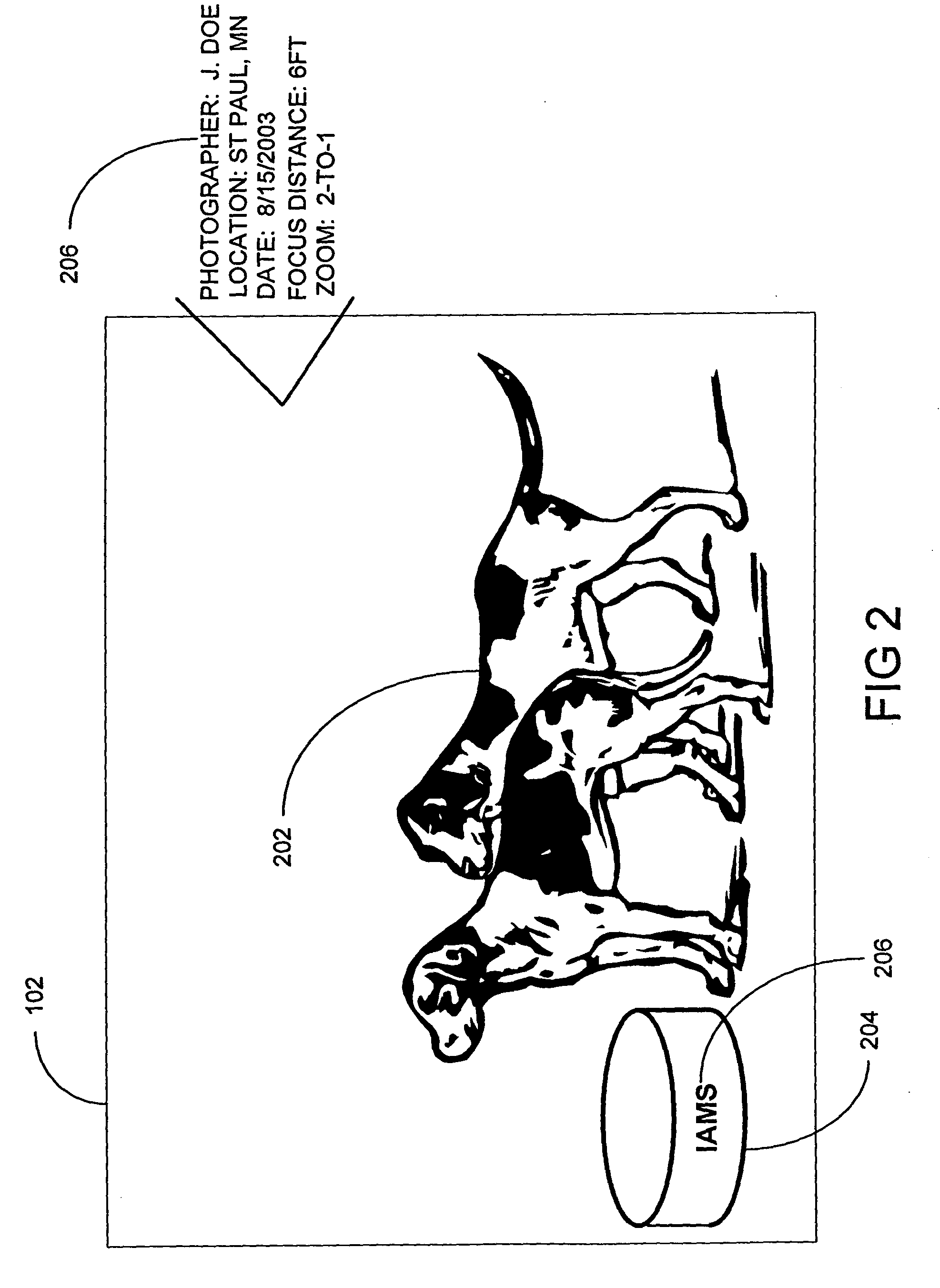

Apparatus and method to advertise to the consumer based off a digital image

InactiveUS20050018216A1Digitally marking record carriersDigital computer detailsText recognitionComputer vision

The present invention provides an apparatus, method and program product for analyzing a digital image for consumer identifying characteristics, and generating advertisements specifically to the consumer based on the identifying characteristics of the digital image. The analysis of the digital image may involve object recognition, text recognition and / or metadata analysis of a selected digital image. The present invention may be implemented, for example, within a photo kiosk or digital minilab. The generated advertisements may utilize a variety of media, including on-screen displays on the photo kiosk, a customized coupon, or a photo jacket insert.

Owner:IBM CORP

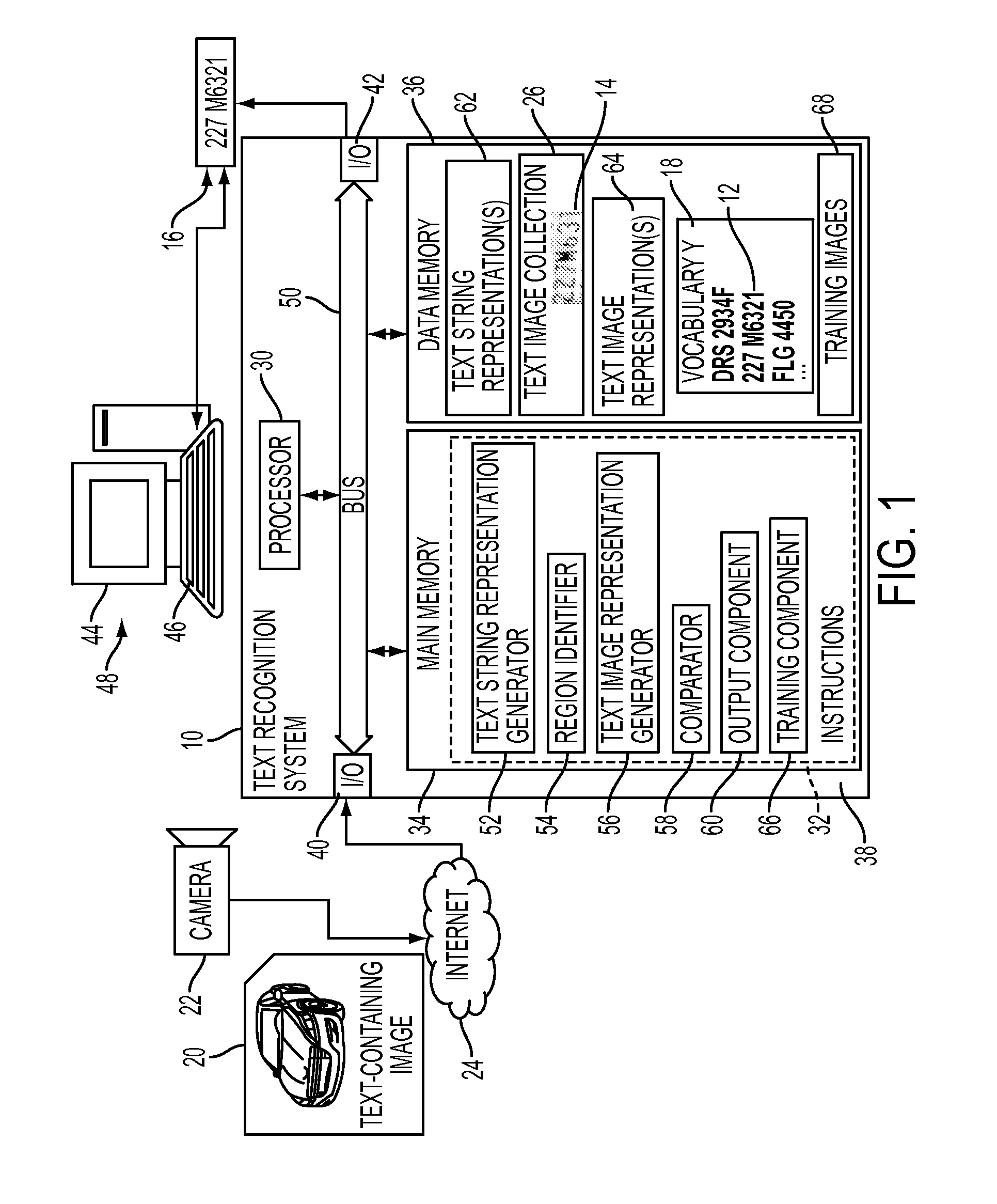

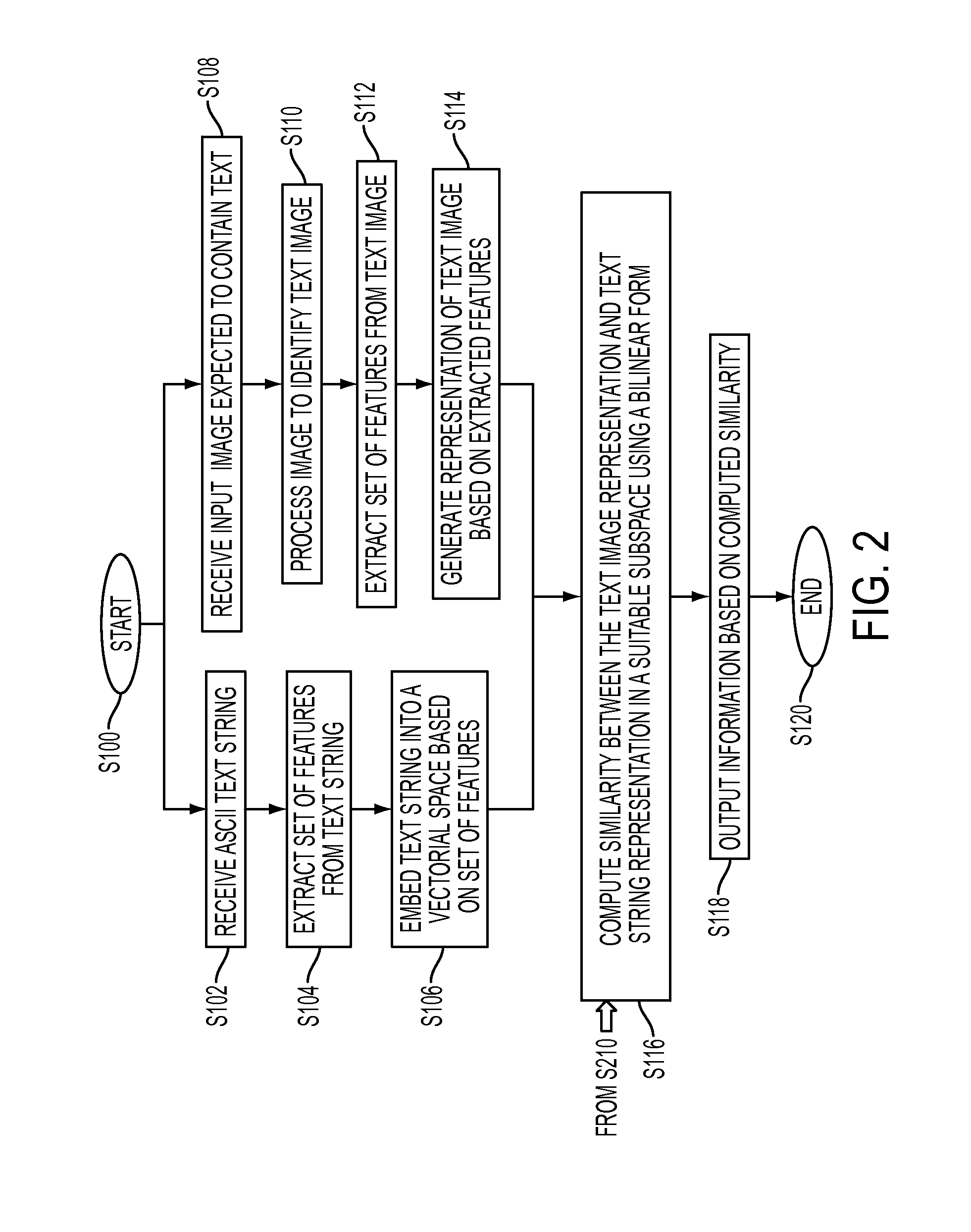

System and method for OCR output verification

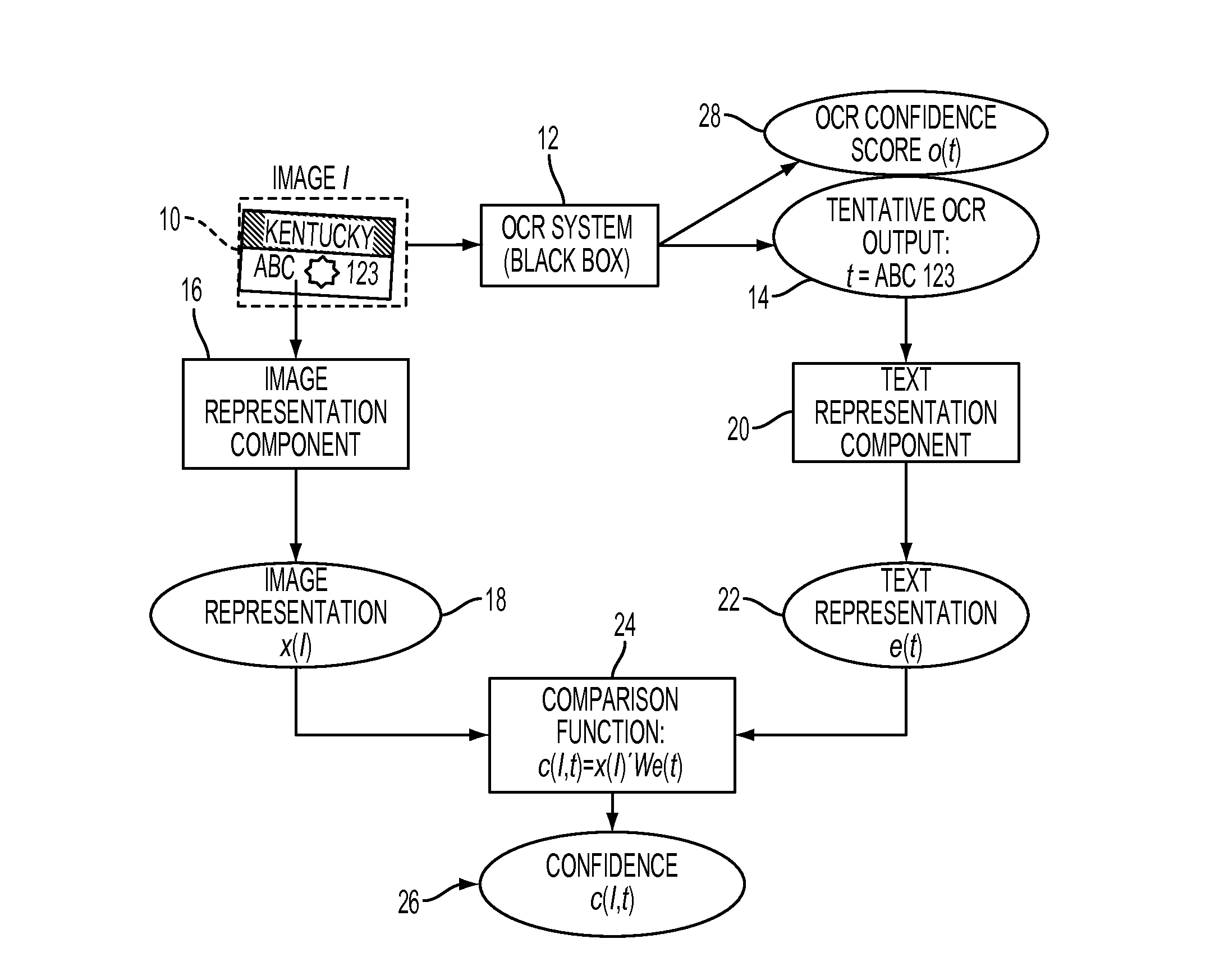

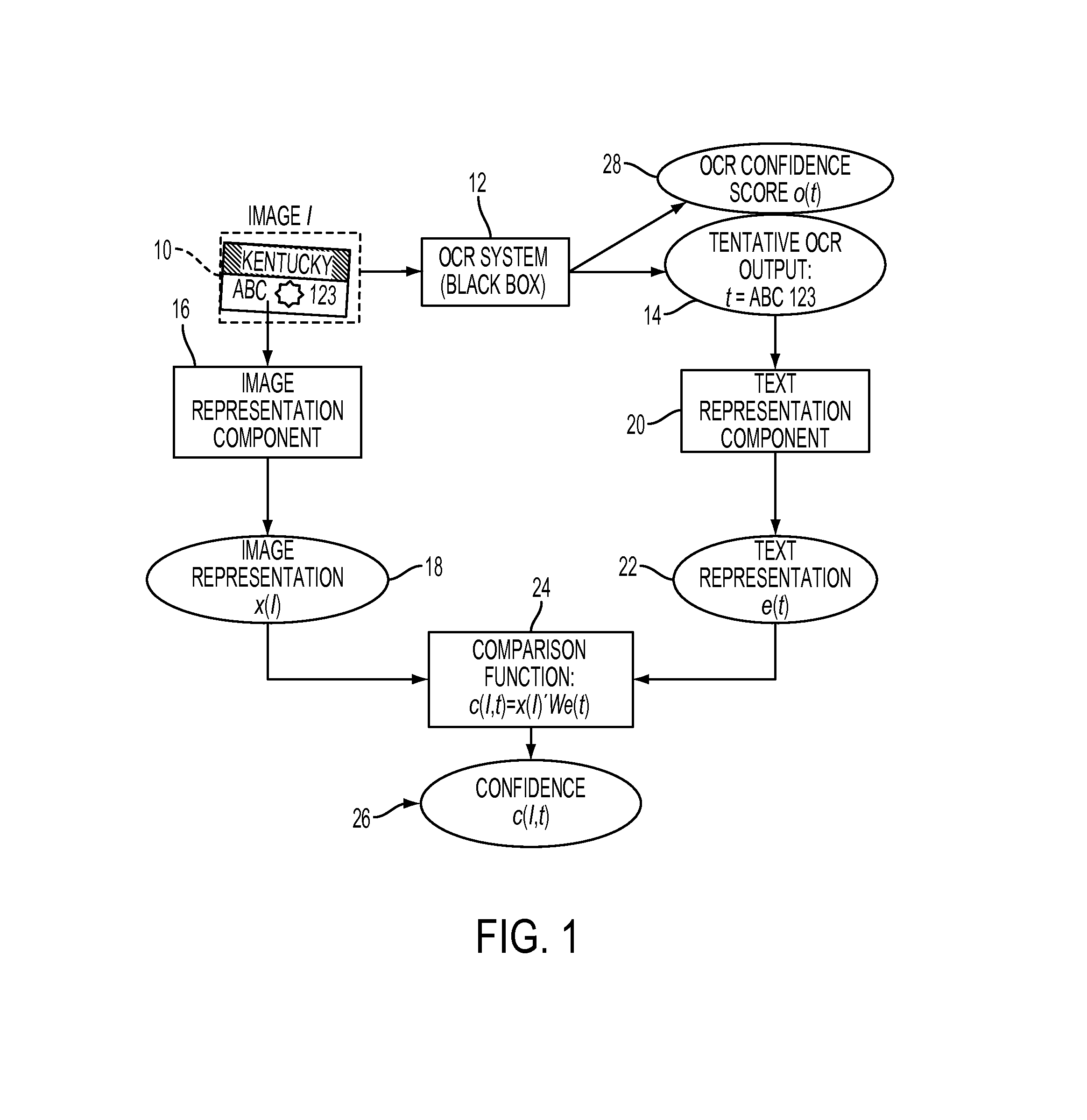

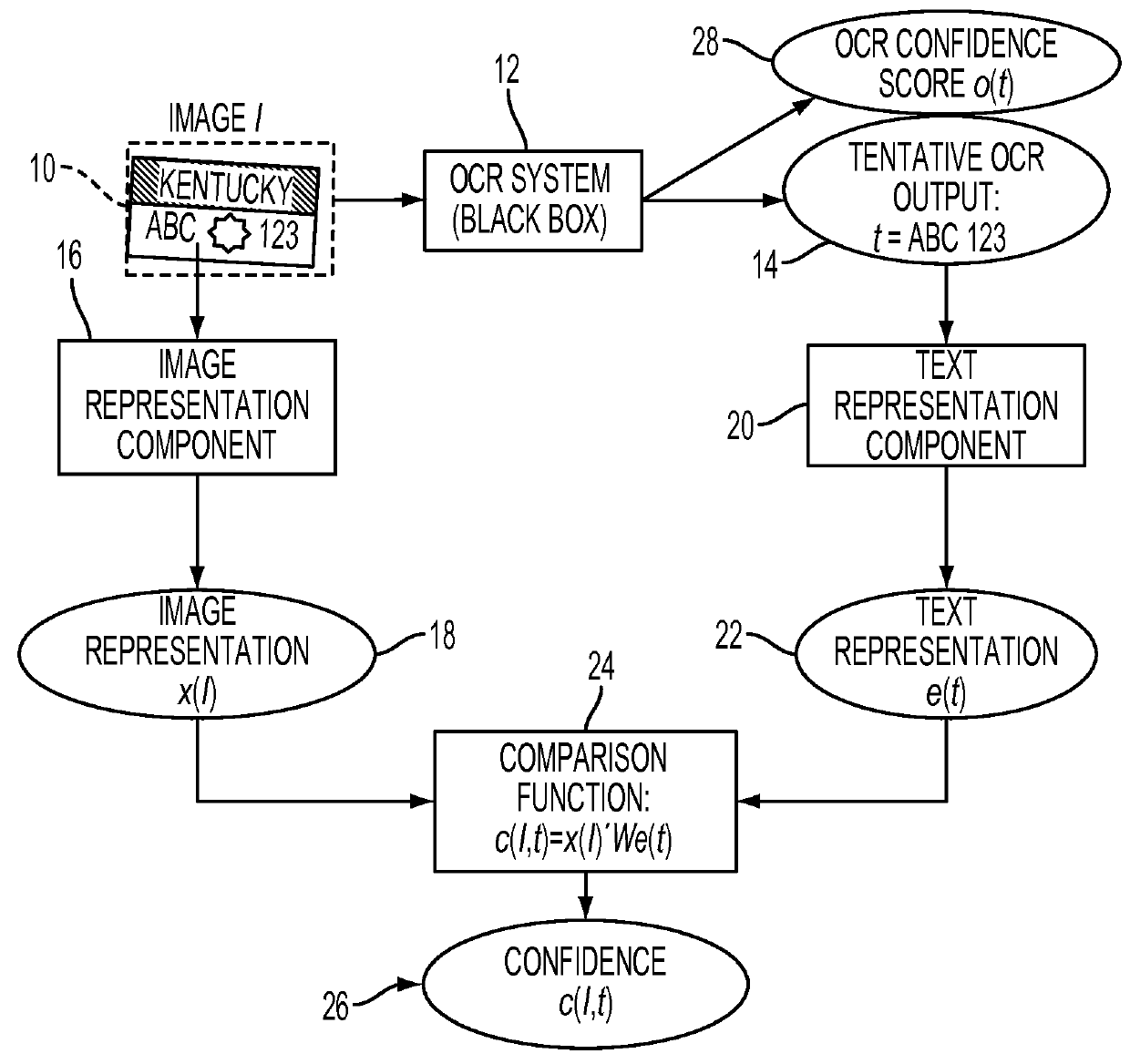

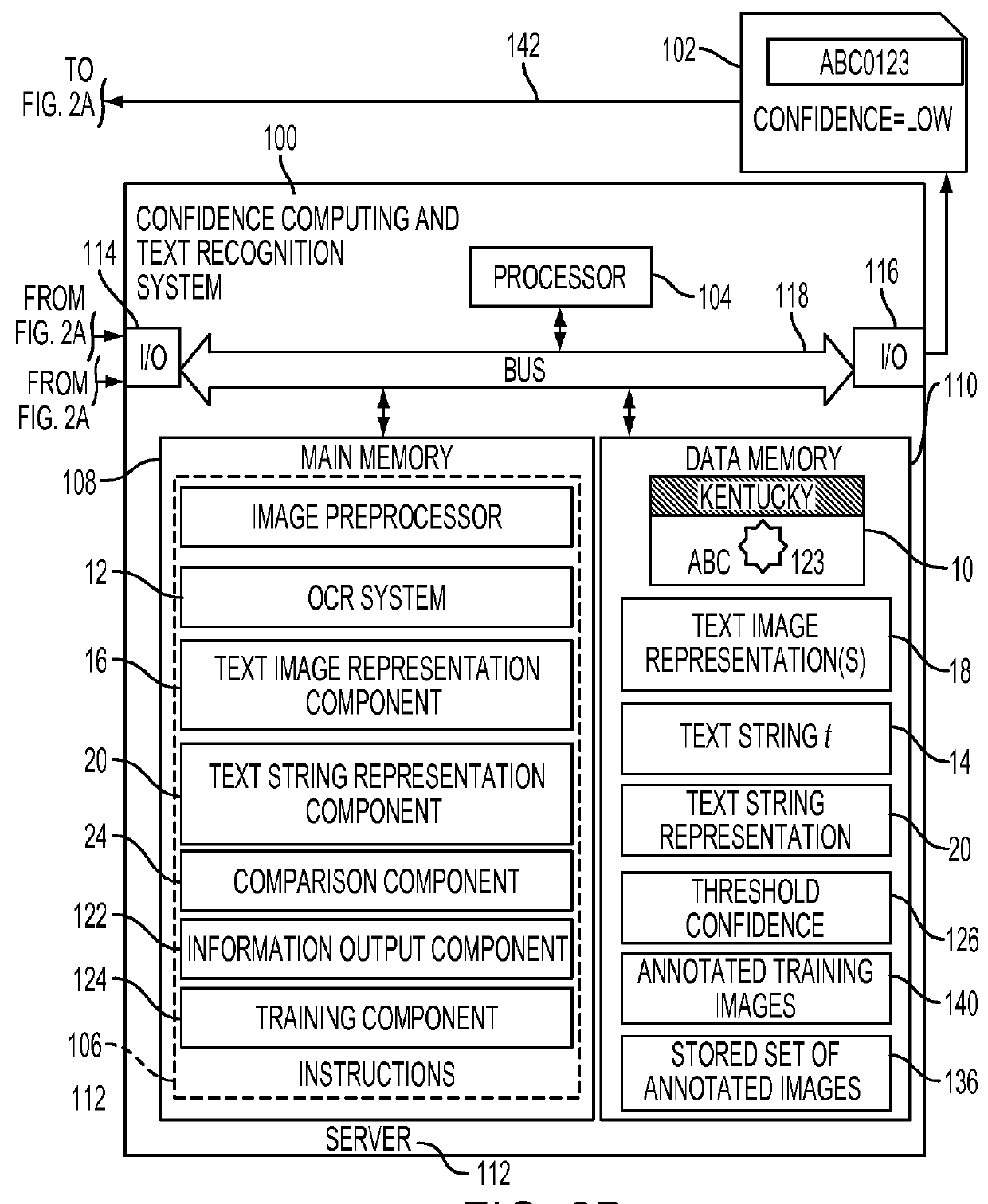

A system and method for computing confidence in an output of a text recognition system includes performing character recognition on an input text image with a text recognition system to generate a candidate string of characters. A first representation is generated, based on the candidate string of characters, and a second representation is generated based on the input text image. A confidence in the candidate string of characters is computed based on a computed similarity between the first and second representations in a common embedding space.

Owner:XEROX CORP

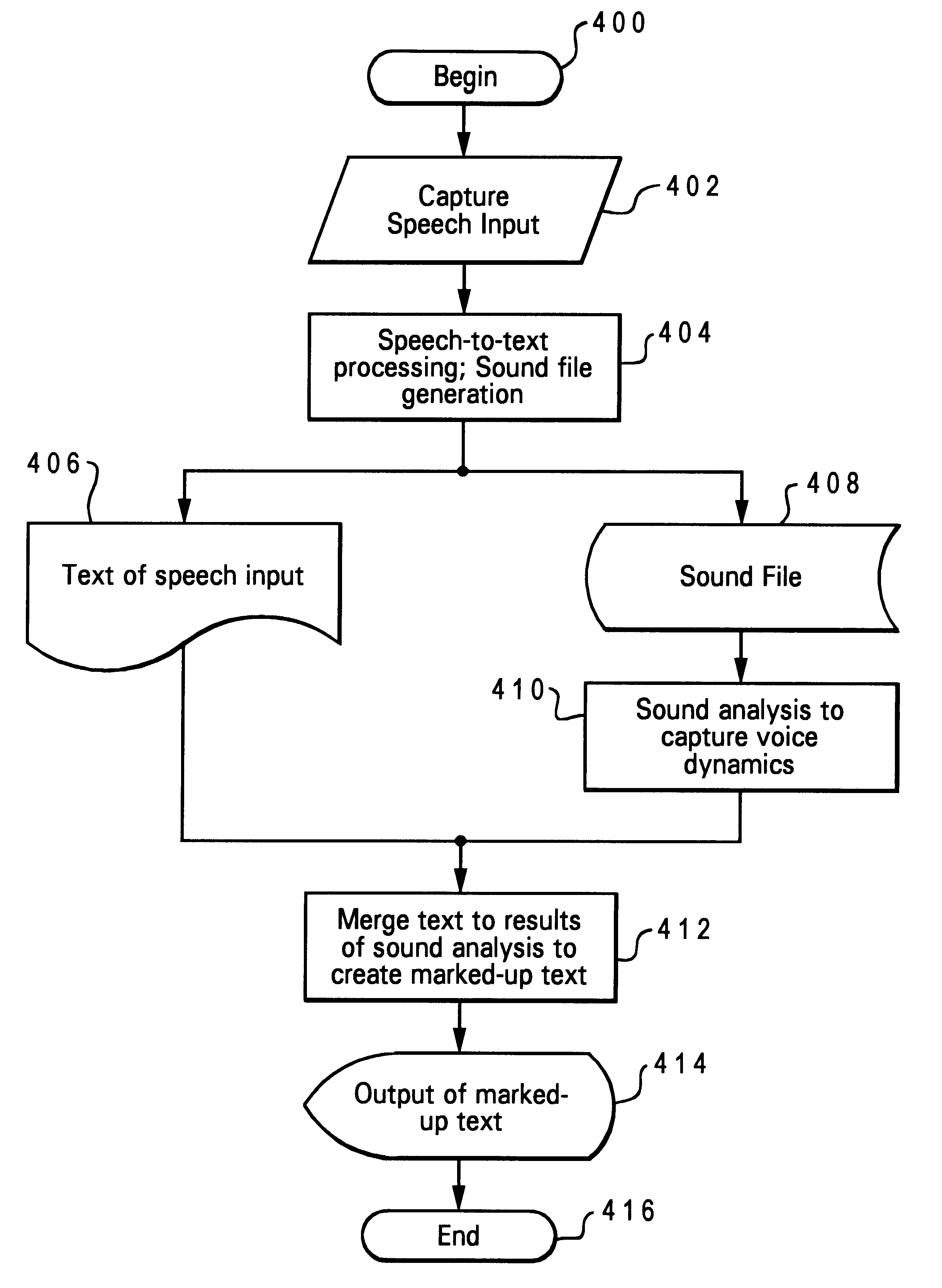

Capture and application of sender voice dynamics to enhance communication in a speech-to-text environment

A method for providing voice dynamics of human utterances converted to and represented by text within a data processing system. A plurality of predetermined parameters for recognition and representation of dynamics in human utterances are selected. An enhanced human speech recognition software program is created implementing the predetermined parameters on a data processing system. The enhanced software program includes an ability to monitor and record human voice dynamics and provide speech-to-text recognition. The dynamics in a human utterance is captured utilizing the enhanced human speech recognition software. The human utterance is converted into a textual representation utilizing the speech-to-text ability of the software. Finally, the dynamics are merged along with the textual representation of the human utterance to produce a marked-up text document on the data processing system.

Owner:NUANCE COMM INC

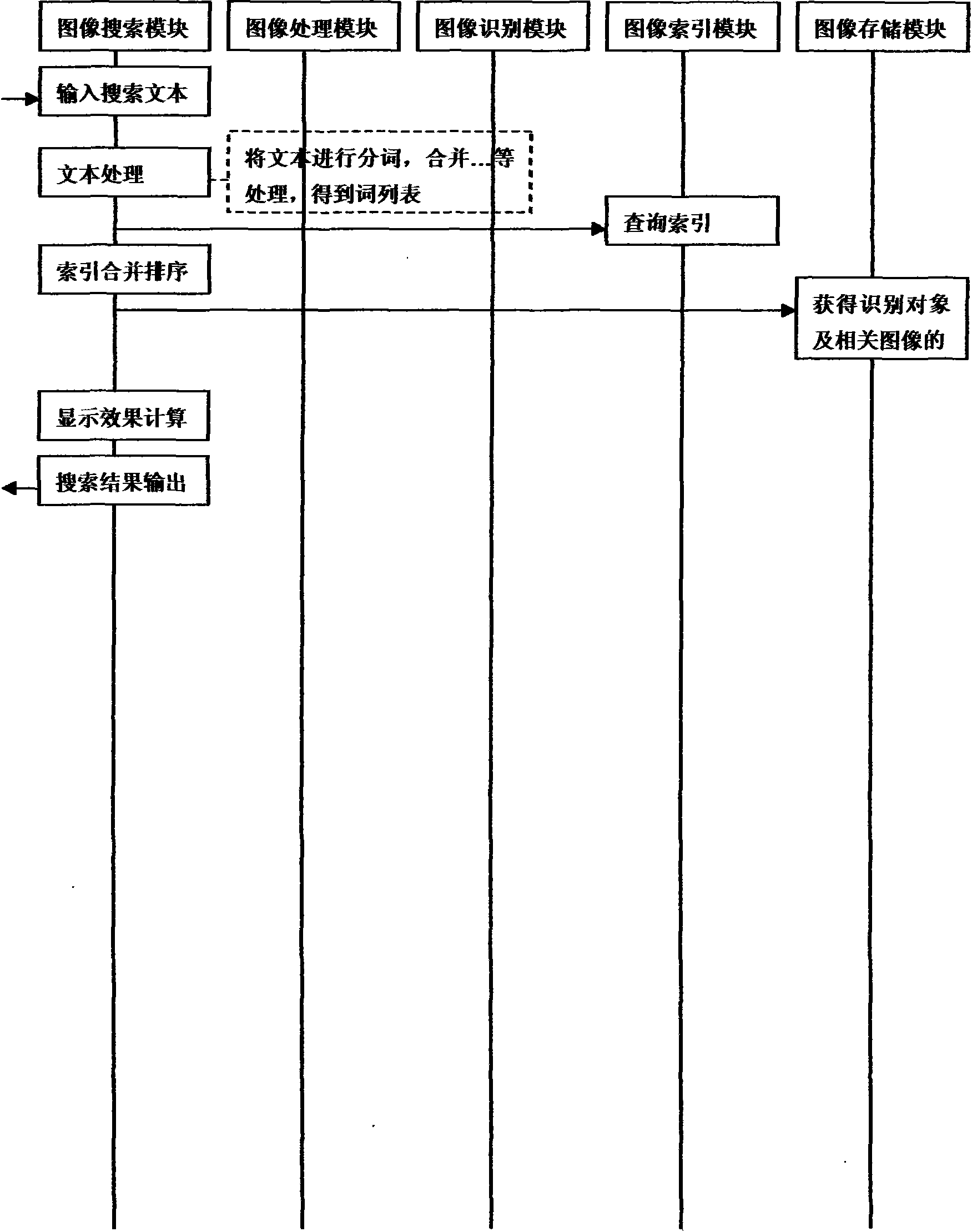

Realization method of image searching system based on image recognition

InactiveCN101571875ACharacter and pattern recognitionSpecial data processing applicationsText recognitionMethod of images

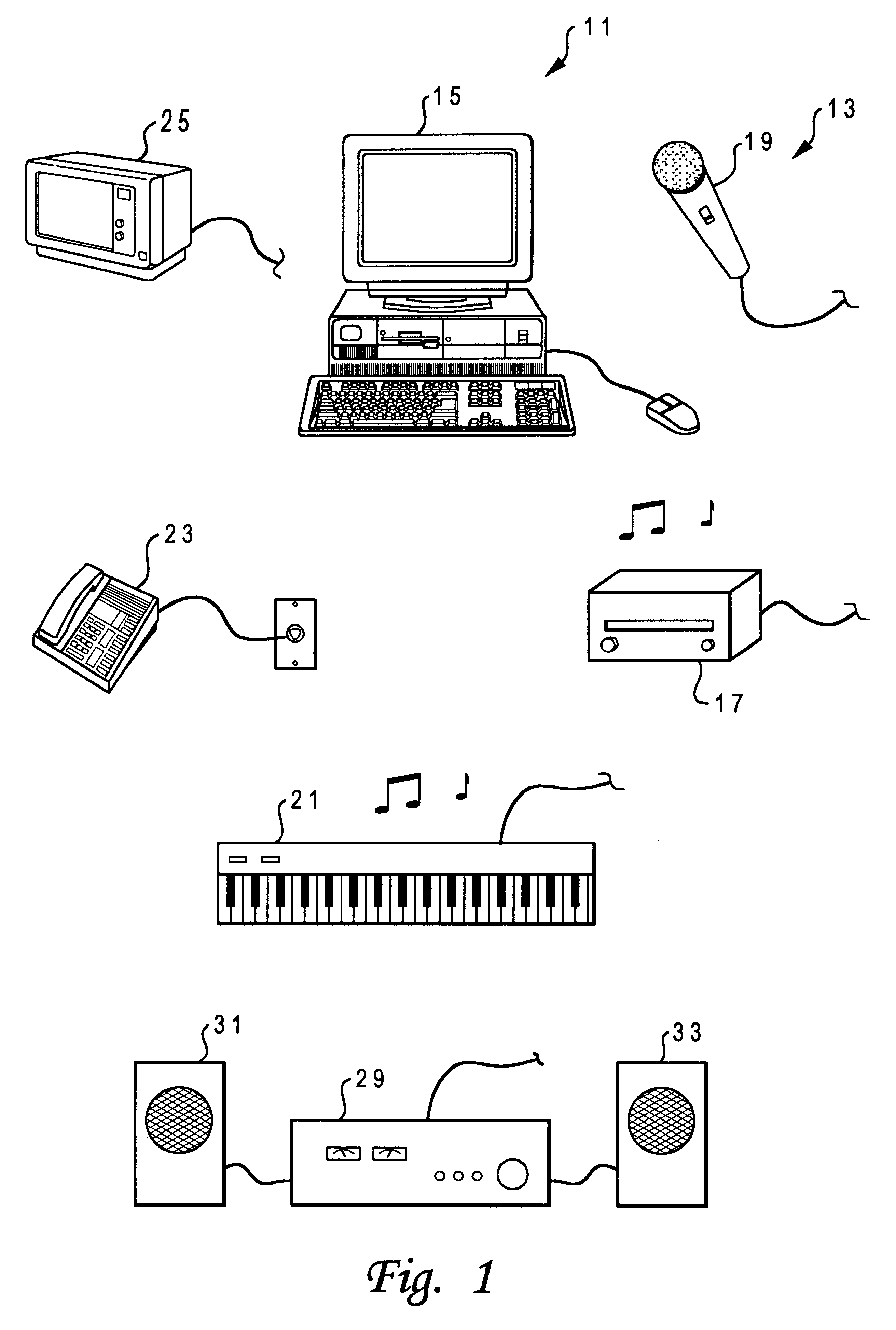

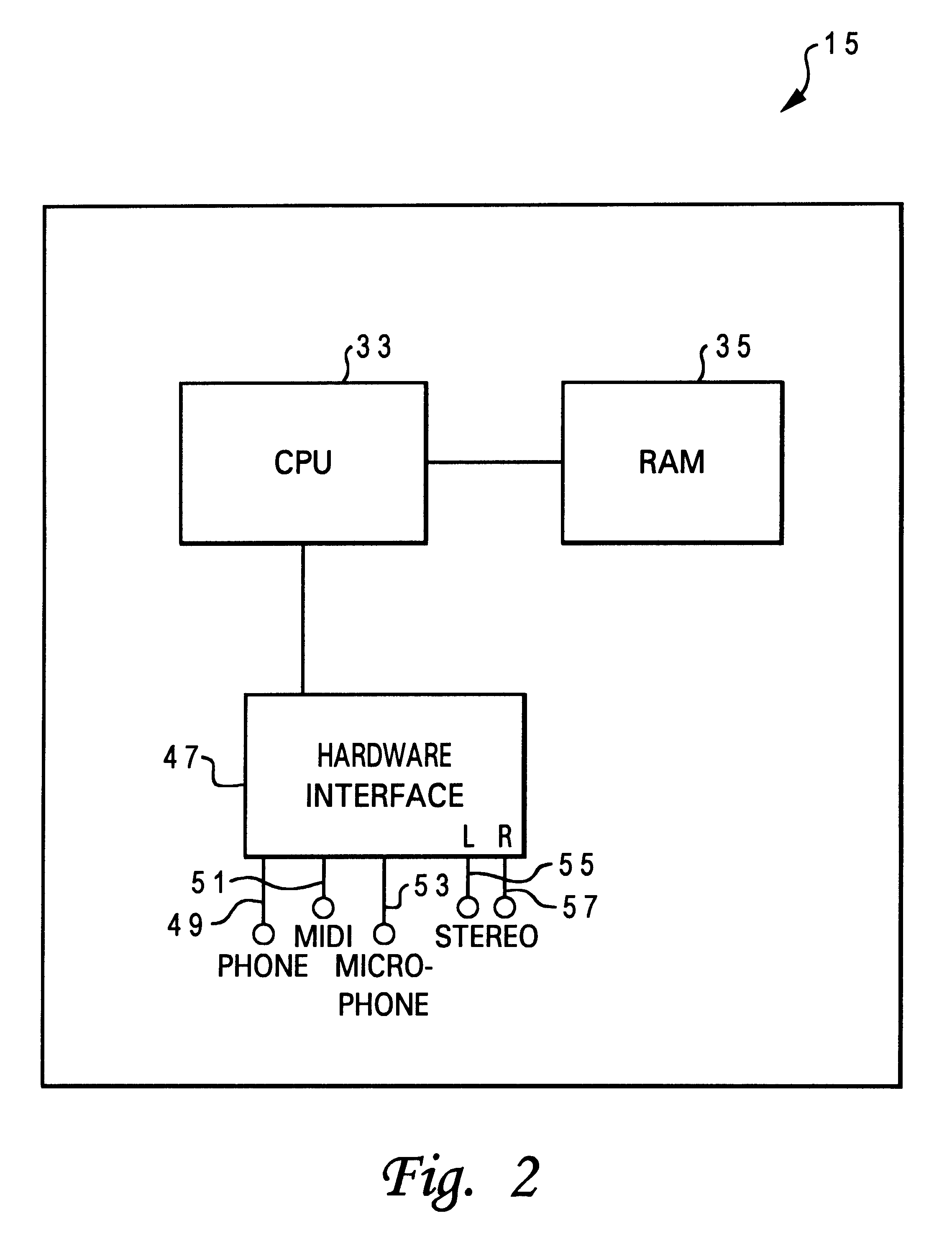

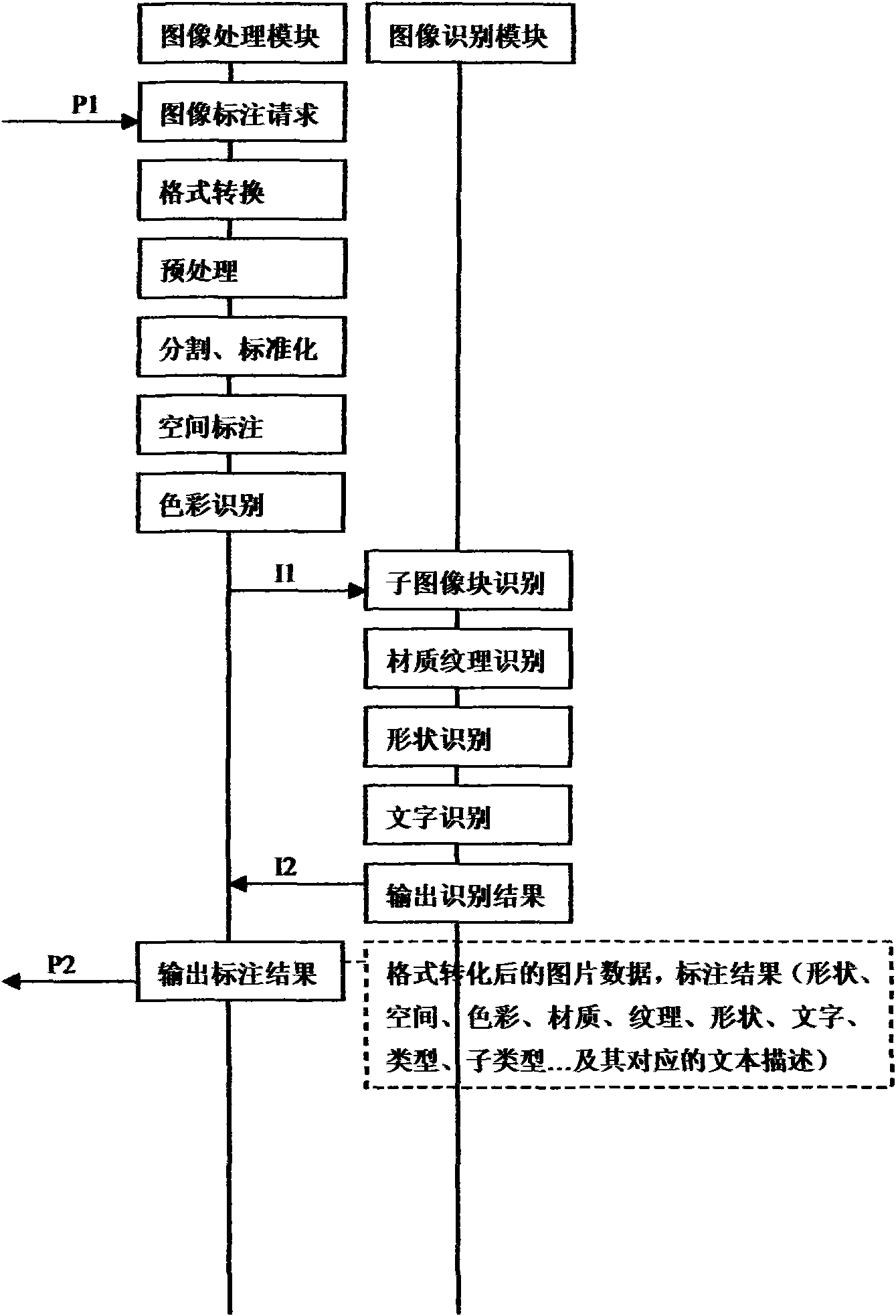

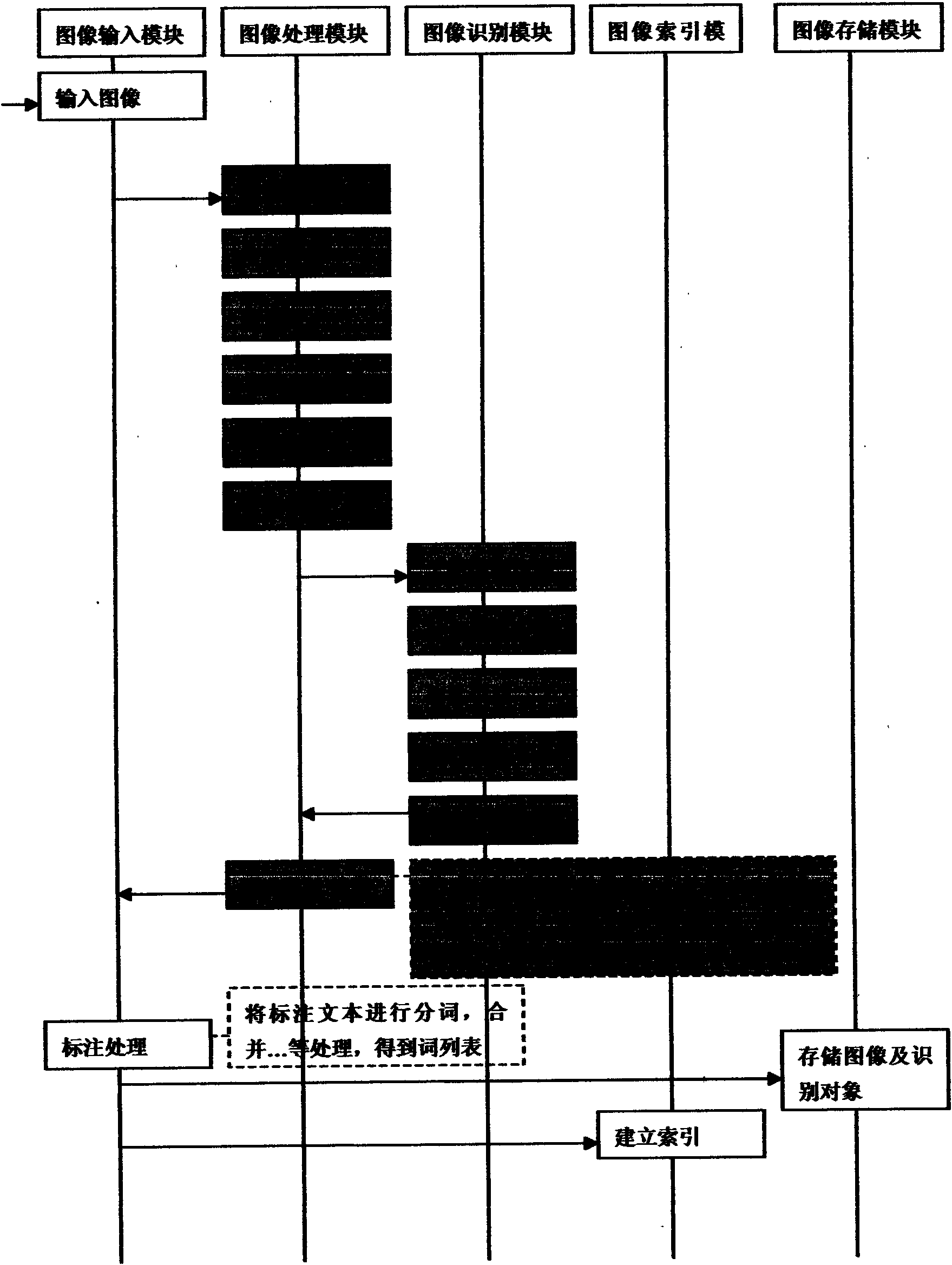

The invention discloses a realization method of an image searching system based on image recognition; wherein the realization method comprises the following steps: carrying out preprocessing steps such as format transformation on images in adding images; carrying out recursion multilevel cutting to obtain all potential characters, portraits, objects and object subimage blocks based on uniform space, contrast grade, similarity in color and texture; obtaining marking texts of position and color; carrying out texture and material recognition, character recognition, outline object recognition and text marking on the subimage blocks; carrying out combination and word segmentation on the marking results; establishing index for the word segmentation result and related subimage block information and storing related data of the subimage blocks; in the situation of searching based on images, first identifying images and marking texts; then carrying out word segmentation on the text to obtain index data of a keyword sequence; combining and sequencing the index data of the keyword sequence to obtain related data of the subimage blocks and the images matching with the result set, and returning to users. With the system and method of the invention, the users can input characters and images to retrieve the content of the images.

Owner:程治永

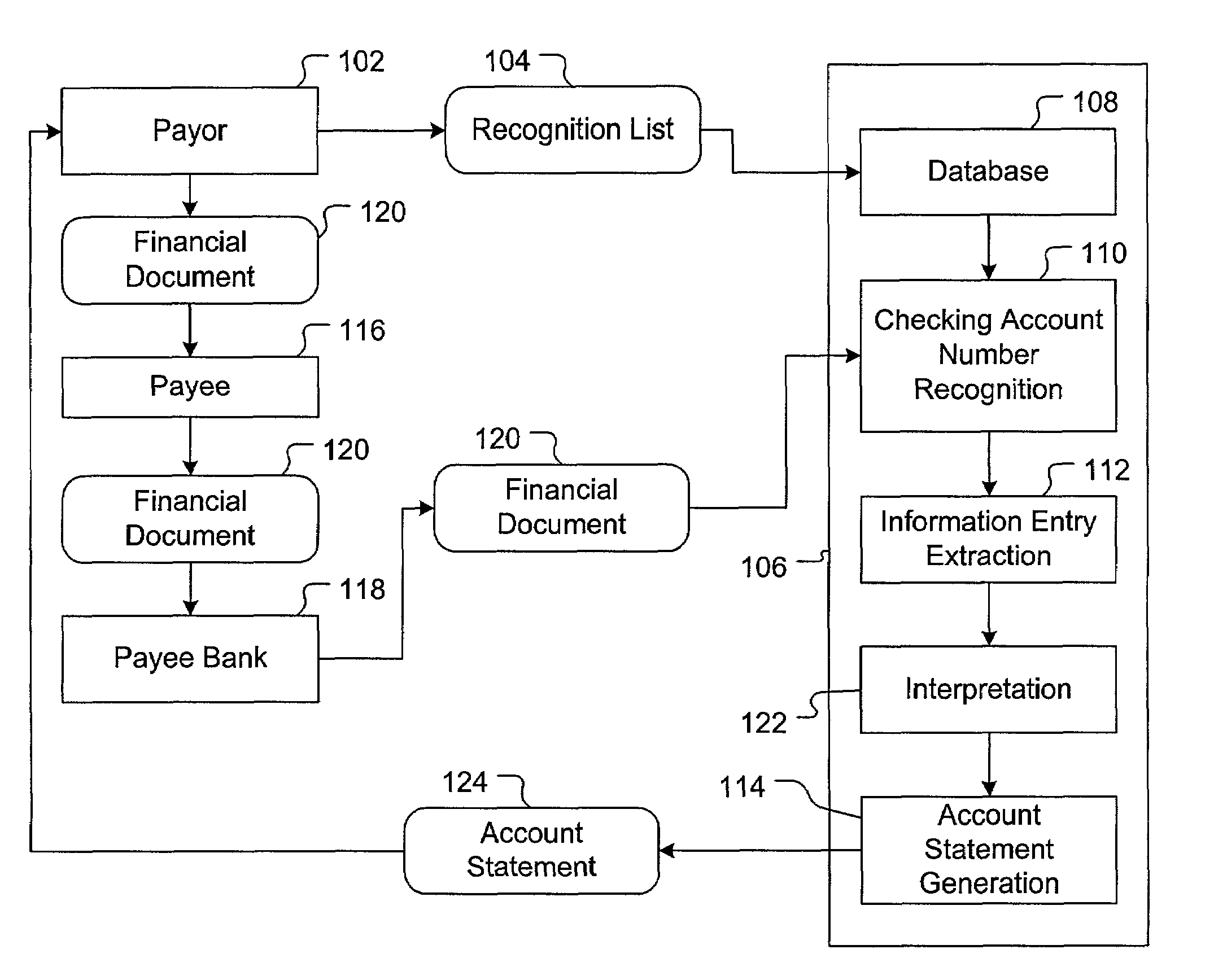

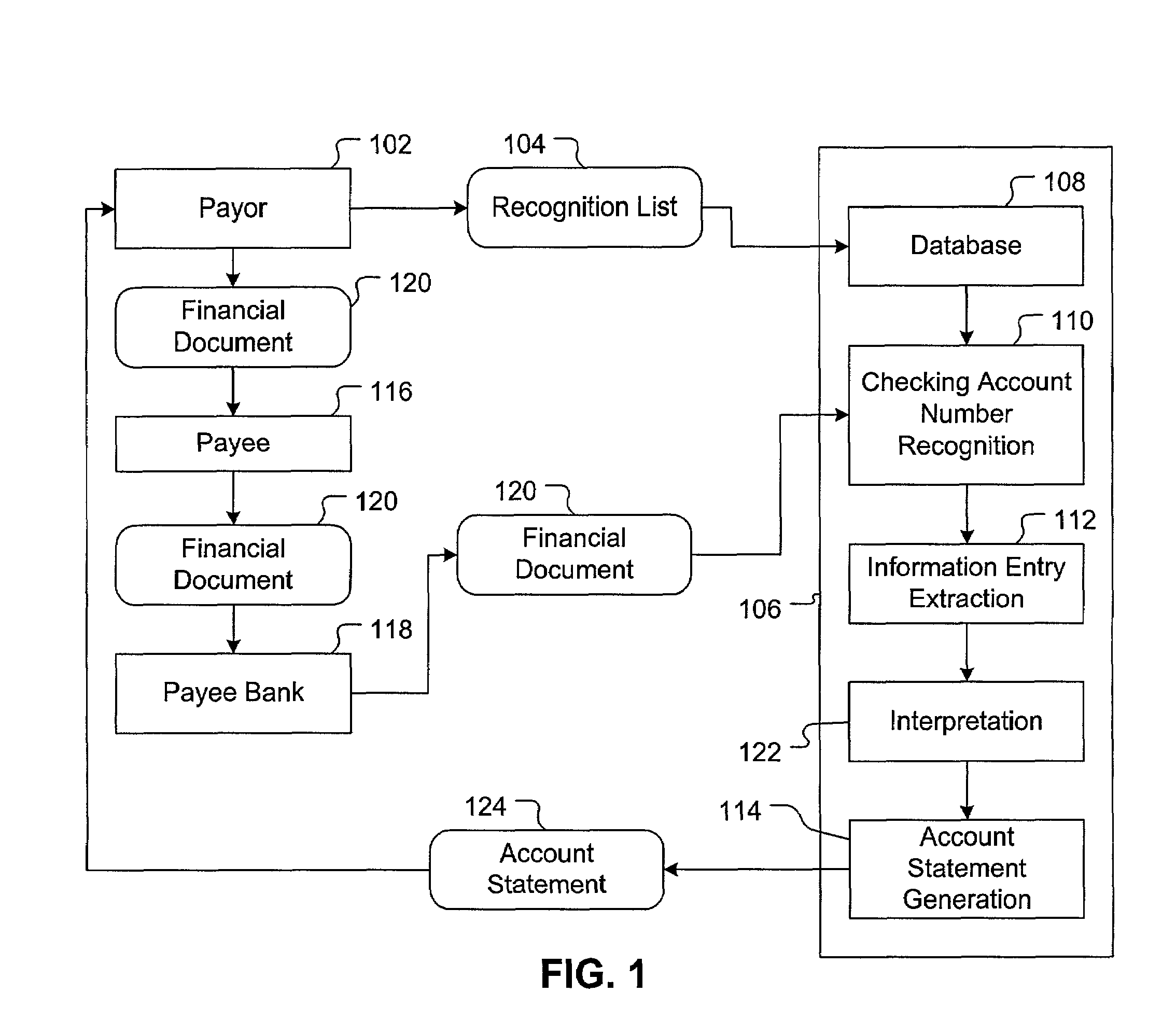

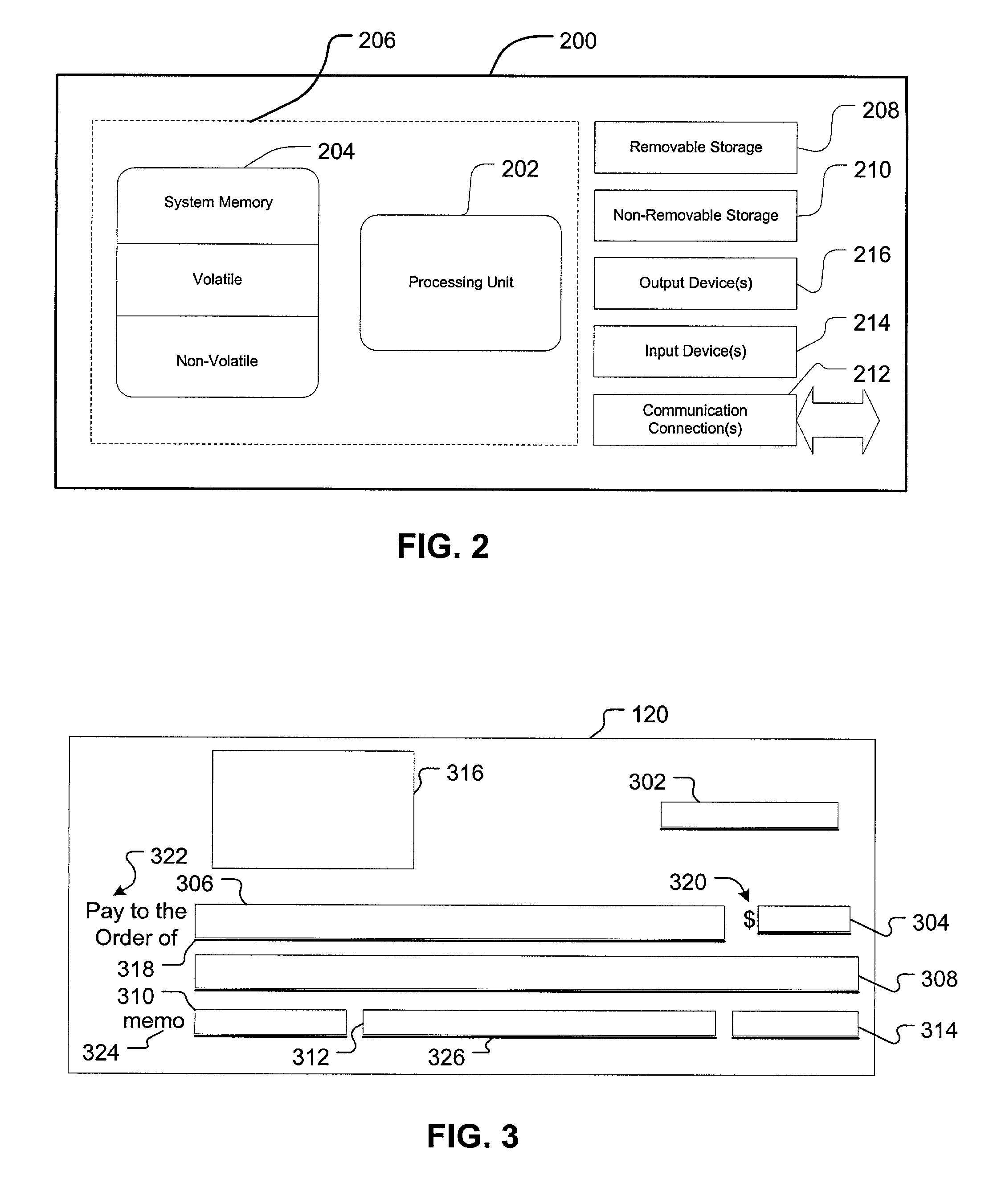

Extracting text written on a check

A check processing system for recognizing text on a check and processing the check using an interpretation for the recognized text is disclosed. The check processing system recognizes text on a check using a text recognition method wherein the text is compared to information entries in a customized recognition list stored in a restrictive lexicon. Thus, a process of creating restricted lexicons containing only customized recognition lists is disclosed. Like the text on the check, the information entries may be strings of characters, such as, without limitation, strings of numerical characters, alphabetic characters or a combination of alphabetic and numerical characters. Furthermore, the strings of characters for the text, as well as the information entries, may be a word or a phrase of words, such as payee names or memo categories. After the text is recognized, the check processing system processes the check using the interpretation by linking the check to the interpretation on an account statement.

Owner:PARASCRIPT

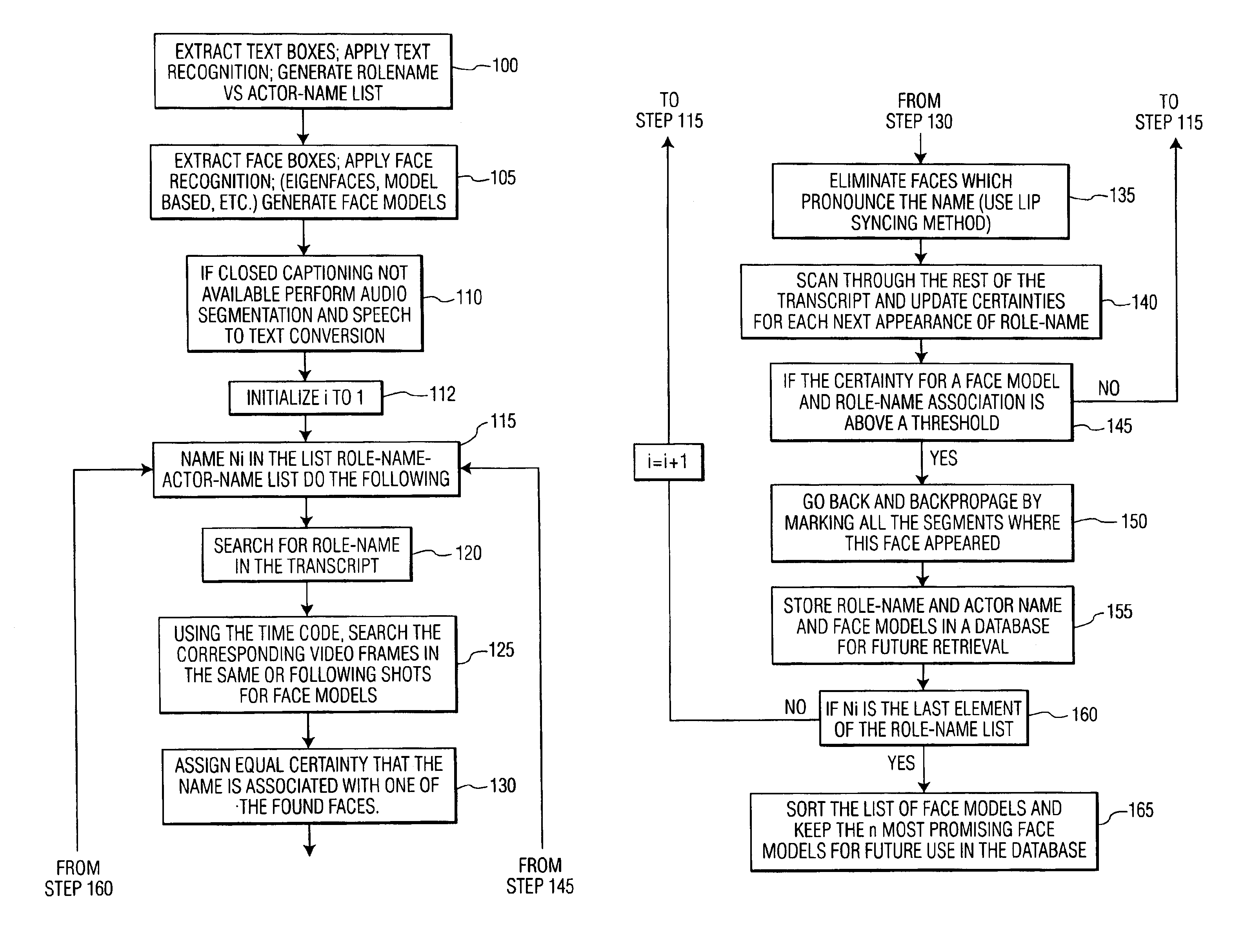

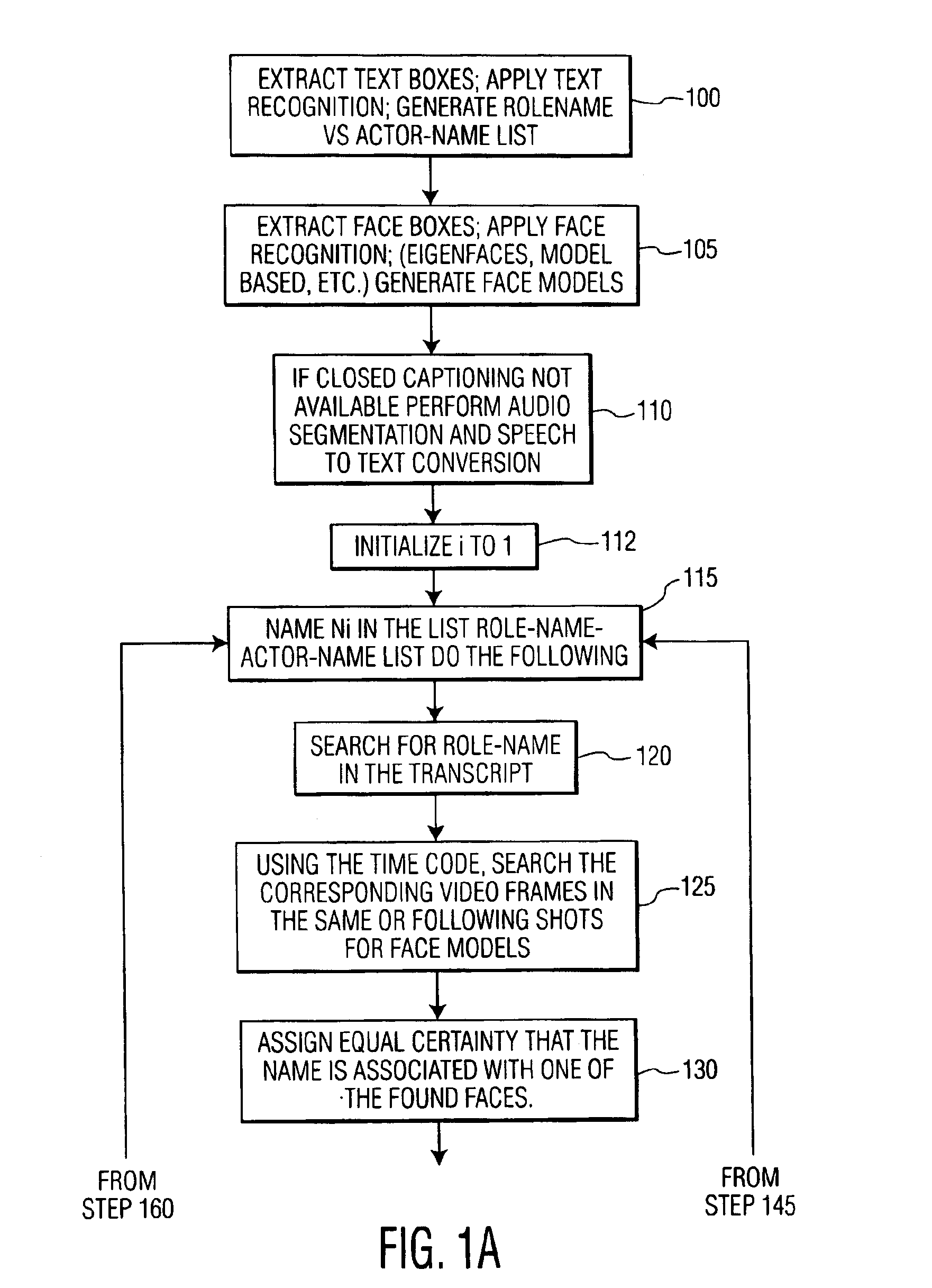

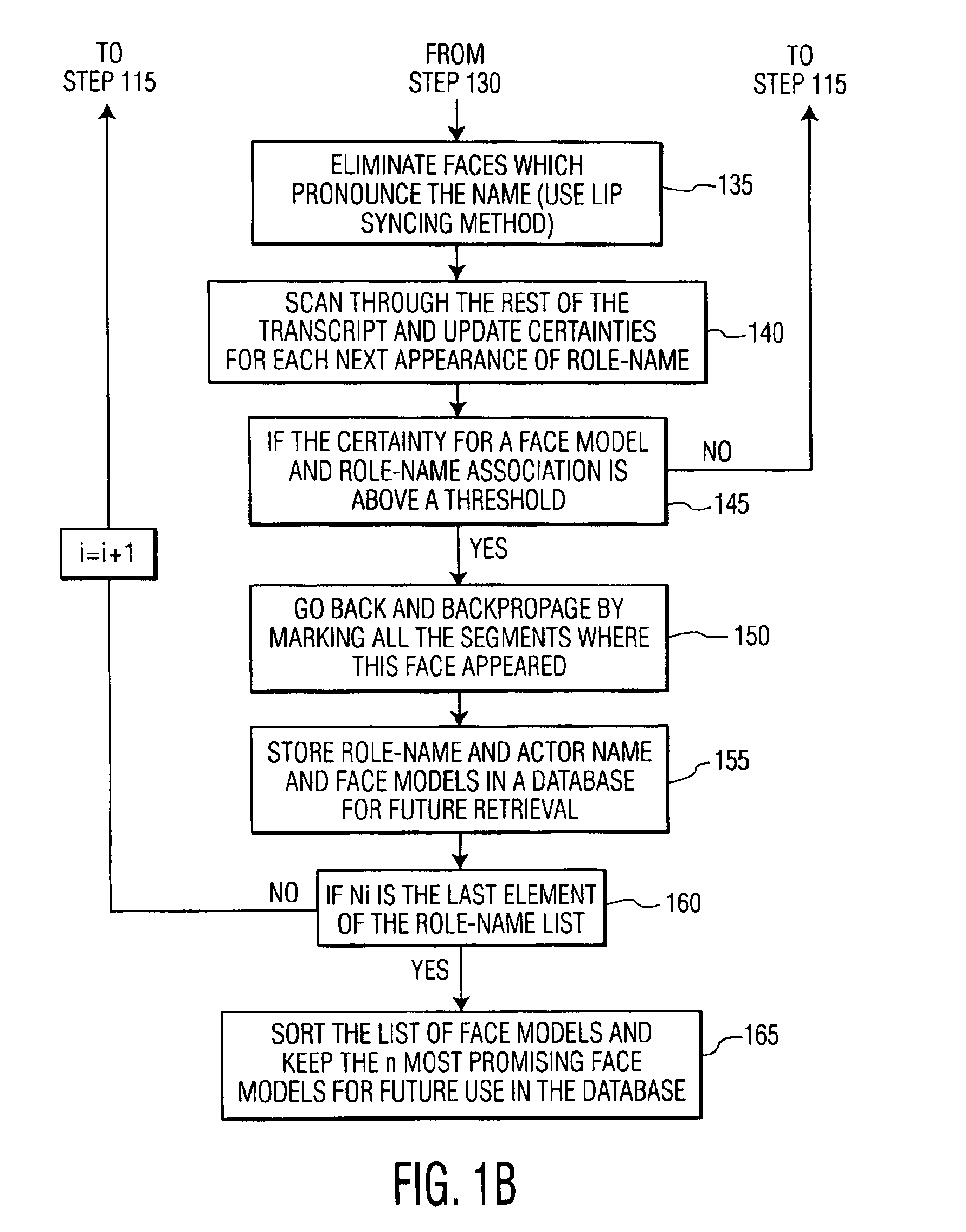

Method and system for name-face/voice-role association

InactiveUS6925197B2Digital data information retrievalData processing applicationsText recognitionVideo sequence

A method for providing name-face / voice-role association includes determining whether a closed captioned text accompanies a video sequence, providing one of text recognition and speech to text conversion to the video sequence to generate a role-name versus actor-name list from the video sequence, extracting face boxes from the video sequence and generating face models, searching a predetermined portion of text for an entry on the role-name versus actor-name list, searching video frames for face models / voice models that correspond to the text searched by using a time code so that the video frames correspond to portions of the text where role-names are detected, assigning an equal level of certainty for each of the face models found, using lip reading to eliminate face models found that pronounce a role-name corresponding to said entry on the role-name versus actor-name list, scanning a remaining portion of text provided and updating a level of certainty for said each of the face models previously found. Once a particular face model / voice model and role-name association has reached a threshold the role-name, actor name, and particular face model / voice model is stored in a database and can be displayed by a user when the threshold for the particular face model has been reached. Thus the user can query information by entry of role-name, actor name, face model, or even words spoken by the role-name as a basis for the association. A system provides hardware and software to perform these functions.

Owner:UNILOC 2017 LLC

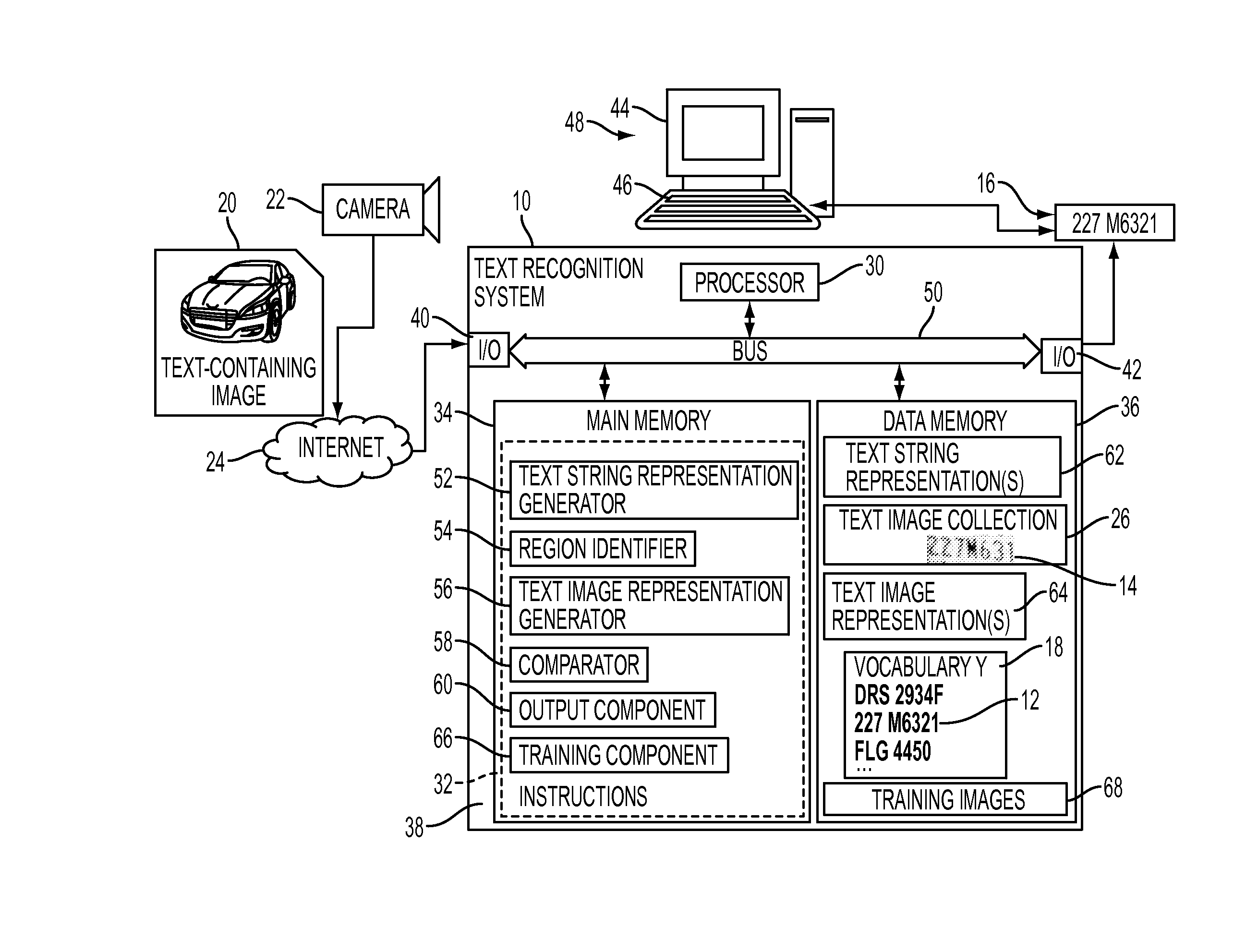

Label-embedding for text recognition

A system and method for comparing a text image and a character string are provided. The method includes embedding a character string into a vectorial space by extracting a set of features from the character string and generating a character string representation based on the extracted features, such as a spatial pyramid bag of characters (SPBOC) representation. A text image is embedded into a vectorial space by extracting a set of features from the text image and generating a text image representation based on the text image extracted features. A compatibility between the text image representation and the character string representation is computed, which includes computing a function of the text image representation and character string representation.

Owner:XEROX CORP

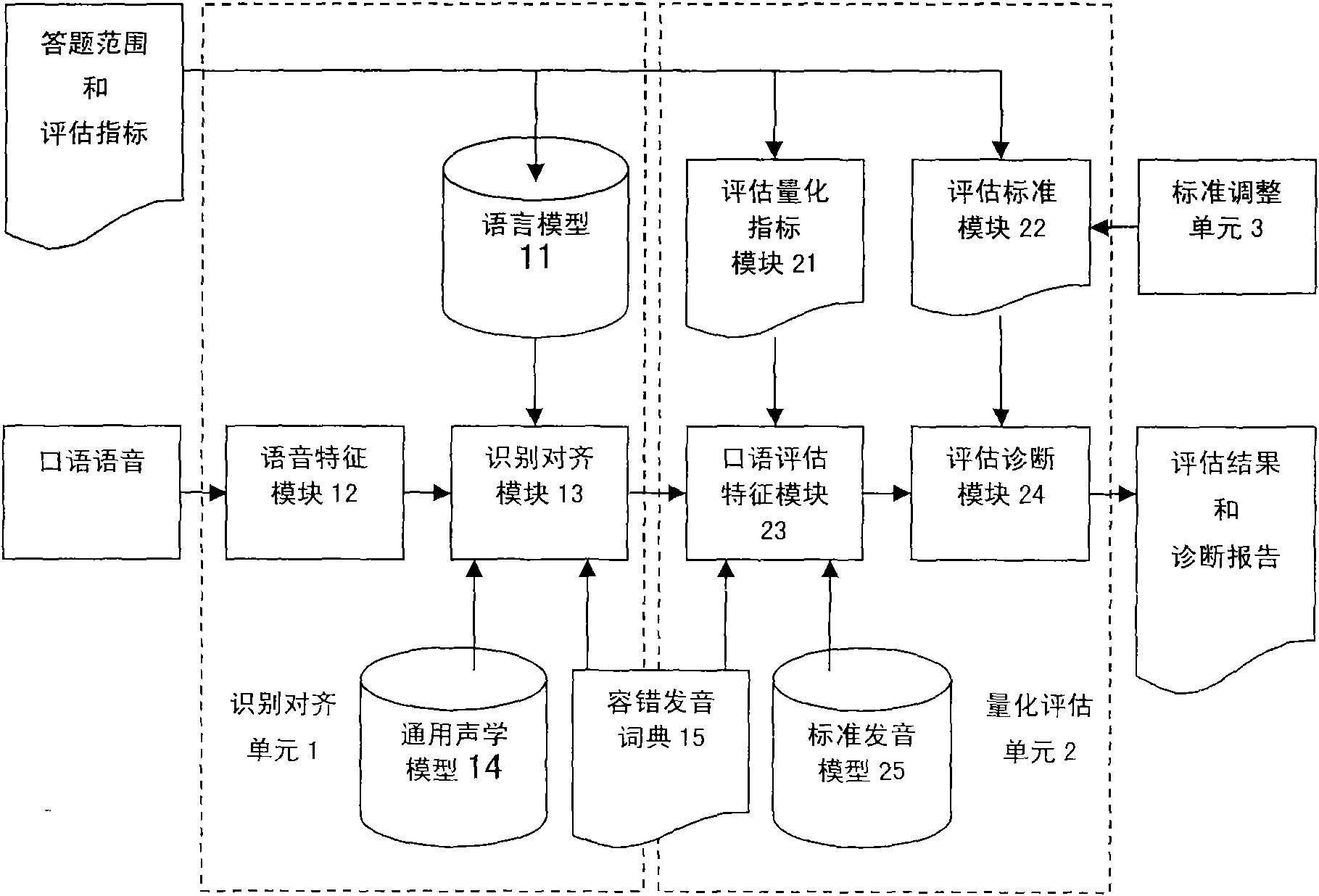

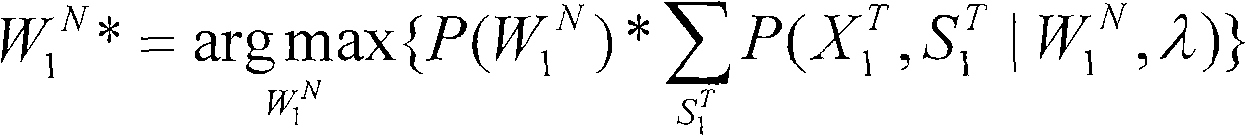

Objective standard based automatic oral evaluation system

ActiveCN101826263AImprove professionalismImprove notarizationSpeech recognitionElectrical appliancesEvaluation resultText recognition

The invention relates to an objective standard based automatic oral evaluation system comprising a recognition aligning unit, a quantization evaluation unit and a standard adjustment unit, wherein the recognition aligning unit receives oral voice messages and a answer range and evaluation index information, recognizes and aligns input oral voice messages, generates characters from the oral voice messages and aligns the characters with voices; the standard adjustment unit carries out quantization evaluation standard adjustment on specific examination objects, targets and demands by an examination organization and generates and outputs a final quantization evaluation standard; and the quantization evaluation unit is respectively connected with the recognition aligning unit and the standard adjustment unit, receives quantization evaluation index information, quantization evaluation standard information output by the standard adjustment unit and character recognition aligning information output by the recognition aligning unit, extracts oral evaluation features according to the three information, caries out automatic evaluation and diagnosis and generates evaluation results and diagnosis report information.

Owner:IFLYTEK CO LTD

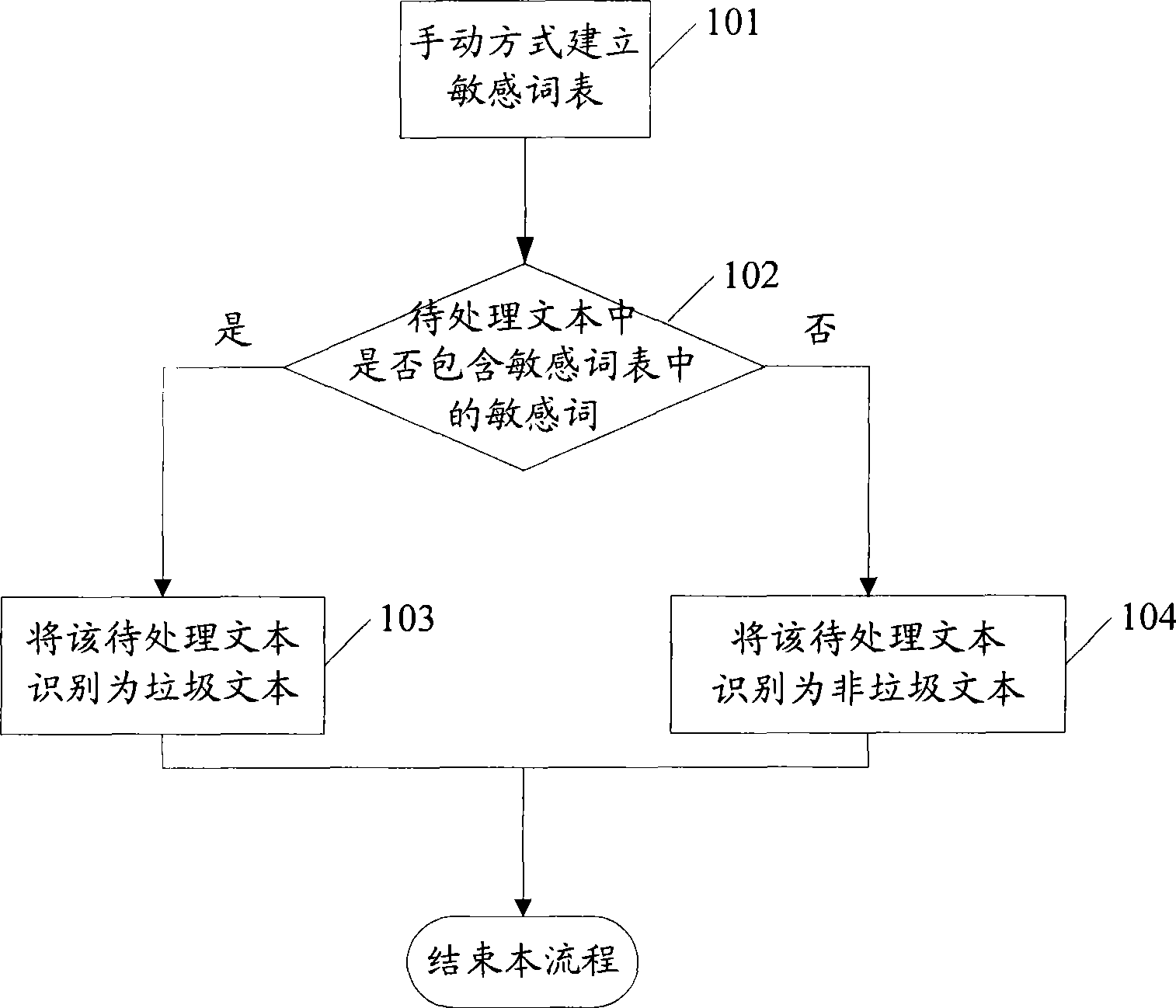

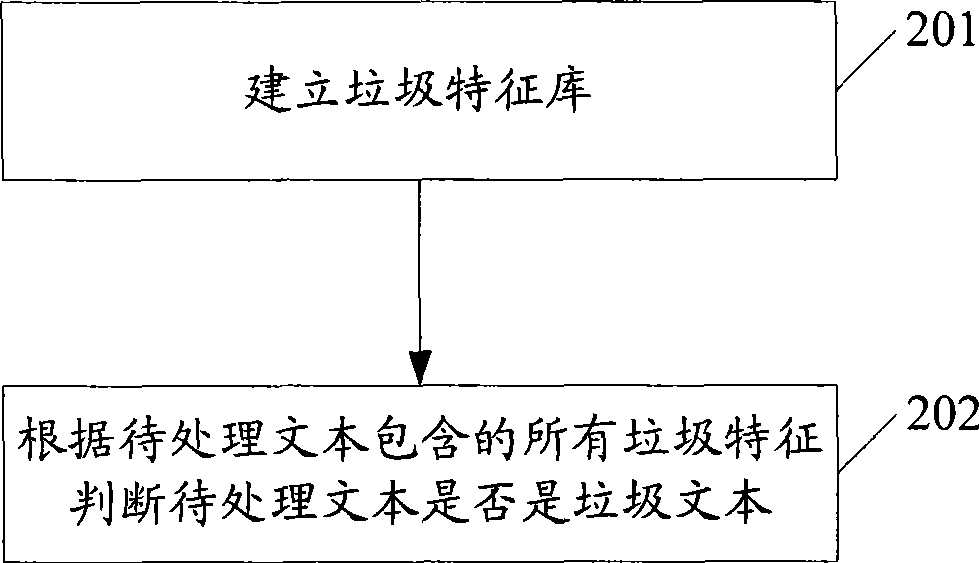

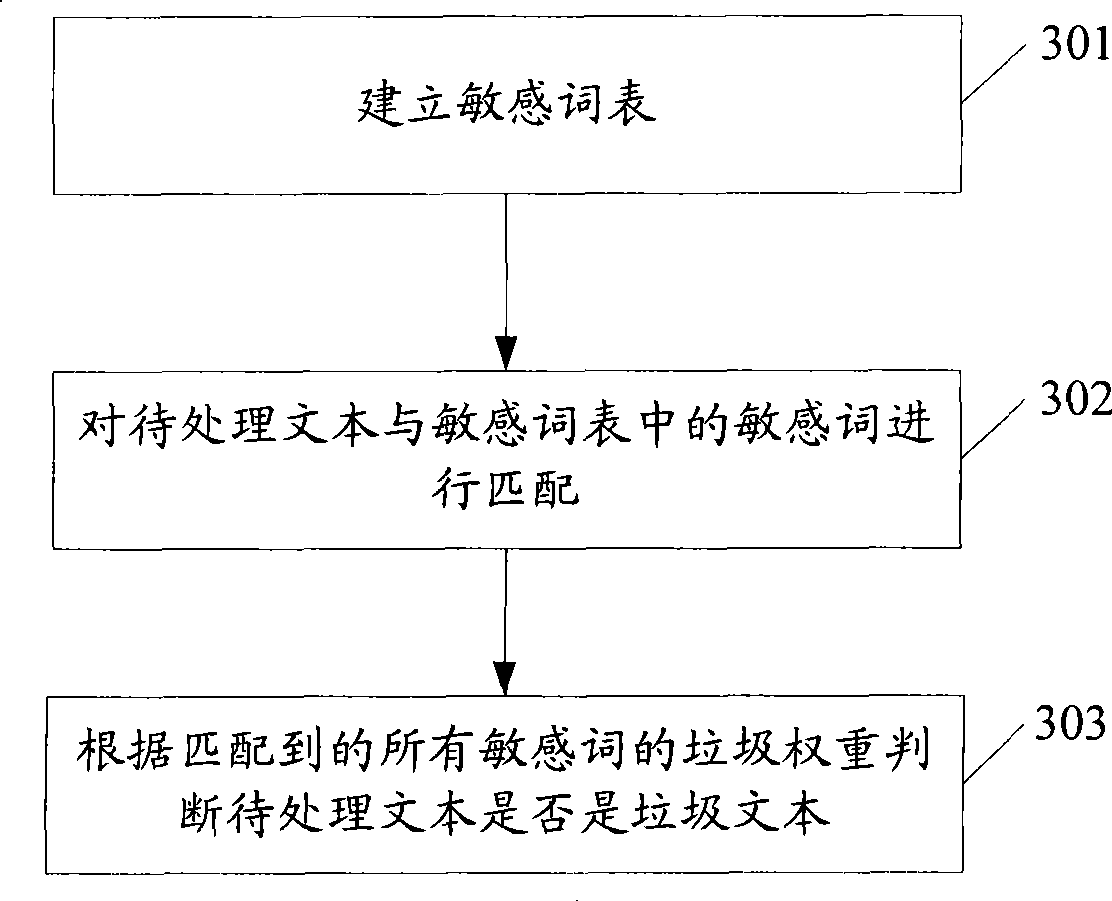

Rubbish text recognition method and system

ActiveCN101477544AImprove accuracyDigital data information retrievalData switching networksText recognitionIdentification device

The invention discloses a method and a system for recognizing spam texts, which comprises the following steps: extracting features of spam samples, confirming spam features from all the features of the spam samples according to the probability of the spam texts attribute to the texts including the features, endowing a spam weight for each spam feature and forming a spam feature database by all the spam features endowed with spam weights; matching pending texts with the spam features in the spam feature database, and judging whether the pending texts are spam texts according to the spam weights matched with all the spam features. The system comprises the spam feature database and a spam text recognizing device, wherein, the spam feature database is used for storing the spam features endowed with spam weights; and the spam text recognizing device is used for receiving the pending texts, matching the pending texts with the spam features in the spam feature database and judging whether the pending texts are spam texts according to the spam weights matched with all the spam features. Moreover, the invention can enhance the accuracy of recognizing spam texts.

Owner:SHENZHEN SHI JI GUANG SU INFORMATION TECH

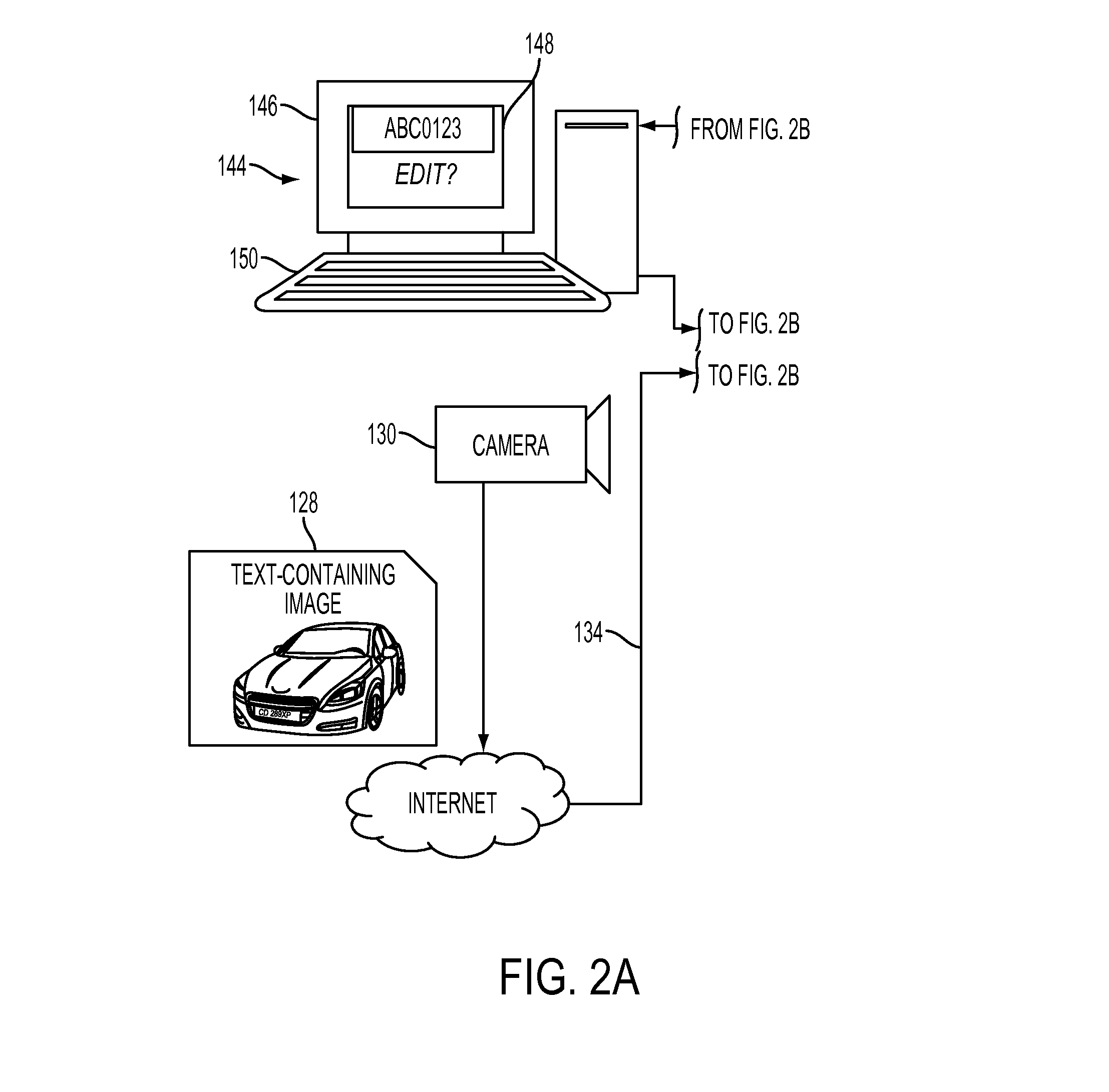

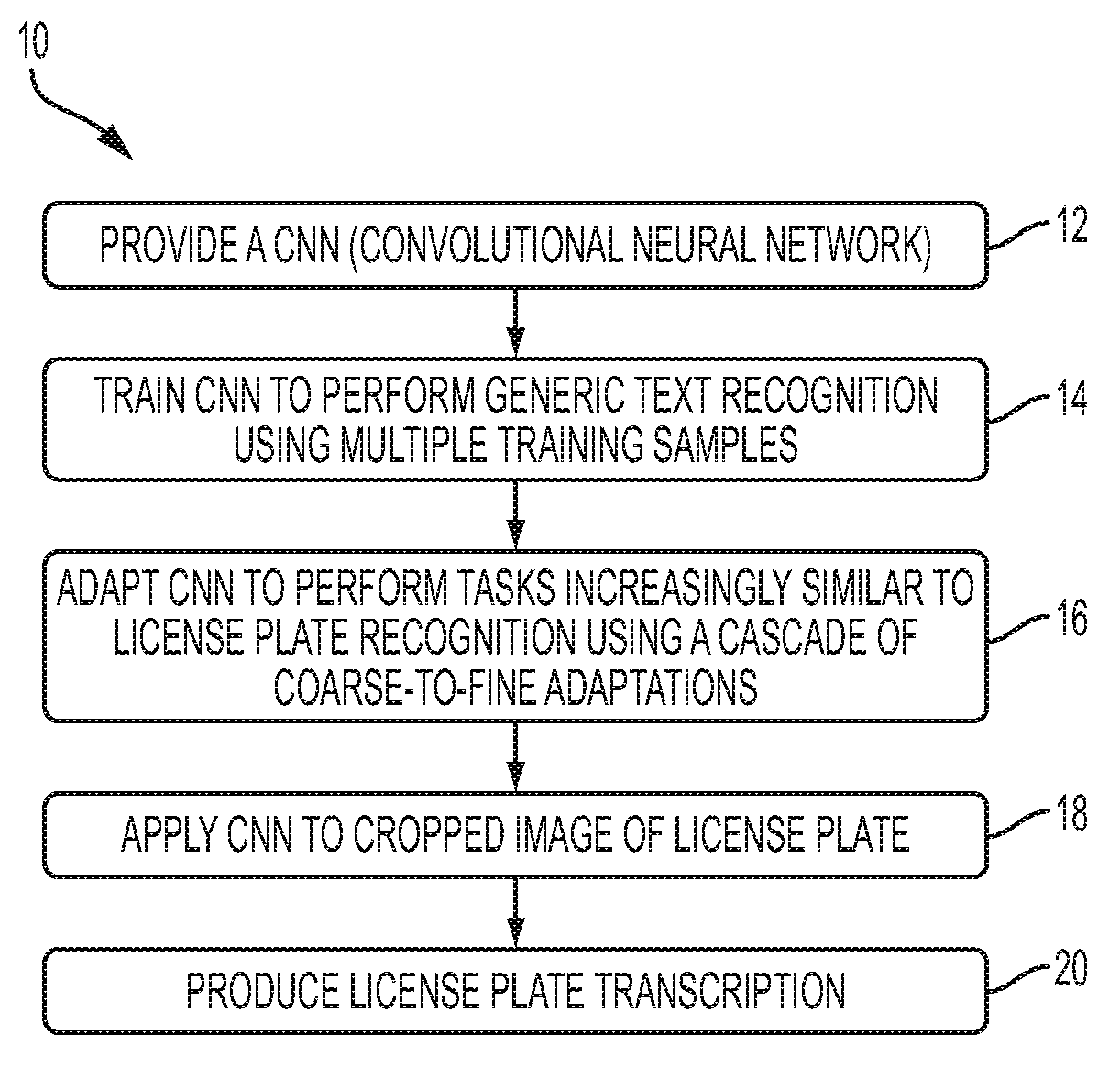

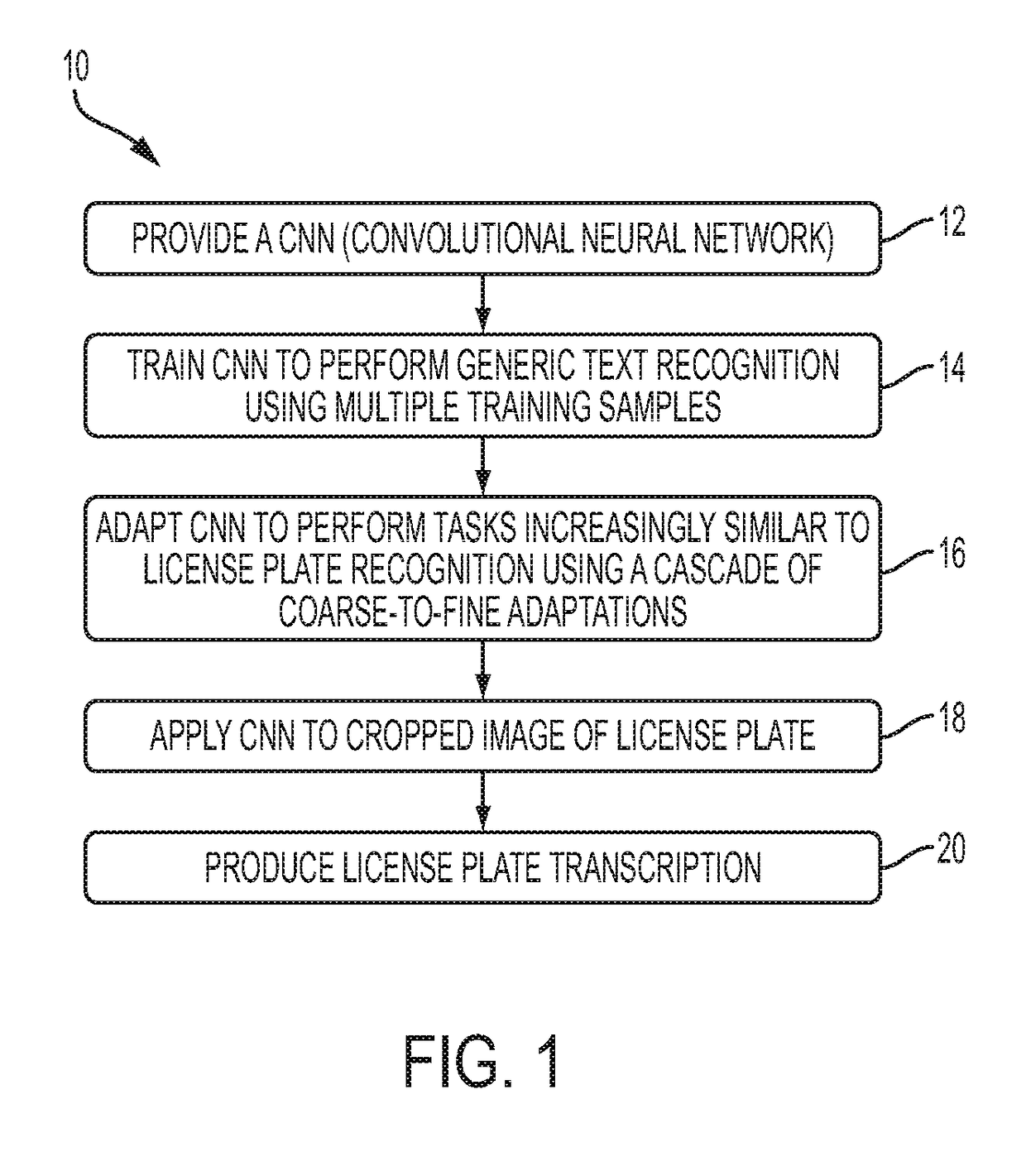

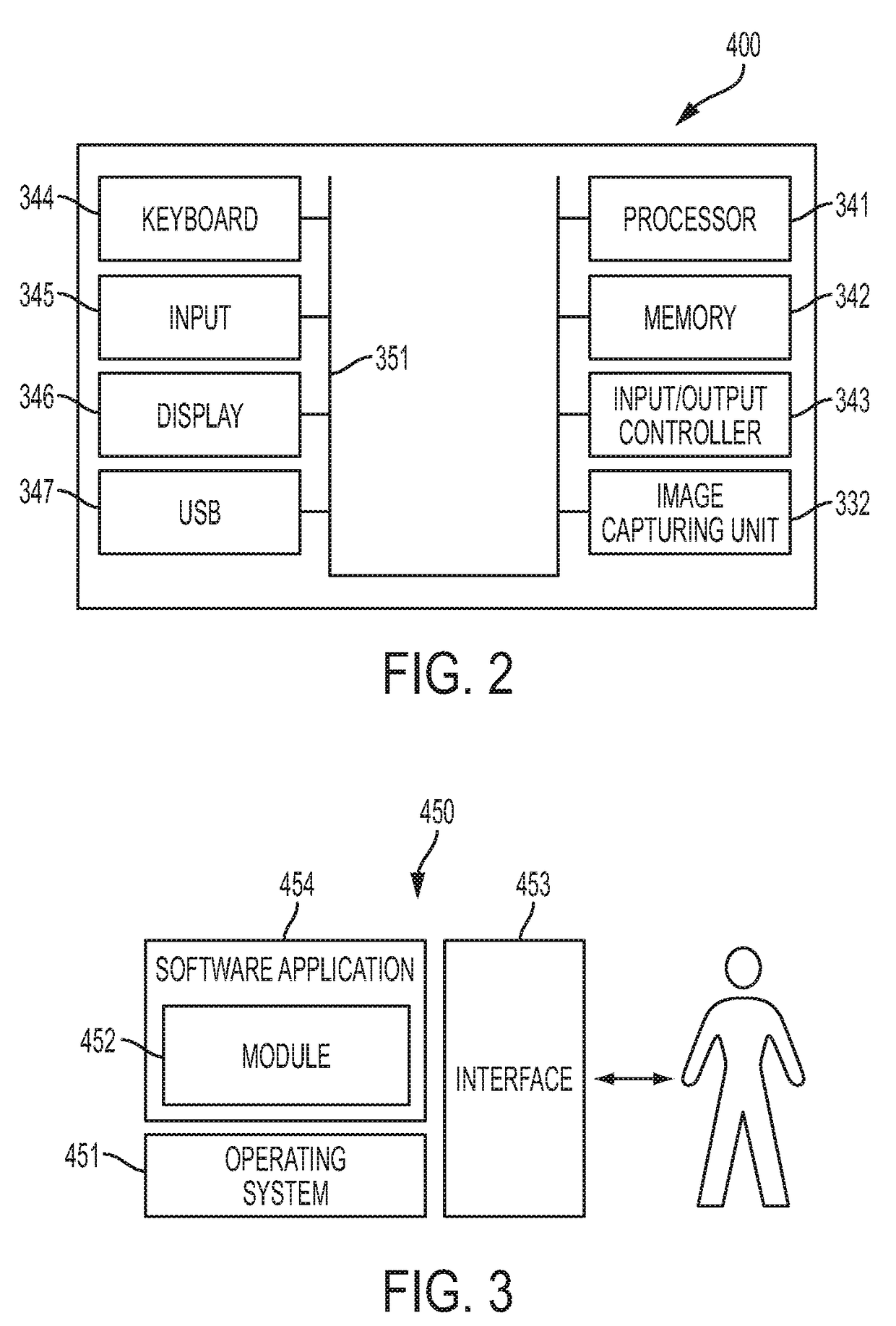

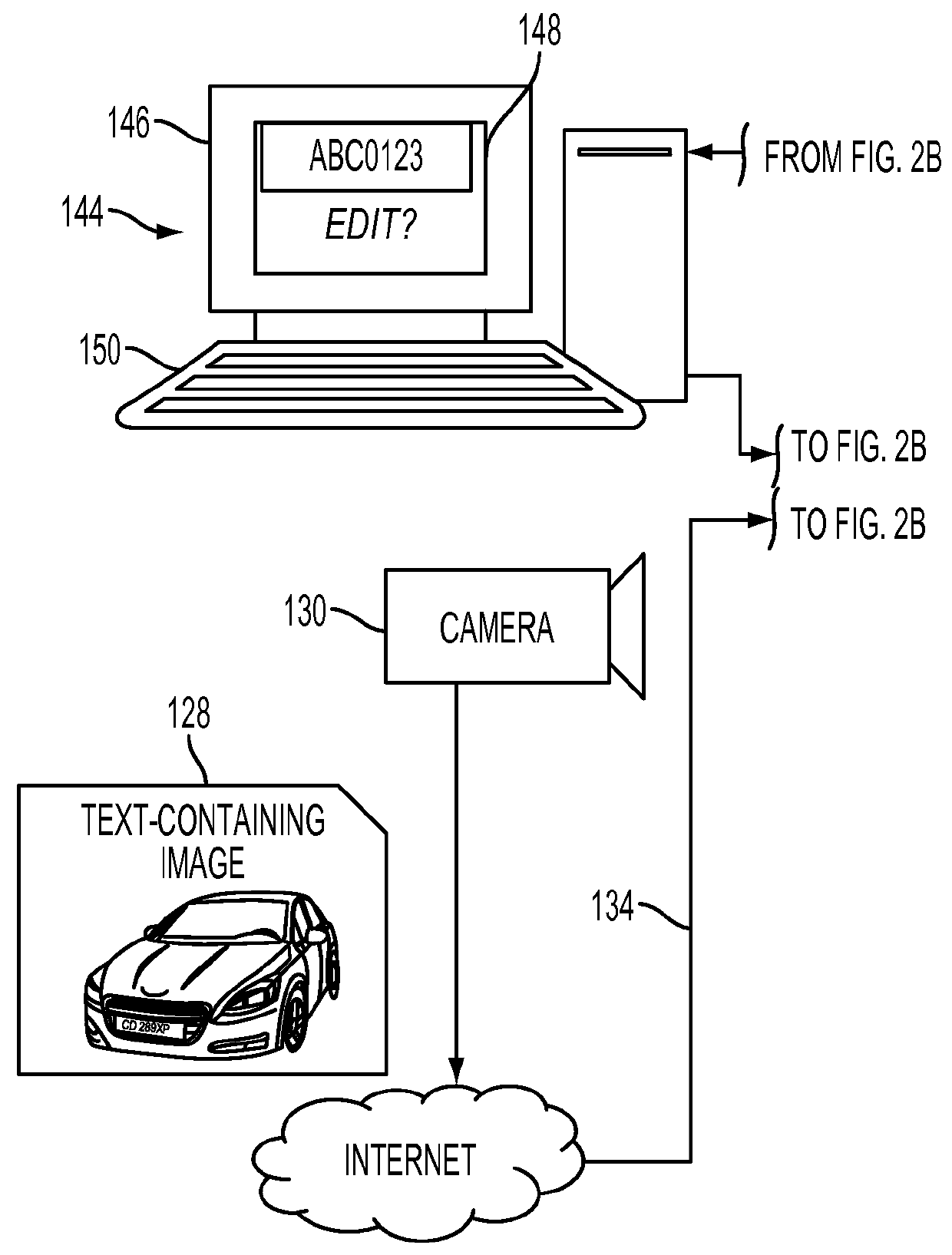

Coarse-to-fine cascade adaptations for license plate recognition with convolutional neural networks

Methods and systems for license plate recognition utilizing a trained neural network. In an example embodiment, a neural network can be subject to operations involving iteratively training and adapting the neural network for a particular task such as, for example, text recognition in the context of a license plate recognition application. The neural network can be trained to perform generic text recognition utilizing a plurality of training samples. The neural network can be applied to a cropped image of a license plate in order to recognize text and produce a license plate transcription with respect to the license plate. An example of such a neural network is a CNN (Convolutional Neural Network).

Owner:CONDUENT BUSINESS SERVICES LLC

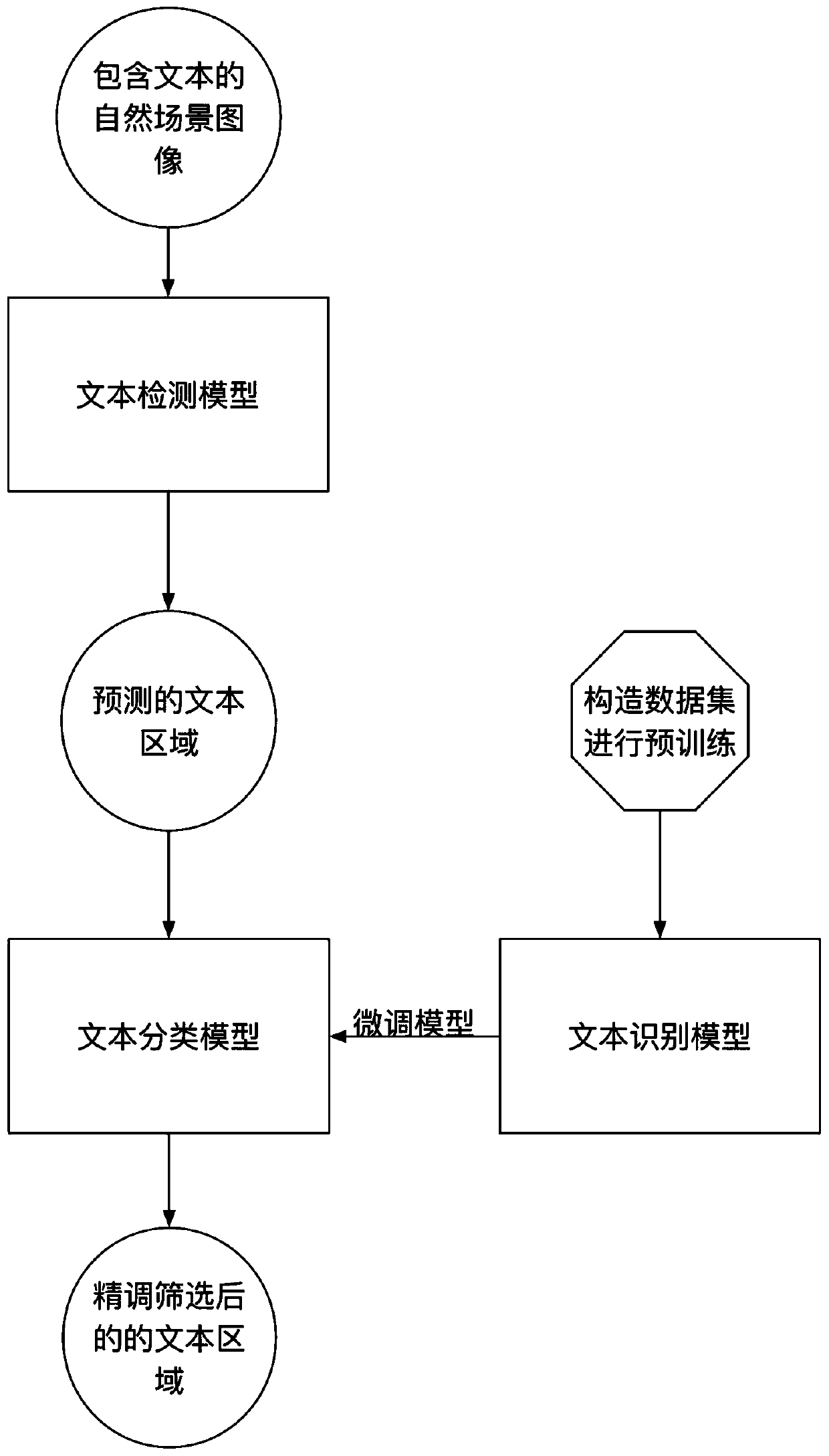

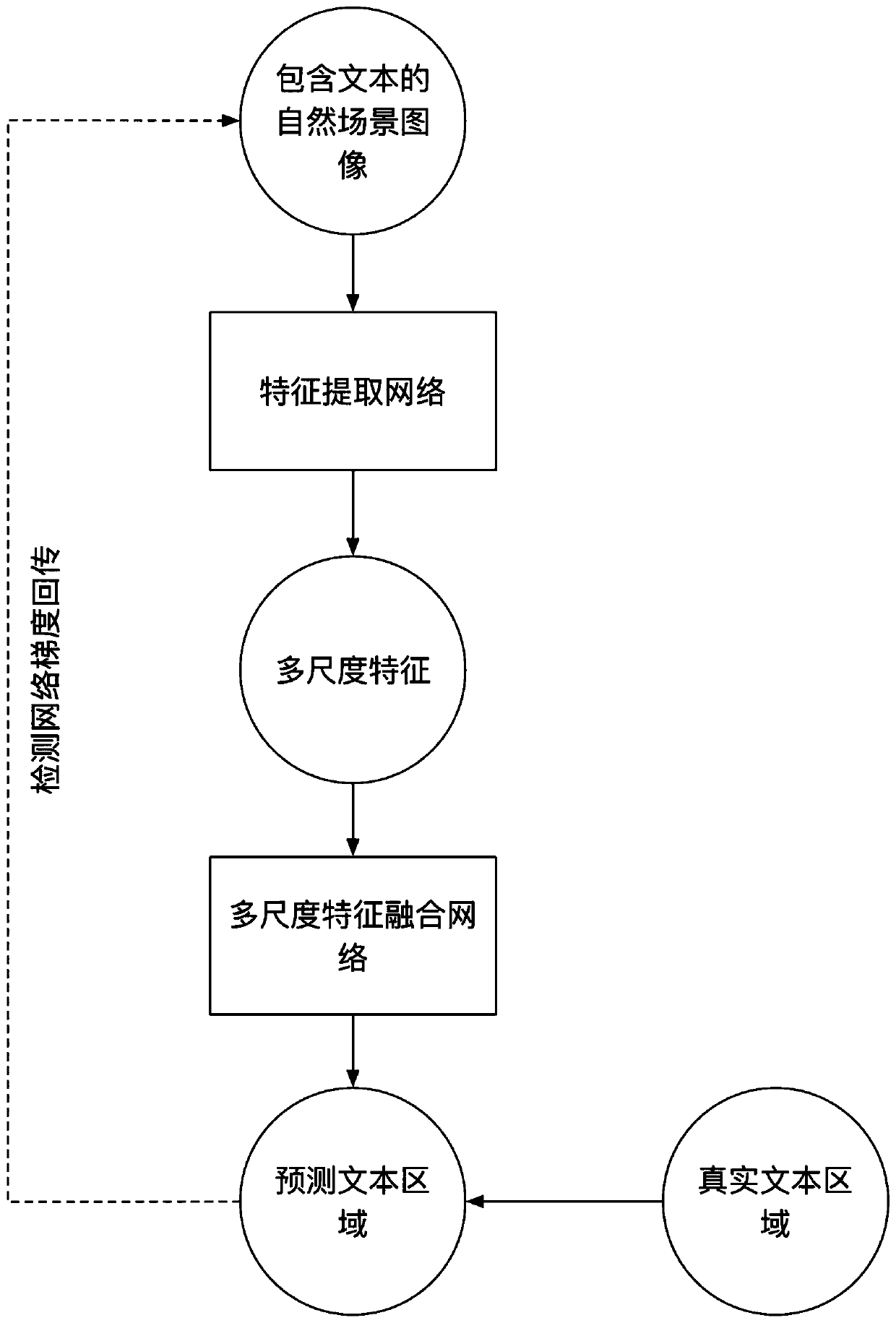

Natural scene text detection method and system

InactiveCN110097049ANo need to manually design featuresSimple methodCharacter and pattern recognitionNeural architecturesText recognitionFeature extraction

The invention provides a natural scene text detection method and system. The system comprises two neural network models: a text detection network based on multi-level semantic feature fusion and a detection screening network based on an attention mechanism, wherein the text detection network is an FCN-based image feature extraction fusion network, and is used for extracting multi-semantic level information of input data, carrying out full fusion of multi-scale features, and finally predicting the position and confidence degree of text information in a natural scene by carrying out convolutionoperation on the fused multi-scale information. According to the detection screening network, the trained convolutional recurrent neural network is used for discriminating and scoring an initial detection result output by the convolutional neural network of the first part, so that a background which is easy to confuse with foreground characters is filtered out, and the accuracy of natural scene text recognition is further improved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI +1

Text recognition using artificial intelligence

InactiveUS20190180154A1Kernel methodsDigital computer detailsText recognitionSymbolic artificial intelligence

A method includes obtaining an image of text. The text in the image includes one or more words in one or more sentences. The method also includes providing the image of the text as first input to a set of trained machine learning models, obtaining one or more final outputs from the set of trained machine learning models, and extracting, from the one or more final outputs, one or more predicted sentences from the text in the image. Each of the one or more predicted sentences includes a probable sequence of words.

Owner:ABBYY PRODUCTION LLC

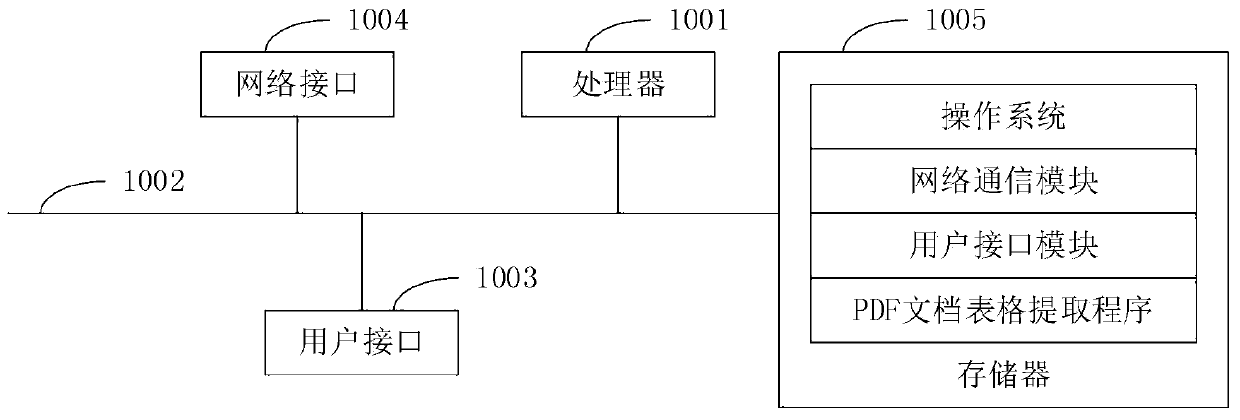

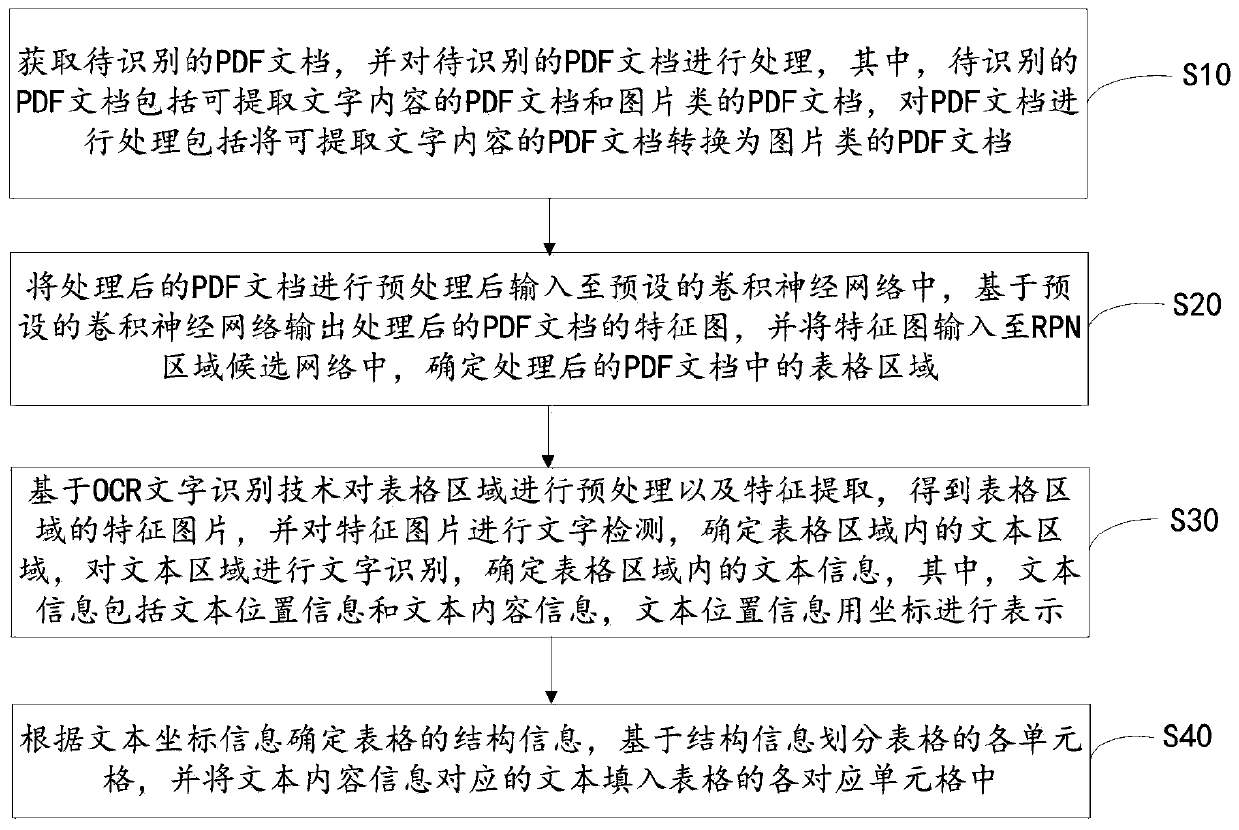

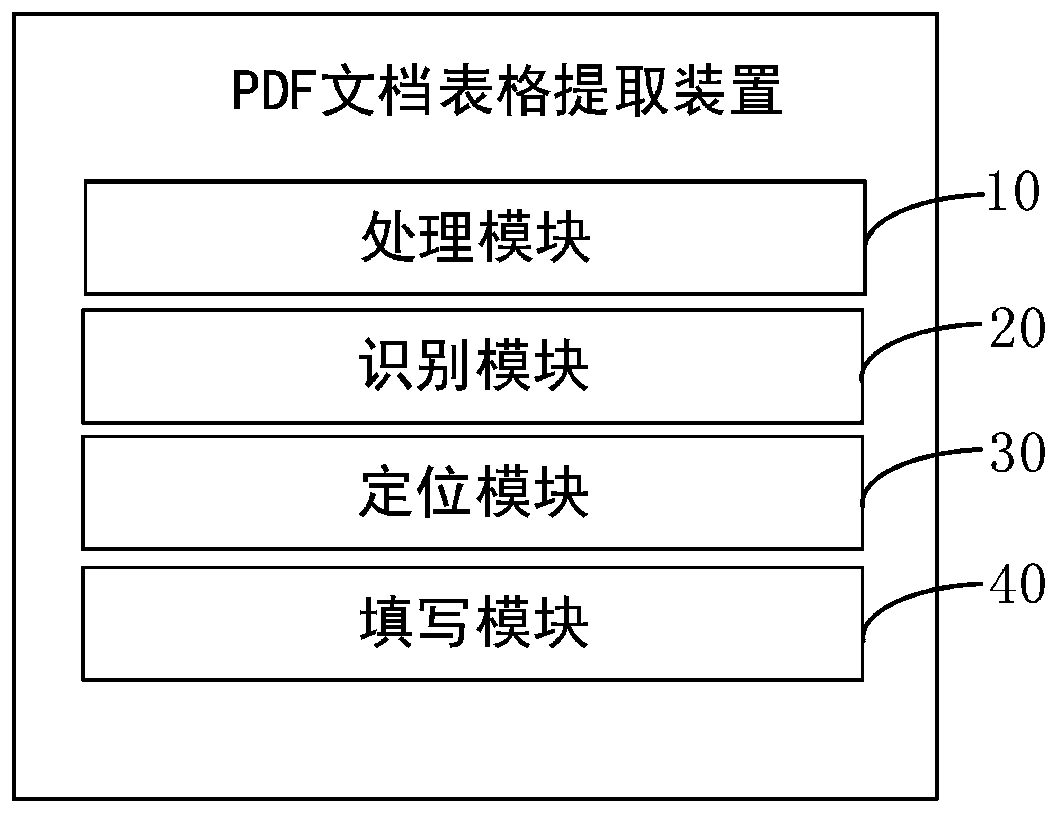

PDF document table extraction method, device and equipment and computer readable storage medium

ActiveCN110390269AImprove accuracyPrecise positioningCharacter recognitionText recognitionFeature extraction

The invention relates to the technical field of artificial intelligence, and discloses a PDF document table extraction method and device, equipment and a computer readable storage medium. The method comprises the steps of obtaining a to-be-identified PDF document, and processing the to-be-identified PDF document; preprocessing the processed PDF document, inputting the preprocessed PDF document into a convolutional neural network, outputting a feature map, inputting the feature map into an RPN region candidate network, and determining a table region; carrying out preprocessing and feature extraction on the table area based on the OCR character recognition technology, obtaining a feature picture, carrying out character detection on the feature picture, determining a text area, carrying out character recognition on the text area, determining text informatio, wherein the text information comprises text position information and text content information; and determining structure informationof the table according to the text coordinate information, dividing each cell of the table based on the structure information, and filling each corresponding cell of the table with a text corresponding to the text content information. According to the method and the device, the accuracy of PDF document table extraction is improved.

Owner:PING AN TECH (SHENZHEN) CO LTD

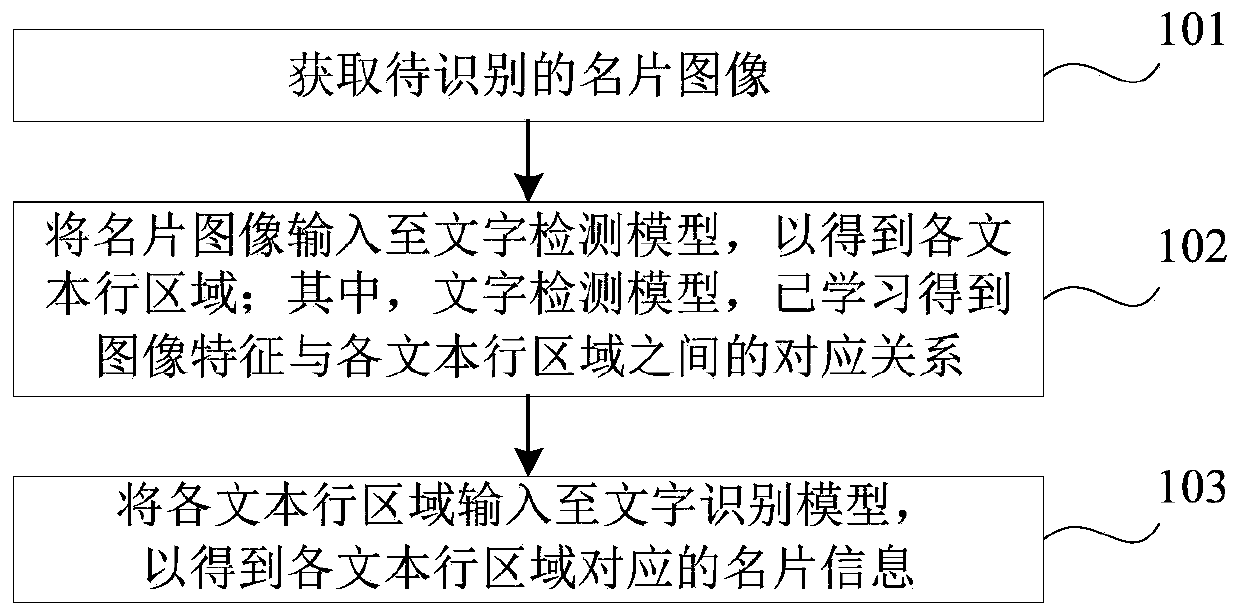

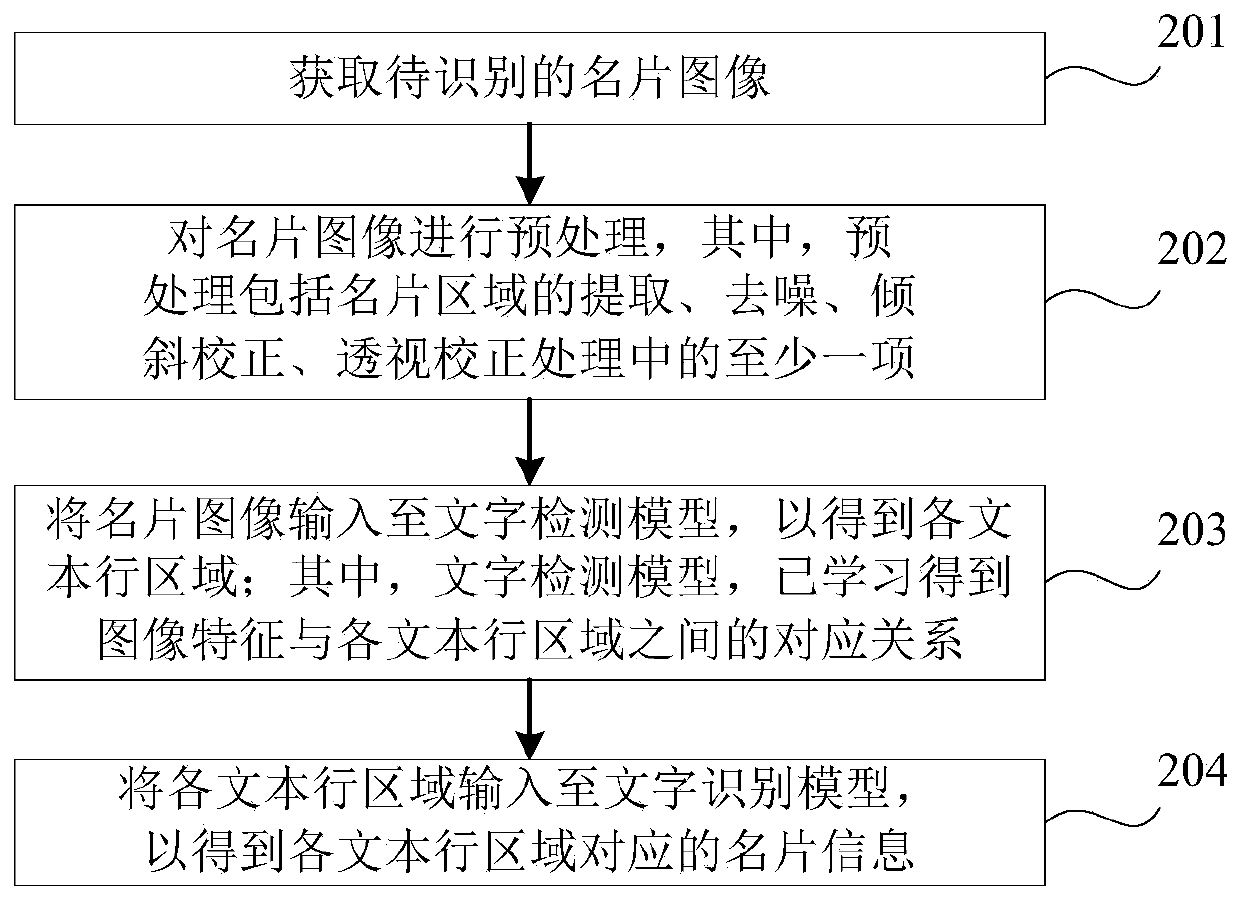

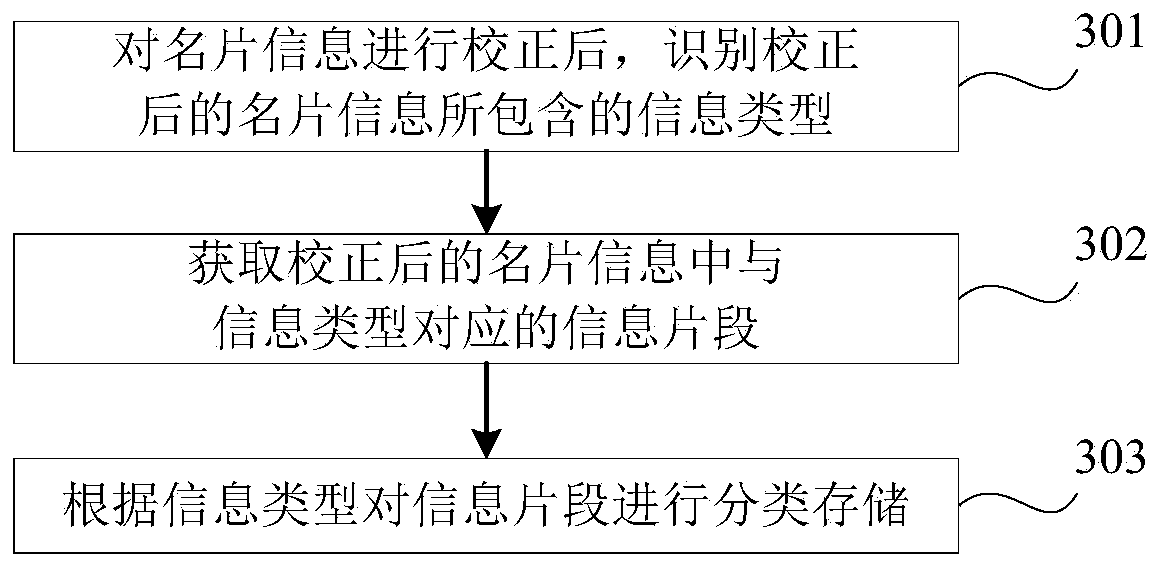

Business card identification method and device

ActiveCN110135411AReduce the impact of extractionImprove robustnessCharacter and pattern recognitionRobustificationBusiness card

The invention provides a business card identification method and device, and the method comprises the steps: obtaining a to-be-identified business card image; inputting the business card image into acharacter detection model to obtain each text line area, wherein the character detection model learns and obtain a corresponding relation between image features and each text line area; and inputtingeach text line area into the character recognition model to obtain business card information corresponding to each text line area. According to the method, the text line areas in the business card image can be identified through the text detection model based on deep learning, the robustness is high, the influence of low-quality and noise data on text extraction can be reduced, and therefore the universality and the application space of the method are improved. Moreover, the text line areas are subjected to end-to-end recognition based on the deep learning character recognition model, single word segmentation is not needed, higher accuracy is achieved, higher recognition capability is achieved for various complex changes, and the universality and the recognition effect of the method are improved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

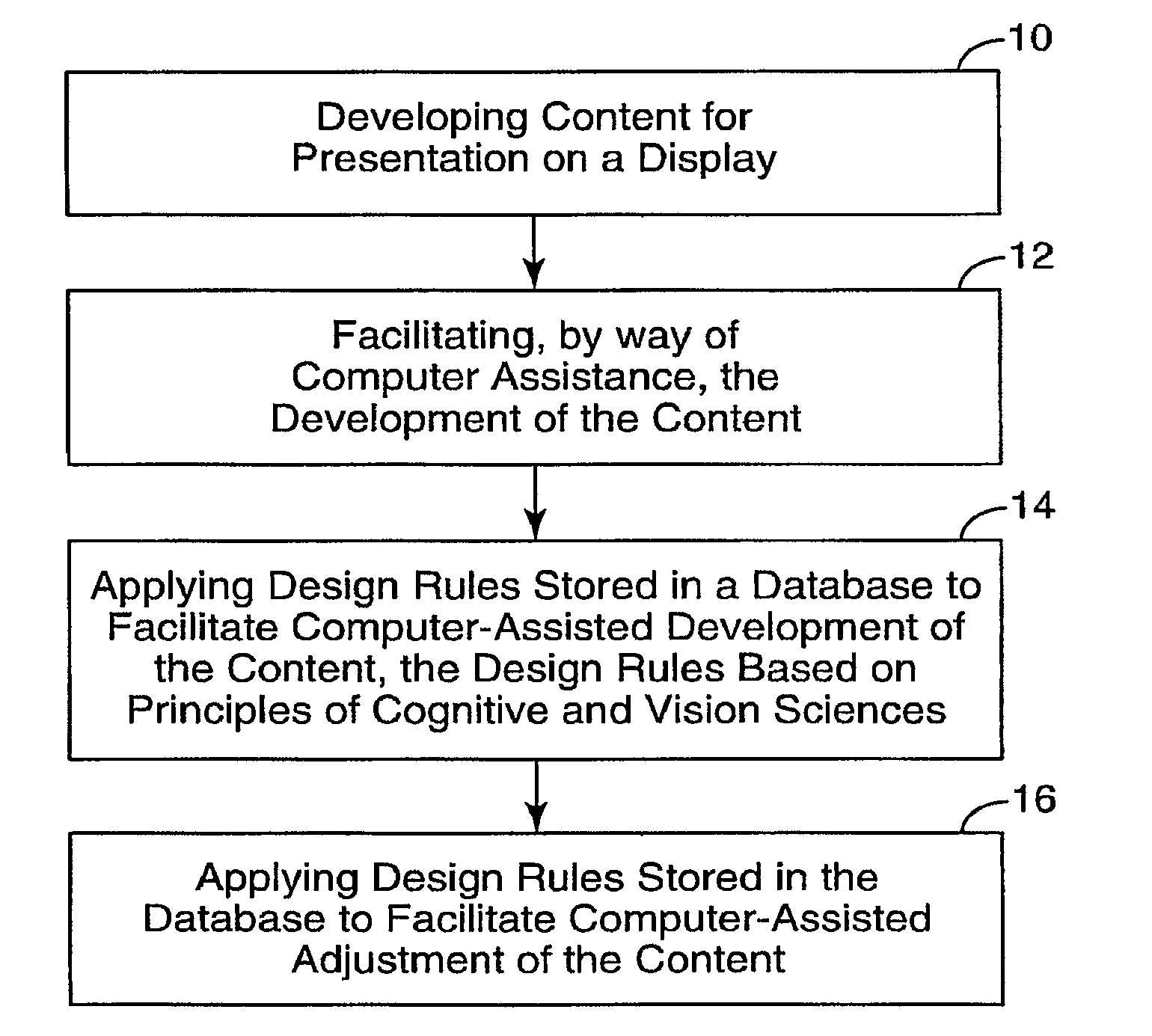

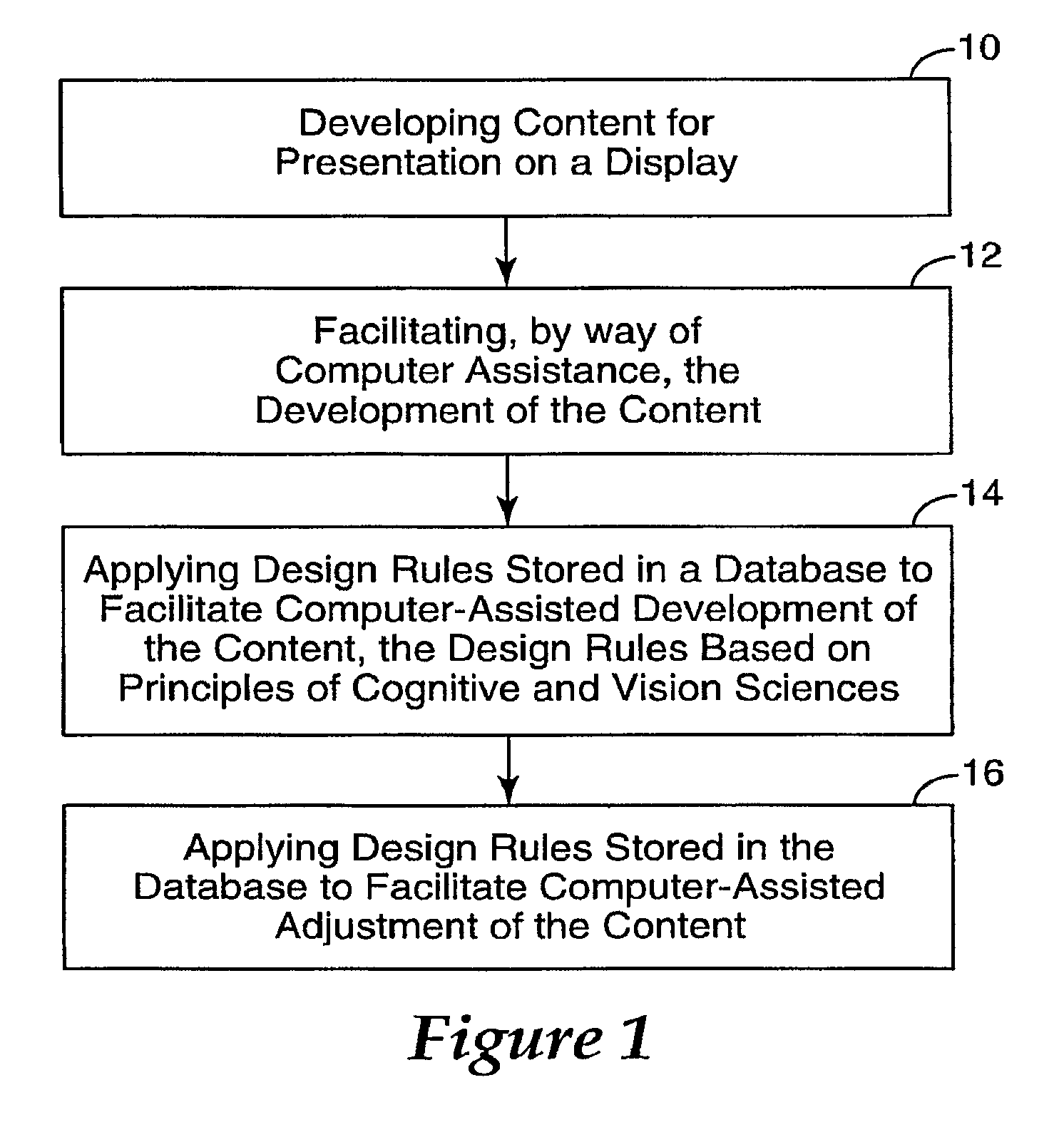

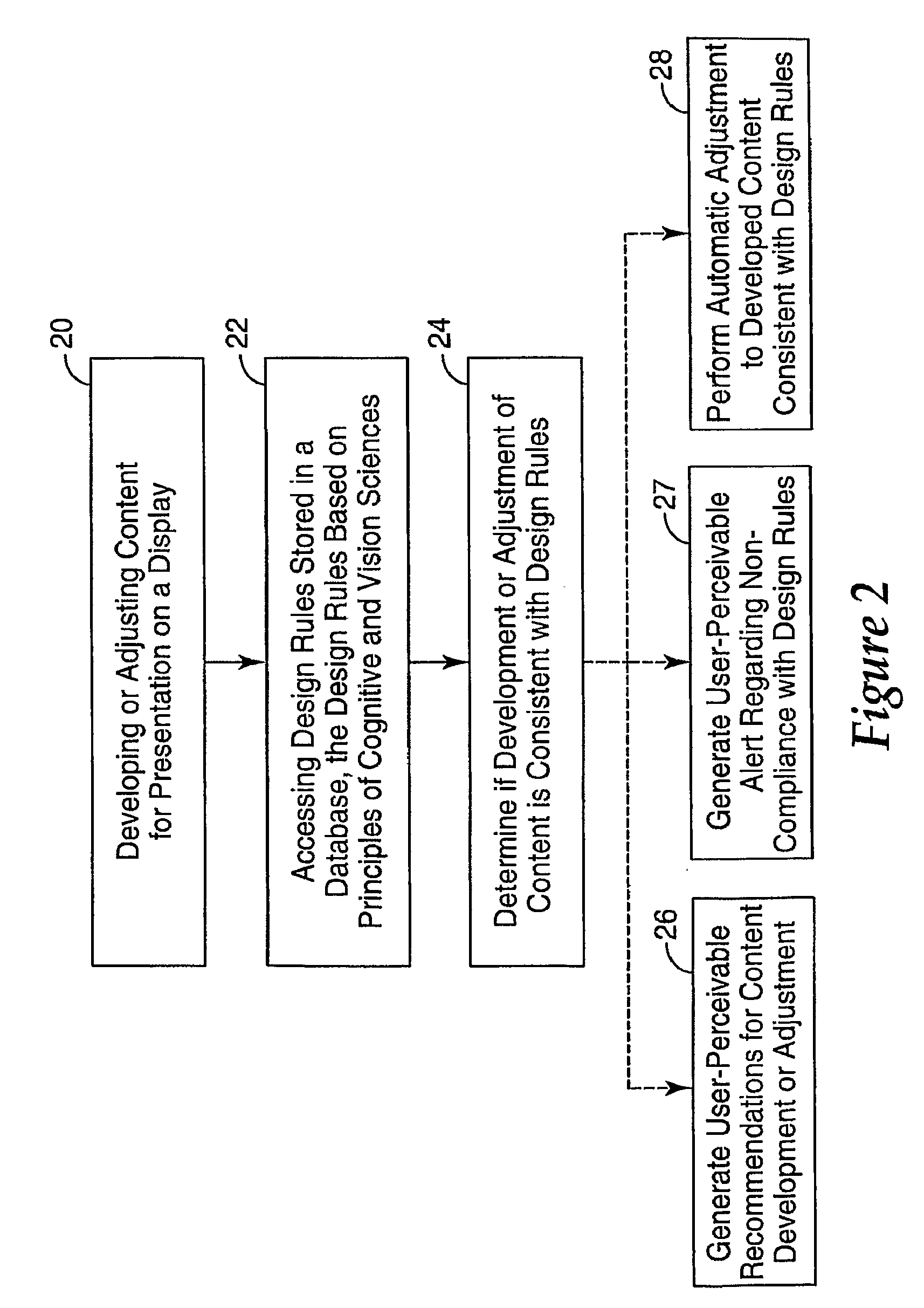

Content development and distribution using cognitive sciences database

InactiveUS20090158179A1Maximizing memory retention and recallImprove readabilityNatural language data processingInference methodsGraphicsText recognition

Computer implemented methods and systems facilitate development and distribution of content for presentation on a display or a multiplicity of networked displays, the content including content elements. The content elements may include graphics, text, video clips, still images, audio clips or web pages. The development of the content is facilitated using a database comprising design rules based on principles of cognitive and vision sciences. The database may include design rules based on visual attention, memory, and / or text recognition, for example.

Owner:3M INNOVATIVE PROPERTIES CO

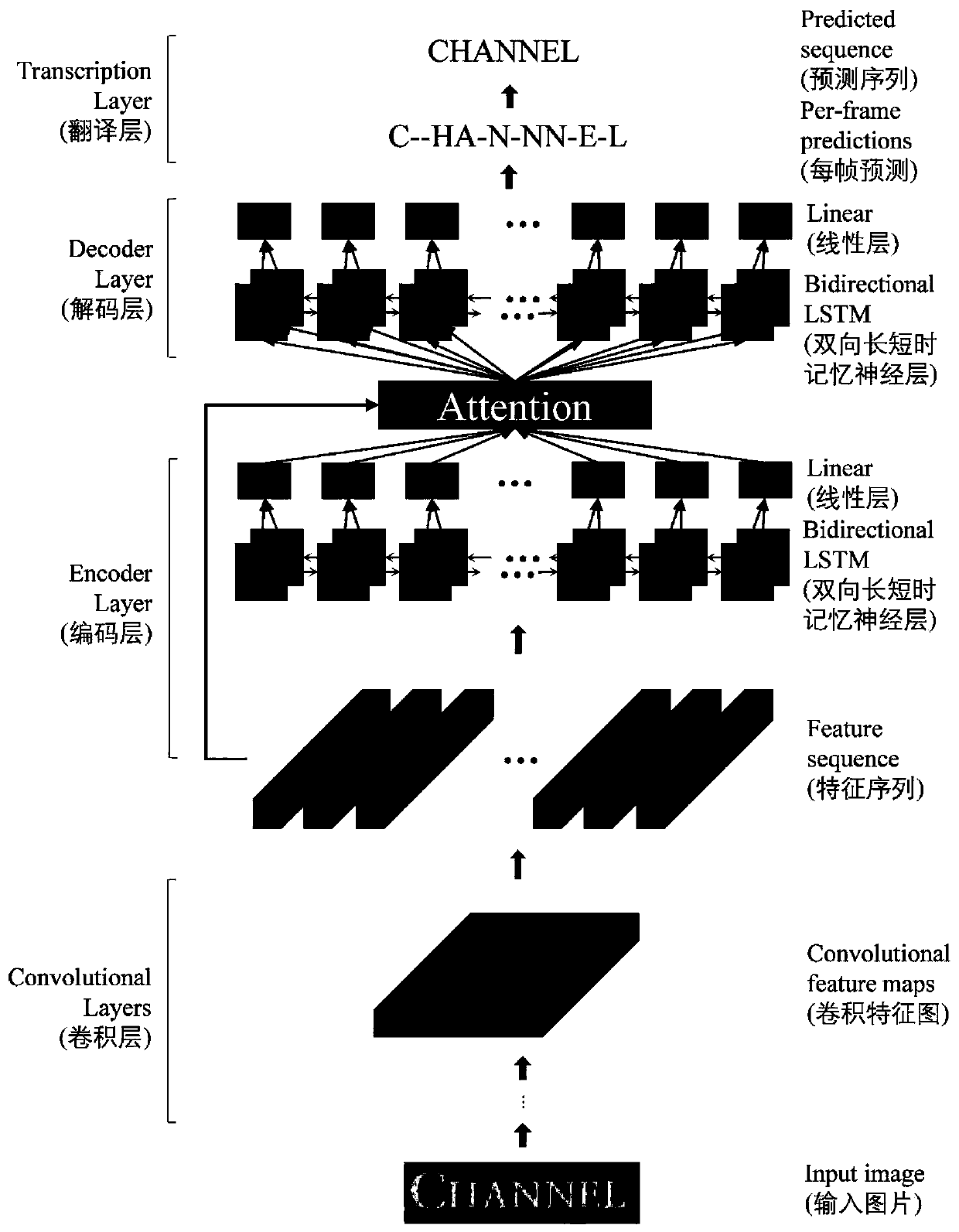

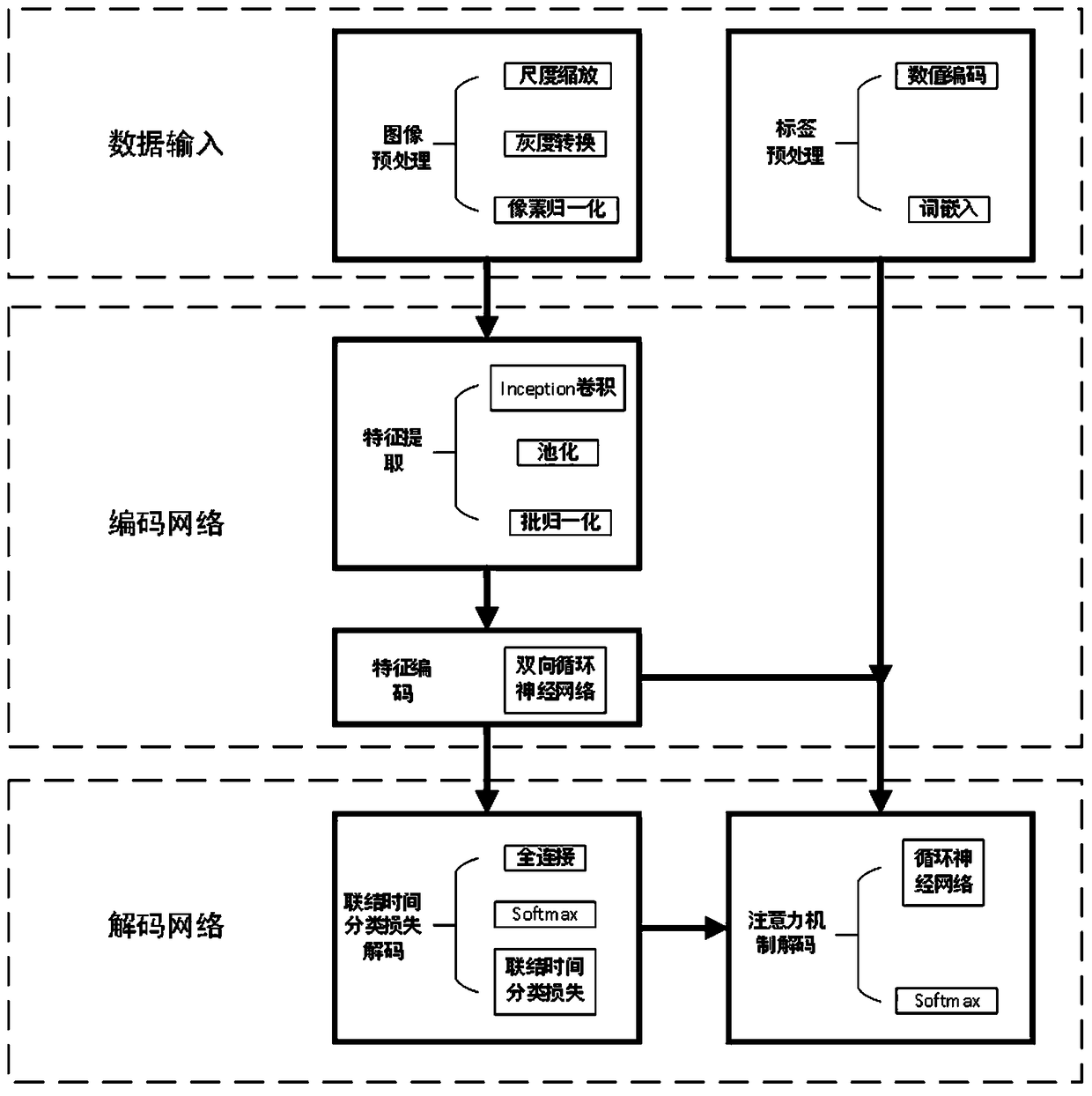

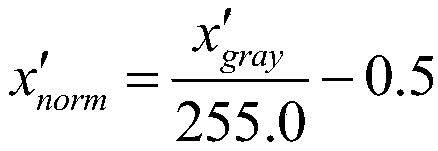

Character recognition method based on an attention mechanism and linkage time classification loss

InactiveCN109492679ADependencies getRich relevant informationNeural architecturesCharacter recognitionCharacter recognitionGray level

The invention discloses a character recognition method based on an attention mechanism and linkage time classification loss. The character recognition method comprises the following steps that S1, a data set is collected; s2, carrying out preprocessing such as scale zooming, gray level conversion and pixel normalization on the image sample; s3; processing the tag sequence of the sample, includingfilling, coding and word embedding; s4, constructing a convolutional neural network, and performing feature extraction on the text image processed in the step S3; s5, encoding the features extracted in the step S4 by using a stacked bidirectional recurrent neural network to obtain encoded features; s6, inputting the coding features obtained in the S5 into a connection time classification model tocalculate a prediction probability; and S7, calculating weights of different coding characteristics by using an attention mechanism to obtain coded semantic vectors.

Owner:HANGZHOU DIANZI UNIV

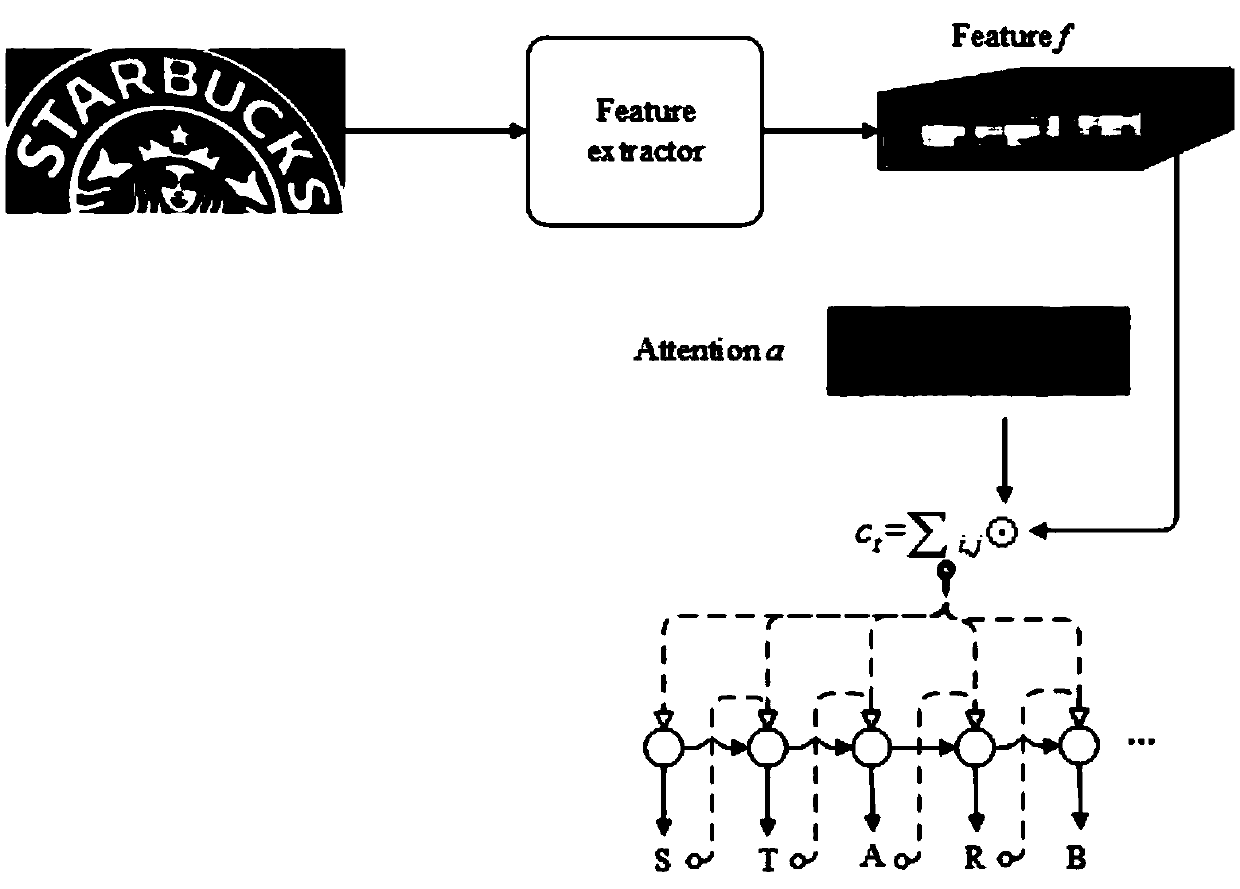

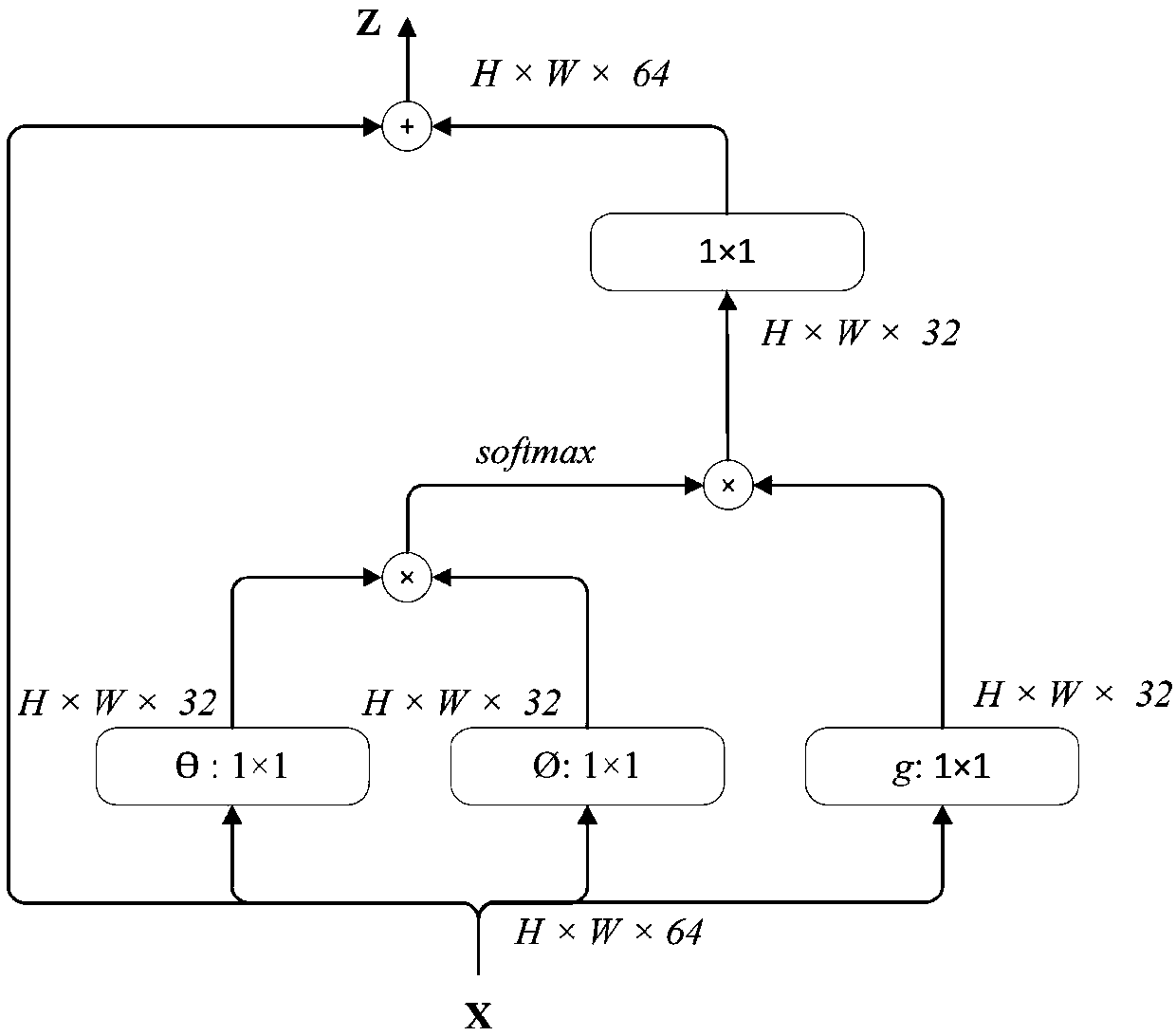

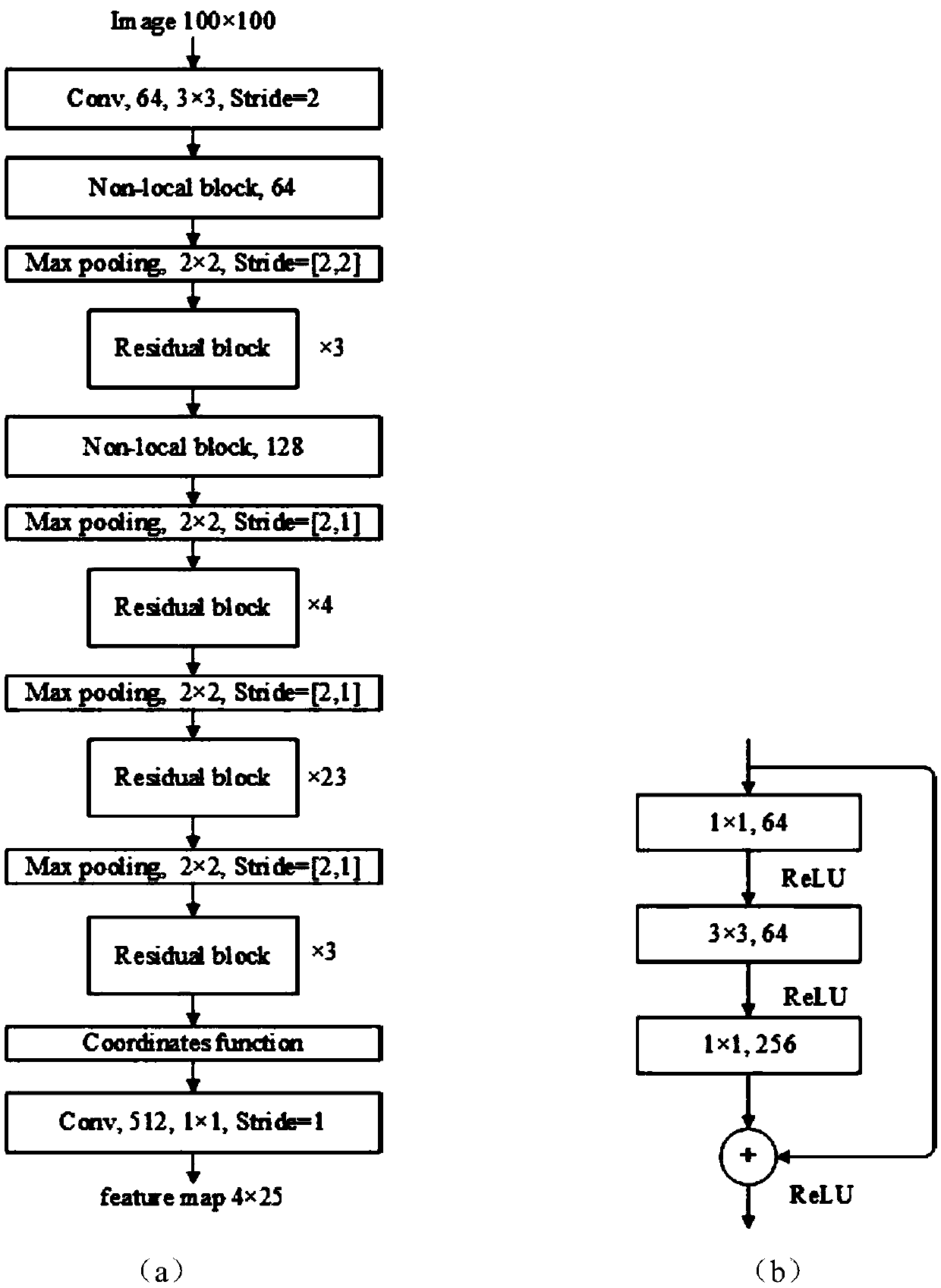

A text recognition method based on attention mechanism

The invention discloses a text recognition method based on attention mechanism. The network SAN based on spatial attention is an end-to-end text recognition model. The text recognition model comprisesa feature extractor with a local neural network, a residual neural network and coordinate information, and a spatial decoder based on attention mechanism. The text recognition model is based on the encoding and decoding structure, so the text recognition model can also be understood as encoder and decoder. The encoder is used to encode the input image to obtain the encoded feature sequence whichcan be recognized by the decoder. A decoder is used to decode the encoded features of the encoder, thereby realizing recognition of text in an image. For the arc text CUTE80 dataset, the result of this method is superior to all the existing methods, and the accuracy is 77.43%. In other scene text datasets, this method also has a good effect.

Owner:BEIJING UNIV OF TECH

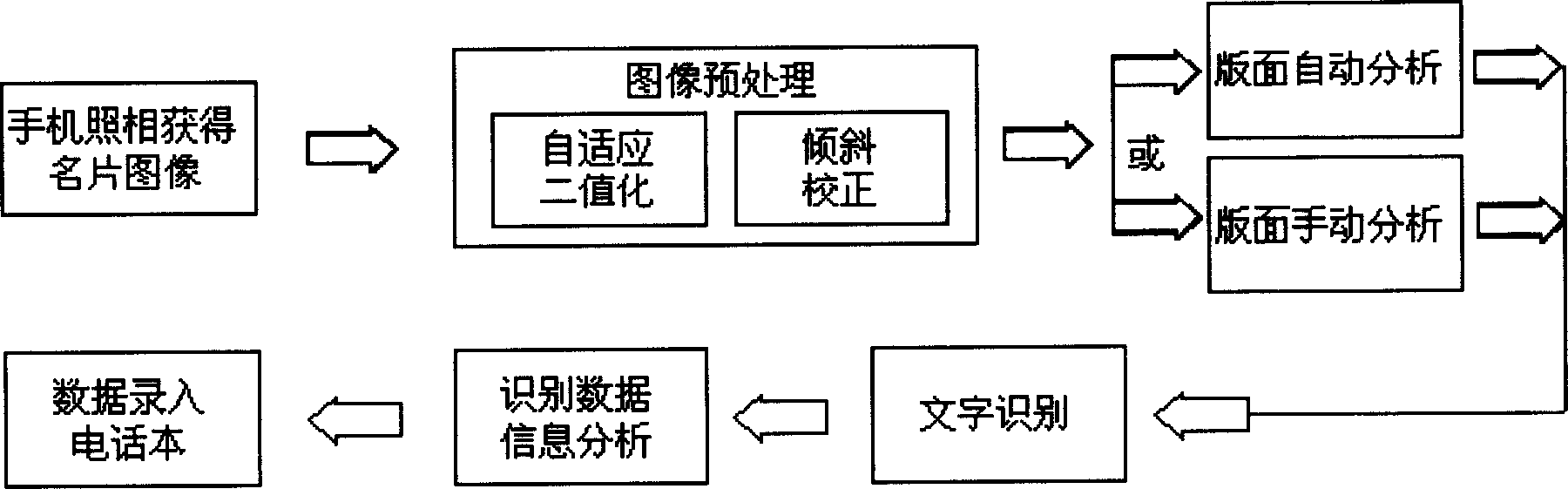

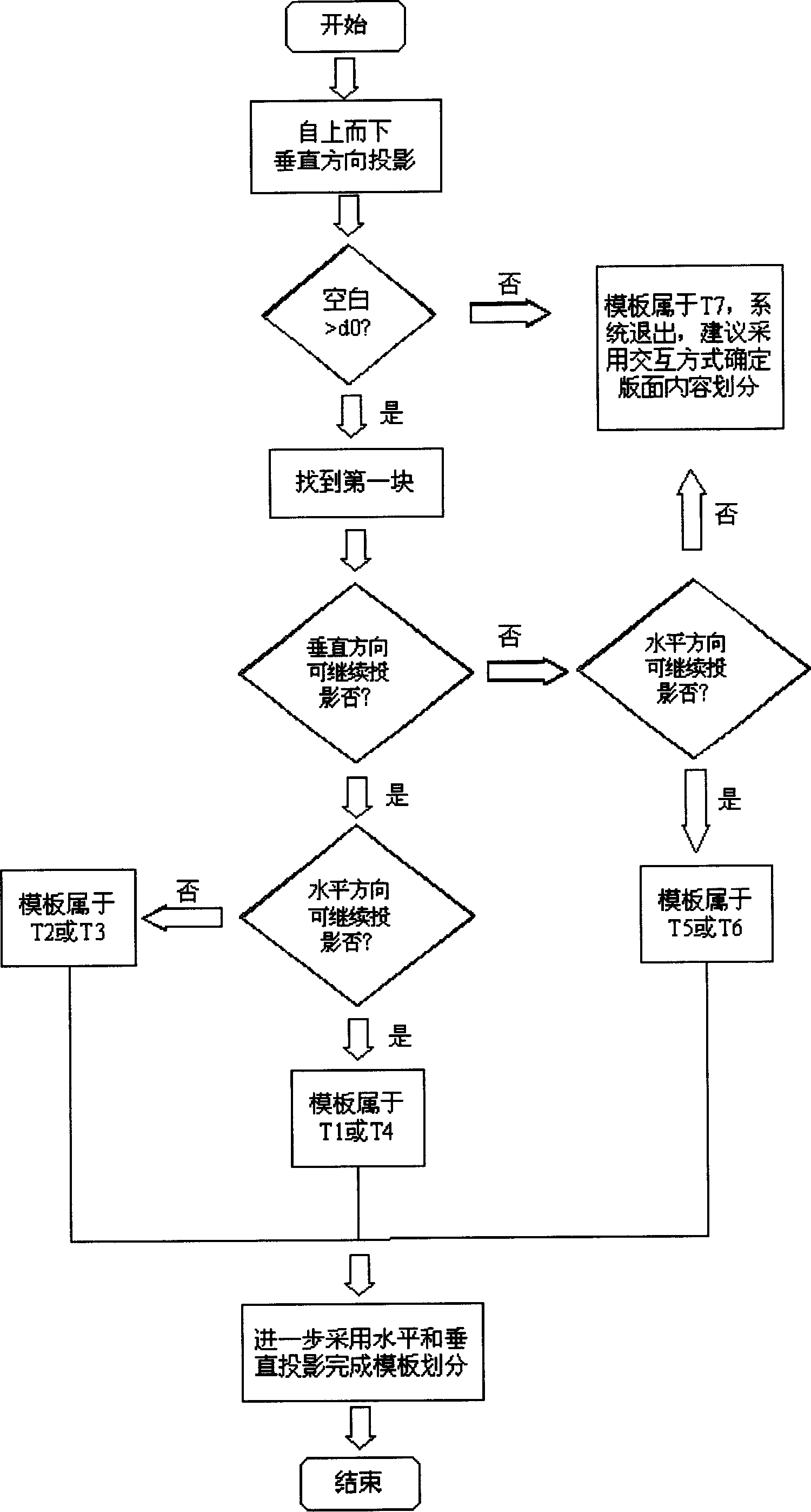

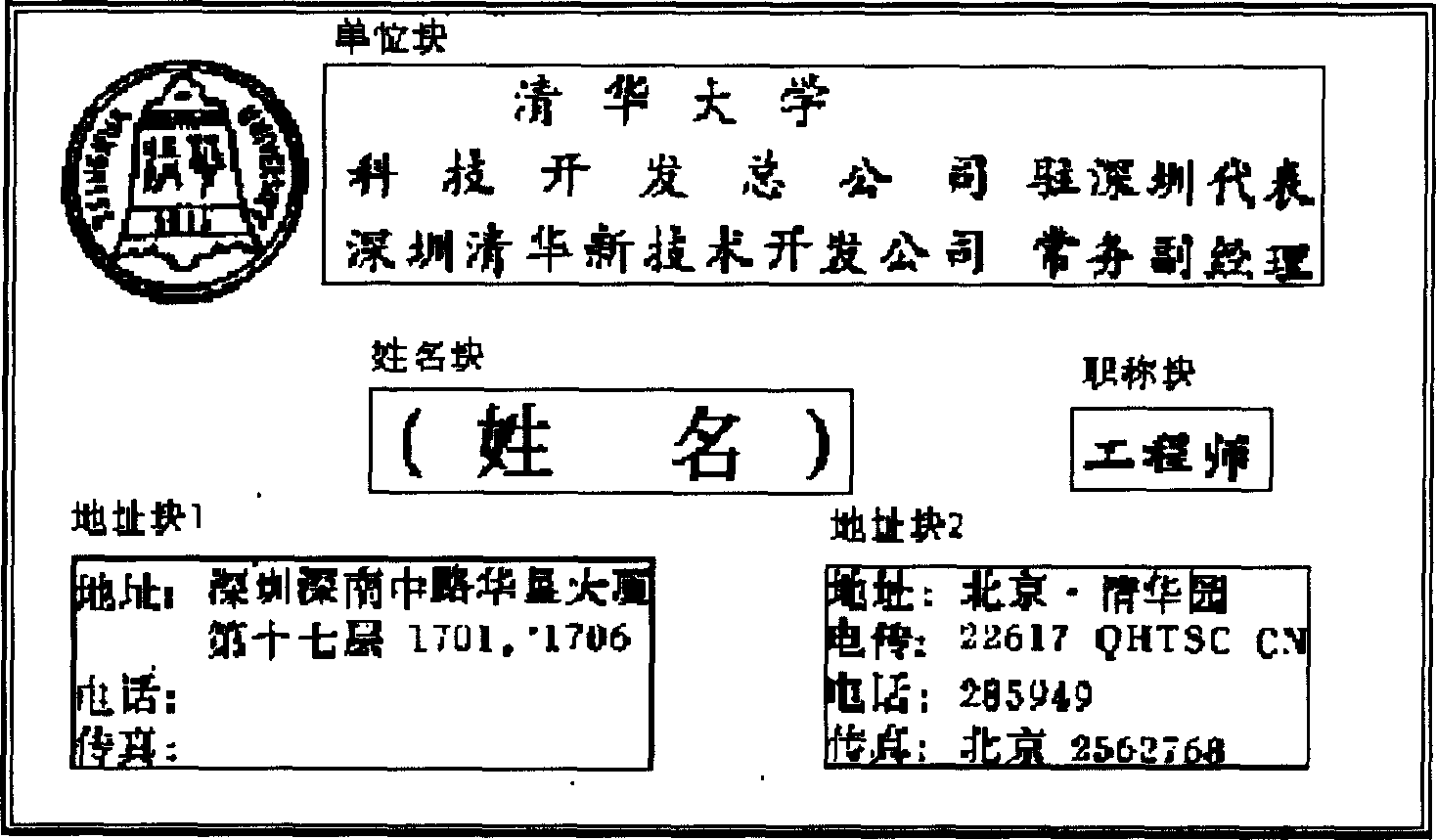

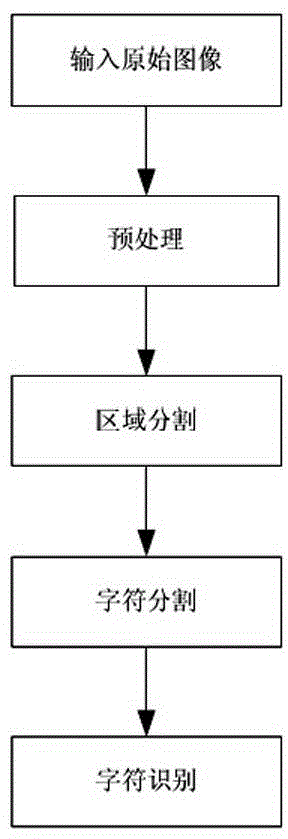

Method for gathering and recording business card information in mobile phone by using image recognition

ActiveCN1877598AEasy to useSolve the shortcomings of slow speedCharacter and pattern recognitionCamera phoneBusiness card

The related collection and record method for business card information by image recognition in mobile phone comprises: using phone camera to obtain card information, taking pre-process, analyzing image impression, dividing area, taking letter recognition to every area, recognizing data, analyzing information, and storing the data into telephone directory in phone. This invention needs no additional device, improves speed, and fit to wide application.

Owner:BEIJING XIAOMI MOBILE SOFTWARE CO LTD

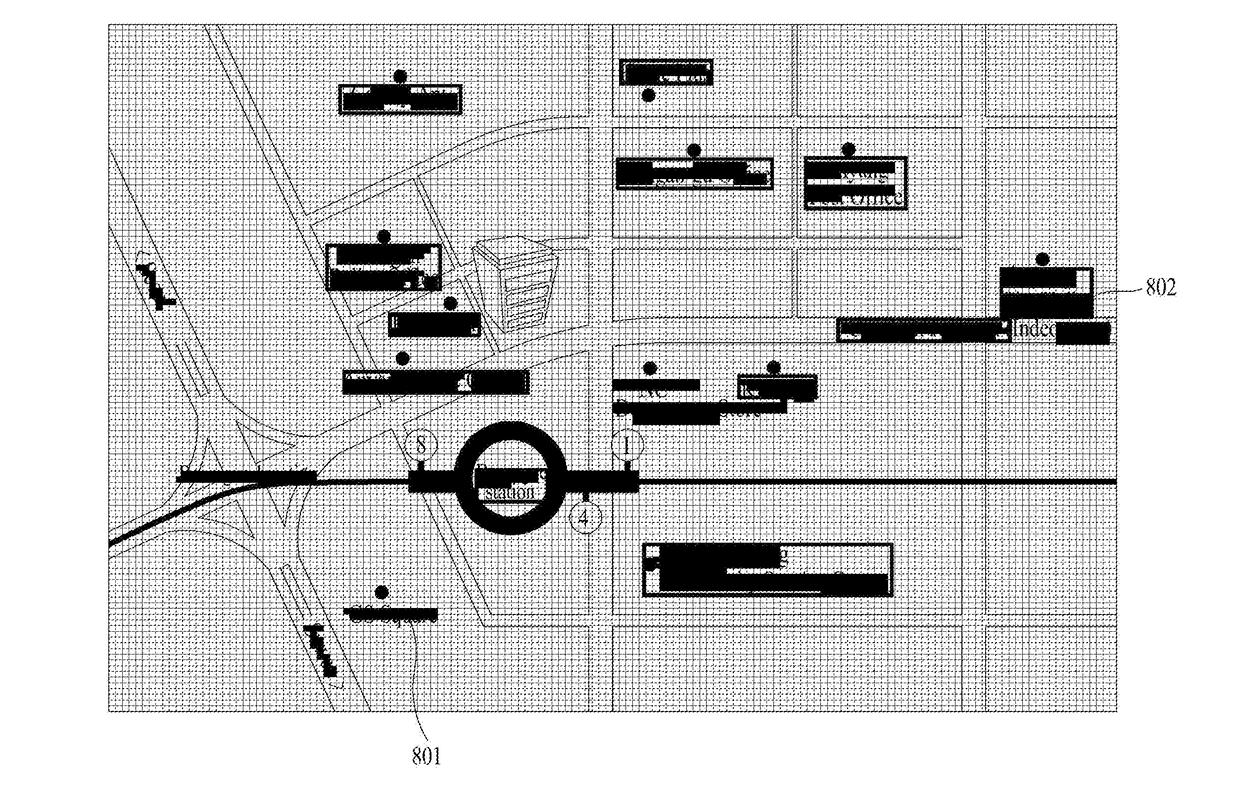

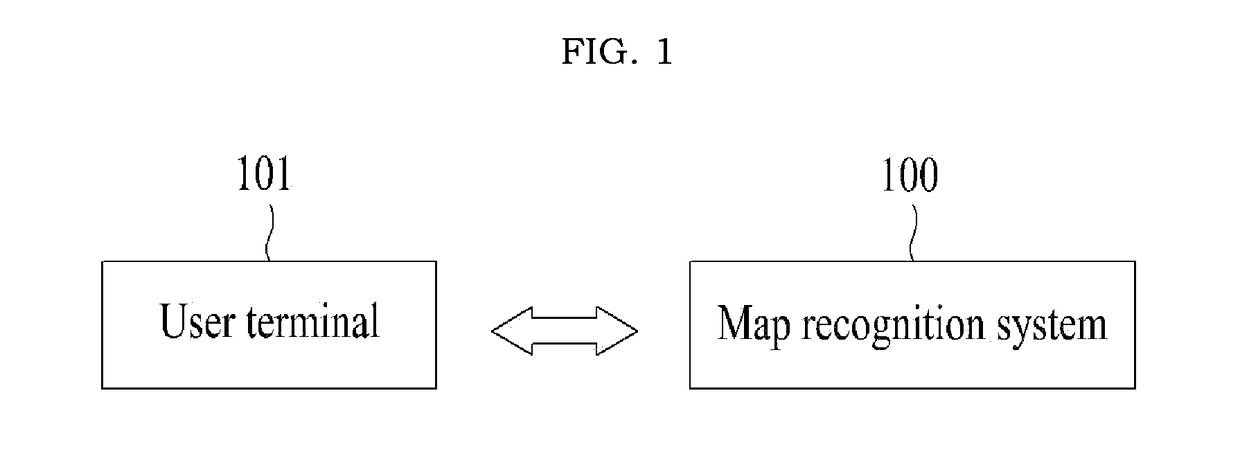

Ocr-based system and method for recognizing map image, recording medium and file distribution system

Disclosed are OCR-based system and method for recognizing a map image. The system for map recognition comprise a recognition unit for recognizing text on an inputted image by means of a text recognition technology; a search unit for searching, in a database comprising toponym data, for a toponym corresponding to the text; and a provision unit for providing map information comprising the toponym as a result of the recognition of the inputted image.

Owner:NAVER CORP

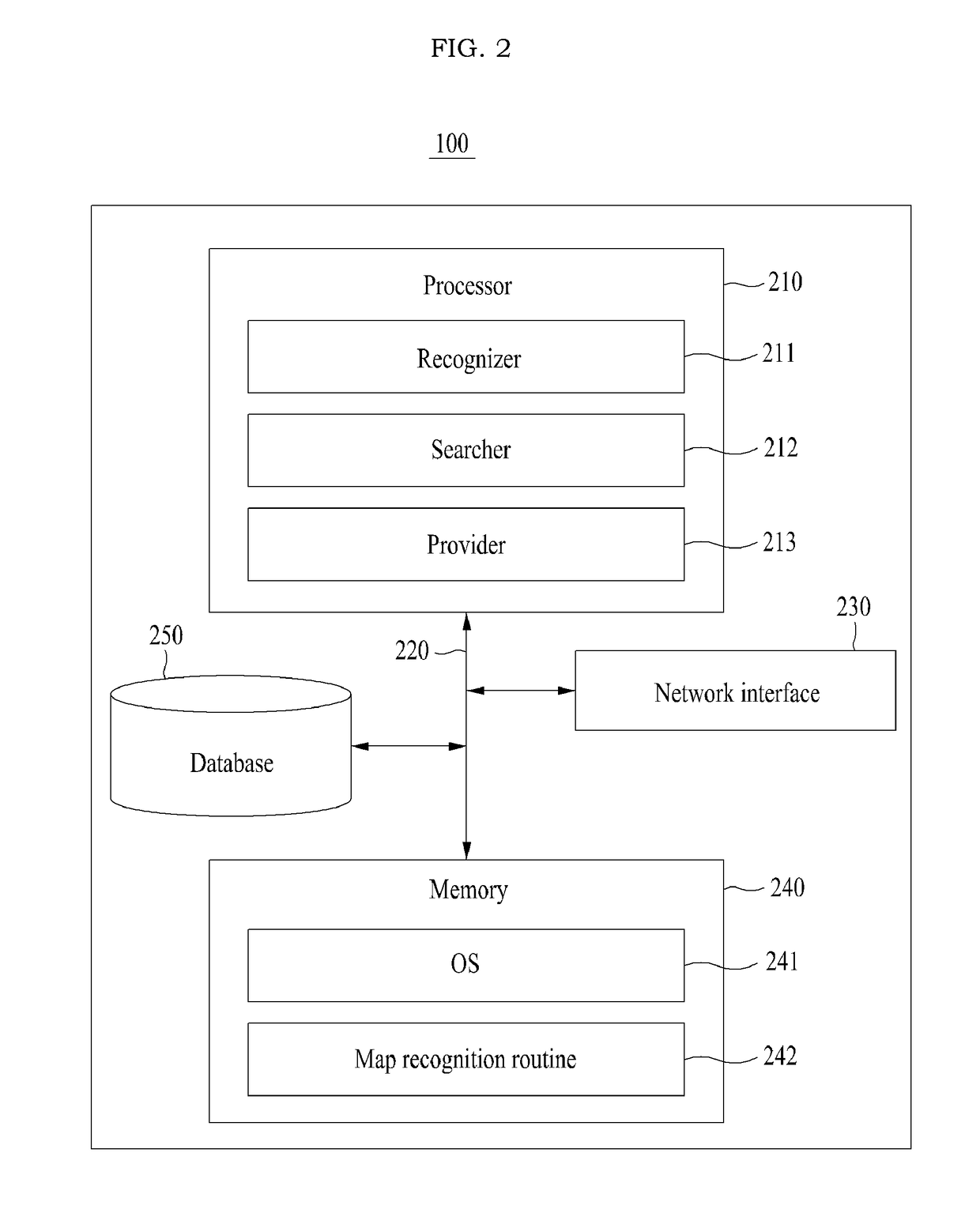

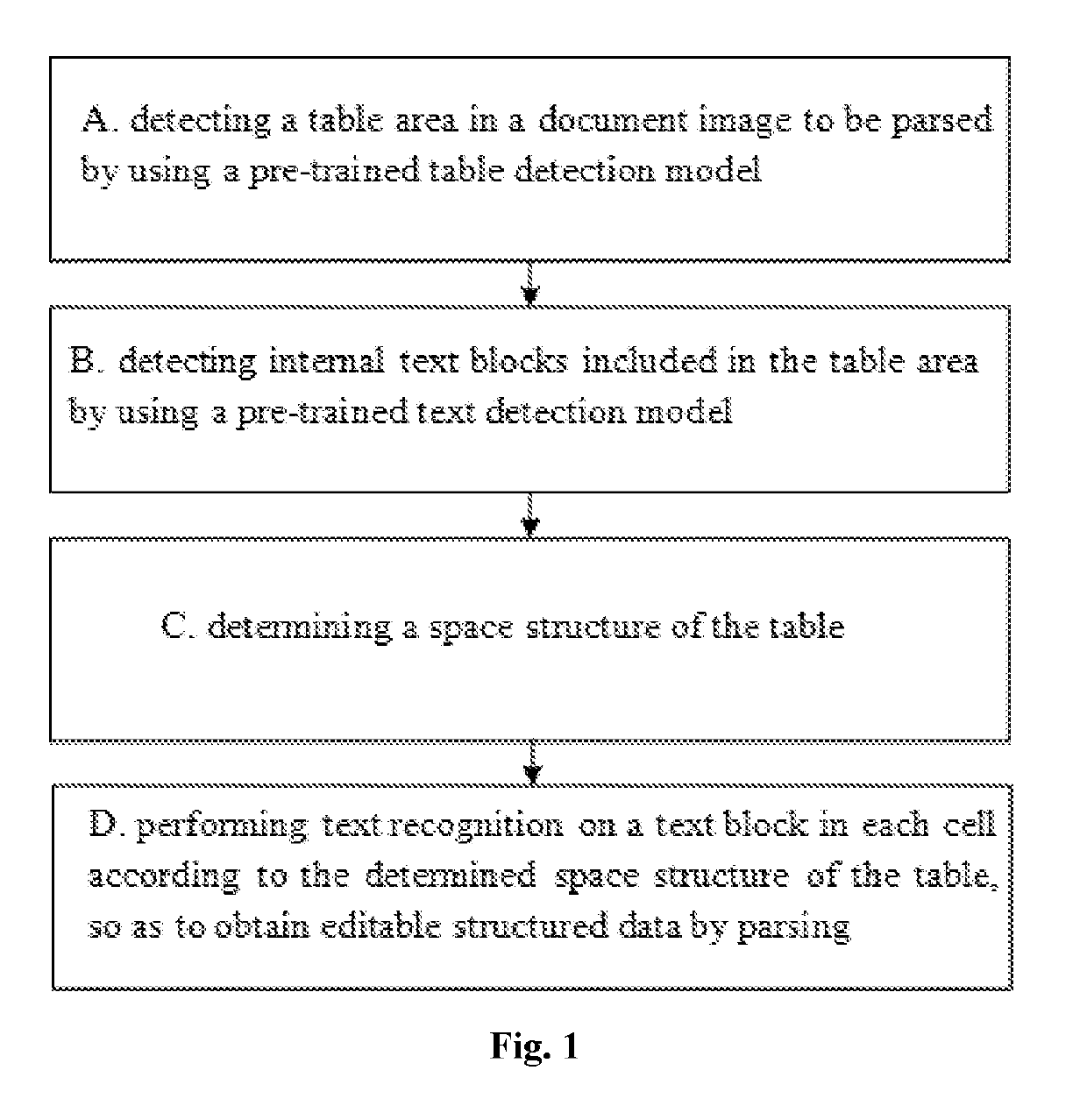

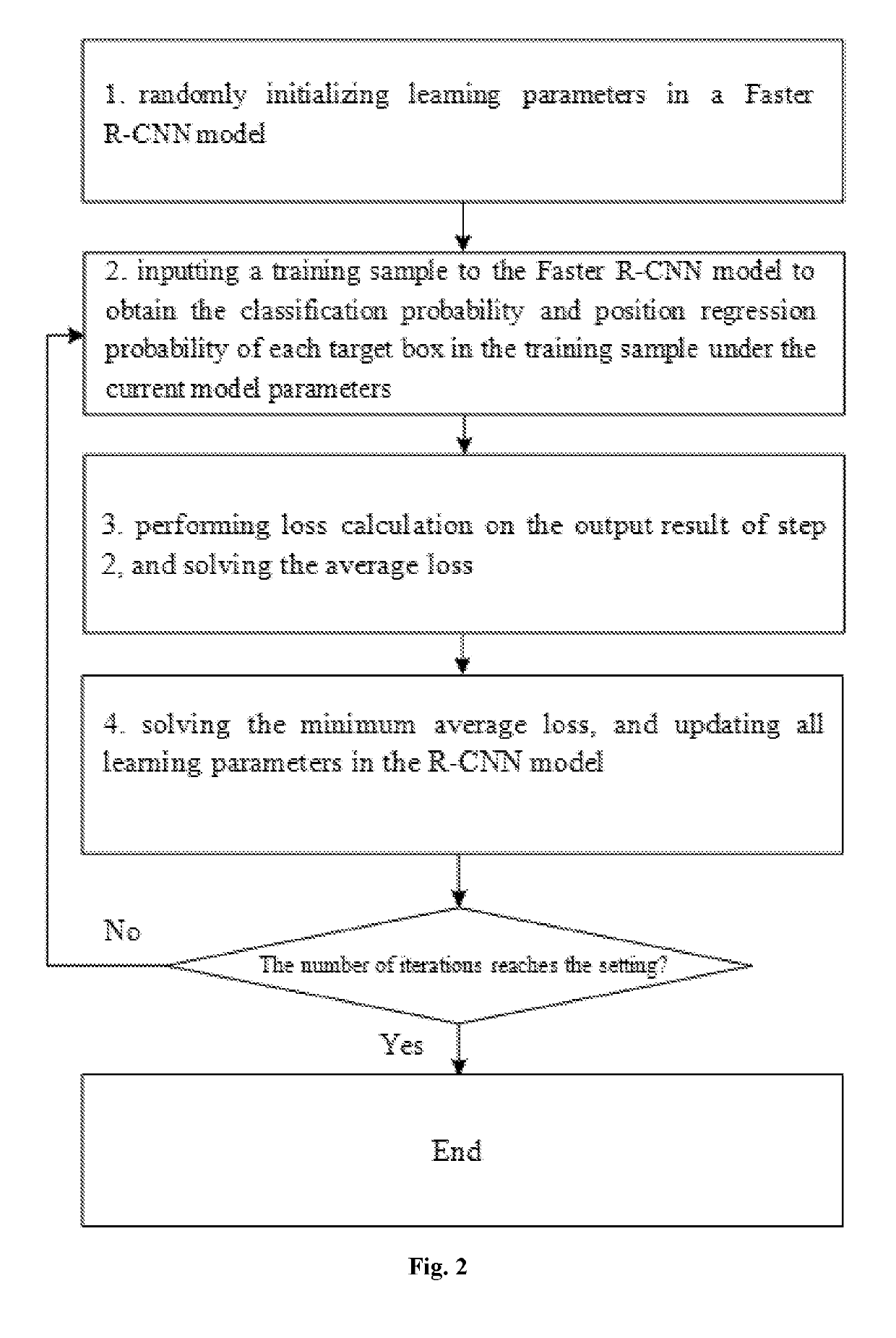

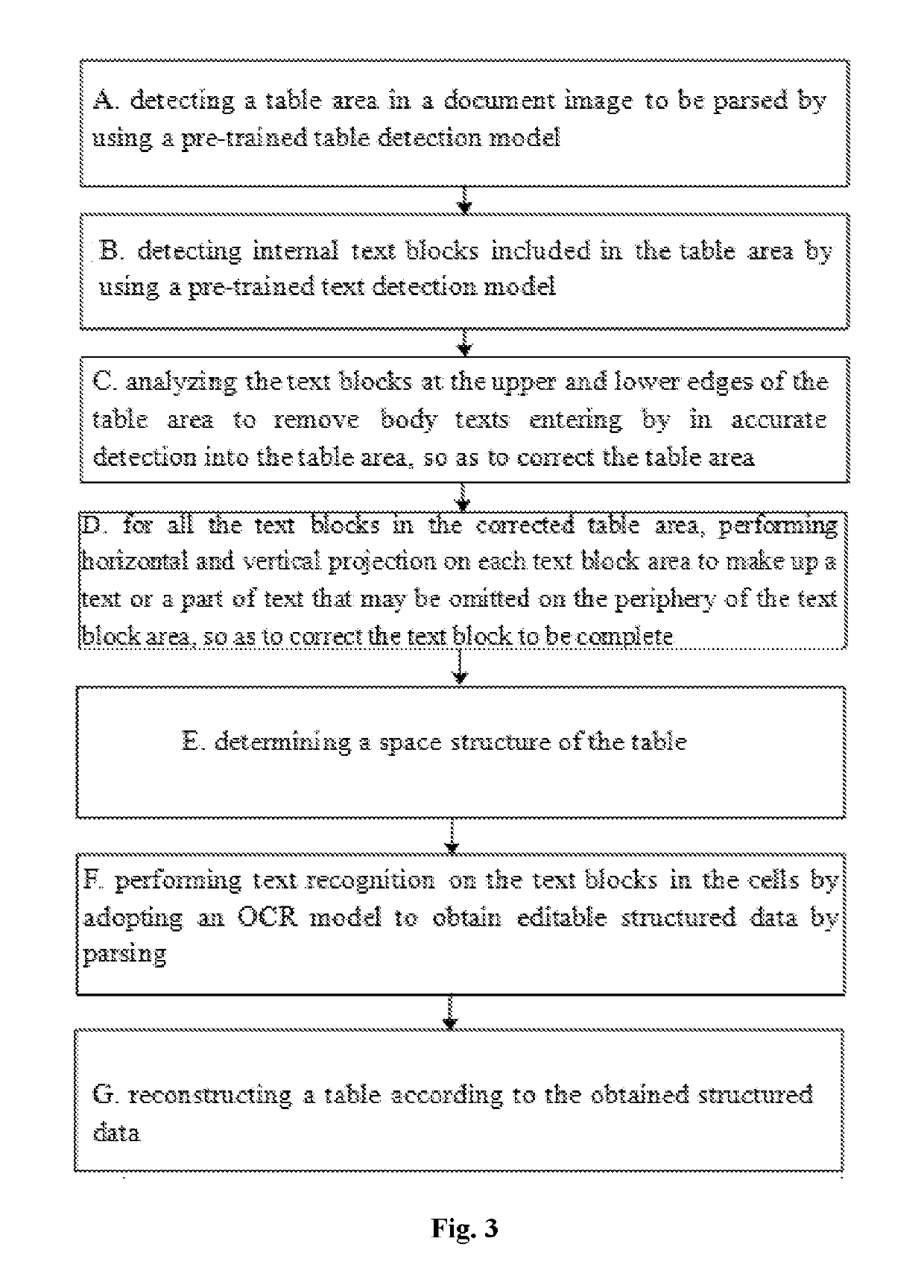

Method and device for parsing table in document image

ActiveUS20190266394A1Efficient analysisEasy to solveCharacter recognitionText detectionText recognition

The present application relates to a method and a device for parsing a table in a document image. The method comprises the following steps: inputting a document image to be parsed which includes one or more table areas into the electronic device; detecting, by the electronic device, a table area in the document image by using a pre-trained table detection model; detecting, by the electronic device, internal text blocks included in the table area by using a pre-trained text detection model; determining, by the electronic device, a space structure of the table; and performing text recognition on a text block in each cell according to the space structure of the table, so as to obtain editable structured data by parsing. The method and the device of the present application can be applied to various tables such as line-including tables or line-excluding tables or black-and-white tables.

Owner:ABC FINTECH CO LTD

System and method for OCR output verification

A system and method for computing confidence in an output of a text recognition system includes performing character recognition on an input text image with a text recognition system to generate a candidate string of characters. A first representation is generated, based on the candidate string of characters, and a second representation is generated based on the input text image. A confidence in the candidate string of characters is computed based on a computed similarity between the first and second representations in a common embedding space.

Owner:XEROX CORP

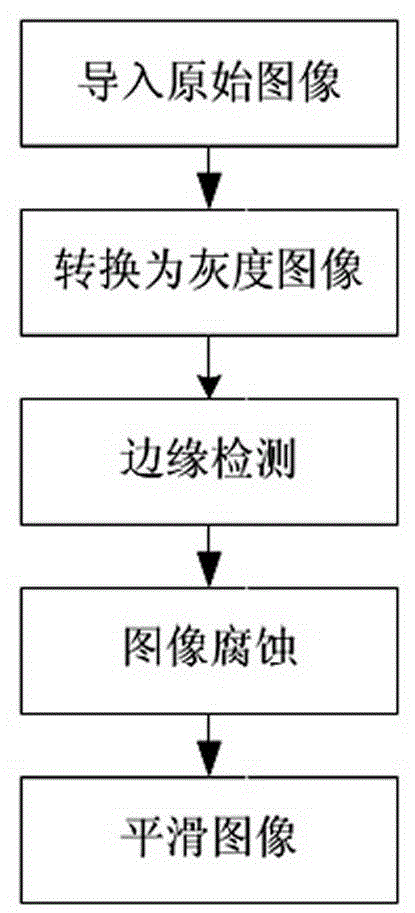

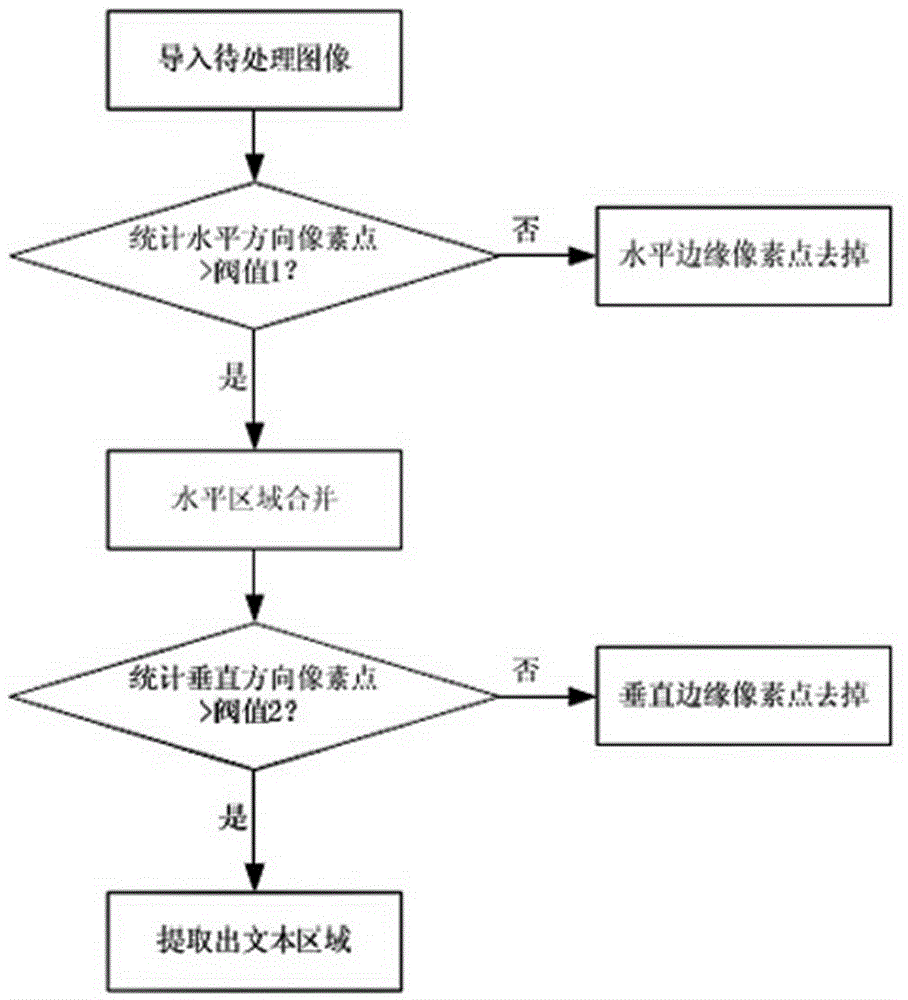

Character recognition method for underwater video images

InactiveCN104361336AConvenient segmentationEasy to implementCharacter recognitionImaging processingText recognition

The invention discloses a character recognition method for underwater video images. The method comprises the steps that the video images are subjected to preprocessing according to the morphological image processing principle, the contrast ratio is enhanced, and noise is filtered out; video characters are subjected to region segmentation according to preprocessing results, and text region location is carried out through a method combining edge detection and communication elements; according to video character region segmentation results, character segmentation is carried out on characters through a binary method combining a global threshold method and a local threshold method, and segmented characters are subjected to normalization to enable the segmented characters to be consistent with characters in a template base in size; the template base is designed according to the characteristics of video characters, the segmented characters and the characters in the template base are matched, and the characters can be recognized and stored in text.

Owner:HOHAI UNIV

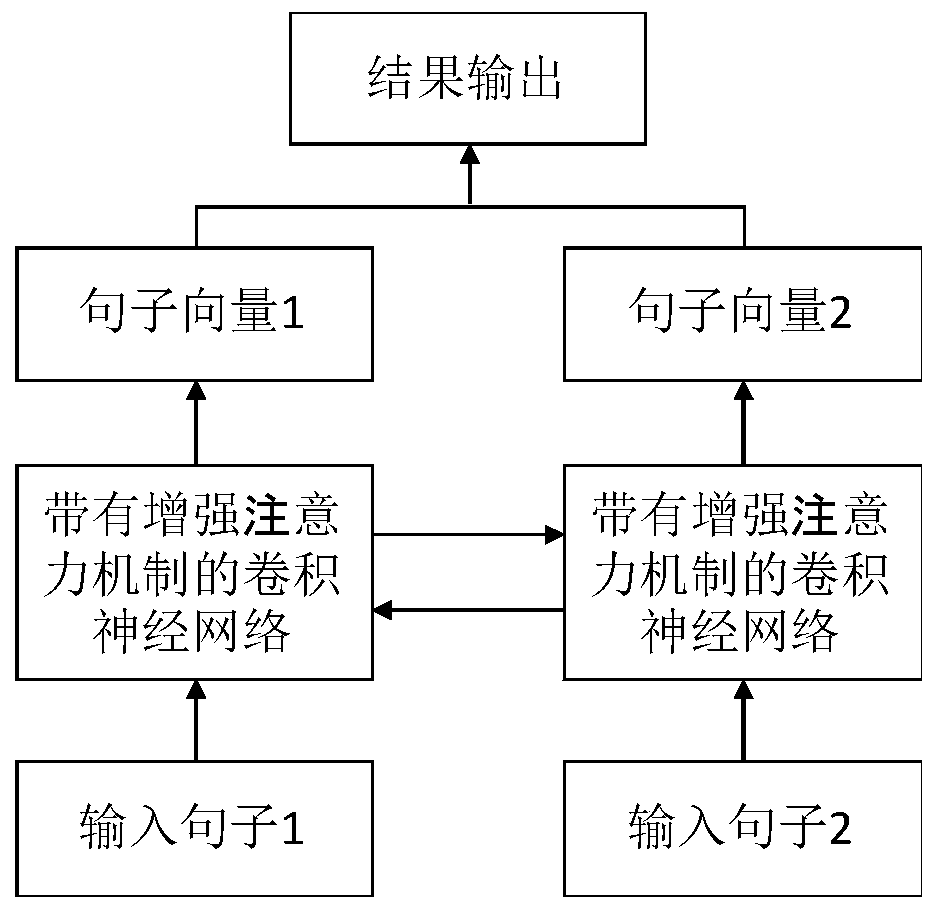

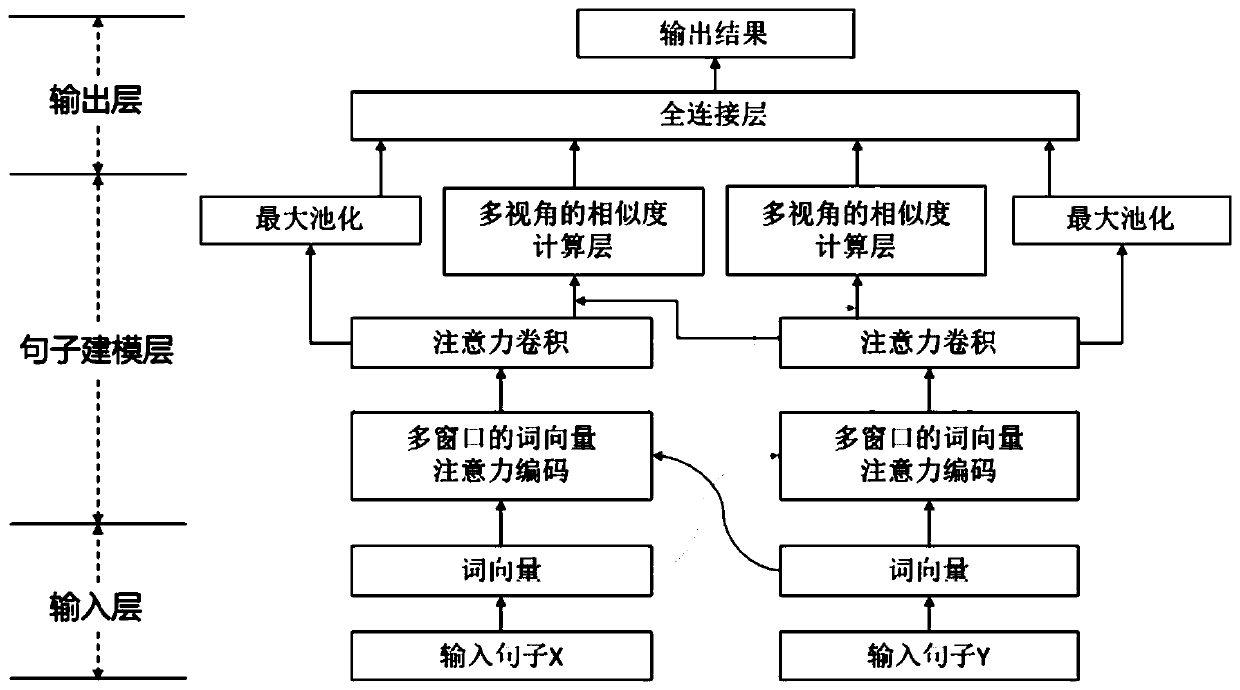

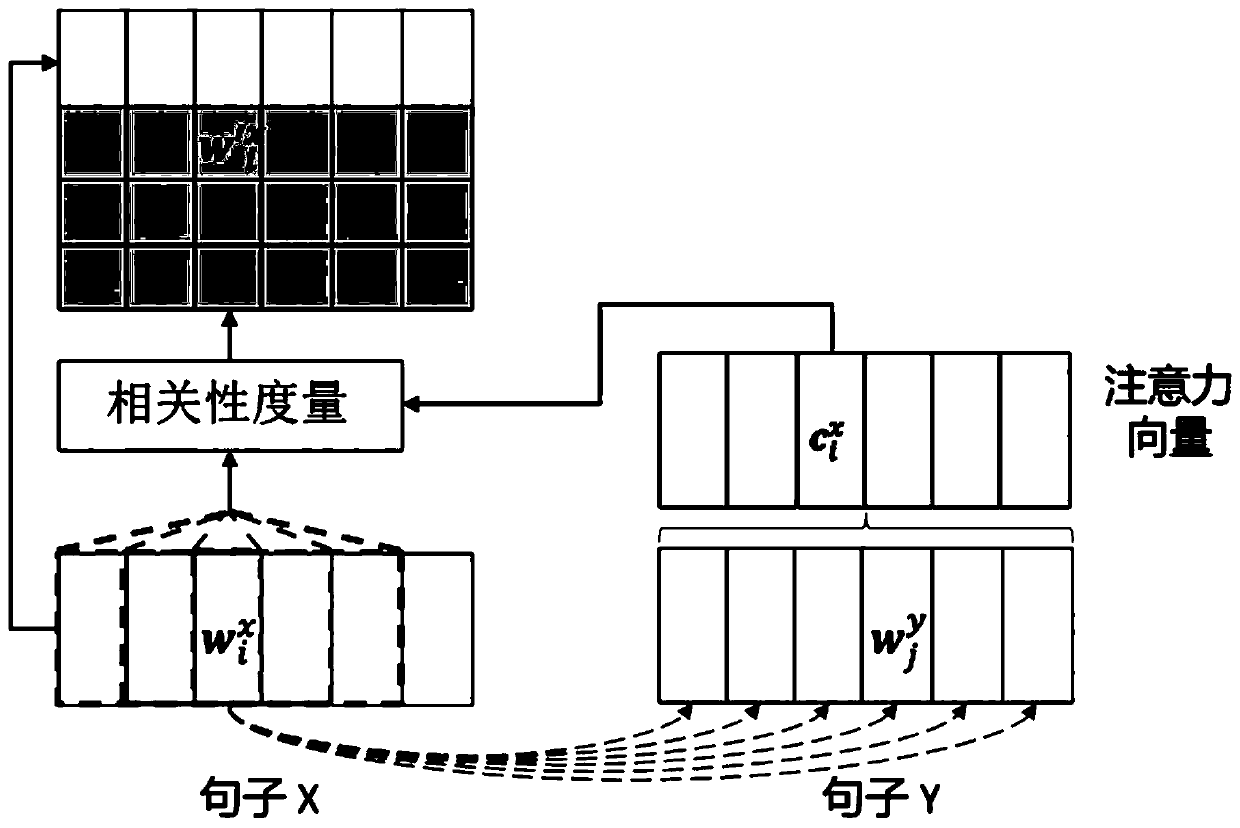

Convolutional neural network matching text recognition method based on attention enhancement mechanism

PendingCN110298037AReflect semantic featuresEnhanced interactionSemantic analysisNeural architecturesText recognitionGranularity

The invention relates to a convolutional neural network matching text recognition method based on an attention enhancement mechanism, and the method comprises the steps: 1, carrying out the preprocessing of an input text, and carrying out the pre-training of a text corpus, and obtaining an initial word vector; 2, converting sentences in the input text into a matrix composed of initial word vectorsby utilizing the initial word vectors; 3, encoding the matrix through a convolutional neural network with an attention enhancement mechanism and generating a low-dimensional sentence vector; and 4, obtaining the correlation of the low-dimensional sentence vectors corresponding to every two sentences, and identifying the overall text according to the correlation result. Compared with the prior art, the defect that two sentences are completely independent in the sentence modeling process is avoided, the relevant attention information in the other sentence is added on the basis that the convolutional neural network acquires the local context information, so that the two sentences interact as soon as possible, and multi-granularity information obtained by combining convolution kernels of different sizes is obtained.

Owner:TONGJI UNIV

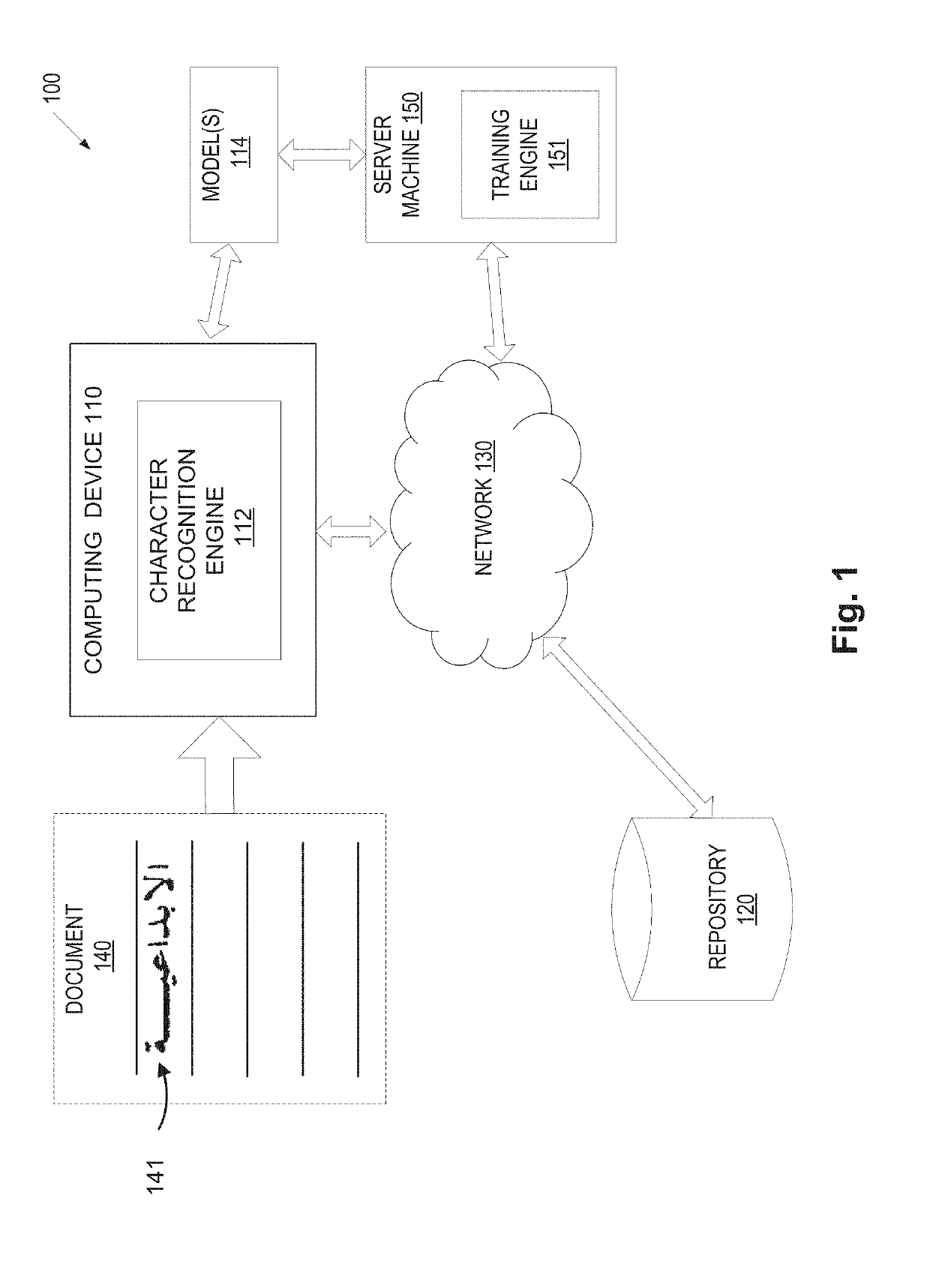

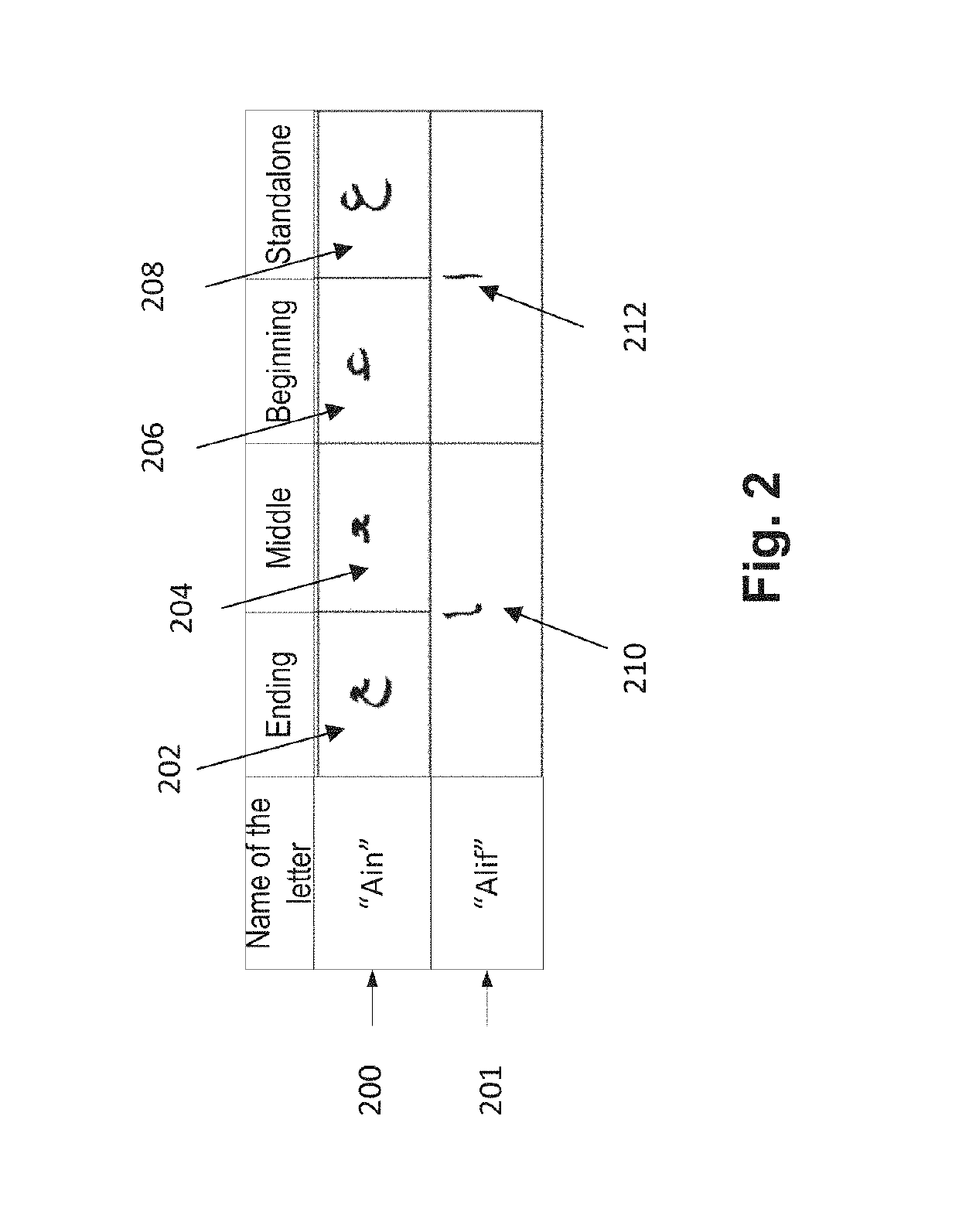

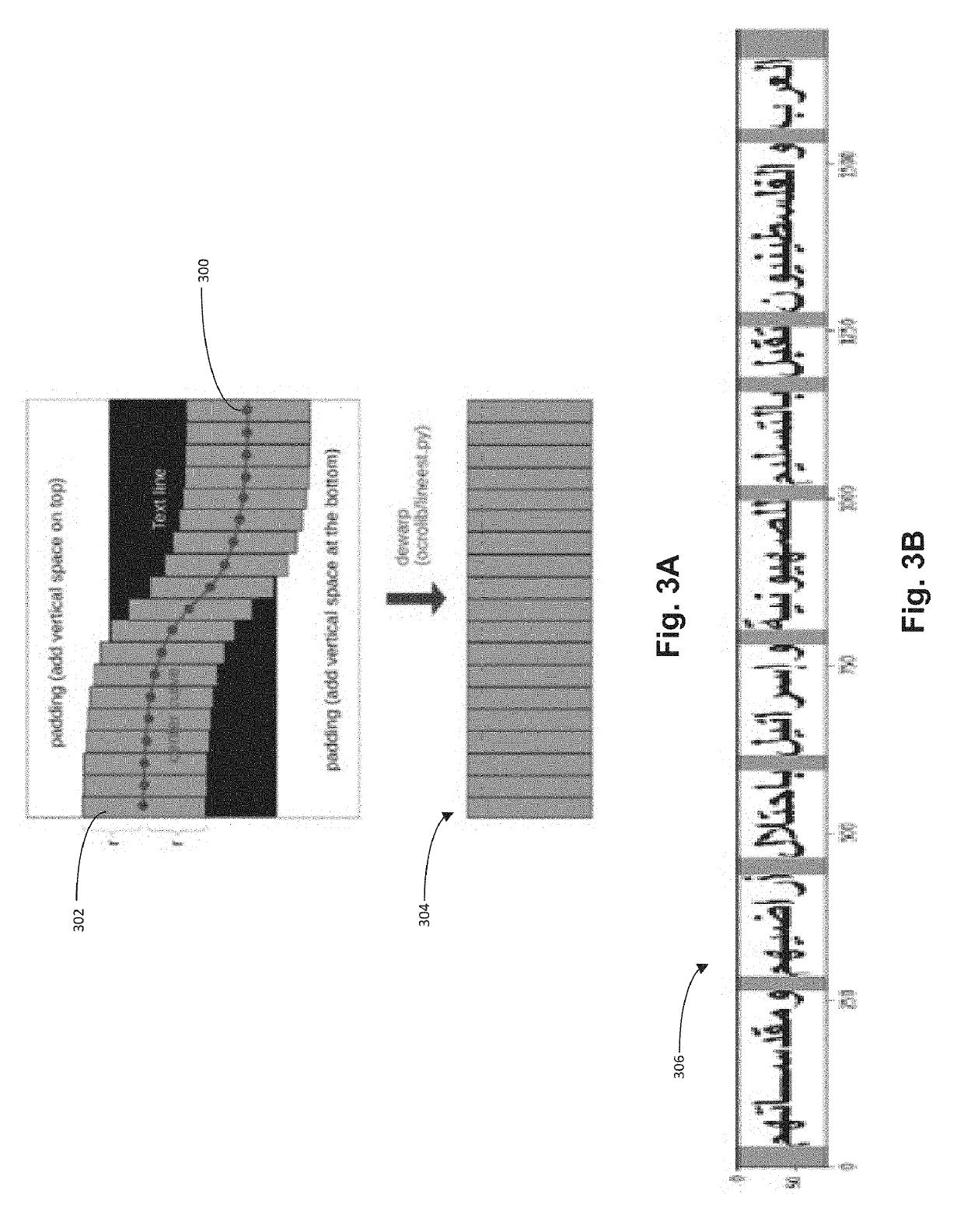

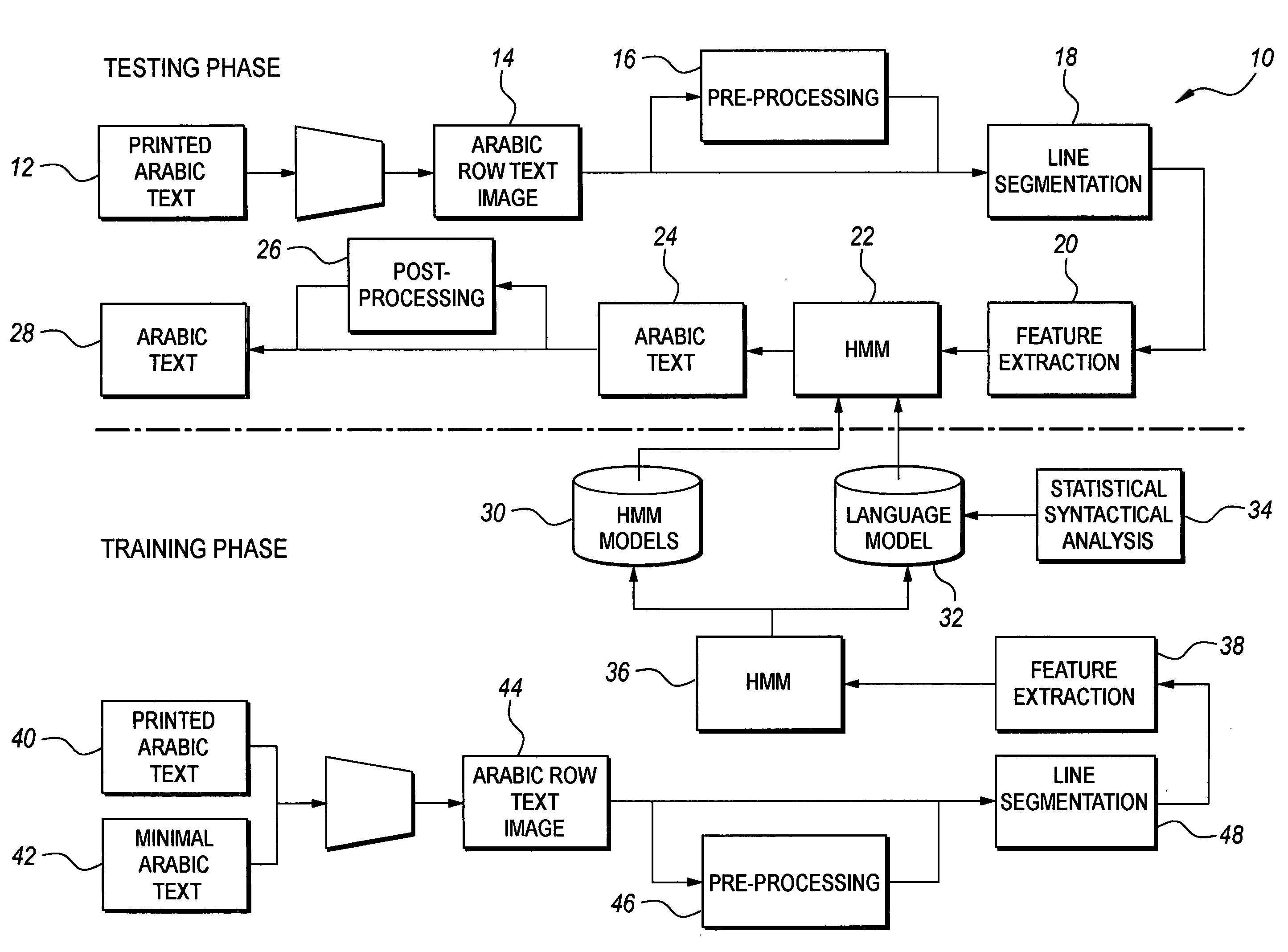

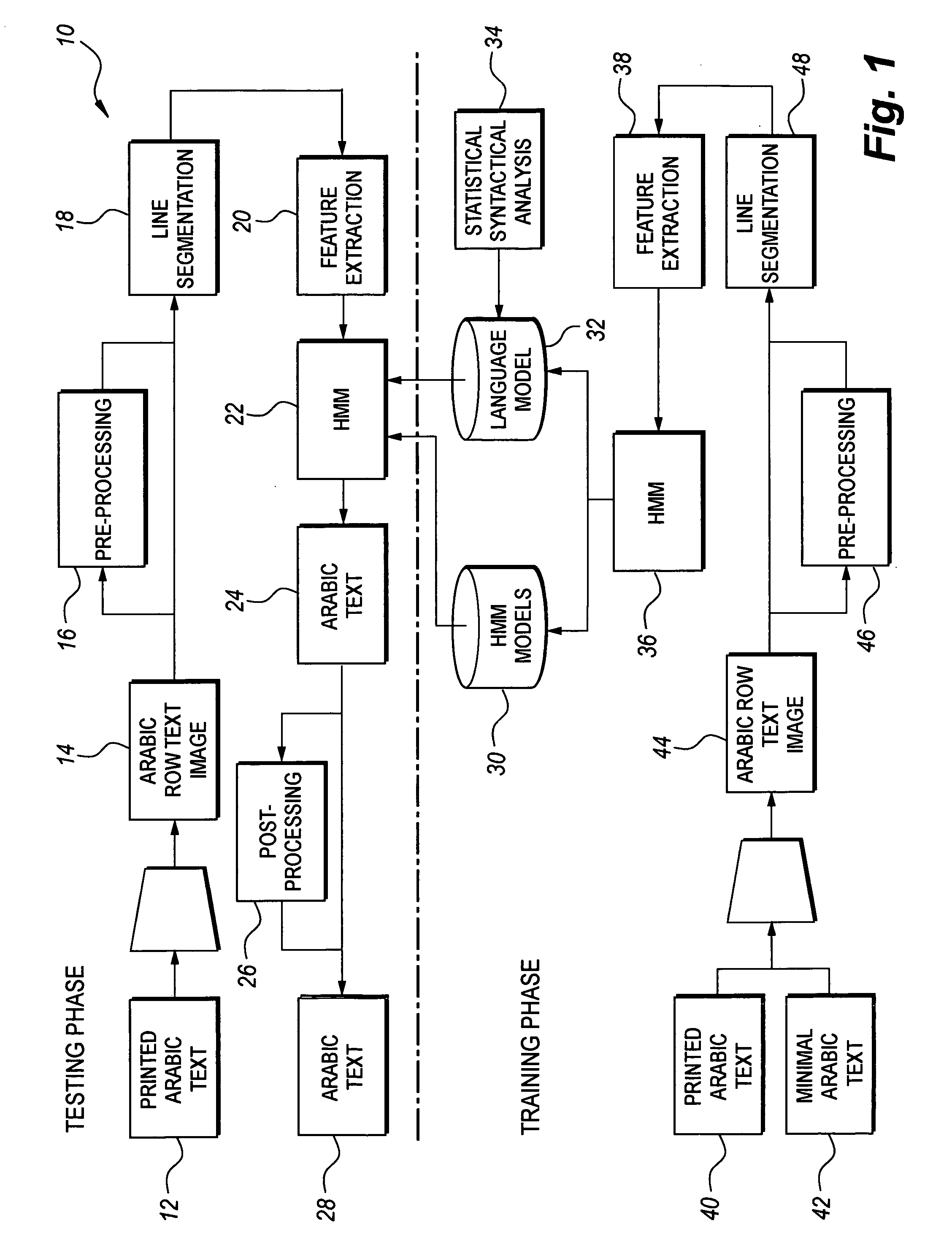

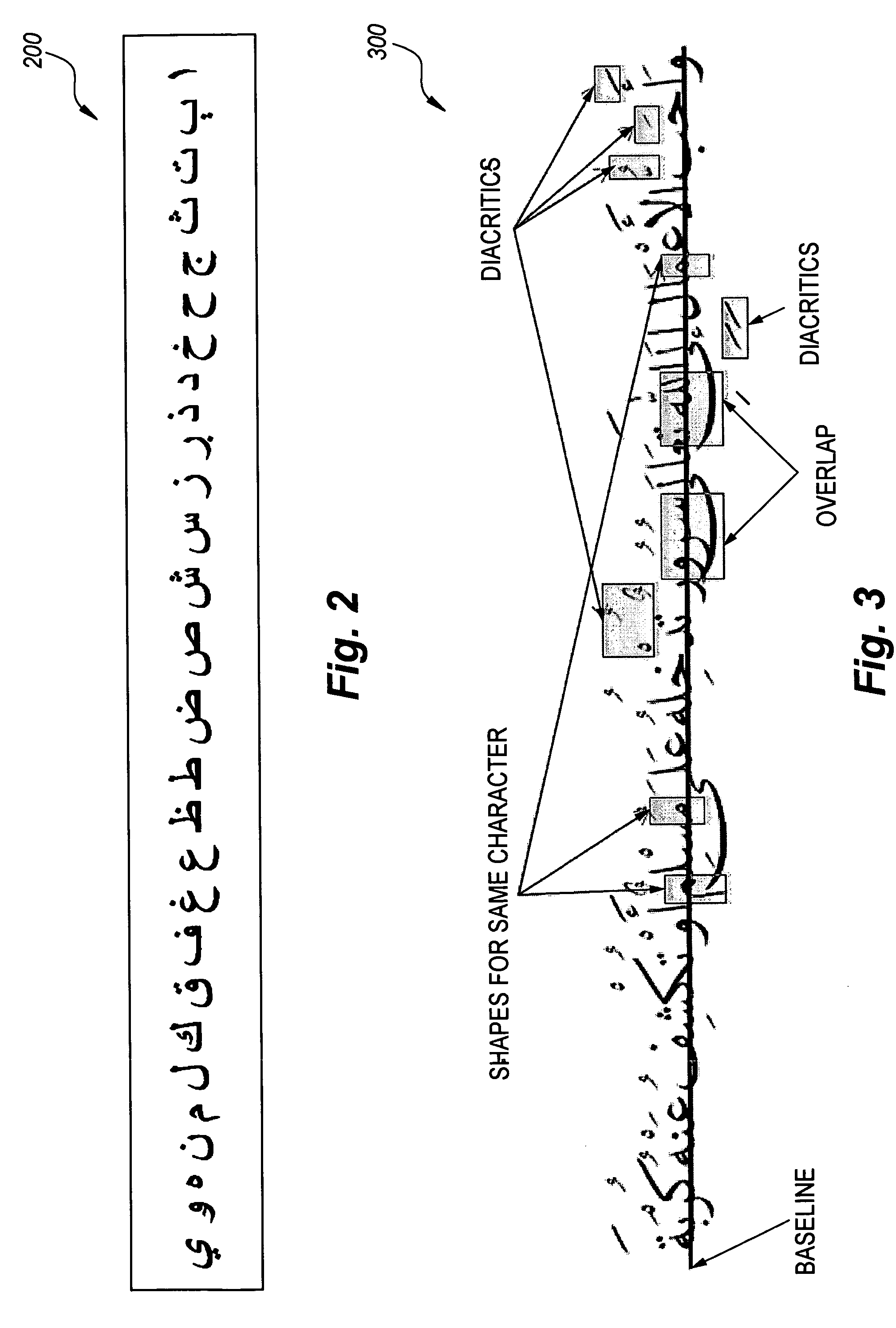

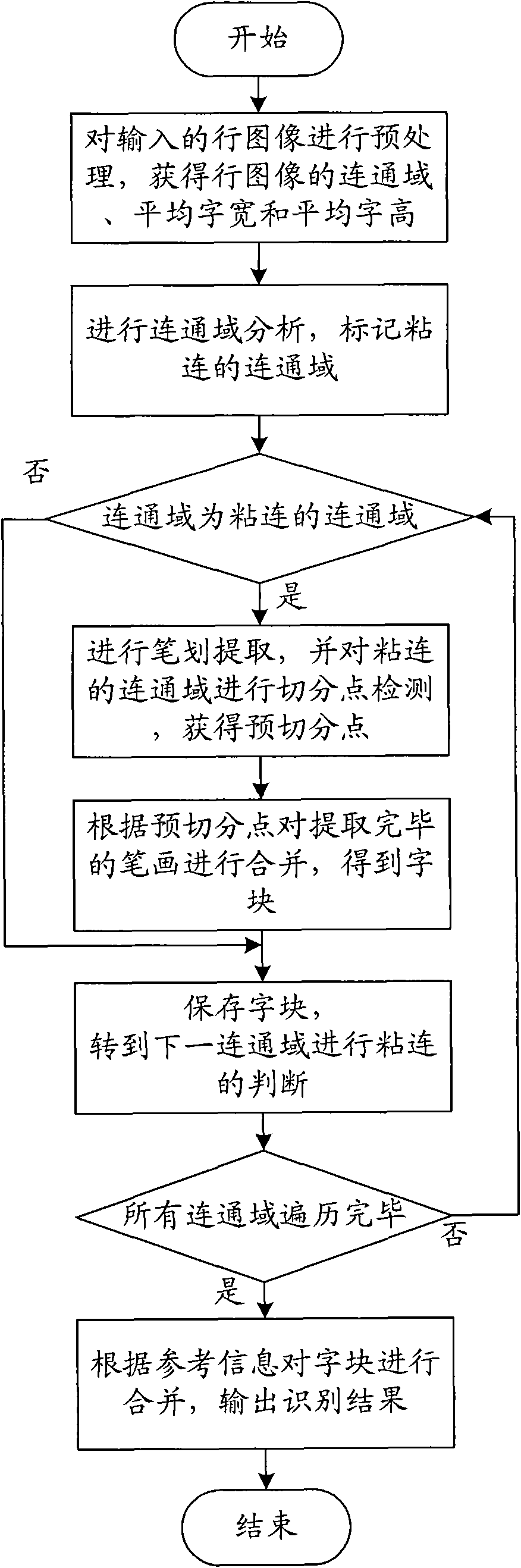

Automatic arabic text image optical character recognition method

InactiveUS20100246963A1Improve recognition rateCharacter and pattern recognitionSpecial data processing applicationsText recognitionOptical character recognition

The automatic Arabic text image optical character recognition method includes training a text recognition system using Arabic printed text, using the produced models for classification of newly unseen Arabic scanned text, and generating the corresponding textual information. Scanned images of Arabic text and copies of minimal Arabic text are used in the training sessions. Each page is segmented into lines. Features of each line are extracted and input to Hidden Markov Model (HMM). All training data training features are used. HMM runs training algorithms to produce codebook and language models. In the classification stage new Arabic text is input in scanned form. Line segmentation where lines are extracted is passed through. In the feature stage, line features are extracted and input to the classification stage. In the classification stage the corresponding Arabic text is generated.

Owner:KING FAHD UNIVERSITY OF PETROLEUM AND MINERALS

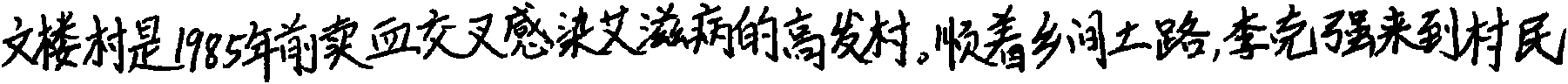

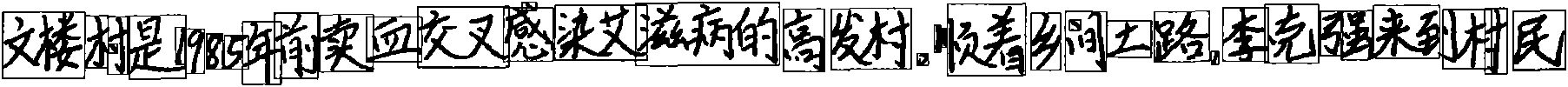

Method and device for touching character segmentation in character recognition

InactiveCN102169542AShorten operation timeReduce storage spaceCharacter and pattern recognitionText recognitionDomain analysis

The present invention discloses a method and a device for touching character segmentation in character recognition, and belongs to the character recognition field. The method comprises the steps of carrying out preprocessing to obtain connected domains, an average character width and an average character height of a row image; carrying out connected domain analysis, marking touching connected domains, carrying out stroke extraction for selected touching connected domains, carrying out segmentation point detection of the touching connected domains to obtain pre-segmentation points, and saving character blocks for non-touching connected domains; merging extracted strokes according to the pre-segmentation points to obtain the character blocks; saving the character blocks and turning to a next connected domain for carrying out touching determination, and outputting a character block sequence after all connected domains having been traversed; and merging the character blocks according to reference information and outputting recognition result. The method and the device provided in the invention merge the strokes according to the pre-segmentation points to obtain the character blocks, guaranteeing that segmentation points in a larger scope can be detected, and take the mode that contour information is used to predetect the segmentation points as a parameter in merging, avoiding merging mistakes caused by merging correct segmentation points.

Owner:HANVON CORP

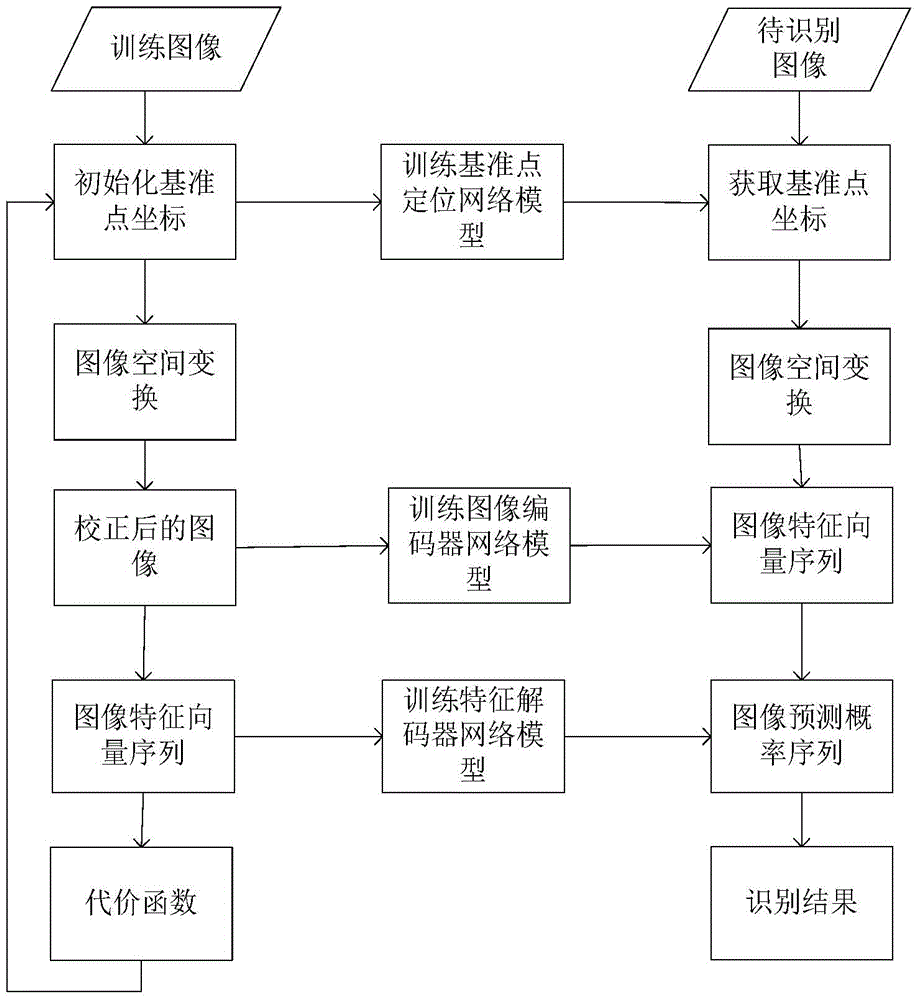

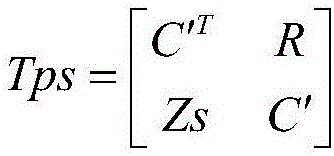

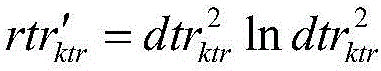

Text recognition method under natural scene on the basis of spatial transformation

ActiveCN105740909AImprove recognition accuracyNo need for manual annotation transformationCharacter and pattern recognitionFeature vectorText recognition

The invention discloses a text recognition method under a natural scene on the basis of spatial transformation. The method comprises the following steps: firstly, obtaining the text contents of a text image in a training image set, and training network models including a datum point positioning network, an image preprocessing network, an image encoder network, a feature encoder network and the like; then, utilizing the network models obtained by training to carry out spatial transformation on an image in a set of images to be identified to obtain the transformed image to be identified; and then, calculating the feature vector and a prediction probability sequence of the transformed image to be identified, and finally obtaining an image recognition result. The method is high in text recognition accuracy and can overcome the influence of adverse factors including irregular text arrangement and the like.

Owner:HUAZHONG UNIV OF SCI & TECH

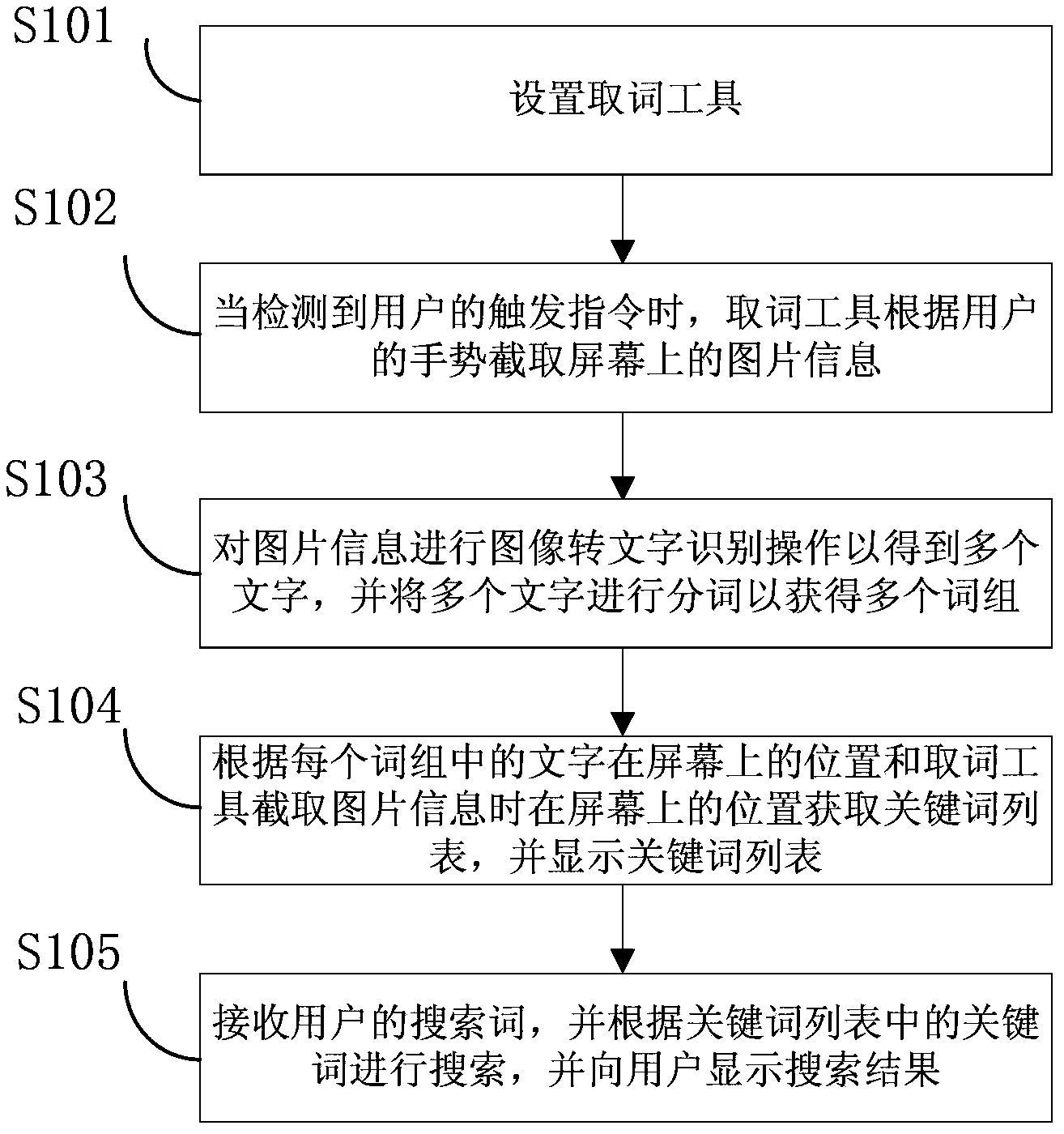

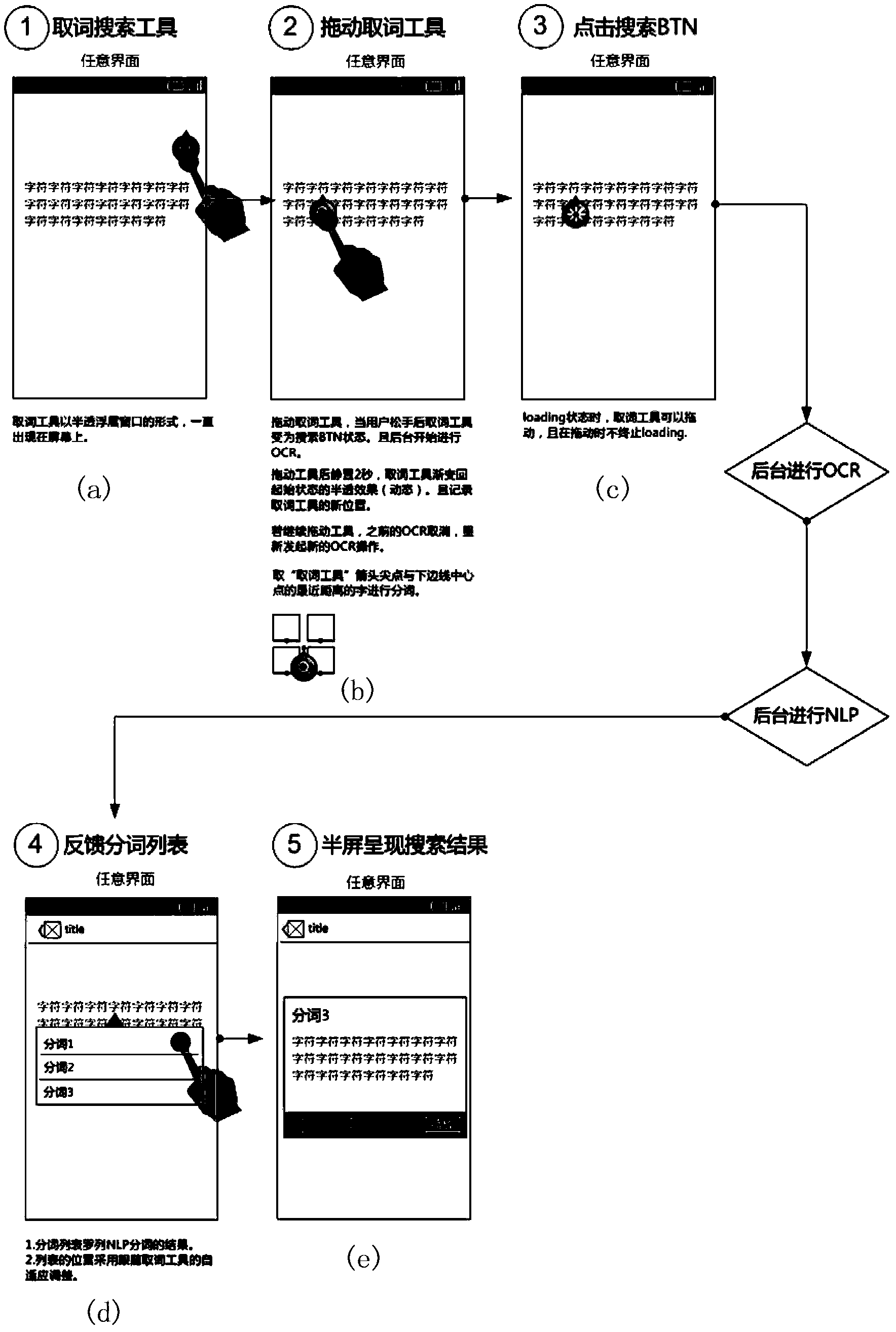

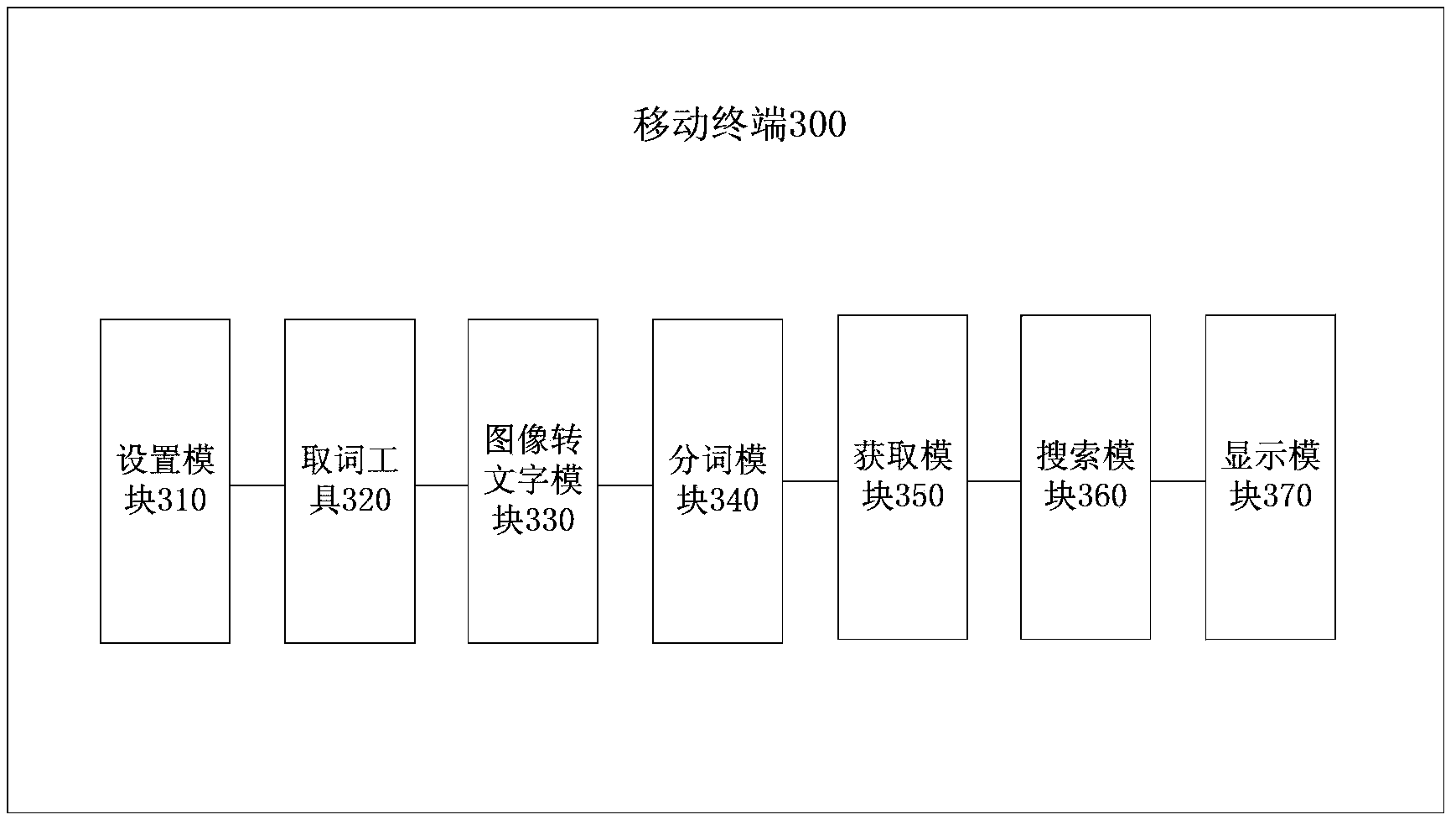

Method for searching for characters displayed in screen and based on mobile terminal and mobile terminal

ActiveCN104239313AQuick extractionHas quicknessWeb data indexingSpecial data processing applicationsSearch wordsText recognition

The invention provides a method for searching for characters displayed in a screen and based on a mobile terminal. The method includes setting a word fetching tool, wherein the window rank of the word fetching tool is higher than that of an application program of the mobile terminal; utilizing the word fetching tool to intercept picture information on the screen according to the gesture of a user when detecting a triggering command of the user; conducting image-to-text identification operation on the picture information to obtain a plurality of characters, conducting word segmentation on the plurality of characters to obtain a plurality of word groups; acquiring a key word list according to the position of the characters in the word groups on the screen and the position of the word fetching tool on the screen during the picture information intercepting and displaying the key word list; receiving a searching word of the user, conducting searching according to key words in the key word list and displaying a searching result for the user. The method improves user experience, increases the page view of the searched pages, and has quickness, high efficiency and usability. The mobile terminal is further disclosed.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com