Patents

Literature

1160 results about "Text detection" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Methods and systems for text detection in mixed-context documents using local geometric signatures

InactiveUS7043080B1Quality andSpeed andCharacter and pattern recognitionVisual presentationGraphicsImaging processing

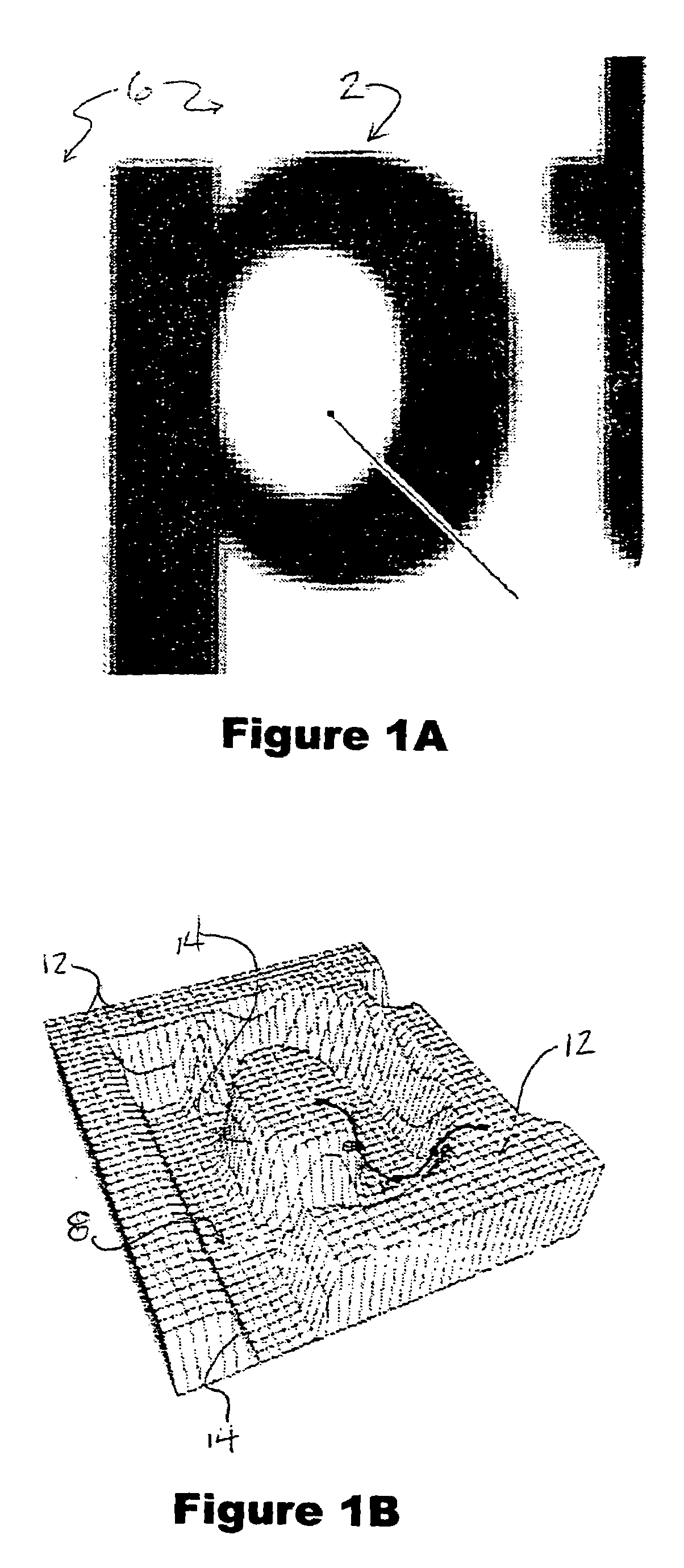

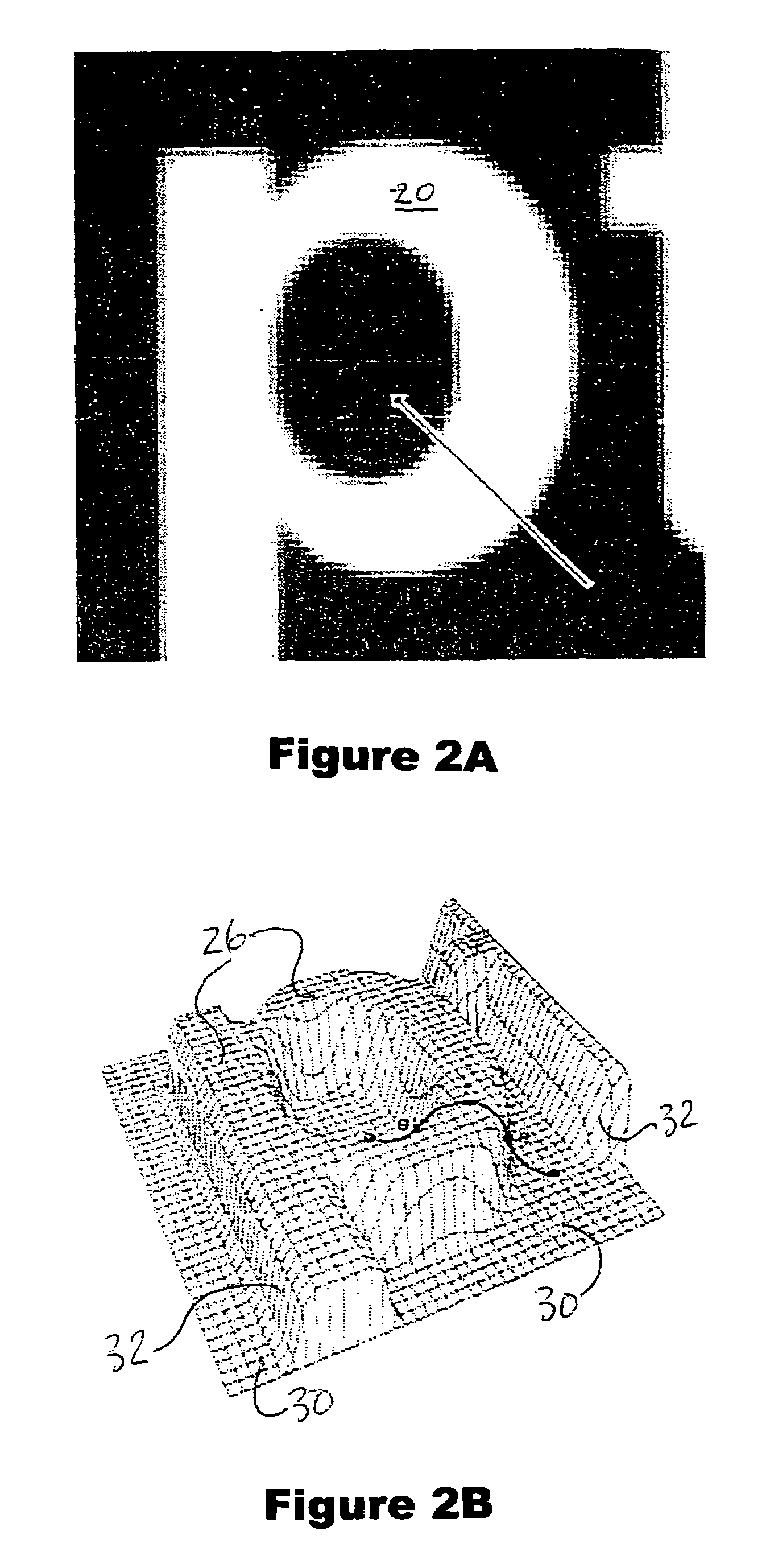

Embodiments of the present invention relate to methods and systems for detection and delineation of text characters in images which may contain combinations of text and graphical content. Embodiments of the present invention employ intensity contrast edge detection methods and intensity gradient direction determination methods in conjunction with analyses of intensity curve geometry to determine the presence of text and verify text edge identification. These methods may be used to identify text in mixed-content images, to determine text character edges and to achieve other image processing purposes.

Owner:SHARP KK

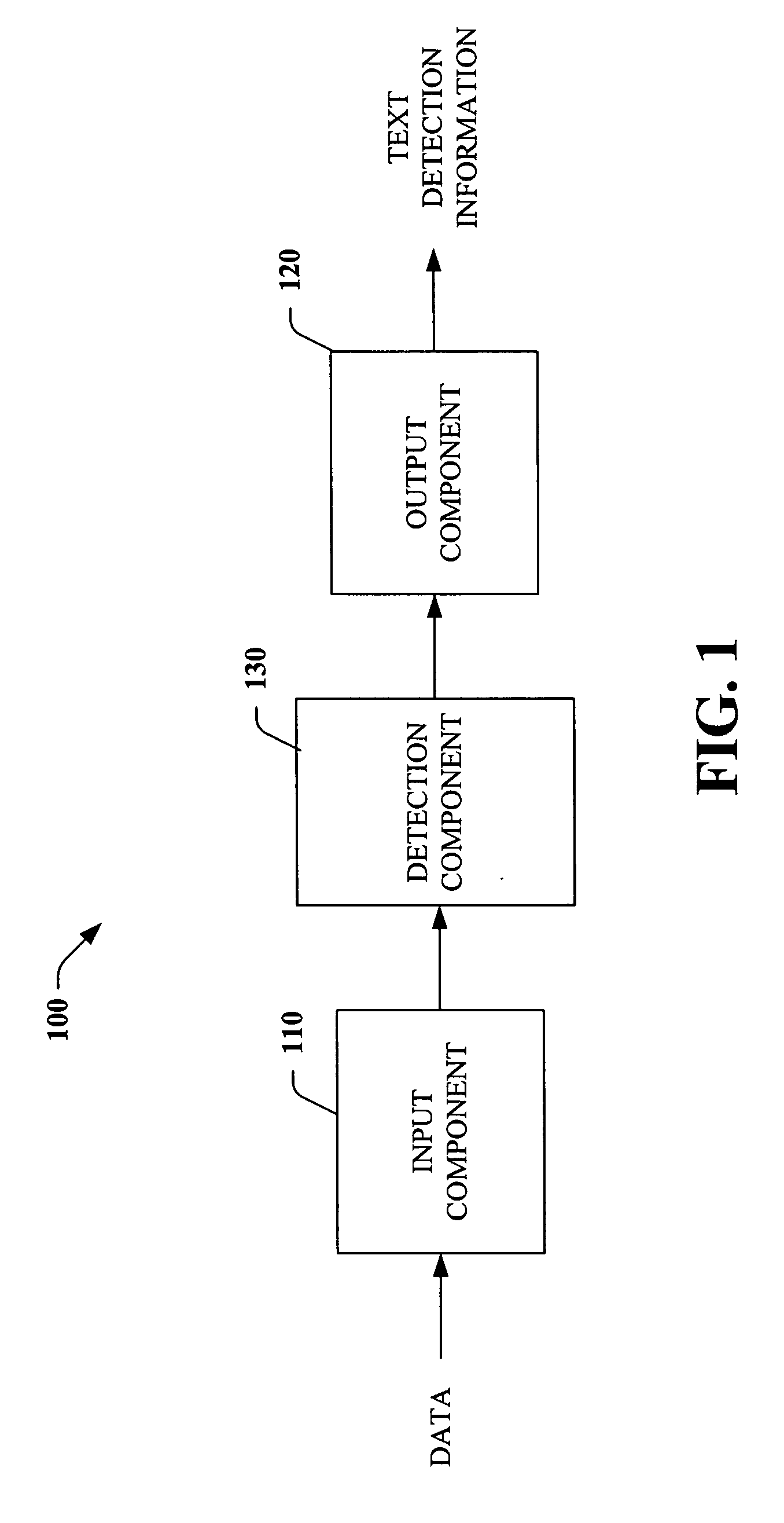

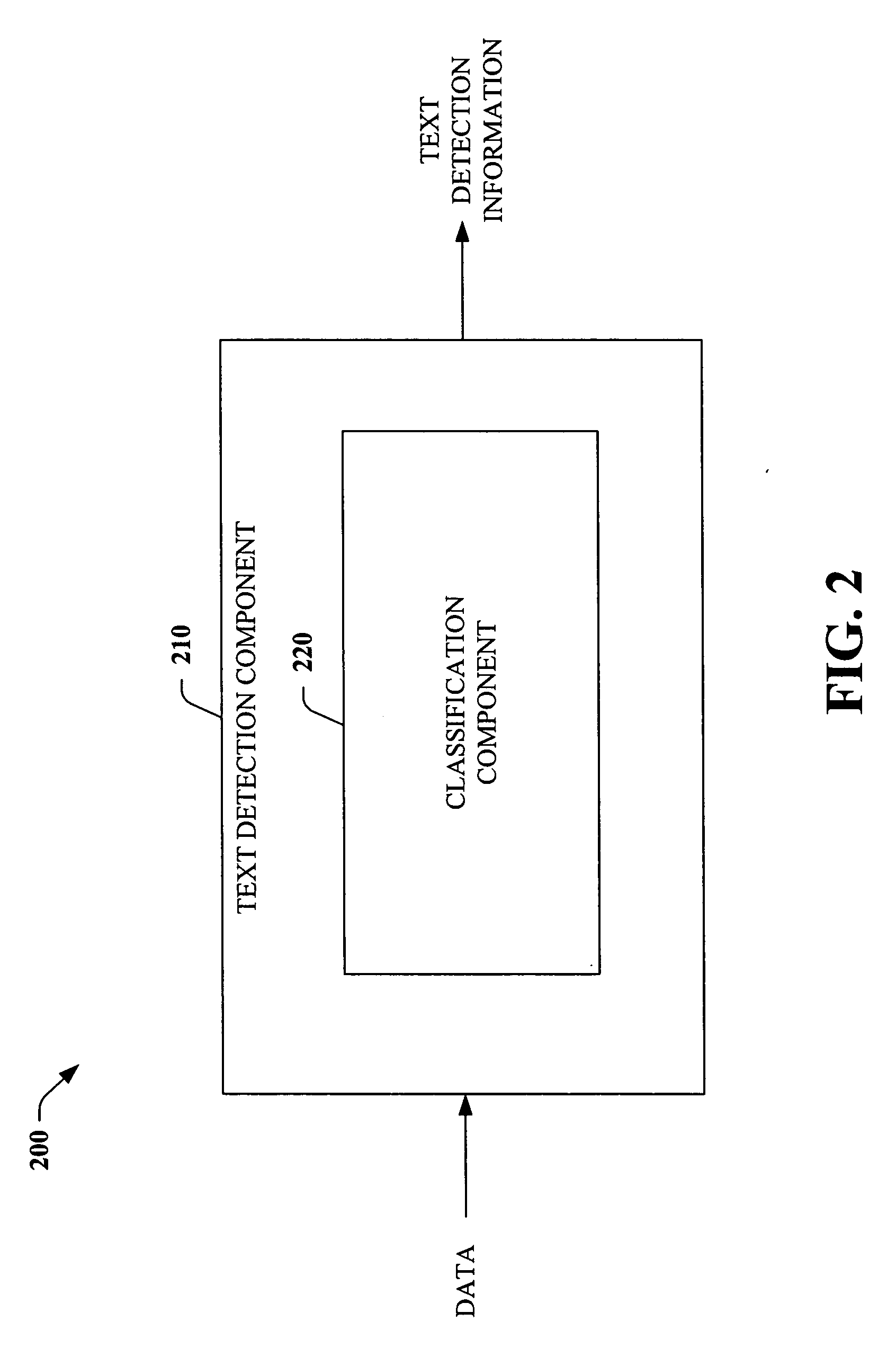

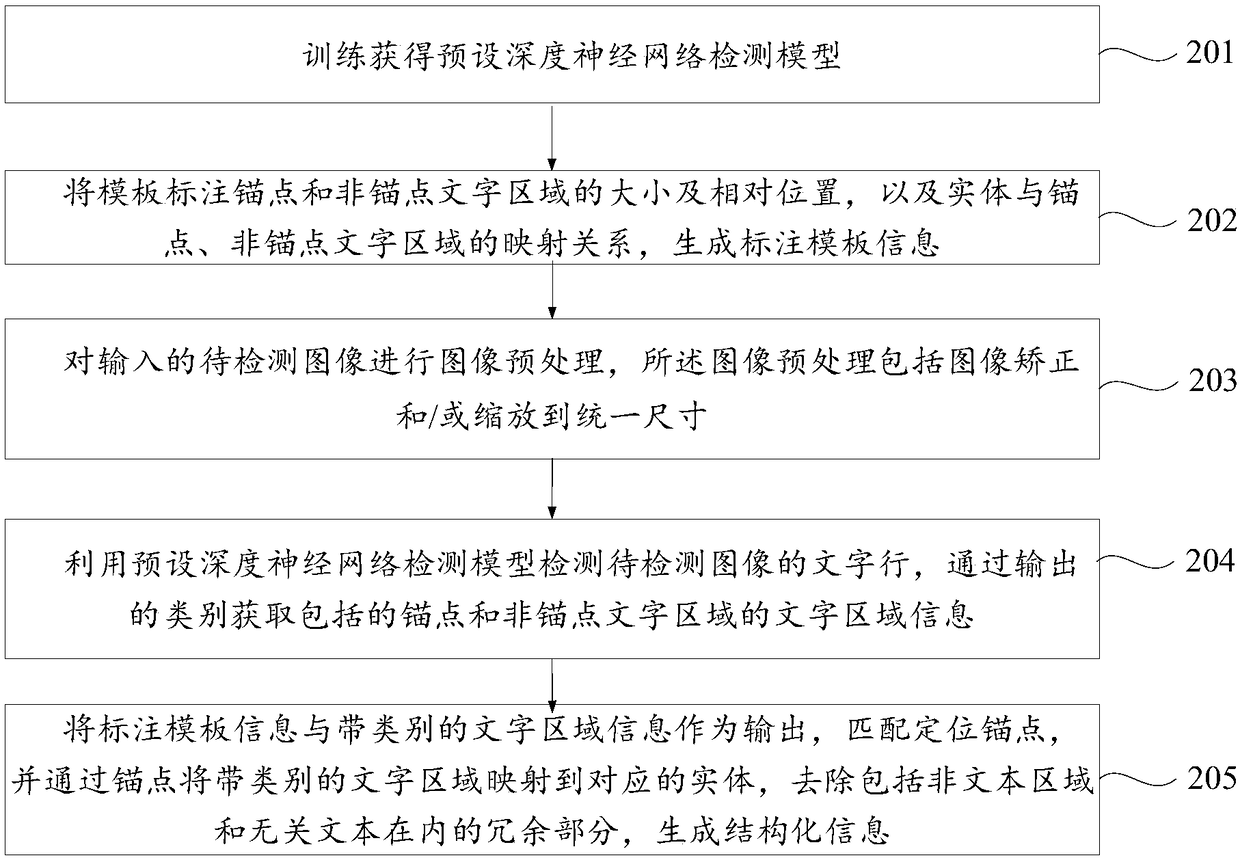

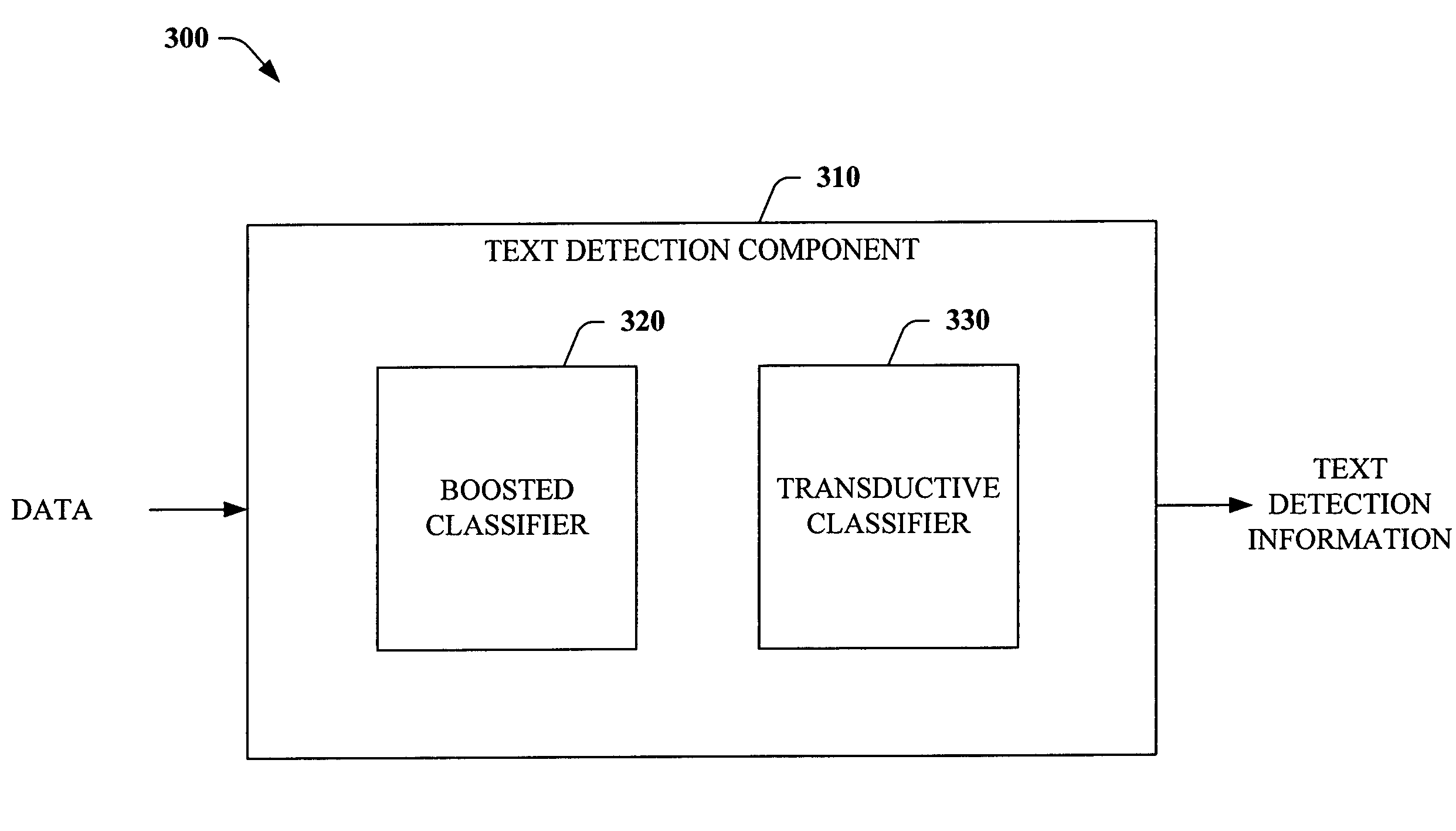

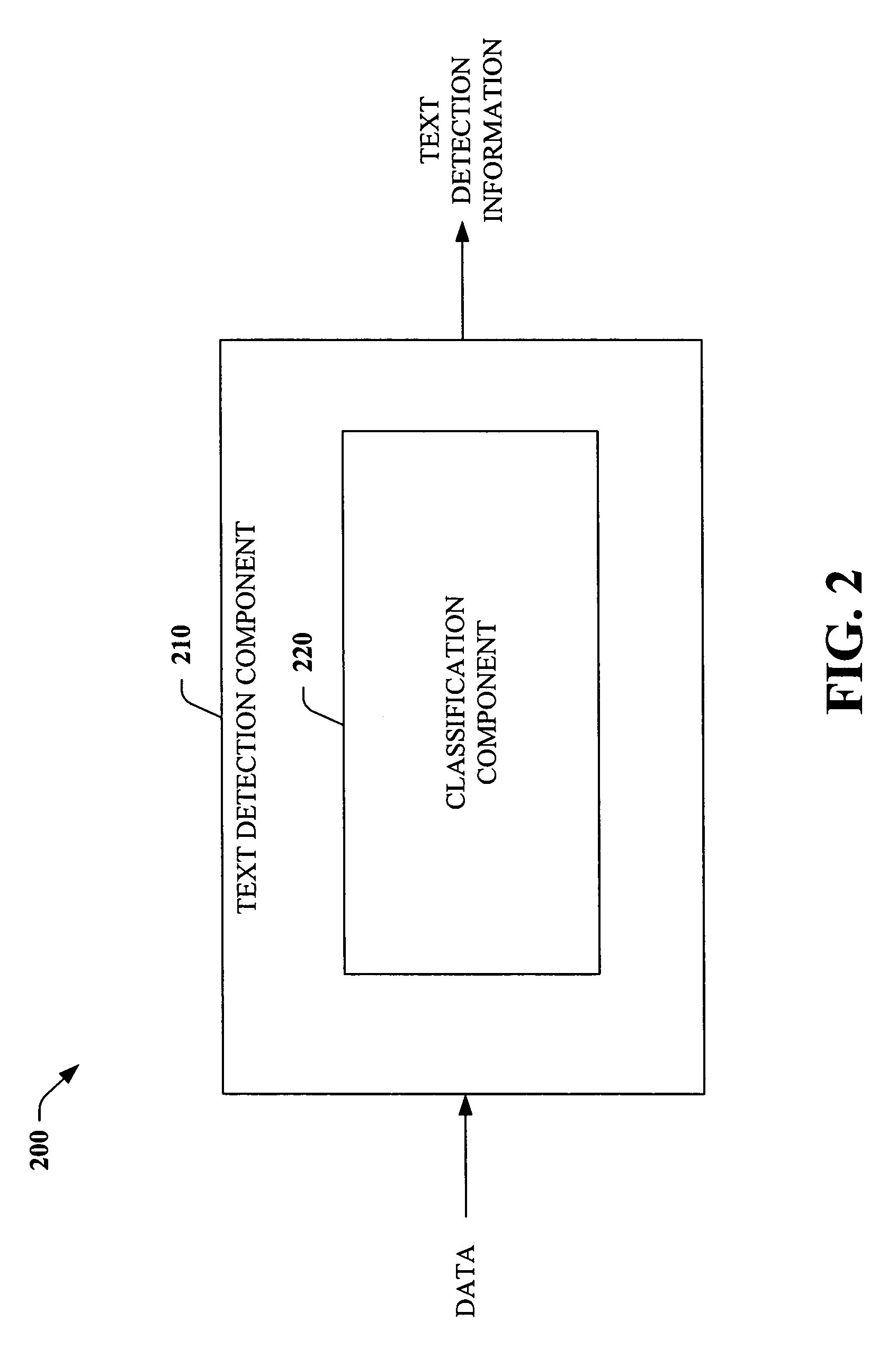

Systems and methods for detecting text

InactiveUS20060222239A1Easy to detectPromote resultsCharacter and pattern recognitionFeature vectorText detection

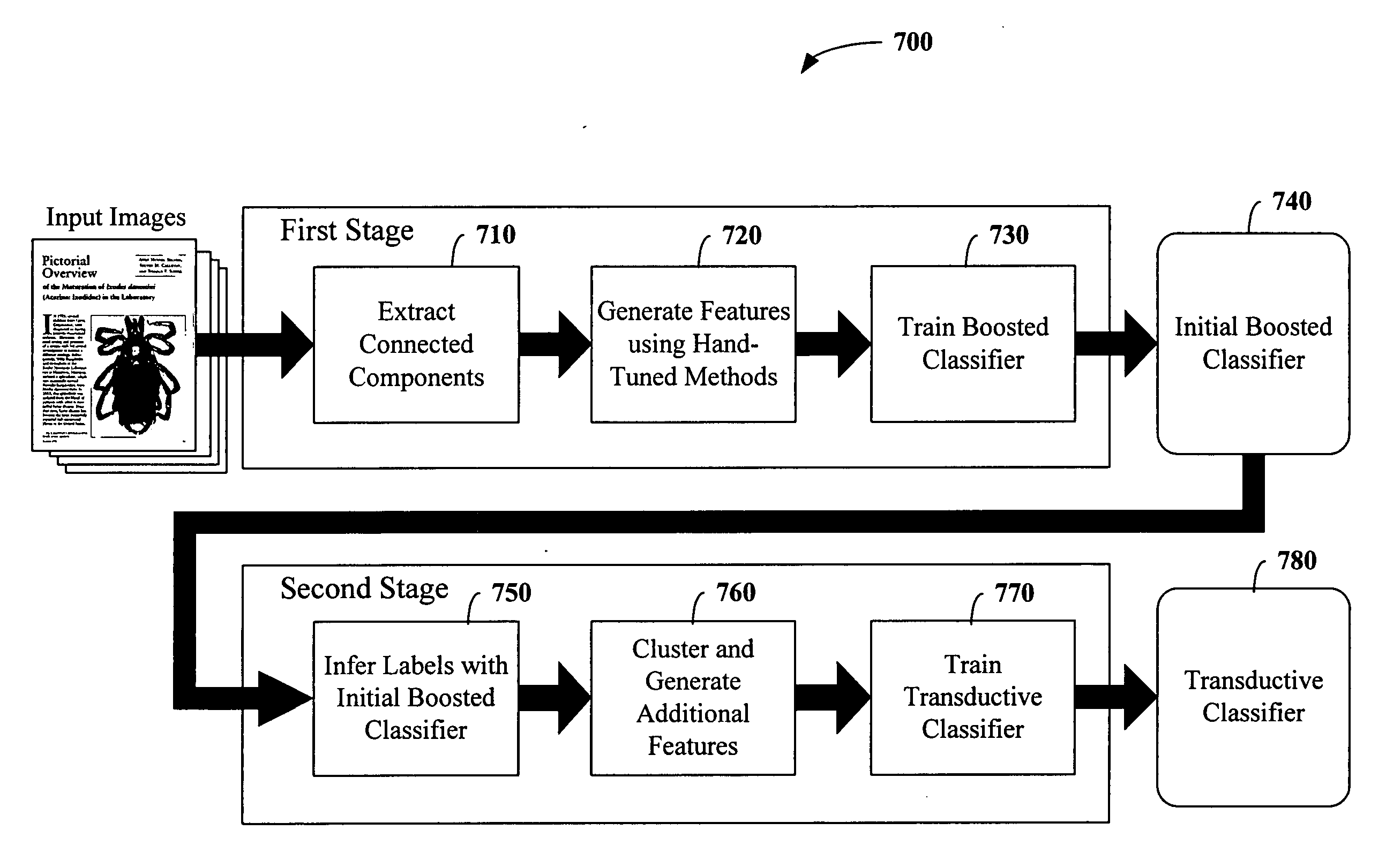

The subject invention relates to facilitating text detection. The invention employs a boosted classifier and a transductive classifier to provide accurate and efficient text detection systems and / or methods. The boosted classifier is trained through features generated from a set of training connected components and labels. The boosted classifier utilizes the features to classify the training connected components, wherein inferred labels are conveyed to a transductive classifier, which generates additional properties. The initial set of features and the properties are utilized to train the transductive classifier. Upon training, the system and / or methods can be utilized to detect text in data under text detection, wherein unlabeled data is received, and connected components are extracted therefrom and utilized to generate corresponding feature vectors, which are employed to classify the connected components using the initial boosted classifier. Inferred labels are utilized to generate properties, which are utilized along with the initial feature vectors to classify each connected component using the transductive classifier.

Owner:MICROSOFT TECH LICENSING LLC

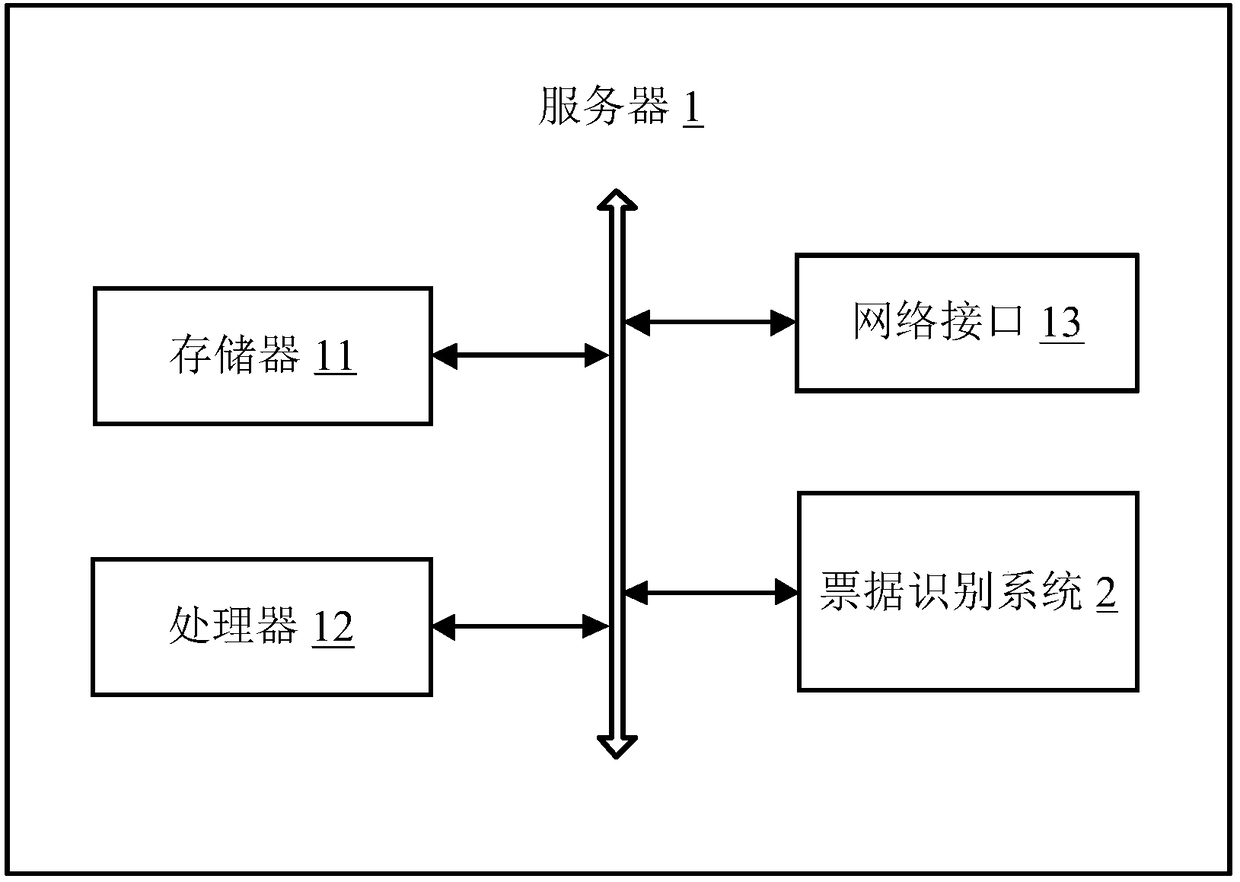

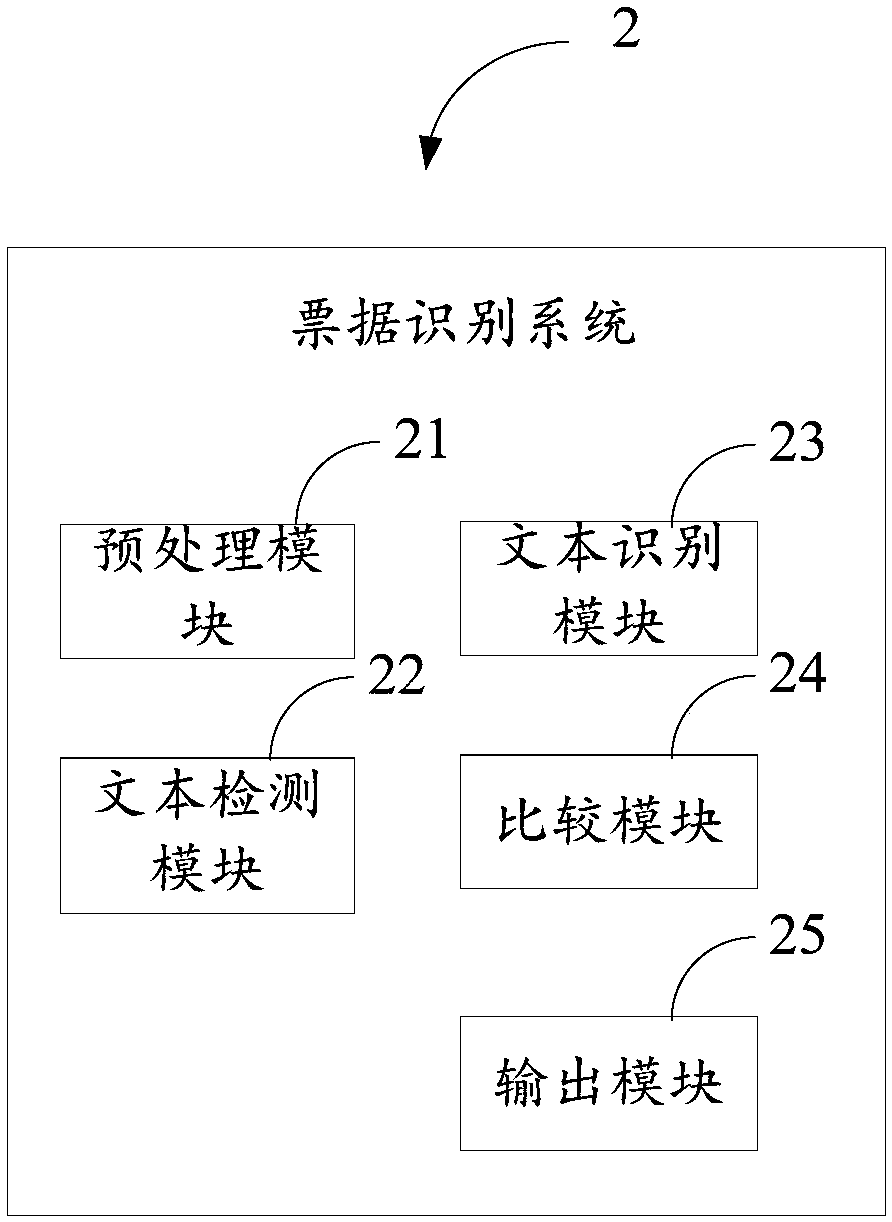

Bill identification method, server and computer readable storage medium

InactiveCN108446621AImprove digital efficiencyImprove accuracyFinanceBilling/invoicingCharacter recognitionText detection

The invention discloses a bill identification method. The method comprises that a bill picture to be identified is received, the bill picture is processed via a pre-trained bill picture identificationmodel, text detection is carried out on the bill picture by using a pre-trained text detection model, a target character area including characters and fields to be identified in the target characterarea are determined in the bill picture, aimed at the fields to be identified, a corresponding text identification model is called to identify characters so that character information contained by thefields to be identified is identified from the target character area, and an identification result is output. The invention also provides a server and a computer readable storage medium. According tothe bill identification method, server and computer readable storage medium, the digitalization efficiency of bills can be improved, the labor intensity of service staff is reduced, and data is moreaccurate or refined.

Owner:PING AN TECH (SHENZHEN) CO LTD

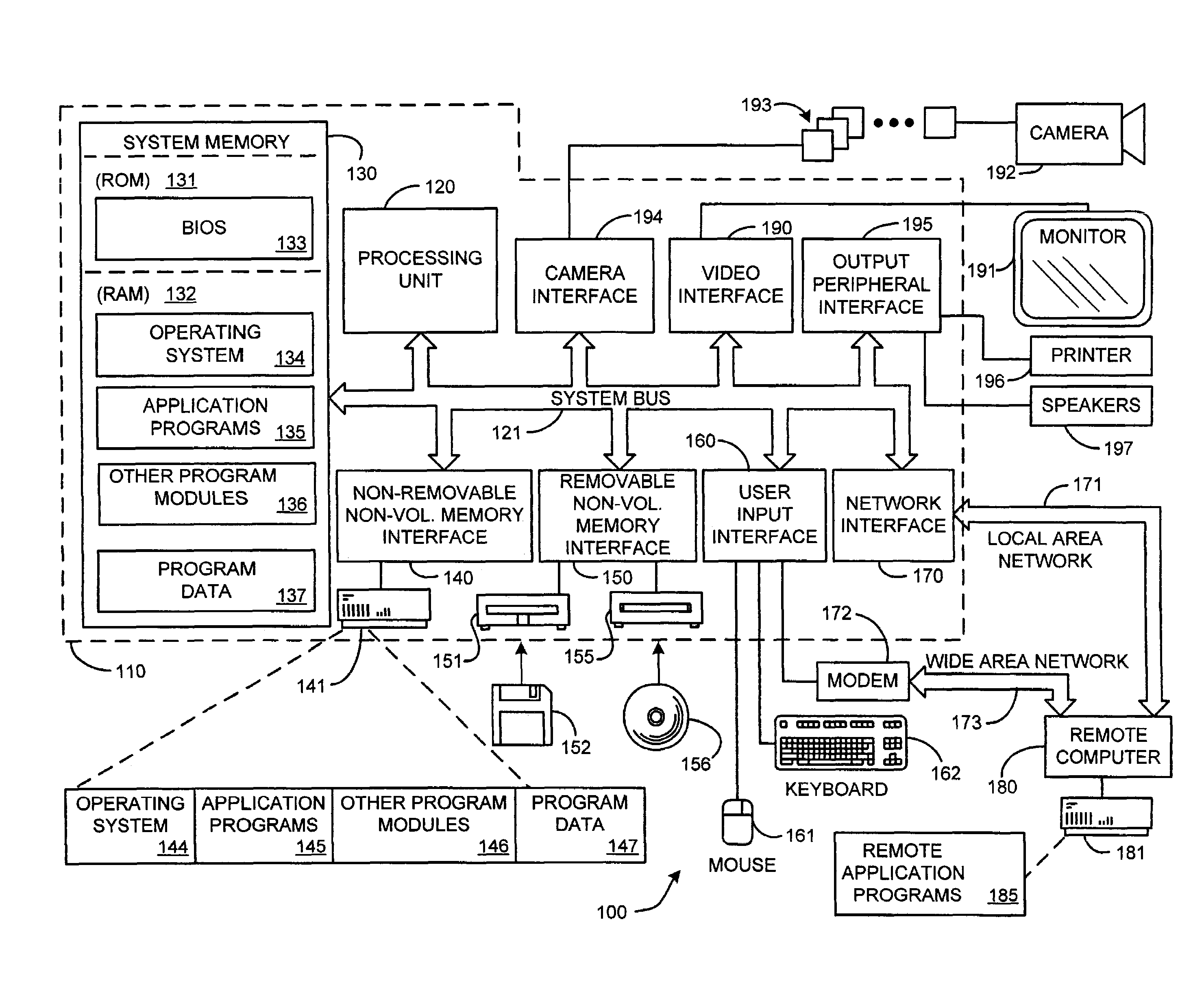

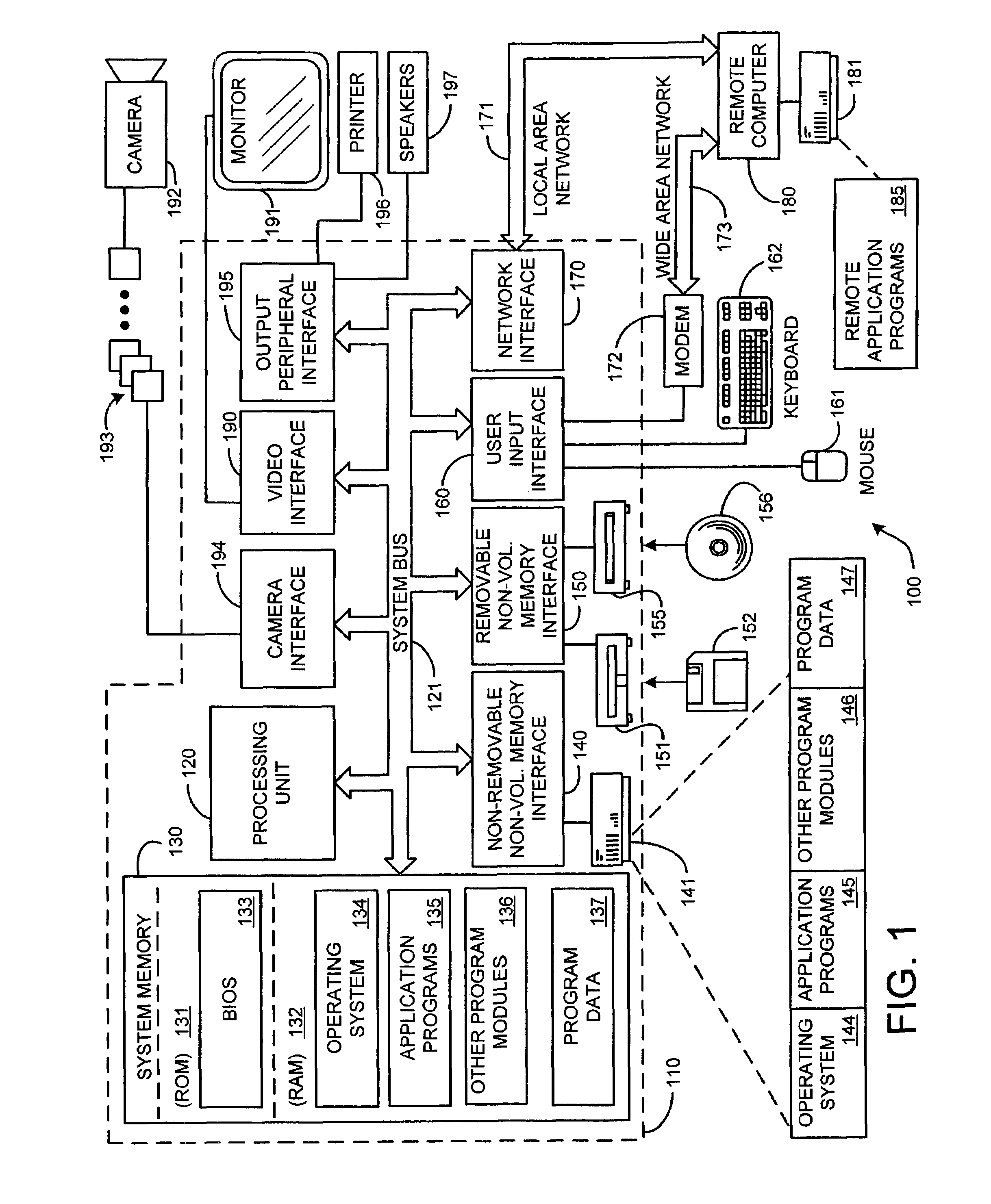

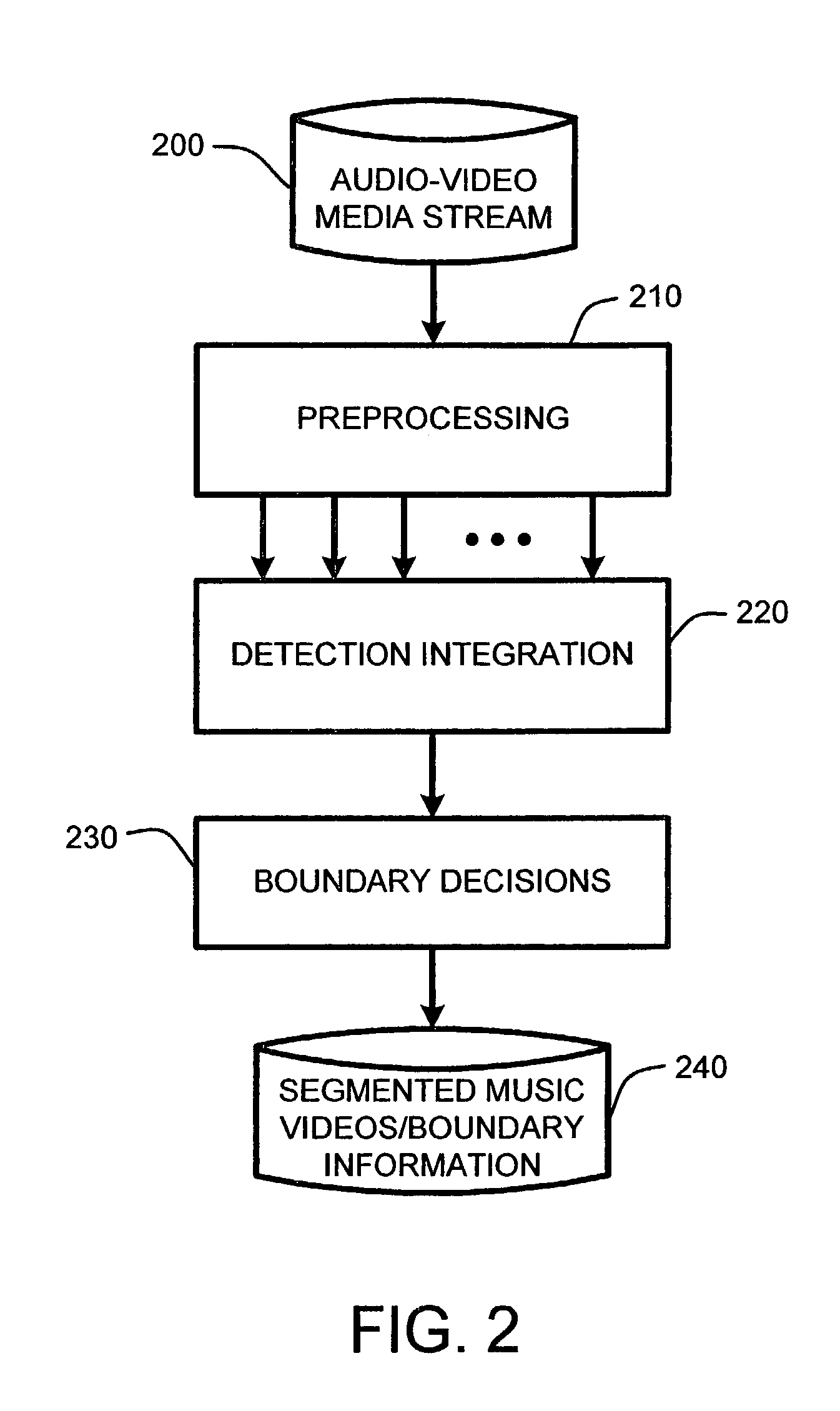

Automatic detection and segmentation of music videos in an audio/video stream

InactiveUS7336890B2Television system detailsSpecific information broadcast systemsText detectionBoundary detection

A “music video parser” automatically detects and segments music videos in a combined audio-video media stream. Automatic detection and segmentation is achieved by integrating shot boundary detection, video text detection and audio analysis to automatically detect temporal boundaries of each music video in the media stream. In one embodiment, song identification information, such as, for example, a song name, artist name, album name, etc., is automatically extracted from the media stream using video optical character recognition (OCR). This information is then used in alternate embodiments for cataloging, indexing and selecting particular music videos, and in maintaining statistics such as the times particular music videos were played, and the number of times each music video was played.

Owner:MICROSOFT TECH LICENSING LLC

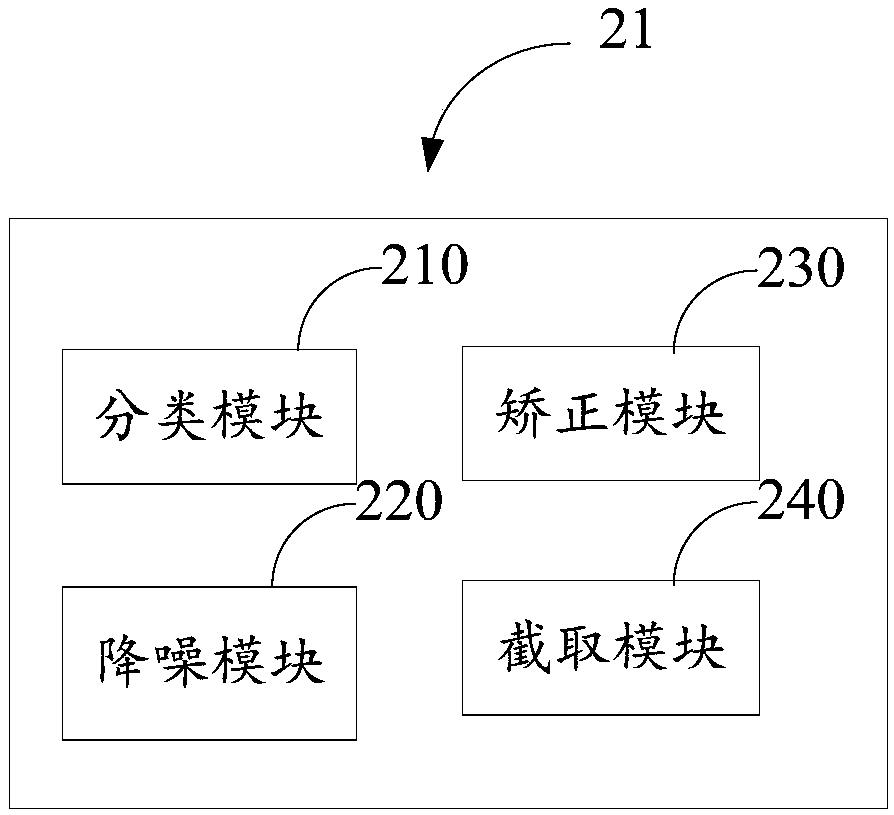

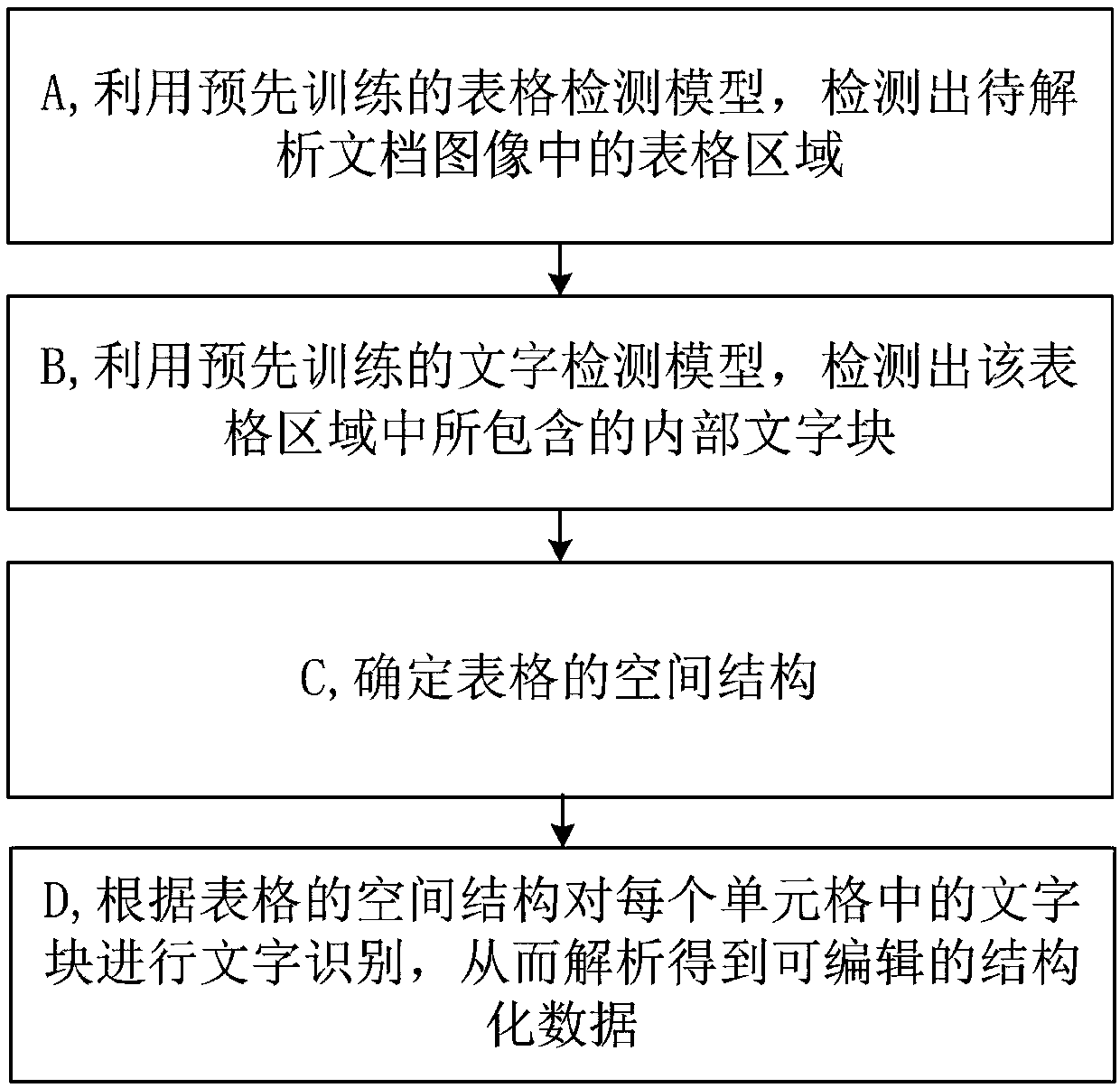

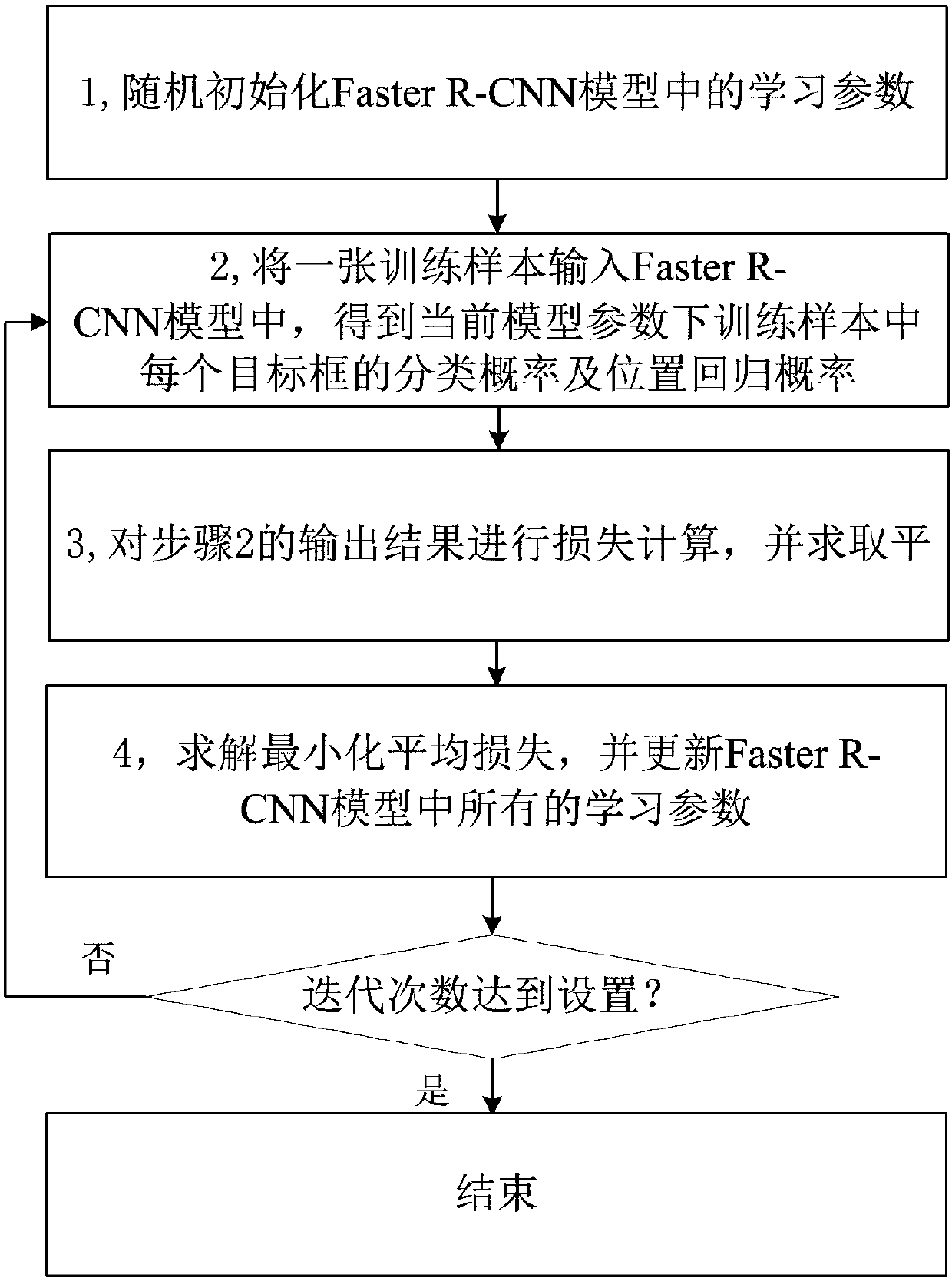

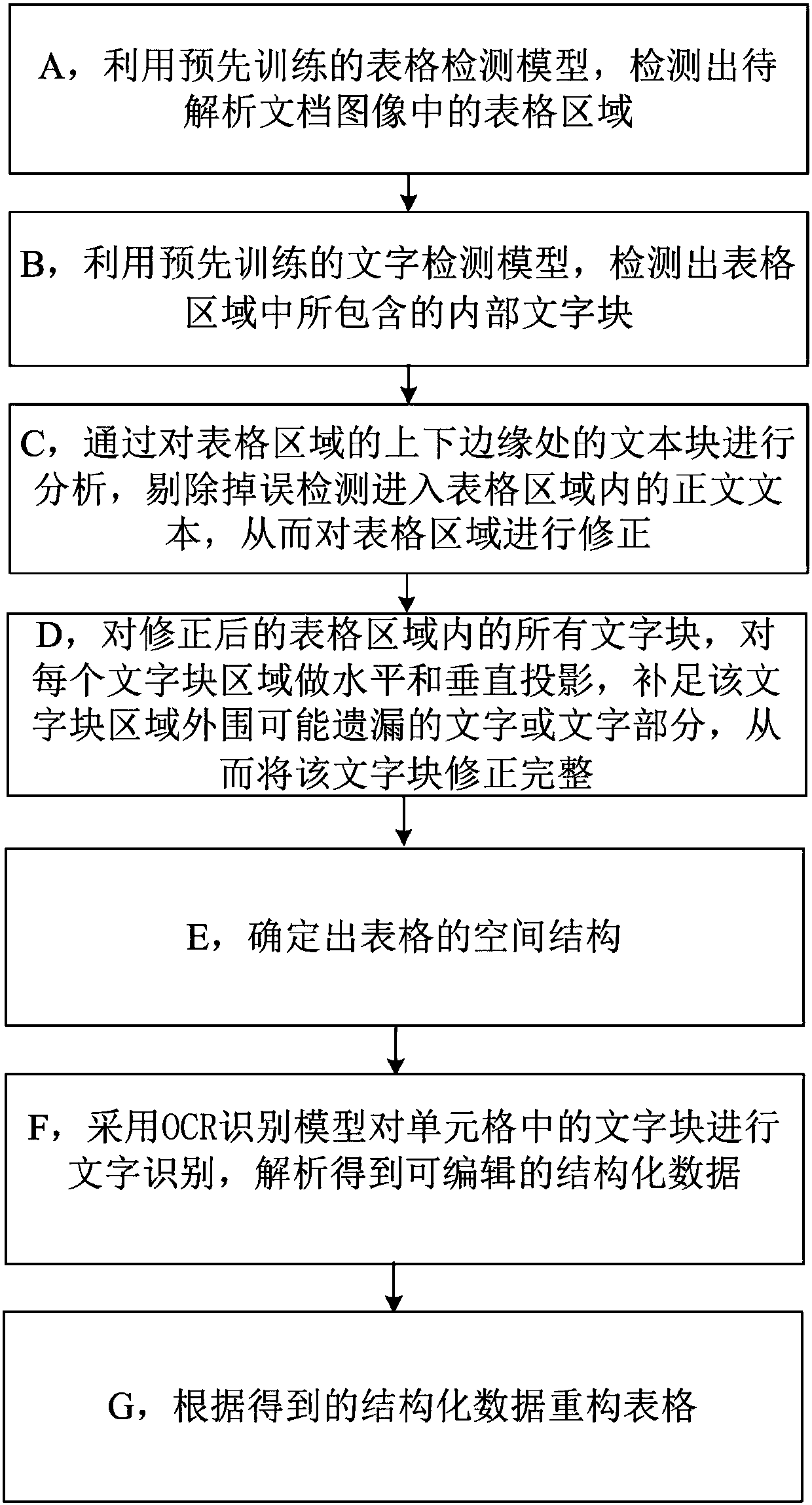

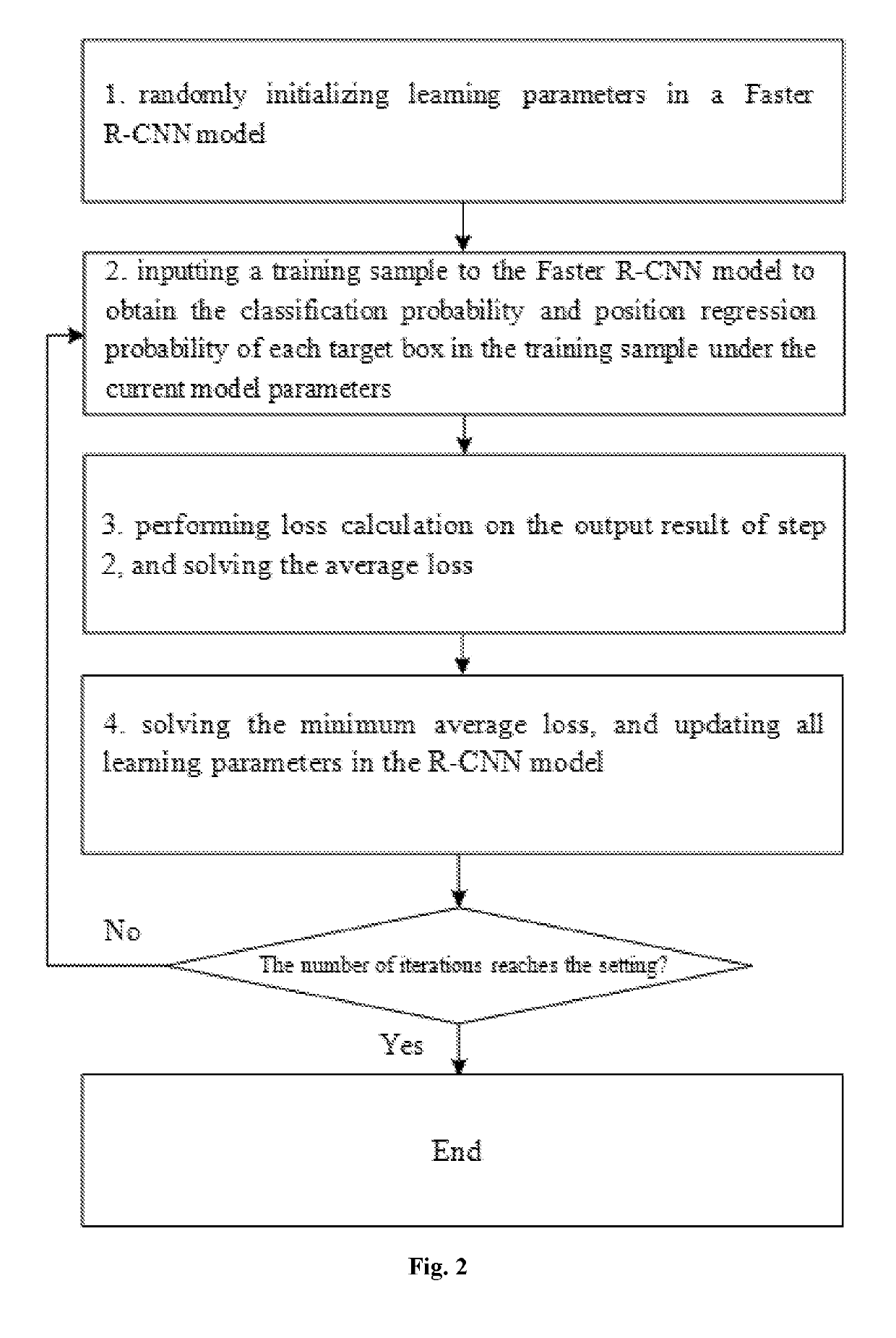

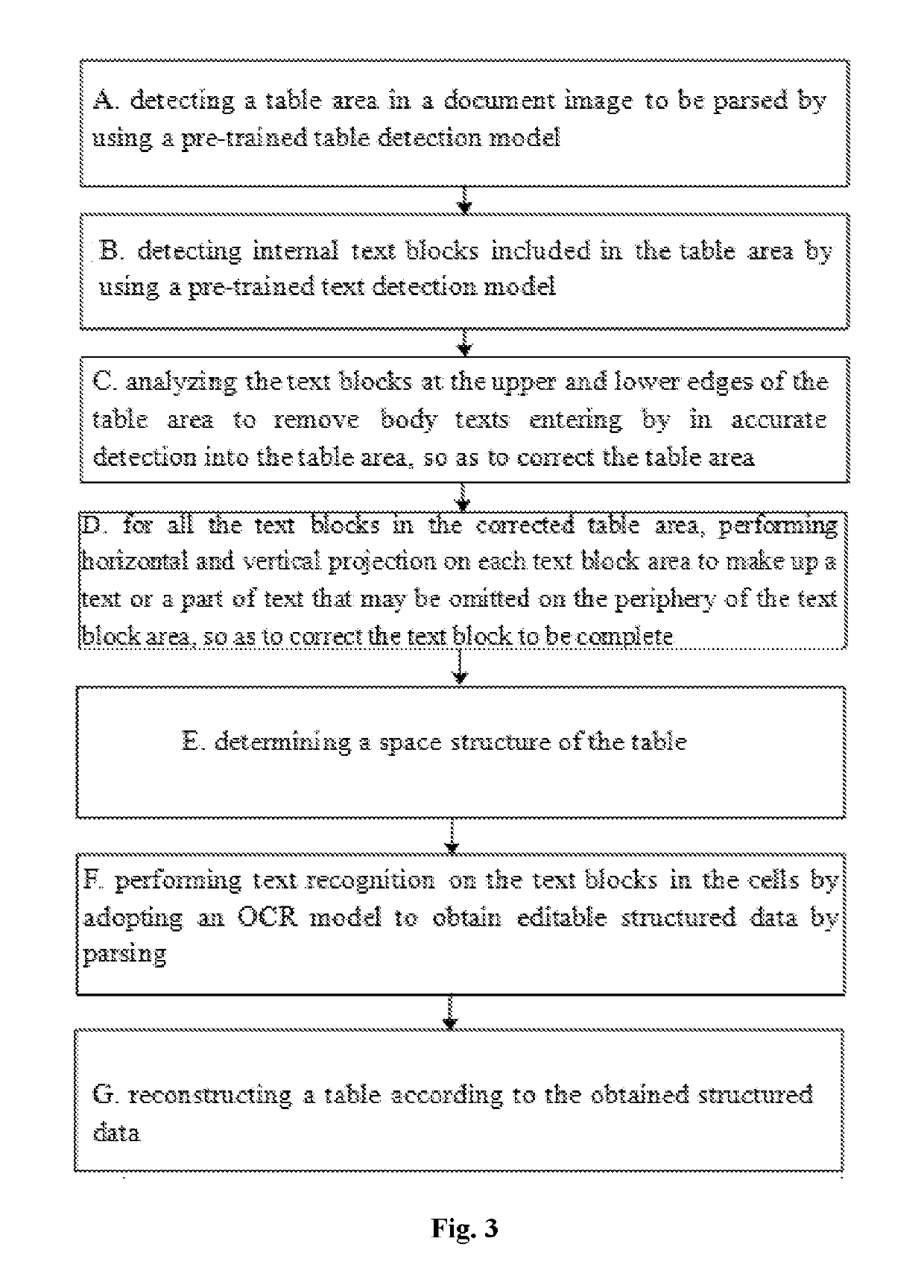

Table parsing method and device in document image

ActiveCN108416279AImprove analysis efficiencyImprove accuracyCharacter recognitionEffective solutionText detection

The invention relates to a table parsing method and device in a document image. The method comprises: detecting a table area in a to-be-parsed document image by using a pre-trained table detection model; detecting an internal character block included by the table area by using a pre-trained character detection model; determining a spatial structure of the table; and according to the spatial structure of the table, carrying out character identification on the character block in each table cell to obtain an editable structural data by parsing. The table parsing method and device can be applied to various tables like a lined table, a line-free table, or a black-and-white table and a simple and effective solution is provided for table parsing in the document image.

Owner:BEIJING ABC TECH CO LTD

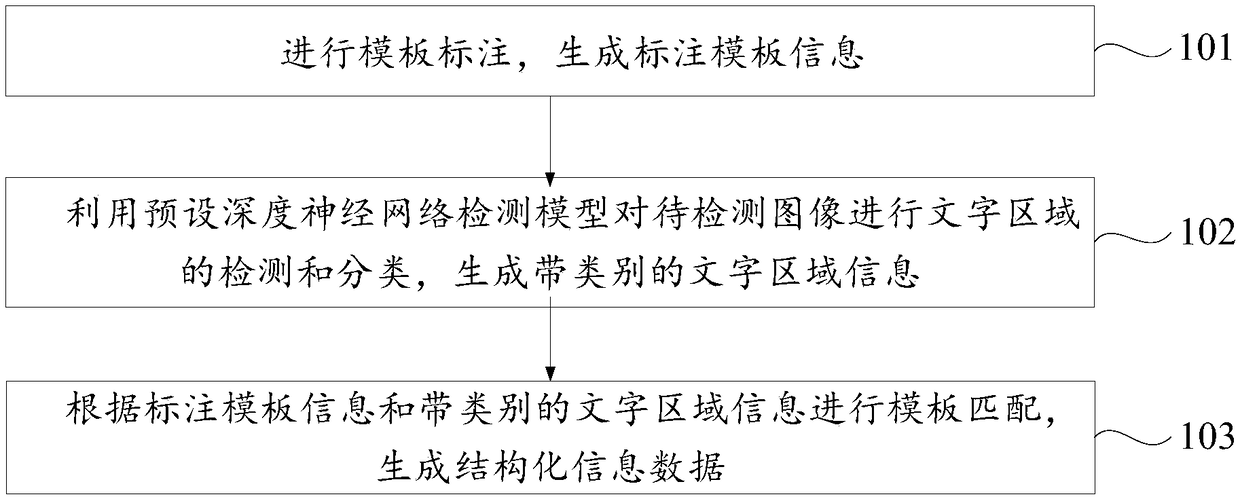

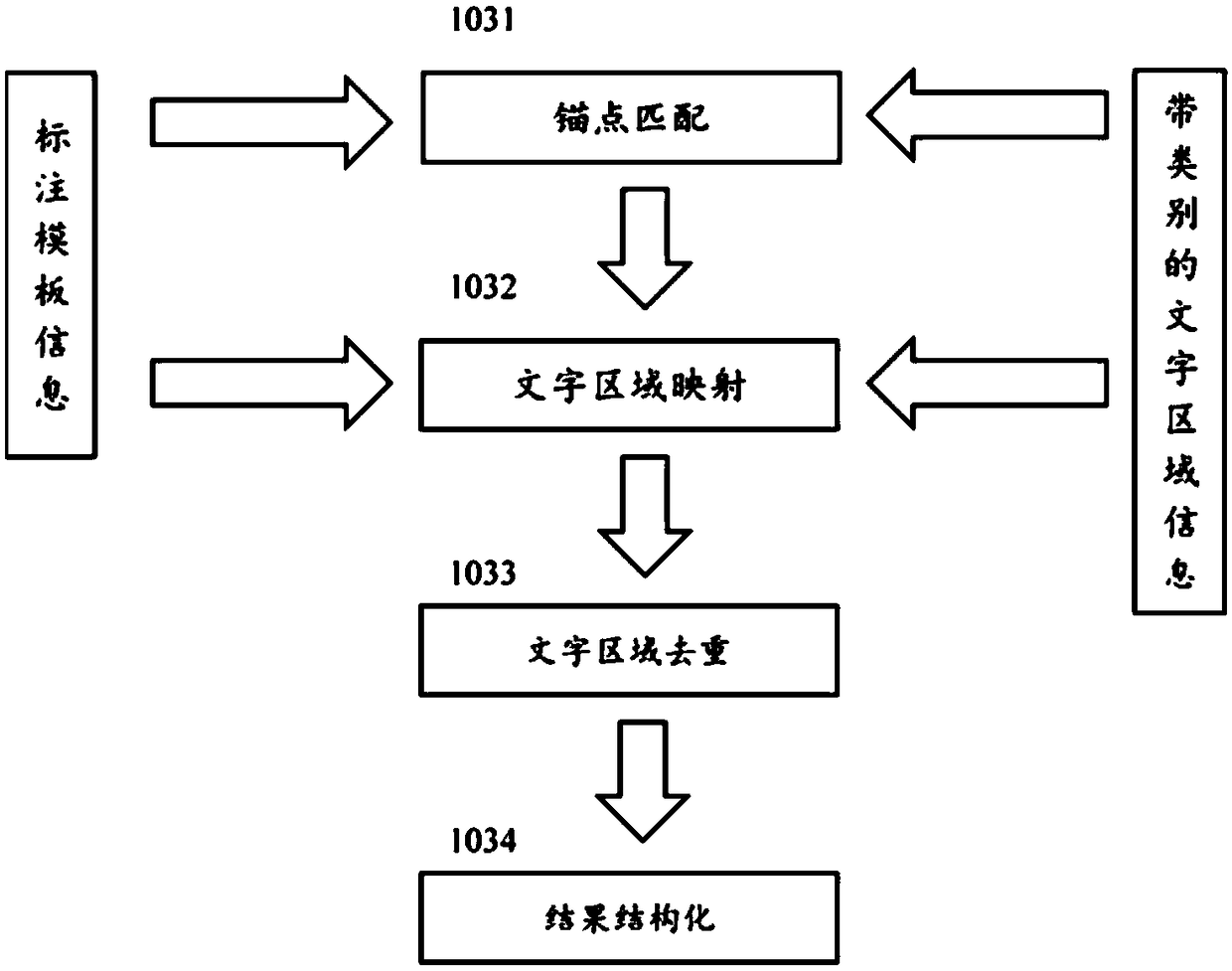

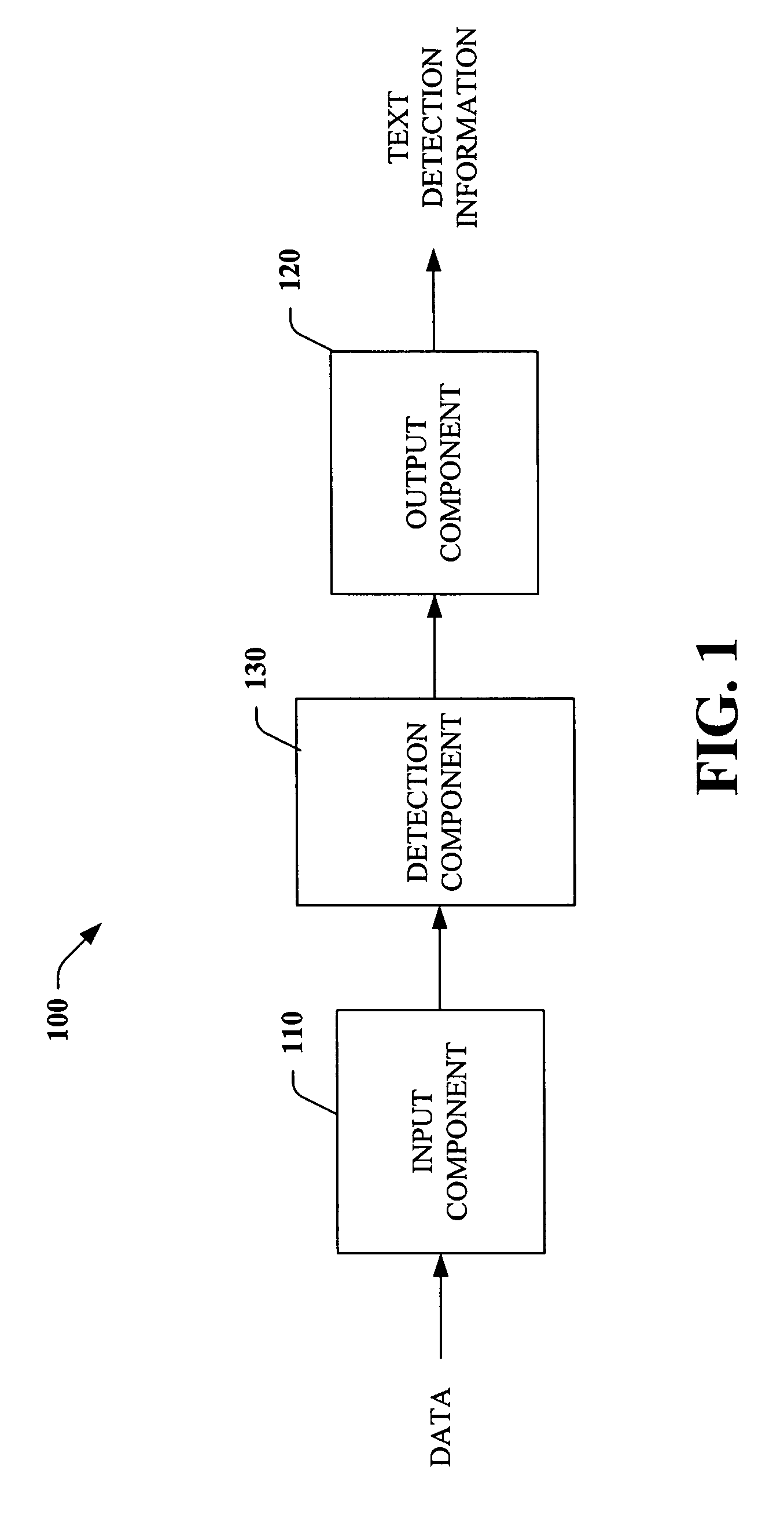

Method, device and equipment for text detection and analysis based on depth neural network

ActiveCN109086756AExact matchImprove accuracyCharacter and pattern recognitionNeural architecturesTemplate matchingText detection

The invention discloses a text detection and analysis method, a device and equipment based on a depth neural network, belonging to the technical field of depth learning and image processing. The method comprises the following steps of: performing template labeling to generate labeling template information; using the preset depth neural network detection model to detect and classify the text regionof the image to be detected, and generating the text region information with categories; template matching being performed according to the label template information and the text area information with categories to generate structured information data. The invention can realize fast and accurate detection and analysis according to various fields in a bill image, has the characteristics of real-time, accuracy, universality, robustness and expandability for the detection and analysis of a document image, and can be widely applied to the field of image text detection, analysis and recognition containing a plurality of texts.

Owner:ZHONGAN INFORMATION TECH SERVICES CO LTD

Systems and methods for detecting text

InactiveUS7570816B2Easy to detectPromote resultsDigital data processing detailsCharacter and pattern recognitionFeature vectorText detection

Owner:MICROSOFT TECH LICENSING LLC

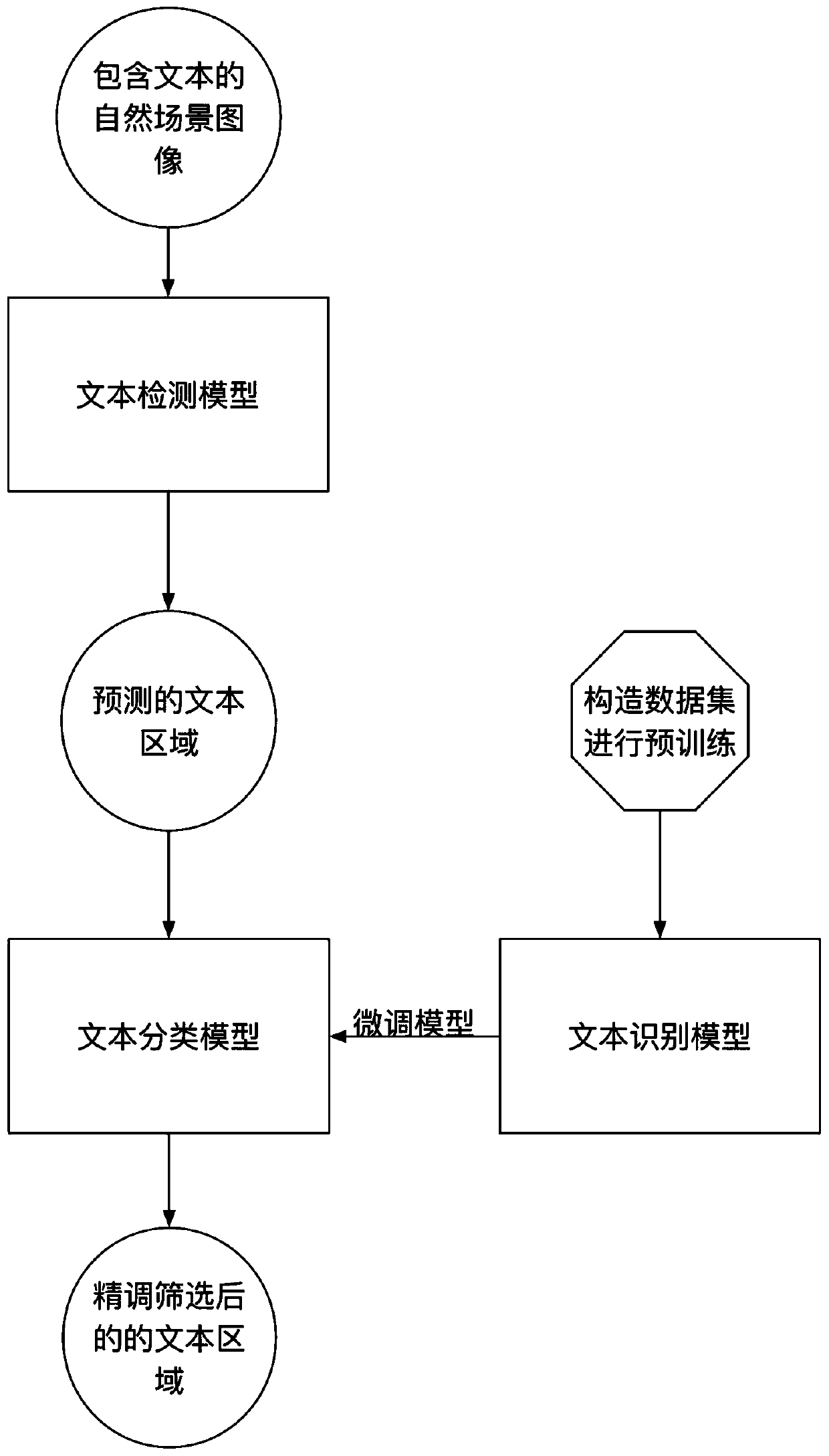

Natural scene text detection method and system

InactiveCN110097049ANo need to manually design featuresSimple methodCharacter and pattern recognitionNeural architecturesText recognitionFeature extraction

The invention provides a natural scene text detection method and system. The system comprises two neural network models: a text detection network based on multi-level semantic feature fusion and a detection screening network based on an attention mechanism, wherein the text detection network is an FCN-based image feature extraction fusion network, and is used for extracting multi-semantic level information of input data, carrying out full fusion of multi-scale features, and finally predicting the position and confidence degree of text information in a natural scene by carrying out convolutionoperation on the fused multi-scale information. According to the detection screening network, the trained convolutional recurrent neural network is used for discriminating and scoring an initial detection result output by the convolutional neural network of the first part, so that a background which is easy to confuse with foreground characters is filtered out, and the accuracy of natural scene text recognition is further improved.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI +1

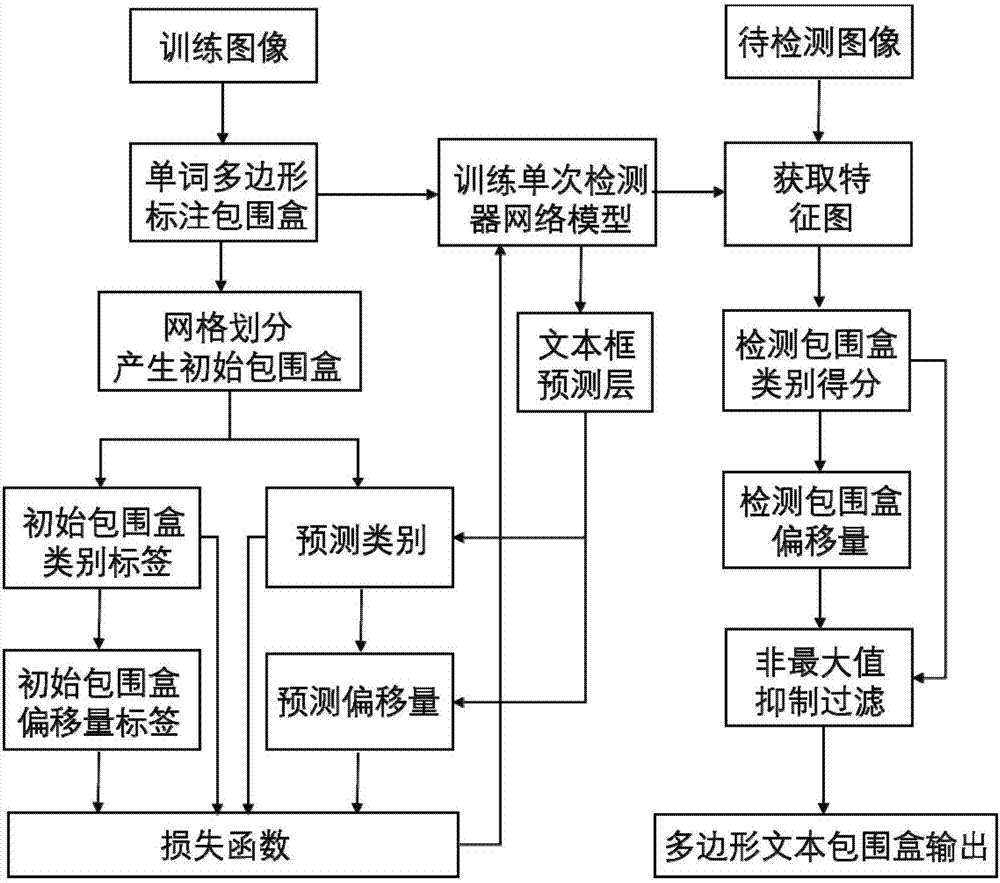

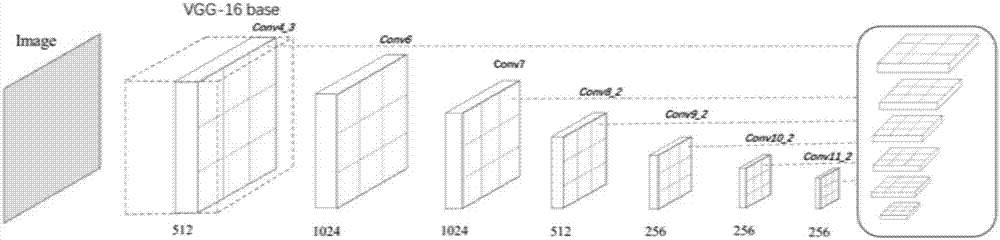

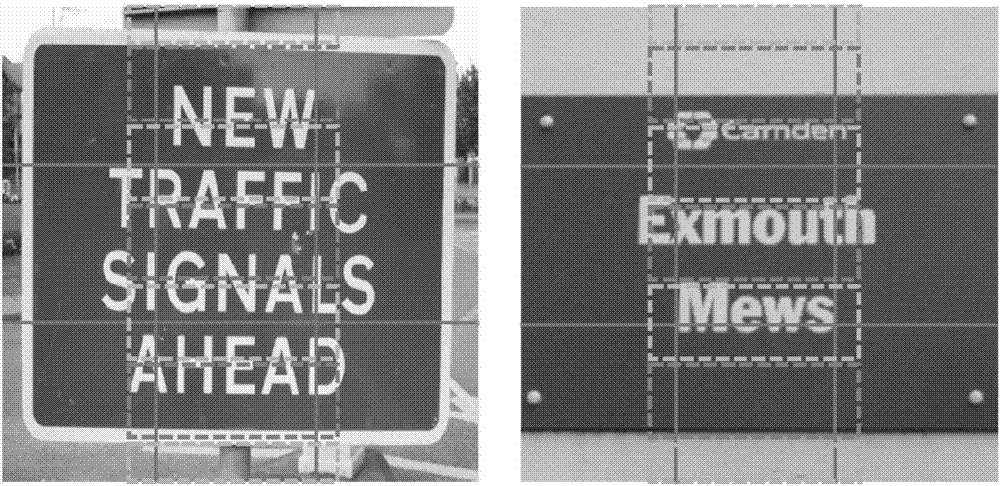

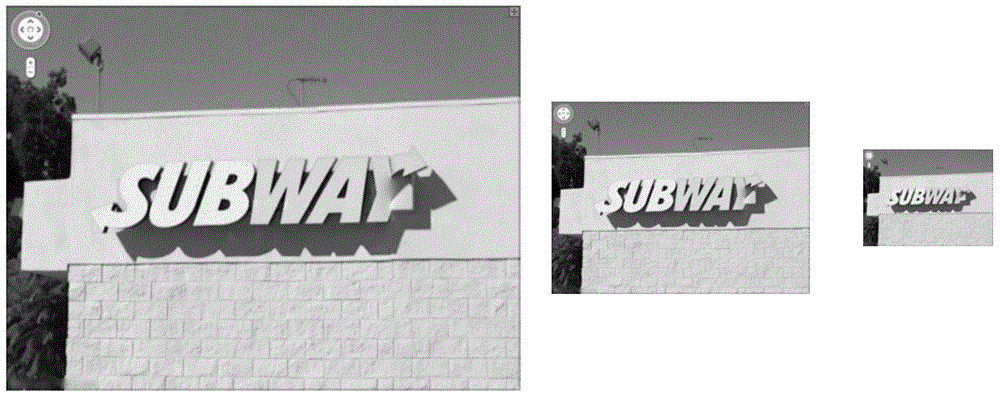

Single detection method for multi-direction scene based on fully convolutional network

ActiveCN107977620ASimple structureImprove accuracyCharacter and pattern recognitionRobustificationText detection

The invention discloses a single detection method for a multi-direction scene based on the fully convolutional network. A fully convolutional single detection network model is constructed, end-to-endtraining can be carried out via single network needless of multi-step processing, a multi-scale feature extraction layer is combined with a text box prediction layer to detect multi-direction naturalscene characters in different sizes, length-width ratios and resolutions, a polygonal enclosure box is combined with characters to introduce less background interference, and a final text detection result can be obtained via simple non-maximal-value inhibition operations. Compared with the prior art, the detection method is simple and effective in structure, improves the accuracy, detection speedand robustness, and is high in practical application value.

Owner:HUAZHONG UNIV OF SCI & TECH

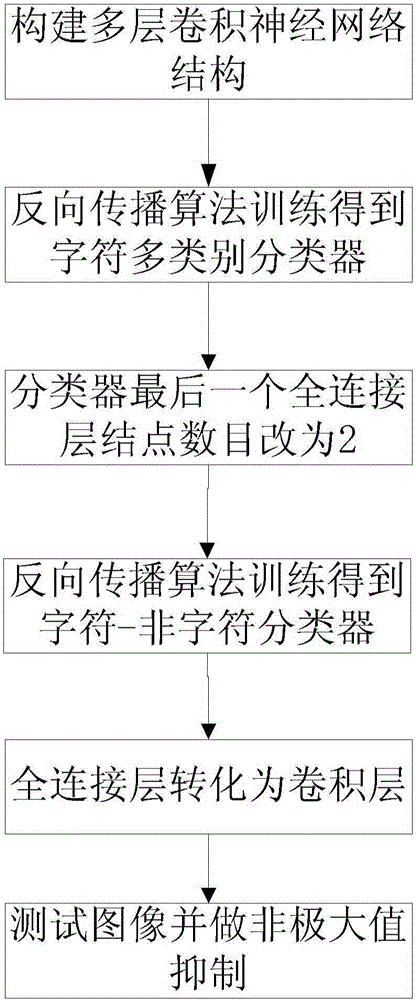

Character detection method and device based on deep learning

ActiveCN105184312AFind out quickly and efficientlyRobustCharacter and pattern recognitionNeural learning methodsFeature extractionAlgorithm

The invention discloses a character detection method and device based on deep learning. The method comprises the steps: designing a multilayer convolution neural network structure, and enabling each character to serve as a class, thereby forming a multi-class classification problem; employing a counter propagation algorithm for the training of a convolution neural network, so as to recognize a single character; minimizing a target function of the network in a supervision manner, and obtaining a character recognition model; finally employing a front-end feature extracting layer for weight initialization, changing the node number of a last full-connection layer into two, enabling a network to become a two-class classification model, and employing character and non-character samples for training the network. Through the above steps, one character detection classifier can complete all operation. During testing, the full-connection layer is converted into a convolution layer. A given input image needs to be scanned through a multi-dimension sliding window, and a character probability graph is obtained. A final character region is obtained through non-maximum-value inhibition.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +1

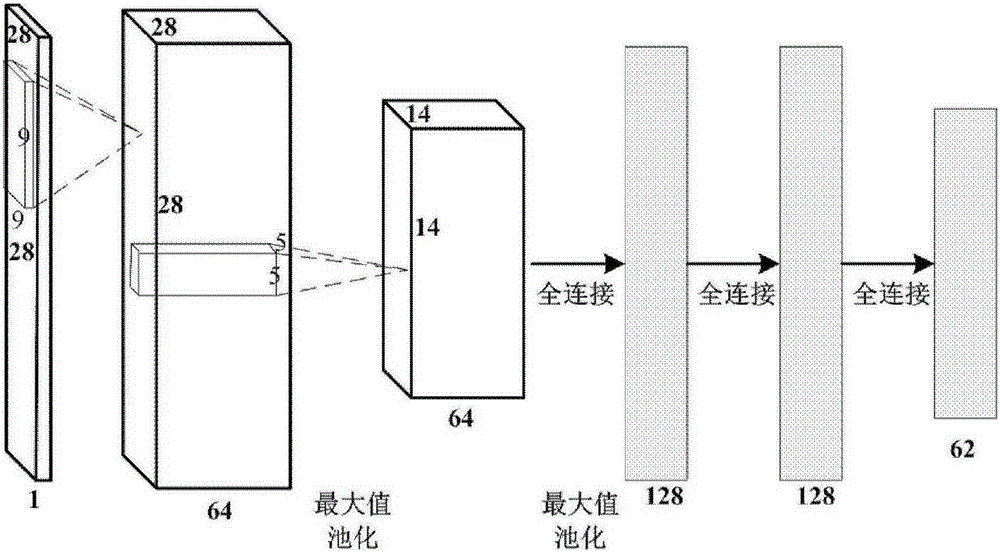

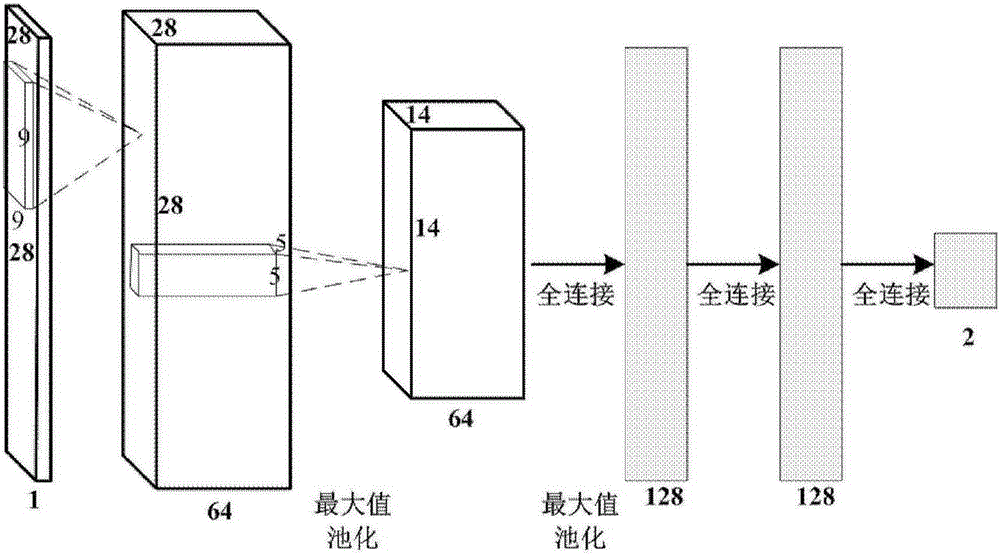

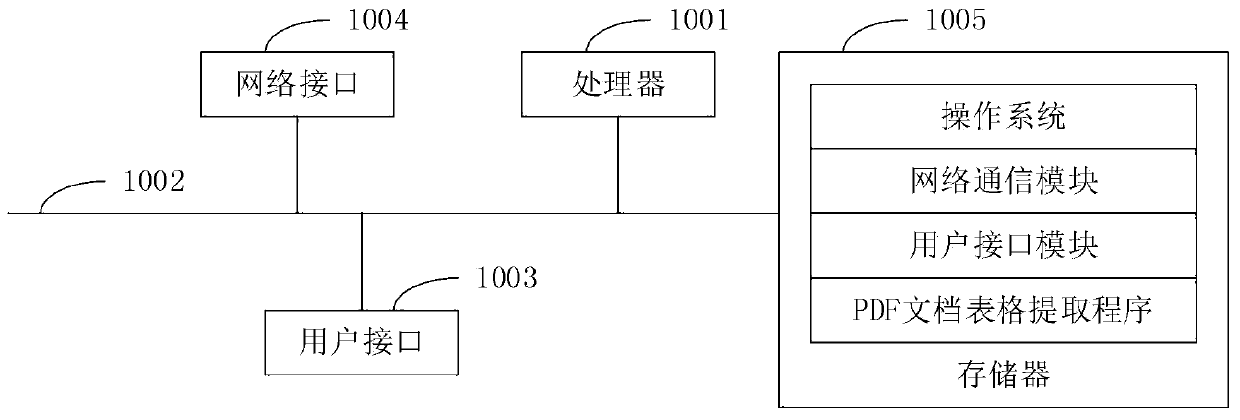

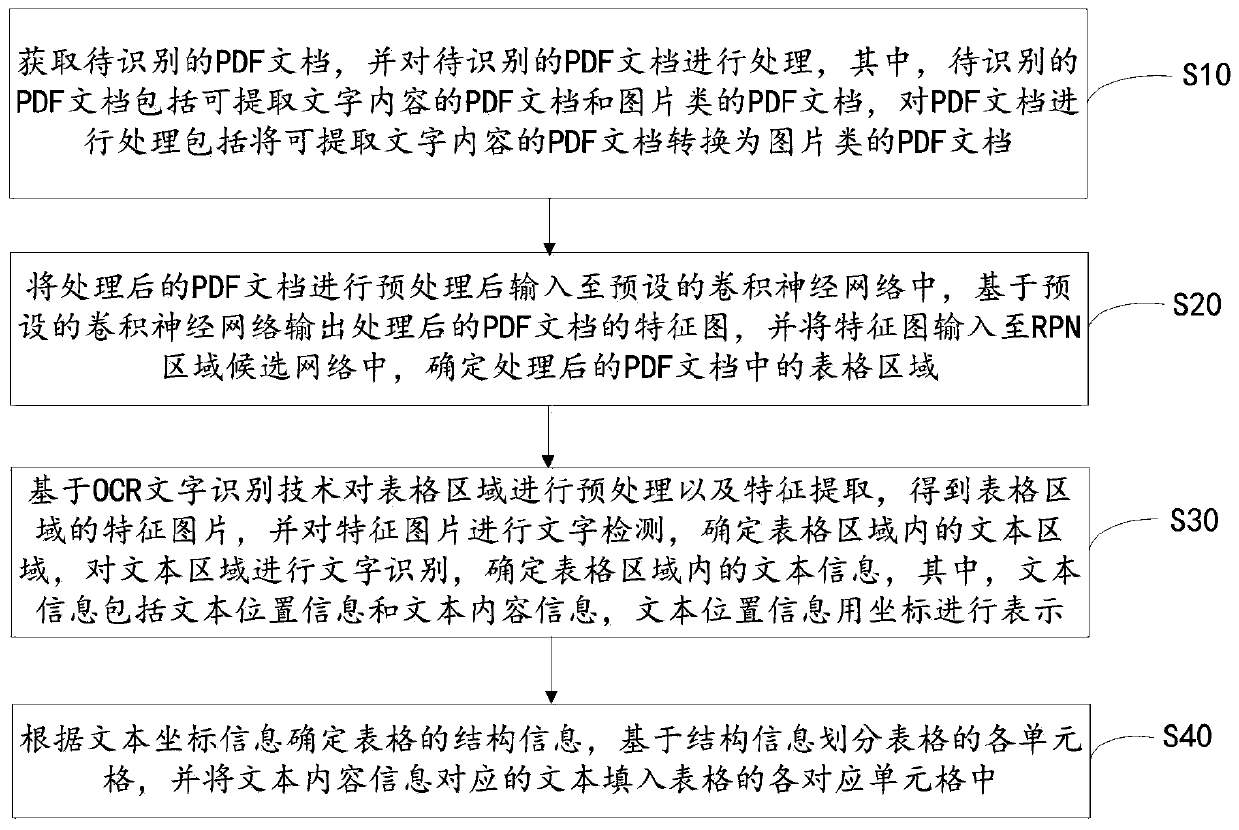

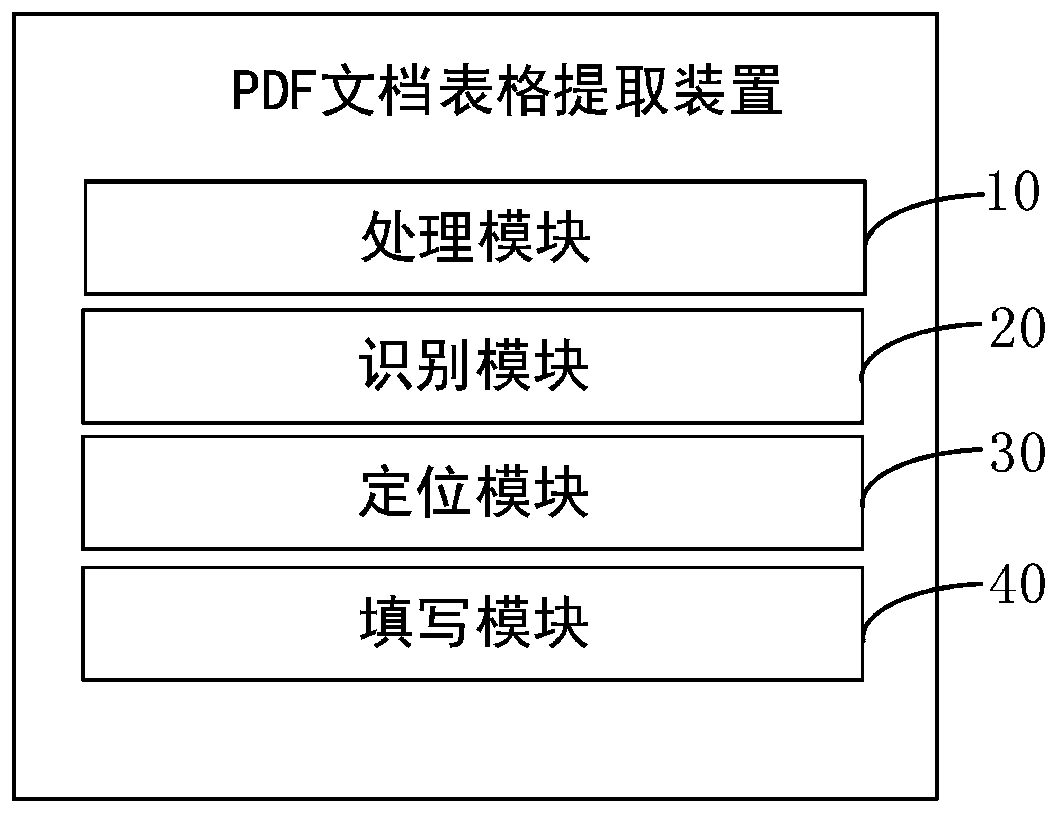

PDF document table extraction method, device and equipment and computer readable storage medium

ActiveCN110390269AImprove accuracyPrecise positioningCharacter recognitionText recognitionFeature extraction

The invention relates to the technical field of artificial intelligence, and discloses a PDF document table extraction method and device, equipment and a computer readable storage medium. The method comprises the steps of obtaining a to-be-identified PDF document, and processing the to-be-identified PDF document; preprocessing the processed PDF document, inputting the preprocessed PDF document into a convolutional neural network, outputting a feature map, inputting the feature map into an RPN region candidate network, and determining a table region; carrying out preprocessing and feature extraction on the table area based on the OCR character recognition technology, obtaining a feature picture, carrying out character detection on the feature picture, determining a text area, carrying out character recognition on the text area, determining text informatio, wherein the text information comprises text position information and text content information; and determining structure informationof the table according to the text coordinate information, dividing each cell of the table based on the structure information, and filling each corresponding cell of the table with a text corresponding to the text content information. According to the method and the device, the accuracy of PDF document table extraction is improved.

Owner:PING AN TECH (SHENZHEN) CO LTD

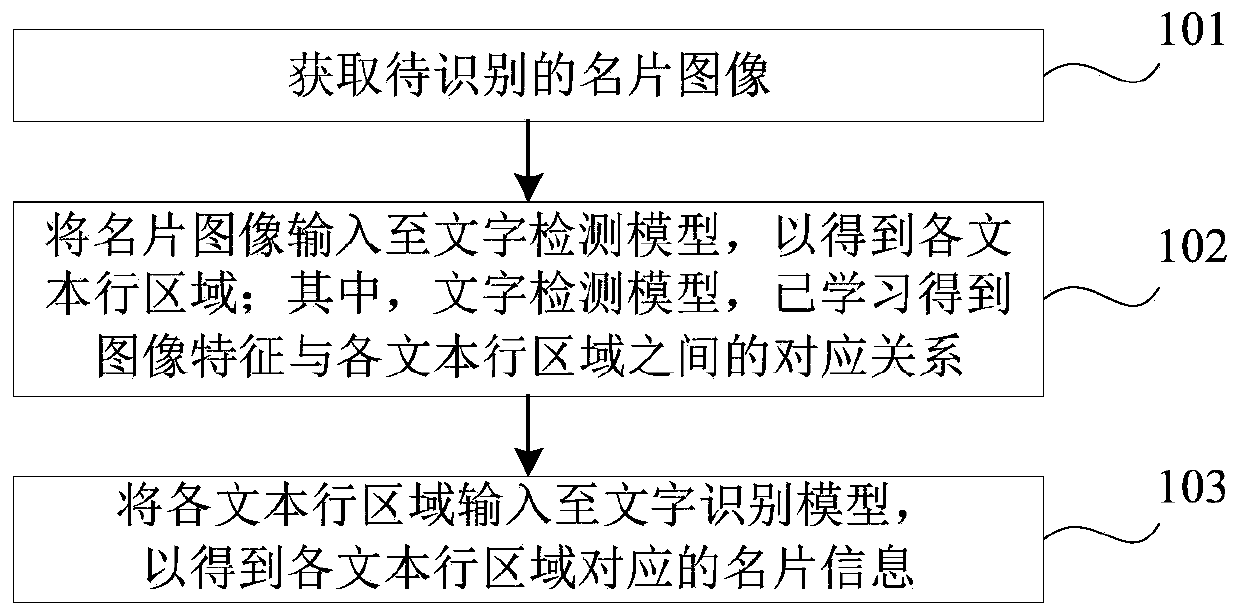

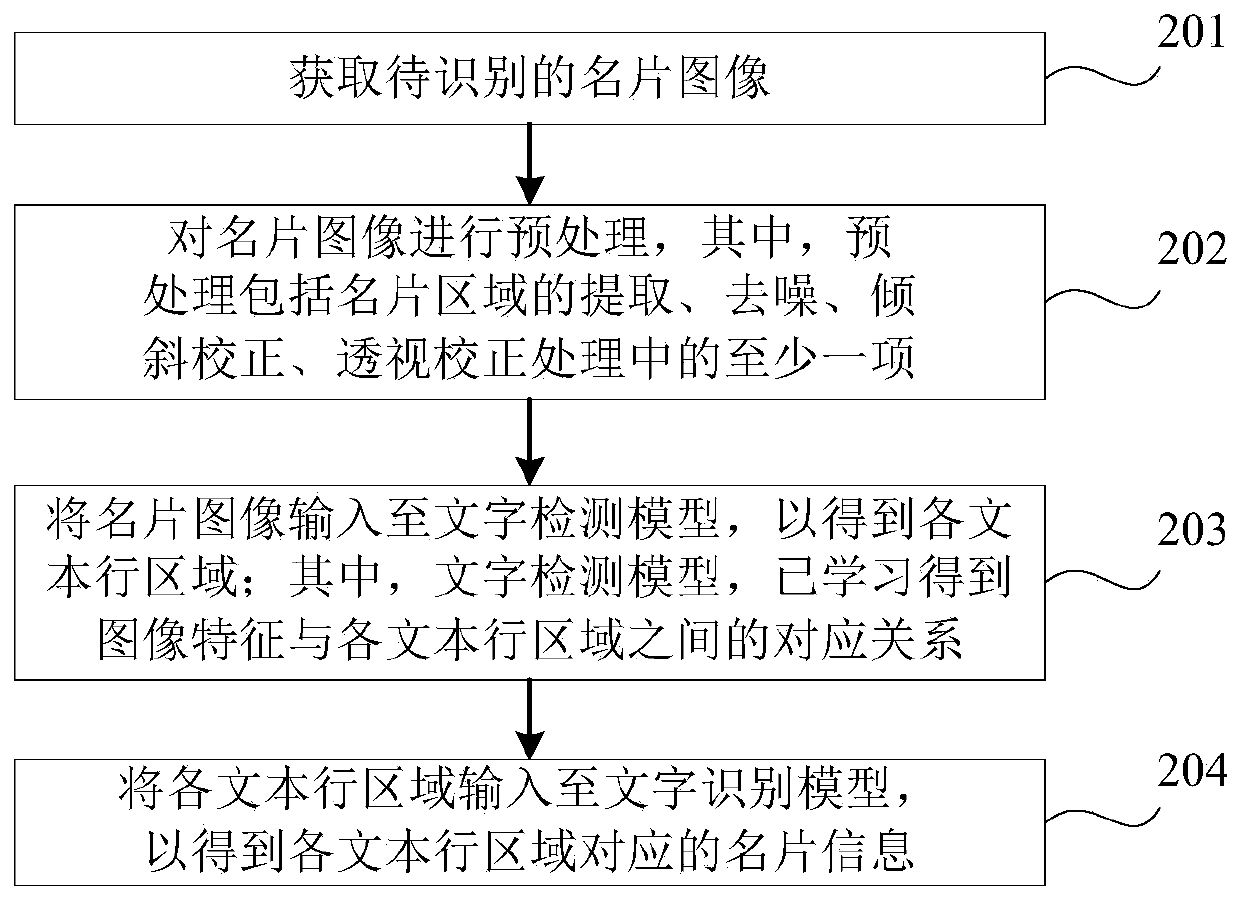

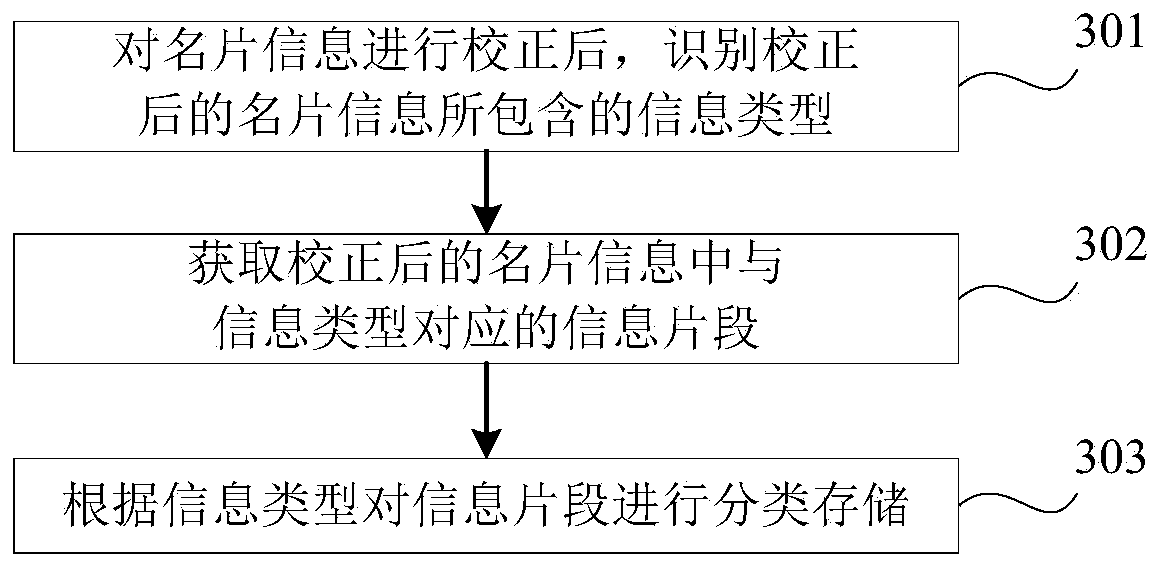

Business card identification method and device

ActiveCN110135411AReduce the impact of extractionImprove robustnessCharacter and pattern recognitionRobustificationBusiness card

The invention provides a business card identification method and device, and the method comprises the steps: obtaining a to-be-identified business card image; inputting the business card image into acharacter detection model to obtain each text line area, wherein the character detection model learns and obtain a corresponding relation between image features and each text line area; and inputtingeach text line area into the character recognition model to obtain business card information corresponding to each text line area. According to the method, the text line areas in the business card image can be identified through the text detection model based on deep learning, the robustness is high, the influence of low-quality and noise data on text extraction can be reduced, and therefore the universality and the application space of the method are improved. Moreover, the text line areas are subjected to end-to-end recognition based on the deep learning character recognition model, single word segmentation is not needed, higher accuracy is achieved, higher recognition capability is achieved for various complex changes, and the universality and the recognition effect of the method are improved.

Owner:BEIJING UNIV OF POSTS & TELECOMM

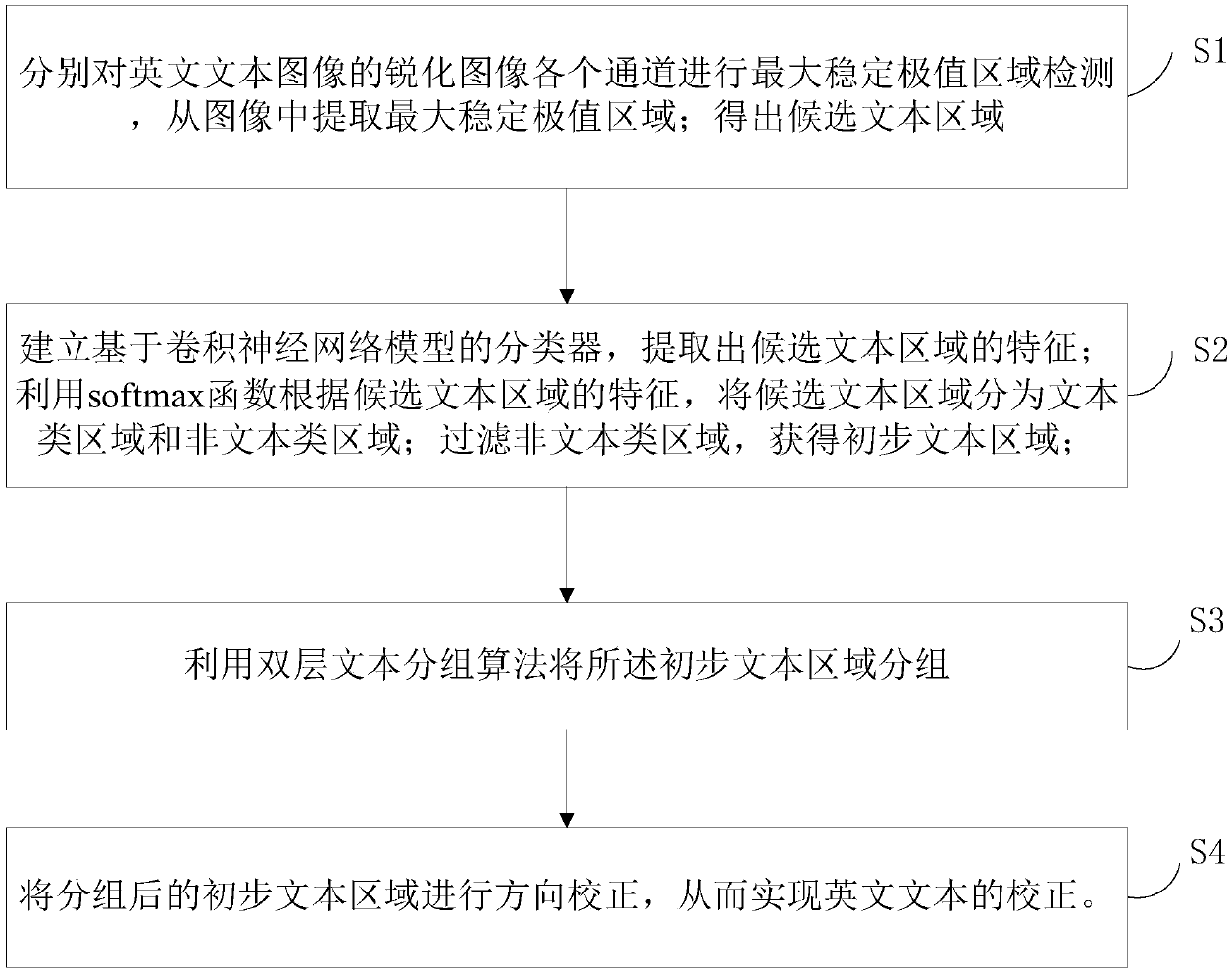

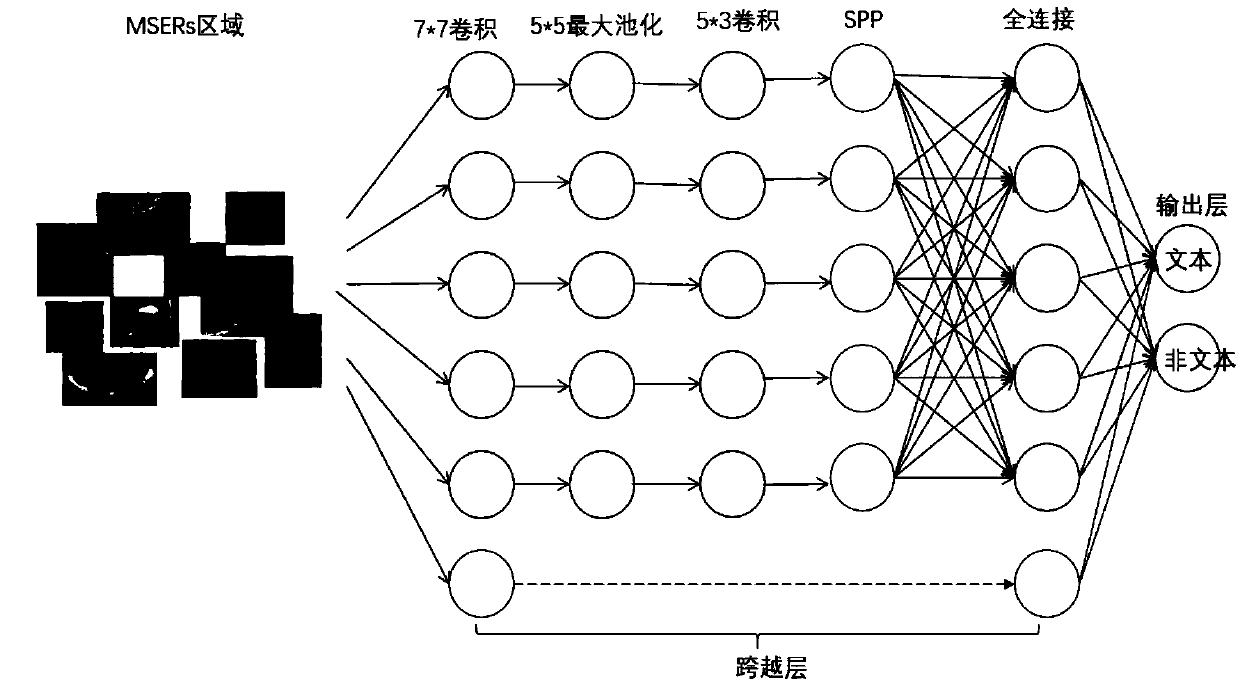

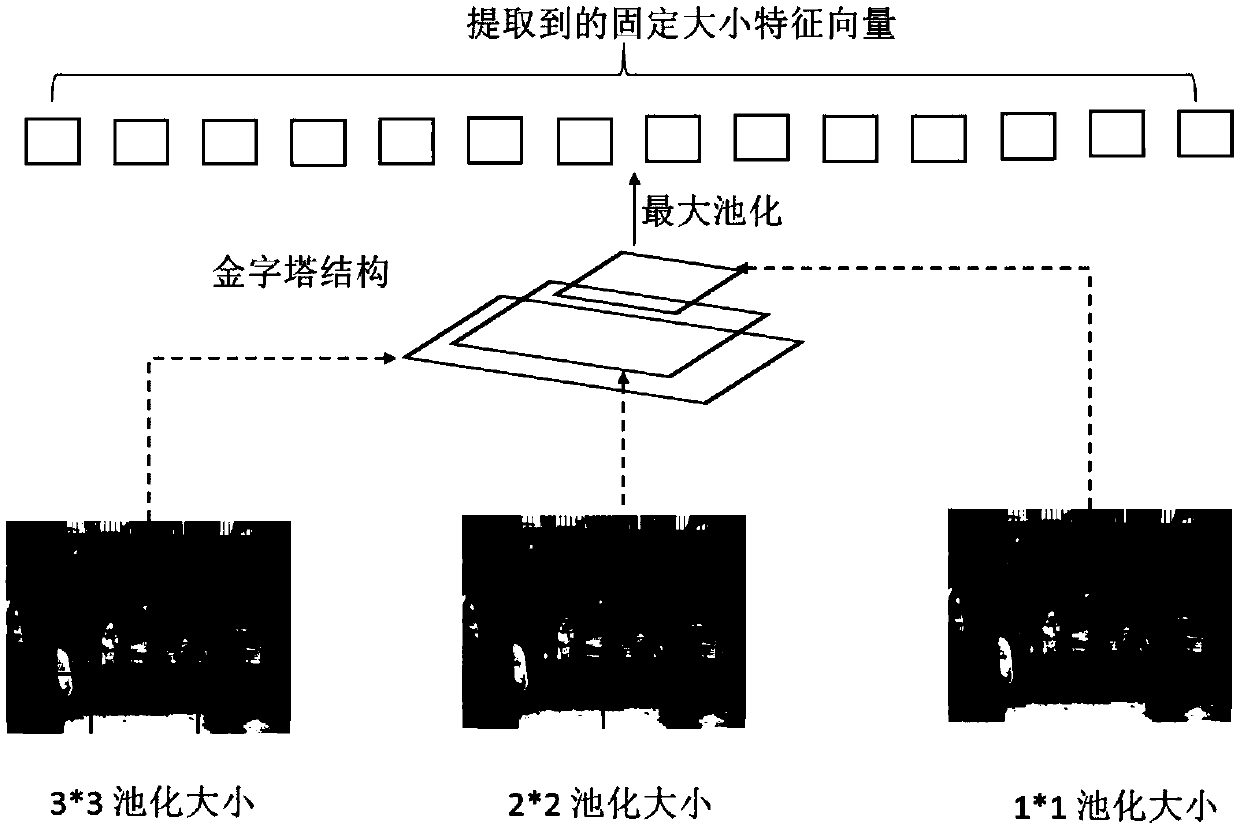

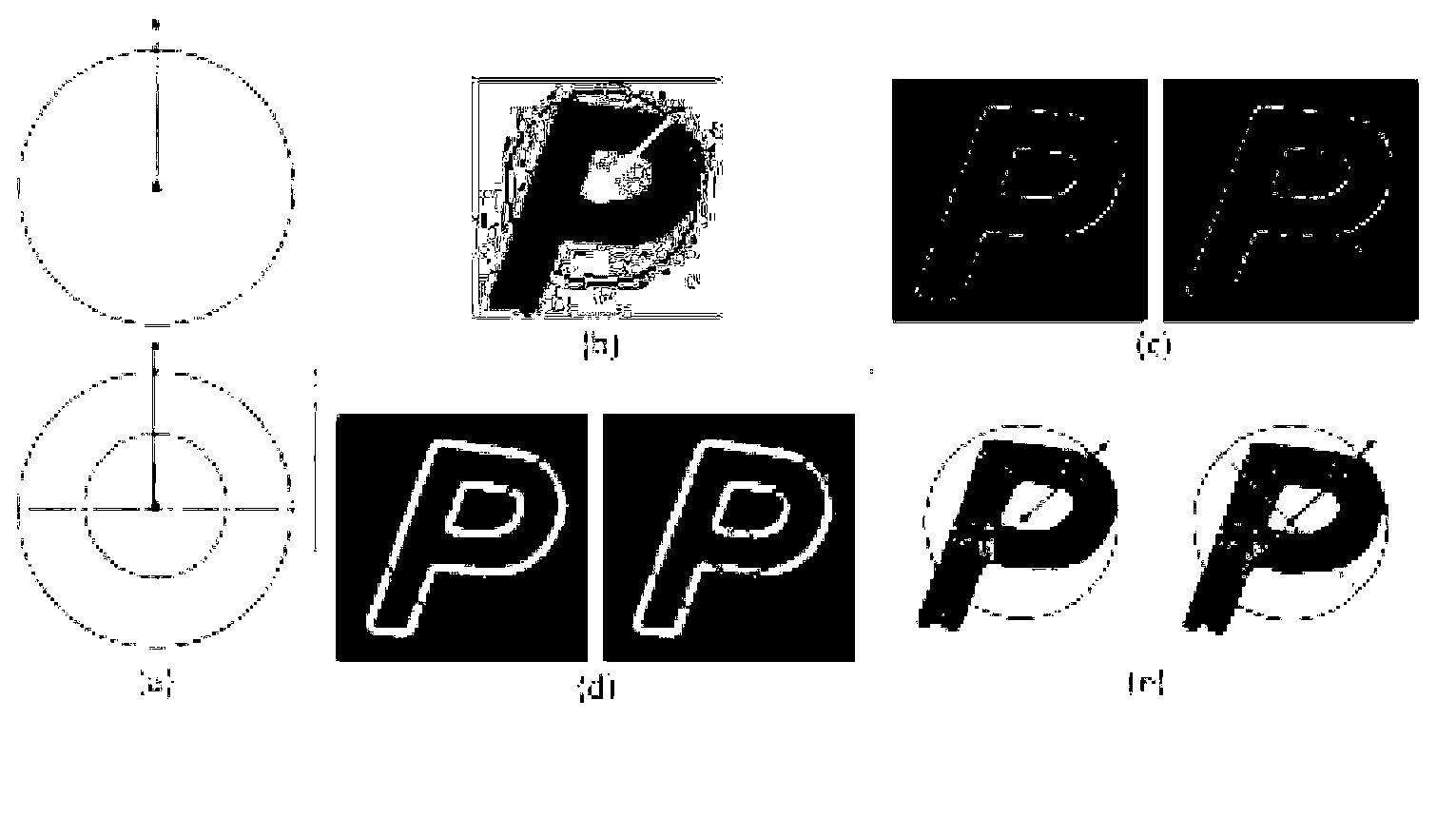

English text detection method with text direction correction function

ActiveCN108647681AEasy to distinguishAchieve correctionCharacter recognitionImaging processingText detection

The invention belongs to the technical field of image processing, and specifically relates to an English text detection method with a text direction correction function. The method comprises the following steps of: respectively carrying out maximally stable extremal region detection on each channel of an English text image so as to obtain candidate text regions; establishing a convolutional neuralnetwork model-based classifier, and filtering wrong candidate text regions so as to obtain preliminary text regions; grouping the preliminary text regions by utilizing a double-layer text grouping algorithm; and carrying out direction correction on the grouped preliminary text regions so as to obtain a corrected text. According to the method, an enhanced multichannel MSER model is utilized to obtain more refined text regions, a parallel SPP-CNN classifier is imported to better distinguish text regions and non-text regions, images with any sizes can be processed, and pool features can be extracted under multiple scales, so that more features can be known through multilayer space information of source images; and the method is capable of processing slightly inclined scene texts.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

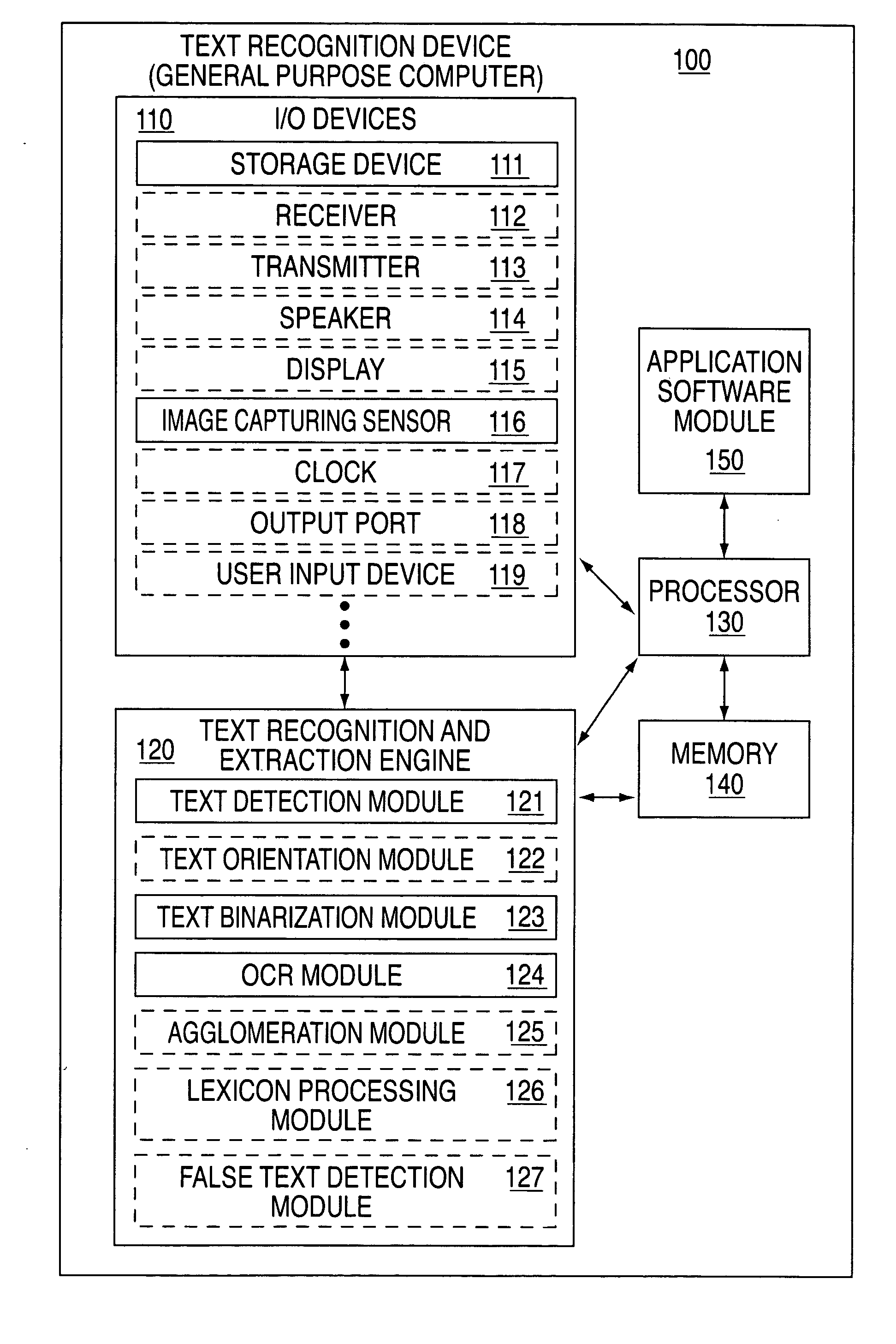

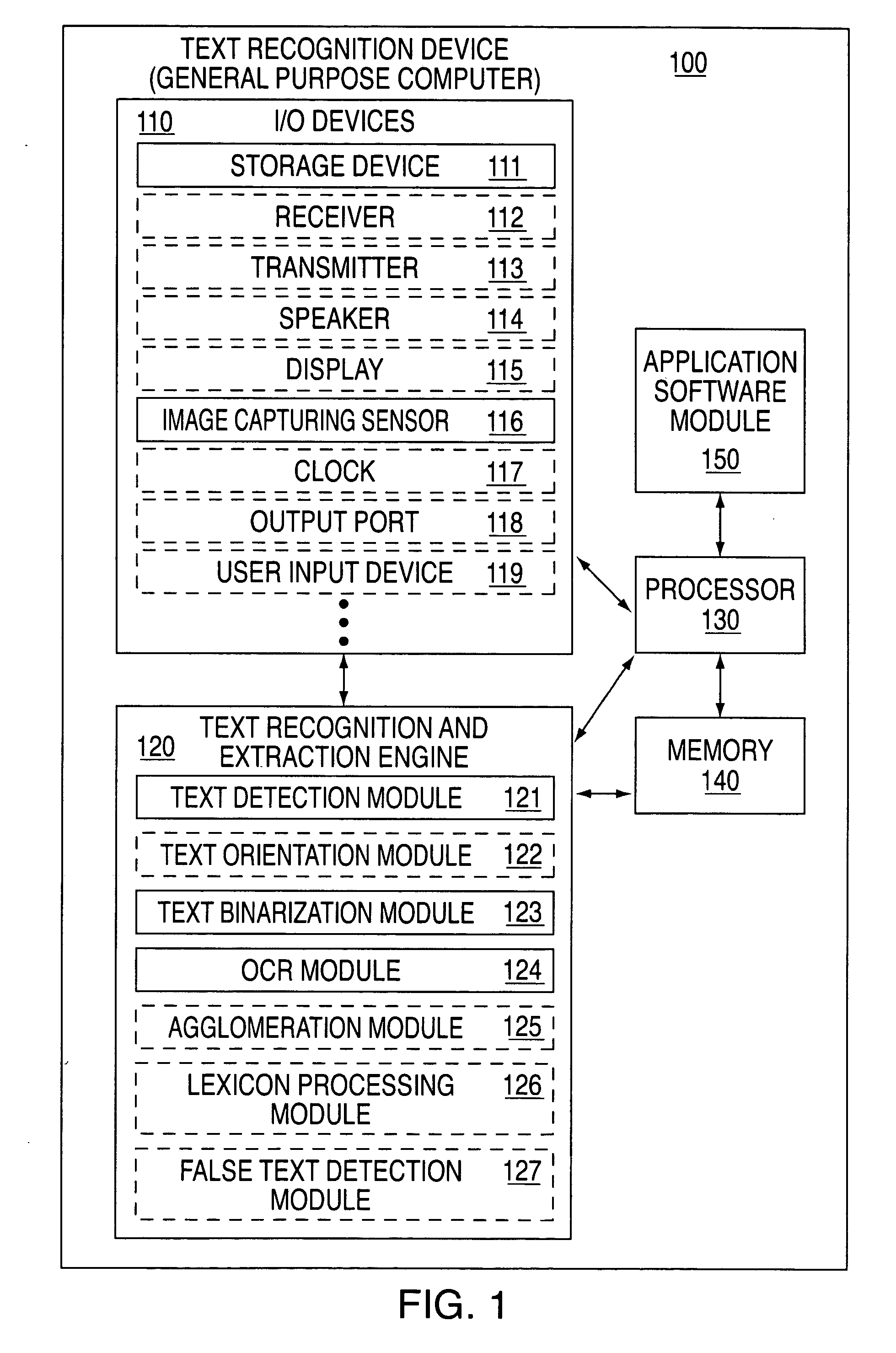

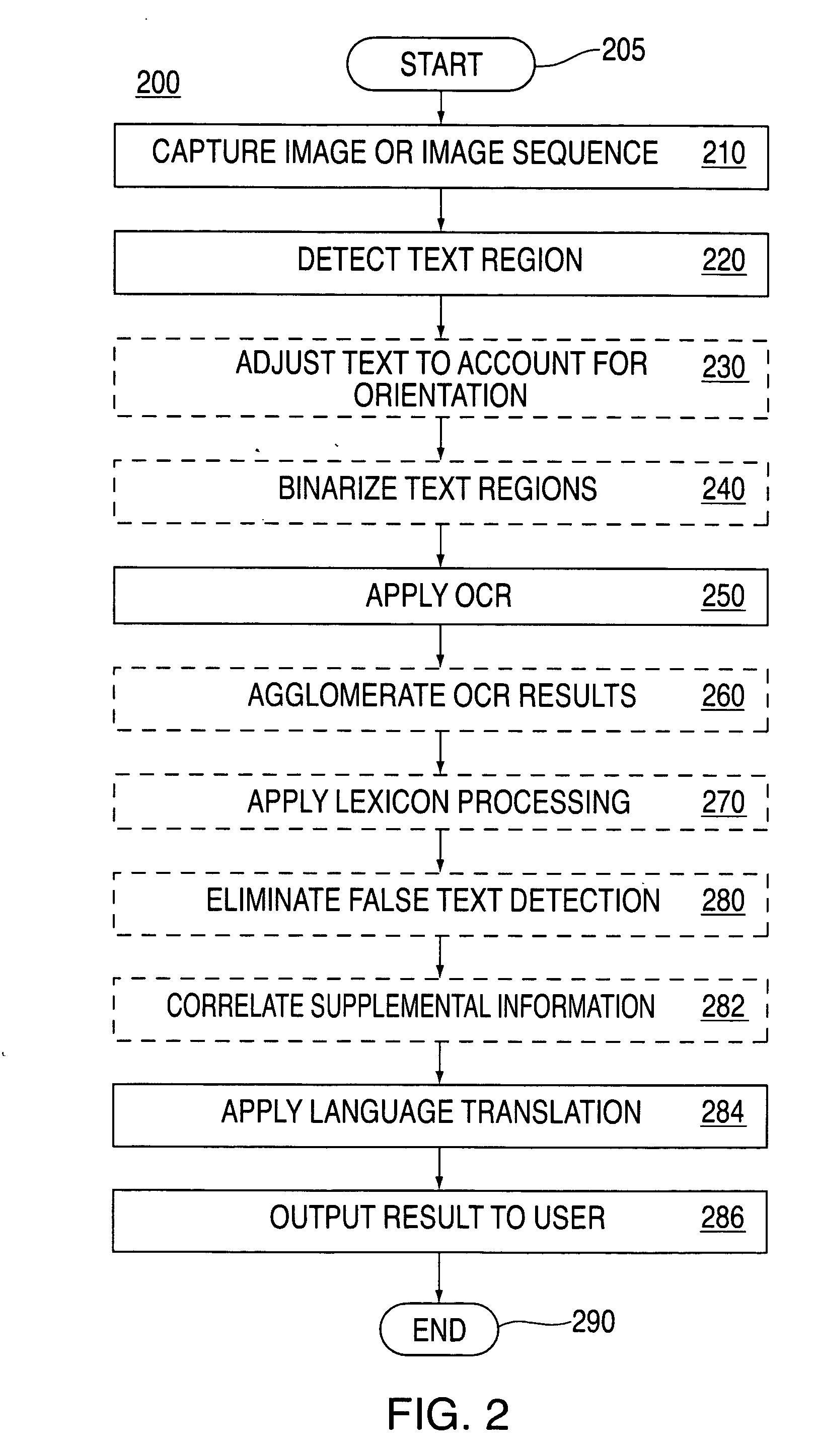

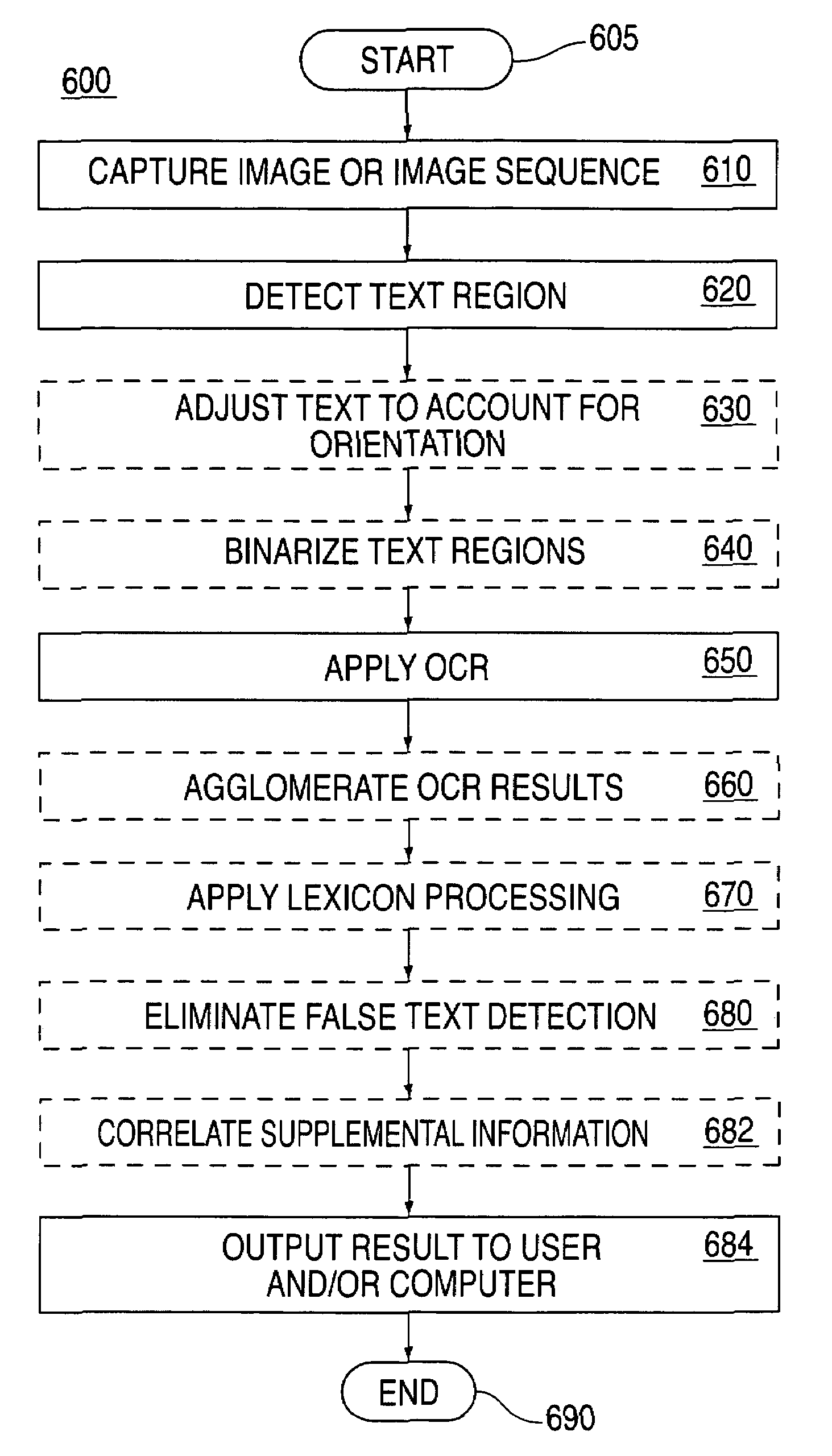

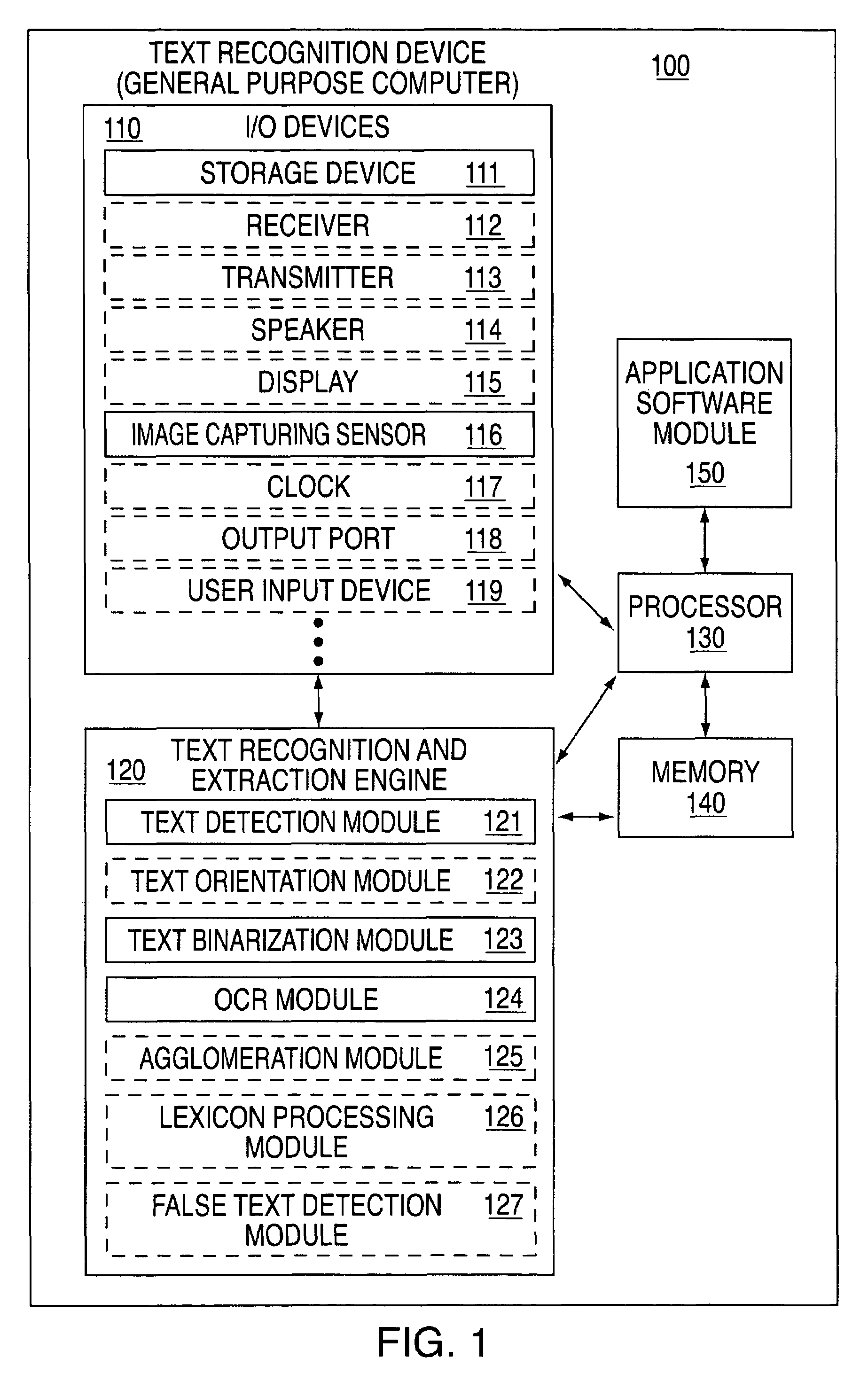

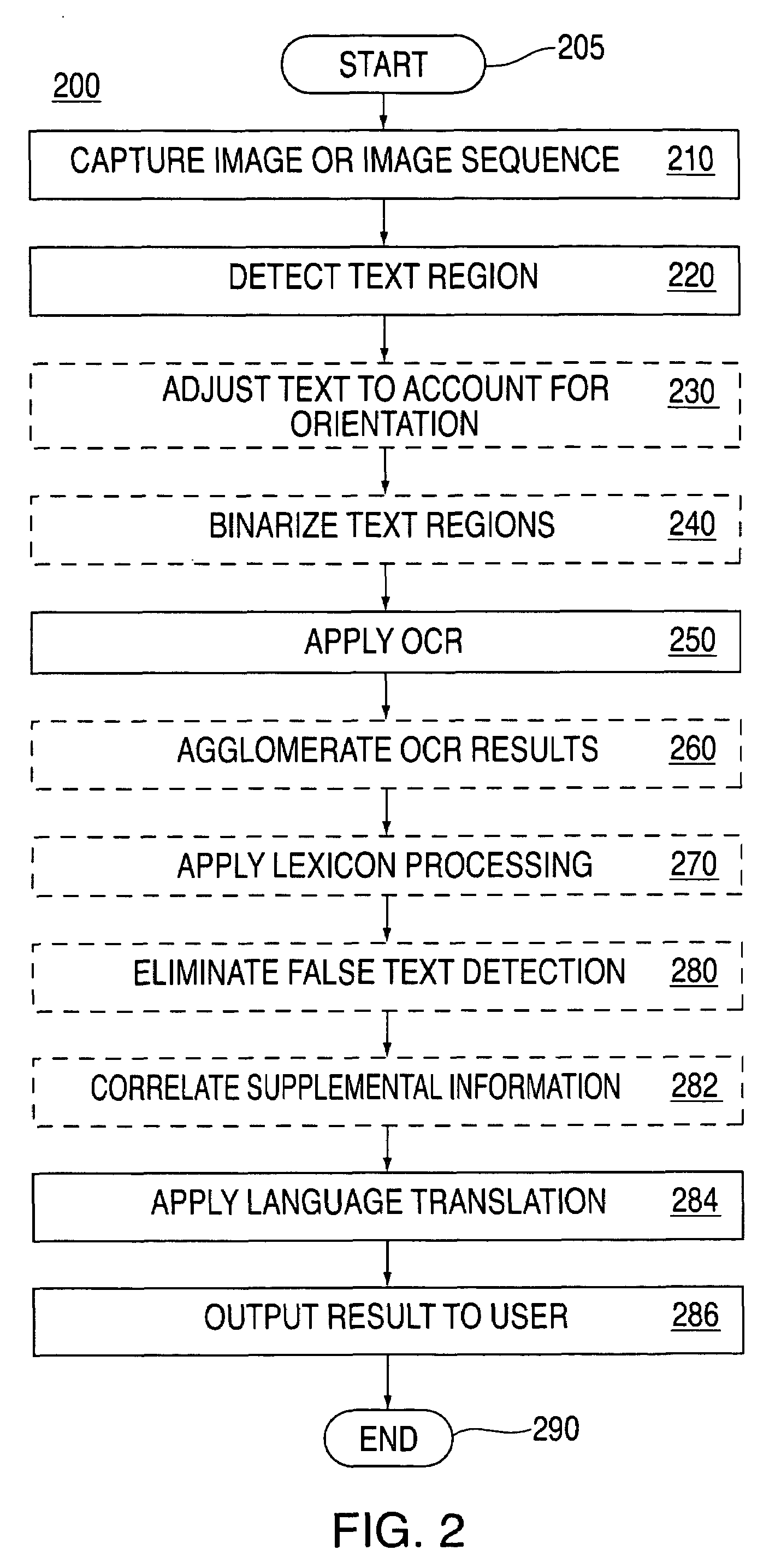

Method and apparatus for portably recognizing text in an image sequence of scene imagery

InactiveUS20050123200A1Road vehicles traffic controlCharacter recognitionText detectionComputer graphics (images)

An apparatus and a concomitant method for portably detecting and recognizing text information in a captured imagery. The present invention is a portable device that is capable of capturing imagery and is also capable of detecting and extracting text information from the captured imagery. The portable device contains an image capturing sensor, a text detection module, an OCR module, a storage device and means for presenting the output to the user or other devices.

Owner:SRI INTERNATIONAL

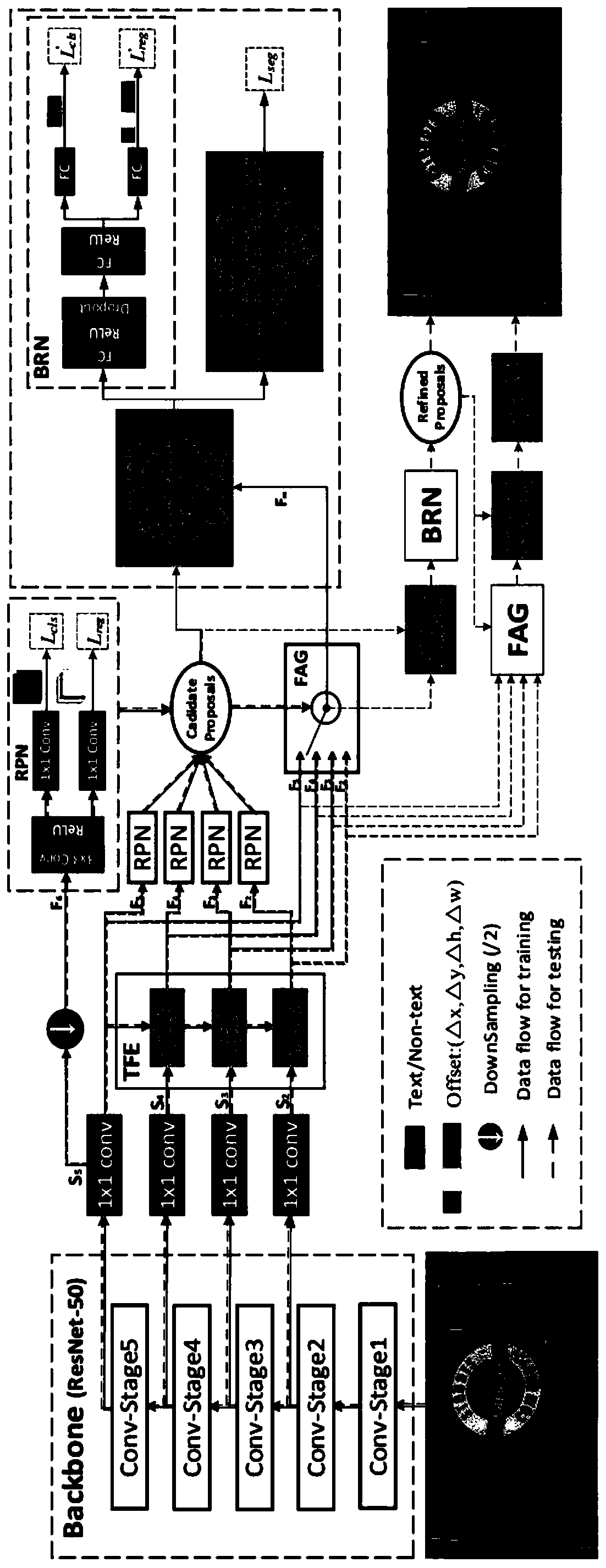

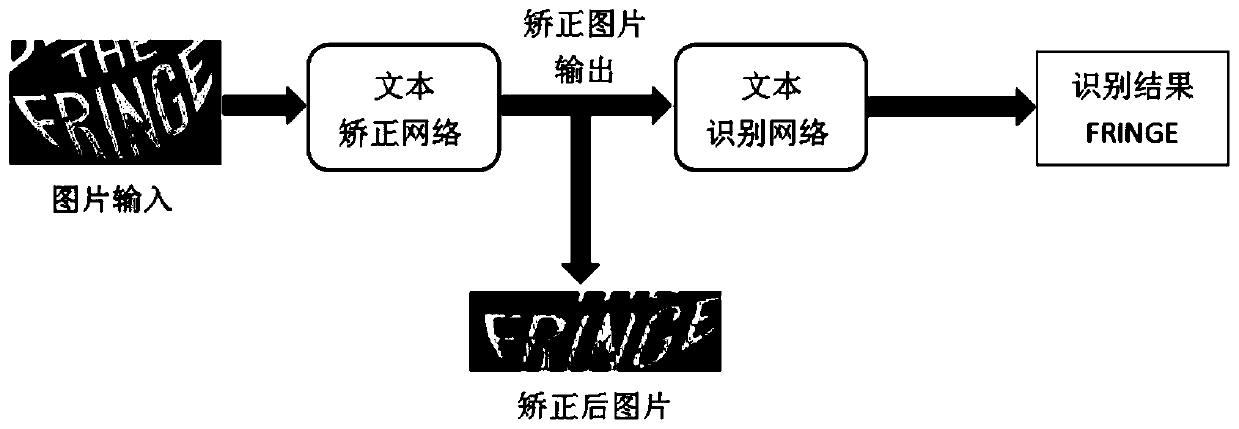

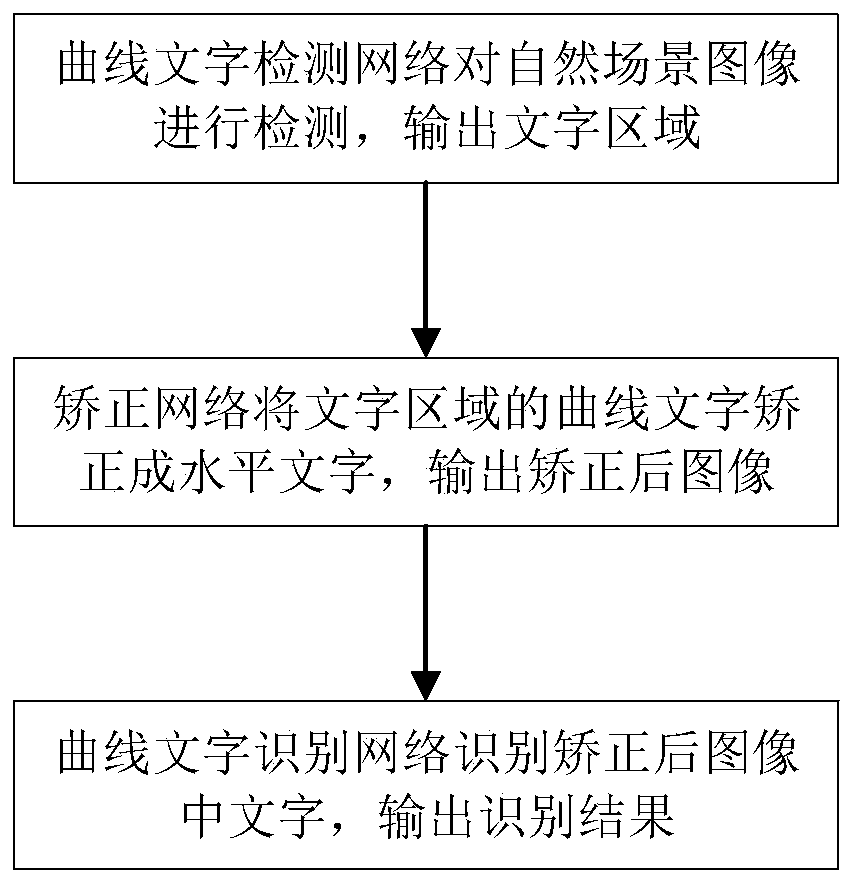

Method for detecting and identifying curve characters in natural scene image

ActiveCN110287960AEnhanced Representational CapabilitiesCharacter recognitionText detectionComputer science

The invention discloses a method for detecting and identifying curve characters in a natural scene image, which is used for solving the problems of fuzzy boundary and low background contrast ratio in curve character identification and improving the curve character detection precision. The method mainly comprises the following steps: 1) training a curve character detection network based on a Mask RCNN network, detecting a natural scene image by using the trained curve character detection network, and detecting a character region in the image; (2) correcting curve characters in the character area into horizontal characters by utilizing a correction network, and outputting a corrected image, and (3) training a curve character recognition network, extracting convolution characteristics of the corrected image by utilizing the trained curve character recognition network, decoding the convolution characteristics, and recognizing the characters.

Owner:INST OF INFORMATION ENG CAS

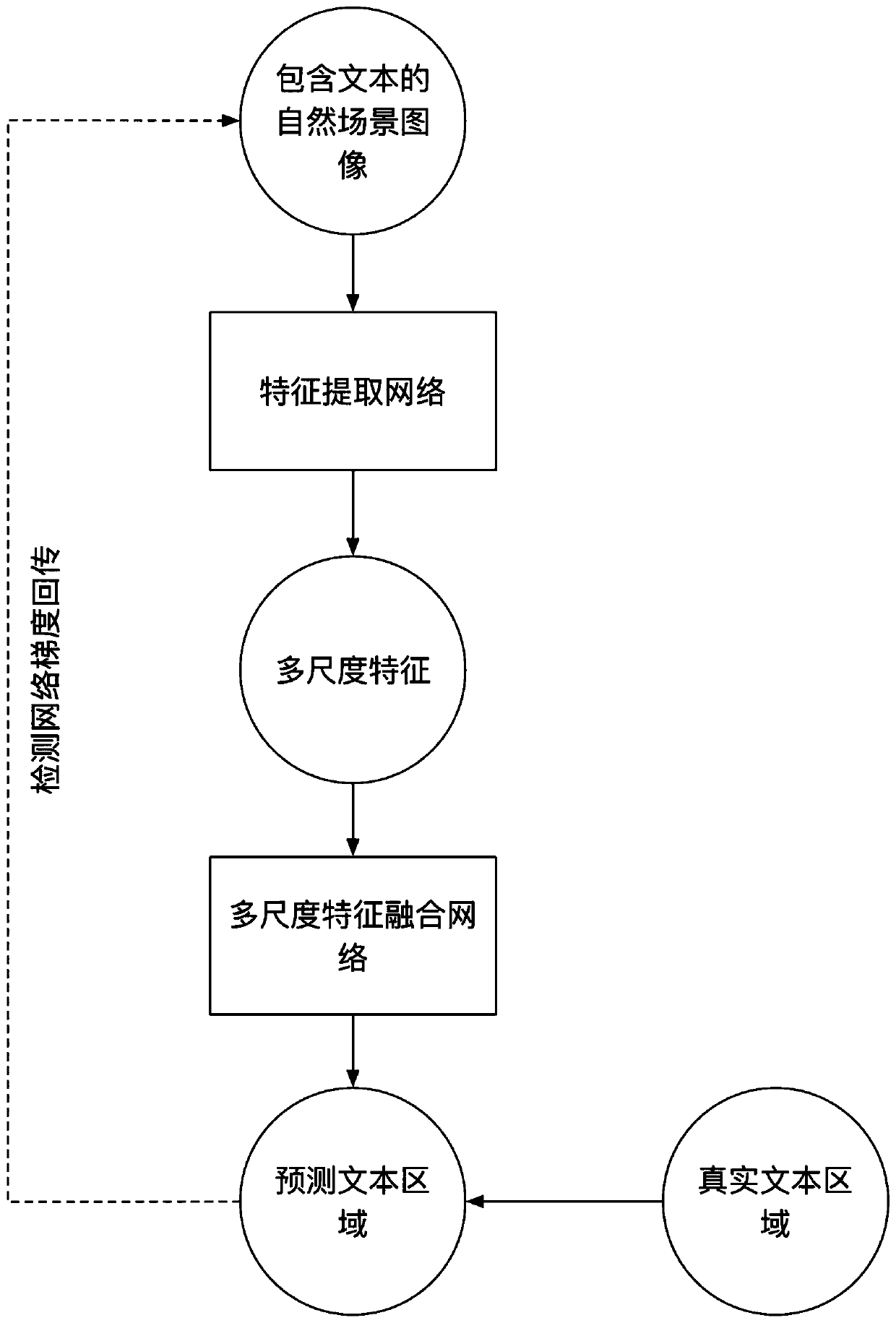

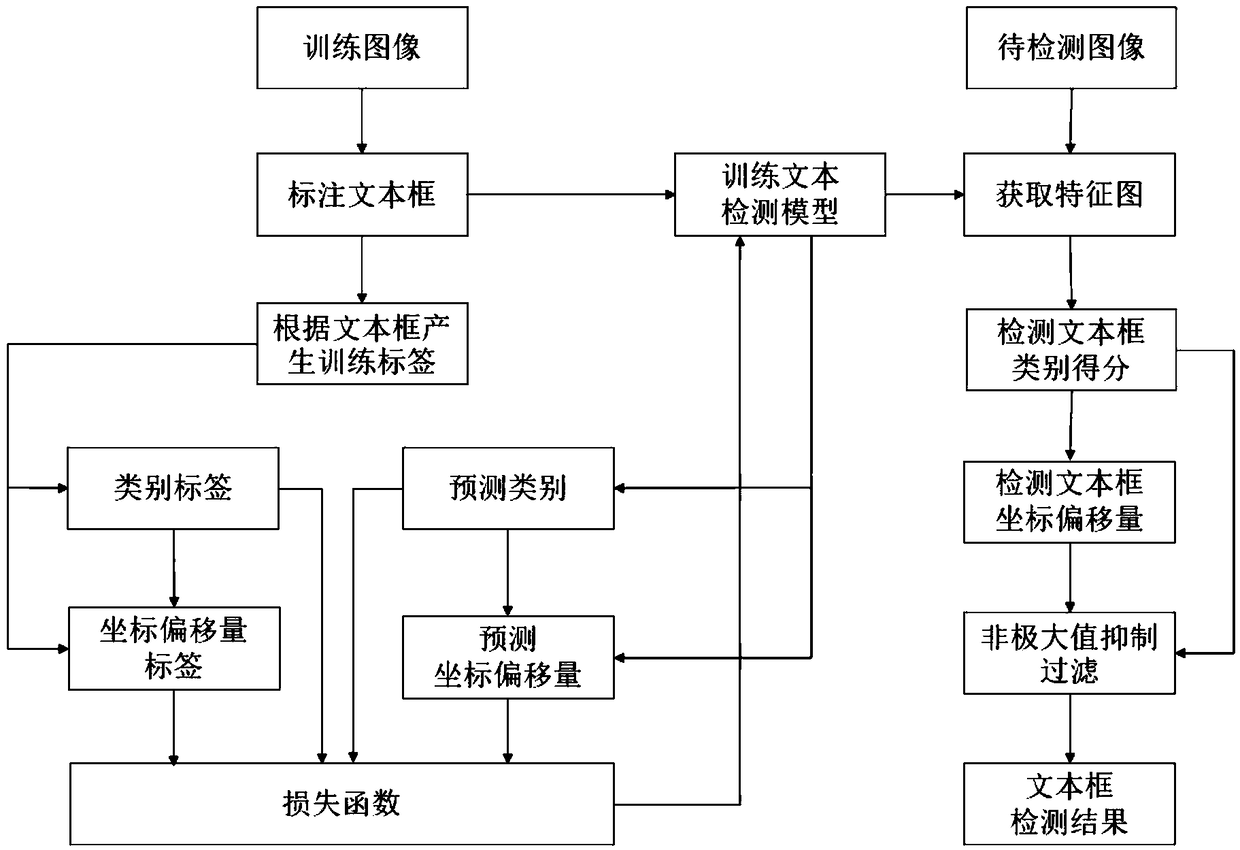

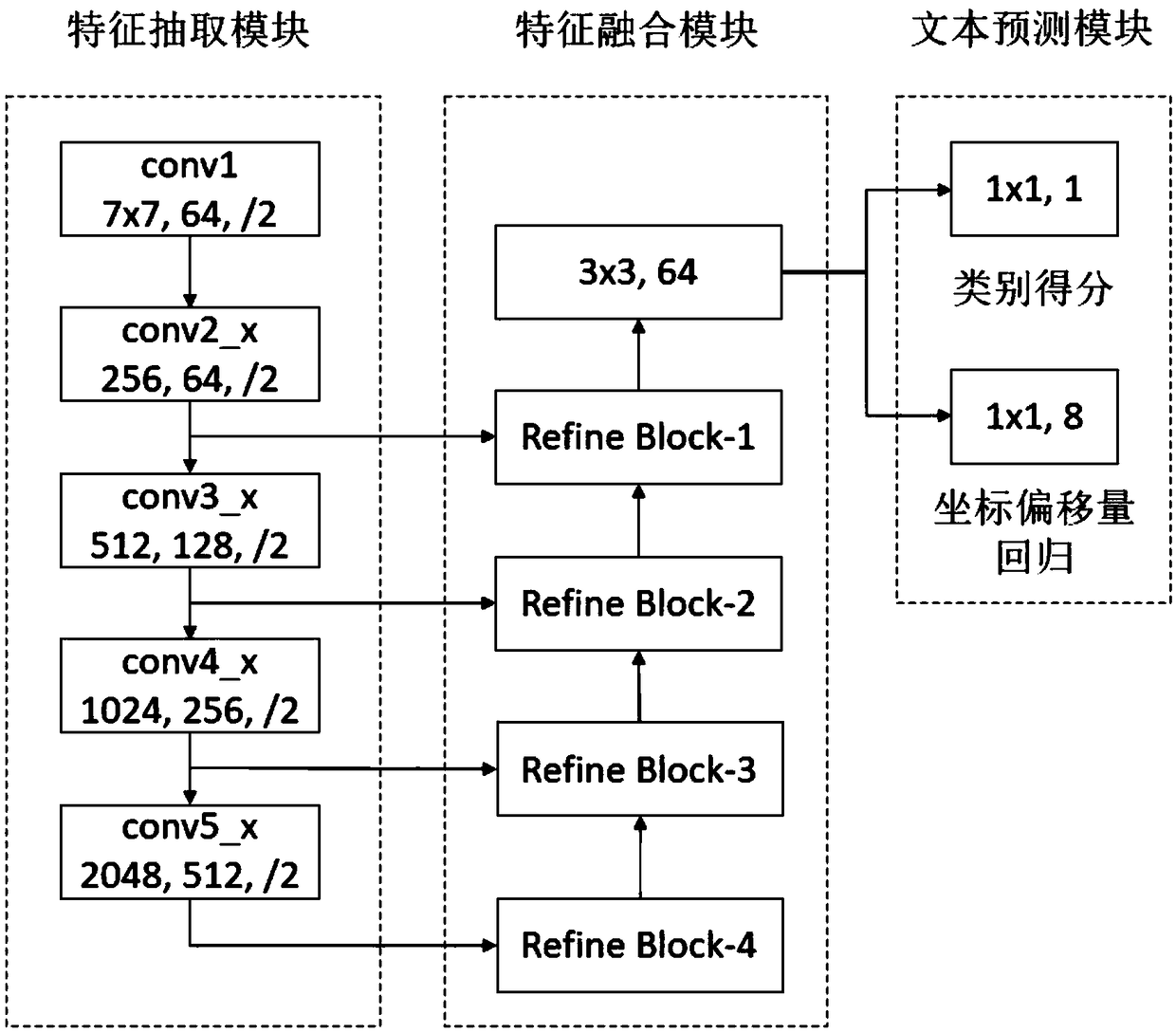

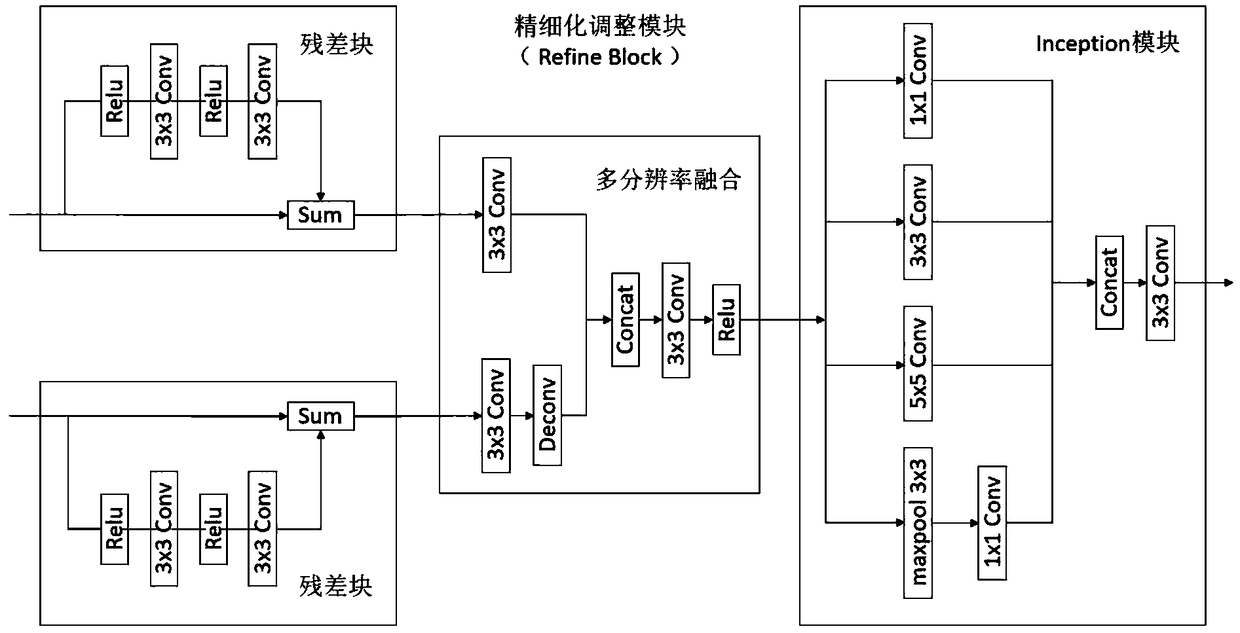

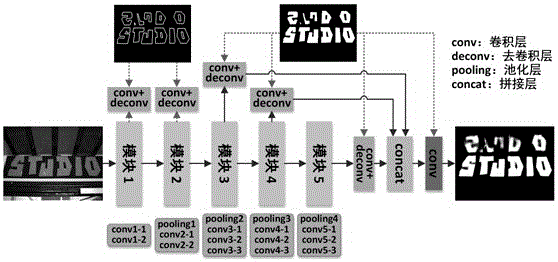

A natural scene text detection method based on full convolution neural network

ActiveCN109299274AImprove accuracyEnhance expressive abilityNeural architecturesText database clustering/classificationText detectionSemantic feature

The invention discloses a natural scene text detection method based on full convolution neural network, This method uses CNN network to extract the feature representation of text, and adjusts the feature representation by feature fusion module, at the same time fuses the semantic feature of high-level feature map and the position information of low-level feature map, so that the extracted featurehas stronger representation ability, and combines with text prediction module to directly predict the candidate text object. This method adopts end-to-end training and prediction process, The processing flow is simple, and no multi-step hierarchical processing is needed. Finally, the final detection result is obtained by simple NMS operation, which has high accuracy and strong robustness. The multi-directional and multi-size text object in the natural scene image with complex background can be detected well, and the detection performance of the natural scene text is excellent.

Owner:NANJING UNIV

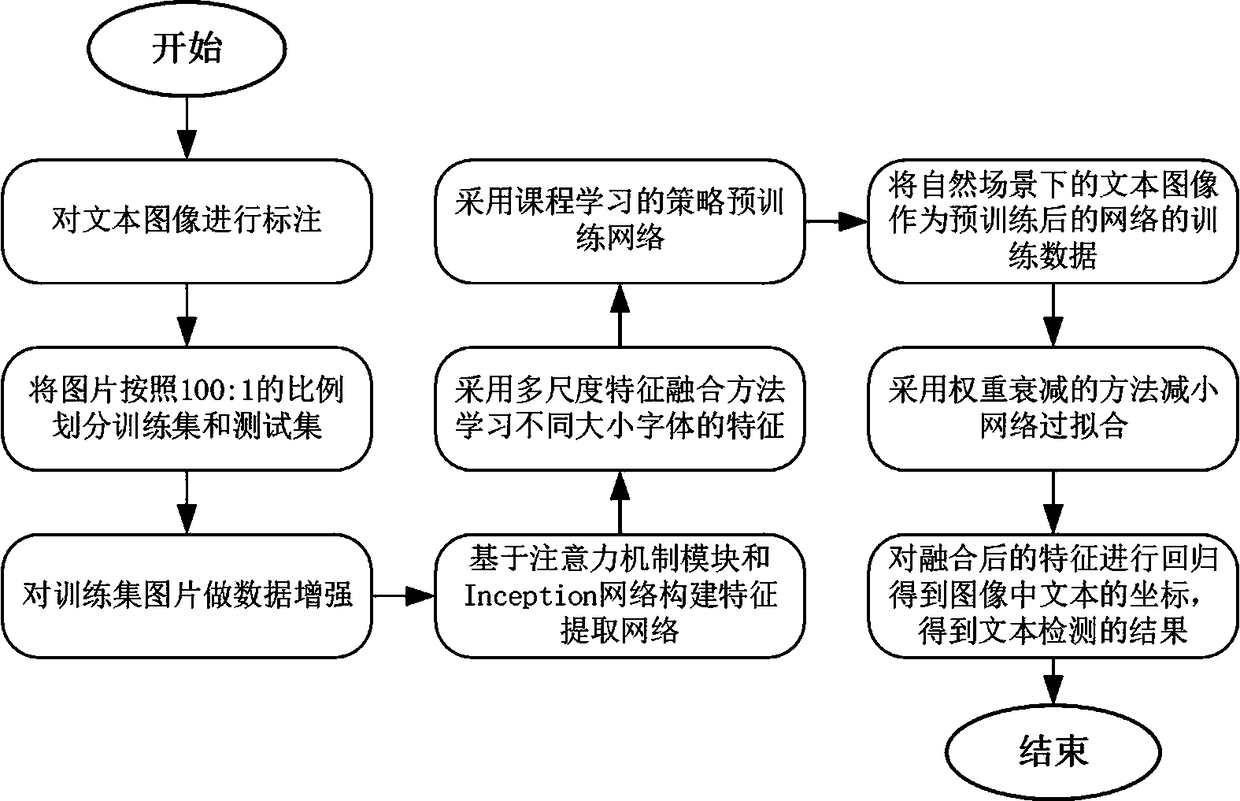

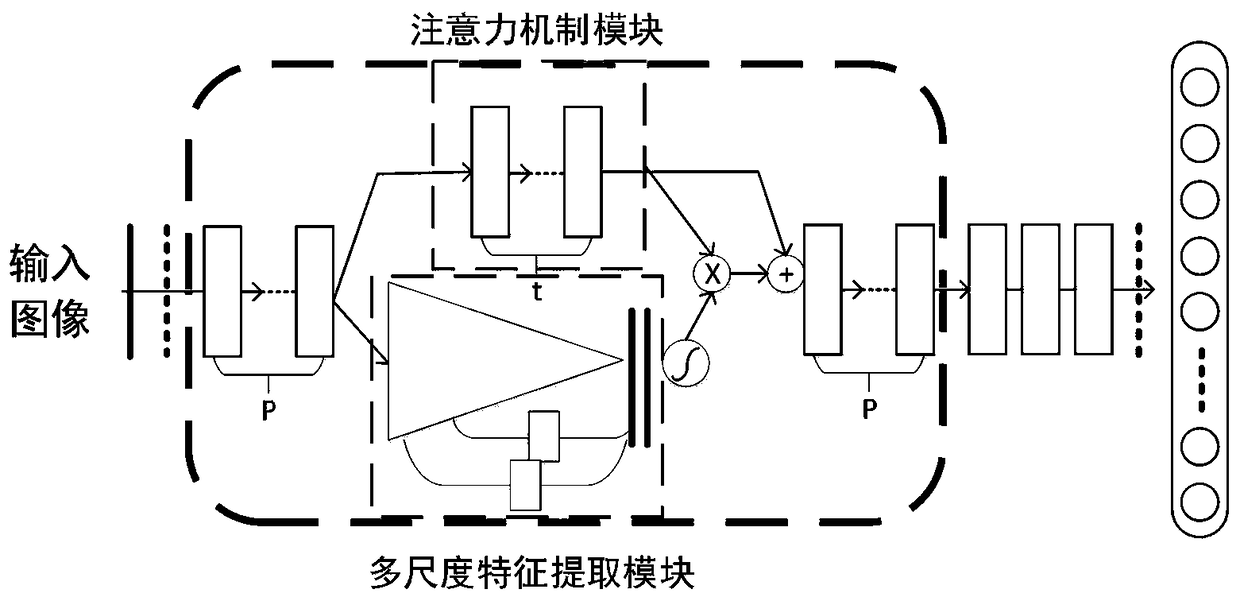

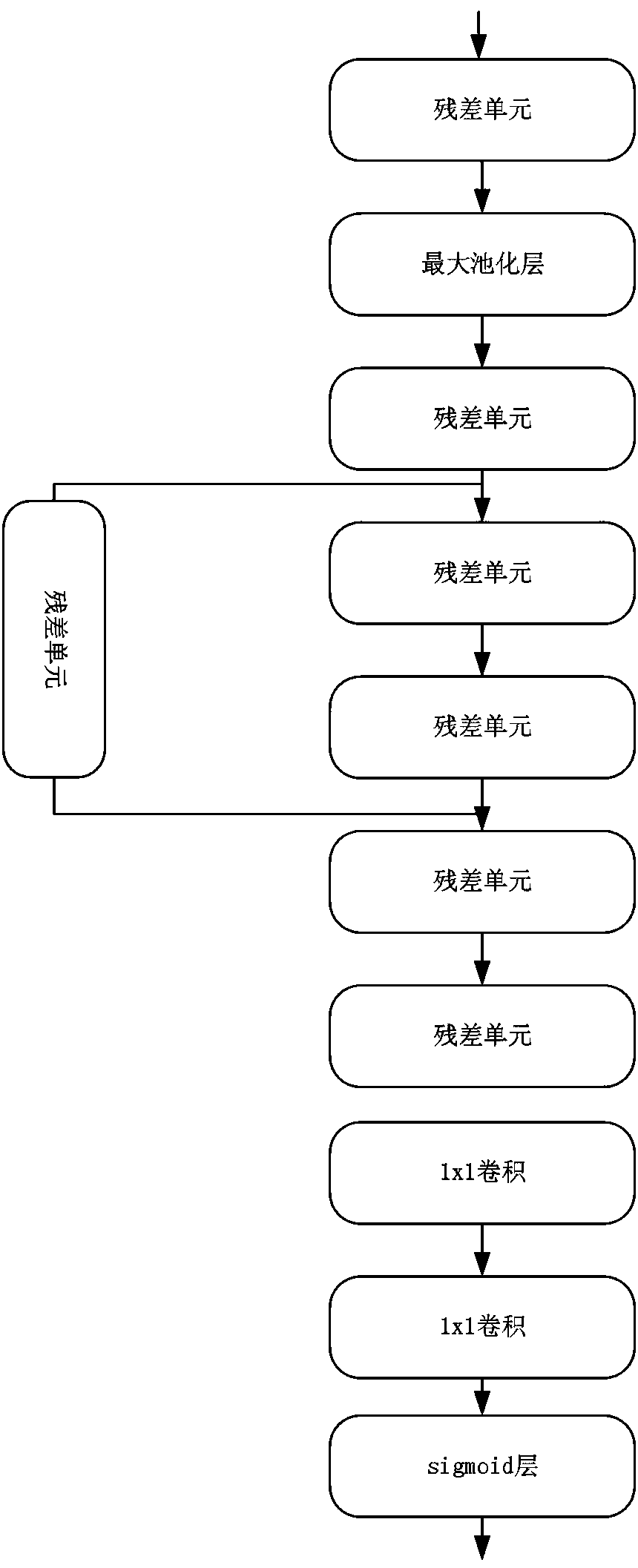

A natural scene text detection method based on an attention mechanism convolutional neural network

ActiveCN109165697ASolve the characteristicsSolve the lack of parameters that need to be adjustedNeural architecturesCharacter recognitionData setOpen data

The invention relates to a natural scene text detection method based on an attention mechanism convolutional neural network. Firstly, image data with text under natural scene is labeled and divided into training set and test set. Then the text image is processed as training data by data enhancement method. Based on the attention mechanism module and Inception network, a feature extraction networkis constructed, and the features of different fonts are learned by multi-scale feature fusion. The network is pre-trained using course learning strategies. The network is trained again by using the text image data of the natural scene. The fused features are adopted to regress to get the coordinates of the text in the image, and the text detection results are obtained. Finally, the validity of thetrained neural network is verified in the test set and other open data sets. The method can solve the problems of low character recall rate and low recognition accuracy under complex natural environment in the current technology, and has advantages in running speed.

Owner:FUZHOU UNIV

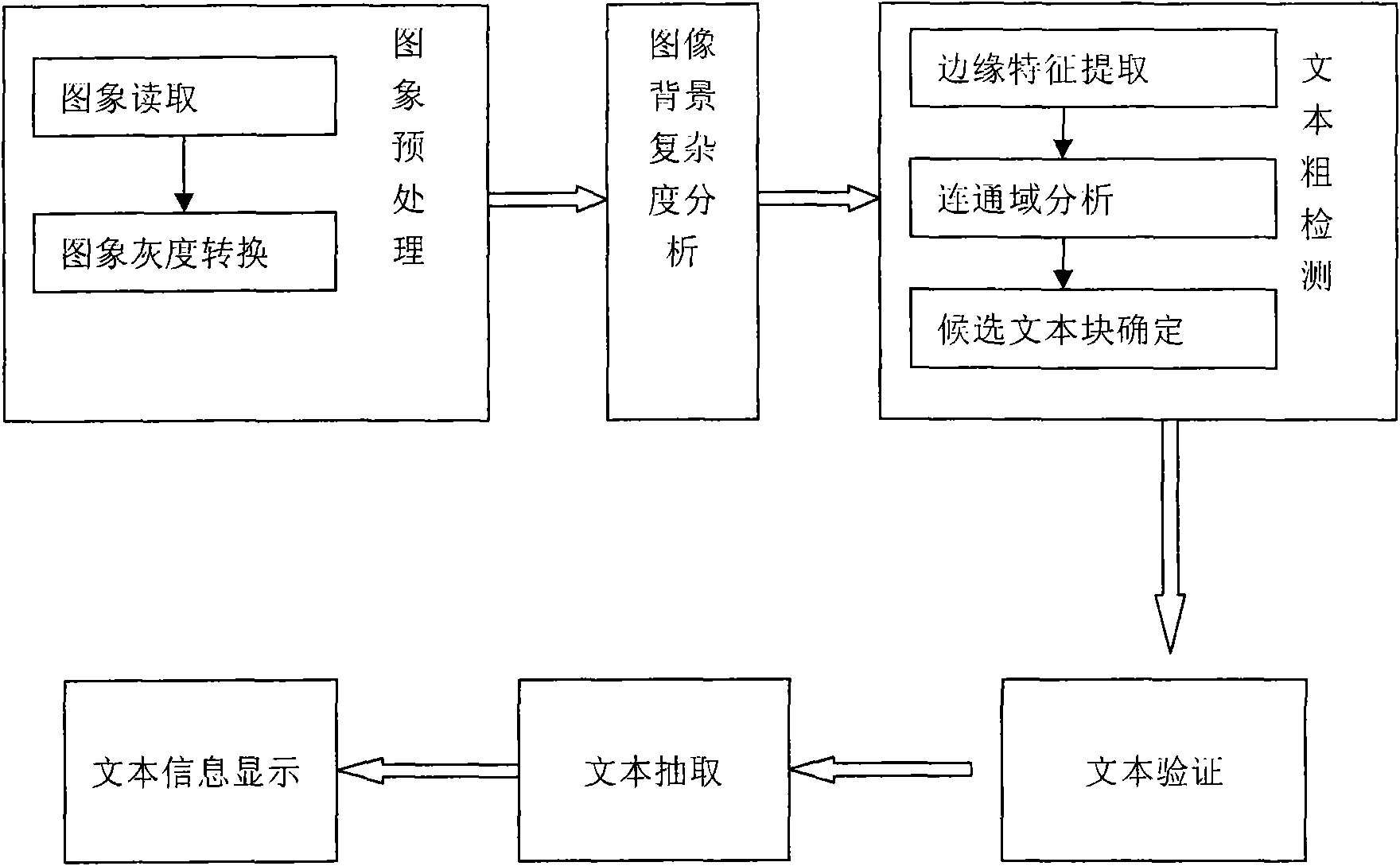

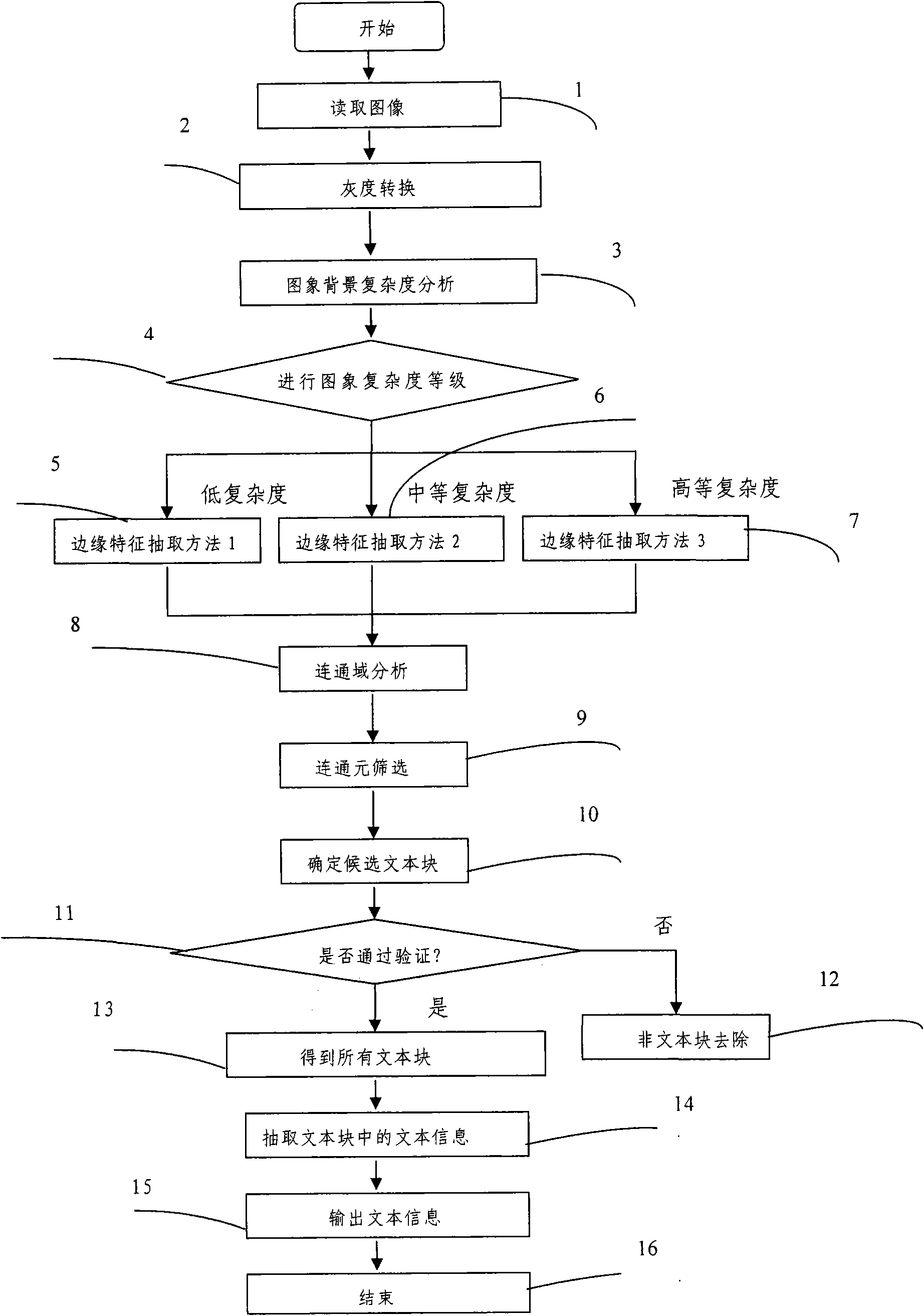

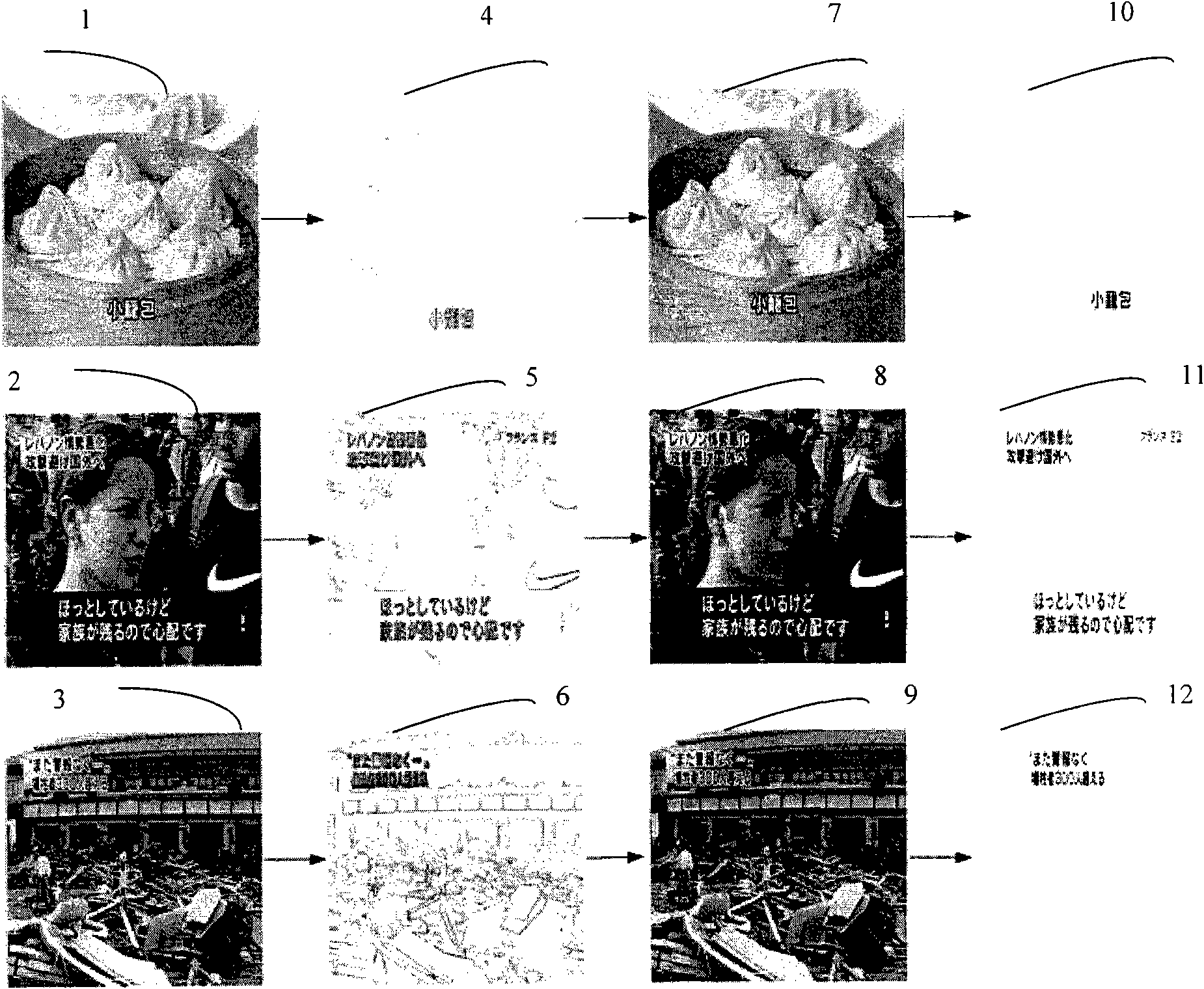

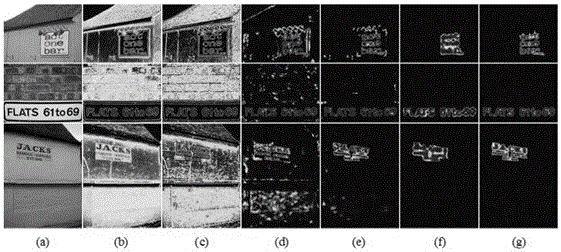

Method for extracting text information from adaptive images

InactiveCN101615252AImprove performanceThe detection method is simpleCharacter and pattern recognitionText detectionCalculation methods

The invention discloses a method for extracting text information from adaptive images, and relates to technology for extracting text information from images. The method comprises the following steps: 1) preprocessing the images; 2) analyzing complexities of the backgrounds of the images; 3) initially detecting texts; 4) verifying the texts; 5) extracting the texts; and 6) outputting or displaying the text information. The method adopts different text detection methods for the images with different complexities of the backgrounds by computing the complexities of the backgrounds of the images, reduces missing and false detection caused by a single text detection method, and improves the overall performance of a text extracting system. The computation method of the complexities of the backgrounds of the images is simple and effective. The method can detect text information in the images with different complexities of the backgrounds, and the text information detected by the method is free from the influence of type-font, word size and language; and the method has strong commonality.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

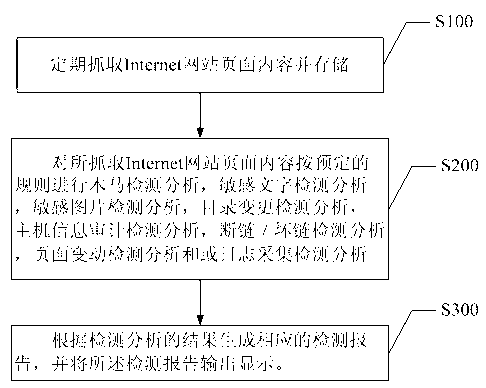

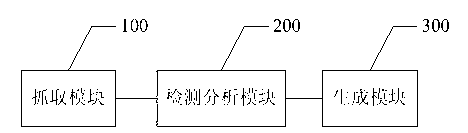

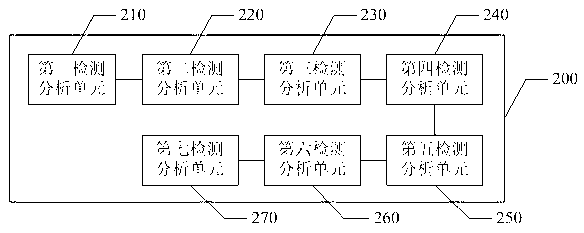

Method and system for detecting hostile attack on Internet information system

ActiveCN103281177AComprehensive detectionImprove securityMultiple keys/algorithms usagePlatform integrity maintainanceText detectionThe Internet

The invention discloses a method and a system for detecting a hostile attack on an Internet information system. The method comprises the following steps: A, regularly crawling and saving the content of an Internet web page; B, conducting a Trojan detection analysis, a sensitive word detection analysis, a sensitive image detection analysis, a directory change detection analysis, a host information audit detection analysis, a broken link / wrong link detection analysis, a page change detection analysis and / or log collection detection analysis to the crawled content of the Internet web page according to a predefined rule; and C, according to the results of the detection analyses, generating corresponding detection reports, and outputting and displaying the detection reports. The method for detecting the hostile attack on the Internet information system has the advantages that the detection is comprehensive, the defection safety is increased, the workload is reduced, the human cost is reduced, and the method provides convenience for users.

Owner:GUANGDONG POWER GRID CO LTD INFORMATION CENT

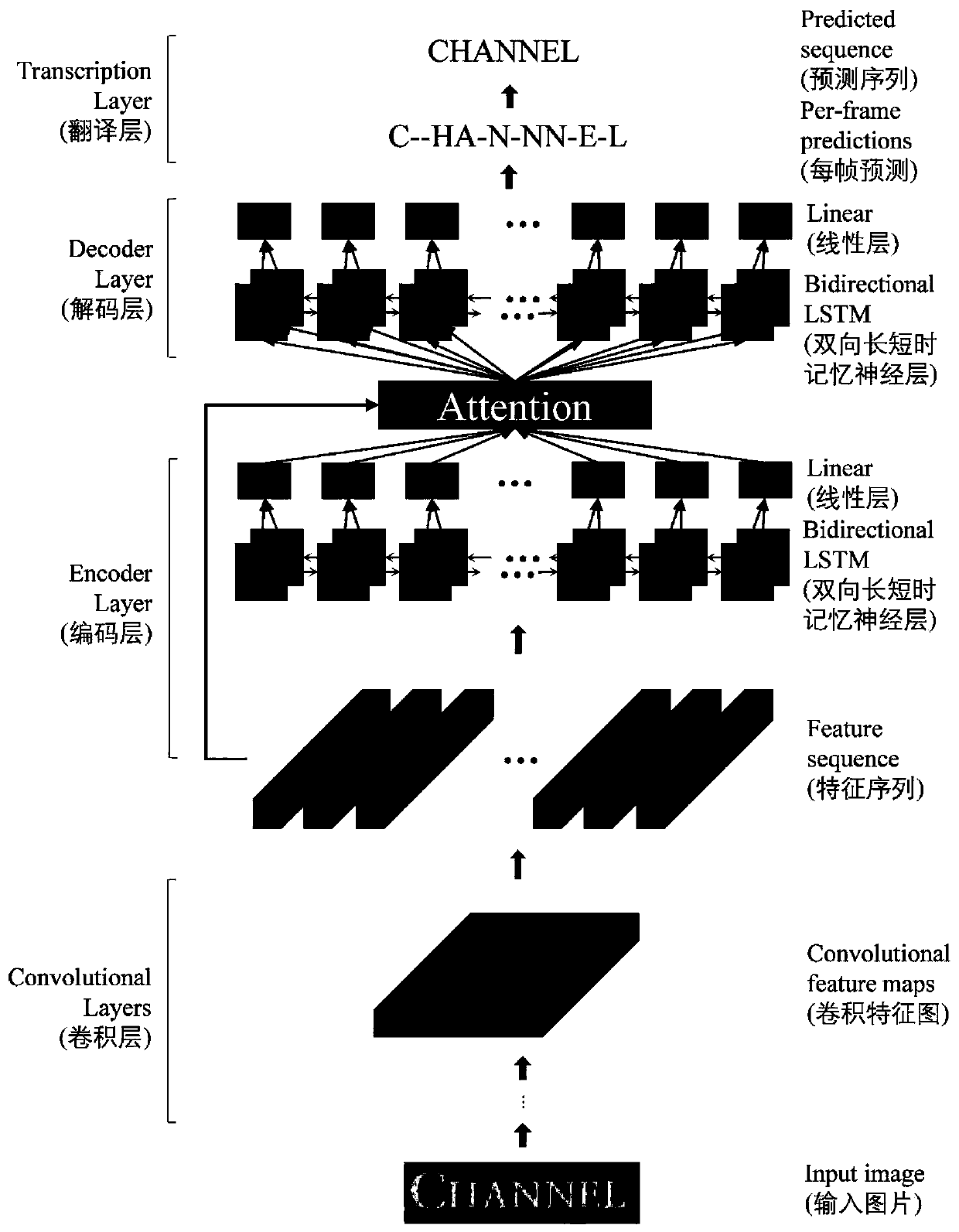

Text detection method of document image in natural scene

ActiveCN107609549ARich sceneAvoid fit problemsCharacter and pattern recognitionNeural architecturesText detectionConvolution

The invention discloses a text detection method of a document image in a natural scene. Commonly used Chinese characters are selected to make Chinese character pictures, a dataset 1 is formed, randomrevolving and cropping operations are carried out on labeled document images, then a manner of Poisson cloning is used to fuse different background images, and a dataset 2 is formed; the dataset 1 isadopted to carry out training of a text classification model on a VGG16 network, and after the model converges, obtained parameters are used to initialize a fully convolutional neural network model, and the dataset 2 is used to train the model; the trained fully-convolutional neural network model is used to process the image, a classification situation of each pixel point is obtained according toa maximum probability method, and a text-non-text binary image is formed; a method of connected regions is used to obtain text regions, the original image is binarized, and only text information in the text regions in the text-non-text region binary image is extracted to obtain a text binary image; the image is corrected through a maximum variance method; and projection is carried out again on thecorrected image, and the text-non-text region binary image is refined.

Owner:BEIJING UNIV OF TECH

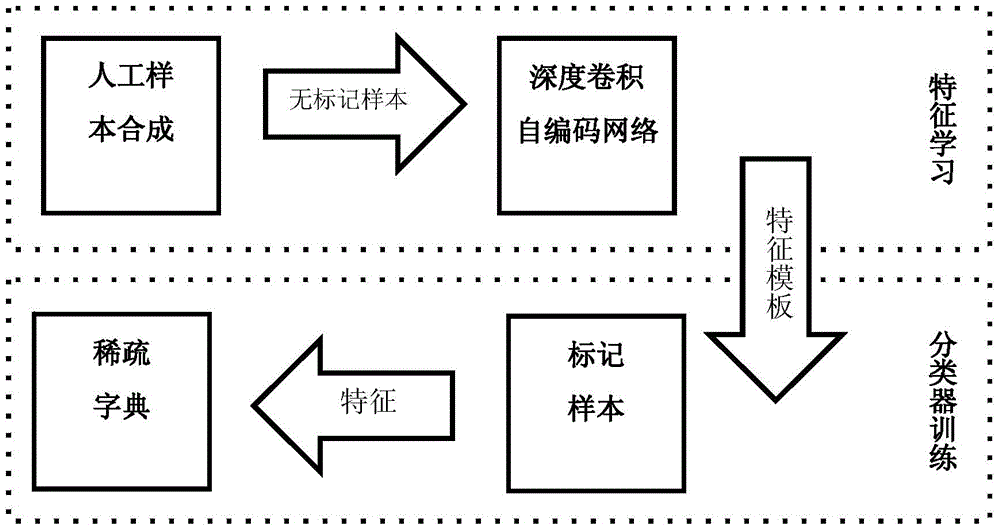

Graphic pattern text detection method based on deep learning

ActiveCN104794504ASolve problemsSolve overfittingImage analysisCharacter and pattern recognitionGraphicsText detection

The invention discloses a graphic pattern text detection method based on deep learning. The method includes firstly, training a depth convolution self-encoding network by combining graphic pattern text samples, than adopting marked samples, and classifying through a sparse dictionary; extracting graphic pattern texts from a sample library, rotating, shifting and transmitting, and combining the graphic pattern texts with pure background graphics; adopting a combined sample seat, establishing a depth convolution self-encoding network, and learning characteristic templates in layered training and entire optimizing manners; performing characteristic extraction on the characteristic templates acquired by deep network learning according to the acquired marked samples; sampling the extracted characteristics in the size of the original graphics, adopting single blocks as identifying units, and training the sparse dictionary and a classifier; after training, performing multi resolution decomposition on the graphics to be processed, utilizing the characteristic templates to extract characteristics, and utilizing the sparse dictionary to acquire results in a classified manner.

Owner:ZHEJIANG UNIV

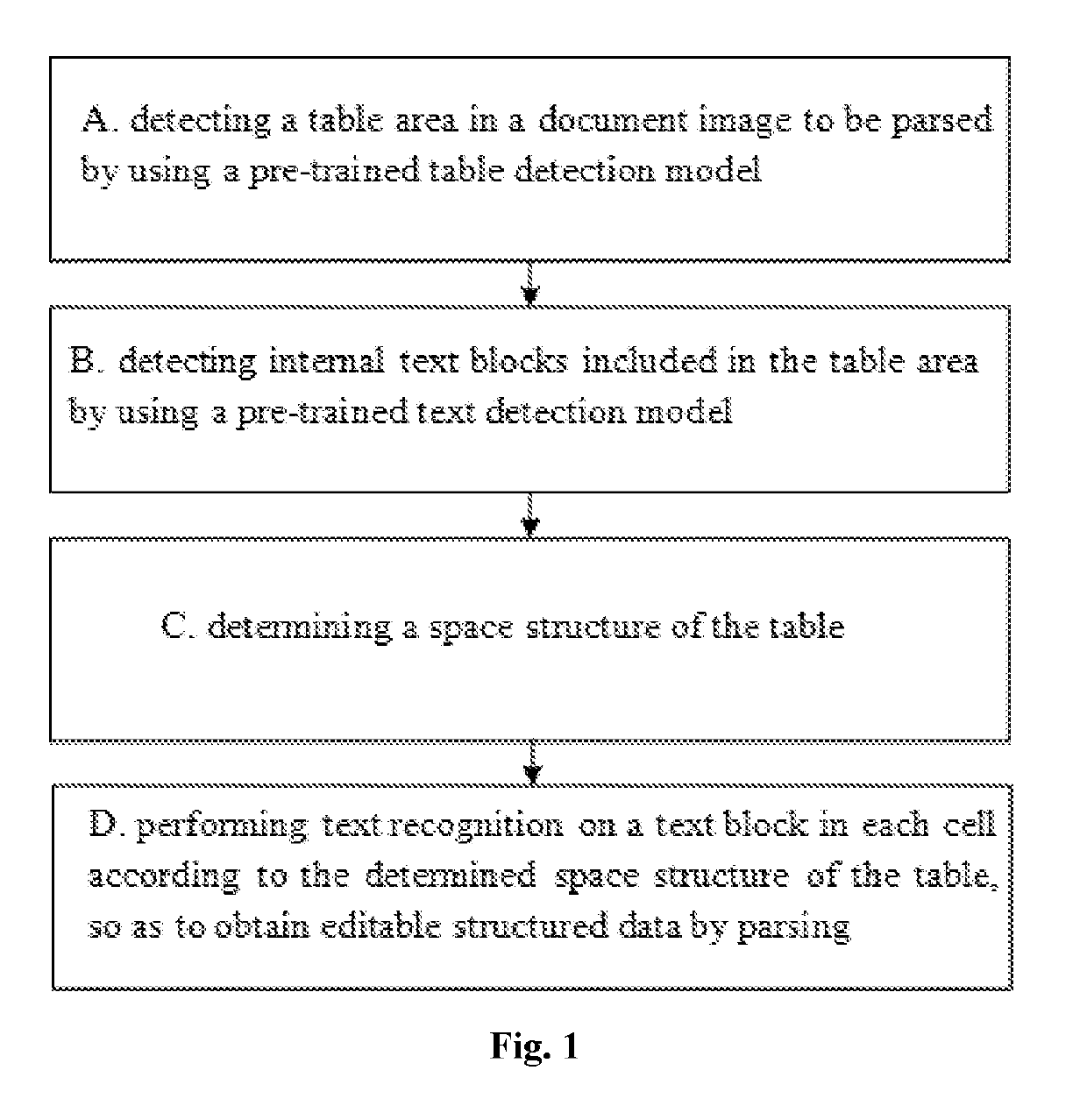

Method and device for parsing table in document image

ActiveUS20190266394A1Efficient analysisEasy to solveCharacter recognitionText detectionText recognition

The present application relates to a method and a device for parsing a table in a document image. The method comprises the following steps: inputting a document image to be parsed which includes one or more table areas into the electronic device; detecting, by the electronic device, a table area in the document image by using a pre-trained table detection model; detecting, by the electronic device, internal text blocks included in the table area by using a pre-trained text detection model; determining, by the electronic device, a space structure of the table; and performing text recognition on a text block in each cell according to the space structure of the table, so as to obtain editable structured data by parsing. The method and the device of the present application can be applied to various tables such as line-including tables or line-excluding tables or black-and-white tables.

Owner:ABC FINTECH CO LTD

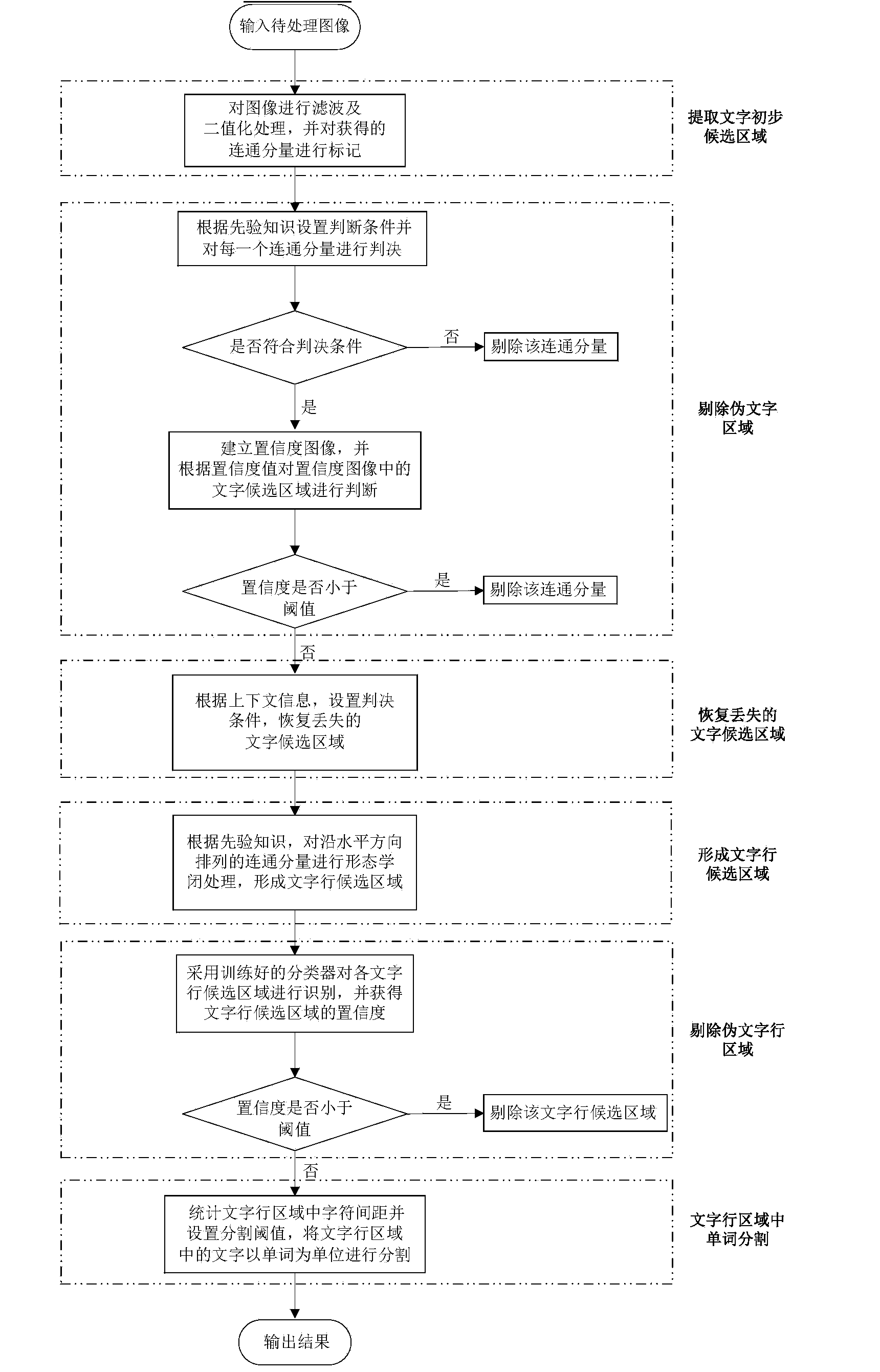

Natural scene character detection method and system

InactiveCN104050471AImprove robustnessEasy to handleCharacter and pattern recognitionText detectionAutomation

The invention discloses a natural scene character detection method and system and belongs to the technical field of mode identification. The natural scene character detection method comprises the steps of performing binarization processing on an image to obtain a character preliminary candidate region, and establishing two layers of filtering mechanisms based on judgment rules and a confidence coefficient image to remove a pseudo character region; making obtained character candidate region form a seed region in order to solve the character loss problem possibly caused in the earlier stage processing and restoring the lost character candidate region in the adjacent region according to contextual information; making adjacent character regions arranged in the horizontal direction form character lines and performing judgment through a classifier to remove pseudo character lines; finally, segmenting characters in the character lines with words as units. By means of the natural scene character detection method and system, characters in complex natural scenes can be extracted effectively, and high actual value in quickening automation and intelligence of comprehension and analysis of the natural scenes is achieved.

Owner:HUAZHONG UNIV OF SCI & TECH

Method and apparatus for portably recognizing text in an image sequence of scene imagery

InactiveUS7171046B2Road vehicles traffic controlCharacter recognitionText detectionComputer graphics (images)

Owner:SRI INTERNATIONAL

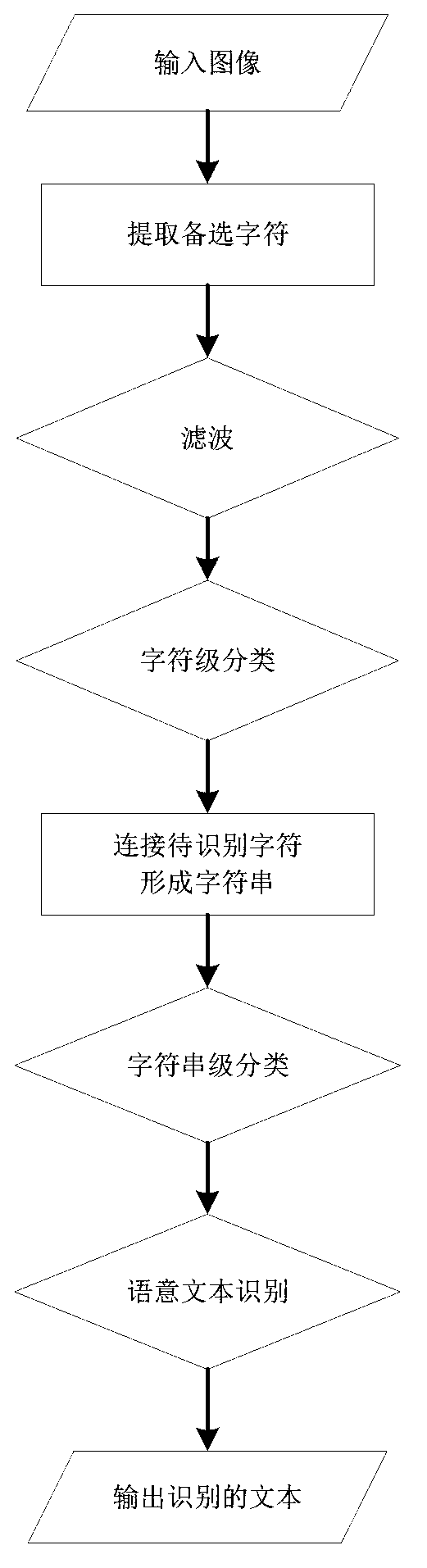

Text detection and recognition method combining character level classification and character string level classification

ActiveCN103077389AHigh accuracy of resultsGuaranteed accuracyCharacter and pattern recognitionText detectionScale invariance

The invention discloses a text detection and recognition method combining character level and character string level classification. According to the method, pixel sets possibly belonging to the same character are extracted from images to form alternate characters; alternate characters which do not meet the geometric feature statistic rule are filtered out; a character level classifier based on the character rotation and dimension invariance features is adopted for classifying the alternate characters, and the probability that the alternate characters are certain characters is determined; the characters are combined two by two, and initial character strings are formed; the similarity between every two character strings is calculated, two character strings with the highest similarity are combined into new character strings until no character strings capable of being combined exist; a character level classifier based on the character string structure feathers is adopted for classifying the character strings to determine the character strings with semanteme; and the probability that character strings to be recognized are certain characters is utilized for recognizing the character strings, and the semantic text is obtained. The text detection and recognition method has the advantages that the text detection and recognition process is used as a whole, the interaction of the text detection and the recognition is utilized for improving the result precision, and simplicity and high efficiency are realized.

Owner:HUAZHONG UNIV OF SCI & TECH

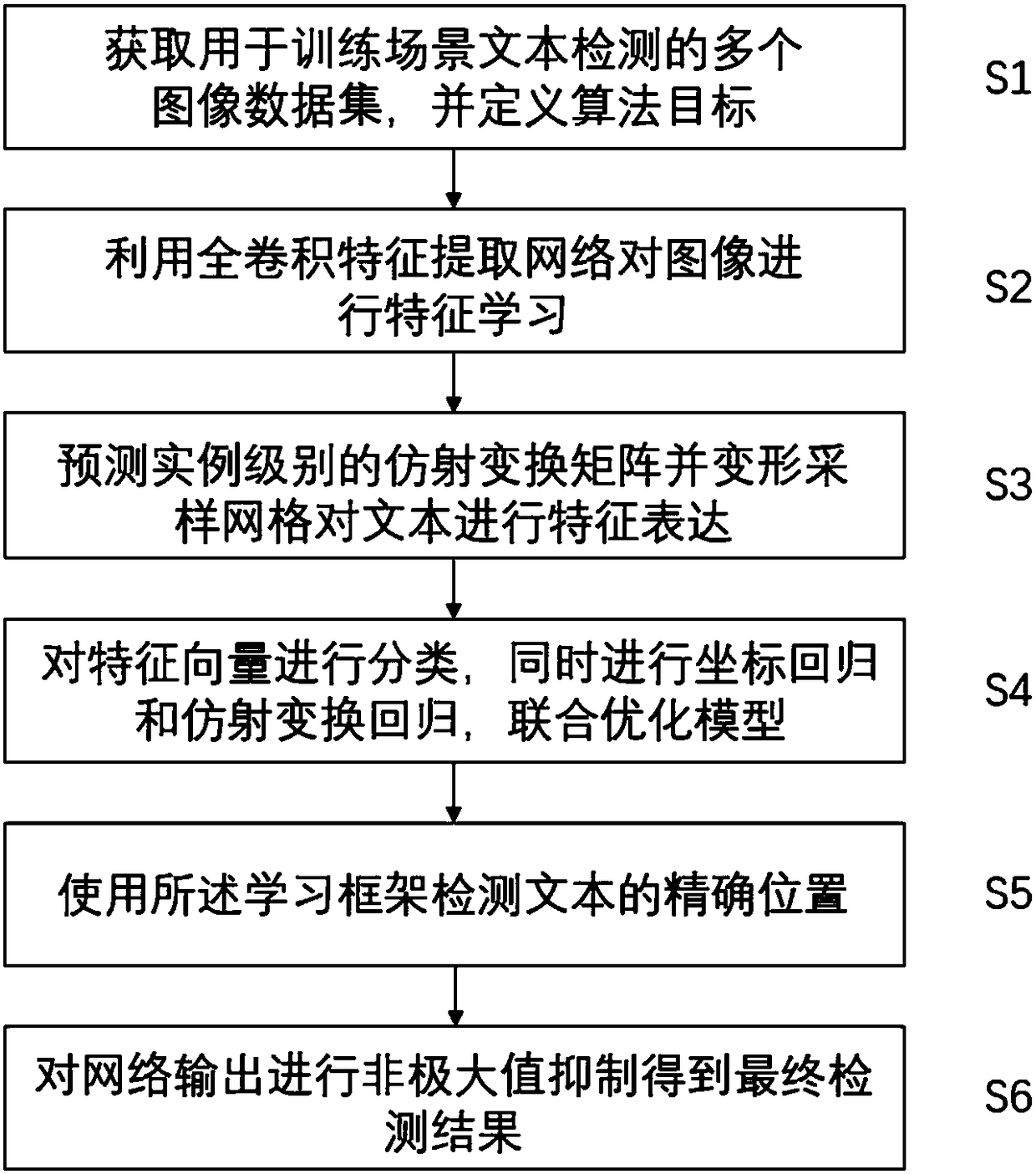

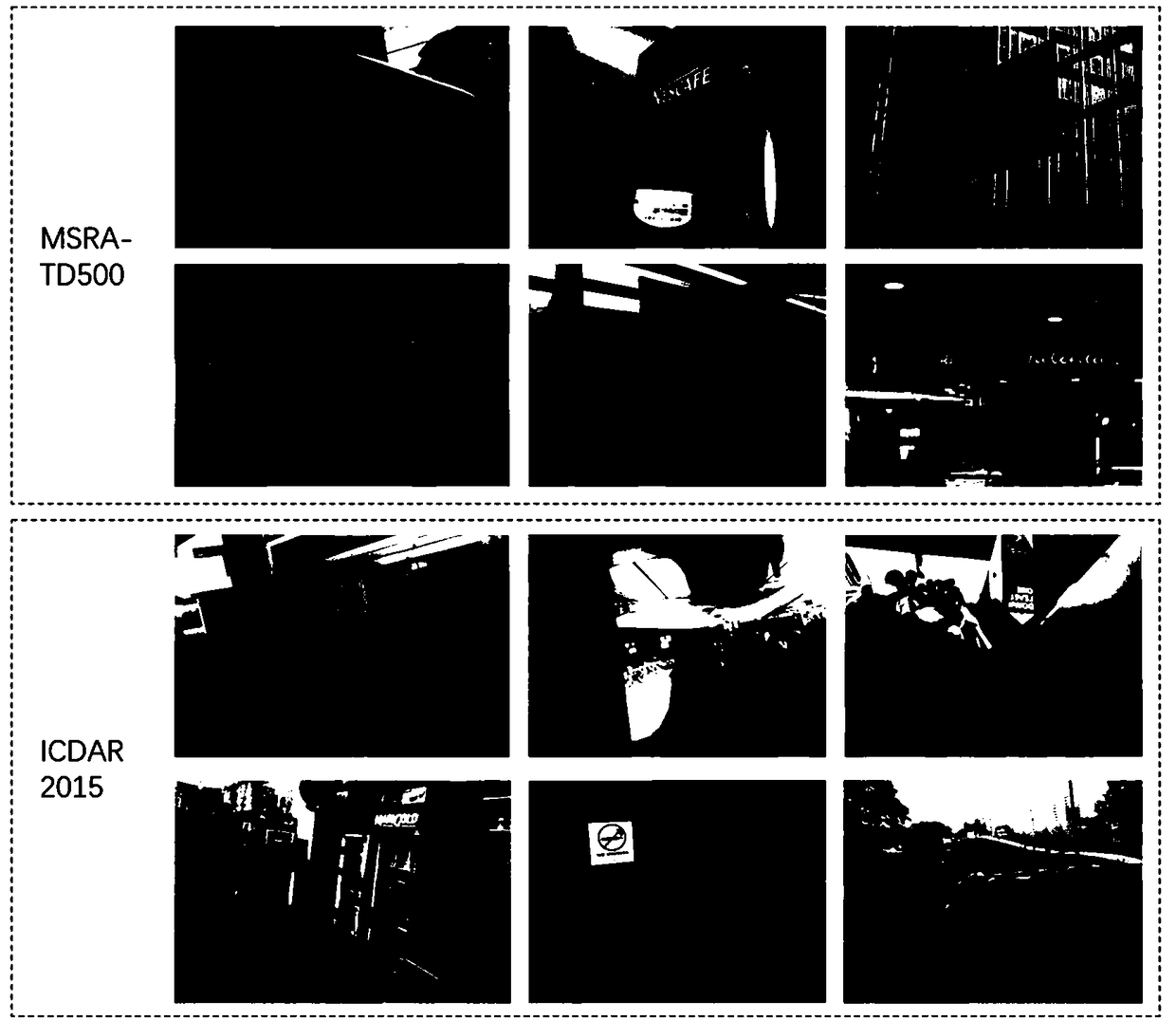

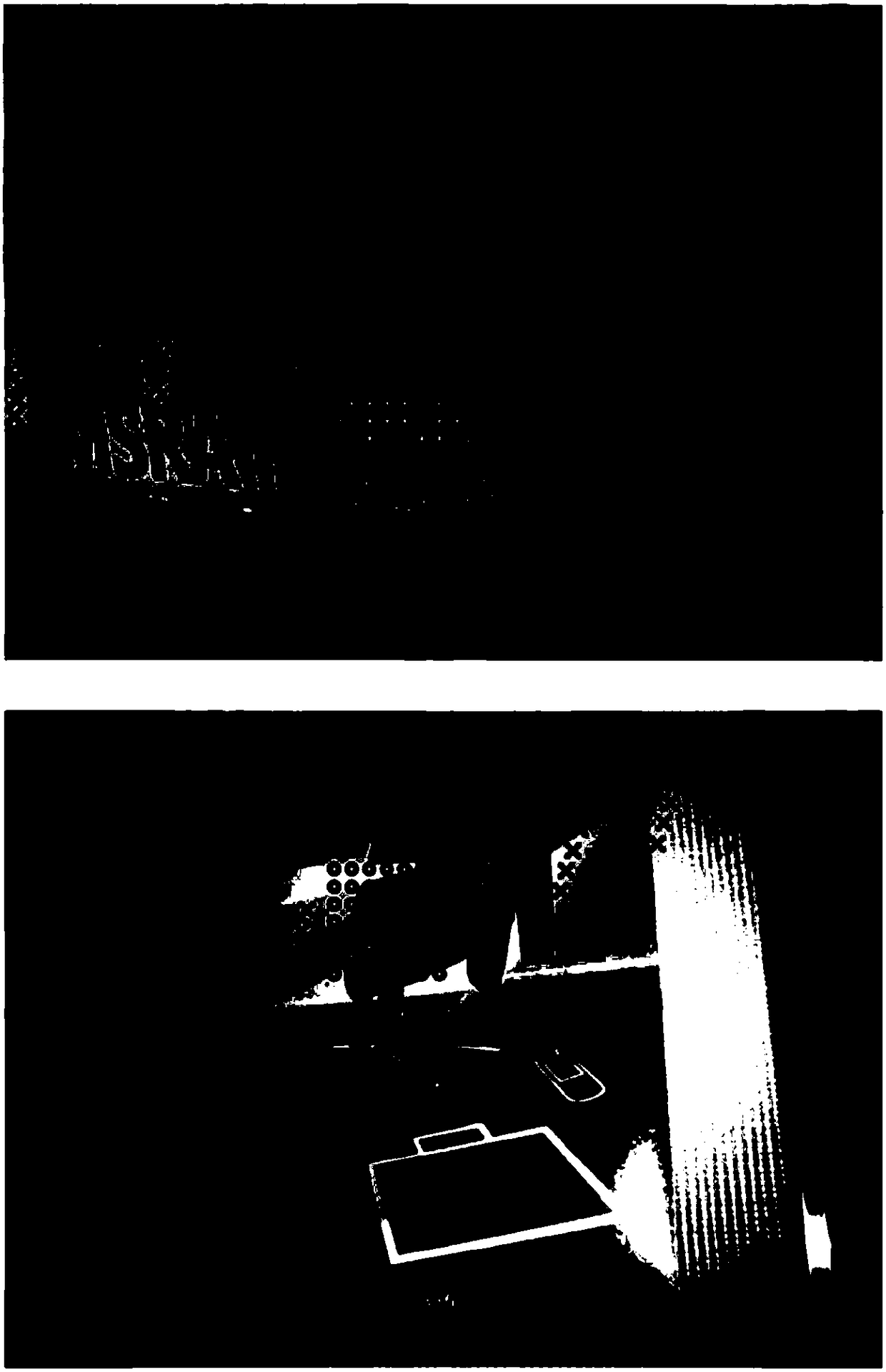

Scene text detection method based on end-to-end full convolutional neural network

ActiveCN108288088AImprove detection resultsRobust Scene Text Detection ResultsNeural architecturesSpecial data processing applicationsText detectionComputer science

The present invention discloses a scene text detection method based on an end-to-end full convolutional neural network, which is used for the problem of finding a multi-directional text position in animage of a natural scene. The method specifically comprises the following steps: obtaining a plurality of image data sets for training scene text detection, and defining an algorithm target; carryingout feature learning on the image by using a full convolution feature extraction network; predicting an affine transformation matrix in an instance level for each sample point on the feature map, andcarrying out feature expression on the text according to the predicted affine transformation deformation sampling grid; classifying feature vectors of a candidate text, and carrying out coordinate regression and affine transformation regression to jointly optimize the model; using the learning framework to detect the precise position of the text; and carrying out non-maximum suppression on the bounding box set output by the network to obtain a final text detection result. The method disclosed by the present invention is used for scene text detection of real image data, and has a better effectand robustness for multi-directional, multi-scale, multi-lingual, shape distortion and other complicated situations.

Owner:ZHEJIANG UNIV

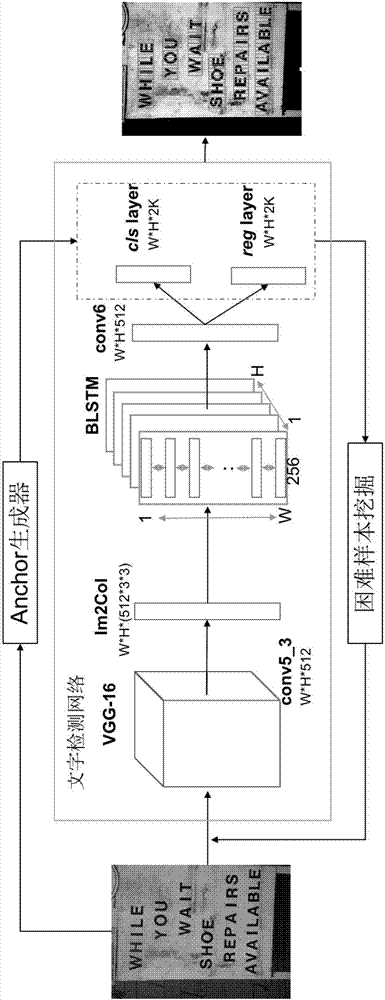

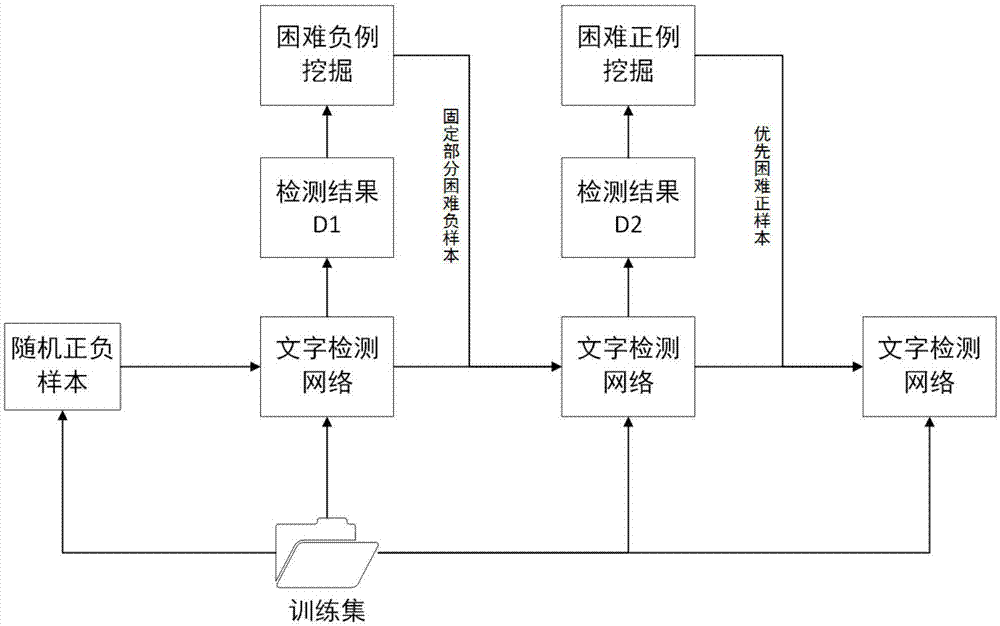

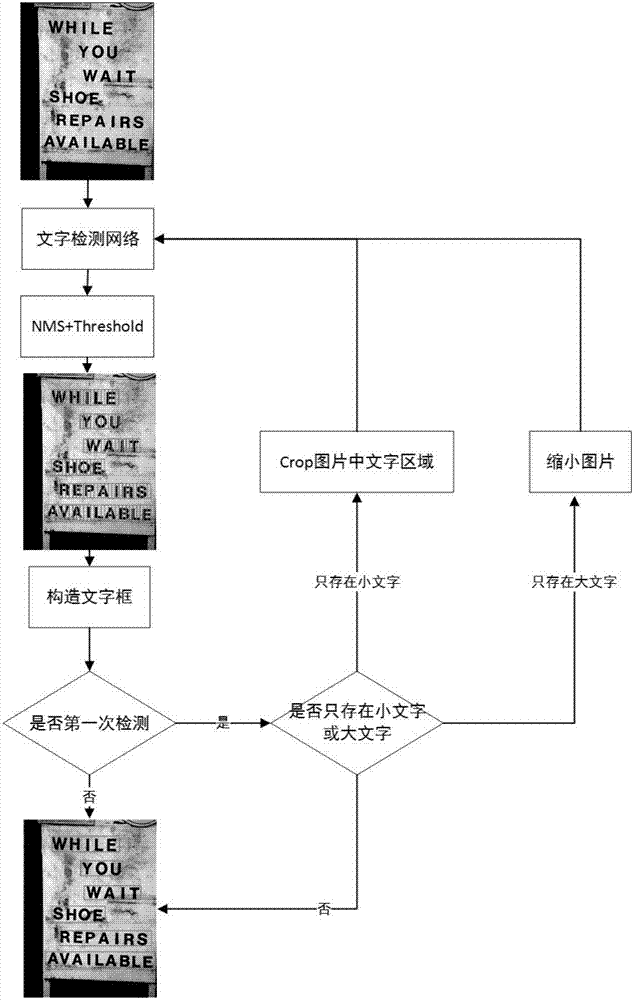

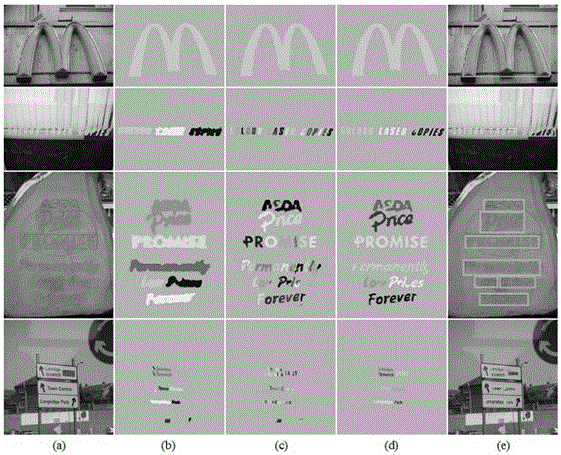

Text detection and localization method in natural scene based on deep learning

ActiveCN107346420AEasy to trainPromote migrationCharacter and pattern recognitionText detectionFalse detection

The invention provides a text detection and localization method in a natural scene based on deep learning. The size of anchor and the regression mode in an RPN (region proposal network) based on Faster-R CNN are changed according to the characteristic information of text. An RNN network layer is added to analyze image context information. A text detection network capable of detecting texts is constructed. In addition, the size of anchor is set through clustering. In particular, cascaded training is carried out through mining difficult samples, which can reduce the false detection rate of texts. In the aspect of test, a cascaded test method is employed. Finally, accurate and efficient text localization is realized.

Owner:INST OF INFORMATION ENG CHINESE ACAD OF SCI

Text saliency-based scene text detection method

ActiveCN106778757AImprove robustnessImprove the ability to distinguishCharacter and pattern recognitionNeural learning methodsSaliency mapText detection

The invention discloses a text saliency-based scene text detection method. The method comprises the following steps of initial text saliency detection, text saliency detailing and text saliency region classification. In the initial text saliency detection stage, a CNN model used for text saliency detection is designed, and the model can automatically learn features capable of representing intrinsic attributes of a text from an image and obtain a saliency map with consciousness for the text. In the text saliency detailing stage, a text saliency detailing CNN model is designed and used for performing further text saliency detection on a rough text saliency region. In the text saliency region classification stage, a text saliency region classification CNN model is used for filtering a non-text region and obtaining a final text detection result. By introducing saliency detection in a scene text detection process, a text region in a scene can be effectively detected, so that the performance of the scene text detection method is improved.

Owner:HARBIN INST OF TECH

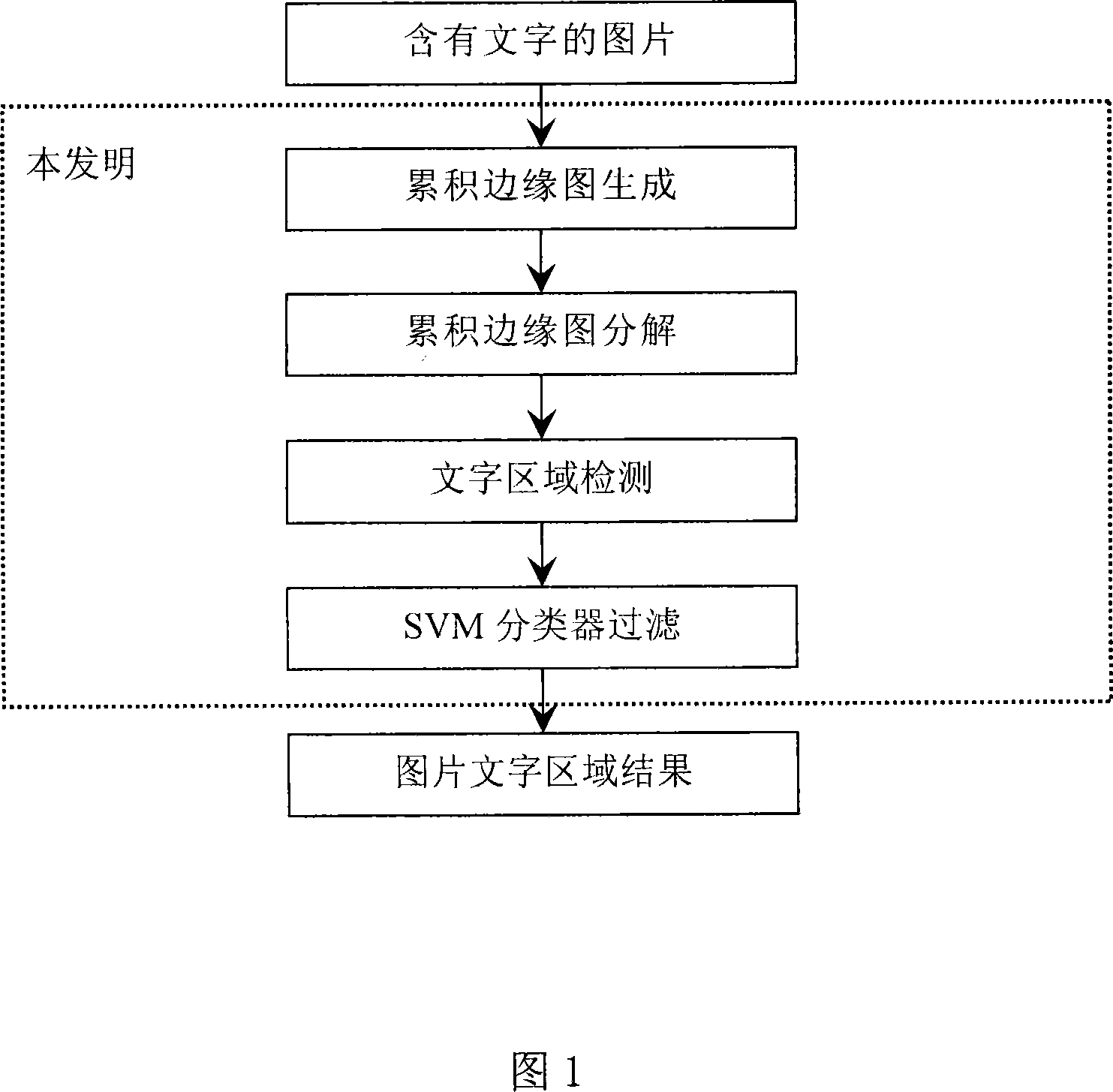

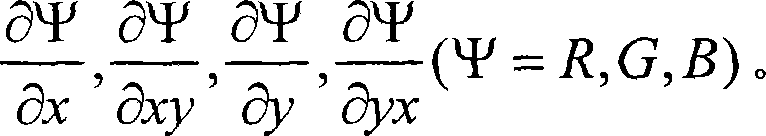

Picture words detecting method

InactiveCN101122952AImprove recallImprove precisionCharacter and pattern recognitionText detectionHorizontal and vertical

Owner:PEKING UNIV

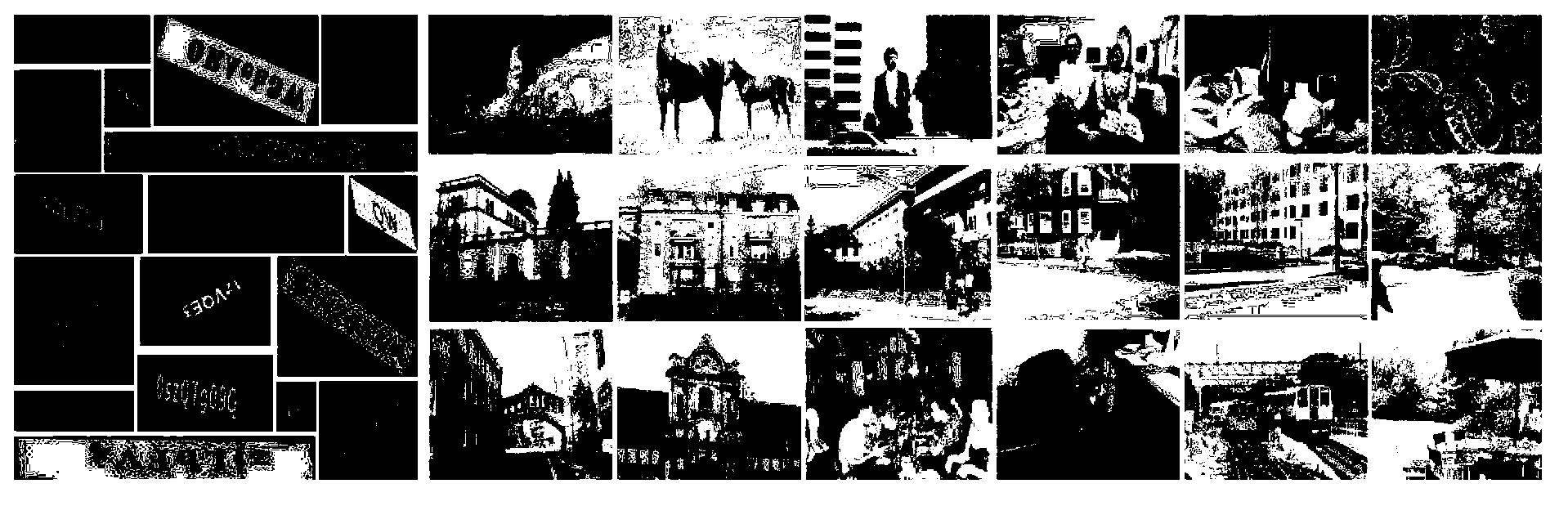

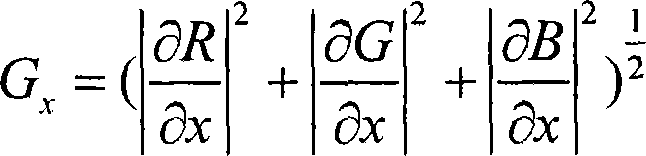

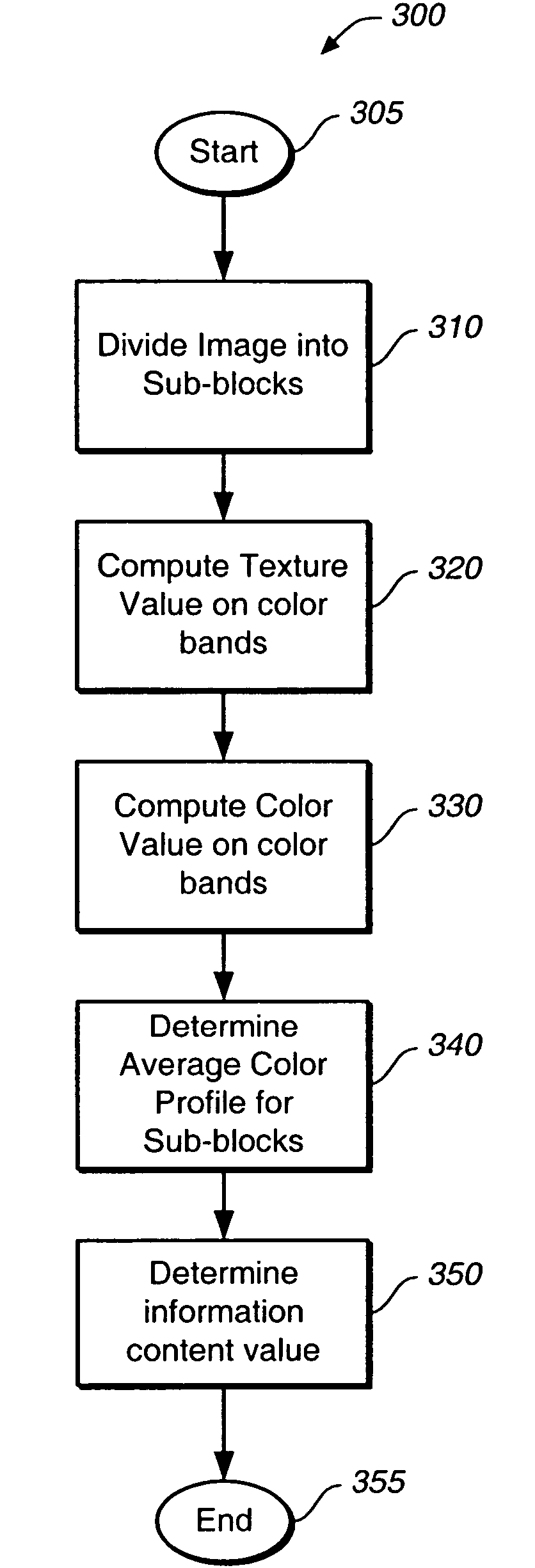

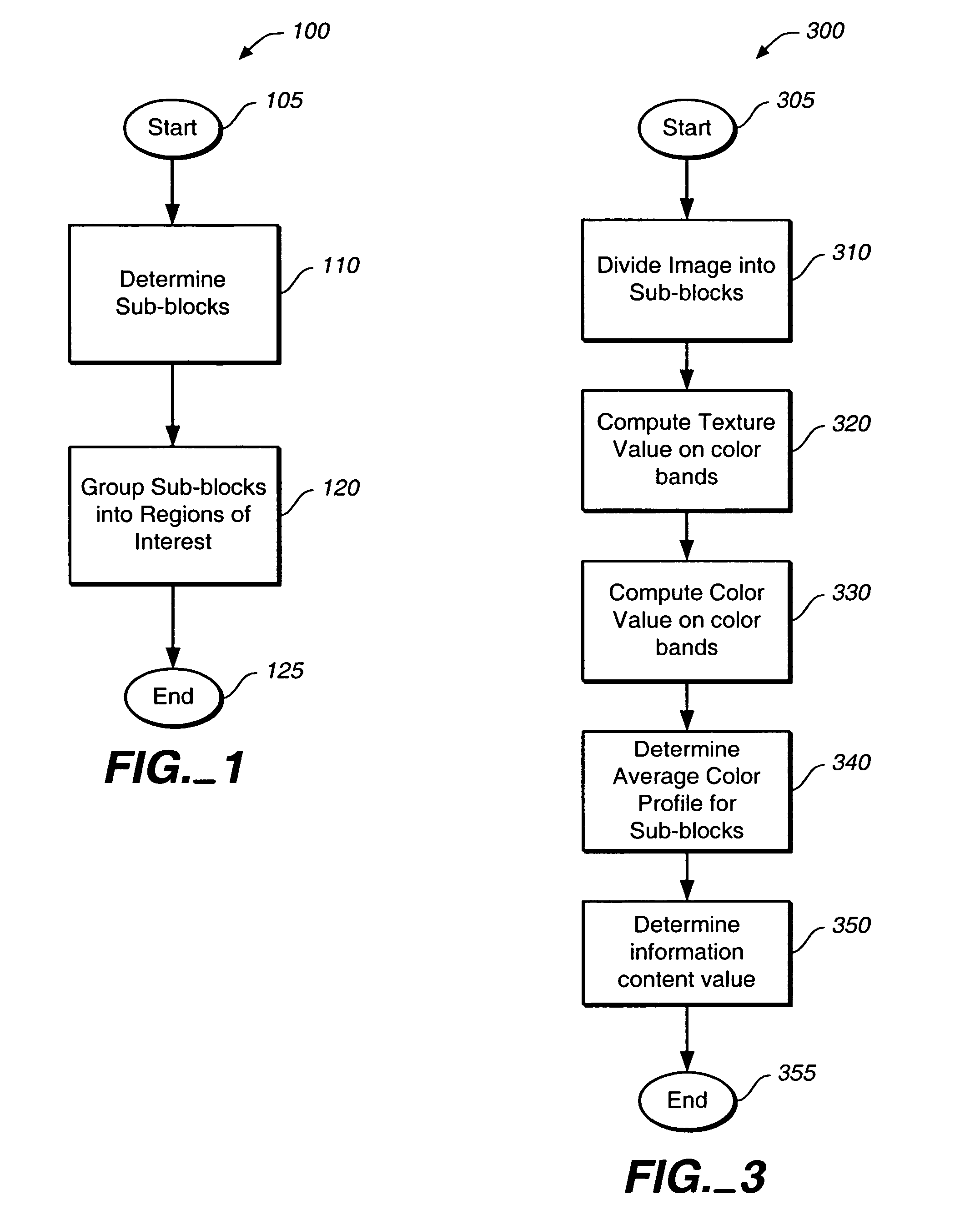

Determining regions of interest in synthetic images

An algorithm for finding regions of interest (ROI) in synthetic images based on an information driven approach in which sub-blocks of a set of synthetic image are analyzed for information content or compressibility based on textural and color features. A DCT may be used to analyze the textural features of a set of images and a color histogram may be used to analyze the color features of the set of images. Sub-blocks of low compressibility are grouped into ROIs using a type of morphological technique. Unlike other algorithms that are geared for highly specific types of ROI (e.g. OCR text detection), the method of the present invention is generally applicable to arbitrary synthetic images. The present invention can be used with several other image applications, including Stained-Glass collages presentations.

Owner:FUJIFILM BUSINESS INNOVATION CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com