Patents

Literature

4217results about How to "Easy to train" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

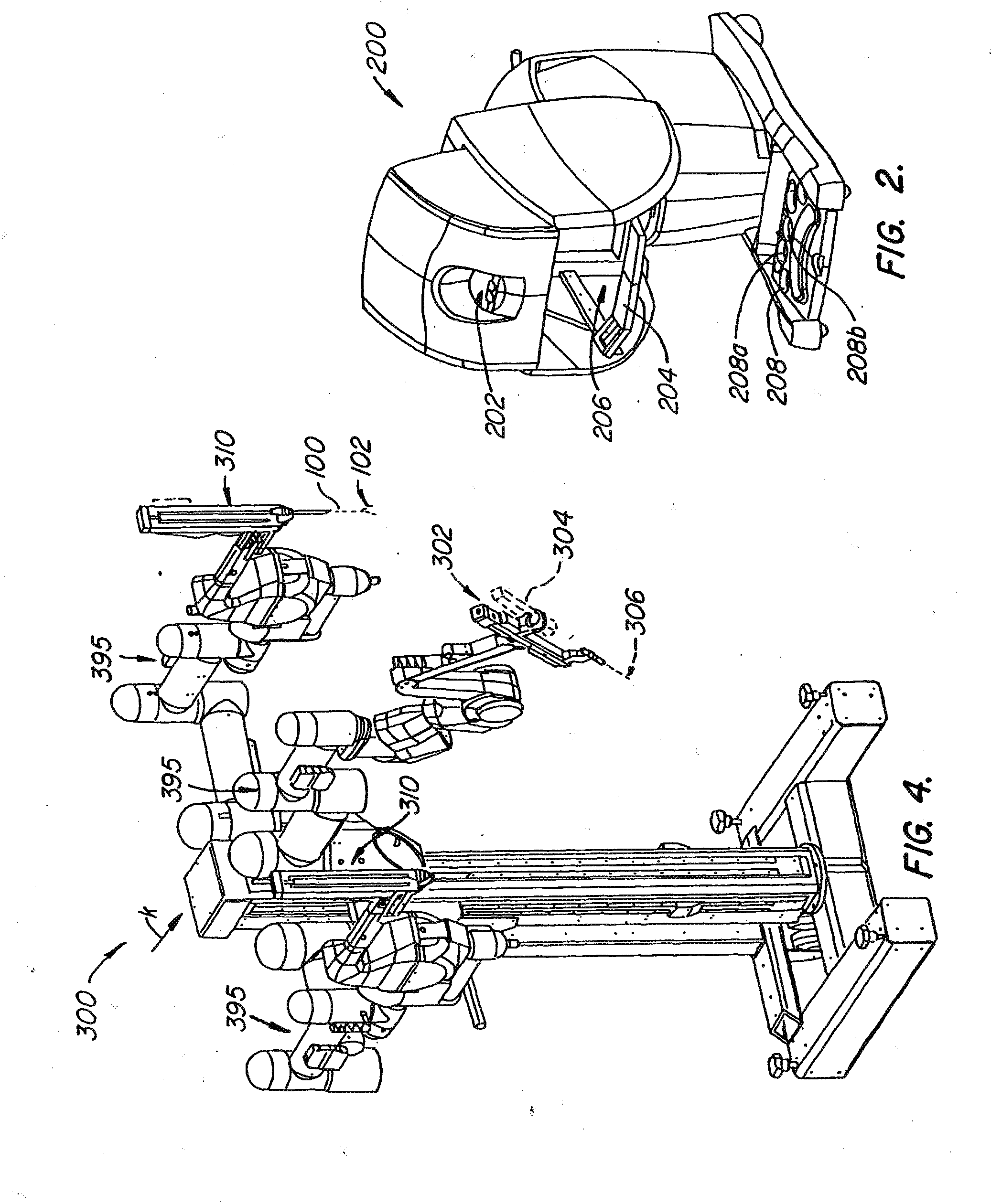

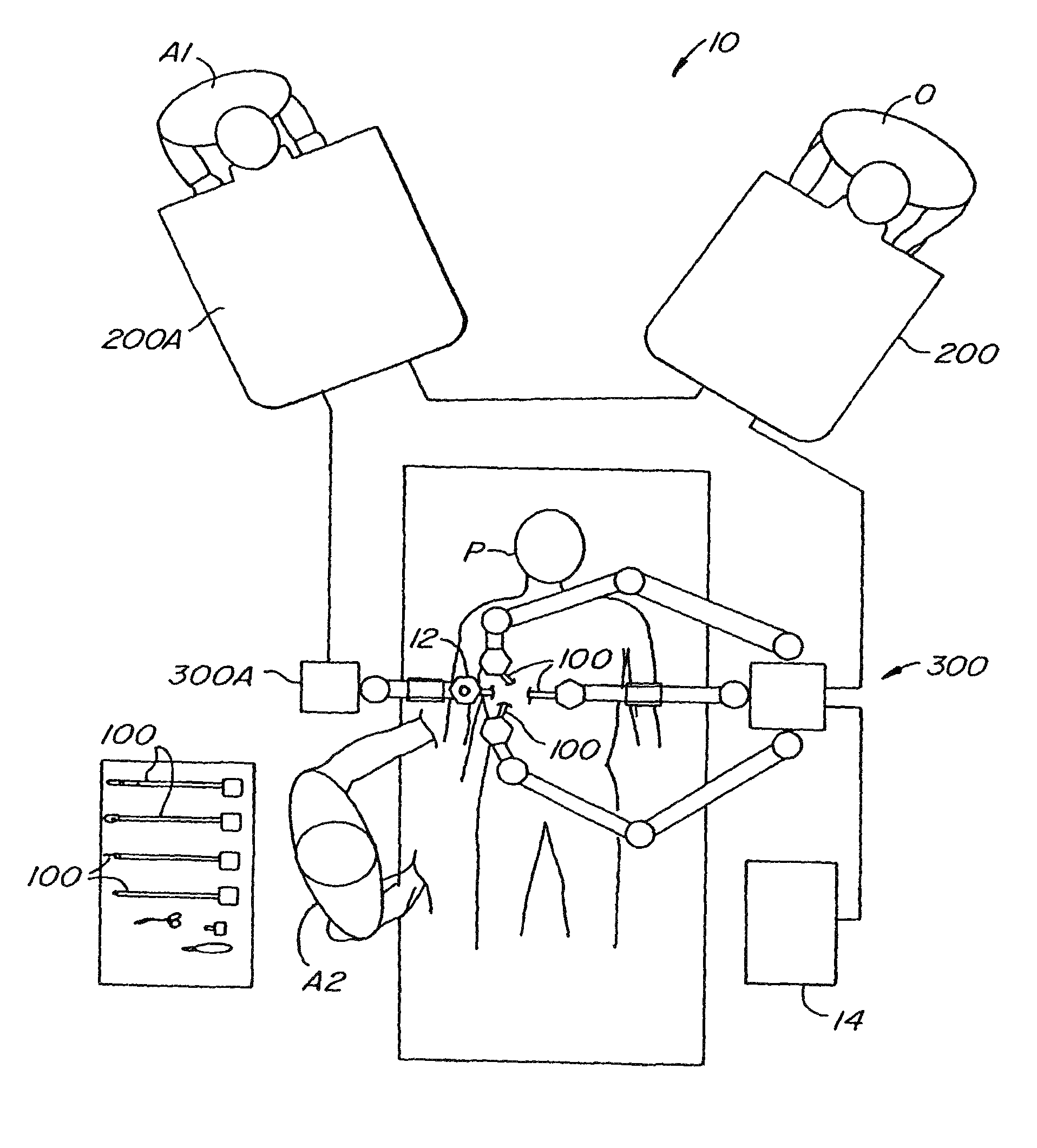

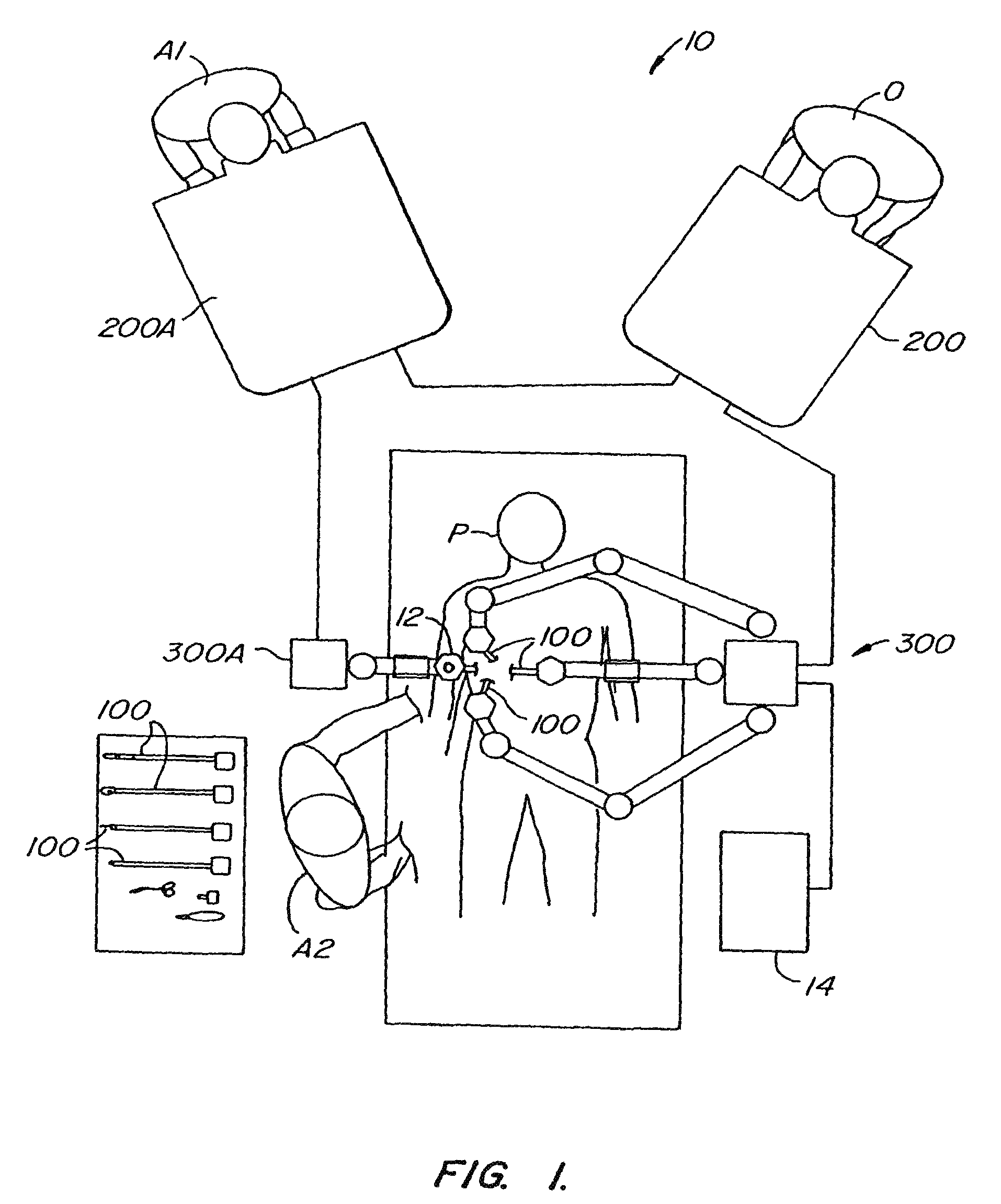

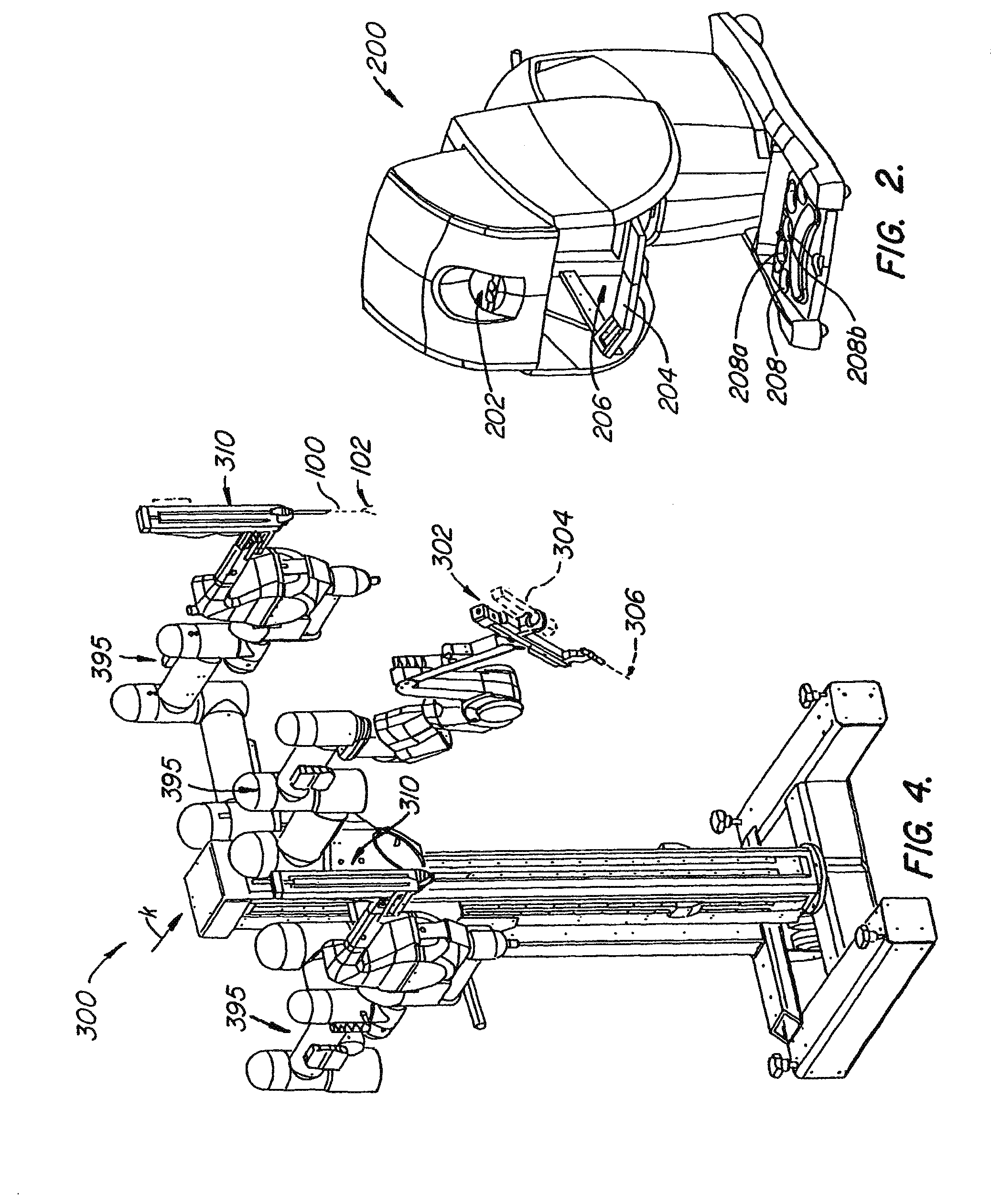

Multi-user medical robotic system for collaboration or training in minimally invasive surgical procedures

ActiveUS20060178559A1Promote collaborationMinimally invasiveMedical communicationProgramme controlLess invasive surgeryRobotic systems

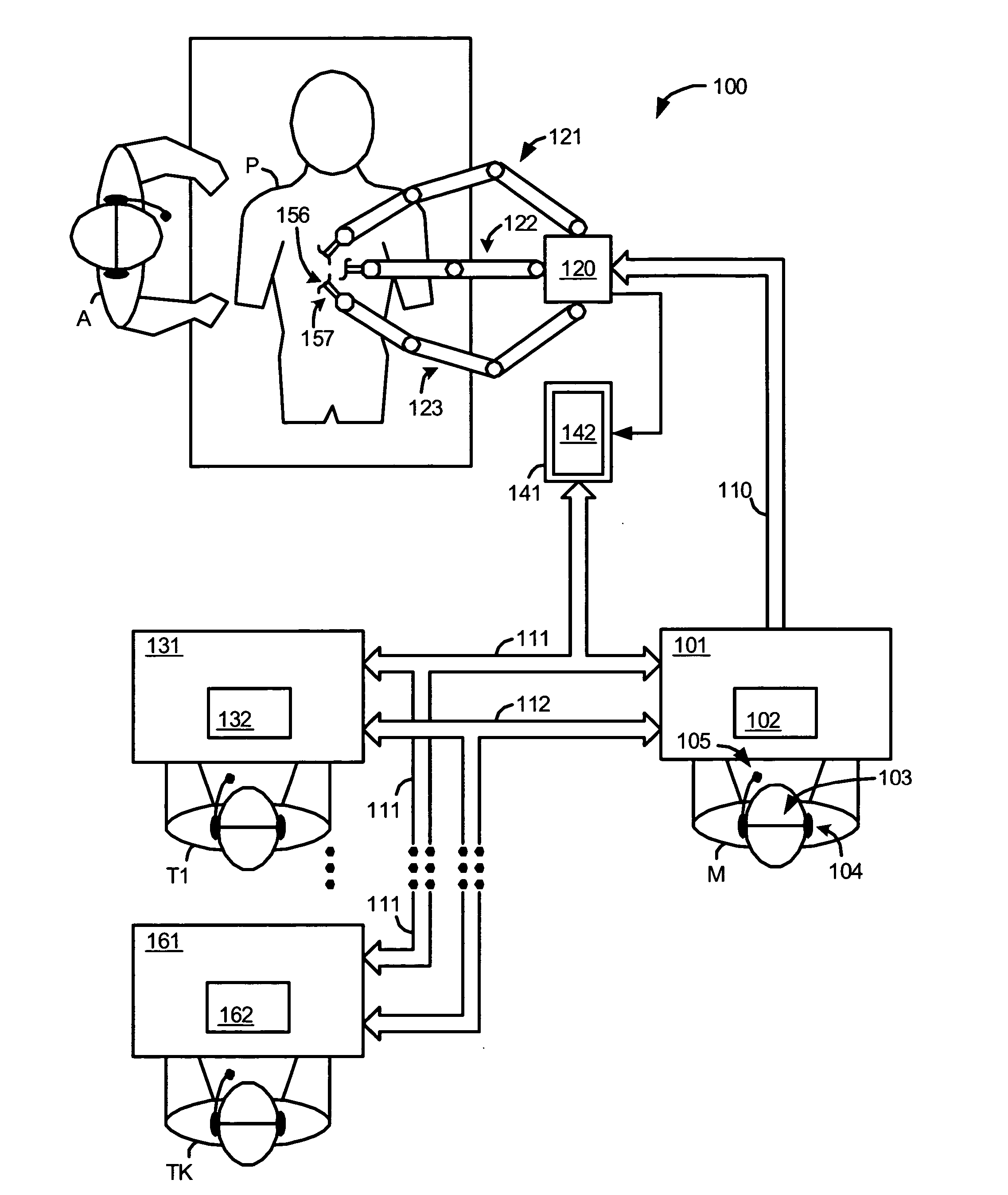

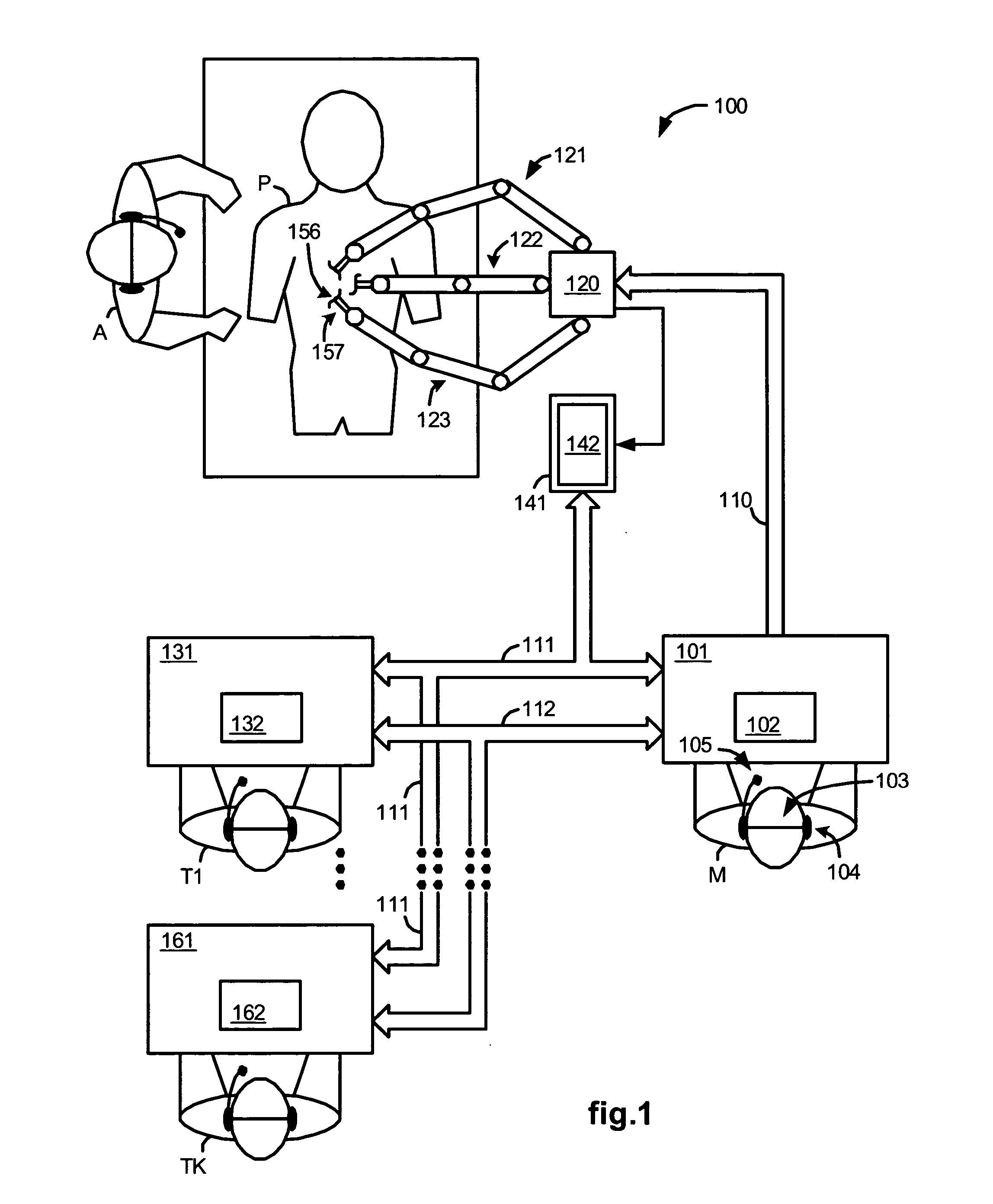

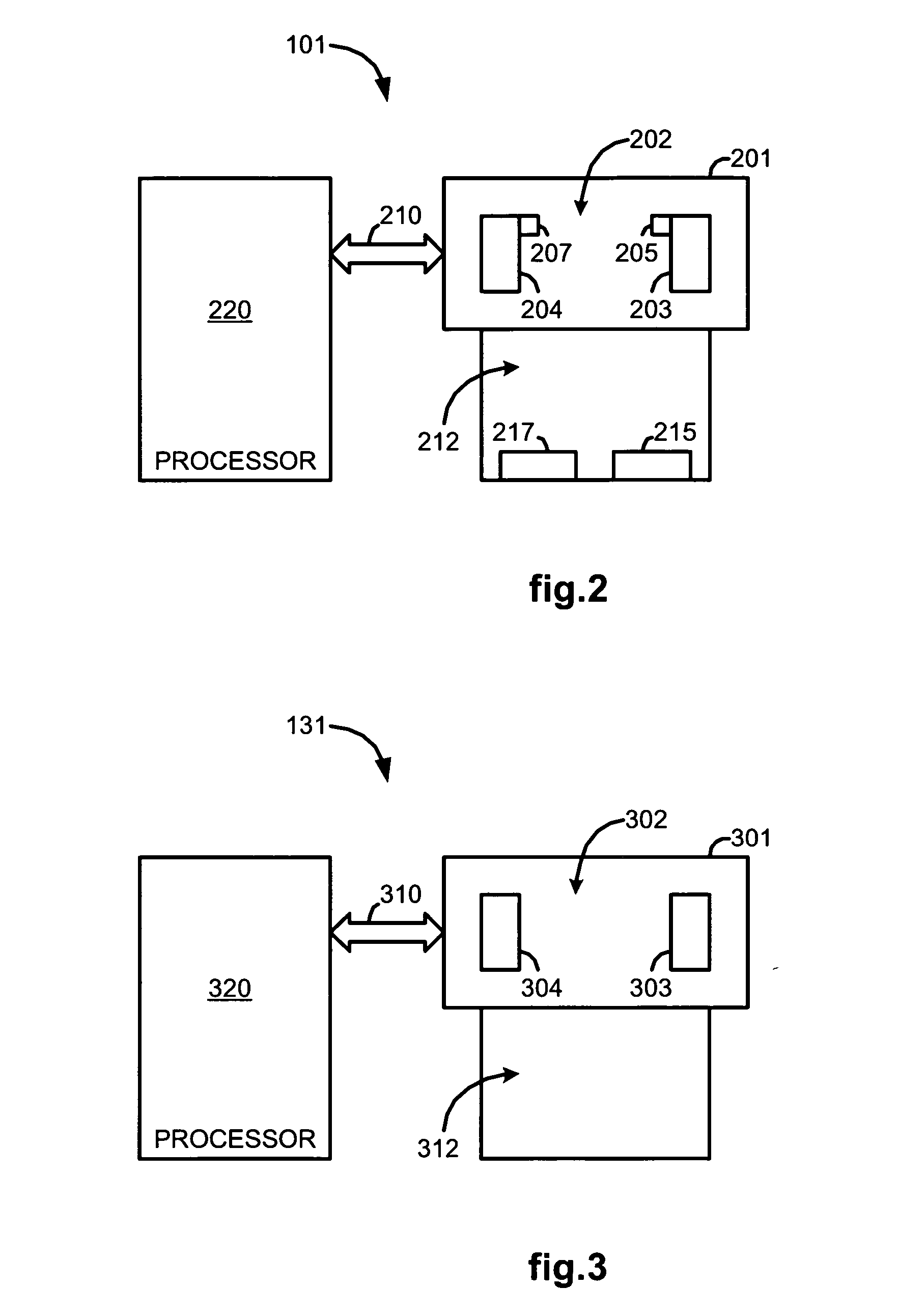

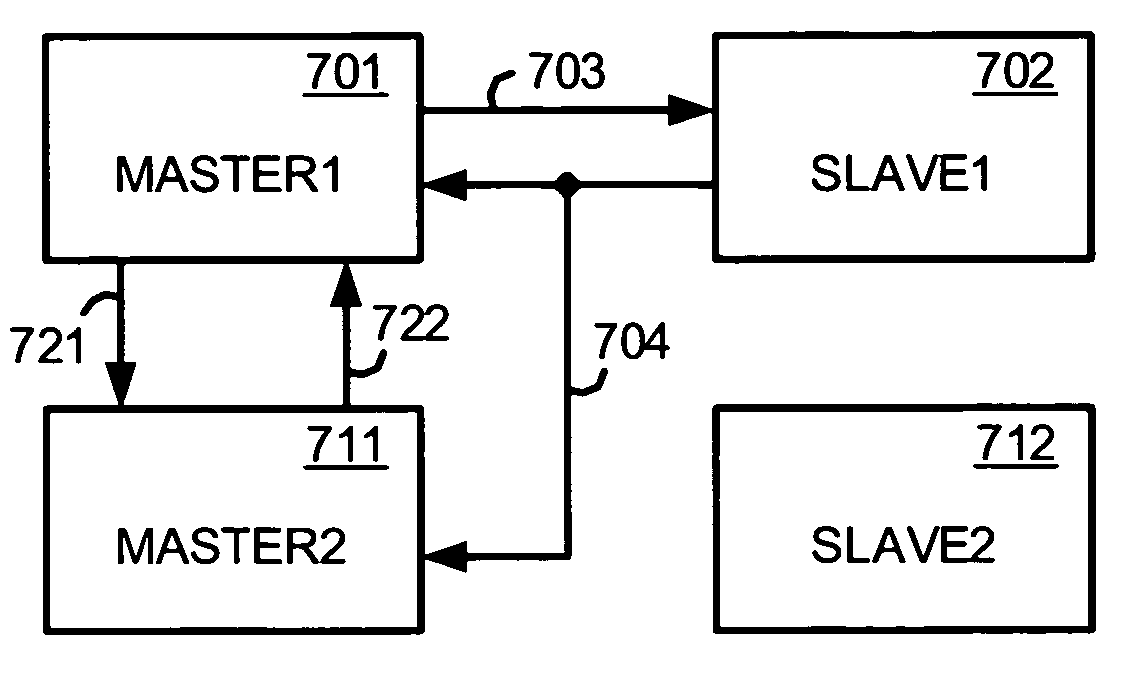

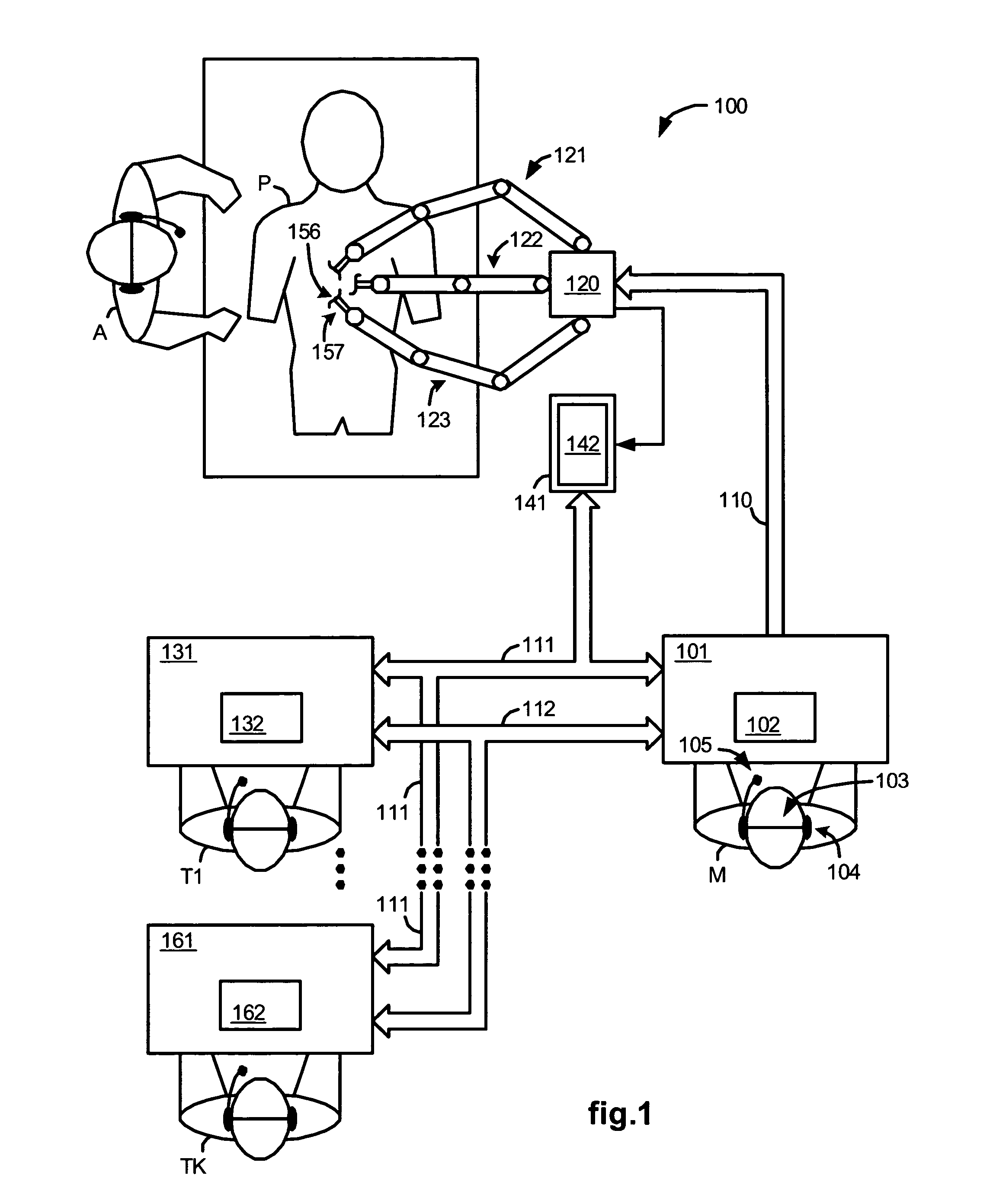

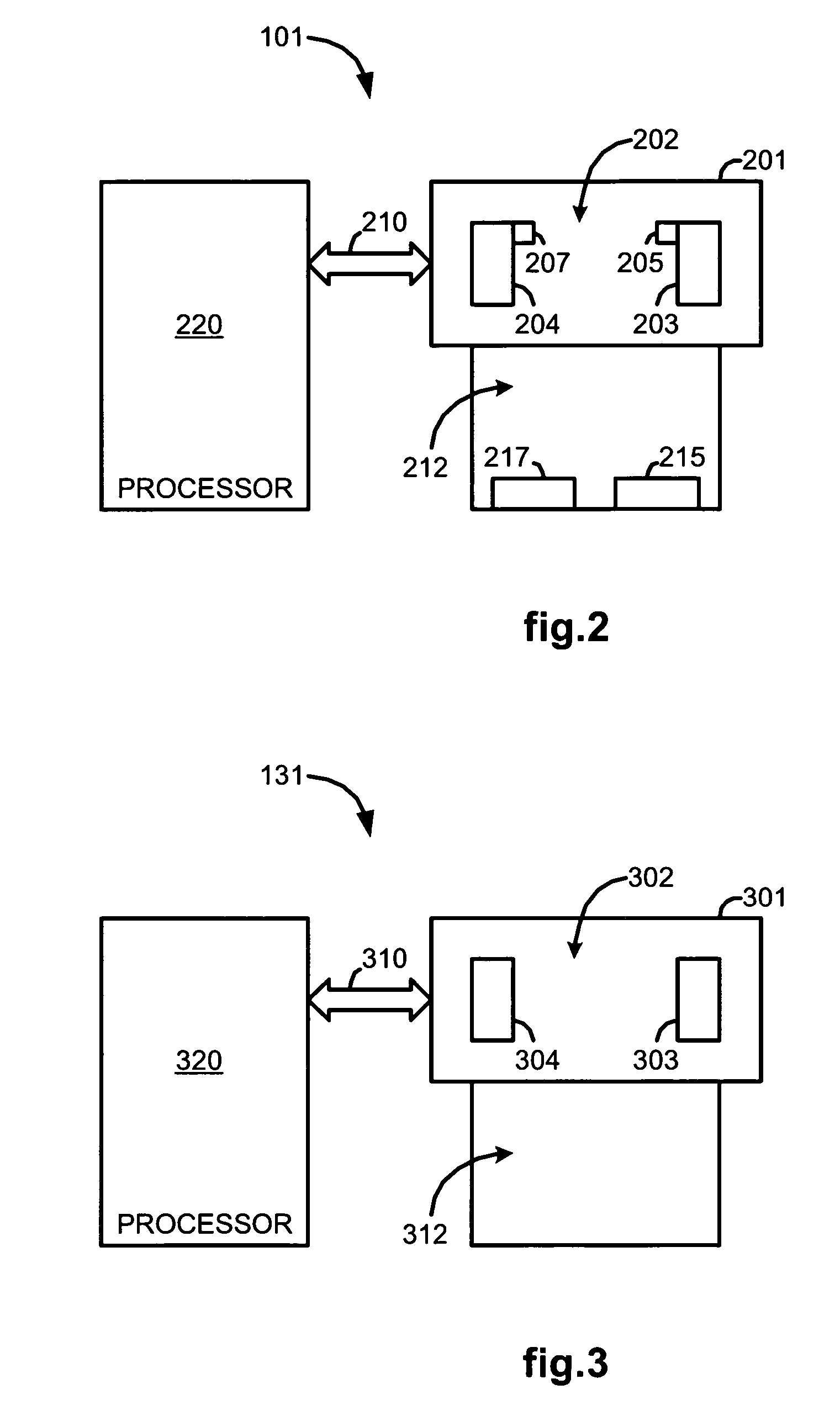

A multi-user medical robotic system for collaboration or training in minimally invasive surgical procedures includes first and second master input devices, a first slave robotic mechanism, and at least one processor configured to generate a first slave command for the first slave robotic mechanism by switchably using one or both of a first command indicative of manipulation of the first master input device by a first user and a second command indicative of manipulation of the second master input device by a second user. To facilitate the collaboration or training, both first and second users communicate with each other through an audio system and see the minimally invasive surgery site on first and second displays respectively viewable by the first and second users.

Owner:INTUITIVE SURGICAL OPERATIONS INC

Multi-user medical robotic system for collaboration or training in minimally invasive surgical procedures

ActiveUS8527094B2Promote collaborationMinimally invasiveMedical communicationProgramme controlLess invasive surgeryRobotic systems

Owner:INTUITIVE SURGICAL OPERATIONS INC

Medical robotic system with operatively couplable simulator unit for surgeon training

InactiveUS20100234857A1Easy to trainCost effectiveComputer controlSimulator controlRobotic armMedical robotics

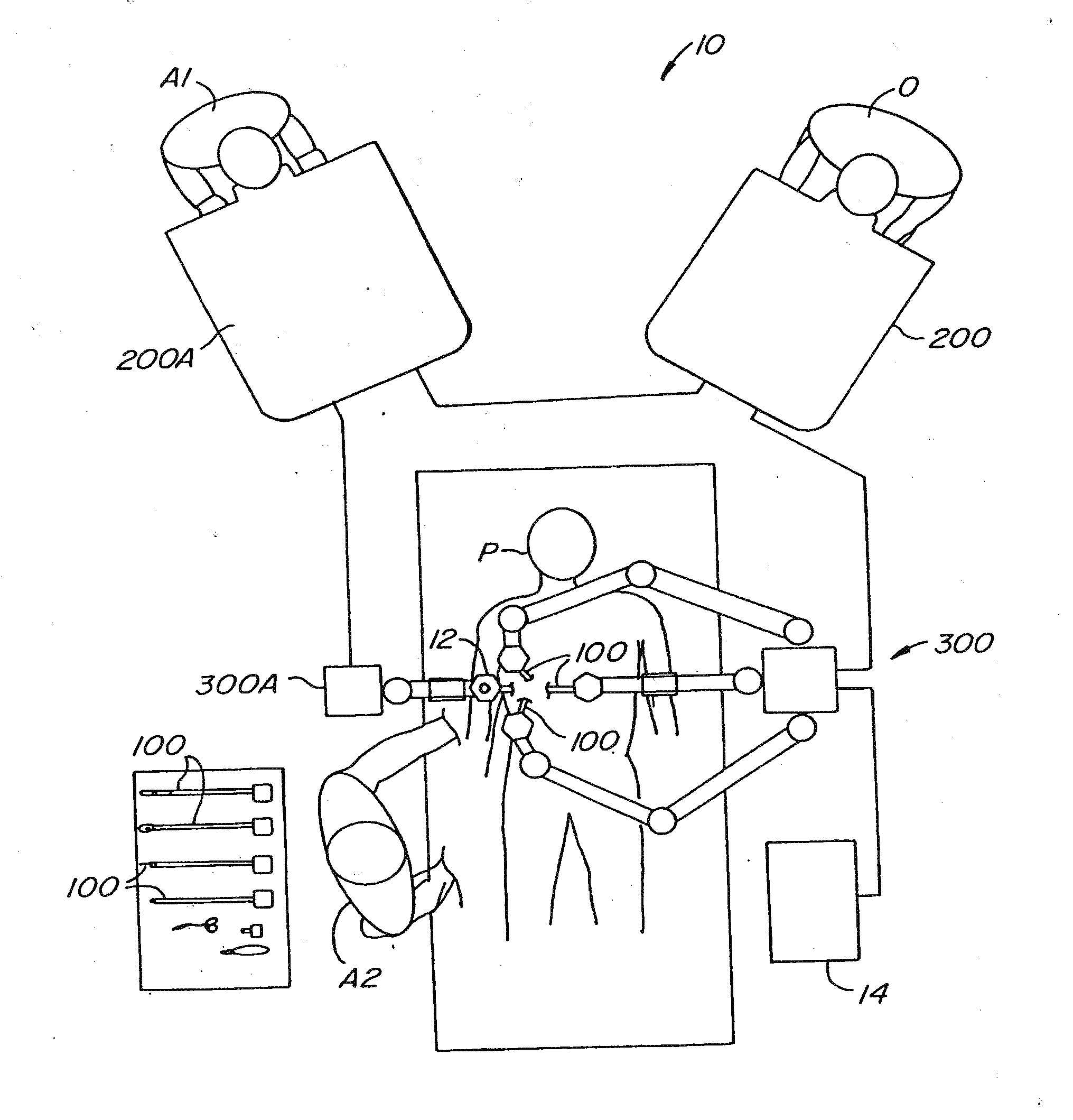

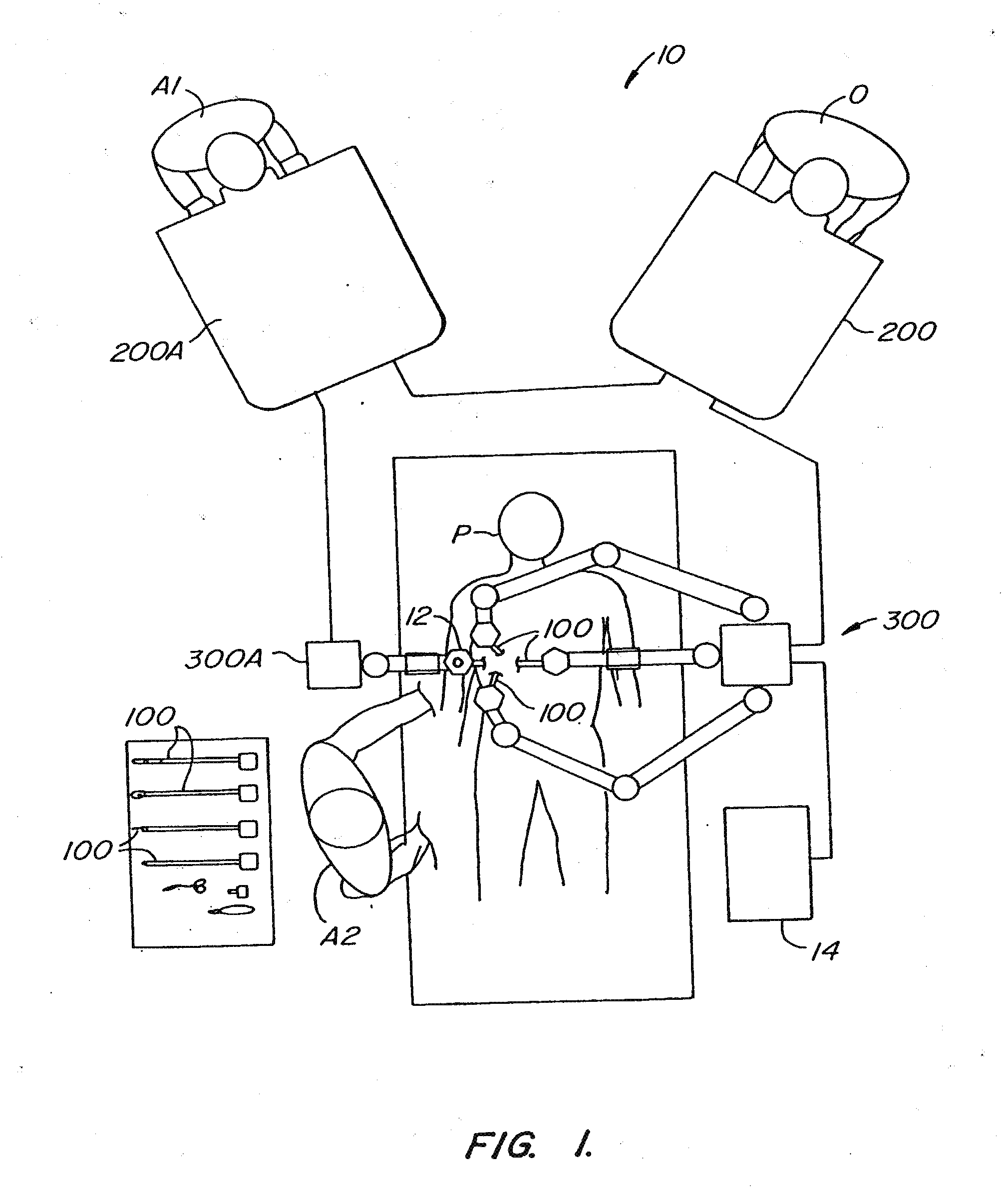

A medical robotic system has a surgeon console which is operatively couplable to a patient side unit for performing medical procedures or operatively couplable to a simulator unit for training purposes. The surgeon console has a monitor, input devices and foot pedals. The patient side unit has robotic arm assemblies coupled to instruments and an endoscope. When the surgeon console is coupled to the patient side unit, the instruments move in response to movement of the input devices to perform a medical procedure while captured images of the instruments are displayed on the monitor. When the surgeon console is coupled to the simulator unit, virtual instruments move in response to movement of the input devices to perform a user selected virtual procedure while virtual images of the virtual instruments are displayed on the monitor.

Owner:INTUITIVE SURGICAL OPERATIONS INC

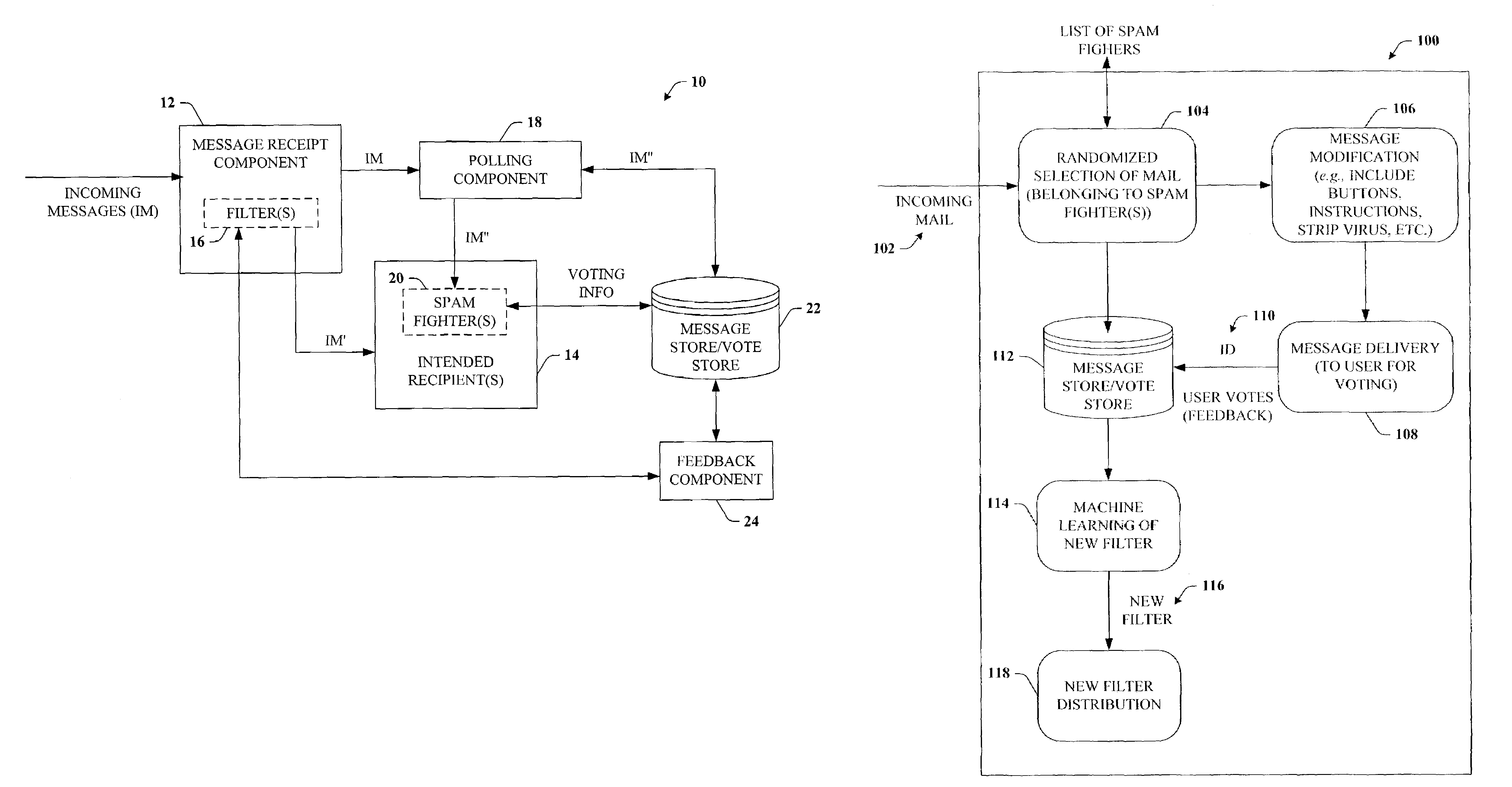

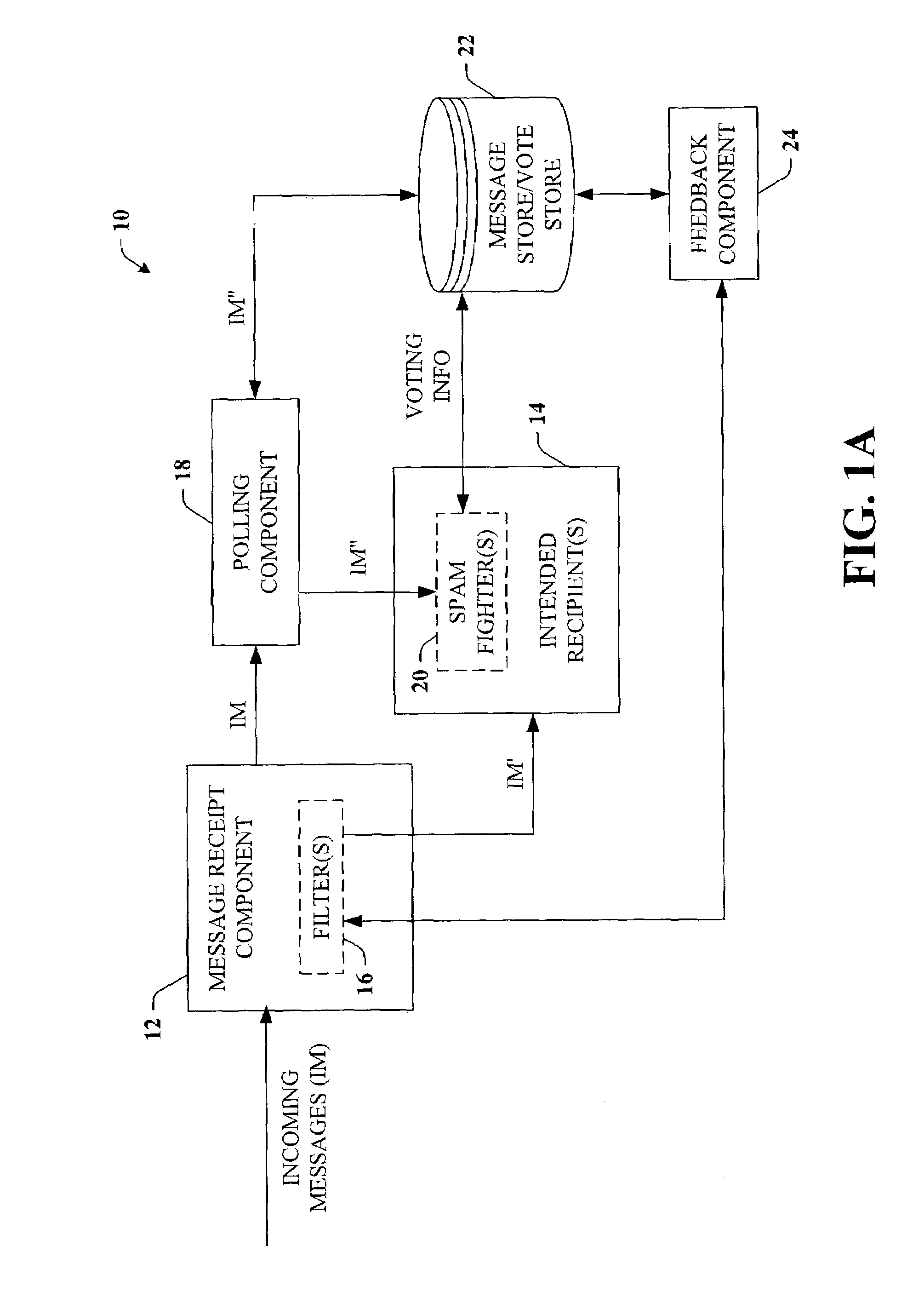

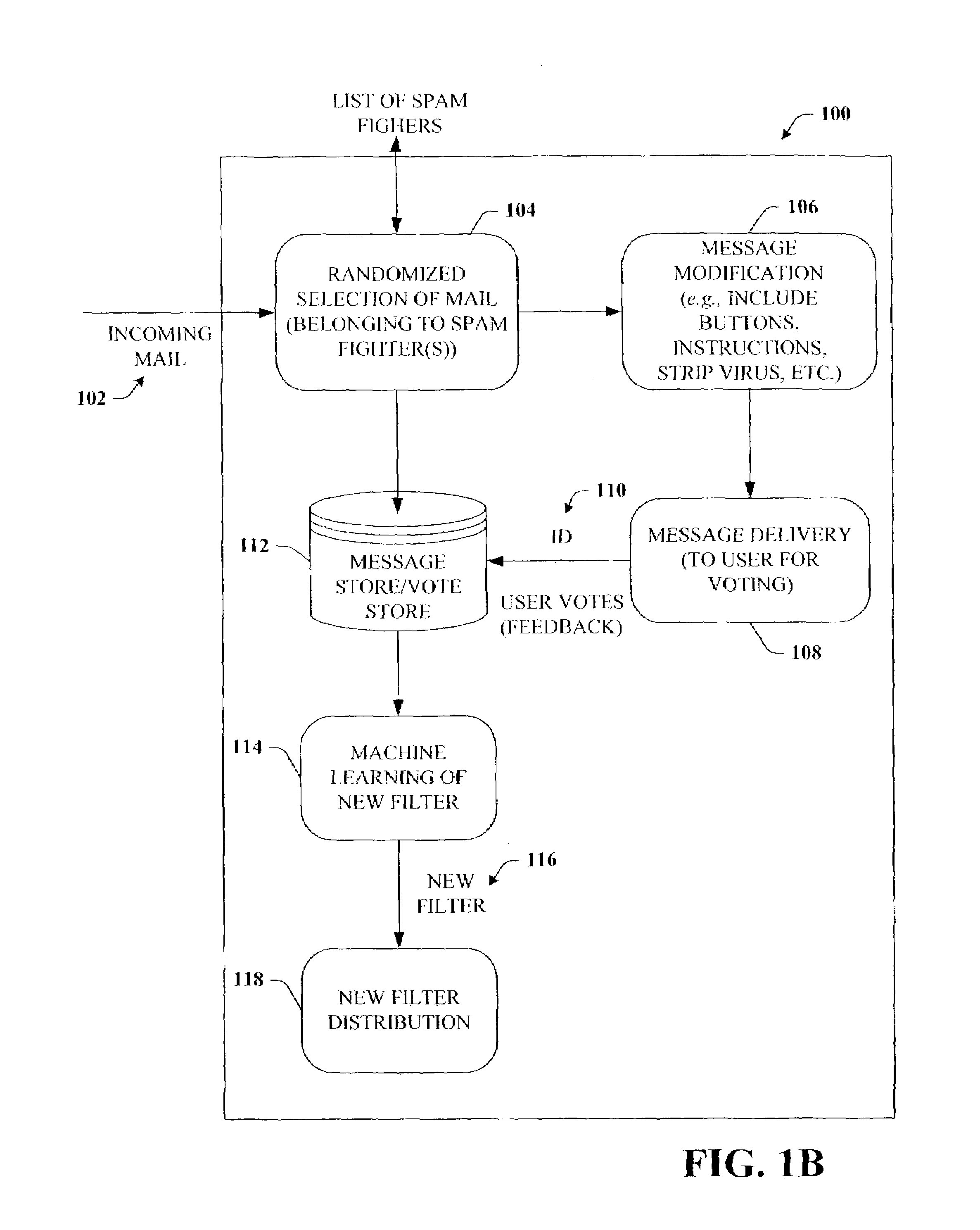

Feedback loop for spam prevention

InactiveUS7219148B2Facilitate trainingImprove performanceDigital data processing detailsUser identity/authority verificationClient-sideTraining data sets

The subject invention provides for a feedback loop system and method that facilitate classifying items in connection with spam prevention in server and / or client-based architectures. The invention makes uses of a machine-learning approach as applied to spam filters, and in particular, randomly samples incoming email messages so that examples of both legitimate and junk / spam mail are obtained to generate sets of training data. Users which are identified as spam-fighters are asked to vote on whether a selection of their incoming email messages is individually either legitimate mail or junk mail. A database stores the properties for each mail and voting transaction such as user information, message properties and content summary, and polling results for each message to generate training data for machine learning systems. The machine learning systems facilitate creating improved spam filter(s) that are trained to recognize both legitimate mail and spam mail and to distinguish between them.

Owner:MICROSOFT TECH LICENSING LLC

Personal digital assistant key for an electronic lock

InactiveUS6937140B1Easy to trainElectric signal transmission systemsDigital data processing detailsEmbedded systemRemote computer

A mechanical interface (84) for a PDA (80) allows the PDA to be positioned in an operative relationship relative to an electronic lock or electronic lockbox (82). The mechanical interface allows the PDA to be used as a key (80) to actuate the lock, by transmitting signals from the PDA to the lock. The PDA retains its normal functionality as a general purpose computer, and the interface can also form part of a link between the PDA and a remote computer (88) and / or database (92).

Owner:GE SECURITY INC +1

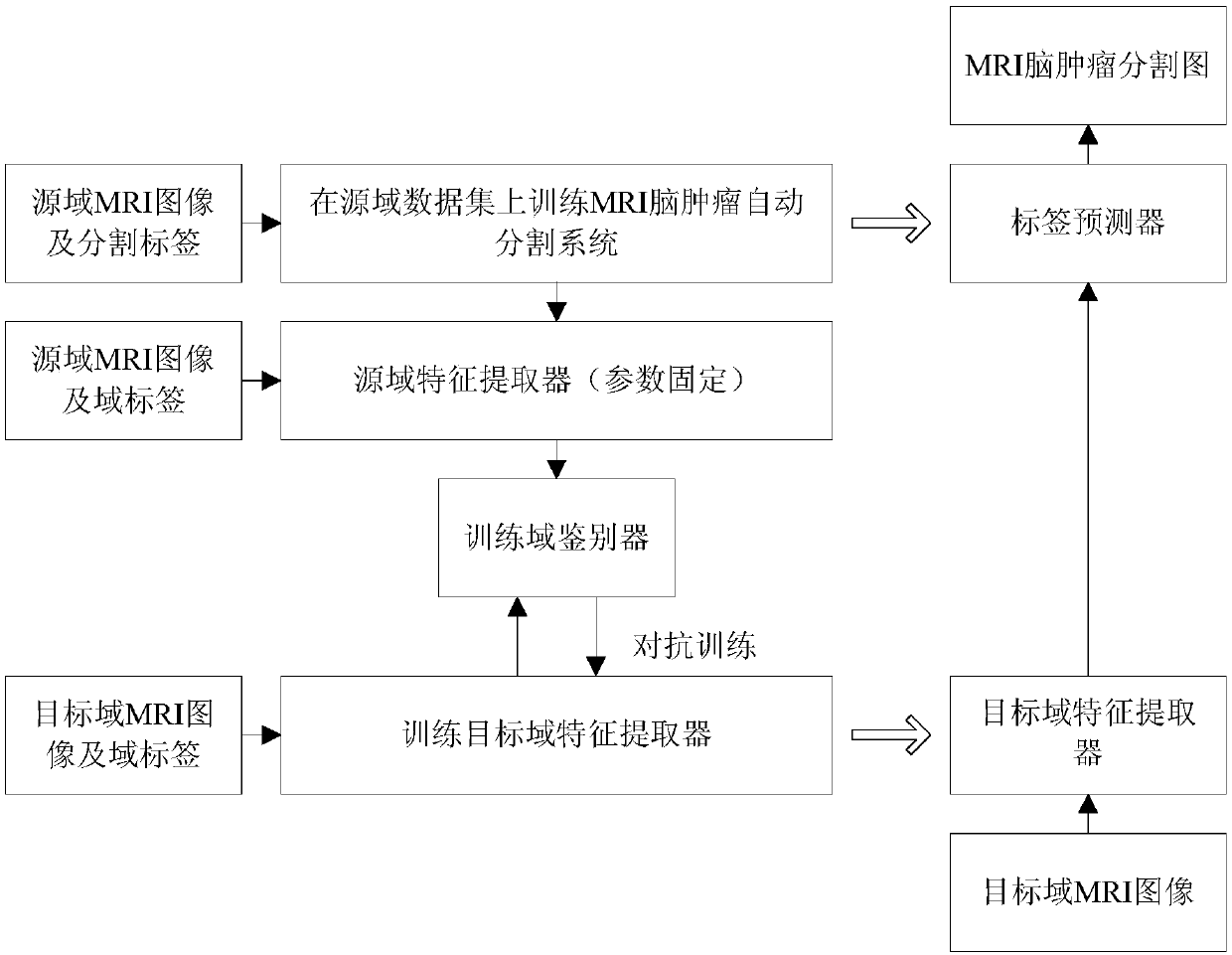

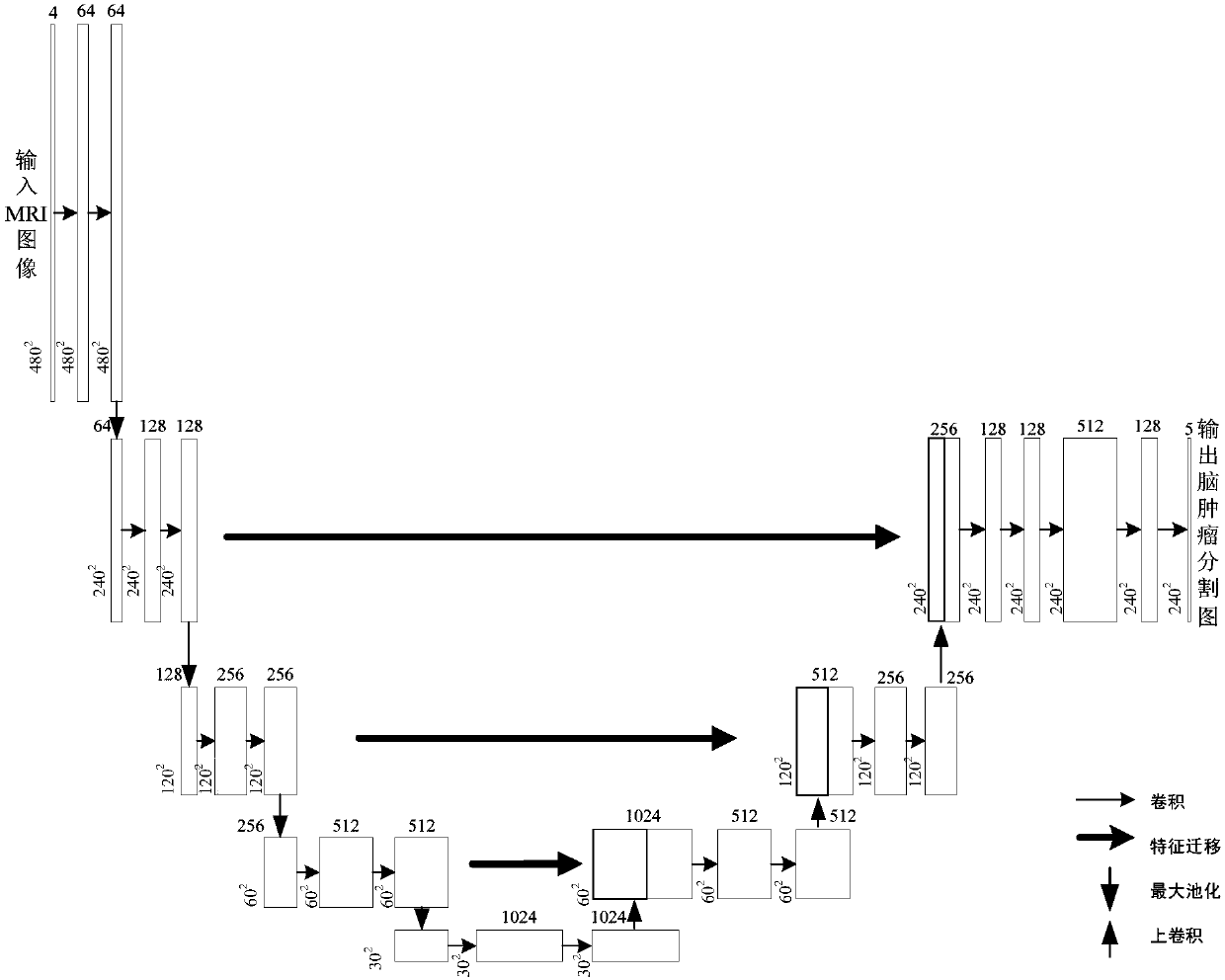

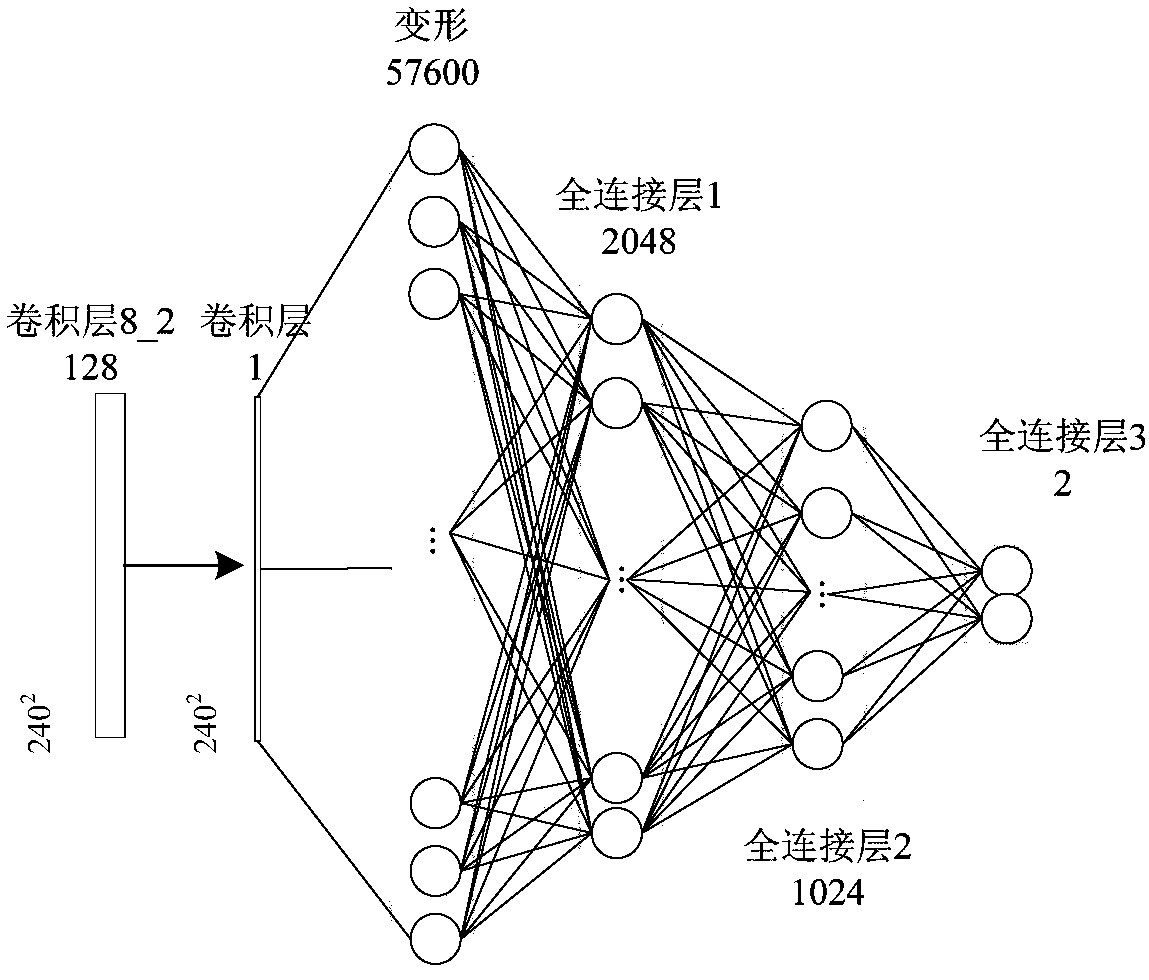

Unsupervised domain-adaptive brain tumor semantic segmentation method based on deep adversarial learning

InactiveCN108062753AAccurate predictionEasy to trainImage enhancementImage analysisDiscriminatorNetwork model

The invention provides an unsupervised domain-adaptive brain tumor semantic segmentation method based on deep adversarial learning. The method comprises the steps of deep coding-decoding full-convolution network segmentation system model setup, domain discriminator network model setup, segmentation system pre-training and parameter optimization, adversarial training and target domain feature extractor parameter optimization and target domain MRI brain tumor automatic semantic segmentation. According to the method, high-level semantic features and low-level detailed features are utilized to jointly predict pixel tags by the adoption of a deep coding-decoding full-convolution network modeling segmentation system, a domain discriminator network is adopted to guide a segmentation model to learn domain-invariable features and a strong generalization segmentation function through adversarial learning, a data distribution difference between a source domain and a target domain is minimized indirectly, and a learned segmentation system has the same segmentation precision in the target domain as in the source domain. Therefore, the cross-domain generalization performance of the MRI brain tumor full-automatic semantic segmentation method is improved, and unsupervised cross-domain adaptive MRI brain tumor precise segmentation is realized.

Owner:CHONGQING UNIV OF TECH

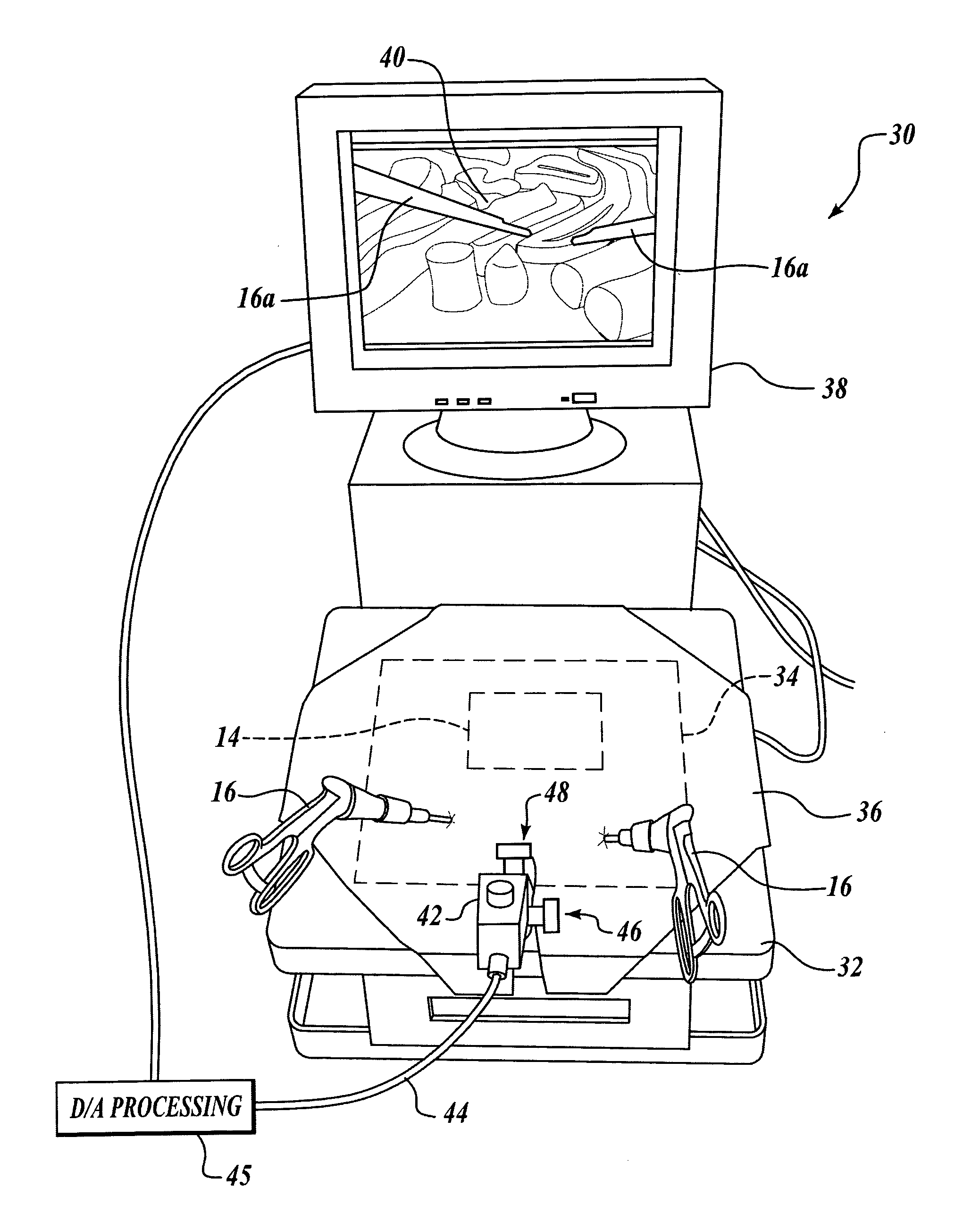

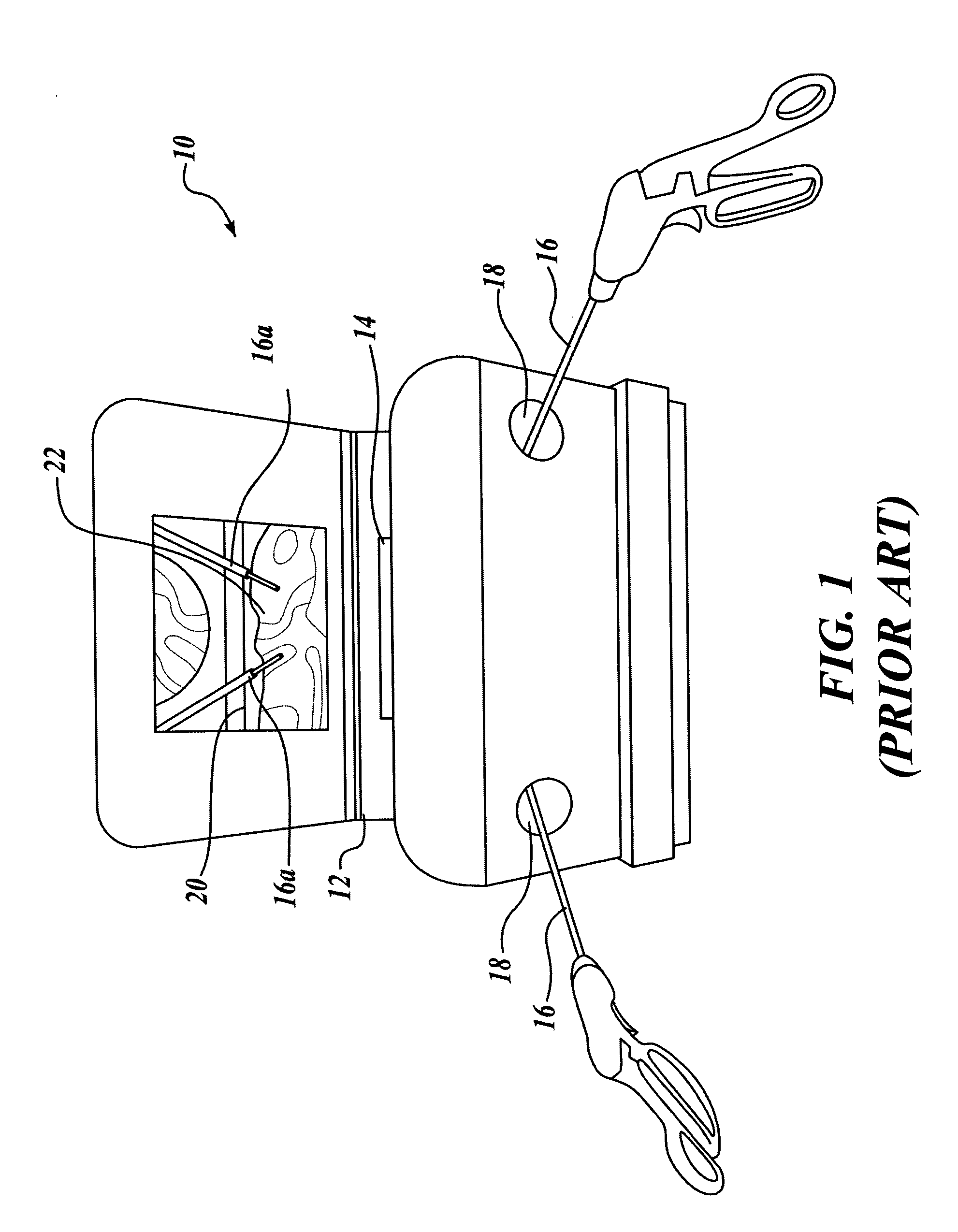

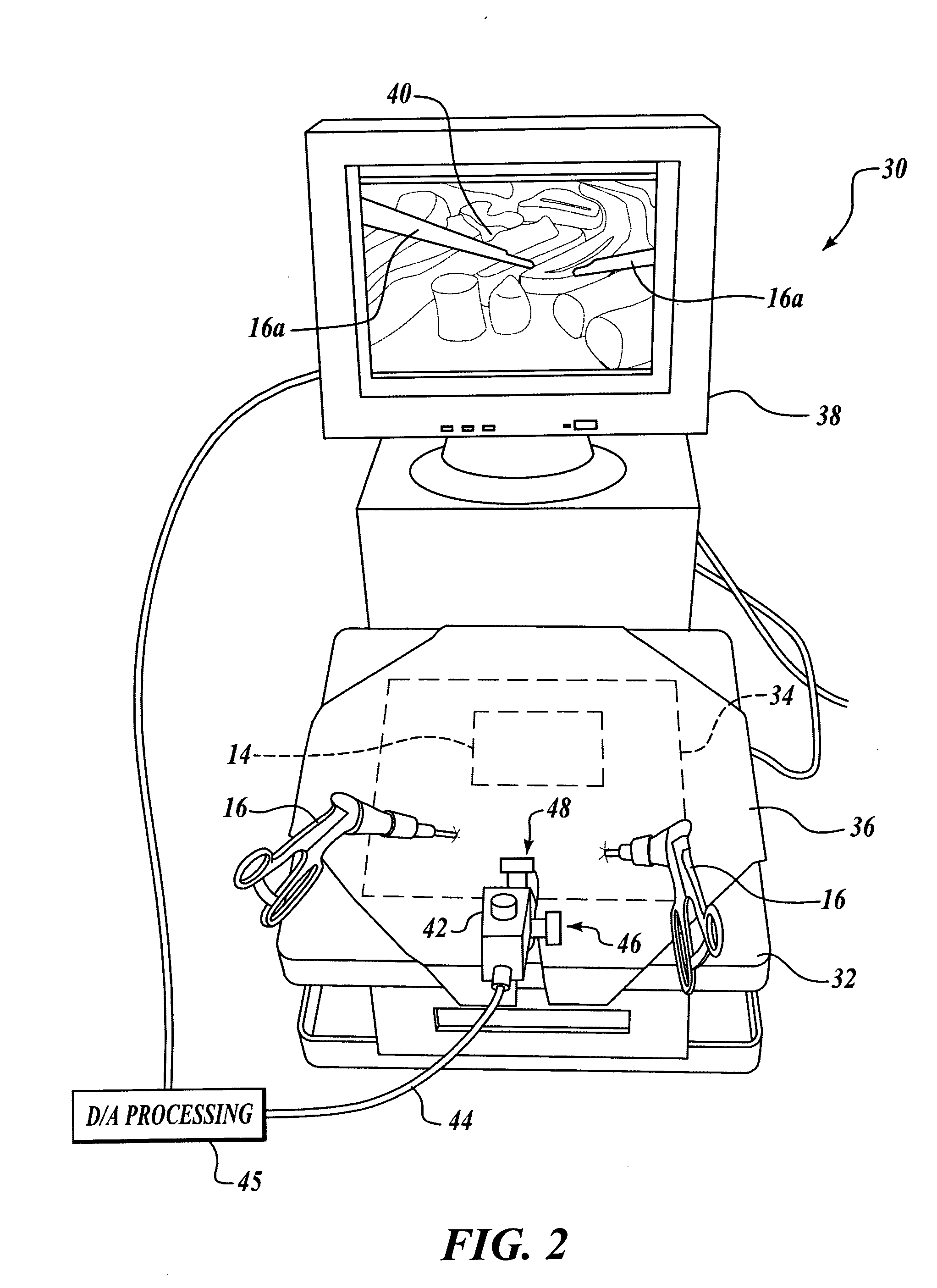

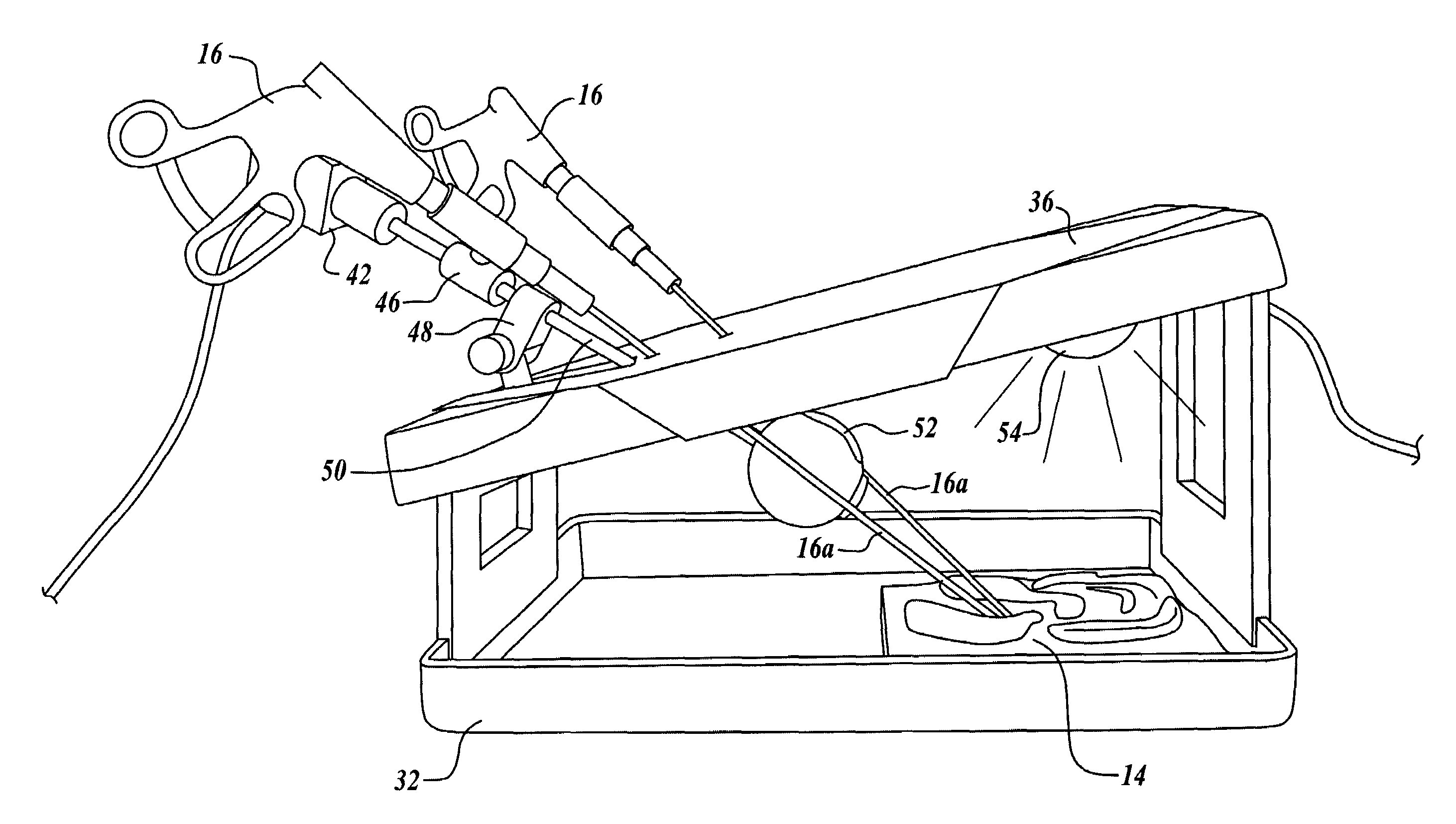

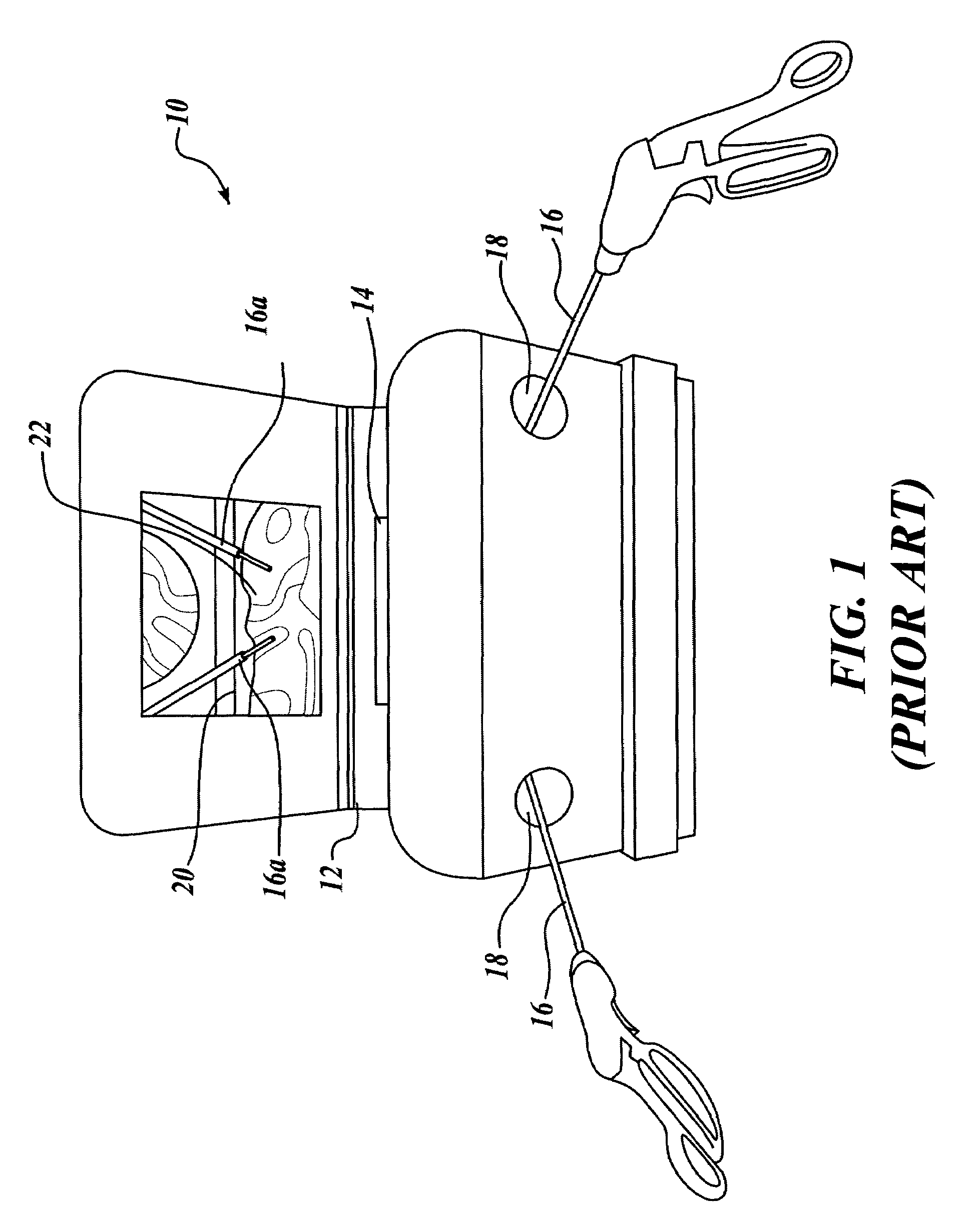

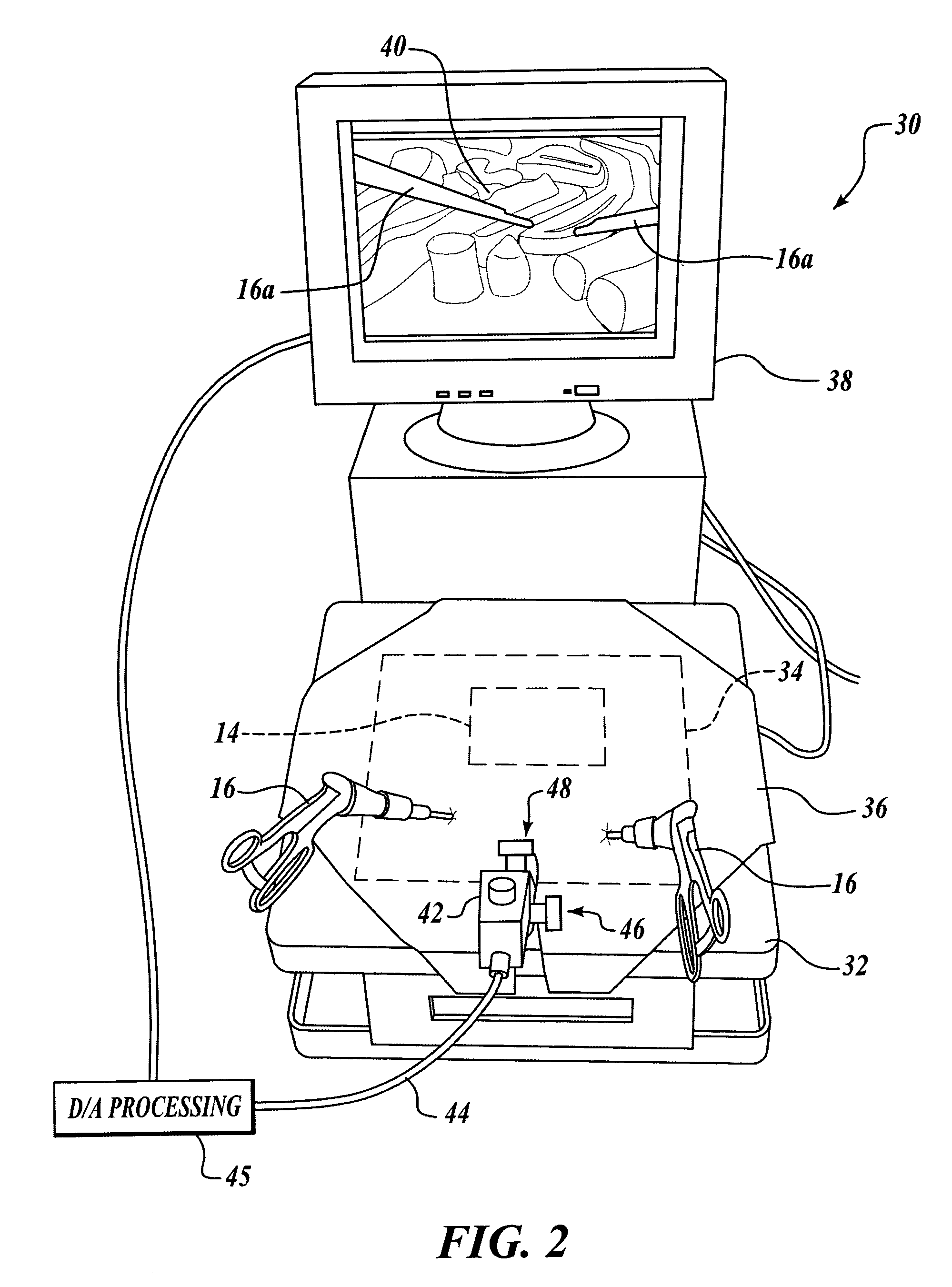

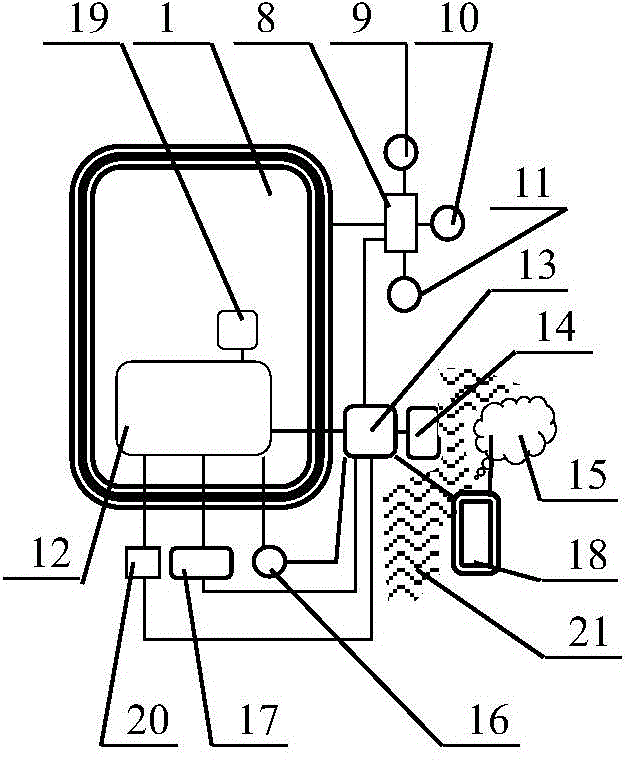

Laparoscopic and endoscopic trainer including a digital camera

ActiveUS20050064378A1Enhance endoscopic skill trainingEasy to trainSurgeryEducational modelsDigital videoAnatomical structures

A videoendoscopic surgery training system includes a housing defining a practice volume in which a simulated anatomical structure is disposed. Openings in the housing enable surgical instruments inserted into the practice volume to access the anatomical structure. A digital video camera is disposed within the housing to image the anatomical structure on a display. The position of the digital video camera can be fixed within the housing, or the digital video camera can be positionable within the housing to capture images of different portions of the practice volume. In one embodiment the digital video camera is coupled to a boom, a proximal end of which extends outside the housing to enable positioning the digital video camera. The housing preferably includes a light source configured to illuminate the anatomical structure. One or more reflectors can be used to direct an image of the anatomical structure to the digital video camera.

Owner:TOLY CHRISTOPHER C

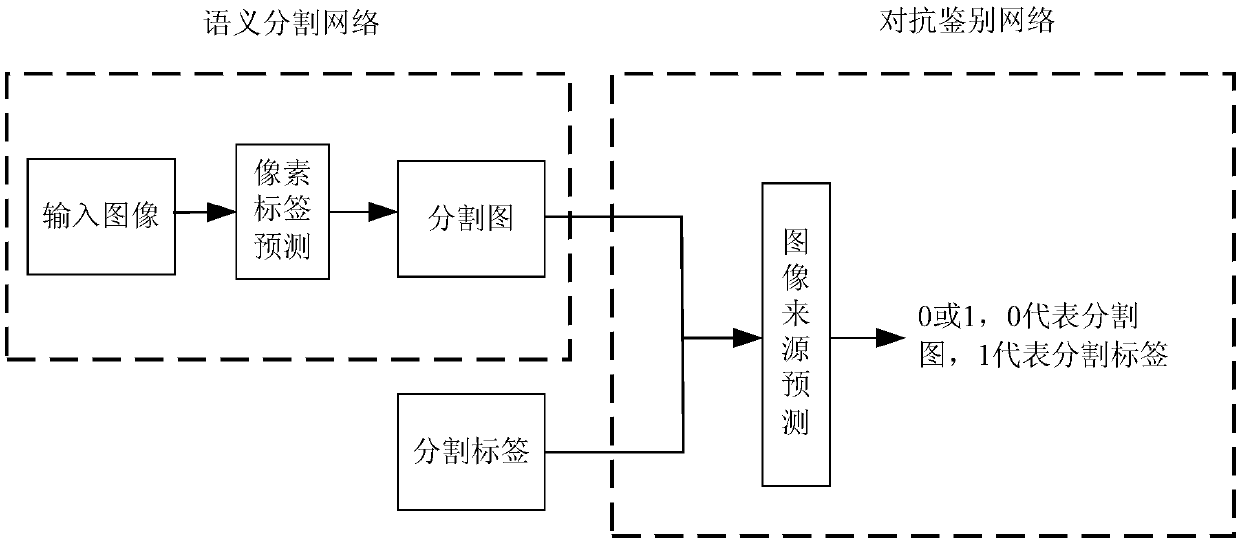

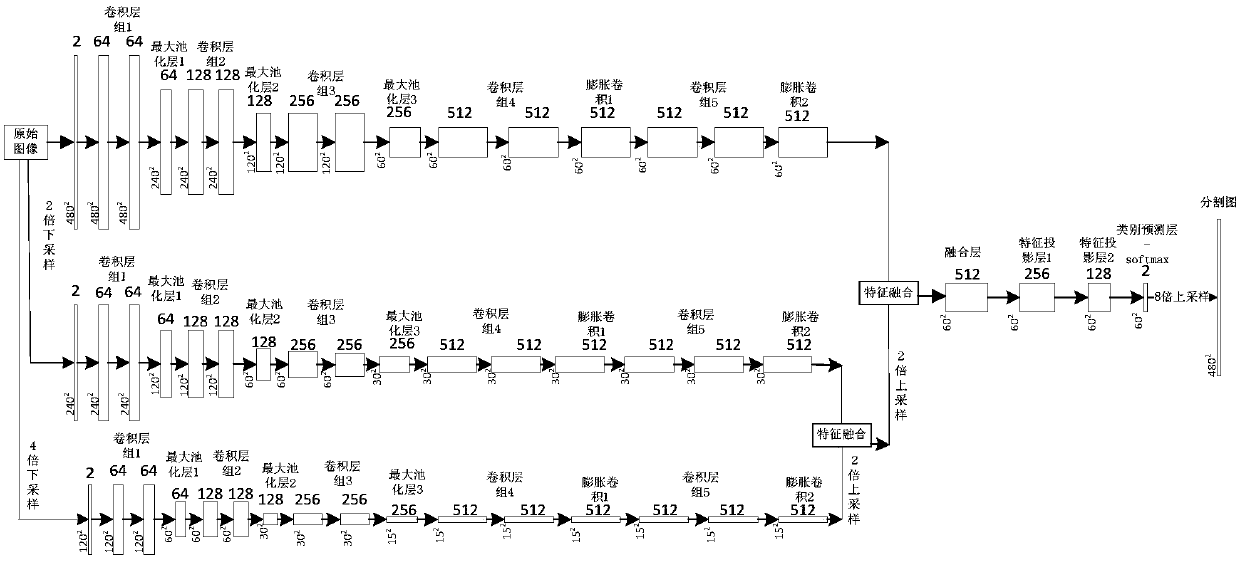

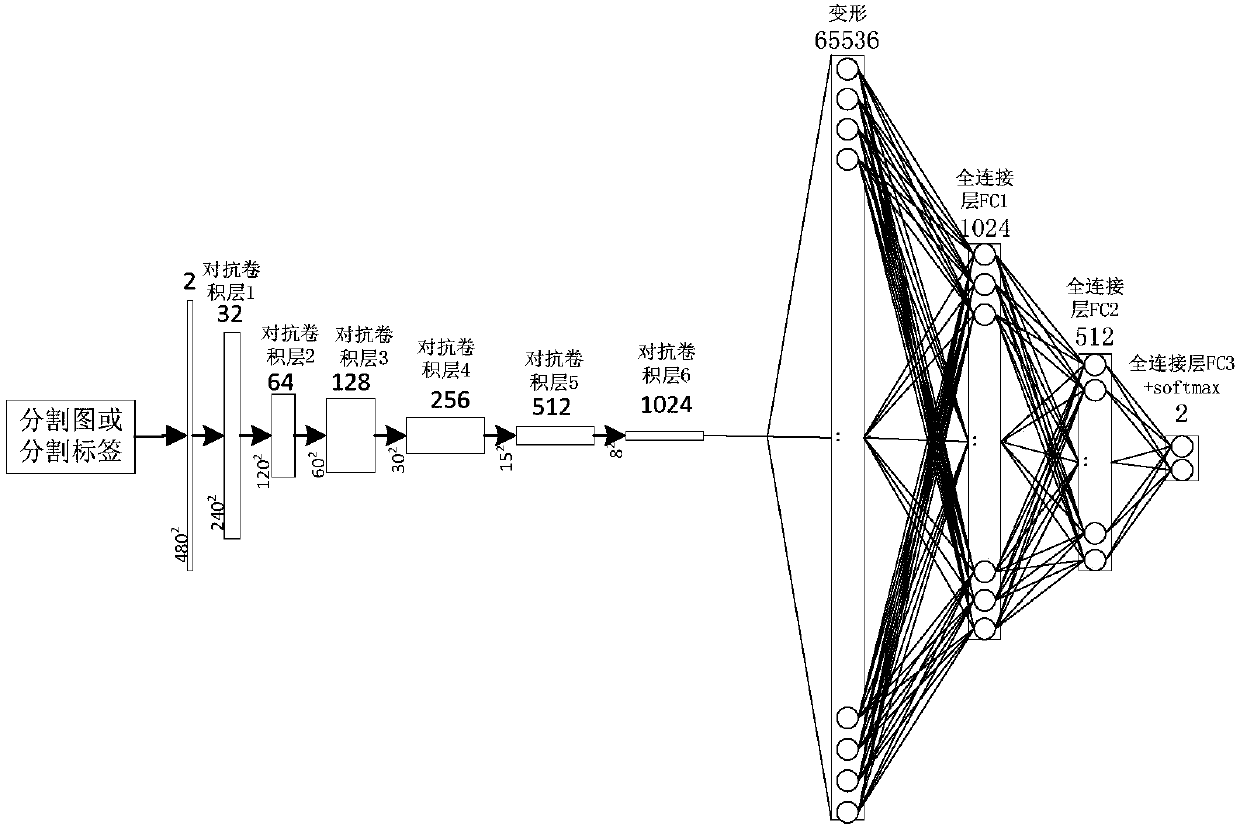

Multi-scale feature fusion ultrasonic image semantic segmentation method based on adversarial learning

ActiveCN108268870AImprove forecast accuracyFew parametersNeural architecturesRecognition of medical/anatomical patternsPattern recognitionAutomatic segmentation

The invention provides a multi-scale feature fusion ultrasonic image semantic segmentation method based on adversarial learning, and the method comprises the following steps: building a multi-scale feature fusion semantic segmentation network model, building an adversarial discrimination network model, carrying out the adversarial training and model parameter learning, and carrying out the automatic segmentation of a breast lesion. The method provided by the invention achieves the prediction of a pixel class through the multi-scale features of input images with different resolutions, improvesthe pixel class label prediction accuracy, employs expanding convolution for replacing partial pooling so as to improve the resolution of a segmented image, enables the segmented image generated by asegmentation network guided by an adversarial discrimination network not to be distinguished from a segmentation label, guarantees the good appearance and spatial continuity of the segmented image, and obtains a more precise high-resolution ultrasonic breast lesion segmented image.

Owner:CHONGQING NORMAL UNIVERSITY

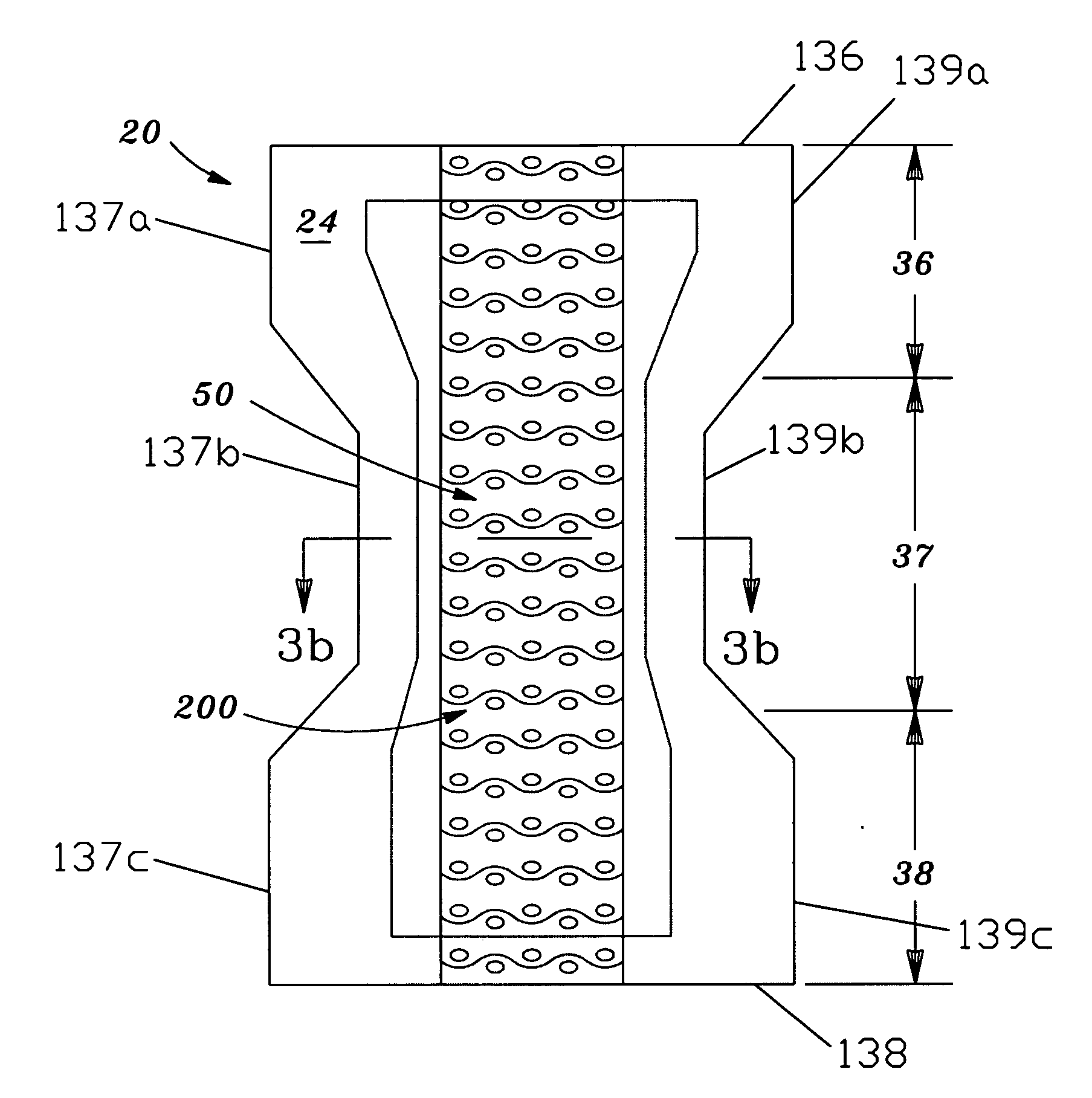

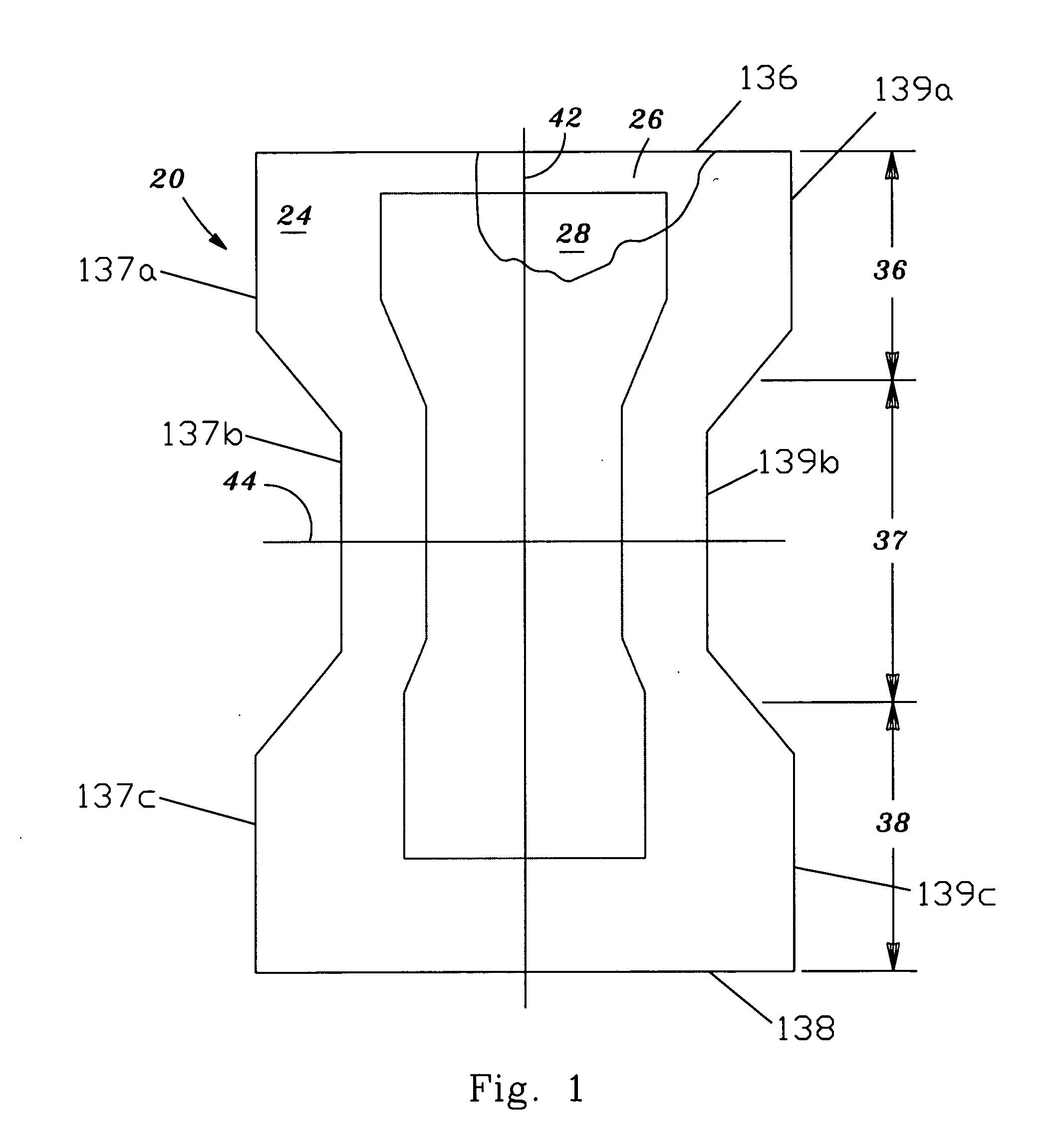

Disposable absorbent article having refastenable side seams and a wetness sensation member

A disposable absorbent article including features facilitating toilet training of a wearer. A wetness sensation member provides a wetness sensation on the wearer's skin upon urination. Highlighting that is visible when viewing a body-facing surface of the article may be associatively correlated with the concept of toilet training and indicates the presence of the wetness sensation member in the article while providing a visual reference and topic for conversation relevant to toilet training. Refastenable side seams enable the configuration, application, and removal of the article as a pair of training pants or as a diaper, while providing an appearance like training pants when the article is worn and allowing easy inspection of the interior of the article without the necessity of pulling the article downward. The synergistic effect of each feature in combination with one or more of the other features enhances the usefulness of the article in toilet training.

Owner:THE PROCTER & GAMBLE COMPANY

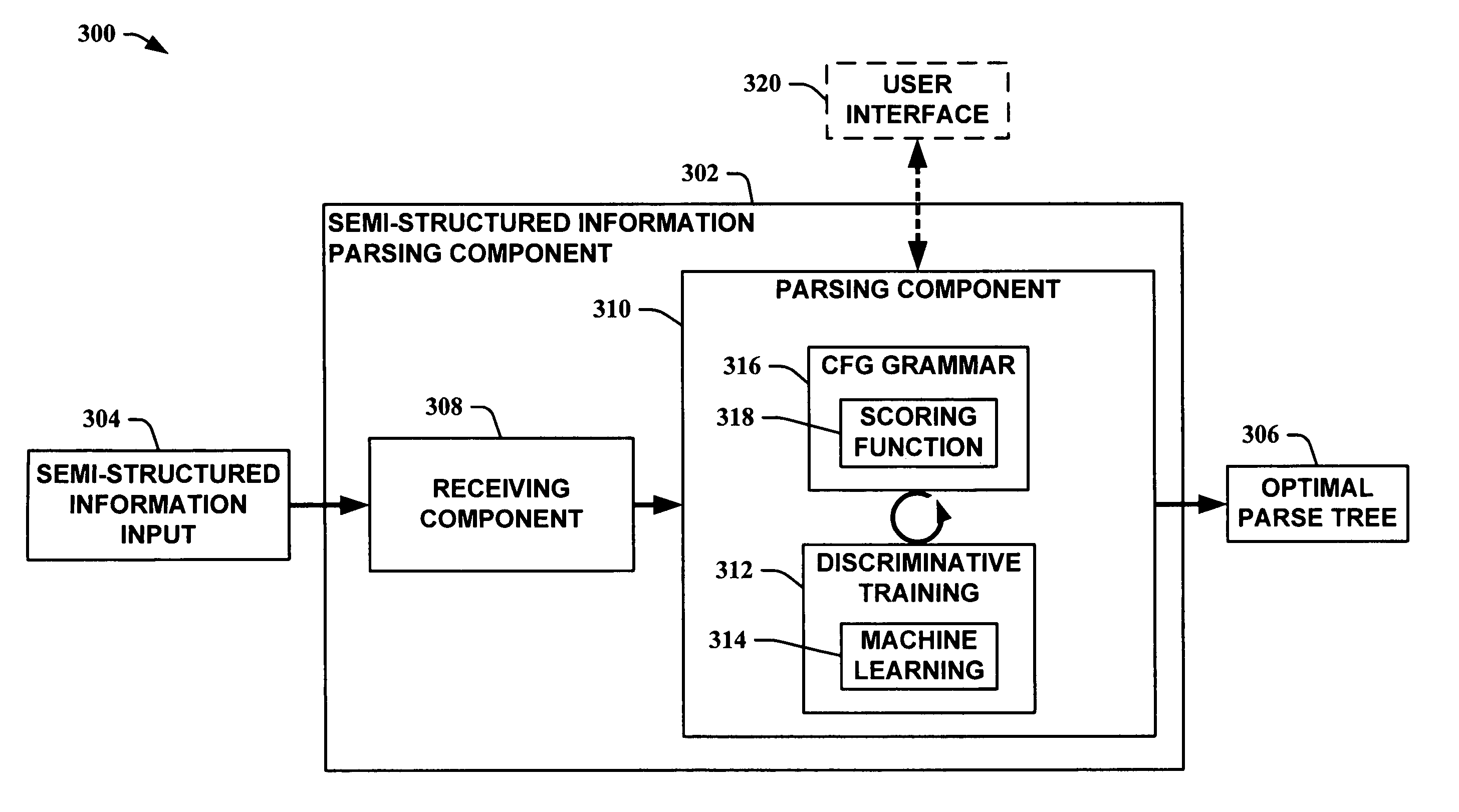

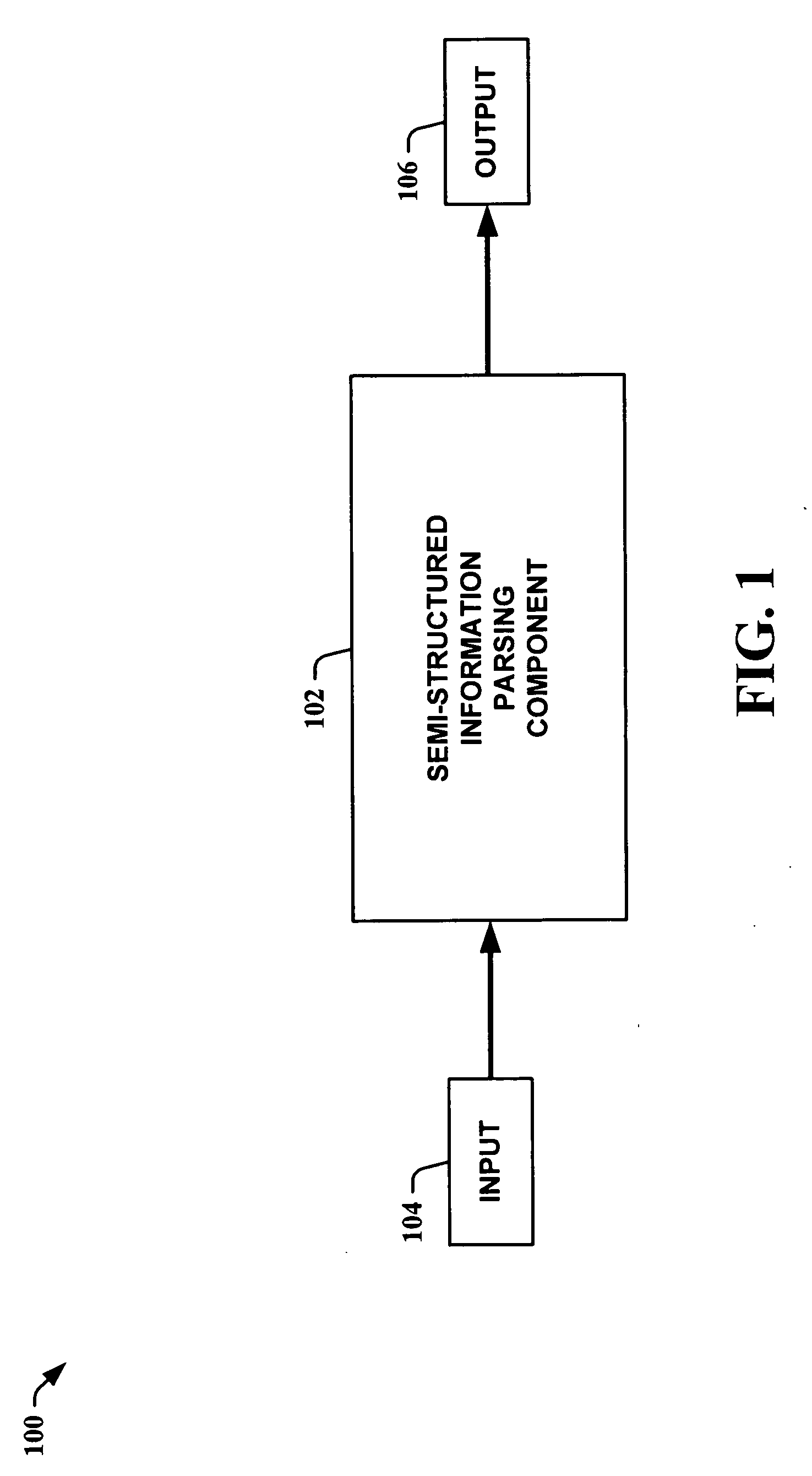

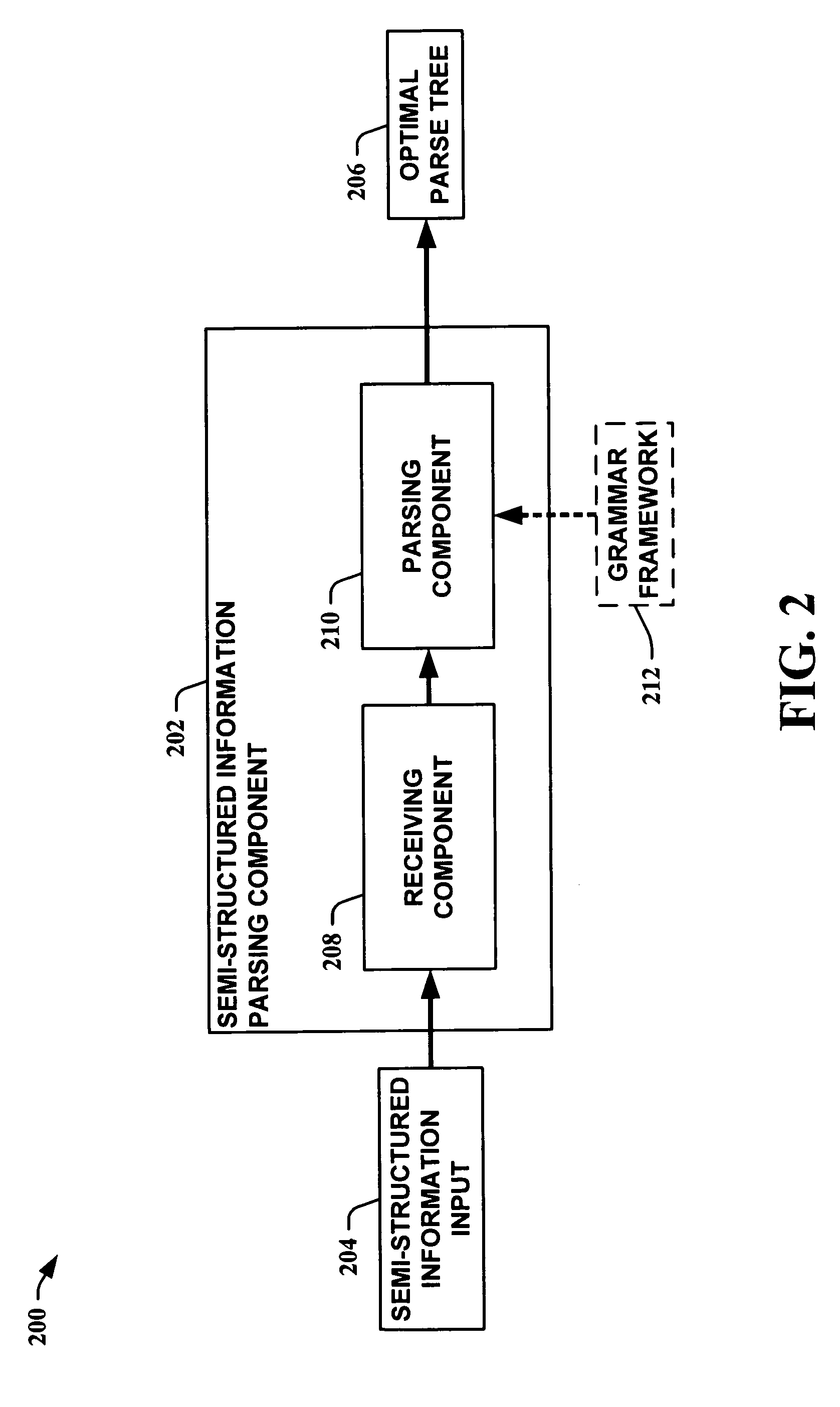

Extracting data from semi-structured information utilizing a discriminative context free grammar

InactiveUS20060245641A1Easy to extract dataImprovement in error reductionCharacter and pattern recognitionNatural language data processingDiscriminantContext independent

A discriminative grammar framework utilizing a machine learning algorithm is employed to facilitate in learning scoring functions for parsing of unstructured information. The framework includes a discriminative context free grammar that is trained based on features of an example input. The flexibility of the framework allows information features and / or features output by arbitrary processes to be utilized as the example input as well. Myopic inside scoring is circumvented in the parsing process because contextual information is utilized to facilitate scoring function training.

Owner:MICROSOFT TECH LICENSING LLC

Medical robotic system with operatively couplable simulator unit for surgeon training

InactiveUS8600551B2Easy to trainCost effectiveComputer controlSimulator controlRobotic armMedical robotics

A medical robotic system has a surgeon console which is operatively couplable to a patient side unit for performing medical procedures or operatively couplable to a simulator unit for training purposes. The surgeon console has a monitor, input devices and foot pedals. The patient side unit has robotic arm assemblies coupled to instruments and an endoscope. When the surgeon console is coupled to the patient side unit, the instruments move in response to movement of the input devices to perform a medical procedure while captured images of the instruments are displayed on the monitor. When the surgeon console is coupled to the simulator unit, virtual instruments move in response to movement of the input devices to perform a user selected virtual procedure while virtual images of the virtual instruments are displayed on the monitor.

Owner:INTUITIVE SURGICAL OPERATIONS INC

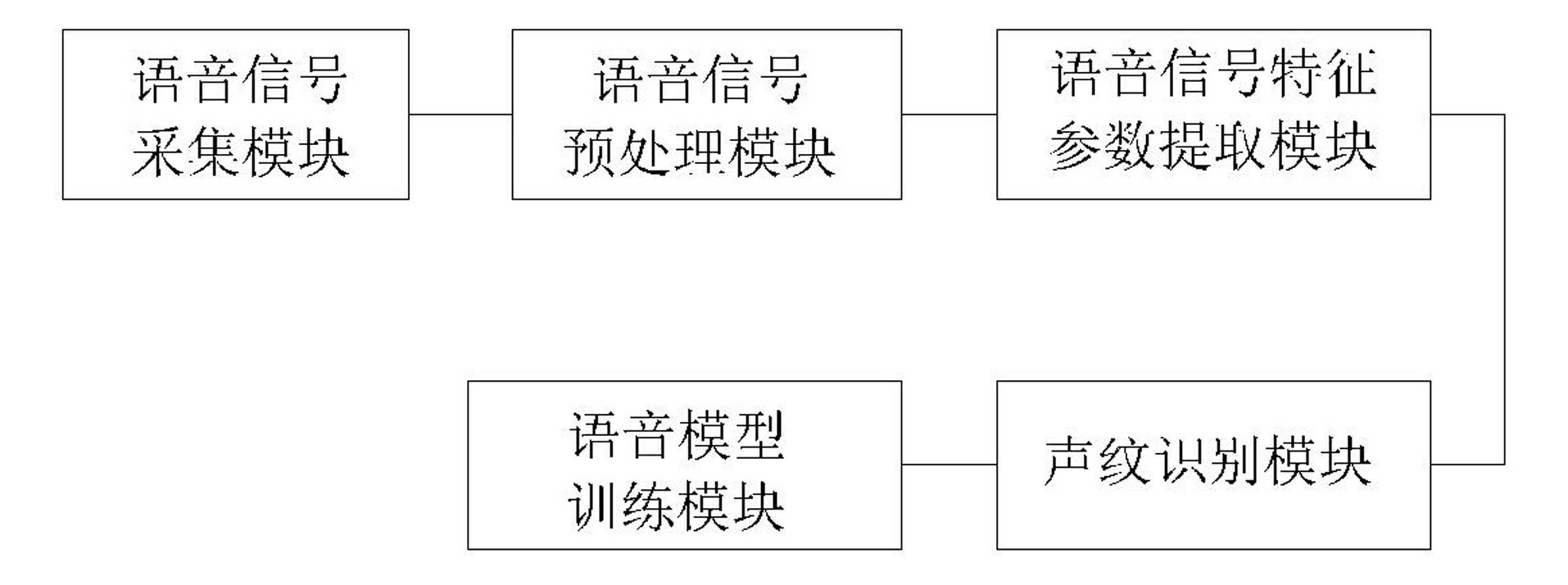

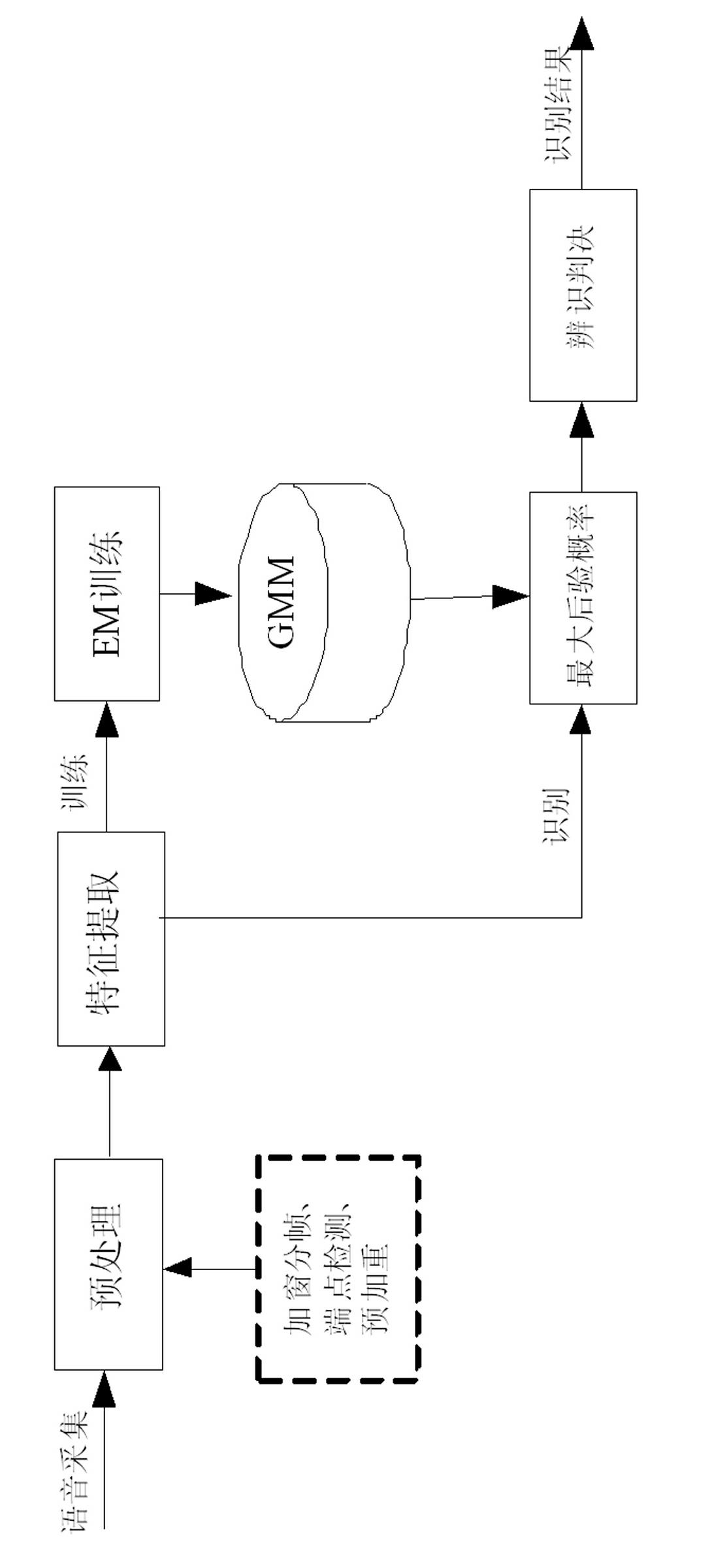

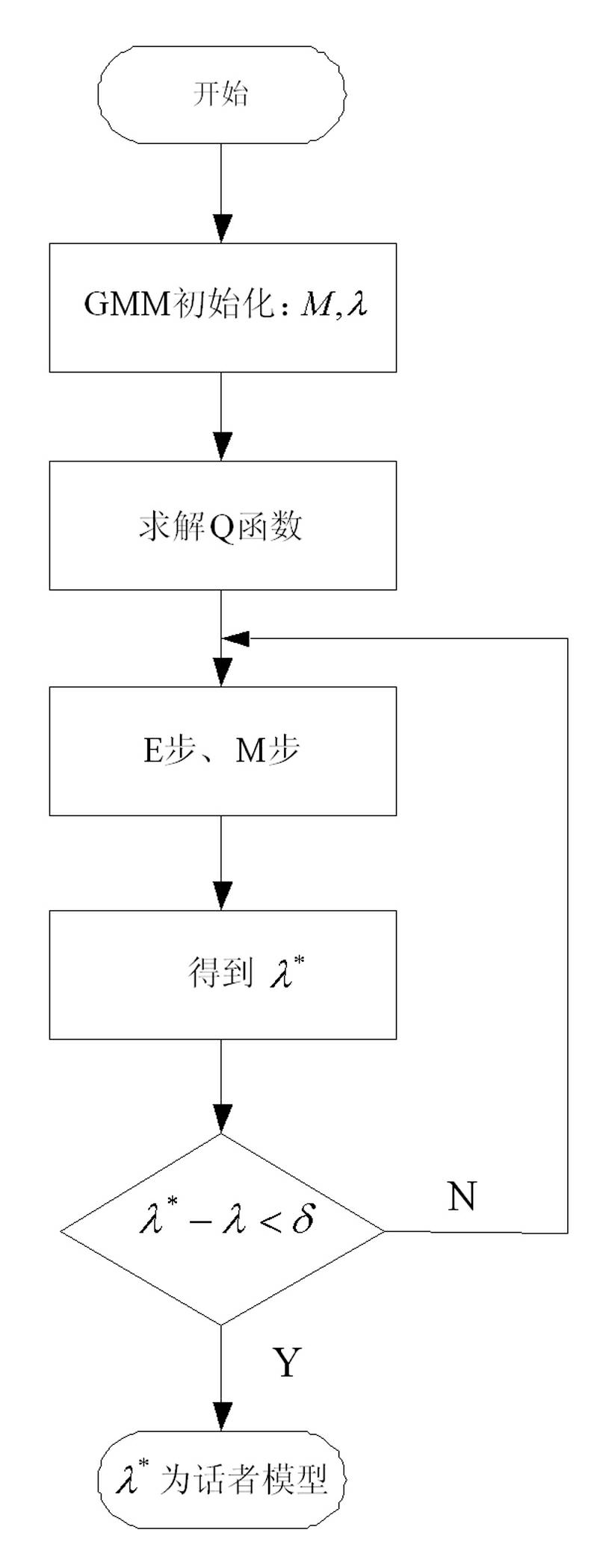

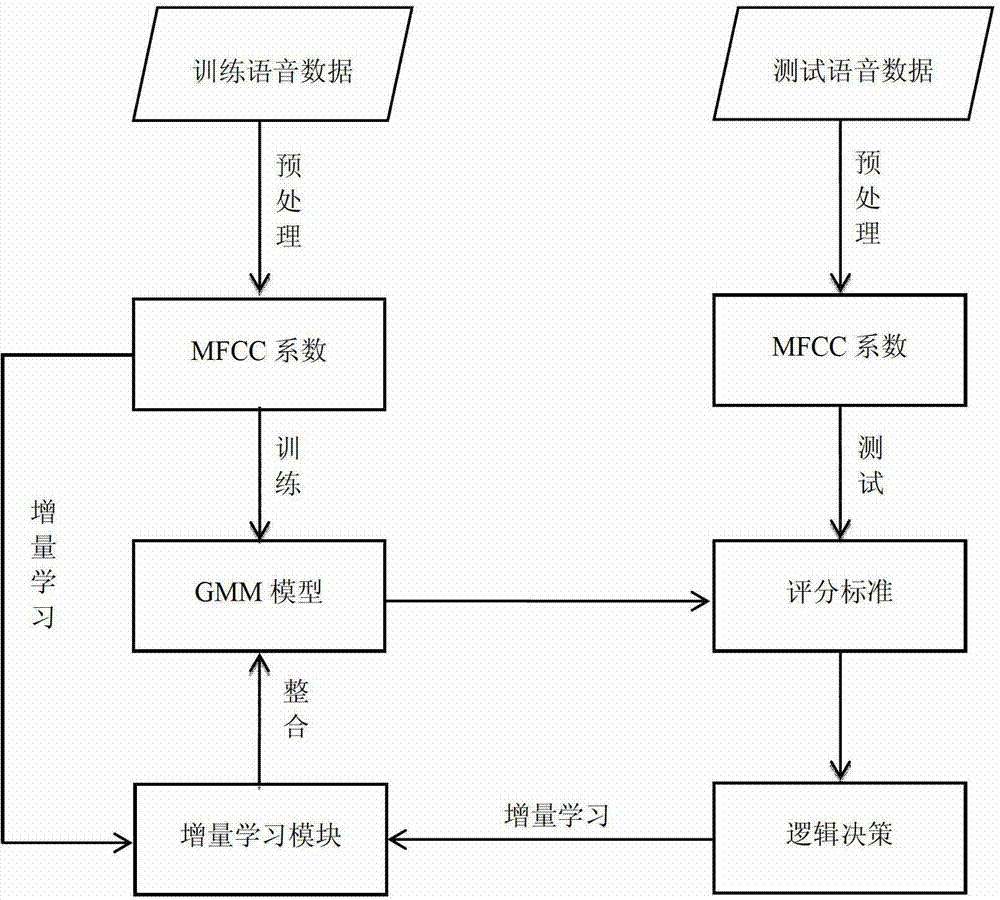

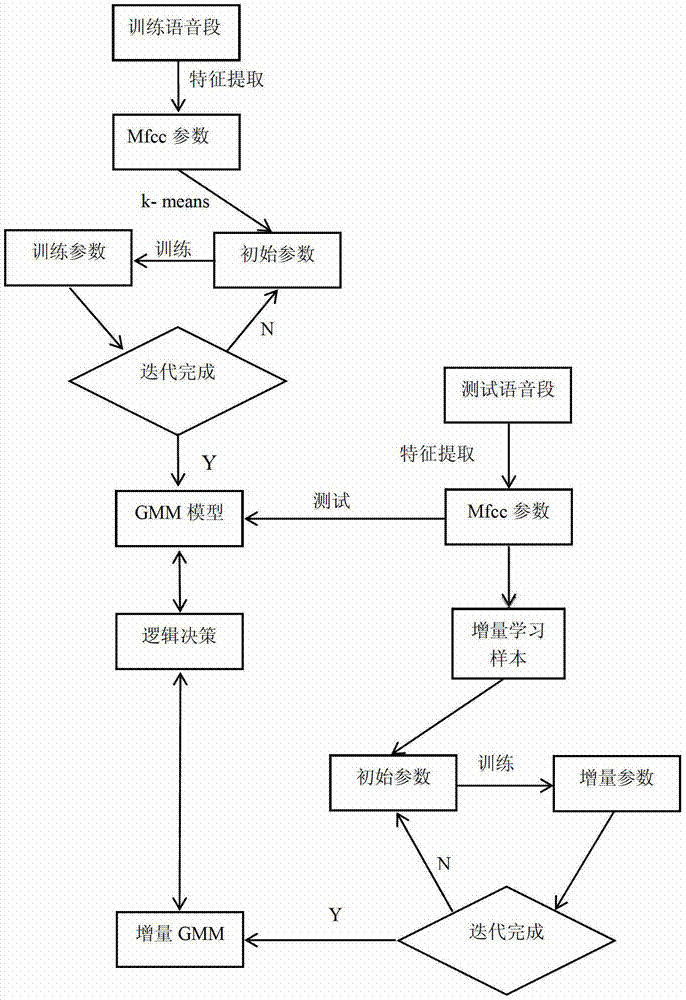

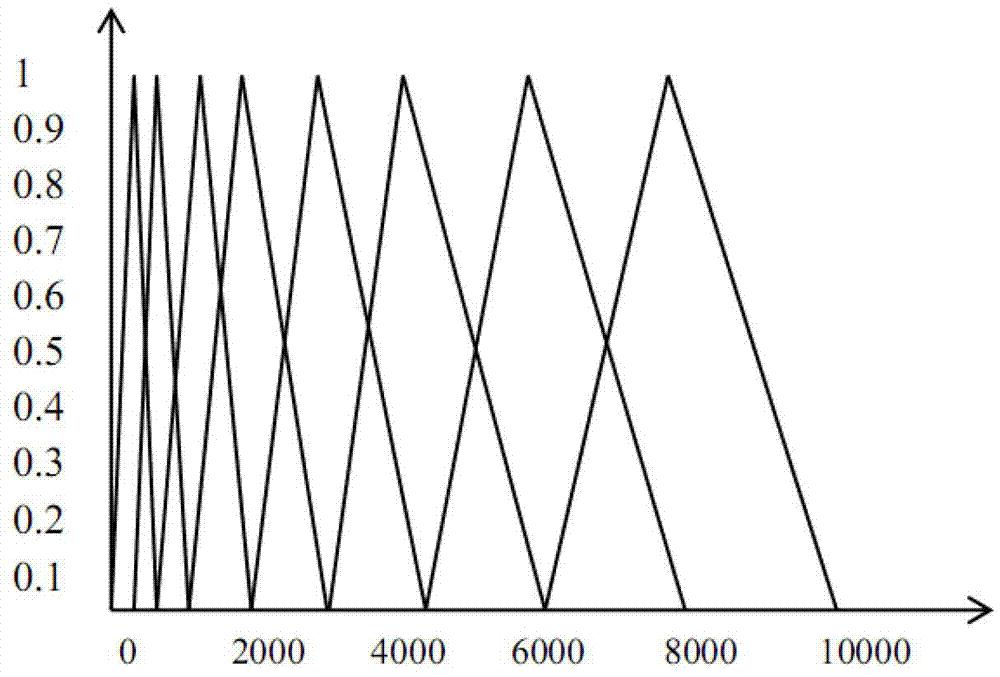

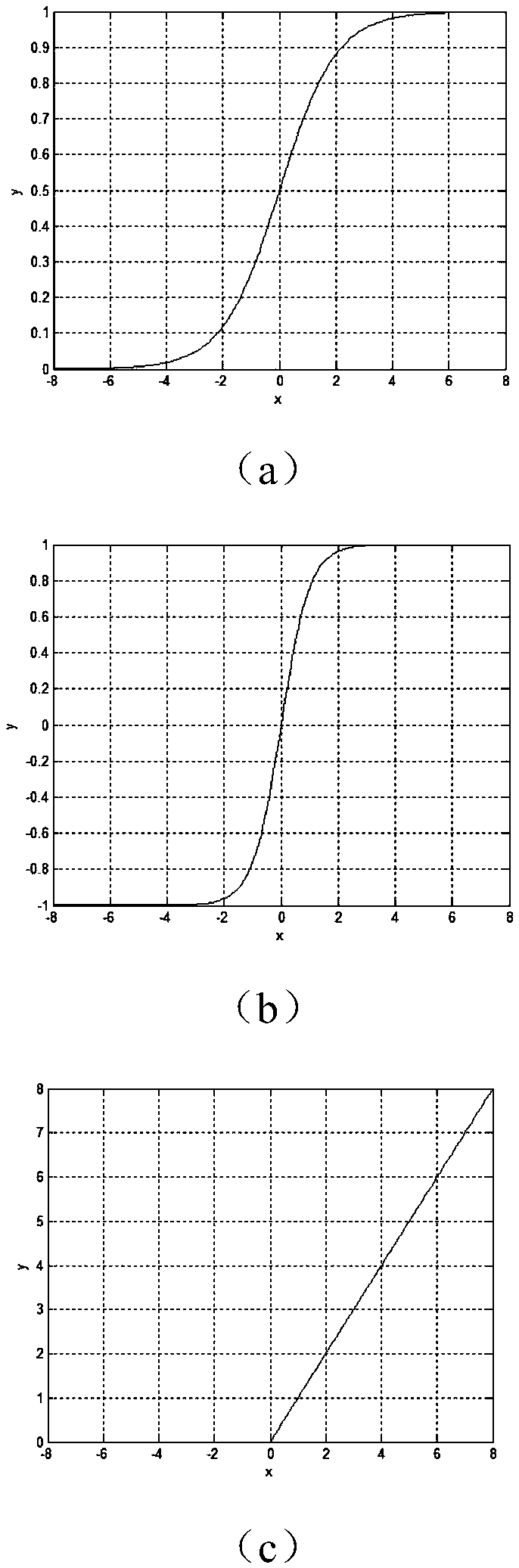

Voiceprint identification method based on Gauss mixing model and system thereof

InactiveCN102324232AGood estimateEasy to trainSpeech recognitionMel-frequency cepstrumNormal density

The invention provides a voiceprint identification method based on a Gauss mixing model and a system thereof. The method comprises the following steps: voice signal acquisition; voice signal pretreatment; voice signal characteristic parameter extraction: employing a Mel Frequency Cepstrum Coefficient (MFCC), wherein an order number of the MFCC usually is 12-16; model training: employing an EM algorithm to train a Gauss mixing model (GMM) for a voice signal characteristic parameter of a speaker, wherein a k-means algorithm is selected as a parameter initialization method of the model; voiceprint identification: comparing a collected voice signal characteristic parameter to be identified with an established speaker voice model, carrying out determination according to a maximum posterior probability method, and if a corresponding speaker model enables a speaker voice characteristic vector X to be identified to has maximum posterior probability, identifying the speaker. According to the method, the Gauss mixing model based on probability statistics is employed, characteristic distribution of the speaker in characteristic space can be reflected well, a probability density function is common, a parameter in the model is easy to estimate and train, and the method has good identification performance and anti-noise capability.

Owner:LIAONING UNIVERSITY OF TECHNOLOGY

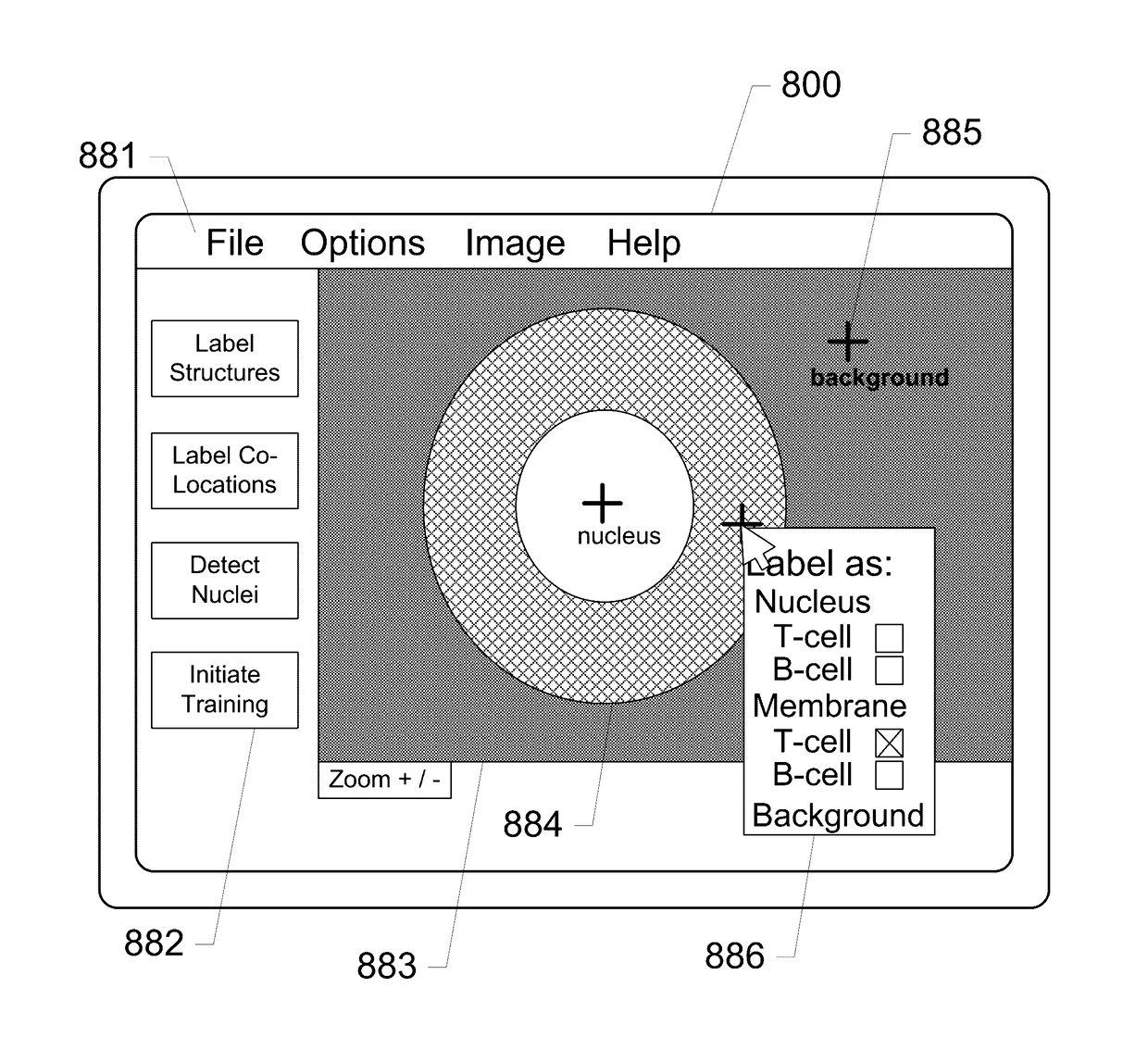

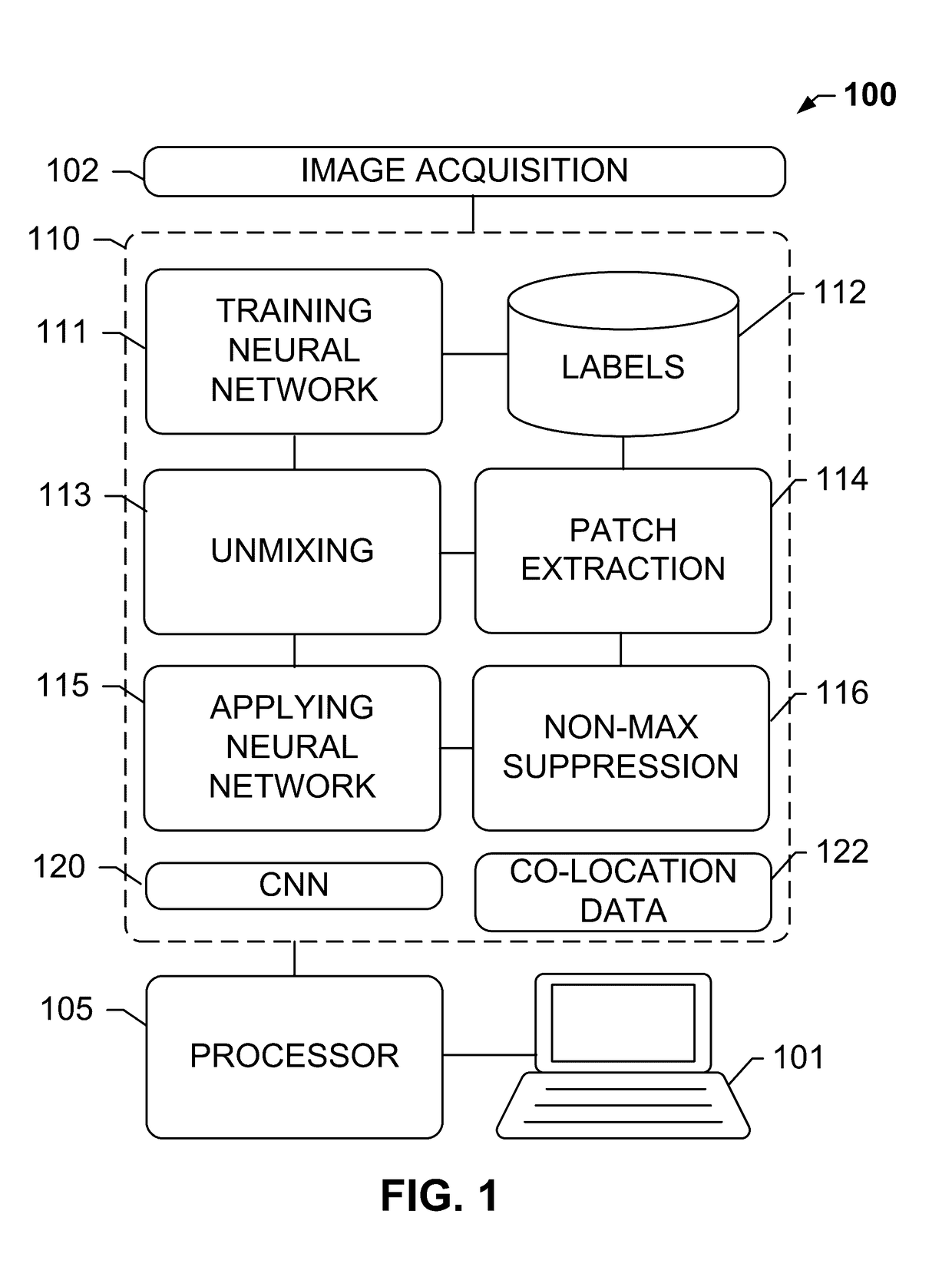

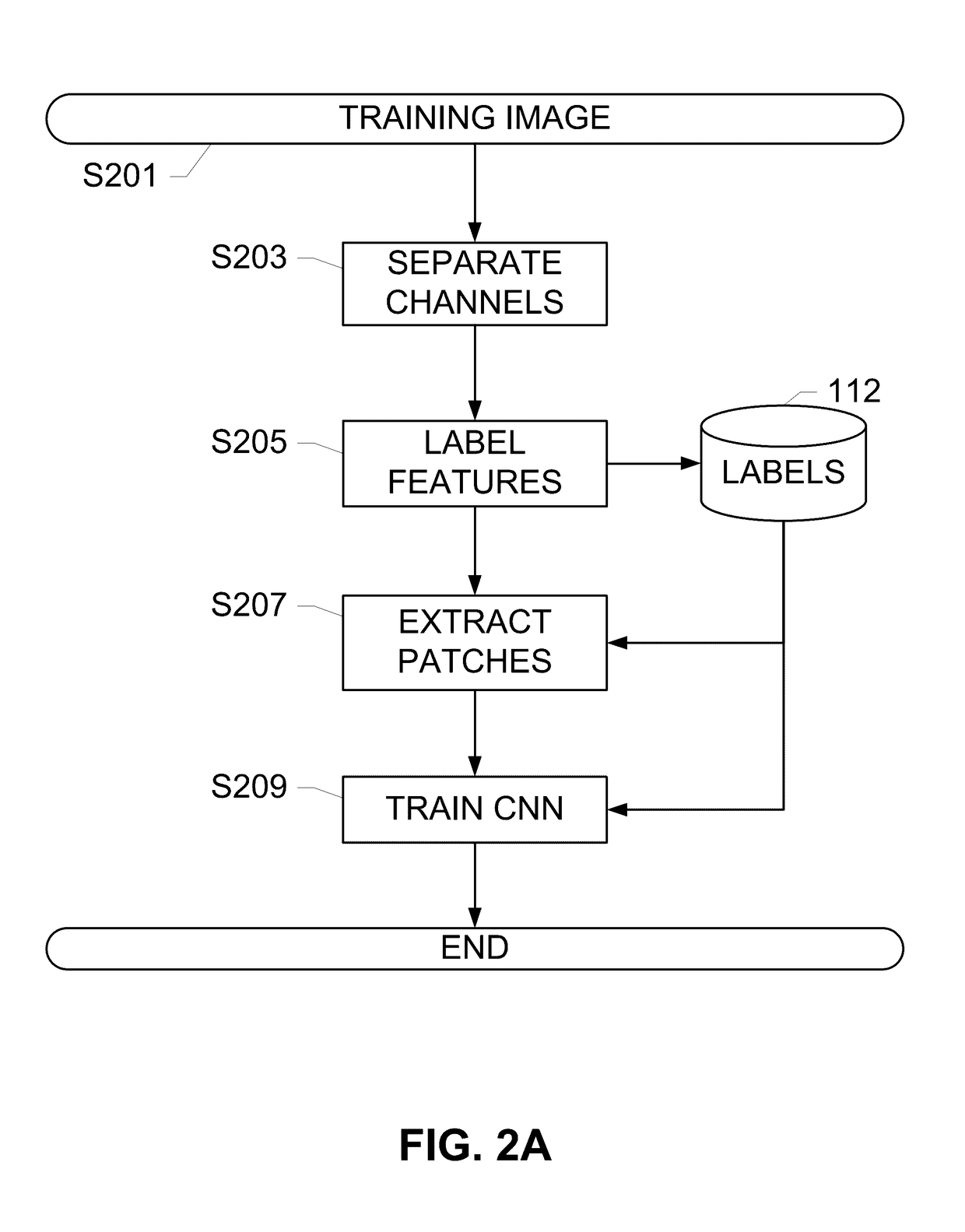

Systems and methods for detection of structures and/or patterns in images

ActiveUS20170169567A1Lighten the computational burdenImprove stabilityImage enhancementImage analysisRgb imageNon maximum suppression

The subject disclosure presents systems and computer-implemented methods for automatic immune cell detection that is of assistance in clinical immune profile studies. The automatic immune cell detection method involves retrieving a plurality of image channels from a multi-channel image such as an RGB image or biologically meaningful unmixed image. A cell detector is trained to identify the immune cells by a convolutional neural network in one or multiple image channels. Further, the automatic immune cell detection algorithm involves utilizing a non-maximum suppression algorithm to obtain the immune cell coordinates from a probability map of immune cell presence possibility generated from the convolutional neural network classifier.

Owner:VENTANA MEDICAL SYST INC

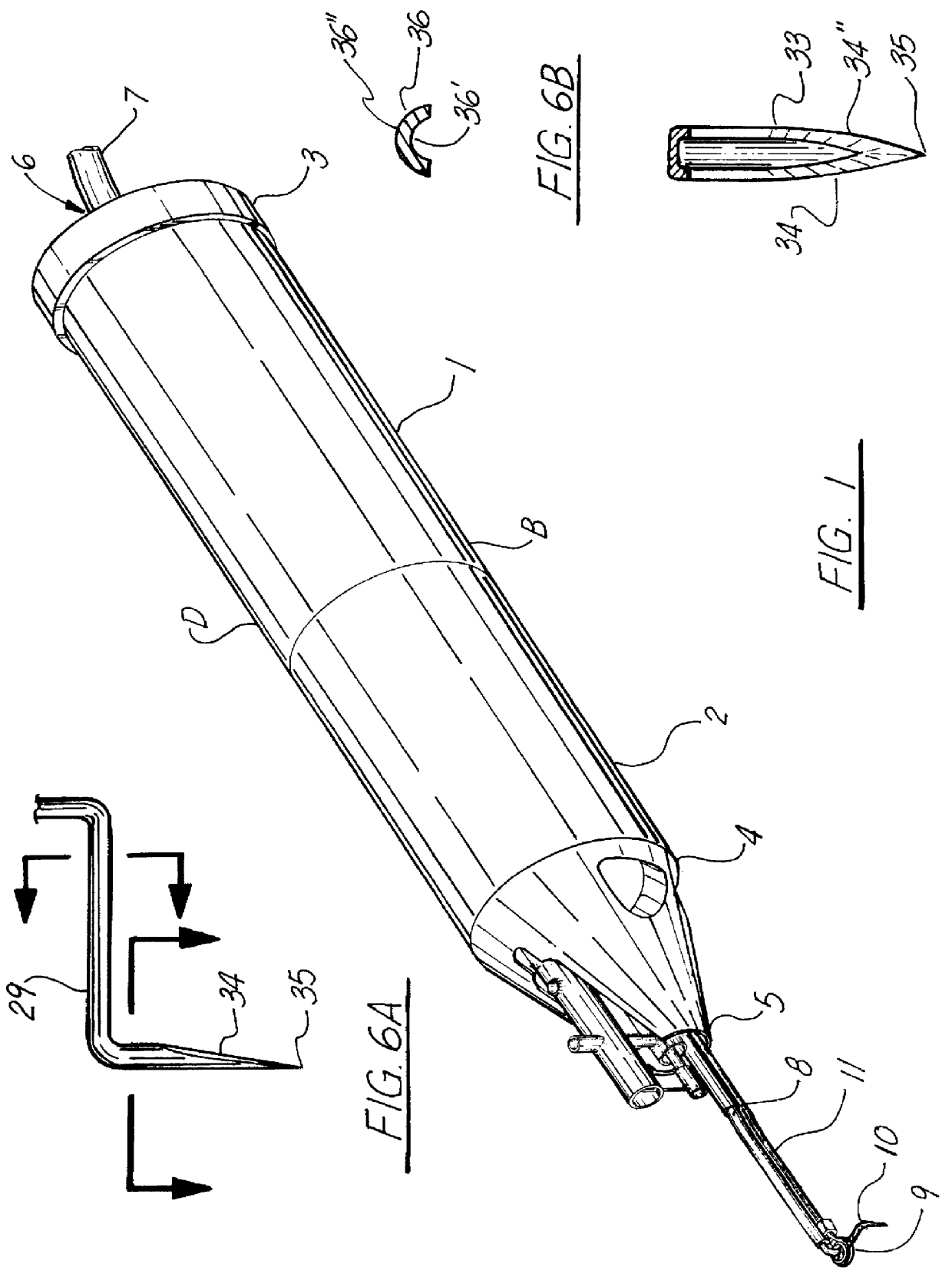

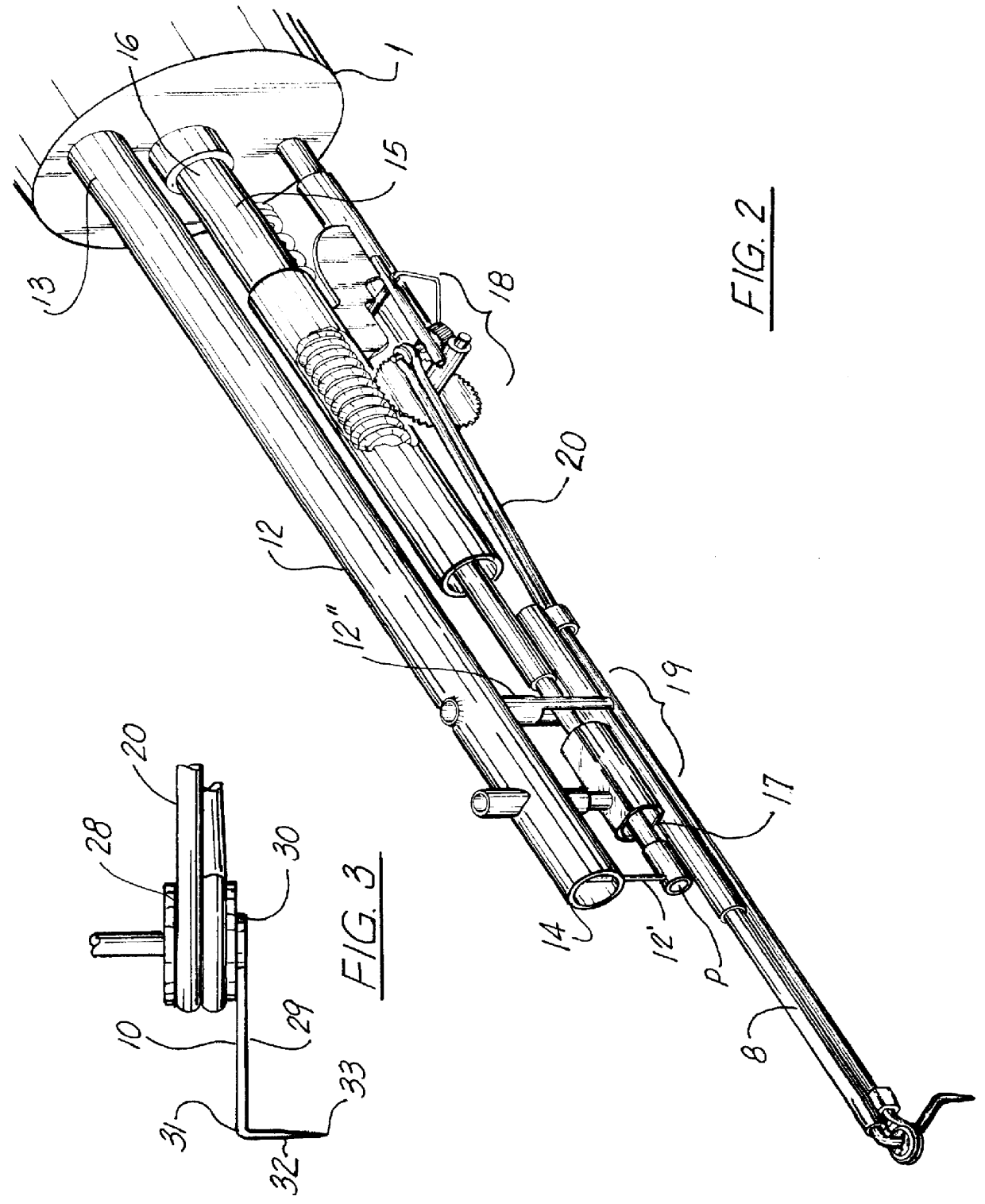

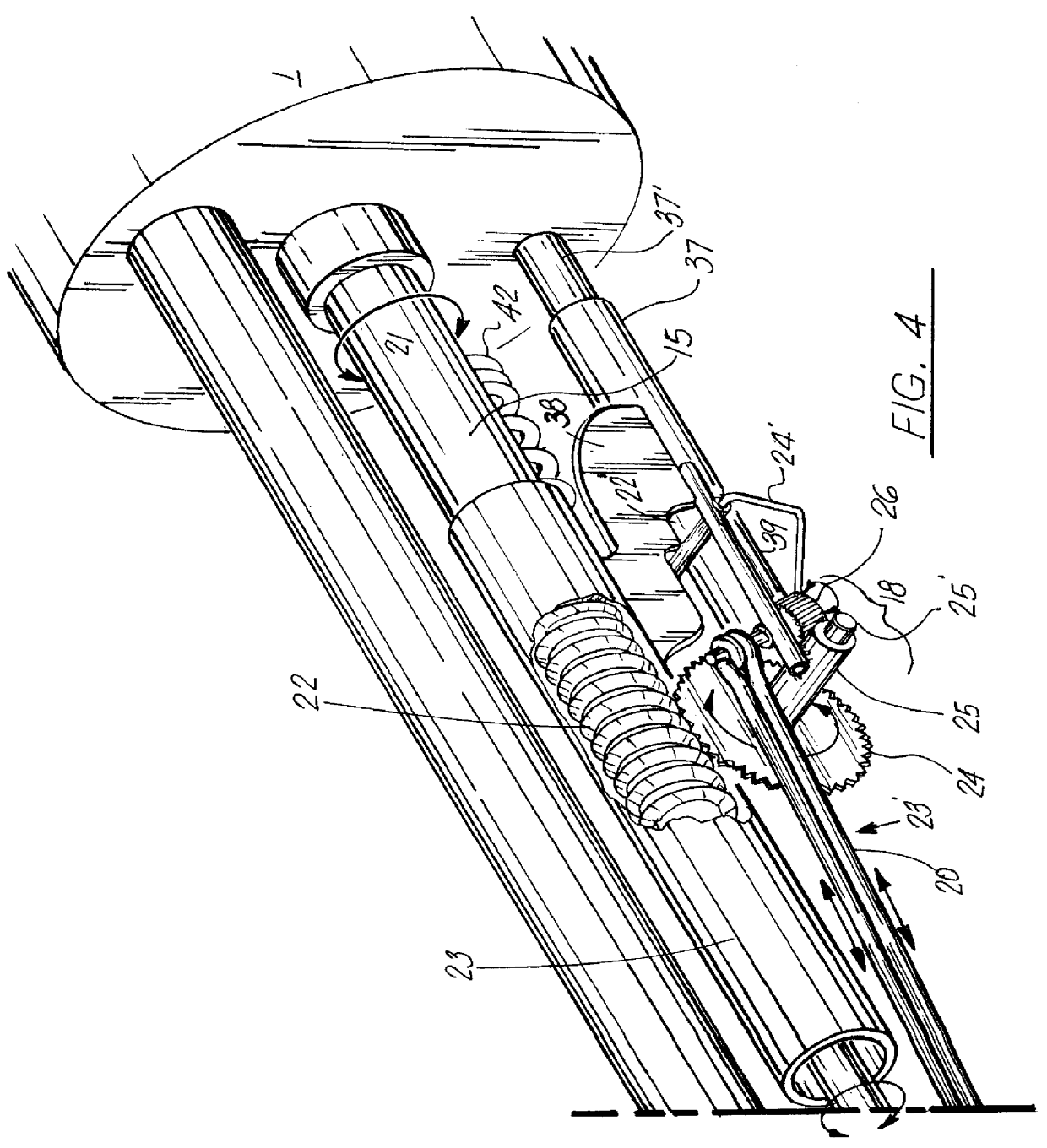

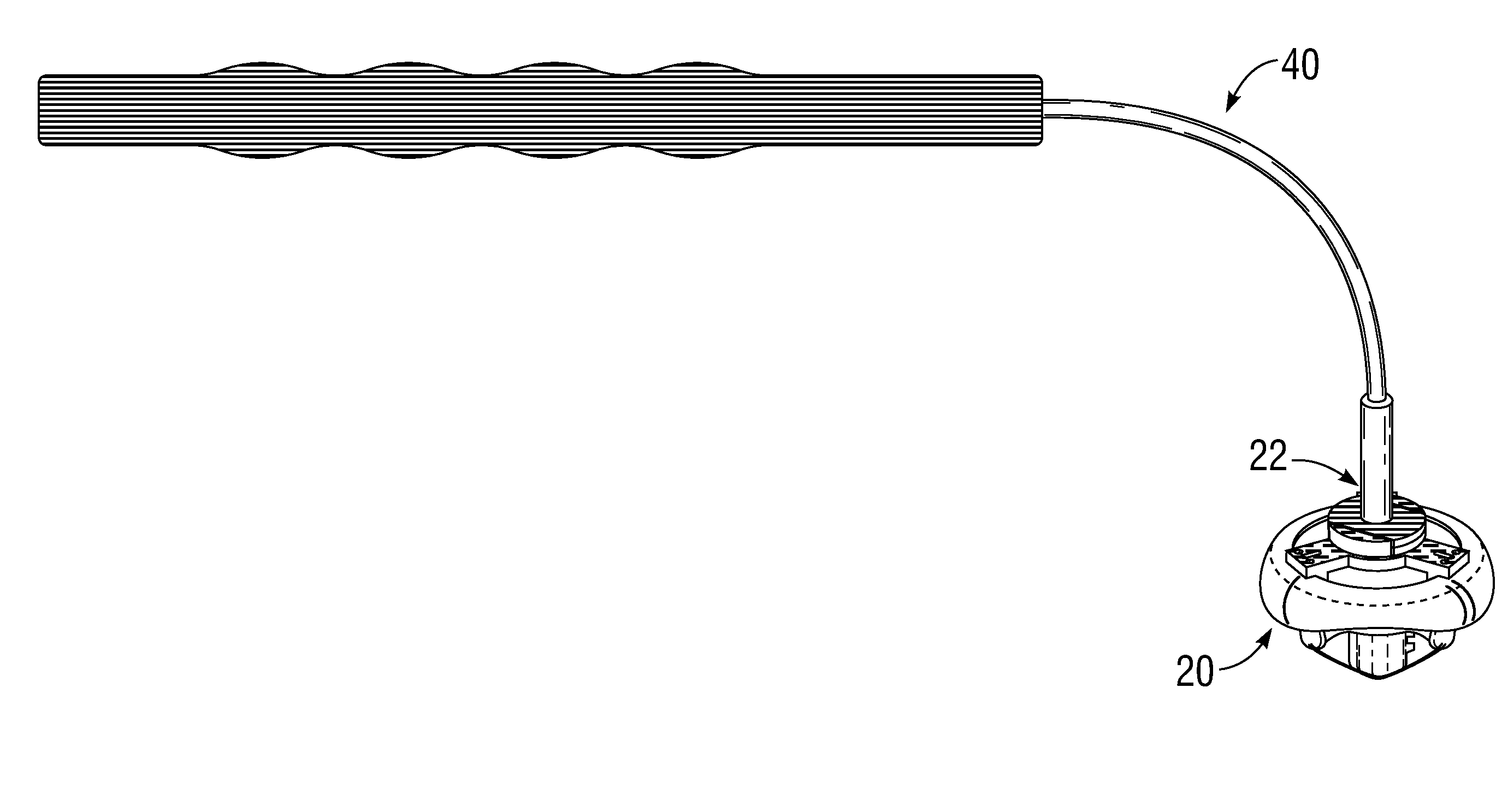

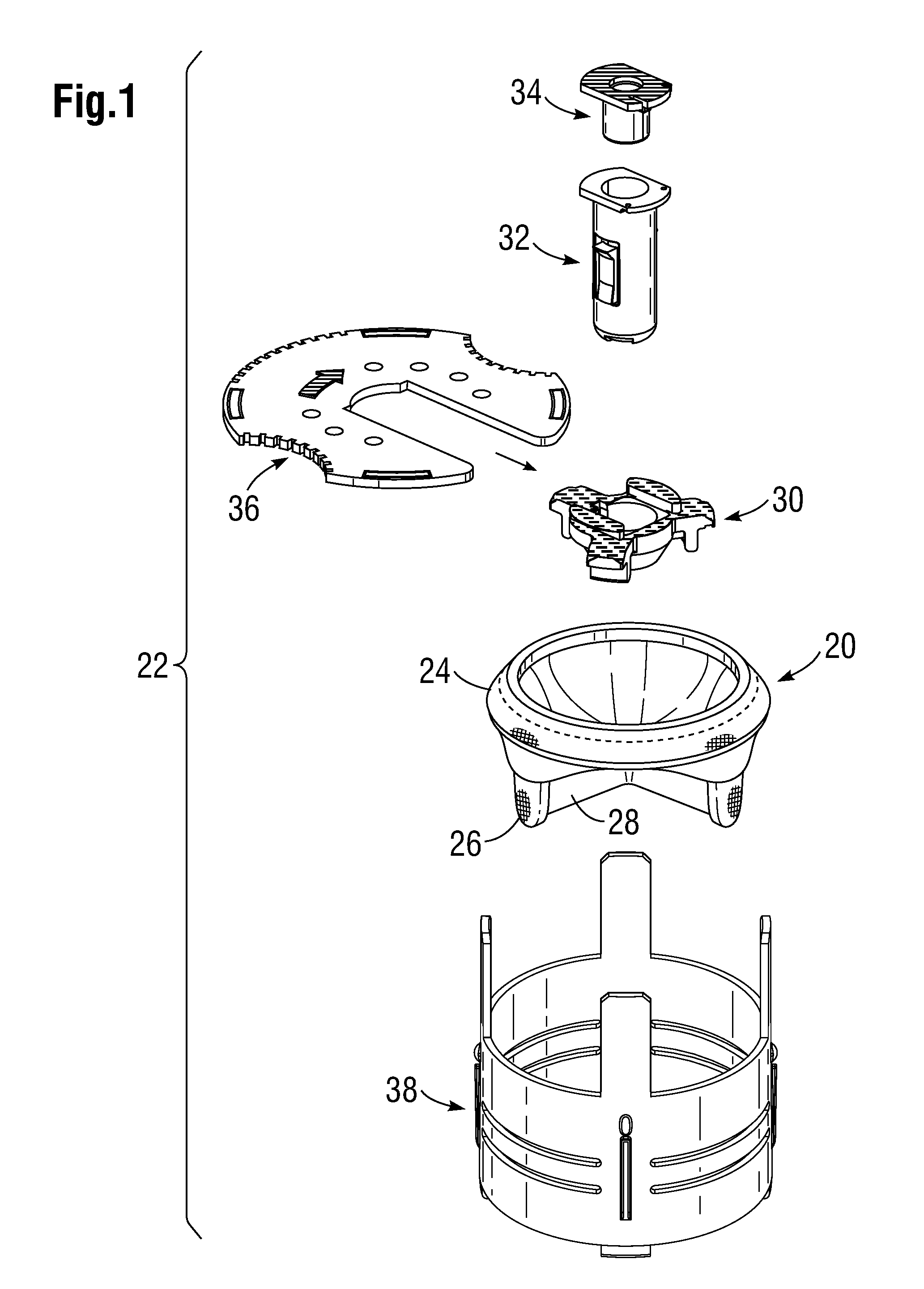

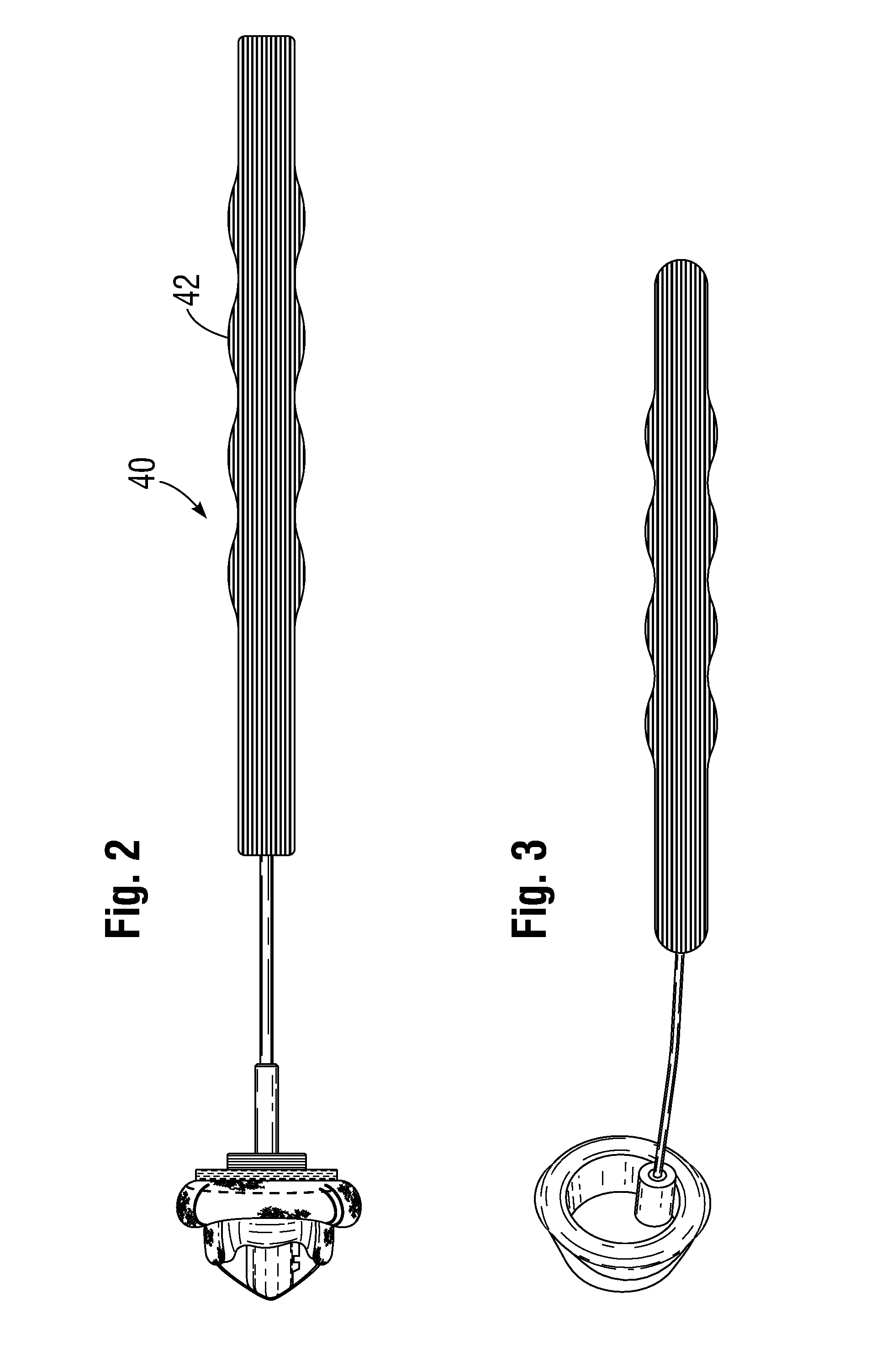

Capsulectomy device and method therefore

InactiveUS6165190AEasy to useCost-effective in manufacture and operation and maintenanceEye surgerySurgeryHand heldEngineering

A surgical instrument for ophthalmic surgery, allowing the user to form a uniform circular incision of the anterior lens capsule of an eyeball, as part of an anterior capsulotomy. The capsulectomy device of the preferred embodiment of the present invention has first and second ends, with a rotor emanating from one end, the rotor having a cutting blade or bin situated at the distal end of the rotor, the rotor rotating in pivotal fashion up to 360 degrees, while simultaneously reciprocating the cutting blade at a consistent stroke so as to provide optimal incision edge and depth of the anterior lens capsule of the eyeball. The device is hand held and relatively compact, having provided therein a motor and gear reduction / transmission system for driving the rotor and providing the reciprocating action to the cutting blade or pin. The device further includes a power supply, which is illustrated as a separate component fed to the device via wire, as well as controls for initiating power, as well as varying the speed of the motor. Unlike the prior art systems, which generally have relied upon the skill of the surgeon to perform the radial incision by hand, the present system provides a relatively easy and uniform system for performing the radial incision which is believed to be safer, more uniform, and less time consuming than prior techniques.

Owner:NGUYEN NHAN

Laparoscopic and endoscopic trainer including a digital camera

ActiveUS7594815B2Easy to trainWide field of viewSurgeryEducational modelsAnatomical structuresDigital video

Owner:TOLY CHRISTOPHER C

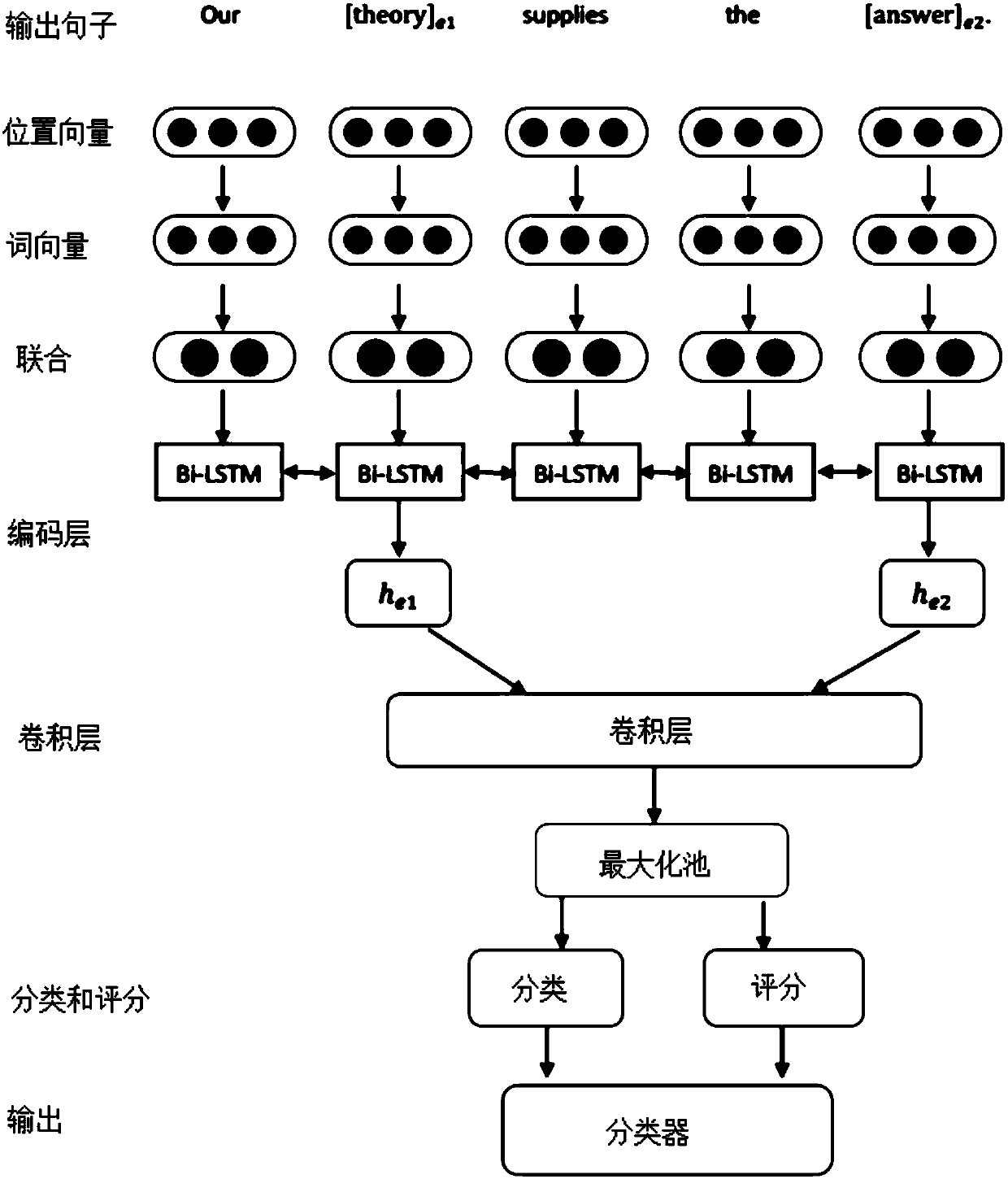

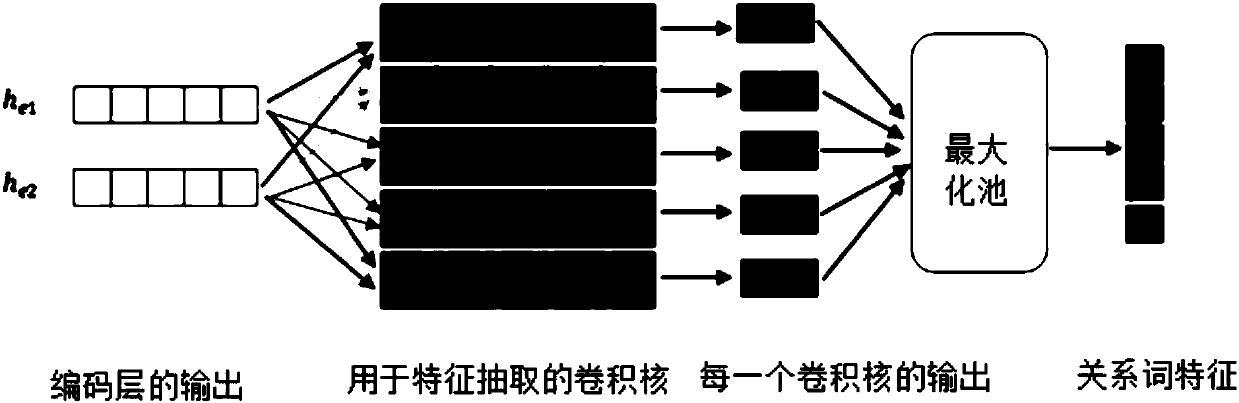

Method for relationship classification with LSTM and CNN joint model based on location

ActiveCN107832400AUniversalGood effectNatural language data processingNeural architecturesFeature vectorHigh dimensional

The invention relates to a method for relationship classification with an LSTM and CNN joint model based on location. The method includes the steps of (1) preprocessing data; (2) training word vectors; (3) extracting location vectors; acquiring the location vector feature and high-dimensional location feature vector of each word in a training set, cascading the word vector of each word with the high-dimensional location feature vector thereof to obtain a joint feature; (4) building a model for a specific task; encoding contextual information and semantic information of entities by use of bidirectional LSTM; outputting the vector of the location corresponding to the marked entities, inputting the output to CNN, outputting two entity nouns and their contextual information and relational wordinformation, and inputting the entity nouns and their contextual information and relational word information into a classifier for classification; (5) training the model by use of a loss function. The method does not need to manually extract any features, the joint model does not need to use additional natural language processing tools to preprocess the data, the algorithm is simple and clear, and the best effect at present is achieved.

Owner:SHANDONG UNIV

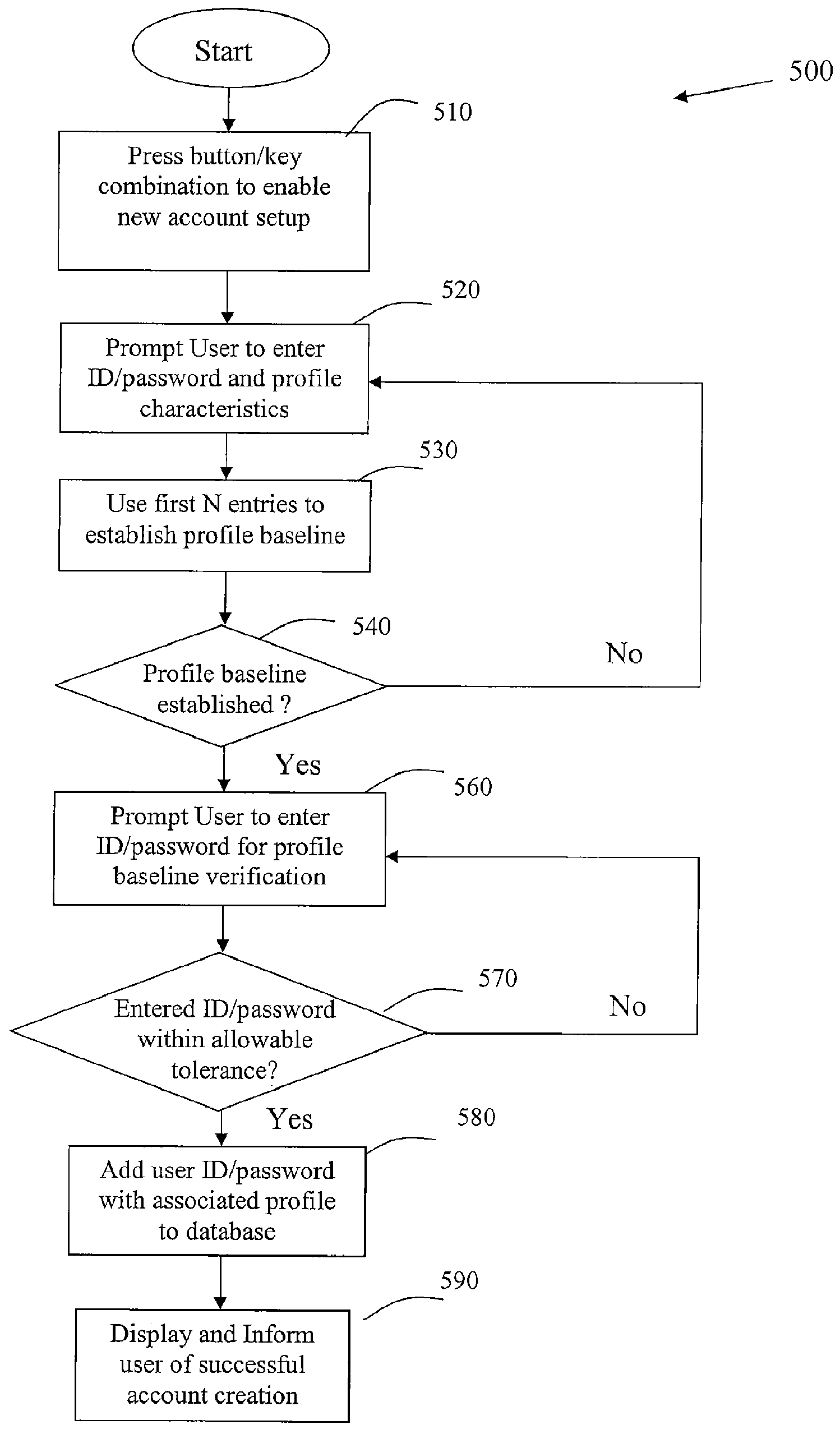

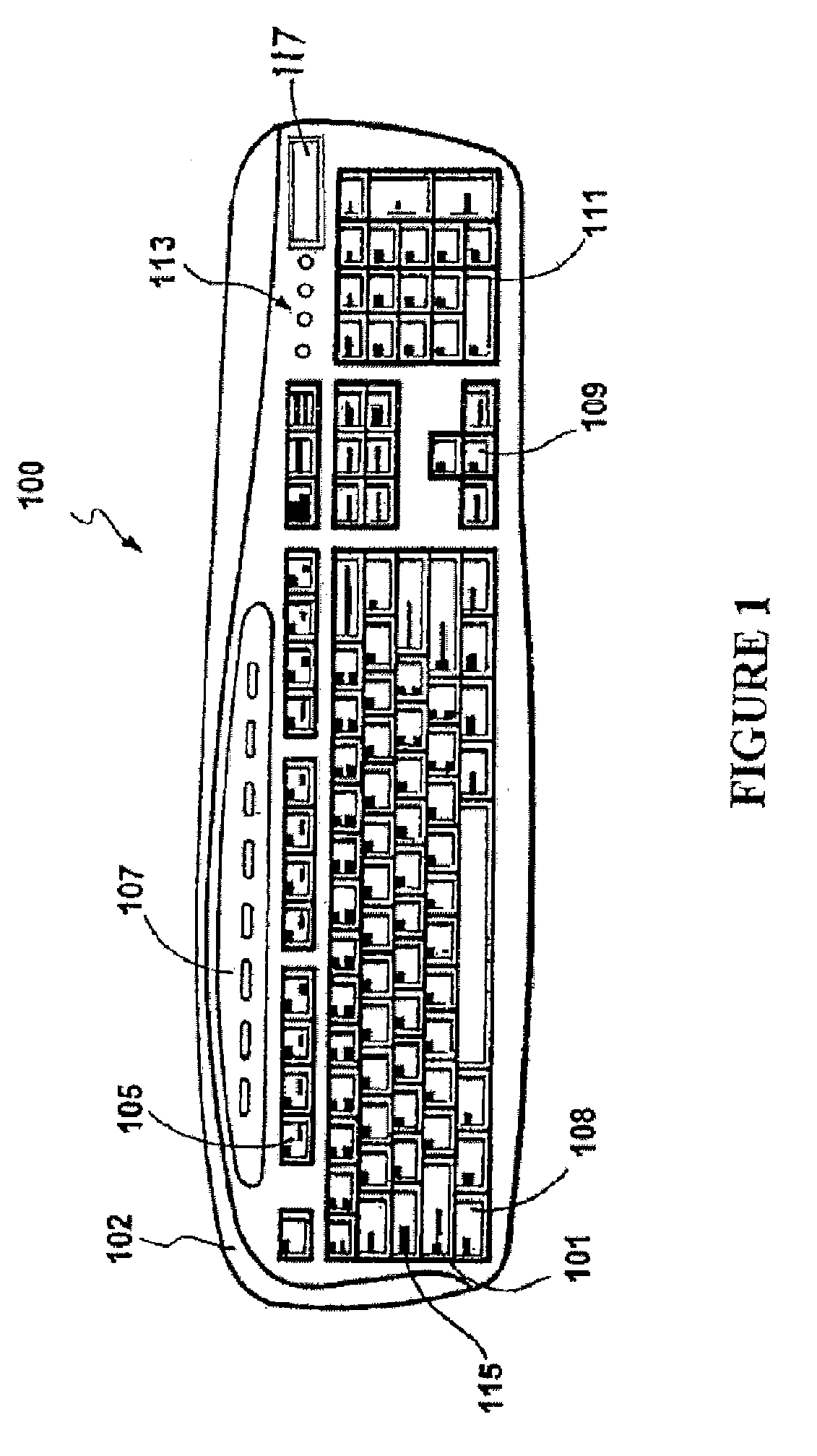

Method and system for biometric keyboard

InactiveUS20090134972A1Disabling connectionTightens toleranceElectric signal transmission systemsDigital data processing detailsDisplay deviceFlight time

A method for training a computing system using keyboard biometric information. The method includes depressing two or more keys on a keyboard input device for a first sequence of keys. The method then determines a key press time for each of the two or more keys to provide a key press time characteristic in the first sequence of keys. The method also determines a flight time between a first key and a second key to provide a flight time characteristic in the first sequence of keys, the first key being within the two or more keys. The method includes storing the key press time characteristic and the flight time characteristic for the first sequence of keys, and displaying indications associated with the first sequence of keys on a display device provided on a portion of the keyboard input device.

Owner:MINEBEA CO LTD

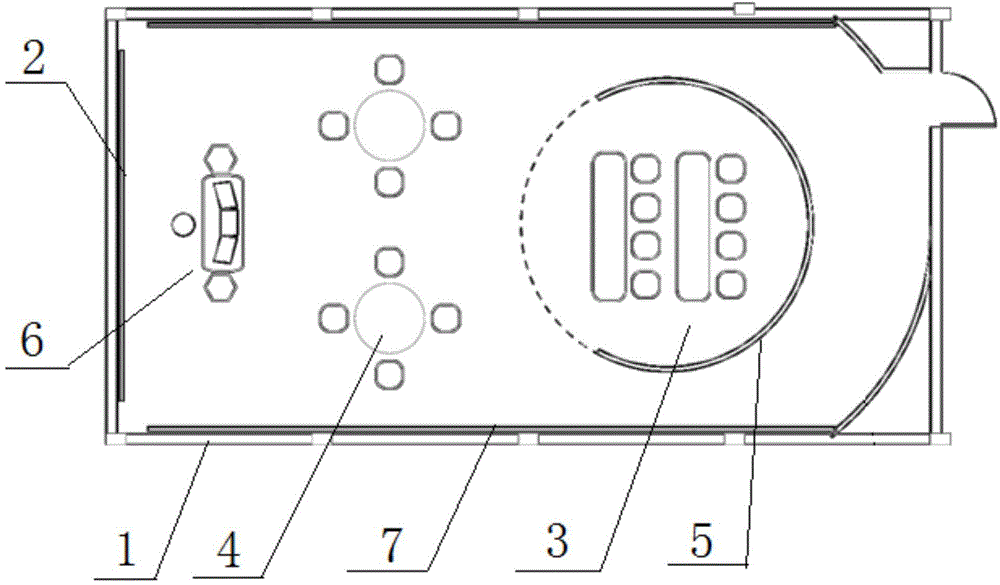

Experiential digitalized multi-screen seamless cross-media interactive opening teaching laboratory

ActiveCN104575142ASupports real-time processingRealize analysisElectrical appliancesPhysical spaceVirtual space

An experiential digitalized multi-screen seamless cross-media interactive opening teaching laboratory is integrated in testing, researching and analyzing. Experiment and data analysis are performed in a real teaching environment; under support of the multi-screen interactive technology, the laboratory comprises a laboratory functional partition, an operation support system, a data working system, an experiment information acquisition system and an audio and video input and output device; a screen jilting function among multiple mobile terminals is realized; the data working system comprises a server, a database, education resource cloud, a U-teaching system, a learning analysis and evaluation system, a mobile device, a cross-screen management module, a recording and broadcasting system and an Internet; learning space for cross-media interactive learning is provided, technologies of holographic imaging, multi-screen interaction, learning analysis and the like are integrated, and seamless fusion of the physical space and the virtual space is realized; seamless fusion of supporting technologies from formal learning to informal learning, multiple learning modes, cross-terminal, cross-media and the like is realized, and good learning experience is provided for learners.

Owner:SHANGHAI OPEN UNIVERSITY

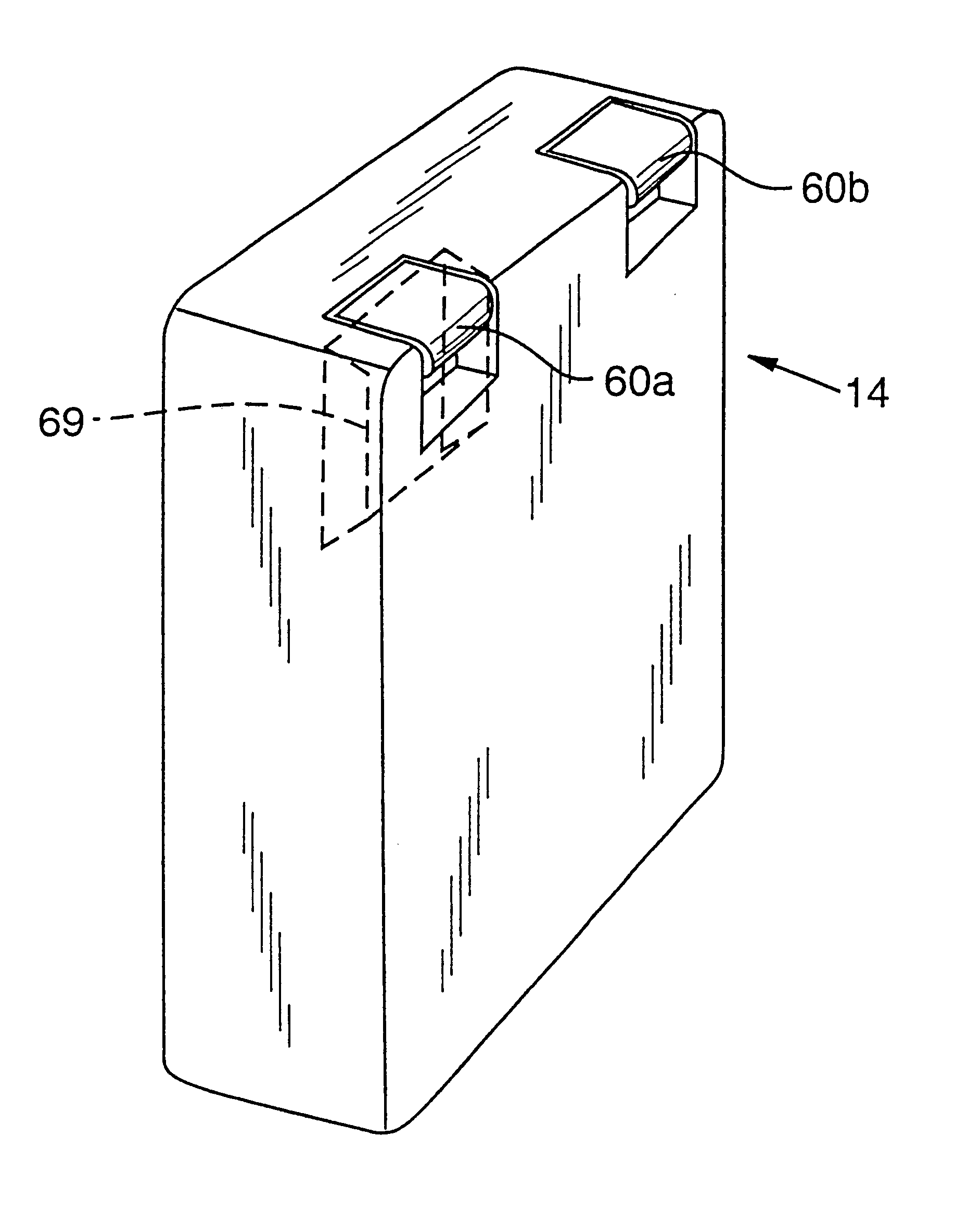

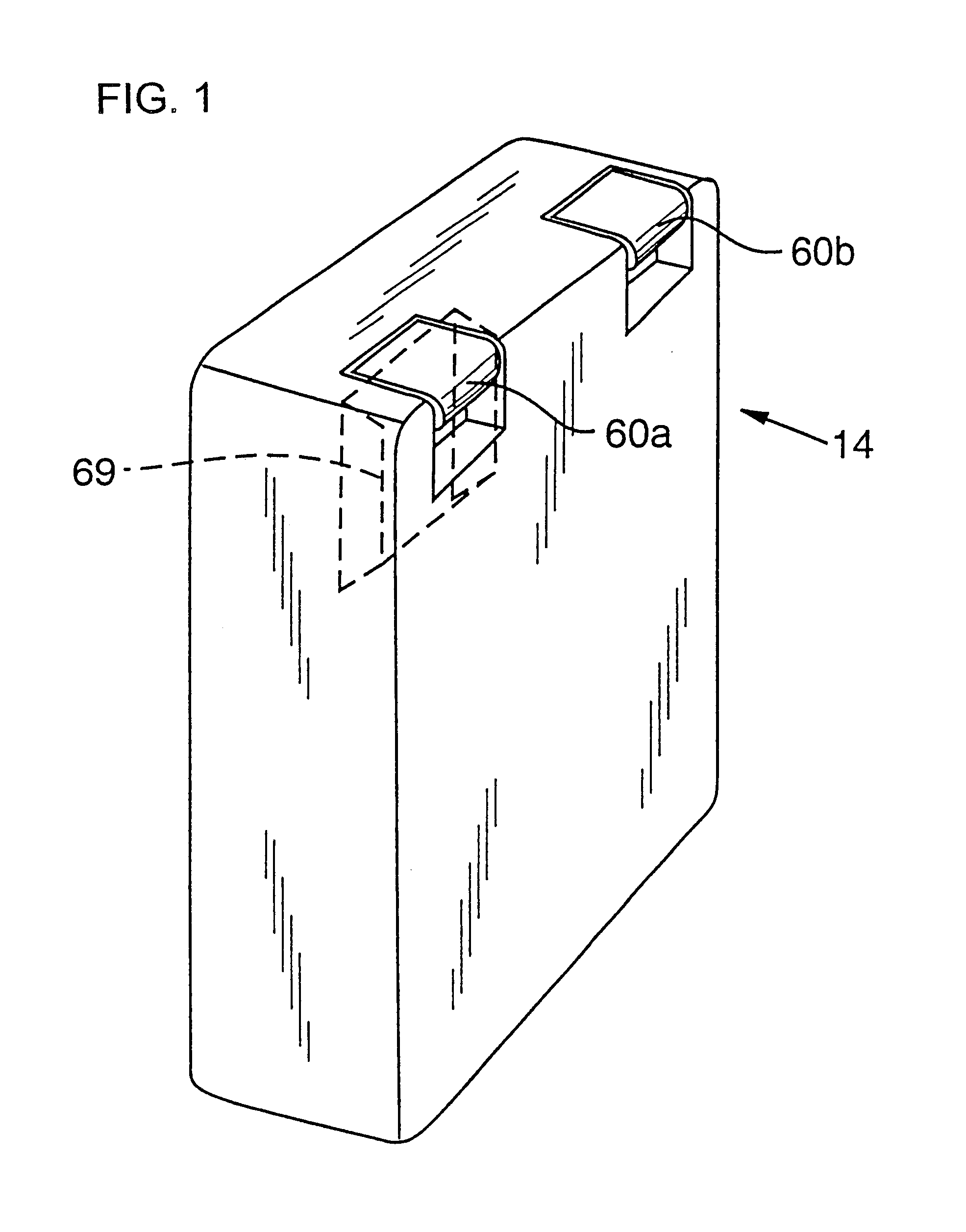

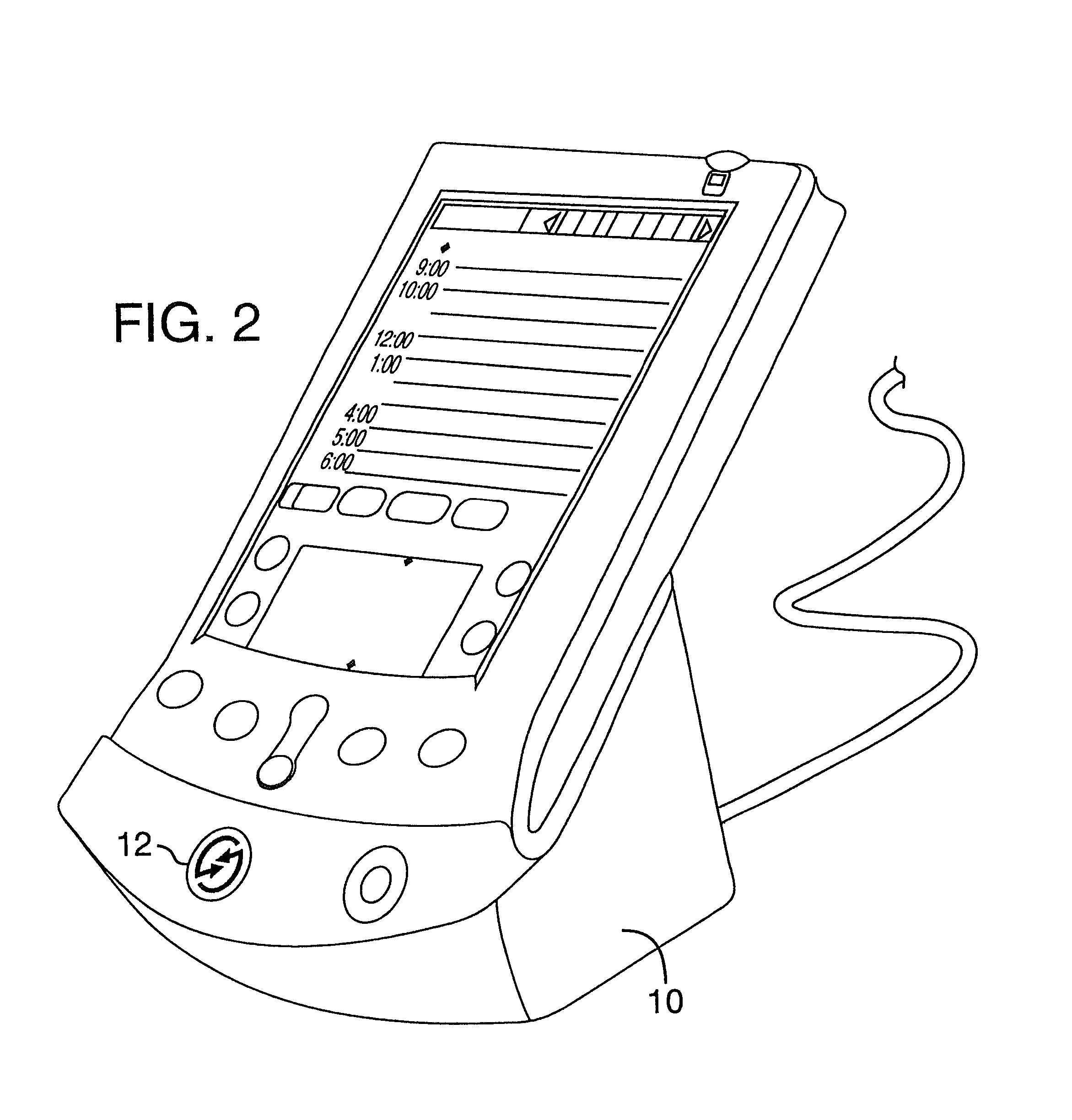

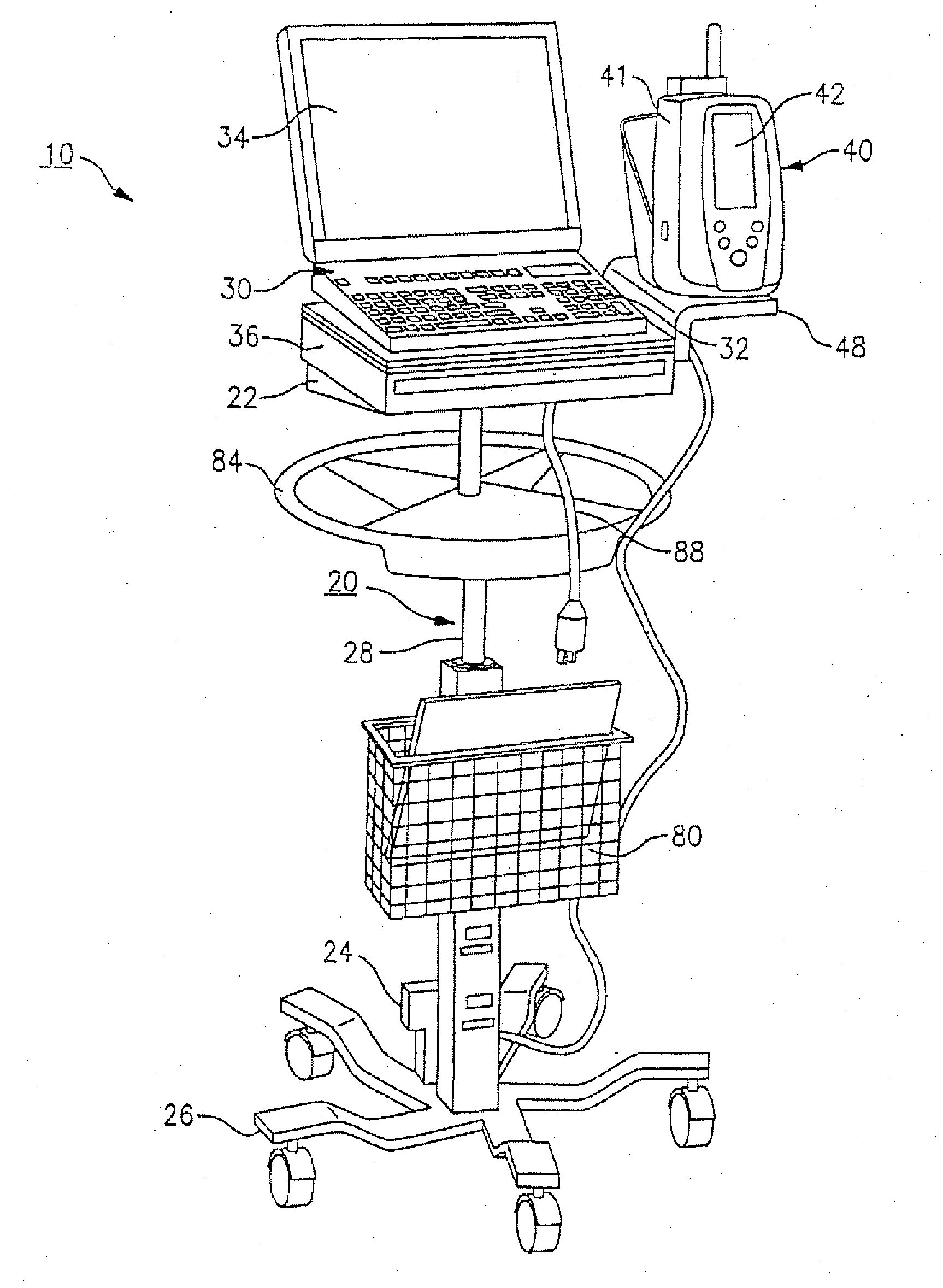

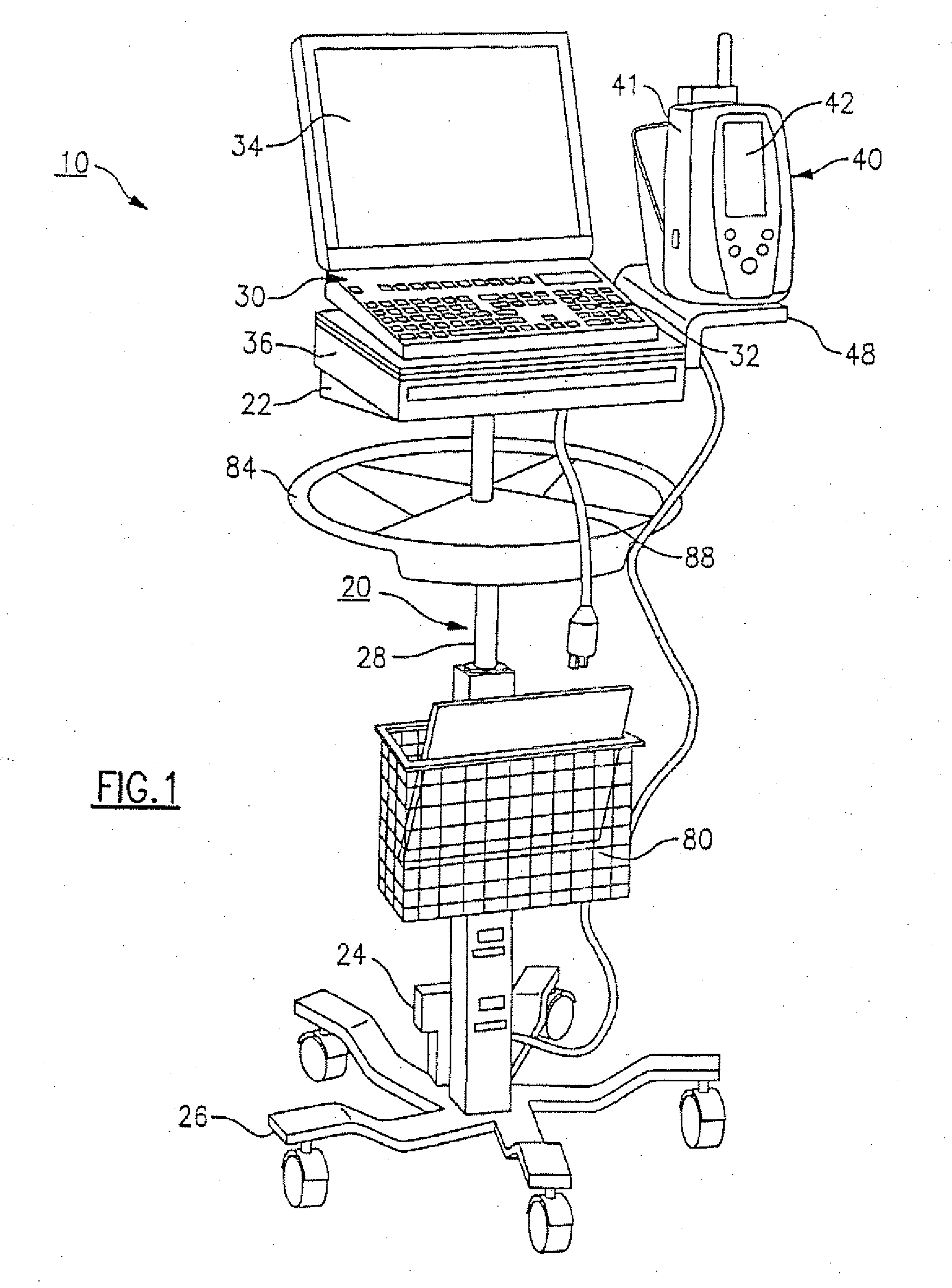

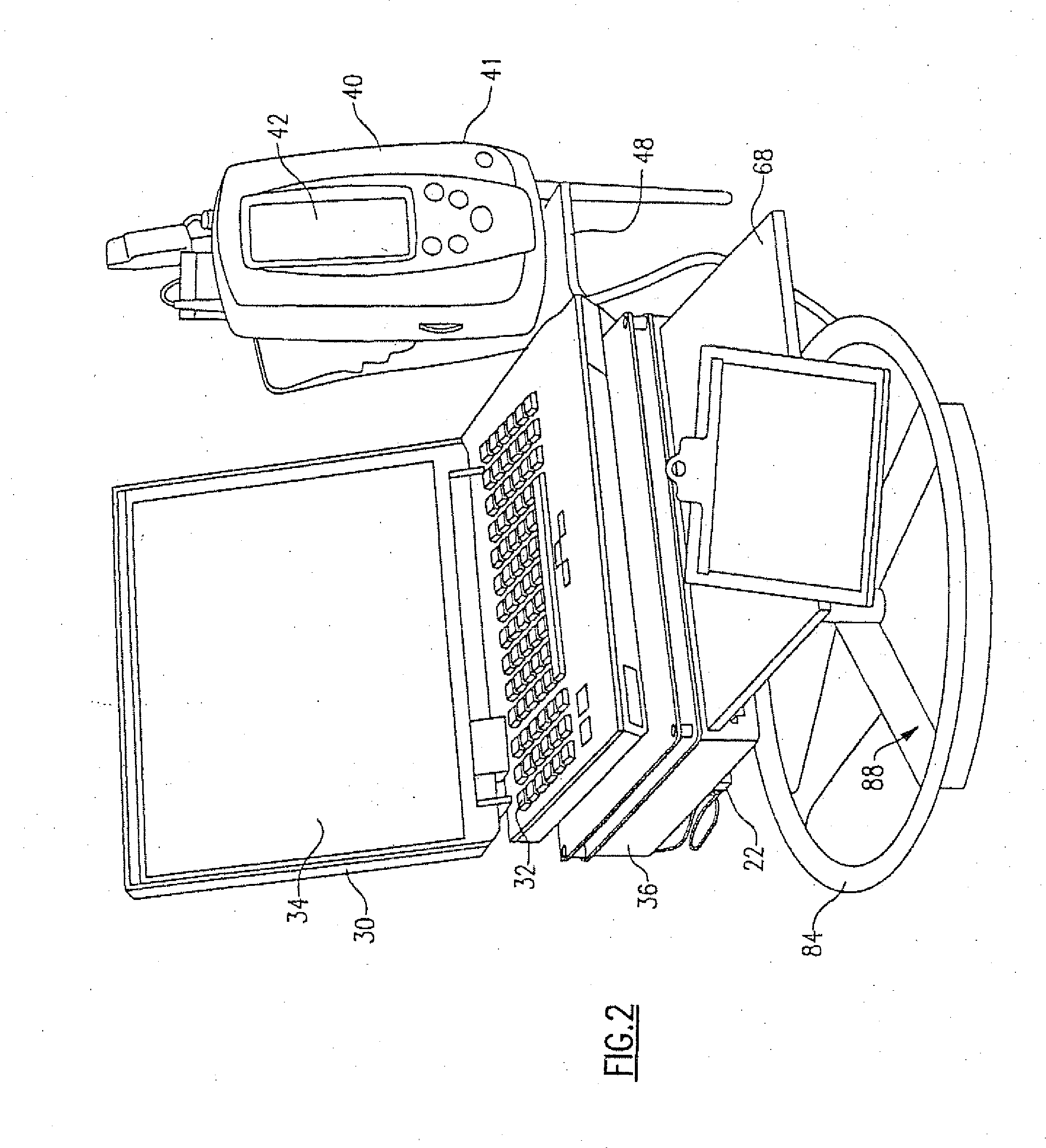

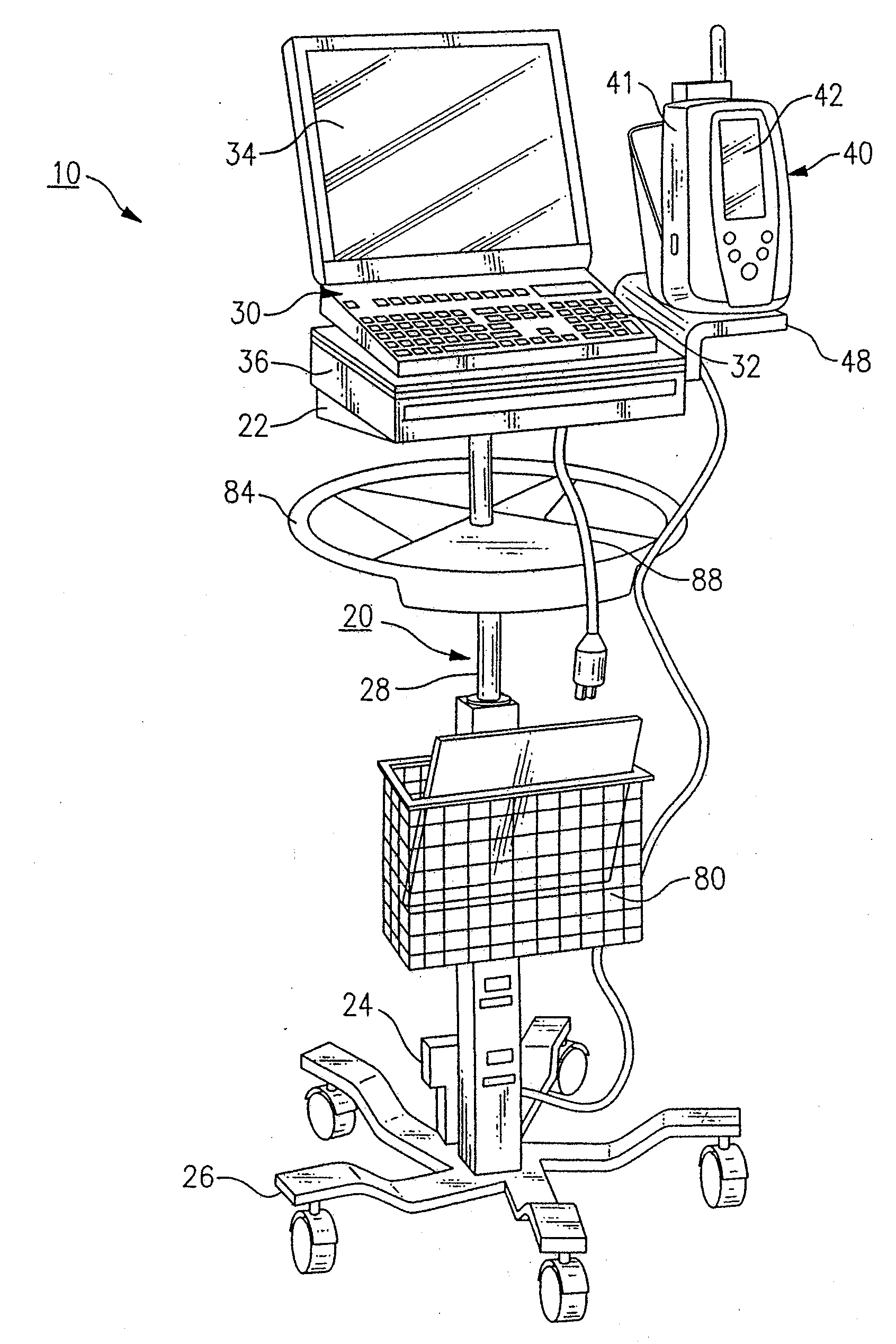

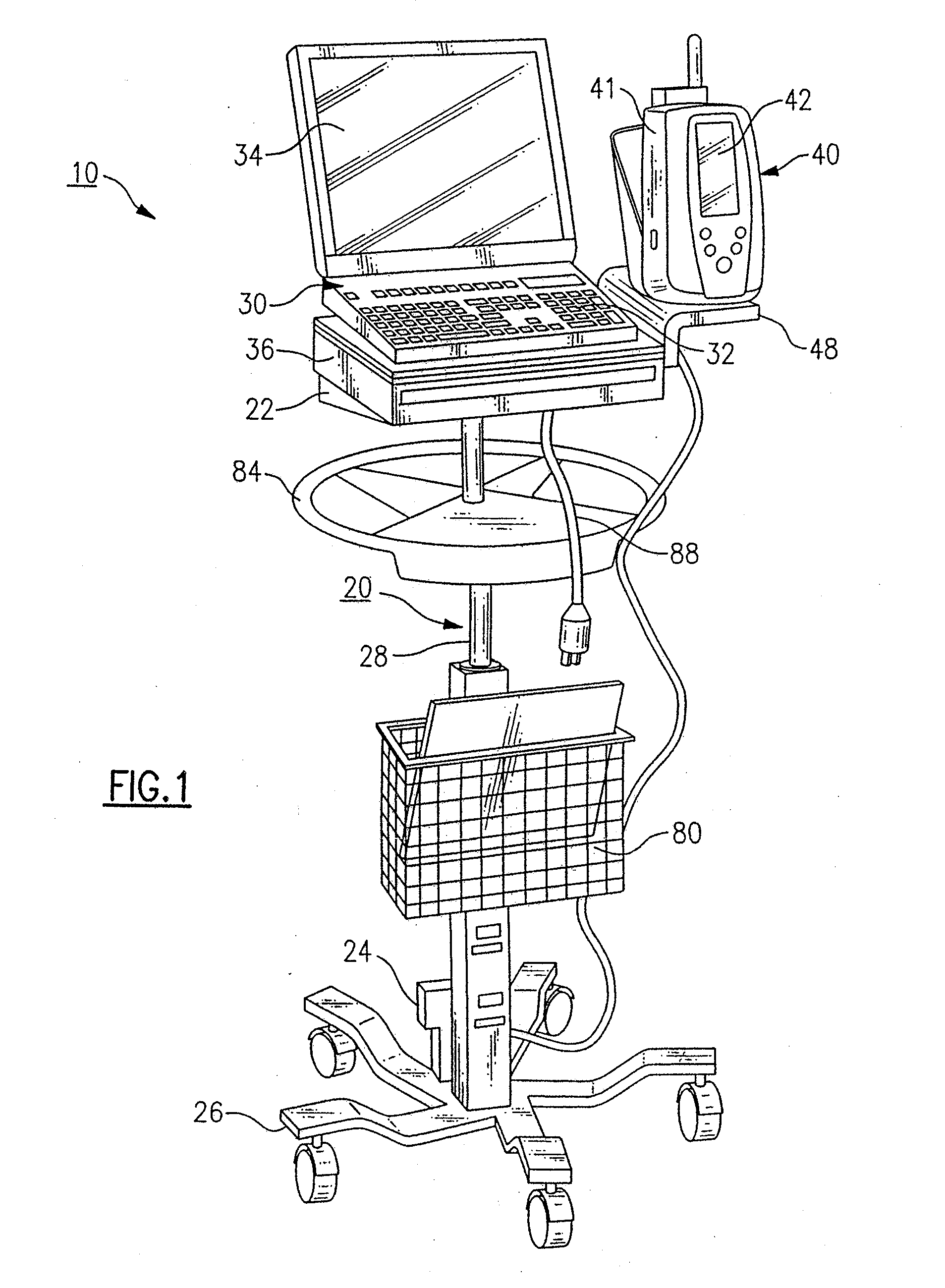

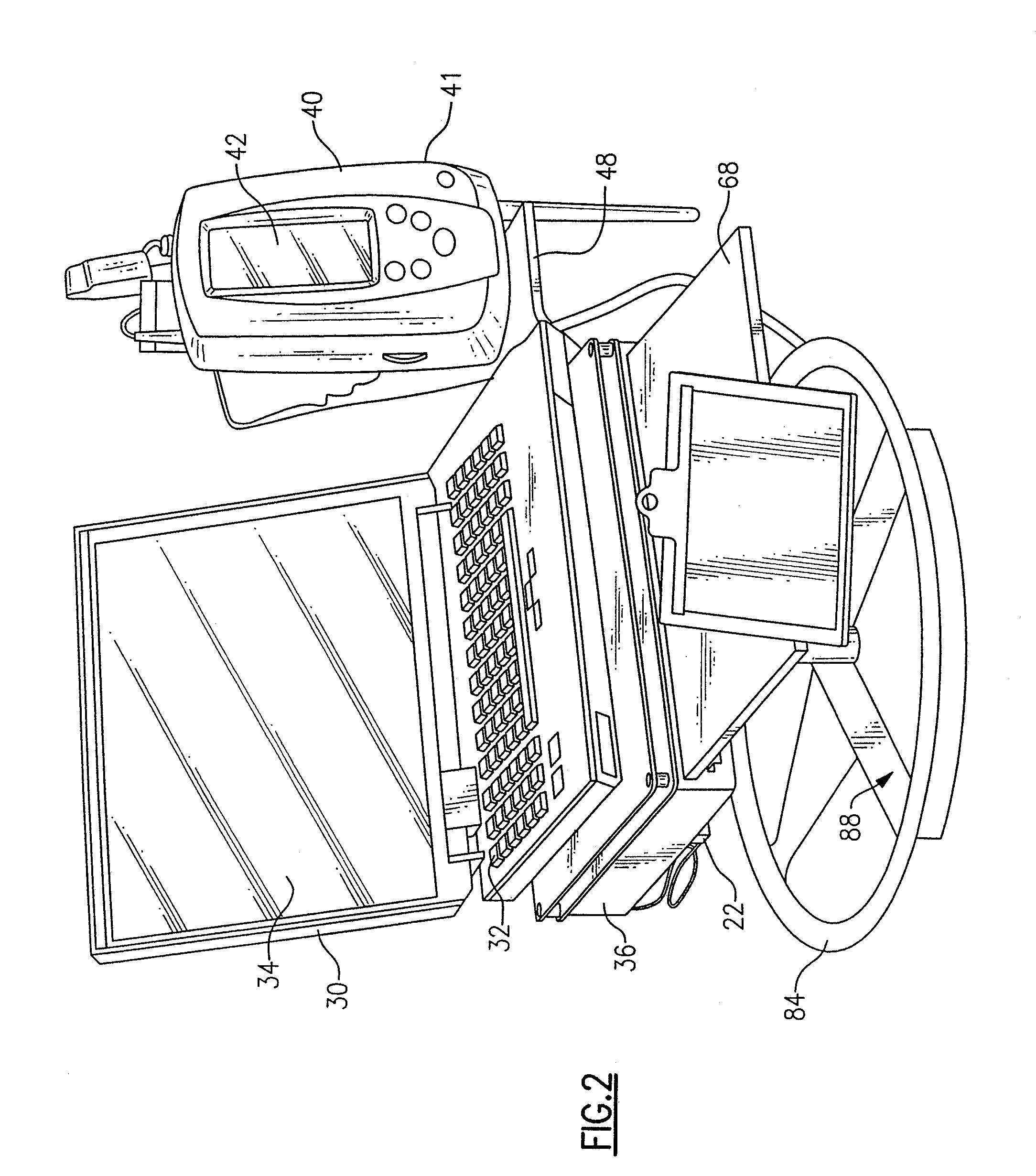

Diagnostic instrument workstation

InactiveUS20090221880A1More comprehensiveMore efficientPerson identificationEndoscopesBarcodeWorkstation

An integrated medical workstation for use in patient clinical encounters includes an input device such as a bar code scanner that is interconnected to a computing device. At least one device capable of obtaining at least one physiological parameter is either attached directly to the workstation or is in communication therewith. Preferably, the input scanning device controls at least substantial overall operation of the medical workstation that can be placed, for example, into a network.

Owner:WELCH ALLYN INC

Diagnostic instrument workstation

InactiveUS20080281167A1More comprehensiveMore efficientPerson identificationEndoscopesWorkstationDiagnostic instrument

An integrated medical workstation for use in patient clinical encounters includes an input device such as a bar code scanner that is interconnected to a computing device. At least one device capable of obtaining at least one physiological parameter is either attached directly to the workstation or is in communication therewith. Preferably, the input scanning device controls at least substantial overall operation of the medical workstation that can be placed, for example, into a network.

Owner:WELCH ALLYN INC

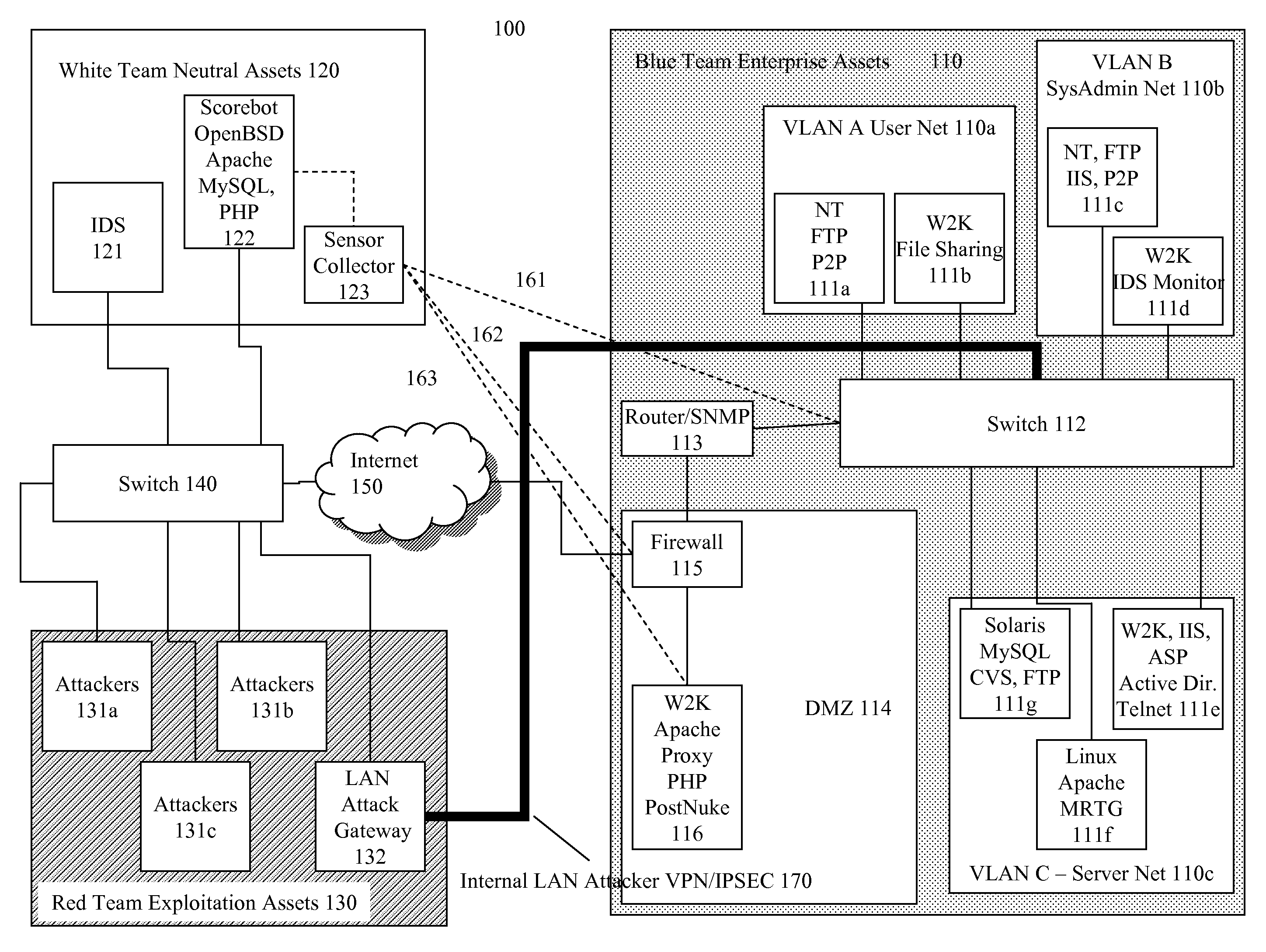

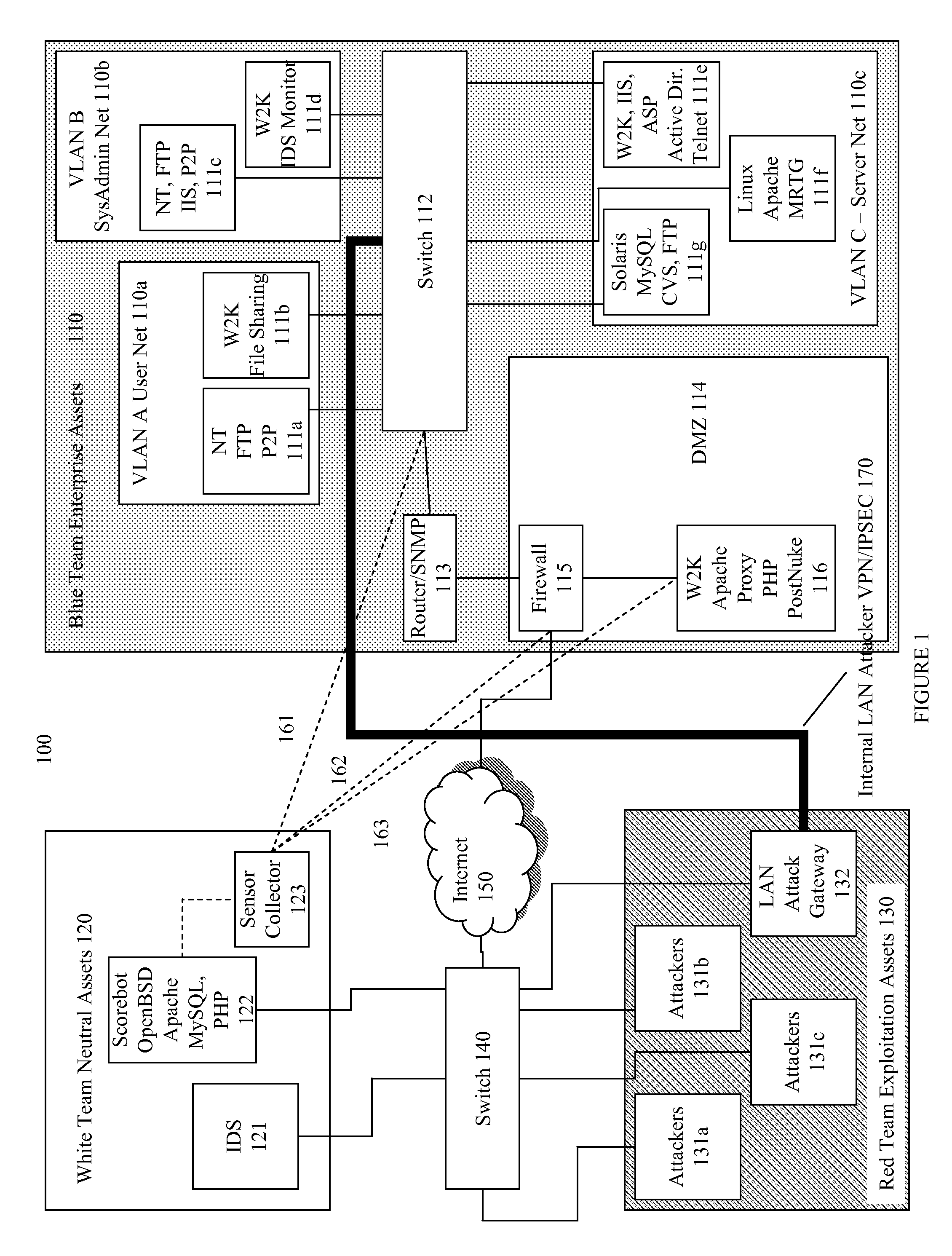

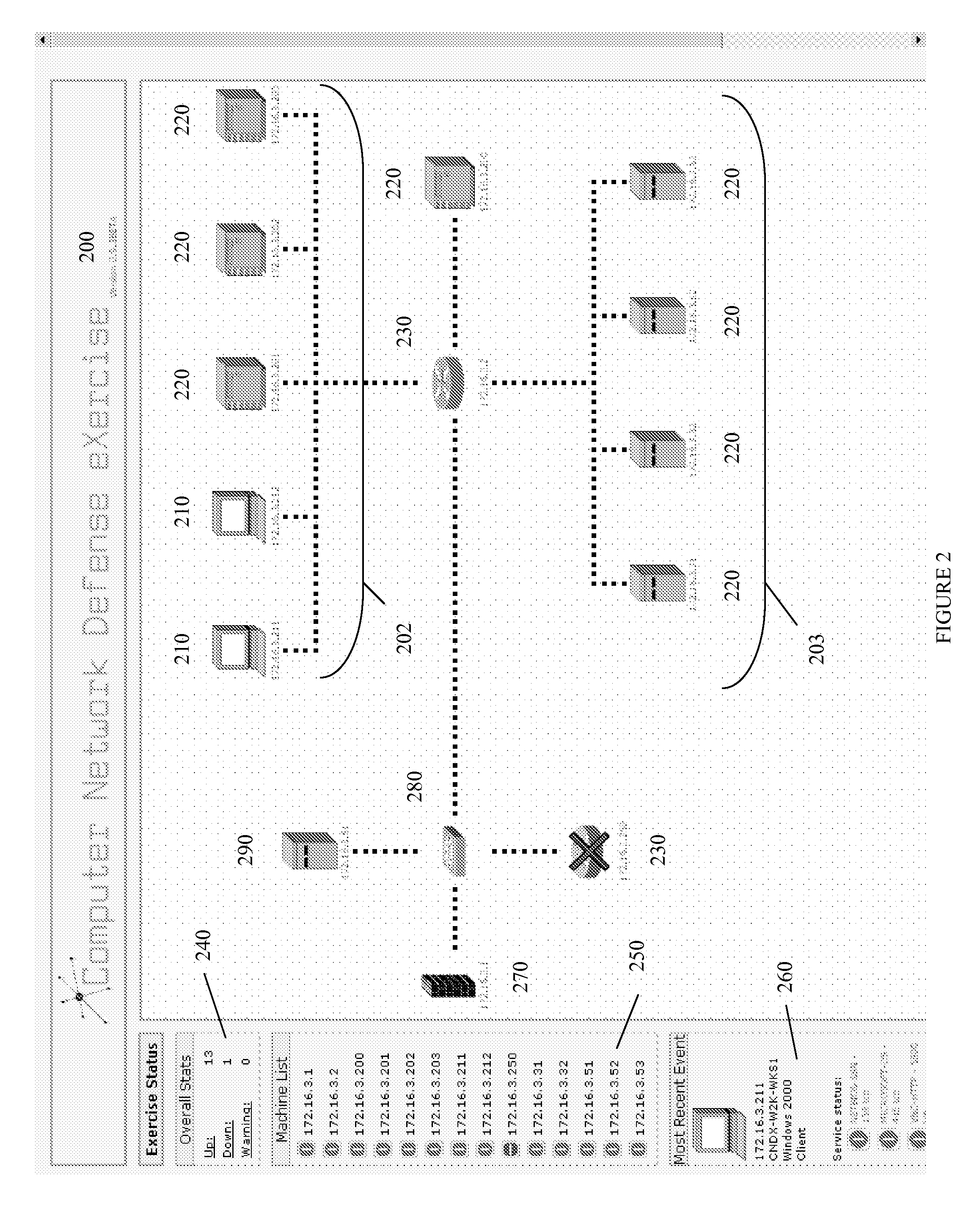

Systems and methods for implementing and scoring computer network defense exercises

ActiveUS8250654B1Easy to trainMemory loss protectionUnauthorized memory use protectionOperating systemClient system

A process for facilitating a client system defense training exercise implemented over a client-server architecture includes designated modules and hardware for protocol version identification message; registration; profiling; health reporting; vulnerability status messaging; storage; access and scoring. More particularly, the server identifies a rule-based vulnerability profile to the client and scores client responses in accordance with established scoring rules for various defensive and offensive asset training scenarios.

Owner:LEIDOS

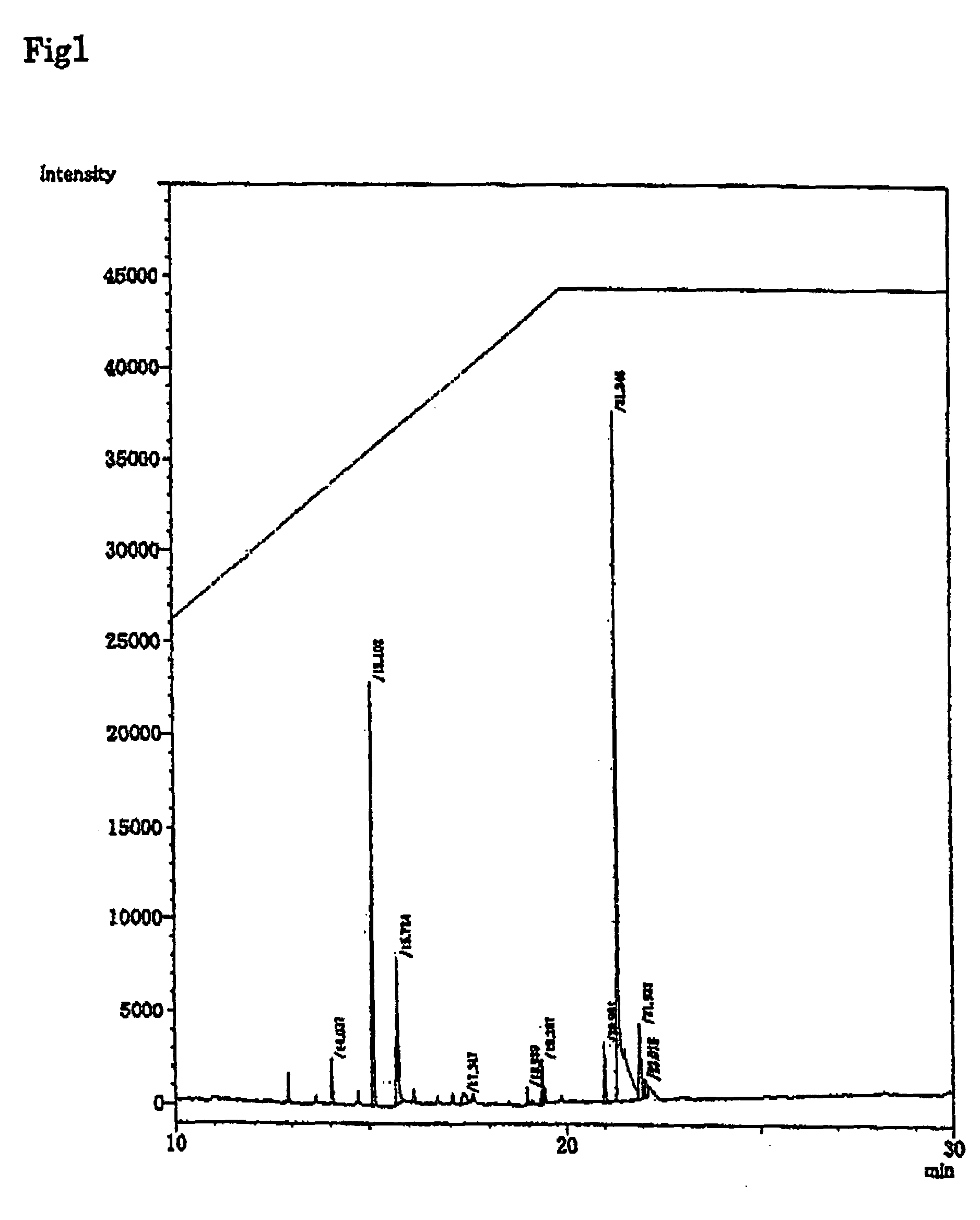

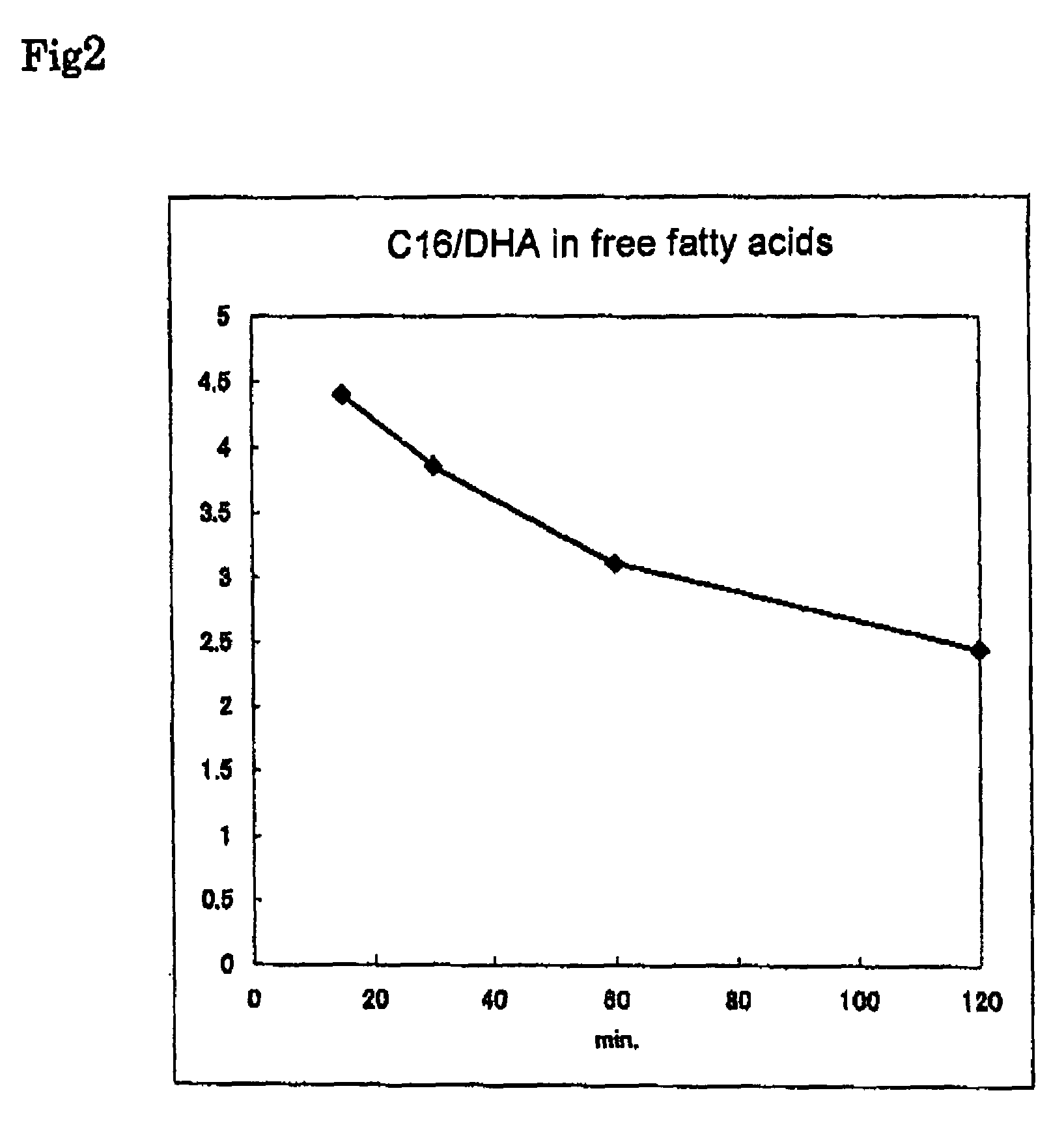

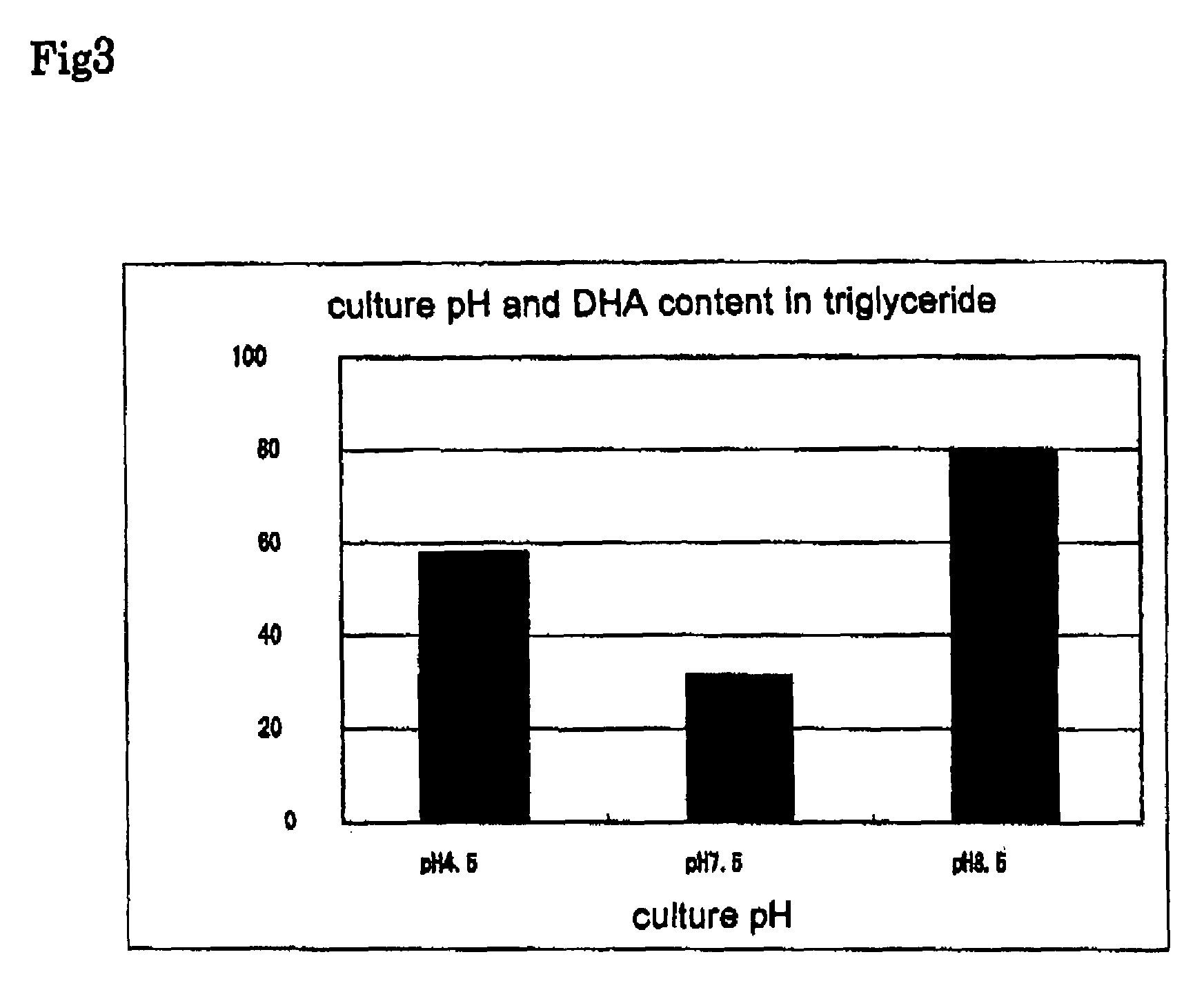

Microorganism having an ability of producing docosahexaenoic acid and use thereof

ActiveUS7259006B2High ability of producing docosahexaenoic acidEfficient productionBiocideFatty acid hydrogenationDocosahexaenoic acidMicroorganism

An object of the present invention is to provide a microorganism which has a high ability of producing docosahexaenoic acid. The present invention provides a Thraustochytrium strain which has an ability of producing docosahexaenoic acid, and use thereof.

Owner:FUJIFILM HLDG CORP +1

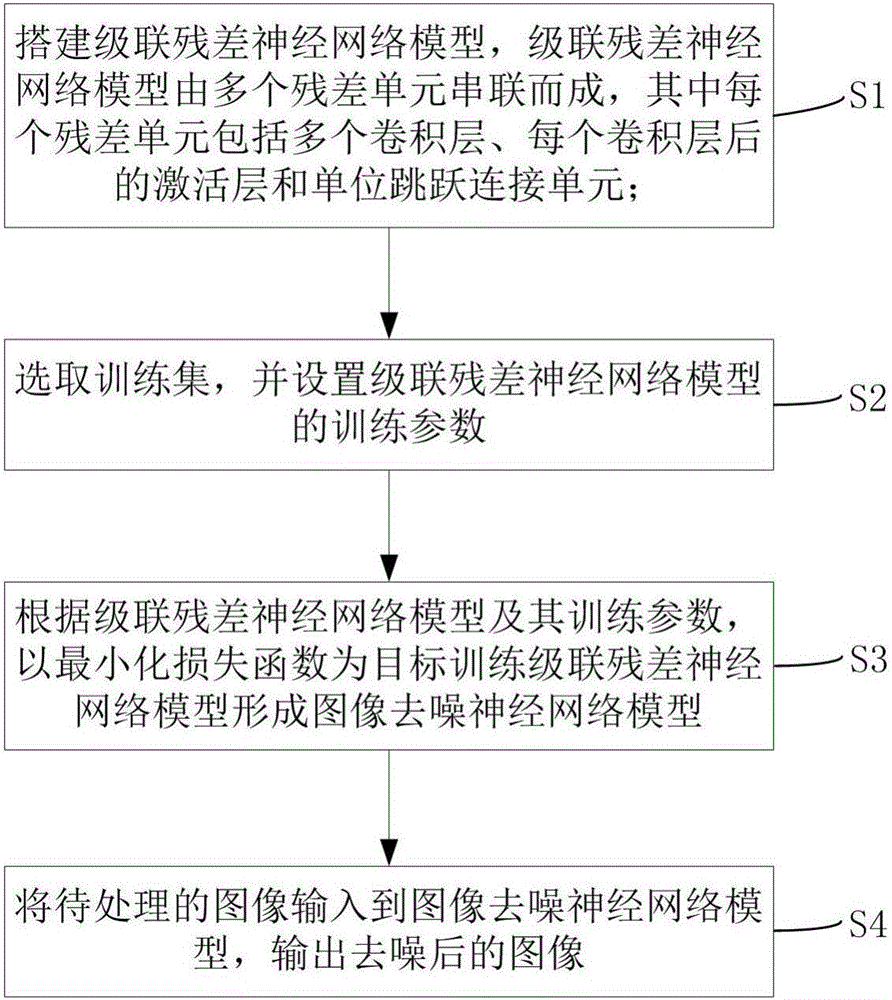

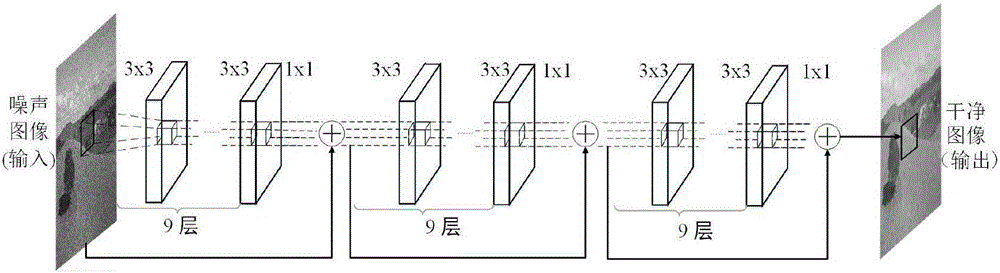

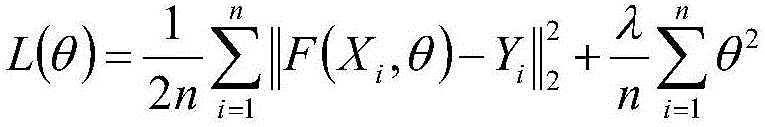

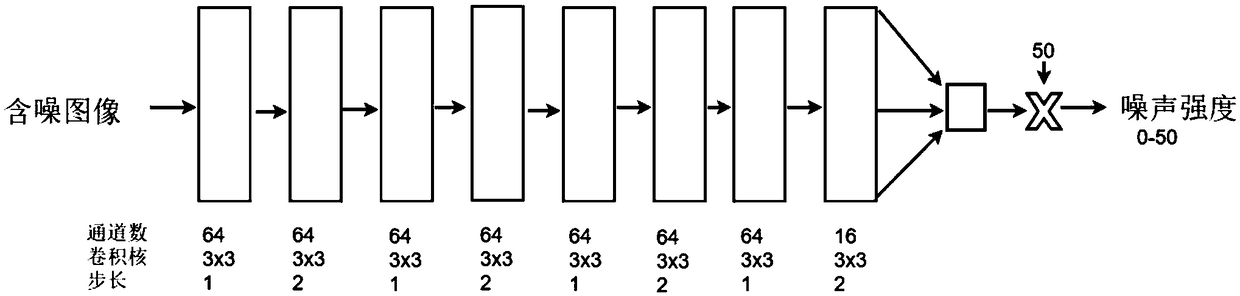

Cascaded residual error neural network-based image denoising method

ActiveCN106204467AEnhanced Feature ExtractionImprove convergence speedImage enhancementImage analysisLearning abilitiesMachine learning

The invention discloses a cascaded residual error neural network-based image denoising method. The method comprises the following steps of building a cascaded residual error neural network model, wherein the cascaded residual error neural network model is formed by connecting a plurality of residual error units in series, and each residual error unit comprises a plurality of convolutional layers, active layers after the convolutional layers and unit jump connection units; selecting a training set, and setting training parameters of the cascaded residual error neural network model; training the cascaded residual error neural network model by taking a minimized loss function as a target according to the cascaded residual error neural network model and the training parameters of the cascaded residual error neural network model to form an image denoising neural network model; and inputting a to-be-processed image to the image denoising neural network model, and outputting a denoised image. According to the cascaded residual error neural network-based image denoising method disclosed by the invention, the learning ability of the neural network is greatly enhanced, accurate mapping from noisy images to clean images is established, and real-time denoising can be realized.

Owner:SHENZHEN INST OF FUTURE MEDIA TECH +1

Voiceprint identification method

The invention discloses a voiceprint identification method. The voiceprint identification method comprises the following steps of: 1, preprocessing segmented speech data of each speaker in a training speech set to form a group of sample sets corresponding to each speaker; 2, extracting Mel-frequency cepstrum coefficients from each sample in all sample sets; 3, selecting a sample set one by one and randomly selecting the Mel-frequency cepstrum coefficients of part samples of the sample set, and training a Gaussian mixture model for the sample set; 4, performing incremental learning on the samples which are not selected and trained in the step 3 and the Gaussian mixture model of the sample set corresponding to the sample set one by one to obtain all optimized Gaussian mixture models, and optimizing a model library by utilizing all optimized Gaussian mixture models; and 5, inputting and identifying test voice data, identifying the Gaussian mixture model of the sample set corresponding to the test voice data by utilizing the optimized model library in the step 4, and adding the test voice data to the sample set corresponding to the speaker.

Owner:NANJING UNIV

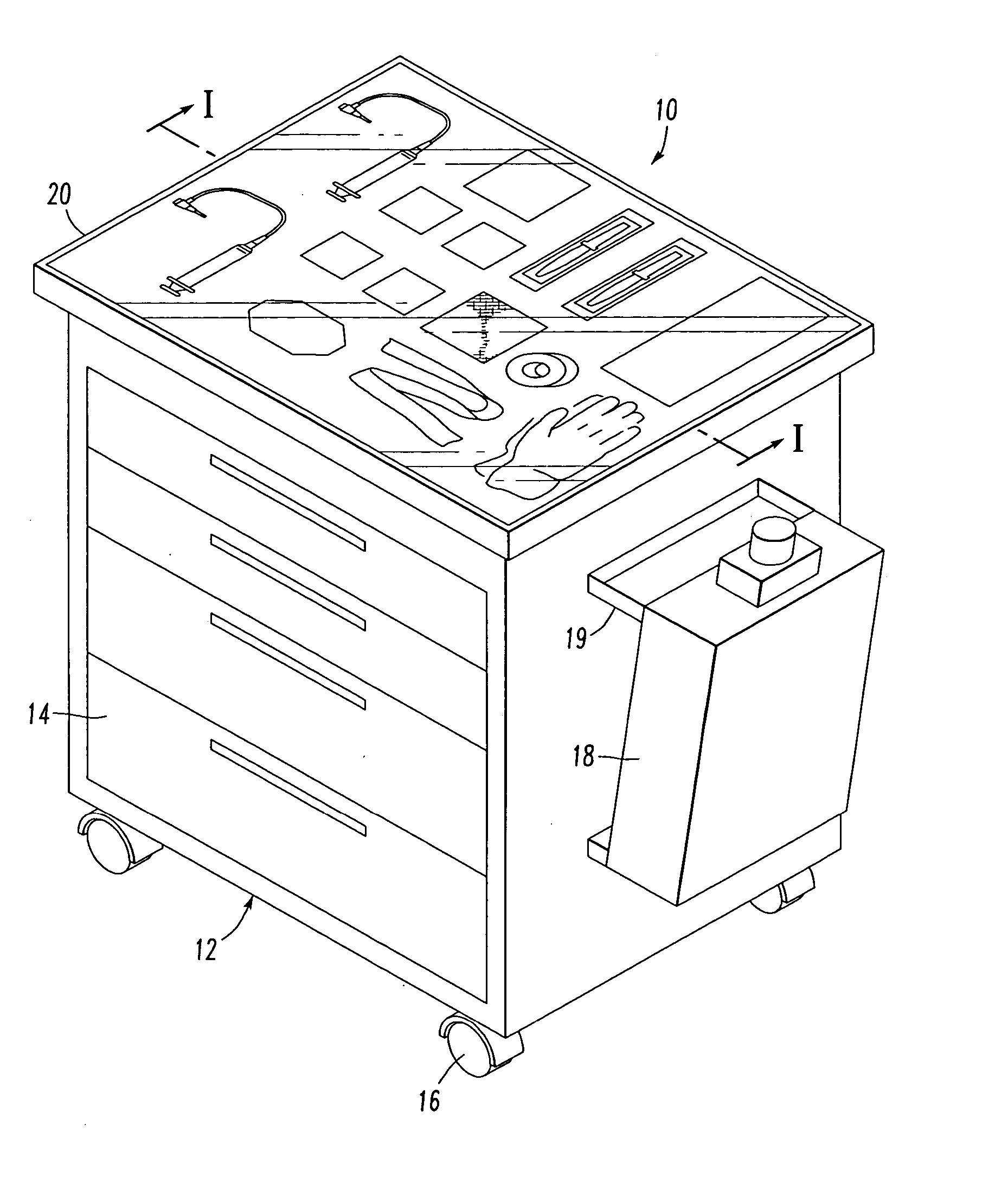

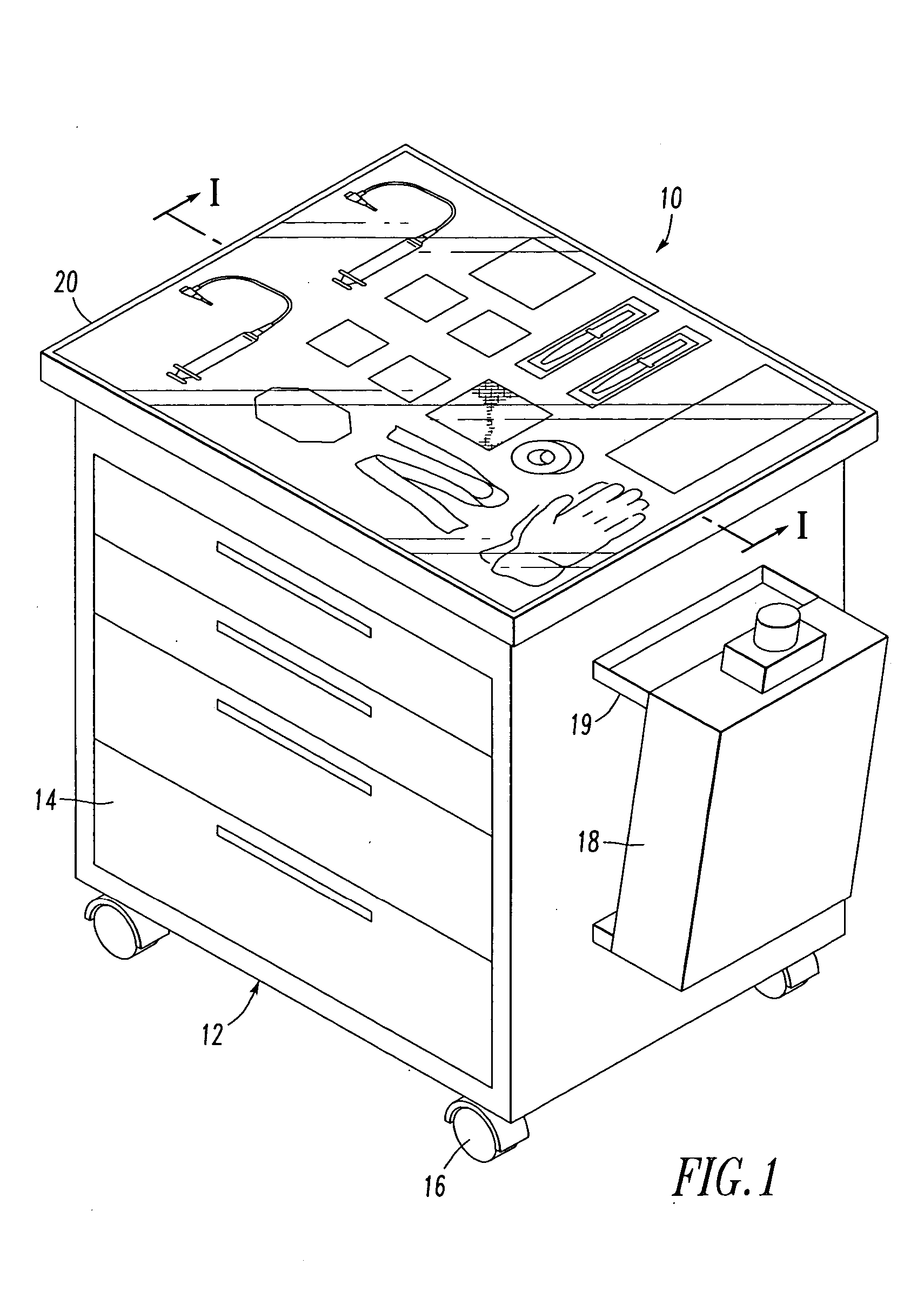

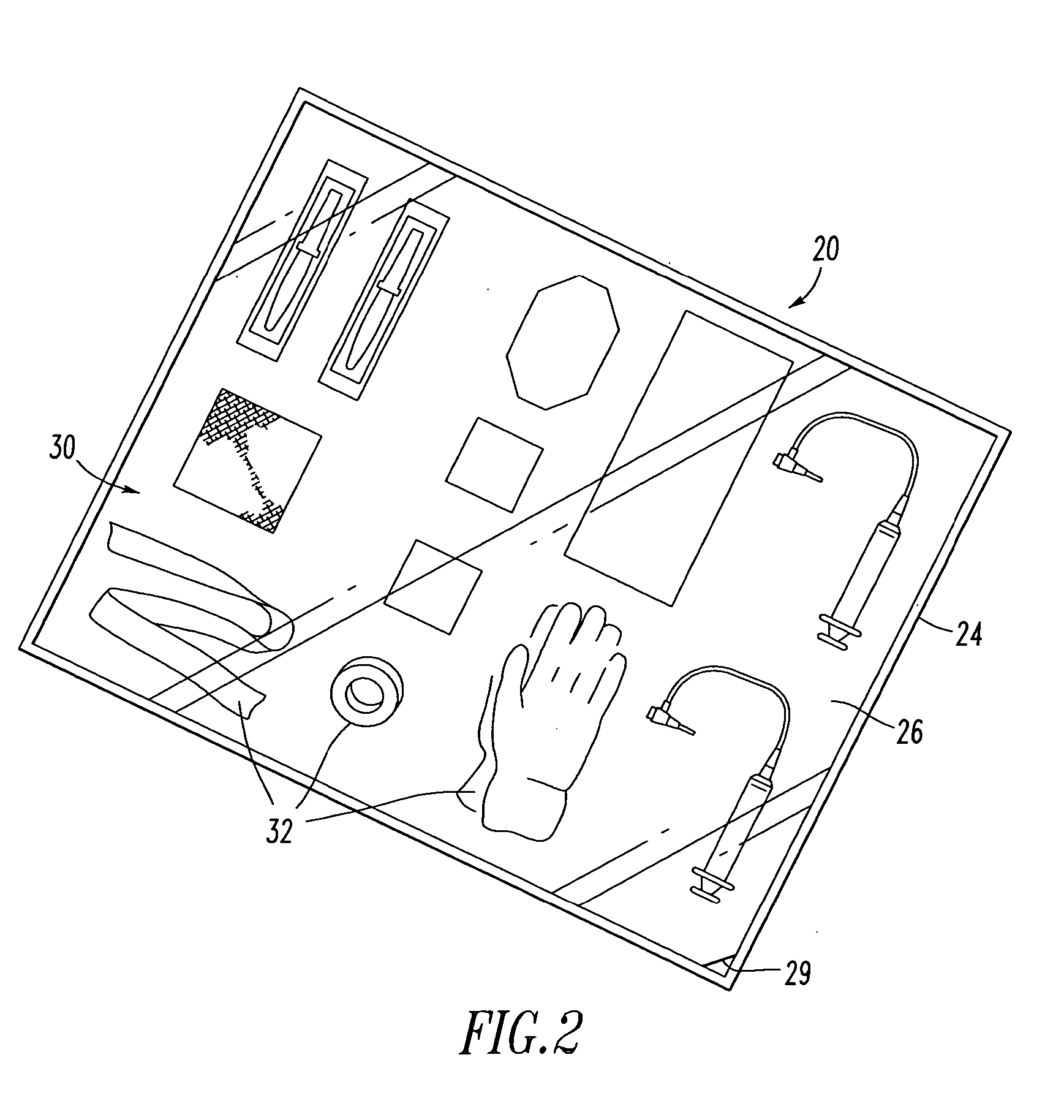

Medical procedure cart and method of customizing the same

InactiveUS20050236940A1Improve organizationReadily availableSurgical furnitureSledgesEquipment OperatorEngineering

A medical procedure cart includes a housing having a working surface disposed on a top thereof. The working surface forms a tray and includes a transparent top removably disposed above the tray. A height adjustment mechanism adjusts the height of the working surface. The template includes a plurality of figures depicting various different materials, instruments and equipment an operator prefers for a particular procedure. The operator can place the instruments, supplies and equipment on the transparent top of the working surface above a corresponding figure to enable the working surface to be stocked for a particular procedure.

Owner:CHILDRENS MEDICAL CENT CORP

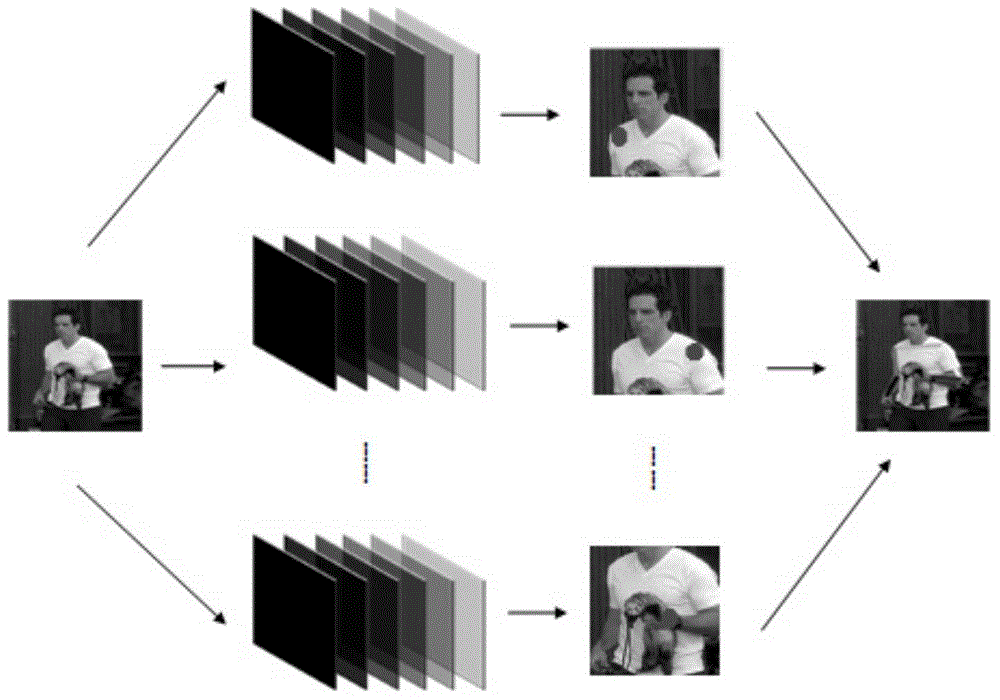

Image denoising method based on generative adversarial networks

InactiveCN108765319AEasy to trainEvenly distributedImage enhancementImage analysisGenerative adversarial networkImage denoising

The invention provides an image denoising method based on generative adversarial networks, and belongs to the technical field of computer vision. The method comprises the following steps: (1) designing a neural network for estimation for noise intensity of an image containing noises; (2) using image blocks in an image library to add noises of the intensity according to the estimated noise intensity to use the same as samples of training the networks; (3) in network training, designing a new generation network and discrimination network, and adopting a form of fixing the generation network to train the discrimination network and fixing discrimination network parameters to train the generation network to enable the networks to carry out adversarial training; and (4) using the trained generation network as a denoising network, and selecting a network parameter according to a result, which is obtained by the noise recognition network, to denoise the image containing the noises. The methodhas the effects and the advantages that a visual effect of the denoised image is improved without the need for manual intervention for adjusting the parameter, and texture details of the image can bebetter restored.

Owner:DALIAN UNIV OF TECH

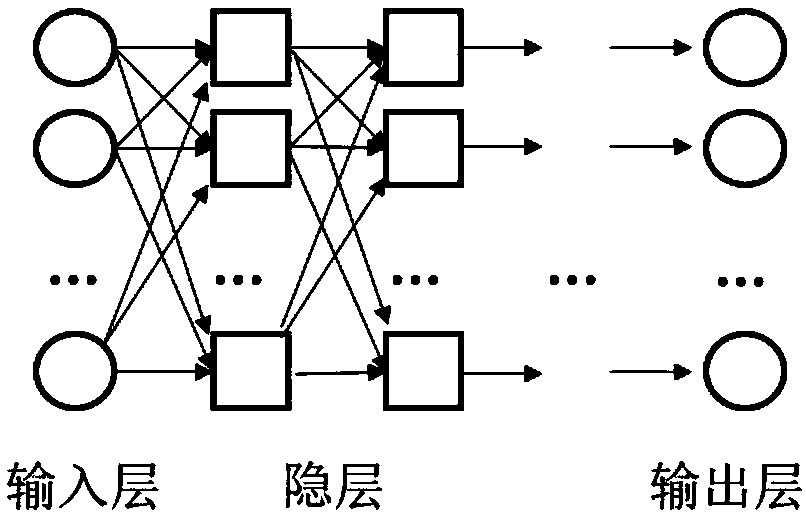

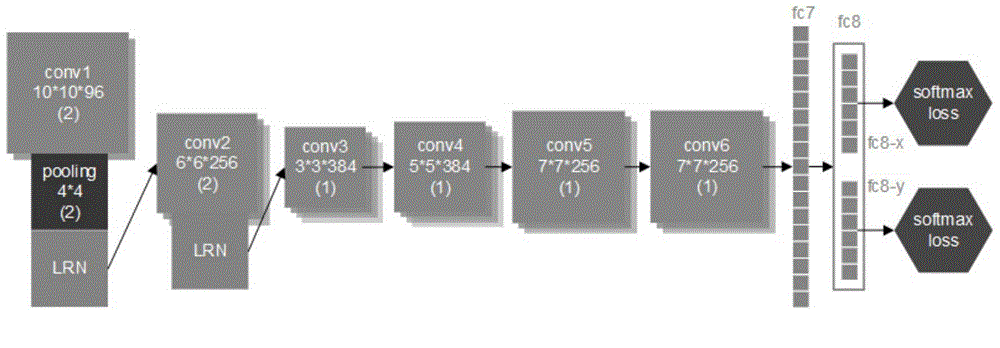

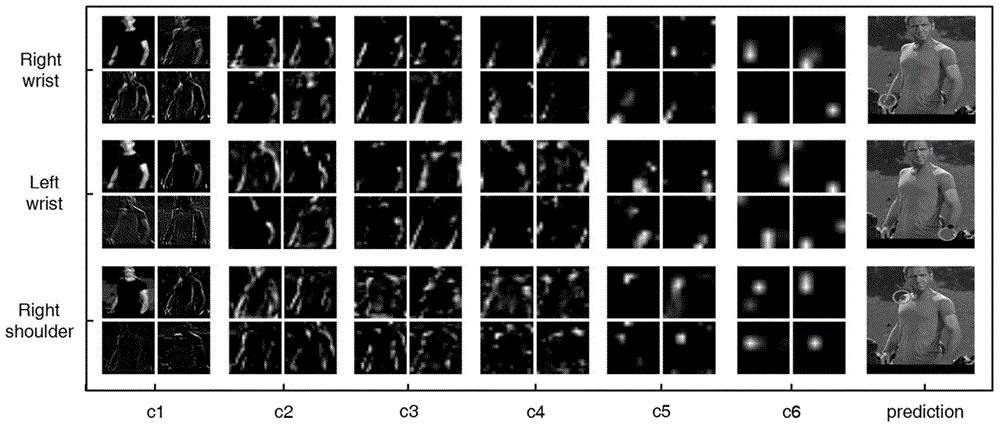

Human body gesture identification method based on depth convolution neural network

InactiveCN105069413AOvercome limitationsEasy to trainBiometric pattern recognitionHuman bodyInformation processing

The invention discloses a human body gesture identification method based on a depth convolution neural network, belongs to the technical filed of mode identification and information processing, relates to behavior identification tasks in the aspect of computer vision, and in particular relates to a human body gesture estimation system research and implementation scheme based on the depth convolution neural network. The neural network comprises independent output layers and independent loss functions, wherein the independent output layers and the independent loss functions are designed for positioning human body joints. ILPN consists of an input layer, seven hidden layers and two independent output layers. The hidden layers from the first to the sixth are convolution layers, and are used for feature extraction. The seventh hidden layer (fc7) is a full connection layer. The output layers consist of two independent parts of fc8-x and fc8-y. The fc8-x is used for predicting the x coordinate of a joint. The fc8-y is used for predicting the y coordinate of the joint. When model training is carried out, each output is provided with an independent softmax loss function to guide the learning of a model. The human body gesture identification method has the advantages of simple and fast training, small computation amount and high accuracy.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Multi modality brain mapping system (MBMS) using artificial intelligence and pattern recognition

ActiveUS20160035093A1Easy to trainUltrasonic/sonic/infrasonic diagnosticsImage enhancementPattern recognitionBrain mapping

A Multimodality Brain Mapping System (MBMS), comprising one or more scopes (e.g., microscopes or endoscopes) coupled to one or more processors, wherein the one or more processors obtain training data from one or more first images and / or first data, wherein one or more abnormal regions and one or more normal regions are identified; receive a second image captured by one or more of the scopes at a later time than the one or more first images and / or first data and / or captured using a different imaging technique; and generate, using machine learning trained using the training data, one or more viewable indicators identifying one or abnormalities in the second image, wherein the one or more viewable indicators are generated in real time as the second image is formed. One or more of the scopes display the one or more viewable indicators on the second image.

Owner:INT BRAIN MAPPING & INTRA OPERATIVE SURGICAL PLANNING FOUND +1

Color-Coded Prosthetic Valve System and Methods for Using the Same

A color-coded bioprosthetic valve system having a valve with an annular sewing ring, and a valve holder system with a holder sutured to the ring of the valve, a post operatively connected to the holder, and an adapter sutured to the post and having a color associated with the valve model and / or size. For example, the adapter may be blue to indicate that the valve of the system is a mitral valve of a particular type and / or size. The system may also include a flex handle that is configured to engage with the adapter. The handle has a color associated with the adapter such that a user is able to visually determine that the handle color matches the valve model. For example, the handle may have a grip that is colored blue to match the blue color of the adapter. Accordingly, the color-coded system enables users to confirm easily that the correct accessories such as the sizer or flex handle are being used with the correct valve.

Owner:EDWARDS LIFESCIENCES CORP

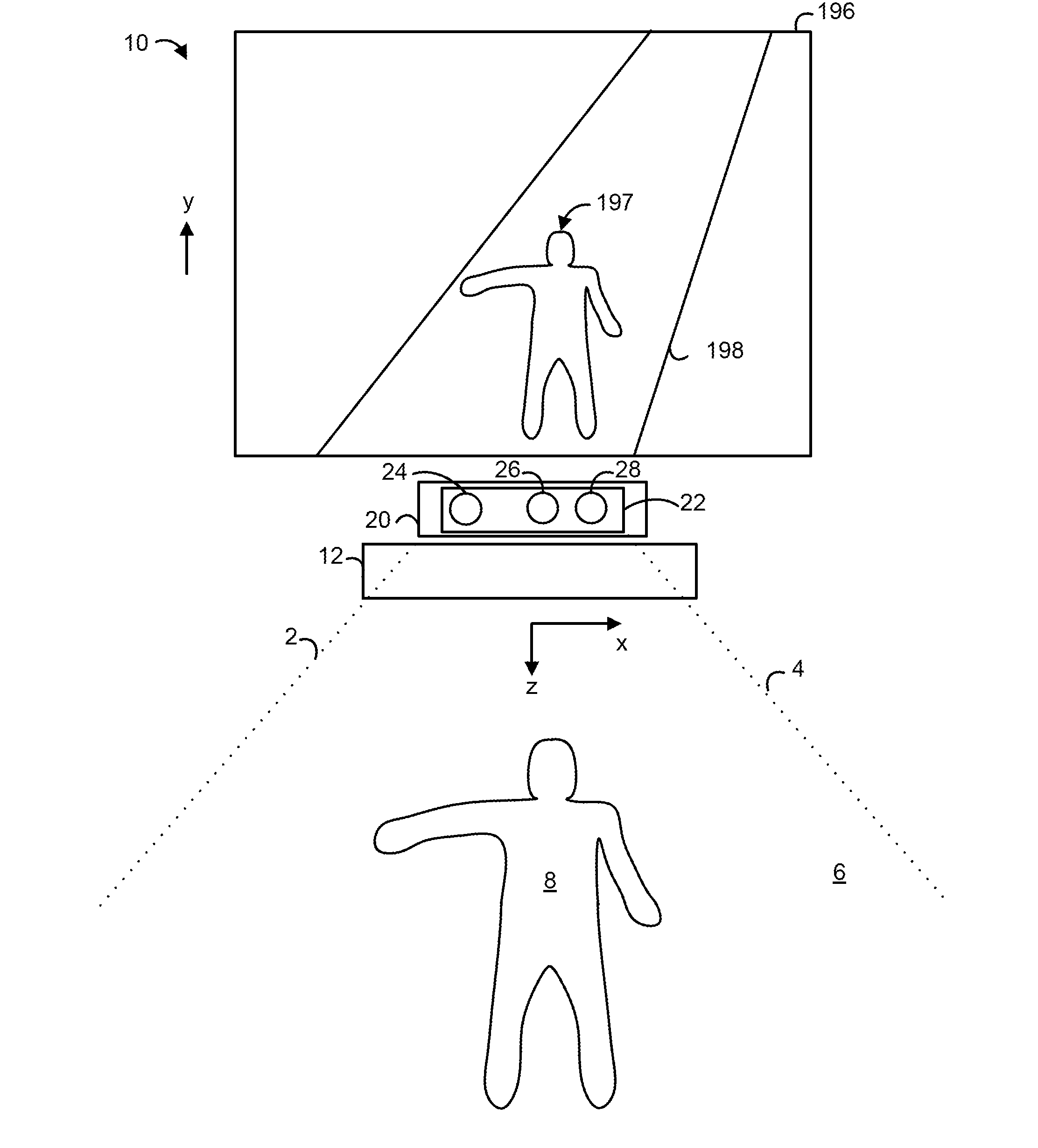

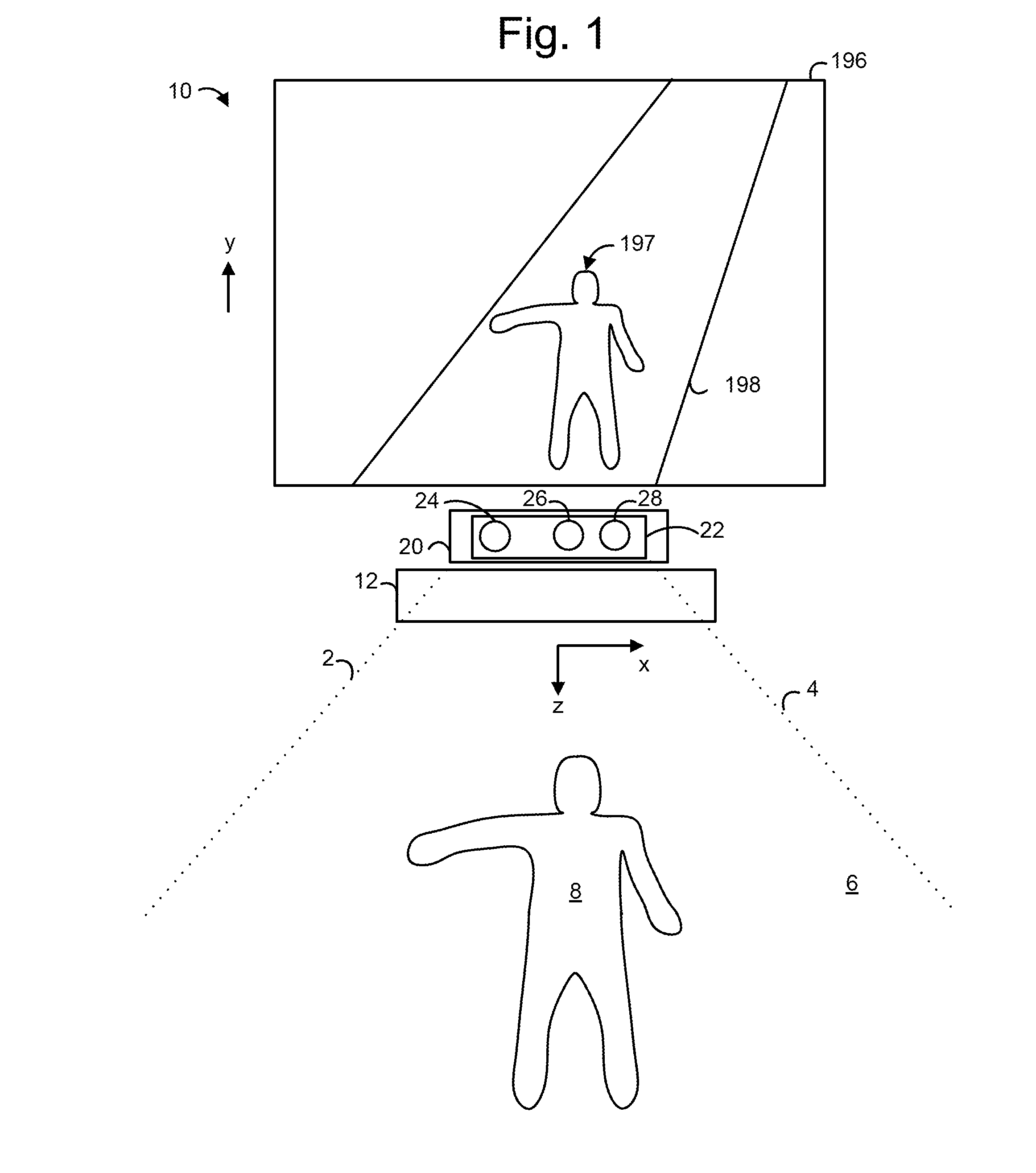

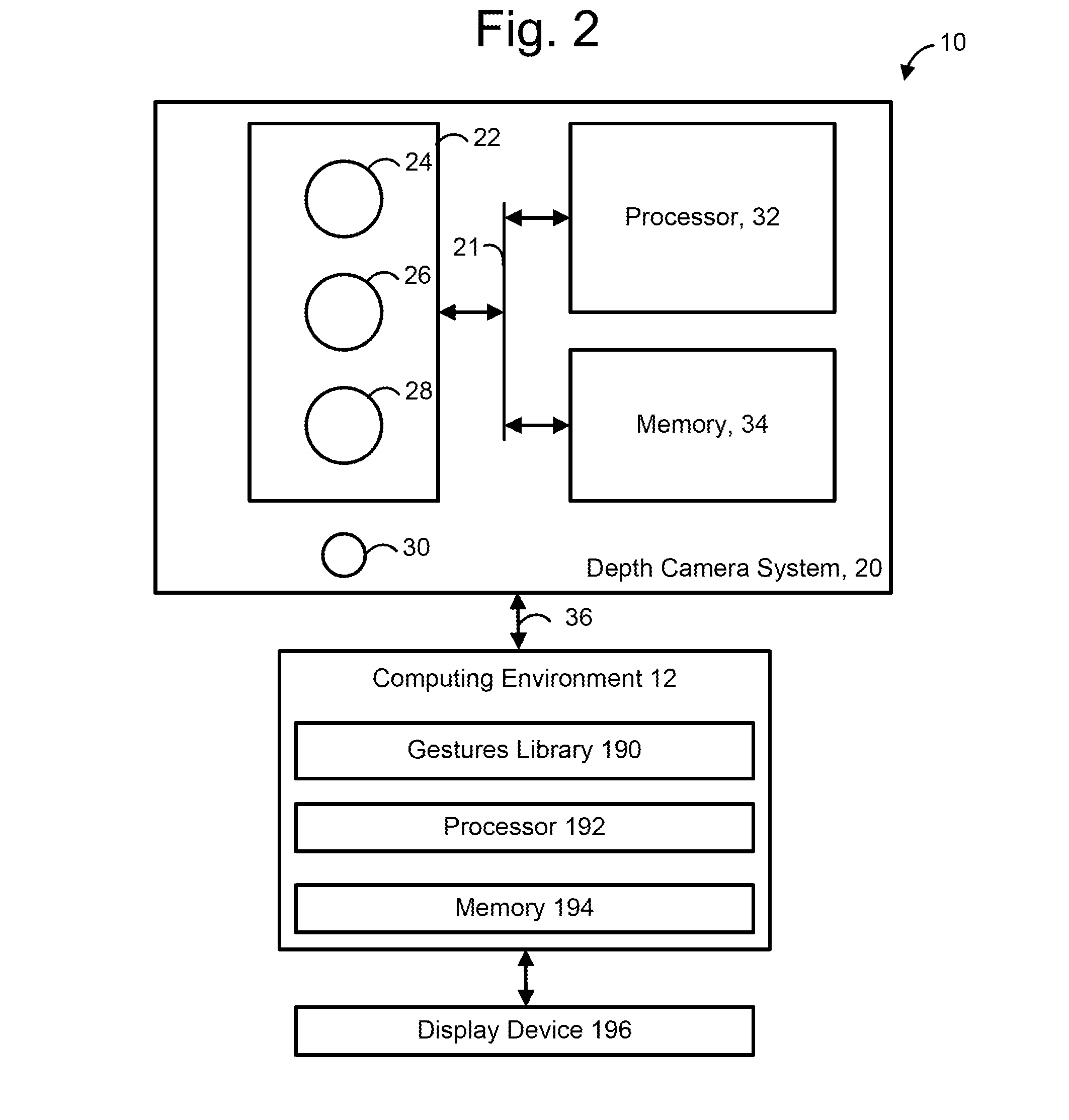

Proxy training data for human body tracking

InactiveUS20110228976A1Easy to trainIncrease variabilityCharacter and pattern recognitionHuman bodyBody joints

Synthesized body images are generated for a machine learning algorithm of a body joint tracking system. Frames from motion capture sequences are retargeted to several different body types, to leverage the motion capture sequences. To avoid providing redundant or similar frames to the machine learning algorithm, and to provide a compact yet highly variegated set of images, dissimilar frames can be identified using a similarity metric. The similarity metric is used to locate frames which are sufficiently distinct, according to a threshold distance. For realism, noise is added to the depth images based on noise sources which a real world depth camera would often experience. Other random variations can be introduced as well. For example, a degree of randomness can be added to retargeting. For each frame, the depth image and a corresponding classification image, with labeled body parts, are provided. 3-D scene elements can also be provided.

Owner:MICROSOFT TECH LICENSING LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com