Patents

Literature

2684 results about "Rgb image" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

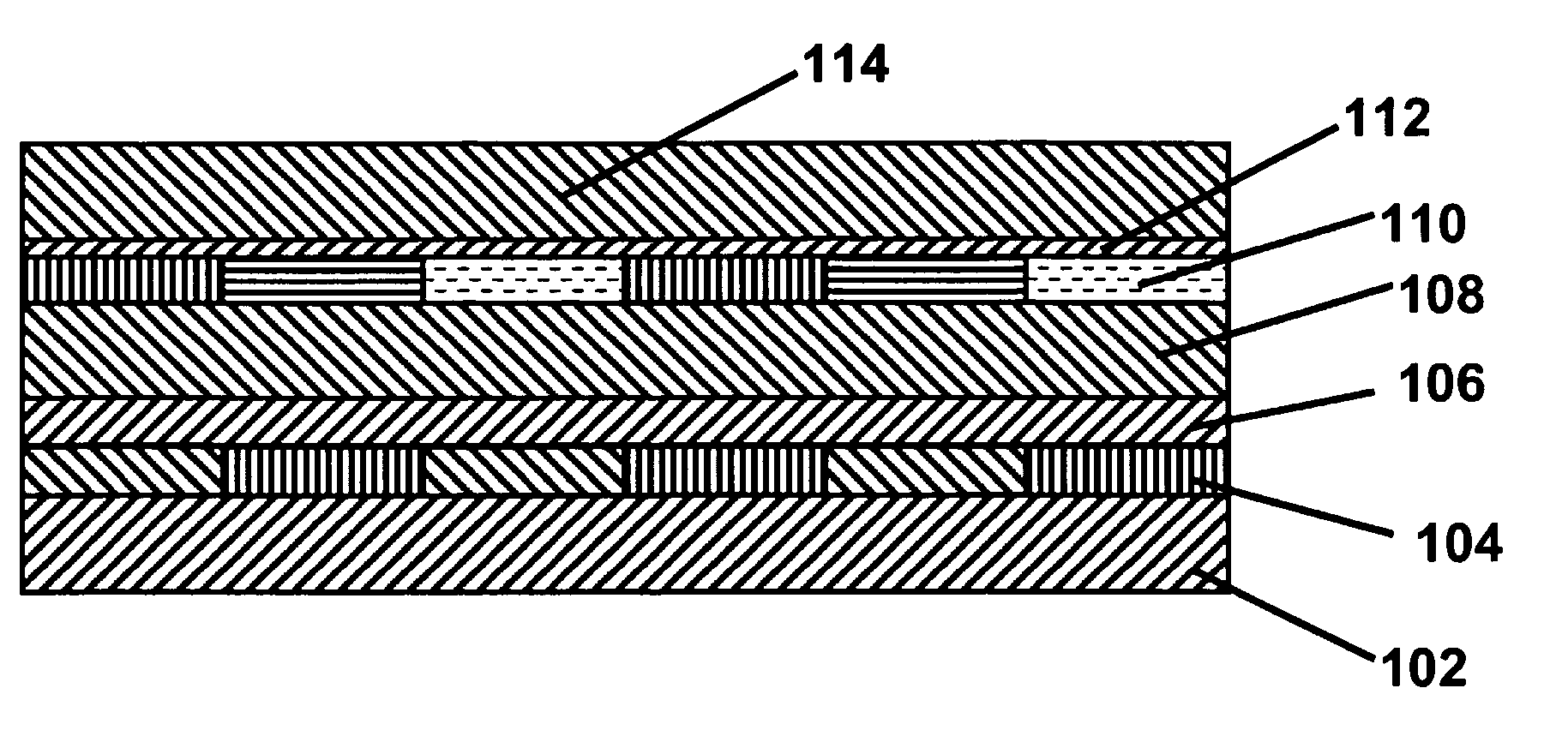

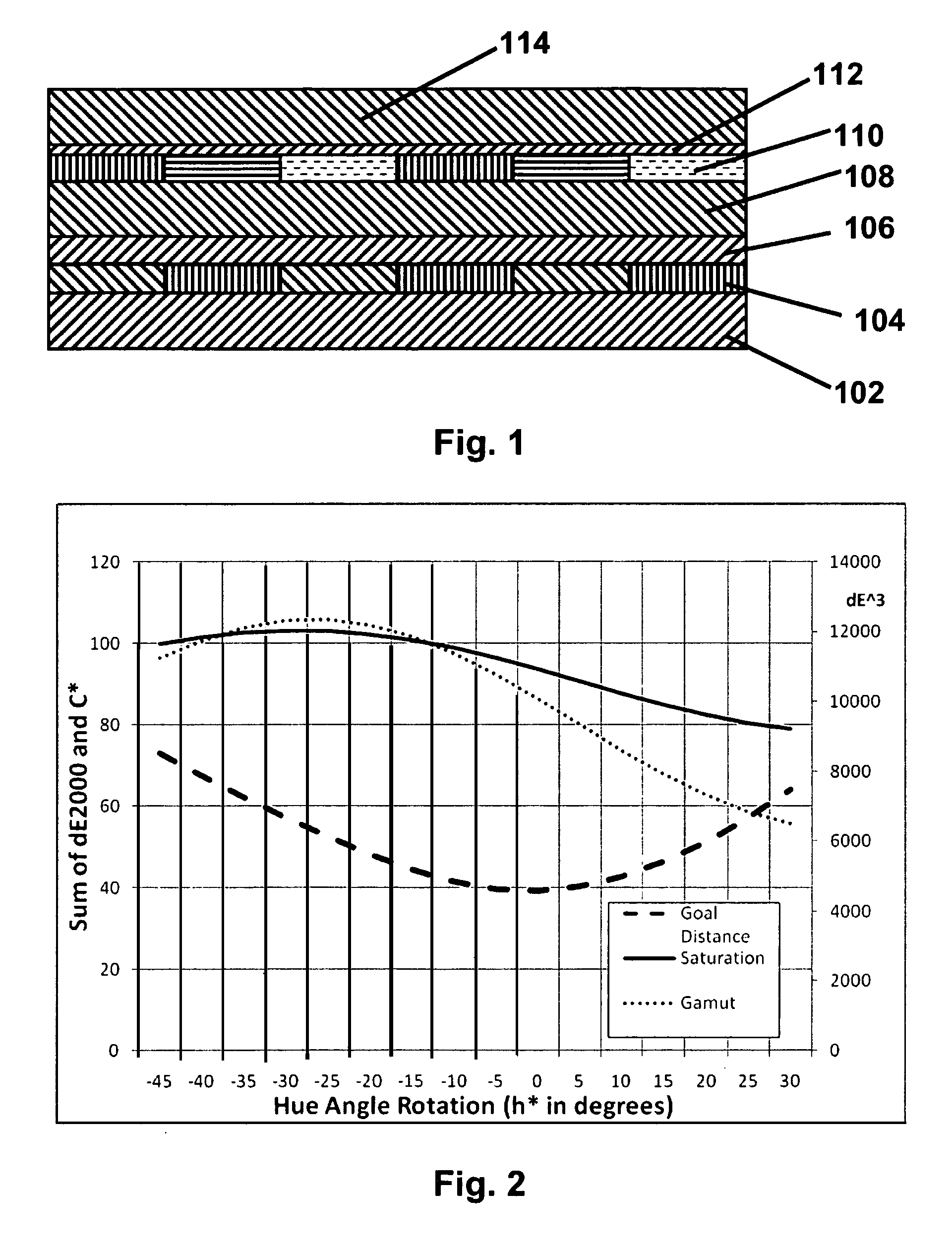

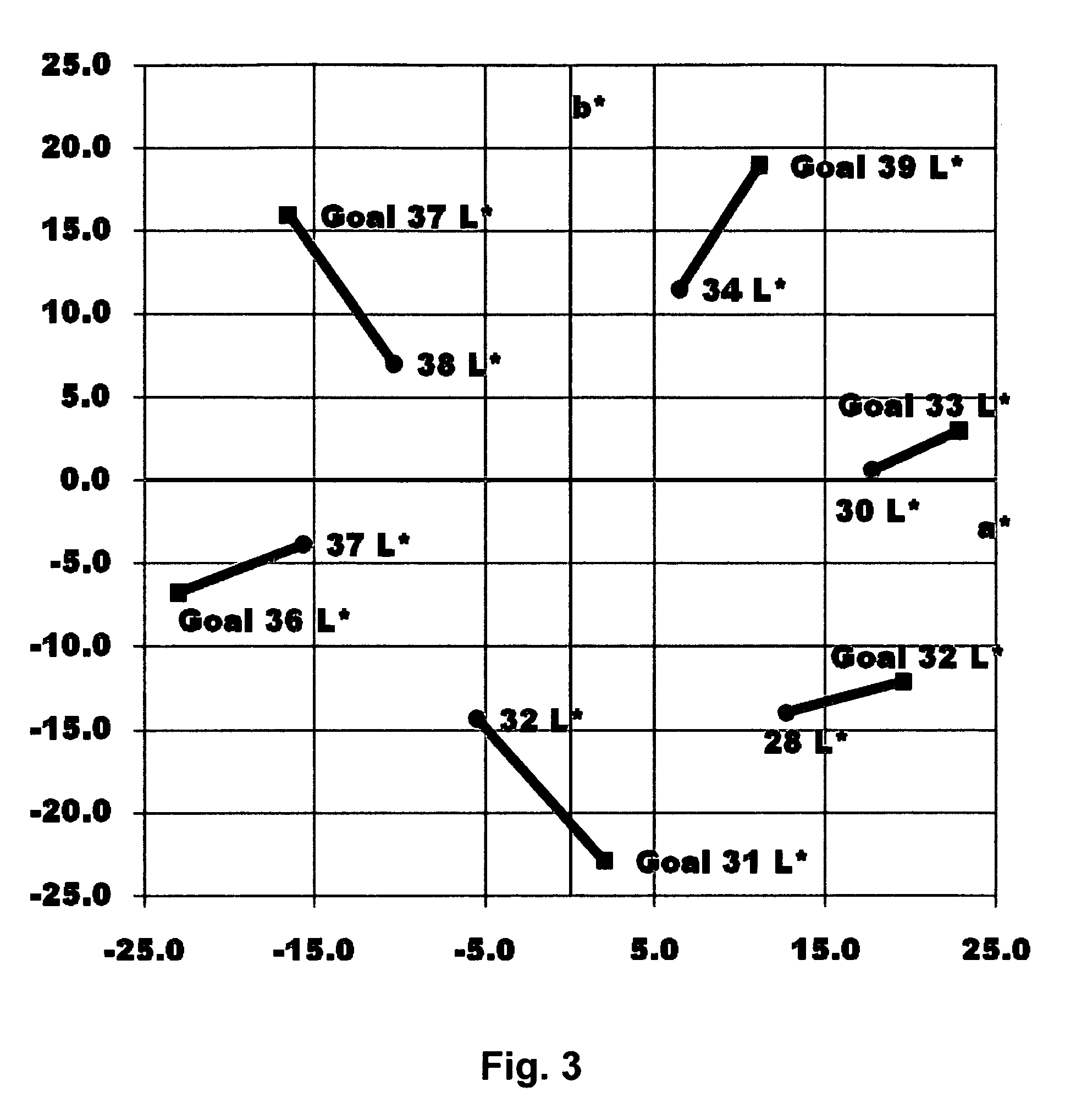

Electro-optic displays, and color filters for use therein

InactiveUS8054526B2Good lookingSolve lack of contrastNon-linear opticsOptical elementsRgb imageDisplay device

A color filter array comprises orange, lime and purple sub-pixels, optionally with the addition of white sub-pixels. The color filter array is useful in electro-optic displays, especially reflective electro-optic displays. A method is provided for converting RGB images for use with the new color filter array.

Owner:E INK CORPORATION

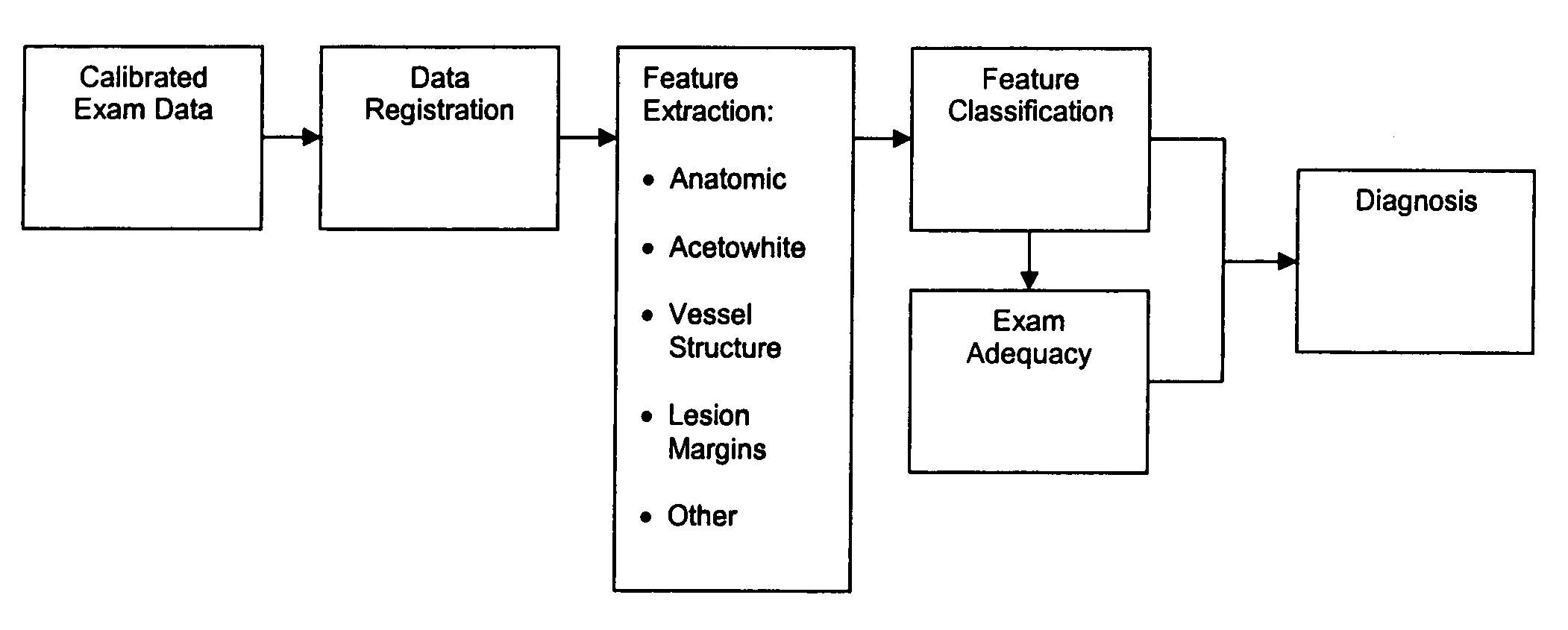

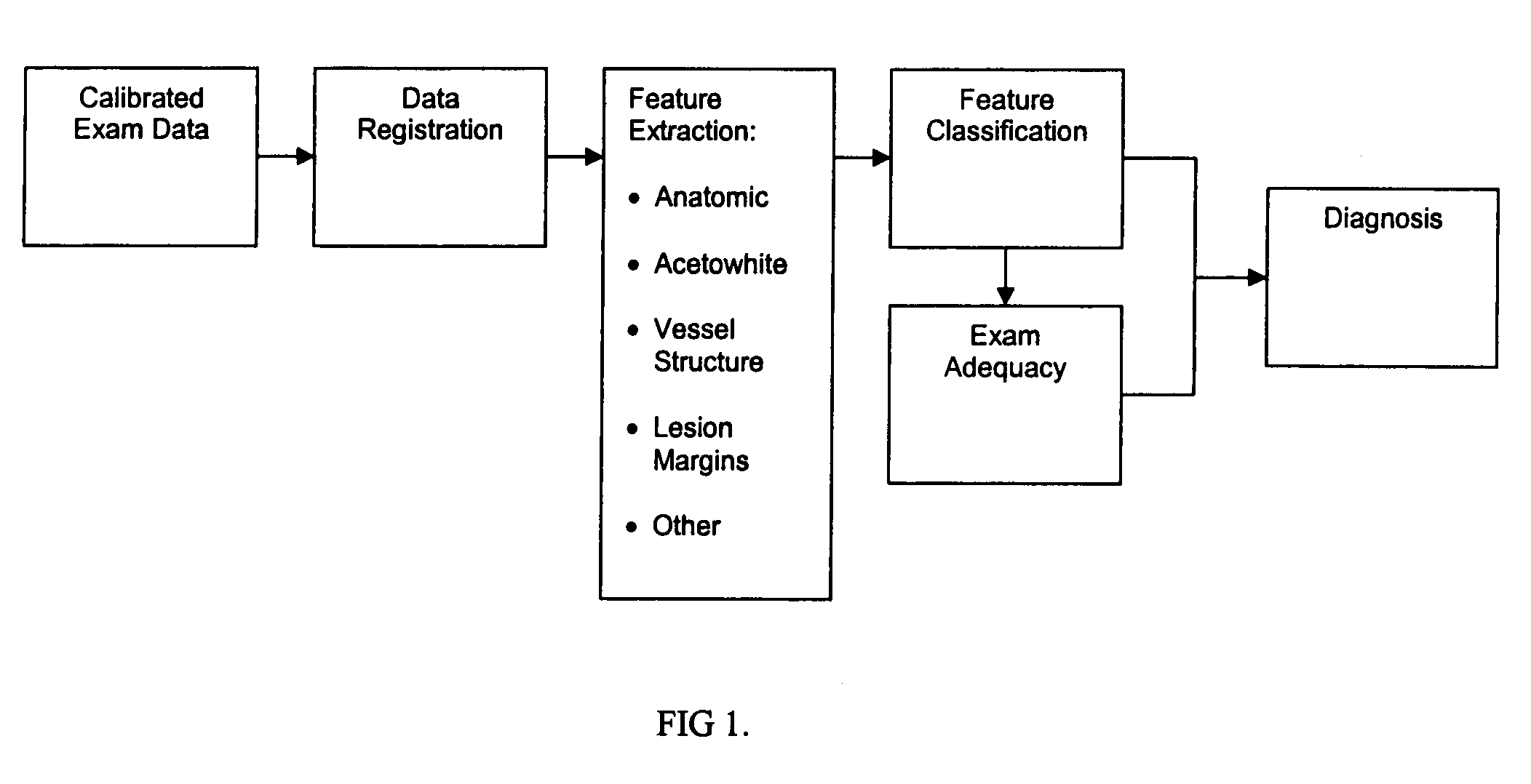

Uterine cervical cancer computer-aided-diagnosis (CAD)

Uterine cervical cancer Computer-Aided-Diagnosis (CAD) according to this invention consists of a core processing system that automatically analyses data acquired from the uterine cervix and provides tissue and patient diagnosis, as well as adequacy of the examination. The data can include, but is not limited to, color still images or video, reflectance and fluorescence multi-spectral or hyper-spectral imagery, coherent optical tomography imagery, and impedance measurements, taken with and without the use of contrast agents like 3-5% acetic acid, Lugol's iodine, or 5-aminolevulinic acid. The core processing system is based on an open, modular, and feature-based architecture, designed for multi-data, multi-sensor, and multi-feature fusion. The core processing system can be embedded in different CAD system realizations. For example: A CAD system for cervical cancer screening could in a very simple version consist of a hand-held device that only acquires one digital RGB image of the uterine cervix after application of 3-5% acetic acid and provides automatically a patient diagnosis. A CAD system used as a colposcopy adjunct could provide all functions that are related to colposcopy and that can be provided by a computer, from automation of the clinical workflow to automated patient diagnosis and treatment recommendation.

Owner:STI MEDICAL SYST

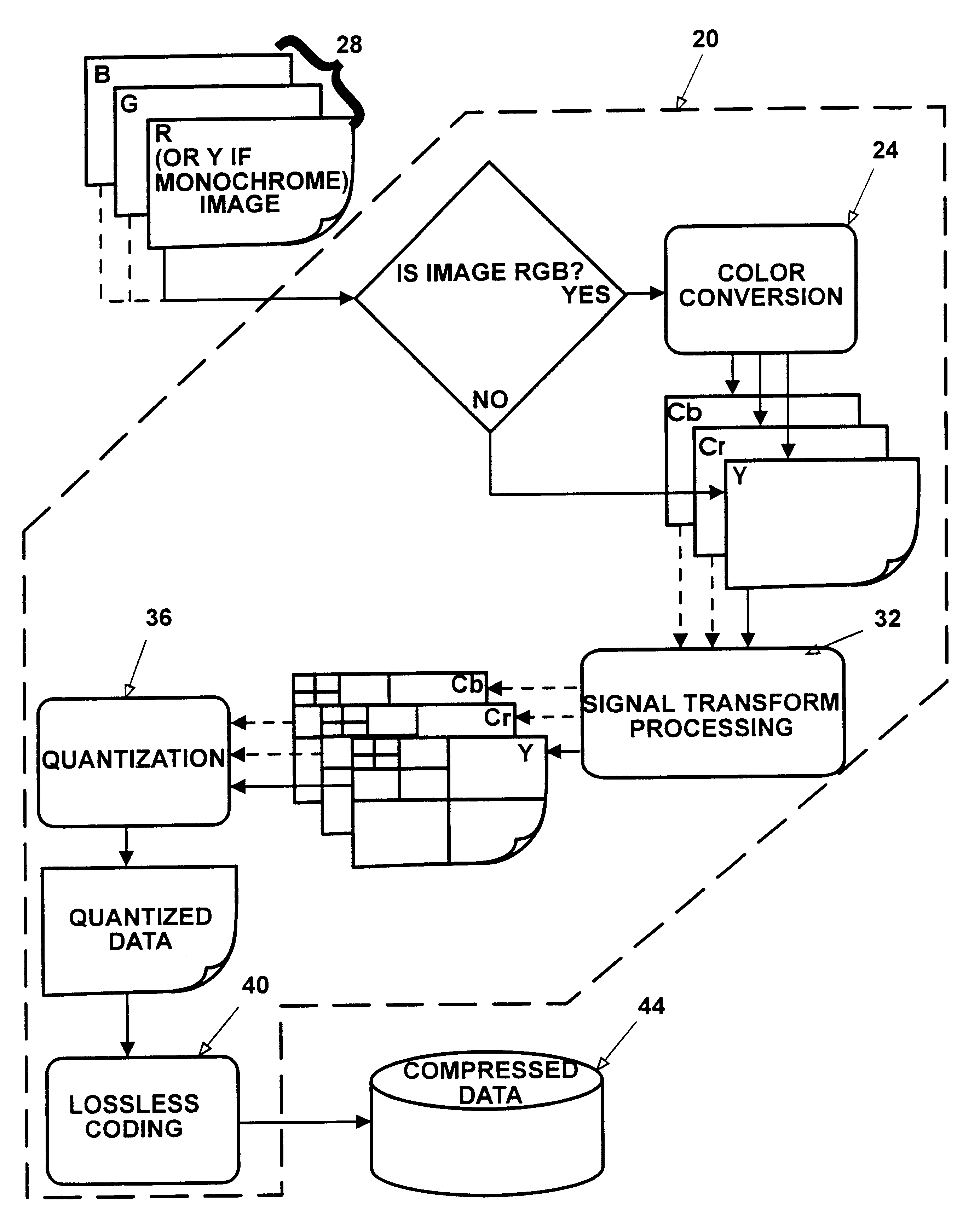

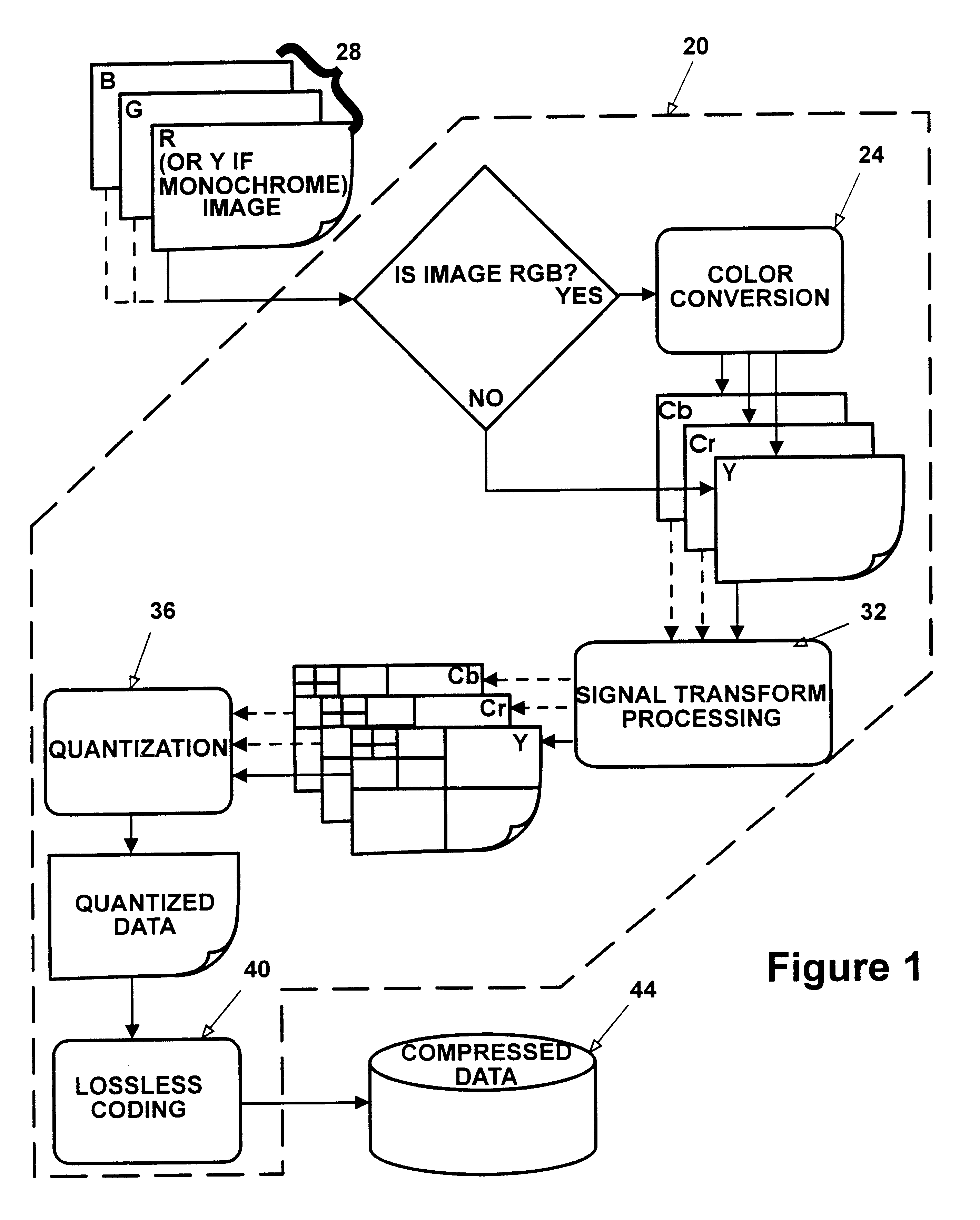

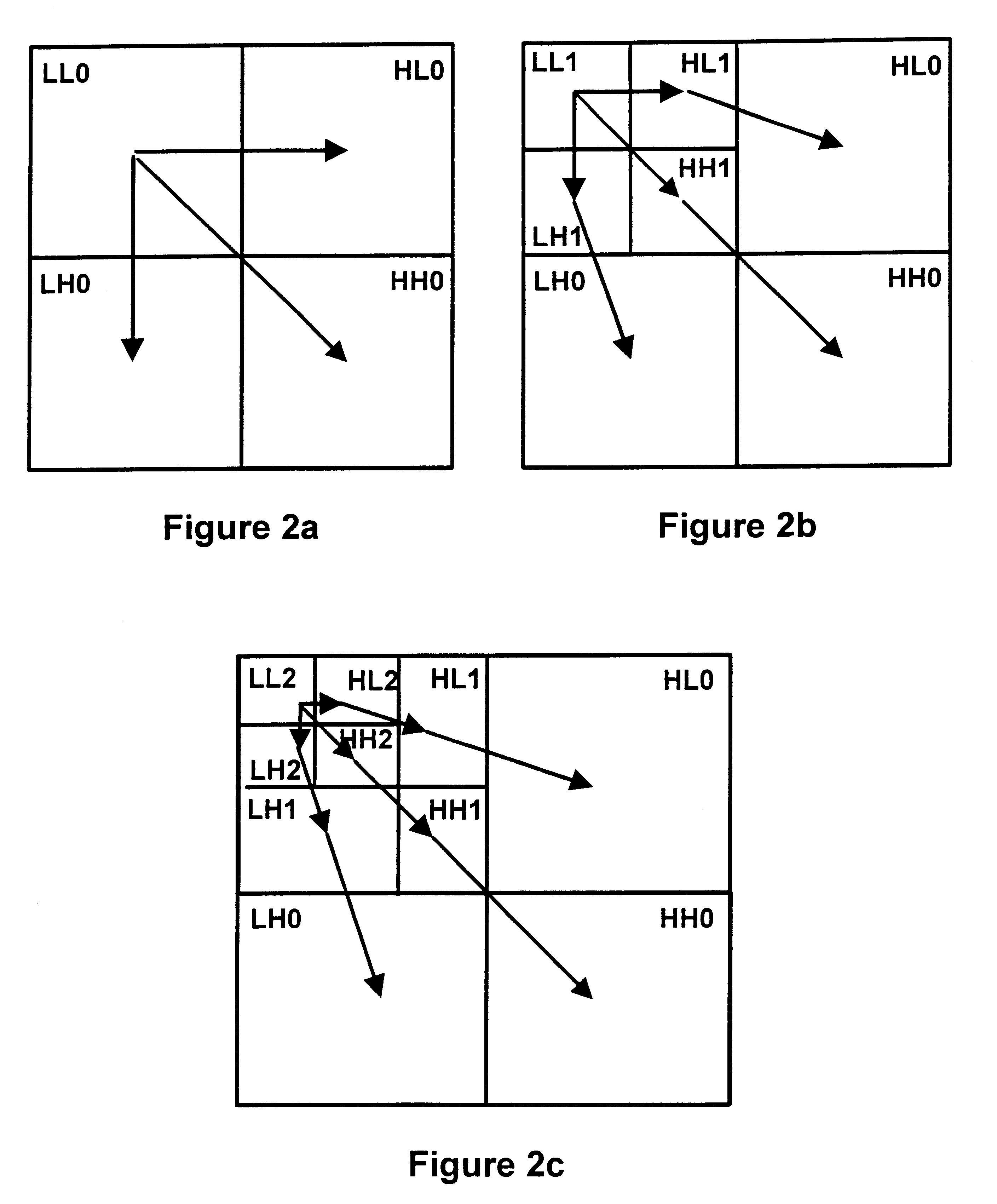

Method apparatus and system for compressing data that wavelet decomposes by color plane and then divides by magnitude range non-dc terms between a scalar quantizer and a vector quantizer

InactiveUS6865291B1Color television with pulse code modulationColor television with bandwidth reductionData compressionImaging quality

An apparatus and method for image data compression performs a modified zero-tree coding on a range of image bit plane values from the largest to a defined smaller value, and a vector quantizer codes the remaining values and lossless coding is performed on the results of the two coding steps. The defined smaller value can be adjusted iteratively to meet a preselected compressed image size criterion or to meet a predefined level of image quality, as determined by any suitable metric. If the image to be compressed is in RGB color space, the apparatus converts the RGB image to a less redundant color space before commencing further processing.

Owner:WDE

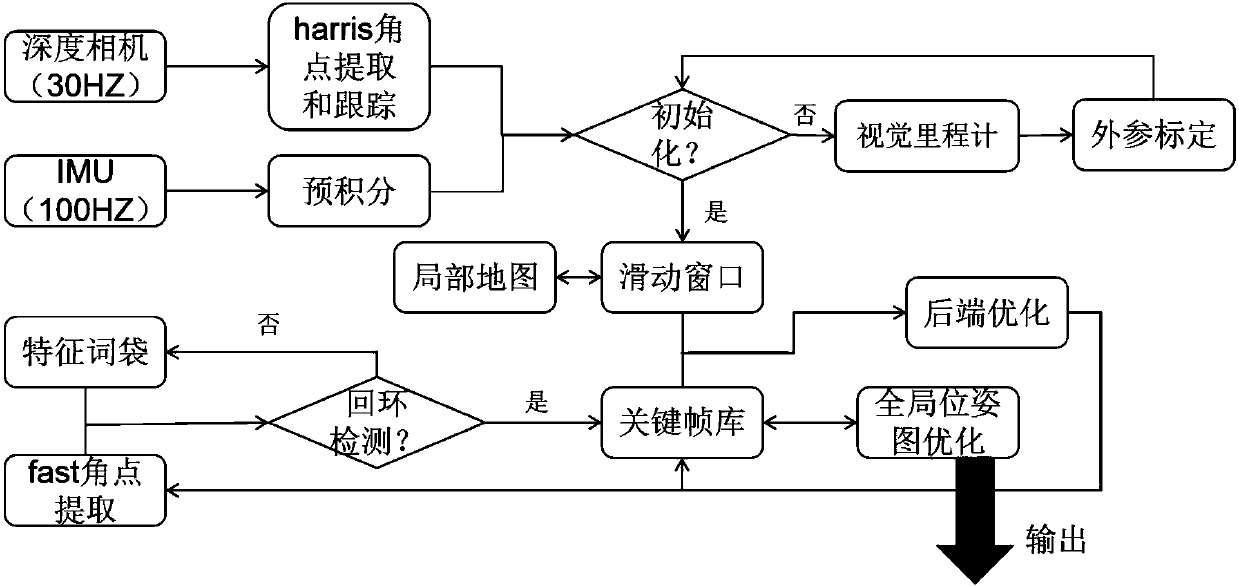

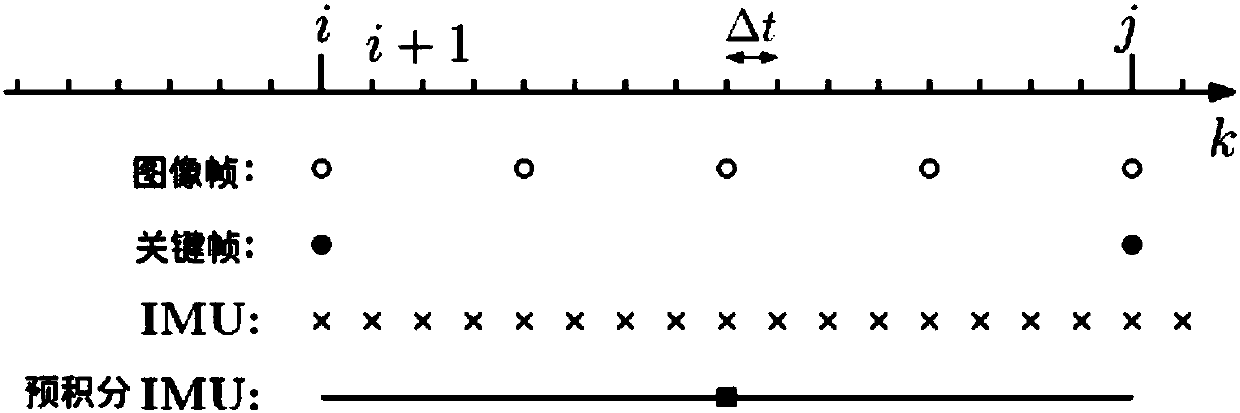

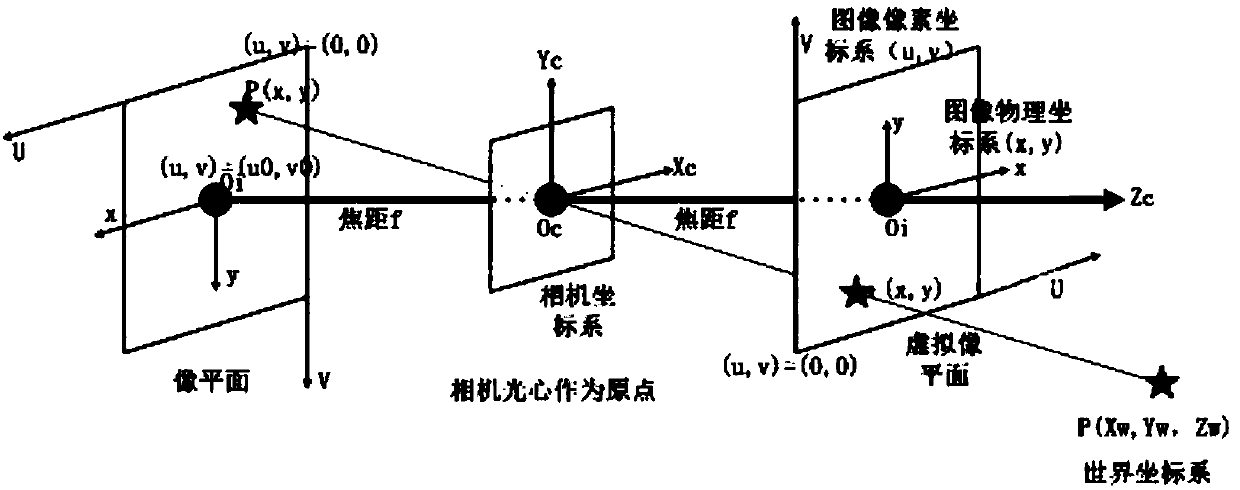

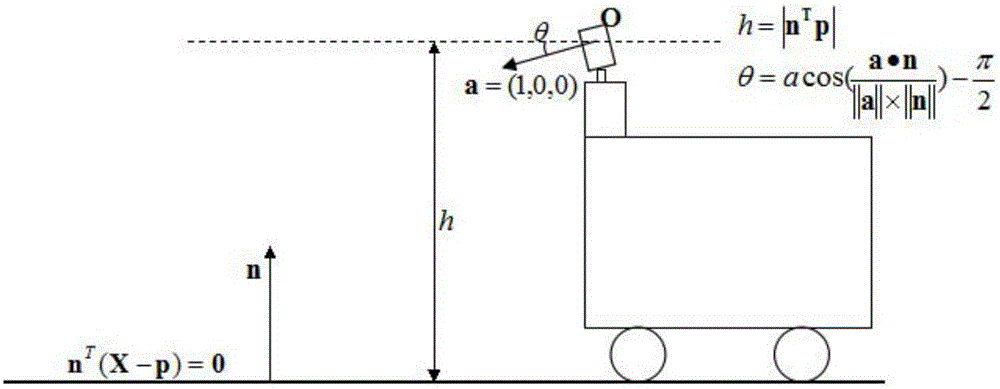

Positioning method and system based on visual inertial navigation information fusion

ActiveCN107869989AEasy to assembleEasy to disassembleNavigational calculation instrumentsNavigation by speed/acceleration measurementsRgb imageVision based

The invention discloses a positioning method and system based on visual inertial navigation information fusion. The method comprises steps as follows: acquired sensor information is preprocessed, wherein the sensor information comprises an RGB image and depth image information of a depth vision sensor and IMU (inertial measurement unit) data; external parameters of a system which the depth visionsensor and an IMU belong to are acquired; the pre-processed sensor information and external parameters are processed with an IMU pre-integration model and a depth camera model, and pose information isacquired; the pose information is corrected on the basis of a loop detection mode, and the corrected globally uniform pose information is acquired. The method has good robustness in the positioning process and the positioning accuracy is improved.

Owner:NORTHEASTERN UNIV

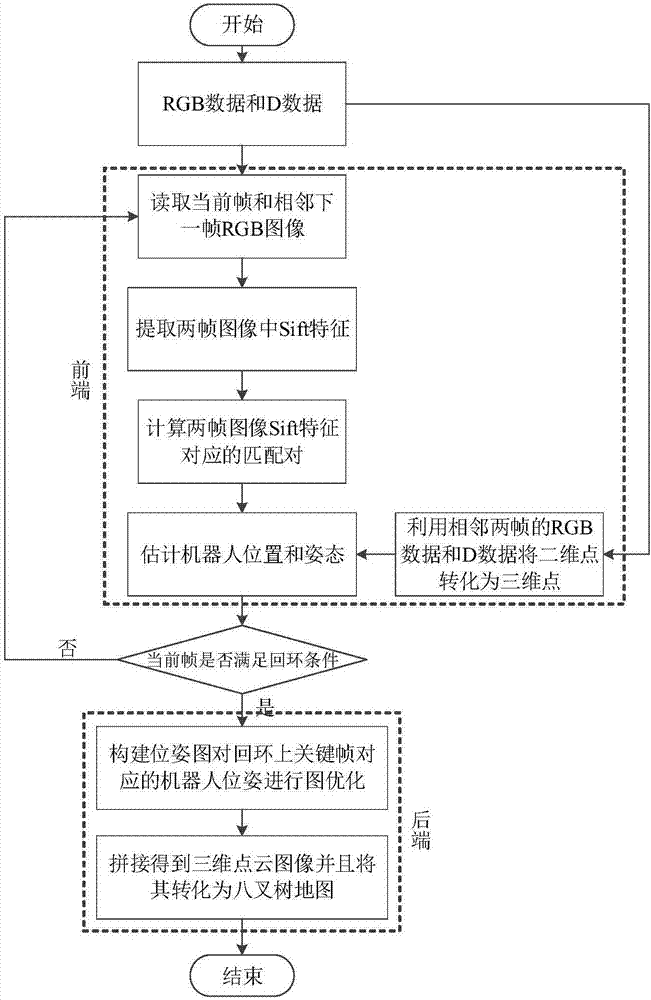

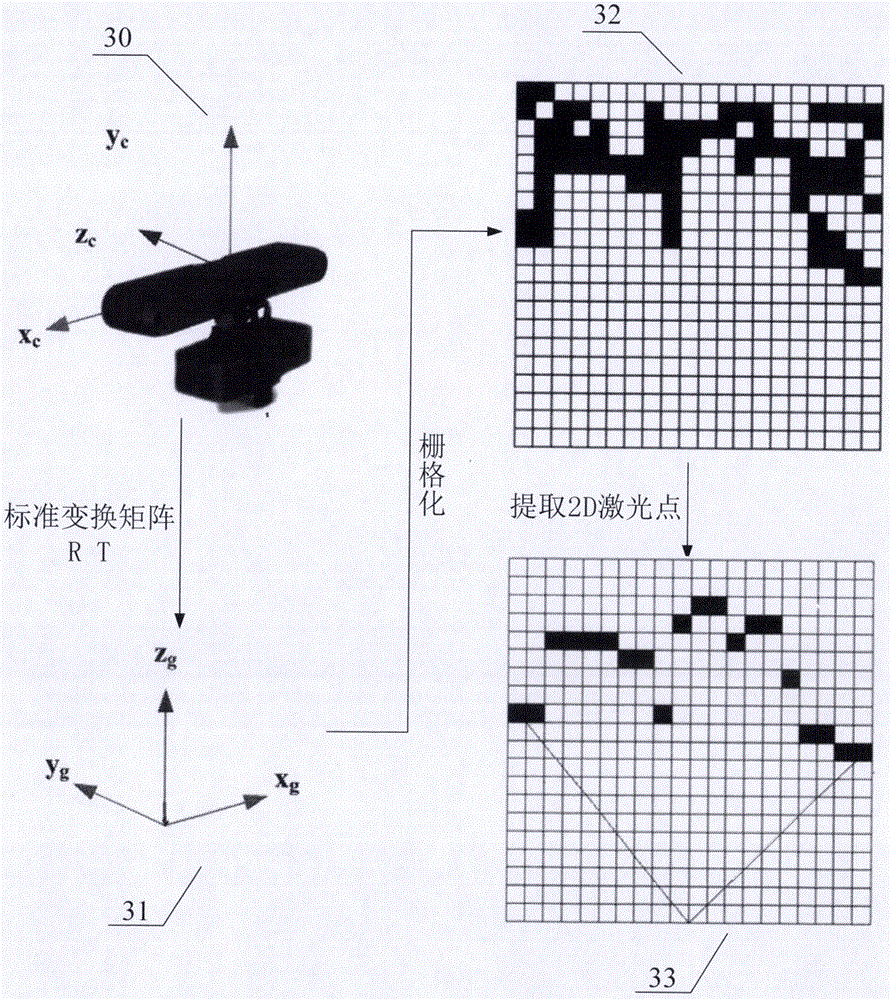

Kinect-based robot self-positioning method

ActiveCN105045263ARealize self-positioningIndependent positioning and stabilityPosition/course control in two dimensionsPoint cloudRgb image

The invention discloses a Kinect-based robot self-positioning method. The method includes the following steps that: the RGB image and depth image of an environment are acquired through the Kinect, and the relative motion of a robot is estimated through the information of visual fusion and a physical speedometer, and pose tracking can be realized according to the pose of the robot at a last time point; depth information is converted into three-dimensional point cloud, and a ground surface is extracted from the point cloud, and the height and pitch angle of the Kinect relative to the ground surface are automatically calibrated according to the ground surface, so that the three-dimensional point cloud can be projected to the ground surface, and therefore, two-dimensional point cloud similar to laser data can be obtained, and the two-dimensional point cloud is matched with pre-constructed environment raster map, and thus, accumulated errors in a robot tracking process can be corrected, and the pose of the robot can be estimated accurately. According to the Kinect-based robot self-positioning method of the invention, the Kinect is adopted to replace laser to perform positioning, and therefore, cost is low; image and depth information is fused, so that the method can have high precision; and the method is compatible with a laser map, and the mounting height and pose of the Kinect are not required to be calibrated in advance, and therefore, the method is convenient to use, and requirements for autonomous positioning and navigation of the robot can be satisfied.

Owner:HANGZHOU JIAZHI TECH CO LTD

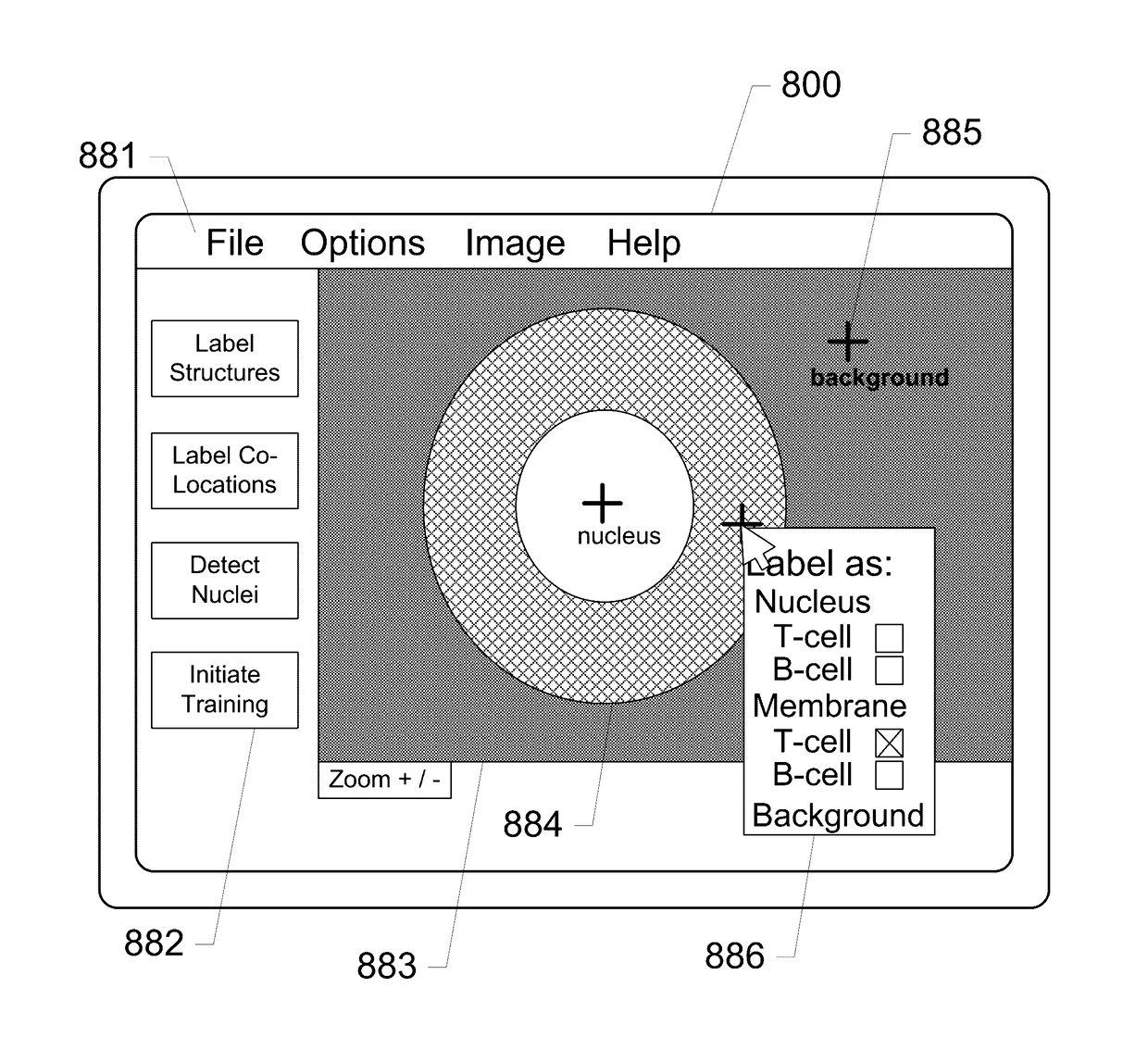

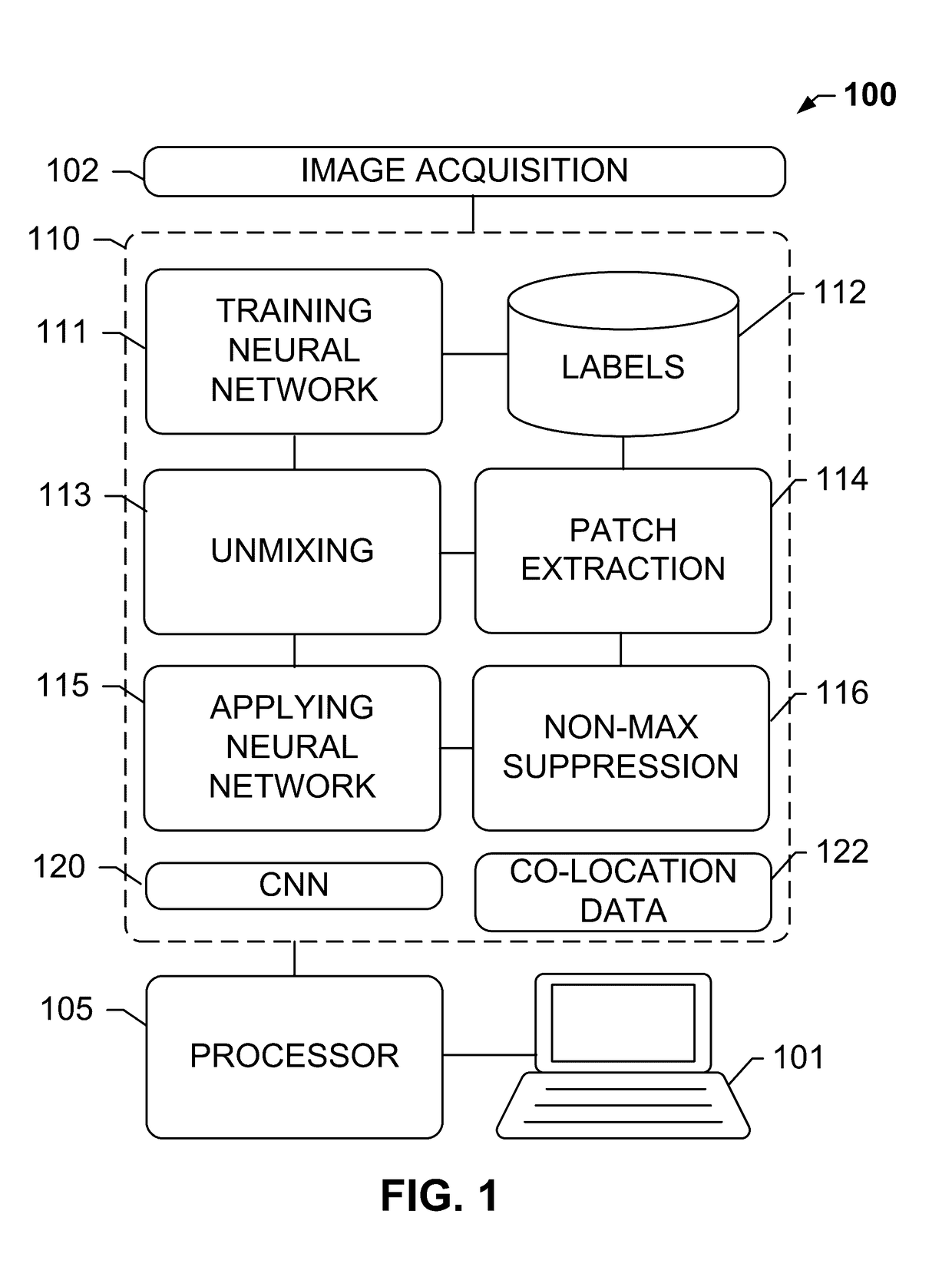

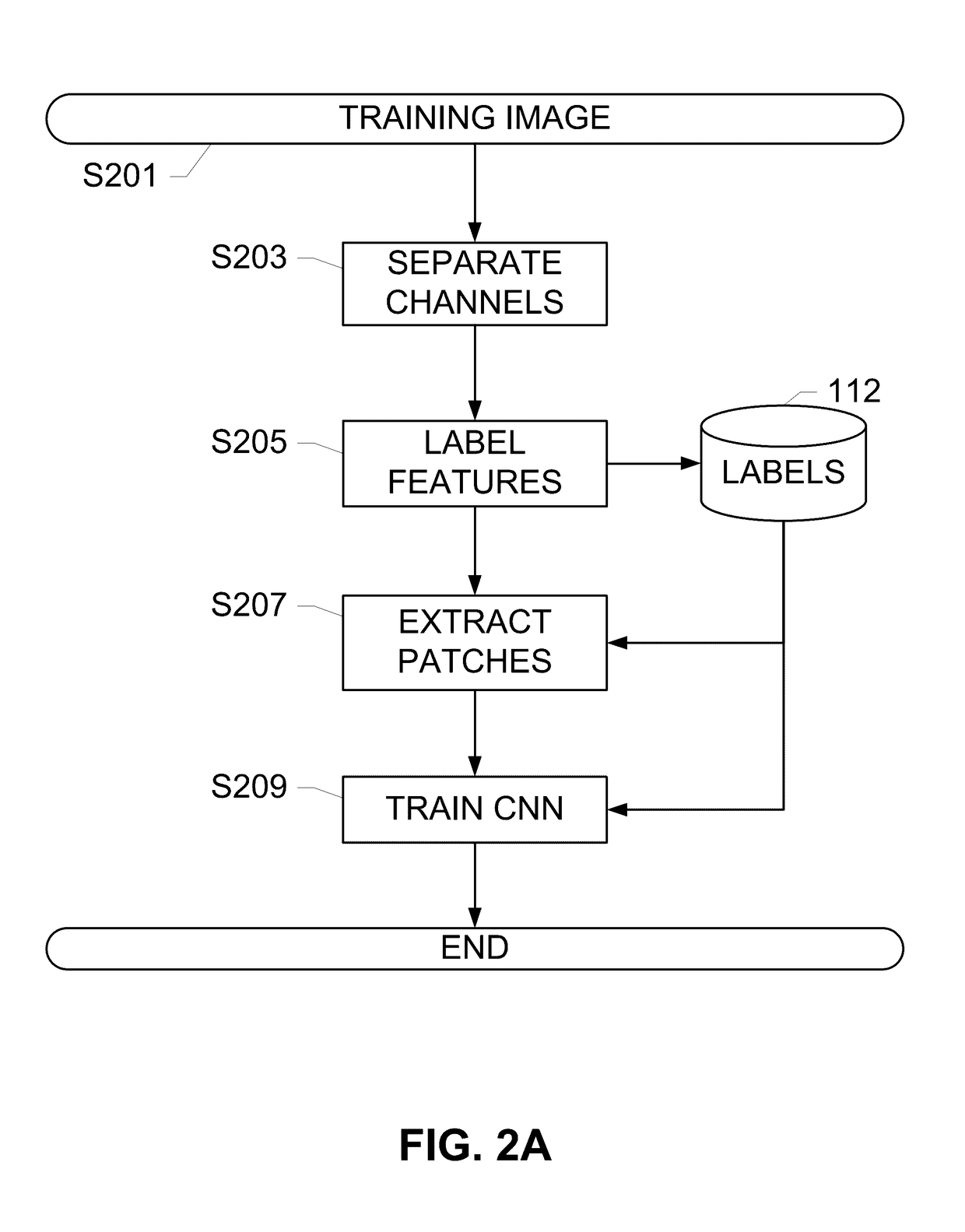

Systems and methods for detection of structures and/or patterns in images

ActiveUS20170169567A1Lighten the computational burdenImprove stabilityImage enhancementImage analysisRgb imageNon maximum suppression

The subject disclosure presents systems and computer-implemented methods for automatic immune cell detection that is of assistance in clinical immune profile studies. The automatic immune cell detection method involves retrieving a plurality of image channels from a multi-channel image such as an RGB image or biologically meaningful unmixed image. A cell detector is trained to identify the immune cells by a convolutional neural network in one or multiple image channels. Further, the automatic immune cell detection algorithm involves utilizing a non-maximum suppression algorithm to obtain the immune cell coordinates from a probability map of immune cell presence possibility generated from the convolutional neural network classifier.

Owner:VENTANA MEDICAL SYST INC

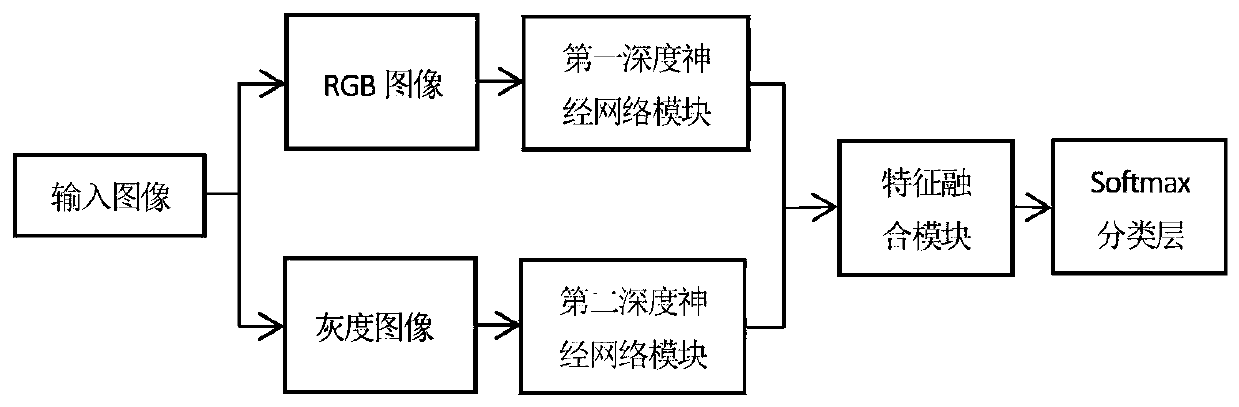

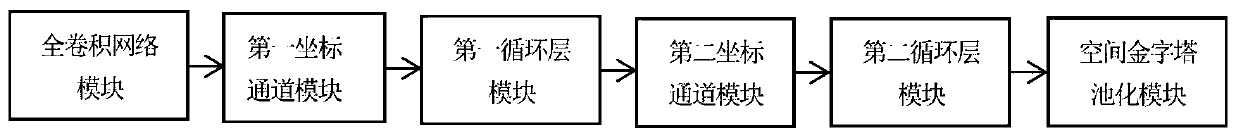

An image semantic segmentation method based on deep learning

ActiveCN109711413ARich coordinate featuresImprove generalization abilityInternal combustion piston enginesCharacter and pattern recognitionData setRgb image

The invention discloses an image semantic segmentation method based on deep learning. The method comprises four parts of data set processing, deep semantic segmentation network construction, deep semantic segmentation network training and parameter learning, and semantic segmentation on a test image. The RGB image and the gray level image of the input image are used as the input of the network model, the edge information of the gray level image is fully utilized, and the richness degree of input characteristics is effectively increased; a convolutional neural network and a bidirectional threshold recursion unit are combined, and more context dependency relationships and global feature information are captured on the basis of learning image local features; coordinate information is added tothe feature map through the first coordinate channel module and the second coordinate channel module, the coordinate features of the model are enriched, the generalization ability of the model is improved, and a semantic segmentation result with high resolution and accurate boundary is generated.

Owner:SHAANXI NORMAL UNIV

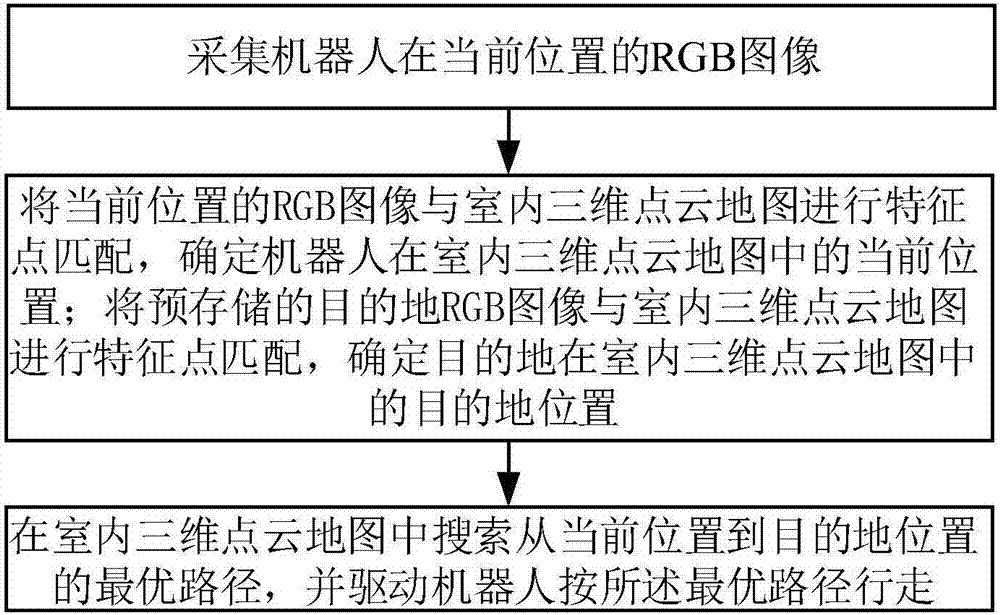

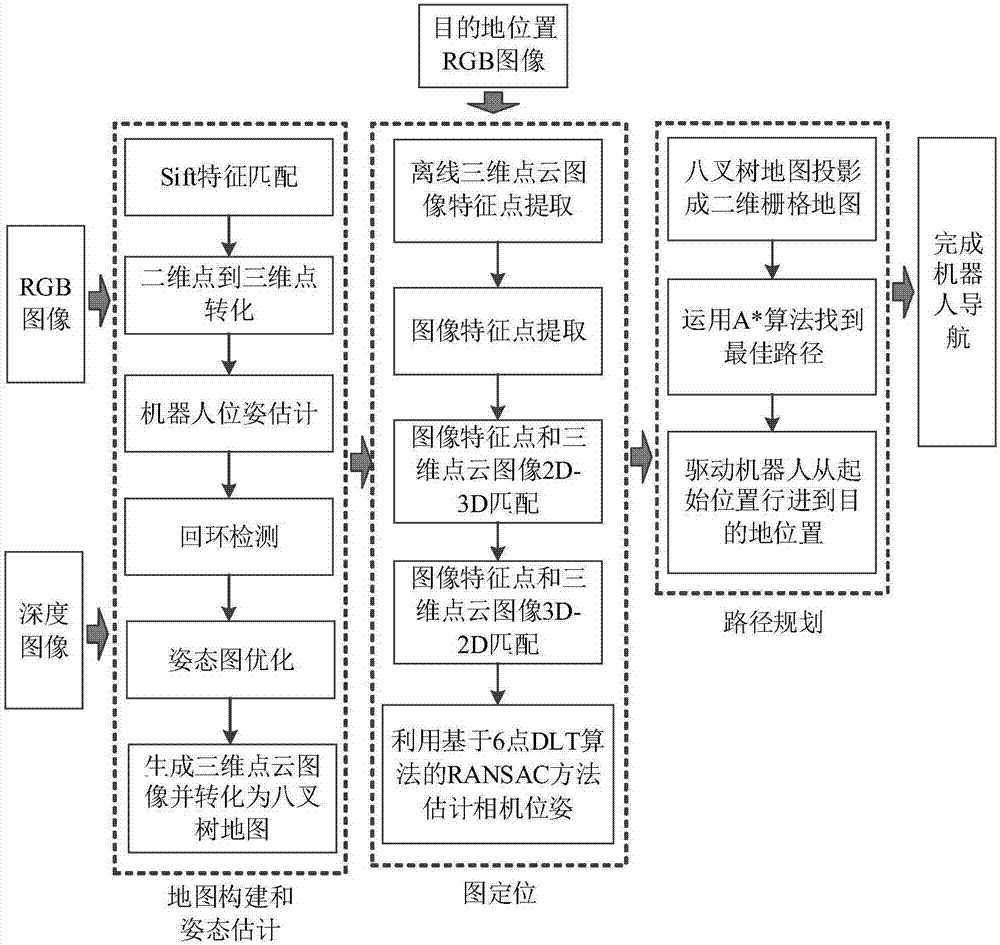

Autonomous location and navigation method and autonomous location and navigation system of robot

ActiveCN106940186AAutomate processingFair priceNavigational calculation instrumentsPoint cloudRgb image

The invention discloses an autonomous location and navigation method and an autonomous location and navigation system of a robot. The method comprises the following steps: acquiring the RGB image of the robot in the current position; carrying out characteristic point matching on the RGB image of the current position and an indoor three-dimensional point cloud map, and determining the current position of the robot in the indoor 3D point cloud map; carrying out characteristic point matching on a pre-stored RGB image of a destination and the indoor three-dimensional point cloud map, and determining the position of the destination in the indoor 3D point cloud map; and searching an optimal path from the current position to the destination position in the indoor 3D point cloud map, and driving the robot to run according to the optimal path. The method and the system have the advantages of completion of autonomous location and navigation through using a visual sensor, simple device structure, low cost, simplicity in operation, and high path planning real-time property, and can be used in the fields of unmanned driving and indoor location and navigation.

Owner:HUAZHONG UNIV OF SCI & TECH

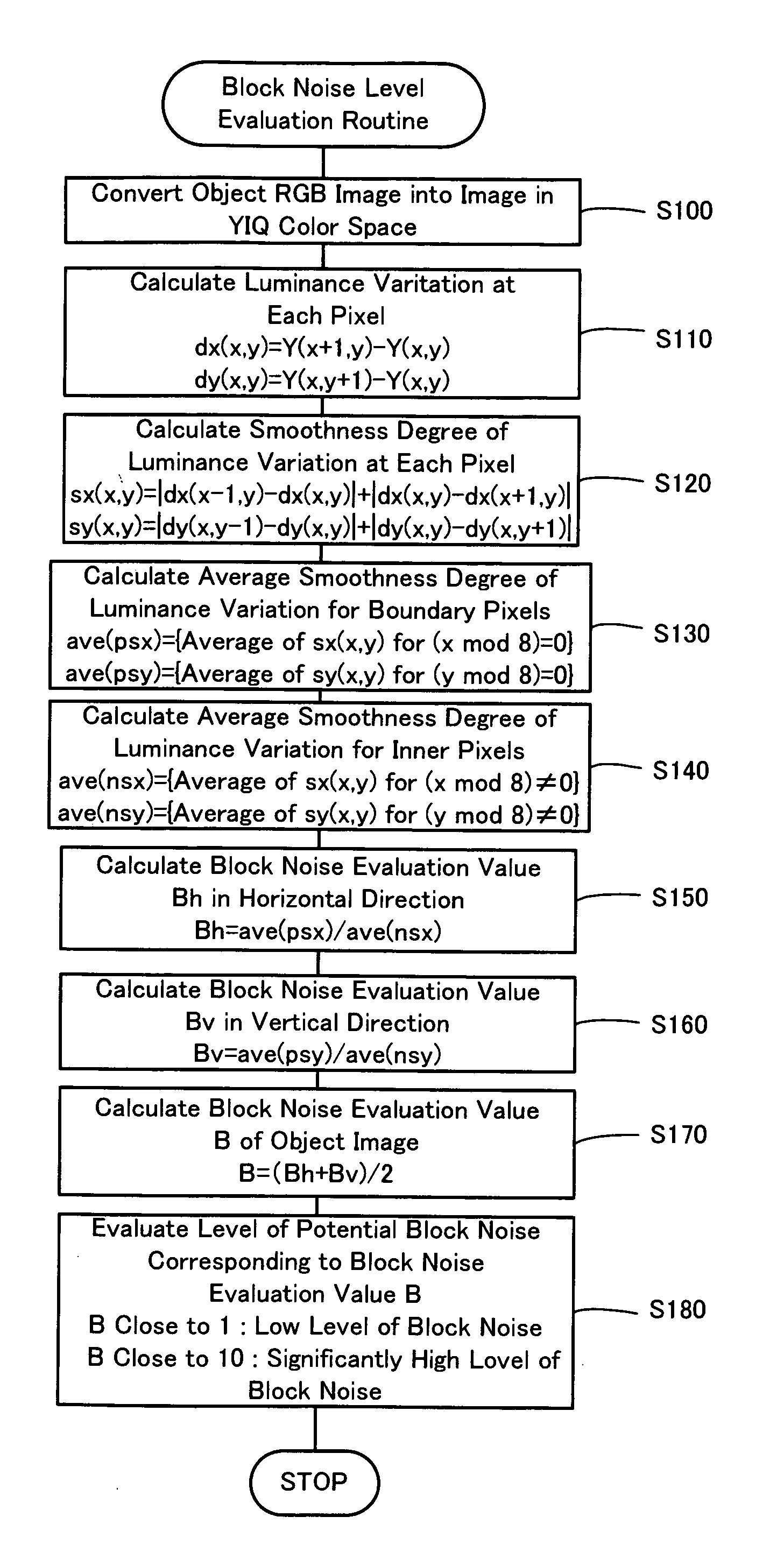

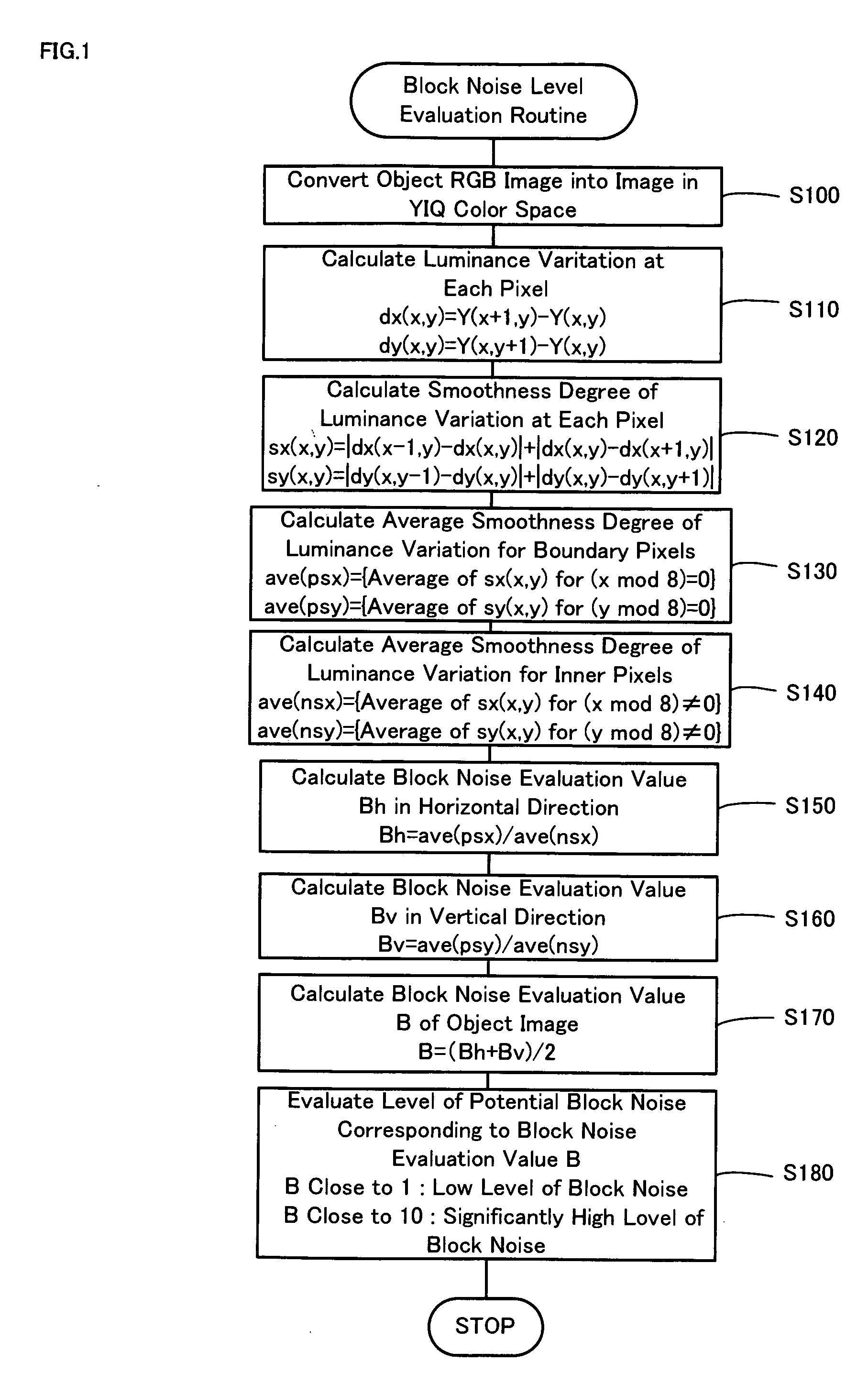

Block noise level evaluation method for compressed images and control method of imaging device utilizing the evaluation method

InactiveUS20060034531A1Reducing influence level of noiseReduce in quantityImage enhancementImage analysisPattern recognitionNoise level

The technique of the invention converts an object RGB image into an image in a YIQ color space, calculates a luminance variation at each target pixel from Y channel values of the target pixel and an adjacent pixel adjoining to the target pixel, and computes a smoothness degree of luminance variation at the target pixel as summation of absolute values of differences between luminance variations at the target pixel and adjacent pixels. A block noise evaluation value B is obtained as a ratio of an average smoothness degree ave(psx), ave(psy) of luminance variation for boundary pixels located on each block boundary to an average smoothness degree ave(nsx), ave(nsy) of luminance variation for inner pixels not located on the block boundary. The block noise evaluation value B closer to 1 gives an evaluation result of a lower level of block noise, whereas the block noise evaluation value B closer to 10 gives an evaluation result of a higher level of block noise.

Owner:SEIKO EPSON CORP

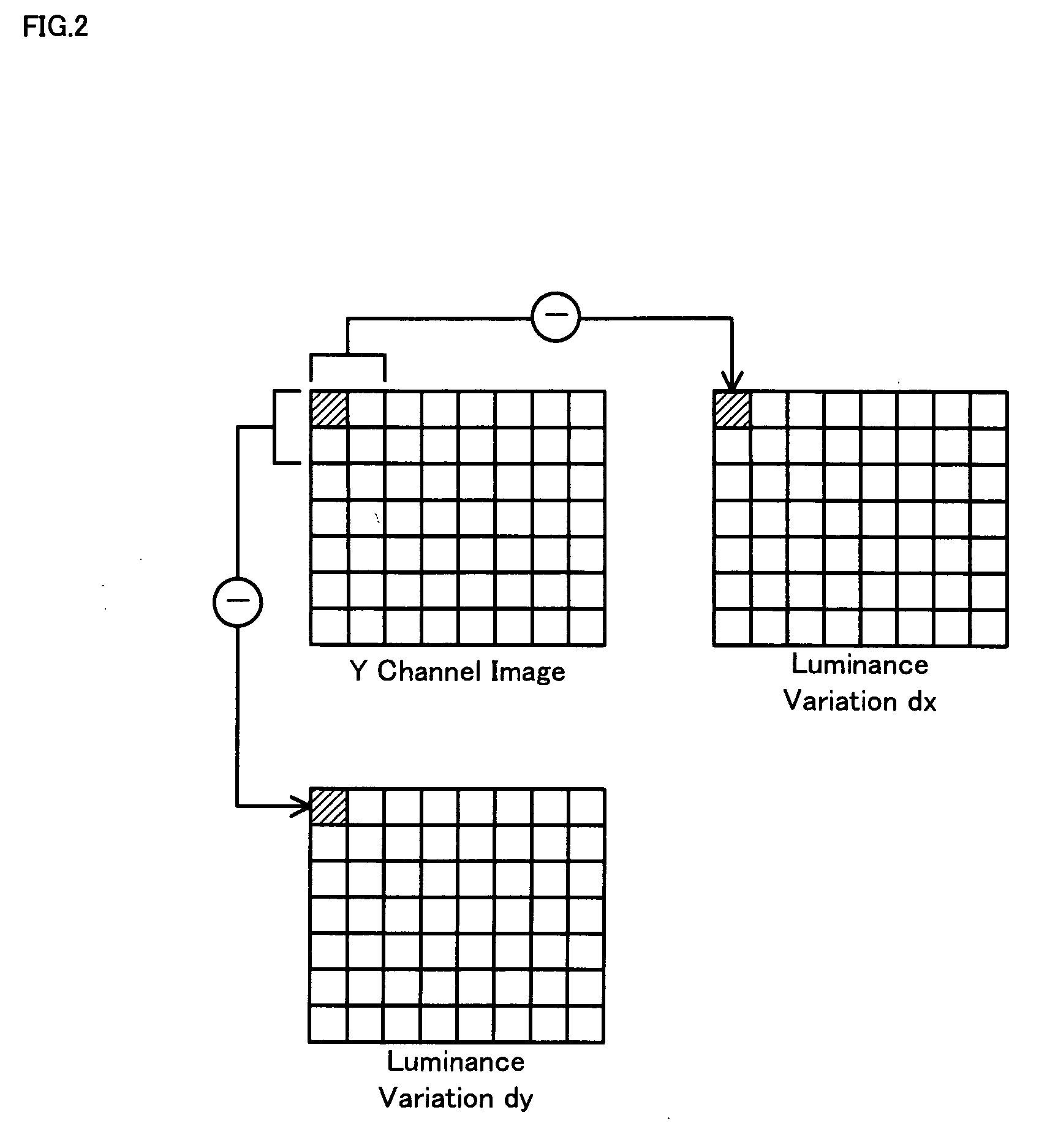

Full convolution neural network (FCN)-based monocular image depth estimation method

ActiveCN107578436AReduce the amount of parametersSimple structureImage analysisCharacter and pattern recognitionPattern recognitionStochastic gradient descent

The invention discloses a full convolution neural network (FCN)-based monocular image depth estimation method. The method comprises the steps of acquiring training image data; inputting the training image data into a full convolution neural network (FCN), and sequentially outputting through pooling layers to obtain a characteristic image; subjecting each characteristic image outputted by a last pooling layer sequentially to amplification treatment to obtain a new characteristic image the same with the dimension of a characteristic image outputted by a previous pooling layer, and fusing the twocharacteristic images; sequentially fusing the outputted characteristic image of each pooling layer from back to front so as to obtain a final prediction depth image; training the parameters of the full convolution neural network (FCN) by utilizing a random gradient descent method (SGD) during training; acquiring an RGB image required for depth prediction, and inputting the RGB image into the well trained full convolution neural network (FCN) so as to obtain a corresponding prediction depth image. According to the method, the problem that the resolution of an output image is low in the convolution process can be solved. By adopting the form of the full convolution neural network, a full-connection layer is removed. The number of parameters in the network is effectively reduced.

Owner:NANJING UNIV OF POSTS & TELECOMM

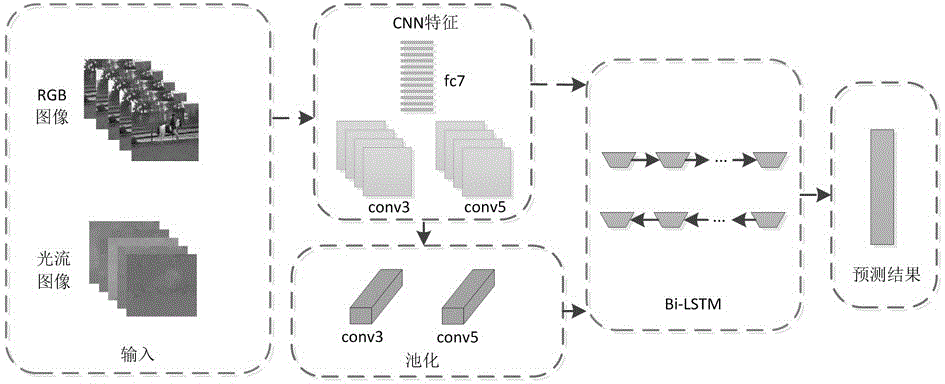

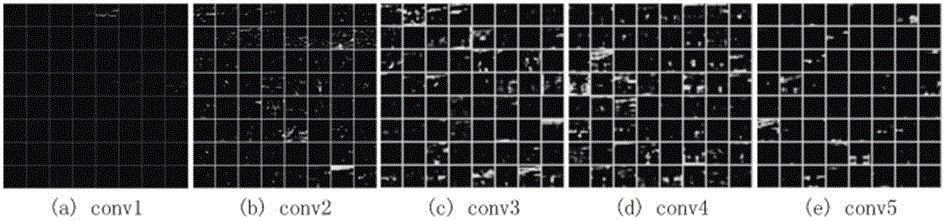

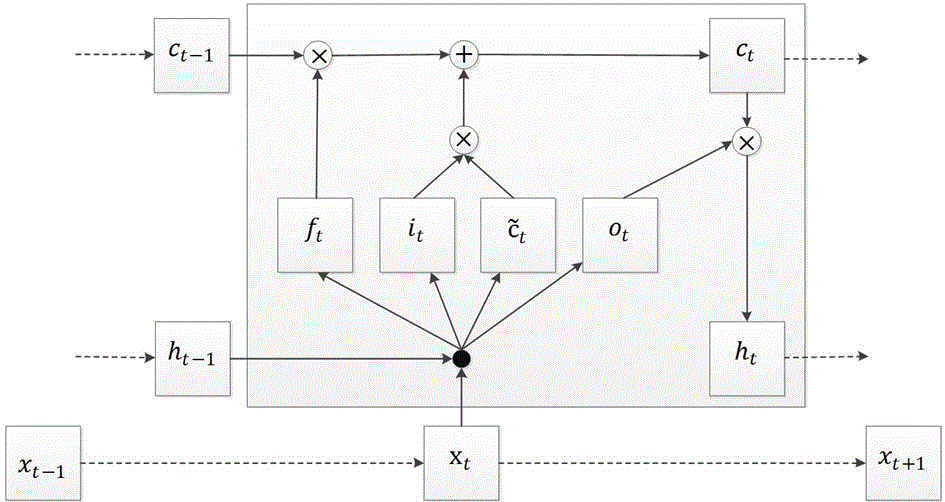

Bidirectional long short-term memory unit-based behavior identification method for video

InactiveCN106845351AImprove accuracyGuaranteed accuracyCharacter and pattern recognitionNeural architecturesTime domainTemporal information

Owner:SUZHOU UNIV

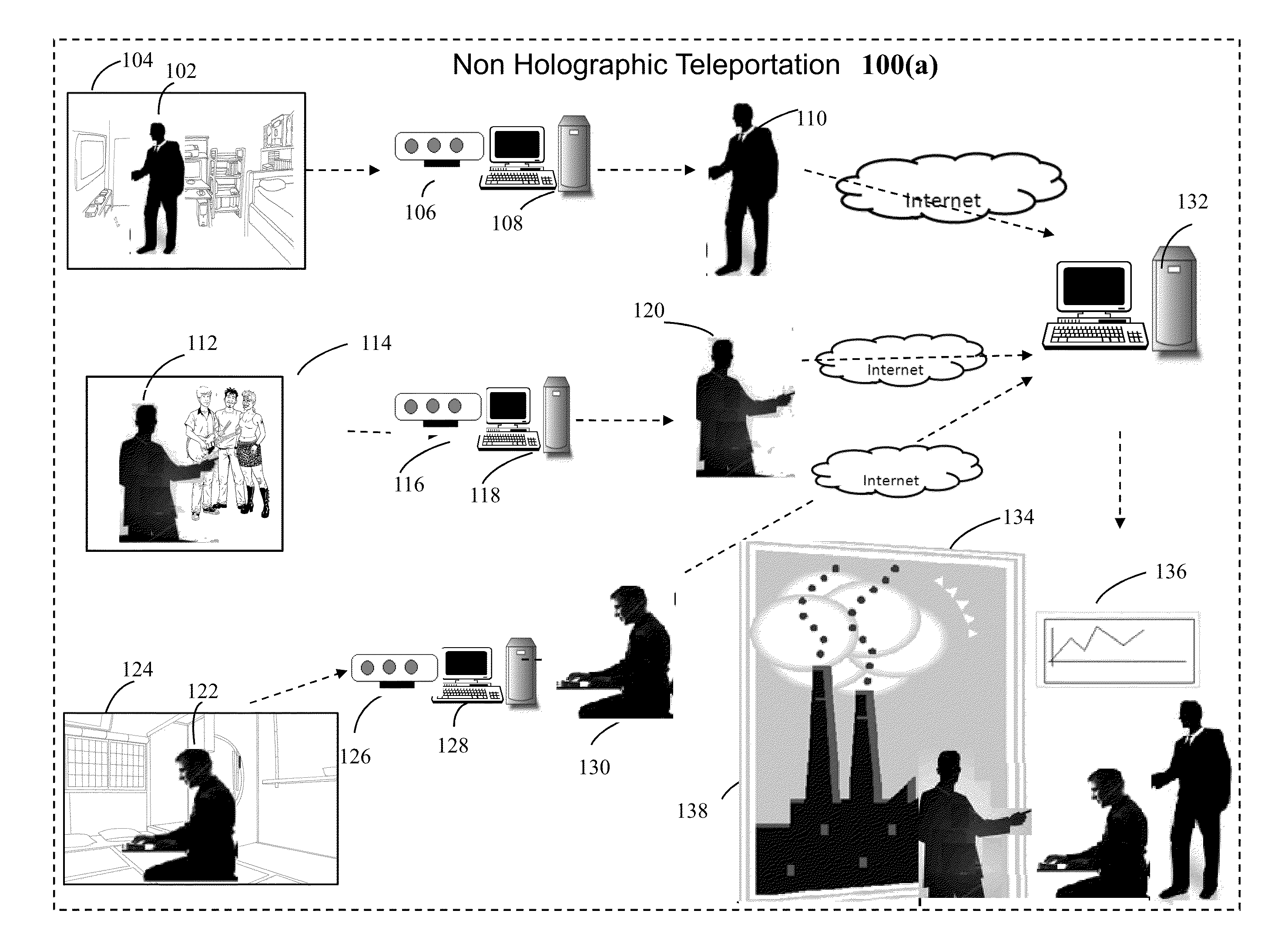

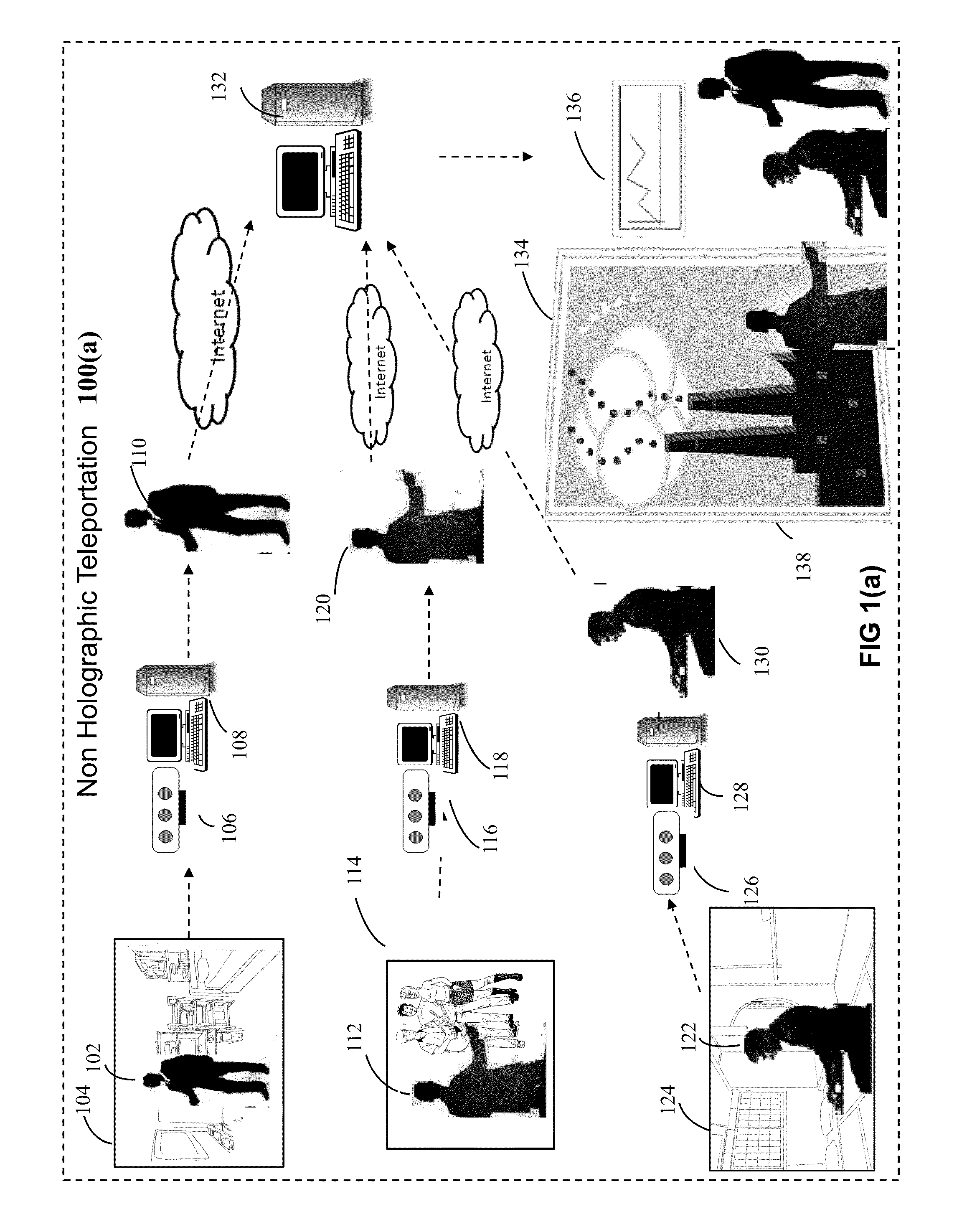

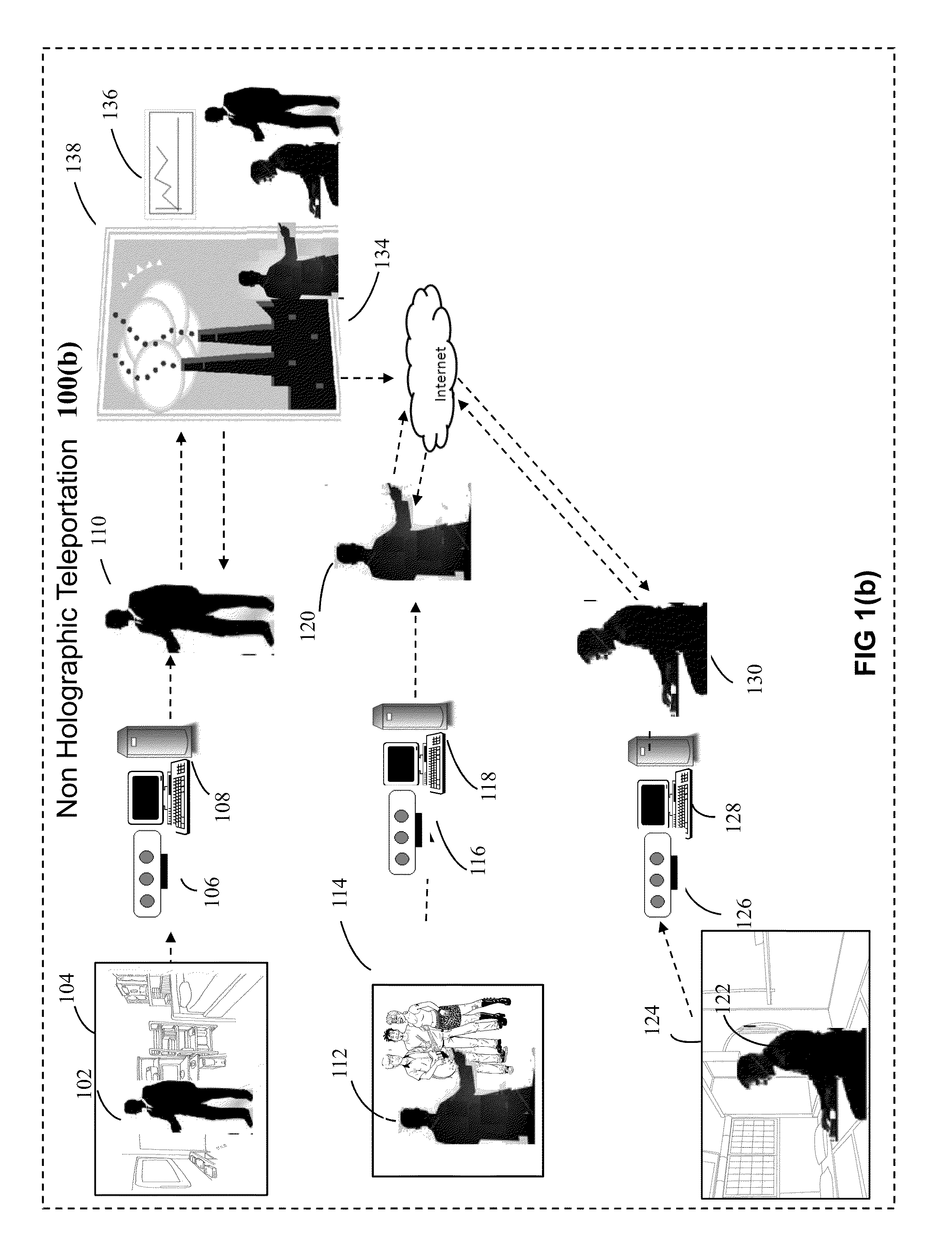

System and method for non-holographic teleportation

InactiveUS20150091891A1Easy to installEffective and accessible for massAmusementsTelevision systemsVirtual trainingRgb image

The present invention discloses a system and method for non-holographic virtual teleportation of one or more remote objects to a designated three-dimensional space around a user in realtime. This is achieved by using a plurality of participating teleportation terminals, located at geographically diverse locations, that capture the RGB and depth data of their respective environments, extract RGB images of target objects from their corresponding environment, and transmit the alpha channeled object images via Internet for integration into a single composite scene in which layers of computer graphics are added in the foreground and in the background. The invention thus creates a virtual 3D space around a user in which almost anything imaginable can be virtually teleported for user interaction. This invention has application in intuitive computing, perceptual computing, entertainment, gaming, virtual meetings, conferencing, gesture based interfaces, advertising, ecommerce, social networking, virtual training, education, so on and so forth.

Owner:DUMEDIA

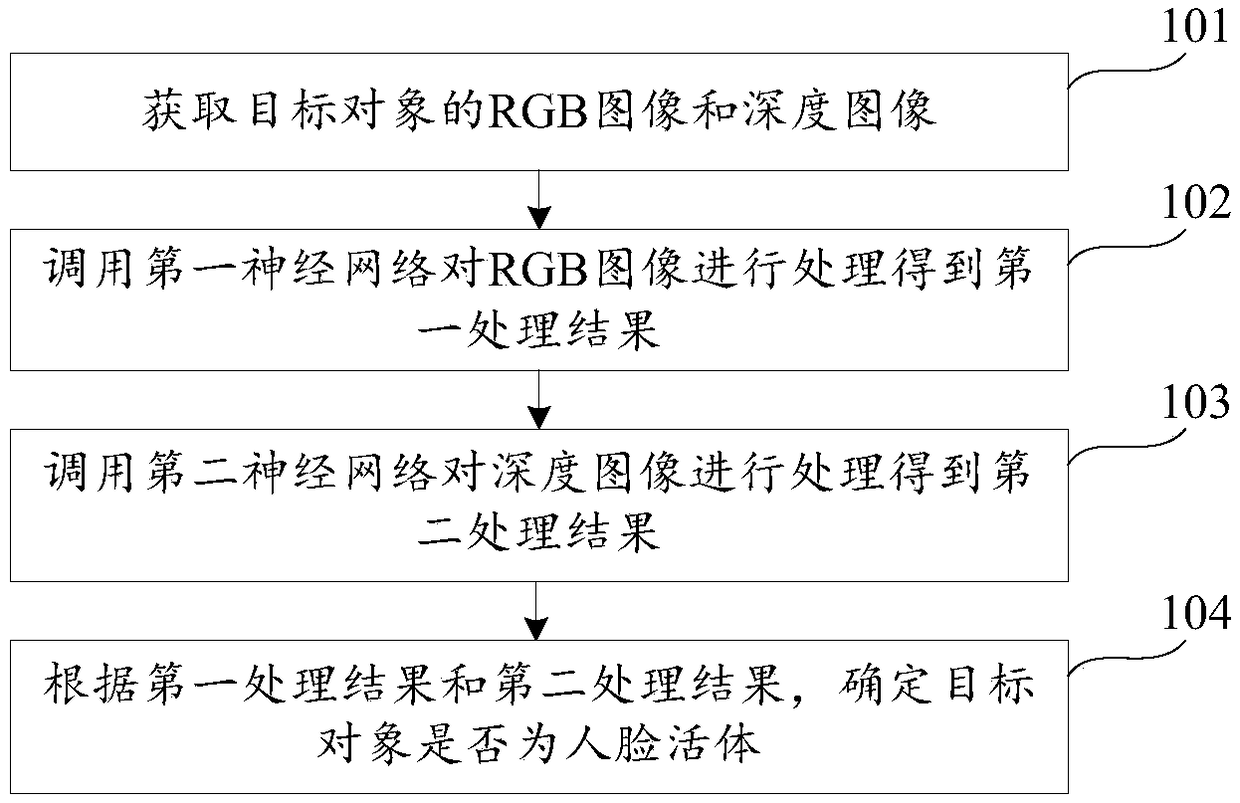

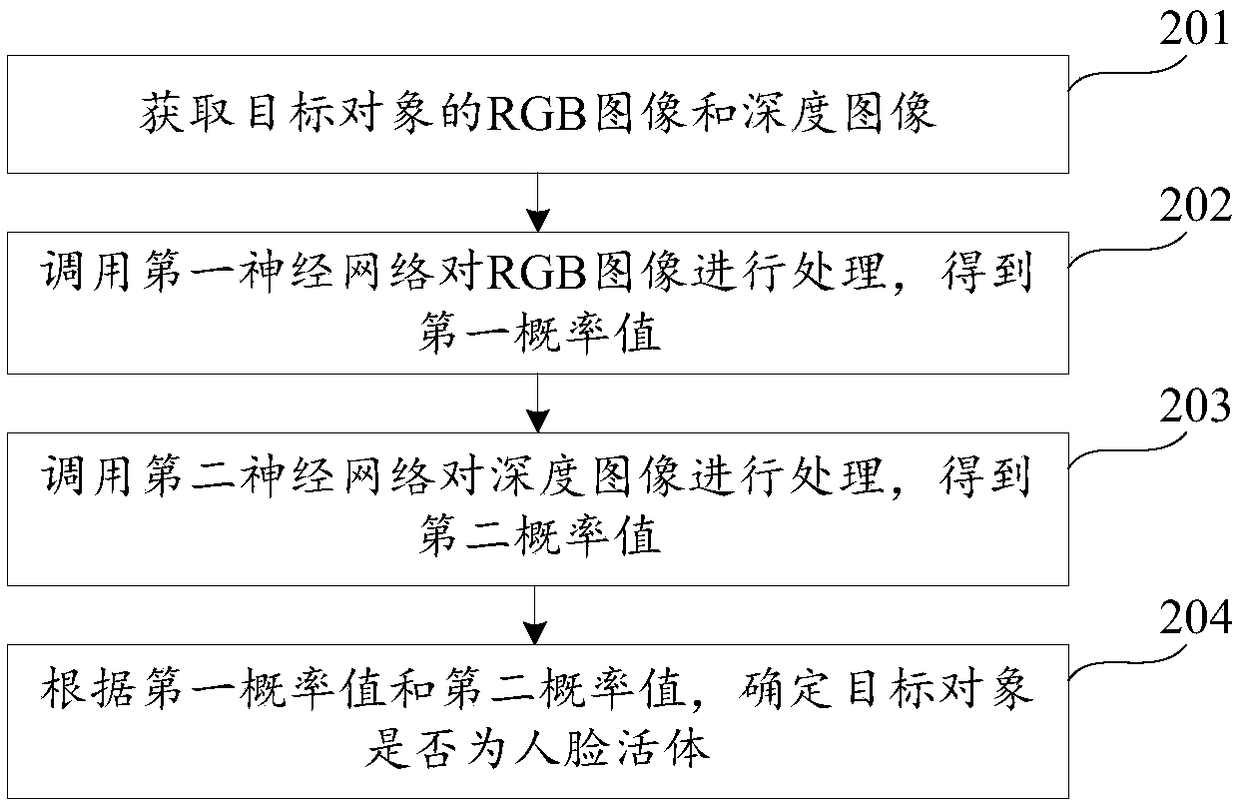

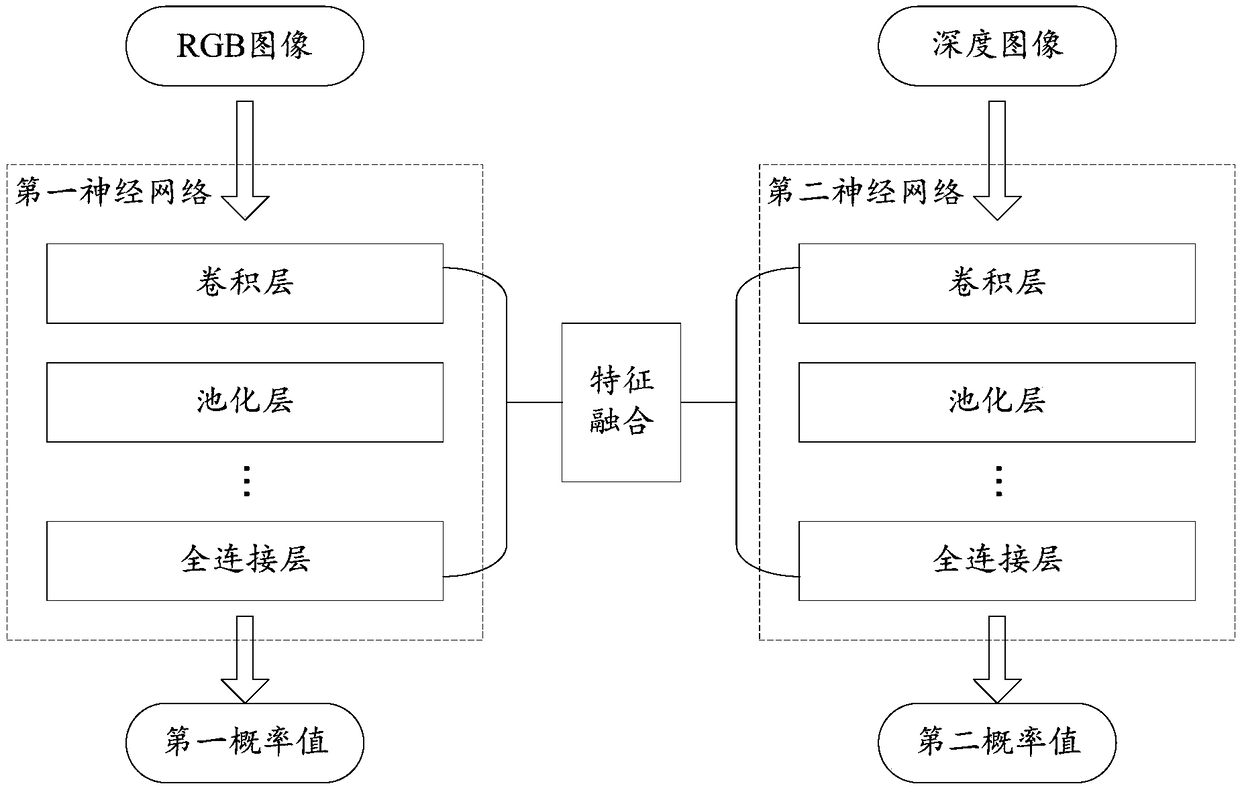

Face-in-vivo detection method, device, equipment and storage medium

ActiveCN109034102AImprove experienceEffective interceptionSpoof detectionPattern recognitionRgb image

An embodiment of the present application discloses a method, a device, equipment and a storage medium for detecting human face in vivo. The method comprises the following steps of: acquiring an RGB image and a depth image of a target object; calling a first neural network to process the RGB image to obtain a first processing result; invoking a second neural network to process the depth image to obtain a second processing result; according to the first processing result and the second processing result, determining whether or not the target object is a human face living body. An embodiment of the present application obtains an RGB image and a depth image of a target object, combining the two images to detect the face of the target object in vivo, the face texture clues and 3D face structureclues being combined to detect the face in vivo, which improves the accuracy and can effectively intercept the face paper, reproduce the face on the high-definition screen, synthesize the face video,face mask, 3D prosthetic model and other attacks. Moreover, it is simpler and more efficient to capture RGB images and depth images without user interaction.

Owner:TENCENT TECH (SHENZHEN) CO LTD

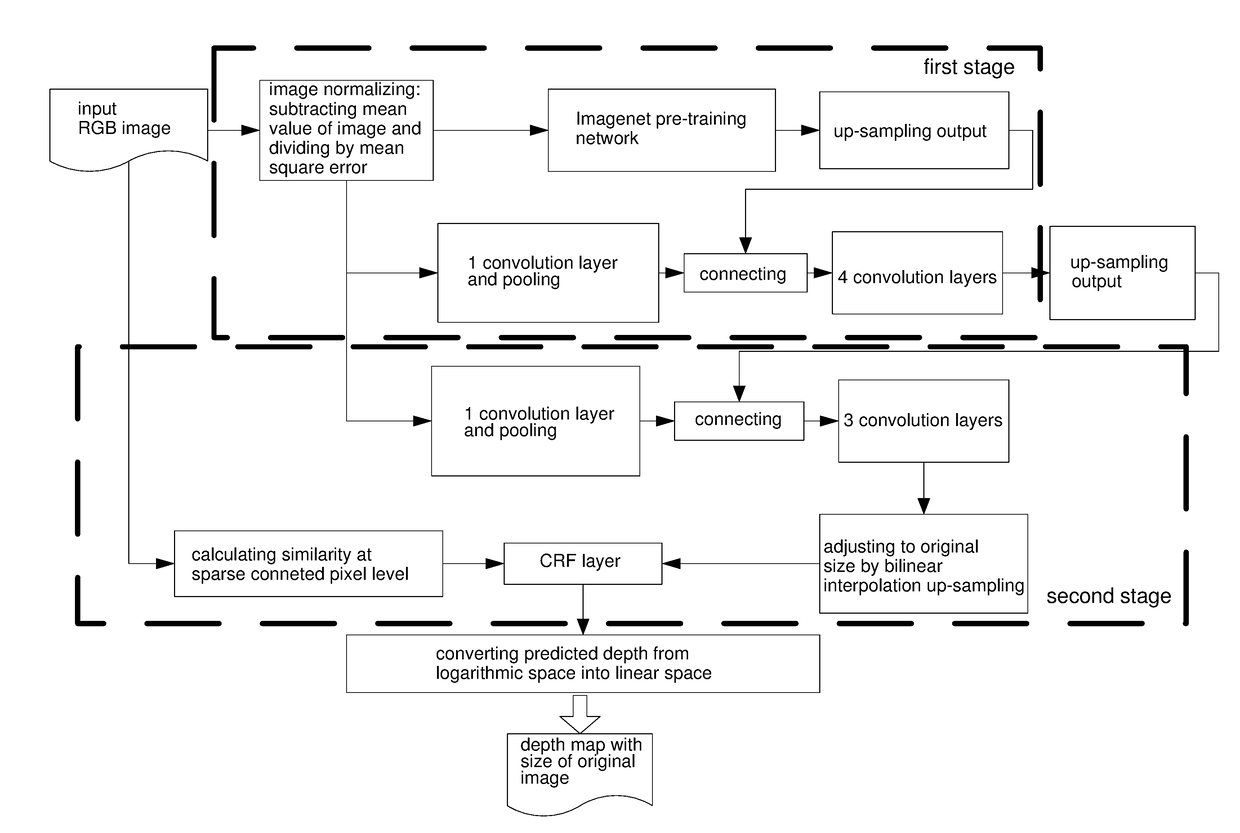

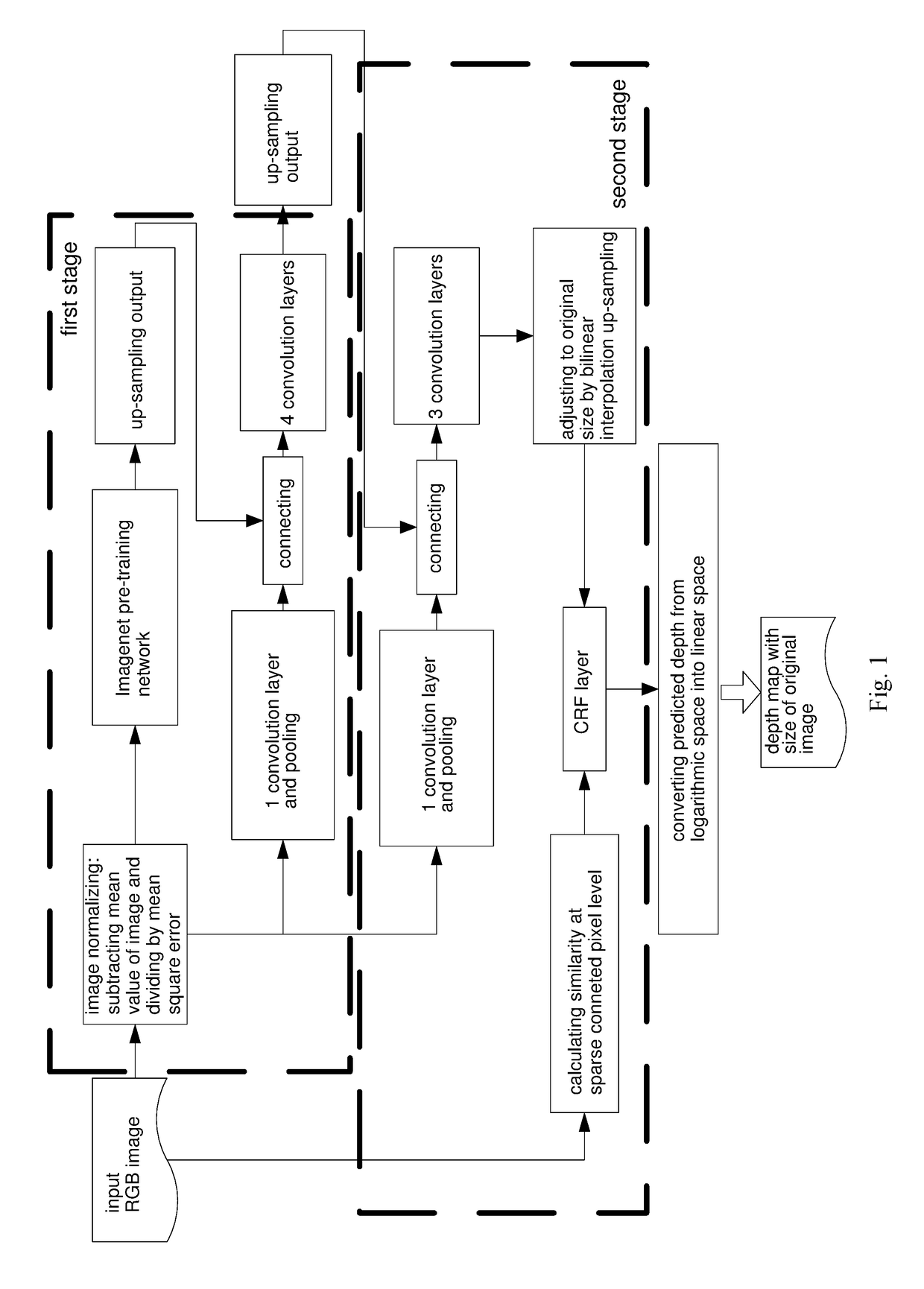

Depth estimation method for monocular image based on multi-scale CNN and continuous CRF

ActiveUS20180231871A1Improve accuracyClear outlineImage enhancementMathematical modelsRgb imageMaximum a posteriori estimation

A depth estimation method for a monocular image based on a multi-scale CNN and a continuous CRF is disclosed in this invention. A CRF module is adopted to calculate a unary potential energy according to the output depth map of a DCNN, and the pairwise sparse potential energy according to input RGB images. MAP (maximum a posteriori estimation) algorithm is used to infer the optimized depth map at last. The present invention integrates optimization theories of the multi-scale CNN with that of the continuous CRF. High accuracy and a clear contour are both achieved in the estimated depth map; the depth estimated by the present invention has a high resolution and detailed contour information can be kept for all objects in the scene, which provides better visual effects.

Owner:ZHEJIANG GONGSHANG UNIVERSITY

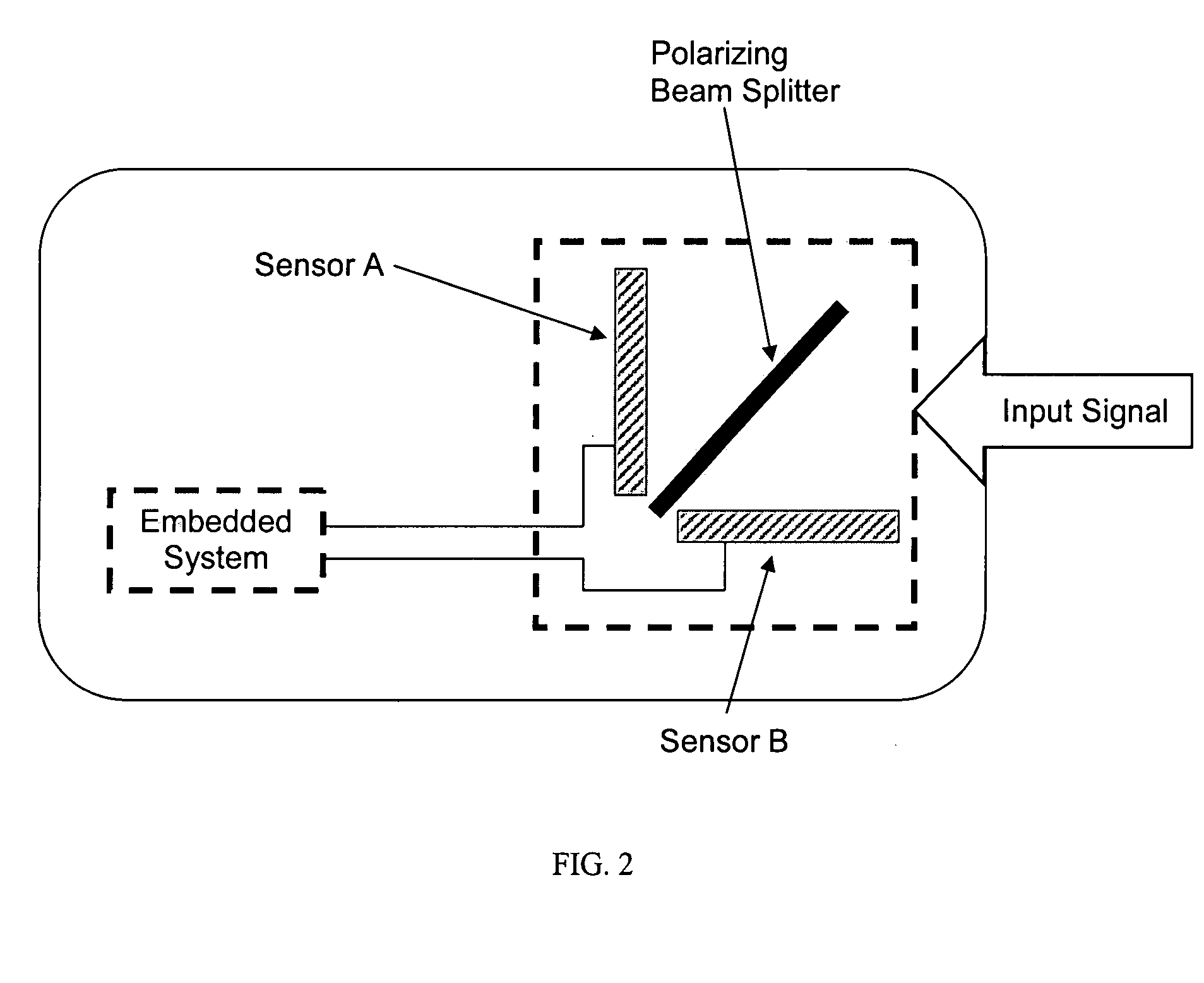

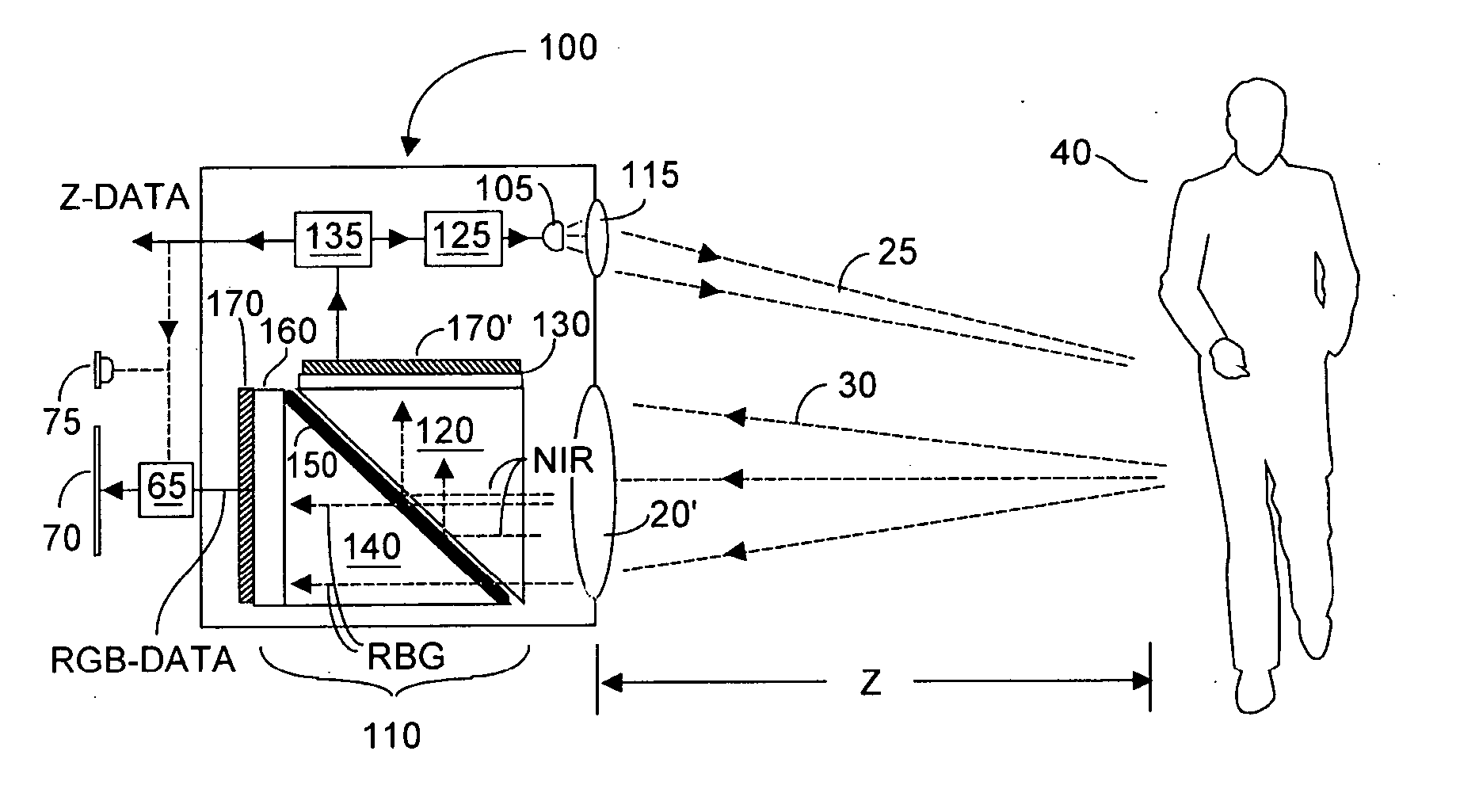

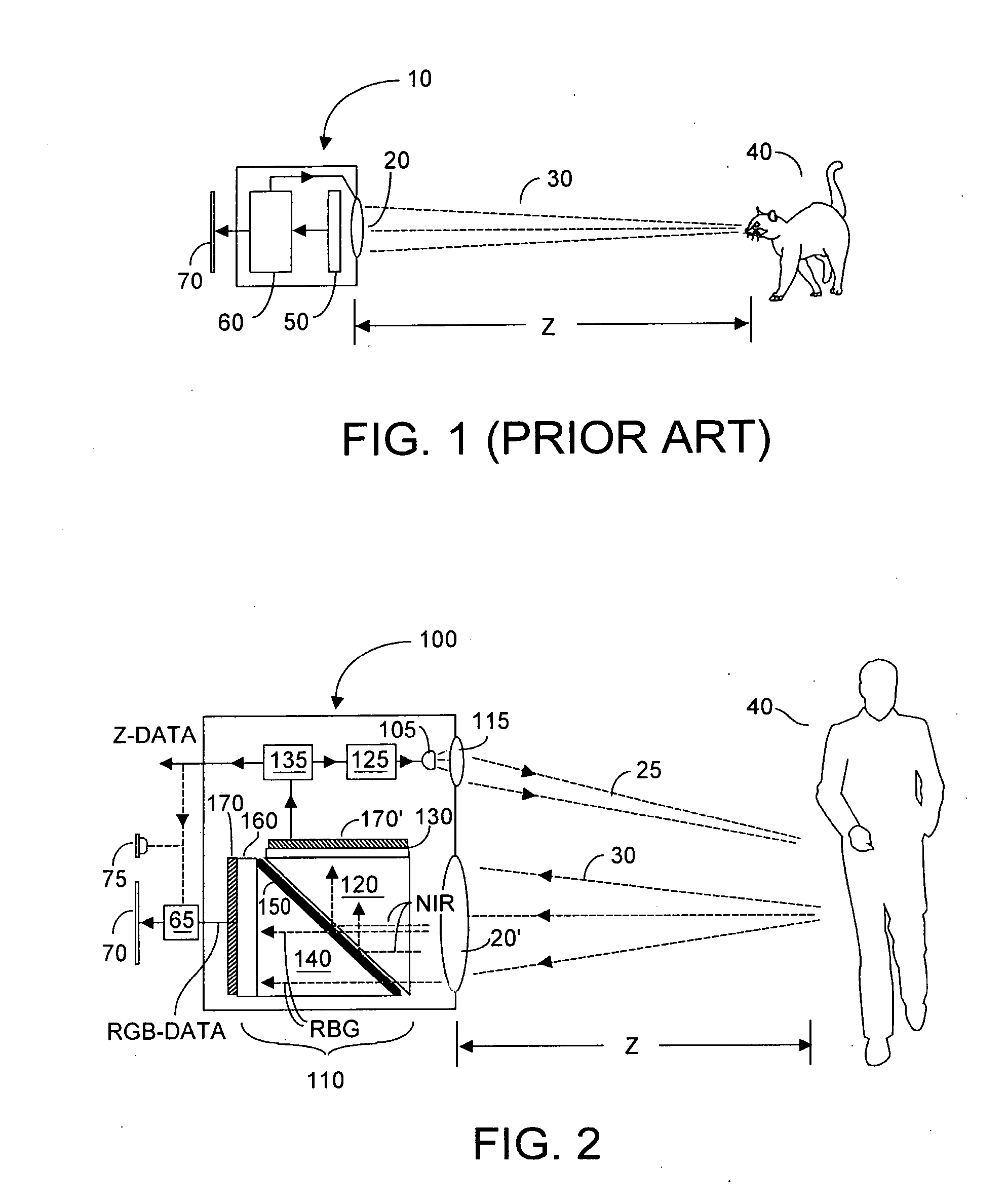

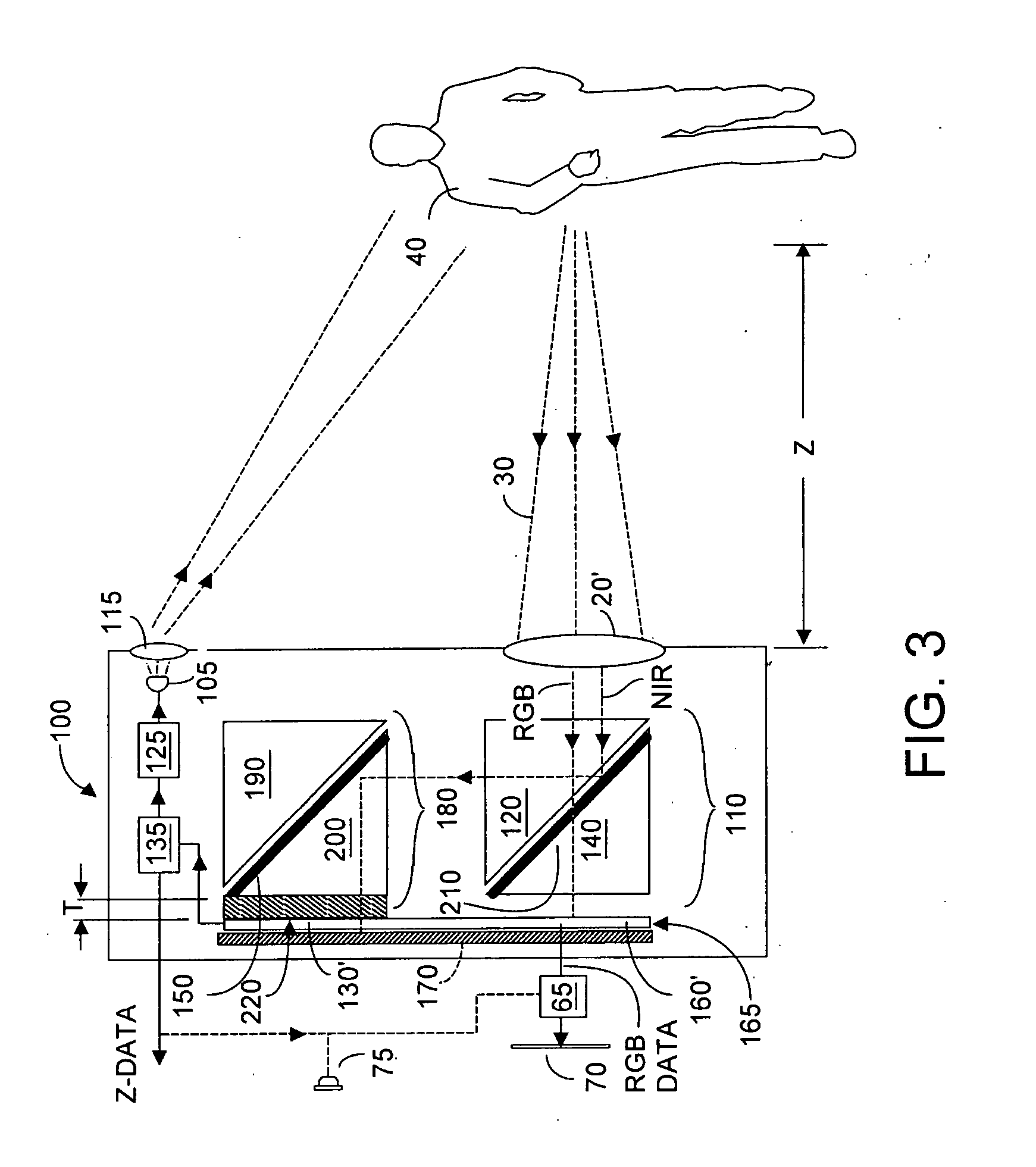

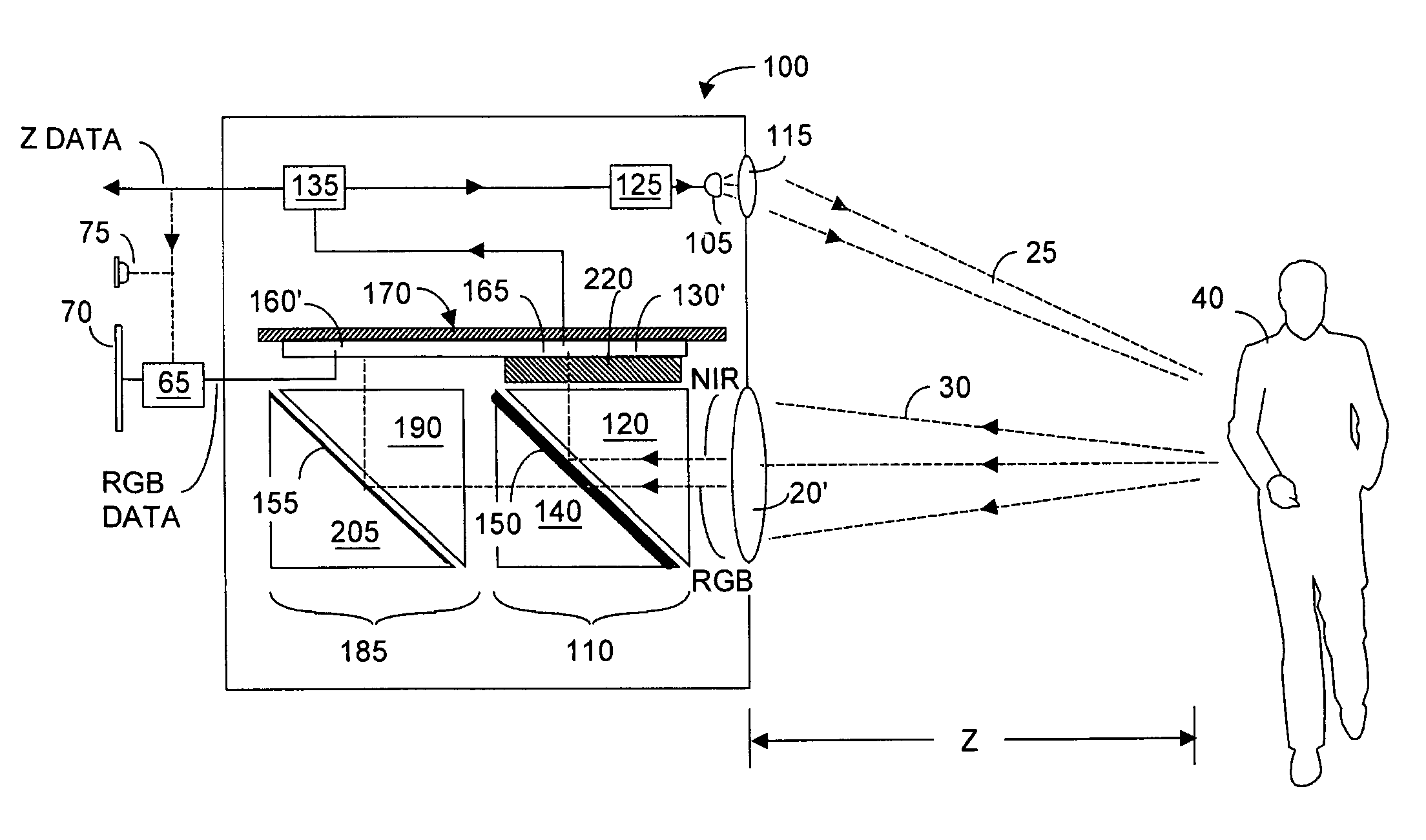

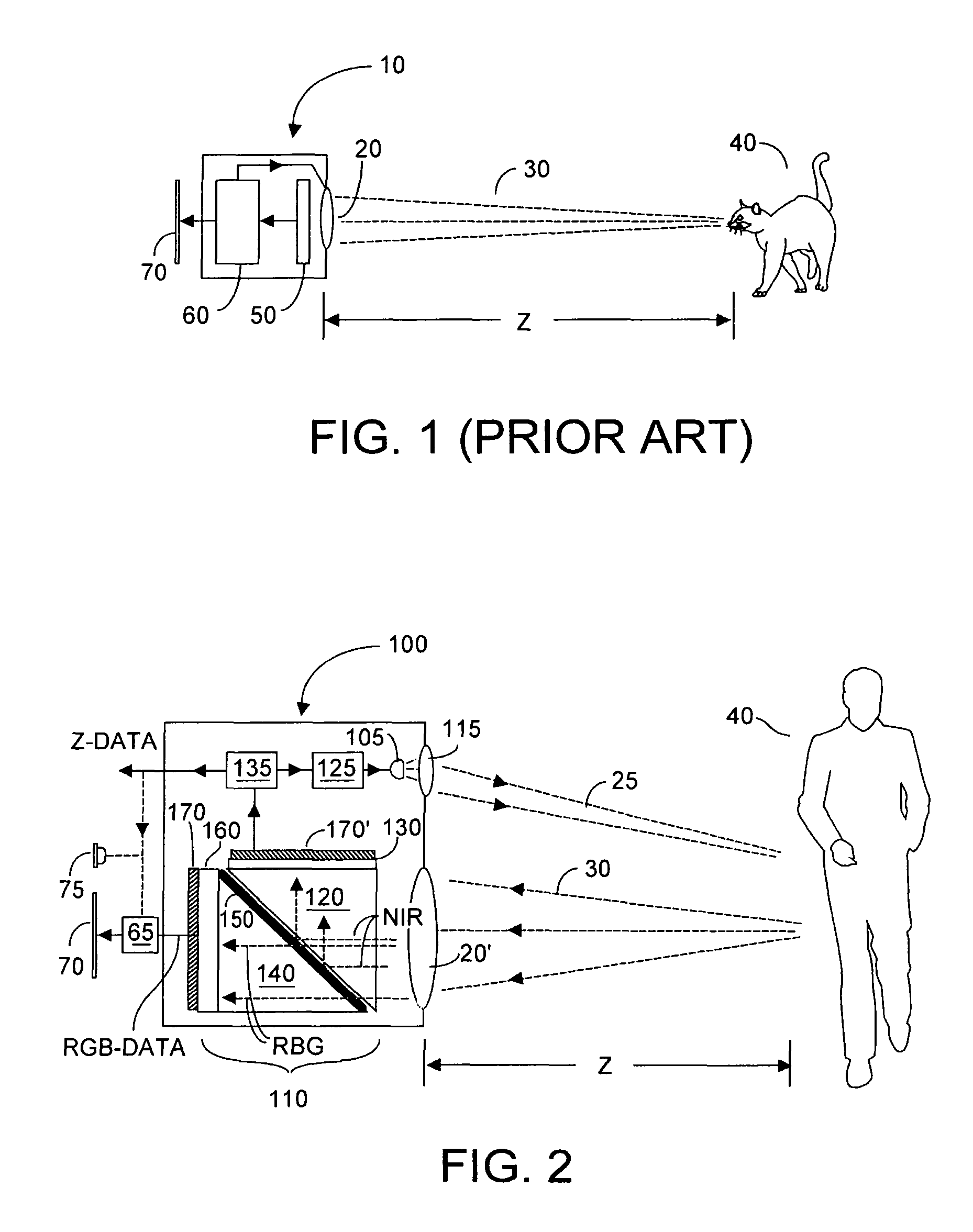

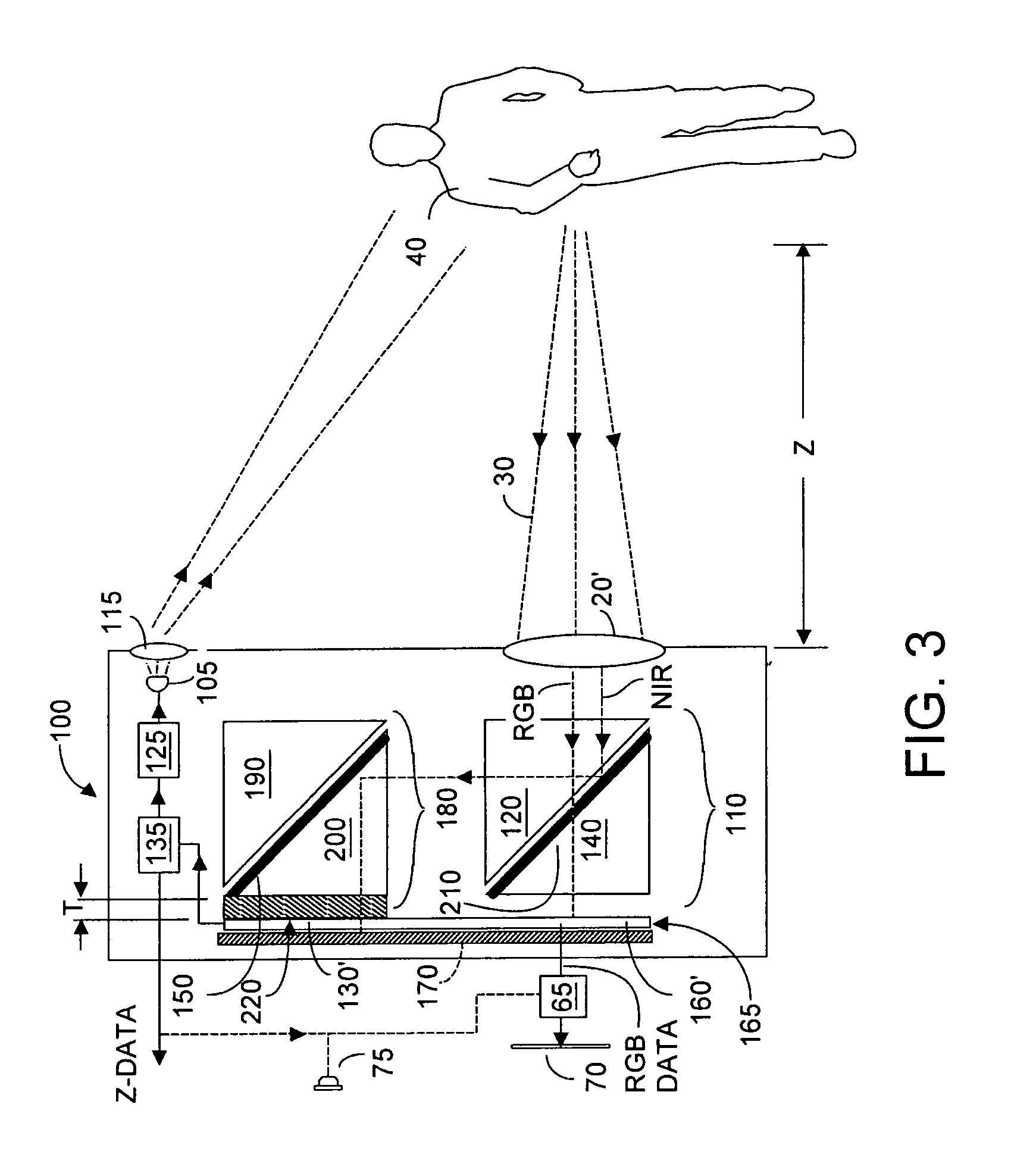

Single chip red, green, blue, distance (RGB-Z) sensor

ActiveUS20050285966A1Quick identificationHigh resolutionTelevision system detailsOptical rangefindersBeam splitterSpectral bands

An RGB-Z sensor is implementable on a single IC chip. A beam splitter such as a hot mirror receives and separates incoming first and second spectral band optical energy from a target object into preferably RGB image components and preferably NIR Z components. The RGB image and Z components are detected by respective RGB and NIR pixel detector array regions, which output respective image data and Z data. The pixel size and array resolutions of these regions need not be equal, and both array regions may be formed on a common IC chip. A display using the image data can be augmented with Z data to help recognize a target object. The resultant structure combines optical efficiency of beam splitting with the simplicity of a single IC chip implementation. A method of using the single chip red, green, blue, distance (RGB-Z) sensor is also disclosed.

Owner:MICROSOFT TECH LICENSING LLC

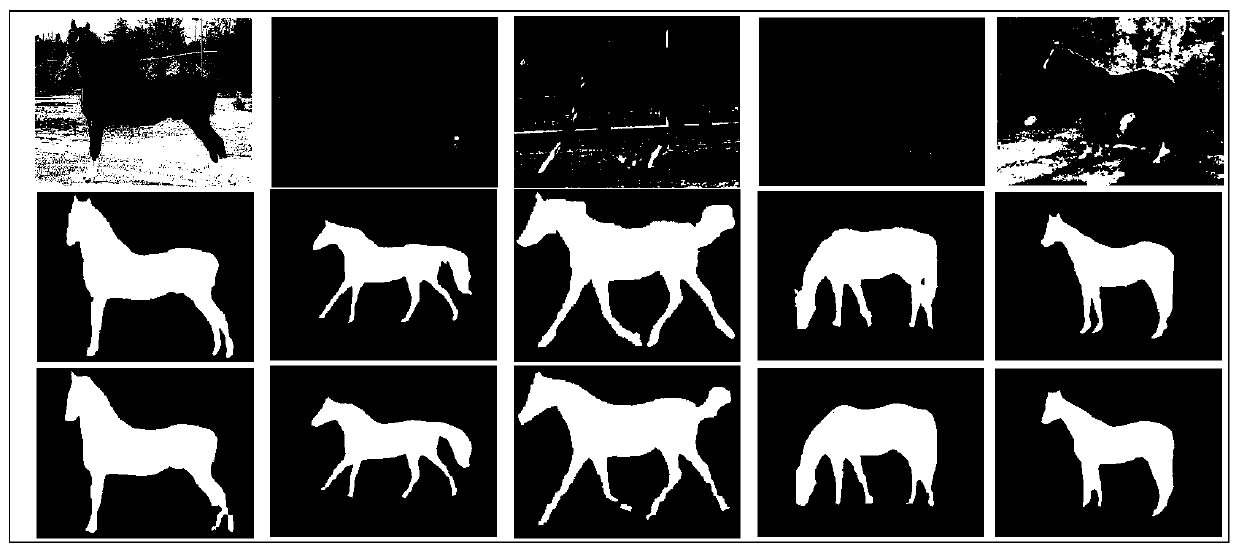

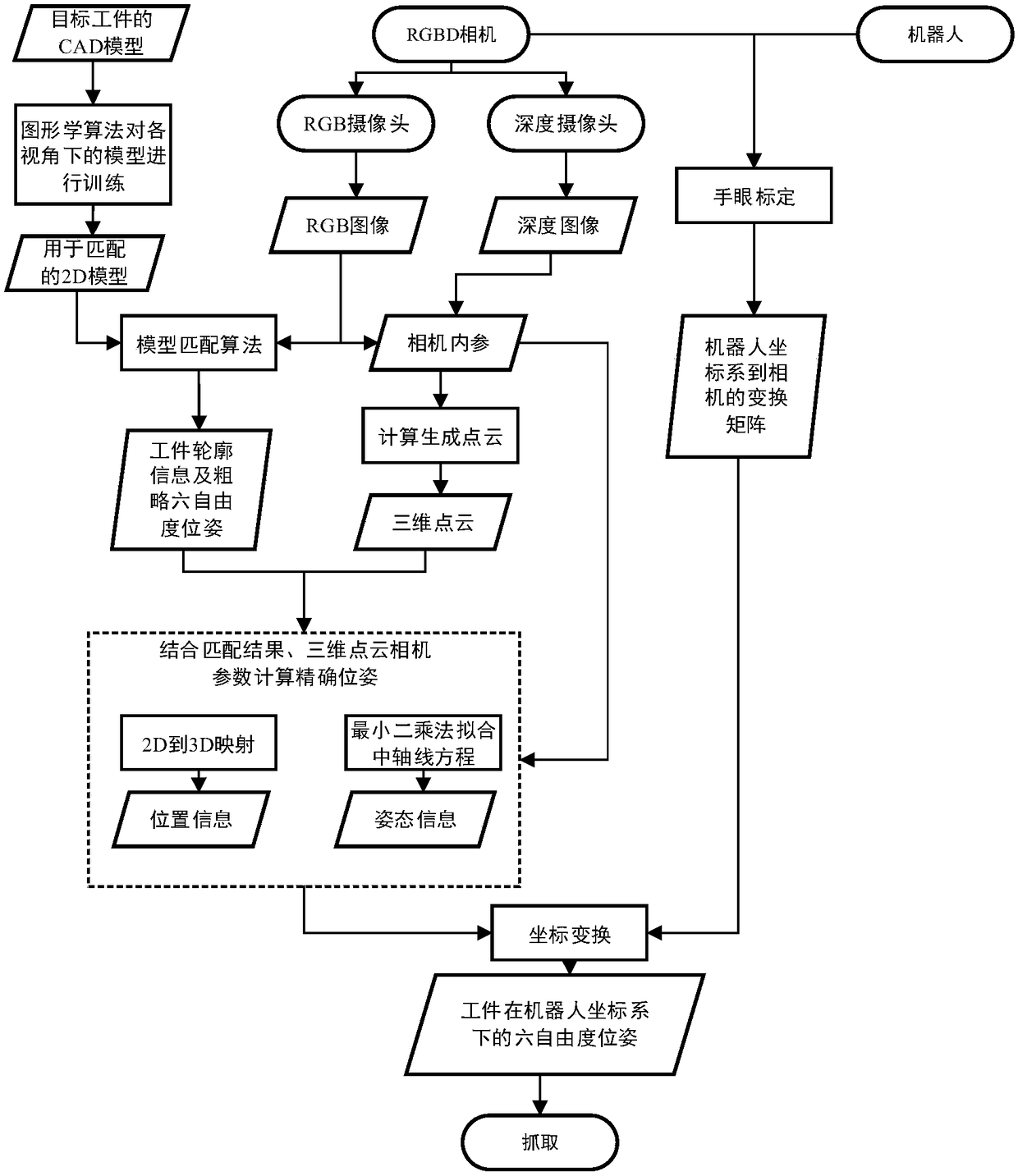

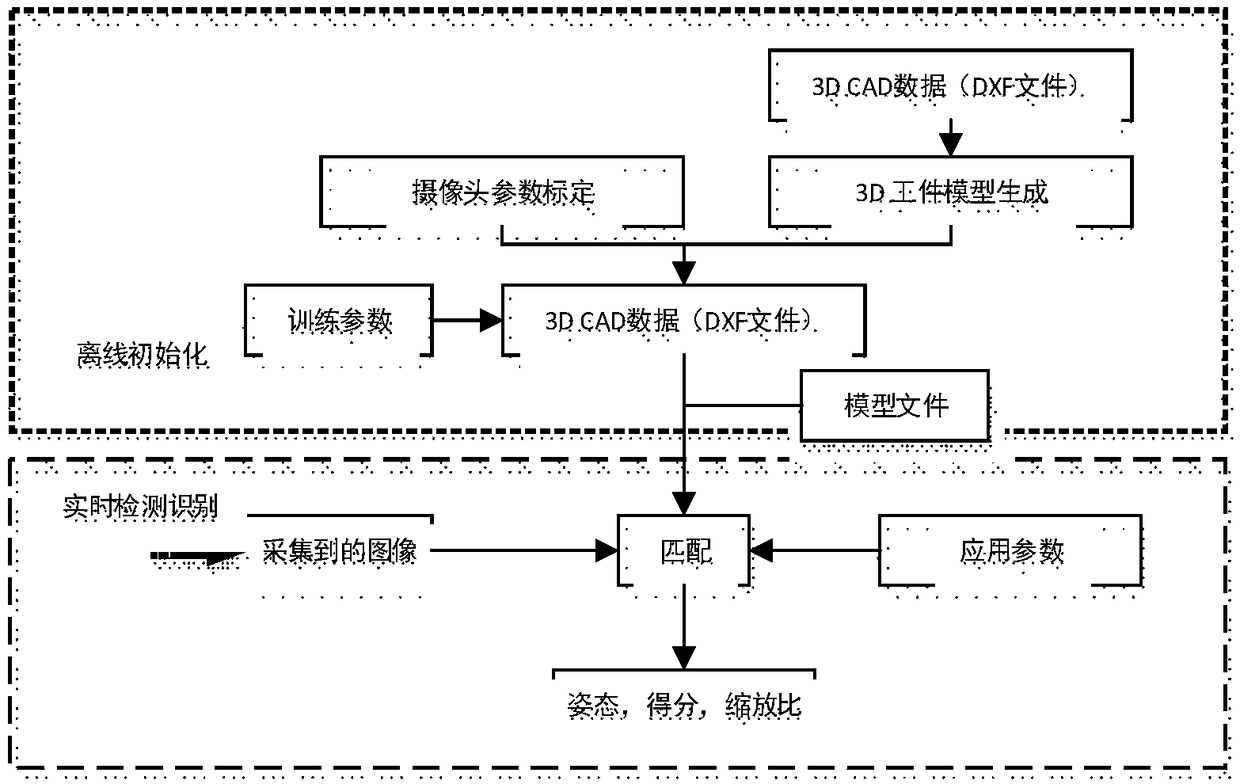

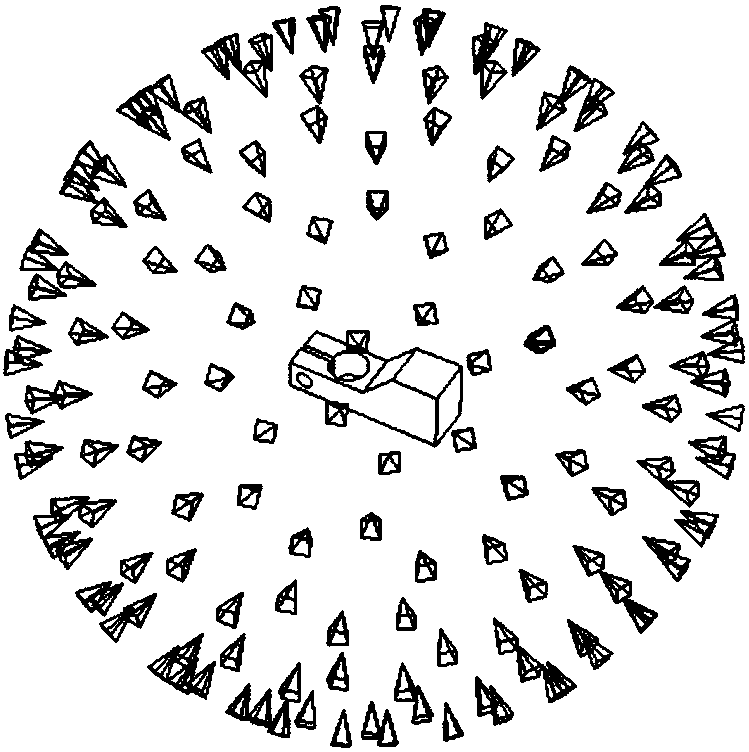

Stacked workpiece posture recognizing and picking method based on RGBD camera

ActiveCN108555908AShort processing timeRapid positioningProgramme-controlled manipulatorImage analysisRgb imageComputer vision

The invention relates to a stacked workpiece posture recognizing and picking method based on an RGBD camera. The method includes the following steps that 1), parameters in the RGBD camera are calibrated; 2), training is carried out according to a pre-obtained 3D model of a to-be-captured workpiece, and a 2D model for matching is generated; 3), an RGB image and a depth image of the to-be-recognizedworkpiece are obtained through the RGBD camera, and contour information of the to-be-captured workpiece is obtained; 4), two-dimensional position information of the to-be-captured workpiece in an image pixel coordinate system and a six-freedom-degree pose under a camera coordinate system are obtained; 5), a six-freedom-degree pose of the to-be-captured workpiece under a robot coordinate system isobtained; and 6), a six-axis robot is controlled to pick the to-be-captured workpiece. Compared with the prior art, the RGBD camera with low cost is utilized, in combination with RGB and depth information, the postures of various types of workpieces stacked disorderly are recognized and captured, and the method is high in precision, low in cost and high in adaptability, and can meet the requirements of industrial production.

Owner:TONGJI UNIV

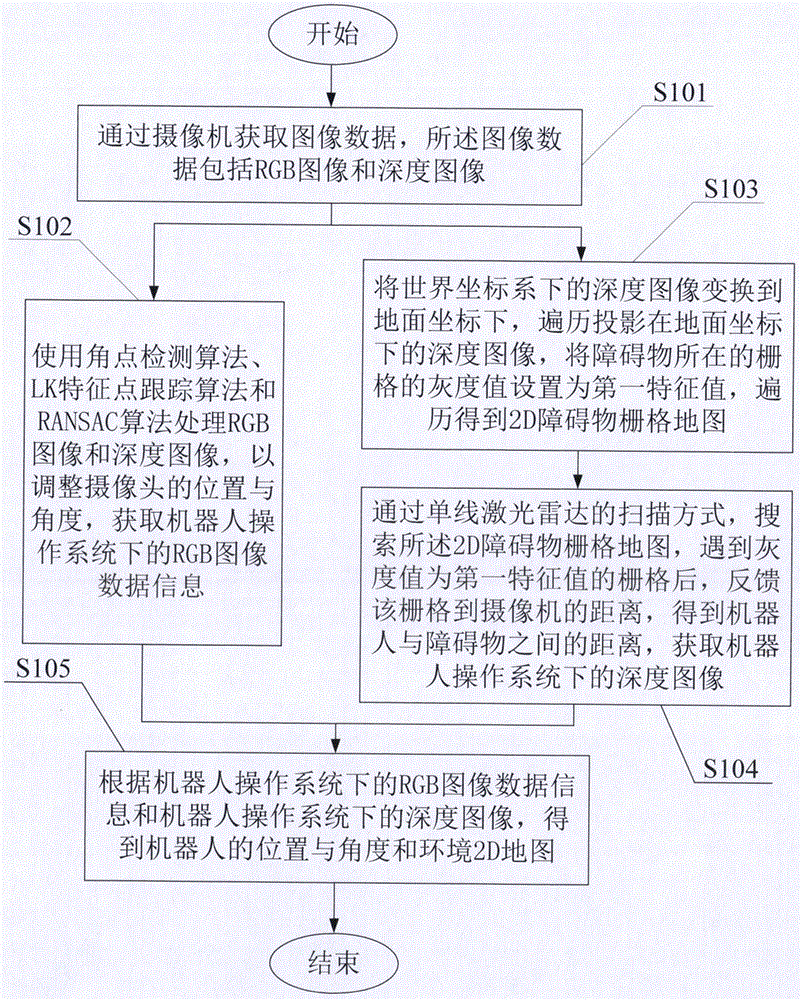

Indoor robot SLAM method and system

ActiveCN106052674AMeet indoor navigation requirementsDoes not affect appearance structureNavigational calculation instrumentsOperational systemRgb image

The invention discloses an indoor robot SLAM method. The method comprises the following steps: obtaining image data through a camera, wherein the image data comprises RGB images and depth images; using a corner detection algorithm, an LK characteristic point tracing algorithm, and a RANSAC algorithm to process the RGB images and depth images to adjust the position and angle of the camera so as to obtain the RGB image data information under a robot operation system; converting the depth images in a world coordinate system to a ground coordinate, traversing the depth images that are projected on the ground coordinate, setting the gray value of the grid where a barrier stays as a first characteristic value, carrying out traversing to obtain a 2D barrier grid map; through a single line laser radar scanning mode, searching the 2D barrier grid map, when the grid with a grey value equal to the first characteristic value is found, feeding back the distance between the grid and the camera to obtain the distance between a robot and the barrier, obtaining the depth images under a robot operation system, and obtaining an environment 2D map.

Owner:青岛克路德智能科技有限公司

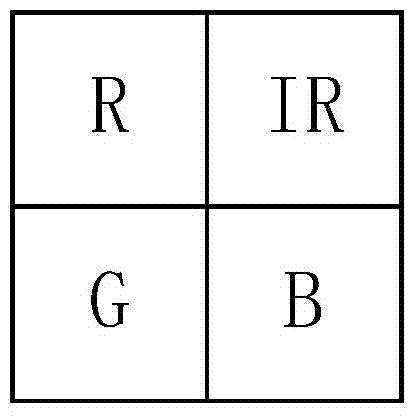

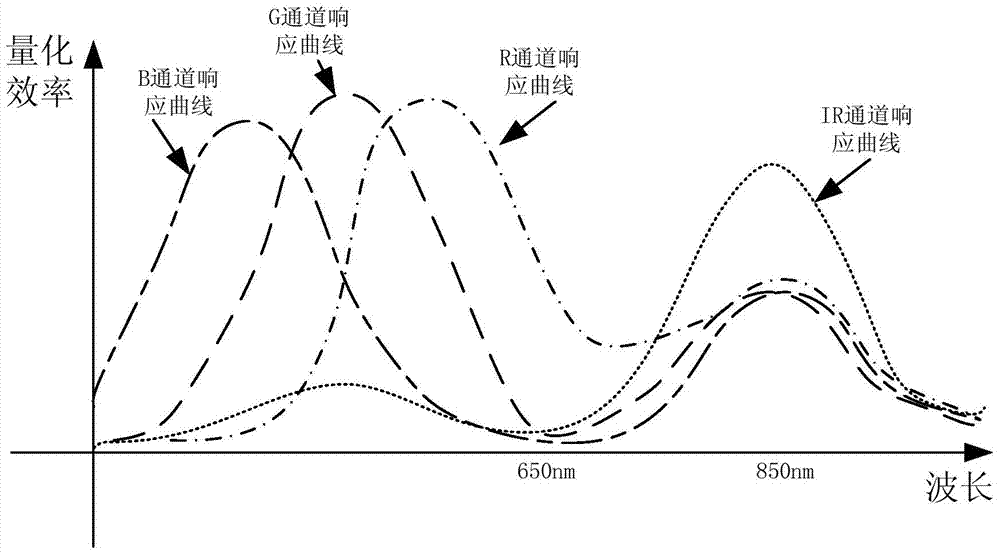

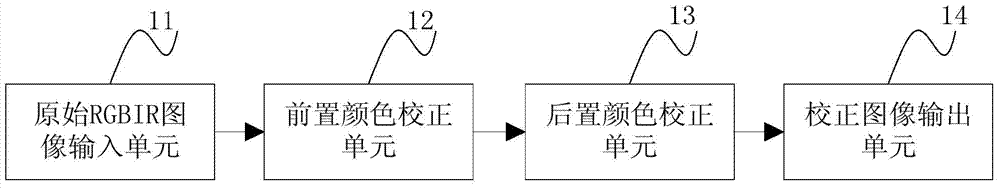

Method and device for correcting color based on RGBIR (red, green and blue, infra red) image sensor

ActiveCN103686111AEliminate the effects ofColor signal processing circuitsImaging processingRgb image

The invention discloses a method and a device for correcting color based on an RGBIR (red, green and blue, infra red) image sensor. The method comprises the following steps of entering an RGBIR image; interpolating the image, and calculating the channel values of an RGB (red, green and blue) channel and an IR (infra red) channel on each pixel position; calculating the channel statistical values of an RGB channel and an IR channel in a particular area; according to the statistical values, looking up the table to obtain the correction coefficients of the current scene; configuring the correction coefficients into a correction matrix, and separating the RGB channel and the IR channel; carrying out the color correction on the separated RGB channel, to obtain the corrected RGB image. A pre-correcting unit eliminates the effect of invisible light on the RGB channel of the original image, and the output utilizes a back-correcting unit to eliminate the effect of wavelength change of visible light on the RGB channel. The method not only can correct the color of the RBGIR image sensor, but also can divide the color correction into two relatively independent parts, and can be well compatible with the color correction method in the existing image processing module.

Owner:SHANGHAI FULLHAN MICROELECTRONICS

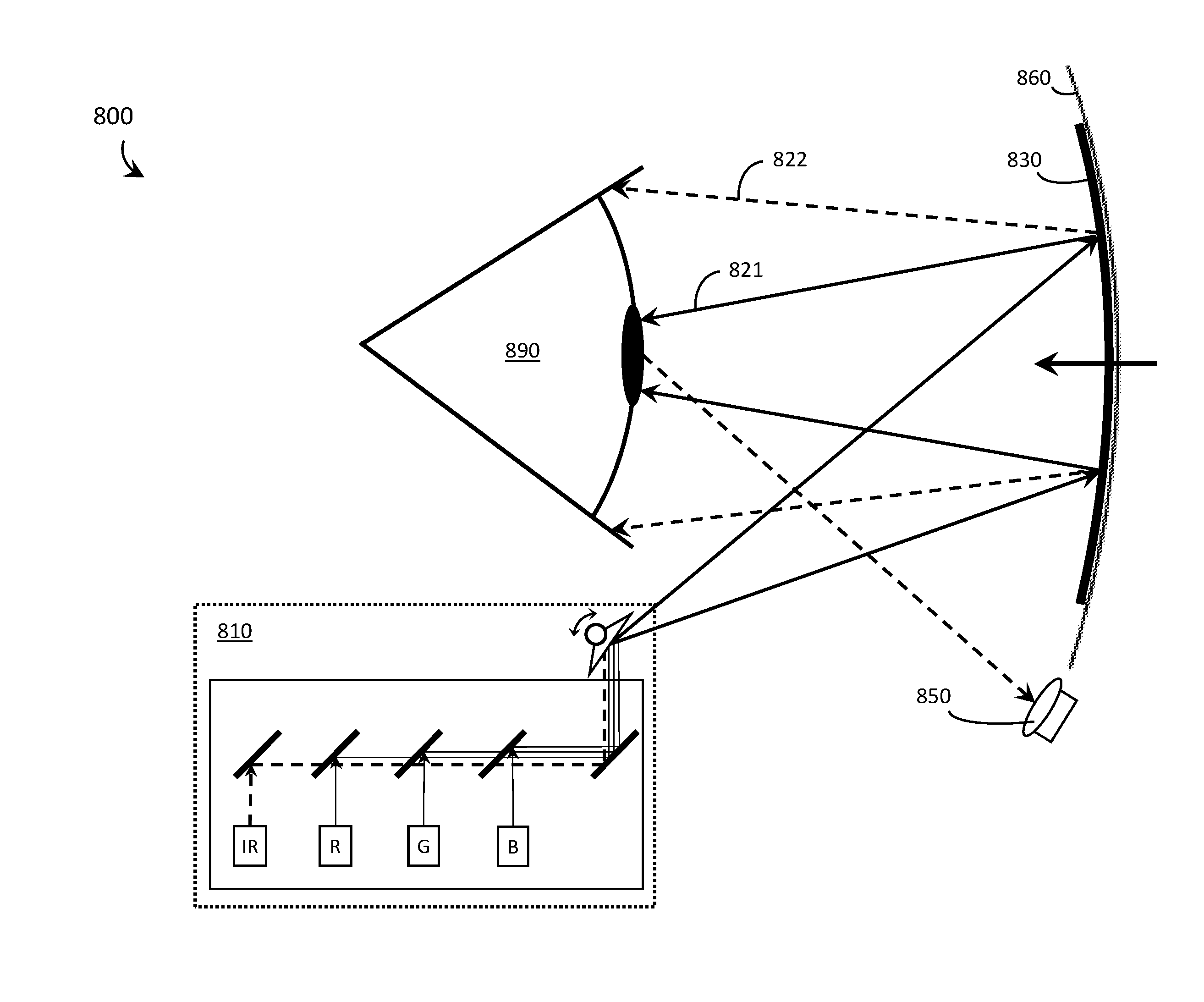

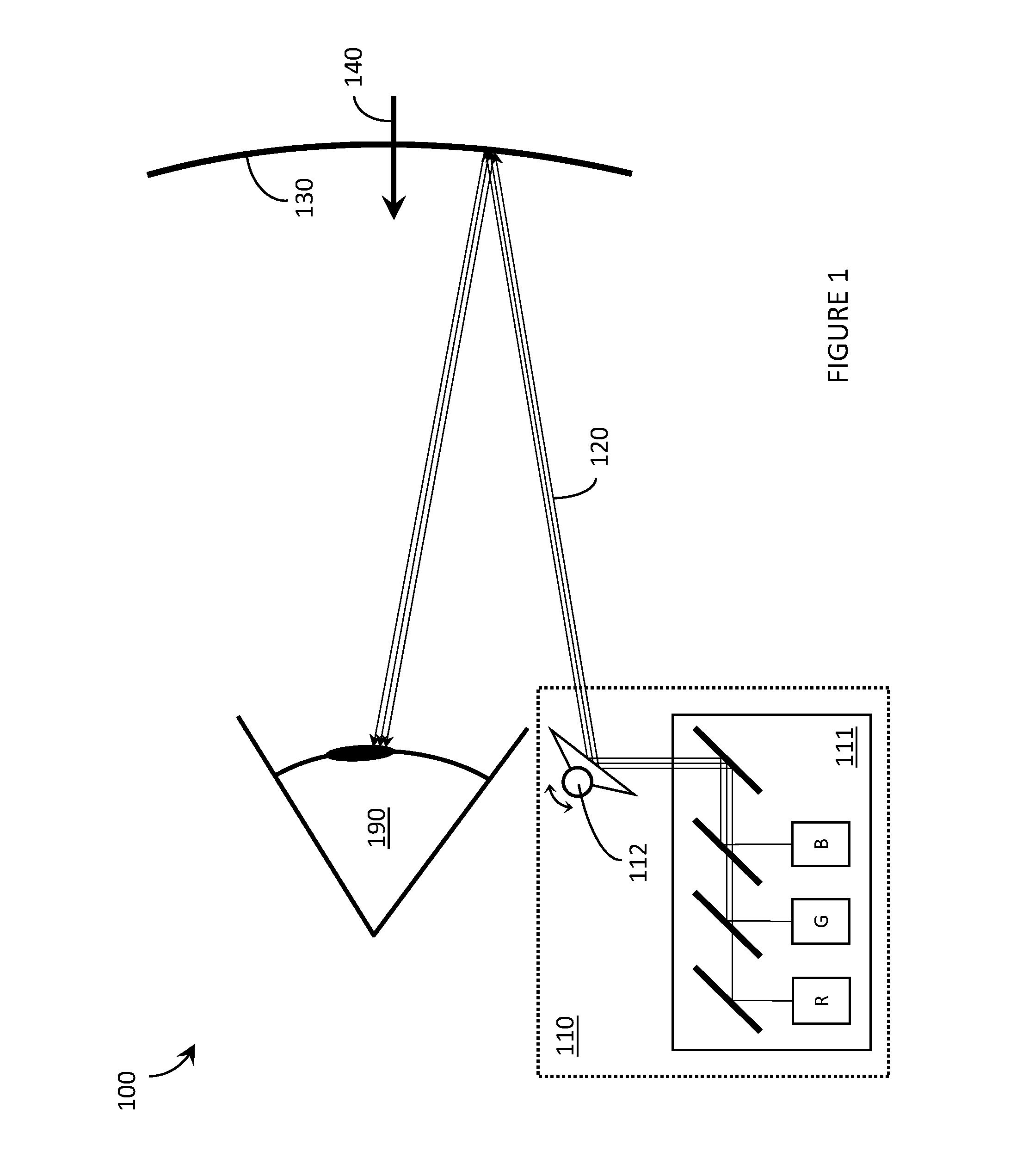

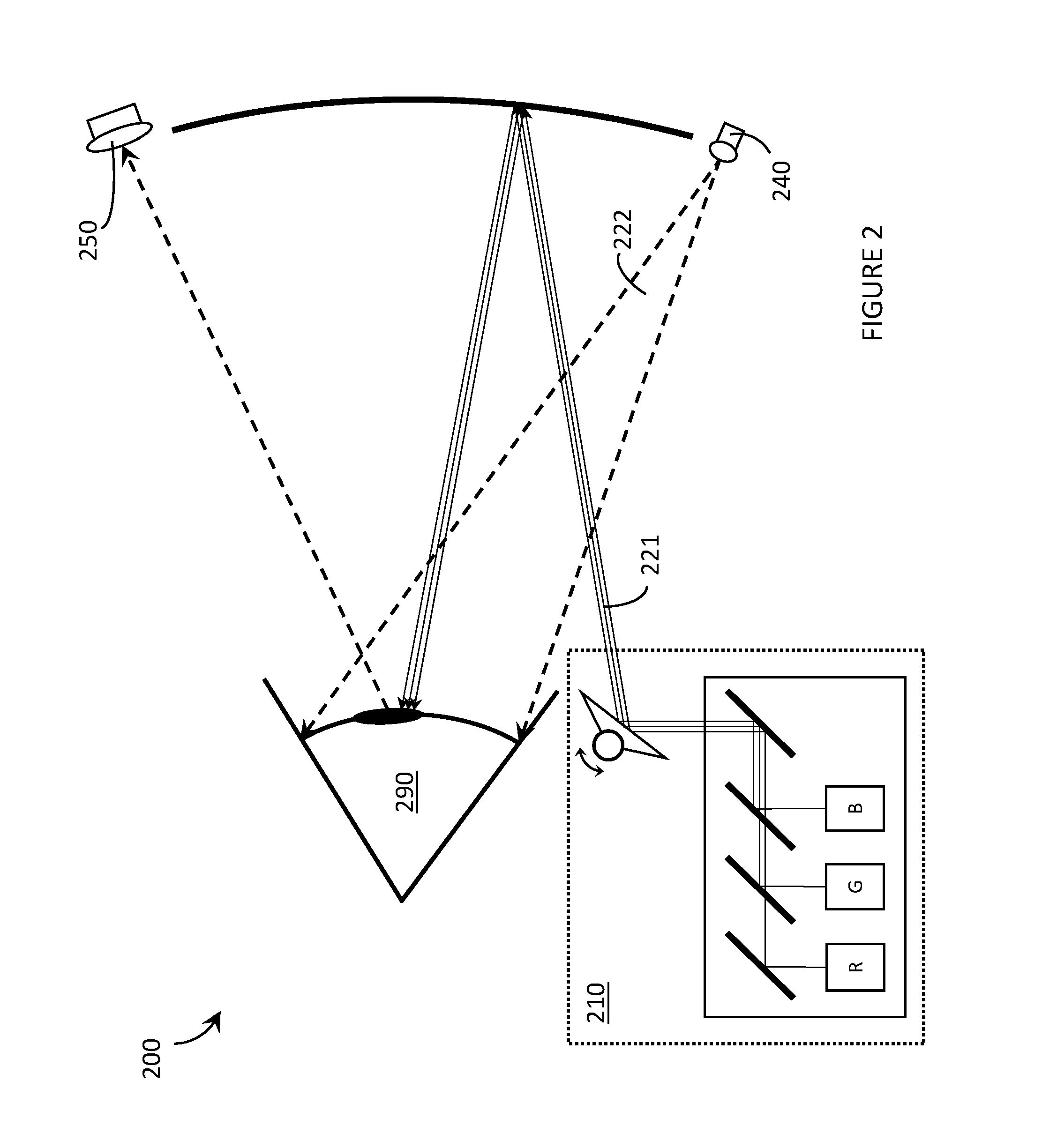

Systems, devices, and methods that integrate eye tracking and scanning laser projection in wearable heads-up displays

ActiveUS20160349515A1Picture reproducers using projection devicesInput/output processes for data processingHead-up displayPhotodetector

Systems, devices, and methods that integrate eye tracking capability into scanning laser projector (“SLP”)-based wearable heads-up displays are described. At least one narrow waveband laser diode is used in an SLP to define one or more portion(s) of a visible image. At least one corresponding narrow waveband photodetector is aligned to detect reflections of the portion(s) of the image from features of the eye. A holographic optical element (“HOE”) may be used to combine the image and environmental light into the user's “field of view.” Three narrow waveband photodetectors each responsive to a respective one of three narrow wavebands output by the RGB laser diodes of an RGB SLP are aligned to detect reflections of a projected RGB image from features of the eye.

Owner:GOOGLE LLC

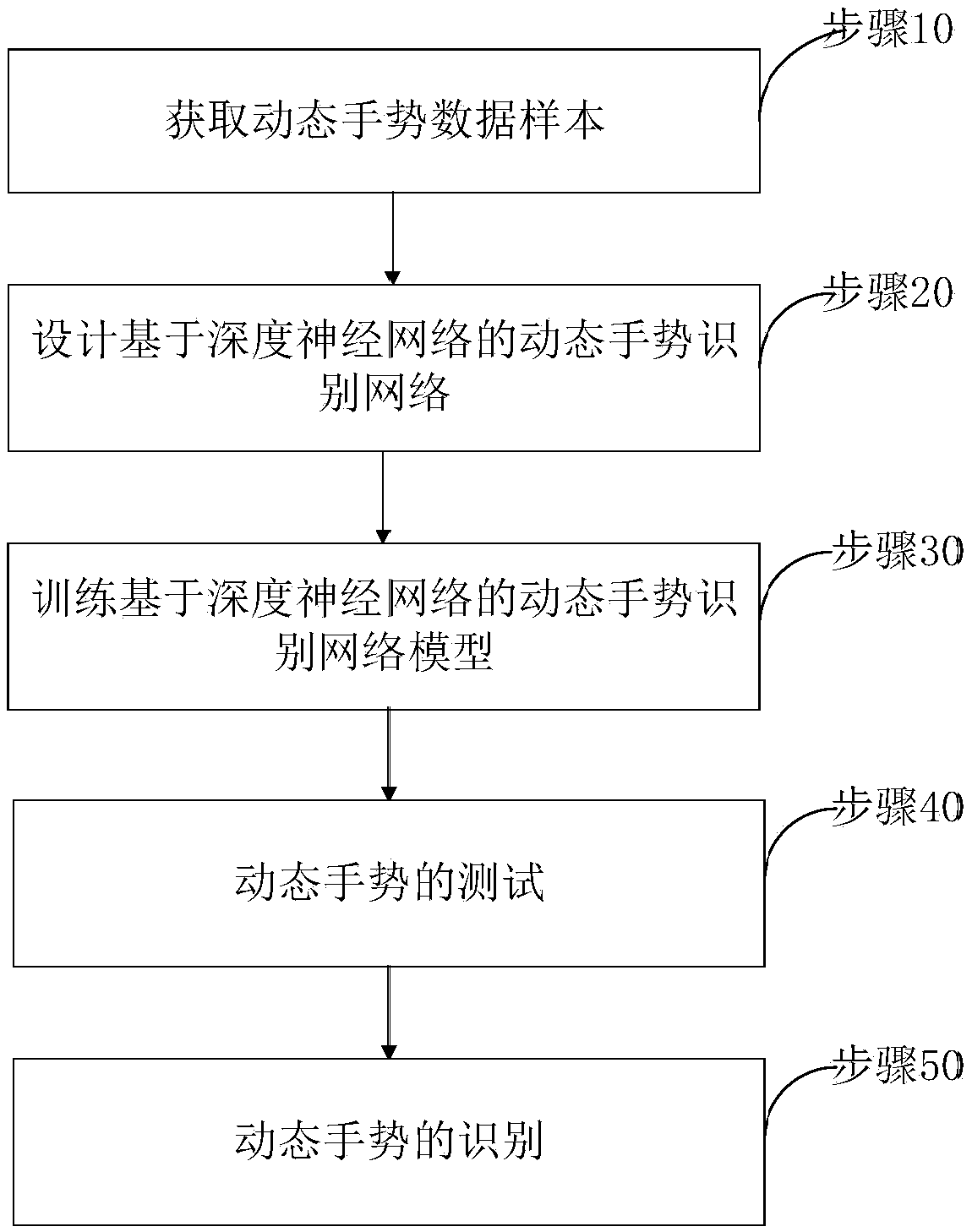

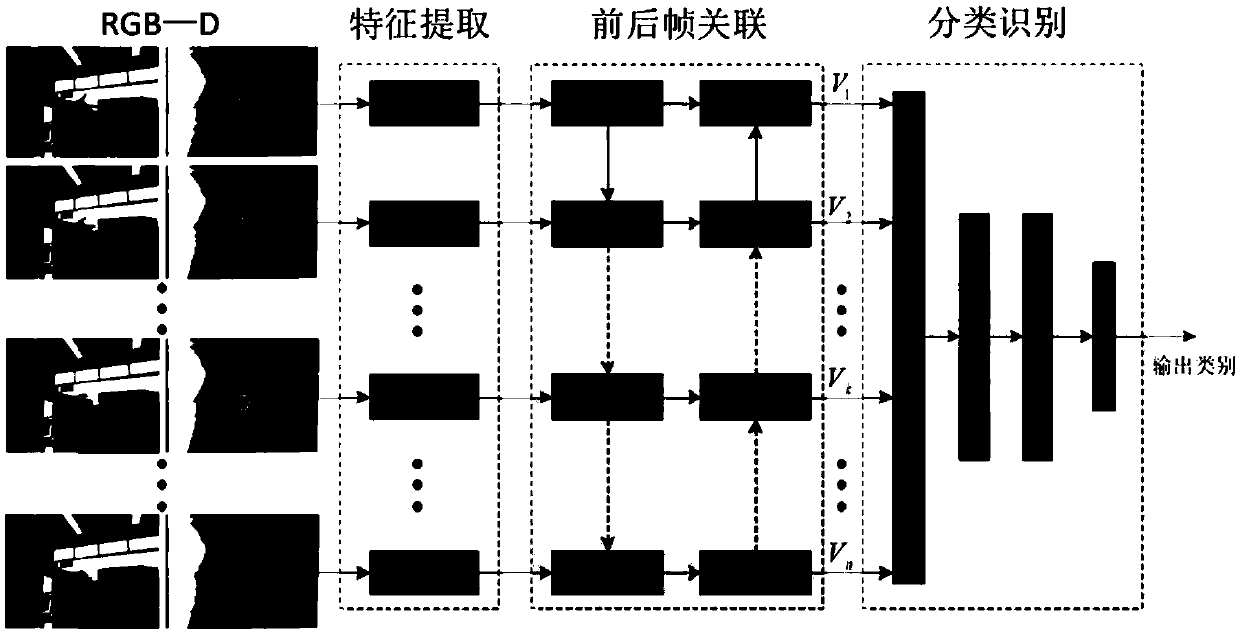

Dynamic gesture recognition method and system based on deep neural network

ActiveCN108932500AImprove recognition rateCharacter and pattern recognitionNeural architecturesFeature vectorData set

The invention discloses a dynamic gesture recognition method and system based on a deep neural network. The dynamic gesture recognition method comprises the steps of collecting dynamic gesture video clips with different gesture meanings to generate a training sample data set, wherein the sample data includes RGB images and depth information; designing a dynamic gesture recognition network model based on the deep neural network, and training the model by using the training samples; and performing dynamic gesture testing and recognition by using the trained dynamic gesture recognition model. Thedynamic gesture recognition network model is composed of a feature extraction network, a front and back frame association network and a classification recognition network, wherein the front and backframe association network is used for performing front and back time frame association mapping on feature vectors obtained through the feature extraction network of the samples of each gesture meaningand merging the feature vectors into a fusion feature vector of the gesture meaning. According to the invention, a bidirectional LSTM model is introduced into the network model to understand the correlation between continuous gesture postures, thereby greatly improving the recognition rate of dynamic gestures.

Owner:广州智能装备研究院有限公司

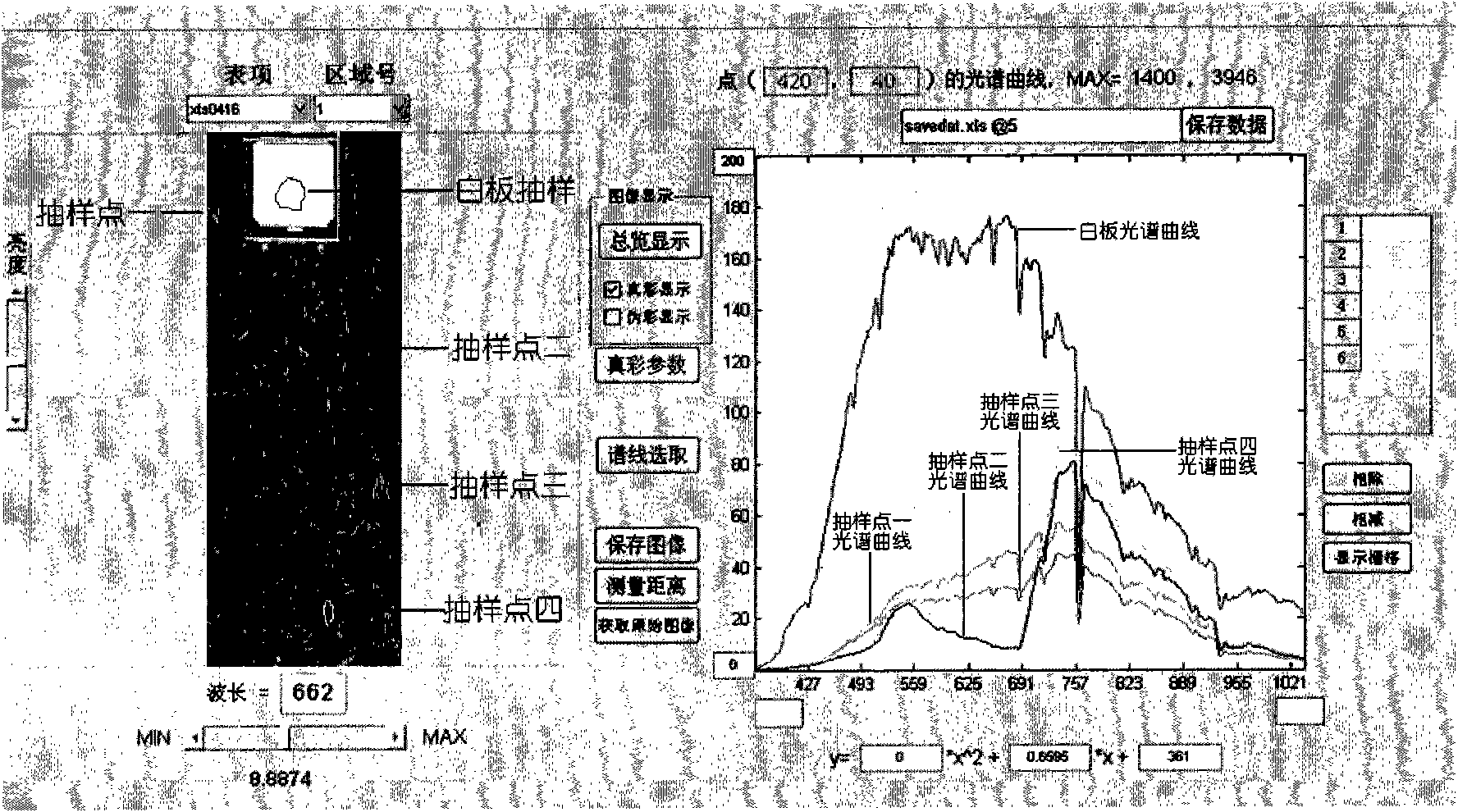

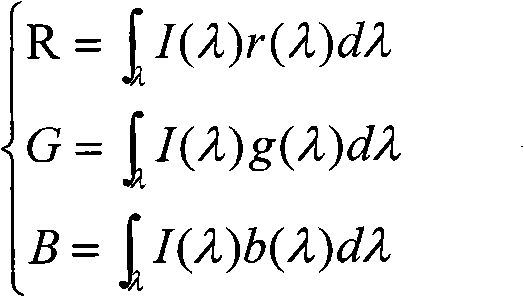

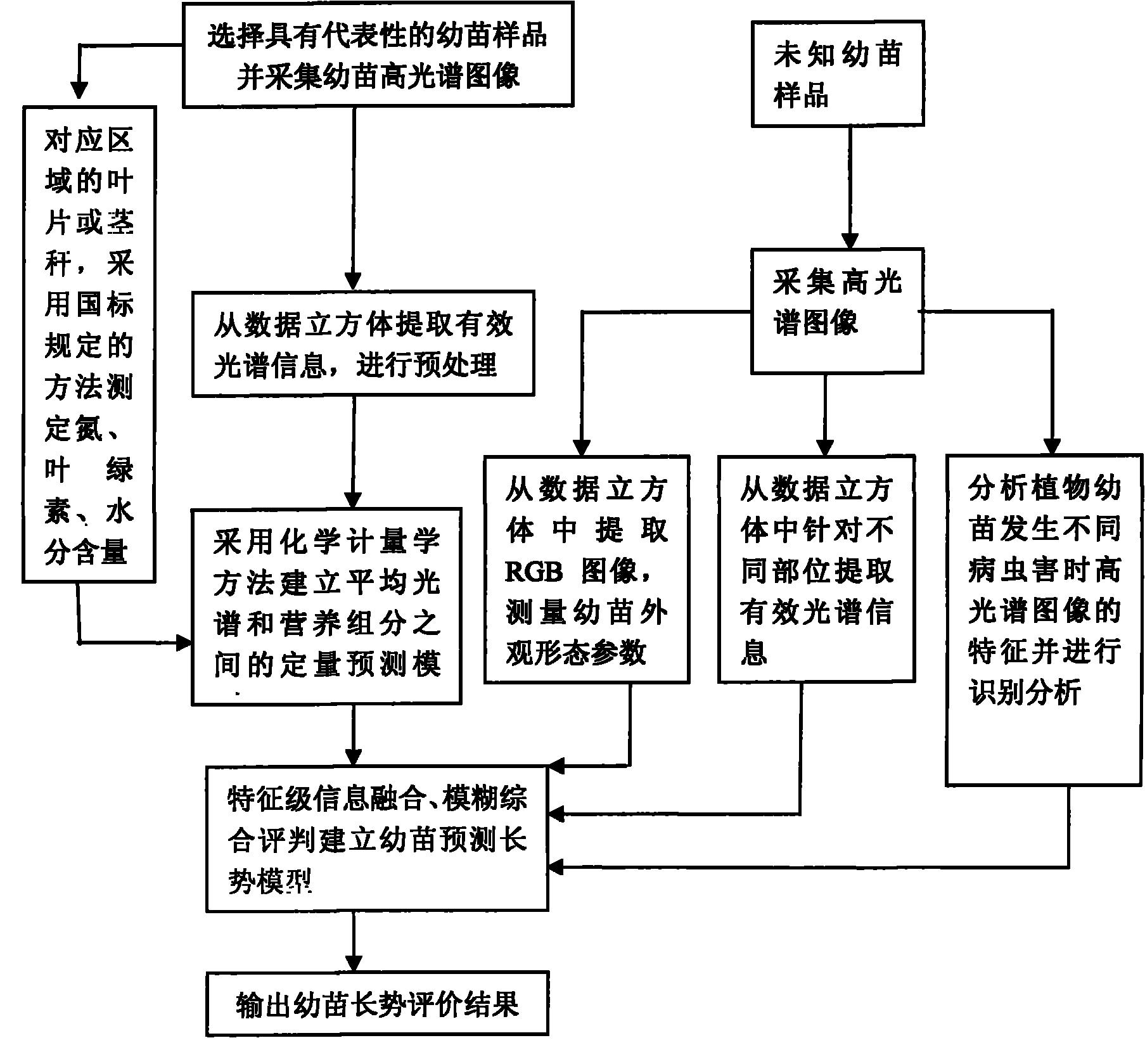

Nondestructive detection method for comprehensive character living bodies of plant seedlings

ActiveCN101881726ARealize evaluationAvoid errorsColor/spectral properties measurementsDiseaseContent distribution

The invention relates to a nondestructive detection method for comprehensive character living bodies of plant seedlings, which comprises the following steps of: performing high-spectrum imaging on the plant seedlings, extracting RGB images and a spectrum of a specific area from a high-spectrum data cube, and acquiring morphological parameter, component content distribution and disease and insect pest information of the seedlings by image processing and spectrum analysis; and establishing a growth prediction model of the plant seedlings by adopting a character-level information fusion method and a fuzzy comprehensive judgment method. The method can realize comprehensive judgment on characters of appearance, nutritional component and disease and insect pest information of the seedlings, and overcome the error caused by un-uniformity during component measurement.

Owner:BEIJING RES CENT OF INTELLIGENT EQUIP FOR AGRI

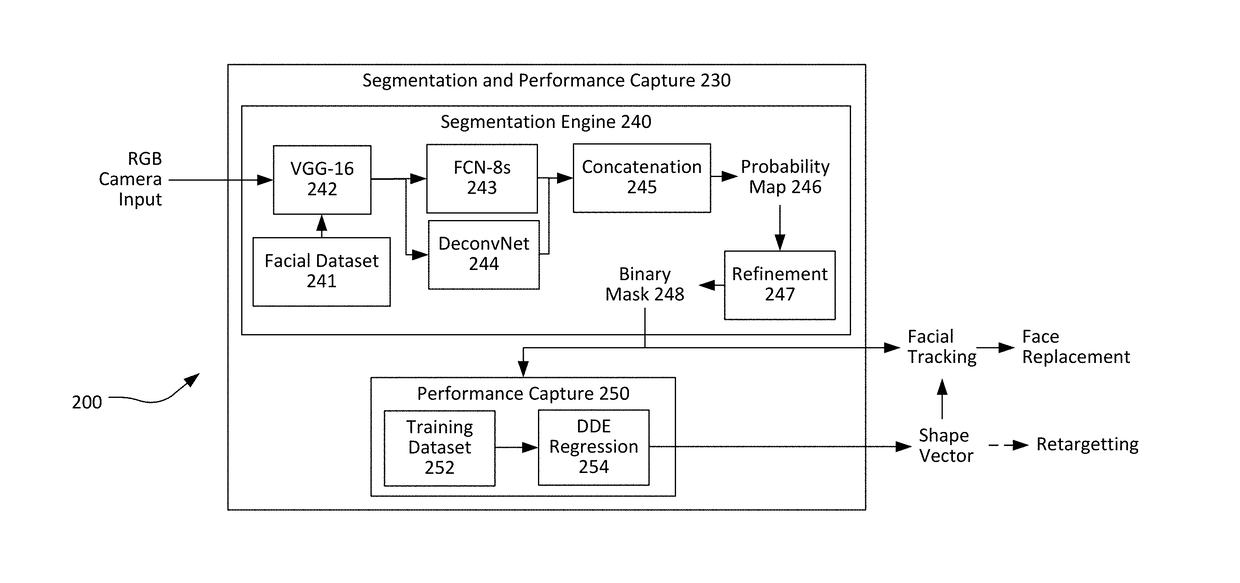

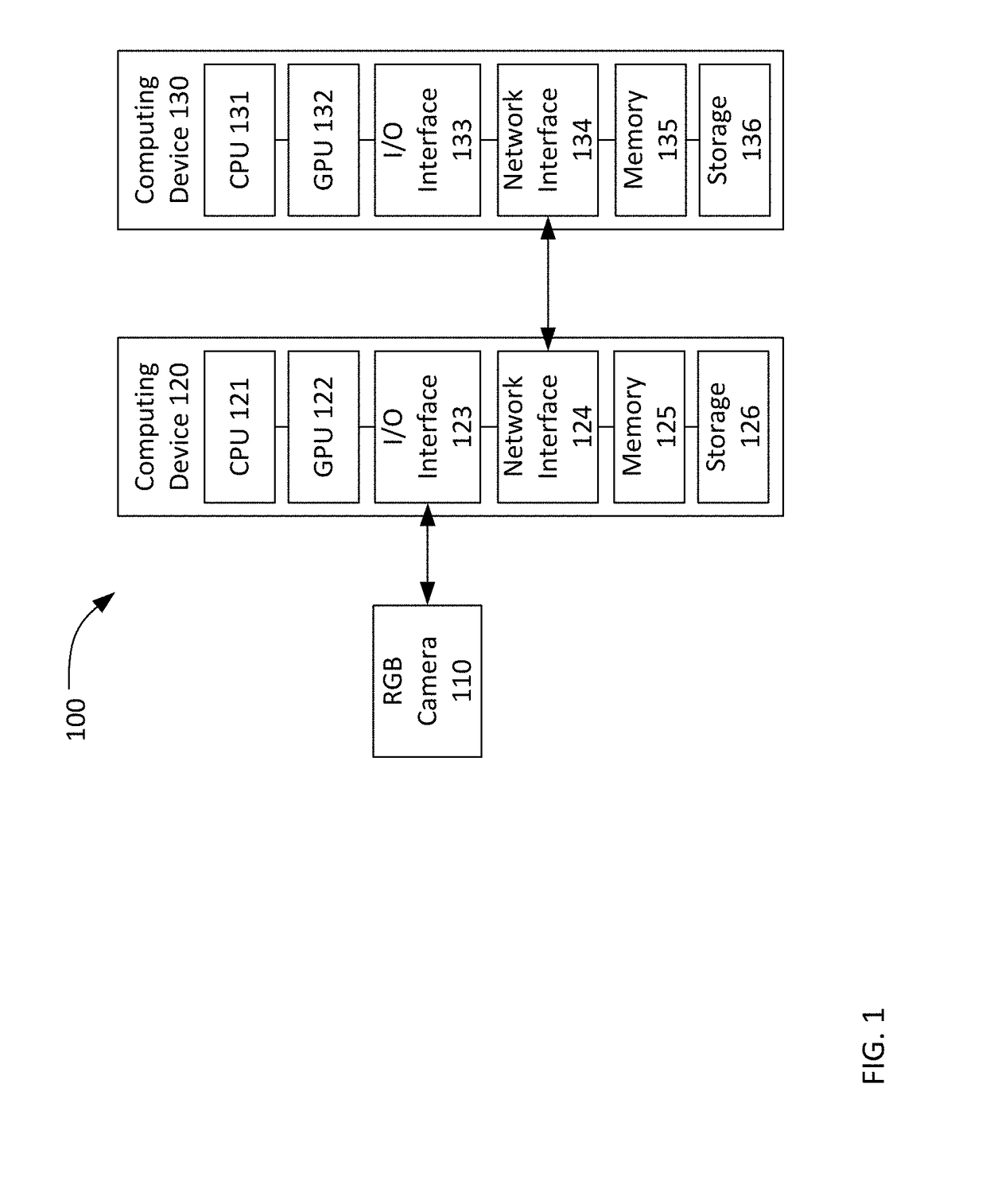

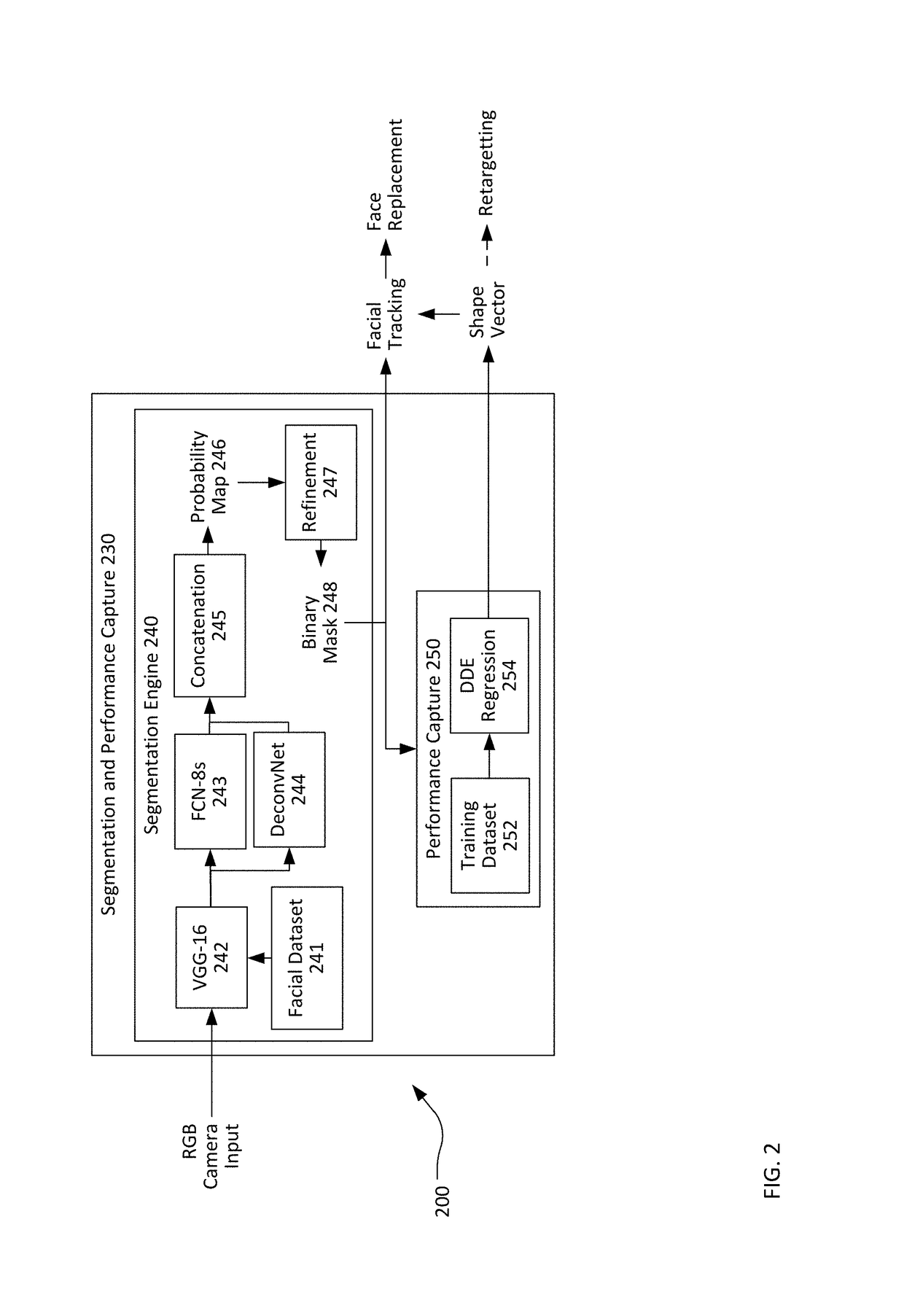

Real-time facial segmentation and performance capture from RGB input

There is disclosed a system and method of performing facial recognition from RGB image data. The method includes generating a lower-resolution image from the RGB image data, performing a convolution of the lower-resolution image data to derive a probability map identifying probable facial regions and a probable non-facial regions, and performing a first deconvolution on the lower-resolution image using a bilinear interpolation layer to derive a set of coarse facial segments. The method further includes performing a second deconvolution on the lower-resolution image using a series of unpooling, deconvolution, and rectification layers to derive a set of fine facial segments, concatenating the set of coarse facial segments to the set of fine facial segments to create an image matrix made up of a set of facial segments, and generating a binary facial mask identifying probable facial regions and probable non-facial regions from the image matrix.

Owner:PINSCREEN INC

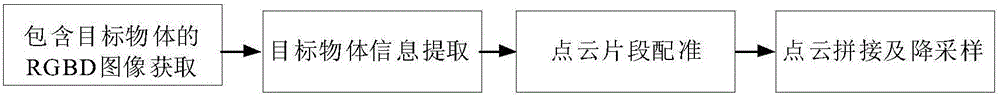

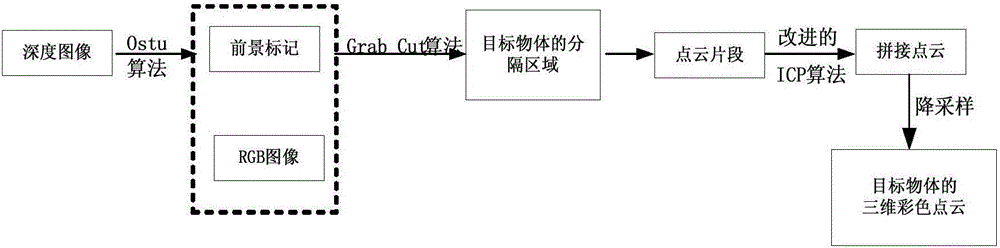

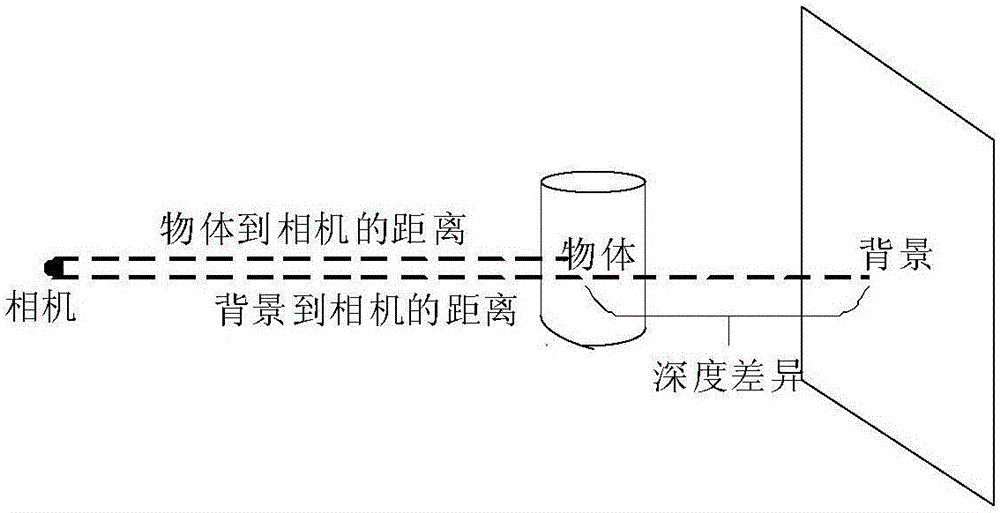

Target object three-dimensional color point cloud generation method based on KINECT

InactiveCN105989604AReduce memory space requirementsLow priceImage enhancementImage analysisPoint cloudRgb image

The invention discloses a target object three-dimensional color point cloud generation method based on Kinect. Firstly a set of RGBD images are photographed around a target object so that the set of RGBD images are enabled to include complete information of the target object; then as for each RGBD image, Ostu segmentation is performed on a depth image and then a foreground mark is acquired and acts as the input of a Grab Cut algorithm, the RGB image is segmented again and then the accurate area of the target object is acquired, and background information is removed; registration is performed on adjacent point cloud fragments by using an improved ICP algorithm so that a transformation relation matrix between the point cloud fragments is acquired; and finally point clouds are spliced by using the transformation relation matrix between the point cloud fragments, and down-sampling is performed to reduce redundancy so that the complete three-dimensional color point cloud data of the target object can be acquired.

Owner:HEFEI UNIV OF TECH

Single chip red, green, blue, distance (RGB-Z) sensor

ActiveUS8139141B2High resolutionQuick identificationTelevision system detailsOptical rangefindersBeam splitterSpectral bands

An RGB-Z sensor is implementable on a single IC chip. A beam splitter such as a hot mirror receives and separates incoming first and second spectral band optical energy from a target object into preferably RGB image components and preferably NIR Z components. The RGB image and Z components are detected by respective RGB and NIR pixel detector array regions, which output respective image data and Z data. The pixel size and array resolutions of these regions need not be equal, and both array regions may be formed on a common IC chip. A display using the image data can be augmented with Z data to help recognize a target object. The resultant structure combines optical efficiency of beam splitting with the simplicity of a single IC chip implementation. A method of using the single chip red, green, blue, distance (RGB-Z) sensor is also disclosed.

Owner:MICROSOFT TECH LICENSING LLC

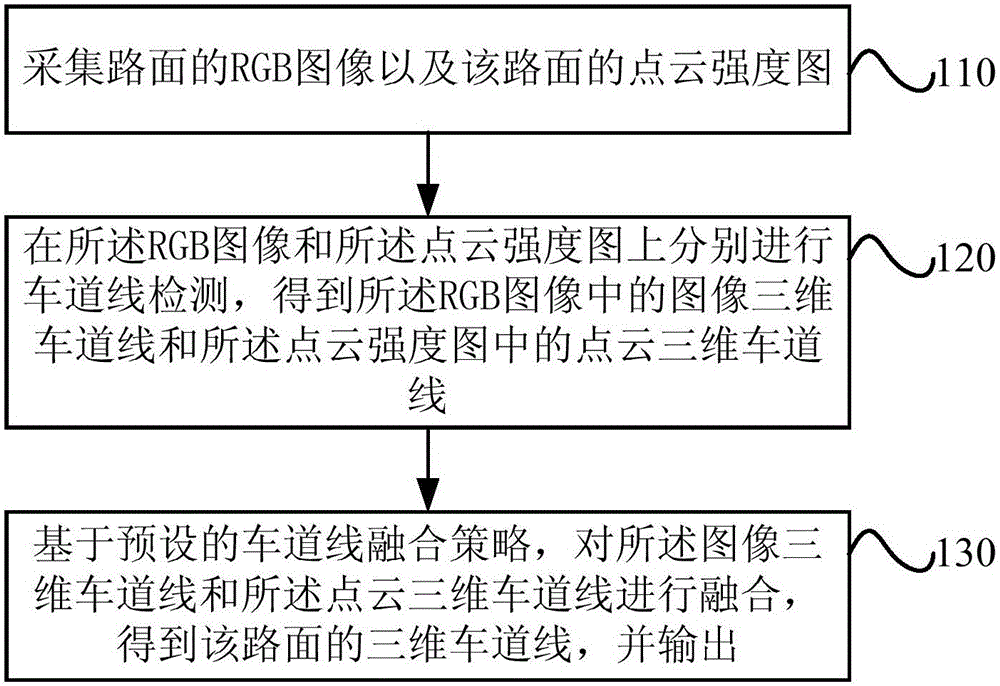

Method and device for detecting lane lines on road surface

ActiveCN105701449AAgainst occlusionAnti-wearCharacter and pattern recognitionUsing optical meansPoint cloudRgb image

An embodiment of the invention provides a method and device for detecting lane lines on a road surface. The method includes the steps of: collecting an RGB image of a road surface and a point cloud intensity map of the road surface; performing lane line detection on the RGB image and the point cloud intensity map respectively, thereby obtaining image three-dimensional lane lines in the RGB image and point cloud three-dimensional lane lines in the point cloud intensity map; and based on a preset lane line fusing strategy, fusing the image three-dimensional lane lines and the point cloud three-dimensional lane lines, thereby obtaining three-dimensional lane lines of the road surface, and outputting the three-dimensional lane lines. By fusion of a lane line detection result of the RGB image and a lane line detection result of the point cloud intensity map, shielding of the road surface lane lines by other vehicles and wear of the lane lines on the road surface and road surface direction arrows can be effectively resisted in most cases, integrity and precision of geometric outlines of the obtained three-dimensional lane lines of the road surface are improved, and in addition, manpower cost is saved.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

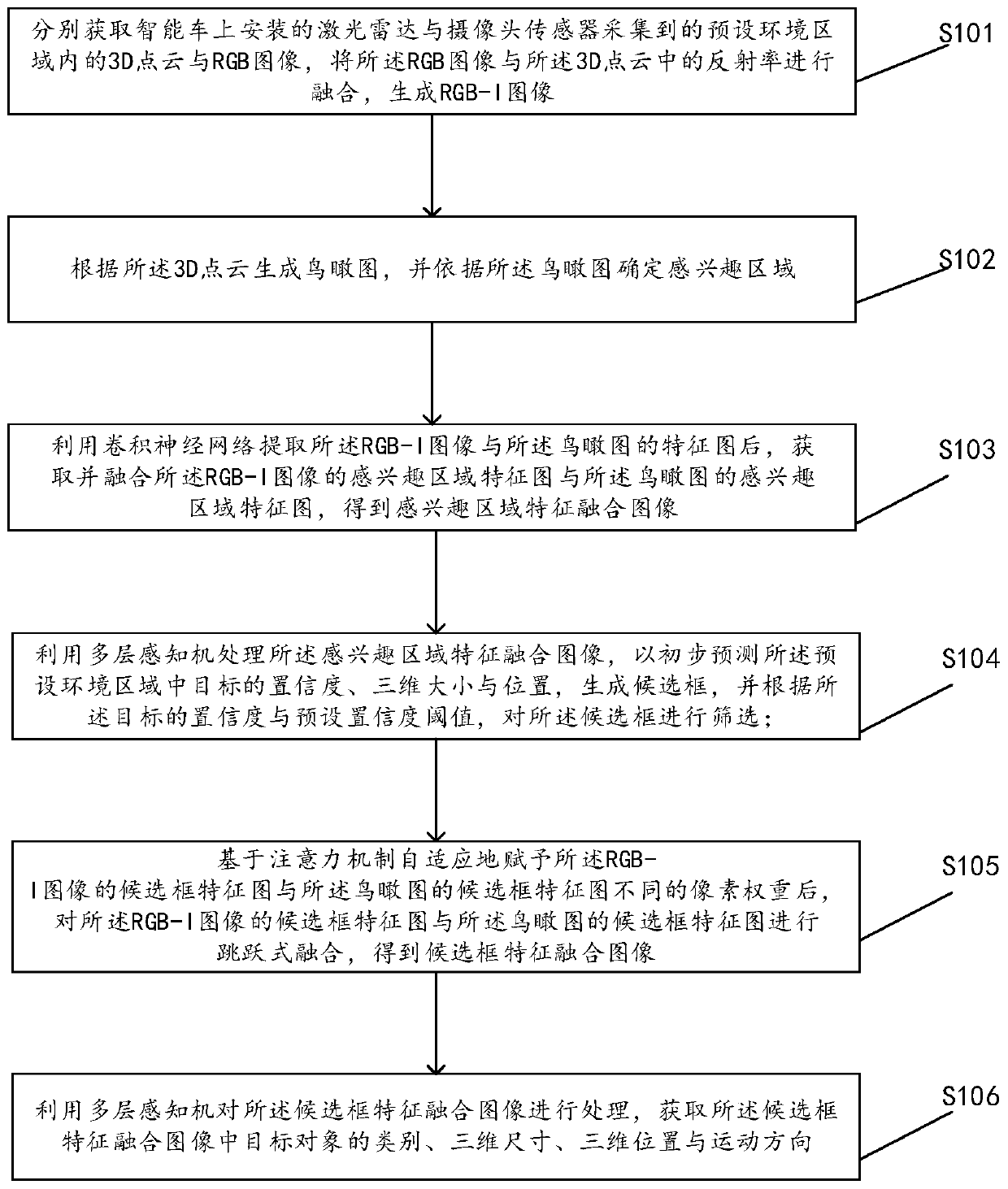

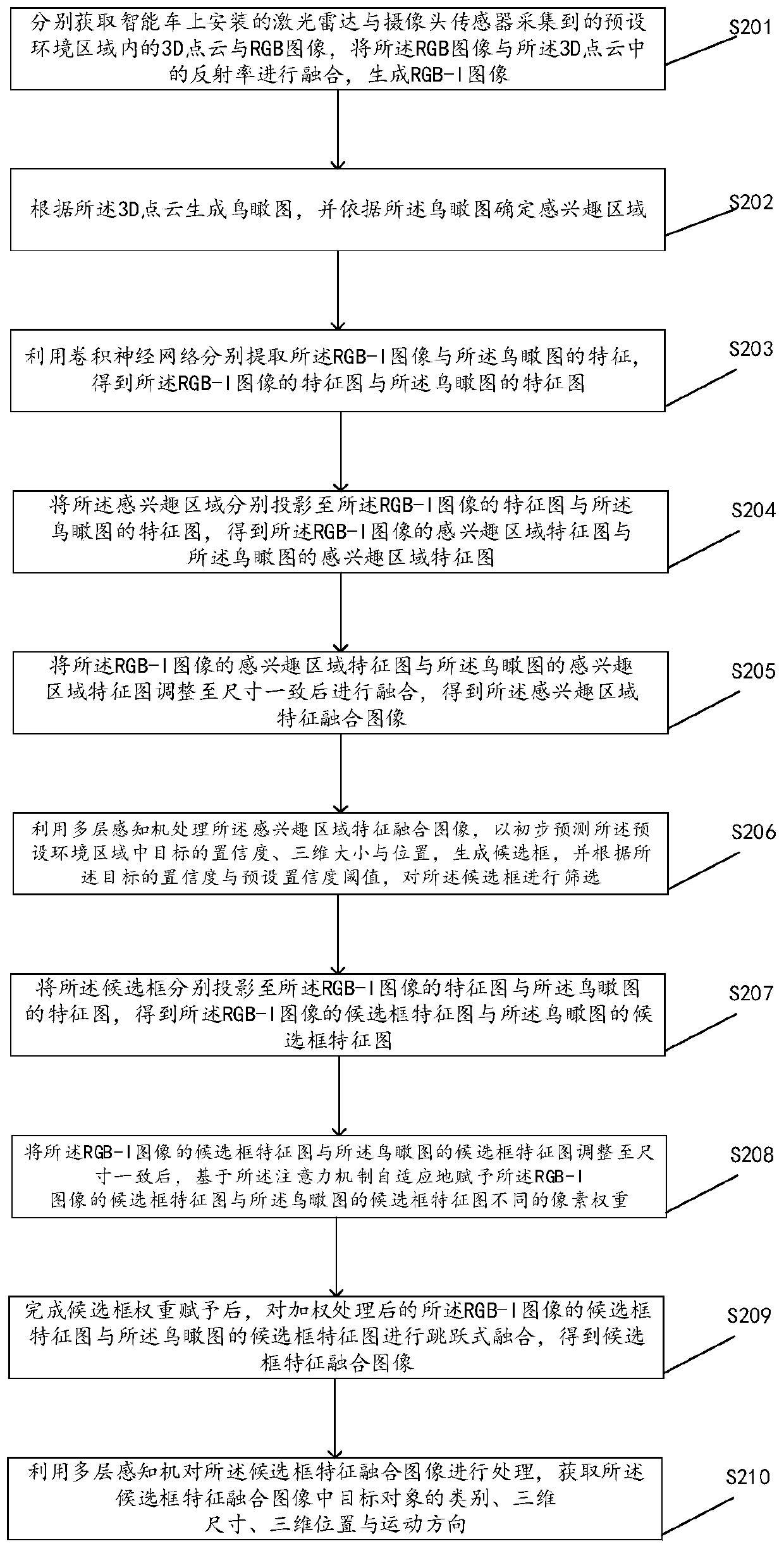

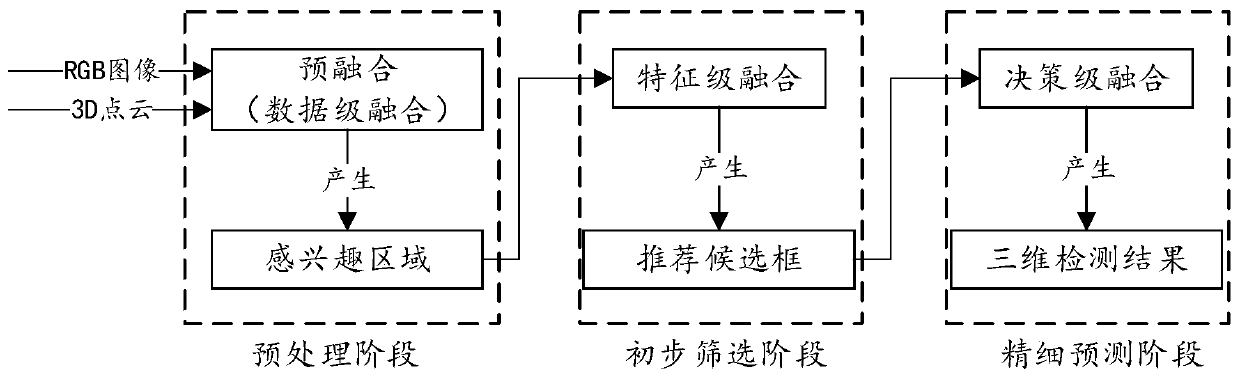

Three-dimensional target detection method and device based on multi-sensor information fusion

ActiveCN110929692AEasy to identifyHigh positioning accuracyCharacter and pattern recognitionView basedRgb image

The invention discloses a three-dimensional target detection method, apparatus and device based on multi-sensor information fusion, and a computer readable storage medium. The three-dimensional targetdetection method comprises the steps: fusing 3D point cloud and an RGB image collected by a laser radar and a camera sensor, and generating an RGB-I image; generating a multi-channel aerial view according to the 3D point cloud so as to determine a region of interest; respectively extracting and fusing region-of-interest features of the RGB-I image and the aerial view based on a convolutional neural network; utilizing a multi-layer perceptron to fuse the confidence coefficient, the approximate position and the size of the image prediction target based on the features of the region of interest,and determining a candidate box; adaptively endowing different pixel weights to different sensor candidate box feature maps based on an attention mechanism, and carrying out skip fusion; and processing the candidate frame feature fusion image by using a multi-layer perceptron, and outputting a three-dimensional detection result. According to the three-dimensional target detection method, apparatus and device, and the computer readable storage medium provided by the invention, the target recognition rate is improved, and the target can be accurately positioned.

Owner:CHANGCHUN INST OF OPTICS FINE MECHANICS & PHYSICS CHINESE ACAD OF SCI

A man-machine cooperation oriented real-time posture detection method for hand-held objects

ActiveCN109255813AThe test result is accurateImage enhancementImage analysisInteraction systemsColor image

The invention provides a man-machine cooperation oriented real-time posture detection method for hand-held objects, belonging to the technical field of a human-machine cooperation interaction system and the position and posture sensing of an industrial robot to a hand-held working object. The depth images of each part of the object to be detected are captured by a 3D stereoscopic camera, and the local point clouds are aligned and merged into a complete three-dimensional point cloud model of the object. Real-time RGB color images and depth images containing 3D point cloud information of the scene are obtained. The RGB image is segmented automatically to get the pixels representing the object in the image. The corresponding point cloud in the depth image is fused with the pixels to get the RGB-D image with color information of the object in the scene. Using ICP algorithm, the RGB-D image is matched with the complete 3D point cloud image of the object to obtain the position and posture ofthe hand-held object in the scene. The method overcomes the problem of obtaining the exact posture of the hand-held object at the current time, and can be used in a variety of scenes.

Owner:DALIAN UNIV OF TECH

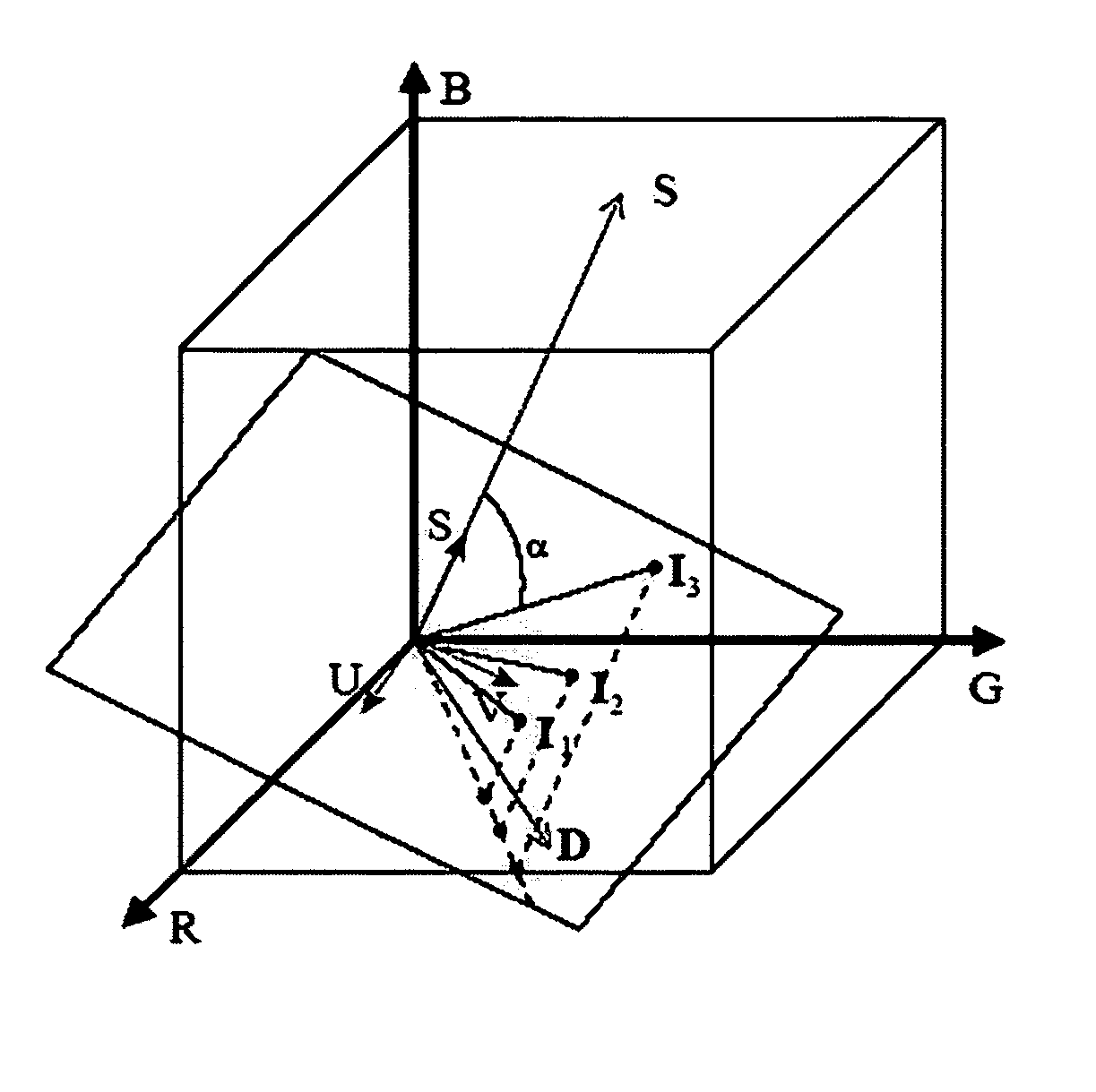

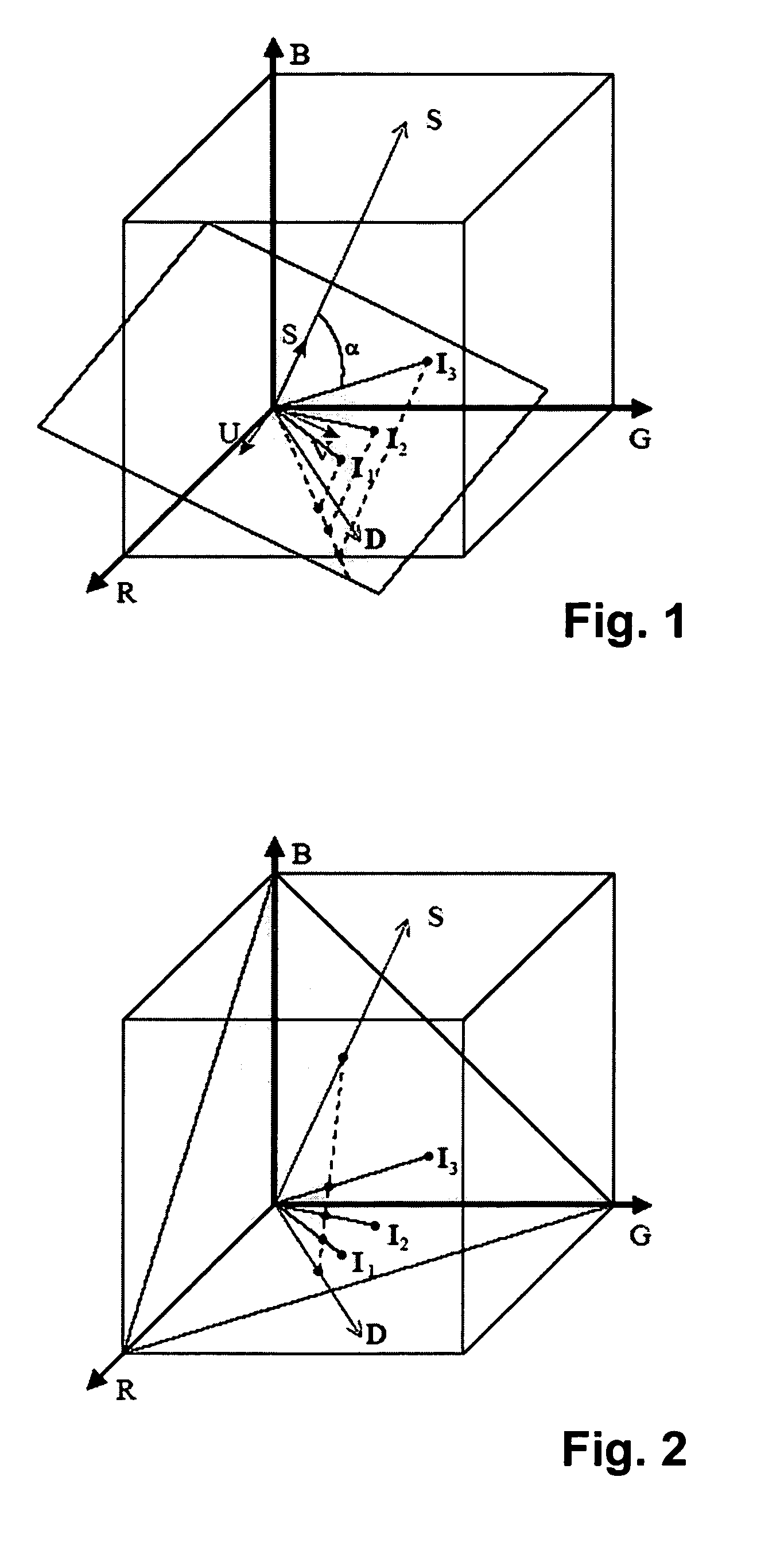

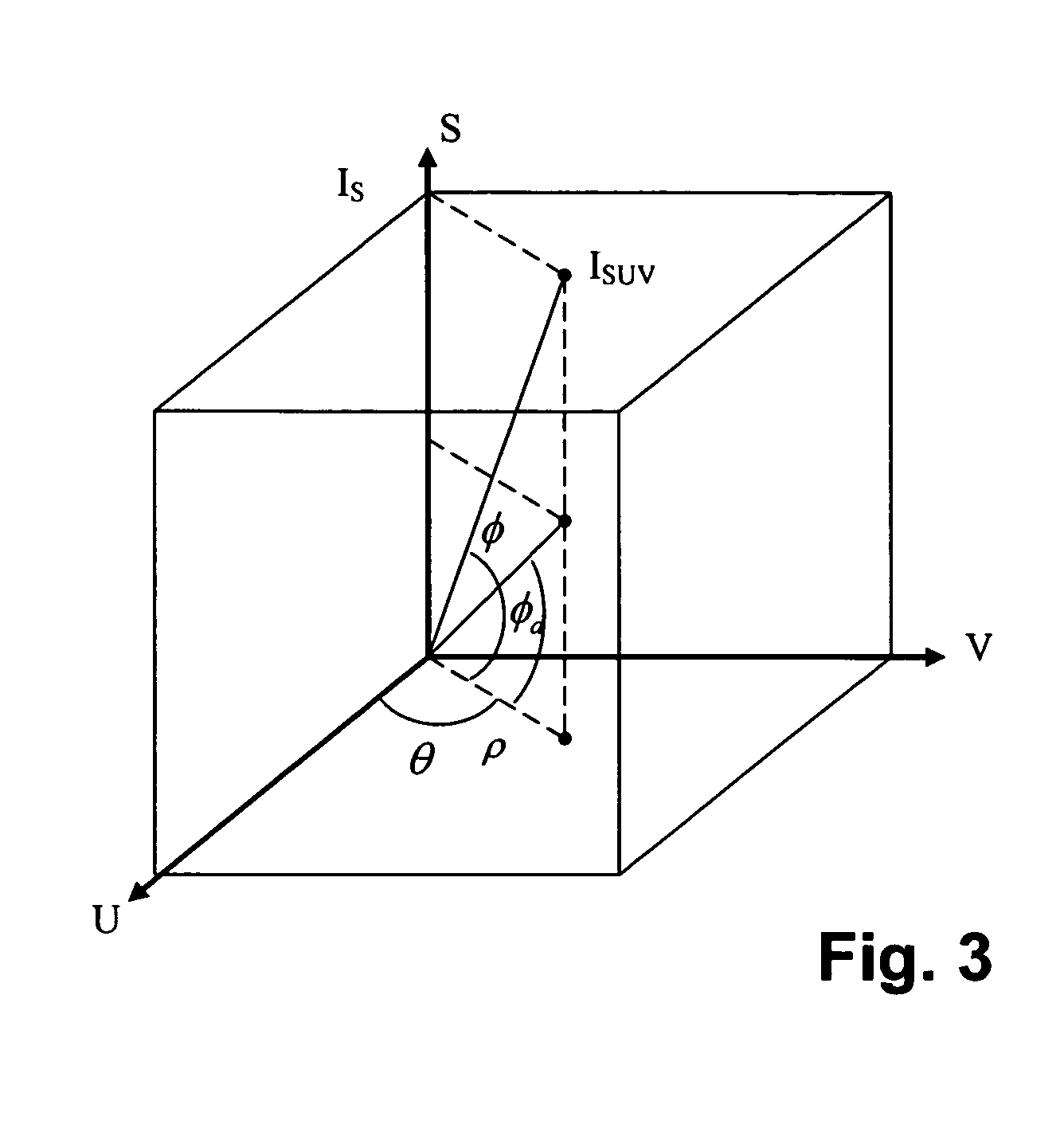

Methods for identifying, separating and editing reflection components in multi-channel images and videos

InactiveUS20070132759A1Preserve textureReduce specular reflectionImage enhancementImage analysisStructure from motionRgb image

The present invention presents a framework for separating specular and diffuse reflection components in images and videos. Each pixel of the an M-channel input image illuminated by N light sources is linearly transformed into a new color space having (M-N) channels. For an RGB image with one light source, the new color space has two color channels (U,V) that are free of specularities and a third channel (S) that contains both specular and diffuse components. When used with multiple light sources, the transformation may be used to produce a specular invariant image. A diffuse RGB image can be obtained by applying a non-linear partial differential equation to an RGB image to iteratively erode the specular component at each pixel. An optional third dimension of time may be added for processing video images. After the specular and diffuse components are separated, dichromatic editing may be used to independently process the diffuse and the specular components to add or suppress visual effects. The (U,V) channels of images can be used as input to 3-D shape estimation algorithms including shape-from-shading, photometric stereo, binocular and multinocular stereopsis, and structure-from-motion.

Owner:RGT UNIV OF CALIFORNIA

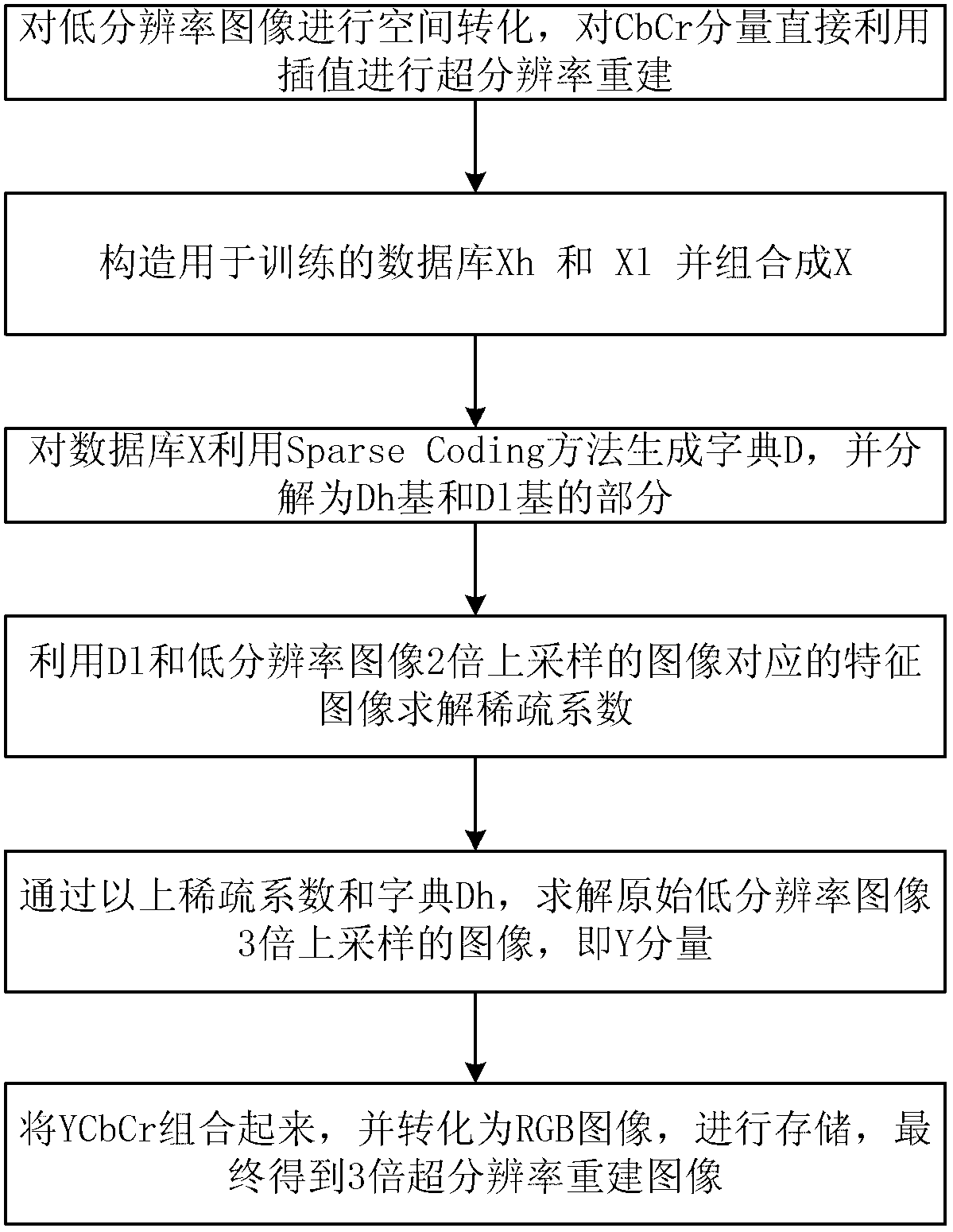

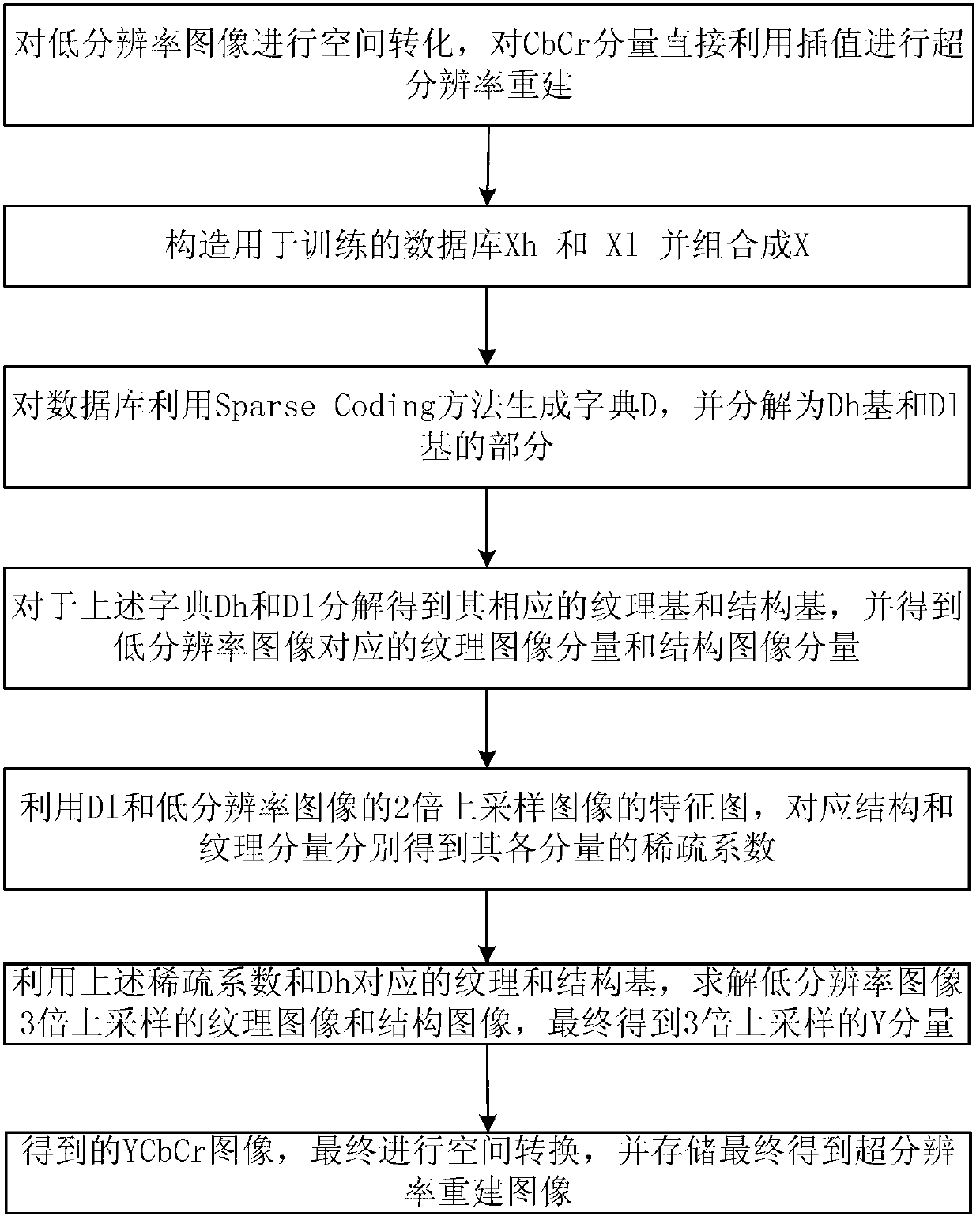

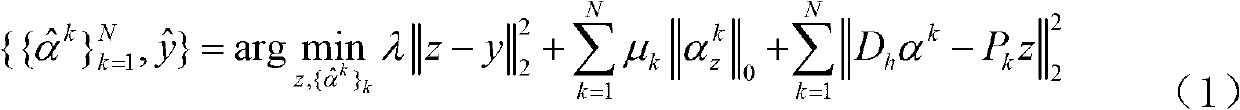

Super-resolution sparse representation method

ActiveCN102722865AHigh resolutionImage enhancementCharacter and pattern recognitionColor imagePattern recognition

Under the premise of no extraneous high-resolution image library, a super-resolution sparse representation method for acquiring a high-resolution image is provided. The method comprises the steps of: (1) carrying out space conversion on a given low-resolution color image to obtain the YCbCr space image of the color image, and reconstructing the constituents of Cb and Cr by using an interpolation method; (2) constructing a database used for training, namely, a high-resolution image block Xh and a low-resolution image block Xl, and combining the two image blocks into a database X; (3) generating a dictionary D from the database X by using a sparse coding method, decomposing the dictionary D into a high-resolution image dictionary Dh and a low-resolution image dictionary Dl; (4) solving a sparse coefficient by using the Dl and characteristic images corresponding to an image of upsampling the low-resolution image by 2 times; (5) solving an image of upsampling the original low-resolution image by 3 times through the sparse coefficient and the Dh; and (6) combining Y, Cb, and Cr to obtain a YCbCr image, and converting the YCbCr image into an RGB image and storing the RGB image to obtain the final super-resolution representation image.

Owner:BEIJING UNIV OF TECH

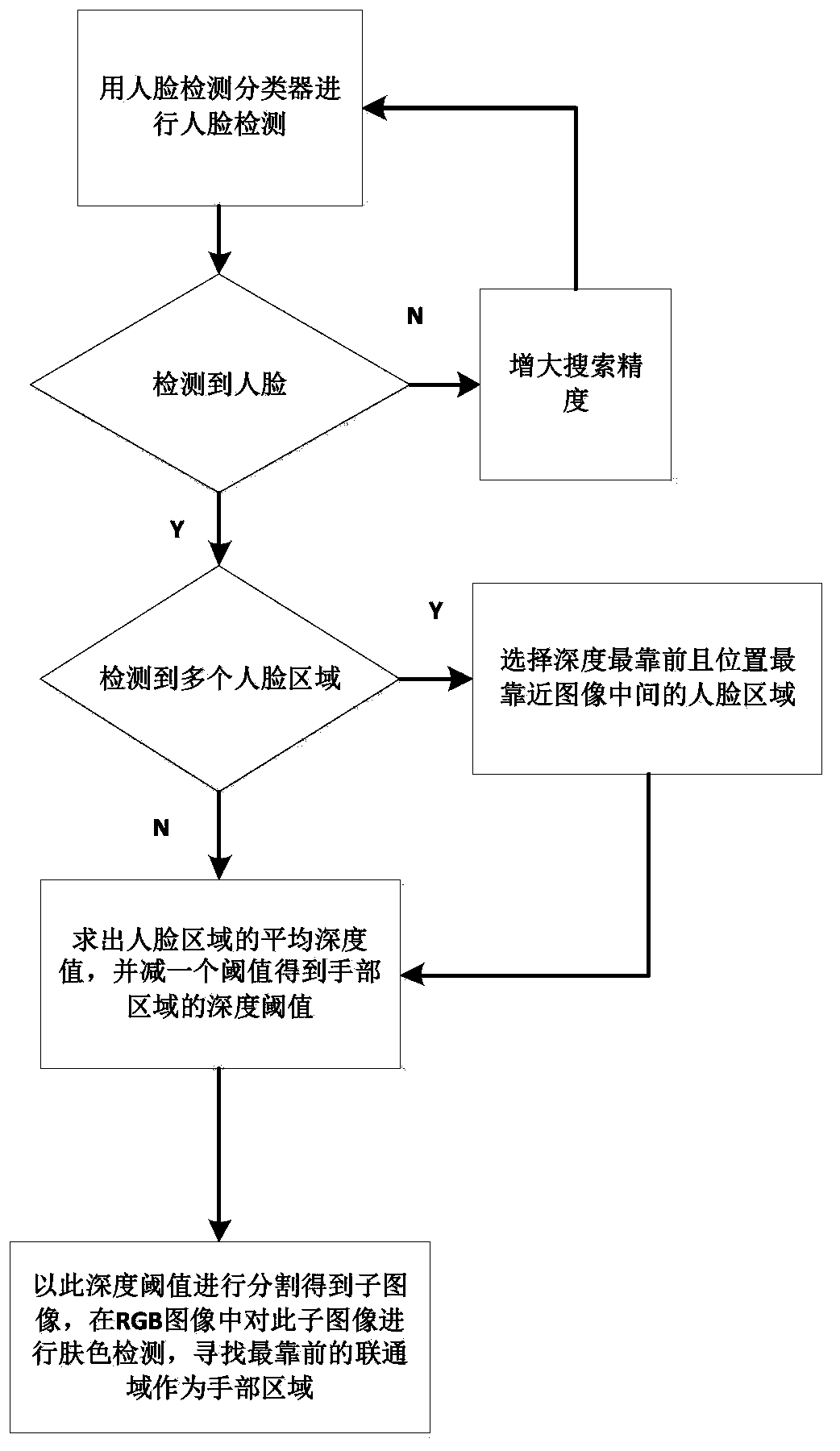

3D dynamic gesture identification method for intelligent home system

InactiveCN103353935AMeet the requirements of interactionEasy to operateInput/output for user-computer interactionCharacter and pattern recognitionHuman bodyFace detection

The invention relates to the technical field of computer visual sense and man-machine interaction, and particularly to a 3D dynamic gesture identification method for an intelligent home system. The method comprises the following steps: a Kinect camera connected with a computer acquiring a depth image and an RGB image; preprocessing the depth image; performing human face detection on the RGB image; extracting human face depth; separating a human body hand portion area image; searching for a palm area; and storing palm position information. The method provided by the invention can be used for controlling the intelligent home system so that conventional switch keyboard control is replaced; human hand motion is transmitted to a central system so that a human does not have to get up and go to housing products for adjustment because all that can be done by a computer and the operation method is quite easy and simple.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com