Patents

Literature

1663 results about "Stereopsis" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

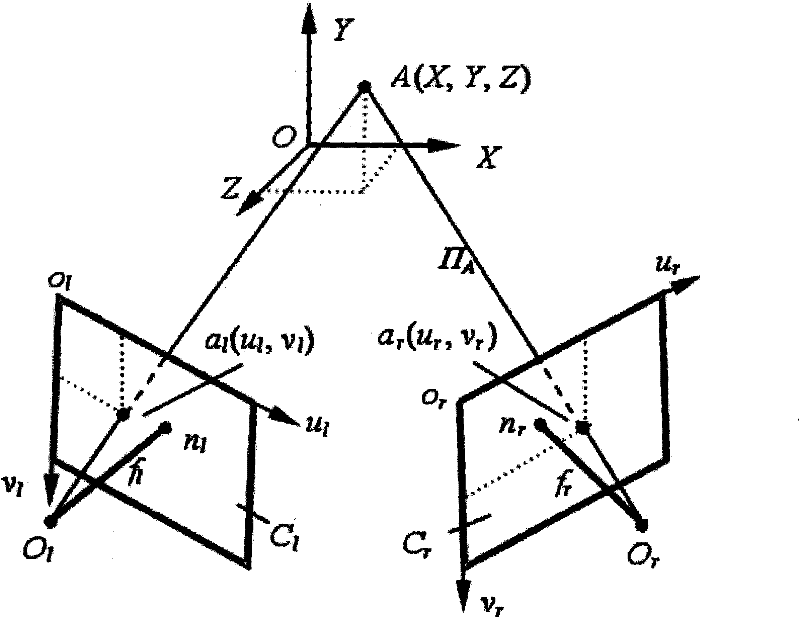

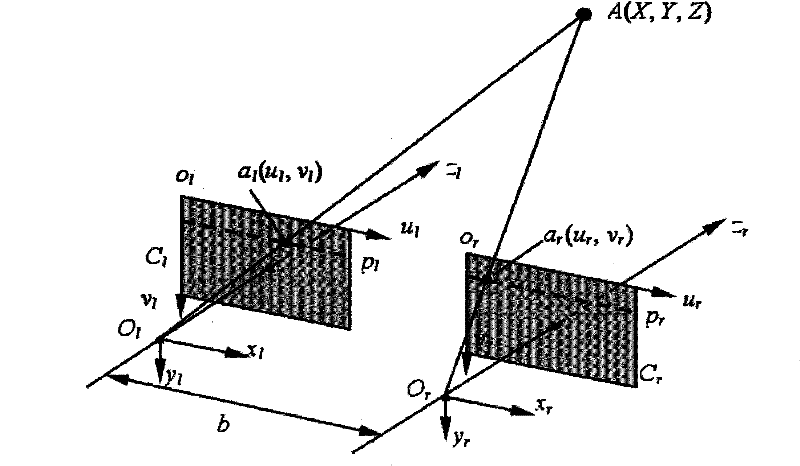

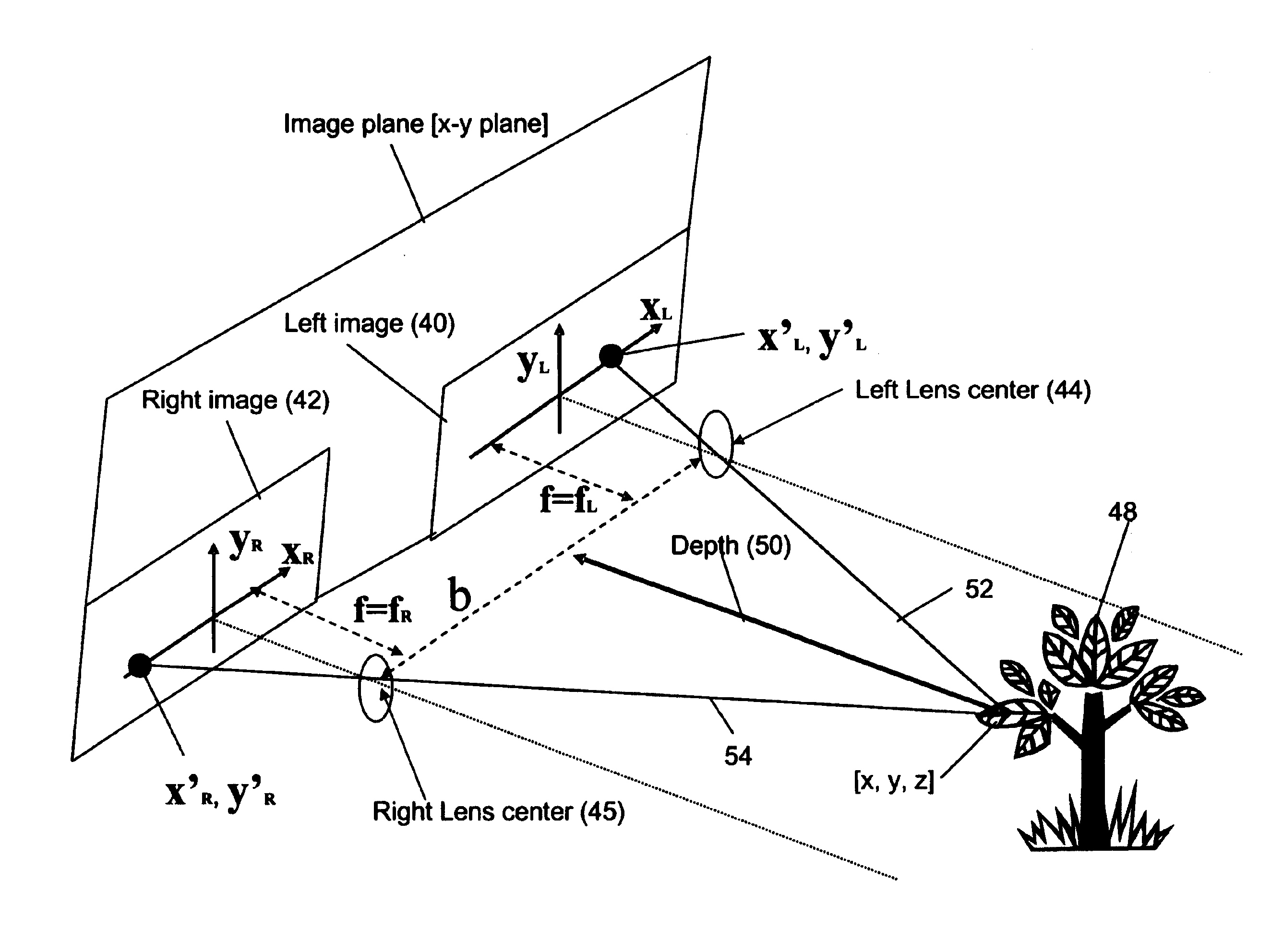

Stereopsis (from the Greek στερεο- stereo- meaning "solid", and ὄψις opsis, "appearance, sight") is a term that is most often used to refer to the perception of depth and 3-dimensional structure obtained on the basis of visual information deriving from two eyes by individuals with normally developed binocular vision. Because the eyes of humans, and many animals, are located at different lateral positions on the head, binocular vision results in two slightly different images projected to the retinas of the eyes. The differences are mainly in the relative horizontal position of objects in the two images. These positional differences are referred to as horizontal disparities or, more generally, binocular disparities. Disparities are processed in the visual cortex of the brain to yield depth perception. While binocular disparities are naturally present when viewing a real 3-dimensional scene with two eyes, they can also be simulated by artificially presenting two different images separately to each eye using a method called stereoscopy. The perception of depth in such cases is also referred to as "stereoscopic depth".

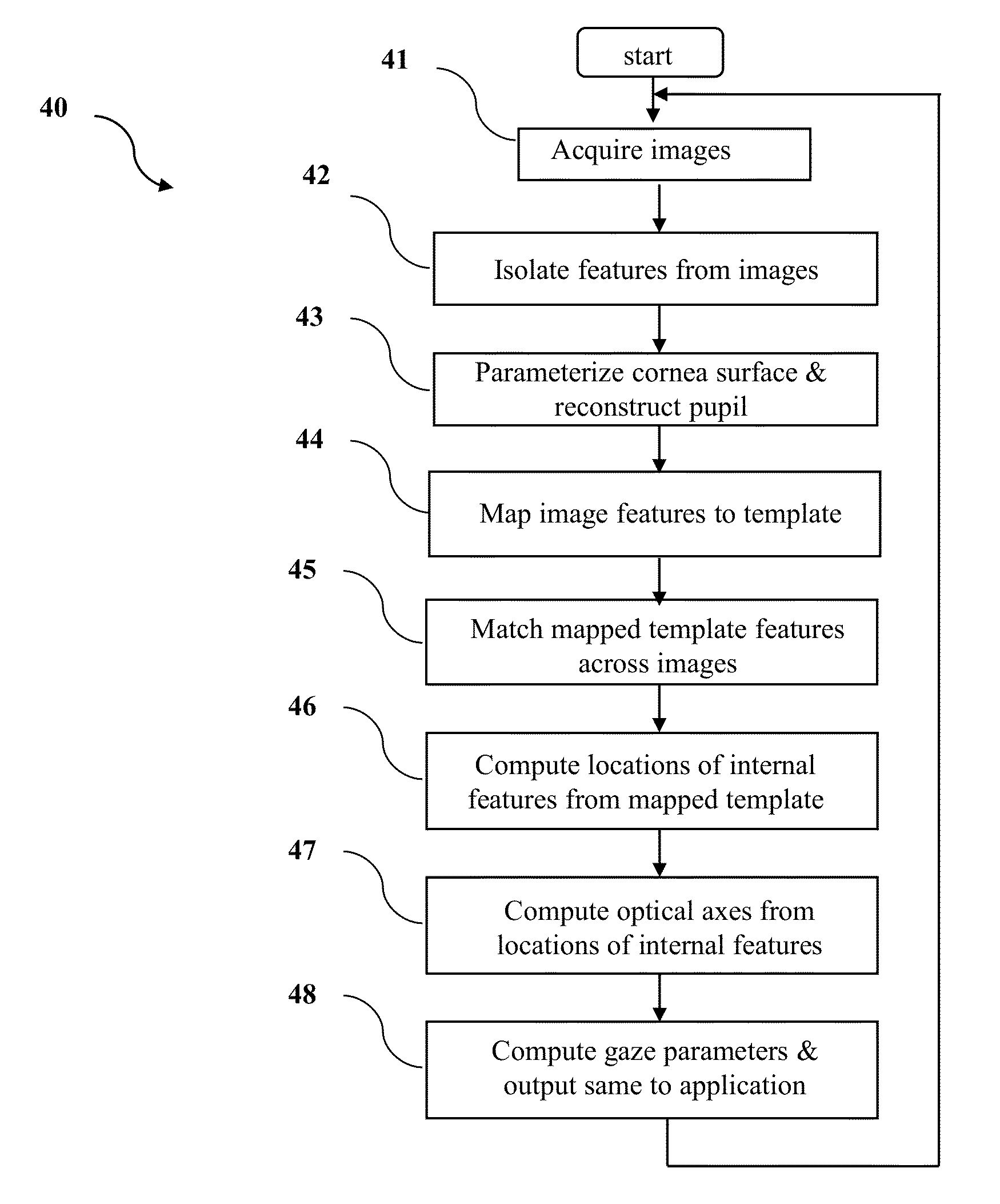

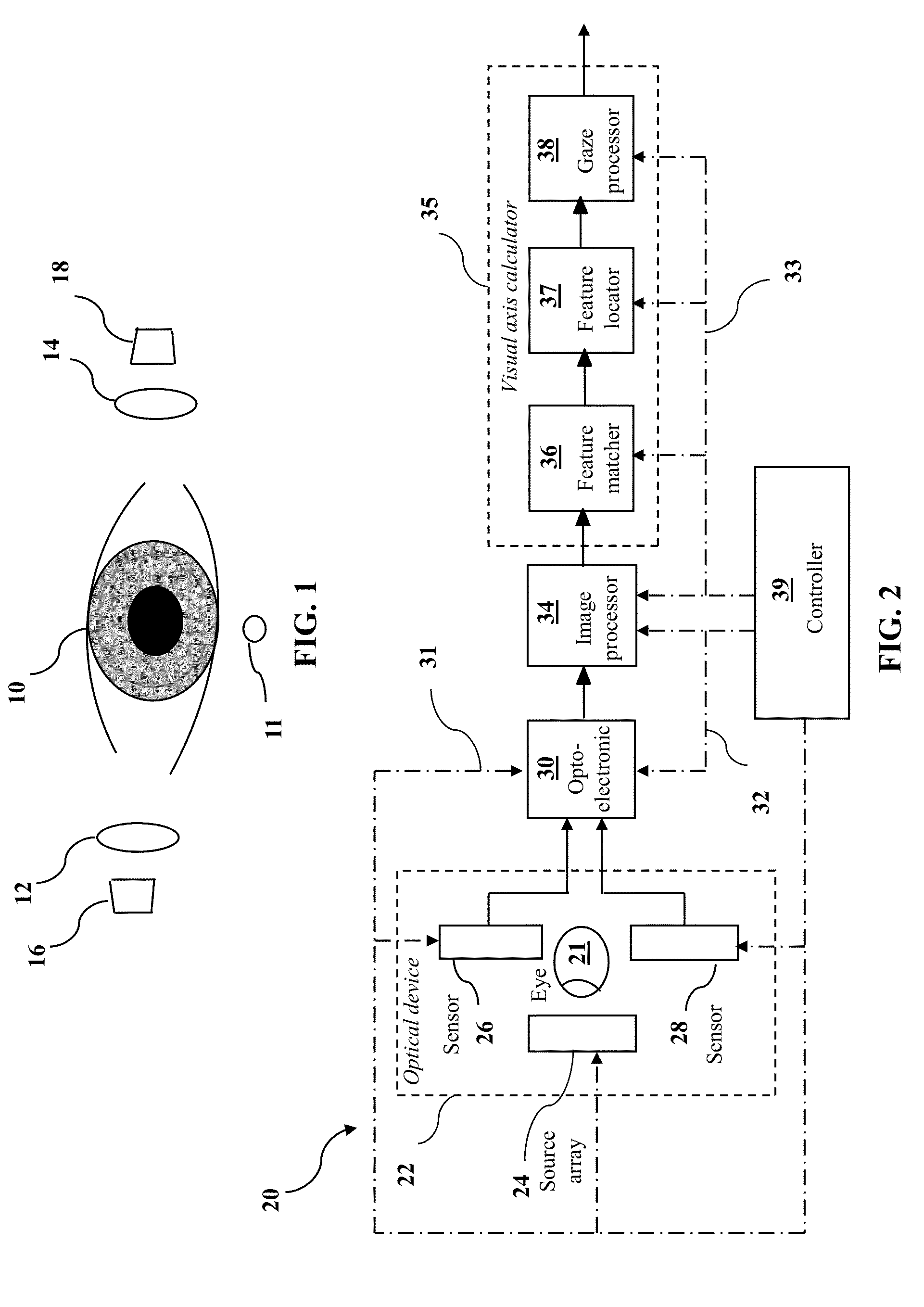

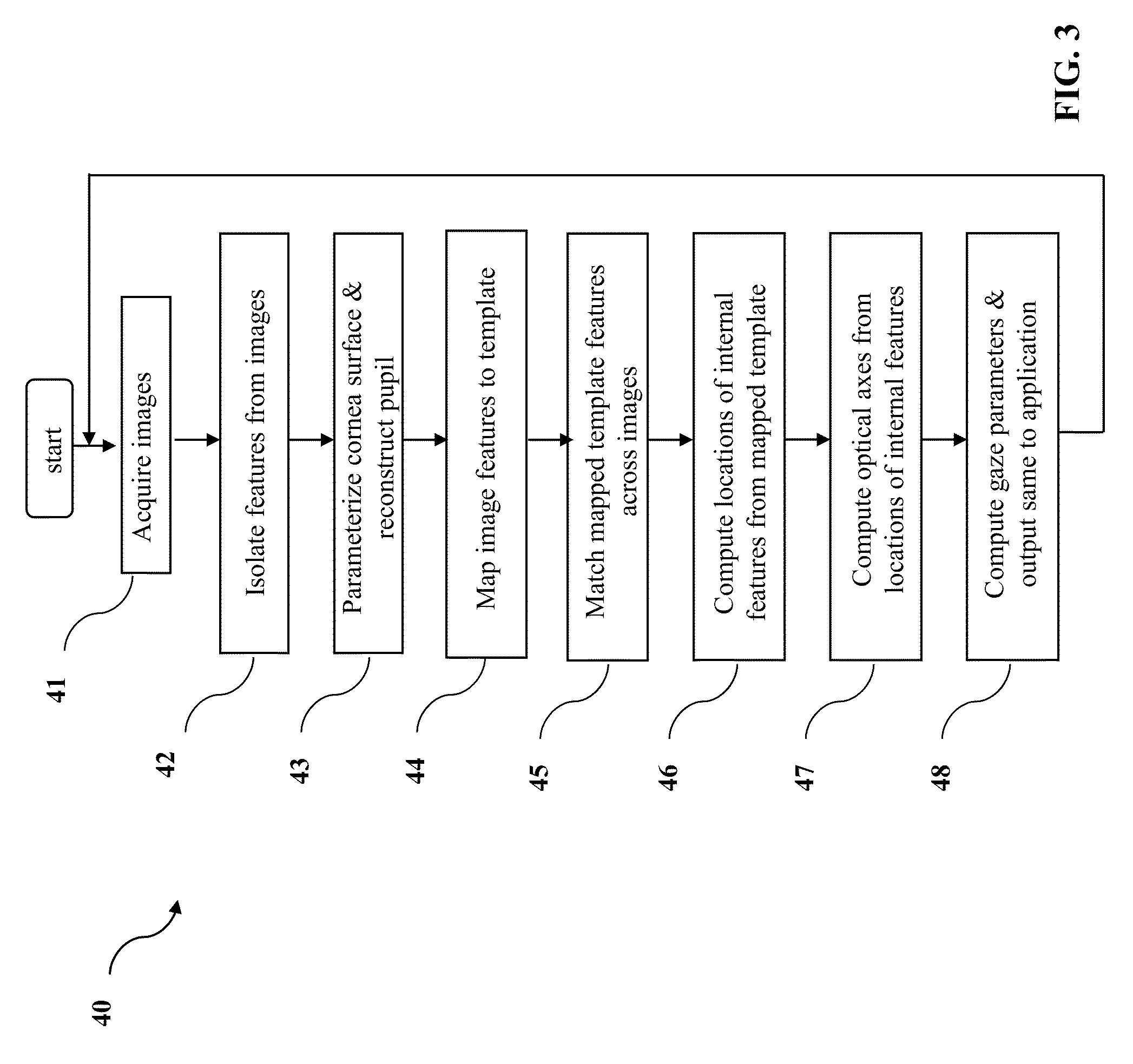

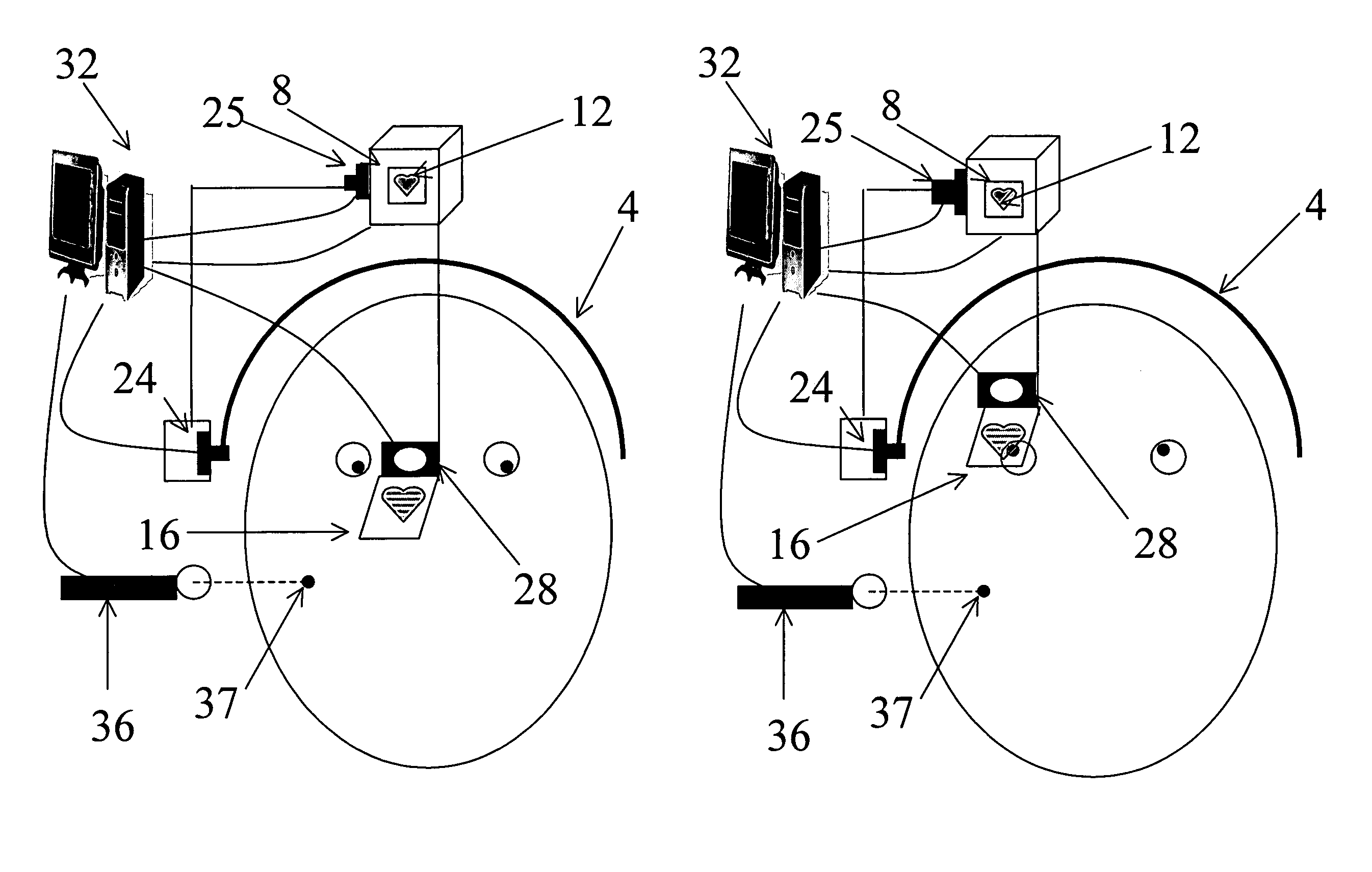

Apparatus and method for determining eye gaze from stereo-optic views

InactiveUS8824779B1Improve accuracyImprove image processing capabilitiesImage enhancementImage analysisWide fieldOptical axis

The invention, exemplified as a single lens stereo optics design with a stepped mirror system for tracking the eye, isolates landmark features in the separate images, locates the pupil in the eye, matches landmarks to a template centered on the pupil, mathematically traces refracted rays back from the matched image points through the cornea to the inner structure, and locates these structures from the intersection of the rays for the separate stereo views. Having located in this way structures of the eye in the coordinate system of the optical unit, the invention computes the optical axes and from that the line of sight and the torsion roll in vision. Along with providing a wider field of view, this invention has an additional advantage since the stereo images tend to be offset from each other and for this reason the reconstructed pupil is more accurately aligned and centered.

Owner:CORTICAL DIMENSIONS LLC

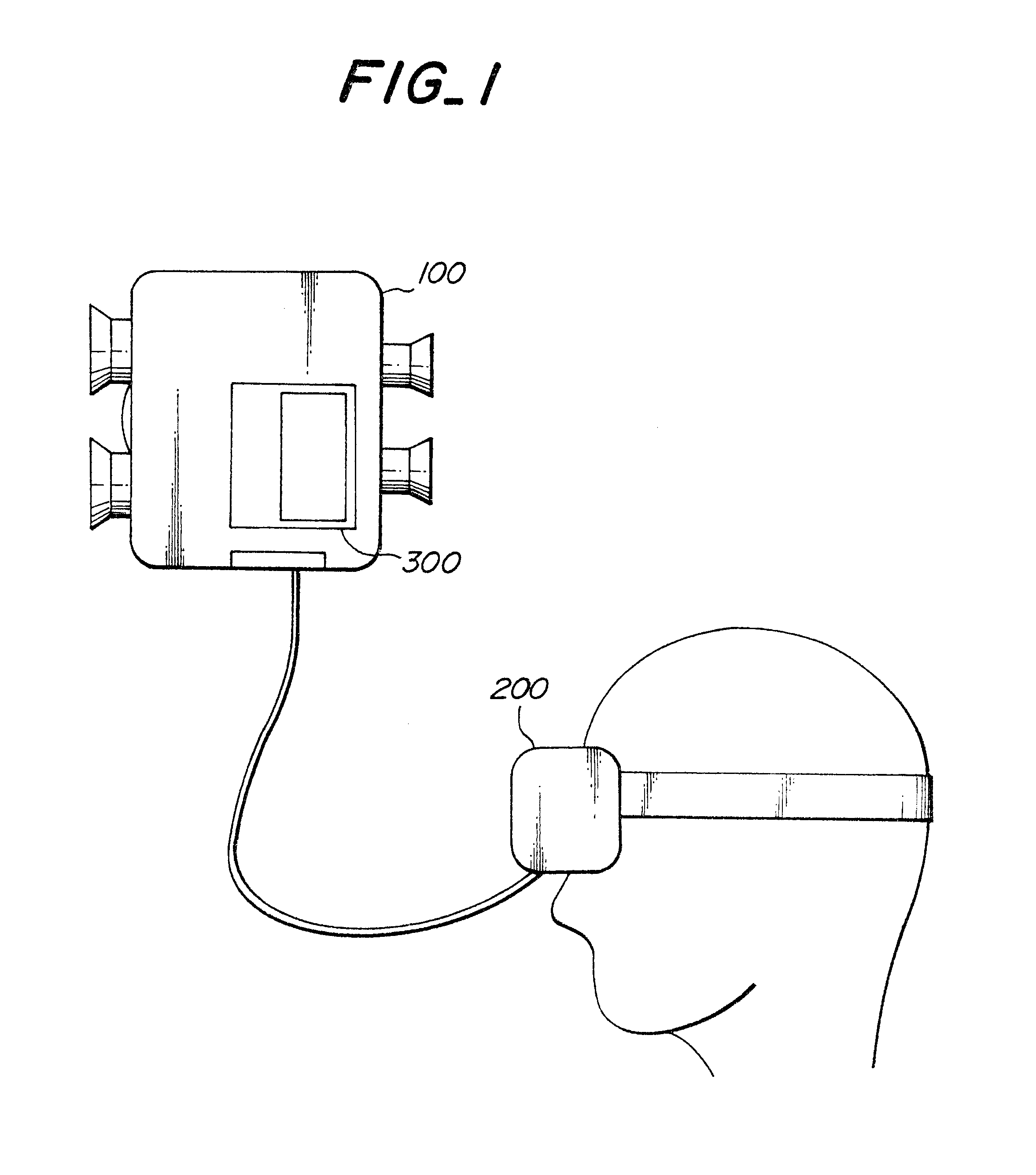

Eye tracking head mounted display

InactiveUS7542210B2Not salientImprove matching characteristicsCathode-ray tube indicatorsOptical elementsBeam splitterCentre of rotation

A head mounted display device has a mount which attaches the device to a user's head, a beam-splitter attached to the mount with movement devices, an image projector which projects images onto the beam-splitter, an eye-tracker which tracks a user's eye's gaze, and one or more processors. The device uses the eye tracker and movement devices, along with an optional head-tracker, to move the beam-splitter about the center of the eye's rotation, keeping the beam-splitter in the eye's direct line-of-sight. The user simultaneously views the image and the environment behind the image. A second beam-splitter, eye-tracker, and projector can be used on the user's other eye to create a stereoptic, virtual environment. The display can correspond to the resolving power of the human eye. The invention presets a high-resolution image wherever the user looks.

Owner:TEDDER DONALD RAY

Eye tracking head mounted display

InactiveUS20080002262A1Not salientImprove matching characteristicsCathode-ray tube indicatorsOptical elementsBeam splitterDisplay device

A head mounted display device has a mount which attaches the device to a user's head, a beam-splitter attached to the mount with movement devices, an image projector which projects images onto the beam-splitter, an eye-tracker which tracks a user's eye's gaze, and one or more processors. The device uses the eye tracker and movement devices, along with an optional head-tracker, to move the beam-splitter about the center of the eye's rotation, keeping the beam-splitter in the eye's direct line-of-sight. The user simultaneously views the image and the environment behind the image. A second beam-splitter, eye-tracker, and projector can be used on the user's other eye to create a stereoptic, virtual environment. The display can correspond to the resolving power of the human eye. The invention presets a high-resolution image wherever the user looks.

Owner:TEDDER DONALD RAY

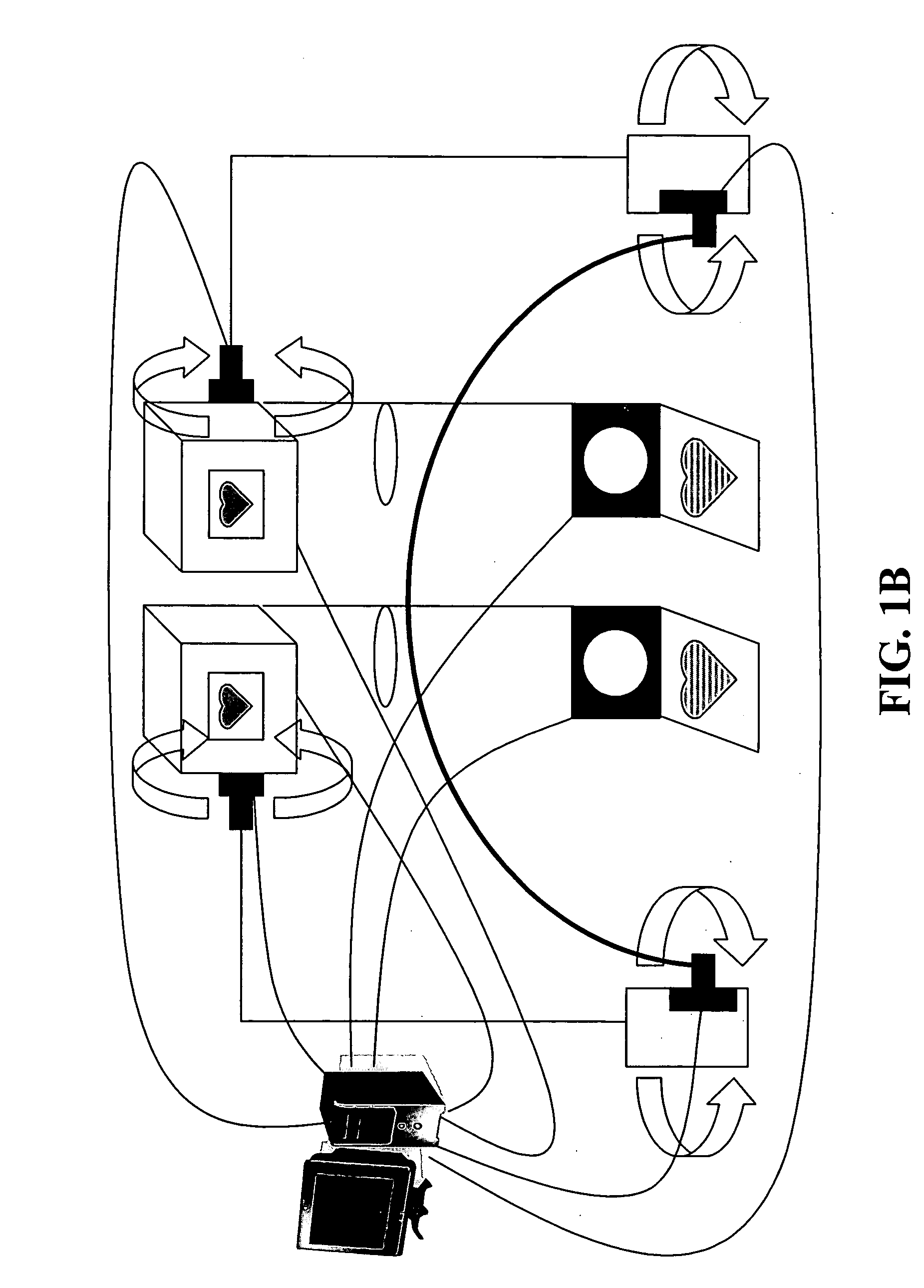

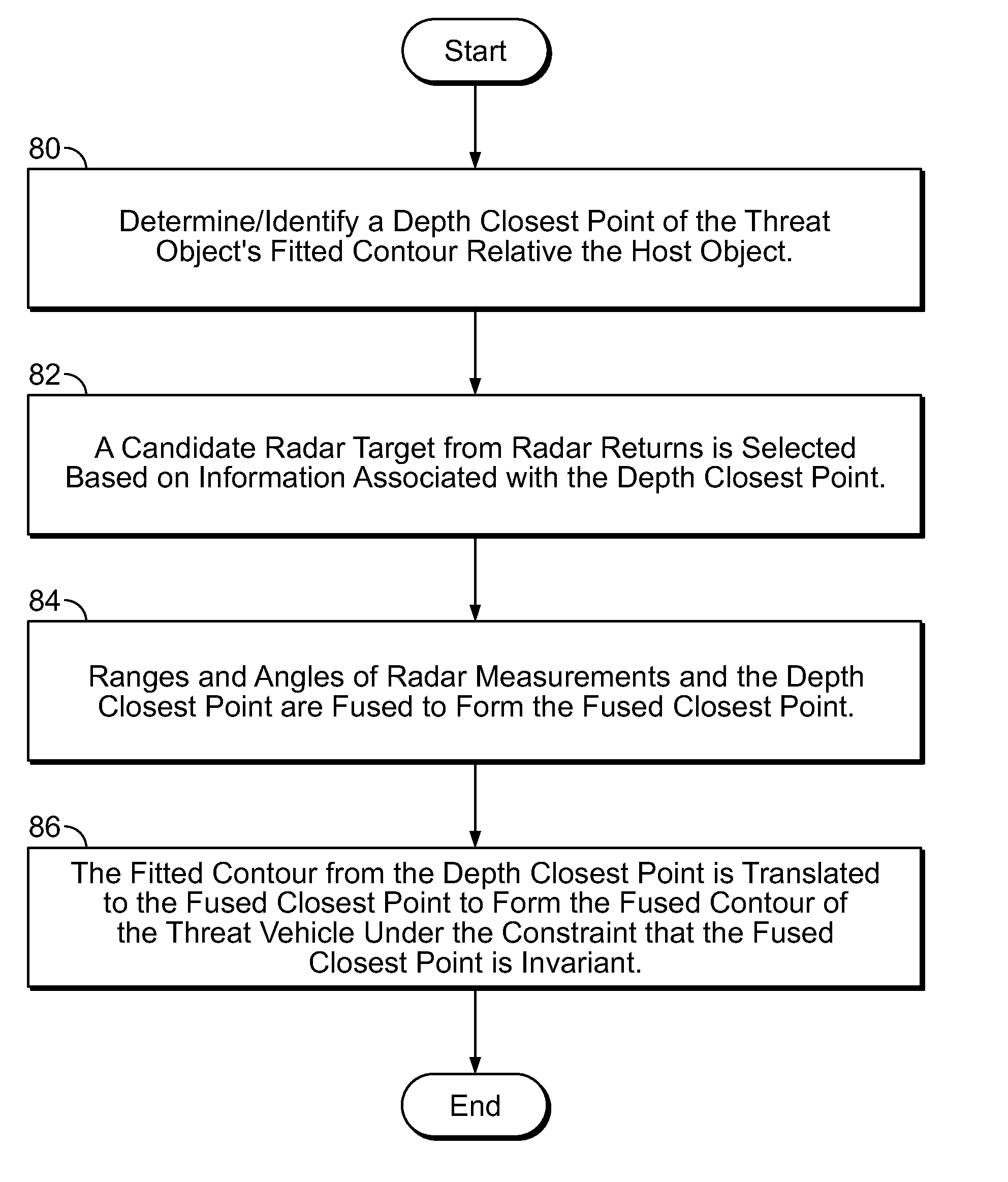

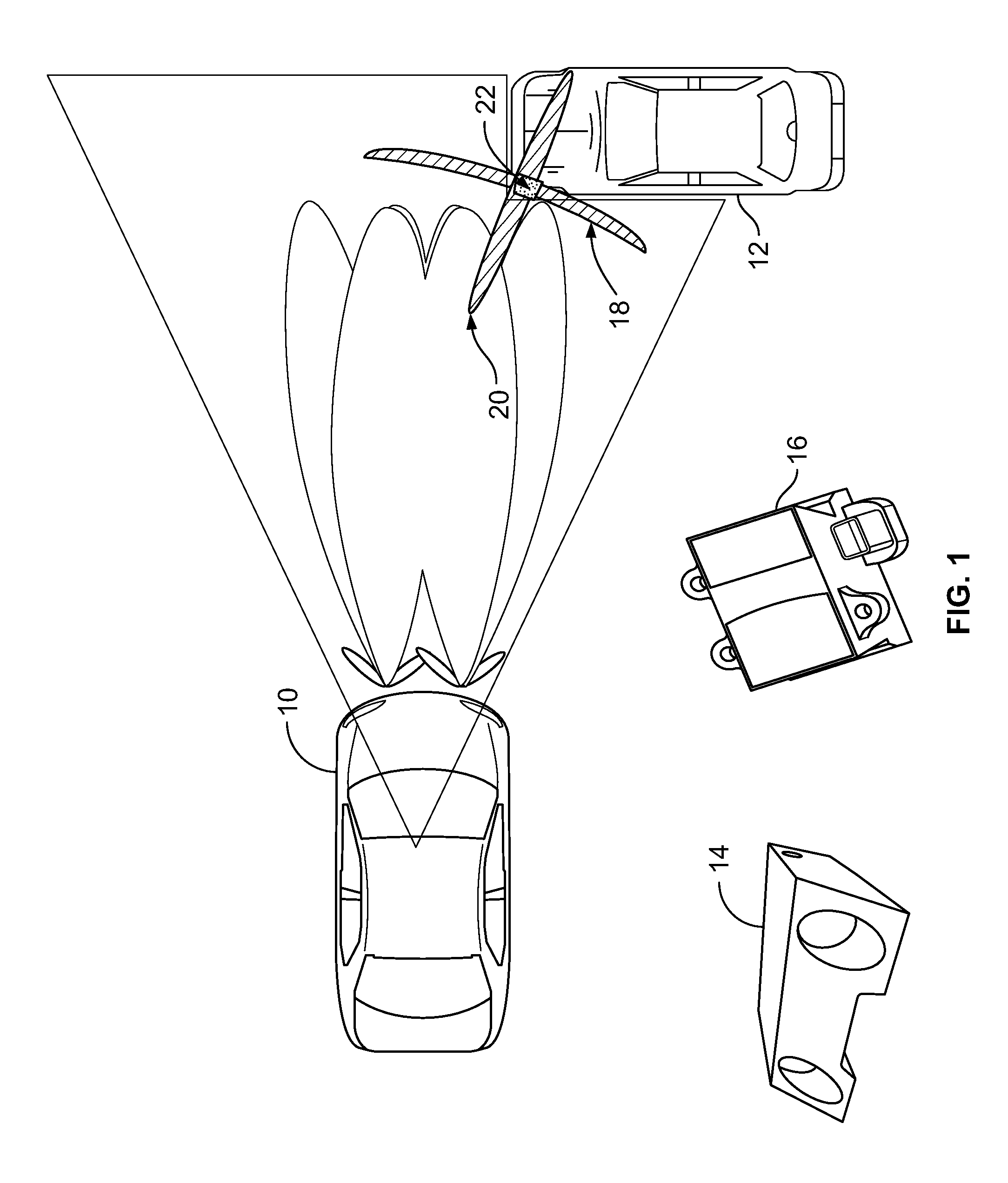

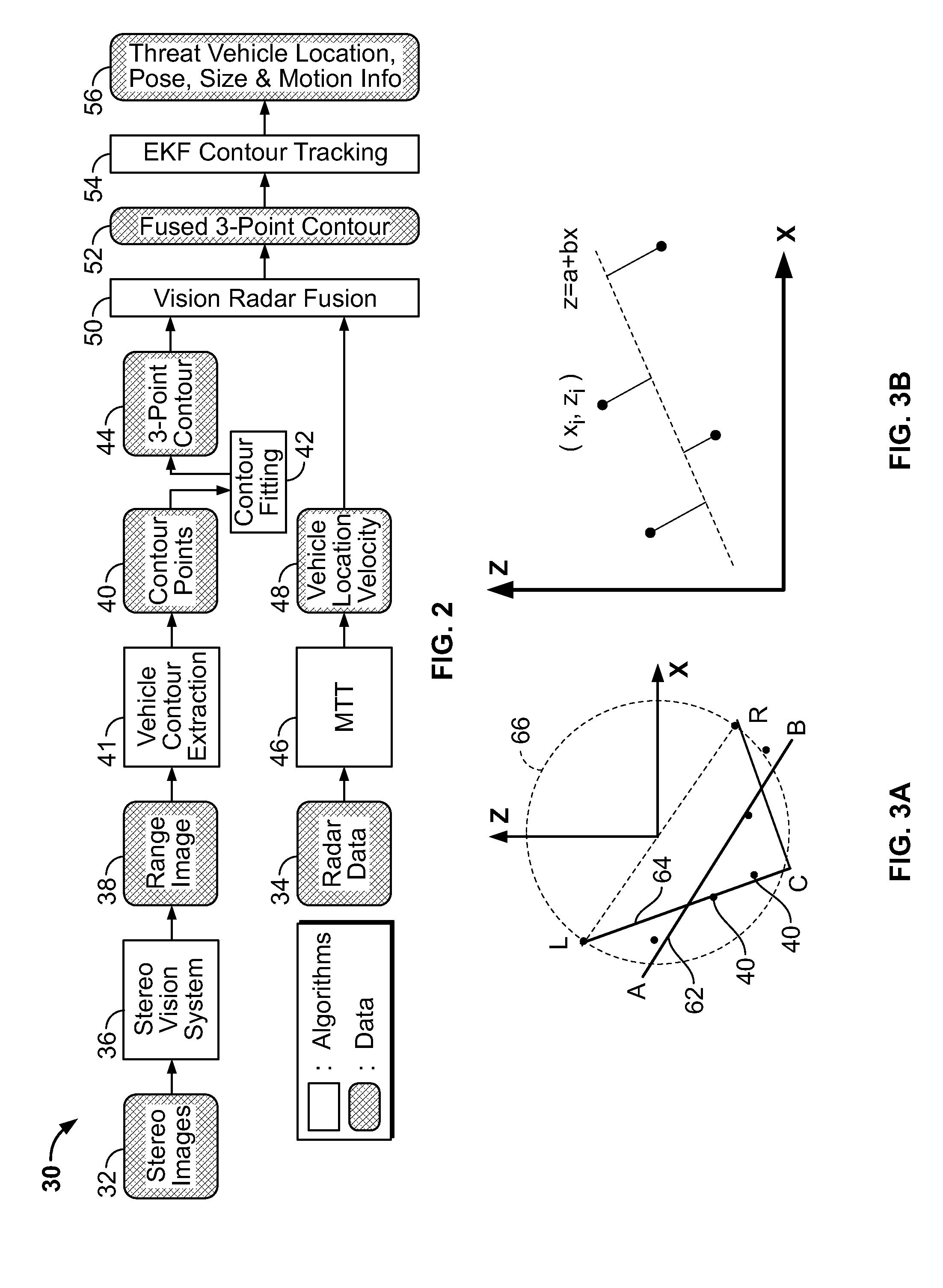

Collision avoidance method and system using stereo vision and radar sensor fusion

A system and method for fusing depth and radar data to estimate at least a position of a threat object relative to a host object is disclosed. At least one contour is fitted to a plurality of contour points corresponding to the plurality of depth values corresponding to a threat object. A depth closest point is identified on the at least one contour relative to the host object. A radar target is selected based on information associated with the depth closest point on the at least one contour. The at least one contour is fused with radar data associated with the selected radar target based on the depth closest point to produce a fused contour. Advantageously, the position of the threat object relative to the host object is estimated based on the fused contour. More generally, a method is provided for aligns two possibly disparate sets of 3D points.

Owner:SARNOFF CORP

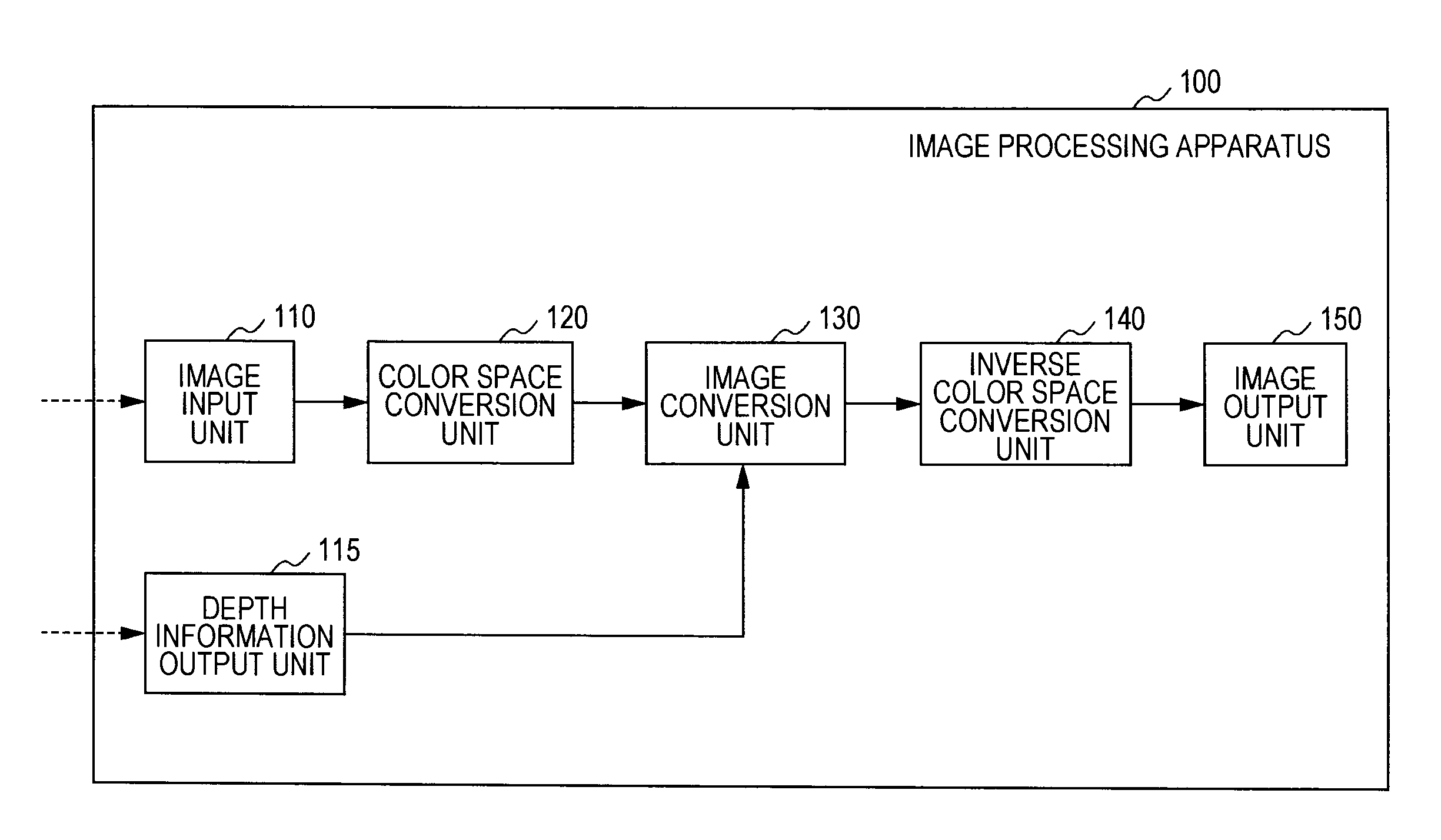

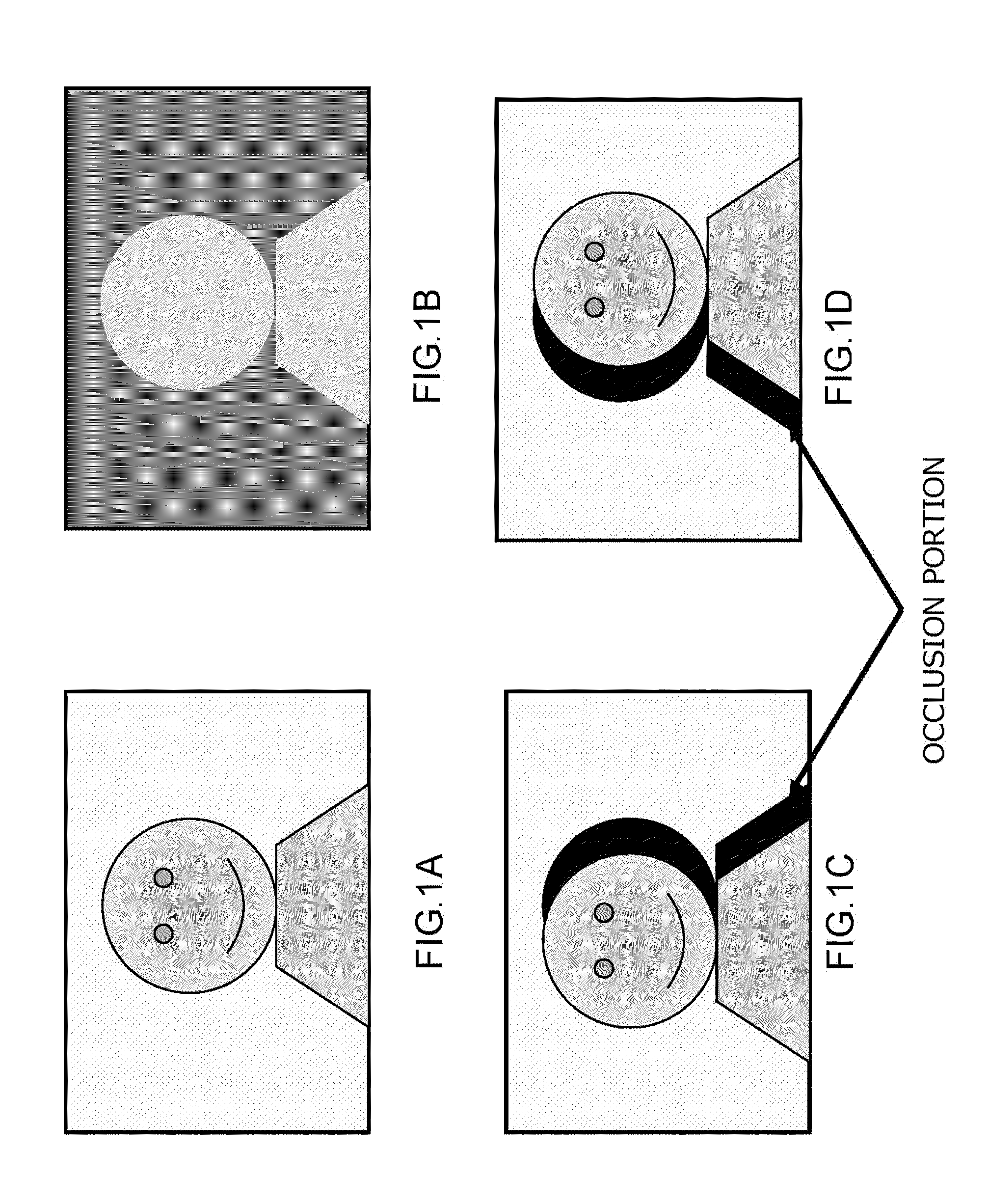

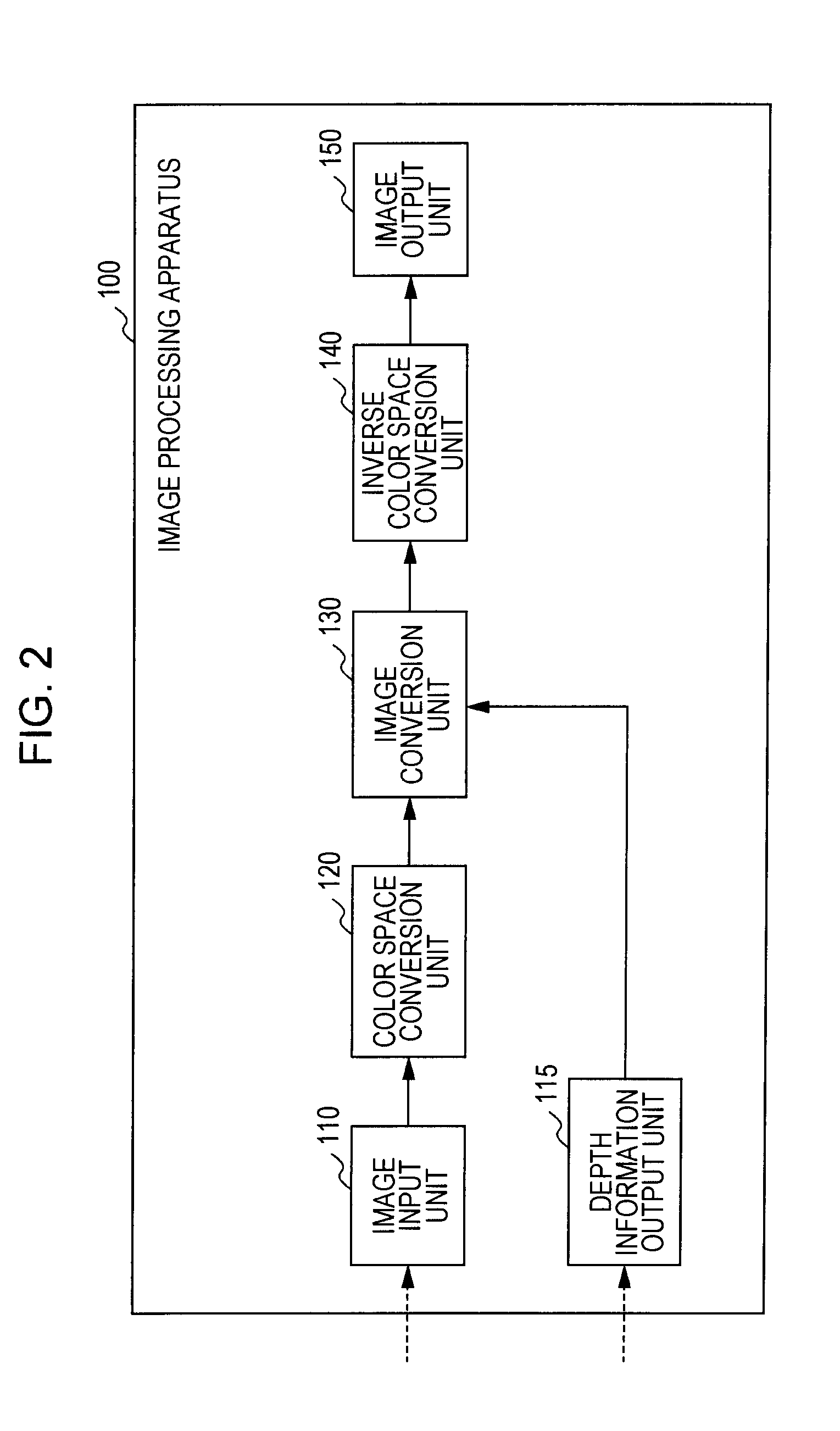

Image processing apparatus, image processing method and program

An image processing apparatus includes an image input unit that inputs a two-dimensional image signal, a depth information output unit that inputs or generates depth information of image areas constituting the two-dimensional image signal, an image conversion unit that receives the image signal and the depth information from the image input unit and the depth information output unit, and generates and outputs a left eye image and a right eye image for realizing binocular stereoscopic vision, and an image output unit that outputs the left and right eye images. The image conversion unit extracts a spatial feature value of the input image signal, and performs an image conversion process including an emphasis process applying the feature value and the depth information with respect to the input image signal, thereby generating at least one of the left eye image and the right eye image.

Owner:SATURN LICENSING LLC

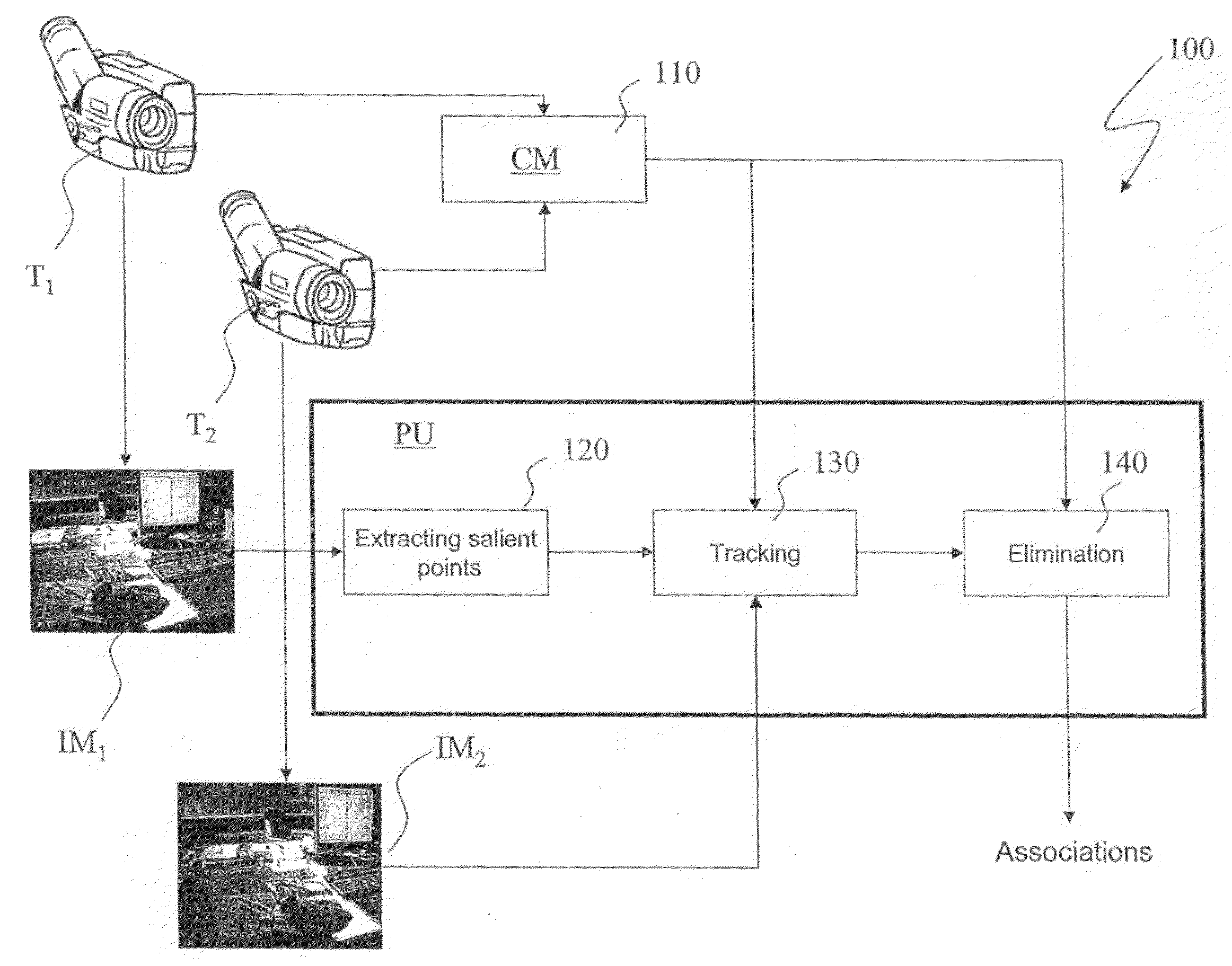

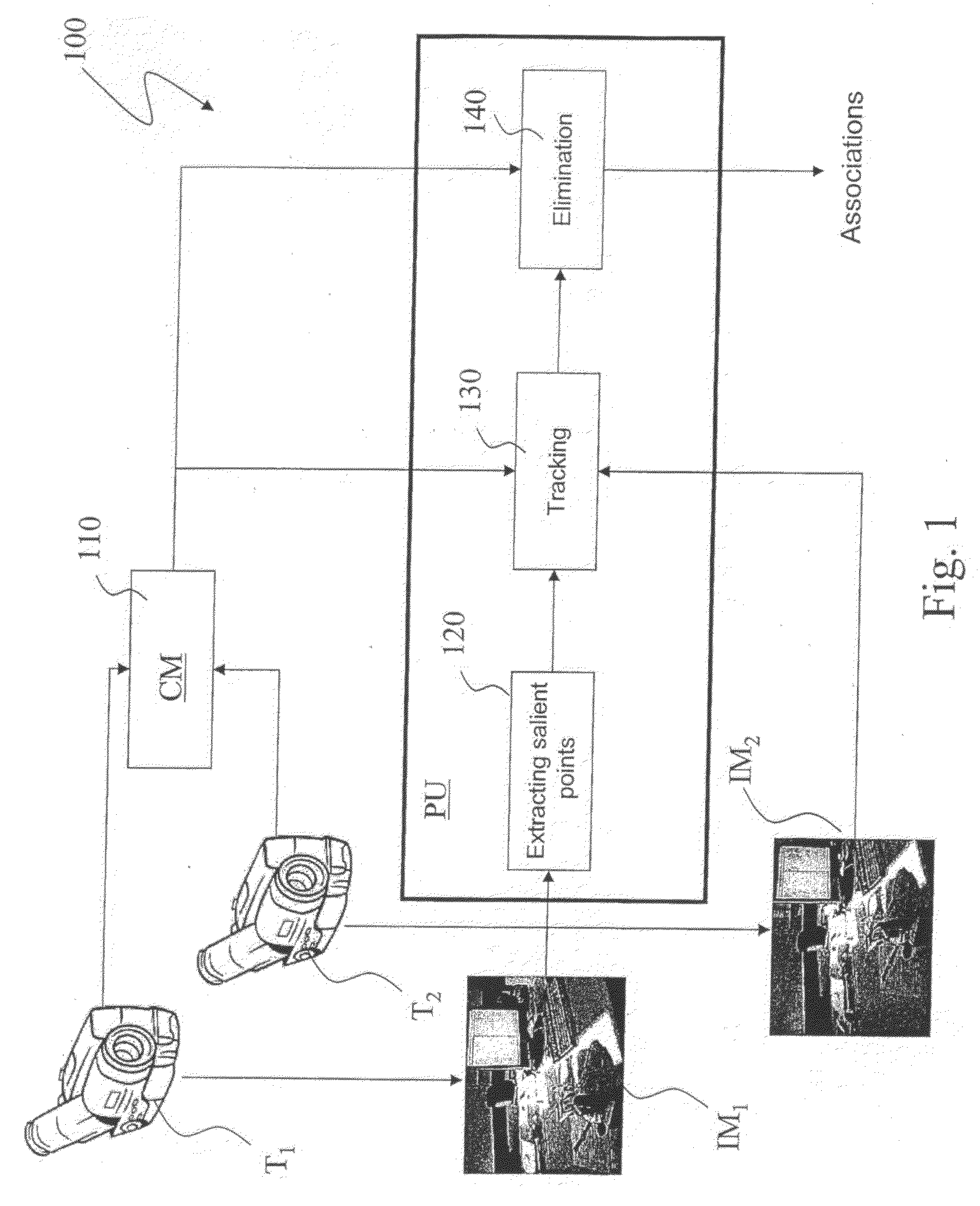

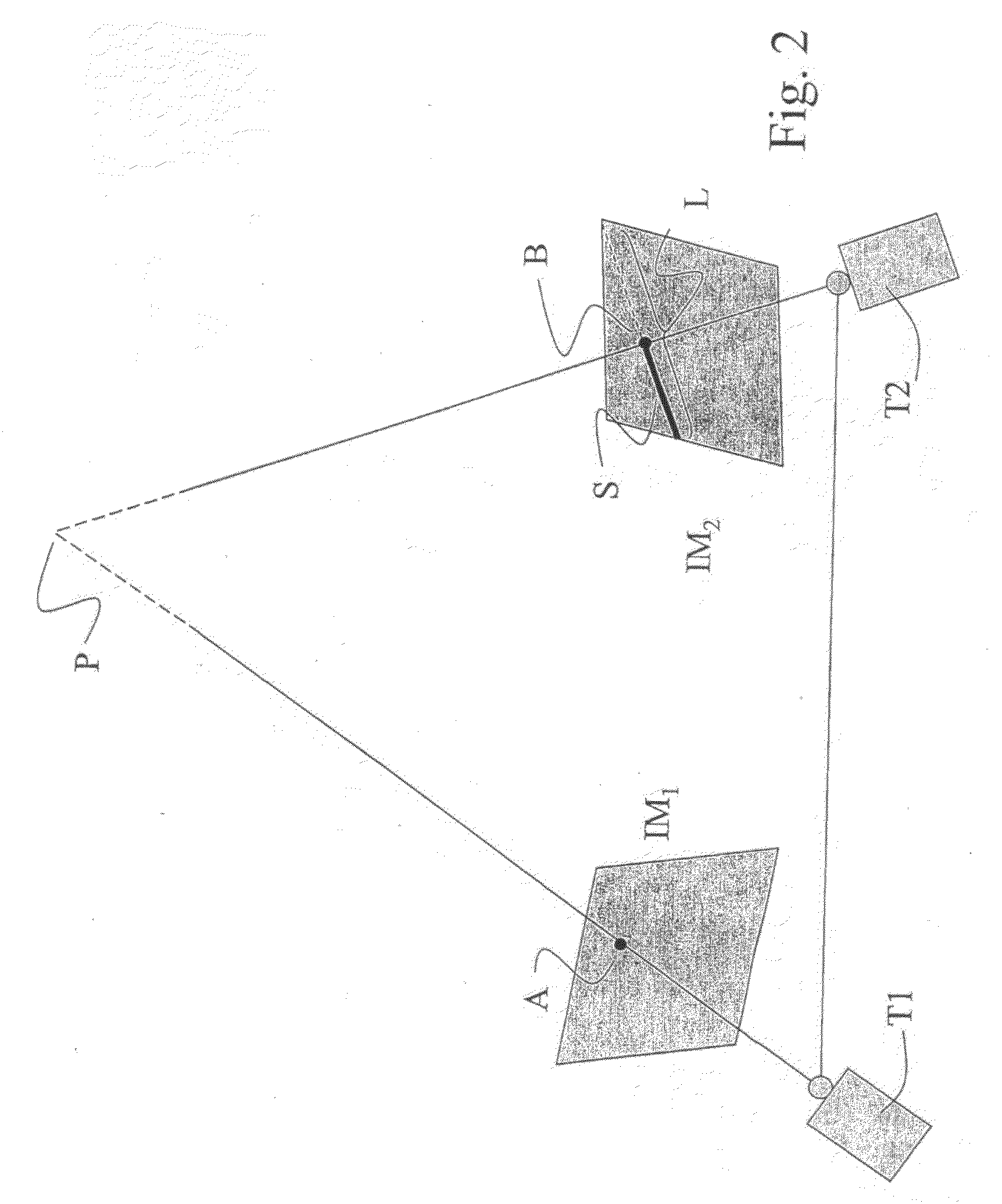

Method for Determining Scattered Disparity Fields in Stereo Vision

ActiveUS20090207235A1Quality improvementCompensating for such errorImage enhancementImage analysisComputer scienceVisual perception

In a system for stereo vision including two cameras shooting the same scene, a method is performed for determining scattered disparity fields when the epipolar geometry is known, which includes the steps of: capturing, through the two cameras, first and second images of the scene from two different positions; selecting at least one pixel in the first image, the pixel being associated with a point of the scene and the second image containing a point also associated with the above point of the scene; and computing the displacement from the pixel to the point in the second image minimising a cost function, such cost function including a term which depends on the difference between the first and the second image and a term which depends on the distance of the above point in the second image from a epipolar straight line, and a following check whether it belongs to an allowability area around a subset to the epipolar straight line in which the presence of the point is allowed, in order to take into account errors or uncertainties in calibrating the cameras.

Owner:TELECOM ITALIA SPA

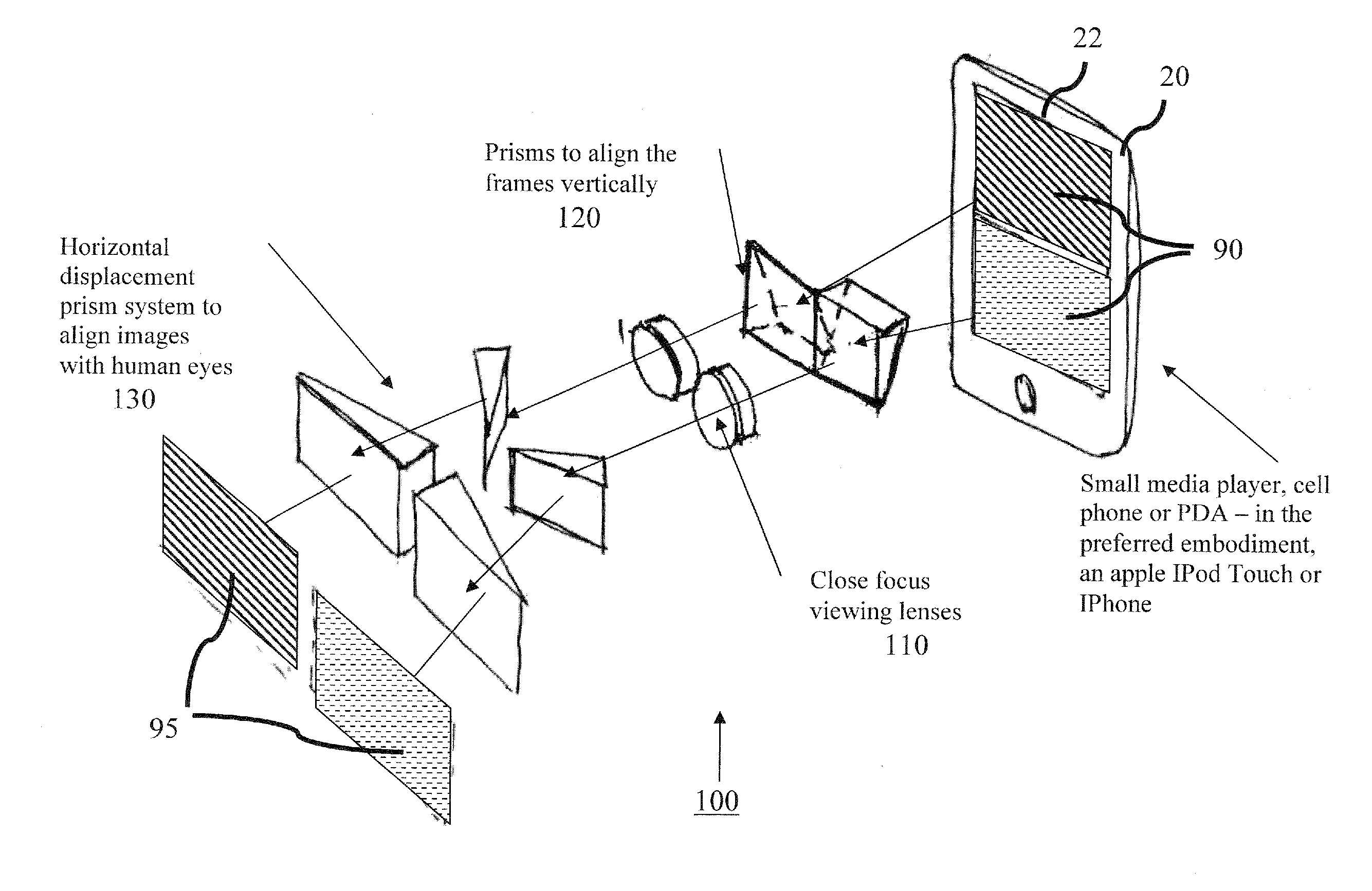

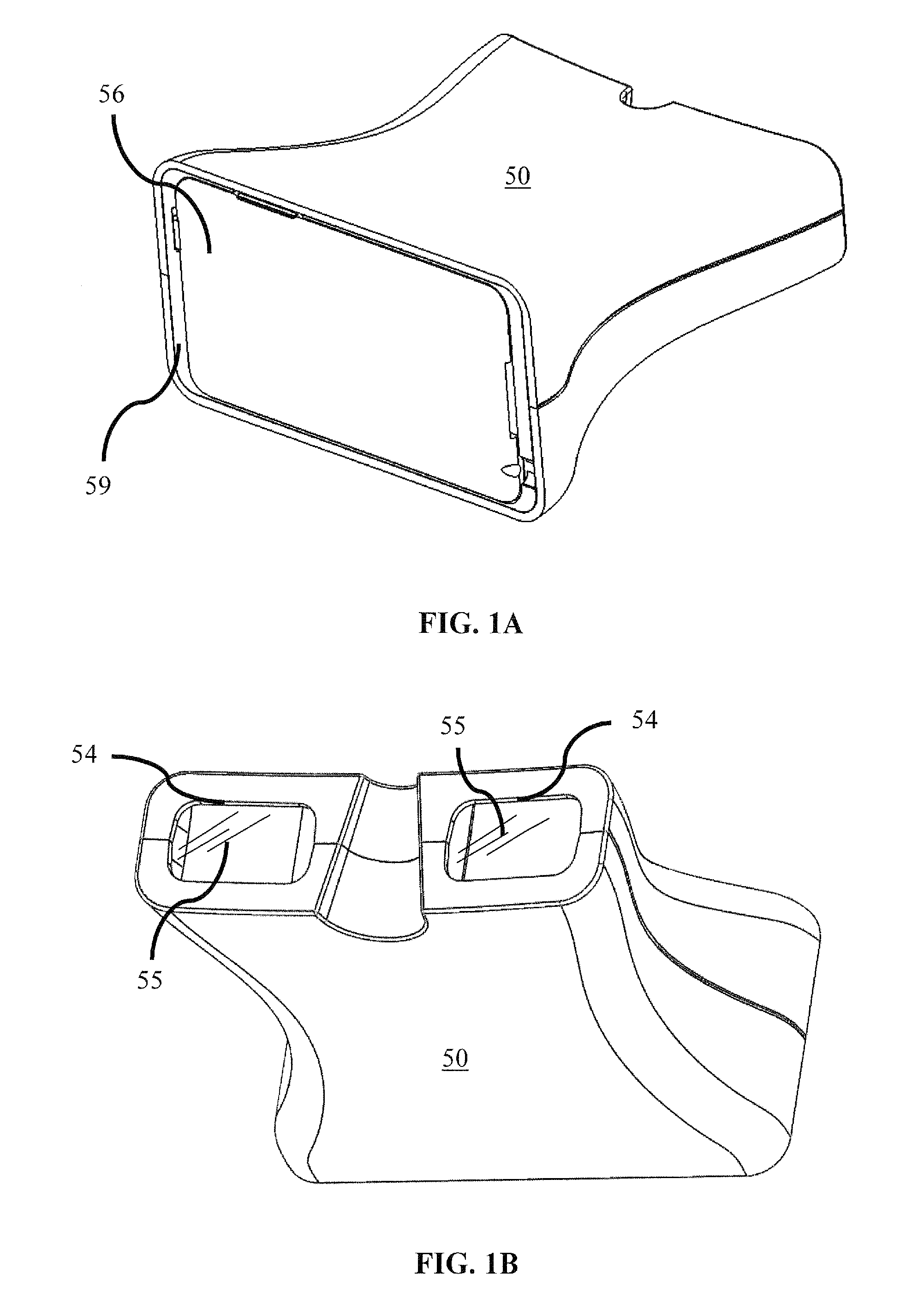

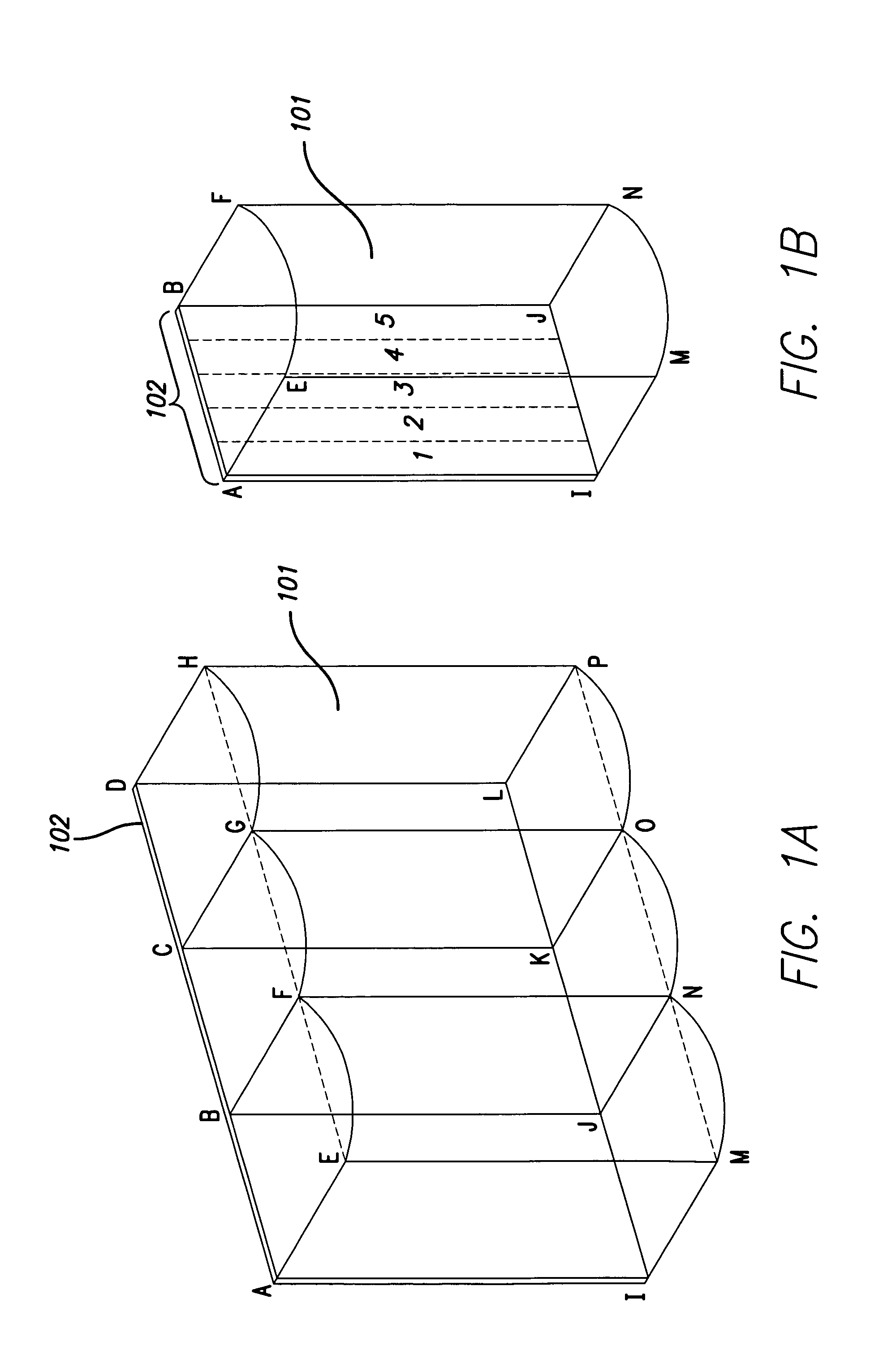

Method and apparatus for providing a 3D image via a media device

InactiveUS20100277575A1Maximize number of pixelDifficult to eliminateColor television detailsSteroscopic systemsParallaxComputer graphics (images)

Embodiments of the subject invention relate to a method and apparatus for providing a 3D image and / or 3D video. Specific embodiments can provide a 3D image and / or 3D video via a handheld media player. Examples of handheld media players via which embodiments can provide a 3D image and / or 3D video include, but are not limited to, an IPod®, a personal digital assistant (PDA), a cell phone, or an IPhone®. In accordance with specific embodiments of the invention, the problem of viewing three-Dimensional (3D) images on personal or handheld media devices is solved by the use of a compatible 3D viewer. Embodiments of the viewer, can provide a 3D experience to a user from images provided on the smaller viewscreen of a smaller, personal, or handheld media device. Specific embodiments described herein provide a 3D viewer that can present two stereoscopic images (i.e., 2-Dimensional (2D) images, having horizontal disparity) to a user's or viewer's eyes, such that each eye sees only one of the two stereoscopic images. By the process of stereopsis, the images are combined by the brain into a single 3D image giving the sensation, or perception, of depth and / or distance.

Owner:TETRACAM

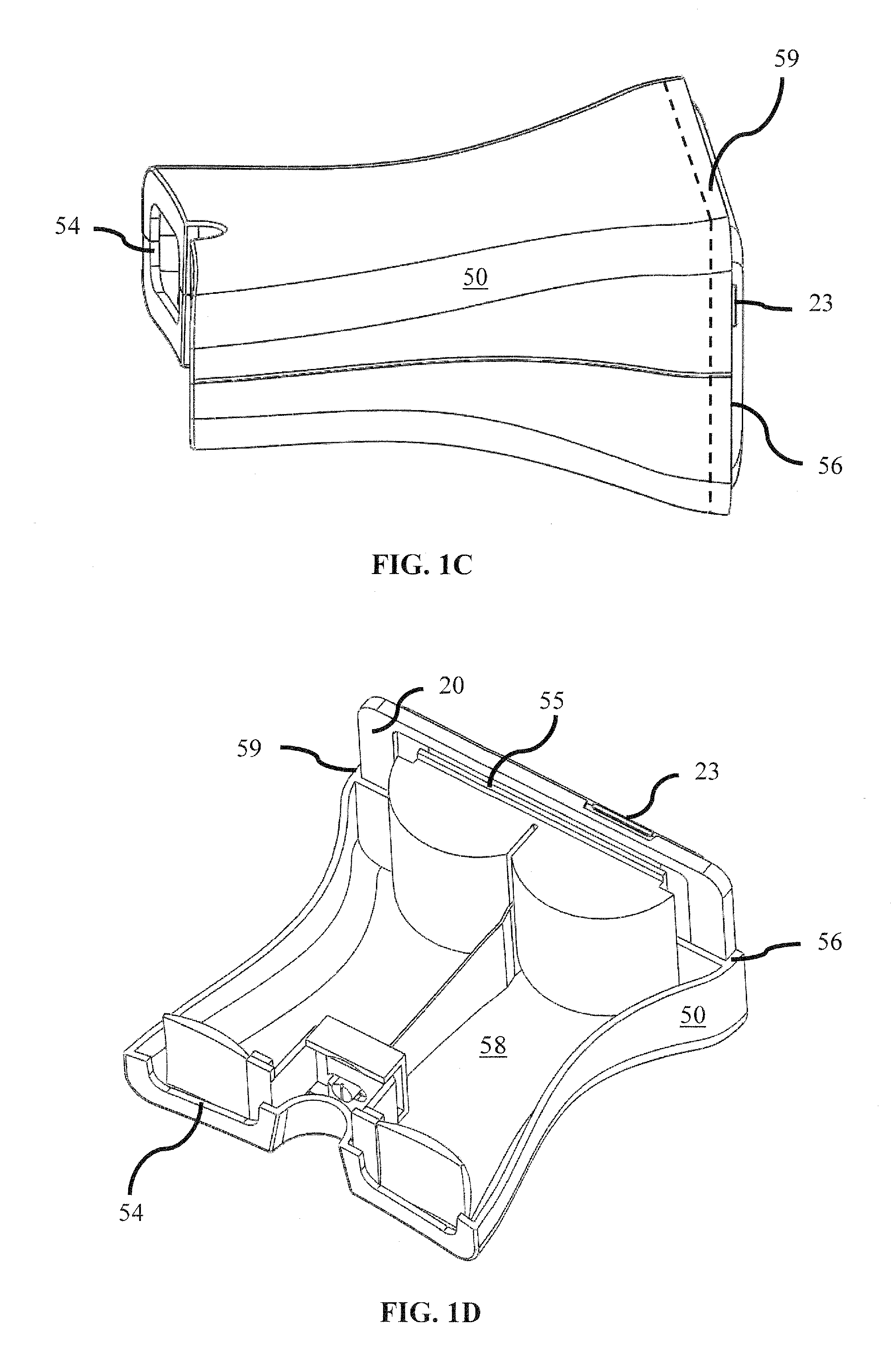

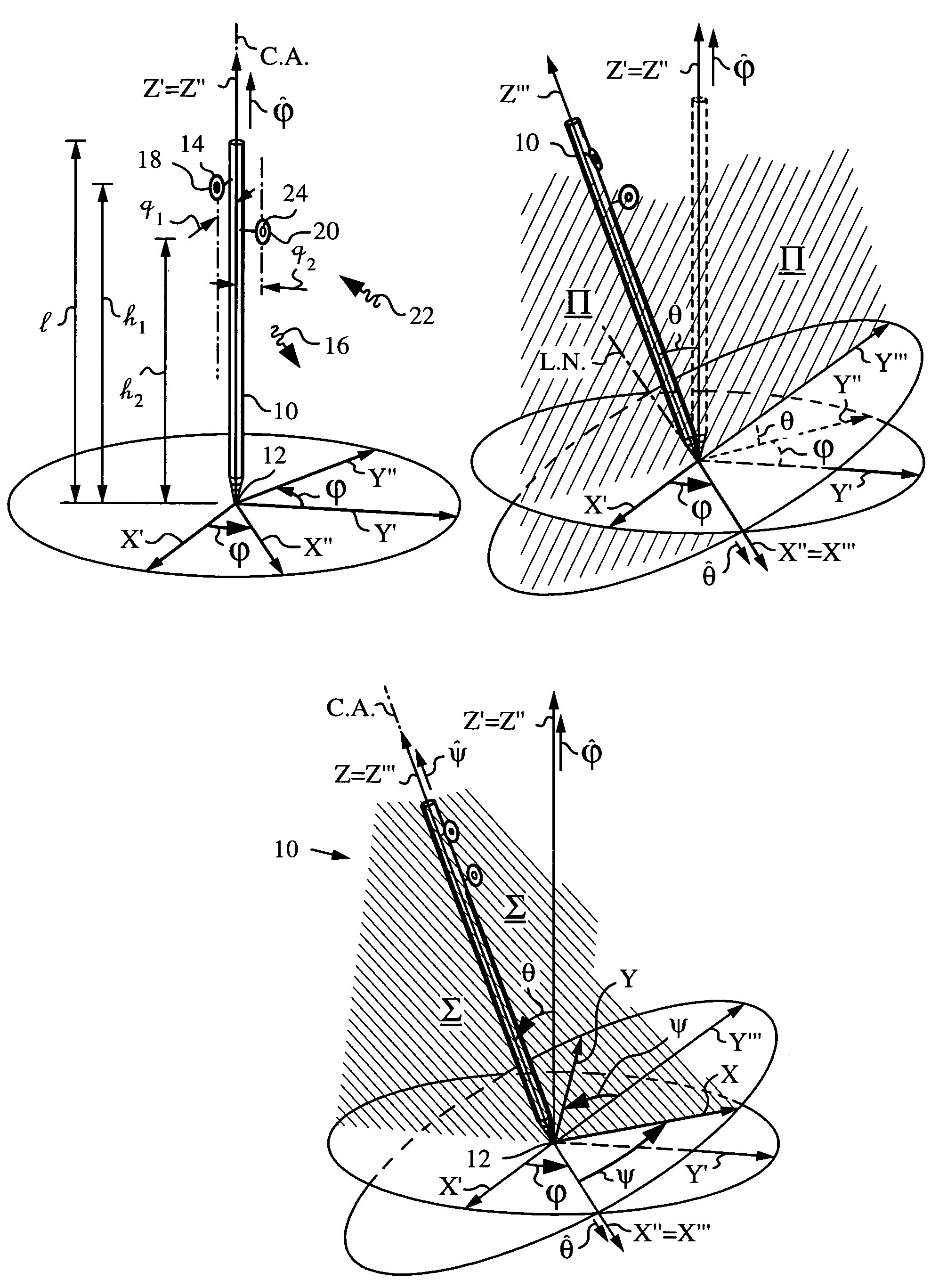

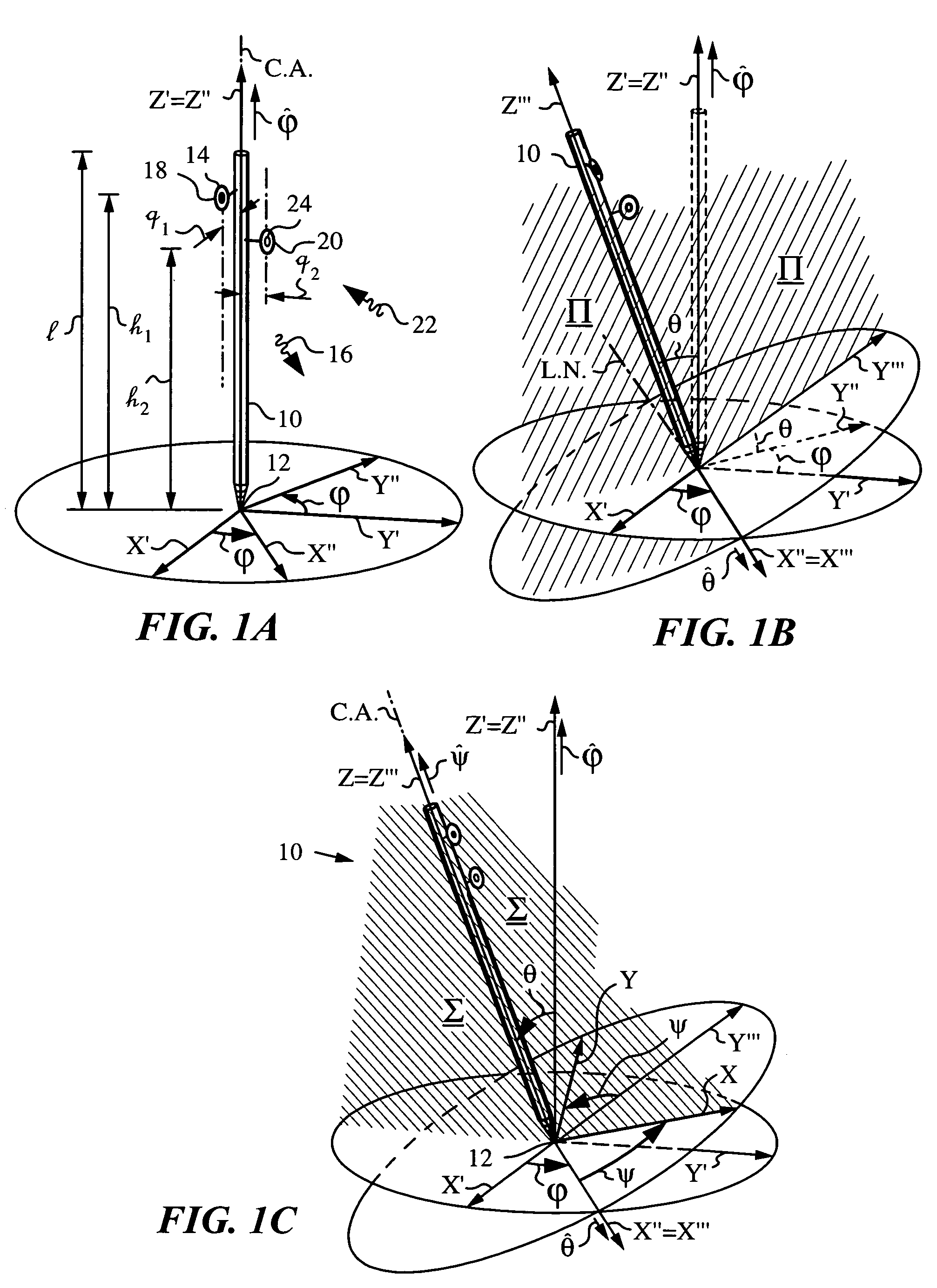

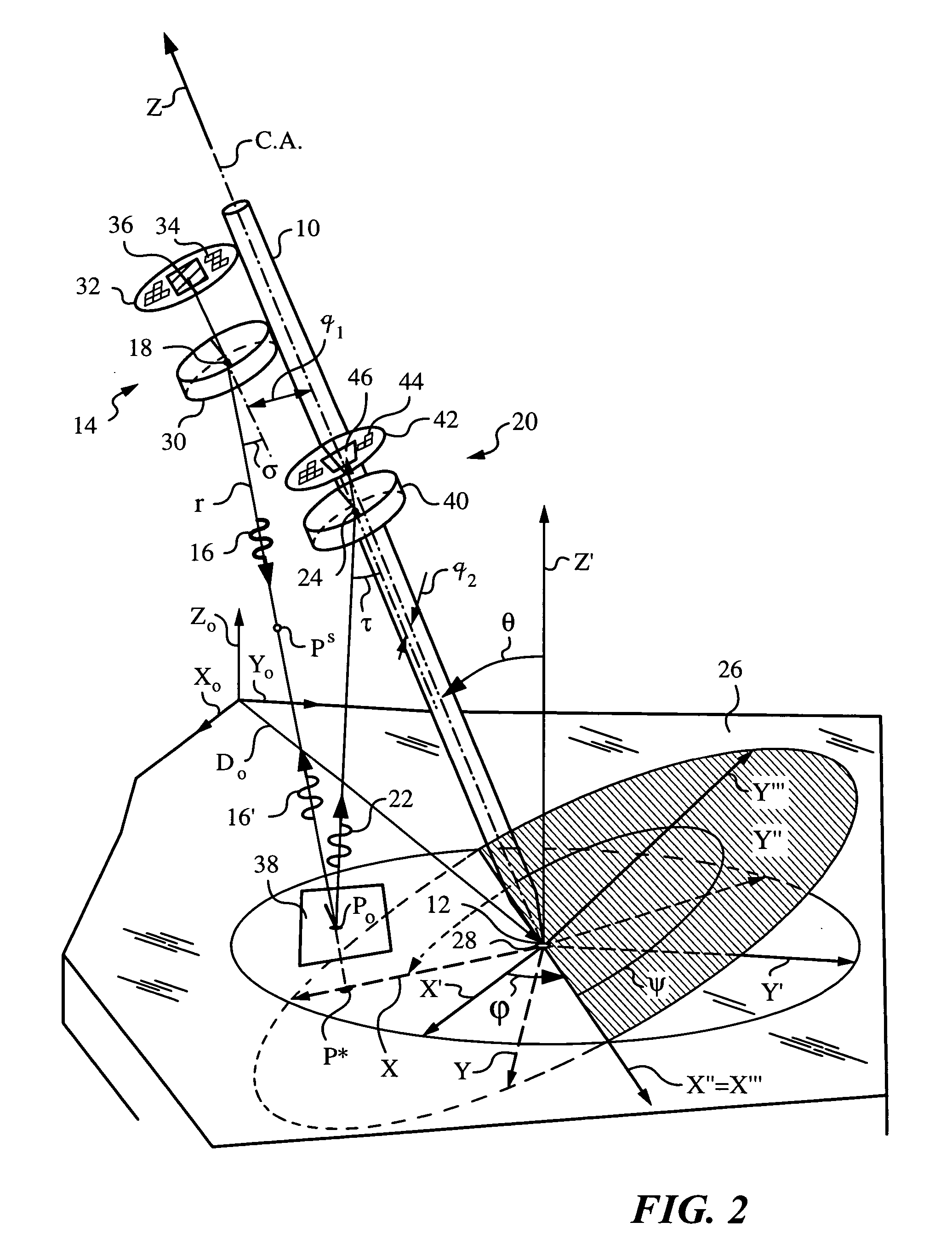

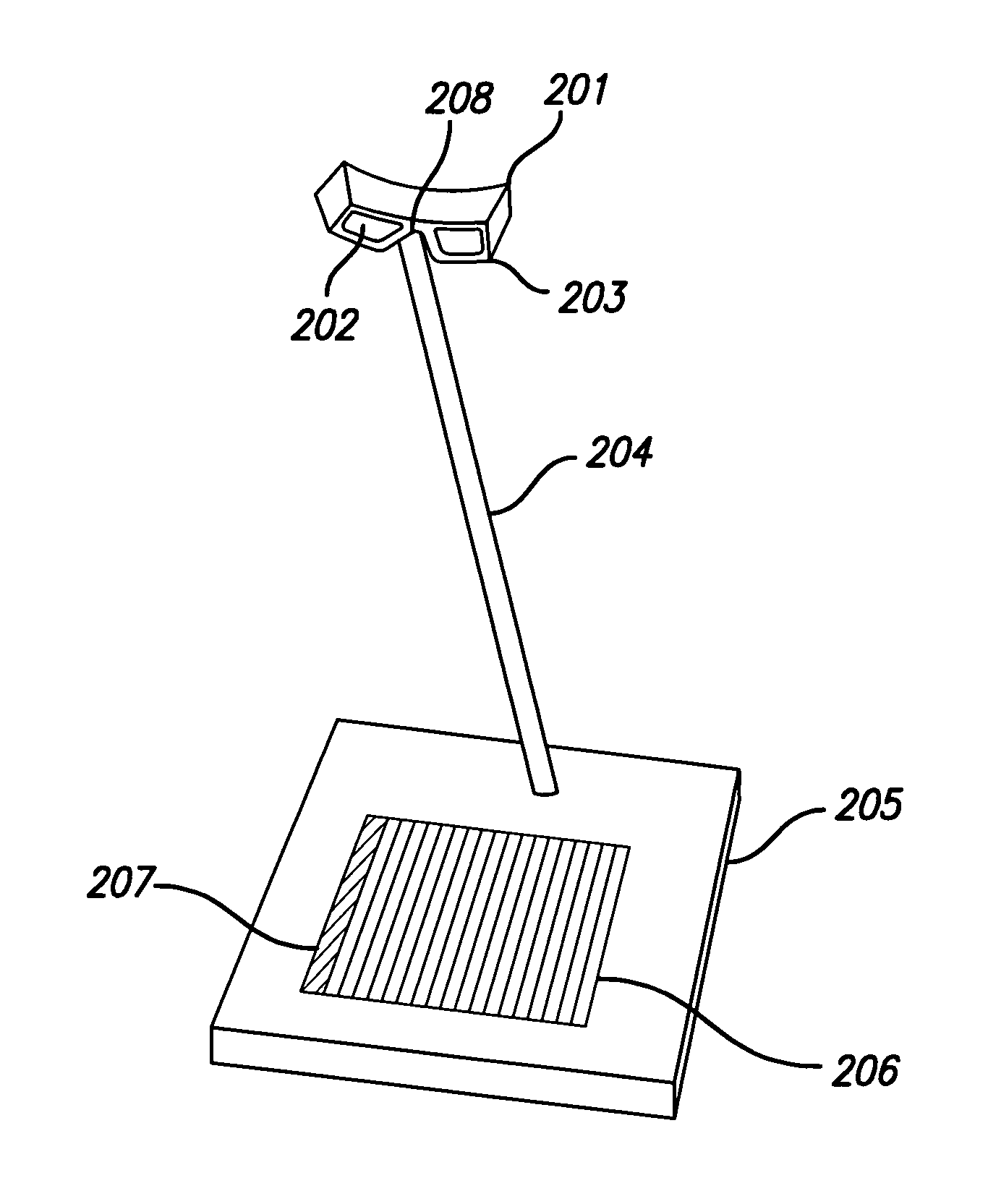

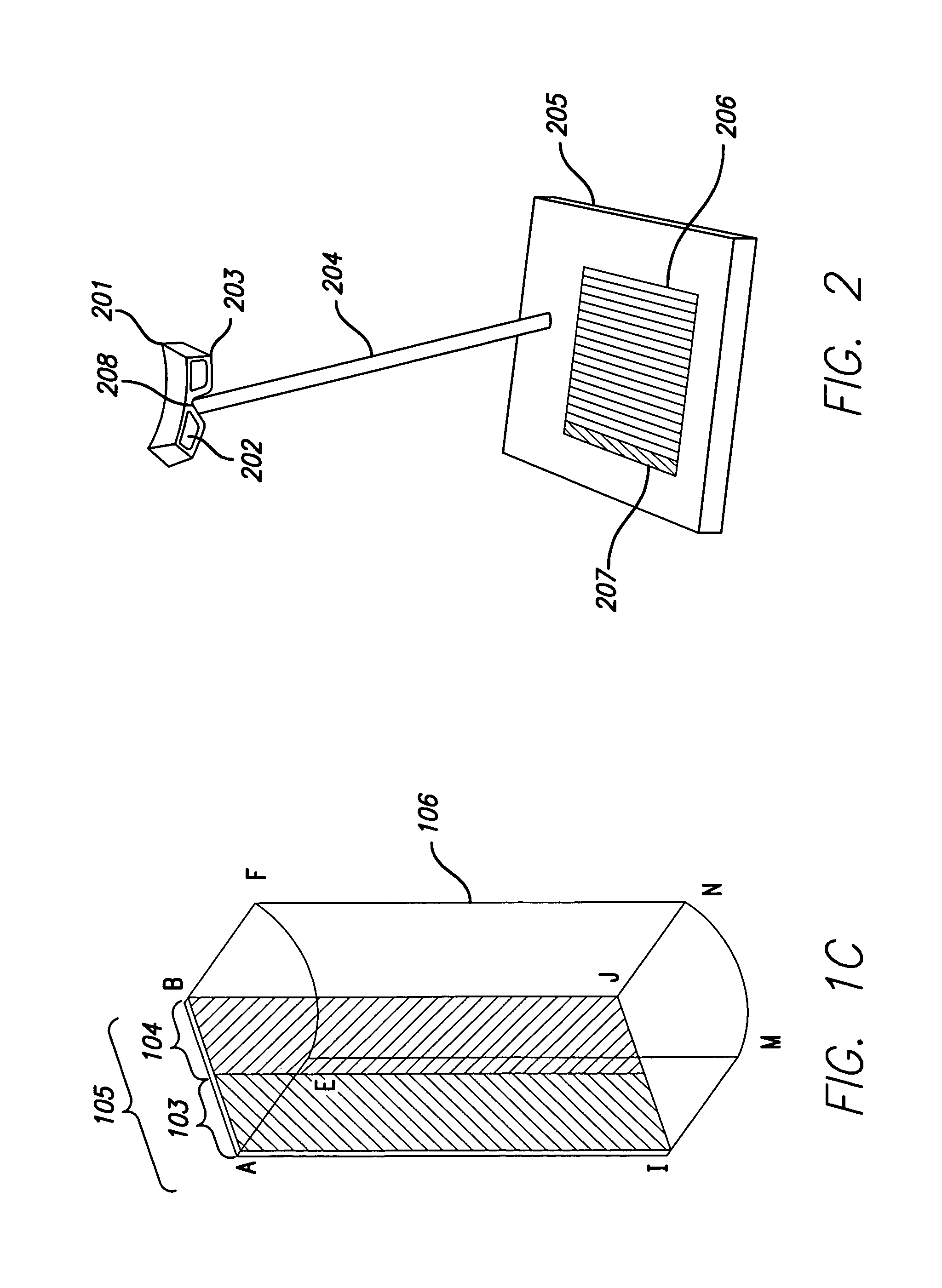

Apparatus and method for determining orientation parameters of an elongate object

An apparatus and method employing principles of stereo vision for determining one or more orientation parameters and especially the second and third Euler angles θ, ψ of an elongate object whose tip is contacting a surface at a contact point. The apparatus has a projector mounted on the elongate object for illuminating the surface with a probe radiation in a known pattern from a first point of view and a detector mounted on the elongate object for detecting a scattered portion of the probe radiation returning from the surface to the elongate object from a second point of view. The orientation parameters are determined from a difference between the projected and detected probe radiation such as the difference between the shape of the feature produced by the projected probe radiation and the shape of the feature detected by the detector. The pattern of probe radiation is chosen to provide information for determination of the one or more orientation parameters and can include asymmetric patterns such as lines, ellipses, rectangles, polygons or the symmetric cases including circles, squares and regular polygons. To produce the patterns the projector can use a scanning arrangement or a structured light optic such as a holographic, diffractive, refractive or reflective element and any combinations thereof. The apparatus is suitable for determining the orientation of a jotting implement such as a pen, pencil or stylus.

Owner:ELECTRONICS SCRIPTING PRODS

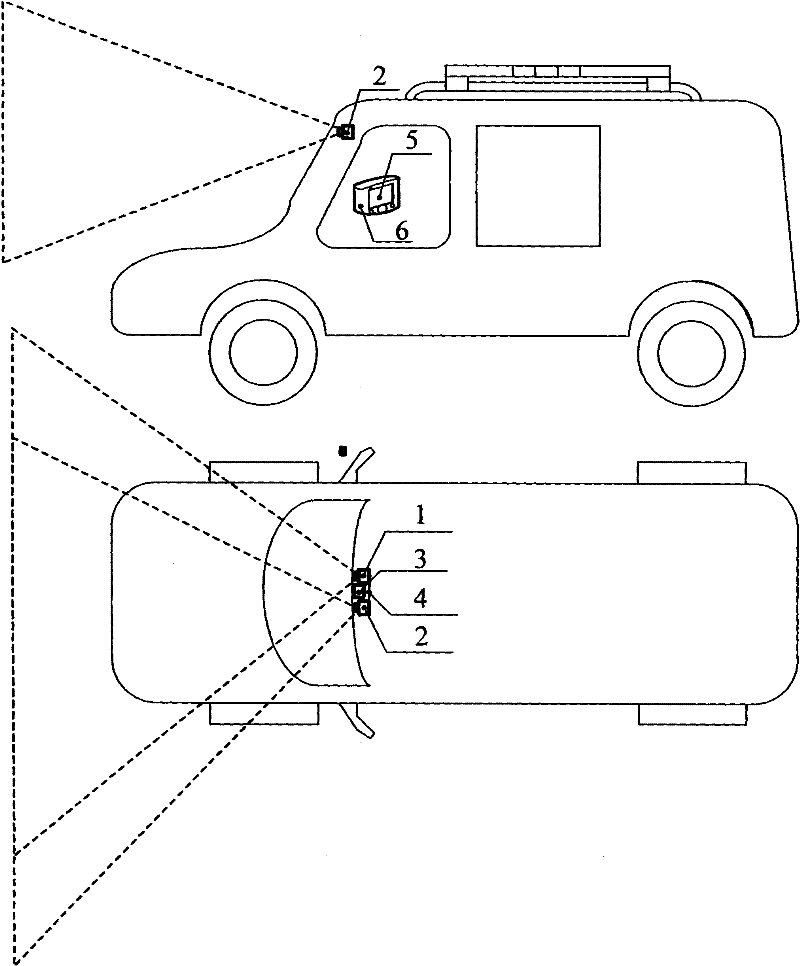

Active safety type assistant driving method based on stereoscopic vision

InactiveCN102685516AAvoid tailgatingPreventing accidents such as frontal collisionsImage enhancementImage analysisActive safetyDriver/operator

The invention discloses an active safety type assistant driving method based on stereoscopic vision. An active safety type assistant driving system comprehensively utilizes an OME information technology, consists of a stereoscopic vision subsystem, an image immediate processing subsystem and a safety assistant driving subsystem and comprises two sets of high resolution CCD (charge-coupled device) cameras, an ambient light sensor, a two-channel video collecting card, a synchronous controller, a data transmission circuit, a power supply circuit, an image immediate processing algorithms library, a voice reminding module, a screen display module and an active safety type driving control module. According to the active safety type assistant driving method, separation lines and parameters such as relative distance, relative speed, the relative acceleration and the like of dangerous objects such as front vehicles, front bicycles, front pedestrians and the like can be accurately identified in real time in sunny days, cloudy days, at nigh and under the severe weather conditions such as rain with snow, dense fog and the like, so that the system can prompt a driver to adopt countermeasure through voice and can realize automatic deceleration and emergency brake at emergency situation, thereby ensuring safe travel in a whole day.

Owner:李慧盈 +2

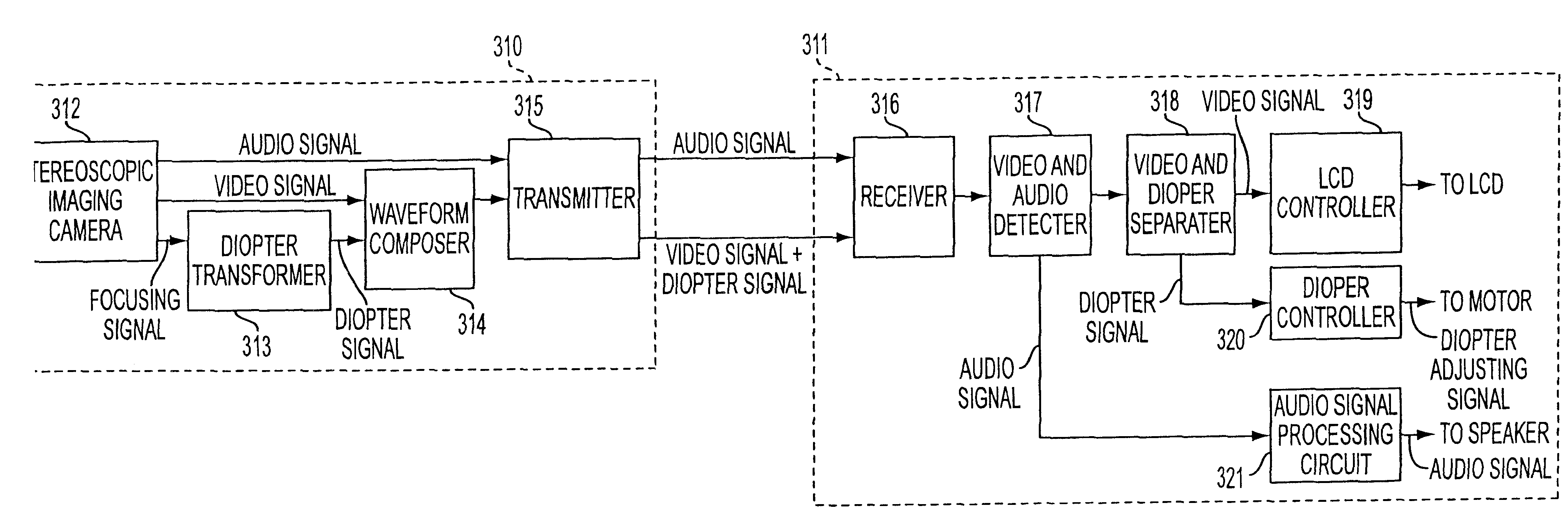

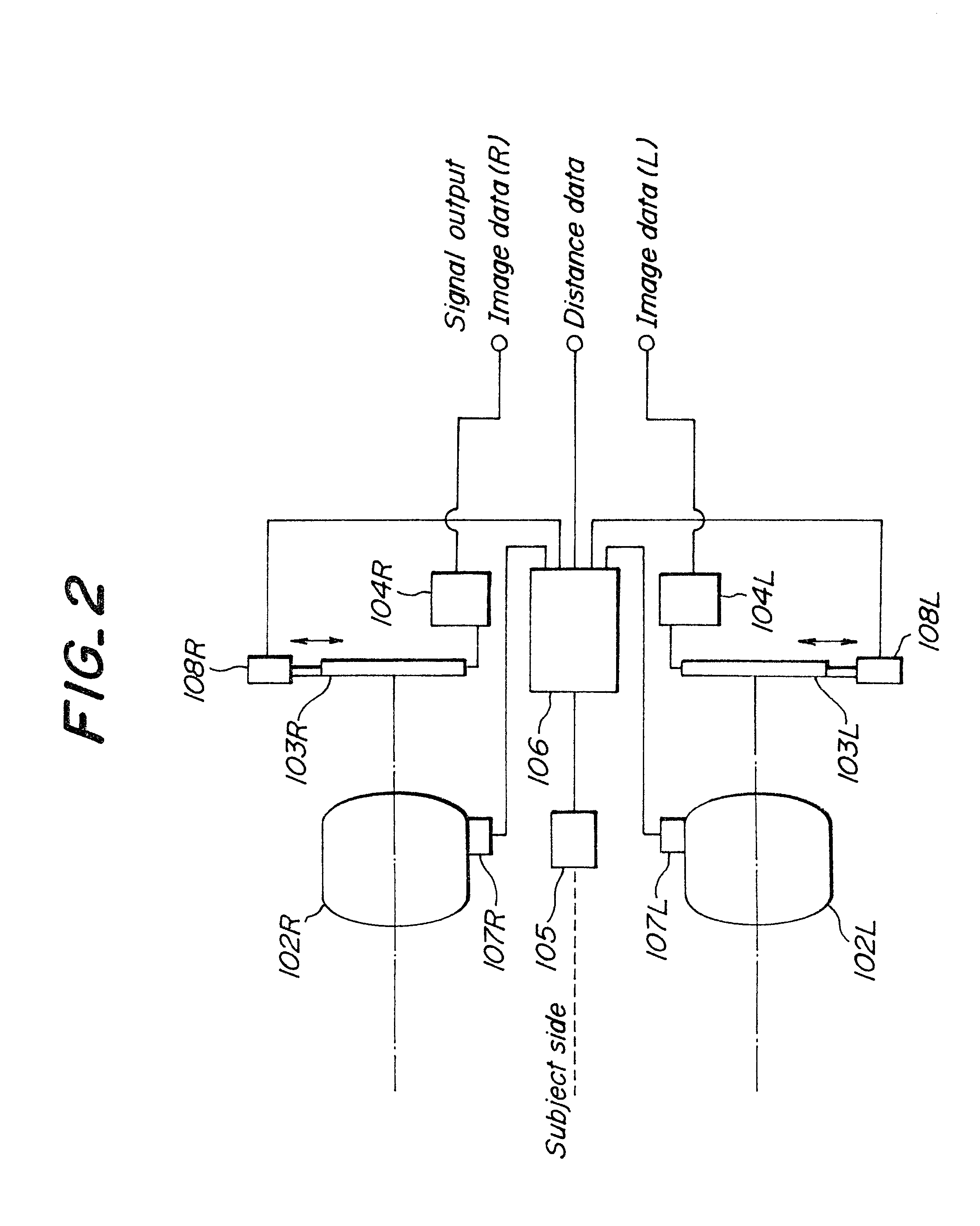

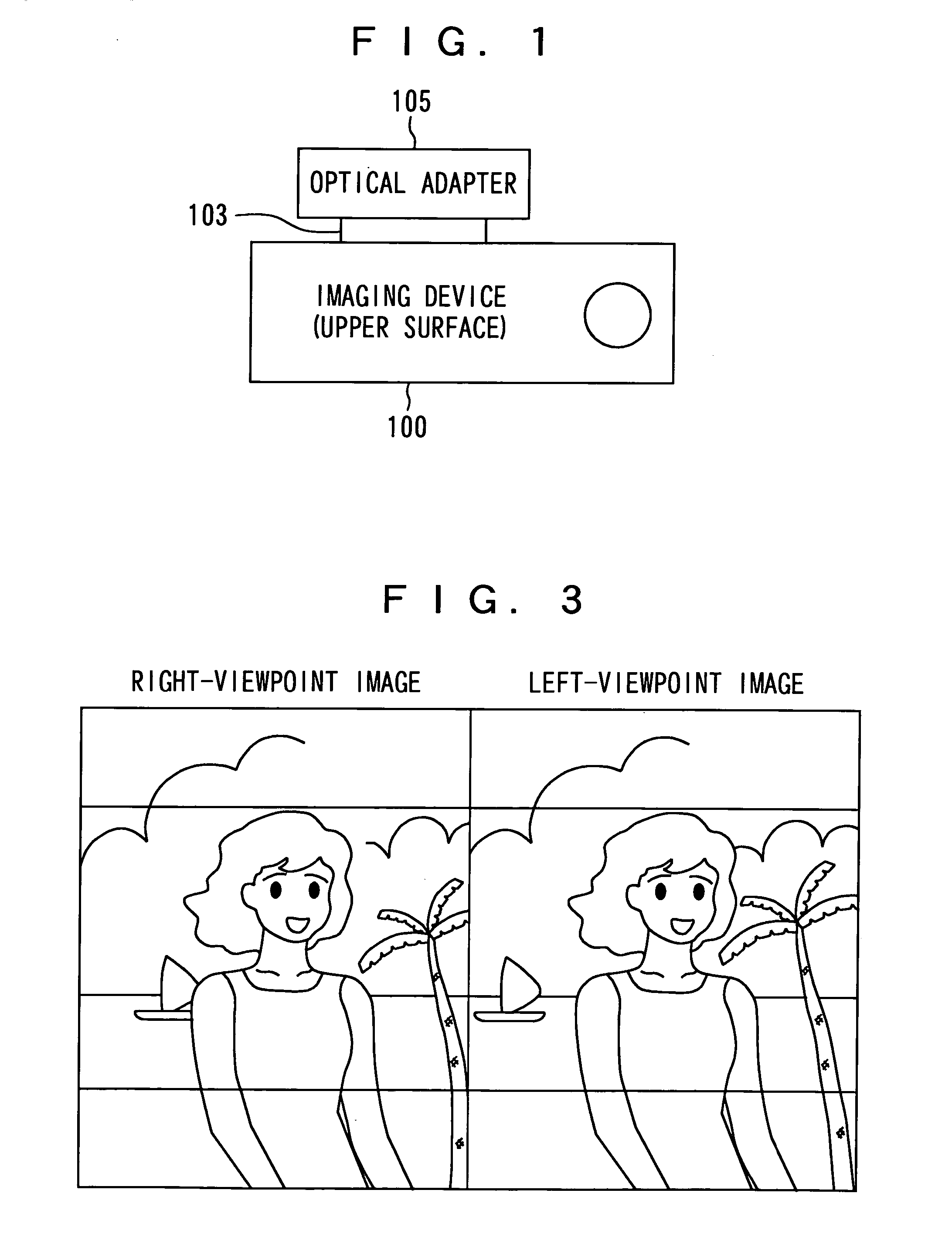

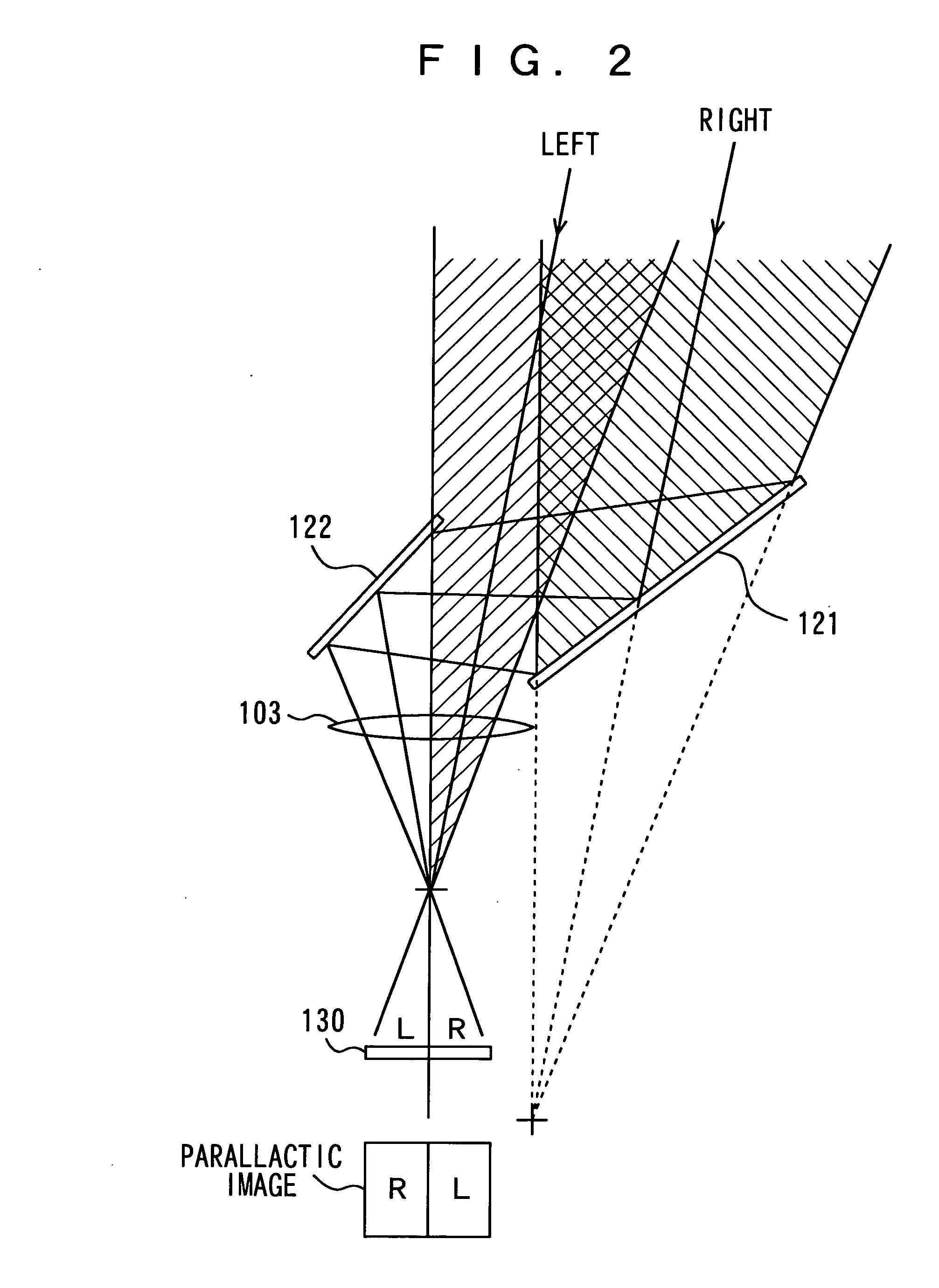

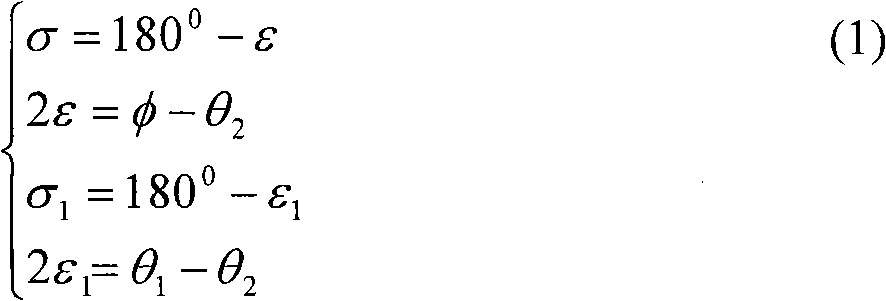

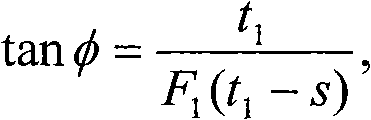

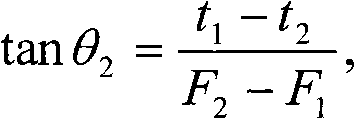

Imaging apparatus, image display apparatus and image recording and/or reproducing apparatus

A stereoscopic image display apparatus having right and left display elements for displaying inputted stereoscopic image signals, right and left displaying optical systems for leading images displayed on the right and left image elements, a setting information element for outputting setting information signals on stereoscopic image display, based on the stereoscopic vision information signals about the inputted imaging distance in synchronization with the stereoscopic image signals, and a control element for controlling image position displayed on the right and left image display elements to at least optical axes of the right and left display optical systems, based on the setting information signals.

Owner:OLYMPUS OPTICAL CO LTD

Method and apparatus for optimizing the viewing distance of a lenticular stereogram

InactiveUS7375886B2Easy to viewColor television detailsSteroscopic systemsComputer graphics (images)Data memory

A method and apparatus for optimizing viewing distance for a stereogram system. In a stereogram, an image is held in close juxtaposition with a lenticular screen. In the invention, a data store is used to store optimum pitch values for specified viewing distances. An interdigitation program then acts on the table values and creates a mapping of interdigitated views for each viewing distance. The user can then select or specify a desired viewing distance, and the optimum mapping of views is automatically chosen for display.

Owner:LEDOR LLC +1

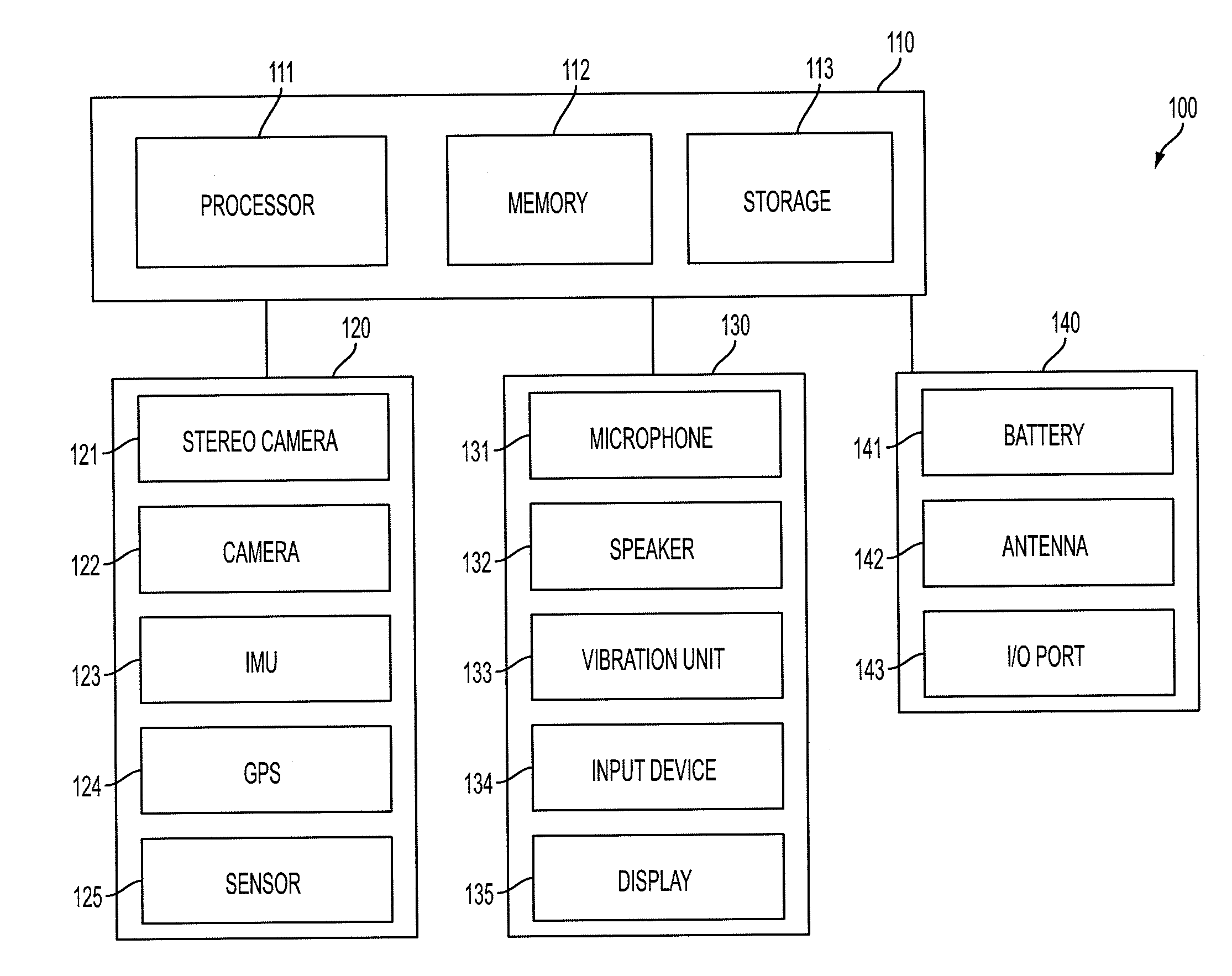

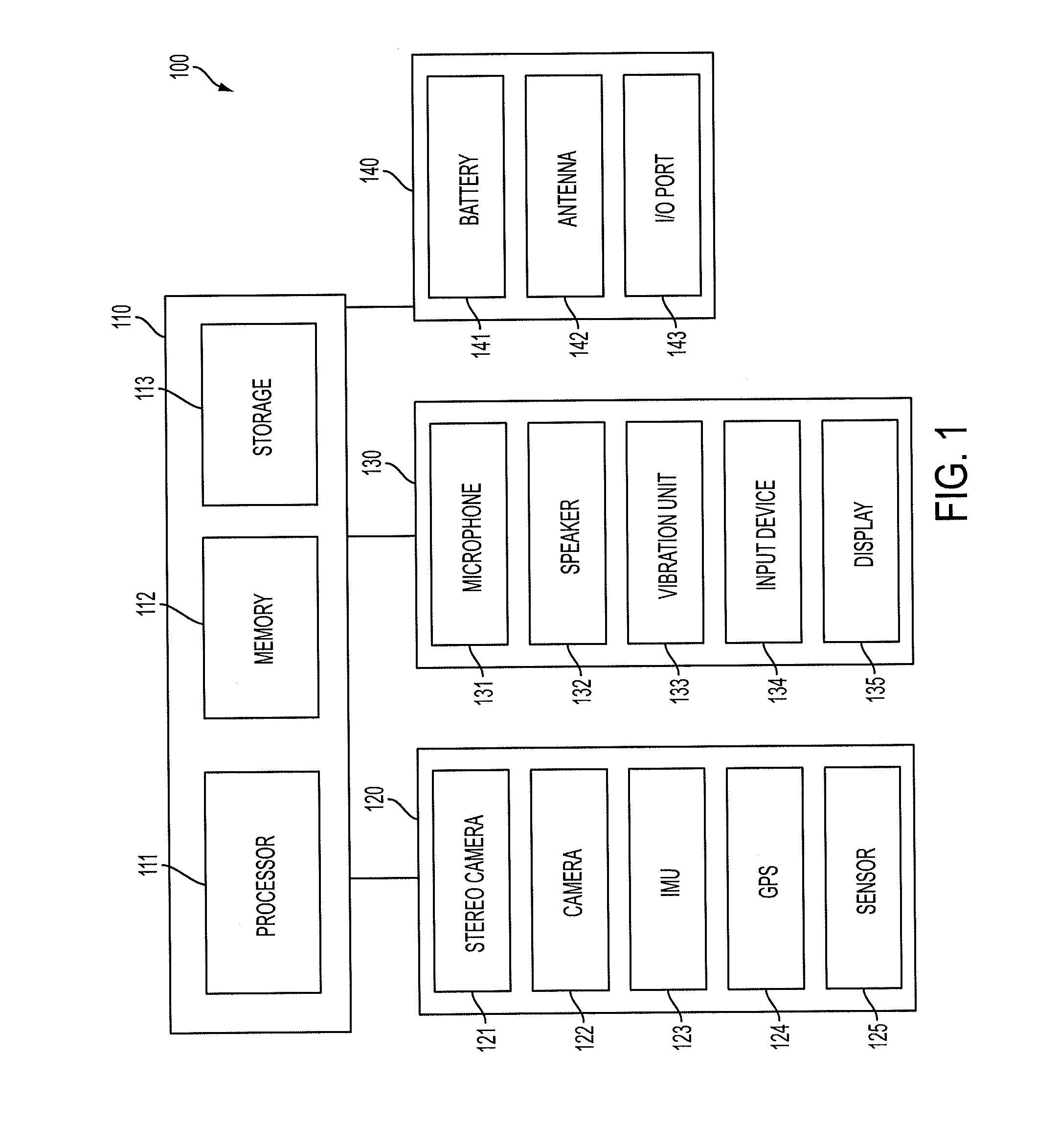

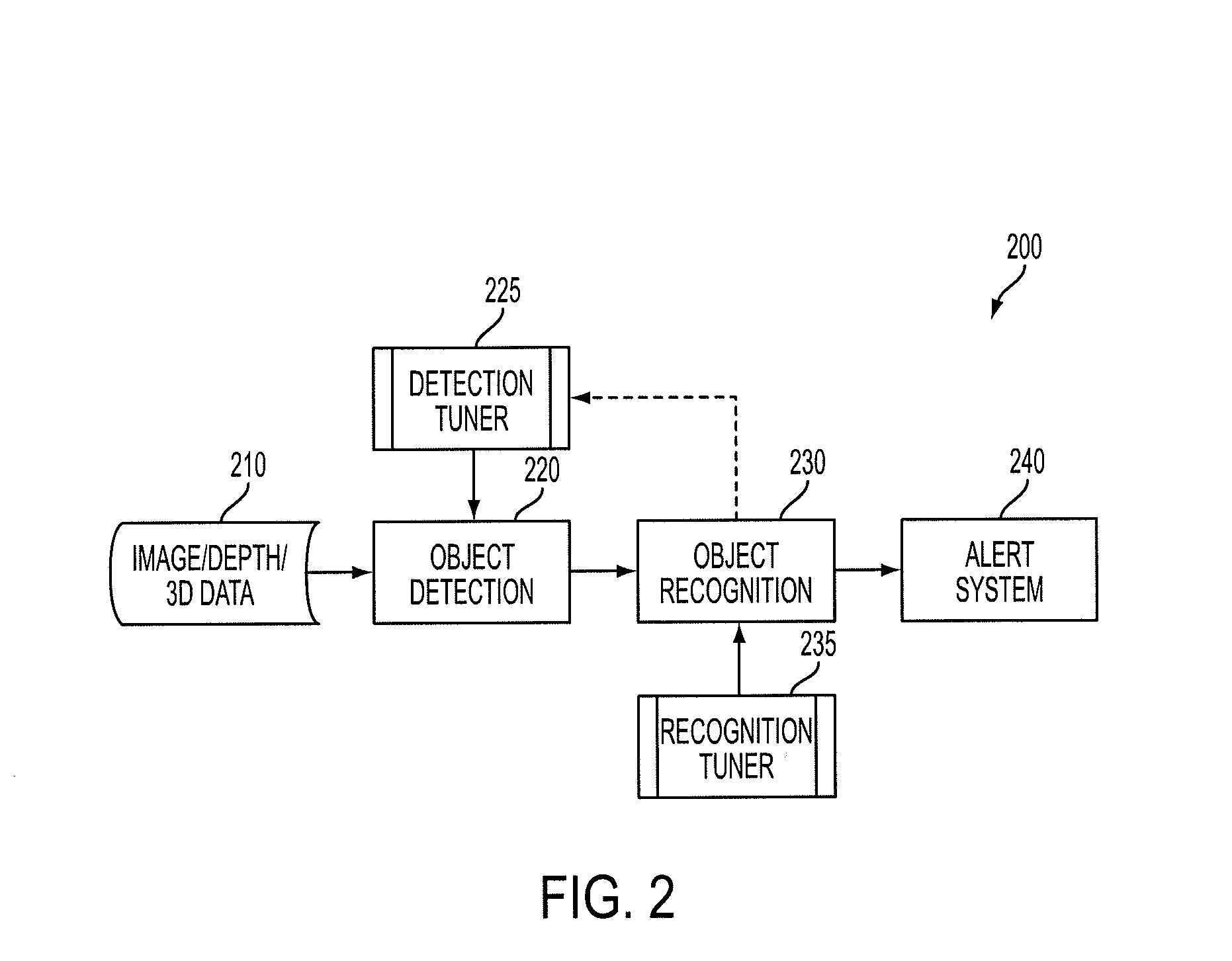

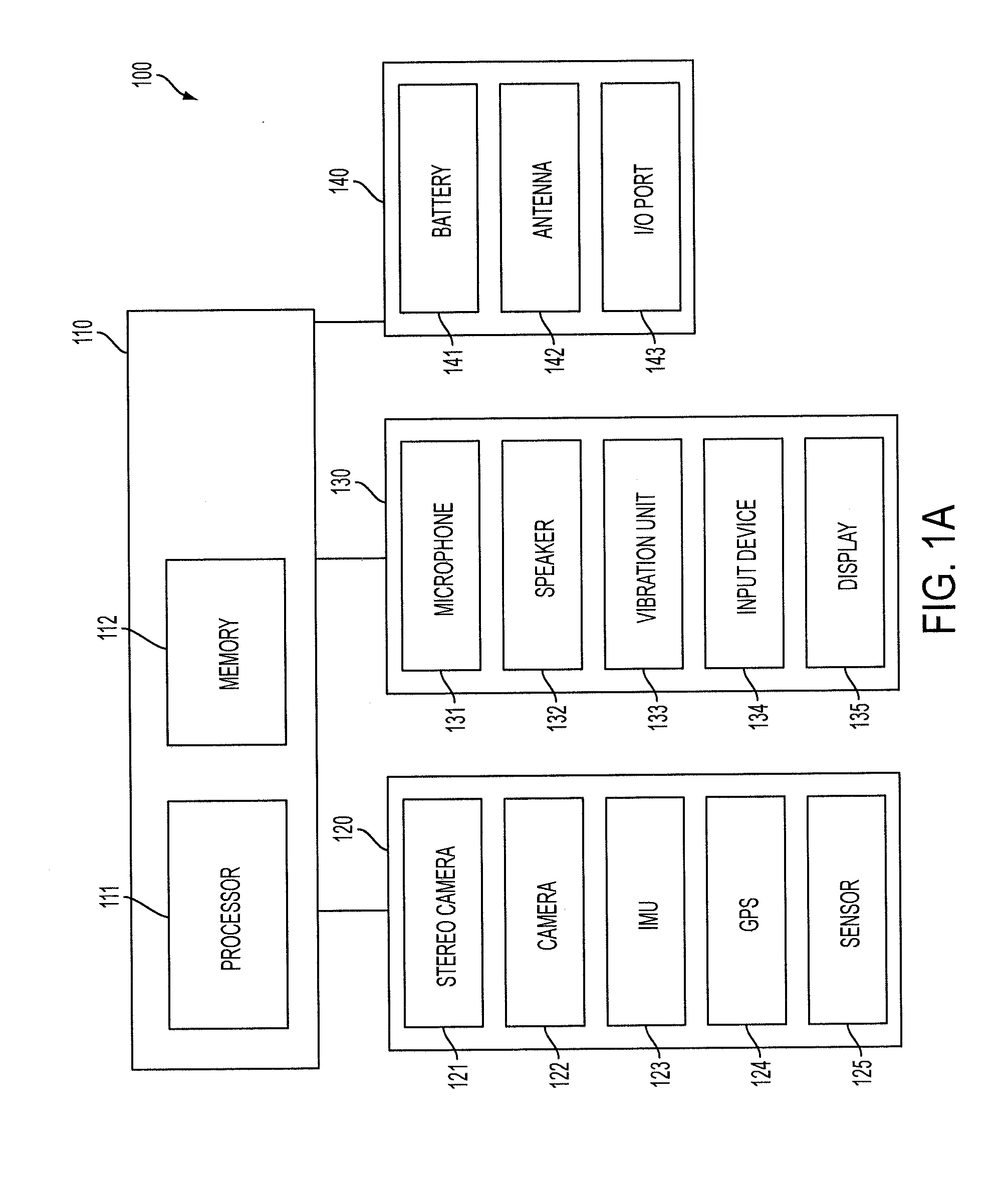

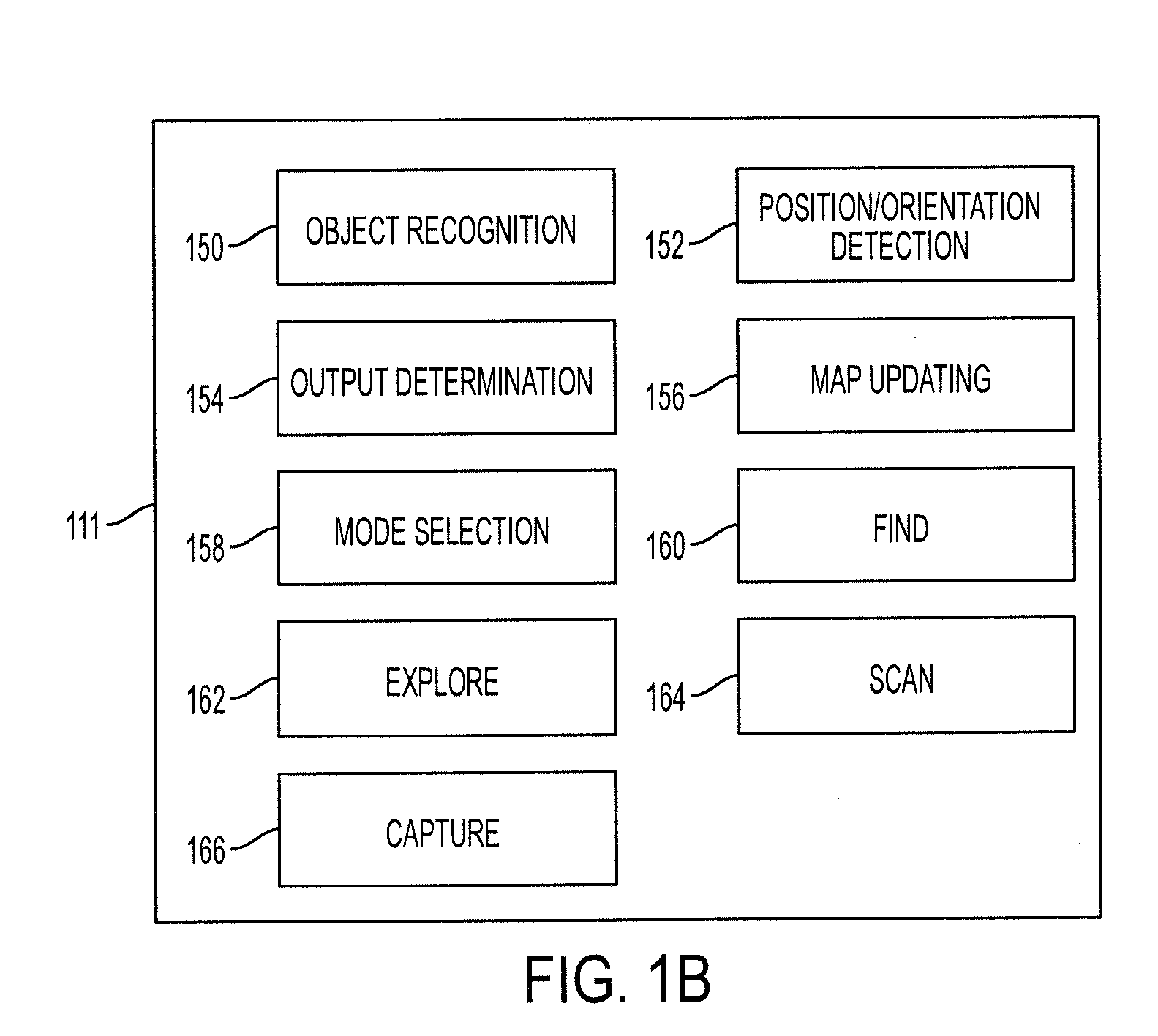

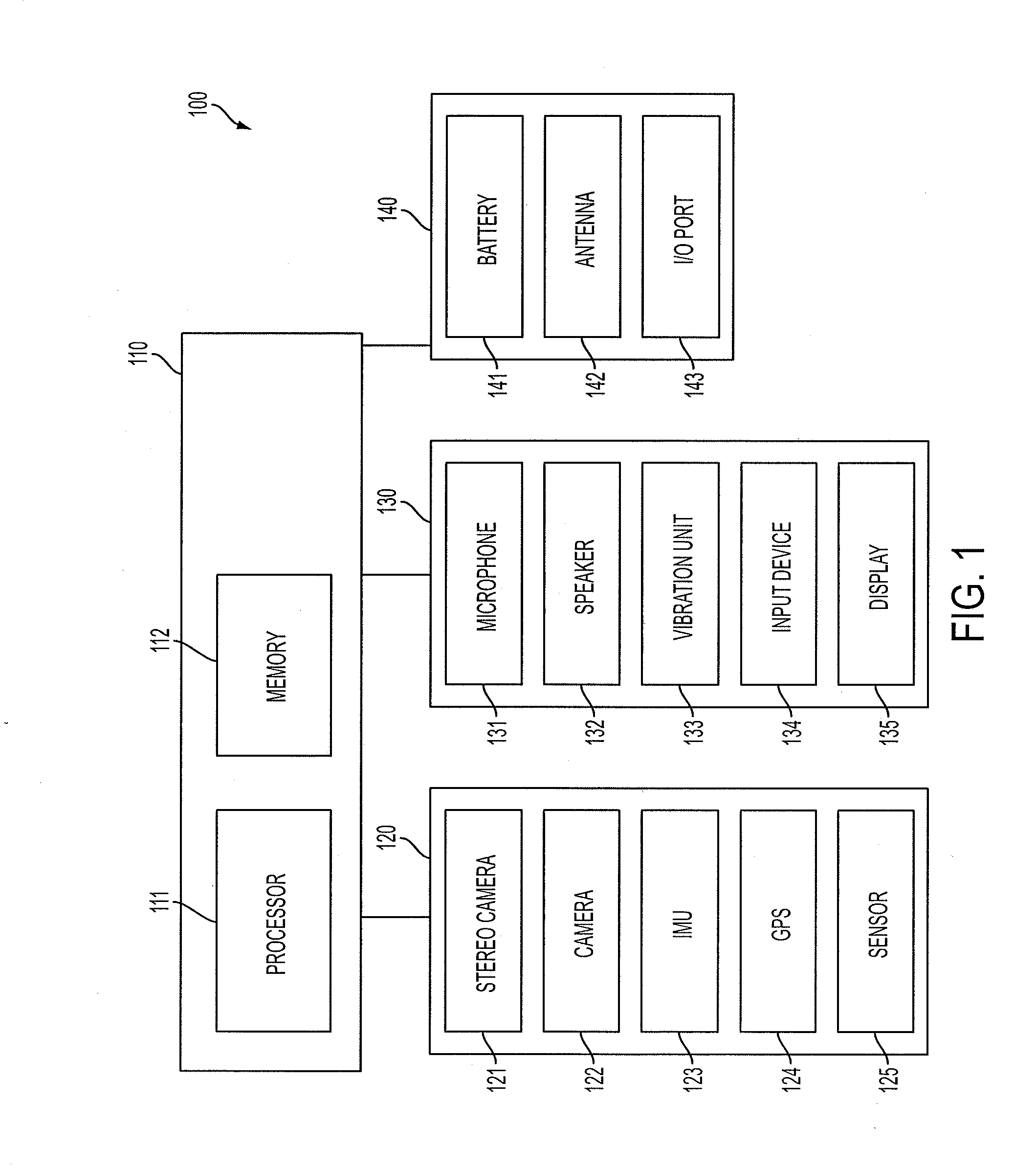

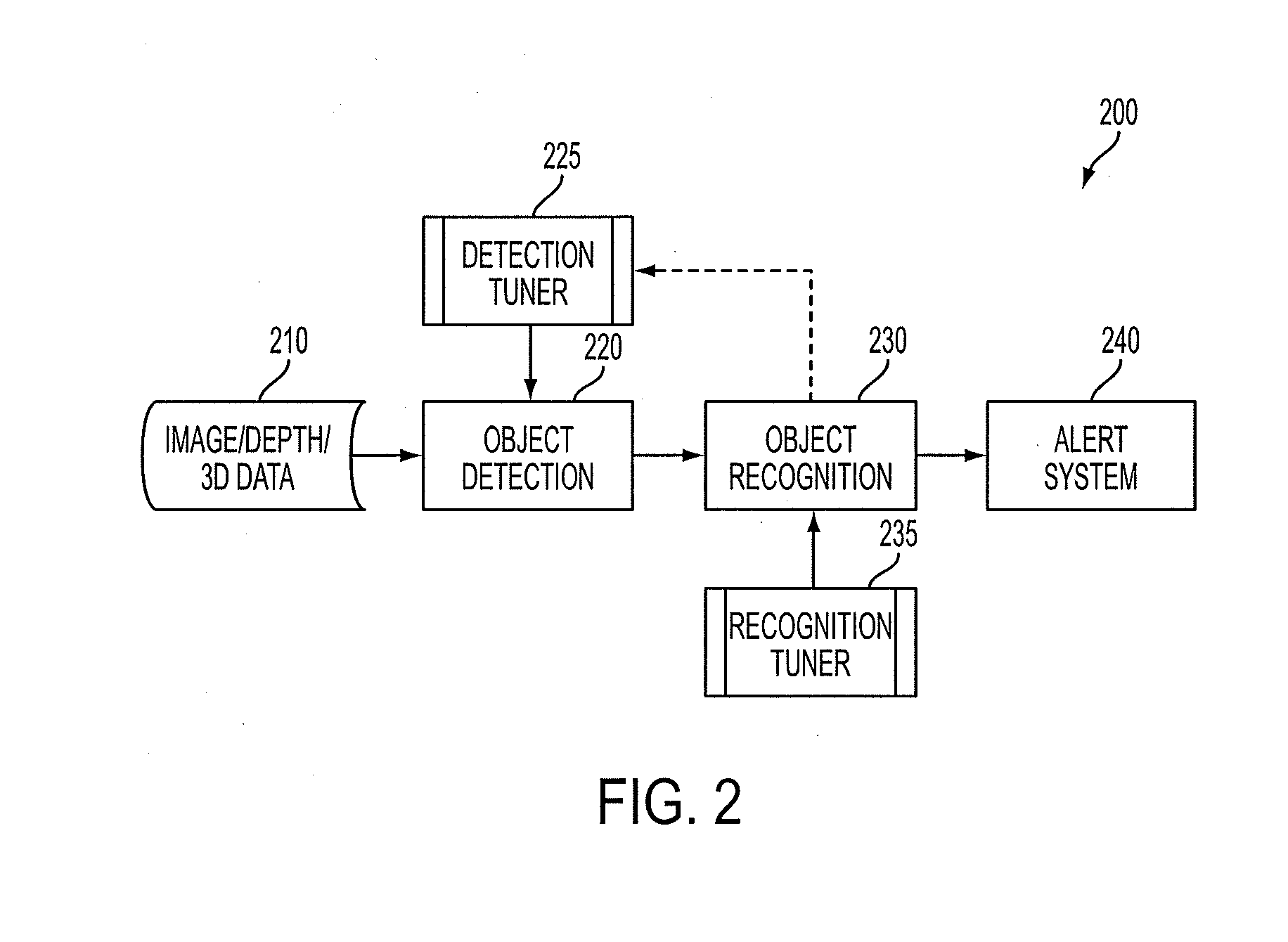

Smart necklace with stereo vision and onboard processing

ActiveUS20150201181A1Enhance environmental awarenessIncreased obstacle avoidanceInput/output for user-computer interactionImage enhancementTouch PerceptionStereo camera

A wearable neck device for providing environmental awareness to a user, the wearable neck device includes a flexible tube. A first stereo pair of cameras is encased in a left portion of the flexible tube and a second stereo pair of cameras is encased in a right portion of the flexible tube. A vibration motor within the flexible tube provides haptic and audio feedback to the user. A processor in the flexible tube recognizes objects from the first stereo pair of cameras and the second stereo pair of cameras. The vibration motor provides haptic and audio feedback of the items or points of interest to the user.

Owner:TOYOTA JIDOSHA KK

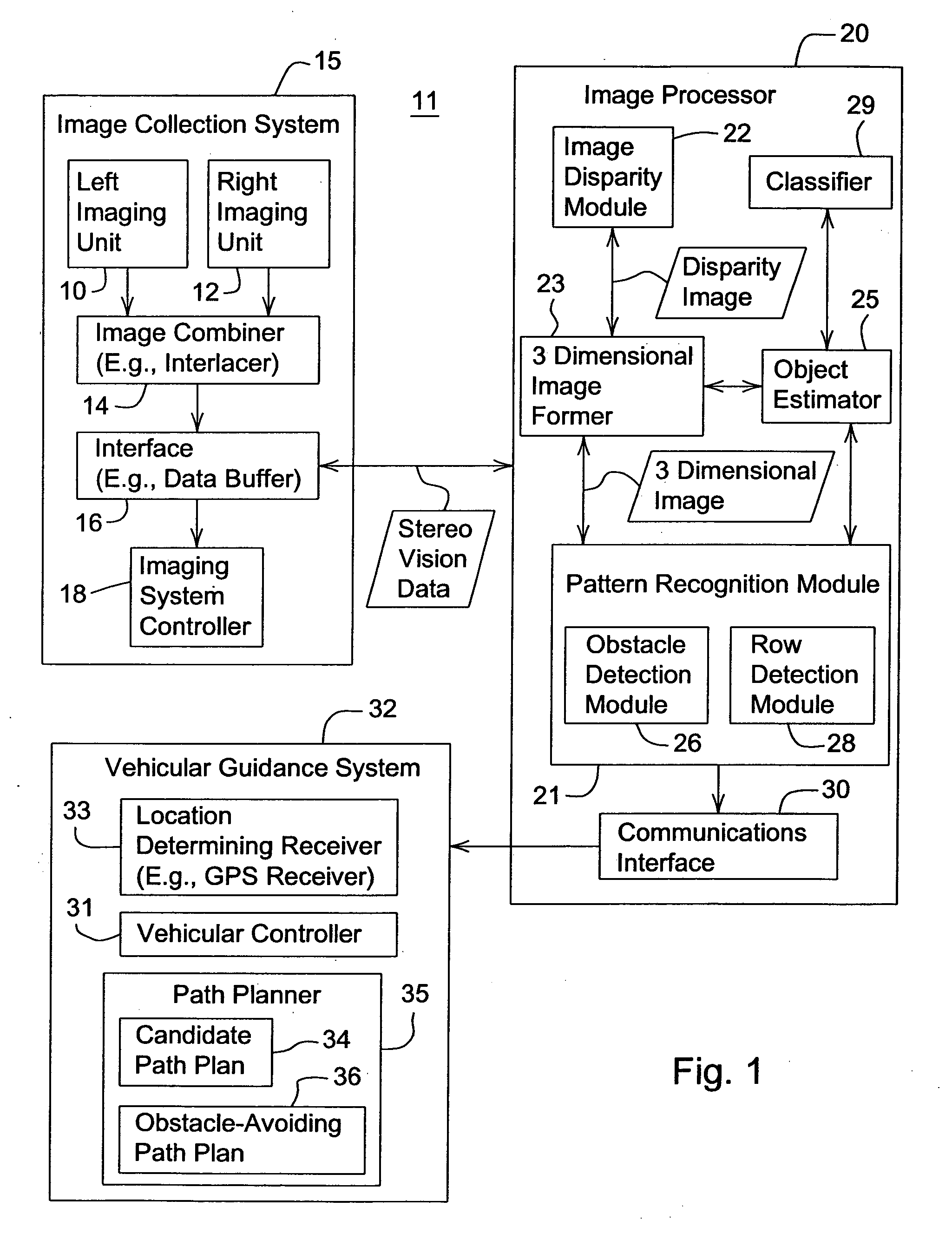

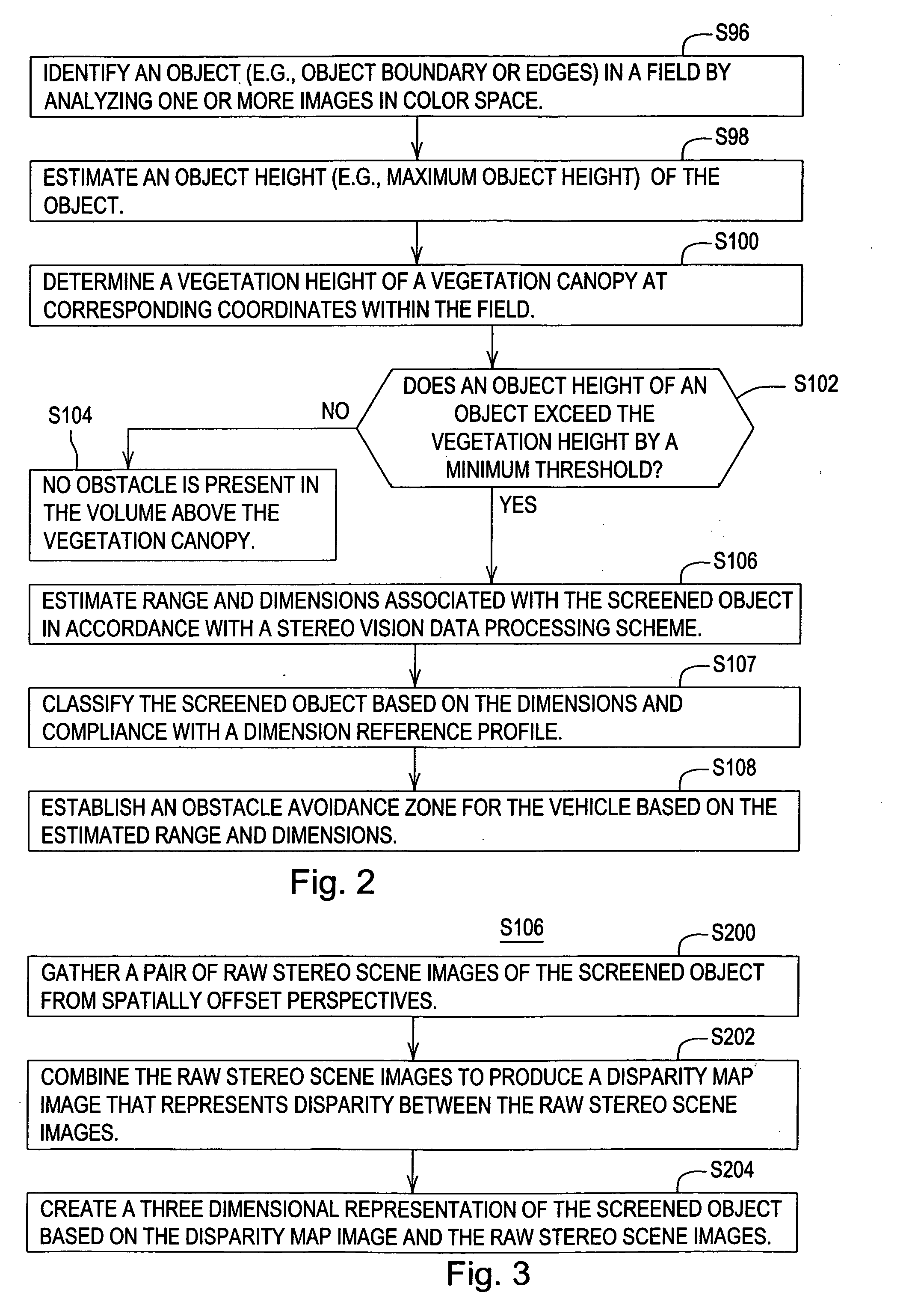

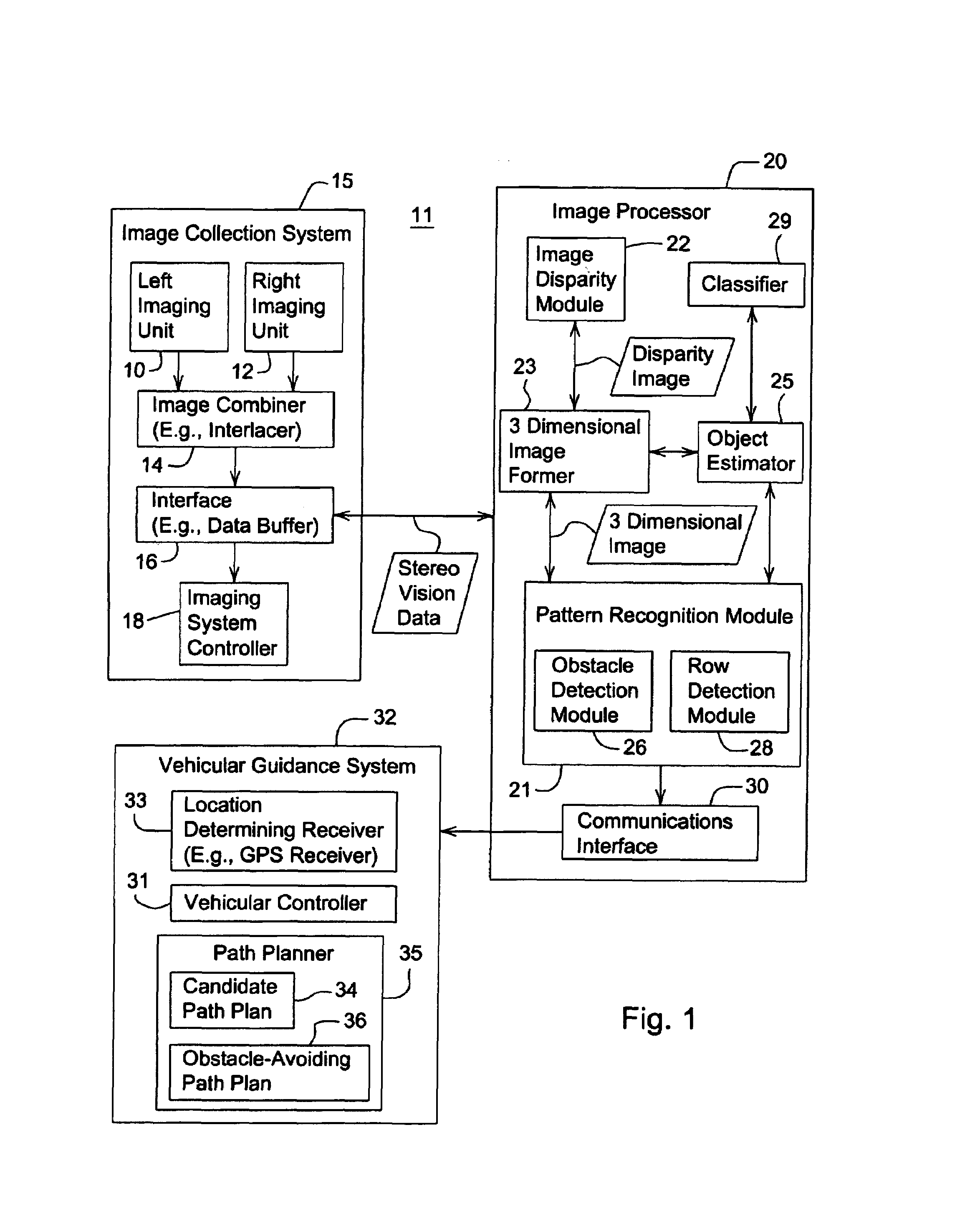

Obstacle detection using stereo vision

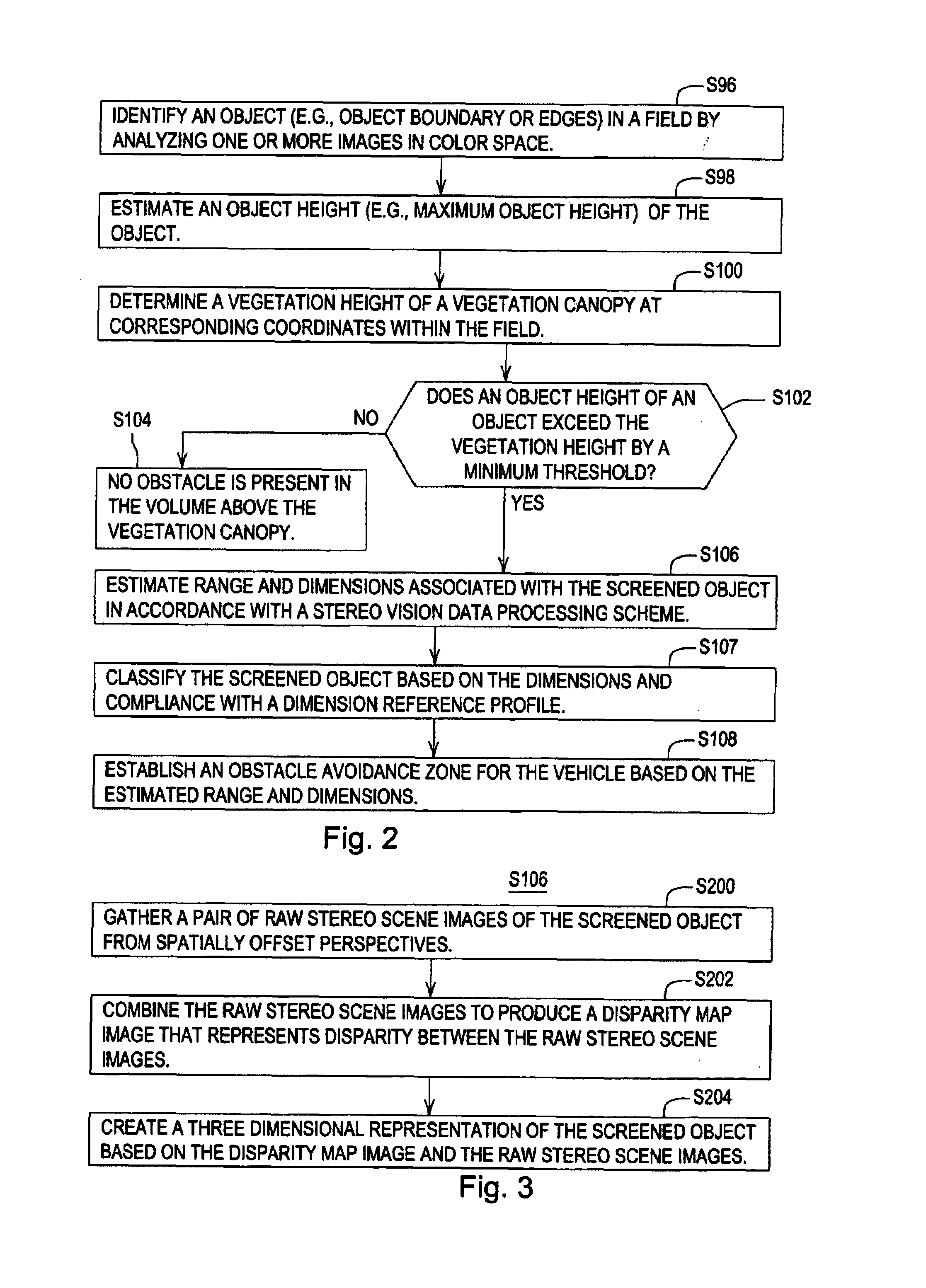

ActiveUS20060095207A1Analogue computers for vehiclesAnalogue computers for trafficVegetation heightObstacle avoidance

A system and method for detecting an obstacle comprises determining a vegetation height of a vegetation canopy at corresponding coordinates within a field. An object height is detected, where the object height of a object exceeds the vegetation height. A range of the object is estimated. The range of the object may be references to a vehicle location, a reference location, or absolute coordinates. Dimensions associated with the object are estimated. An obstacle avoidance zone is established for the vehicle based on the estimated range and dimensions.

Owner:DEERE & CO

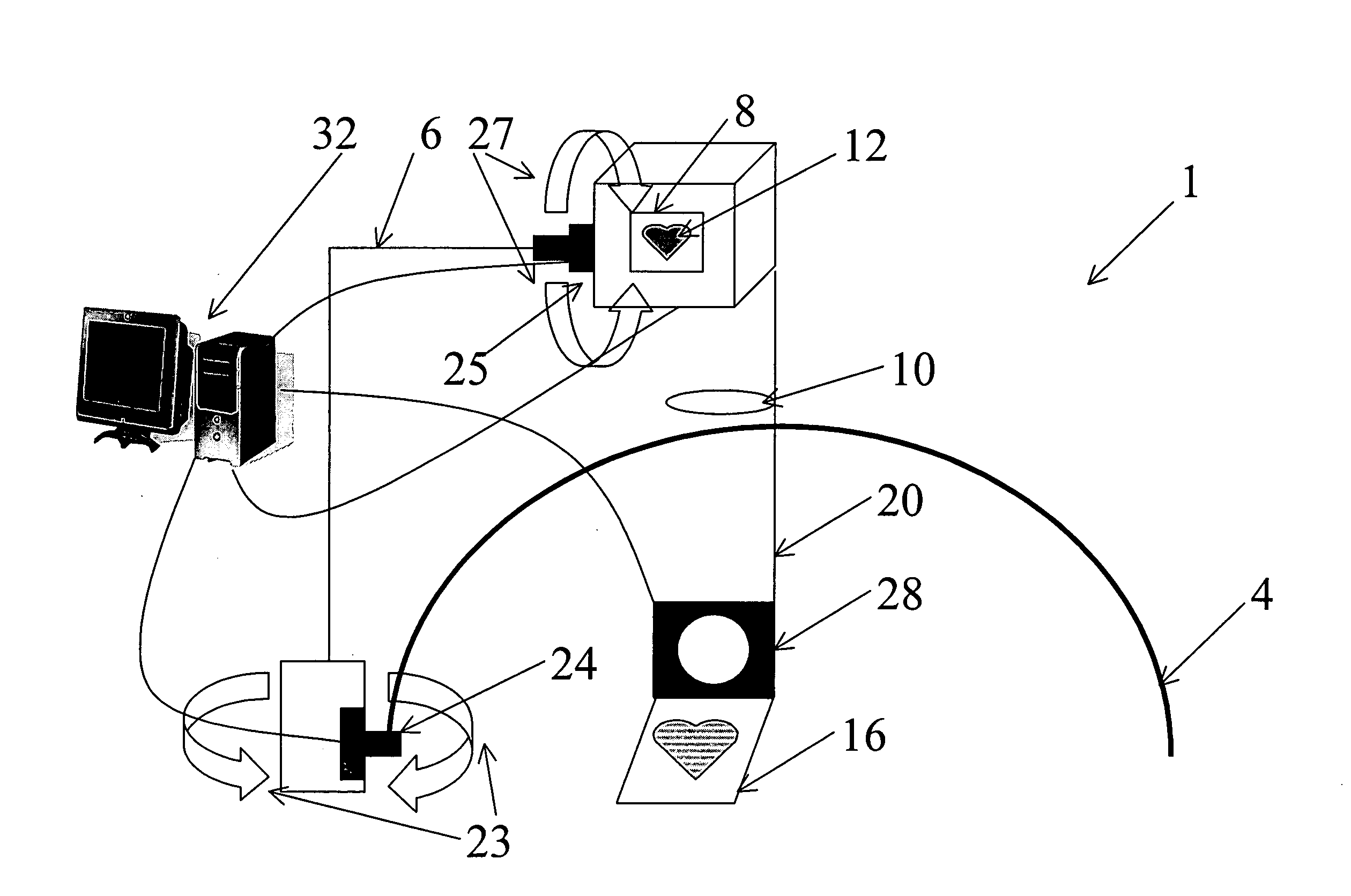

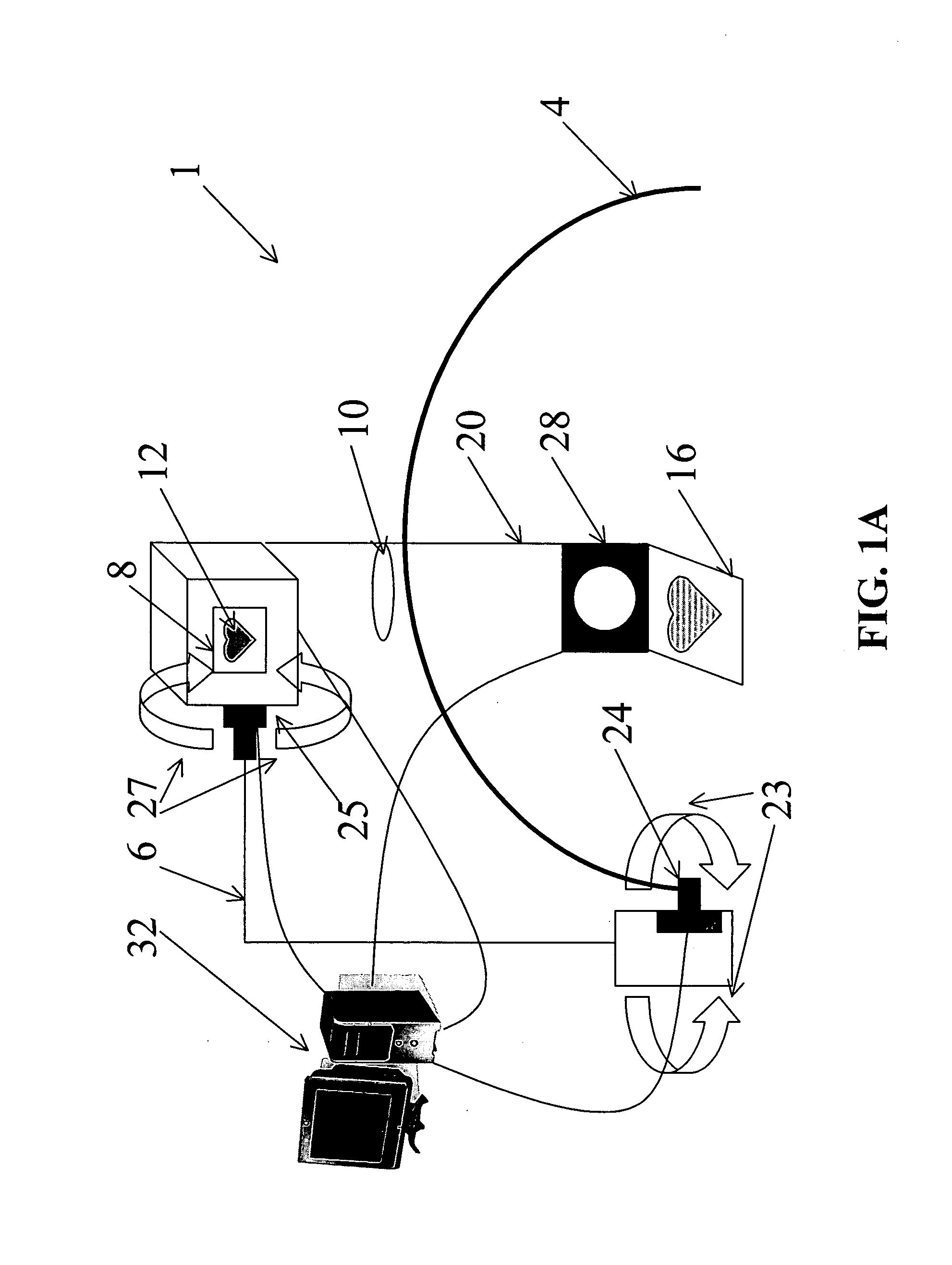

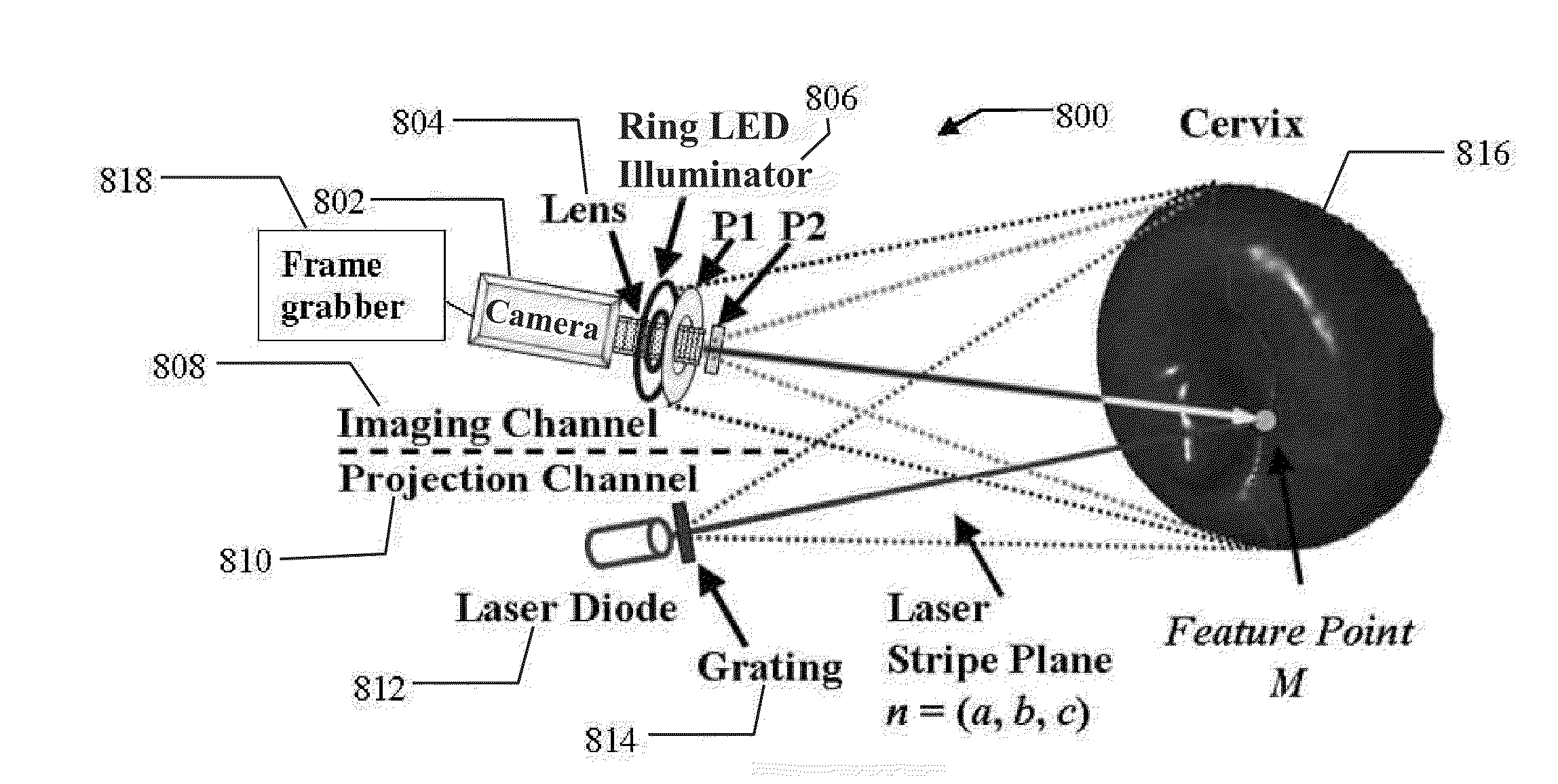

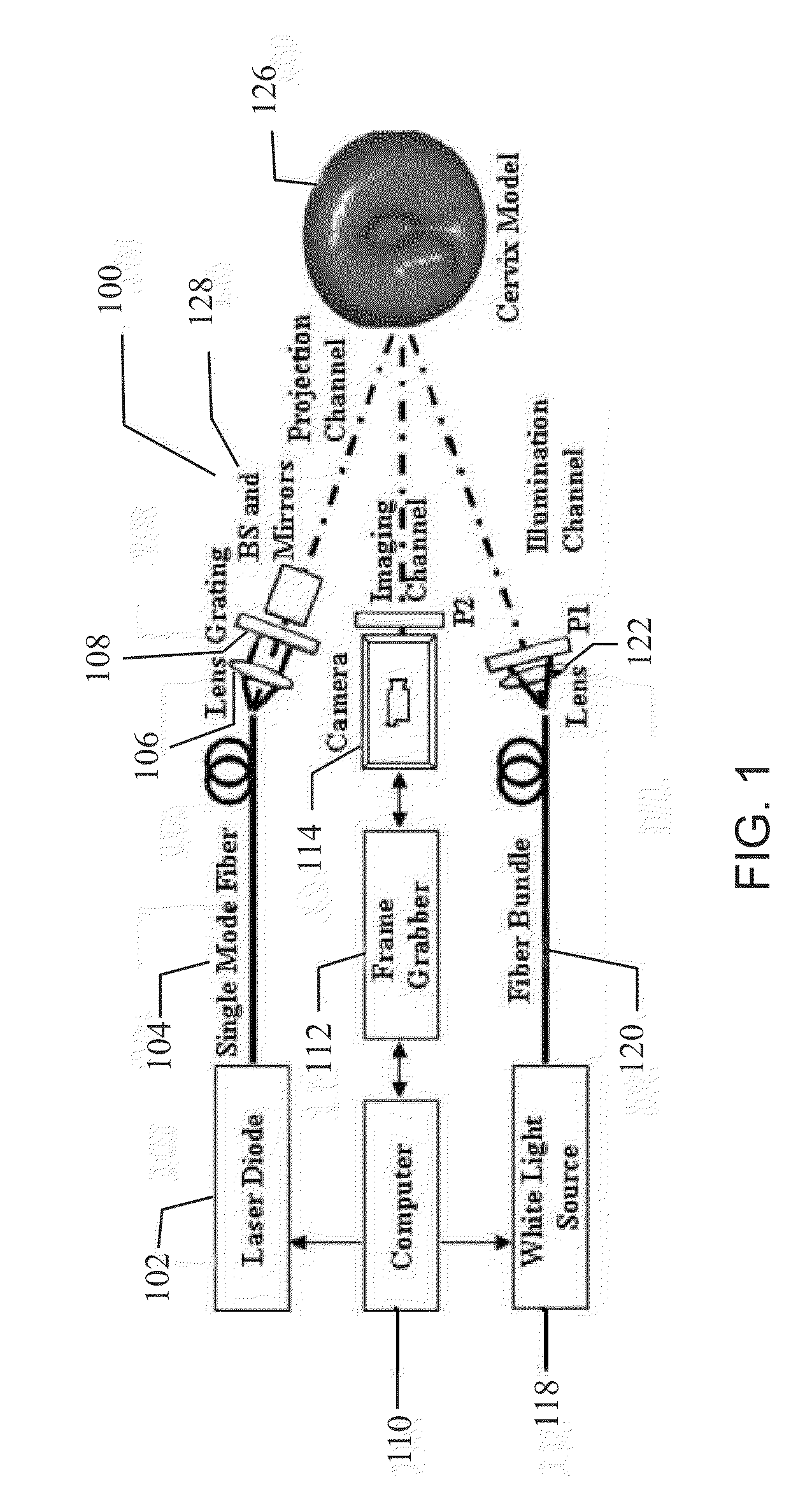

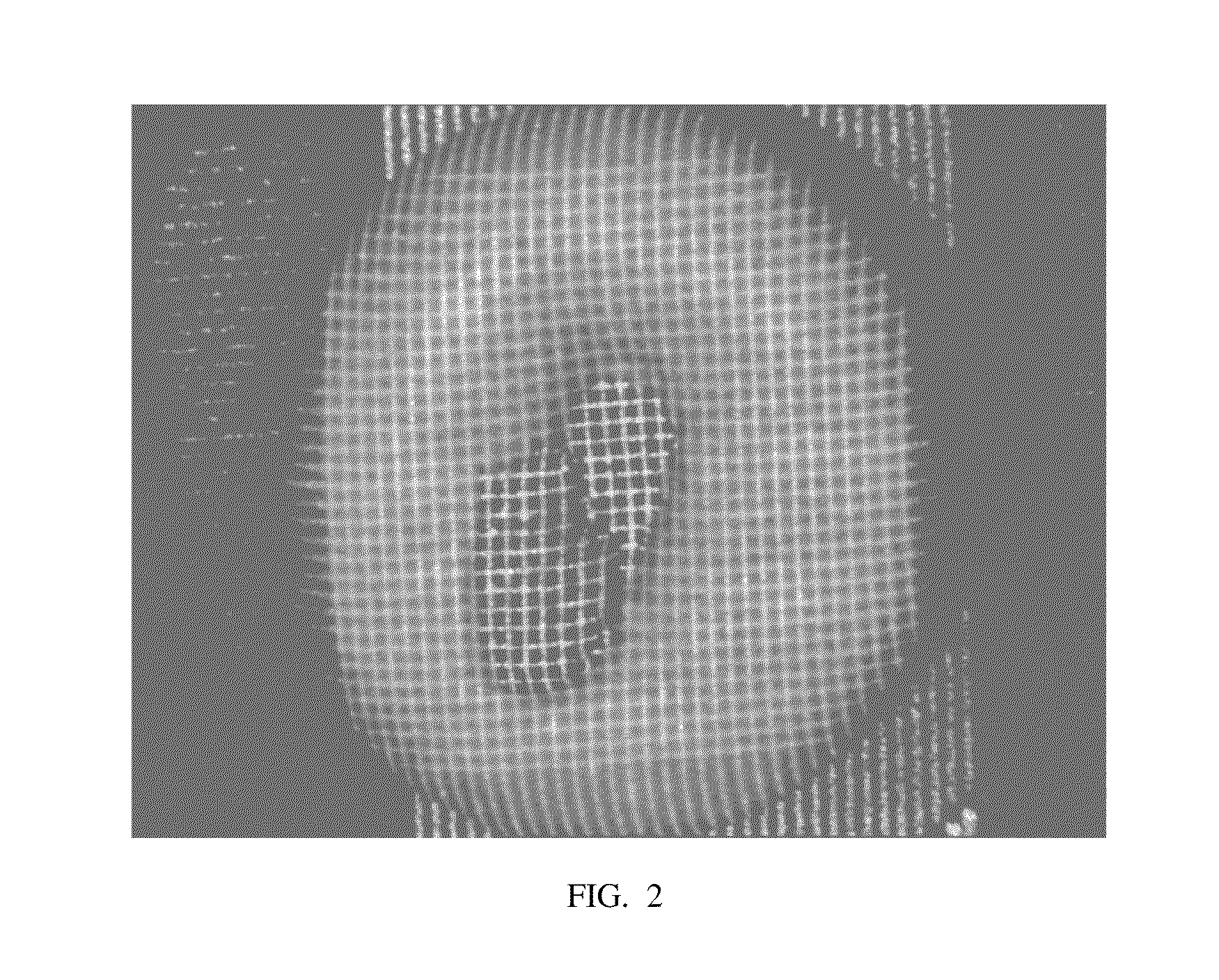

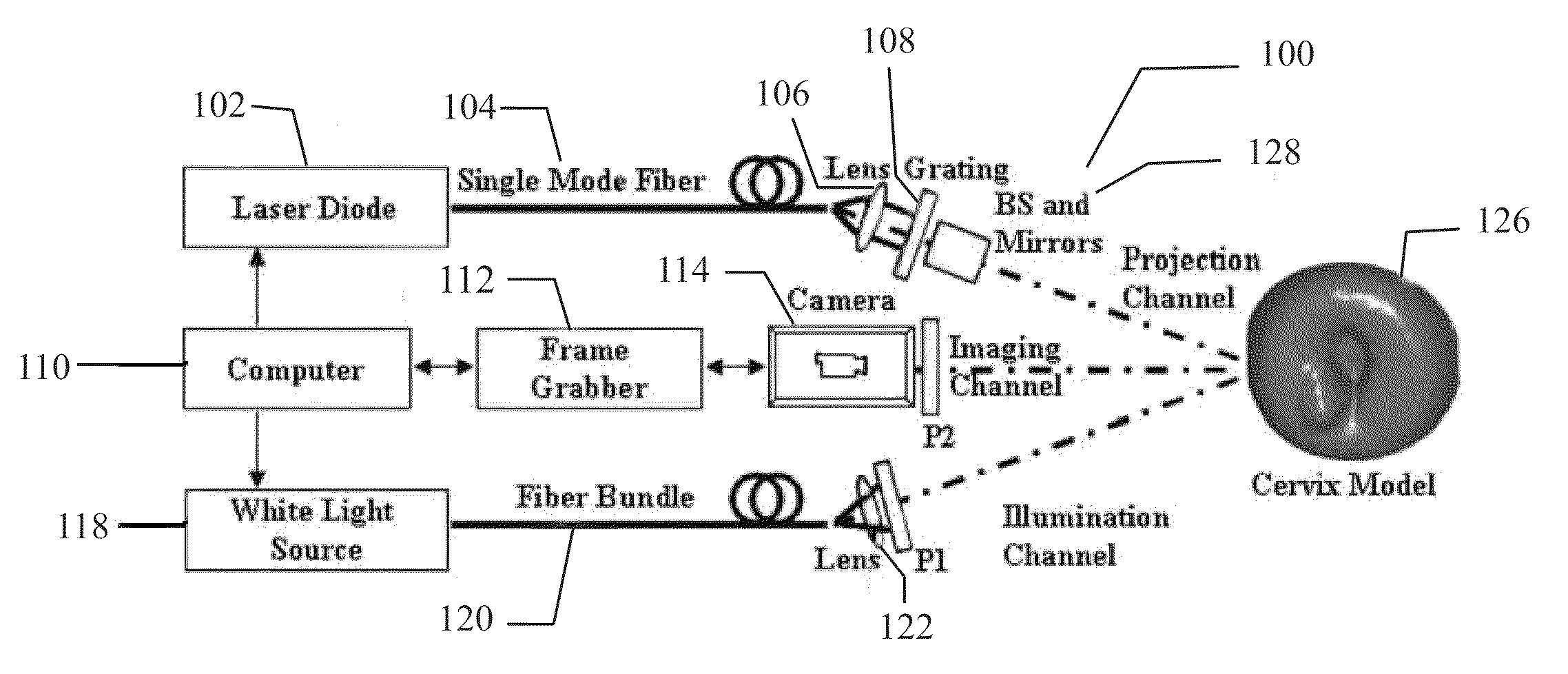

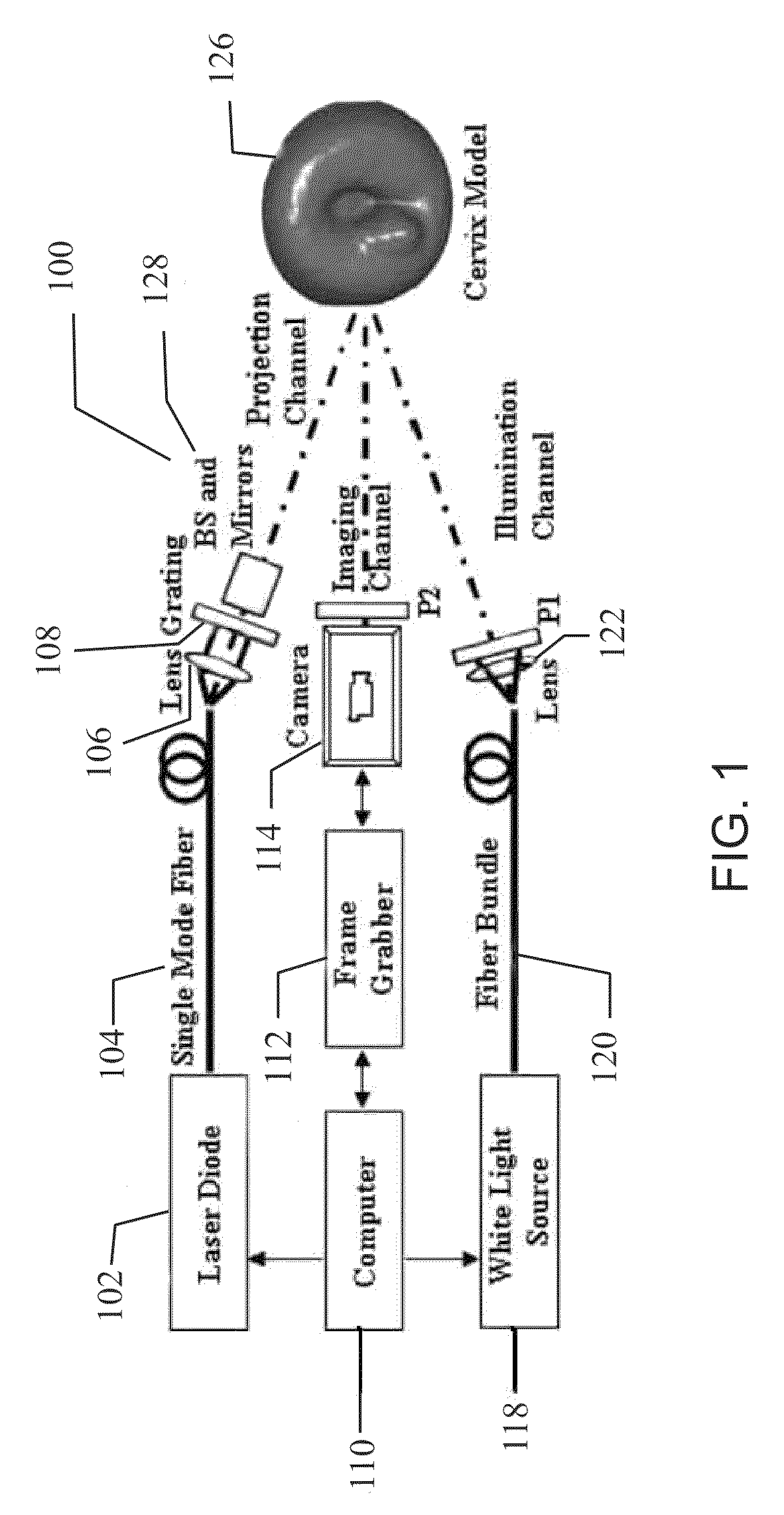

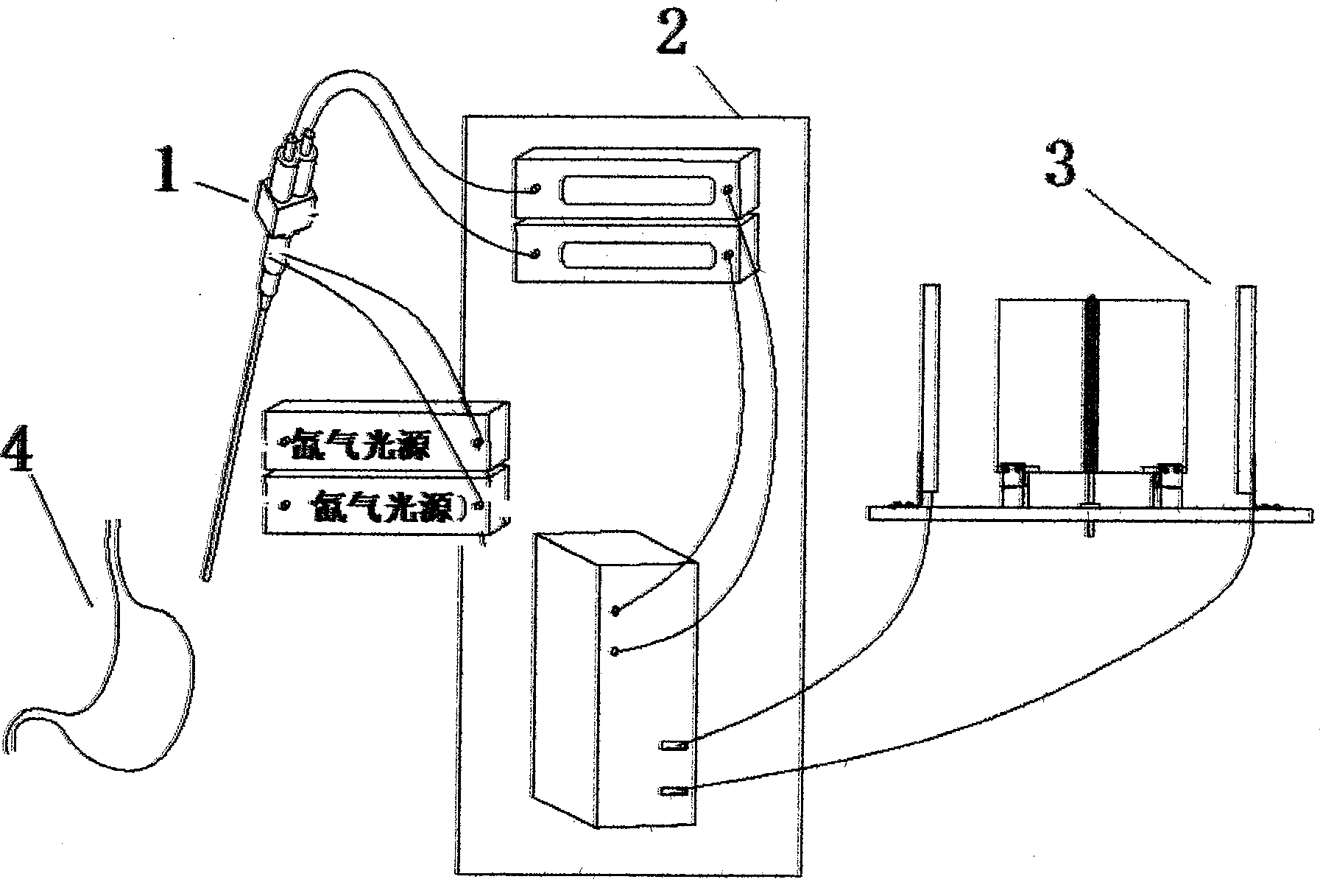

Apparatus and method of optical imaging for medical diagnosis

ActiveUS20100149315A1Reduce complexityImproves shape reconstructionTelevision system detailsSurgeryMedical diagnosisVisual perception

Described herein is a novel 3-D optical imaging system based on active stereo vision and motion tracking for to tracking the motion of patient and for registering the time-sequenced images of suspicious lesions recorded during endoscopic or colposcopic examinations. The system quantifies the acetic acid induced optical signals associated with early cancer development. The system includes at least one illuminating light source for generating light illuminating a portion of an object, at least one structured light source for projecting a structured light pattern on the portion of the object, at least one camera for imaging the portion of the object and the structured light pattern, and means for generating a quantitative measurement of an acetic acid-induced change of the portion of the object.

Owner:THE HONG KONG UNIV OF SCI & TECH

Obstacle detection using stereo vision

ActiveUS7248968B2Analogue computers for vehiclesAnalogue computers for trafficVegetation heightObstacle avoidance

Owner:DEERE & CO

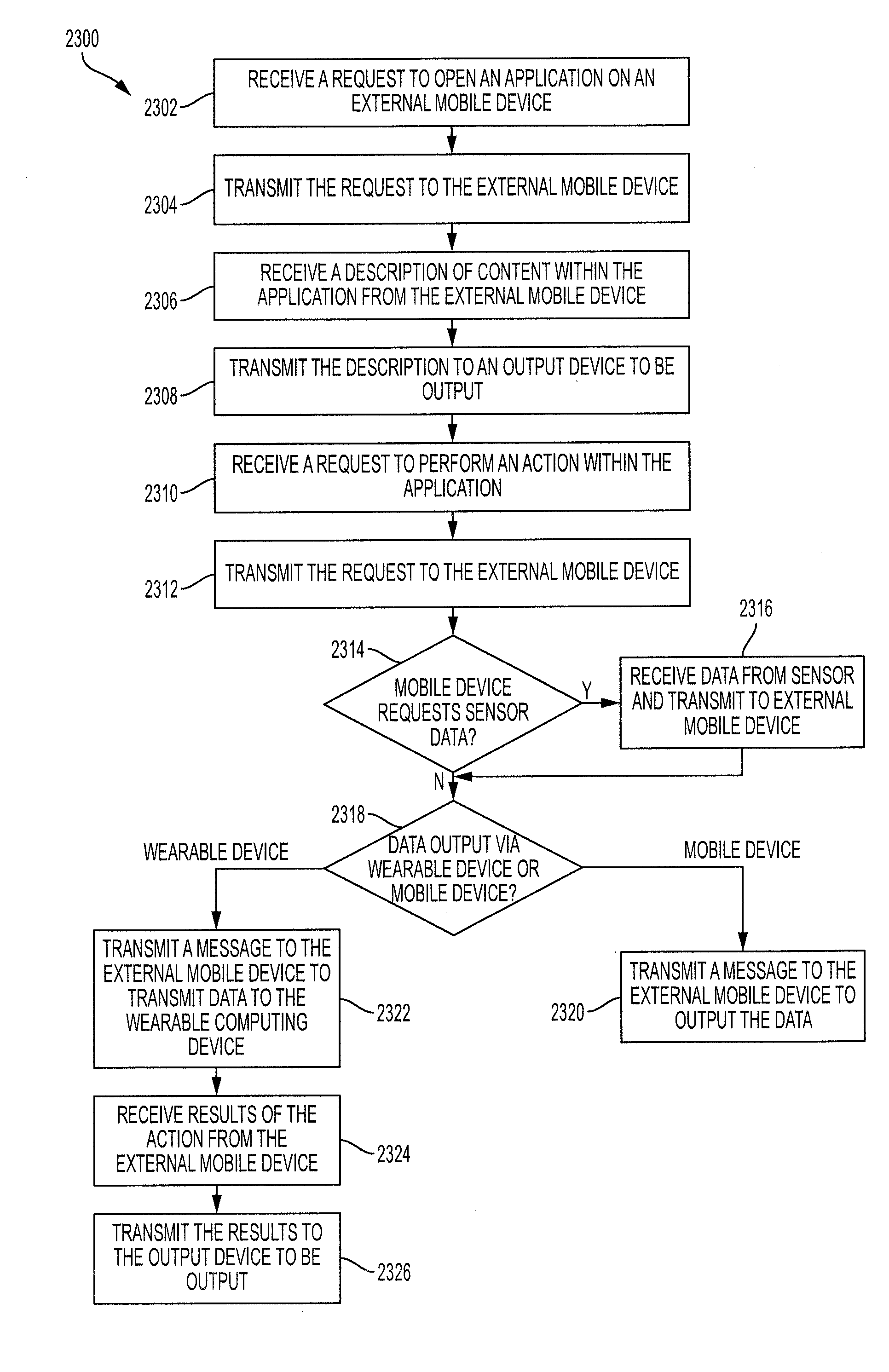

Smart necklace with stereo vision and onboard processing

A wearable computing device includes an input / output port for communicating with an external mobile device, a microphone for receiving speech data, a speaker for outputting audio feedback data, and a mobile processor. The mobile processor is designed to receive detected speech data corresponding to a request to open an application on the external mobile device, transmit the request to the external mobile device, and receive a description of content within the application from the external mobile device. The mobile processor is also designed to transmit the description to the speaker to be output, receive detected speech data corresponding to a request for the external mobile device to perform an action within the application, and transmit the request to the external mobile device. The mobile processor is also designed to receive results of the action from the external mobile device and transmit the results to the speaker to be output.

Owner:TOYOTA MOTOR ENGINEERING & MANUFACTURING NORTH AMERICA

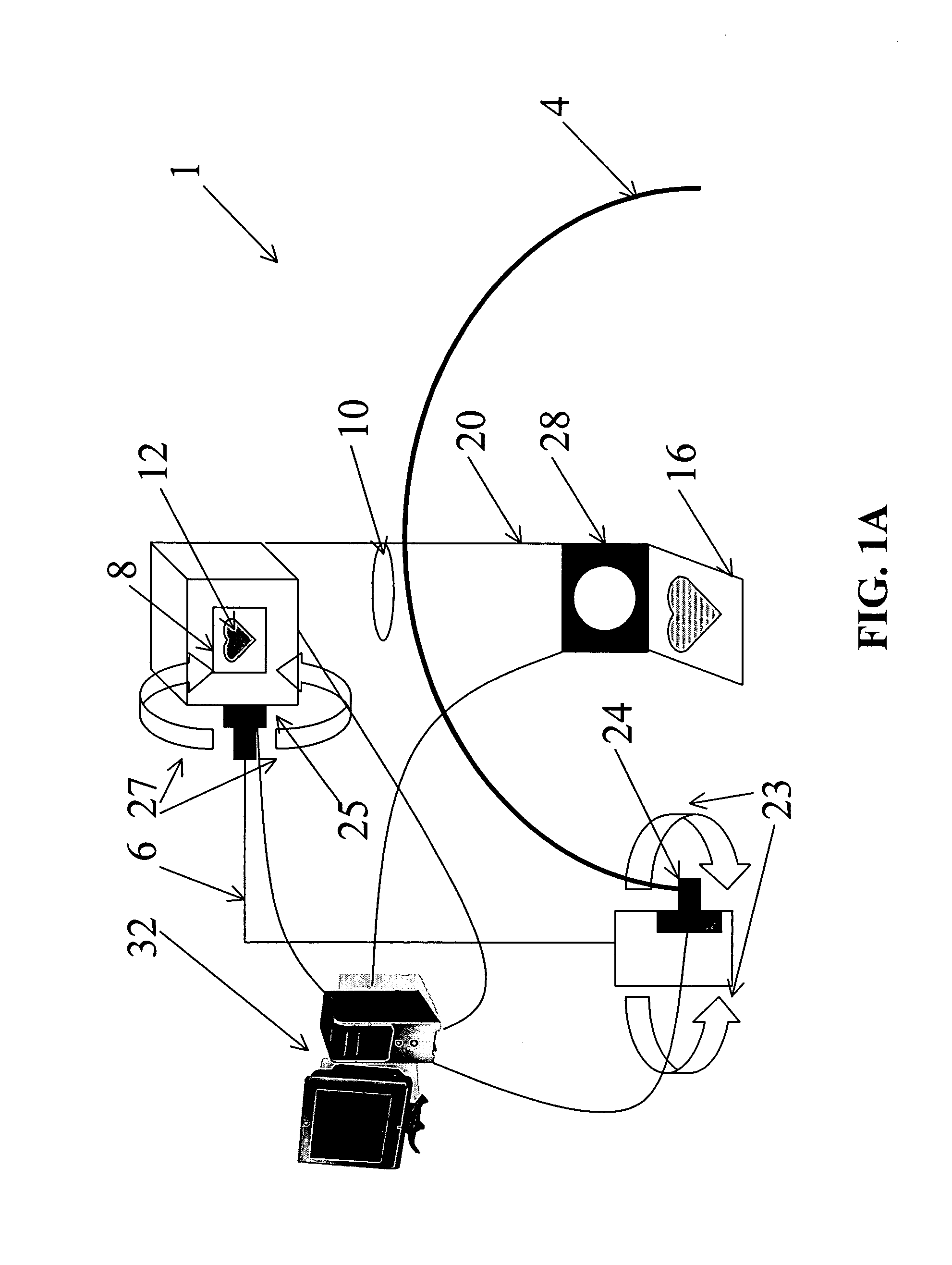

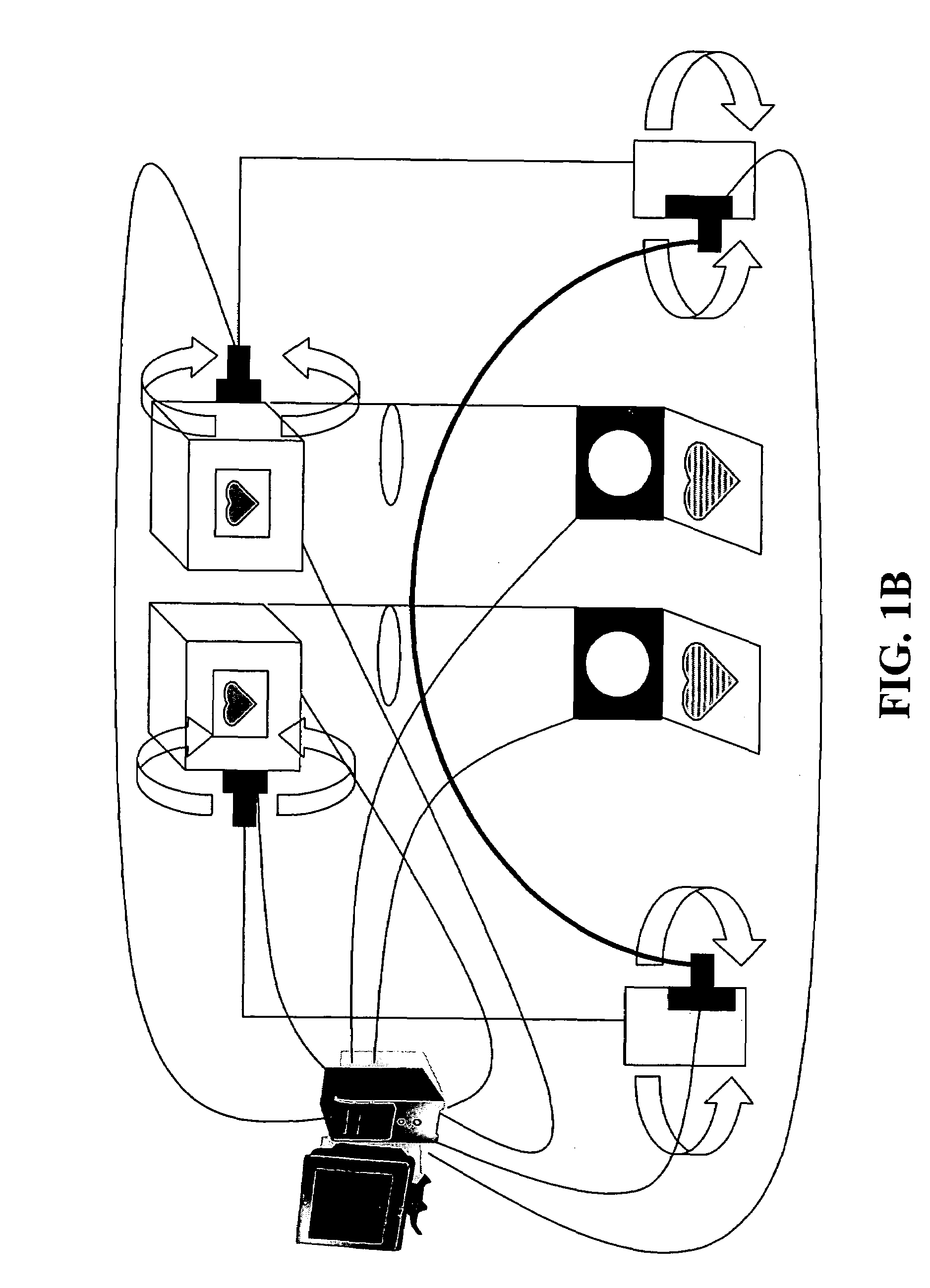

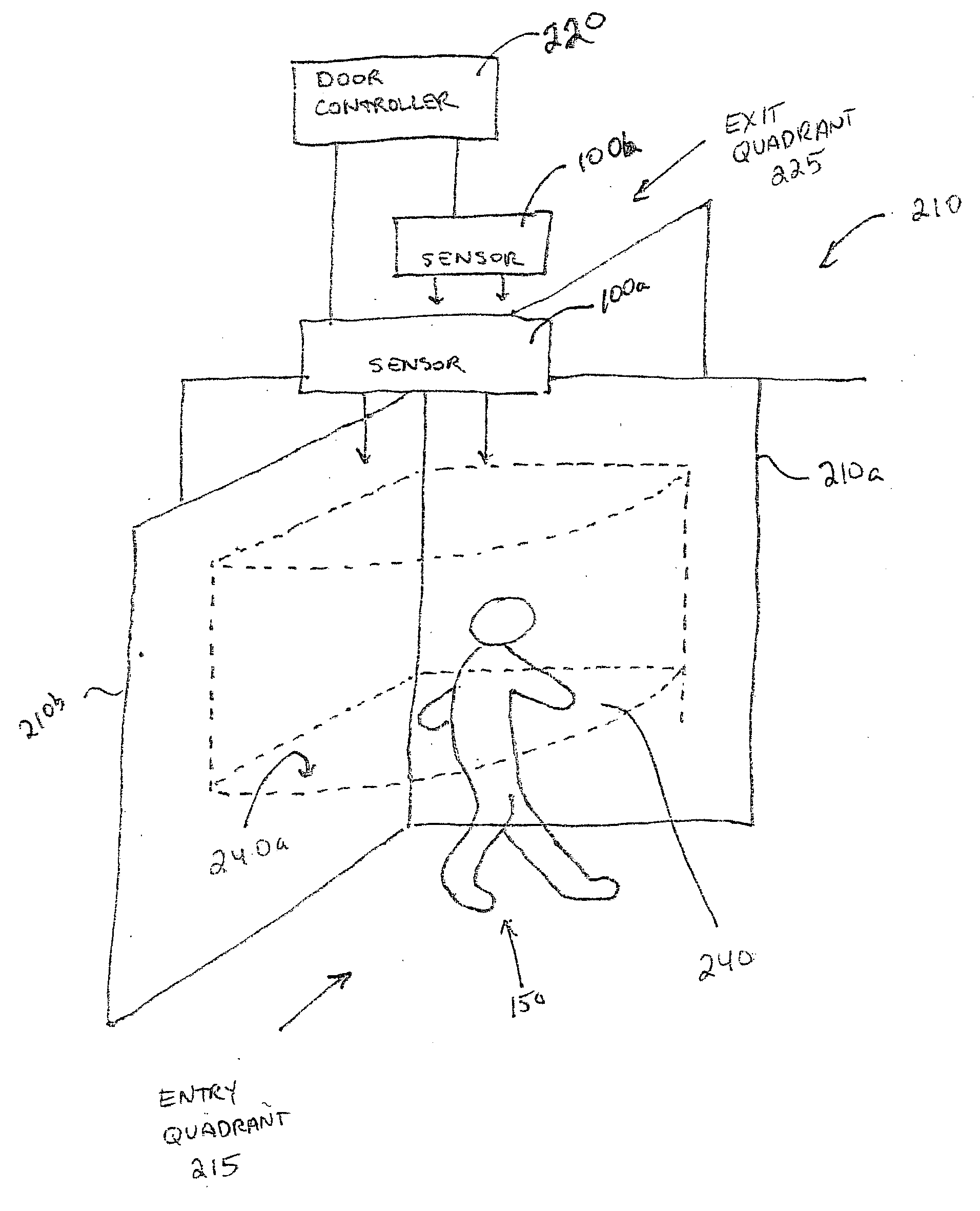

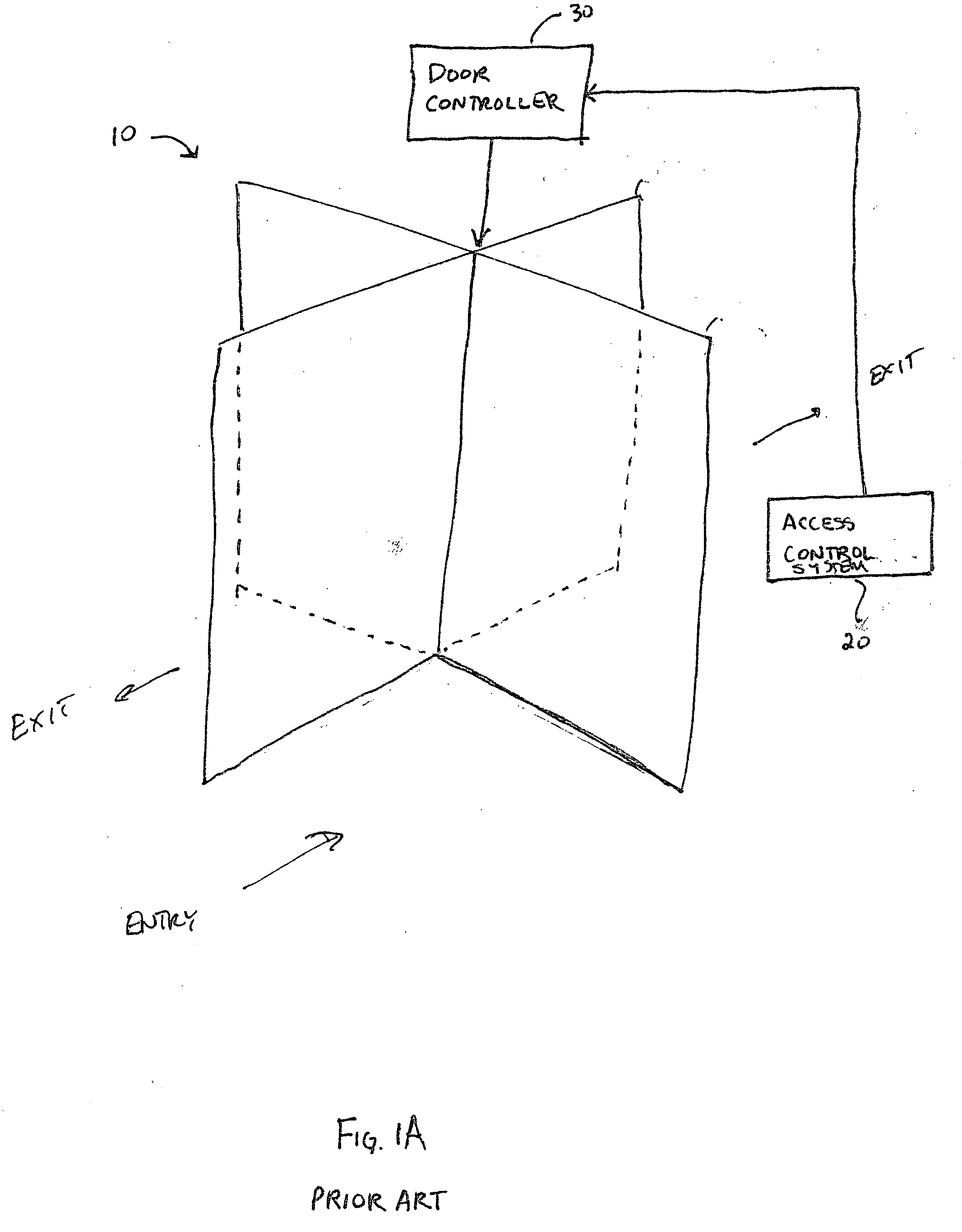

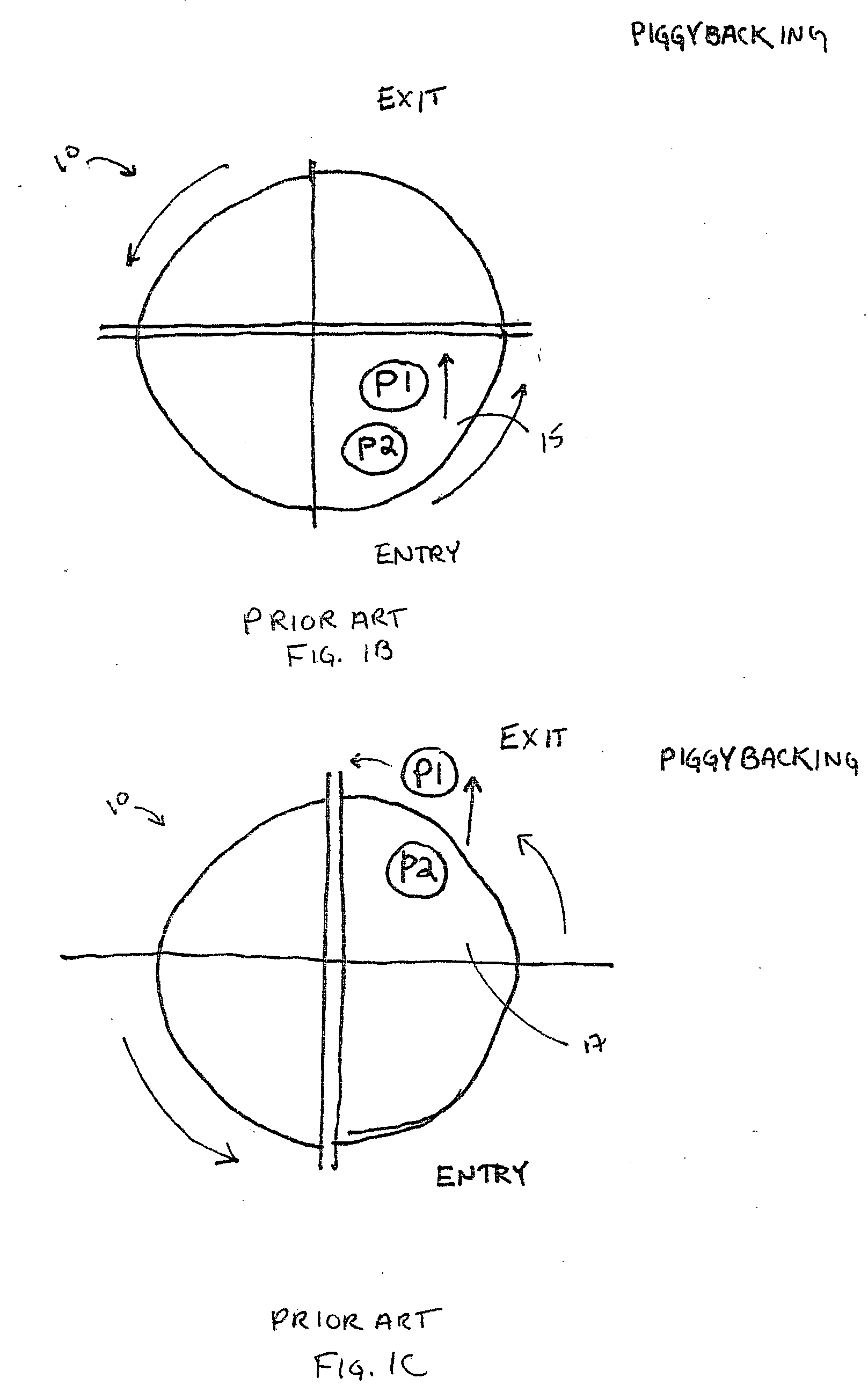

Method and system for enhanced portal security through stereoscopy

Enhanced portal security is provided through stereoscopy, including a stereo door sensor for detecting and optionally preventing access violations, such as piggybacking and tailgating. A portal security system can include a 3D imaging system that generates a target volume from plural 2D images of a field of view about a portal; and a processor that detects and tracks people candidates moving through the target volume to detect a portal access event.

Owner:COGNEX TECH & INVESTMENT

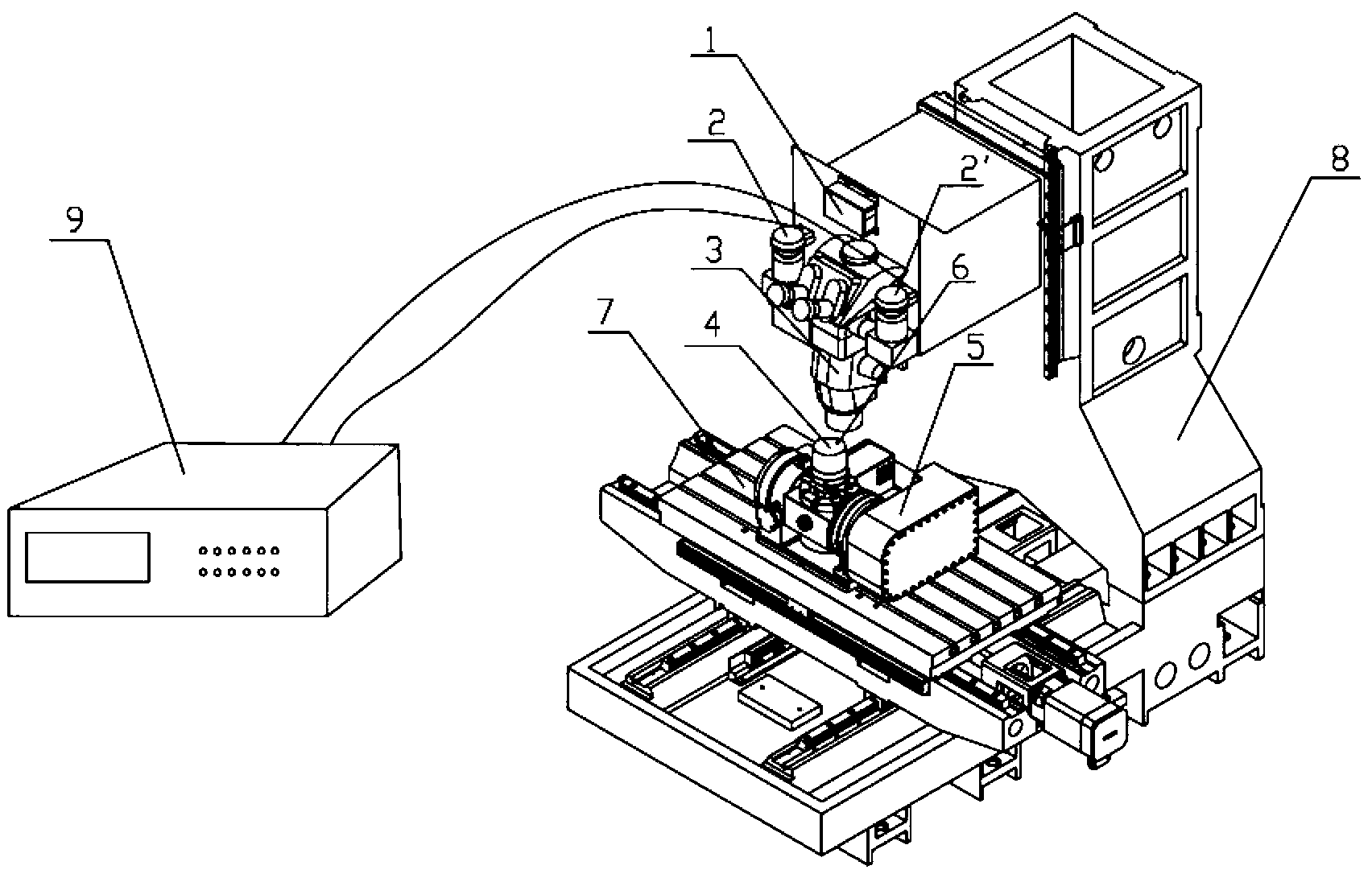

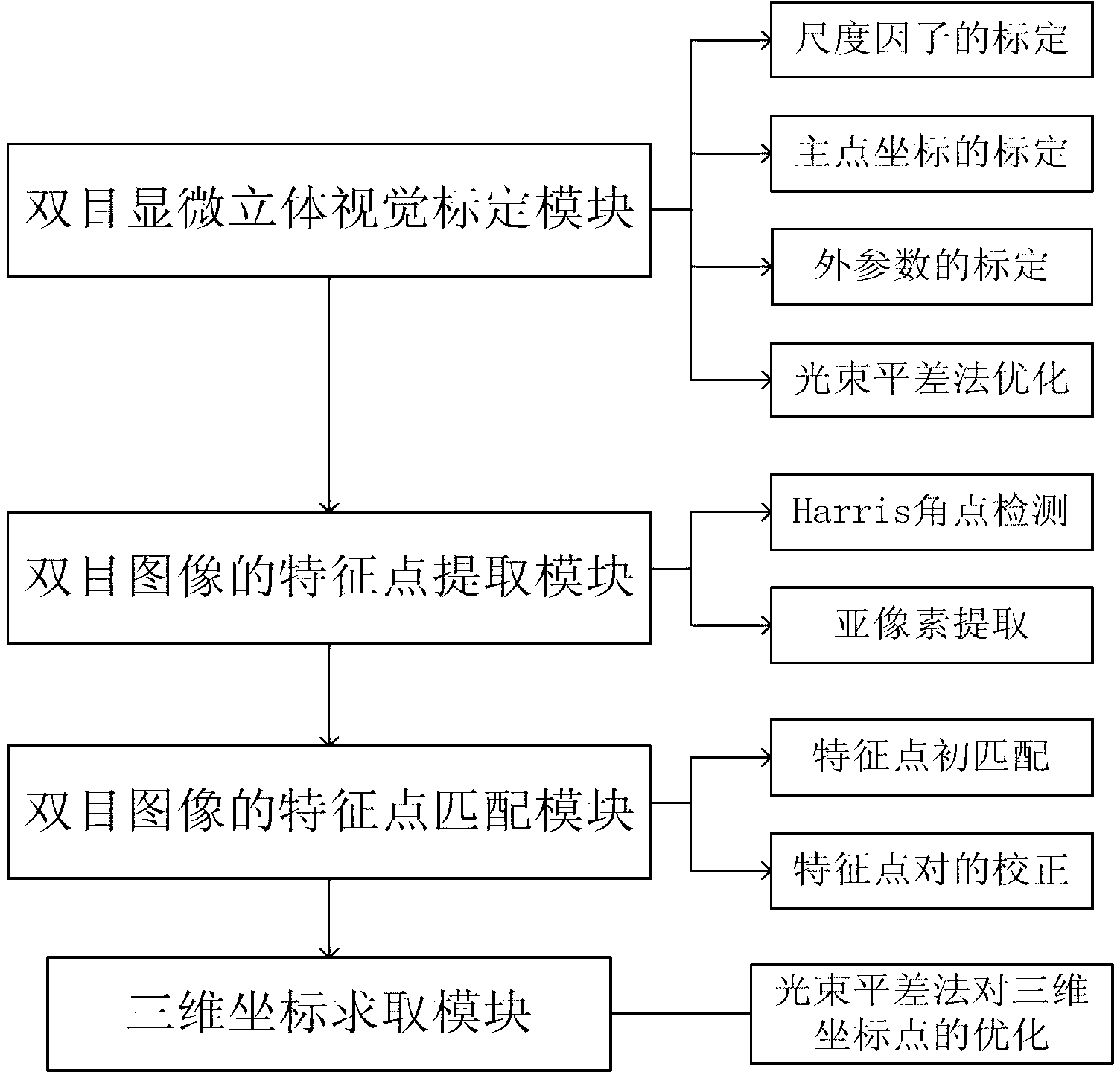

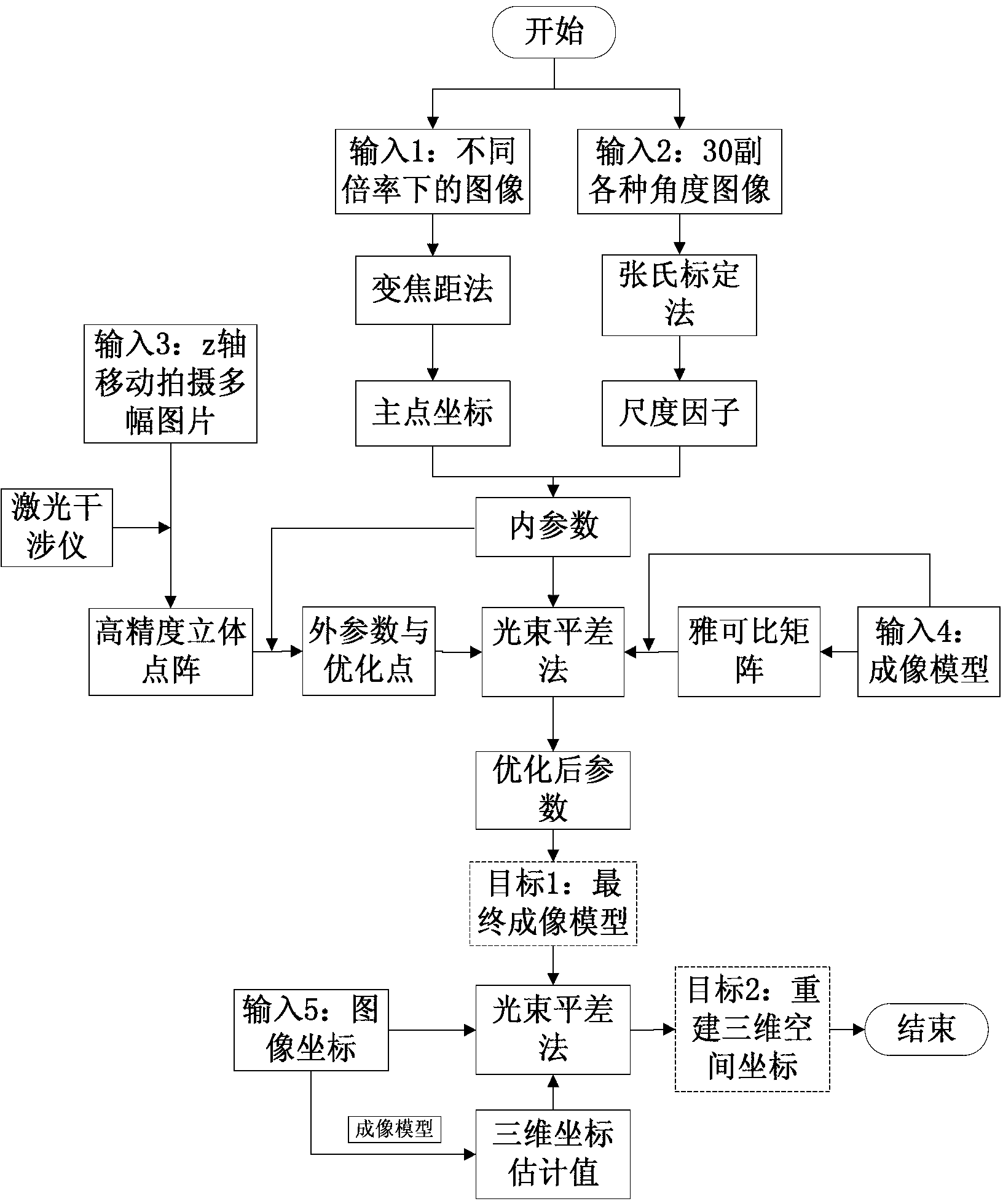

Accurate part positioning method based on binocular microscopy stereo vision

The invention discloses an accurate part positioning method based on binocular microscopy stereo vision, which belongs to the technical field of computer visual measuring and relates to an accurate precision part positioning method based on the binocular microscopy stereo vision. A binocular microscopy stereo vision system is adopted, two CCD (charge coupled device) cameras are adopted to acquire the images of the measured parts, the image information in the to-be-measured area on the measured part is amplified by a stereo microscope, a checkerboard calibrating board is adopted to calibrate the two CCD cameras, and a Harris corner point detecting algorithm and a sub-pixel extracting algorithm are adopted to extract feature points. The extracted feature points are subjected to the primary matching and correcting of matching point pairs, and the feature point image coordinates are inputted to a calibrated system to obtain the space actual coordinates of the feature points. The accurate part positioning method based on the binocular microscopy stereo vision solves the measuring difficult problems generated by the small size of the to-be-measured area, high positioning demand, non-contact and the like. The accurate positioning of the precision part is well finished by adopting the non-contact measuring method of the binocular microscopy stereo vision.

Owner:DALIAN UNIV OF TECH

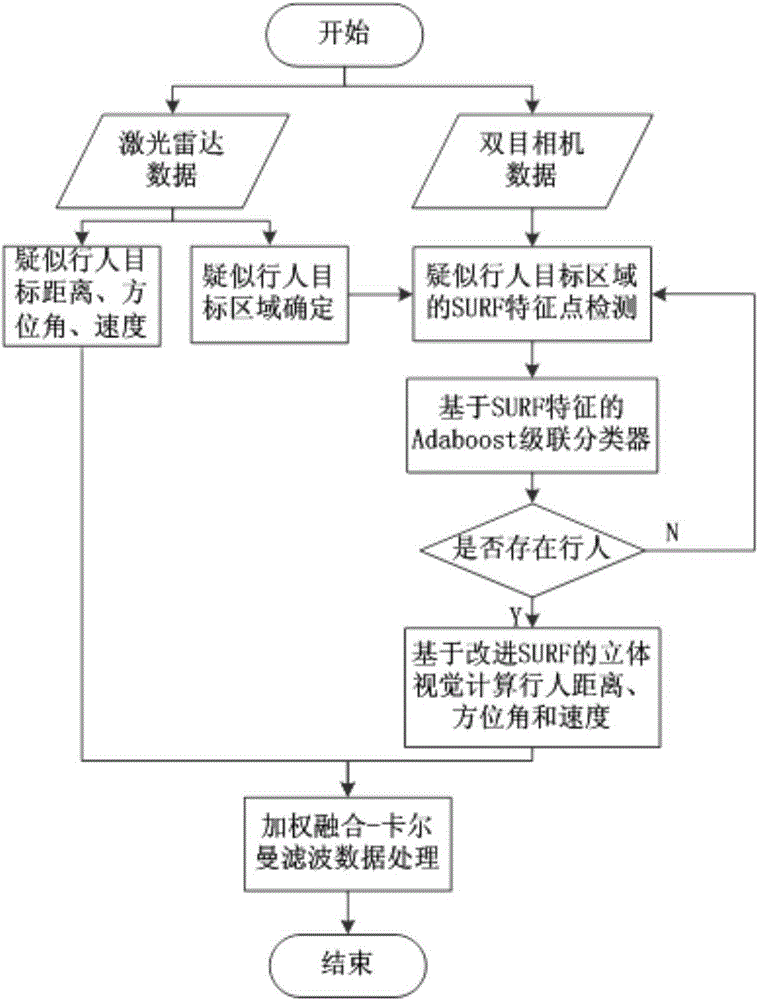

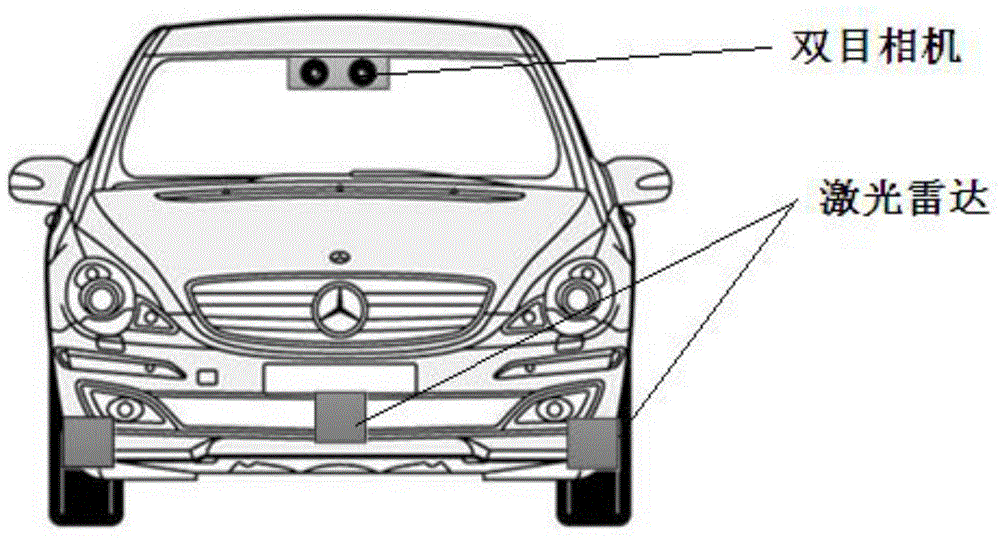

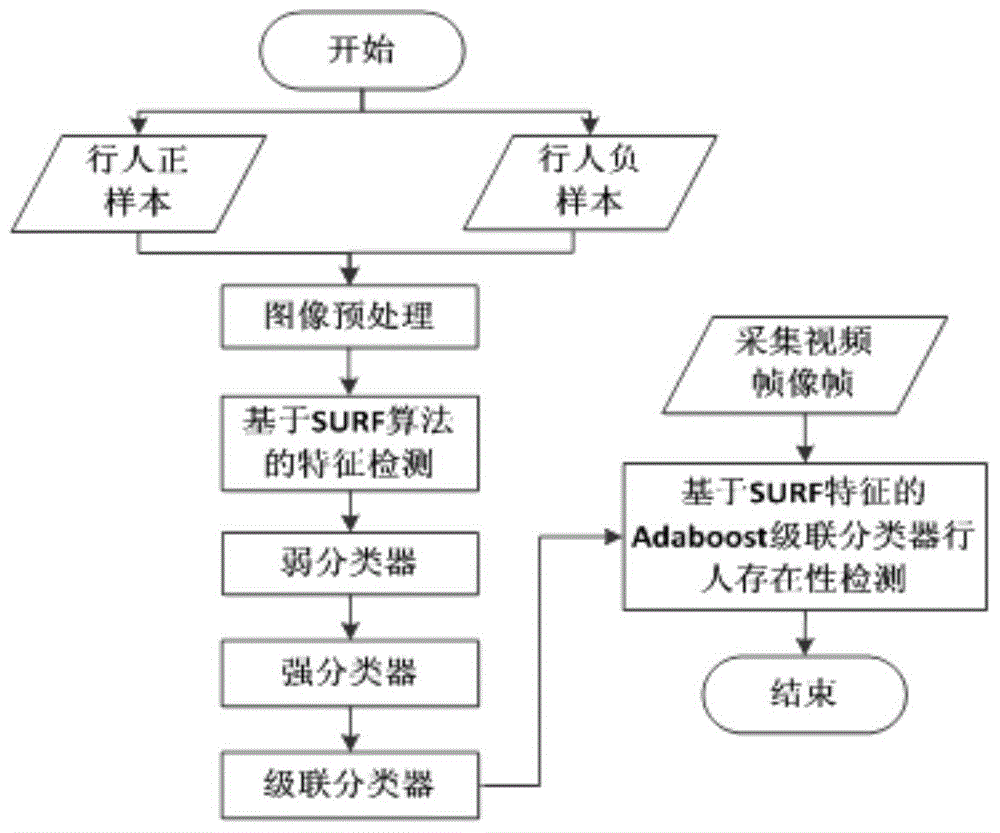

Detection method and system, based on laser radar and binocular camera, for pedestrian in front of vehicle

ActiveCN104573646AHigh measurement accuracyThe data is accurate and completeCharacter and pattern recognitionActive safetyVisual perception

The invention belongs to the field vehicle active safety, and particularly discloses a detection method and system, based on laser radar and binocular camera, for a pedestrian in front of vehicle. The method comprises the following steps: collection data of the front of the vehicle through the laser radar and the binocular camera; respectively processing the data collected by the laser radar and the binocular camera, so as to obtain the distance, azimuth angle and speed value of the pedestrian relative to the vehicle; correcting the information of the pedestrian through a Kalman filter. The method comprehensively utilizes a stereoscopic vision technology and a remote sensing technology, integrates laser radar and binocular camera information, is high in measurement accuracy and pedestrian detection accuracy, and can effectively reduce the occurrence rate of traffic accidents.

Owner:CHANGAN UNIV

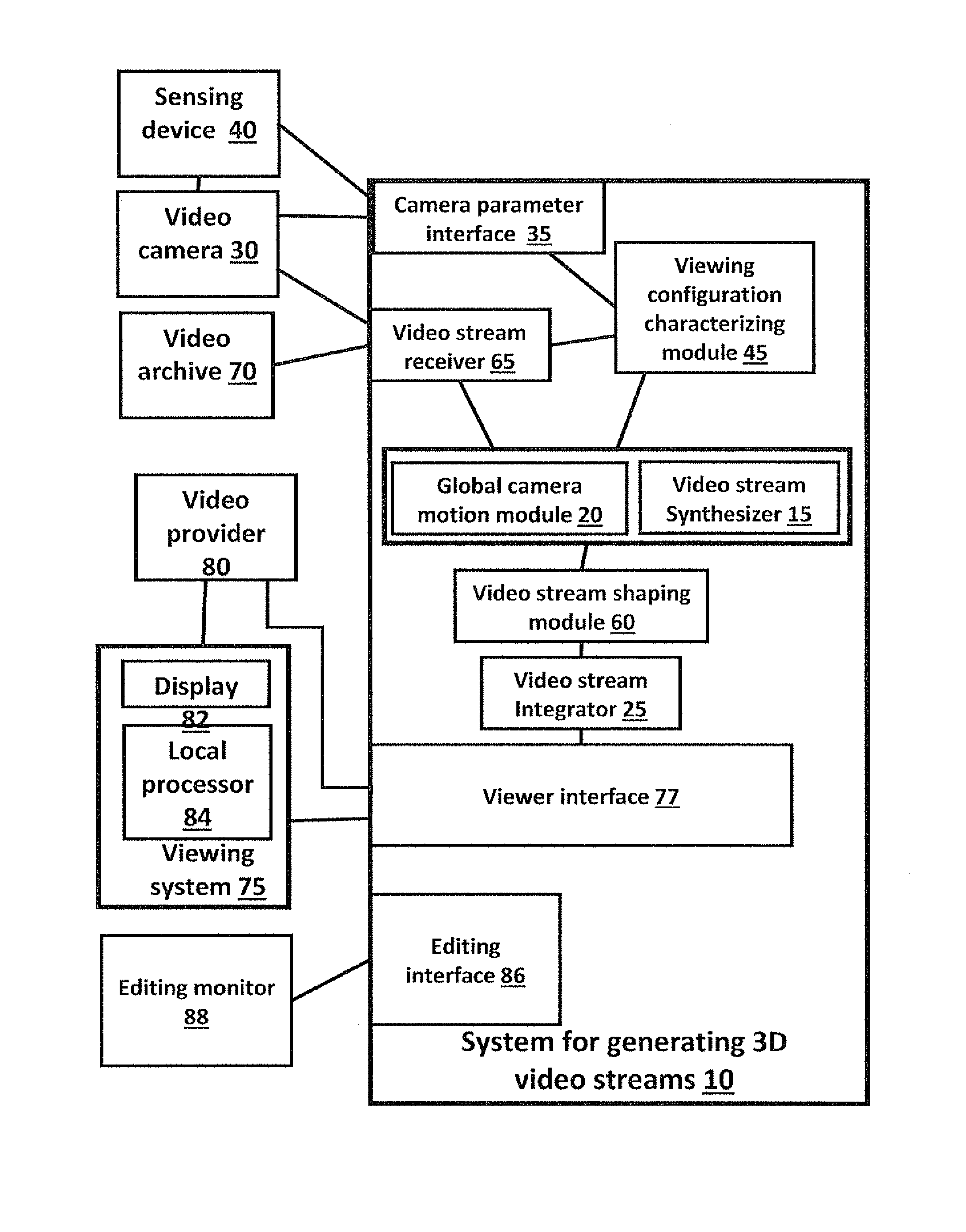

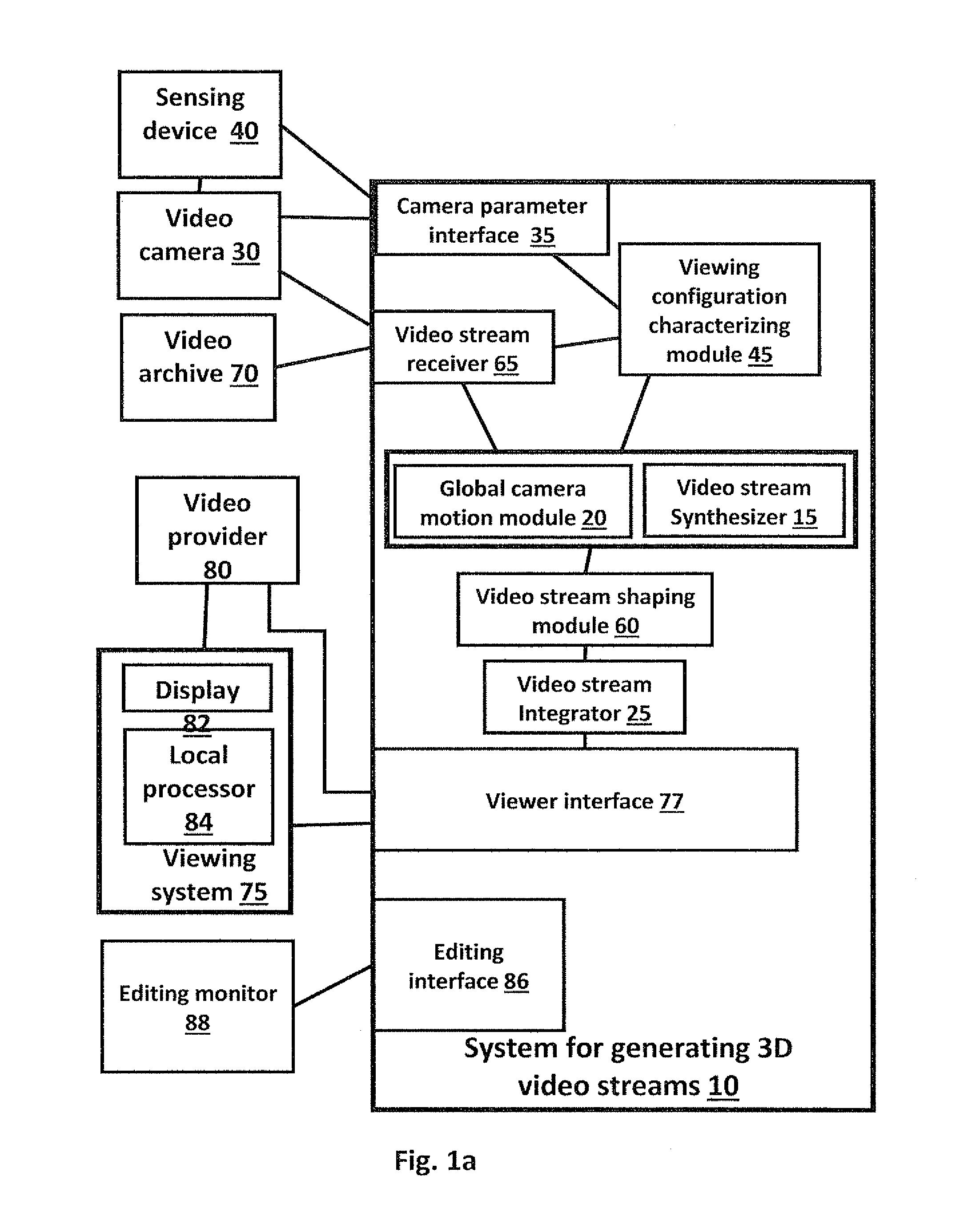

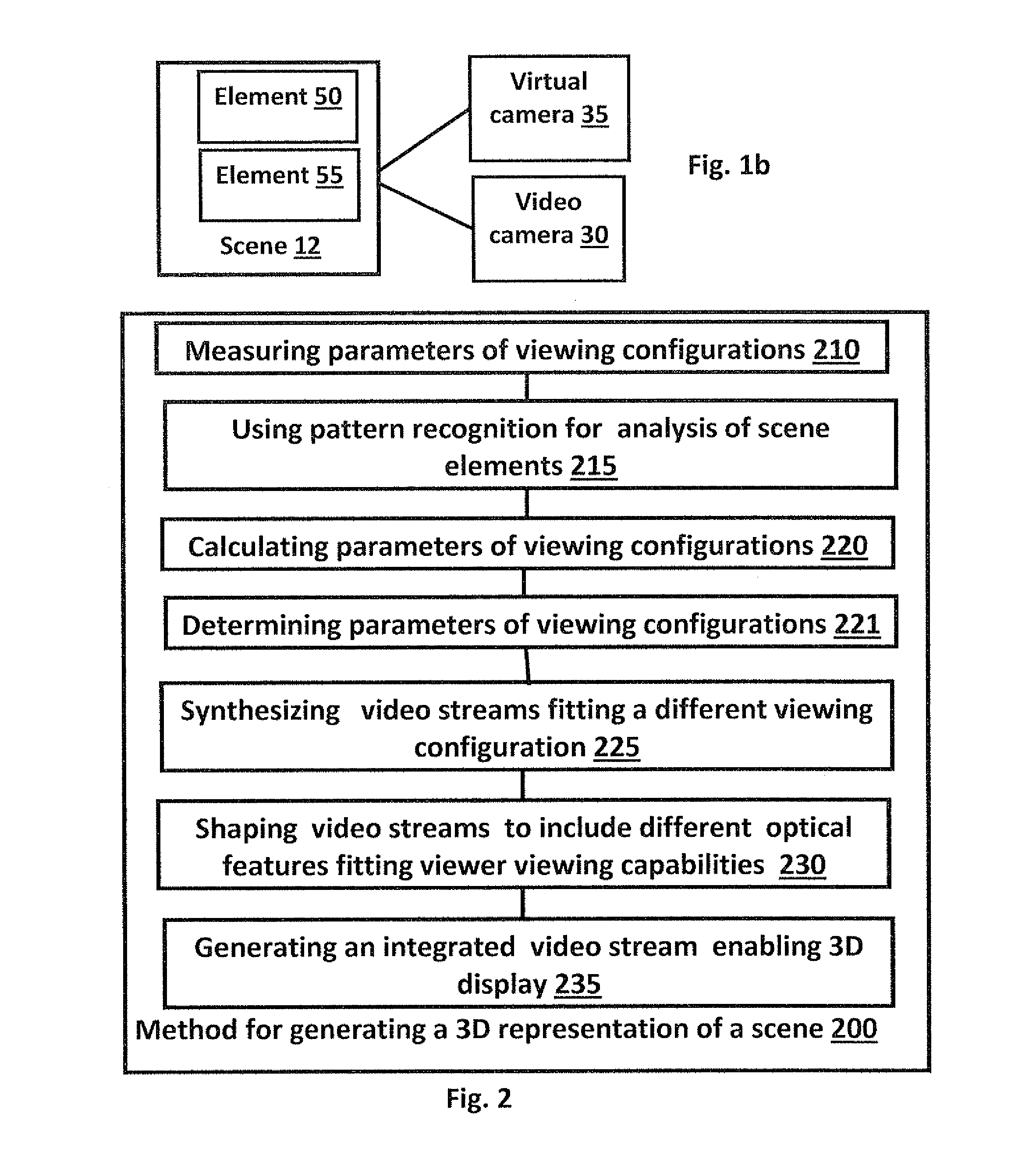

Method and system for creating three-dimensional viewable video from a single video stream

Generating 3D representations of a scene represented by a first video stream captured by video cameras. Identifying a transition between cameras, retrieving parameters of a first set of viewing configurations, providing 3D video representations representing the scene at several sets of viewing configurations different from the first set of viewing configurations, and generating an integrated video stream enabling 3D display of the scene by integration of at least two video streams having respective sets of viewing configurations, which are mutually different. Another provided process is for synthesizing an image of an object from a first image, captured by a certain camera at a first viewing configuration. Assigning a 3D model to a portion of a segmented object, calculating a modified image of the portion of the object from a viewing configuration different from the first viewing configuration, and embedding the modified image in a frame for stereoscopy.

Owner:STERGEN HI TECH

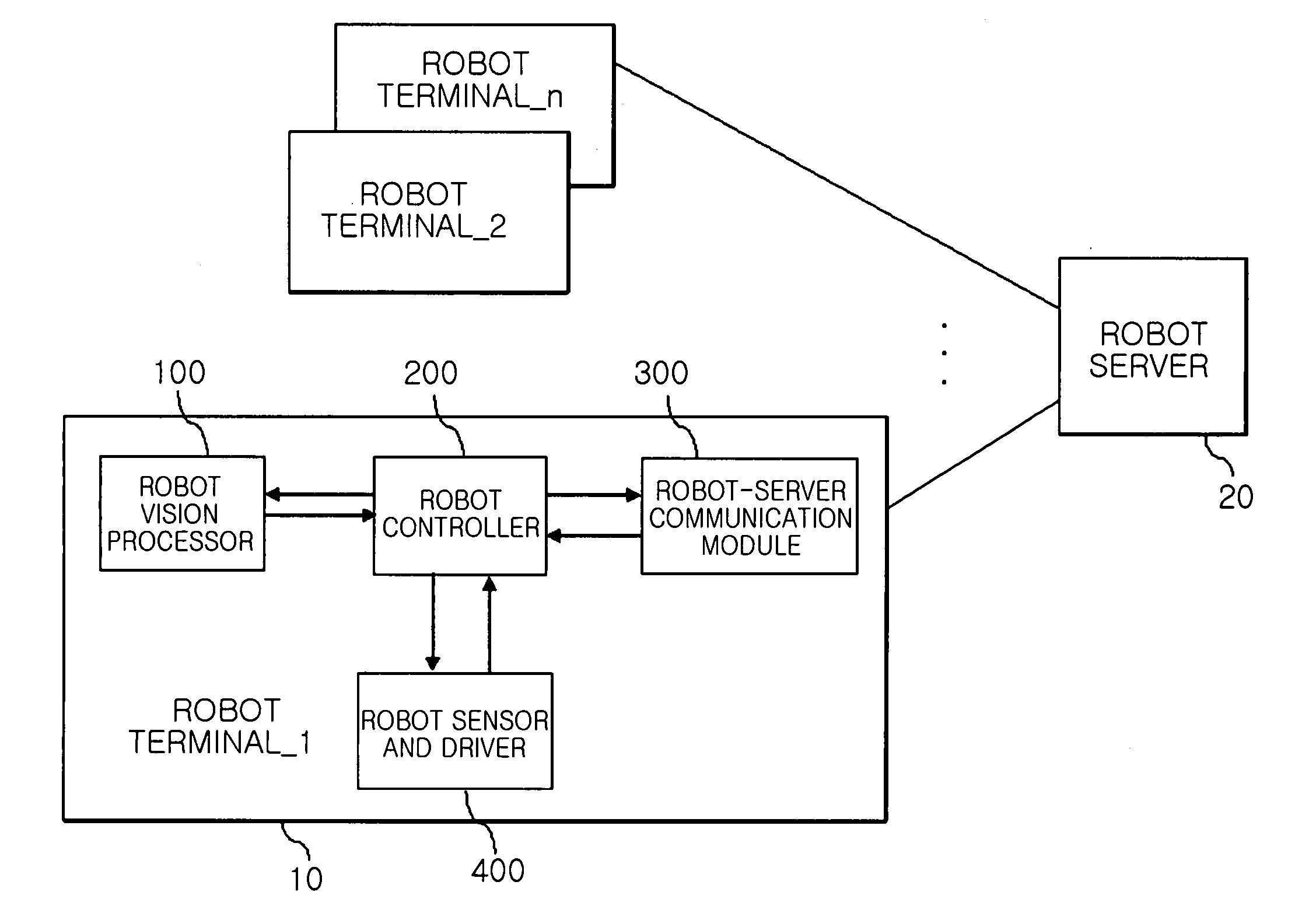

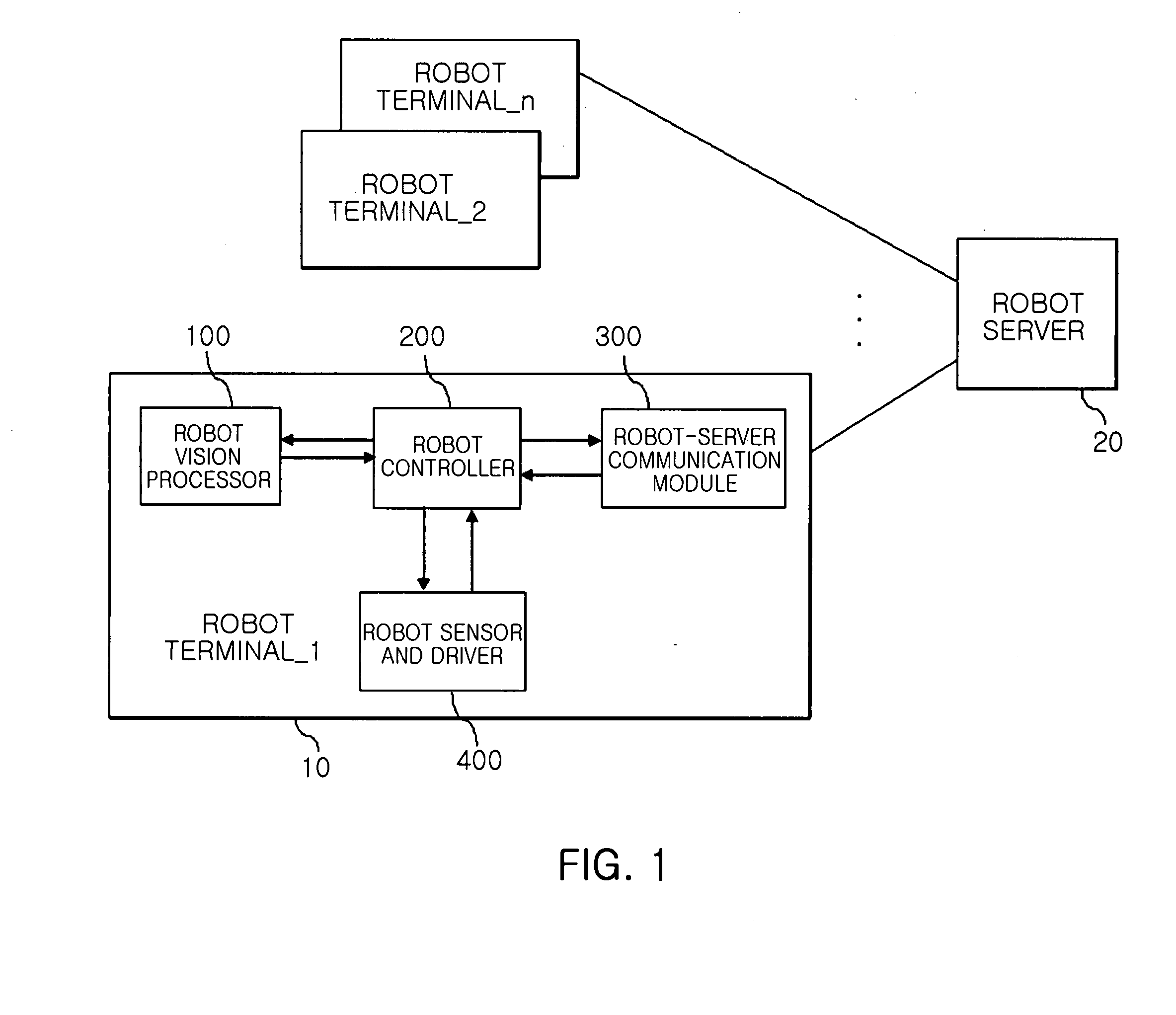

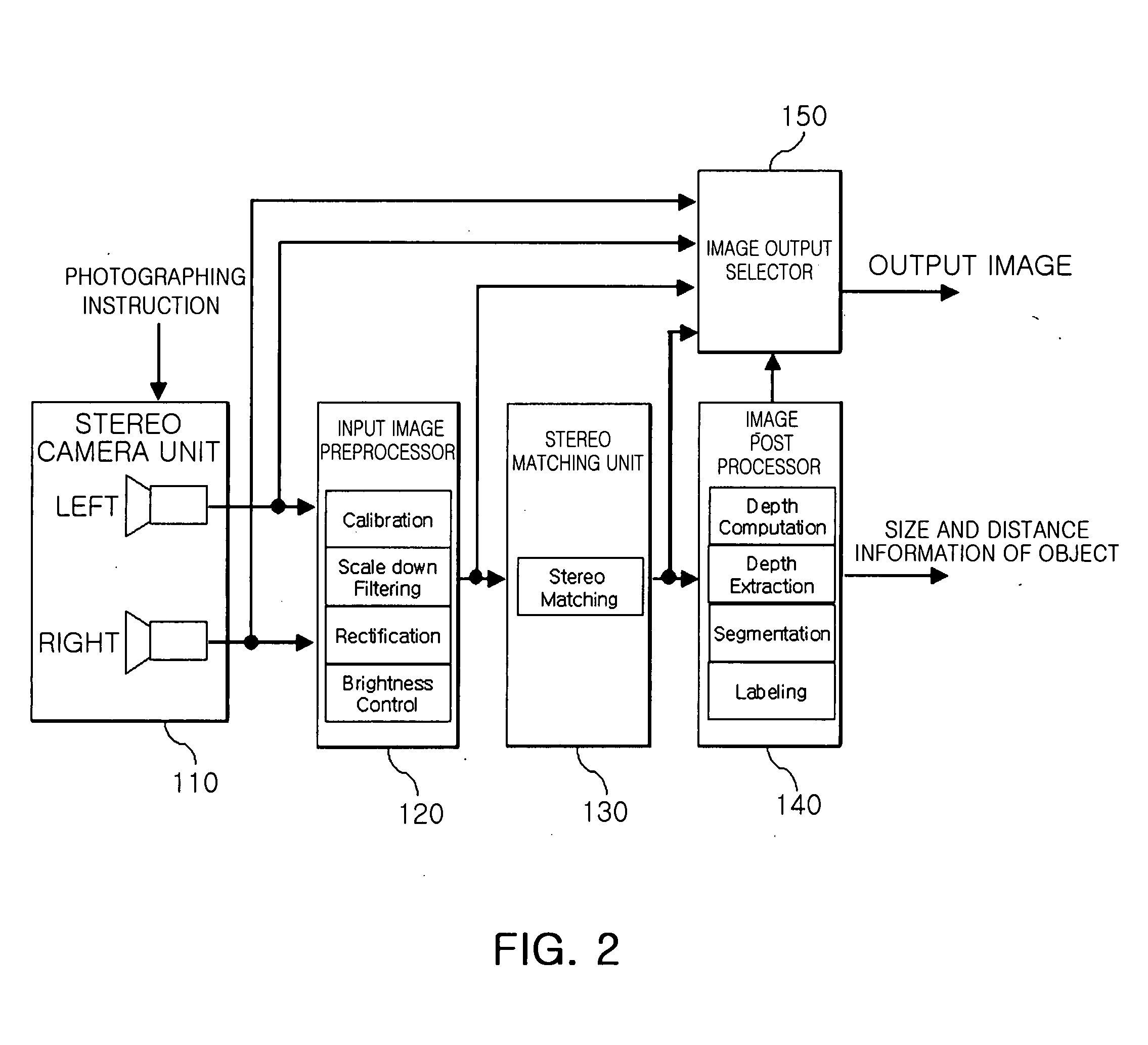

Method for searching target object and following motion thereof through stereo vision processing and home intelligent service robot using the same

InactiveUS20080215184A1Reduce computing loadReduce network trafficProgramme-controlled manipulatorComputer controlVision processingDriver/operator

A home intelligent service robot for recognizing a user and following the motion of a user and a method thereof are provided. The home intelligent service robot includes a driver, a vision processor, and a robot controller. The driver moves an intelligent service robot according to an input moving instruction. The vision processor captures images through at least two or more cameras in response to a capturing instruction for following a target object, minimizes the information amount of the captured image, and discriminates objects in the image into the target object and obstacles. The robot controller provides the capturing instruction for following the target object in a direction of collecting instruction information to the vision processor when the instruction information is collected from outside, and controls the intelligent service robot to follow and move the target object while avoiding obstacles based on the discriminating information from the vision processor.

Owner:ELECTRONICS & TELECOMM RES INST

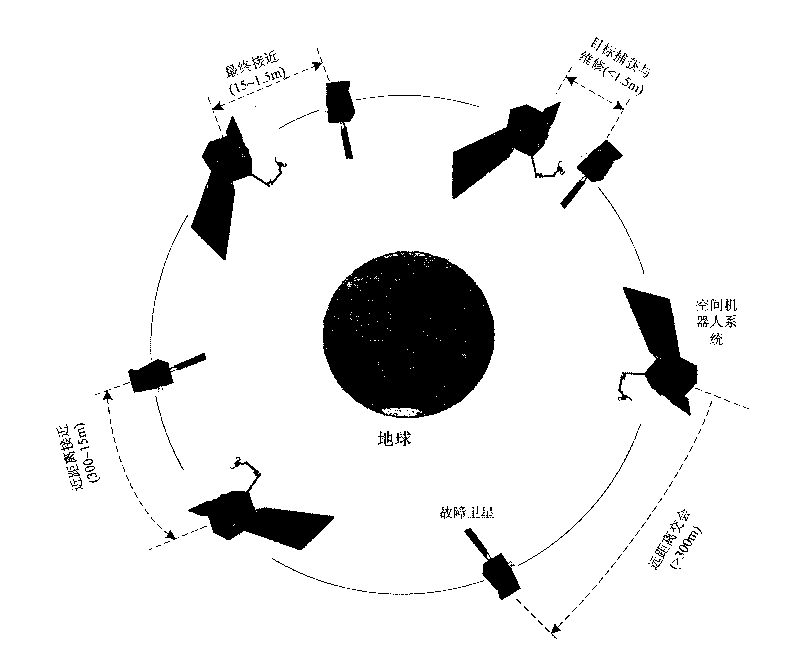

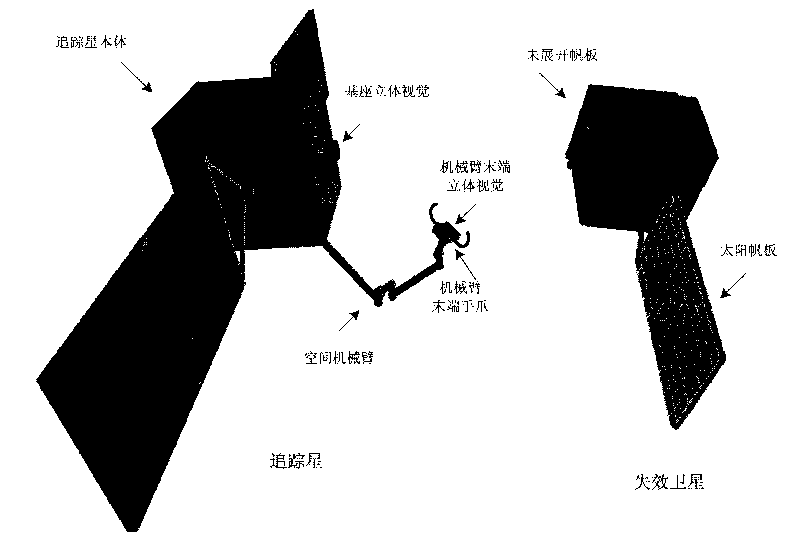

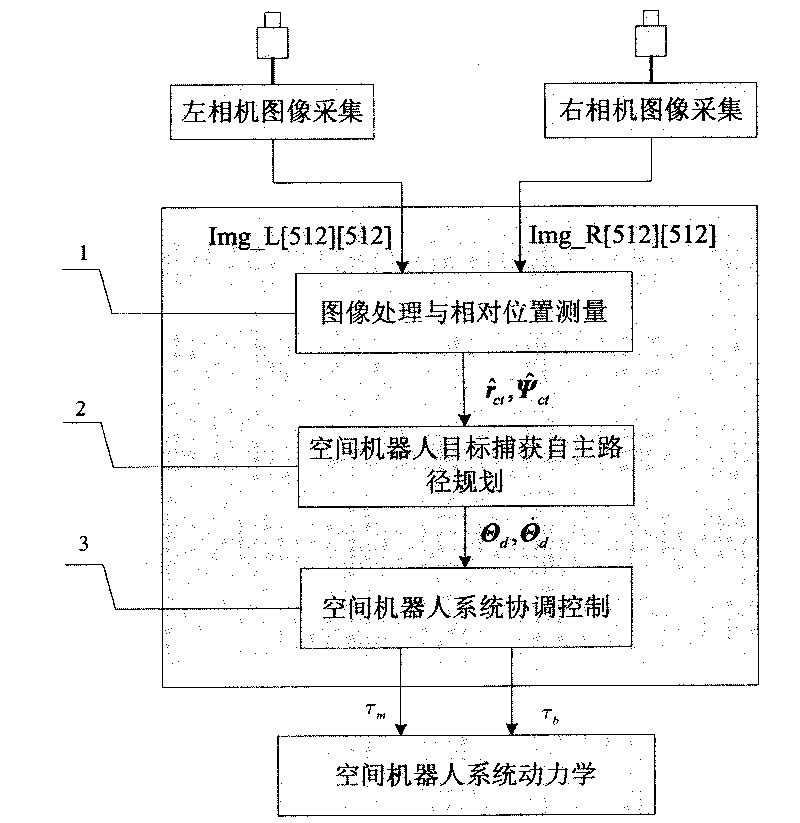

Autonomously identifying and capturing method of non-cooperative target of space robot

InactiveCN101733746AReal-time prediction of motion statusPredict interference in real timeProgramme-controlled manipulatorToolsKinematicsTarget capture

The invention relates to an autonomously identifying and capturing method of a non-cooperative target of a space robot, comprising the main steps of (1) pose measurement based on stereoscopic vision, (2) autonomous path planning of the target capture of the space robot and (3) coordinative control of a space robot system, and the like. The pose measurement based on the stereoscopic vision is realized by processing images of a left camera and a right camera in real time, and computing the pose of a non-cooperative target star relative to a base and a tail end, wherein the processing comprises smoothing filtering, edge detection, linear extraction, and the like. The autonomous path planning of the target capture of the space robot comprises is realized by planning the motion tracks of joints in real time according to the pose measurement results. The coordinative control of the space robot system is realized by coordinately controlling mechanical arms and the base to realize the optimal control property of the whole system. In the autonomously identifying and capturing method, a self part of a spacecraft is directly used as an identifying and capturing object without installing a marker or a comer reflector on the target star or knowing the geometric dimension of the object, and the planned path can effectively avoid the singular point of dynamics and kinematics.

Owner:HARBIN INST OF TECH

Apparatus and method of optical imaging for medical diagnosis

ActiveUS8334900B2Reduce complexityImproves shape reconstructionSurgeryEndoscopesMedical diagnosisVisual perception

Described herein is a novel 3-D optical imaging system based on active stereo vision and motion tracking for to tracking the motion of patient and for registering the time-sequenced images of suspicious lesions recorded during endoscopic or colposcopic examinations. The system quantifies the acetic acid induced optical signals associated with early cancer development. The system includes at least one illuminating light source for generating light illuminating a portion of an object, at least one structured light source for projecting a structured light pattern on the portion of the object, at least one camera for imaging the portion of the object and the structured light pattern, and means for generating a quantitative measurement of an acetic acid-induced change of the portion of the object.

Owner:THE HONG KONG UNIV OF SCI & TECH

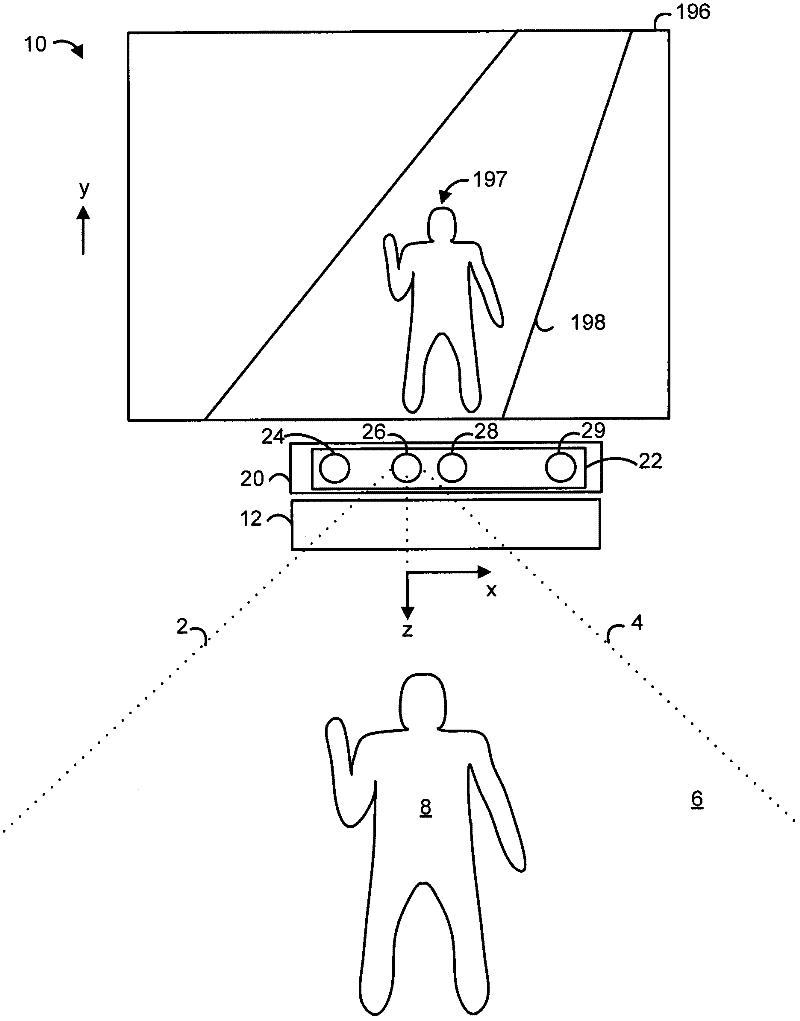

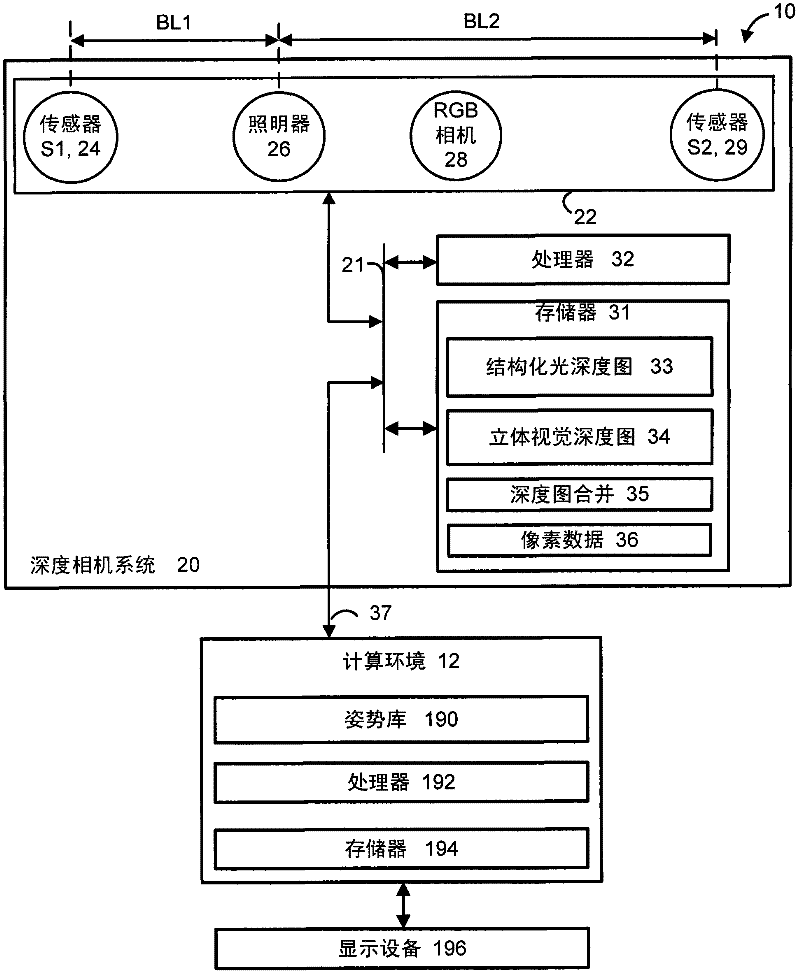

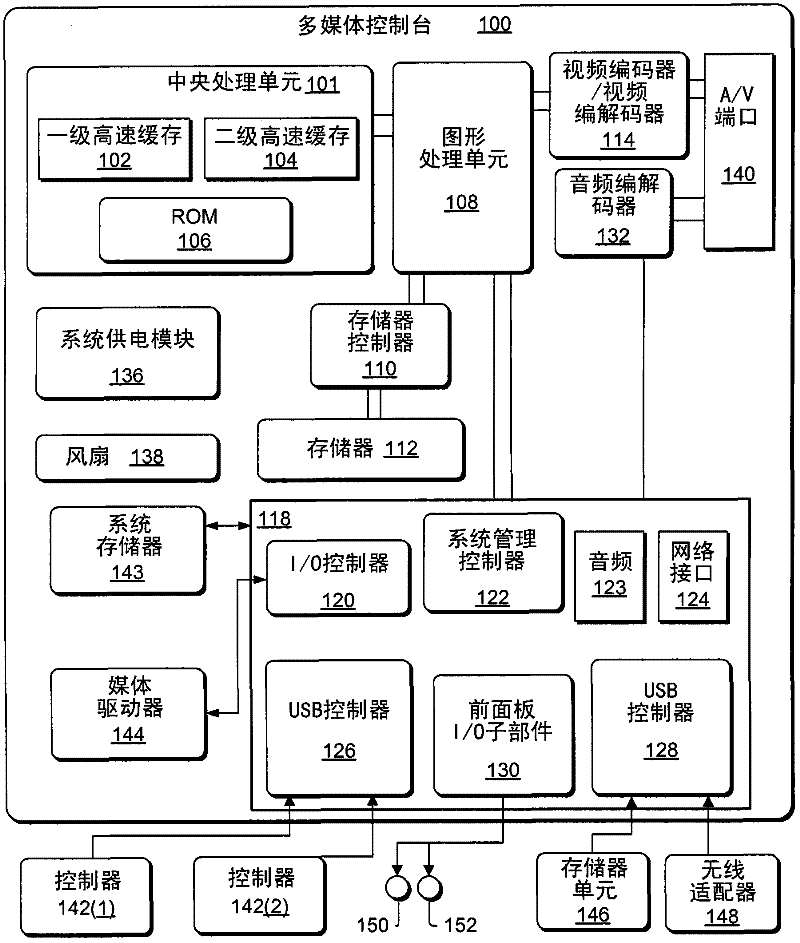

Depth camera based on structured light and stereo vision

InactiveCN102385237AChange displayImage enhancementTelevision system detailsVisual field lossStereo matching

The invention discloses a depth camera based on structured light and stereo vision A depth camera system uses a structured light illuminator and multiple sensors such as infrared light detectors, such as in a system which tracks the Motion of a user in a field of view. One sensor can be optimized for shorter range detection while another sensor is optimized for longer range detection. The sensors can have a different baseline distance from the illuminator, as well as a different spatial resolution, exposure time and sensitivity. In one approach, depth values are obtained from each sensor by matching to the structured light pattern, and the depth values are merged to obtain a final depth map which is provided as an input to an application. The merging can involve unweighted averaging, weighted averaging, accuracy measures and / or confidence measures. In another approach, additional depth values which are included in the merging are obtained using stereoscopic matching among pixel data of the sensors.

Owner:MICROSOFT TECH LICENSING LLC

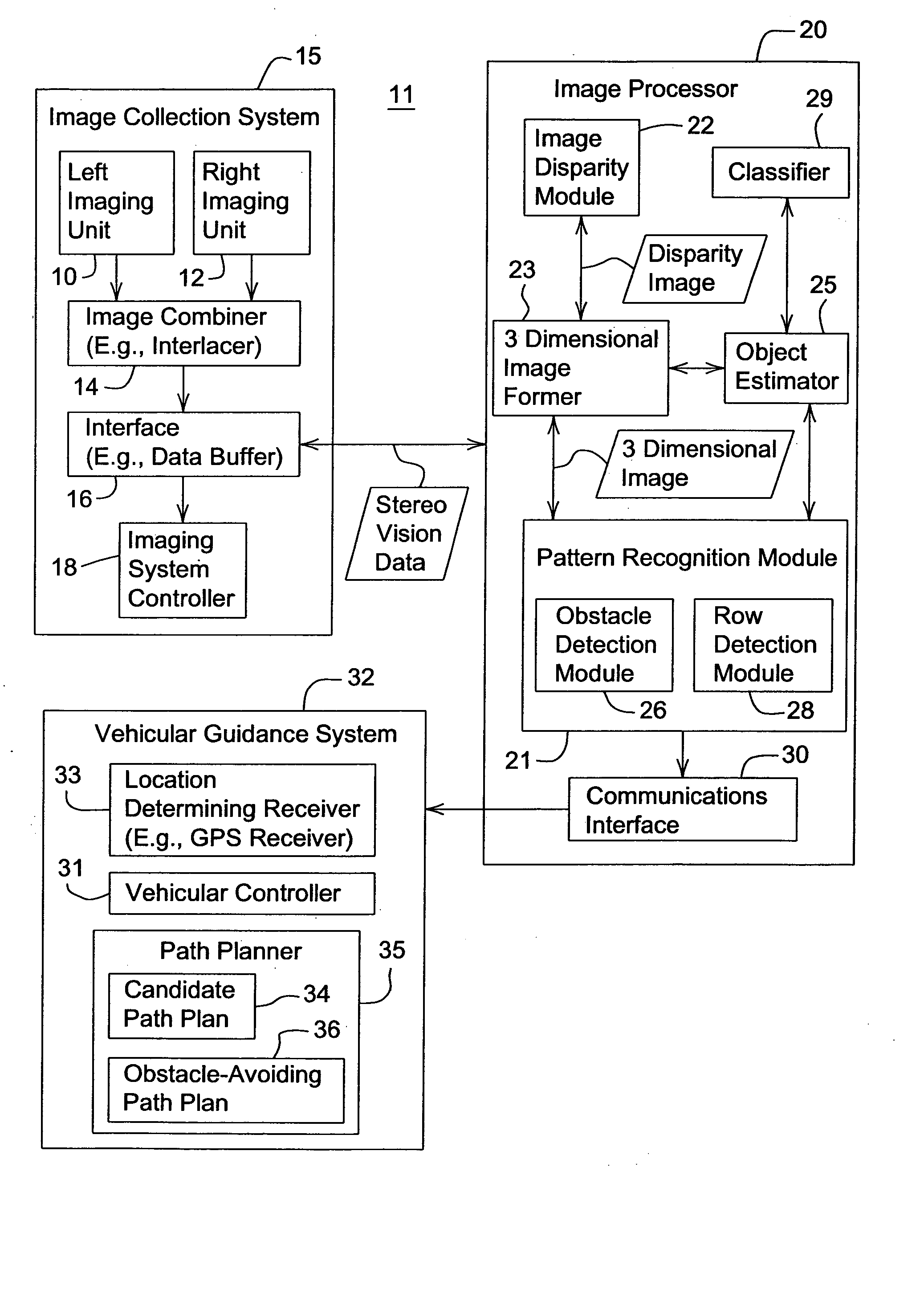

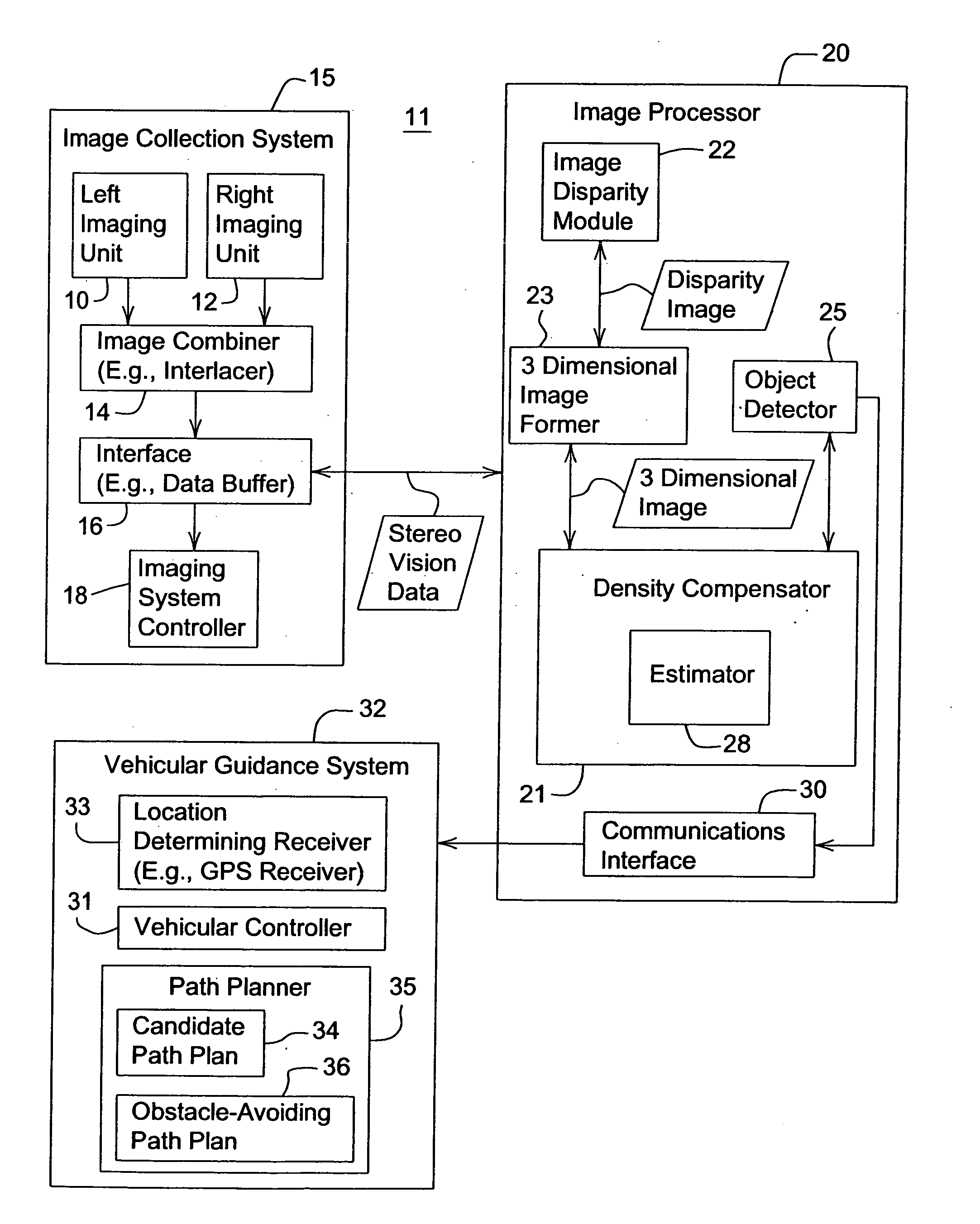

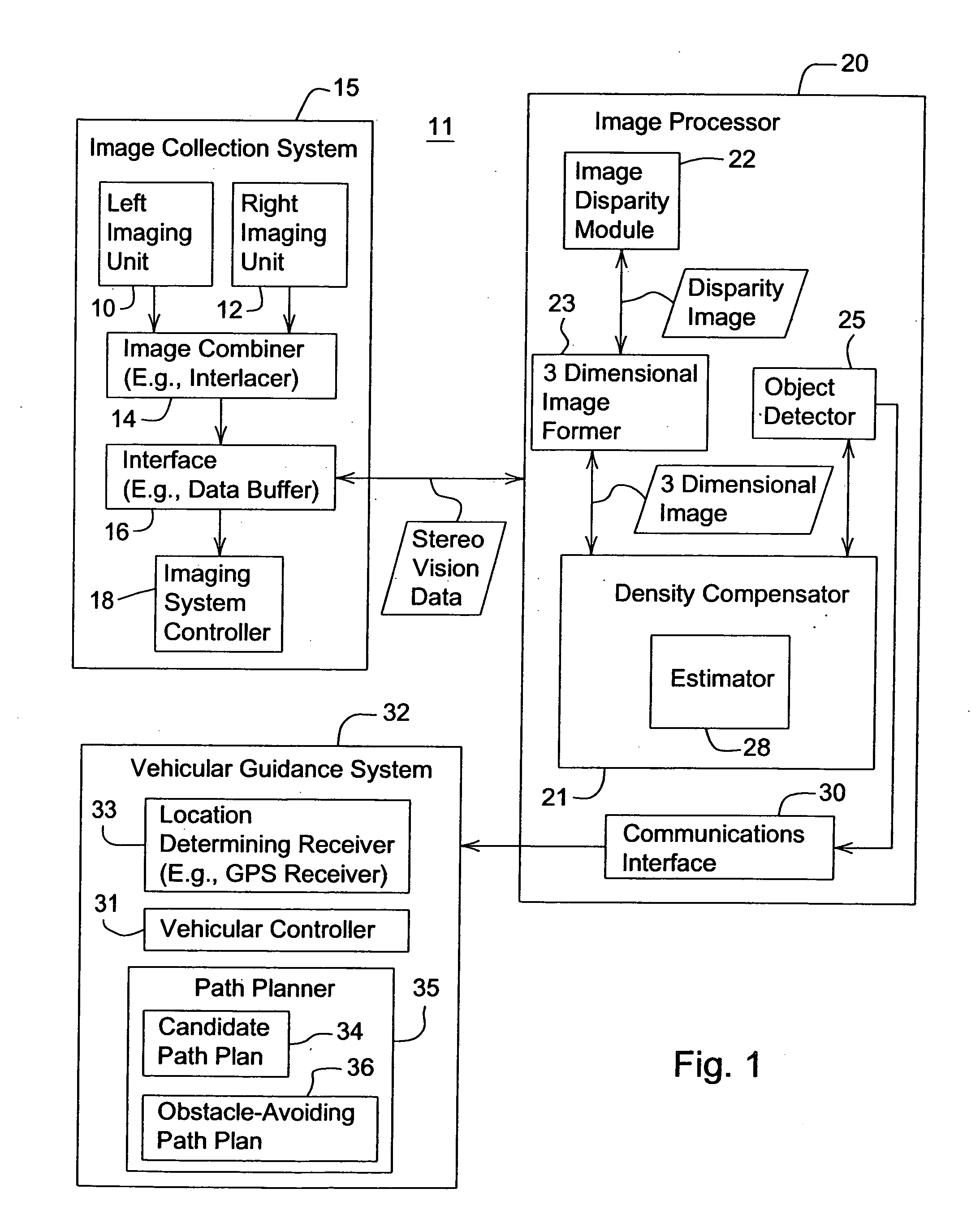

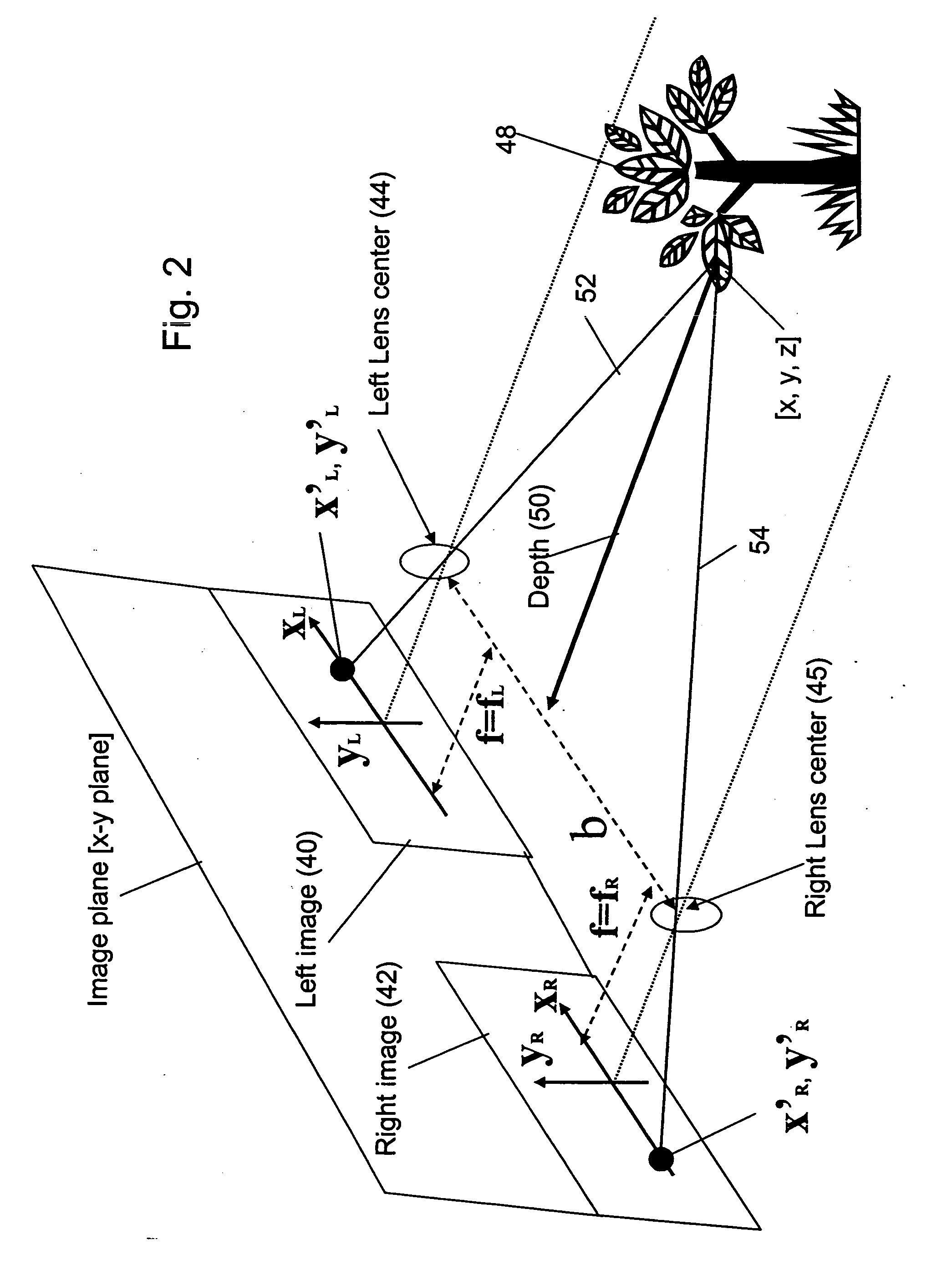

Method for processing stereo vision data using image density

An image collection system collects stereo image data comprising left image data and right image data of a particular scene. An estimator estimates a reference three dimensional image density rating for corresponding reference volumetric cell within the particular scene at a reference range from an imaging unit. An image density compensator compensates for the change in image density rating between the reference volumetric cell and an evaluated volumetric cell by using a compensated three dimensional image density rating based on a range or relative displacement between the reference volumetric cell and the evaluated volumetric cell. An obstacle detector identifies a presence or absence of an obstacle in a particular compensated volumetric cell in the particular scene if the particular compensated volumetric cell meets or exceeds a minimum three dimensional image density rating

Owner:DEERE & CO +1

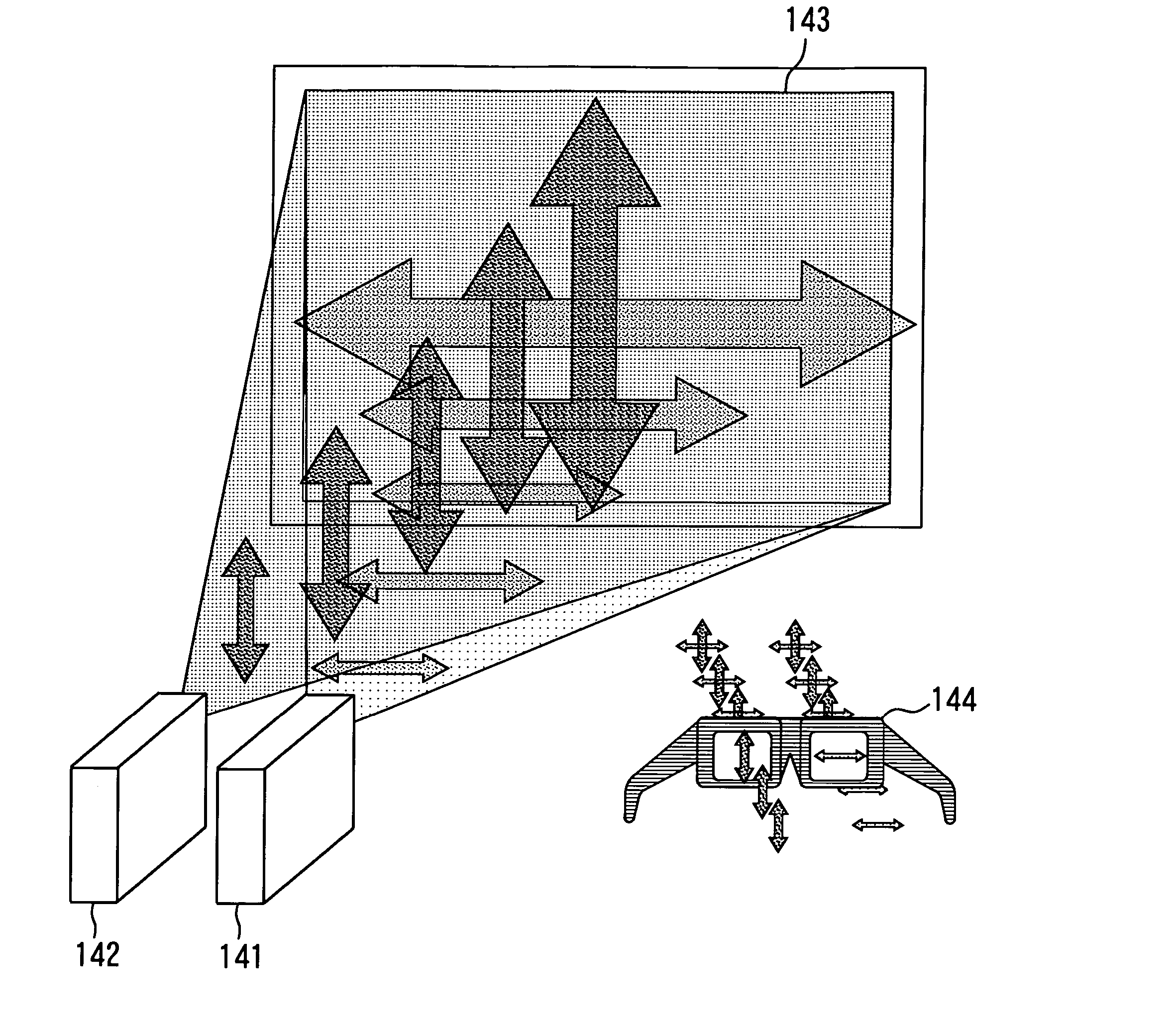

Stereoscopic-Vision Image Processing Apparatus, Stereoscopic-Vision Image Providing Method, and Image Display Method

InactiveUS20070257902A1Valid choiceEfficient managementProjectorsCathode-ray tube indicatorsImaging processingComputer graphics (images)

This invention provides a stereoscopic-vision image processing apparatus, a stereoscopic-vision image providing method, and an image display method that are capable of managing specification of display unit on which a stereoscopic-vision image is desired to be displayed as accessory information (assumed display information). The assumed display information includes a type and a display size of the display unit on which a stereoscopic-vision image is desired to be displayed. This allows an appropriate stereoscopic-vision image to be obtained by specifying a type or a display size of the display unit that is desired to be displayed with them being combined with the stereoscopic-vision image and scaling the stereoscopic-vision image up or down, in display of a stereoscopic image, in accordance with the type or the display size of the display unit.

Owner:SHARP KK +1

Stereo vision measuring apparatus based on binocular omnidirectional visual sense sensor

Disclosed is a stereo vision measuring device based on a binocular omni-directional vision sensor. Each ODVS composing the binocular omni-directional vision sensor adopts the design of mean angle resolution. The parameters of two image collection cameras are in complete accord and in possession of a pretty good symmetry, and can quickly realize the point-to-point matching. The device adopts a unified spherical coordinate in the process of data collection, processing, description and representation of space objects in terms of centering on human in visual space, and adopts the elements of distance sense, direction sense and color sense to express features of each characteristic point, thereby simplifying the complication of calculus, omitting the calibration of the cameras, facilitating the feature extraction and realizing the stereo image matching easily, finally realizing the purpose of high-effective, real-time, and accurate stereo vision measurement. The device can be applied in a plurality of fields of industrial detection, object identification, robot automatic guidance, astronautics, aeronautics, military affairs, etc.

Owner:汤一平

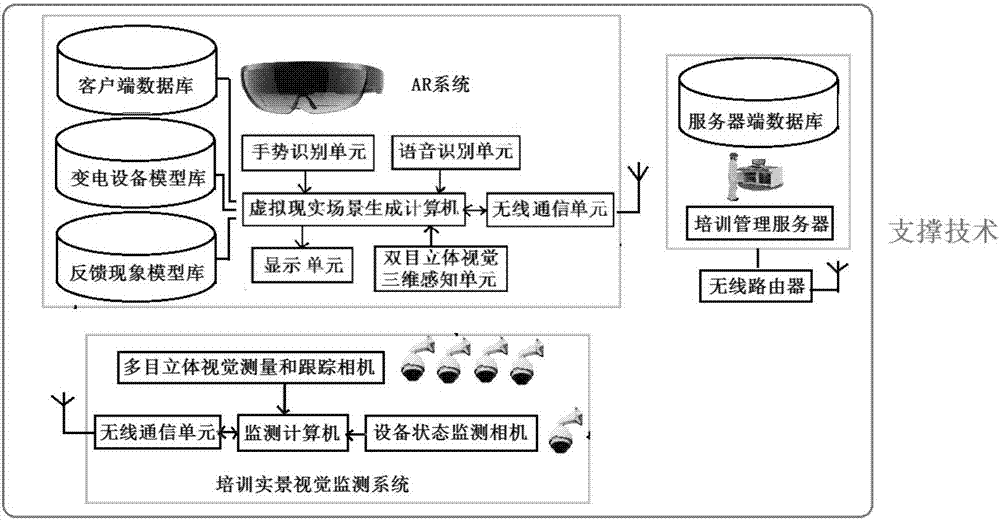

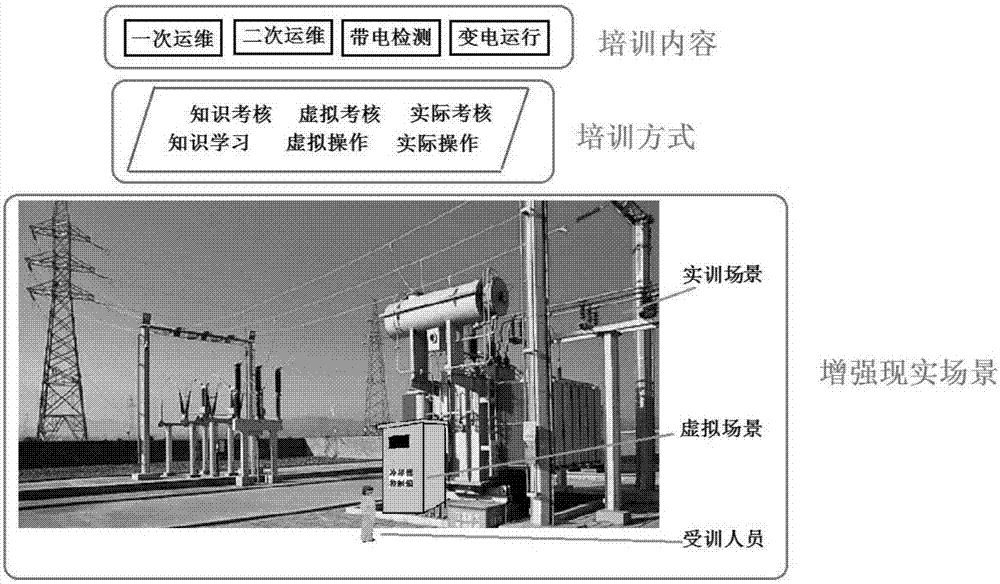

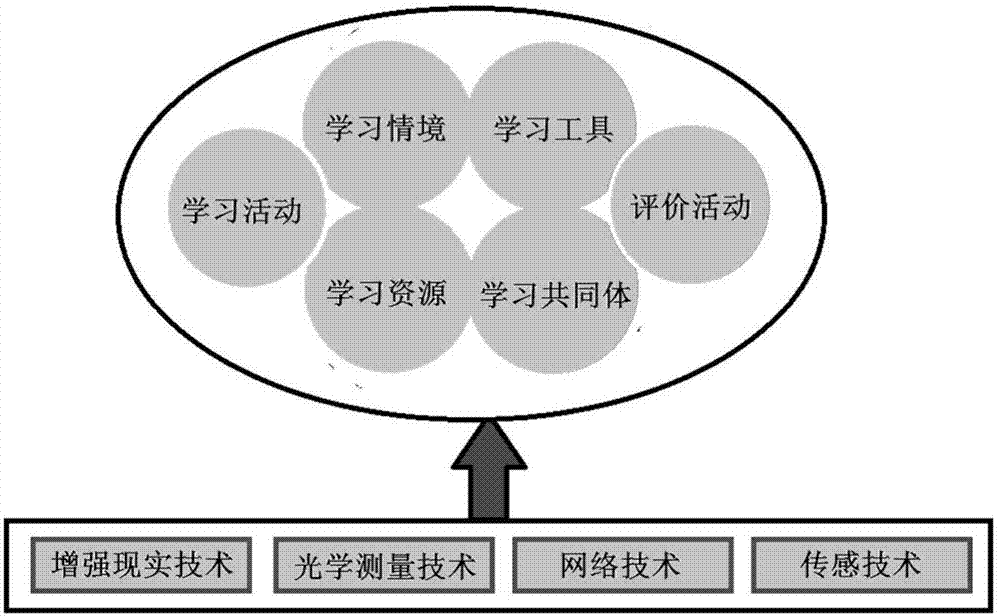

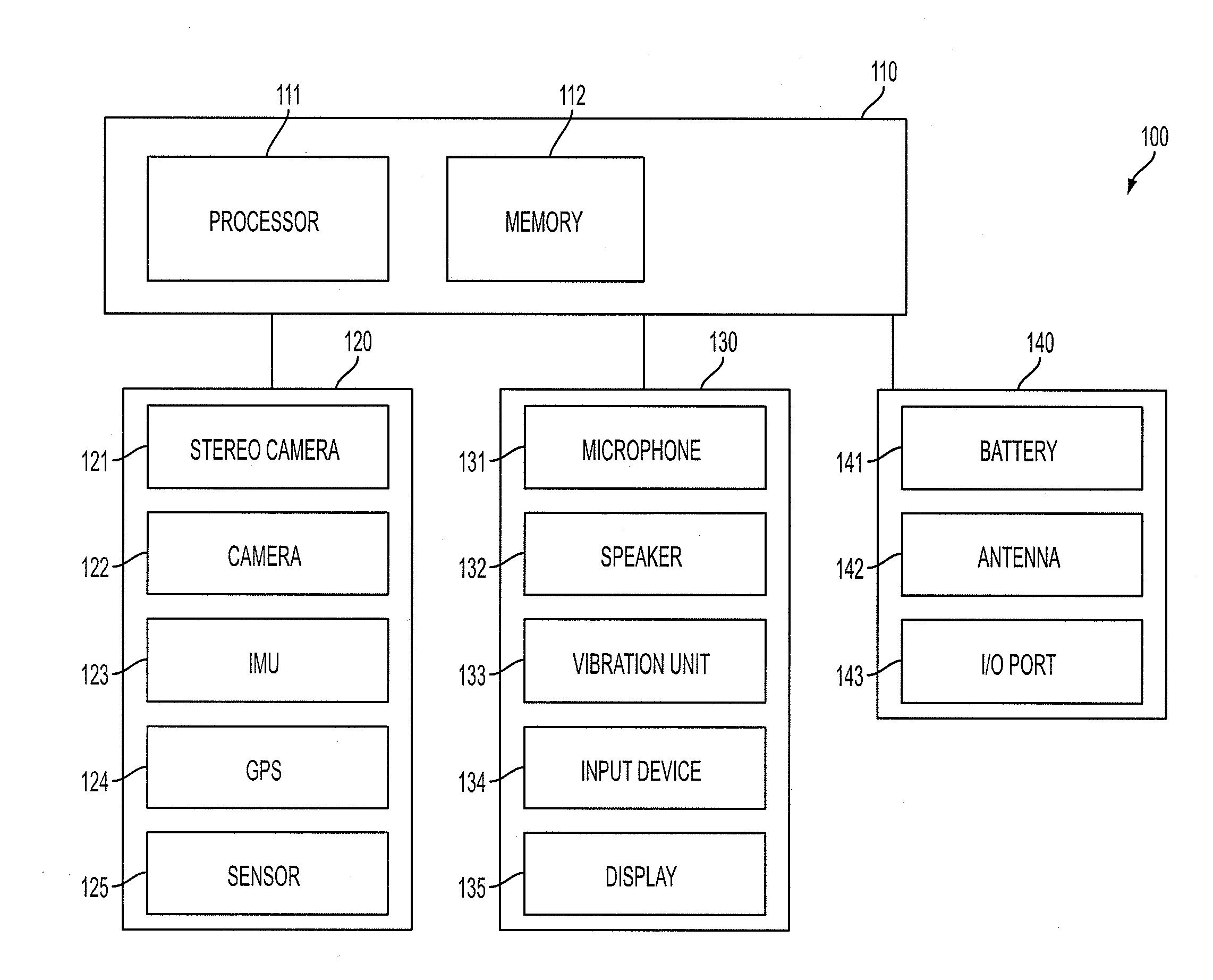

Substation equipment operation and maintenance simulation training system and method based on augmented reality

InactiveCN107331220ACosmonautic condition simulationsElectrical appliancesLive actionPattern perception

The invention provides a substation equipment operation and maintenance simulation training system and method based on augmented reality. The system comprises a training management server, an AR subsystem and a training live-action visual monitoring subsystem; the AR subsystem comprises a binocular stereoscopic vision three-dimensional perception unit used for identifying, tracking and registering target equipment, a display unit making a displayed virtual scene overlaid on an actual scene, a gesture recognition unit used for recognizing actions of a user and a voice recognition unit used for recognizing voice of the user; the training live-action visual monitoring subsystem comprises a multi-view stereoscopic vision measurement and tracking camera and a equipment state monitoring video camera which are arranged in the actual scene; the equipment state monitoring video camera is used for recognizing the working condition of substation equipment, and the multi-view stereoscopic vision measurement and tracking camera is used for obtaining three-dimensional space coordinates of the substation equipment in the actual scene, measuring three-dimensional space coordinates of trainees on the training site and tracking and measuring walking trajectories of the trainees.

Owner:JINZHOU ELECTRIC POWER SUPPLY COMPANY OF STATE GRID LIAONING ELECTRIC POWER SUPPLY +3

Smart necklace with stereo vision and onboard processing

ActiveUS20150199566A1Character and pattern recognitionNavigation instrumentsDocumentationLoudspeaker

A wearable neck device and a method of operating the wearable neck device are provided for outputting optical character recognition information to a user. The wearable neck device has at least one camera, and a memory storing optical character or image recognition processing data. A processor detects a document in the surrounding environment and adjusts the field of view of the at least one camera such that the detected document is within the adjusted field of view. The processor analyzes the image data within the adjusted field of view using the optical character or image recognition processing data. The processor determines output data based on the analyzed image data. A speaker of the wearable neck device provides audio information to the user based on the output data.

Owner:TOYOTA JIDOSHA KK

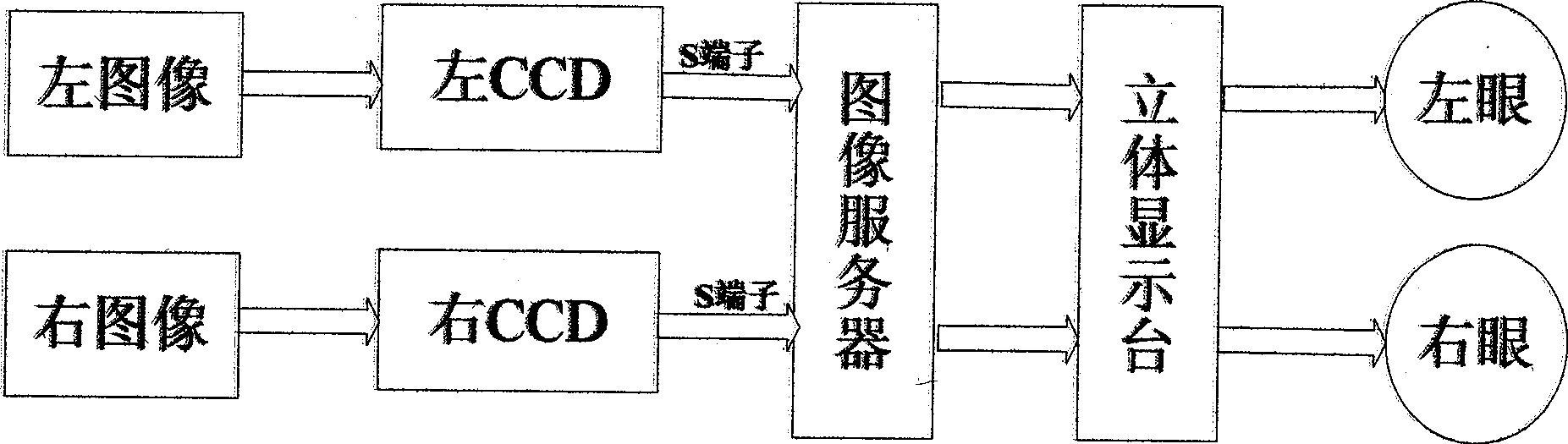

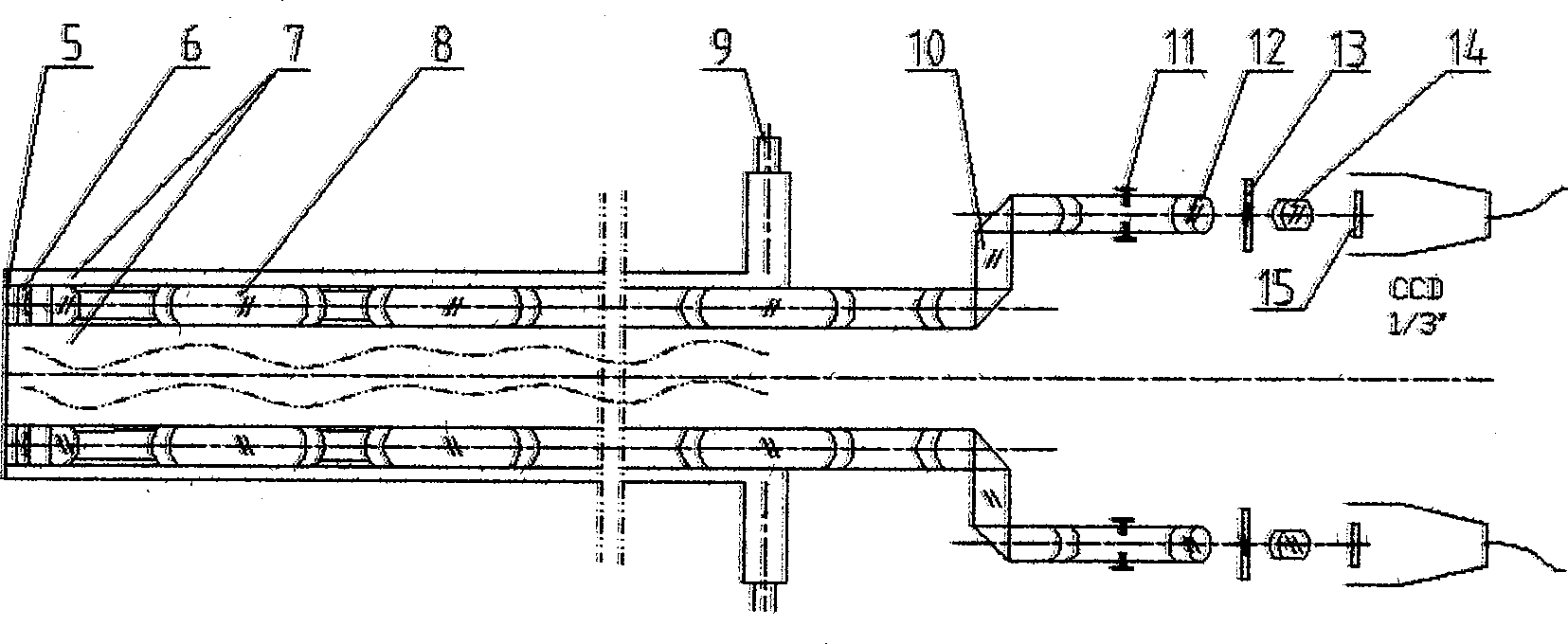

Binocular endoscope operation visual system

InactiveCN101518438AMeet the needs of 3D stereo visionStereoscopic effect is goodLaproscopesEndoscopesVideo memoryLaparoscopy

A binocular endoscope operation visual system mainly comprises a binocular endoscope, an image server and an endoscope stereo display unit. Two ways of clear abdominal cavity image video signals are obtained by the binocular endoscope, two ways of video streams enter a memory of an image processing server or a video memory by a two-way image capture card, and a left-way image and a right-way image which are processed are respectively displayed in a left displayer and a right displayer. By a flat mirror, the contents of the left displayer and the right displayer respectively enter the left eye and the right eye of an observer, thereby the stereo effect is realized. The binocular endoscope operation visual system is suitable for the circumstance of a medical surgical endoscope operation, can completely satisfy the stereoscopic vision requirement of a laparoscope operation of a minimally invasive operation robot, realizes the binocular stereoscopic vision real-time display in the laparoscope operation, has reliable performance, simple structure and high commercialization level, and adapts the germfree circumstances of the operation.

Owner:NANKAI UNIV

Popular searches

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com