Patents

Literature

4120 results about "Characteristic point" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

First, the characteristic point which is expressed as an object point is stored in hash table form which includes a large amount of information due to geometric transformation to store in the database. On one end of a bone, for example, the top end would have a characteristic point that would be very easy to decipher between species.

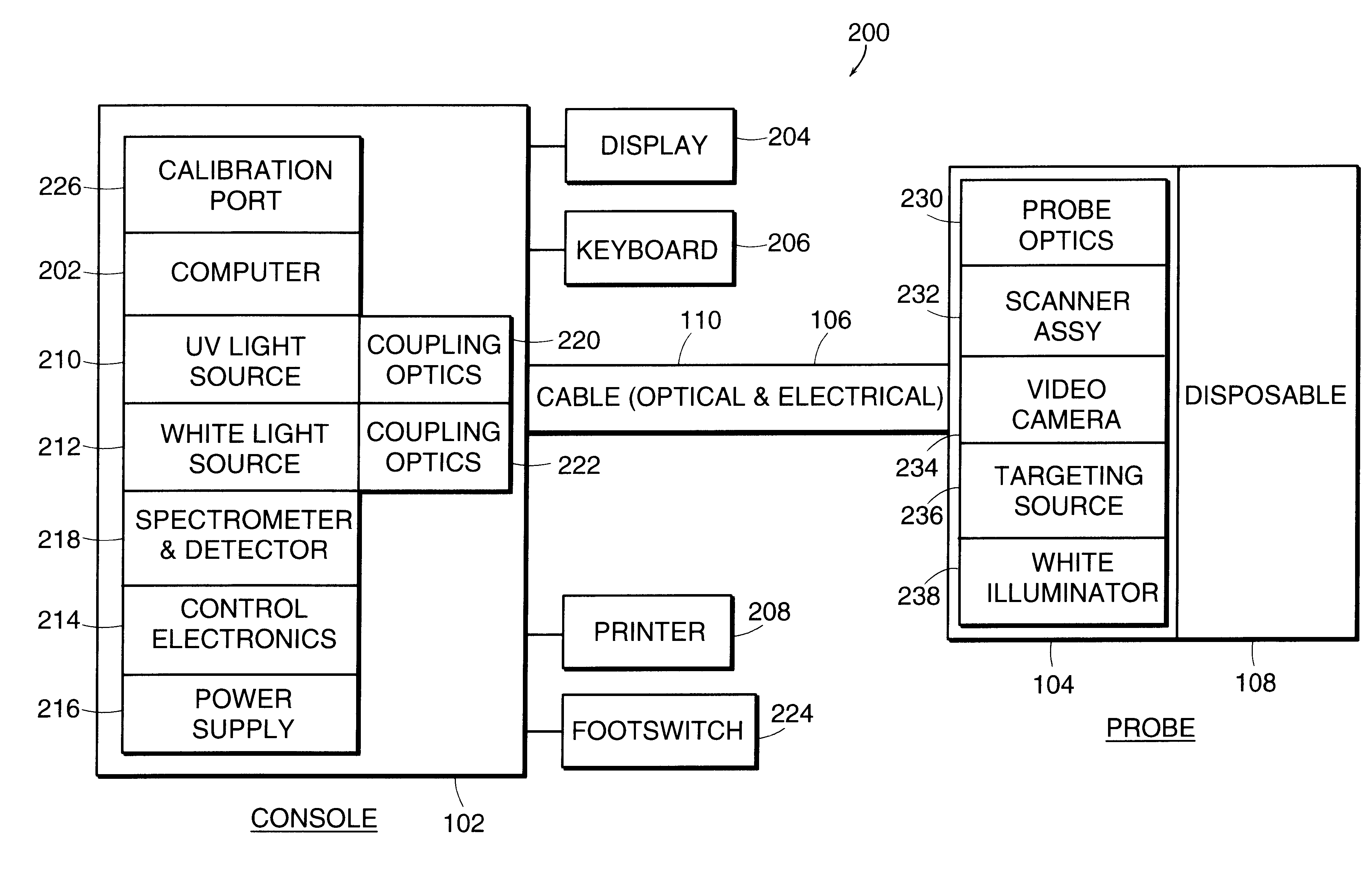

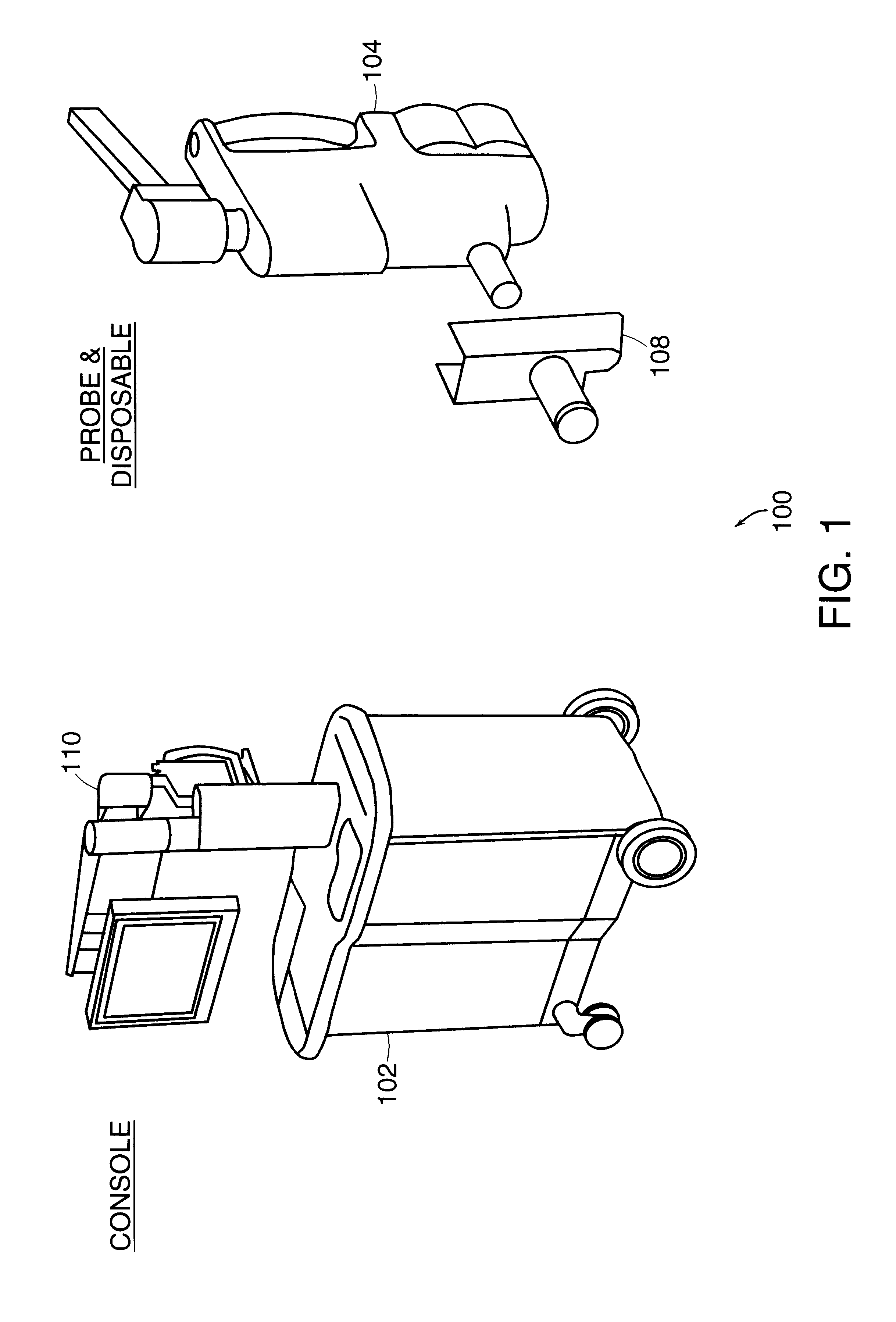

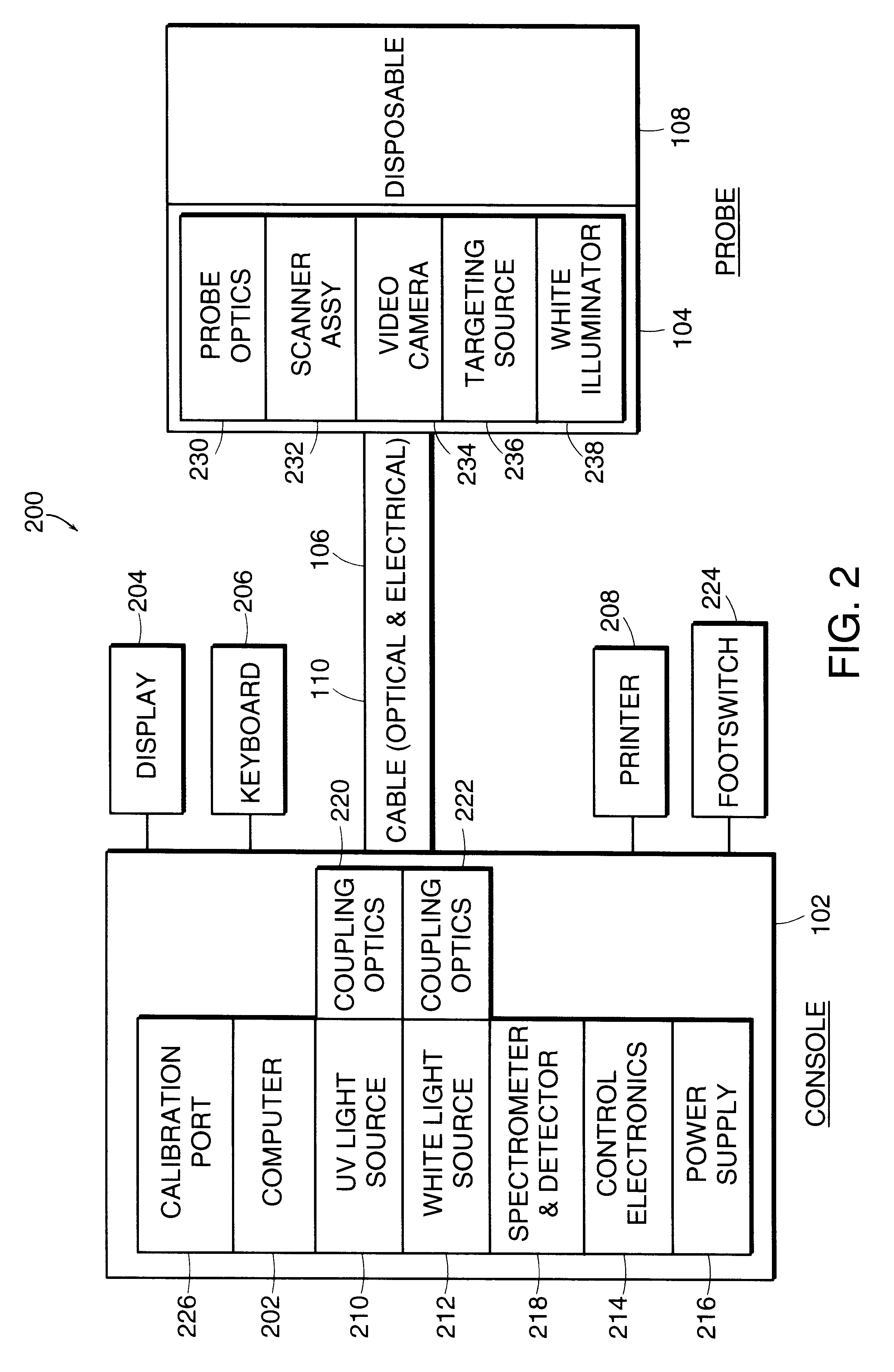

Spectral data classification of samples

A system and method for classifying tissue by application of discriminant analysis to spectral data. Spectra are recorded as amplitudes at a series of discrete wavelengths. Pluralities of reference spectra are recorded for specimens having known conditions. The reference spectra are subjected to discriminant analysis to determine wavelength regions of interest for the analysis. A plurality of amplitudes are selected for the analysis, and are plotted in an N-dimensional space. For each plurality of reference spectra corresponding to a specific known condition, a characteristic point is determined and plotted, the characteristic point representative of the known condition. A test spectrum is recorded from a test specimen, and the plurality of amplitudes corresponding in wavelength to the wavelength regions of interest are selected. A characteristic point in N-dimensional space is determined for the test spectrum. The distance of the characteristic point of the test spectrum from each of the plurality of characteristic points representative of known conditions is determined. The test specimen is assigned the condition corresponding to the characteristic point of a plurality of reference spectra, based on a distance relationship with at least two distances, provided that at least one distance is less than a pre-determined maximum distance. In some embodiments, the test specimen can comprise human cervical tissue, and the known conditions can include normal health, metaplasia, CIN I and CIN II / III.

Owner:LUMA IMAGING CORP

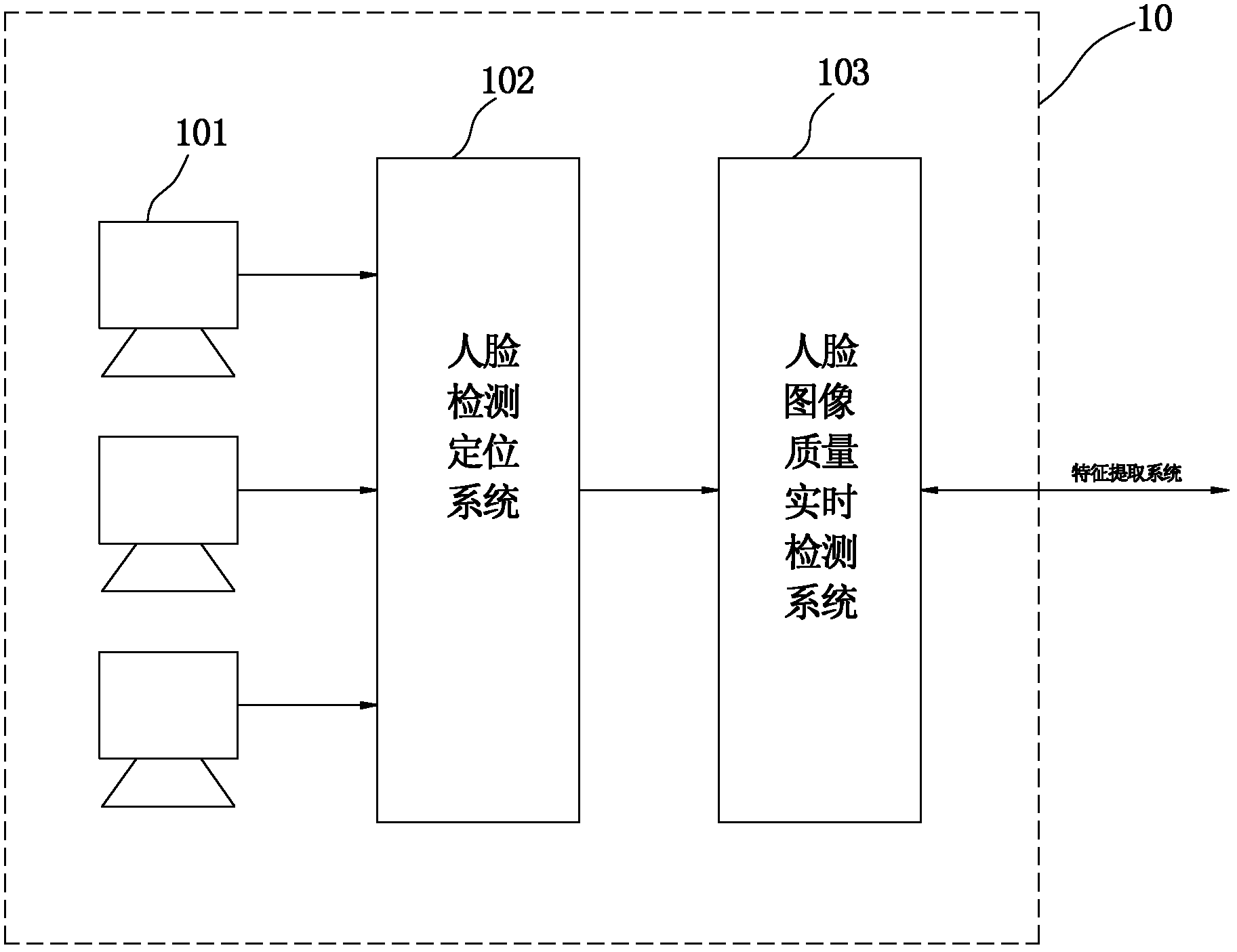

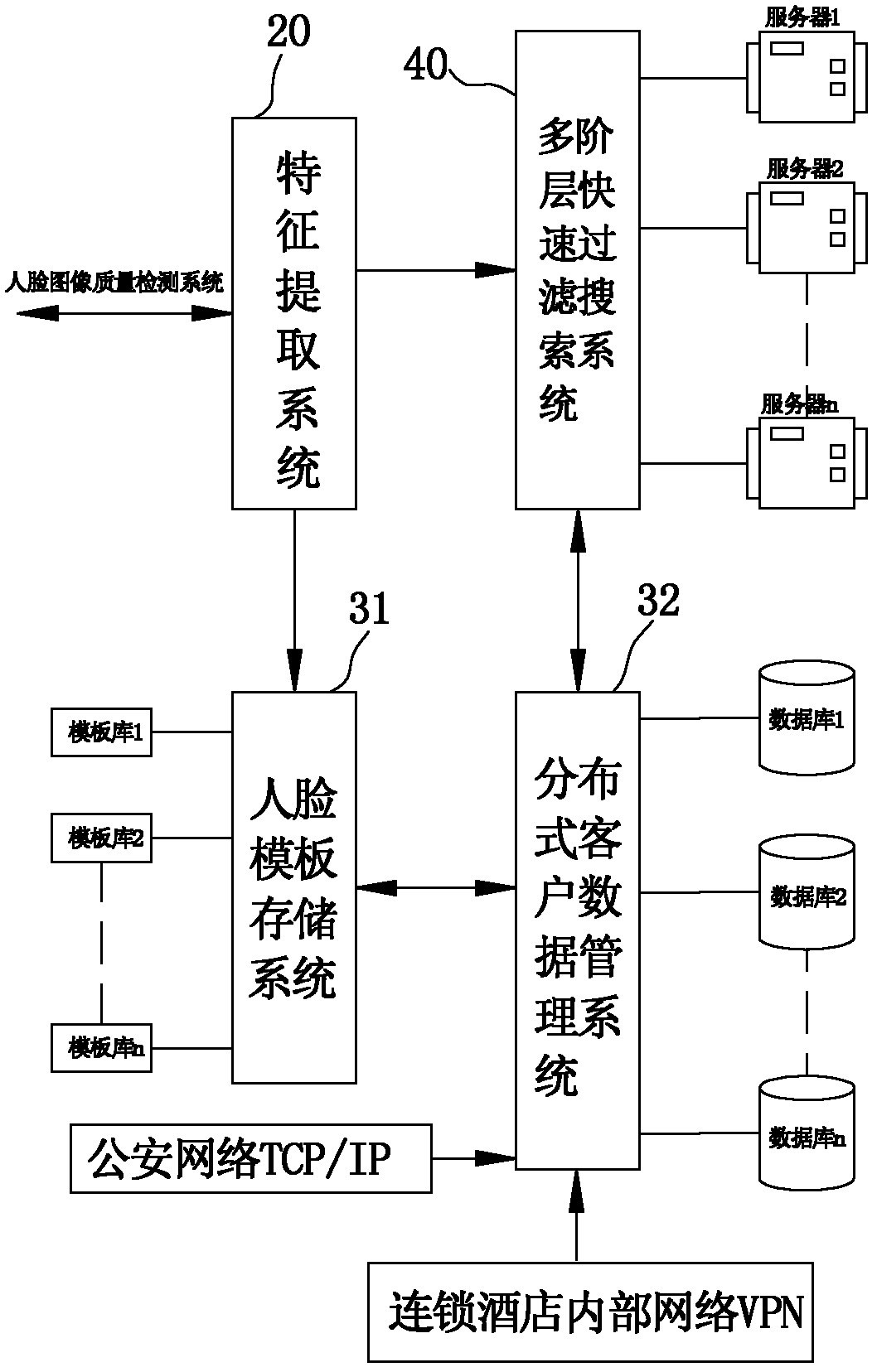

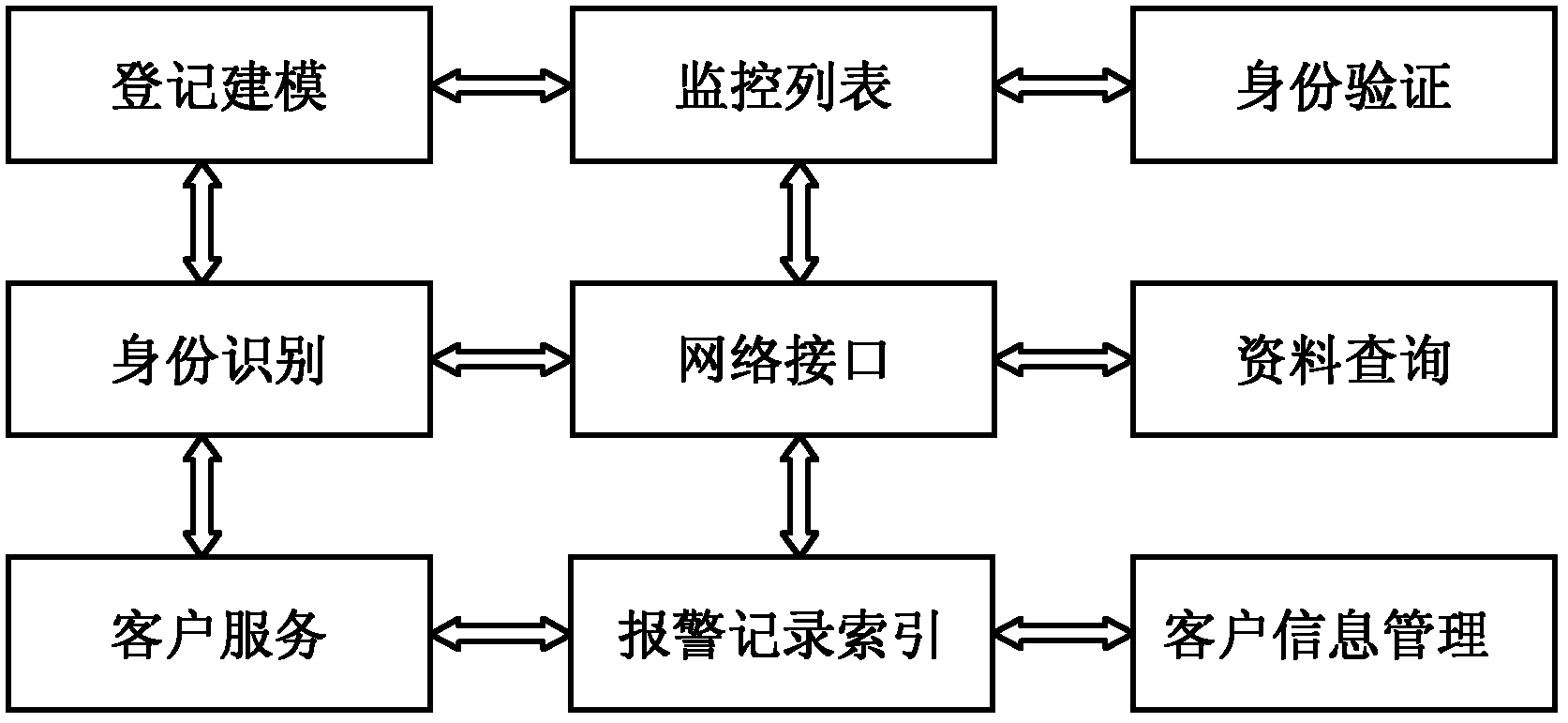

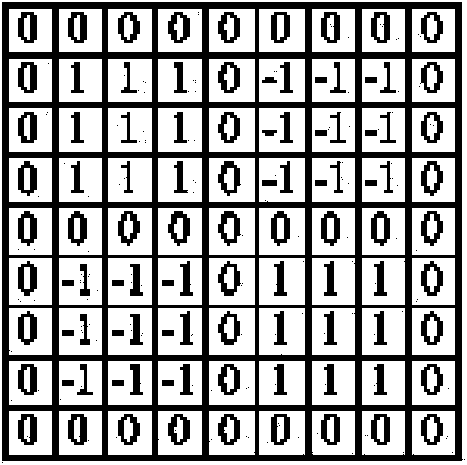

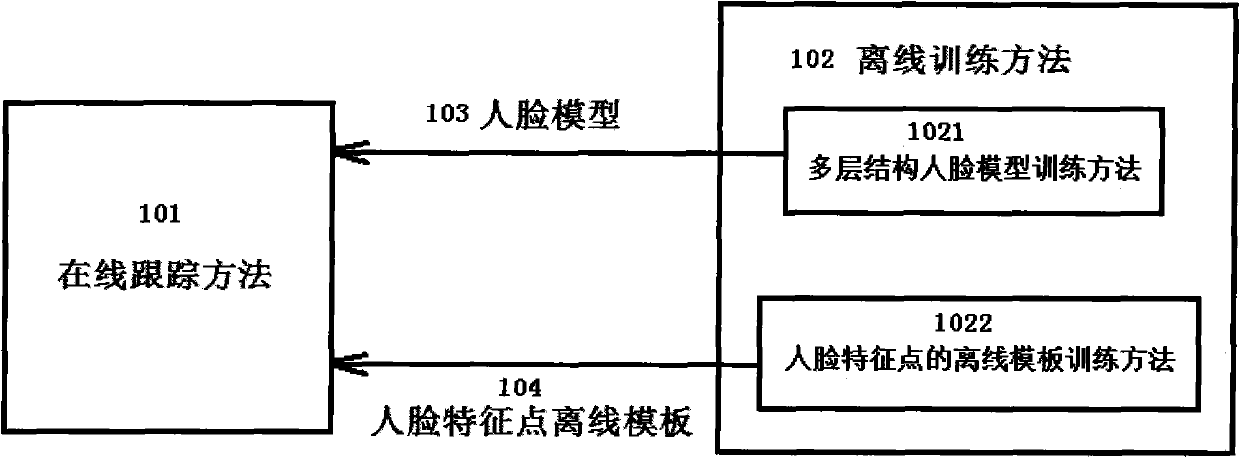

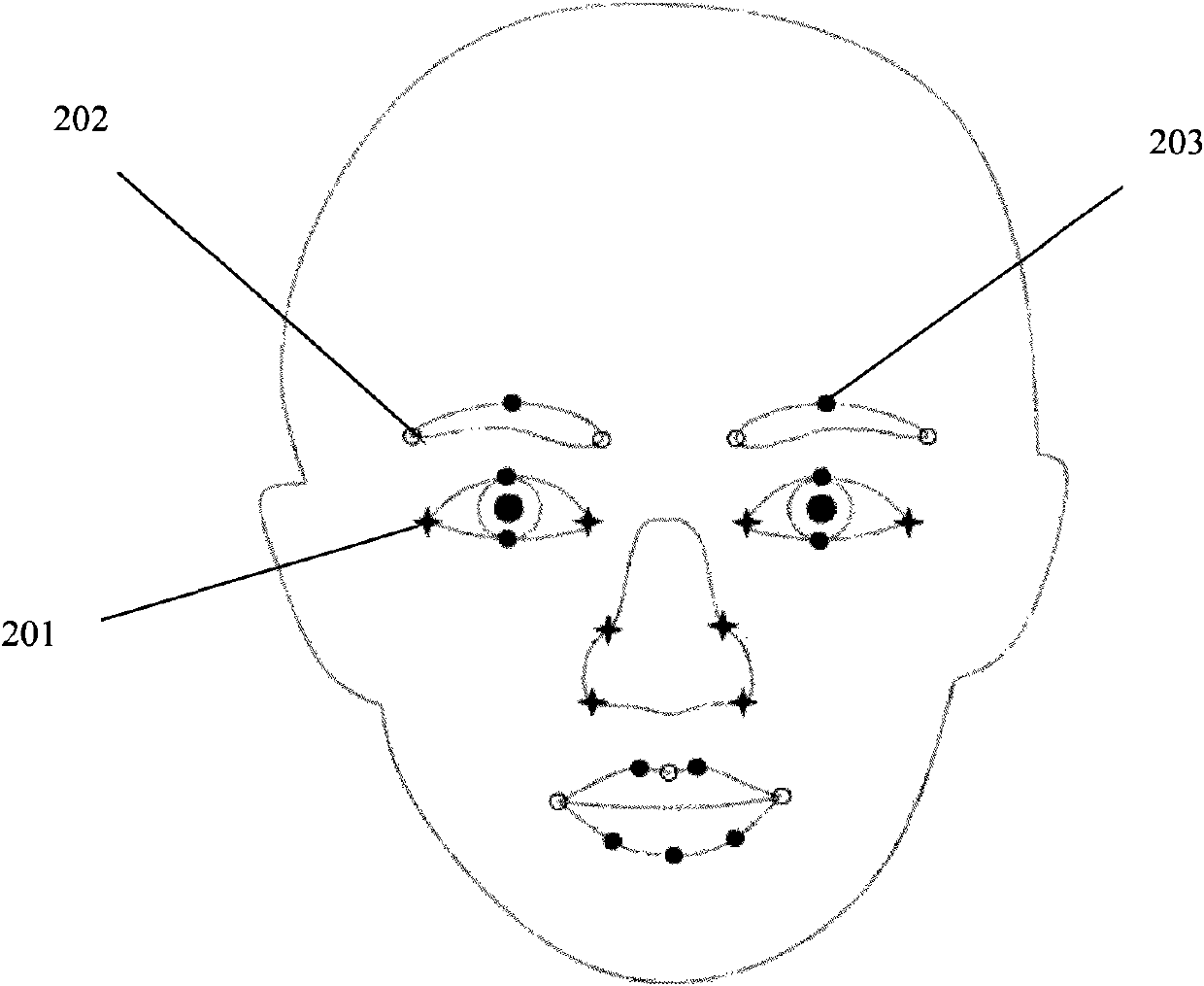

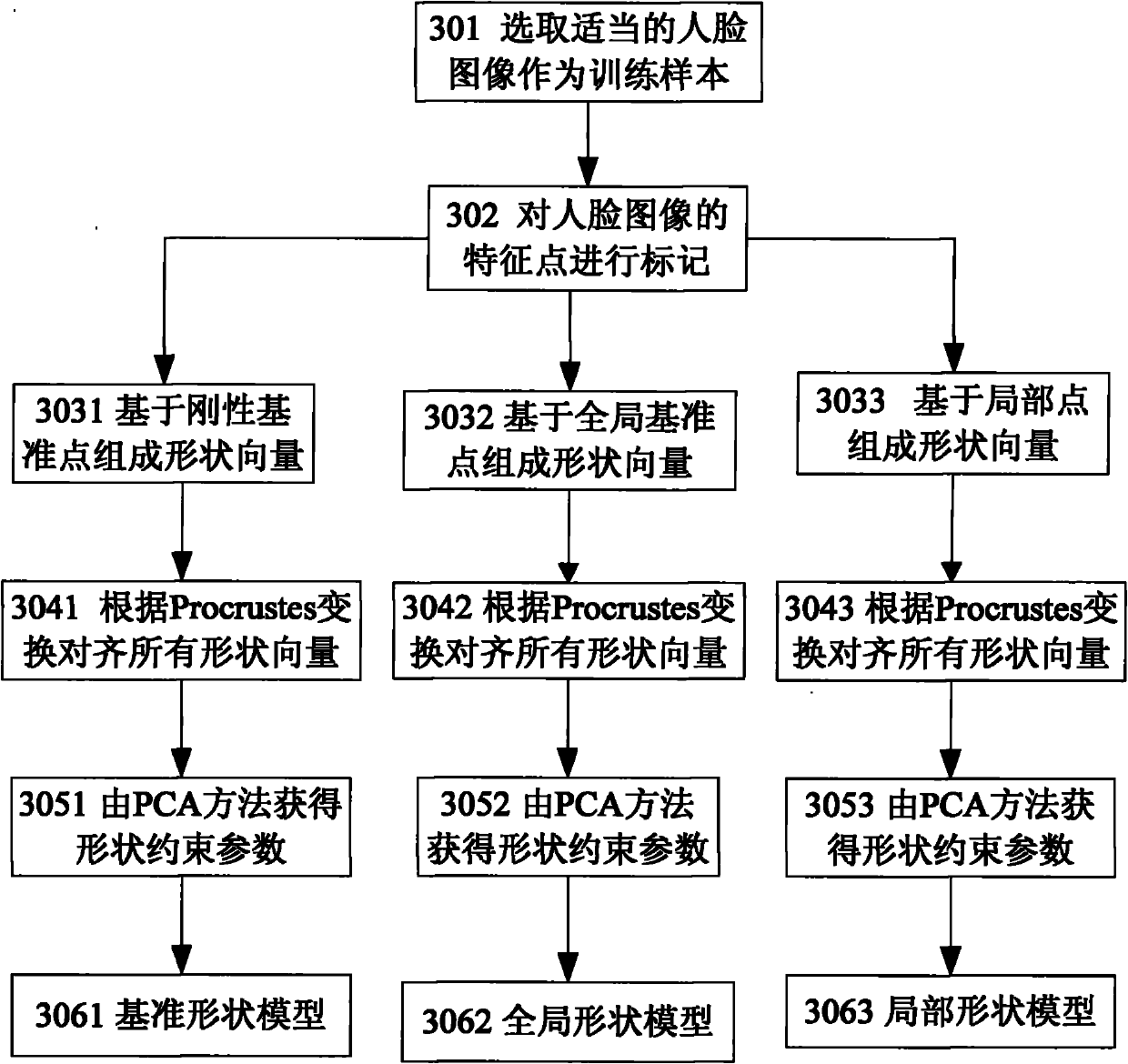

Intelligent safety monitoring system and method based on multilevel filtering face recognition

InactiveCN102201061AImprove recognition accuracyImprove search efficiencyCharacter and pattern recognitionSpecial data processing applicationsFace detectionPattern recognition

The invention discloses a method based on multilevel filtering face recognition. The method comprises the following steps of: collecting a face image of a detected man through an image collection system on a user terminal; automatically detecting and partitioning an exact position of a face from the collected face image by a face detection and positioning system, and performing intelligent indication and image quality real-time monitoring on a face image collection process through an automatic and real-time face image quality detection system; extracting characteristic points from the face image of the user terminal according to an image quality detection threshold value, and generating corresponding target face templates; and performing real-time comparison on a face to be recognized which is detected by a client and a known face database based on a multilevel filter searching algorithm through a background server, finding out the face template having the highest matching score, judging according to a preset threshold value of the system and determining identity information of the shot man in real time. The invention also provides an intelligent identity recognition and safety monitoring system based on a multilevel face filtering and searching technology with high reliability and flexibility.

Owner:CHANGZHOU RUICHI ELECTRONICS TECH

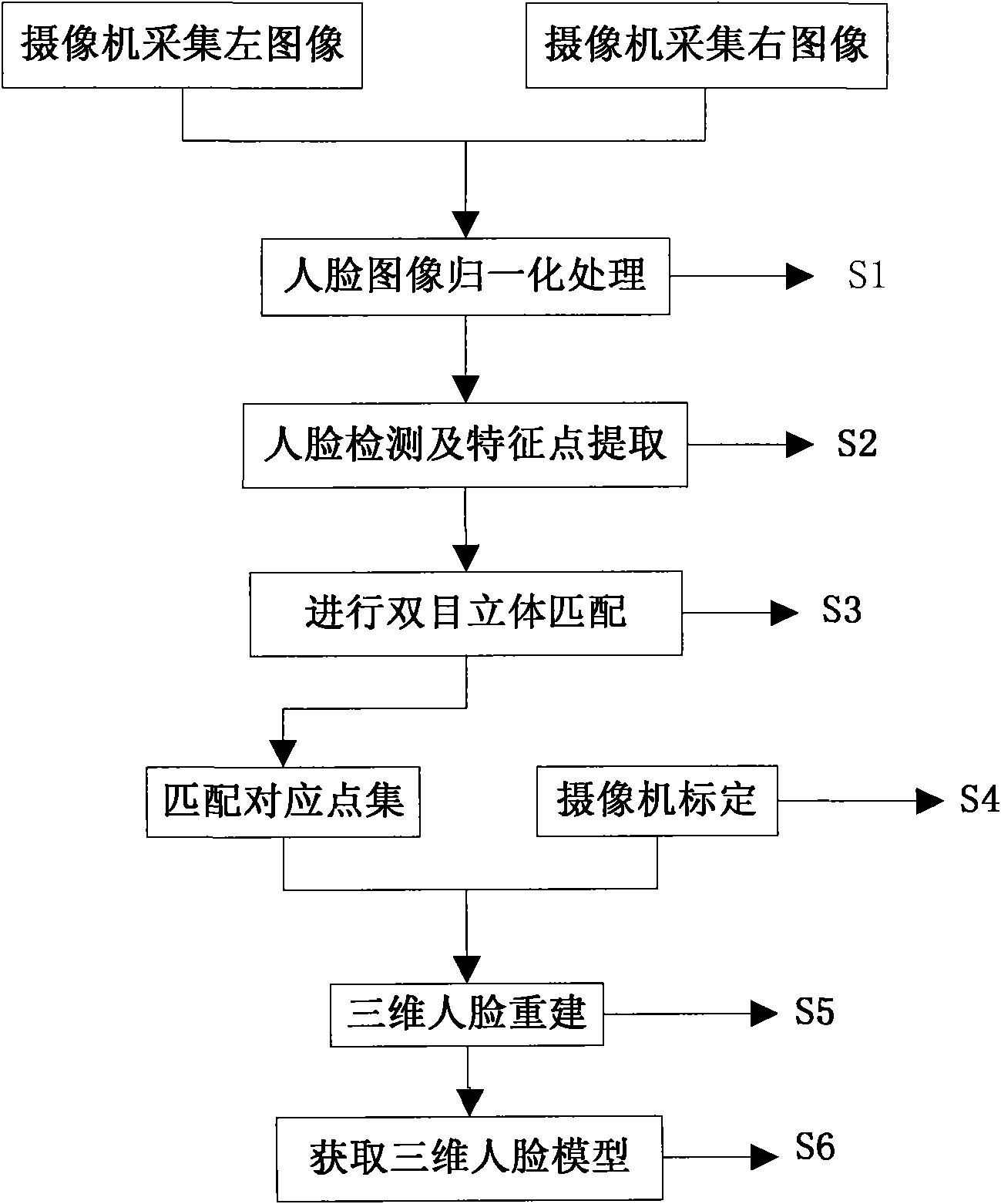

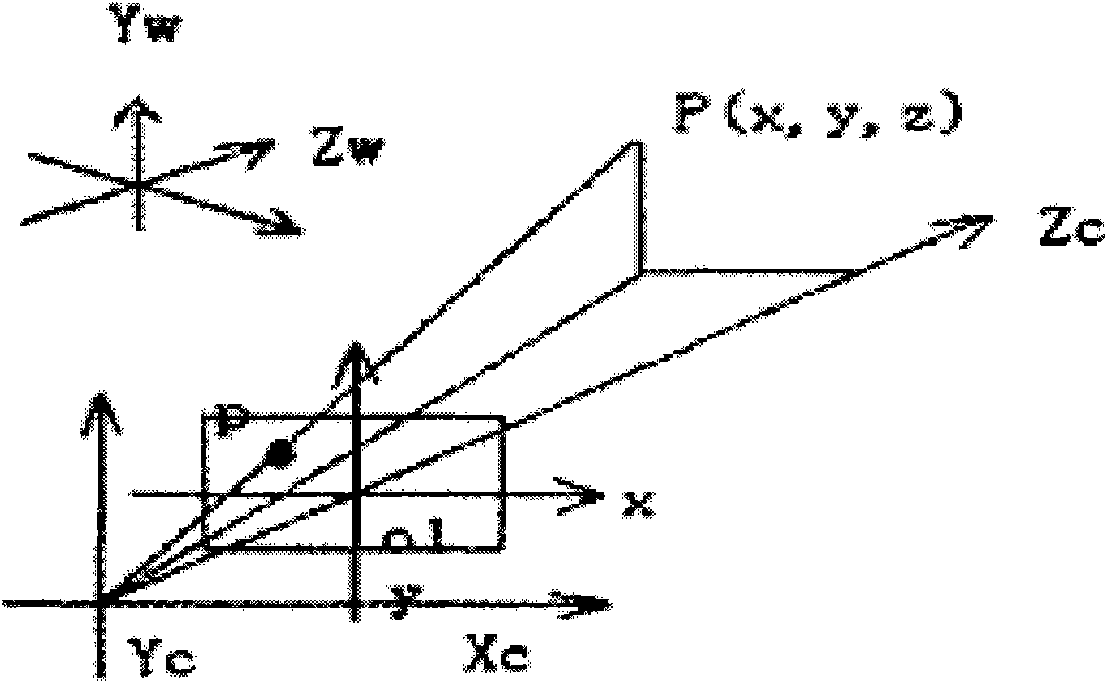

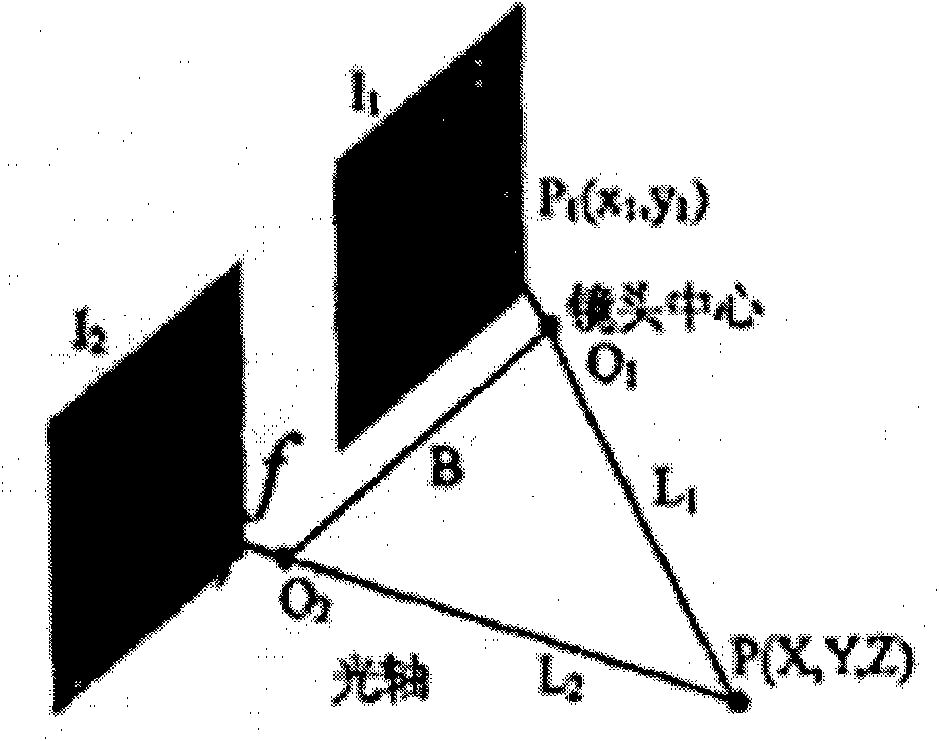

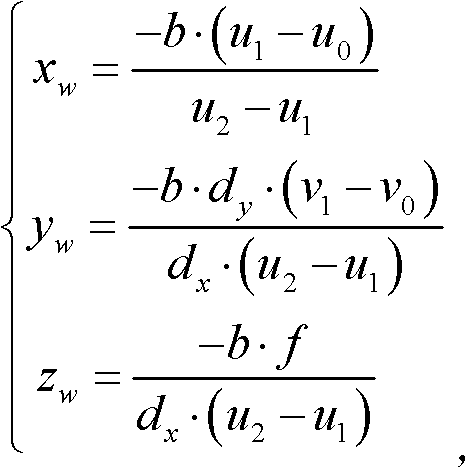

Binocular stereo vision based intelligent three-dimensional human face rebuilding method and system

The invention discloses a binocular stereo vision based intelligent three-dimensional human face rebuilding method and a system; the method comprises: preprocessing operations including image normalization, brightness normalization and image correction are carried out to a human face image; a human face area in the human face image which is preprocessed is obtained and human face characteristic points are extracted; the object is rebuilt by projection matrix, so as to obtain internal and external parameters of a vidicon; based on the human face characteristic points, gray level cross-correlation matching operators are expanded to color information, and a parallax image generated by stereo matching is calculated according to information including polar line restraining, human face area restraining and human face geometric conditions; a three-dimensional coordinate of a human face spatial hashing point cloud is calculated according to the vidicon calibration result and the parallax image generated by stereo matching, so as to generate a three-dimensional human face model. By adopting the steps, more smooth and vivid three-dimensional human face model is rebuilt in the invention.

Owner:BEIJING JIAOTONG UNIV

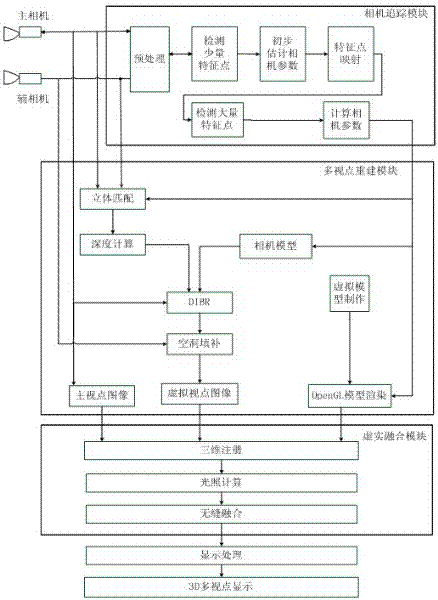

Three-dimensional enhancing realizing method for multi-viewpoint free stereo display

ActiveCN102568026ARealisticAchieve consistencySteroscopic systems3D-image renderingViewpointsDisplay device

The invention discloses a three-dimensional enhancing realizing method for multi-viewpoint free stereo display, which comprises the following steps: 1) stereoscopically shooting a natural scene by using a binocular camera; 2) extracting and matching a characteristic point of an image of a main camera, generating a three-dimensional point cloud picture of the natural scene in real time, and calculating a camera parameter; 3) calculating a depth image corresponding to the image of the main camera, drawing a virtual viewpoint image and a depth image thereof, and performing hollow repairing; 4) utilizing three-dimensional making software to draw a three-dimensional virtual model and utilizing a false-true fusing module to realize the false-true fusing of the multi-viewpoint image; 5) suitably combining multiple paths of false-true fused images; and 6) providing multi-viewpoint stereo display by a 3D display device. According to the method provided by the invention, the binocular camera is used for stereoscopically shooting and the characteristic extracting and matching technique with better instantaneity is adopted, so that no mark is required in the natural scene; the false-true fusing module is used for realizing the illumination consistency and seamless fusing of the false-true scenes; and the multi-user multi-angle naked-eye multi-viewpoint stereo display effect is supplied by the 3D display device.

Owner:万维显示科技(深圳)有限公司

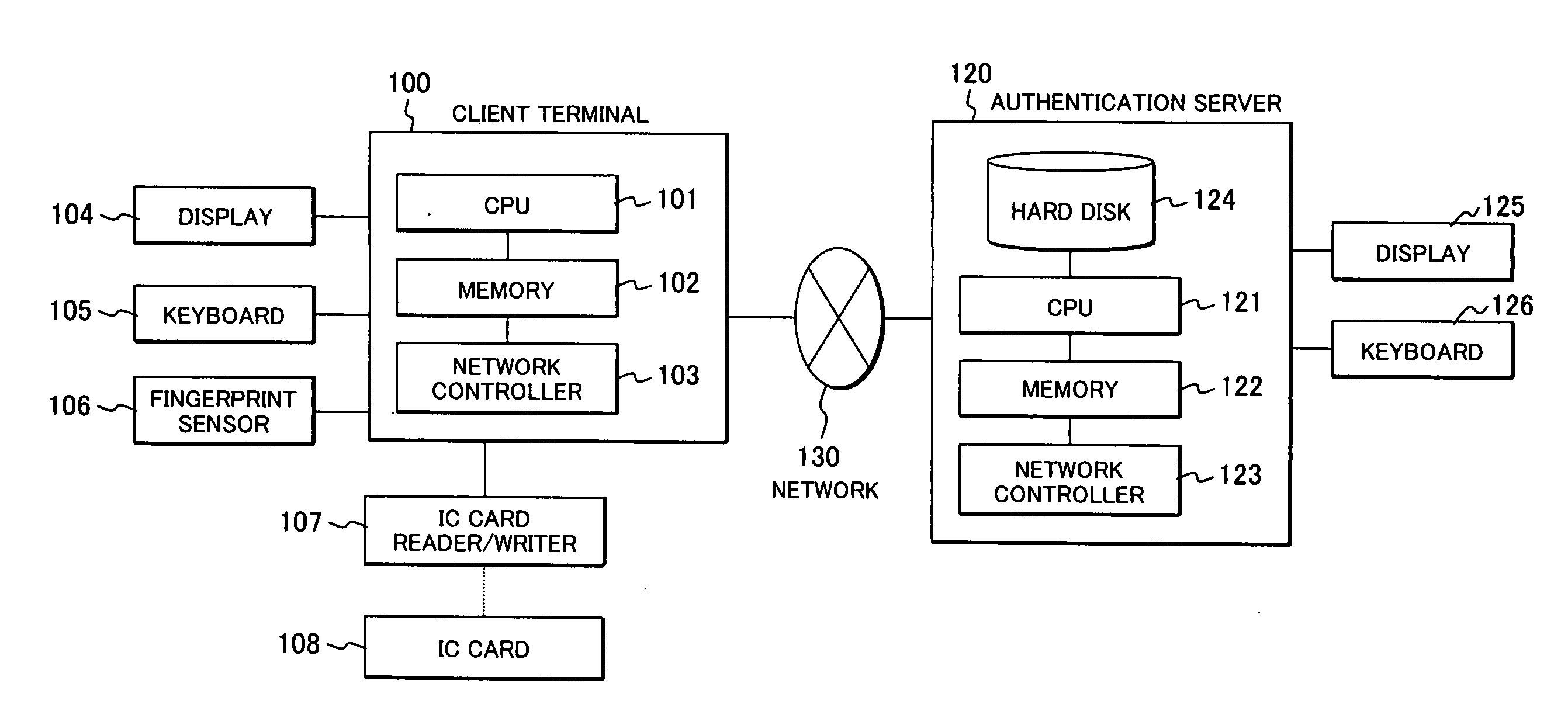

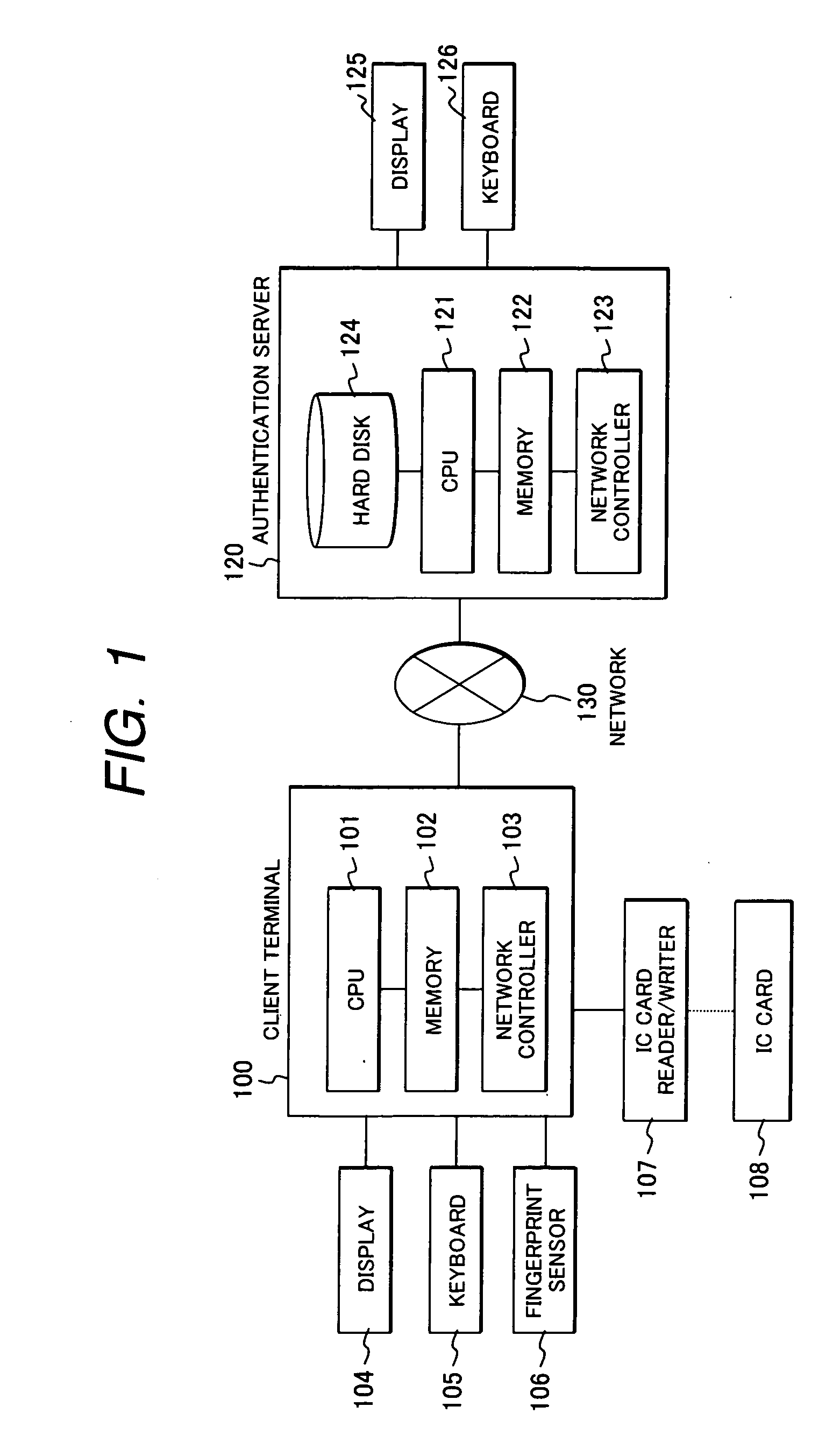

Method for generating an encryption key using biometrics authentication and restoring the encryption key and personal authentication system

InactiveUS20080072063A1Electric signal transmission systemsDigital data processing detailsComputer hardwareAuthentication system

A personal authentication system using biometrics information. The system orders, when an characteristic element in the biometrics information (such as a characteristic point in a fingerprint) can be expressed with two types of information (such as, for instance, a coordinate values for a characteristic point in a fingerprint and a local partial image), the characteristic points using one type of information (for instance, local partial image) as label information, and outputs other type of information (such as coordinate values) as key information according to the order.

Owner:HITACHI LTD

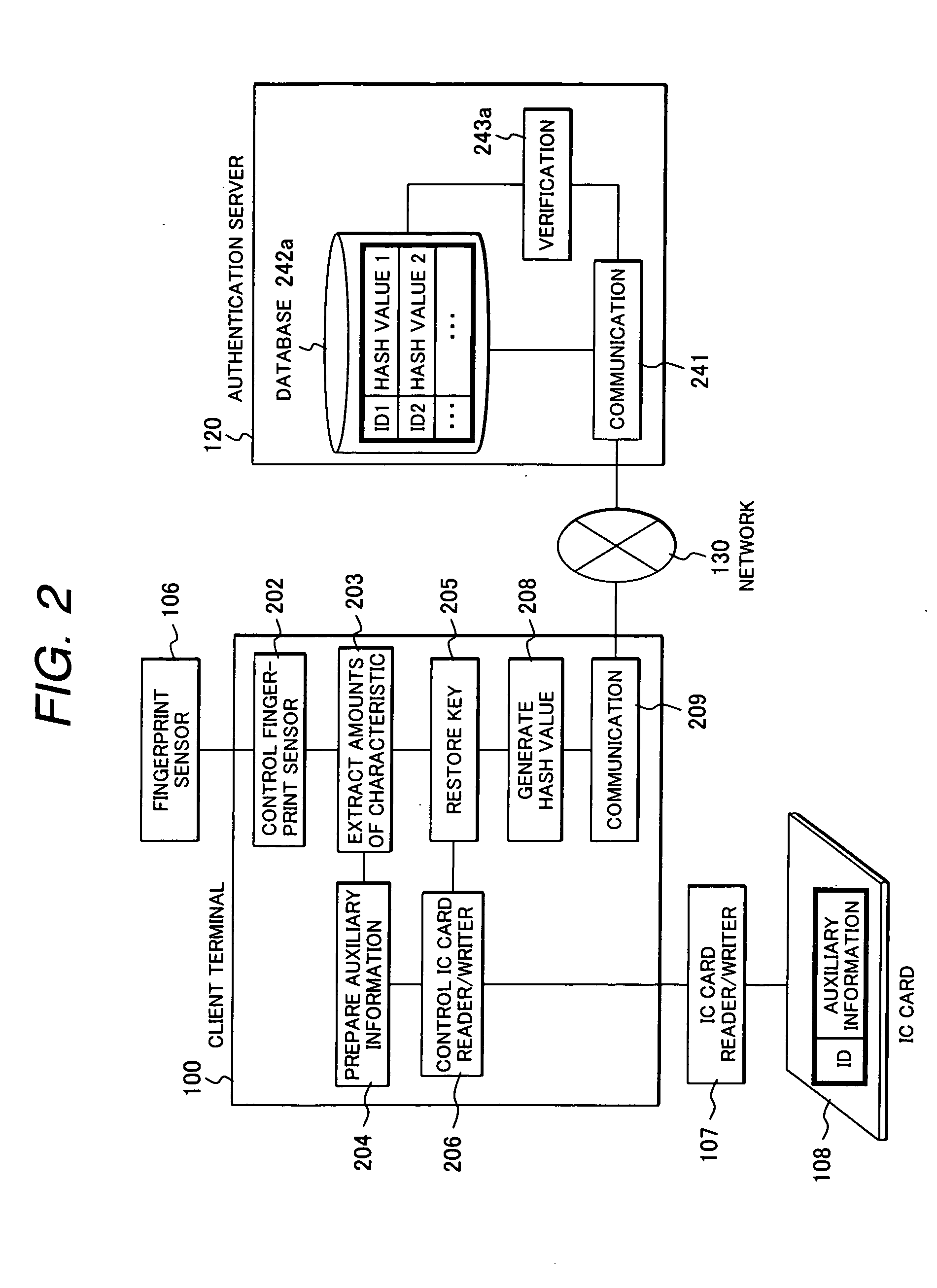

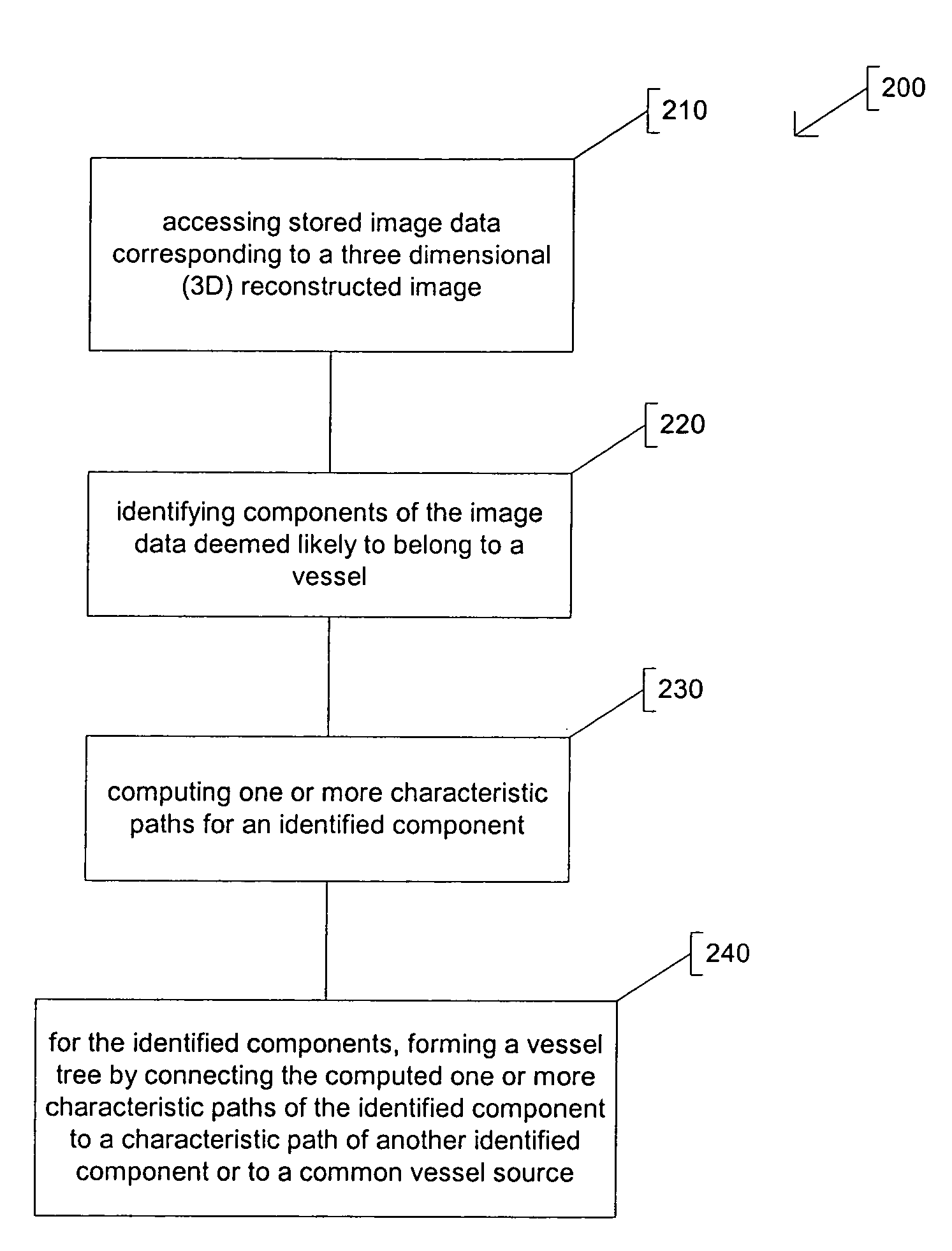

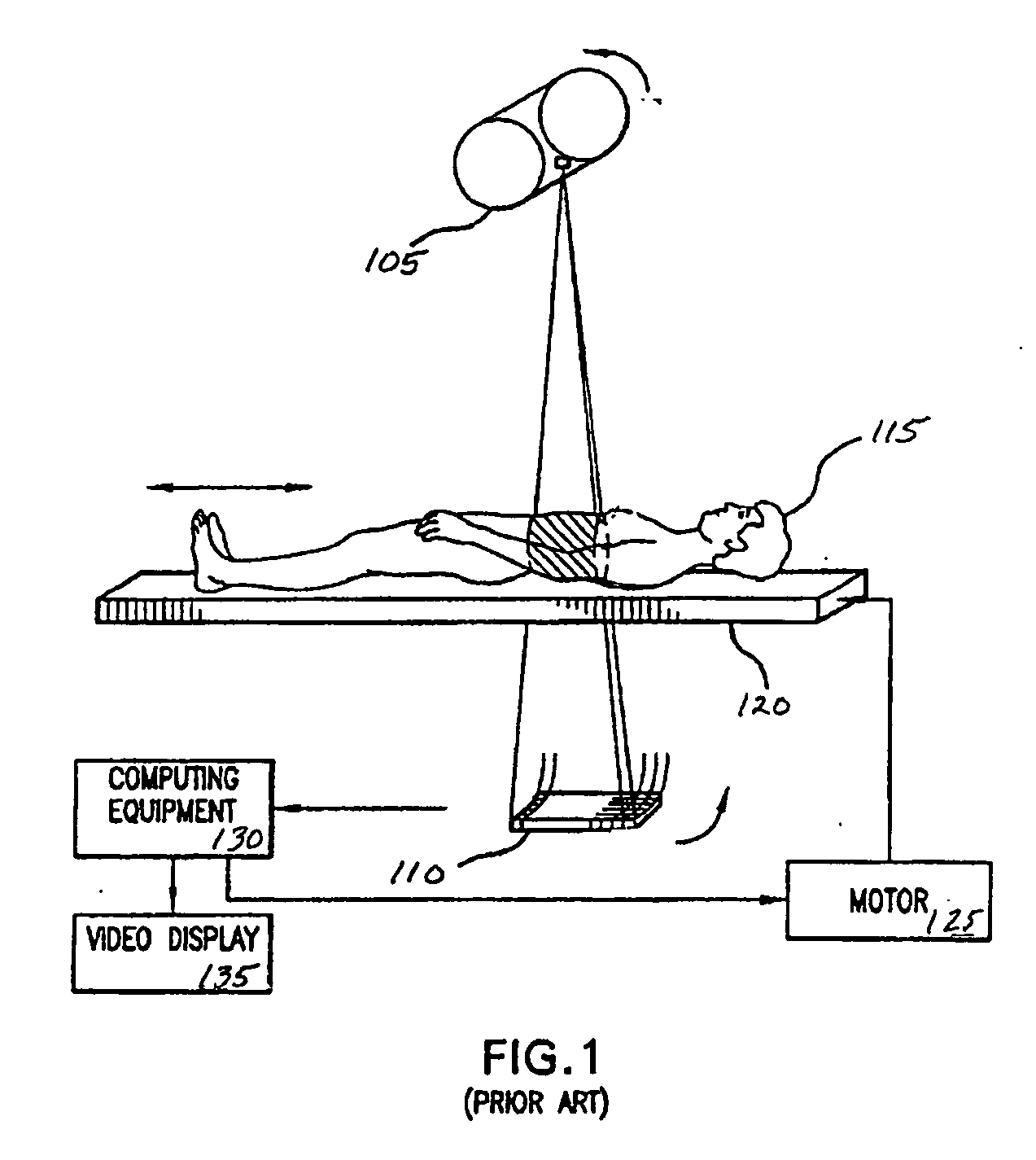

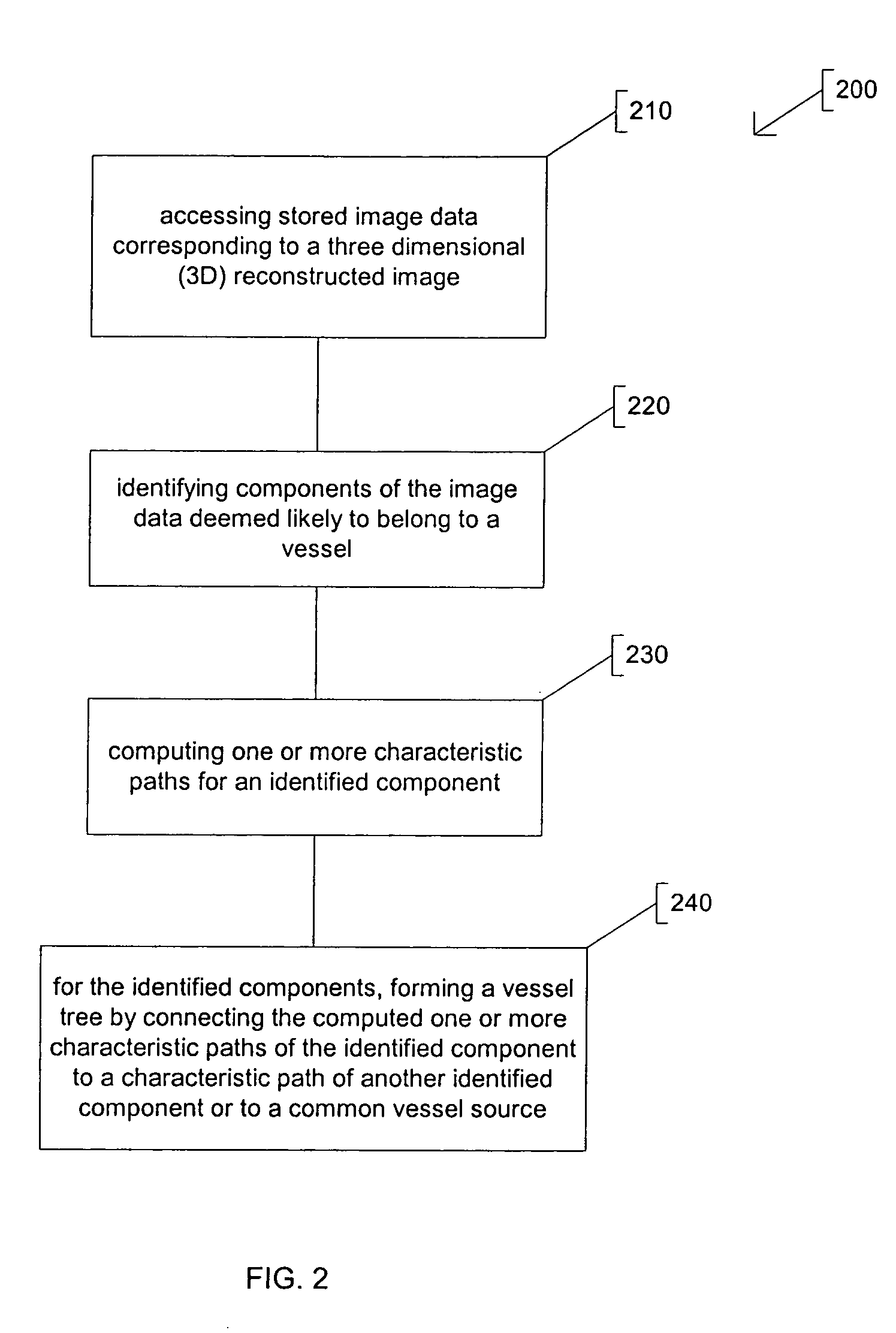

Fully automatic vessel tree segmentation

A system including a memory to store image data corresponding to a three dimensional (3D) reconstructed image, and a processor that includes an automatic vessel tree extraction module. The automatic vessel tree extraction module includes a load data module to access the stored image data, a component identification module to identify those components of the image data deemed likely to belong to a vessel, a characteristic path computation module to compute one or more characteristic paths for an identified component having a volume greater than a specified threshold volume value and discard an identified component having a volume less than the specified threshold volume value, and a connection module to connect the computed one or more characteristic paths of the identified component to a characteristic path of another identified component or to a common vessel source until the identified components are connected or discarded.

Owner:VITAL IMAGES

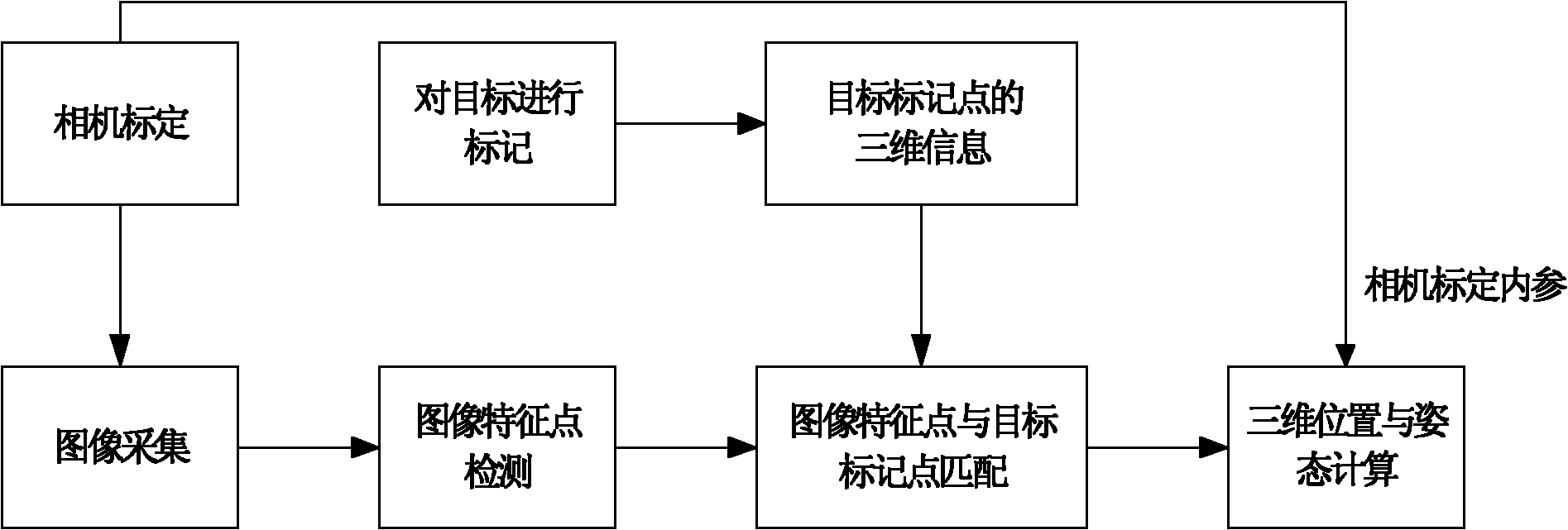

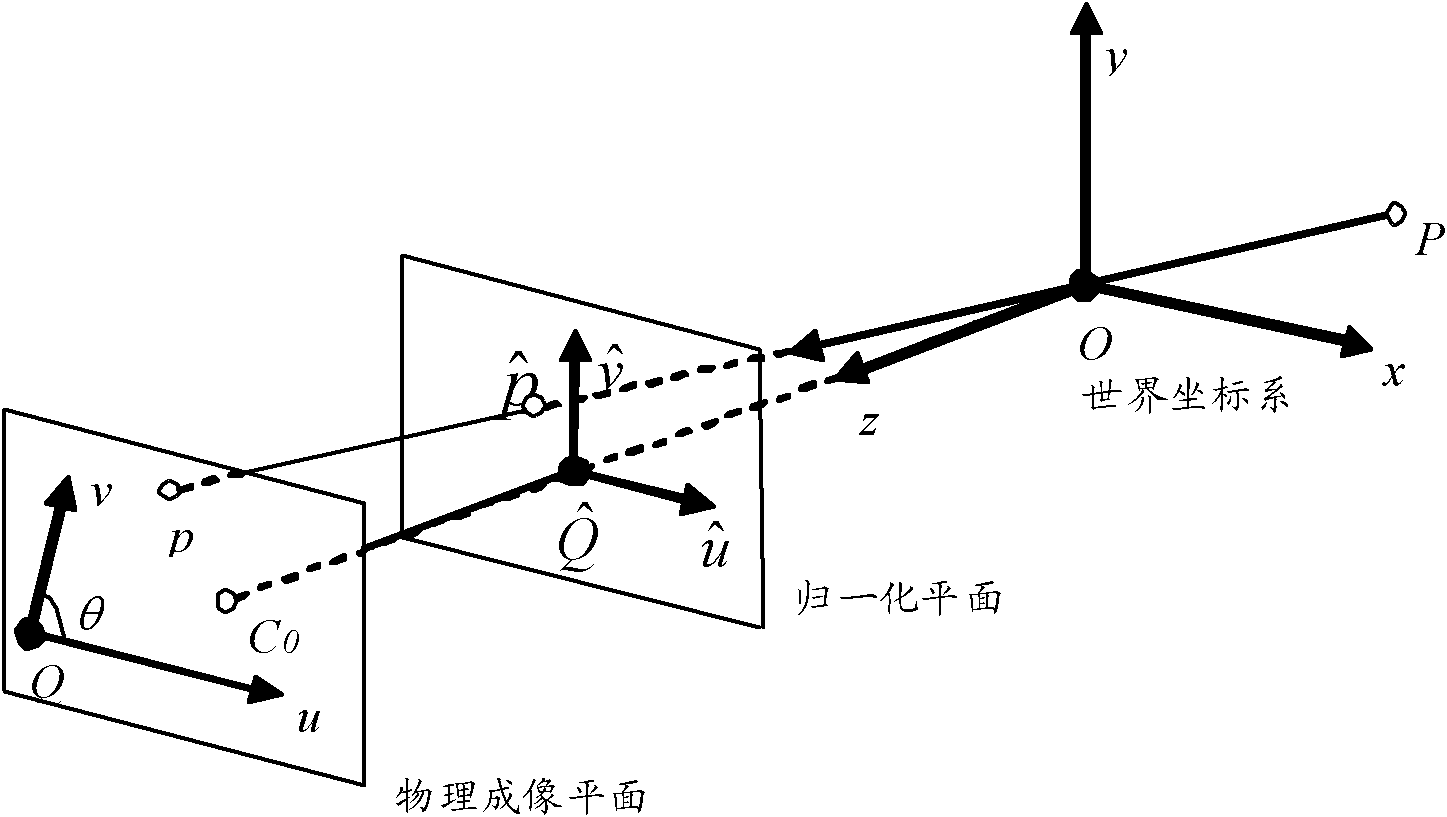

Method for measuring three-dimensional position and stance of object with single camera

InactiveCN101839692AReduce cost of measurementReduce volumeUsing optical meansPicture interpretationThree-dimensional spaceMeasurement point

The invention discloses a method for measuring the three-dimensional position and the stance of an object with a single camera. The method comprises the following steps of: acquiring an image of a target to be measured by utilizing a single camera; confirming the real-time three-dimensional position and stance information of the target to be measured by accurately identifying marking points on the target to be measured; selecting a suitable camera according to a detection scene and a range and calibrating the camera to acquire inner and outer parameters of the camera; designing target marking points according to the target to be measured and reasonably arranging the marking points; then, detecting the target, identifying characteristic points according to the image shot by the camera, and matching the detected characteristic points with the marking points; and finally, solving the three-dimensional position and stance information of the target to be measured according to the corresponding relation between the measuring points and the object marking points. Whether a non-rigid object is deformed or not can also be detected by using the method. In the invention, the single camera is adopted to realize three-dimensional measurement, acquire the information of the target in a three-dimensional space, such as space geometrical parameters, position, stance, and the like, decrease the measuring cost and the size of a measuring system, and facilitate the operation.

Owner:XI AN JIAOTONG UNIV

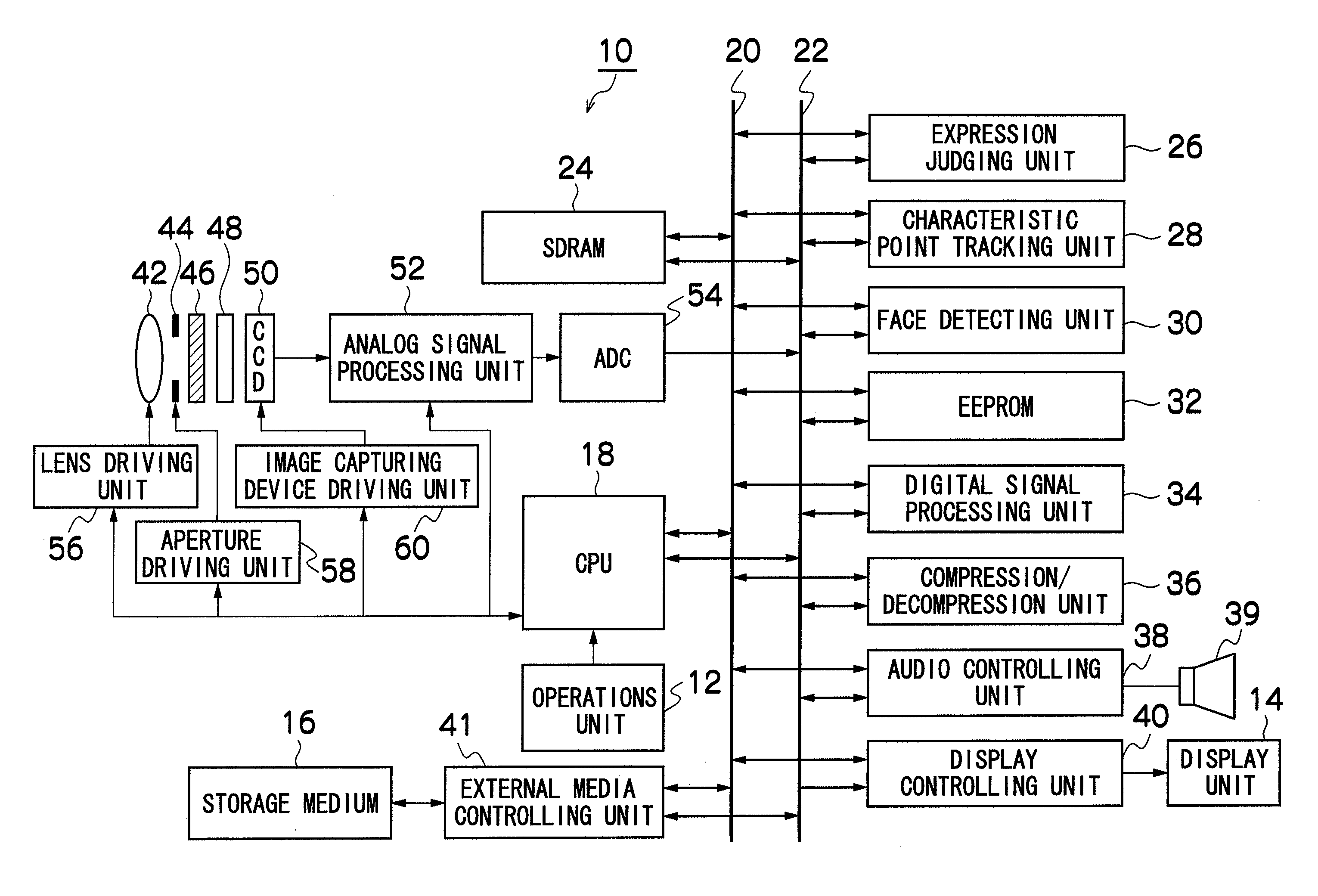

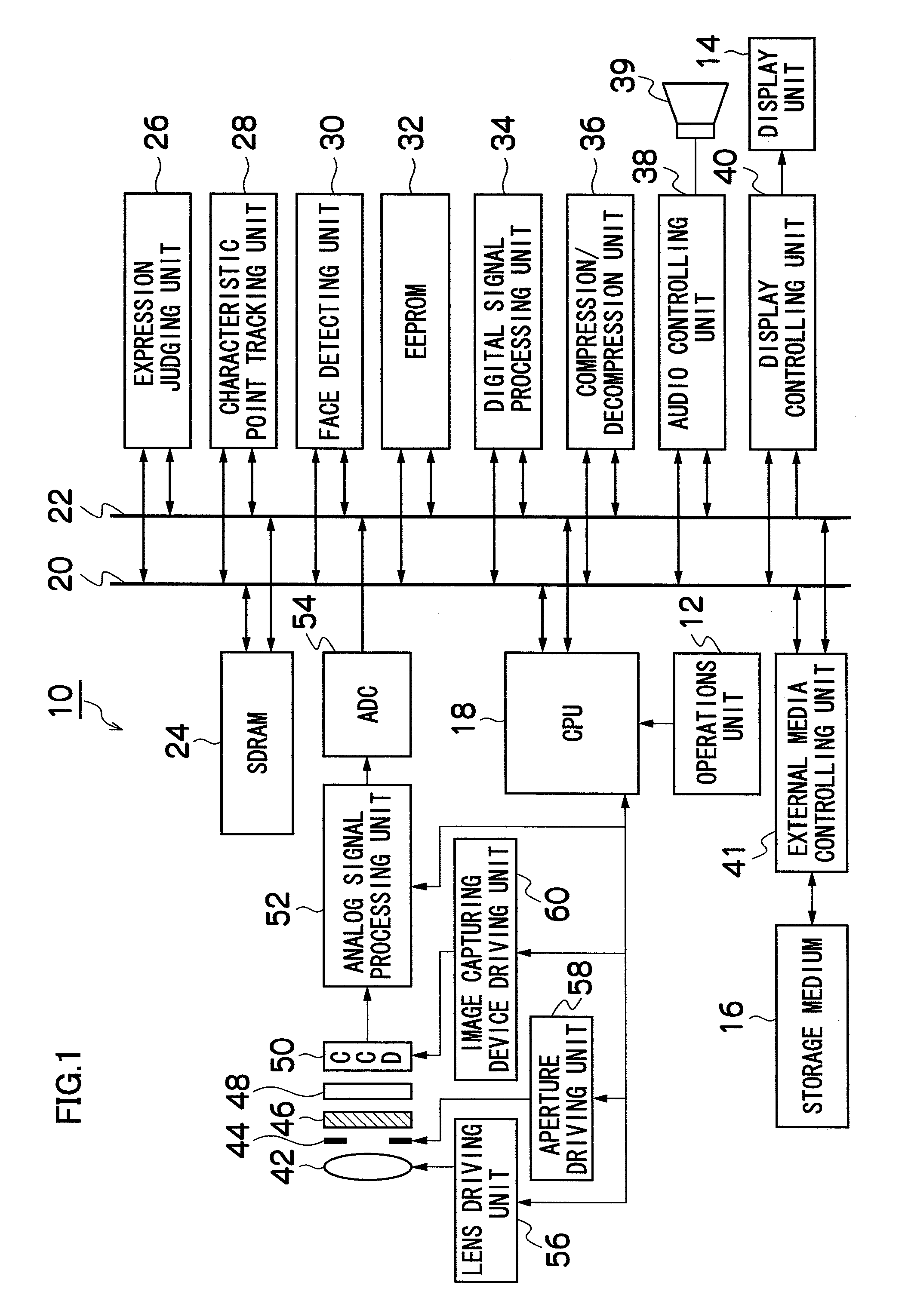

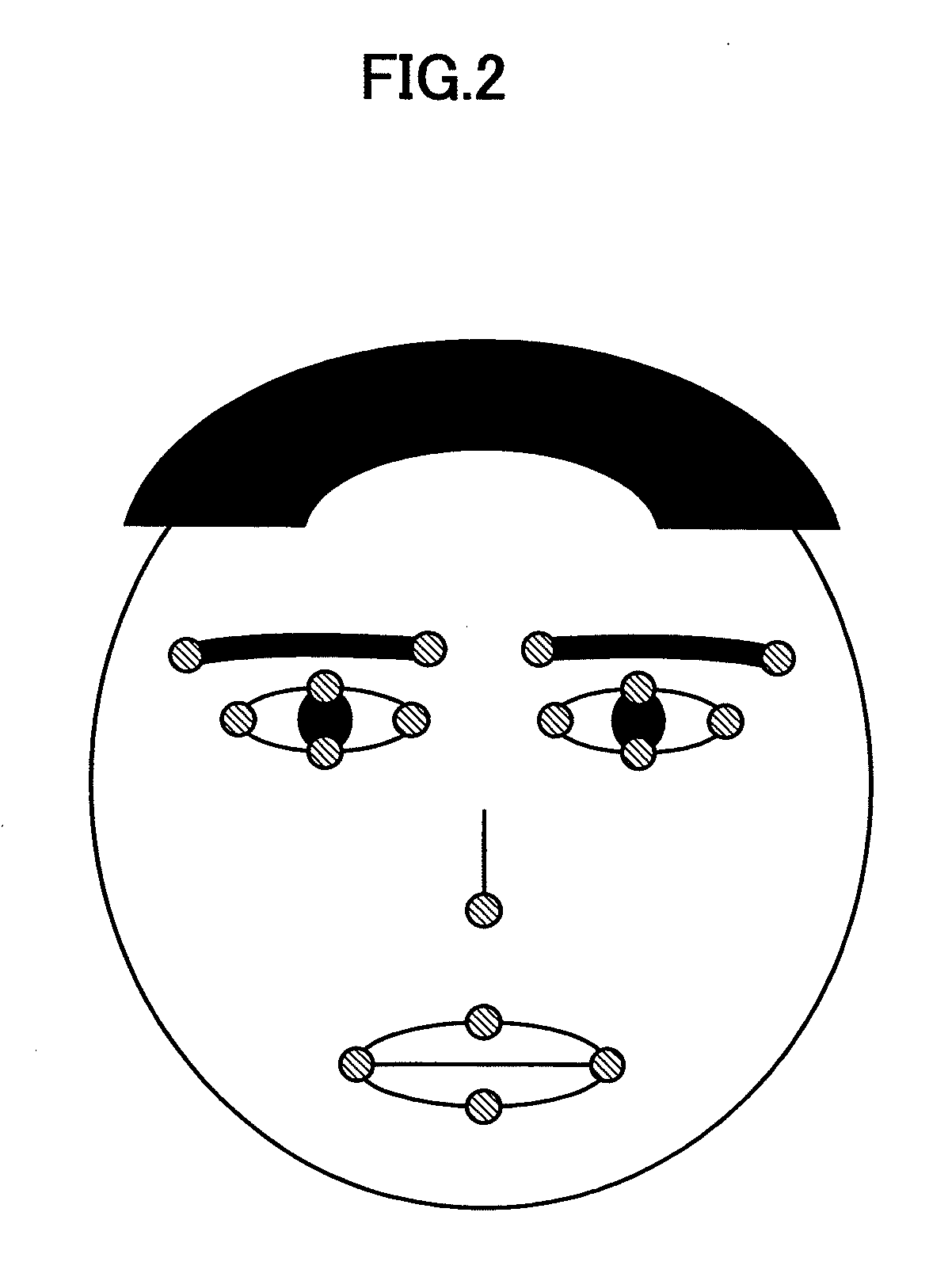

Image processing apparatus, image capturing apparatus, image processing method and recording medium

ActiveUS20090087099A1Enhance the imageSignificant changeTelevision system detailsCharacter and pattern recognitionImaging processingImage capture

Frame images captured in a continuous manner are acquired, and temporarily stored. Characteristic points of faces in the acquired frame images are extracted. A sum (expression change amount) of distances between characteristic points of the face (face parts) in a current frame and the characteristic points of a preceding frame is calculated. The target frame image in which the expression change amount is largest, and m frame images preceding and following the target frame image in which the expression change amount is largest are extracted as best image candidates. A best shot image is extracted from the best image candidates and stored in a storage medium. Thus, only an image (best shot image) which contains a face which a user wishes to record can be efficiently extracted from among images captured in a continuous manner, and stored.

Owner:FUJIFILM CORP

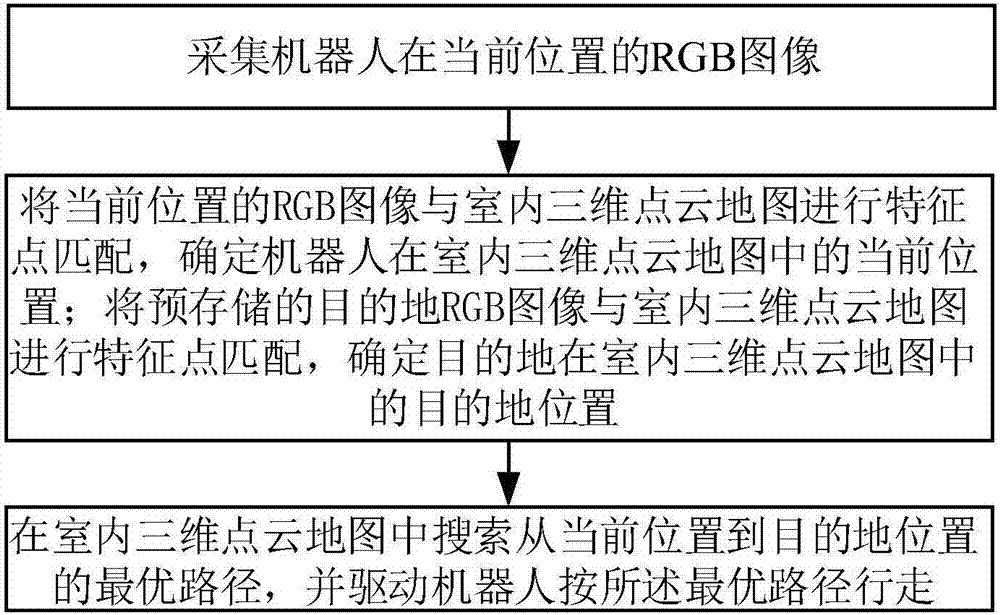

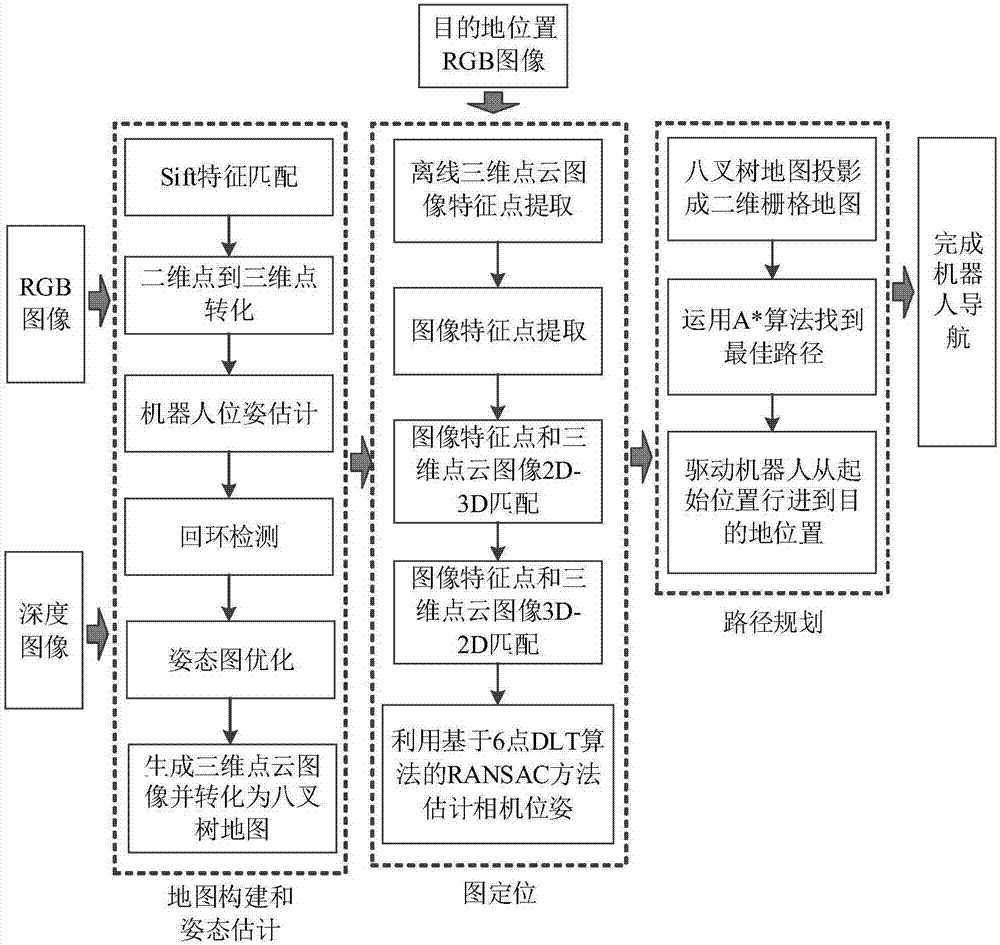

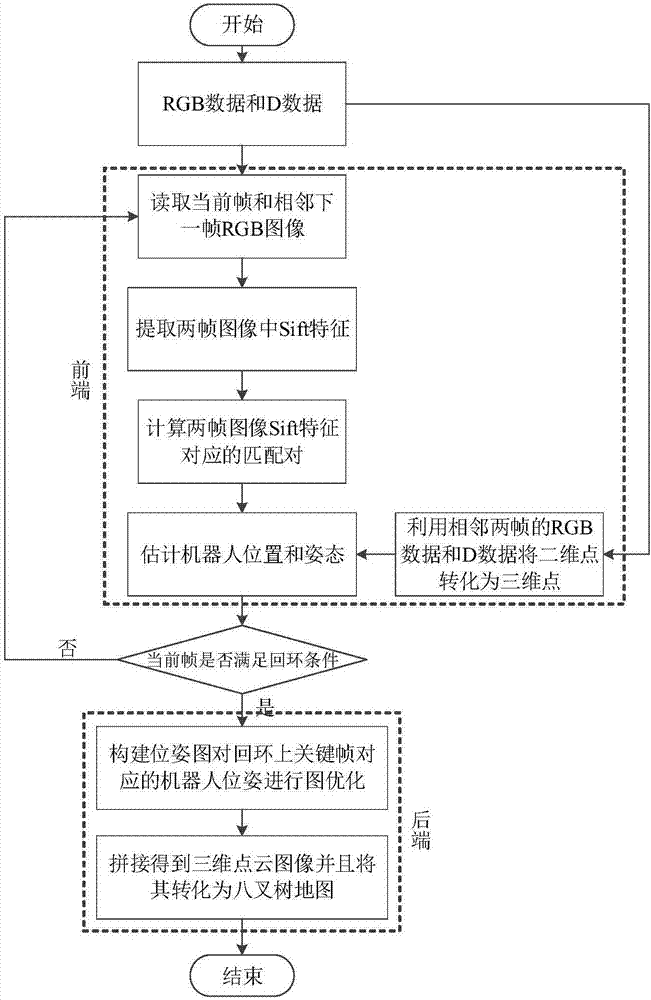

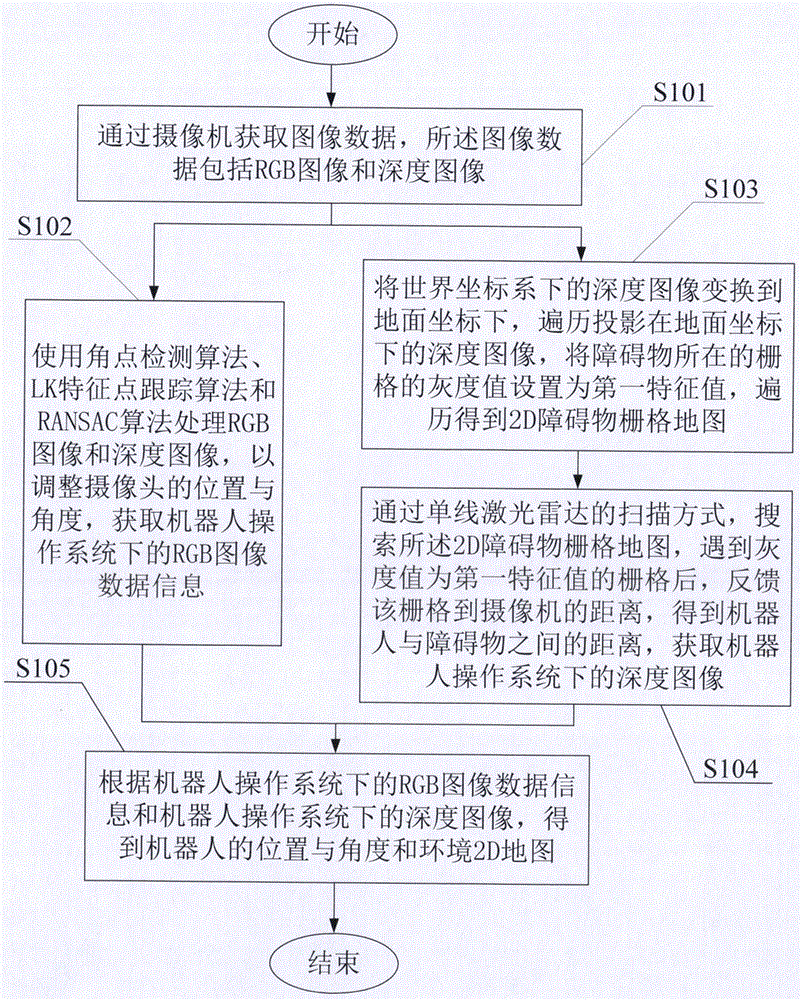

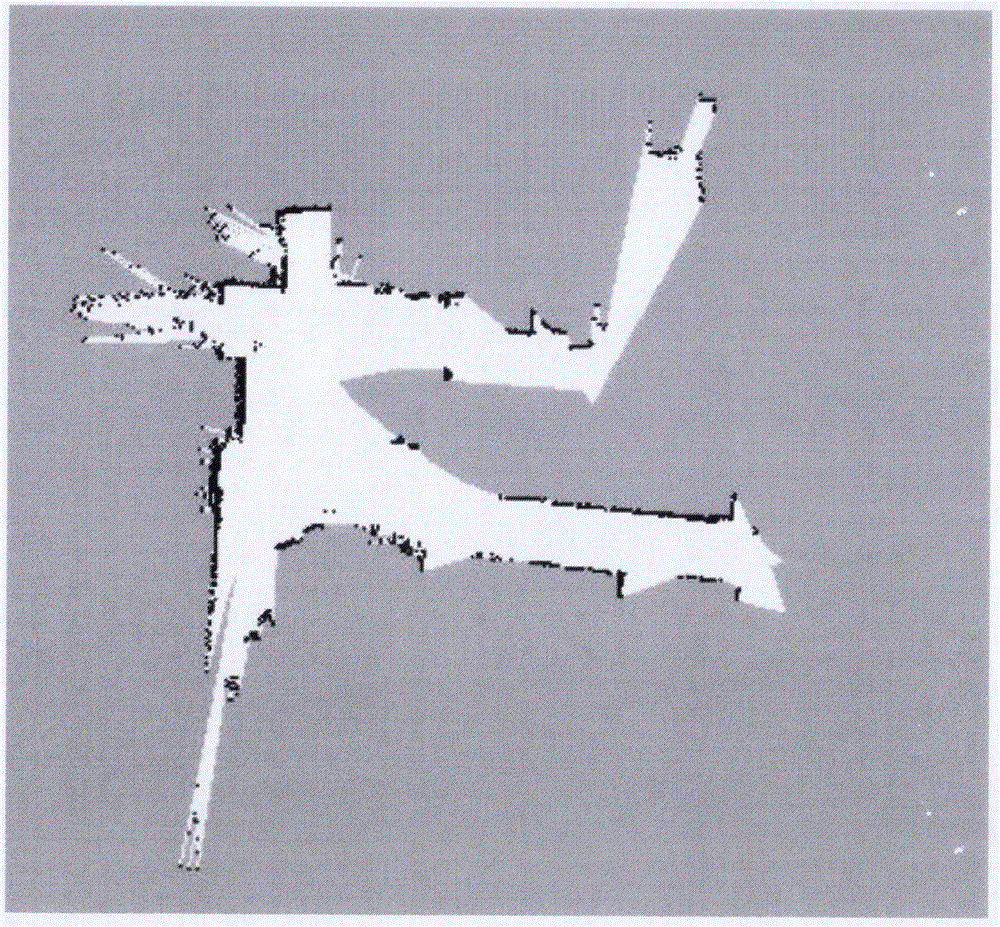

Autonomous location and navigation method and autonomous location and navigation system of robot

ActiveCN106940186AAutomate processingFair priceNavigational calculation instrumentsPoint cloudRgb image

The invention discloses an autonomous location and navigation method and an autonomous location and navigation system of a robot. The method comprises the following steps: acquiring the RGB image of the robot in the current position; carrying out characteristic point matching on the RGB image of the current position and an indoor three-dimensional point cloud map, and determining the current position of the robot in the indoor 3D point cloud map; carrying out characteristic point matching on a pre-stored RGB image of a destination and the indoor three-dimensional point cloud map, and determining the position of the destination in the indoor 3D point cloud map; and searching an optimal path from the current position to the destination position in the indoor 3D point cloud map, and driving the robot to run according to the optimal path. The method and the system have the advantages of completion of autonomous location and navigation through using a visual sensor, simple device structure, low cost, simplicity in operation, and high path planning real-time property, and can be used in the fields of unmanned driving and indoor location and navigation.

Owner:HUAZHONG UNIV OF SCI & TECH

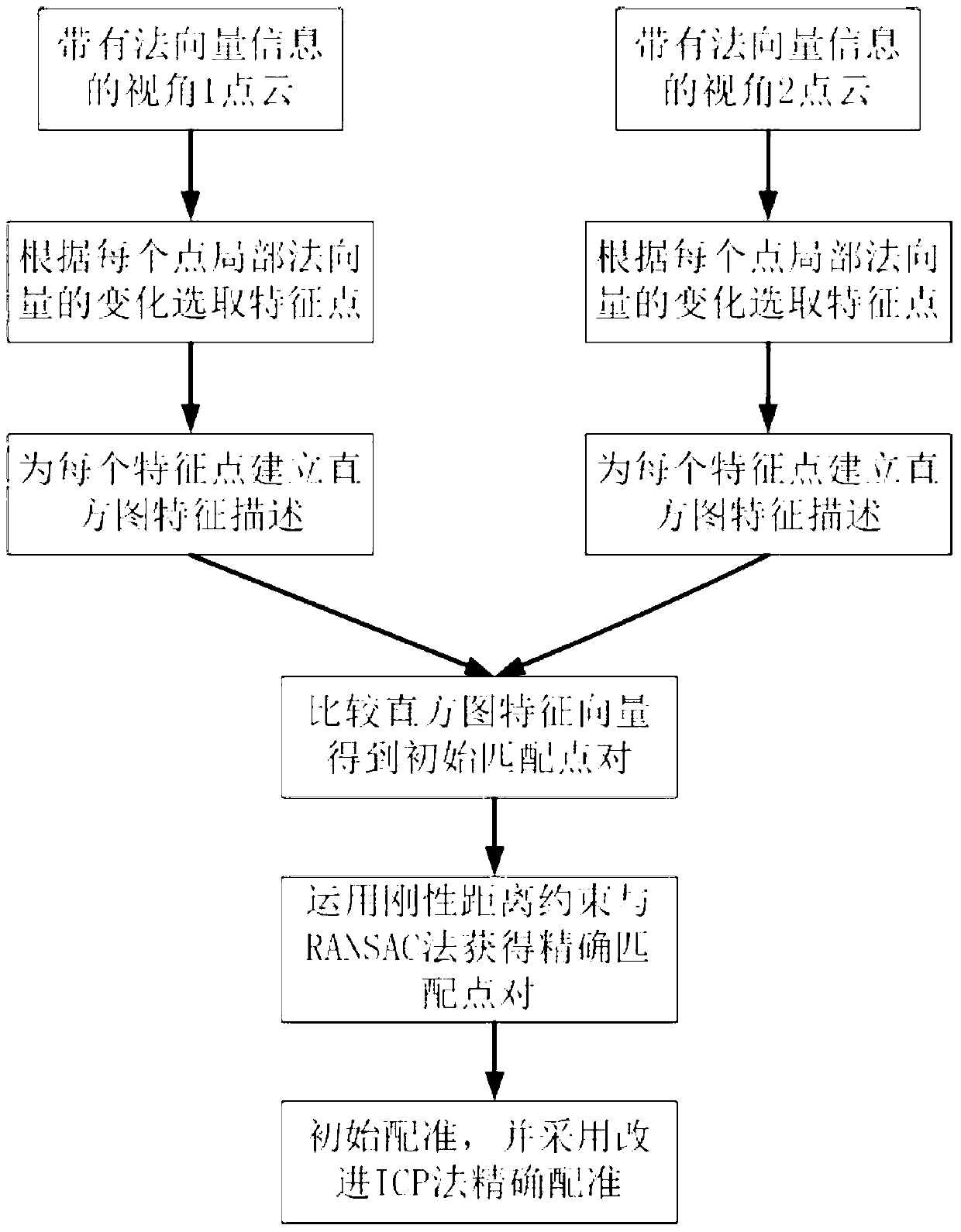

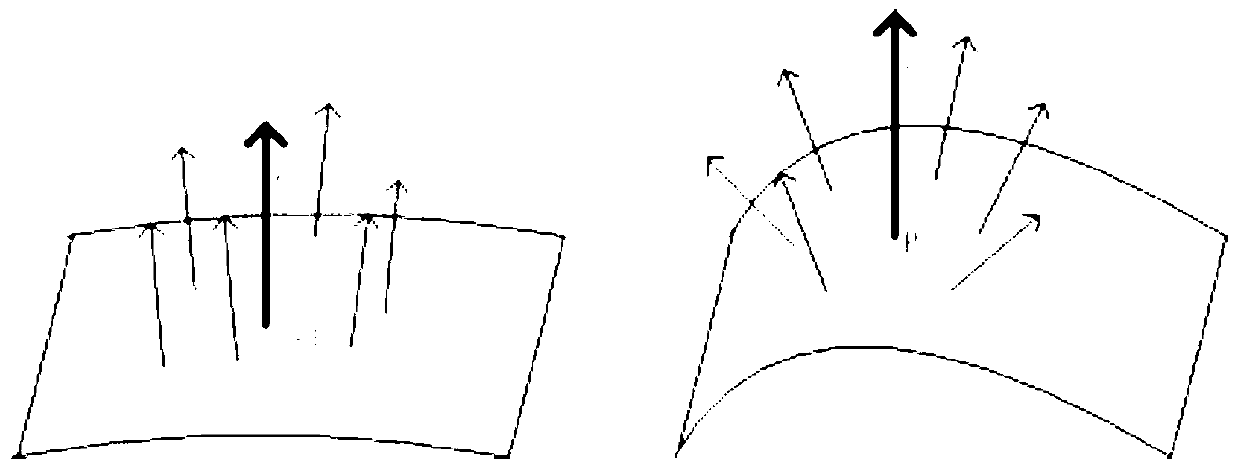

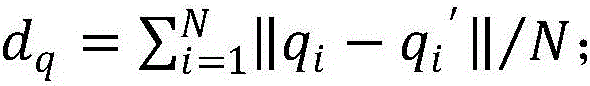

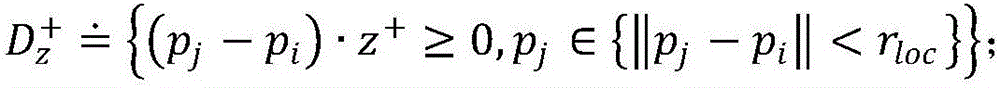

Point cloud automatic registration method based on normal vector

ActiveCN103236064AMeet registration requirementsRealize automatic registrationImage analysisFeature vectorExact match

The invention relates to a point cloud automatic registration method based on normal vector. According to the method, processing objects are two or more than two pieces of three-dimensional point cloud data, wherein overlapped part exists every two pieces of adjacent three-dimensional point cloud data. The method comprises the following processing steps that (1) feature points are selected according to the point cloud local normal vector changes; (2) the histogram feature quantity is designed for carrying out feature description on each obtained feature point; (3) the initial matching dot pair is obtained through comparing the histogram feature vector of the feature points; (4) the precise matching dot pair is obtained through applying the rigid distance constraint condition and combining a RANSAC (random sample consensus) algorithm, and in addition, the initial registration parameters are obtained through calculation by using a four-element method; and (5) an improved ICP (iterative closest point)) algorithm is adopted for carrying out point cloud precise registration. The point cloud can be automatically registered according to the steps. The method has the advantages that feature description is simple, identification degree is high, higher robustness is realized, and registration precision and speed are improved to a certain degree.

Owner:SOUTHEAST UNIV

Automatic registration method for three-dimensional point cloud data

InactiveCN106780459AReduce manual pasteEliminate workloadImage enhancementImage analysisPoint cloudSystem transformation

The invention discloses an automatic registration method for three-dimensional point cloud data. The method comprises the steps that two point clouds to be registered are sampled to obtain feature points, rotation invariant feature factors of the feature points are calculated, and the rotation invariant feature factors of the feature points in the two point clouds are subjected to matching search to obtain an initial corresponding relation between the feature points; then, a random sample consensus algorithm is adopted to judge and remove mismatching points existing in an initial matching point set to obtain an optimized feature point corresponding relation, and a rough rigid transformation relation between the two point clouds is obtained through calculation to realize rough registration; a rigid transformation consistency detection algorithm is provided, a local coordinate system transformation relation between the matching feature points is utilized to perform binding detection on the rough registration result, and verification of the correctness of the rough registration result is completed; and an ICP algorithm is adopted to optimize the rigid transformation relation between the point cloud data to realize automatic precise registration of the point clouds finally.

Owner:HUAZHONG UNIV OF SCI & TECH

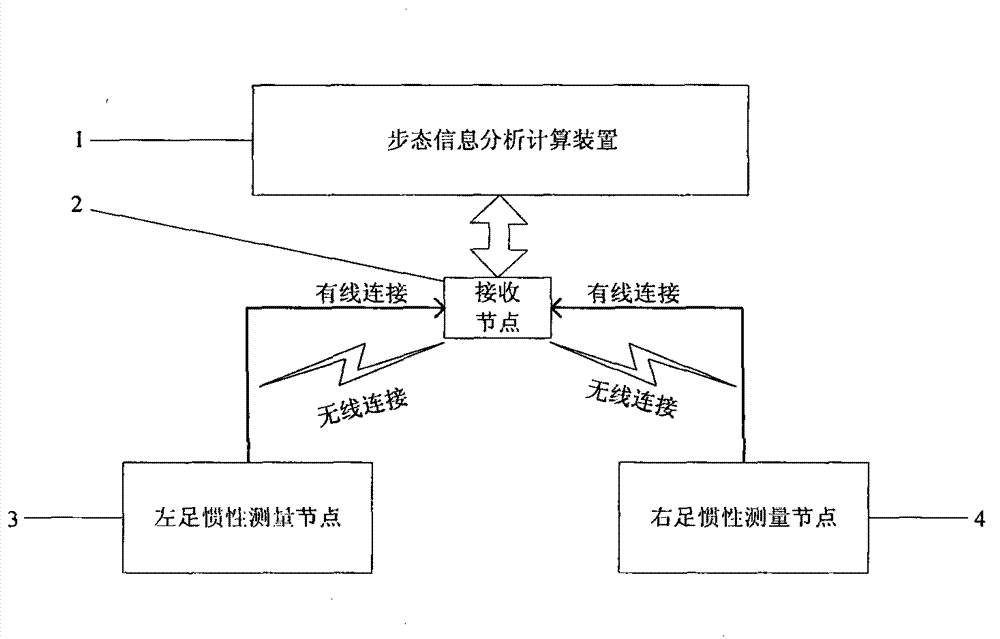

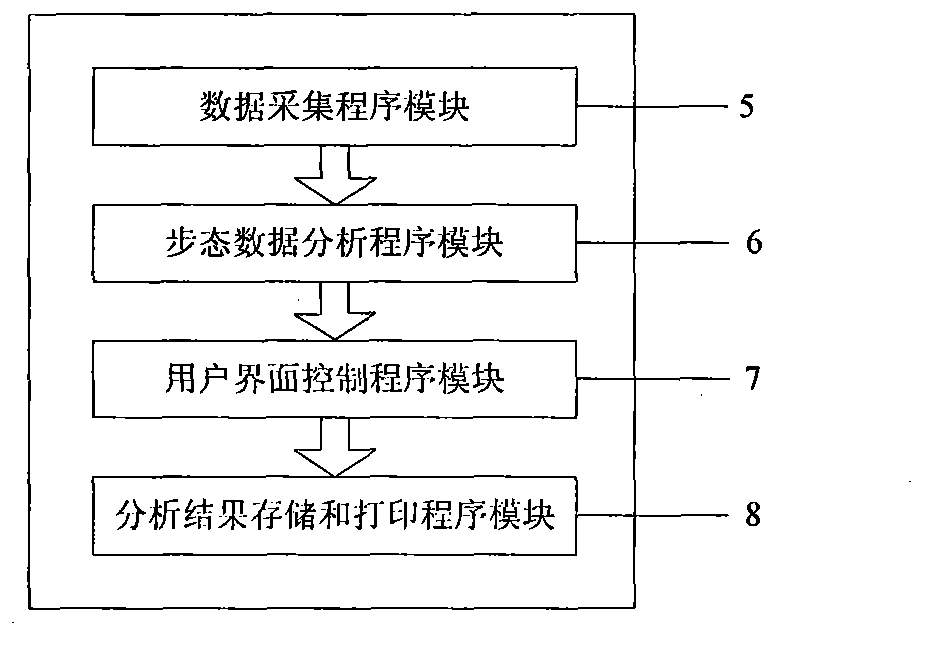

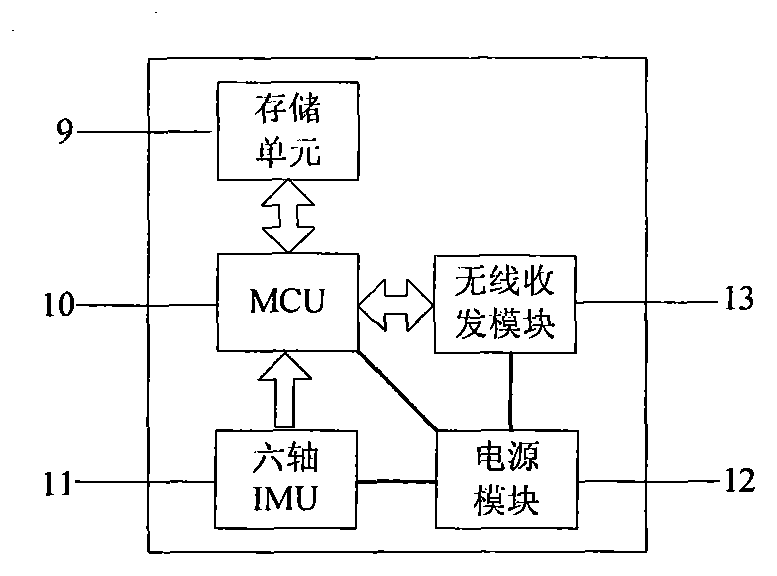

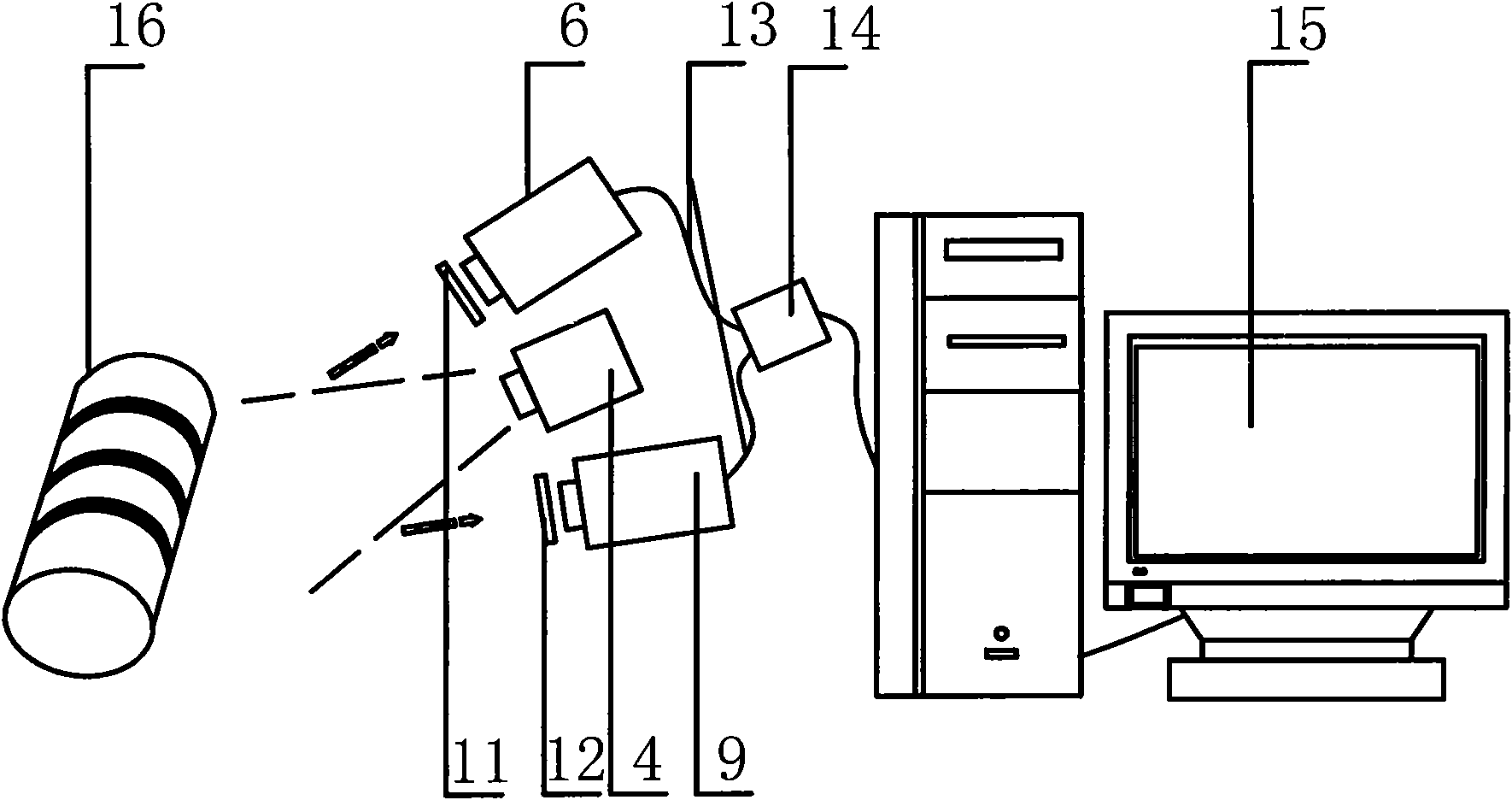

Three-dimensional human body gait quantitative analysis system and method

ActiveCN102824177AAccurate collectionHigh speed acquisitionDiagnostic recording/measuringSensorsNODALObservational error

The invention discloses a three-dimensional human body gait quantitative analysis system and method. The method comprises the following steps of: simultaneously using two inertia measurement nodes on feet, carrying out analyses fusion on the data of the two inertia measurement nodes, measuring a more accurate gait parameter, and obtaining information which can not be measured in a single-foot manner; and firstly storing the collected data in storage units of the measurement nodes in a walking process, and finally transmitting the collected data to an analytical calculation device in a wired or wireless manner after the walking process is ended. The high-speed acquisition of all gait information in the walking process can be realized and no characteristic gait information point is omitted. A foot part binding device of the designed inertia measurement node is adopted, a measuring error brought by fixed position shift of the measurement nodes in the walking process is eliminated, and the identity of fixed positions of the measurement nodes in a walking process is guaranteed through repeated measurement; and a gait analytical calculation program module adopts a moving window searching value method to definition a characteristic point of gait information, and can more exactly extract the gait diagnostic information.

Owner:王哲龙

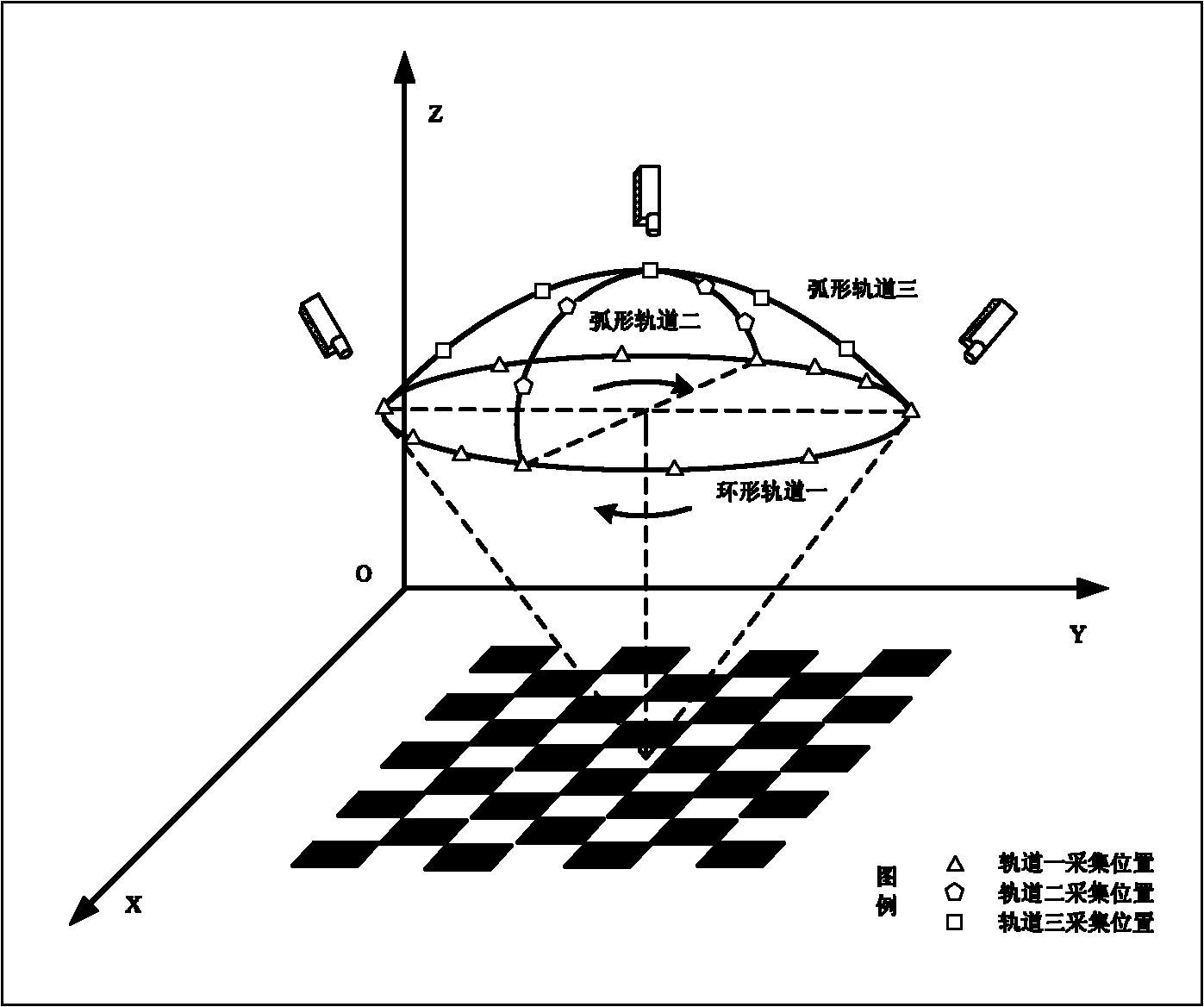

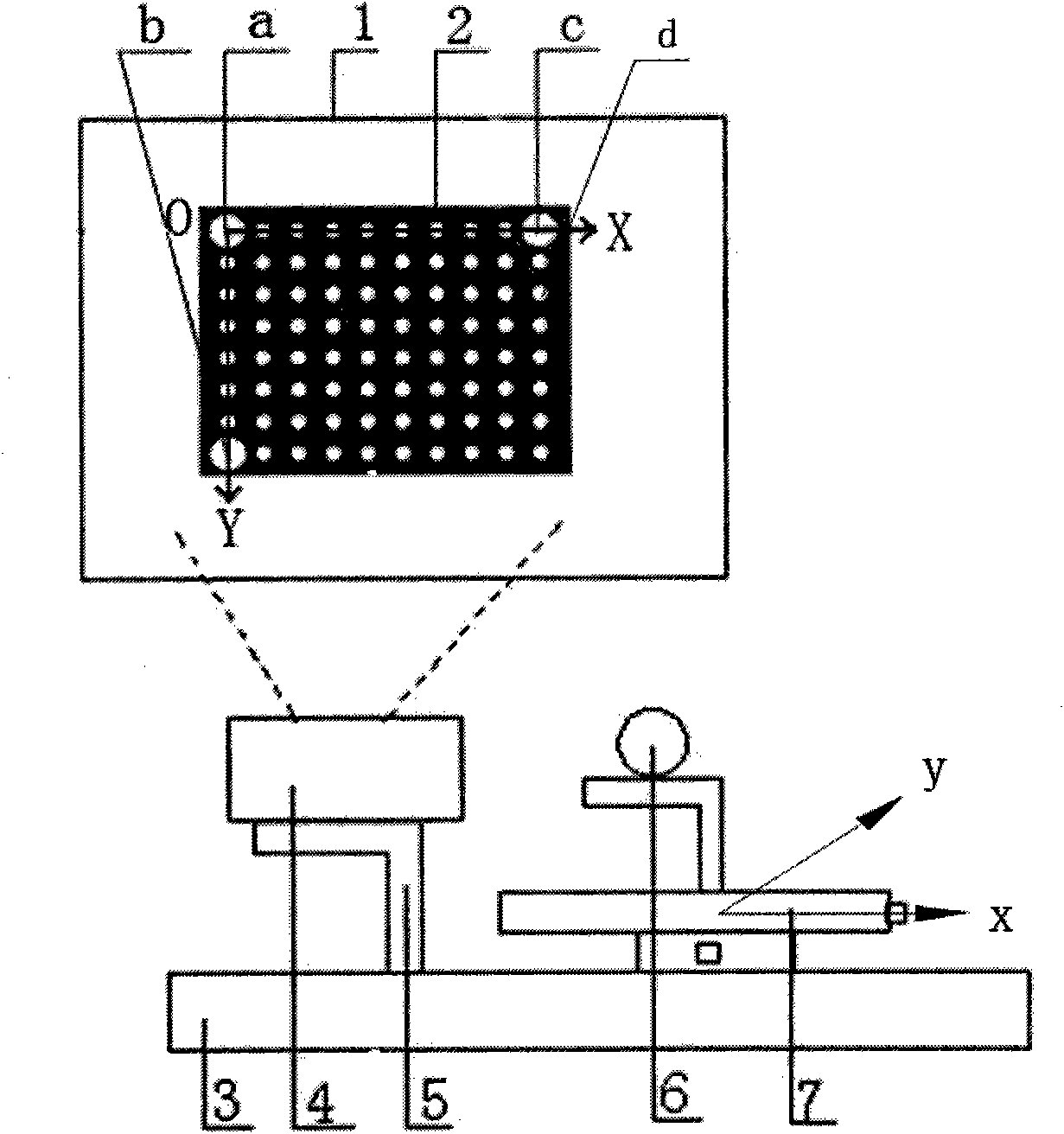

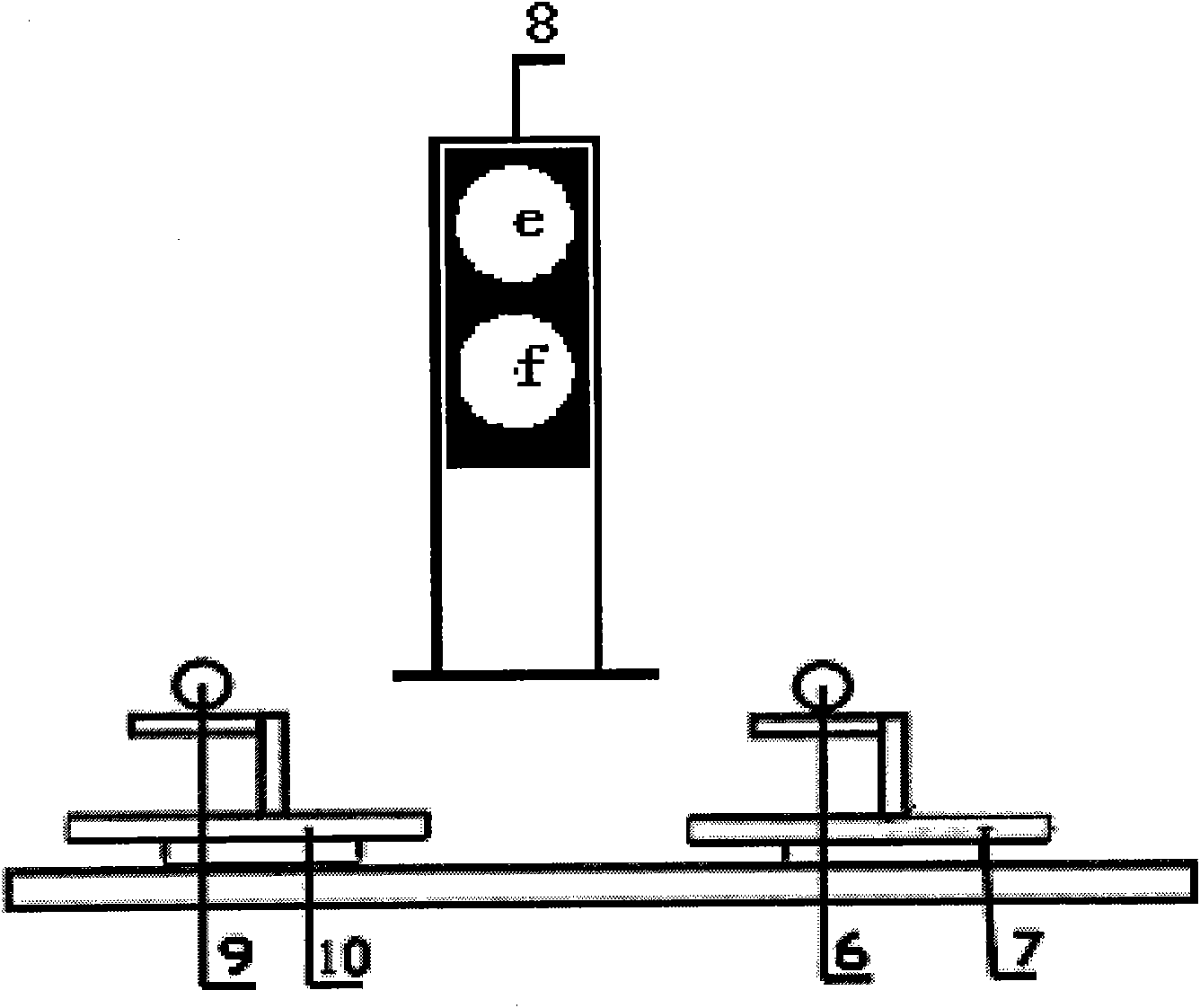

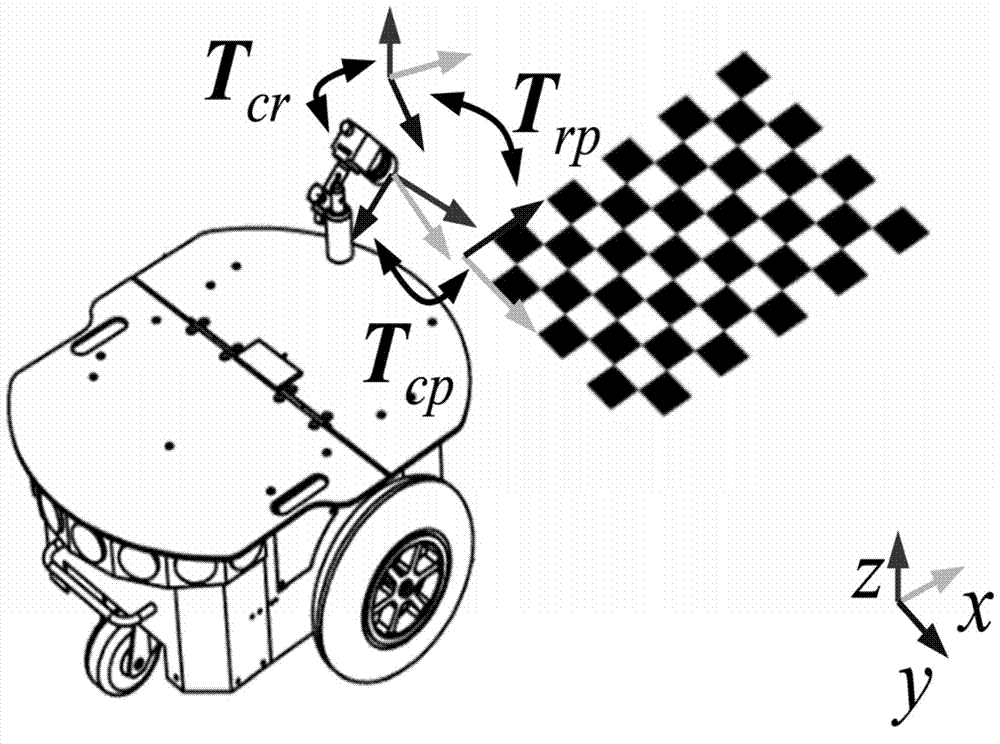

Camera on-field calibration method in measuring system

ActiveCN101876532AEasy extractionOvercome the adverse effects of opaque imagingImage analysisUsing optical meansTheodoliteSize measurement

The invention discloses a camera on-field calibration method in a measuring system, belonging to the field of computer vision detection, in particular to an on-field calibration method for solving inside and outside parameters of cameras in a large forgeable piece size measuring system. Two cameras and one projector are provided in the measuring system. The calibration method comprises the following steps of: manufacturing inside and outside parameter calibration targets of the cameras; projecting inside parameter targets and shooting images; extracting image characteristic points of the images through an image processing algorithm in Matlab; writing out an equation to solve the inside parameters of the cameras; processing the images shot simultaneously by the left camera and the right camera; and measuring the actual distance of the circle center of the target by using a left theodolite and a right theodolite, solving a scale factor and further solving the actual outside parameters. The invention has stronger on-field adaptability, overcomes the influence of impermeable and illegible images caused by the condition that a filter plate filters infrared light in a large forgeable piece binocular vision measuring system by adopting the projector to project the targets, and is suitable for occasions with large scene and complex background.

Owner:DALIAN UNIV OF TECH

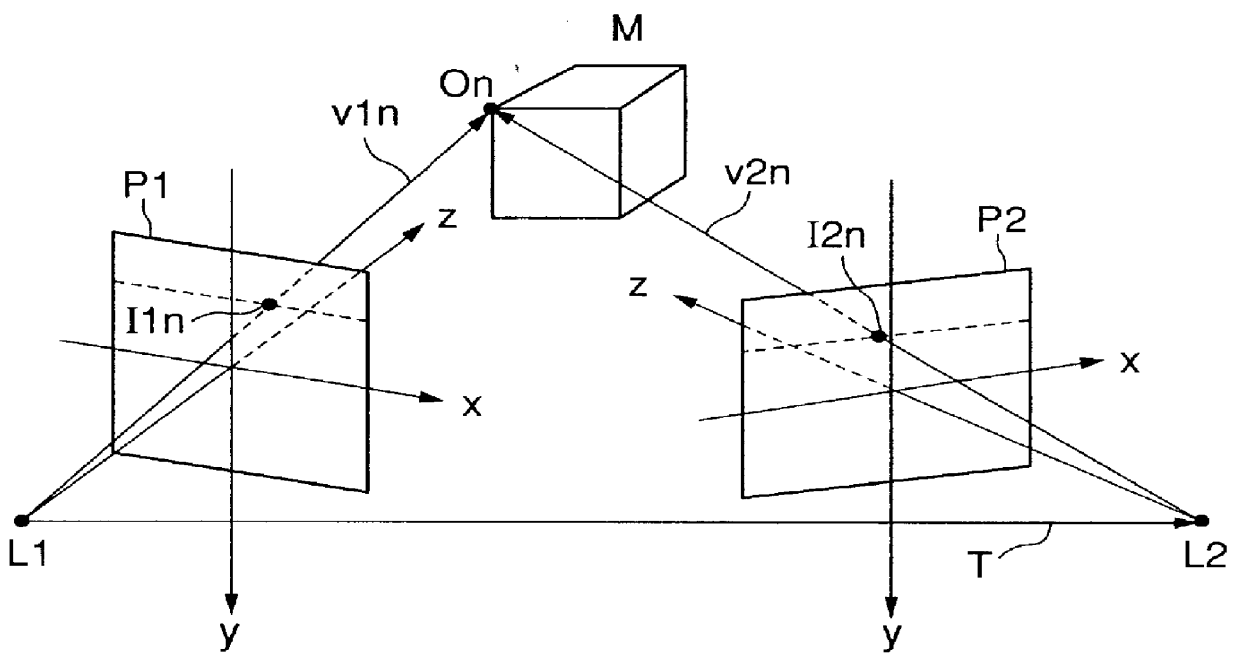

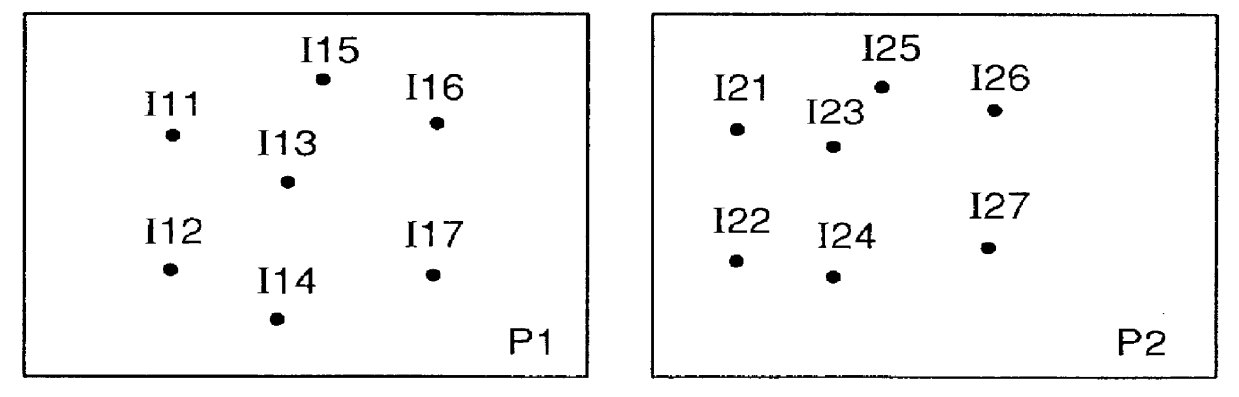

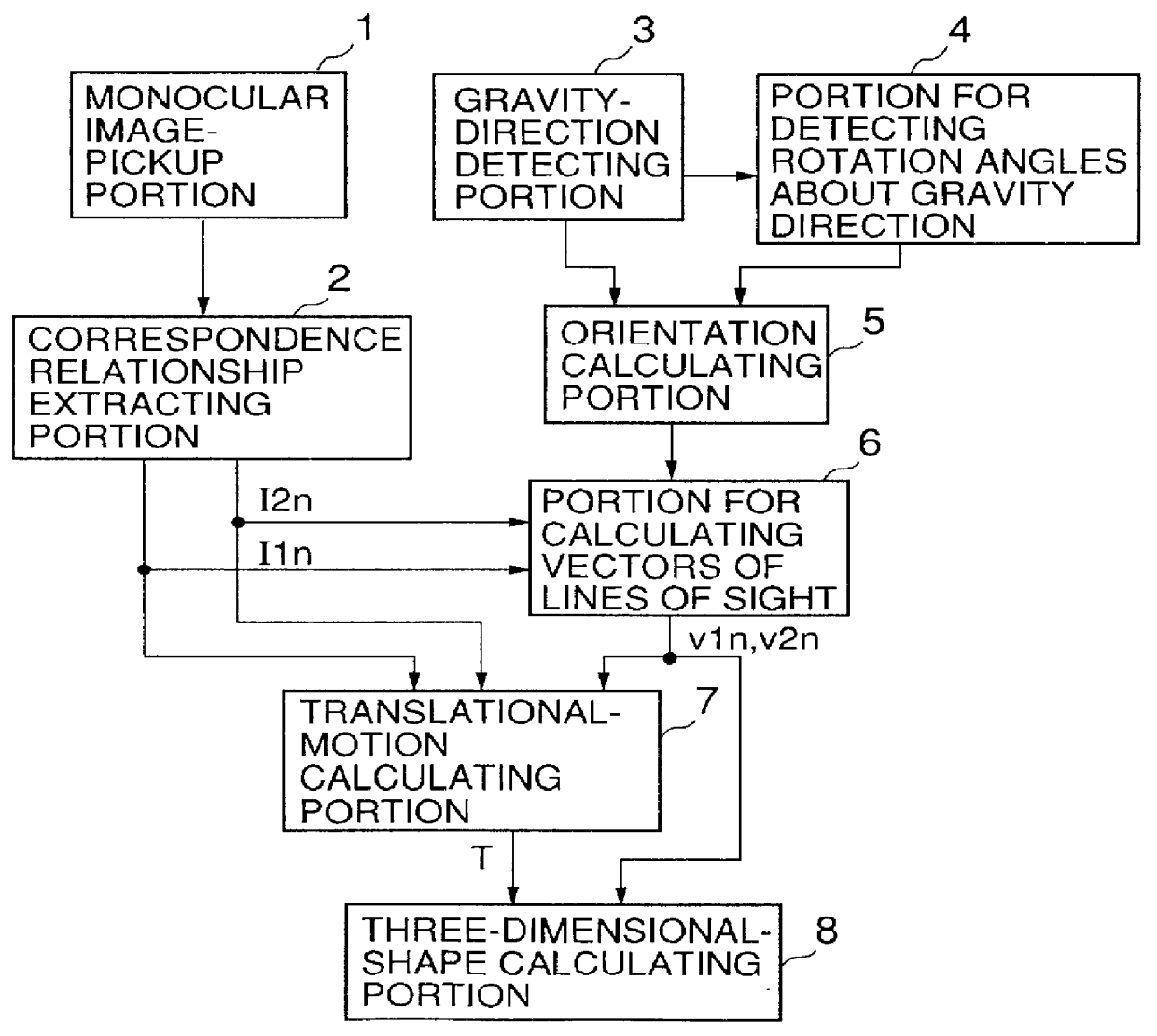

Three-dimensional measuring apparatus and method, image pickup apparatus, and apparatus and method for inputting image

InactiveUS6038074AUltrasonic/sonic/infrasonic diagnosticsAngle measurementObject pointThree dimensional measurement

Owner:RICOH KK

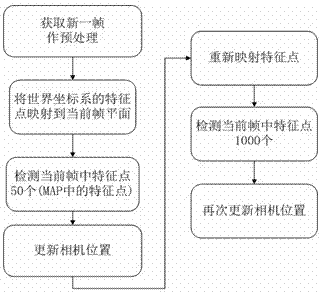

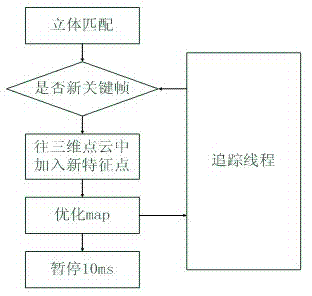

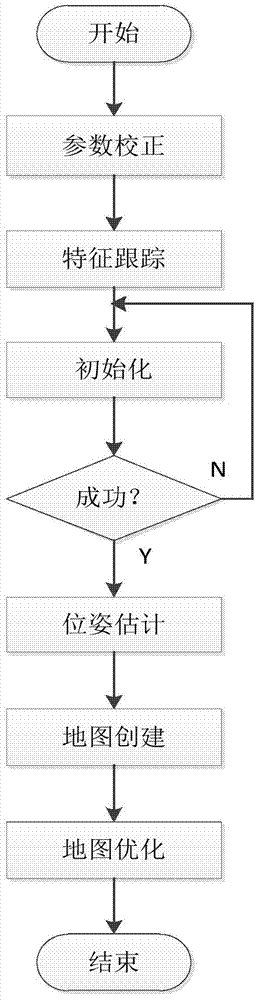

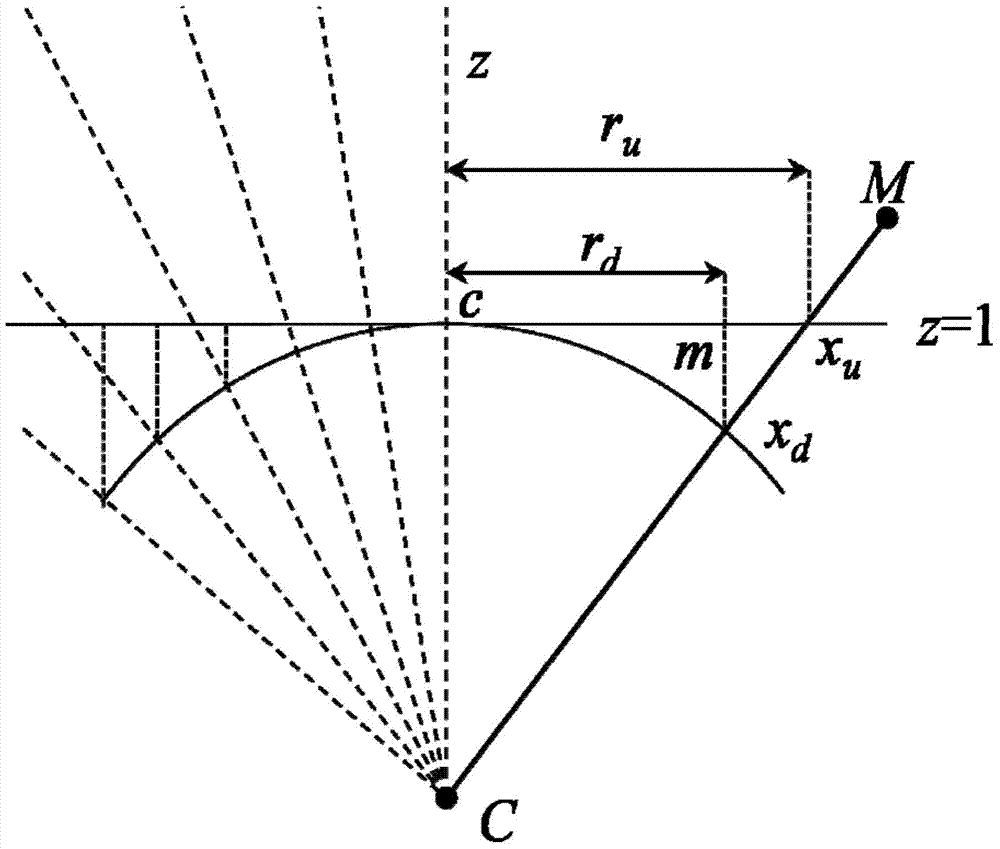

PTAM improvement method based on ground characteristics of intelligent robot

InactiveCN104732518ALift strict restrictionsEasy to initializeImage analysisNavigation instrumentsLine searchKey frame

The invention discloses a PTAM improvement method based on ground characteristics of an intelligent robot. The PTAM improvement method based on ground characteristics of the intelligent robot comprises the steps that firstly, parameter correction is completed, wherein parameter correction includes parameter definition and camera correction; secondly, current environment texture information is obtained by means of a camera, a four-layer Gausses image pyramid is constructed, the characteristic information in a current image is extracted by means of the FAST corner detection algorithm, data relevance between corner characteristics is established, and then a pose estimation model is obtained; two key frames are obtained so as to erect the camera on the mobile robot at the initial map drawing stage; the mobile robot begins to move in the initializing process, corner information in the current scene is captured through the camera and association is established at the same time; after a three-dimensional sparse map is initialized, the key frames are updated, the sub-pixel precision mapping relation between characteristic points is established by means of an extreme line searching and block matching method, and accurate re-positioning of the camera is achieved based on the pose estimation model; finally, matched points are projected in the space, so that a three-dimensional map for the current overall environment is established.

Owner:BEIJING UNIV OF TECH

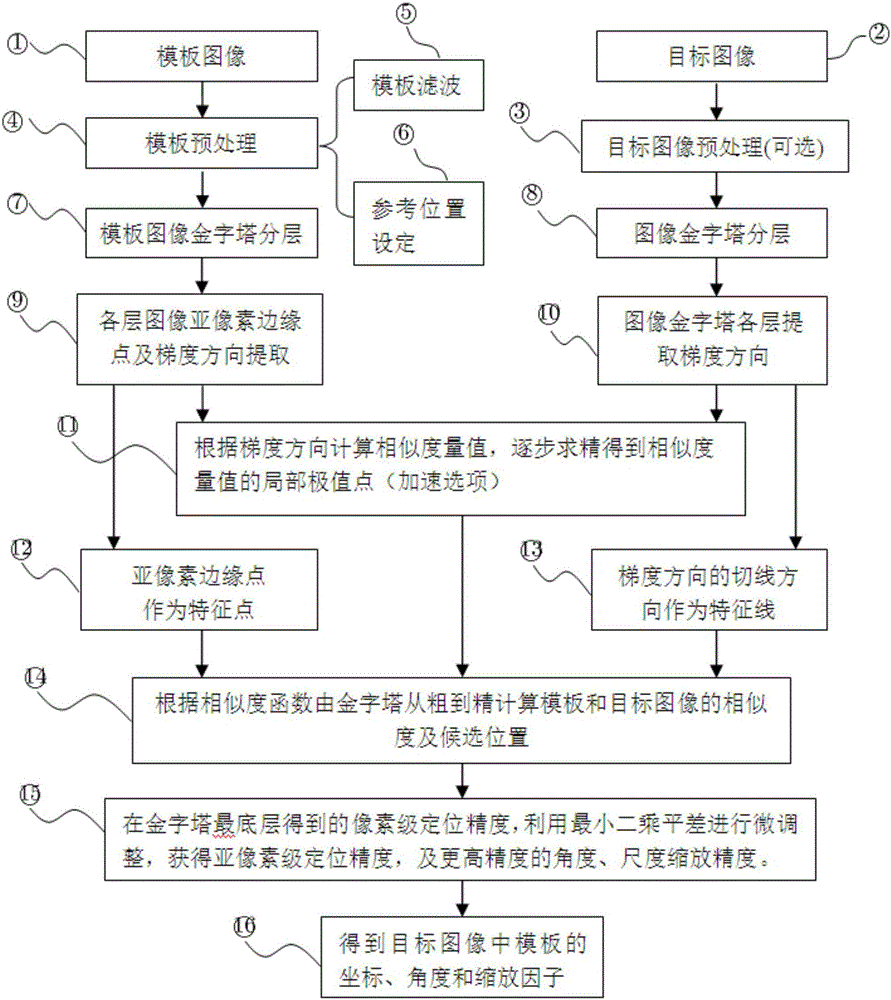

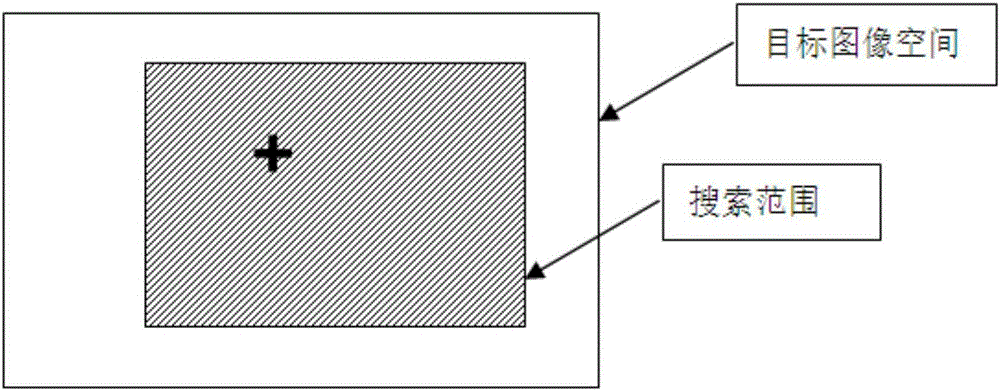

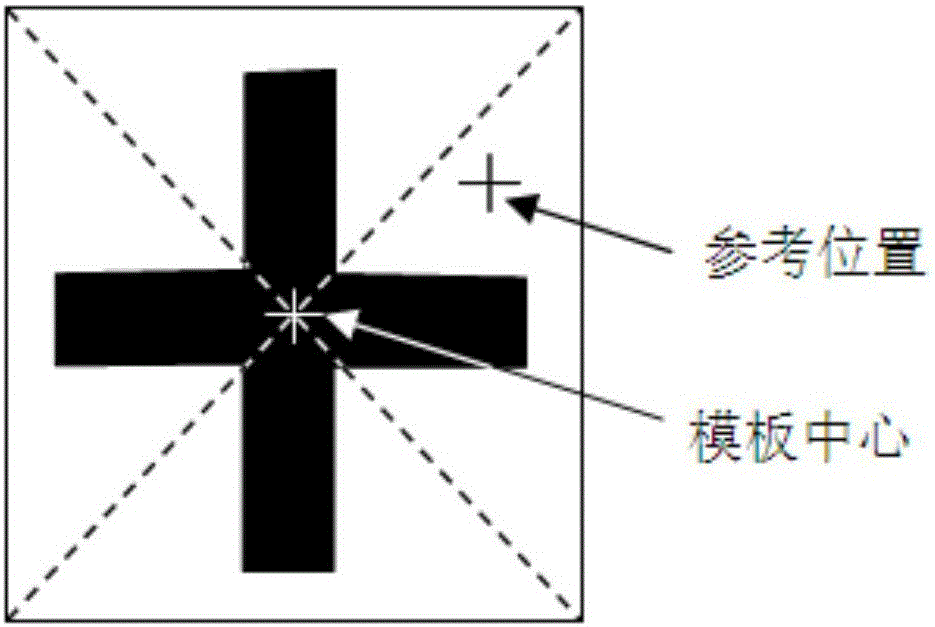

Fast high-precision geometric template matching method enabling rotation and scaling functions

ActiveCN105930858APrecise positioningGuaranteed stabilityCharacter and pattern recognitionTemplate matchingMachine vision

The present invention provides a fast high-precision geometric template matching method enabling rotation and scaling functions. According to the method, based on image edge information, with the sub-pixel edge points of a template image adopted as feature points, the tangent directions of the gradient directions of a target image adopted as feature lines, and based on the local extremum points of a similarity value, the similarity and the candidate positions of the template image and the target image are calculated from fine to rough through adopting a Pyramid algorithm and according to a similarity function; and pixel-level positioning accuracy is obtained at the bottommost layer of a Pyramid, the least squares method is adopted to carry out fin adjustment, so that sub-pixel positioning accuracy, higher-precision angle and size scaling accuracy can be achieved. The method can realize fast, stable and high-precision positioning and identification of target images which are moved, rotated, scaled and is partially shielded, and where the brightness of illumination changes, illumination is uneven, and cluttered background exists. The method can be applied to situations which require machine vision to carry out target positioning and identification.

Owner:吴晓军

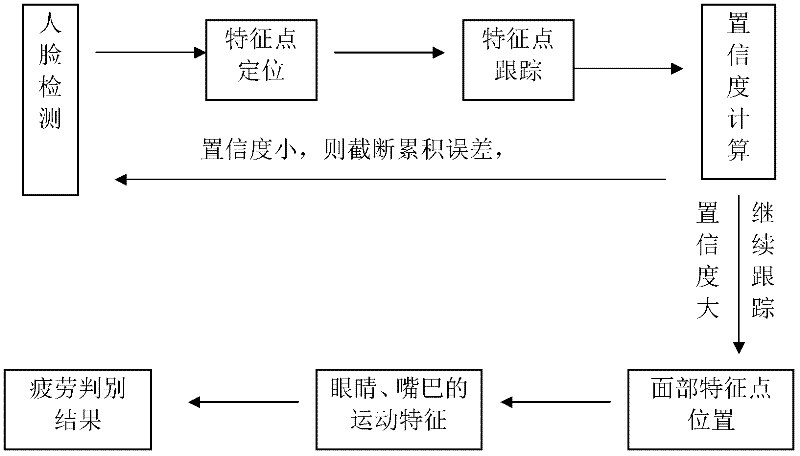

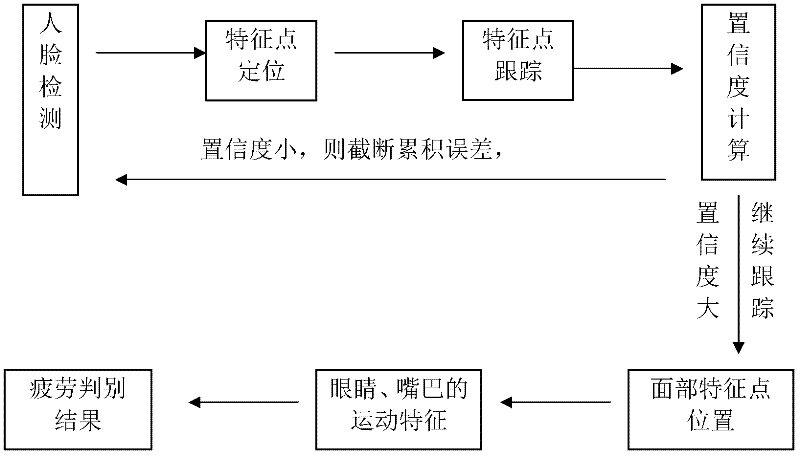

Driver fatigue detection method based on face video analysis

ActiveCN102254151ADetection speedImprove reliabilityCharacter and pattern recognitionActive safetyPattern recognition

The invention relates to a driver fatigue detection method based on face video analysis, belonging to the field of vehicle active safety and mode recognition. The method comprises the steps of: carrying out face detection on a face image to be detected for primarily positioning local organs, such as eyes, nose and mouth; further obtaining face characteristic points positioned accurately; and finally, on the basis of an accurate positioning result of multiple frames of face characteristic points to be detected, carrying out quantization description on a face motion characteristic, and obtaining the result of fatigue detection on a driver to be detected according to a face motion statistical indicator. The driver fatigue detection method has high reliability, has no special requirements on hardware, does not involve complex operation, and has good instantaneity so as to be applied in real time in practice. The driver fatigue detection method has better robustness on the aspects of eyeglasses wearing, face angles, nonuniform illumination and the like, and can be suitable for various types of vehicles for reminding a driver of being in a fatigue state so that traffic accidents are avoided.

Owner:TSINGHUA UNIV

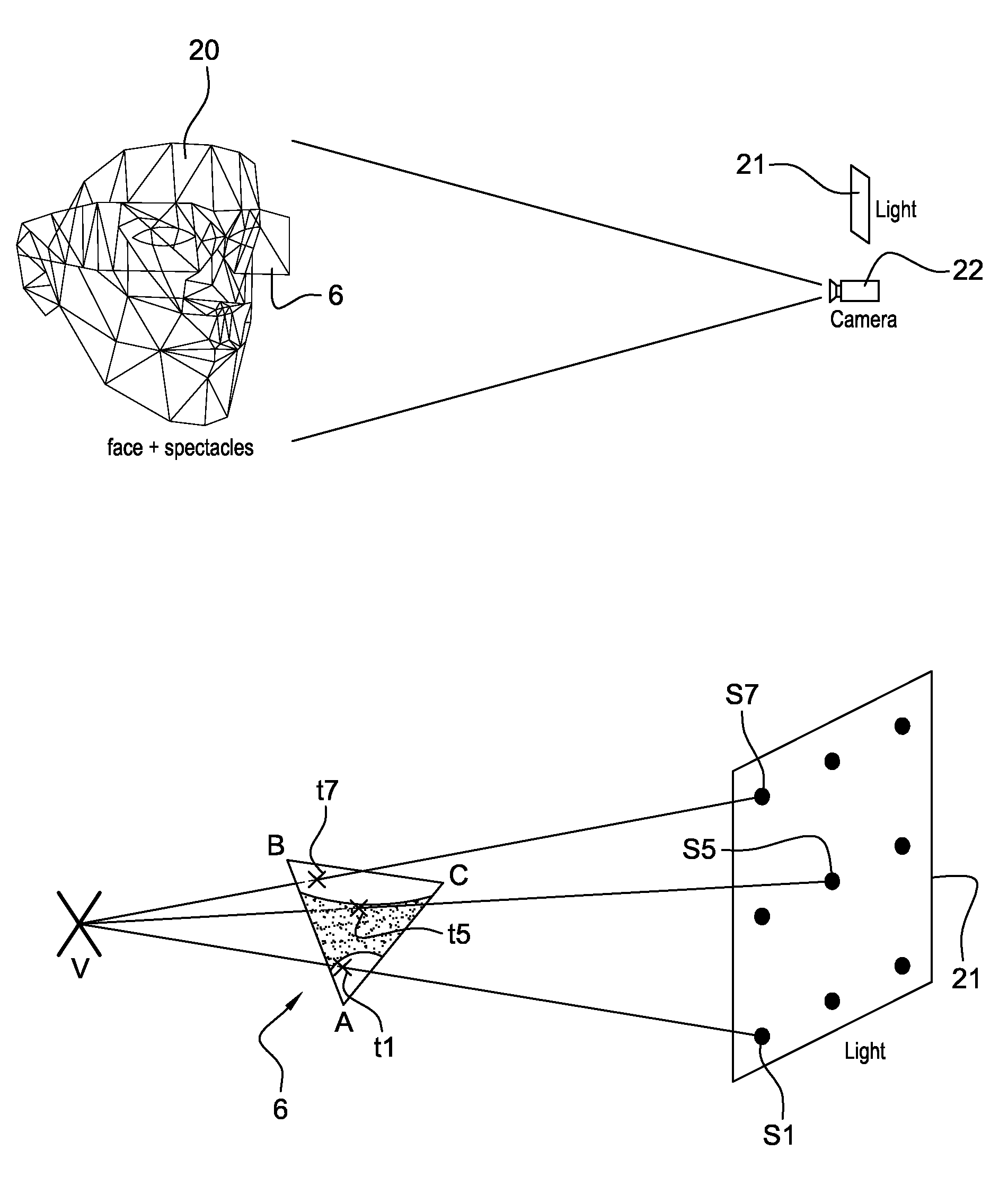

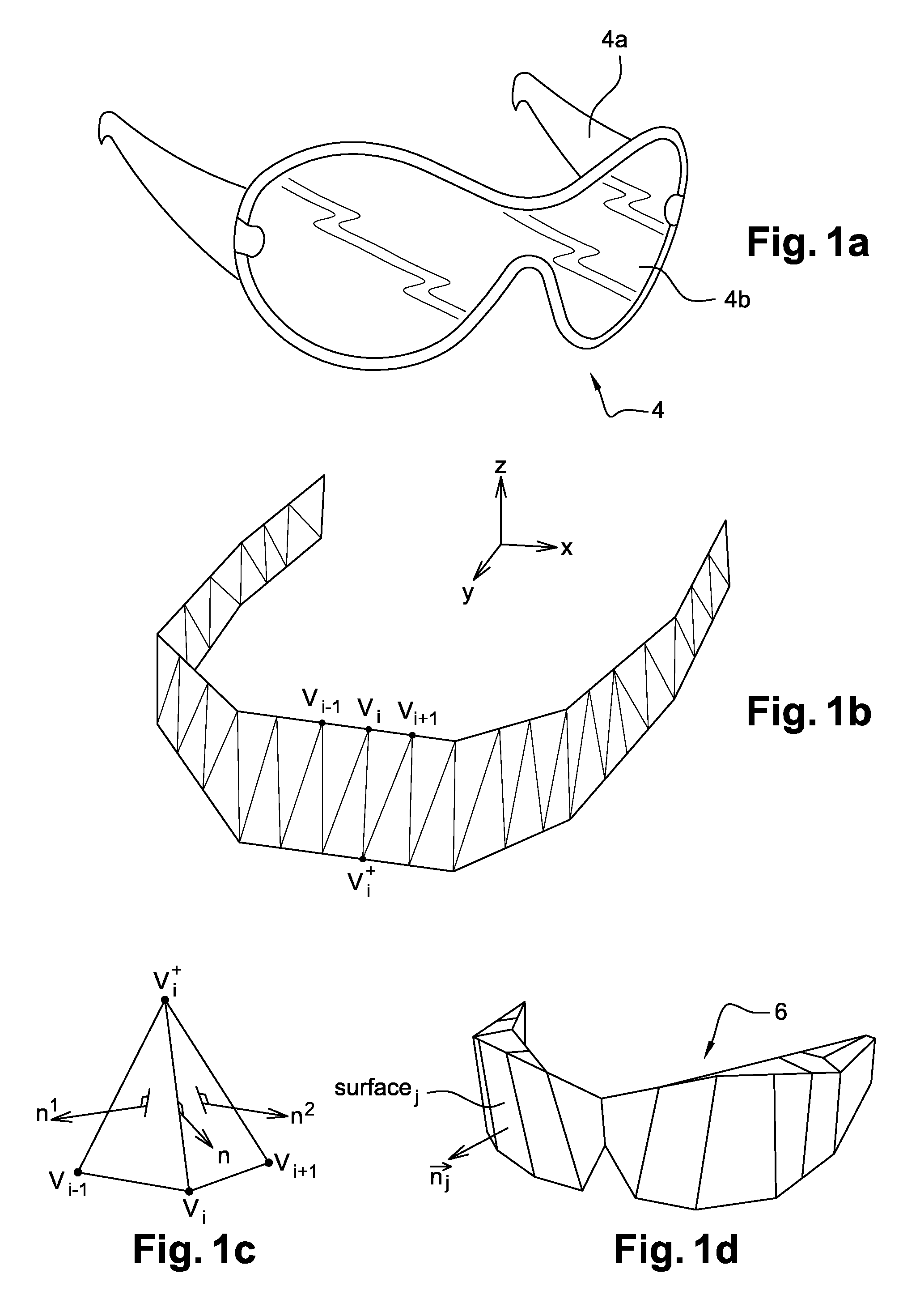

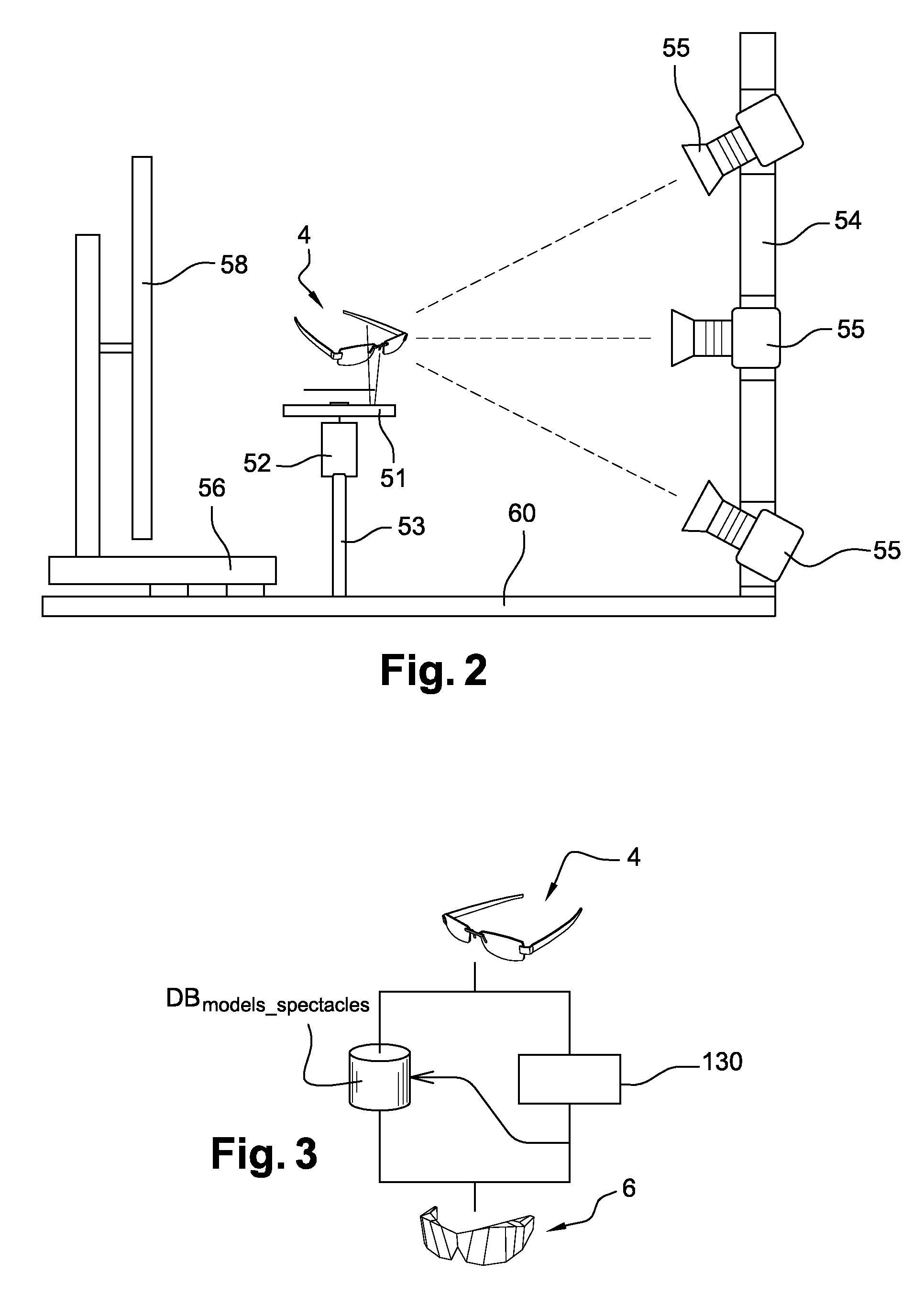

Augmented reality method applied to the integration of a pair of spectacles into an image of a face

Method for creating a final real-time photorealistic image of a virtual object, corresponding to a real object arranged on an original photo of a user, in a realistic orientation related to the user's position, includes: detecting the presence of an area for the object in the photo; determining the position of characteristic points of the area for the object in the photo; determining the 3D orientation of the face, the angles Φ and Ψ of the camera having taken the photo relative to the principal plane of the area; selecting the texture to be used for the virtual object, in accordance with the angle-of-view, and generating the view of the virtual object in 3D; creating a first layered rendering in the correct position consistent with the position of the placement area for the object in the original photo; obtaining the photorealistic rendering by adding overlays to obtain the final image.

Owner:FITTINGBOX

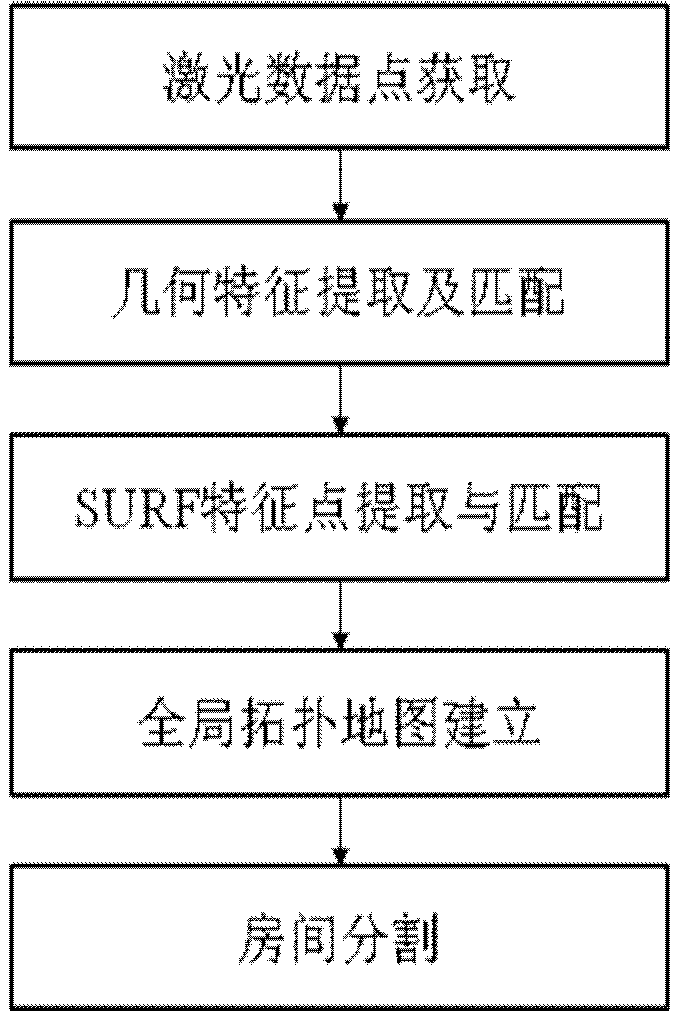

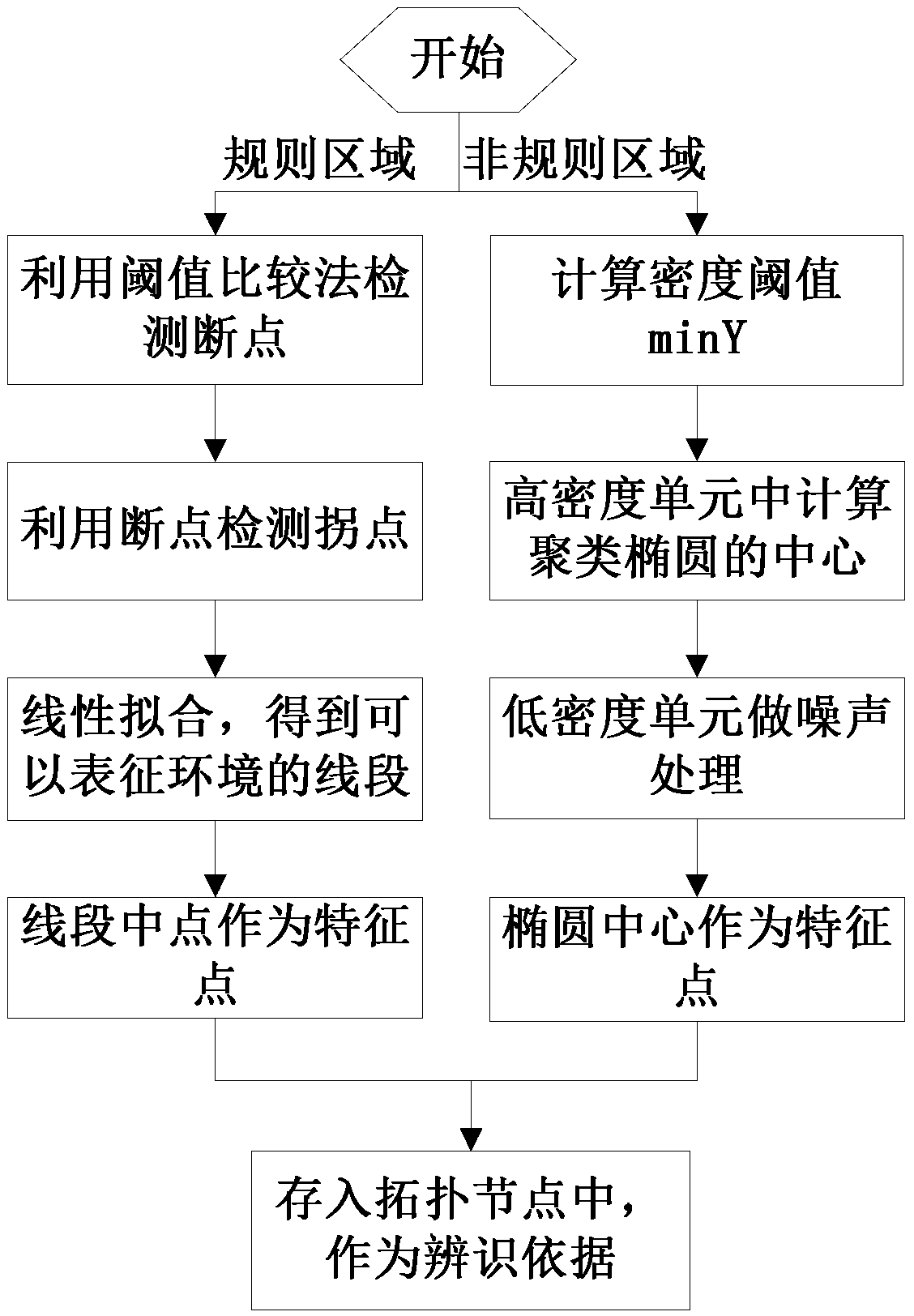

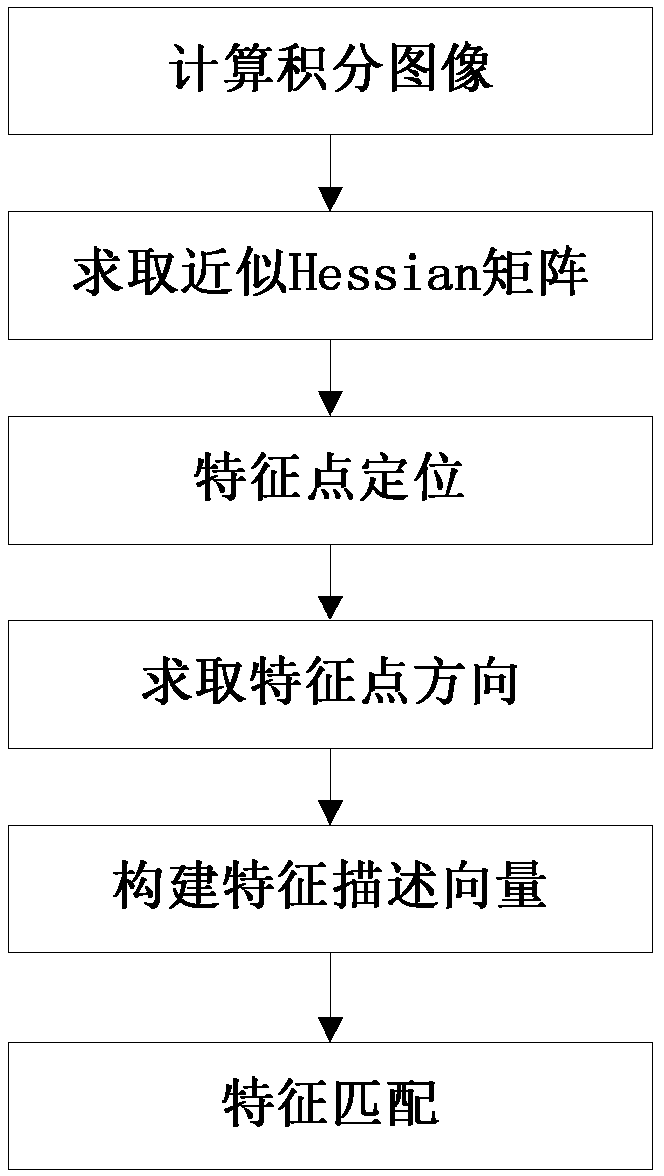

Mobile robot cascading type map creating method based on mixed characteristics

InactiveCN103268729AFix the defect createdRich room informationImage enhancementImage analysisTopological graphLaser data

The invention belongs to the field of intelligent mobile robots and discloses a mobile robot cascading type map creating method based on mixed characteristics. The mobile robot cascading type map creating method based on the mixed characteristics overcomes the defect of the creation of a signal map and solves the problem that a large amount of service information can not be supplied by single map creation. The method comprises the steps of acquiring laser data points, extracting geometrical characteristics and conducting characteristic matching, extracting SURF characteristic points and conducting matching, establishing a cascading type map and segregating a room. According to the mobile robot cascading type map creating method based on the mixed characteristics, a laser optical sensor is used for acquiring environmental data and extracting the geometrical characteristics, meanwhile, a visual sensor is used for extracting SURF characteristics, an overall topological graph is created, an undirected weighted graph is structured to achieve segmentation of the room, the defects that in a traditional topological map, geometric environment information contained in topological nodes is less and precise location cannot be achieved are effectively overcome, and abundant room information can be provided. The mobile robot cascading type map creating method based on the mixed characteristics is suitable for the field of service robots and other fields related to mobile robot map creation.

Owner:BEIJING UNIV OF TECH

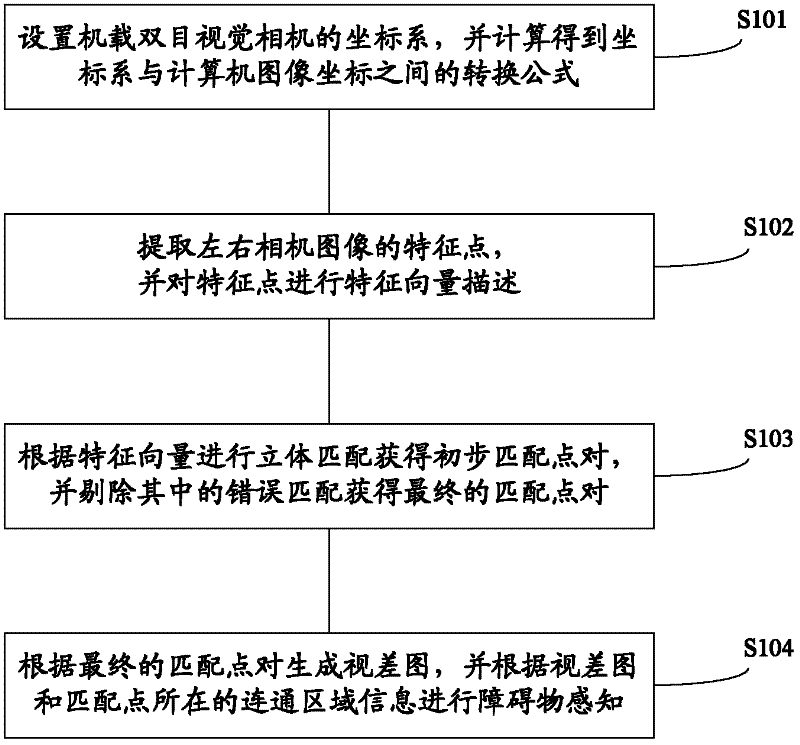

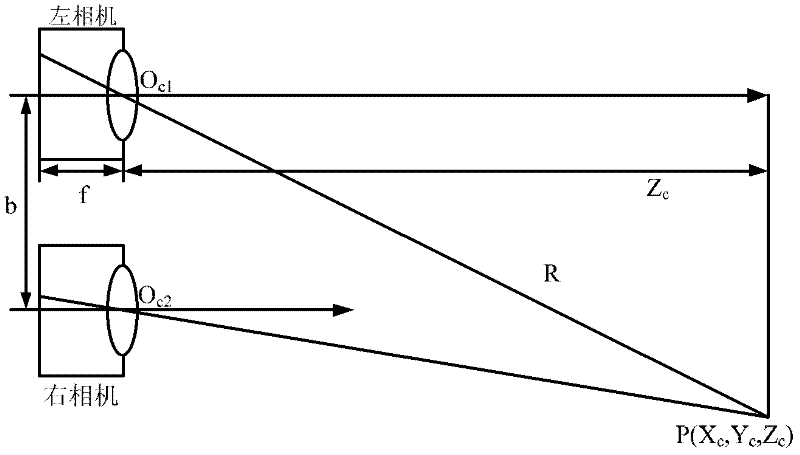

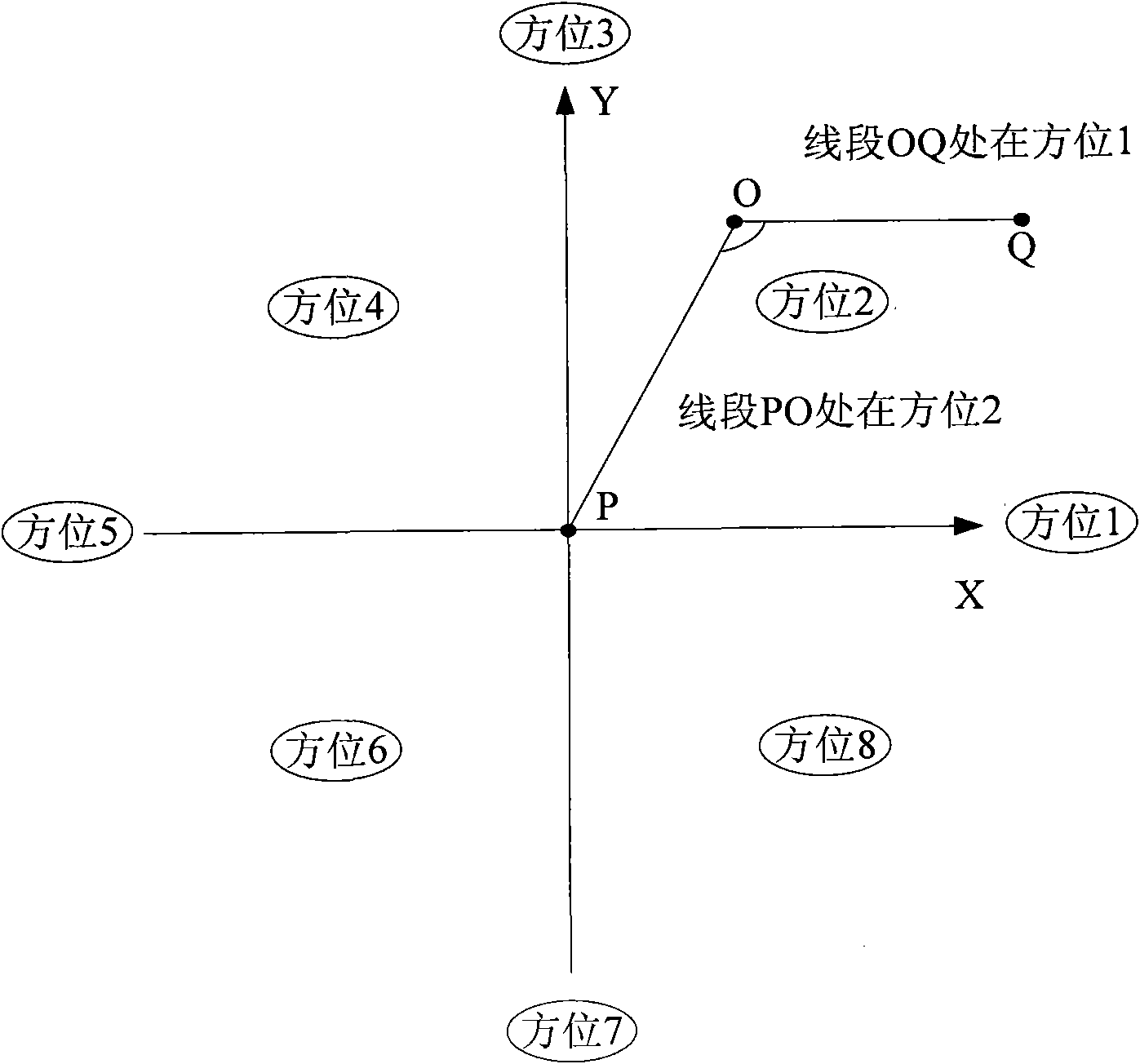

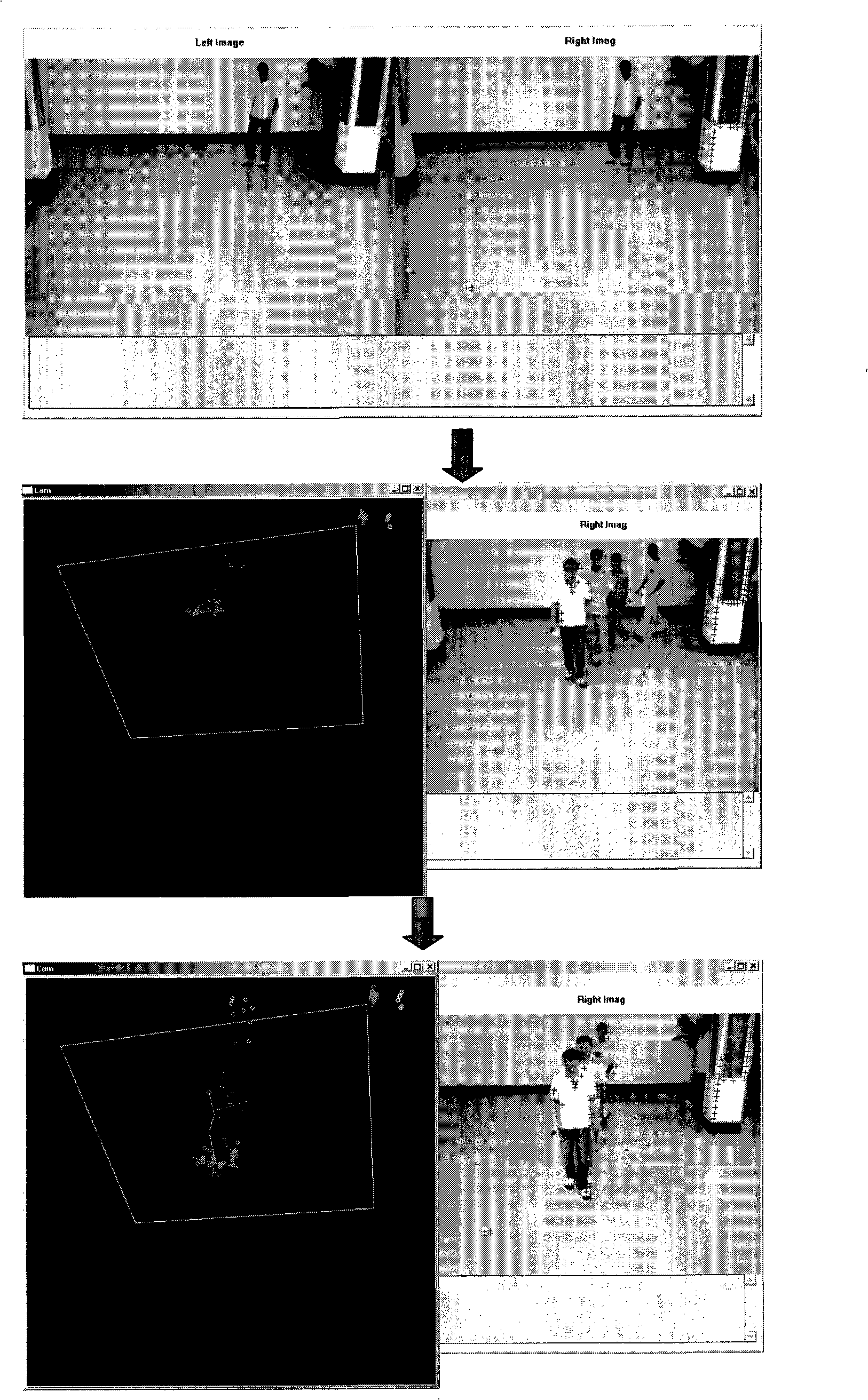

Method for barrier perception based on airborne binocular vision

The invention provides a method for barrier perception based on airborne binocular vision. The method comprises the following steps of setting a coordinate system of an airborne binocular vision camera, calculating a formula for conversion between the coordinate system and computer image coordinates of an image obtained by the airborne binocular vision camera according to the coordinate system, wherein the airborne binocular vision camera comprises a left camera and a right camera, extracting characteristic points of an image obtained by the airborne binocular vision camera, carrying out a characteristic vector description process on the characteristic points, carrying out stereo matching of left and right images according to characteristic vectors of the characteristic points to obtain preliminary matching point pairs, eliminating error matching in the preliminary matching point pairs to obtain final matching point pairs, creating a disparity map according to the final matching point pairs, and carrying out barrier perception according to the disparity map. The method for barrier perception based on airborne binocular vision has the advantages of strong adaptability, good instantaneity and good concealment performance.

Owner:SHENZHEN AUTEL INTELLIGENT AVIATION TECH CO LTD

Method and device for reconstructing three-dimensional face based on single face image

InactiveCN102054291AReduce modeling timeLow costNeural learning methods3D modellingPattern recognitionComputer graphics (images)

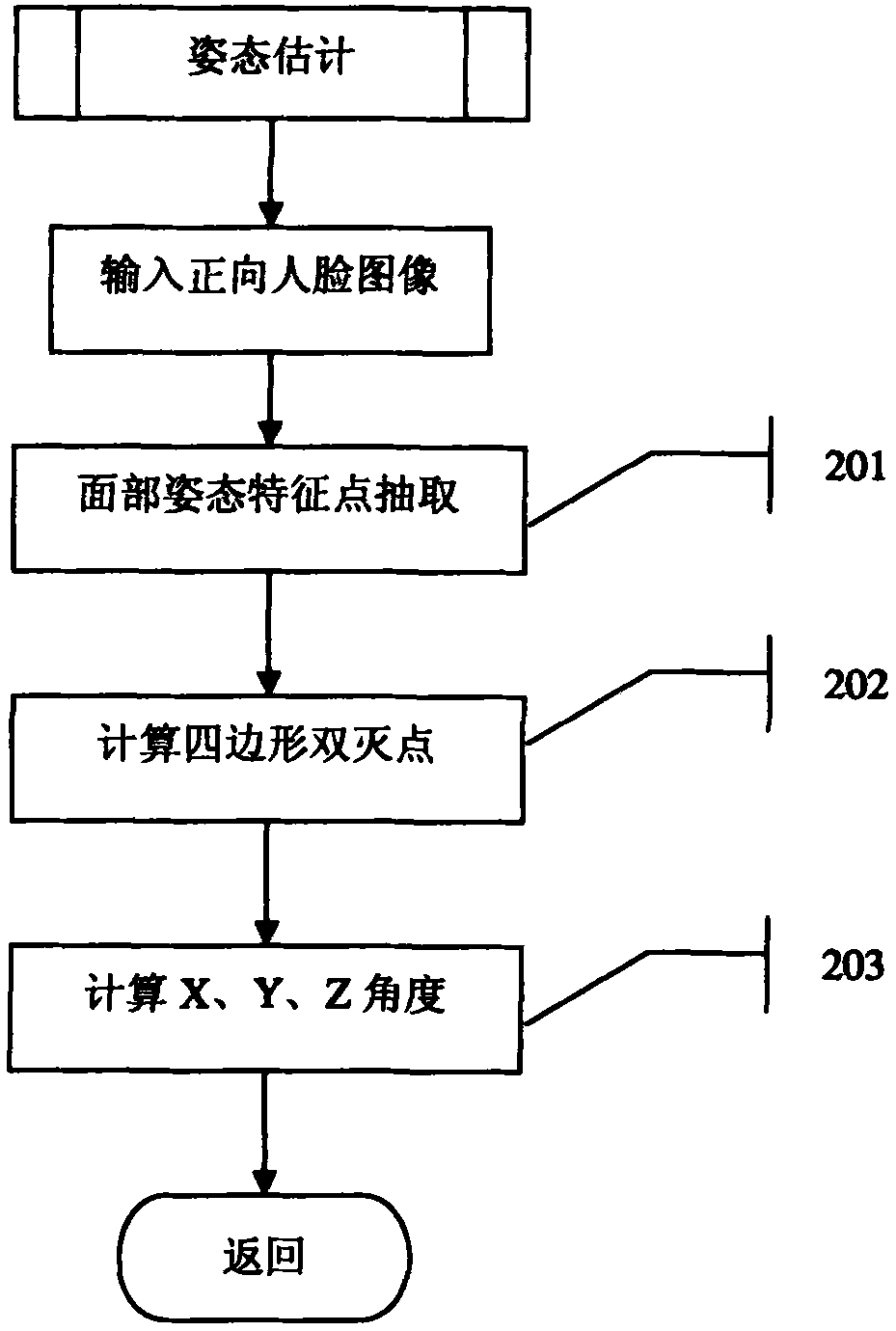

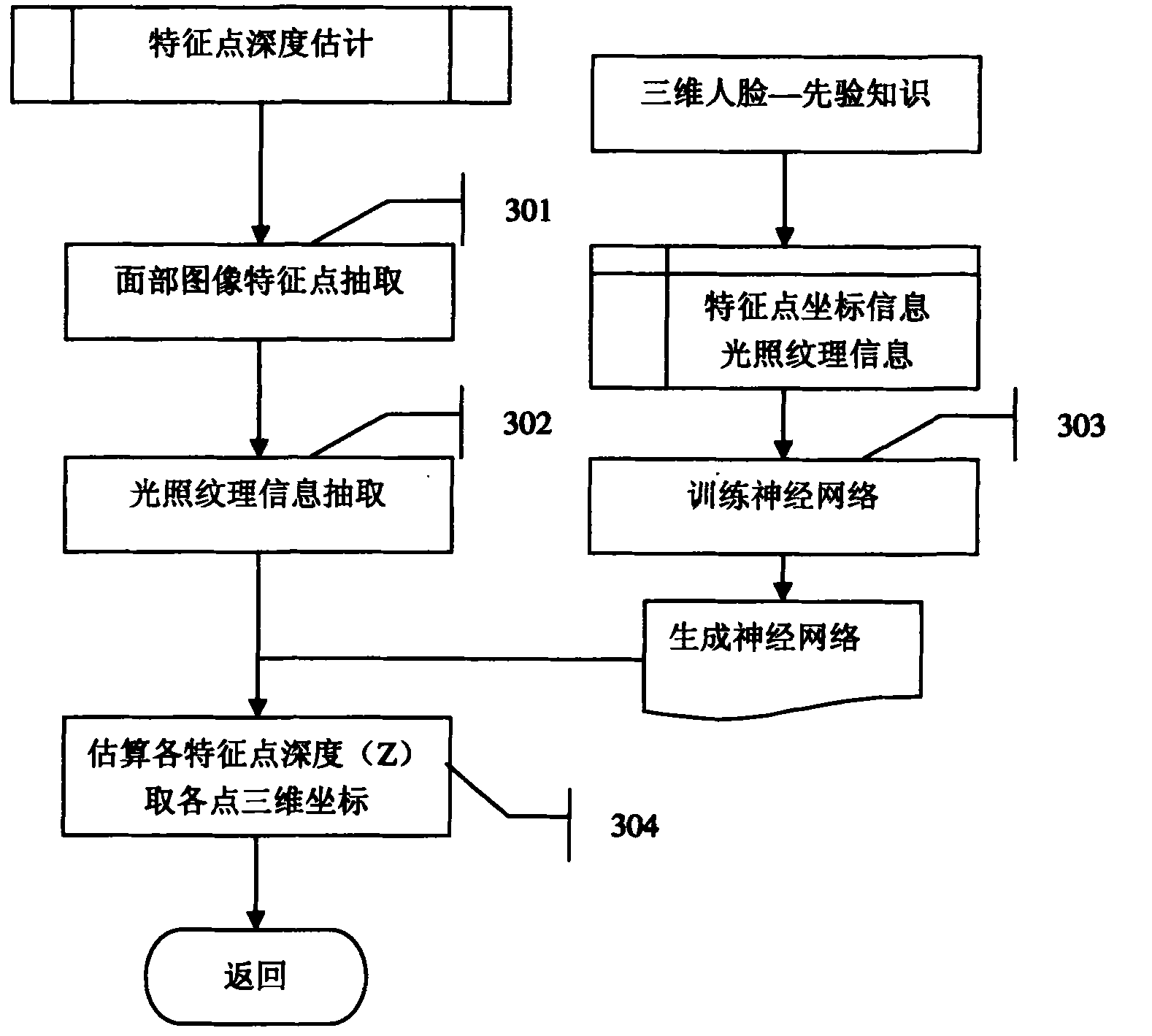

The invention discloses a method and a device for reconstructing a three-dimensional face based on a single face image. The method comprises the following steps of: performing posture recognition on a face image by utilizing face structure priori knowledge, and estimating rotating direction and angle of a face plane by combining face measurement and projective geometry so as to set a rotating angle of the three-dimensional face; estimating the depth of two-dimensional characteristic points on the face image by adopting an artificial neural network, and acquiring three-dimensional coordinates of the characteristic points; converting a common three-dimensional face model into a specific model by adopting a Dirichlet free deformation algorithm; and mapping the three-dimensional face model by adopting characteristic points extracted from the two-dimensional image. Therefore, the true three-dimensional model is constructed through the single face image, the three-dimensional modeling accuracy is effectively improved, the three-dimensional modeling time is shortened and the three-dimensional modeling cost is reduced.

Owner:XIAMEN MEIYA PICO INFORMATION

Depth camera-based visual mileometer design method

ActiveCN107025668AEffective trackingEffective estimateImage enhancementImage analysisColor imageFrame based

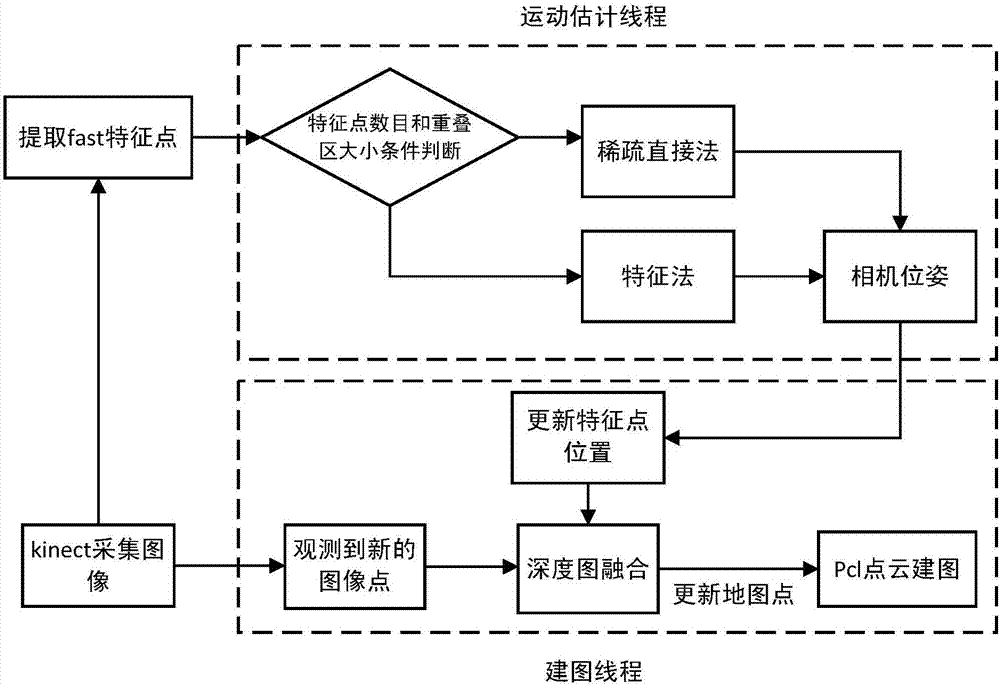

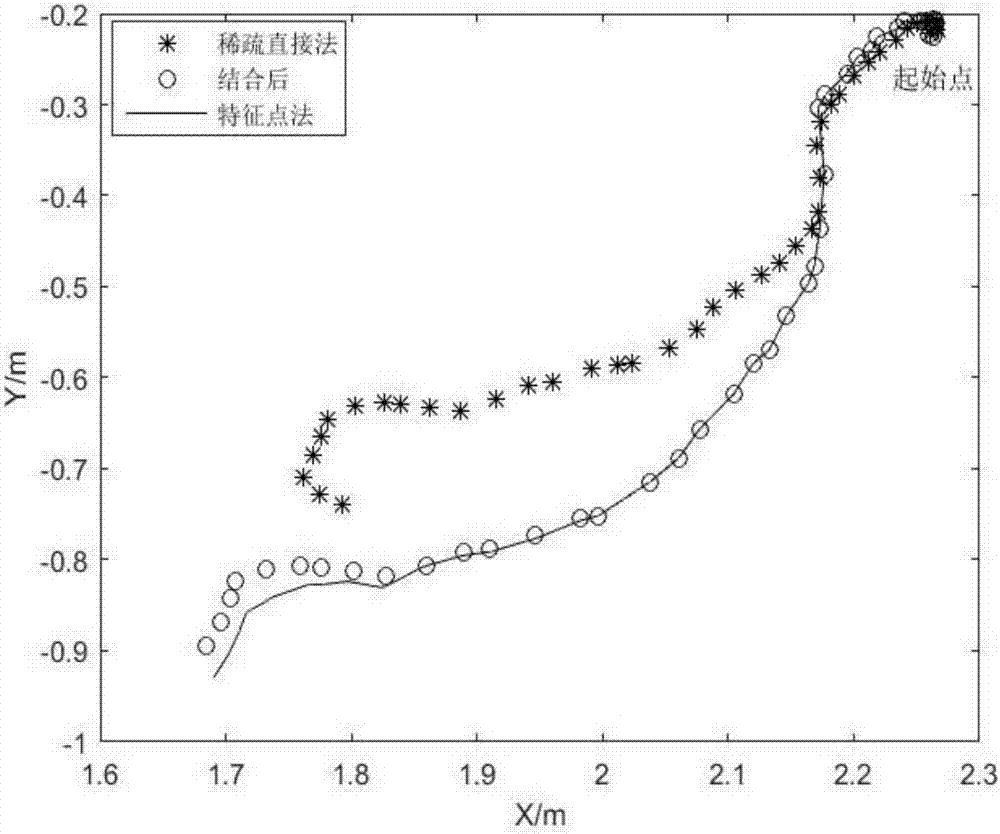

The invention discloses a depth camera-based visual mileometer design method. The method comprises the following steps of acquiring the color image information and the depth image information in the environment by a depth camera; extracting feature points in an initial key frame and in all the rest image frames; tracking the position of each feature point in the current frame based on the optical flow method so as to find out feature point pairs; according to the number of actual feature points and the region size of the overlapped regions of feature points in two successive frames, selectively adopting the sparse direct method or the feature point method to figure out relative positions and postures between two frames; based on the depth information of a depth image, figuring out the 3D point coordinates of the feature points of the key frame in a world coordinate system based on the combination of relative positions and postures between two frames; conducting the point cloud splicing on the key frame during another process, and constructing a map. The method combines the sparse direct method and the feature point method, so that the real-time performance and the robustness of the visual mileometer are improved.

Owner:SOUTH CHINA UNIV OF TECH

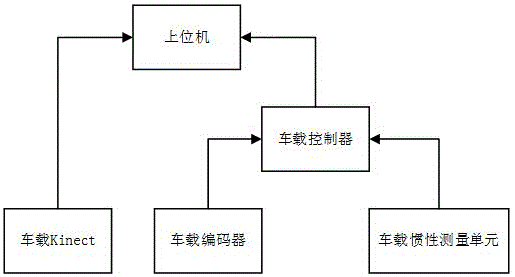

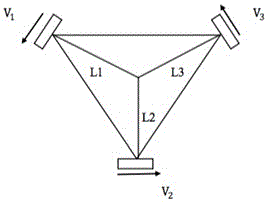

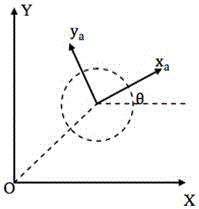

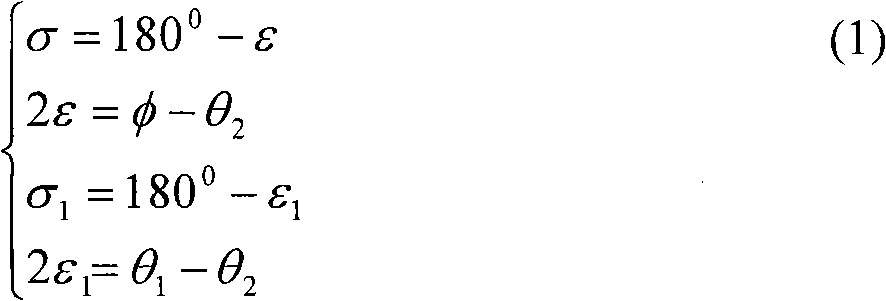

SLAM device integrating multiple vehicle-mounted sensors and control method of device

InactiveCN105783913APrecise positioningImprove mapping performanceNavigation by speed/acceleration measurementsSimultaneous localization and mappingMultiple sensor

The invention relates to the technical field of simultaneous localization and mapping methods of three-wheel omnidirectional mobile robots integrating multiple vehicle-mounted sensors, and particularly relates to an SLAM device integrating the multiple vehicle-mounted sensors and a control method of the device.The SLAM device integrating the multiple vehicle-mounted sensors comprises the vehicle-mounted sensors, a vehicle-mounted encoder, a vehicle-mounted inertia measurement unit, a vehicle-mounted controller and an upper computer; the vehicle-mounted encoder and the vehicle-mounted inertia measurement unit are connected with the vehicle-mounted controller, and the vehicle-mounted controller is connected with the upper computer.The localization and mapping effects of the robots can be improved, the problem of localization and mapping errors brought by depth value deletion or characteristic point scarcity which are generated when SLAM is conducted only by relying on an RGB-D sensor is solved, and therefore the robustness and accuracy of SLAM are improved.

Owner:SUN YAT SEN UNIV

Stereo vision measuring apparatus based on binocular omnidirectional visual sense sensor

Disclosed is a stereo vision measuring device based on a binocular omni-directional vision sensor. Each ODVS composing the binocular omni-directional vision sensor adopts the design of mean angle resolution. The parameters of two image collection cameras are in complete accord and in possession of a pretty good symmetry, and can quickly realize the point-to-point matching. The device adopts a unified spherical coordinate in the process of data collection, processing, description and representation of space objects in terms of centering on human in visual space, and adopts the elements of distance sense, direction sense and color sense to express features of each characteristic point, thereby simplifying the complication of calculus, omitting the calibration of the cameras, facilitating the feature extraction and realizing the stereo image matching easily, finally realizing the purpose of high-effective, real-time, and accurate stereo vision measurement. The device can be applied in a plurality of fields of industrial detection, object identification, robot automatic guidance, astronautics, aeronautics, military affairs, etc.

Owner:汤一平

Method for splicing video in real time based on multiple cameras

InactiveCN102006425AReduce the amount of splicing calculationsSplicing speed is fastTelevision system detailsColor television detailsColor imageNear neighbor

The invention discloses a method for splicing a video in real time based on multiple cameras, which comprises the following steps of: acquiring synchronous multi-path video data; preprocessing frame images at the same moment; converting a color image into a grayscale image; enhancing the image, and expanding the dynamic range of grayscale of the image by a histogram equalization method; extracting the characteristic points of corresponding frames by using a speeded up robust features (SURF) algorithm; solving matched characteristic point pairs among corresponding frame images of the video by using a nearest neighbor matching method and a random sample consensus matching algorithm; solving an optimal homography matrix of initial k frames of the video; determining splicing overlapping regions according to the matched characteristic point pairs; taking a homography matrix corresponding to a frame with highest overlapping region similarity as the optimal homography matrix, and splicing subsequent video frame scenes; and outputting the spliced video. The method can reduce the calculated amount of splicing the video frame single-frame image, improves the splicing speed of traffic monitoring videos and achieves real-time processing effect.

Owner:RES INST OF HIGHWAY MINIST OF TRANSPORT

Human face comparison method

InactiveCN101964064AImprove accuracyImprove robustnessImage analysisCharacter and pattern recognitionPattern recognitionMultiple frame

The invention discloses a human face characteristic comparison method. The method comprises the following steps of: tracking a human face to acquire characteristic points and extracting detailed human face characteristic data; comparing human faces, namely comparing the human face characteristic data with the characteristic data of each human face in a human face database to acquire the similarity; judging whether a matched face has been found, wherein delta is a similarity threshold value; if Smax is more than delta, judging that the input face is matched with the face k' in the database; judging whether an expression is changed obviously or not; performing analysis according to continuous multi-frame human face characteristic points which comprise but not limited to the opening and the closing of a mouth and the opening and the closing of eyes; and judging whether the human face expression is changed obviously or not and outputting the compared human face. The human face characteristic comparison method belongs to the technical field of biological feature identification, is applied to human face tracking and comparison and is widely applied to various human face comparison systems.

Owner:SHANGHAI YINGSUI NETWORK TECH

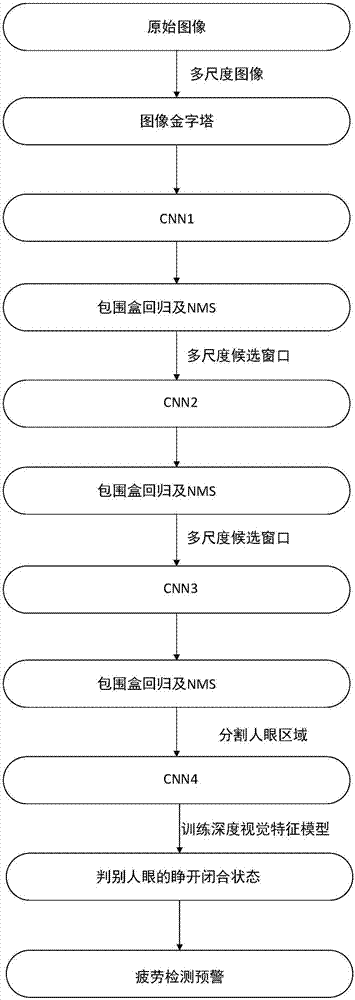

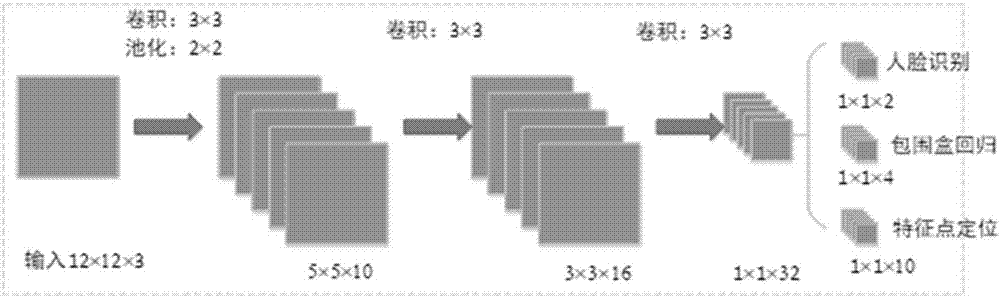

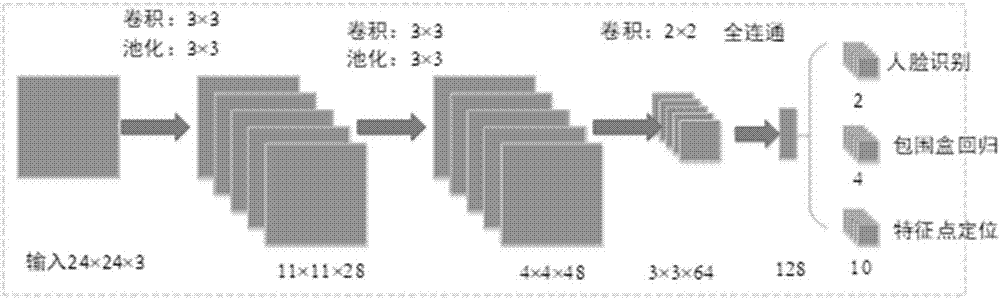

Automobile fatigue driving prediction method

InactiveCN107194346AImprove detection accuracyImprove robustnessCharacter and pattern recognitionAlarmsVisual assessmentVisual perception

The invention discloses an automobile fatigue driving prediction method. The method comprises the following steps of successively constructing and arranging first to fourth grades of convolutional neural networks, inputting an image, using a first grade of the convolutional neural network to acquire a candidate face window and a corresponding bounding box regression vector, and through the first grade and a second grade of the convolutional neural networks, merging candidate windows which are highly overlapped; for the residual candidate windows, through a third grade of the convolutional neural network, using face characteristic point mark information to predict and identify a human eye area; according to an eye characteristic point, segmenting an eye area, inputting a fourth grade of the convolutional neural network, through a depth learning algorithm, training a depth visual characteristic model of an eye image; making a video collected by a camera successfully pass through a CNN1, a CNN2, a CNN3 and a CNN4, and distinguishing a closing state of eyes; and calculating a driver fatigue visual assessment parameter PERCLOS, and when a PERCLOS value is greater than 40%, determining that a driver begins to feel fatigue or is in a fatigue state, and outputting an early warning signal. By using the method of the invention, the fatigue state of the driver under various conditions of illumination, an attitude and an expression can be detected, detection result robustness is high, and influences of factors of the illumination, the attitude, the expression and the like on driver fatigue detection are effectively overcome.

Owner:FUJIAN NORMAL UNIV

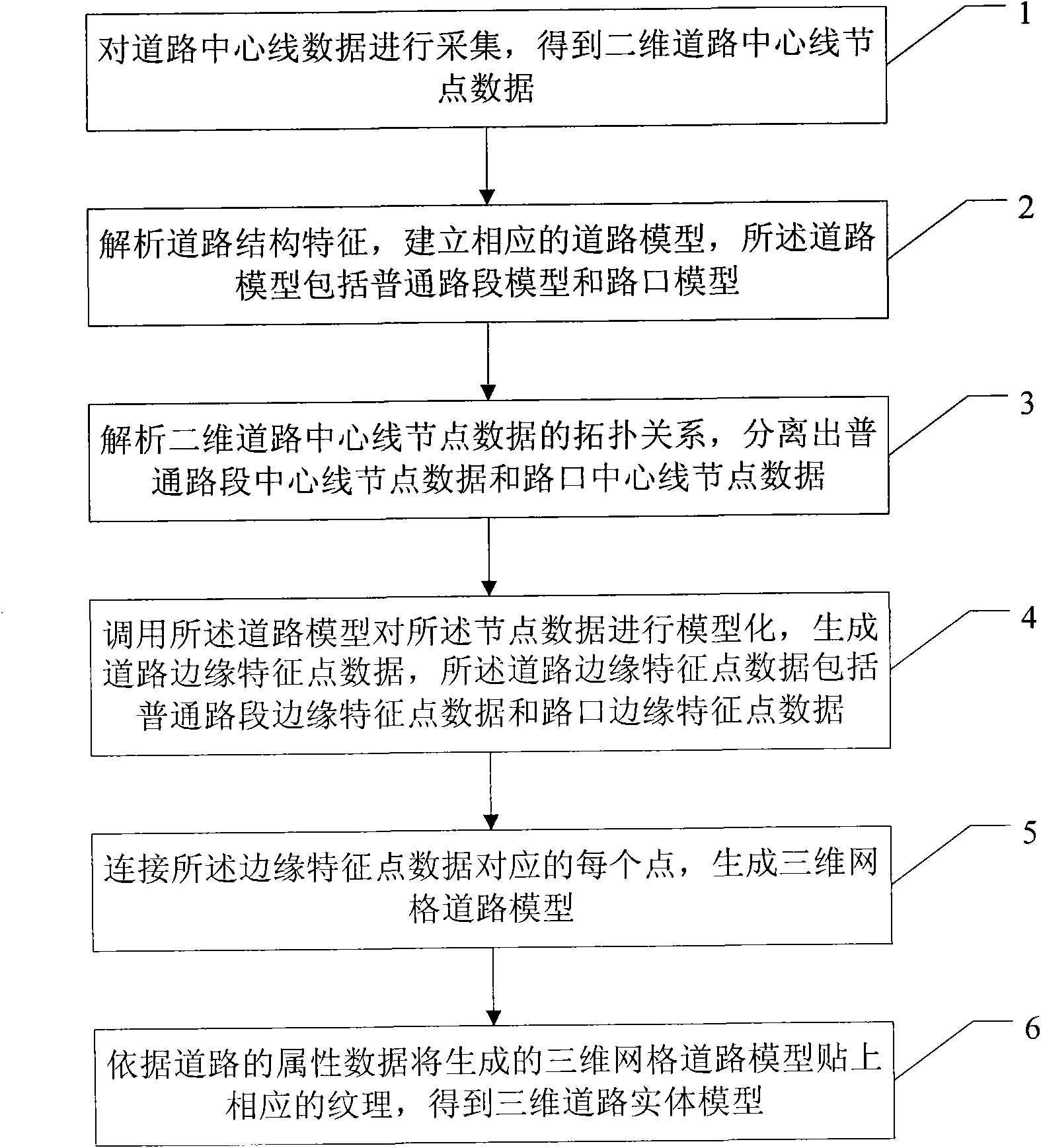

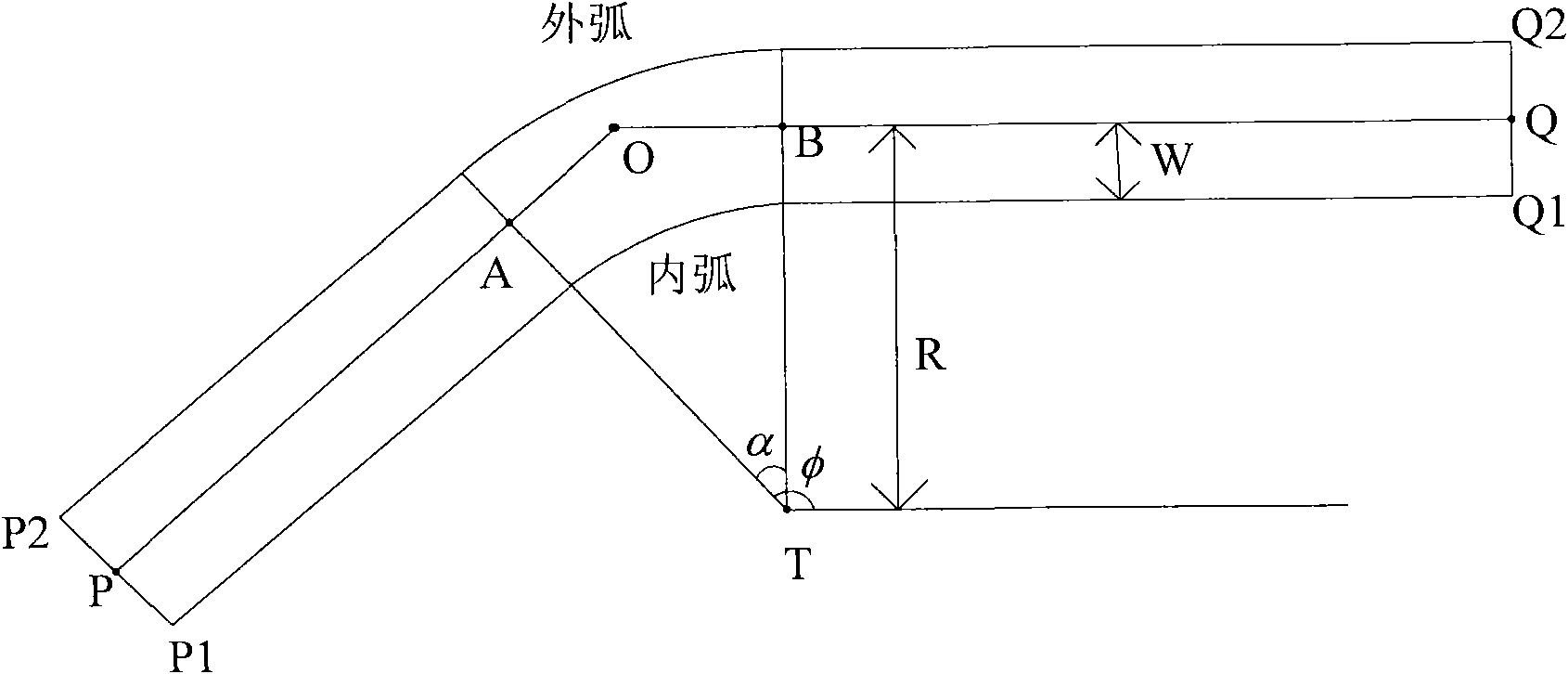

Method and system for generating three-dimensional road model

ActiveCN102436678AAvoid manual interventionImprove modeling efficiency3D modellingDimensional modelingFully automatic

The invention relates to a method for generating a three-dimensional road model, which belongs to the field of three-dimensional road modeling. The method comprises the following steps: (1) collecting the data of the center line of a road to obtain the two-dimensional node data of the center line of the road; (2) resolving the structural characteristic of the road and constructing a road model; (3) separating out the node data of the center lines of a common road section and an intersection from the two-dimensional data of the center line of the road; (4) modeling the node data by invoking the road model to generate the characteristic point data of the edges of the common road section and the intersection; (5) connecting each point corresponding to the characteristic point data of the edges to generate a road model of a three-dimensional grid; and (6) sticking a corresponding texture onto the generated road model of the three-dimensional grid according to the attribute data of the road to obtain a three-dimensional solid model of the road. The invention also provides a system for generating the three-dimensional road model. According to the method for generating the three-dimensional road model, which is disclosed by the invention, the three-dimensional road model is generated in a fully automatic way, the manual intervention is avoided, and the modeling efficiency is enhanced.

Owner:北京中恒丰泰科技有限公司

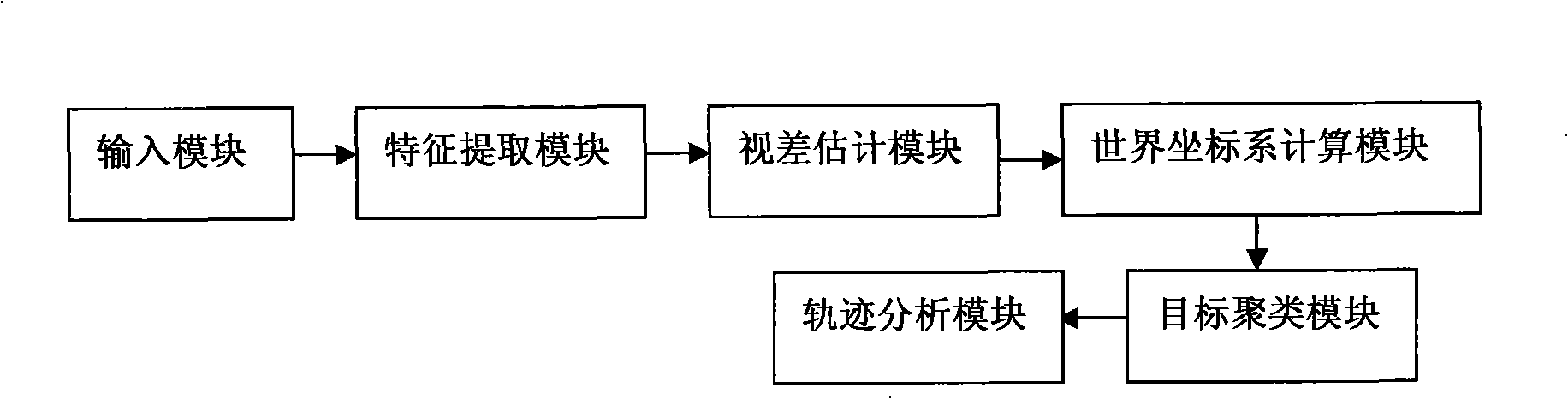

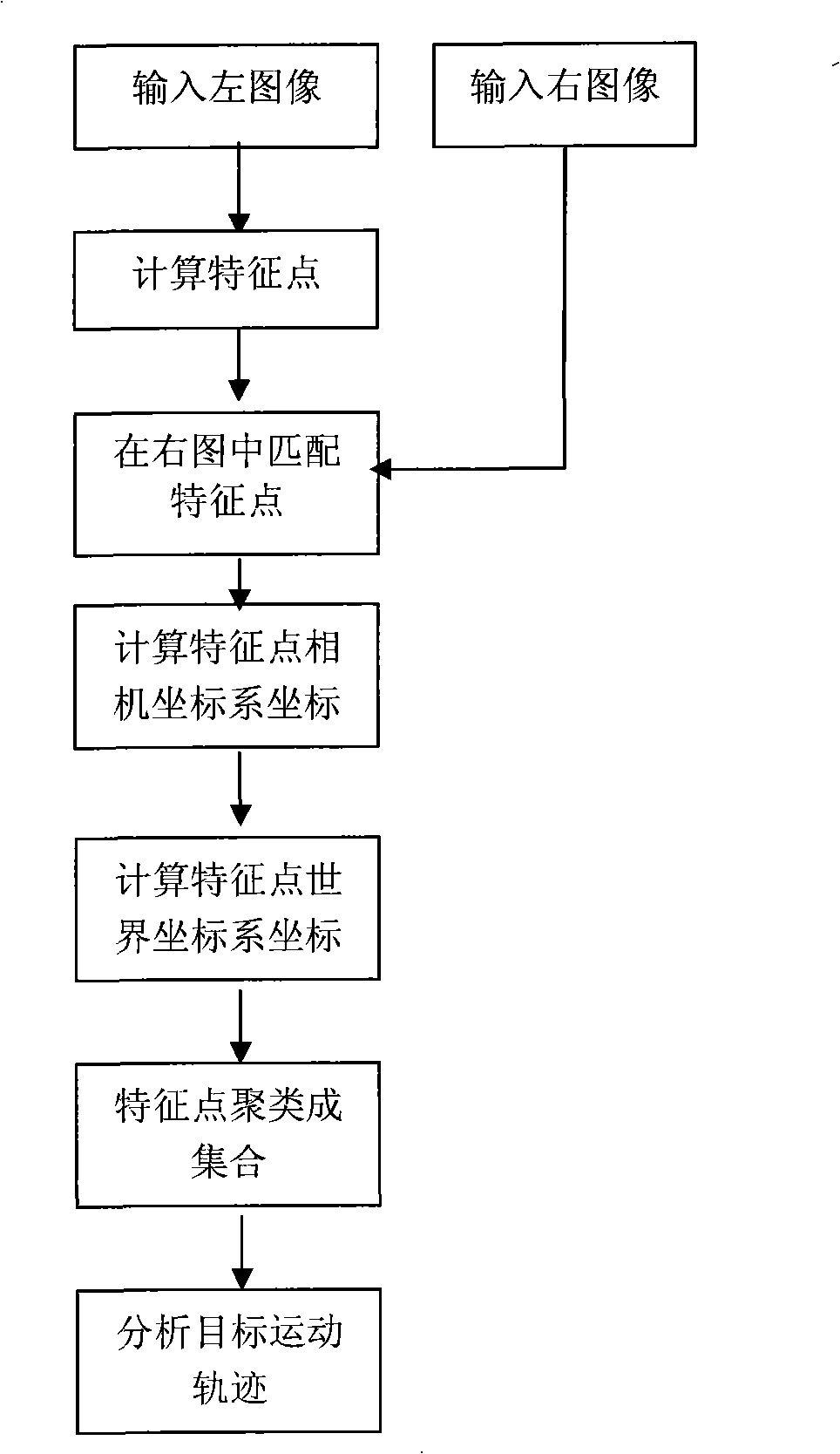

Tracking system based on binocular camera shooting

InactiveCN101344965AAcquisition stableImage analysisClosed circuit television systemsParallaxFeature extraction

The invention relates to a full automatic target detecting and tracking system in the computer vision field, wherein, an input module is responsible for collecting digital images shot by a binocular camera to be taken as system input, the obtained digital images are input into a feature extraction module and feature analysis is carried out to one image to obtain a plurality of characteristic points to be taken as the subsequently processed images. By matching the characteristic points of two images, the parallax of the two images is calculated, and by combining the pre-informed external and internal parameters of the camera, the lower coordinate of a camera coordinate system of the characteristic points can be calculated; furthermore, by the relationship between a world coordinate system and the camera coordinate system, the coordinate of the world coordinate system of the characteristic points can be known. A clustering module clusters the characteristic points into an aggregation for expressing target position, while a trajectory analysis module estimates the target position on a time sequence to obtain the motion trajectory of the target. The invention can effectively and steadily detect the targets in a designated area, track the targets and calculate the motion trajectories of the targets.

Owner:SHANGHAI JIAO TONG UNIV

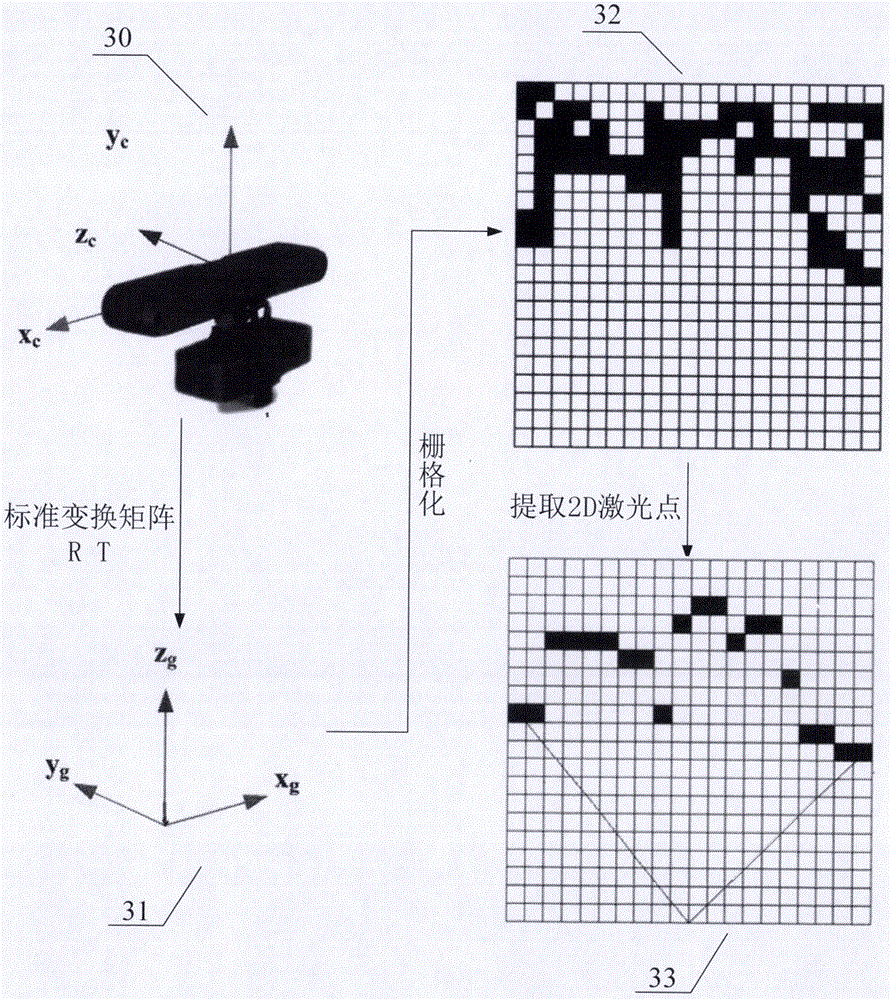

Indoor robot SLAM method and system

ActiveCN106052674AMeet indoor navigation requirementsDoes not affect appearance structureNavigational calculation instrumentsOperational systemRgb image

The invention discloses an indoor robot SLAM method. The method comprises the following steps: obtaining image data through a camera, wherein the image data comprises RGB images and depth images; using a corner detection algorithm, an LK characteristic point tracing algorithm, and a RANSAC algorithm to process the RGB images and depth images to adjust the position and angle of the camera so as to obtain the RGB image data information under a robot operation system; converting the depth images in a world coordinate system to a ground coordinate, traversing the depth images that are projected on the ground coordinate, setting the gray value of the grid where a barrier stays as a first characteristic value, carrying out traversing to obtain a 2D barrier grid map; through a single line laser radar scanning mode, searching the 2D barrier grid map, when the grid with a grey value equal to the first characteristic value is found, feeding back the distance between the grid and the camera to obtain the distance between a robot and the barrier, obtaining the depth images under a robot operation system, and obtaining an environment 2D map.

Owner:青岛克路德智能科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com