Patents

Literature

395 results about "Simultaneous localization and mapping" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In navigation, robotic mapping and odometry for virtual reality or augmented reality, simultaneous localization and mapping (SLAM) is the computational problem of constructing or updating a map of an unknown environment while simultaneously keeping track of an agent's location within it. While this initially appears to be a chicken-and-egg problem there are several algorithms known for solving it, at least approximately, in tractable time for certain environments. Popular approximate solution methods include the particle filter, extended Kalman filter, Covariance intersection, and GraphSLAM.

Systems and methods for vslam optimization

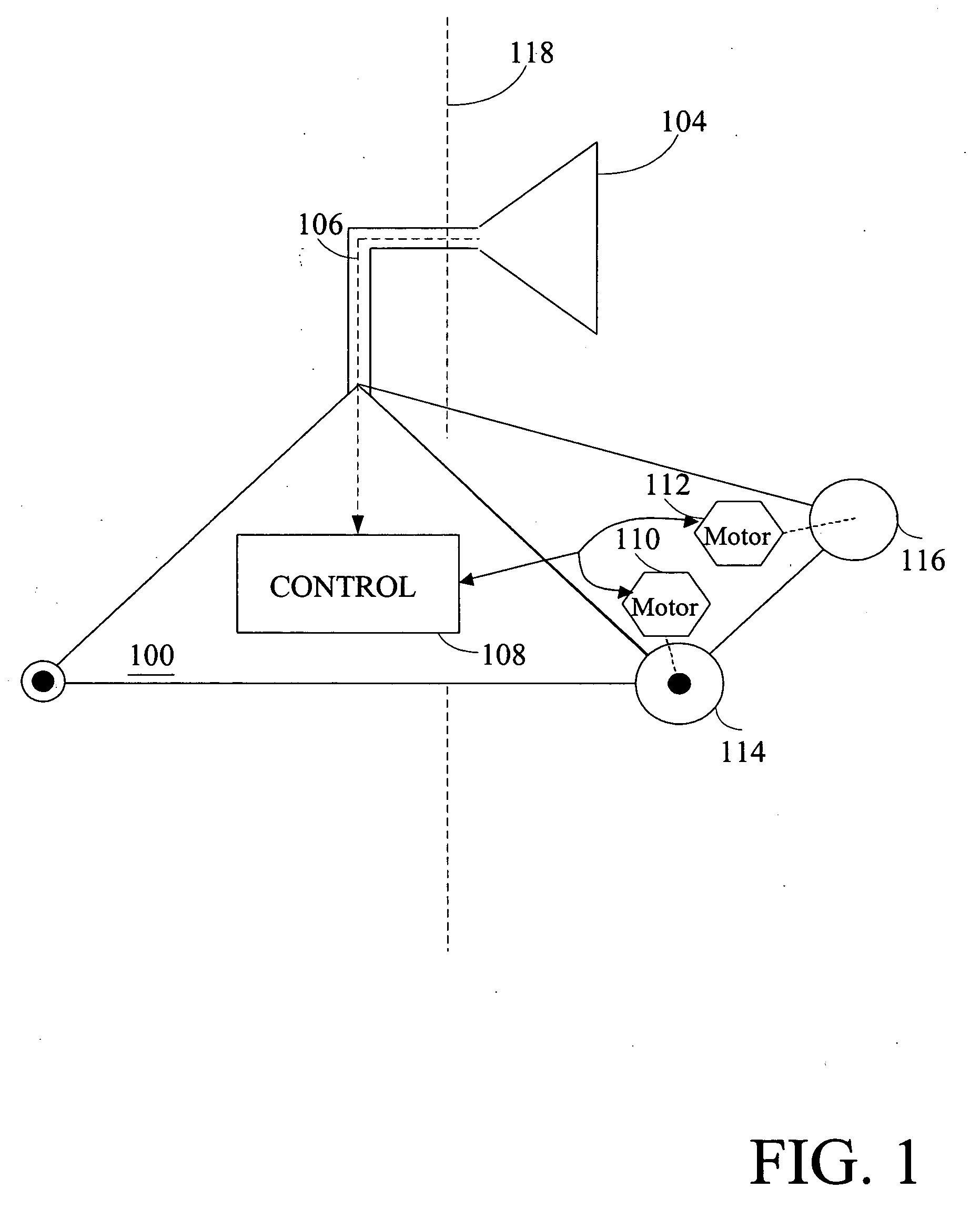

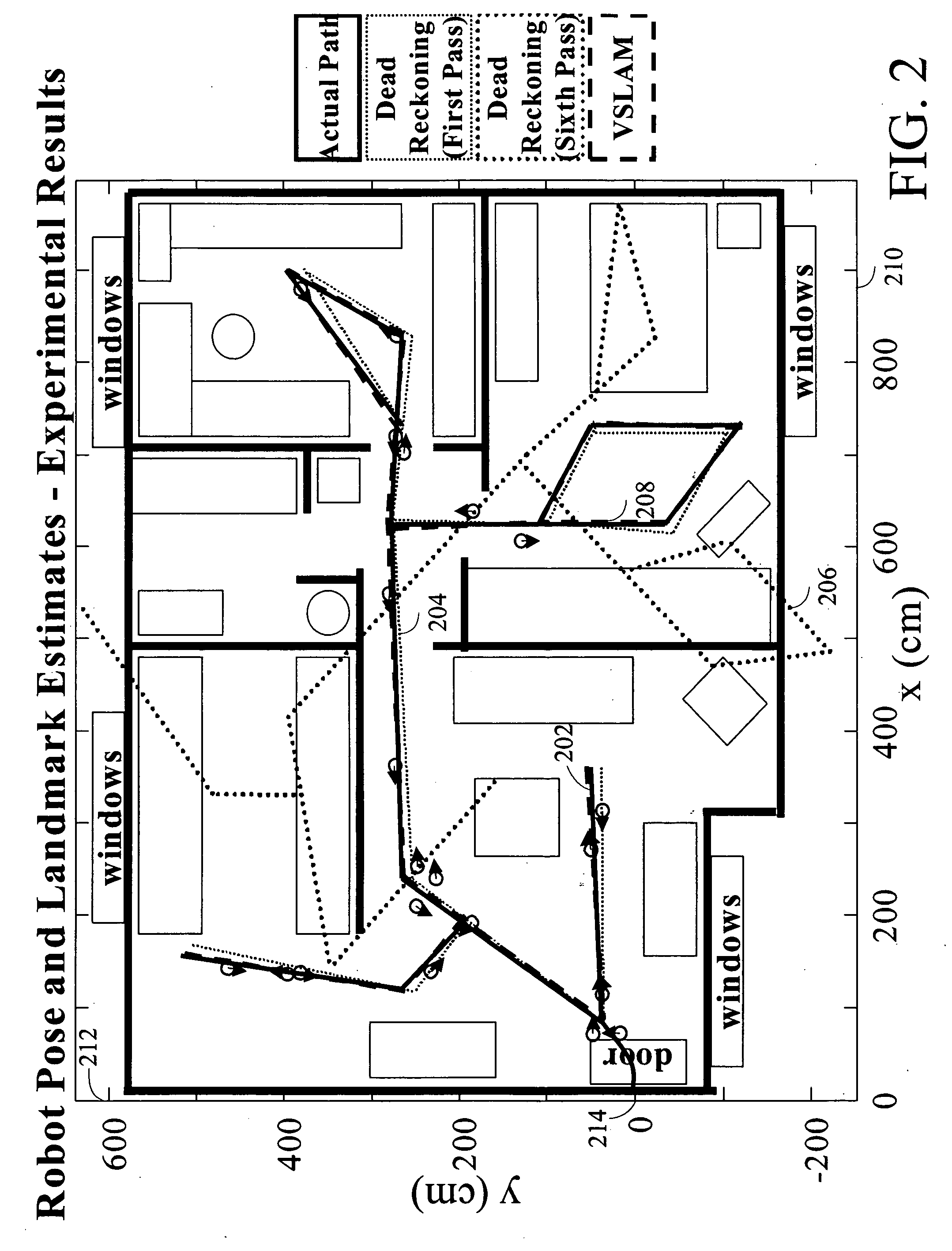

ActiveUS20120121161A1Database queryingCharacter and pattern recognitionSimultaneous localization and mappingLandmark matching

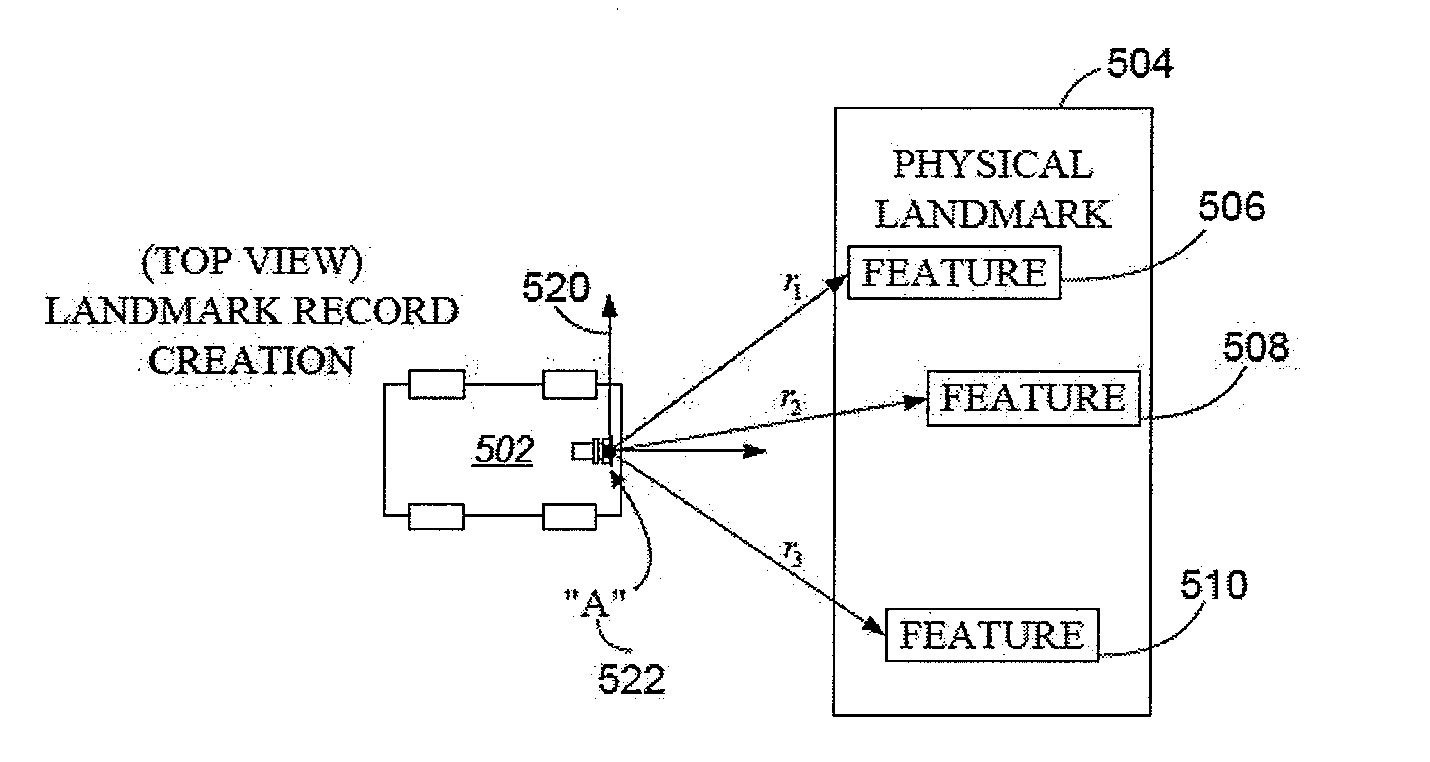

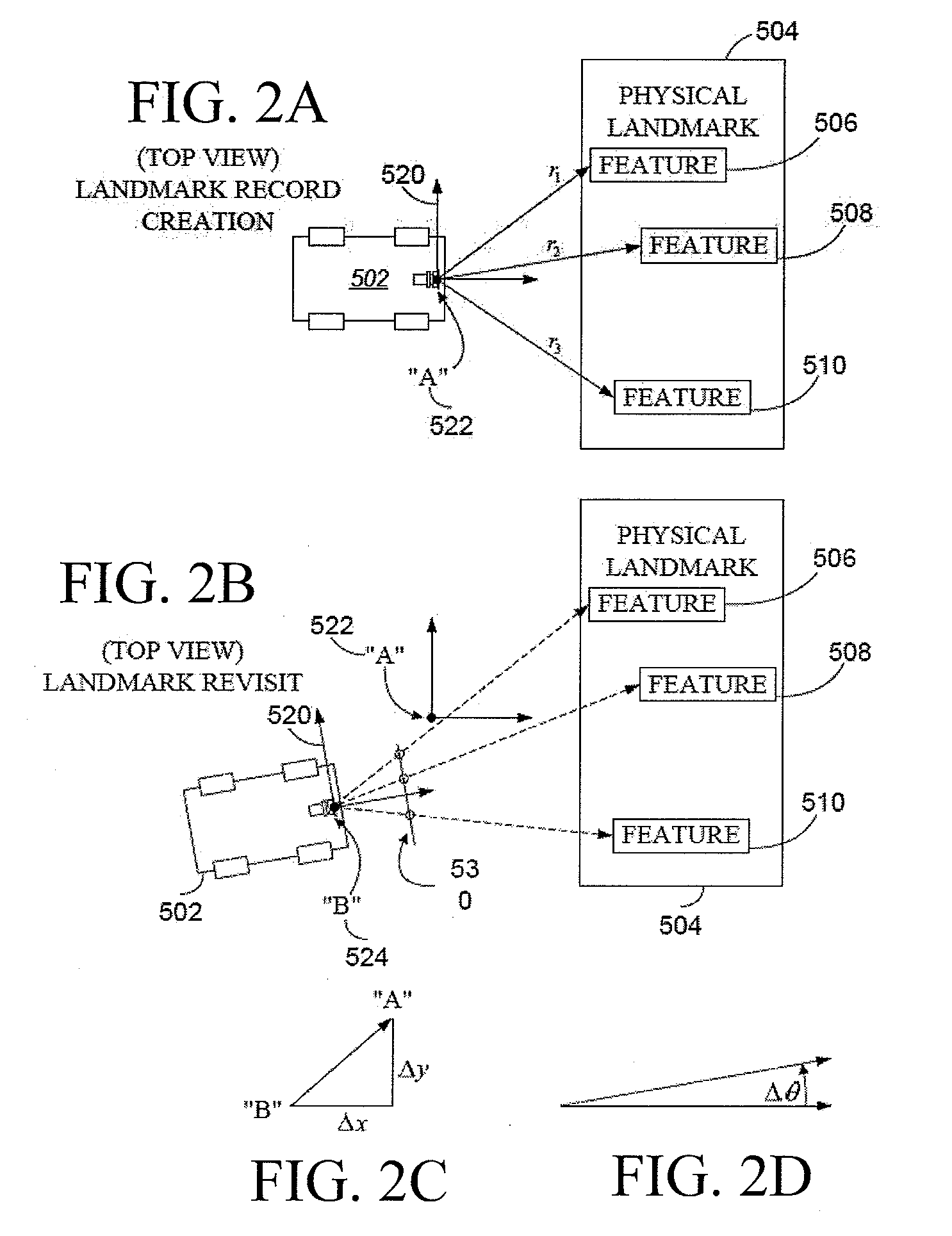

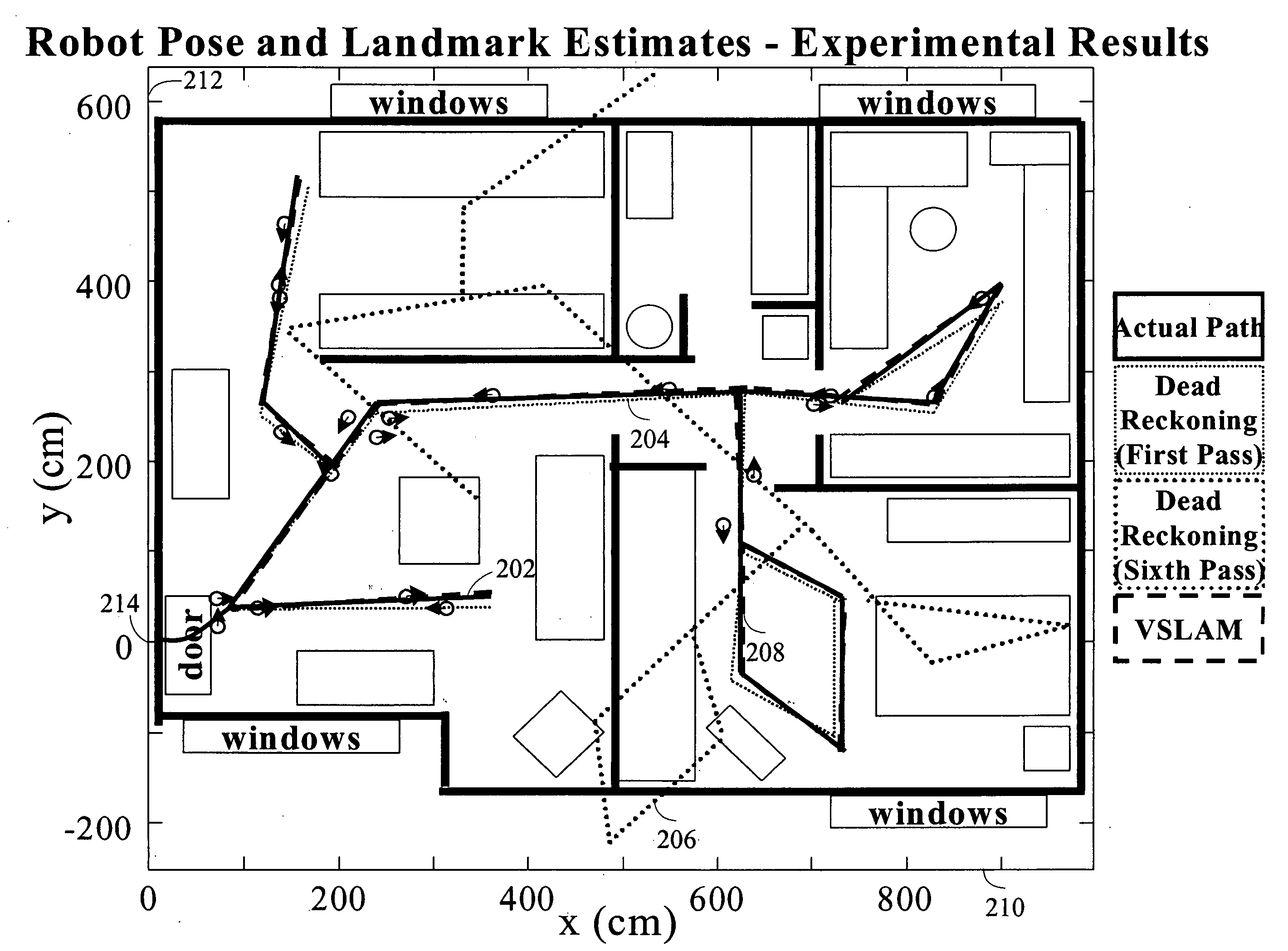

The invention is related to methods and apparatus that use a visual sensor and dead reckoning sensors to process Simultaneous Localization and Mapping (SLAM). These techniques can be used in robot navigation. Advantageously, such visual techniques can be used to autonomously generate and update a map. Unlike with laser rangefinders, the visual techniques are economically practical in a wide range of applications and can be used in relatively dynamic environments, such as environments in which people move. Certain embodiments contemplate improvements to the front-end processing in a SLAM-based system. Particularly, certain of these embodiments contemplate a novel landmark matching process. Certain of these embodiments also contemplate a novel landmark creation process. Certain embodiments contemplate improvements to the back-end processing in a SLAM-based system. Particularly, certain of these embodiments contemplate algorithms for modifying the SLAM graph in real-time to achieve a more efficient structure.

Owner:IROBOT CORP

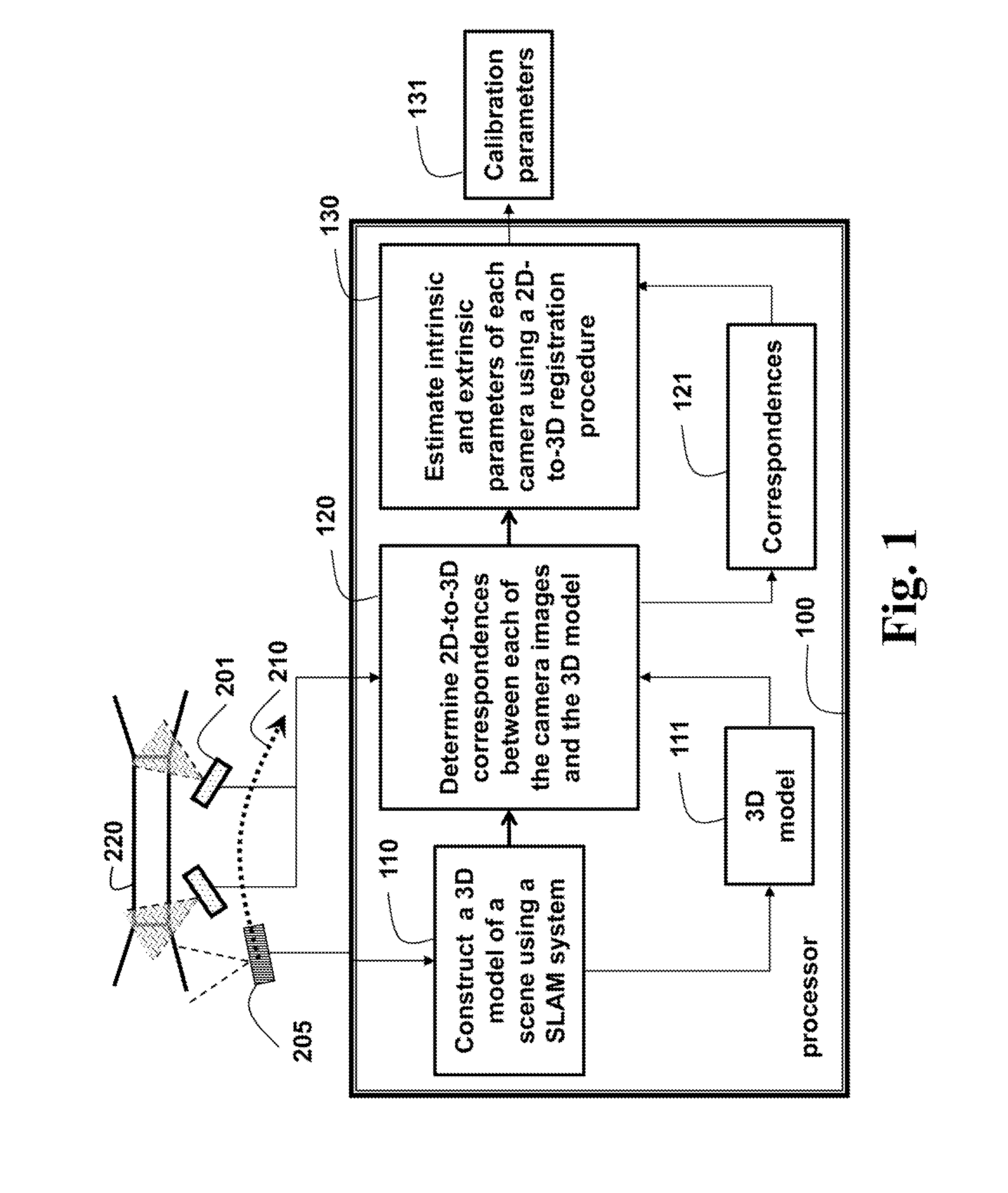

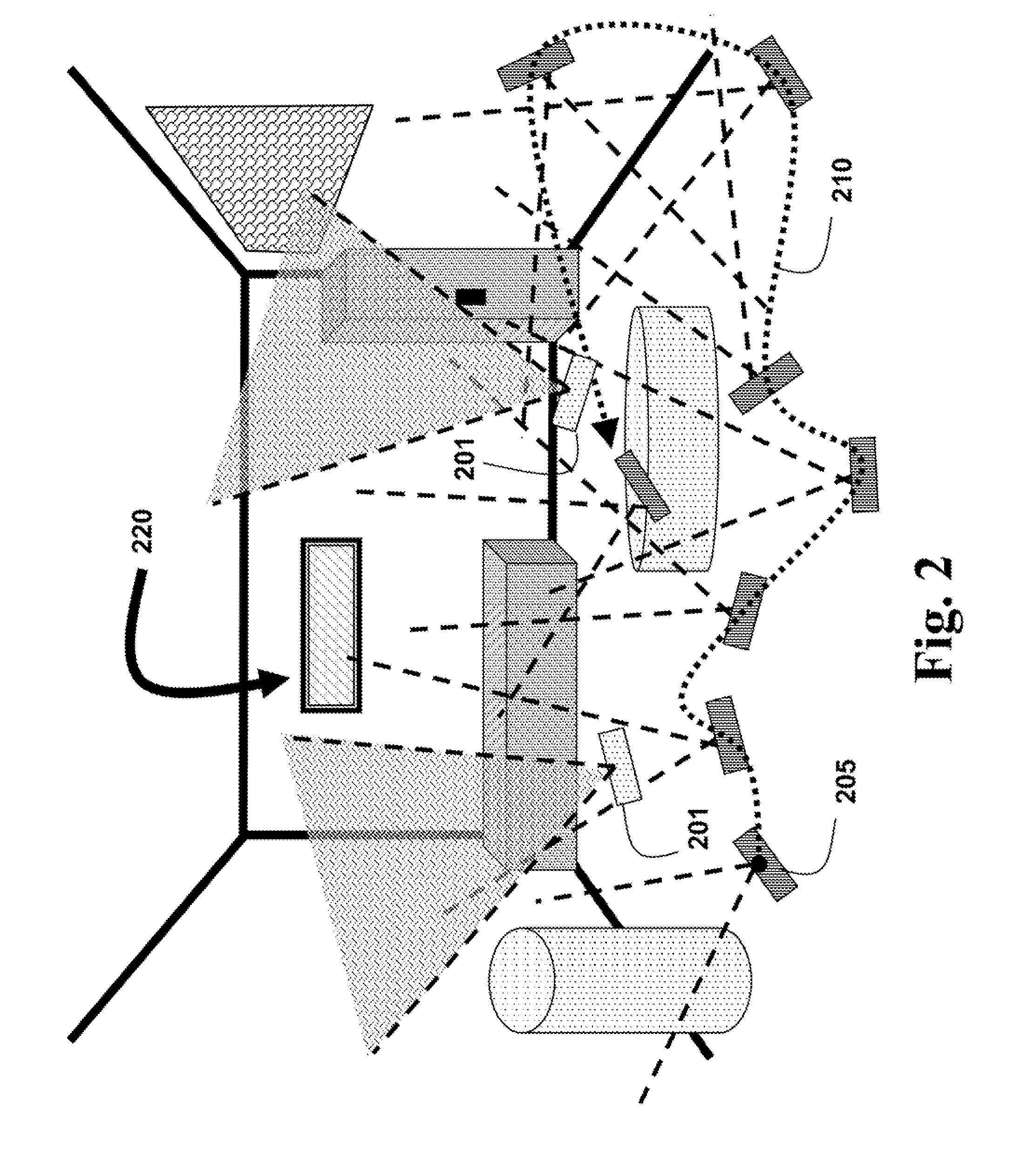

Method for Calibrating Cameras with Non-Overlapping Views

ActiveUS20160012588A1Few degeneracy problemImage enhancementImage analysisSimultaneous localization and mappingViewpoints

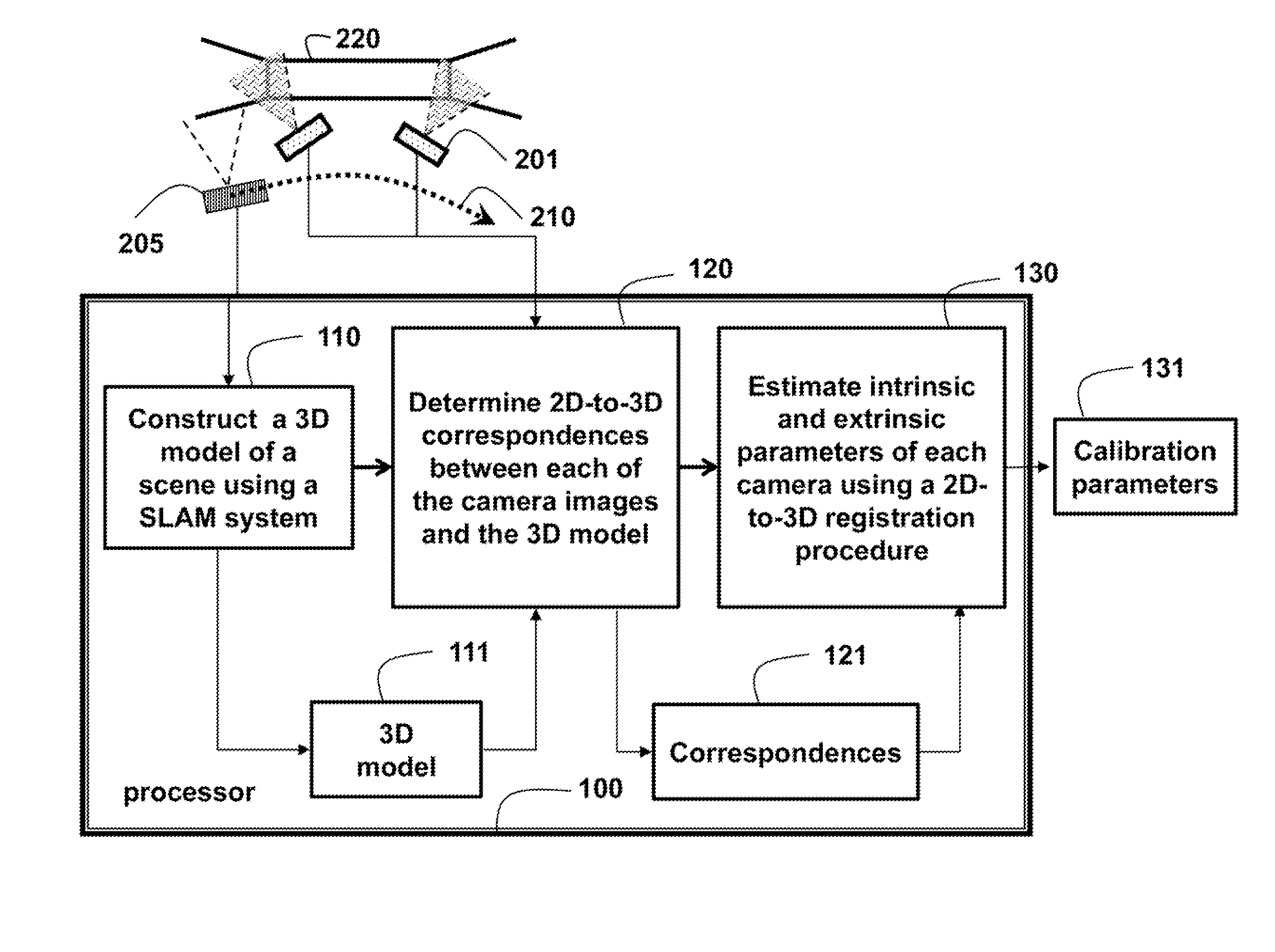

A method calibrates one or more cameras, wherein the one or more cameras acquire images of a scene for different viewpoints, and wherein the images are non-overlapping, by first constructing a 3D model of the scene using a calibration camera that is independent of the one or more cameras, and a. simultaneous localization and mapping (SLAM) procedure. 2D-to-3D correspondences between each of the images and the 3D model are determined. Then, calibration parameters of each of the one or more cameras are estimated using a 2D-to-3D registration procedure.

Owner:MITSUBISHI ELECTRIC CORP

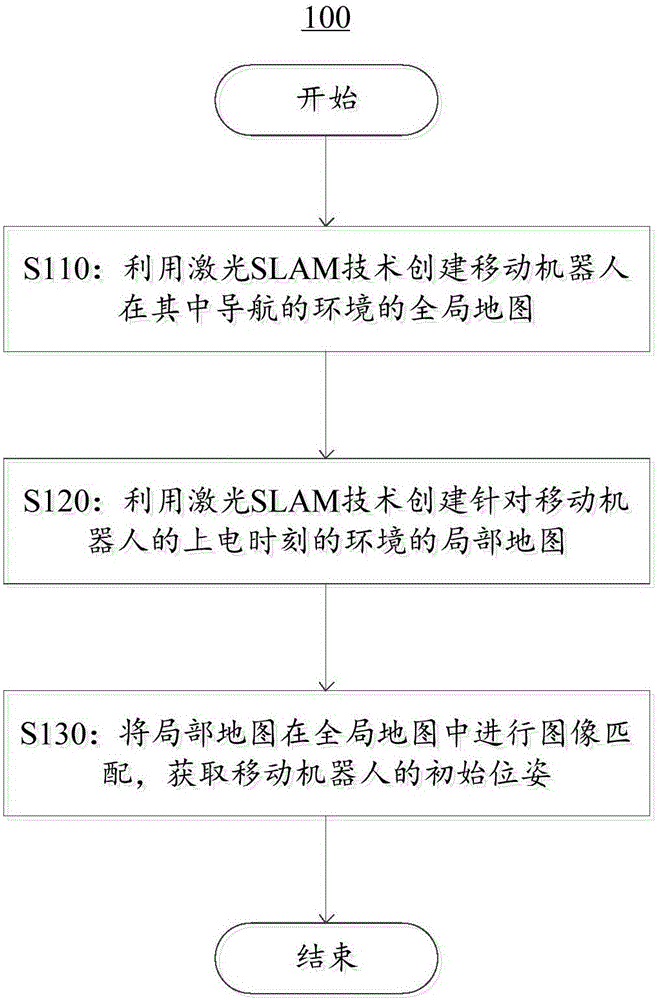

Determination method and apparatus for position and orientation of mobile robot

ActiveCN105928505AImplement initializationTimely detection of pose errorsNavigation by speed/acceleration measurementsPicture interpretationSimultaneous localization and mappingElectricity

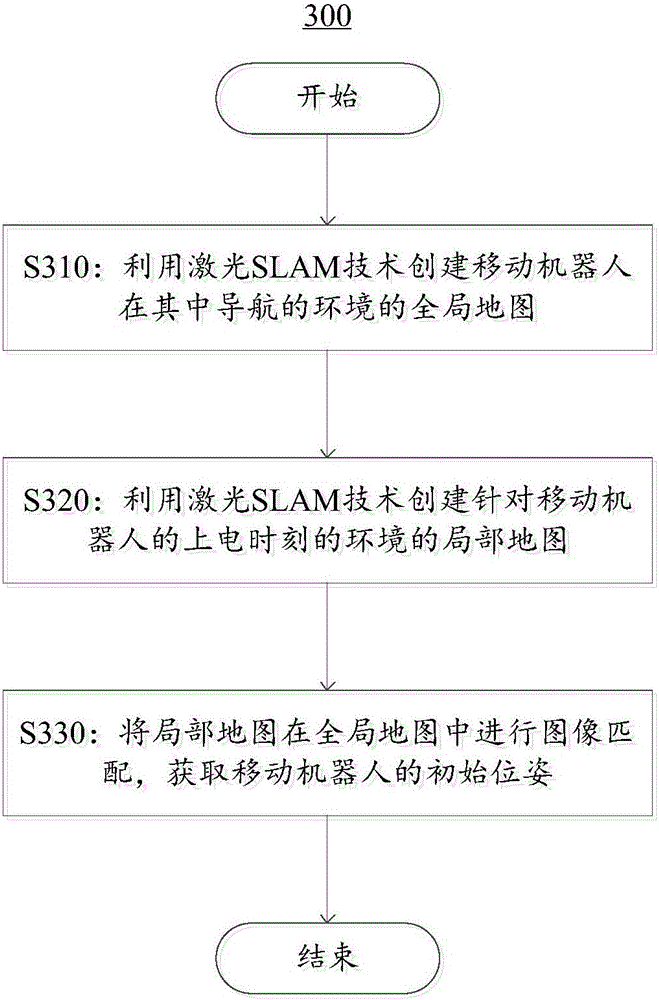

Embodiments of the invention relate to a determination method and apparatus for the position and orientation of a mobile robot. The method comprises the following steps: in virtue of simultaneous localization and mapping (SLAM) technology, establishing a global map of an environment where the mobile robot plays a navigation role by using a laser scanner; when the mobile robot is energized, establishing a local map of an environment in the energization moment of the mobile robot by using a laser scanner in virtue of SLAM technology; and subjecting the local map to image matching in the global map so as to obtain the initial position and orientation of the mobile robot in the global map. According to the embodiments of the invention, the absolute position and orientation of the mobile robot in the global map in the moment the mobile robot is energized and started can be obtained, thereby realizing initialization of the position and orientation of the mobile robot; moreover, determined position and orientation of the mobile robot can be further corrected.

Owner:SMART DYNAMICS CO LTD

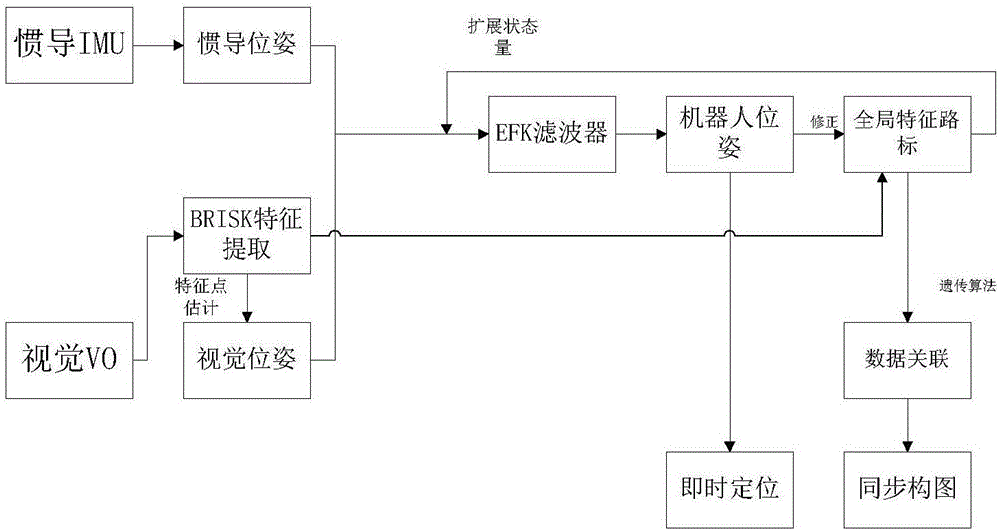

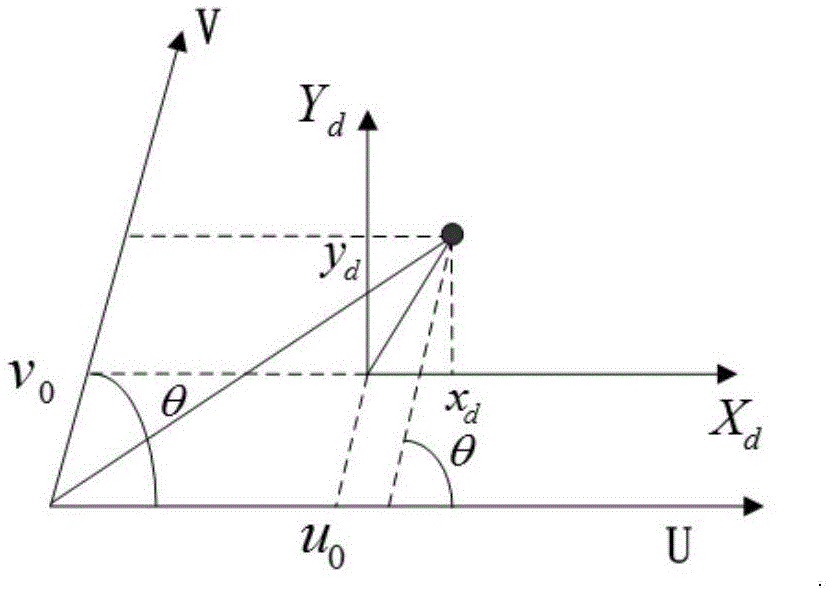

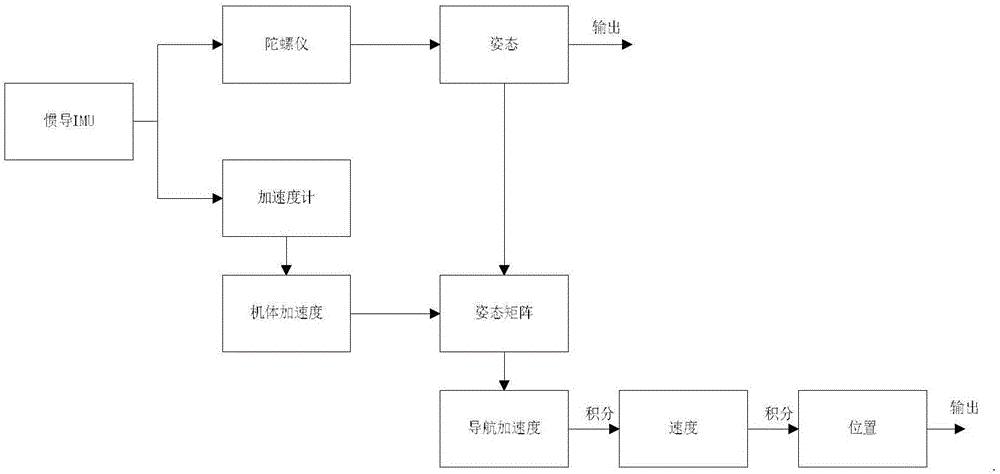

Vision-inertia integrated SLAM (Simultaneous Localization and Mapping) method based on genetic algorithm

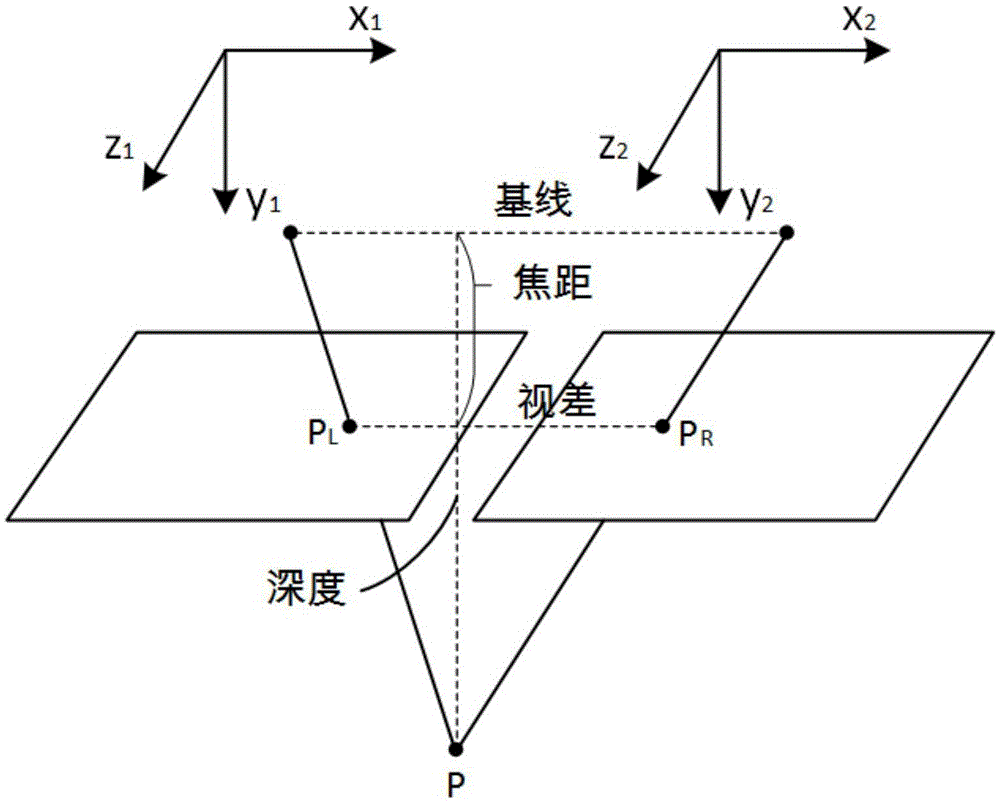

ActiveCN106679648AHigh precisionNavigation by speed/acceleration measurementsSimultaneous localization and mappingKaiman filter

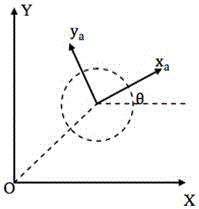

The invention discloses a vision-inertia integrated SLAM (Simultaneous Localization and Mapping) method based on a genetic algorithm. The method comprises the following steps: integrating a vision navigation coordinate system with an inertia navigation coordinate system, and calibrating parameters of a binocular camera so as to solve a three-dimensional space coordinate according to an image pixel coordinate; independently calculating by inertia navigation; calculating by vision navigation; integrating vision navigation information with inertia navigation information by using an extended Kalman filter, and building a system filter model; and observing global feature point road signs by using the binocular camera by taking localization locality into account, carrying out data association on map features based on the genetic algorithm, and feeding extended state vectors back to a filter. The method is capable of carrying out long-time and high-accuracy localization; the genetic algorithm is added for improving the data association of the map, so that the simultaneous mapping accuracy is greatly improved.

Owner:SOUTHEAST UNIV

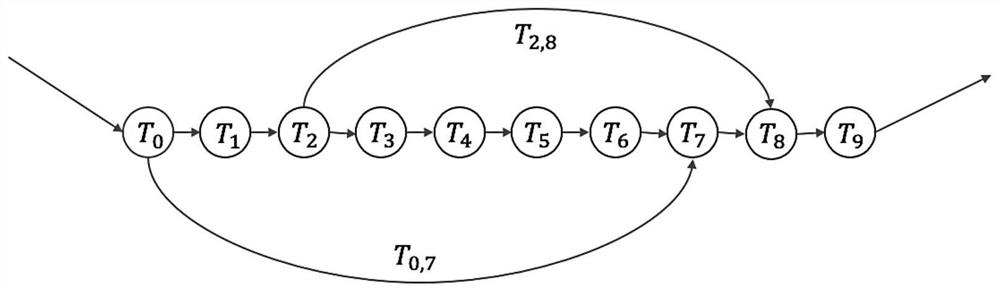

Graph-based vision SLAM (simultaneous localization and mapping) method

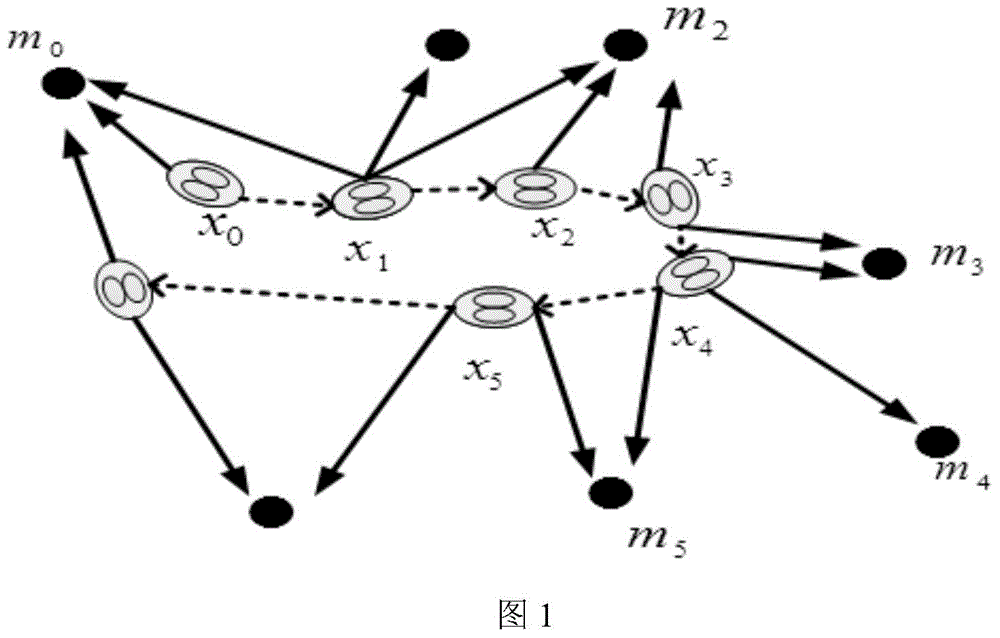

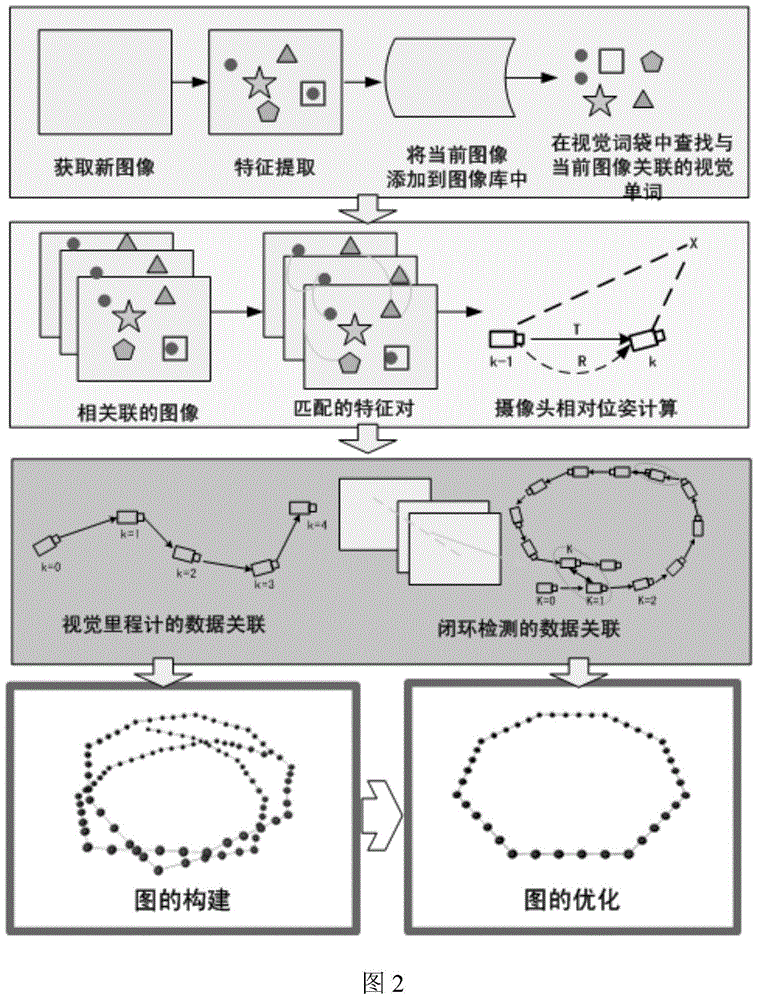

InactiveCN104374395AProof of validityInstruments for road network navigationSimultaneous localization and mappingStochastic gradient descent

The invention discloses a graph-based vision SLAM (simultaneous localization and mapping) method. According to the method, the matching relation between an image and visual feature can be obtained based on the natural feature probability vector representation of the image, and the relative pose between two interframes can be calculated by utilizing the space geometry relation of images. Data association of visual odometry is obtained by utilizing the corresponding relation of continuous images, so that all constraints in an image sequence can be obtained. The camera relative pose is taken as a node in a map, the space constrained relation of image interframes is taken as an edge, so that an estimated track map based on the camera relative pose is constructed. Finally, a maximum likelihood method is employed for optimizing the map, and optimized pose estimation is obtained through a random gradient descent method. Related experiments are performed in the laboratory environment based on the provided method, also the moving track of a robot is displayed, and the validity of the algorithm is confirmed.

Owner:NANJING UNIV OF POSTS & TELECOMM

Systems and methods for incrementally updating a pose of a mobile device calculated by visual simultaneous localization and mapping techniques

ActiveUS20060012493A1Image enhancementInstruments for road network navigationPattern recognitionSimultaneous localization and mapping

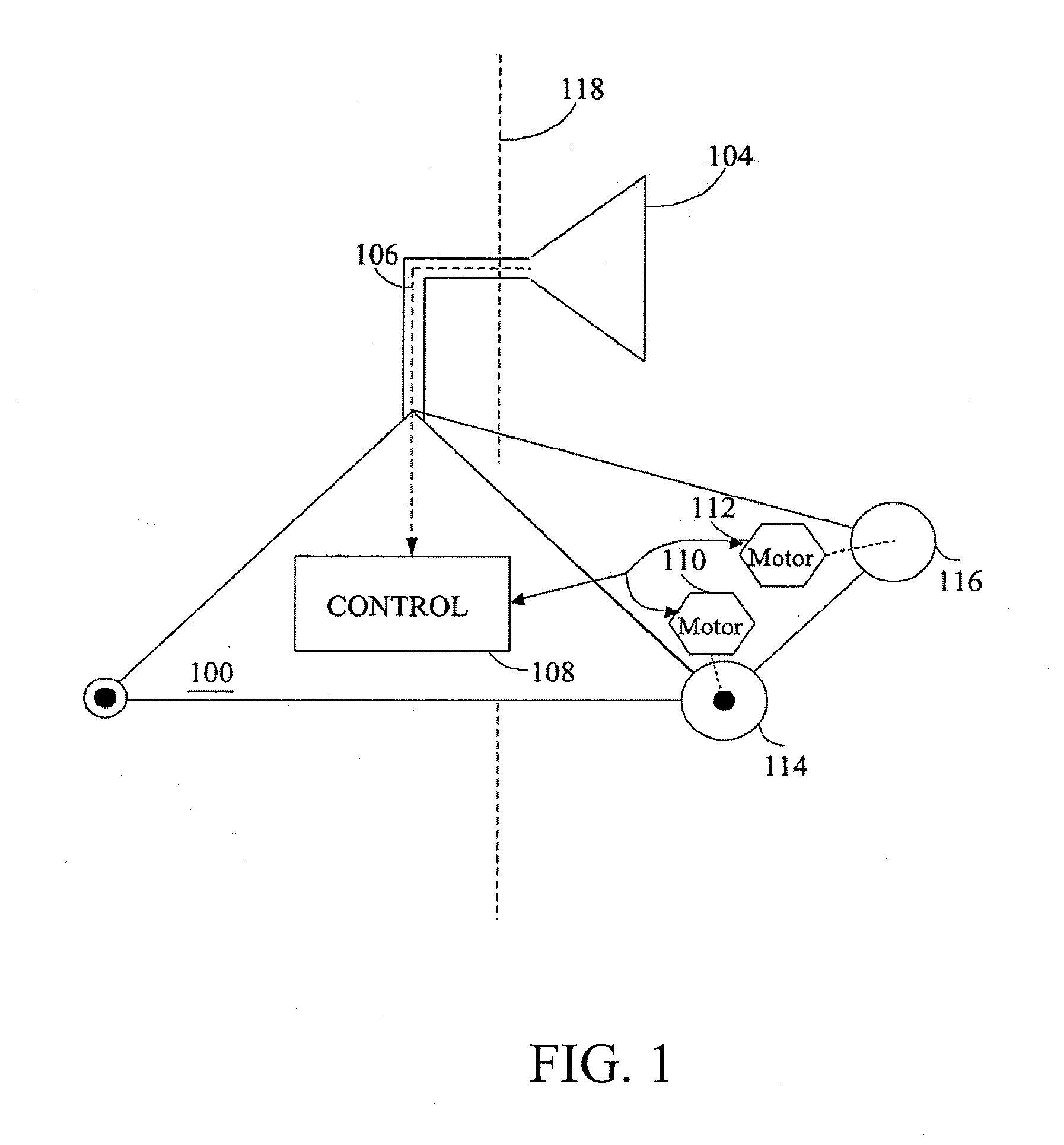

The invention is related to methods and apparatus that use a visual sensor and dead reckoning sensors to process Simultaneous Localization and Mapping (SLAM). These techniques can be used in robot navigation. Advantageously, such visual techniques can be used to autonomously generate and update a map. Unlike with laser rangefinders, the visual techniques are economically practical in a wide range of applications and can be used in relatively dynamic environments such as environments in which people move. One embodiment further advantageously uses multiple particles to maintain multiple hypotheses with respect to localization and mapping. Further advantageously, one embodiment maintains the particles in a relatively computationally-efficient manner, thereby permitting the SLAM processes to be performed in software using relatively inexpensive microprocessor-based computer systems.

Owner:IROBOT CORP

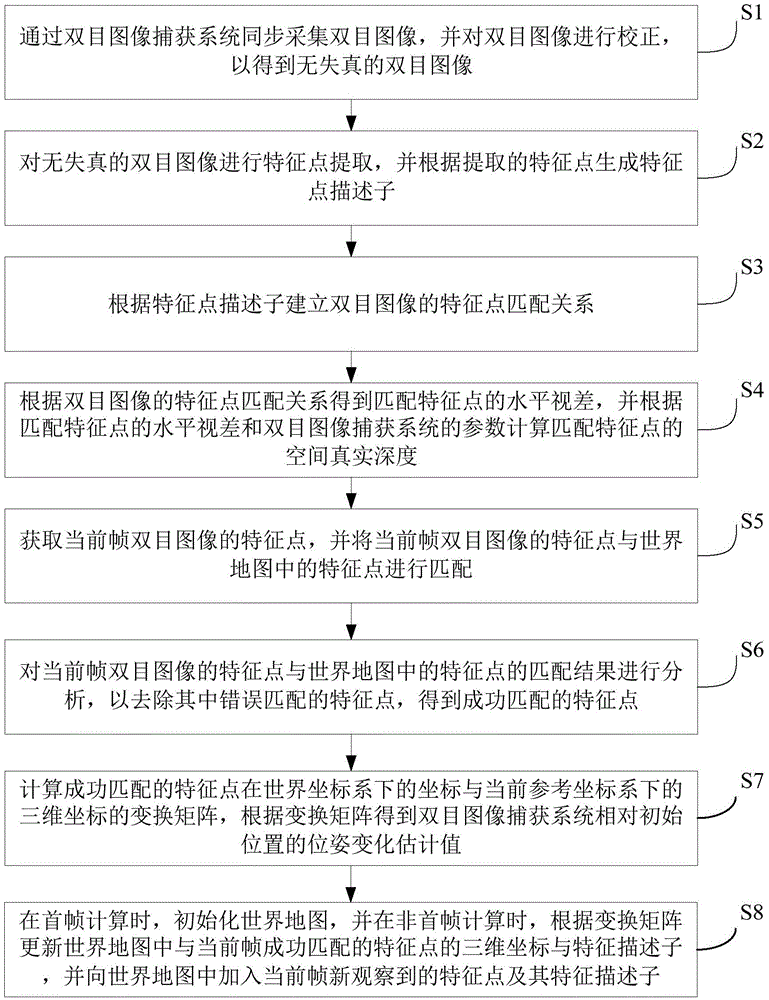

Visual ranging-based simultaneous localization and map construction method

ActiveCN105469405AReduce computational complexityEliminate accumulationImage enhancementImage analysisSimultaneous localization and mappingComputation complexity

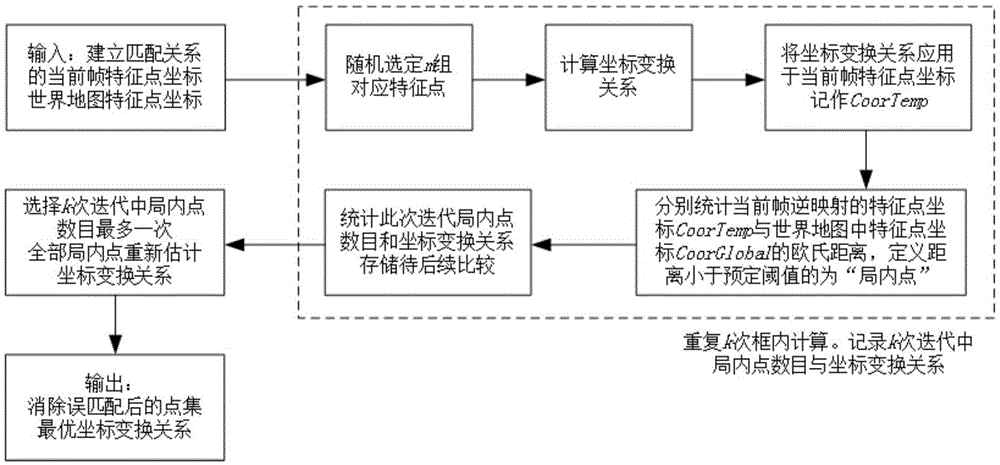

The invention provides a visual ranging-based simultaneous localization and map construction method. The method includes the following steps that: a binocular image is acquired and corrected, so that a distortion-free binocular image can be obtained; feature extraction is performed on the distortion-free binocular image, so that feature point descriptors can be generated; feature point matching relations of the binocular image are established; the horizontal parallax of matching feature points is obtained according to the matching relations, and based on the parameters of a binocular image capture system, real space depth is calculated; the matching results of the feature points of a current frame and feature points in a world map are calculated; feature points which are wrongly matched with each other are removed, so that feature points which are successfully matched with each other can be obtained; a transform matrix of the coordinates of the feature points which are successfully matched with each other under a world coordinate system and the three-dimension coordinates of the feature points which are successfully matched with each other under a current reference coordinate system is calculated, and a pose change estimated value of the binocular image capture system relative to an initial position is obtained according to the transform matrix; and the world map is established and updated. The visual ranging-based simultaneous localization and map construction method of the invention has low computational complexity, centimeter-level positioning accuracy and unbiased characteristics of position estimation.

Owner:北京超星未来科技有限公司

Determination method and apparatus for poses of mobile robot

ActiveCN105953798AImprove applicabilityIncrease flexibilityNavigation by speed/acceleration measurementsVehicle position/course/altitude controlSimultaneous localization and mappingComputer graphics (images)

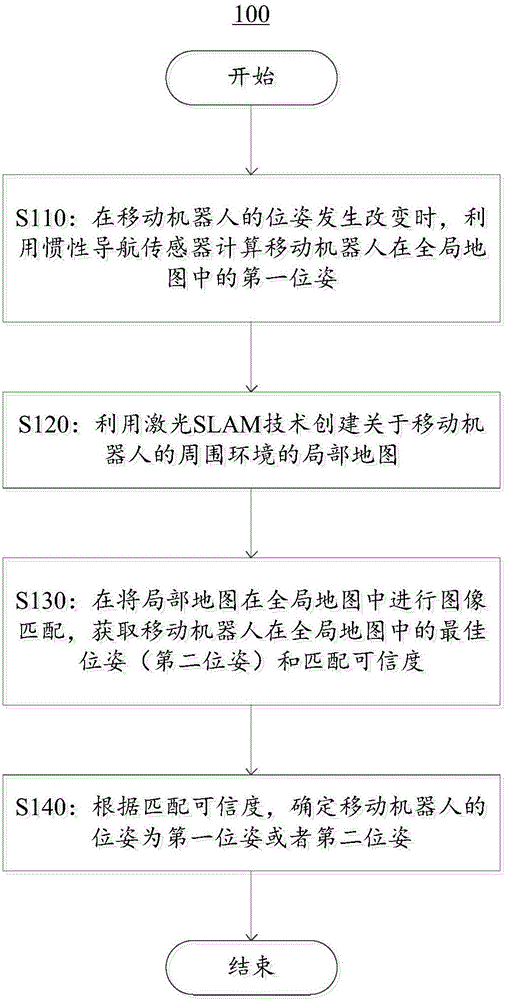

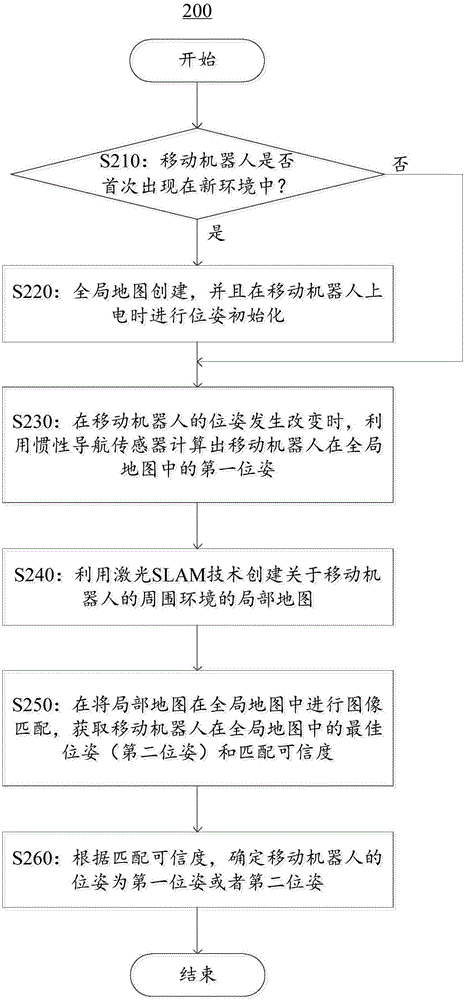

Embodiments of the invention relate to a determination method and apparatus for poses of a mobile robot. The method comprises the following steps: when the pose of the mobile robot changes, calculating the first pose of the mobile robot in a global map by using an inertial navigation sensor; establishing a local map of a surrounding environment where the mobile robot is located by using a laser scanner in virtue of SLAM technology; subjecting the local map to image matching in the global map so as to obtain the second pose of the mobile robot in the global map and matching credibility, wherein the second pose is an optimal pose of the mobile robot in the global map obtained through image matching; and determining the pose of the mobile robot to be the first pose or the second pose according to matching credibility. According embodiments in the invention, determination of the poses of the mobile robot can be improved, and errors in pose determination can be reduced.

Owner:SMART DYNAMICS CO LTD

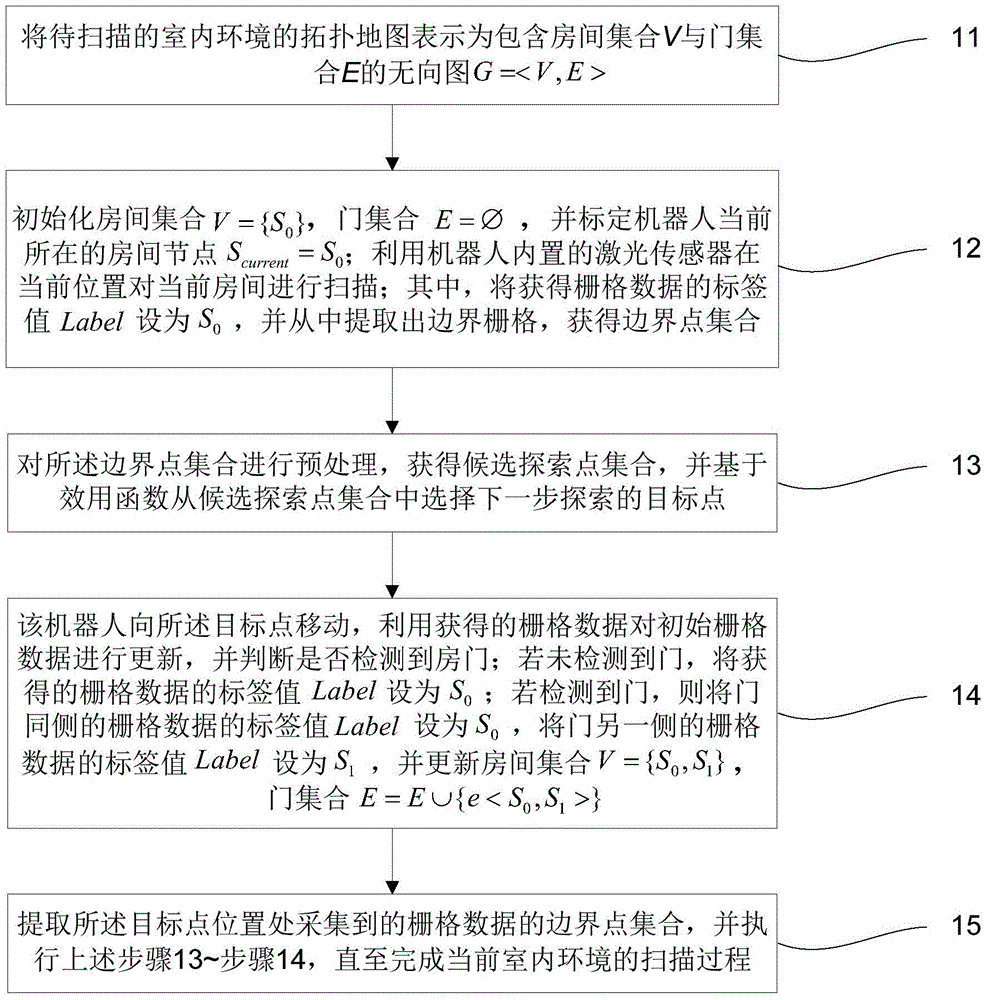

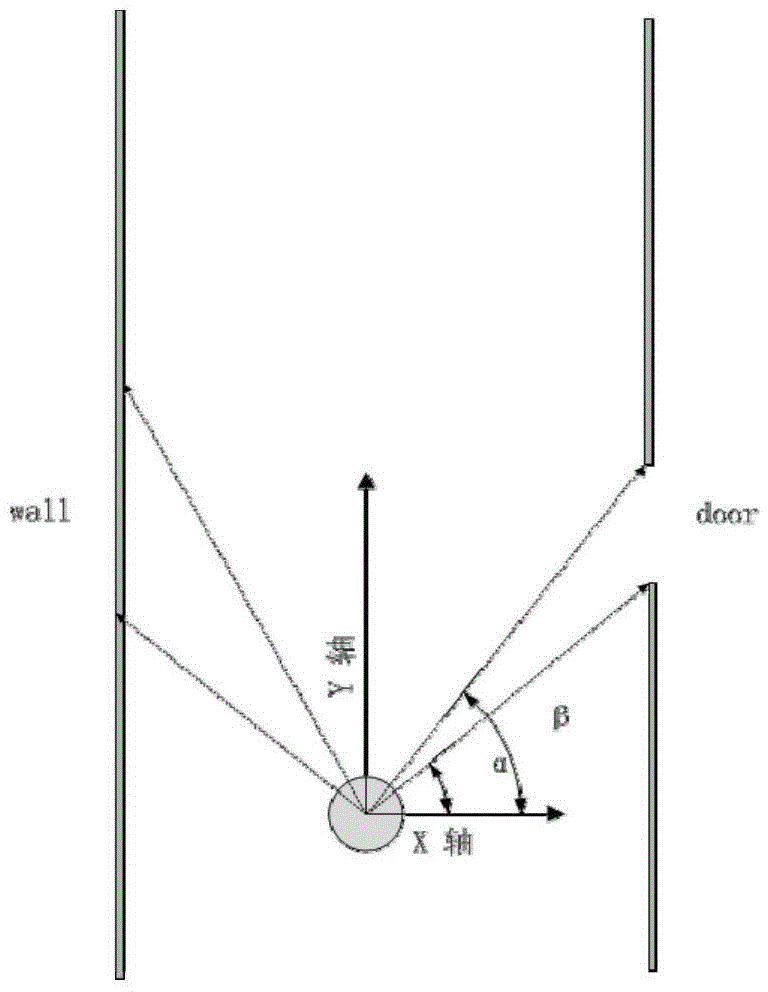

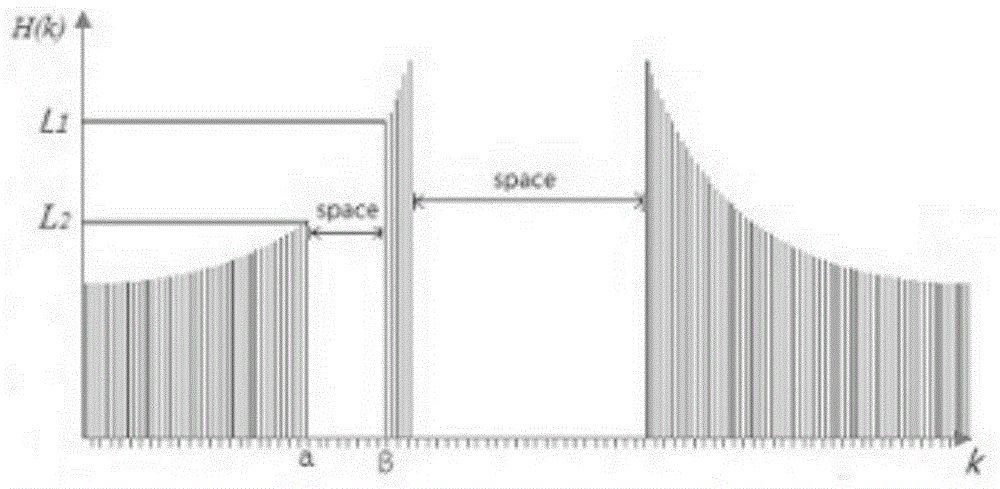

Indoor map building method for improving robot path planning efficiency

ActiveCN104898660AAvoid planningPosition/course control in two dimensionsSimultaneous localization and mappingTopological order

The invention discloses an indoor map building method for improving robot path planning efficiency. The method combines a topological map and a grid map, the planning problem happens in space represented by topological nodes, and planning of global space is avoided. In addition, laser is used for identifying a door in the indoor environment, a topological relationship between rooms is built, the process is merged in particle filter SLAM (simultaneous localization and mapping) algorithm, the grid map represented by the environment and the topological map based on the grid map can be obtained finally, and the path planning algorithm in robot navigation is simpler and more efficient.

Owner:UNIV OF SCI & TECH OF CHINA

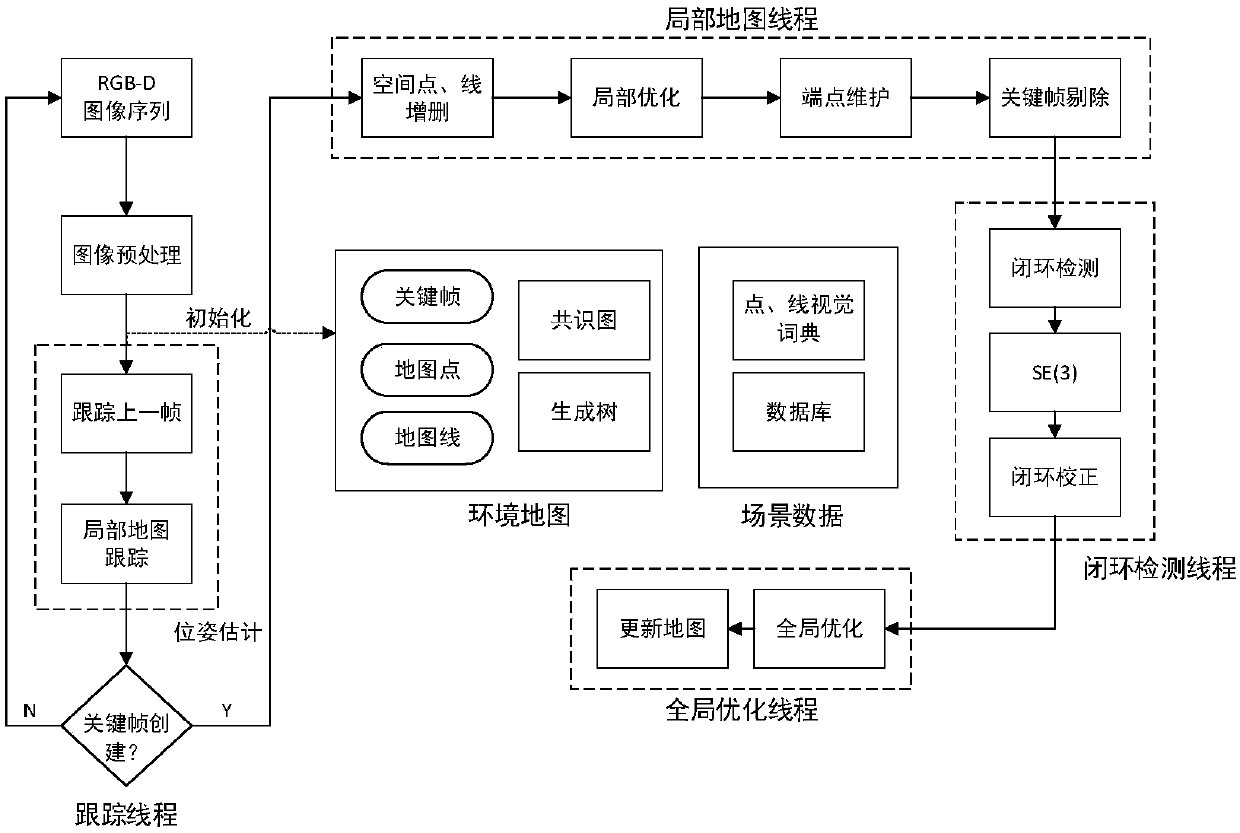

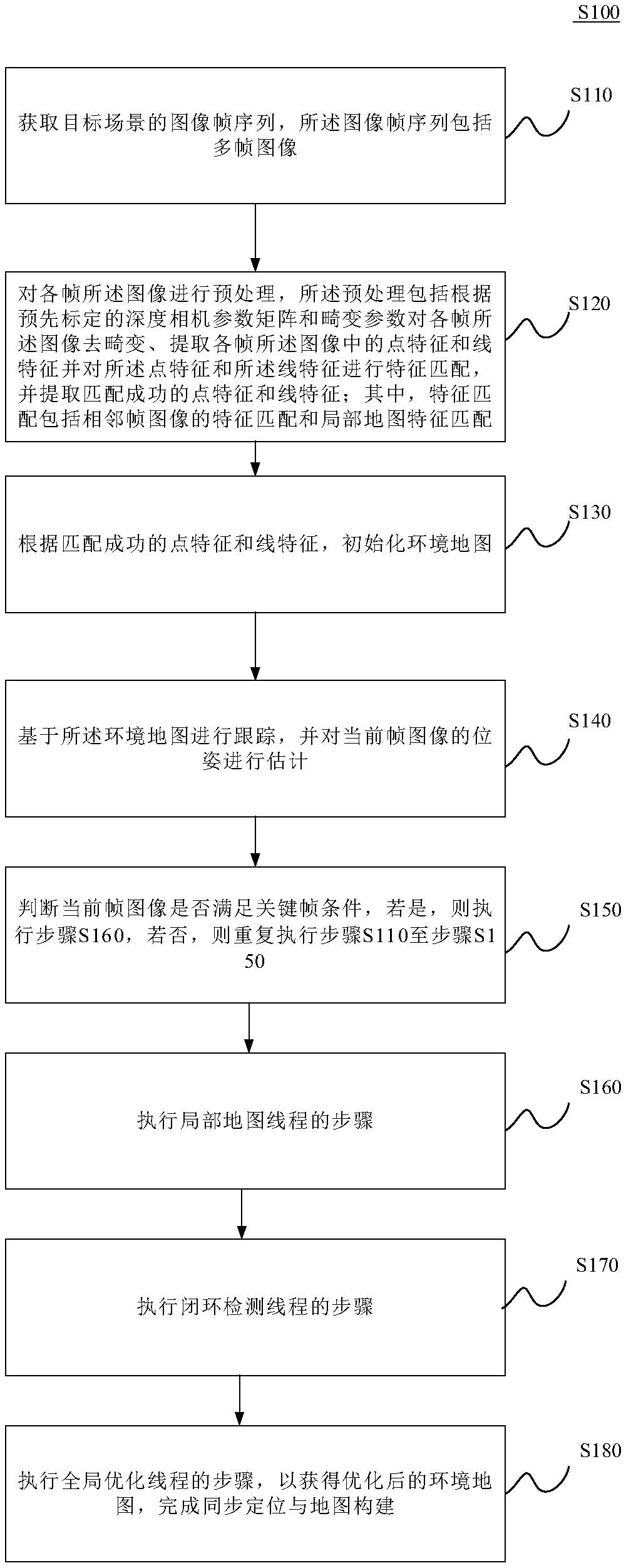

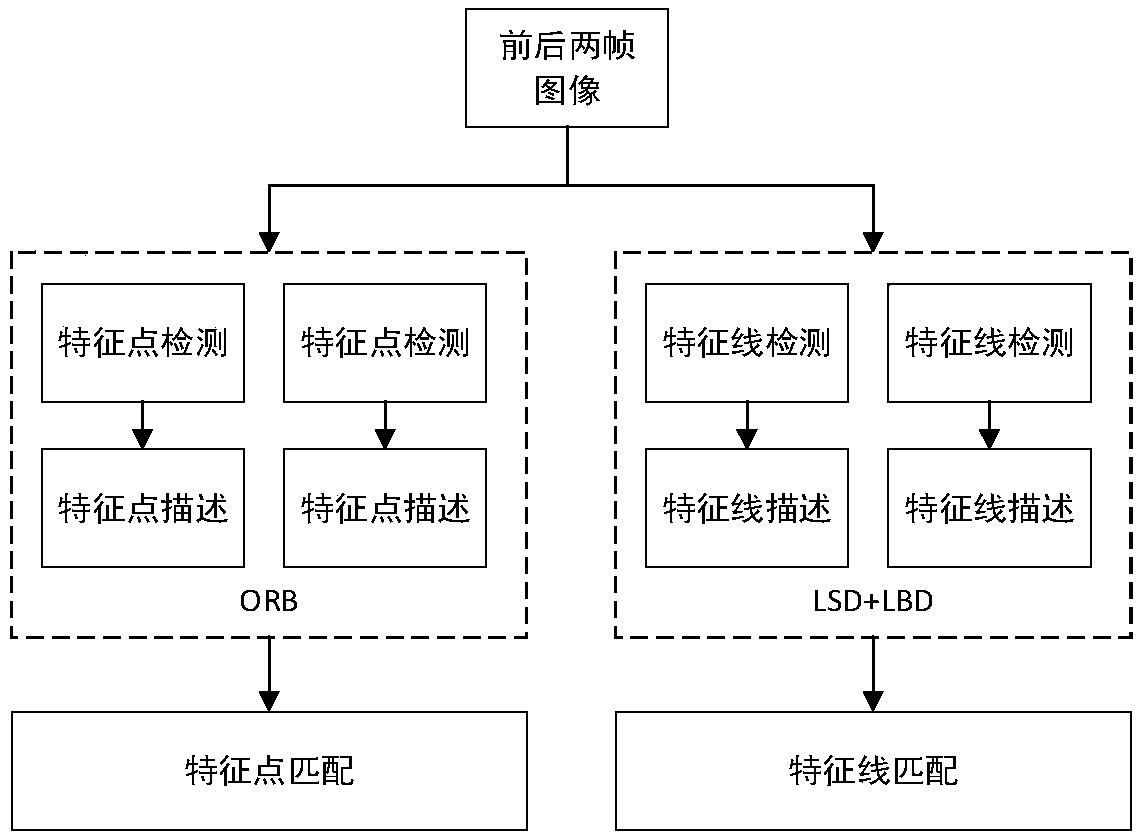

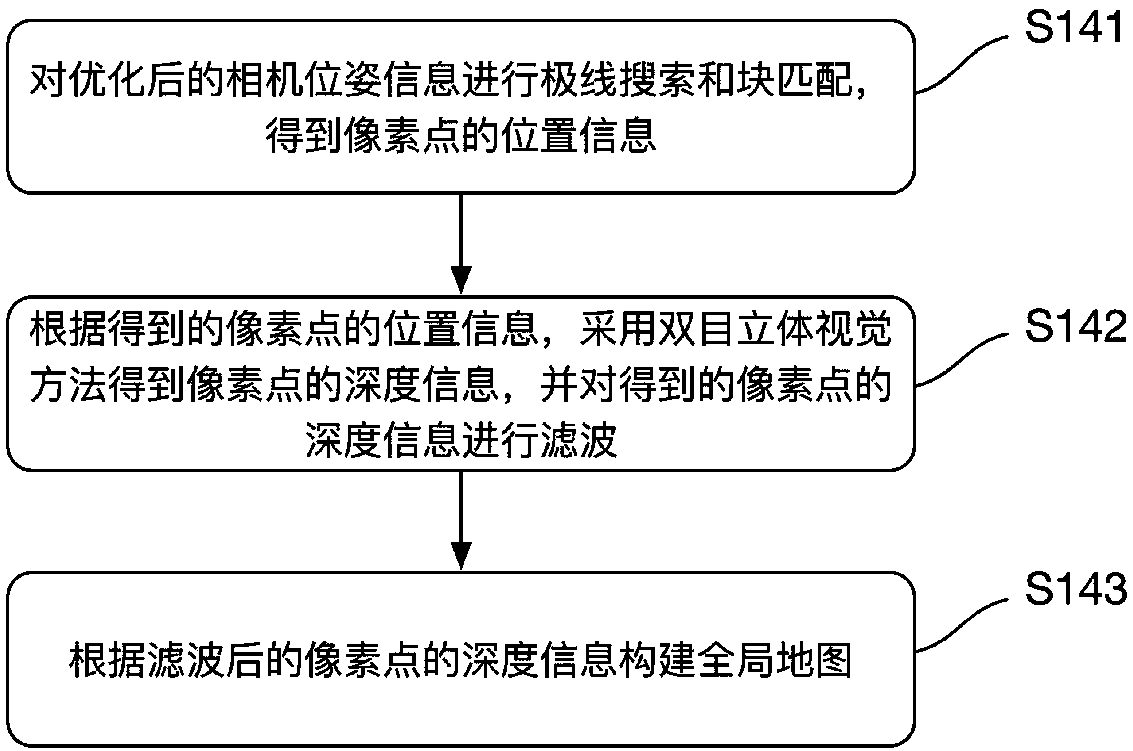

vSLAM implementation method and system based on point and line feature fusion

InactiveCN108682027AImprove accuracyReliable loopback detection functionImage enhancementImage analysisSimultaneous localization and mappingClosed loop

The invention discloses a vSLAM implementation method and system based on point and line feature fusion. The method includes Step S110, obtaining an image frame sequence of a target scene; Step S120,preprocessing each frame of image; Step S130, according to successfully matched point features and lie features, initializing an environmental map; Step S140, tracking based on the environmental map,and estimating the pose of a current frame of image; Step S150, judging whether the current frame of image meets a key frame condition, if yes, executing Step S160, otherwise repeatedly executing StepS110 to Step S150; Step S160, executing a step of a local map thread; Step S170, executing a step of a closed-loop detection thread; and Step S180, executing a step of a global optimization thread toobtain an optimized environmental map and complete simultaneous localization and mapping. The extraction and matching processes of line features are improved, so as to improve the accuracy of data association in the front end, and thus the defects of vSLAM which exist in a complicated low-texture scene can be effectively overcome.

Owner:BEIJING HUAJIE IMI TECH CO LTD

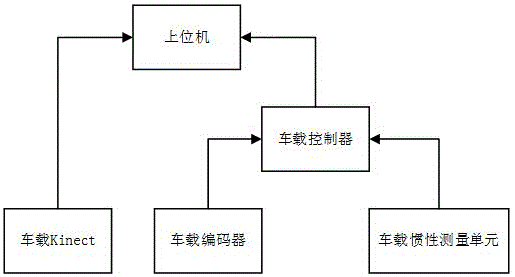

SLAM device integrating multiple vehicle-mounted sensors and control method of device

InactiveCN105783913APrecise positioningImprove mapping performanceNavigation by speed/acceleration measurementsSimultaneous localization and mappingMultiple sensor

The invention relates to the technical field of simultaneous localization and mapping methods of three-wheel omnidirectional mobile robots integrating multiple vehicle-mounted sensors, and particularly relates to an SLAM device integrating the multiple vehicle-mounted sensors and a control method of the device.The SLAM device integrating the multiple vehicle-mounted sensors comprises the vehicle-mounted sensors, a vehicle-mounted encoder, a vehicle-mounted inertia measurement unit, a vehicle-mounted controller and an upper computer; the vehicle-mounted encoder and the vehicle-mounted inertia measurement unit are connected with the vehicle-mounted controller, and the vehicle-mounted controller is connected with the upper computer.The localization and mapping effects of the robots can be improved, the problem of localization and mapping errors brought by depth value deletion or characteristic point scarcity which are generated when SLAM is conducted only by relying on an RGB-D sensor is solved, and therefore the robustness and accuracy of SLAM are improved.

Owner:SUN YAT SEN UNIV

Visual sense simultaneous localization and mapping method based on dot and line integrated features

ActiveCN106909877AImprove clustering effectCharacter and pattern recognitionSimultaneous localization and mappingClosed loop

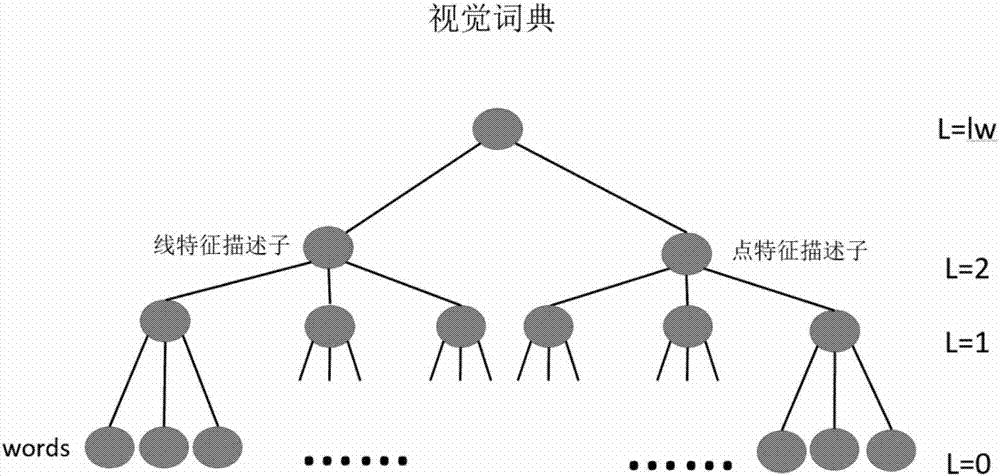

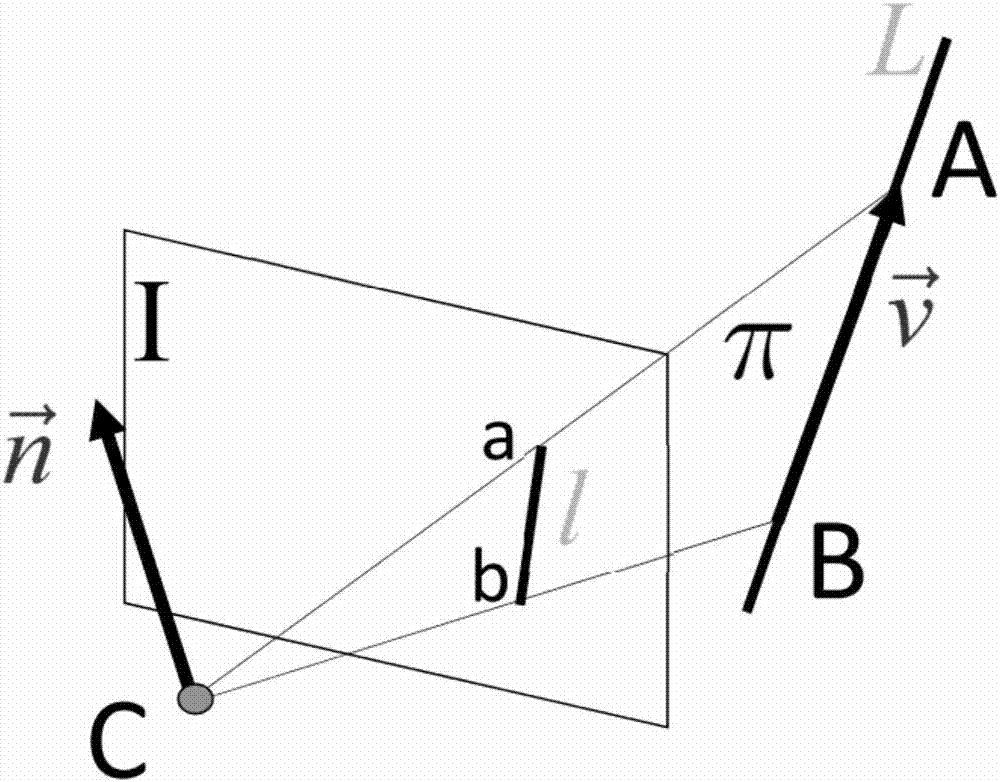

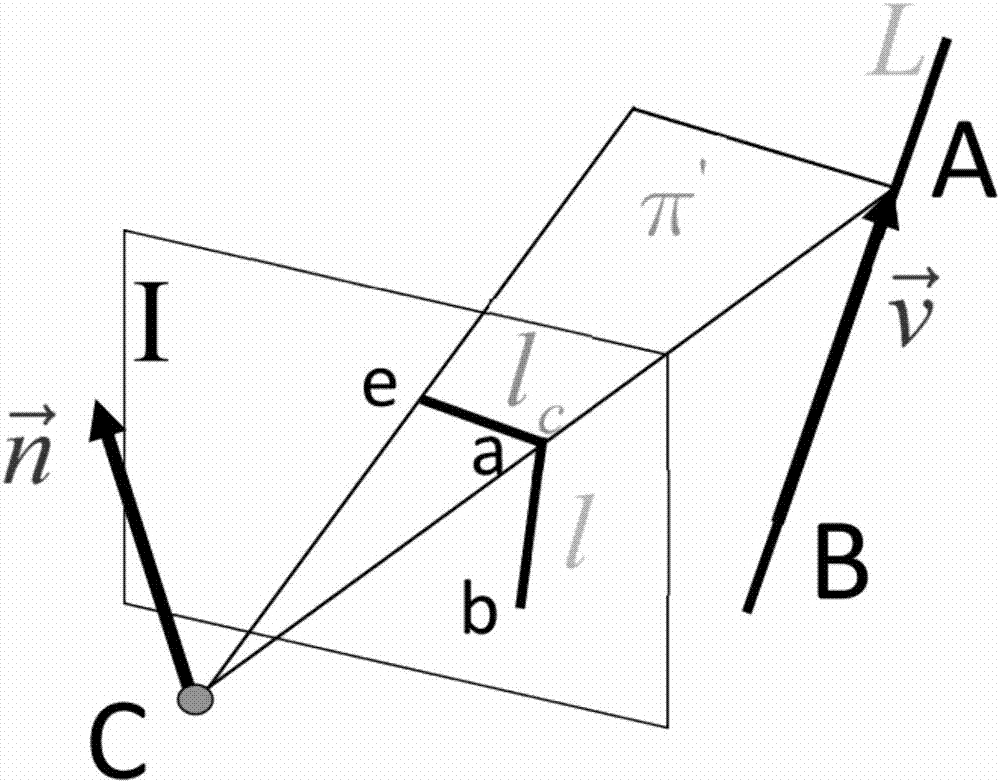

The invention discloses a visual sense simultaneous localization and mapping method based on dot and line integrated features. The method comprehensively utilizes the line features and the dot features extracted and obtained from a binocular camera image and is able to be used for the positioning and the attitude estimation of a robot in both an external environment and an internal environment. As the dot features and the line features are integrated for use, the system becomes more robust and more accurate. For the parameterization of linear features, the Pluck coordinates are used for straight line calculations, including geometric transformations, 3D reconstructions, and etc. In the back-end optimization, the orthogonal representation of the straight line is used to minimize the number of the parameters of the straight line. The off-line established visual dictionary for the dot and line integrated features is used for closed loop detections; and through the method of adding zone bits, the dot characteristics and the line characteristics are treated differently in the visual dictionary and when an image database is created and image similarity is calculated. The invention can be applied to the construction of a scene image both indoors and outdoors. The constructed map integrates the feature dots and the feature lines, therefore, able to provide even richer information.

Owner:ZHEJIANG UNIV

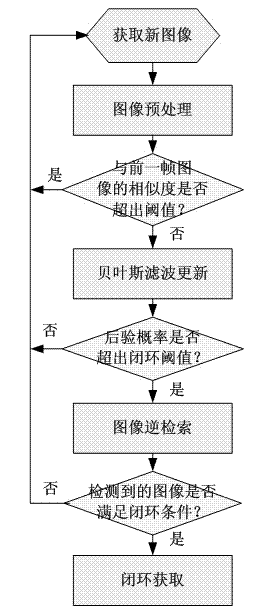

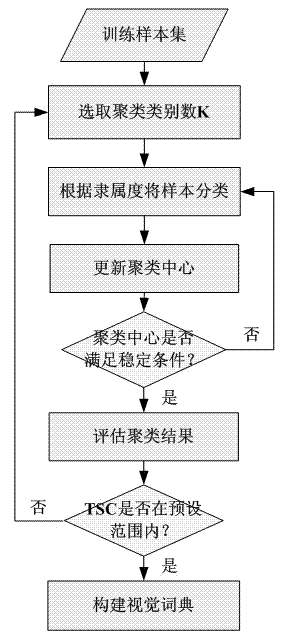

Image appearance based loop closure detecting method in monocular vision SLAM (simultaneous localization and mapping)

InactiveCN102831446AReduce the amount of calculationLower requirementCharacter and pattern recognitionPosition/course control in two dimensionsSimultaneous localization and mappingVisual perception

The invention discloses an image appearance based loop closure detecting method in monocular vision SLAM (simultaneous localization and mapping). The image appearance based loop closure detecting method includes acquiring images of the current scene by a monocular camera carried by a mobile robot during advancing, and extracting characteristics of bag of visual words of the images of the current scene; preprocessing the images by details of measuring similarities of the images according to inner products of image weight vectors and rejecting the current image highly similar to a previous history image; updating posterior probability in a loop closure hypothetical state by a Bayesian filter process to carry out loop closure detection so as to judge whether the current image is subjected to loop closure or not; and verifying loop closure detection results obtained in the previous step by an image reverse retrieval process. Further, in a process of establishing a visual dictionary, the quantity of clustering categories is regulated dynamically according to TSC (tightness and separation criterion) values which serve as an evaluation criterion for clustering results. Compared with the prior art, the loop closure detecting method has the advantages of high instantaneity and detection precision.

Owner:NANJING UNIV OF POSTS & TELECOMM

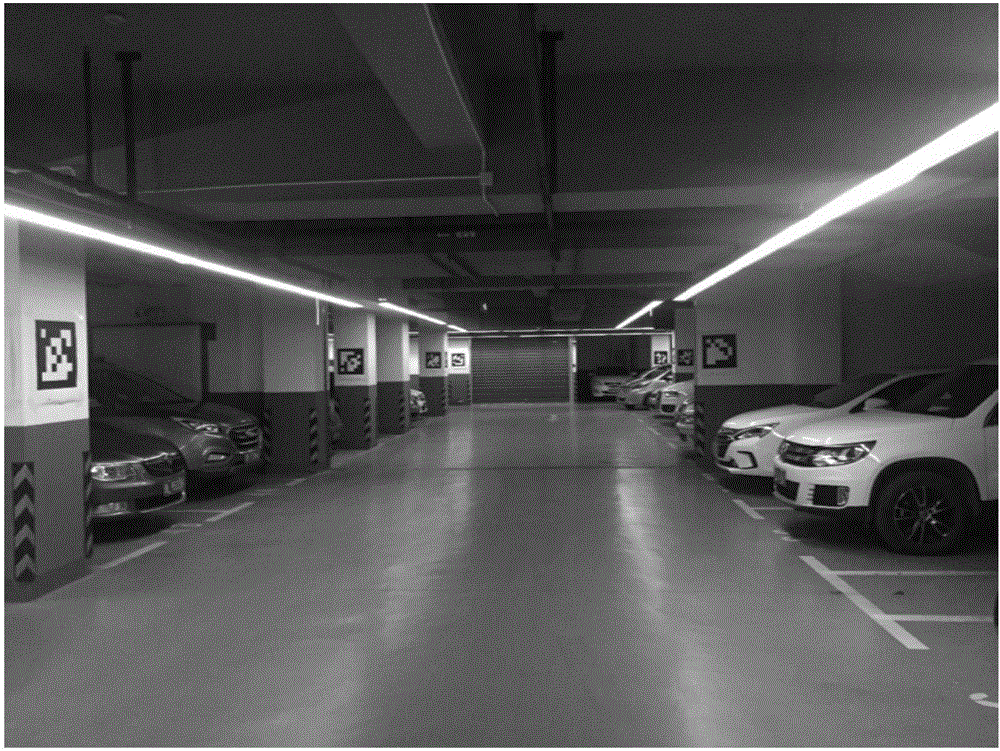

Parking lot automatic mapping and high-precision localization method based on parking locations and two-dimensional codes

InactiveCN107180215ARemove accumulated noiseOptimal Mapping2D-image generationNavigation by speed/acceleration measurementsSimultaneous localization and mappingMarine navigation

Owner:TONGJI UNIV

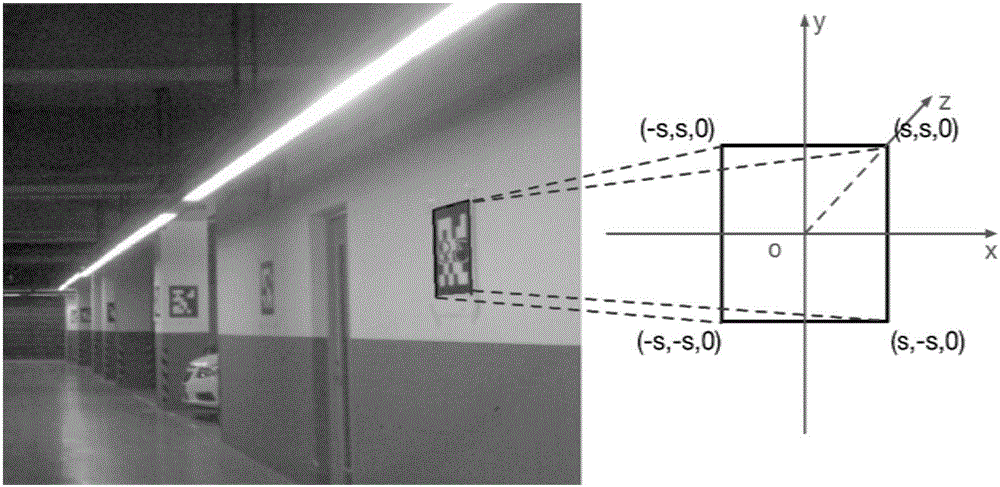

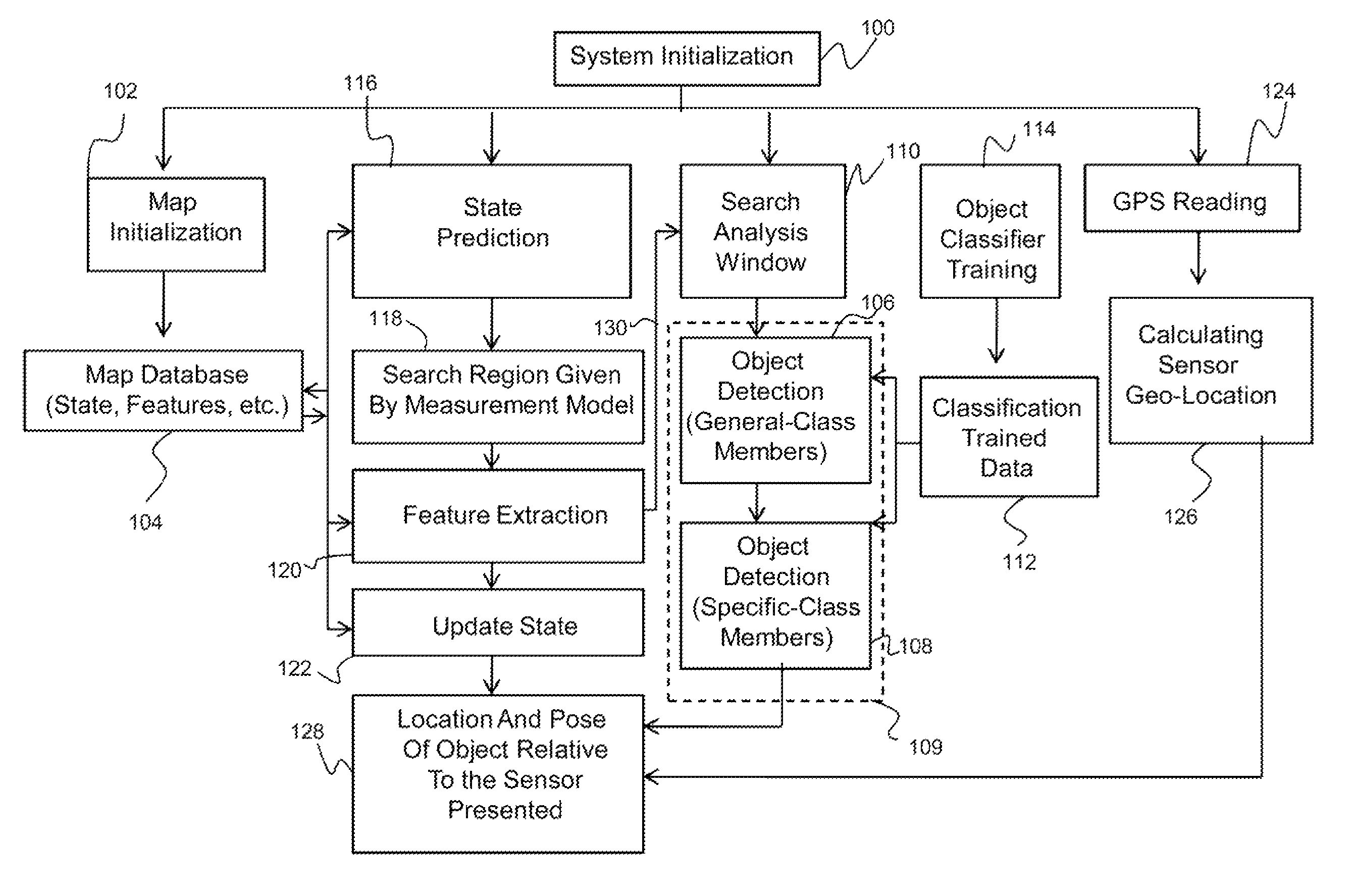

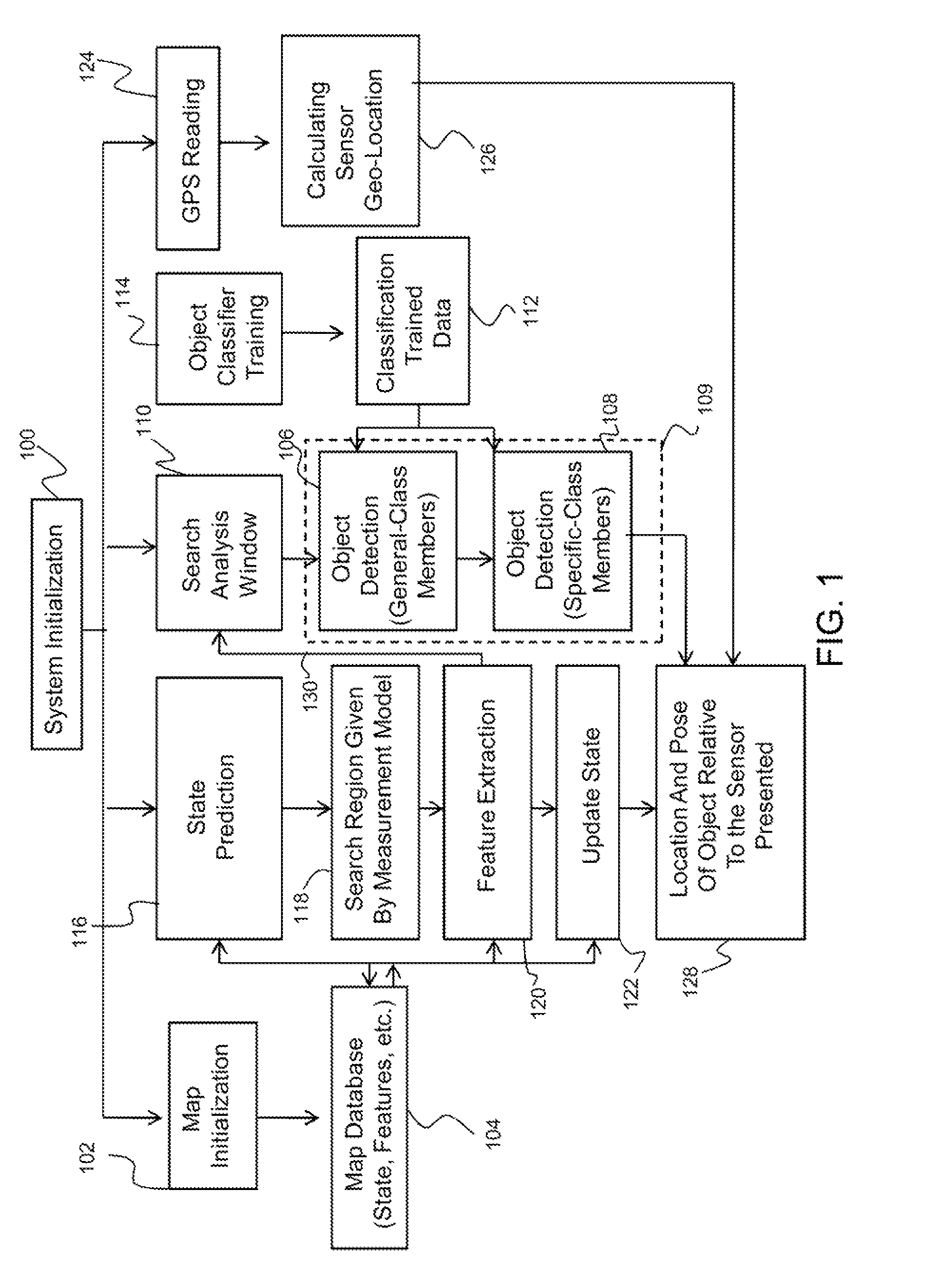

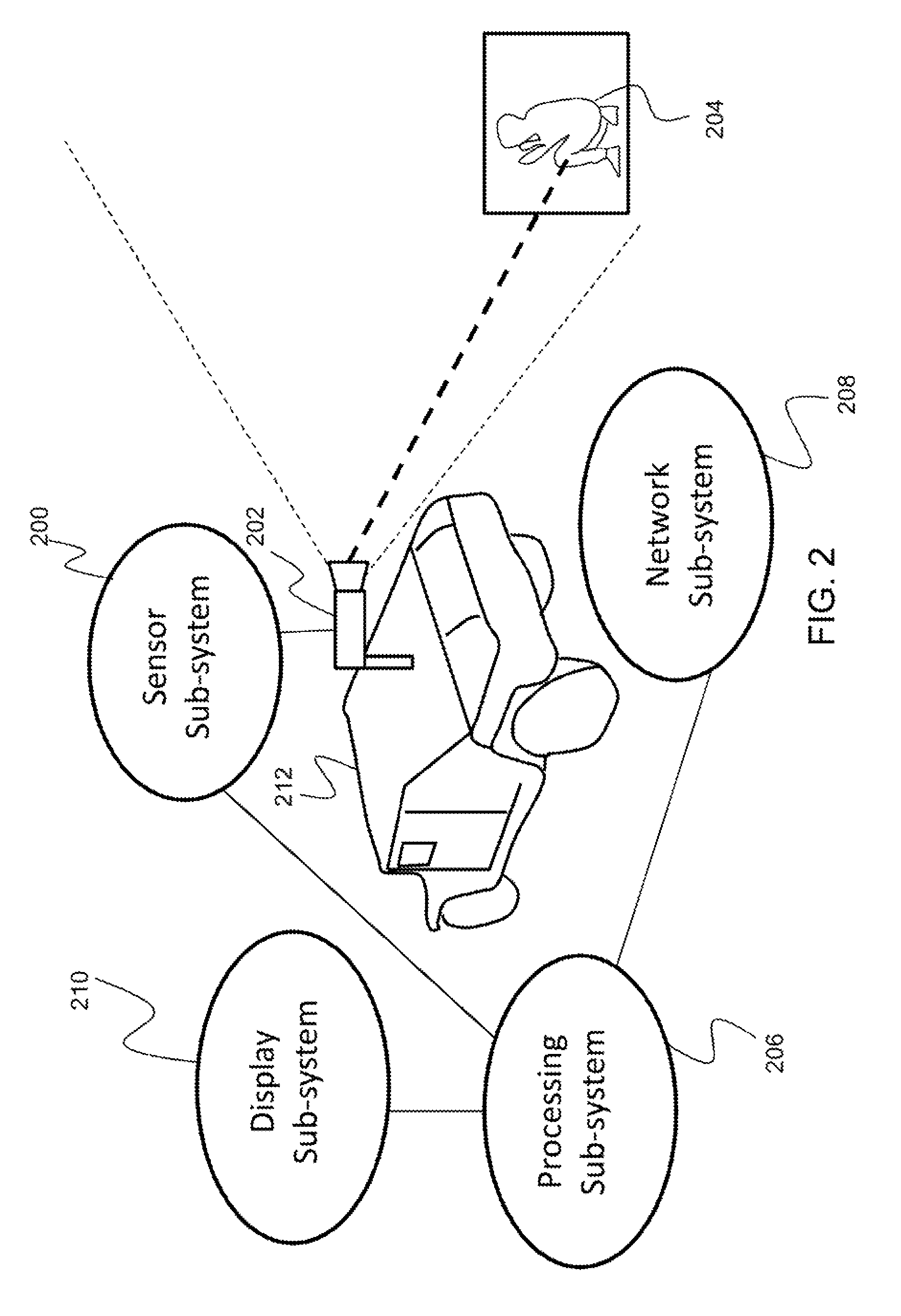

System for automatic object localization based on visual simultaneous localization and mapping (SLAM) and cognitive swarm recognition

InactiveUS8649565B1Character and pattern recognitionNavigation instrumentsSimultaneous localization and mappingVision based

Described is a system for automatic object localization based on visual simultaneous localization and mapping (SLAM) and cognitive swarm recognition. The system is configured to detect a set of location data corresponding to a current location of a sensor positioned on a platform. A map model of an environment surrounding the sensor is generated based on an input image from the sensor and the location data. In a desired aspect, a cognitive swarm object detection module is used to search for and detect an object of interest. The three-dimensional location of the object of interest relative to the platform is then estimated based on the map model and the location data regarding the sensor. The system described allows for real-time, continuous three-dimensional location updating for moving objects of interest from a mobile platform. A computer-implemented method and computer program product are also described.

Owner:HRL LAB

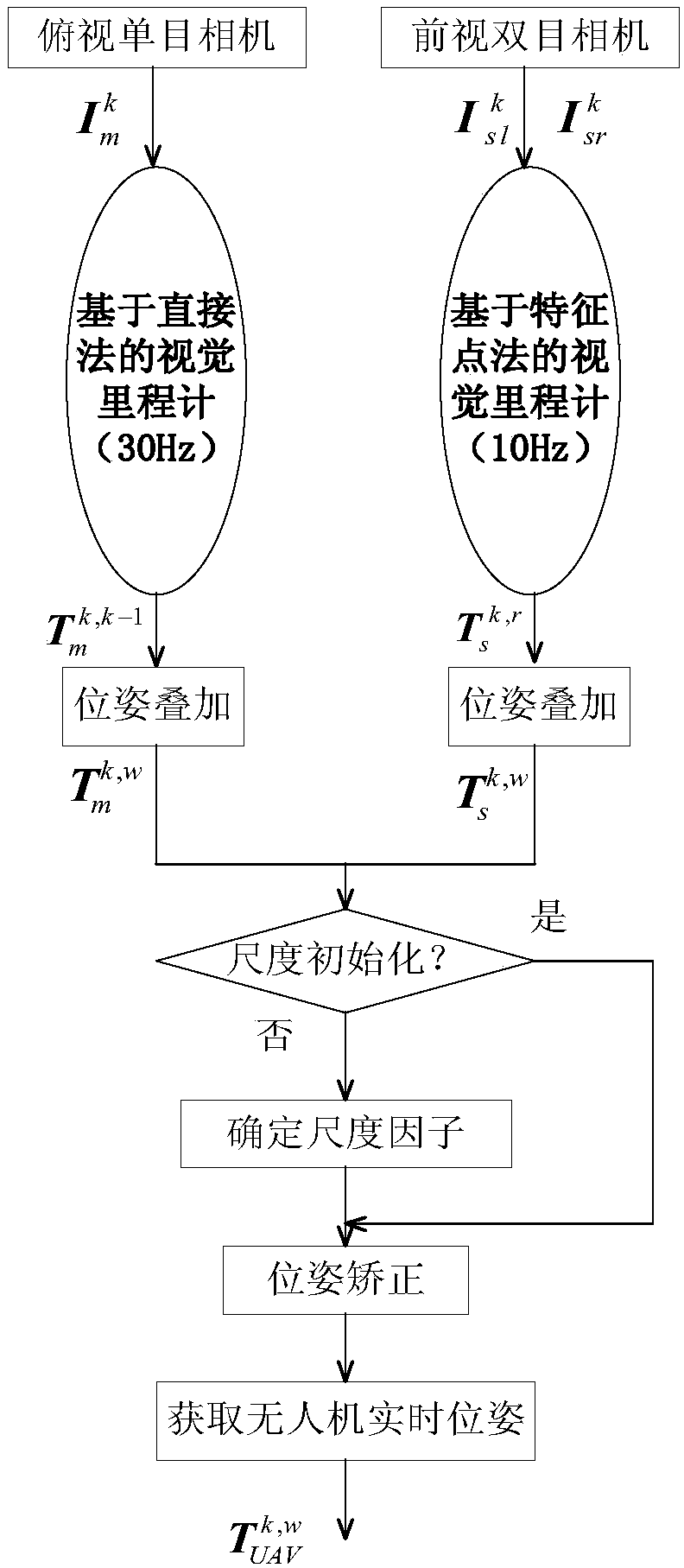

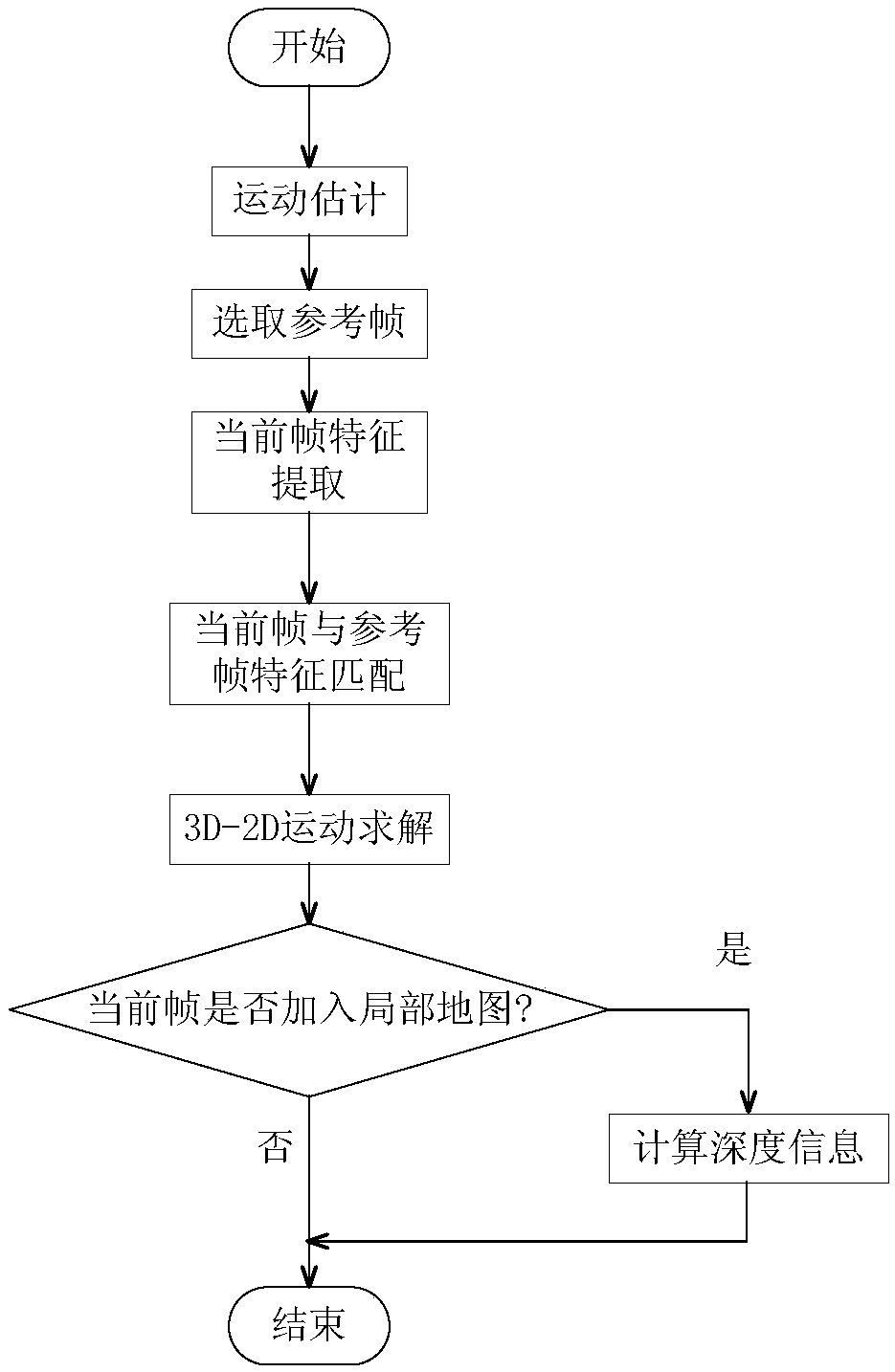

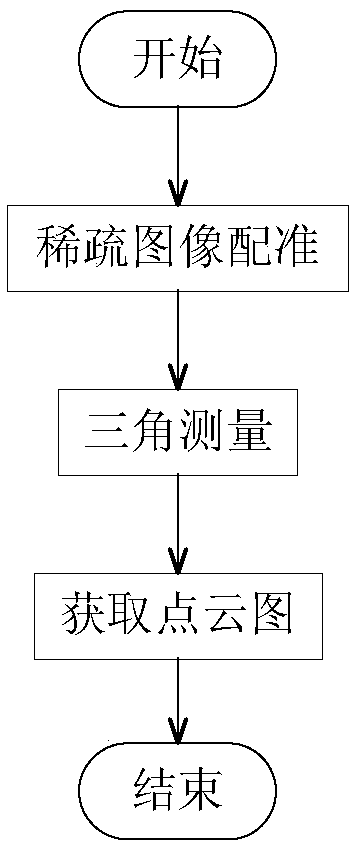

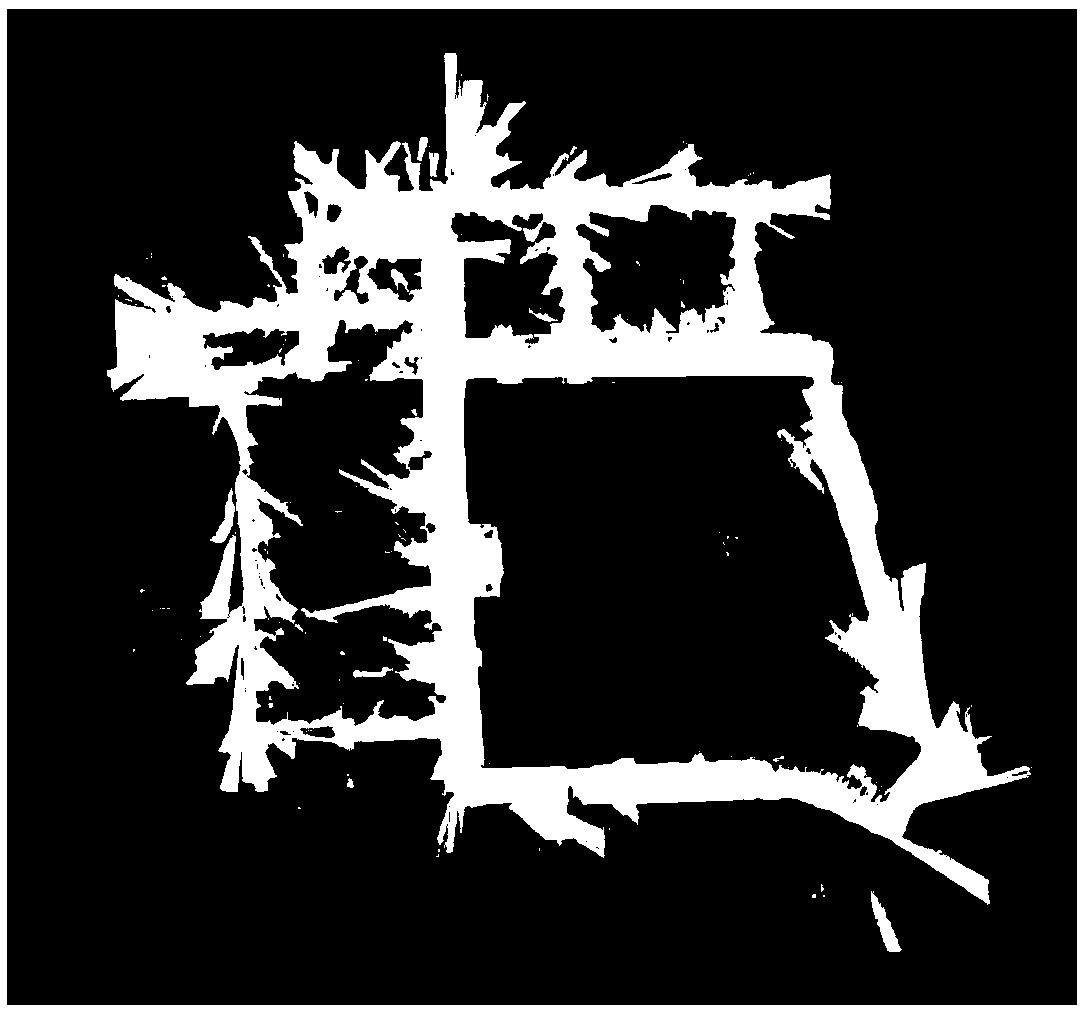

Simultaneous localization and mapping (SLAM) method for unmanned aerial vehicle based on mixed vision odometers and multi-scale map

ActiveCN109029417AReal-time accurate and reliable positioningShorten operation timeNavigational calculation instrumentsSimultaneous localization and mappingEnvironmental perception

The invention discloses a simultaneous localization and mapping (SLAM) method for an unmanned aerial vehicle based on mixed vision odometers and a multi-scale map, and belongs to the technical field of autonomous navigation of unmanned aerial vehicles. According to the SLAM method, an overlooking monocular camera, a foresight binocular camera and an airborne computer are carried on an unmanned aerial vehicle platform; the monocular camera is used for the visual odometer based on a direct method, and binocular camera is used for the visual odometer based on feature point method; the mixed visual odometers conduct information fusion on output of the two visual odometers to construct the local map for positioning, and the real-time posture of the unmanned aerial vehicle is obtained; then theposture is fed back to a flight control system to control the position of the unmanned aerial vehicle; and the airborne computer transmits the real-time posture and collected images to a ground station, the ground station plans the flight path in real time according to the constructed global map and sends waypoint information to the unmanned aerial vehicle, and thus autonomous flight of the unmanned aerial vehicle is achieved. Real-time posture estimation and environmental perception of the unmanned aerial vehicle under the non-GPS environment are achieved, and the intelligent level of the unmanned aerial vehicle is greatly increased.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

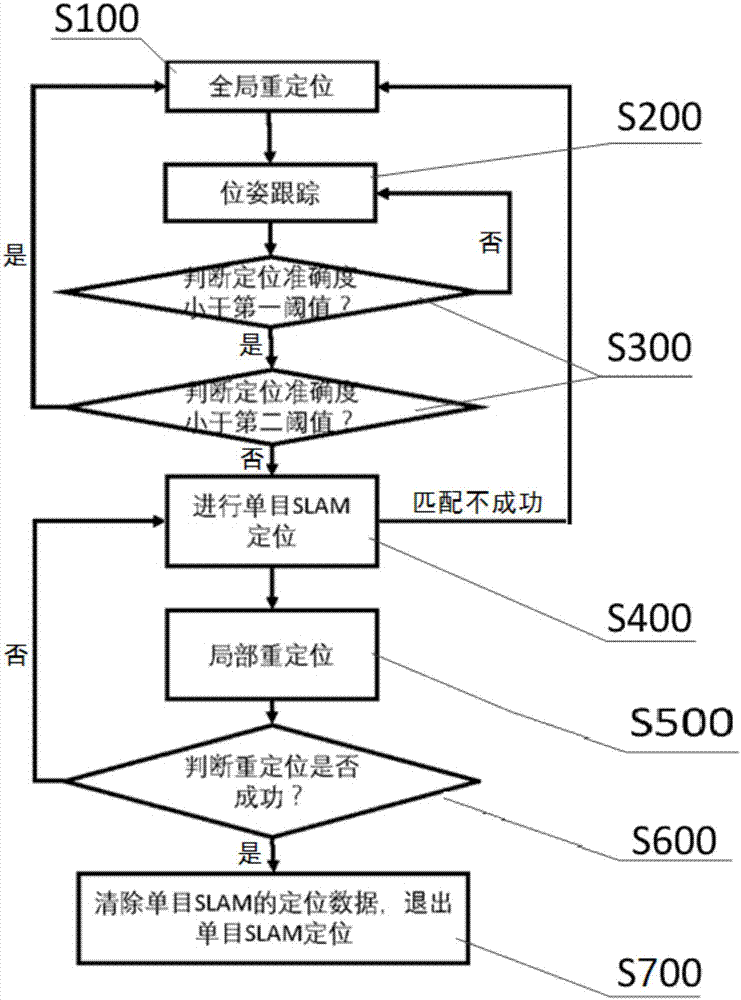

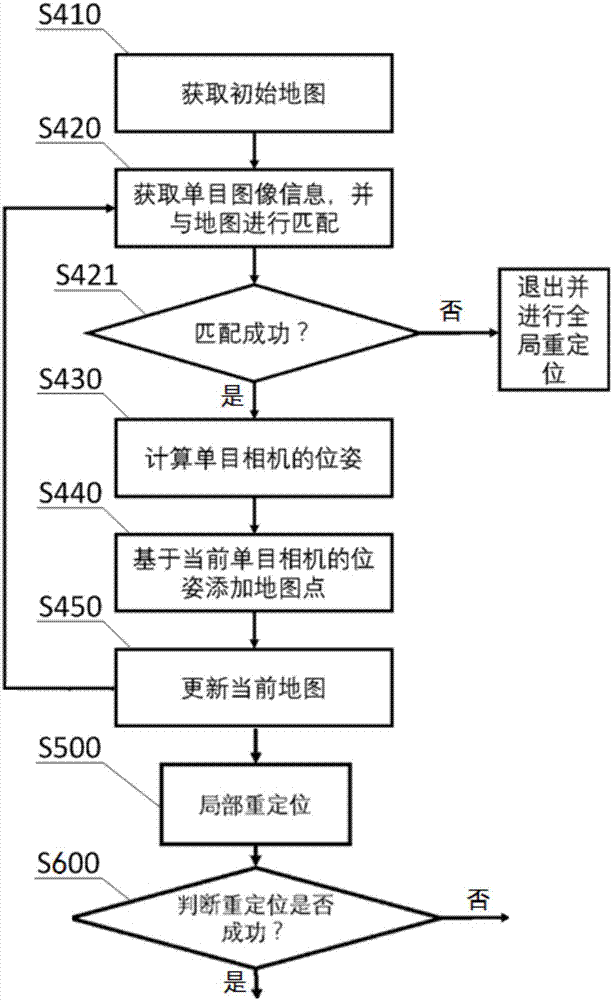

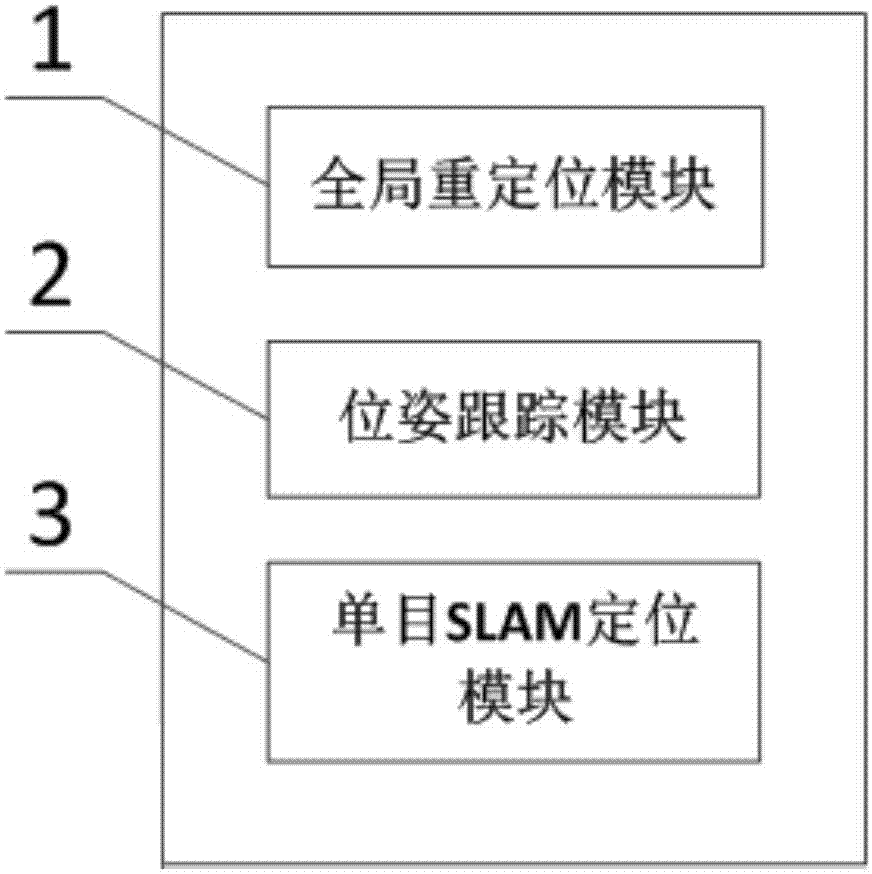

SLAM (Simultaneous Localization and Mapping)-based positioning method and system

ActiveCN107990899AImprove accuracyImprove positioning efficiencyImage enhancementImage analysisSimultaneous localization and mappingComputer science

The invention discloses an SLAM (Simultaneous Localization and Mapping)-based positioning method and system. The method disclosed by the invention comprises the following steps: calculating an initialposition of a camera in a global map, wherein the global map refers to a binocular SLAM global map; extracting a local map from the global map according to the initial position of the camera; matching a current frame and a local map point; based on a matching result of the current frame and the local map point, triggering monocular SLAM positioning, wherein the monocular SLAM positioning is usedfor generating a new map point through a monocular camera and positioning. The SLAM-based positioning method and system can be used for triggering the monocular SLAM positioning to supplement the mappoint when the positioning quality is poor, the positioning accuracy is increased and the positioning efficiency is improved; under the condition that the positioning quality is good and positioning is lost, basic map positioning is carried out.

Owner:UISEE TECH BEIJING LTD

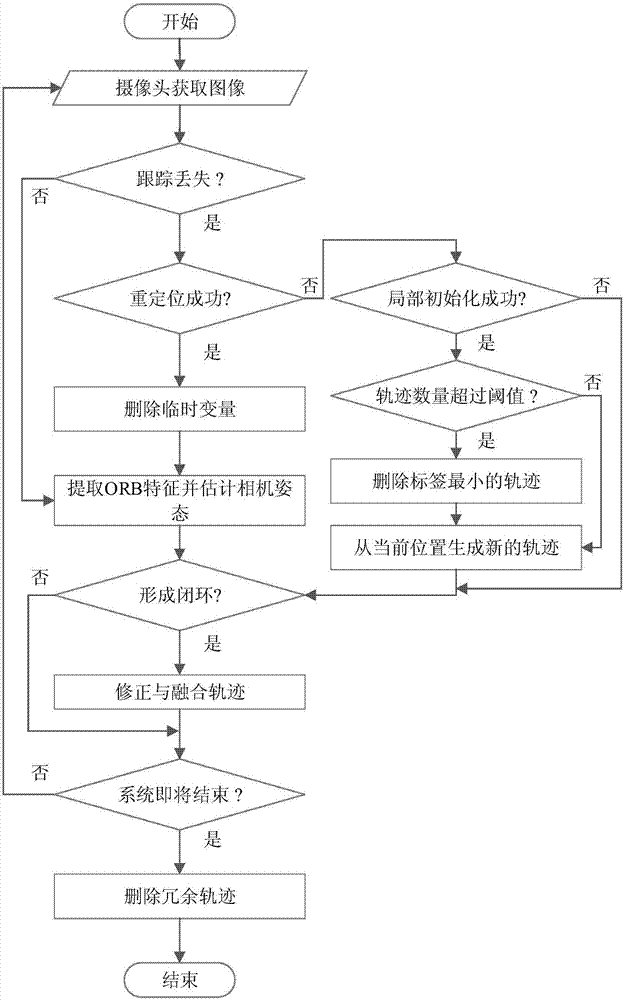

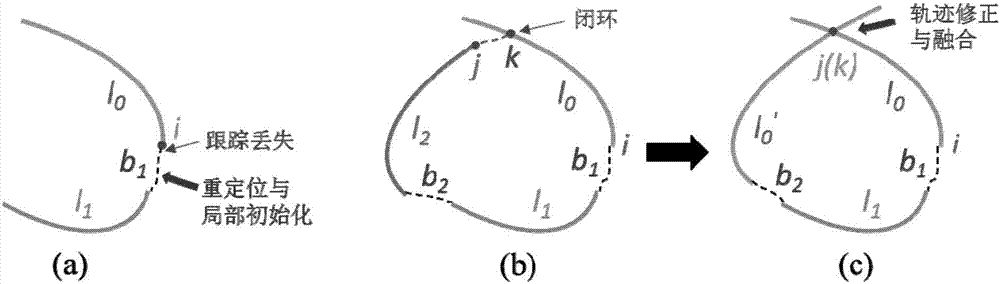

Simultaneous localization and mapping method of monocular vision robot based on retracking strategy

ActiveCN106885574AImprove completenessThe solution cannot be estimatedNavigational calculation instrumentsPhotogrammetry/videogrammetryTemporary variableSimultaneous localization and mapping

The invention discloses a simultaneous localization and map construction method of a monocular vision robot based on a retracking strategy. The method comprises the following steps: 1) extracting ORB characteristics of each picture; 2) tracking camera postures by utilizing characteristic matching of the adjacent pictures; 3) carrying out relocation and retracking strategy on pictures which are not tracked; 4) if the relocation is successfully executed, estimating postures of a current camera; stopping the retracking strategy and deleting a temporary variable generated by the retracking strategy; 5) if the retracking strategy is successfully executed, generating a new track; 6) judging the quantity of the generated tracks; if the quantity is more than a threshold value, eliminating an initial track; 7) carrying out closed-loop detection on each key frame and carrying out track fusion after the detection is successful; 8) when a localization system is finished, screening the tracks to obtain one track with the accurate posture. The simultaneous localization and map construction method disclosed by the invention has the advantages that the complete camera track can be located under the conditions of rapidness in movement, shielding, insufficient textures and illumination changes.

Owner:PEKING UNIV SHENZHEN GRADUATE SCHOOL +1

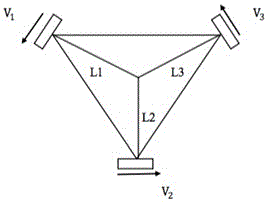

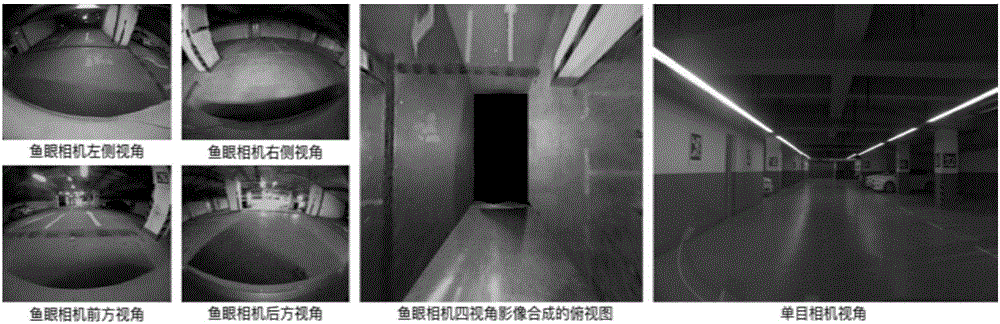

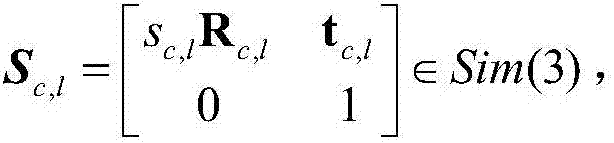

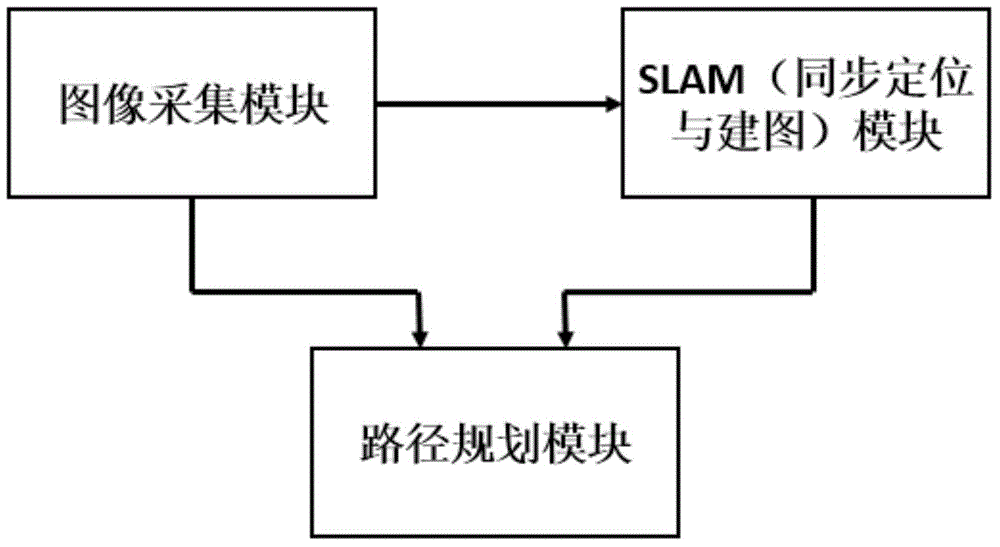

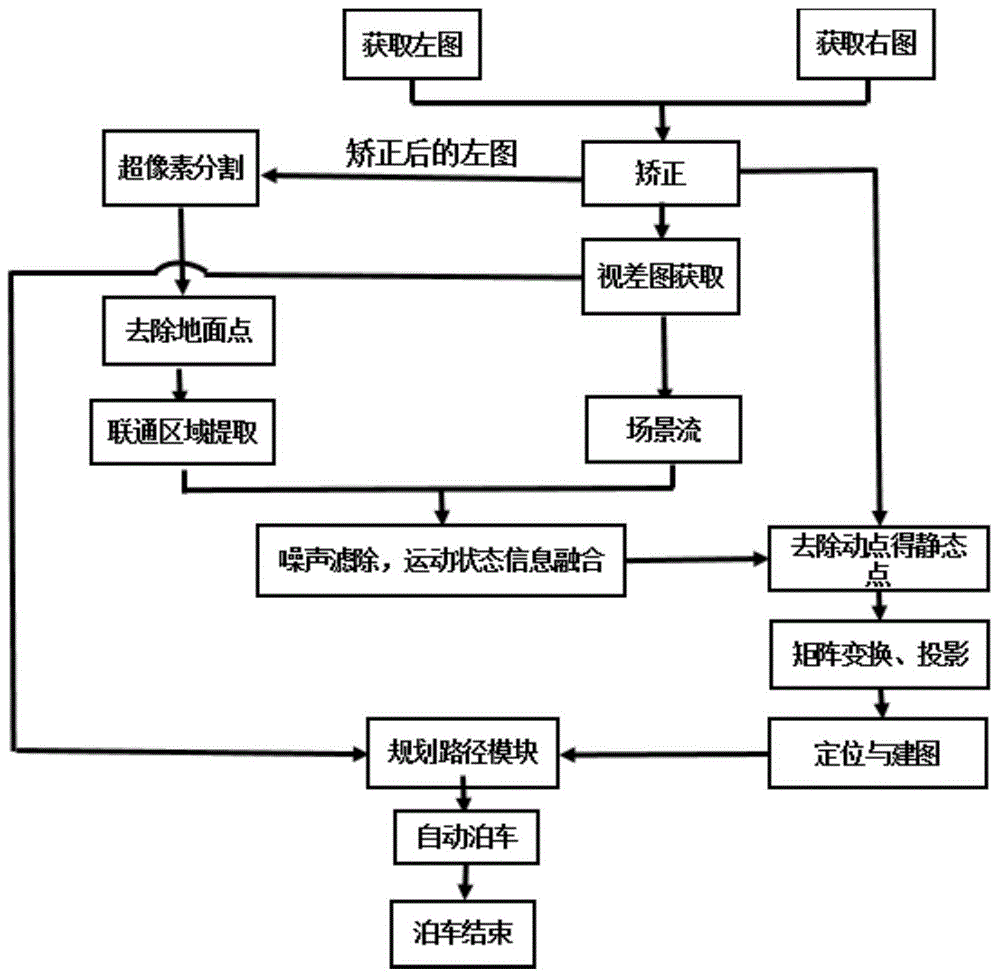

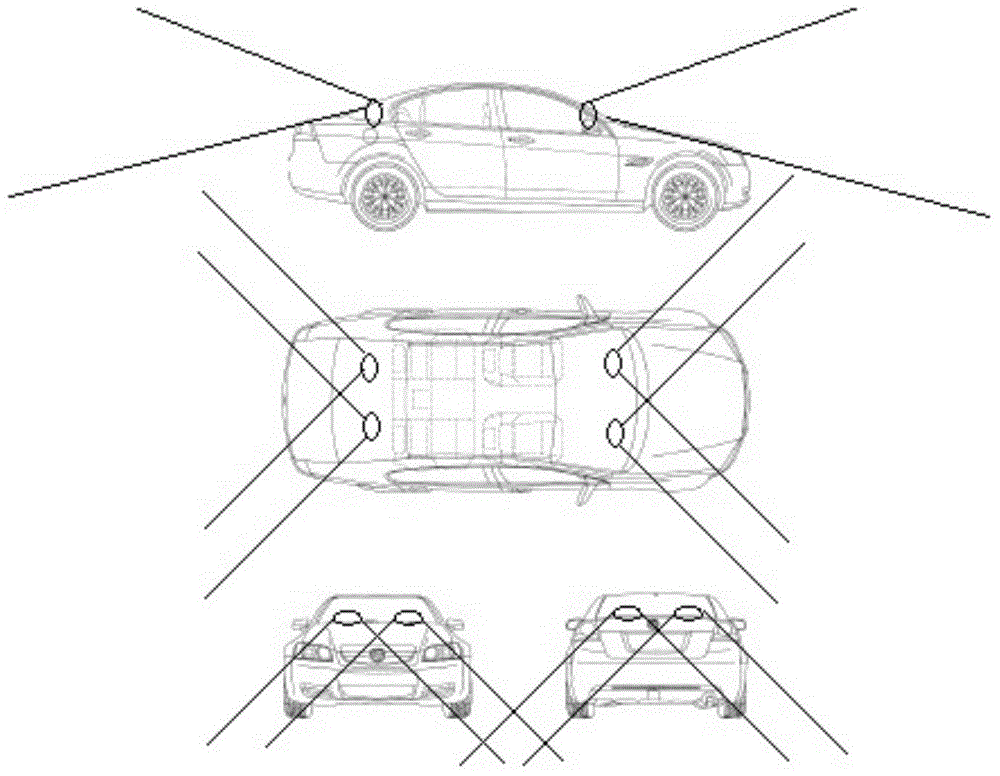

Automatic parking system and method based on stereoscopic vision localization and mapping

ActiveCN105015419ALow costIntuitive Image VisualizationOptical viewingSimultaneous localization and mappingColor image

The invention discloses an automatic parking system and method based on stereoscopic vision localization and mapping. The automatic parking system comprises an image acquisition module, an SLAM (Simultaneous Localization and Mapping) module and a route planning module, wherein the image acquisition module corrects an image acquired by a binocular camera calibrated in advance and obtains a disparity image, so that distance information between a vehicle and surrounding objects is obtained; scene-flow motion detection is realized through the matching of the image of a front frame and the image of a rear frame, so that dynamic points in the image are obtained; the SLAM module integrates acquired color images and obtained three-dimensional information, so that the position of the vehicle is located, and a map is established; the route planning module plans a route to find a parking position according to the established map; after the position of the vehicle and a target position are obtained, whether the space of the parking position is enough or not is judged, if parking conditions are met, an optimal parking track is planned to control the vehicle, so that automatic parking is realized. The automatic parking system is simple in operation process, easy to implement, low in cost and wide in application range.

Owner:SUN YAT SEN UNIV

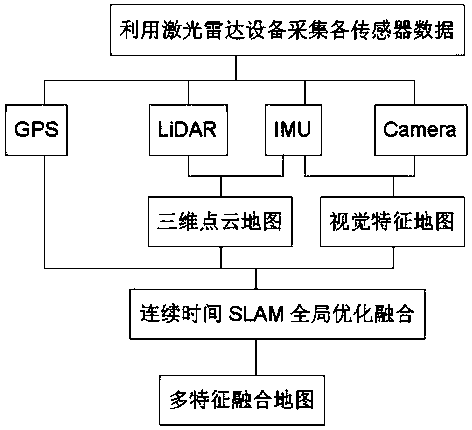

Manufacturing method of multi-feature fusion map facing autonomous vehicle

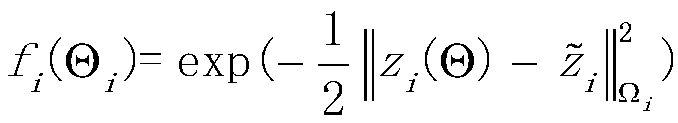

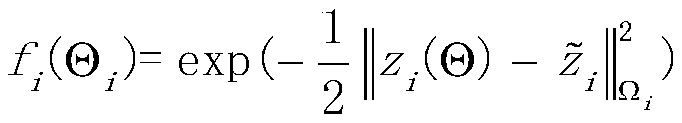

ActiveCN109341706ARich in attributesImprove securityInstruments for road network navigationSimultaneous localization and mappingPoint cloud

The invention discloses a manufacturing method of a multi-feature fusion map facing an autonomous vehicle. The method comprises the following steps: collecting data of each sensor by utilizing an on-board laser radar device; generating a three-dimensional point cloud map by utilizing IMU(Inertial Measurement Unit) data and laser measurement data; generating a visual feature map by utilizing the IMU data and camera data; preprocessing GPS data, and converting a geodetic coordinates into a spatial rectangular coordinates; performing global optimization fusion by utilizing a continuous time SLAM(Simultaneous Localization and Mapping) algorithm; and generating the multi-feature fusion map. According to the manufacturing method of the multi-feature fusion map, various sensor data of a laser radar, an IMU, a camera and a GPS, etc., are integrated, so that the stability and the accuracy are greatly improved; the data are processed by fusing a visual SLAM algorithm and a laser SLAM algorithm,thereby obtaining a better composition effect than a single visual SLAM algorithm or a single laser SLAM algorithm; and the method is simple, practical and low in cost, and the map produced is accurate and rich in attributes, thereby improving the safety of the driving process of the autonomous vehicle.

Owner:深圳市智绘科技有限公司

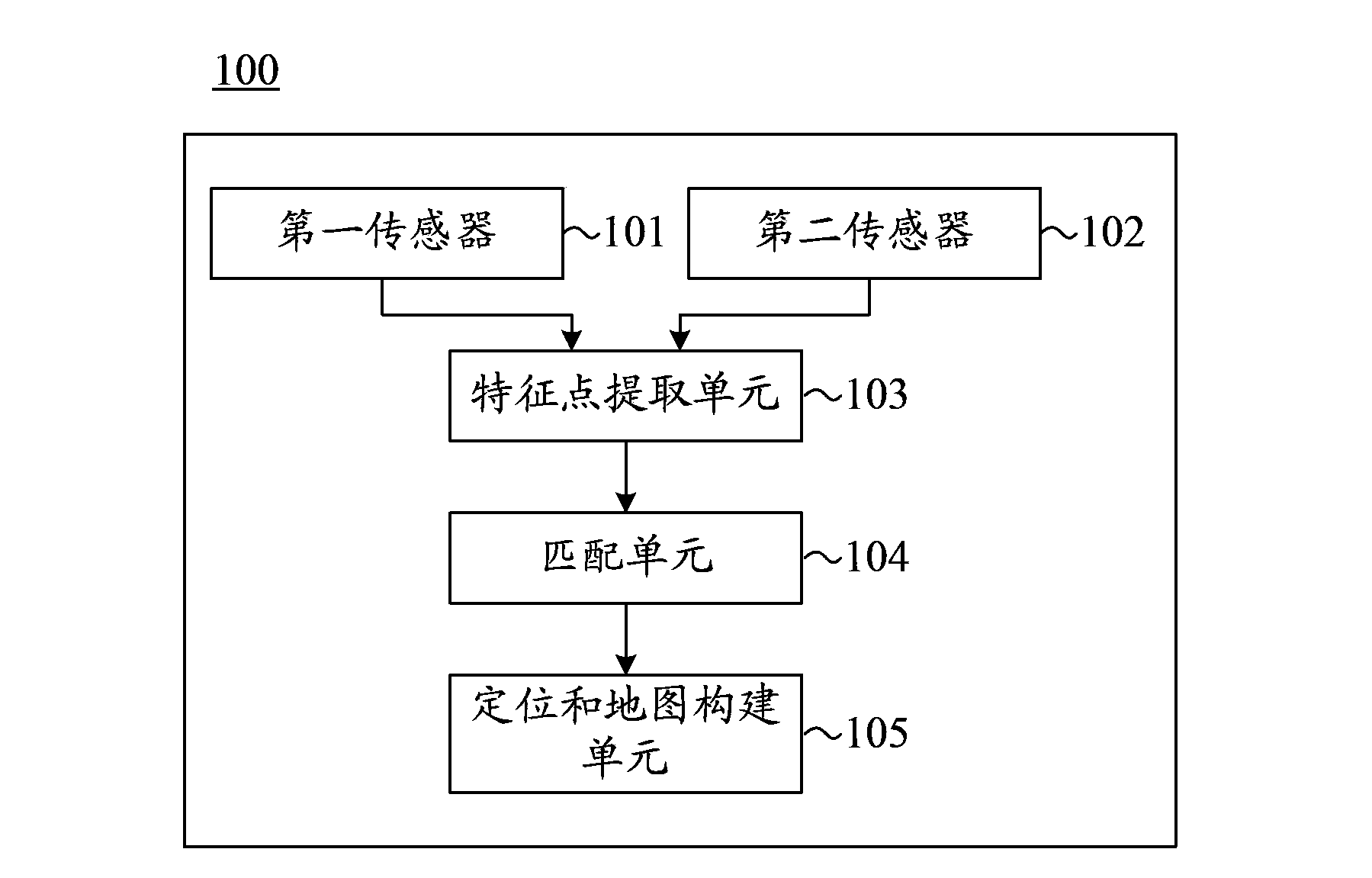

Device and method used for real-time positioning and map building

ActiveCN103900583AIncrease the number of feature pointsPrecise positioningInstruments for road network navigationPattern recognitionSimultaneous localization and mapping

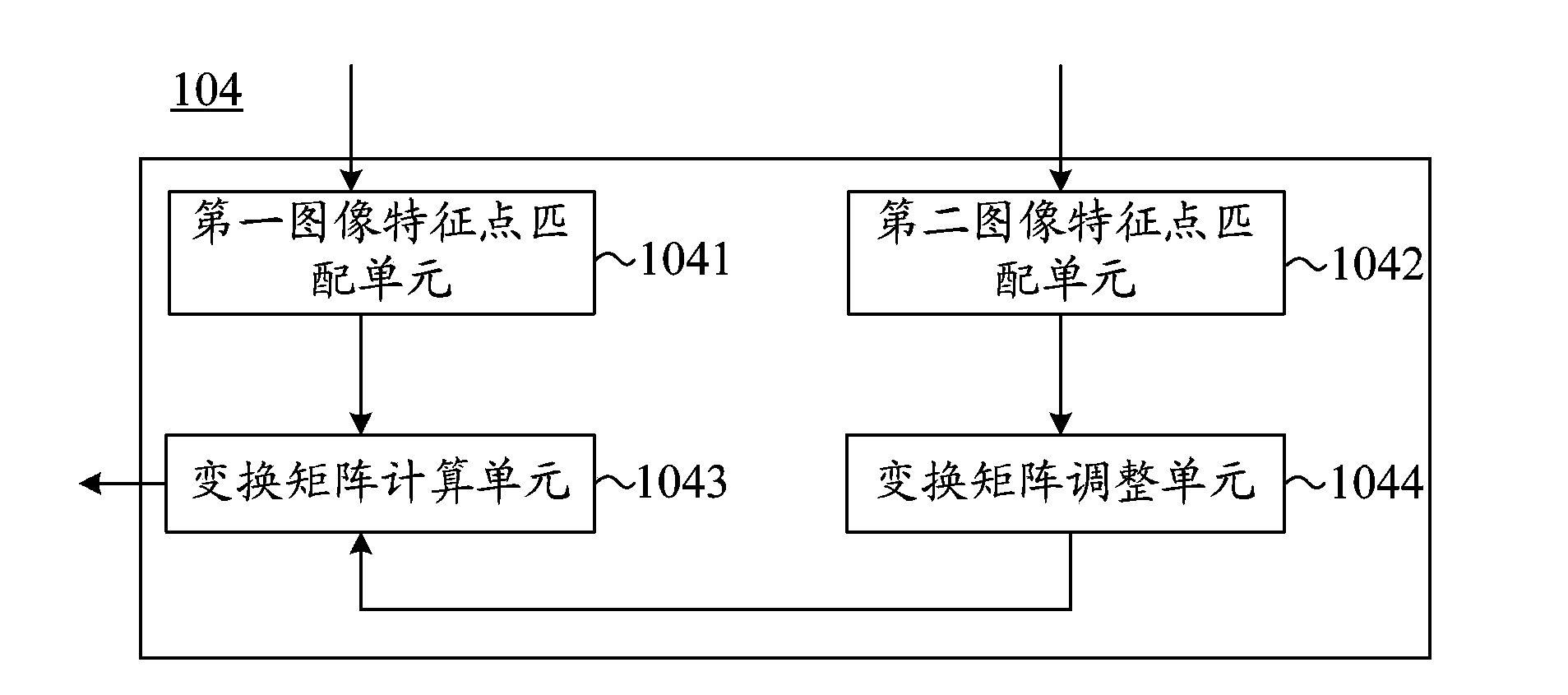

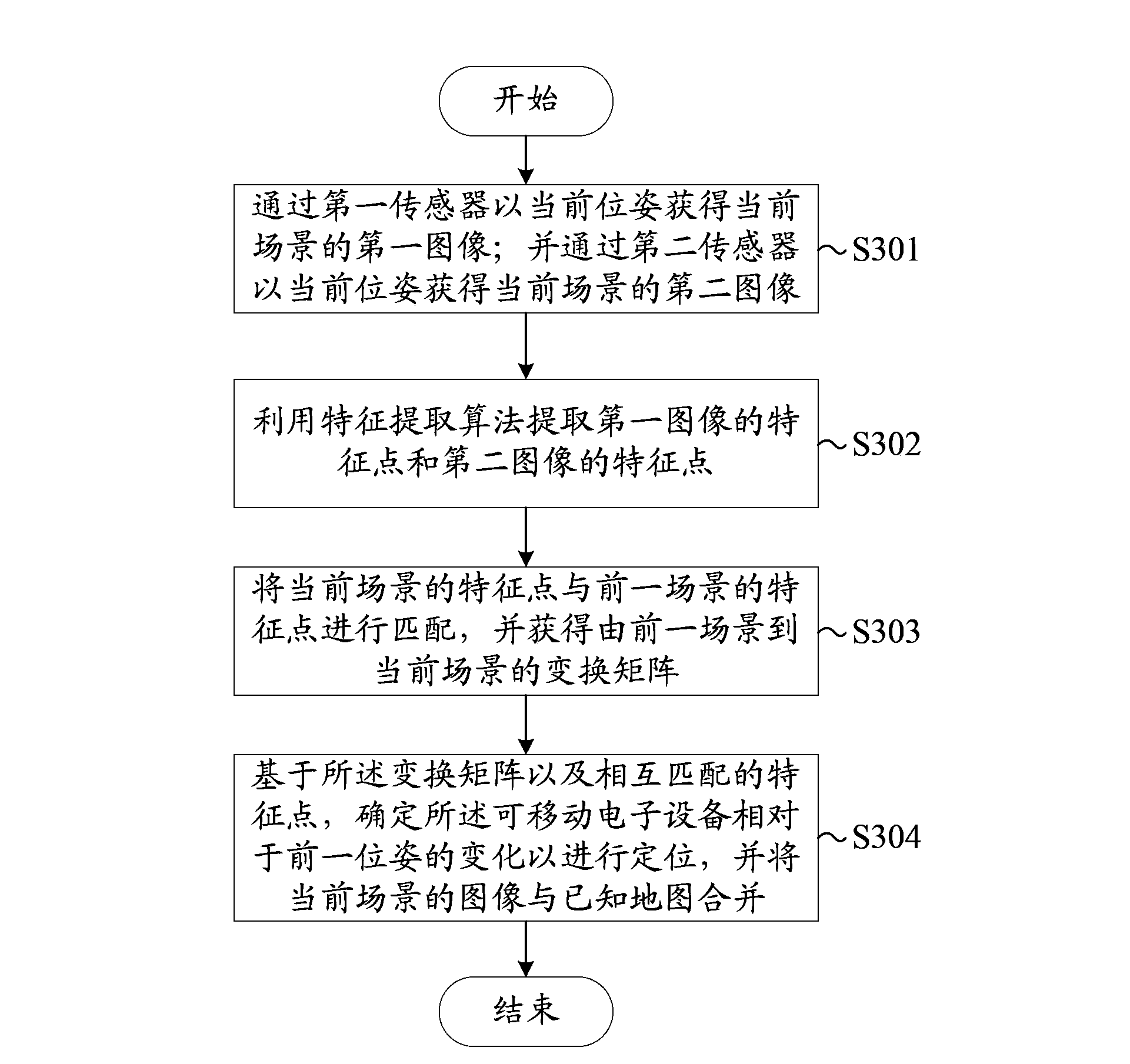

A mobile electron device is used for positioning and map building in an unknown environment. The mobile electron device comprises: a first sensor, a second sensor, a feature point extraction unit, a matching unit, and a positioning and map building unit, wherein the first sensor is used for acquiring a first image of the current scene with the current position and orientation; the second sensor is used for acquiring a second image of the current scene with the current position and orientation; the feature point extraction unit is used for extracting feature points of the first image and feature points of the second image by utilization of a feature extraction algorithm; the matching unit is used for matching the feature points of the current scene and feature points of a previous scene, and acquiring a transformation matrix from the previous scene to the current scene; and the positioning and map building unit is used for, based on the transformation matrix and the matched feature points, determining changes on position and orientation of the mobile electron device relative to the previous position and orientation so as to perform positioning, and is also used for combining the images of the current scene with a known map.

Owner:LENOVO (BEIJING) CO LTD

Path planning method, device and mobile device

ActiveCN109541634AReduce resolutionImprove path planning efficiencyImage enhancementImage analysisSimultaneous localization and mappingMobile device

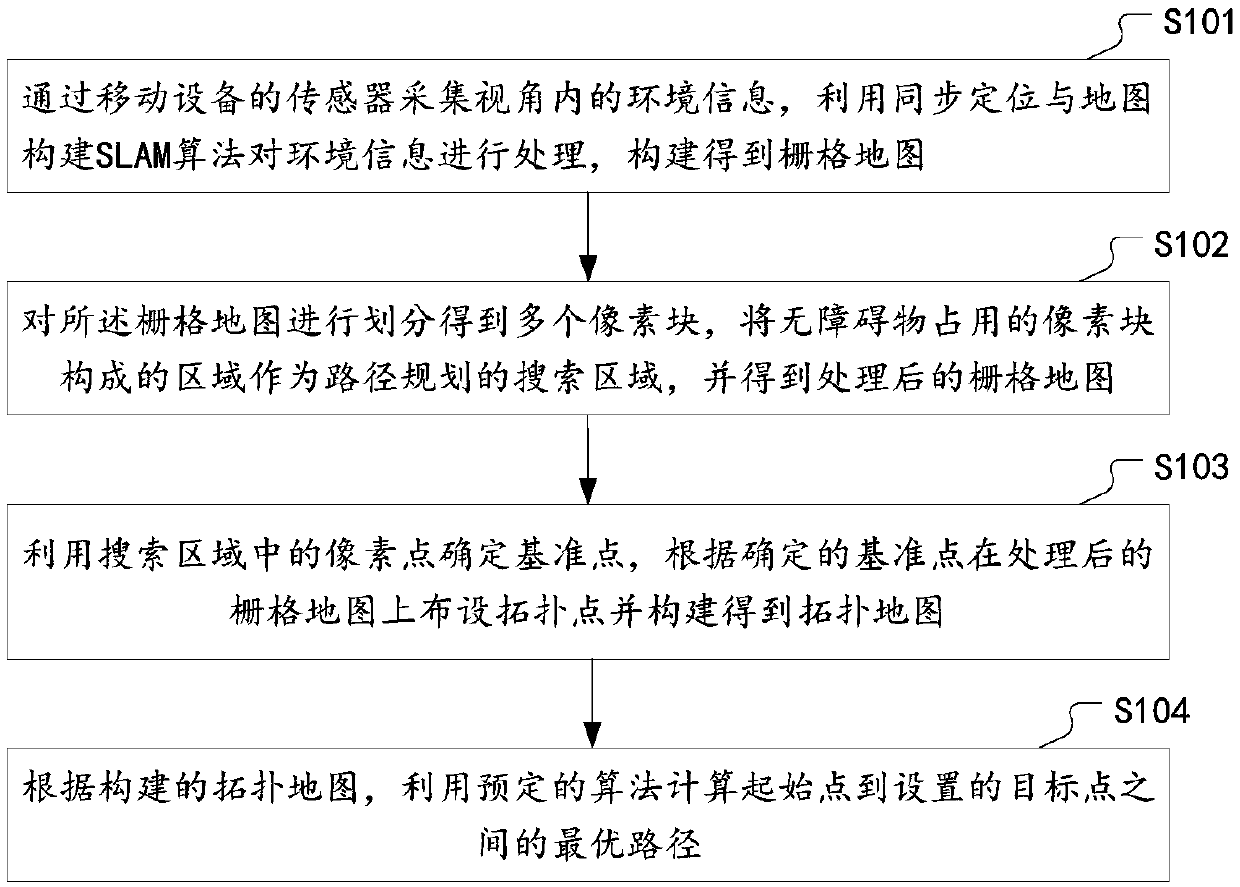

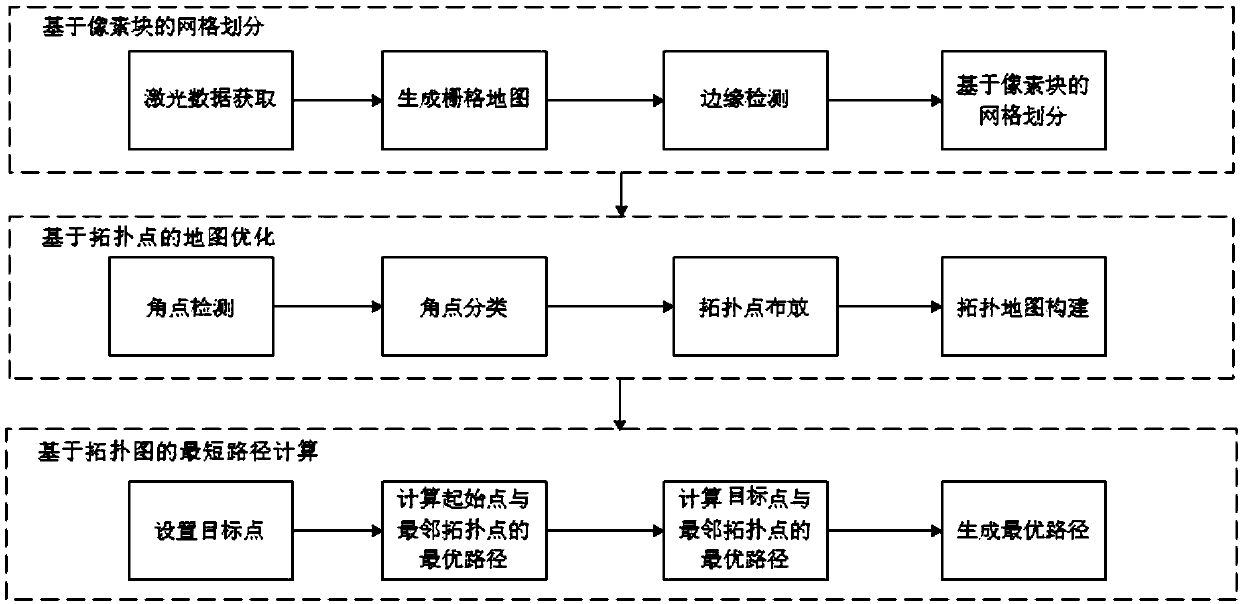

The invention discloses a path planning method, a device and a mobile device. The method comprises: collecting environment information within the perspective by a sensor of the mobile device, using the simultaneous localization and mapping(SLAM) algorithm to process the environment information to obtain a grid map; dividing the grid map into pixel blocks, taking the area composed of the pixel blocks having no obstacles as a search area of the path planning, and obtaining a processed grid map; determining a reference point based on pixel points in the search area, arranging topological points on the processed grid map according to the determined reference point, and constructing a topological map; and calculating the optimal path between a starting point and a target point using a predetermined algorithm based on the constructed topological map. The embodiments of the invention improve the path planning efficiency and save storage resources.

Owner:GOERTEK INC

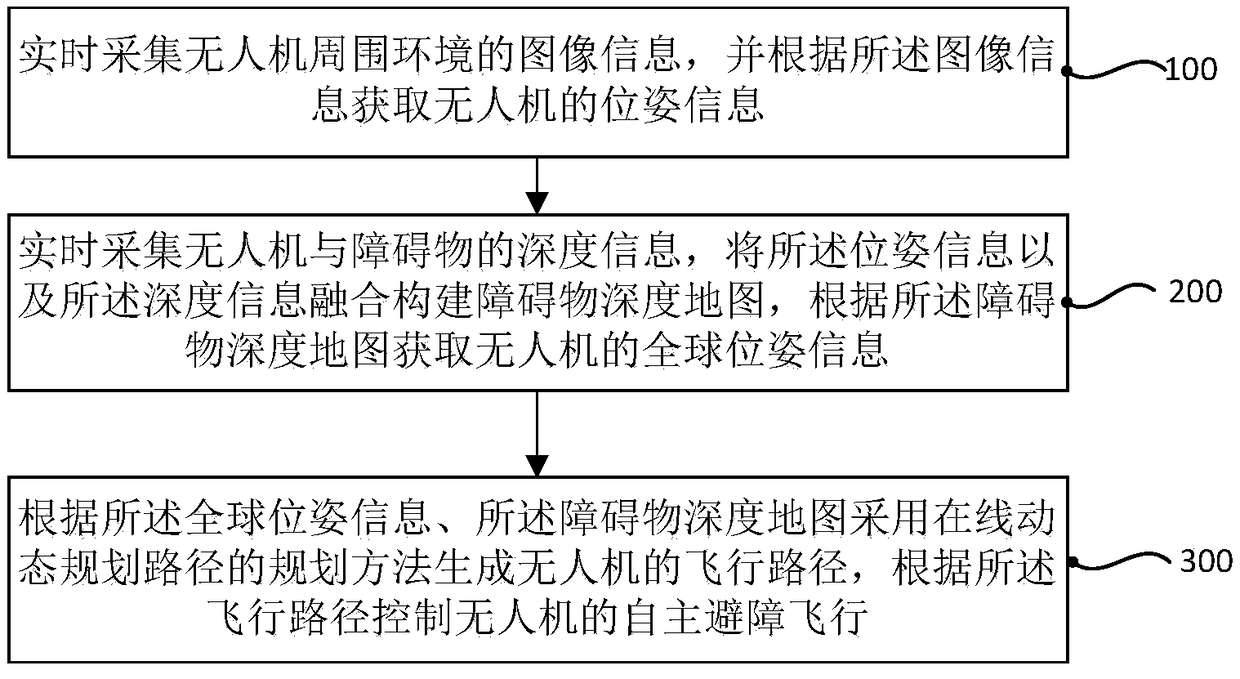

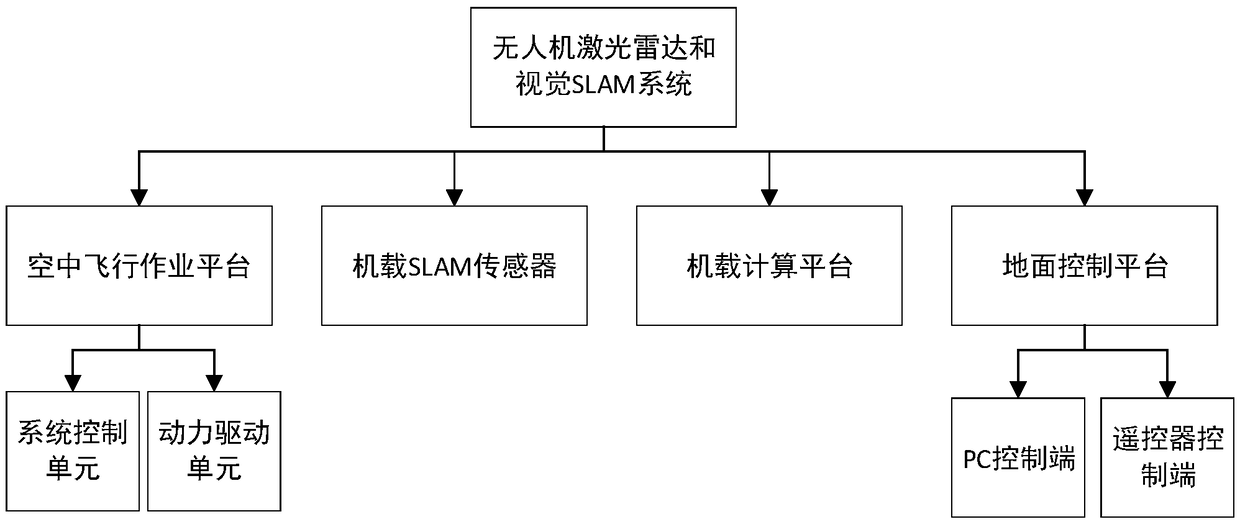

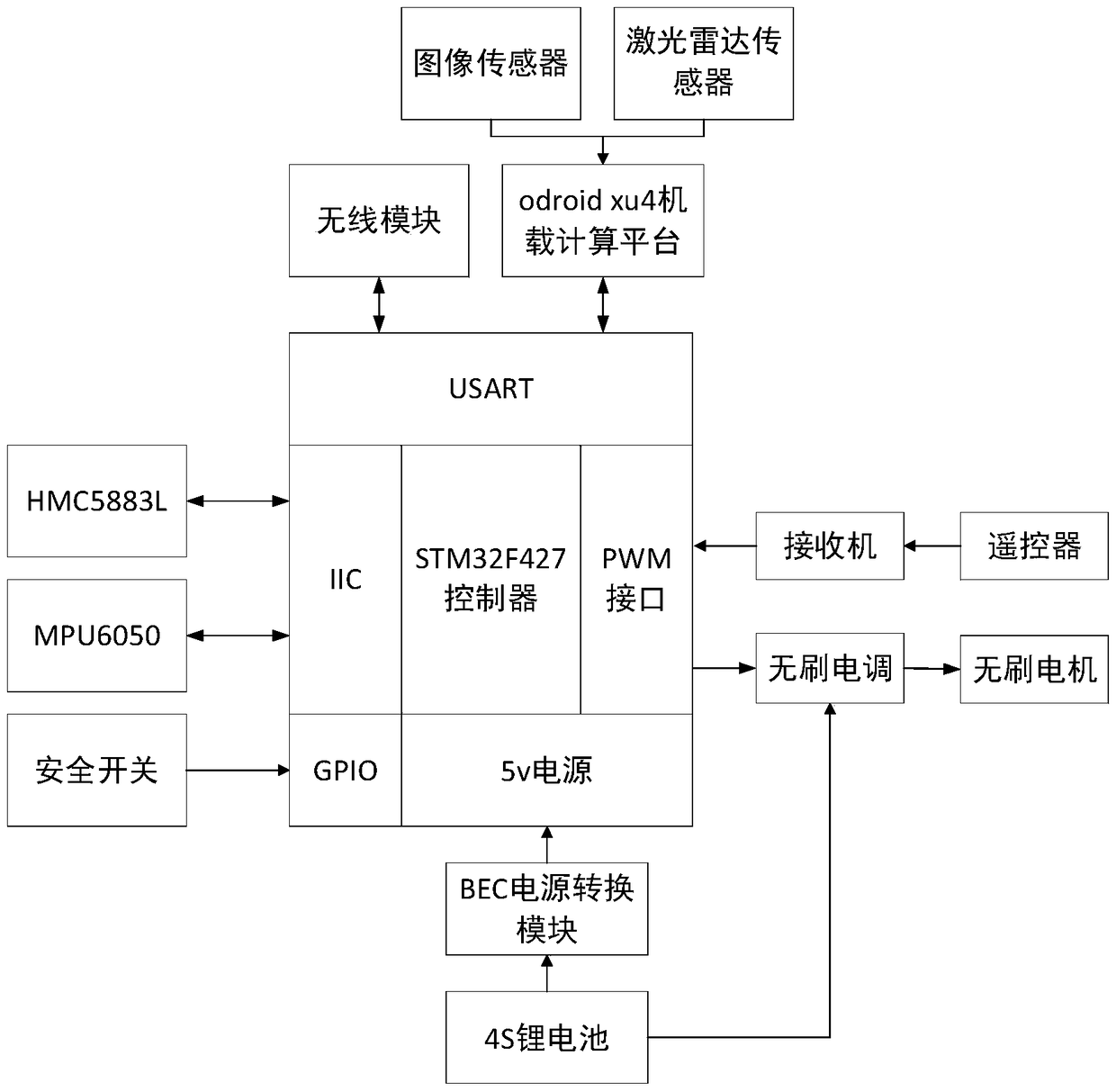

Multisensor fusion-based nmanned aerial vehicle SLAM (simultaneous localization and mapping) navigation method and system

ActiveCN108827306AImprove completenessImprove navigation accuracyNavigational calculation instrumentsSimultaneous localization and mappingUncrewed vehicle

The invention discloses a multisensor fusion-based unmanned aerial vehicle SLAM (simultaneous localization and mapping) navigation method and a multisensor fusion-based unmanned aerial vehicle SLAM navigation system. The multisensor fusion-based unmanned aerial vehicle SLAM navigation method comprises the following steps: acquiring image information of the surrounding environment of an unmanned aerial vehicle in real time, and acquiring pose information of the unmanned aerial vehicle according to the image information; acquiring depth information of the unmanned aerial vehicle and an obstaclein real time, fusing the pose information and the depth information to construct an obstacle depth map, and acquiring global position information of the unmanned aerial vehicle according to the obstacle depth map; according to the global pose information and the obstacle depth map, generating a flight path of the unmanned aerial vehicle by an online dynamic path planning method, and controlling autonomous obstacle-avoiding flight of the unmanned aerial vehicle according to the flight path. Through the multisensor fusion-based unmanned aerial vehicle SLAM navigation method and the multisensor fusion-based unmanned aerial vehicle SLAM navigation system, real-time localization and mapping of the unmanned aerial vehicle in a complex environment can be achieved; compared with the conventional unmanned aerial vehicle navigation technology, the navigation technology adopted by the invention has the advantages as follows: real-time localization and mapping and autonomous navigation are achieved, and the intelligence degree and the navigation accuracy of the unmanned aerial vehicle are improved.

Owner:BEIJING FORESTRY UNIVERSITY

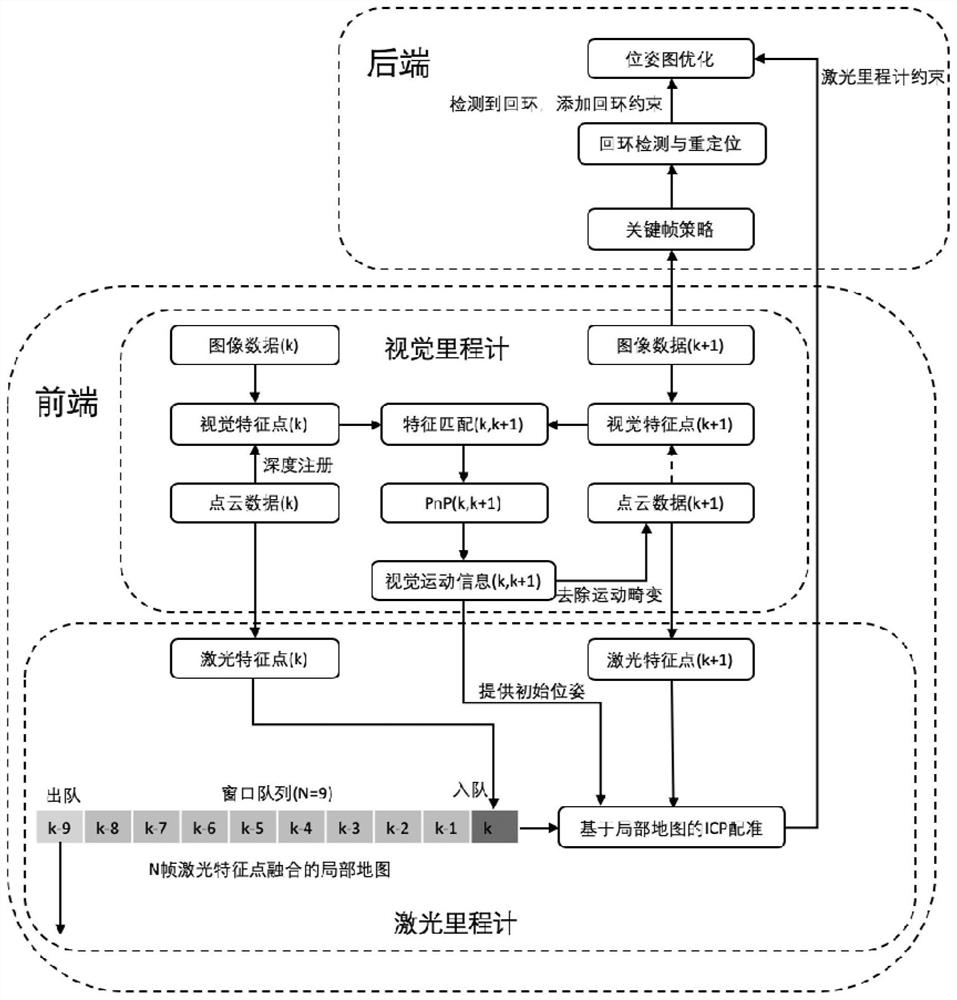

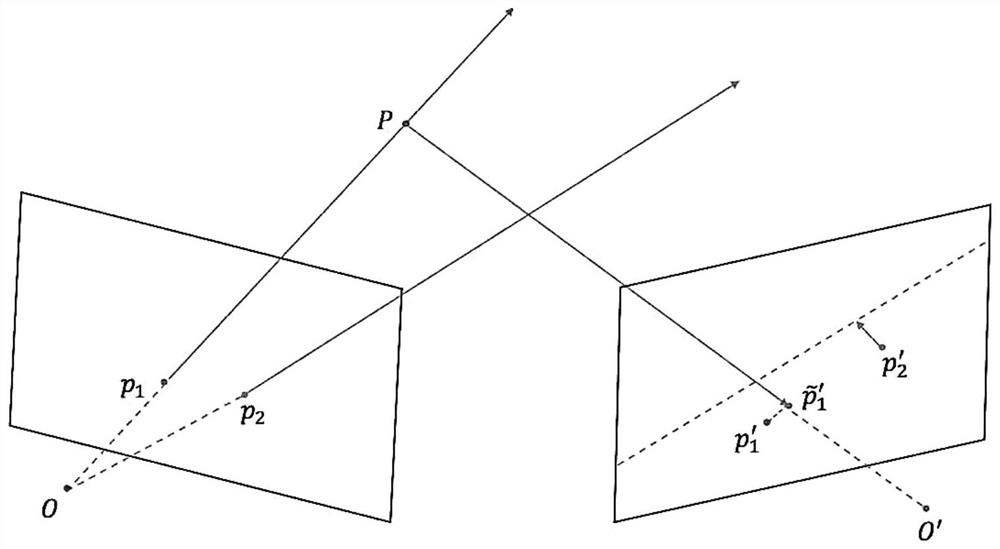

Simultaneous localization and mapping method based on vision and laser radar

InactiveCN112258600AAccurate initial poseIterative convergence is fastImage enhancementReconstruction from projectionSimultaneous localization and mappingPoint cloud

The invention discloses a simultaneous localization and mapping method based on vision and laser radar, and belongs to the field of SLAM. According to the method, the laser odometer and the visual odometer are operated at the same time, the visual odometer can assist the laser point cloud in better removing motion distortion, and meanwhile the point cloud with motion distortion removed can be projected to the image to serve as depth information for motion calculation of the next frame. After the laser odometer obtains a good initial value, the laser odometer is prevented from falling into a degradation scene due to the defects of a single sensor, and the odometer achieves higher positioning precision due to the addition of visual information. A loop detection and repositioning module is achieved through a visual word bag, and a complete visual laser SLAM system is formed; after loop is detected, the system performs pose graph optimization according to loop constraints, accumulated errors after long-time movement are eliminated to a certain extent, and high-precision positioning and point cloud map construction can be completed in a complex environment.

Owner:ZHEJIANG UNIV

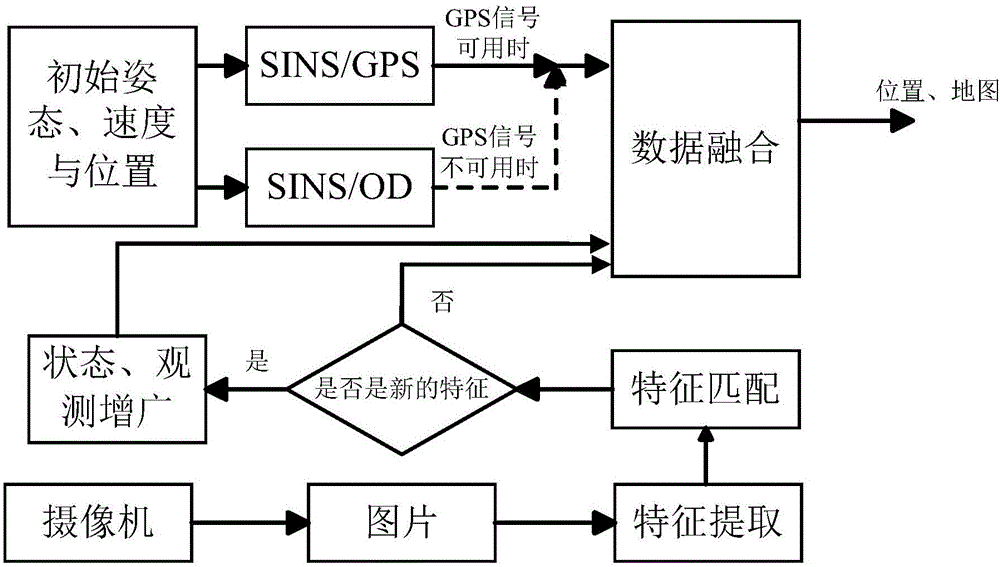

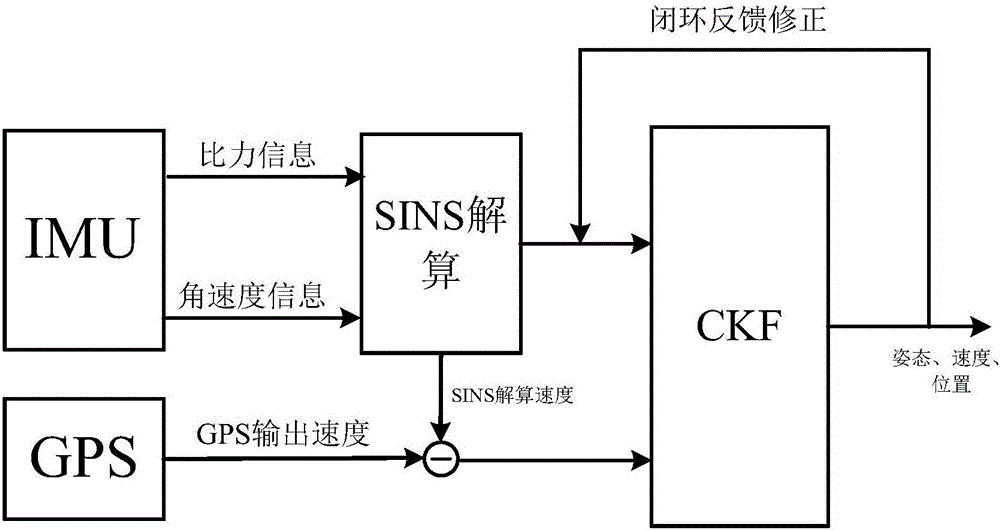

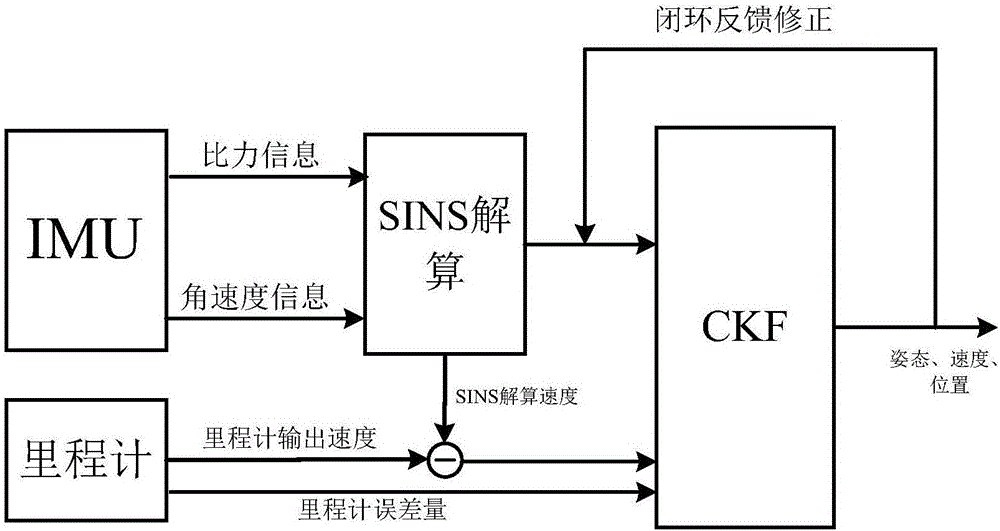

Visual SLAM (simultaneous localization and mapping) method based on SINS (strapdown inertial navigation system)/GPS (global positioning system) and speedometer assistance

ActiveCN106780699AOvercome the problem of a single application environmentHigh precisionInstruments for road network navigationGeographical information databasesSimultaneous localization and mappingGlobal Positioning System

The invention discloses a visual SLAM (simultaneous localization and mapping) method based on SINS (strapdown inertial navigation system) / GPS (global positioning system) and speedometer assistance. The method comprises the following steps: when GPS signals are available, performing data fusion on output information of the GPS and the SINS to obtain information including attitudes, speeds, positions and the like; when the GPS signals are unavailable, performing data fusion on output information of a speedometer and the SINS to obtain information including attitudes, speeds, positions and the like; shooting environmental images by using a binocular camera, and performing feature extraction and feature matching on the environmental images; and achieving positioning and map building by using the obtained transcendental attitude, speed and position information and environmental features, thereby completing a visual SLAM algorithm. According to the visual SLAM method disclosed by the invention, visual SLAM is assisted by using the SINA, the GPS and the speedometer, the positioning and map building under indoor and outdoor environments can be achieved, the application range is wide, and the precision and robustness of positioning can be improved.

Owner:SOUTHEAST UNIV

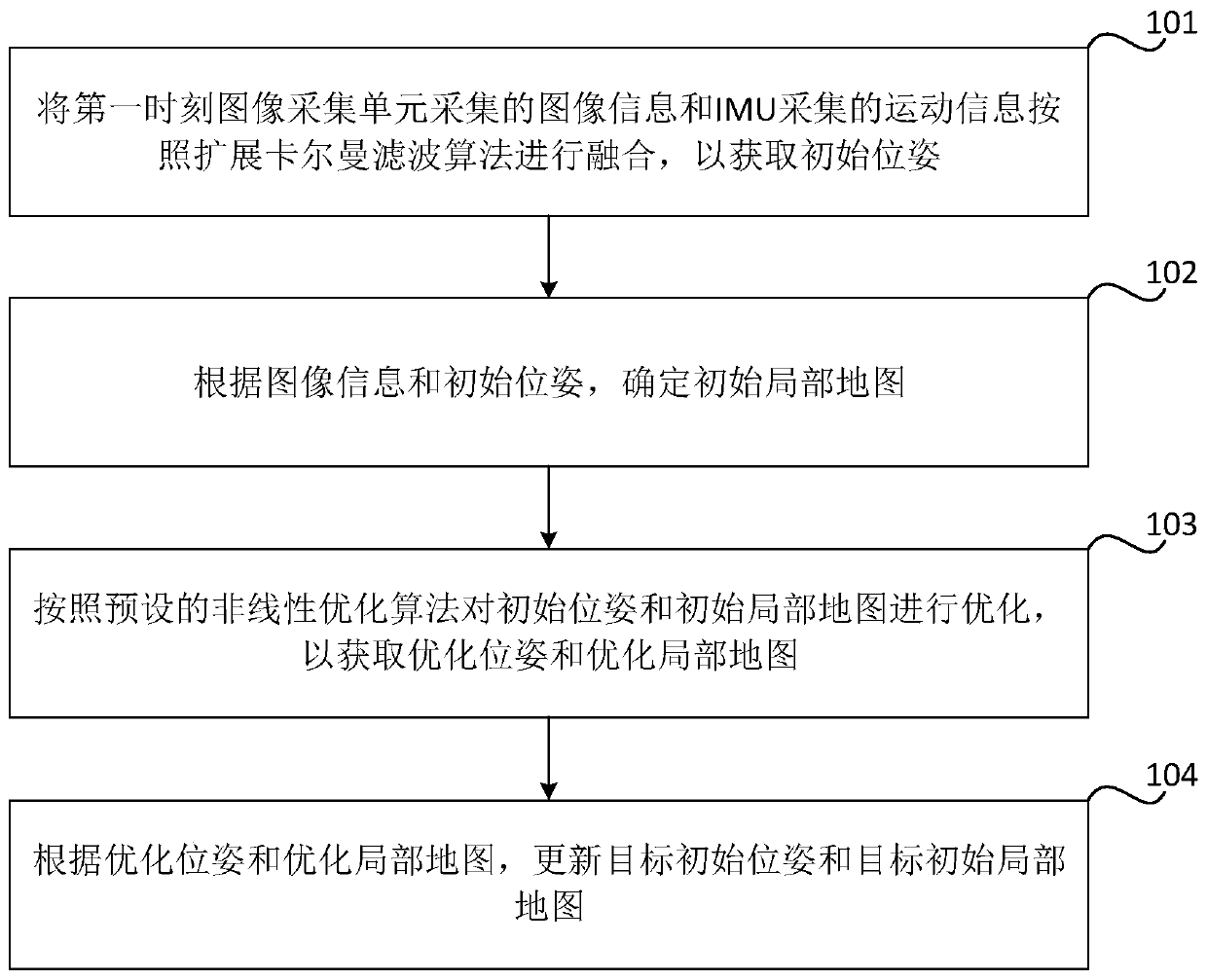

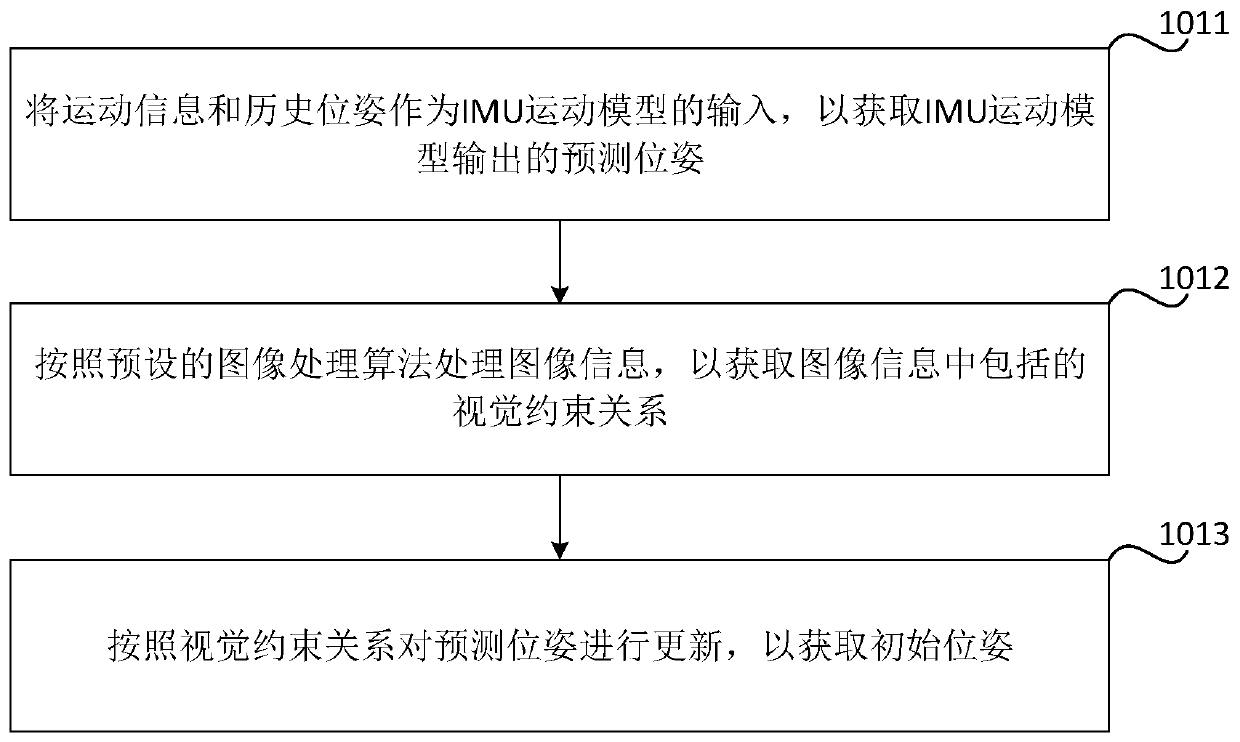

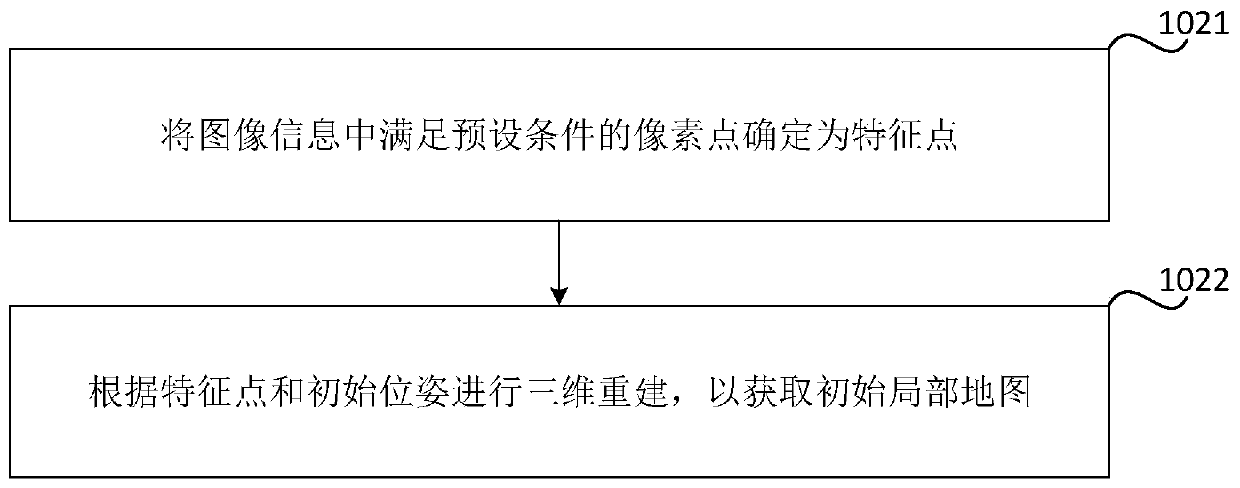

SLAM (Simultaneous Localization and Mapping) method and device based on visual inertia, storage medium and equipment

ActiveCN110118554AImprove computing efficiencyHigh positioning accuracyNavigational calculation instrumentsNavigation by speed/acceleration measurementsSimultaneous localization and mappingPattern recognition

The invention relates to an SLAM (Simultaneous Localization and Mapping) method and device based on visual inertia, a storage medium and equipment, and relates to the technical field of wireless locating. The method comprises the steps of: fusing image information acquired by an image acquisition unit at the first time and motion information acquired by an inertial measurement unit IMU according to an extended Kalman filtering algorithm, so that the initial posture is obtained, determining an initial local map according to the image information and the initial posture, optimizing the initial posture and the initial local map according to a pre-set nonlinear optimization algorithm, so that the optimized posture and the optimized local map are obtained, and updating the target initial posture and the target initial local map according to the optimized posture and the optimized local map. The SLAM calculation efficiency and locating precision can be increased.

Owner:CLOUDMINDS SHANGHAI ROBOTICS CO LTD

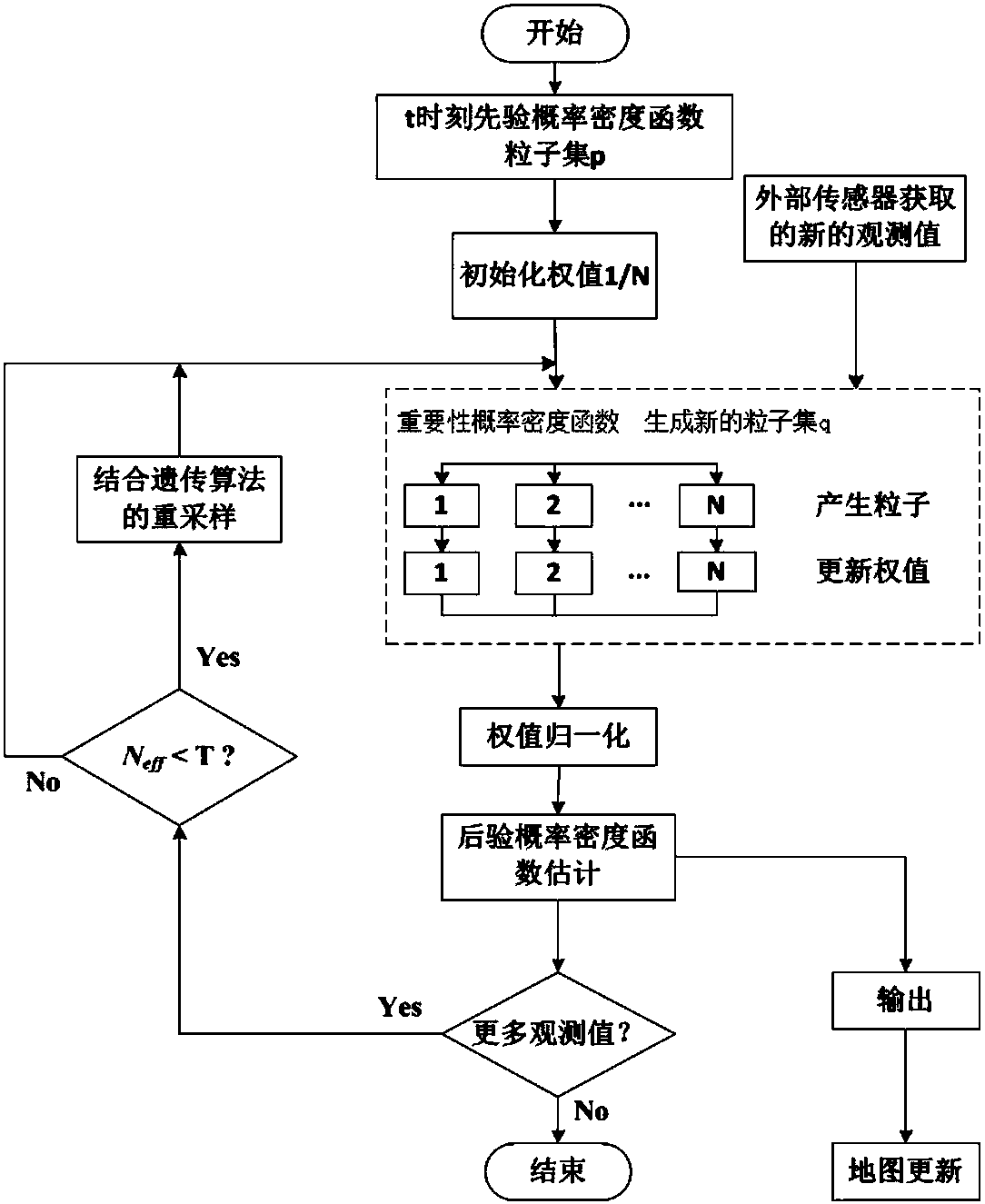

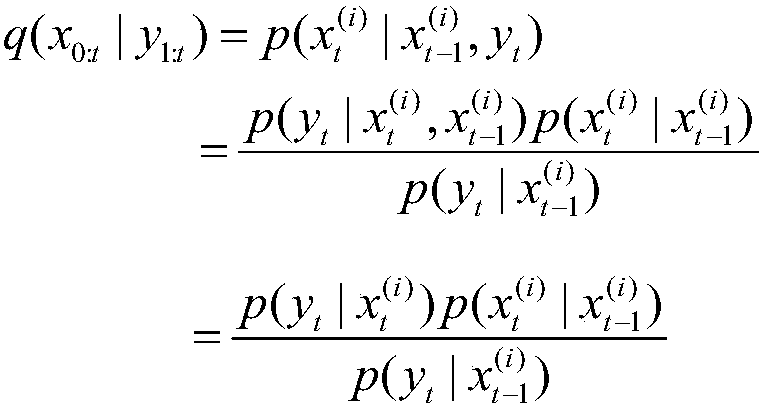

Method for simultaneous localization and mapping of mobile robot based on improved particle filter

InactiveCN107246873AEasy to keepReduce the impactNavigational calculation instrumentsPosterior probability densitySimultaneous localization and mapping

The invention discloses a method for simultaneous localization and mapping of a mobile robot based on an improved particle filter. The method comprises the following steps: initializing an initial-moment pose of a robot; obtaining a t-moment prior probability density function according to the pose information at a t-1 moment, and generating a sampling particle set p; initializing the weights of particles; selecting an importance probability density function, generating a new sampling particle set q, calculating the weights of particles, updating the weights of the particles, and normalizing the weights; calculating the weighted sum of random sample particles at current moment t to express posterior probability density, and obtaining the moving pose and environmental map information; judging whether a new observed value is input; if so, returning; otherwise, ending the cycle; before returning, judging whether resampling is needed or not. According to the difference of the system state, a dynamic threshold is set for judgment, and a genetic algorithm is combined. According to the method disclosed by the invention, influence of a problem of particle degeneration on SLAM is reduced, and the calculated amount of the SLAM problem is reduced.

Owner:HARBIN ENG UNIV

Information processing method, mobile electronic device, guidance device, and server

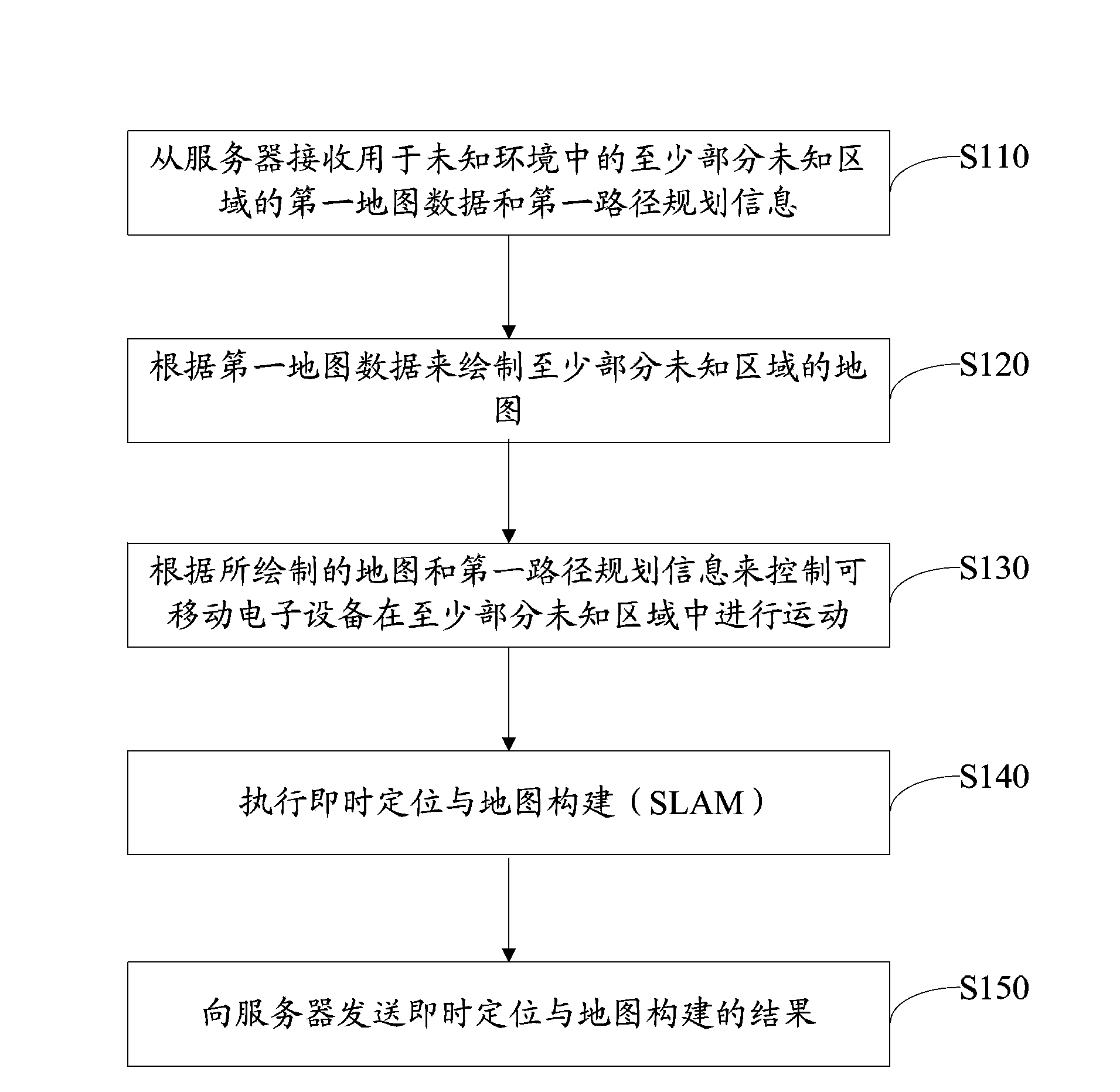

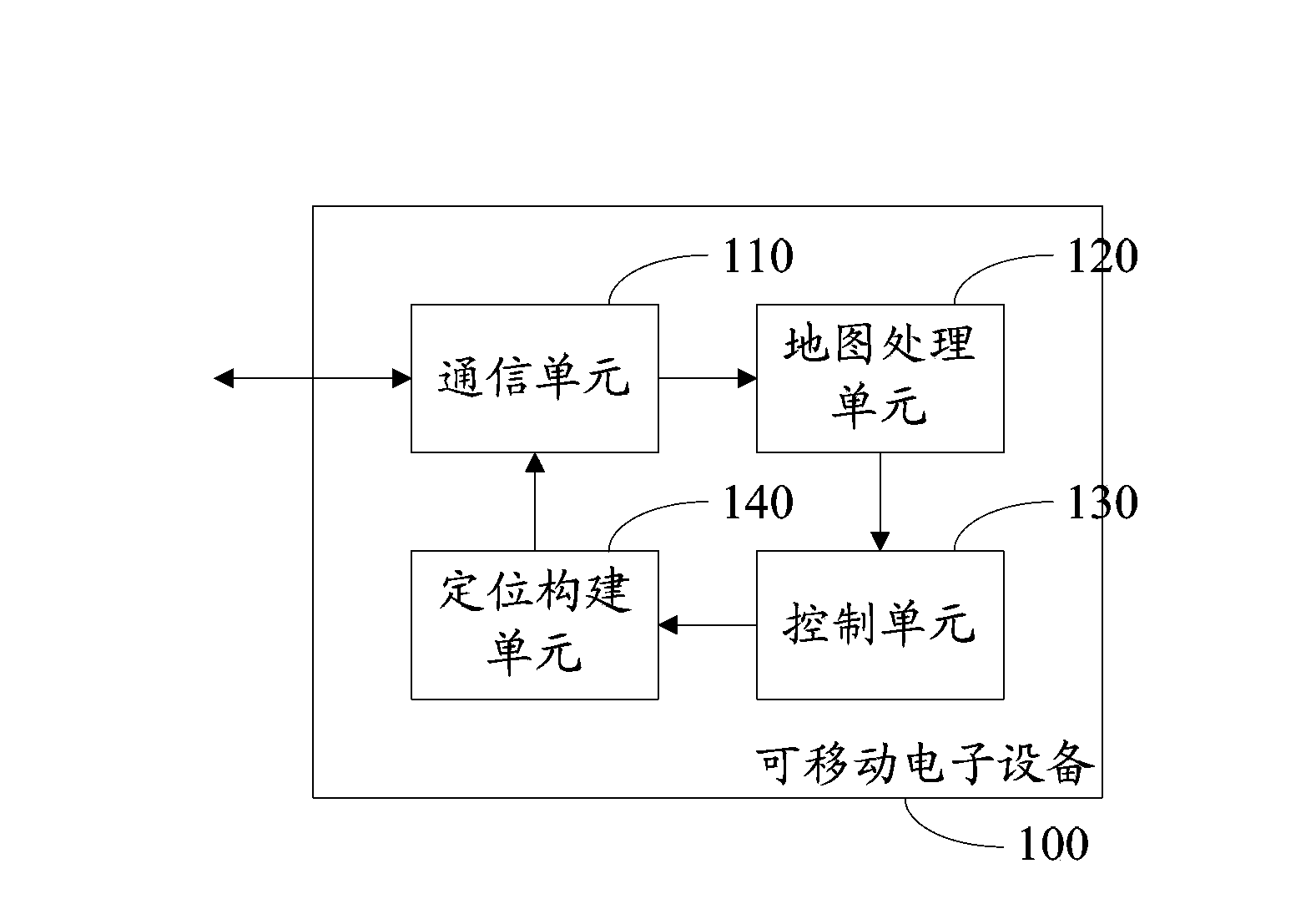

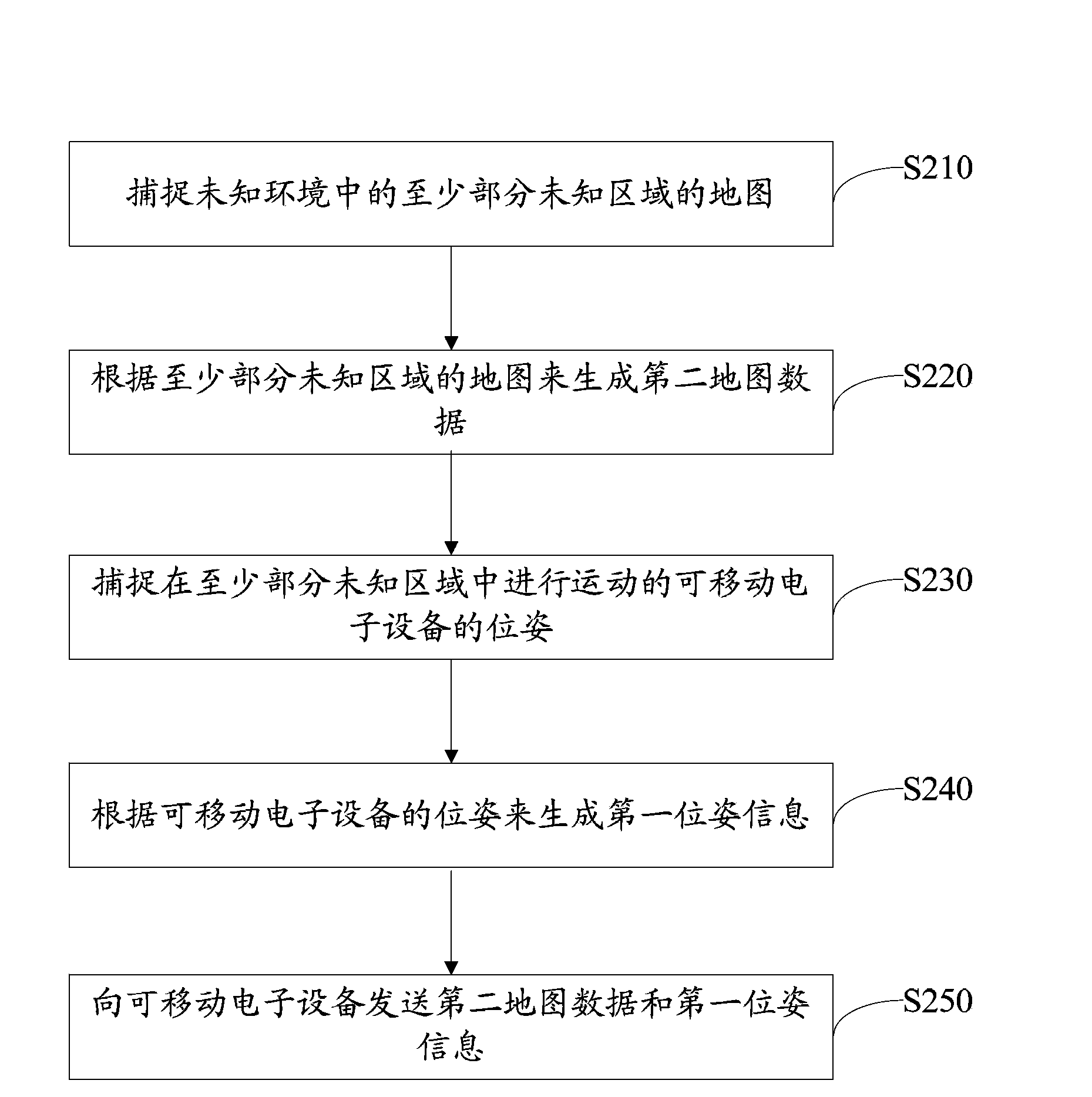

ActiveCN103884330AReduce complexityFast operationNavigation instrumentsInformation processingSimultaneous localization and mapping

The present invention discloses an information processing method, a mobile electronic device, a guidance device and a server. The method is applied in the mobile electronic device in an information processing system at least comprising the server and the mobile electronic device, is provided for providing navigation information for the mobile electronic device moving in an unknown environment, and comprises: receiving first map data for at least partial unknown region in the unknown environment and first path planning information, drawing the map of the at least partial unknown region according to the first map data, controlling the mobile electronic device to move in the at least partial unknown region according to the drawn map and the first path planning information, performing simultaneous localization and mapping (SLAM), and transmitting the SLAM result to the server. According to the present invention, the multi-level control structure is adopted, such that the complexity of each level of the structure is low, the operation speed is fast, and the deployment use is easily achieved.

Owner:LENOVO (BEIJING) LTD

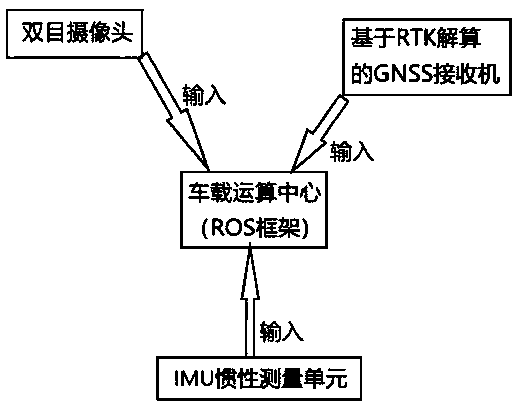

Mapping method and system based on GPS, IMU and binocular vision

PendingCN109991636AAchieve correctionRealize SupplementInstruments for road network navigationSatellite radio beaconingSimultaneous localization and mappingRobotic systems

The invention discloses a mapping method and system based on a GPS, an IMU and binocular vision. A map is corrected through the IMU and the binocular vision, and vision navigation is used in places, where GPS signals do not exist or are weak, such as tunnels. The problems in vision simultaneous localization and mapping (SLAM) can be solved well, and robustness of an SLAM system is improved; and avision information and IMU information fused method is adopted, various sensor data are fused, a new algorithm structure is provided, a stable and effective frame is constructed, stable and effectiveautonomous localization and mapping are conducted on an unmanned vehicle or a robot, positioning information of the GPS is corrected and supplemented, and the mapping method and system are applied tounmanned vehicle and robot systems on a large scale.

Owner:QIMING INFORMATION TECH

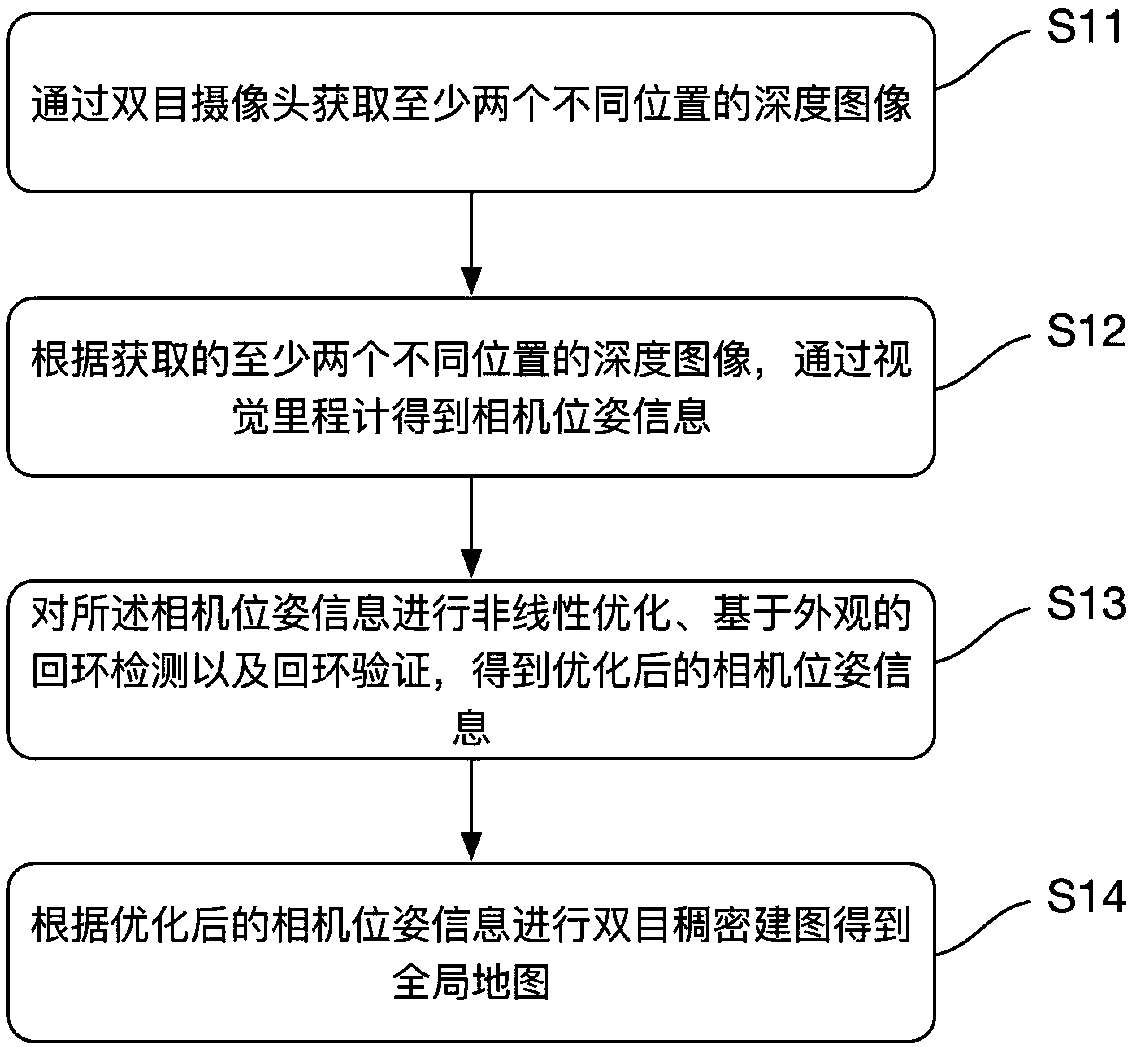

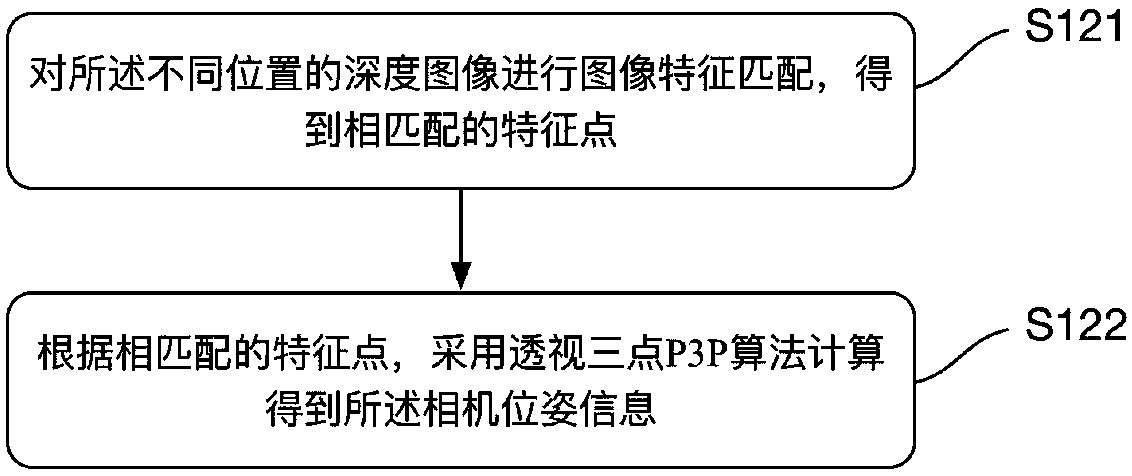

Unmanned-aerial-vehicle visual-SLAM (Simultaneous Localization and Mapping) method based on binocular camera, unmanned aerial vehicle and storage medium

ActiveCN107808407AResolve interferencePrecise positioningImage enhancementImage analysisSimultaneous localization and mappingUncrewed vehicle

The invention discloses an unmanned-aerial-vehicle visual-SLAM (Simultaneous Localization and Mapping) method based on a binocular camera, an unmanned aerial vehicle and a computer-readable storage medium. The method includes the steps of: acquiring depth images of at least two different locations through the binocular camera; obtaining camera pose information through a visual odometer according to the acquired depth images of the at least two different locations; carrying out nonlinear optimization, appearance-based circle loop detection and circle loop verification on the camera pose information to obtain optimized camera pose information; and carrying out binocular dense mapping according to the optimized camera pose information to obtain a global map. According to the method, the depthimages of the different locations are acquired through the binocular camera, and binocular dense mapping is carried out after use of the visual odometer, nonlinear optimization, circle loop detectionand circle loop verification to obtain the global map; and on the one hand, the interference problem existing with adopting of RGB-D cameras can be solved, and on the other hand, more precise localization can be realized, and the more precise map is established.

Owner:EHANG INTELLIGENT EQUIP GUANGZHOU CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com