Patents

Literature

2766 results about "Key frame" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

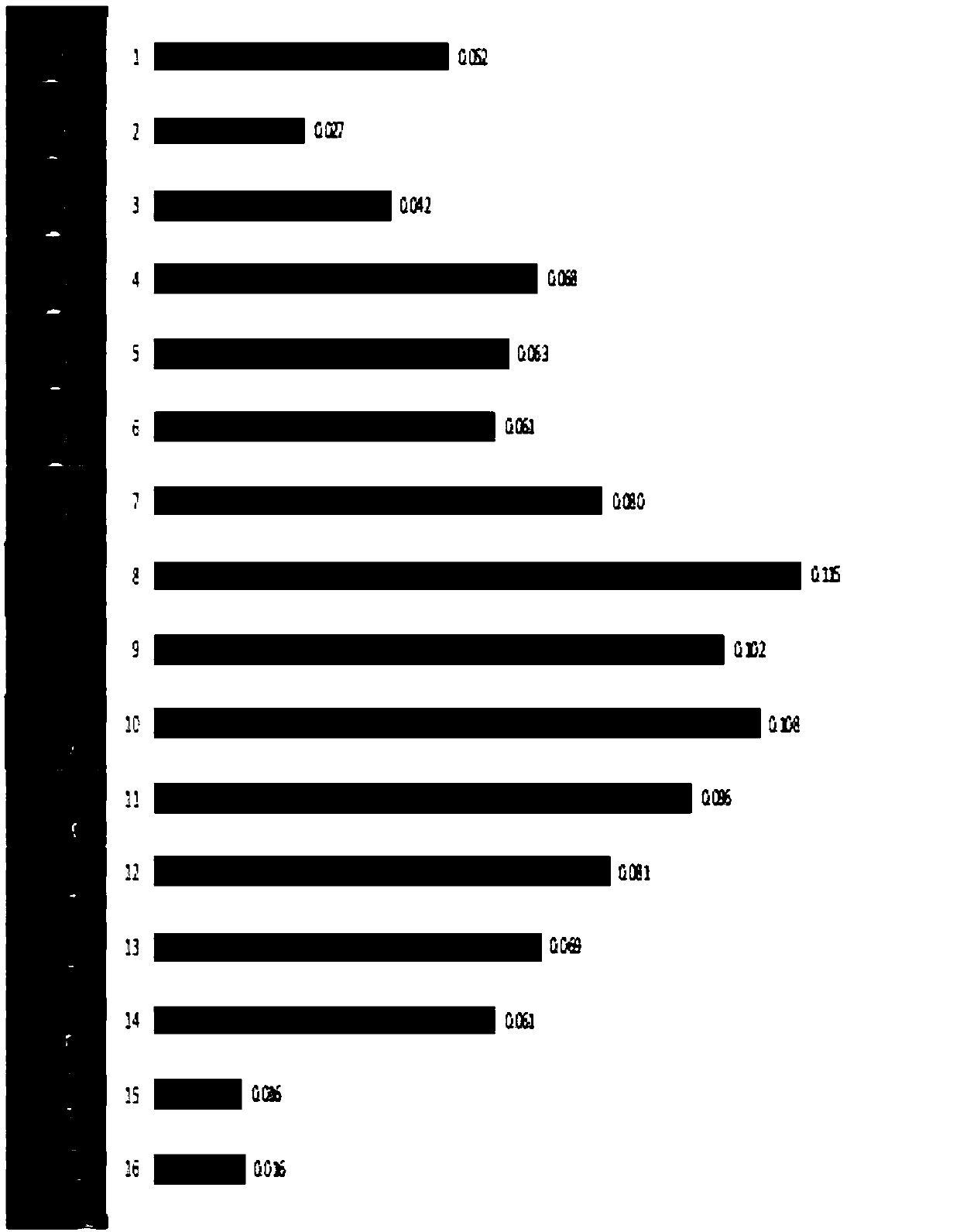

Application Year

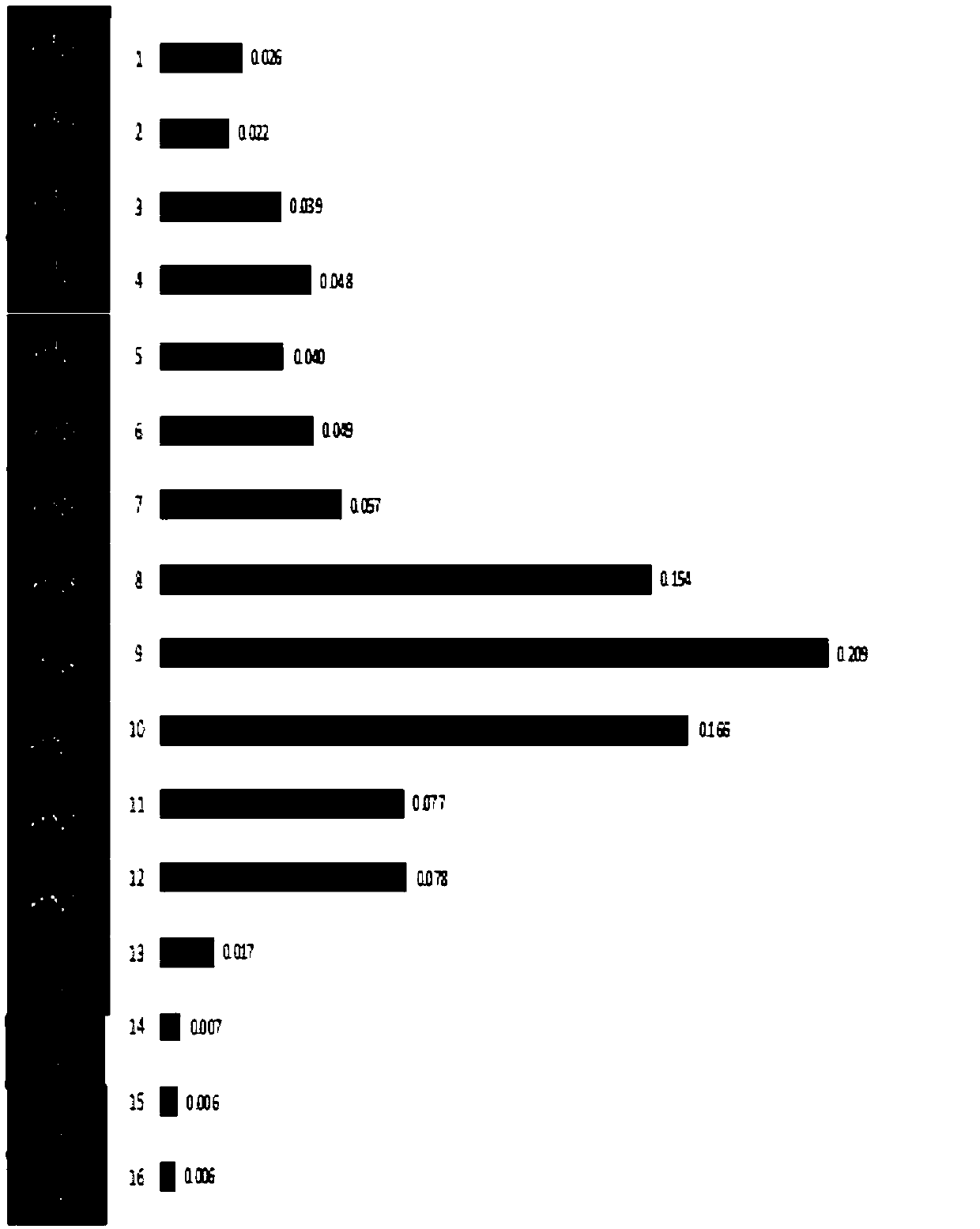

Inventor

A keyframe in animation and filmmaking is a drawing that defines the starting and ending points of any smooth transition. The drawings are called "frames" because their position in time is measured in frames on a strip of film. A sequence of keyframes defines which movement the viewer will see, whereas the position of the keyframes on the film, video, or animation defines the timing of the movement. Because only two or three keyframes over the span of a second do not create the illusion of movement, the remaining frames are filled with inbetweens.

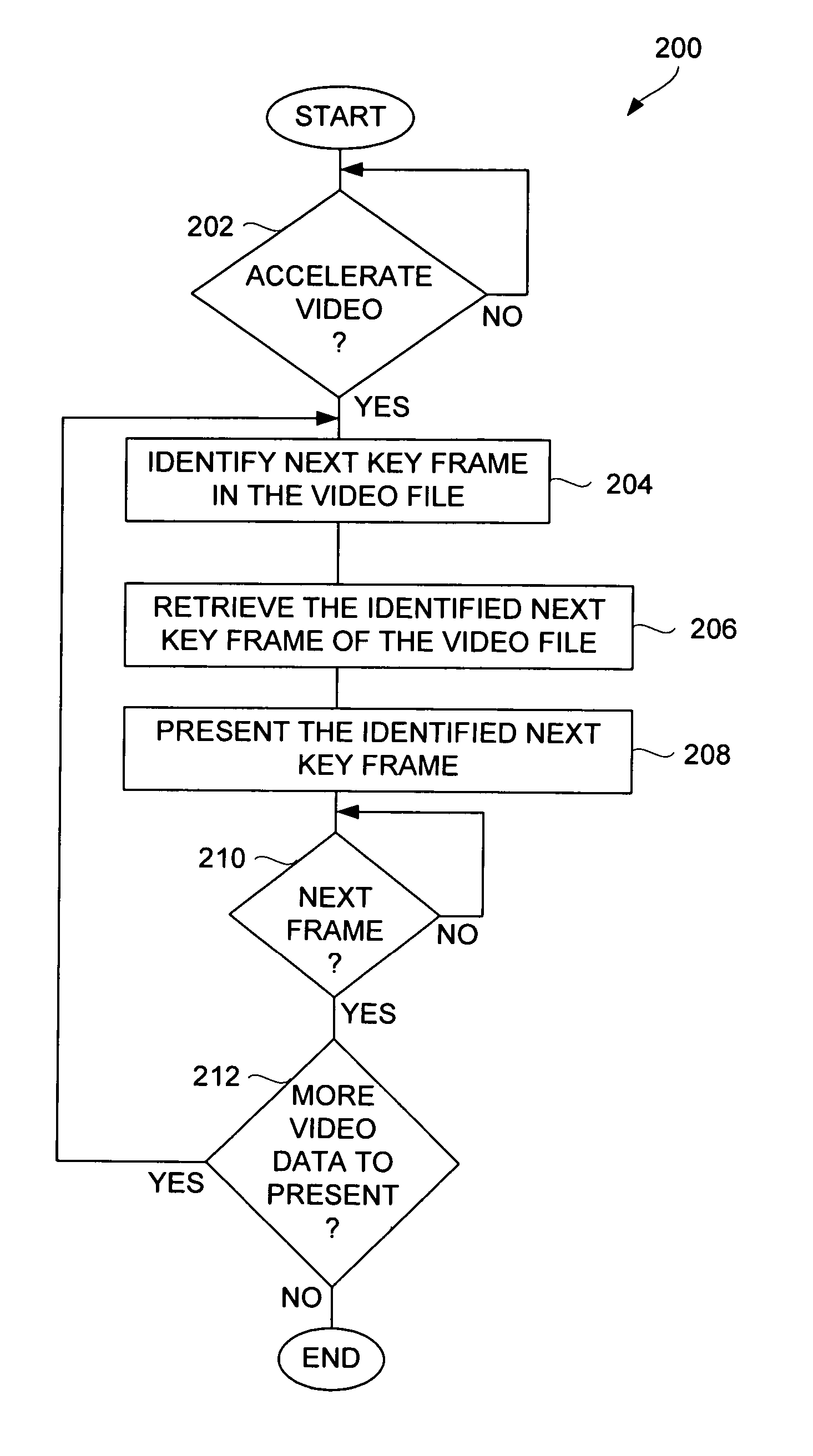

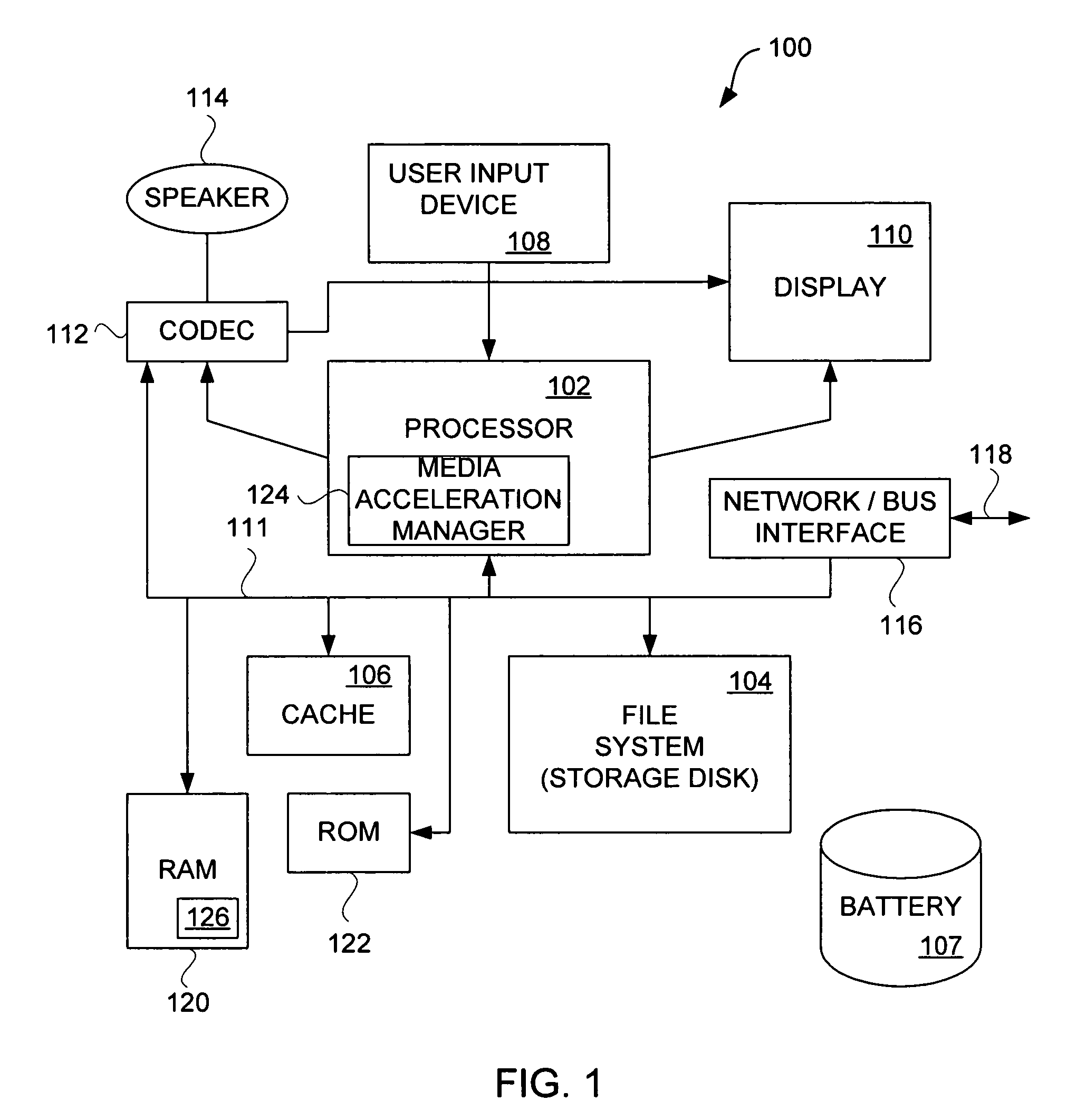

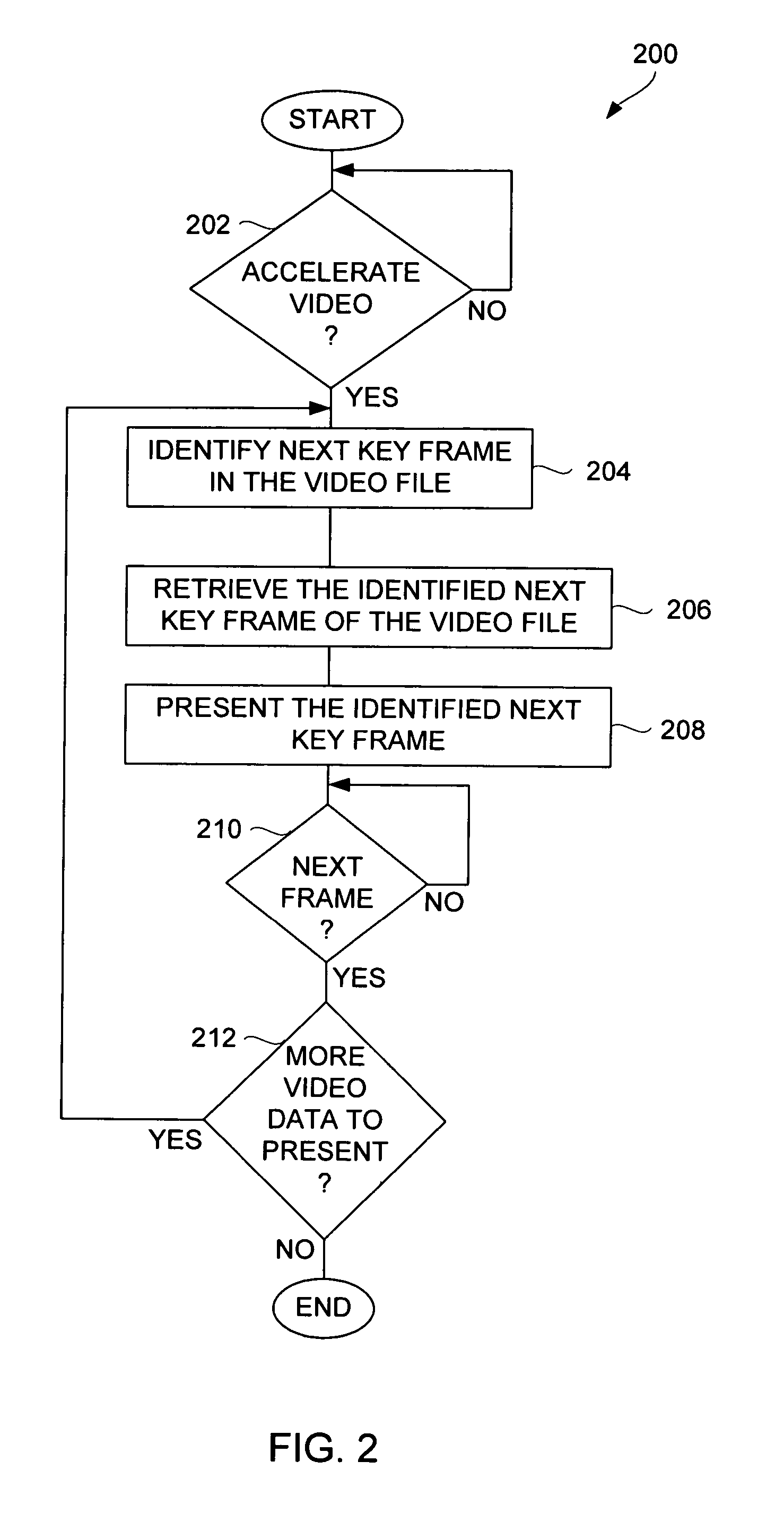

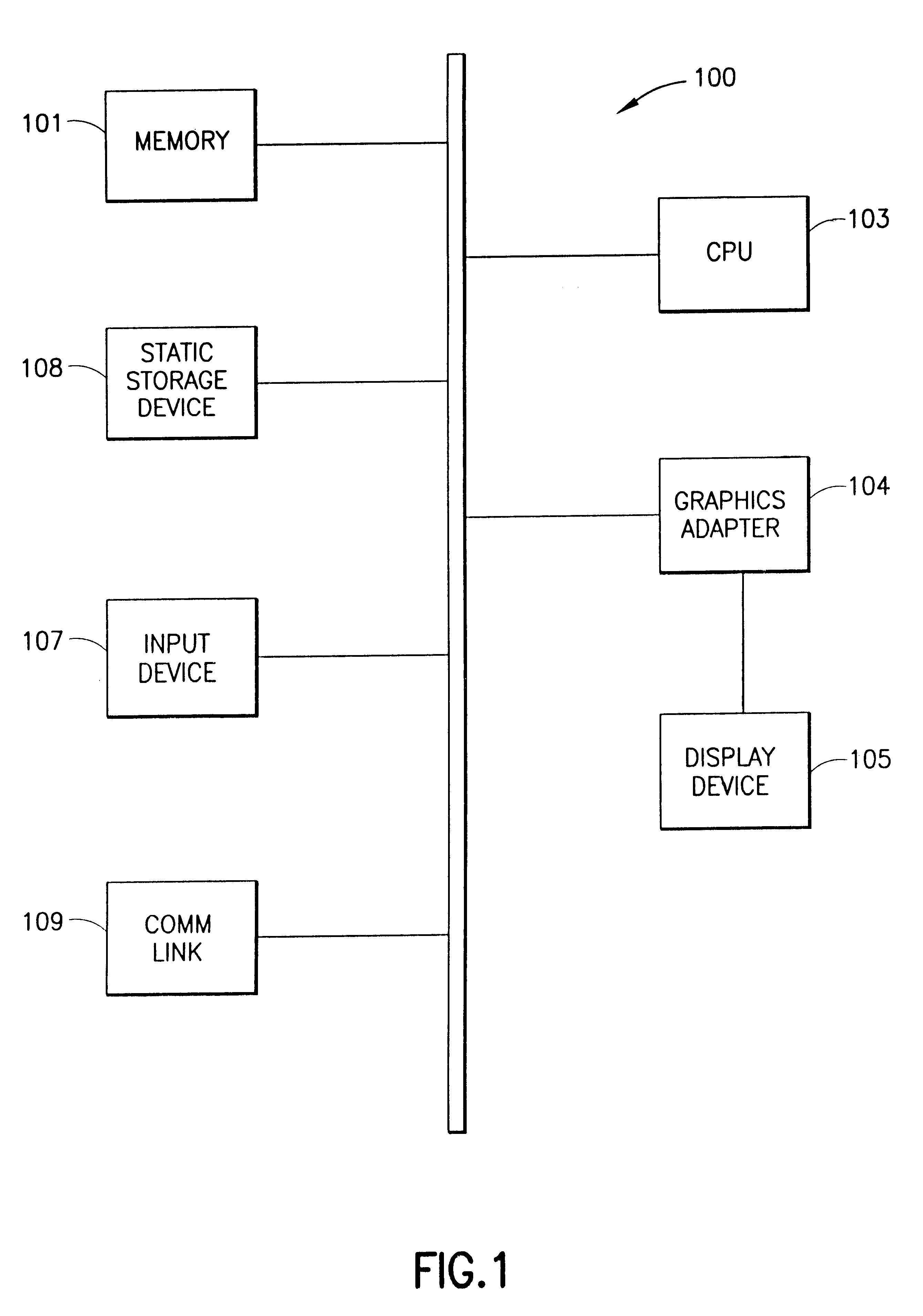

Portable media device with video acceleration capabilities

ActiveUS7673238B2Control directionControl rateTelevision system detailsRecording carrier detailsKey frameAcceleration Unit

Owner:APPLE INC

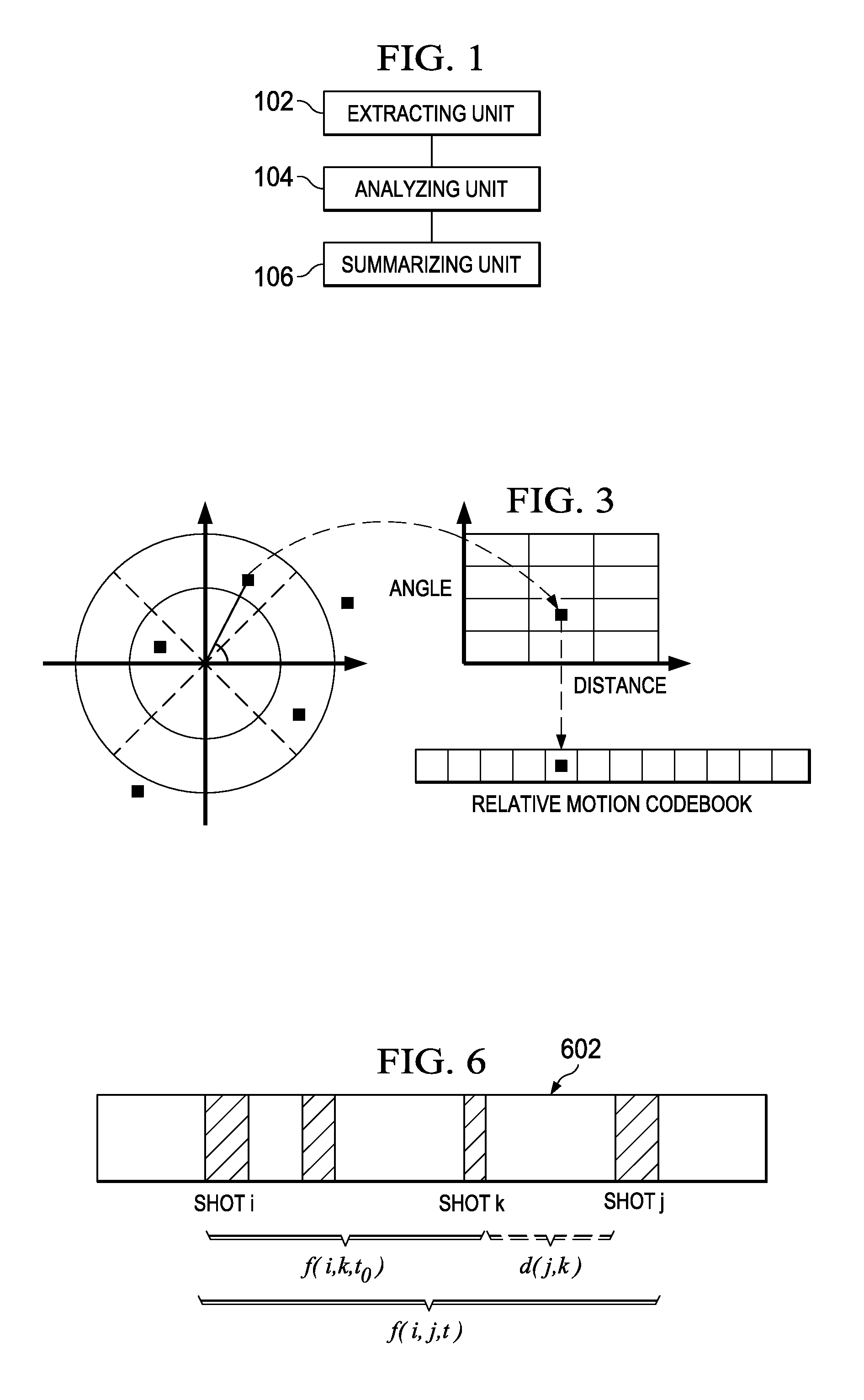

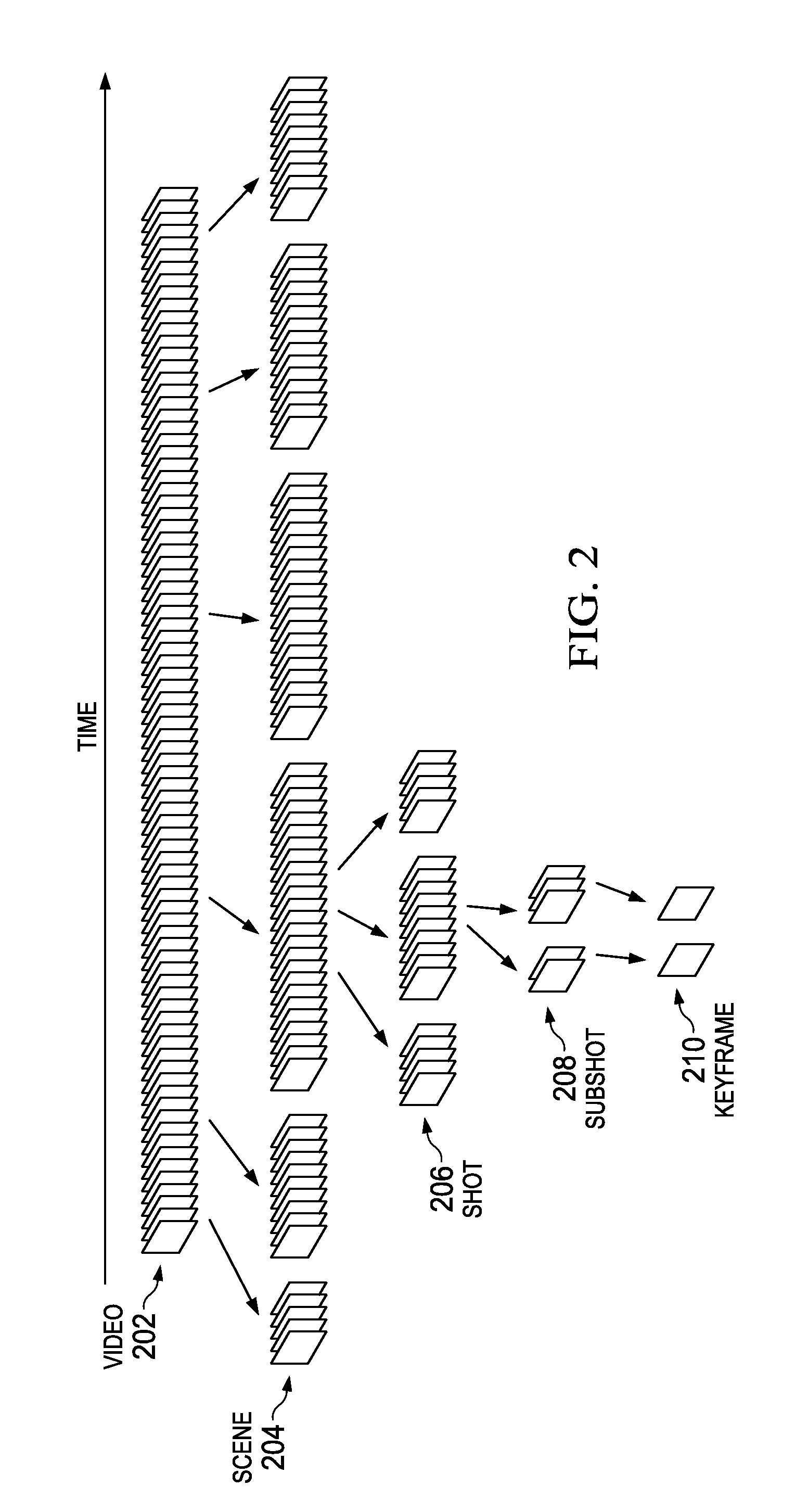

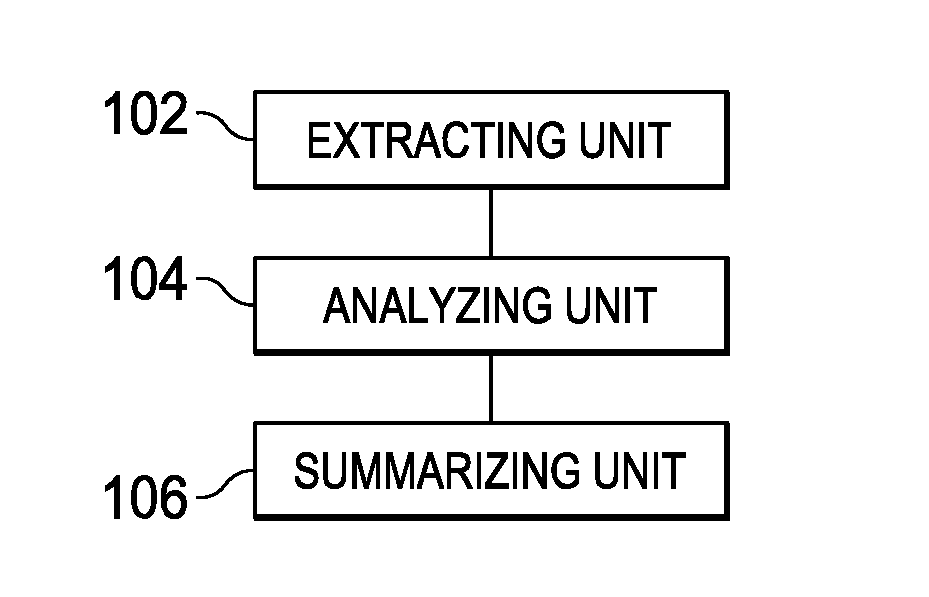

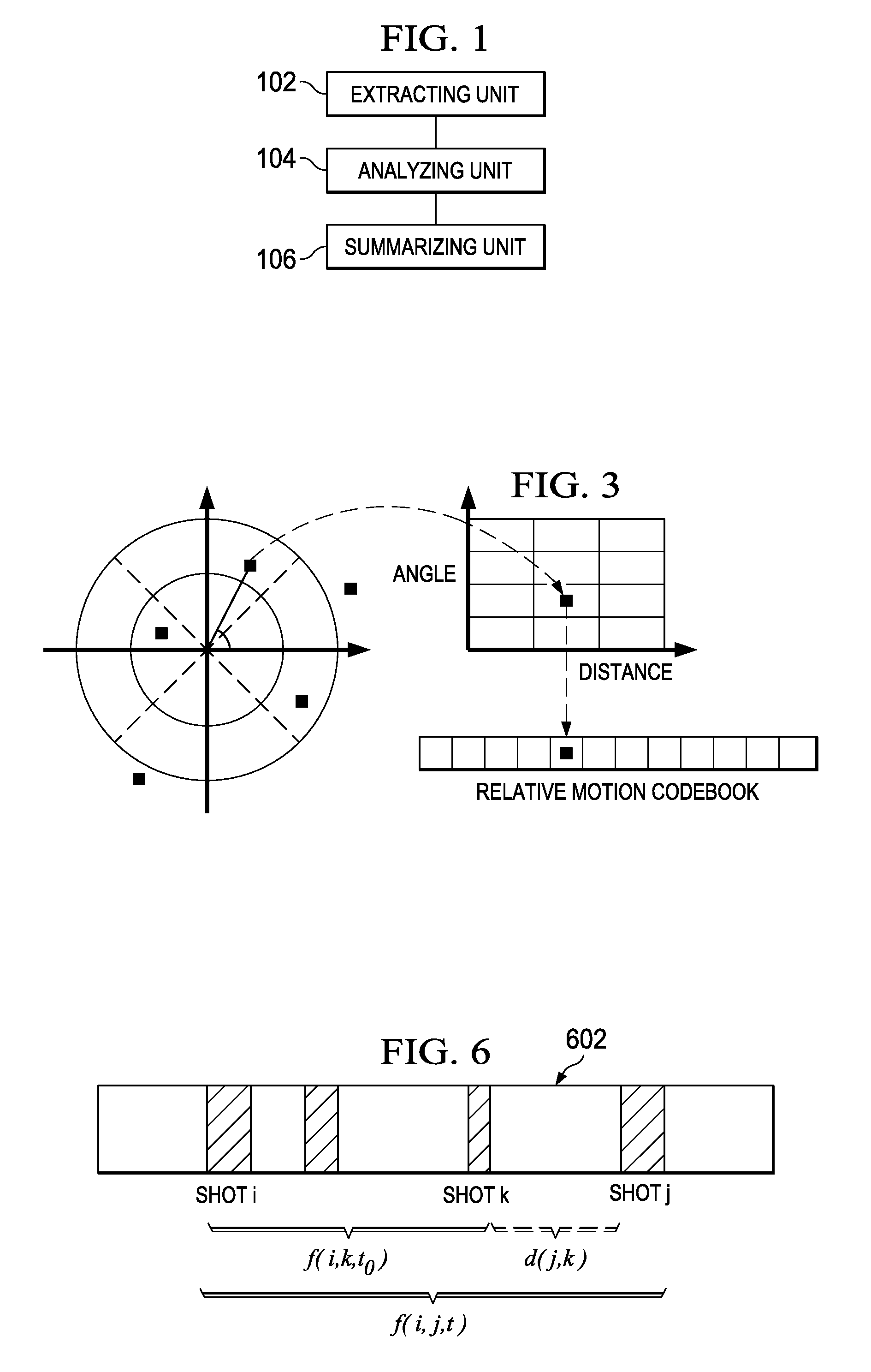

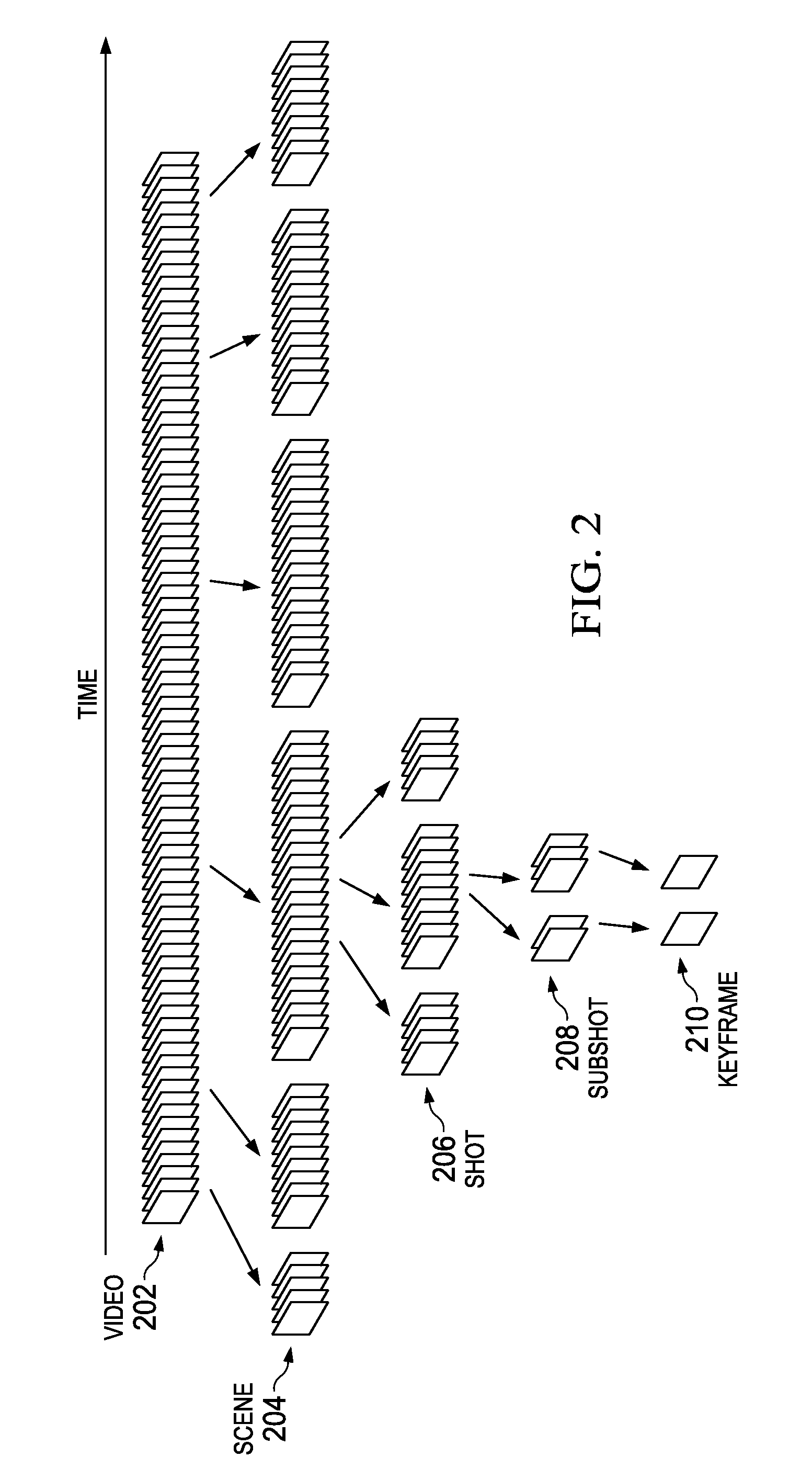

Method and system for video summarization

ActiveUS20120123780A1Easy to useCharacter and pattern recognitionSpeech recognitionPattern recognitionKey frame

A video summary method comprises dividing a video into a plurality of video shots, analyzing each frame in a video shot from the plurality of video shots, determining a saliency of each frame of the video shot, determining a key frame of the video shot based on the saliency of each frame of the video shot, extracting visual features from the key frame and performing shot clustering of the plurality of video shots to determine concept patterns based on the visual features. The method further comprises fusing different concept patterns using a saliency tuning method and generating a summary of the video based upon a global optimization method.

Owner:FUTUREWEI TECH INC

Method of selecting key-frames from a video sequence

InactiveUS7184100B1Reducing online storage requirementReduce search timeTelevision system detailsColor signal processing circuitsGraphicsVideo sequence

A method of selecting key-frames (230) from a video sequence (210, 215) by comparing each frame in the video sequence with respect to its preceding and subsequent key-frames for redundancy where the comparison involves region and motion analysis. The video sequence is optionally pre-processed to detect graphic overlay. The key-frame set is optionally post-processed (250) to optimize the resulting set for face or other object recognition.

Owner:ANXIN MATE HLDG

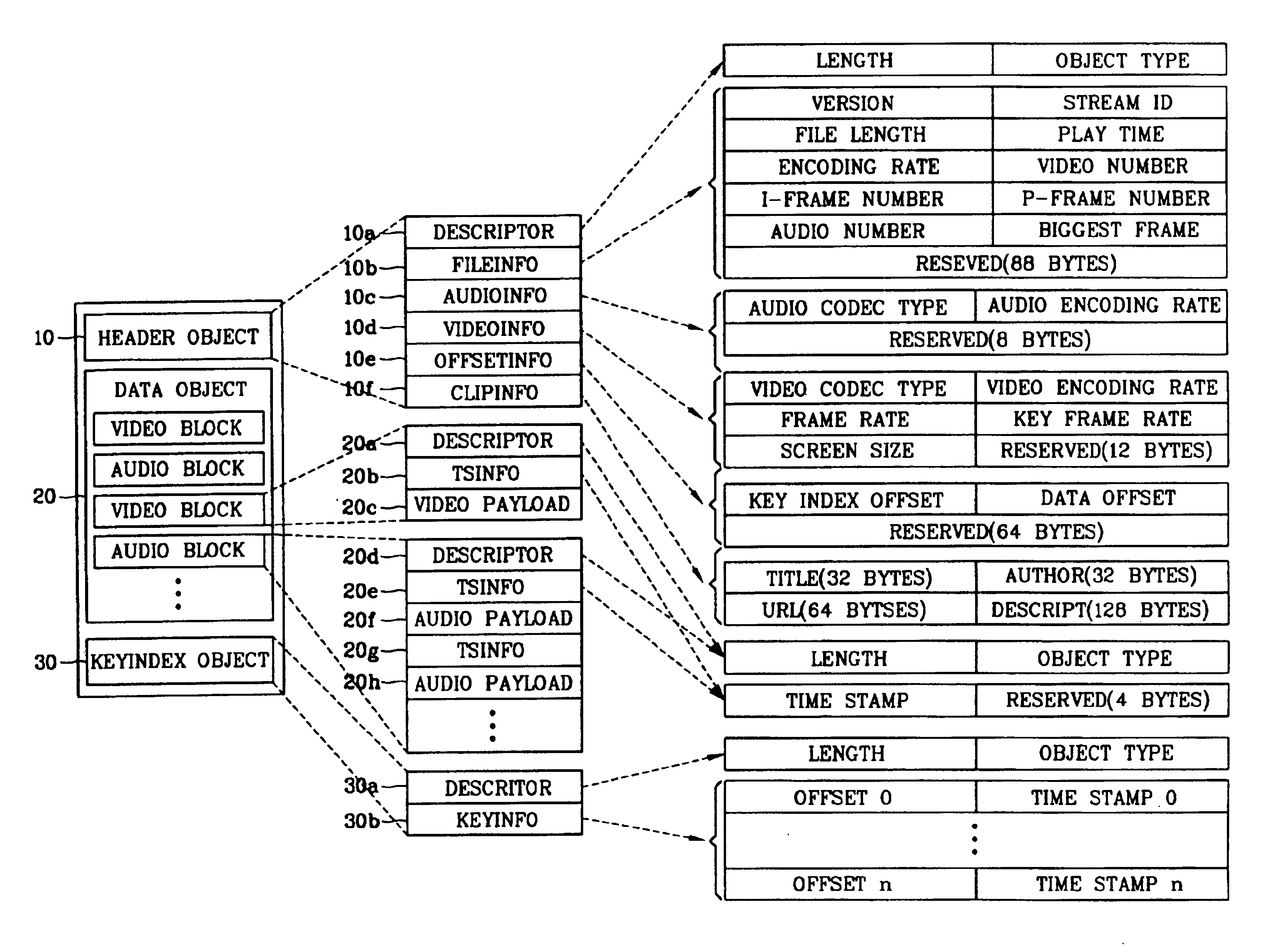

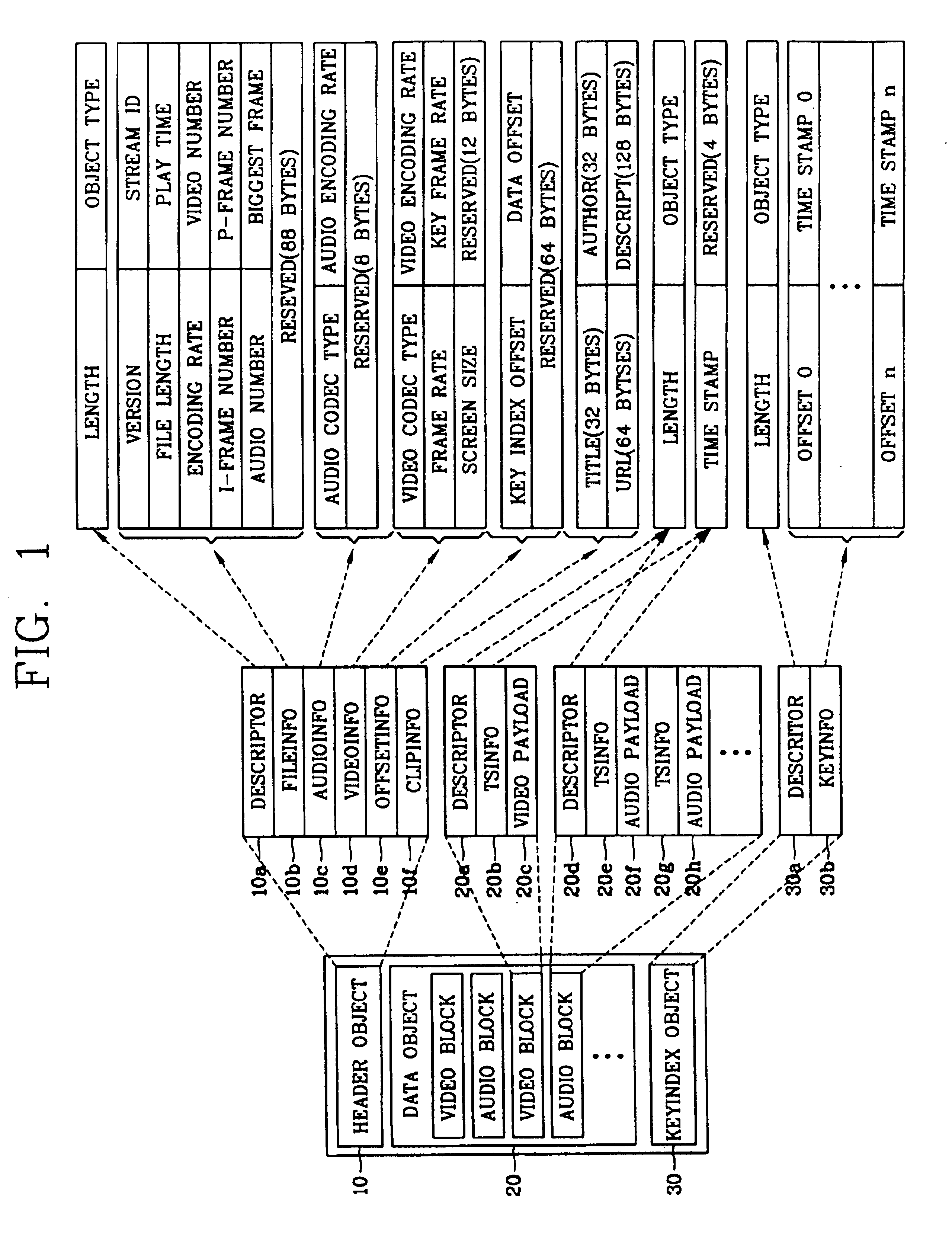

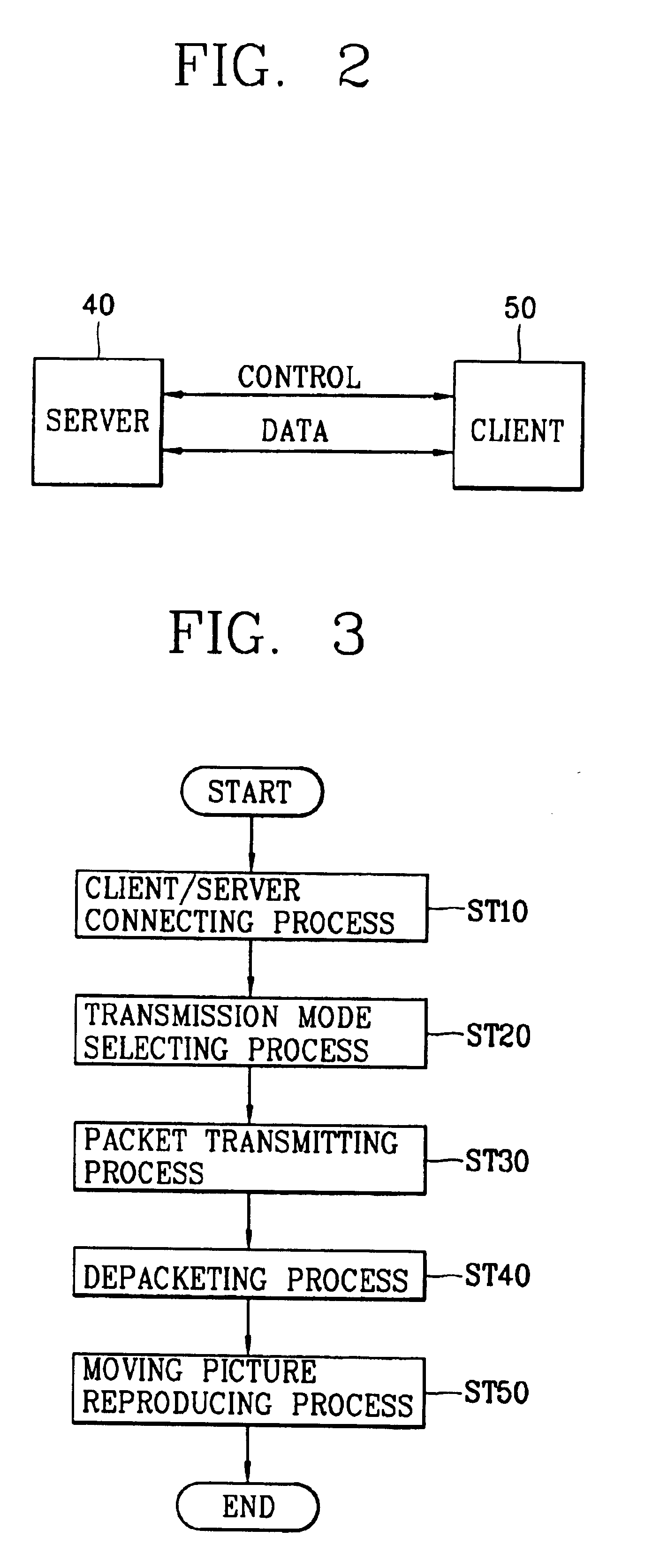

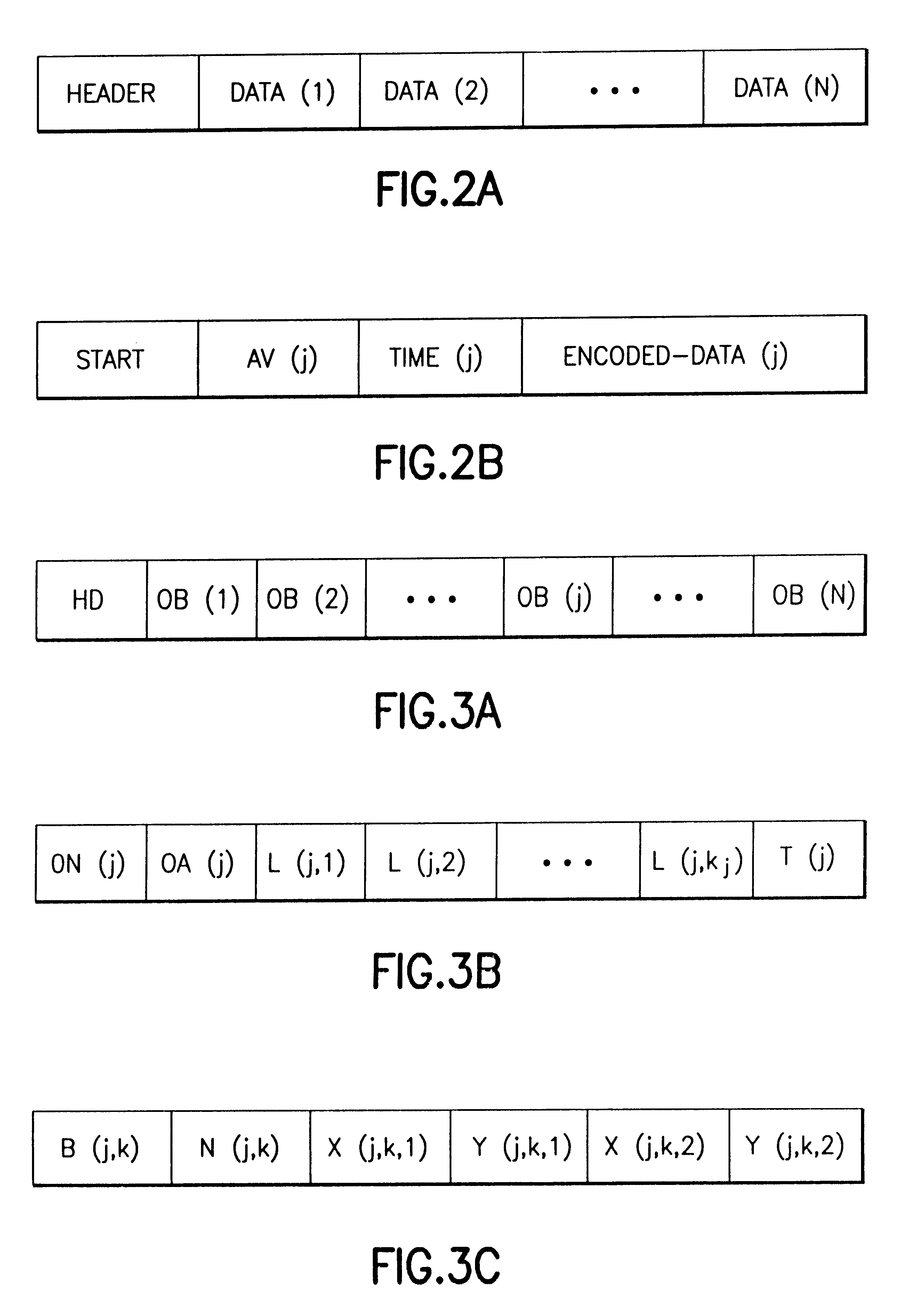

Apparatus and method for providing file structure for multimedia streaming service

InactiveUS6856997B2Reduce bandwidth wasteFast data transferData processing applicationsPulse modulation television signal transmissionData synchronizationTemporal information

In a file structure for a streaming service and a method for providing a streaming service, a file structure includes a header object having basic information about a file and information for an application service, a data object synchronizing multimedia data with temporal information and storing it, and a key index object storing an offset and temporal information of a video block having a key frame in video blocks as a basis on a time axis for random access and reproduction. Accordingly, data can be transmitted quickly by not including unnecessary additional data, an application region can be expanded by including other media file besides a video and an audio files, because a random access and random reproduction by key index information are possible, it is possible to support various reproduction functions such as a fast play, a reverse play and random reproduction, etc.

Owner:LG ELECTRONICS INC

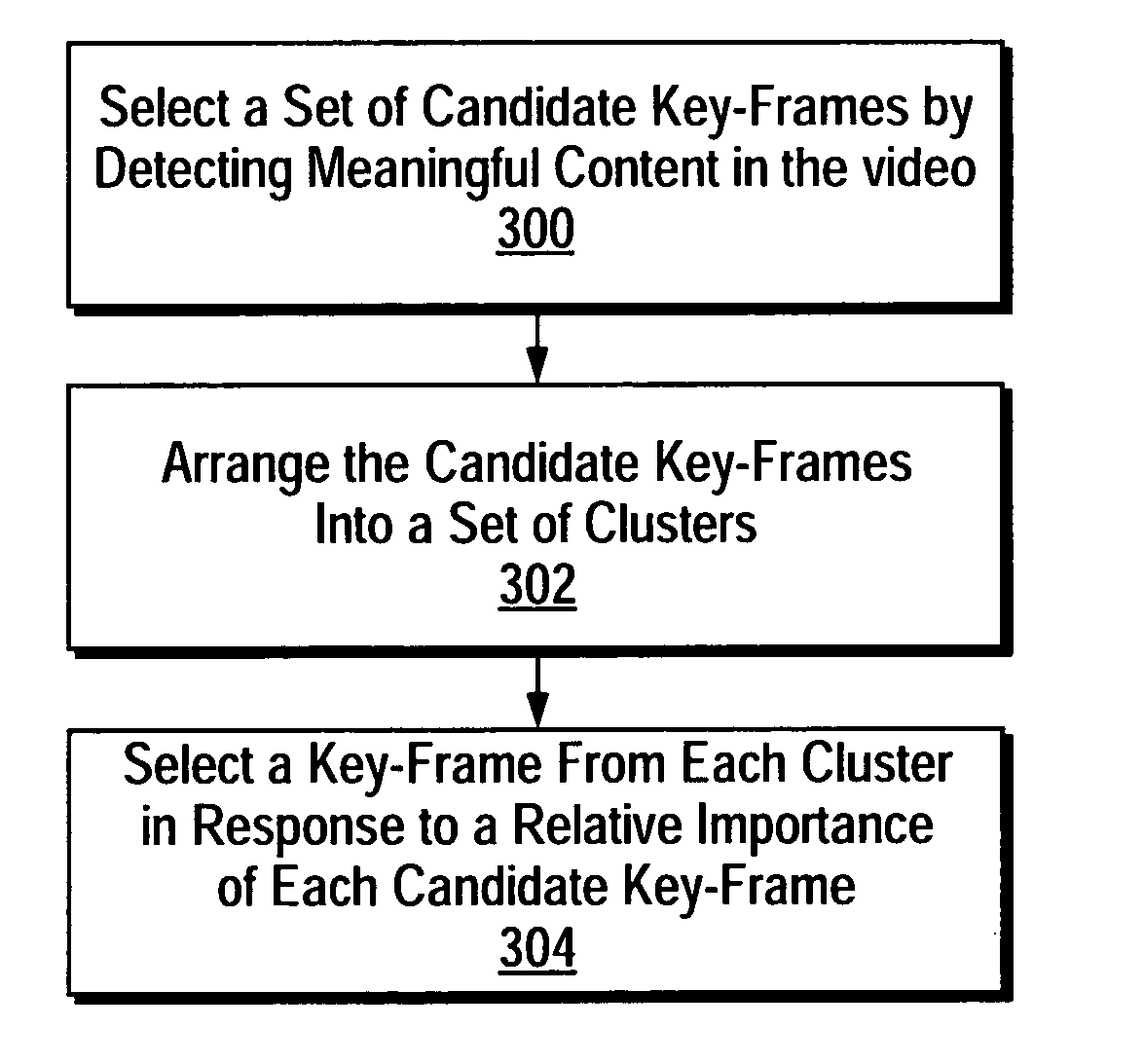

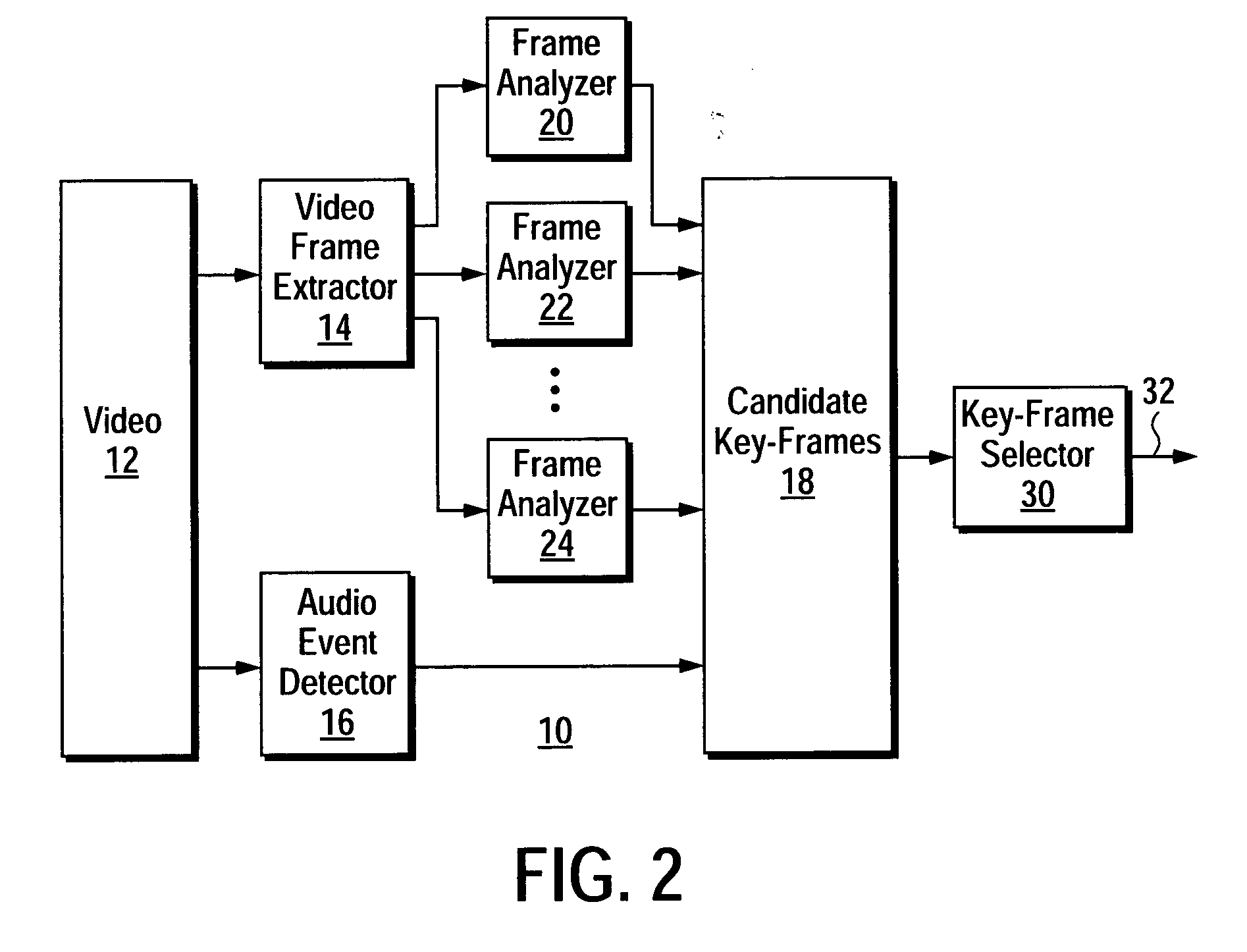

Intelligent key-frame extraction from a video

A method for intelligent extraction of key-frames from a video that yields key-frames that depict meaningful content in the video. A method according to the present techniques includes selecting a set of candidate key-frames from among a series of video frames in a video by performing a set of analyses on each video frame. Each analysis is selected to detect a corresponding type of meaningful content in the video. The candidate key-frames are then arranged into a set of clusters and a key-frame is then selected from each cluster in response to its relative importance in terms of depicting meaningful content in the video.

Owner:HEWLETT PACKARD DEV CO LP

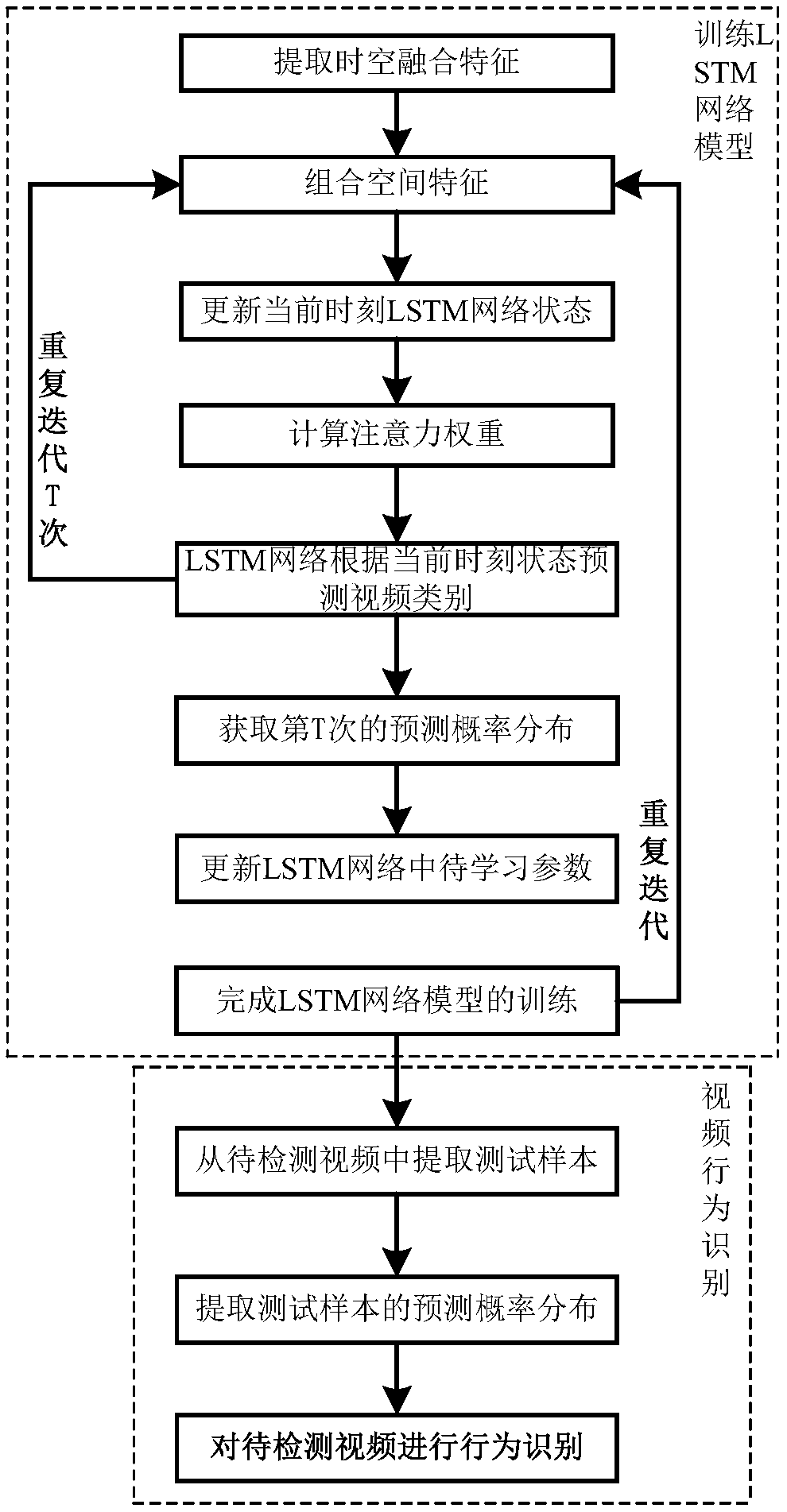

A video behavior recognition method based on spatio-temporal fusion features and attention mechanism

ActiveCN109101896AImprove accuracyEfficient use ofCharacter and pattern recognitionNeural architecturesFrame sequenceKey frame

The invention discloses a video behavior identification method based on spatio-temporal fusion characteristics and attention mechanism, The spatio-temporal fusion feature of input video is extracted by convolution neural network Inception V3, and then combining with the attention mechanism of human visual system on the basis of spatio-temporal fusion characteristics, the network can automaticallyallocate weights according to the video content, extract the key frames in the video frame sequence, and identify the behavior from the video as a whole. Thus, the interference of redundant information on the identification is eliminated, and the accuracy of the video behavior identification is improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

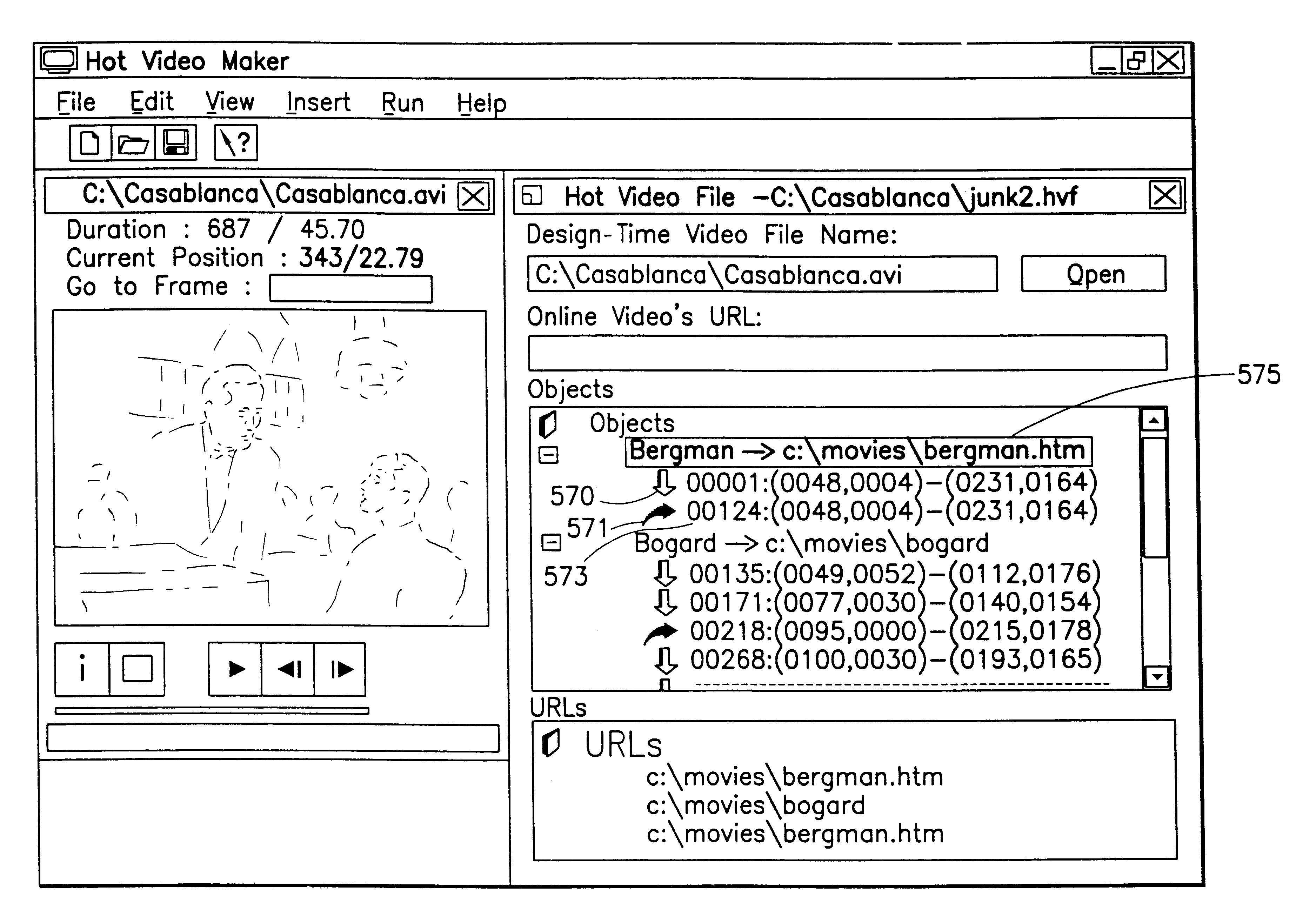

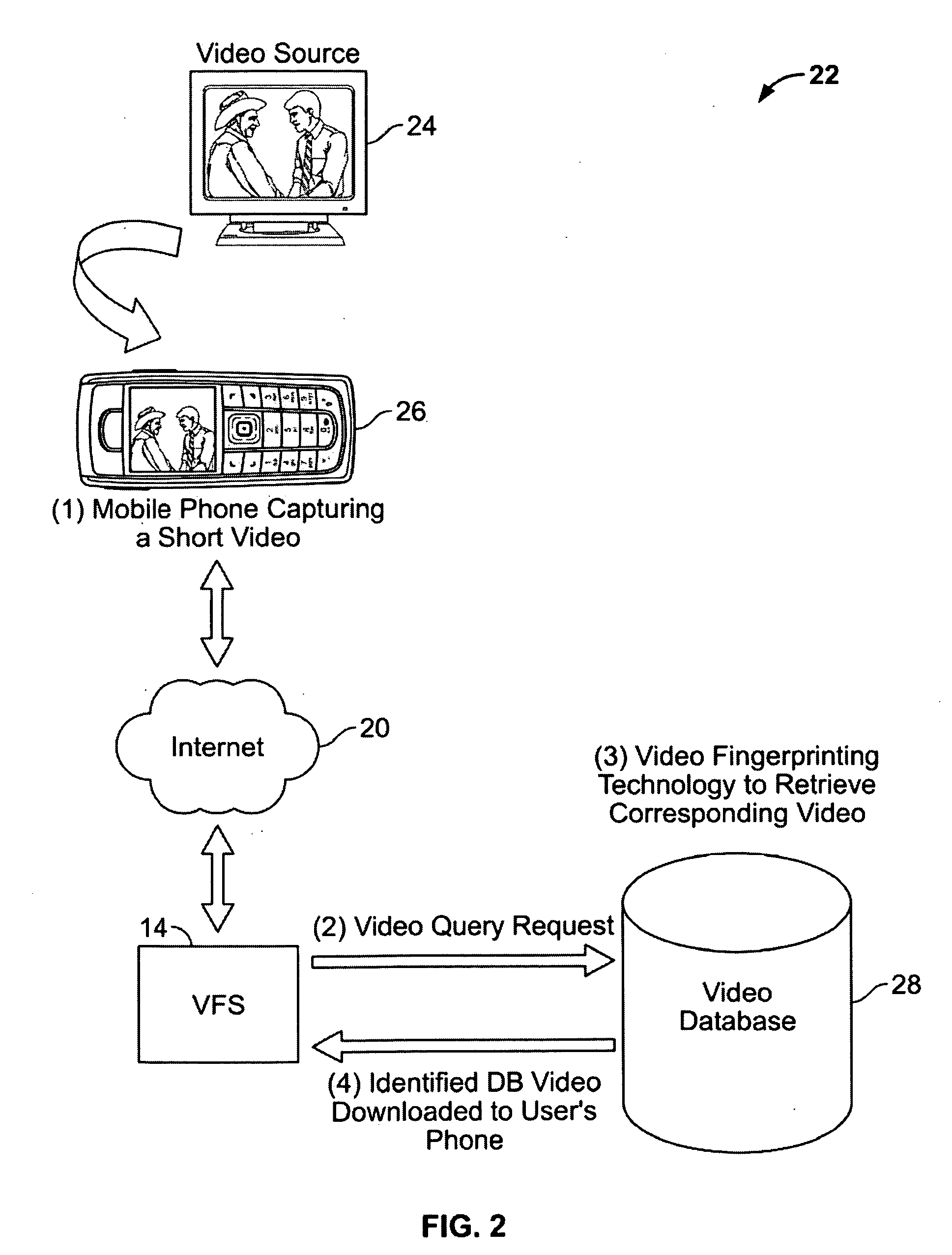

Method and apparatus for integrating hyperlinks in video

InactiveUS6912726B1Reduce amountProcess can be speededTelevision system detailsDigital data information retrievalComputer hardwareHyperlink

Hypervideo data is encoded with two distinct portions, a first portion which contains the video data and a second portion, typically much smaller than the first, which contains hyperlink information associated with the video data. Preferably, the first and second portions are stored in separate and distinct files. The encoding of the hyperlink information is preferably made efficient by encoding only key frames of the video, and by encoding hot link regions of simple geometries. A hypervideo player determines the hot link regions in frames between key frames by interpolating the hot link regions in key frames which sandwich those frames.

Owner:IBM CORP

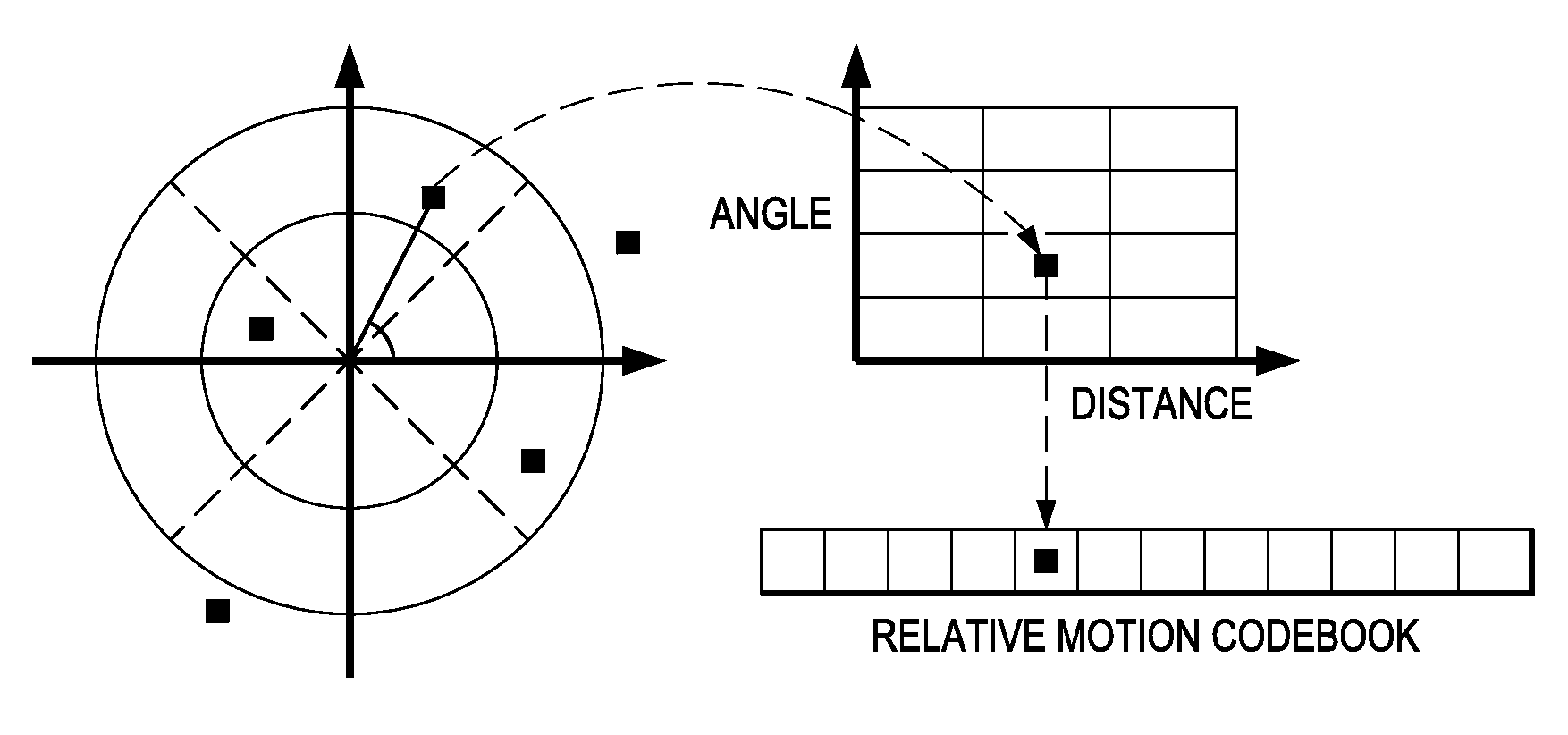

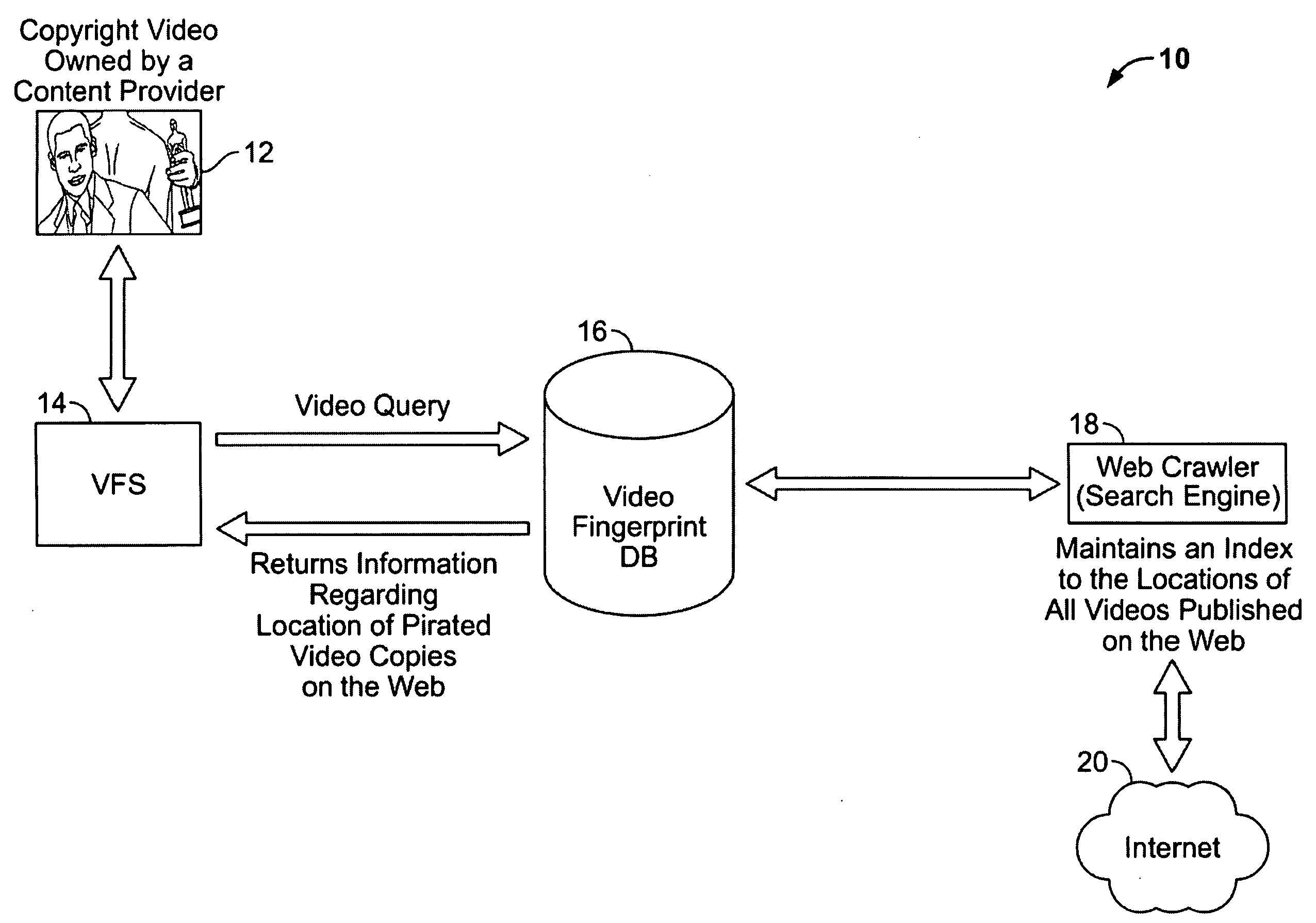

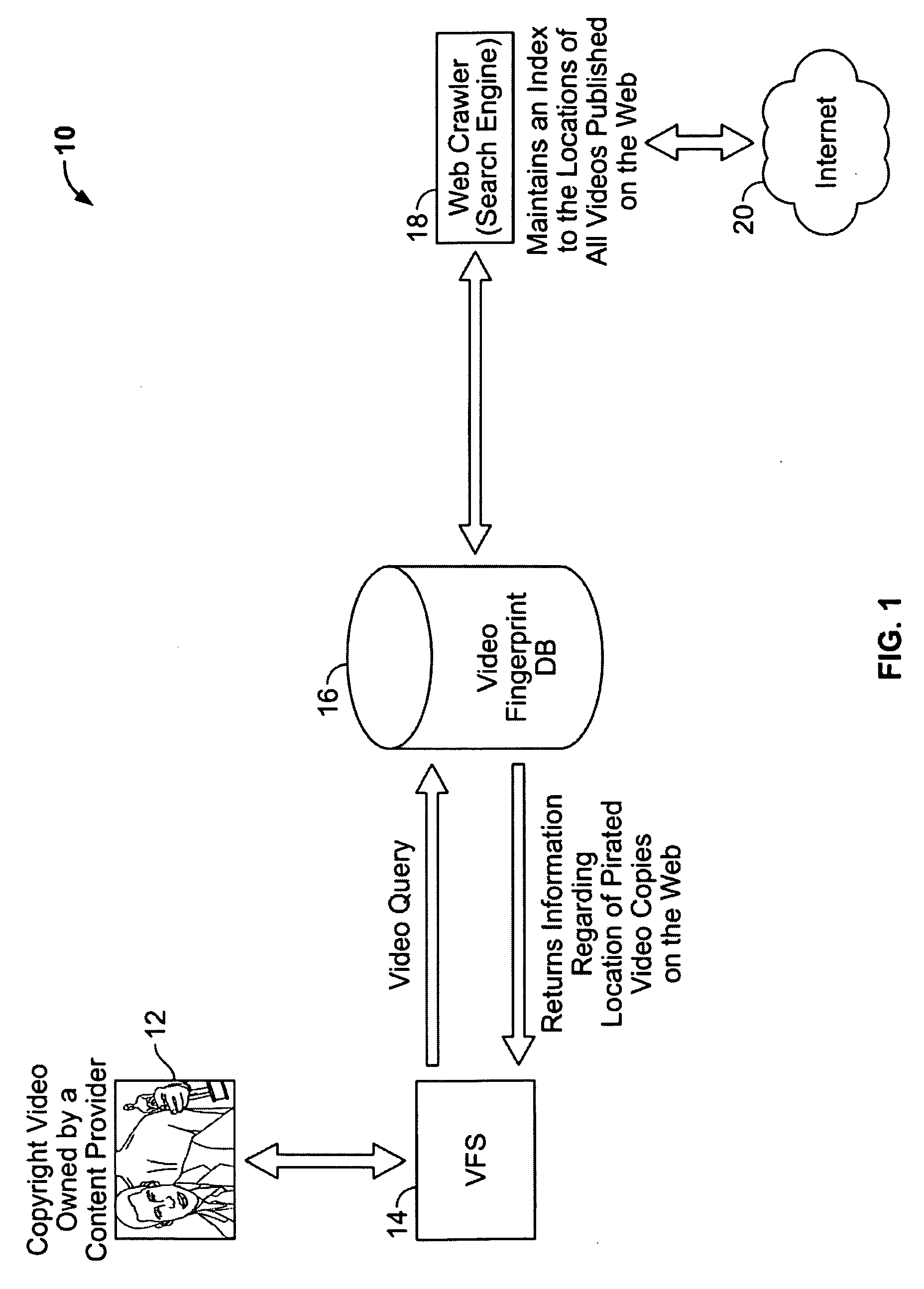

Content-based matching of videos using local spatio-temporal fingerprints

ActiveUS20100049711A1Poor discriminationImprove discriminationDigital data processing detailsPicture reproducers using cathode ray tubesData matchingPattern recognition

A computer implemented method computer implemented method for deriving a fingerprint from video data is disclosed, comprising the steps of receiving a plurality of frames from the video data; selecting at least one key frame from the plurality of frames, the at least one key frame being selected from two consecutive frames of the plurality of frames that exhibiting a maximal cumulative difference in at least one spatial feature of the two consecutive frames; detecting at least one 3D spatio-temporal feature within the at least one key frame; and encoding a spatio-temporal fingerprint based on mean luminance of the at least one 3D spatio-temporal feature. The least one spatial feature can be intensity. The at least one 3D spatio-temporal feature can be at least one Maximally Stable Volume (MSV). Also disclosed is a method for matching video data to a database containing a plurality of video fingerprints of the type described above, comprising the steps of calculating at least one fingerprint representing at least one query frame from the video data; indexing into the database using the at least one calculated fingerprint to find a set of candidate fingerprints; applying a score to each of the candidate fingerprints; selecting a subset of candidate fingerprints as proposed frames by rank ordering the candidate fingerprints; and attempting to match at least one fingerprint of at least one proposed frame based on a comparison of gradient-based descriptors associated with the at least one query frame and the at least one proposed frame.

Owner:SRI INTERNATIONAL

Method and system for video summarization

ActiveUS9355635B2Easy to useCharacter and pattern recognitionSpeech recognitionPattern recognitionKey frame

Owner:FUTUREWEI TECH INC

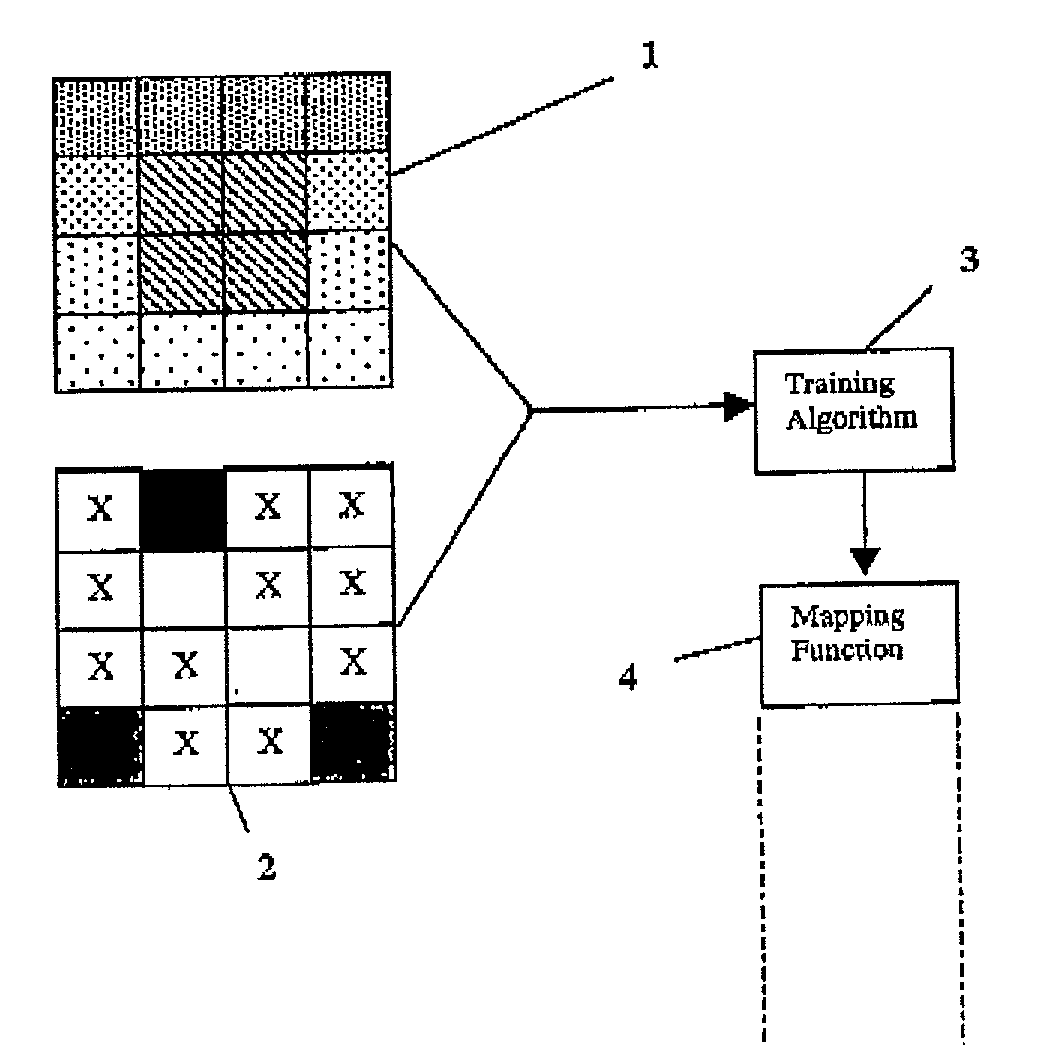

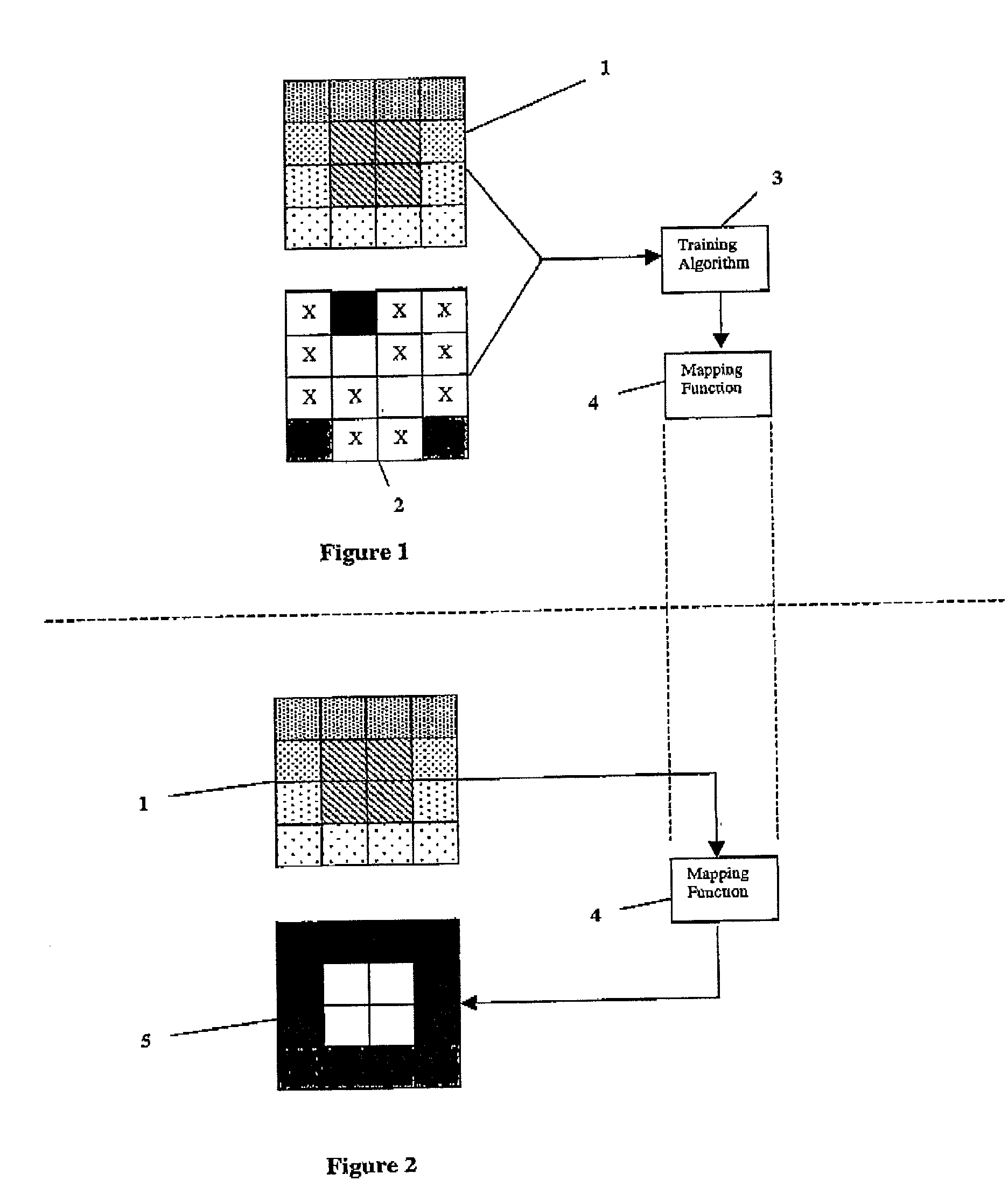

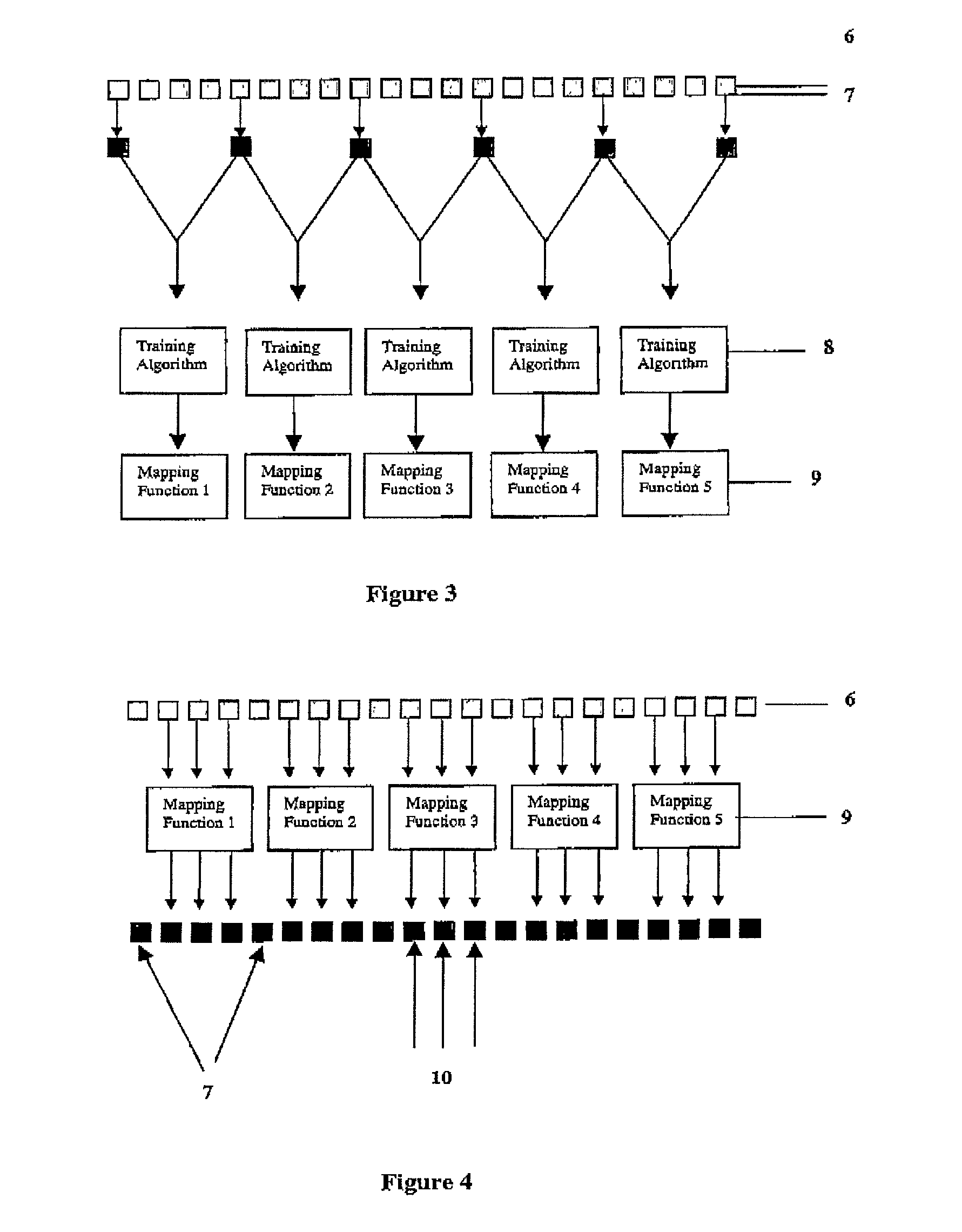

Image conversion and encoding techniques

InactiveUS20020048395A1Reduce in quantityReducing time commitmentImage enhancementImage analysisPattern recognitionKey frame

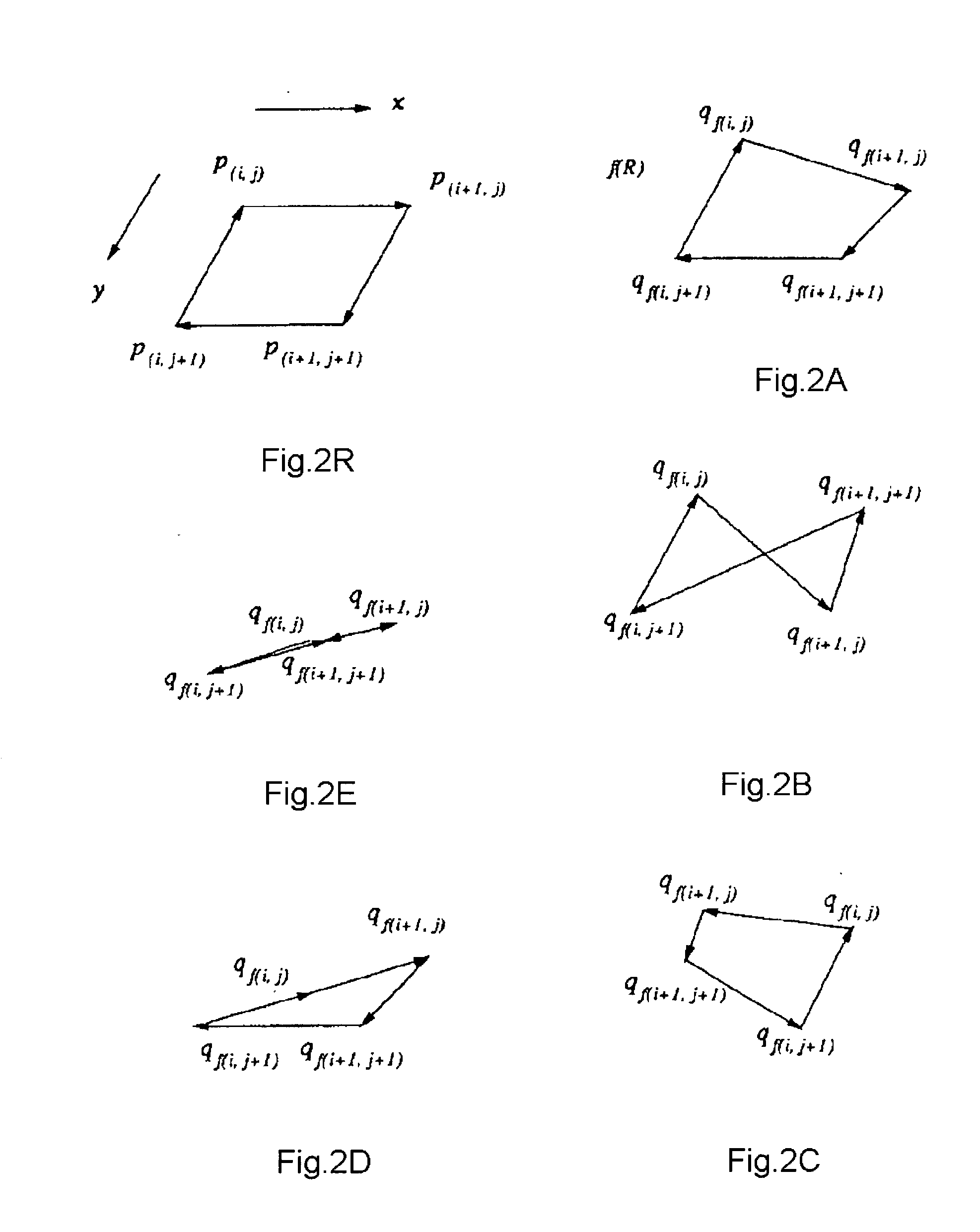

A method of creating a depth map including the steps of assigning a depth to at least one pixel or portion of an image, determining relative location and image characteristics for each at least one pixel or portion of the image, utilising the depth(s), image characteristics and respective location to determine an algorithm to ascertain depth characteristics as a function relative location and image characteristics, utilising said algorithm to calculate a depth characteristic for each pixel or portion of the image, wherein the depth characteristics form a depth map for the image. In a second phase of processing the said depth maps form key frames for the generation of depth maps for non-key frames using relative location, image characteristics and distance to key frame(s).

Owner:DYNAMIC DIGITAL DEPTH RES

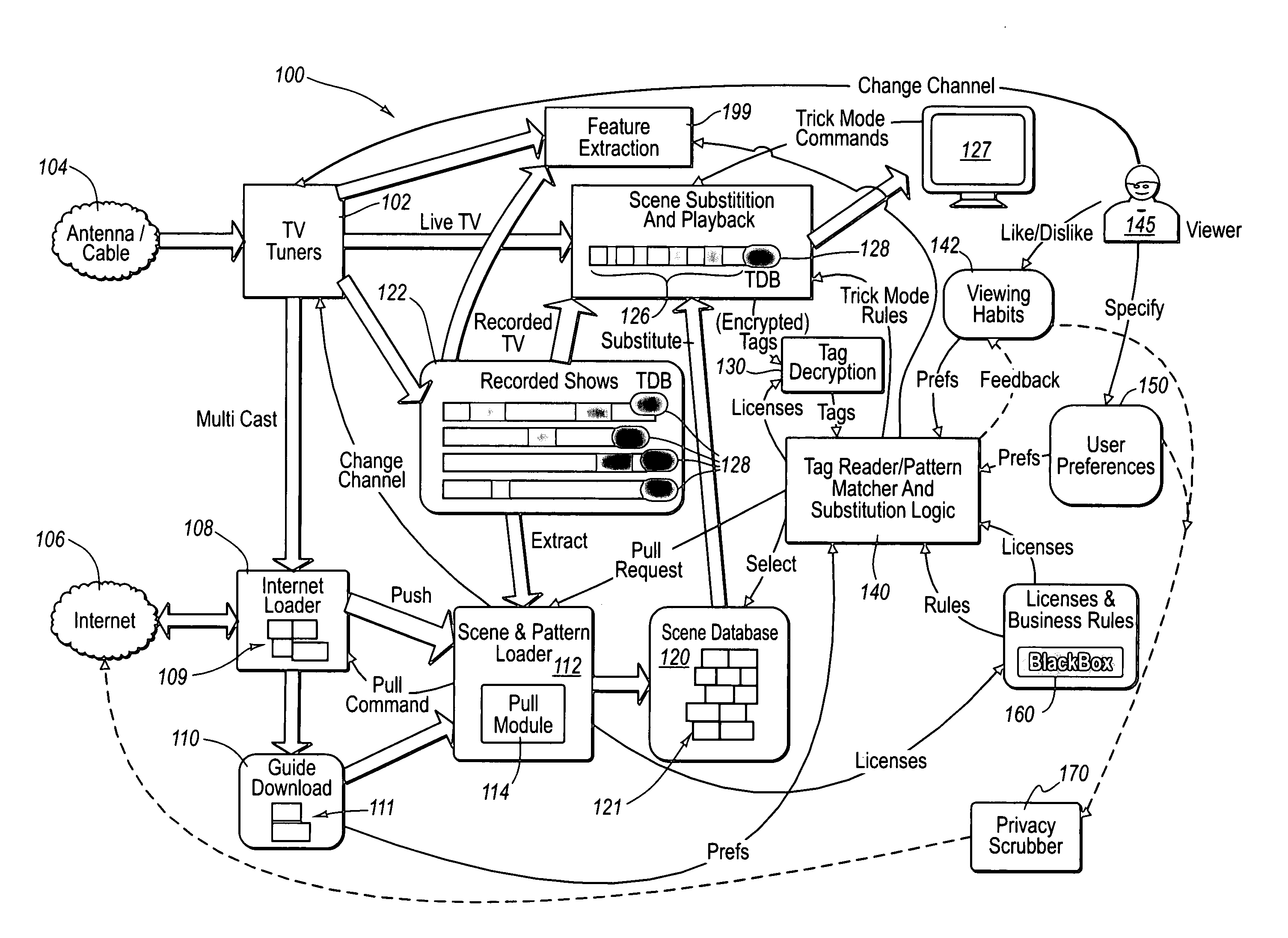

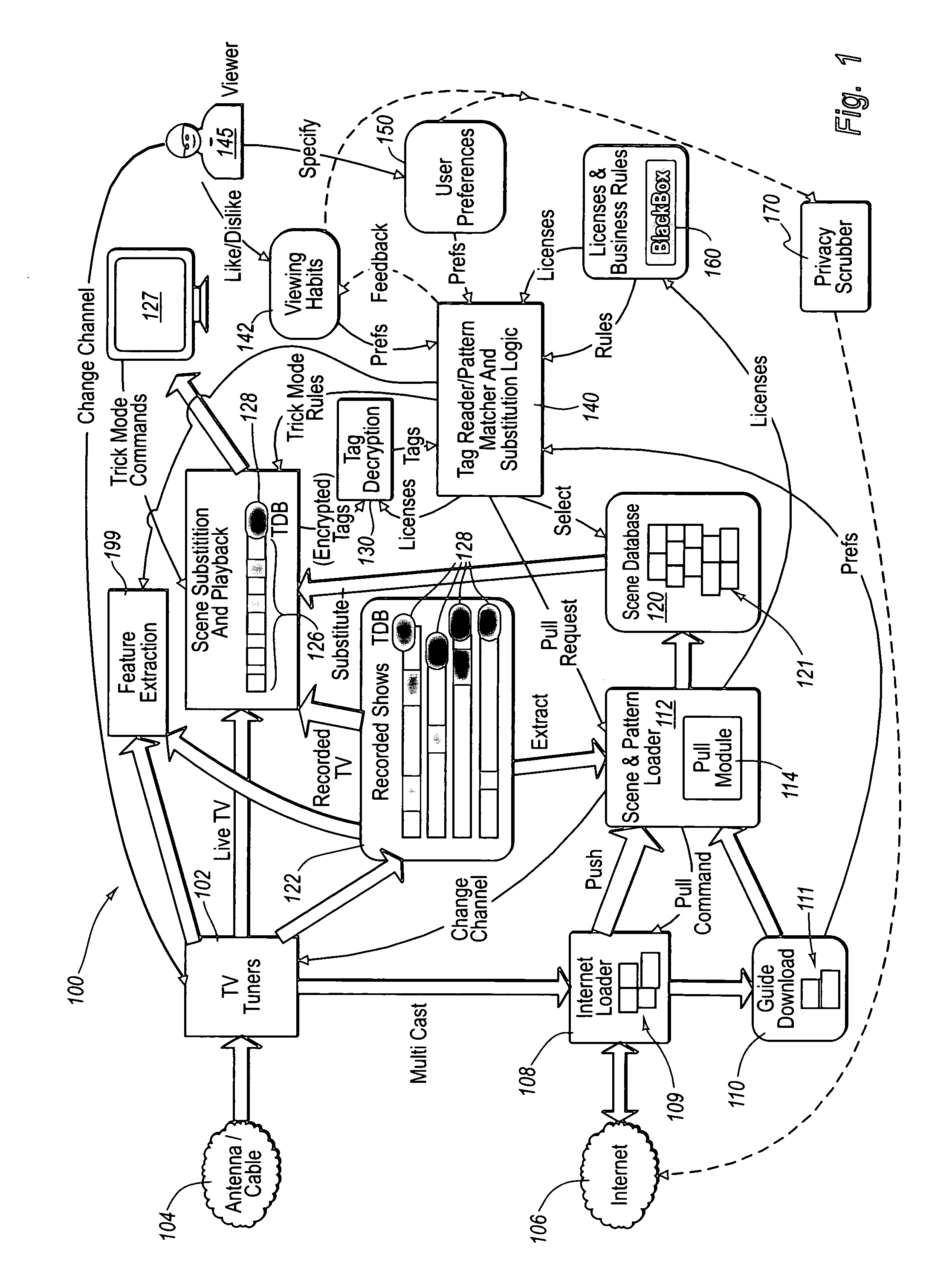

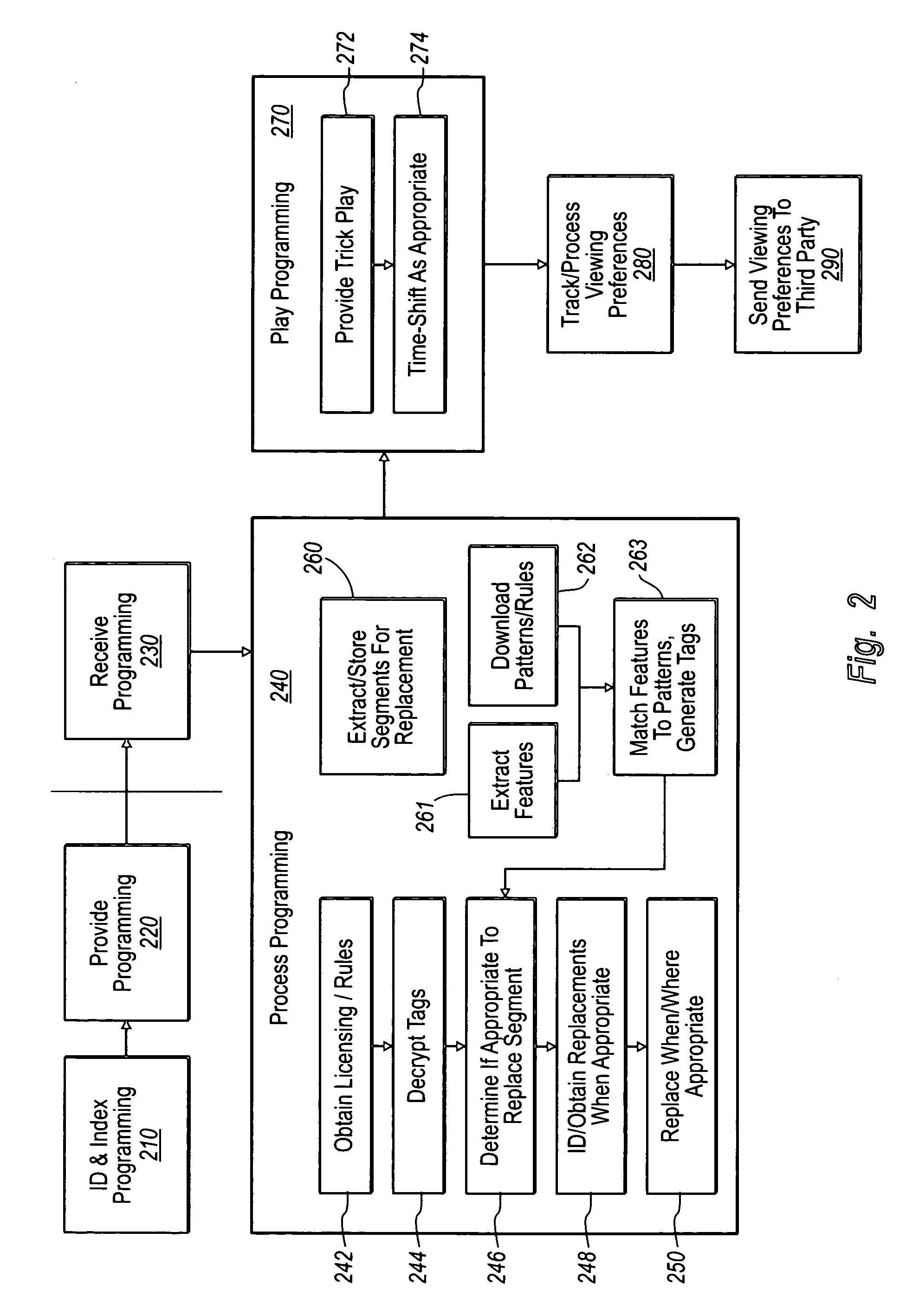

Extensible content identification and indexing

InactiveUS20060218617A1Improve relevanceImprove targetingTelevision system detailsAnalogue secracy/subscription systemsKey frameTargeted advertising

Innovative techniques for identifying and distinguishing content, such as commercials, can be used with means for marking key frames within the commercials to facilitate replacement of commercials and other programming segments in such a way as to provide improved focus and relevance for targeted advertising based on known and / or dynamic conditions. The identification of commercial features can also be used to provide improved trick play functionality while the commercial or other programming is rendered.

Owner:MICROSOFT TECH LICENSING LLC

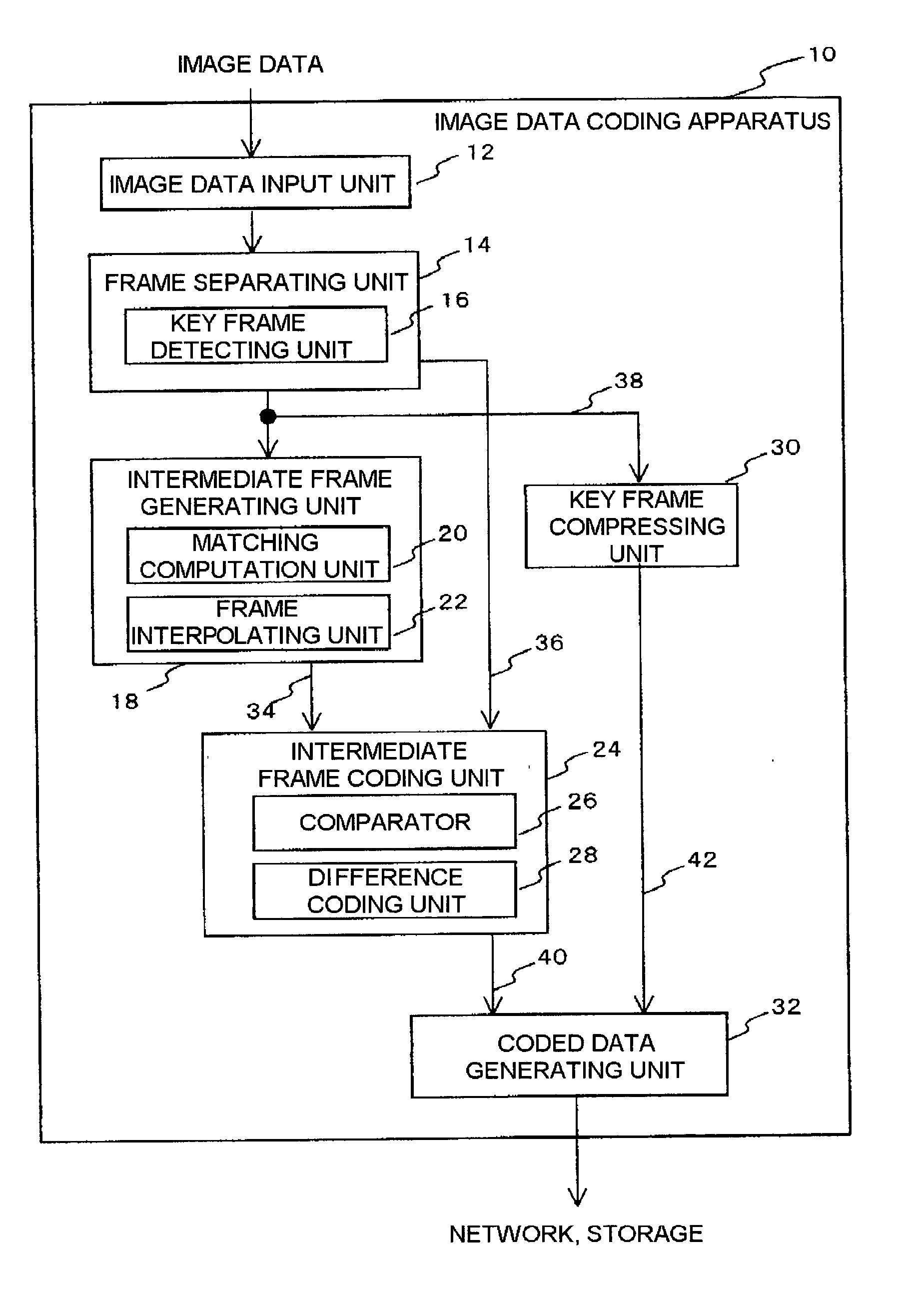

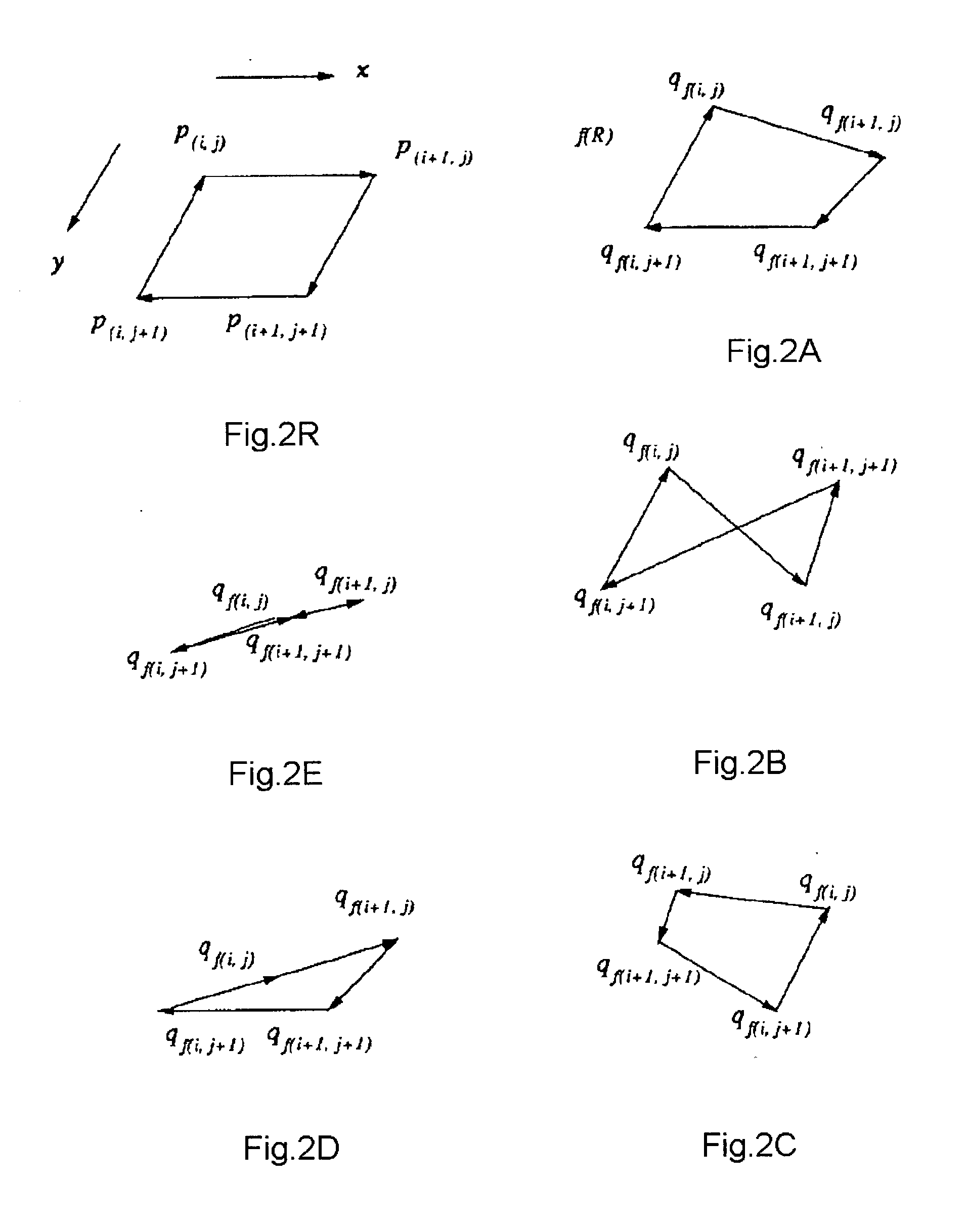

Method and apparatus for coding and decoding image data

InactiveUS20030076881A1Television system detailsPicture reproducers using cathode ray tubesKey frameImaging data

Owner:MONOLITH

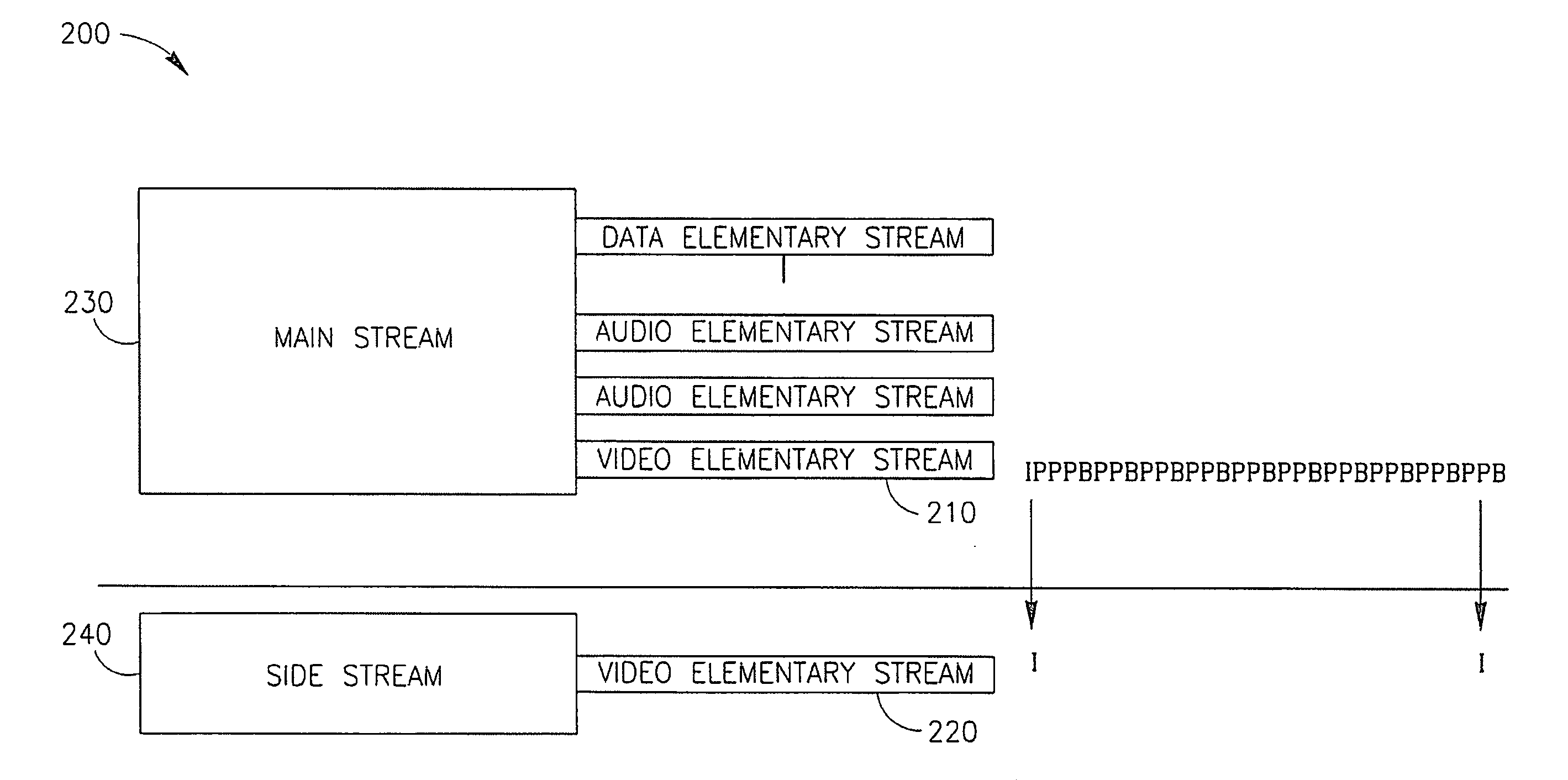

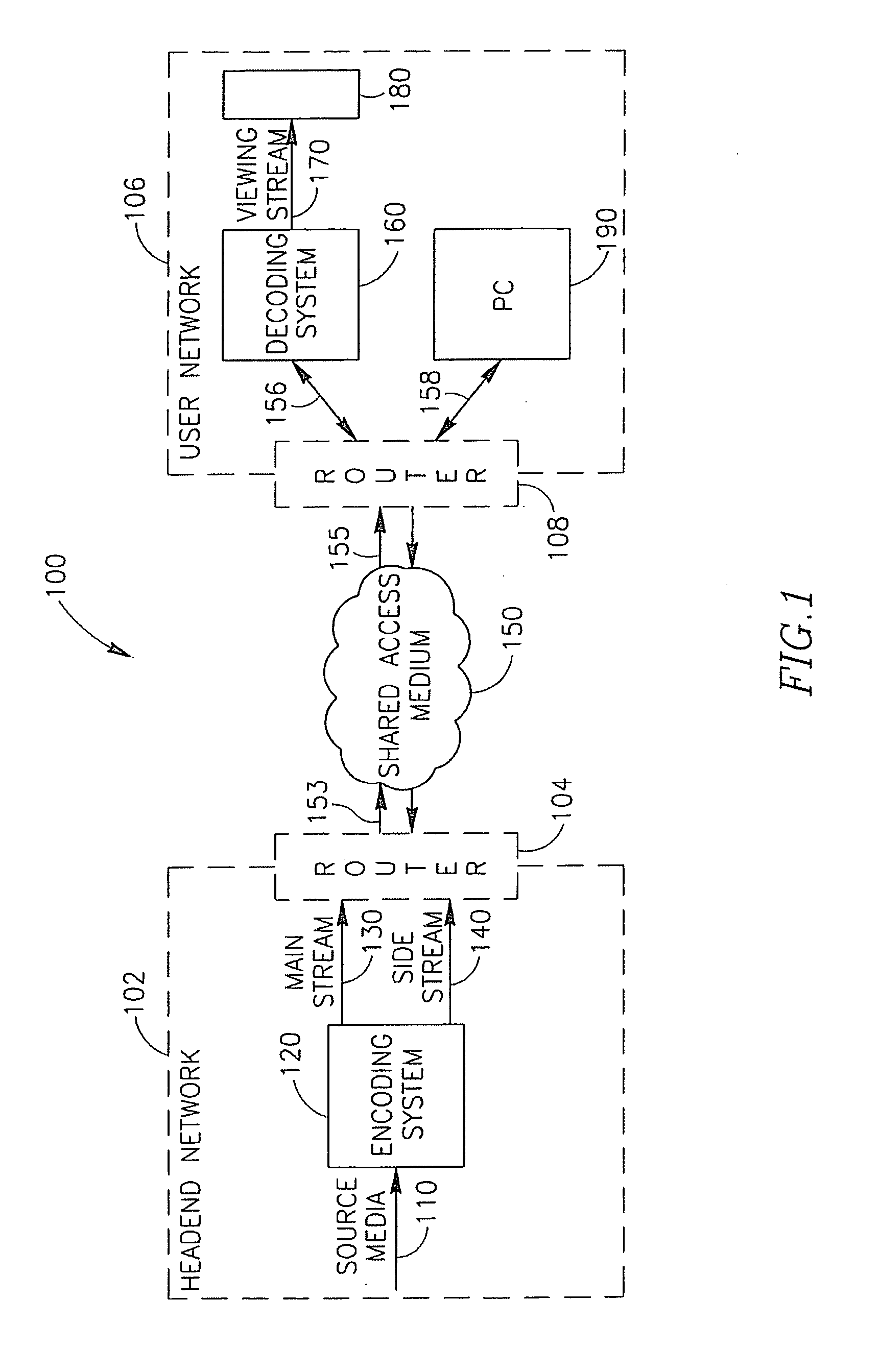

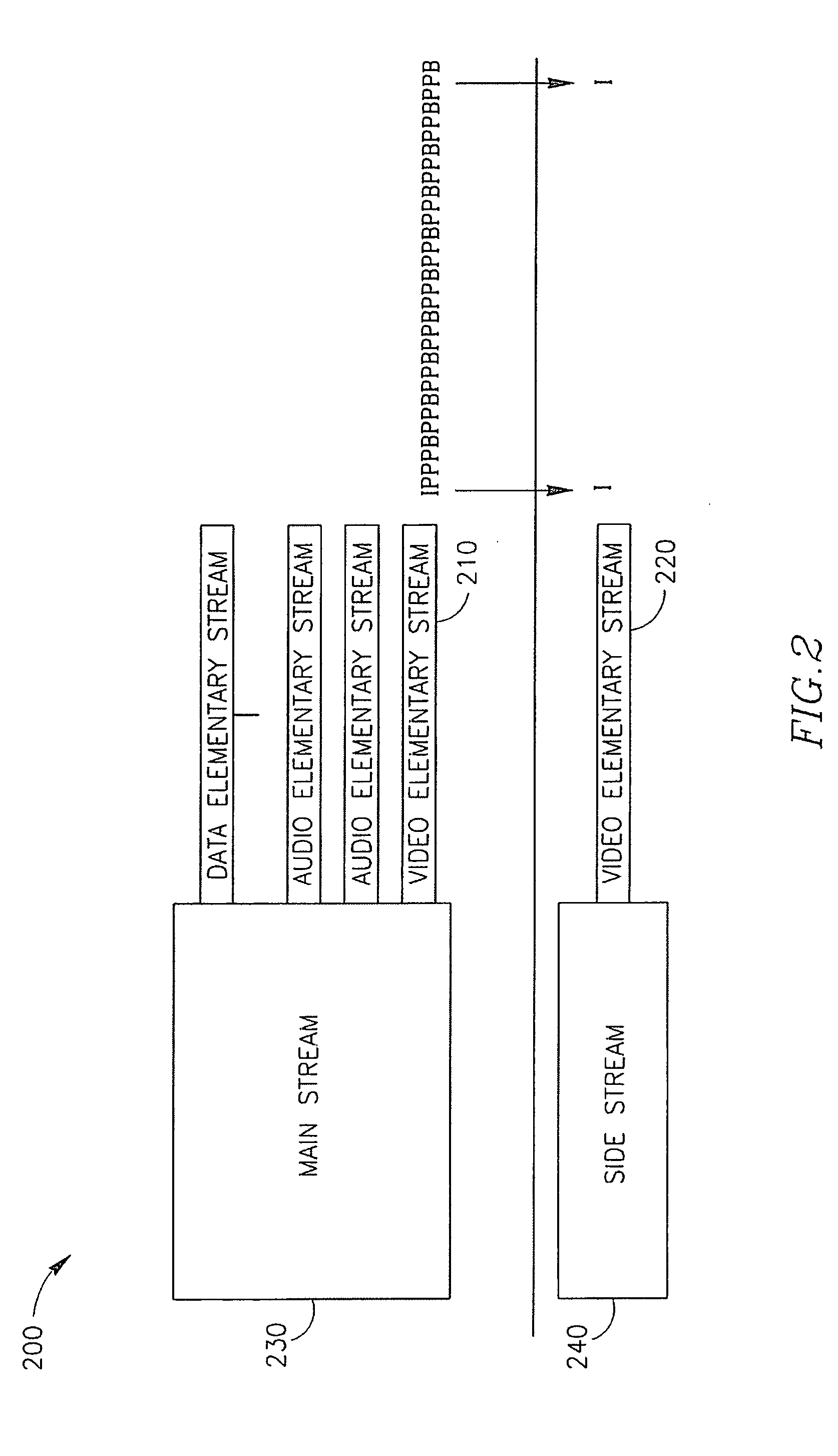

Method, apparatus, and system of fast channel hopping between encoded video streams

InactiveUS20070174880A1Two-way working systemsDigital video signal modificationKey frameUncompressed video

A method, apparatus, and system for rapid switching between encoded video streams while introducing a reduced amount of additional information during the switch. For example, a method of encoding uncompressed video frames in accordance with embodiments of the invention includes producing a first stream having a first key frame, a second key frame, and a delta frame therebetween; and producing a second stream having said first key frame, said second key frame, and a third key frame therebetween, wherein said third key frame corresponds with said delta frame. Other features are described and claimed.

Owner:OPTIBASE

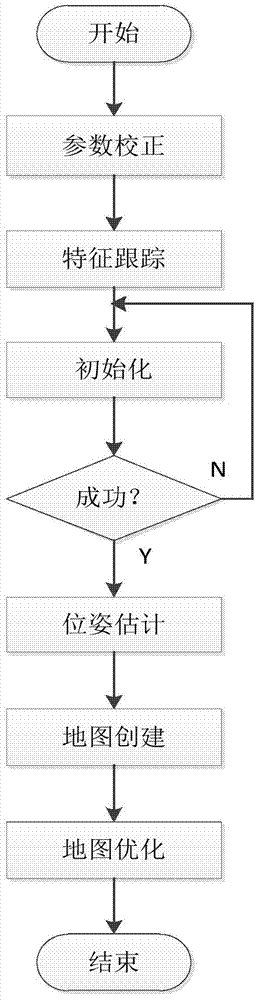

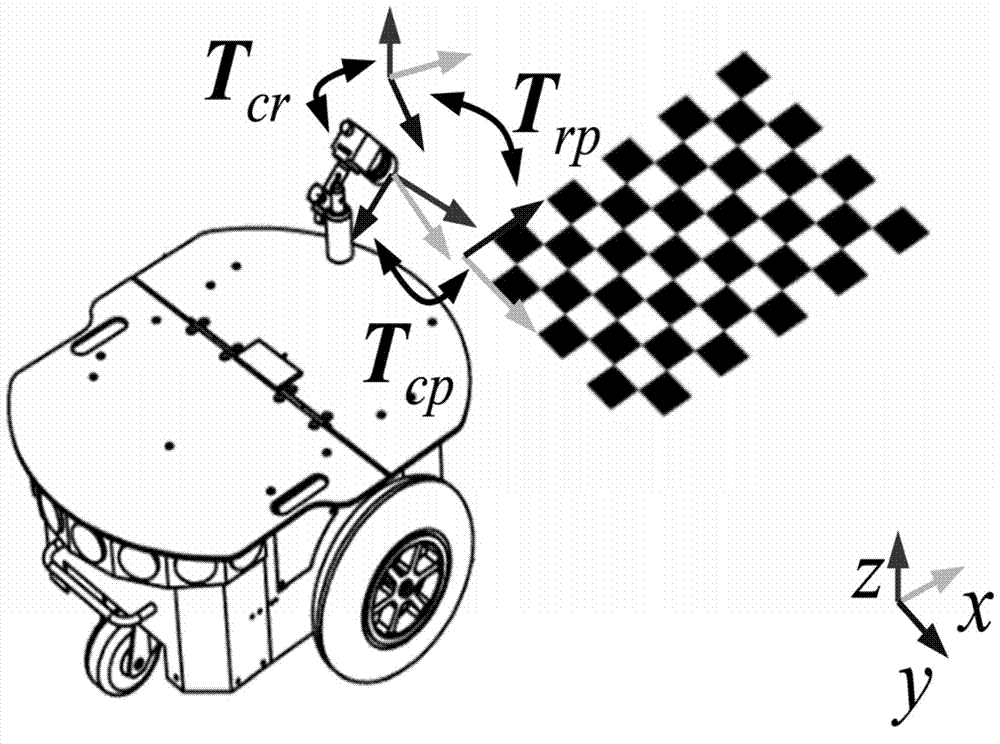

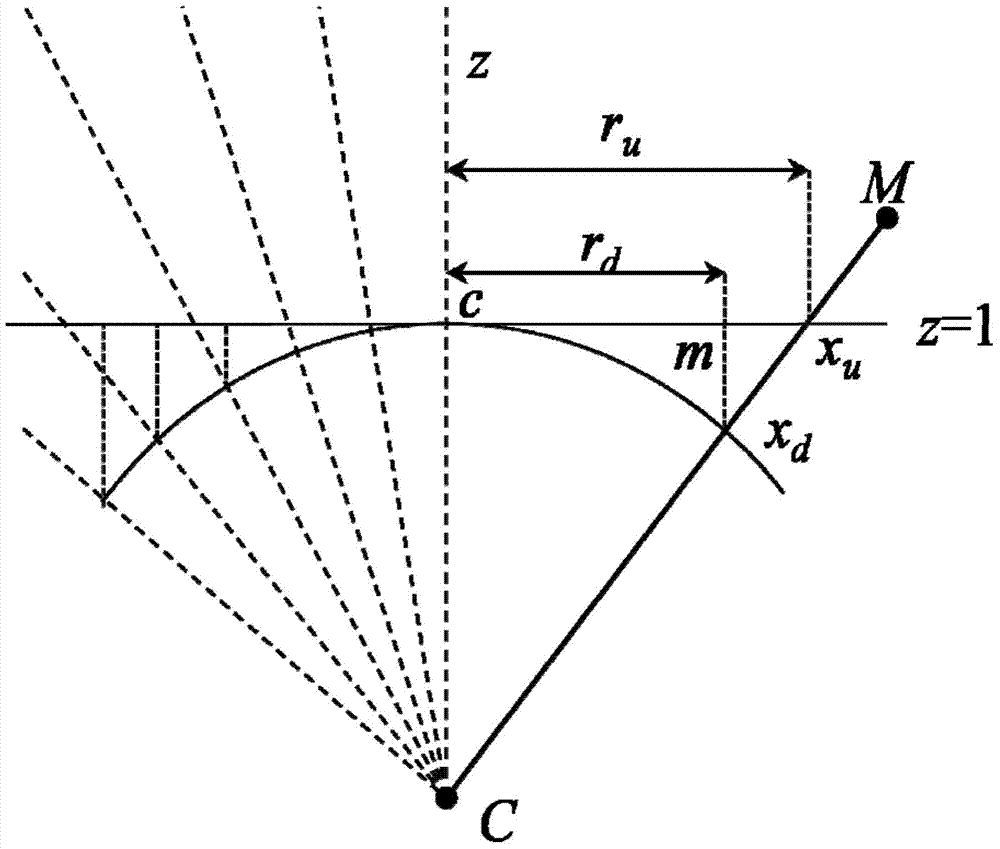

PTAM improvement method based on ground characteristics of intelligent robot

InactiveCN104732518ALift strict restrictionsEasy to initializeImage analysisNavigation instrumentsLine searchKey frame

The invention discloses a PTAM improvement method based on ground characteristics of an intelligent robot. The PTAM improvement method based on ground characteristics of the intelligent robot comprises the steps that firstly, parameter correction is completed, wherein parameter correction includes parameter definition and camera correction; secondly, current environment texture information is obtained by means of a camera, a four-layer Gausses image pyramid is constructed, the characteristic information in a current image is extracted by means of the FAST corner detection algorithm, data relevance between corner characteristics is established, and then a pose estimation model is obtained; two key frames are obtained so as to erect the camera on the mobile robot at the initial map drawing stage; the mobile robot begins to move in the initializing process, corner information in the current scene is captured through the camera and association is established at the same time; after a three-dimensional sparse map is initialized, the key frames are updated, the sub-pixel precision mapping relation between characteristic points is established by means of an extreme line searching and block matching method, and accurate re-positioning of the camera is achieved based on the pose estimation model; finally, matched points are projected in the space, so that a three-dimensional map for the current overall environment is established.

Owner:BEIJING UNIV OF TECH

Method and apparatus for coding and decoding image data

A matching processor detects correspondence information between key frames. A judging unit detects motion vectors from the correspondence information to determine variation of a field of view between the key frames. When variation of the field of view is sufficiently large, added information is generated related to the variation of the field of view. A stream generator generates a coded data stream by incorporating the added information. An intermediate image generator (for example, at a decoding side) detects the added information and trims edges of a generated intermediate image to account for the variation in the field of view, so that an unnatural invalidated region is cut out.

Owner:JBF PARTNERS

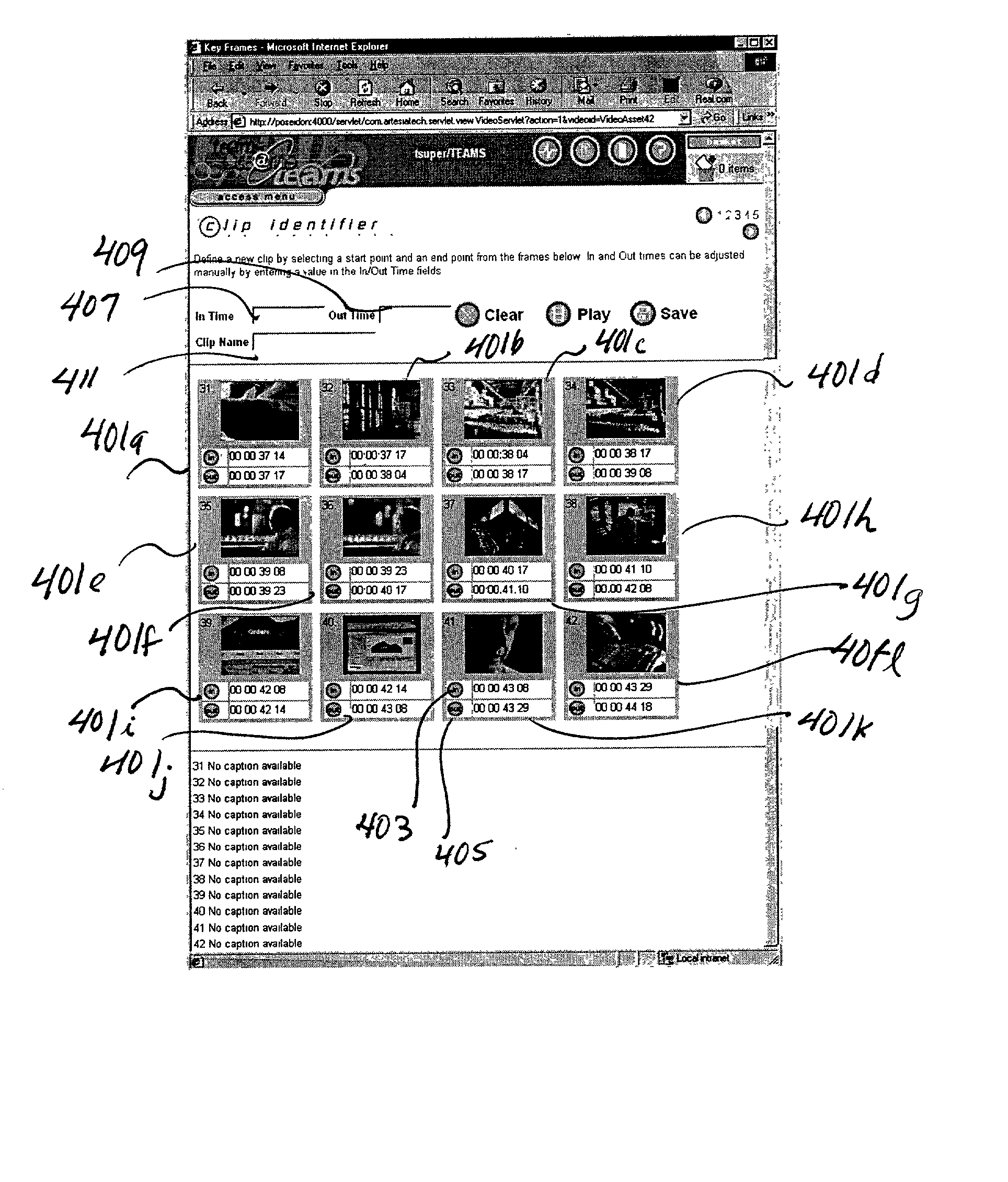

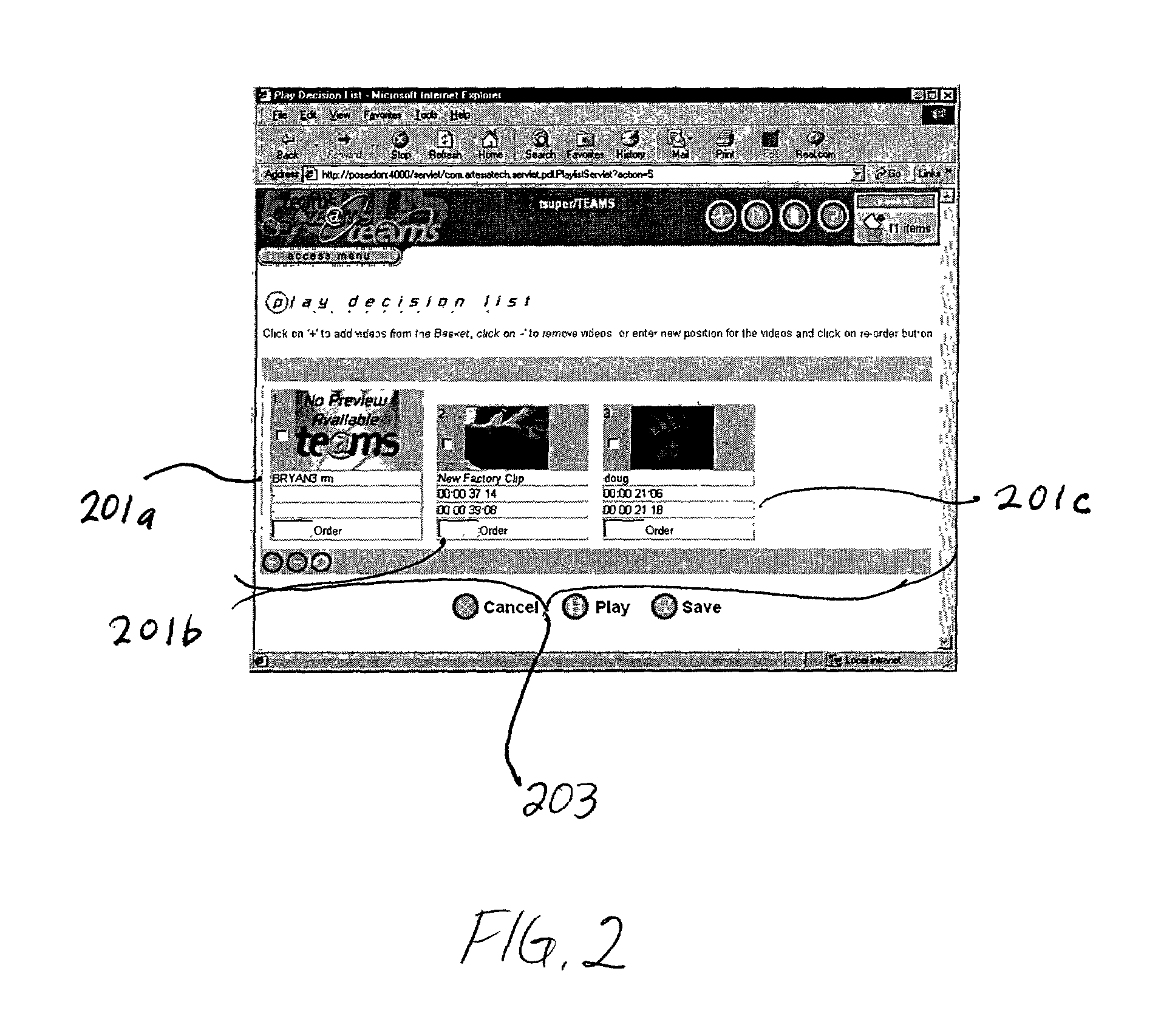

Method and system for streaming media manager

InactiveUS20020175917A1Electronic editing digitised analogue information signalsCathode-ray tube indicatorsDigital videoStoryboard

A computer-implemented or computer-enabled method and system is provided for working with streaming media, such as digital video clips and entire videos. Clips can be grouped together and snippets of video can be re-ordered into a rough cut assemblage of a video storyboard. Later, the video storyboard and the final video scene may be fine-tuned. The invention is not limited to digital video, and may also be used with other digital assets, including for example audio, animation, logos, text, etc. Accordingly, computer-enabled storyboarding of digital assets includes providing a storage having digital assets, the digital assets including at least one digital clip, and each digital clip having frames including a key frame corresponding to the digital clip. Digital clips are selected to be included in a storyboard. The storyboard is displayed, including an image for the key frame corresponding to each of the digital clips of the storyboard. Preferably, the image is a low-resolution image representing the key frame for the digital clips. The storyboard may be modified and saved, including adding parts of digital assets to the storyboard, deleting digital clips from the storyboard, and re-ordering the order of the clips in the storyboard. The digital clips can be edited / adjusted, including adjusted the in and / or out time of each clip. The storyboard may be played, that is each digital clip in the storyboard is played in sequence.

Owner:ARTESIA TECH

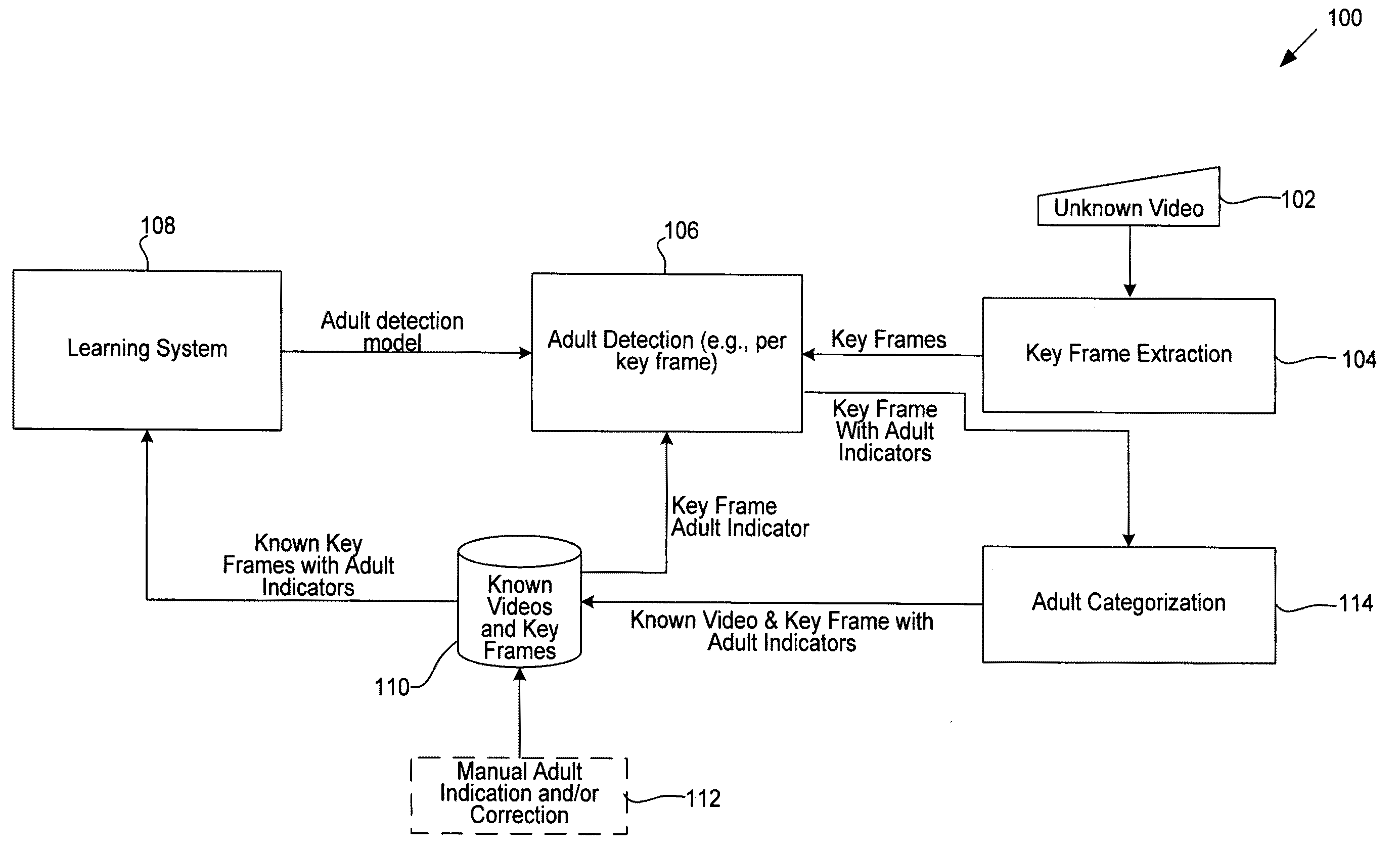

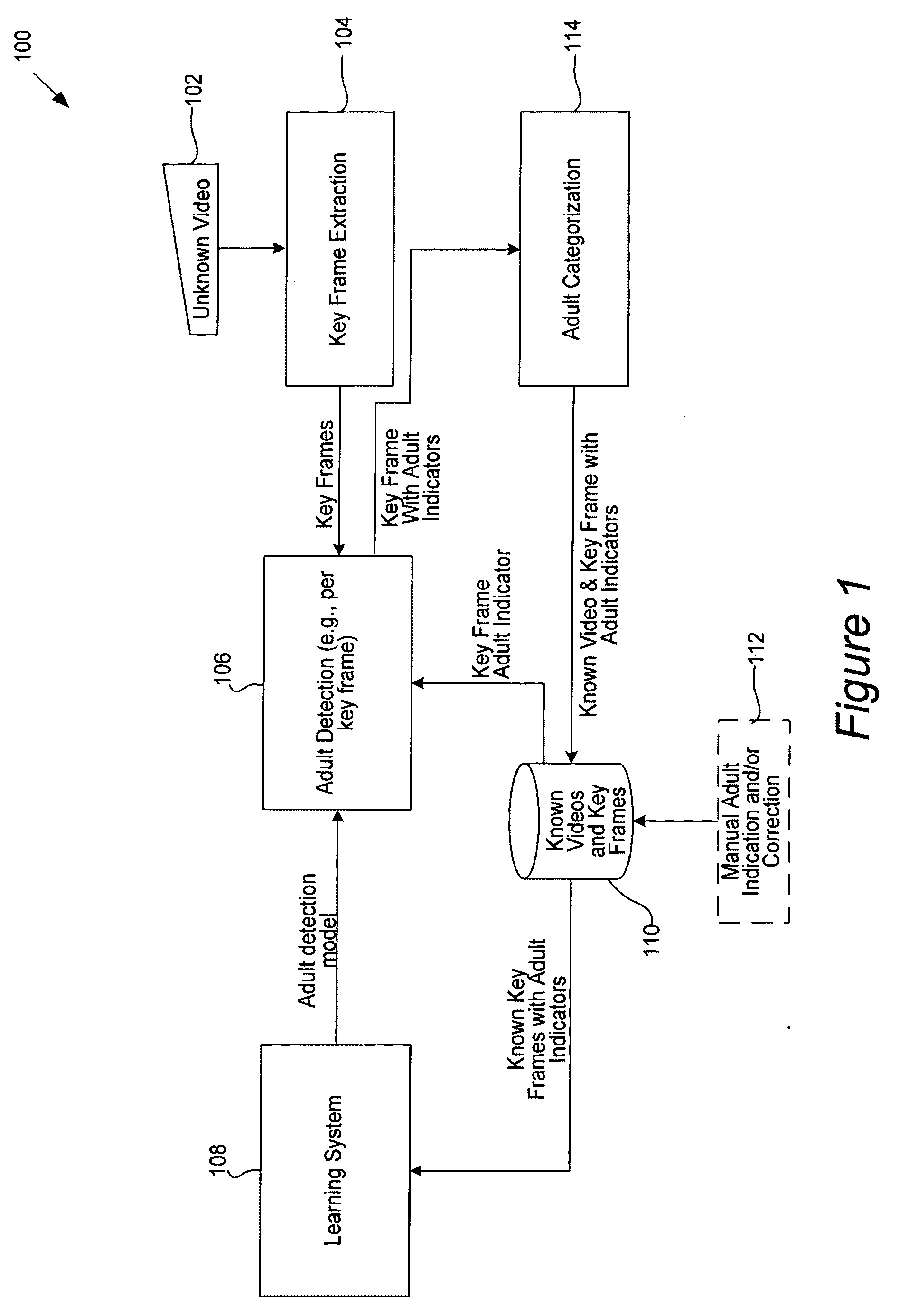

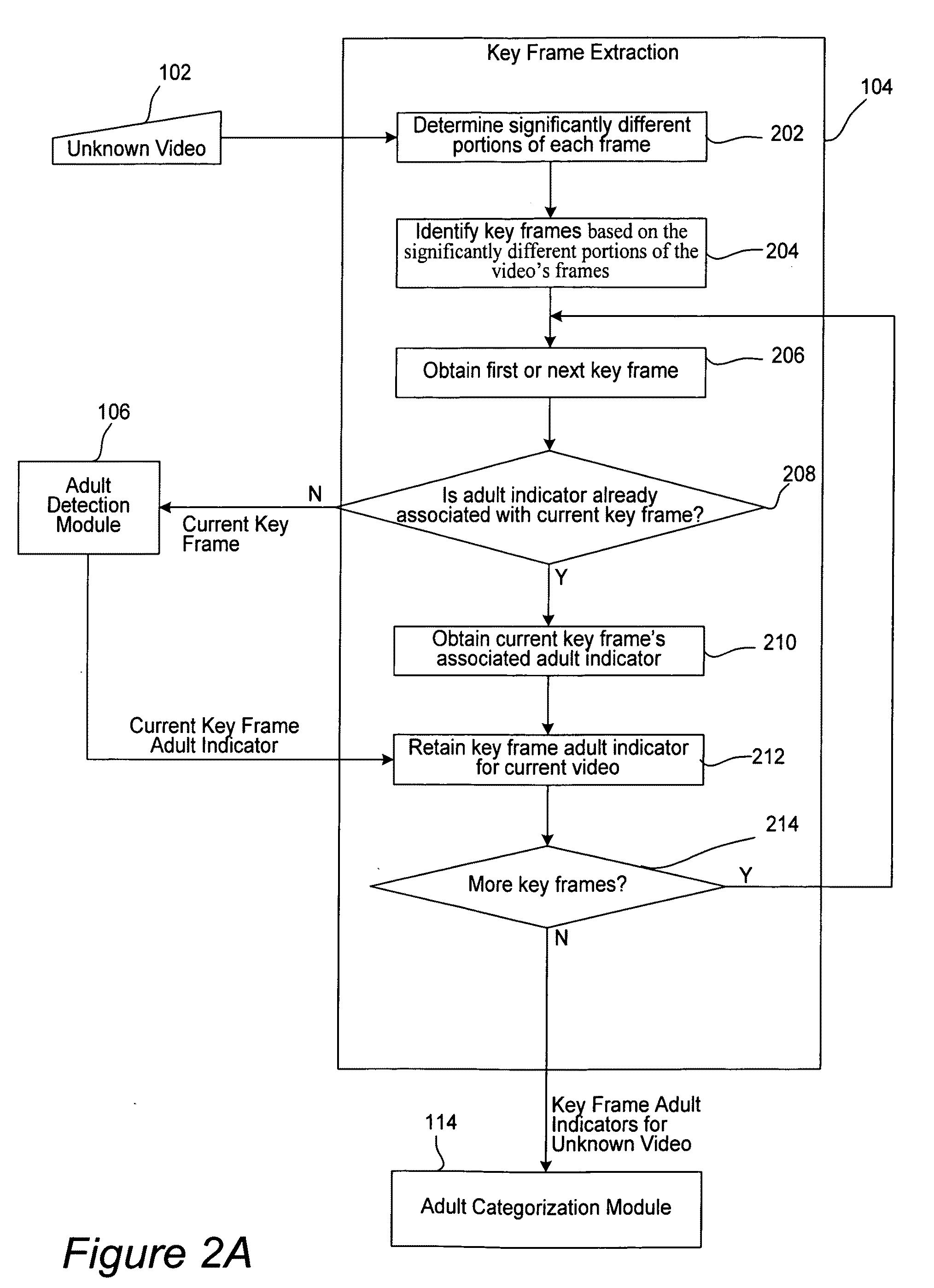

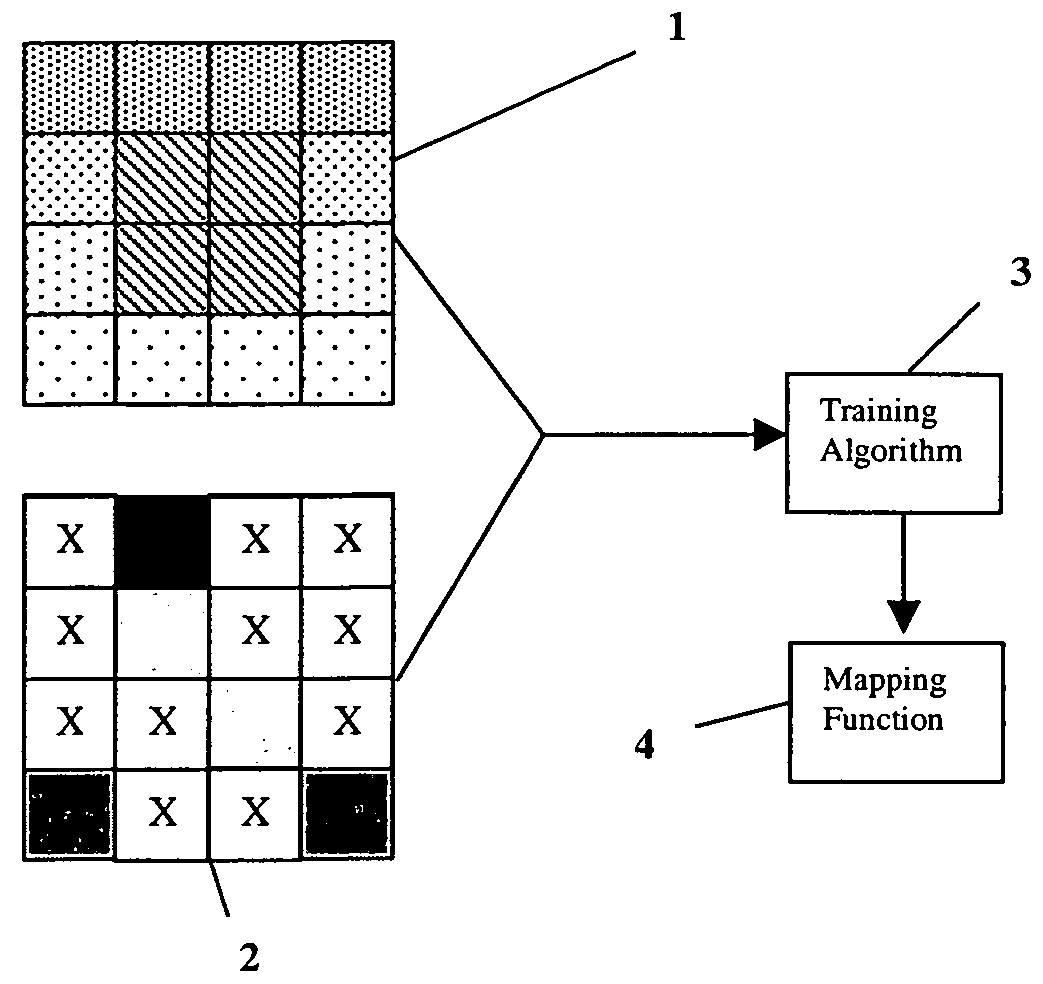

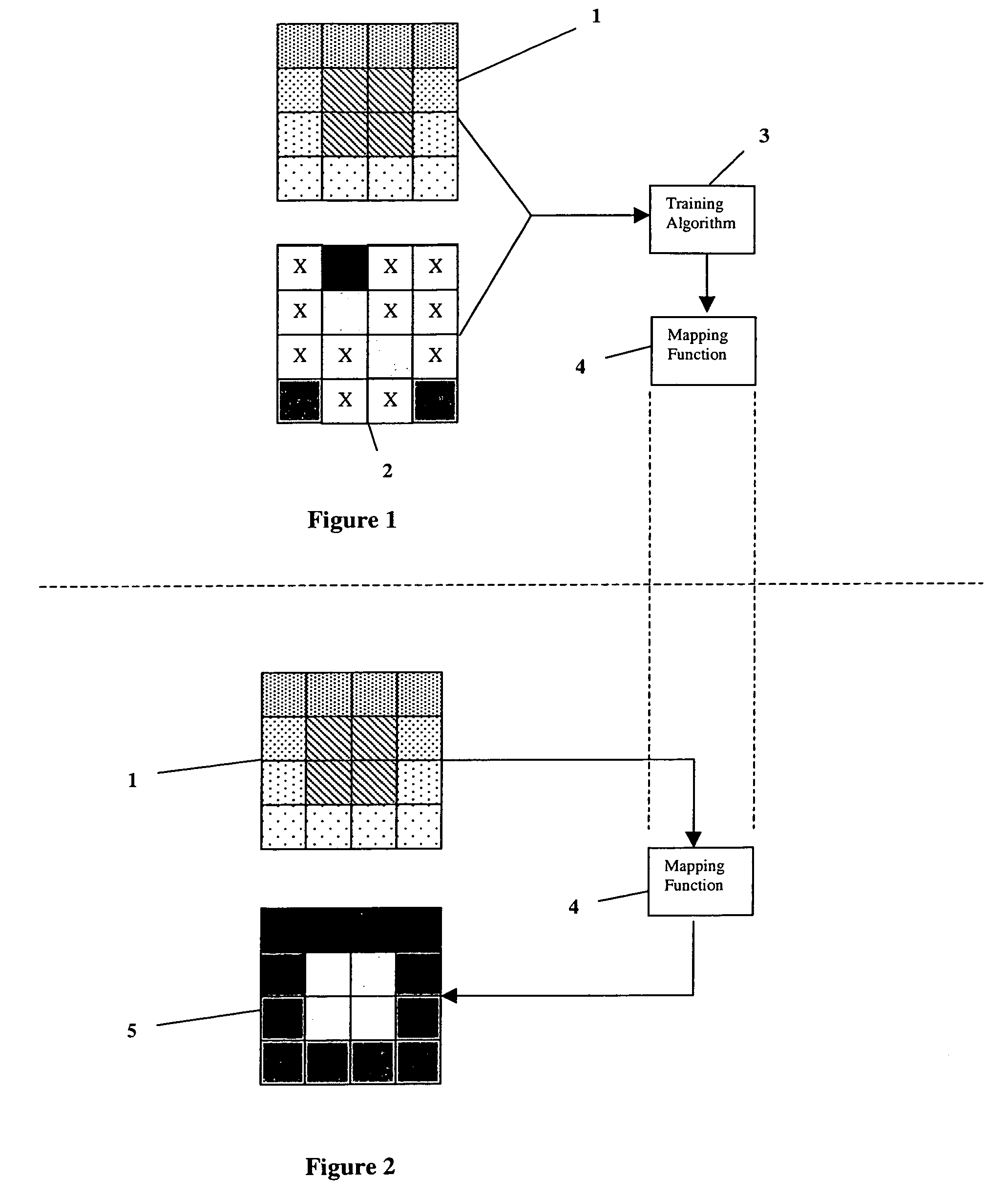

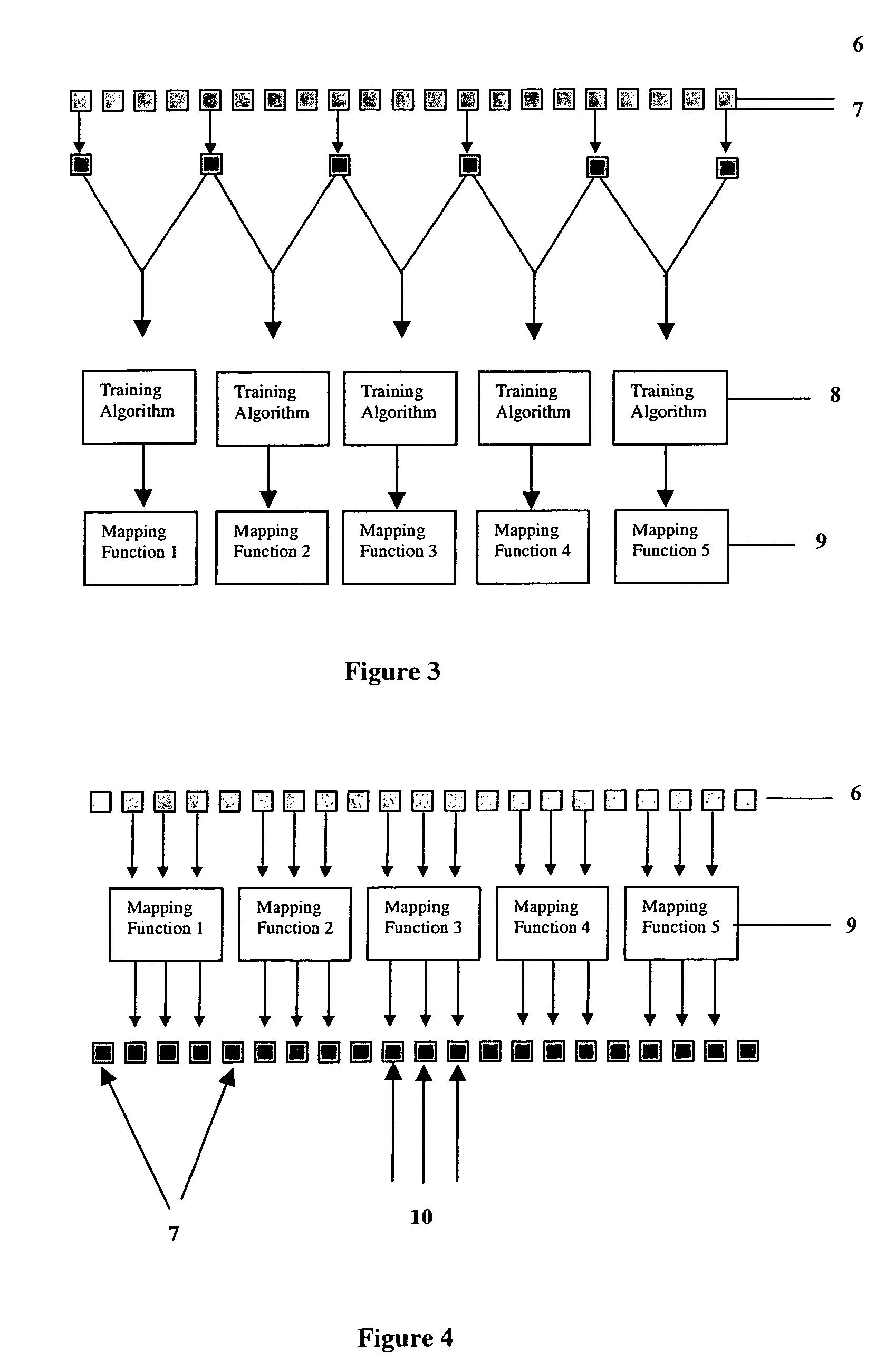

Apparatus and methods for detecting adult videos

Disclosed are apparatus and methods for detecting whether a video is adult or non-adult. In certain embodiments, a learning system is operable to generate one or more models for adult video detection. The model is generated based on a large set of known videos that have been defined as adult or non-adult. Adult detection is then based on this adult detection model. This adult detection model may be applied to selected key frames of an unknown video. In certain implementations, these key frames can be selected from the frames of the unknown video. Each key frame may generally correspond to a frame that contains key portions that are likely relevant for detecting pornographic or adult aspects of the unknown video. By way of examples, key frames may include moving objects, skin, people, etc. In alternative embodiments, a video is not divided into key frames and all frames are analyzed by a learning system to generate a model, as well as by an adult detection system based on such model.

Owner:VERIZON PATENT & LICENSING INC

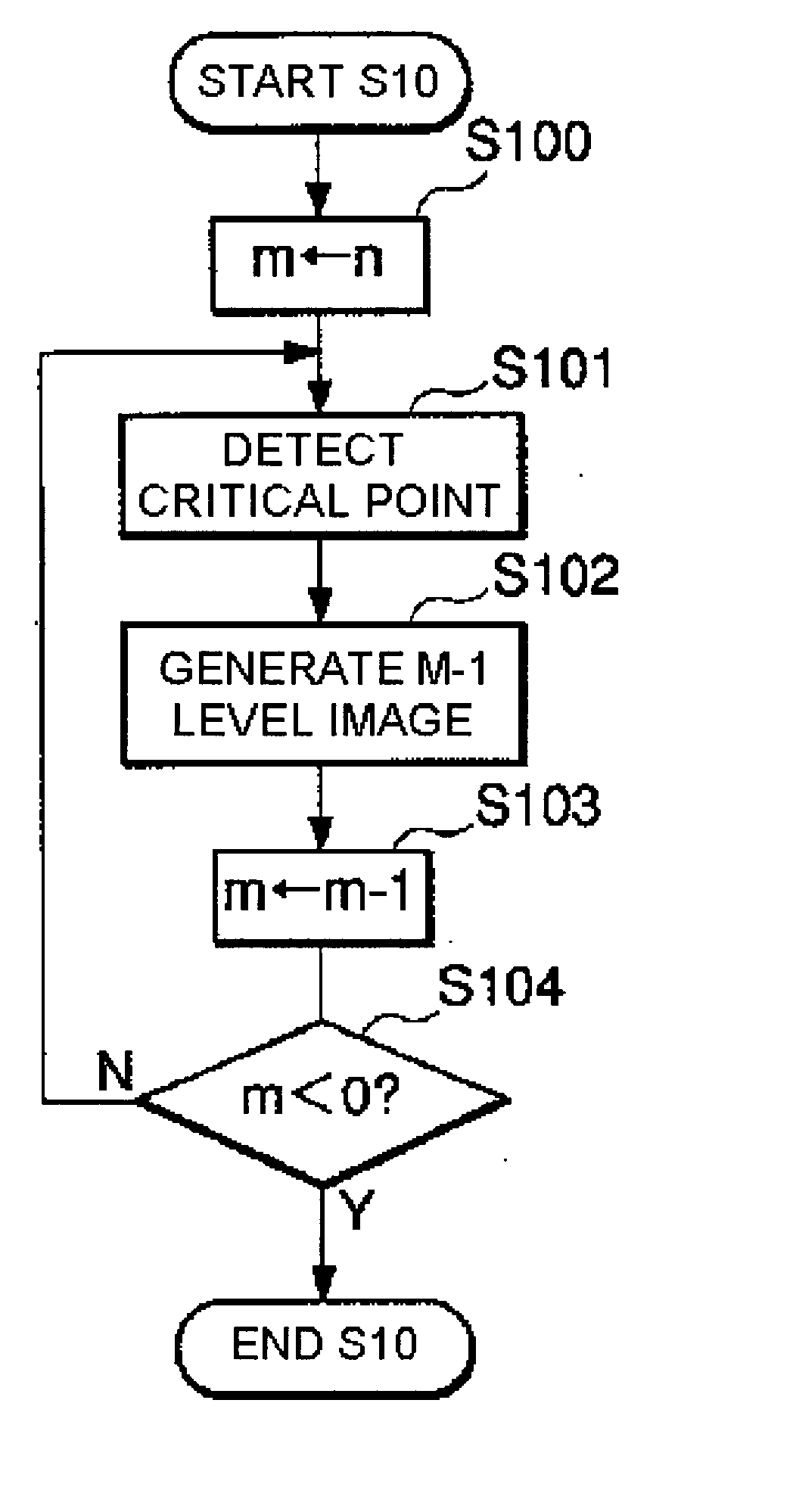

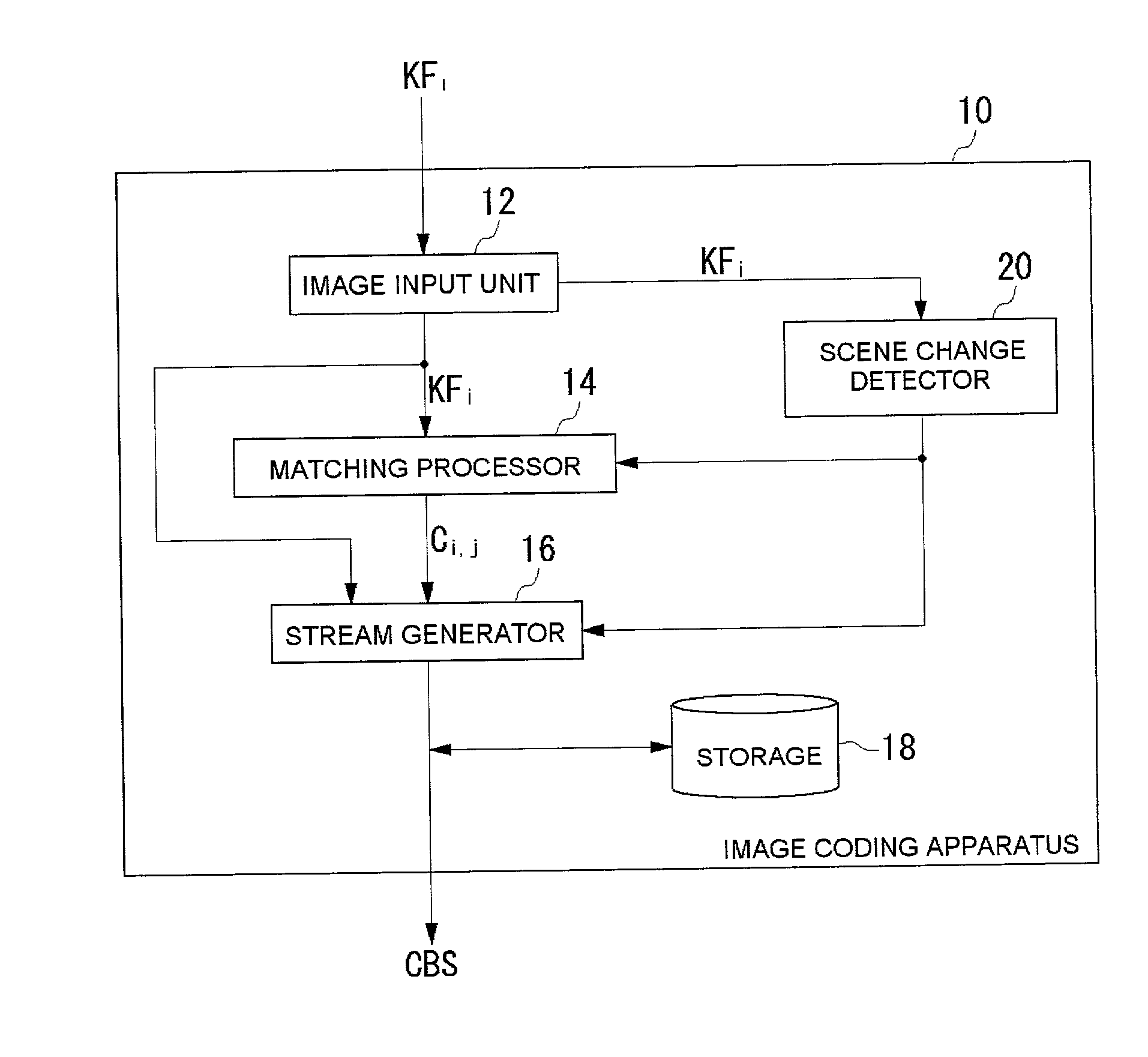

Image coding method and apparatus and image decoding method and apparatus

InactiveUS20020191112A1Television system detailsPicture reproducers using cathode ray tubesDecoding methodsData stream

An image coding and decoding technology for compressing motion pictures which makes use of critical point filter technology and that includes detection of scene changes to provide more accurate decoding. An image input unit acquires key frames. A scene change detector detects when there is a scene change between key frames, and notifies a matching processor to that effect. The matching processor then skips generation of correspondence data between those key frames. A stream generator generates a coded data stream in a manner that key frames and associated correspondence data are incorporated when there is no scene change, while key frames and associated correspondence disable data are incorporated when there is a scene change. This allows a decoder to more accurately decode the data stream and take account of scene changes.

Owner:JBF PARTNERS

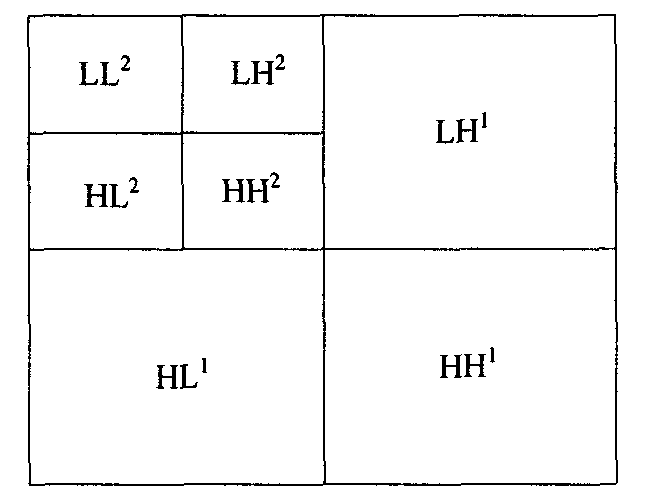

Method for segmenting and indexing scenes by combining captions and video image information

InactiveCN101719144AImprove accuracyAvoid Manual LabelingTelevision system detailsColor television detailsPattern recognitionCrucial point

The invention relates to a method for segmenting and indexing scenes by combining captions and video image information. The method is characterized in that: in the duration of each piece of caption, a video frame collection is used as a minimum unit of a scene cluster. The method comprises the steps of: after obtaining the minimum unit of the scene cluster, and extracting at least three or more discontinuous video frames to form a video key frame collection of the piece of caption; comparing the similarities of the key frames of a plurality of adjacent minimum units by using a bidirectional SIFT key point matching method and establishing an initial attribution relationship between the captions and the scenes by combining a caption related transition diagram; for the continuous minimum cluster units judged to be dissimilar, further judging whether the minimum cluster units can be merged by the relationship of the minimum cluster units and the corresponding captions; and according to the determined attribution relationships of the captions and the scenes, extracting the video scenes. For the segments of the extracted video scenes, the forward and reverse indexes, generated by the caption texts contained in the segments, are used as a foundation of indexing the video segments.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

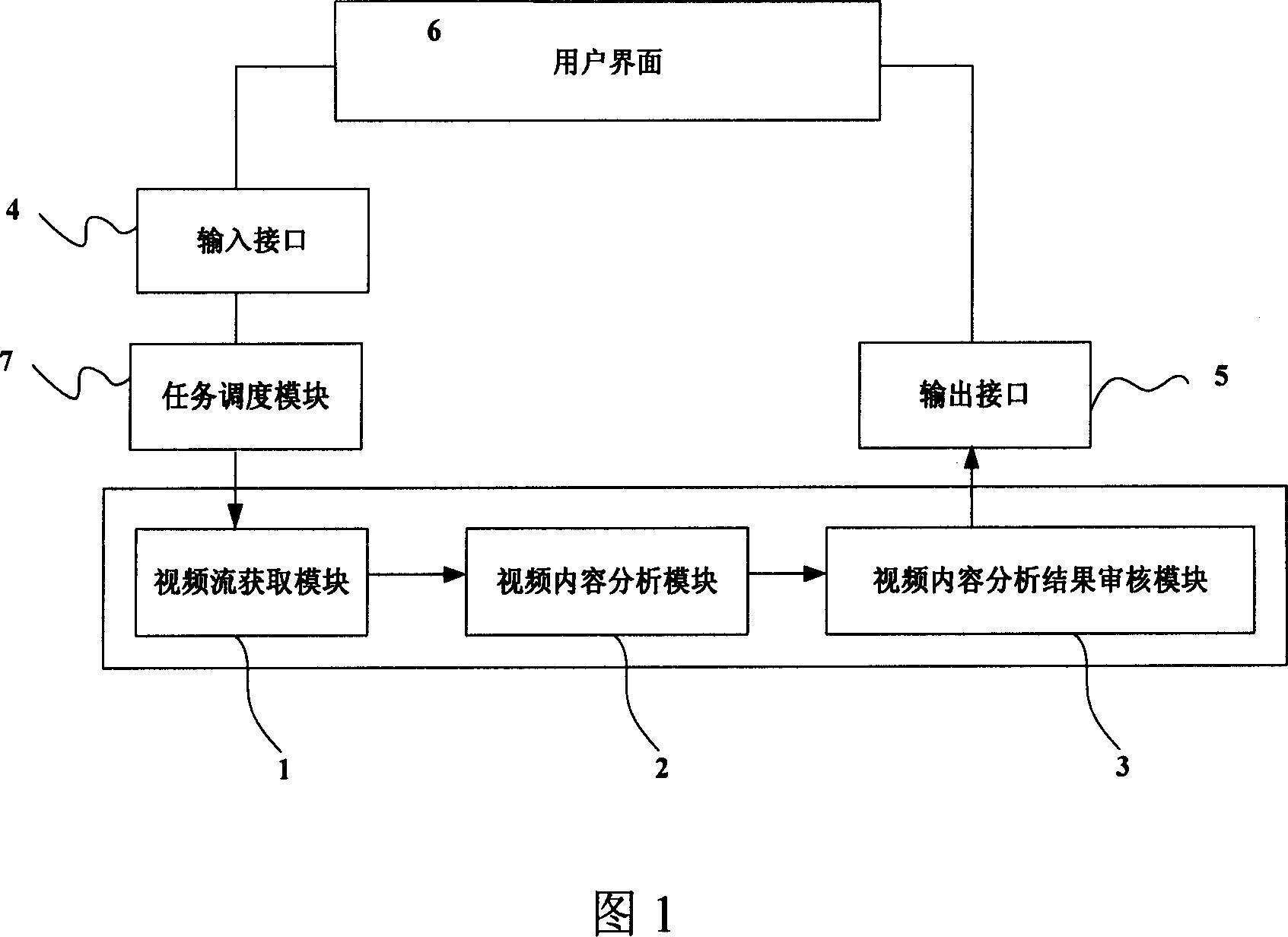

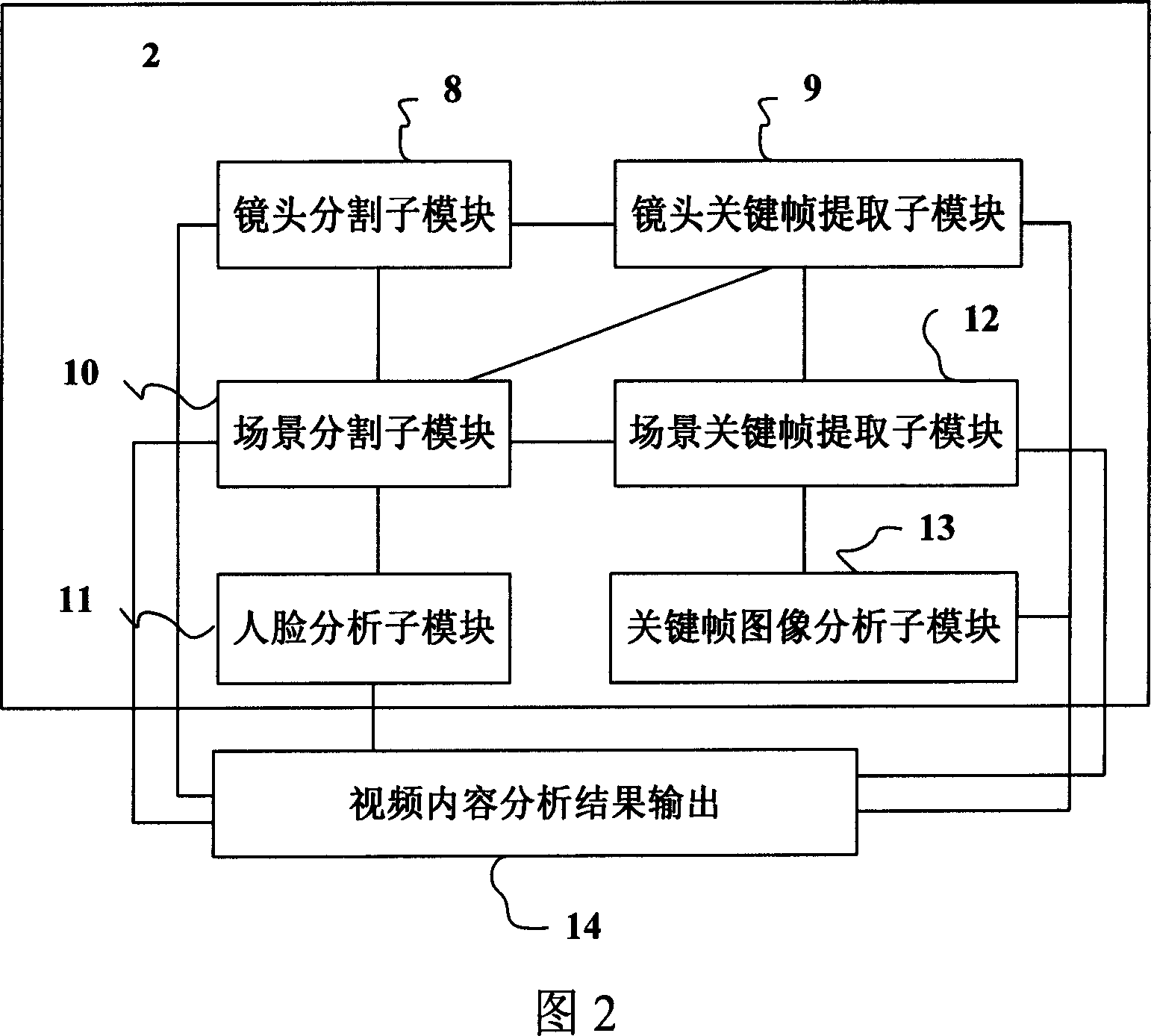

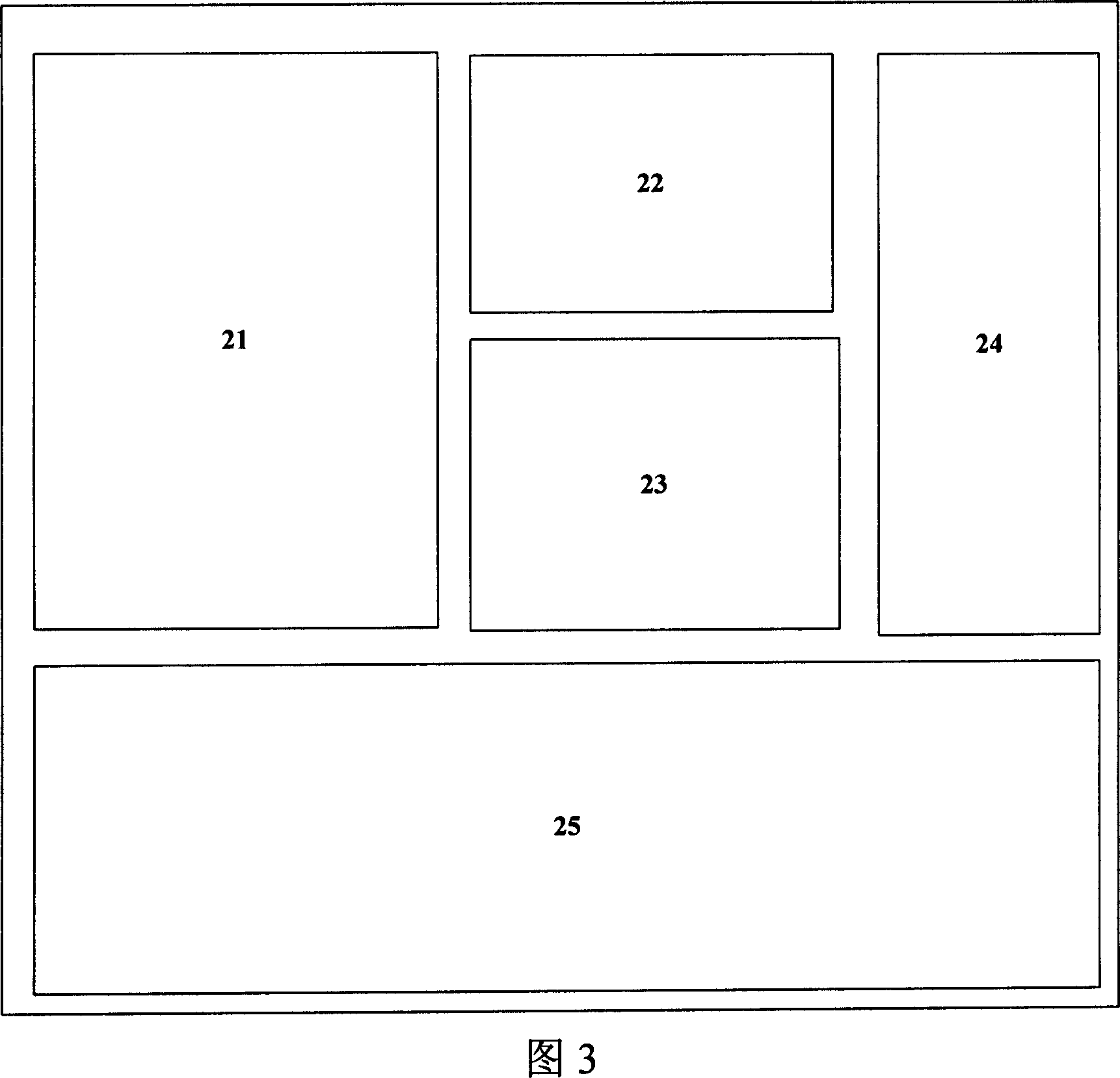

Video content analysis system

This invention relates to a video content analysis system including: an input interface, a task dispatch module, a video stream obtaining module, a video content analysis module, a check module for video analysis result, an output interface and a UI. The system flow includes: the system receives the video analysis instruction from the network or the local, the task dispatch module decides the execution sequence of the task, the video flow obtaining module decodes the being analyzed video and the content analysis module analyzes and picks up lens information, the lens key frame information, the scene information, key frame information, its image information and man-face informatioin to be checked according to the need to be stored in the mode of XML file to be uploaded to the video information database via the output interface, and the system is used in supporting video search service based on content.

Owner:北京新岸线网络技术有限公司

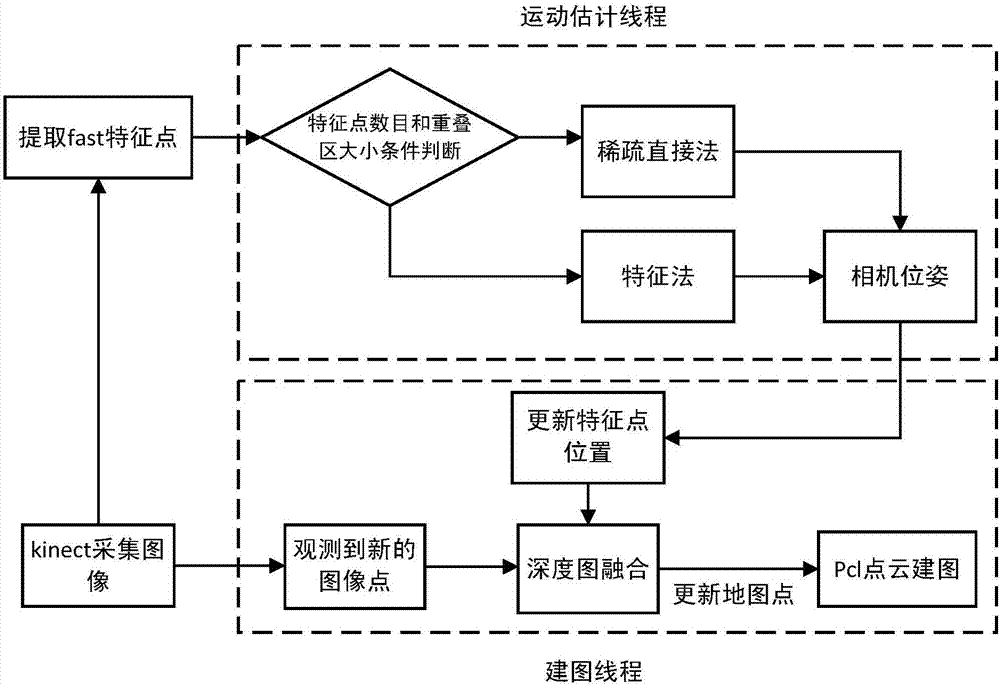

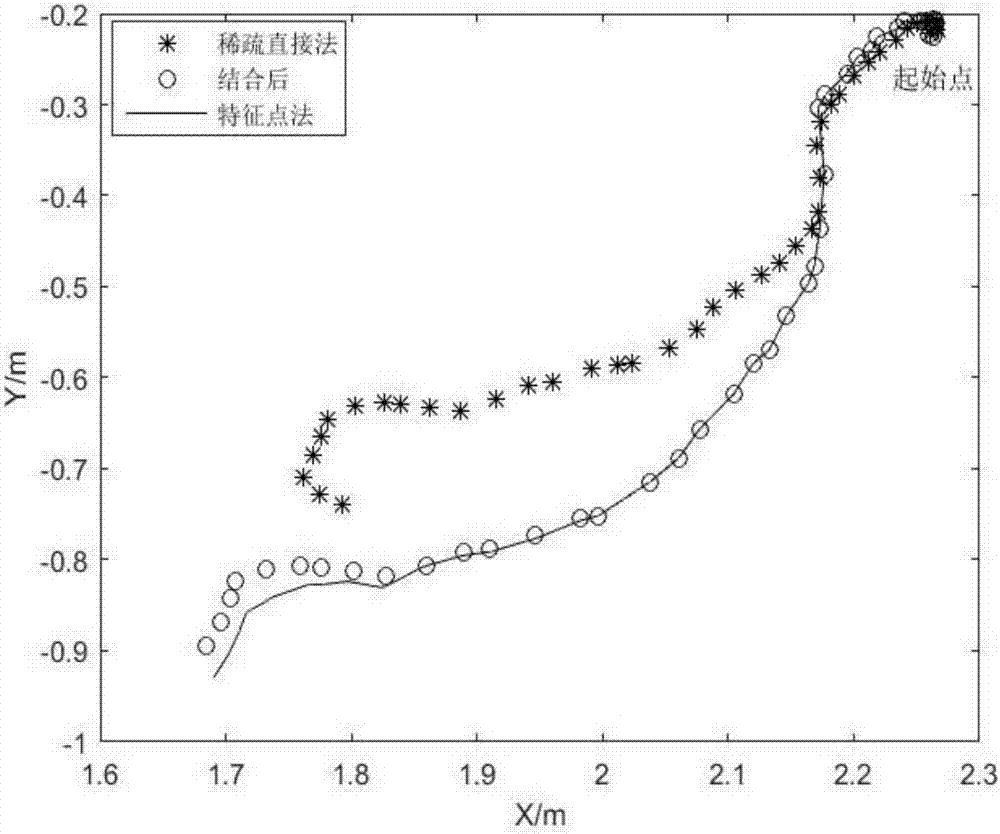

Depth camera-based visual mileometer design method

ActiveCN107025668AEffective trackingEffective estimateImage enhancementImage analysisColor imageFrame based

The invention discloses a depth camera-based visual mileometer design method. The method comprises the following steps of acquiring the color image information and the depth image information in the environment by a depth camera; extracting feature points in an initial key frame and in all the rest image frames; tracking the position of each feature point in the current frame based on the optical flow method so as to find out feature point pairs; according to the number of actual feature points and the region size of the overlapped regions of feature points in two successive frames, selectively adopting the sparse direct method or the feature point method to figure out relative positions and postures between two frames; based on the depth information of a depth image, figuring out the 3D point coordinates of the feature points of the key frame in a world coordinate system based on the combination of relative positions and postures between two frames; conducting the point cloud splicing on the key frame during another process, and constructing a map. The method combines the sparse direct method and the feature point method, so that the real-time performance and the robustness of the visual mileometer are improved.

Owner:SOUTH CHINA UNIV OF TECH

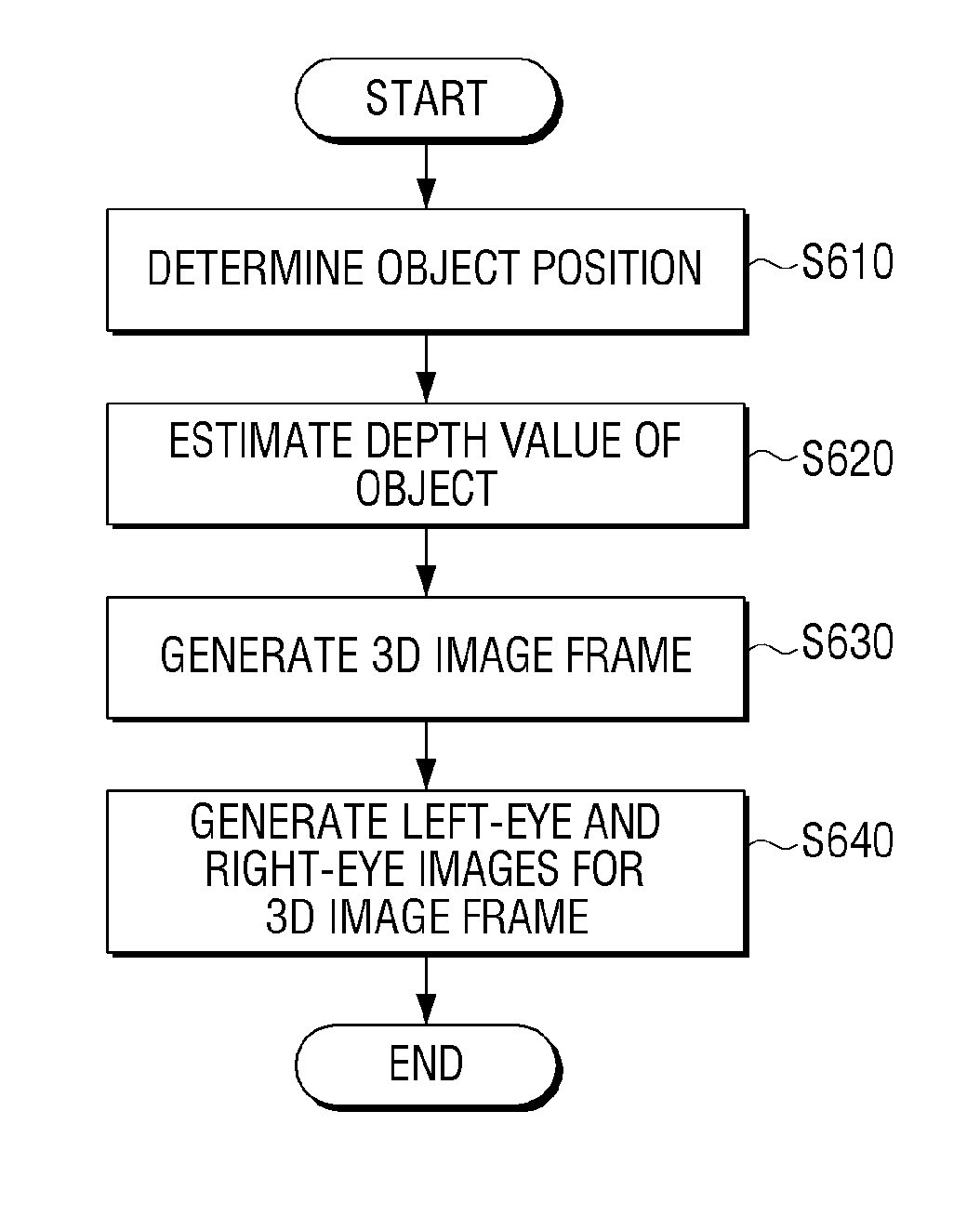

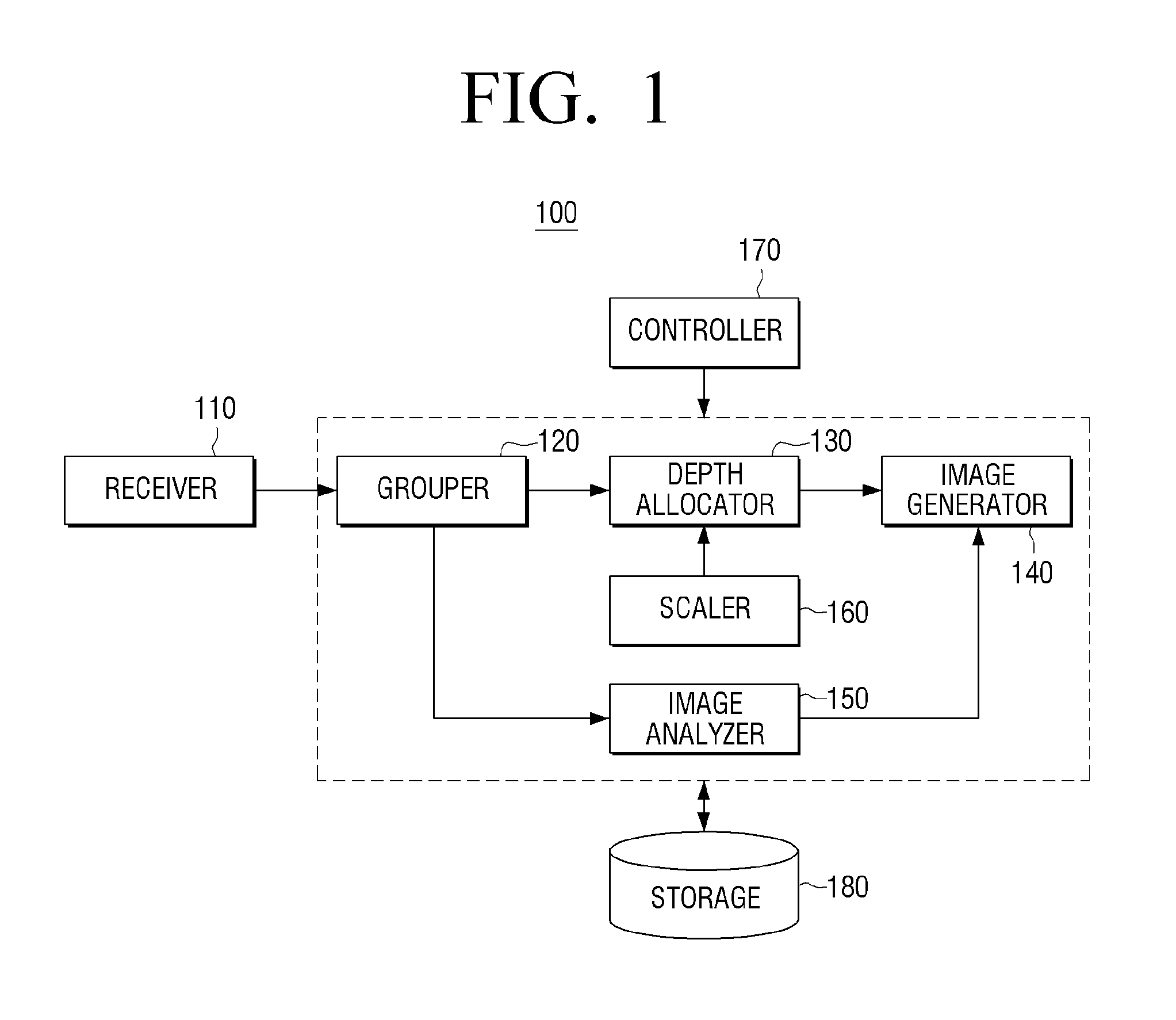

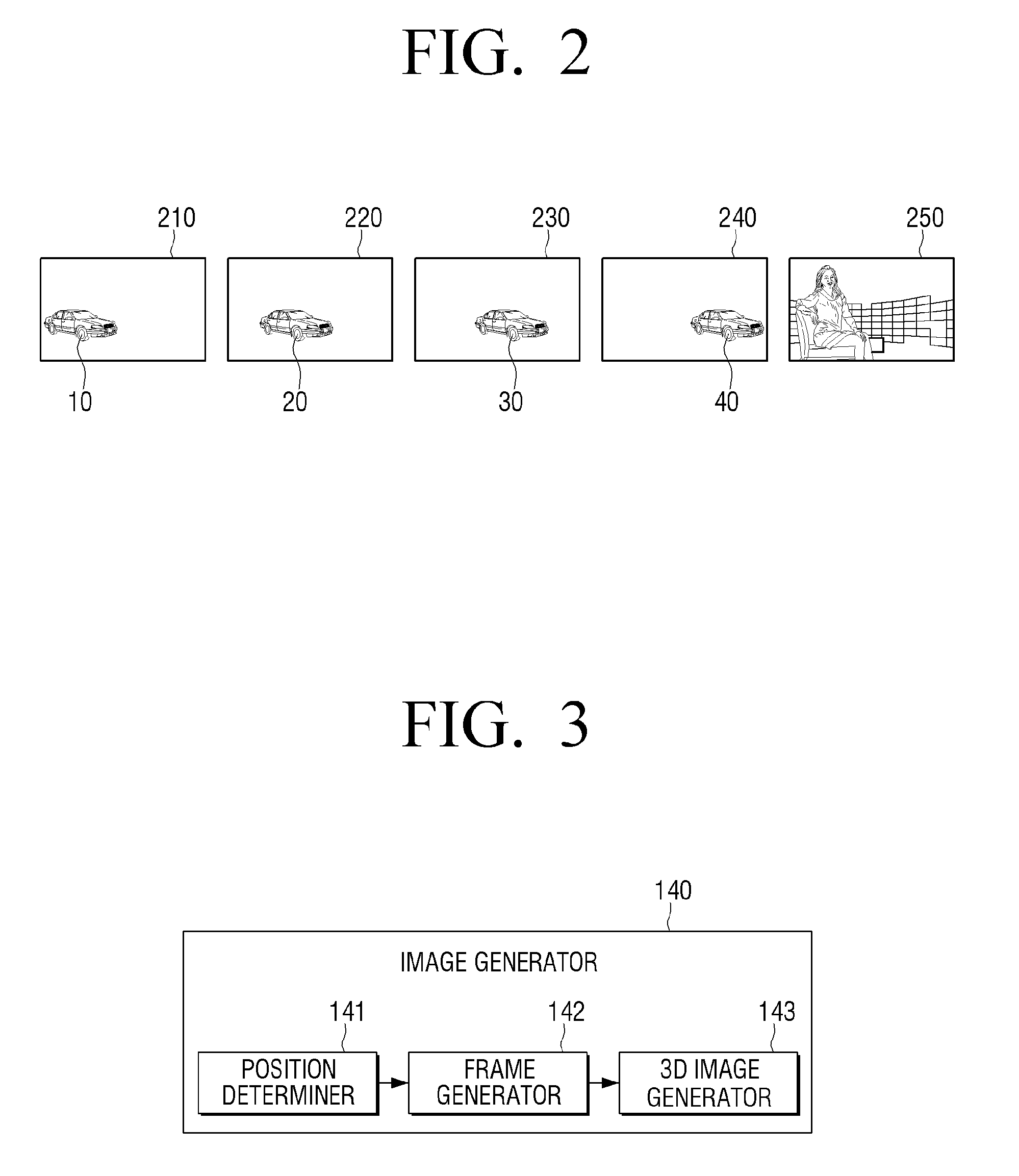

Apparatus and method for display

InactiveUS20140063005A1Minimize timeValid conversionSteroscopic systems3D-image rendering3d imageKey frame

Display apparatus and method are provided. The display apparatus may include a receiver for receiving an image; a grouper for analyzing the received image and grouping a plurality of frames of the received image based on the analysis; a depth allocator for determining at least two key frames from a plurality of frames grouped into at least one group, and allocating a depth per object in the determined key frames; and an image generator for generating a 3D image corresponding to other frames excluding the key frames based on a depth value allocated to the key frames. Hence, the display apparatus can allocate the depth value of a higher quality to the object in the frames of the received image.

Owner:SAMSUNG ELECTRONICS CO LTD

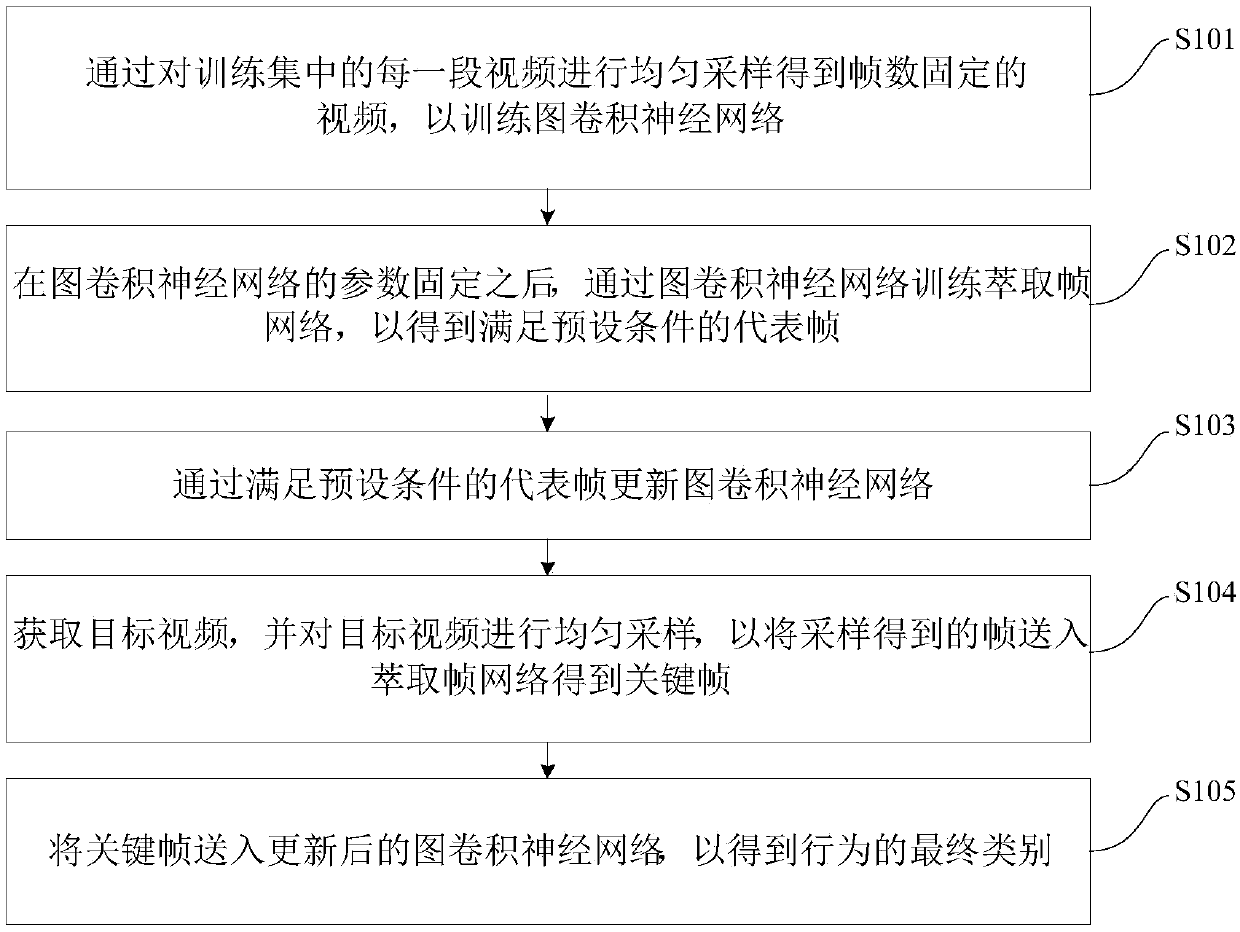

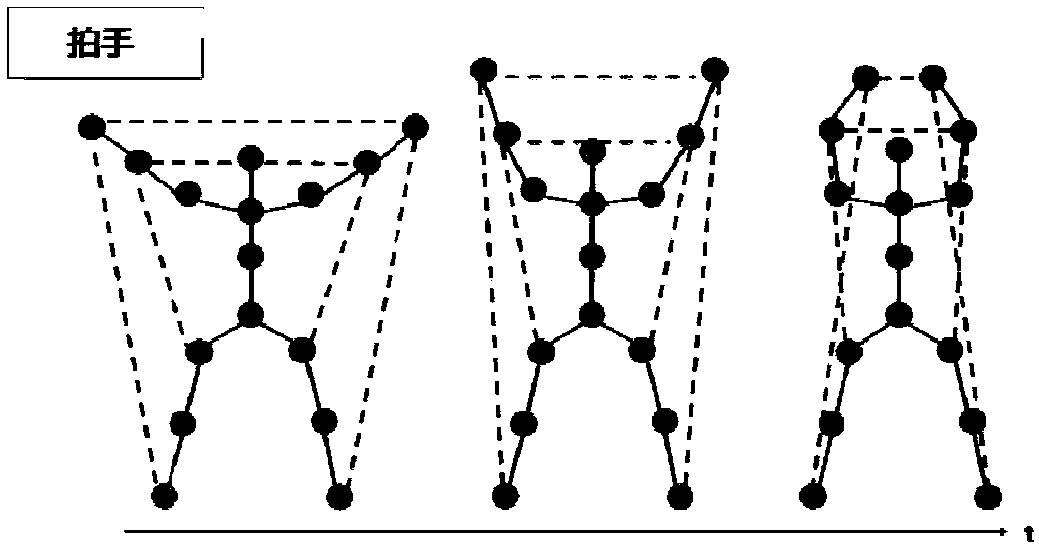

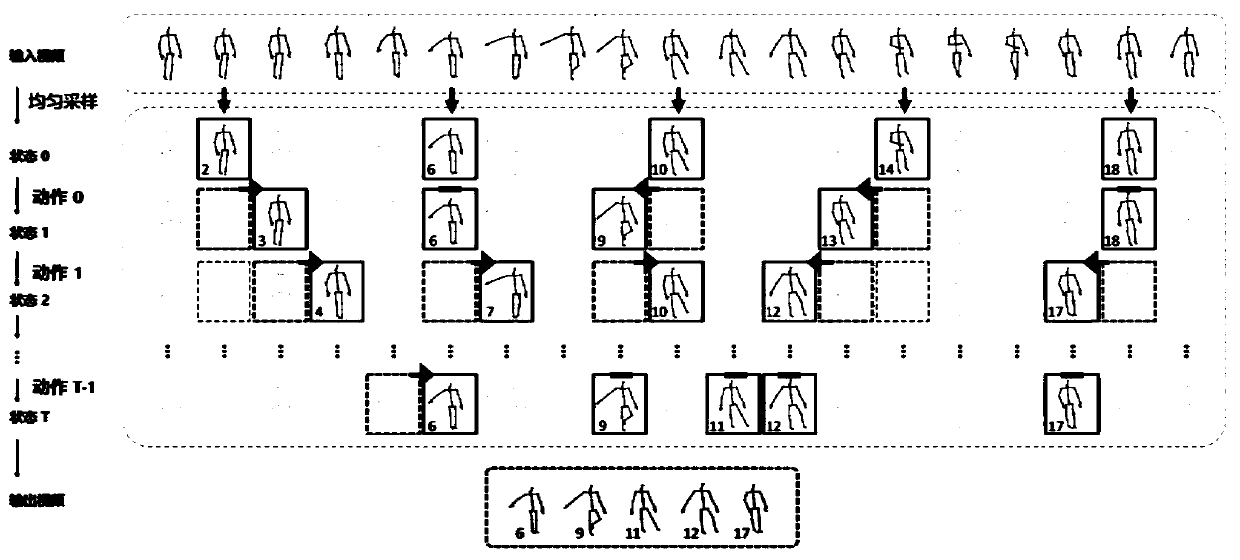

Human skeleton behavior recognition method and device based on deep reinforcement learning

ActiveCN108304795AImprove discrimination abilityEasy to identifyCharacter and pattern recognitionNeural architecturesFixed frameKey frame

The invention discloses a human skeleton behavior recognition method and device based on deep reinforcement learning. The method comprises: uniform sampling is carried out on each video segment in a training set to obtain a video with a fixed frame number, thereby training a graphic convolutional neural network; after parameter fixation of the graphic convolutional neural network, an extraction frame network is trained by using the graphic convolutional neural network to obtain a representative frame meeting a preset condition; the graphic convolutional neural network is updated by using the representative frame meeting the preset condition; a target video is obtained and uniform sampling is carried out on the target video, so that a frame obtained by sampling is sent to the extraction frame network to obtain a key frame; and the key frame is sent to the updated graphic convolutional neural network to obtain a final type of the behavior. Therefore, the discriminability of the selectedframe is enhanced; redundant information is removed; the recognition performance is improved; and the calculation amount at the test phase is reduced. Besides, with full utilization of the topologicalrelationship of the human skeletons, the performance of the behavior recognition is improved.

Owner:TSINGHUA UNIV

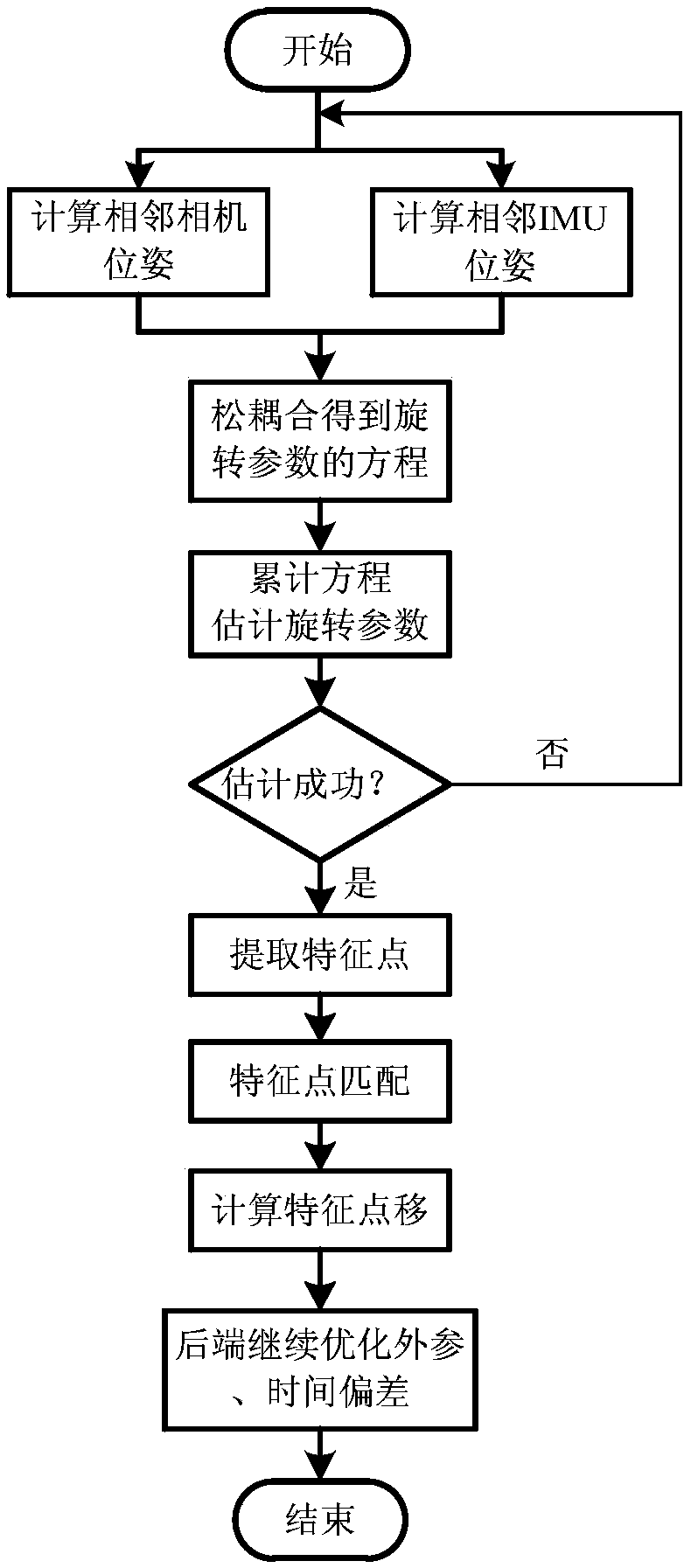

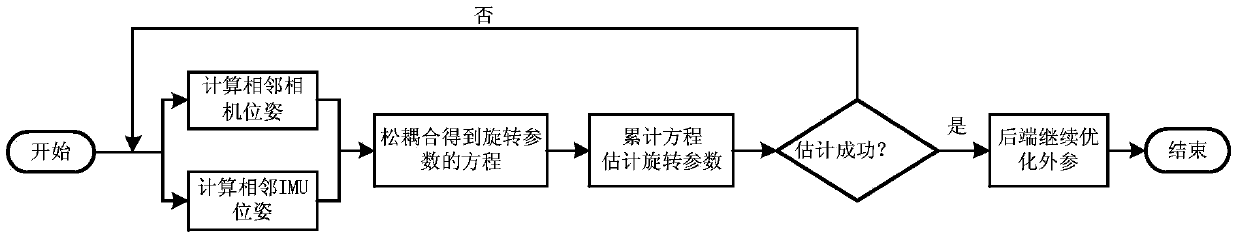

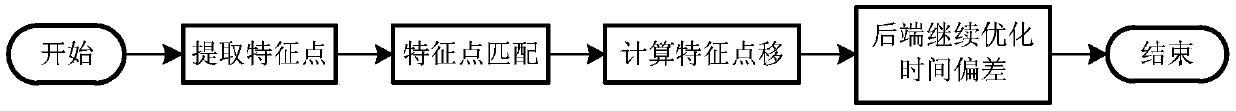

Visual and inertial navigation fusion SLAM-based external parameter and time sequence calibration method on mobile platform

ActiveCN109029433AReduce mistakesExcellent trajectory accuracyNavigational calculation instrumentsNavigation by speed/acceleration measurementsEnd stagesVision based

The invention discloses a visual and inertial navigation fusion SLAM-based external parameter and time sequence calibration method on a mobile platform. The method comprises an initialization stage, wherein the relative rotation parameters between two frames estimated by a camera and an IMU are aligned through a loosely-coupled method, and the relative rotation parameters of the camera and the IMUare estimated and obtained; a front-end stage, wherein the front end completes the function of a visual odometer, namely, the pose of the current frame of the camera in the world coordinate system isgenerally estimated according to the pose of the camera in the world coordinate system estimated in the former several frames, and the estimated value serves as an initial value of back-end optimization; a back-end stage, wherein some key frames are selected from all the frames, variables to be optimized are set, a uniform objective function is established, and optimization is carried out according to the corresponding constraint conditions, and therefore, accurate external parameters are obtained. By adoption of the method disclosed by the invention, the error of the estimated external parameters is relatively low, and the precision of the time sequence calibration track is high.

Owner:SOUTHEAST UNIV

Image conversion and encoding techniques

InactiveUS7035451B2Reduce in quantityReduce time investmentImage enhancementImage analysisKey frameImage conversion

A method of creating a depth map including the steps of assigning a depth to at least one pixel or portion of an image, determining relative location and image characteristics for each at least one pixel or portion of the image, utilizing the depth(s), image characteristics and respective location to determine an algorithm to ascertain depth characteristics as a function relative location and image characteristics, utilizing said algorithm to calculate a depth characteristic for each pixel or portion of the image, wherein the depth characteristics form a depth map for the image. In a second phase of processing the said depth maps form key frames for the generation of depth maps for non-key frames using relative location, image characteristics and distance to key frame(s).

Owner:DYNAMIC DIGITAL DEPTH RES

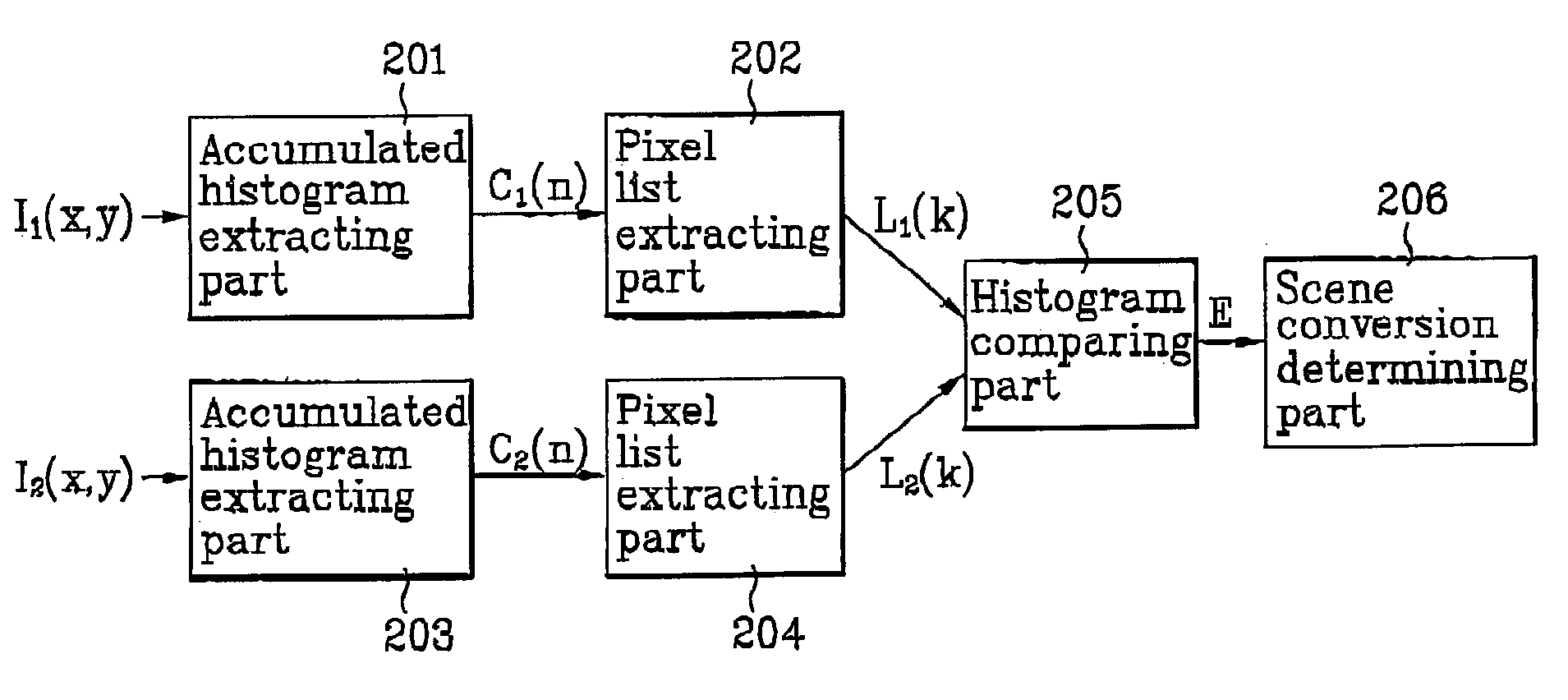

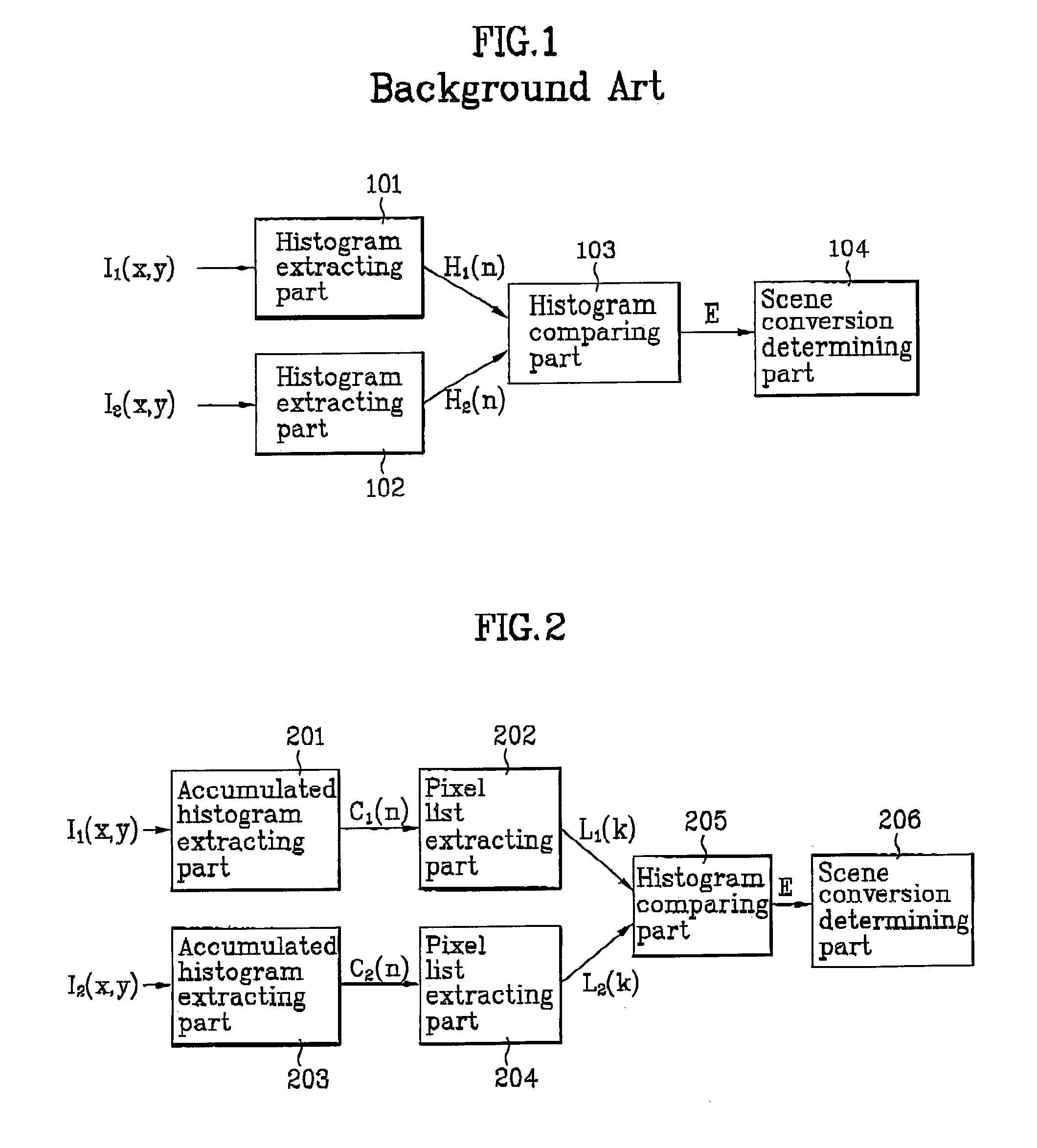

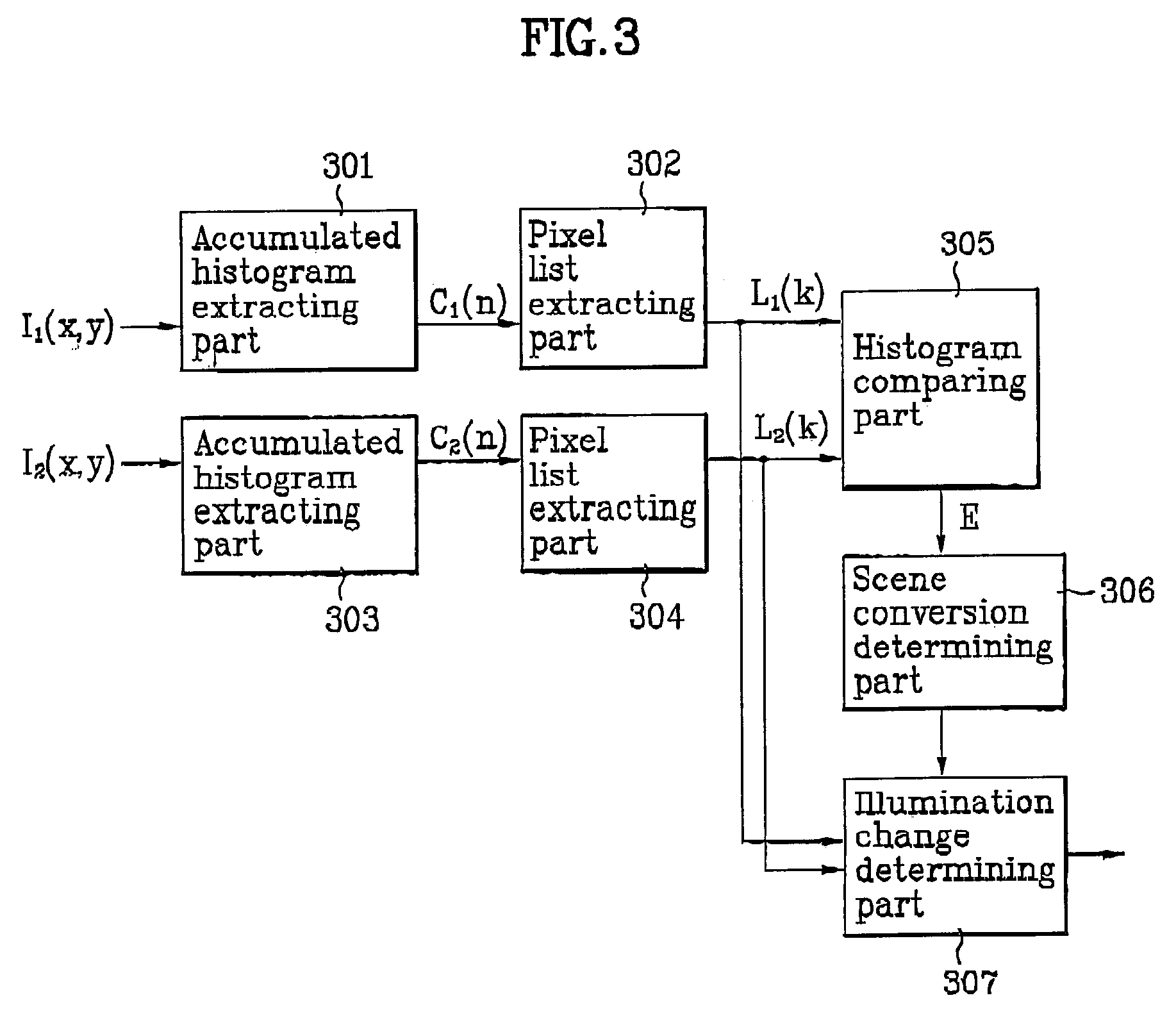

Scene change detection apparatus

InactiveUS7158674B2Eliminate the problemImage enhancementTelevision system detailsKey frameFlash light

An apparatus for detecting a scene change is disclosed, which is provided for realizing functions such as a nonlinear browsing of a video, a video indexing and a key frame generation in a personal video recorder or video database. In the apparatus according to the present invention, accumulated histograms are extracted from the received two frames, and then a pixel value corresponding to a specific accumulated distribution of respective accumulated histograms is stored, thereby accurately detecting the scene change by comparing difference of pixel value lists. Also, an illumination change determining part for receiving first and second pixel lists from first and second pixel list extracting parts is additionally included so as to determine whether a brightness change of an image occurs due to a change of illumination conditions. Accordingly, it is possible to detect the scene change without any influence from changes of illumination, a camera flash or other optical elements.

Owner:LG ELECTRONICS INC

Monocular vision SLAM algorithm based on semi-direct method and sliding window optimization

InactiveCN107610175ASmall amount of calculationGuaranteed uptimeImage analysisColor imageSlide window

The invention discloses a monocular vision SLAM algorithm based on a semi-direct method and sliding window optimization. The monocular vision SLAM algorithm comprises the steps that 1) color image frames acquired by a monocular color camera are uploaded to a computer through a third-party image acquisition interface; 2) the algorithm initialization process is performed, the camera pose transformation relationship between the two initial frames is established, initial map points are established, the two initial frames act as key frames and the initial map points are inserted in the map and thesliding window; and 3) the map points observed in the previous frame are projected to the current frame, and bundle set optimization computation based on the luminosity error is performed between thetwo frames of images so as to obtain the camera pose transformation between the two frames to track the movement of the camera. The monocular vision SLAM algorithm has faster operation speed, and theused equipment is simple and easy to calibrate so as to have higher practical value and wider application occasions.

Owner:SOUTH CHINA UNIV OF TECH

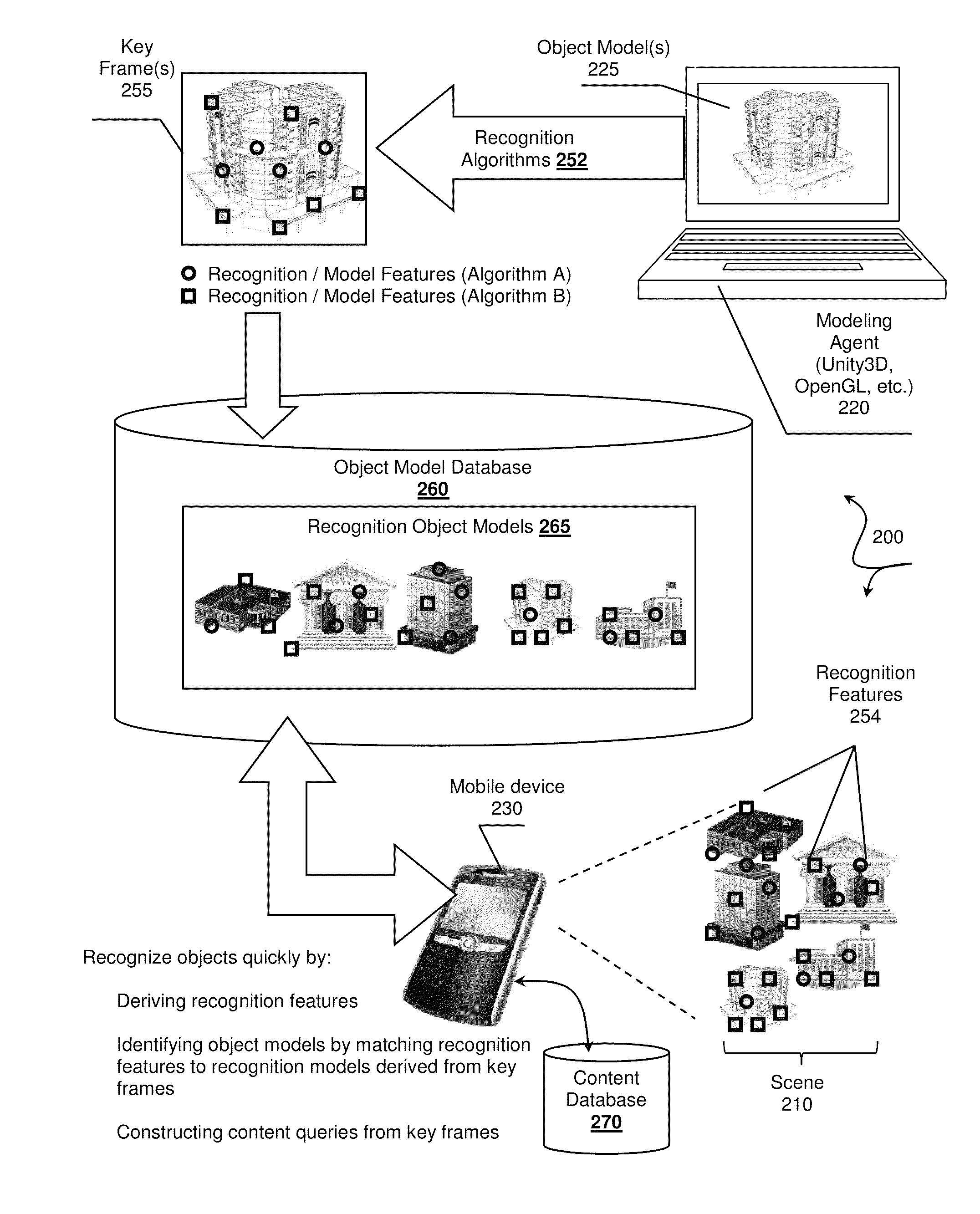

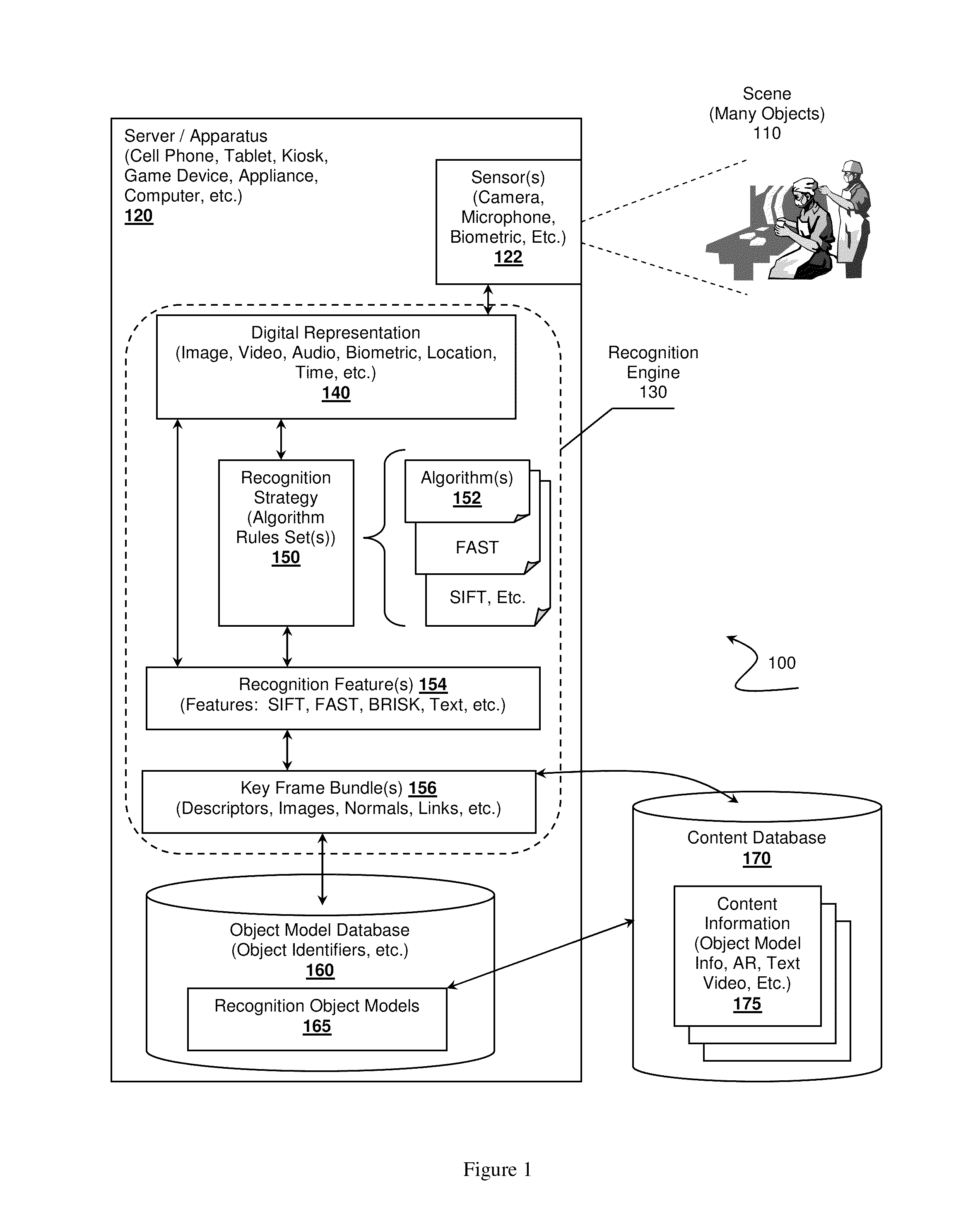

Fast recognition algorithm processing, systems and methods

Systems and methods of quickly recognizing or differentiating many objects are presented. Contemplated systems include an object model database storing recognition models associated with known modeled objects. The object identifiers can be indexed in the object model database based on recognition features derived from key frames of the modeled object. Such objects are recognized by a recognition engine at a later time. The recognition engine can construct a recognition strategy based on a current context where the recognition strategy includes rules for executing one or more recognition algorithms on a digital representation of a scene. The recognition engine can recognize an object from the object model database, and then attempt to identify key frame bundles that are contextually relevant, which can then be used to track the object or to query a content database for content information.

Owner:NANTMOBILE +1

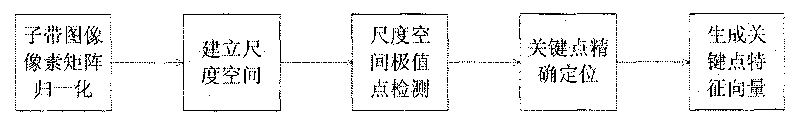

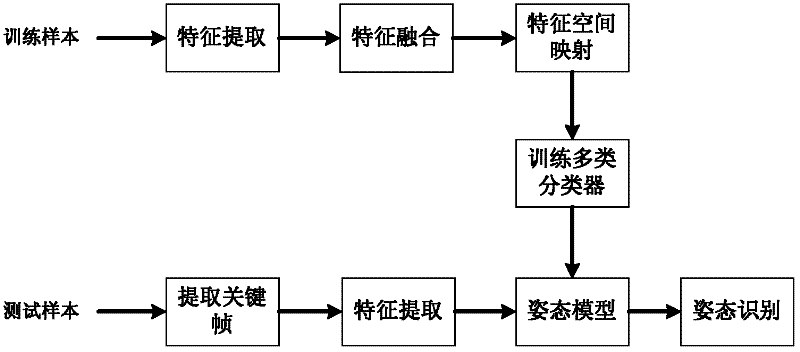

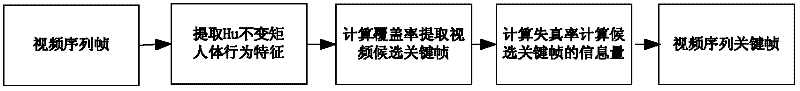

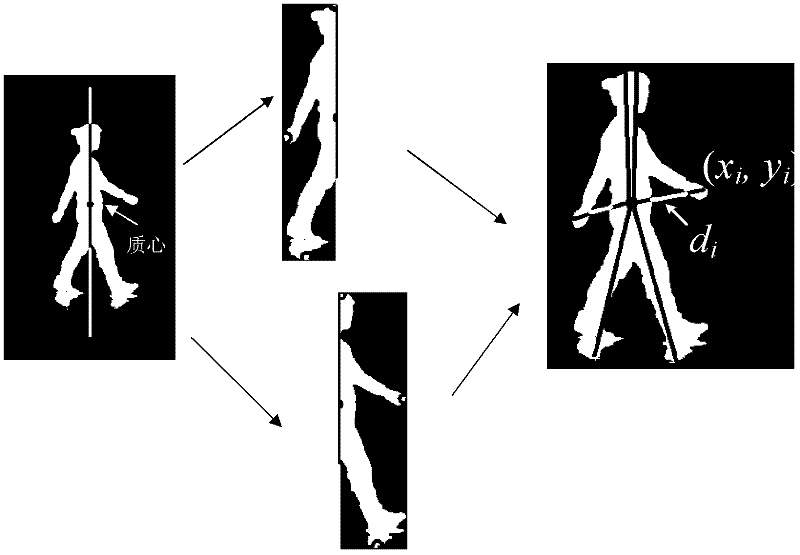

Human body posture identification method based on multi-characteristic fusion of key frame

ActiveCN102682302ADescribe wellEfficient analysisImage analysisCharacter and pattern recognitionHuman bodyFeature vector

The invention provides a human body posture identification method based on multi-characteristic fusion of a key frame, comprising the following steps of: (1) extracting Hu invariant moment characteristics from a video image; calculating a covering rate of an image sequence; extracting the highest covering percentage of the covering rate as a candidate key frame; then calculating a distortion rate of the candidate key frame and extracting the minimum distortion percentage as the key frame; (2) carrying out extraction of a foreground image on the key frame to obtain the foreground image of a moving human body; (3) extracting characteristic information of the key frame, wherein the characteristic information comprises a six-planet model, a six-planet angle and eccentricity; obtaining a multi-characteristic fused image characteristic vector; and (4) utilizing a one-to-one trained classification model, wherein the classification model is a posture classifier based on an SVM (Secure Virtual Machine); and identifying a posture. The human body posture identification method has the advantages of simplified calculation, good stability and good robustness.

Owner:ZHEJIANG UNIV OF TECH

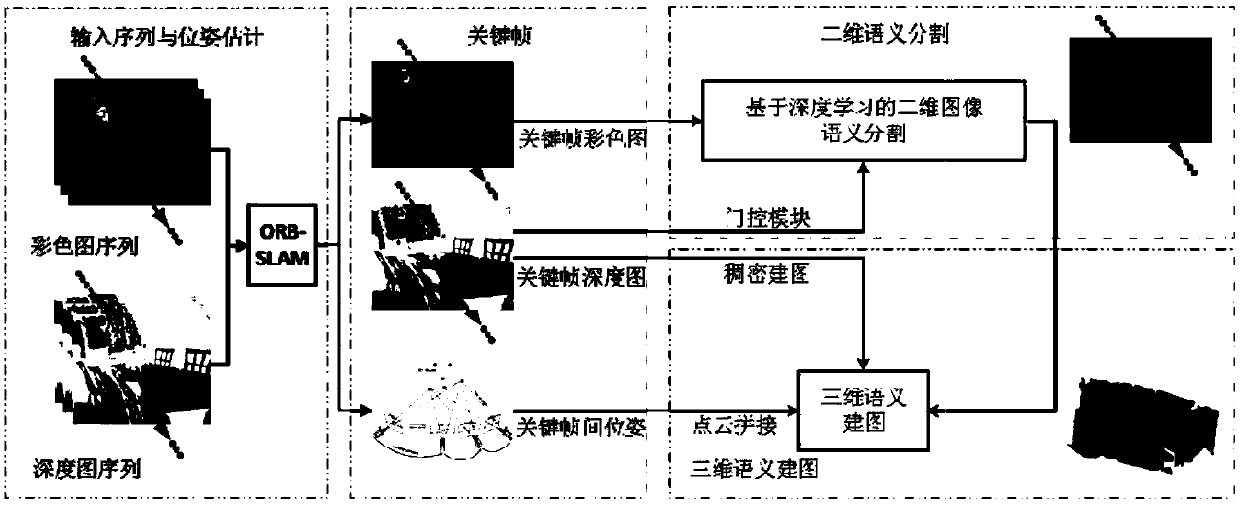

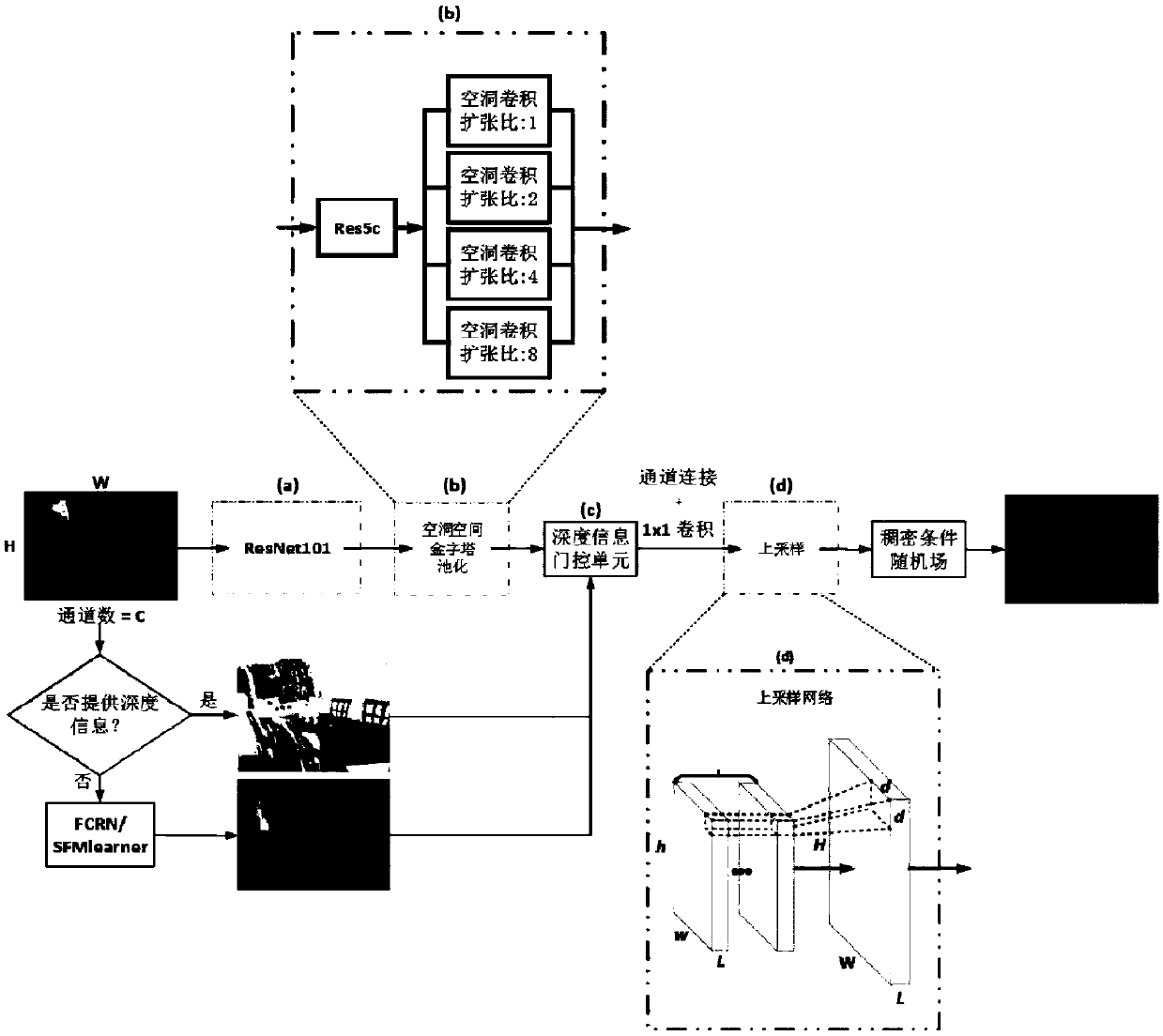

Environment semantic mapping method based on deep convolutional neural network

ActiveCN109636905AProduce global consistencyHigh precisionImage enhancementImage analysisKey frameImage segmentation

The invention provides an environmental semantic mapping method based on a deep convolutional neural network, and the method can build an environmental map containing object category information by combining the advantages of deep learning in the aspect of scene recognition with the autonomous positioning advantages of an SLAM technology. In particular, ORB-is utilized Carrying out key frame screening and inter-frame pose estimation on the input image sequence by the SLAM; Carrying out two-dimensional semantic segmentation by utilizing an improved method based on Deeplab image segmentation; Introducing an upper sampling convolutional layer behind the last layer of the convolutional network; and using the depth information as a threshold signal to control selection of different convolutionkernels, aligning the segmented image and the depth map, and constructing a three-dimensional dense semantic map by using a spatial corresponding relationship between adjacent key frames. According tothe scheme, the image segmentation precision can be improved, and higher composition efficiency is achieved.

Owner:NORTHEASTERN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com