Patents

Literature

135 results about "Video content analysis" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Video content analysis (also video content analytics, VCA) is the capability of automatically analyzing video to detect and determine temporal and spatial events. This technical capability is used in a wide range of domains including entertainment, health-care, retail, automotive, transport, home automation, flame and smoke detection, safety and security. The algorithms can be implemented as software on general purpose machines, or as hardware in specialized video processing units.

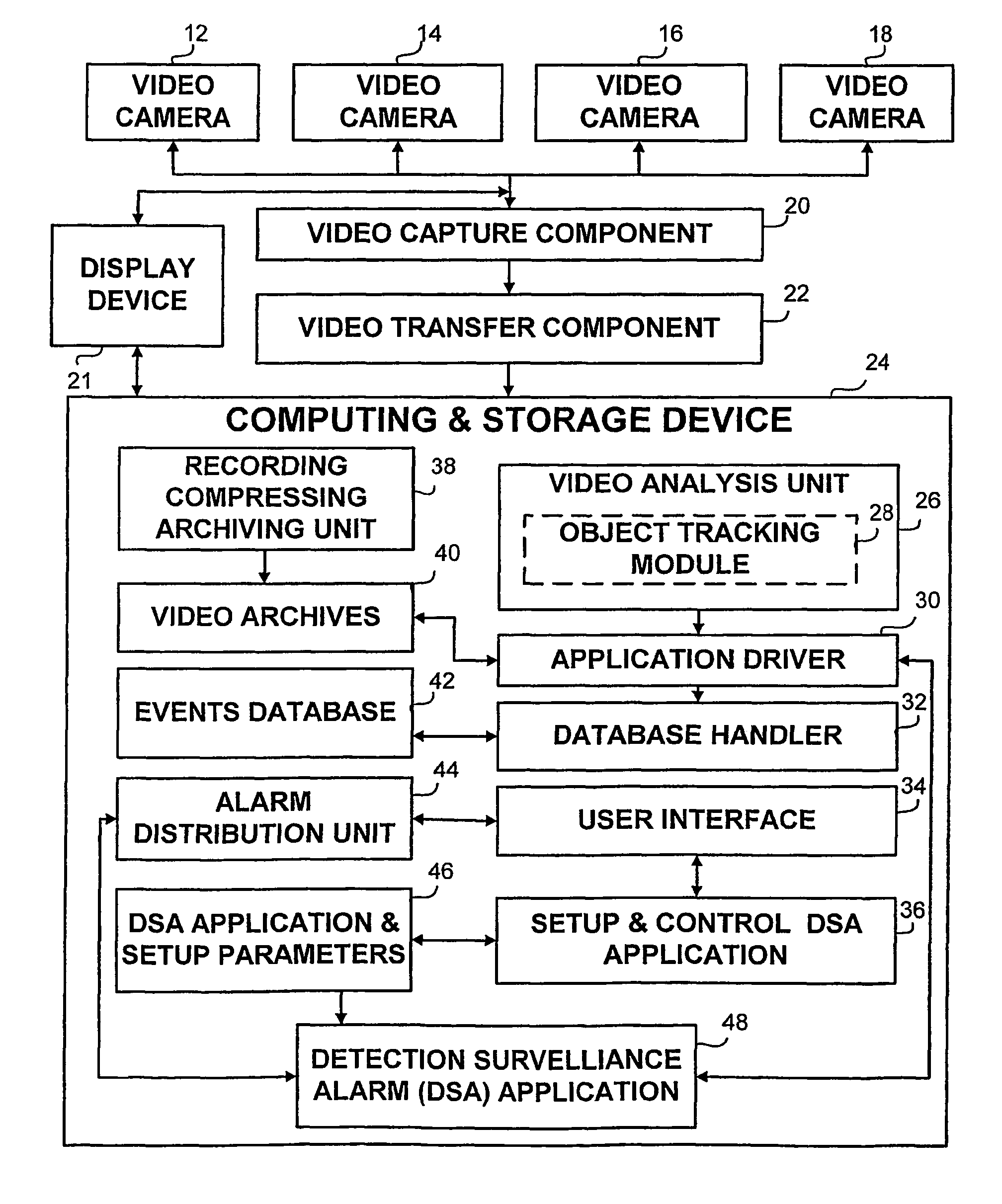

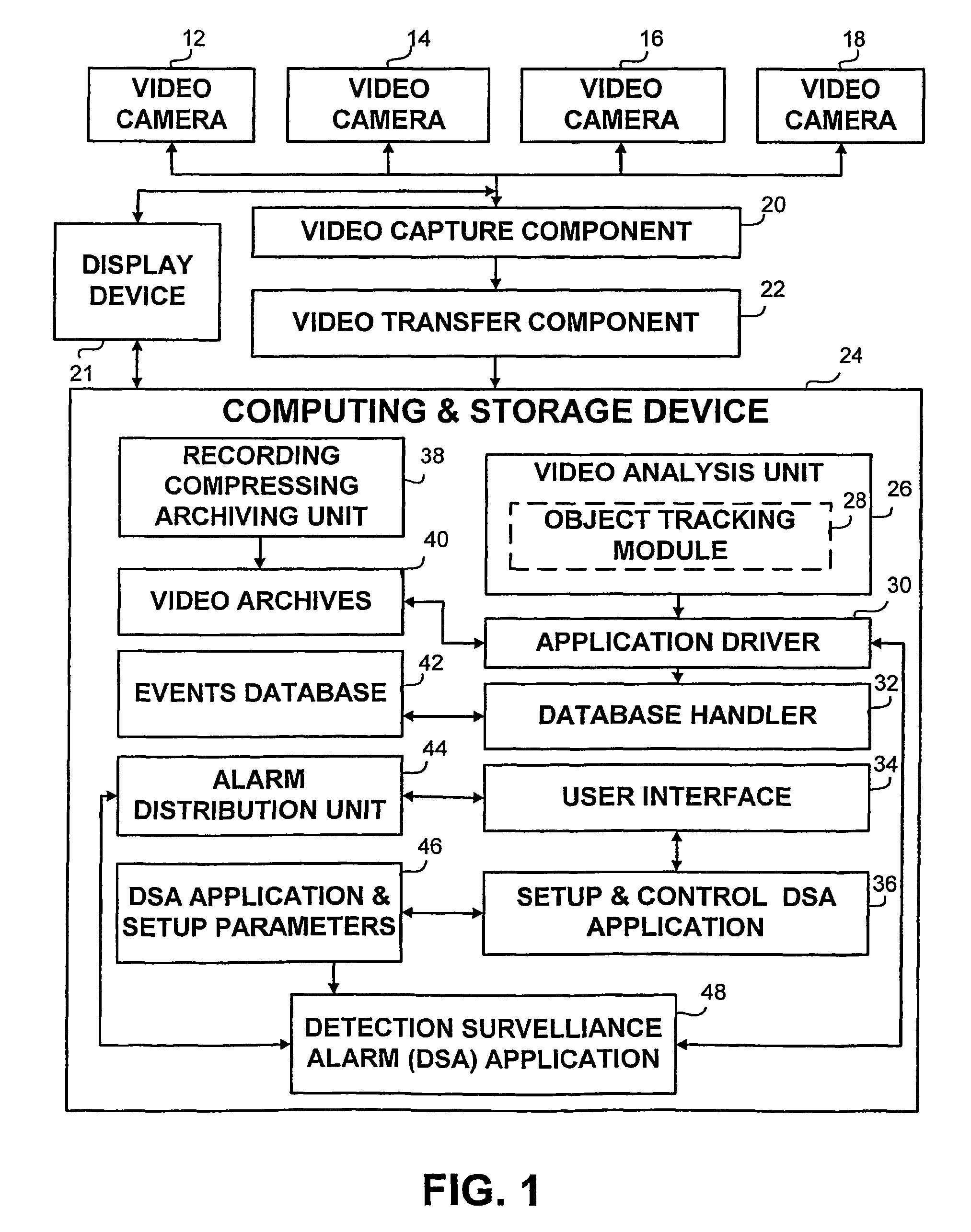

System and method for video content analysis-based detection, surveillance and alarm management

A surveillance system and method for the automatic detection of potential alarm situation via a recorded surveillance content analysis and for the management of the detected unattended object situation. The system and method are operative in capturing surveillance content, analyzing the captured content and providing in real time a set of alarm messages to a set of diverse devices. The system provides event based debriefing according to captured objects captured by one or more cameras covering different scenes. The invention is implemented in the context of unattended objects (such as luggage, vehicles or persons), parking or driving in restricted zones, controlling access of persons into restricted zones, preventing loss of objects such as luggage or persons and counting of persons.

Owner:QOGNIFY

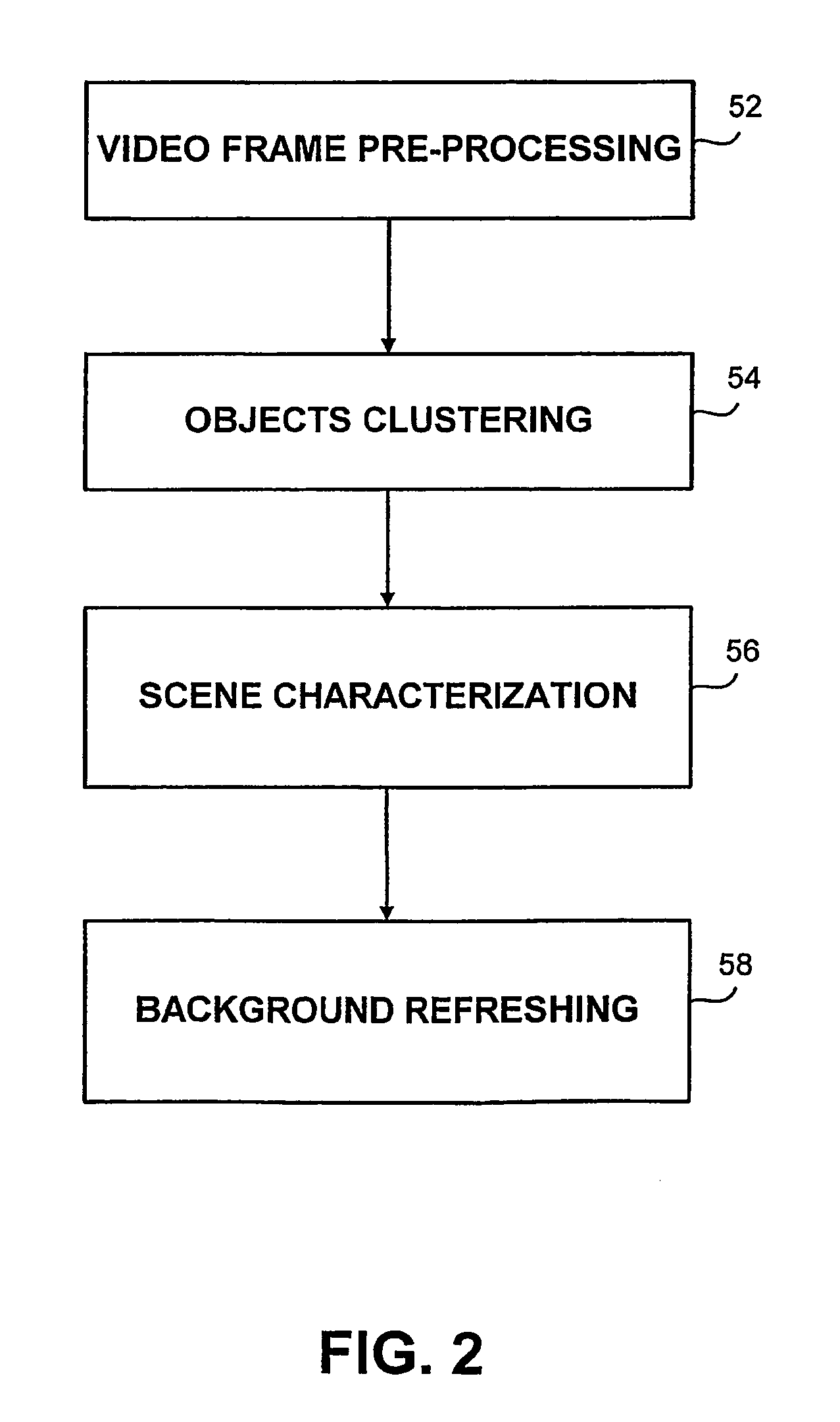

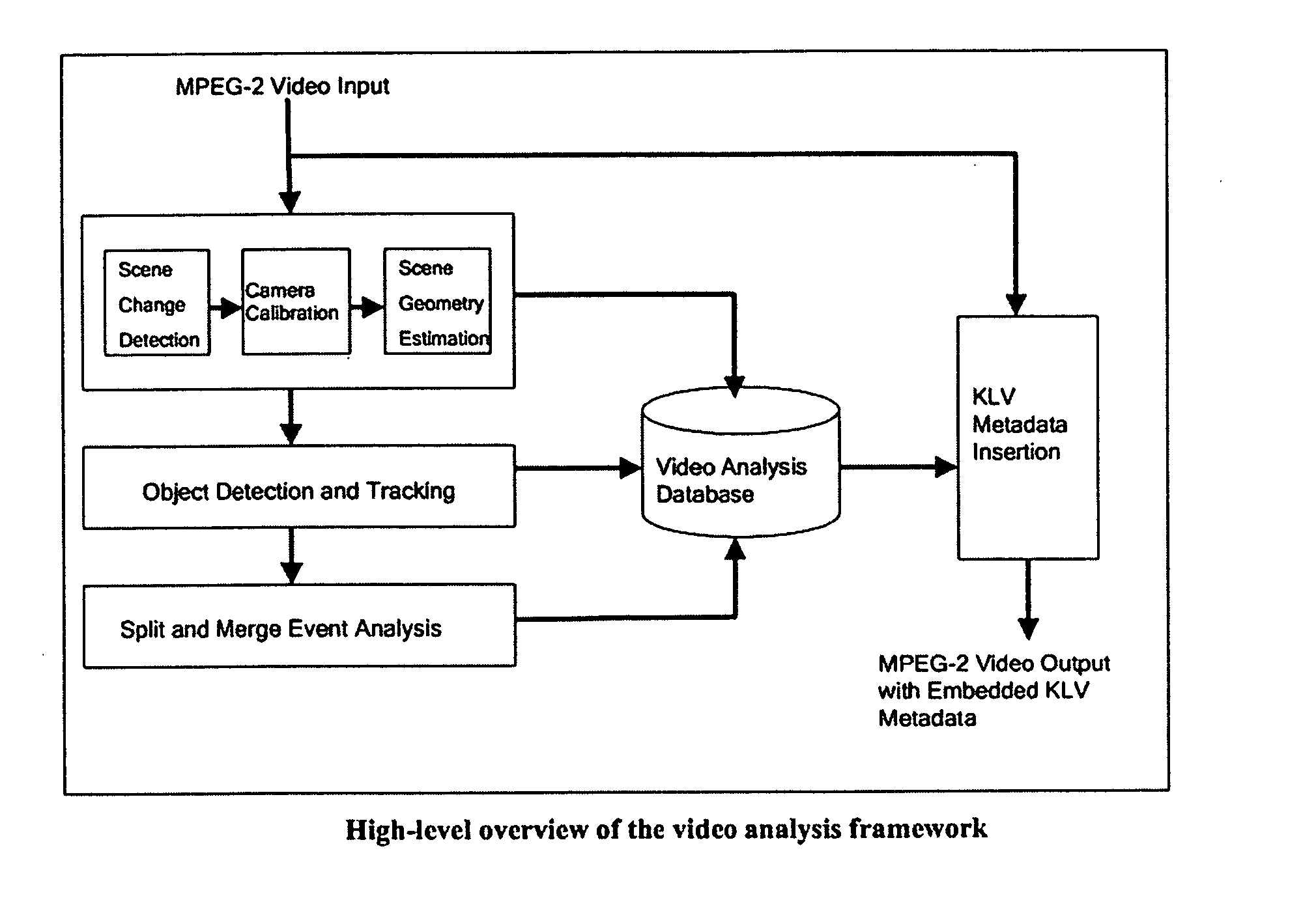

Split and merge behavior analysis and understanding using Hidden Markov Models

InactiveUS20040113933A1Shorten the timeImprove accuracy and productivityImage analysisCharacter and pattern recognitionProcess supportHide markov model

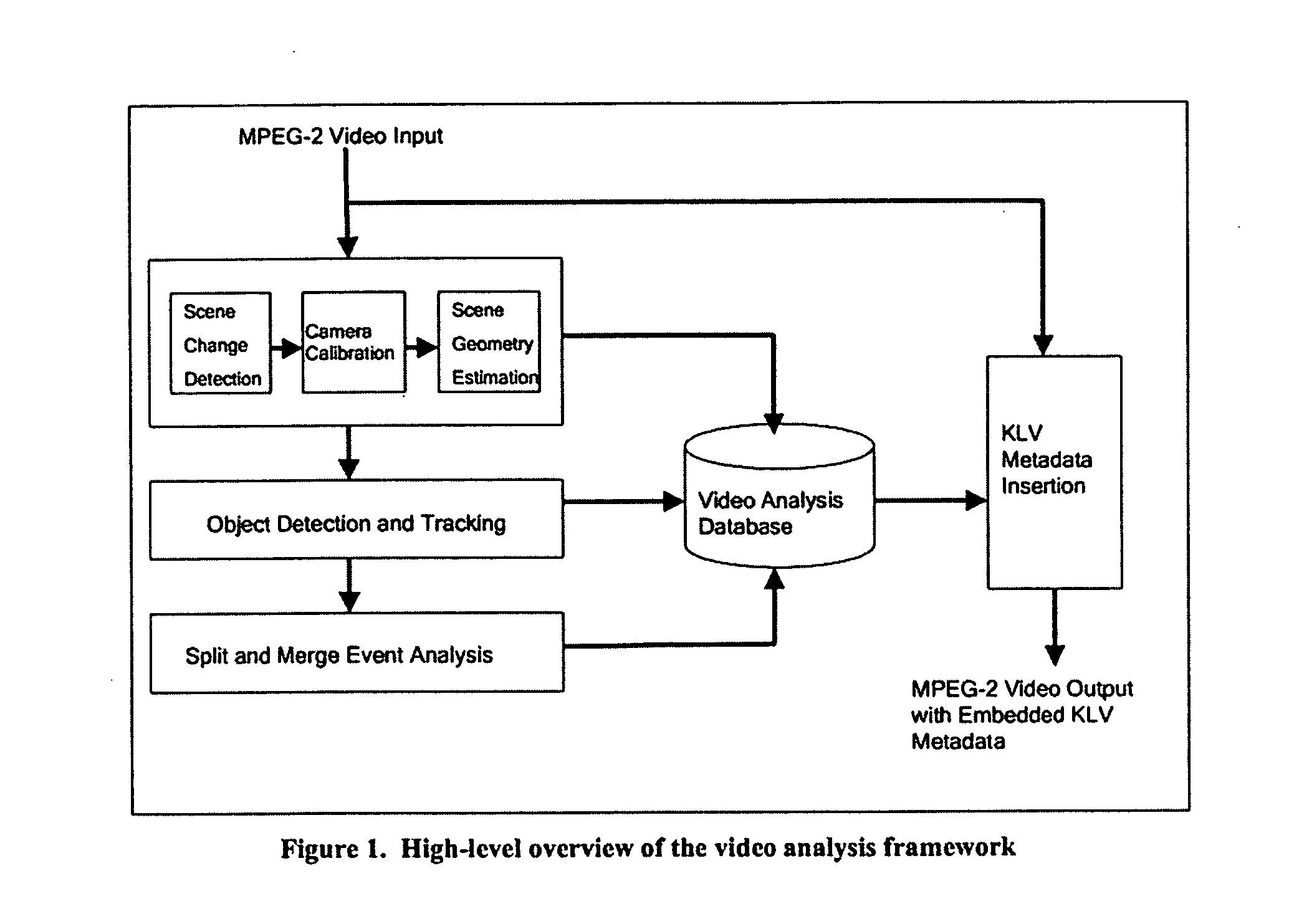

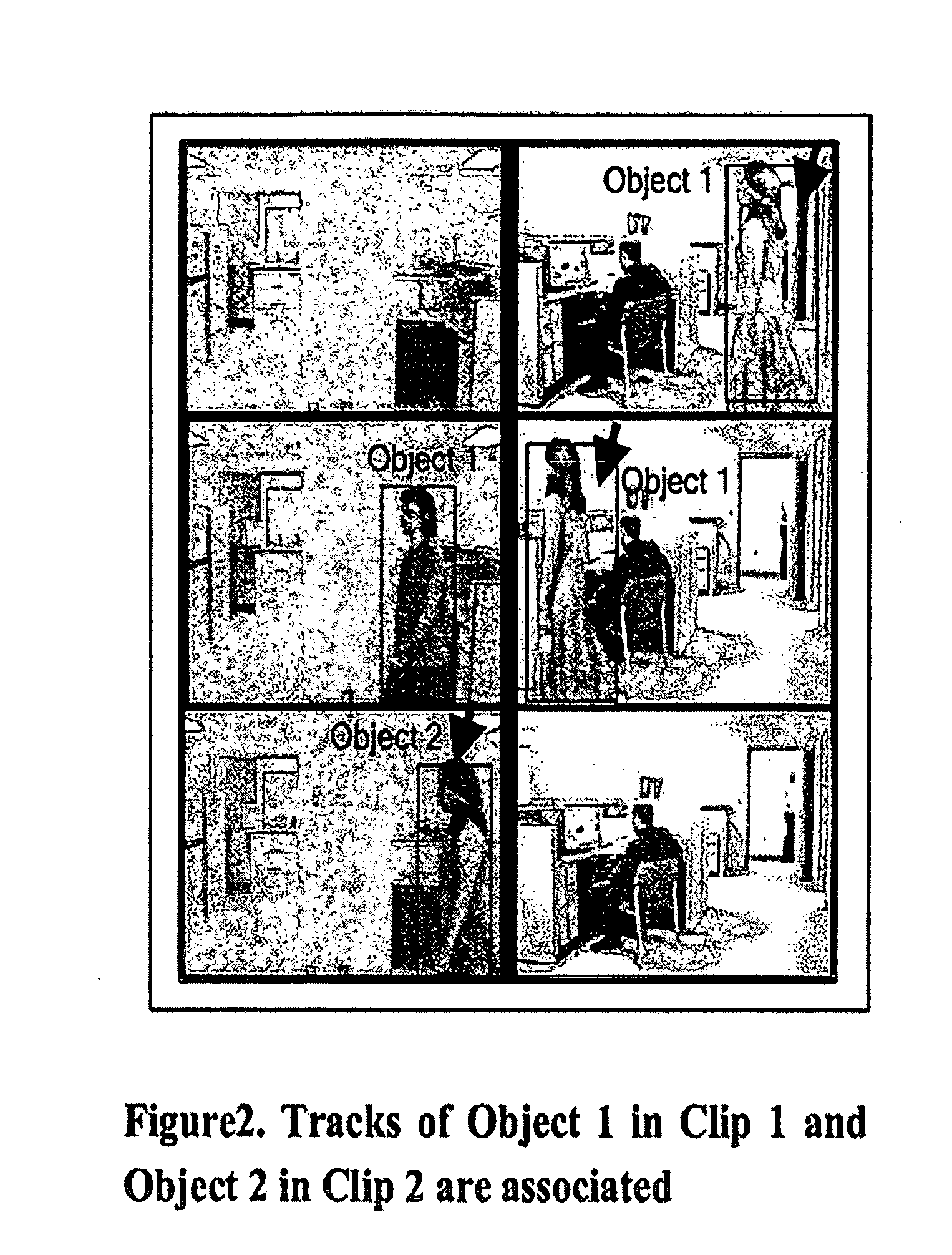

A process for video content analysis to enable productive surveillance, intelligence extraction, and timely investigations using large volumes of video data. The process for video analysis includes: automatic detection of key split and merge events from video streams typical of those found in area security and surveillance environments; and the efficient coding and insertion of necessary analysis metadata into the video streams. The process supports the analysis of both live and archived video from multiple streams for detecting and tracking the objects in a way to extract key split and merge behaviors to detect events. Information about the camera, scene, objects and events whether measured or inferred, are embedded in the video stream as metadata so the information will stay intact when the original video is edited, cut, and repurposed.

Owner:NORTHROP GRUMAN CORP

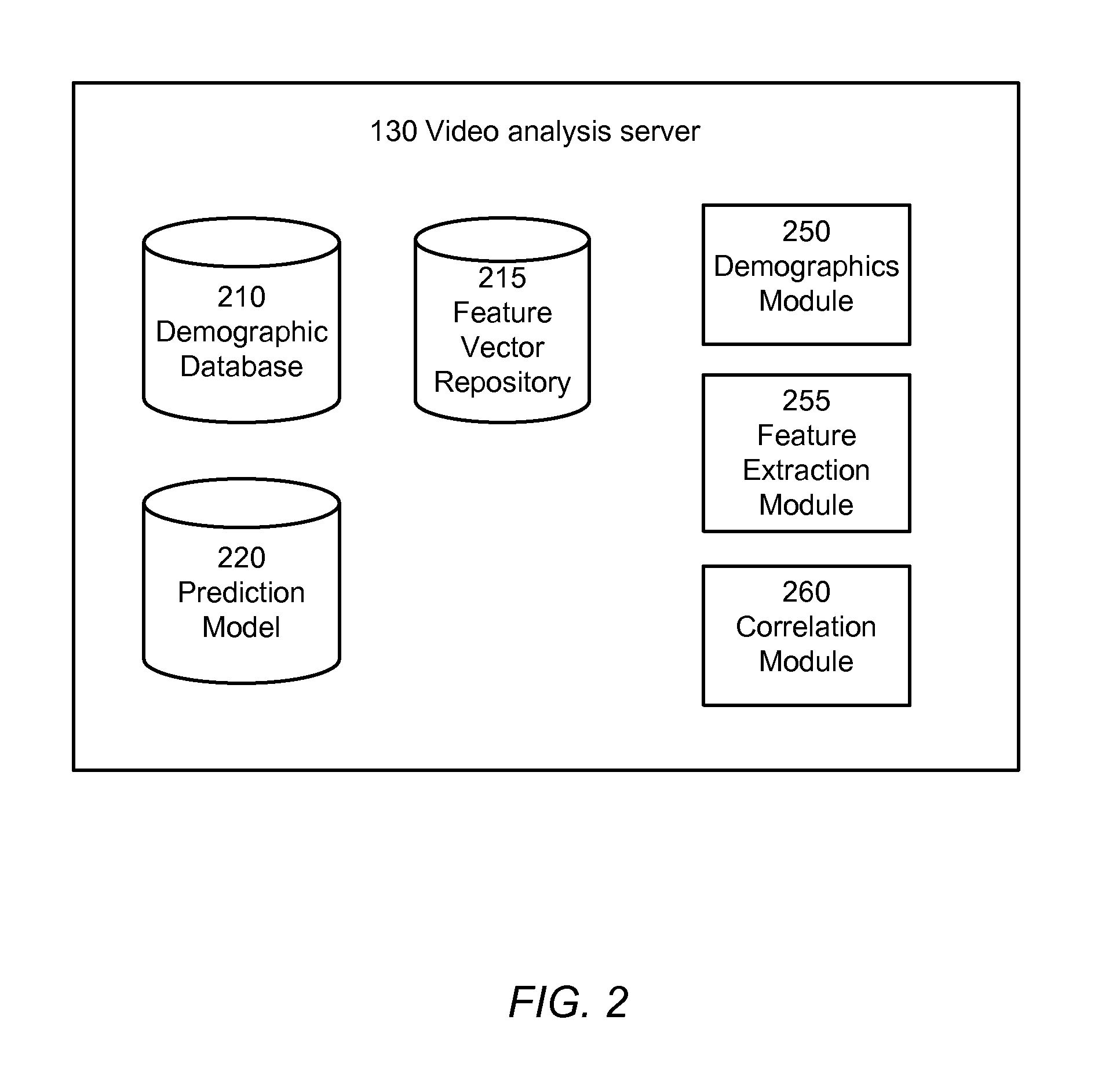

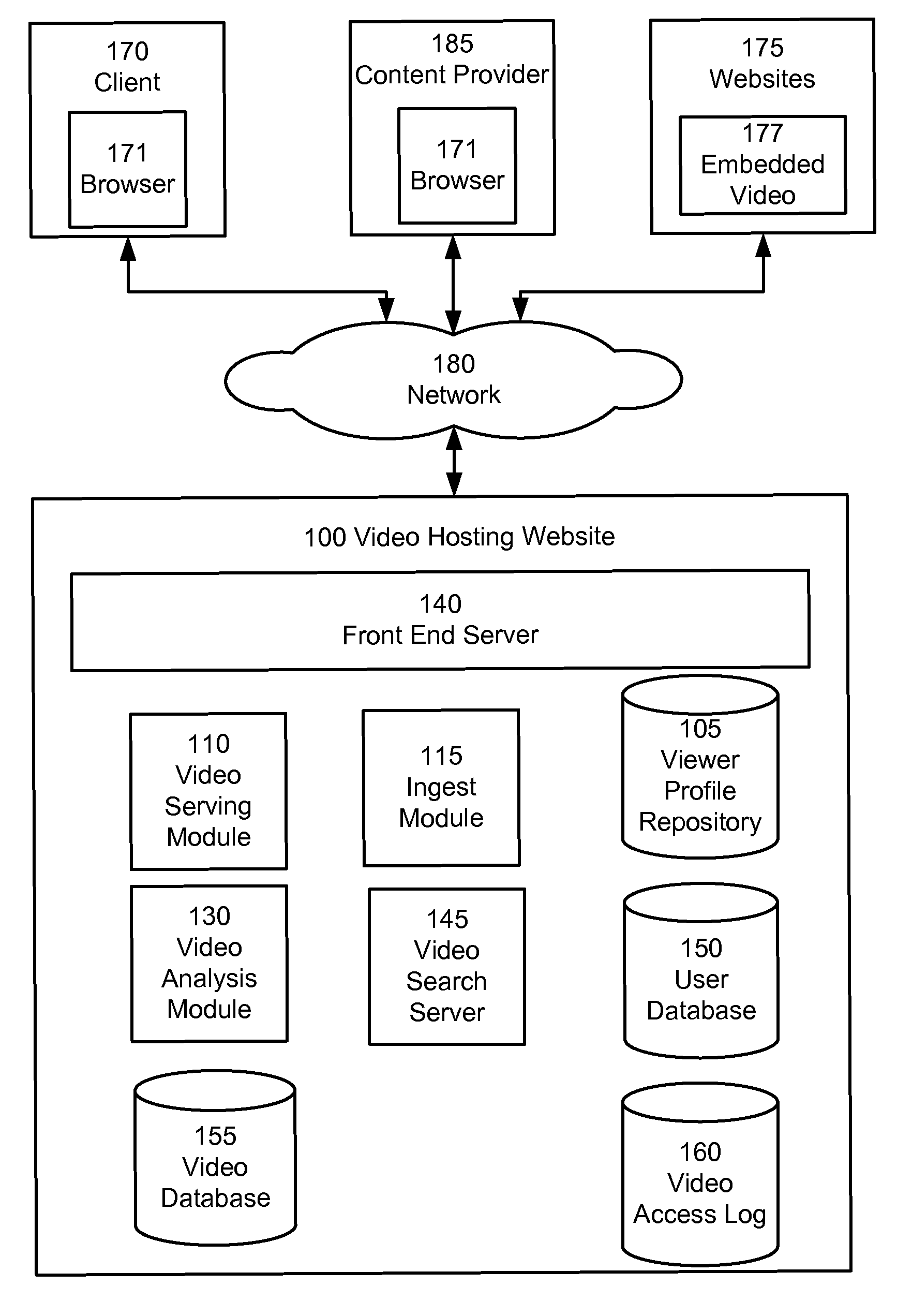

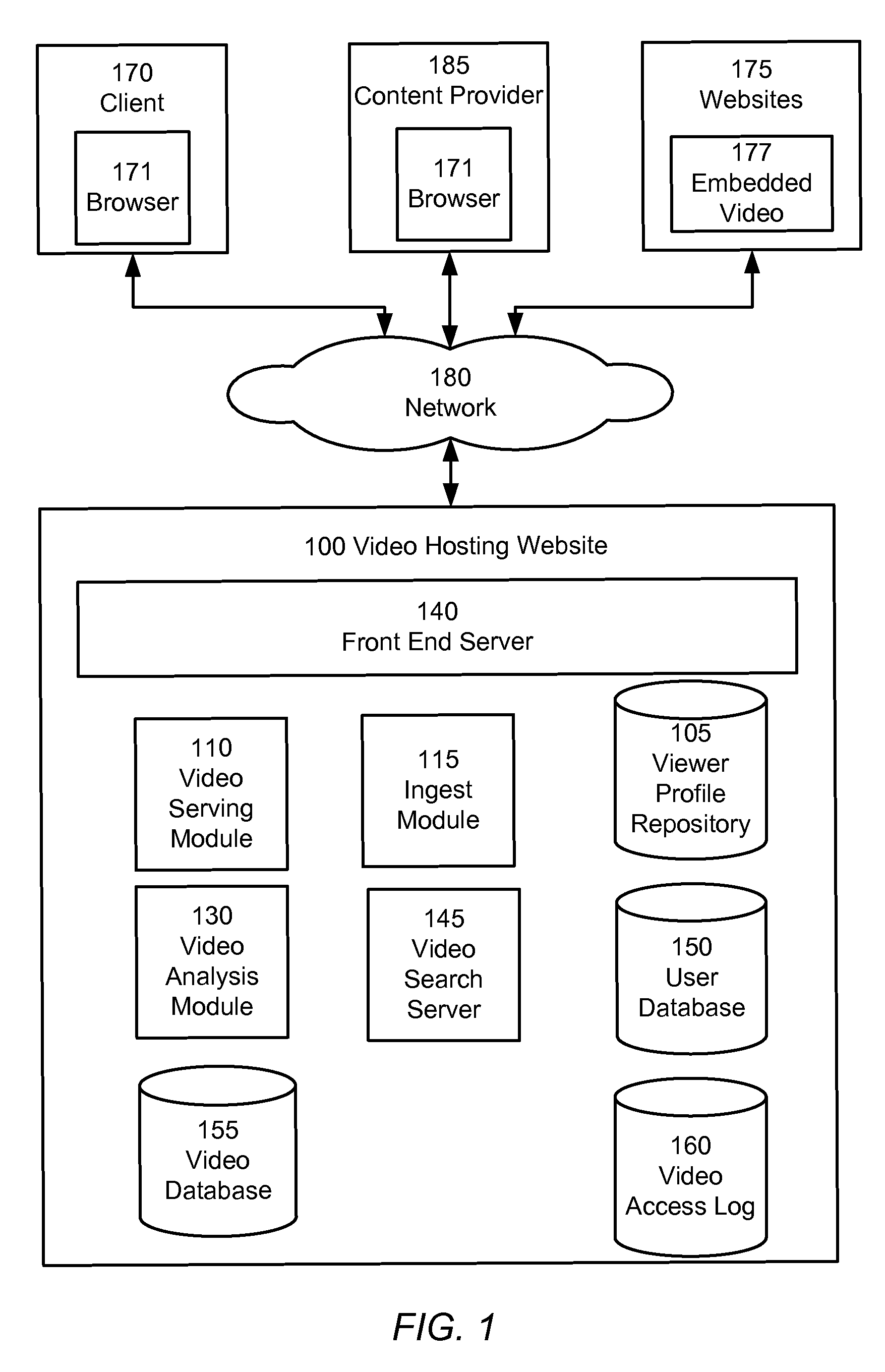

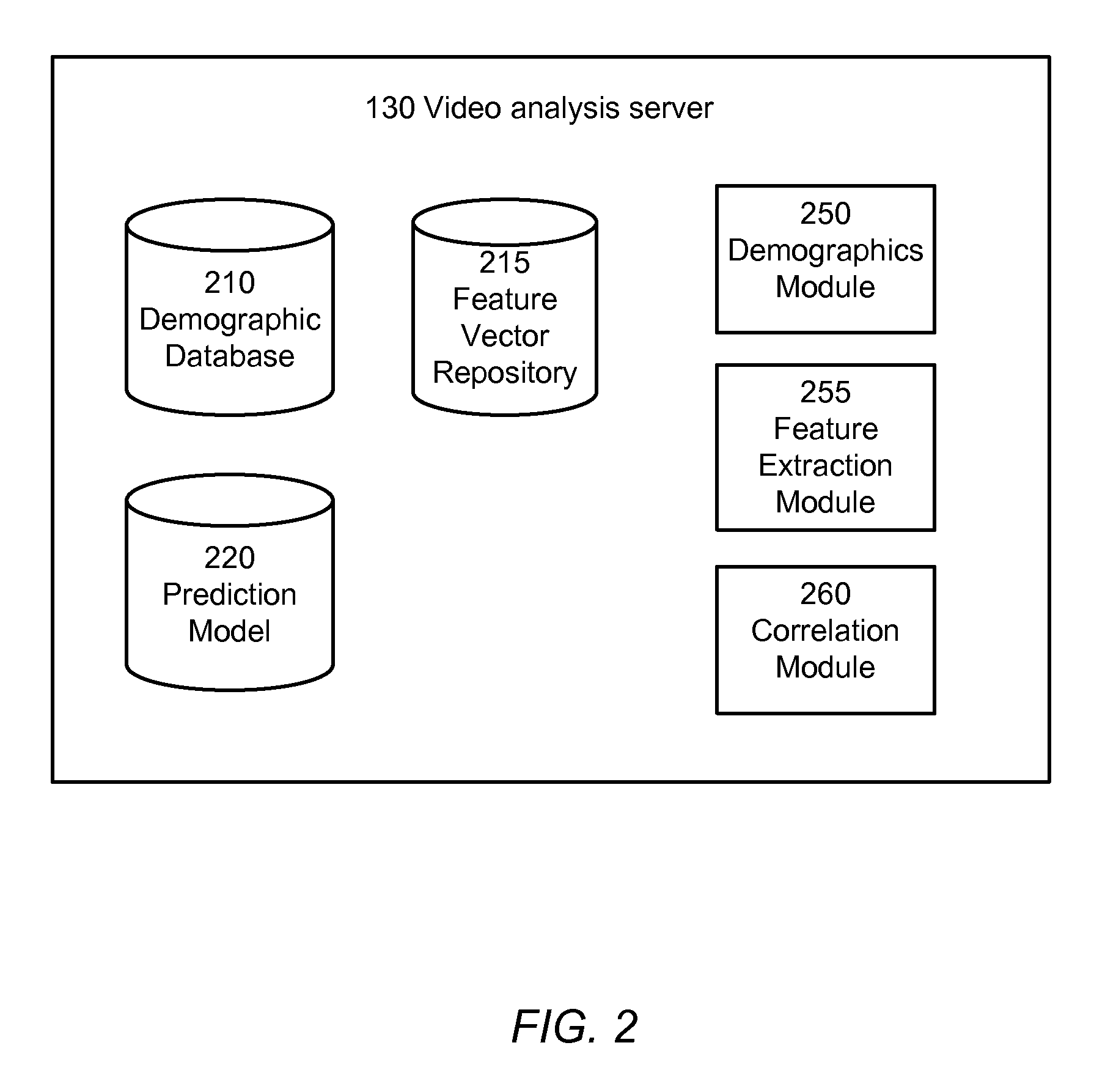

Video content analysis for automatic demographics recognition of users and videos

InactiveUS20100191689A1Metadata video data retrievalCharacter and pattern recognitionVideo content analysisDemographic data

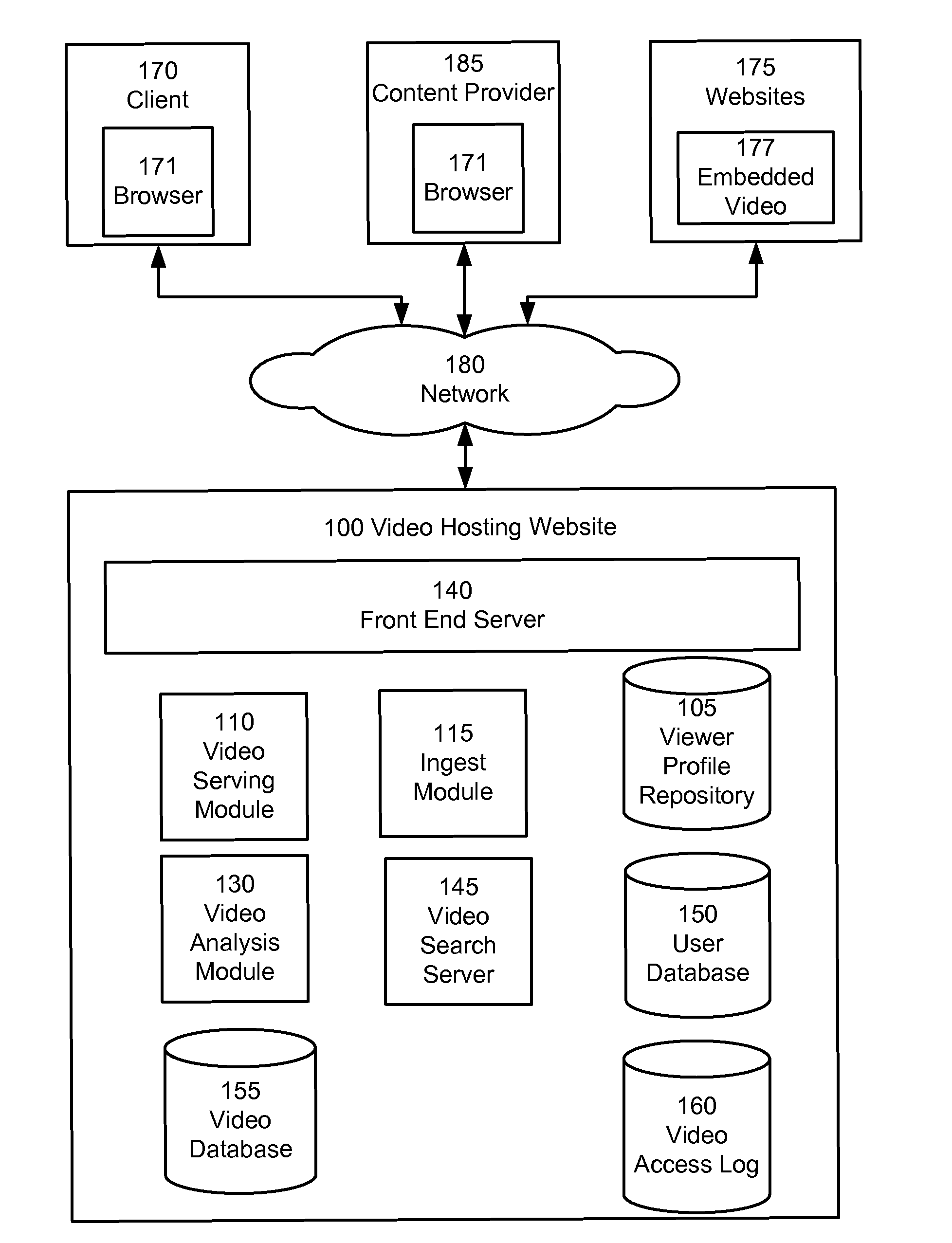

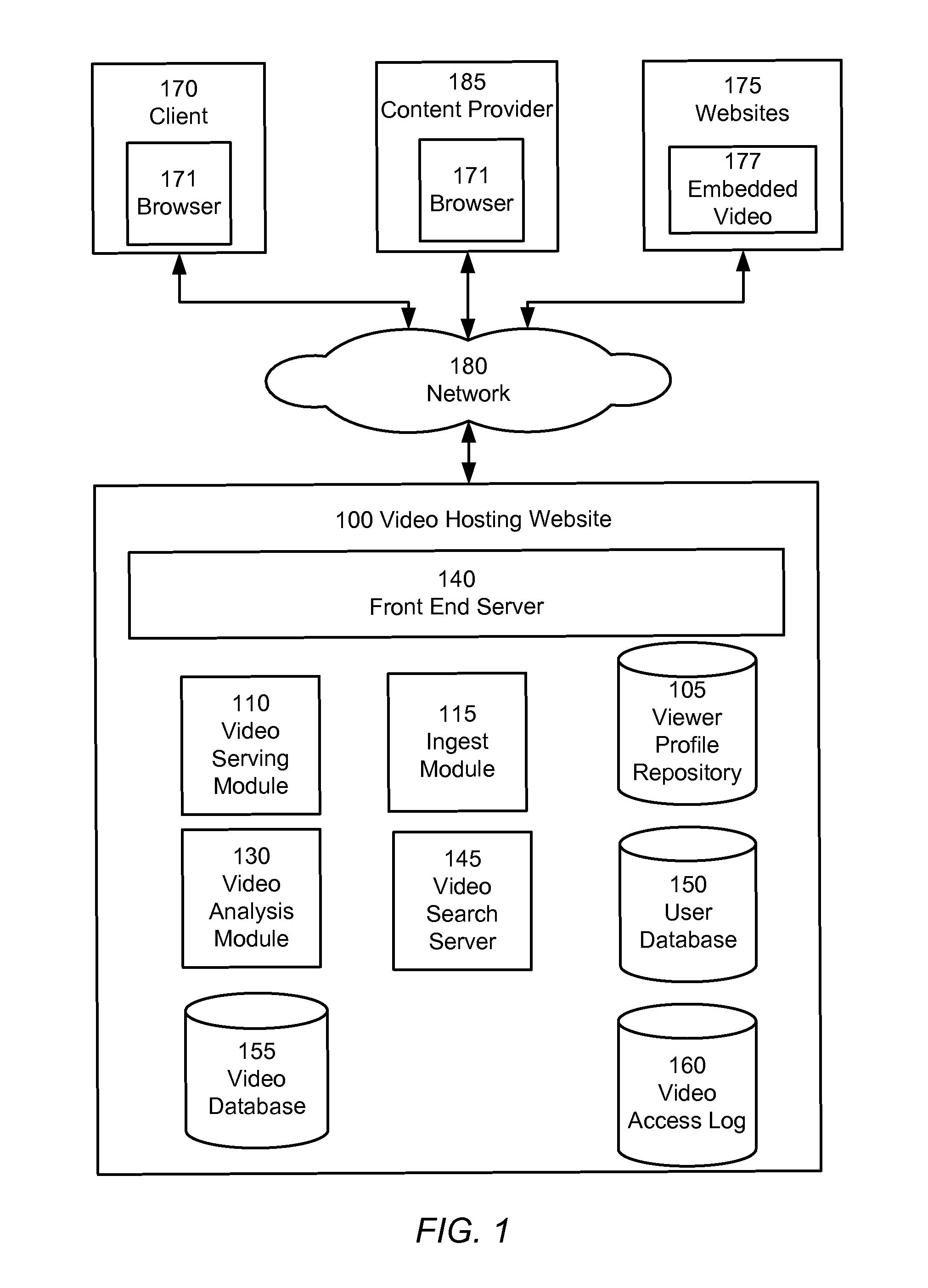

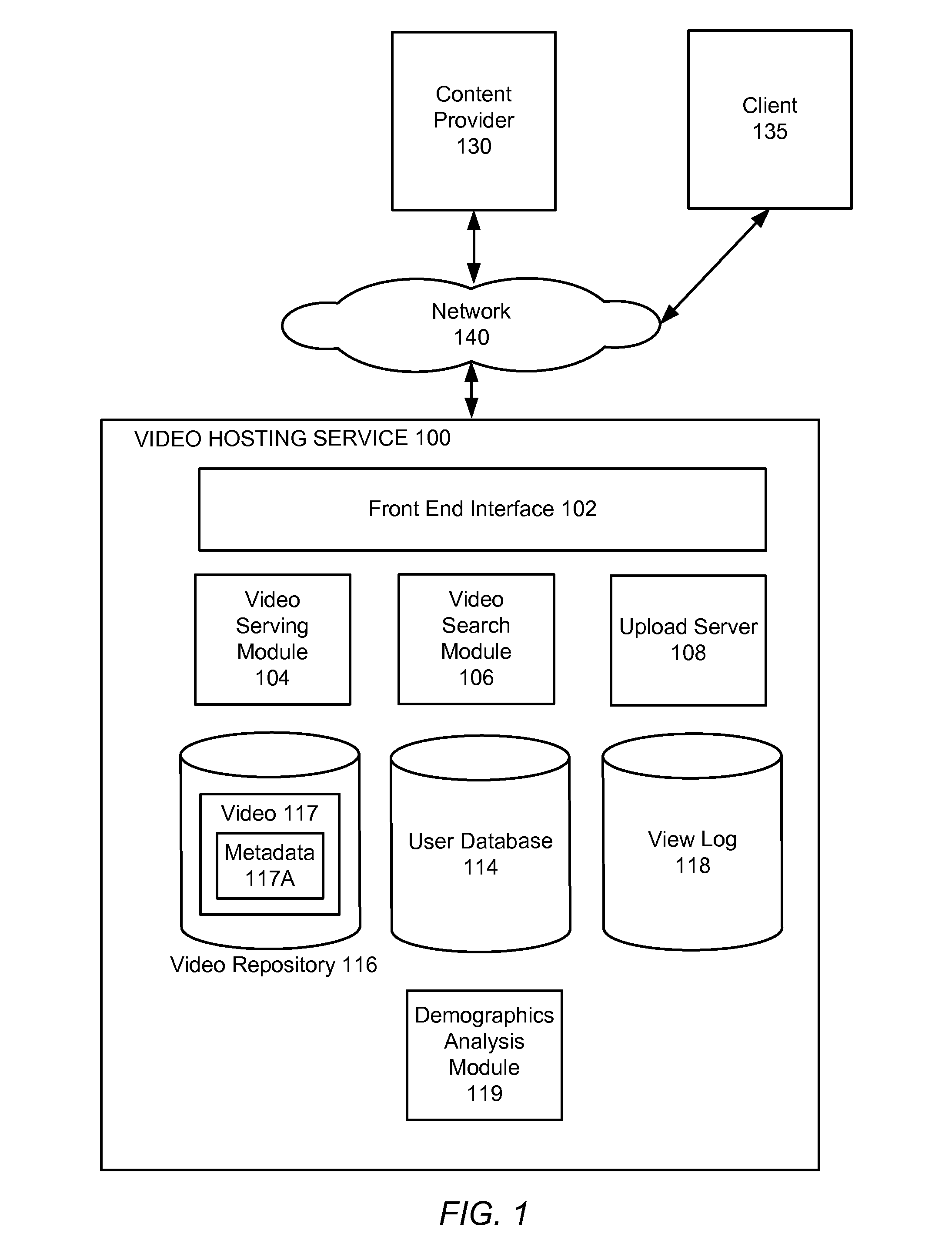

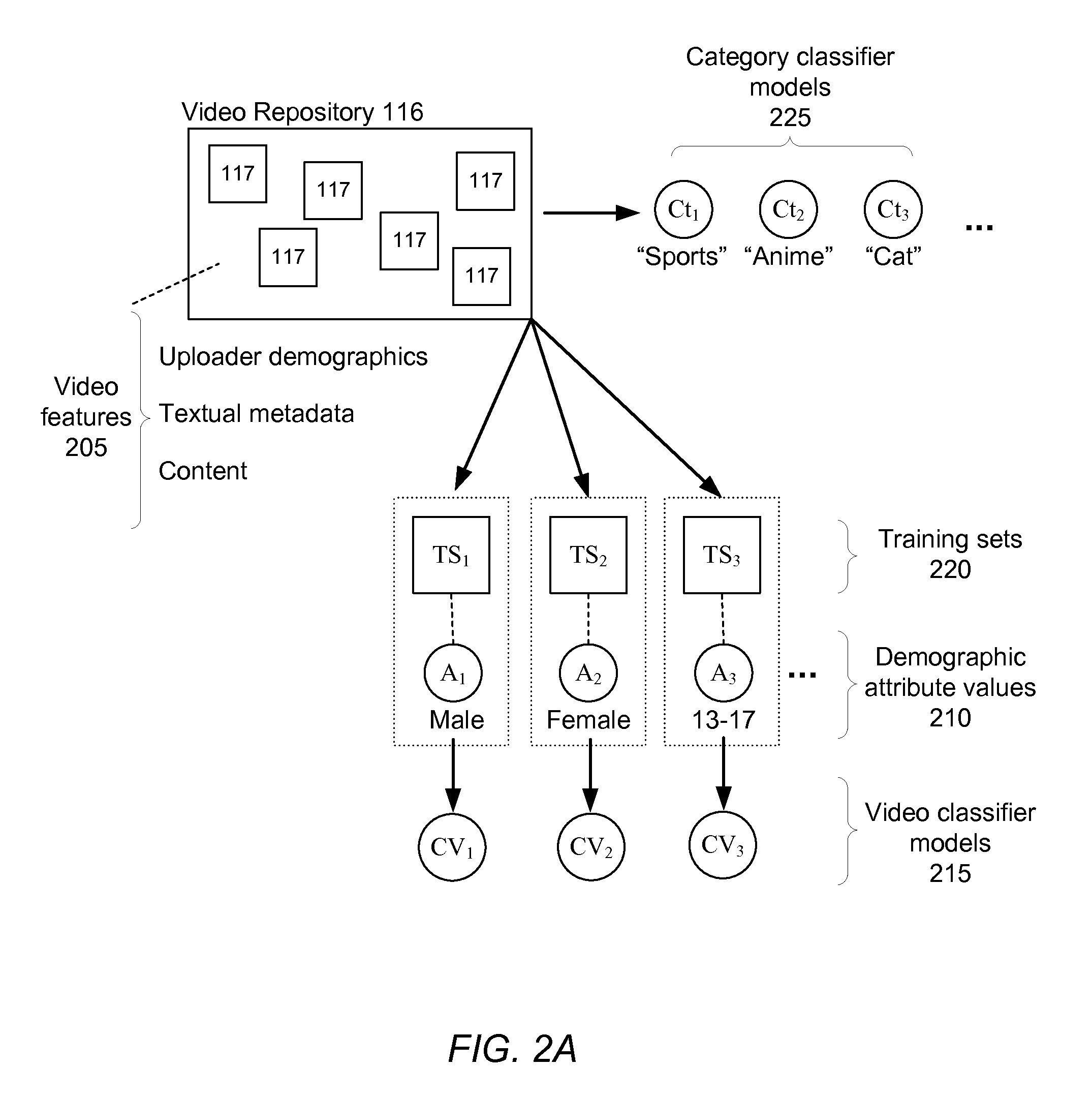

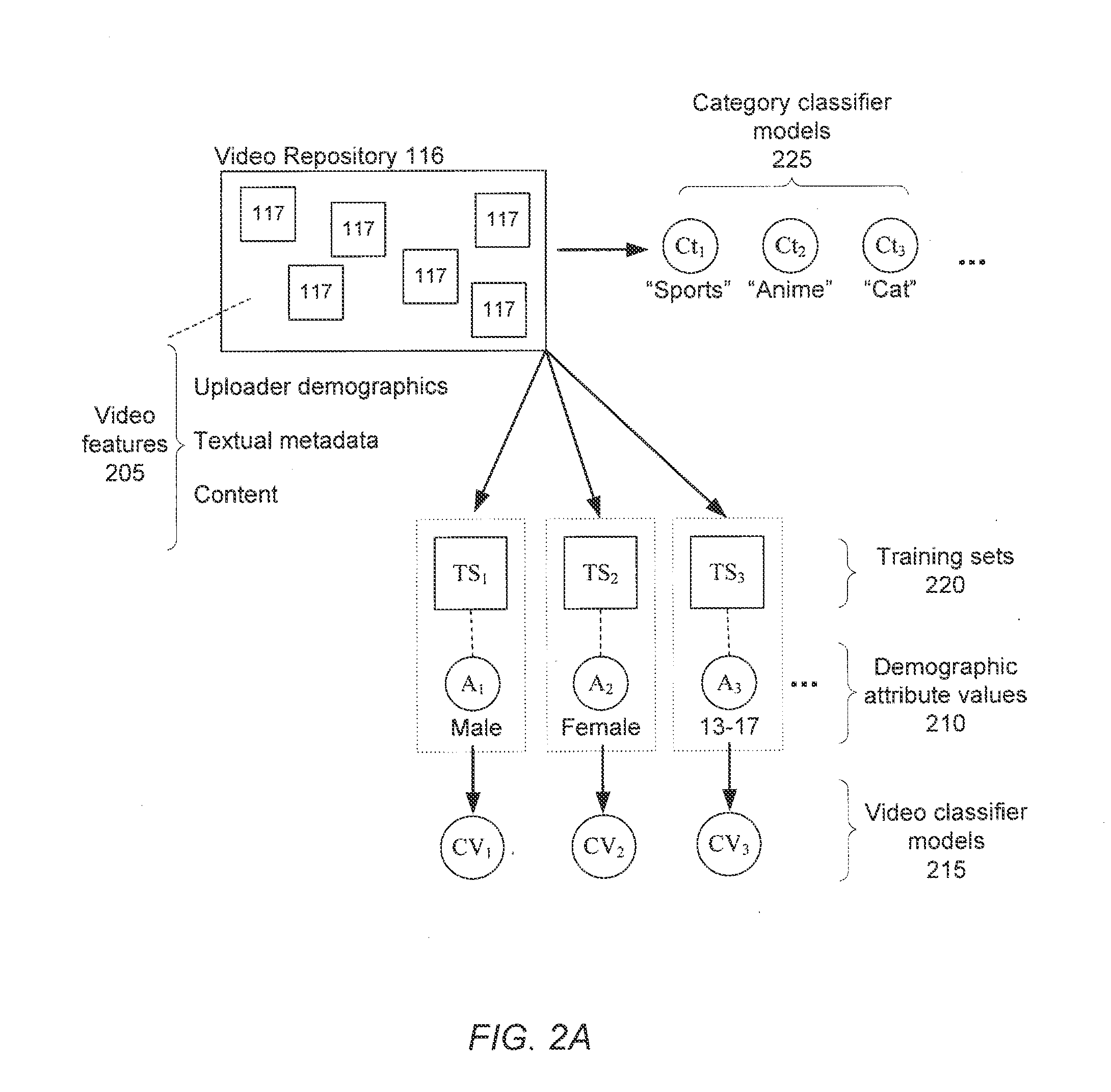

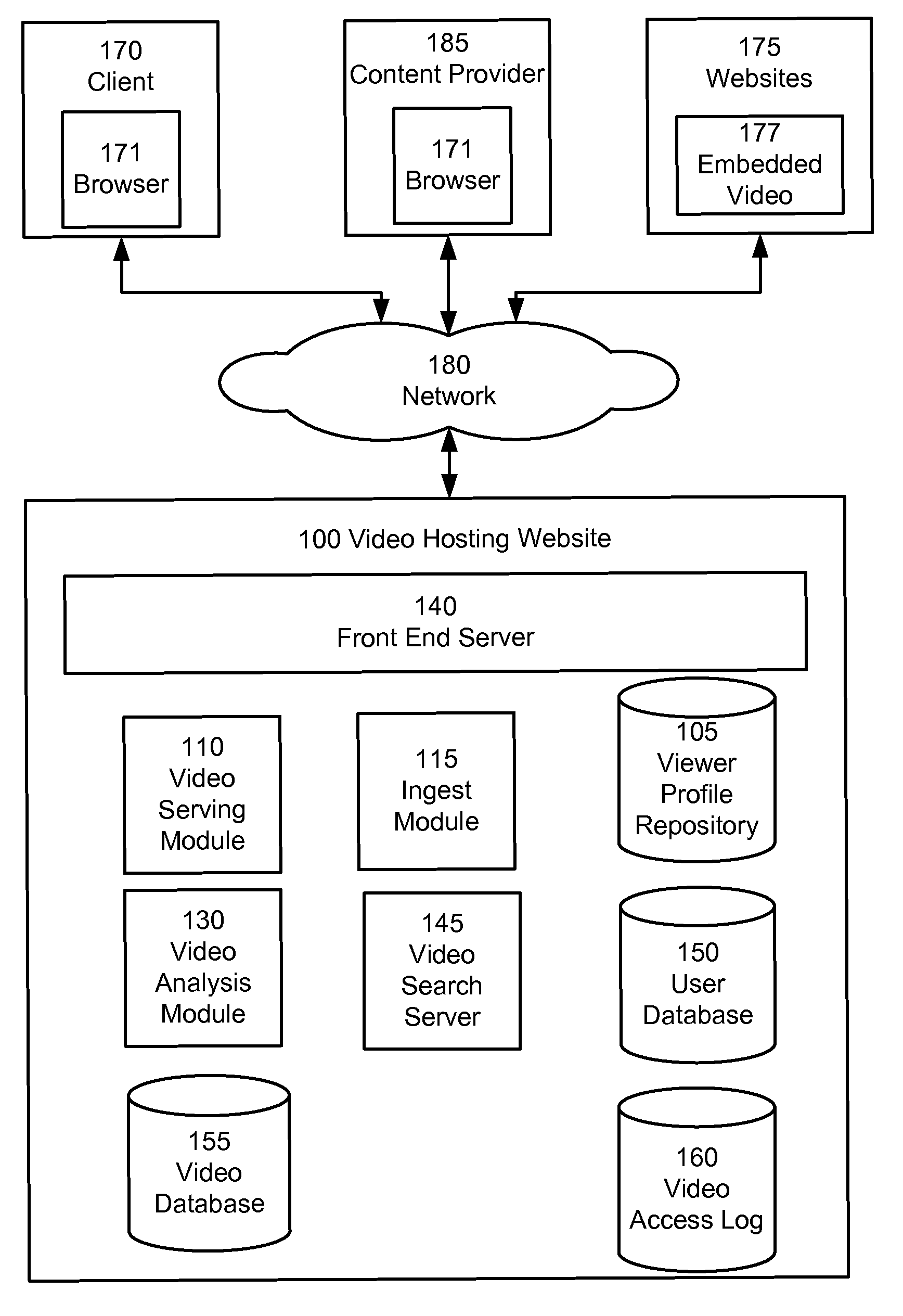

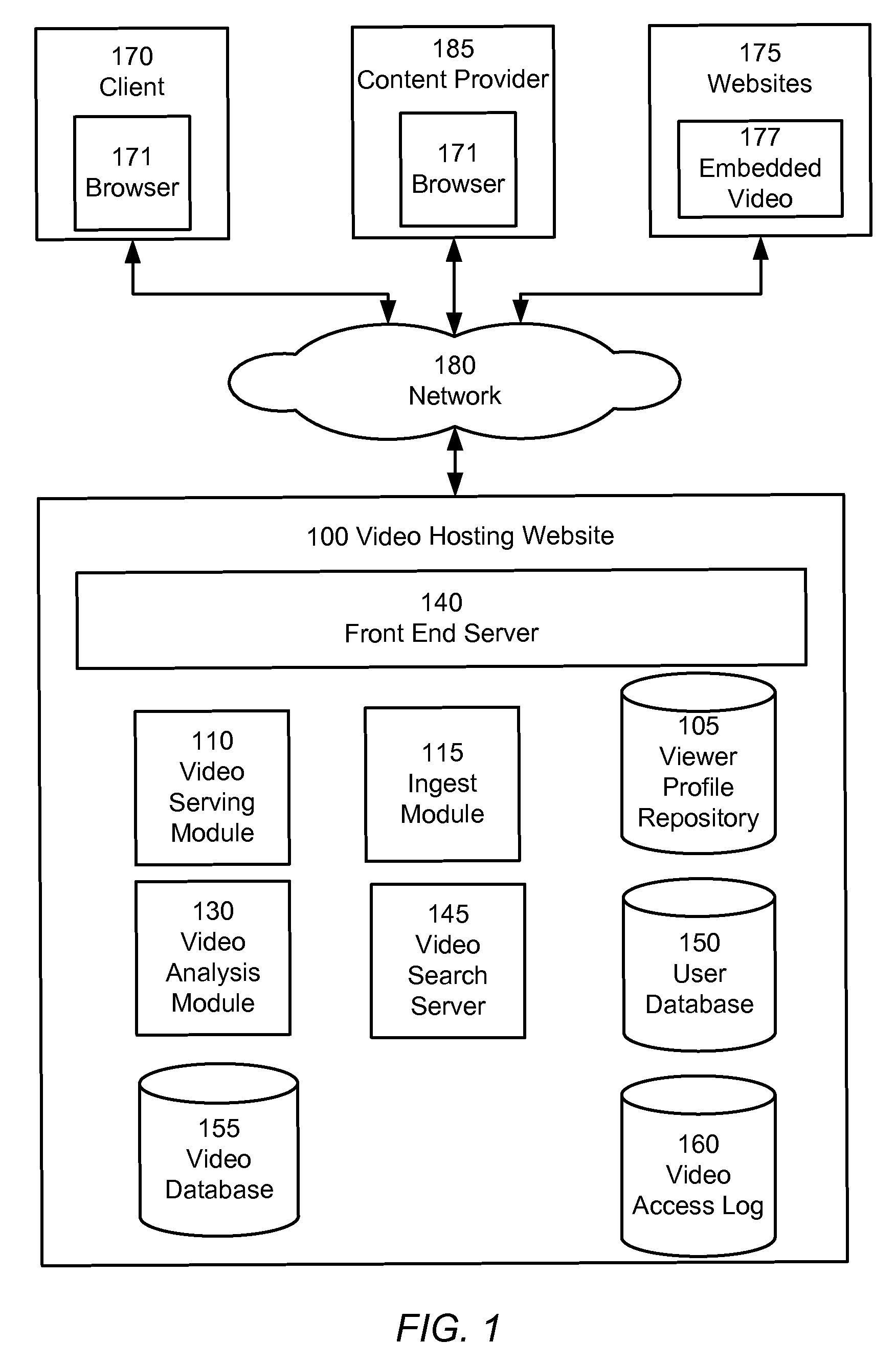

A video demographics analysis system selects a training set of videos to use to correlate viewer demographics and video content data. The video demographics analysis system extracts demographic data from viewer profiles related to videos in the training set and creates a set of demographic distributions, and also extracts video data from videos in the training set. The video demographics analysis system correlates the viewer demographics with the video data of videos viewed by that viewer. Using the prediction model produced by the machine learning process, a new video about which there is no a priori knowledge can be associated with a predicted demographic distribution specifying probabilities of the video appealing to different types of people within a given demographic category, such as people of different ages within an age demographic category.

Owner:GOOGLE LLC

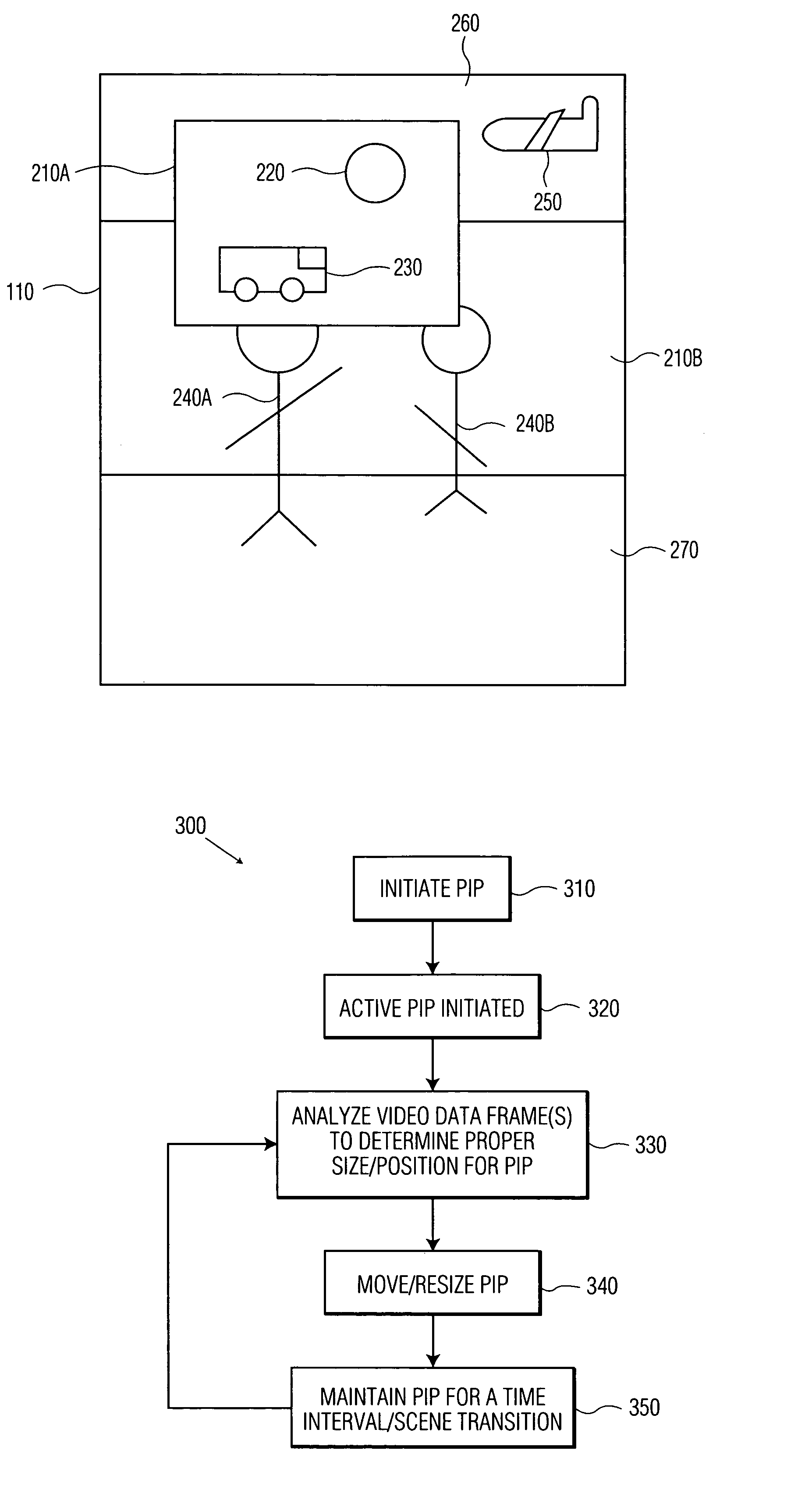

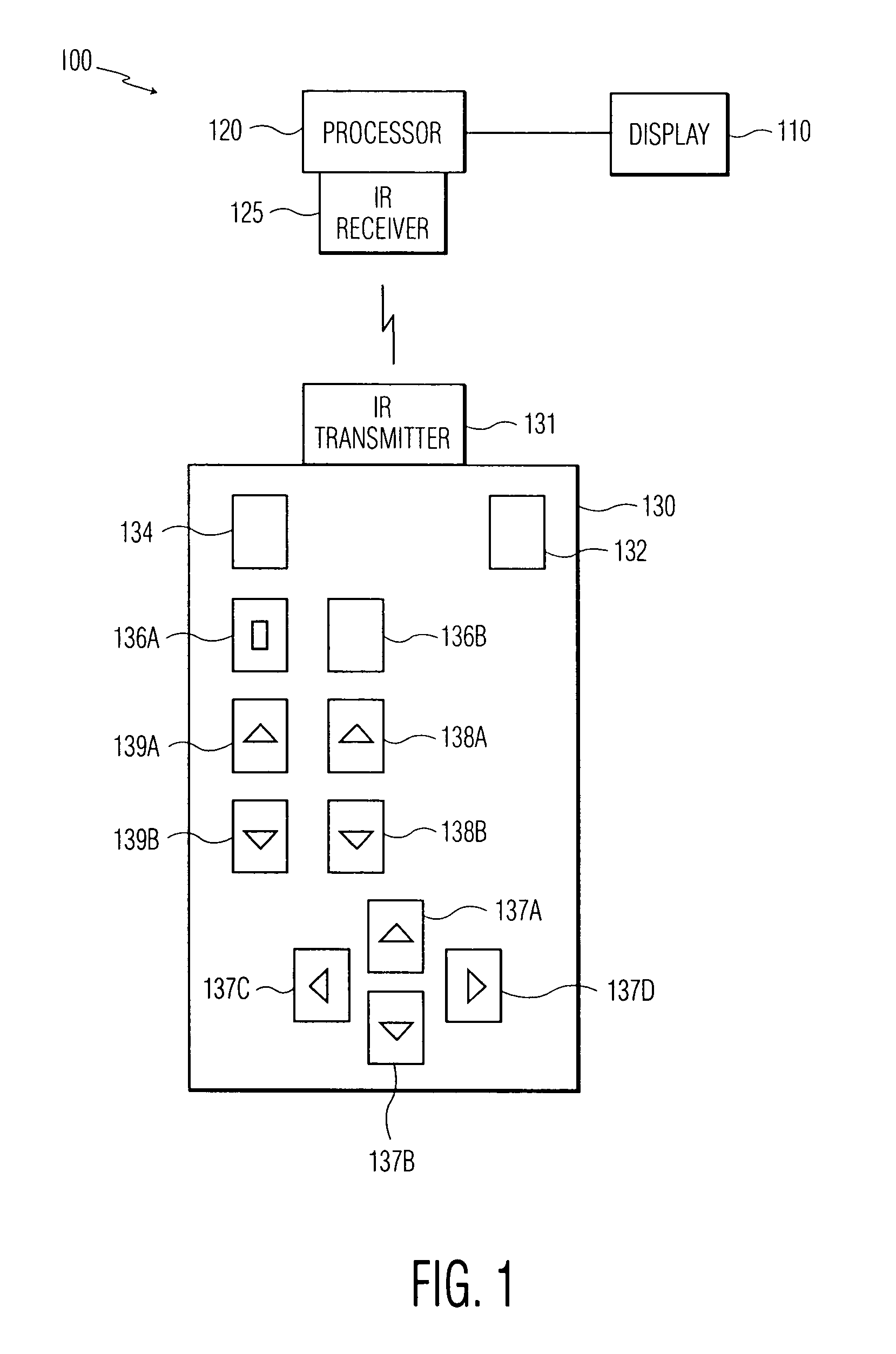

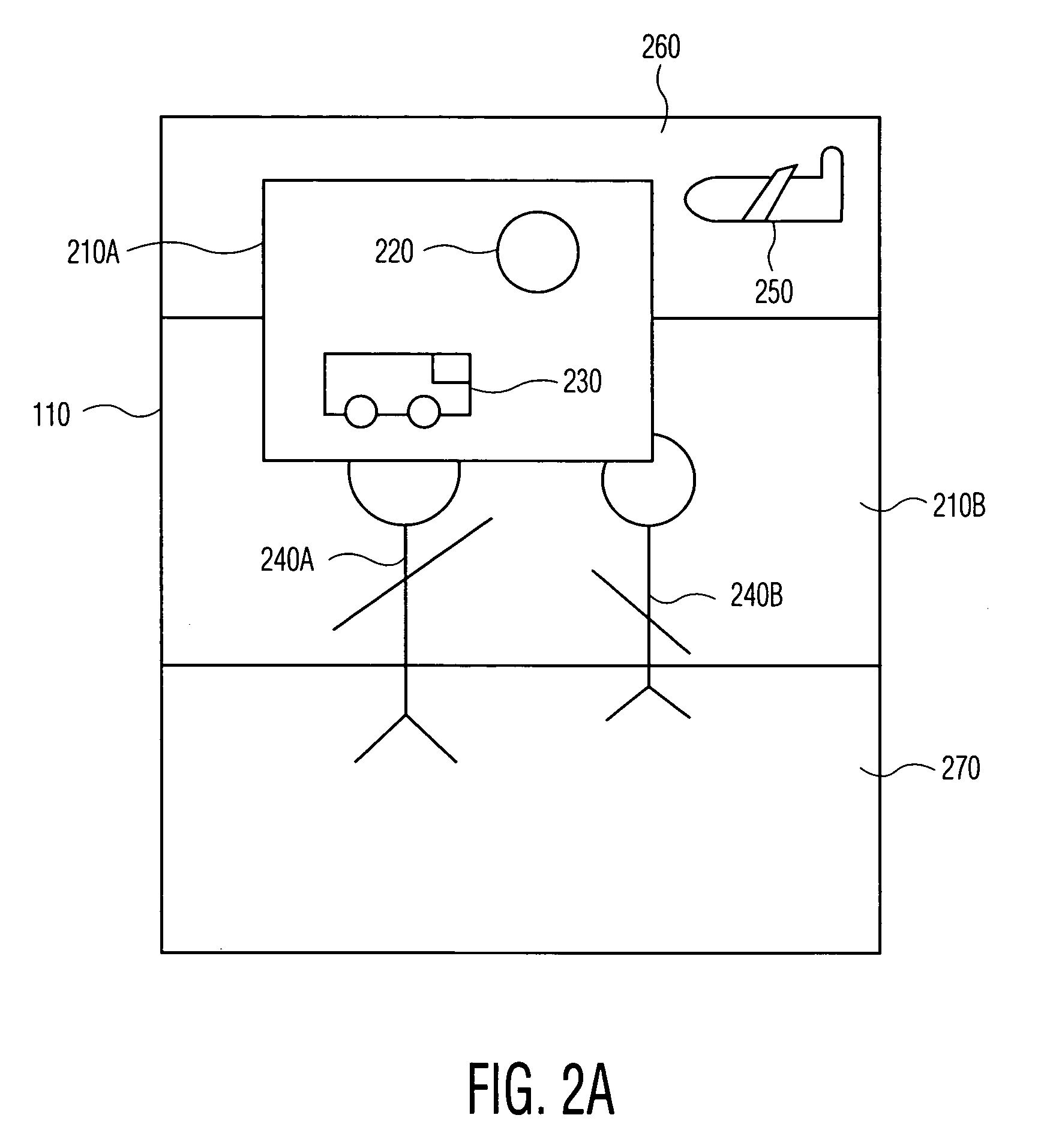

Picture-in-picture repositioning and/or resizing based on video content analysis

ActiveUS7206029B2Television system detailsCharacter and pattern recognitionVideo content analysisDisplay device

A video display device, such as a television, having a picture-in-picture (PIP) display and a processor. The processor detects cues, such as color / texture / events / behaviors, etc., present in a primary display image, that is overlaid by the PIP. These cues are utilized by the processor to determine important and relatively unimportant portions of the primary display image. The processor then determines whether a change in a display characteristic of the PIP leads to the PIP obscuring less of an important portion of the primary display image, and if so, the processor changes the display characteristic of the PIP. Display characteristics of the PIP that may be changed by the processor include the PIP position, size, and transparency. The processor may also utilize a combination of the detected cues to determine important and relatively unimportant portions of the primary display image. The processor may also change combinations of display characteristics of the PIP.

Owner:ARRIS ENTERPRISES LLC

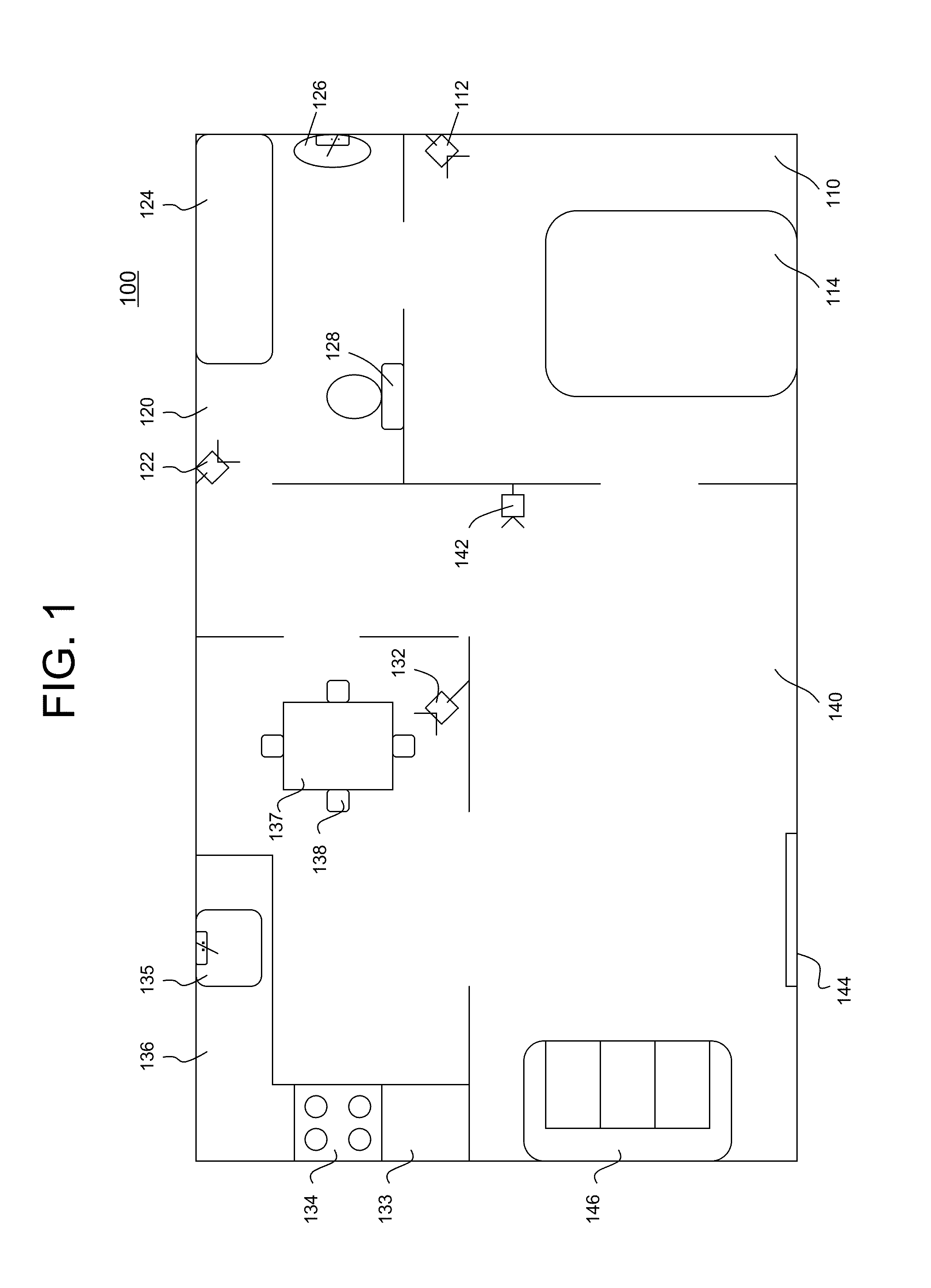

System and method for home health care monitoring

A home health care monitoring method and system are disclosed. In one embodiment, a method includes: capturing a plurality of video sequences from a plurality of respective cameras disposed in different locations within a patient's home, including capturing two-dimensional image data and depth data for each video sequence; defining a plurality of events to monitor associated with the patient, the events including, during a predetermined time period, at least (1) the patient's body being in a particular position for at least a predetermined amount of time; performing depth-enhanced video content analysis on the plurality of video sequences to determine whether the event (1) has occurred. The video content analysis may include: for each of at least two cameras: automatically detecting a human object based on the two-dimensional image data; using the depth data to confirm whether the human object is in the particular position; and based on the confirmation, tracking an amount of time that the human object is in the particular position; and then determining that the event (1) has occurred based on the collective tracked amount of time.

Owner:MOTOROLA SOLUTIONS INC

Method And System For Semantically Segmenting Scenes Of A Video Sequence

ActiveUS20070201558A1Easy to browseLaborious processTelevision system detailsPicture reproducers using cathode ray tubesCluster algorithmVideo content analysis

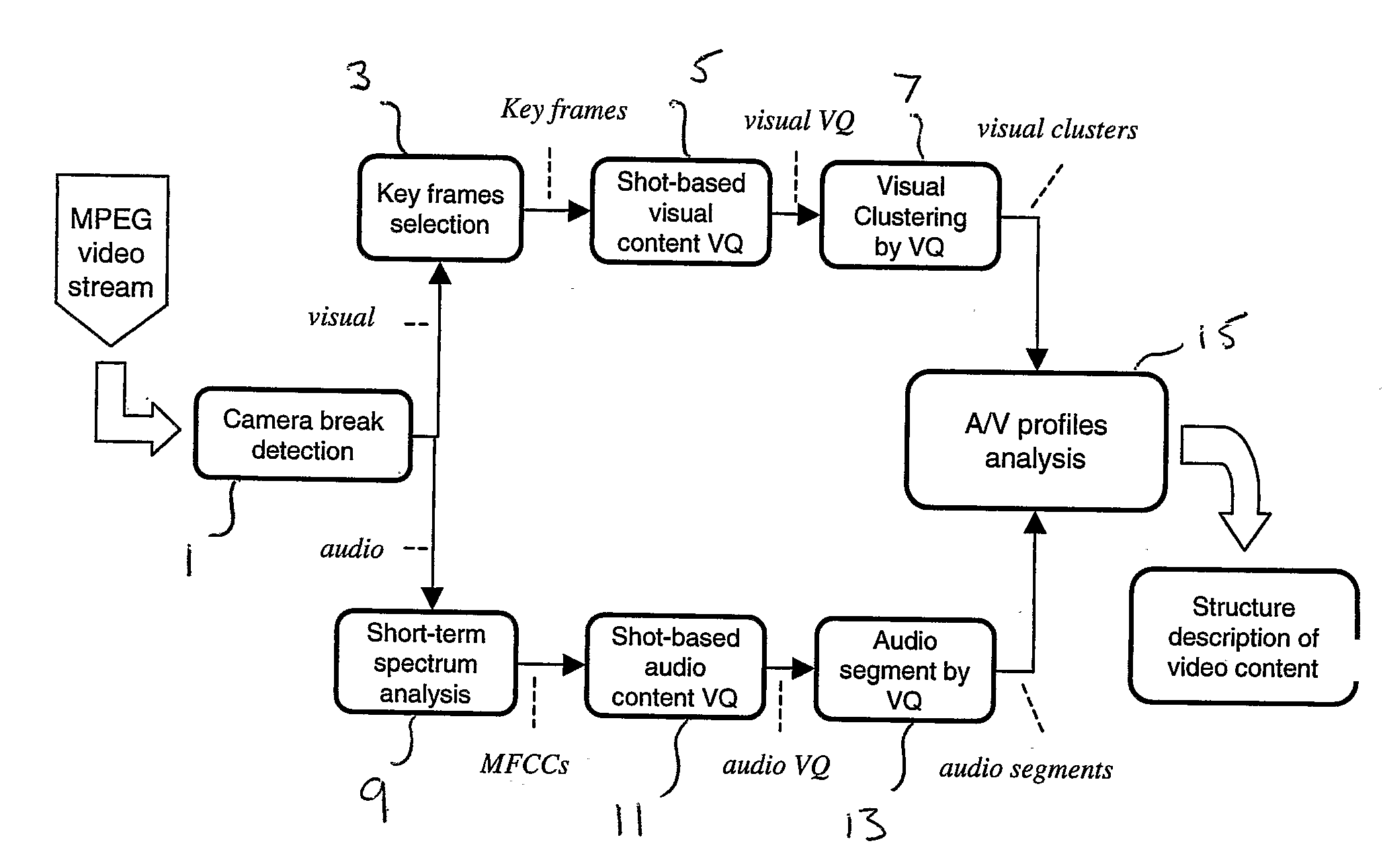

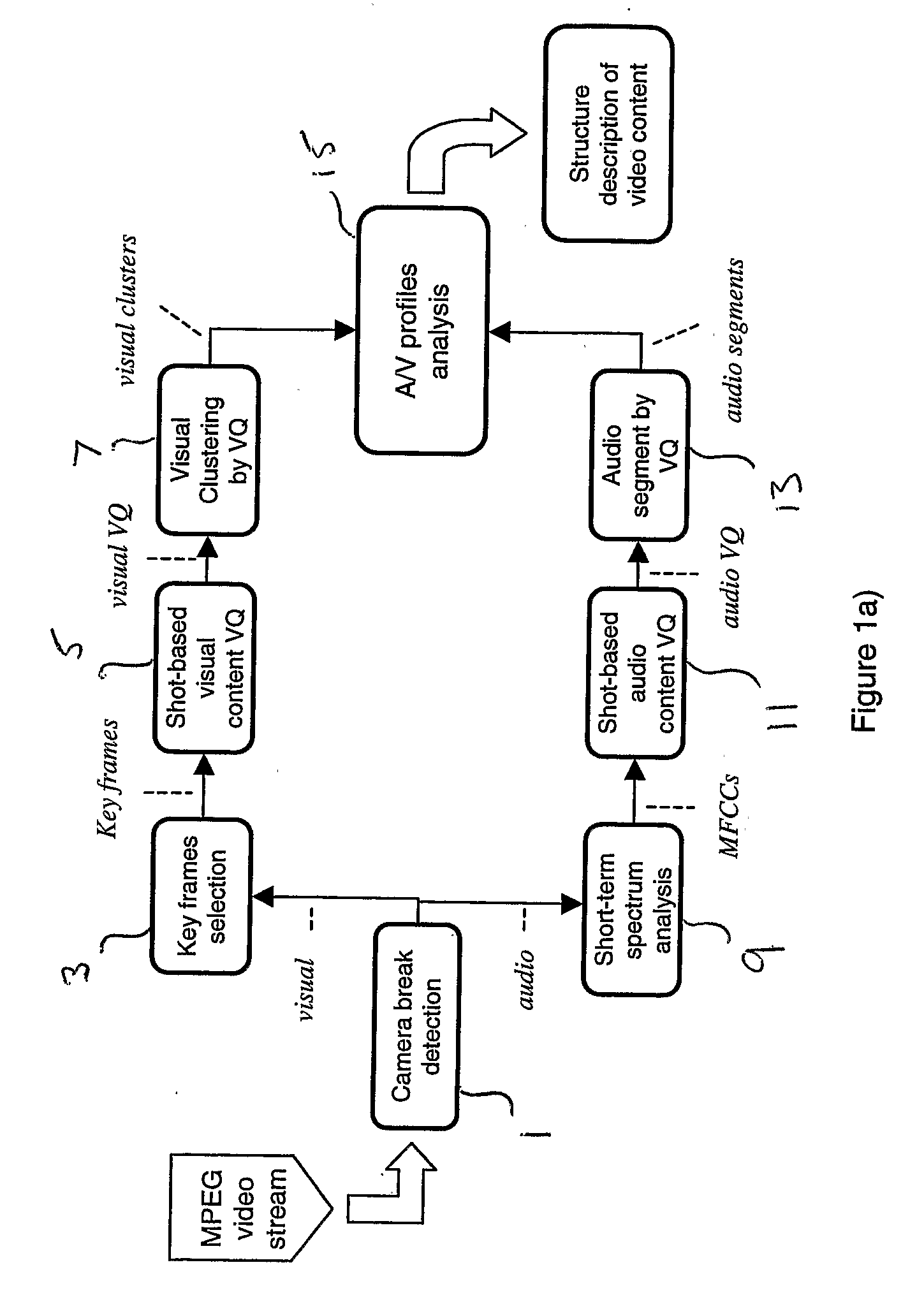

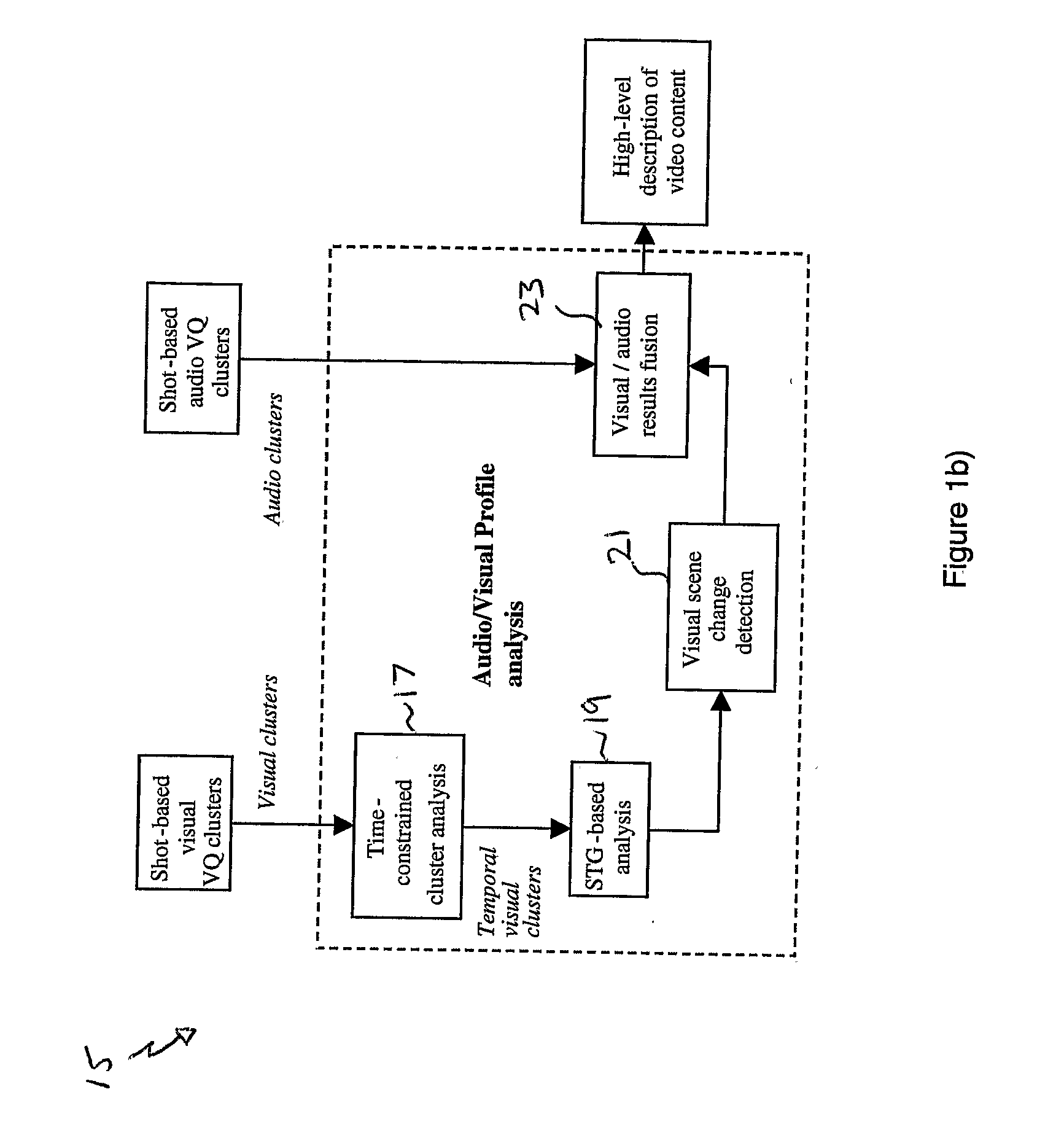

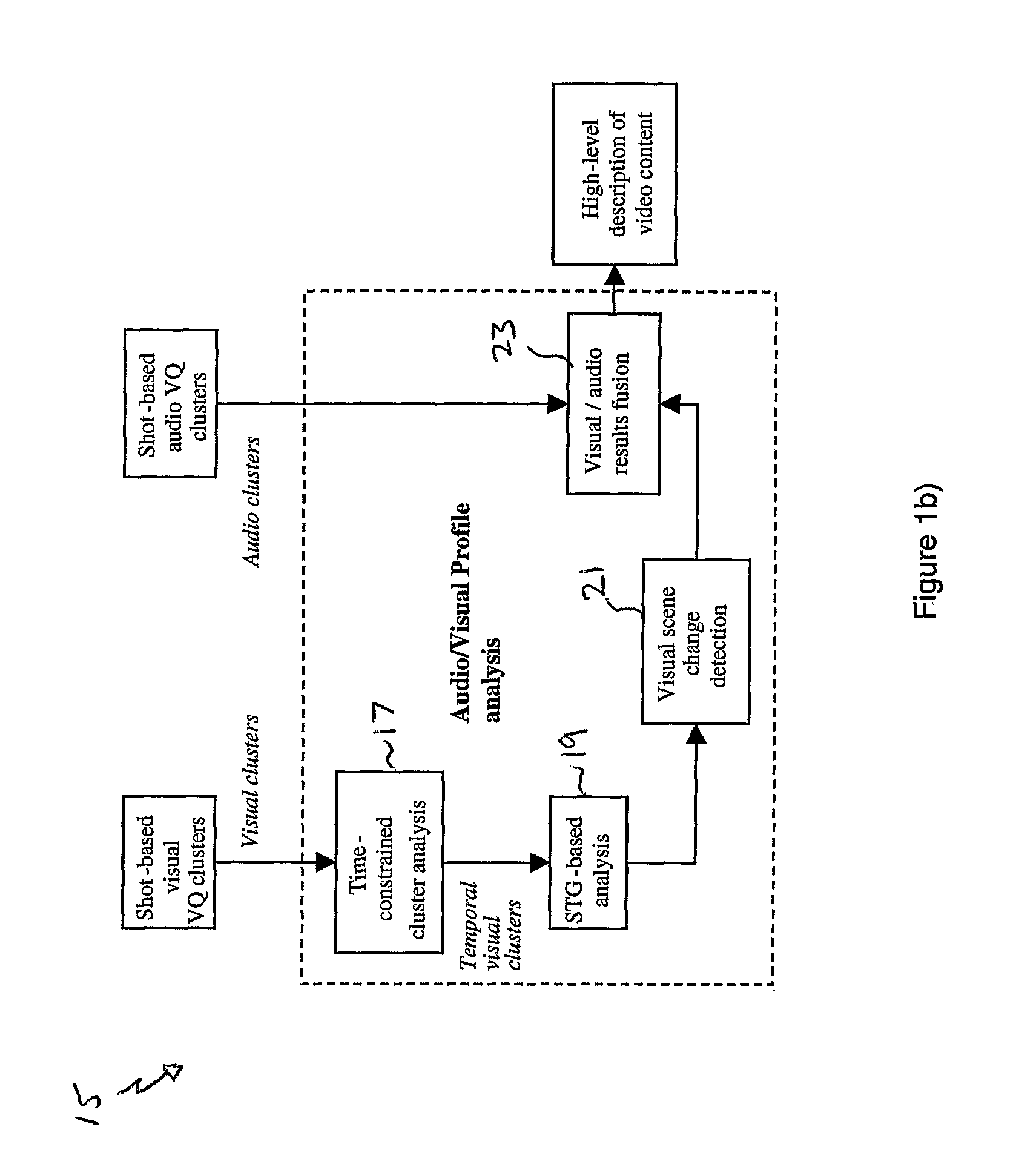

A shot-based video content analysis method and system is described for providing automatic recognition of logical story units (LSUs). The method employs vector quantization (VQ) to represent the visual content of a shot, following which a shot clustering algorithm is employed together with automatic determination of merging and splitting events. The method provides an automated way of performing the time-consuming and laborious process of organising and indexing increasingly large video databases such that they can be easily browsed and searched using natural query structures.

Owner:BRITISH TELECOMM PLC

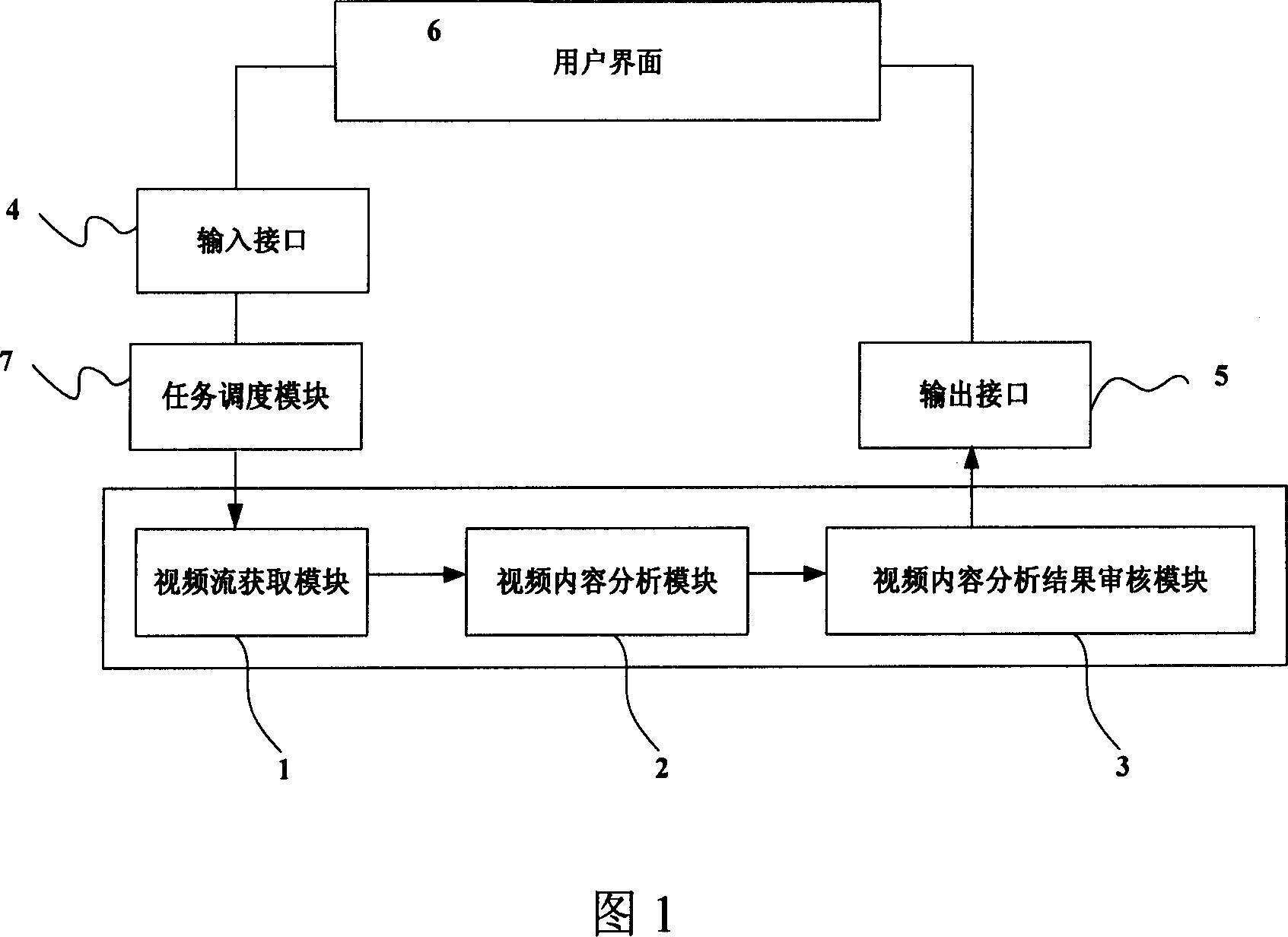

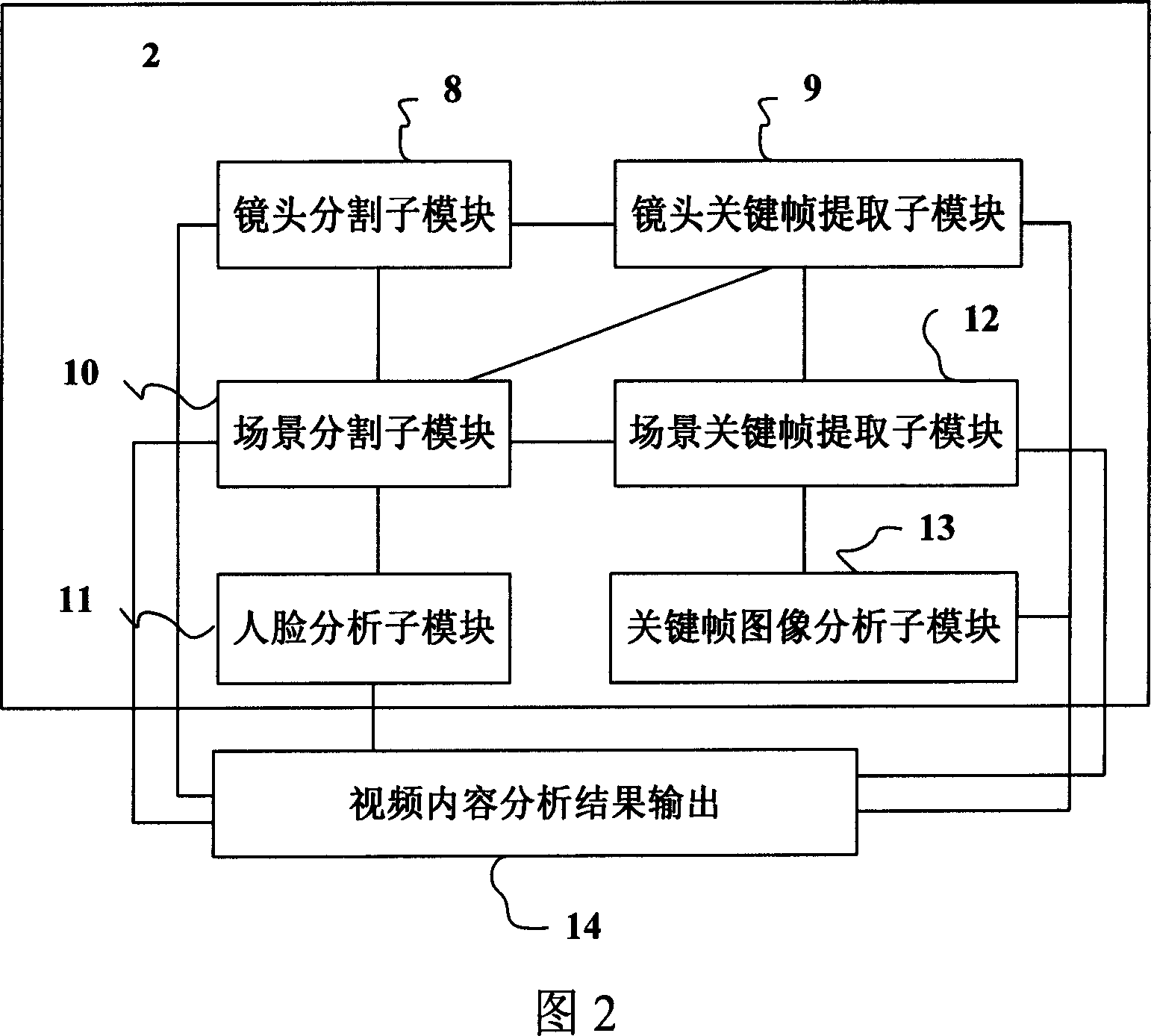

Video content analysis system

This invention relates to a video content analysis system including: an input interface, a task dispatch module, a video stream obtaining module, a video content analysis module, a check module for video analysis result, an output interface and a UI. The system flow includes: the system receives the video analysis instruction from the network or the local, the task dispatch module decides the execution sequence of the task, the video flow obtaining module decodes the being analyzed video and the content analysis module analyzes and picks up lens information, the lens key frame information, the scene information, key frame information, its image information and man-face informatioin to be checked according to the need to be stored in the mode of XML file to be uploaded to the video information database via the output interface, and the system is used in supporting video search service based on content.

Owner:北京新岸线网络技术有限公司

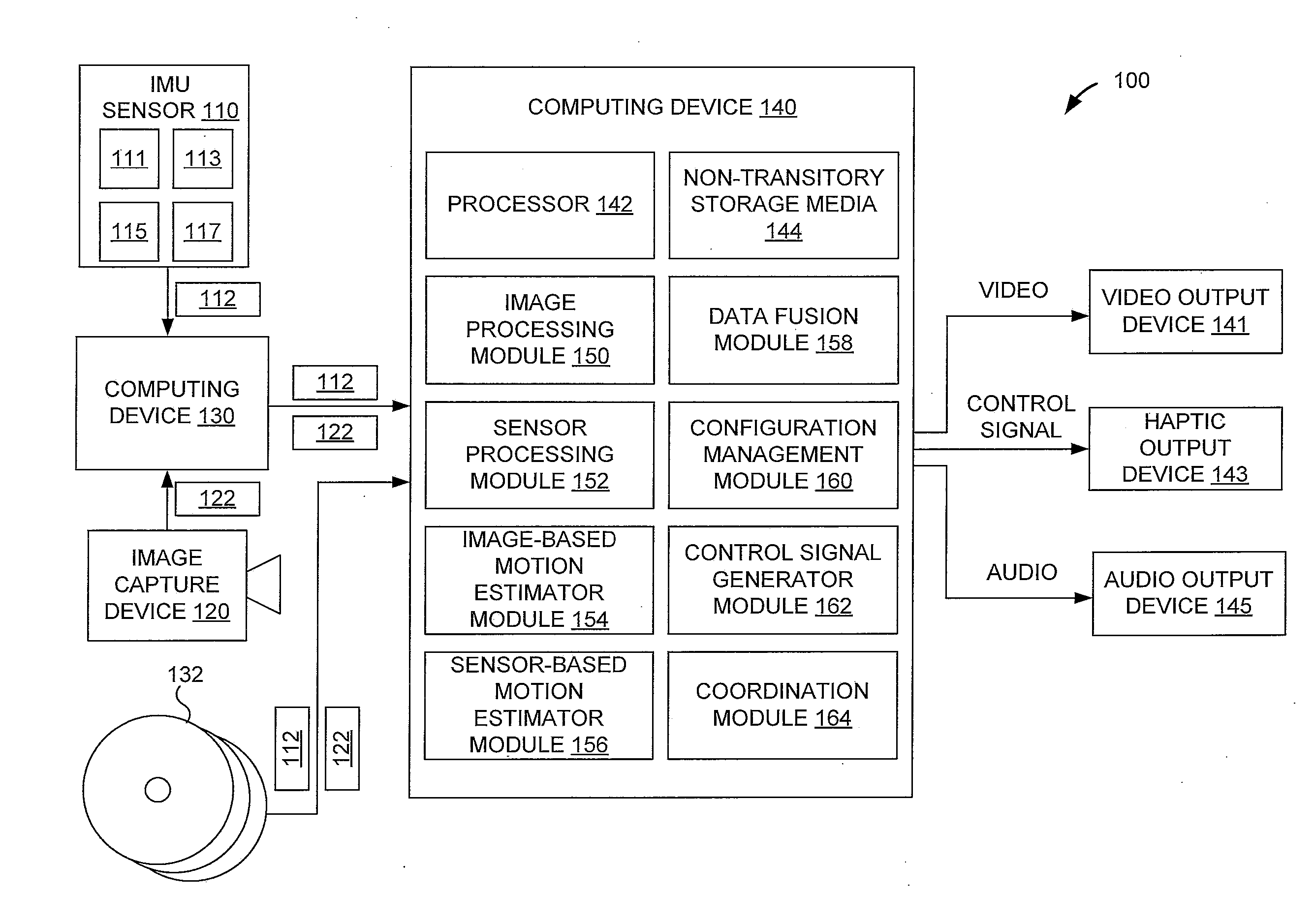

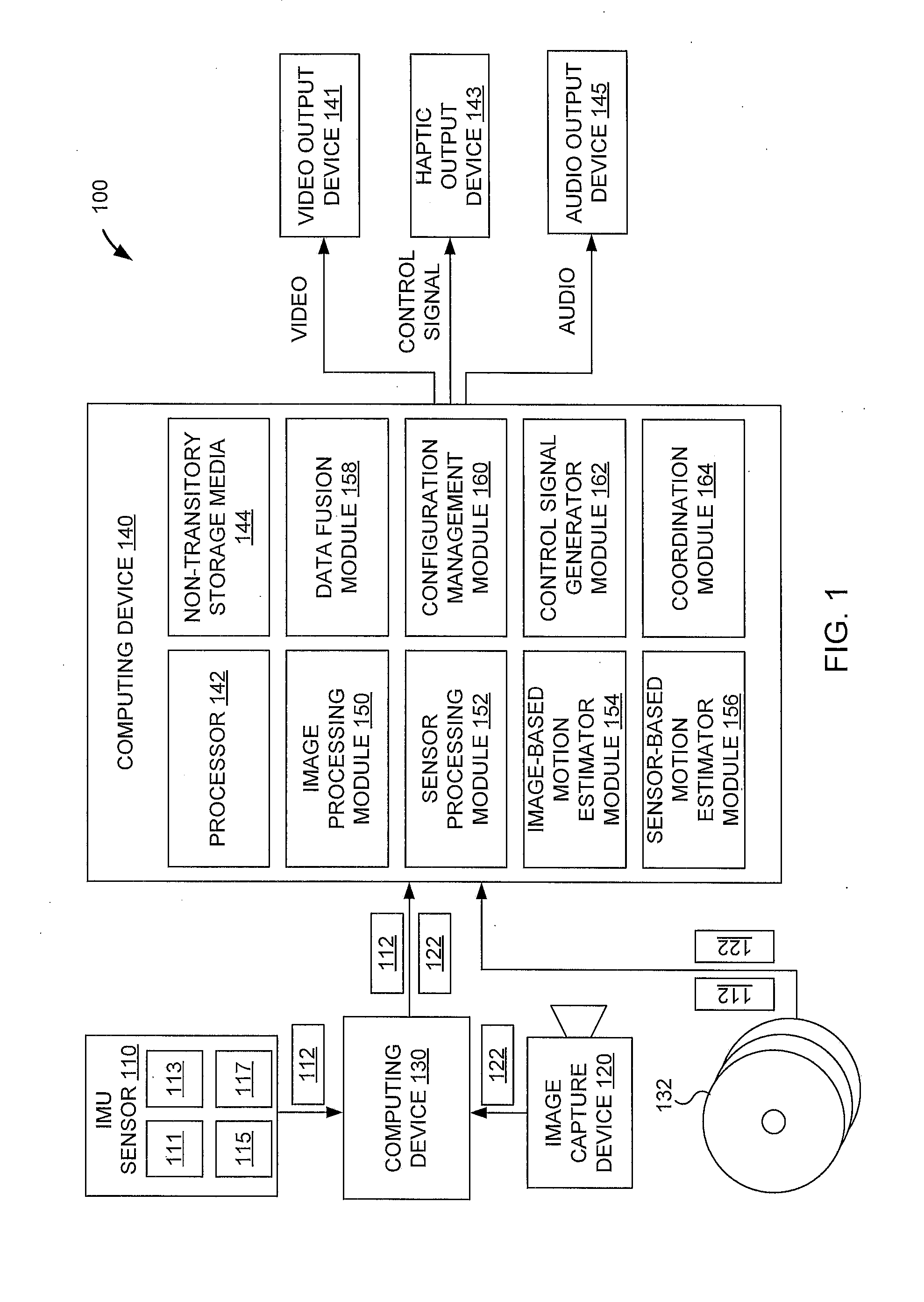

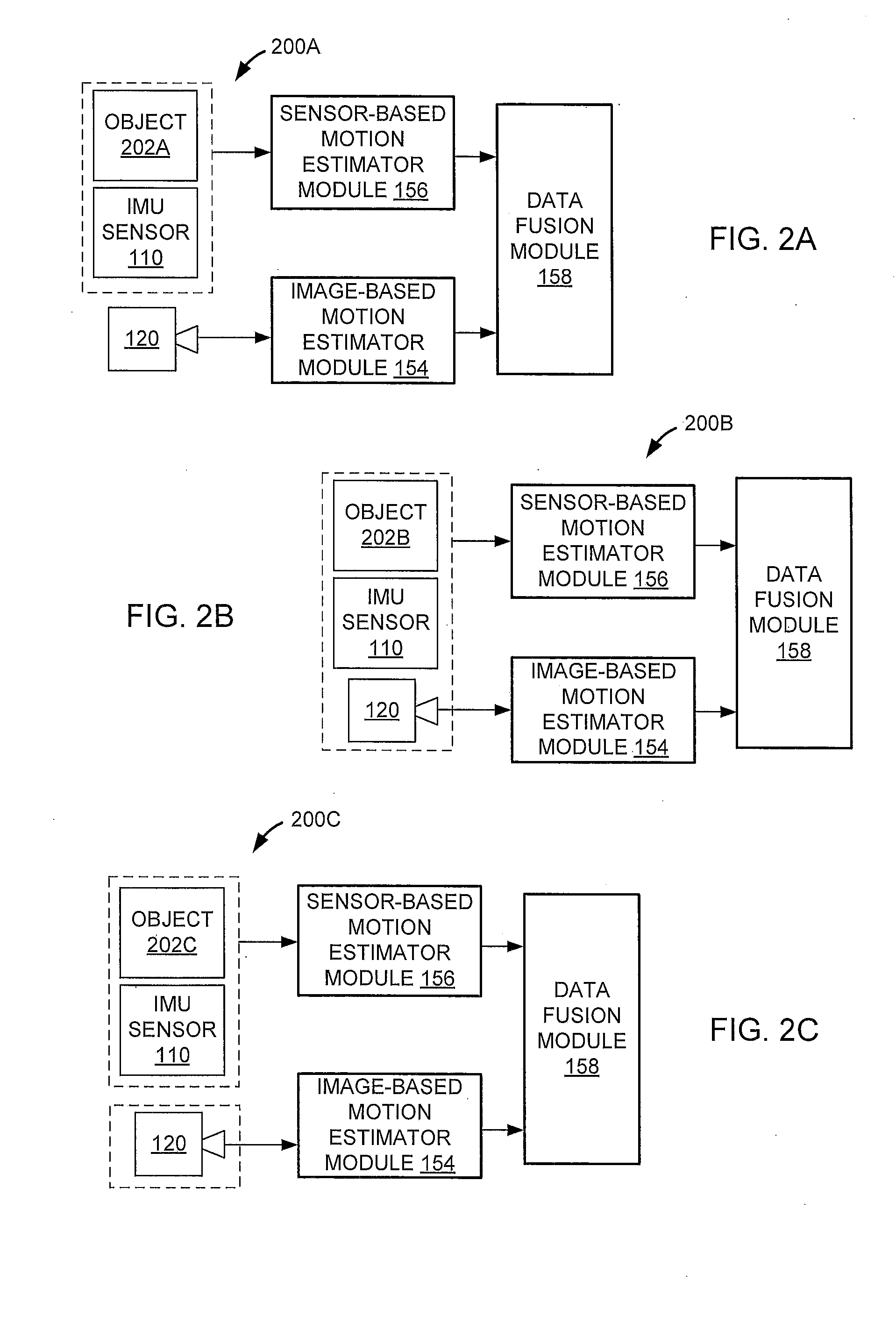

Method and apparatus to generate haptic feedback from video content analysis

ActiveUS20140267904A1Improve user experienceFacilitates real-time haptic feedbackTelevision system detailsImage analysisImaging processingTouch Perception

The disclosure relates to generating haptic feedback based on video content analysis, sensor information that includes one or more measurements of motion of one or more objects on a video, and / or sensor information that includes one or more measurements of motion of one or more image capture devices. The video content analysis may include image processing of the video. The system may identify one or more events based on the image processing and cause a haptic feedback based on the one or more events. The haptic feedback may vary based on an estimated acceleration of an object of a scene of the video, wherein the estimated acceleration is based on the image processing. The estimated acceleration may be made more accurate at high speeds using the sensor information and an estimated acceleration based on the sensor information may be made more accurate at lower speeds based on the image processing.

Owner:IMMERSION CORPORATION

Video content analysis for automatic demographics recognition of users and videos

ActiveUS8924993B1Analogue secracy/subscription systemsCharacter and pattern recognitionVideo content analysisDemographics

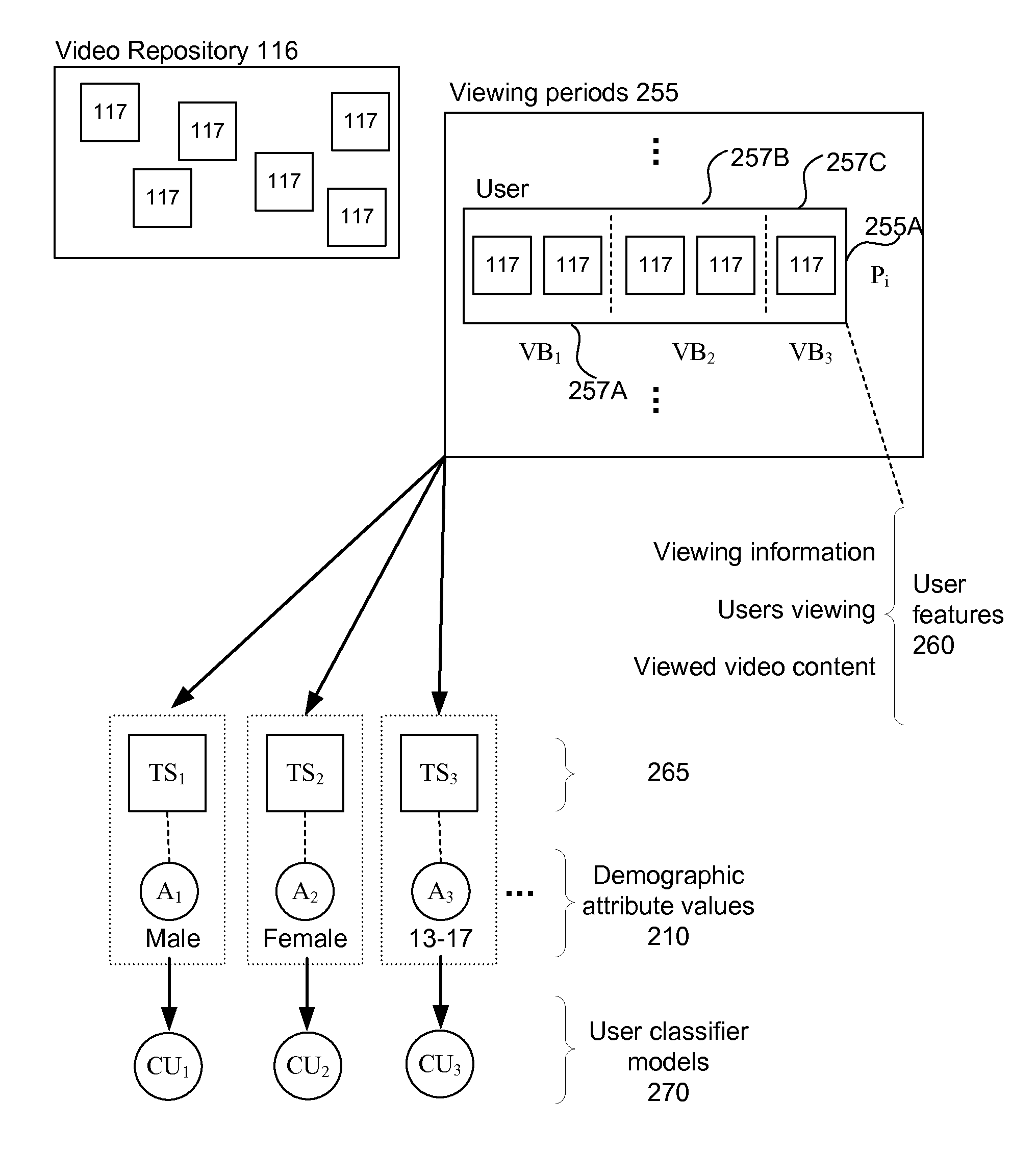

A demographics analysis trains classifier models for predicting demographic attribute values of videos and users not already having known demographics.In one embodiment, the demographics analysis system trains classifier models for predicting demographics of videos using video features such as demographics of video uploaders, textual metadata, and / or audiovisual content of videos.In one embodiment, the demographics analysis system trains classifier models for predicting demographics of users (e.g., anonymous users) using user features based on prior video viewing periods of users. For example, viewing-period based user features can include individual viewing period statistics such as total videos viewed. Further, the viewing-period based features can include distributions of values over the viewing period, such as distributions in demographic attribute values of video uploaders, and / or distributions of viewings over hours of the day, days of the week, and the like.

Owner:GOOGLE LLC

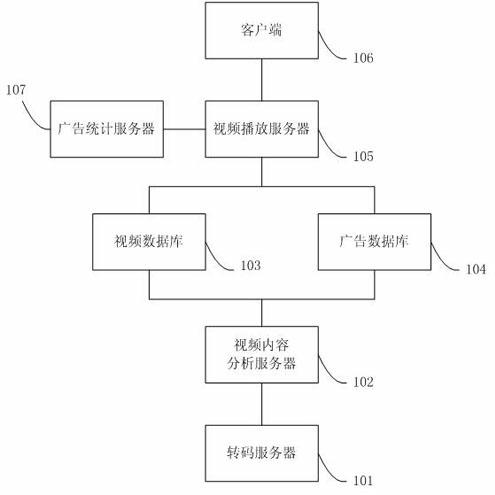

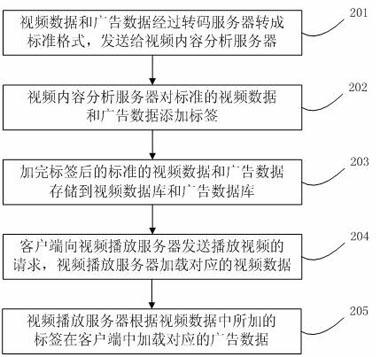

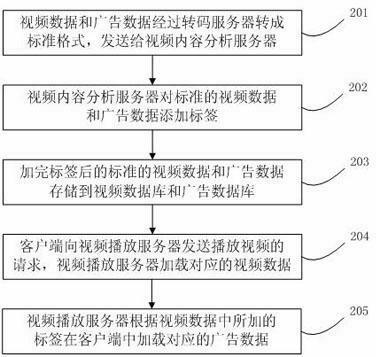

Network video advertisement placing method and system

InactiveCN102685550AConvenient statisticsFavorable chargesSelective content distributionMarketingVideo content analysisClient-side

The invention discloses a network video advertisement placing method and system. Video data and advertisement data are converted into standard format through a transcoding server and are sent to a video content analysis server which adds tags to the standard video data and advertisement data, the tag-added video data and advertisement data are stored in a video database and an advertisement database, a client side sends a request for playing videos to a video playing server, and the video playing server loads corresponding video data in the client side according to the request and loads corresponding advertisement data in the client side according to the tags added to the video data. By means of the technical scheme, advertisements are placed when watch effects of the video data are not influenced, and the advertisements with different types are placed according to relevant content of the videos, so that advertisement placing is accurate and has obvious effects. Besides, the network videos and the advertisements are not bound, information of the advertisement placing can be conveniently calculated, and charge of network media is facilitated.

Owner:TVMINING BEIJING MEDIA TECH

Video content analysis for automatic demographics recognition of users and videos

ActiveUS20120272259A1Metadata video data retrievalAnalogue secracy/subscription systemsVideo content analysisDemographic data

A video demographics analysis system selects a training set of videos to use to correlate viewer demographics and video content data. The video demographics analysis system extracts demographic data from viewer profiles related to videos in the training set and creates a set of demographic distributions, and also extracts video data from videos in the training set. The video demographics analysis system correlates the viewer demographics with the video data of videos viewed by that viewer. Using the prediction model produced by the machine learning process, a new video about which there is no a priori knowledge can be associated with a predicted demographic distribution specifying probabilities of the video appealing to different types of people within a given demographic category, such as people of different ages within an age demographic category.

Owner:GOOGLE LLC

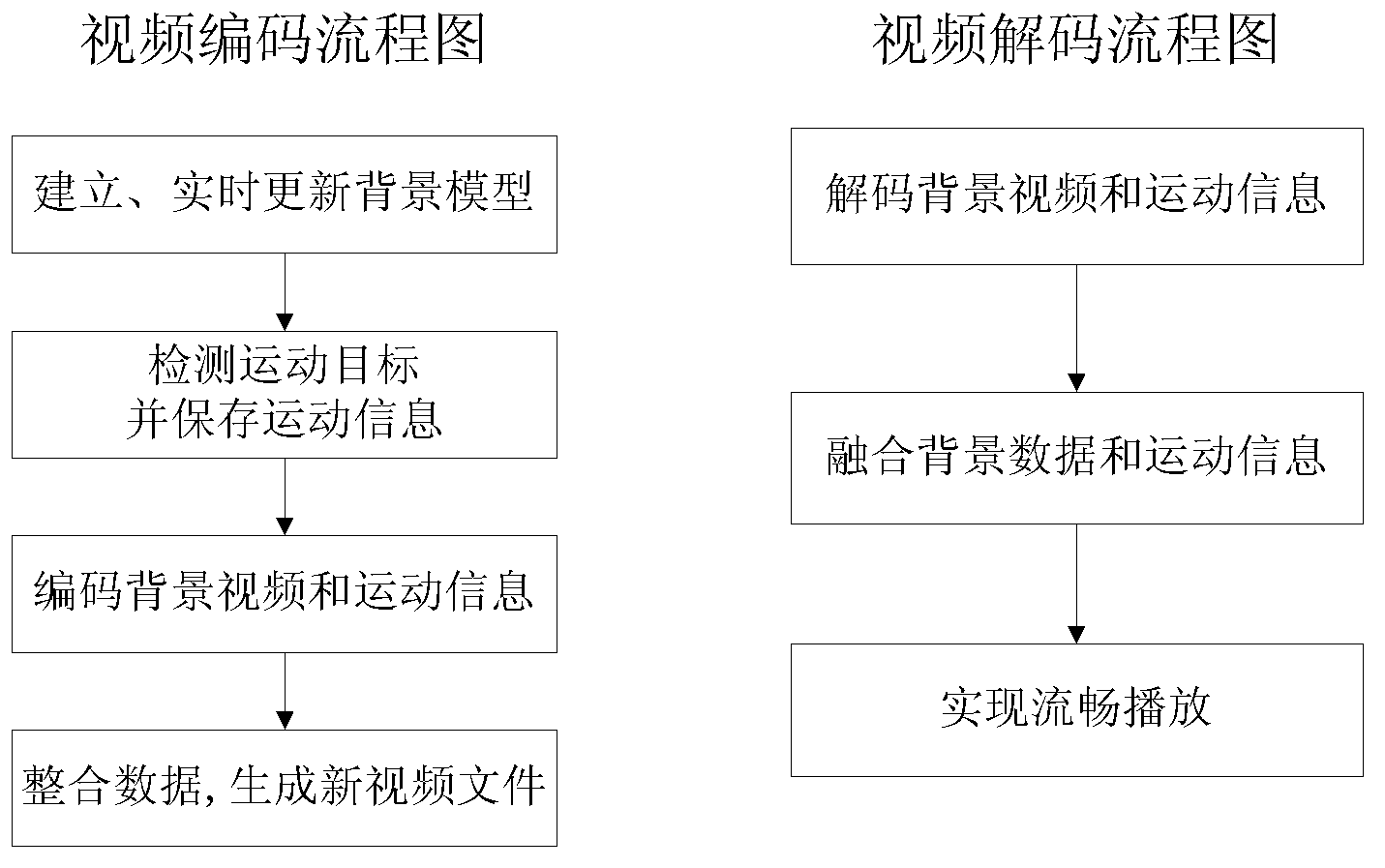

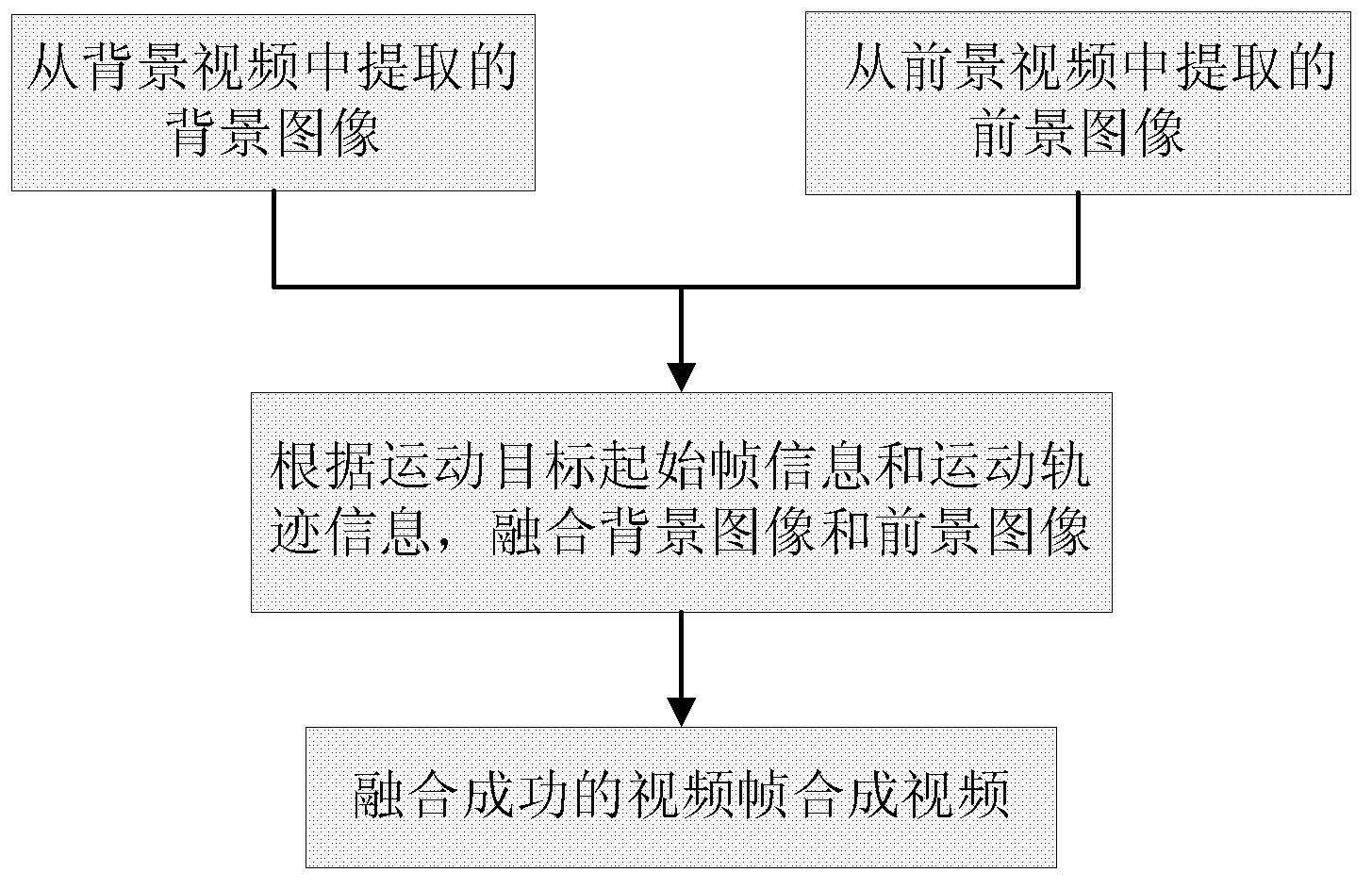

Video compression coding and decoding method and device

InactiveCN103179402AImprove timelinessSave storage spaceTelevision system detailsColor television detailsRelevant informationVideo encoding

The invention discloses a video compression coding and decoding method based on video content analysis. The video compression coding and decoding method comprises the following steps of: establishing background models of videos in real time, and carrying out compressed coding on background videos according to the background models; detecting a foreground movement target, storing the relevant information of the foreground movement target, and carrying out compressed coding on foreground video data according to the detected foreground movement target; integrating the background video data and foreground video data subjected to compressed coding into new video files; and decoding the new video files, and playing the videos after integrating the background video data and foreground video data subjected to compressed coding. The video compression coding and decoding method is on the basis of a general coding and decoding manner, and the new video files are generated through separation and coding of the background and foreground video data. The video files greatly reduce the storage space and improve the compression ratio and the storage cycle of video equipment. Finally, the fluent playing of the videos can be realized through video encoding and data integration.

Owner:INST OF SEMICONDUCTORS - CHINESE ACAD OF SCI

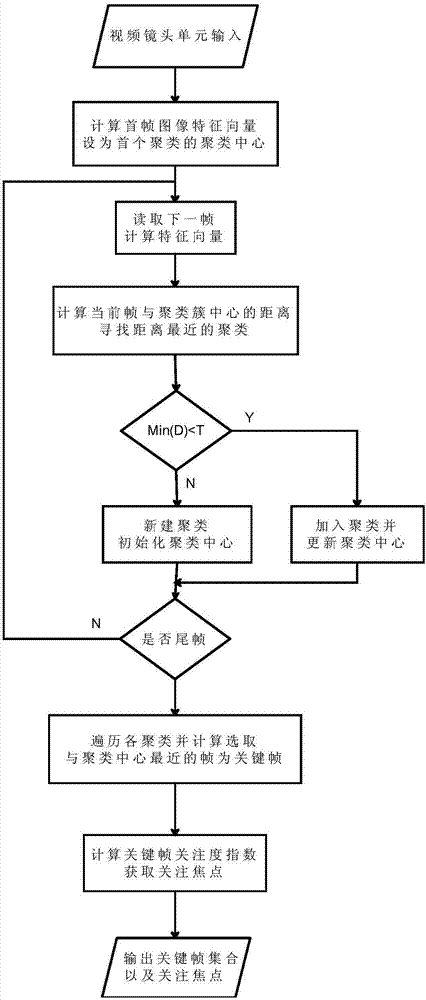

Video key frame extraction method based on multi-characteristic fusion shot clustering

InactiveCN107220585AImprove universalityFlexible extractionCharacter and pattern recognitionVideo content analysisSelf adaptive

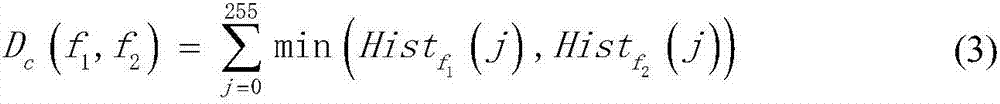

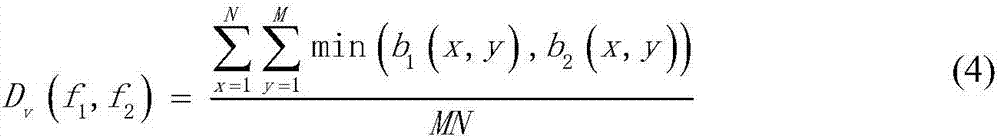

The invention discloses a video key frame extraction method based on multi-characteristic fusion shot clustering and belongs to the video content analysis field. Research of the method is based on video shot segmentation. The method comprises the following steps of step1, extracting a group of image frame set in a video shot, extracting an HSV histogram characteristic of each frame of image and a canny edge contour characteristic, and weighting and forming characteristic information of key frame clustering; step2, using an adaptive mean value clustering method to successively classify each frame of image to a corresponding clustering cluster and updating a clustering center of each clustering cluster in real time; step3, extracting an image frame which is nearest to each clustering center as a key frame of each cluster respectively, and arranging according to a time sequence so as to form a key frame set; and step4, calculating an attention index of each key frame and extracting one frame with a highest attention index as a representative frame of a video. By using the method of the invention, problems that flexibility of a traditional key frame extraction algorithm is not high and a fidelity is low are solved.

Owner:NANJING UNIV OF POSTS & TELECOMM

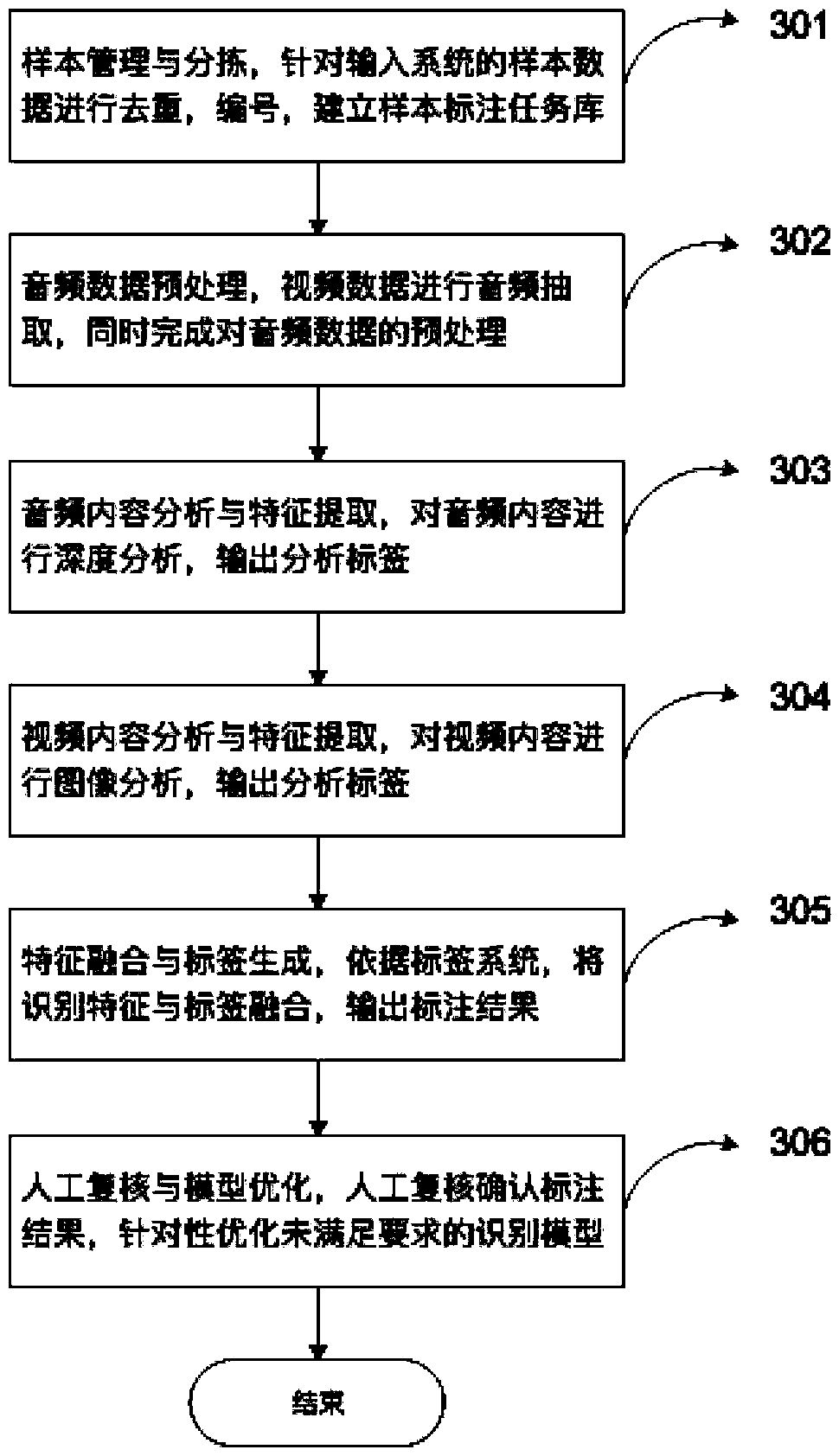

Multi-dimension labelling and model optimization method for audio and video

InactiveCN108806668ARealize closed-loop operationImprove compatibilitySpeech recognitionImaging analysisVideo content analysis

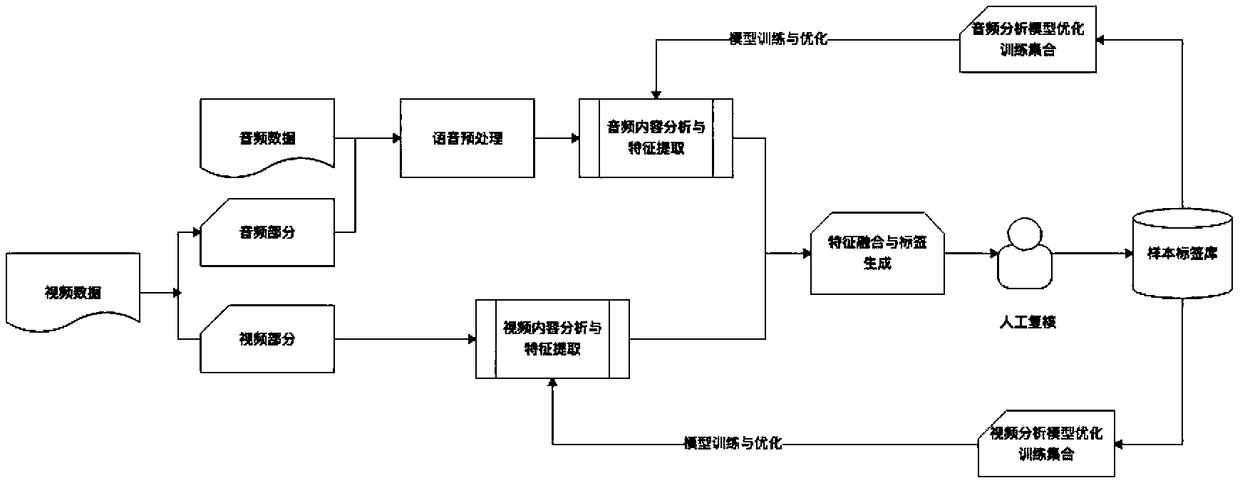

The invention discloses a multi-dimension labelling and model optimization method for audio and video. The method specifically comprises the following steps: first, carrying out sample management andsorting, carrying out de-duplication aiming at sample data of an input system, carrying out numbering, and establishing a sample labelling task library; at the preprocessing stage of audio data, carrying out audio extraction on video data of the task library, and completing the preprocessing operation for the audio data; at the audio content analysis and feature extraction stage, after the audio preprocessing is completed, carrying out deep analysis according to a labelling standardized system configured at the background, and outputting label data; S304, at the video content analysis and feature extraction stage, carrying out image analysis on the video content, and carrying out deep analysis according to the labelling standardized system configured at the background, and outputting the label data; S305, carrying out feature fusion and label generation, namely, fusing the recognition features and label information, and outputting a label result of the sample; carrying out manual rechecking and model optimization, wherein the label result data generated by the system can be subjected to artificial re-check conformation.

Owner:NAT COMP NETWORK & INFORMATION SECURITY MANAGEMENT CENT +1

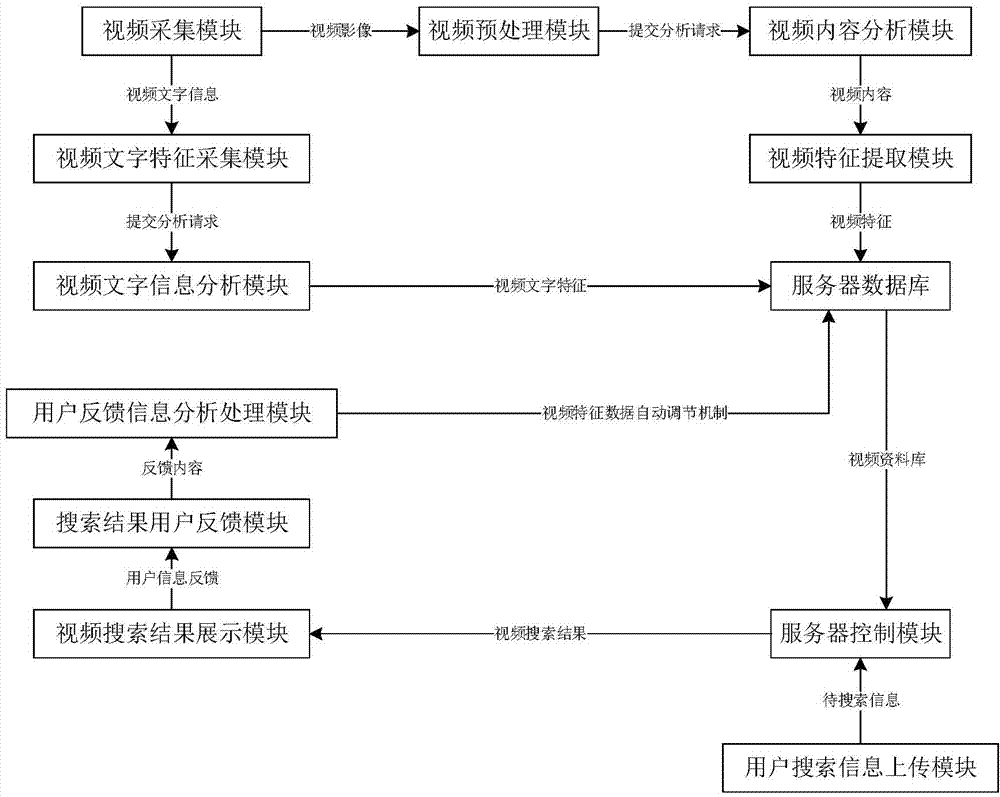

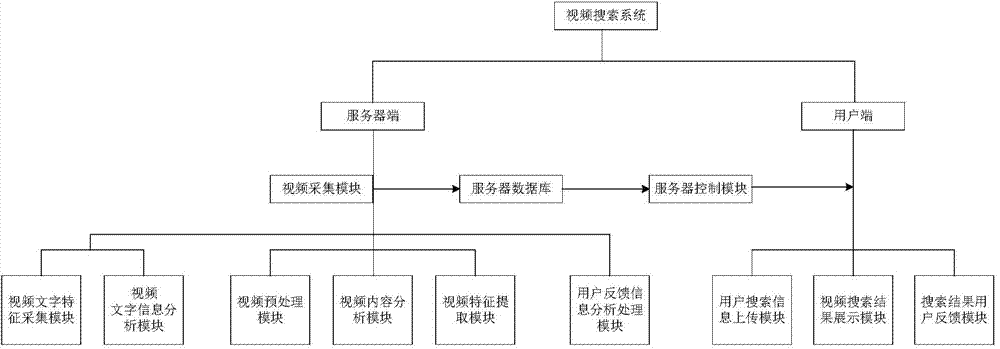

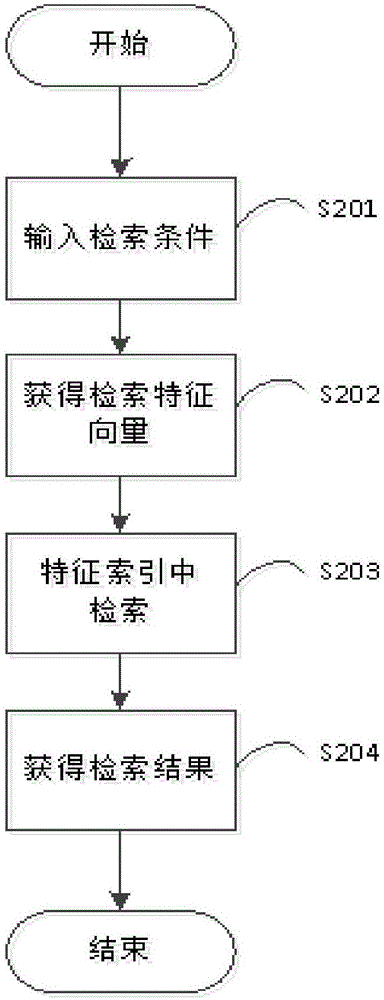

Video search system based on image recognition and matching

ActiveCN103942337AIncrease contentRich relevant informationSpecial data processing applicationsInformation analysisFeature extraction

A video search system based on image recognition and matching comprises a server side and a user side. The server side comprises a video collecting module, a video character feature collecting module, a video character information analysis module, a video preprocessing module, a video content analysis module, a video feature extraction module, a server database module, an image feature extraction module, a server control module and a user feedback information analysis and process module. The user side comprises a user search information uploading module, a video search result displaying module and a search result user feedback module. The invention provides a method for searching for videos through images. Good user experience is achieved; by uploading one image, a user can obtain video resource information related to the content of the image; the returned search result is accurate, the information amount is large, and mass video resources on the Internet can be fully utilized.

Owner:珠海市颢腾智胜科技有限公司

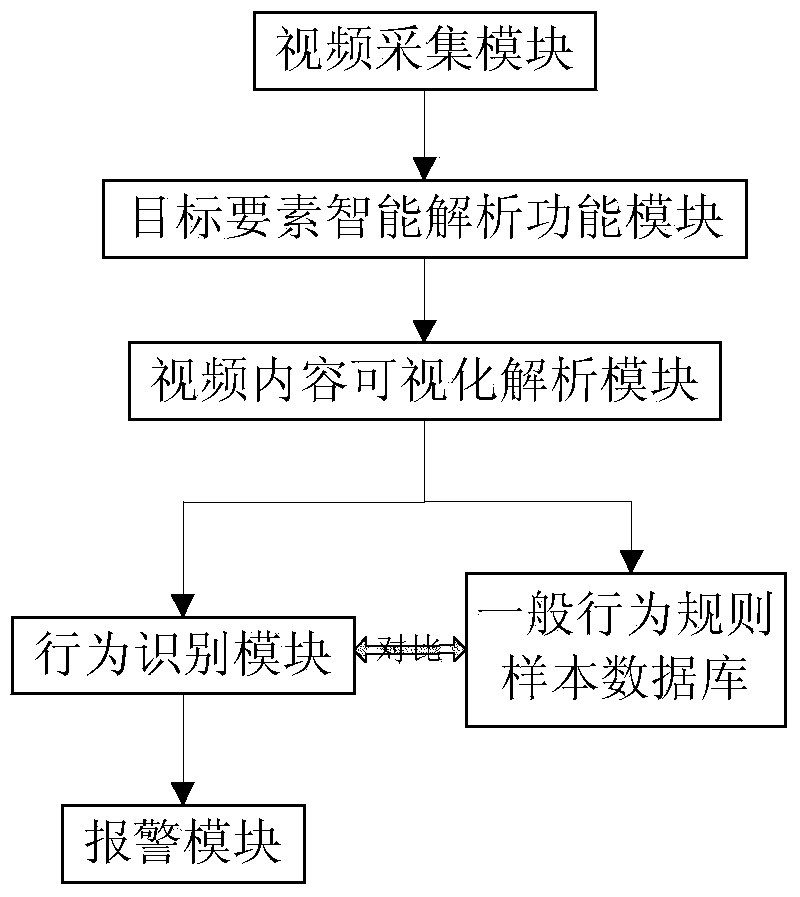

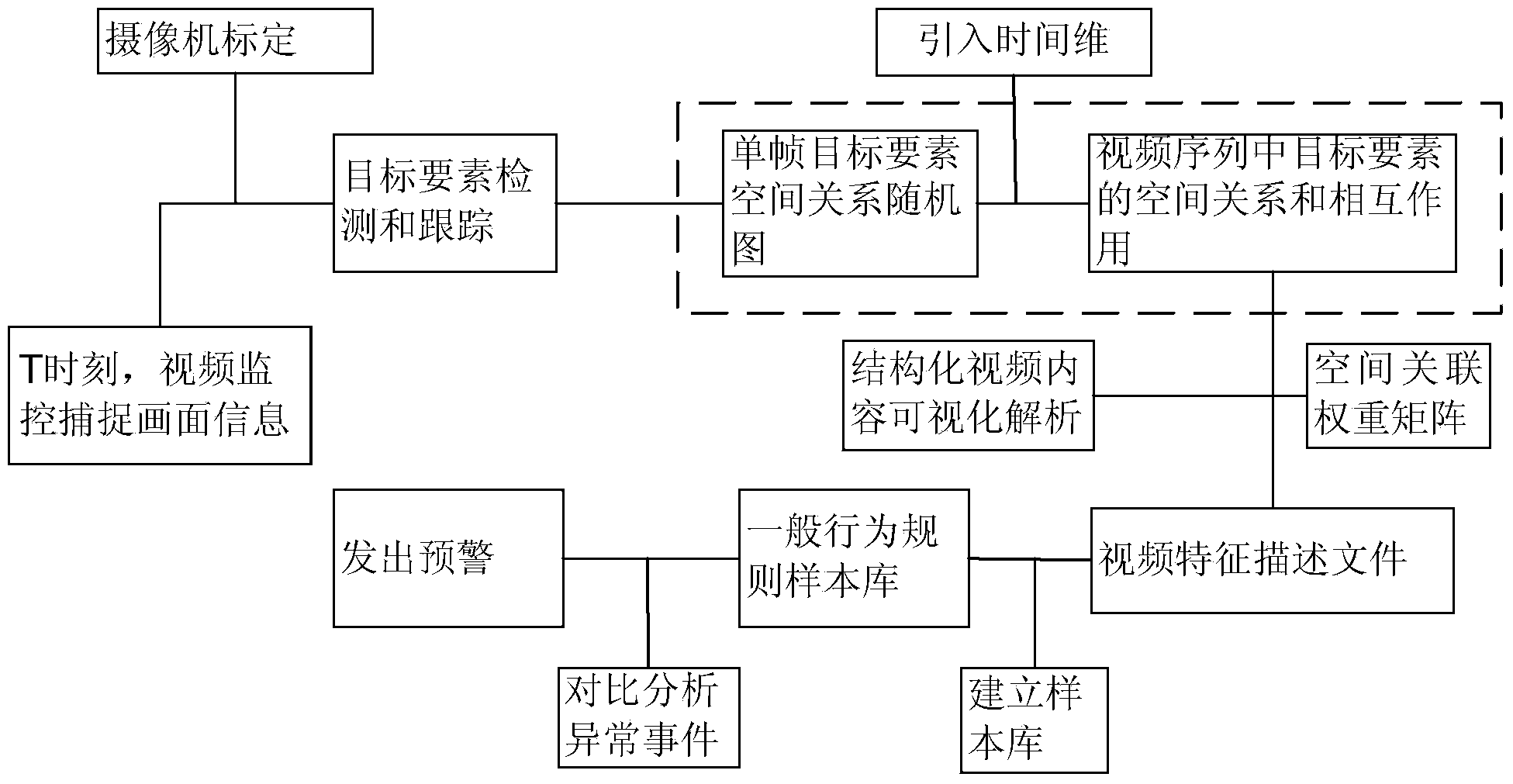

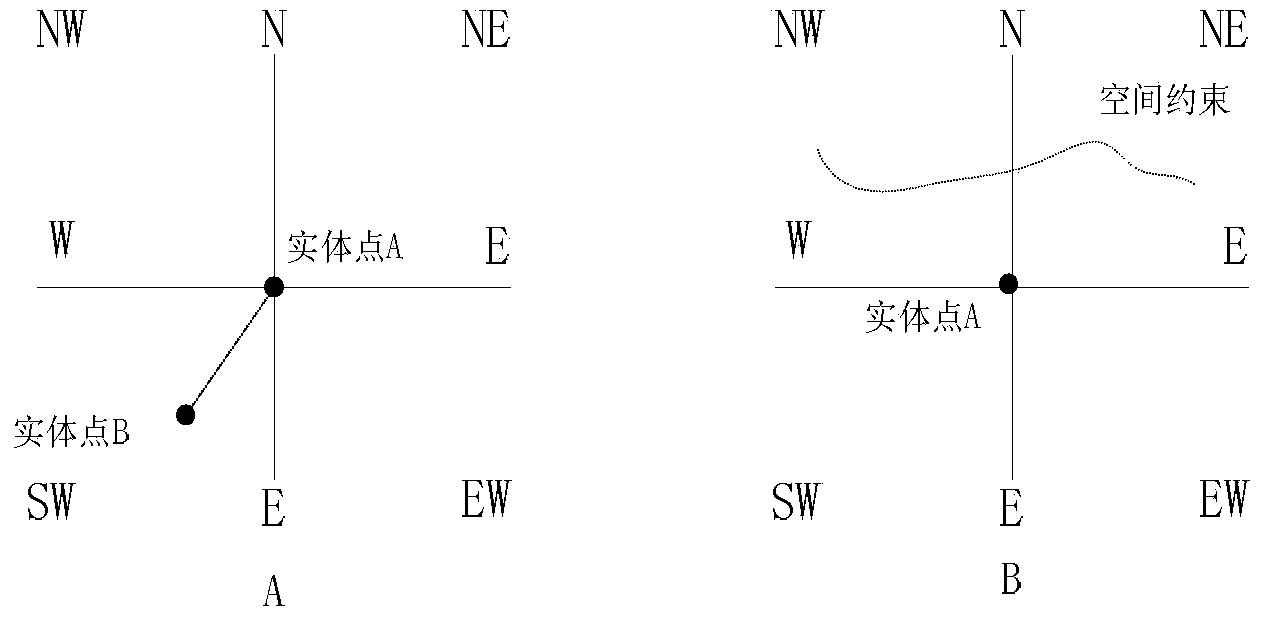

Video monitoring intelligent early-warning system and method on basis of target space relation constraint

ActiveCN103530995AEfficient detectionRapid positioningClosed circuit television systemsAlarmsVideo monitoringEarly warning system

The invention discloses a video monitoring intelligent early-warning system and a video monitoring intelligent early-warning method on the basis of target space relation constraint. The system comprises a video acquisition module, a target component intelligent analysis functional module, a video content visual analysis module, a general behavior rule sample database and an alarm module. On the basis of behavior comprehension on target components, aiming at spatial correlation between targets, the system carries out behavior characteristic analysis, evolution and classification, establishes a spatial correlation mode sample library, implements detection and identification on abnormal behaviors, can more effectively and accurately carry out identification and judgment on emergencies and can lock an abnormity trigger point to provide evidence for post-mordem forensics. The system introduces target space relation constraint, divides a video scene region to complete defining related concepts of the target components of a video, eliminates a certain fuzziness and faults in space semantic information, solves the problems of incompletion and inaccuracy in video content analysis and is more accurate and more efficient to detect abnormal events in a video monitoring range.

Owner:博拉网络股份有限公司

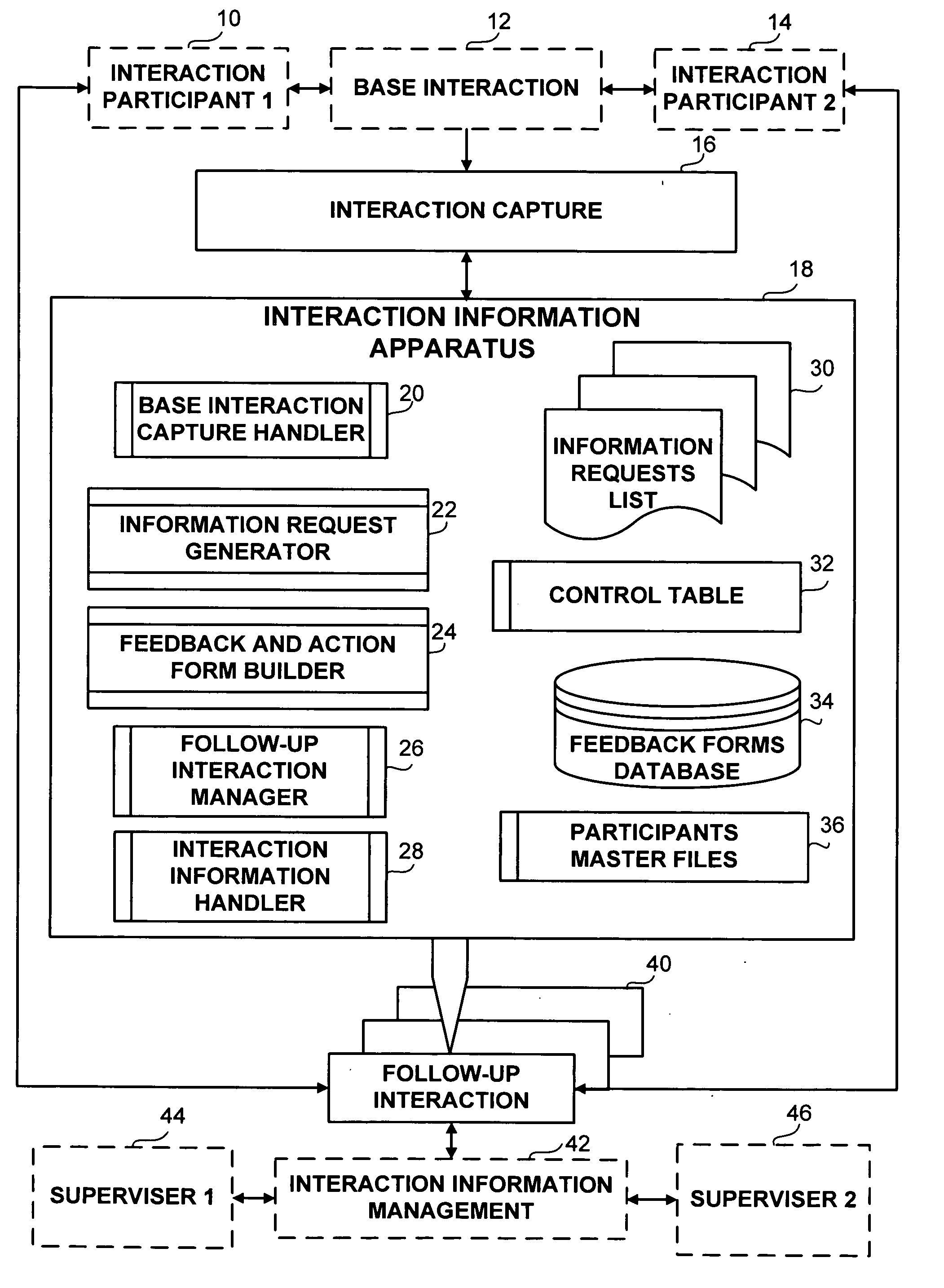

System and method for video content analysis-based detection, surveillance and alarm management

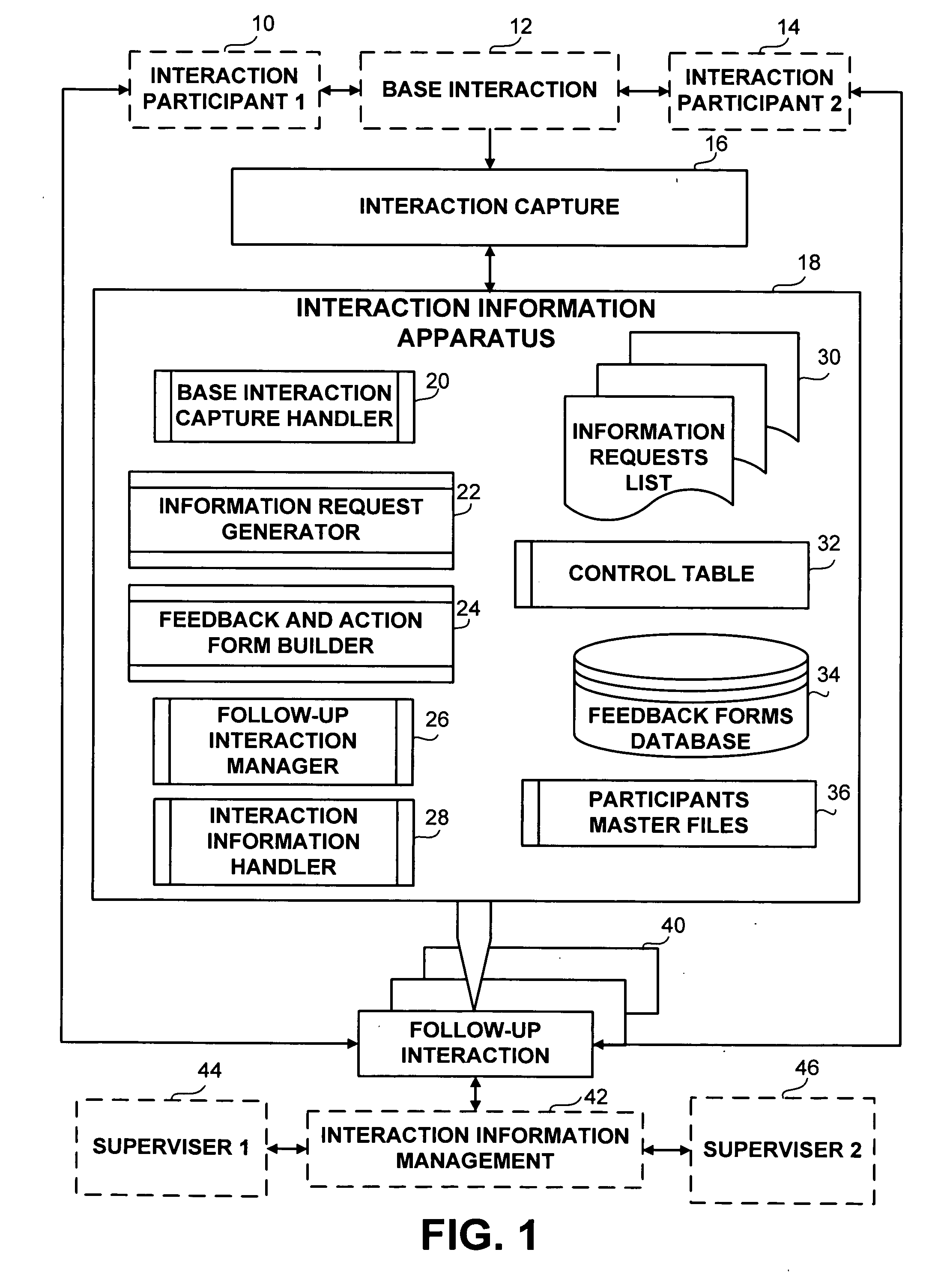

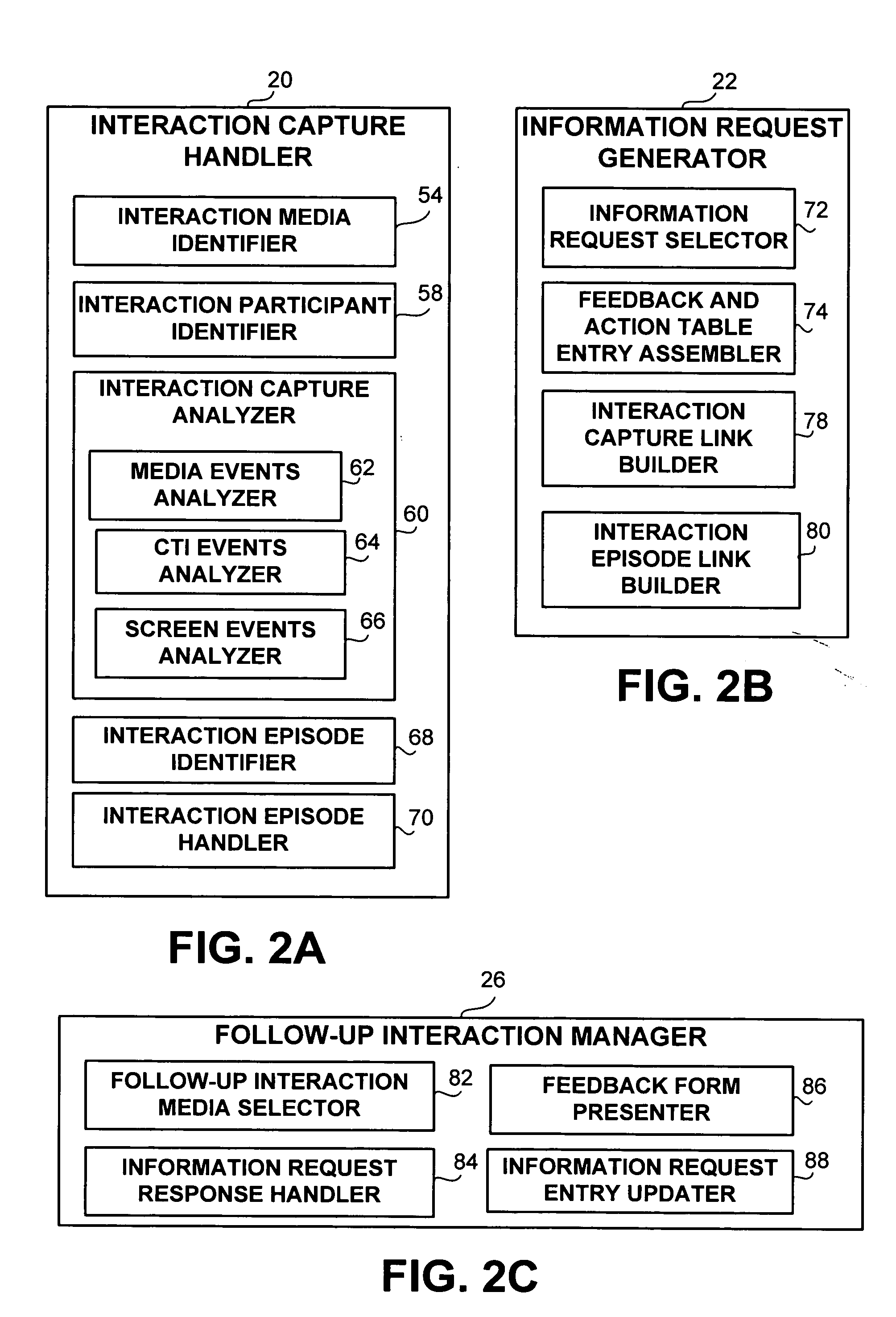

InactiveUS20050204378A1Television system detailsMarket predictionsVideo content analysisHuman–computer interaction

An apparatus, system, and method for the creation of interaction requests during or after a base interaction. The interaction requests are put into feedback and action forms and are based on content of the base interaction, created in order to be used as drivers for the execution of follow up interactions associated with the base interaction.

Owner:NICE SYSTEMS

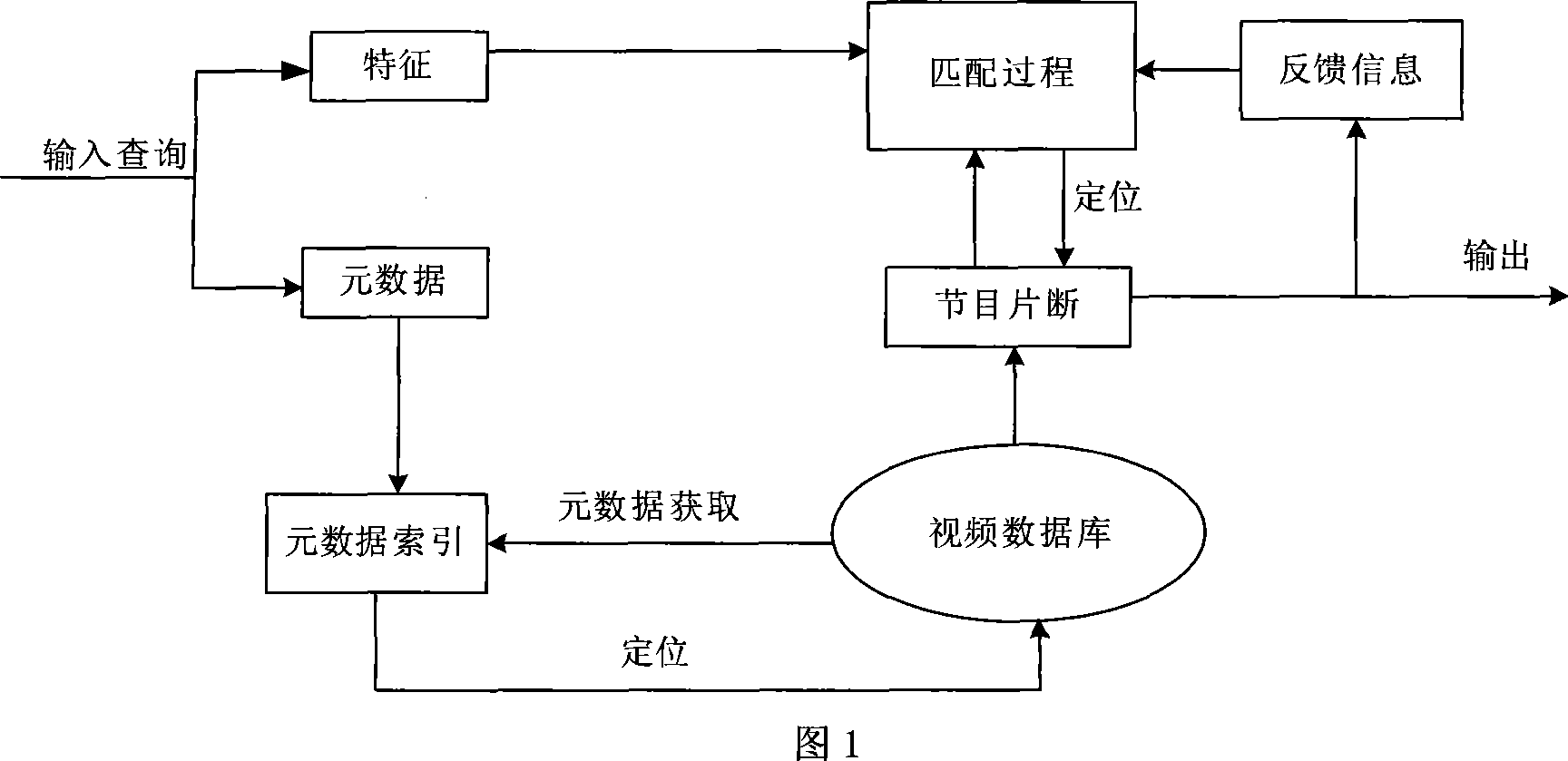

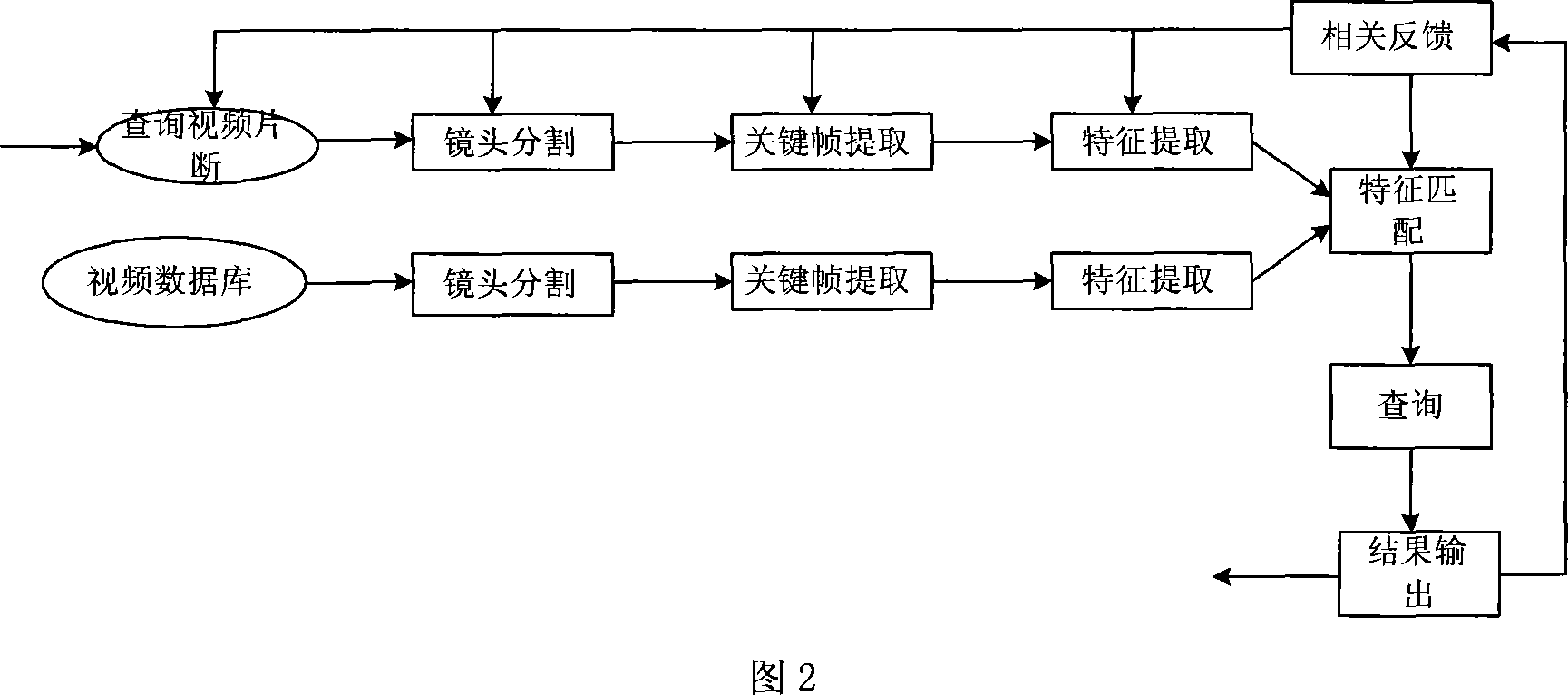

Time-shifted television video matching method combining program content metadata and content analysis

ActiveCN101064846AFast matchingImprove accuracyTwo-way working systemsSpecial data processing applicationsContent analyticsVideo sequence

A time-shift TV set video matching method which combines program content metadata and content analysis belongs to field of electronic information technique. Steps are following: (1) capture of metadata: picking up video program metadata information as package identification symbol, parsing as digital broadcasting operation information rules, and building metadata information index for transferring of inquiring module; (2) video matching in compressed code flow: firstly the video sequence is comminuted into scenes, and selecting key frame within scenes, then picking up movement characteristic and statistic characteristic of key frame to build video structure storeroom and characteristic storeroom, at last searching as inquiring characteristic of user, results which are sequenced for user. The invention makes the best of current technique, adds the metadata high level semanteme characteristic, considers the feedback opinion of user, and increases the precision of result.

Owner:SHANGHAI JIAO TONG UNIV

Video Content Analysis For Automatic Demographics Recognition Of Users And Videos

ActiveUS20150081604A1Digital computer detailsCharacter and pattern recognitionVideo content analysisDemographics

A demographics analysis trains classifier models for predicting demographic attribute values of videos and users not already having known demographics. In one embodiment, the demographics analysis system trains classifier models for predicting demographics of videos using video features such as demographics of video uploaders, textual metadata, and / or audiovisual content of videos. In one embodiment, the demographics analysis system trains classifier models for predicting demographics of users (e.g., anonymous users) using user features based on prior video viewing periods of users. For example, viewing-period based user features can include individual viewing period statistics such as total videos viewed. Further, the viewing-period based features can include distributions of values over the viewing period, such as distributions in demographic attribute values of video uploaders, and / or distributions of viewings over hours of the day, days of the week, and the like.

Owner:GOOGLE LLC

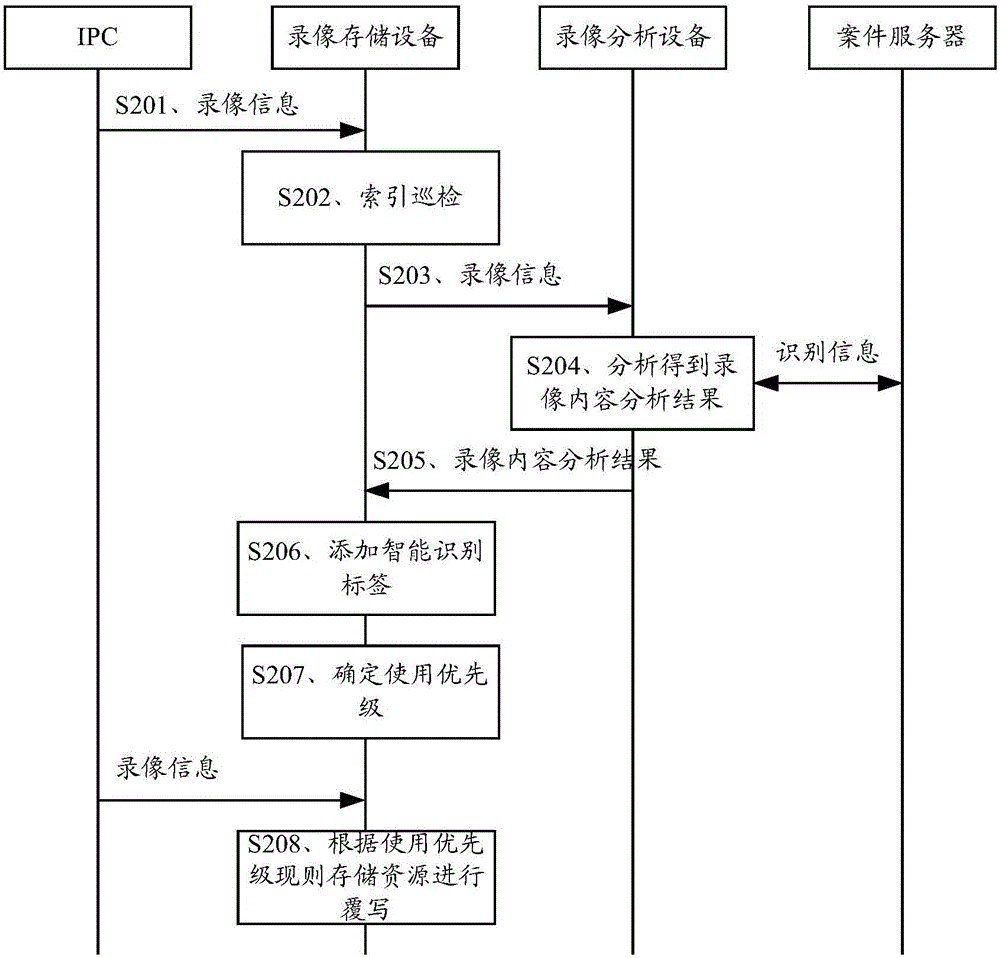

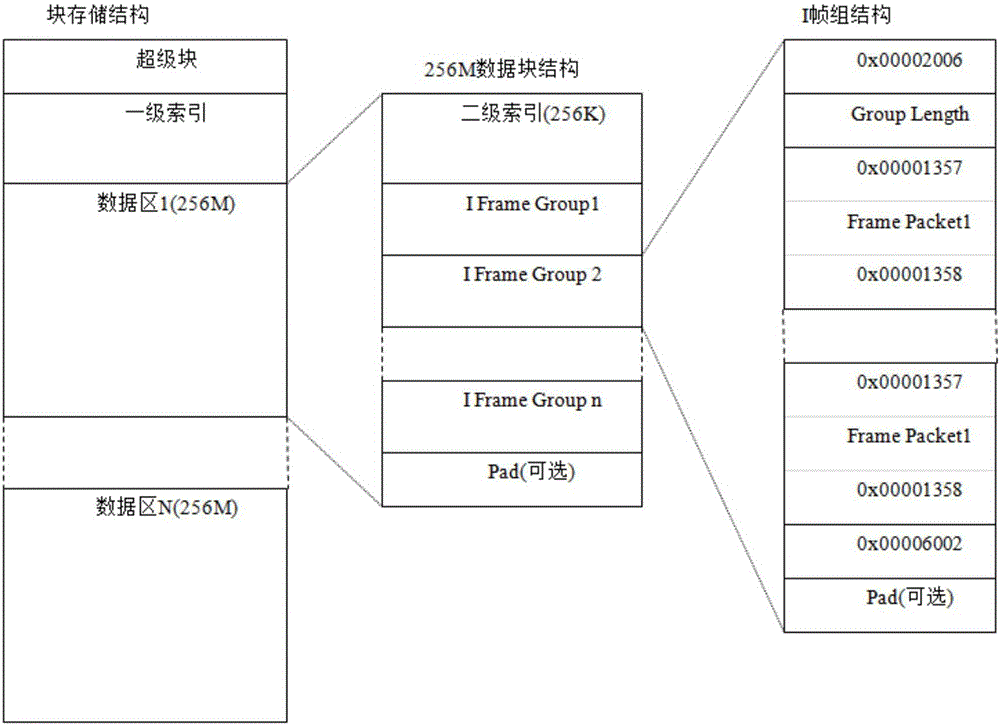

Video storage method, device and system

ActiveCN106060442ASafe storageGuaranteed preservationTelevision system detailsCharacter and pattern recognitionVideo storageComputer graphics (images)

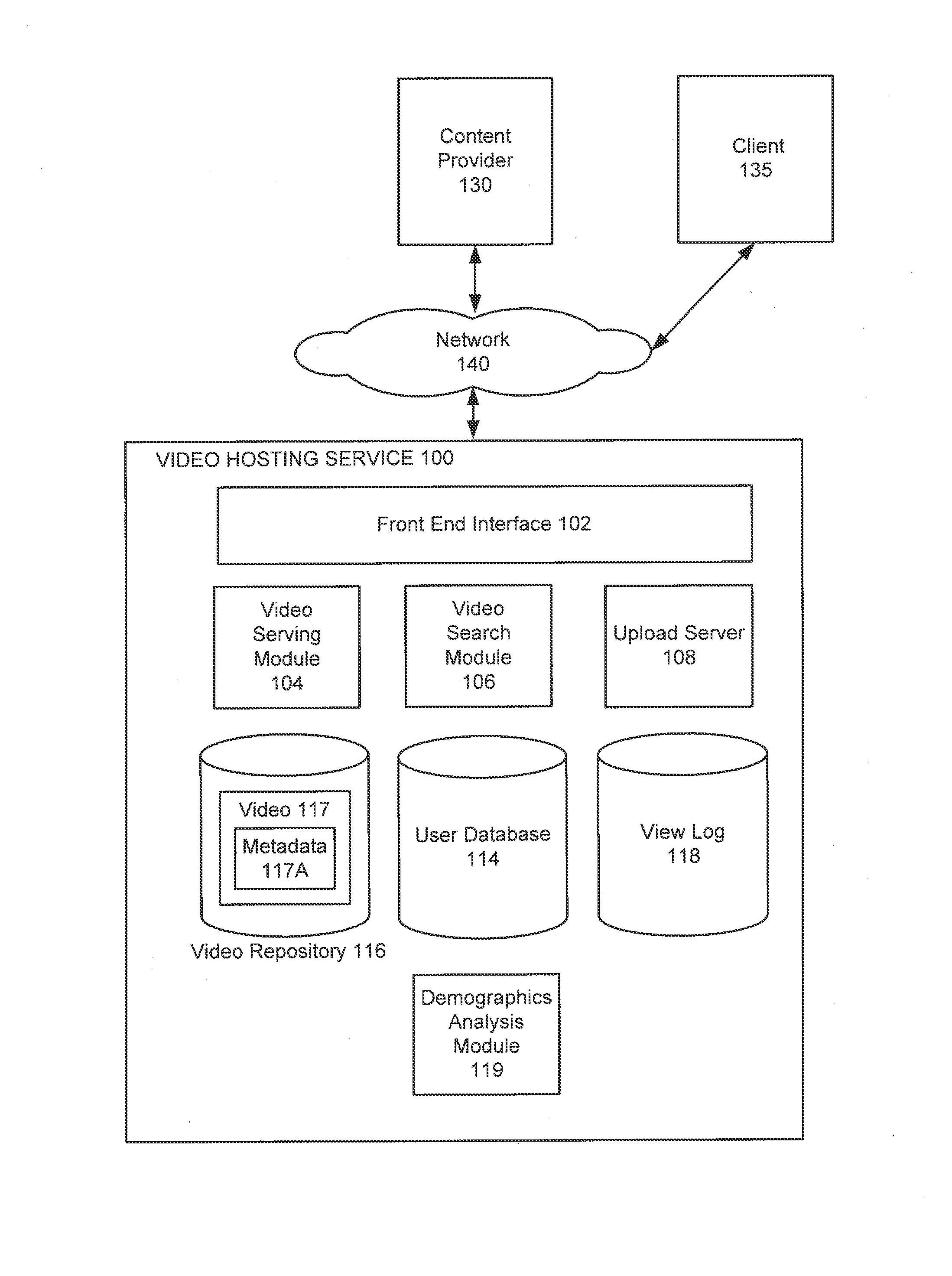

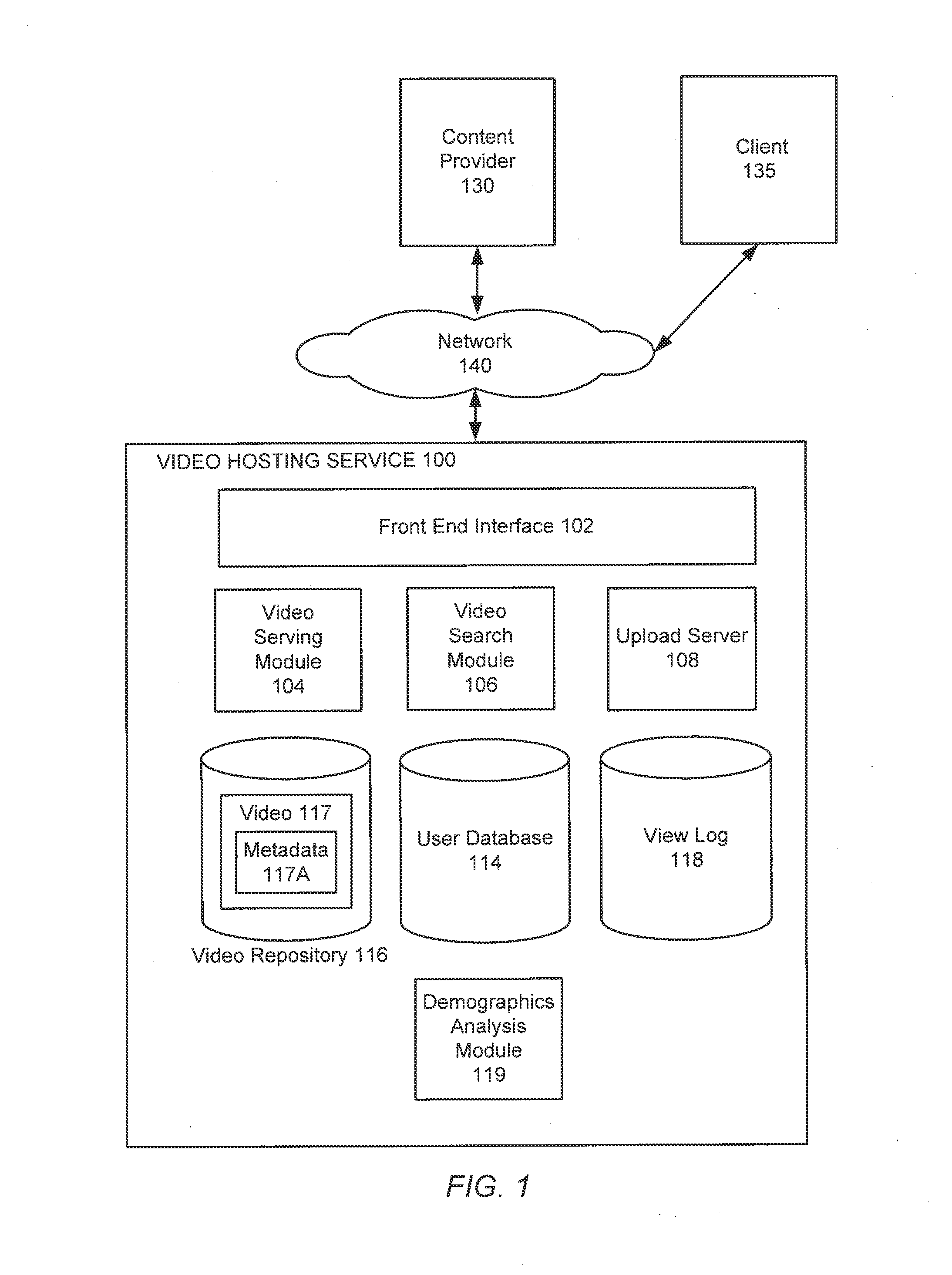

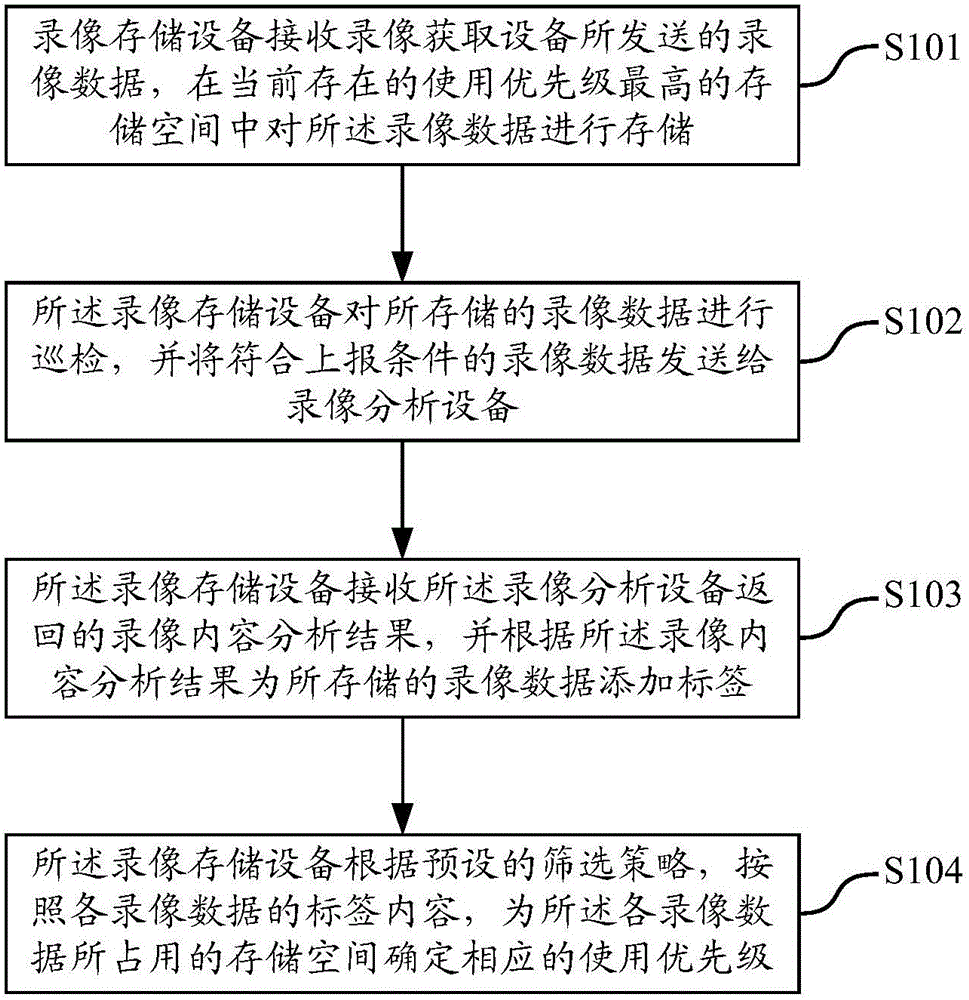

The embodiment of the application discloses a video storage method, device and system. The video storage device selects a corresponding storage space according to the use priority of storage spaces to store video data, sends video data to a video analysis device for analyzing video contents when meeting reporting conditions, adds a label for the video data based on a video content analysis result, and adjusts the use priority of a corresponding storage space according to label contents. The technical scheme can determine video importance based on video content analysis, flexibly adjust the life cycle of stored video data according to importance difference, preferentially store video data including important information, and preferentially cover video data having no important information or less important information; under a same storage space, important video data is preferentially guaranteed so as to maximize video data validity.

Owner:ZHEJIANG UNIVIEW TECH CO LTD

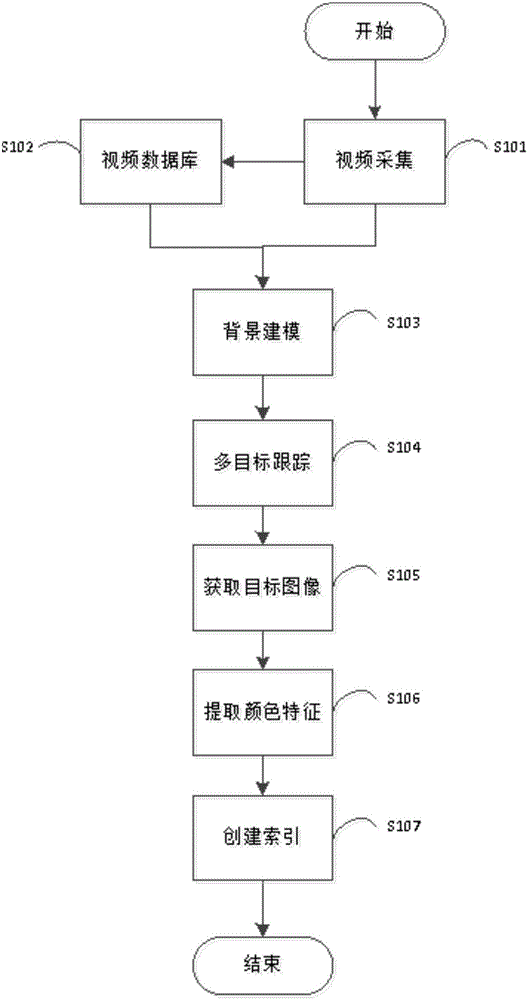

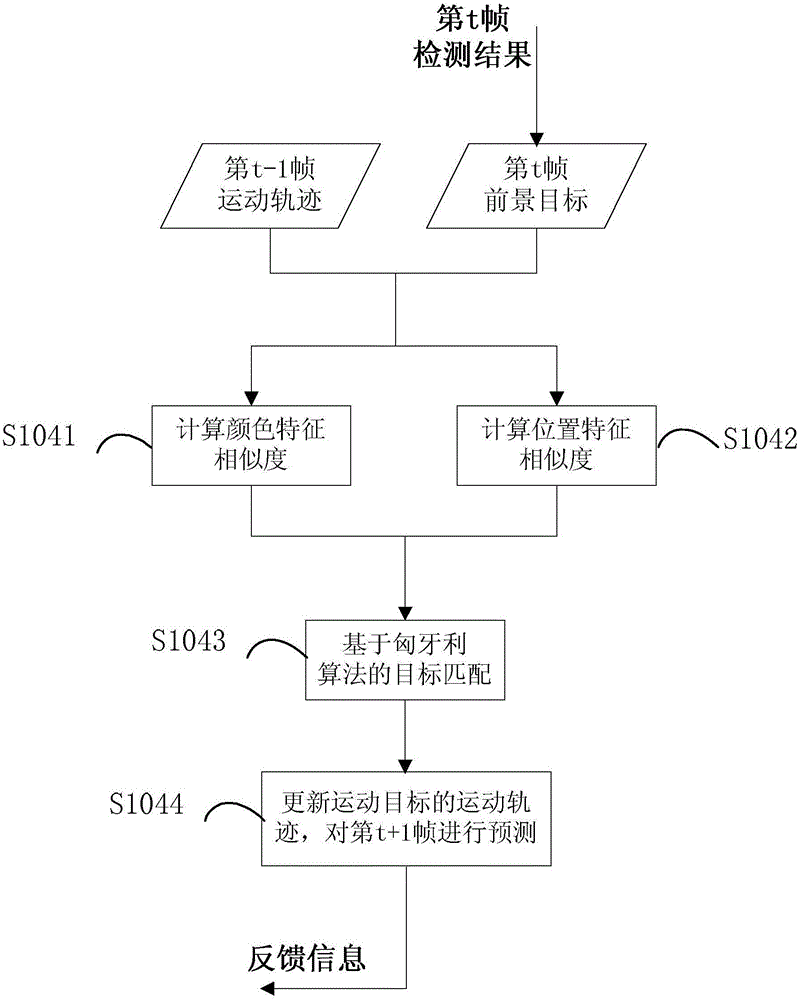

Method for retrieving object in video based on color information

ActiveCN105141903AGuaranteed reliabilityClosed circuit television systemsSpecial data processing applicationsFeature vectorMulti target tracking

The invention discloses a method for retrieving an object in a video based on color information. With respect to a monitoring video under complex scenes, candidate moving objects in the original video are extracted by virtue of video content analysis, and then are differentiated in a multi-object tracking manner; a color feature is extracted from every confirmed moving object to establish an index, and the similarity of an input image feature and a feature vector in the index is calculated and an object retrieval result is obtained according to a similarity sorting method. The method is convenient for a user to quickly find out an interested object from a long-time monitoring video; as a result, the time taken by the user in watching the monitoring video is saved and the utilization rate of the monitoring video is increased.

Owner:北京中科神探科技有限公司

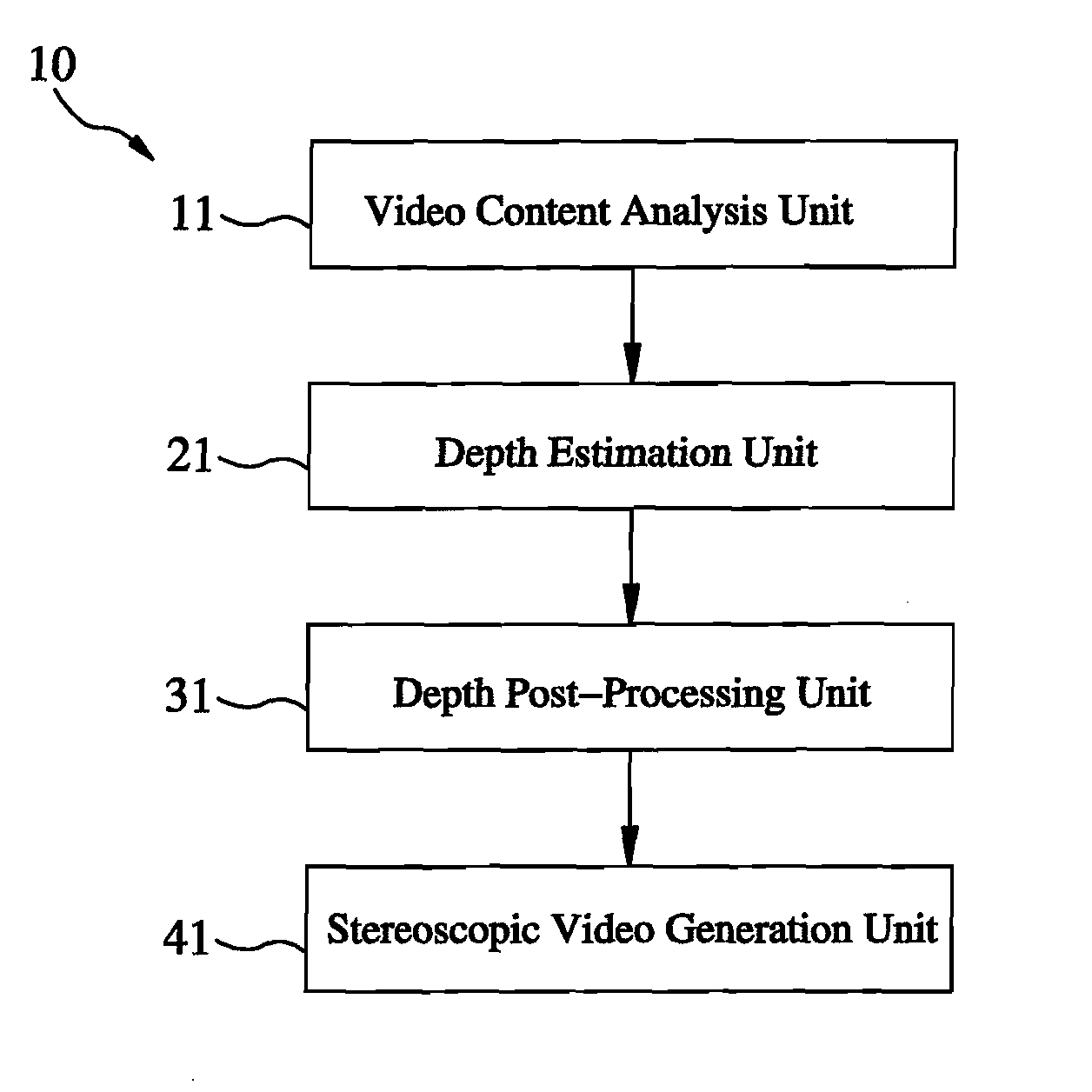

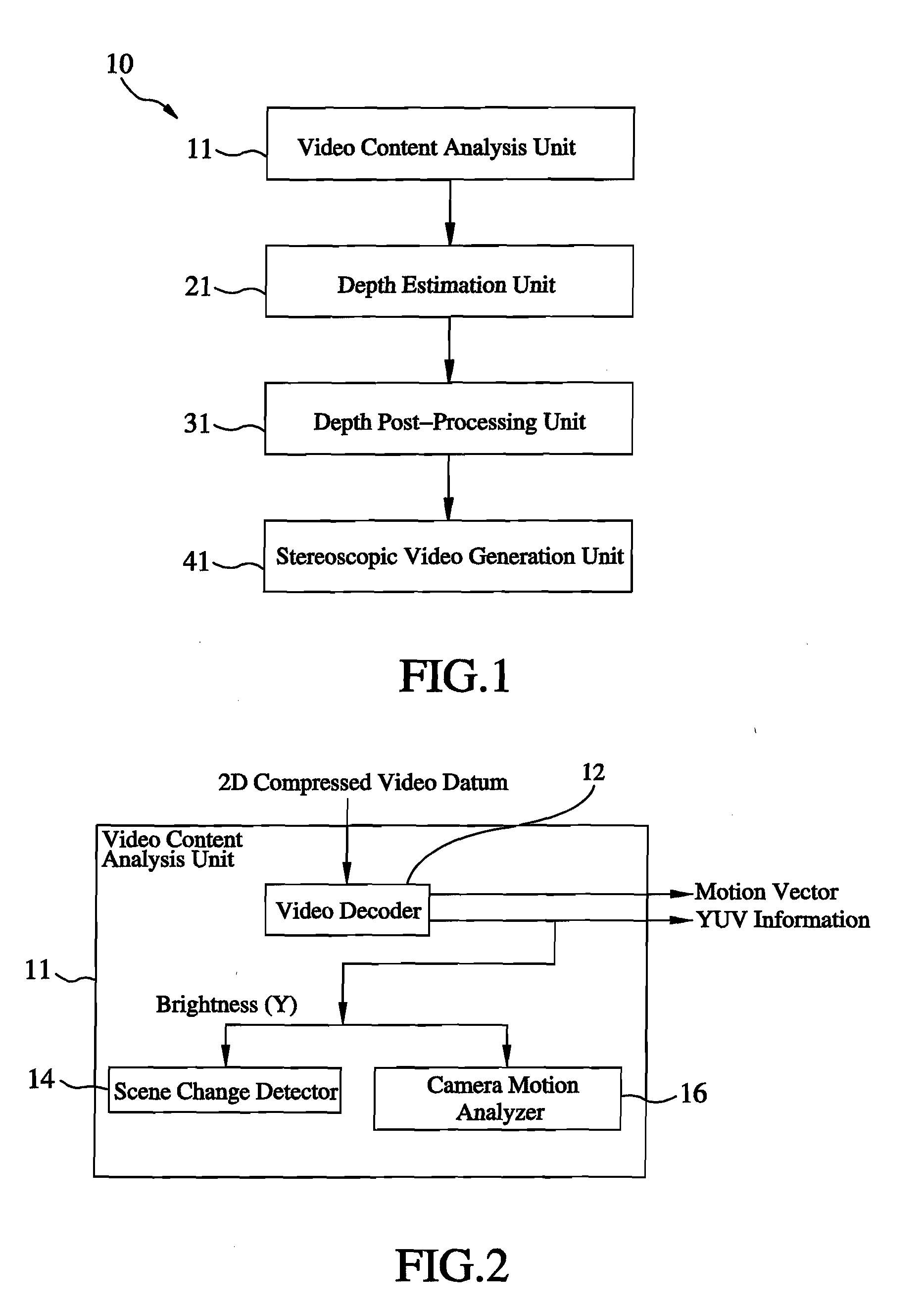

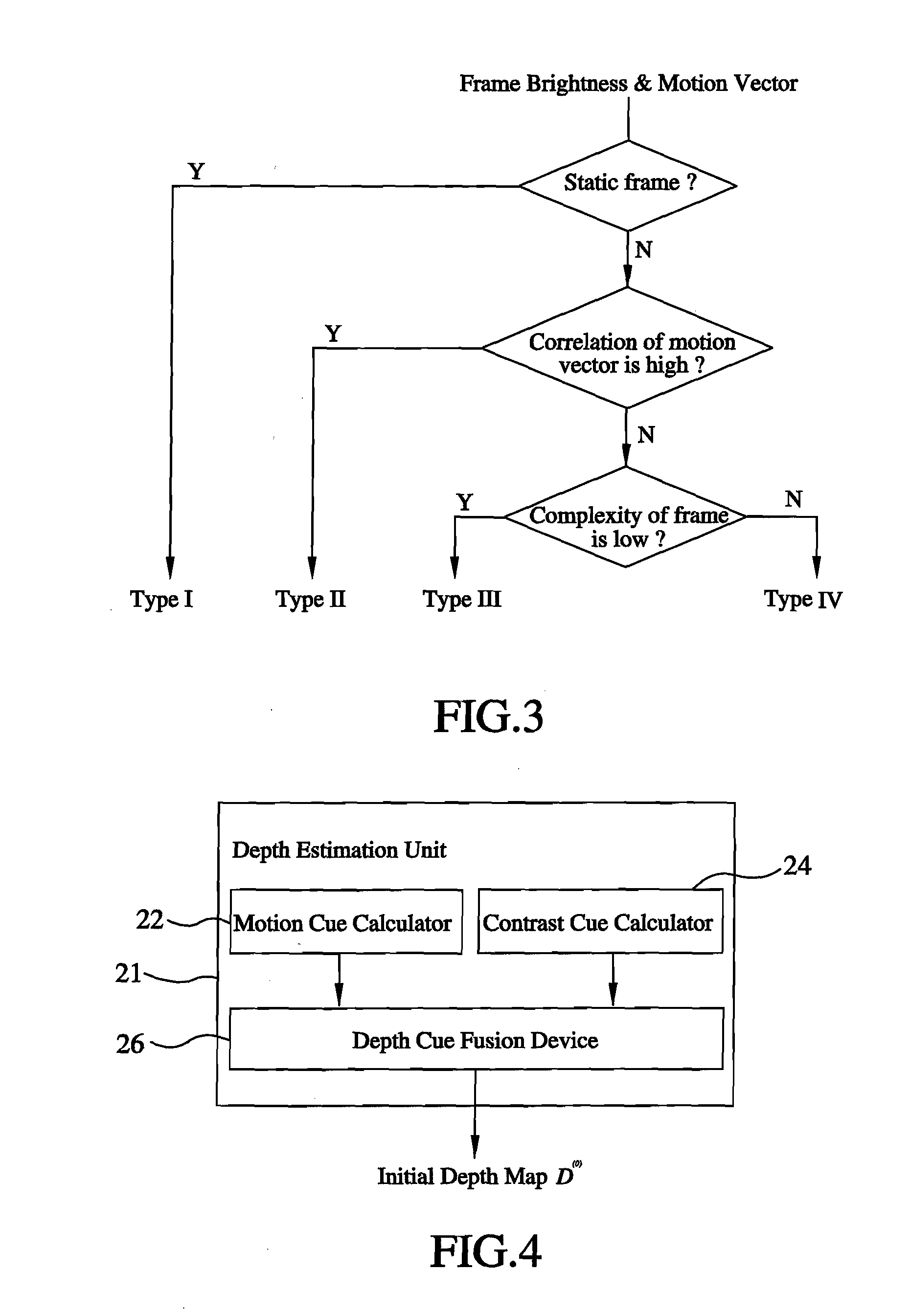

System for converting 2d video into 3D video

ActiveUS20130162768A1Quality improvementEnhance depth continuityImage enhancementImage analysisStereoscopic videoTime domain

A 2D video to 3D video conversion system includes a video content analysis unit, a depth estimation unit, a post-processing unit, and a stereoscopic video generation unit. The video content analysis unit can analyze a 2D video datum and extract useful information including motion and color from the 2D video datum for depth estimation. The depth estimation unit is adapted for receiving the useful information, calculating motion cue and contrast cue for initial depth estimation, and generating an initial depth map. The post-processing unit is adapted for correcting the initial depth map in spatial domain and temporal domain to increase accuracy in spatial domain and depth continuity between adjacent time instances and for processing the caption in the video to generate a final depth map. The stereoscopic video generation unit is adapted for synthesizing 3D video datum from the final depth map and 2D video datum.

Owner:NATIONAL CHUNG CHENG UNIV

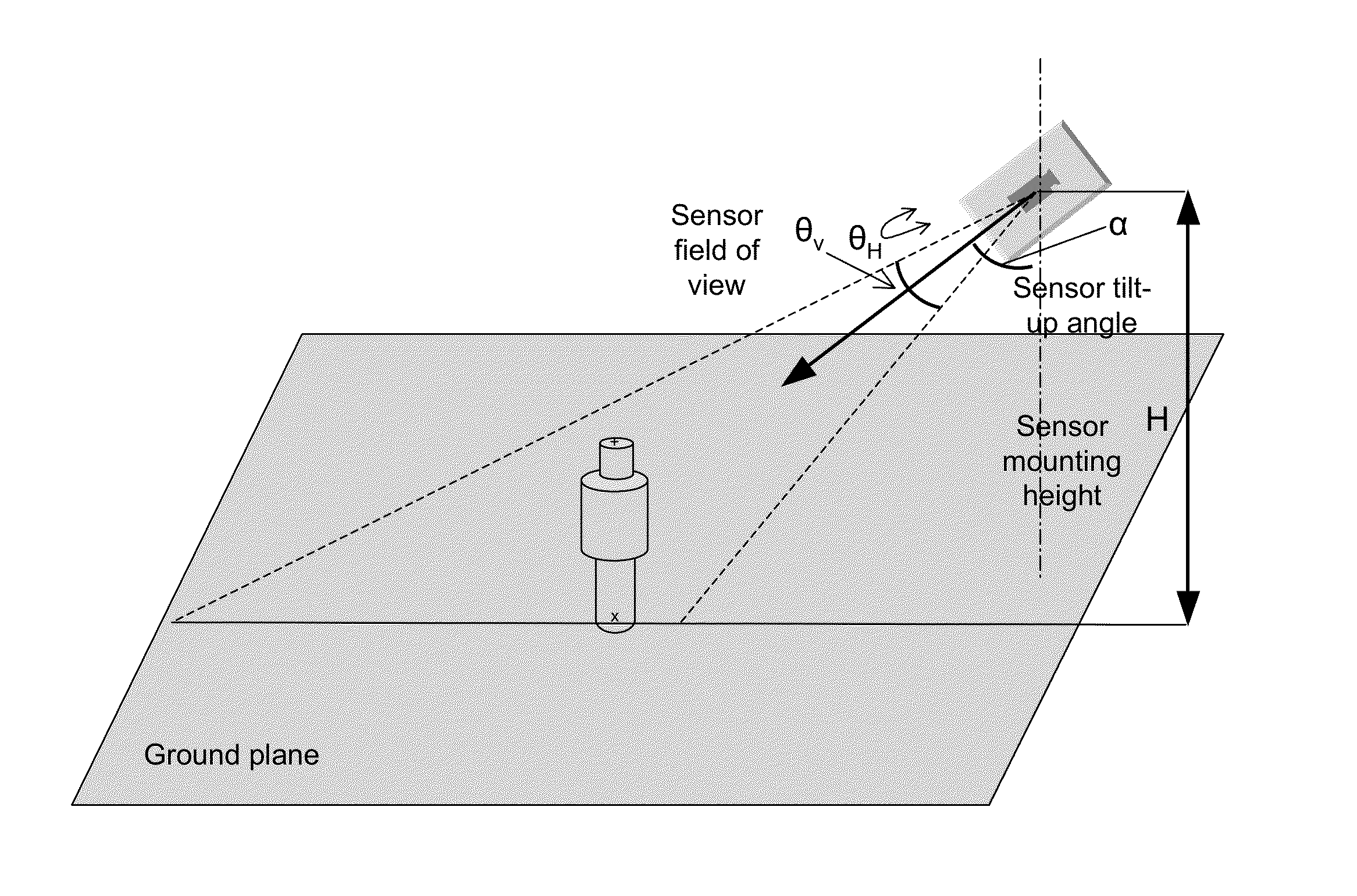

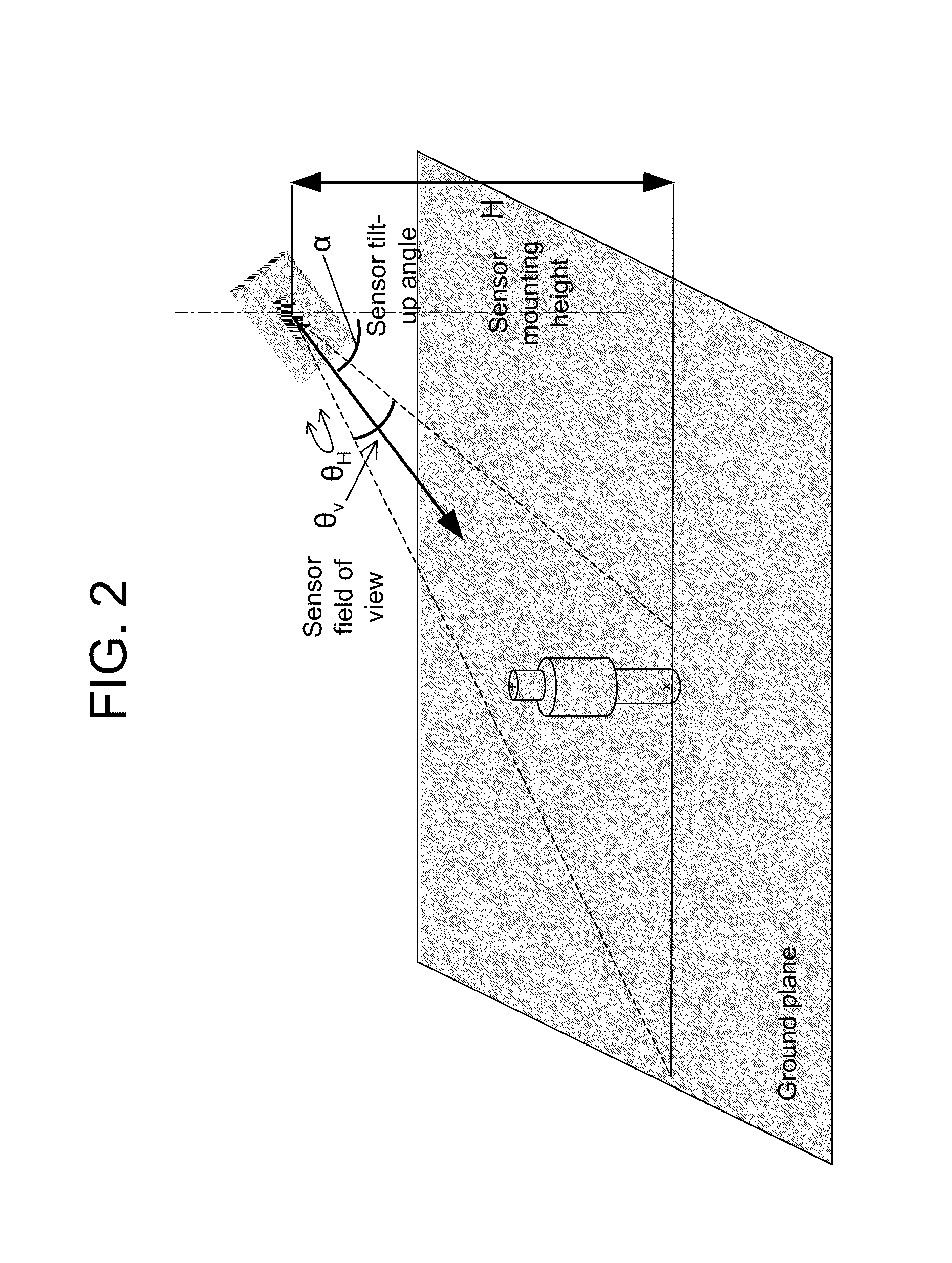

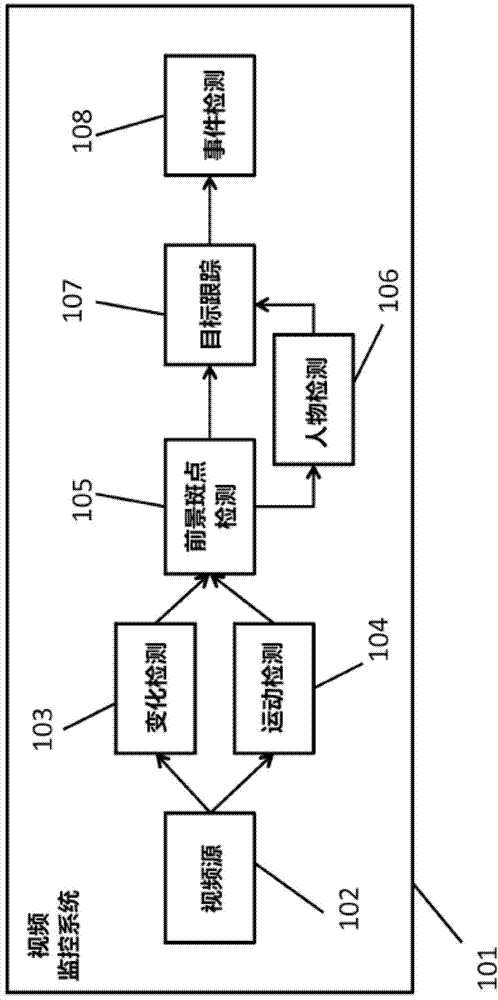

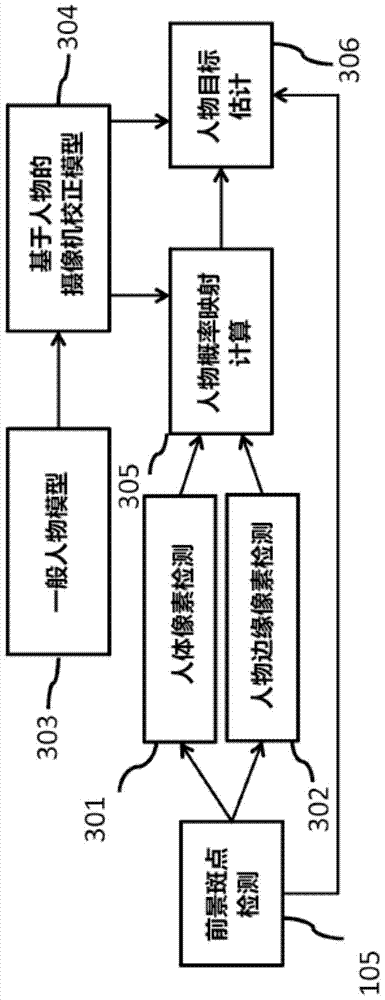

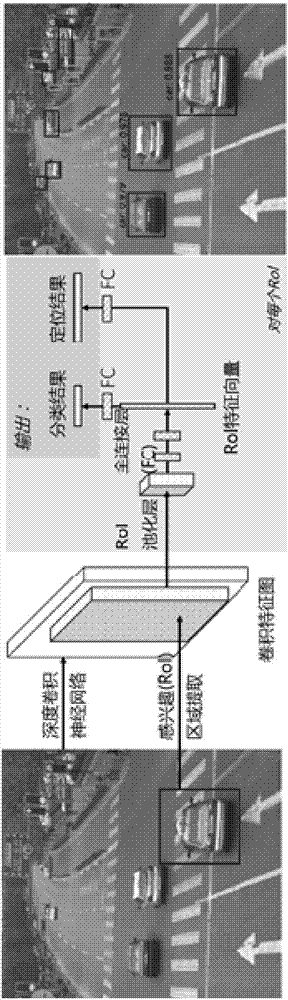

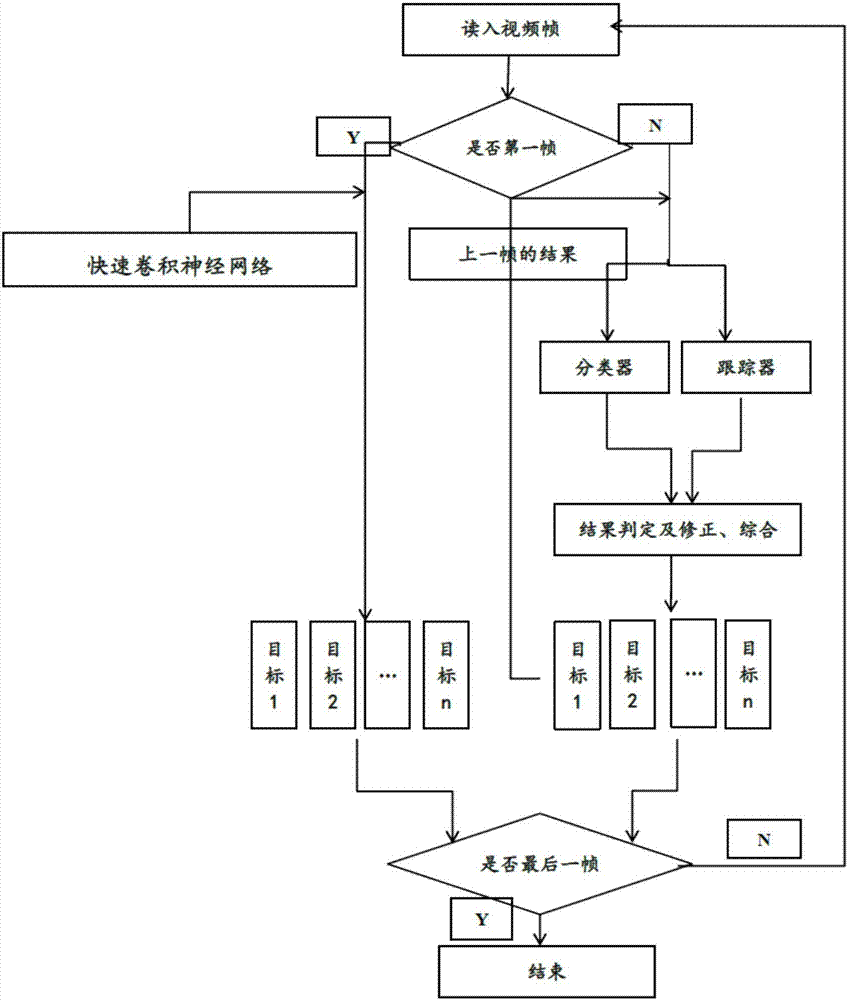

Methods, devices and systems for detecting objects in a video

Methods, devices and systems for performing video content analysis to detect humans or other objects of interest a video image is disclosed. The detection of humans may be used to count a number of humans, to determine a location of each human and / or perform crowd analyses of monitored areas.

Owner:MOTOROLA SOLUTIONS INC

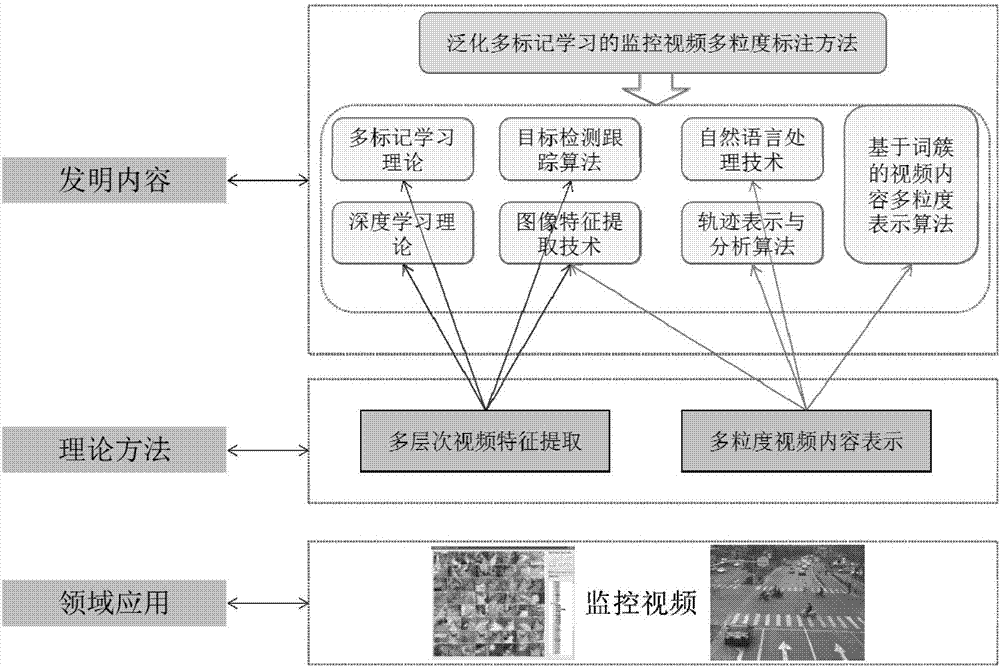

Monitoring video multi-granularity marking method based on generalized multi-labeling learning

ActiveCN107133569AEffective theoryEfficient methodCharacter and pattern recognitionVideo monitoringLanguage understanding

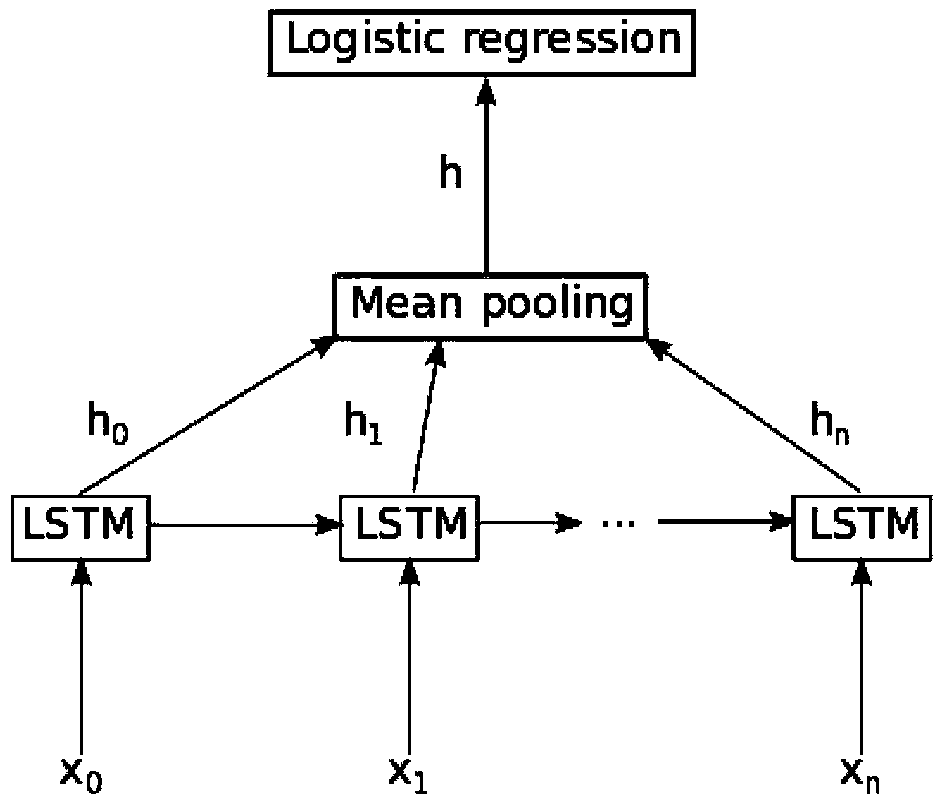

The invention discloses a monitoring video multi-granularity marking method based on generalized multi-marking learning. The monitoring video multi-granularity marking method of the invention takes the backdrop of public security video monitoring content analysis and carries out a research according to video characteristic multi-layer acquisition and multi-granularity representation theory and method. The monitoring video multi-granularity marking method comprises steps of analyzing and extracting characteristics of different layers of an object in a video on the basis of a multi-marking learning theory and a deep learning theory , constructing a generalized multi-mark classification algorithm on the basis of a multi-mark learning theory and a deep learning theory, and characterizing a multi-granularity representation model of video information on the basis of a granular computing theory and a nature language understanding technology. The monitoring video multi-granularity marking method, targeting the monitoring video content field, carries out a research going deep into the system, constructs a multi-mark learning algorithm through the deep learning theory and can provide an effective theory and method to multi-layer video information. Through simulating the way that human recognize and describe the image, the monitoring video multi-granularity marking method establishes the multi-granularity video representation theory and method, provides a new idea to the video content analysis, and lays theory and application foundations for pushing development of future video monitoring intelligentalization.

Owner:TONGJI UNIV

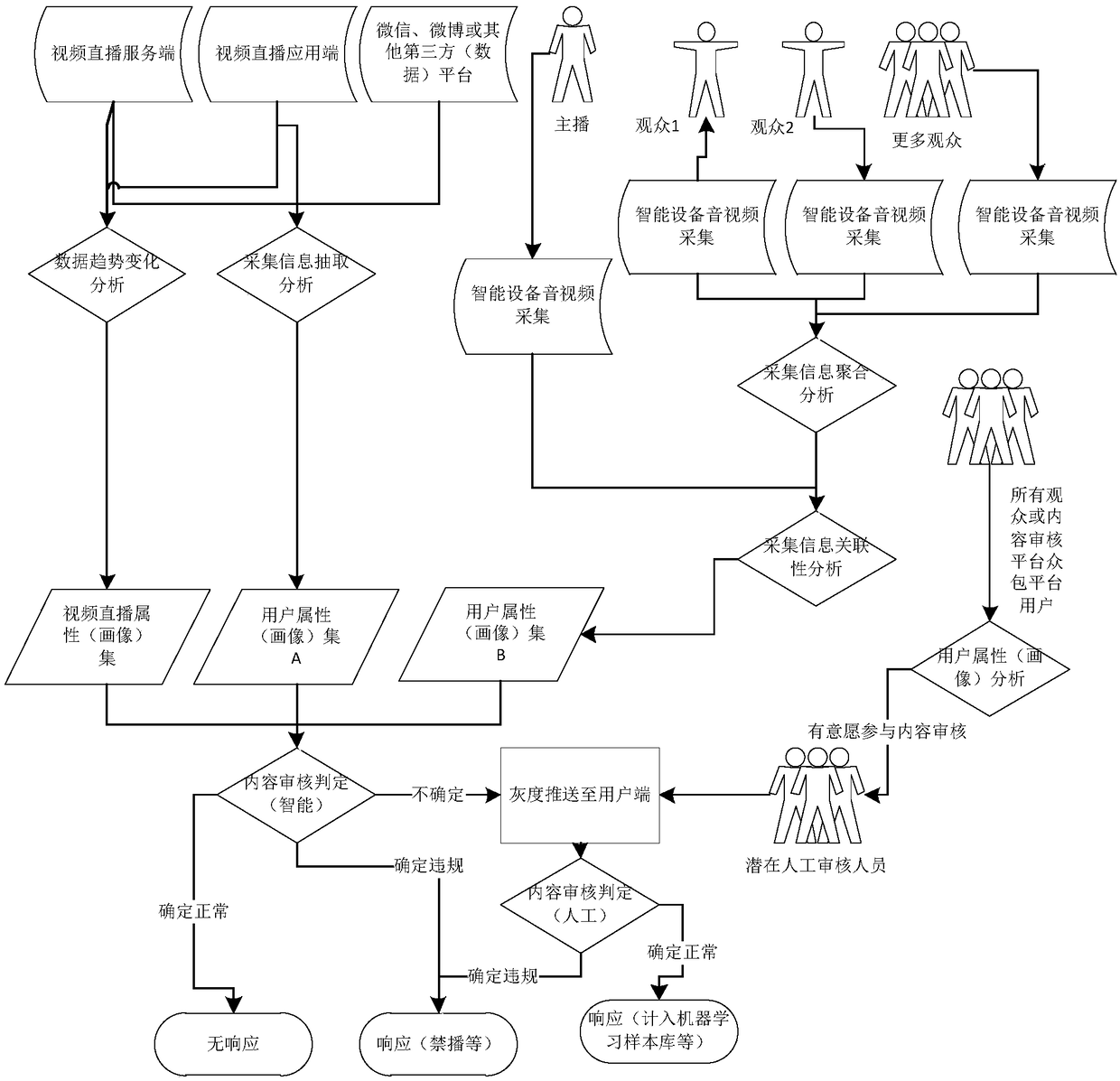

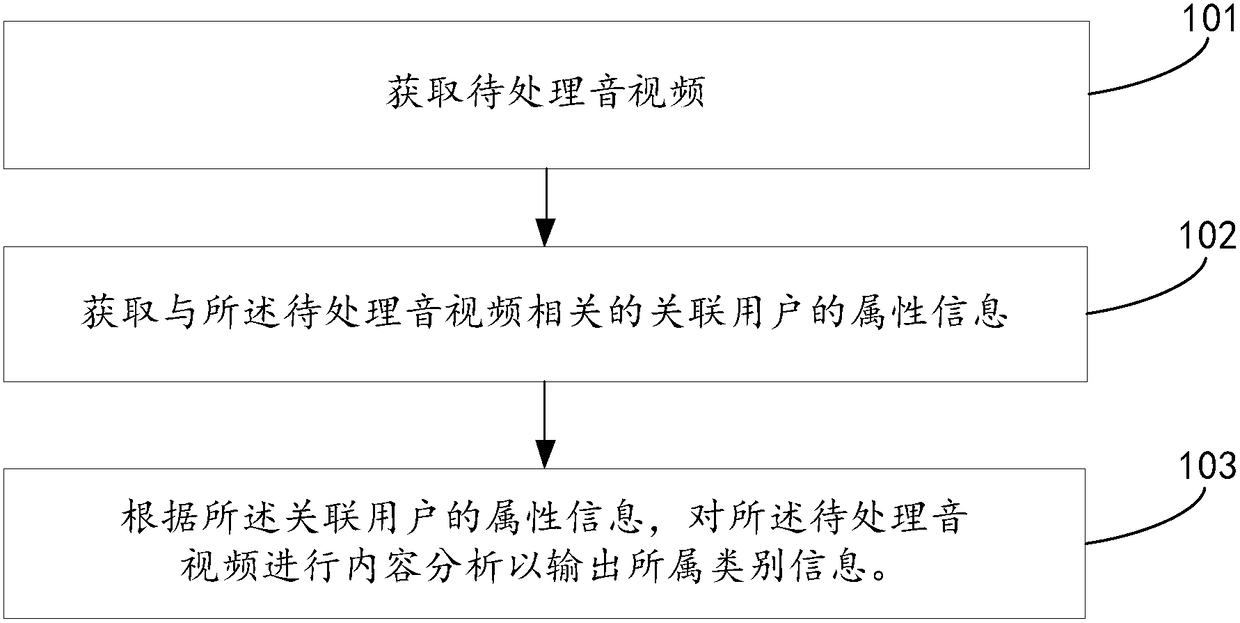

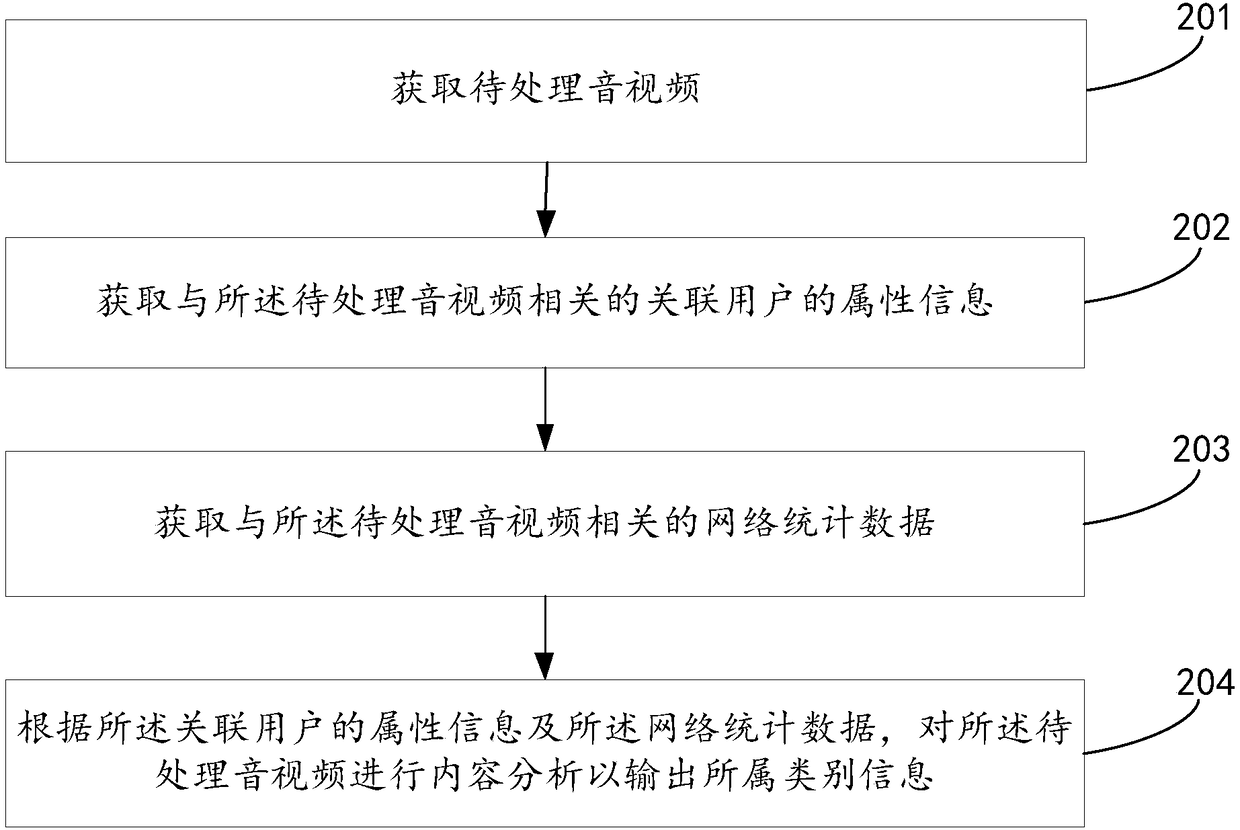

Audio and video content analyzing method and apparatus

InactiveCN108932451AImprove audit accuracyAdvance the process of review automationCharacter and pattern recognitionVideo content analysisMultimedia

An embodiment of the present invention provides an audio and video content analyzing method and apparatus. The method includes the following steps: a step of acquiring an audio and video file to be processed, a step of acquiring attribute information of an associated user related to the audio and video file to be processed, and a step of performing content analysis on the audio and video file to be processed according to attribute information of the associated user to output category information. Via the audio and video content analyzing method and apparatus disclosed in the technical solutionprovided in the embodiment of the present invention, content review is performed on the processed audio and video file to be processed based on the audio and video file to be processed and the attribute information of the associated user related to the audio and video file to be processed, the attribute information of the associated user includes a large quantity of data related to video contentthat can be utilized and mined; auxiliary classification of videos is performed based on the data, reviewing accuracy can be effectively improved, and advancement of video reviewing automation can befacilitated.

Owner:BEIJING KINGSOFT CLOUD NETWORK TECH CO LTD +1

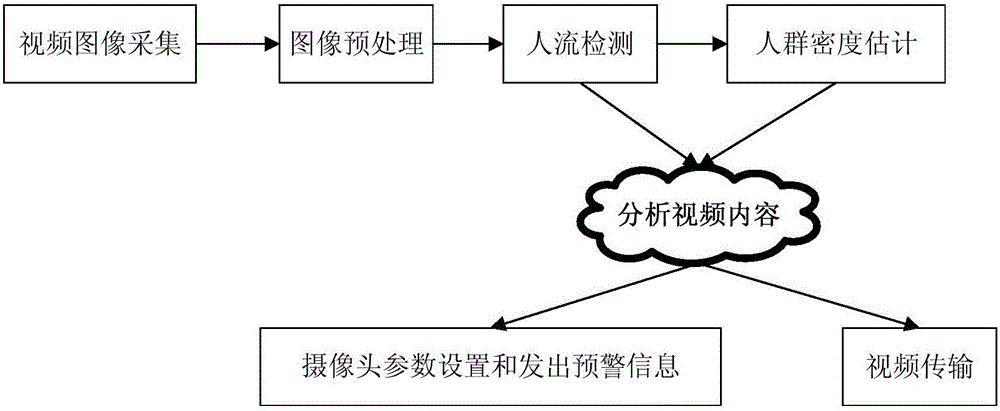

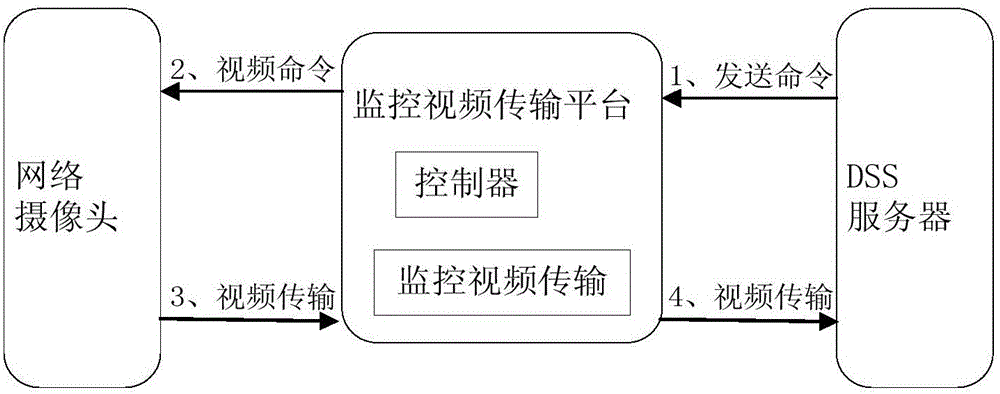

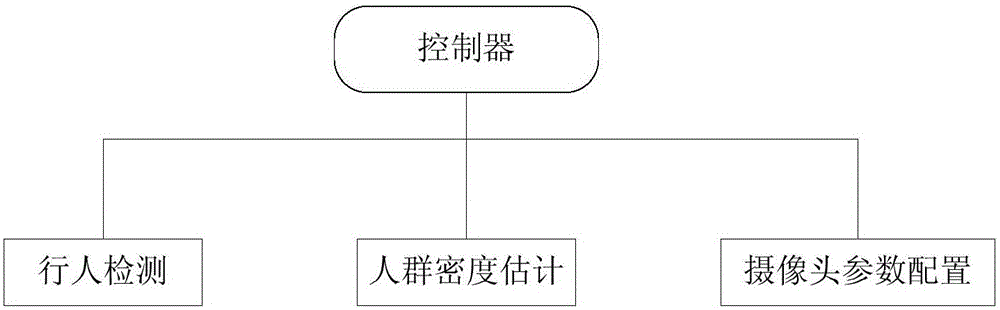

Surveillance video transmission method based on video content analysis

InactiveCN105791774AShielding differencesGuaranteed real-timeImage enhancementTelevision system detailsVideo transmissionVideo content analysis

The invention discloses a surveillance video transmission method based on video content analysis, which is characterized by comprising an image acquisition module, an image preprocessing module, a pedestrian flow detection module, a crowd density estimation module, a camera parameter setting and warning module, and a video transmission module. The pedestrian flow detection module is used for detecting and counting pedestrians in a surveillance video image. The crowd density estimation module is used for estimating the density of pedestrian flow in the current video image by use of a crowd density estimation algorithm. The camera parameter setting and warning module is used for sending out a camera parameter modification command and crowd event warning information when pedestrian detection and crowd density detection reach a preset level. According to the invention, the crowd density in a video is estimated by use of a pedestrian detection algorithm and a crowd density estimation algorithm, and the configuration of the camera is modified and corresponding surveillance video transmission is carried out according to the result of estimation. Moreover, 'problematic' videos can be transmitted actively or passively, and warning information can be sent out.

Owner:BEIJING UNIV OF TECH

Video content analysis for automatic demographics recognition of users and videos

ActiveUS8301498B1Sampled-variable control systemsMetadata video data retrievalVideo content analysisDemographic data

Owner:GOOGLE LLC

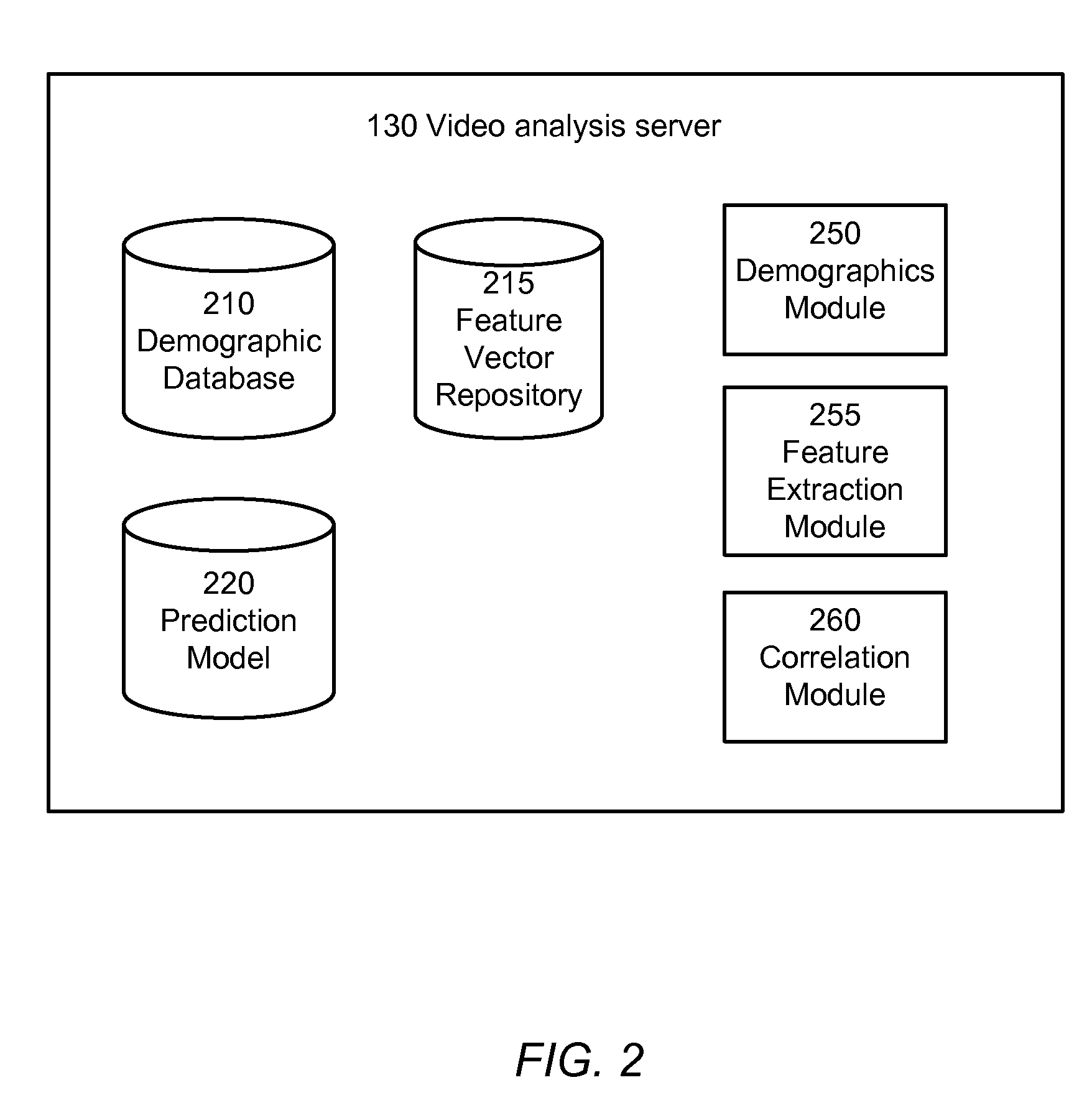

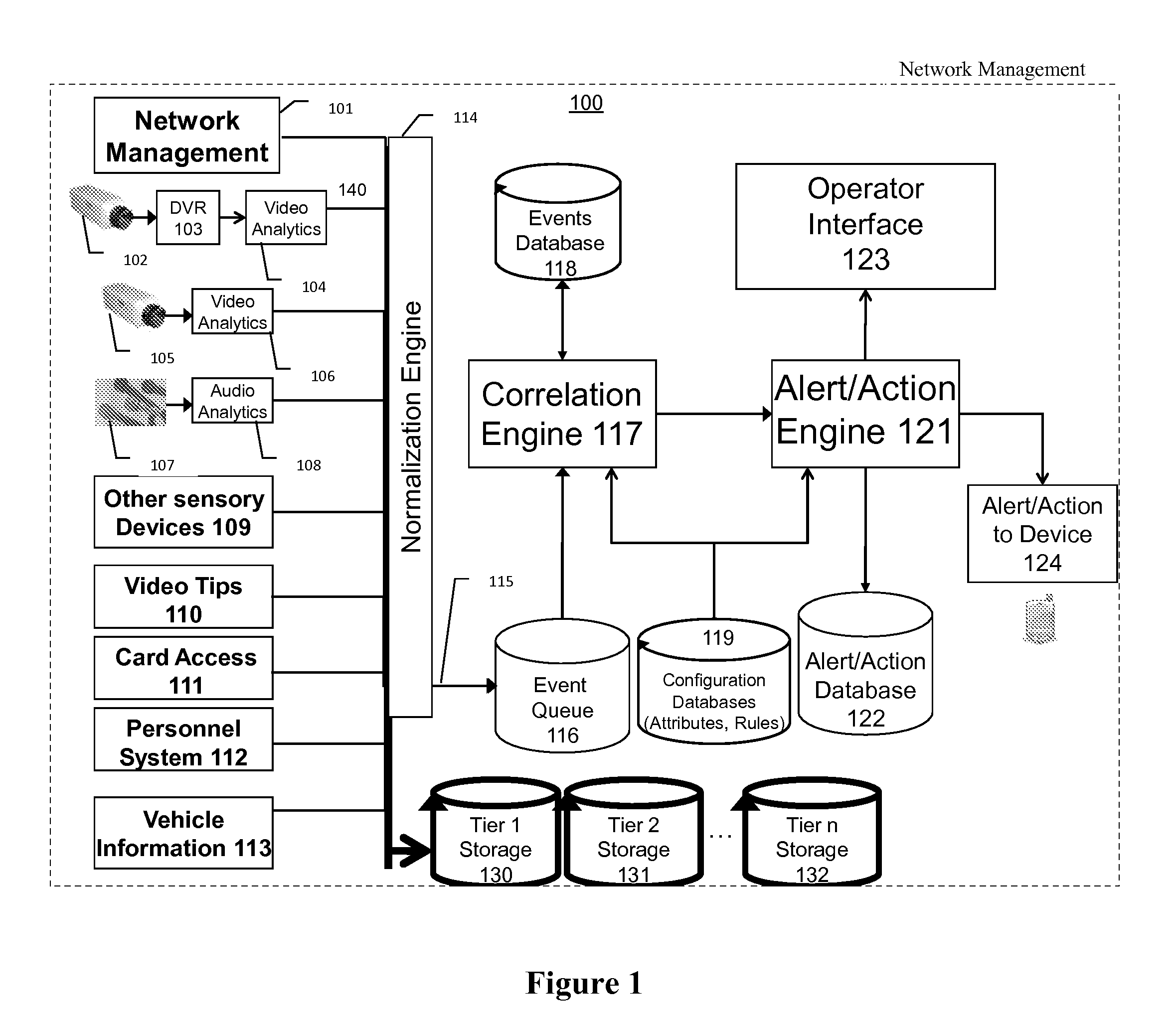

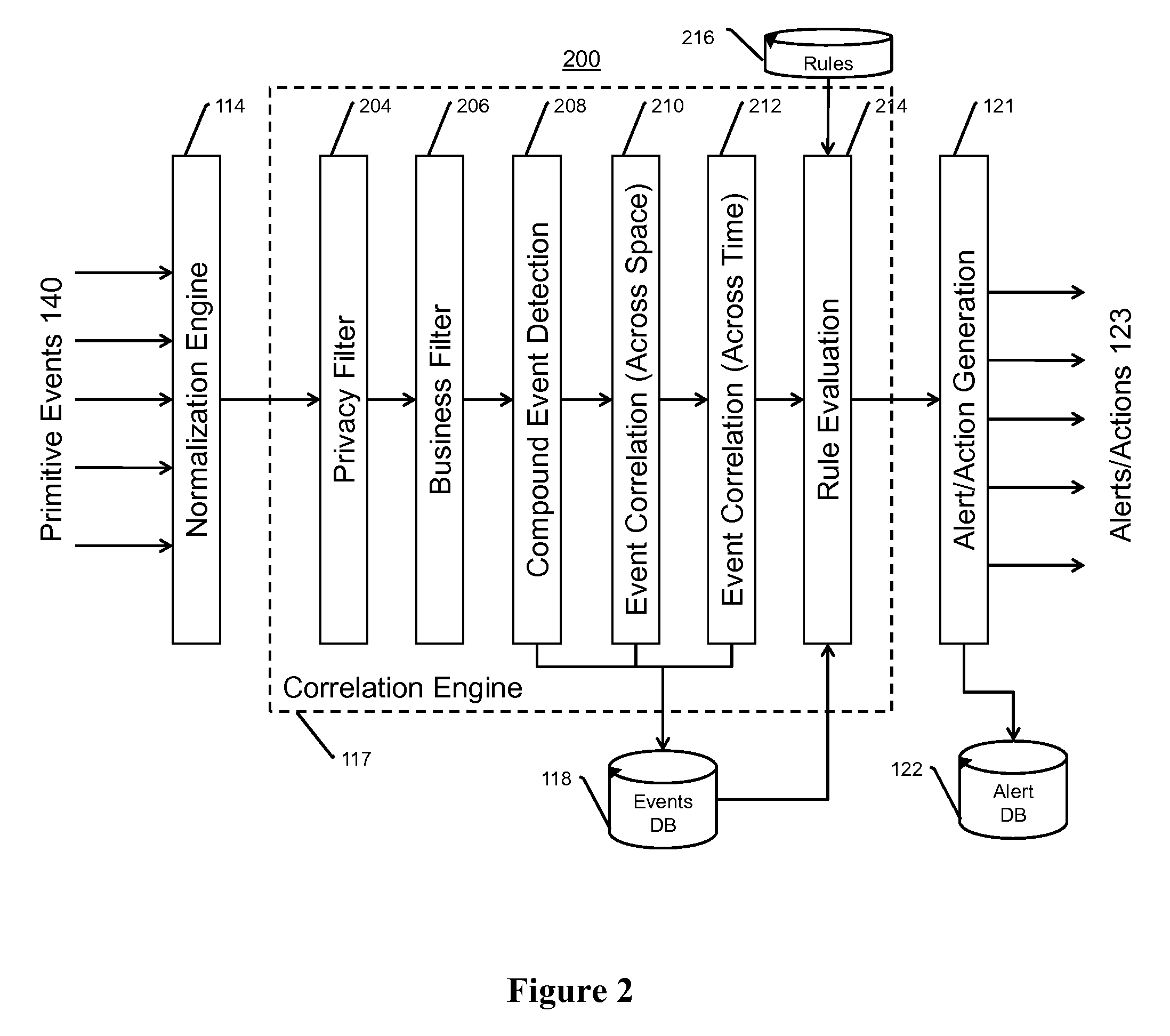

Audio-video tip analysis, storage, and alerting system for safety, security, and business productivity

InactiveUS7999847B2Color television detailsClosed circuit television systemsVideo content analysisNetwork management

An audio surveillance, storage, and alerting system, including the following components: One or more audio sensory devices capture audio data having attribute data representing importance of the audio sensory devices. One or more audio analytics devices process the audio data to detect audio events. A network management module monitors network status of the audio sensory devices and generates network events reflective of the network status of all subsystems. A correlation engine correlates two or more events weighted by the attribute data of the device used to capture the data. Finally, an alerting engine generates one or more alerts and performs one or more actions based on the correlation performed by the correlation engine. This invention may be used to help fight crime and help ensure safety procedures are followed.

Owner:SECURENET SOLUTIONS GRP

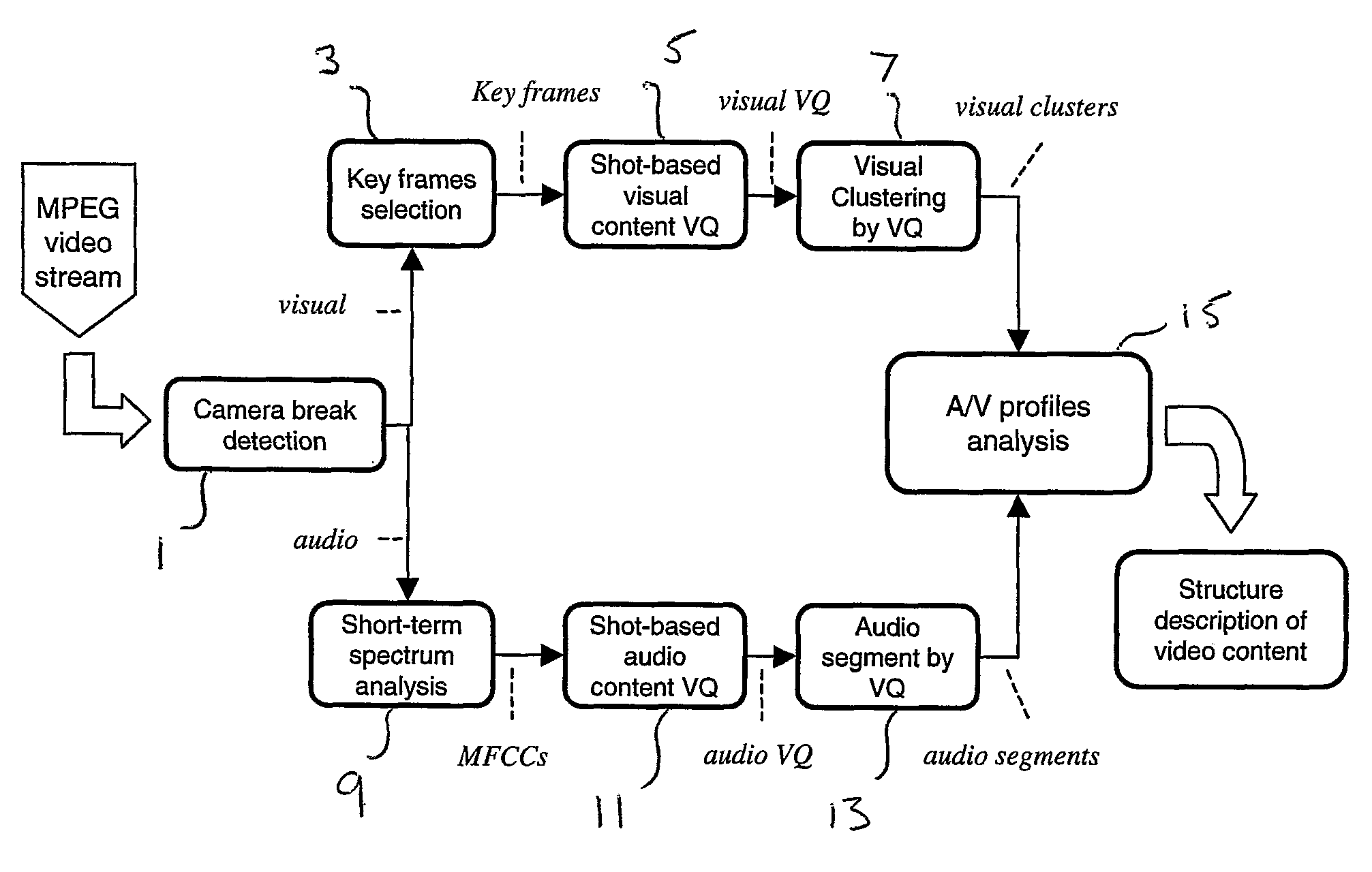

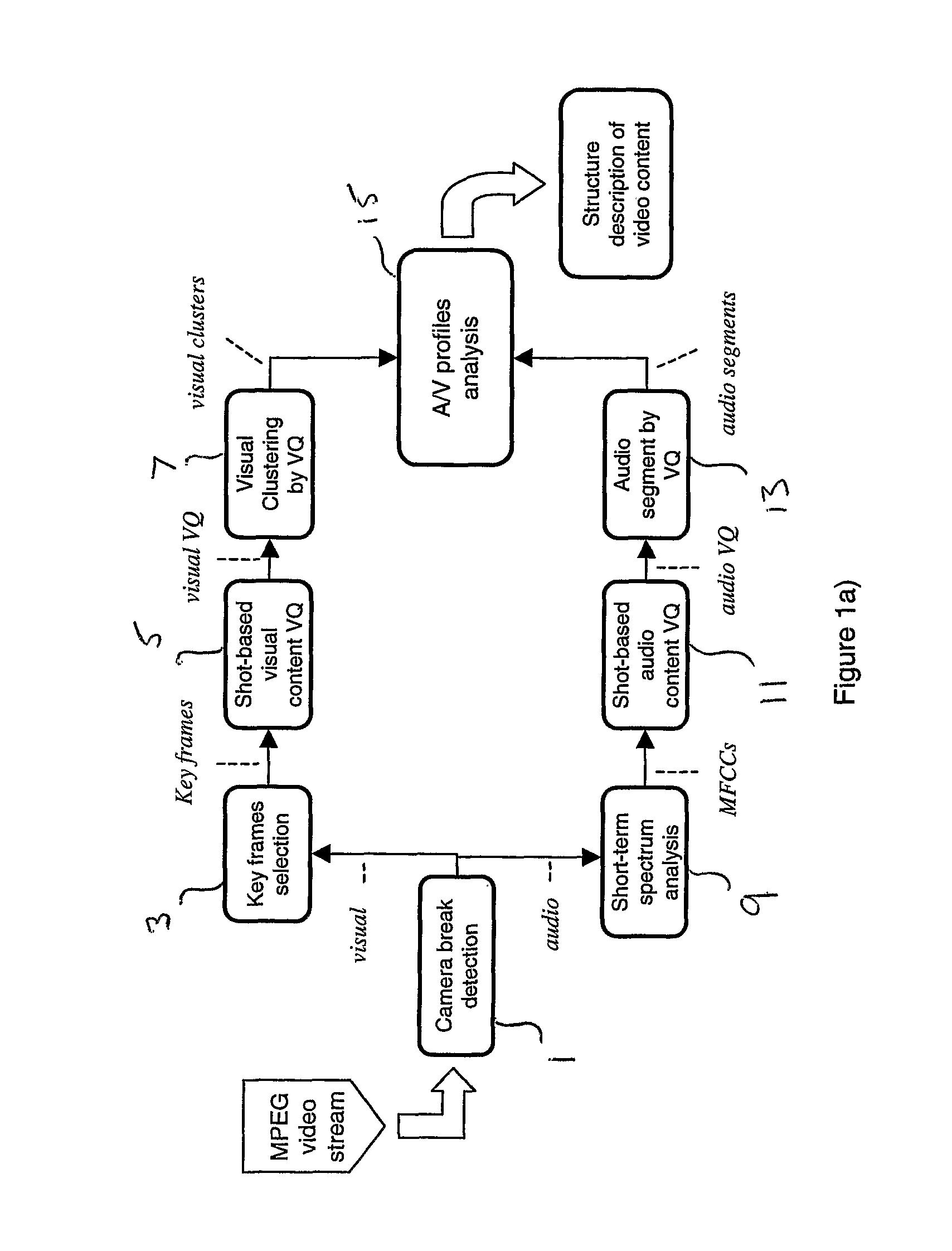

Method and system for semantically segmenting scenes of a video sequence

ActiveUS7949050B2Easy to browseLaborious processTelevision system detailsPicture reproducers using cathode ray tubesCluster algorithmVideo content analysis

A shot-based video content analysis method and system is described for providing automatic recognition of logical story units (LSUs). The method employs vector quantization (VQ) to represent the visual content of a shot, following which a shot clustering algorithm is employed together with automatic determination of merging and splitting events. The method provides an automated way of performing the time-consuming and laborious process of organising and indexing increasingly large video databases such that they can be easily browsed and searched using natural query structures.

Owner:BRITISH TELECOMM PLC

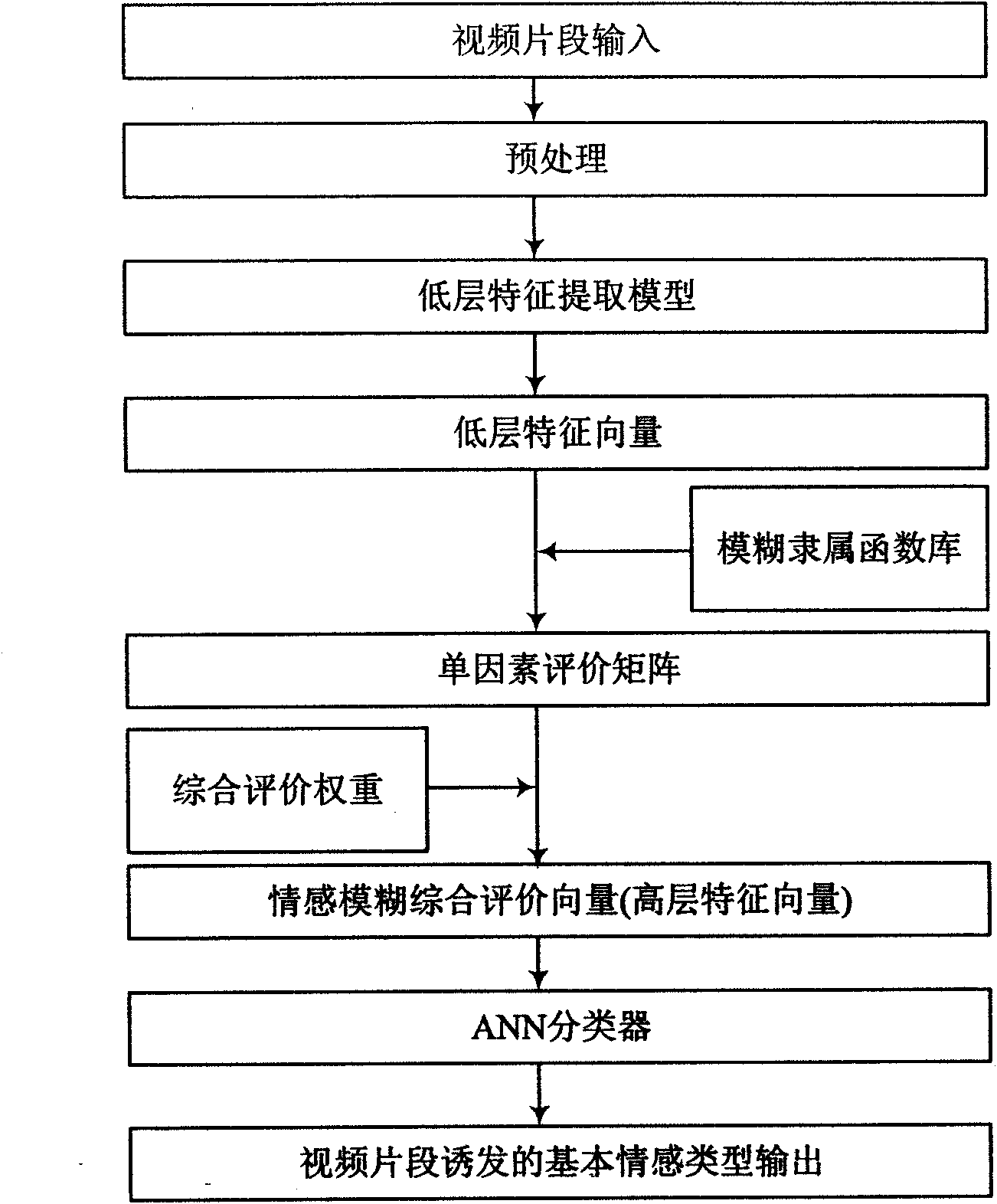

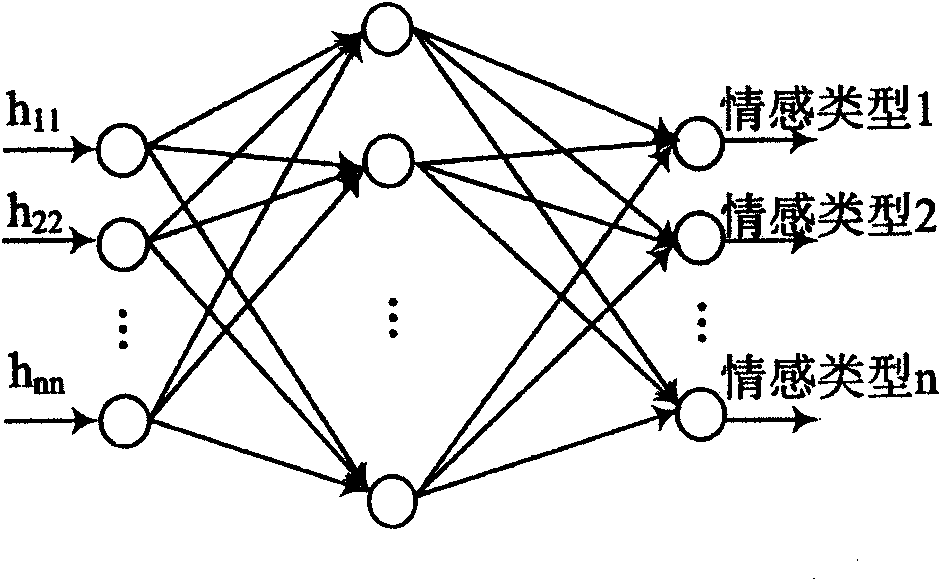

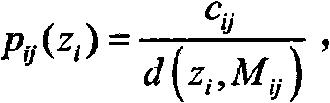

Video feeling content identification method based on fuzzy comprehensive evaluation

InactiveCN101593273AImprove recognition accuracyHigh recognition rateCharacter and pattern recognitionFeature vectorVideo content analysis

The invention belongs to the field of video content analysis, and in particular relates to a video feeling content identification method based on fuzzy comprehensive evaluation. The prior video feeling content identification method is insufficient in considering the problem of fuzzy attribute of feeling. Aiming at the defects existing in the prior art, the method first uses a fuzzy comprehensive evaluation model in fuzzy theory in the video feeling content identification. Compared with the prior art, the method sufficiently considers the fuzzy attribute of the video feeling content, expresses video clip content by a high-level characteristic vector closely related to feeling based on the fuzzy comprehensive evaluation model, and researches the video feeling content identification in high level; and further, the method adopts an artificial neural network (ANN) to simulate a human feeling response system and identify the video clip to induce basic feeling types generated by audiences. Experiment results verify the effectiveness and feasibility of the method in video feeling content identification.

Owner:BEIJING UNIV OF POSTS & TELECOMM

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com