Patents

Literature

515 results about "Crucial point" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

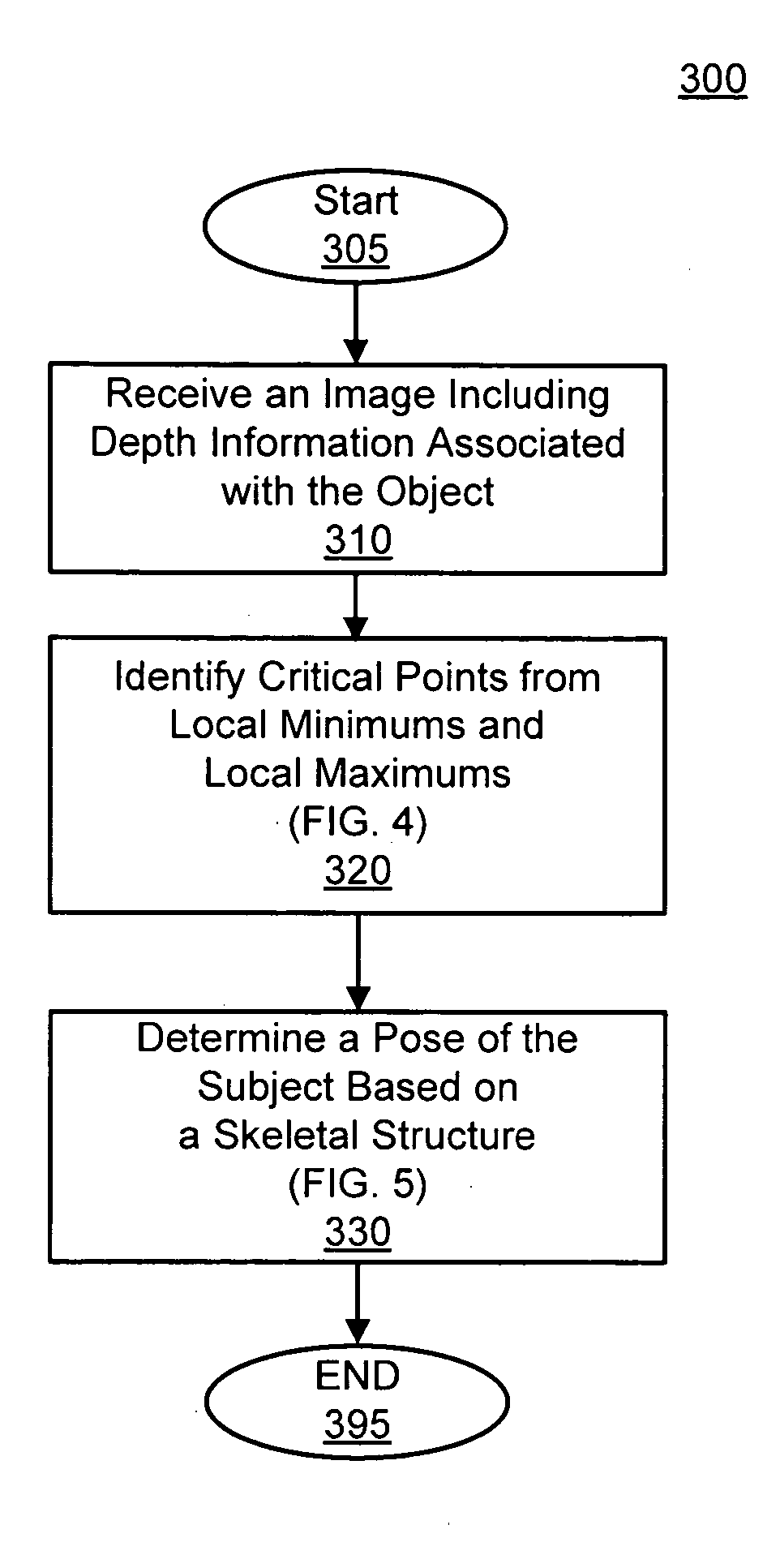

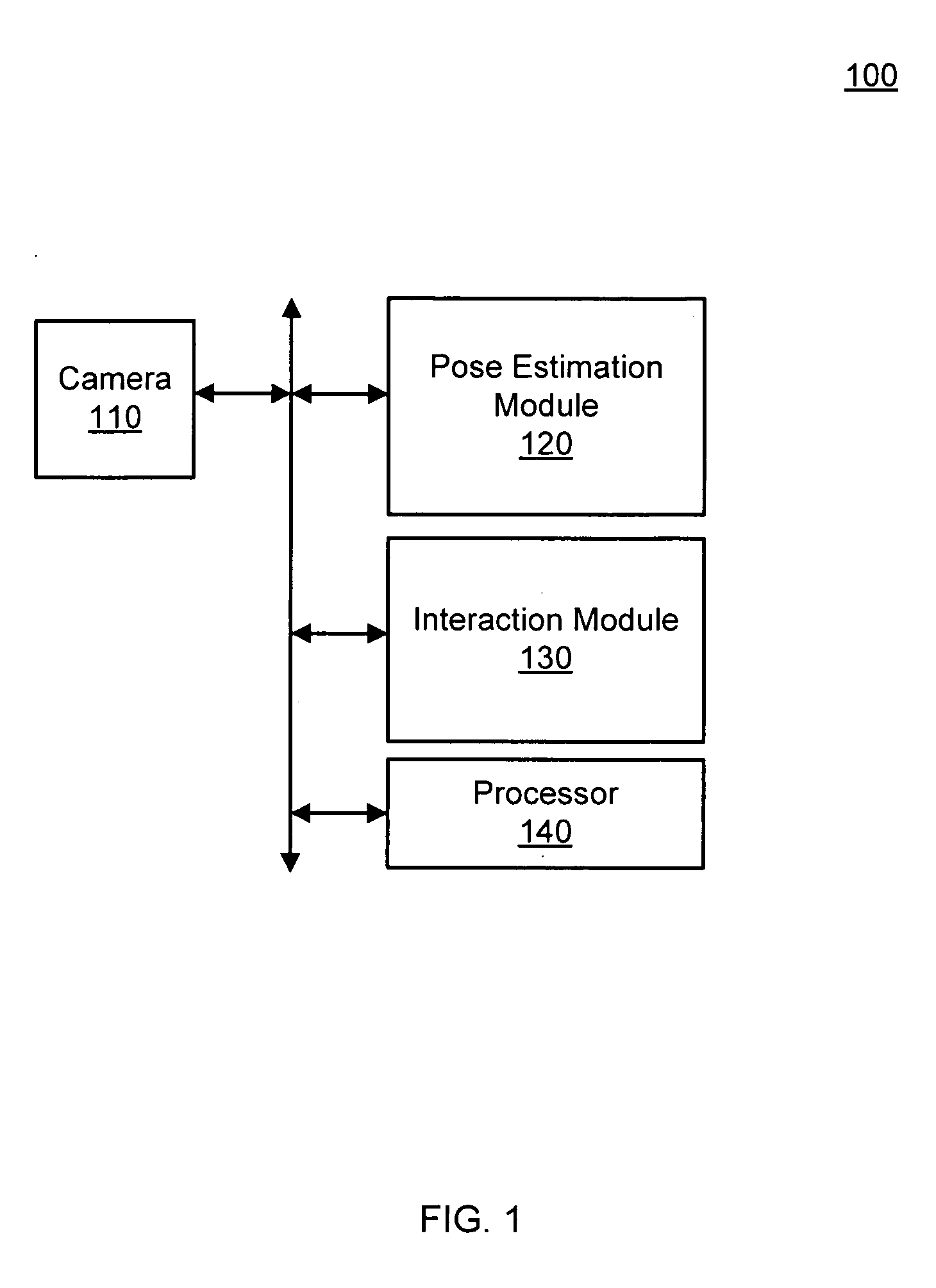

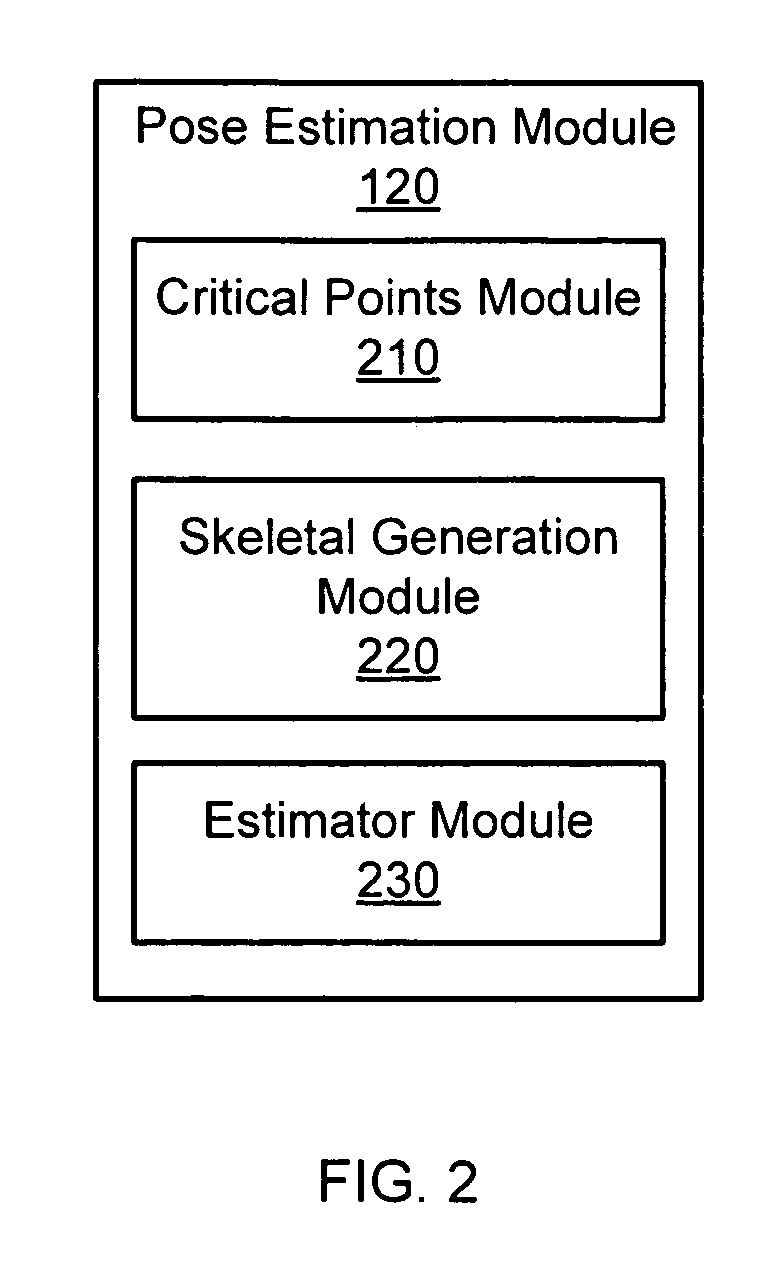

Pose estimation based on critical point analysis

Owner:THE OHIO STATE UNIV RES FOUND +1

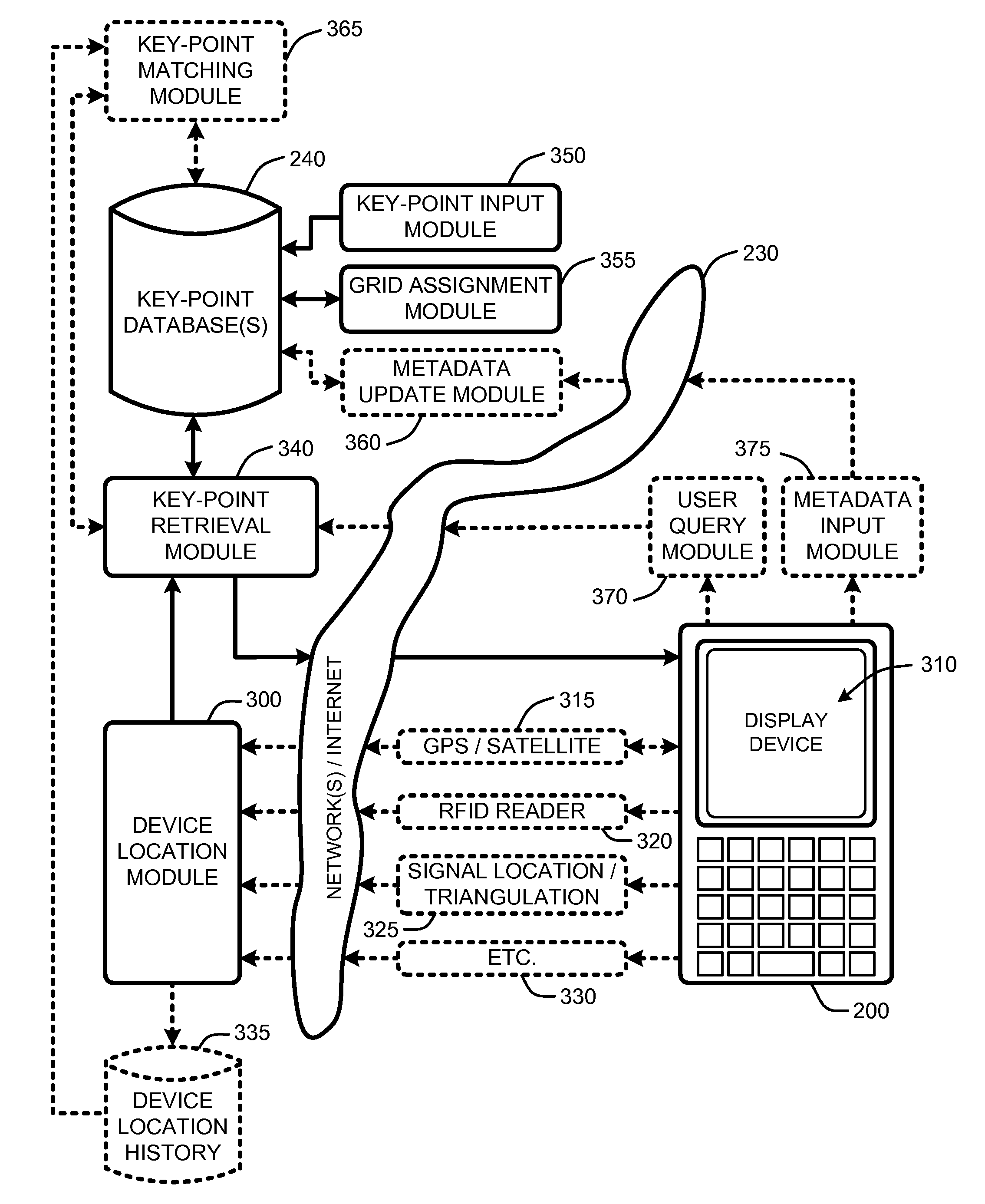

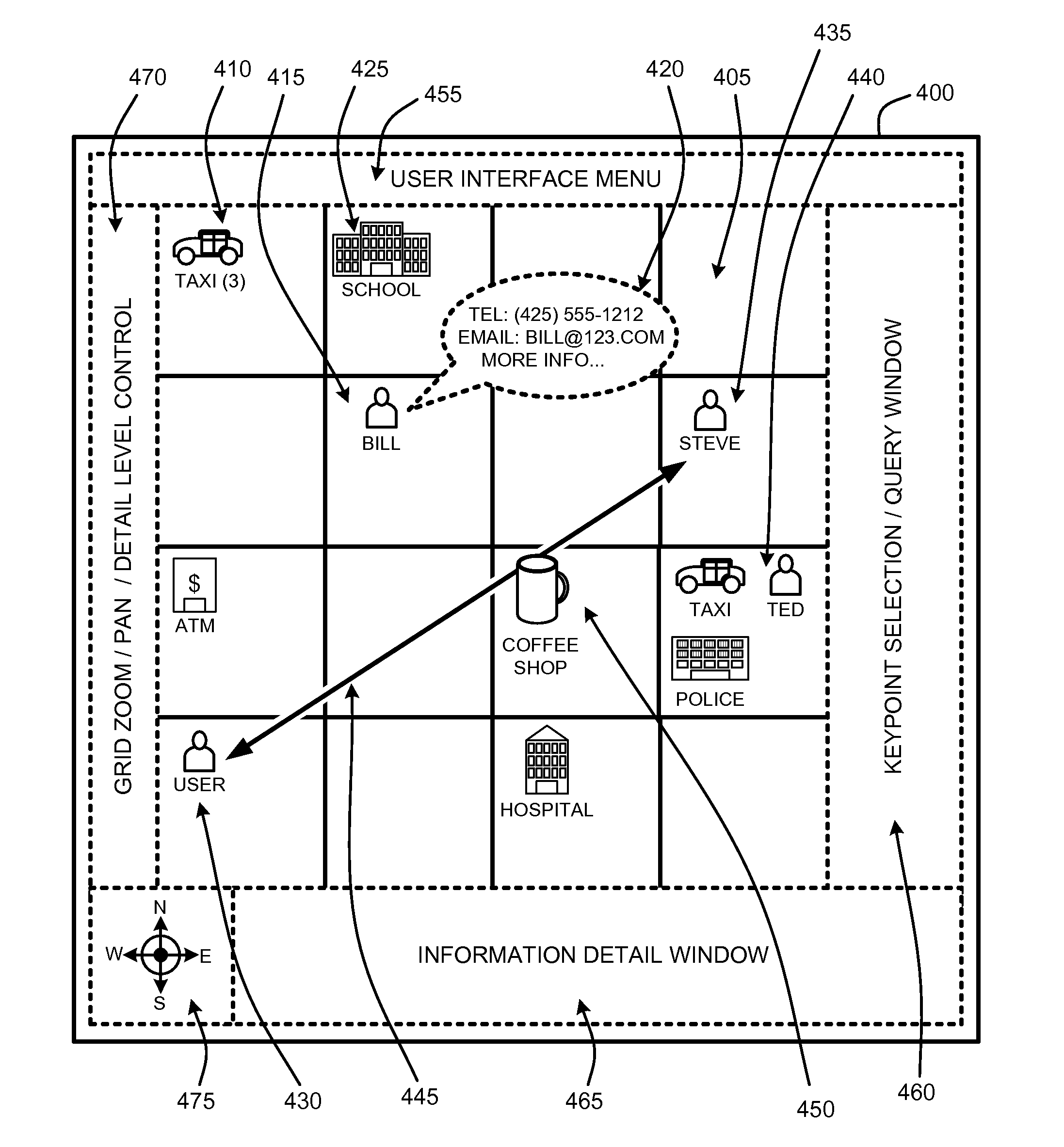

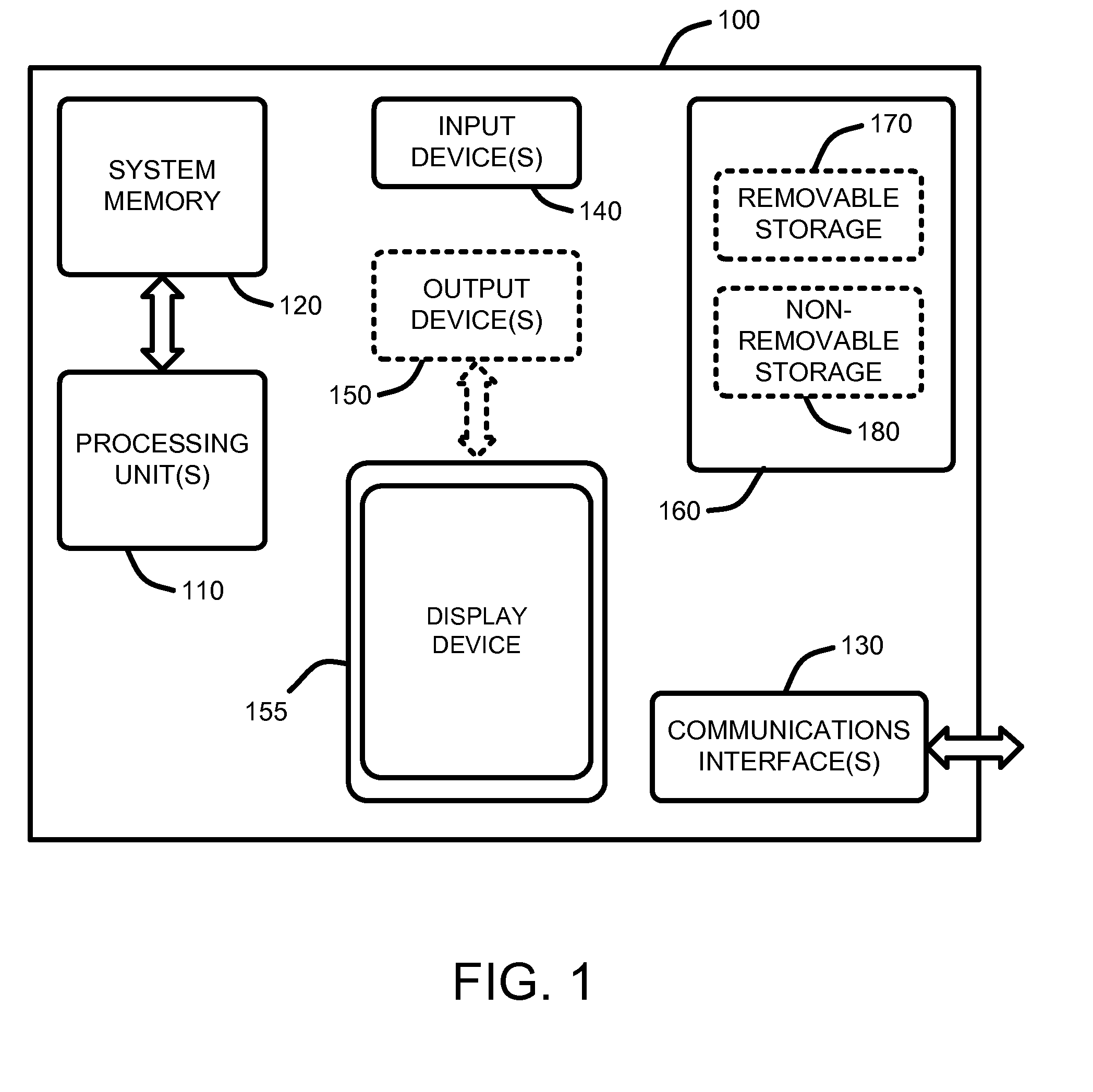

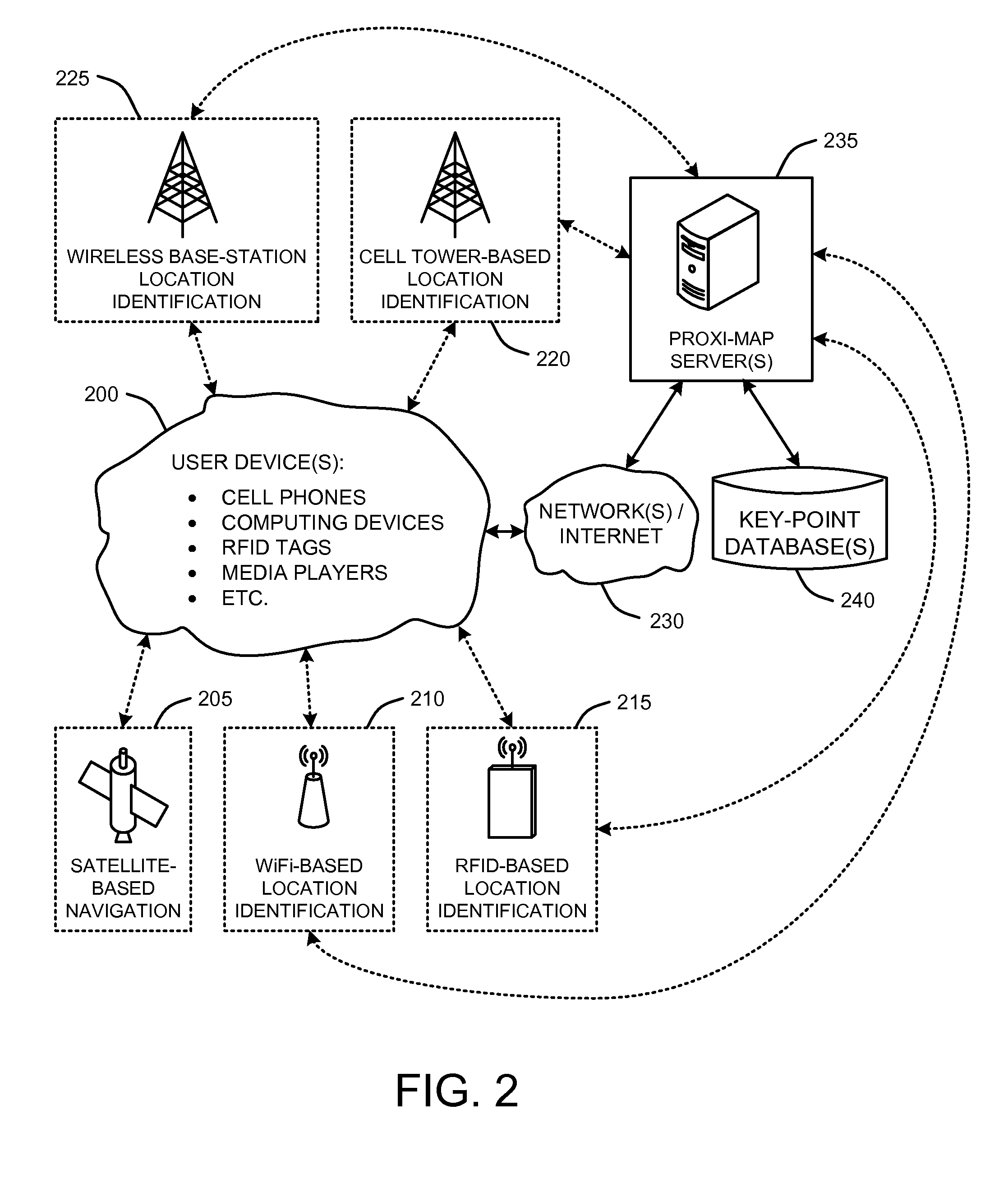

Location mapping for key-point based services

ActiveUS20080172173A1Minimizes bandwidth requirementMaximize user experienceInstruments for road network navigationRoad vehicles traffic controlCrucial pointUser device

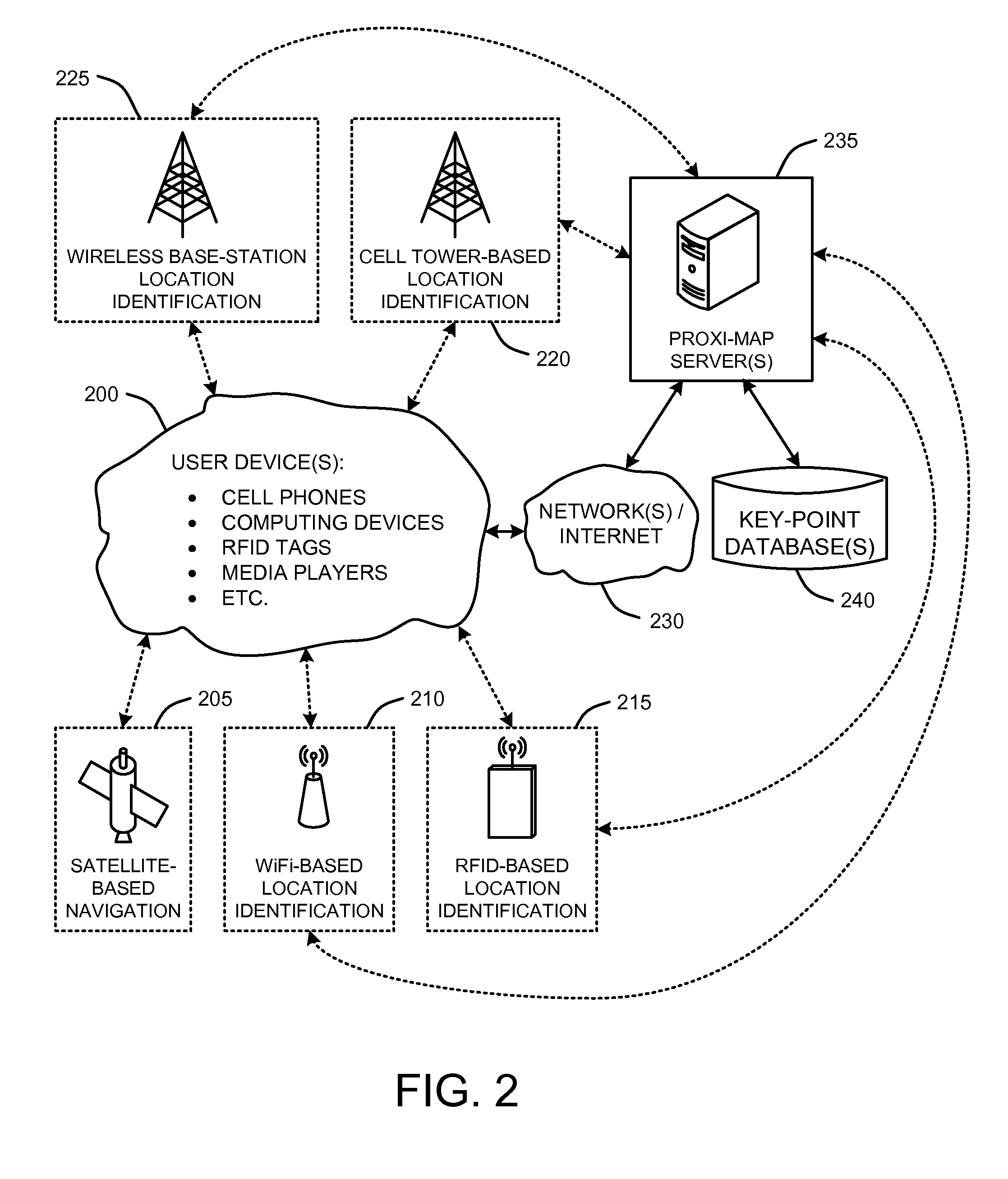

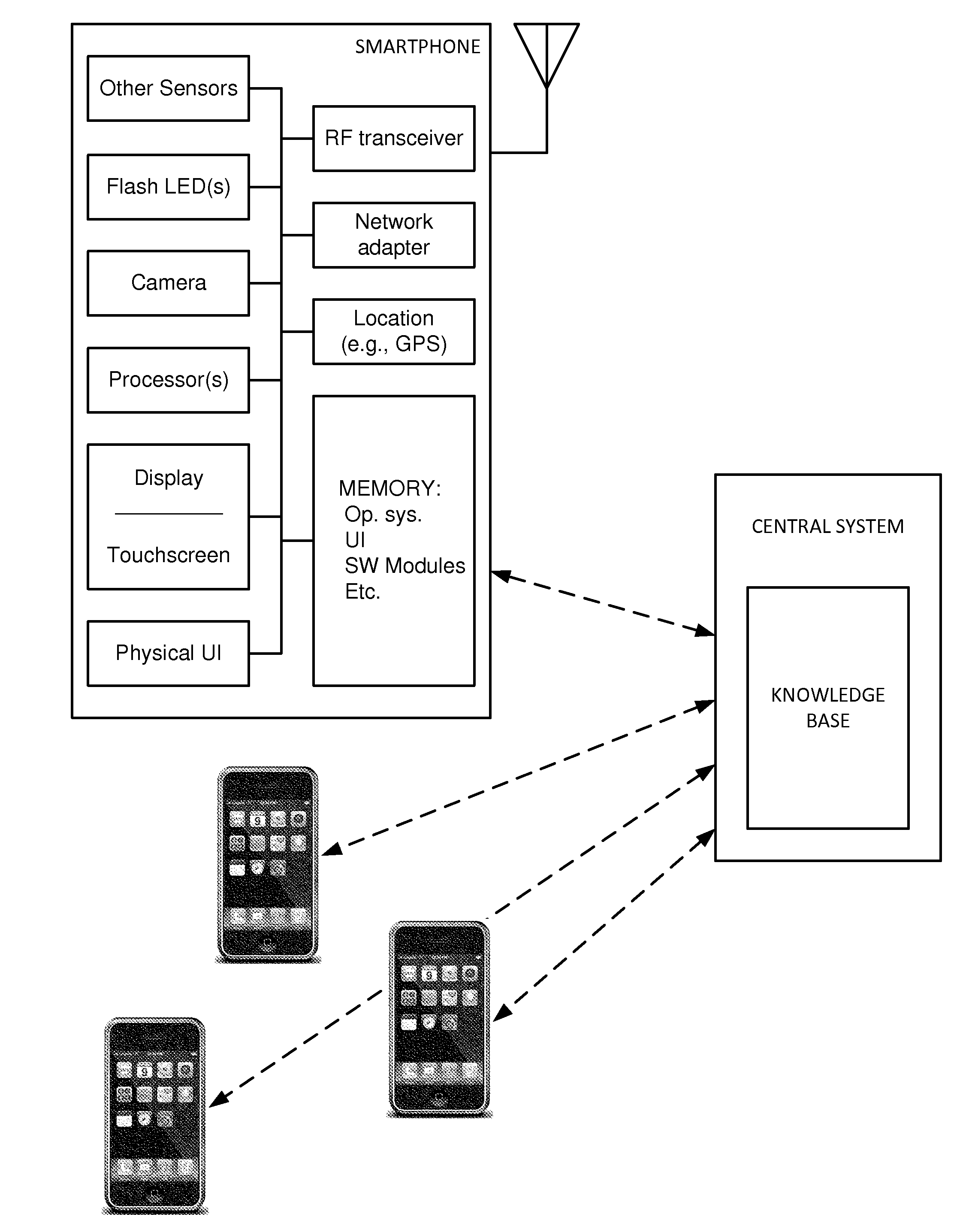

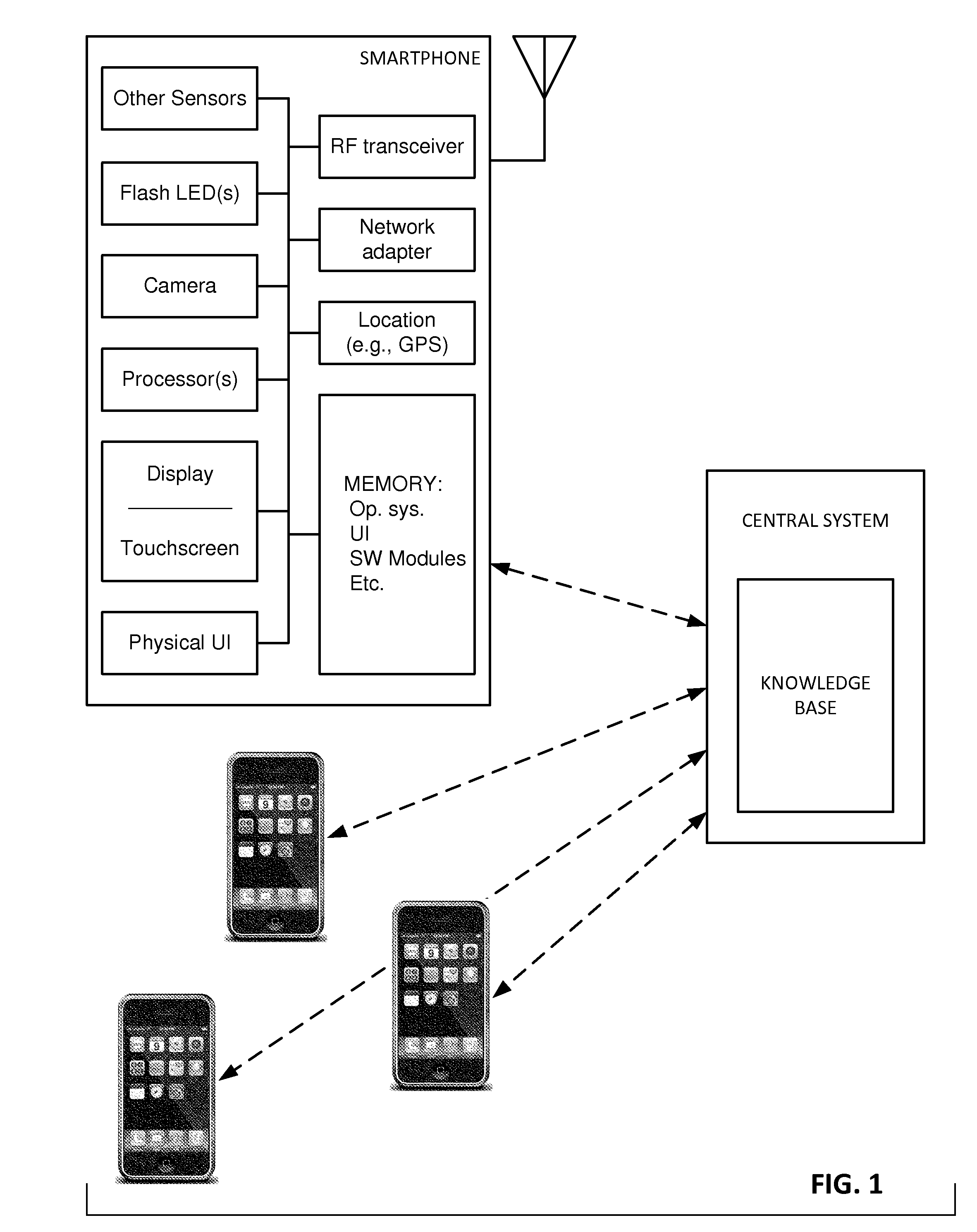

A “Proxi-Mapper” combines location based services (LBS), local searching capabilities, and relative mapping in a way that minimizes bandwidth requirements and maximizes user experience. The Proxi-Mapper automatically determines approximate locations of one or more local user devices (cell phones, PDA's, media players, portable computing devices, etc.) and returns a lightweight model of local entities (“key-points”) representing businesses, services or people to those devices. Key-points are maintained in one or more remote databases in which key-points are assigned to predetermined grid sections based on the locations of the corresponding entities. Metadata associated with the key-points provides the user with additional information relating to the corresponding entities. In various embodiments, user query options allow the Proxi-Mapper to pull or push relevant local key-point based information to user devices via one or more wired or wireless networks.

Owner:UBER TECH INC

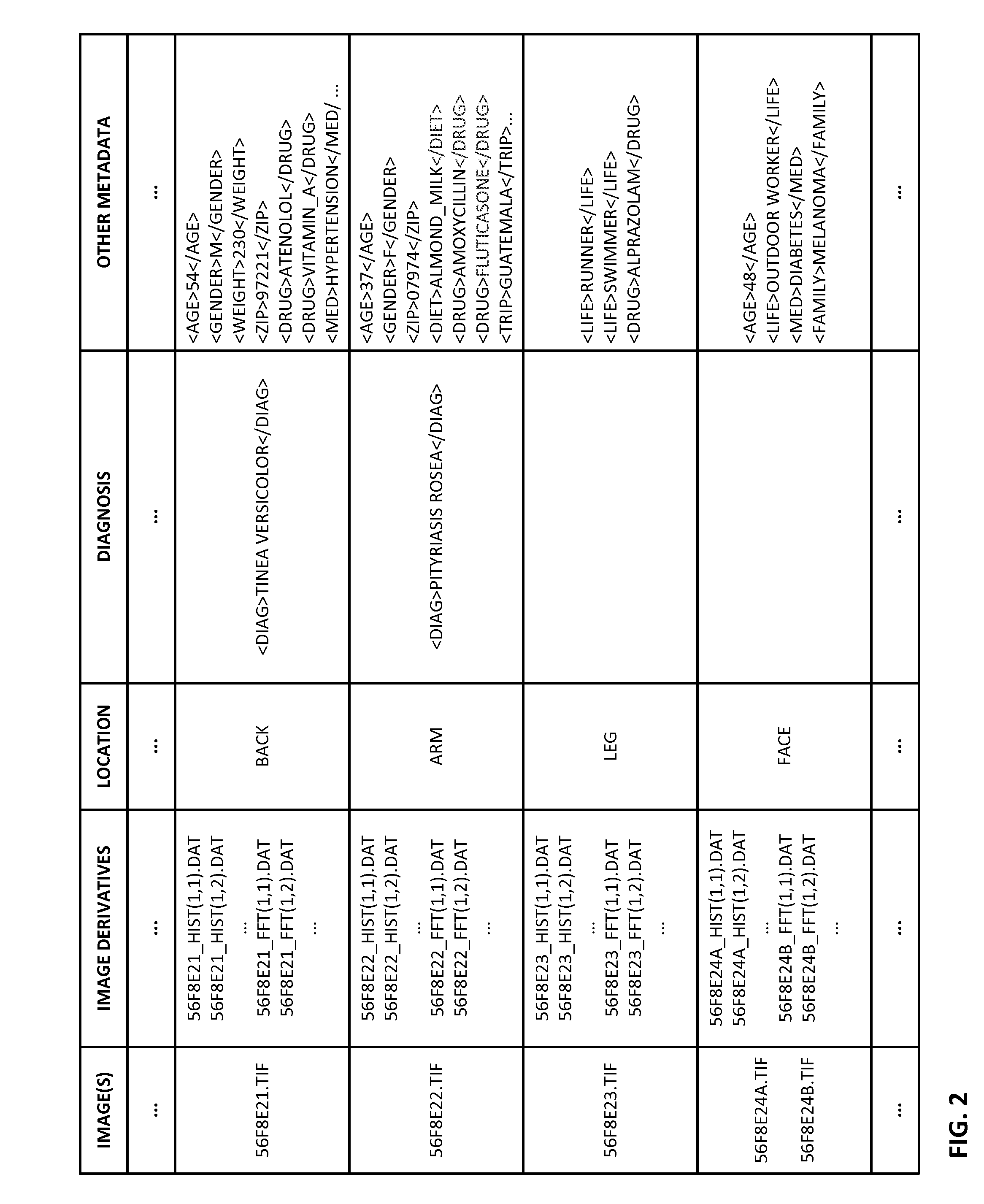

Longitudinal dermoscopic study employing smartphone-based image registration

InactiveUS20140313303A1Lower cost of careImage enhancementTelevision system detailsHypopigmentationPattern recognition

The evolution of a skin condition over time can be useful in its assessment. In an illustrative arrangement, a user captures skin images at different times, using a smartphone. The images are co-registered, color-corrected, and presented to the user (or a clinician) for review, e.g., in a temporal sequence, or as one image presented as a ghosted overlay atop another. Image registration can employ nevi, hair follicles, wrinkles, pores, and pigmented regions as keypoints. With some imaging spectra, keypoints from below the outermost layer of skin can be used. Hair may be removed for image registration, and restored for image review. Transformations in addition to rotation and affine transforms can be employed. Diagnostic correlations with reference image sequences can be made, employing machine learning in some instances. A great variety of other features and arrangements are also detailed.

Owner:DIGIMARC CORP

Location mapping for key-point based services

ActiveUS7751971B2Minimization requirementsMaximize user experienceInstruments for road network navigationRoad vehicles traffic controlCrucial pointUser device

A “Proxi-Mapper” combines location based services (LBS), local searching capabilities, and relative mapping in a way that minimizes bandwidth requirements and maximizes user experience. The Proxi-Mapper automatically determines approximate locations of one or more local user devices (cell phones, PDA's, media players, portable computing devices, etc.) and returns a lightweight model of local entities (“key-points”) representing businesses, services or people to those devices. Key-points are maintained in one or more remote databases in which key-points are assigned to predetermined grid sections based on the locations of the corresponding entities. Metadata associated with the key-points provides the user with additional information relating to the corresponding entities. In various embodiments, user query options allow the Proxi-Mapper to pull or push relevant local key-point based information to user devices via one or more wired or wireless networks.

Owner:UBER TECH INC

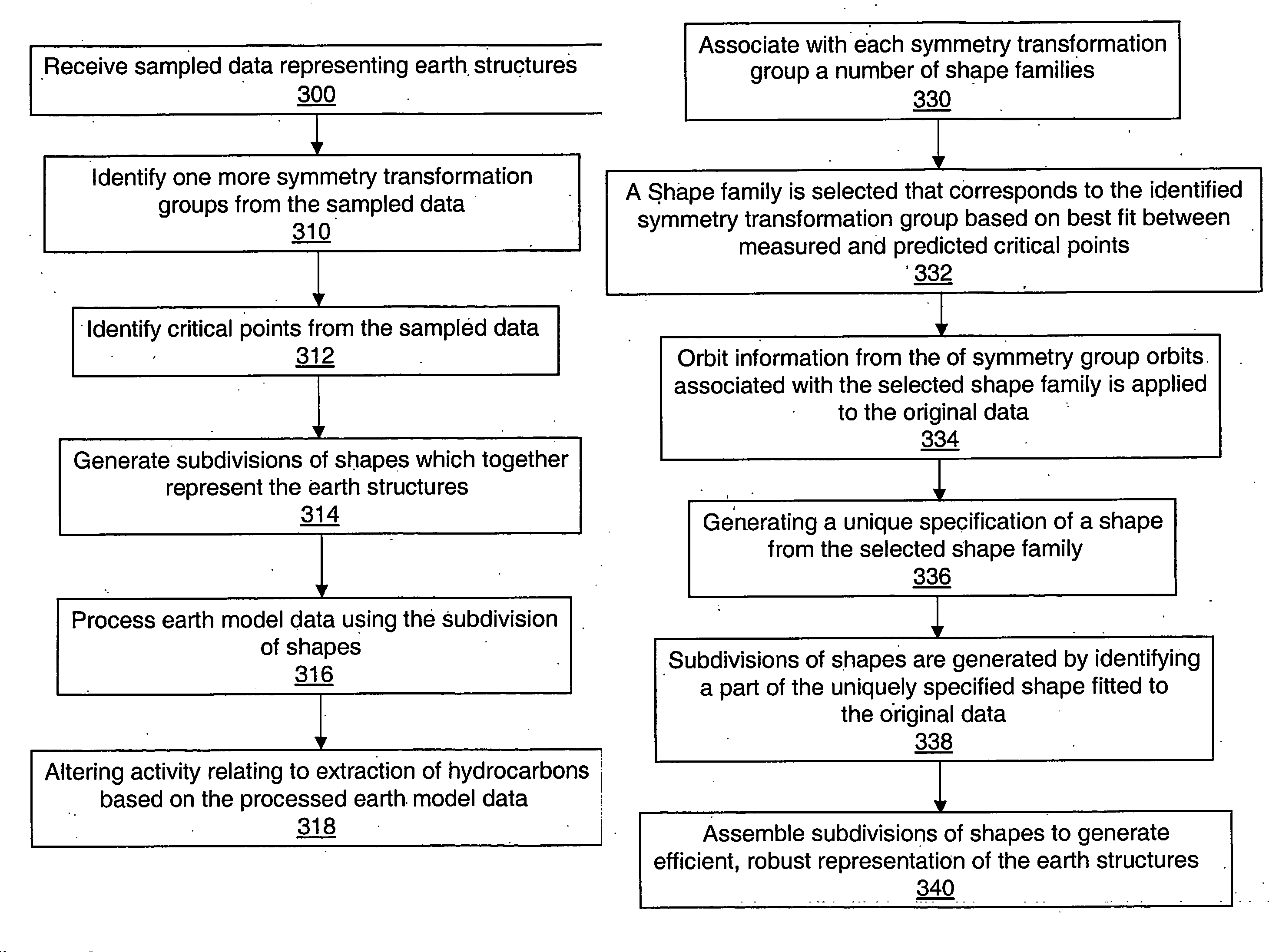

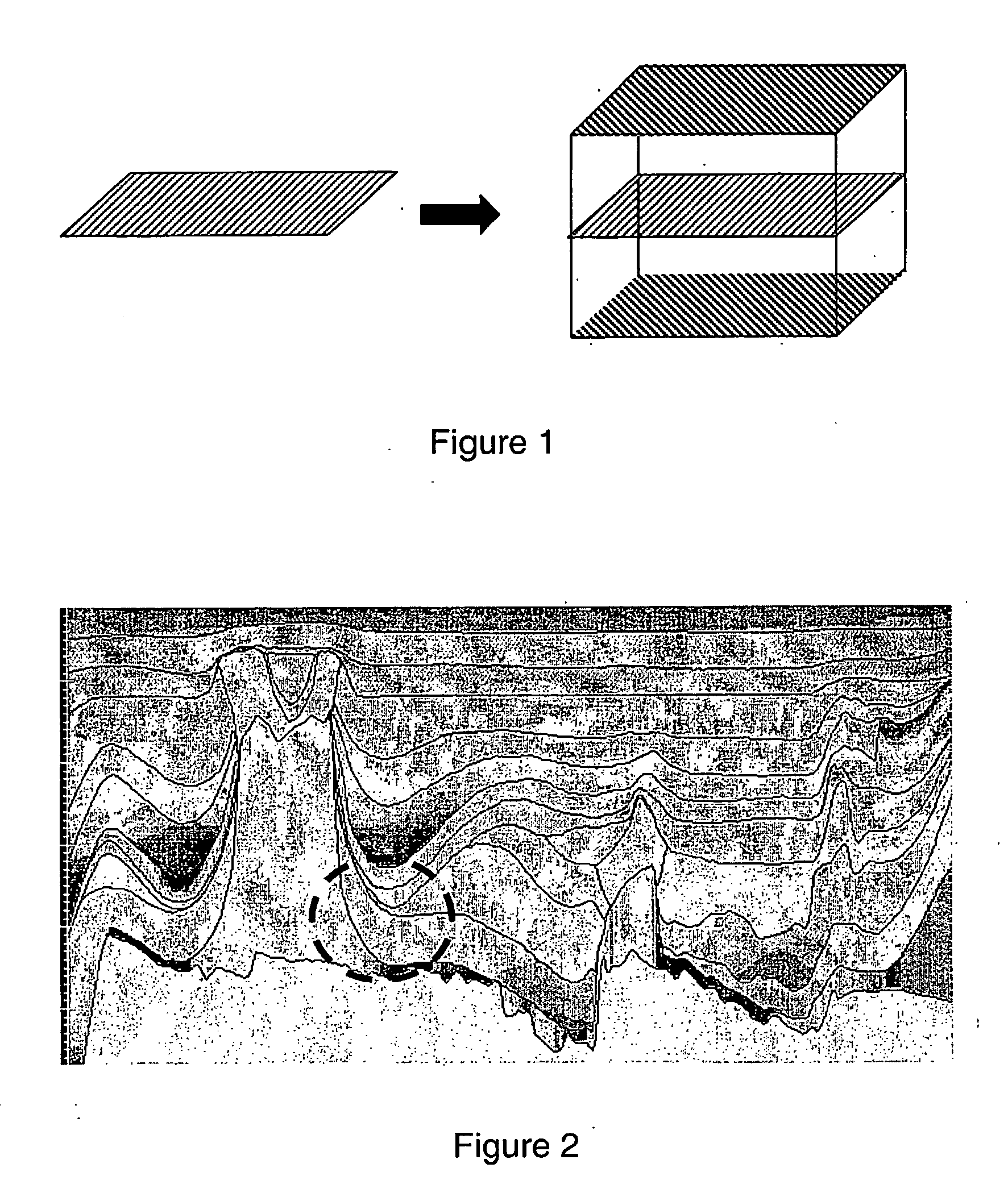

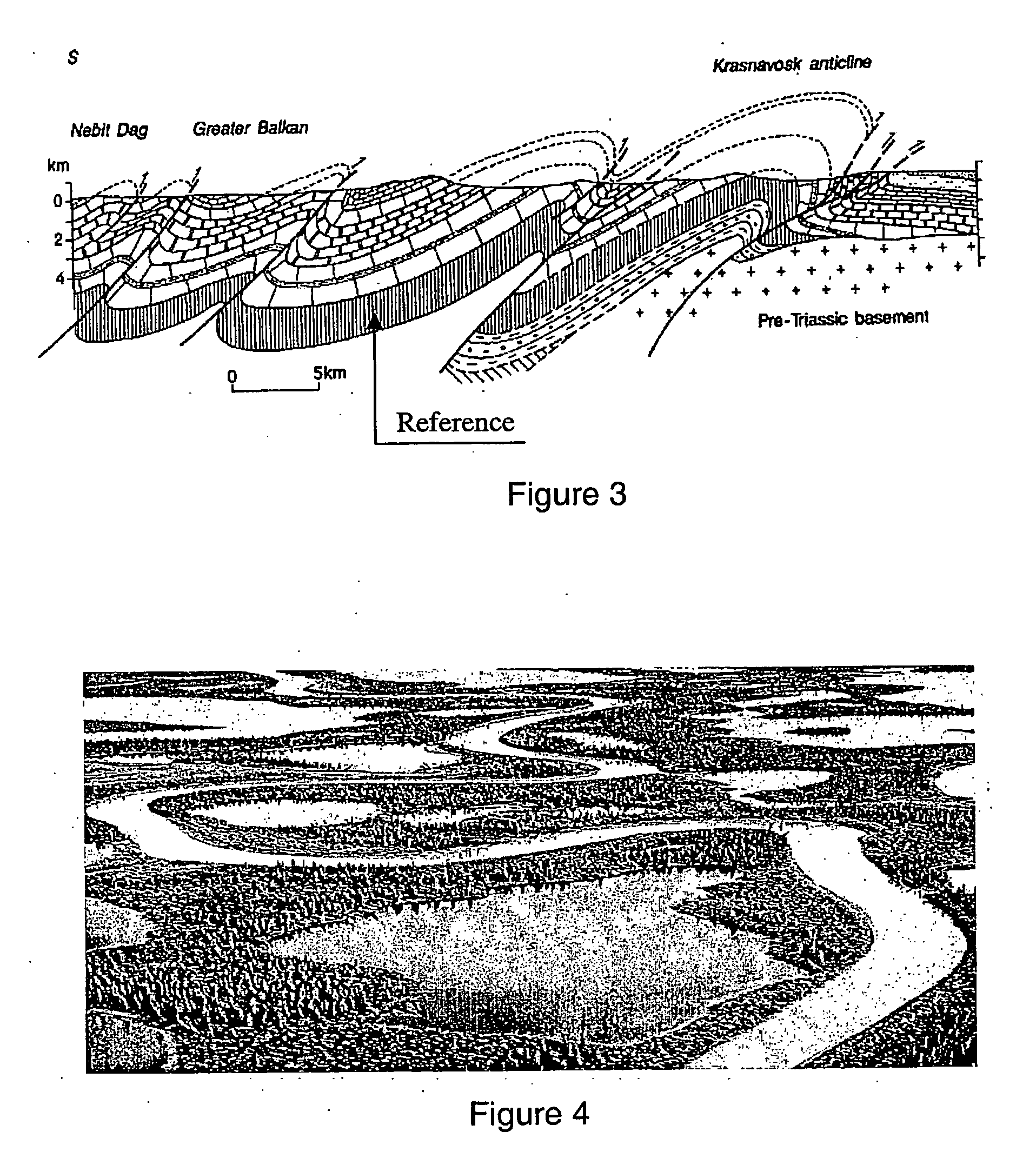

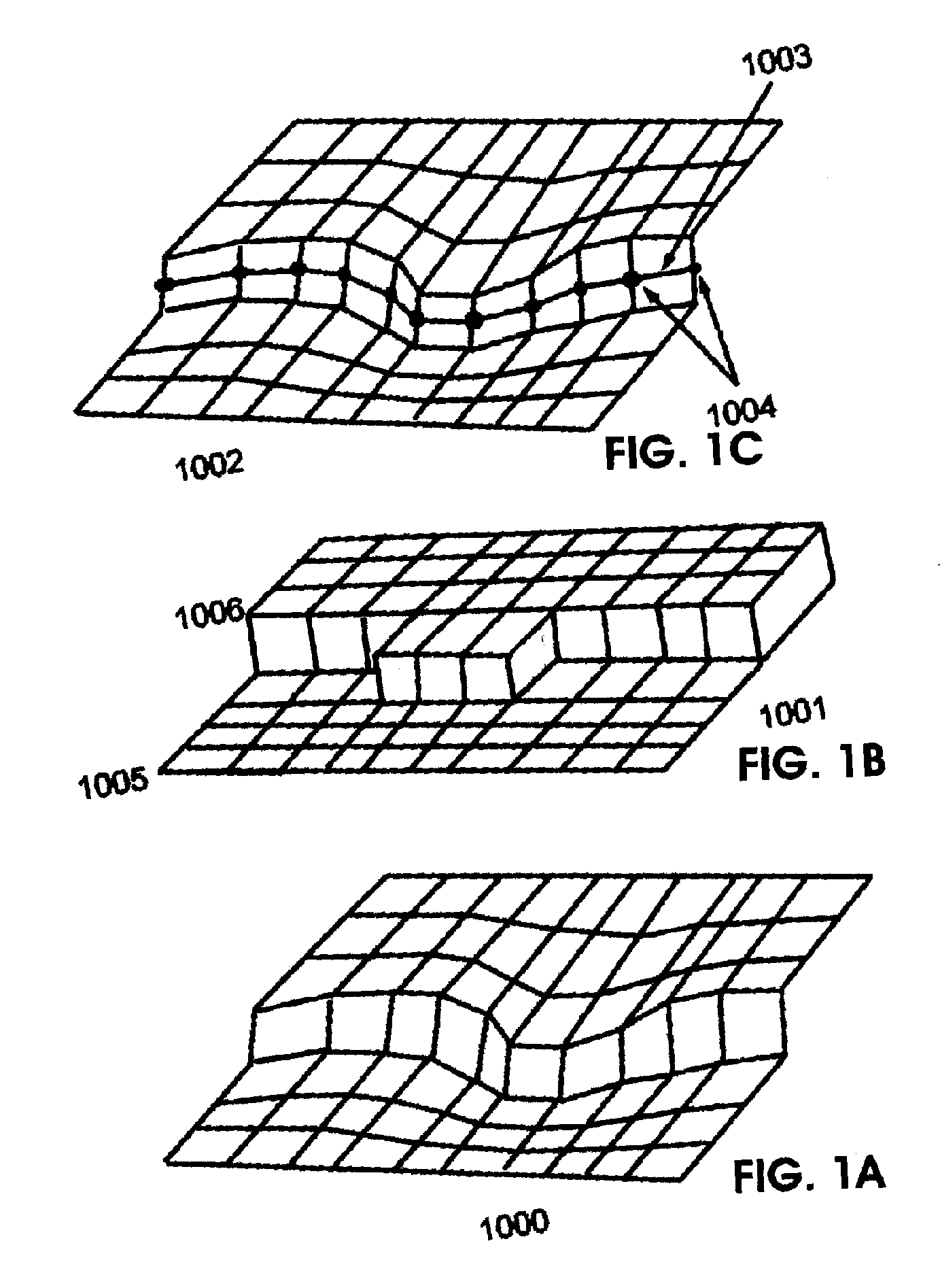

System and method for representing and processing and modeling subterranean surfaces

InactiveUS20060235666A1Enhance memoryImprove efficiencyGeological measurementsAnalogue processes for specific applicationsCrucial pointHydrocotyle bowlesioides

Methods and systems are disclosed for processing data used for hydrocarbon extraction from the earth. Symmetry transformation groups are identified from sampled earth structure data. A set of critical points is identified from the sampled data. Using the symmetry groups and the critical points, a plurality of subdivisions of shapes is generated, which together represent the original earth structures. The symmetry groups correspond to a plurality of shape families, each of which includes a set of predicted critical points. The subdivisions are preferably generated such that a shape family is selected according to a best fit between the critical points from the sampled data and the predicted critical points of the selected shape family.

Owner:SCHLUMBERGER TECH CORP

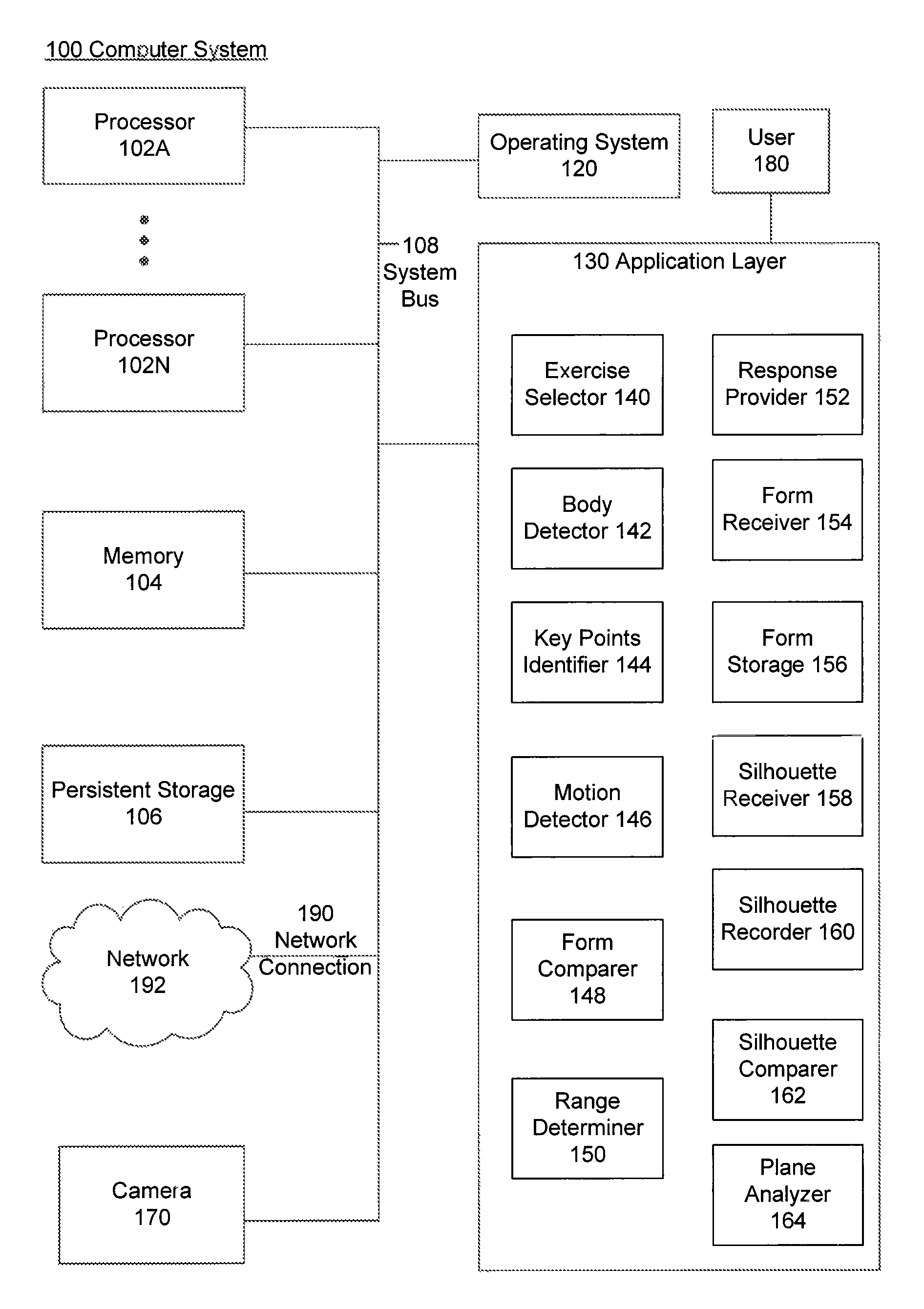

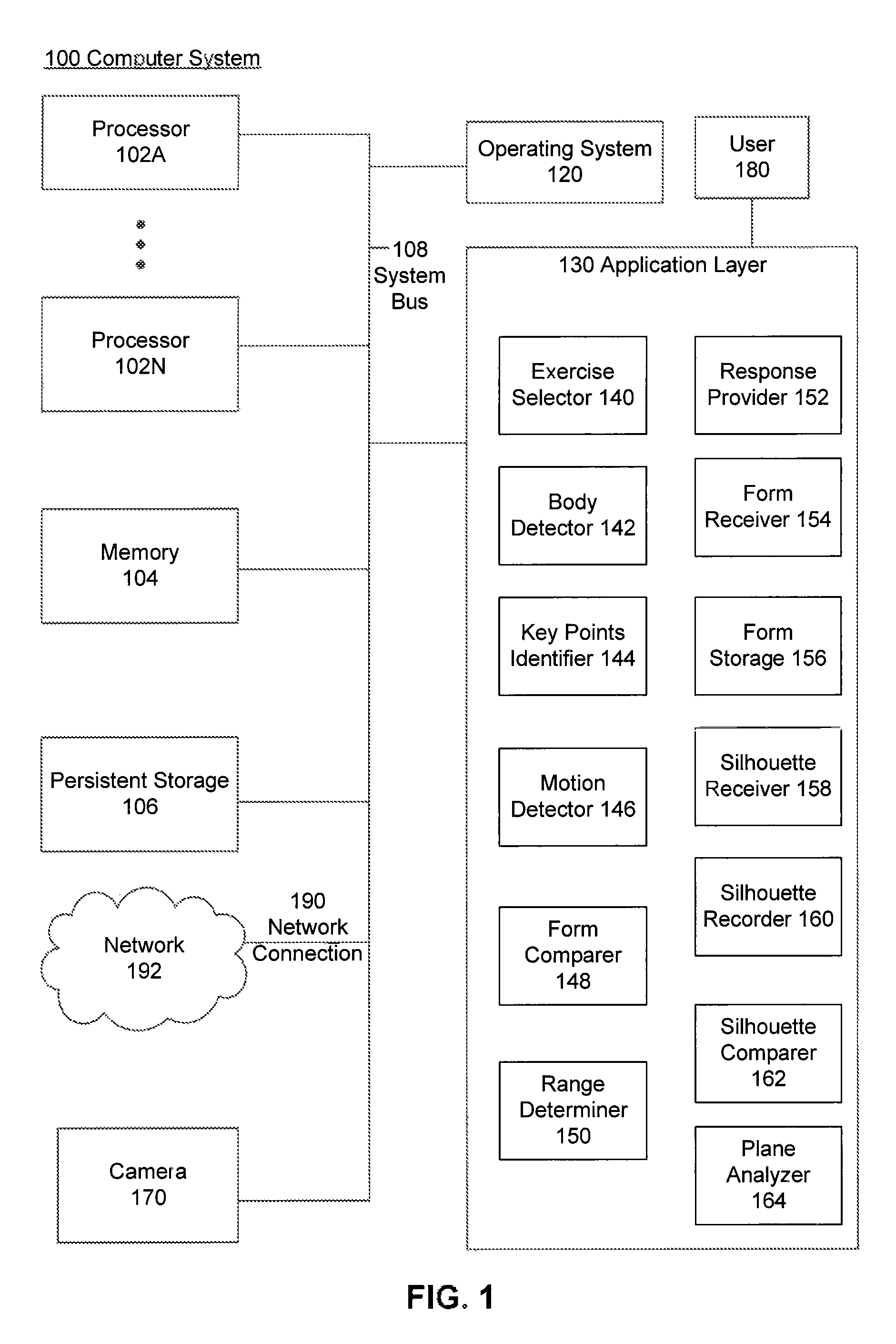

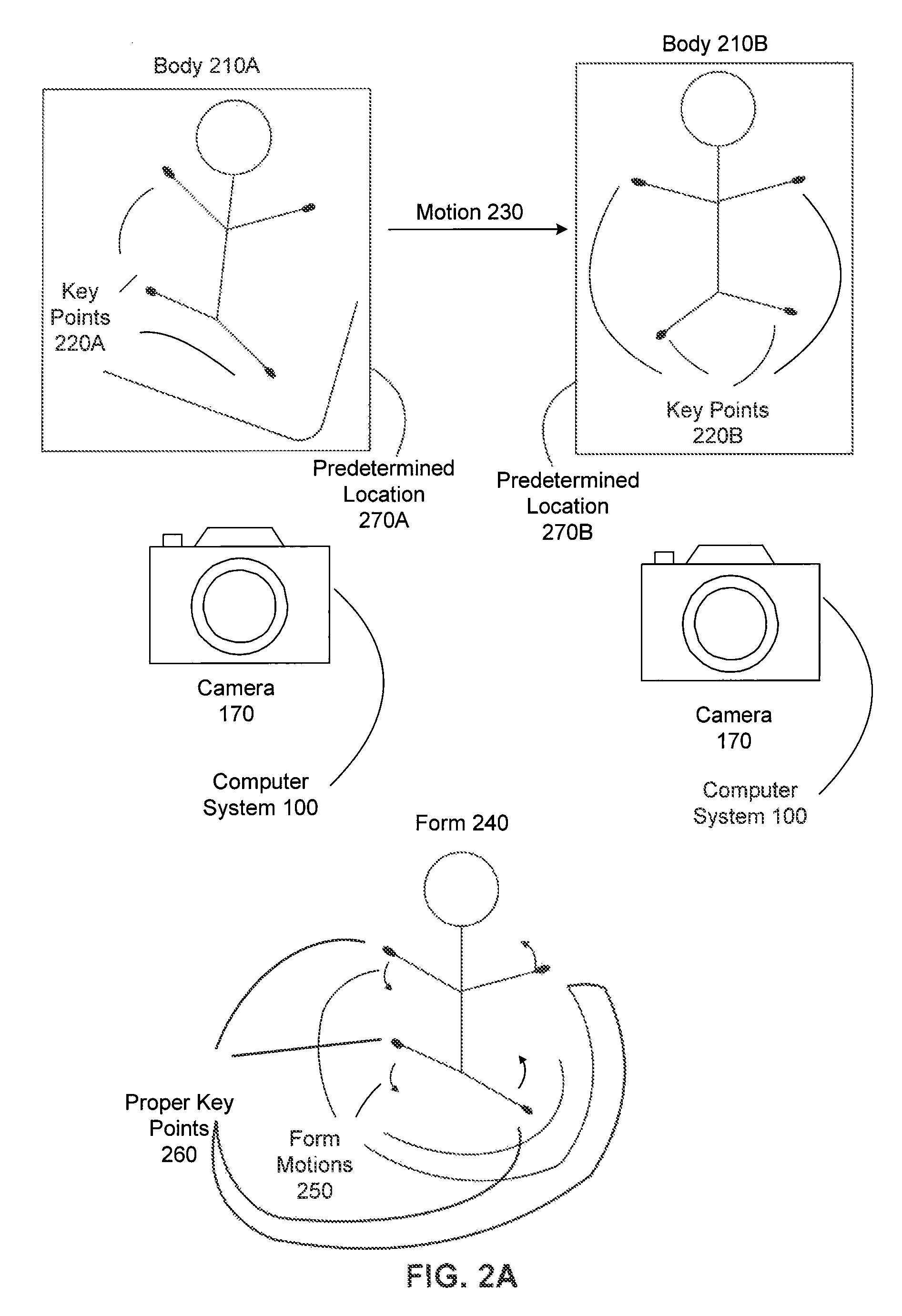

Physical training assistant system

InactiveUS9154739B1Physical therapies and activitiesImage enhancementCrucial pointHuman–computer interaction

A computer-implemented method, a system and a computer-readable medium provide useful feedback for a user involved in exercise. A camera is used to track user motion by using image processing techniques to identify key points on a user's body and track their motion. The tracked points are compared to proper form for an exercise, and an embodiment gives feedback based on the relationship between the actual movement of the user and the proper form. Alternatively, silhouette information may be used in a similar manner in another embodiment.

Owner:GOOGLE LLC

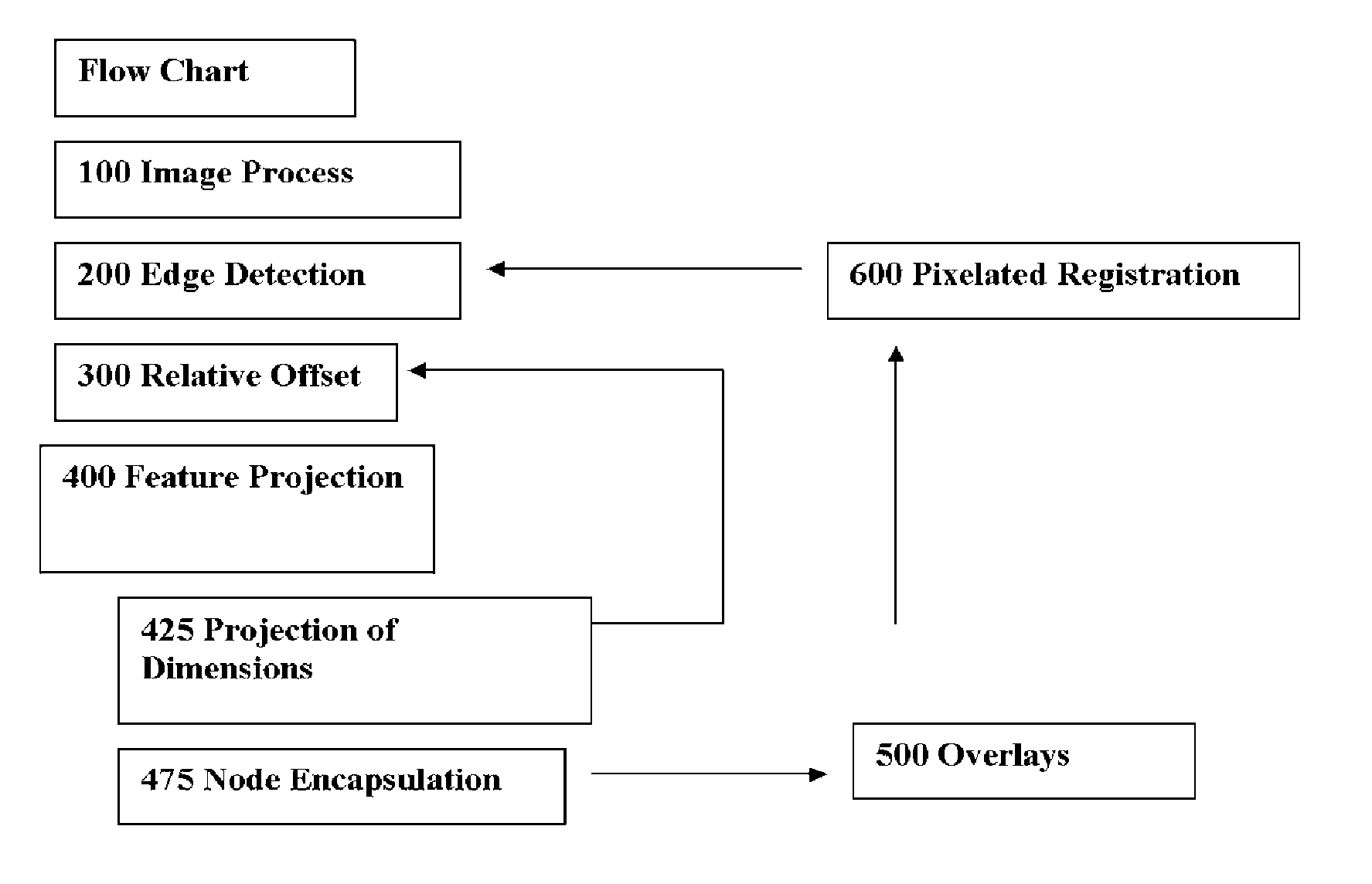

Method and apparatus for determining offsets of a part from a digital image

InactiveUS20060056732A1Improve good performanceHigh resolutionImage enhancementImage analysisGraphicsCrucial point

A method for image recognition of a material object that utilizes graphical modeling of the corner points of a vertex which includes projecting a point on a digital display to an inward depth, a one half pixel distance in the plane of the display, with a conic to a digital display, and a square block containing one half size child blocks that are scaled to depth, projecting the corner points of a vertex and replacing the bisecting points of edge features detected in a digital display scaled at an increasing rate of congruency to the dimensions of an object. The method may further include producing a digital image of the material object, providing a central processing unit, providing memory associated with a central processing unit; providing a display associated with a central processing unit; loading the digital image into the memory; defining the edges of features within the digital image; and a finding fight crucial points from registrations projected on to an edge feature display.

Owner:HOLMES DAVID

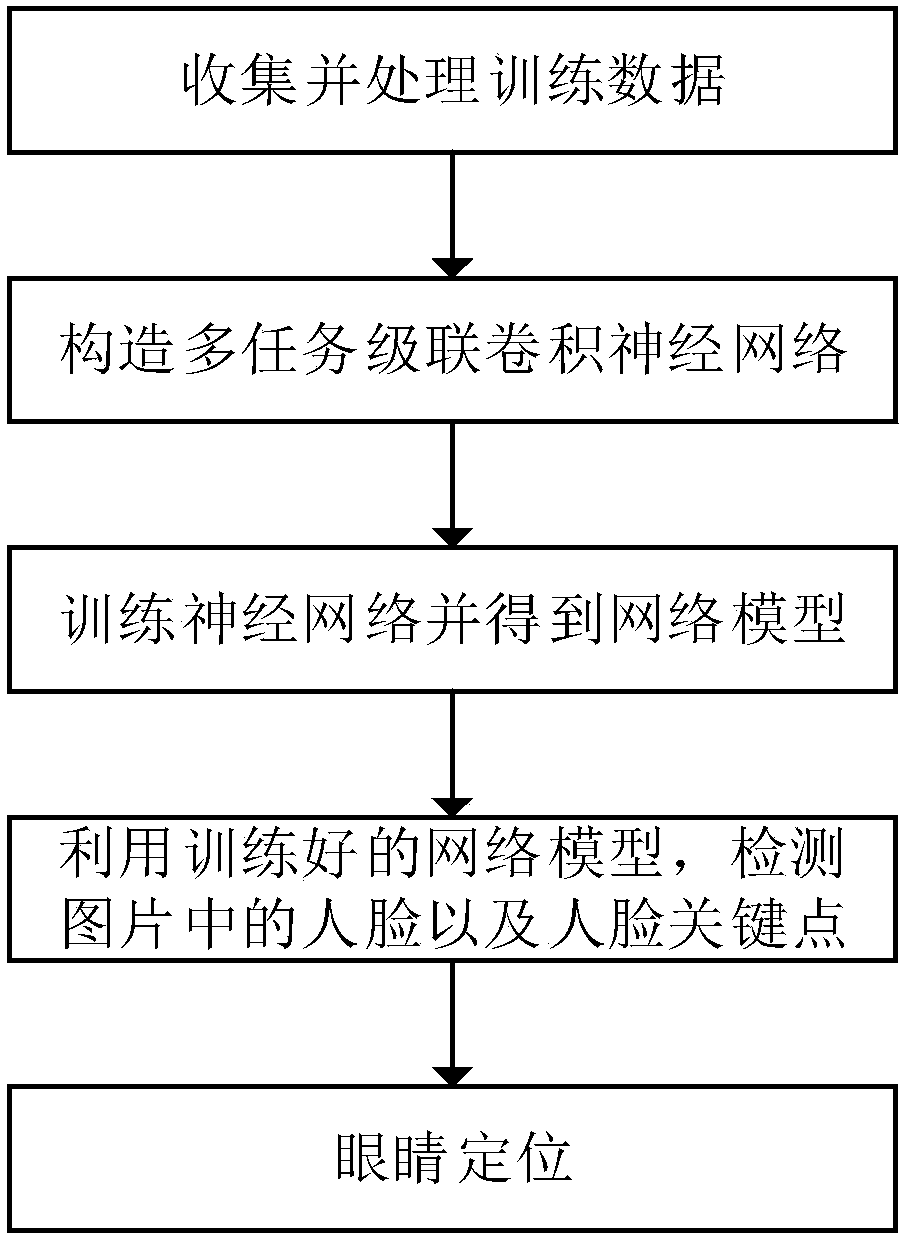

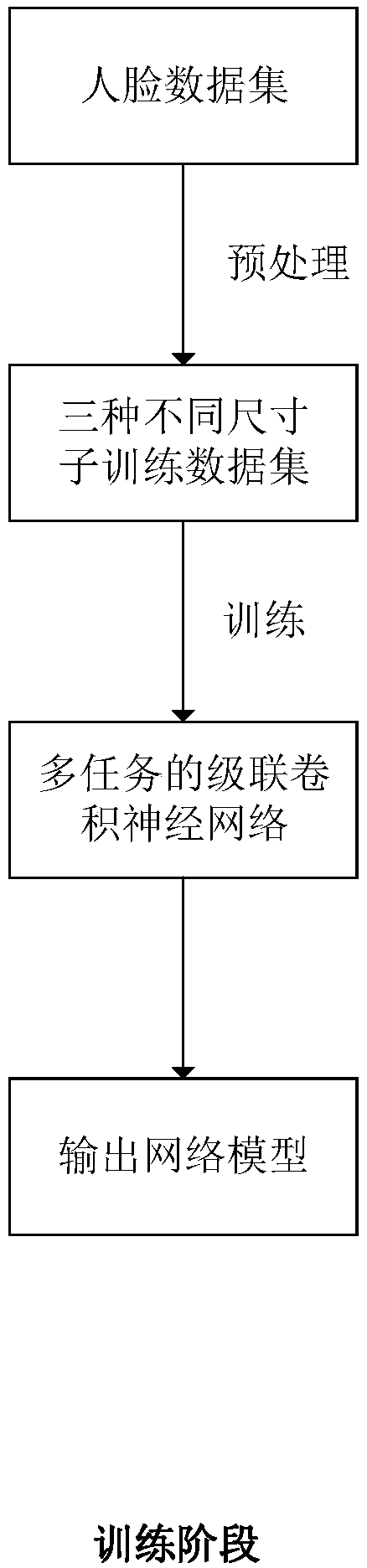

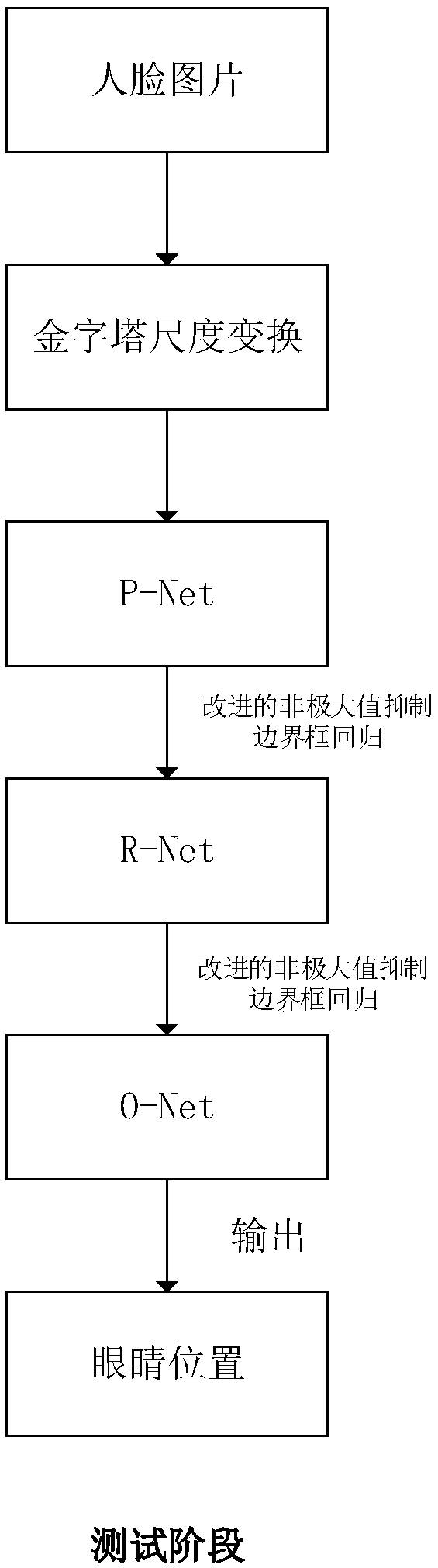

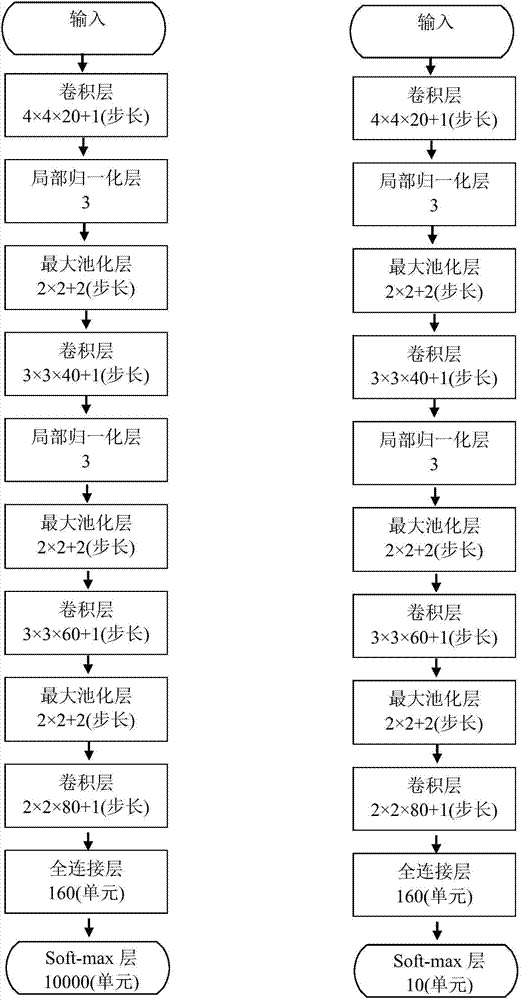

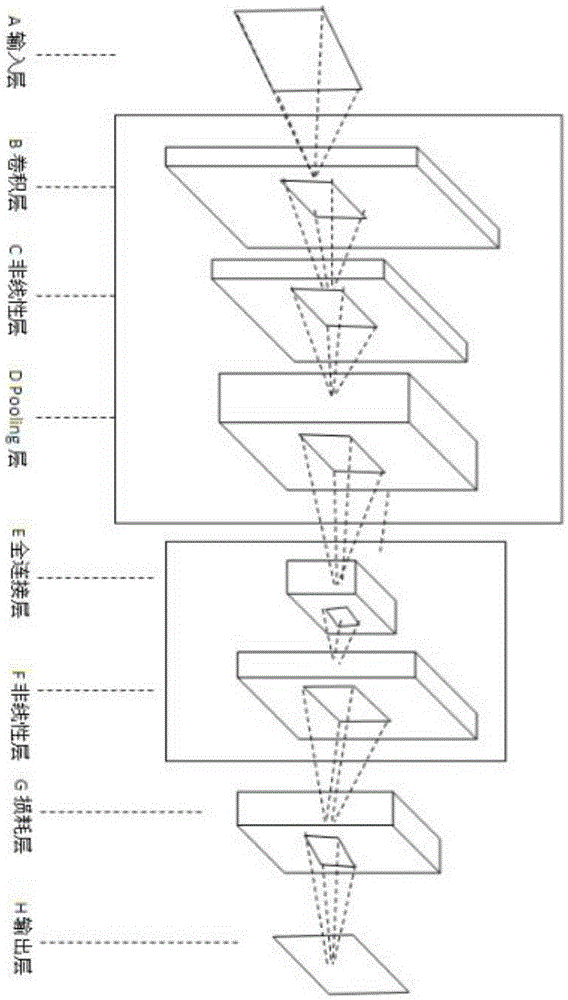

Multi-pose eye positioning algorithm based on cascaded convolutional neural network

InactiveCN107748858AGood effectEfficient multi-pose face detectionCharacter and pattern recognitionNeural learning methodsFace detectionNerve network

The invention discloses a multi-pose eye positioning algorithm based on the cascaded convolutional neural network, belongs to the machine learning and computer vision field and is suitable for intelligent systems such as face recognition, sight tracking and driver fatigue detection. The method comprises steps that face pictures marked with various types of information are collected to form a training data set; the multi-task cascaded convolutional neural network is constructed; the training data set is utilized to train the network to acquire a network model; and lastly, the network model is utilized to detect faces and face key points of the pictures, and the smallest rectangular box containing the eye key points is selected as the eye positioning result. The method is advantaged in thatthe multi-task cascading convolutional neural network is utilized to accomplish face detection and face key point detection, so the multi-pose eye positioning effect is obviously improved.

Owner:SOUTH CHINA UNIV OF TECH

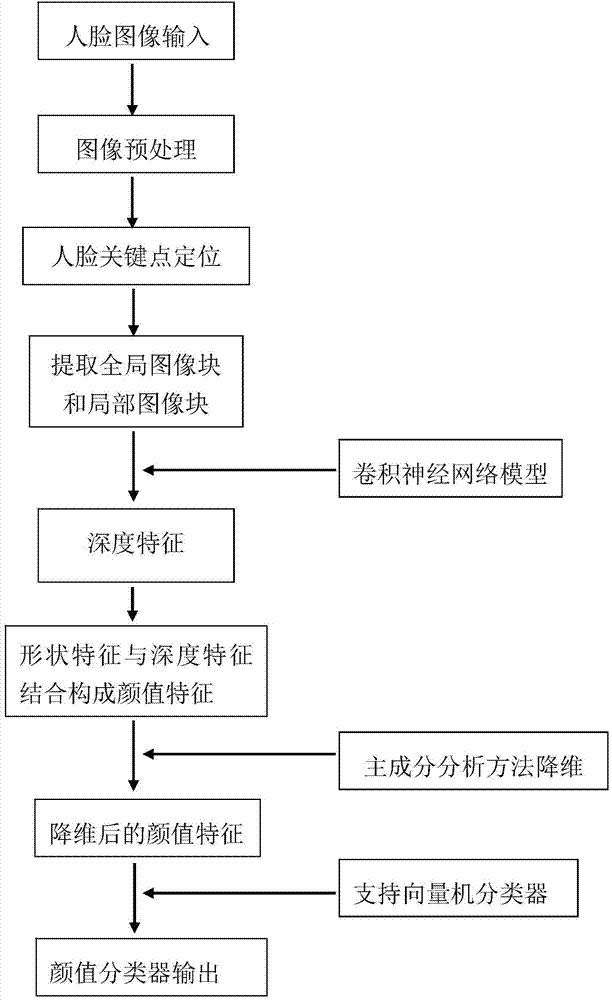

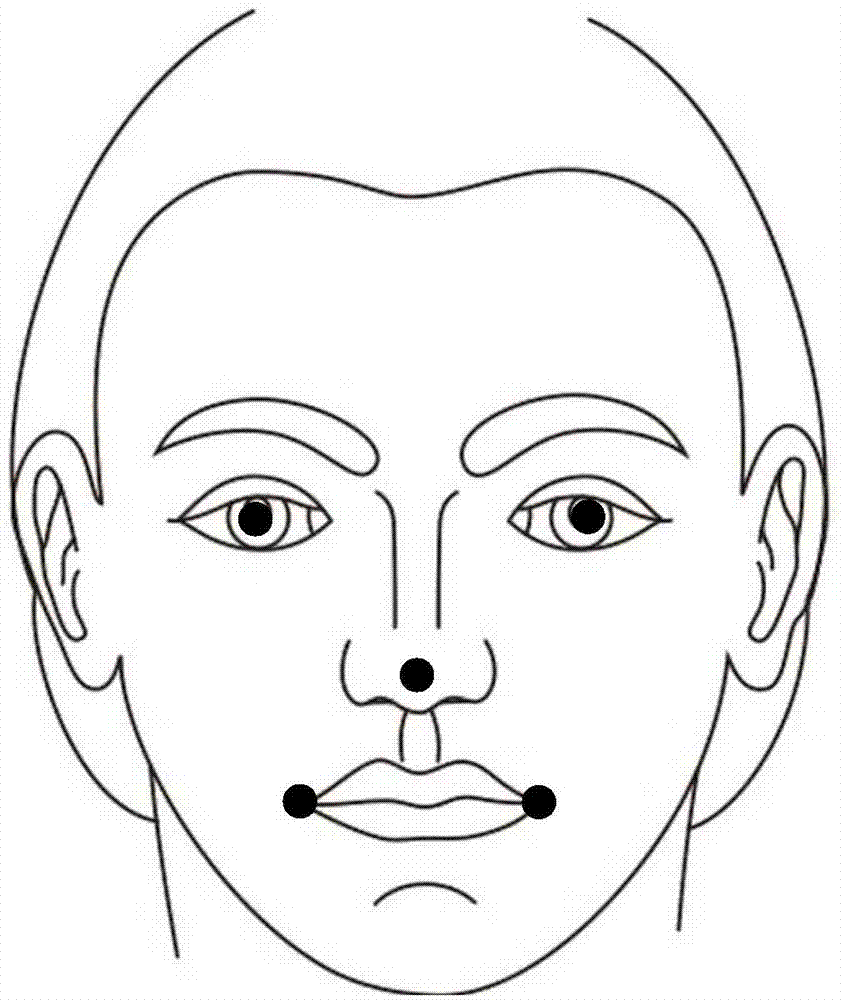

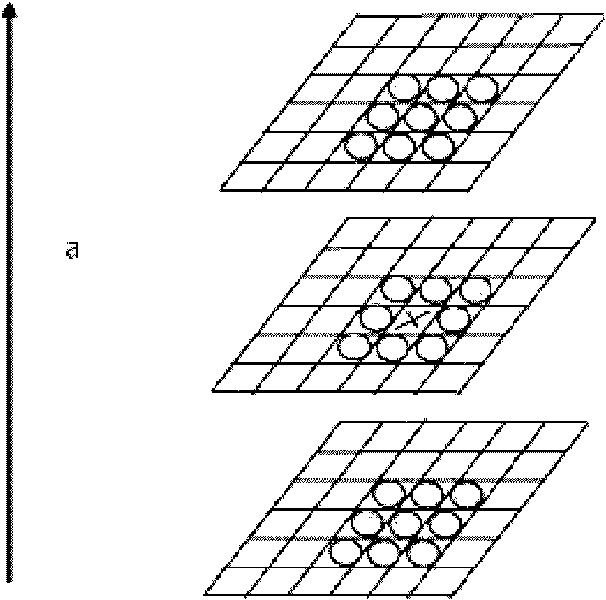

Facial image face score calculating method based on convolutional neural network

ActiveCN104850825AHigh class discriminativeImprove robustnessCharacter and pattern recognitionCrucial pointFace shape

The present invention discloses a facial image face score calculating method based on convolutional neural networks. The method comprises acquiring facial images with and without face score labels; performing earlier-stage pretreatment to acquire key points of a face and extract overall and partial face image blocks; pre-training and then tuning the convolutional neural networks, and extracting and combining depth characters and shape features of the face to serve as face score features; inputting the face score features into a classifier to train to obtain a face score classifier; and performing the above steps on the facial images to be detected in turn to obtain respectively face score features, and calculating the face score features of the facial images by the face score classifier to obtain respective face scores. According to the calculating method provided by the present invention, the convolutional neural networks are adopted to extract depth characters of the overall and partial facial images, and through combination with the face shape features, face score calculating uncertainty under complex situations is overcome, the robustness is high, and excellent effects in engineering application are achieved.

Owner:CHINA JILIANG UNIV

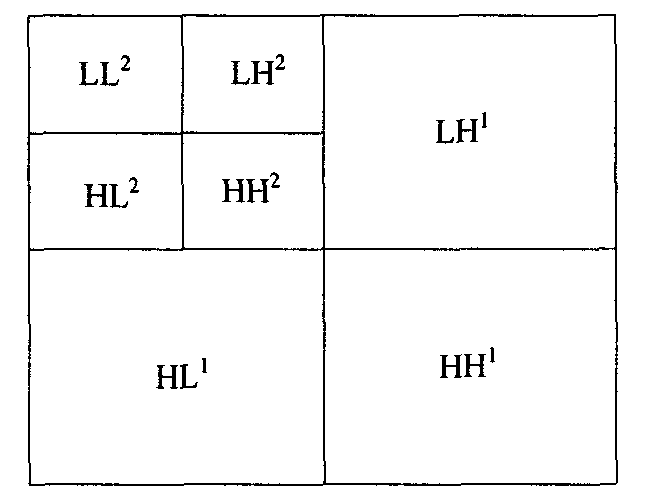

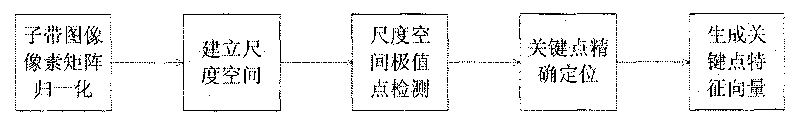

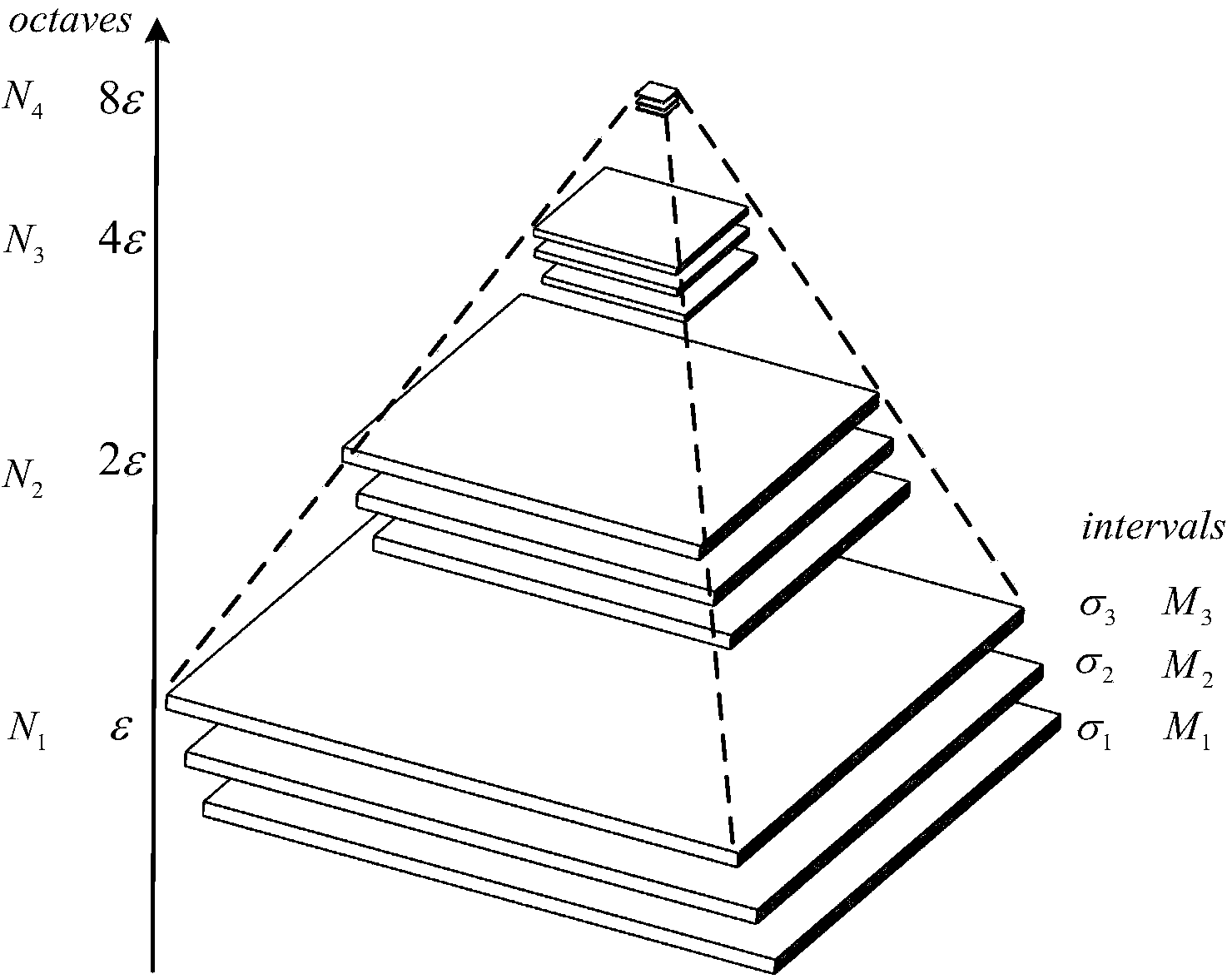

Registering control point extracting method combining multi-scale SIFT and area invariant moment features

InactiveCN101714254AMake up for defects that are susceptible to factors such as noiseImage analysisFeature vectorImaging processing

The invention discloses a registering control point extracting method combining multi-scale SIFT and area invariant moment features, relating to the field of image processing. The invention solves the technical problems of how to extract stable and reliable feature points in the image registering process. The method comprises the following steps of: firstly, carrying out continuous filtering on images by utilizing Gauss kernel functions to generate the DOG scale-space by combining with a downsampling method, and seeking and calculating space and scale coordinates of a local extremum. Then, forming the feature vectors of a key point by utilizing directional gradient information, and obtaining an originally matching key point pair through the Euclidean distance; and then calculating local area HU invariant moment features by taking the originally selected key point as the center, and screening out a finally accurate and effective registering control point by combining with the Euclidean distance. The method combines the multi-scale features of an SIFT arithmetic and the image local area grayscale invariant moment features, thereby effectively improving the stability and the reliability of extracting multisensor image registering control point pairs.

Owner:HARBIN INST OF TECH

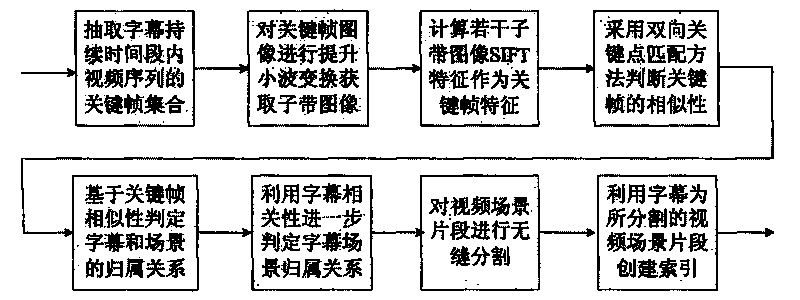

Method for segmenting and indexing scenes by combining captions and video image information

InactiveCN101719144AImprove accuracyAvoid Manual LabelingTelevision system detailsColor television detailsPattern recognitionCrucial point

The invention relates to a method for segmenting and indexing scenes by combining captions and video image information. The method is characterized in that: in the duration of each piece of caption, a video frame collection is used as a minimum unit of a scene cluster. The method comprises the steps of: after obtaining the minimum unit of the scene cluster, and extracting at least three or more discontinuous video frames to form a video key frame collection of the piece of caption; comparing the similarities of the key frames of a plurality of adjacent minimum units by using a bidirectional SIFT key point matching method and establishing an initial attribution relationship between the captions and the scenes by combining a caption related transition diagram; for the continuous minimum cluster units judged to be dissimilar, further judging whether the minimum cluster units can be merged by the relationship of the minimum cluster units and the corresponding captions; and according to the determined attribution relationships of the captions and the scenes, extracting the video scenes. For the segments of the extracted video scenes, the forward and reverse indexes, generated by the caption texts contained in the segments, are used as a foundation of indexing the video segments.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

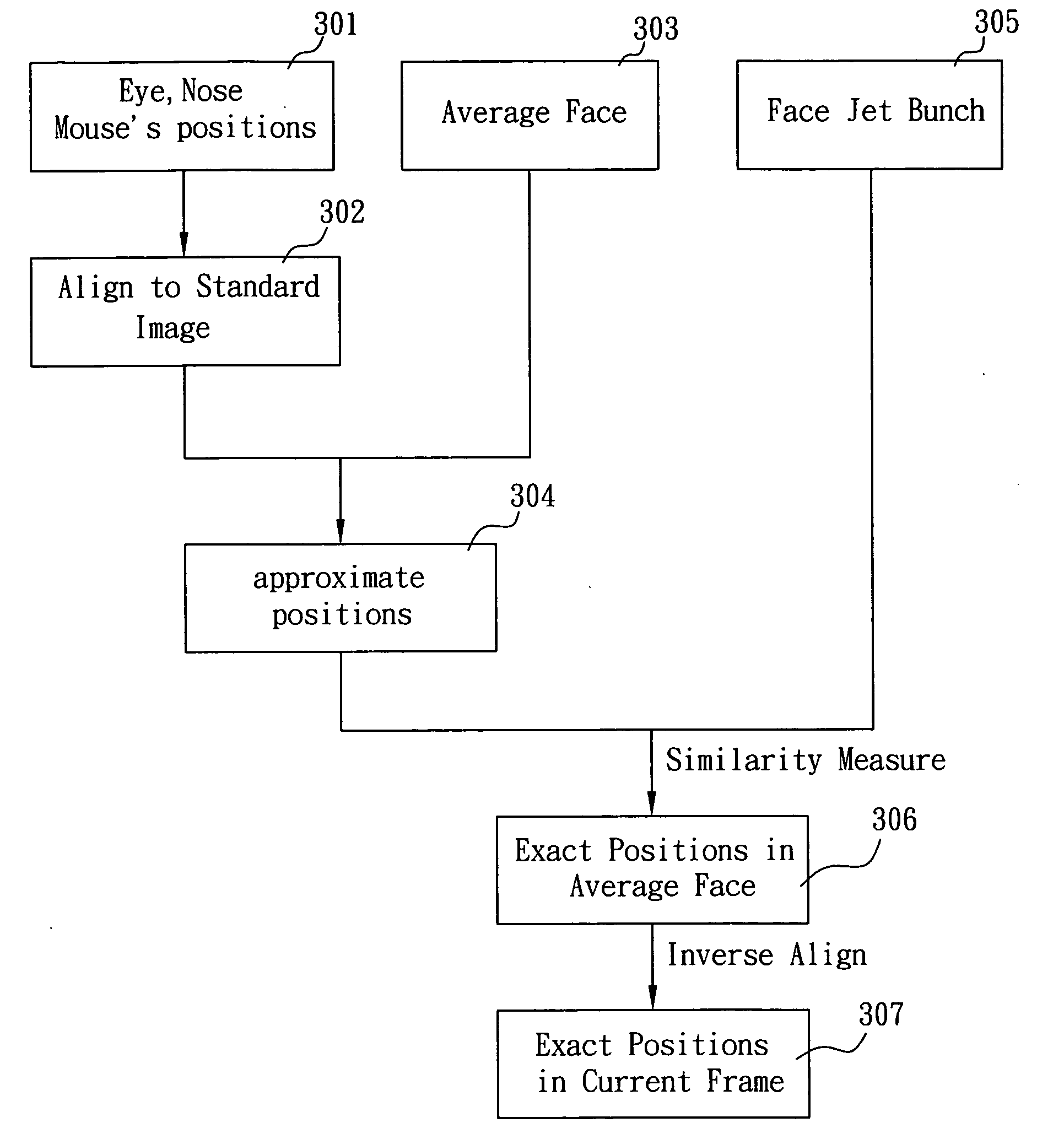

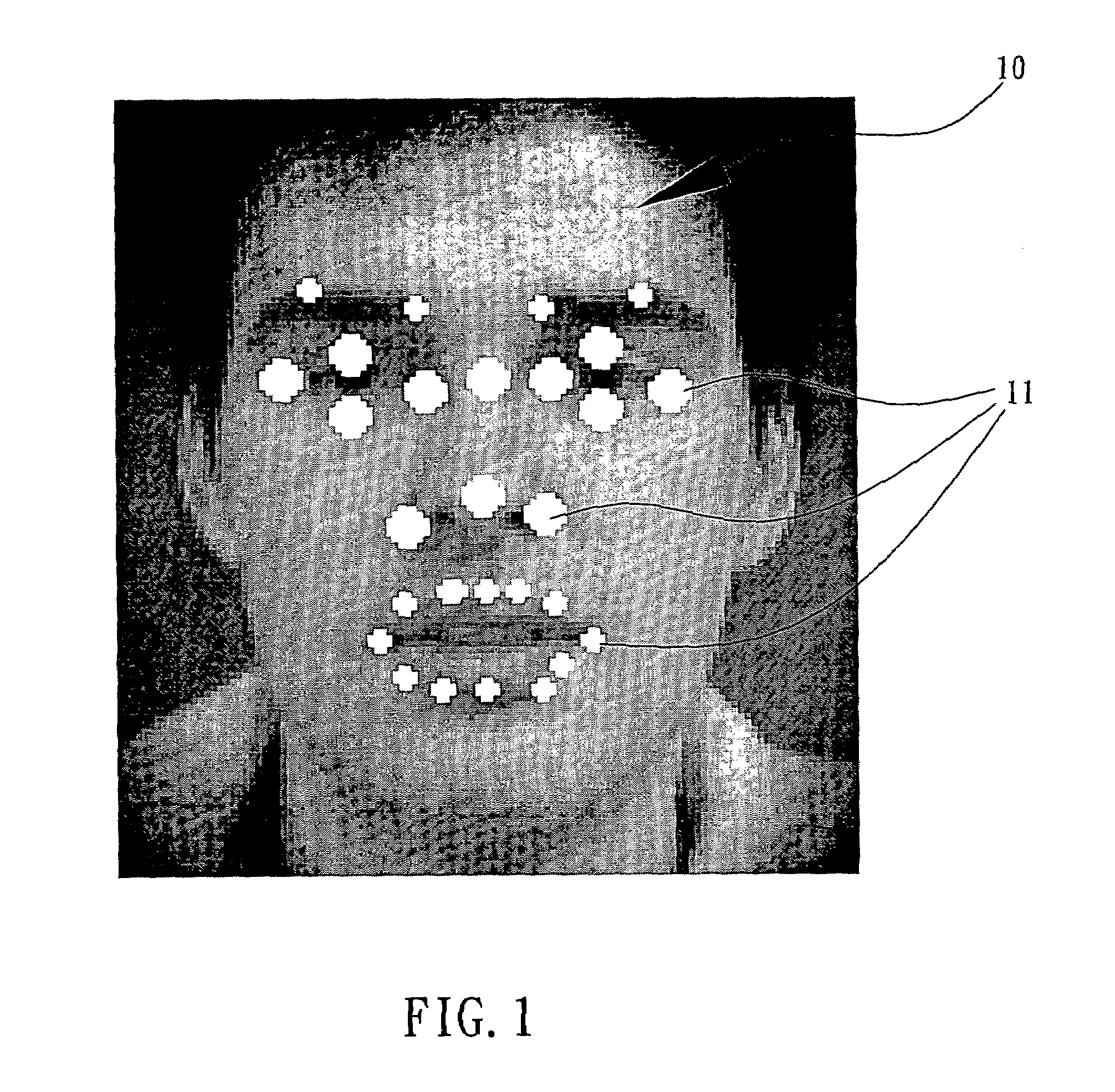

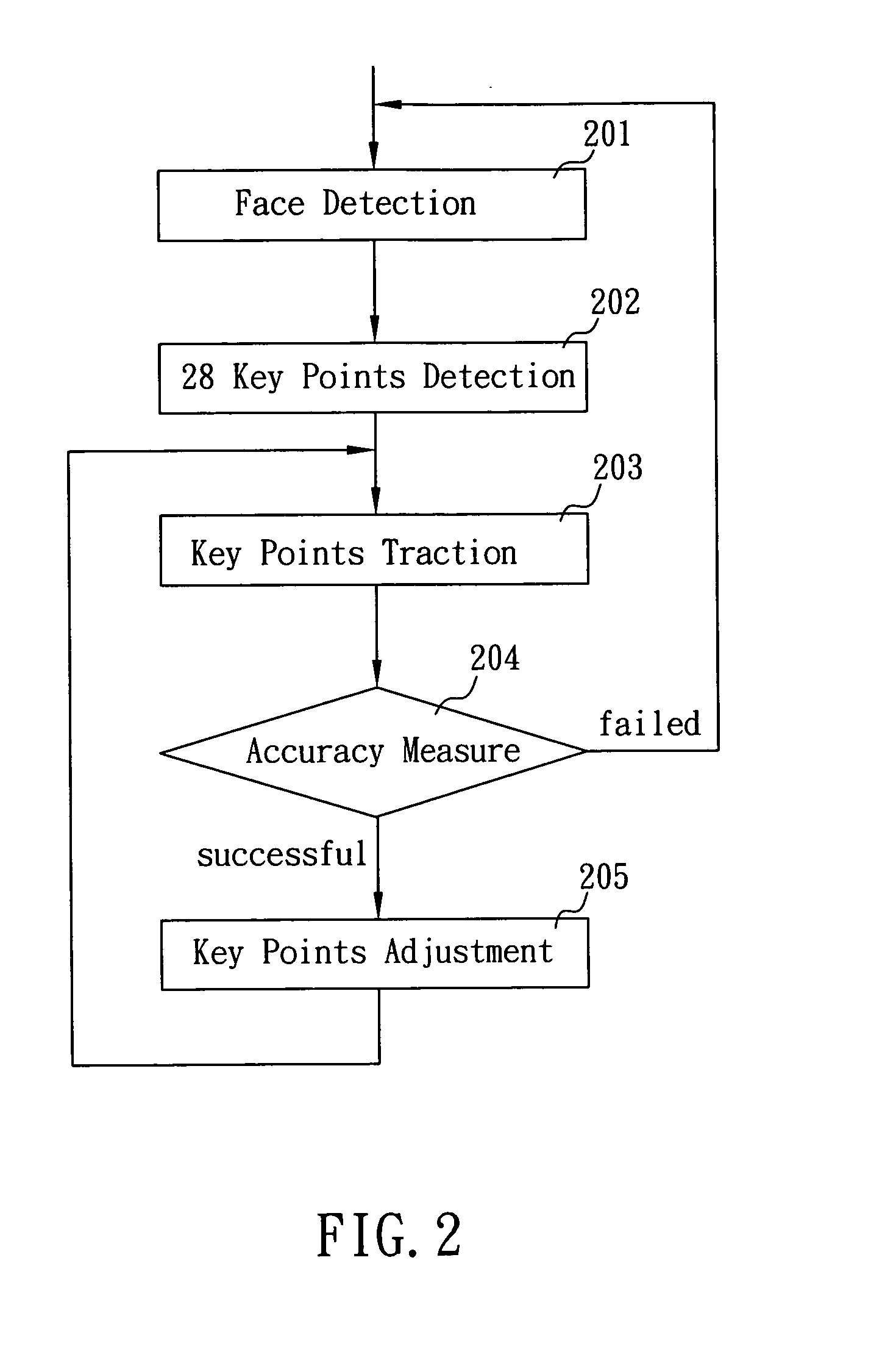

Method for driving virtual facial expressions by automatically detecting facial expressions of a face image

ActiveUS20080037836A1Precise positioningCharacter and pattern recognitionAnimationPattern recognitionCrucial point

A method for driving virtual facial expressions by automatically detecting facial expressions of a face image is applied to a digital image capturing device. The method includes the steps of detecting a face image captured by the image capturing device and images of a plurality of facial features with different facial expressions to obtain a key point position of each facial feature on the face image; mapping the key point positions to a virtual face as the key point positions of corresponding facial features on the virtual face; dynamically tracking the key point of each facial feature on the face image; estimating the key point positions of each facial feature of the current face image according to the key point positions of each facial feature on a previous face image; and correcting the key point positions of the corresponding facial features on the virtual face.

Owner:ARCSOFT

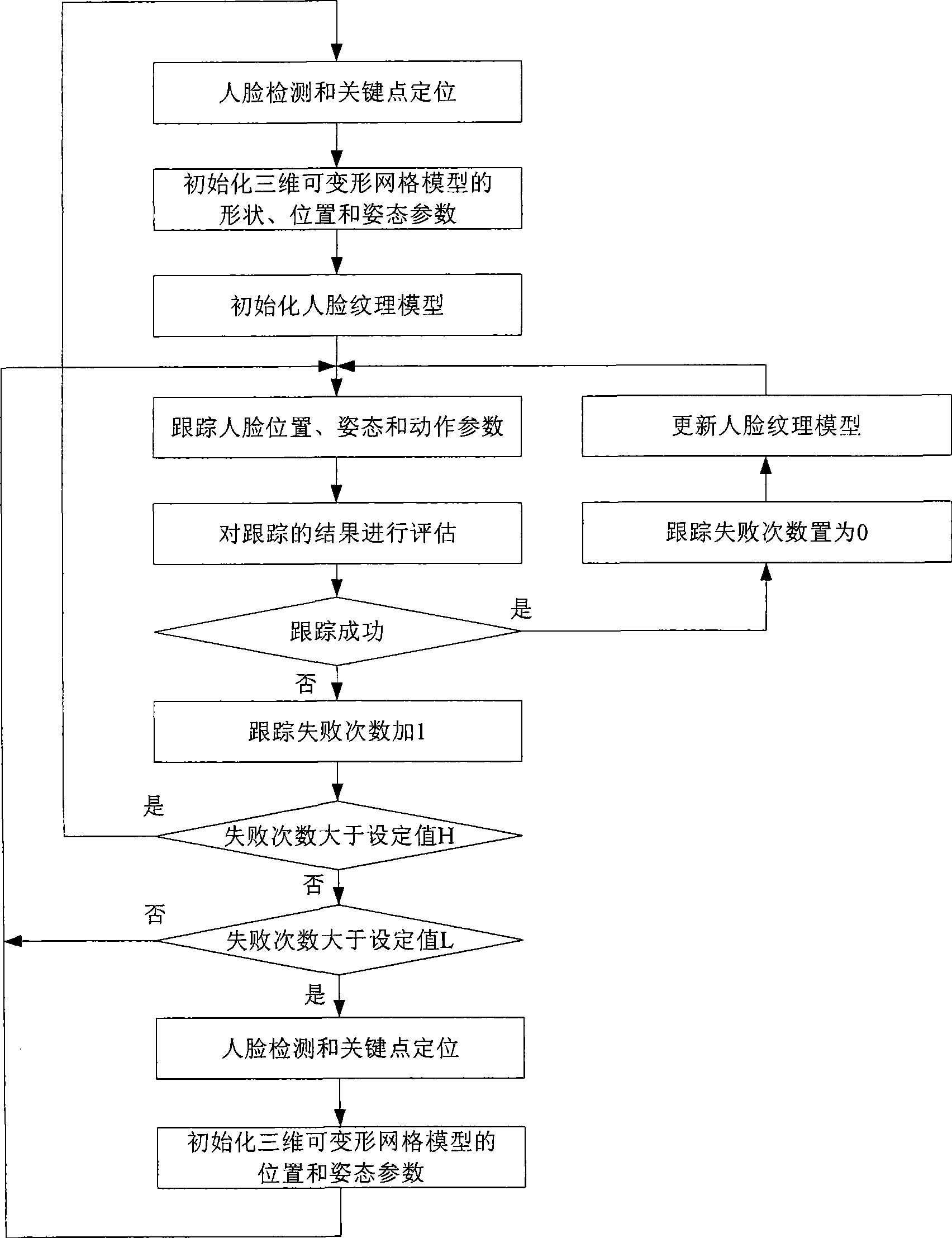

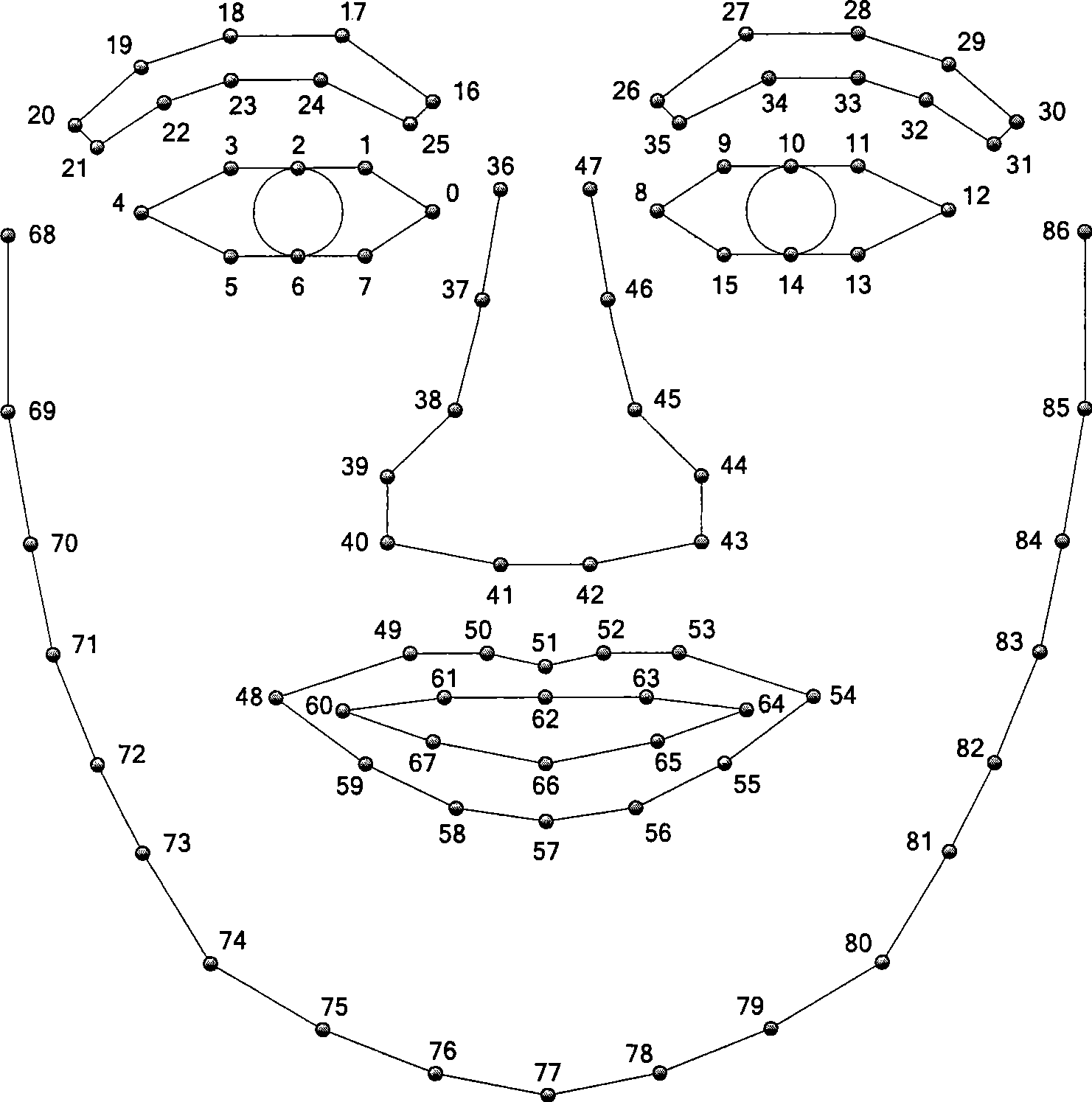

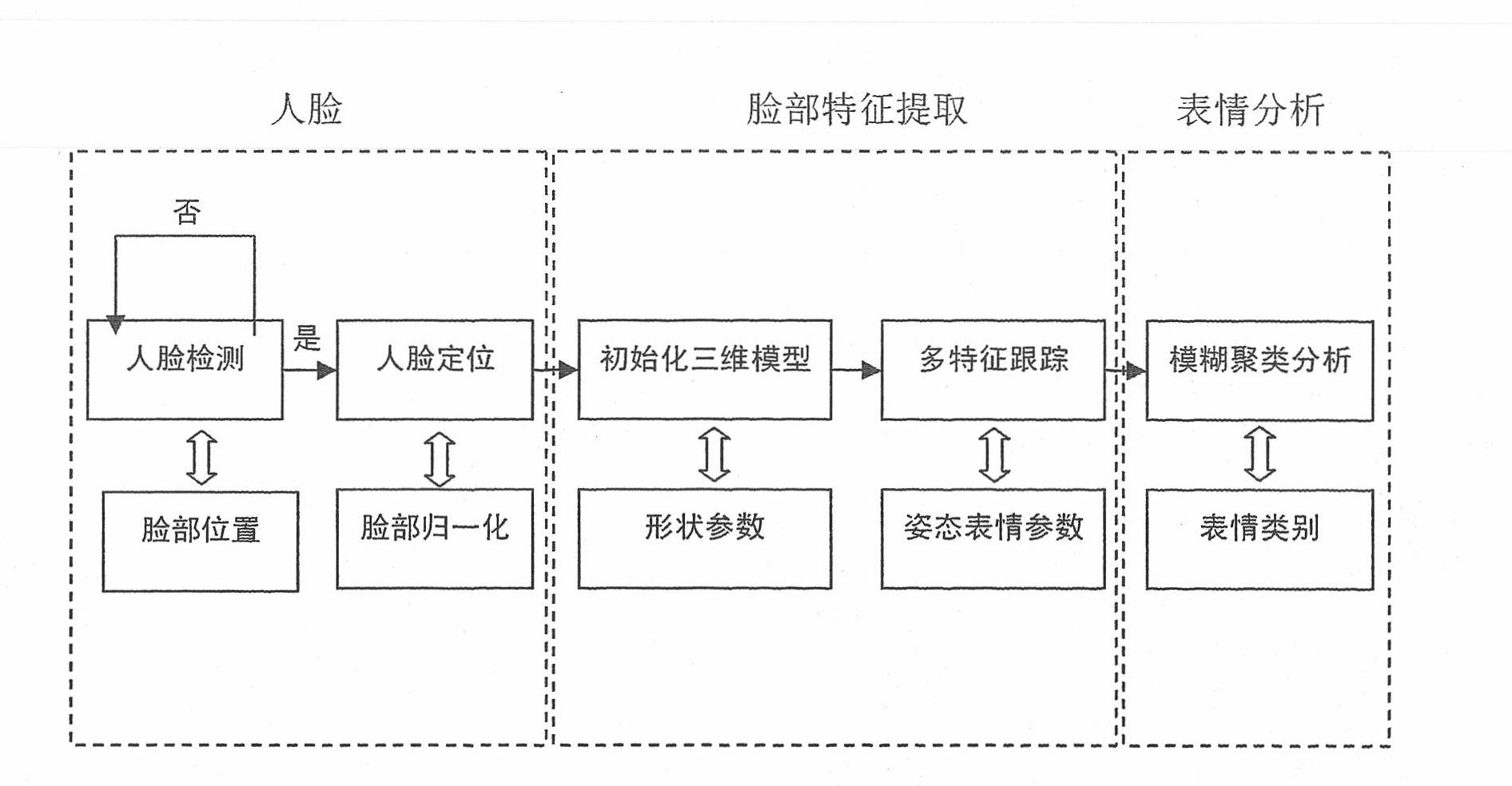

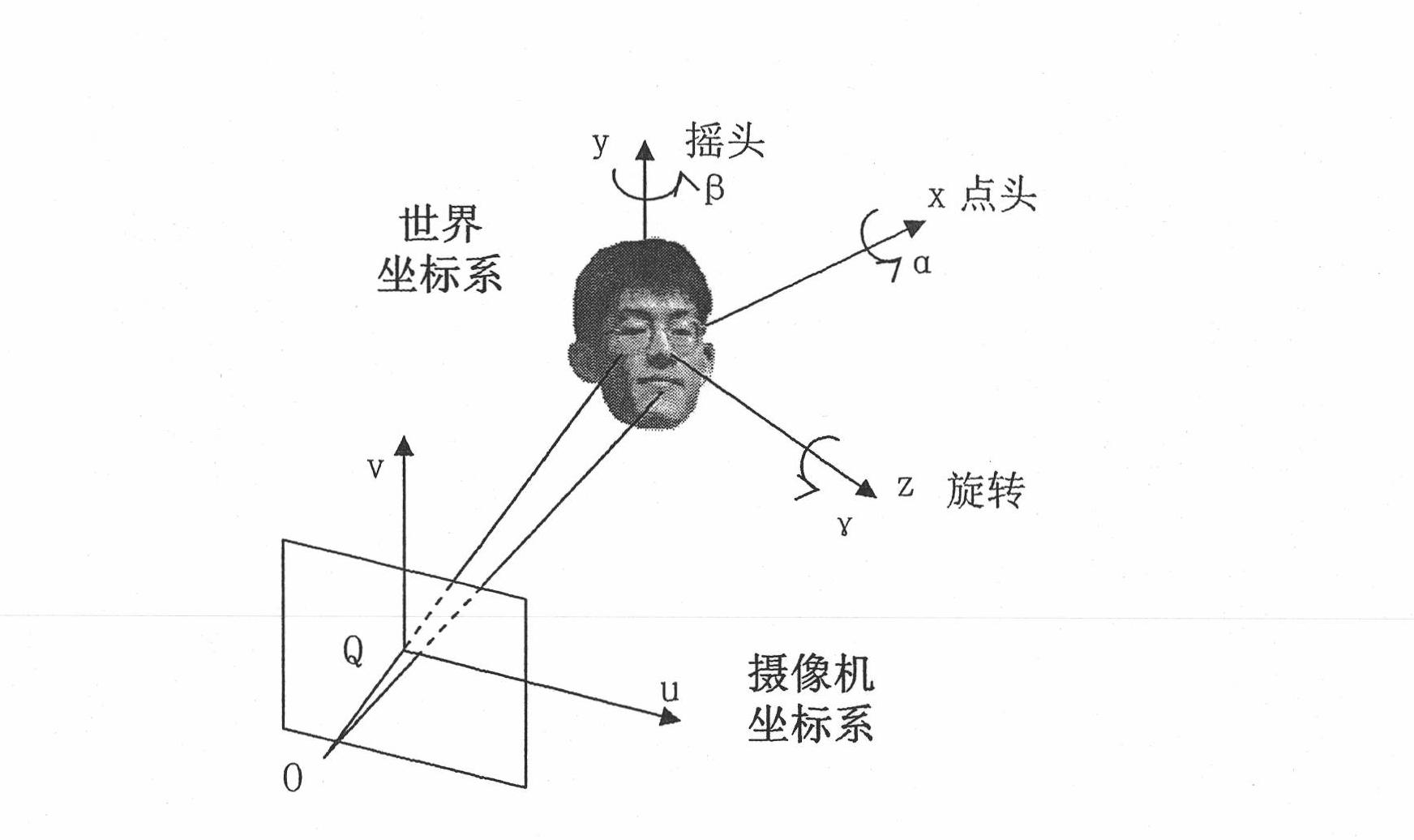

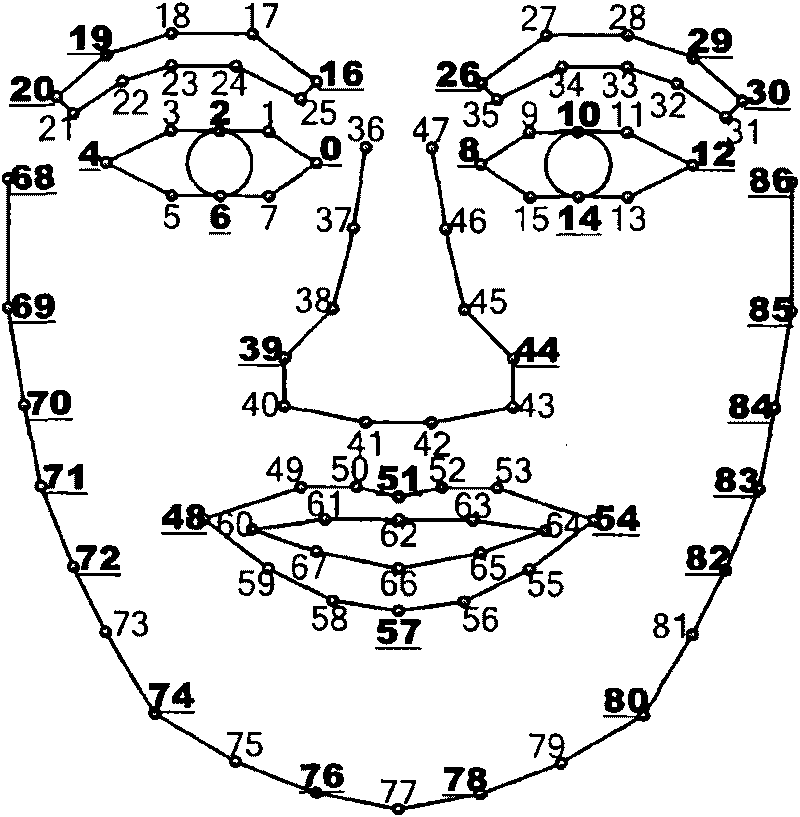

Three-dimensional human face action detecting and tracing method based on video stream

InactiveCN101499128AImplement automatic detectionGuaranteed robustnessCharacter and pattern recognitionPattern recognitionCrucial point

The invention provides a method for detecting and tracing three-dimension face action based on video stream. The method include steps as follows: detecting face and a key point position on the face; initializing the three-dimension deformable face gridding module and face texture module used for tracing; processing real time, continuous trace to the face position, gesture and face action in follow video image by using image registering with two modules; processing evaluation to the result of detection, location and tracking by using a PCl face sub-space, if finding the trace is interrupted, adopting measure to restore trace automatically. The method does not need to train special user, has wide head gesture tracing range and accurate face action detail, and has certain robustness to illumination and shelter. The method has more utility value and wide application prospect in the field, such as human-computer interaction, expression analysis, game amusement.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +1

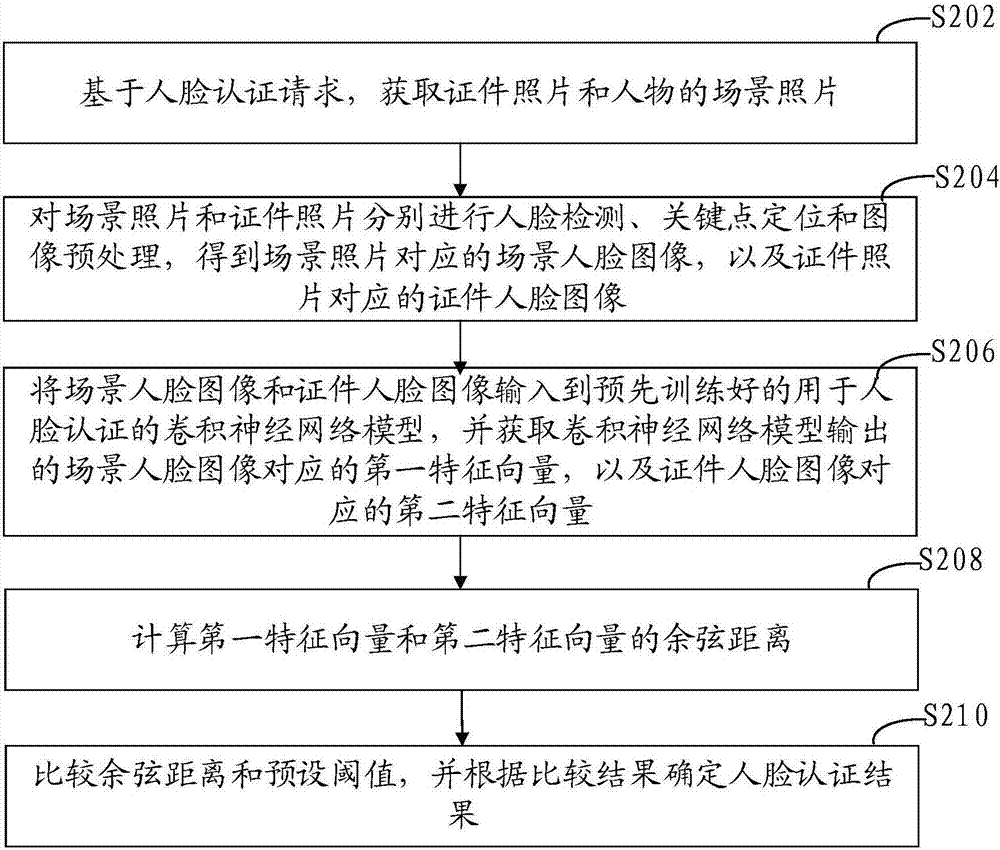

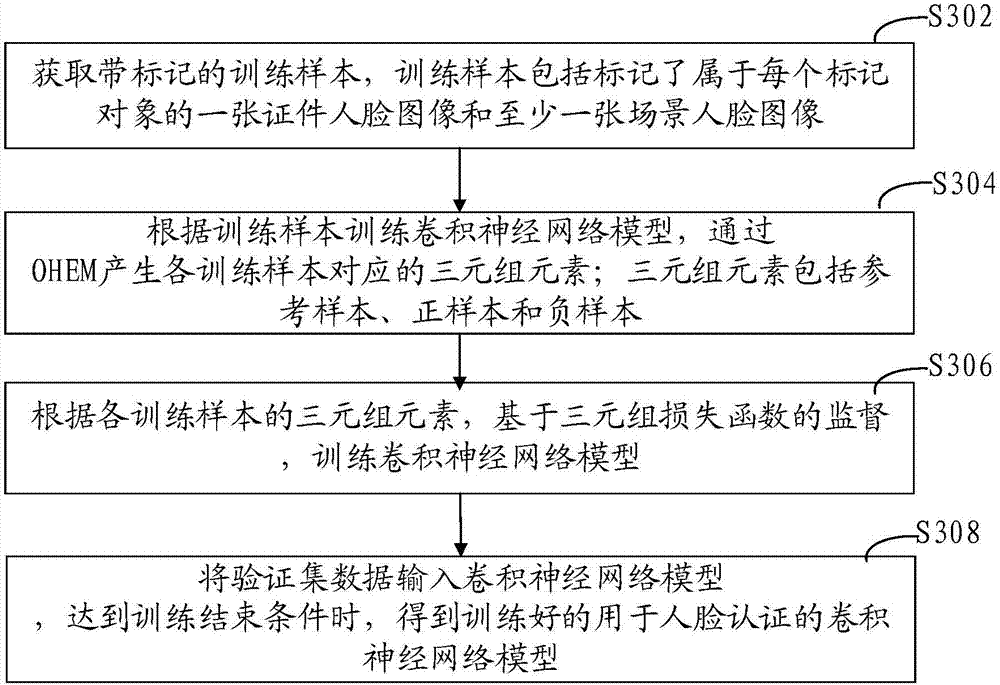

Face verification method and device based on Triplet Loss, computer device and storage medium

ActiveCN108009528AImprove reliabilityConform to the distribution propertiesCharacter and pattern recognitionFace detectionFeature vector

The present invention relates to a face verification method and device based on the Triplet Loss, a computer device and a storage medium. The method comprises the steps of: based on a face verification request, obtaining a certificate photo and a figure scene photo; performing face detection, key point positioning and image preprocessing of the scene photo and the certificate photo, and obtaininga scene face image corresponding to the scene photo and a certificate face image corresponding to the certificate photo; inputting the scene face image and the certificate face image into a pre-trained convolutional neural network model used for face verification, obtaining a first feature vector, corresponding to the scene face image, output by the convolutional neural network model and a secondfeature vector, corresponding to the certificate face image, output by the convolutional neural network model; calculating a cosine distance of the first feature vector and the second feature vector;and comparing the cosine distance with a preset threshold, and determining a face verification result according to a comparison result. The reliability of face verification is improved by the method.

Owner:GRG BAKING EQUIP CO LTD

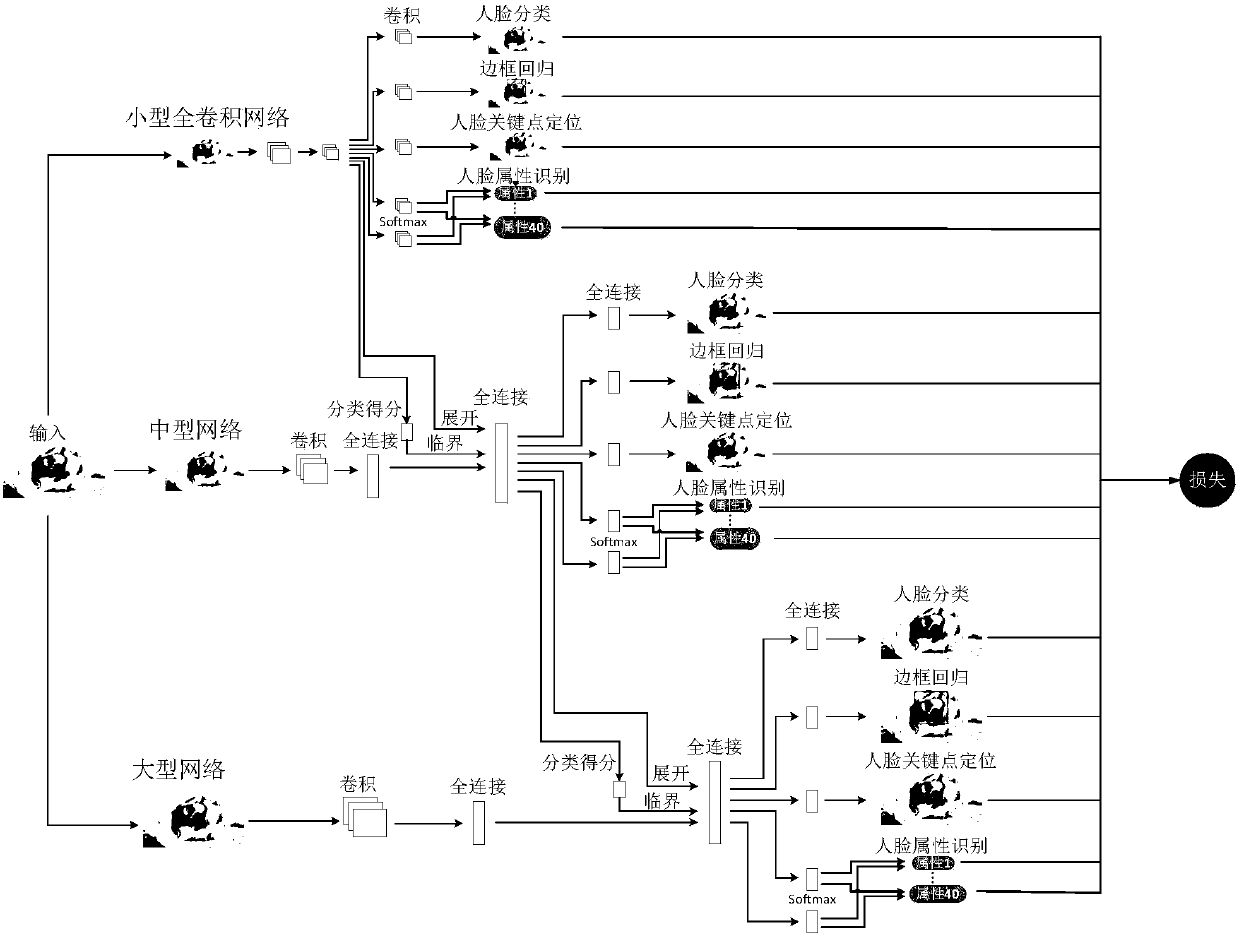

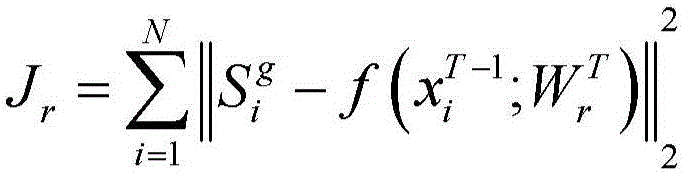

Face attribute recognition method of deep neural network based on cascaded multi-task learning

ActiveCN108564029APromote resultsThe result of face attribute recognition is improvedCharacter and pattern recognitionNeural architecturesVisual technologyCrucial point

The invention provides a face attribute recognition method of a deep neural network based on cascaded multi-task learning and relates to the computer vision technology. Firstly, a cascaded deep convolutional neural network is designed, then multi-task learning is used for each cascaded sub-network in the cascaded deep convolutional neural network, four tasks of face classification, border regression, face key point detection and face attribute analysis are learned simultaneously, then a dynamic loss weighting mechanism is used in the deep convolutional neural network based on the cascaded multi-task learning to calculate the loss weights of face attributes, finally a face attribute recognition result of a last cascaded sub-network is used as the final face attribute recognition result based on a trained network model. A cascading method is used to jointly train three different sub-networks, end-to-end training is achieved, the result of face attribute recognition is optimized, different from the use of fixed loss weights in a loss function, a difference between the face attributes present is considered in the invention.

Owner:XIAMEN UNIV

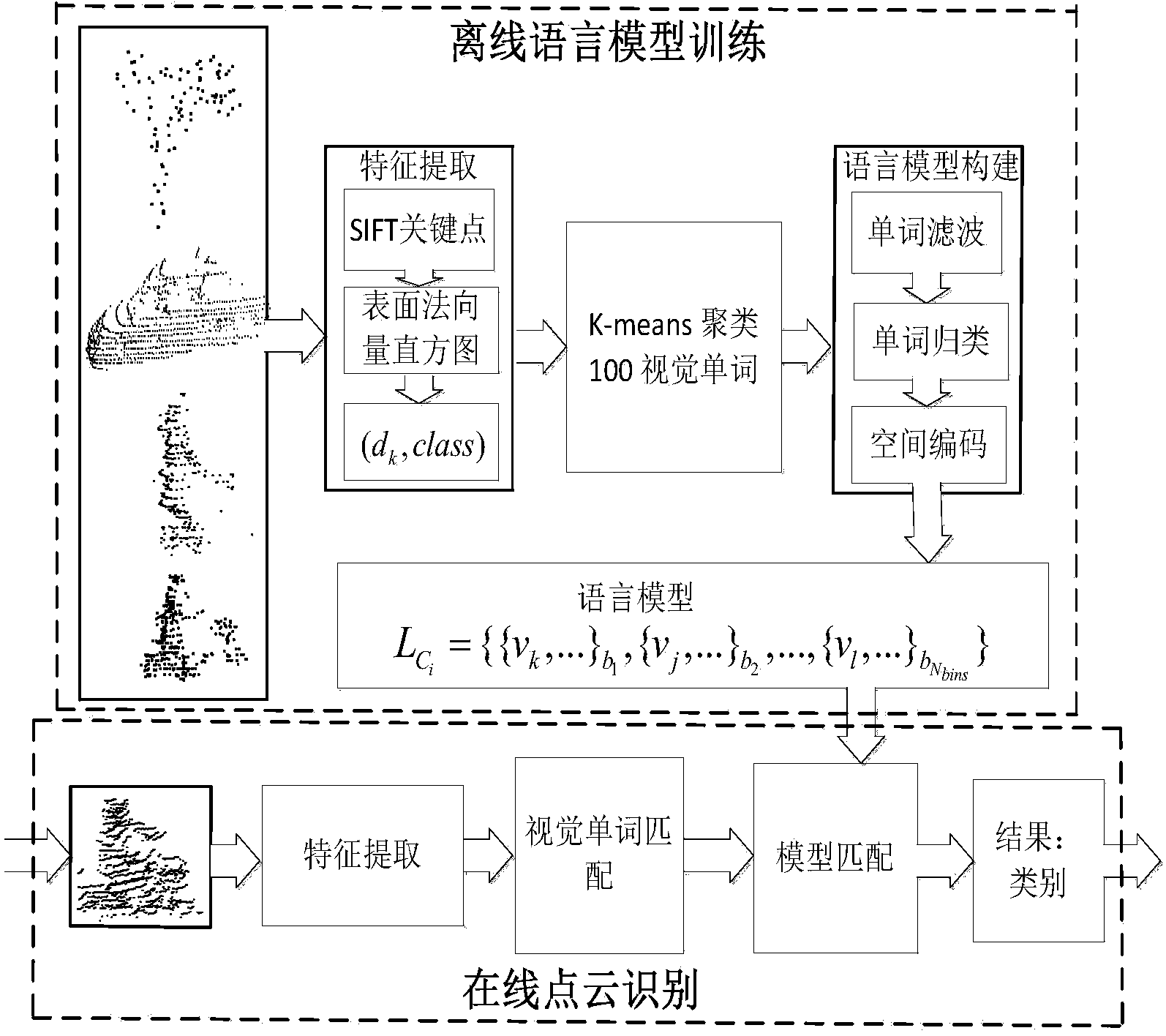

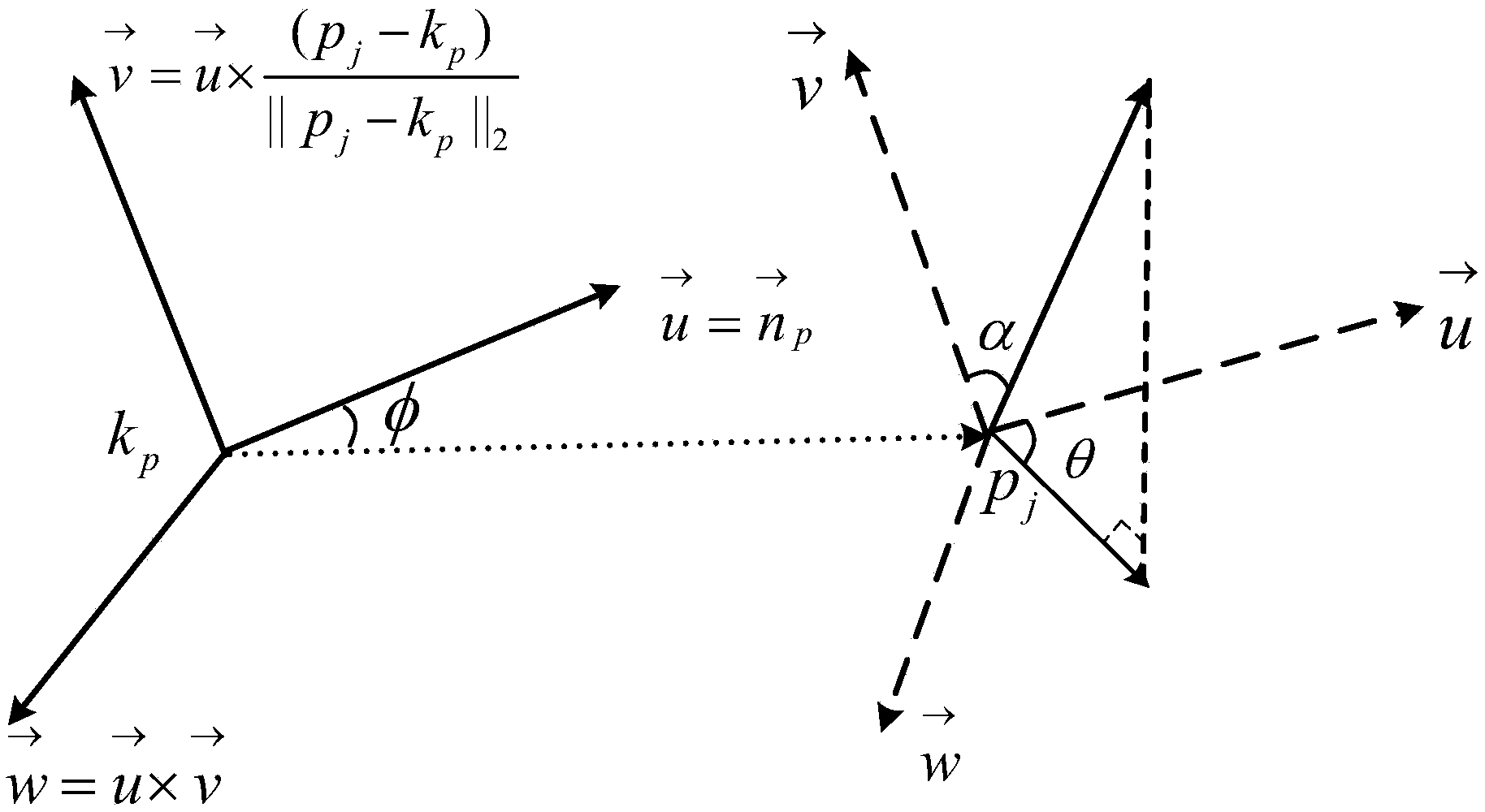

Method for identifying objects in 3D point cloud data

ActiveCN104298971AFeatures are stable and reliableAccurate modelingCharacter and pattern recognitionPoint cloudCrucial point

The invention discloses a method for identifying objects in 3D point cloud data. 2D SIFT features are extended to a 3D scene, SIFT key points and a surface normal vector histogram are combined to achieve scale-invariant local feature extraction of 3D depth data, and the features are stable and reliable. A provided language model overcomes the shortcoming that a traditional visual word bag model is not accurate and is easily influenced by noise when using local features to describe global features, and the accuracy of target global feature description based on the local features is greatly improved. By means of the method, the model is accurate, and identification effect is accurate and reliable. The method can be applied to target identification in all outdoor complicated or simple scenes.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Method for analyzing facial expressions on basis of motion tracking

InactiveCN101777116ARealize automatic detection and positioningImprove performanceImage analysisCharacter and pattern recognitionFace detectionStudy methods

The invention relates to a method for analyzing facial expressions on the basis of motion tracking, in particular to a technique for face multi-feature tracking and expression recognition. The method comprises the following steps: pre-processing an inputted video image, and carrying out the face detection and face principle point location to determine and normalize the position of the face; modeling the face and expressions by using a three-dimensional parametric face mesh model, extracting the robust features and tracking the positions, gestures and expressions of the face in the inputted video image by combining the online learning method, so as to achieve the rapid and effective face multi-feature tracking; and taking the tracked expression parameters as the features for expression analysis; and carrying out the expression analysis by using an improved fuzzy clustering algorithm based on Gaussian distance measurement, so as to provide the fuzzy description of the expression.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

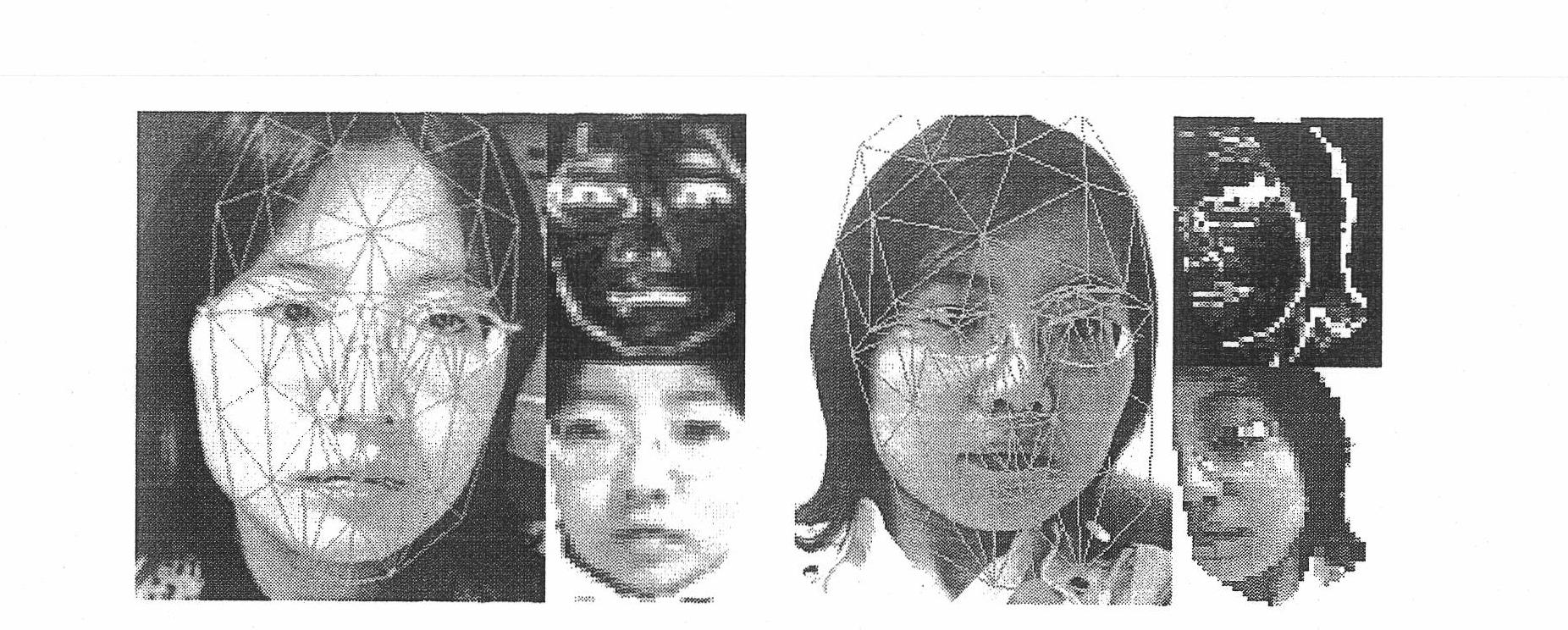

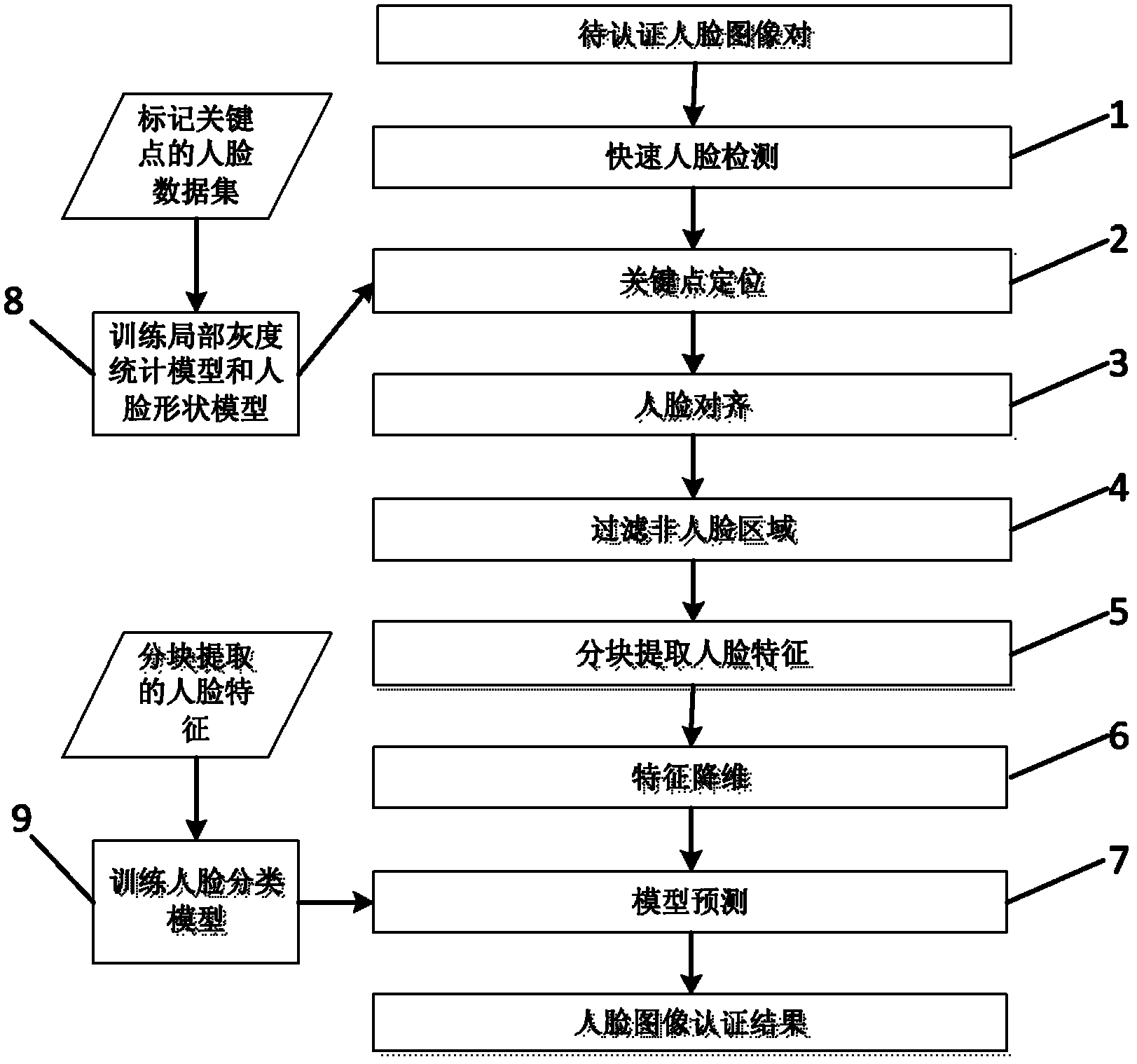

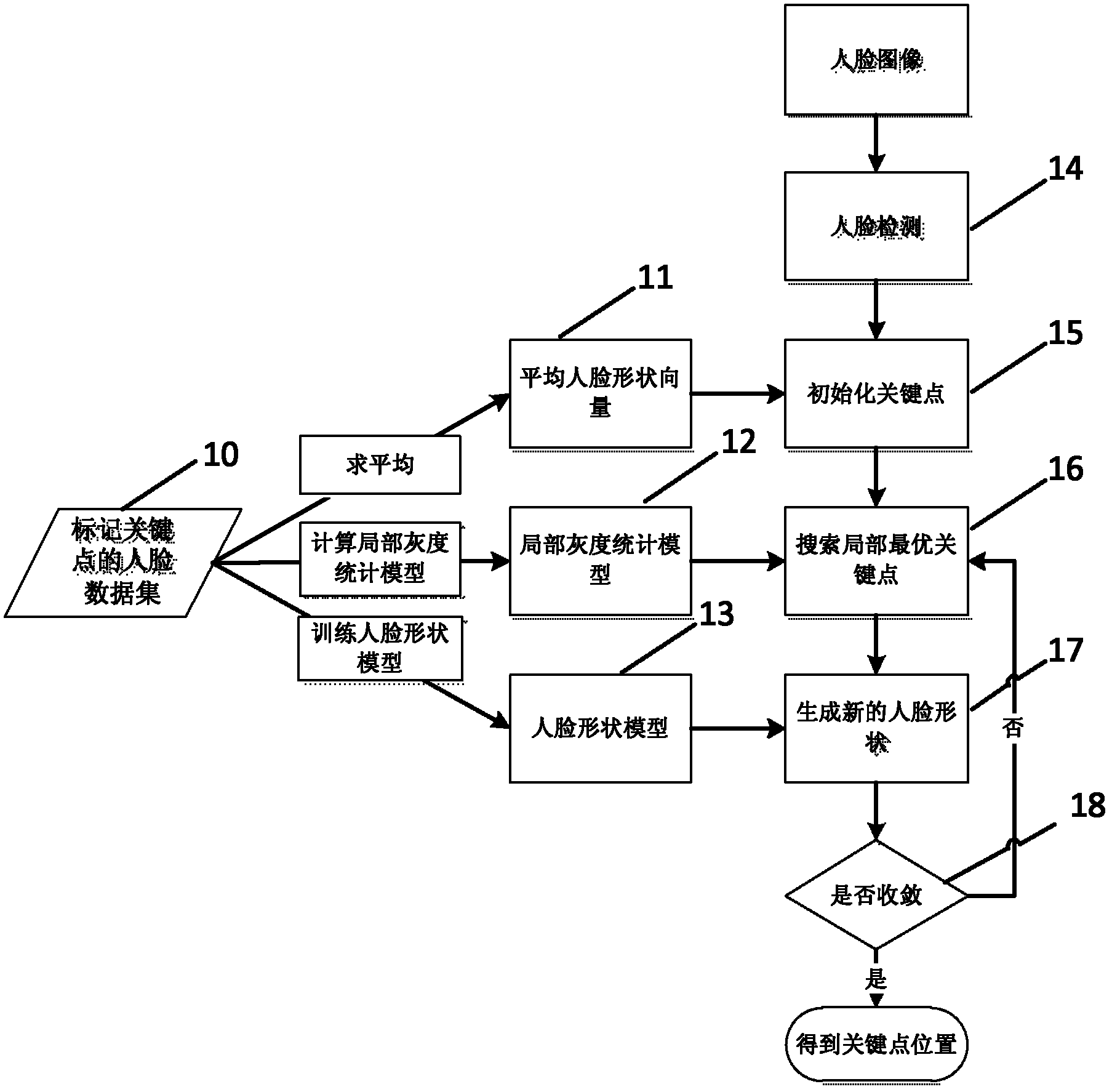

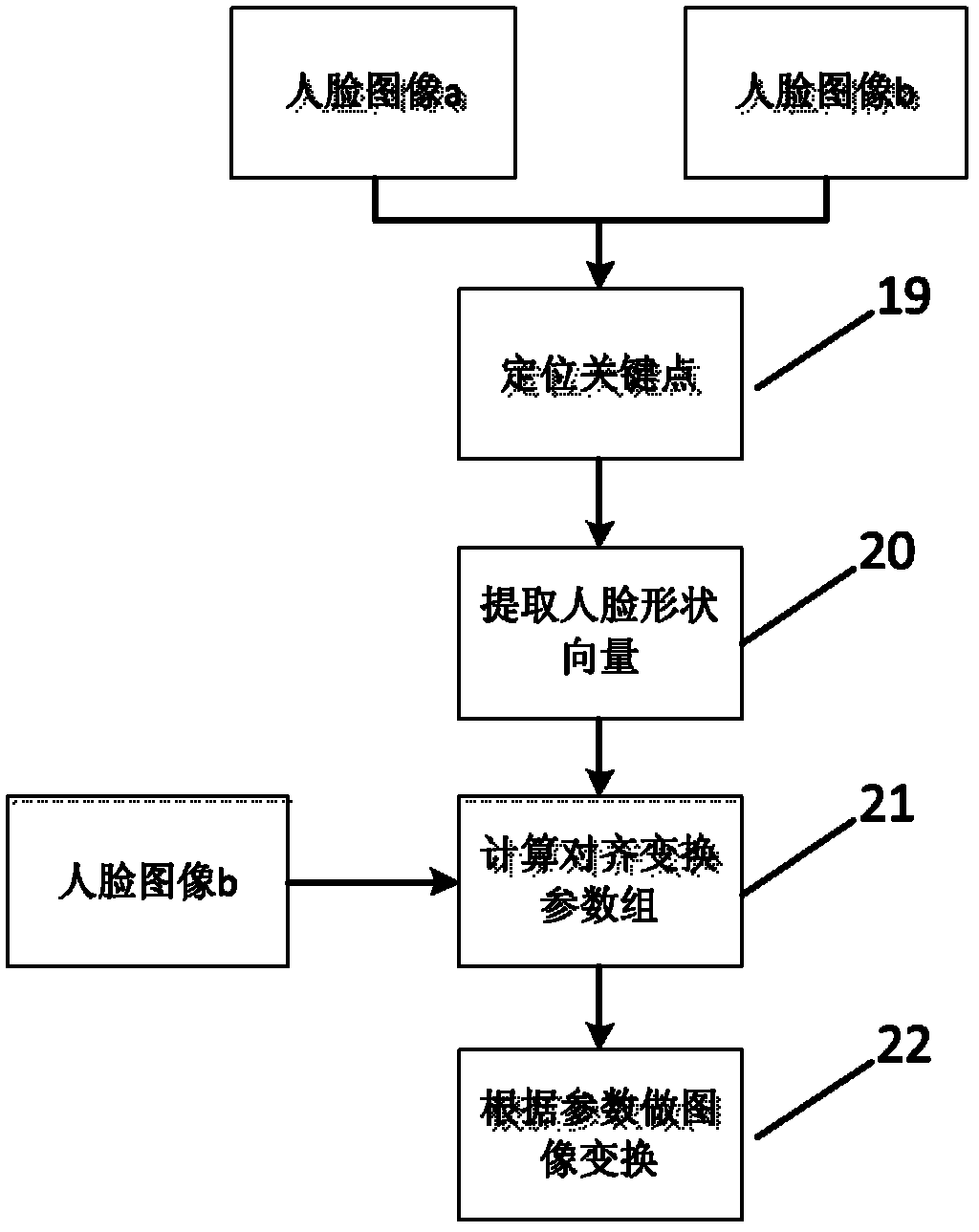

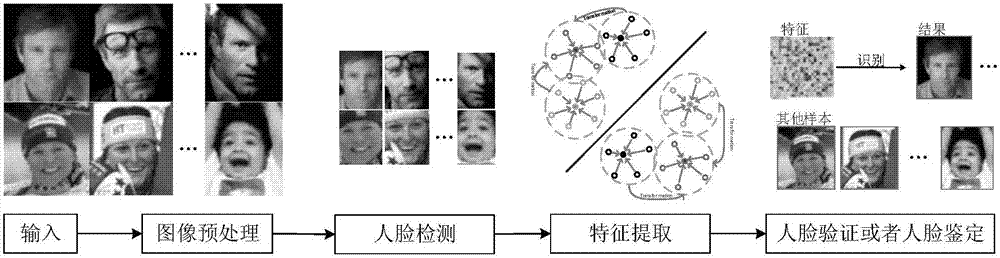

Multi-gesture and cross-age oriented face image authentication method

InactiveCN102663413AThe location correspondence is clearEliminate the effects ofCharacter and pattern recognitionFace detectionFeature Dimension

The invention discloses a multi-gesture and cross-age oriented face image authetication method. The method comprises the following steps of: rapidly detecting a face, performing key point positioning, performing face alignment, performing non-face area filtration, extracting the face features by blocks, performing feature dimension reduction and performing model prediction. The method provided by the invention can perform the face alignment, realize the automatic remediation for a multi-gesture face image, and improves the accuracy rate of the algorithm, furthermore, the feature extraction and dimension reduction modules provided by the invnetion have robustness for aging changes of the face, thus having high use value.

Owner:中盾信安科技(江苏)有限公司

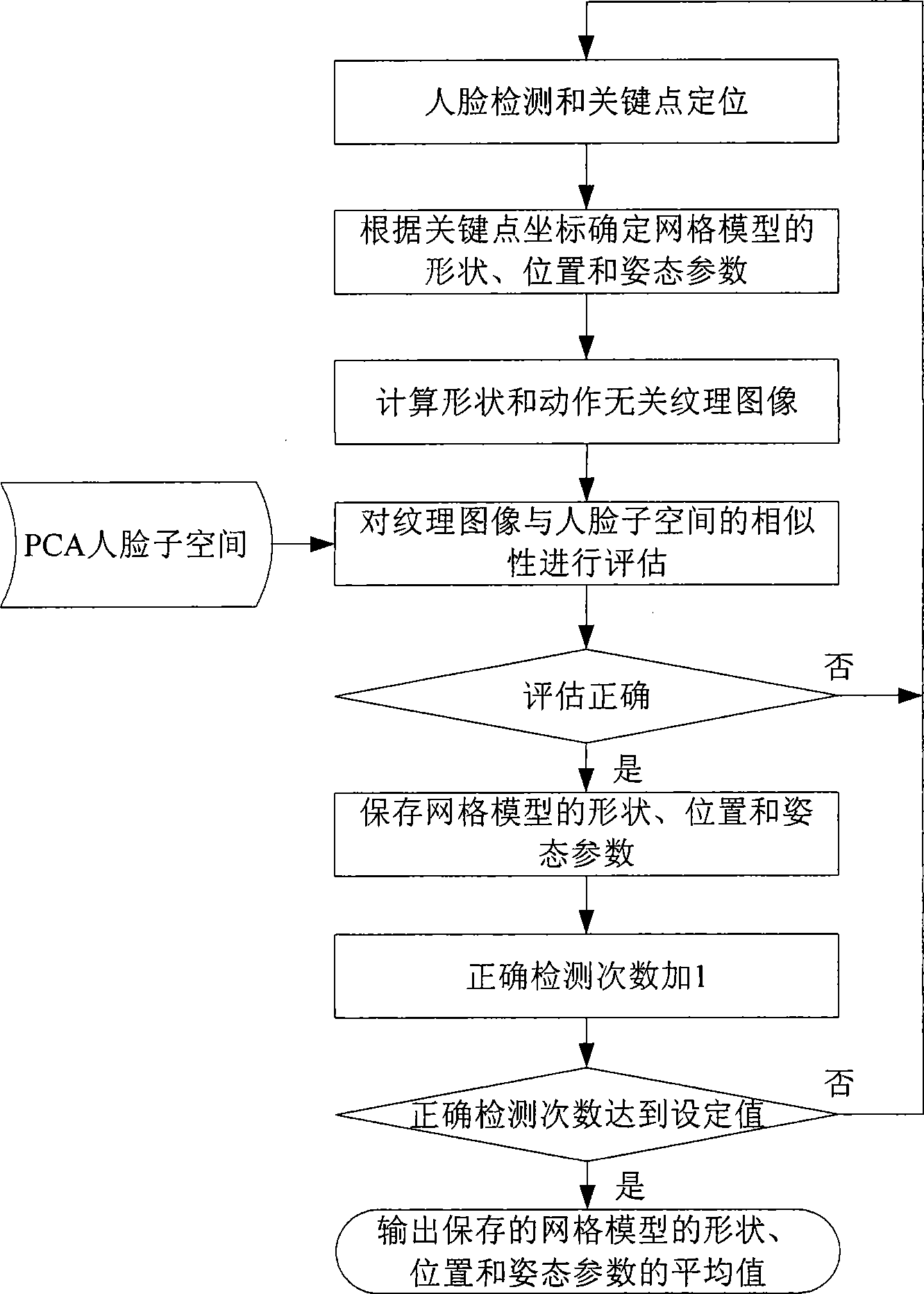

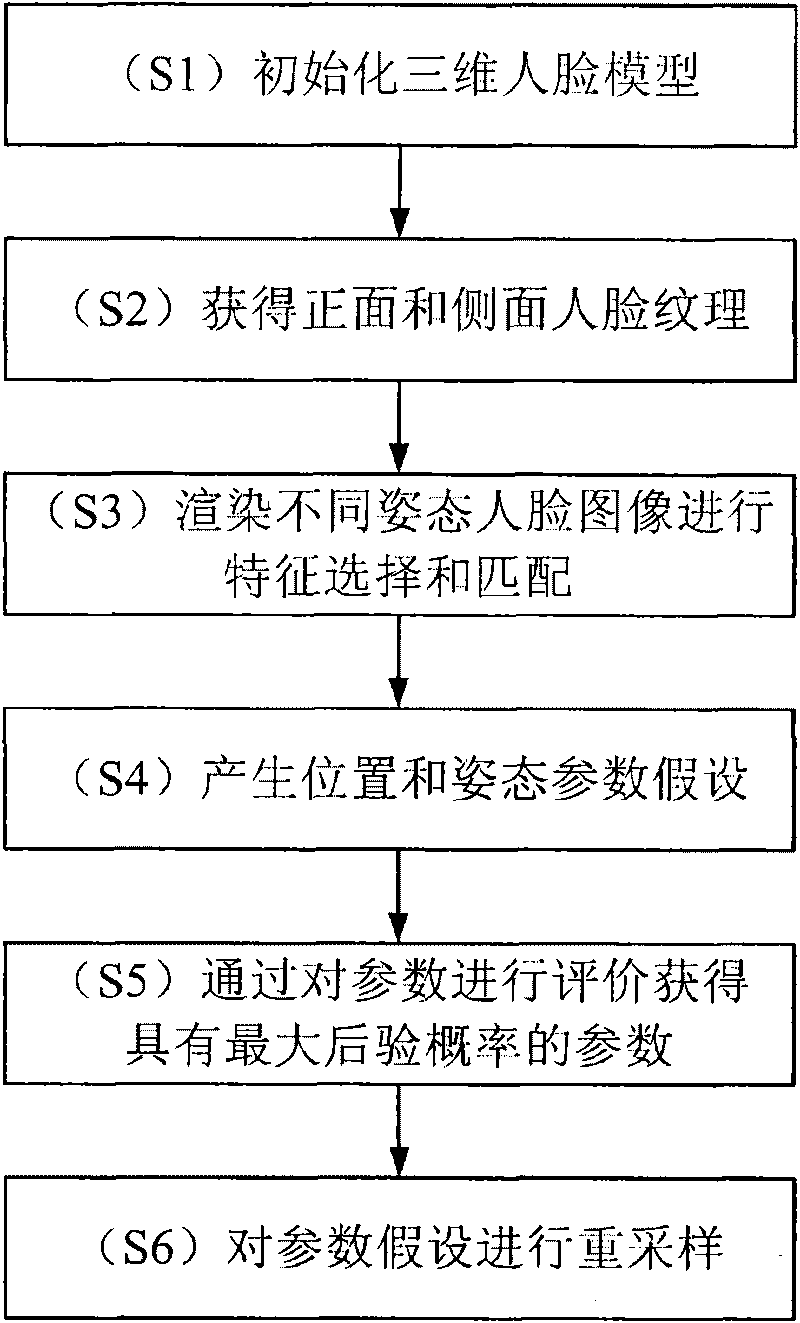

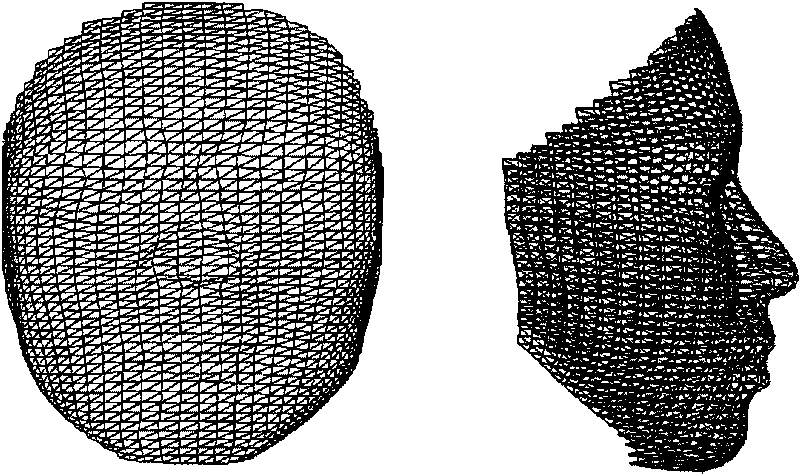

Method for tracing position and pose of 3D human face in video sequence

InactiveCN101763636AImprove tracking performanceImprove accuracyImage analysisCrucial pointPrincipal component analysis

The invention provides a method for tracing the position and pose of a 3D human face in a video sequence. In the method, based on the principal component analysis, by using a deformable 3D grid model, minimizing the distance between a key point on the grid model and a corresponding key point on an input image, the model can fit the head figure of a user; the human face texture can be obtained by using the 3D model at an initial phase, so as to render human face images under different poses; the feature points can be selected in the rendered images, and a corresponding position can be searched on the input image; the matching faults can be removed by using a random sampling mode; then, mode pose changed parameters can be estimated according to the corresponding relationship between the feature points, so as to update a hypothetical state; finally, a distance between the rendered image and an actual image can be calculated by using the average normalized correlation algorithm, so as to calculate a hypothetical weight. Experiments show that the method can effectively trace the pose of the 3D head in the video.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +1

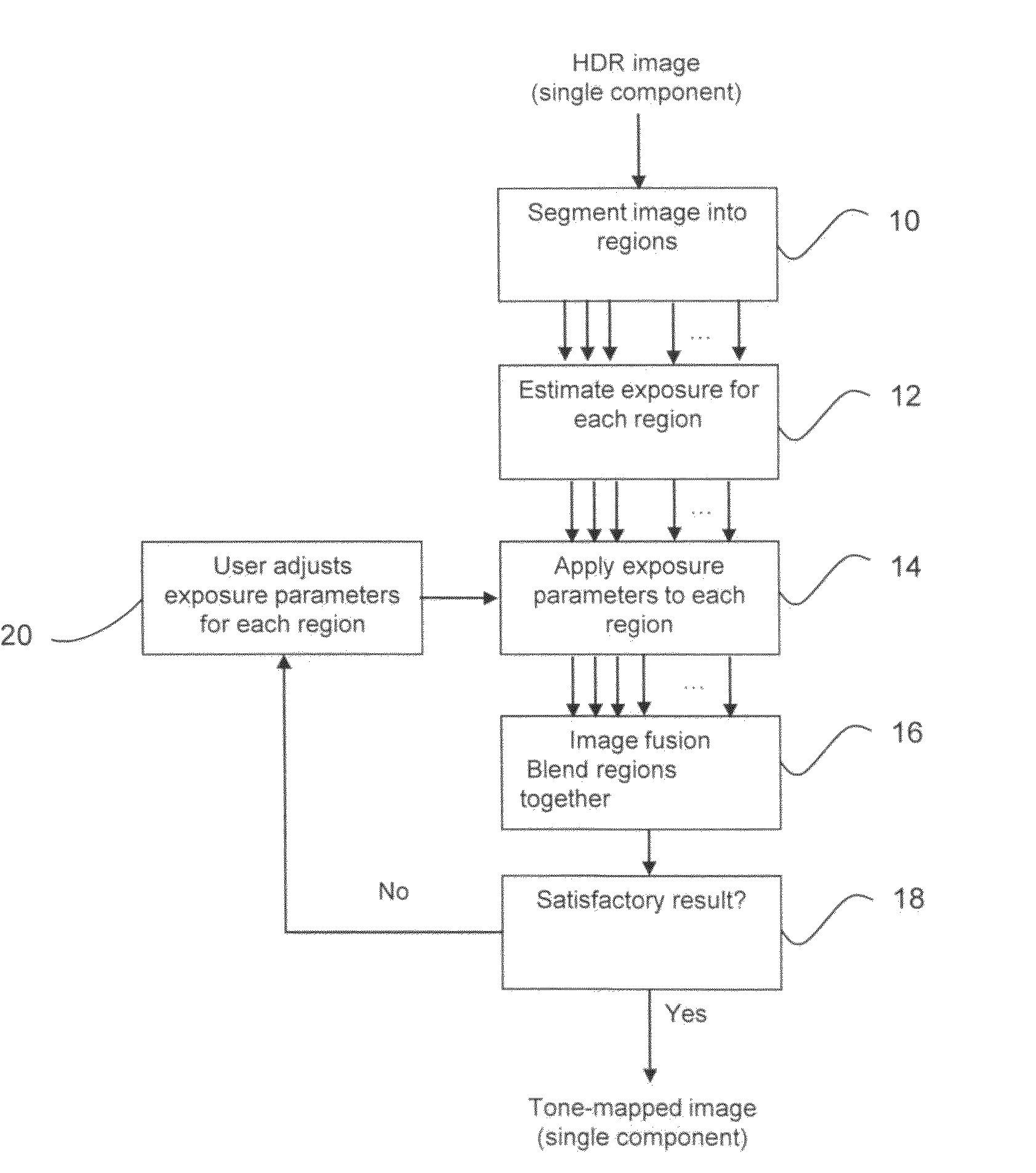

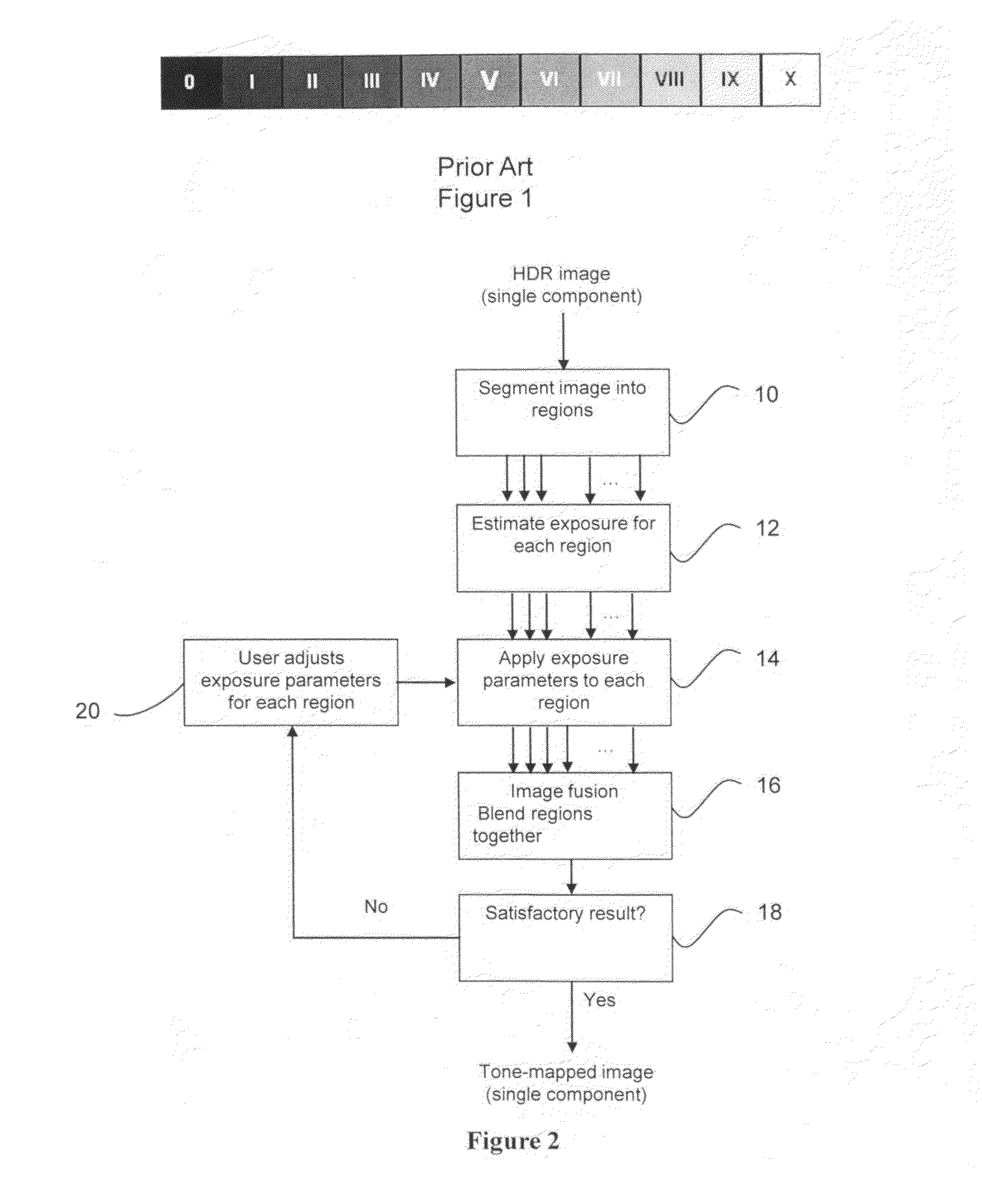

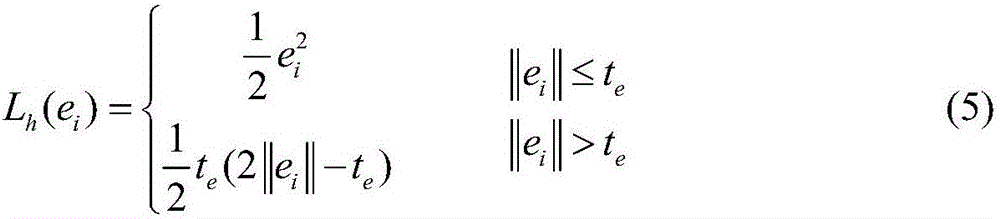

Automatic exposure estimation for HDR images based on image statistics

A method for tone mapping high dynamic range images for display on low dynamic range displays wherein high dynamic range images are first accessed. The high dynamic range images are divided the into different regions such that each region is represented by a matrix, where each element of the matrix is a weight or probability of a pixel value. An exposure of each region is determined or calculated by estimating an anchor point in each region such that most pixels in each region are mapped to mid grey and the anchor points are adjusted to a key of the images to preserve overall brightness. The regions are then placed or mapped to zones and exposure values are applied to the regions responsive to the weight or probability. The regions are fused together to obtain a final tone mapped image.

Owner:INTERDIGITAL MADISON PATENT HLDG

3D point cloud FPFH characteristic-based real-time three dimensional space positioning method

ActiveCN106296693AReduce time and space complexityImage enhancementImage analysisCrucial pointPoint cloud

The present invention relates to a 3D point cloud FPFH characteristic-based real-time three dimensional space positioning method. The method comprises a step 1) of obtaining the 3D point cloud data from a depth camera; a step 2) of selecting the point cloud key frames; 3) a point cloud pre-processing step; 4) a characteristic description step of using an ISS algorithm to obtain the point cloud key points and obtaining the FPFH characteristics of the key points; 5) a point cloud registration step of firstly utilizing a sampling consistency initial registration algorithm to carry out the FPFH characteristic-based initial registration on two point clouds, and then using an ICP algorithm to carry out the secondary registration on an initial registration result; 6) a coordinate transformation step of obtaining a change matrix of the three dimensional space coordinates of a mobile robot, and transforming the coordinate of the current point cloud into an initial position via a transformation matrix; a step 7) of repeating the steps 1) to 6), and calculating the coordinate of the robot relative to the initial position along with the movement of the robot. The method of the present invention has a better accuracy for the real-time positioning of the mobile robot on a bad illumination or completely dark condition.

Owner:ZHEJIANG UNIV OF TECH

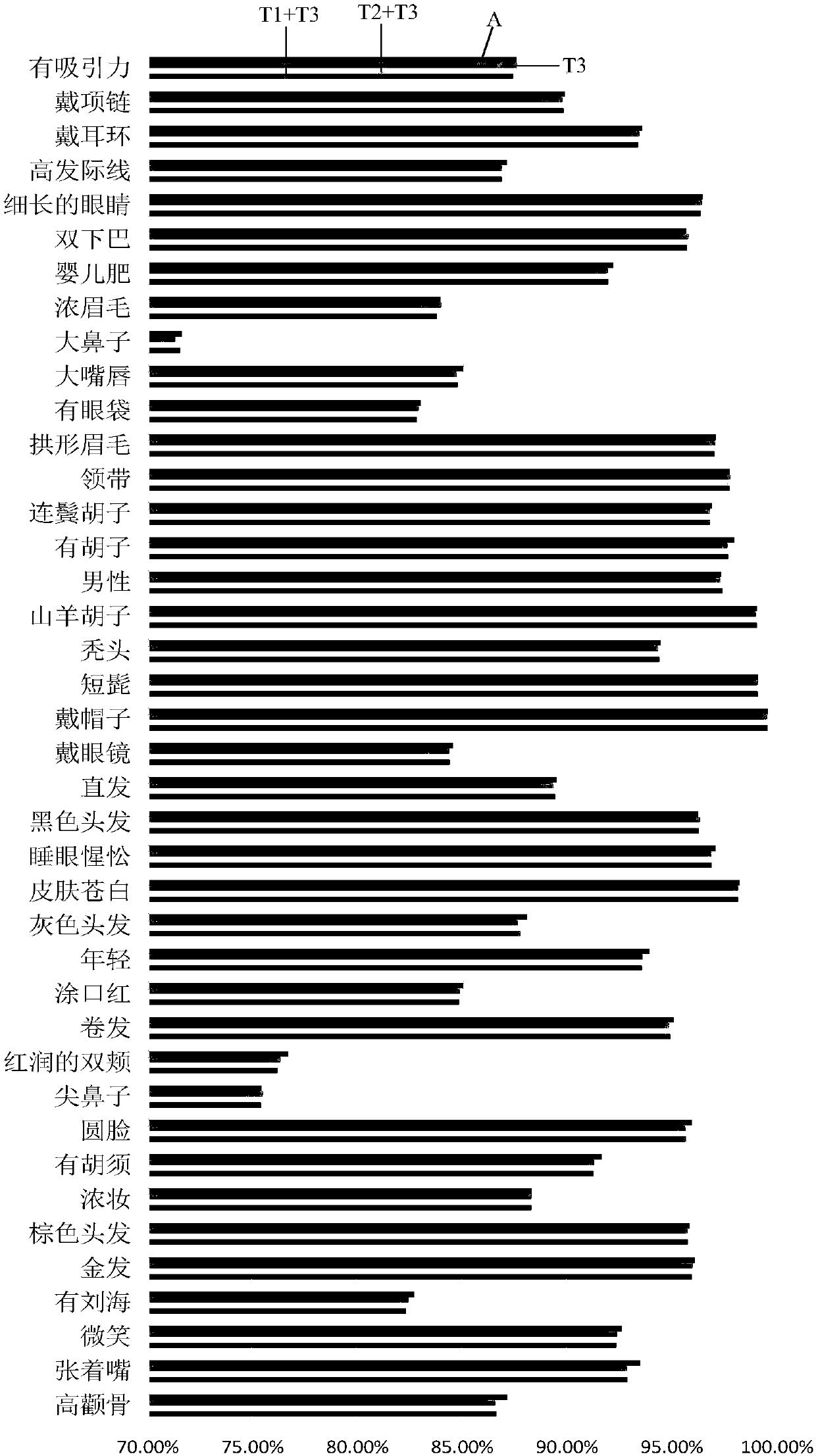

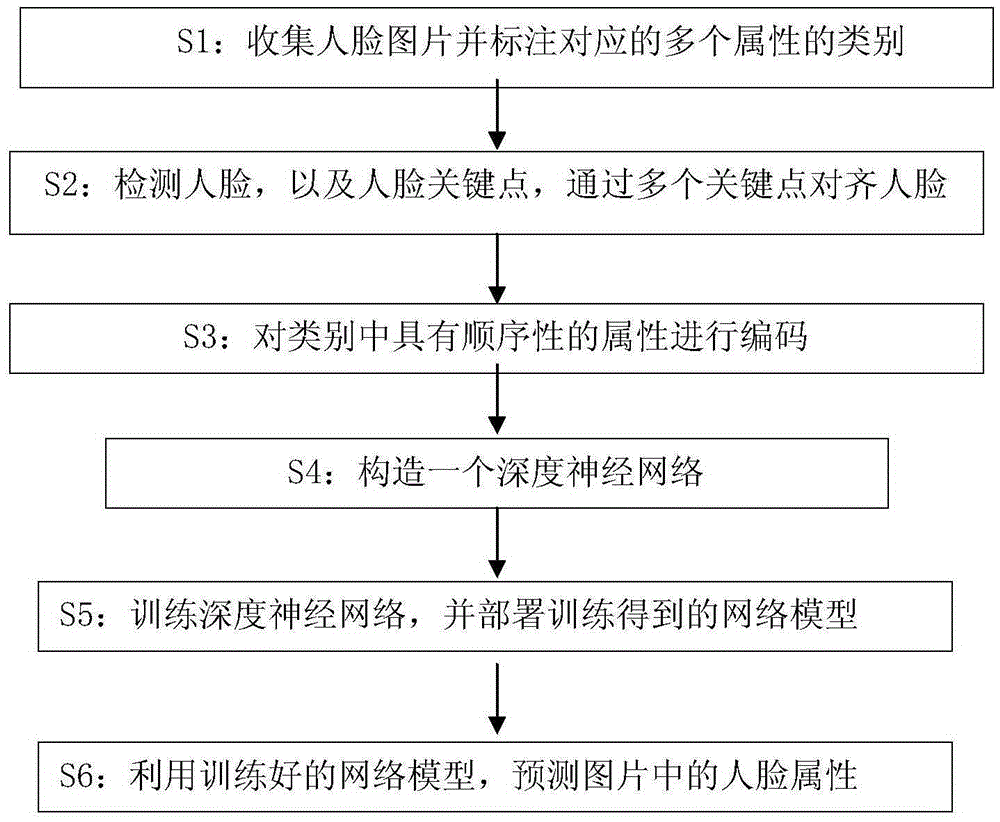

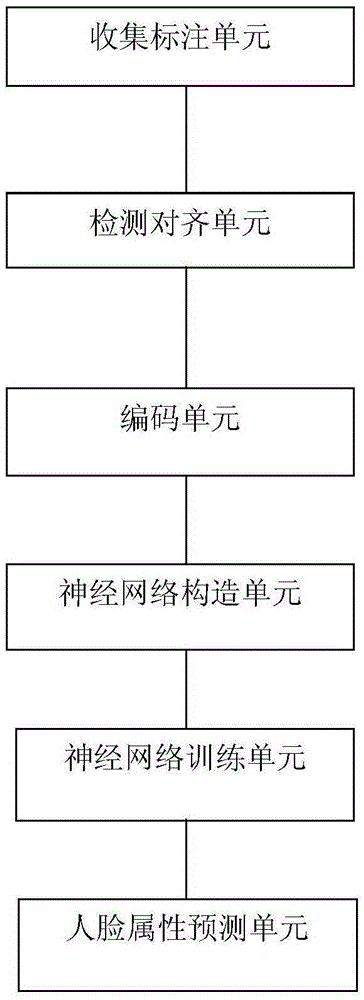

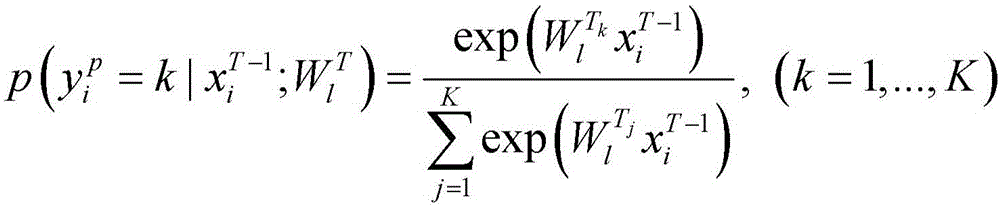

Human face attribute prediction method and apparatus based on deep study and multi-task study

InactiveCN105404877AImprove predictive performanceCharacter and pattern recognitionCrucial pointData set

The invention discloses a human face attribute prediction method and apparatus based on deep study and multi-task study. The face attribute prediction method mainly comprises: collecting a human face, marking a category corresponding to a plurality of attributes, and forming a training data set; detecting a human face and key points of the human face, and aligning the human face via a plurality of key points; encoding the sequential attributes in the category; constructing a deep neural network; and using the training data set to train the deep neural network; deploying a neural network model obtained via training; and finally, using the neural network model to predict the human face attribute in a picture. According to the human face attribute prediction method provided by the invention, through united training of a plurality of attributes, a plurality of attributes can be predicted simultaneously with only one deep network, and a prediction result is improved obviously.

Owner:SENSETIME GRP LTD

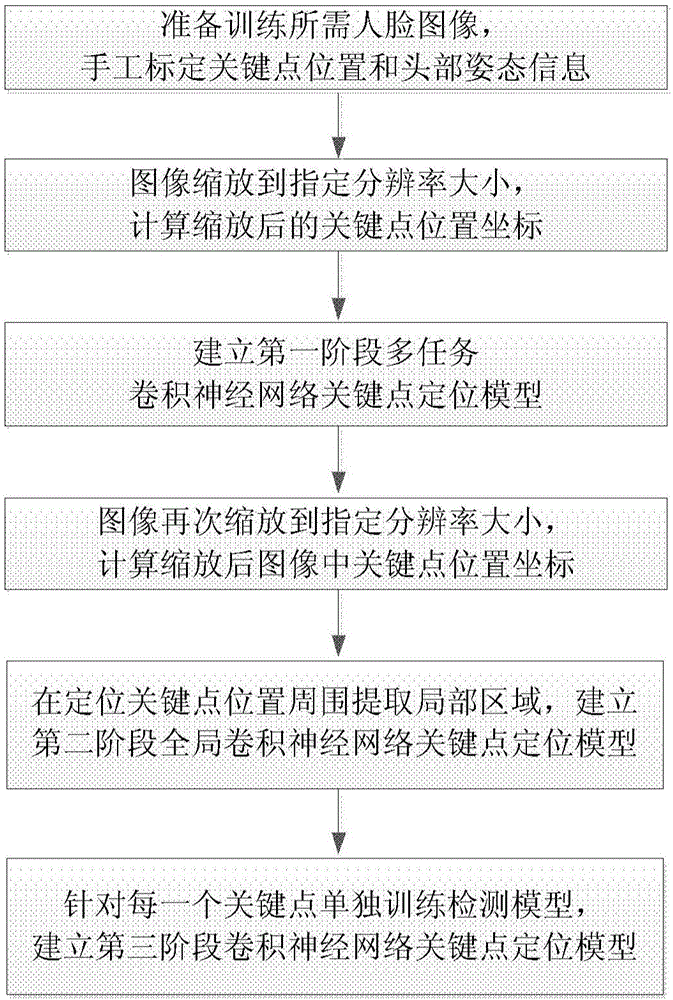

Method and apparatus for positioning face key points

ActiveCN106599830AFanhua has good performanceImprove robustnessCharacter and pattern recognitionCrucial pointNerve network

The invention discloses a method and apparatus for positioning face key points. The method includes the following steps: conducting rough positioning through a multi-task convolutional neural network, determining substantial positions of the face key points; extracting local regions in the peripheries of the key points, fusing the local regions extracted from the peripheries of the key points through the global cascade convolutional neural network, performing cascade positioning; finally, independently training the convolutional neural network at each key point and performing precise and fine positioning. According to the invention, the neural network has fewer total numbers and has excellent positioning effects.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

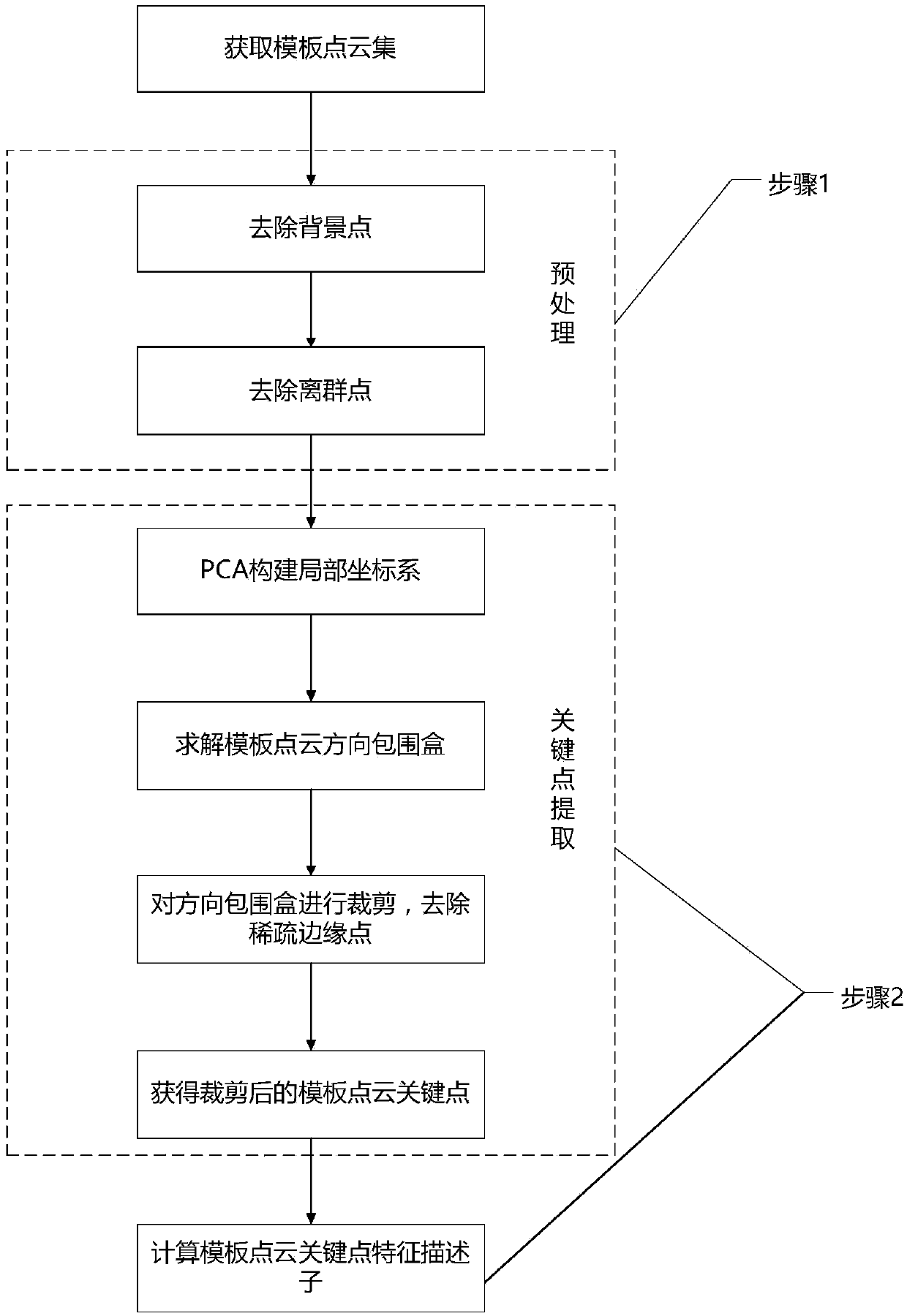

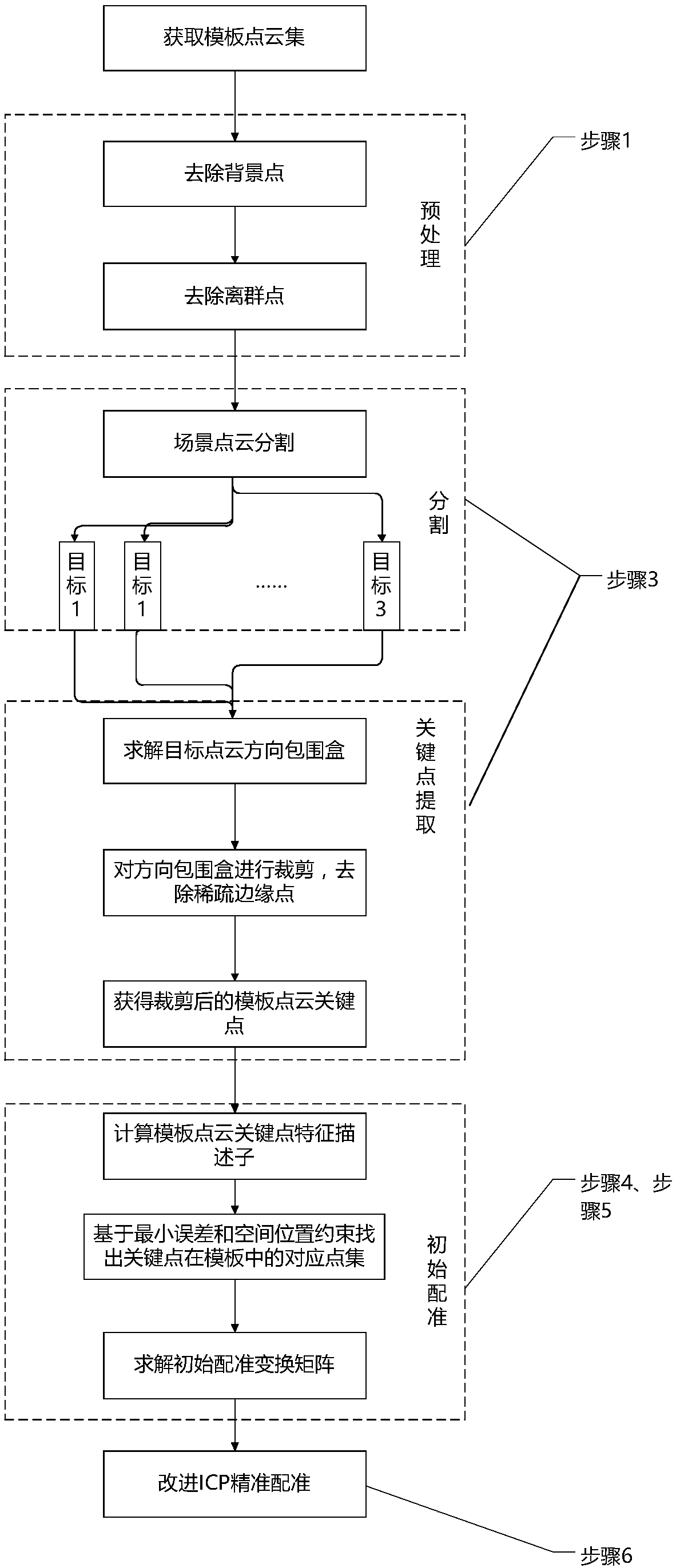

Scattered workpiece recognition and positioning method based on point cloud processing

InactiveCN108830902AAchieve a unique descriptionReduce the probability of falling into a local optimumImage enhancementImage analysisLocal optimumPattern recognition

The invention discloses a scattered workpiece recognition and positioning method based on point cloud processing, and the method is used for solving a problem of posture estimation of scattered workpeics in a random box grabbing process. The method comprises two parts: offline template library building and online feature registration. A template point cloud data set and a scene point cloud are obtained through a 3D point cloud obtaining system. The feature information, extracted in an offline state, of a template point cloud can be used for the preprocessing, segmentation and registration of the scene point cloud, thereby improving the operation speed of an algorithm. The point cloud registration is divided into two stages: initial registration and precise registration. A feature descriptor which integrates the geometrical characteristics and statistical characteristics is proposed at the stage of initial registration, thereby achieving the uniqueness description of the features of a key point. Points which are the most similar to the feature description of feature points are searched from a template library as corresponding points, thereby obtaining a corresponding point set, andachieving the calculation of an initial conversion matrix. At the stage of precise registration, the geometrical constraints are added for achieving the selection of the corresponding points, therebyreducing the number of iteration times of the precise registration, and reducing the probability that the algorithm falls into the local optimum.

Owner:JIANGNAN UNIV +1

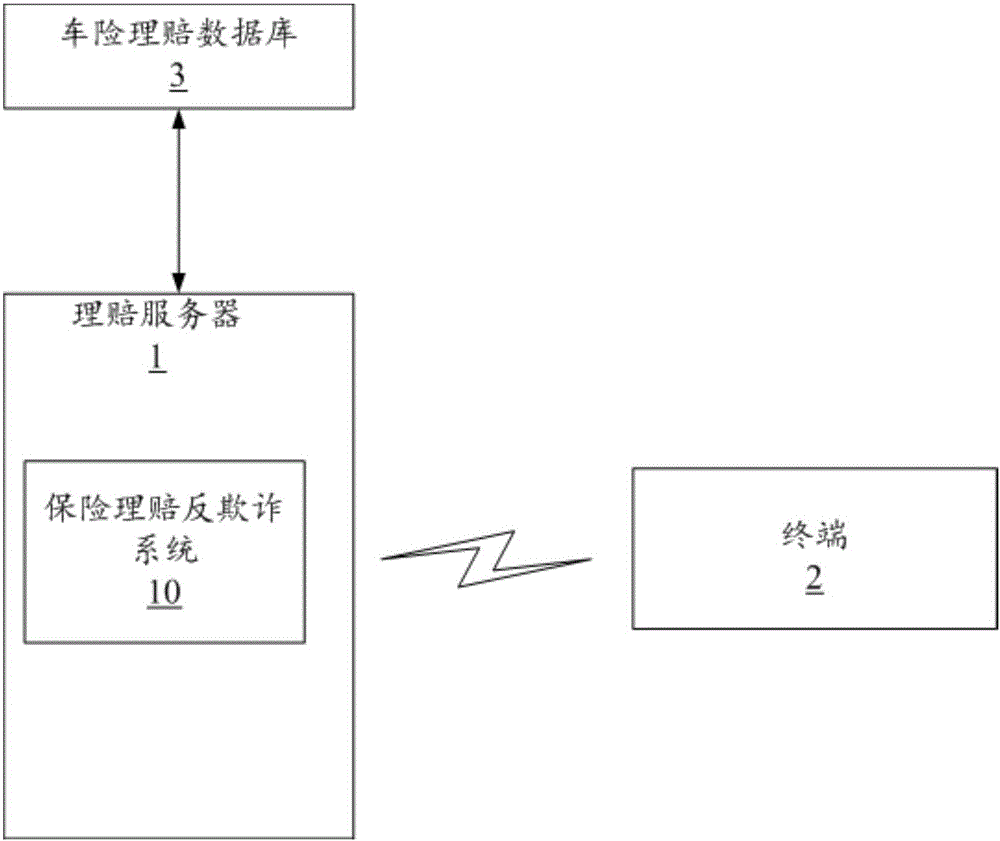

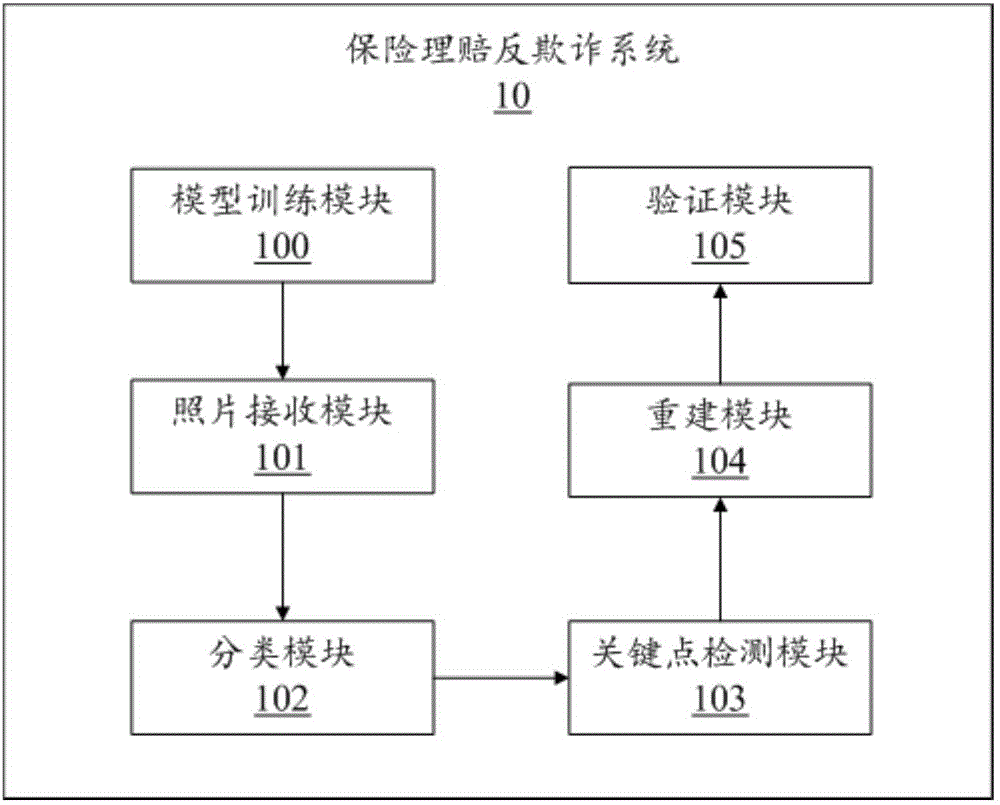

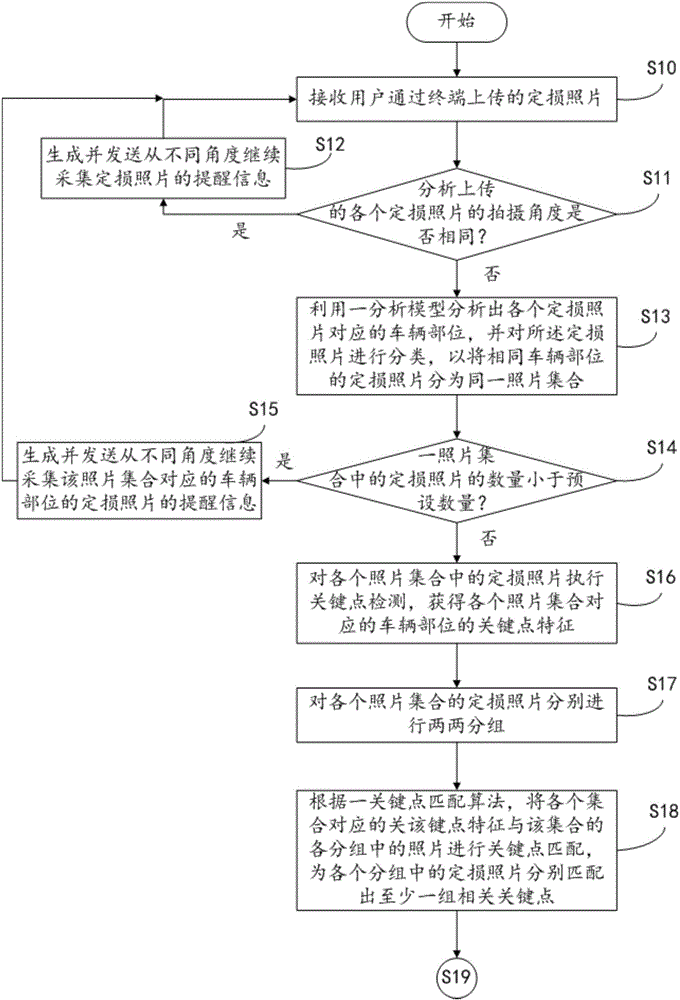

Method and server for achieving insurance claim anti-fraud based on consistency of multiple pictures

ActiveCN105719188APrevent insurance fraud by exaggerating the degree of lossImage enhancementImage analysisCrucial pointFeature parameter

The invention discloses a method for achieving insurance claim anti-fraud based on consistency of multiple pictures.The method comprises the steps of dividing damage assessment pictures of the same position of a vehicle into the same set; obtaining key point features of all sets, grouping the assessment pictures of all the picture sets, and matching multiple related key points with the assessment pictures in each group; according to the related key points of each group, calculating a feature point transformation matrix of each group, and converting one of pictures in each group into a to-be-verified picture having the same shooting angle as another picture in the group through the corresponding feature point transformation matrix; conducting feature parameter matching on each to-be-verified picture and another picture in the corresponding group; when feature parameters are not matched, generating reminding information so as to remind that fraud practice exists in the received picture.The invention further provides a server applicable to the method.By means of the method and server for achieving insurance claim anti-fraud based on consistency of the multiple pictures, fraud insurance claim practice can be identified automatically.

Owner:PING AN TECH (SHENZHEN) CO LTD

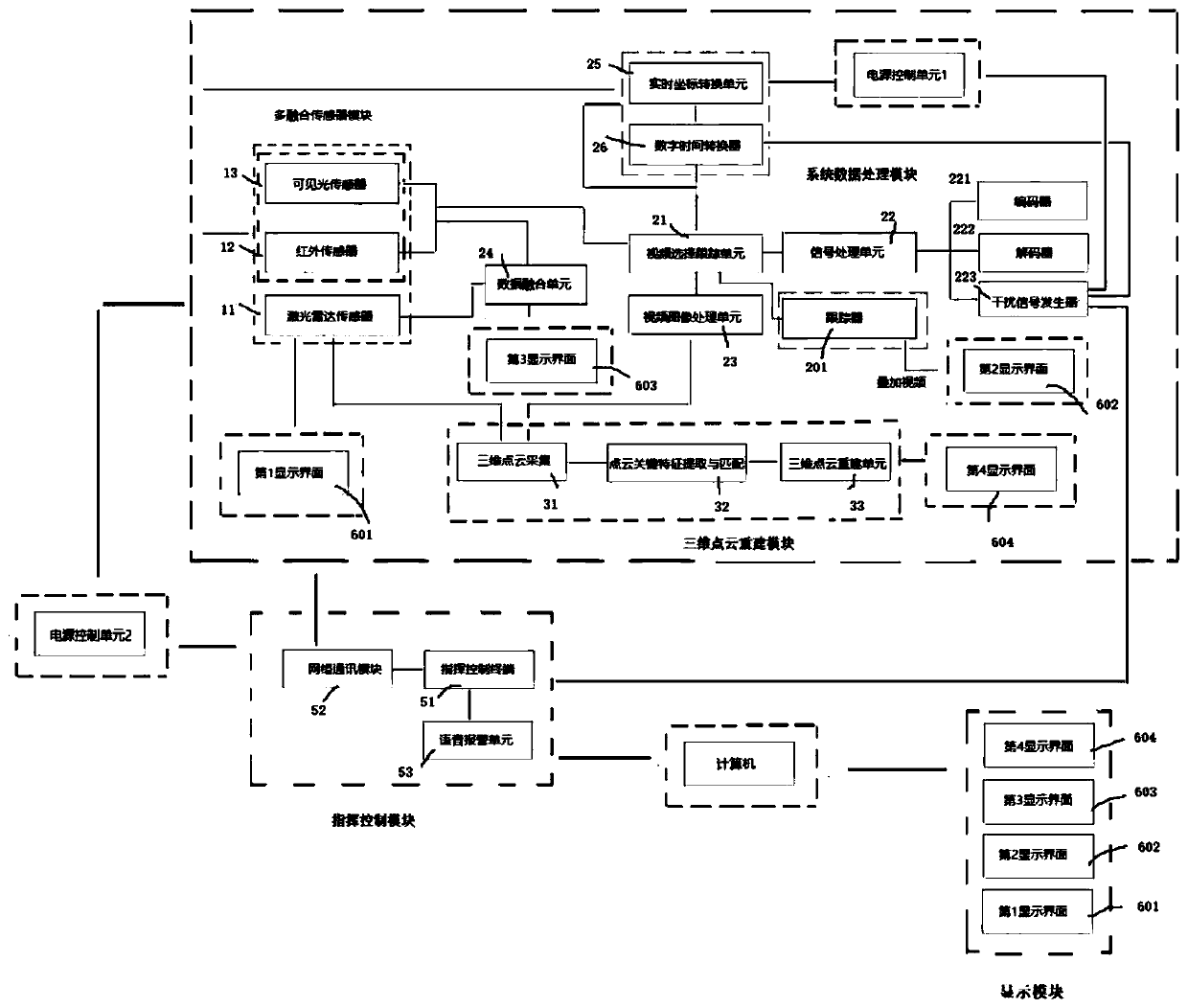

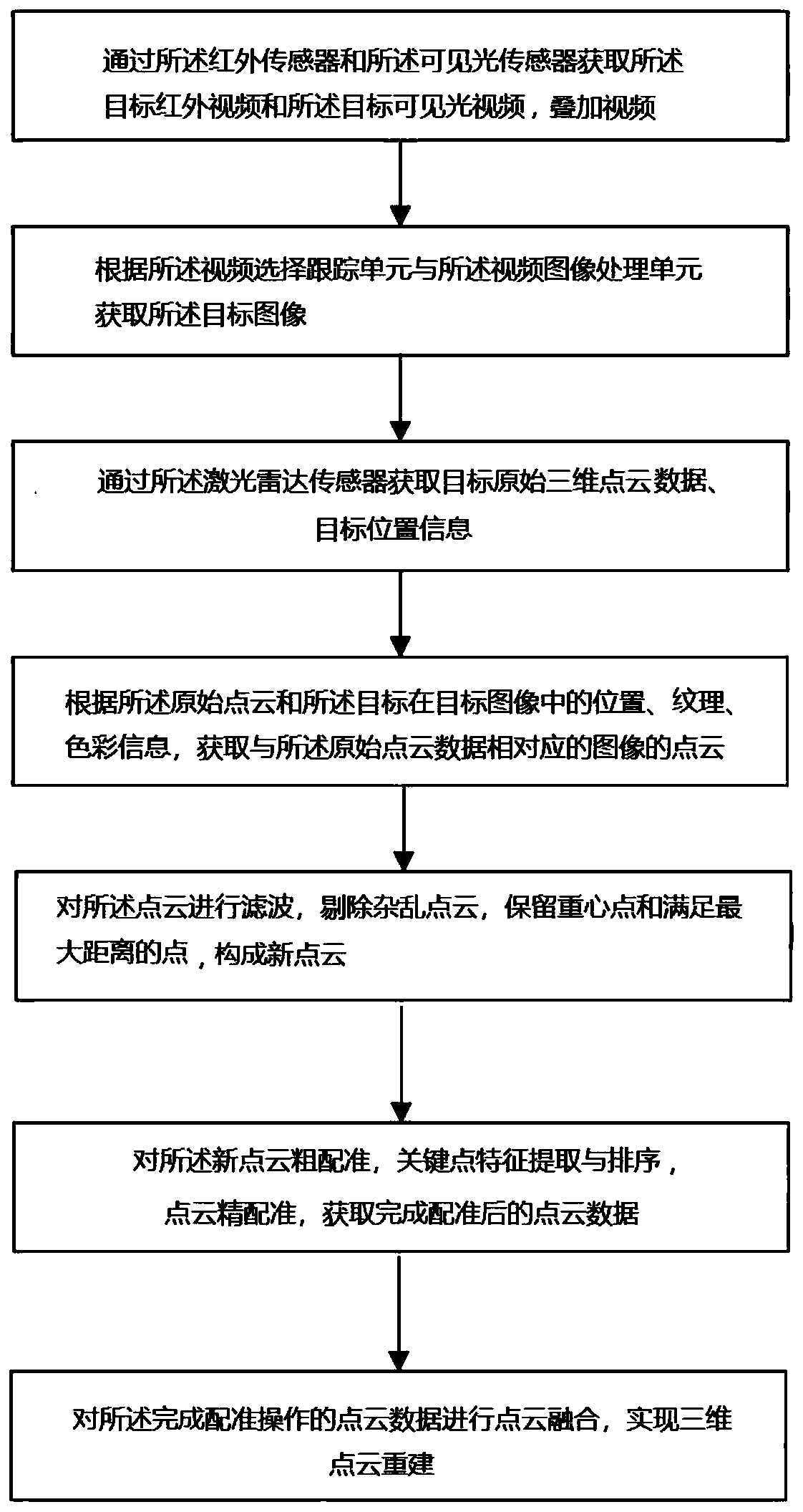

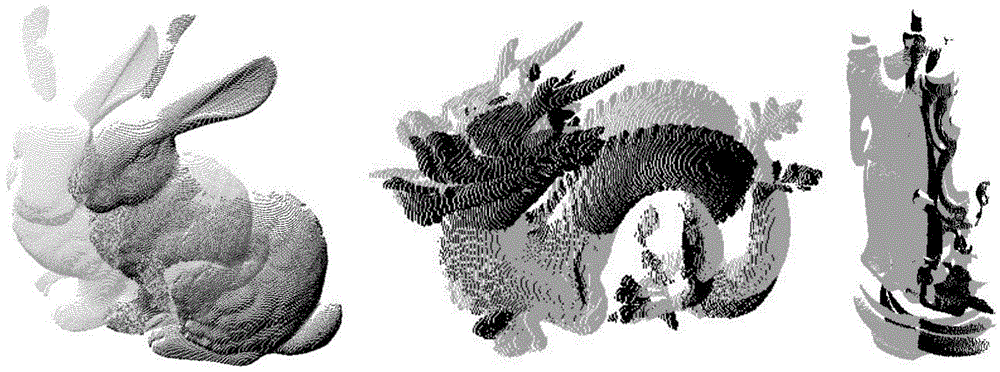

Three-dimensional point cloud reconstruction device and method based on multi-fusion sensor

ActiveCN110415342AAchieve reconstructionAccurate real-time 3D reconstructionDetails involving processing steps3D modellingCrucial pointReconstruction method

The invention discloses a three-dimensional point cloud reconstruction device and a three-dimensional point cloud reconstruction method based on a multi-fusion sensor. The device comprises a multi-fusion sensor module, a system data processing module and a three-dimensional point cloud reconstruction module. The method comprises the following steps: acquiring point cloud data and a video image byusing a multi-fusion sensor device, and processing the video image to obtain a target image including target posture, position and texture information; acquiring target point cloud corresponding to the original point cloud according to the acquired posture position information in the original point cloud and the target image; filtering the point clouds to remove messy point clouds; wherein the point cloud coarse registration comprises key point quality analysis; point cloud fine registration; and inputting the registered point cloud into a point cloud reconstruction unit to realize three-dimensional point cloud fusion and reconstruction. According to the method, the feature points corresponding to the target image reconstruction point cloud corresponding to the original three-dimensional point cloud are extracted to obtain the target three-dimensional space position information, texture and color information. Therefore, the point cloud registration precision is improved, and the falseidentification and tracking loss probability is effectively reduced.

Owner:SHENZHEN WEITESHI TECH

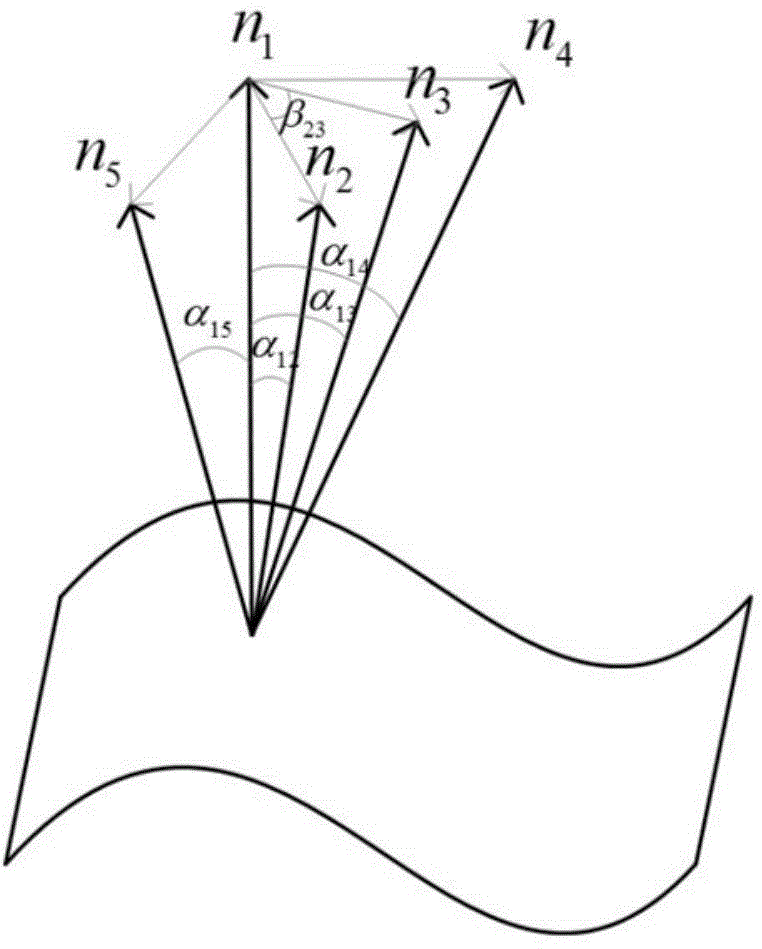

Multi-scale normal feature point cloud registering method

ActiveCN104143210AEvenly distributedRecognizable3D-image renderingSingular value decompositionFeature vector

The invention relates to a multi-scale normal feature point cloud registering method. The multi-scale normal feature point cloud registering method is characterized by including the steps that two-visual-angle point clouds, including the target point clouds and the source point clouds, collected by a point cloud obtaining device are read in; the curvature of radius neighborhoods of three scales of points is calculated, and key points are extracted from the target point clouds and the source point clouds according to a target function; the normal vector angular deviation and the curvature of the key points in the radius neighborhoods of the different scales are calculated and serve as feature components, feature descriptors of the key points are formed, and a target point cloud key point feature vector set and a source point cloud key point feature vector set are accordingly obtained; according to the similarity level of the feature descriptors of the key points, the corresponding relations between the target point cloud key points and the source point cloud key points are preliminarily determined; the wrong corresponding relations are eliminated, and the accurate corresponding relations are obtained; the obtained accurate corresponding relations are simplified with the clustering method, and the evenly-distributed corresponding relations are obtained; singular value decomposition is carried out on the final corresponding relations to obtain a rigid body transformation matrix.

Owner:HARBIN ENG UNIV

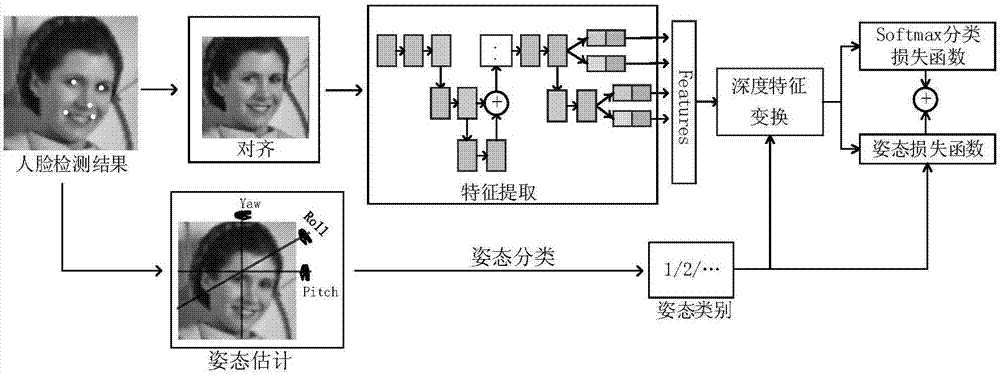

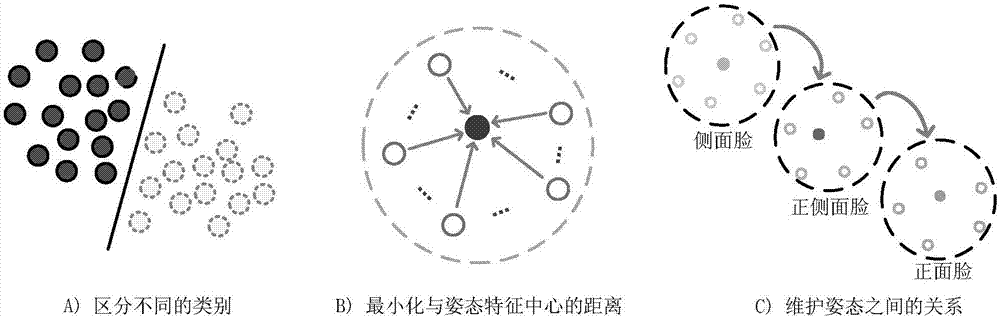

Face recognition method based on deep transformation learning in unconstrained scene

ActiveCN107506717AEnhancing Feature Transformation LearningImprove robustnessCharacter and pattern recognitionNeural learning methodsCrucial pointCharacteristic space

The invention discloses a face recognition method based on deep transformation learning in an unconstrained scene. The method comprises the following steps: obtaining a face image and detecting face key points; carrying out transformation on the face image through face alignment, and in the alignment process, minimizing the distance between the detected key points and predefined key points; carrying out face attitude estimation and carrying out classification on the attitude estimation results; separating multiple sample face attitudes into different classes; carrying out attitude transformation, and converting non-front face features into front face features and calculating attitude transformation loss; and updating network parameters through a deep transformation learning method until meeting threshold requirements, and then, quitting. The method proposes feature transformation in a neural network and transform features of different attitudes into a shared linear feature space; by calculating attitude loss and learning attitude center and attitude transformation, simple class change is obtained; and the method can enhance feature transformation learning and improve robustness and differentiable deep function.

Owner:唐晖

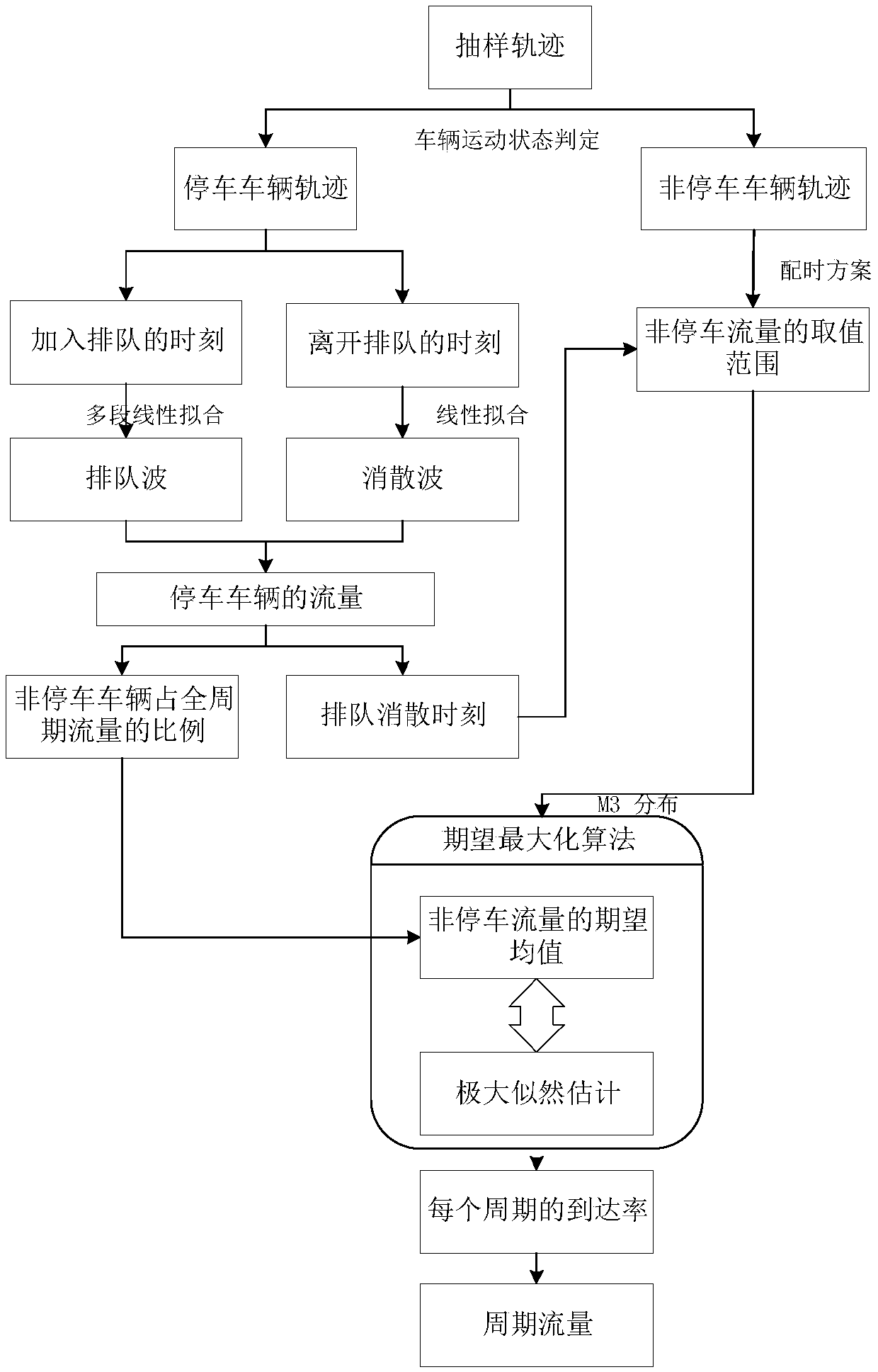

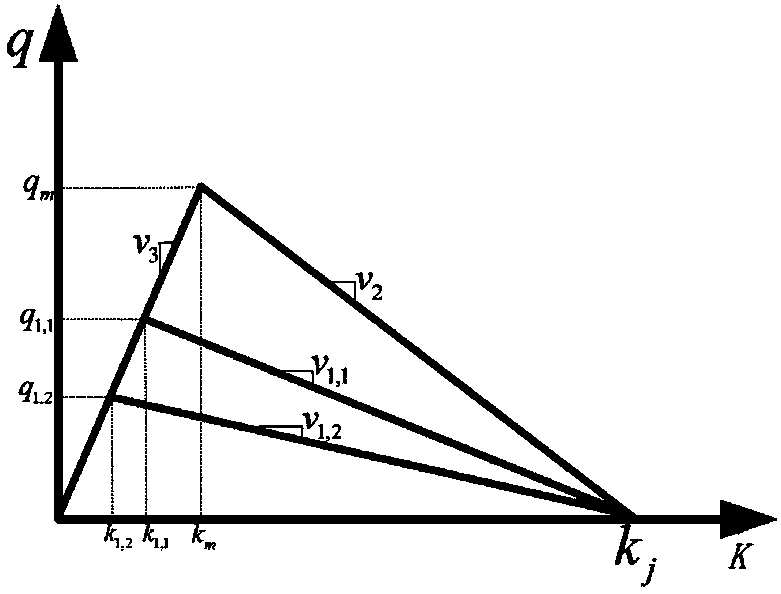

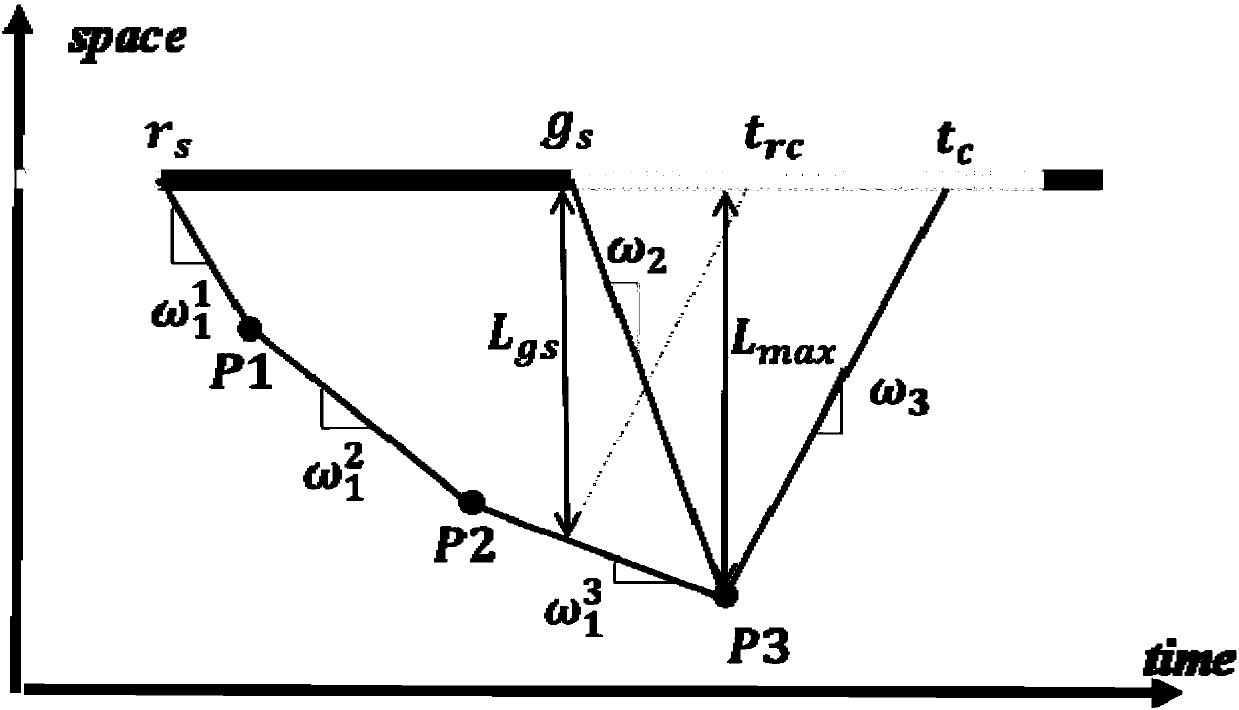

Trajectory data-based signal intersection periodic flow estimation method

ActiveCN108053645AImprove estimation accuracyIncrease profitDetection of traffic movementFull cycleIntersection of a polyhedron with a line

The invention relates to a trajectory data-based signal intersection periodic flow estimation method. The method comprises the following steps that: 1) the trajectory point data of sampled vehicles are acquired, and the key point information of the vehicles entering and leaving a queue is obtained; 2) a fitting method is adopted to estimate the queuing waves and evanescent waves of vehicle queuing, and the intersection point of the queuing waves and the evanescent waves is taken as the flow estimated value of queuing vehicles; 3) the density distribution function of full-cycle flow is obtainedaccording to the flow estimated value, and the proportion of non-stop vehicles in the full-cycle flow; and 4) a full-cycle flow estimation problem is transformed into a parameter estimation problem based on the Poisson distribution and M3 distribution of the non-queuing vehicles according to the density distribution function of the full-cycle flow, and a maximum likelihood estimation method is used to perform estimation, and the maximum-likelihood expectation-maximization method is adopted to perform solving, and the estimated value of the arrival flow of each cycle can be obtained. Comparedwith the prior art, the method of the present invention has the advantages of the fusion of model analysis and statistical analysis, the full use of trajectory information, wide applicability and thelike.

Owner:TONGJI UNIV

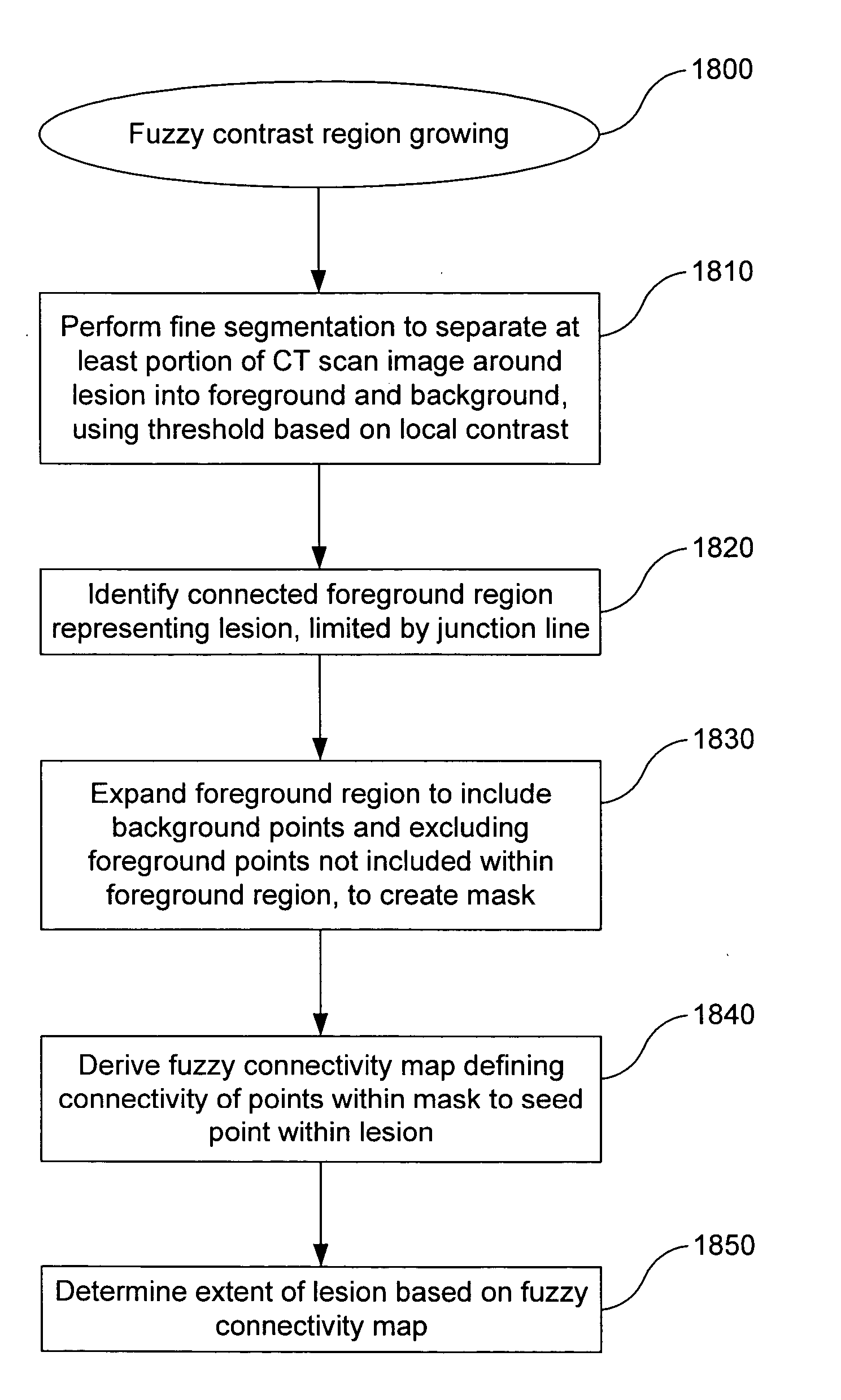

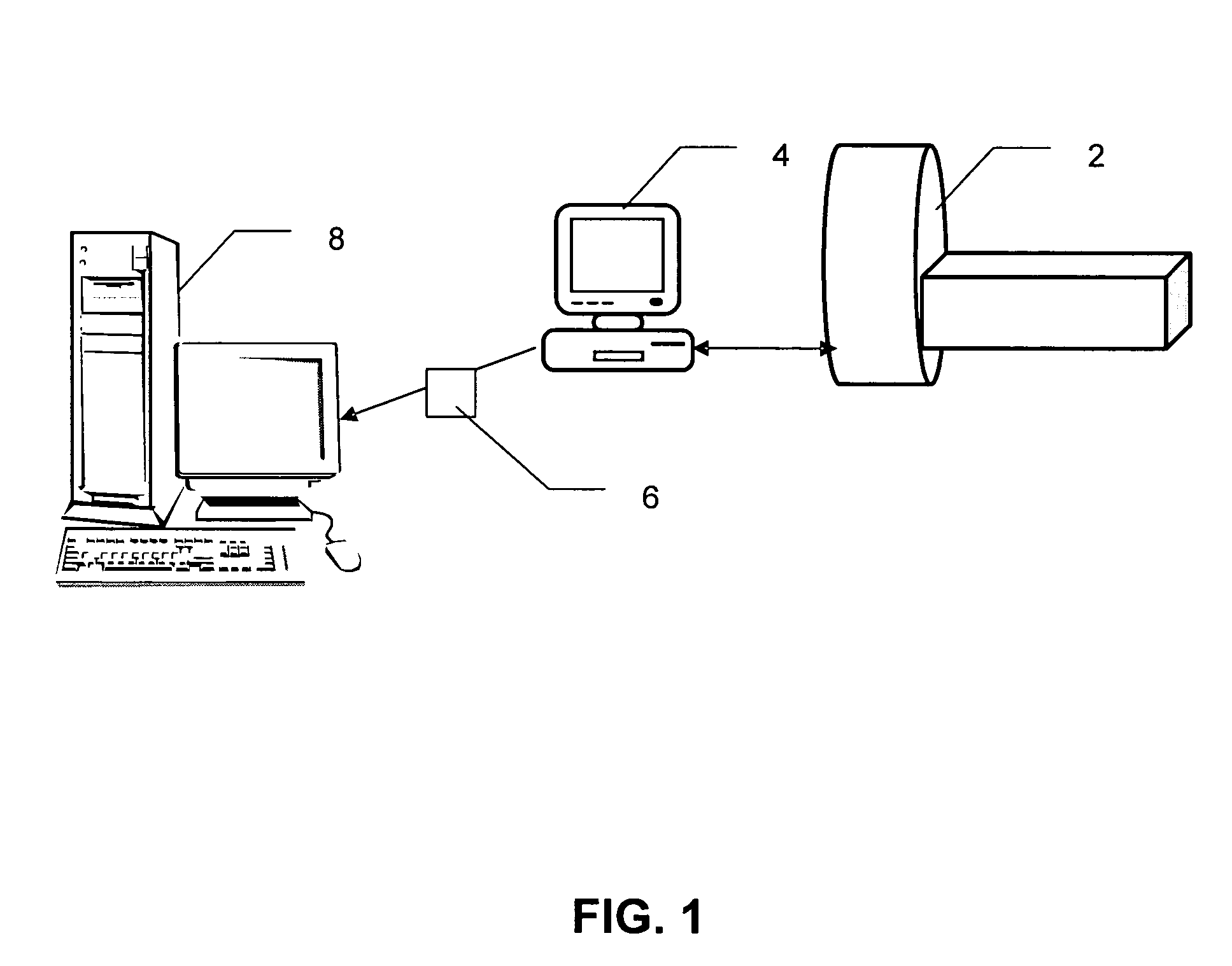

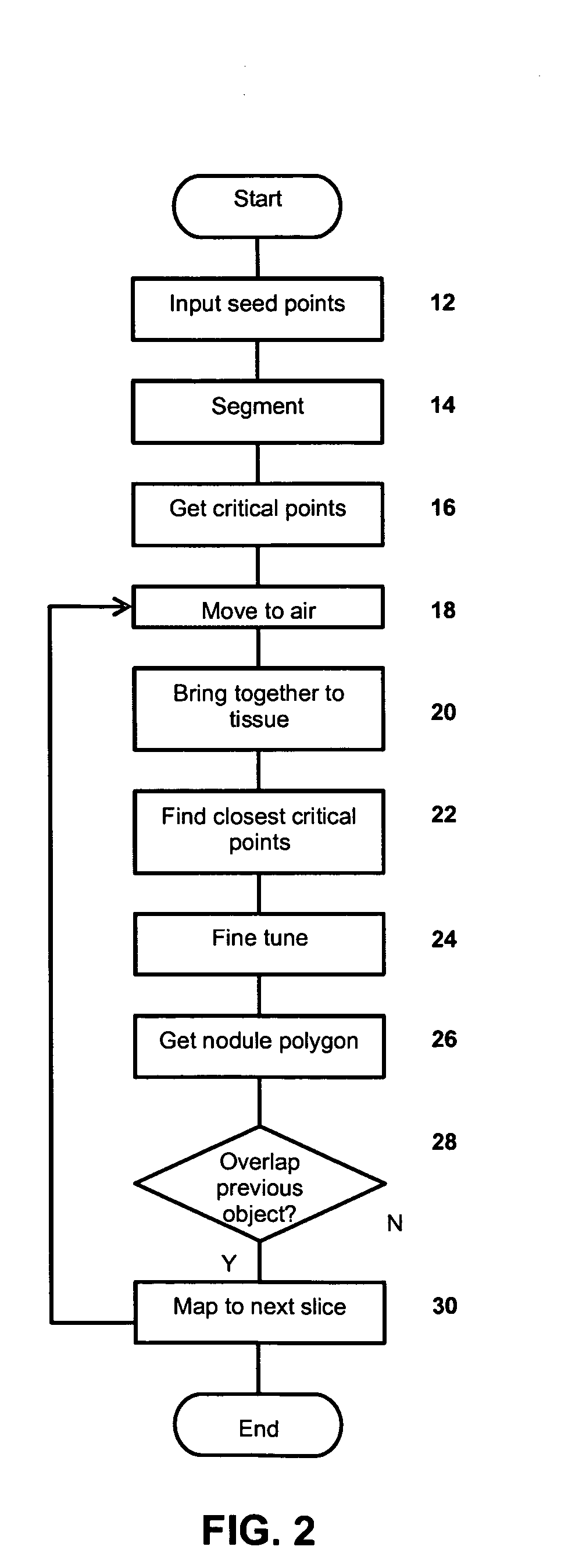

Lesion boundary detection

InactiveUS20050286750A1Accurately determineImage enhancementImage analysisComputed tomographyCrucial point

A method of detecting a junction between a lesion and a wall in a CT scan image may include determining the boundary (B) of the wall to an internal space (L), identifying critical points (c1, c2) along the boundary, and selecting one critical point at either side of the lesion as a junction point between the wall and the lesion. The critical points may be points of maximum local curvature and / or points of transition between straight and curved sections of the boundary. The critical points may be selected by receiving first and second seed points (p1, p2) at either side of the lesion, moving the seed points to the boundary if they are not already located on the boundary, and finding the closest critical points to the seed points. The seed points may be determined by displacing the determined junction points (j1, j2) from an adjacent slice of the image into the current slice.

Owner:SAMSUNG ELECTRONICS CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com