3D point cloud FPFH characteristic-based real-time three dimensional space positioning method

A positioning method and three-dimensional space technology, applied in image data processing, instruments, calculations, etc., can solve the problems of inaccurate positioning results, errors, and low space-time complexity, and achieve the effect of reducing space-time complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The present invention will be further described below.

[0019] A real-time three-dimensional spatial positioning method based on 3D point cloud FPFH features, comprising the steps of:

[0020] 1) Obtain 3D point cloud data from the depth camera;

[0021] 2) Point cloud key frame selection. In the first frame, the first frame is regarded as a key frame, and the remaining key frame selection method is to filter the number of corresponding points matched by the threshold value after the point cloud is accurately matched;

[0022] 3) Point cloud preprocessing: First, segment the point cloud, and after segmentation, all possible planes in the point cloud can be accurately given in real time; then, the grid down-sampling method is used to down-sample and filter the plane; finally, the region is filtered, Eliminate areas with fewer key points;

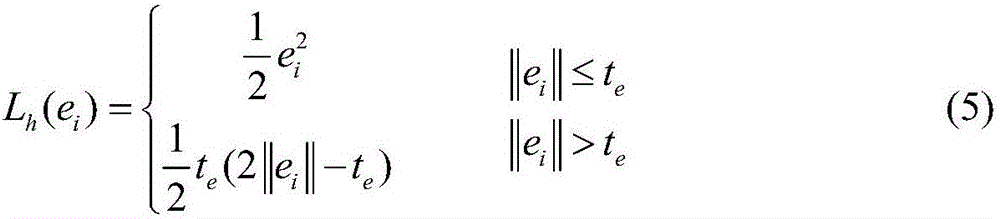

[0023] 4) Feature description: use the ISS algorithm to obtain the key points of the point cloud, and obtain the FPFH features of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com