Patents

Literature

2566 results about "Transformation matrix" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In linear algebra, linear transformations can be represented by matrices. for some m×n matrix A, called the transformation matrix of T. Note that A has m rows and n columns, whereas the transformation T is from ℝⁿ to ℝᵐ. There are alternative expressions of transformation matrices involving row vectors that are preferred by some authors.

View projection matrix based high performance low latency display pipeline

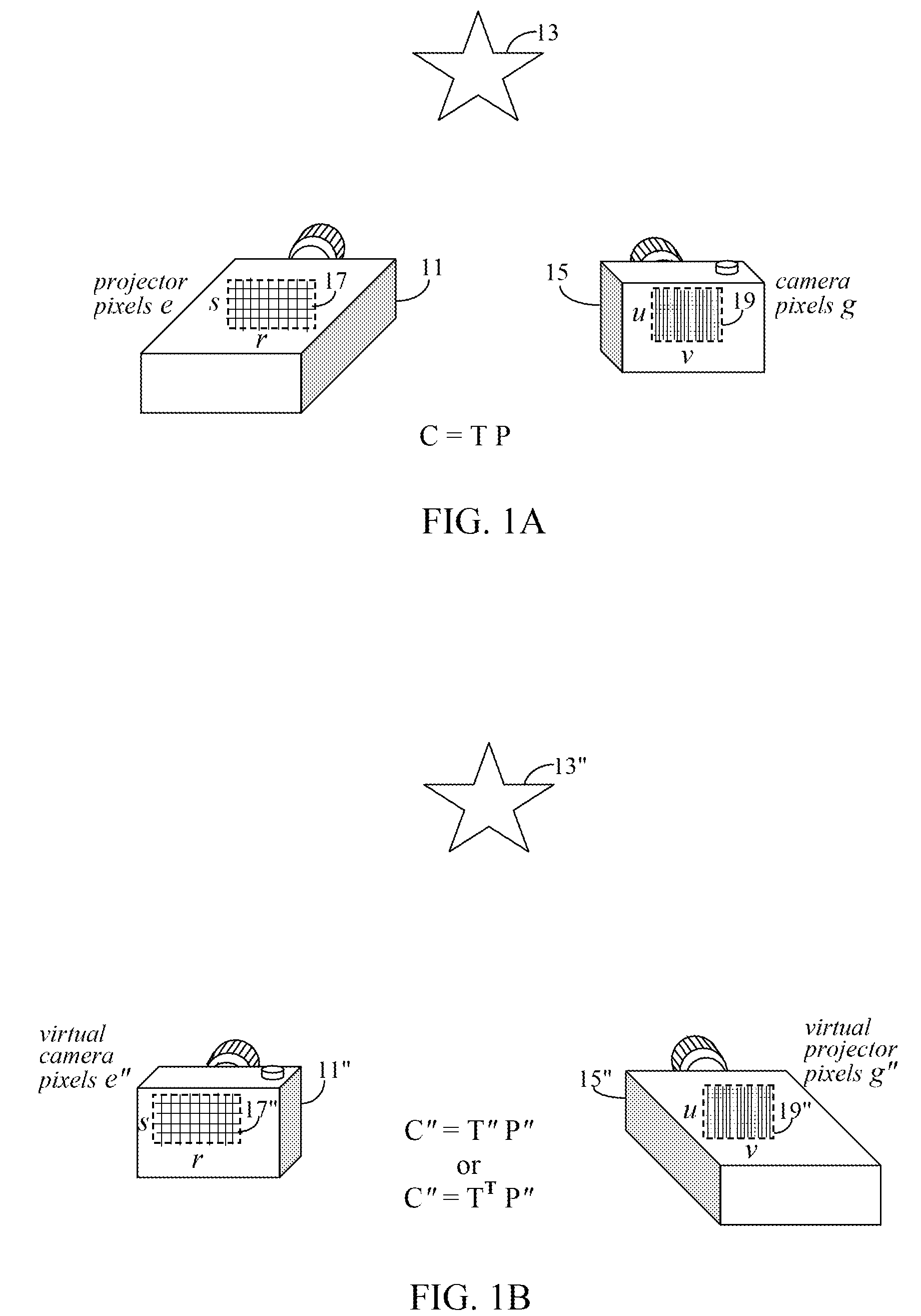

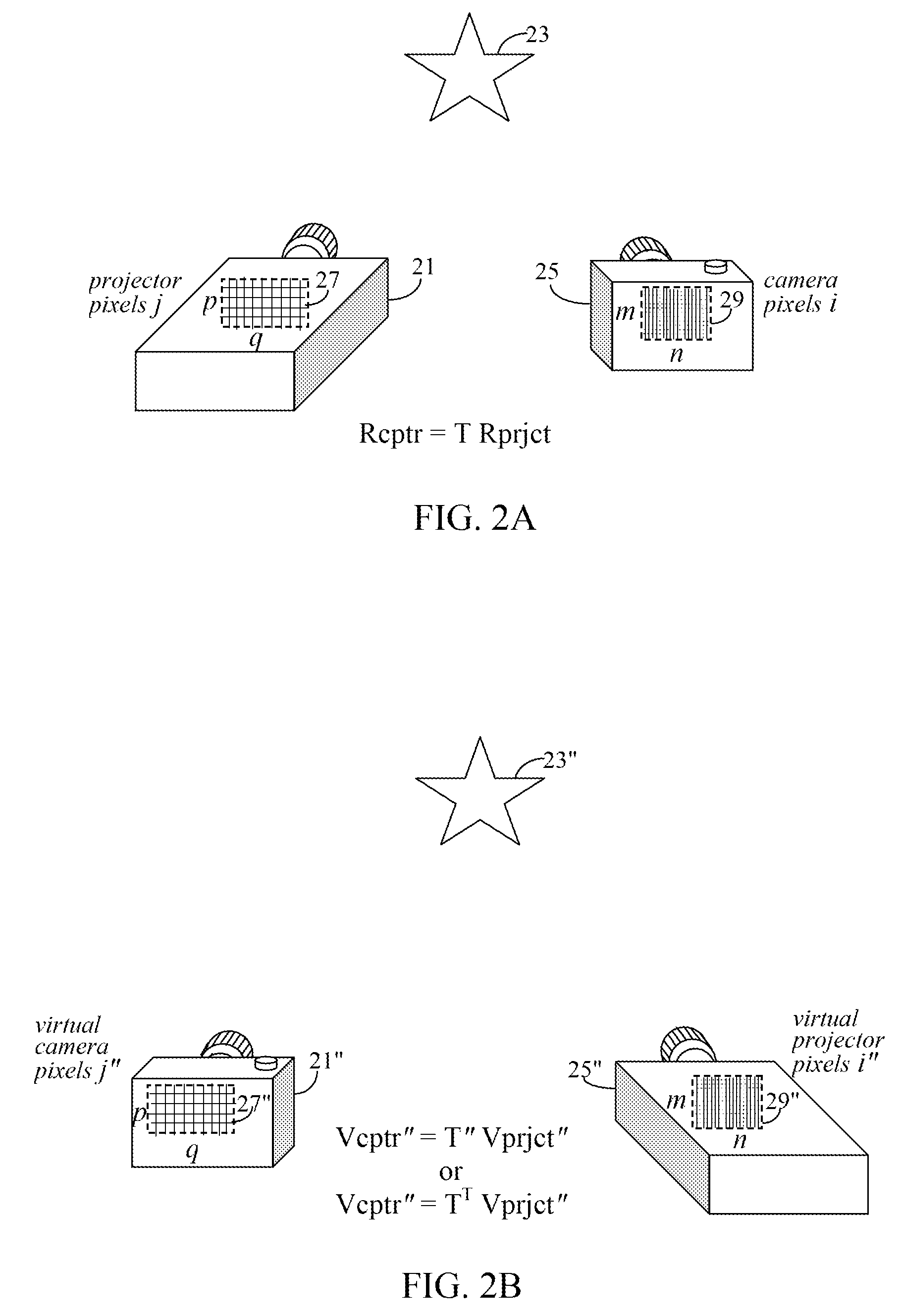

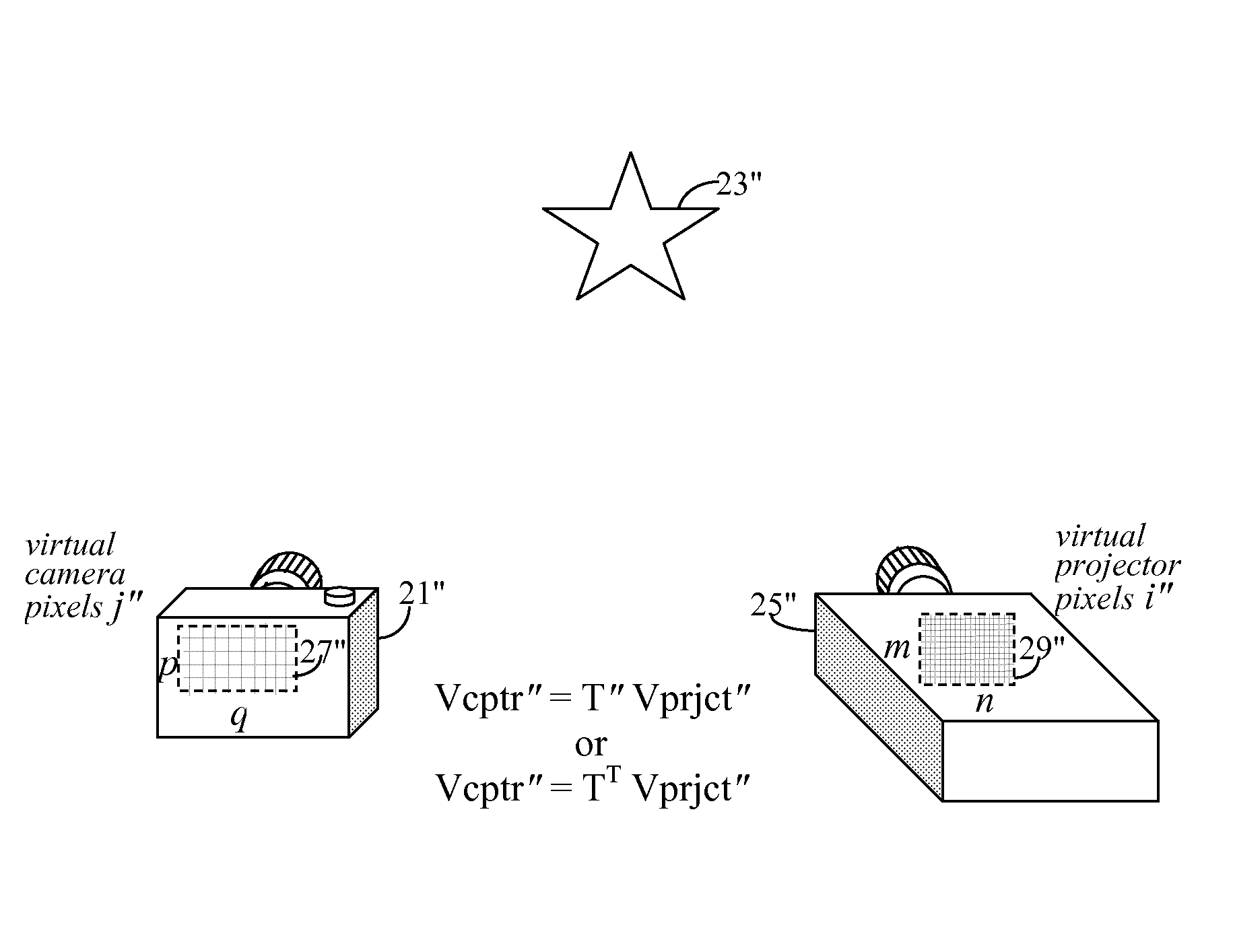

InactiveUS8013904B2Easy to shipEasy to implementTelevision system detailsPicture reproducers using projection devicesHat matrixImaging processing

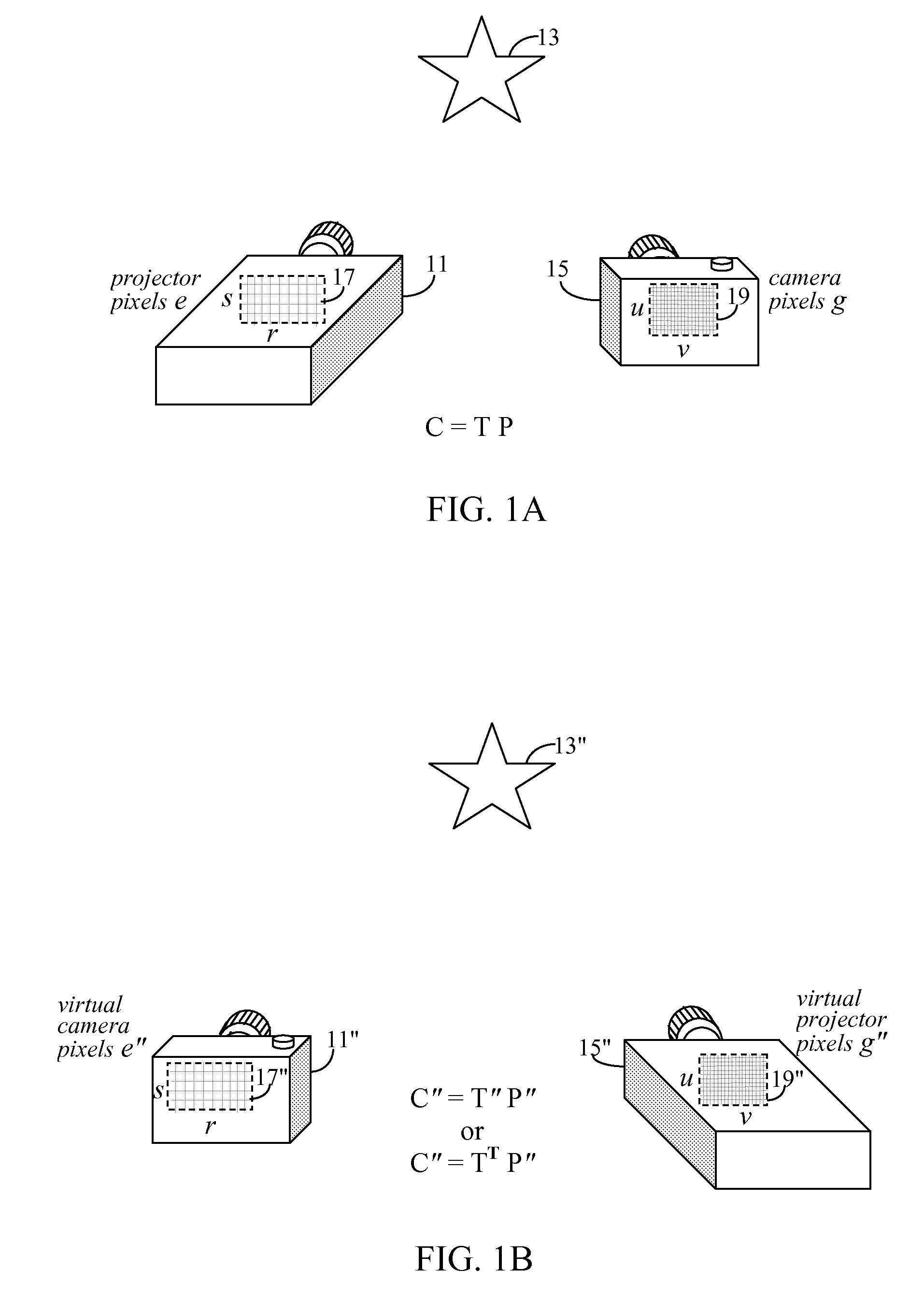

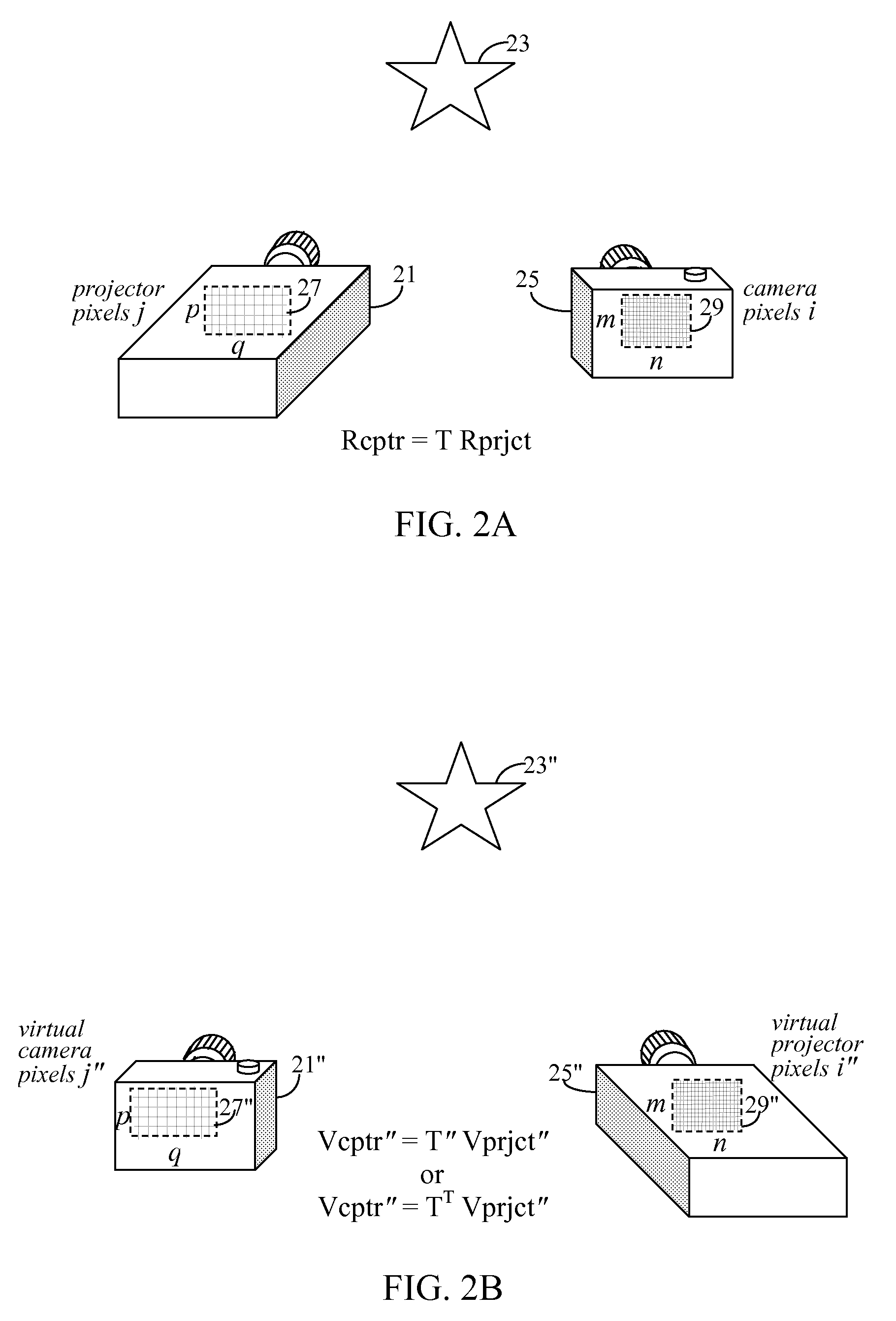

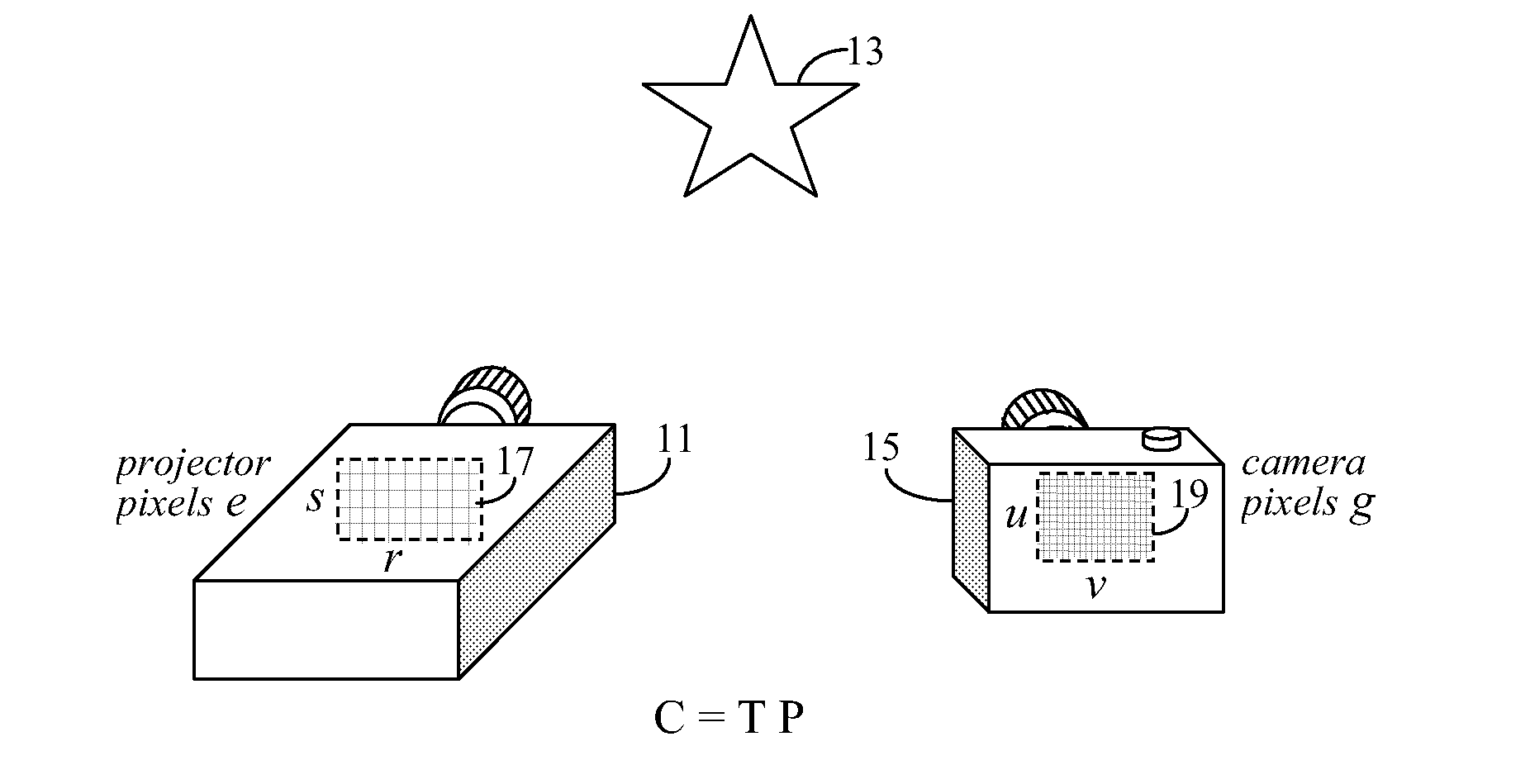

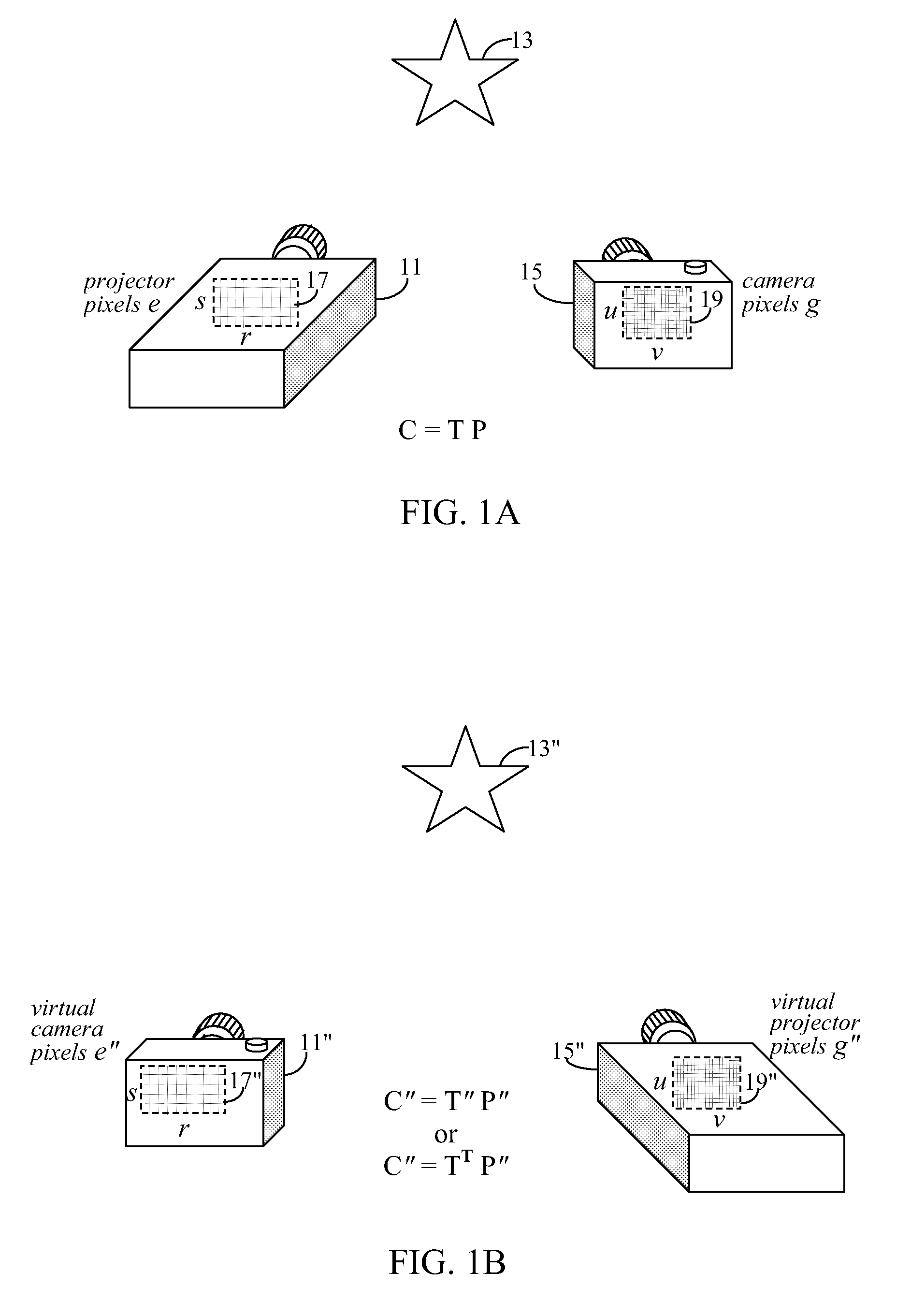

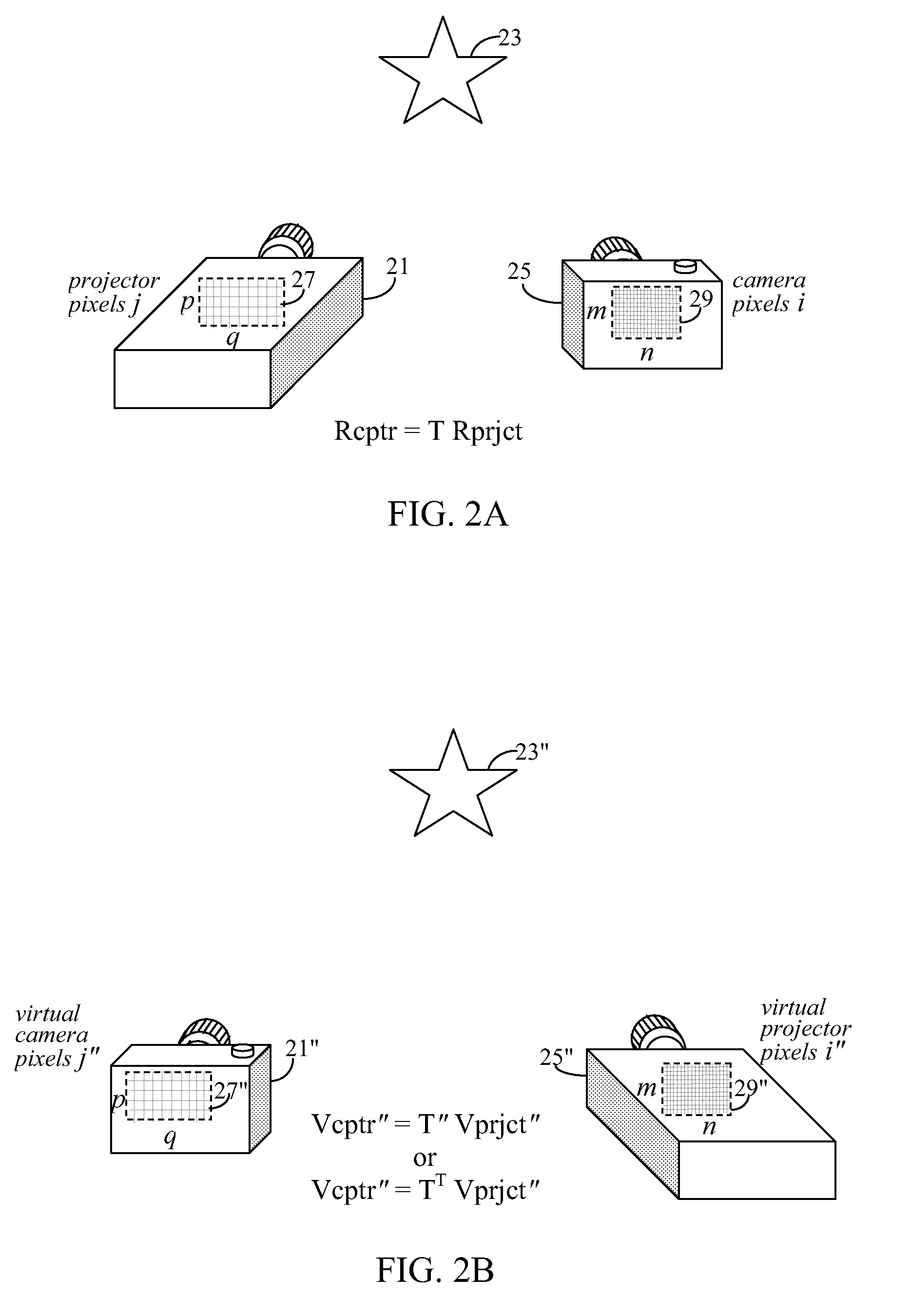

A projection system uses a transformation matrix to transform a projection image p in such a manner so as to compensate for surface irregularities on a projection surface. The transformation matrix makes use of properties of light transport relating a projector to a camera. A display pipeline of user-supplied image modification processing modules are reduced by first representing the processing modules as multiple, individual matrix operations. All the matrix operations are then combined with, i.e., multiplied to, the transformation matrix to create a modified transformation matrix. The created transformation matrix is then used in place of the original transformation matrix to simultaneously achieve both image transformation and any pre and post image processing defined by the image modification processing modules.

Owner:SEIKO EPSON CORP

Small memory footprint light transport matrix capture

ActiveUS8106949B2Easy to shipEasy to implementTelevision system detailsTelevision system scanning detailsImaging processingProjection image

A projection system uses a transformation matrix to transform a projection image p in such a manner so as to compensate for surface irregularities on a projection surface. The transformation matrix makes use of properties of light transport relating a projector to a camera. A display pipeline of user-supplied image modification processing modules are reduced by first representing the processing modules as multiple, individual matrix operations. All the matrix operations are then combined with, i.e., multiplied to, the transformation matrix to create a modified transformation matrix. The created transformation matrix is then used in place of the original transformation matrix to simultaneously achieve both image transformation and any pre and post image processing defined by the image modification processing modules.

Owner:SEIKO EPSON CORP

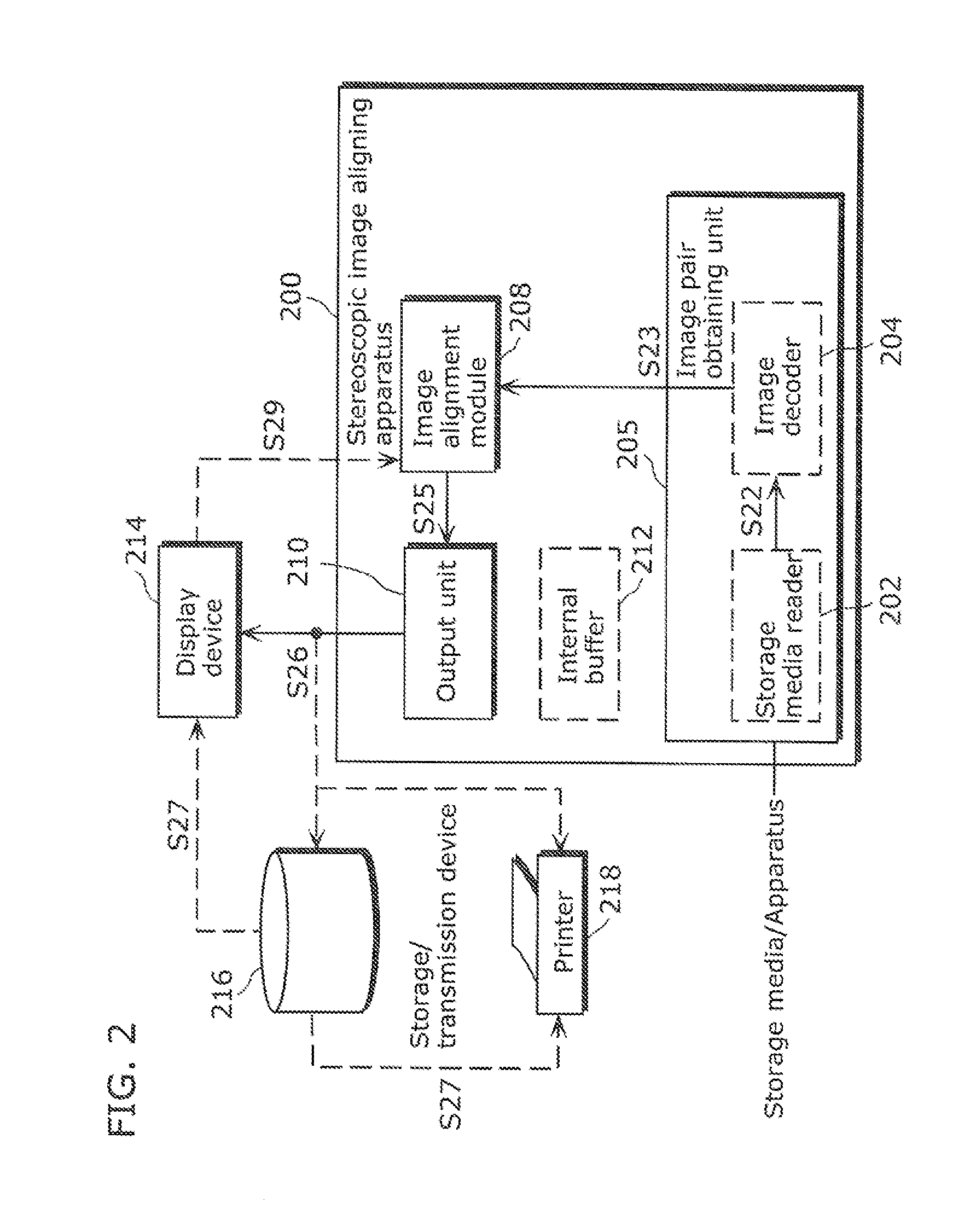

Stereoscopic image aligning apparatus, stereoscopic image aligning method, and program of the same

InactiveUS20120147139A1Short amount of timeFor automatic alignmentStereoscopic photographySteroscopic systemsParallaxImage pair

A stereoscopic image aligning apparatus (200) automatically aligns image pairs for stereoscopic viewing in a shorter amount of time than conventional apparatuses, which is applicable to image pairs captured by a single sensor camera or a variable baseline camera, without relying on camera parameters. The stereoscopic image aligning apparatus (200) includes: an image pair obtaining unit (205) obtaining an image pair including a left-eye image and a right-eye image corresponding to the left-eye image; a corresponding point detecting unit (252) detecting a corresponding point representing a set of a first point included in a first image that is one of the images of the image pair and a second point included in a second image that is the other of the images of the image pair and corresponding to the first point; a first matrix computing unit (254) computing a homography transformation matrix for transforming the first point such that a vertical parallax between the first and second points is smallest and an epipolar constraint is satisfied; a transforming unit (260) transforming the first image using the homography transformation matrix; and an output unit (210) outputting: a third image that is the transformed first image; and the second image.

Owner:PANASONIC CORP

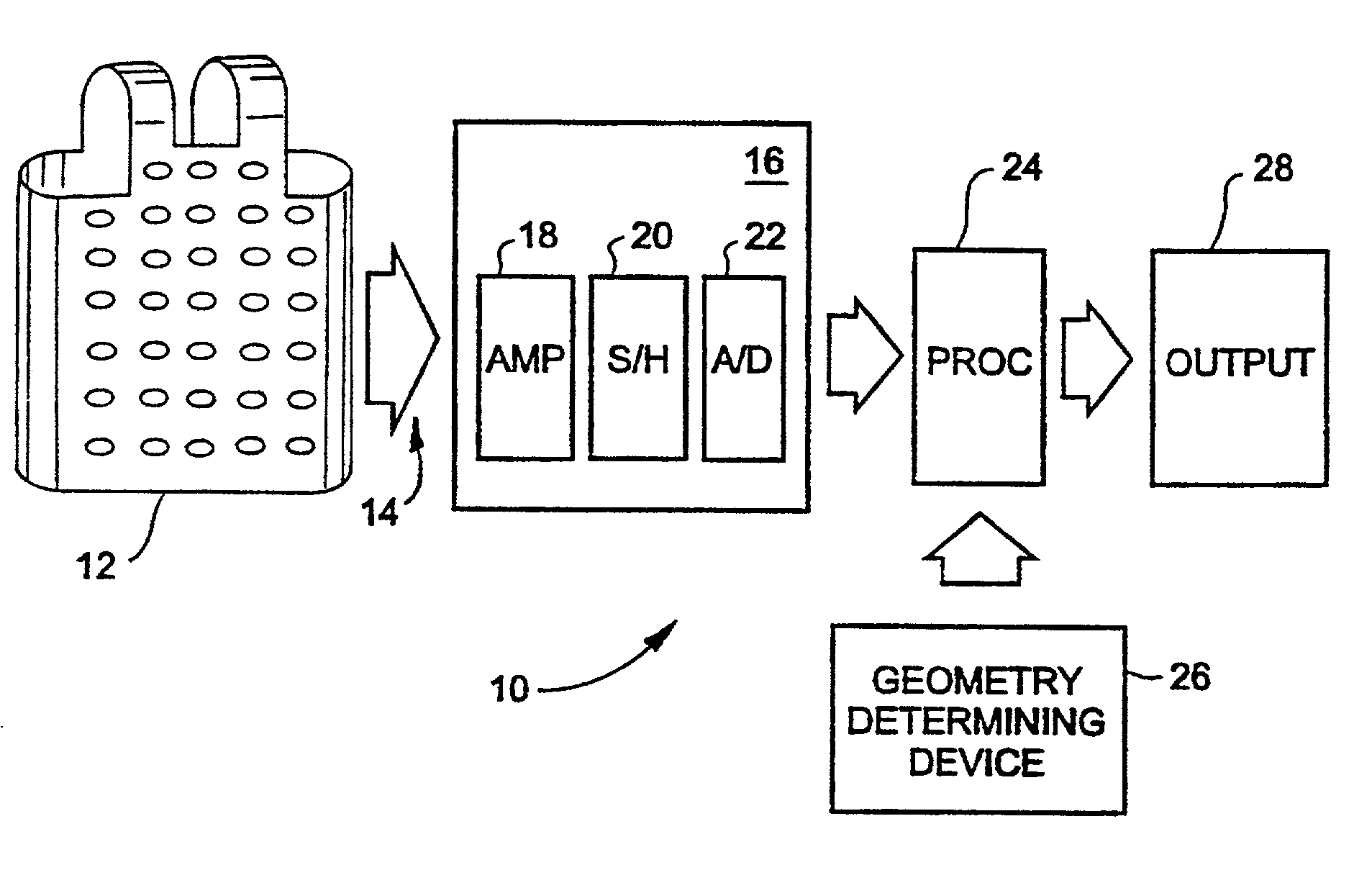

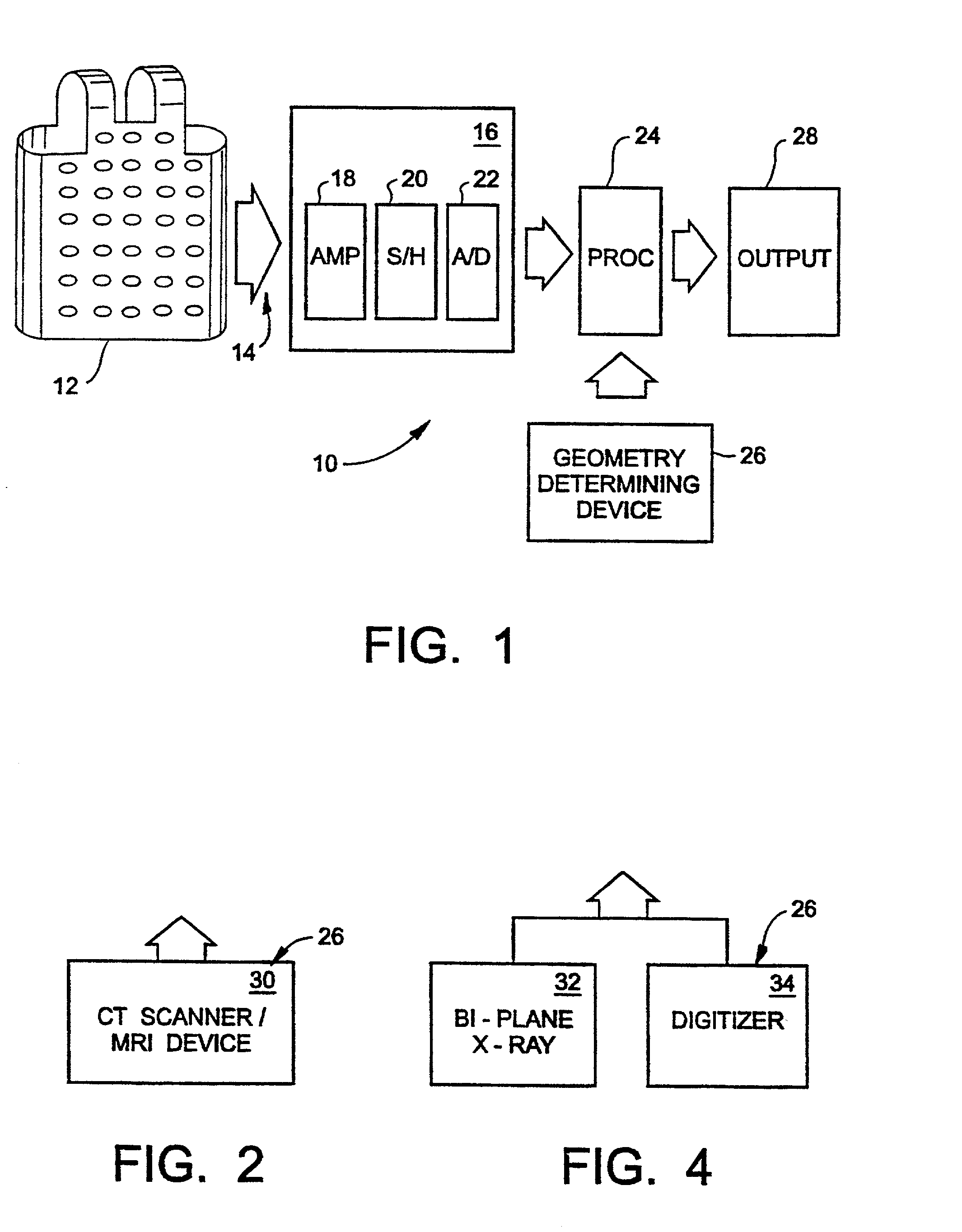

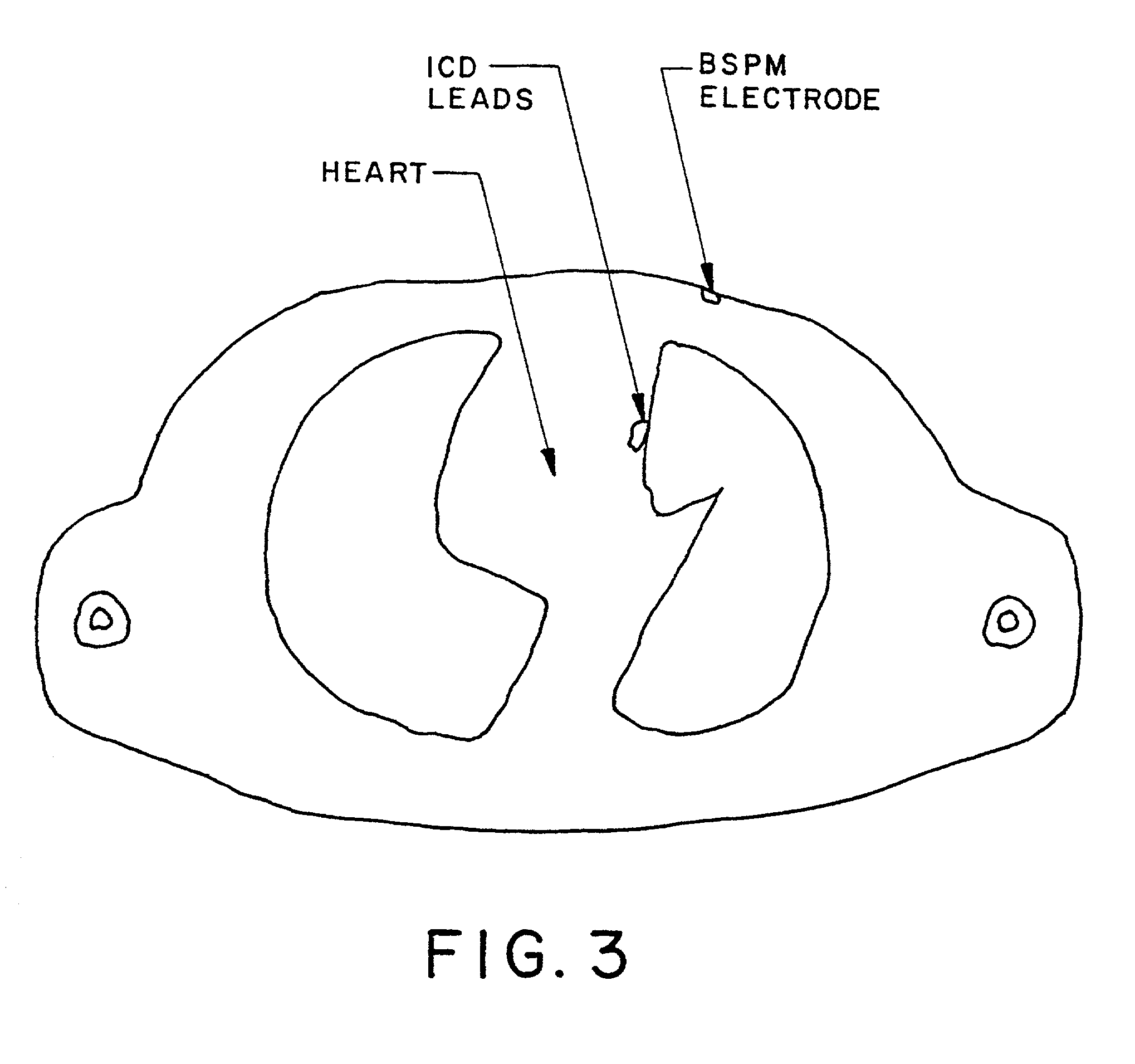

System and method for non-invasive electrocardiographic imaging

A system and method is provided for non-invasively determining electrical activity of the heart of a human being. Electrical potentials are measured on the body surface via an electrode vest (12), and a body surface potential map is generated. A matrix of transformation based on the geometry of the torso, the heart, locations of electrodes, and position of the heart within the torso is also determined with the aid of a processor (24), and a geometry determining device (26). The electrical potential distribution over the epicardial surface of the heart is then determined based ona regularized matrix of transformation, and the body surface potential map. Using the epicardial potential distributions, epicardial electrogram, isochronal are also reconstructed, and displayed via an output device (28).

Owner:CASE WESTERN RESERVE UNIV

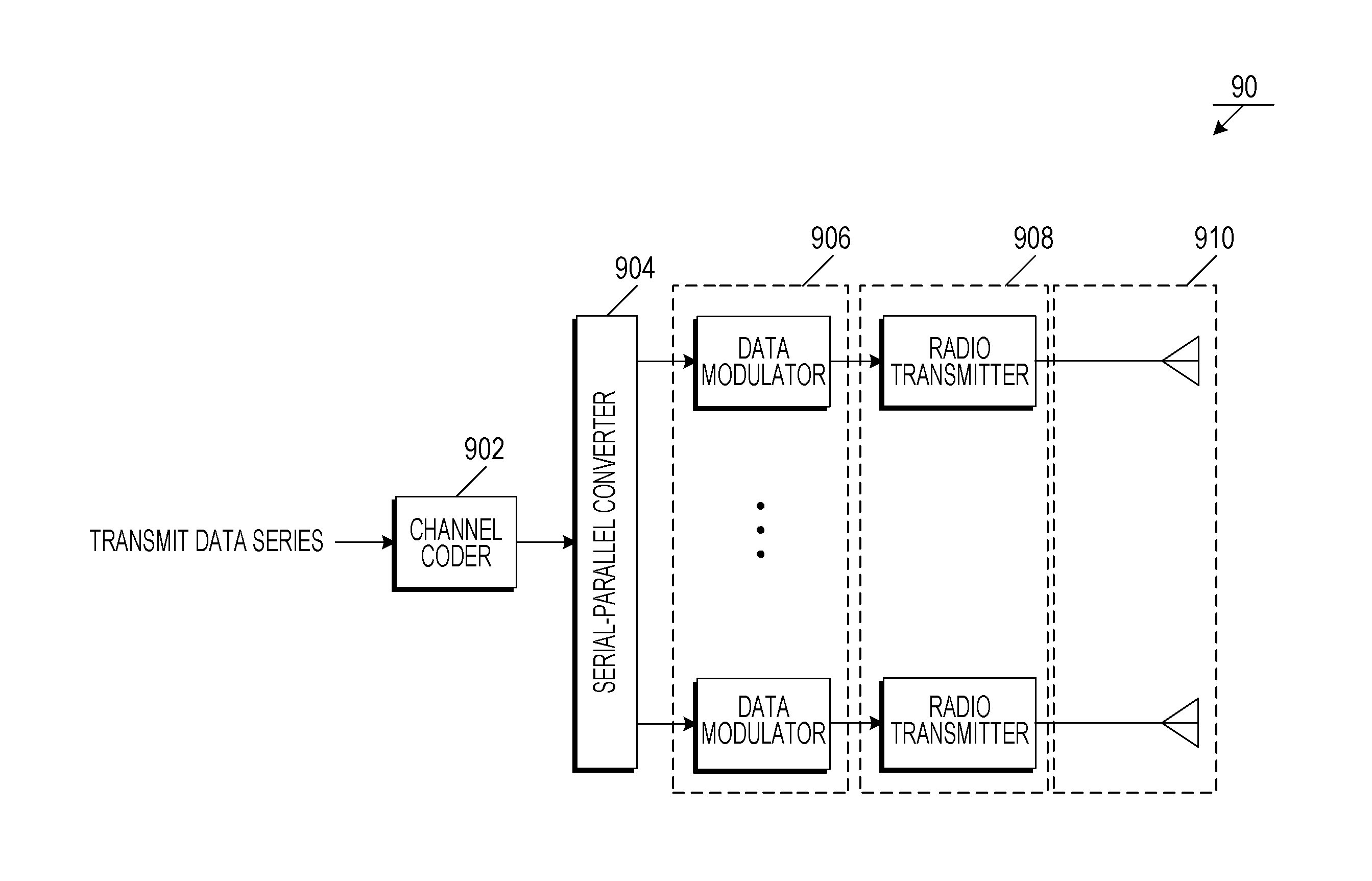

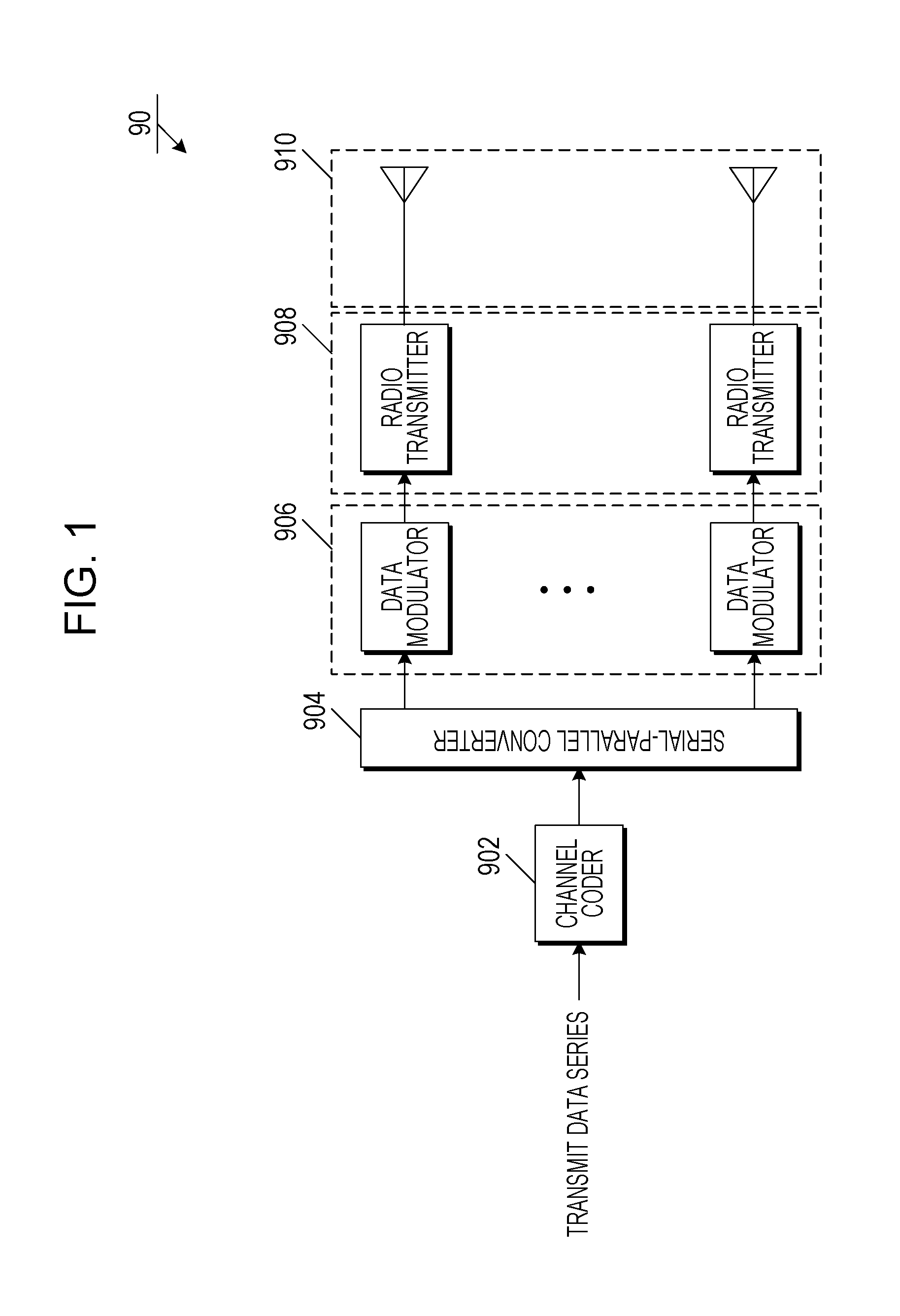

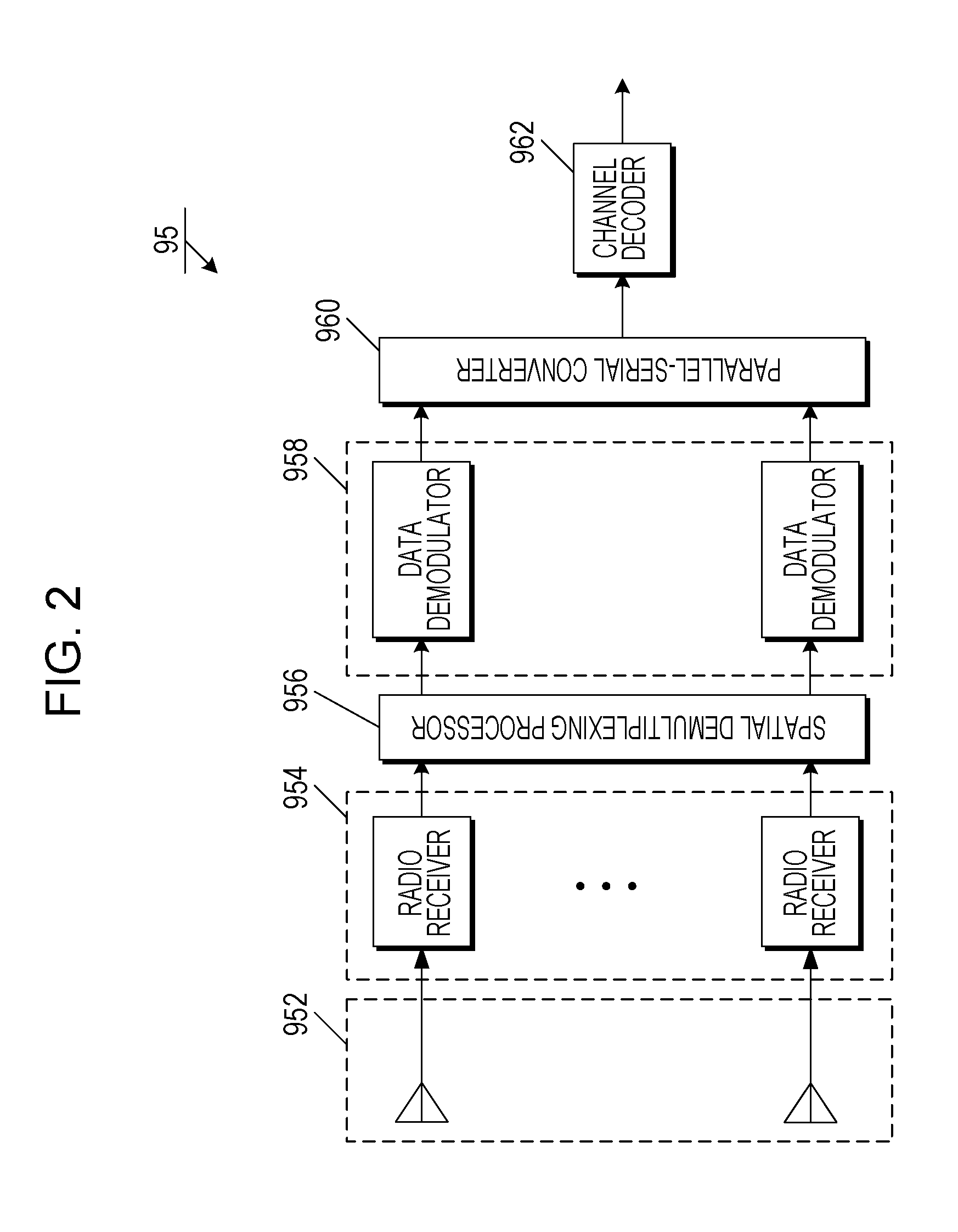

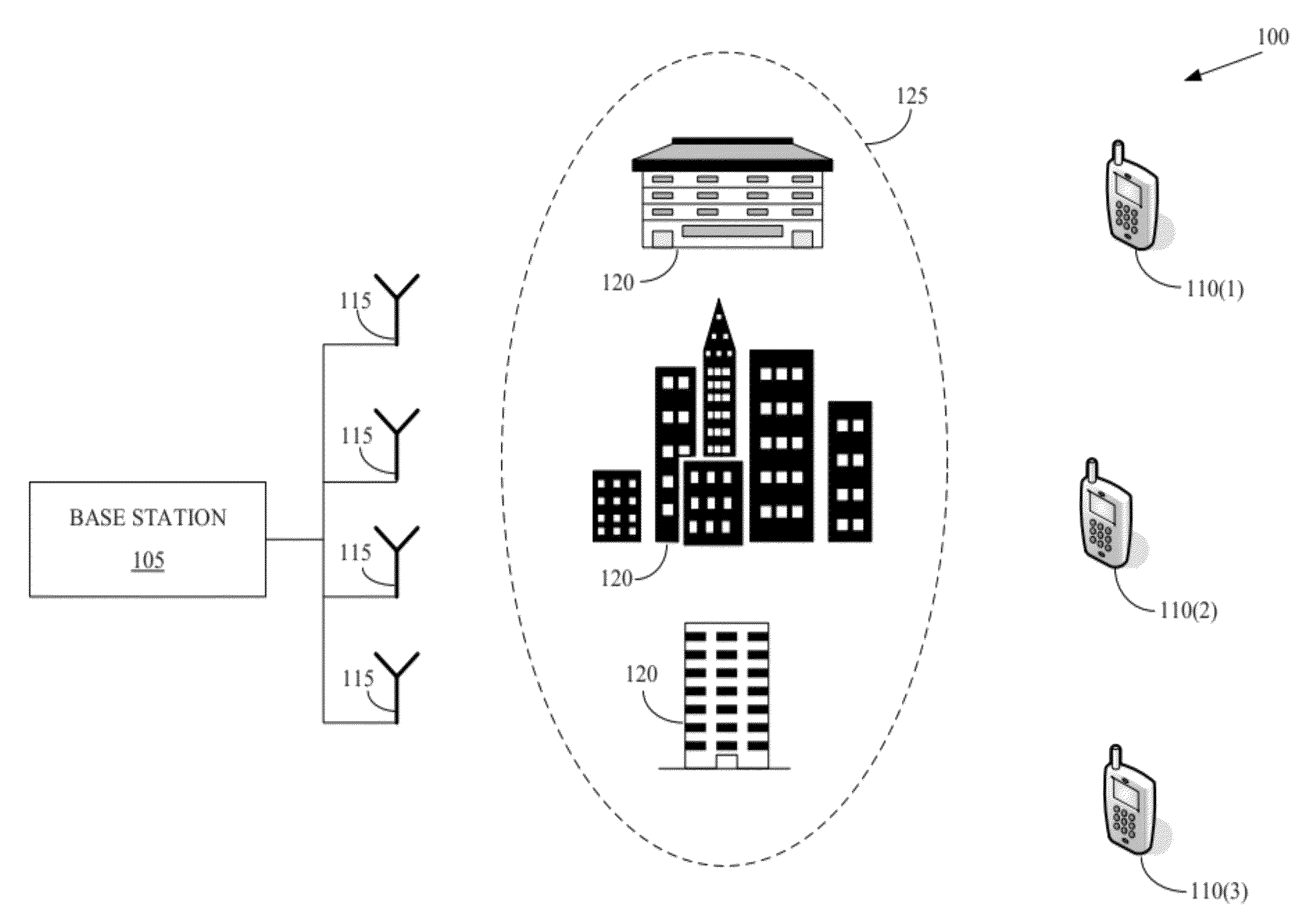

Wireless communication system, base station device, and terminal device

ActiveUS20130336418A1Frequency utilization efficiency can be improvedIncrease frequencySpatial transmit diversityError prevention/detection by diversity receptionChannel state informationCommunications system

A terminal device notifies a base station device about control information associated with a receive quality of the terminal device. The base station device performs data modulation on a plurality of pieces of transmit data for the terminal device, by using a plurality of different data modulation schemes one by one, based on the control information, and spatially multiplexes the plurality of pieces of transmit data after the data modulation over a single wireless resource and transmits a spatially multiplexed signal. The terminal device receives the signal with the plurality of pieces of transmit data spatially multiplexed, and detects desirable transmit data from the receive signal, based on a first channel matrix which represents channel state information between the base station device and the terminal device and a second channel matrix in which the first channel matrix is multiplied by a transform matrix. Accordingly, an adaptive modulation technology is applied even to MIMO spatial multiplexing transmission using a LR technology, and hence a wireless communication system and so forth that can contribute to improvement in frequency utilization efficiency can be provided.

Owner:SHARP KK

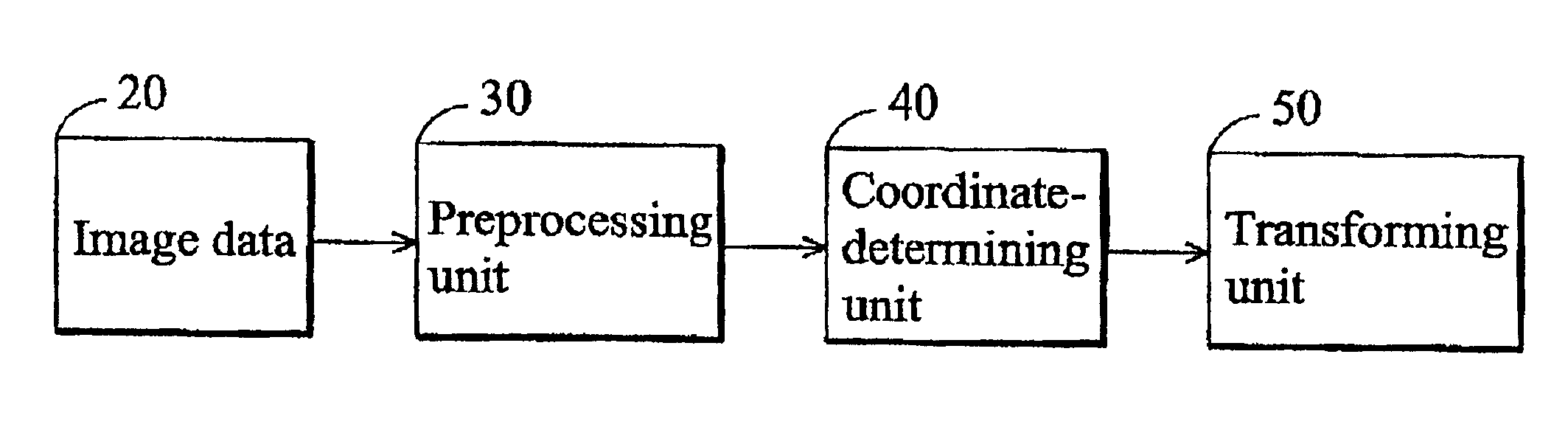

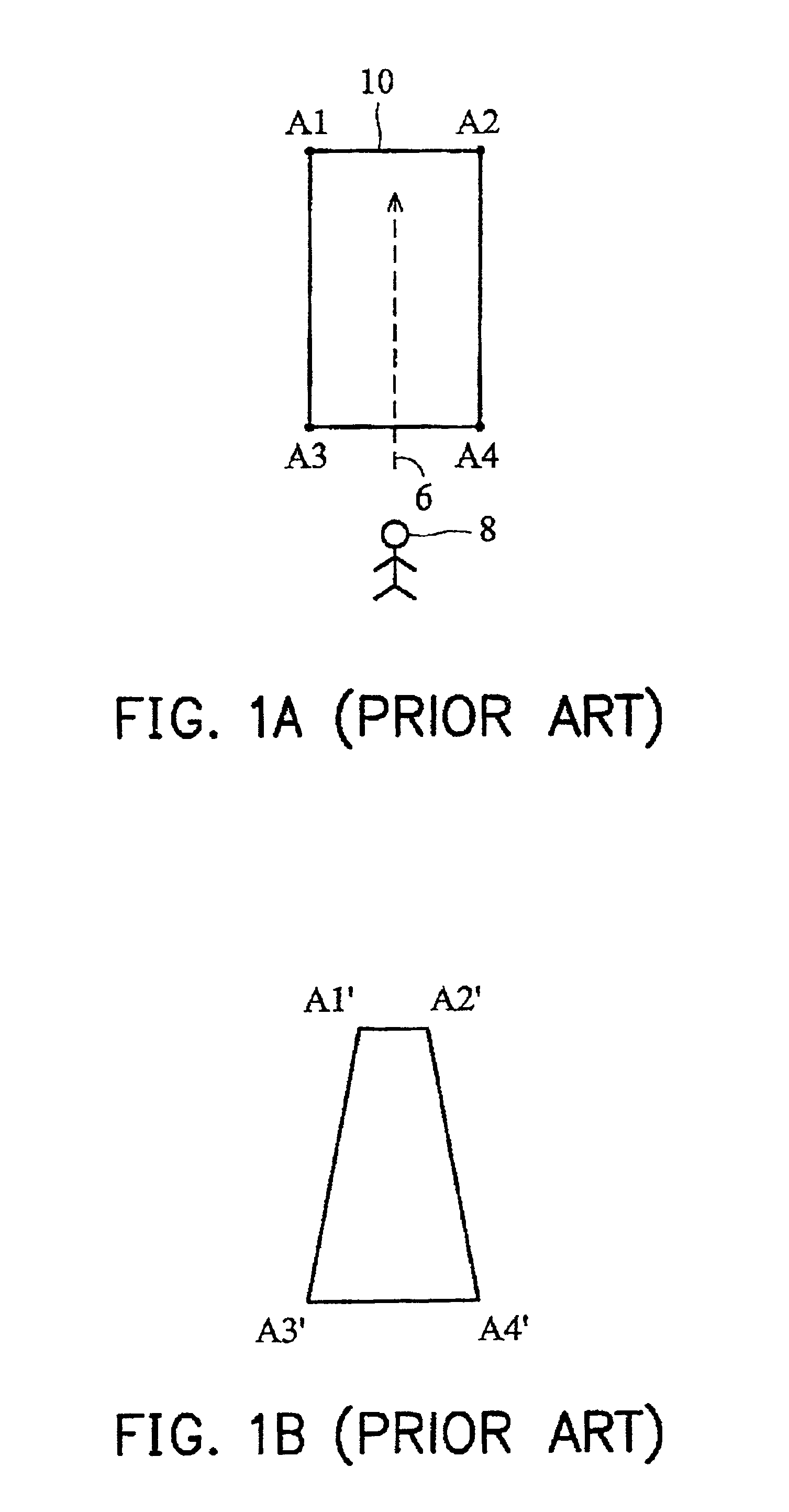

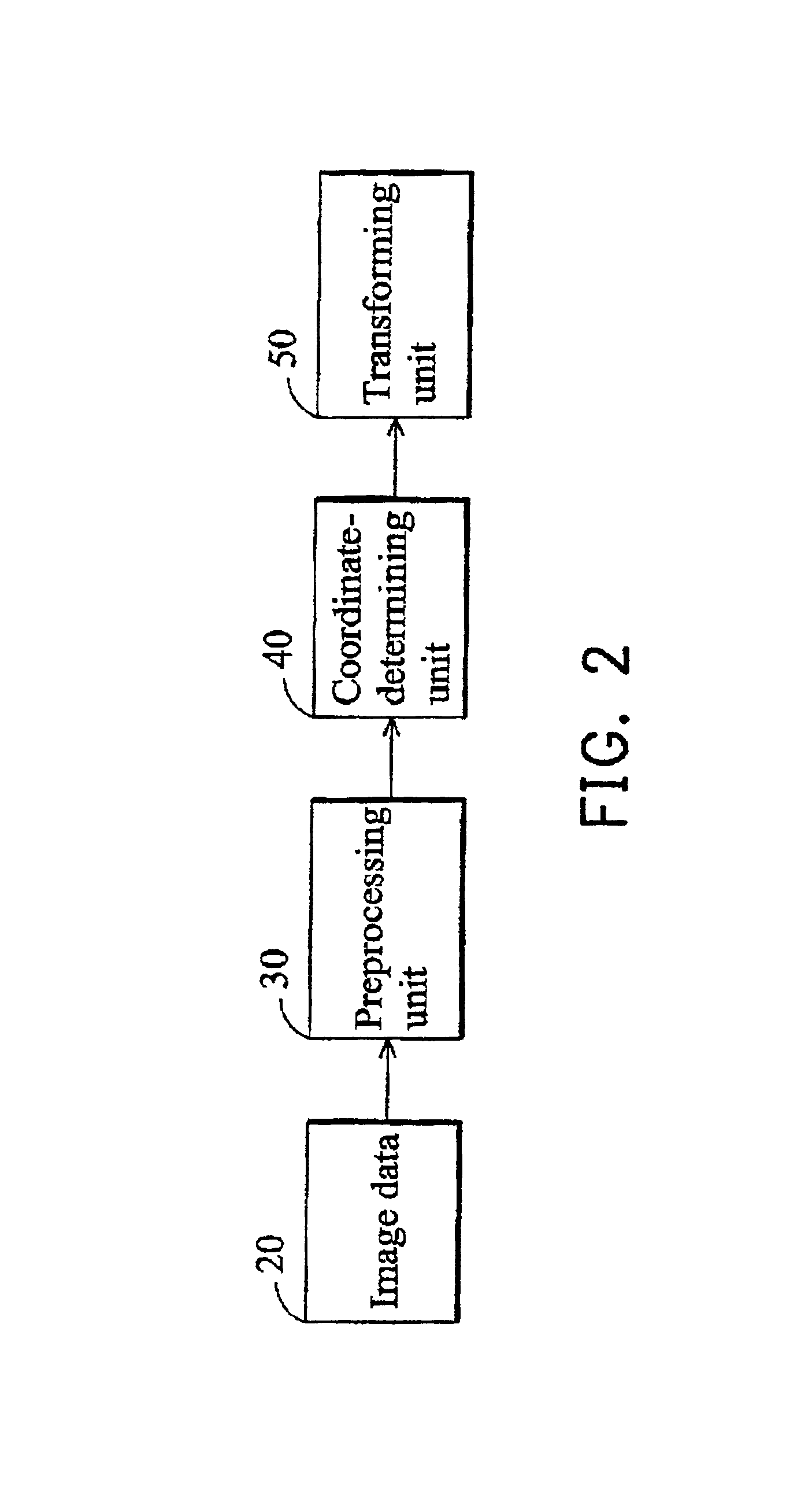

Method of correcting an image with perspective distortion and producing an artificial image with perspective distortion

A method of correcting an image with perspective distortion and creating an artificial image with perspective distortion is disclosed. First, image data including a distorted object image are received. Next, the user inputs an operational instruction for setting a plurality of distortion parameters pertaining to the image data, which include a distorted side of the distorted object image, a central line and a distortion ratio. Thus, a plurality of coordinates of corners of the distorted object image are calculated according to these distortion parameters and a transformation matrix is determined according to the calculated coordinates of the corners of the distorted object image. Finally, the distorted object image is transformed to a corrected object image according to the transformation matrix.

Owner:COREL CORP +1

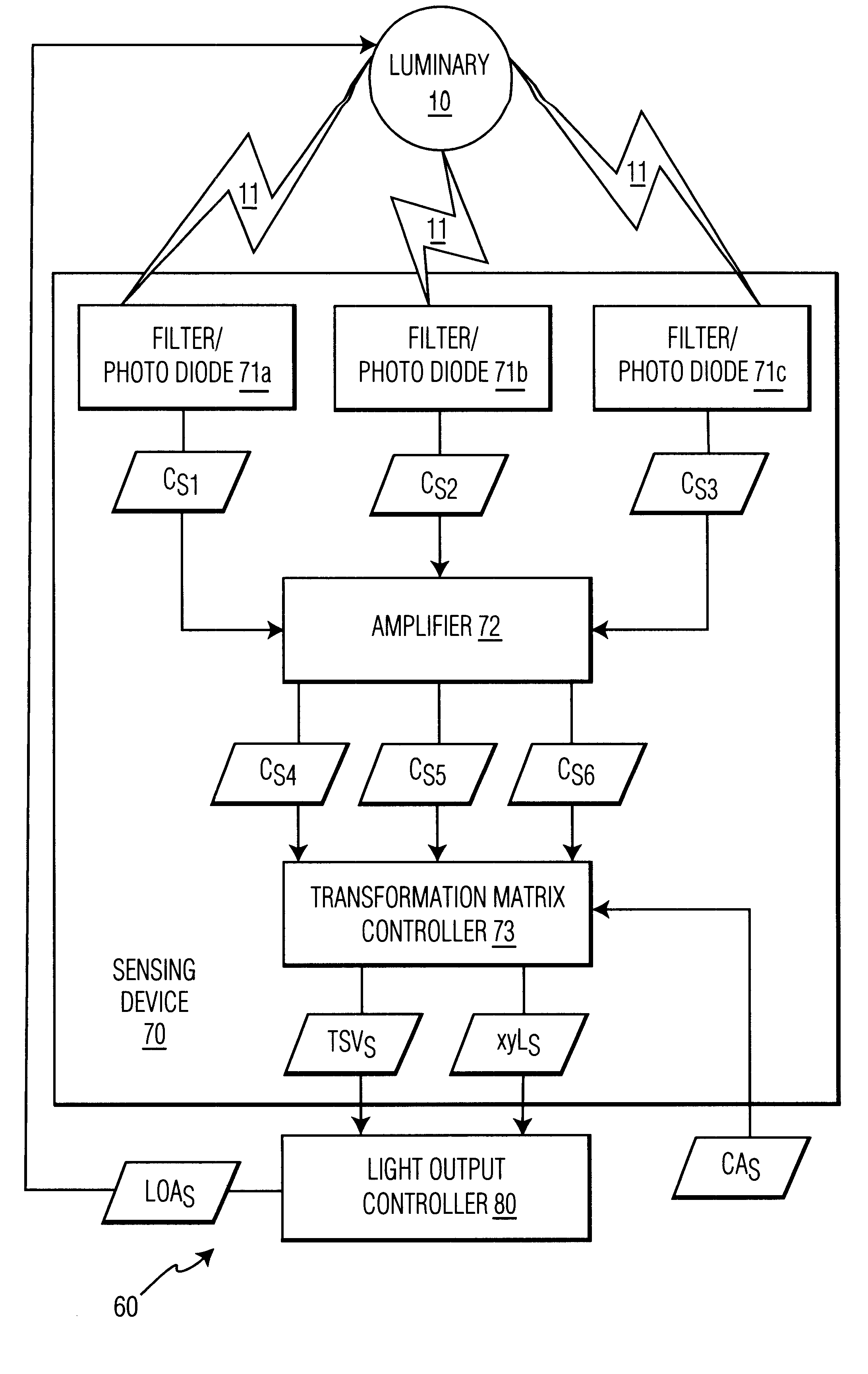

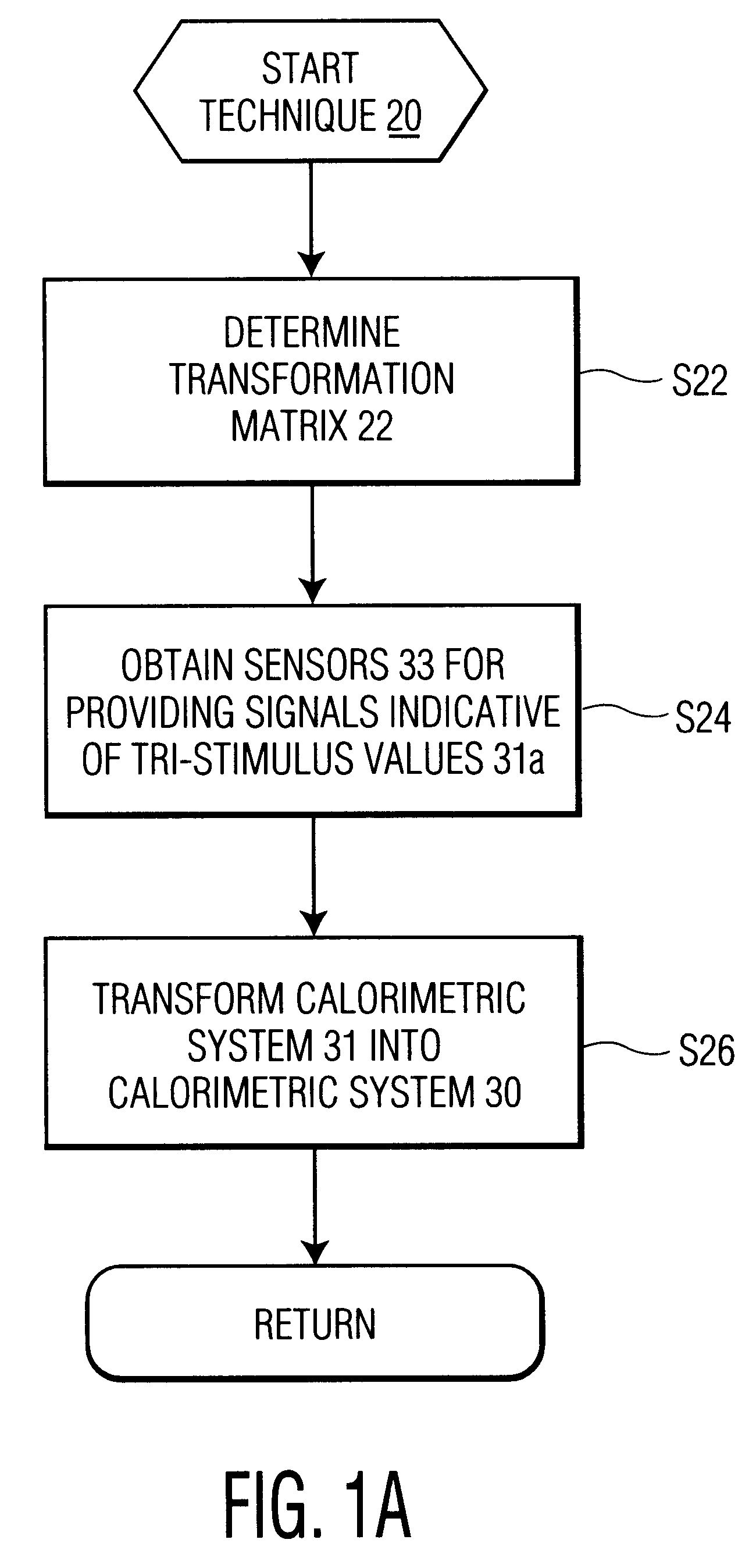

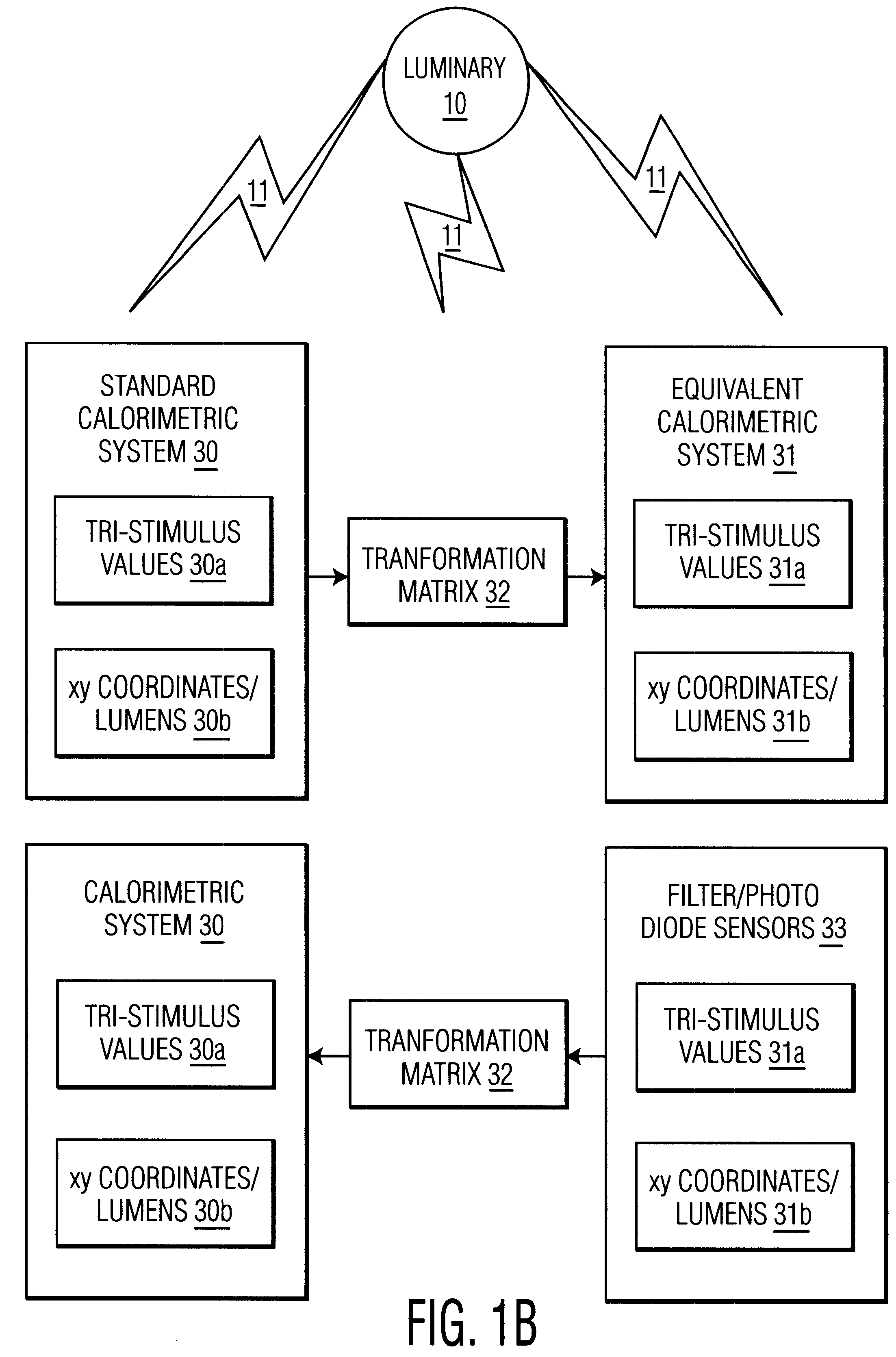

Method and system for controlling a light source

A light output control system for implementing a method for sensing the tri-stimulus values for controlling a light output illuminated from an LED based luminary is disclosed. The system comprises one or more filter / photo diode sensors for sensing a first set of tri-stimulus values of the light output and providing signals indicative thereof. The signals are utilized in a transformation matrix whereby a second set of tri-stimulus values is obtained. The system controls the light output as a function of the second set of tri-stimulus values.

Owner:KONINK PHILIPS ELECTRONICS NV

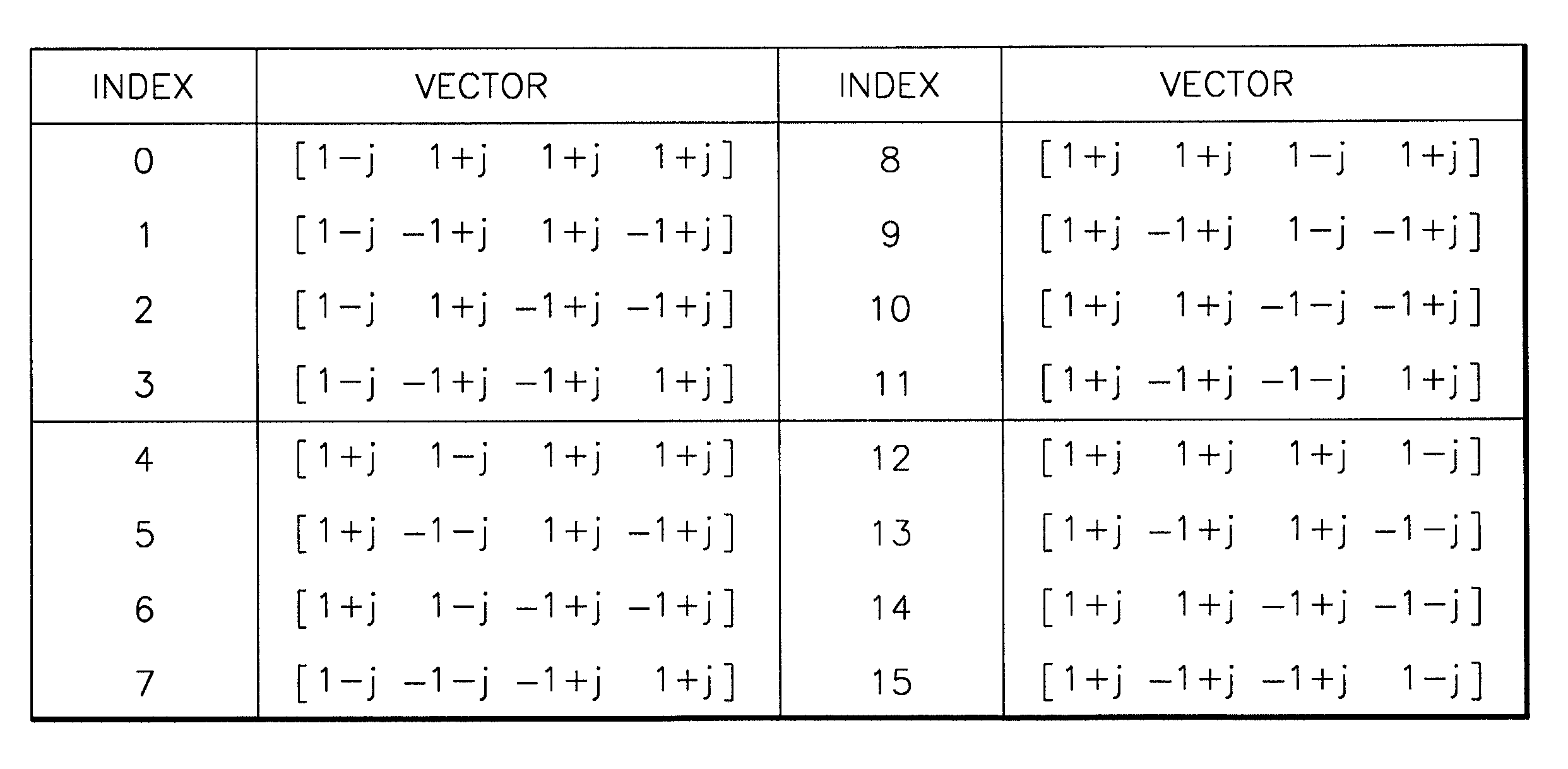

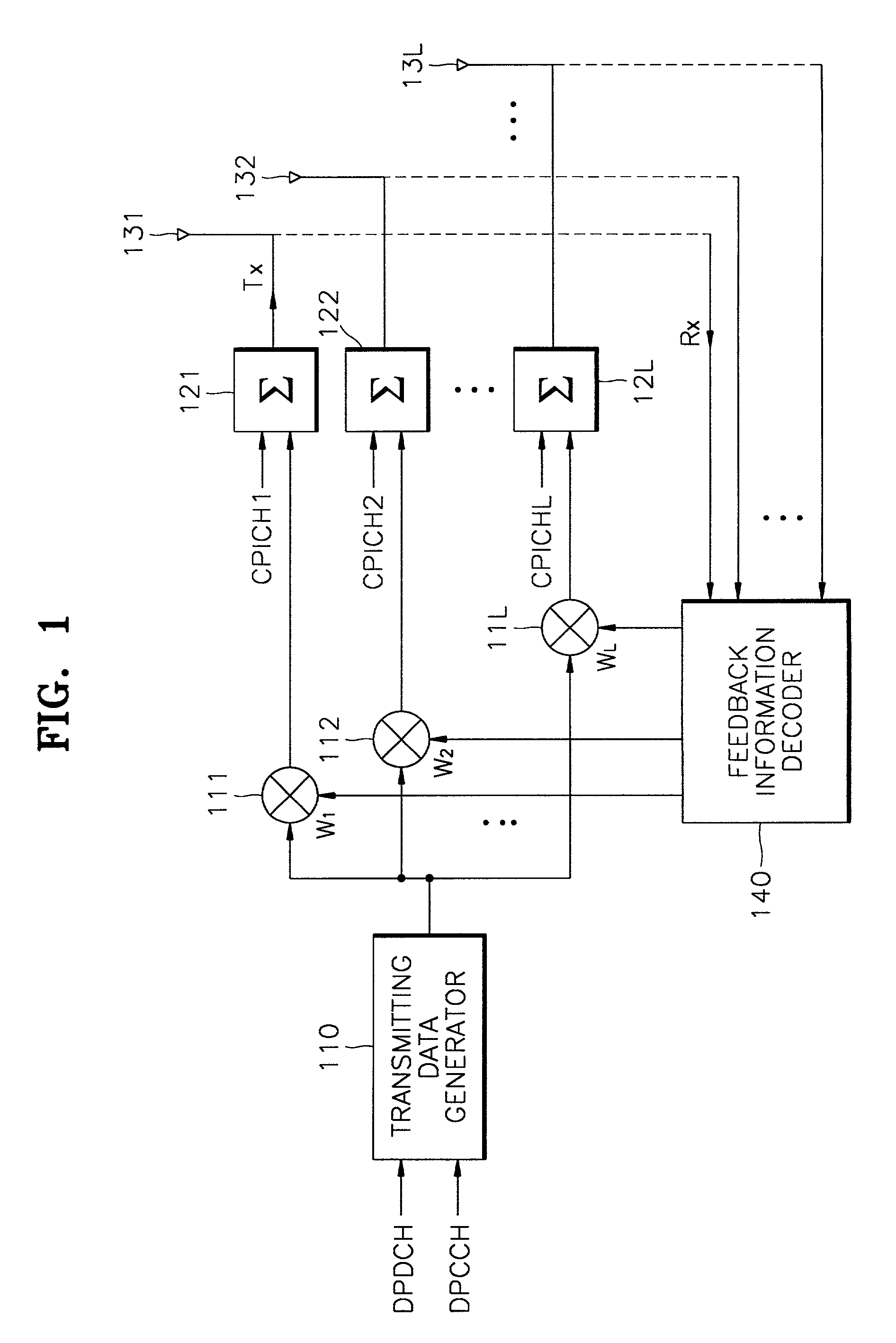

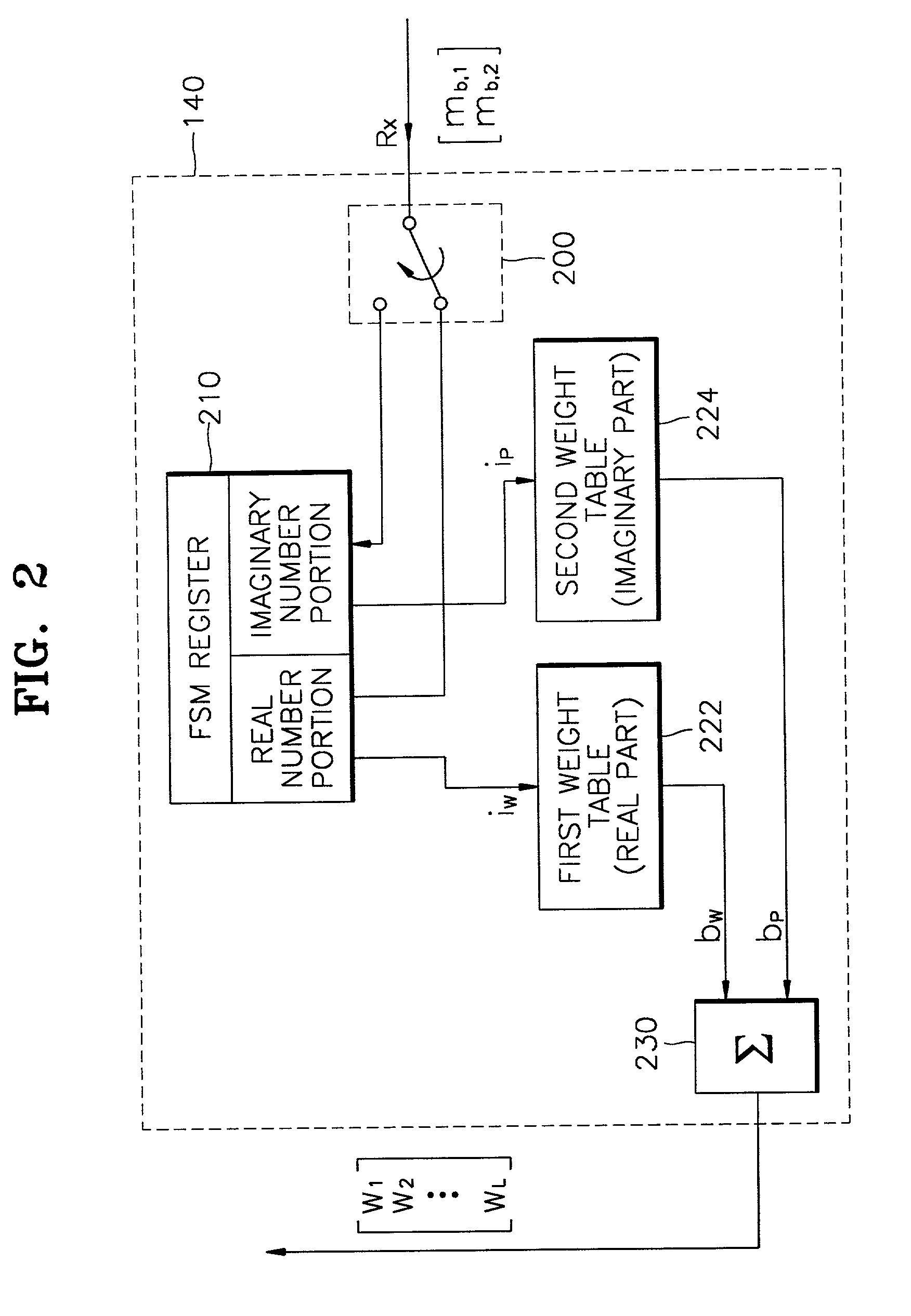

Closed loop transmit diversity method and apparatus using complex basis vector sets for antenna selection

InactiveUS7116723B2Overcome imbalanceSpatial transmit diversityAntenna arraysLow speedCommunications system

A transmission antenna diversity method, and a base station apparatus and a mobile station apparatus therefor in a mobile communication system are provided. In the transmission antenna diversity method, channel information is measured from signals received through the plurality of antennas used in the base station, and a channel information matrix is output. The channel information matrix is transformed according to a transform matrix composed of a complex basis vector set. Reception power with respect to the plurality of antennas is calculated based on the transformed channel information matrix. Antenna selection information obtained based on the calculated reception power is transmitted to the base station as feedback information for controlling transmission antenna diversity. Therefore, power is equally distributed to transmitting antennas, excellent performance is maintained at a high speed of movement, and reliable channel adaptation is accomplished at a low speed of movement of the mobile station.

Owner:SAMSUNG ELECTRONICS CO LTD

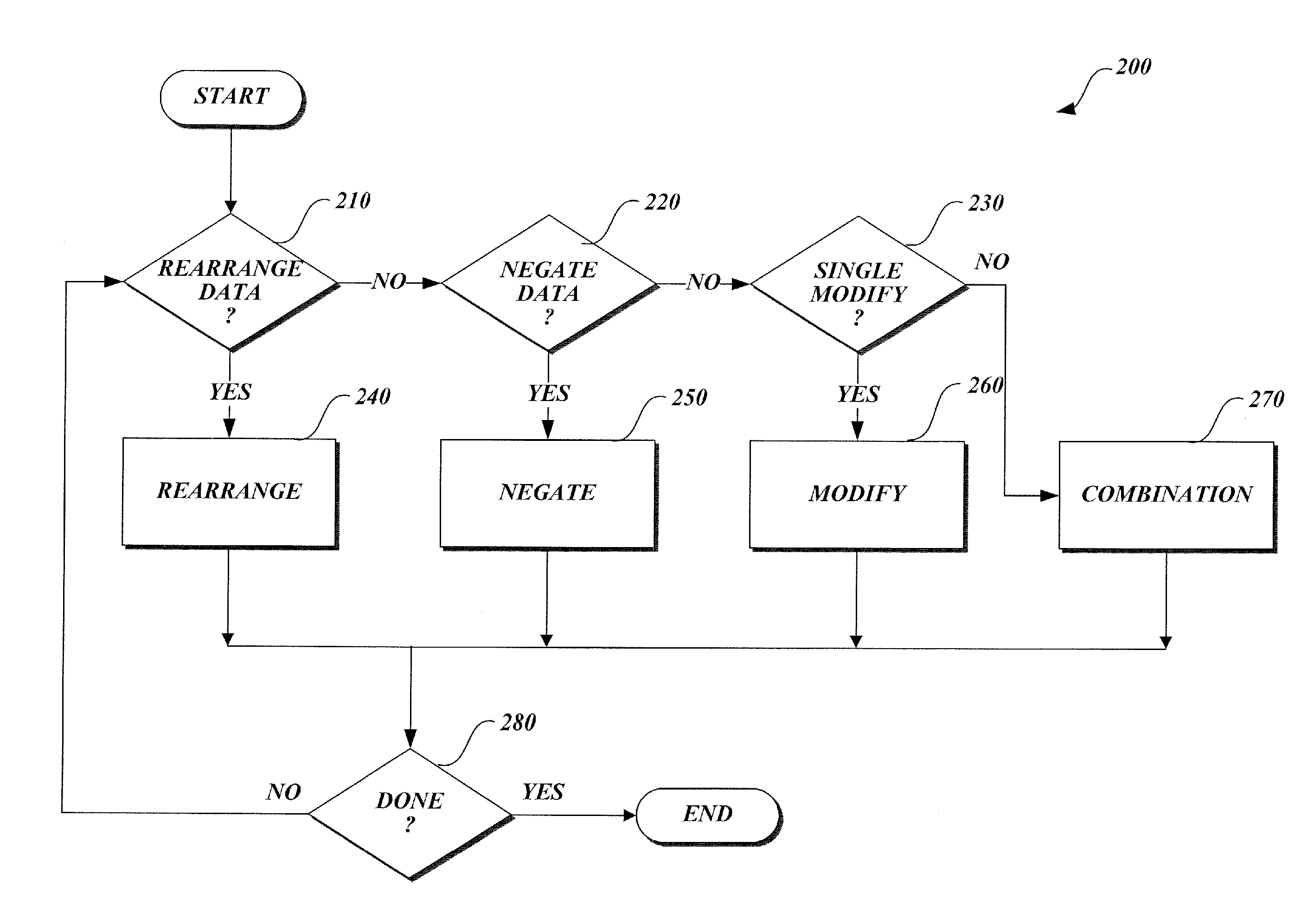

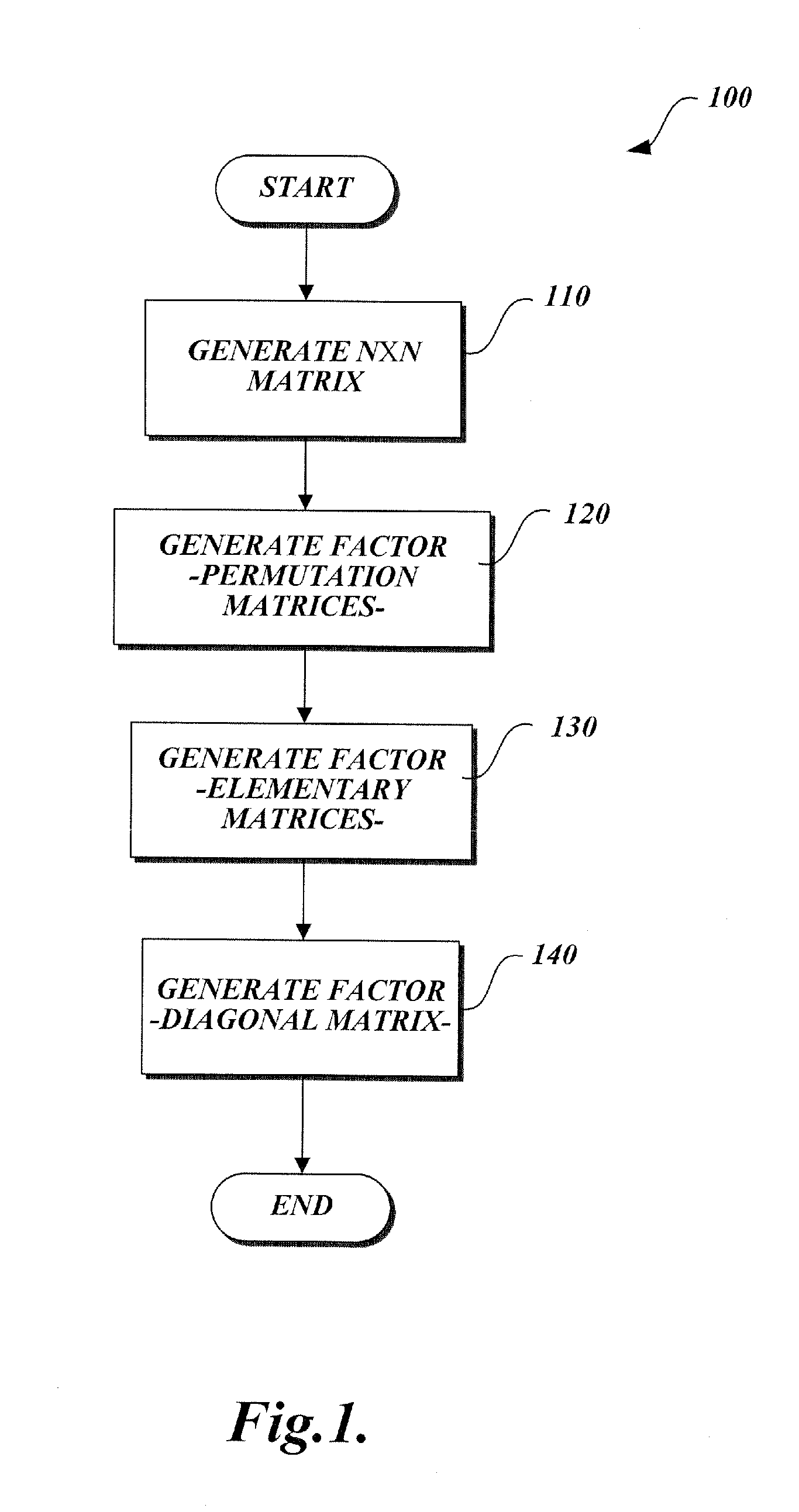

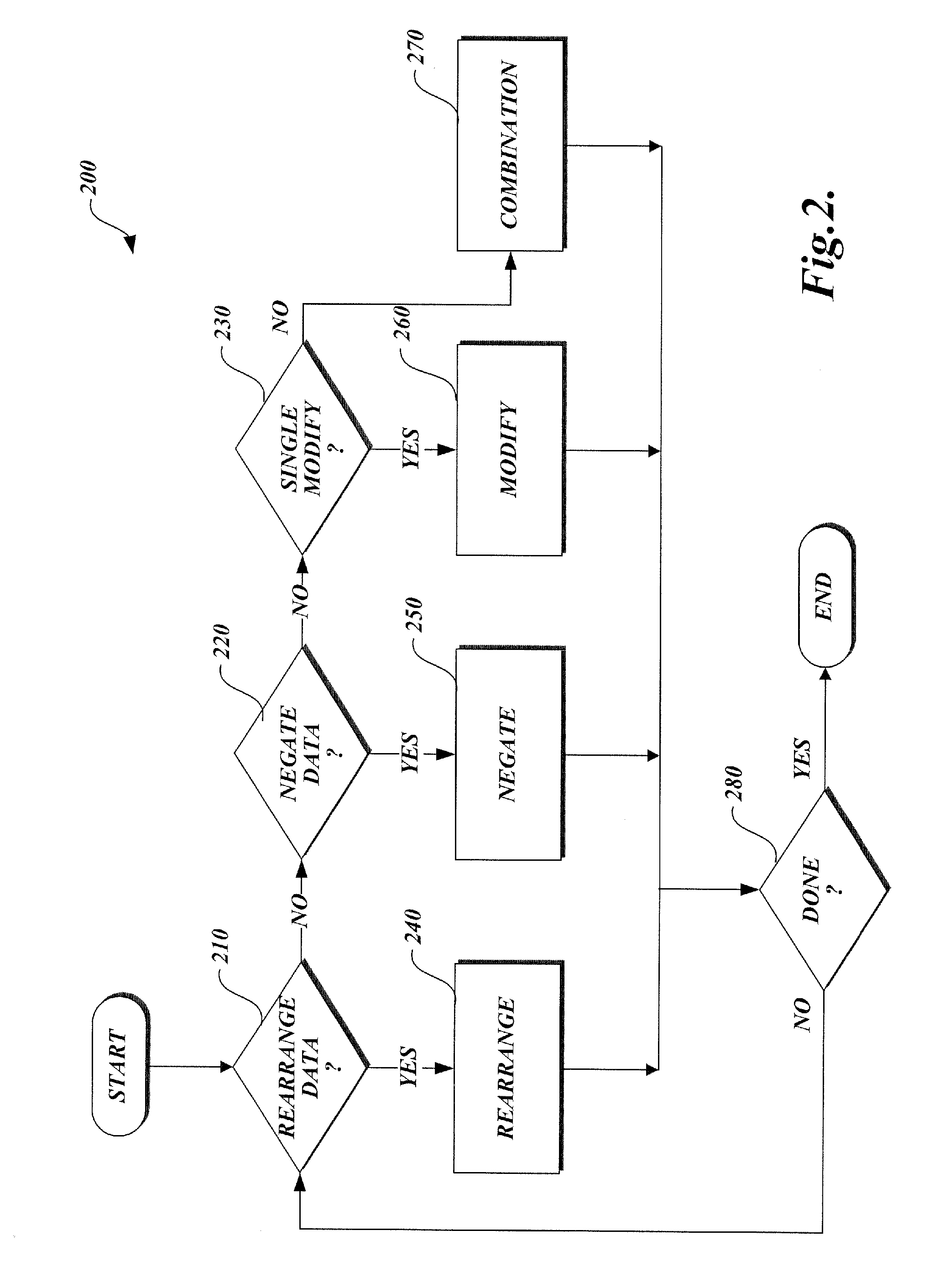

Method of generating matrix factors for a finite-dimensional linear transform

InactiveUS20070211952A1Character and pattern recognitionDigital video signal modificationPartition of unityLU decomposition

A method of generating matrix factors for a finite-dimensional linear transform using a computer. The linear transform is represented by data values stored in a linear transformation matrix having a nonzero determinant. In one aspect, a first LU-decomposition is applied to the linear transformation matrix. Four matrices are generated from the LU-decomposition, including a first permutation matrix, a second permutation matrix, a lower triangular matrix having a unit diagonal, and a first upper triangular matrix. Additional elements include a third matrix Â, a signed permutation matrix Π such that A=ΠÂ, a permuted linear transformation matrix A′, a second upper triangular matrix U1, wherein the second upper triangular matrix satisfies the relationship Â=U1A′. The permuted linear transformation matrix is factored into a product including a lower triangular matrix L and an upper triangular matrix U. The linear transformation matrix is expressed as a product of the matrix factors.

Owner:CELARTEM

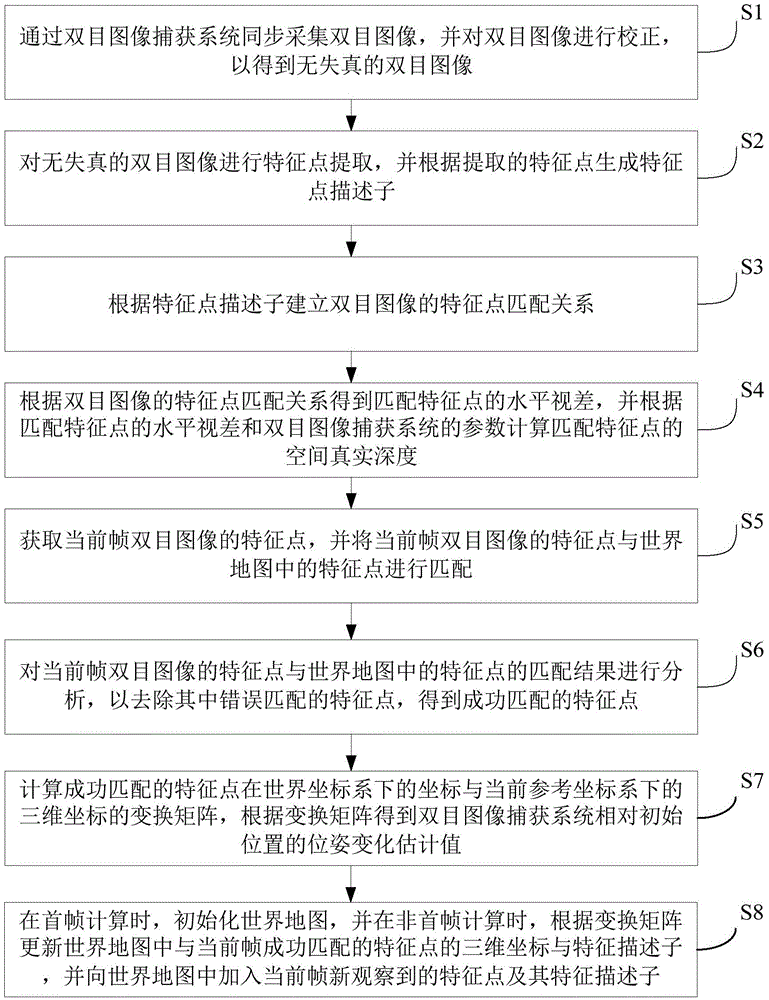

Visual ranging-based simultaneous localization and map construction method

ActiveCN105469405AReduce computational complexityEliminate accumulationImage enhancementImage analysisSimultaneous localization and mappingComputation complexity

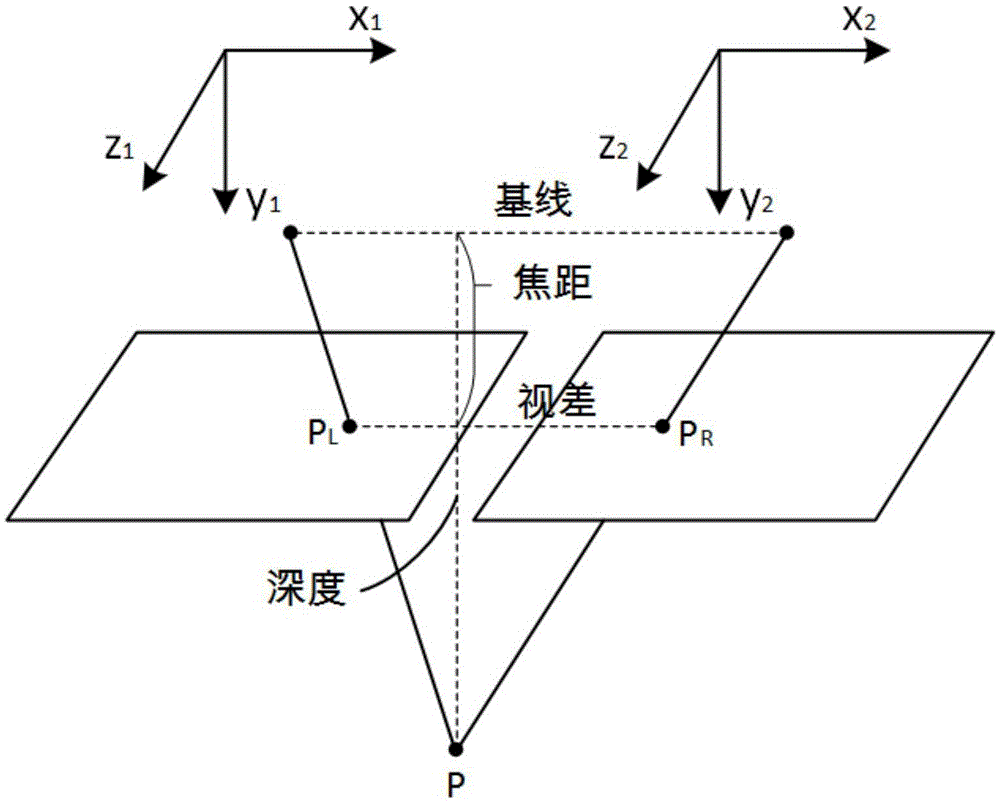

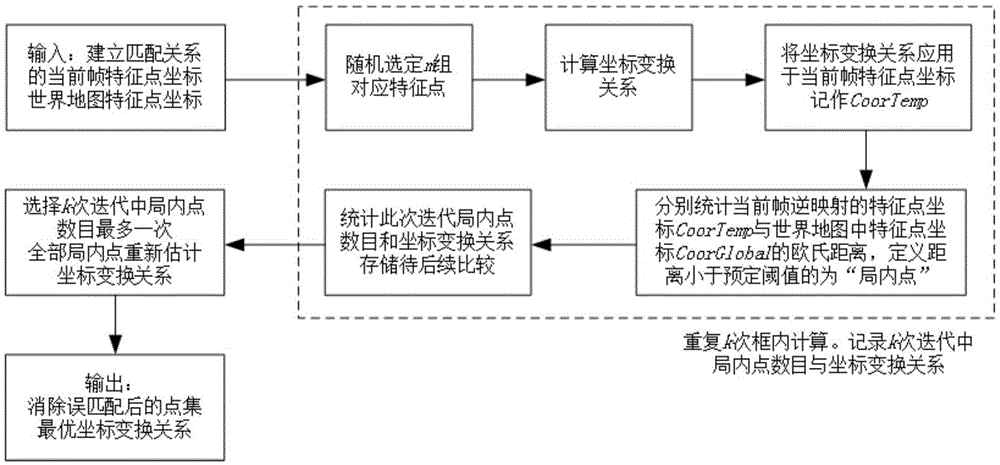

The invention provides a visual ranging-based simultaneous localization and map construction method. The method includes the following steps that: a binocular image is acquired and corrected, so that a distortion-free binocular image can be obtained; feature extraction is performed on the distortion-free binocular image, so that feature point descriptors can be generated; feature point matching relations of the binocular image are established; the horizontal parallax of matching feature points is obtained according to the matching relations, and based on the parameters of a binocular image capture system, real space depth is calculated; the matching results of the feature points of a current frame and feature points in a world map are calculated; feature points which are wrongly matched with each other are removed, so that feature points which are successfully matched with each other can be obtained; a transform matrix of the coordinates of the feature points which are successfully matched with each other under a world coordinate system and the three-dimension coordinates of the feature points which are successfully matched with each other under a current reference coordinate system is calculated, and a pose change estimated value of the binocular image capture system relative to an initial position is obtained according to the transform matrix; and the world map is established and updated. The visual ranging-based simultaneous localization and map construction method of the invention has low computational complexity, centimeter-level positioning accuracy and unbiased characteristics of position estimation.

Owner:北京超星未来科技有限公司

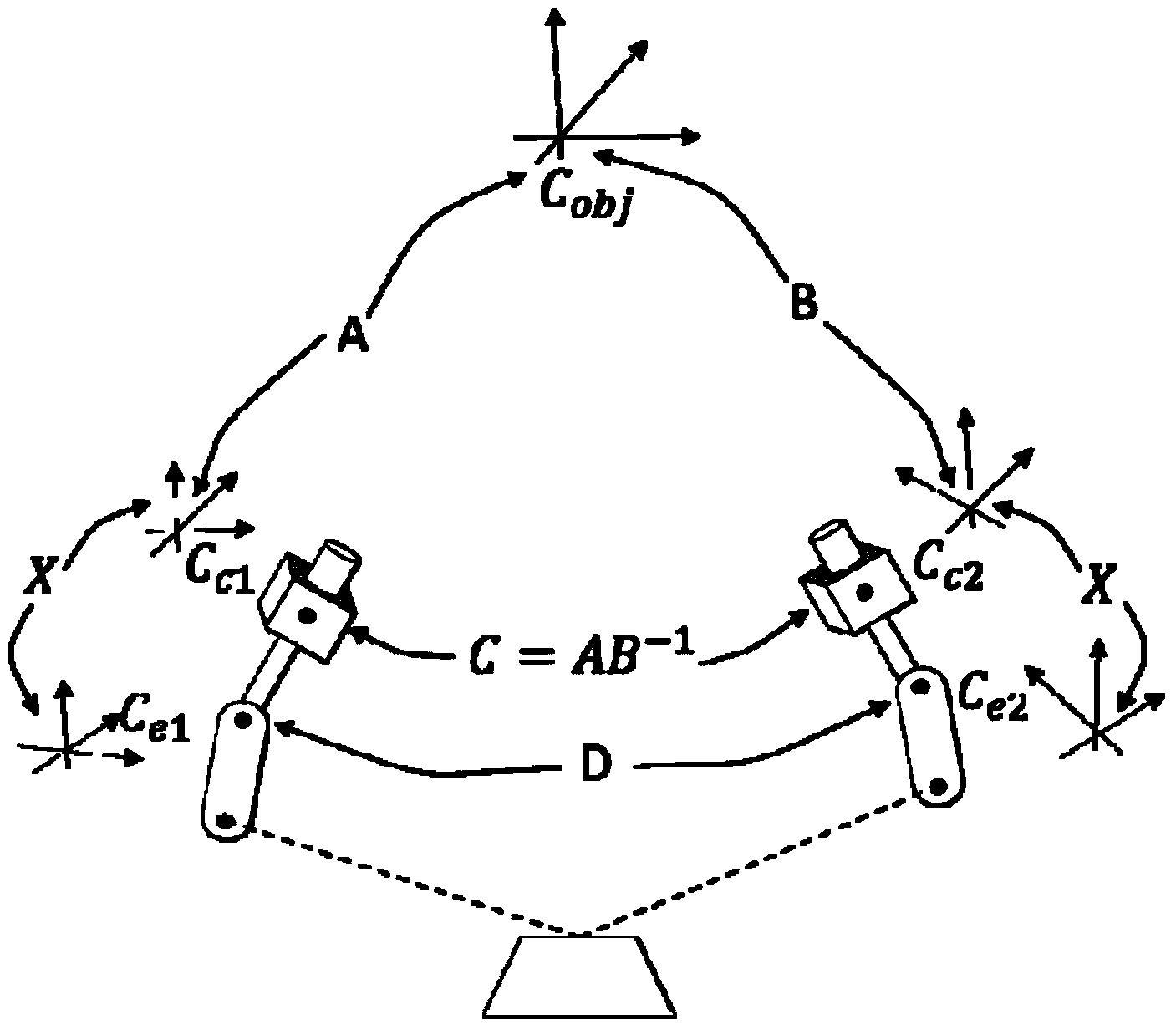

Systematic calibration method of welding robot guided by line structured light vision sensor

ActiveCN102794763AImprove tracking accuracyIncrease flexibilityProgramme-controlled manipulatorWelding/cutting auxillary devicesQuaternionEngineering

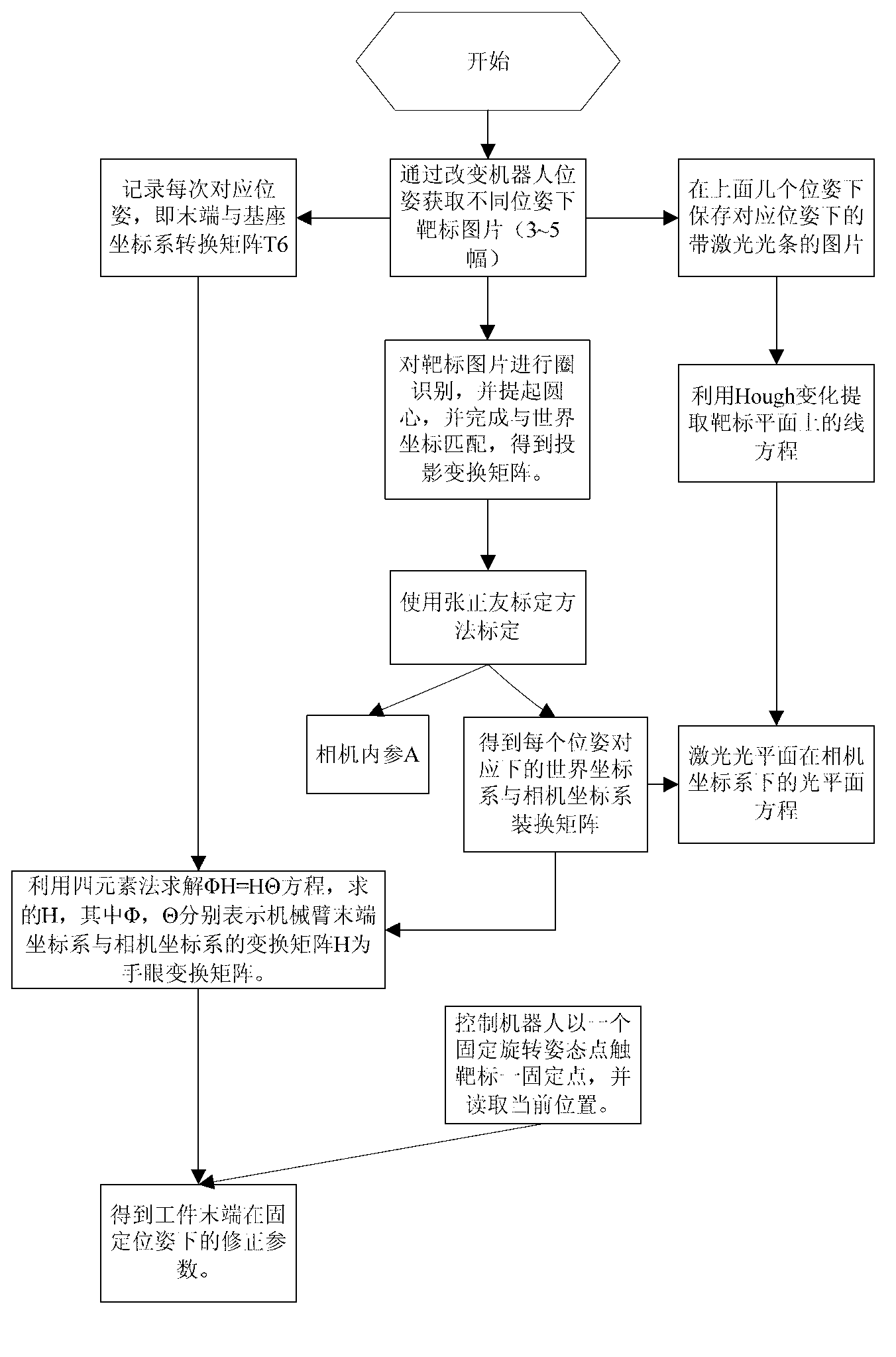

The invention relates to a systematic calibration method of a welding robot guided by a line structured light vision sensor, which comprises the following steps: firstly, controlling a mechanical arm to change pose, obtaining a round target image through a camera, accomplishing the matching of the round target image and a world coordinate, and then obtaining an internal parameter matrix and an external parameter matrix RT of the camera; secondly, solving a line equation of a line laser bar by Hough transformation, and using the external parameter matrix RT obtained in the first step to obtain a plane equation of the plane of the line laser bar under a coordinate system of the camera; thirdly, calculating to obtain a transformation matrix of a tail end coordinate system of the mechanical arm and a base coordinate system of the mechanical arm by utilizing a quaternion method; and fourthly, calculating a coordinate value of a tail end point of a welding workpiece under the coordinate of the mechanical arm, and then calculating an offset value of the workpiece in the pose combined with the pose of the mechanical arm. The systematic calibration method of the welding robot guided by the line structured light vision sensor is flexible, simple and fast, and is high in precision and generality, good in stability and timeliness and small in calculation amount.

Owner:JIANGNAN UNIV +1

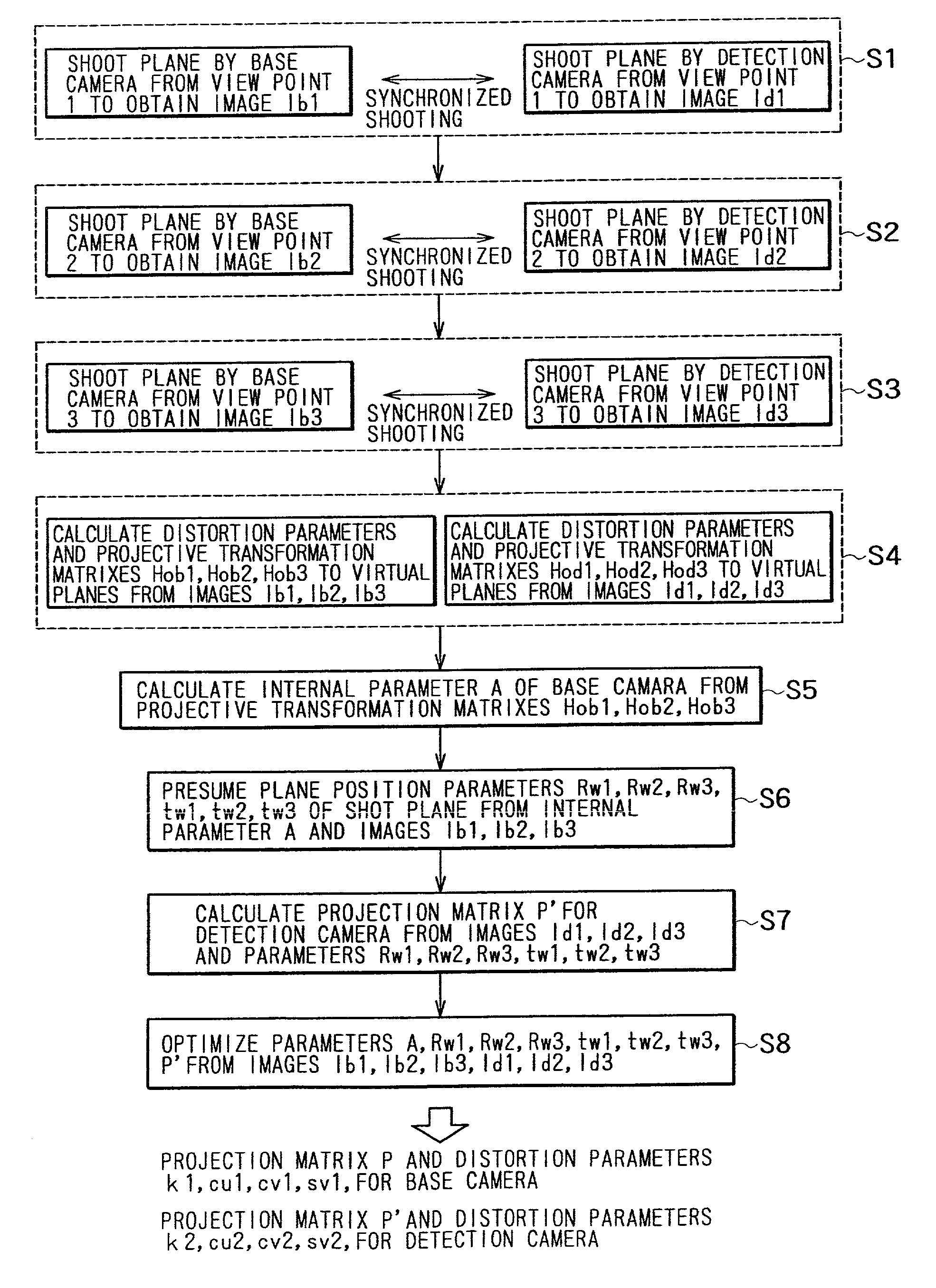

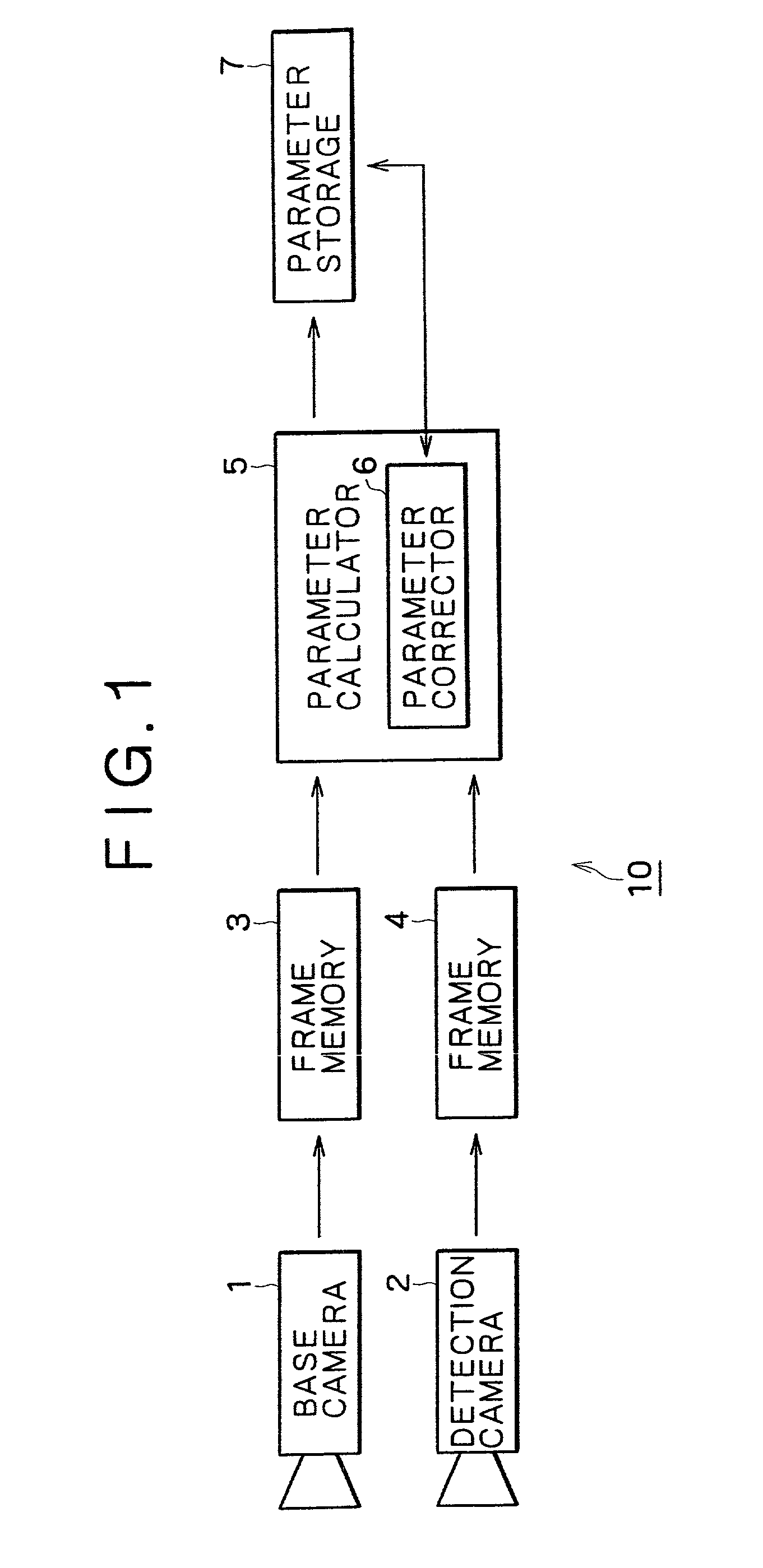

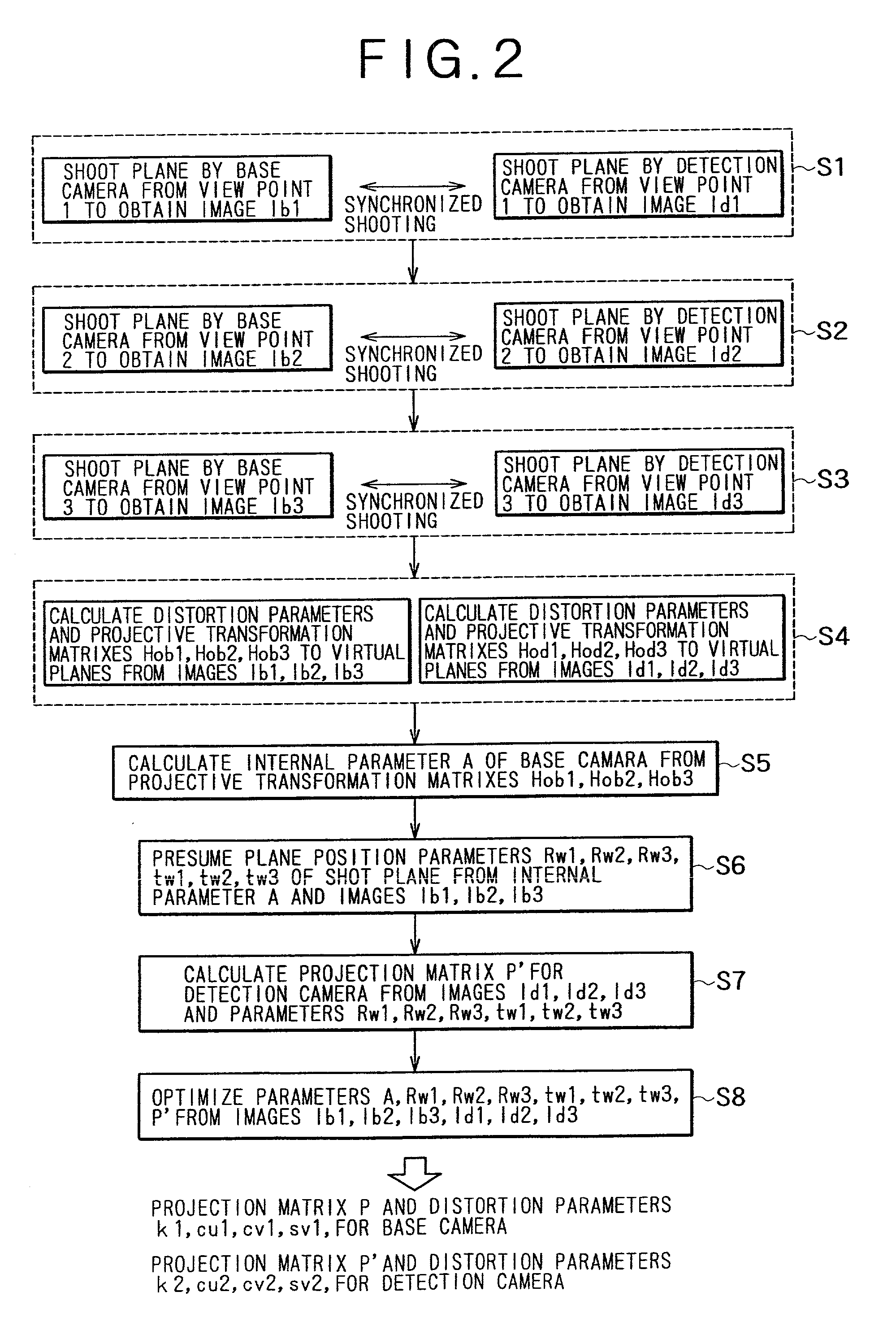

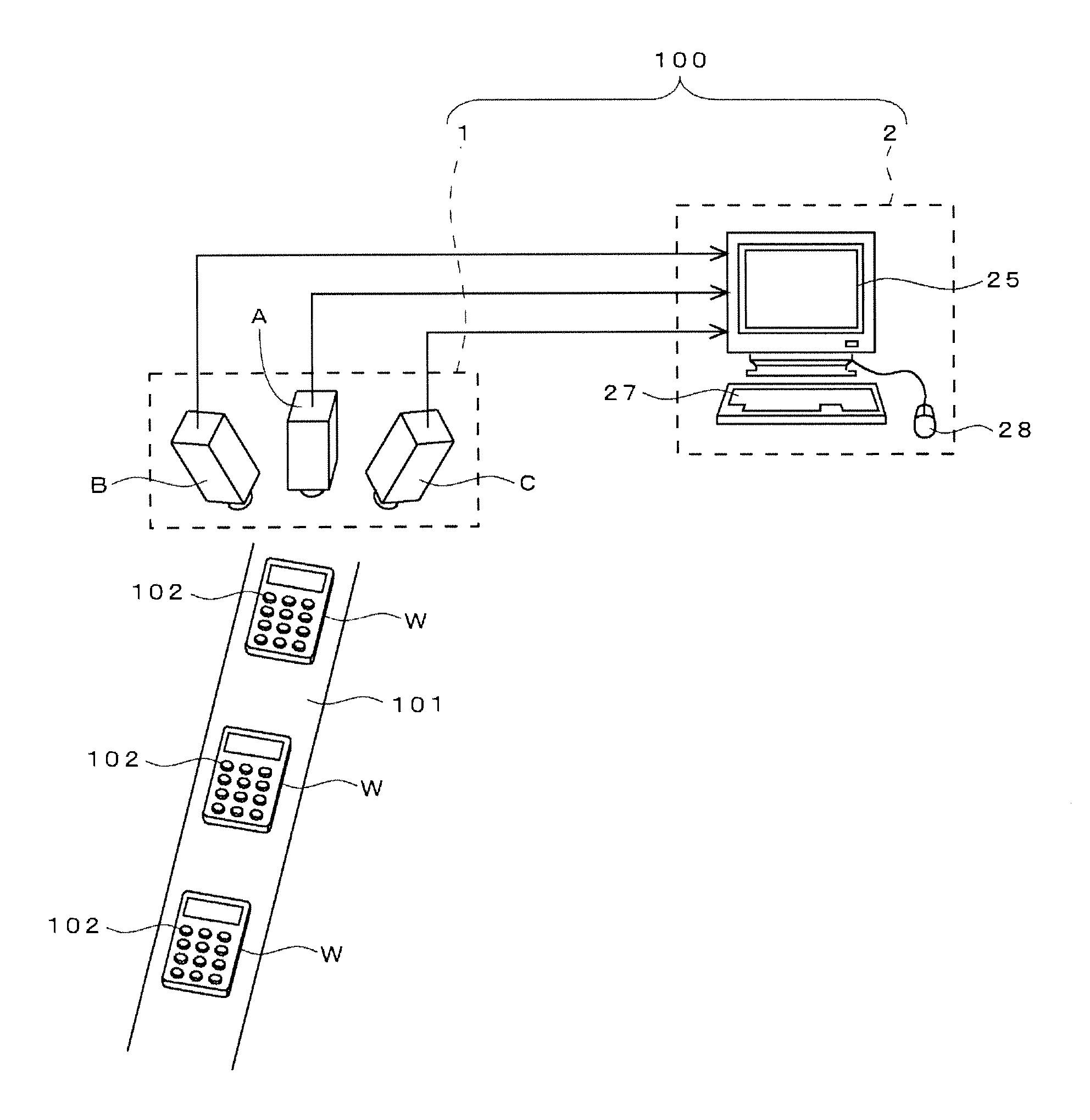

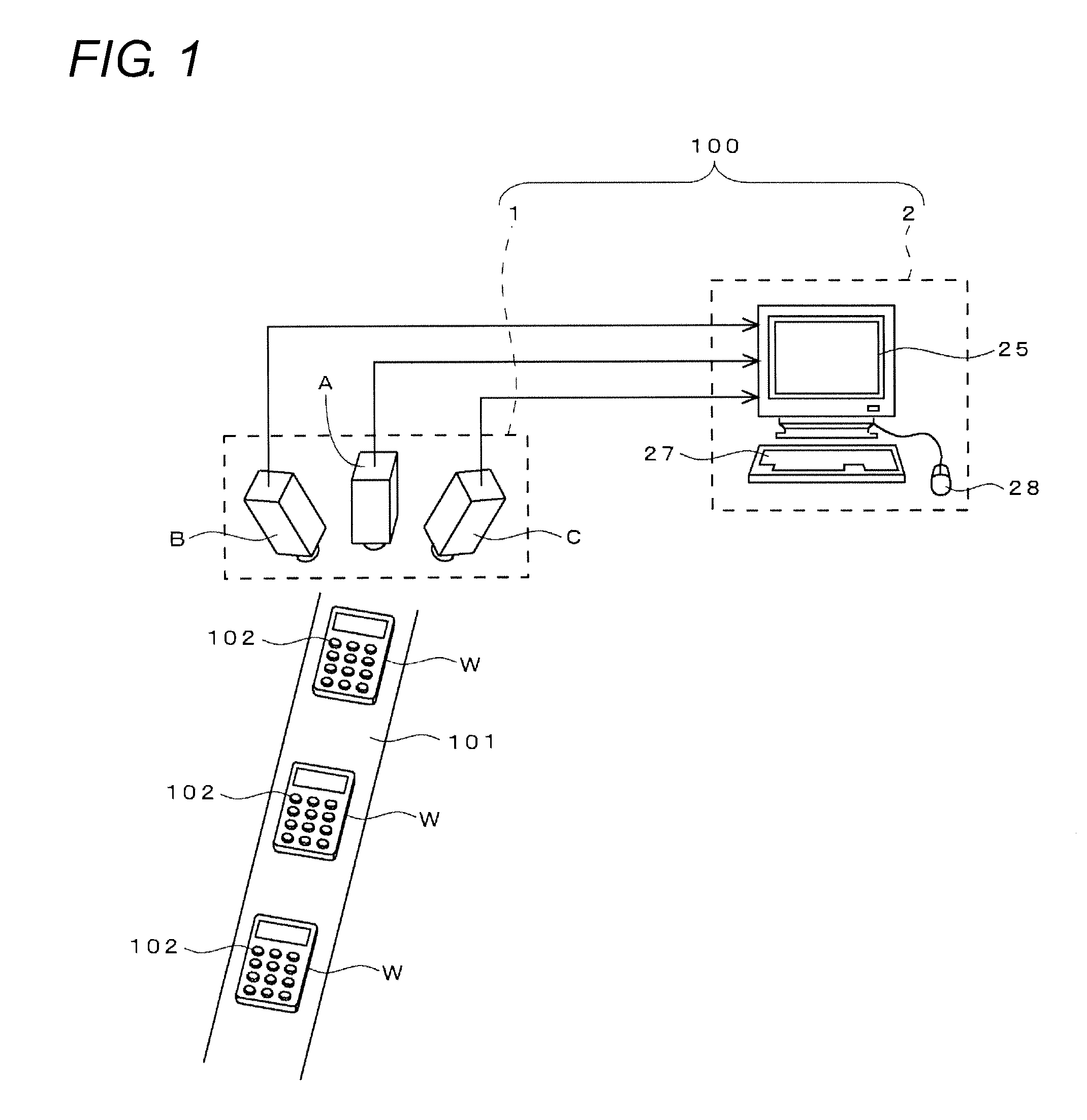

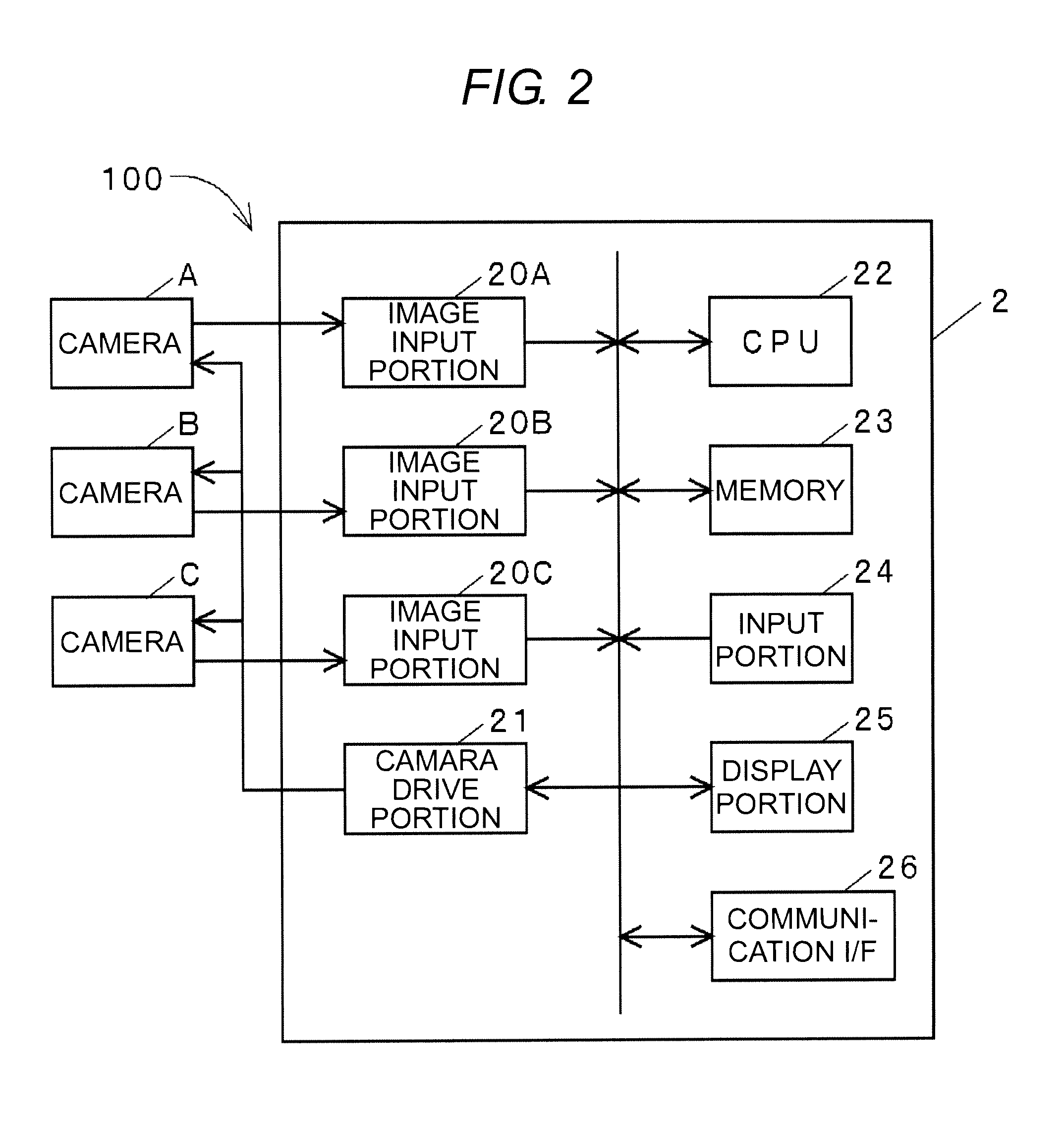

Camera calibration device and method, and computer system

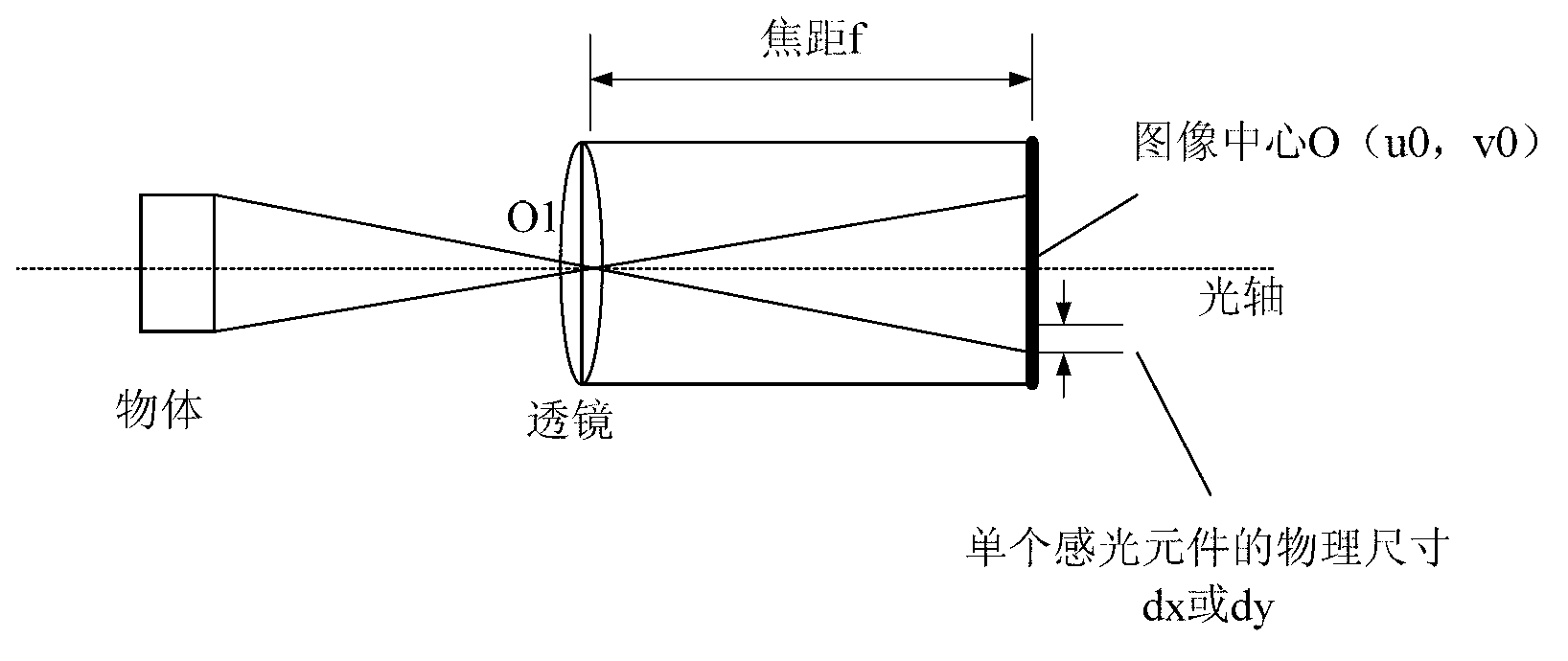

InactiveUS20050185049A1Easy CalibrationEasy to limitImage analysisOptical rangefindersHat matrixCamera lens

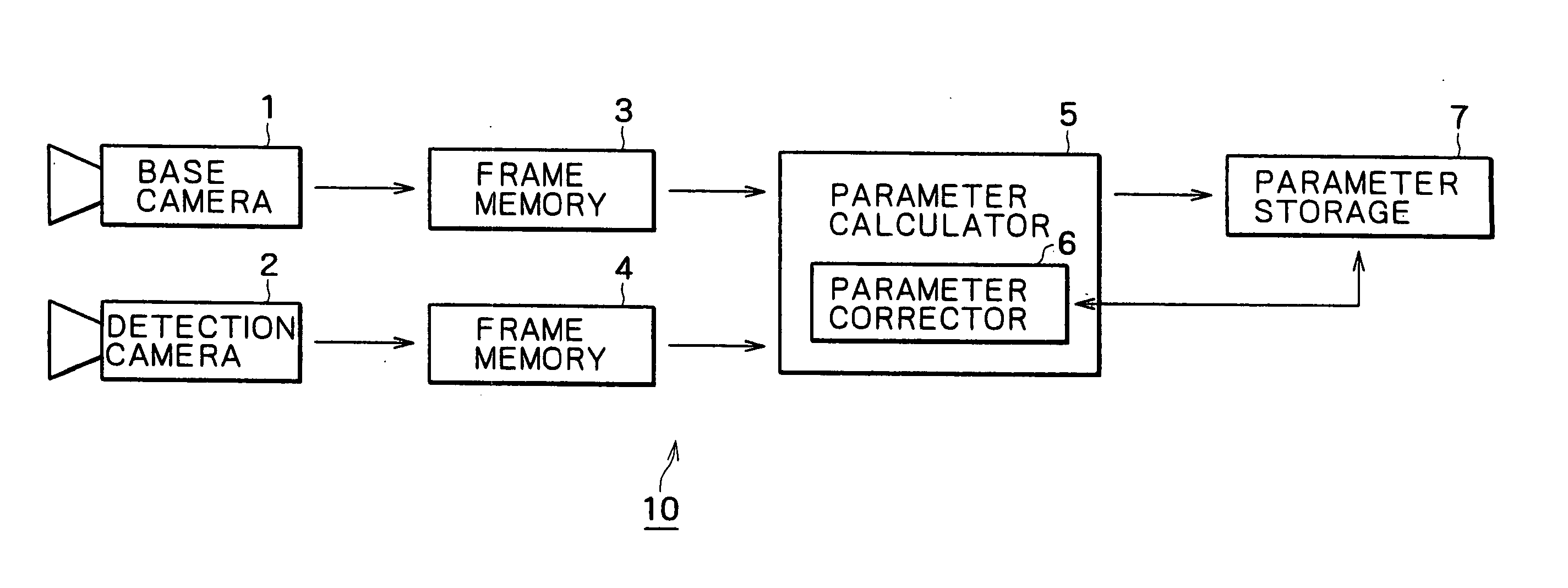

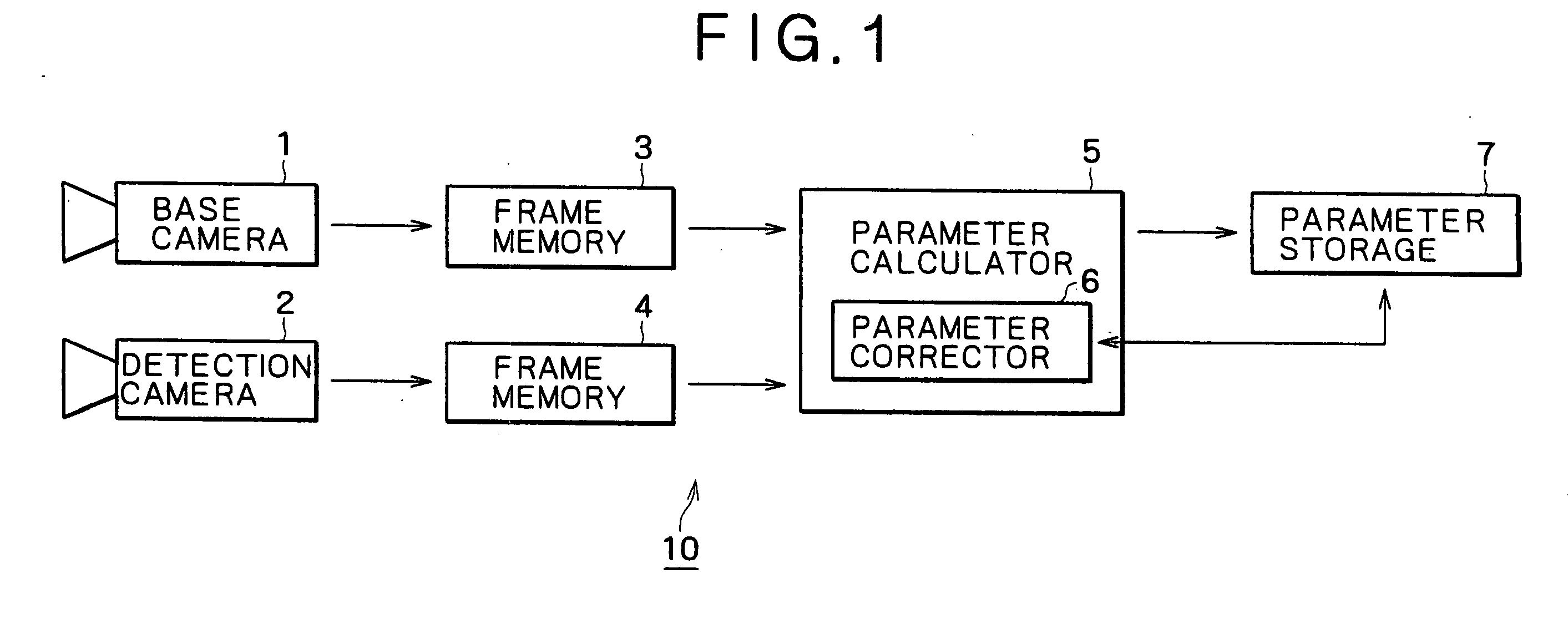

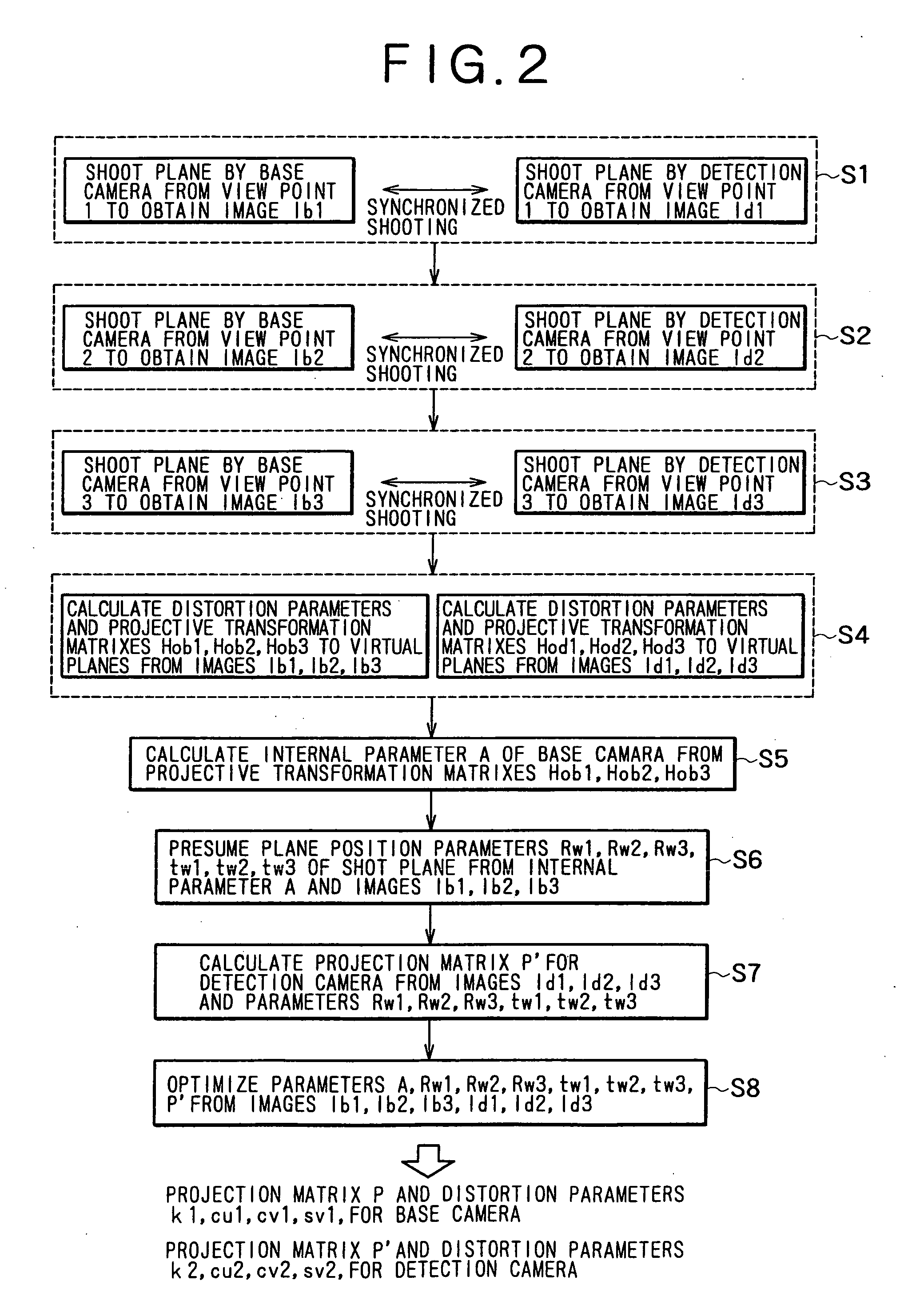

A camera calibration device capable of simply calibrating a stereo system consisting of a base camera and a detection camera. First, distortion parameters of the two cameras necessary for distance measurement are presumed by the use of images obtained by shooting a patterned object plane with the base camera and the reference camera at three or more view points free from any spatial positional restriction, and projective transformation matrixes for projecting the images respectively onto predetermined virtual planes are calculated. Then internal parameters of the base camera are calculated on the basis of the projective transformation matrixes relative to the images obtained from the base camera. Subsequently the position of the shot plane is presumed on the basis of the internal parameter of the base camera and the images obtained therefrom, whereby projection matrixes for the detection camera are calculated on the basis of the plane position parameters and the images obtained from the detection camera. According to this device, simplified calibration can be achieved stably without the necessity of any exclusive appliance.

Owner:SONY CORP

Resolution Scalable View Projection

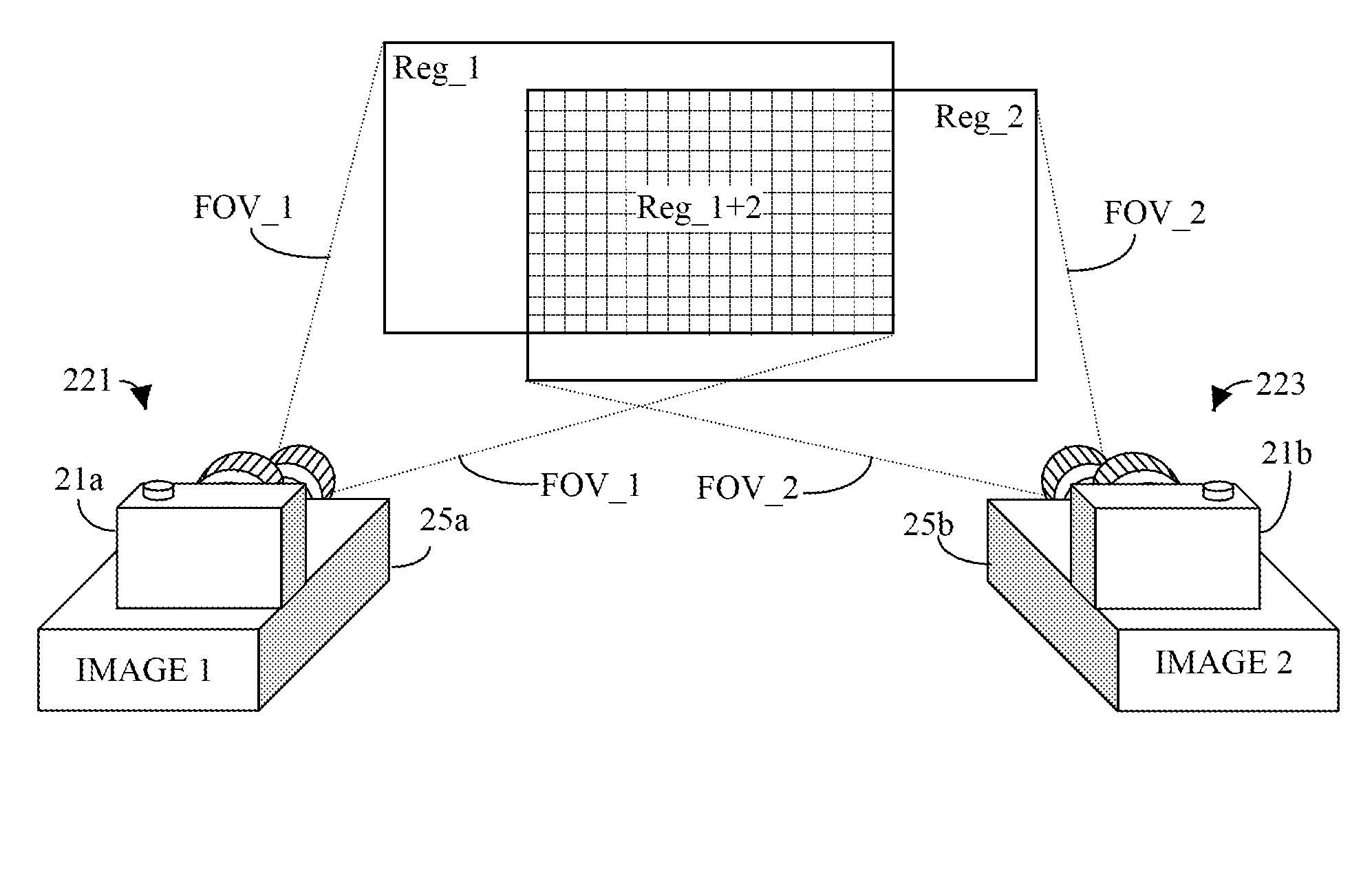

InactiveUS20100245684A1Simplified generationEasy to useTelevision system detailsTelevision system scanning detailsProjection imageImage resolution

A projection system uses a transformation matrix to transform a projection image p in such a manner so as to compensate for surface irregularities on a projection surface. The transformation matrix makes use of properties of light transport relating a projector to a camera. If the resolution a camera is lower than that of a projector within said projection system, then the transformation matrix will have holes where image data corresponding to a projector pixel will have been lost. In this, case, new image are generated to fill-in the holes.

Owner:SEIKO EPSON CORP

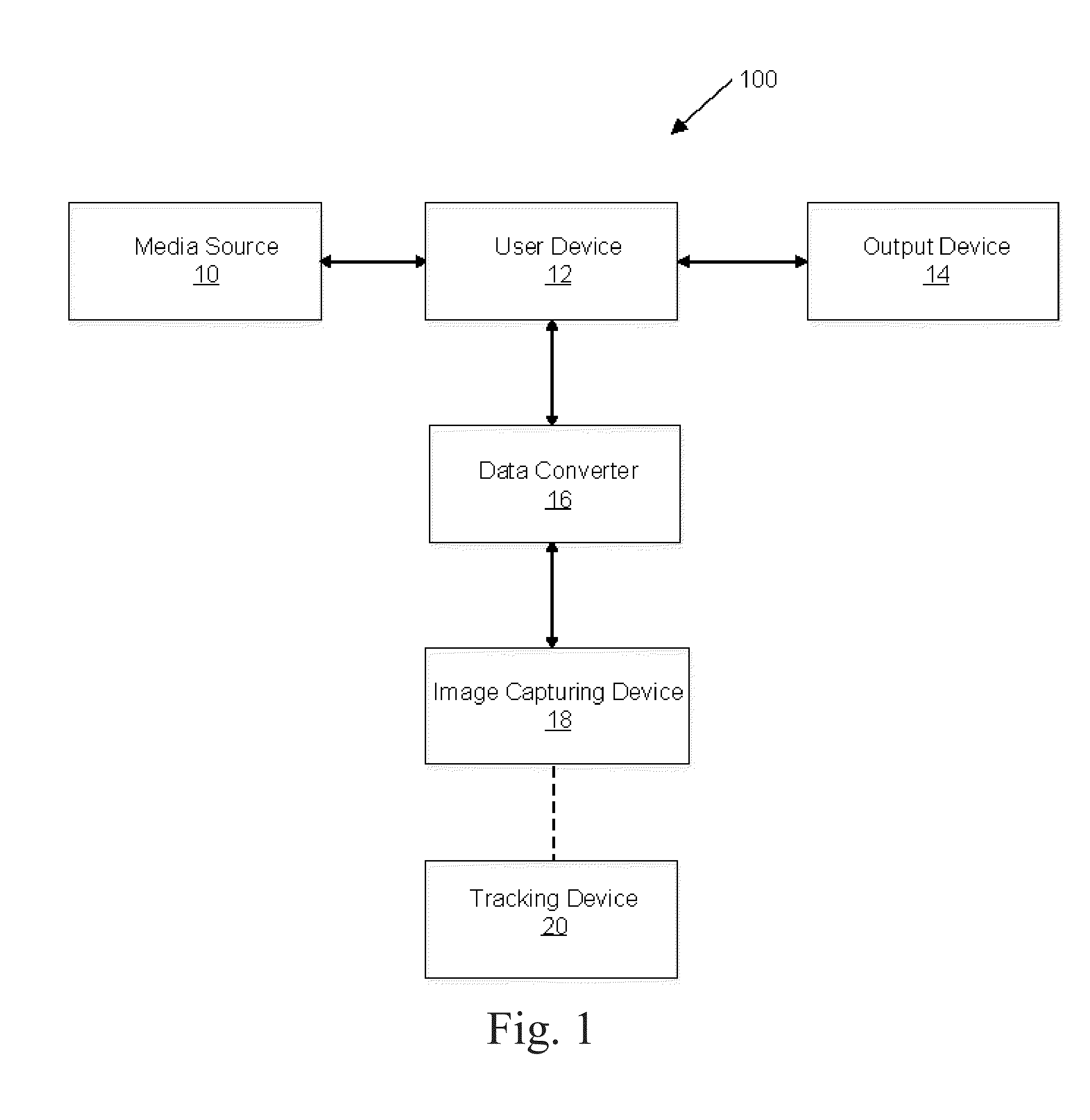

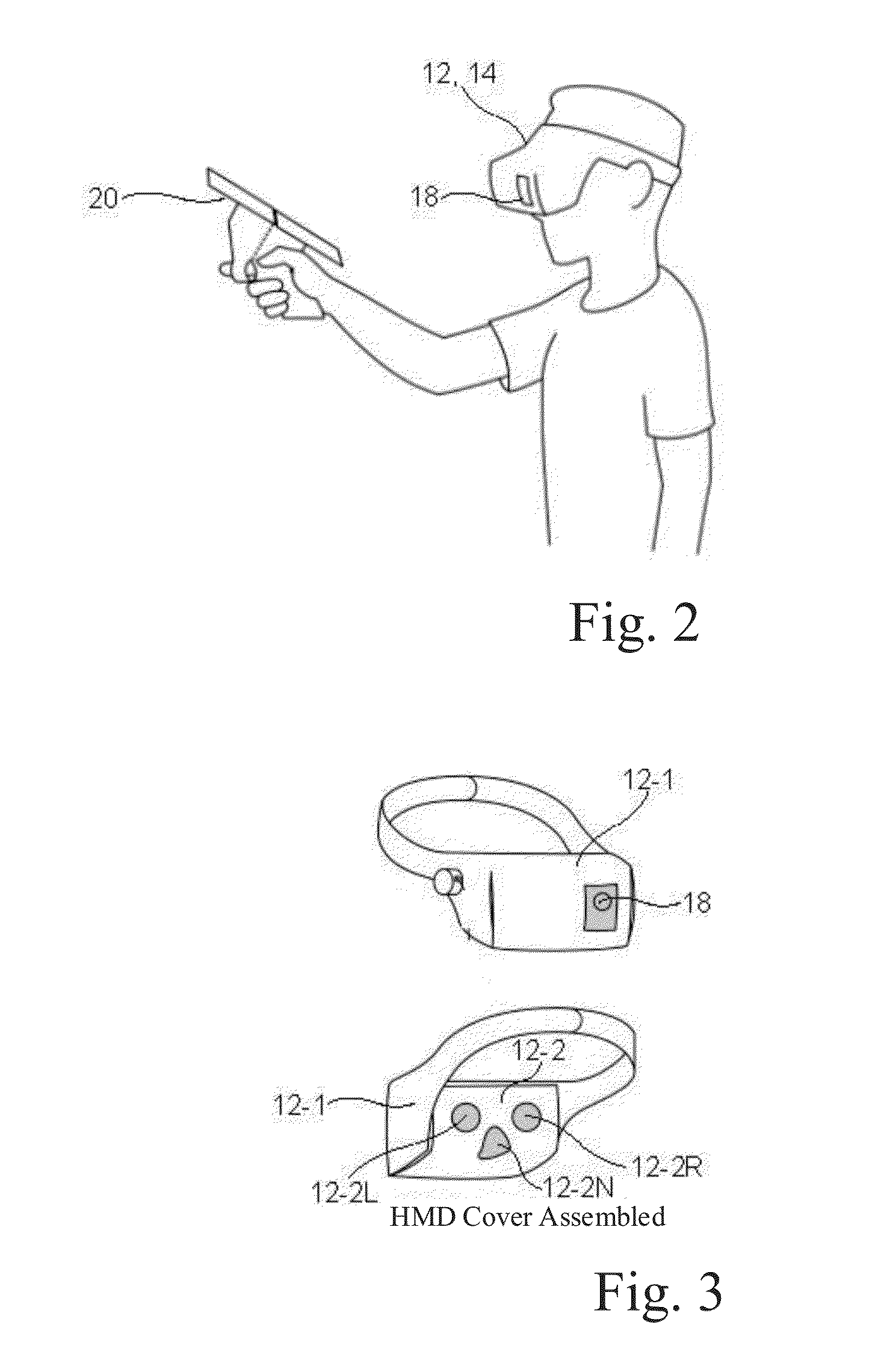

Virtual reality and augmented reality control with mobile devices

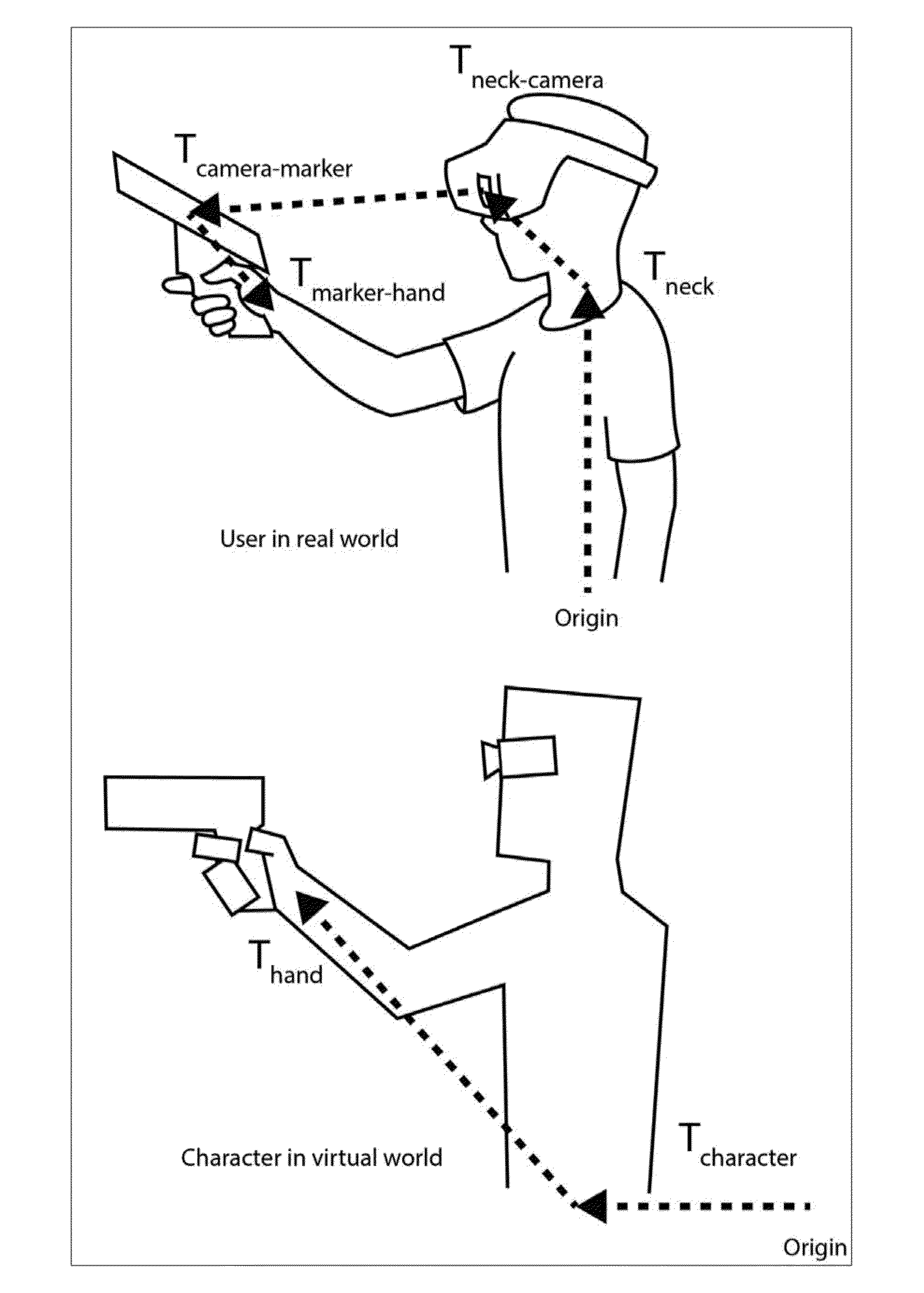

InactiveUS20160232715A1Input/output for user-computer interactionImage enhancementDisplay deviceEyewear

Systems and methods for generating an action in a virtual reality or augmented reality environment based on position or movement of a mobile device in the real world are disclosed. A particular embodiment includes: displaying an optical marker on a display device of a motion-tracking controller; receiving a set of reference data from the motion-tracking controller; receiving captured marker image data from an image capturing subsystem of an eyewear system; comparing reference marker image data with the captured marker image data, the reference marker image data corresponding to the optical marker; generating a transformation matrix using the reference marker image data and the captured marker image data, the transformation matrix corresponding to a position and orientation of the motion-tracking controller relative to the eyewear system; and generating an action in a virtual world, the action corresponding to the transformation matrix.

Owner:LEE FANGWEI

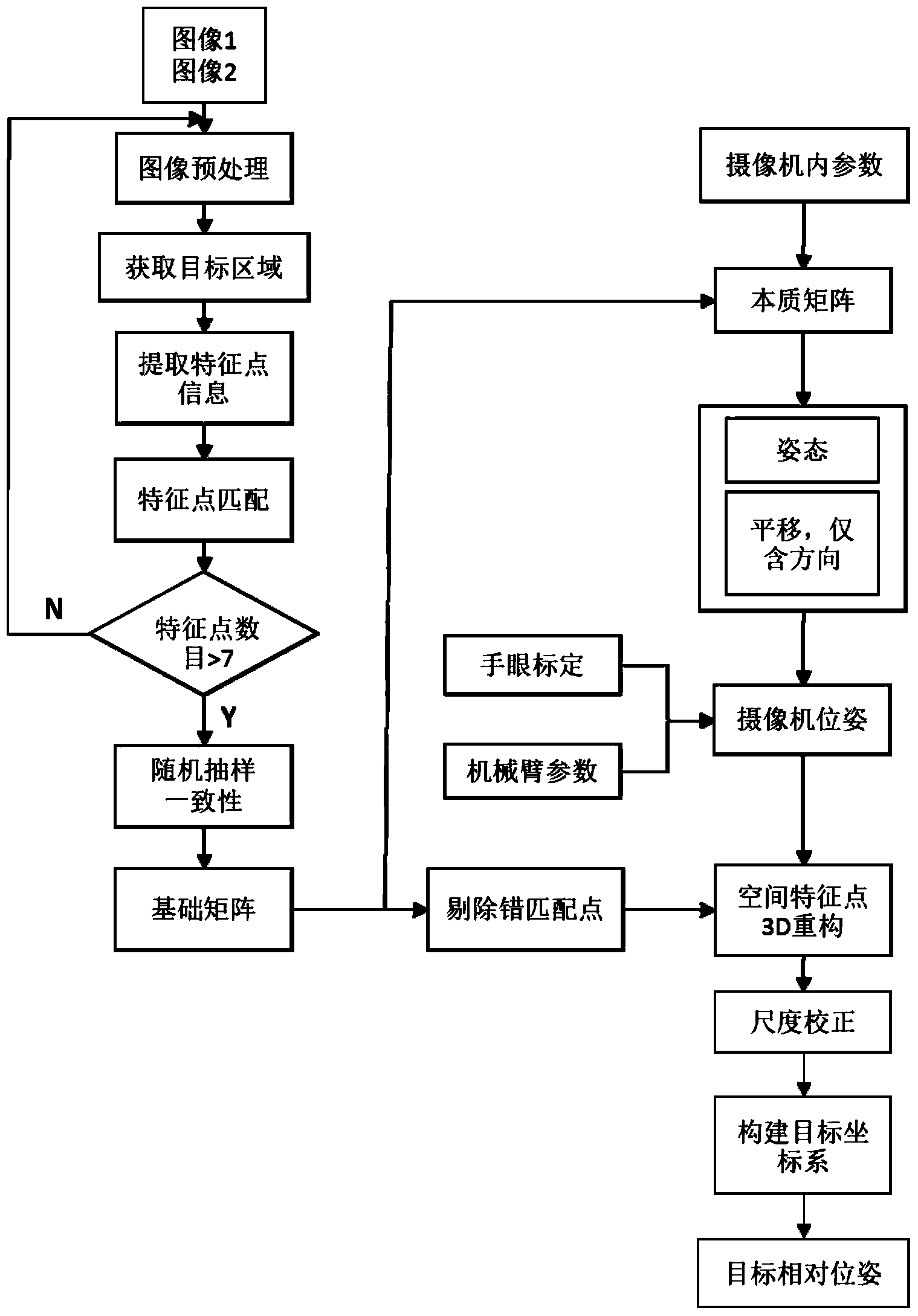

Dynamic target position and attitude measurement method based on monocular vision at tail end of mechanical arm

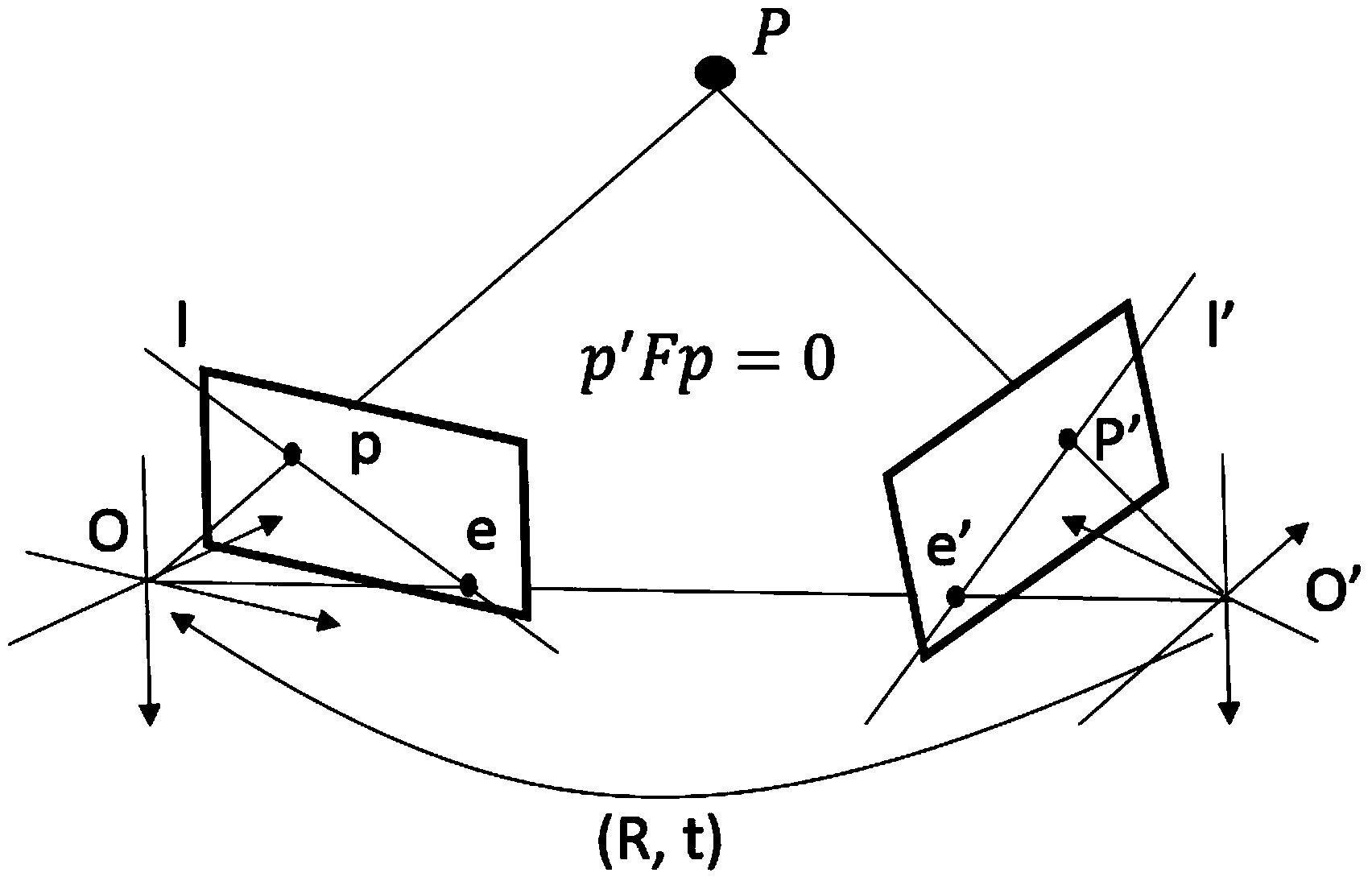

ActiveCN103759716ASimplified Calculation Process MethodOvercome deficienciesPicture interpretationEssential matrixFeature point matching

The invention relates to a dynamic target position and attitude measurement method based on monocular vision at the tail end of a mechanical arm and belongs to the field of vision measurement. The method comprises the following steps: firstly calibrating with a video camera and calibrating with hands and eyes; then shooting two pictures with the video camera, extracting spatial feature points in target areas in the pictures by utilizing a scale-invariant feature extraction method and matching the feature points; resolving a fundamental matrix between the two pictures by utilizing an epipolar geometry constraint method to obtain an essential matrix, and further resolving a rotation transformation matrix and a displacement transformation matrix of the video camera; then performing three-dimensional reconstruction and scale correction on the feature points; and finally constructing a target coordinate system by utilizing the feature points after reconstruction so as to obtain the position and the attitude of a target relative to the video camera. According to the method provided by the invention, the monocular vision is adopted, the calculation process is simplified, the calibration with the hands and the eyes is used, and the elimination of error solutions in the measurement process of the position and the attitude of the video camera can be simplified. The method is suitable for measuring the relative positions and attitudes of stationery targets and low-dynamic targets.

Owner:TSINGHUA UNIV

Camera calibration device and method, and computer system

InactiveUS6985175B2Simply carrying out camera calibrationImage analysisOptical rangefindersHat matrixCamera lens

A camera calibration device capable of simply calibrating a stereo system consisting of a base camera and detection camera. First, distortion parameters of the two cameras necessary for distance measurement are presumed by the use of images obtained by shooting a patterned object plane with the base camera and the reference camera at three or more view points free from any spatial positional restriction, and projective transformation matrixes for projecting the images respectively onto predetermined virtual planes are calculated. Then internal parameters of the base camera are calculated on the basis of the projective transformation matrixes relative to the images obtained from the base camera. Subsequently the position of the shot plane is presumed on the basis of the internal parameter of the base camera and the images obtained therefrom, whereby projection matrixes for the detection camera are calculated on the basis of the plane position parameters and the images obtained from the detection camera. According to this device, simplified calibration can be achieved stably without the necessity of any exclusive appliance.

Owner:SONY CORP

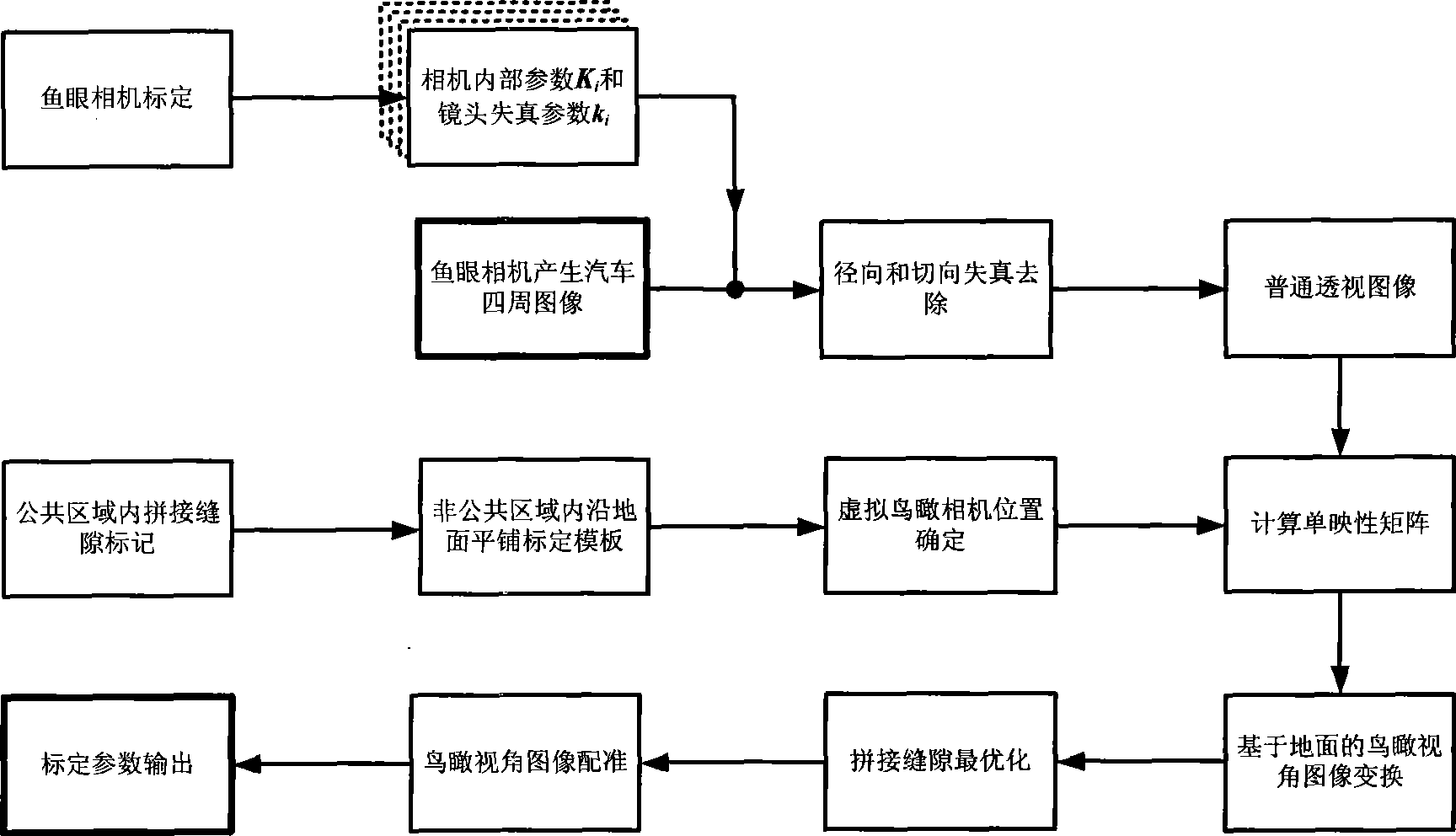

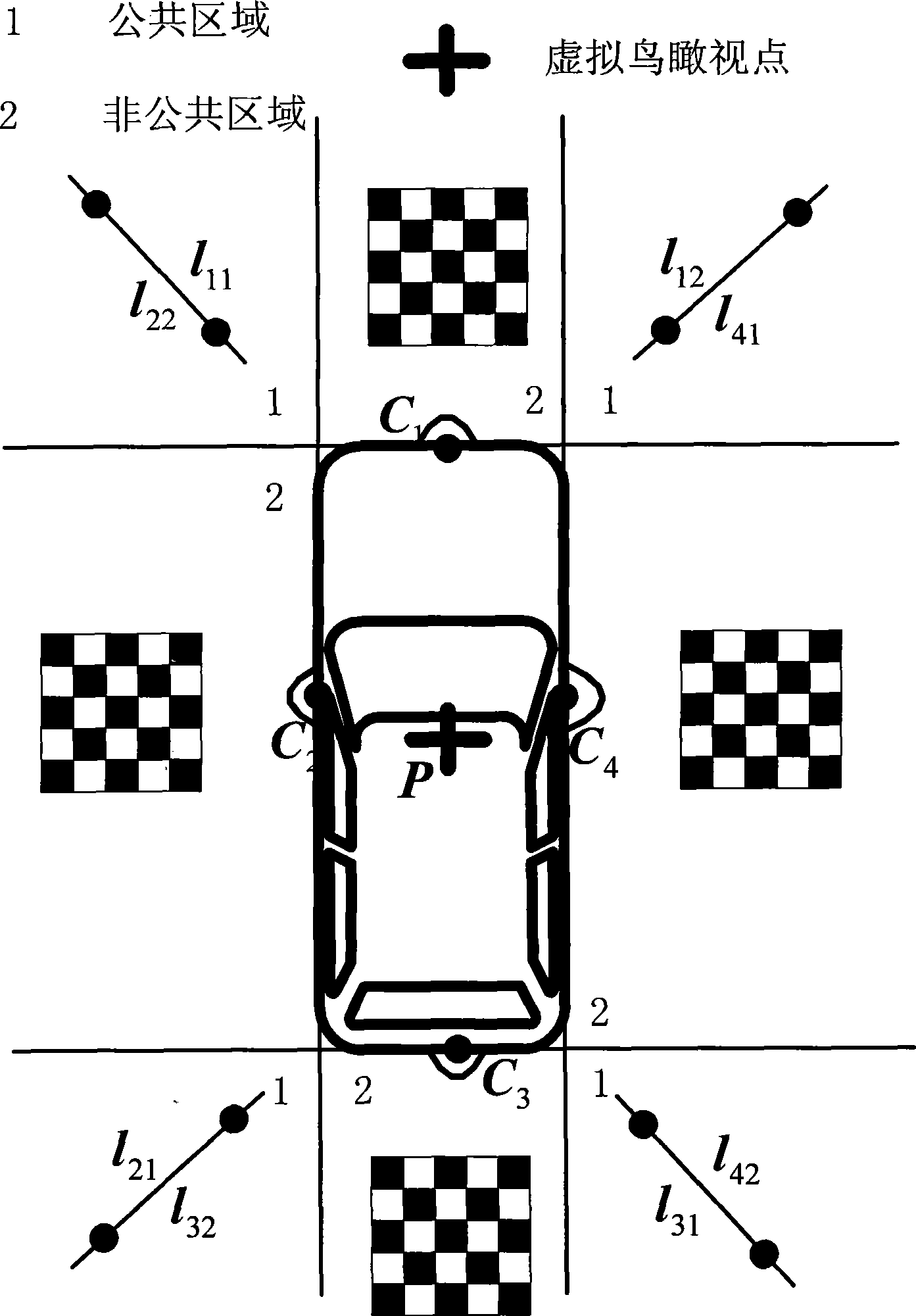

Panoramic view vision auxiliary parking system demarcating method

InactiveCN101425181AGood panoramic bird's eye view effectSimple methodImage analysisSystem integrationView camera

The invention discloses a parking method for a panoramic optical aid parking system, which uses images generated by at least two wide-angle fisheye cameras installed at the periphery of an automobile for generating a virtual aerial view image at a certain height at the top of the automobile. The method comprises the following steps: model parameters of the wide-angle fisheye cameras are calculated; a certain straight line in a public view field is identified as a splice seam; the position of a world coordinate system in a non-public view field is identified; the position of a visual aerial view camera is fixed; a single-mapping transformation matrix based on the ground level is calculated; the position of the splice position is totally optimized; position parameters among all images under the visual aerial view camera are calculated. The method has the advantages of low requirement to equipment, simple process, and no dependence on relative positions among cameras and accurate posture parameters, and reduces the complexity of system integration; calculated parameters during real-time work can be provided by one-time parking; and the method has favorable peripheral panoramic aerial view effect of automobiles.

Owner:ZHEJIANG UNIV

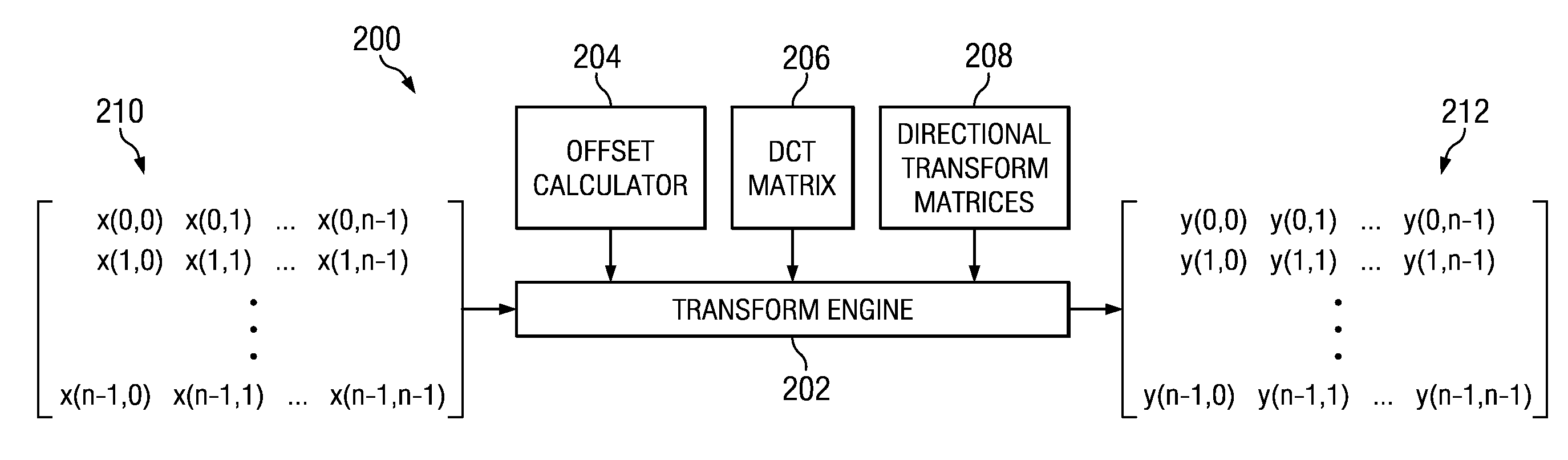

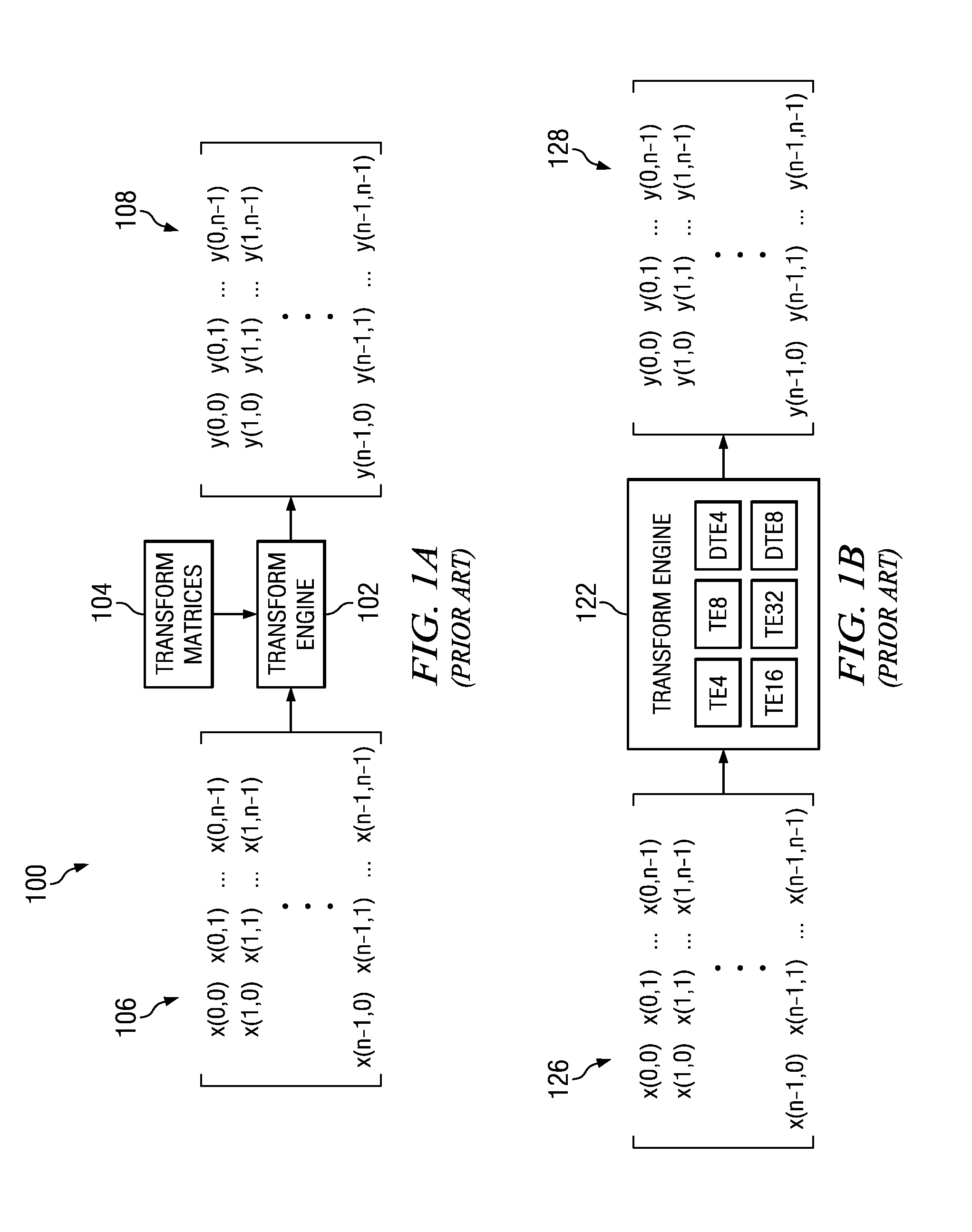

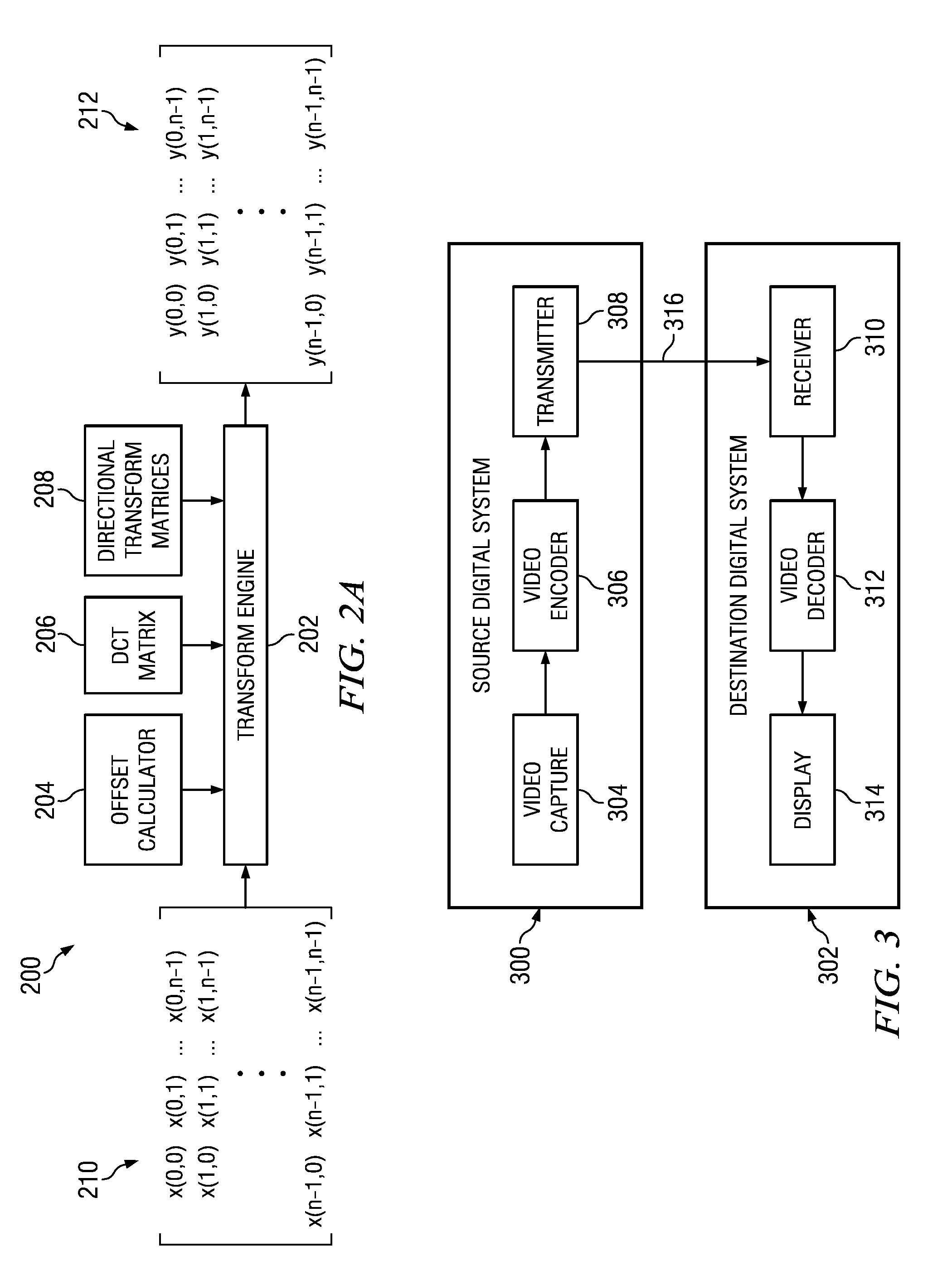

Transform and Quantization Architecture for Video Coding and Decoding

ActiveUS20120082212A1Color television with pulse code modulationColor television with bandwidth reductionComputer graphics (images)Video encoding

A method of encoding a video stream in a video encoder is provided that includes computing an offset into a transform matrix based on a transform block size, wherein a size of the transform matrix is larger than the transform block size, and wherein the transform matrix is one selected from a group consisting of a DCT transform matrix and an IDCT transform matrix, and transforming a residual block to generate a DCT coefficient block, wherein the offset is used to select elements of rows and columns of a DCT submatrix of the transform block size from the transform matrix.

Owner:TEXAS INSTR INC

Three-dimensional vision sensor

InactiveUS20100232681A1Avoid performanceCancel noiseImage enhancementImage analysisTransformation parameterThree dimensional measurement

An object of the present invention is to enable performing height recognition processing by setting a height of an arbitrary plane to zero for convenience of the recognition processing. A parameter for three-dimensional measurement is calculated and registered through calibration and, thereafter, an image pickup with a stereo camera is performed on a plane desired to be recognized as having a height of zero in actual recognition processing. Further, three-dimensional measurement using the registered parameter is performed on characteristic patterns (marks m1, m2 and m3) included in this plane. Three or more three-dimensional coordinates are obtained through this measurement and, then, a calculation equation expressing a plane including these coordinates is derived. Further, based on a positional relationship between a plane defined as having a height of zero through the calibration and the plane expressed by the calculation equation, a transformation parameter (a homogeneous transformation matrix) for displacing points in the former plane into the latter plane is determined, and the registered parameter is changed using the transformation parameter.

Owner:ORMON CORP

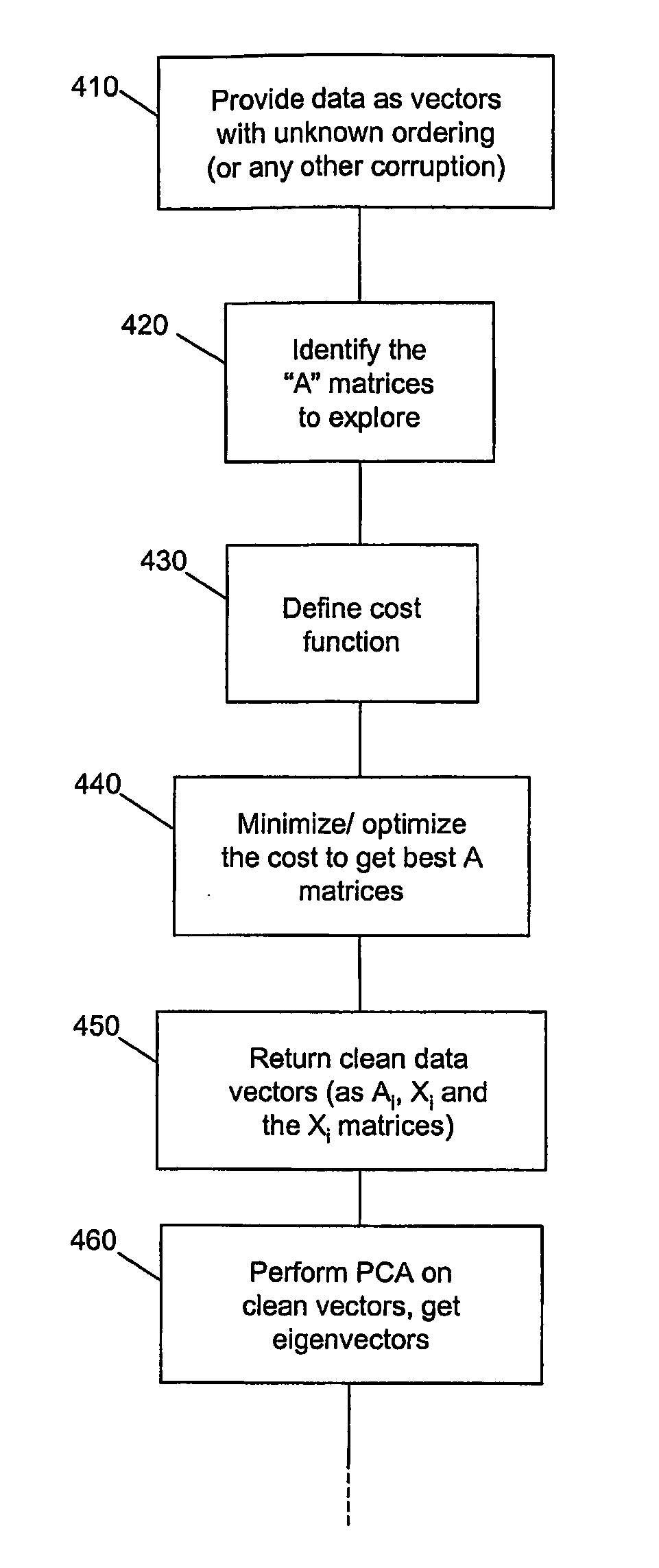

Ordered data compression system and methods

Methods and systems are provided for encoding, transmission and decoding of vectorized input data, for example, video or audio data. A convex invariance learning framework is established for processing input data or a given data type. Each input vector is associated with a variable transformation matrix that acts on the vector to invariantly permute the vector elements. Joint invariance and model learning is performed on a training set of invariantly transformed vectors over a constrained space of transformation matrices using maximum likelihood analysis. The maximum likelihood analysis reduces the data volume to a linear subspace volume in which the training data can be modeled by a reduced number of variables. Principal component analysis is used to identify a set of N eigen vectors that span the linear subspace. The set of N eigenvectors is used a basis set to encode input data and to decode compressed data.

Owner:THE TRUSTEES OF COLUMBIA UNIV IN THE CITY OF NEW YORK

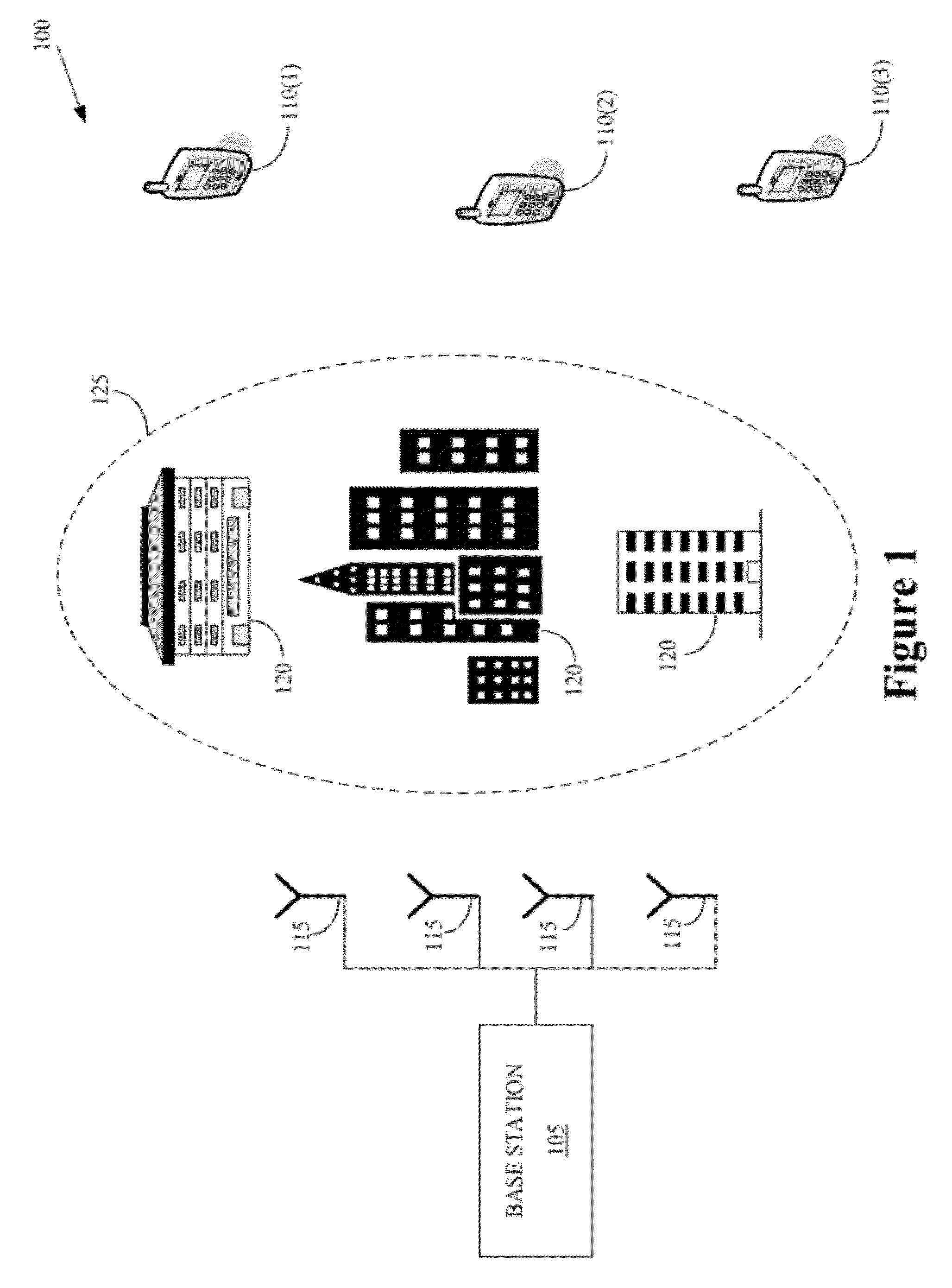

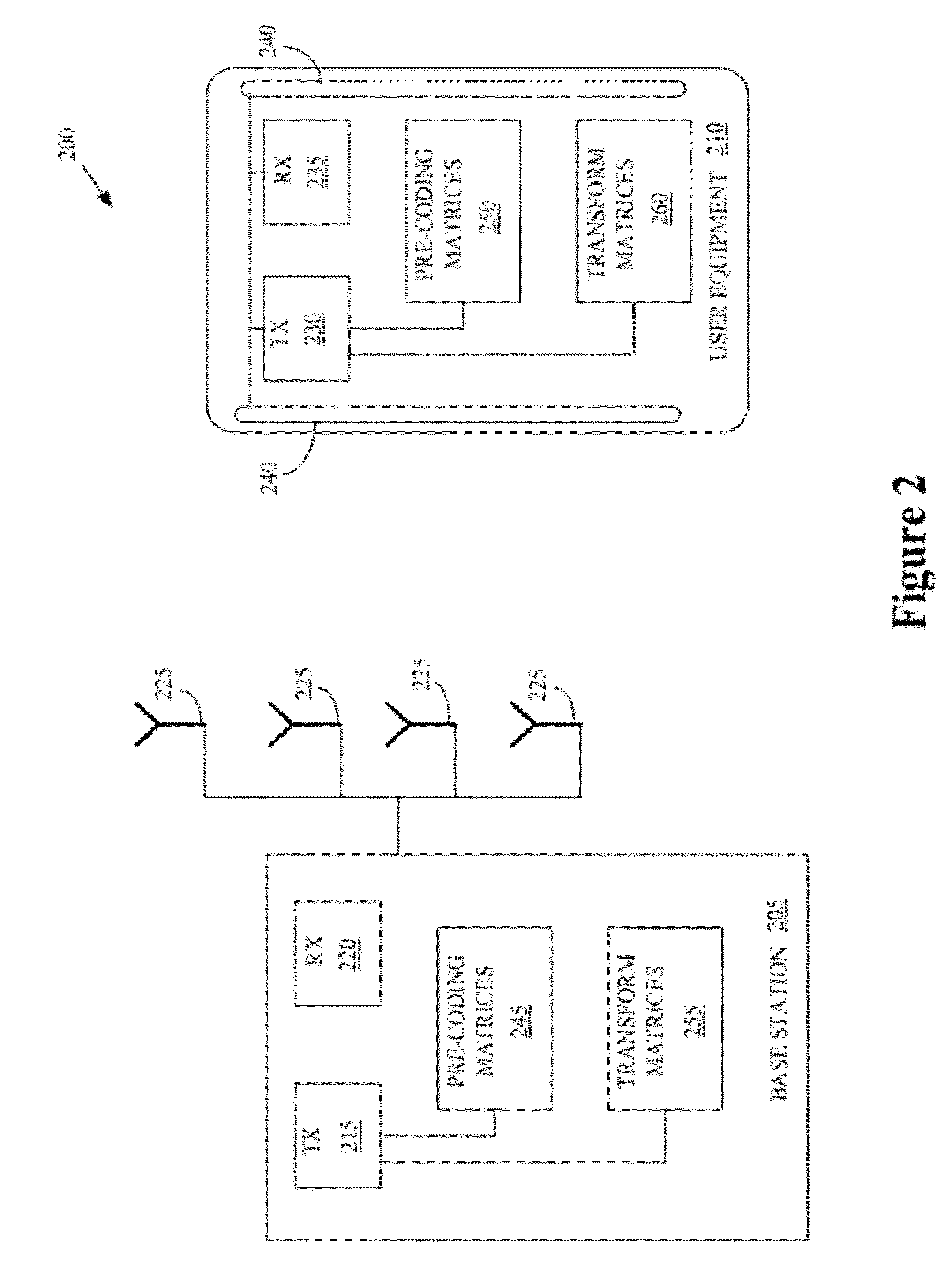

Method of transforming pre-coded signals for multiple-in-multiple-out wireless communication

ActiveUS20120281783A1Well formedModulated-carrier systemsPolarisation/directional diversityCommunications systemAir interface

The present invention provides a method of transforming pre-coded signals for transmission over an air interface in a MIMO wireless communication system. Embodiments of the method may include applying, at a transmitter, a transform matrix and a pre-coding matrix to a signal prior to transmitting the signal using a plurality of antennas deployed in a first antenna configuration. The pre-coding matrix is selected from a codebook defined for a second antenna configuration deployed in a non-scattering environment. The transform matrix is defined based on the first antenna configuration and a scattering environment associated with the transmitter.

Owner:WSOU INVESTMENTS LLC

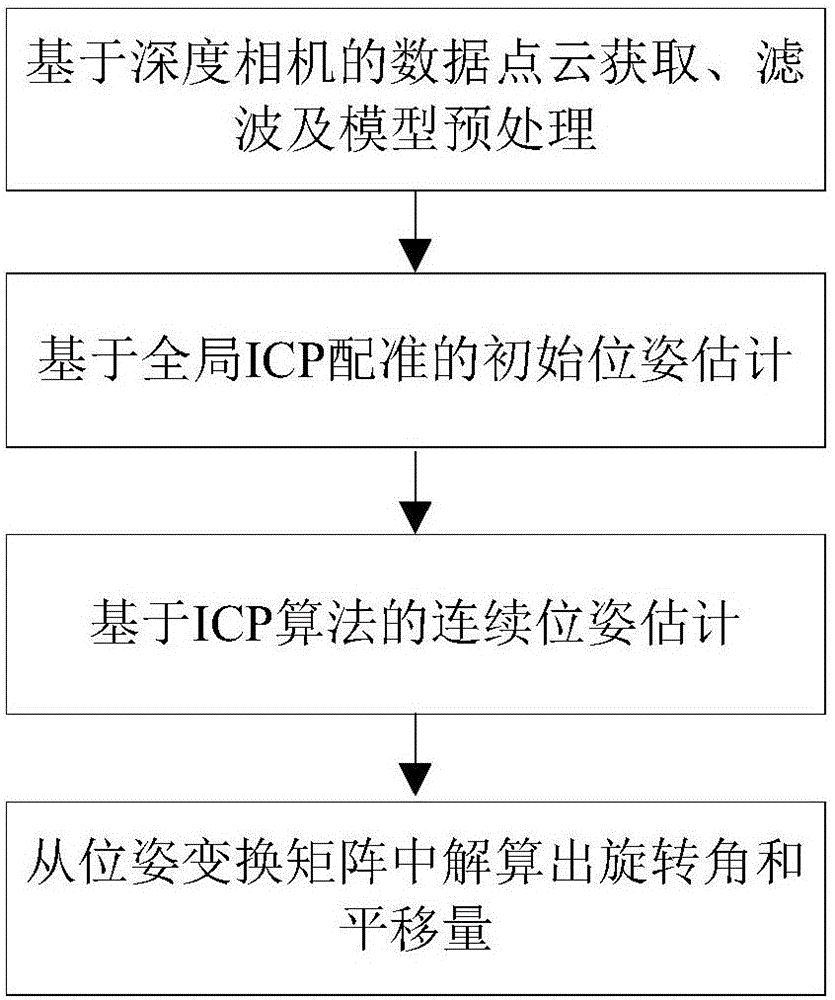

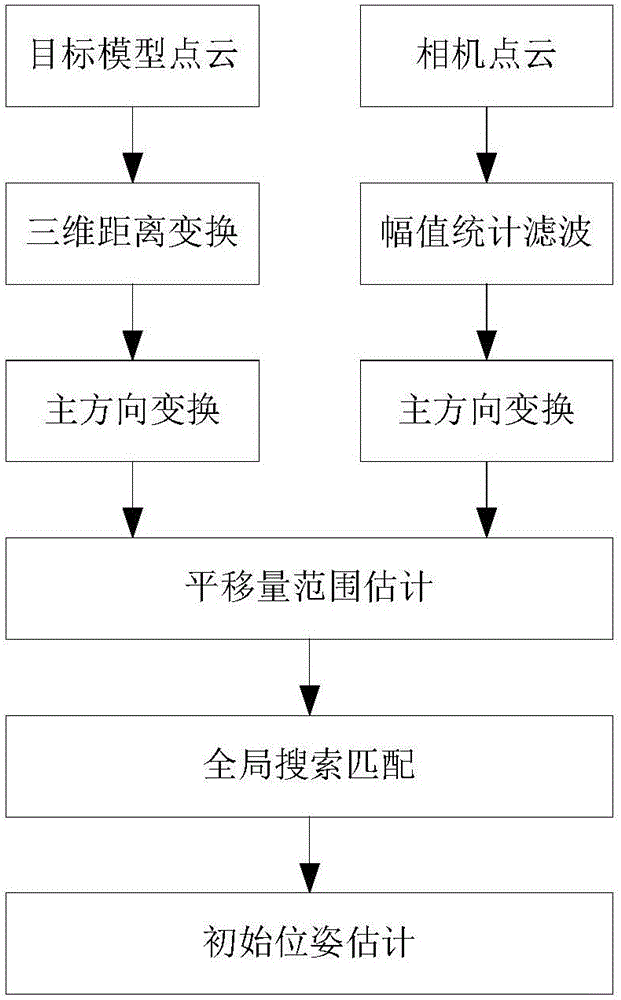

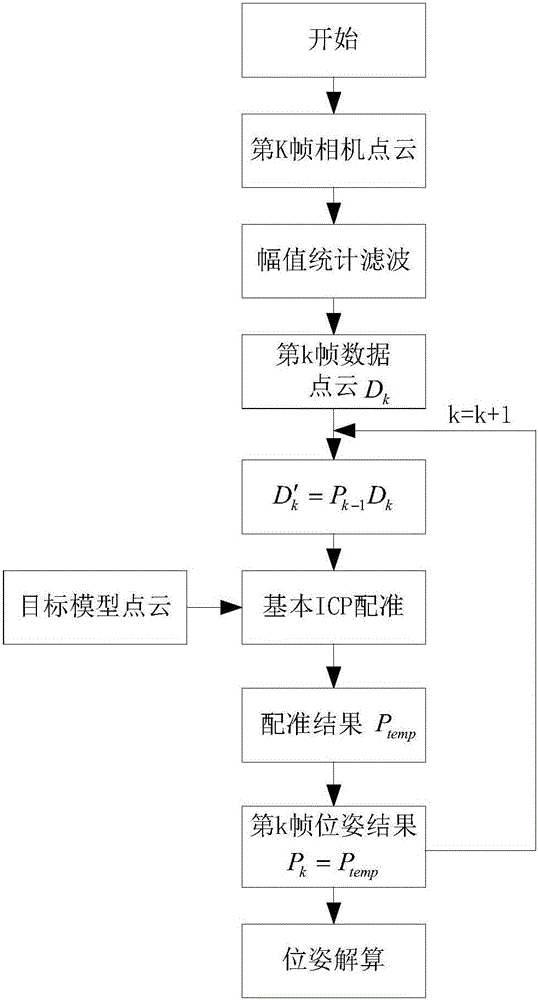

Spatial non-cooperative target pose estimation method based on model and point cloud global matching

The invention discloses a spatial non-cooperative target pose estimation method based on model and point clod global matching. The method comprises the steps that target scene point cloud is acquired by using a depth camera, the target scene point cloud acts as data point cloud to be registered after being filtered, and three-dimensional distance transformation is carried out on the target model point cloud; deblurring main directional transformation is carried out on the initial data point cloud to be registered and the target model point cloud, a translation domain is determined, search and registration are carried out in the translation domain and a rotation domain by using a global ICP algorithm, and an initial transformation matrix from a model coordinate system to a camera coordinate system is acquired, namely, the initial pose of a target is acquired; a pose transformation matrix of the pervious frame is enabled to act on data point cloud of the current frame, and registration with a model is carried out by using the ICP algorithm so as to acquire the pose of the current frame; and a rotation angle and a translation amount are calculated from the pose transformation matrix. The method disclosed by the invention has good anti-noise performance and an ability of outputting the target pose in real time, geometric features such as the normal and the curvature of the data point cloud are not required to be calculated, the registration speed is high, and the precision is high.

Owner:NANJING UNIV OF SCI & TECH

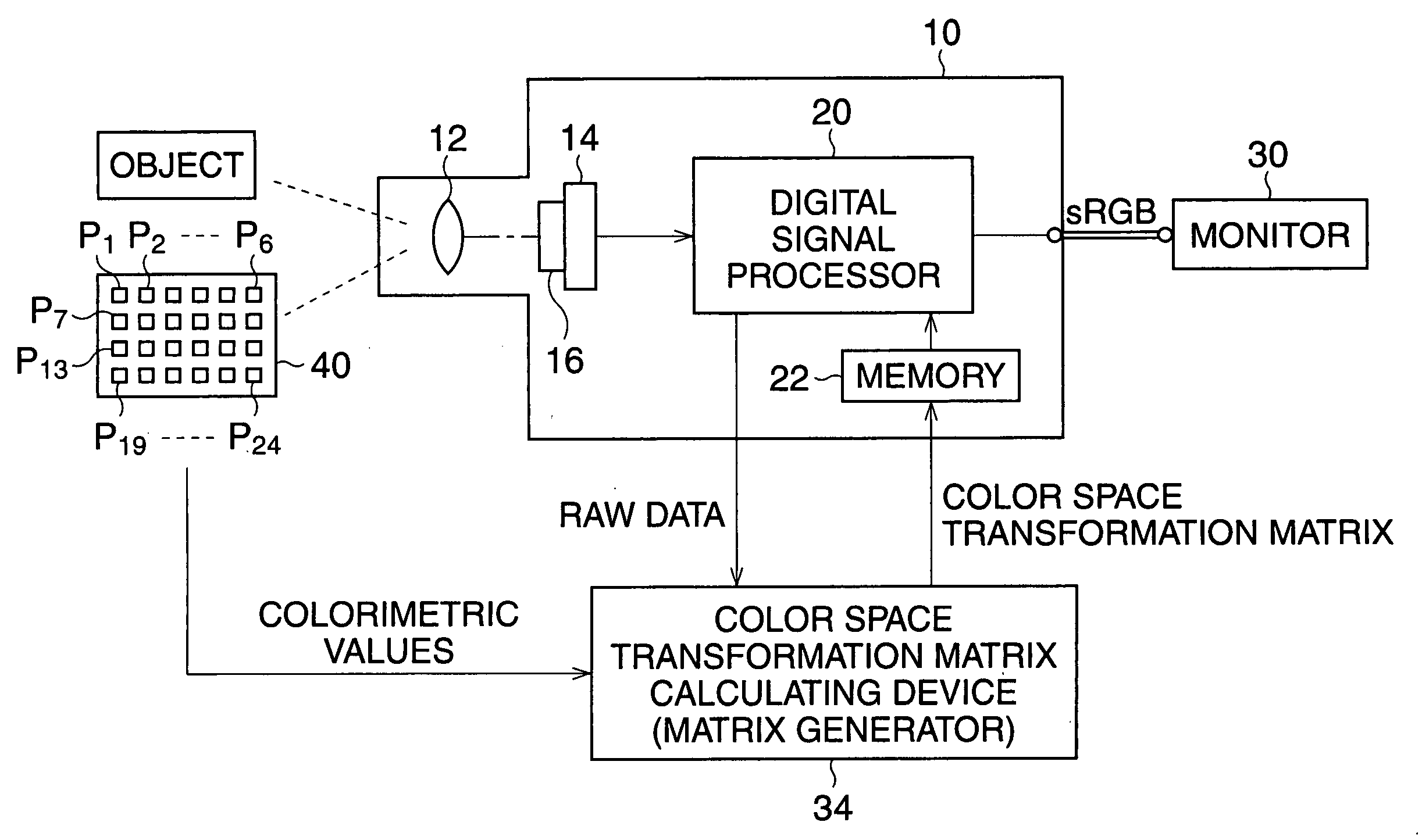

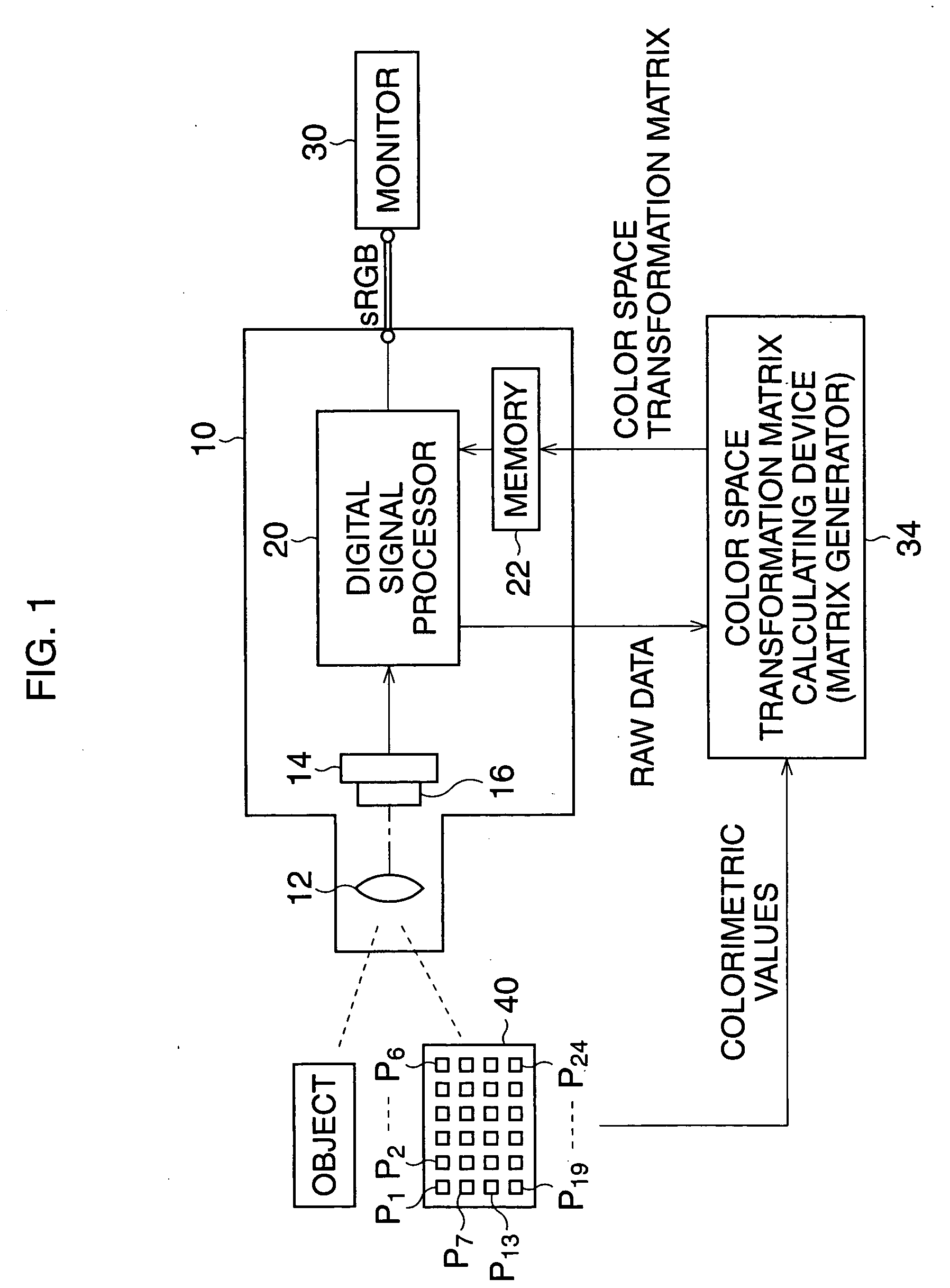

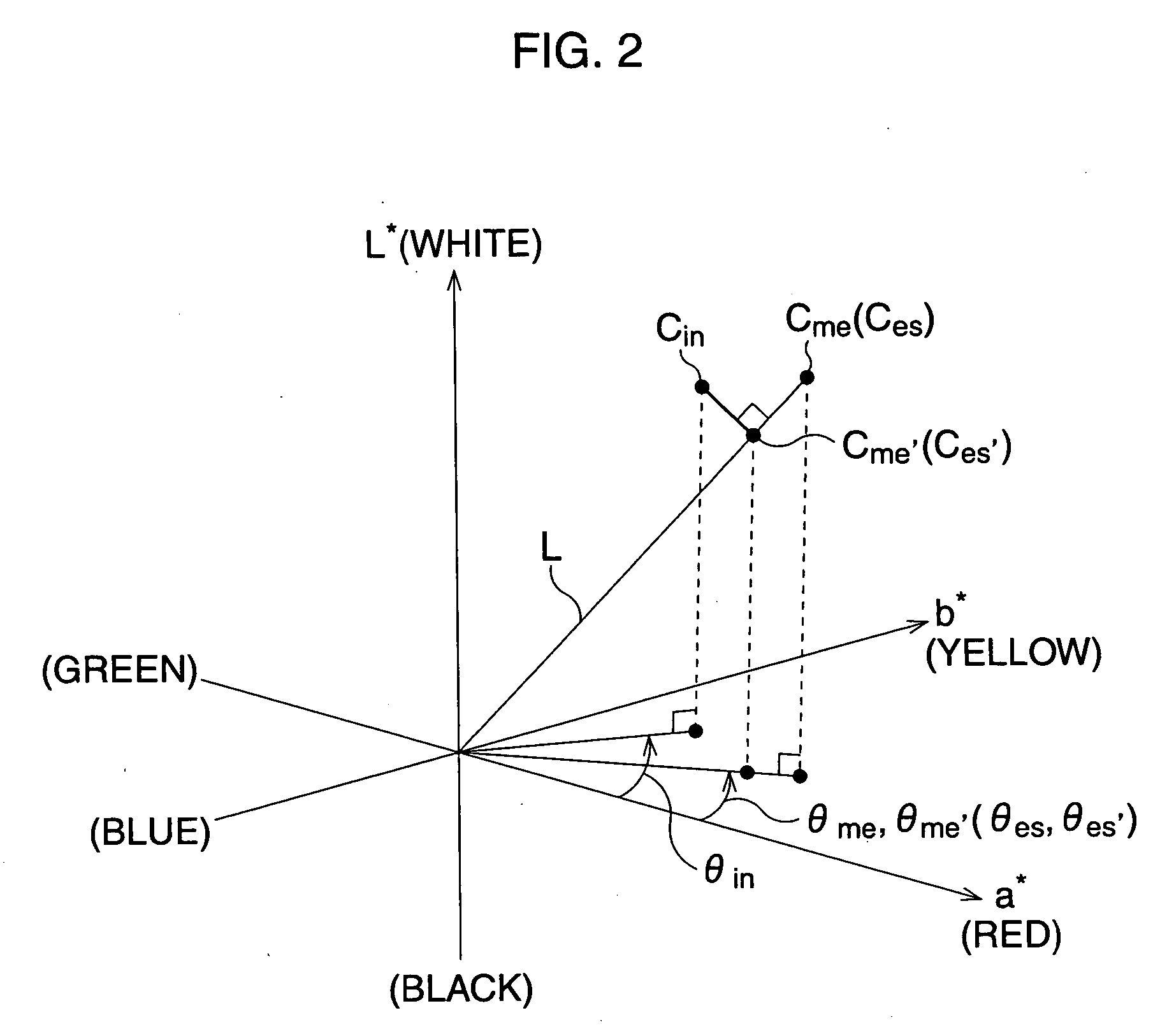

Color-space transformation-matrix calculating system and calculating method

InactiveUS20050018226A1True colorDigitally marking record carriersDigital computer detailsPattern recognitionColor transformation

A color space transformation matrix calculating system is provided that optimizes a color space transformation matrix. The matrix is obtained as a product of a first and a second matrix and transforms colors in a first color space to colors in a second color space. The system comprises first and second optimizers that calculate elements of the first matrix and second matrix by multiple linear regression analysis. The input colors which correspond to color patches and hue corrected colors obtained by using the first matrix and the input colors are set as explanatory variables. First and second goal colors respectively relating to hue and saturation in a second color space, and which correspond to the color patches, are set as criterion variables. The elements of matrices are set as partial regression coefficients.

Owner:HOYA CORP

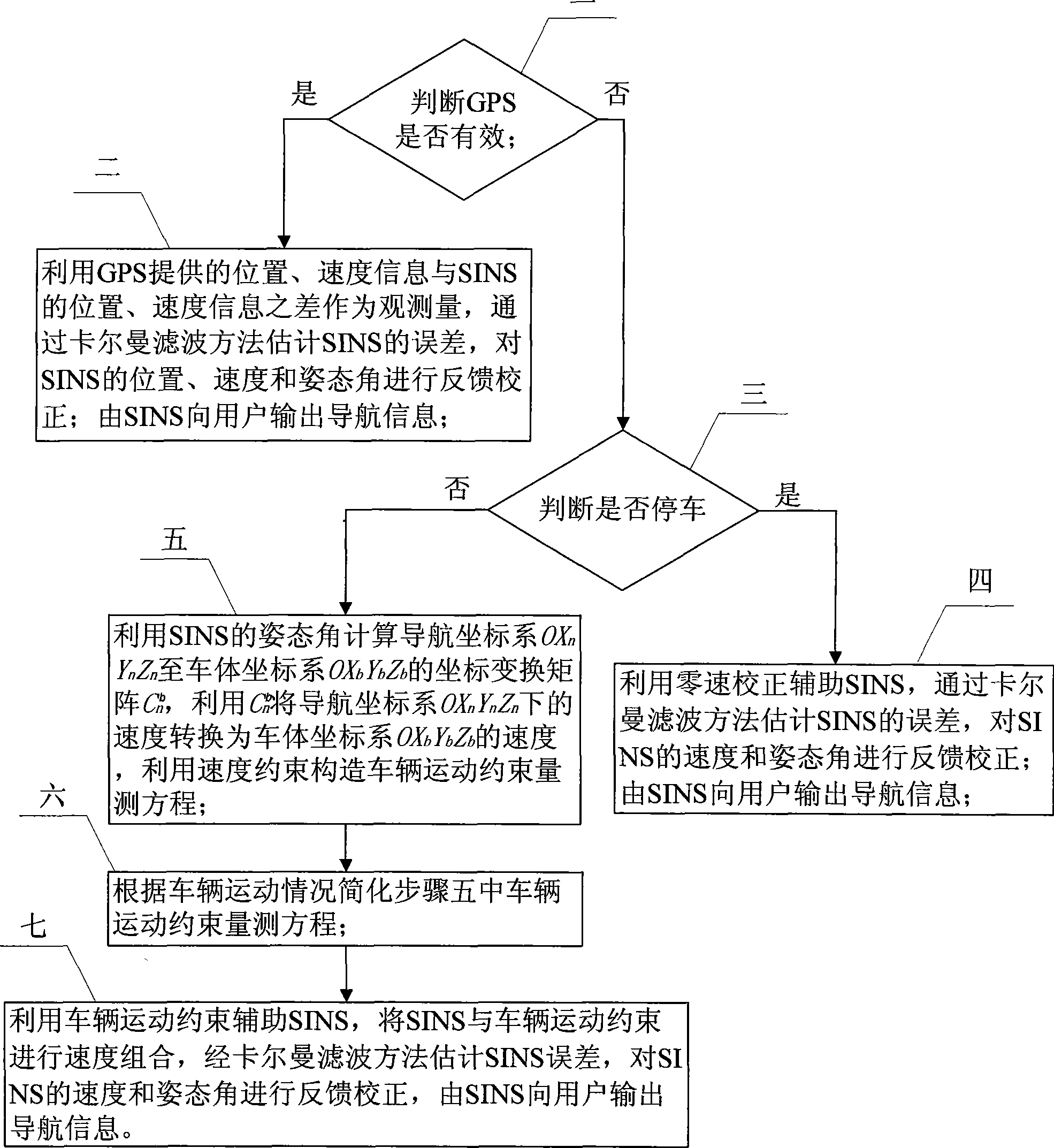

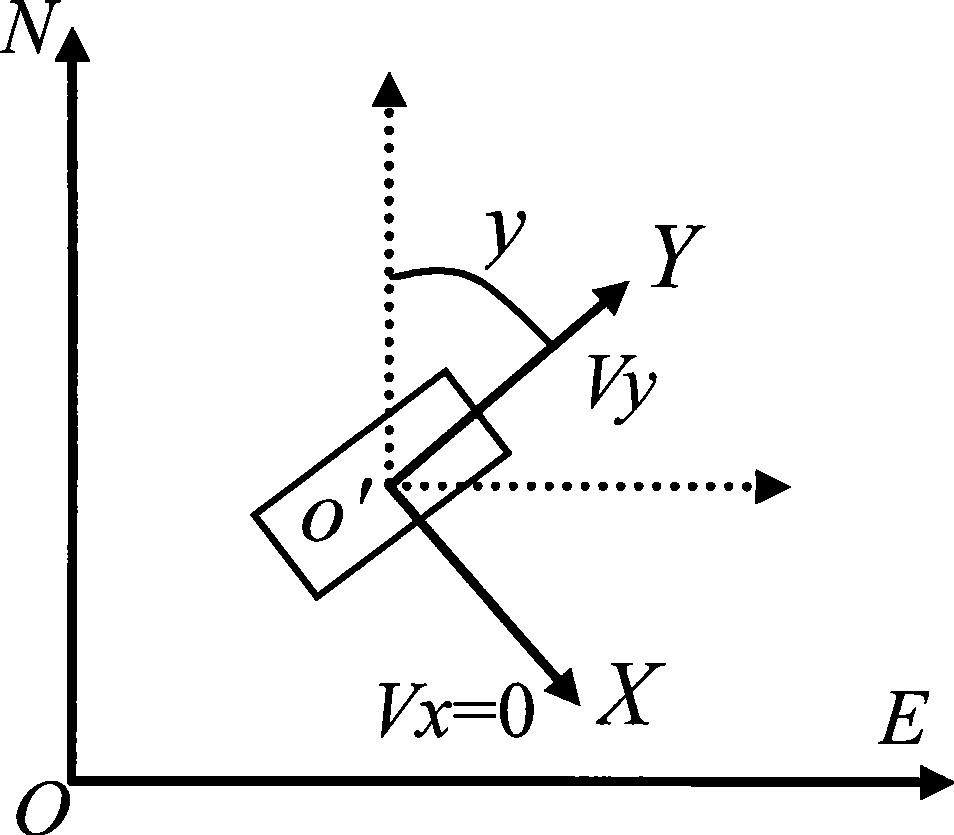

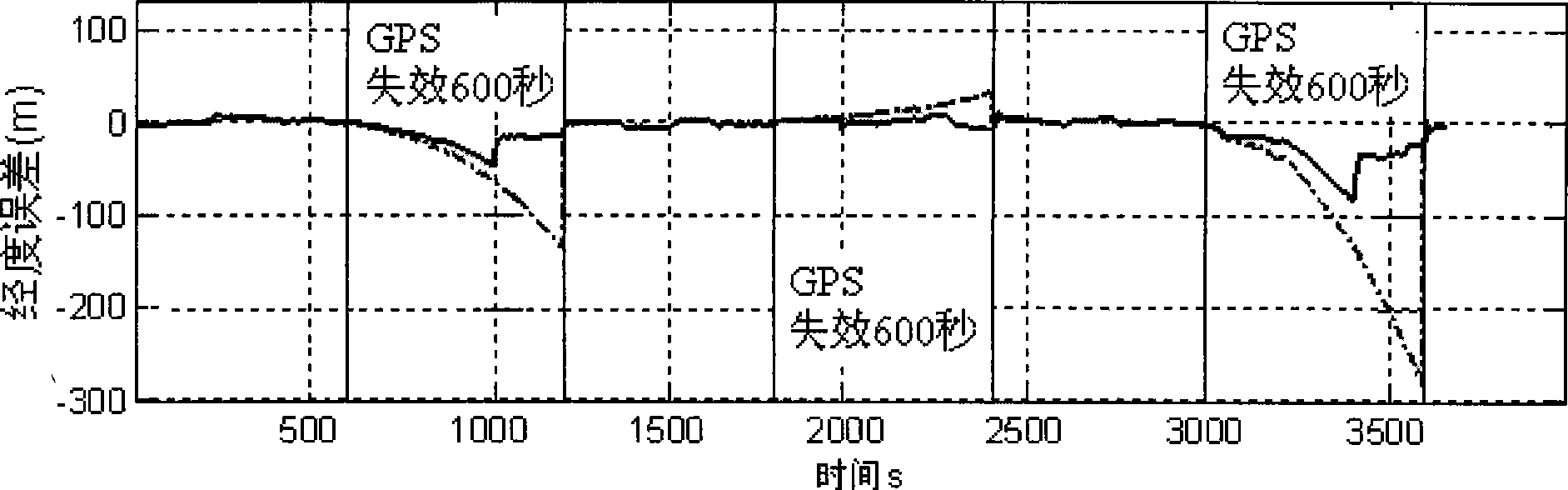

Vehicle-mounted SINS/GPS combined navigation system performance reinforcement method

InactiveCN101476894AHigh precisionImprove reliabilityInstruments for road network navigationPosition fixationNavigation systemMarine navigation

The invention discloses a method for enhancing the performance of a vehicle-mounted SINS / GPS combined navigation system. The invention relates to the technical field of navigation and solves the problems of the prior vehicle-mounted SINS / GPS combined navigation system of low precision and low reliability of the system due to the temporary failure of the GPS. The method comprises the following steps: firstly, judging whether or not the GPS is effective; if the GPS is effective, evaluating and correcting an SINS error by a Kalman filtering method and by using the difference between position and velocity information provided by the GPS and the position and velocity information of the SINS as an observed quantity; if the GPS is noneffective, judging whether or not to stop; if to stop, correcting the SINS error by using a zero velocity update auxiliary SINS; if not to stop, calculating coordinate transformation matrix Cn from a navigation coordinate system to a vehicle body coordinate system by using the attitude angle of the SINS, converting the velocity under the navigation coordinate system into a velocity under the vehicle body coordinate system by using the Cn and creating a vehicle motion constraint measurement equation by using velocity constraint; simplifying the equation according to vehicle motion; and carrying out the velocity composition of the SINS and the vehicle motion constraint and correction by using a vehicle motion constraint auxiliary SINS. The method is used for improving the precision and reliability of the vehicle-mounted SINS / GPS combined navigation system.

Owner:HARBIN INST OF TECH

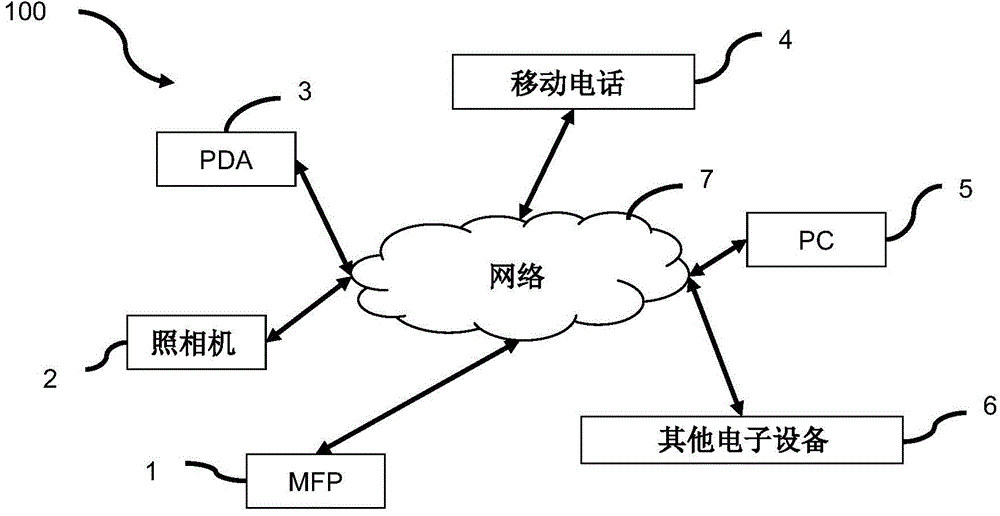

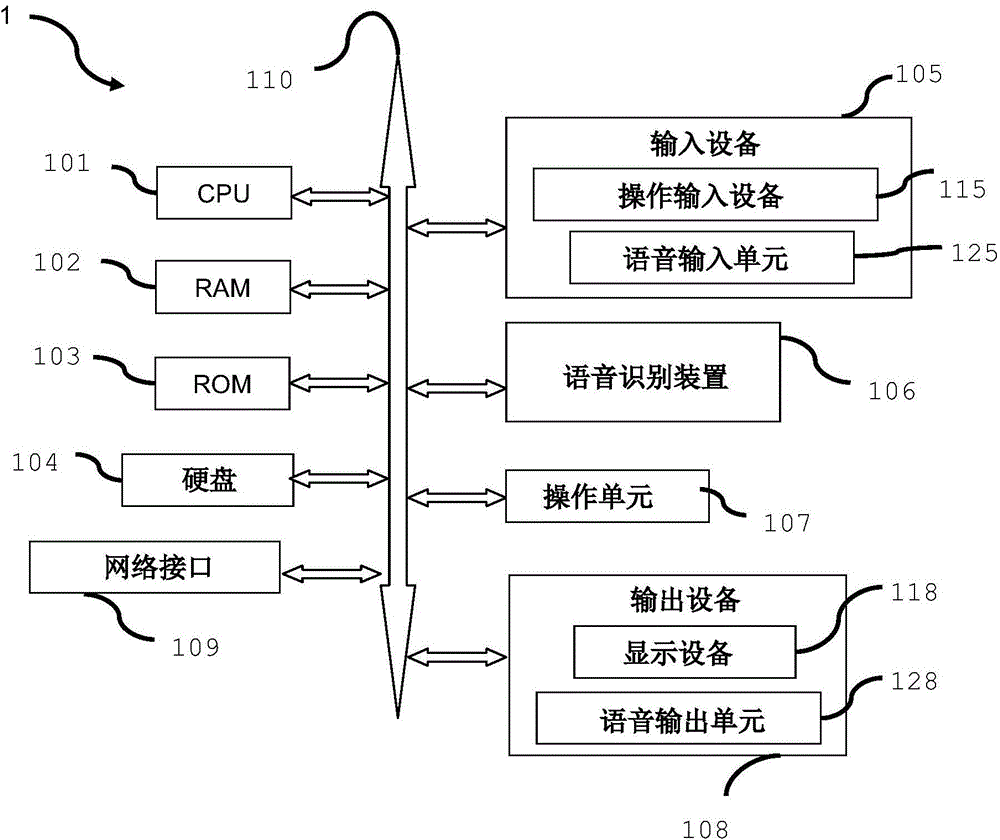

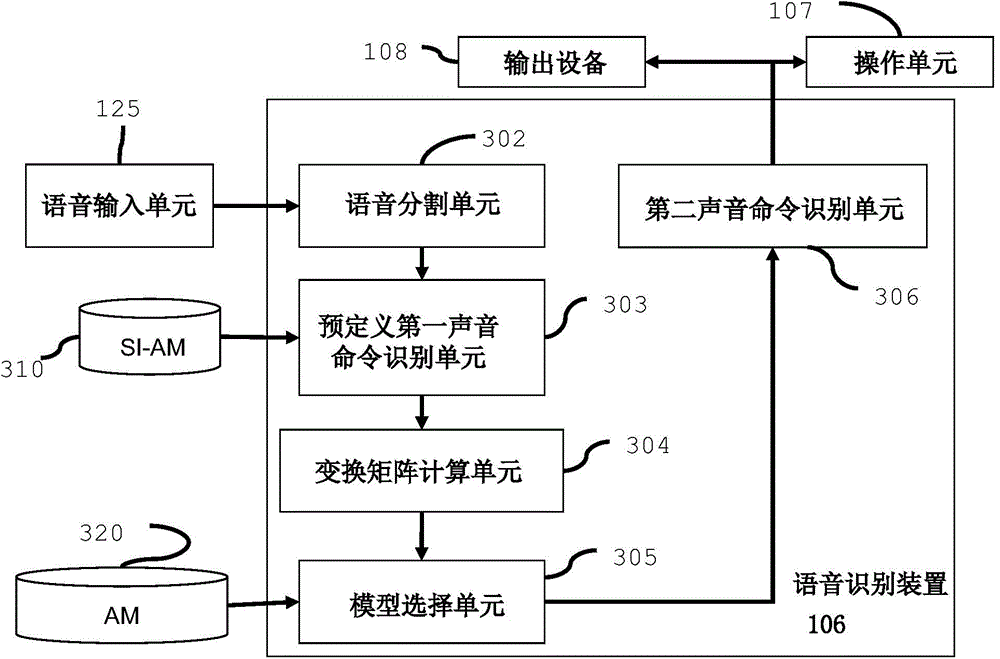

Speech recognition device and speech recognition method

InactiveCN105869641AImprove speech recognition performanceSpeech recognitionSpeech identificationAcoustic model

The invention discloses a speech recognition device and a speech recognition method. The speech recognition device comprises a unit which is configured to obtain speech inputted by a current user, a unit which is configured to split obtained speech and output at least two voice command segments, a unit which is configured to recognize a first predefined voice command from the voice command segments through using an acoustic model unrelated to a speaker, a unit which is configured to calculate a transformation matrix for the current user based on a voice command segment which is recognized as the first predefined voice command, a unit which is configured to select an acoustic model for the current user from acoustic models registered in the speech recognition device based the calculated transformation matrix, and a unit which is configured to recognize a second voice command from the voice command segments through using the selected acoustic model. According to the speech recognition device and the speech recognition method of the invention adopted, speech recognition performance can be improved through using the selected acoustic model (AM).

Owner:CANON KK

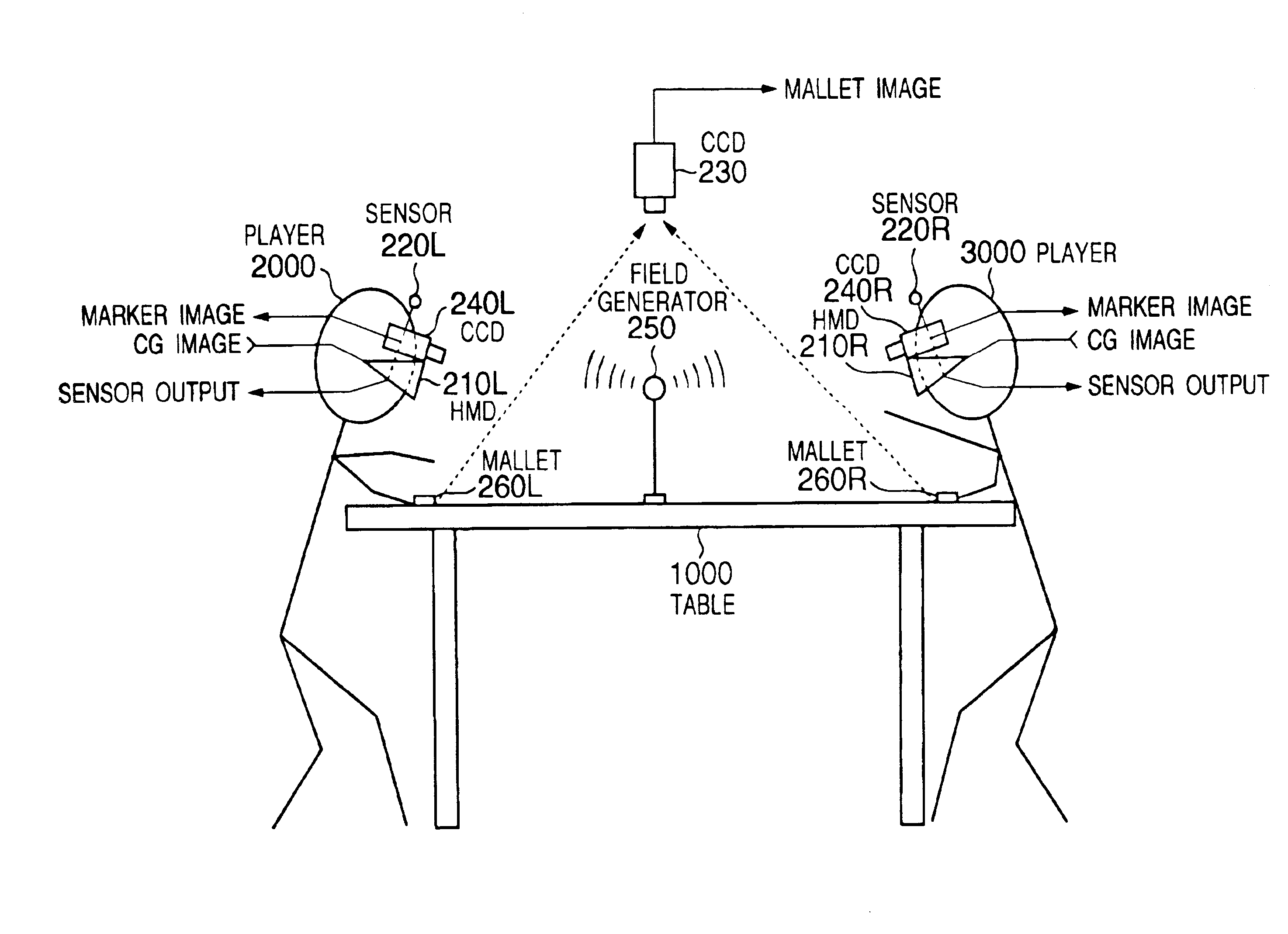

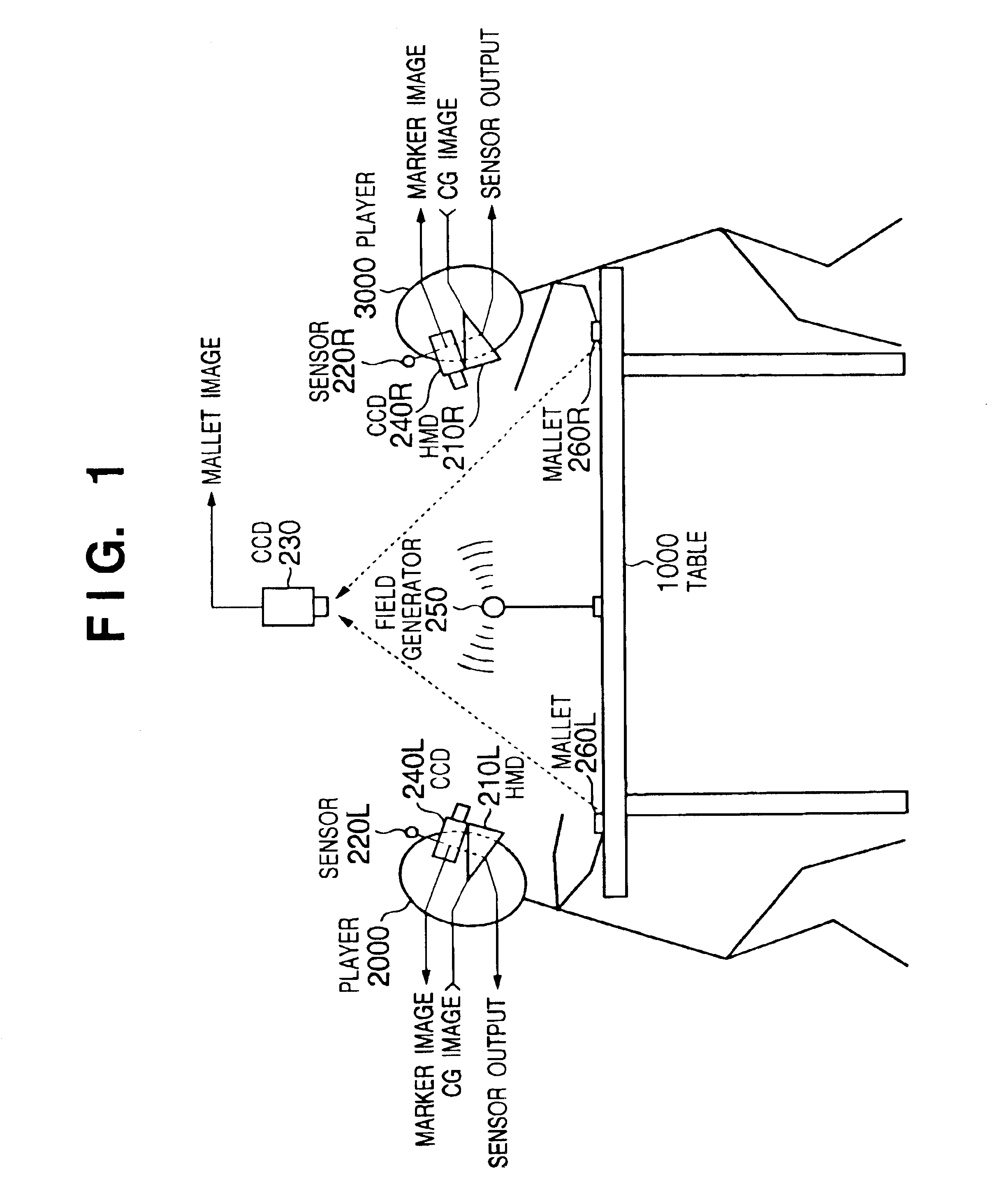

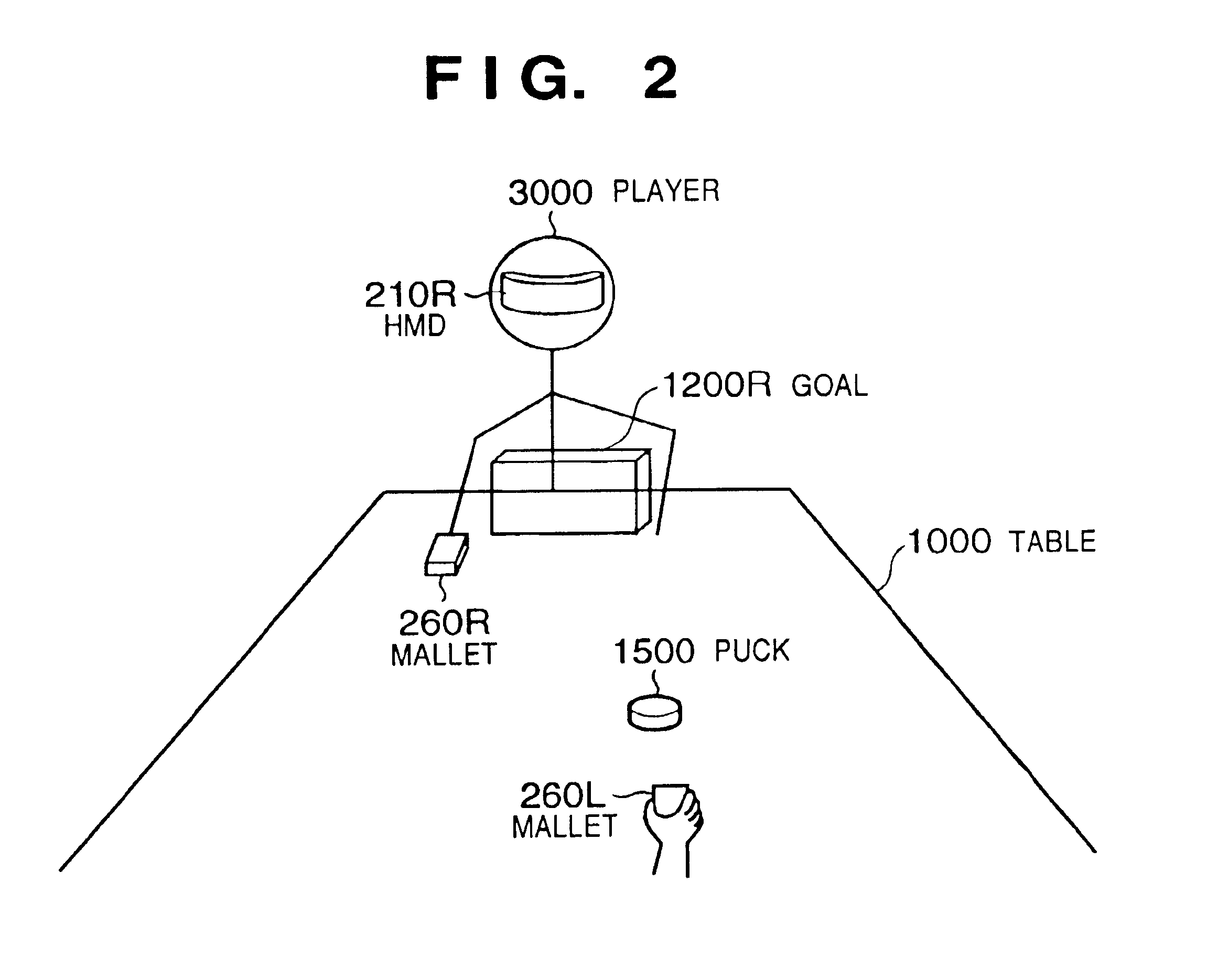

Information processing apparatus, mixed reality presentation apparatus, method thereof, and storage medium

InactiveUS6853935B2Input/output for user-computer interactionImage analysisInformation processingMixed reality

A view transformation matrix that represents the position / attitude of an HMD is generated based on a signal that represents the position / attitude of the HMD (S602). On the other hand, landmarks and their locations are detected based on a captured picture (S604) and a calibration matrix ΔMc is generated using the detected locations of the landmarks (S605). The position / attitude of the HMD is calibrated using the view transformation matrix and calibration matrix ΔMc generated by the above processes (S606), a picture of a virtual object is generated based on external parameters that represent the position / attitude of the calibrated HMD, and a mixed reality picture is generated (S607). The generated mixed reality picture is displayed in the display section (S609).

Owner:CANON KK

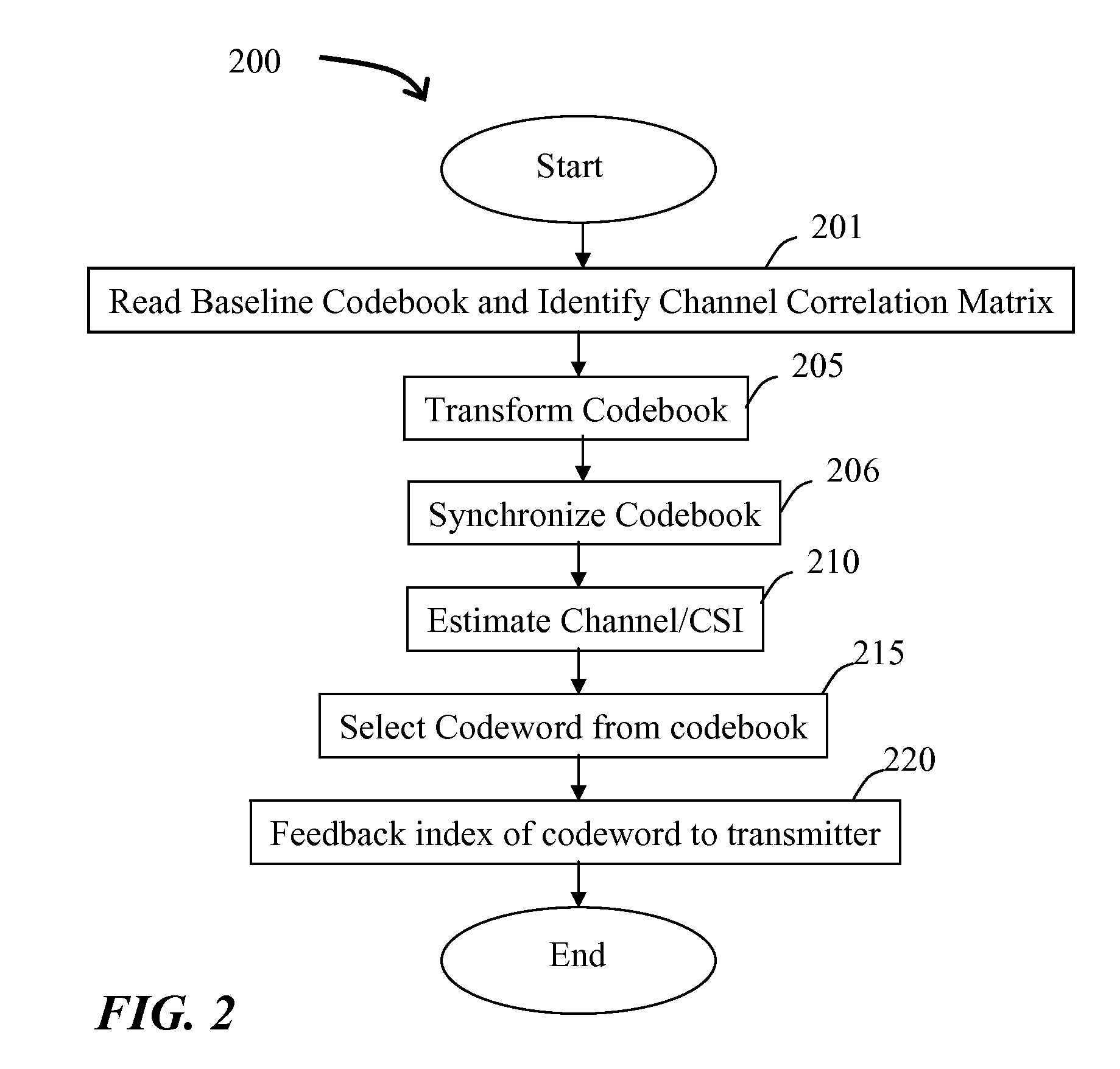

Adaptive Precoding Codebooks for Wireless Communications

ActiveUS20100238913A1Easy to useDiversity/multi-antenna systemsLink quality based transmission modificationTelecommunicationsPrecoding matrix

Adaptive precoding codebooks are described. In one embodiment, the method of wireless communication includes reading at least a rank-2 baseline codebook having codewords representing precoding matrices. An adaptive codebook is generated by multiplying a first column of the codeword with a first transform matrix calculated from a channel correlation matrix, and multiplying a second column of the codeword with a second transform matrix calculated from the channel correlation matrix. The first and the second transform matrices are orthogonal.

Owner:FUTUREWEI TECH INC

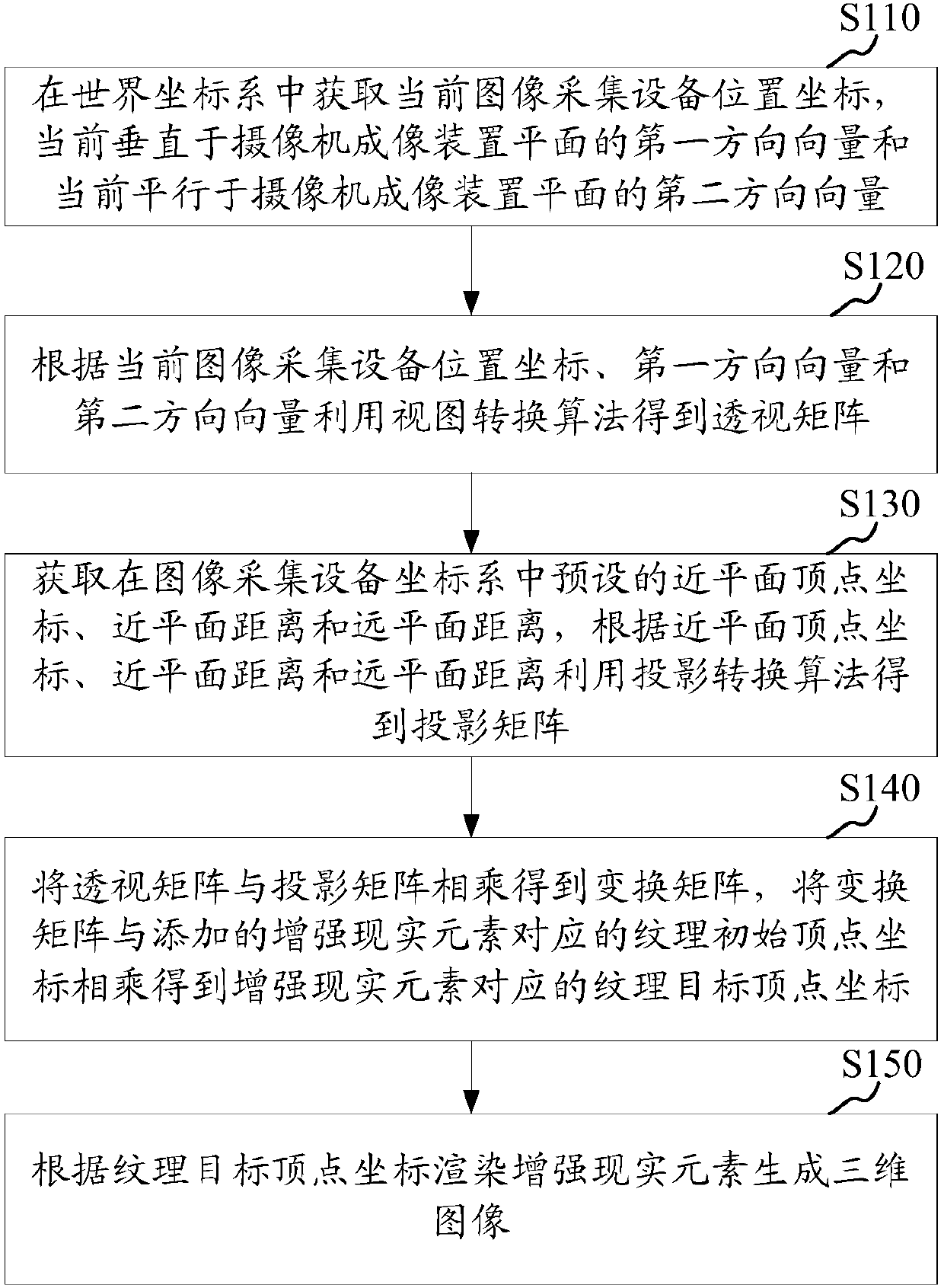

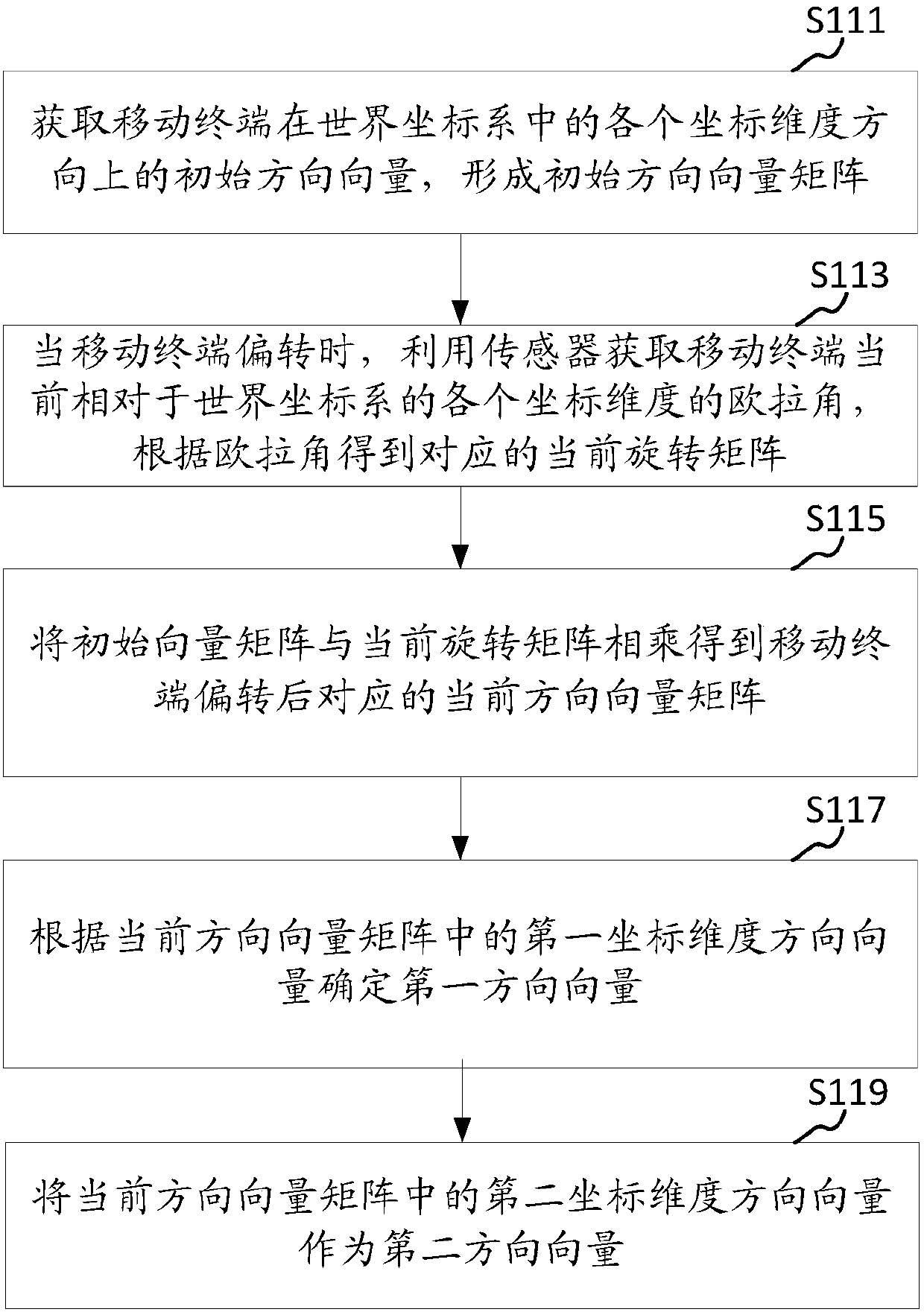

Three-dimension image processing method, device, storage medium and computer equipment

ActiveCN107564089AEasy to integrateIncrease authenticityImage enhancement3D-image renderingHat matrixImaging processing

The invention provides a three-dimension image processing method comprising the following steps: obtaining current image acquisition device position coordinates from a world coordinate system, obtaining a first direction vector and a second direction vector, and using a view transformation algorithm to obtain a transparent matrix; obtaining preset near plane vertex coordinates a near plane distance and a far plane distance from an image acquisition device coordinate system, and using a projection transformation algorithm to obtain a projection matrix; multiplying the transparent matrix with the projection matrix so as to obtain a transformation matrix; multiplying the transformation matrix with texture initial vertex coordinates matched with added augmented reality elements so as to obtaintexture target vertex coordinates matched with the augmented reality elements; using the texture target vertex coordinates to render the augmented reality elements so as to form a three-dimension image. The mobile terminal can rotate to drive the image acquisition device to rotate, so the three-dimension image corresponding to the augmented reality elements can make corresponding rotations, thusincreasing the fusing level between the three-dimension image and a true background image, and improving the image authenticity; the invention also provides a three-dimension image processing device,a storage medium and computer equipment.

Owner:TENCENT TECH (SHENZHEN) CO LTD

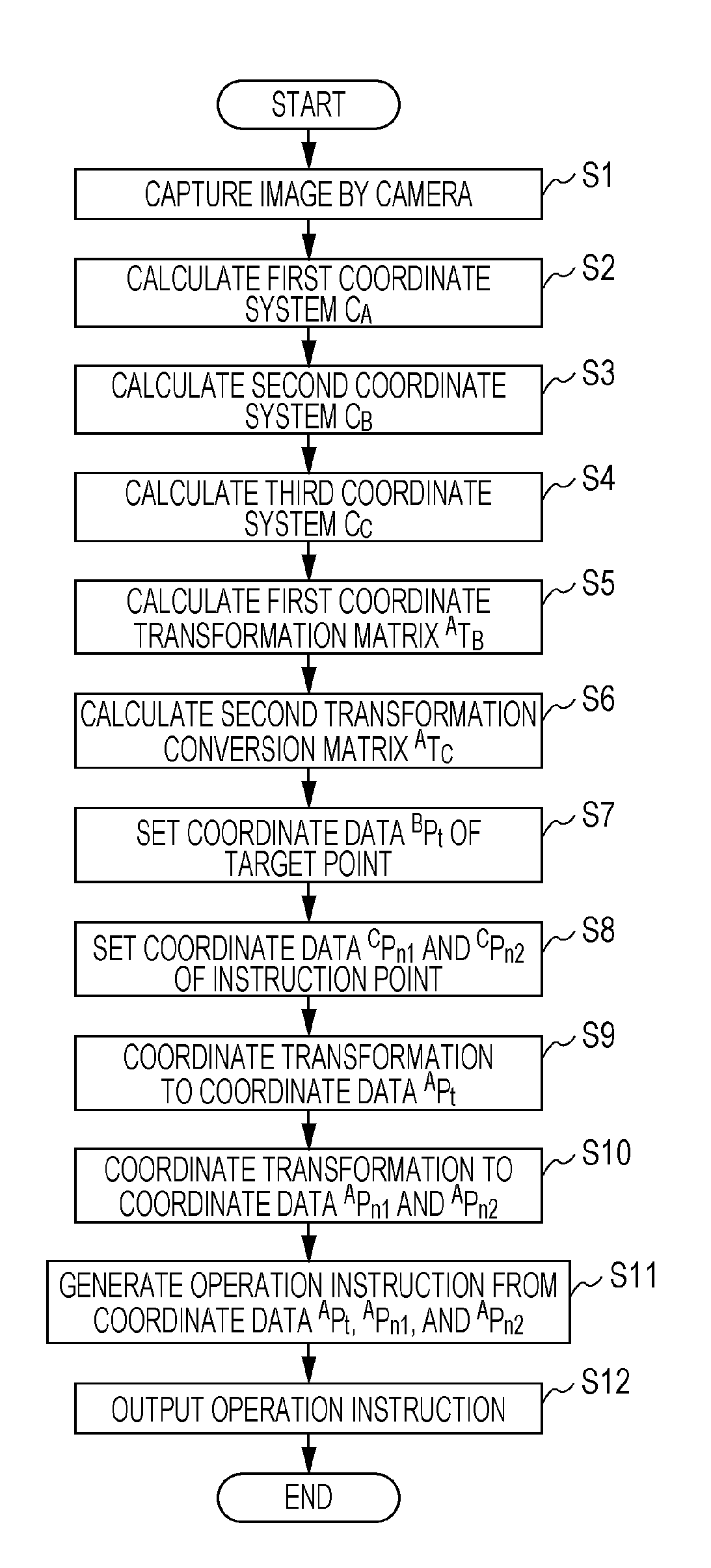

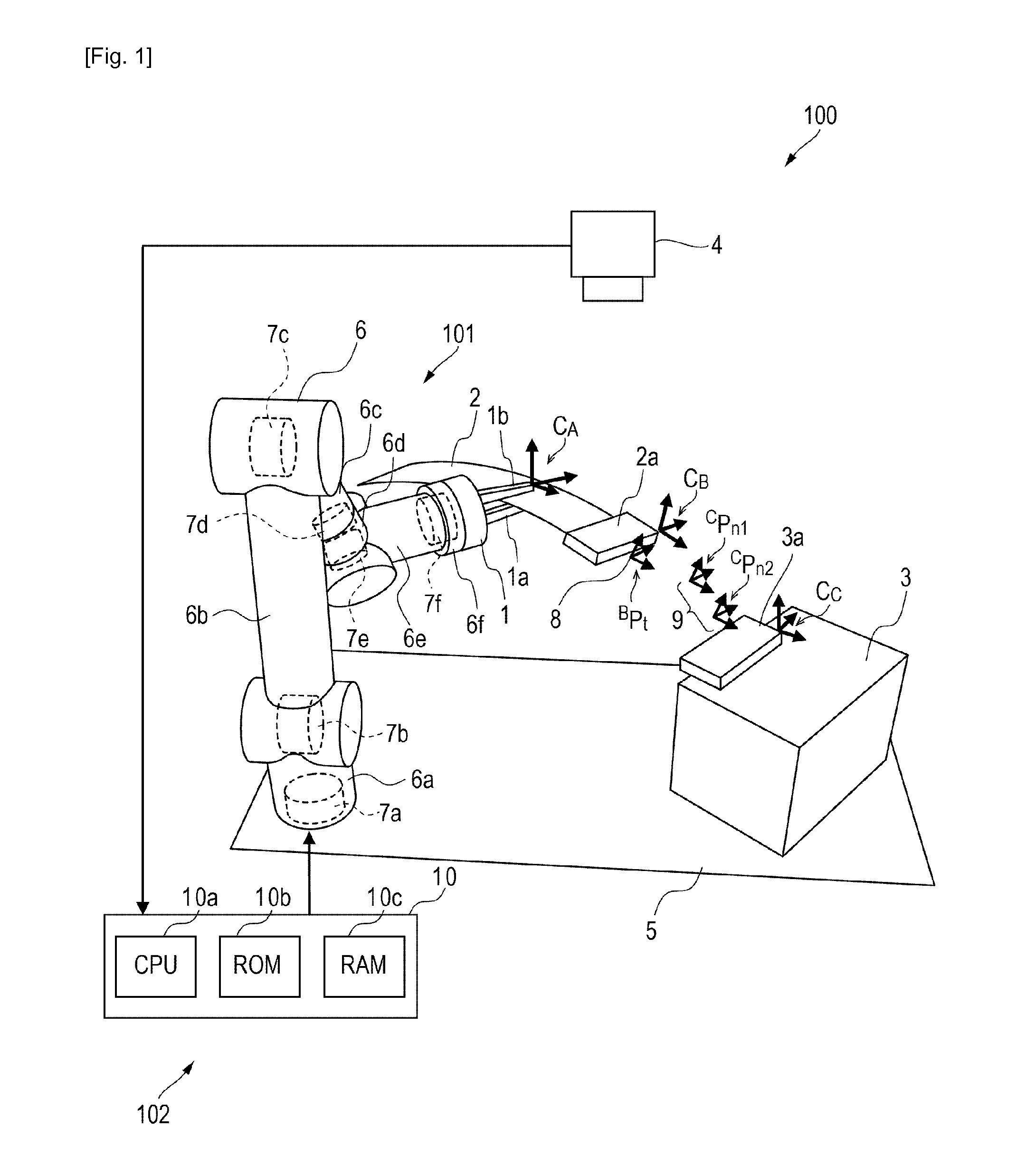

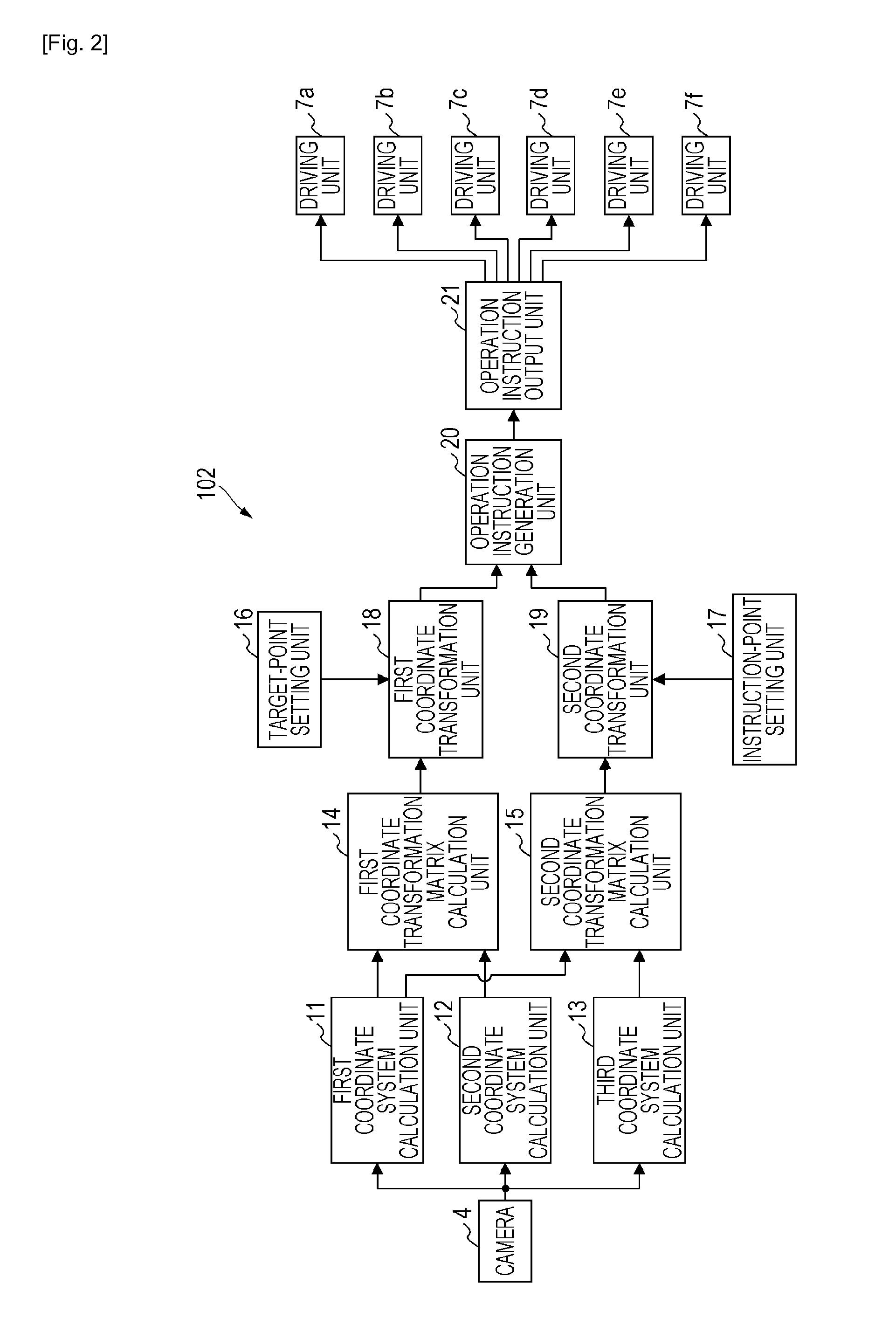

Robot control apparatus, robot control method, program, and recording medium

InactiveUS20140012416A1Assembly precisionProgramme-controlled manipulatorComputer controlComputer graphics (images)Robot control

A first coordinate system CA of the hand unit, a second coordinate system CB of the first workpiece, and a third coordinate system CC of a second workpiece in a camera coordinate system are calculated (S2, S3, and S4). First and second coordinate transformation matrices ATB and ATC are calculated (S5 and S6). Coordinate data of a target point is set in the coordinate system of the first workpiece (S7). Coordinate data of an instruction point is set in the coordinate system of the second workpiece (S8). The coordinate data of the target point is subjected to coordinate transformation using the first coordinate transformation matrix ATB (S9). The coordinate data of the instruction point is subjected to coordinate transformation using the second coordinate transformation matrix ATC (S10). Operation instructions are generated using the converted coordinate data (S11).

Owner:CANON KK

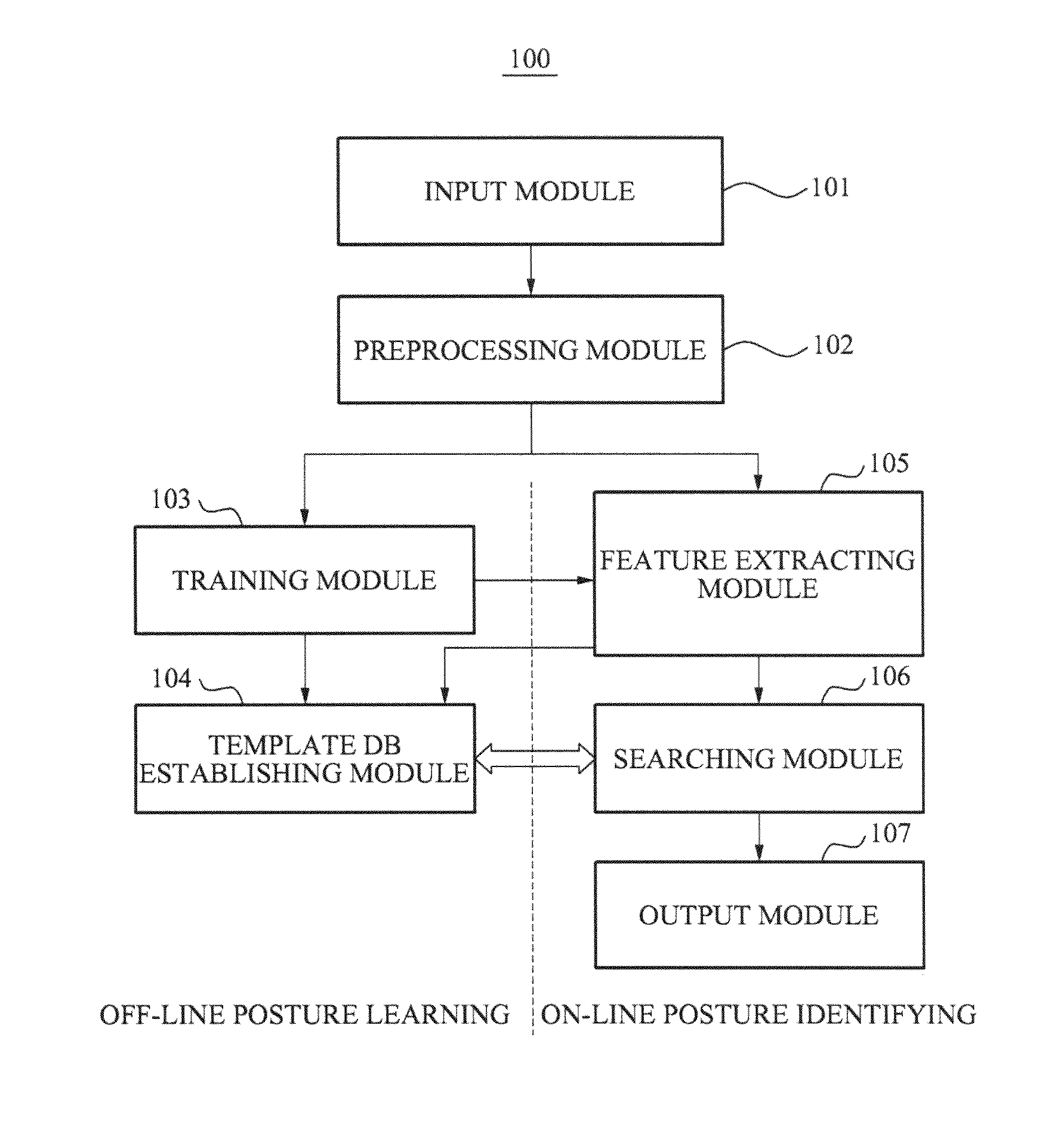

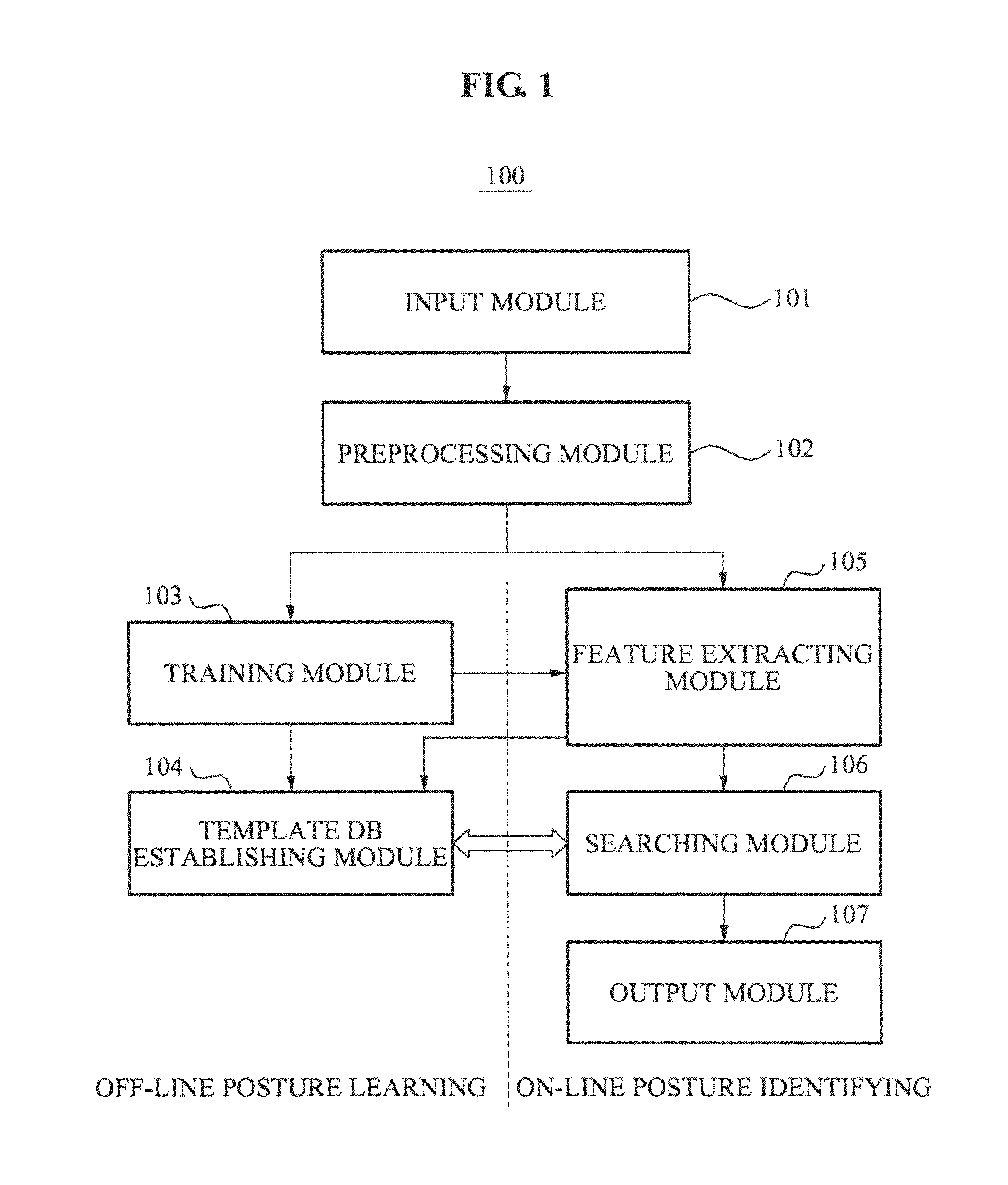

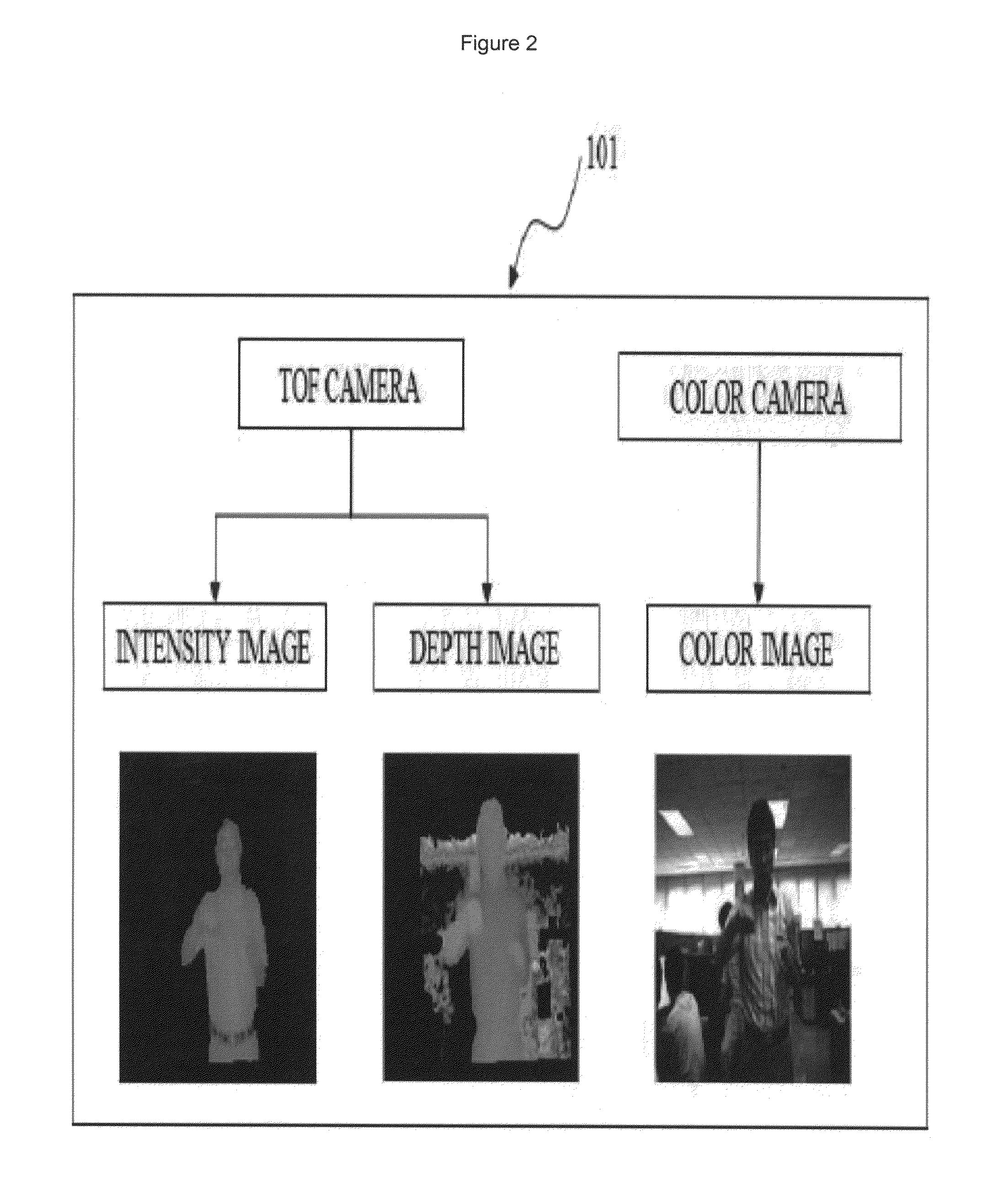

Method and apparatus of identifying human body posture

InactiveUS20110025834A1Efficient identificationImage enhancementImage analysisHuman bodyFeature extraction

Disclosed is a human body posture identifying method and apparatus. The apparatus may include an input module including a depth camera and a color camera, a preprocessing module to perform a preprocess and to generate a posture sample, a training module to calculate a projective transformation matrix, and to establish a NNC, a feature extracting module to extract a distinguishing posture feature, a template database establishing module to establish a posture template database, a searching module to perform a human body posture matching, and an output module to output a best match posture, and to relocate a location of a virtual human body model.

Owner:SAMSUNG ELECTRONICS CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com