Spatial non-cooperative target pose estimation method based on model and point cloud global matching

A non-cooperative target and pose estimation technology, which is applied in the field of spatial non-cooperative target pose estimation based on model and point cloud global matching, can solve the problems of inability to guarantee the target coordinate system, poor reliability of the method, and large amount of calculation, etc., to achieve High frame rate, high reliability, fast effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

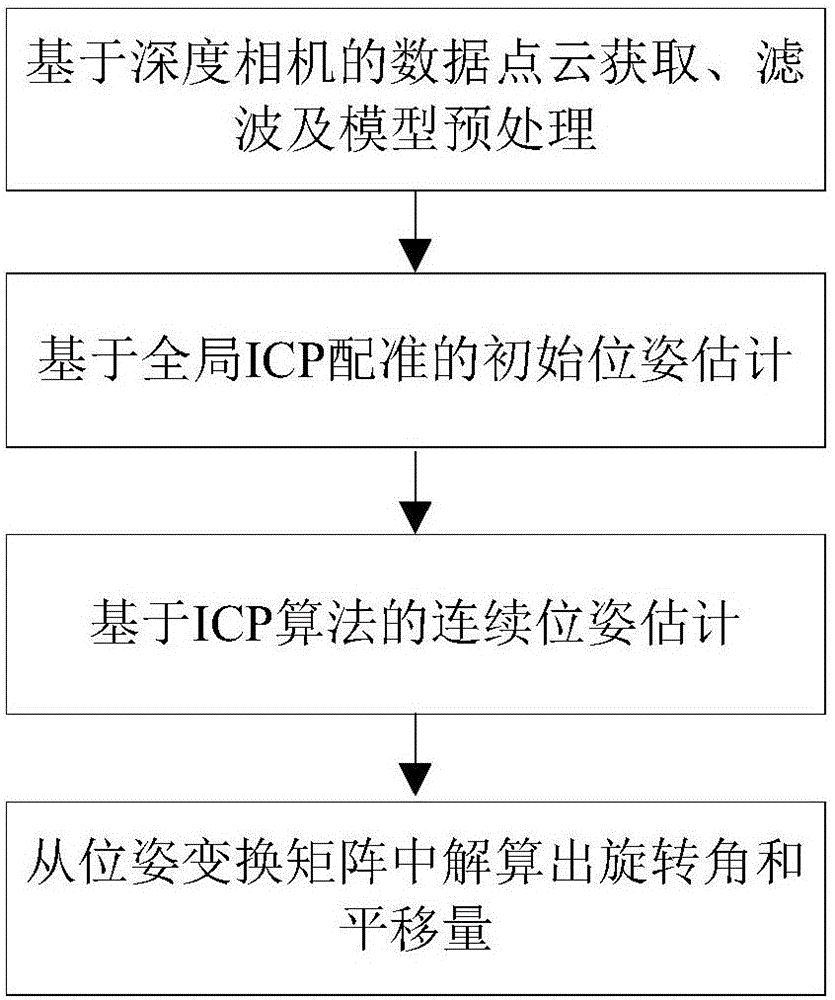

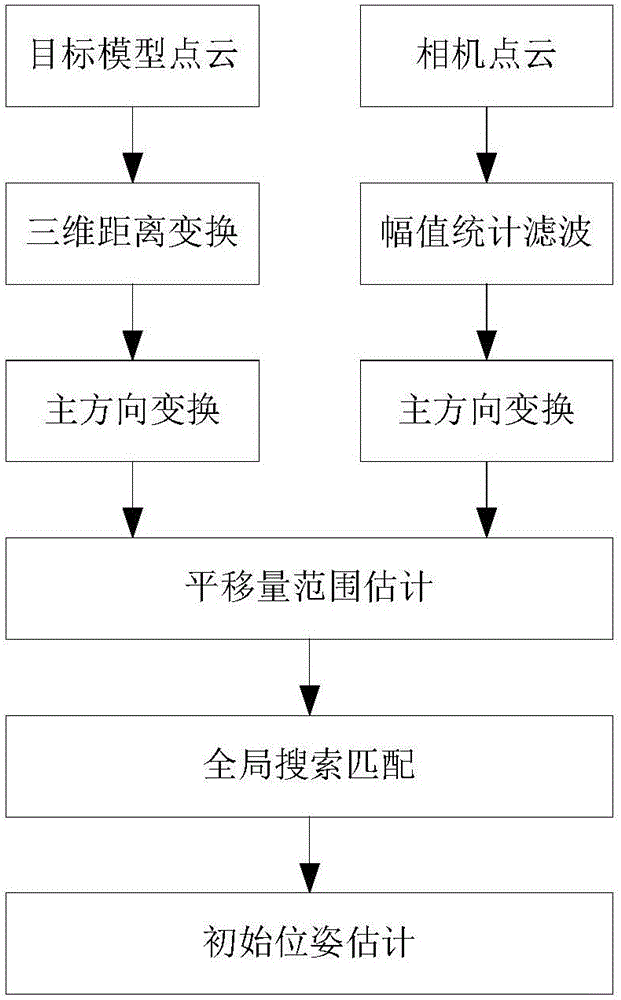

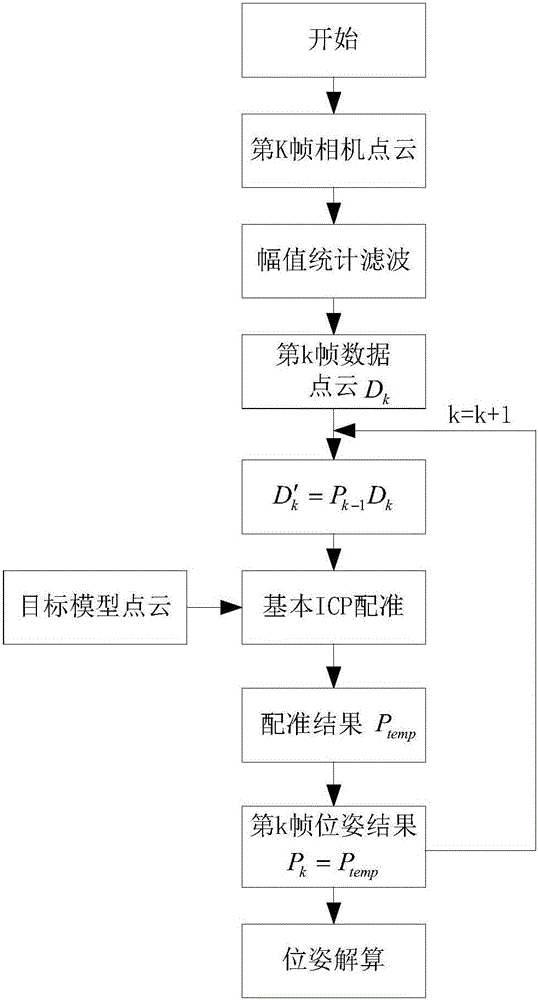

Method used

Image

Examples

Embodiment

[0106]In order to illustrate the present invention, it is fully demonstrated that the method has the performance of continuously and accurately obtaining the target pose and the adaptability to the camera's low resolution and noise. The pose measurement experiment is completed as follows:

[0107] (1) Experimental initial conditions and parameter settings

[0108] The simulation experiment uses a virtual camera to shoot the model point cloud to generate a data point cloud; the parameter settings of the virtual camera are consistent with the actual SR4000 camera, that is, the resolution is 144*176, the focal length is 10mm, and the pixel size is 0.04mm. Start shooting the model point cloud at 10m. The pose of the camera point cloud is set to: translate 10m along the Z axis, rotate around the Z axis from -180° to 180° at intervals of 10°, and the rest is 0.

[0109] The error calculation is: the parameters of the camera point cloud generated by simulation are used as the real v...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com