Patents

Literature

943 results about "Hat matrix" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In statistics, the projection matrix (𝐏), sometimes also called the influence matrix or hat matrix (𝐇), maps the vector of response values (dependent variable values) to the vector of fitted values (or predicted values). It describes the influence each response value has on each fitted value. The diagonal elements of the projection matrix are the leverages, which describe the influence each response value has on the fitted value for that same observation.

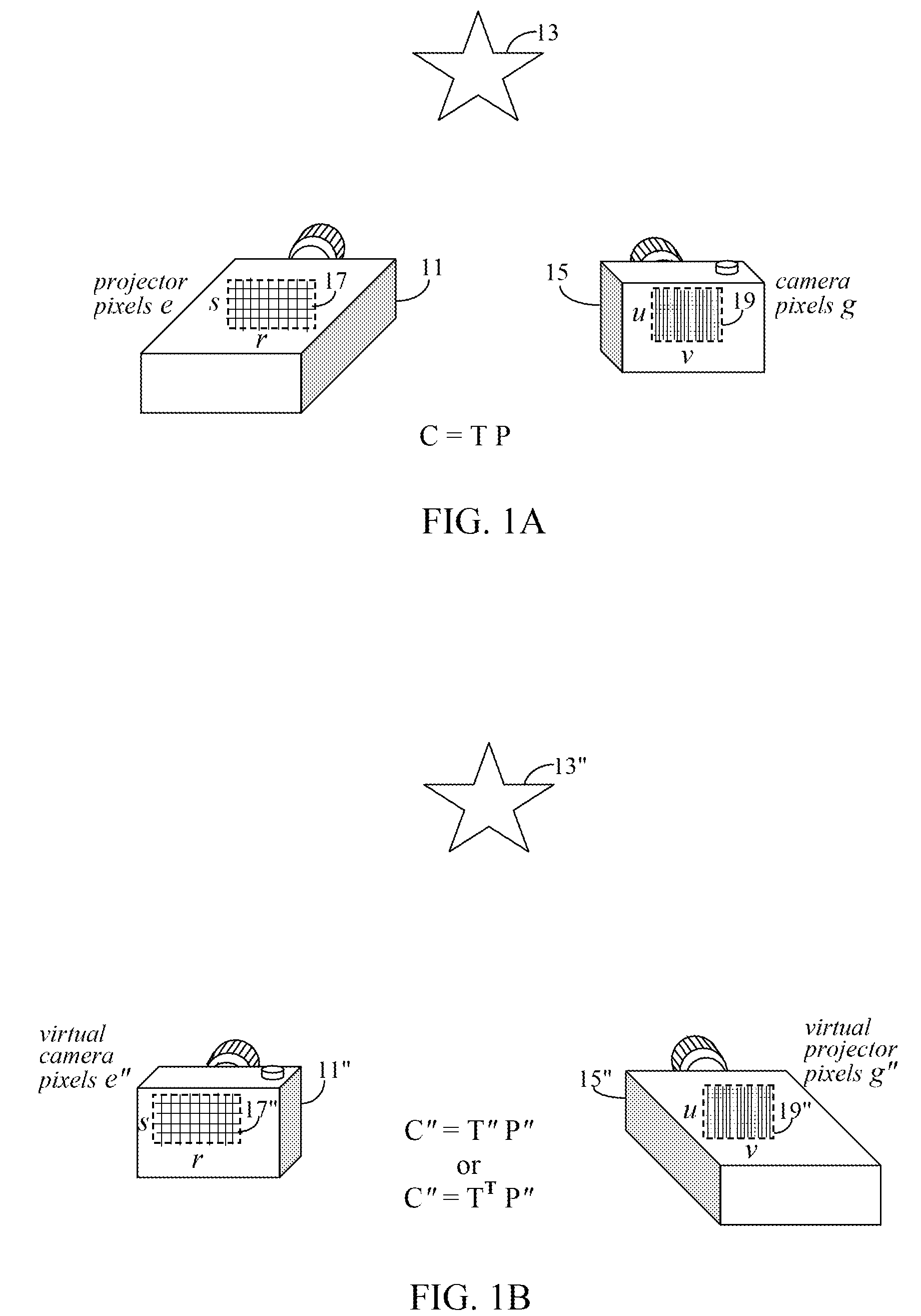

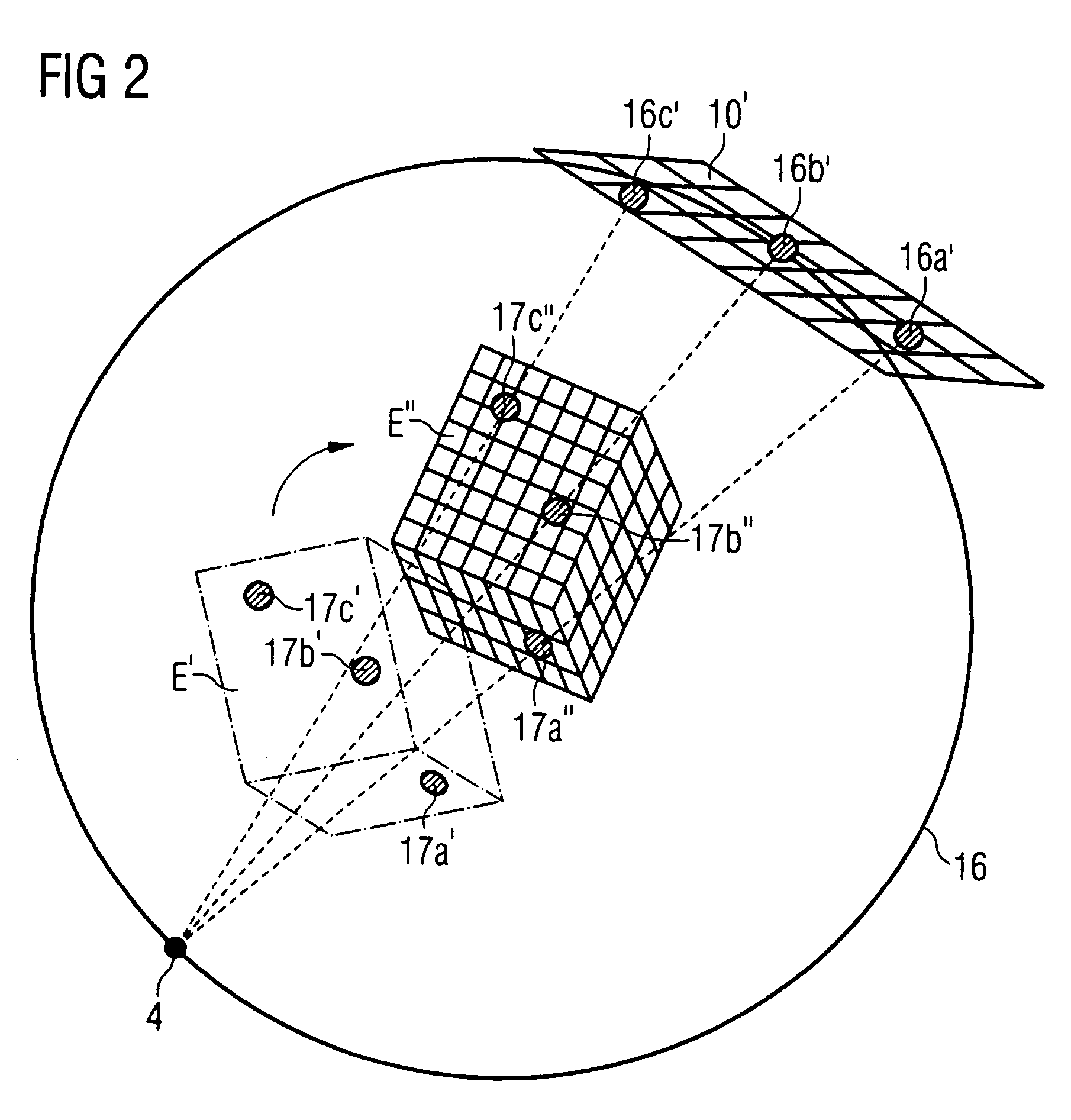

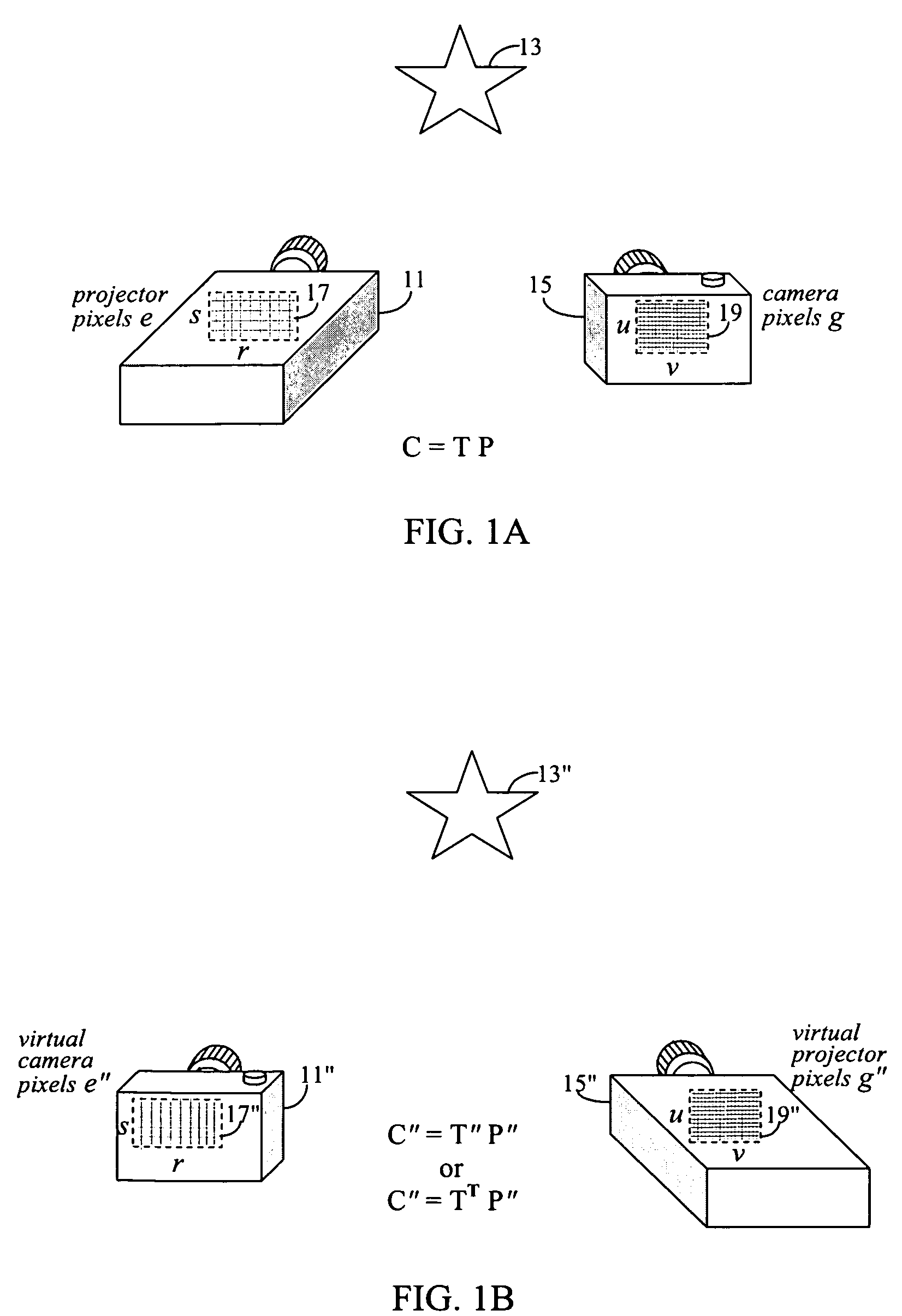

View projection matrix based high performance low latency display pipeline

InactiveUS8013904B2Easy to shipEasy to implementTelevision system detailsPicture reproducers using projection devicesHat matrixImaging processing

A projection system uses a transformation matrix to transform a projection image p in such a manner so as to compensate for surface irregularities on a projection surface. The transformation matrix makes use of properties of light transport relating a projector to a camera. A display pipeline of user-supplied image modification processing modules are reduced by first representing the processing modules as multiple, individual matrix operations. All the matrix operations are then combined with, i.e., multiplied to, the transformation matrix to create a modified transformation matrix. The created transformation matrix is then used in place of the original transformation matrix to simultaneously achieve both image transformation and any pre and post image processing defined by the image modification processing modules.

Owner:SEIKO EPSON CORP

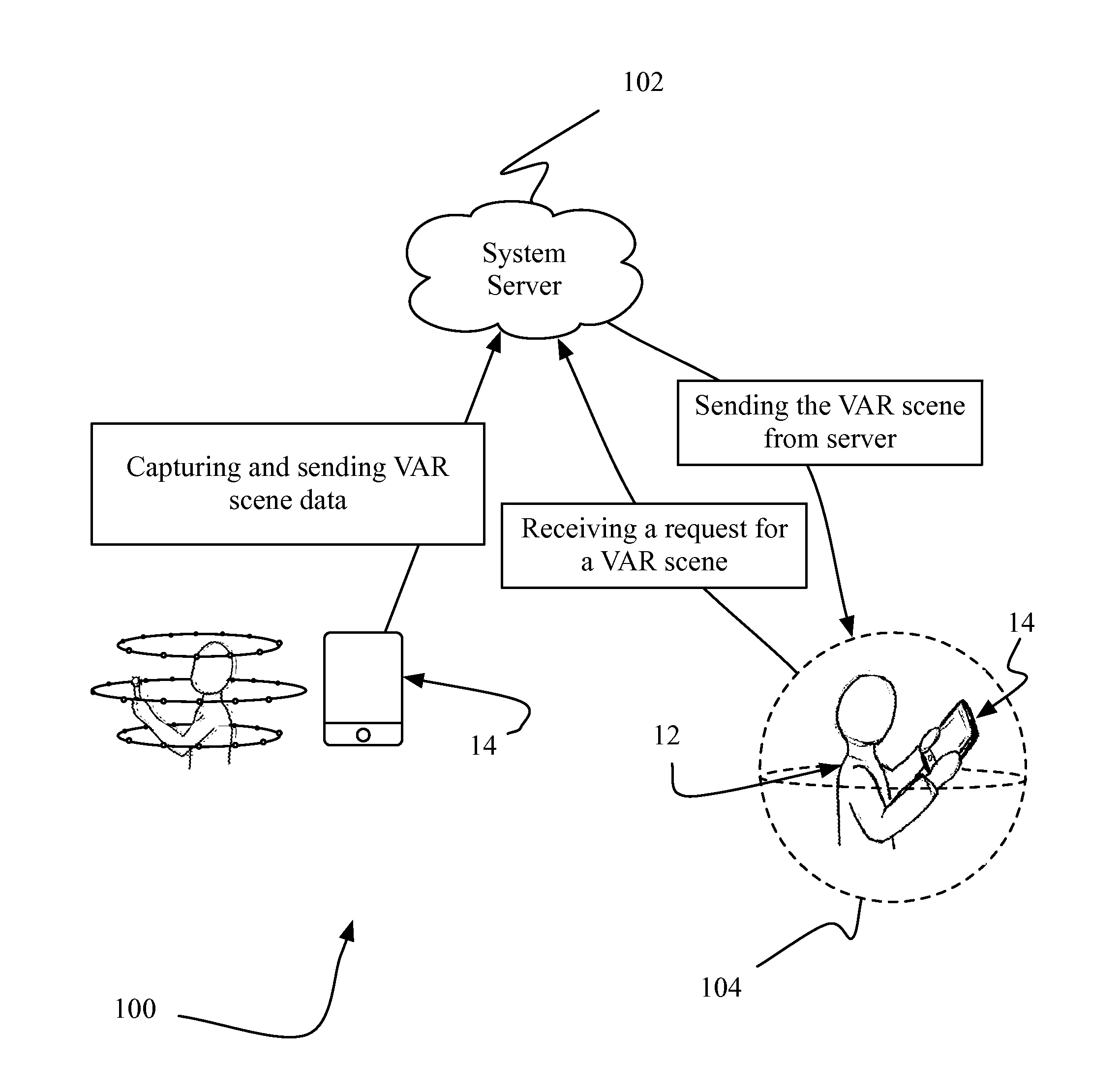

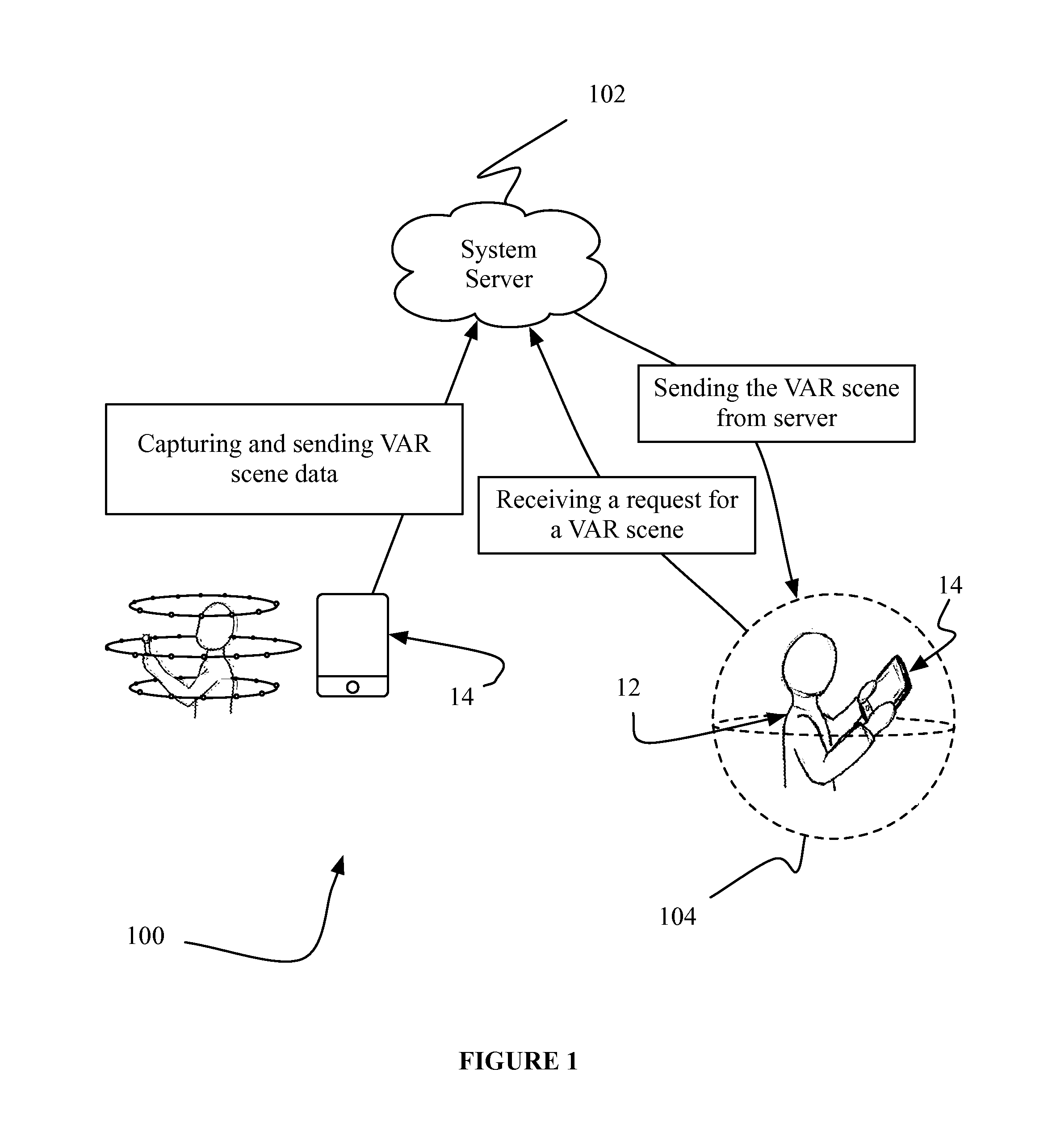

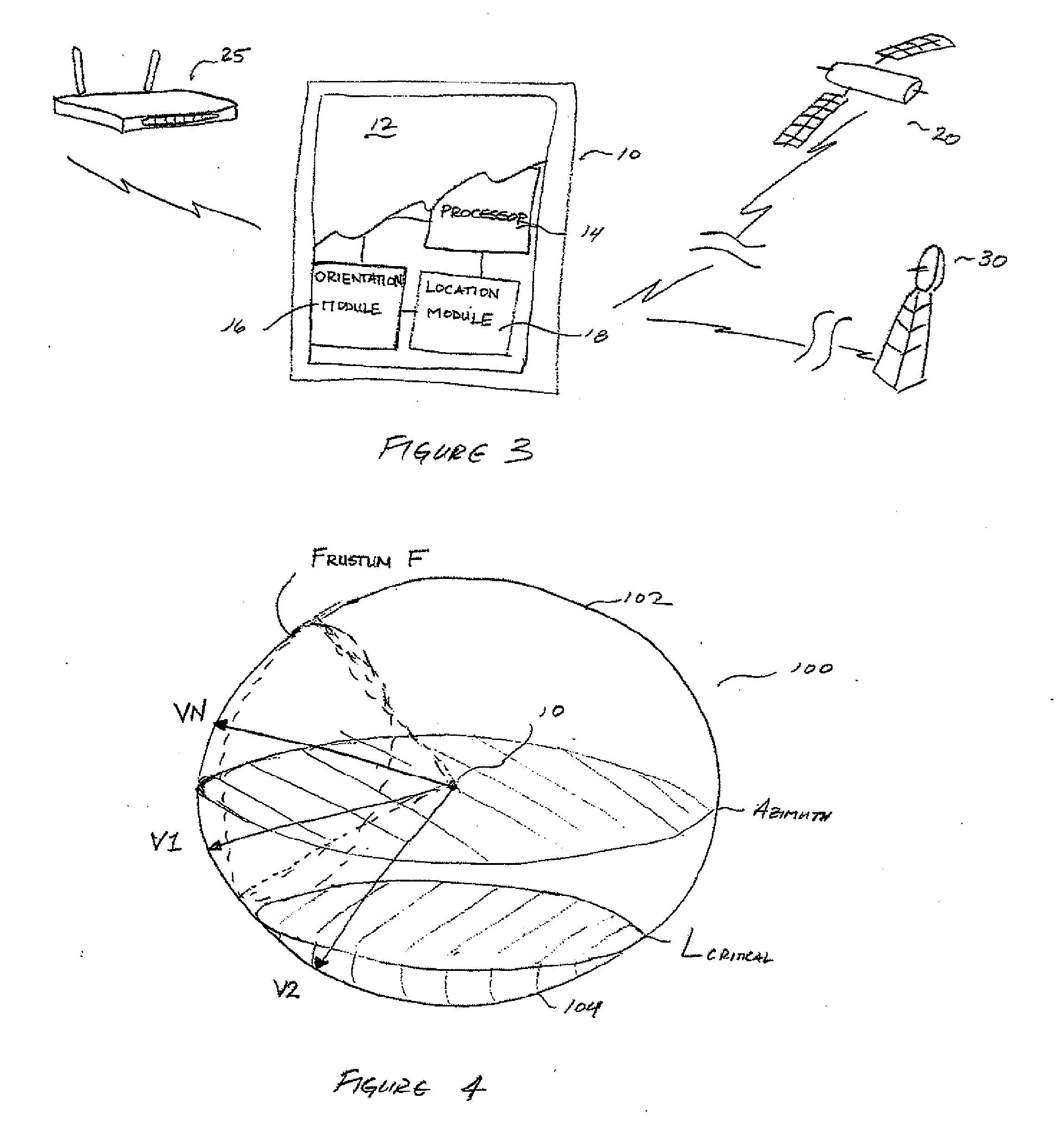

System and method for sharing virtual and augmented reality scenes between users and viewers

ActiveUS20120242798A1Television system detailsColor television detailsHat matrixComputer graphics (images)

A preferred method for sharing user-generated virtual and augmented reality scenes can include receiving at a server a virtual and / or augmented reality (VAR) scene generated by a user mobile device. Preferably, the VAR scene includes visual data and orientation data, which includes a real orientation of the user mobile device relative to a projection matrix. The preferred method can also include compositing the visual data and the orientation data into a viewable VAR scene; locally storing the viewable VAR scene at the server; and in response to a request received at the server, distributing the processed VAR scene to a viewer mobile device.

Owner:ARIA GLASSWORKS

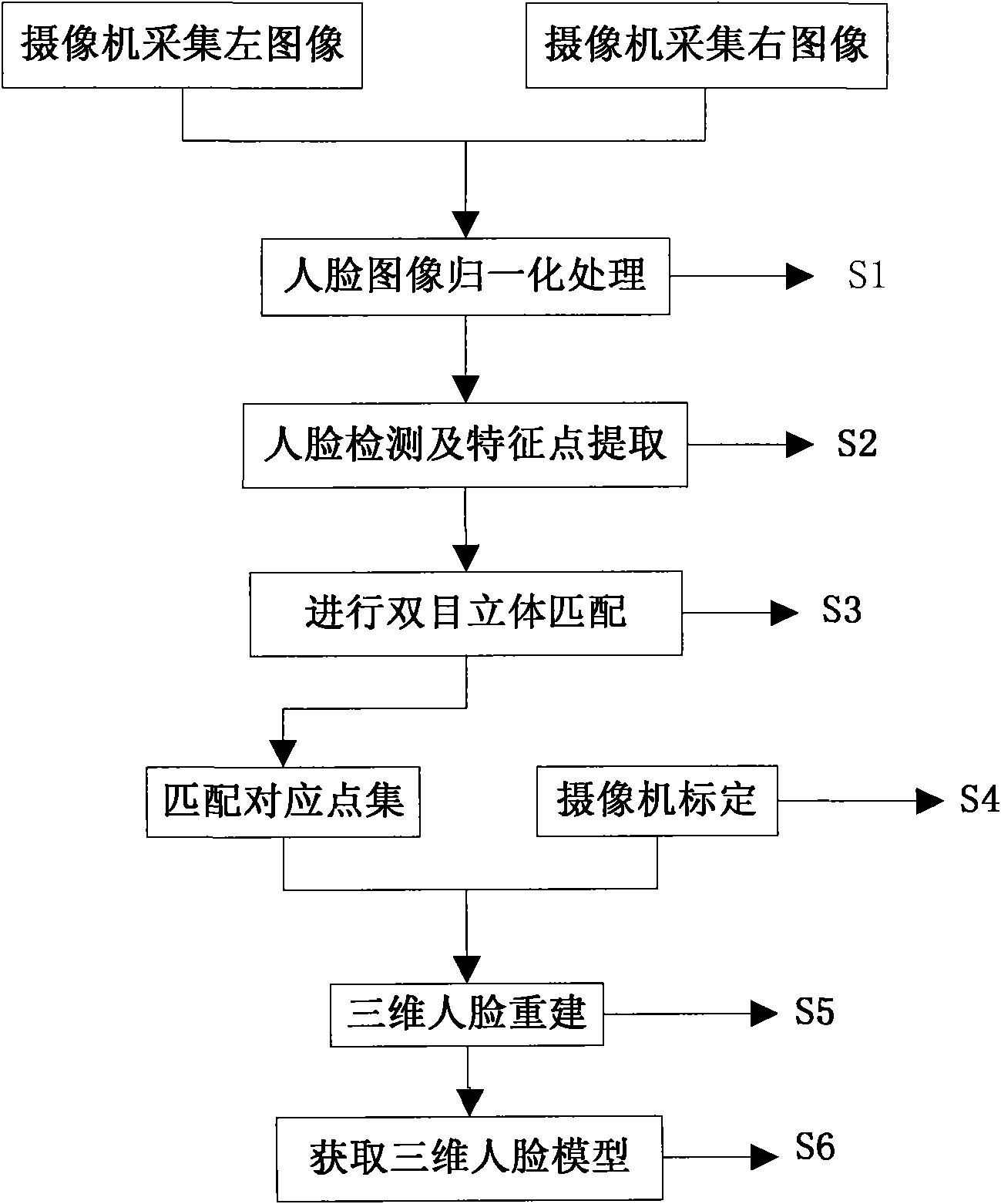

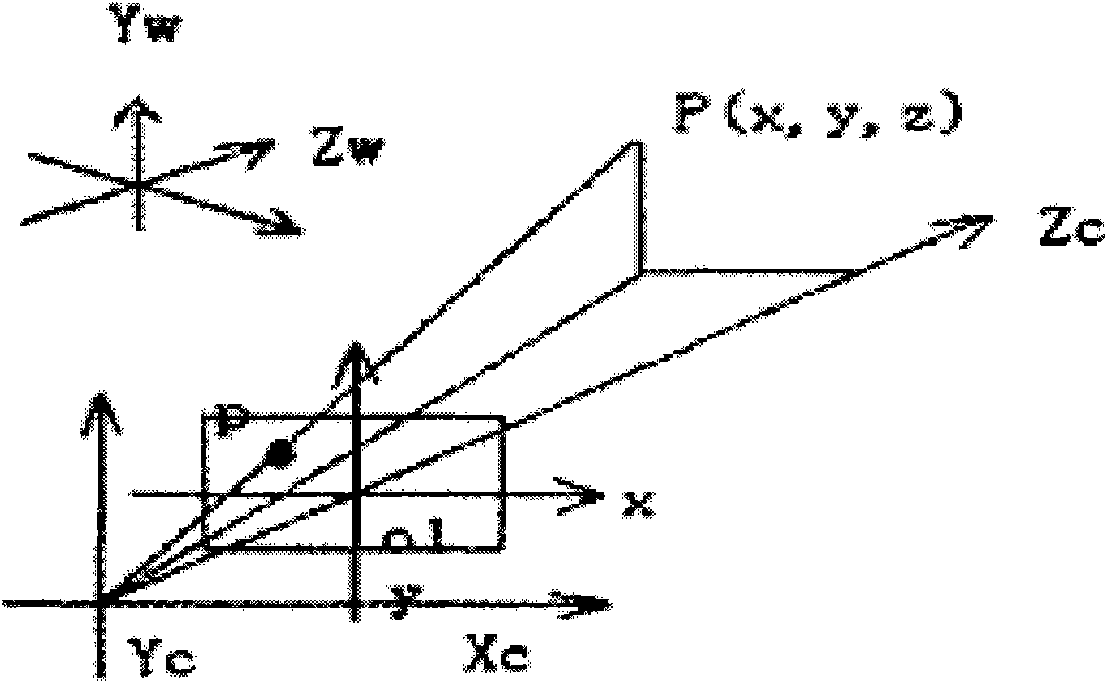

Binocular stereo vision based intelligent three-dimensional human face rebuilding method and system

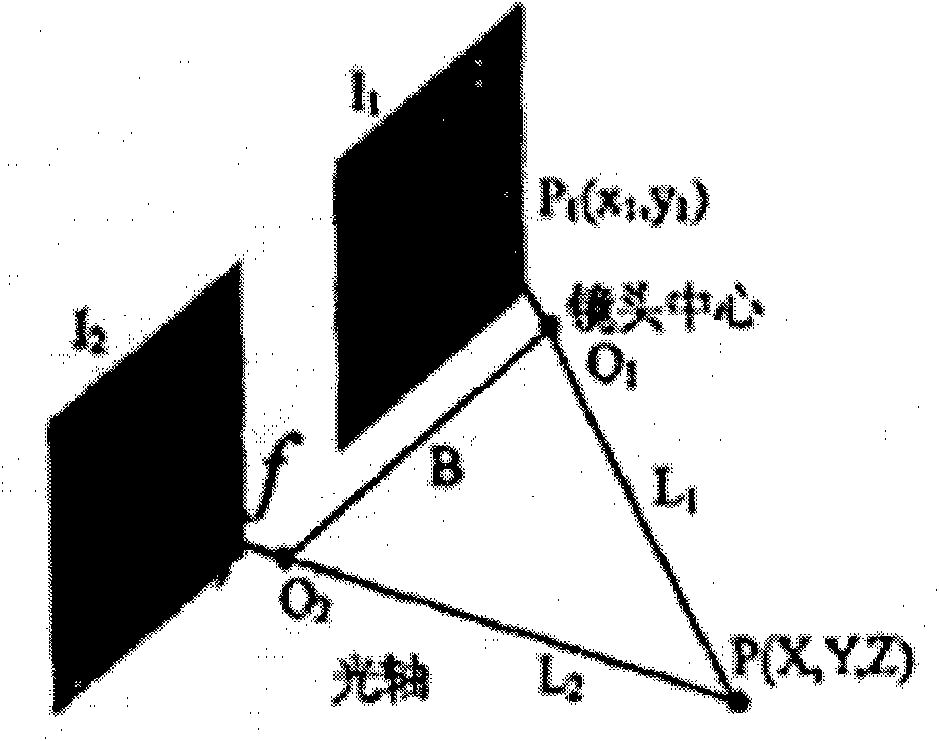

The invention discloses a binocular stereo vision based intelligent three-dimensional human face rebuilding method and a system; the method comprises: preprocessing operations including image normalization, brightness normalization and image correction are carried out to a human face image; a human face area in the human face image which is preprocessed is obtained and human face characteristic points are extracted; the object is rebuilt by projection matrix, so as to obtain internal and external parameters of a vidicon; based on the human face characteristic points, gray level cross-correlation matching operators are expanded to color information, and a parallax image generated by stereo matching is calculated according to information including polar line restraining, human face area restraining and human face geometric conditions; a three-dimensional coordinate of a human face spatial hashing point cloud is calculated according to the vidicon calibration result and the parallax image generated by stereo matching, so as to generate a three-dimensional human face model. By adopting the steps, more smooth and vivid three-dimensional human face model is rebuilt in the invention.

Owner:BEIJING JIAOTONG UNIV

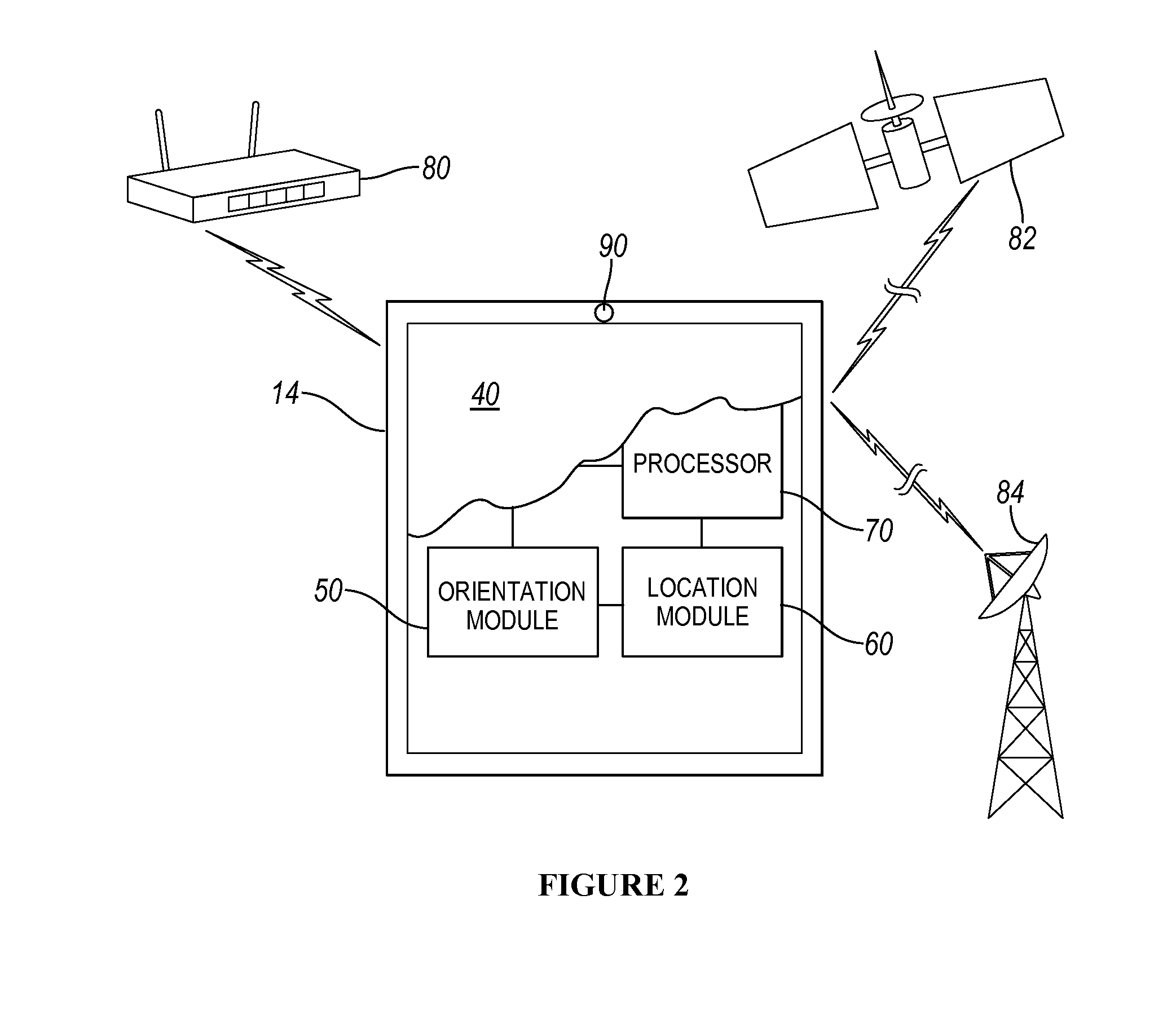

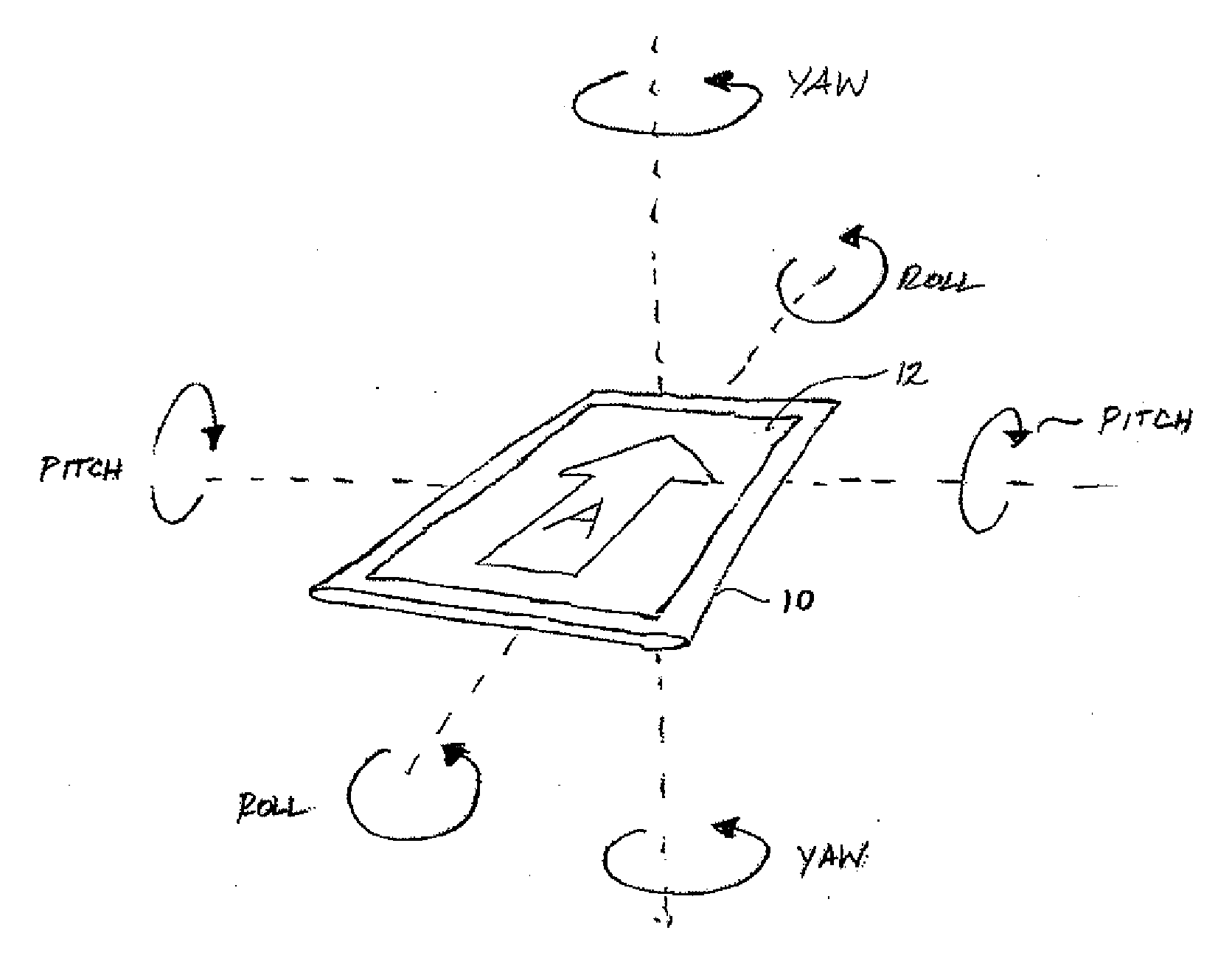

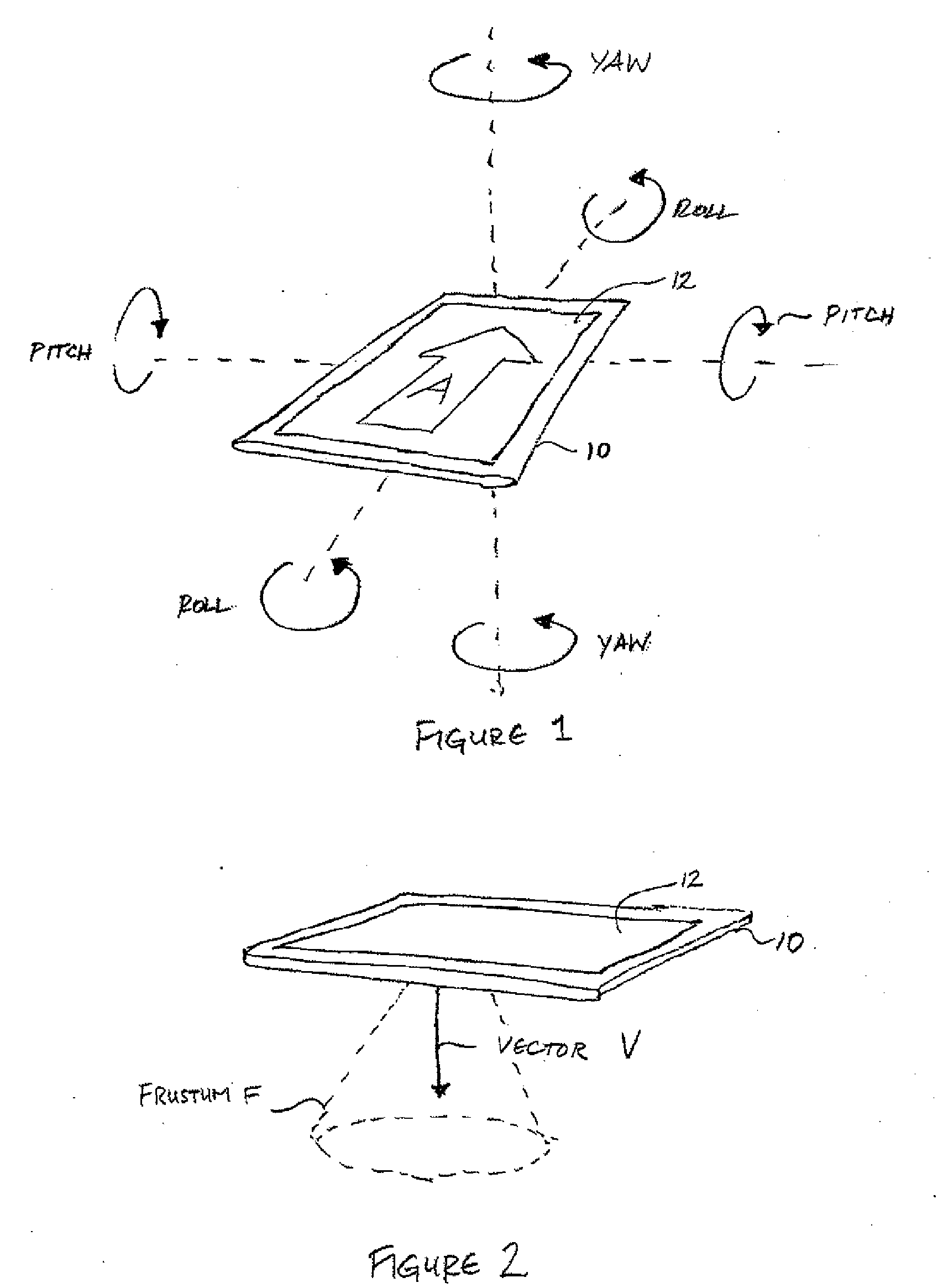

System and method for presenting virtual and augmented reality scenes to a user

InactiveUS20120212405A1Improve usabilityCathode-ray tube indicatorsInput/output processes for data processingHat matrixComputer graphics (images)

A method according to a preferred embodiment can include providing an embeddable interface for a virtual or augmented reality scene, determining a real orientation of a viewer representative of a viewing orientation relative to a projection matrix, and determining a user orientation of a viewer representative of a viewing orientation relative to a nodal point. The method of the preferred embodiment can further include orienting the scene within the embeddable interface and displaying the scene within the embeddable interface on a device.

Owner:ARIA GLASSWORKS

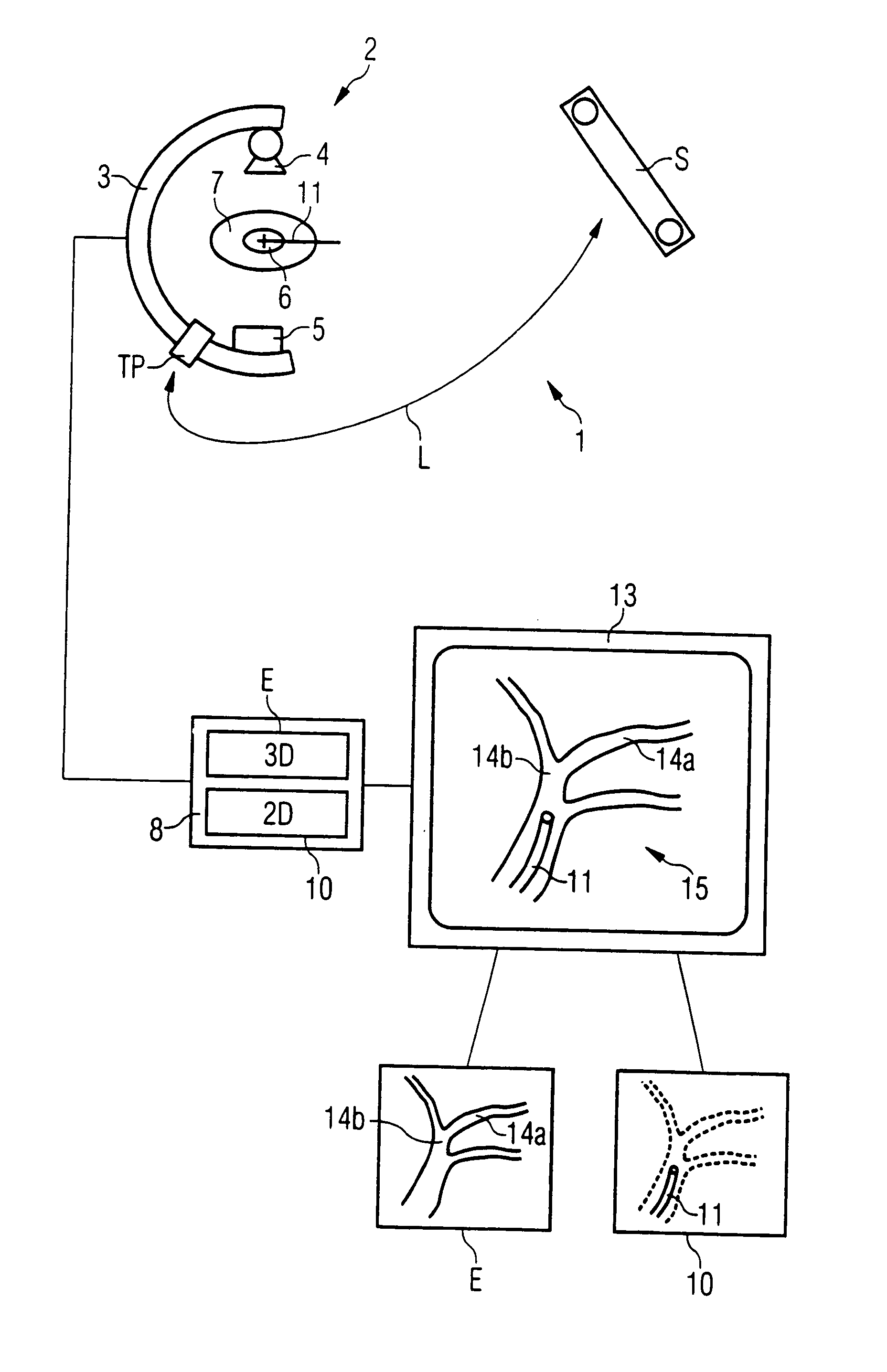

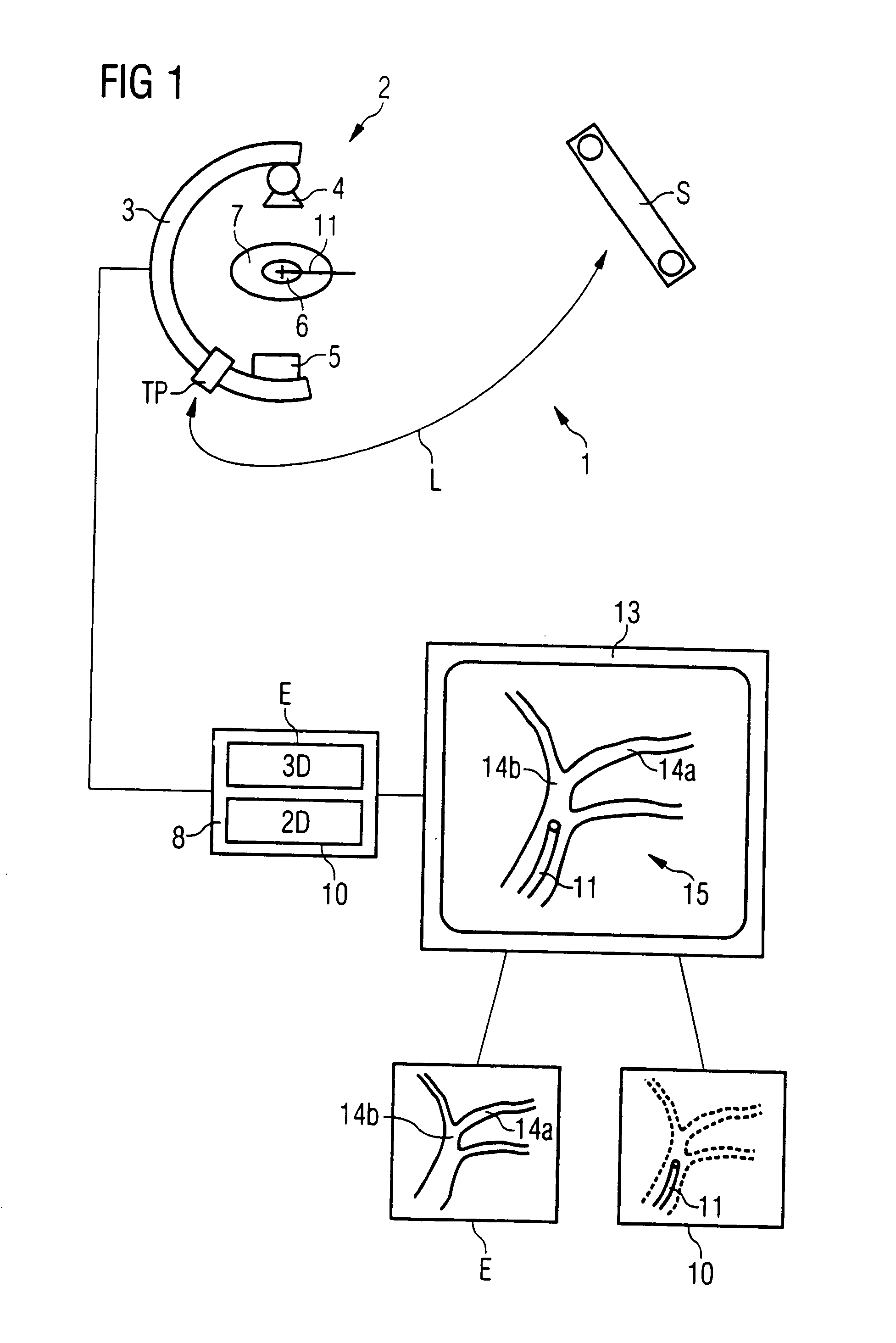

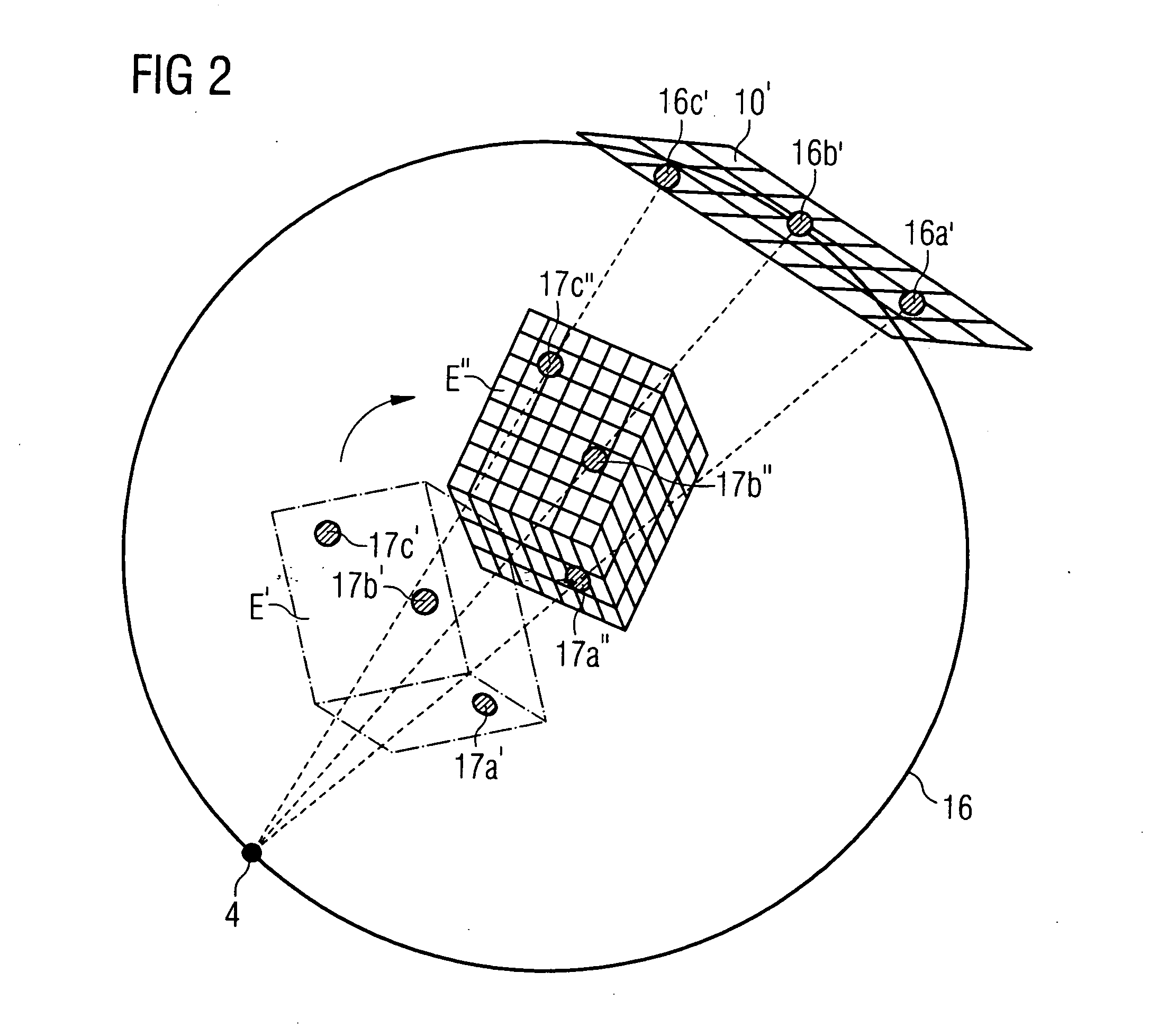

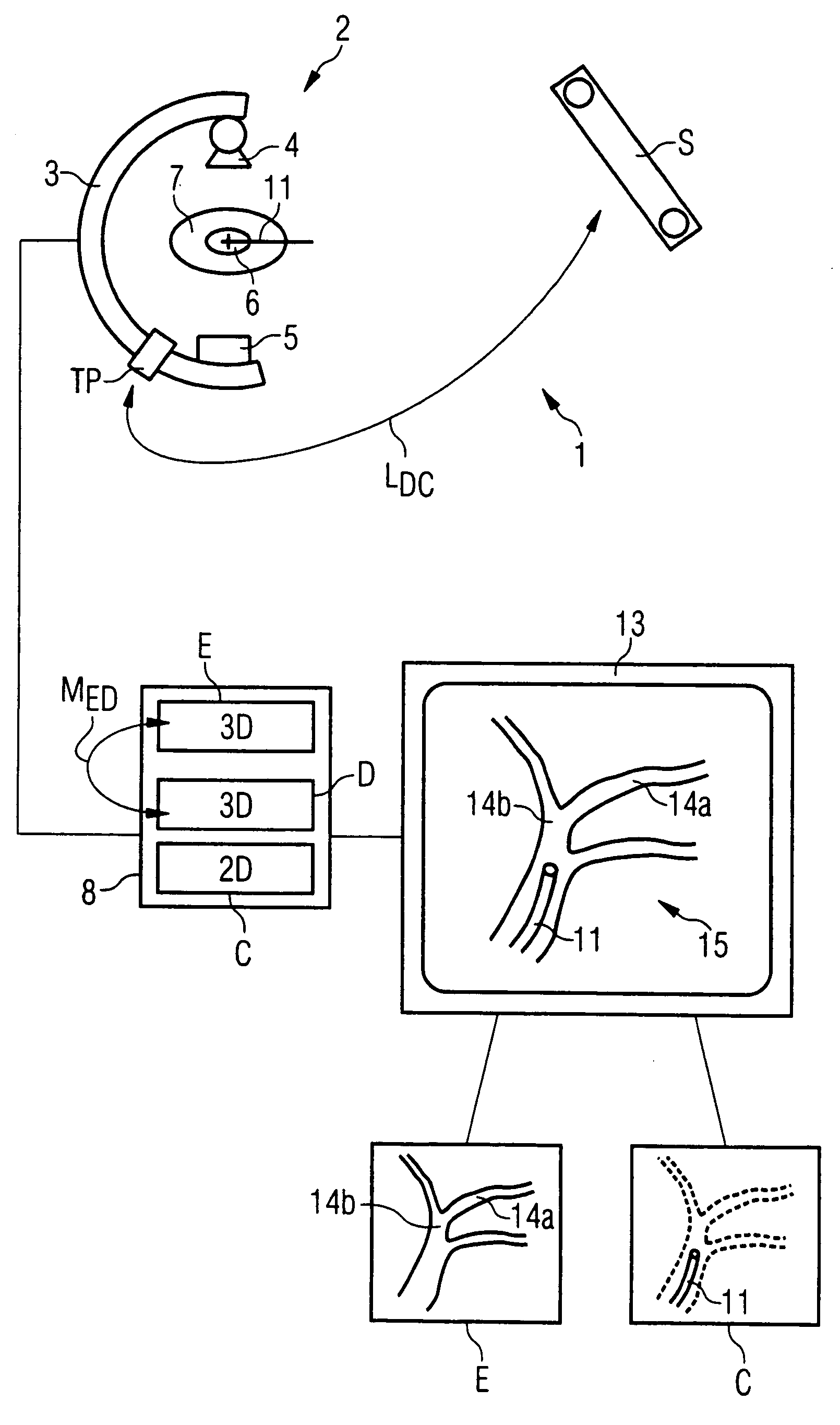

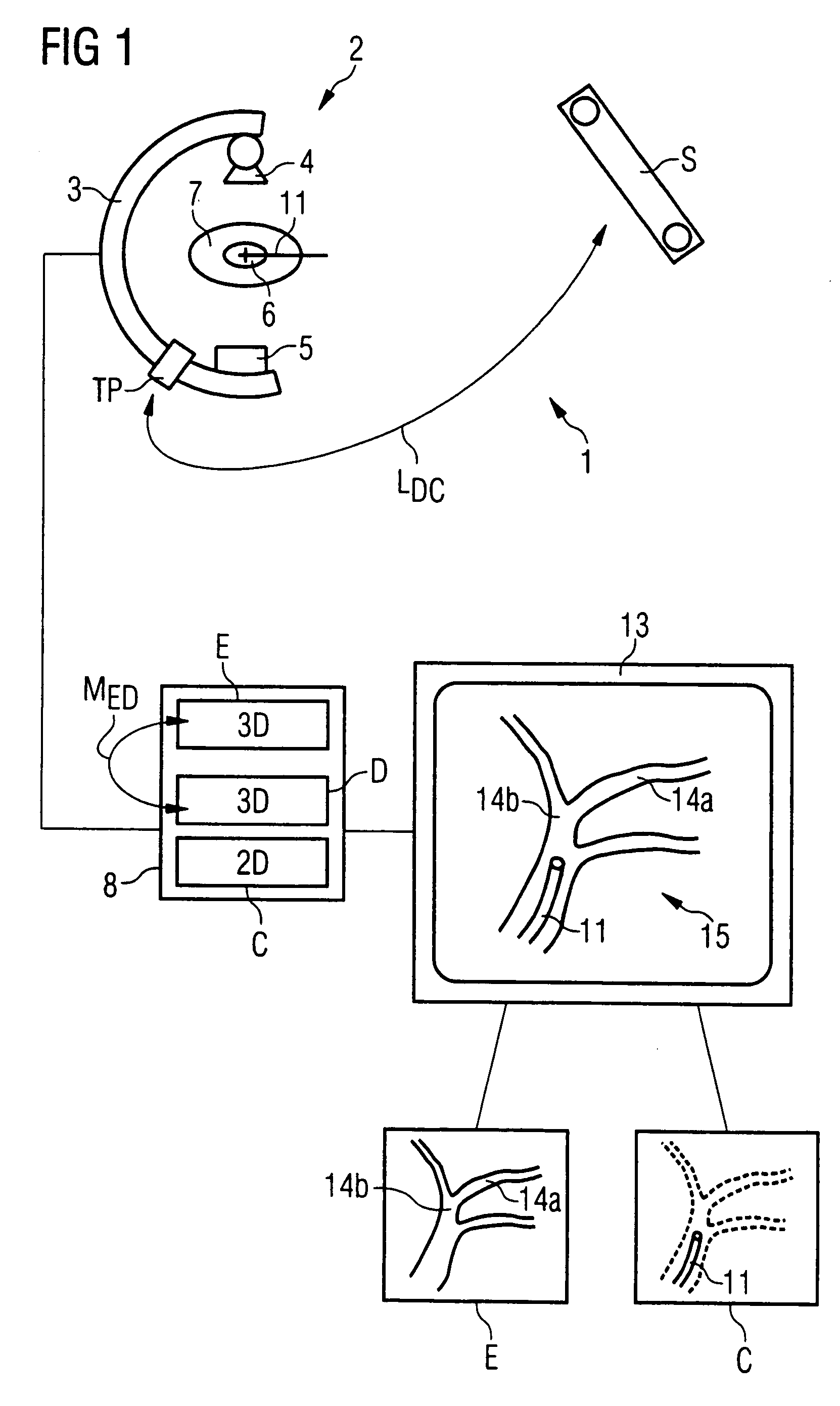

Method for automatically merging a 2D fluoroscopic C-arm image with a preoperative 3D image with one-time use of navigation markers

In a method and apparatus for the automatic merging of 2D fluoroscopic C-arm images with preoperative 3D images with a one-time use of navigation markers, markers in a marker-containing preoperative 3D image are registered relative to a navigation system, a tool plate fixed on the C-arm system is registered in a reference position relative to the navigation system, a 2D C-arm image (2D fluoroscopic image) that contains the image of at least a medical instrument is obtained in an arbitrary C-arm position, a projection matrix for a 2D-3D merge is determined on the basis of the tool plate and the reference position relative to the navigation system, and the 2D fluoroscopic image is superimposed with the 3D image on the basis of the projection matrix.

Owner:SIEMENS AG

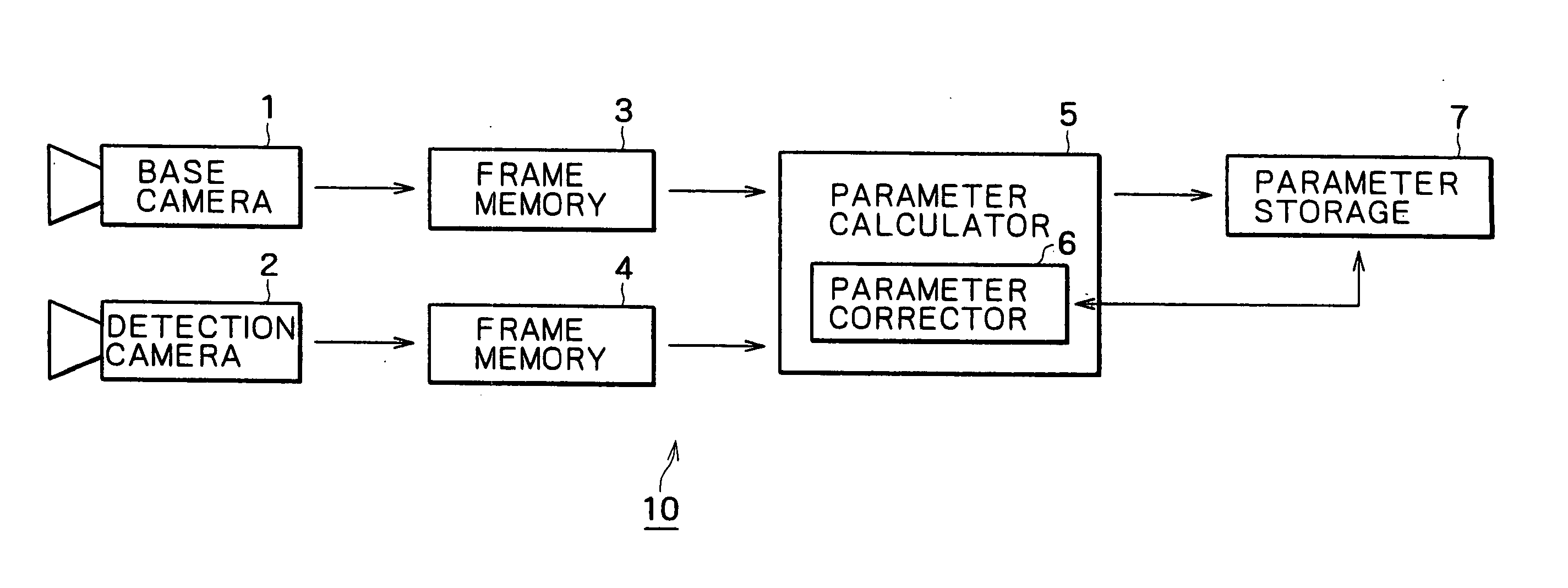

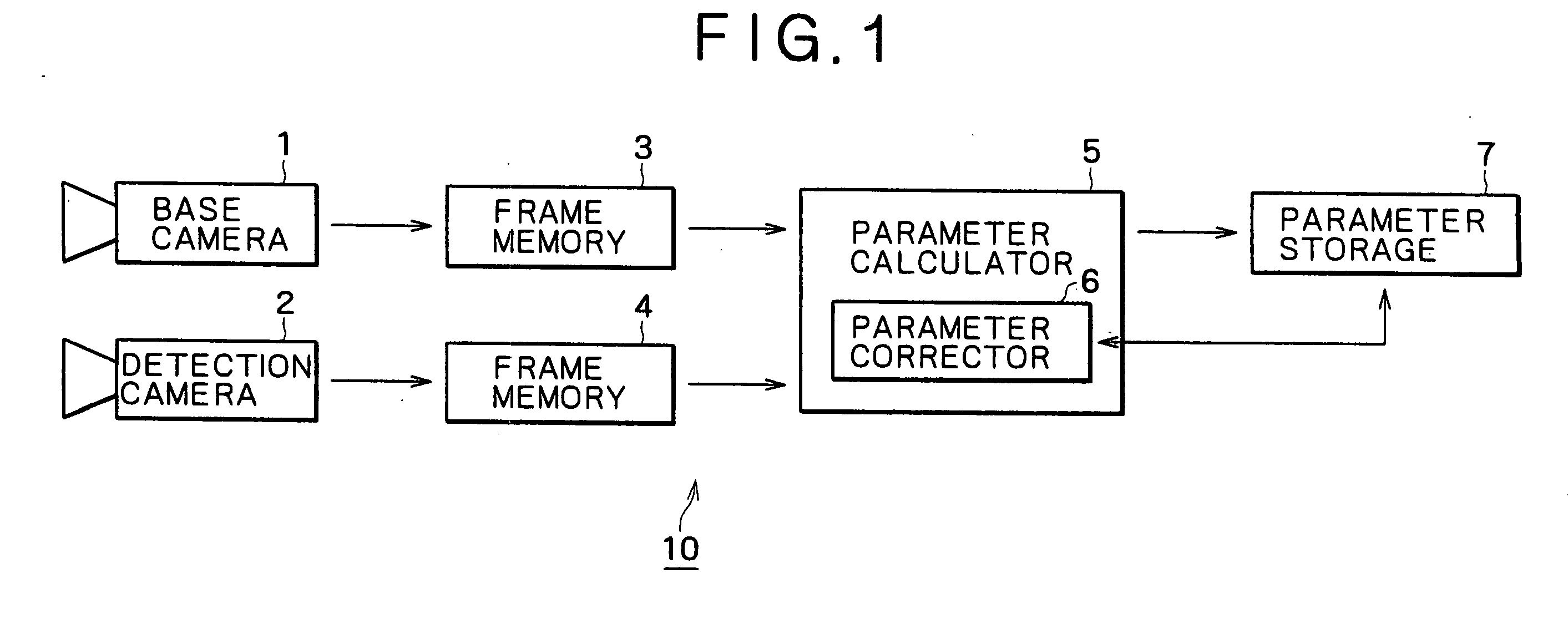

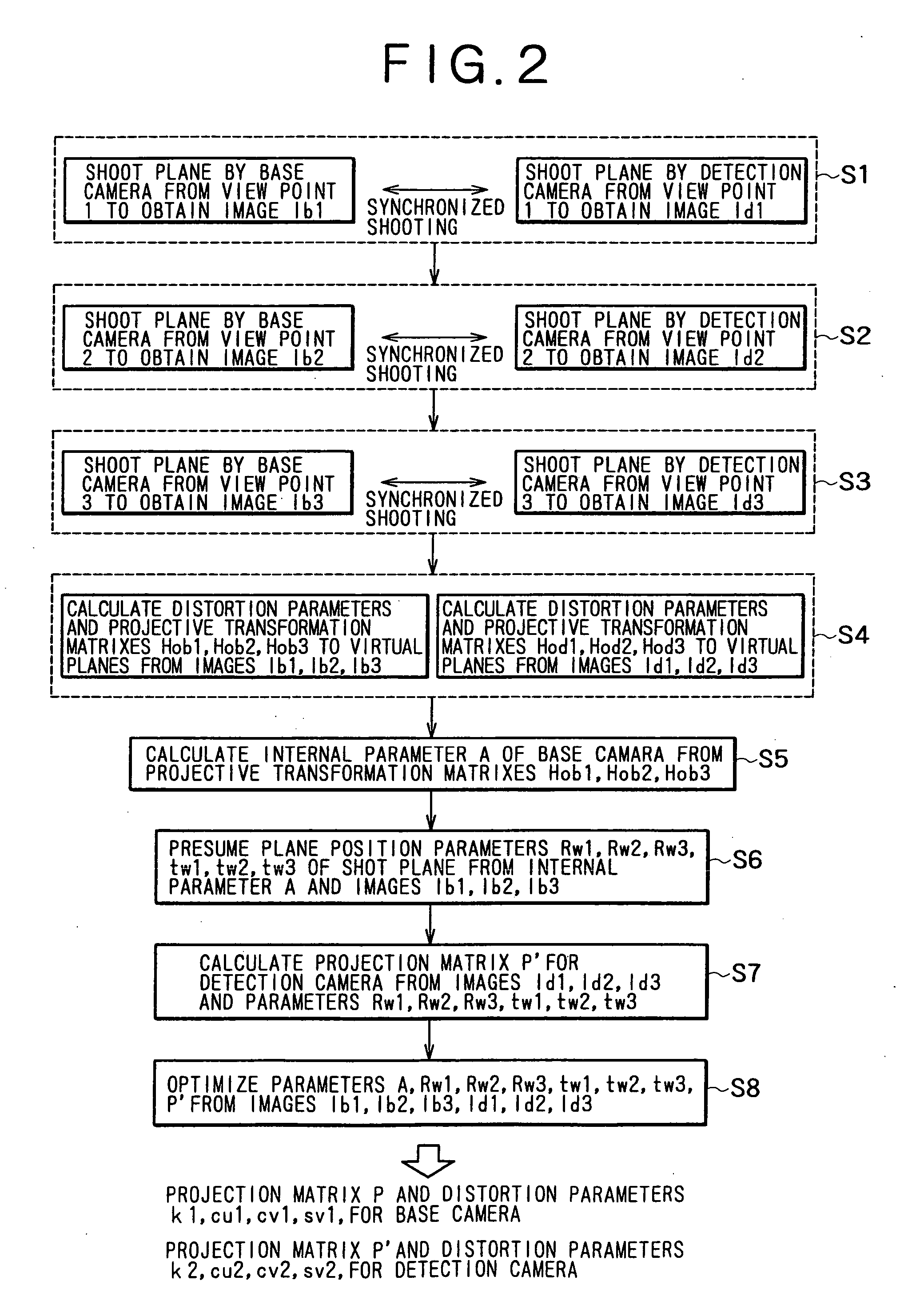

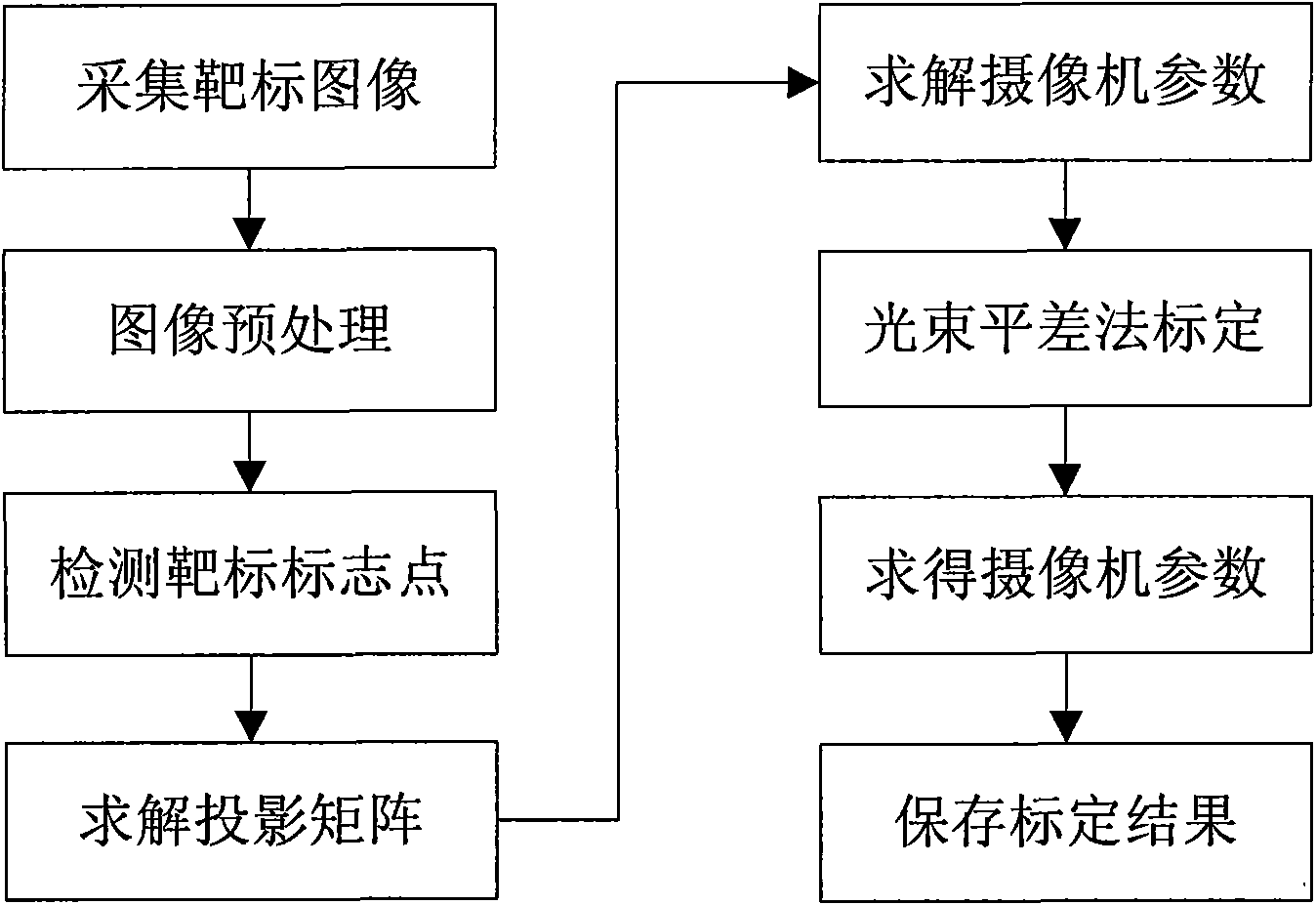

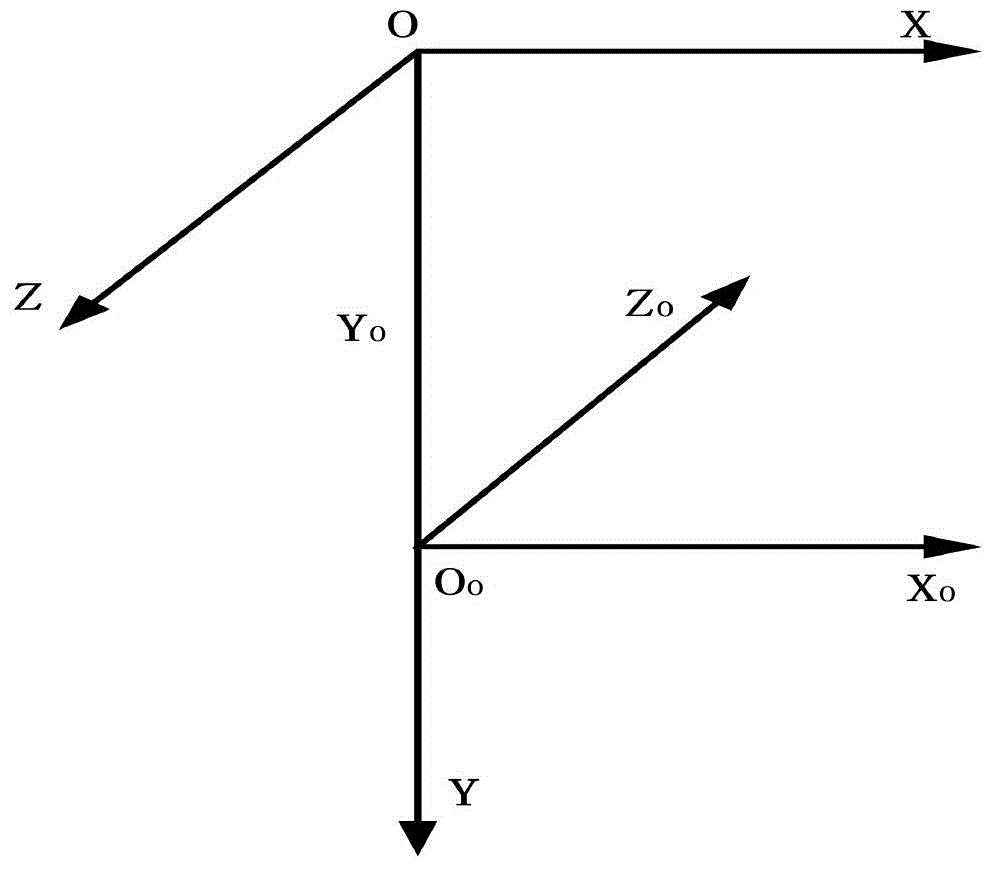

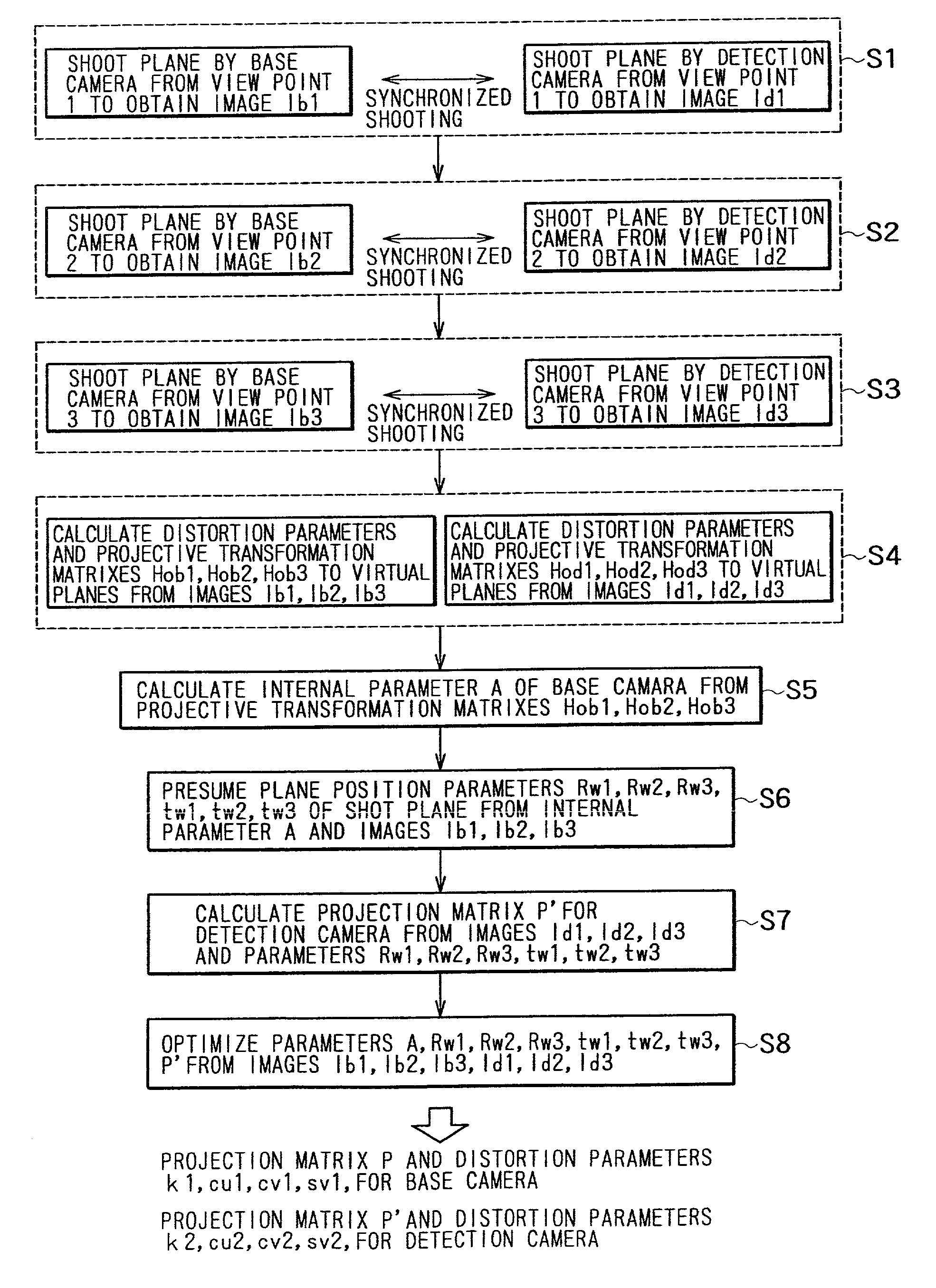

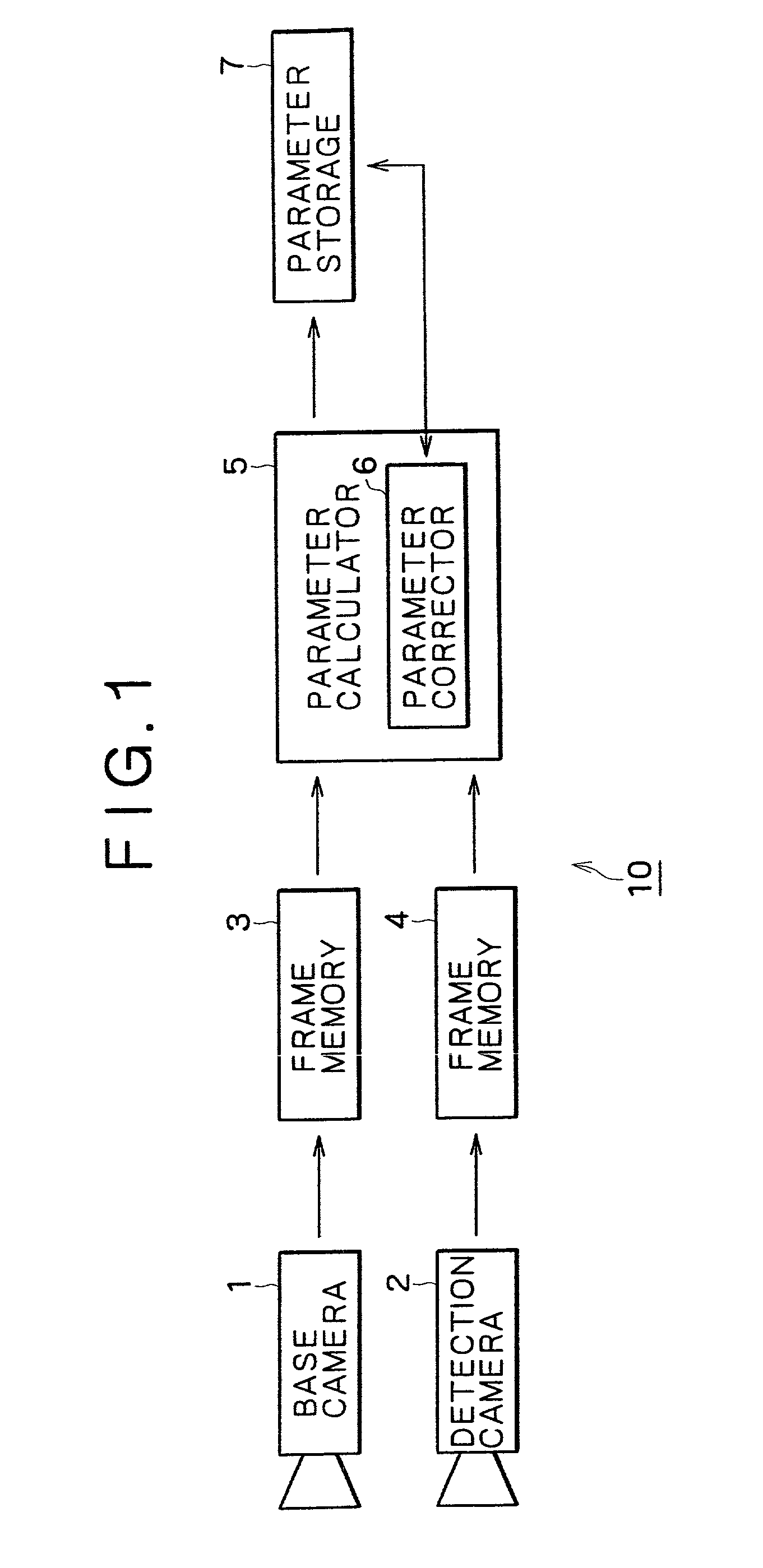

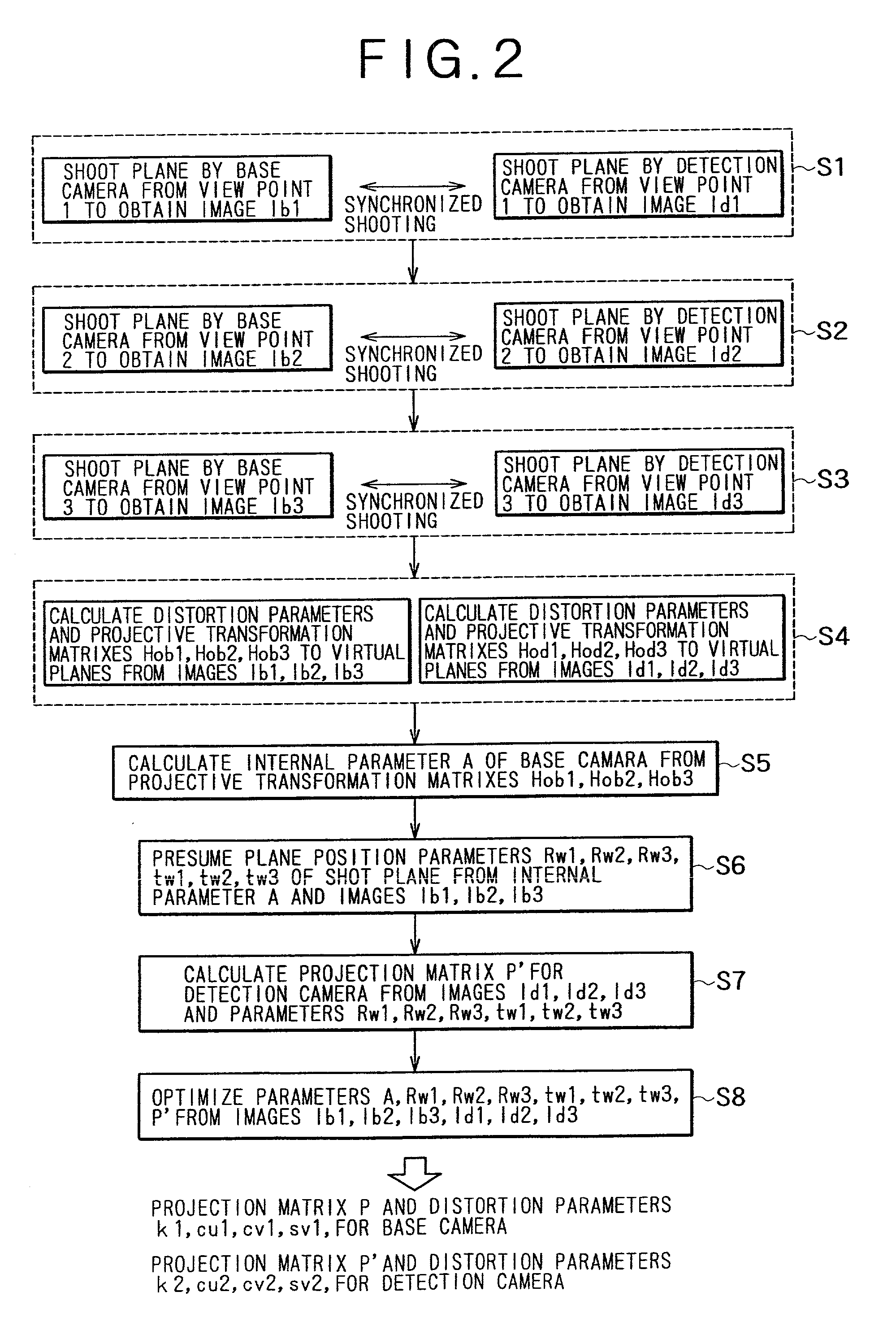

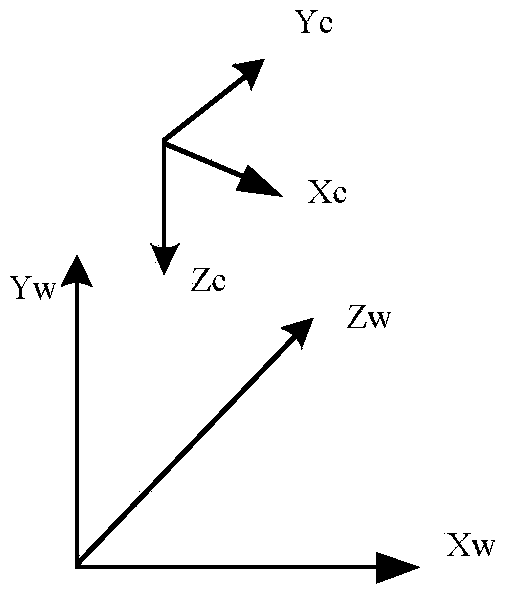

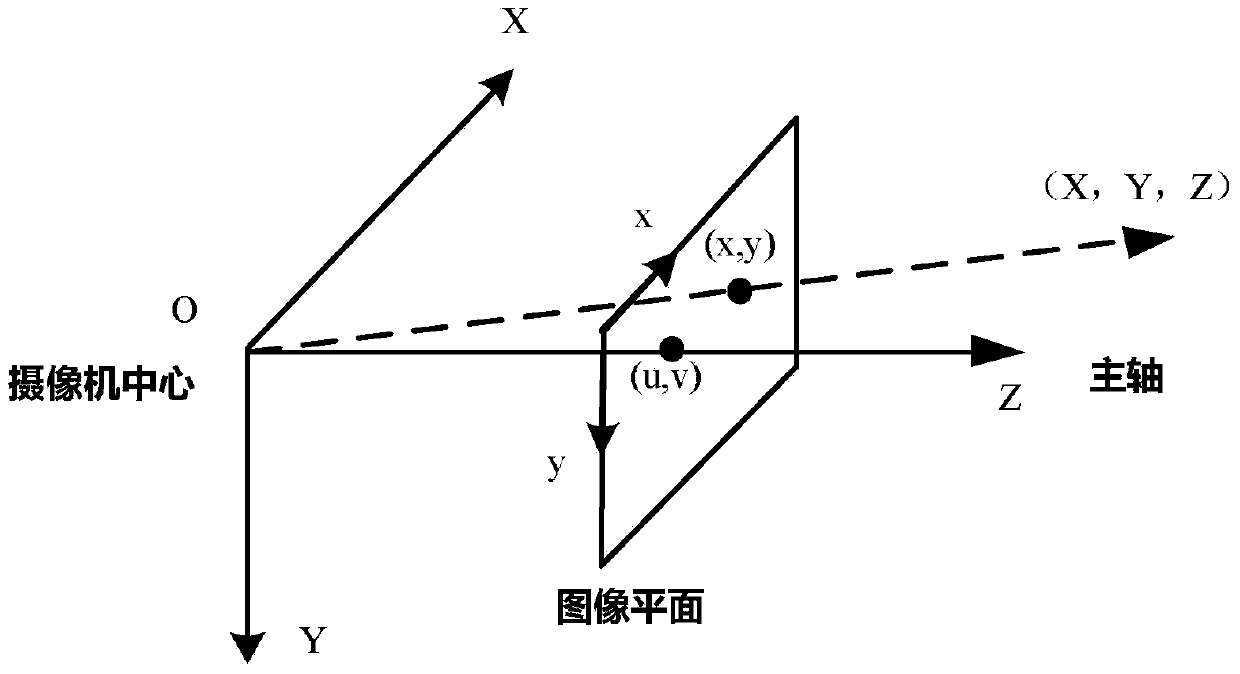

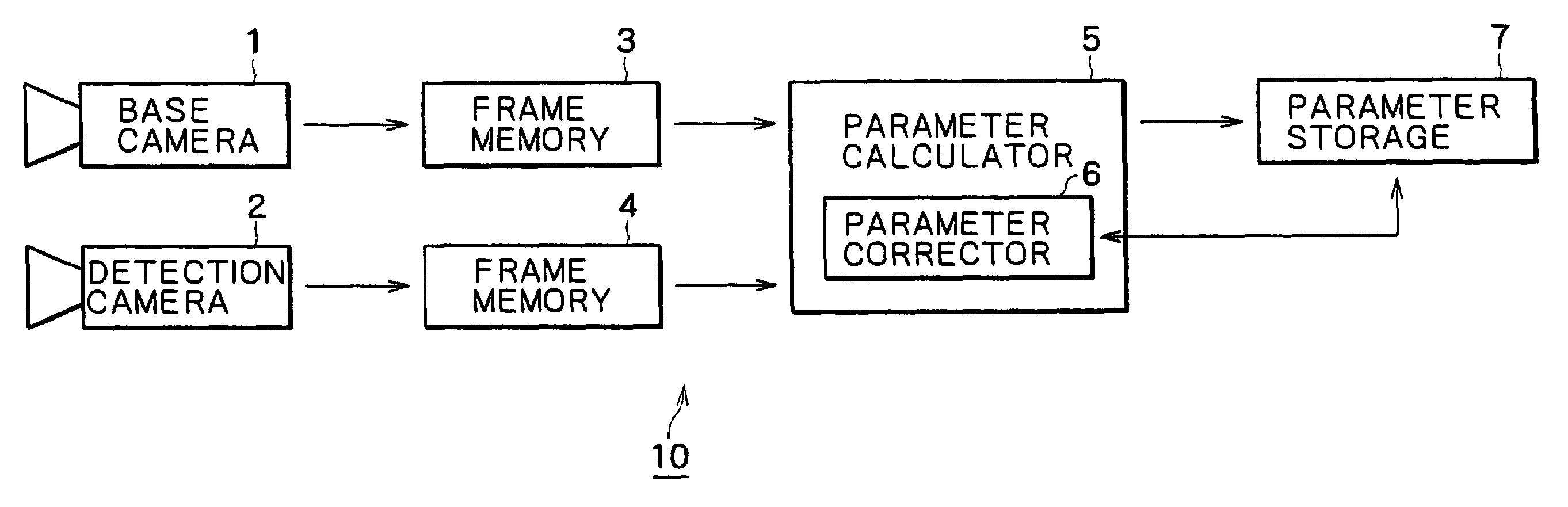

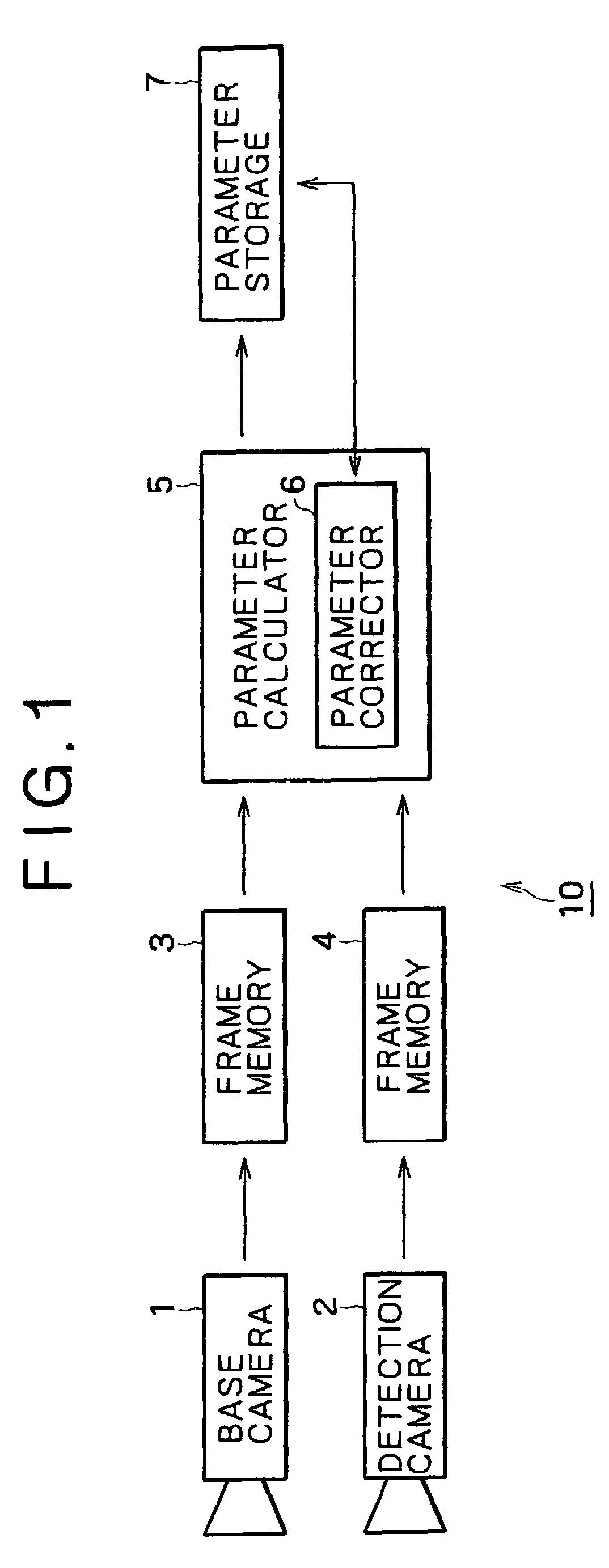

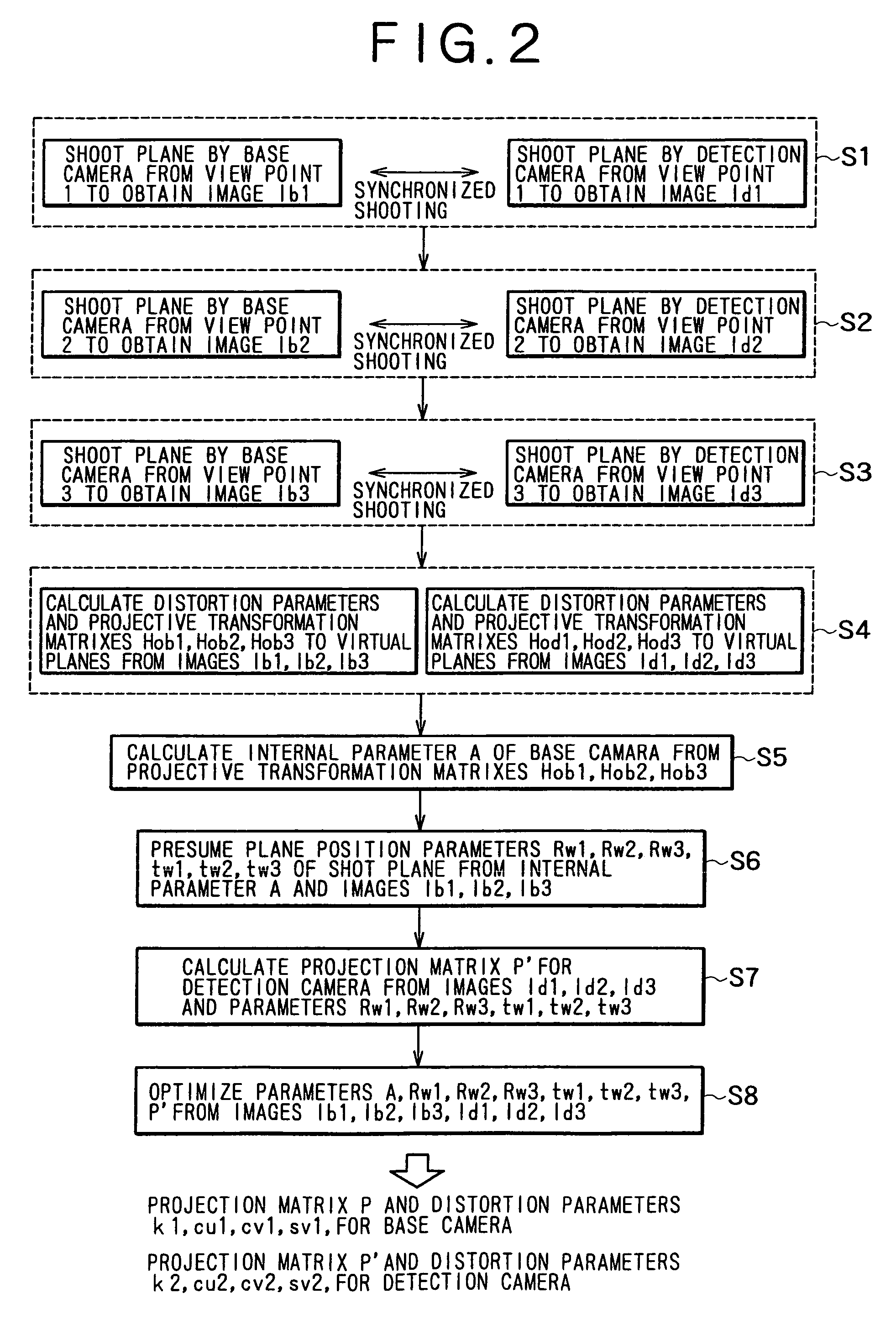

Camera calibration device and method, and computer system

InactiveUS20050185049A1Easy CalibrationEasy to limitImage analysisOptical rangefindersHat matrixCamera lens

A camera calibration device capable of simply calibrating a stereo system consisting of a base camera and a detection camera. First, distortion parameters of the two cameras necessary for distance measurement are presumed by the use of images obtained by shooting a patterned object plane with the base camera and the reference camera at three or more view points free from any spatial positional restriction, and projective transformation matrixes for projecting the images respectively onto predetermined virtual planes are calculated. Then internal parameters of the base camera are calculated on the basis of the projective transformation matrixes relative to the images obtained from the base camera. Subsequently the position of the shot plane is presumed on the basis of the internal parameter of the base camera and the images obtained therefrom, whereby projection matrixes for the detection camera are calculated on the basis of the plane position parameters and the images obtained from the detection camera. According to this device, simplified calibration can be achieved stably without the necessity of any exclusive appliance.

Owner:SONY CORP

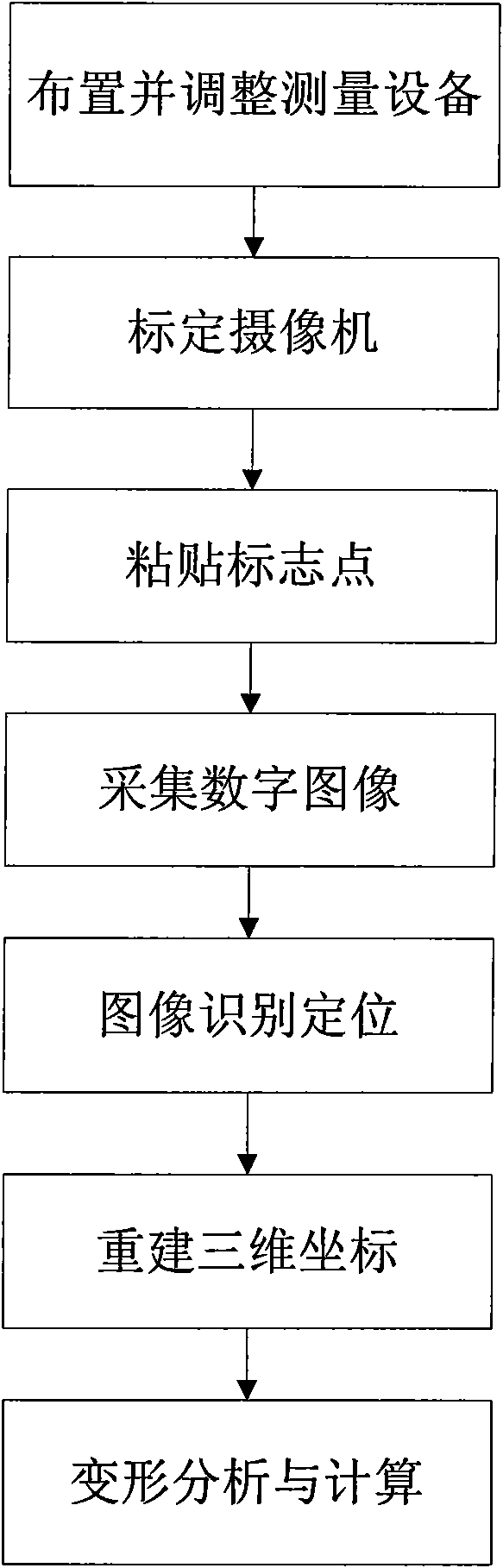

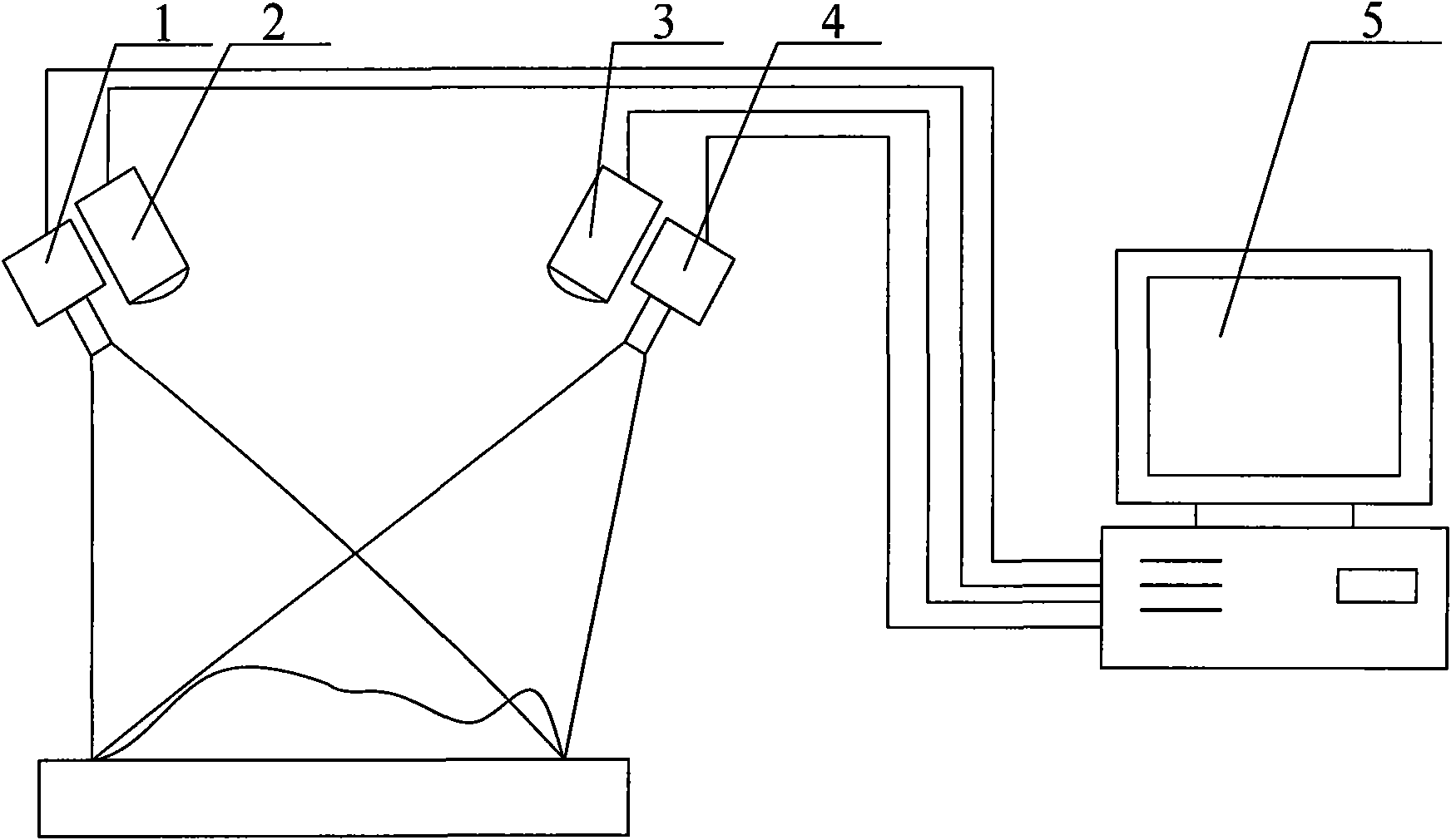

Method for measuring object deformation in real time

The invention discloses a method for measuring object deformation in real time, which comprises the following steps: arranging and adjusting measuring equipment, namely symmetrically arranging two cameras and corresponding LED illumination lamps above a measured object, and making optical axes of the two cameras intersected in a distance of 1 meter in front of the cameras; then calibrating the cameras, namely tightly sticking mark points to the surface of an object to be measured according to the size and shape of the measured object and the measurement requirement; next, placing the measured object stuck with the mark points under the two cameras in about 1 meter or moving the whole measuring equipment to make the measured object enter a measurement range and enable each camera to acquire the mark points on the surface of the measured object, starting the two cameras to shoot sequence images, and acquiring digital images; then identifying and positioning the images; reversely solving a projection matrix according to the calibration result of the cameras, and combining image coordinates of the solved mark points on the surface of the measured object on the images of the two cameras to reconstruct a three-dimensional coordinate of the mark points; and finally, carrying out deformation analysis and calculation for the mark points on the surface of the measured object.

Owner:XI AN JIAOTONG UNIV

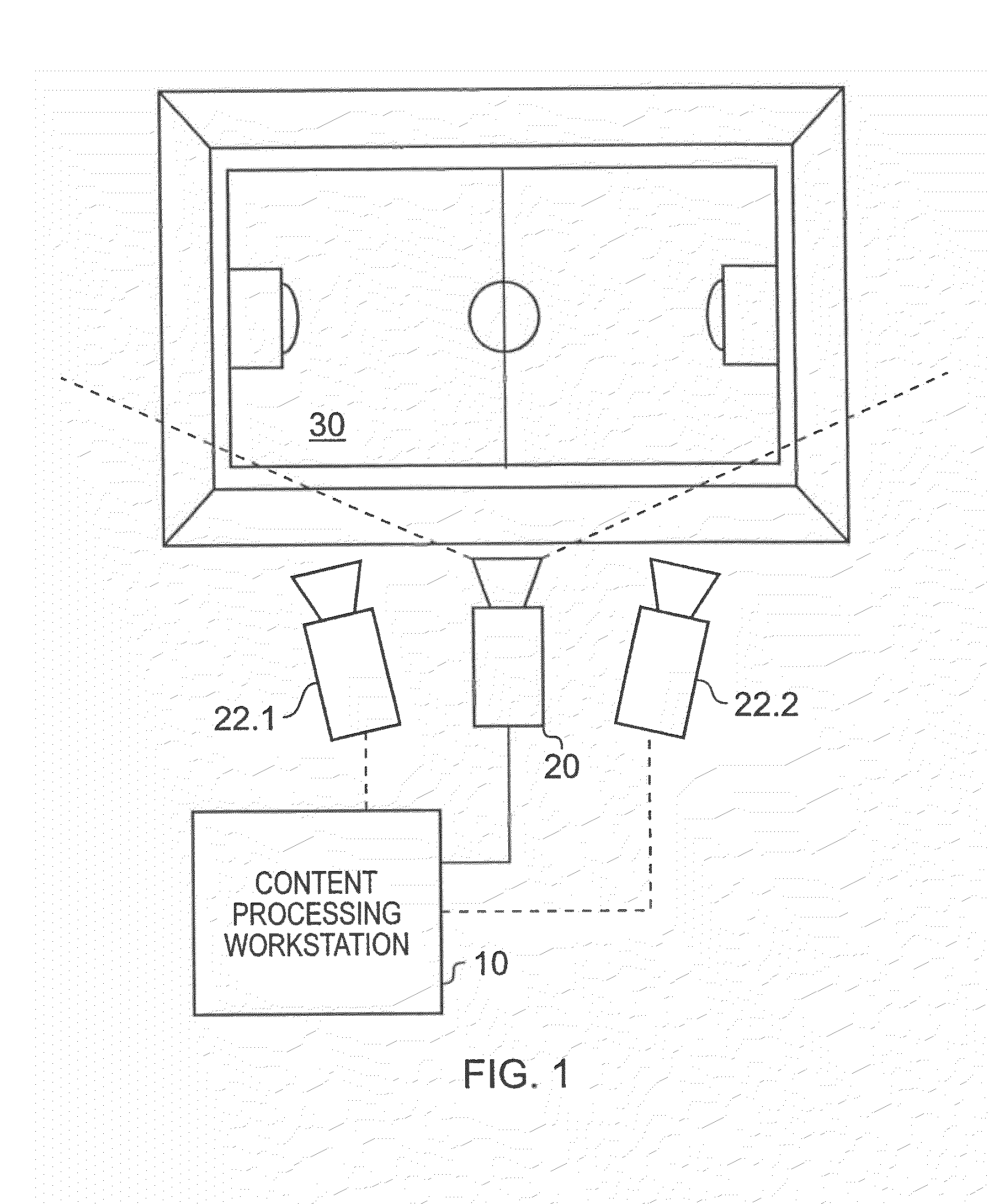

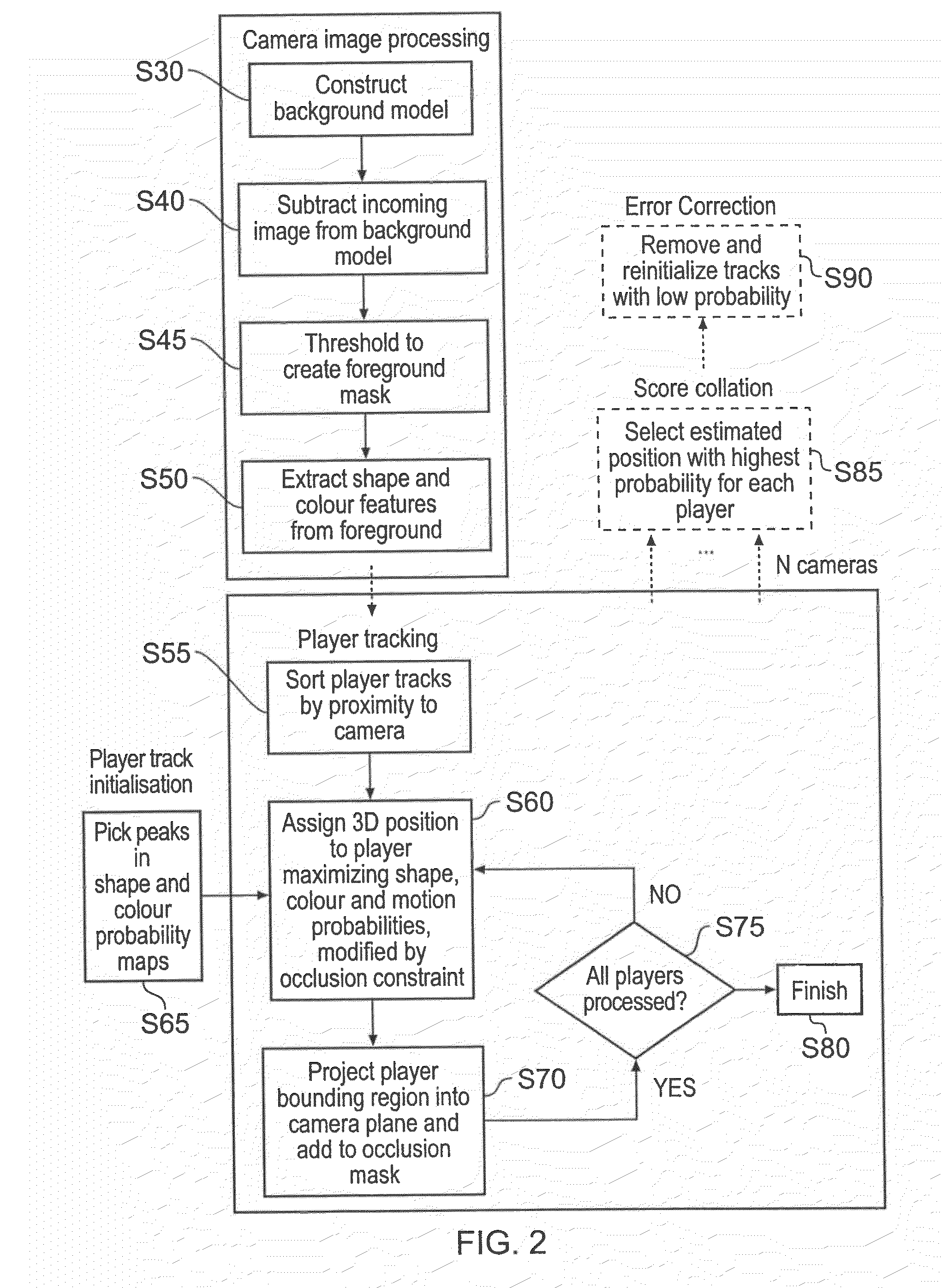

Image processing apparatus and method

InactiveUS20090066784A1Realistic representationImage enhancementImage analysisHat matrixImaging processing

An image processing apparatus and method generate a three dimensional representation of a scene which includes a plurality of objects disposed on a plane. The three dimensional representation is generated from one or more video images of the scene, which include the objects on the plane produced from a view of the scene by a video camera. The method comprises processing the captured video images so as to extract one or more image features from each object, comparing the one or more image features with sample image features from a predetermined set of possible example objects which the video images may contain, and identifying the objects from the comparison of the image features with the stored image features of the possible example objects. The method also includes generating object path data, which includes object identification data for each object which identifies the respective object; and provides a position of the object on the plane in the video images with respect to time. The method further includes calculating a projection matrix for projecting the position of each of the objects according to the object path data from the plane into a three dimensional model of the plane. As such a three dimensional representation of the scene which includes a synthesised representation of each of the plurality of objects on the plane can be produced, by projecting the position of the objects according to the object path data into the plane of the three dimensional model of the scene using the projection matrix and a predetermined assumption of the height of each of the objects. Accordingly, a three dimensional representation of a live video image of, for example, a football match can be generated, or tracking information included on the live video images. As such, a change in a relative view of the generated three dimensional representation can be made, so that a view can be provided in the three dimensional representation of the scene from a view point at which no camera is actually present to capture video images of the live scene.

Owner:SONY CORP

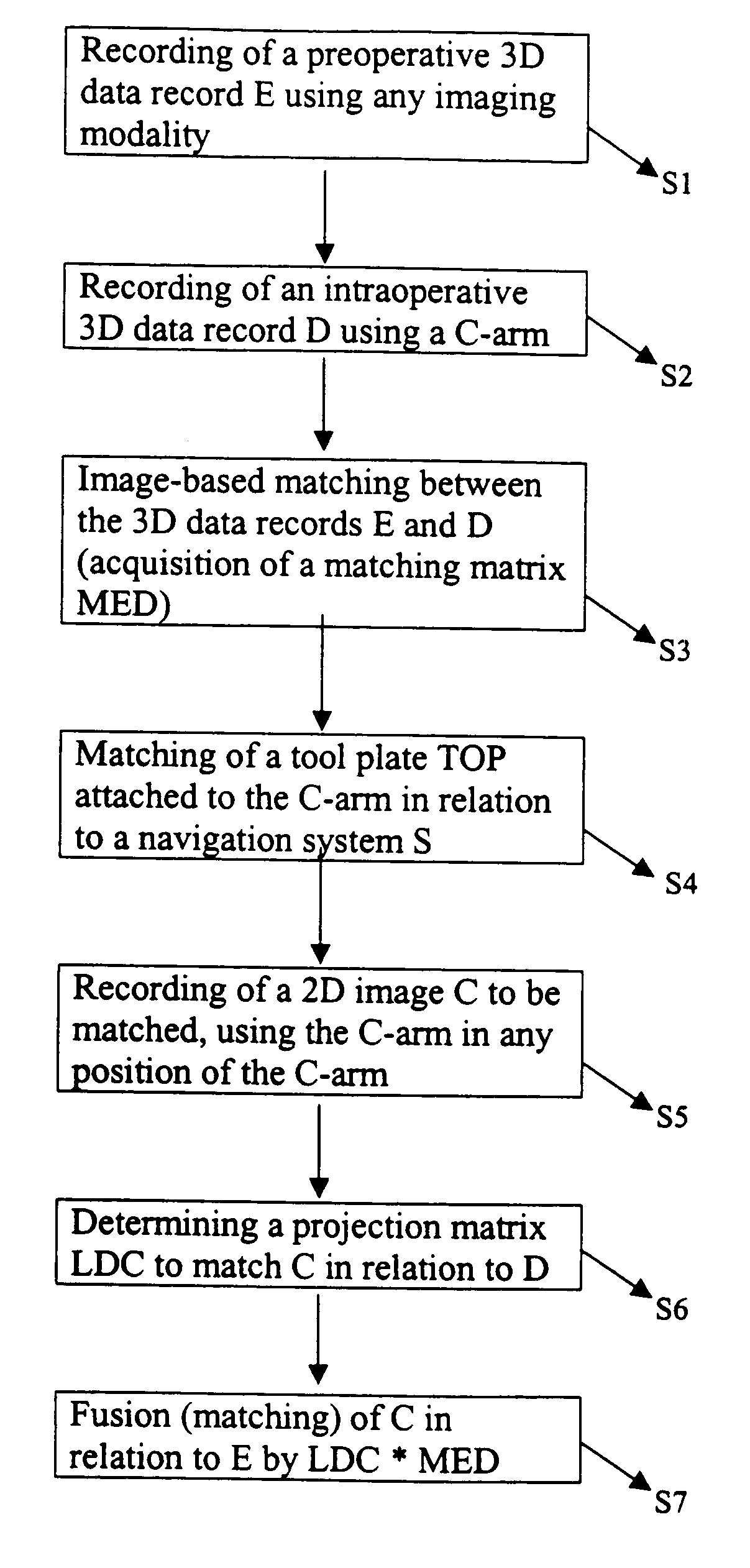

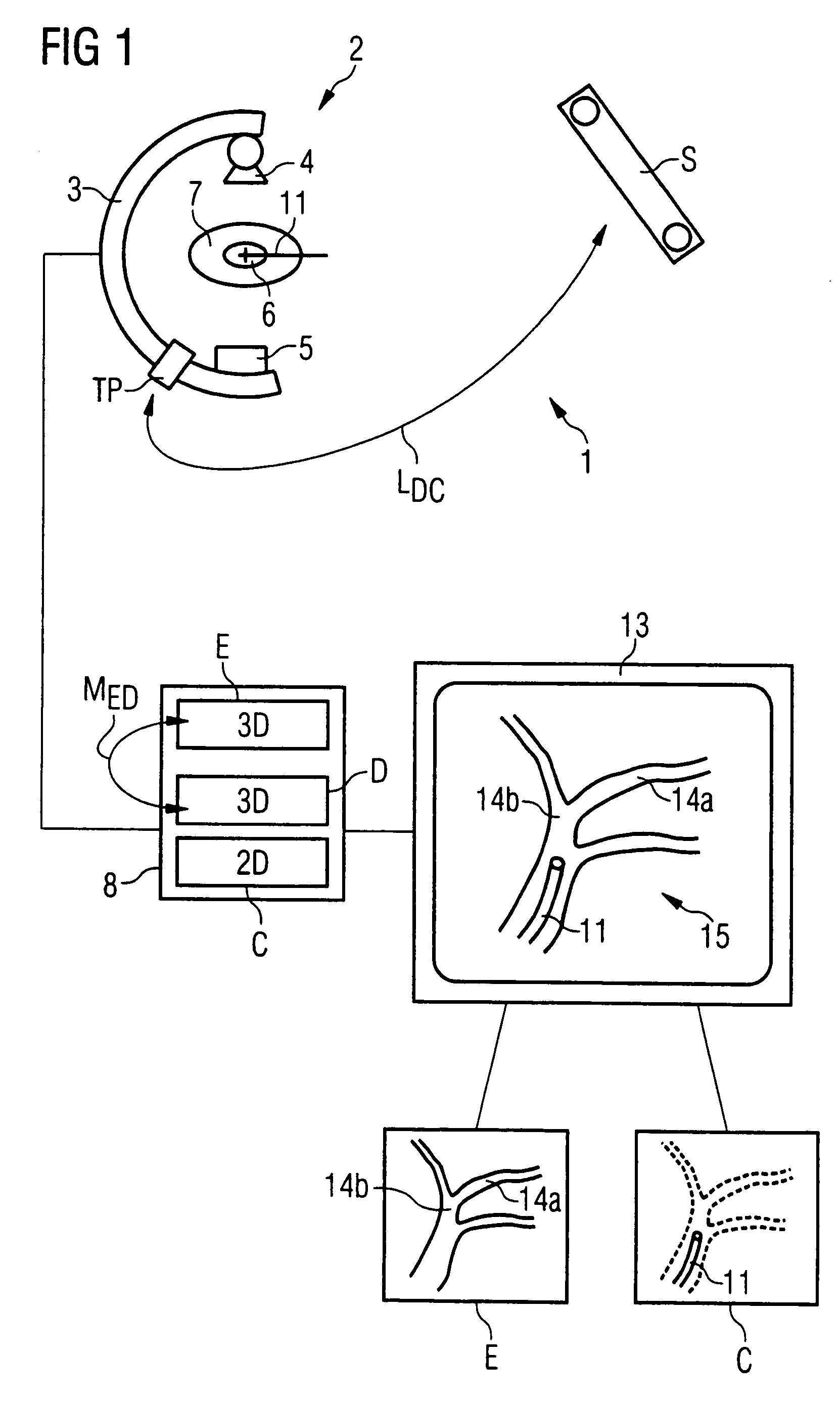

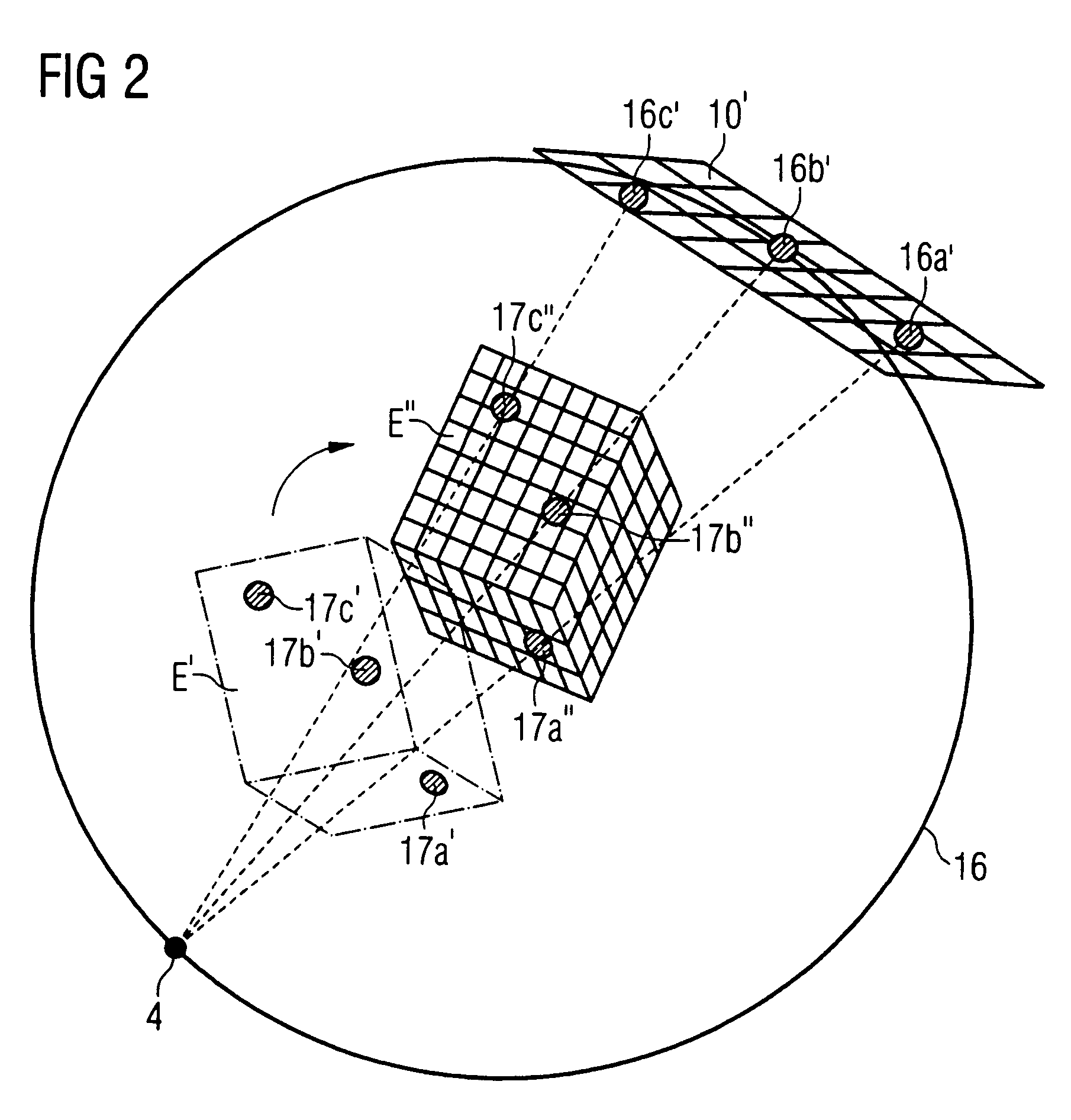

Method for marker-free automatic fusion of 2-D fluoroscopic C-arm images with preoperative 3D images using an intraoperatively obtained 3D data record

In a method and apparatus for automatic marker-free fusion (matching) of 2D fluoroscopic C-arm images with preoperative 3D images using an intraoperatively acquired 3D data record, an intraoperative 3D image is obtained using a C-arm x-ray system, image-based matching of an existing preoperative 3D image in relation to the intraoperative 3D image is undertaken, which generates a matching matrix of a tool plate attached to the C-arm system is matched in relation to a navigation system, a 2D fluoroscopic image to be matched is obtained, with the C-arm of the C-arm system in any arbitrary location, a projection matrix for matching the 2D fluoroscopic image in relation to the 3D image is obtained, and the 2D fluoroscopic image is fused (matched) with the preoperative 3D image on the basis of the matching matrix and the projection matrix.

Owner:SIEMENS HEALTHCARE GMBH

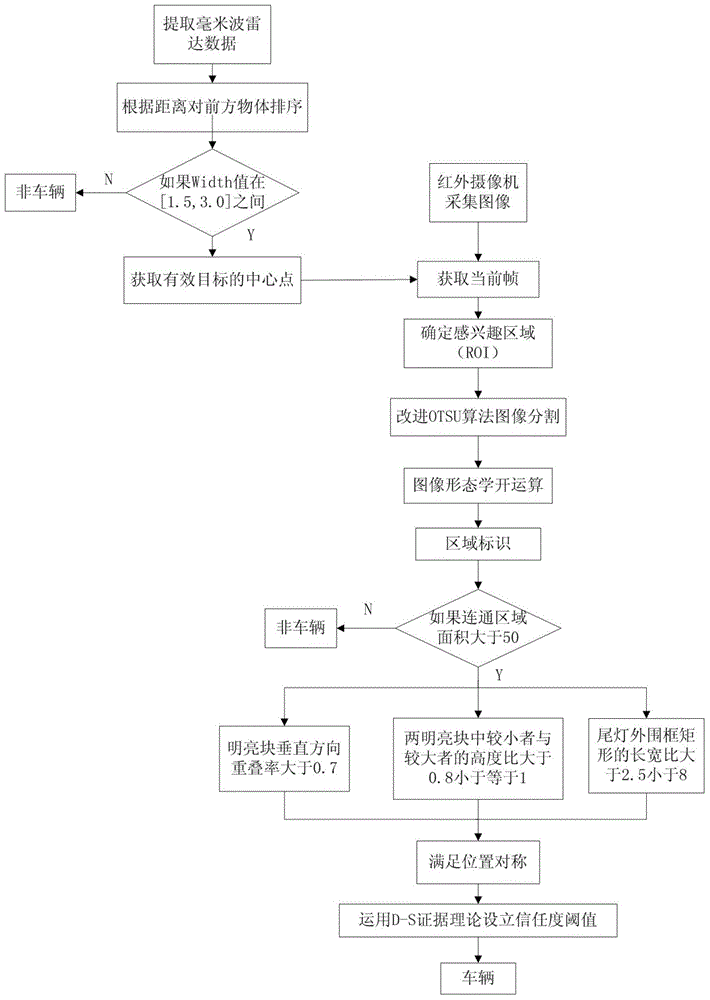

Night preceding vehicle detection method based on millimeter-wave radar and machine vision

InactiveCN104637059AAccurately obtain depth informationImprove accuracyImage analysisCharacter and pattern recognitionHat matrixVehicle detection

The invention relates to a night preceding vehicle detection method based on a millimeter-wave radar and machine vision, which belongs to the field of vehicle control. The night preceding vehicle detection method comprises the following steps: (1) performing camera calibration, so as to obtain a projection matrix of world coordinates to image pixel coordinates, establishing a transition relation between a radar coordinate system and a world coordinate system, and converting radar coordinates into the image pixel coordinates; (2) resolving received millimeter-wave radar data, excluding false targets and determining effective targets through data processing, and synchronously collecting camera images; (3) projecting radar scanning points under the world coordinate system to an image pixel coordinate system, and establishing an region of interest (ROI) on the images according to projective points; (4) detecting whether a vehicle exists in the region of interest based on an image processing method. Compared with the prior art, the night preceding vehicle detection method has the characteristics of fusion of the millimeter-wave radar and the machine vision, high real-time performance and high accuracy.

Owner:JILIN UNIV

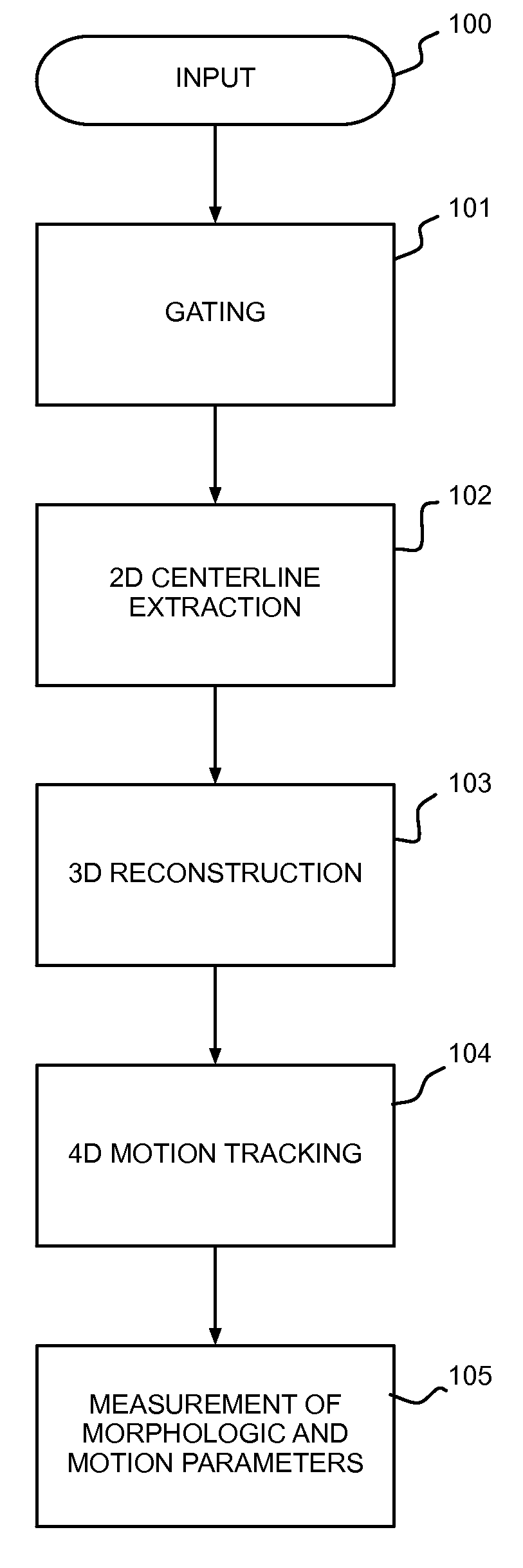

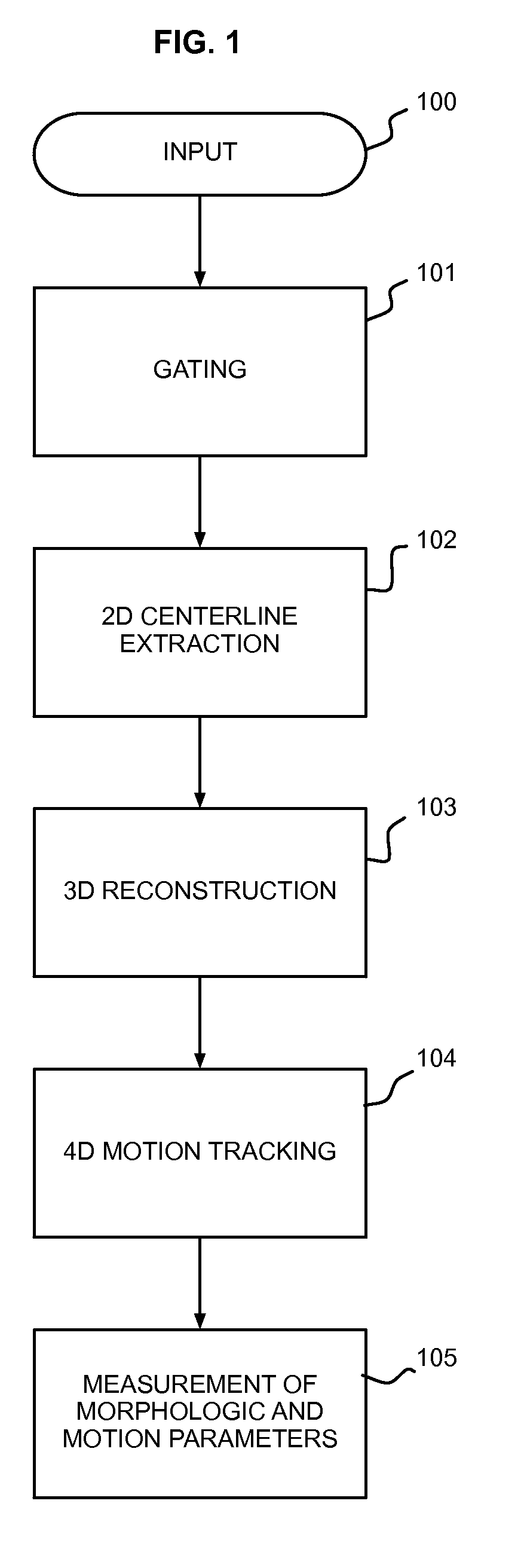

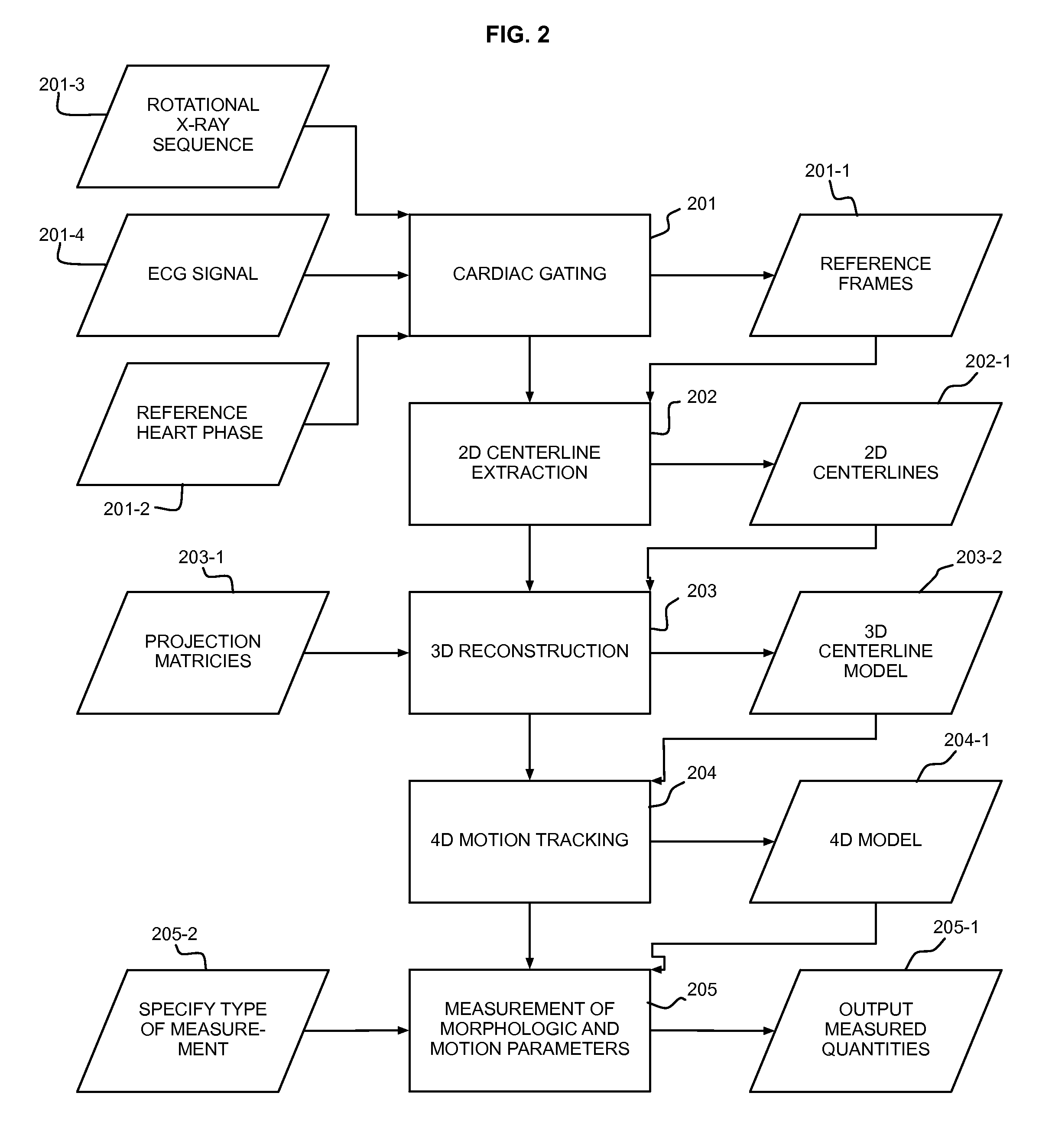

Automatic Measurement of Morphometric and Motion Parameters of the Coronary Tree From A Rotational X-Ray Sequence

Automatic measurement of morphometric and motion parameters of a coronary target includes extracting reference frames from input data of a coronary target at different phases of a cardiac cycle, extracting a three-dimensional centerline model for each phase of the cardiac cycle based on the references frames and projection matrices of the coronary target, tracking a motion of the coronary target through the phases based on the three-dimensional centerline models, and determining a measurement of morphologic and motion parameters of the coronary target based on the motion.

Owner:SIEMENS HEALTHCARE GMBH

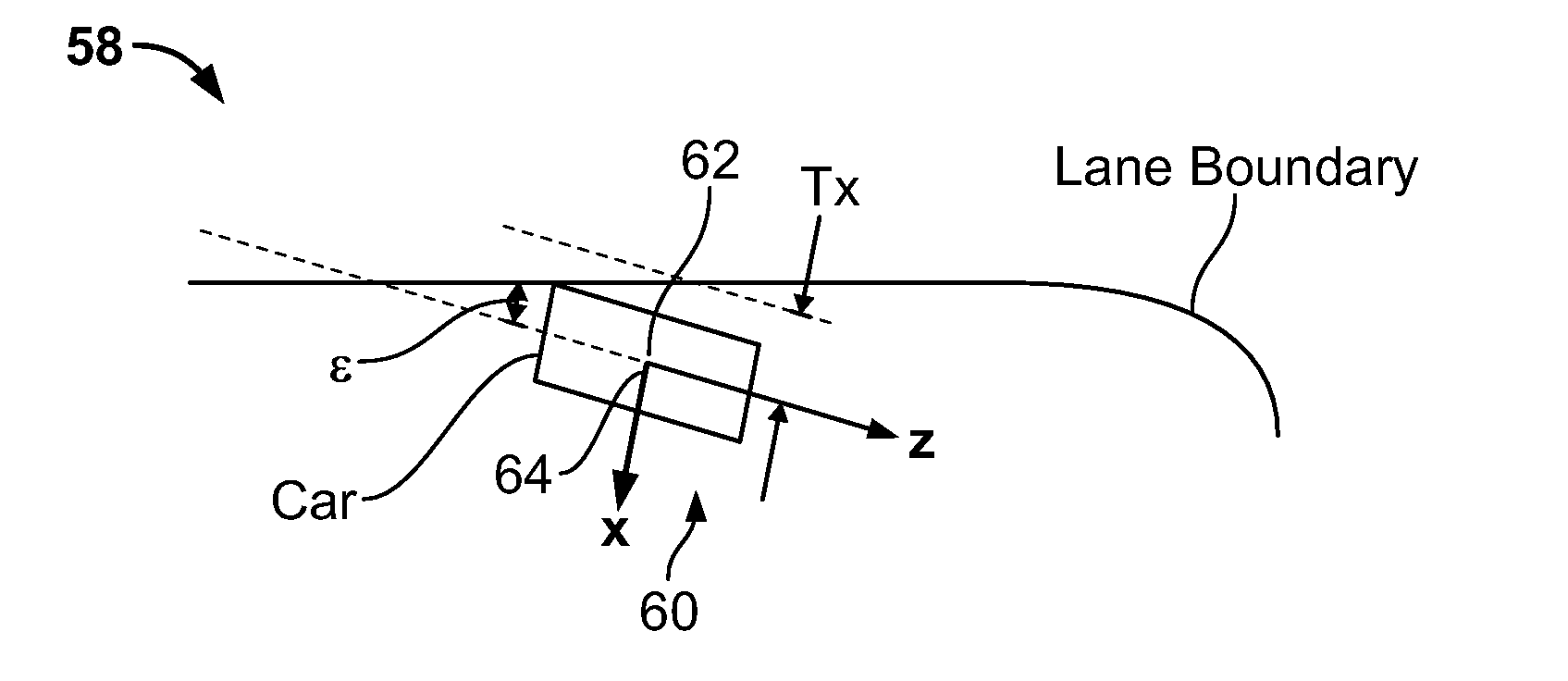

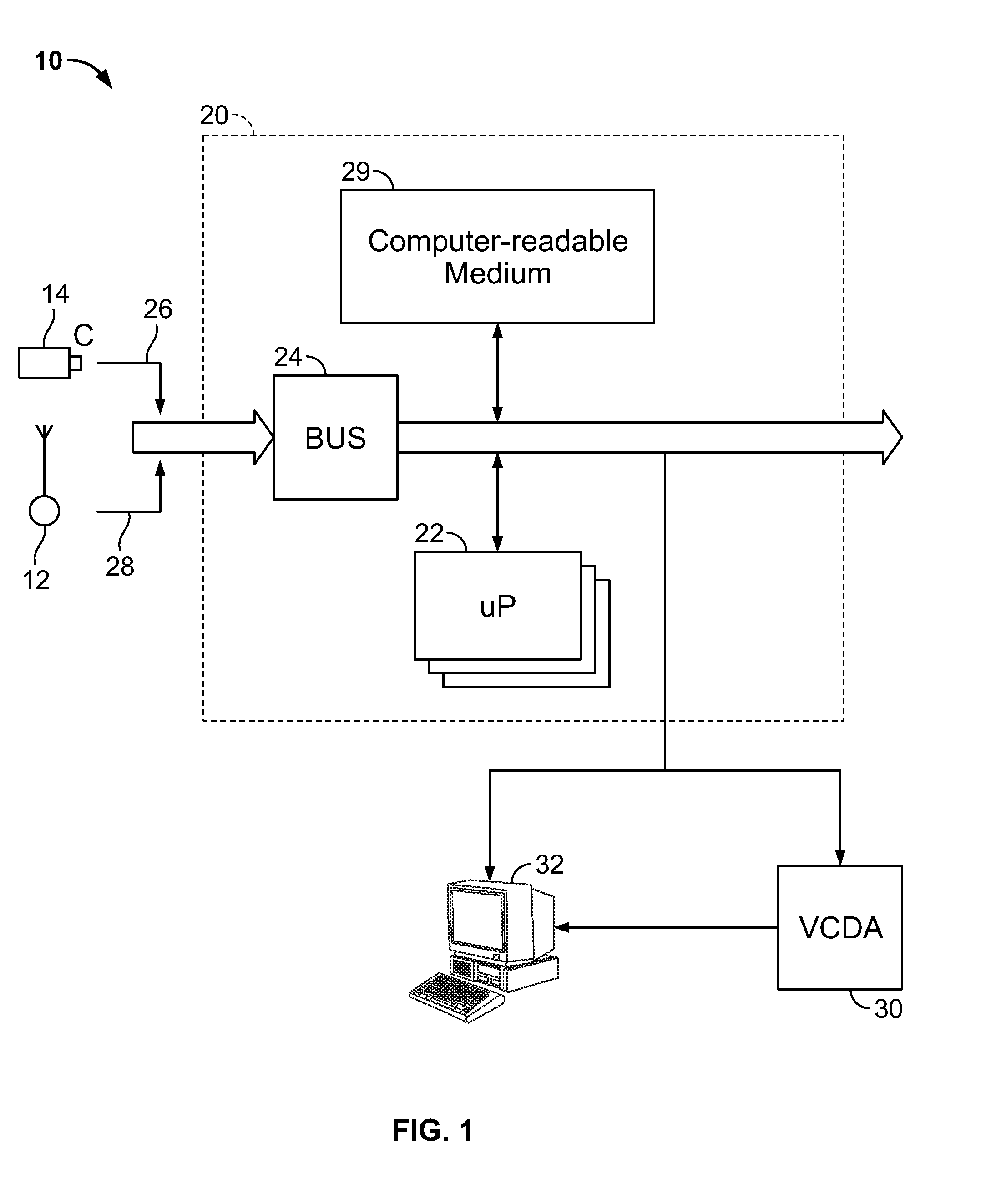

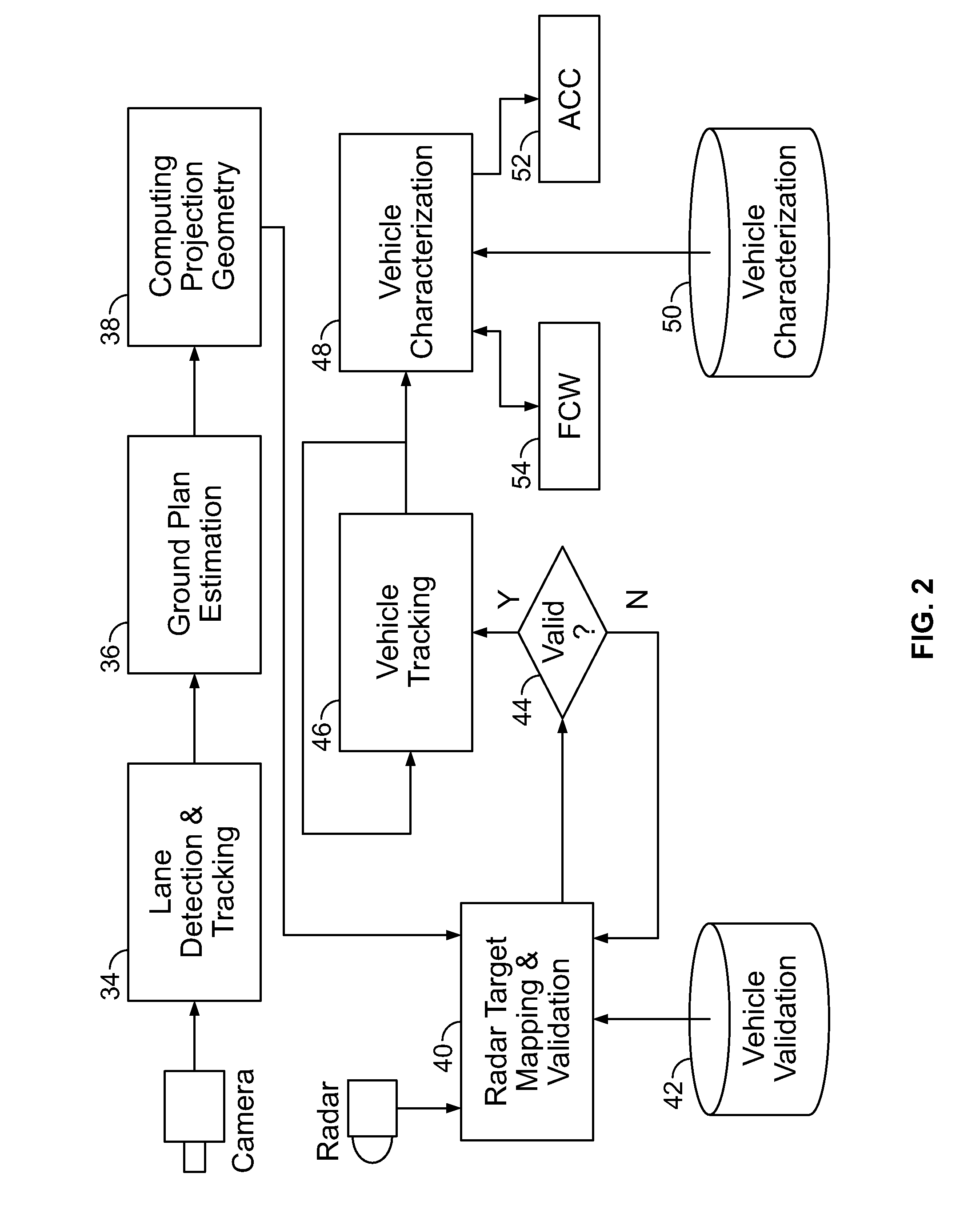

Radar guided vision system for vehicle validation and vehicle motion characterization

InactiveUS20090067675A1Character and pattern recognitionRadio wave reradiation/reflectionHat matrixComputer graphics (images)

A method for determining whether a target vehicle in front of a host vehicle intends to change lanes using radar data and image data is disclosed, comprising the steps of processing the image data to detect the boundaries of the lane of the host vehicle; estimating a ground plane by determining a projected vanishing point of the detected lane boundaries; using a camera projection matrix to map the target vehicle from the radar data to image coordinates; and determining lane change intentions of the target vehicle based on a moving trajectory and an appearance change of the target vehicle. Determining lane change intentions based on a moving trajectory of the target vehicle is based on vehicle motion trajectory relative to the center of the lane such that the relative distance of the target vehicle from the center of the lane follows a predetermined trend. Determining lane change intentions based on an appearance change of the target vehicle is based on a template that tracks changes to the appearance of the rear part of the target vehicle due to rotation.

Owner:SRI INTERNATIONAL +1

Camera calibration device and method, and computer system

InactiveUS6985175B2Simply carrying out camera calibrationImage analysisOptical rangefindersHat matrixCamera lens

A camera calibration device capable of simply calibrating a stereo system consisting of a base camera and detection camera. First, distortion parameters of the two cameras necessary for distance measurement are presumed by the use of images obtained by shooting a patterned object plane with the base camera and the reference camera at three or more view points free from any spatial positional restriction, and projective transformation matrixes for projecting the images respectively onto predetermined virtual planes are calculated. Then internal parameters of the base camera are calculated on the basis of the projective transformation matrixes relative to the images obtained from the base camera. Subsequently the position of the shot plane is presumed on the basis of the internal parameter of the base camera and the images obtained therefrom, whereby projection matrixes for the detection camera are calculated on the basis of the plane position parameters and the images obtained from the detection camera. According to this device, simplified calibration can be achieved stably without the necessity of any exclusive appliance.

Owner:SONY CORP

Vehicle detection method fusing radar and CCD camera signals

ActiveCN103559791AImprove reliabilityHigh precisionDetection of traffic movementCharacter and pattern recognitionHat matrixComputer graphics (images)

The invention discloses a vehicle detection method fusing radar and CCD camera signals. The method includes the steps of inputting the radar and CCD camera signals, correcting a camera to obtain a projection matrix of a road plane coordinate and a projection matrix of an image coordinate, converting a road plane world coordinate into an image plane coordinate, building positive and negative sample sets suitable for a vehicle HOG describer, carrying out batch feature extraction on the vehicle sample sets to build an HOG sample set, building an SVM classification model of a linear support vector machine to train an SVM, extracting regions of interest of barriers detected by radar in a video image, inputting the regions of interest into an SVM classifier to judge a target category, outputting an identifying result, and measuring the distance of a target which is judged to be a vehicle by means of the radar. Combination detection is carried out by means of the radar and CCD camera signals, depth information of the vehicle is obtained, meanwhile, profile information of the vehicle can be well detected, and reliability and accuracy of vehicle detection and positioning are improved.

Owner:BEIJING UNION UNIVERSITY

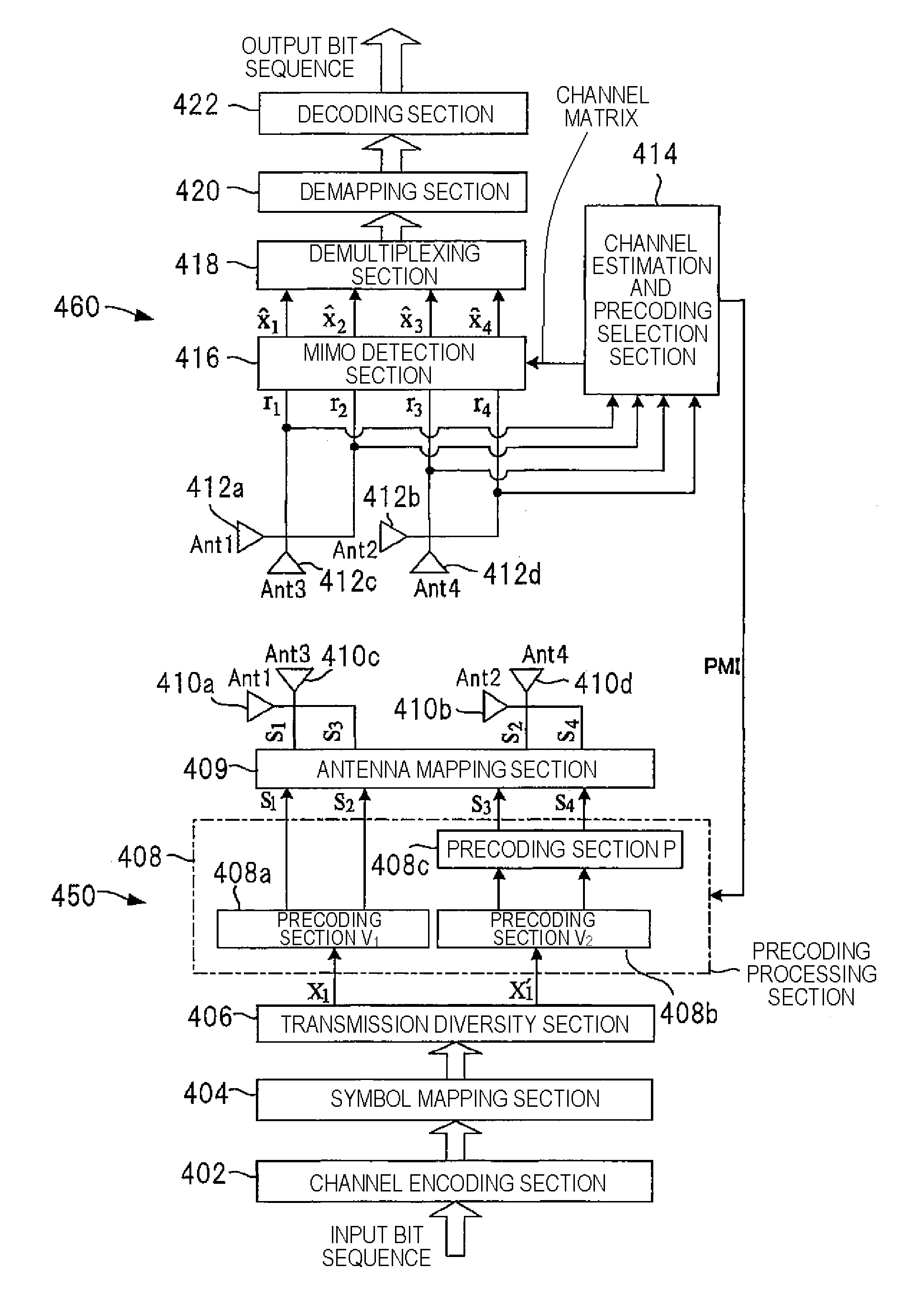

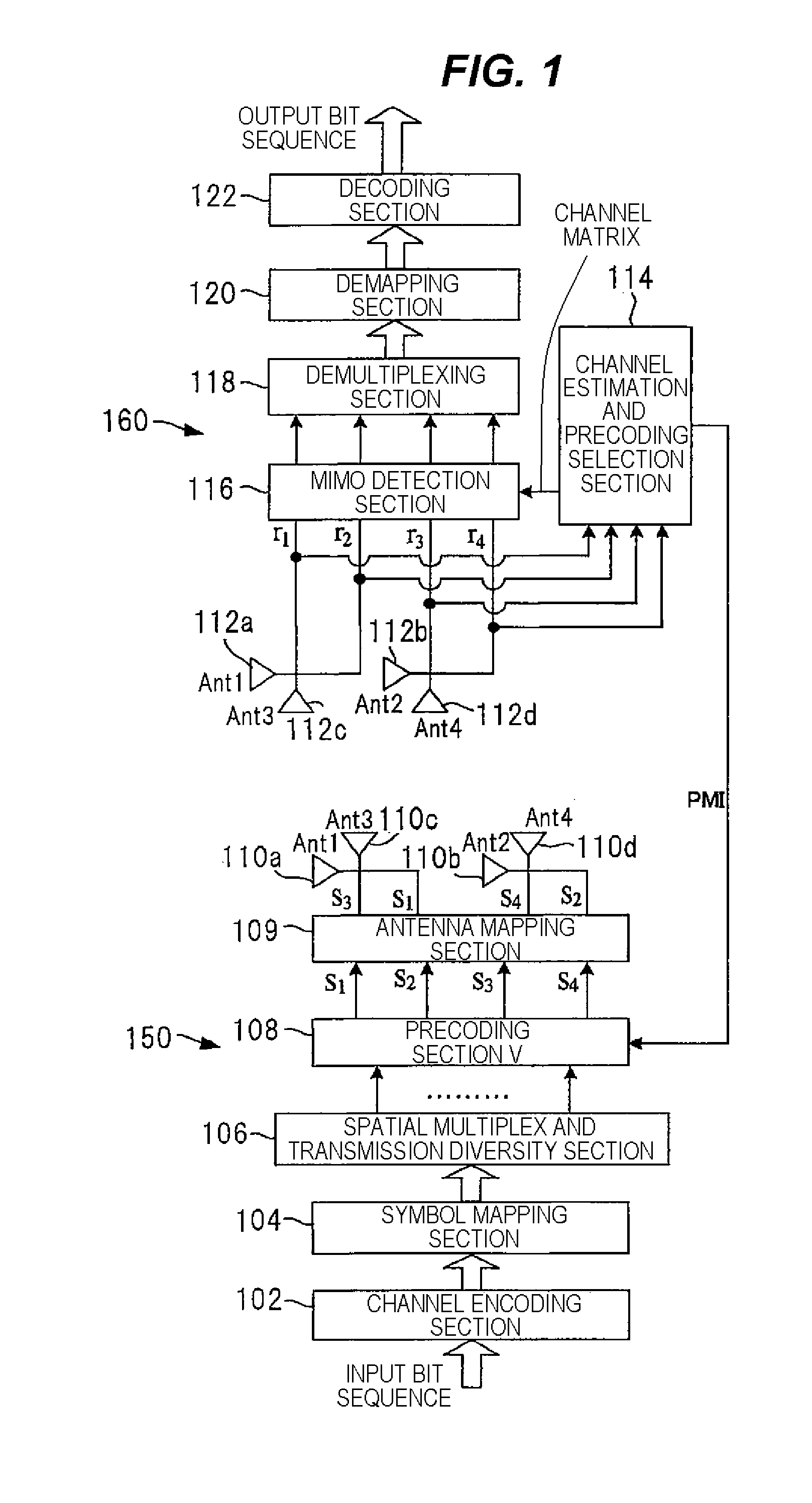

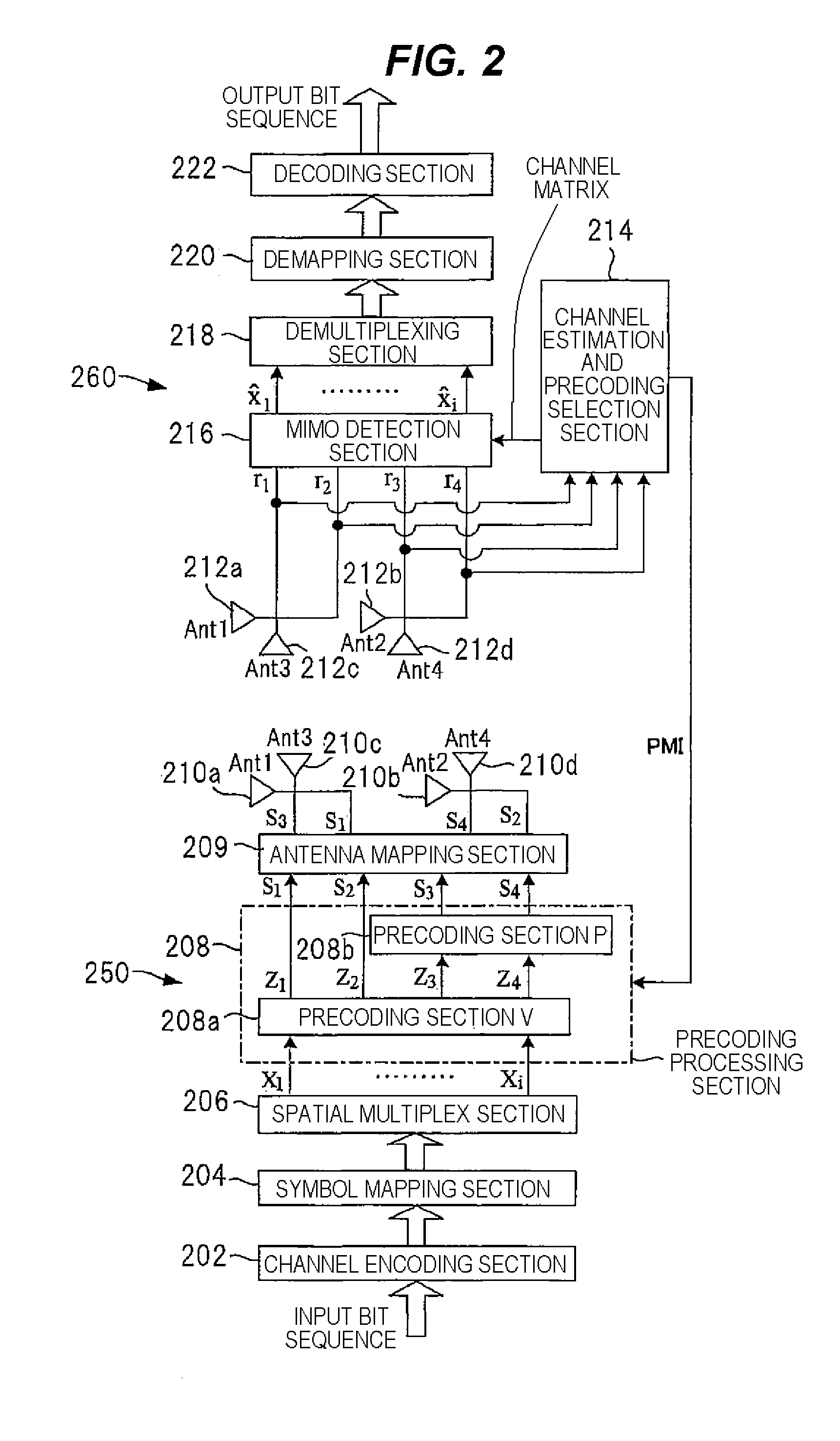

Wireless communication apparatus, wireless communication system and wireless communication method

ActiveUS20110261894A1Reduce distractionsEffective precodingDepolarization effect reductionPolarisation/directional diversityHat matrixCommunications system

In a MIMO system using a cross-polarized antenna structure, even if no ideal XPD can be obtained, the interference between different polarized waves can be reduced to allow an effective precoding to be executed. When a MIMO communication is performed between a transmitter (250) and a receiver (260) each using a cross-polarized antenna structure, a channel estimating and precoding selection section (214) of the receiver (260) performs a channel estimation of MIMO channels from the transmitter to the receiver, decides a precoding matrix (P) of a projection matrix for mutually orthogonalizing or substantially orthogonalizing the channel response matrixes for respective different polarized waves, and feeds the determined precoding matrix (P) back to the transmitter (250). In the transmitter (250), a precoding processing section (208) applies the precoding matrix (P) to the spatial stream corresponding to one of the polarized waves to perform a precoding, thereby allowing the transmitter (250) to transmit the polarized waves with the orthogonality therebetween maintained.

Owner:SUN PATENT TRUST

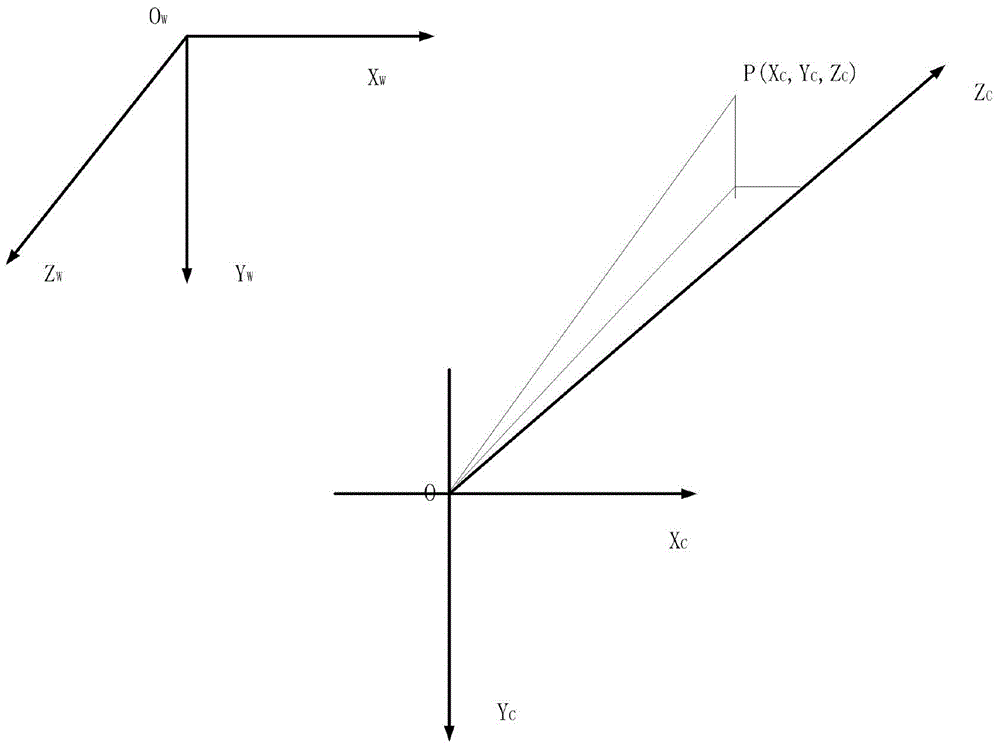

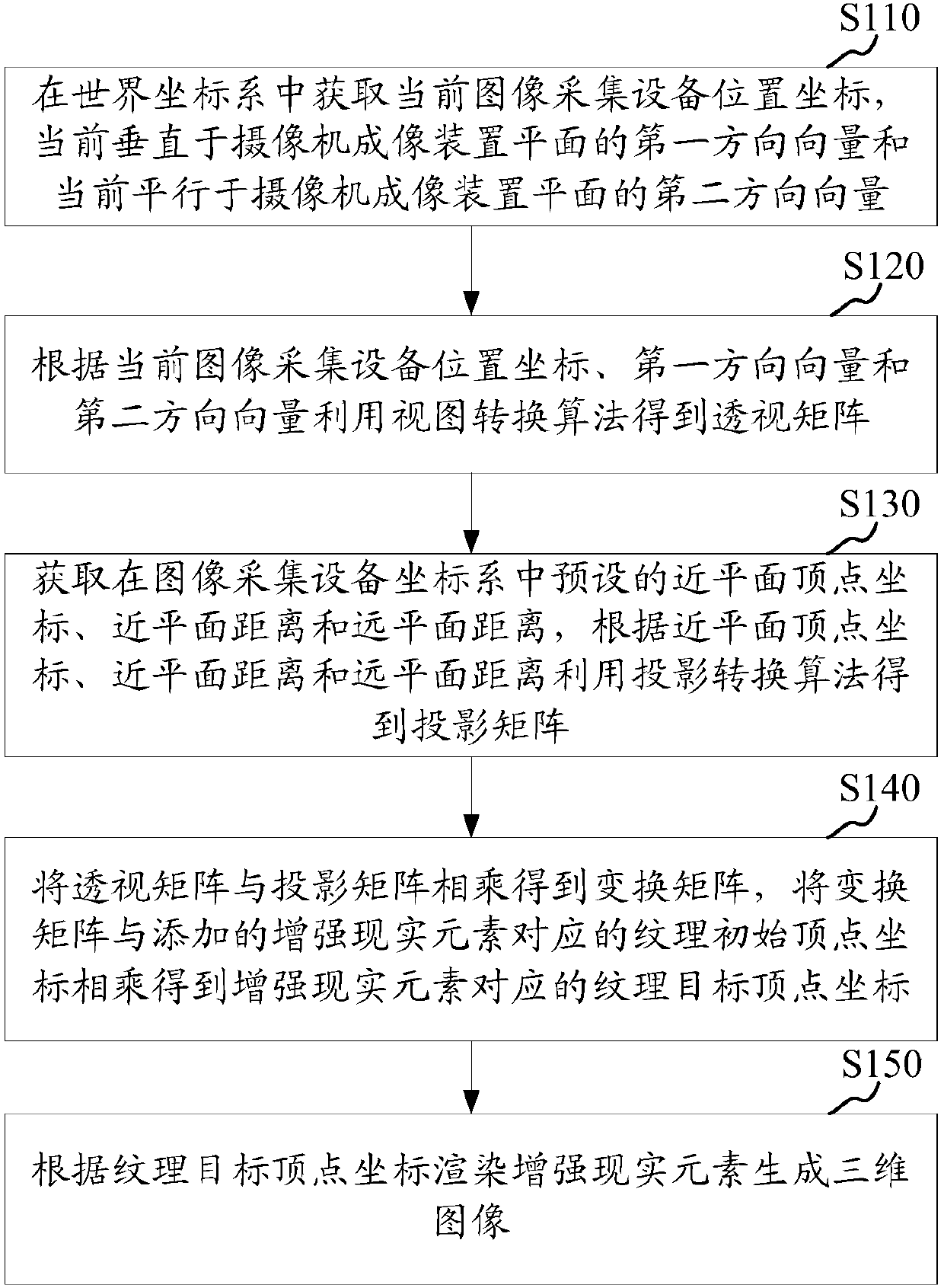

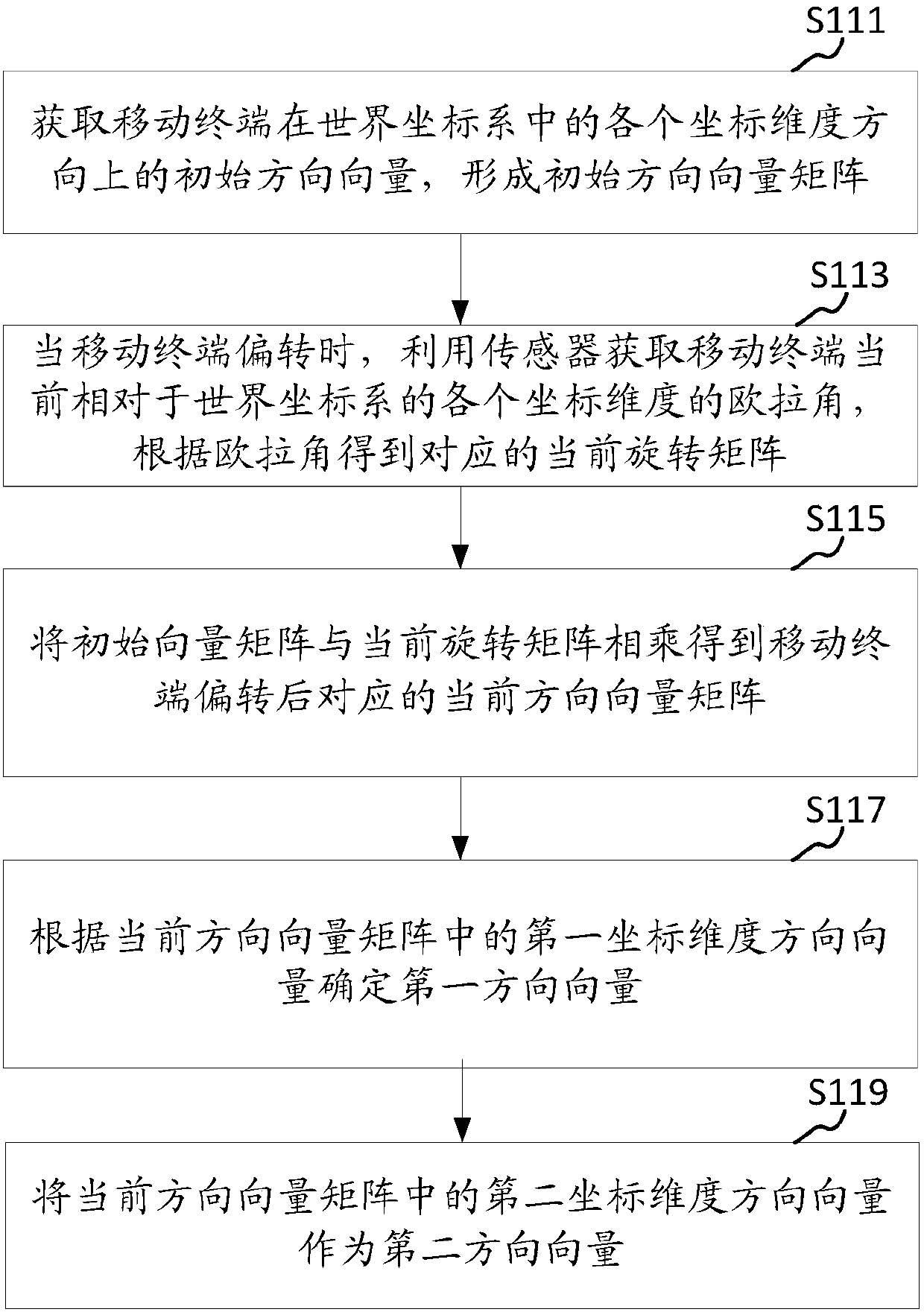

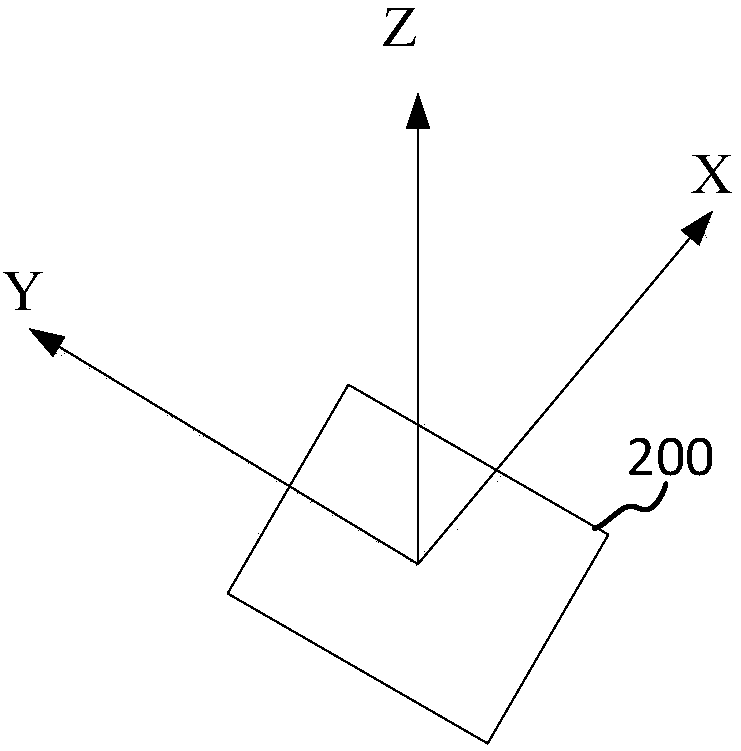

Three-dimension image processing method, device, storage medium and computer equipment

ActiveCN107564089AEasy to integrateIncrease authenticityImage enhancement3D-image renderingHat matrixImaging processing

The invention provides a three-dimension image processing method comprising the following steps: obtaining current image acquisition device position coordinates from a world coordinate system, obtaining a first direction vector and a second direction vector, and using a view transformation algorithm to obtain a transparent matrix; obtaining preset near plane vertex coordinates a near plane distance and a far plane distance from an image acquisition device coordinate system, and using a projection transformation algorithm to obtain a projection matrix; multiplying the transparent matrix with the projection matrix so as to obtain a transformation matrix; multiplying the transformation matrix with texture initial vertex coordinates matched with added augmented reality elements so as to obtaintexture target vertex coordinates matched with the augmented reality elements; using the texture target vertex coordinates to render the augmented reality elements so as to form a three-dimension image. The mobile terminal can rotate to drive the image acquisition device to rotate, so the three-dimension image corresponding to the augmented reality elements can make corresponding rotations, thusincreasing the fusing level between the three-dimension image and a true background image, and improving the image authenticity; the invention also provides a three-dimension image processing device,a storage medium and computer equipment.

Owner:TENCENT TECH (SHENZHEN) CO LTD

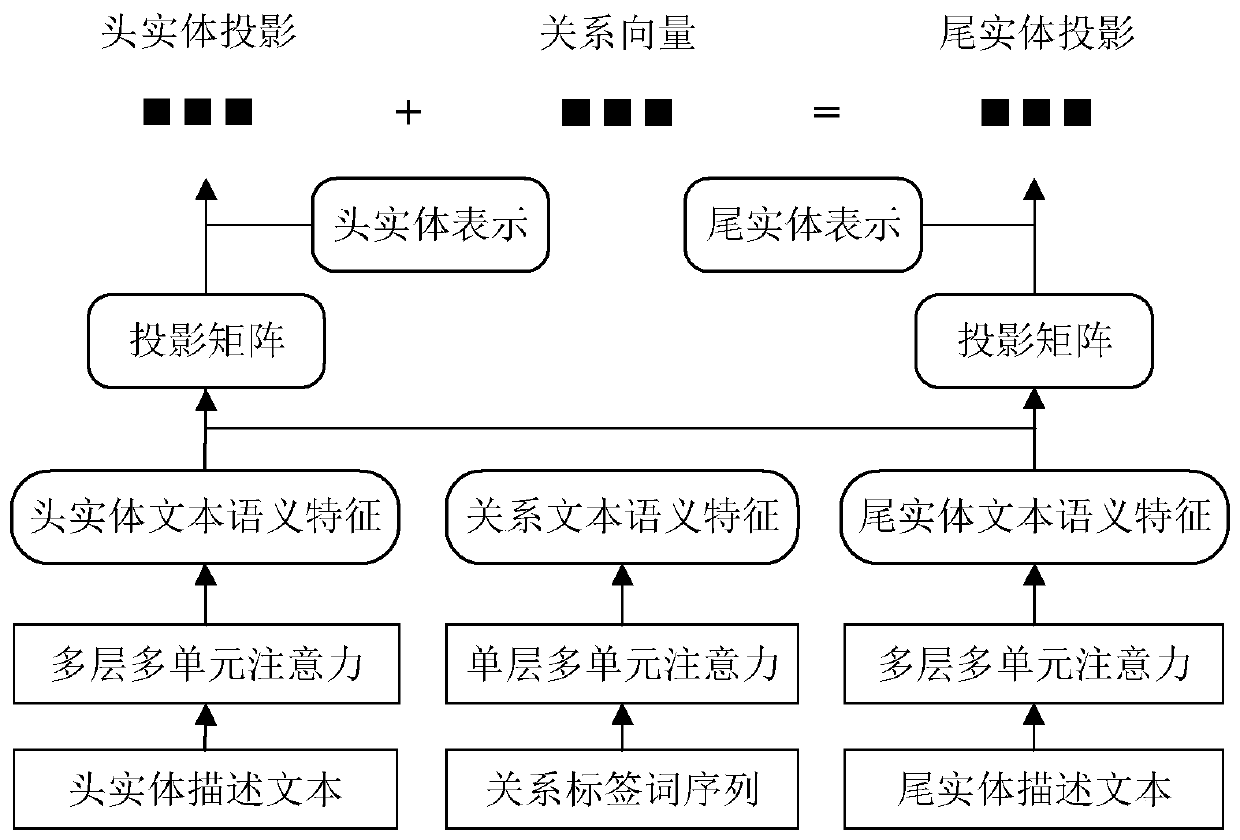

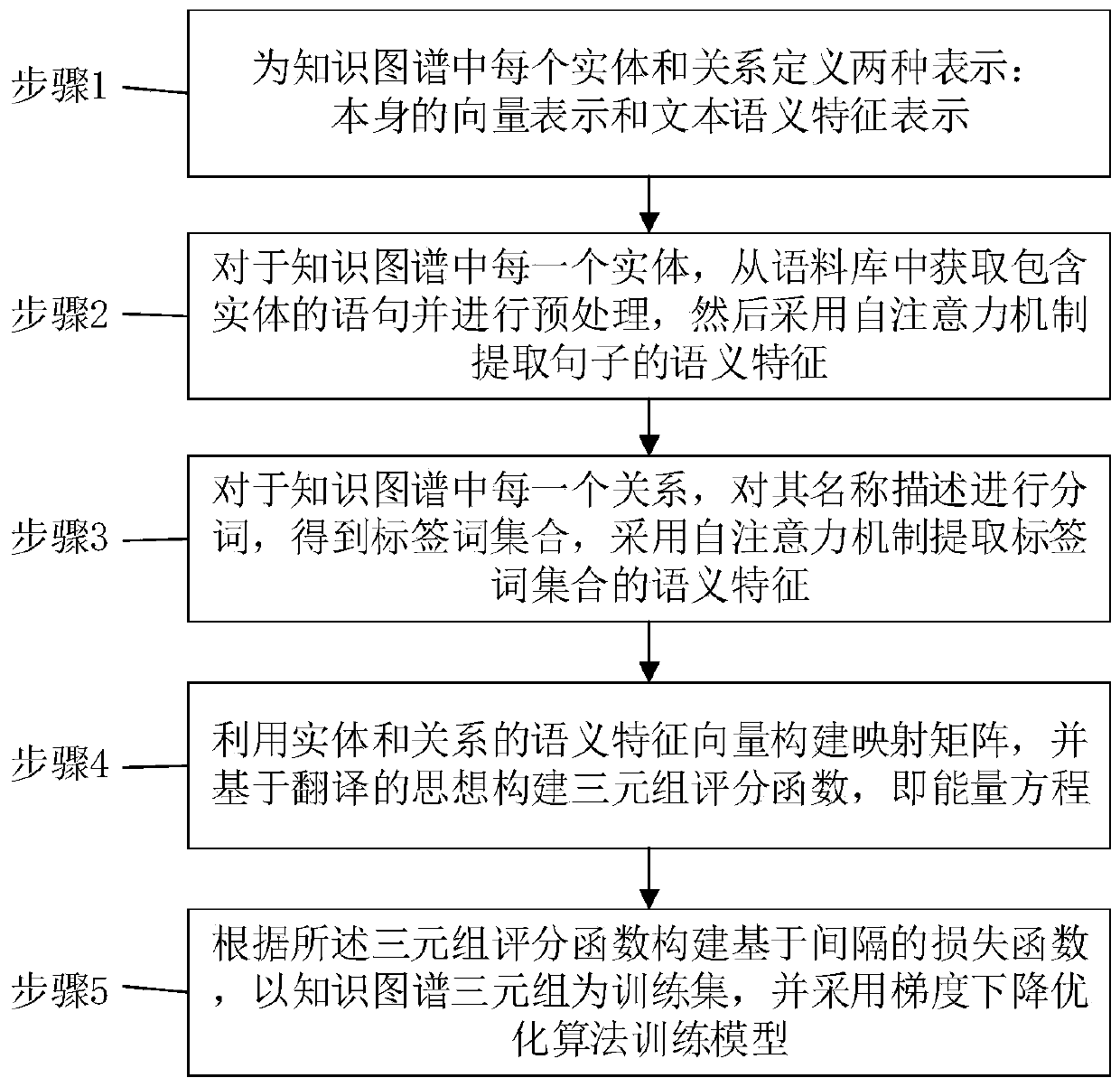

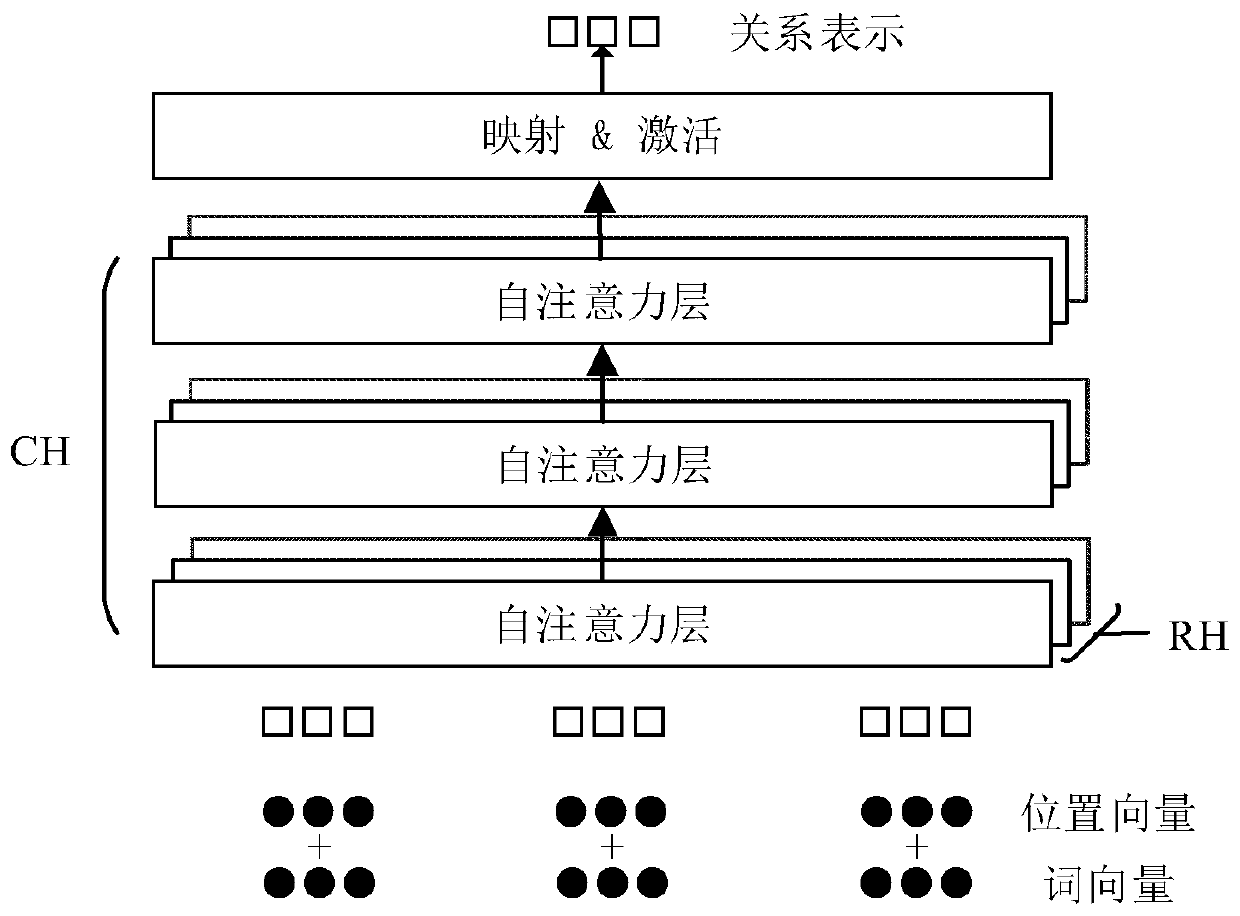

Knowledge graph representation learning method for integrating text semantic features based on attention mechanism

ActiveCN110334219ARich semantic featuresQuality improvementInternal combustion piston enginesSpecial data processing applicationsHat matrixProcess description

The invention relates to a knowledge graph and discloses a knowledge graph representation learning method for integrating text semantic features based on an attention mechanism. The method solves theproblems that semantic features are insufficient due to the fact that a translation model does not utilize description texts of entities and relations, semantic features cannot be fused into entitiesand relations at the same time by a multi-source information embedding method, and the text extraction effect is poor. The method comprises the steps of firstly obtaining and processing description texts of entities and relationships to obtain text semantic features of the entities and the relationships, then constructing a projection matrix of the entities by utilizing the semantic features of the entities and the relationships, projecting entity vectors into a relationship space, modeling in the relationship space by utilizing a translation thought, and carrying out representation learning,so as to model a many-to-many complex relationship. The method is suitable for representation learning of the knowledge graph.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Method for marker-free automatic fusion of 2-D fluoroscopic C-arm images with preoperative 3D images using an intraoperatively obtained 3D data record

ActiveUS20050004454A1Simple wayImage enhancementMaterial analysis using wave/particle radiationHat matrixFluoroscopic image

In a method and apparatus for automatic marker-free fusion (matching) of 2D fluoroscopic C-arm images with preoperative 3D images using an intraoperatively acquired 3D data record, an intraoperative 3D image is obtained using a C-arm x-ray system, image-based matching of an existing preoperative 3D image in relation to the intraoperative 3D image is undertaken, which generates a matching matrix of a tool plate attached to the C-arm system is matched in relation to a navigation system, a 2D fluoroscopic image to be matched is obtained, with the C-arm of the C-arm system in any arbitrary location, a projection matrix for matching the 2D fluoroscopic image in relation to the 3D image is obtained, and the 2D fluoroscopic image is fused (matched) with the preoperative 3D image on the basis of the matching matrix and the projection matrix.

Owner:SIEMENS HEALTHCARE GMBH

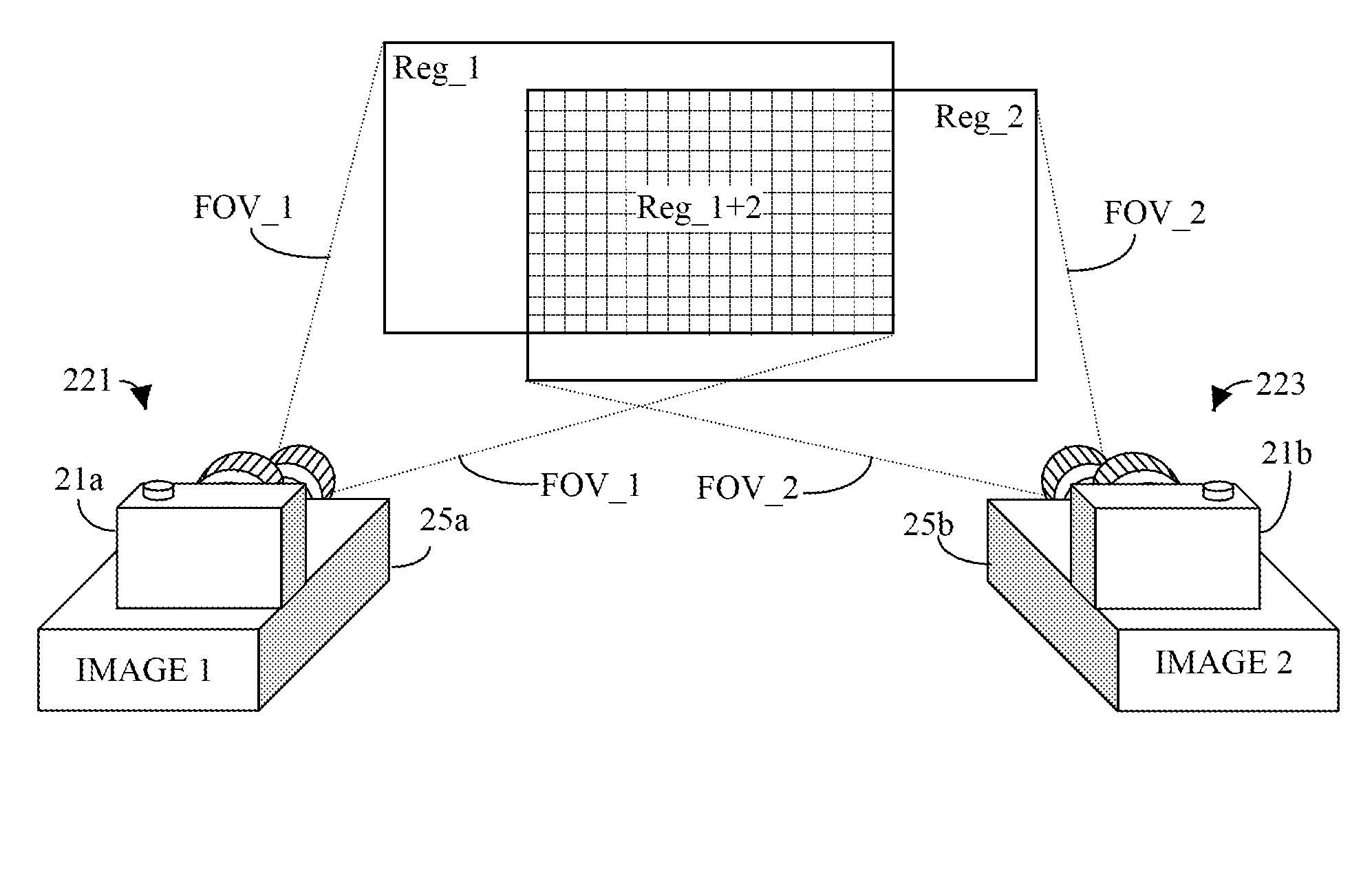

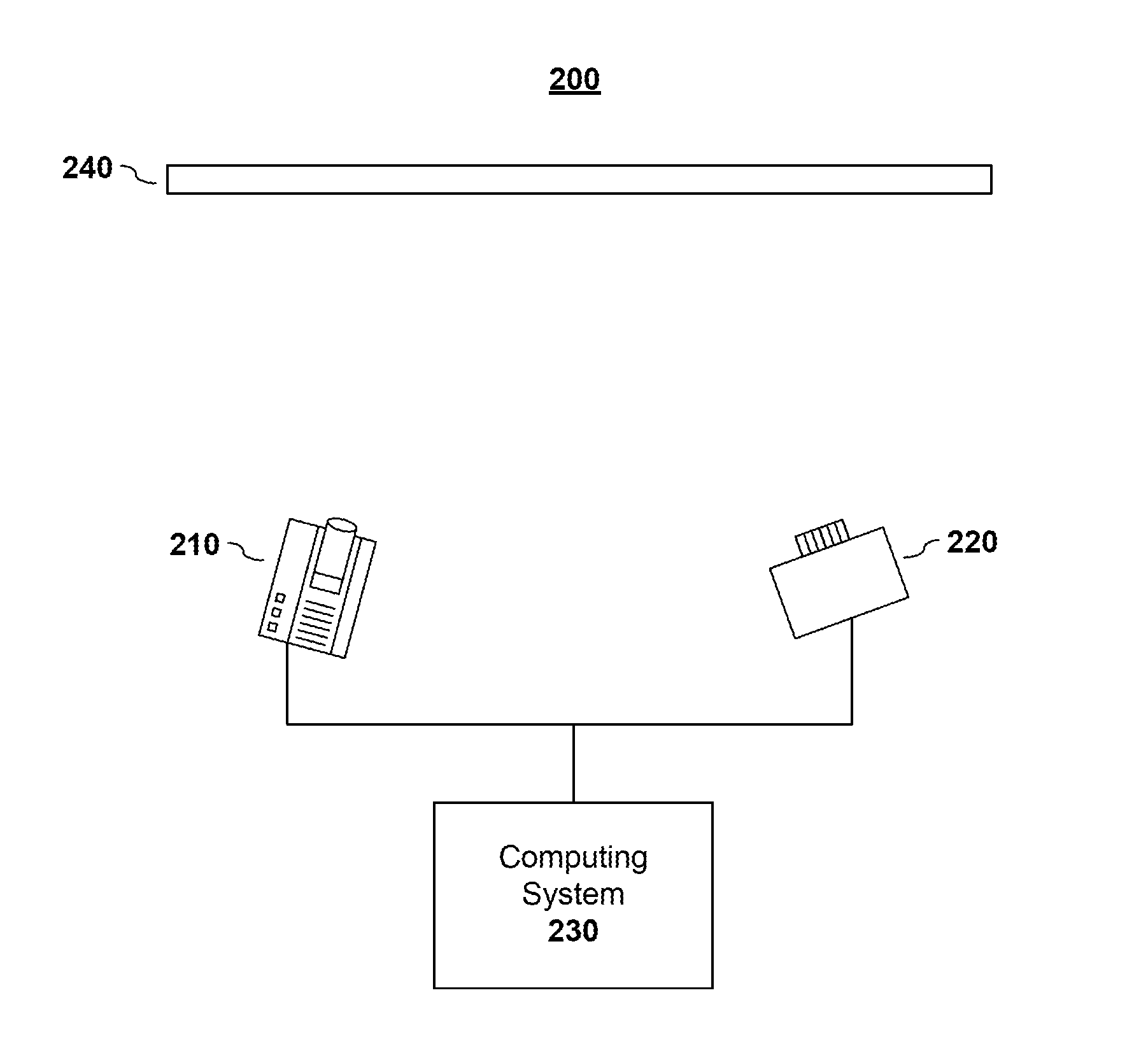

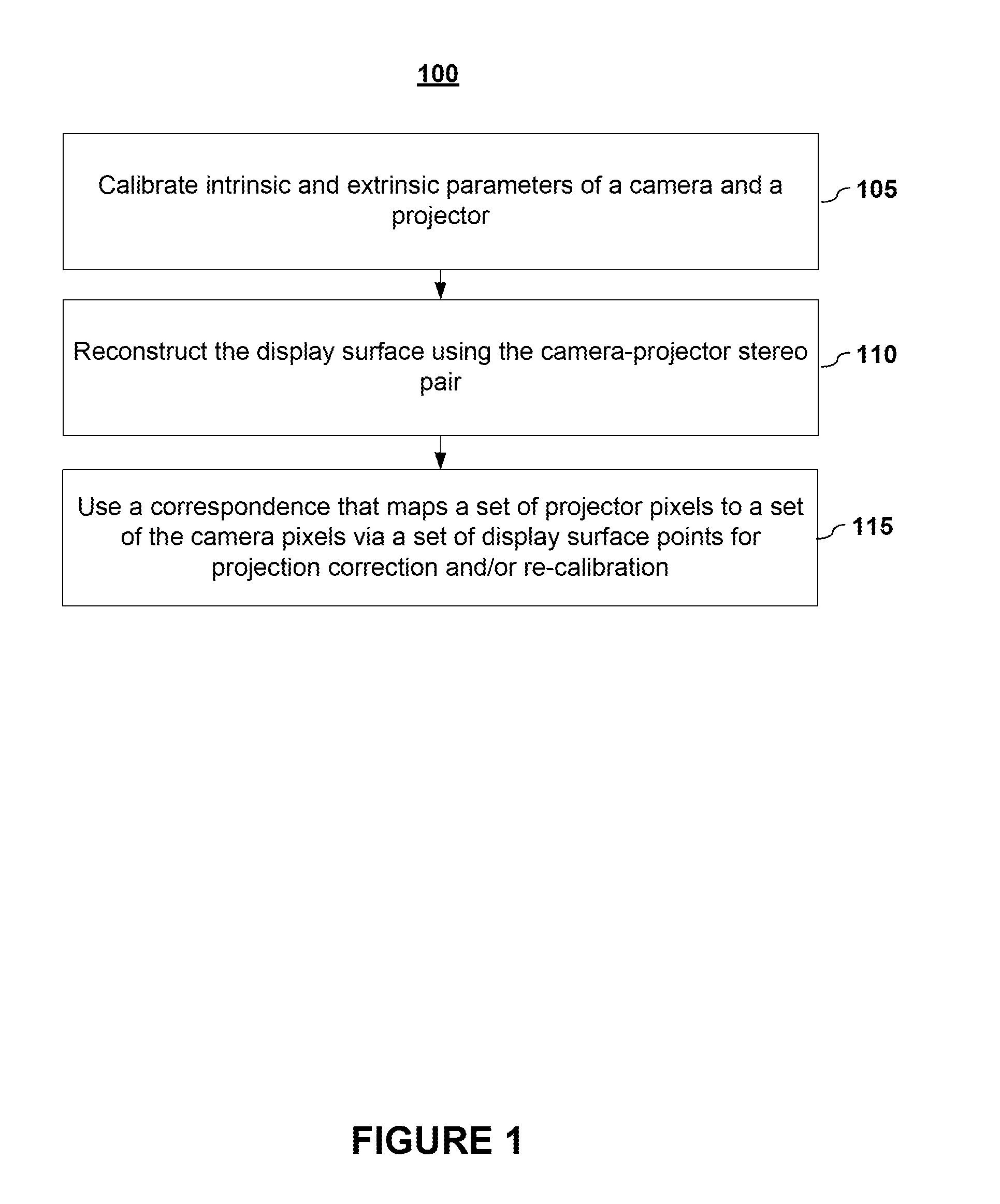

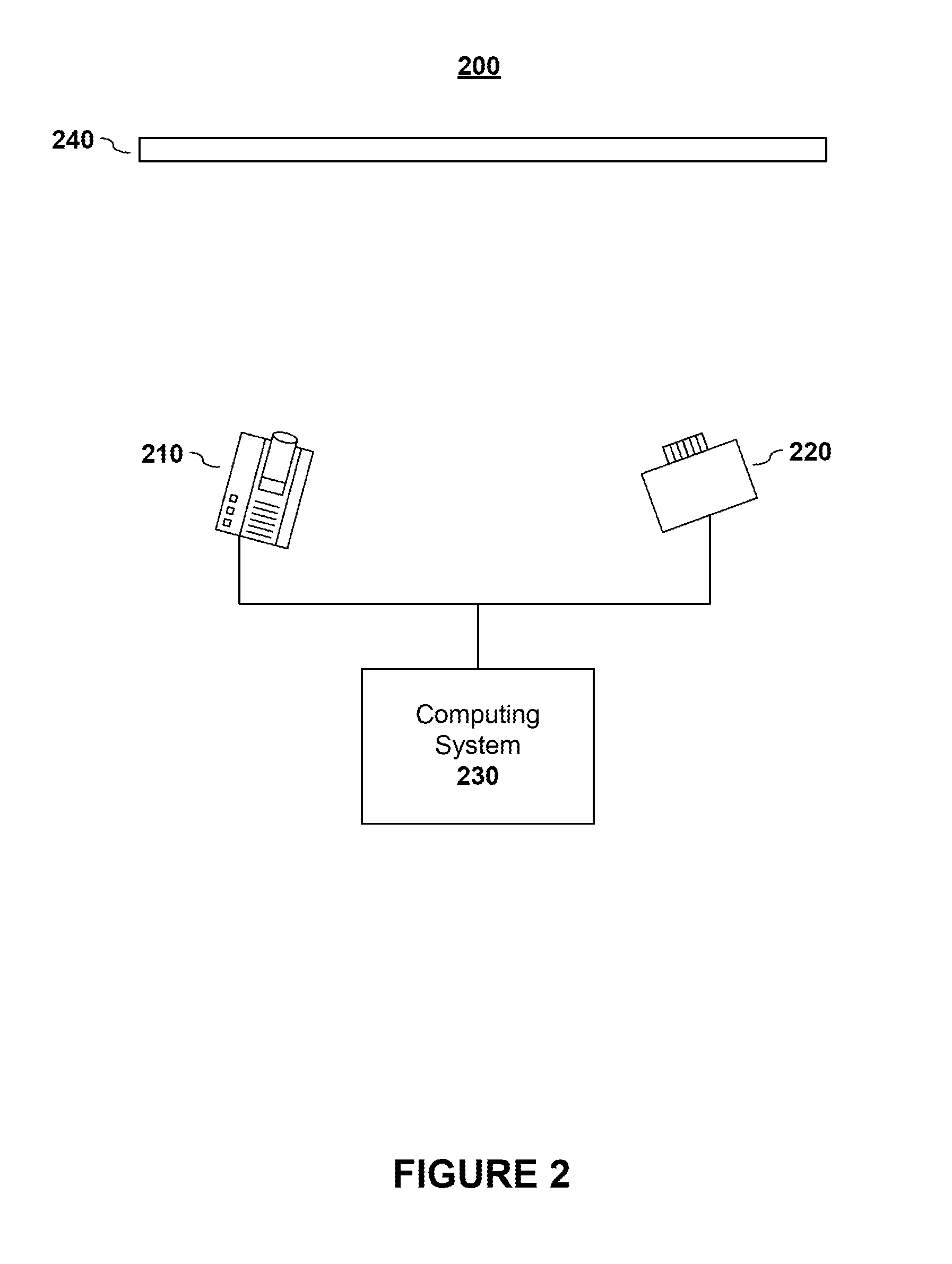

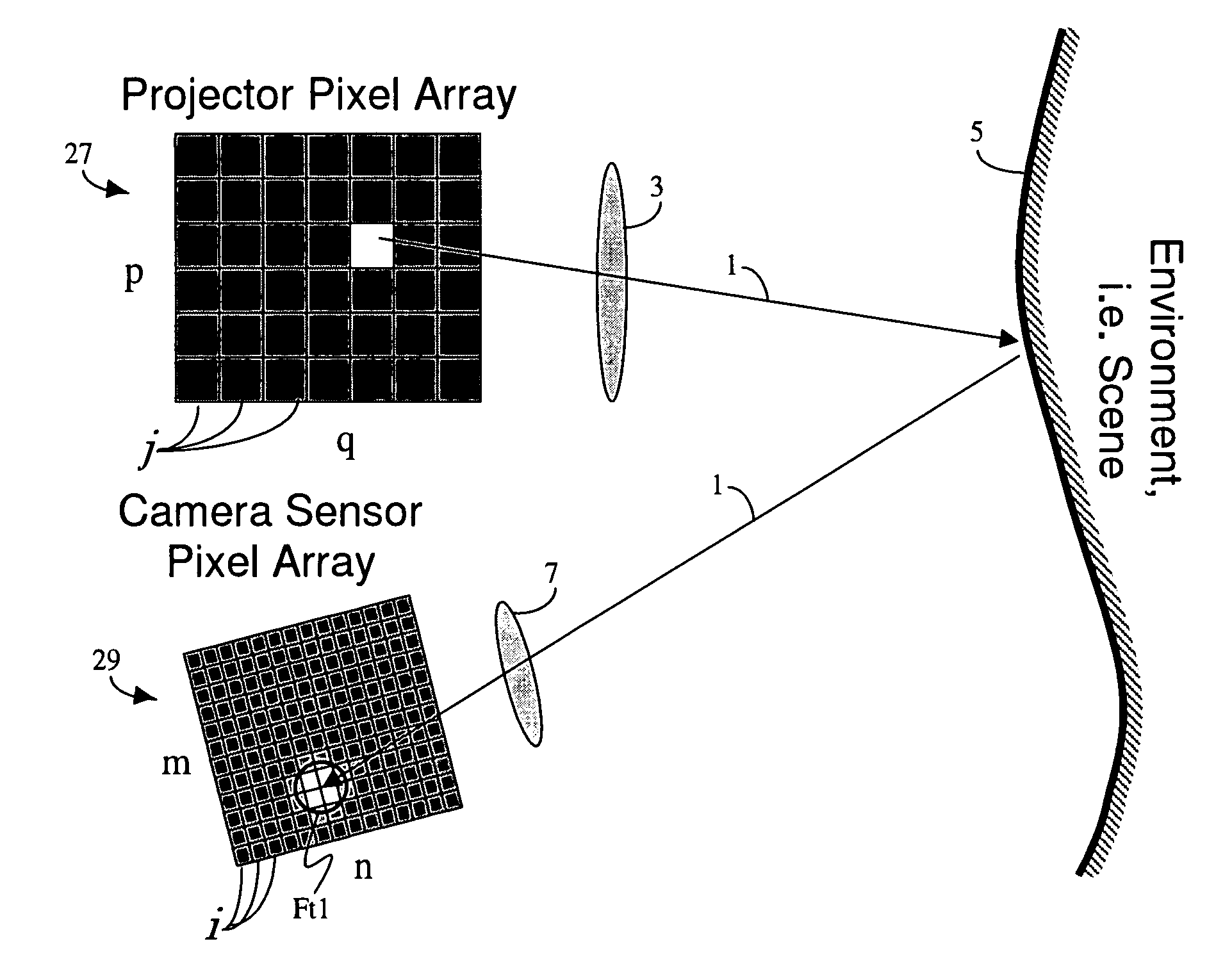

Real-Time Geometry Aware Projection and Fast Re-Calibration

InactiveUS20110176007A1Reduce restrictionsImage enhancementImage analysisHat matrixProjector camera systems

Aspects of the present invention include systems and methods for recalibrating projector-camera systems. In embodiments, systems and methods are able to recalibrate automatically the projector with arbitrary intrinsic and pose, as well as render for arbitrarily desired viewing point. In contrast to previous methods, the methods disclosed herein use the observing camera and the projector to form a stereo pair. Structured light is used to perform pixel-level fine reconstruction of the display surface. In embodiments, the geometric warping is implemented as a direct texture mapping problem. As a result, re-calibration of the projector movement is performed by simply computing the new projection matrix and setting it as a camera matrix. For re-calibrating the new view point, the texture mapping is modified according to the new camera matrix.

Owner:SEIKO EPSON CORP

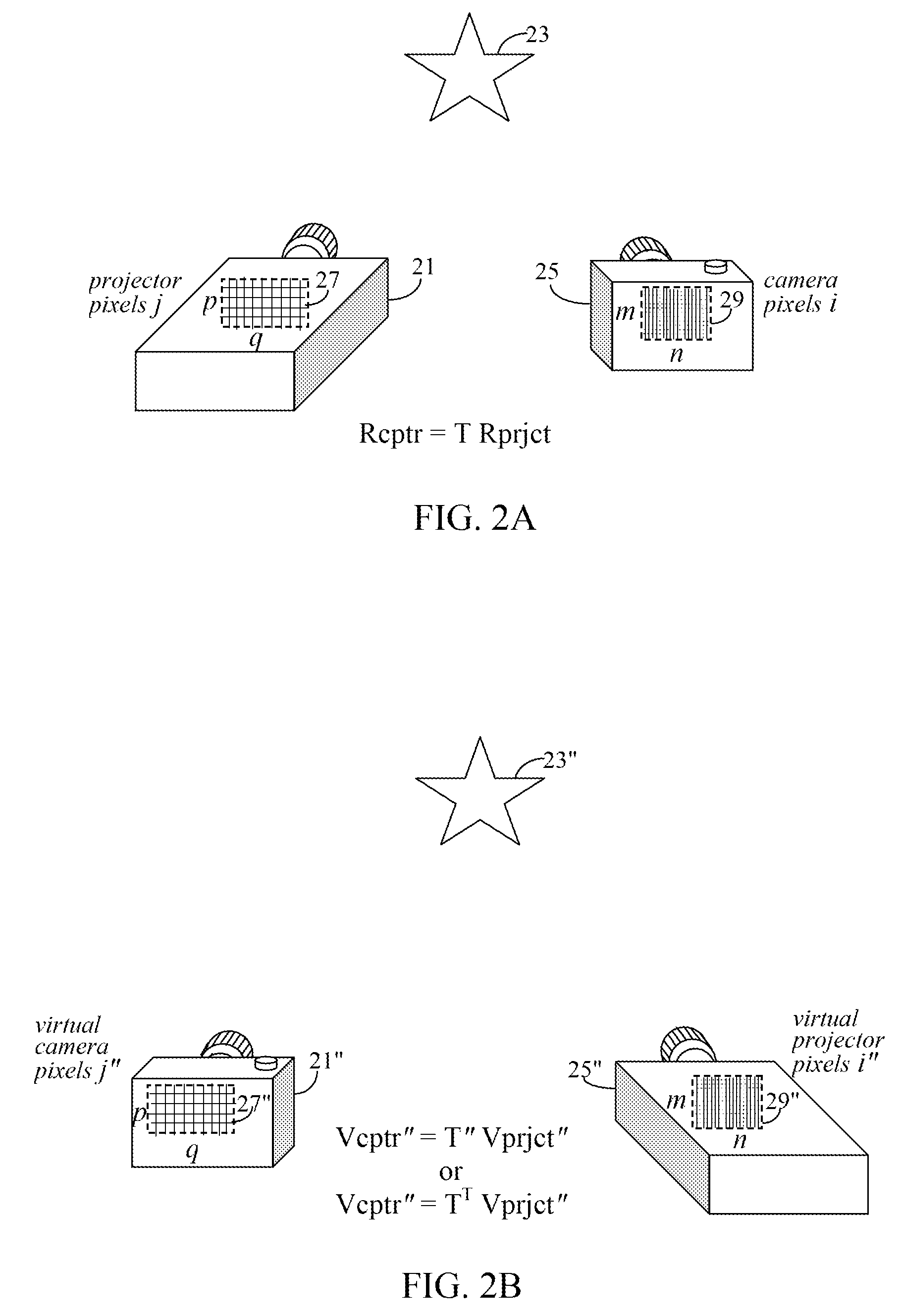

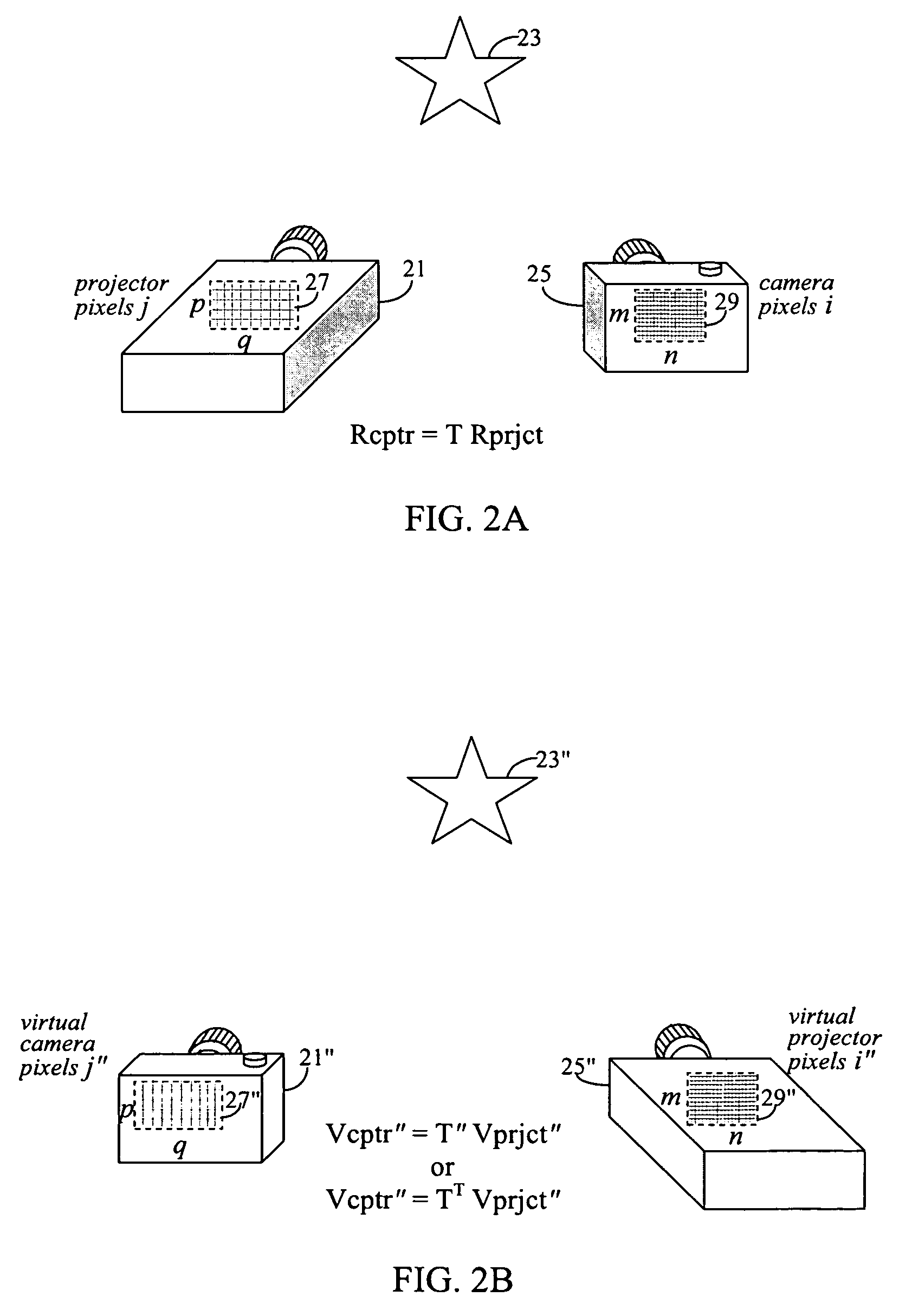

View Projection for Dynamic Configurations

InactiveUS20090073324A1Easy to shipSimple processTelevision system detailsTelevision system scanning detailsHat matrixProjection image

A method and system for compensating for a moving object placed between a projector and a projection scene is shown. The method / system dividing a movement pattern of the moving object into N discrete position states, and for each of said N position states determining a corresponding view projection matrix. While projecting an image within any of the N position states, multiplying a desired projection image by the corresponding view projection matrix.

Owner:SEIKO EPSON CORP

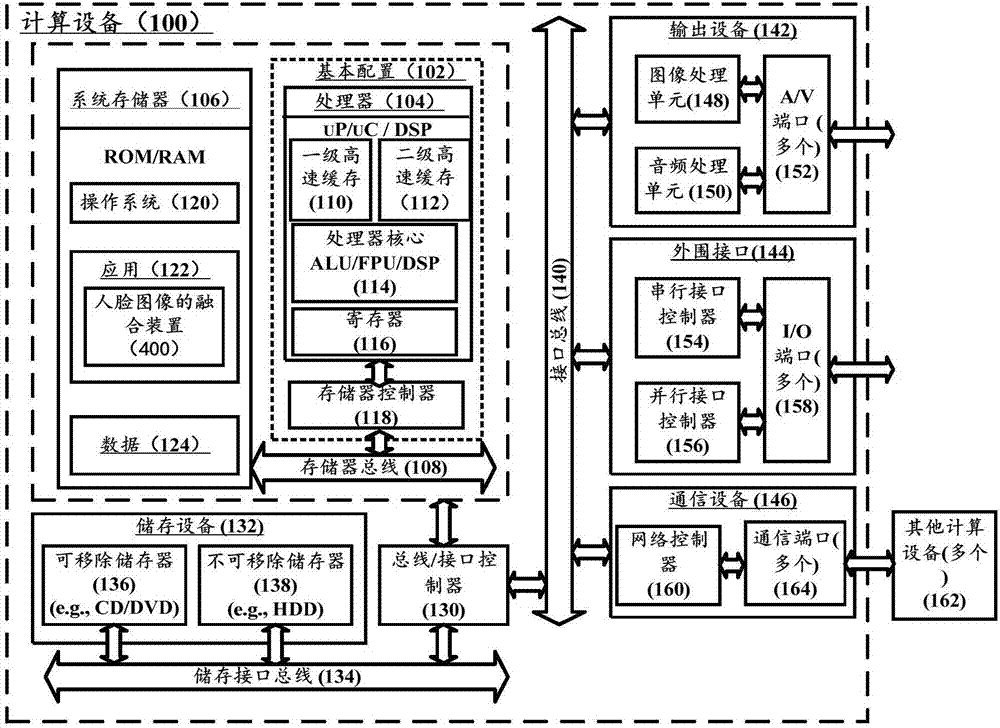

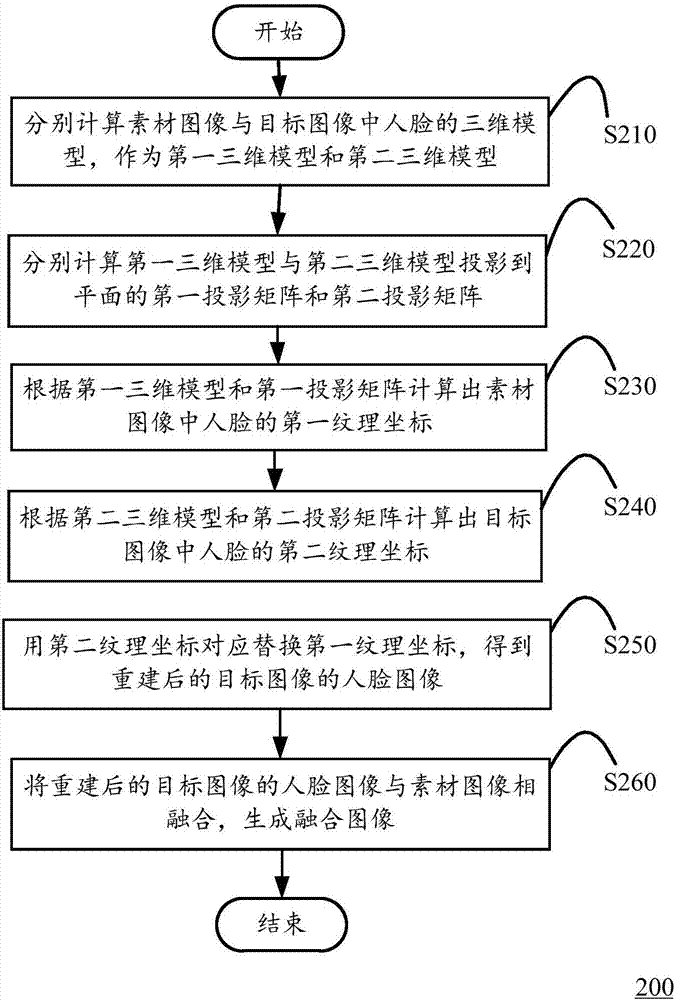

Face image fusion method and device and calculation device

ActiveCN107146199ASolve the problem of changing face deformationAttitude difference improvementImage enhancementImage analysisHat matrixPattern recognition

A face image fusion method disclosed by the present invention is suitable for fusing the face of a target image into the face of a material image, and comprises the steps of respectively calculating the three dimensional models of the faces in the material image and the target image as a first three dimensional model and a second three dimensional model; respectively calculating a first projection matrix and a second projection matrix projected by the first and second three dimensional models to a plane; according to the first three dimensional model and the first projection matrix, calculating a first texture coordinate of the face in the material image; according to the second three dimensional model and the second projection matrix, calculating a second texture coordinate of the face in the target image; using the second texture coordinate to replace the first texture coordinate correspondingly to obtain a reconstructed face image of the target image; and fusing the reconstructed face image of the target image and the material image to generate a fused image. The present invention also discloses a corresponding face image fusion device and a calculation device.

Owner:厦门美图宜肤科技有限公司

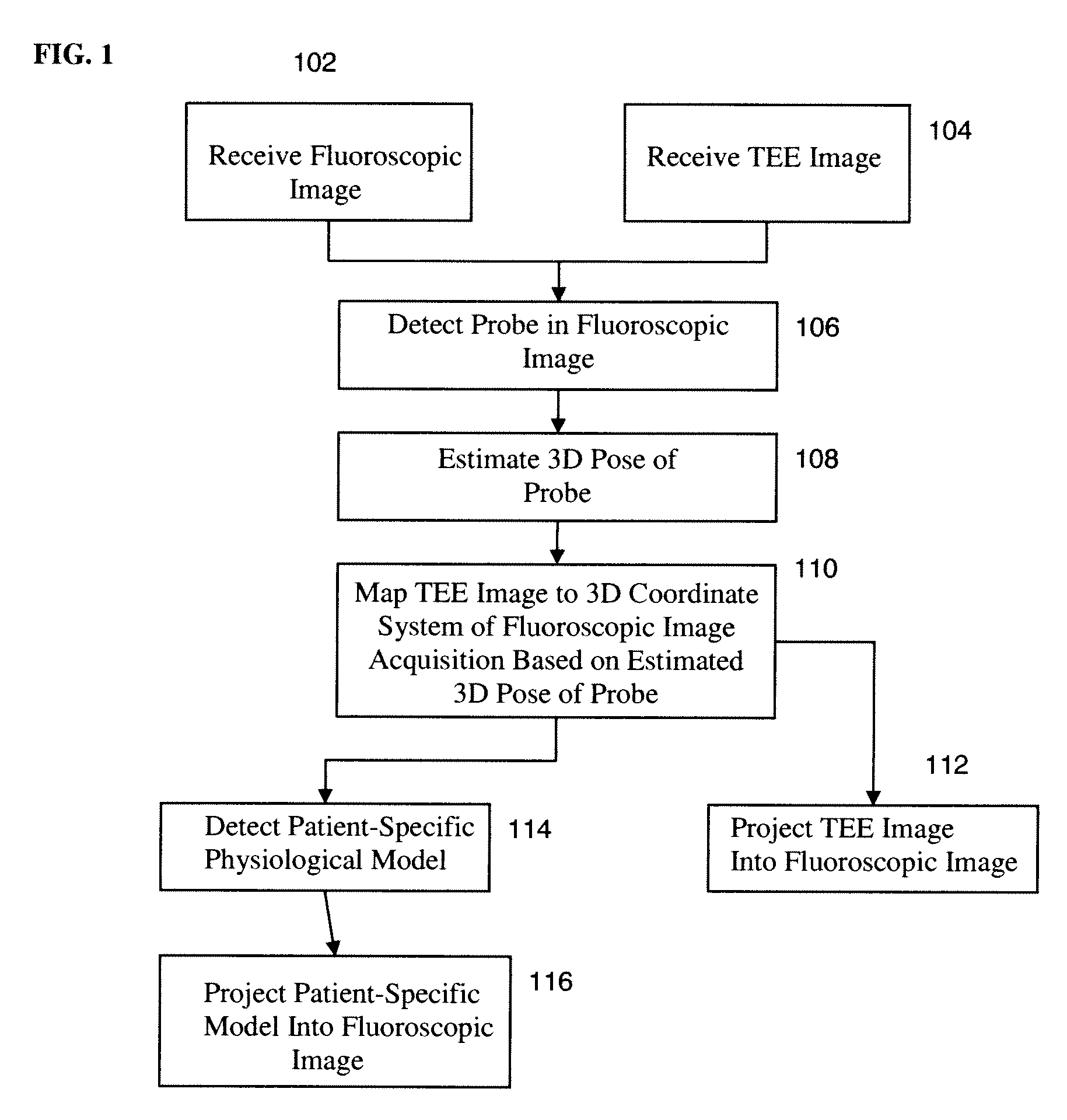

Method and System for Registration of Ultrasound and Physiological Models to X-ray Fluoroscopic Images

A method and system for registering ultrasound images and physiological models to x-ray fluoroscopy images is disclosed. A fluoroscopic image and an ultrasound image, such as a Transesophageal Echocardiography (TEE) image, are received. A 2D location of an ultrasound probe is detected in the fluoroscopic image. A 3D pose of the ultrasound probe is estimated based on the detected 2D location of the ultrasound probe in the fluoroscopic image. The ultrasound image is mapped to a 3D coordinate system of a fluoroscopic image acquisition device used to acquire the fluoroscopic image based on the estimated 3D pose of the ultrasound probe. The ultrasound image can then be projected into the fluoroscopic image using a projection matrix associated with the fluoroscopic image. A patient specific physiological model can be detected in the ultrasound image and projected into the fluoroscopic image.

Owner:SIEMENS HEALTHCARE GMBH

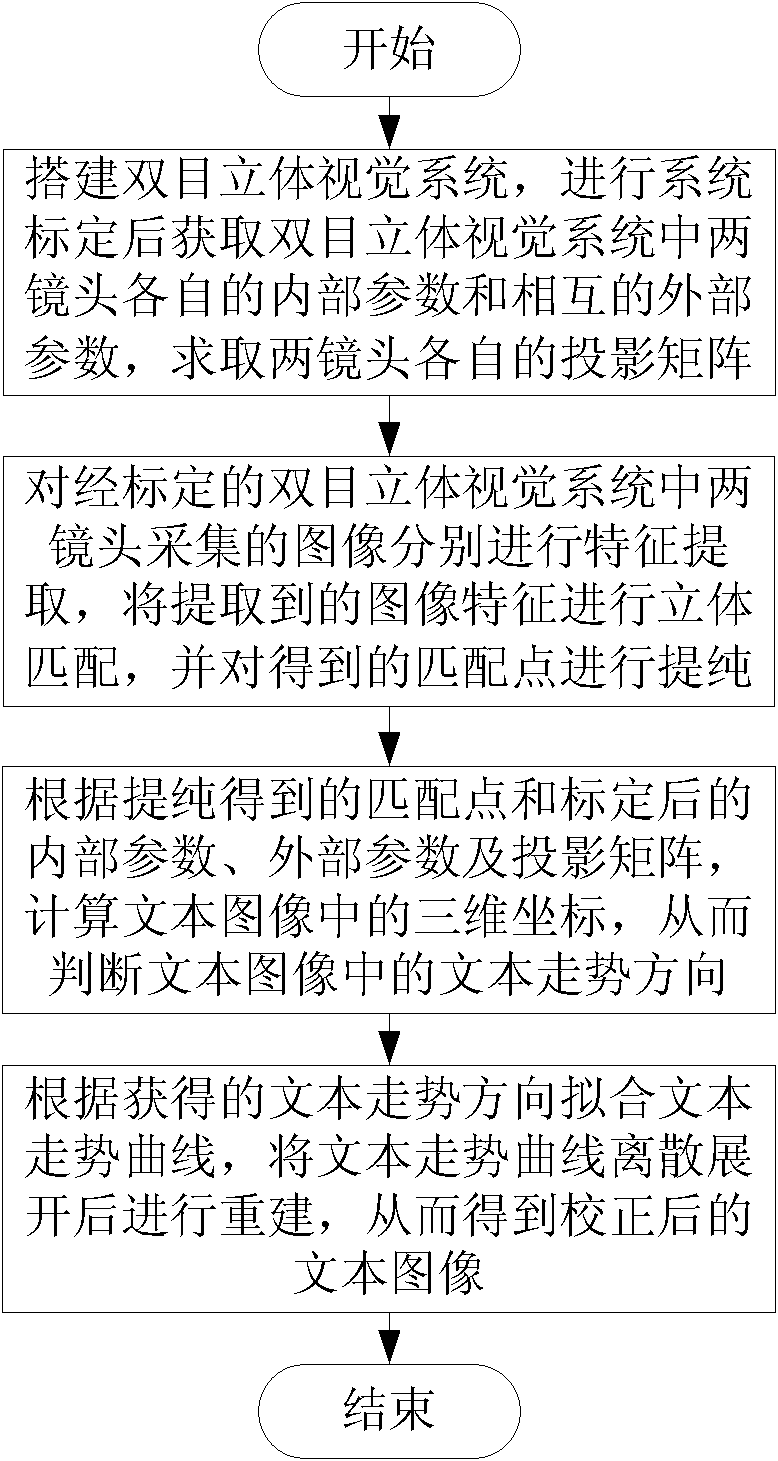

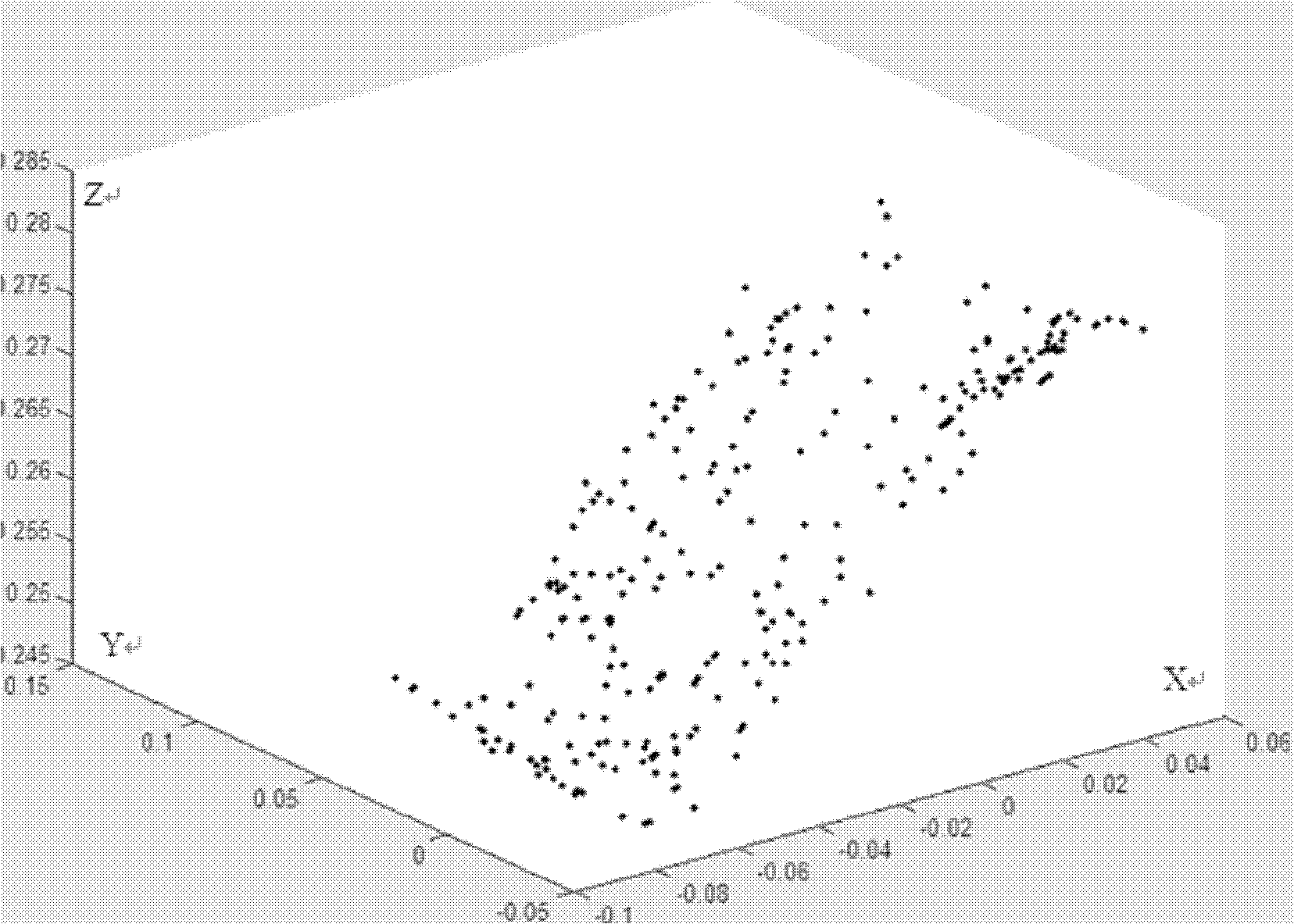

Geometrical correction method, device and binocular stereoscopic vision system of text image

The invention discloses a geometrical correction method, a device and a binocular stereoscopic vision system of a text image, belonging to the field of image processing. The geometrical correction method comprises the steps of: constructing a binocular stereoscopic vision system, obtaining respective internal parameters and mutual external parameters of two lenses in the binocular stereoscopic vision system, and obtaining respective projection matrixes of the two lenses; respectively performing characteristic extraction on the images acquired by the two lenses in the binocular stereoscopic vision system, performing stereoscopic match and purifying the obtained matching points; calculating tridimensional coordinates of corresponding points in the text image according to the obtained matching points, and judging the text trend direction in the text image; and fitting a text trend curve according to the text trend direction, discretely expanding the text trend curve, and reconstructing to obtain a corrected text image. The geometrical correction method of the invention is not affected by text contents or set types, so that the geometrical correction method is especially suitable for the geometrical distortion correction of texts with complex layouts, and the geometrical correction method can be used for processing various types of text images, thereby greatly improving the application range of geometrical distortion correction.

Owner:HANVON CORP

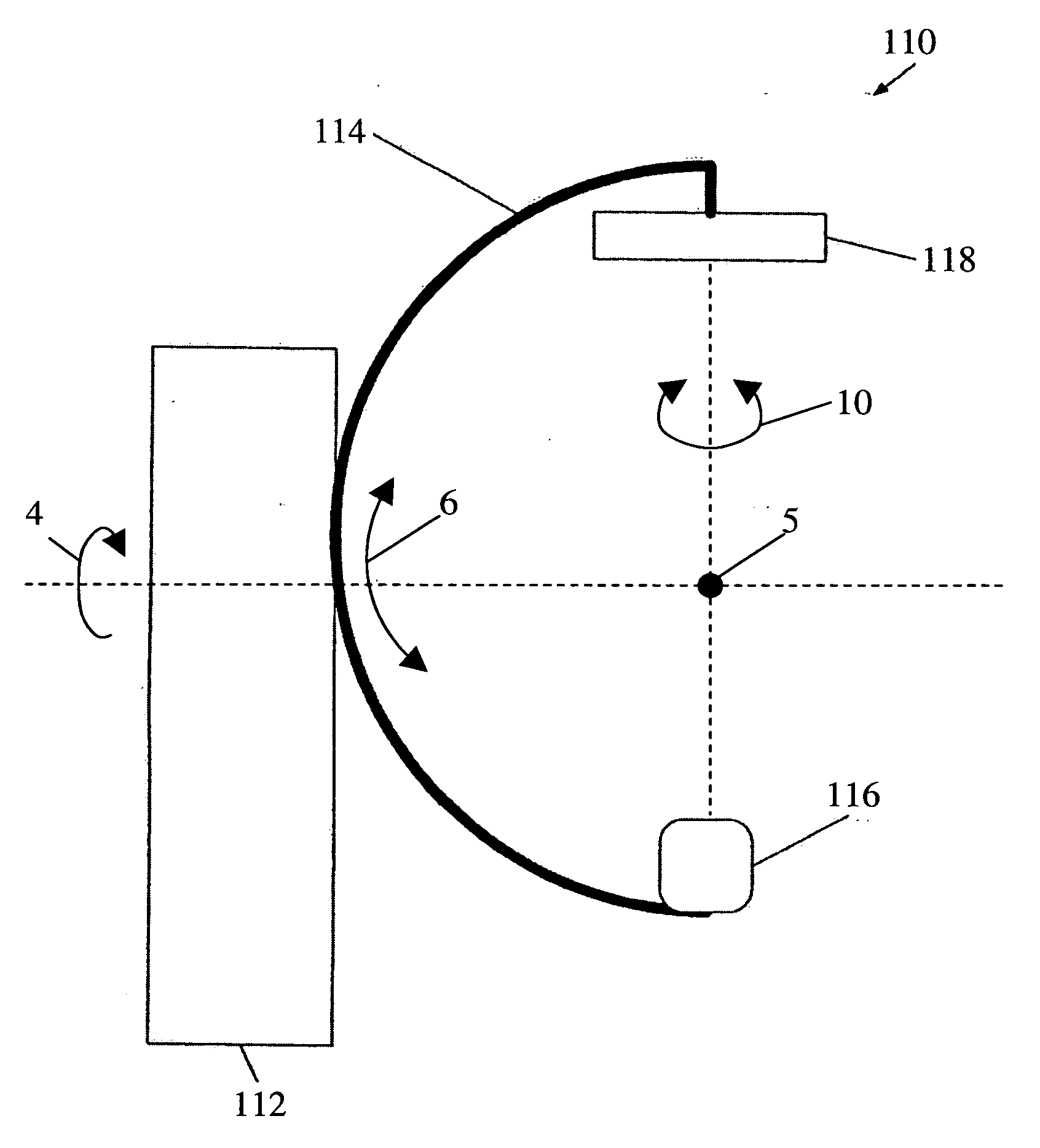

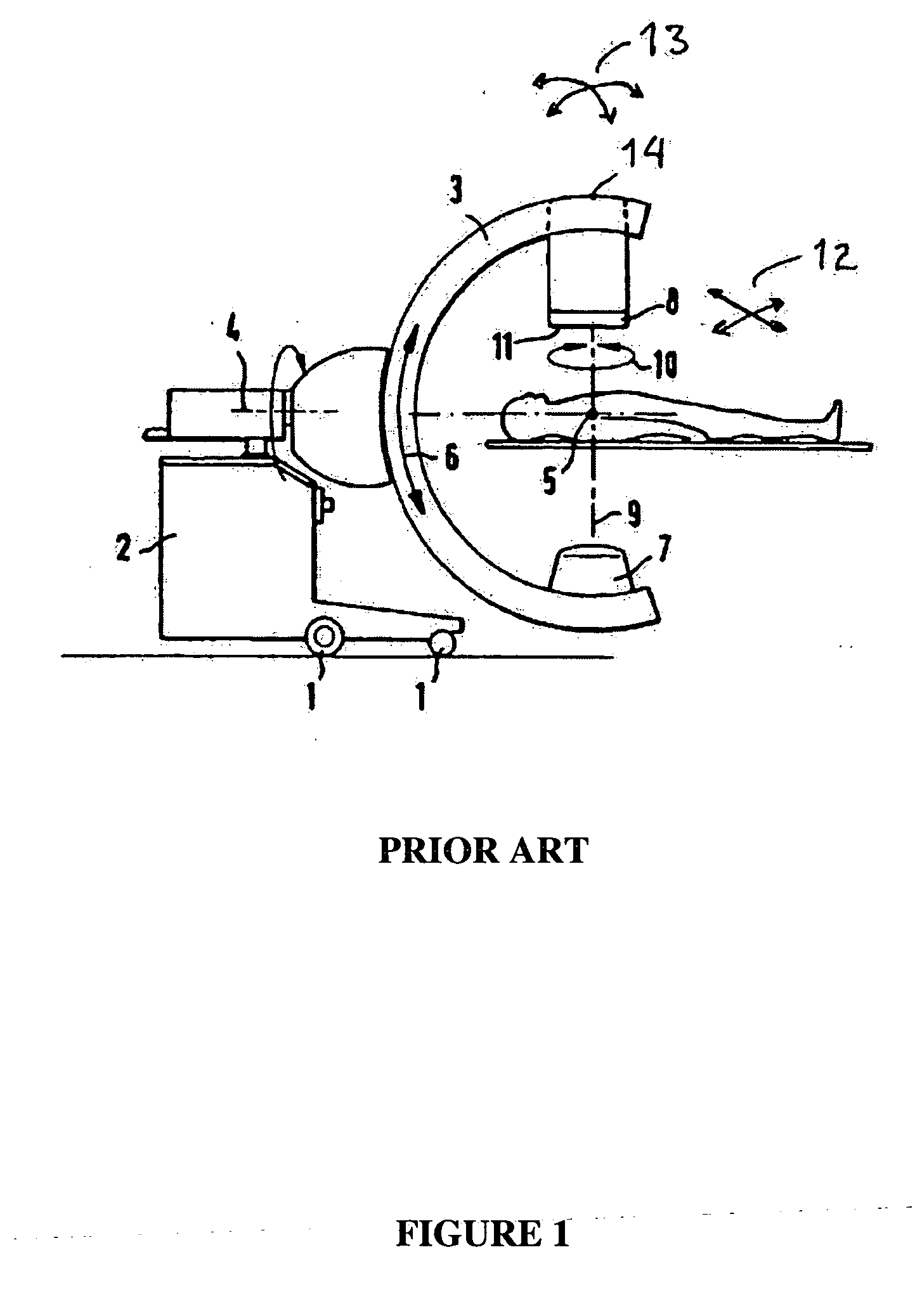

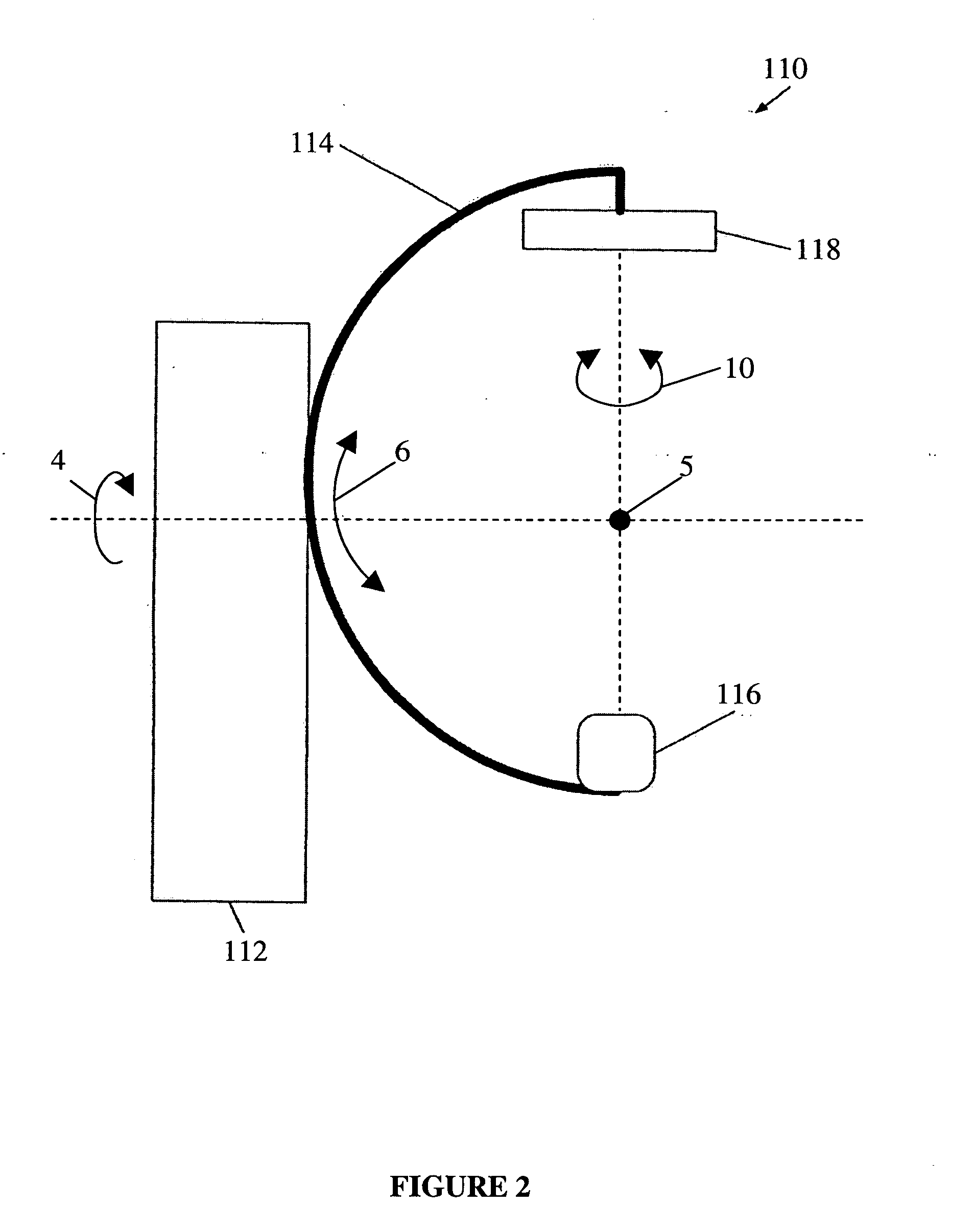

C-arm device with adjustable detector offset for cone beam imaging involving partial circle scan trajectories

InactiveUS20060039537A1Reduce and eliminate truncated projectionFacilitates image reconstructionReconstruction from projectionComputerised tomographsHat matrixData set

A C-arm x-ray imaging system enhances reconstructed volumes internal to a patient. Truncation artifacts in reconstructed volume data sets may be reduced by creating an effective x-ray detector of greater size. The x-ray detector may include a movable stage and a detector mount. The movable stage may be movable within the x-ray detector mount. A first partial circular scan may be performed with the movable stage at a first position. The movable stage may be repositioned to a second position before a second partial circular scan is performed. Performing two partial circular scans with the movable stage located at different positions may increase the effective size of the x-ray detector. The associated views acquired with the detector in opposite offset positions are combined and used for 3D reconstruction. A method of calibration may include generating first and second projection matrices associated with first and second transform parameters, respectively.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

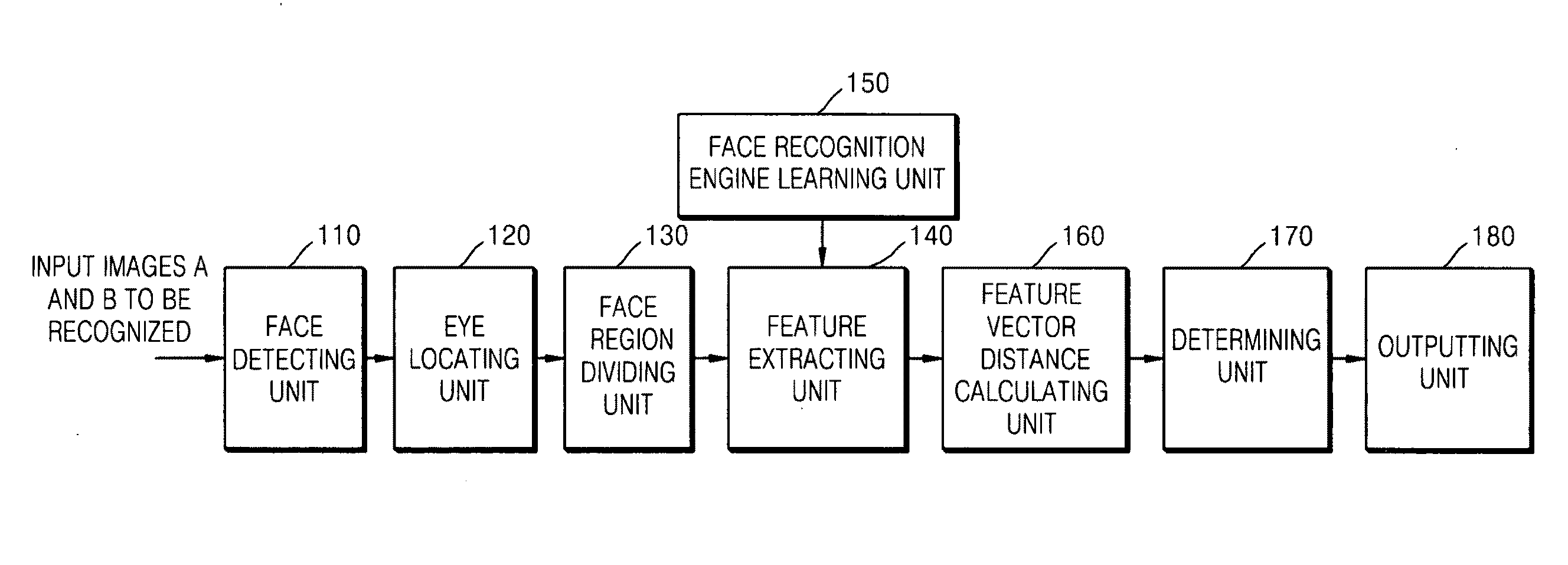

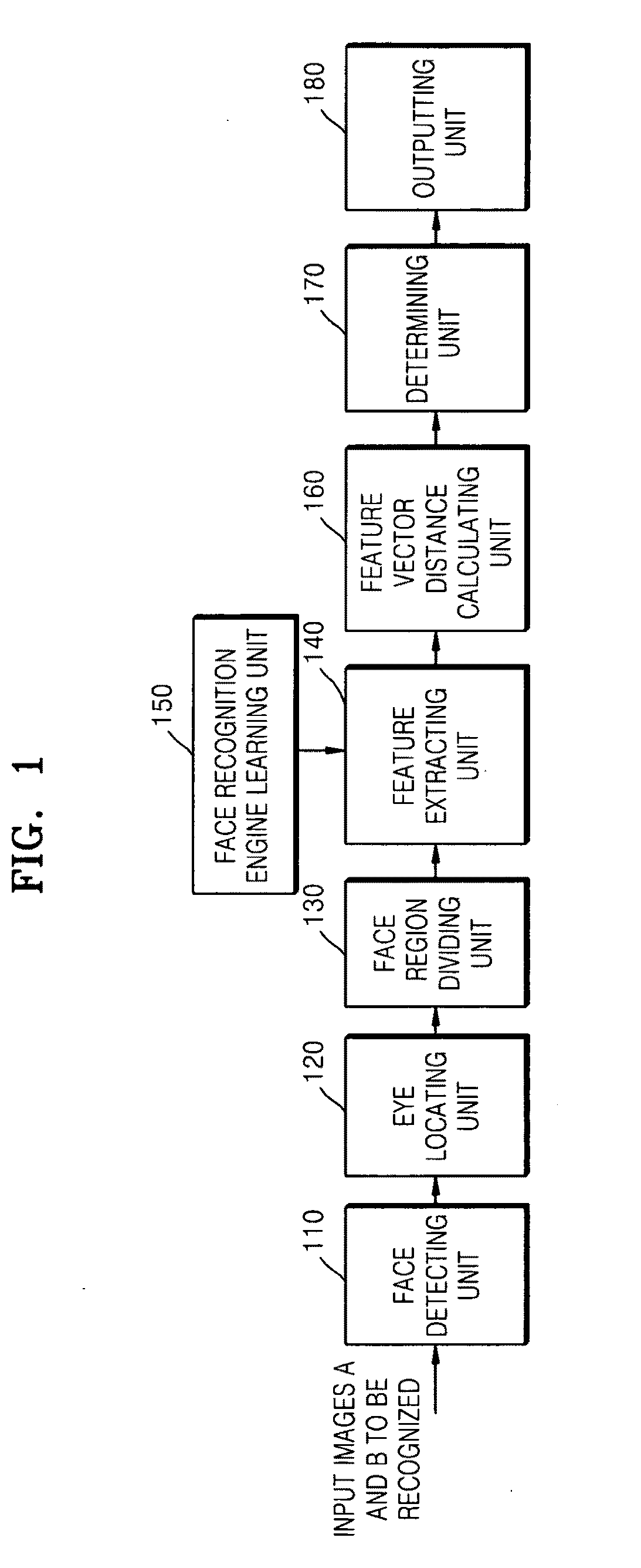

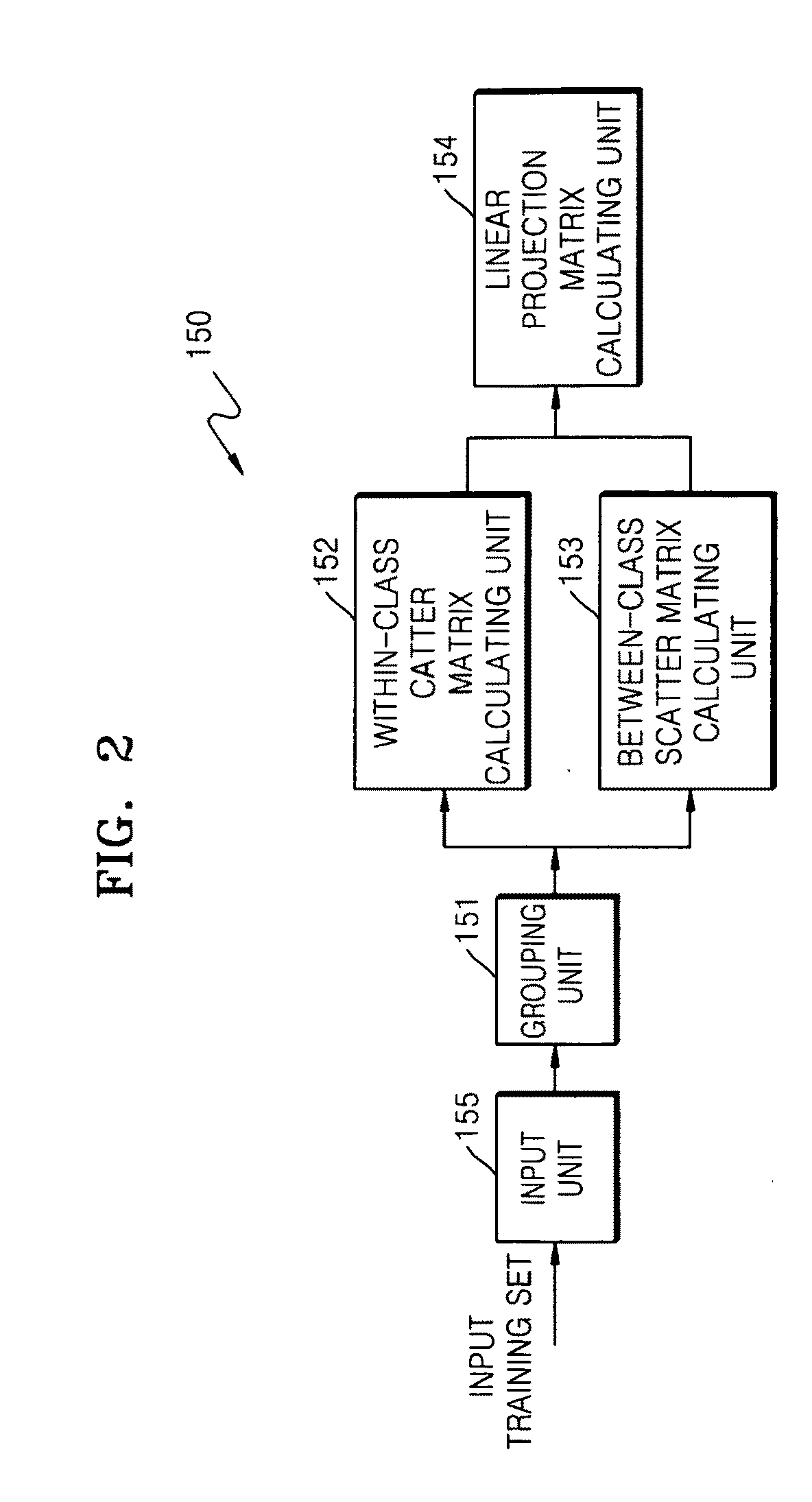

Multi-view face recognition method and system

A multi-view face recognition method and system are provided. In the multi-view face recognition method, two images to be recognized are input, a linear projection matrix is calculated based on grouped images in a training set, two feature vectors corresponding to the two input images are extracted based on the linear projection matrix, a distance between the two extracted feature vectors is calculated, and it is determined based on the distance between the two feature vectors whether the two input images belong to a same person.

Owner:SAMSUNG ELECTRONICS CO LTD +1

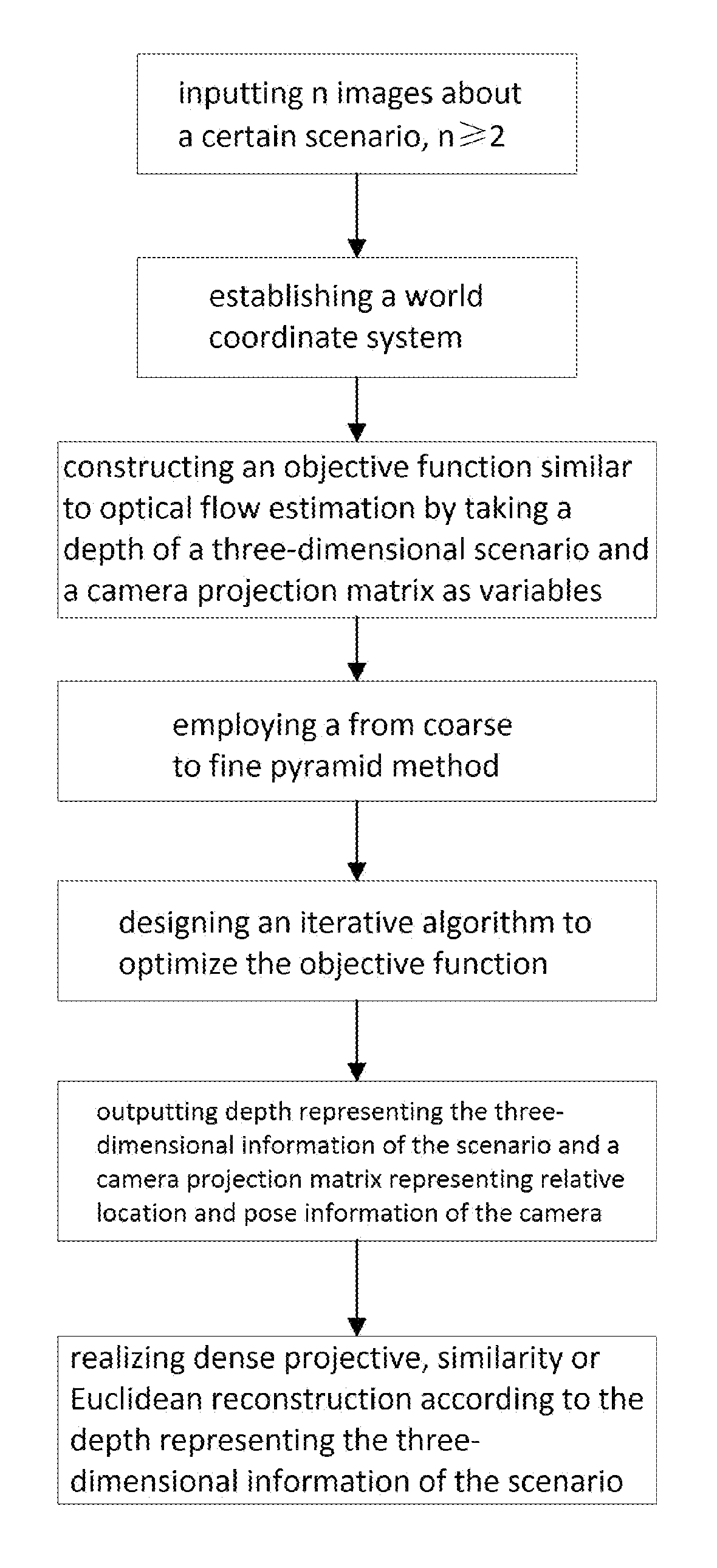

Non-feature extraction-based dense sfm three-dimensional reconstruction method

The present invention discloses a non-feature extraction dense SFM three-dimensional reconstruction method, comprising: inputting n images about a certain scenario, n≧2; establishing a world coordinate system consistent with a certain camera coordinate system; constructing an objective function similar to optical flow estimation by taking a depth of a three-dimensional scenario and a camera projection matrix as variables; employing a from coarse to fine pyramid method; designing an iterative algorithm to optimize the objective function; outputting depth representing the three-dimensional information of the scenario and a camera projection matrix representing relative location and pose information of the camera; and realizing dense projective, similarity or Euclidean reconstruction according to the depth representing the three-dimensional information of the scenario. The present invention can accomplish dense SFM three-dimensional reconstruction with one step. Since estimation of dense three-dimensional information is achieved by one-step optimization, an optimal solution or at least local optimal solution can be obtained by using the objective function as an index, it is significantly improved over an existing method and has been preliminarily verified by experiments.

Owner:SUN YAT SEN UNIV

Camera calibration device and method, and computer system

InactiveUS7023473B2Simply carrying out camera calibrationImage analysisOptical rangefindersCamera lensHat matrix

A camera calibration device capable of simply calibrating a stereo system consisting of a base camera and a detection camera. First, distortion parameters of the two cameras necessary for distance measurement are presumed by the use of images obtained by shooting a patterned object plane with the base camera and the reference camera at three or more view points free from any spatial positional restriction, and projective transformation matrixes for projecting the images respectively onto predetermined virtual planes are calculated. Then internal parameters of the base camera are calculated on the basis of the projective transformation matrixes relative to the images obtained from the base camera. Subsequently the position of the shot plane is presumed on the basis of the internal parameter of the base camera and the images obtained therefrom, whereby projection matrixes for the detection camera are calculated on the basis of the plane position parameters and the images obtained from the detection camera. According to this device, simplified calibration can be achieved stably without the necessity of any exclusive appliance.

Owner:SONY GRP CORP

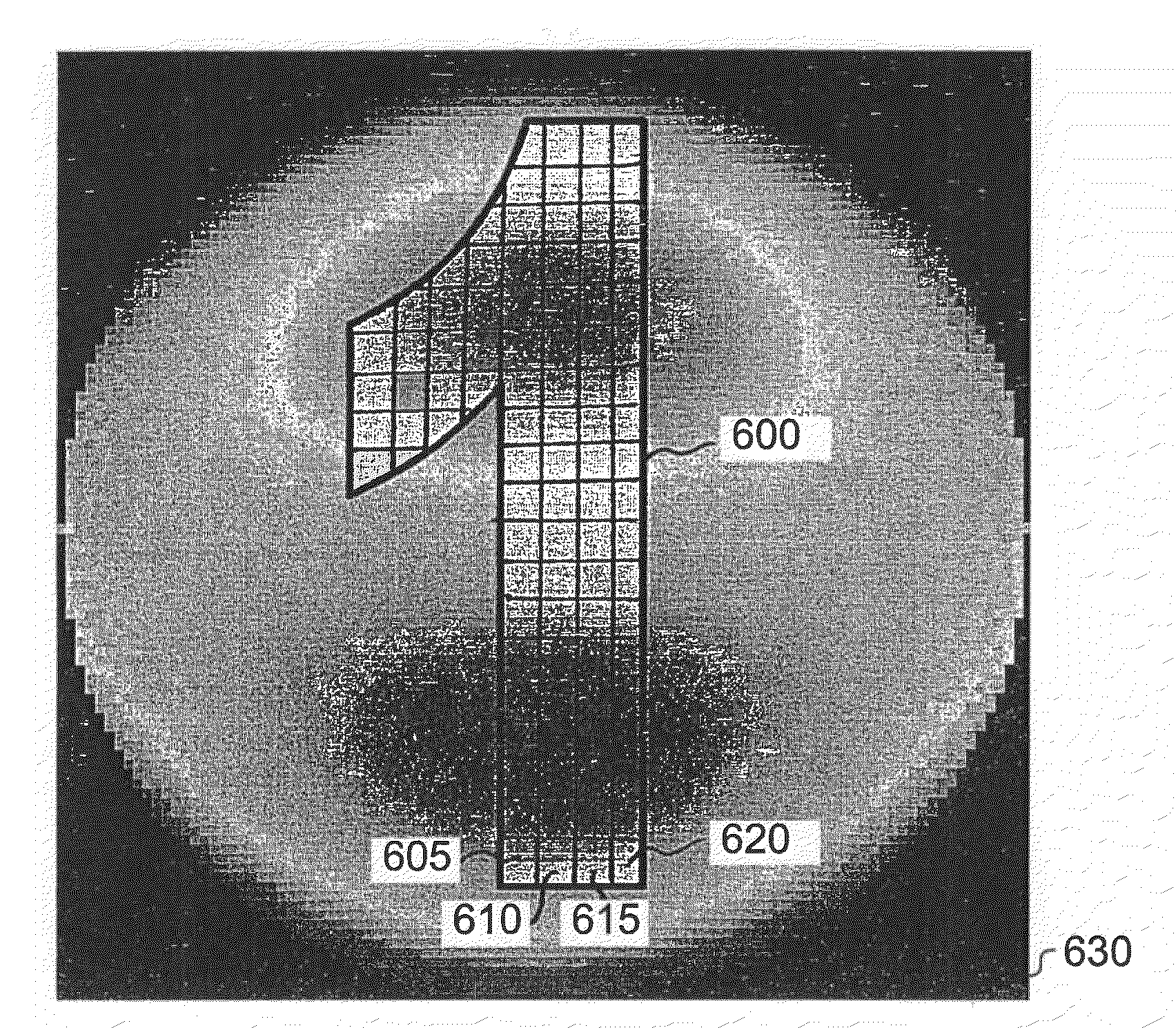

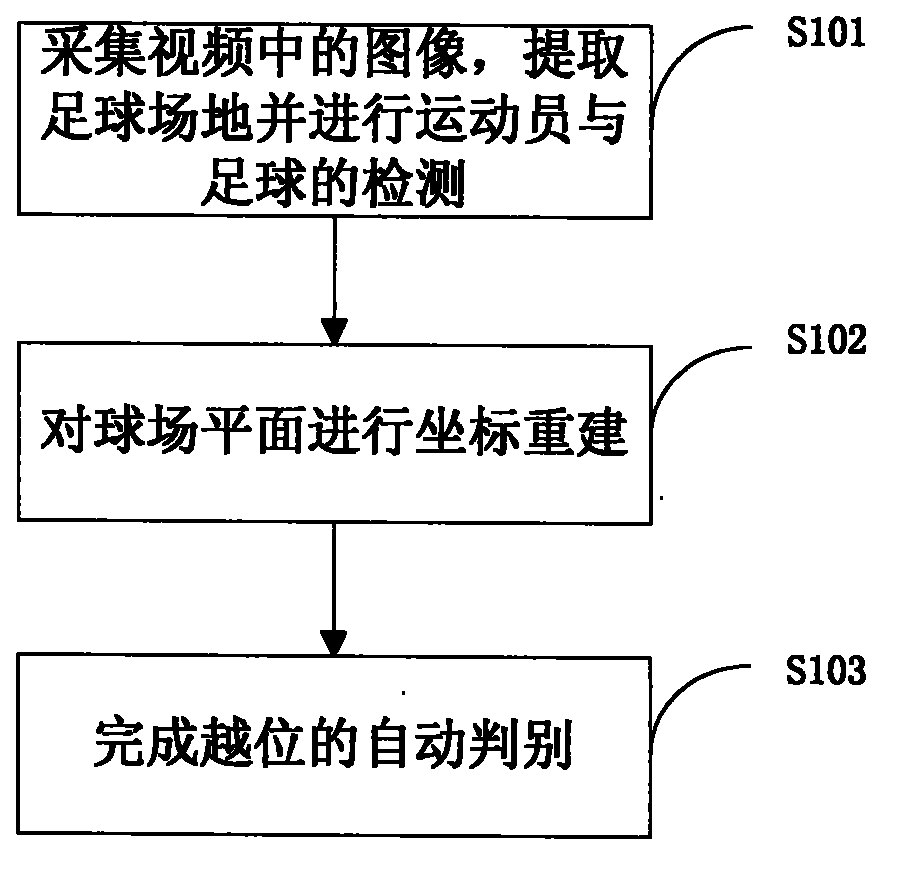

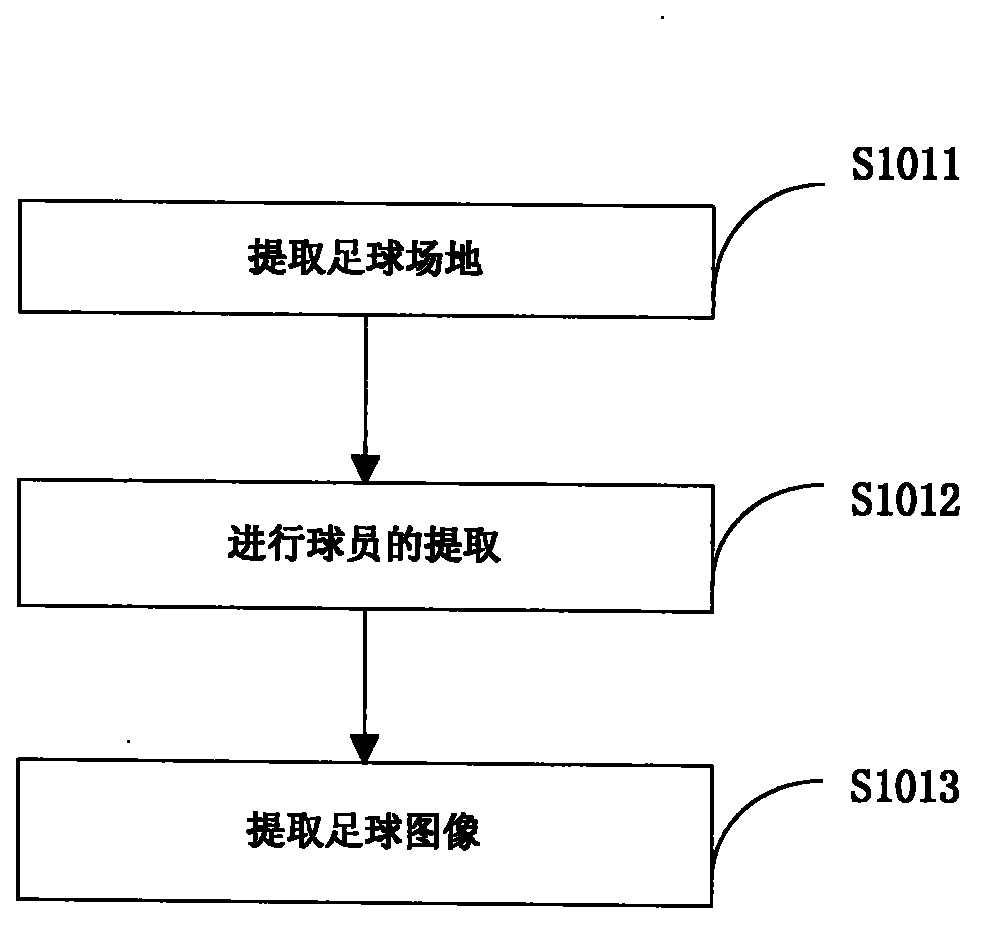

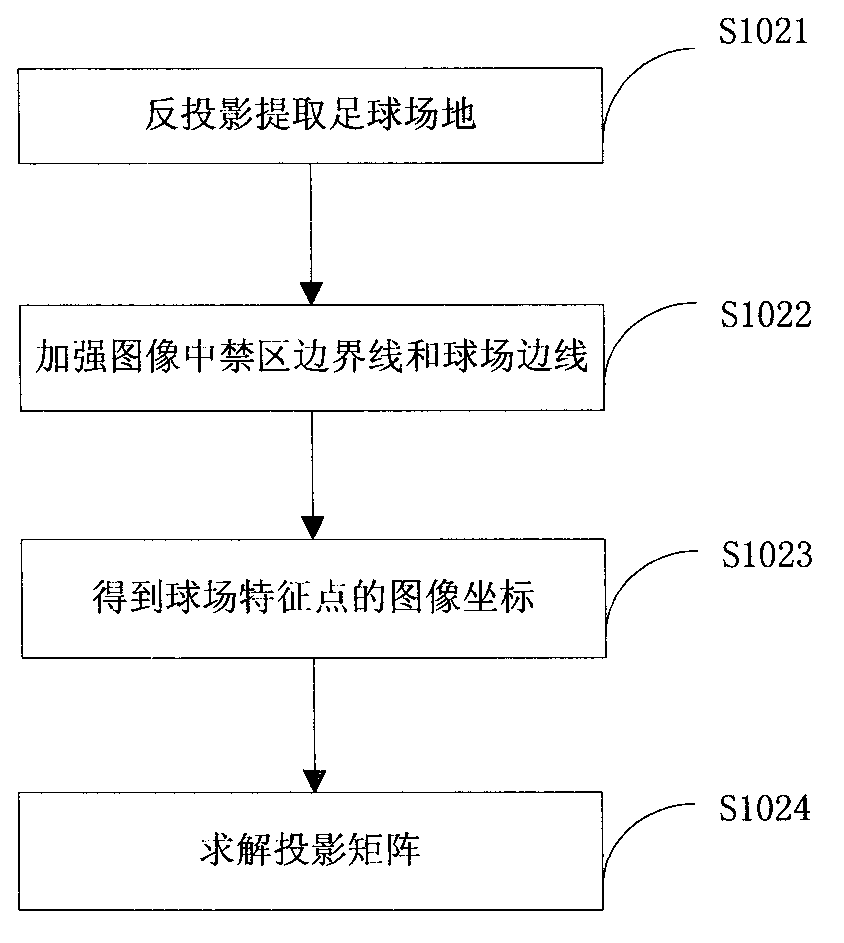

Automatic identification system and method for offside of football based on video analysis

InactiveCN102819749AReduce workloadReduce mistakesTelevision system detailsColor signal processing circuitsHat matrixImaging processing

The invention discloses an automatic identification system and an automatic identification method for offside of a football based on video analysis. The method is characterized by comprising the steps of: collecting an image in a video, extracting a football field and detecting athletes and the football; extracting characteristic lines of the football field and rebuilding a coordinate of a plane of the football field; and finishing identification of the offside in the football match video automatically. The automatic identification system and the automatic identification method disclosed by the invention identify the offside behaviors through image processing of a computer, have the advantages that the identification speed is quick and the result is accurate, the workload and the error of an assistant referee can be reduced to a large extent, artificial factors for controlling the match are greatly removed and the reliability of penalties is improved. The designed offside detecting algorithm is based on plane coordinate scores of the athlete, so that the plane coordinates of the football field can be recovered by shooting with a single camera and solving a projection matrix, and further the lengthy computations on analysis of a plurality of cameras can be saved. According to the method, the offside condition of international football matches can be monitored in a better way, and the purposes of assisting the penalties and reducing the workload and error of the referee are realized.

Owner:XIAN INST OF PHYSICAL EDUCATION

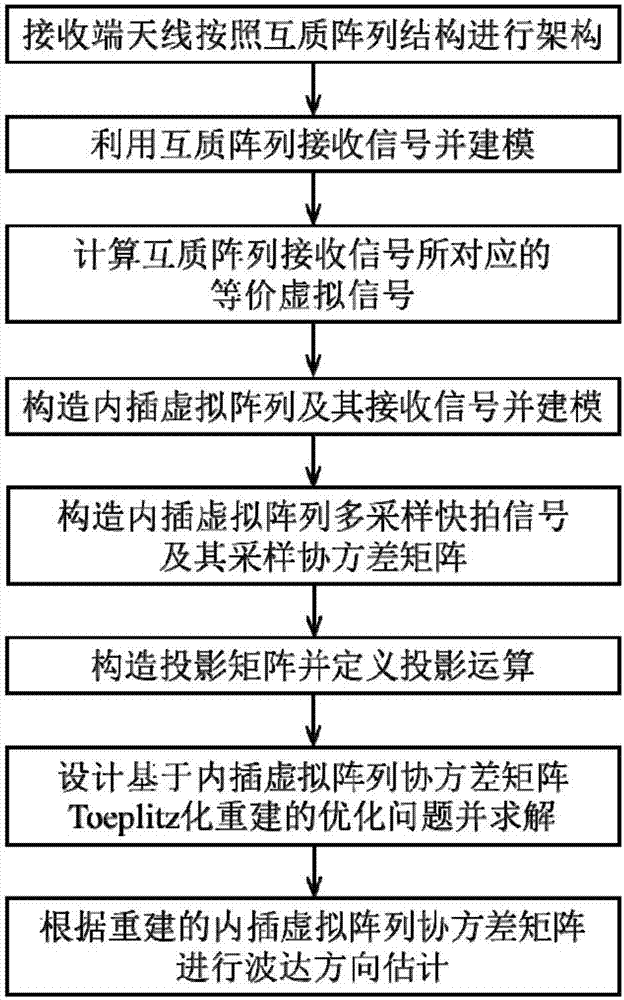

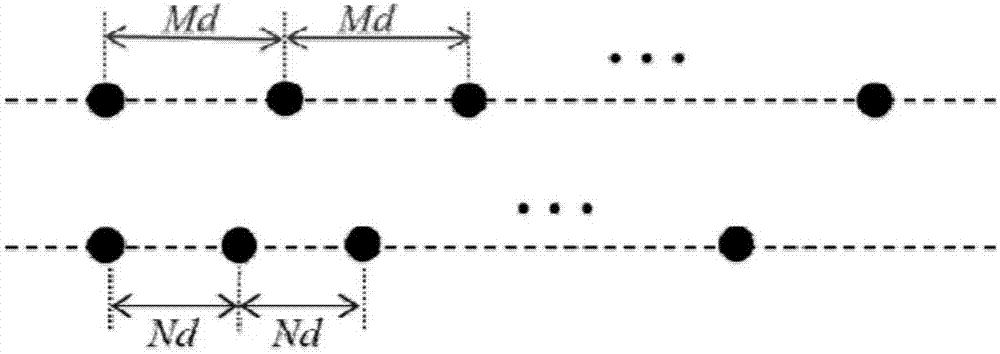

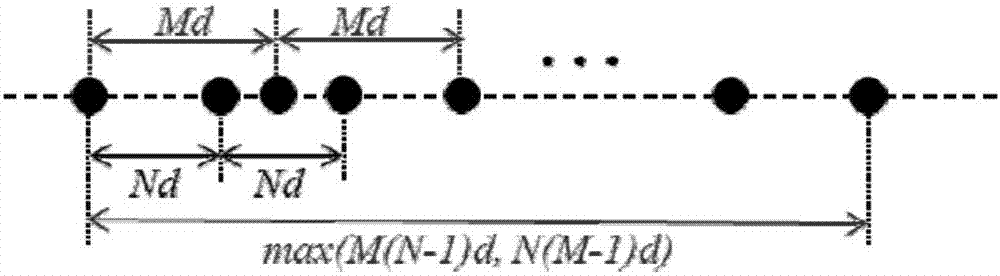

Co-prime array DOA (direction of arrival) estimation method based on interpolation virtual array covariance matrix Toeplitz reconstruction

ActiveCN107329108AIncrease freedomHigh resolutionRadio wave direction/deviation determination systemsDirection/deviation determining electromagnetic systemsHat matrixImage resolution

The invention discloses a co-prime array DOA (direction of arrival) estimation method based on interpolation virtual array covariance matrix Toeplitz reconstruction, and mainly solves a problem of information loss caused by the heterogeneity in a virtual array in the prior art. The method comprises the implementation steps: constructing a co-prime array at a receiving end; receiving an incident signal through the co-prime array, and carrying out the modeling; calculating an equivalent virtual signal corresponding to the signal received by the co-prime array; constructing an interpolation virtual array and carrying out the modeling; constructing a multi-sampling snapshot signals of the interpolation virtual array and a sampling covariance matrix; constructing a projection matrix, and defining projection calculation related with the projection matrix; constructing a reference covariance matrix according to all information in an original virtual array, designing an optimization problem based on the interpolation virtual array covariance matrix Toeplitz reconstruction, and solving the optimization problem; and carrying out the DOA estimation according to the reconstructed interpolation virtual array covariance matrix. The method improves the freedom degree and resolution of signal DOA estimation, and can be used for the passive positioning and target detection.

Owner:ZHEJIANG UNIV

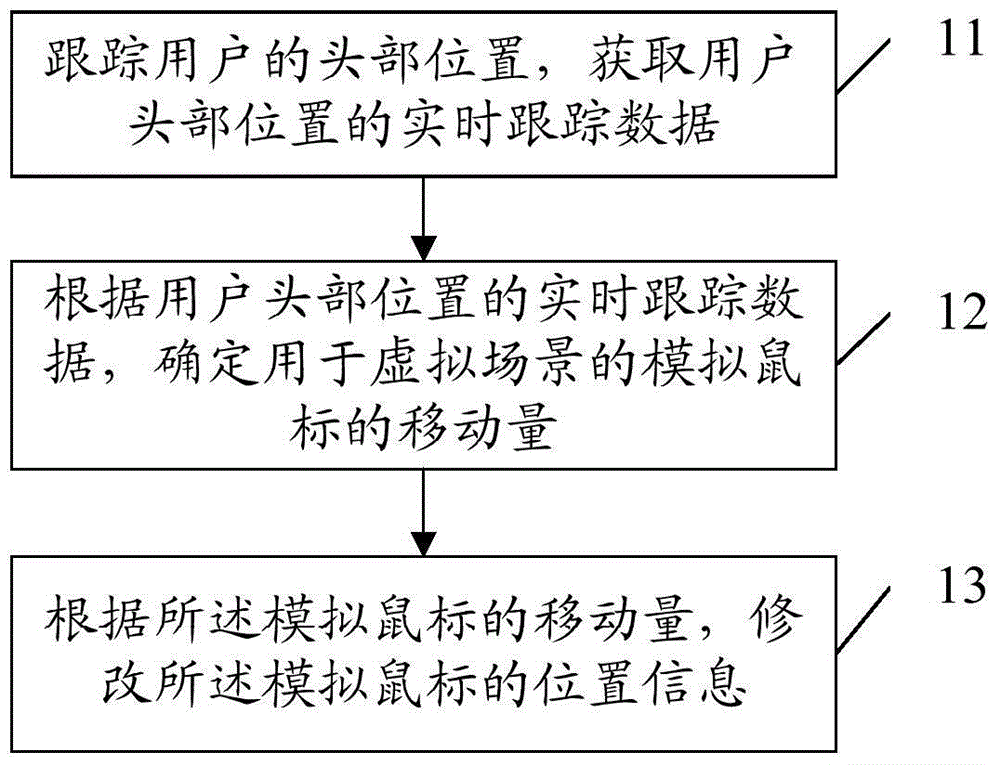

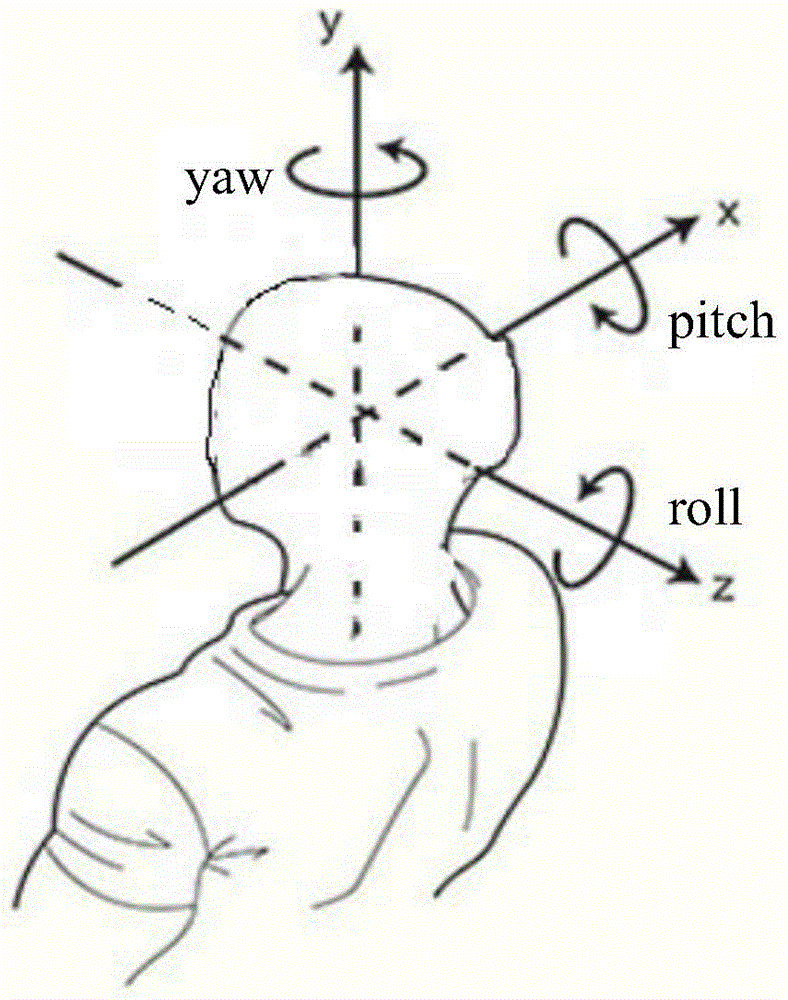

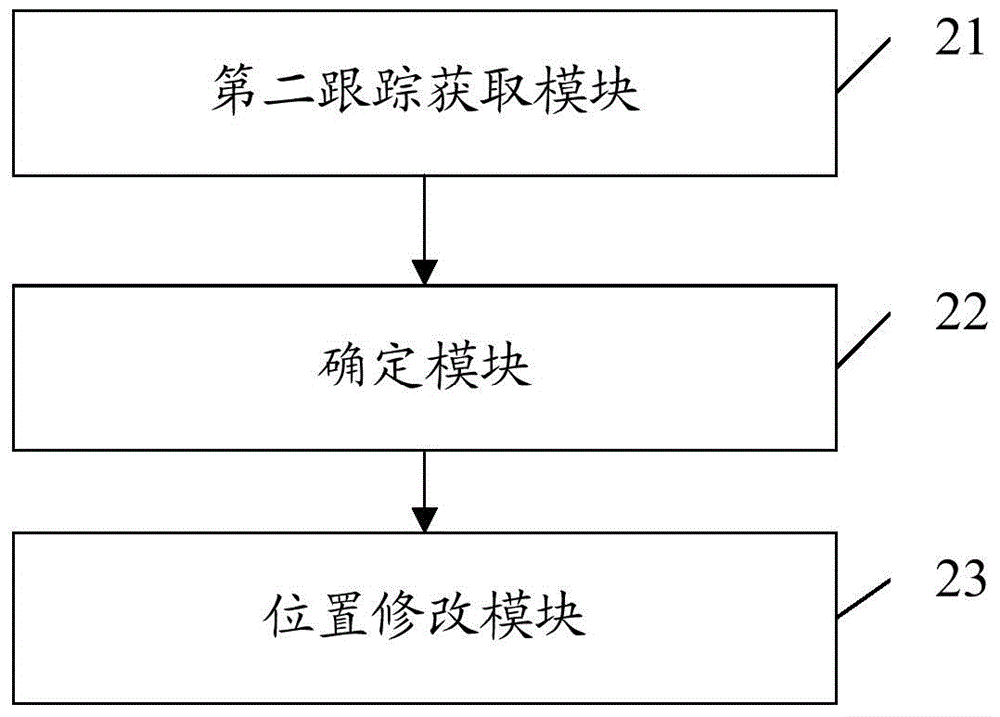

Stereoscopic display method, device and electronic equipment used for virtual and reality scene

ActiveCN105704468ASynchronization of viewing anglesLess discomfortSteroscopic systemsHat matrixObservation matrix

The invention provides a stereoscopic display method, a stereoscopic display device and stereoscopic display electronic equipment used for virtual and reality scenes. The stereoscopic display method comprises the steps of: acquiring real-time tracking data of a head position of a user; converting an original observation matrix or an original projection matrix of a virtual scene according to the real-time tracking data when the head position of the user changes, and acquiring a new observation matrix or a new projection matrix; and constructing and displaying a stereoscopic image of the virtual scene according to the new observation matrix or the new projection matrix, thereby converting an observation viewing angle of the virtual scene, and achieving synchronization of the observation viewing angle in the virtual scene and the observation viewing angle after the change of the head position of the user. The stereoscopic display method, the stereoscopic display device and the stereoscopic display electronic equipment can be applied to 3D display equipment.

Owner:SUPERD CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com