Patents

Literature

1514 results about "Svm classifier" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

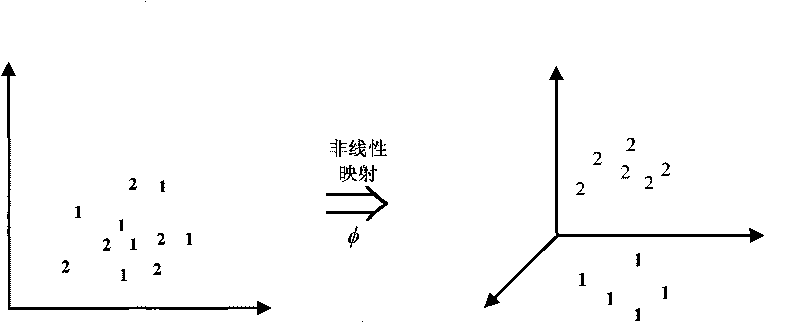

The SVM classifier is a powerful supervised classification method. It is well suited for segmented raster input but can also handle standard imagery. It is a classification method commonly used in the research community.

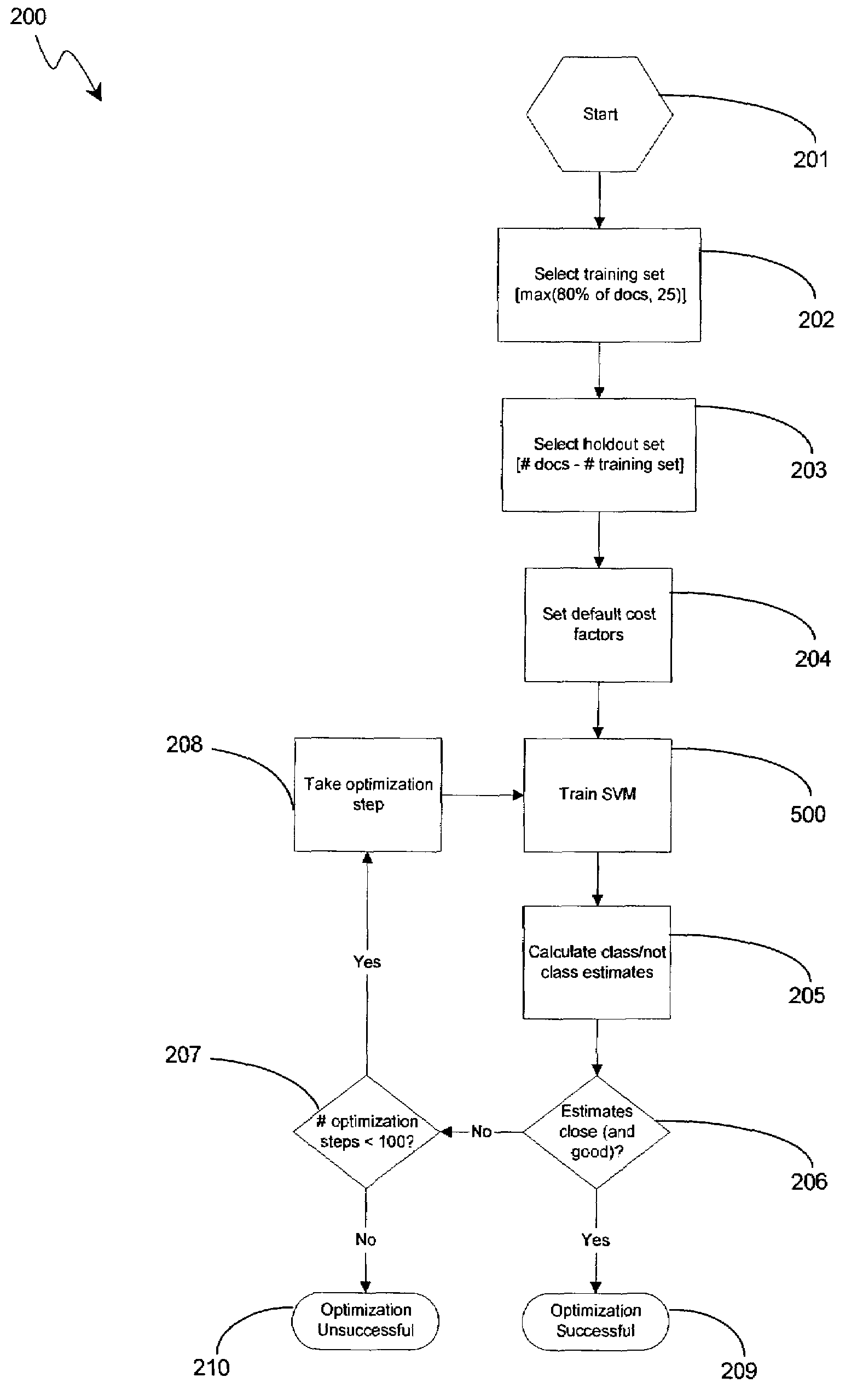

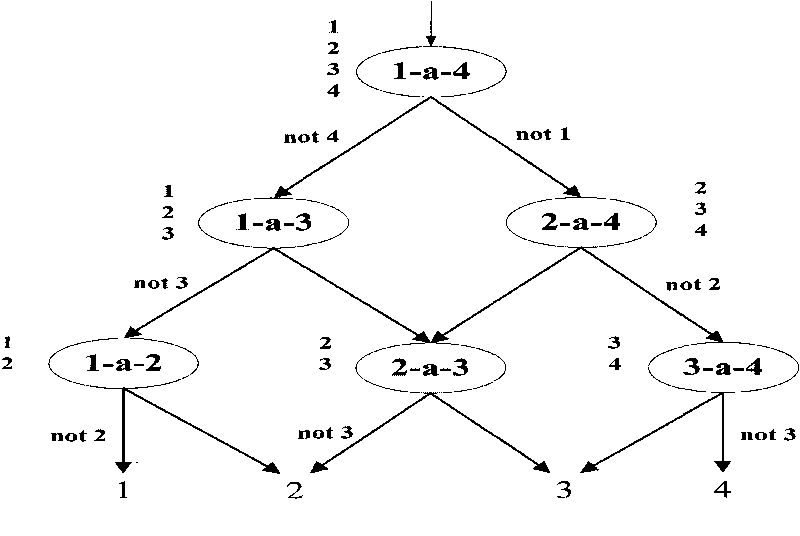

Effective multi-class support vector machine classification

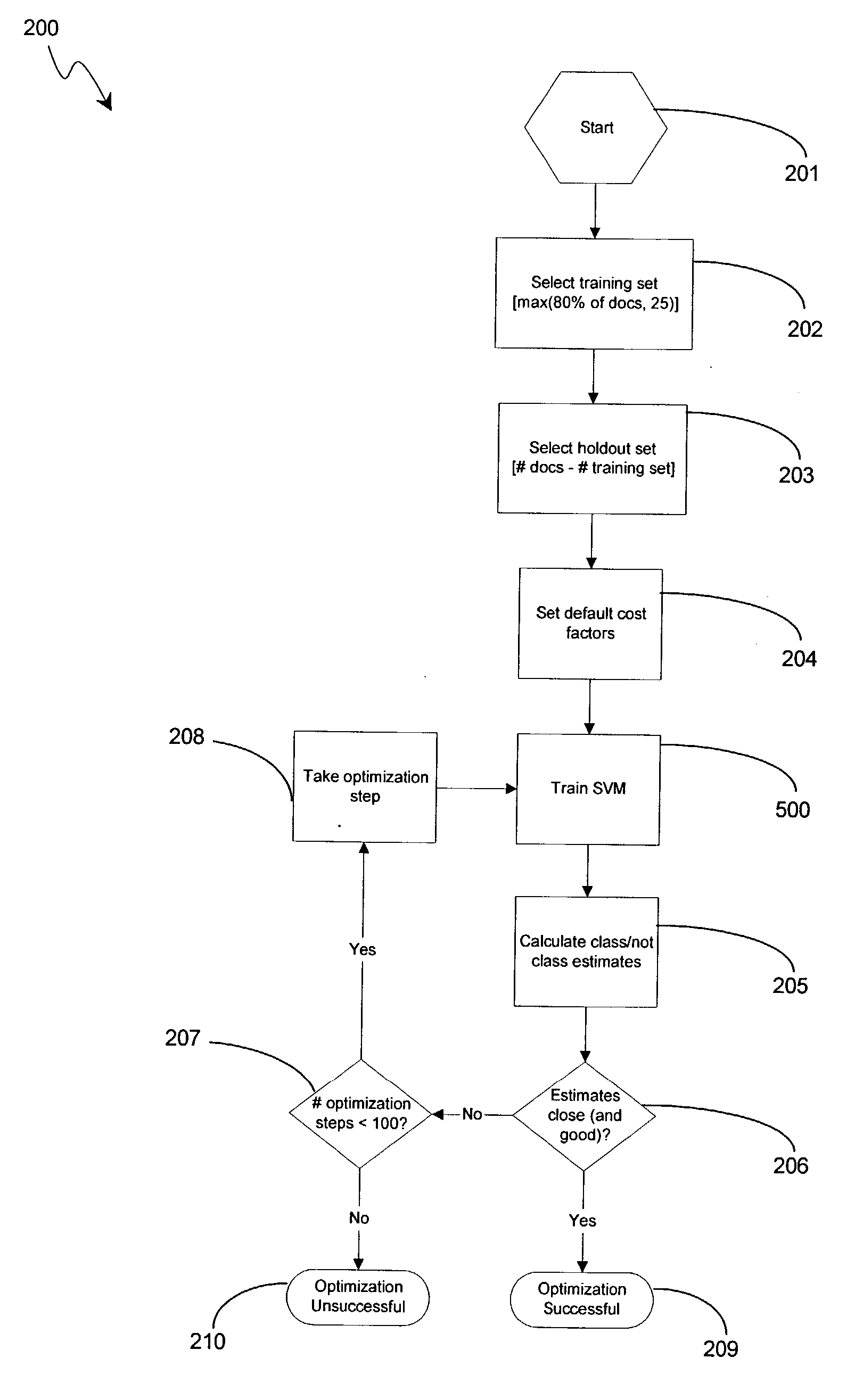

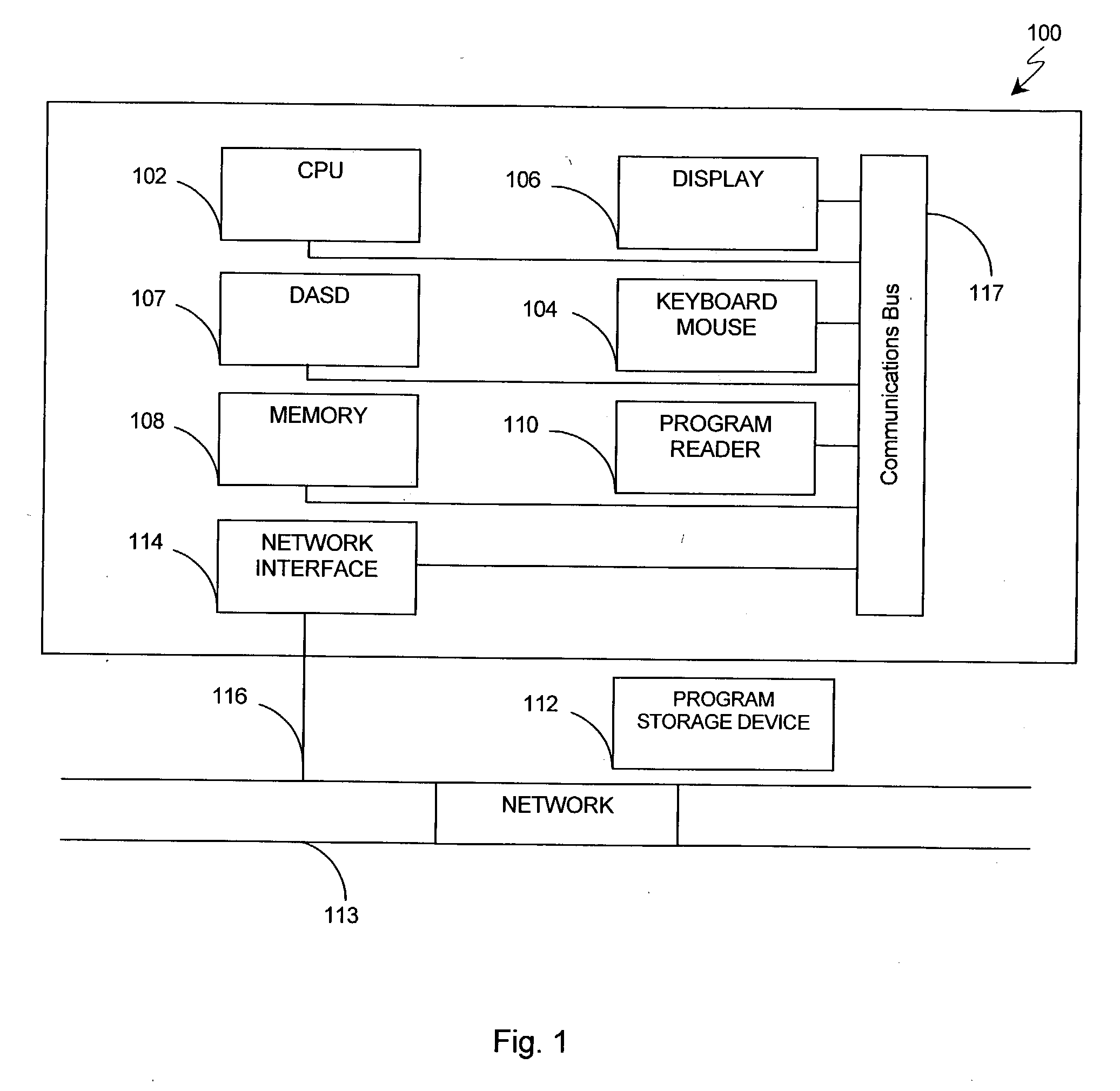

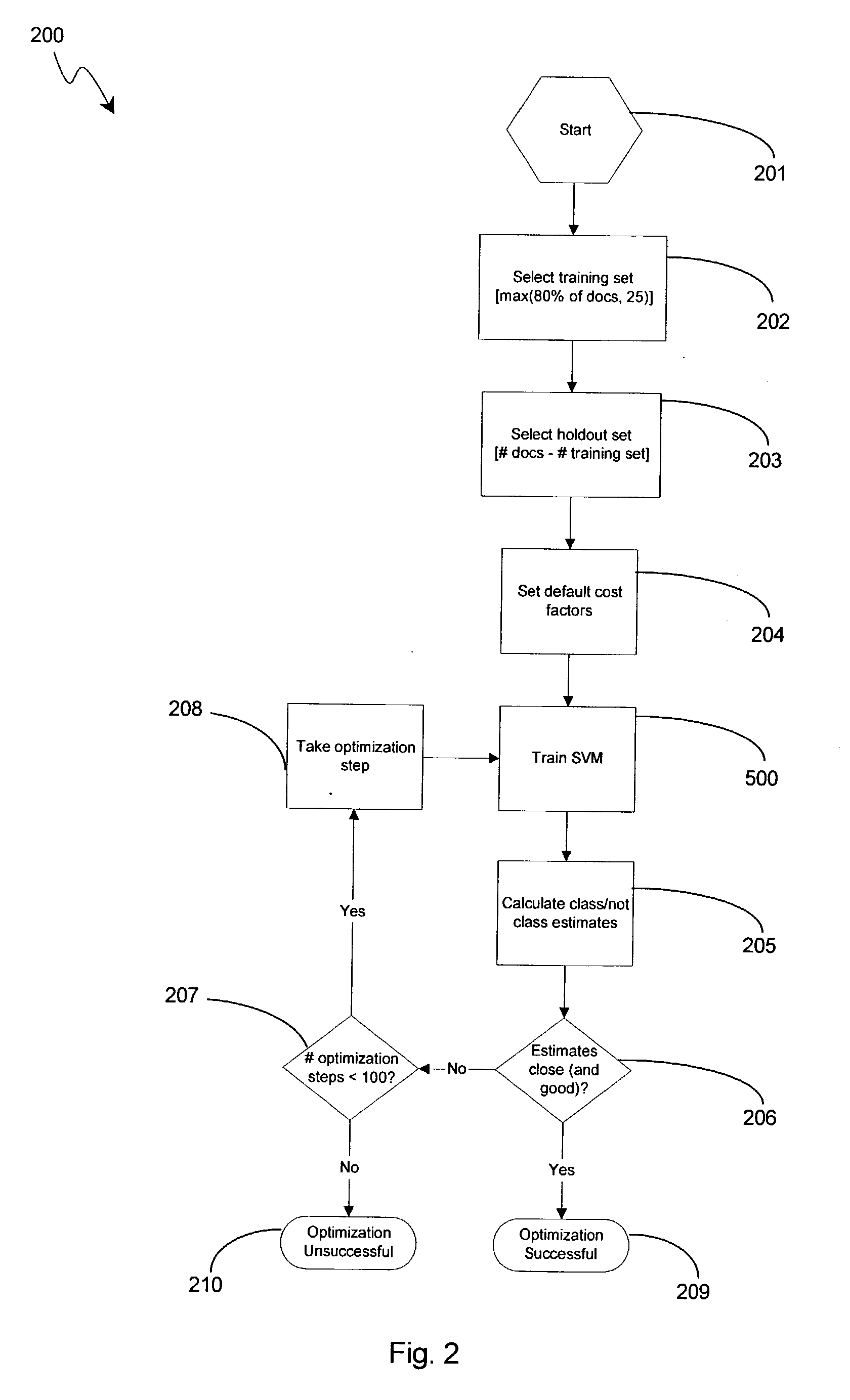

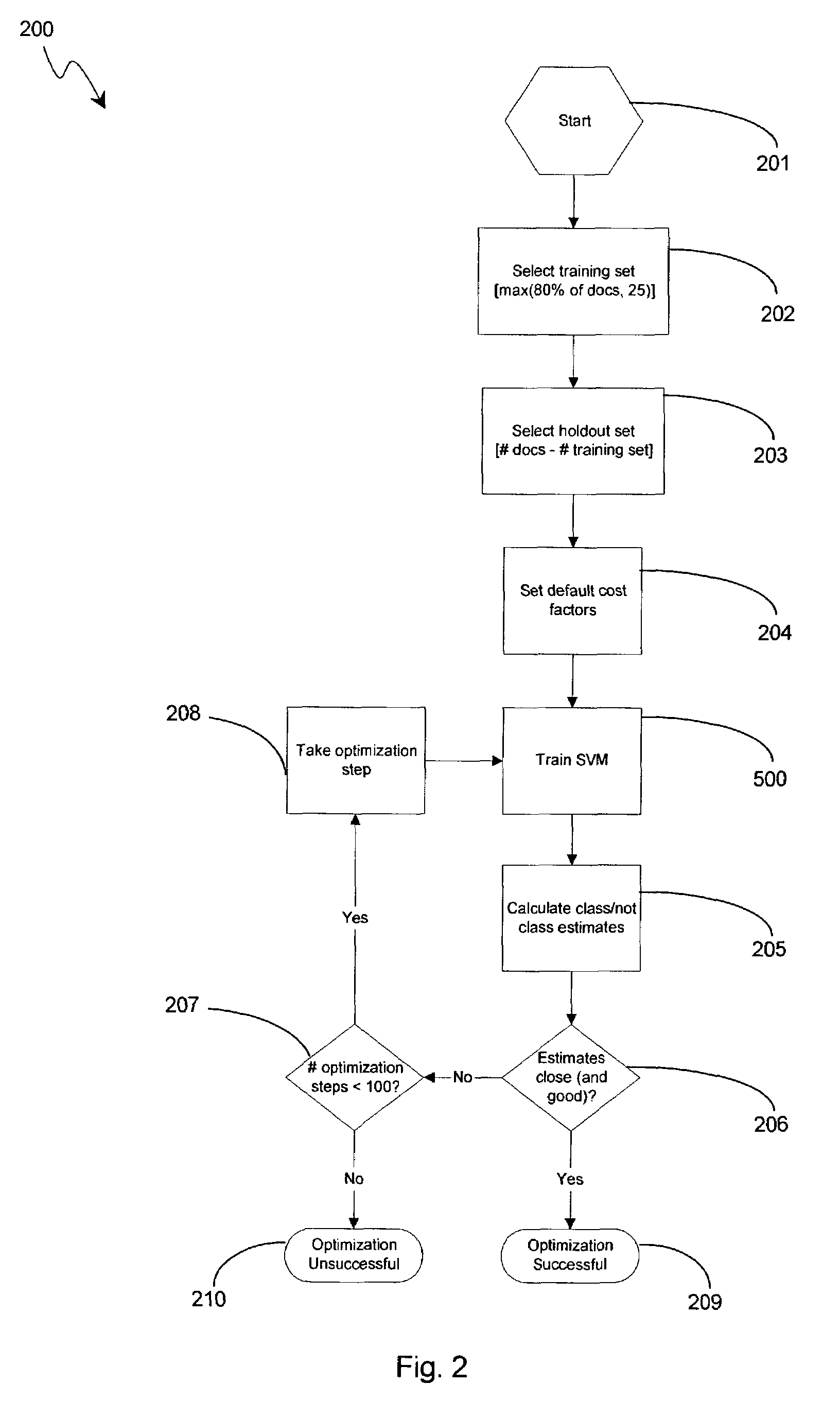

An improved method of classifying examples into multiple categories using a binary support vector machine (SVM) algorithm. In one preferred embodiment, the method includes the following steps: storing a plurality of user-defined categories in a memory of a computer; analyzing a plurality of training examples for each category so as to identify one or more features associated with each category; calculating at least one feature vector for each of the examples; transforming each of the at least one feature vectors so as reflect information about all of the training examples; and building a SVM classifier for each one of the plurality of categories, wherein the process of building a SVM classifier further includes: assigning each of the examples in a first category to a first class and all other examples belonging to other categories to a second class, wherein if any one of the examples belongs to another category as well as the first category, such examples are assigned to the first class only; optimizing at least one tunable parameter of a SVM classifier for the first category, wherein the SVM classifier is trained using the first and second classes; and optimizing a function that converts the output of the binary SVM classifier into a probability of category membership.

Owner:KOFAX

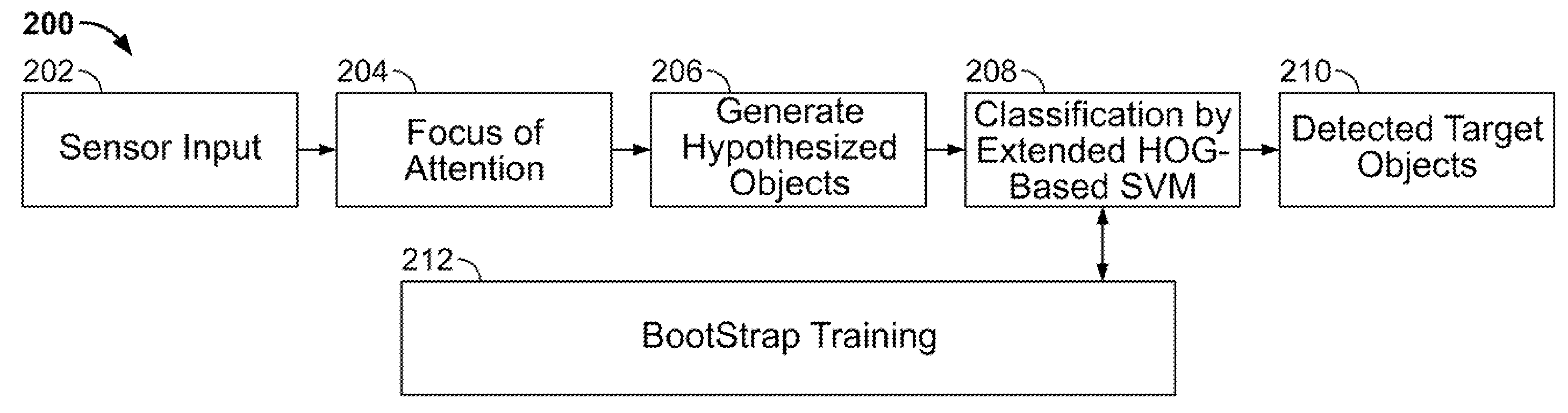

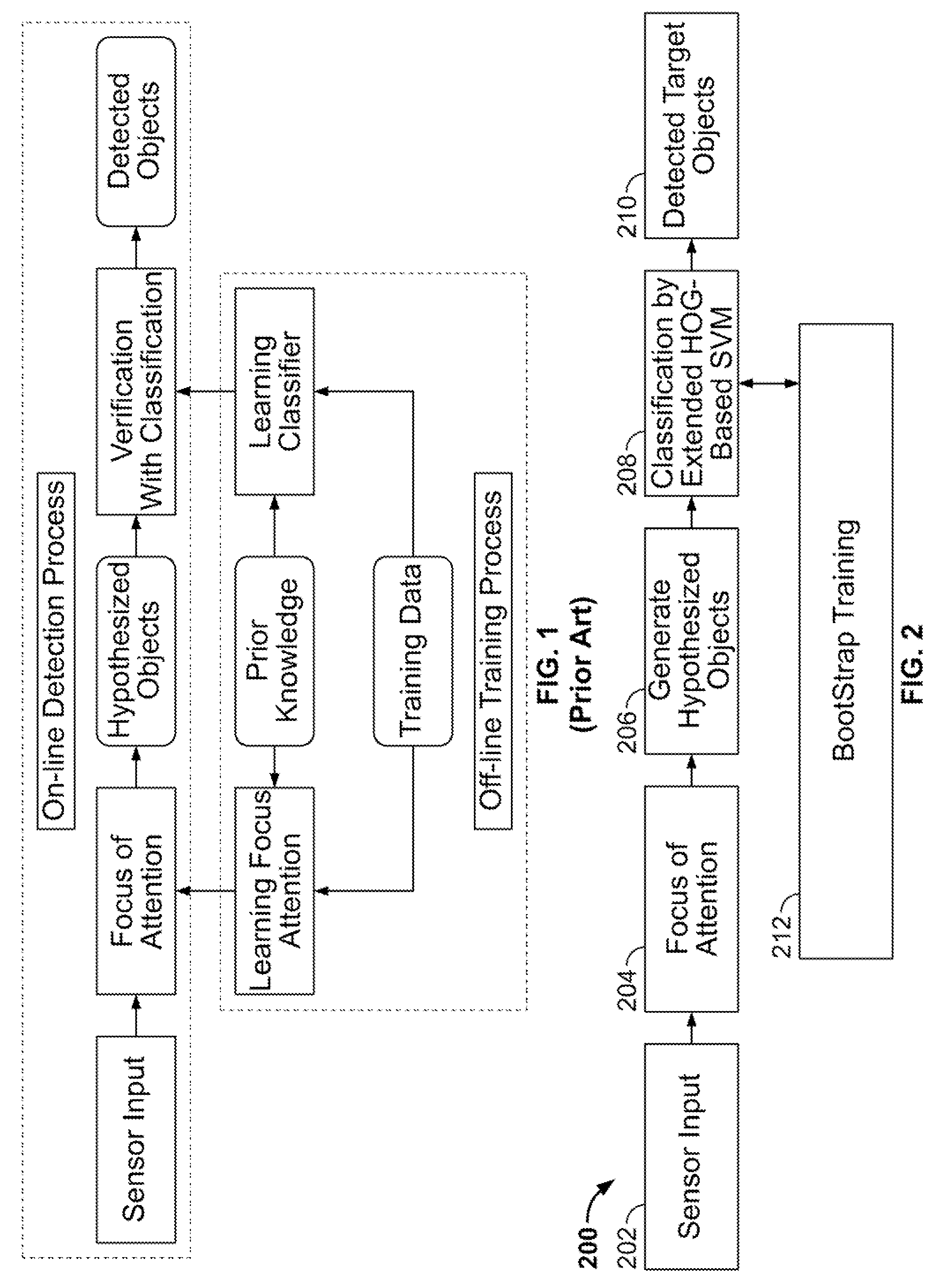

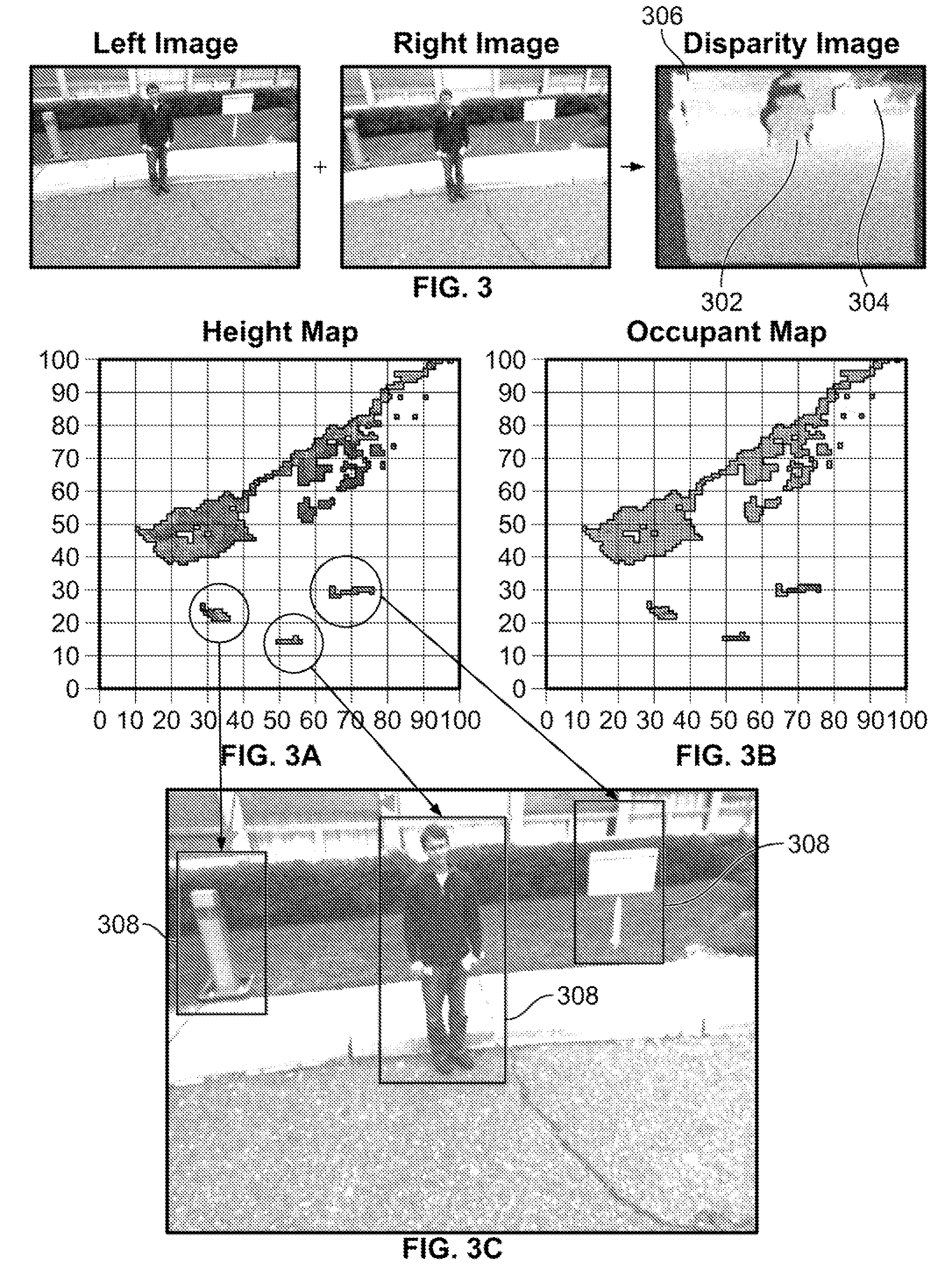

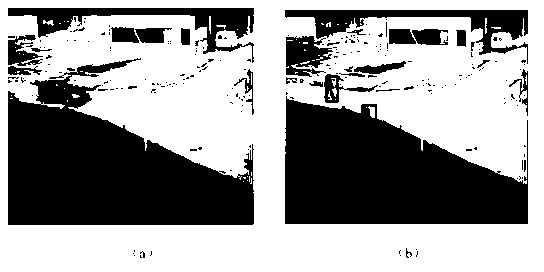

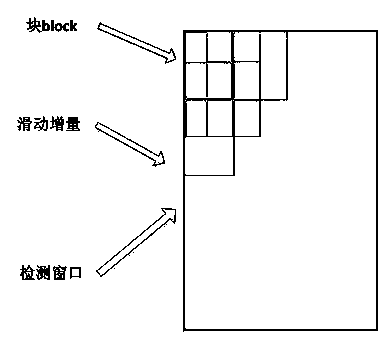

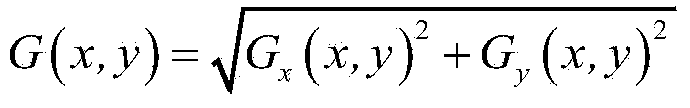

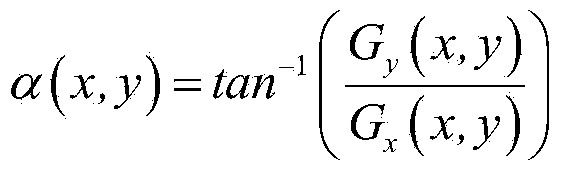

System and method for detecting still objects in images

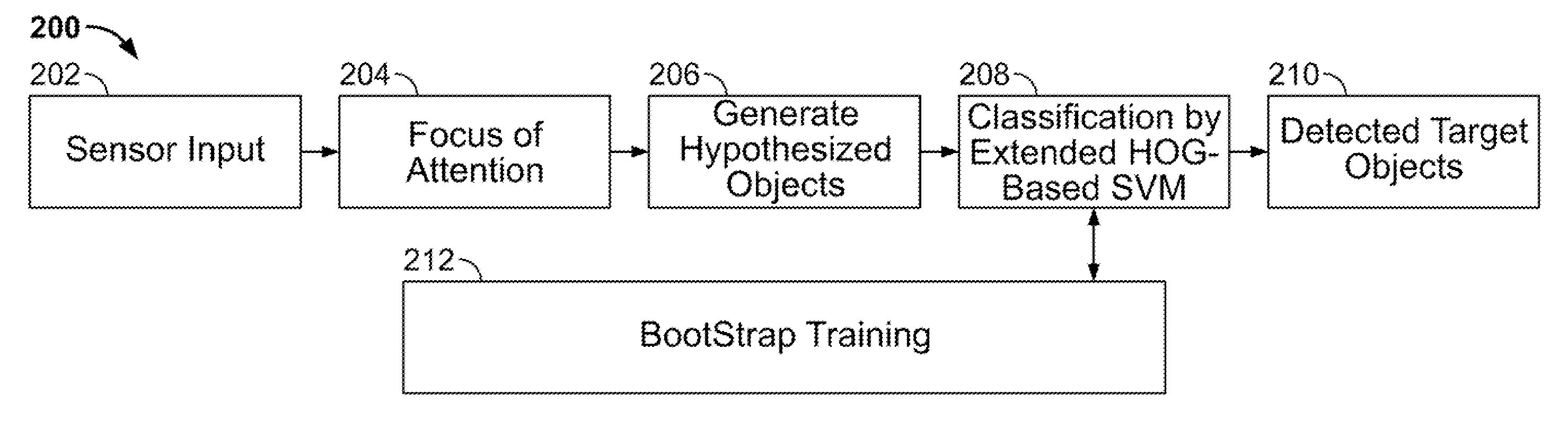

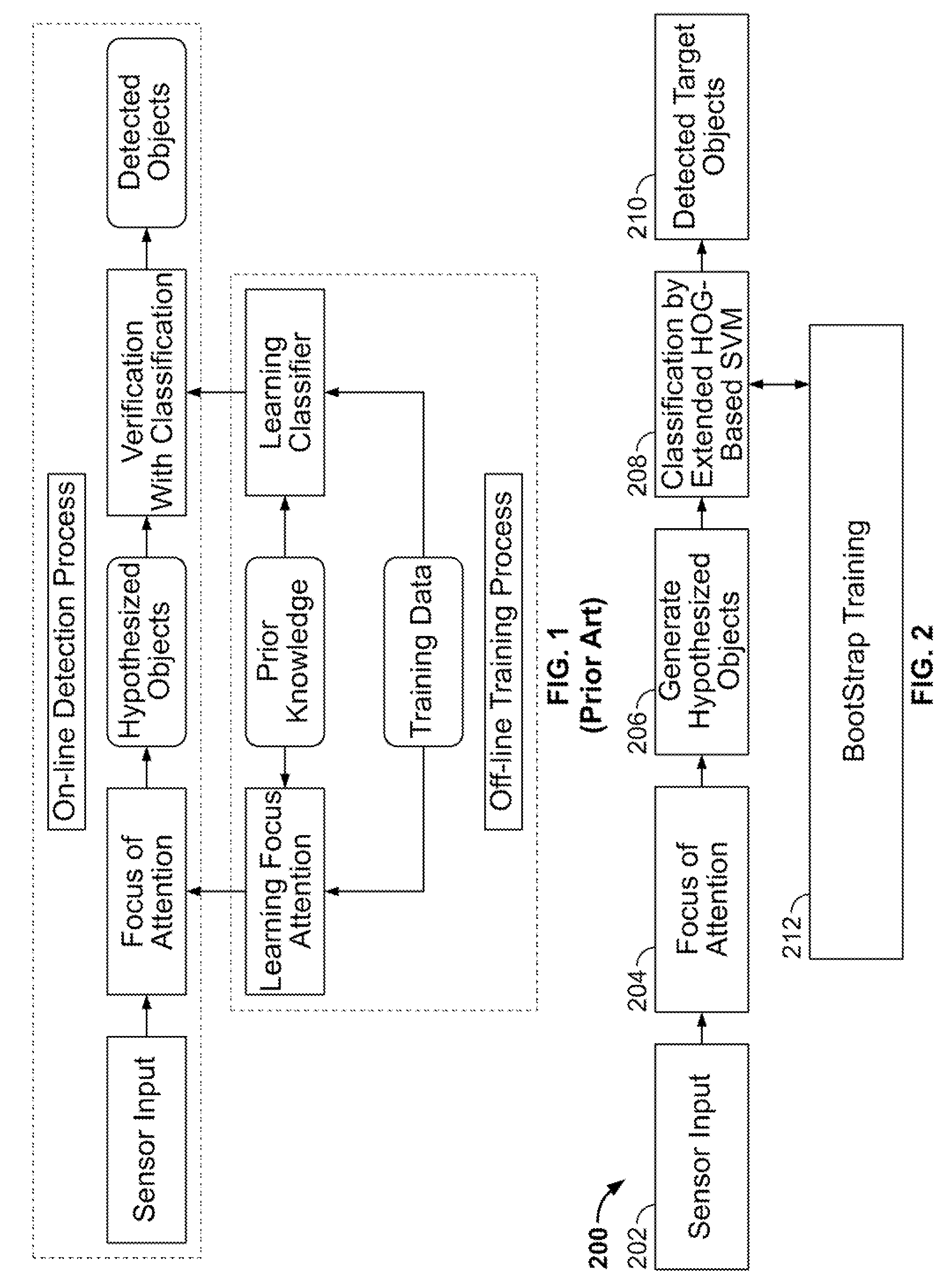

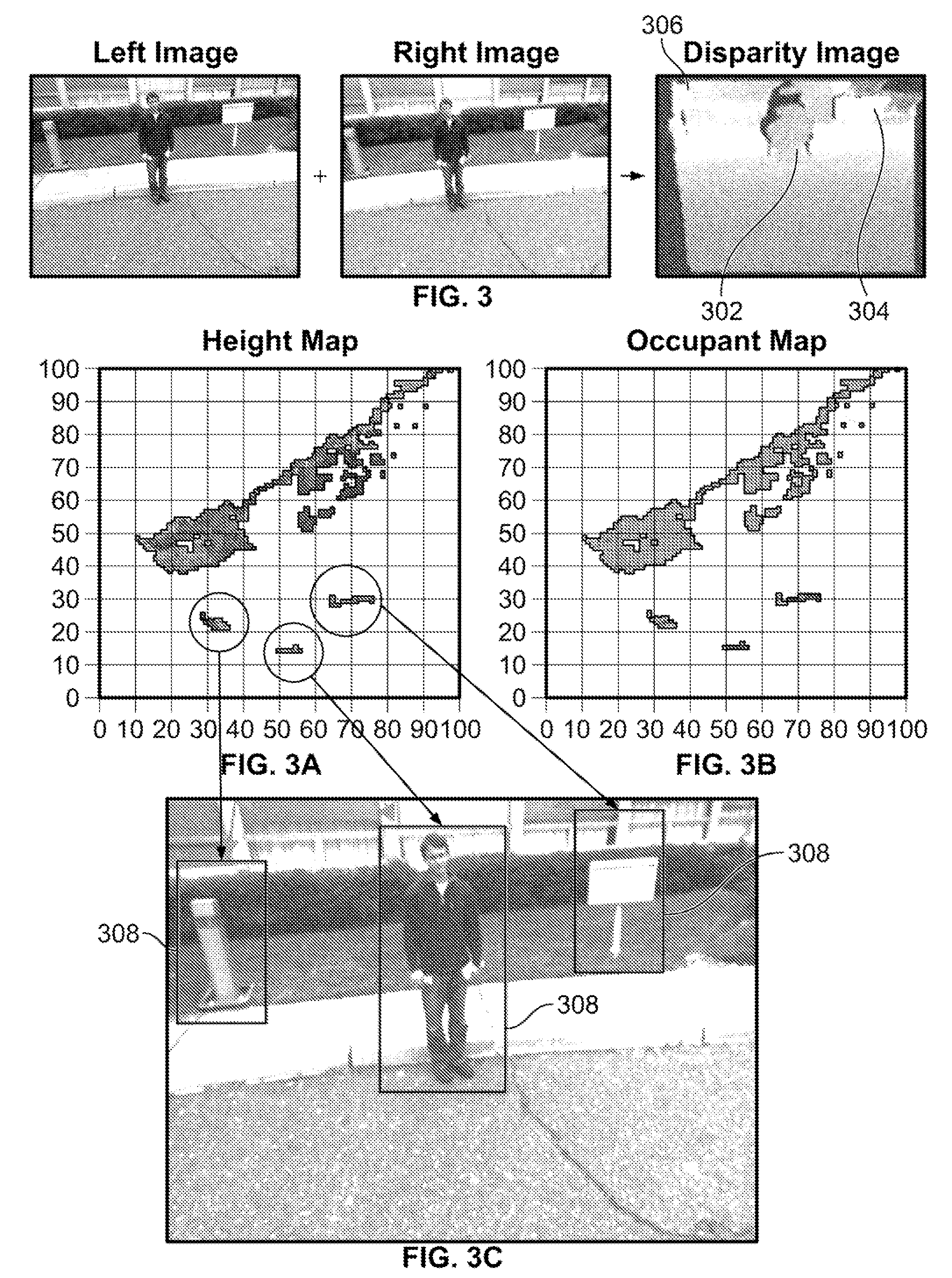

The present invention provides an improved system and method for object detection with histogram of oriented gradient (HOG) based support vector machine (SVM). Specifically, the system provides a computational framework to stably detect still or not moving objects over a wide range of viewpoints. The framework includes providing a sensor input of images which are received by the “focus of attention” mechanism to identify the regions in the image that potentially contain the target objects. These regions are further computed to generate hypothesized objects, specifically generating selected regions containing the target object hypothesis with respect to their positions. Thereafter, these selected regions are verified by an extended HOG-based SVM classifier to generate the detected objects.

Owner:SRI INTERNATIONAL

Effective multi-class support vector machine classification

ActiveUS7386527B2Easy to handleAccurate understandingDigital data processing detailsDigital computer detailsFeature vectorAlgorithm

An improved method of classifying examples into multiple categories using a binary support vector machine (SVM) algorithm. In one preferred embodiment, the method includes the following steps: storing a plurality of user-defined categories in a memory of a computer; analyzing a plurality of training examples for each category so as to identify one or more features associated with each category; calculating at least one feature vector for each of the examples; transforming each of the at least one feature vectors so as reflect information about all of the training examples; and building a SVM classifier for each one of the plurality of categories, wherein the process of building a SVM classifier further includes: assigning each of the examples in a first category to a first class and all other examples belonging to other categories to a second class, wherein if any one of the examples belongs to another category as well as the first category, such examples are assigned to the first class only; optimizing at least one tunable parameter of a SVM classifier for the first category, wherein the SVM classifier is trained using the first and second classes; and optimizing a function that converts the output of the binary SVM classifier into a probability of category membership.

Owner:KOFAX

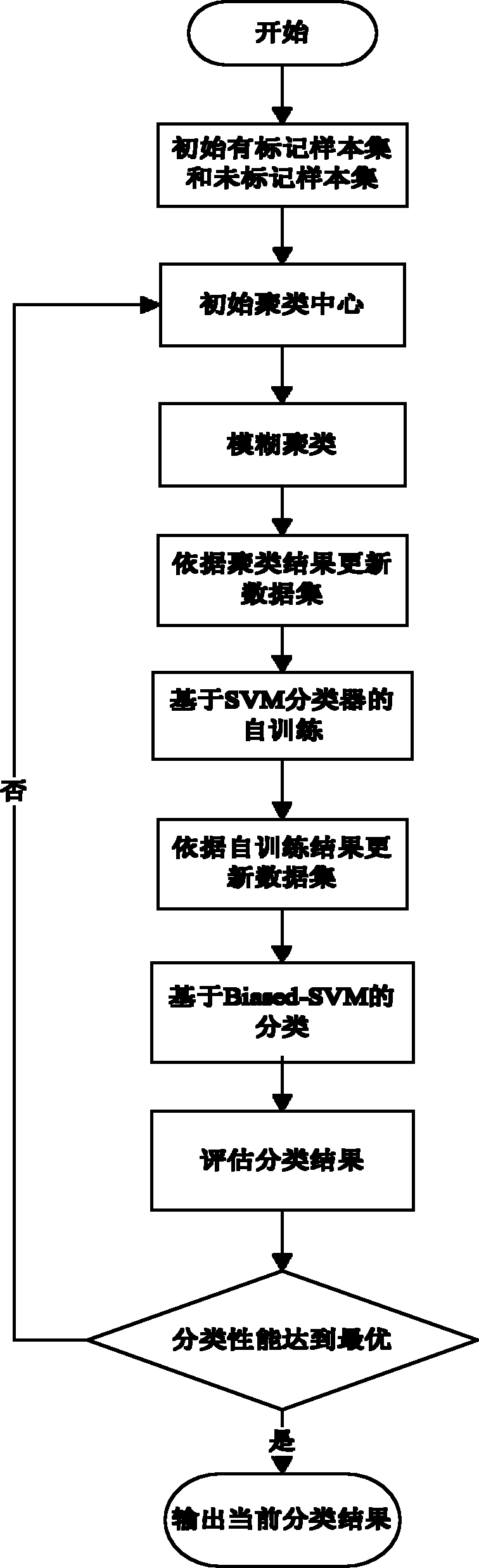

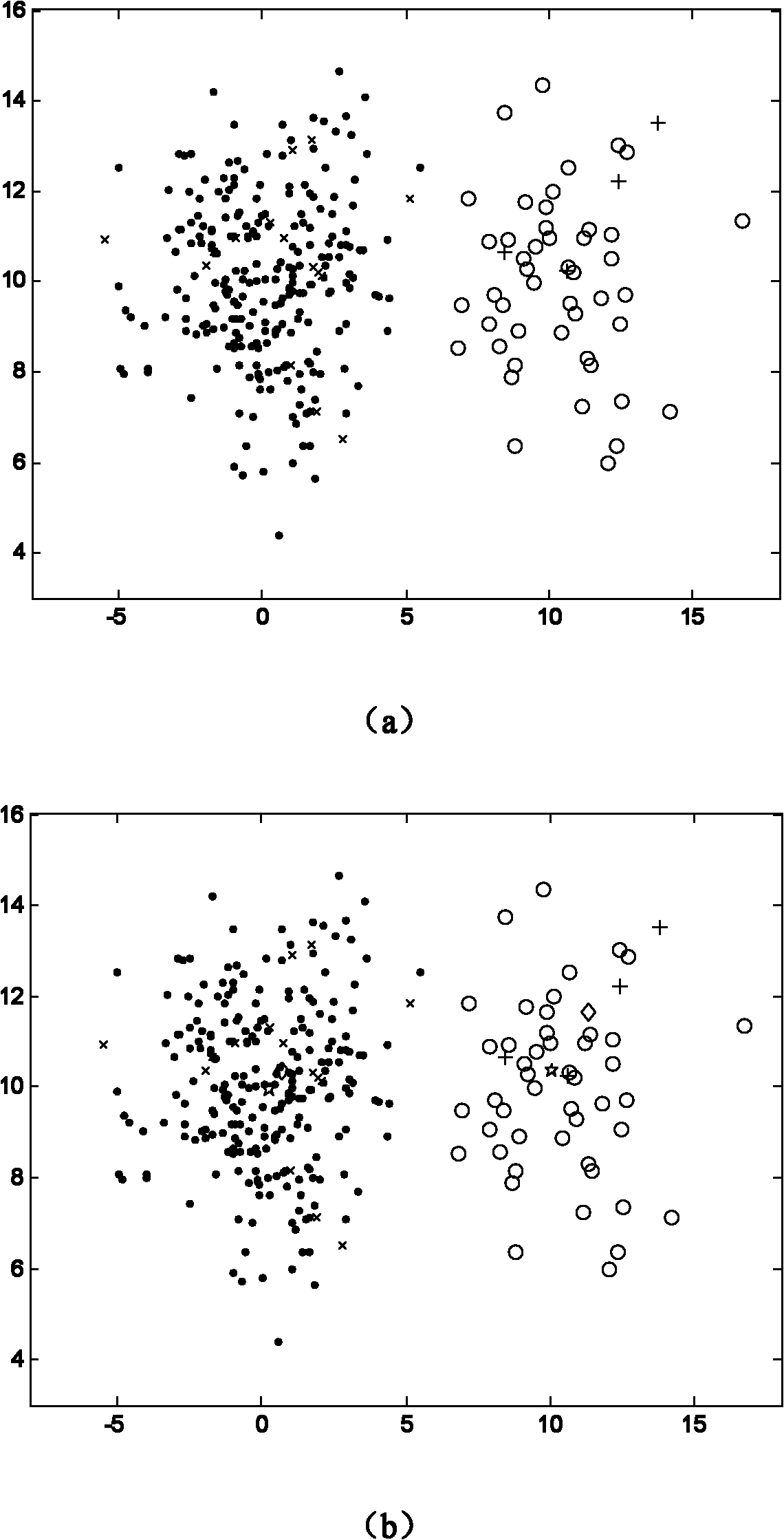

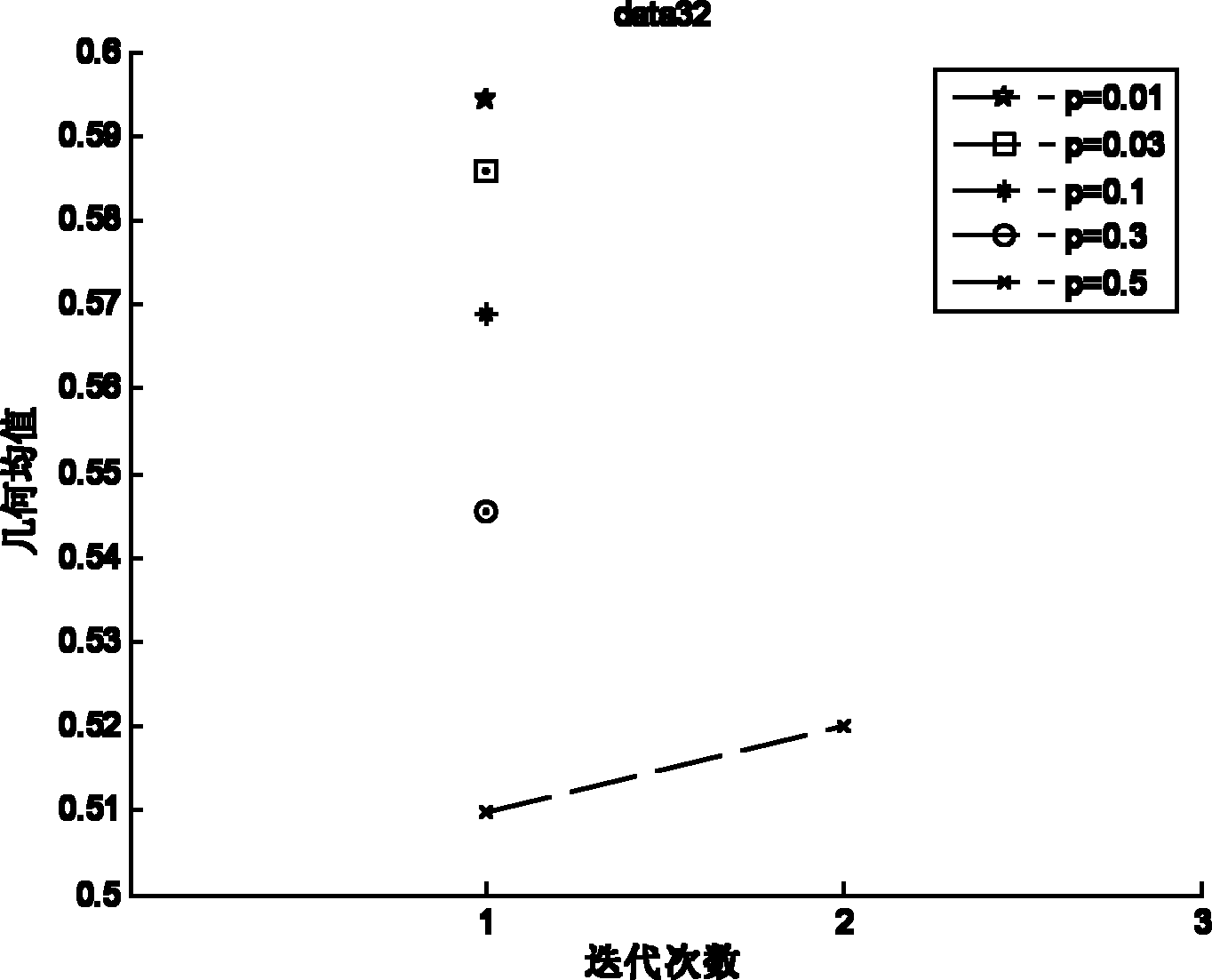

Semi-supervised classification method of unbalance data

InactiveCN101980202AImprove generalization abilityTedious and time-consuming labeling workSpecial data processing applicationsSelf trainingAlgorithm

The invention discloses a semi-supervised classification method of unbalance data, which is mainly used for solving the problem of low classification precision of a minority of data which have fewer marked samples and high degree of unbalance in the prior art. The method is implemented by the following steps: (1) initializing a marked sample set and an unmarked sample set; (2) initializing a cluster center; (3) implementing fuzzy clustering; (4) updating the marked sample set and unmarked sample set according to the result of the clustering; (5) performing the self-training based on a support vector machine (SVM) classifier; (6) updating the marked sample set and unmarked sample set according to the result of the self-training; (7) performing the classification of support vector machines Biased-SVM based on penalty parameters; and (8) estimating a classification result and outputting the result. For unbalance data which have fewer marked samples, the method improves the classification precision of a minority of data. And the method can be used for classifying and identifying unbalance data having few training samples.

Owner:XIDIAN UNIV

Method for the real-time identification of seizures in an electroencephalogram (EEG) signal

ActiveUS20120101401A1Highly accurate real-time seizure identificationAccurate classificationElectroencephalographyMedical data miningFeature vectorAlgorithm

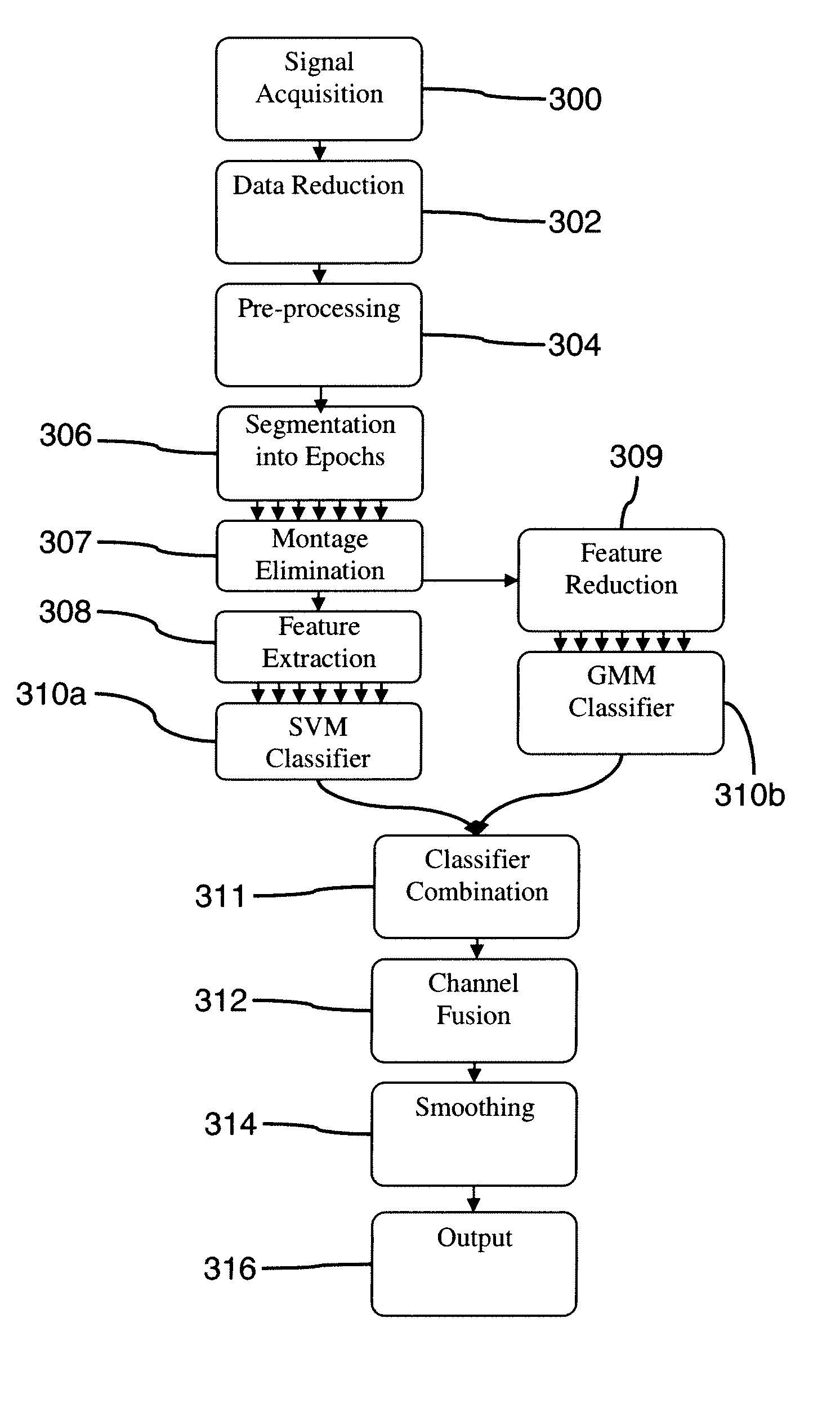

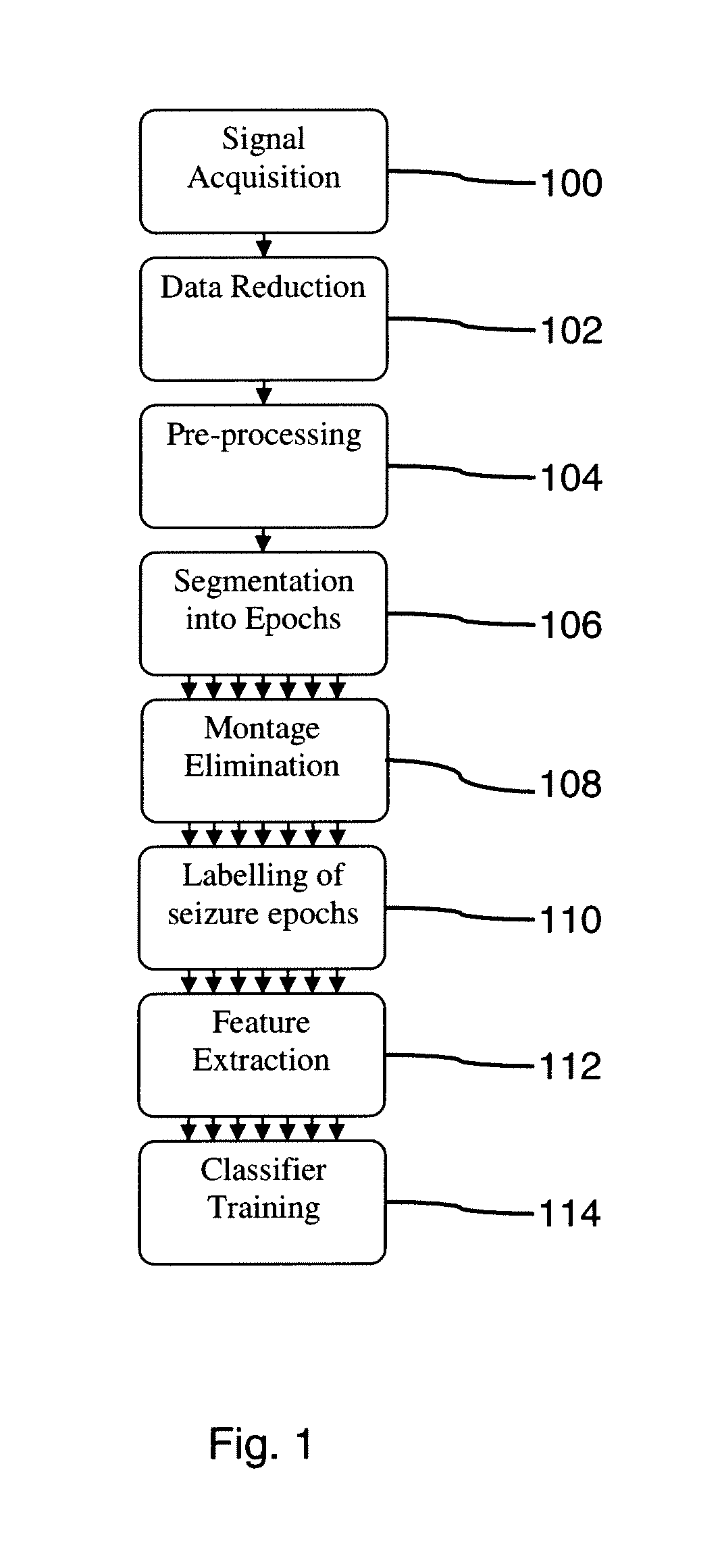

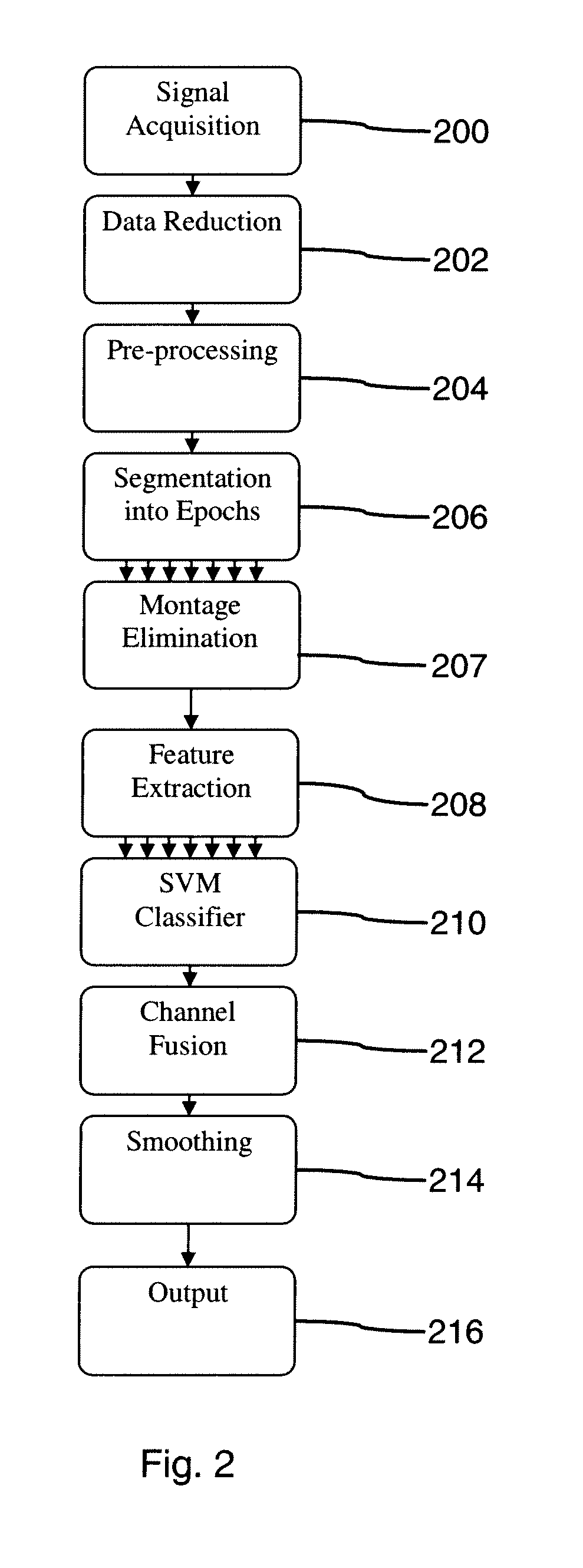

The present invention relates to a method for the real-time identification of seizures in an Electroencephalogram (EEG) signal. The method provides for patient-independent seizure identification by use of a multi-patient trained generic Support Vector Machine (SVM) classifier. The SVM classifier is operates on a large feature vector combining features from a wide variety of signal processing and analysis techniques. The method operates sufficiently accurately to be suitable for use in a clinical environment. The method may also be combined with additional classifiers, such a Gaussian Mixture Model (GMM) classifier, for improved robustness, and one or more dynamic classifiers such as an SVM using sequential kernels for improved temporal analysis of the EEG signal.

Owner:NATIONAL UNIVERSITY OF IRELAND

Vehicle detection method fusing radar and CCD camera signals

ActiveCN103559791AImprove reliabilityHigh precisionDetection of traffic movementCharacter and pattern recognitionHat matrixComputer graphics (images)

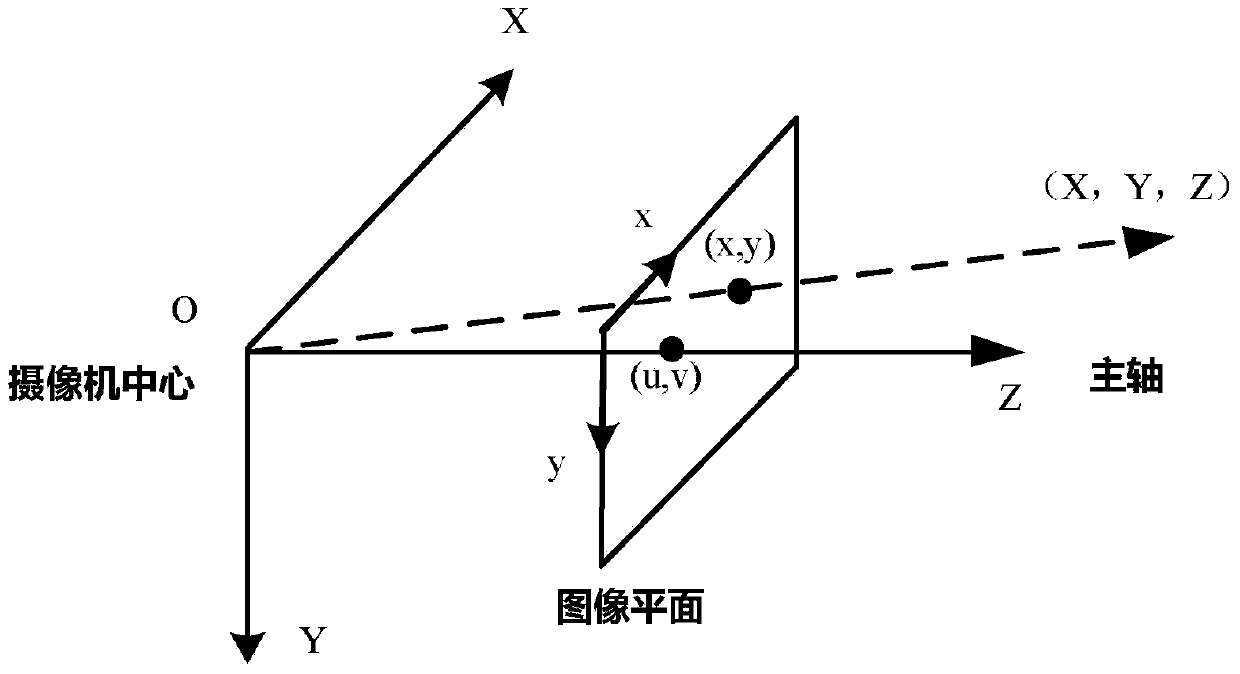

The invention discloses a vehicle detection method fusing radar and CCD camera signals. The method includes the steps of inputting the radar and CCD camera signals, correcting a camera to obtain a projection matrix of a road plane coordinate and a projection matrix of an image coordinate, converting a road plane world coordinate into an image plane coordinate, building positive and negative sample sets suitable for a vehicle HOG describer, carrying out batch feature extraction on the vehicle sample sets to build an HOG sample set, building an SVM classification model of a linear support vector machine to train an SVM, extracting regions of interest of barriers detected by radar in a video image, inputting the regions of interest into an SVM classifier to judge a target category, outputting an identifying result, and measuring the distance of a target which is judged to be a vehicle by means of the radar. Combination detection is carried out by means of the radar and CCD camera signals, depth information of the vehicle is obtained, meanwhile, profile information of the vehicle can be well detected, and reliability and accuracy of vehicle detection and positioning are improved.

Owner:BEIJING UNION UNIVERSITY

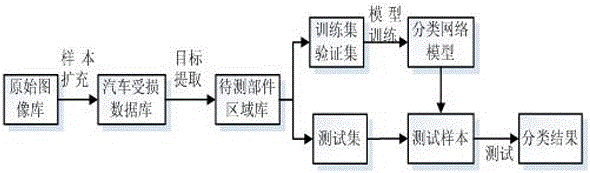

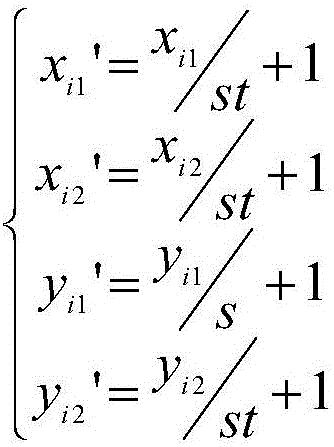

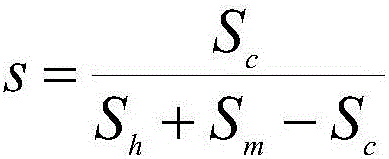

Automobile surface damage classification method and device based on deep learning

ActiveCN106127747AImprove anti-interference abilityVerify validityImage enhancementImage analysisFeature vectorRegioselectivity

The invention relates to the field of image detection, and especially relates to an automobile surface damage classification method and device based on deep learning. According to the method and the device, the classification method and device are provided for the problems in the prior art. Characteristic learning and classification are carried out on input to-be-detected images. Specifically, candidate areas are extracted from the to-be-detected images to by employing an area selective search algorithm, and location information of the candidate areas are recorded; the to-be-detected images are input into a characteristic diagram extraction network model without an output layer, thereby extracting the characteristic vectors of the candidate areas of the to-be-detected images; the characteristic vectors of the candidate areas are input into an SVM classifier to find target characteristic vectors; the locations of the corresponding candidate areas on the to-be-detected images, namely, the target areas of the to-be-detected images, are found according to the locations of the target characteristic vectors in the characteristic diagram; and the target areas of the to-be-detected images are input into an optimum classification network model, and the probabilities of the areas on damage levels are output.

Owner:高前文

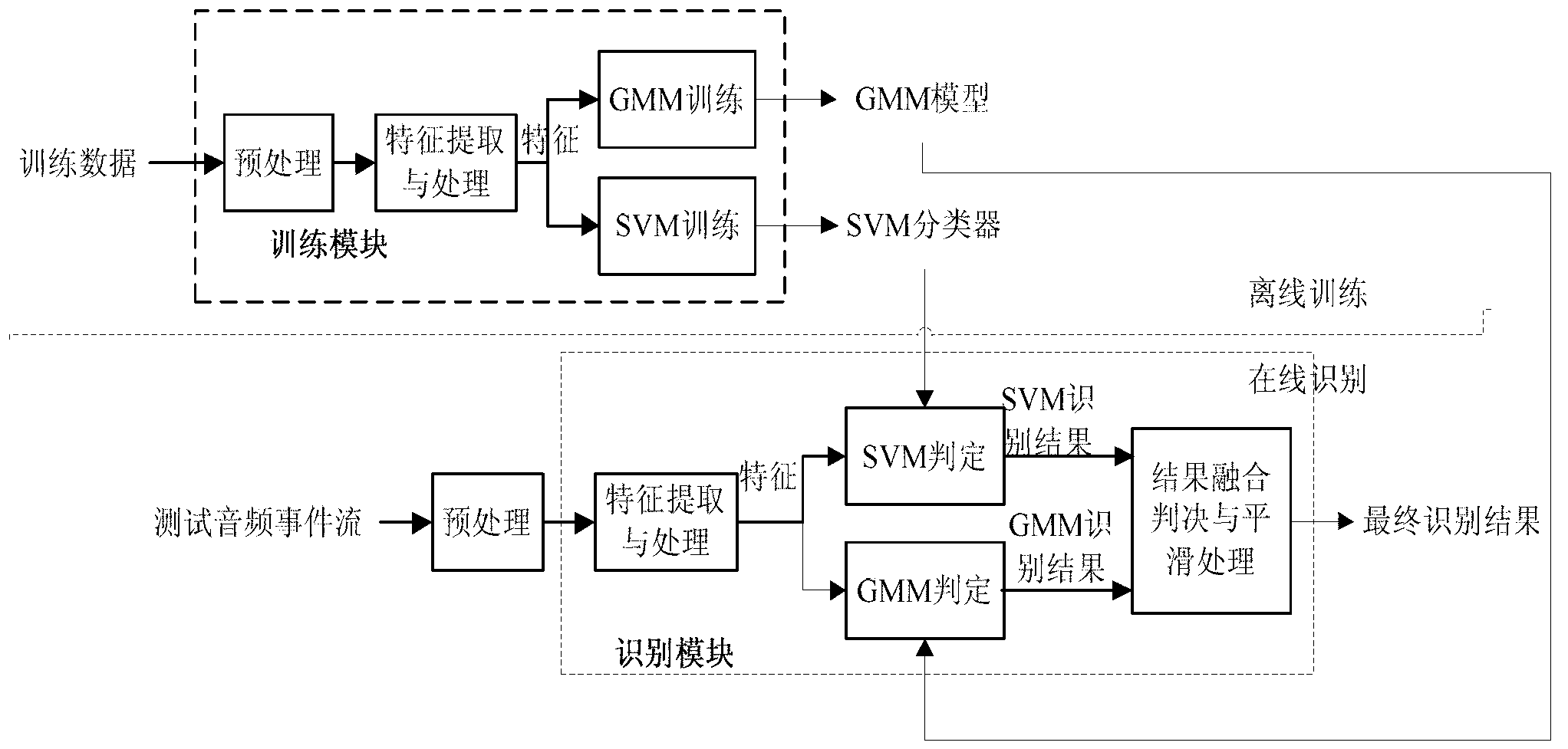

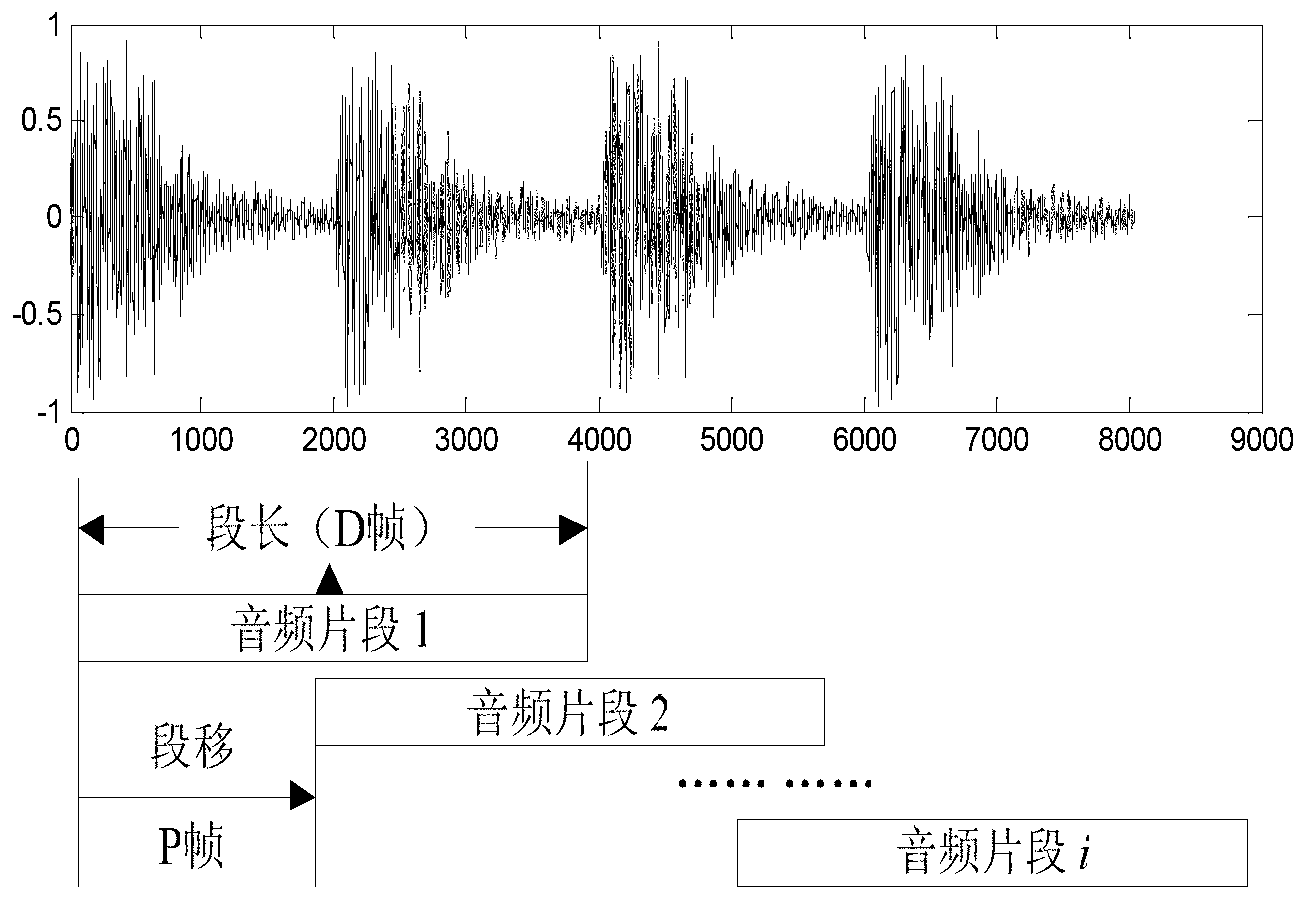

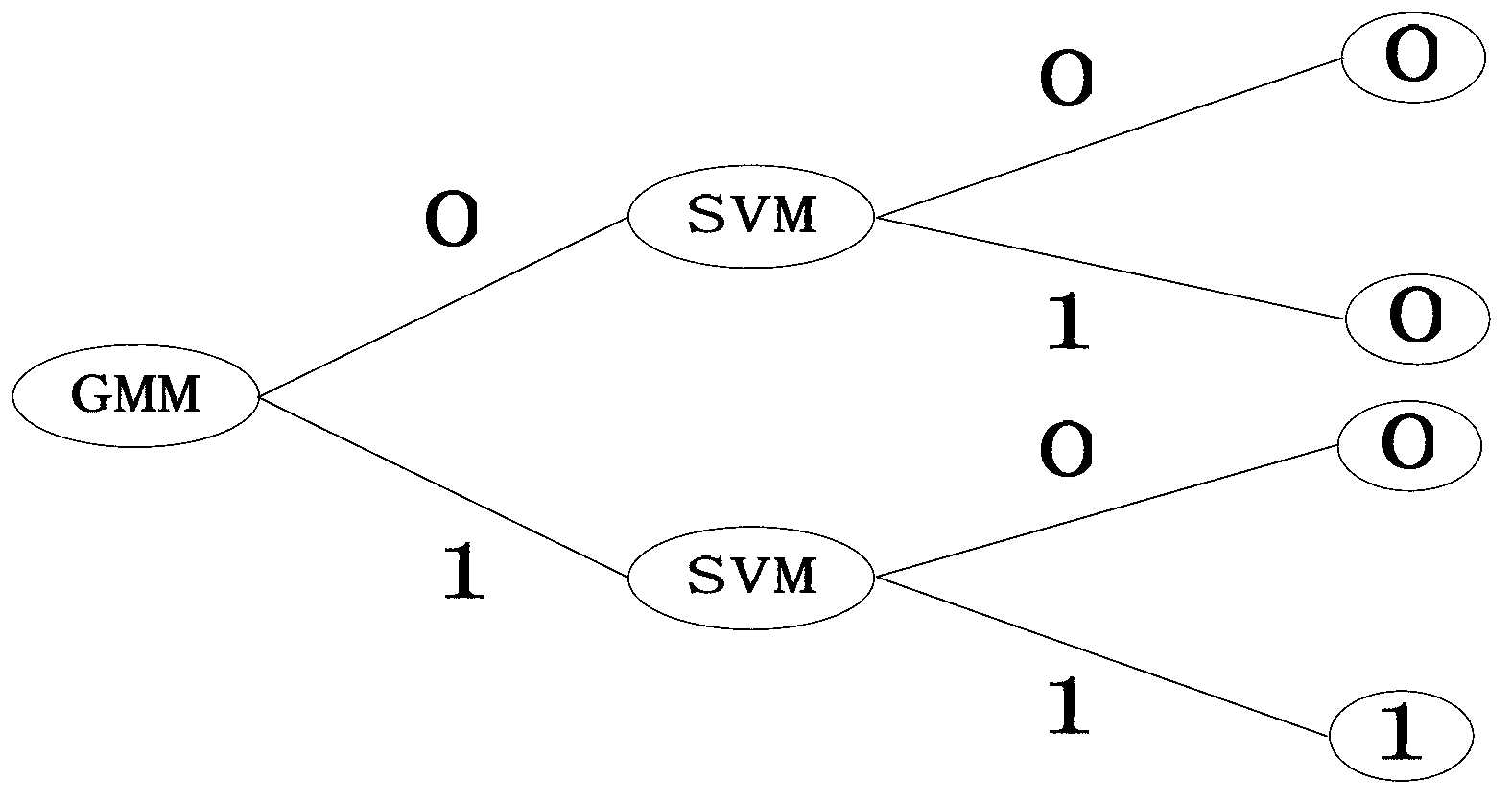

Special audio event layered and generalized identification method based on SVM (Support Vector Machine) and GMM (Gaussian Mixture Model)

InactiveCN102799899AReduce distractionsEasy extractionCharacter and pattern recognitionSvm classifierData mining

The invention relates to a special audio event layered and generalized identification method based on a combination of an SVM (Support Vector Machine) and a GMM (Gaussian Mixture Model), and belongs to the technical field of a computer and audio event identification. The special audio event layered and generalized identification method comprises the following steps of: firstly, obtaining an audio characteristic vector file of a training sample; secondly, respectively carrying out model training on a great quantity of audio characteristic vector files (of the training samples) with various types by using a GMM method and an SVM method, so as to obtain the GMM model with generalization capability and an SVM classifier, and complete offline training; and finally, carrying out layered identification on the audio characteristic vector files to be identified by using the GMM model and the SVM classifier. With the adoption of the method provided by the invention, the problems that the conventional special audio event identification is low in identification efficiency on a continuous audio stream, very short in continuing time, high in audio event false dismissal probability can be solved. The method can be applied to searching a special audio and monitoring a network audio based on contents.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

System and method for detecting still objects in images

The present invention provides an improved system and method for object detection with histogram of oriented gradient (HOG) based support vector machine (SVM). Specifically, the system provides a computational framework to stably detect still or not moving objects over a wide range of viewpoints. The framework includes providing a sensor input of images which are received by the “focus of attention” mechanism to identify the regions in the image that potentially contain the target objects. These regions are further computed to generate hypothesized objects, specifically generating selected regions containing the target object hypothesis with respect to their positions. Thereafter, these selected regions are verified by an extended HOG-based SVM classifier to generate the detected objects.

Owner:SRI INTERNATIONAL

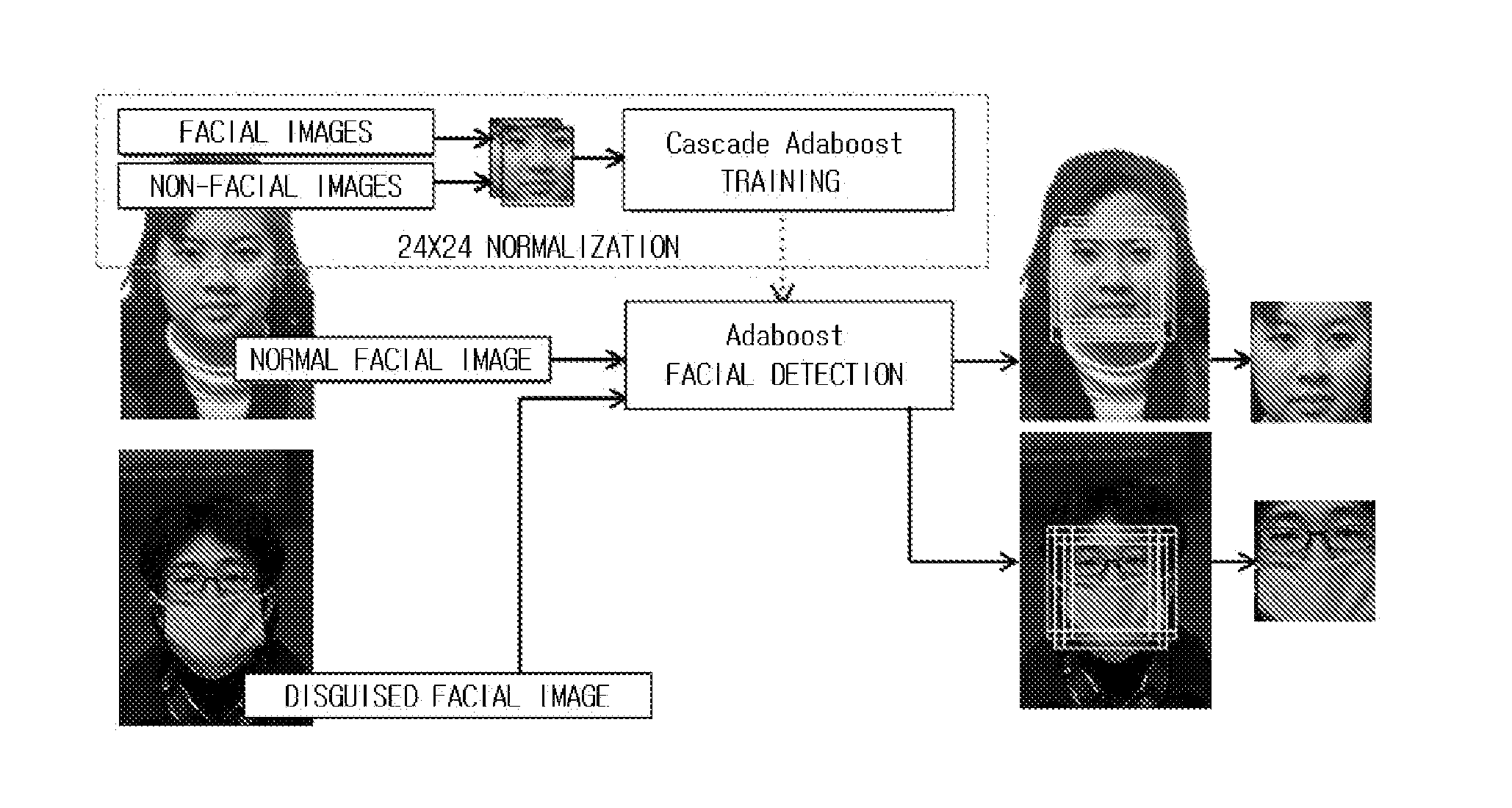

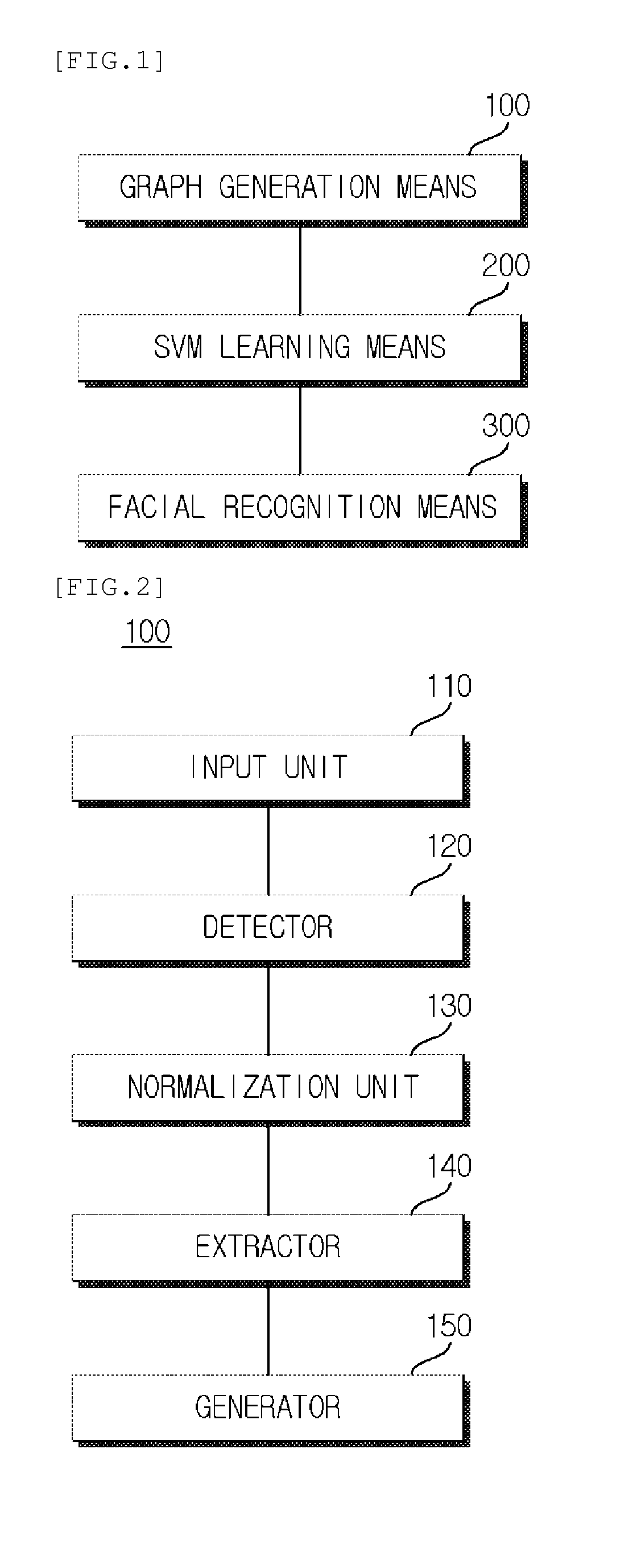

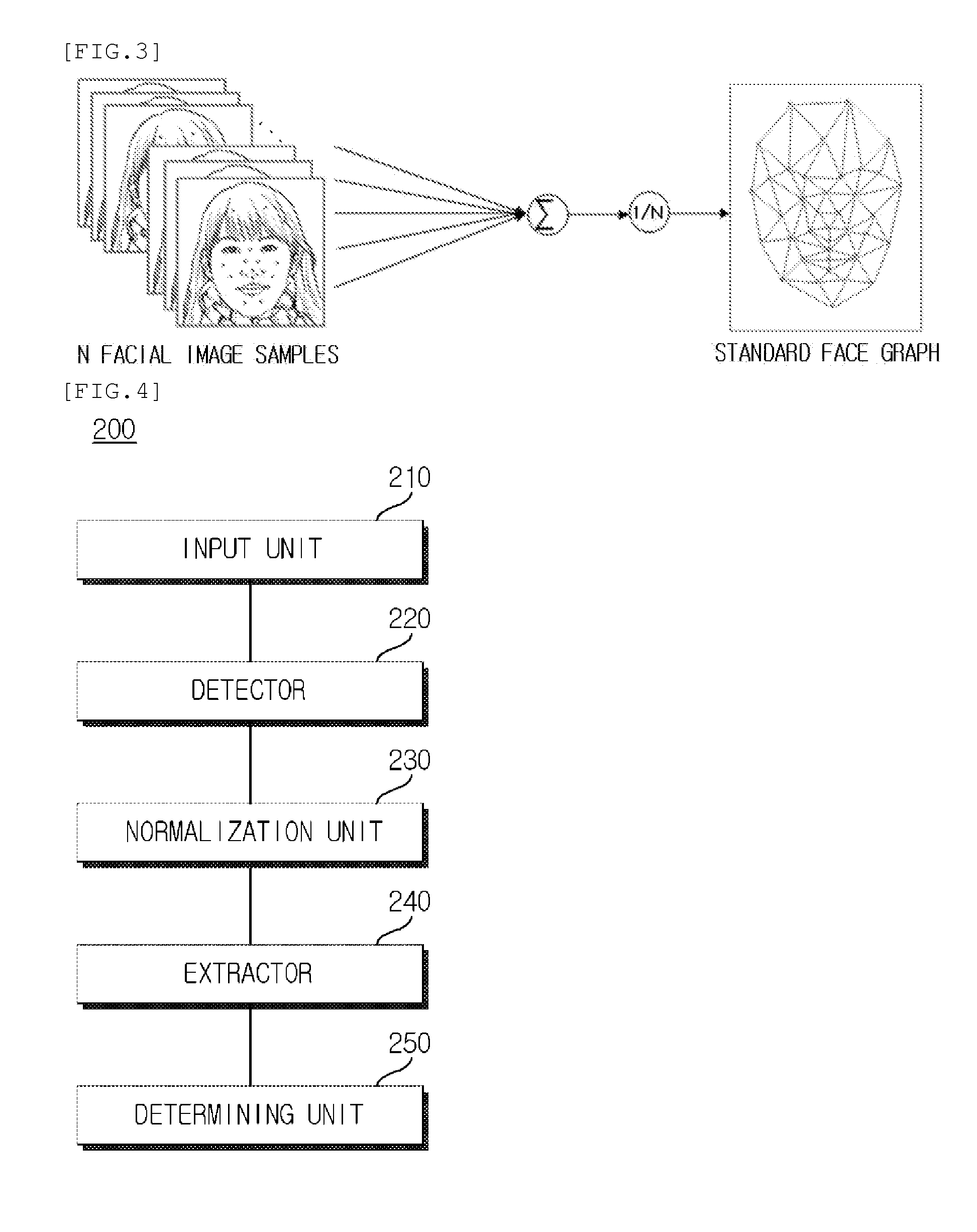

System for recognizing disguised face using gabor feature and svm classifier and method thereof

Disclosed are a system and a method for recognizing a disguised face using a Gabor feature and a support vector machine (SVM) classifier according to the present invention.The system for recognizing a disguised face includes: a graph generation means to generate a single standard face graph from a plurality of facial image samples; a support vector machine (SVM) learning means to determine an optimal classification plane for discriminating a disguised face from the plurality of facial image samples and disguised facial image samples; and a facial recognition means to determine whether an input facial image is disguised using the standard face graph and the optimal classification plane when the facial image to be recognized is input.

Owner:ELECTRONICS & TELECOMM RES INST

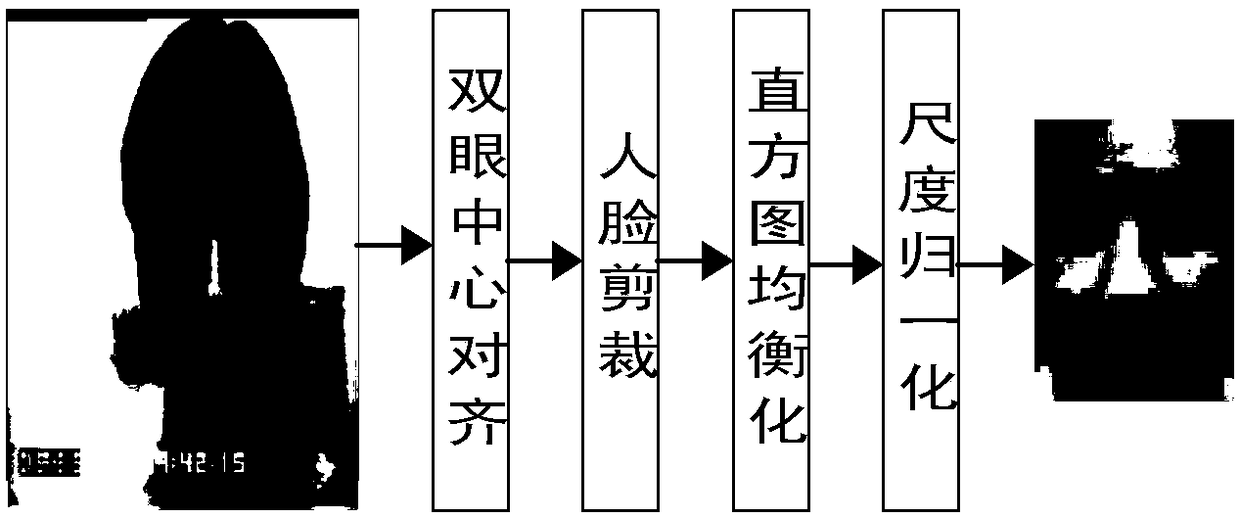

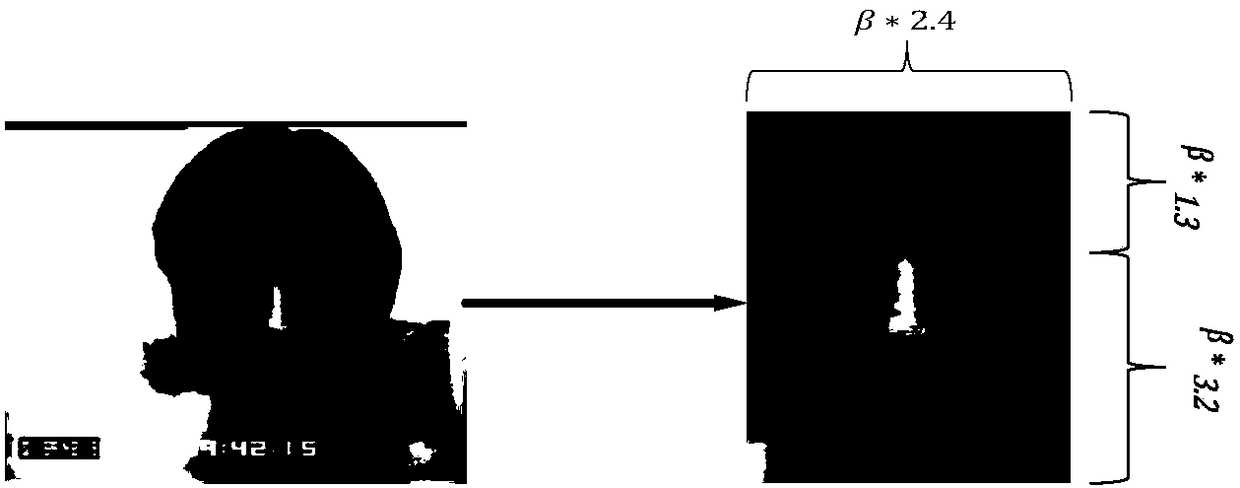

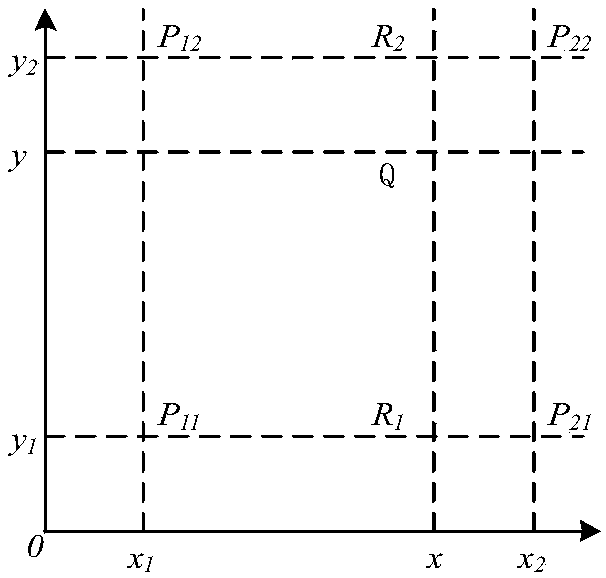

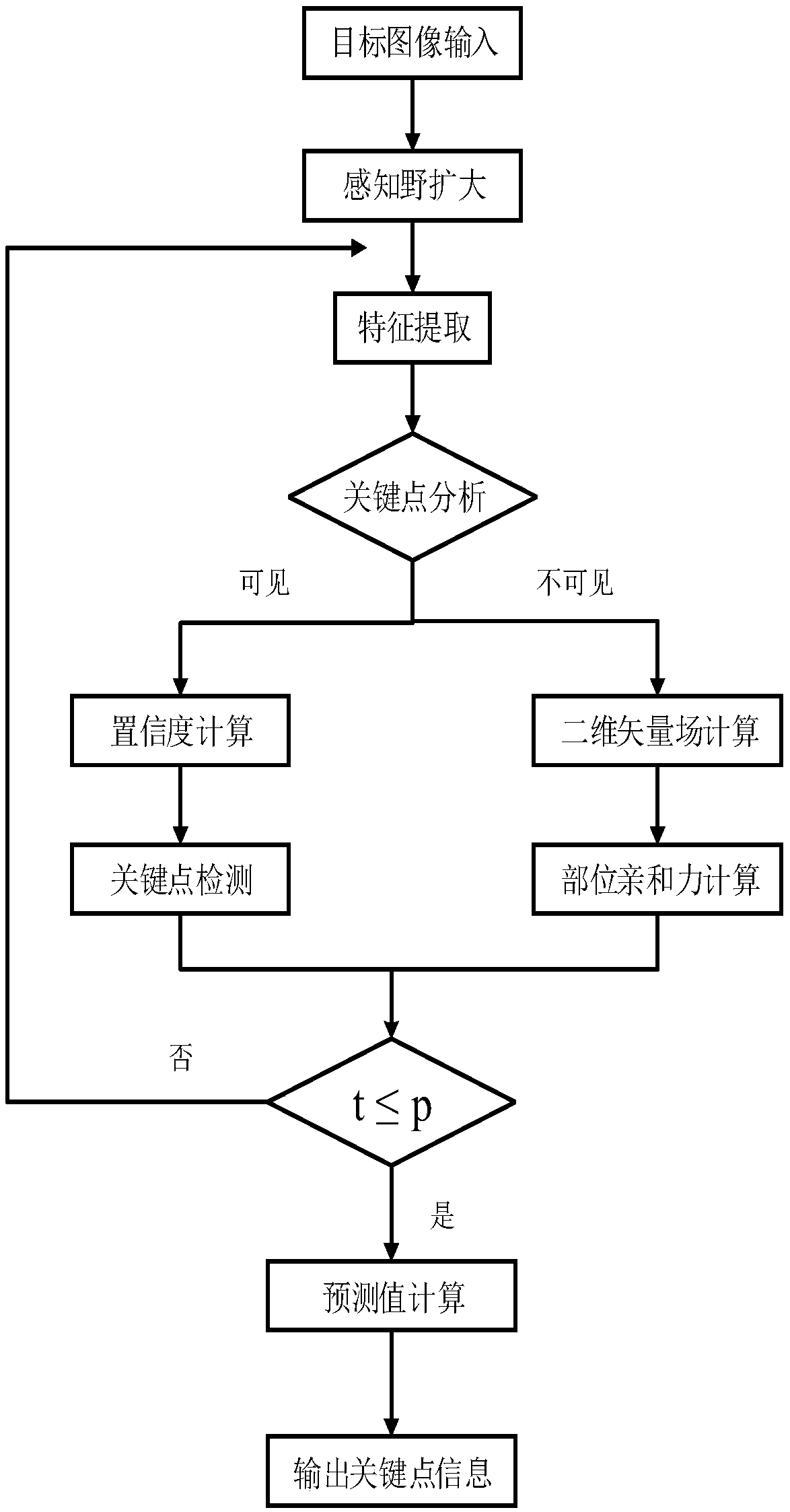

Improved CNN-based facial expression recognition method

InactiveCN108108677AReduce training parametersSmall amount of calculationImage enhancementImage analysisFace detectionSvm classifier

The invention provides an improved CNN-based facial expression recognition method, and relates to the field of image classification and identification. The improved CNN-based facial expression recognition method comprises the following steps: s1, acquiring a facial expression image from a video stream by using a face detection alignment algorithm JDA algorithm integrating the face detection and alignment functions; s2, correcting the human face posture in a real environment by using the face according to the facial expression image obtained in the step s1, removing the background information irrelevant to the expression information and adopting the scale normalization; s3, training the convolutional neural network model to obtain and store an optimal network parameter before extracting feature of the normalized facial expression image obtained in the step s2; s4 loading a CNN model and the optimal network parameters obtained by s3 for the optimal network parameters obtained in the steps3, and performing feature extraction on the normalized facial expression images obtained in the step s2; s5, classifying and recognizing the facial expression features obtained in the step s4 by using an SVM classifier. The method has high robustness and good generalization performance.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

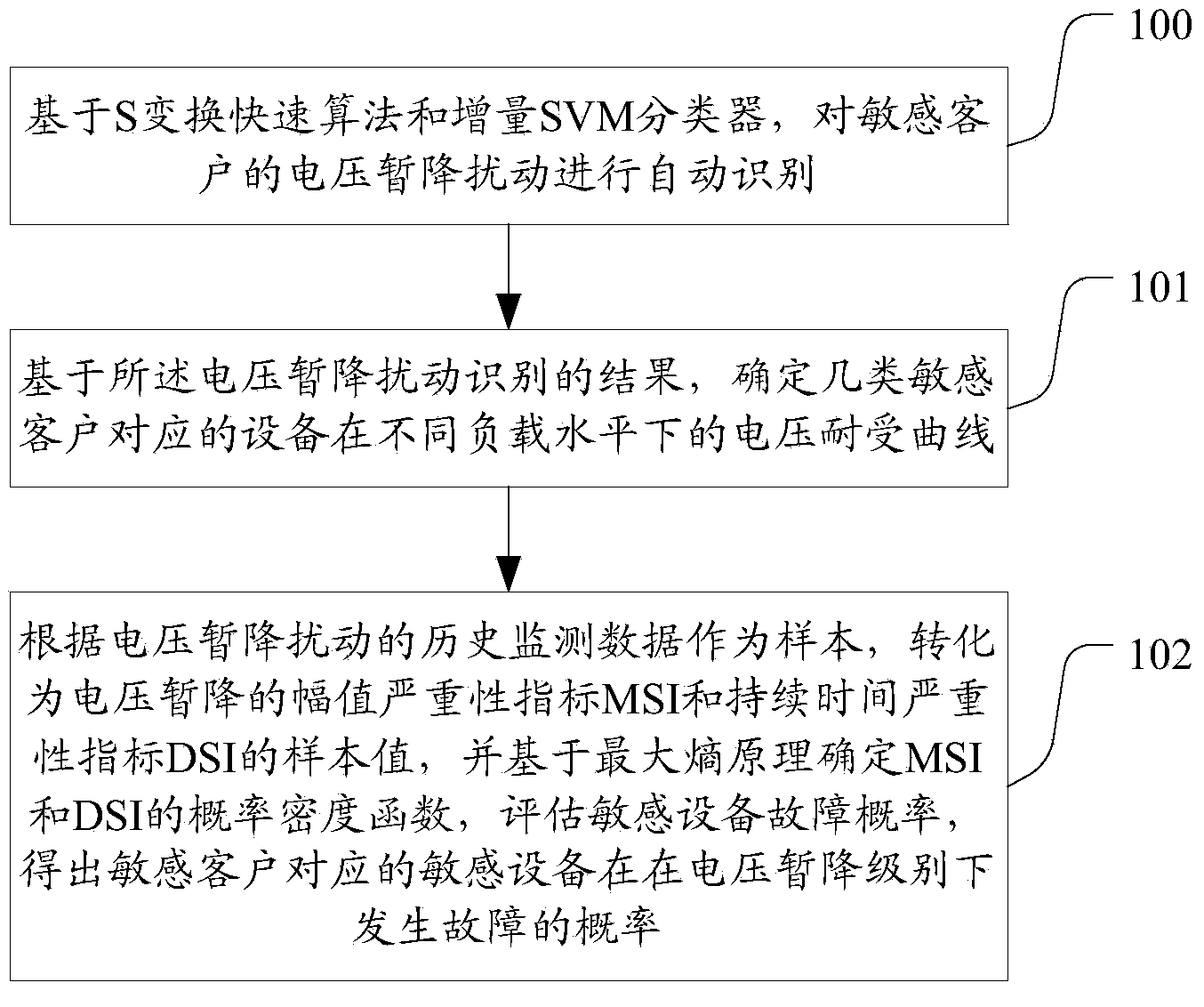

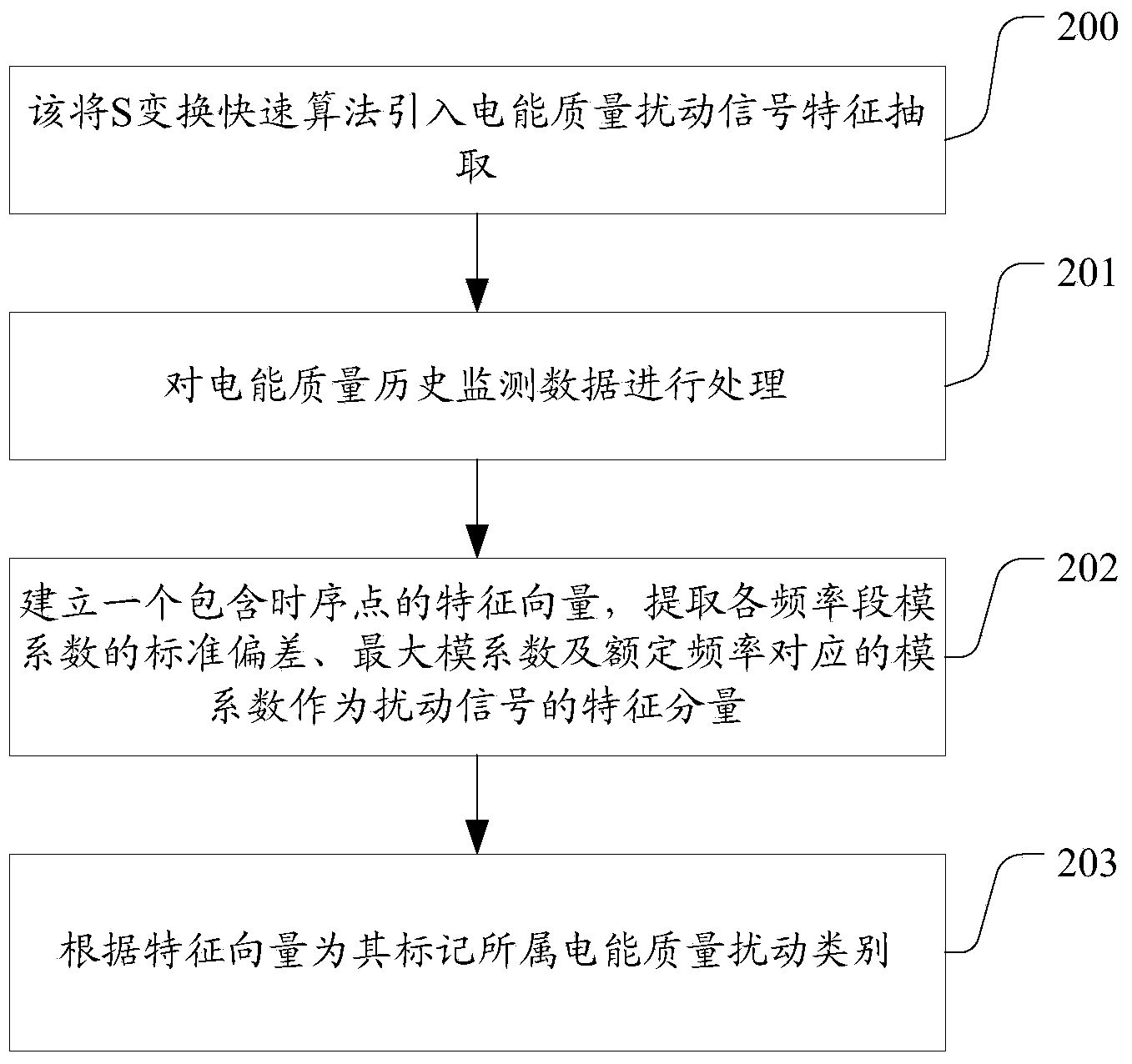

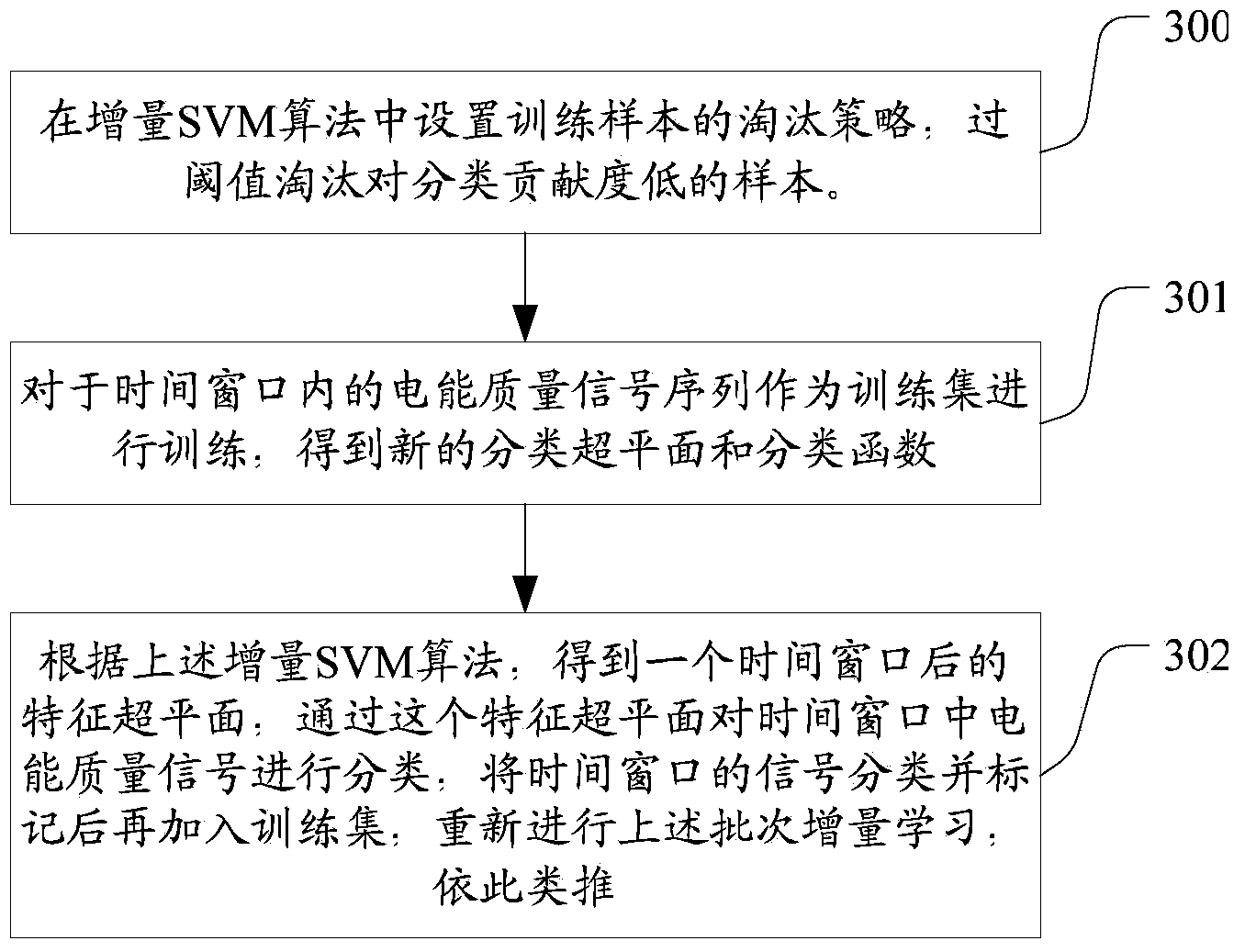

Method for early warning of sensitive client electric energy experience quality under voltage dip disturbance

InactiveCN103487682AReduce the risk of electricity supply and useAccurately monitor power quality disturbancesElectrical testingNormal densitySvm classifier

The invention provides a method for early warning of sensitive client electric energy experience quality under the voltage dip disturbance. The method comprises the steps that based on the S conversion rapid algorithm and an increment SVM classifier, voltage dip disturbances of sensitive clients are automatically identified; based on identification results of the voltage dip disturbances, voltage tolerance curves of devices corresponding to multiple types of sensitive clients at different load levels are determined; historical monitoring data of the voltage dip disturbances serve as samples, the samples are converted into sample values of a voltage dip amplitude ponderance index MSI and a lasting time ponderance index DSI, a probability density function of the MSI and the DSI is determined on the basis of the maximum entropy principle, the sensitive device fault probability is evaluated, and the probabilities of the sensitive devices corresponding to the sensitive clients at the voltage dip level are obtained. By the adoption of the method for early warning of sensitive client electric energy experience quality under the voltage dip disturbance, the electric energy quality disturbance condition can be accurately monitored, whether a client load is influenced by the disturbance or not is determined according to the load sensitivity degree of each client, and potential risks of load operation are found.

Owner:SHENZHEN POWER SUPPLY BUREAU +1

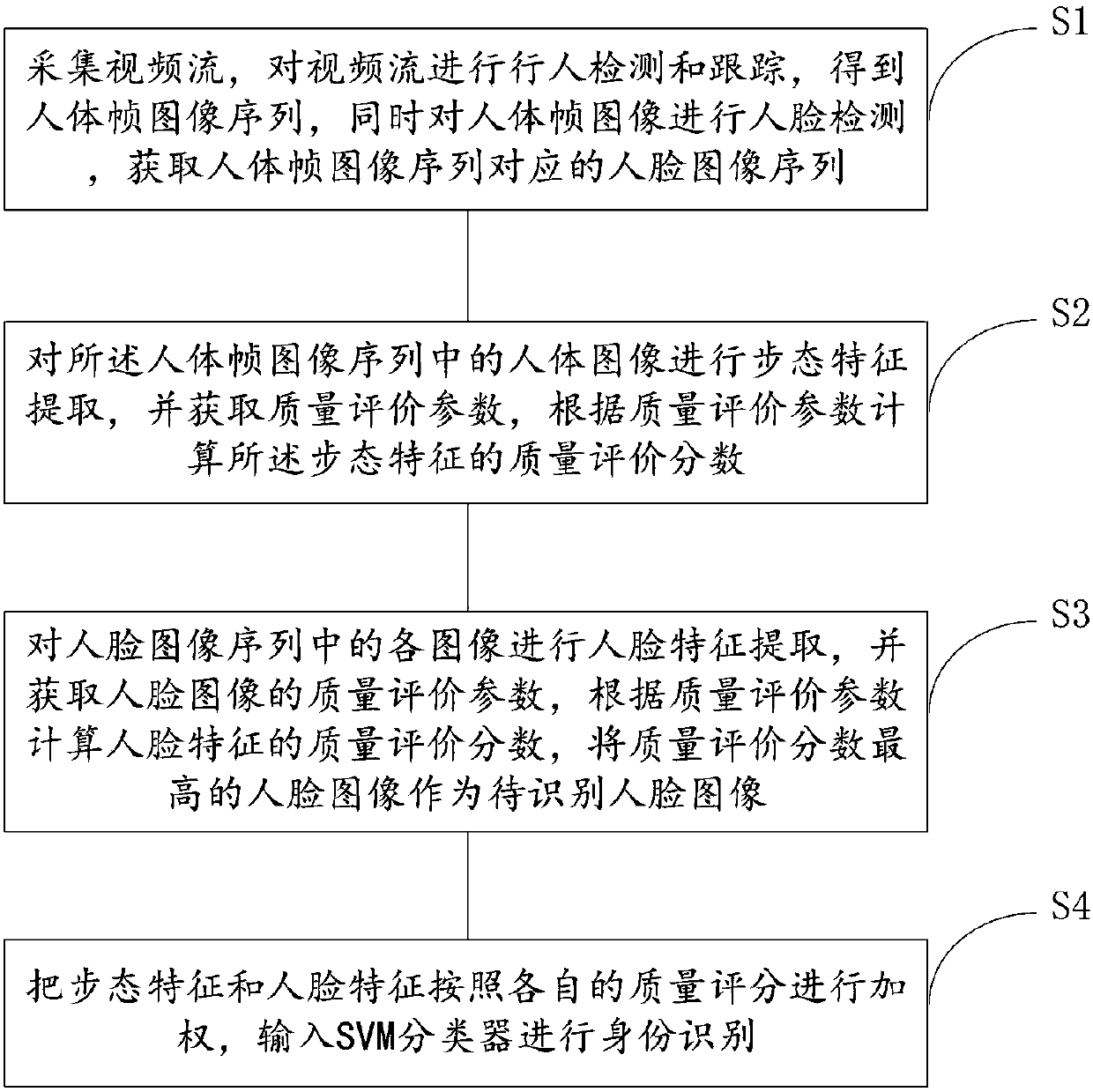

Identity identification method and apparatus based on combination of gait and face

InactiveCN107590452AImprove robustnessImprove accuracyCharacter and pattern recognitionHuman bodyFace detection

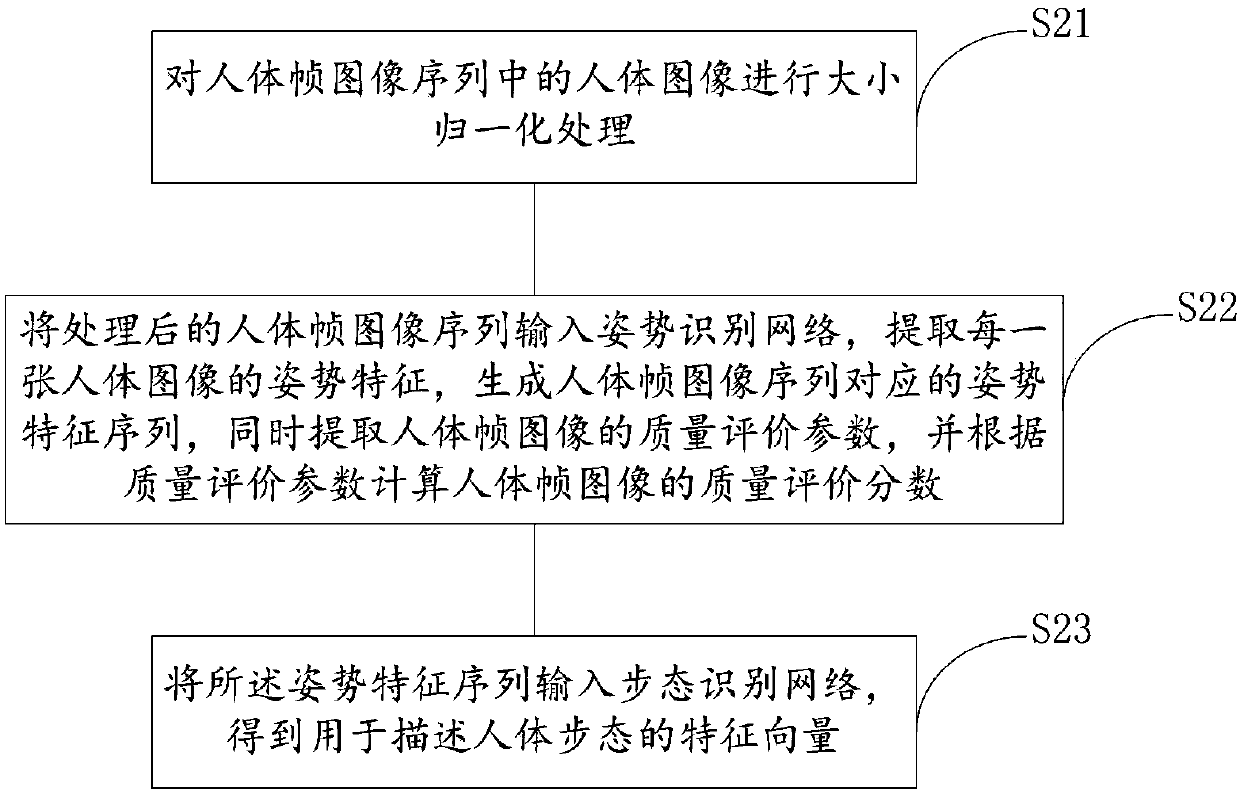

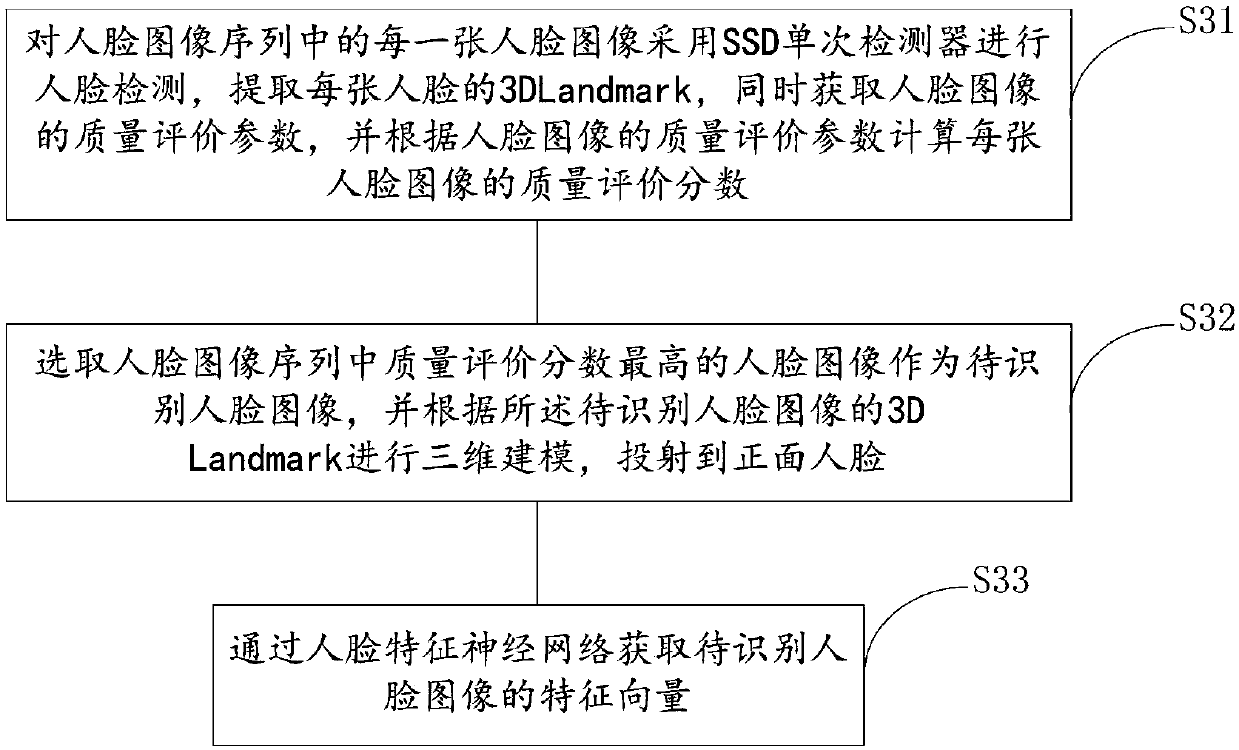

The invention relates to an identity identification method and apparatus with the combination of the gait and the face based on deep learning. The apparatus comprises a video acquisition and preprocessing module, a gait feature extraction module, a face feature extraction module and an identification module. The method includes: acquiring a video stream, and performing pedestrian detection and tracking and face detection on the video stream; performing gait feature extraction on human body images, and calculating quality evaluation scores of gait features; performing face feature extraction onface images, calculating quality evaluation scores of face features, and regarding the face image with the highest quality evaluation score as a to-be-identified face image; and performing weightingon the gait features and the face features according to respective quality scores, and inputting the weighted scores into a SVM classifier for identity identification. According to the method and theapparatus, the quality evaluation scores of the gait features and the face features are calculated, weighting is performed according to the quality scores, advantages of face identification and gait identification are combined, the two identification technologies are complemented, the robustness of an identification system is increased, and the accuracy of figure identity identification is improved.

Owner:武汉神目信息技术有限公司

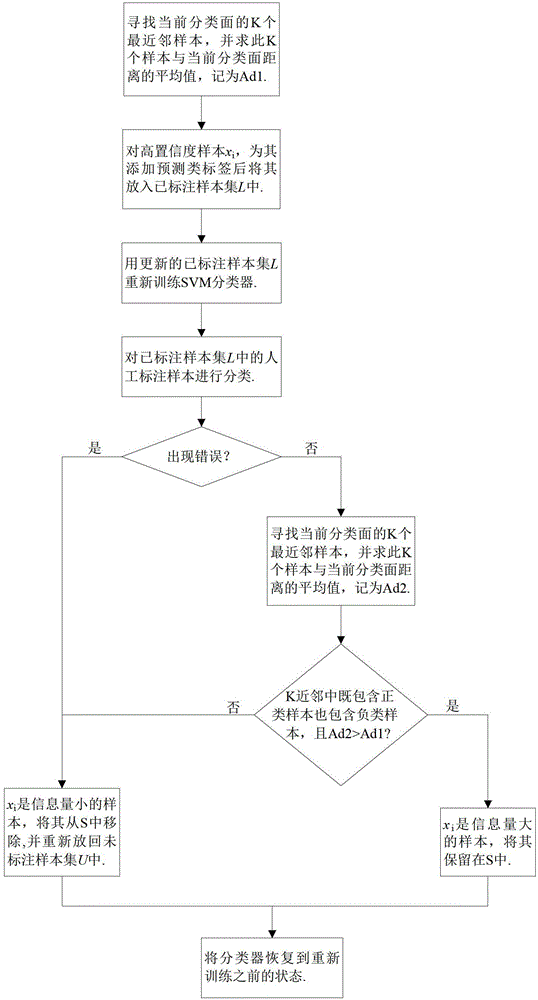

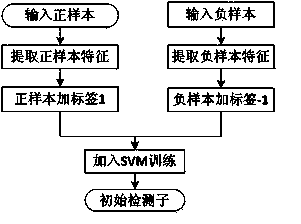

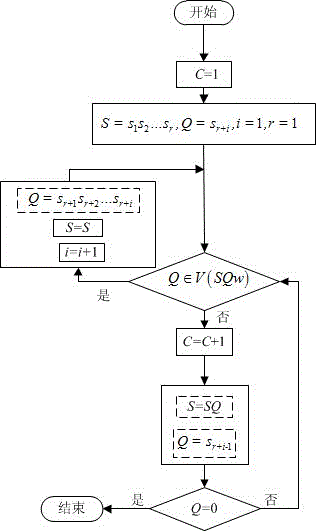

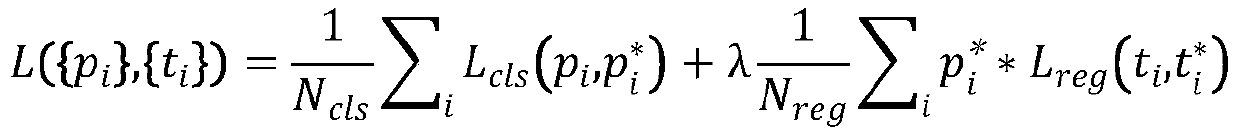

Training method of SVM (Support Vector Machine) classifier based on semi-supervised learning

InactiveCN103150578AReduce workloadFast convergenceDigital computer detailsCharacter and pattern recognitionSvm classifierSupport vector machine classifier

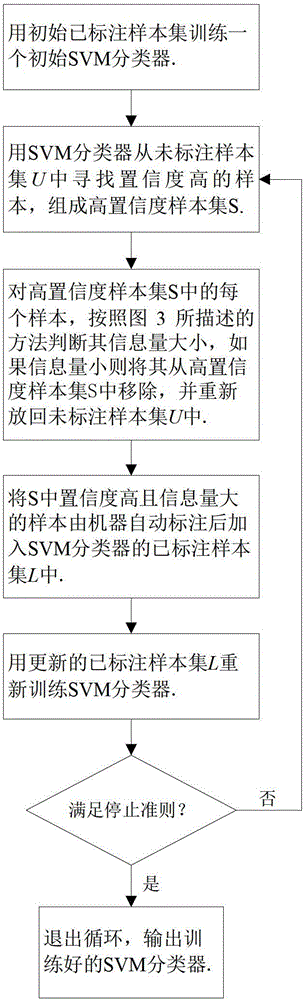

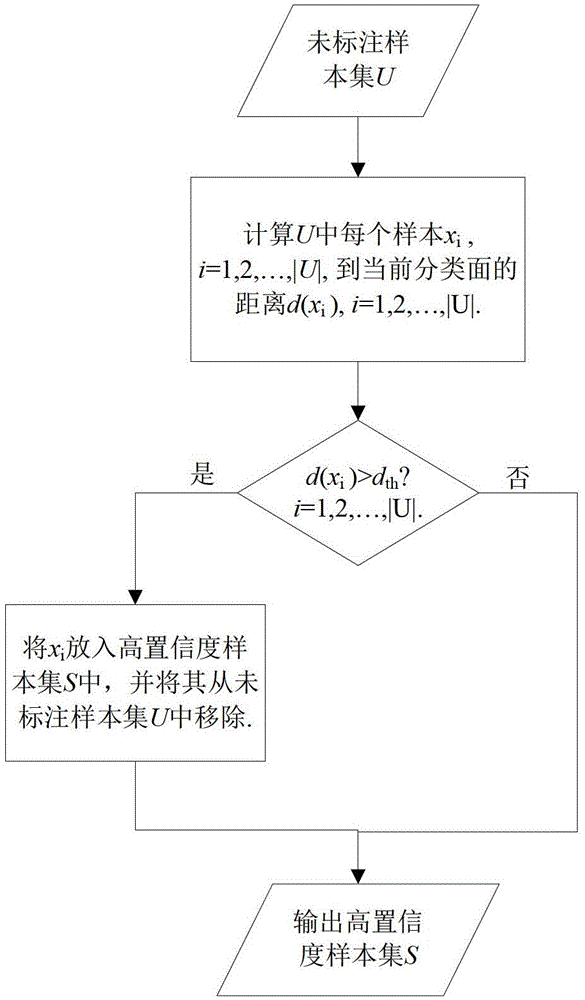

The invention especially discloses a training method of an SVM (Support Vector Machine) classifier based on semi-supervised learning. The training method comprises the following steps of: step 1, training an initial SVM classifier through an initial labelled sample set; step 2, looking for samples with high classifying confidence degrees from an unlabelled sample set U to constitute a sample set S with high confidence degree; step 3, judging an amount of information of each sample in the sample set S with high confidence degrees according to a method described in the graph 3, removing the samples from the sample set S with high confidence degrees if the amount of information is large , and placing the samples back into the unlabelled sample set U; step 4, adding the samples with high confidence degrees and large amount of information after the samples are automatically labeled by a machine in the sample set S into a labeled sample set L of the SVM classifier; step 5, using the renewed labeled sample set L to retrain the SVM classifier; and step 6, judging whether the SVM classifier either exists a loop or continuously iterates according to a stopping criterion.

Owner:SHANDONG NORMAL UNIV

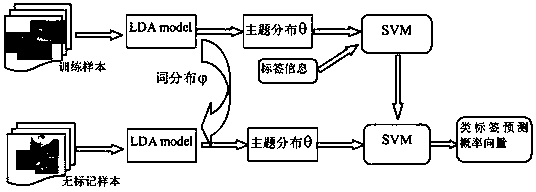

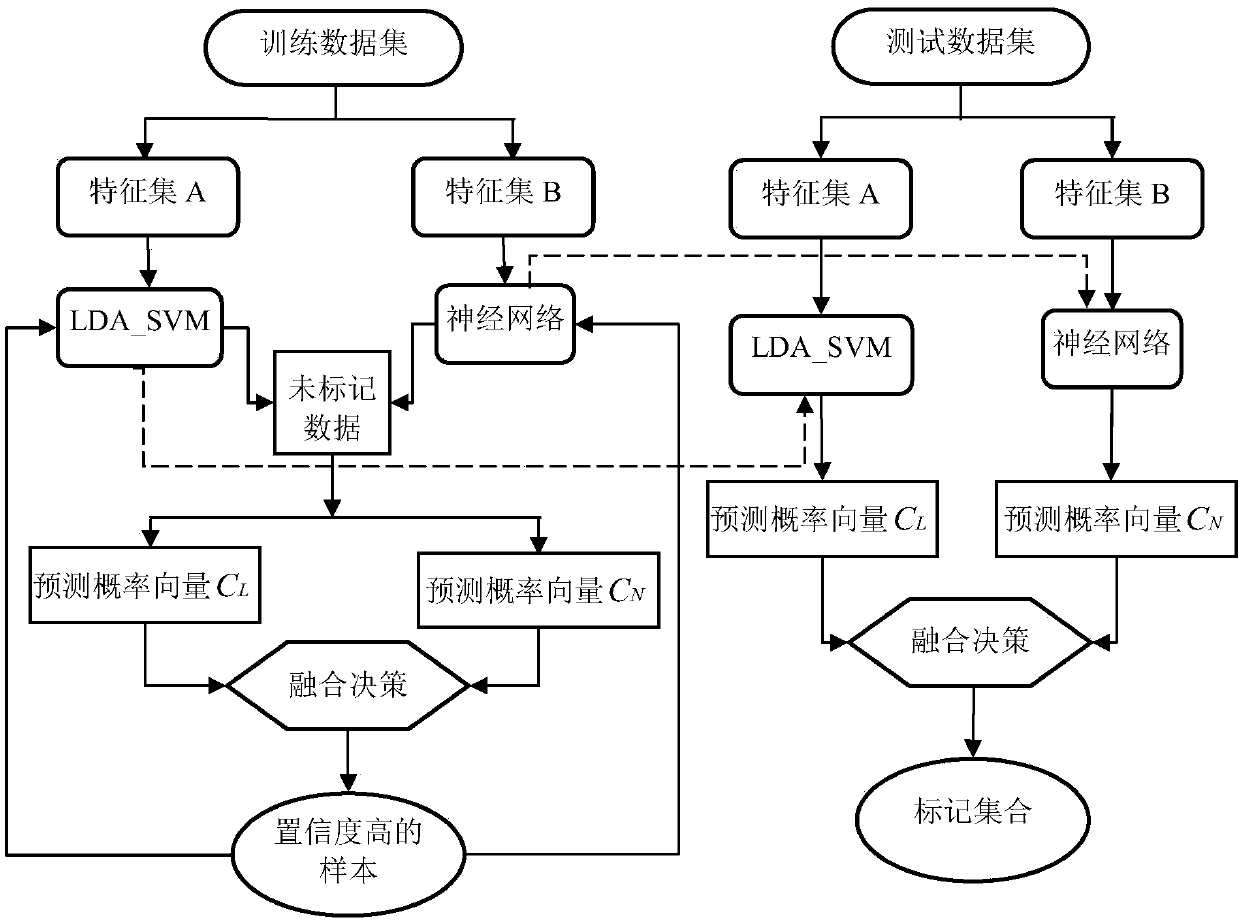

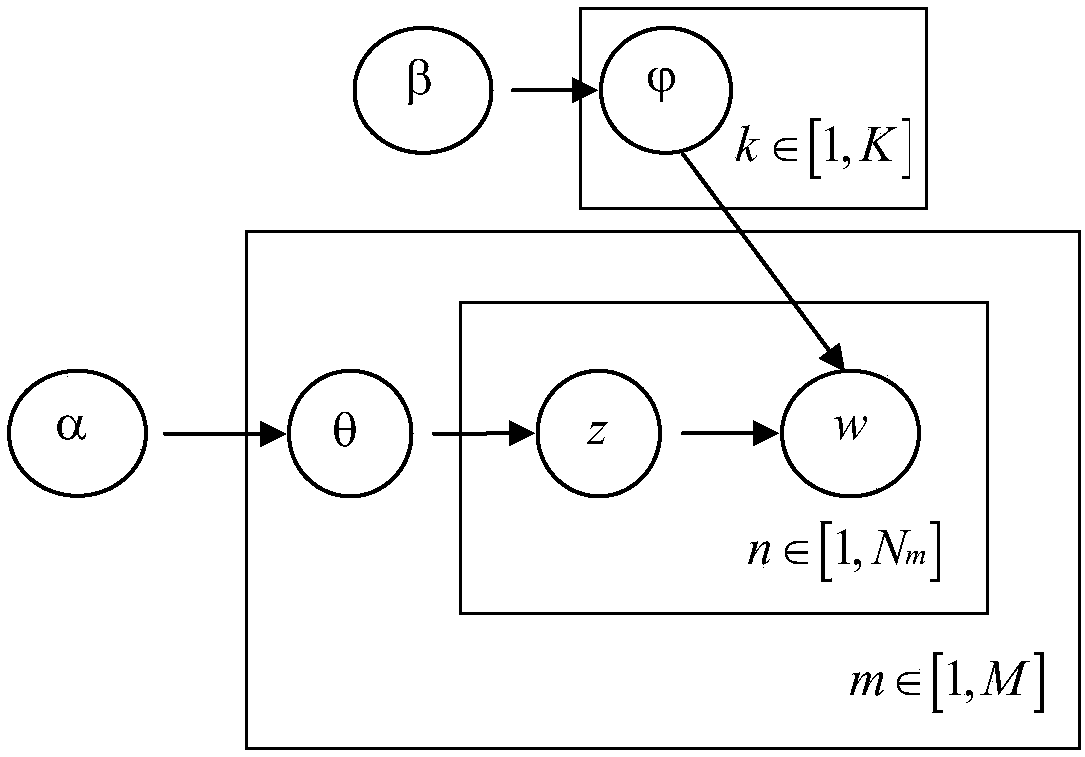

Automatic image annotation method based on semi-supervised learning

InactiveCN107644235AHigh expressionEffective dimensionality reductionCharacter and pattern recognitionData setSupervised learning

The invention discloses an automatic image annotation method based on semi-supervised learning. The method comprises the steps that a data set is divided into a training data set, an unlabeled data set and a test set; the SIFT feature and the HOG feature of a training sample are extracted to train an LDA_SVM classifier; color and texture features are extracted to train a neural network; unlabeleddata are used to enable two classifiers to label and predict the same unlabeled sample simultaneously; according to the contribution of the classifiers to the unlabeled sample classification accuracy,the classification results of two classifiers are weighted and fused by an adaptive weighted fusion policy, so as to acquire the final prediction label probability vector of the sample; and finally two classifiers are updated by the sample with high confidence and the predictive label thereof until the preset maximum number of iterations is reached. According to the invention, the method can makefull use of the unlabeled sample to excavate the inherent law of the image feature; the number of annotation samples required for the classifier training is effectively reduced; the annotation effectis great.

Owner:GUANGXI NORMAL UNIV

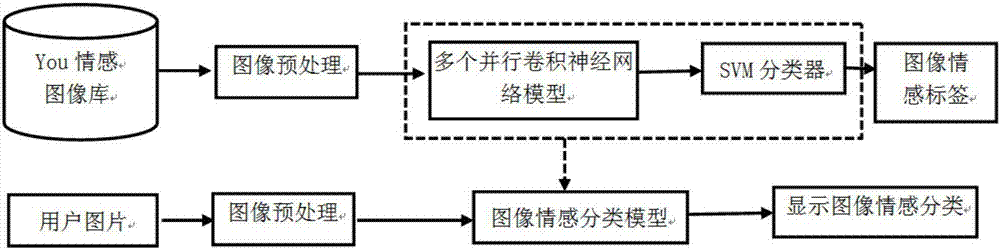

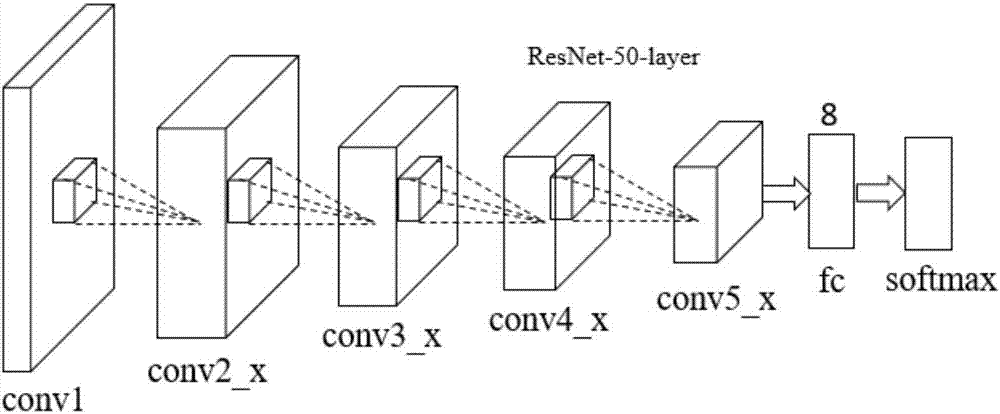

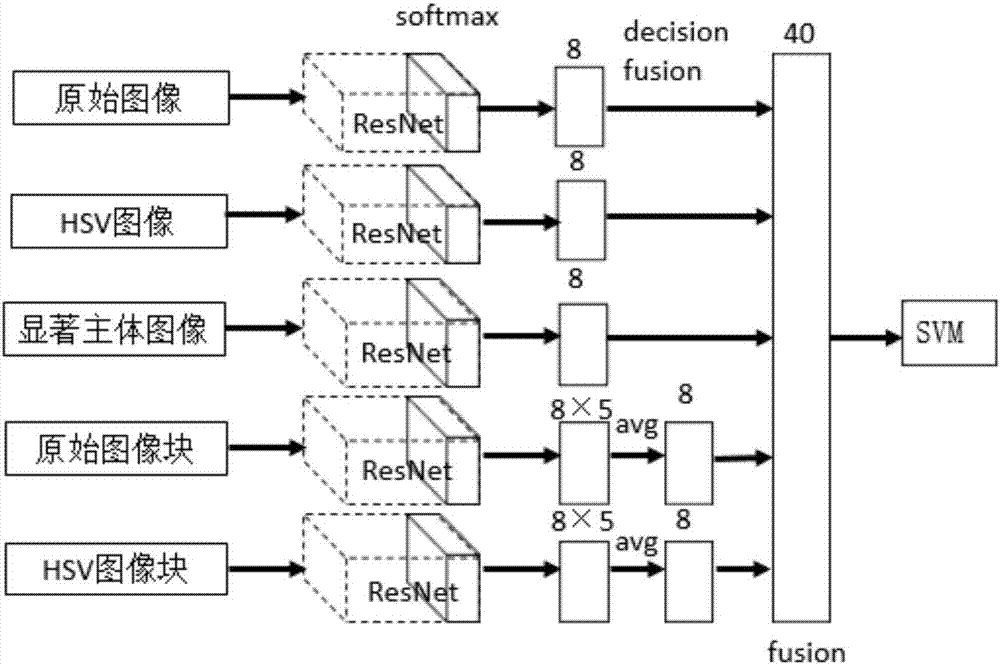

Multi-aspect deep learning expression-based image emotion classification method

InactiveCN107341506AImprove robustnessShorten the timeCharacter and pattern recognitionSvm classifierSupport vector machine classifier

The invention discloses a multi-aspect deep learning expression-based image emotion classification method. The method comprises the following steps of: (1) designing an image emotion classification model: the image emotion classification model comprises a parallel convolutional neural network model and a support vector machine classifier which is used for carrying out decision fusion on network features; (2) designing a parallel convolutional neural network structure: the parallel convolutional neural network structure comprises 5 networks with same a structure, and each network comprises 5 convolutional layer groups, a full connection layer and a softmax layer; (3) carrying out significant main body extraction and HSV format conversion on an original image; (4) training the convolutional neural network model; (5) fusing image emotion features learnt and expressed by the plurality of convolutional neural networks, and training the SVM classifier to carry out decision fusion on the image emotion features; and (6) classifying user images by using the trained image emotion classification model so as to realize image emotion classification. According to the method disclosed by the invention, the obtained image emotion classification result accords with the human emotion standard, and the judgement process is free of artificial participation, so that machine-based full-automatic image emotion classification is realized.

Owner:SOUTH CHINA UNIV OF TECH

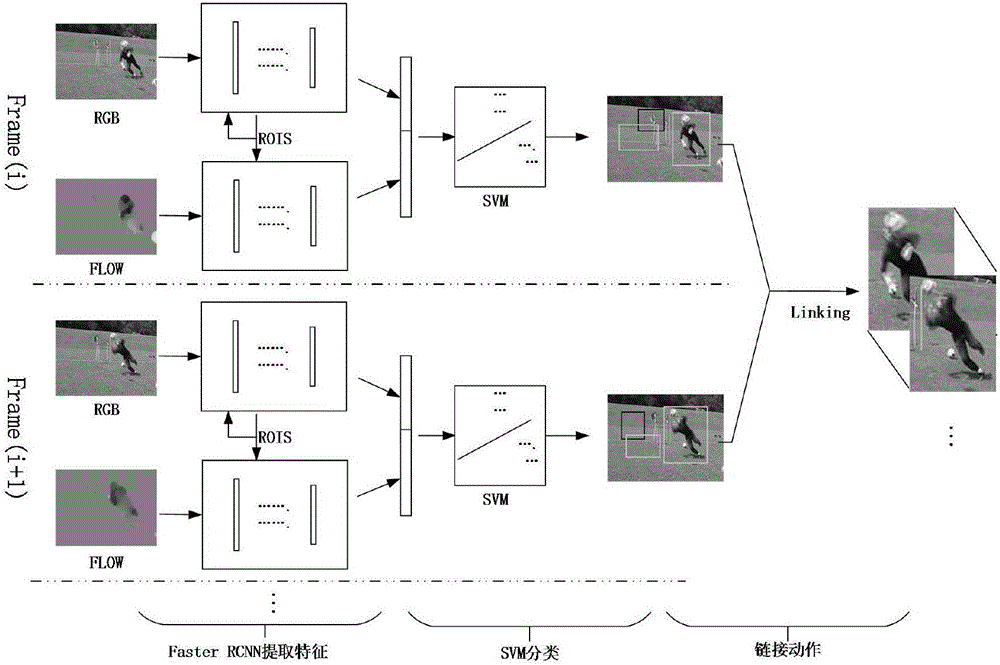

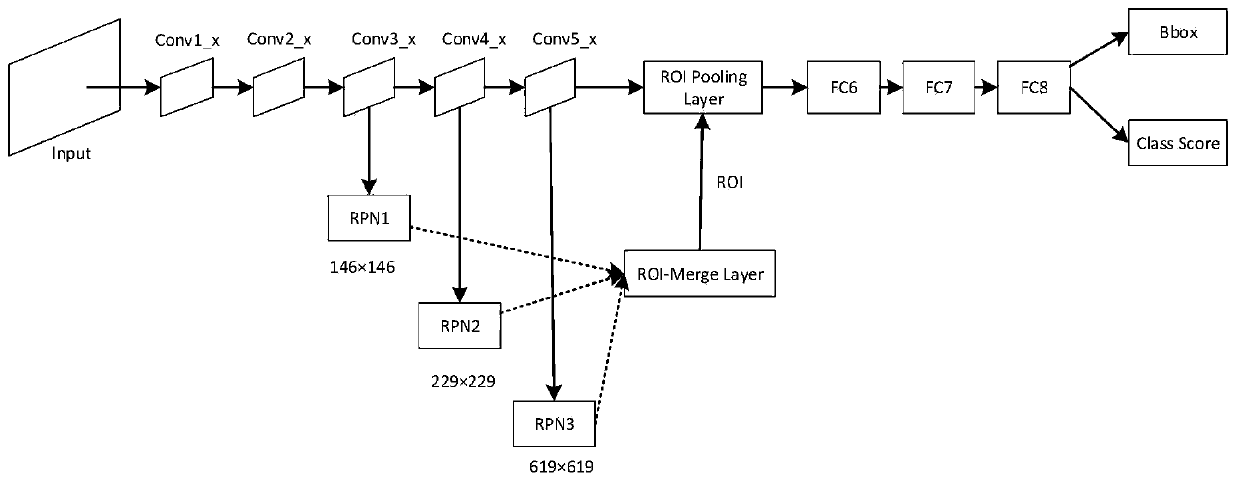

Action detection model based on convolutional neural network

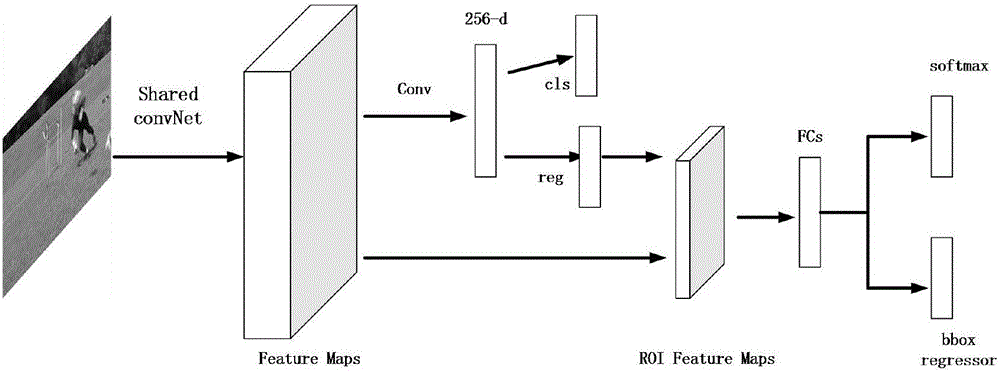

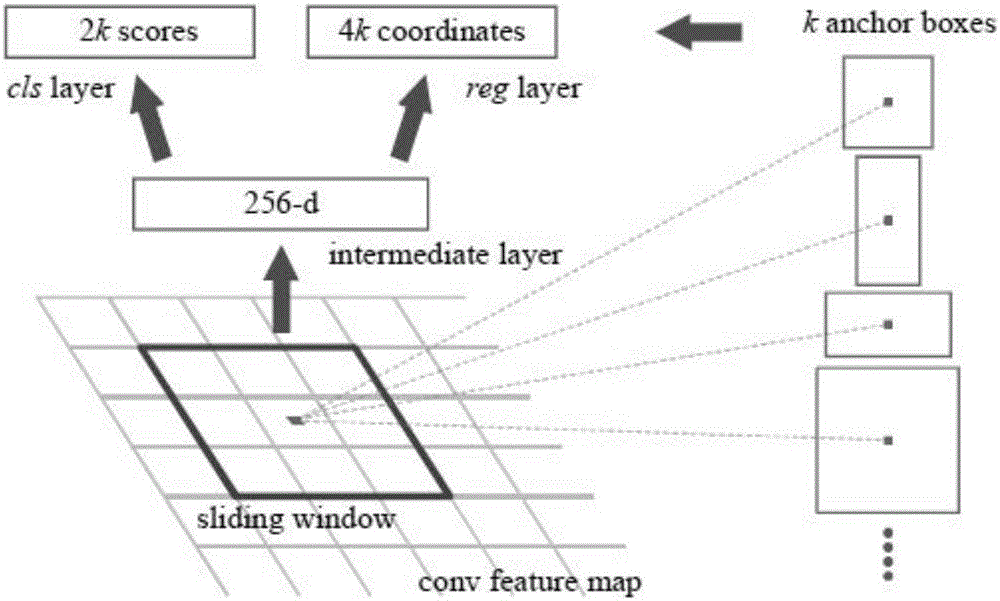

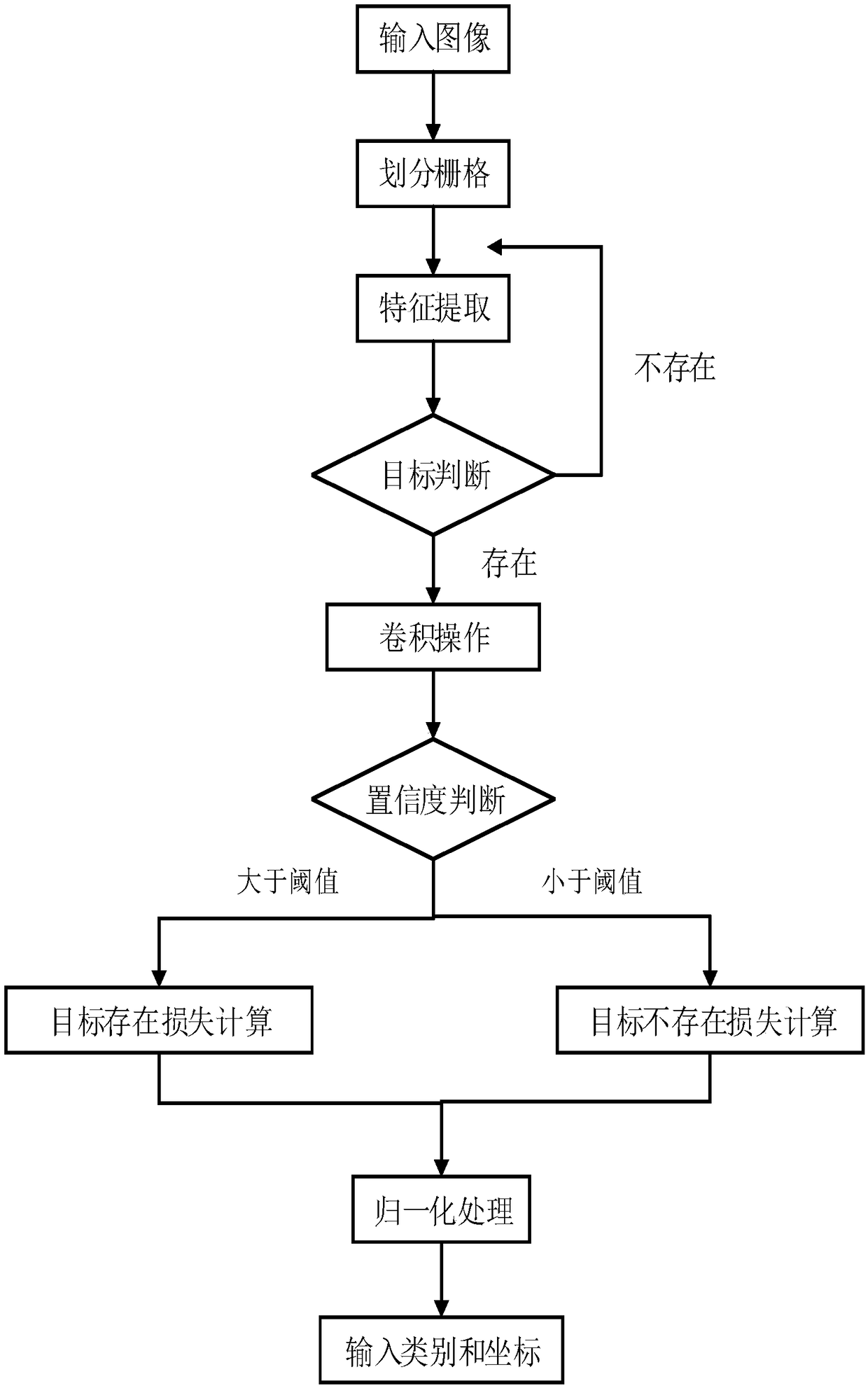

The invention discloses an action detection model based on a convolutional neural network, and belongs to the field of computer visual studies. An efficient action detection model is constructed by using the convolutional neural network in deep learning, thereby recognizing an action from video and detecting and positioning the action. The action detection model is composed of a Faster RCNN (Regional Convolutional Neural Network), an SVM (Support Vector Machine) classifier and an action pipeline. Each part of the action detection model respectively completes the corresponding operation. The Faster RCNN acquires a plurality of regions of interest from each frame of picture, and extracts a feature from each region of interest. The detection model extracts the features by adopting a double-channel model, namely a Faster RCNN channel based on a frame picture and a Faster RCNN channel based on an optical flow picture, which are respectively used for extracting an appearance feature and an action feature. Then, the two features are fused into a time-space domain feature, the time-space domain feature is input to the SVM classifier, and an action type prediction value of the corresponding region is given by SVM classification. Finally, a final action detection result is given by the action pipeline from the perspective of video.

Owner:BEIJING UNIV OF TECH

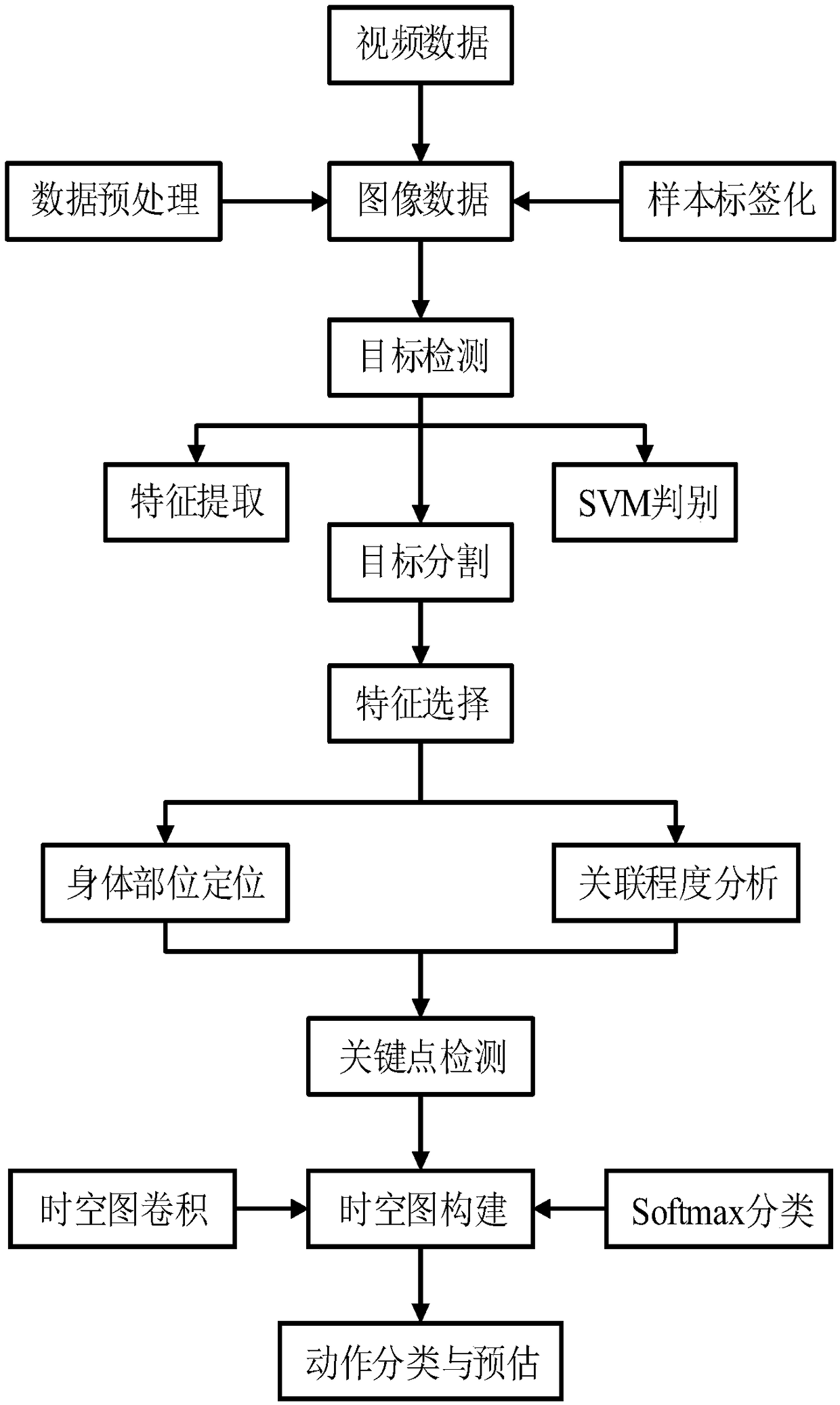

Human body action recognition method based on a TP-STG framework

ActiveCN109492581AReduce prediction lossPrevent early divergenceCharacter and pattern recognitionInternal combustion piston enginesHuman bodySvm classifier

The invention discloses a human body action recognition method based on a TP-STG framework, which comprises the following steps: taking video information as input, adding priori knowledge into an SVMclassifier, and providing a posteriori discrimination criterion to remove a non-personnel target; segmenting a personnel target through a target positioning and detection algorithm, outputting the personnel target in a target frame and coordinate information mode, and providing input data for human body key point detection; utilizing an improved posture recognition algorithm to carry out body partpositioning and correlation degree analysis so as to extract all human body key point information and form a key point sequence; a space-time graph is constructed on a key point sequence through an action recognition algorithm, the space-time graph is applied to multi-layer space-time graph convolution operation, action classification is carried out through a Softmax classifier, and human body action recognition in a complex scene is achieved. According to the method, the actual scene of the ocean platform is combined for the first time, and the provided TP-STG framework tries to identify worker activities on the offshore drilling platform for the first time by using methods of target detection, posture identification and space-time diagram convolution.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

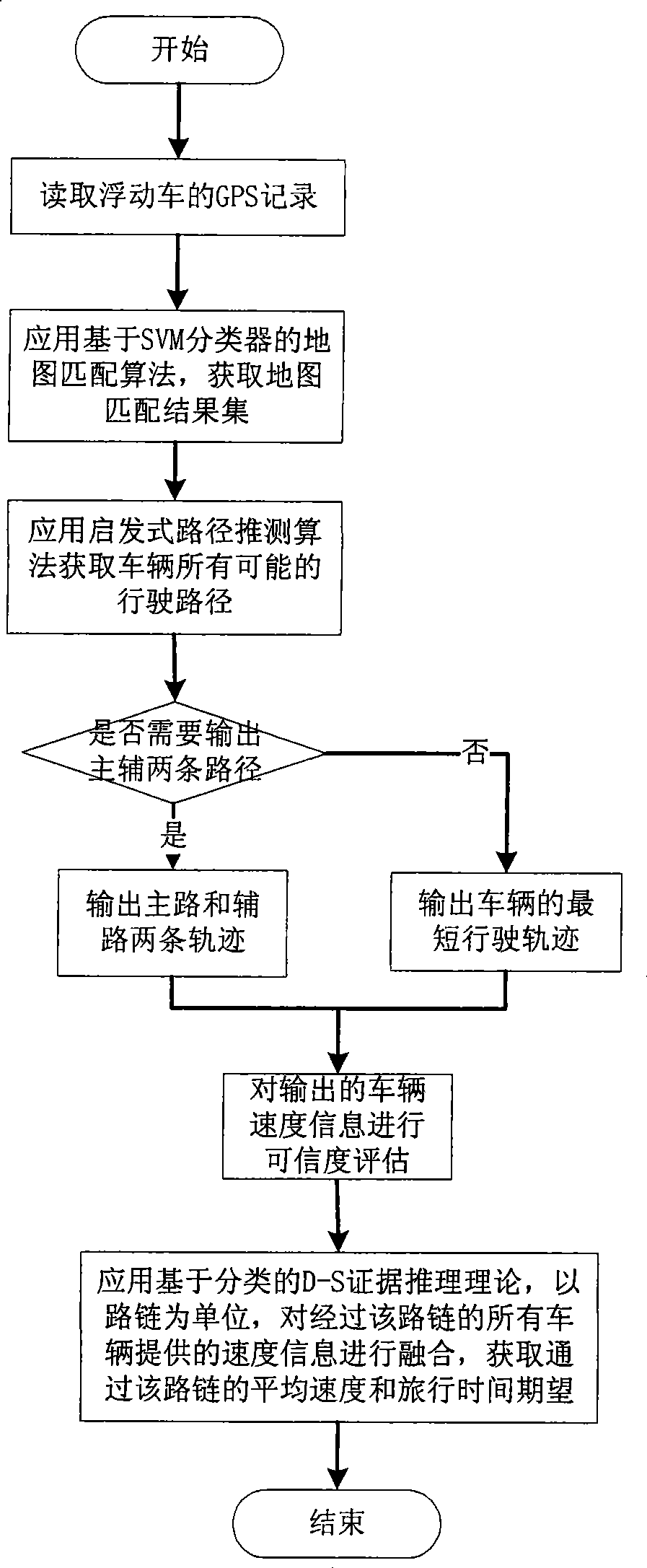

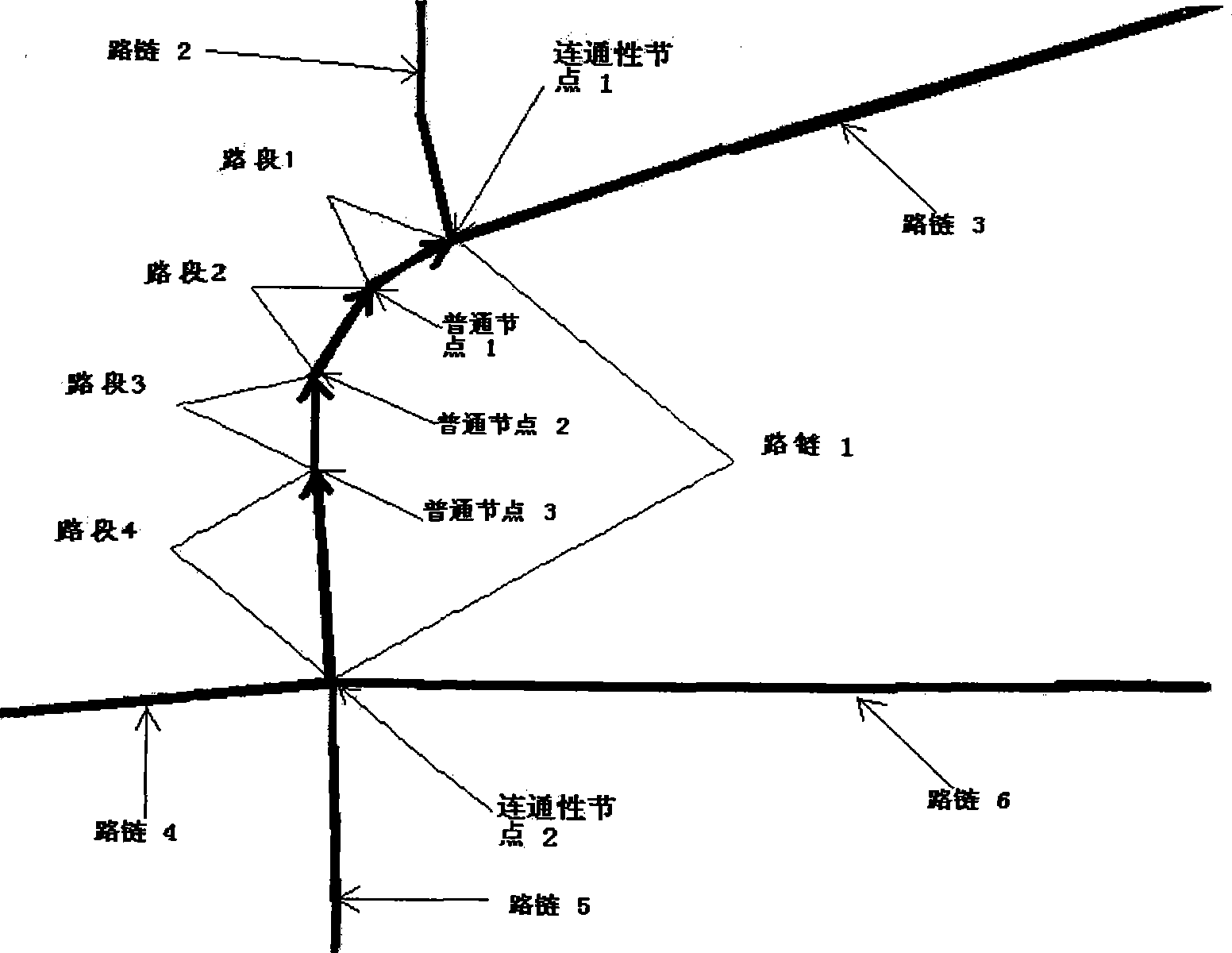

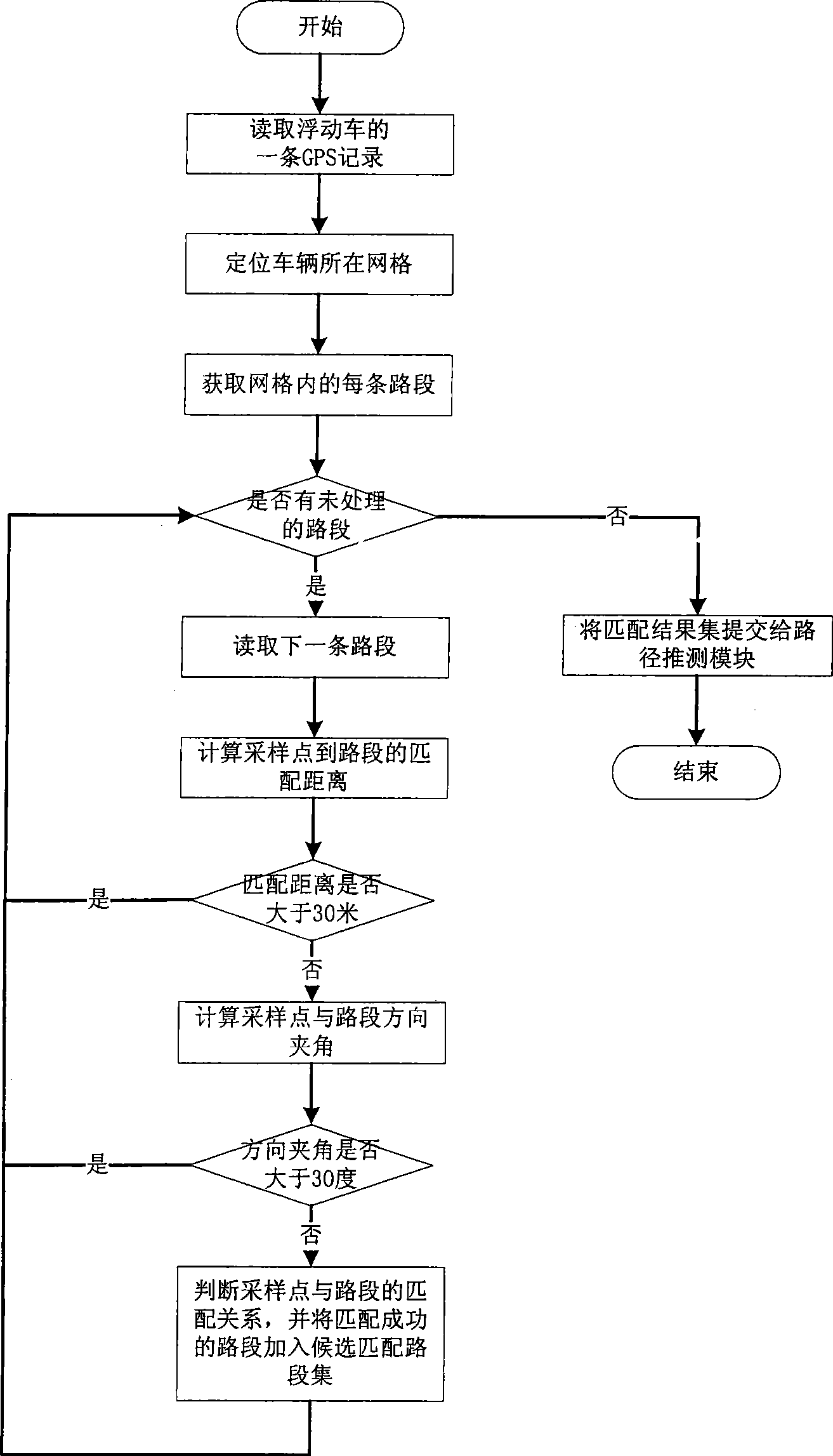

Floating vehicle information processing method under parallel road network structure

InactiveCN101383090AHigh precisionImprove processing accuracyInstruments for road network navigationRoad vehicles traffic controlInformation processingEvidence reasoning

The invention relates to a method for processing the information of float cars under the structure of a parallel road network, which is mainly applied to the service field of the intelligent dynamic traffic information. The method comprises the steps: step 1, an SVM (Support Vector Machine) sorter is adopted to judge the matching relationship between sampling points and roads, and map match is carried out; step 2, according to map match information, a heuristic route deduction algorithm is adopted to deduce possible running routes of a car, and according to the properties of road chains contained in the routes, whether the car runs in the structure of a parallel road network or not is judged; the shortest running route or a main running route and a subsidiary running route are selectively output in different circumstances, and the reliability of average running speed information provided by the car is estimated; step 3, the D-S (Dempster-Shafer) evidence reasoning theory based on classification is adopted to merge road condition information provided by all the float cars passing by a certain road chain in a current period and consider the reliability of the road condition information in a merging process so as to obtain the mathematical expectations of the average speed and the travel time of the float cars passing by the road chain in the current period. On the basis that the data quality of the prior float cars is kept unchanged, the invention realizes the acquisition of the real-time dynamic traffic information under the structure of a parallel road network.

Owner:BEIHANG UNIV

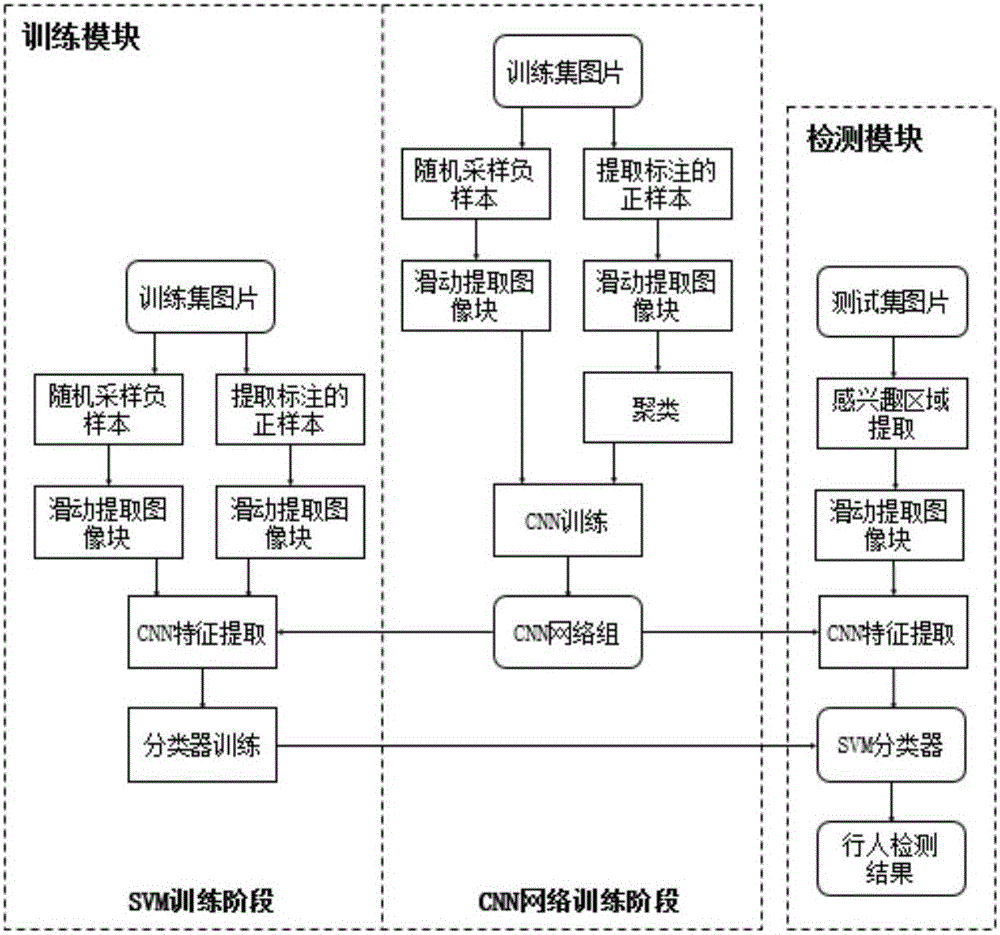

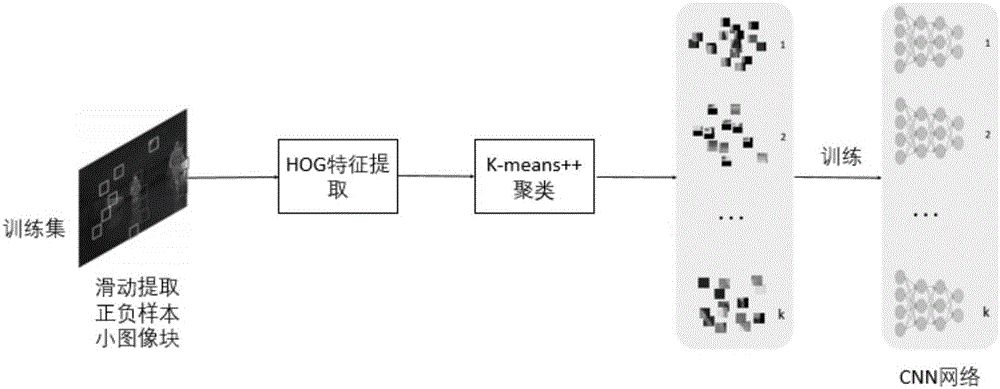

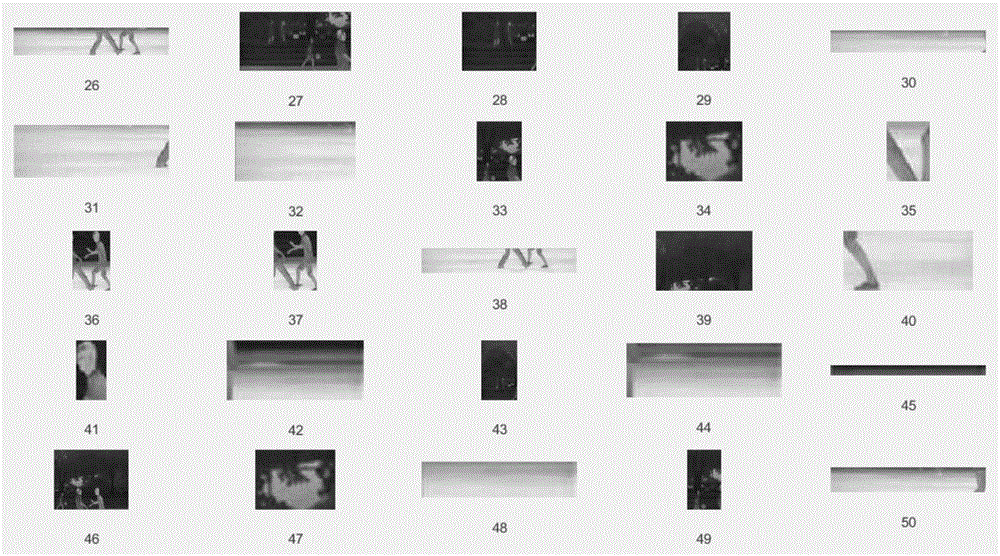

Image block deep learning characteristic based infrared pedestrian detection method

ActiveCN106096561AAddresses poor selection algorithm performanceSufficient dataCharacter and pattern recognitionVisual technologyData set

The invention relates to an image block deep learning characteristic based infrared pedestrian detection method, and belongs to the technical fields of image processing a computer vision. According to the method, a data set is divided into a training set and a test set. In a training stage, firstly, small image blocks are extracted in a sliding manner on positive and negative samples of the infrared pedestrian data set, clustering is carried out, and one convolutional neural network is trained for each type of image blocks; and then feature extraction is carried out on the positive and negative samples by using the trained convolutional neural network group, and an SVM classifier is trained. In a test stage, firstly, a region-of interest is extracted for a test image, then feature extraction is carried out on the region-of-interest by using the trained convolutional neural network group, and finally prediction is performed by using the SVM classifier. The infrared pedestrian detection method achieves a purpose of pedestrian detection via a mode of detecting whether each region-of-interest belongs to a pedestrian region or not, so that pedestrians in an infrared image can be detected accurately under the conditions such that the detection scene is complicated, the environment temperature is high, and the pedestrians vary greatly in scale attitude, and the method provides support for research in follow-up related fields such as intelligent video.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

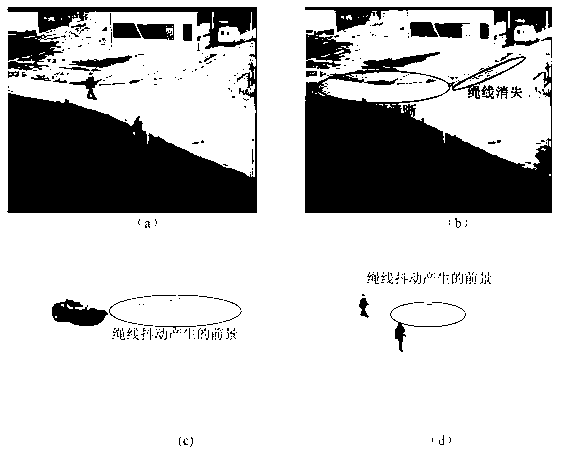

Pedestrian detection method based on video processing

InactiveCN103324955AImprove detection accuracyImprove real-time performanceImage analysisCharacter and pattern recognitionHigh dimensionalitySvm classifier

The invention relates to a pedestrian detection method based on video processing. The pedestrian detection method comprises the steps of (1) extracting a foreground image, extracting a moving object image of each frame of a video, marking the image and storing the image into a storage in sequence, using a background model to extract a background, enabling the model to adopt the gauss mixing model, (2) conducting preliminary screening on the foreground, selecting shape features of a pedestrian for conducting identification, (3) accurately identifying the foreground, selecting HOGs to conduct feature extraction on the foreground image after preliminary screening, then using a low dimensionality soft output SVM pedestrian classifier to conduct classification, and judging whether the pedestrian exists or not. The pedestrian detection method further comprises the step of (4) conducting error correction processing in a secondary thread. As for the foreground image with low dimensionality soft output SVM pedestrian classifier soft output results which are ambiguous in belonging classification, a high dimensionality SVM classifier is called in the secondary thread for recognition processing. The pedestrian detection method based on video processing improves the detection accuracy and is good in real-time performance.

Owner:ZHEJIANG ZHIER INFORMATION TECH

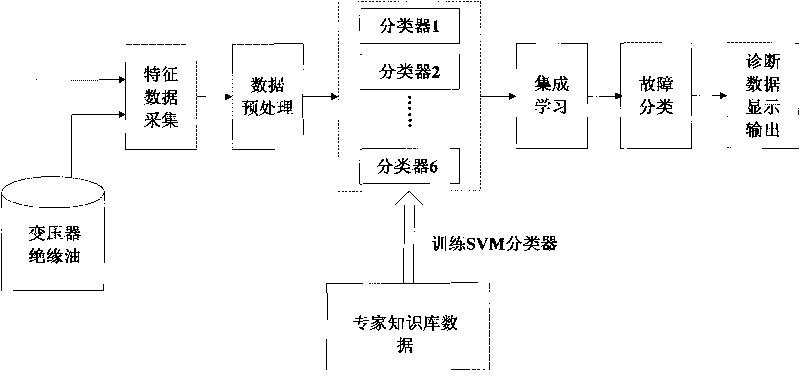

On-line transformer fault diagnosis method based on SVM and DGA

InactiveCN101701940AOvercoming the problem of blind divisionConvenient real-time intelligent fault diagnosisComponent separationComputing modelsTransformerSvm classifier

The invention discloses an on-line transformer fault diagnosis method based on support vector machines (SVM) and dissolved gas analysis (DGA), which belongs to the field of transformer fault diagnosis. The method comprises the following steps of: acquiring the concentration of fault feature gases including H2, CH4, C2H2, C2H4 and C2H6 in transformer insulating oil by a gas chromatographic analysis method, normalizing the data through a preprocessing system, sending the data to a classification diagnosis system assembled by an integrative learning method and formed by six SVM classifiers according to a decision process, classifying the measurement data through calculation, judging the running state of the transformer, and at last outputting the diagnosis results. In the method, the support vector machines (SVM) of the artificial intelligence technology are adopted to analyze the gases in the oil, and the relation between gas components and the running state of the transformer can be objectively and essentially reflected, thereby the accuracy of fault diagnosis is effectively improved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

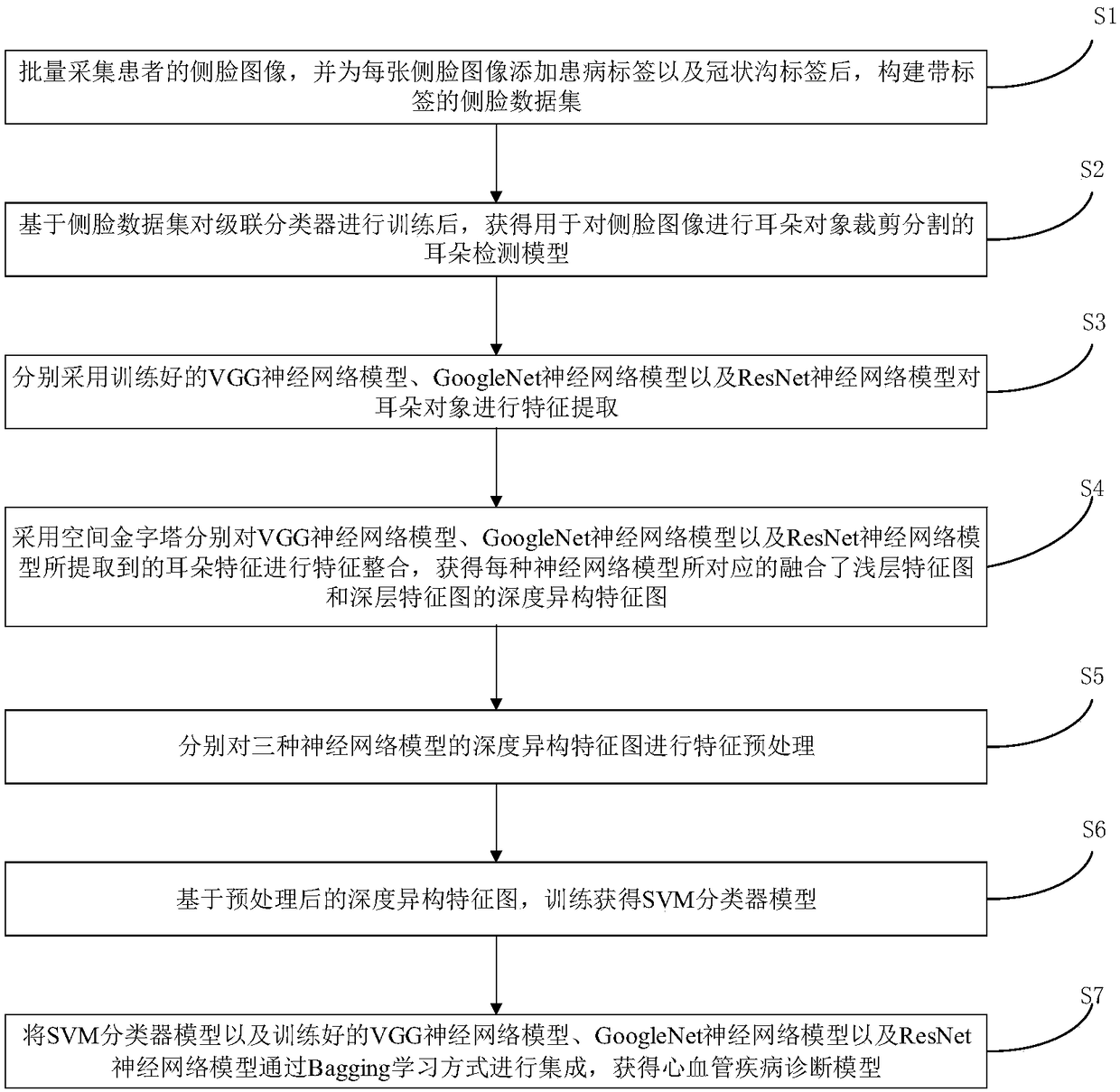

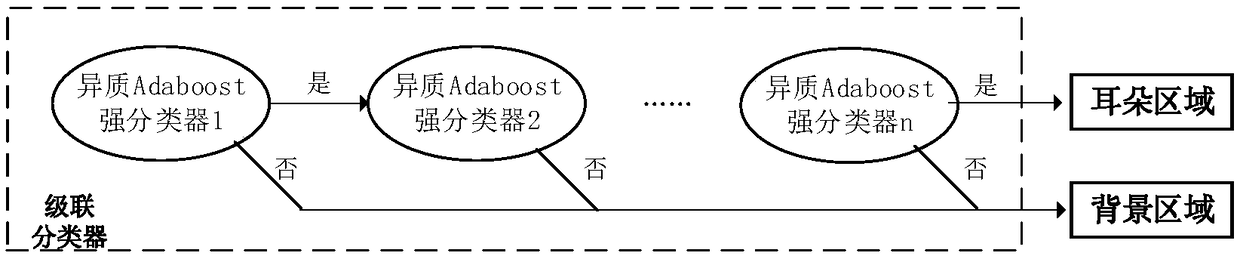

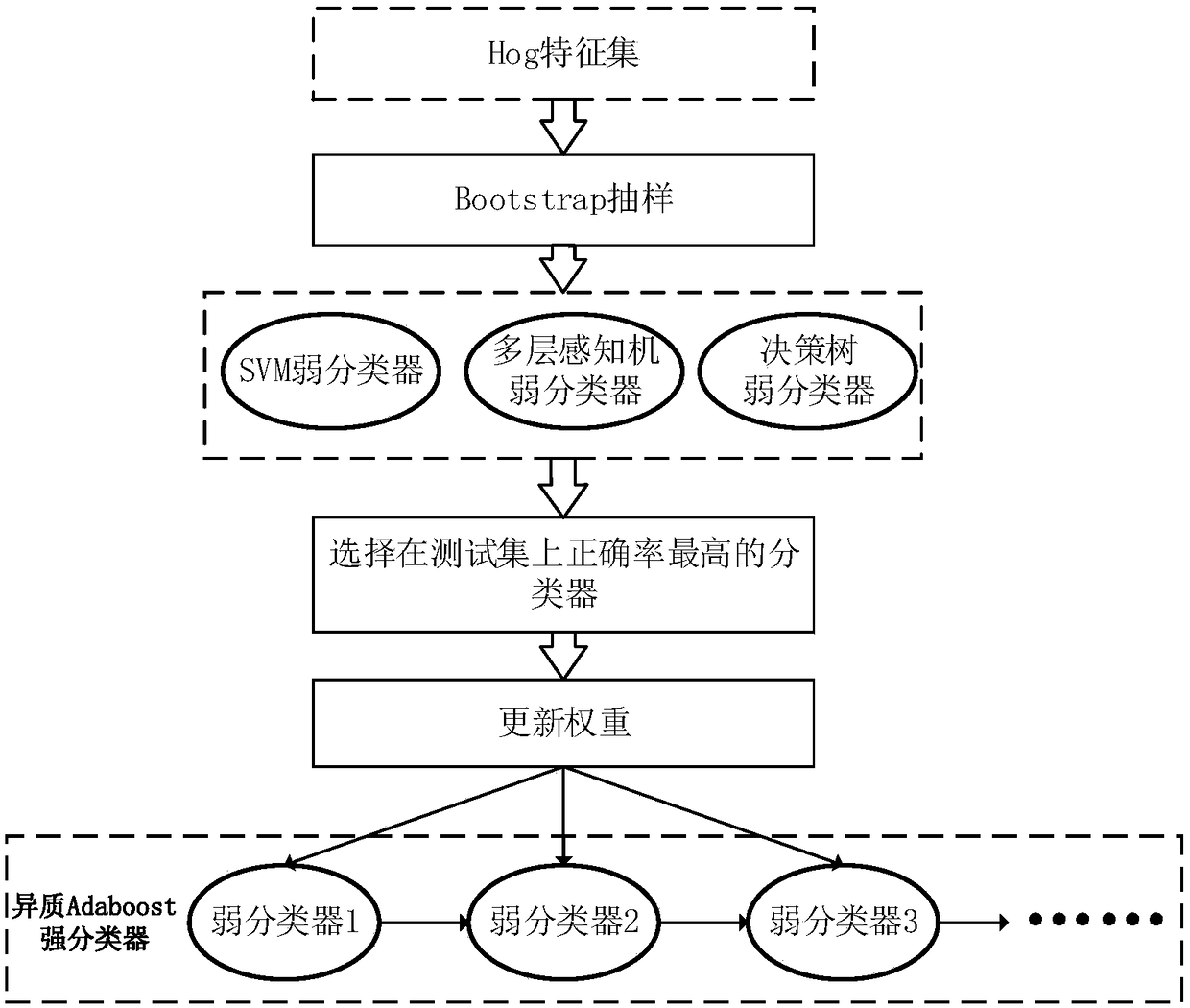

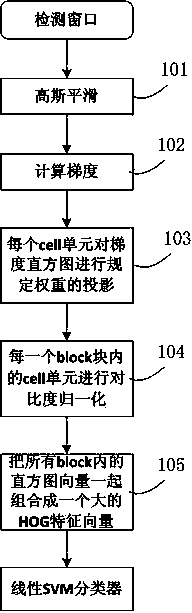

Method and system for constructing cardiovascular disease diagnostic model, and diagnostic model thereof

ActiveCN109300121AExtract comprehensiveComprehensive diagnosisImage enhancementImage analysisData setSvm classifier

The invention discloses a method for constructing a cardiovascular disease diagnosis model, a system and the diagnosis model. The cascade classifier is trained to get the ear detection model. VGG, GoogleNet and ResNet neural network models are used to extract ear features. The spatial pyramid is used to integrate the features of ear extracted from neural network model, and the depth heterogeneousfeature map of each neural network model is obtained. Feature preprocessing of depth isomerism feature map; Training to obtain SVM classifier model; The SVM classifier model and the three trained neural network models are integrated by Bagging learning to obtain the cardiovascular disease diagnosis model. The cardiovascular disease diagnosis model constructed by the invention can comprehensively and scientifically carry out cardiovascular disease diagnosis and prediction, has high precision, and can be widely applied in the field of automatic processing of medical data.

Owner:SOUTH CHINA UNIV OF TECH

Dressing safety detection method for worker on working site of electric power facility

ActiveCN103745226AAvoid safety accidentsEliminate potential safety hazardsData processing applicationsCharacter and pattern recognitionTransformerHistogram of oriented gradients

The invention discloses a dressing safety detection method for a worker on a working site of an electric power facility. An SVM (support vector machine) classifier is trained based on HOG (histogram of oriented gradients) characteristics to identify the worker on the working site of the electric power facility and judge whether the worker is neatly dressed or not based on a worker identification result. The method comprises the following steps of detecting a worker target appearing on the working site of the electric power facility by training a HOG-characteristic-based classifier, and judging whether dressing and equipment of the worker target meet safety requirements on the working site or not based on the identified worker target, mainly comprising safety items such as whether a helmet is worn or not, whether safety clothes are completely worn (without exposed skin) or not and whether the worker on a pole transformer correctly wears a safety belt or not. According to the method, the dressing of the worker can be detected in advance before the worker enters the working site, and an additional worker for supervision is not required to be deployed; in addition, if the dressing of the worker is inconsistent with norms, the worker is early-warned and prompted, so that safety accidents caused by nonstandard dressing are avoided, and potential safety hazards are eliminated.

Owner:STATE GRID CORP OF CHINA +6

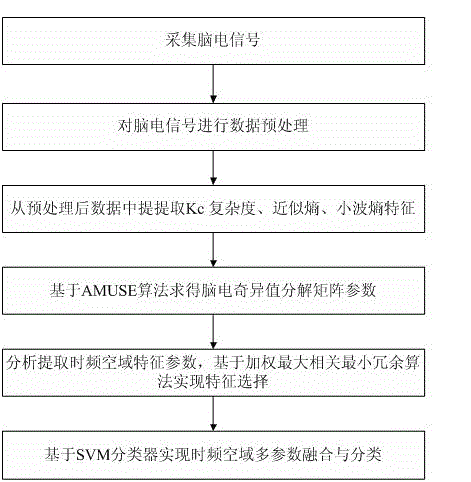

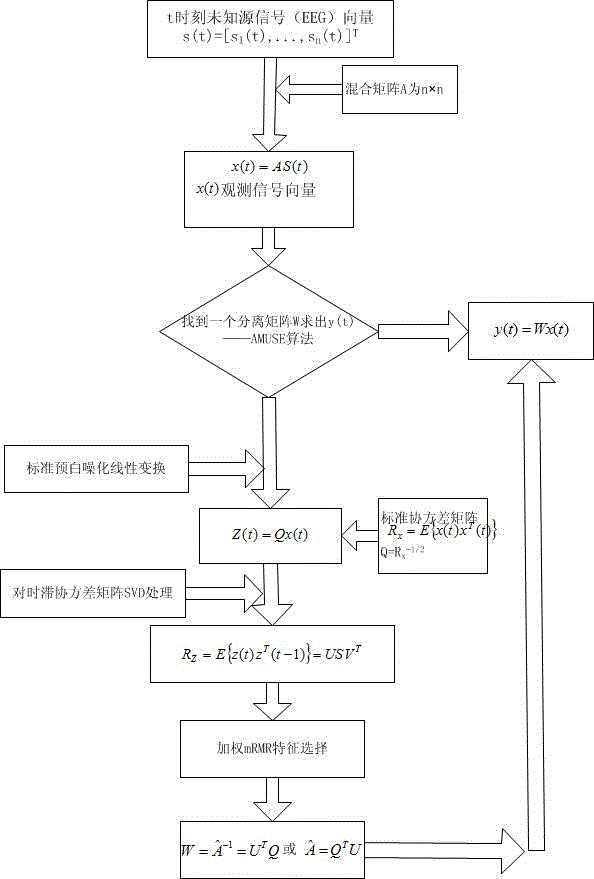

Method for extracting and fusing time, frequency and space domain multi-parameter electroencephalogram characters

ActiveCN104586387AEfficient extractionAll-round extractionDiagnostic recording/measuringSensorsSingular value decompositionFunctional disturbance

The invention relates to a method for extracting and fusing time, frequency and space domain multi-parameter electroencephalogram characters, which comprises the following steps: 1) collecting an electroencephalogram signal; 2) performing data pre-processing on the electroencephalogram signal; 3) extracting Kc complexity, approximate entropy and wavelet entropy from the pre-processed data; 4) on the basis of AMUSE algorithm, acquiring an electroencephalogram singular value decomposition matrix parameter; 5) performing character selection on the time, frequency and space domain character parameters for the extracted Kc complexity, approximate entropy, wavelet entropy and electroencephalogram singular value decomposition matrix parameters; 6) utilizing a SVM classifier to fuse and classify the four parameters of the time, frequency and space domains after the character selection. According to the method provided by the invention, the Kc complexity, the approximate entropy, the wavelet entropy and the electroencephalogram singular value decomposition matrix parameter can be selected for comprehensively presenting electroencephalogram character information, and then subsequent effective fusion is performed, so that effective support and help can be supplied to early diagnosis assessment for the brain functional disordered diseases, such as, Alzheimer disease, mild cognitive impairment, and the like.

Owner:秦皇岛市惠斯安普医学系统股份有限公司 +1

Safety helmet identification method integrating HOG human body target detection and SVM classifier

ActiveCN104063722AThe principle is simpleAccurate monitoringCharacter and pattern recognitionVideo monitoringHuman body

The invention relates to the field of video monitoring, in particular to a safety helmet identification method integrating HOG human body target detection and an SVM classifier. The method includes the steps that parameter values Sigma of HOG positive and negative sample characteristics, an SVM classification function and a Gaussian kernel function are obtained; a monitoring box is extracted; a moving target is detected; HOG characteristic matching is conducted to judge whether a safety helmet is worn. By means of the safety helmet identification method, whether staff in a construction site wear safety helmets as required can be accurately monitored, the algorithm principle is simple, the real-time performance and a high accuracy rate are achieved, a human body target and a non-human-body target can be effectively distinguished, interference factors in a background are overcome, adaptability to outdoor changing light conditions and changes of colors of the safety helmets is achieved, and high robustness is achieved.

Owner:STATE GRID CORP OF CHINA +1

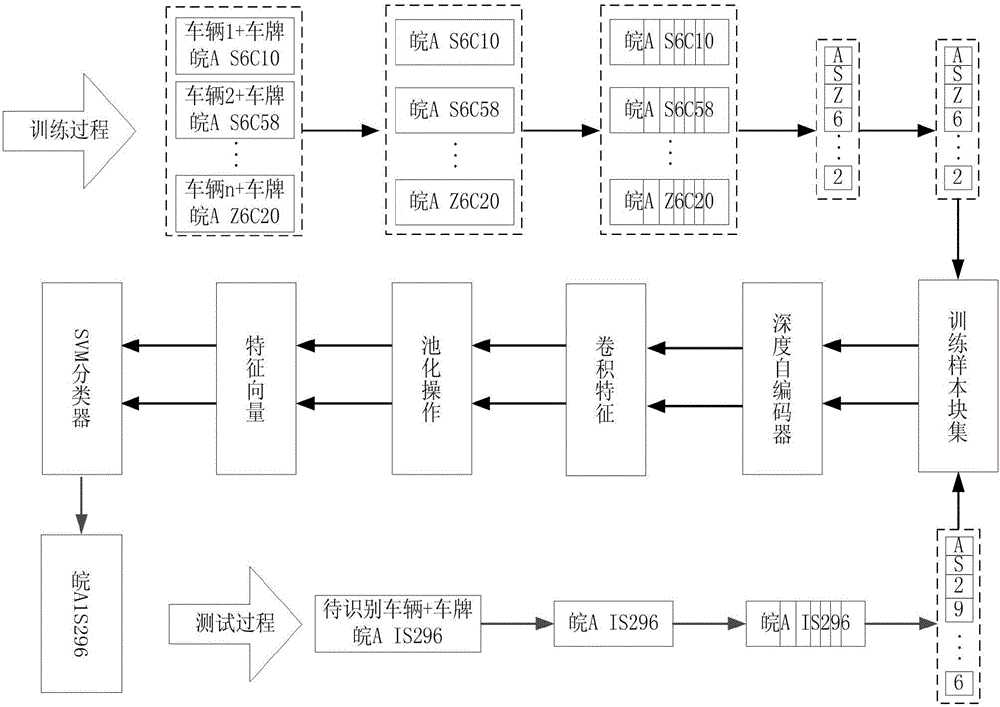

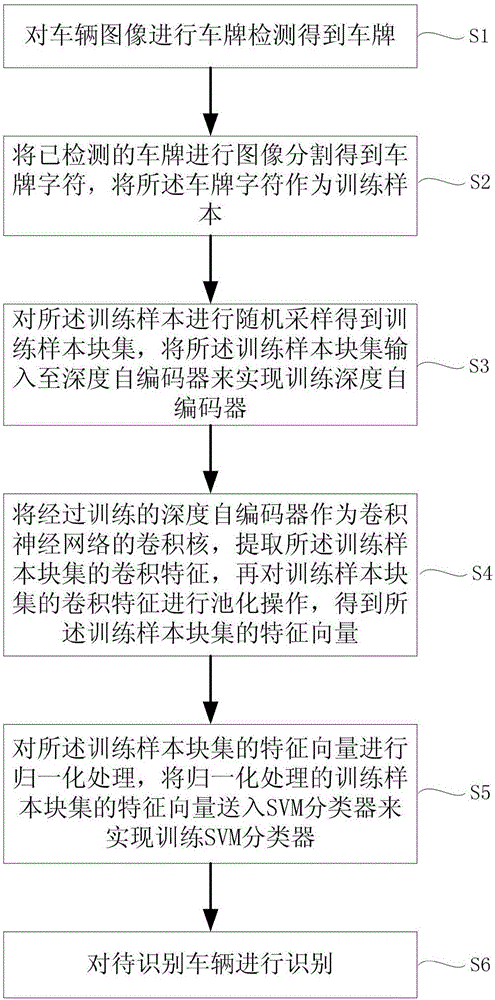

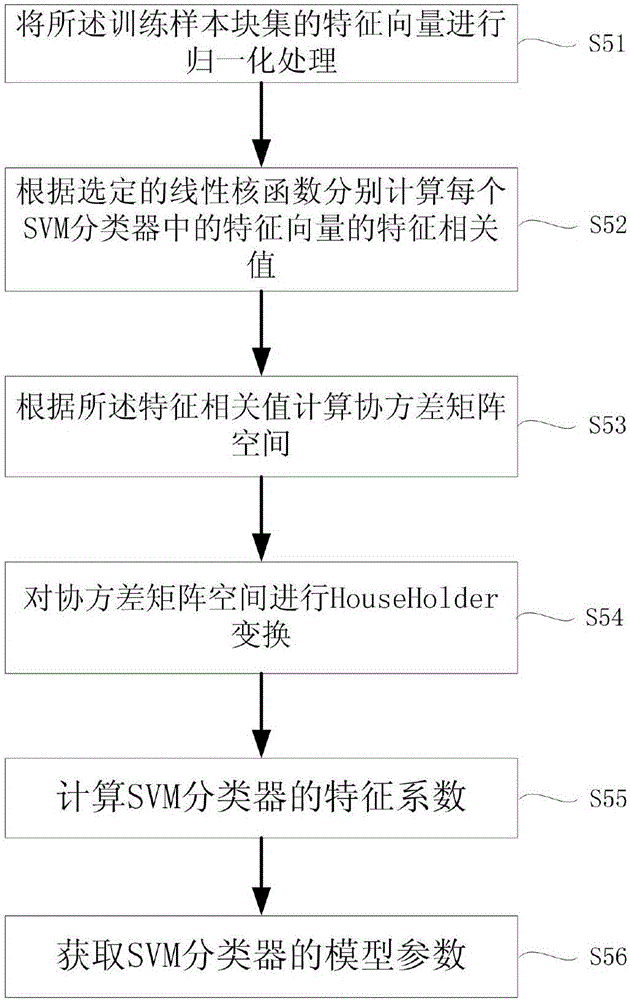

License plate recognition method based on deep convolutional neural network

InactiveCN106446895AImprove accuracyEasy to identifyCharacter and pattern recognitionFeature vectorImaging processing

The invention belongs to the technical field of image processing and mode recognition and particularly relates to a license plate recognition method based on a deep convolutional neural network. The method includes: performing license plate detection on vehicle images, performing image segmentation on detected license plates to obtain license plate characters, using the license plate characters as training samples to obtain a training sample block set, inputting the training sample block set into a deep auto-encoder to train the deep auto-encoder, using the trained deep auto-encoder as the convolution kernel of the convolutional neural network, extracting the convolution features of the training sample block set, performing pooling operation on the convolution features of the training sample block set to obtain feature vectors, performing normalization processing on the feature vectors, loading the feature vectors after the normalization processing into an SVM classifier to train the SVM classifier, and testing to-be-recognized vehicles. By the method, license plate recognition accuracy can be increased, and license plate character recognition rate and robustness can be increased when the license plate characters are located in severe environments.

Owner:ANHUI SUN CREATE ELECTRONICS

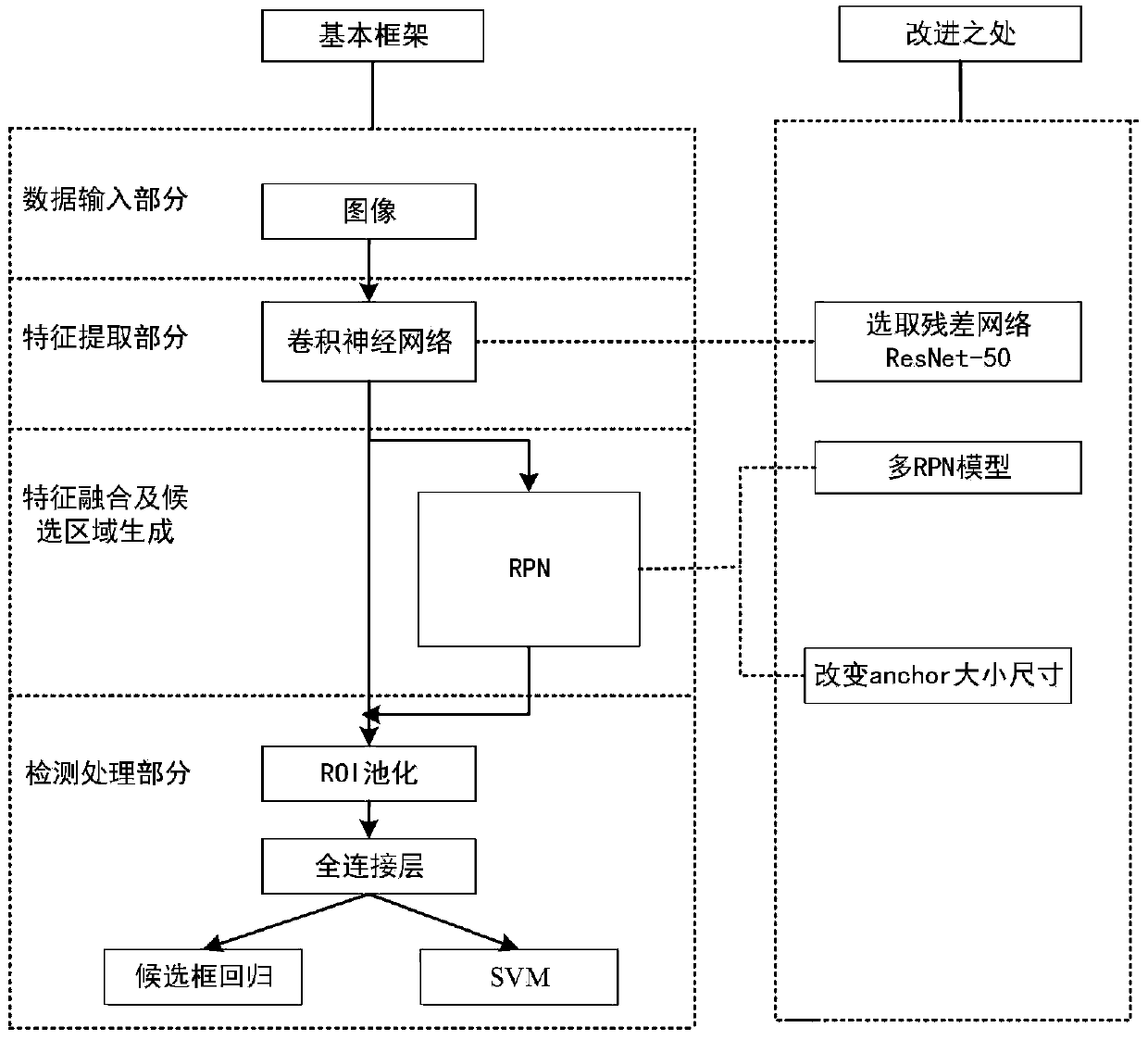

Crack image detection method based on Faster R-CNN parameter migration

ActiveCN110211097AImprove accuracyOptimizing Performance for Deep LearningImage analysisCharacter and pattern recognitionSvm classifierImage detection

The invention discloses a crack image detection method based on Faster R-CNN parameter migration. The crack image detection method comprises the following detailed steps: 1) feature extraction: inputting a picture into a ResNet-50 network to extract features; 2) feature fusion and candidate region generation: inputting the obtained feature map into a multi-task enhanced RPN model, improving the size and the size of an anchor box of the RPN model to improve the detection and recognition precision, and generating a candidate region; and 3) detection processing: sending the feature map and the candidate region to a region of interest (ROI) pool, completely connecting the feature map and the candidate region to an (FC) layer, and then respectively connecting FC layer output to a boundary regression device and an SVM classifier to obtain the category and the position of the target. The crack image detection method solves the problem that dam crack image samples are insufficient, and is suitable for detecting cracks of the dam in different illumination environments and lengths.

Owner:HOHAI UNIV +2

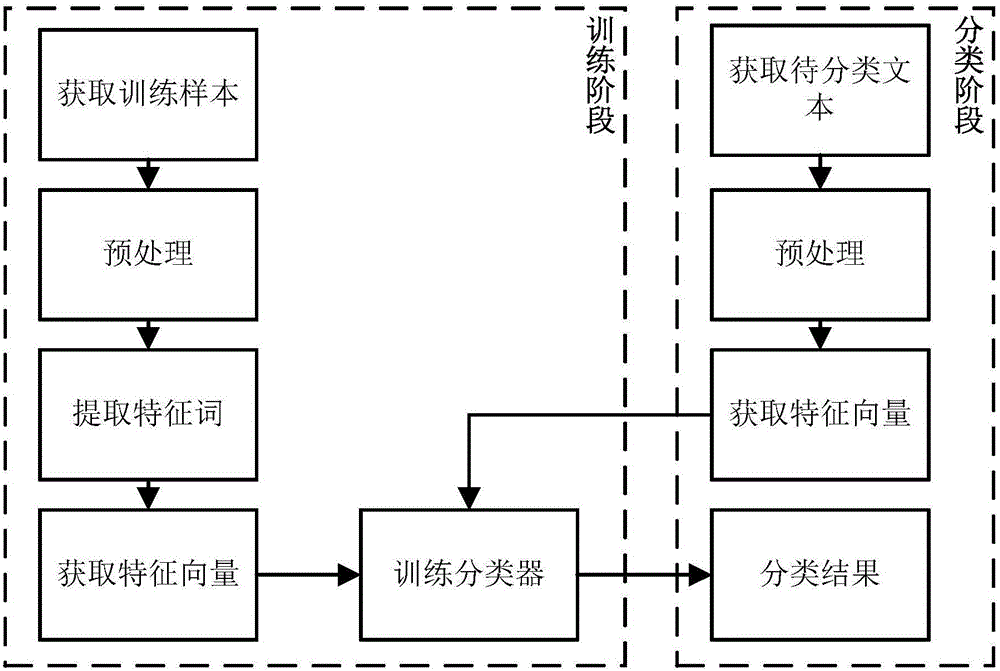

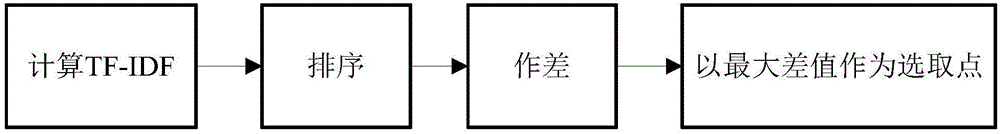

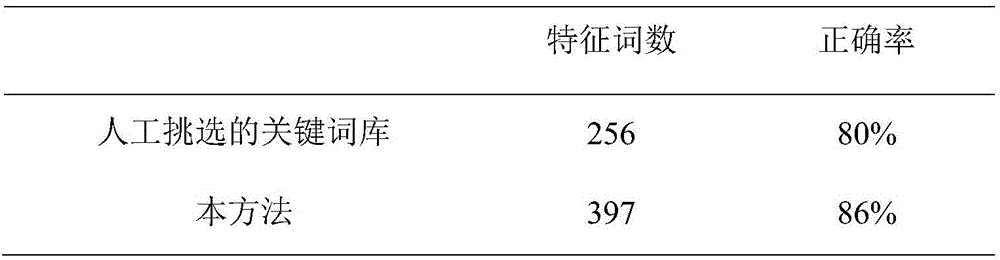

Text classification method

InactiveCN106095996ARealize automatic extractionReduce dimensionalitySpecial data processing applicationsText database clustering/classificationFeature vectorText categorization

The invention relates to a text classification method. The text classification method comprises the steps that text sets pre-marked in category are obtained as training samples, and texts in the training samples are preprocessed to obtain feature word sets for training; feature words are extracted to obtain a feature word dictionary; the feature word dictionary generates feature vectors of the texts in the training samples, and feature vector sets of the training samples are obtained; an SVM classifier is trained by utilizing the feature vectors; the texts to be classified are preprocessed to obtain the feature word sets of the texts to be classified; the feature vectors of the texts to be classified are generated according to the feature word dictionary; the feature vectors are input into the trained SVM classifier to obtain categories of the texts to be classified.

Owner:量子云未来(北京)信息科技有限公司 +1

Polarized SAR (synthetic aperture radar) image classification method based on depth PCA (principal component analysis) network and SVM (support vector machine)

InactiveCN104331707AHigh accuracy of resultsImprove classification accuracyCharacter and pattern recognitionSynthetic aperture radarPrincipal component analysis

The invention discloses a polarized SAR (synthetic aperture radar) image classification method based on a depth PCA (principal component analysis) network and an SVM (support vector machine) classifier. The polarized SAR image classification method includes filtering a polarized SAR image, extracting a shape feature parameter, a scattering feature parameter, a polarization feature parameter and independent elements of a covariance matrix C, and combing and normalizing into new high-dimensional features serving as data to be processed in a next step; according to actual ground feature flags, randomly selecting 10% of data with flags from each type to serve as training samples; whitening the training samples to serve as input to train a first layer of the network, taking a result as input of a second layer to train the second layer of the network, and performing binaryzation and histogram statistics on an output result; taking output of the depth PCA network as a finally learned feature training SVM classifier; whitening test samples, and inputting the test samples into a trained network framework to predict and calculate accuracy; coloring and displaying a classified image and outputting a final result.

Owner:XIDIAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com