Patents

Literature

398 results about "Histogram of oriented gradients" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

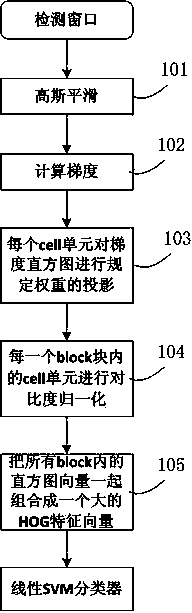

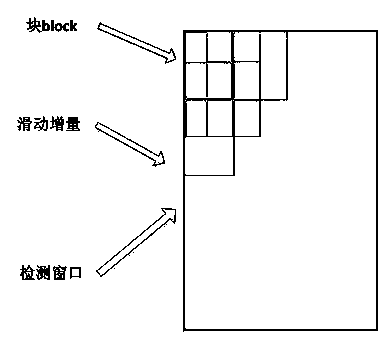

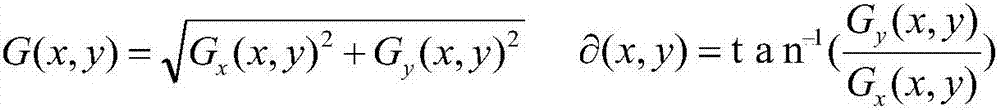

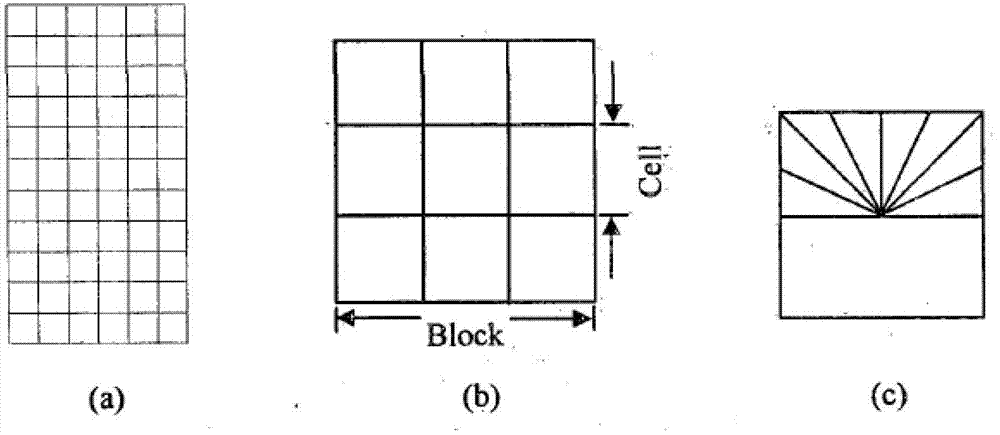

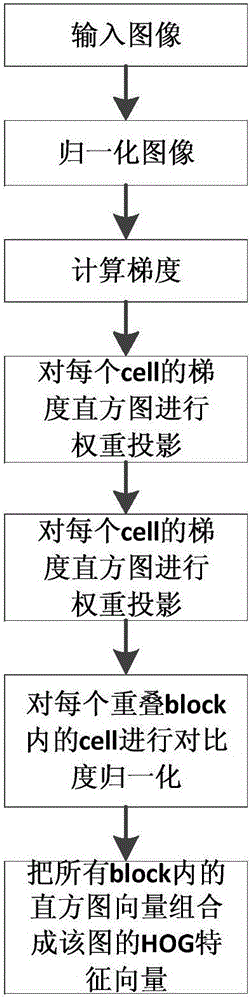

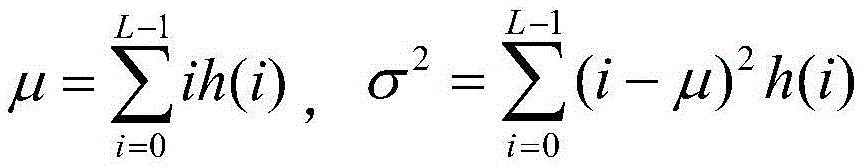

The histogram of oriented gradients (HOG) is a feature descriptor used in computer vision and image processing for the purpose of object detection. The technique counts occurrences of gradient orientation in localized portions of an image. This method is similar to that of edge orientation histograms, scale-invariant feature transform descriptors, and shape contexts, but differs in that it is computed on a dense grid of uniformly spaced cells and uses overlapping local contrast normalization for improved accuracy.

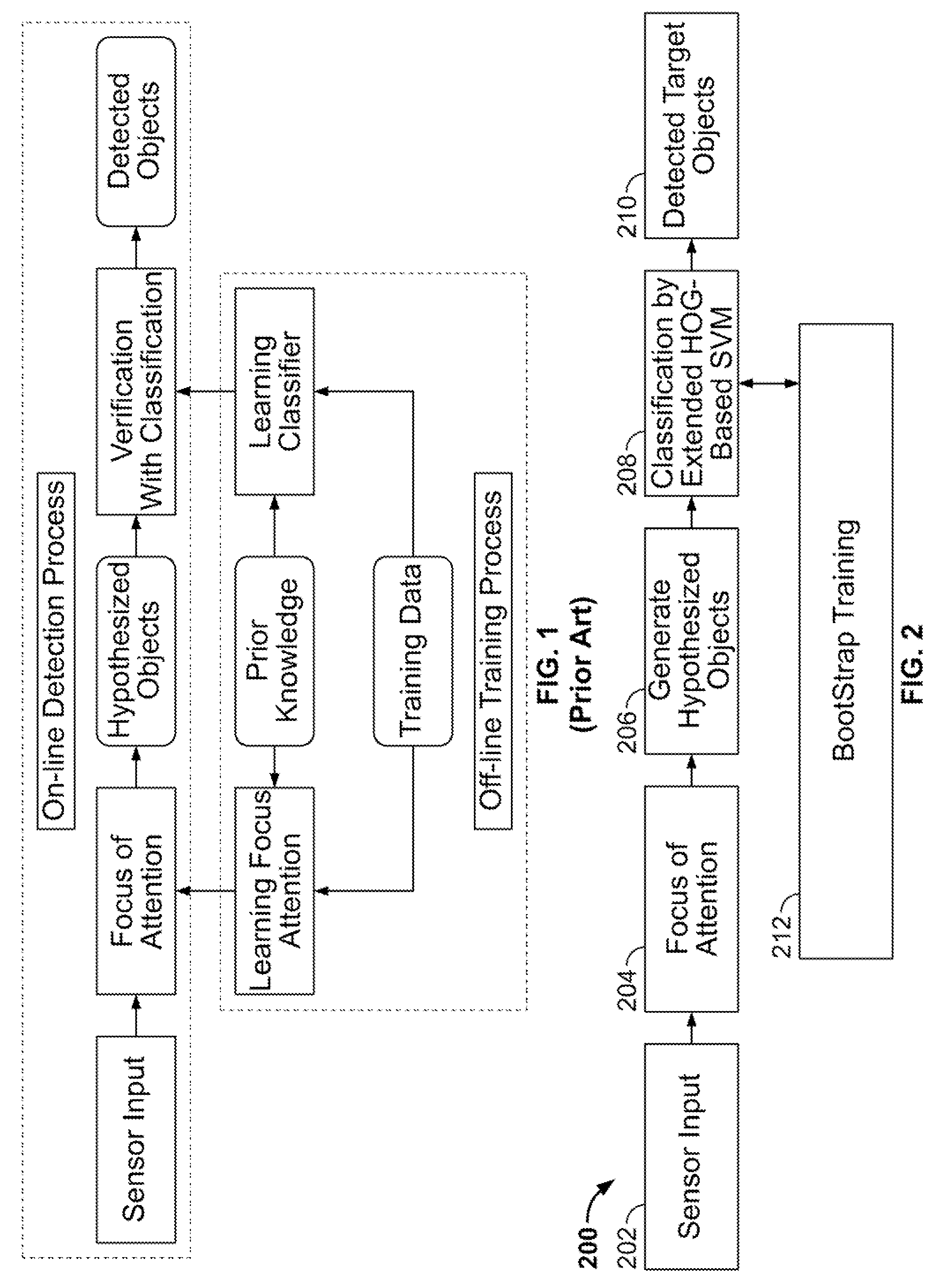

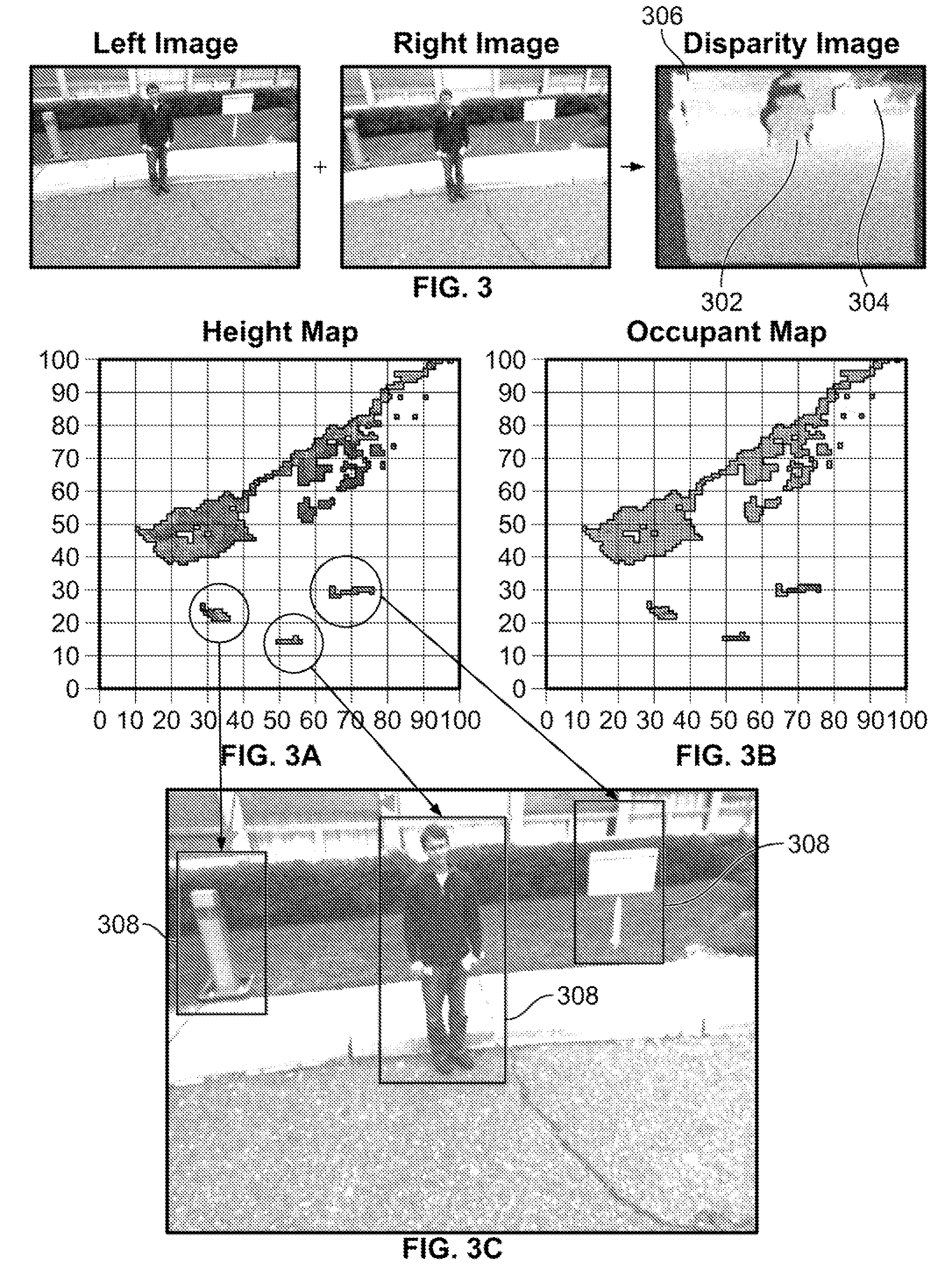

System and method for detecting still objects in images

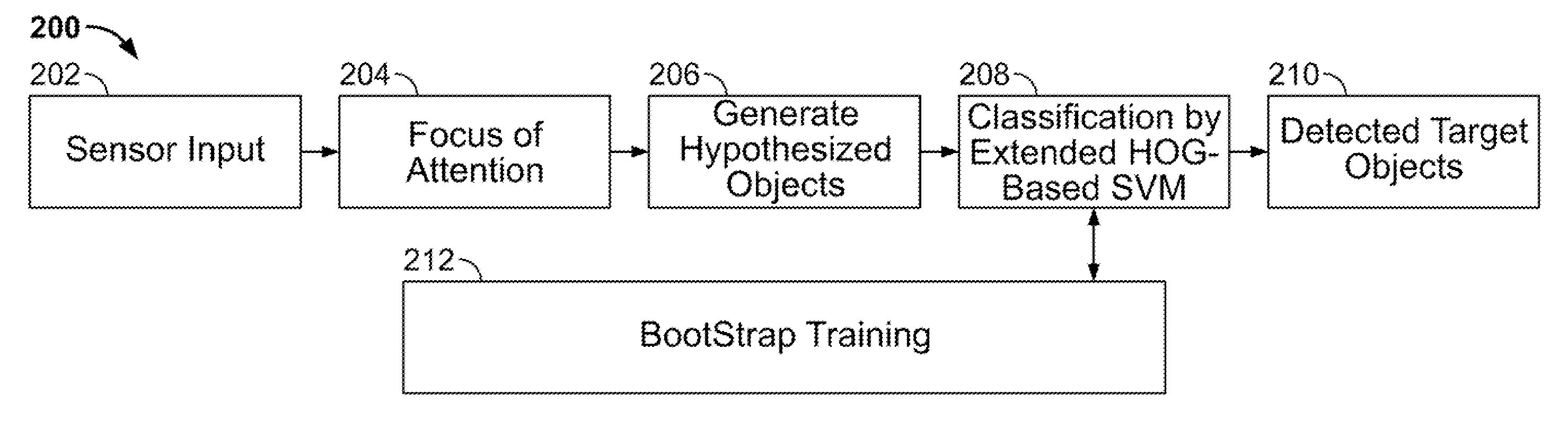

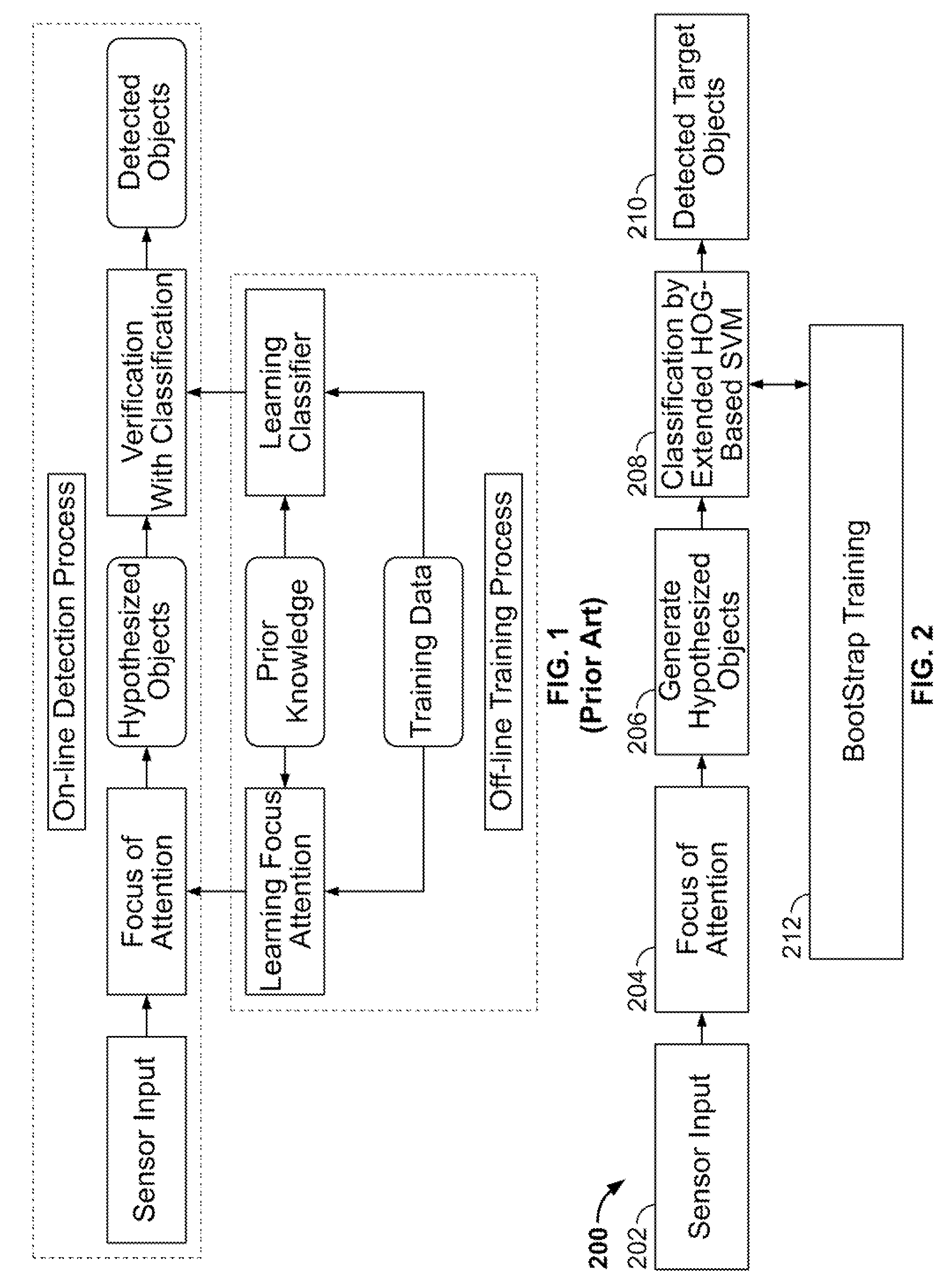

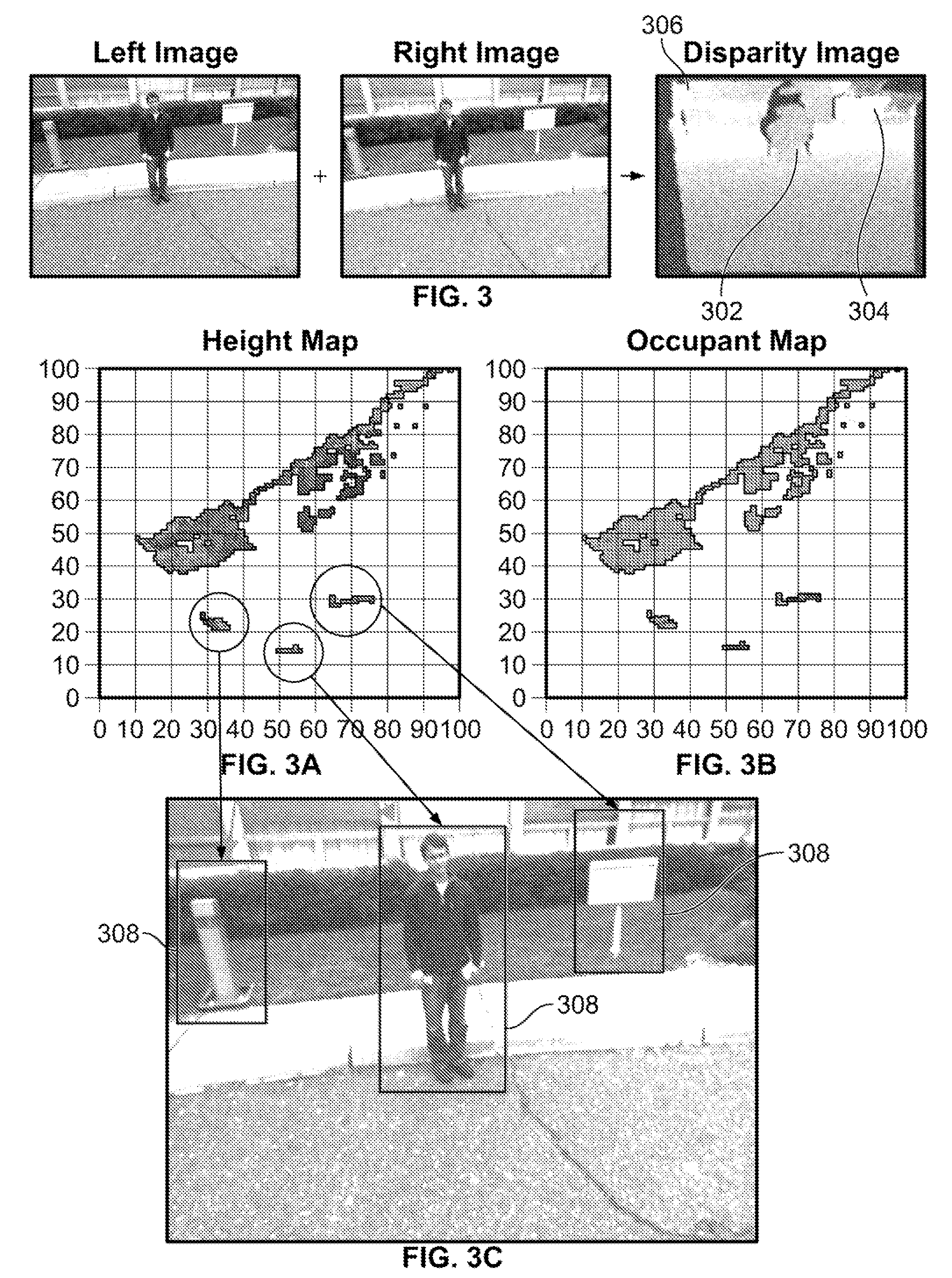

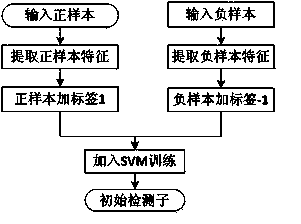

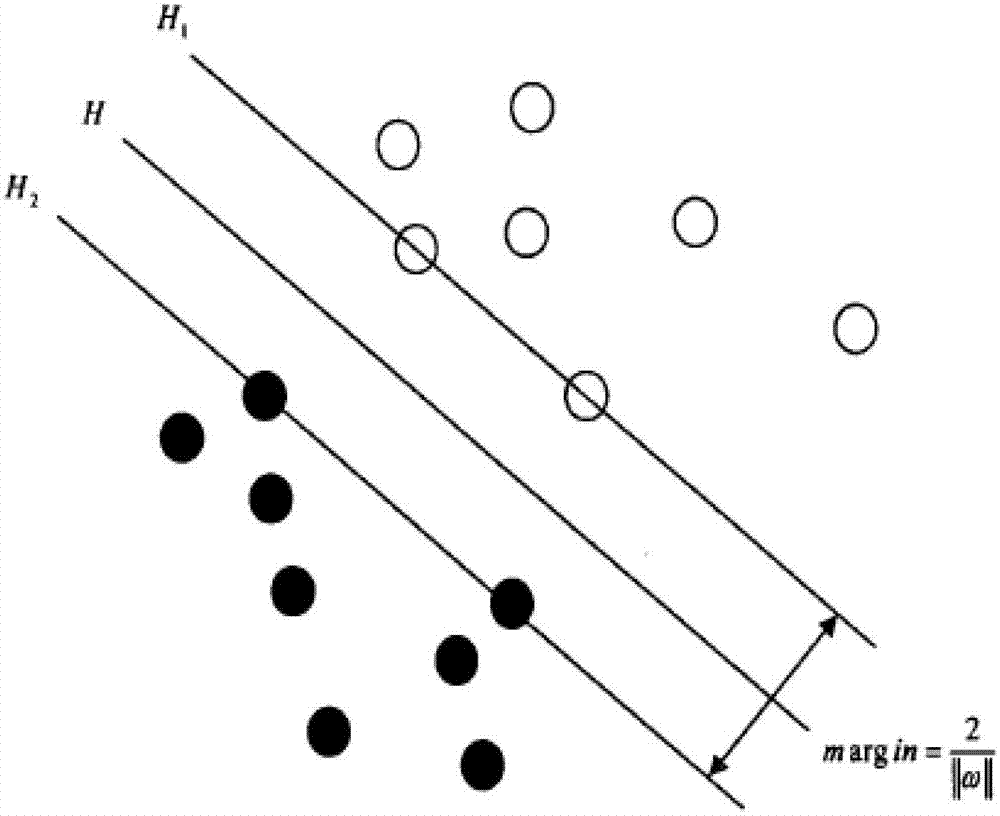

The present invention provides an improved system and method for object detection with histogram of oriented gradient (HOG) based support vector machine (SVM). Specifically, the system provides a computational framework to stably detect still or not moving objects over a wide range of viewpoints. The framework includes providing a sensor input of images which are received by the “focus of attention” mechanism to identify the regions in the image that potentially contain the target objects. These regions are further computed to generate hypothesized objects, specifically generating selected regions containing the target object hypothesis with respect to their positions. Thereafter, these selected regions are verified by an extended HOG-based SVM classifier to generate the detected objects.

Owner:SRI INTERNATIONAL

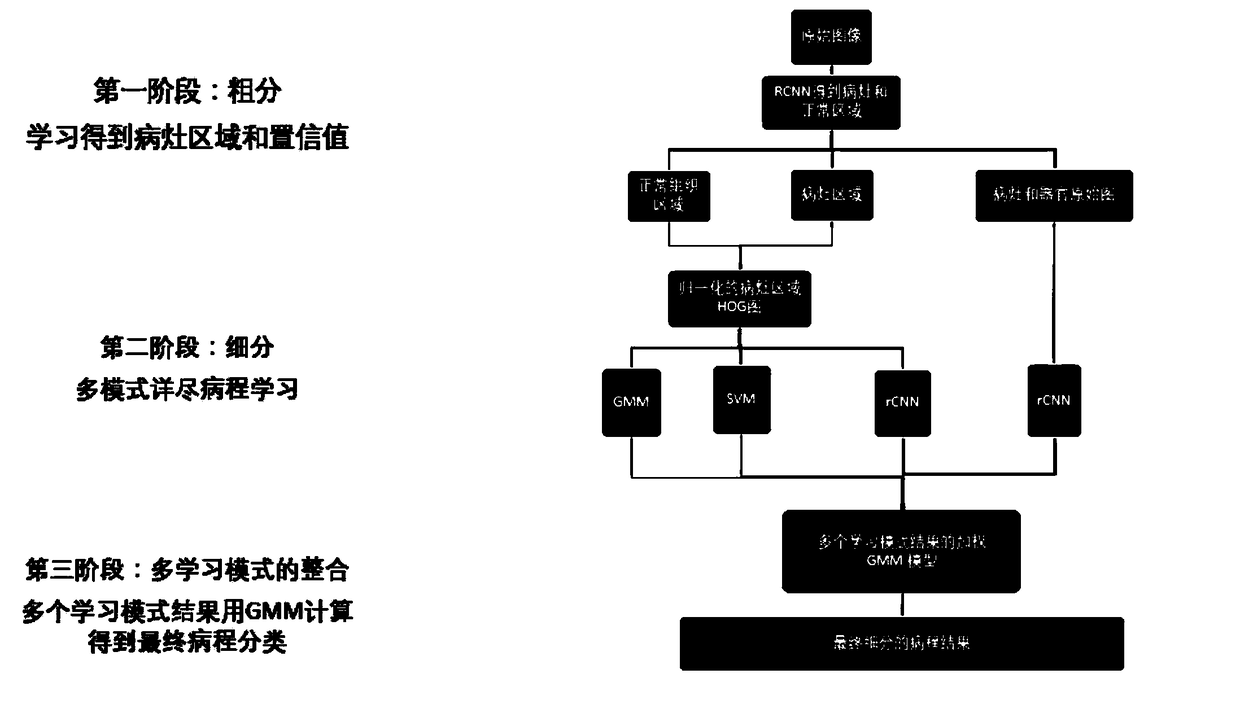

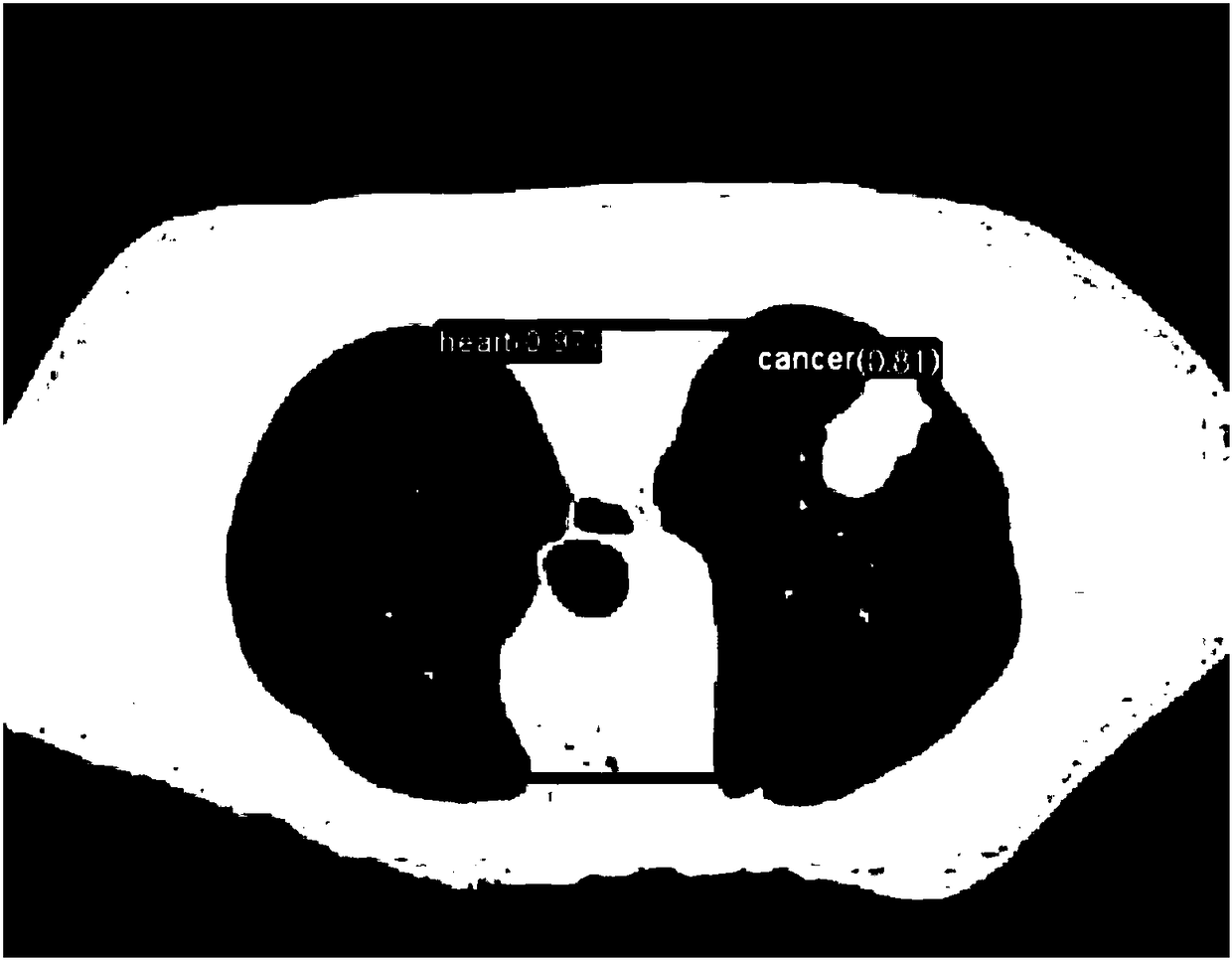

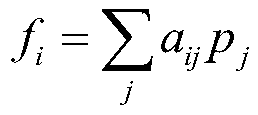

Multi-mode deep learning based medical image classification device and construction method thereof

ActiveCN108364006AReduce complexityRequirements for reducing the amount of training sample dataRecognition of medical/anatomical patternsHistogram of oriented gradientsConfidence score

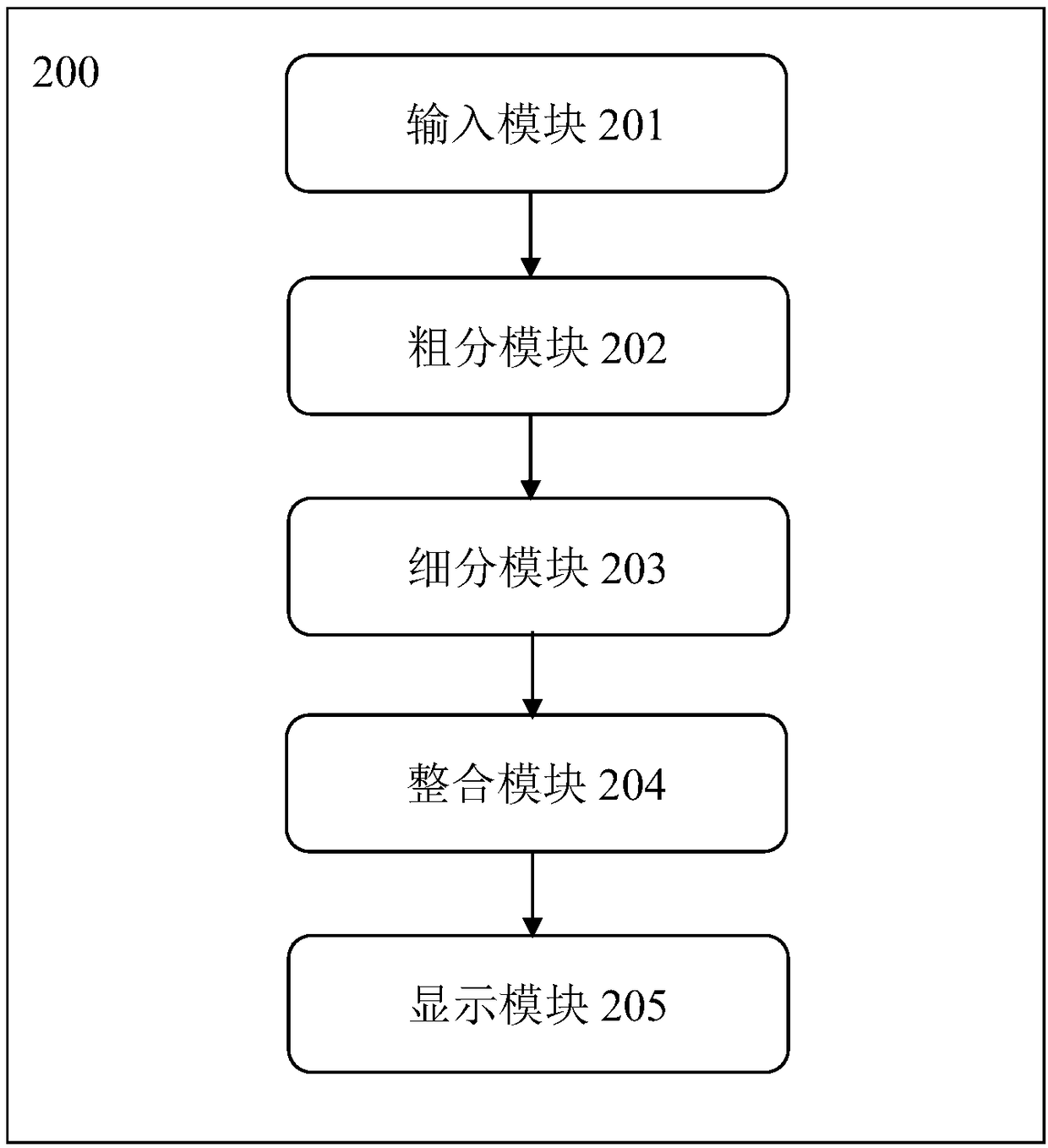

The invention discloses a deep learning based medical image classification device and a construction method thereof. The device comprises an input module, a coarse classification module, a fine classification module, an integration module and a display module; the coarse classification module comprises a regional convolutional neural network RCNN; the fine classification module comprises a first cyclic convolutional neural network rCNN1 for identifying an original image, a HOG (Histogram of Oriented Gradient) model which converts an image to an HOG, a support vector machine (SVM) for identifying the HOG, a Gaussian mixture model (GMM) and a second cyclic convolutional neural network rCNN2; and the integration module comprises an integrated classifier as a GMM, integrates identification confidence scores of different areas output by the four classifiers into one input vector, inputs the input vector after weighting, and obtains a final identification confidence score of the different areas.

Owner:超凡影像科技股份有限公司

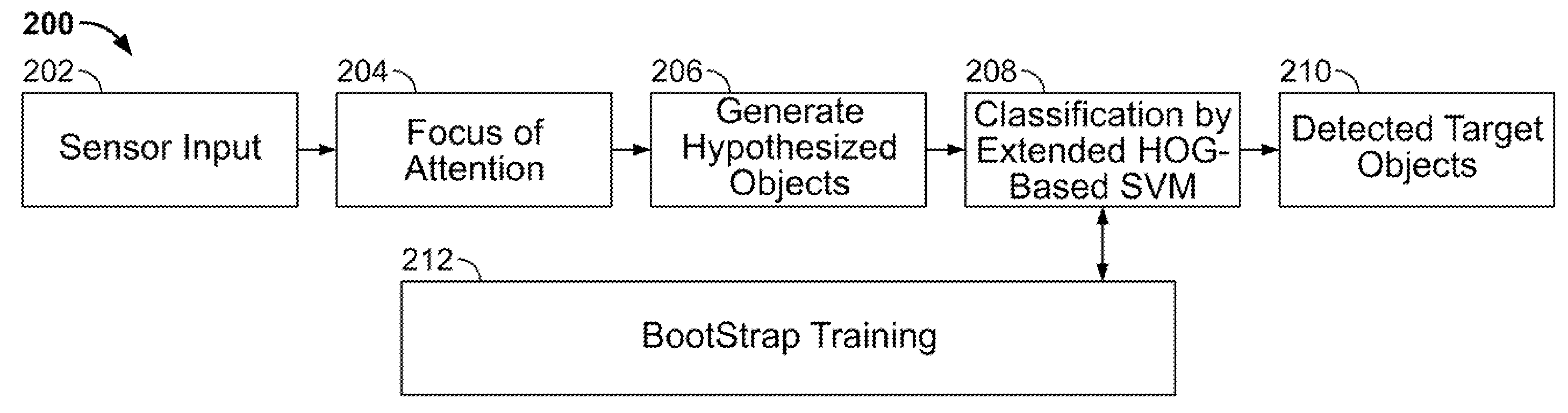

System and method for detecting still objects in images

The present invention provides an improved system and method for object detection with histogram of oriented gradient (HOG) based support vector machine (SVM). Specifically, the system provides a computational framework to stably detect still or not moving objects over a wide range of viewpoints. The framework includes providing a sensor input of images which are received by the “focus of attention” mechanism to identify the regions in the image that potentially contain the target objects. These regions are further computed to generate hypothesized objects, specifically generating selected regions containing the target object hypothesis with respect to their positions. Thereafter, these selected regions are verified by an extended HOG-based SVM classifier to generate the detected objects.

Owner:SRI INTERNATIONAL

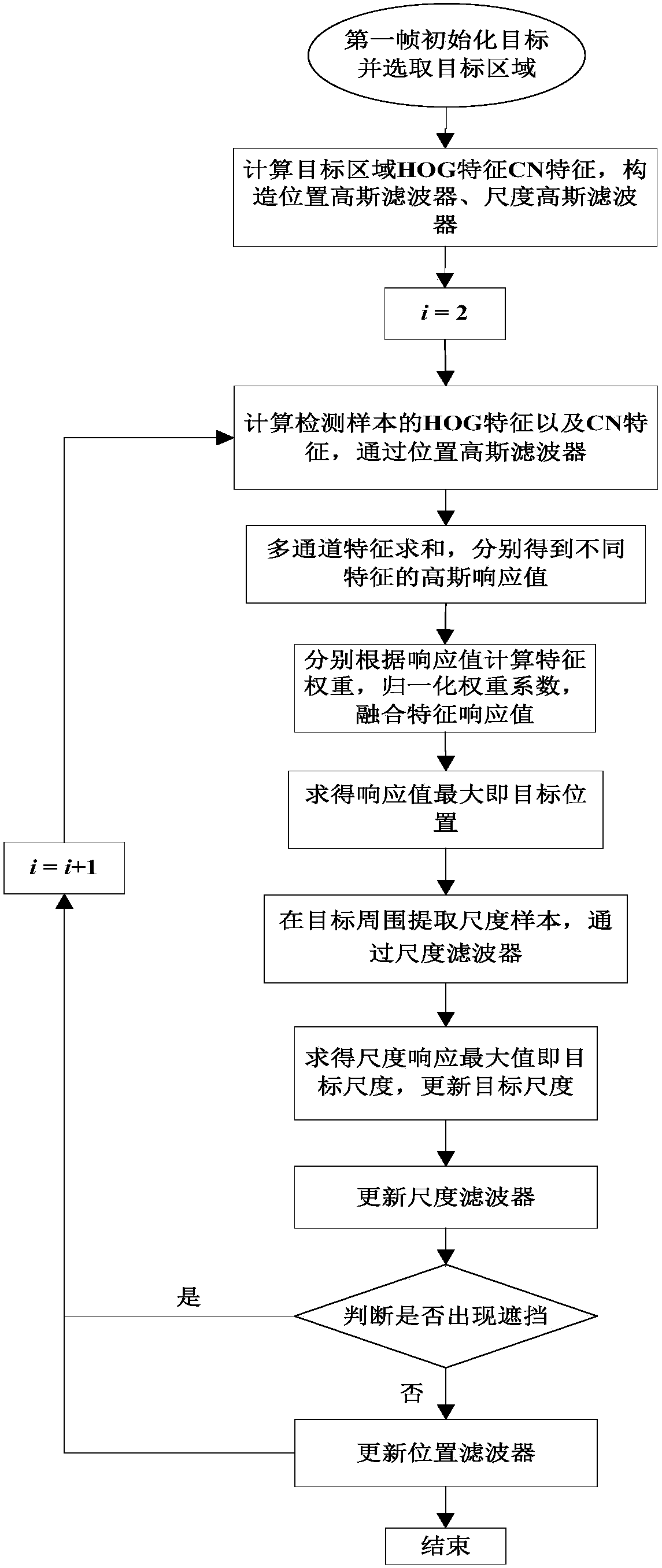

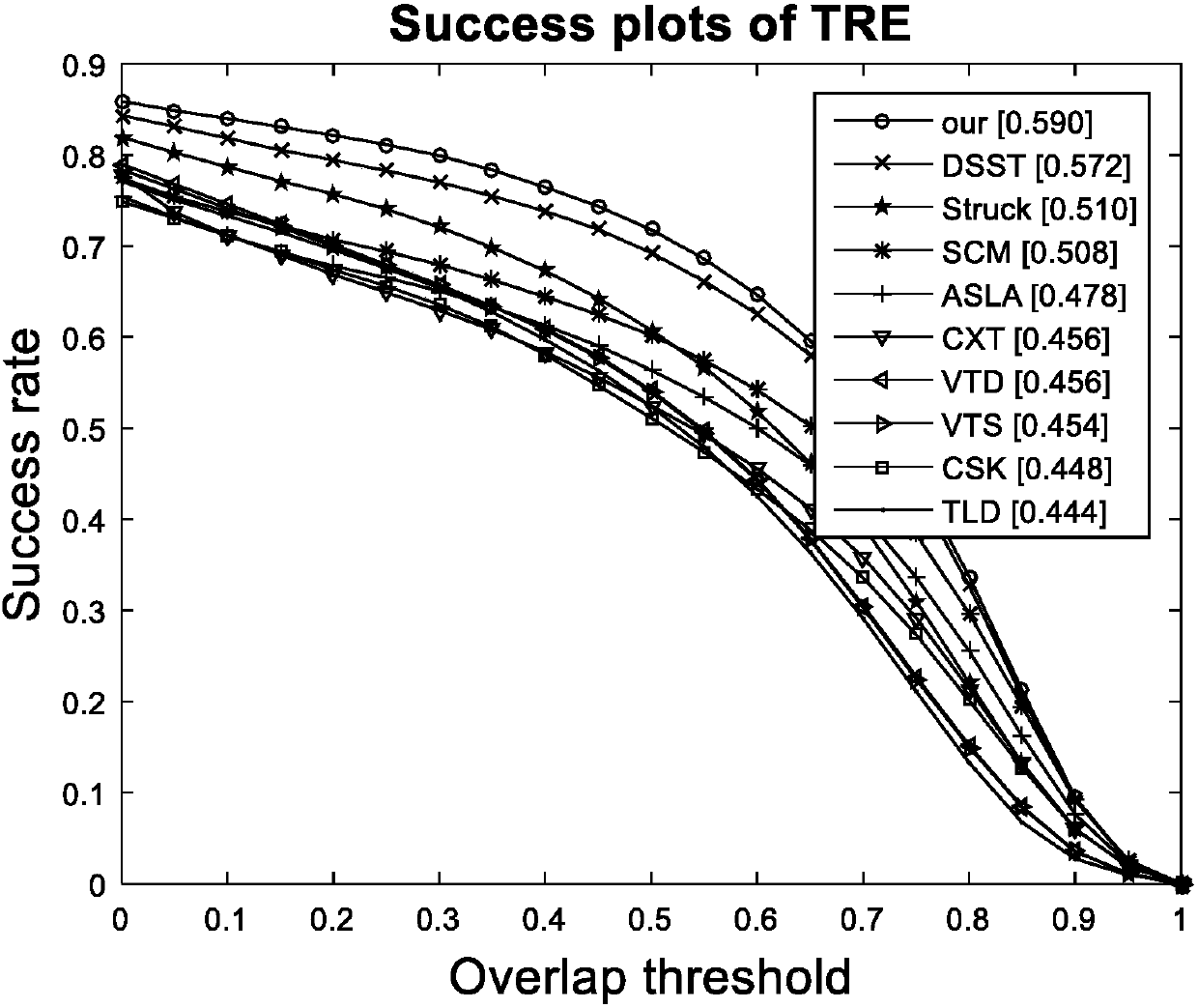

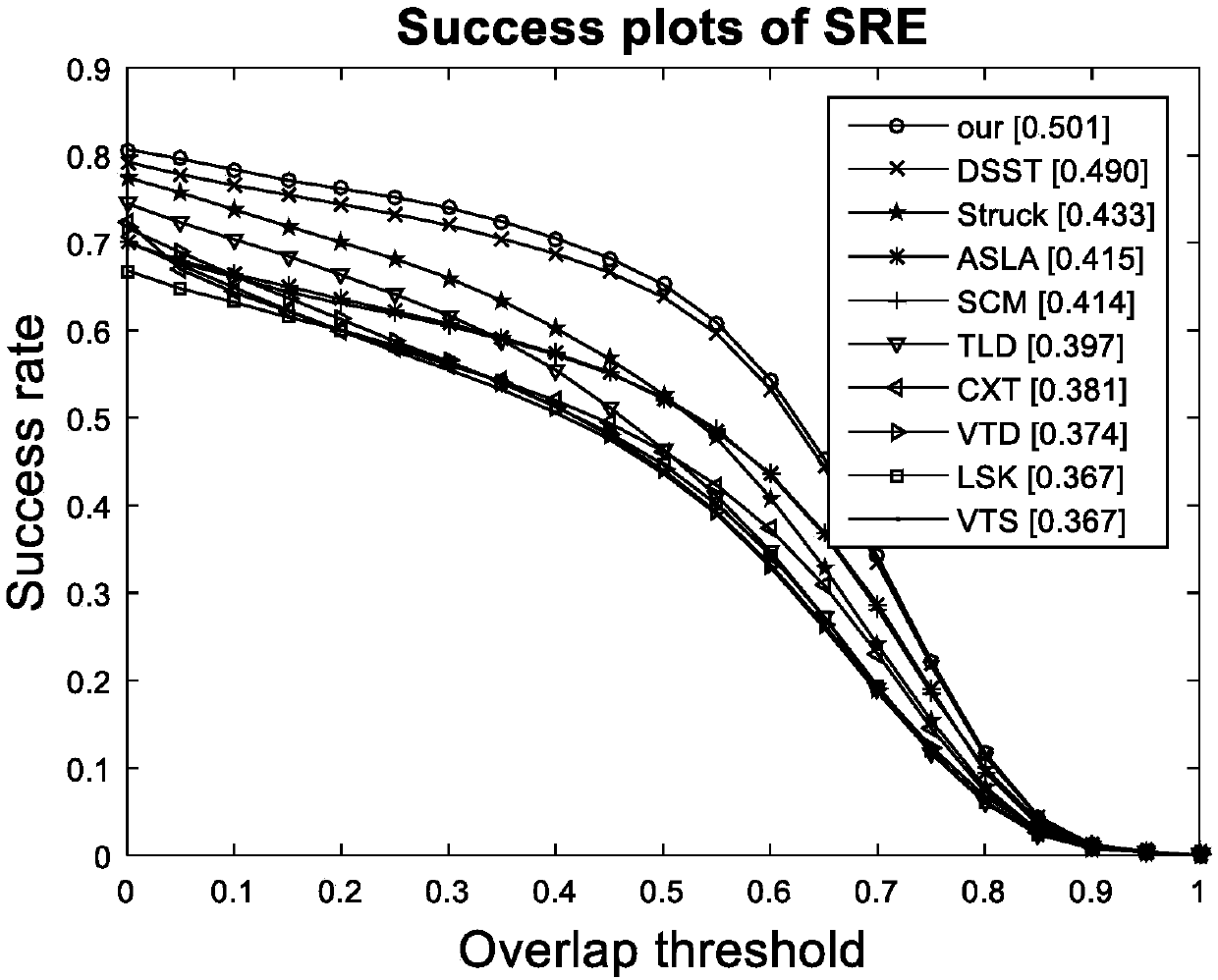

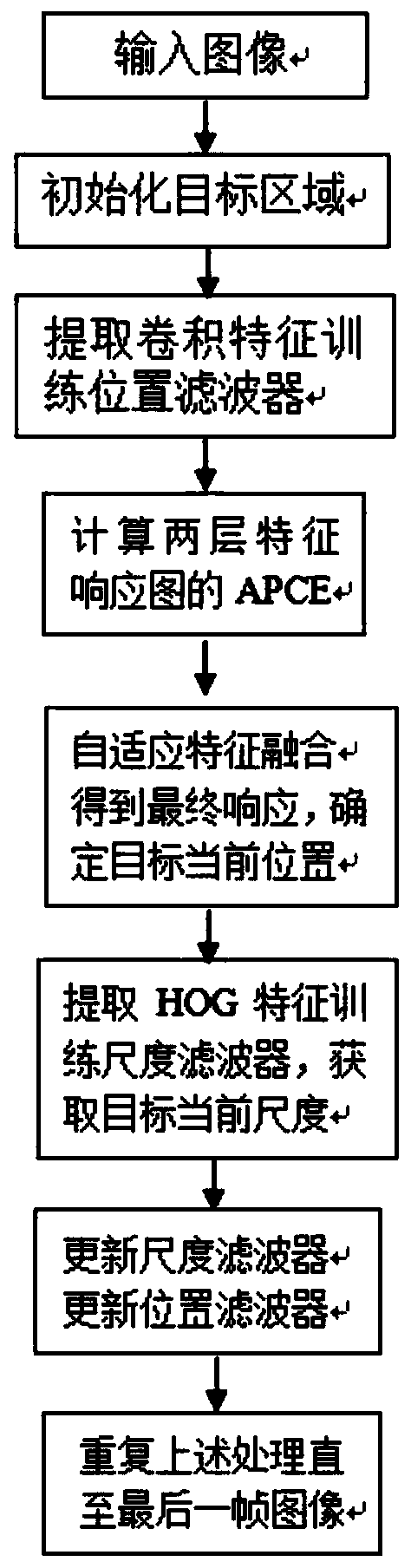

Target tracking based on adaptive feature fusion.

InactiveCN107644430AAccurate trackingImprove tracking accuracyImage analysisCharacter and pattern recognitionTarget ResponseWeight coefficient

The invention provides an adaptive feature fusion target tracking method. The adaptive feature fusion target tracking method comprises in a first frame image, initializing a target area, and constructing a location filter and a scaling filter; extracting detection samples around a target, respectively calculating an HOG (Histogram of Oriented Gradient) feature and a CN (Color Name) feature, and obtaining a response value through the location filter; calculating the feature weight according to the response value, normalizing the weight coefficient, fusing the feature response value, and selecting the point having the largest response value as the central location of the target; judging whether a shielding phenomenon occurs according to the target response, under the shielding condition, only updating the scaling filter without updating the target location filter, carrying out a circular process, and obtaining the target location of each frame. The adaptive feature fusion target trackingmethod is advantaged in that an adaptive feature fusion method is provided, a model updating strategy based on APCE (Average Peak-to-Correlation Energy) is designed, and the target tracking precisionand the robustness under the shielding condition can be greatly improved.

Owner:ANHUI UNIVERSITY

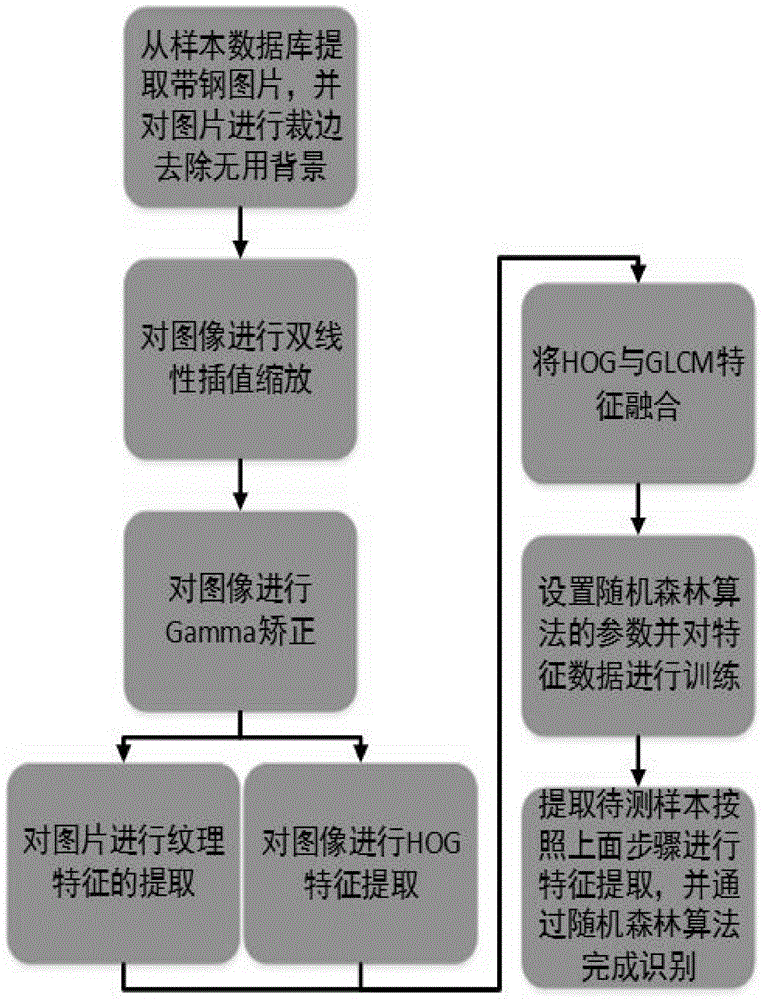

Strip steel surface area type defect identification and classification method

ActiveCN104866862AEasy to handleAccurate identificationCharacter and pattern recognitionFeature setHistogram of oriented gradients

The invention discloses a strip steel surface area type defect identification and classification method which comprises the following steps: extracting strip steel surface pictures in a training sample database, removing useless backgrounds and keeping the category of the pictures to a corresponding label matrix; carrying out bilinear interpolation algorithm zooming on the pictures; carrying out color space normalization on images of the zoomed pictures by adopting a Gamma correction method; carrying out direction gradient histogram feature extraction on the corrected pictures; carrying out textural feature extraction on the corrected pictures by using a gray-level co-occurrence matrix; combining direction gradient histogram features and textural features to form a feature set, which comprises two main kinds of features, as a training database; training the feature data with an improved random forest classification algorithm; carrying out bilinear interpolation algorithm zooming, Gamma correction, direction gradient histogram feature extraction and textural feature extraction on the strip steel defect pictures to be identified in sequence; and then, inputting the feature data into an improved random forest classifier to finish identification.

Owner:CENT SOUTH UNIV

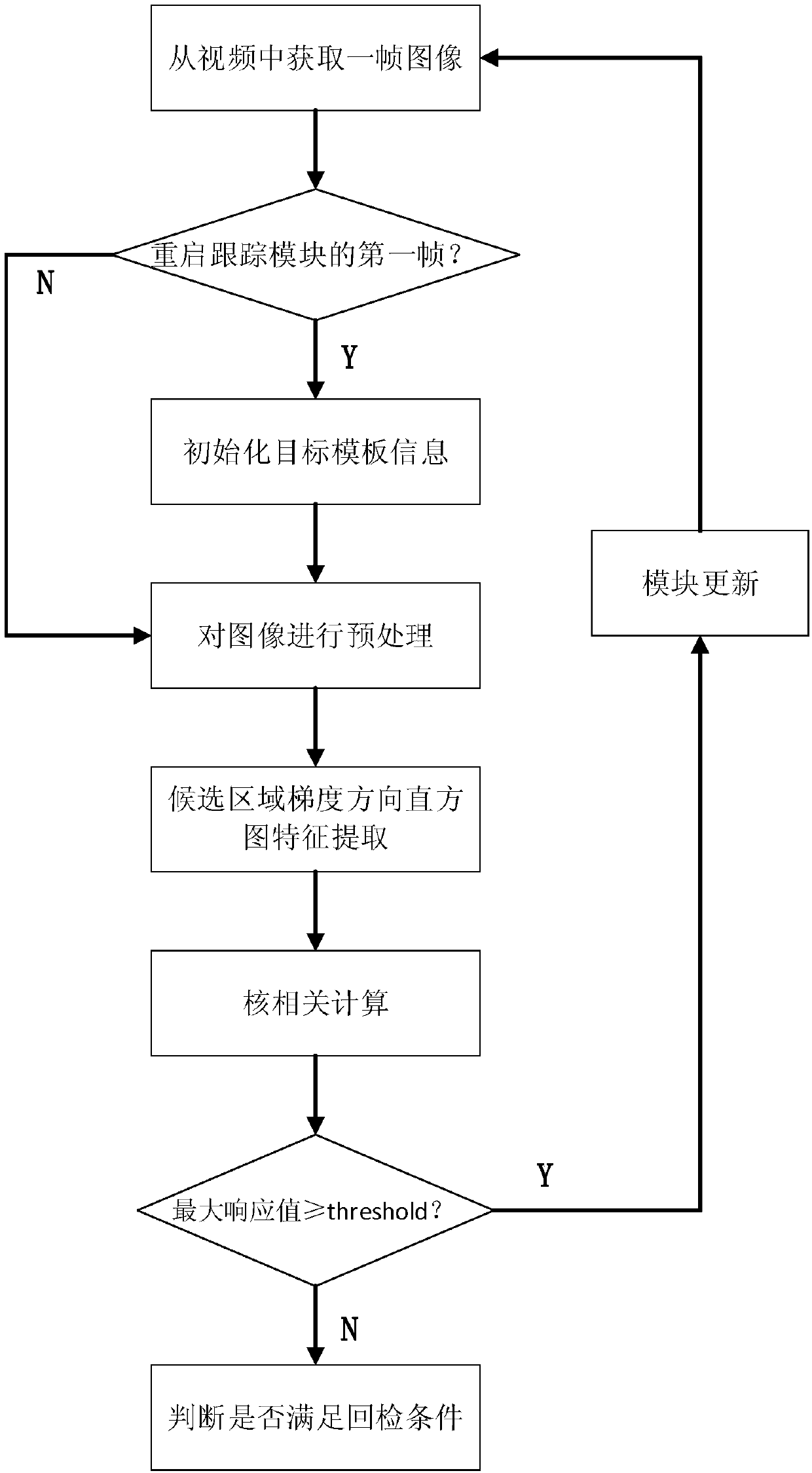

Multi-target pedestrian detecting and tracking method in monitoring video

InactiveCN107564034AHigh precisionImprove robustnessImage analysisCharacter and pattern recognitionTracking modelHistogram of oriented gradients

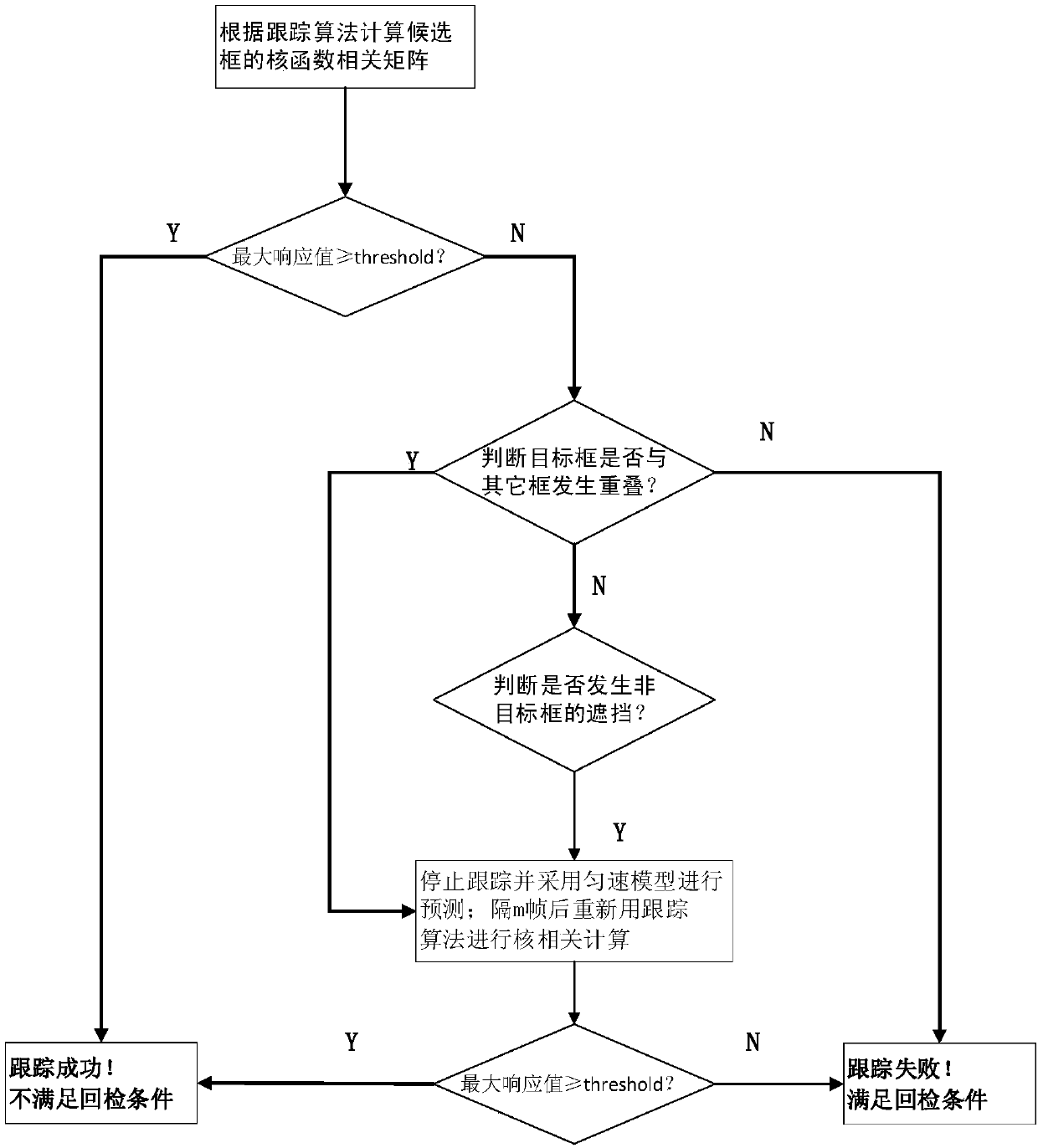

The invention discloses a multi-target pedestrian detecting and tracking method in monitoring video, comprising the steps that a target detection network based on deep learning is adopted for detecting a first frame of pedestrian image, and an initial rectangular area having one or a plurality of corresponding pedestrian targets can be obtained; based on the initial target area information, the Histogram of oriented gradients feature of a target can be extracted, and kernel function autocorrelation calculating of Fourier expansion domain can be conducted, and the tracking model is initializedbased on the calculating result; based on the target area information of the tracking model, a multi-dimensional construction of a pyramid will be carried out from the second frame of pedestrian image, and the extracting of the Histogram of oriented gradients feature matrix and the kernel function autocorrelation calculating of Fourier expansion domain can be conducted on each scale of the pedestrian rectangular area; the returned check condition is determined, and the identity re-verification and the updating of the tracking model can be conducted on the pedestrian target having returned check. The invention is advantageous in that the problem of drifting models can be resolved; a more accurate pedestrian moving track can be obtained; real-time performance is good.

Owner:SOUTH CHINA UNIV OF TECH

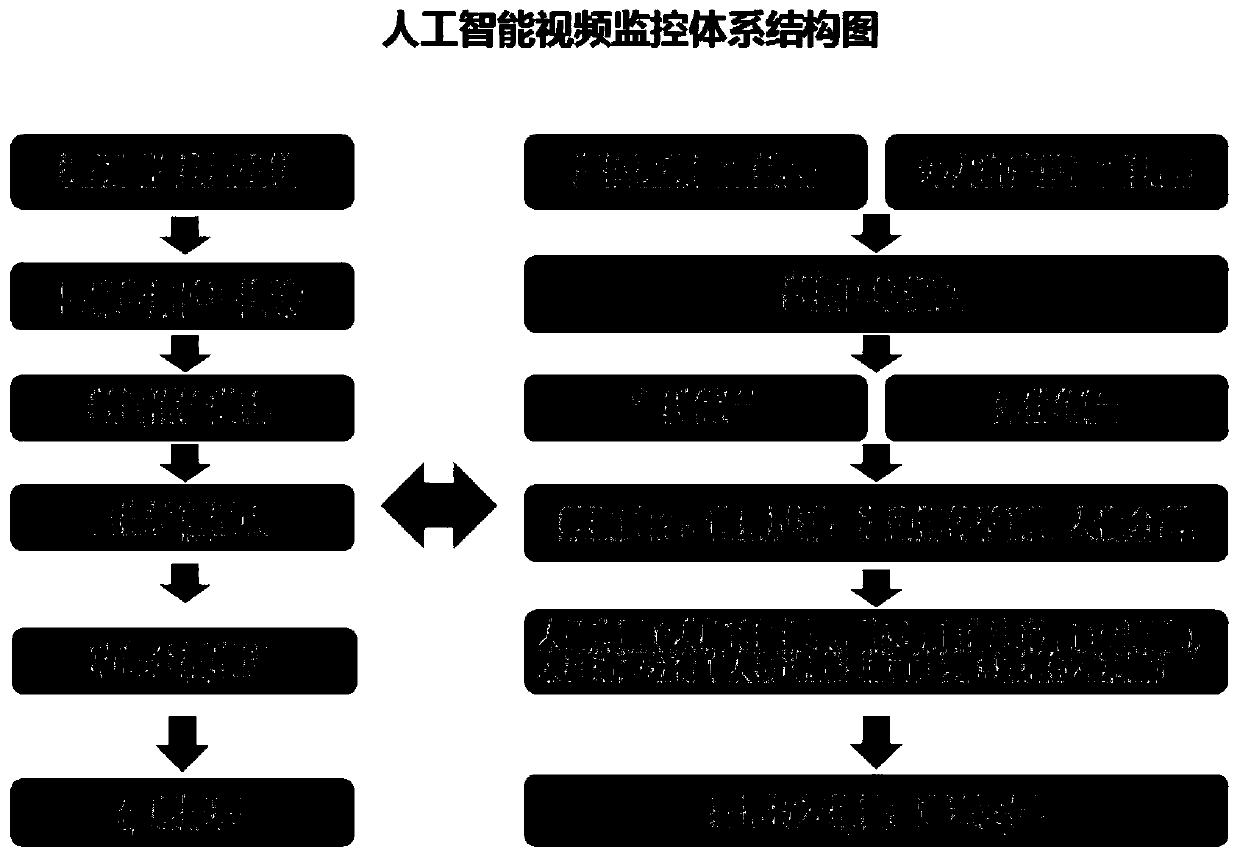

A dense crowd security and protection monitoring management method based on artificial intelligence dynamic monitoring

ActiveCN109819208ARealize security monitoring managementRealize full monitoring coverageTelevision system detailsCharacter and pattern recognitionVideo monitoringMonitoring system

The invention provides a dense crowd security and protection monitoring management method based on artificial intelligence dynamic monitoring. The method comprises the following steps: screening and marking sensitive personnel based on dynamic face recognition at an entrance and an exit, Wherein the face recognition comprises rapid primary screening and accurate secondary screening; And if necessary, using the unmanned aerial vehicle monitoring system to assist in performing face tracking monitoring; performing Crowd density dynamic monitoring based on video analysis, Wherein the dynamic crowddensity is calculated by adopting a direction gradient histogram human body detection algorithm; Detecting and warning group abnormal behaviors of the dense crowd under video monitoring; And performing the unmanned aerial vehicle assisting monitoring in the video monitoring blind area. According to the method, a key monitoring area and comprehensive dynamic monitoring are combined, flow monitoring of an unmanned aerial vehicle system is assisted, and full monitoring coverage of dense crowd occasions is achieved.

Owner:JIANGSU POLICE INST

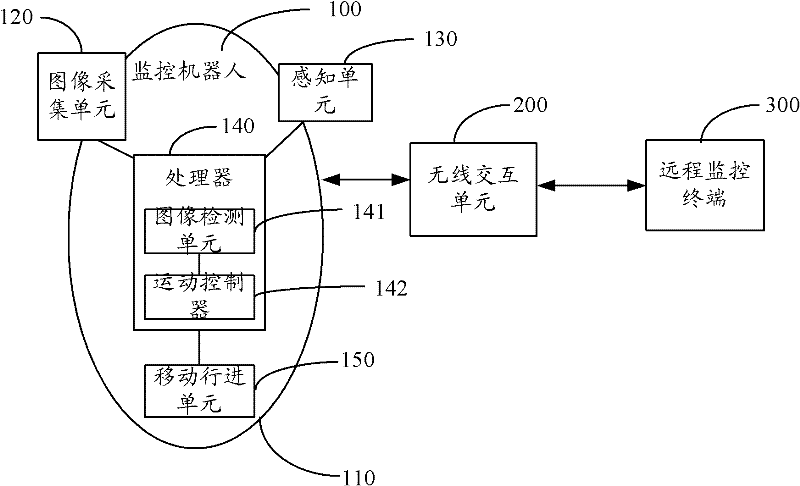

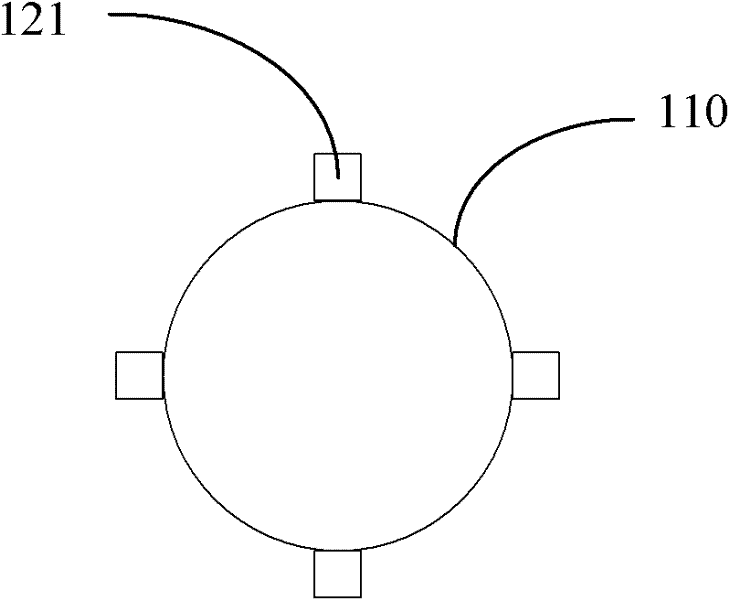

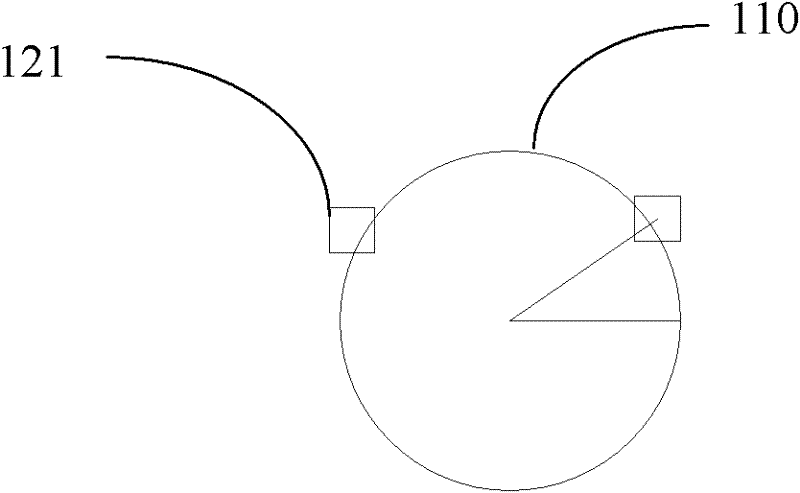

Full-view monitoring robot system and monitoring robot

ActiveCN102161202ARealization of panoramic monitoringImprove monitoring efficiencyProgramme-controlled manipulatorHuman bodyCommand and control

The invention discloses a full-view monitoring robot system, which comprises a monitoring robot, a wireless interaction unit and a remote monitoring terminal, wherein the monitoring robot comprises a robot housing, an image acquisition unit, a sensing unit, a processor and a moving unit; the image acquisition unit comprises a plurality of cameras which surround the robot housing at intervals for acquiring all-around images on the four sides of the monitoring robot; the sensing unit comprises a sensor network on the robot housing; the processor comprises an image detection unit and a motion controller, wherein the image detection unit extracts characteristics of a directional gradient column diagram from the images acquired by the image acquisition unit, classifies linearly supported vector machine, detects human body images according to the classification result and generates a control command when the human body images are detected; and the motion controller receives the control command and controls the travel unit to travel according to the control command. The system can perform 360 degree full-view monitoring and improve monitoring efficiency. Besides, the invention also provides a monitoring robot for use in the full-view monitoring robot system.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

Dressing safety detection method for worker on working site of electric power facility

ActiveCN103745226AAvoid safety accidentsEliminate potential safety hazardsData processing applicationsCharacter and pattern recognitionTransformerHistogram of oriented gradients

The invention discloses a dressing safety detection method for a worker on a working site of an electric power facility. An SVM (support vector machine) classifier is trained based on HOG (histogram of oriented gradients) characteristics to identify the worker on the working site of the electric power facility and judge whether the worker is neatly dressed or not based on a worker identification result. The method comprises the following steps of detecting a worker target appearing on the working site of the electric power facility by training a HOG-characteristic-based classifier, and judging whether dressing and equipment of the worker target meet safety requirements on the working site or not based on the identified worker target, mainly comprising safety items such as whether a helmet is worn or not, whether safety clothes are completely worn (without exposed skin) or not and whether the worker on a pole transformer correctly wears a safety belt or not. According to the method, the dressing of the worker can be detected in advance before the worker enters the working site, and an additional worker for supervision is not required to be deployed; in addition, if the dressing of the worker is inconsistent with norms, the worker is early-warned and prompted, so that safety accidents caused by nonstandard dressing are avoided, and potential safety hazards are eliminated.

Owner:STATE GRID CORP OF CHINA +6

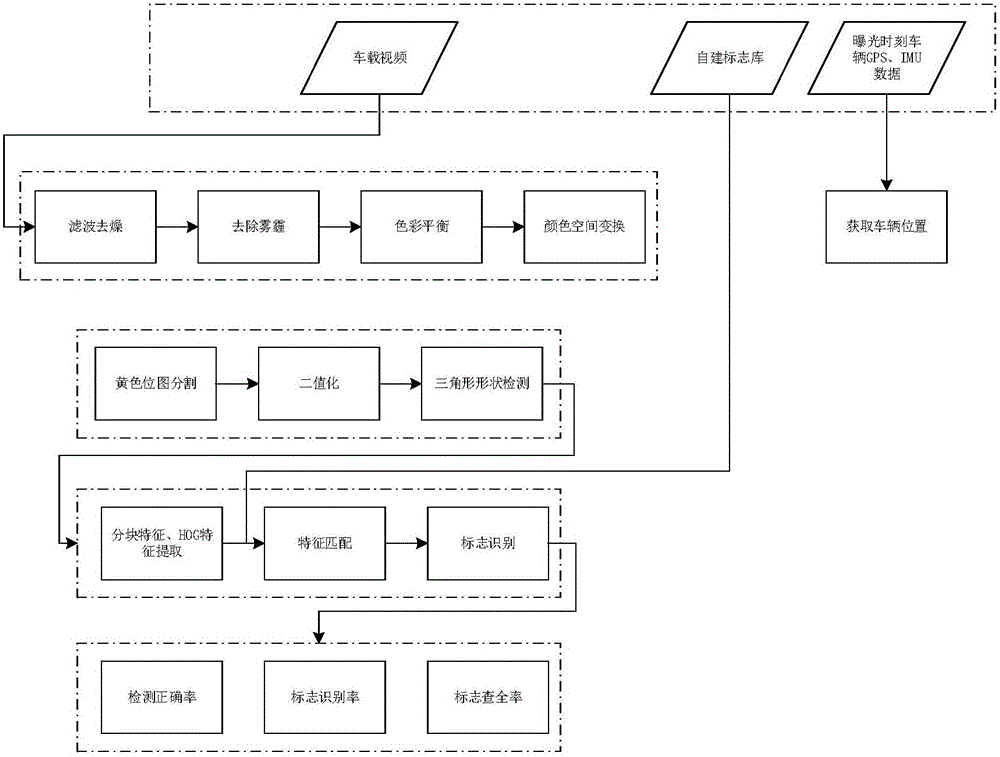

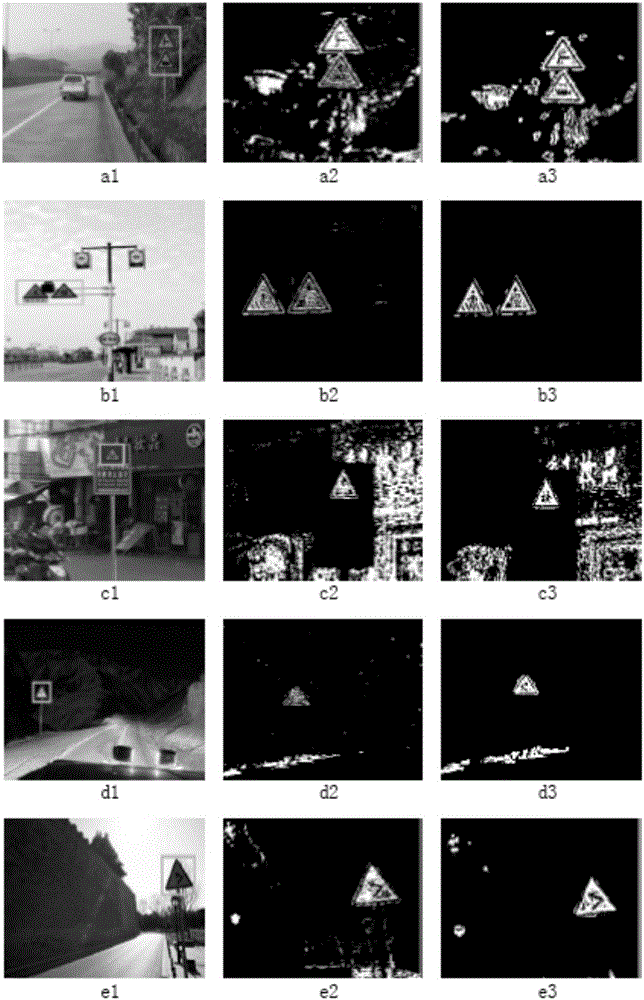

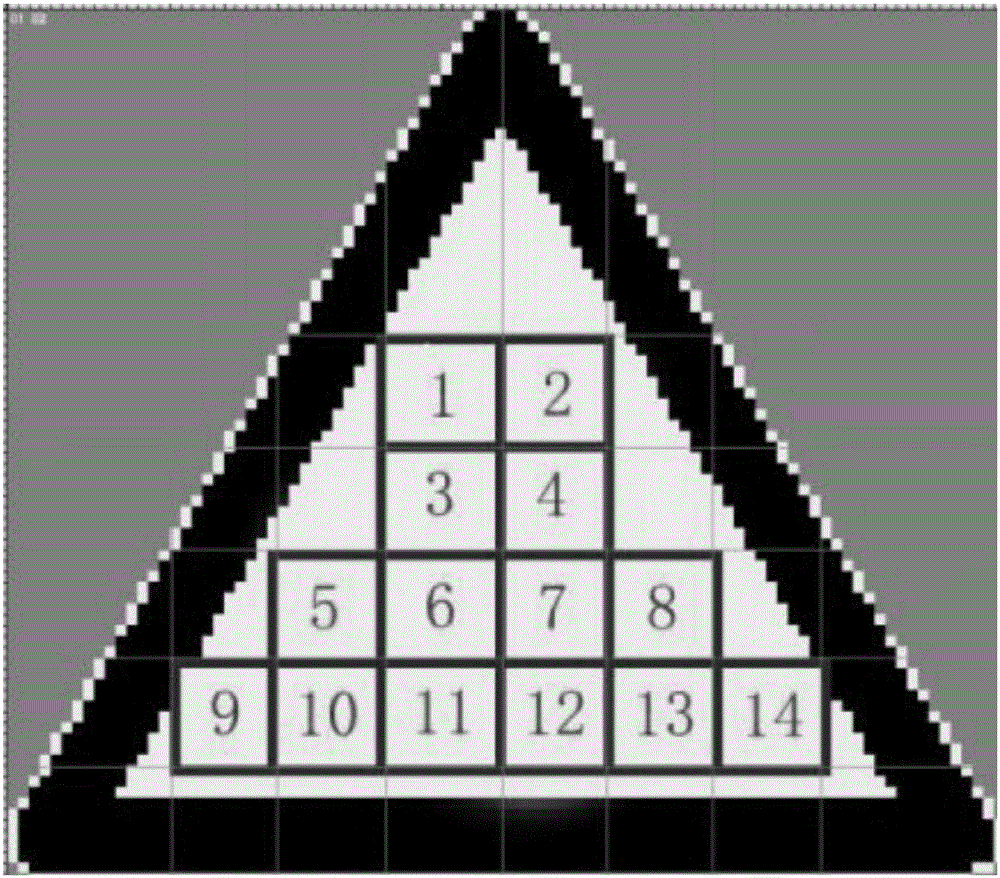

Road warning mark detection and recognition method based on block recognition

ActiveCN105809138AHigh saturationEasy to detectCharacter and pattern recognitionPattern recognitionSign detection

The invention discloses a road warning mark detection and recognition method based on block recognition. The method comprises the following steps of performing HSV (Hue, Saturation and Value) color spatial alternation on vehicle-mounted video data and performing binarization processing; extracting profile information of a binarization image, and judging the shape of the binarization image according to the geometrical characteristics of the binarization image so as to detect a warning mark region; extracting block features and HOG (Histogram of Oriented Gradient) features of the warning mark region; matching out the type of a mark to be recognized by using a mark base as the standard; and obtaining the mark detection result. The method has the advantages that the high mark recognition correct rate can be ensured; and the missing detection is effectively reduced, so that the better mark detection result is obtained. A good application value is realized in the field of intelligent traffic.

Owner:北京图迅丰达信息技术有限公司

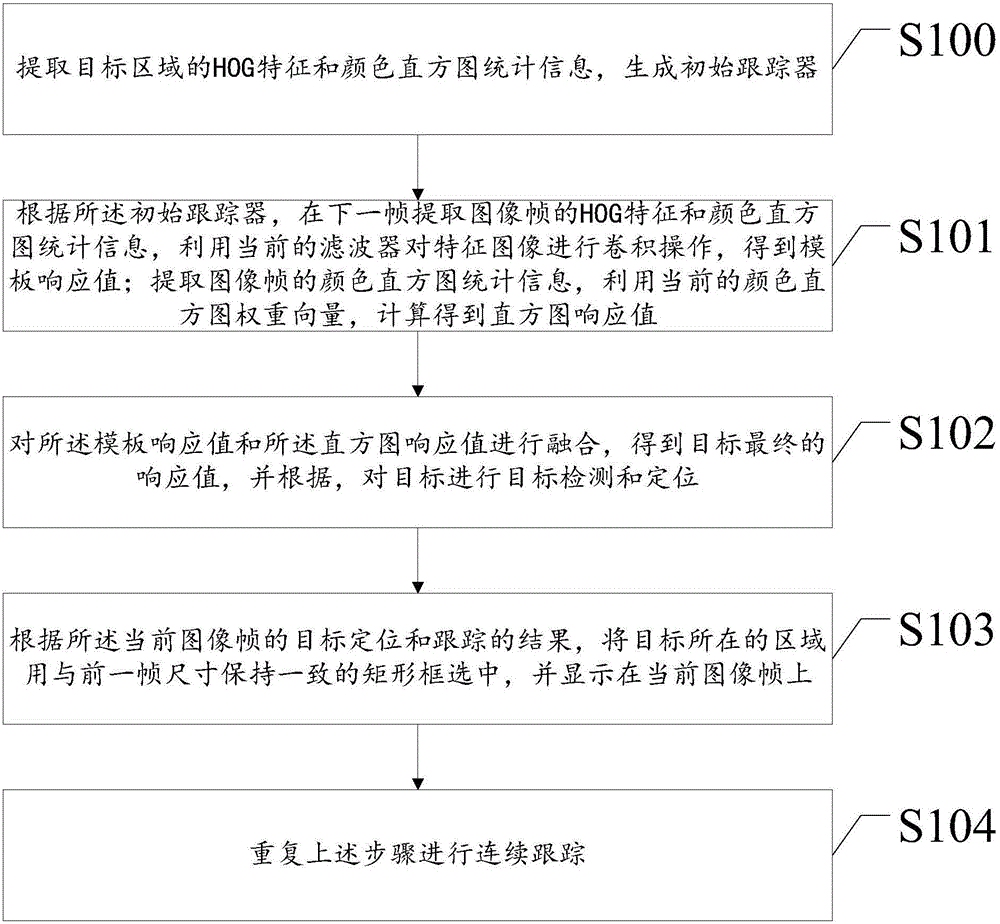

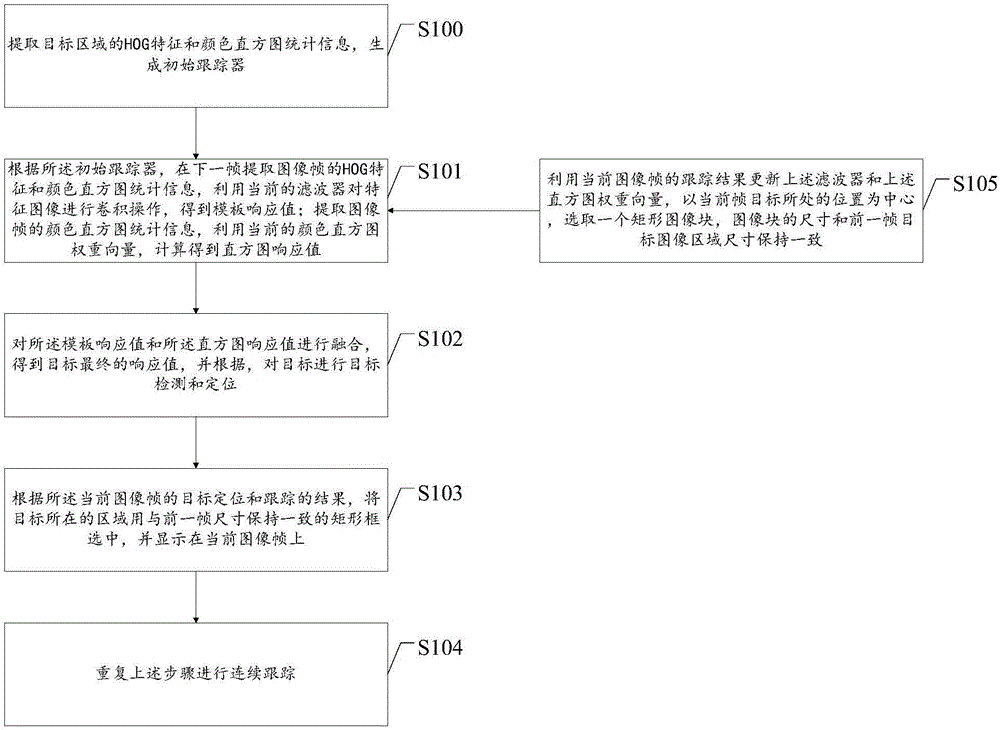

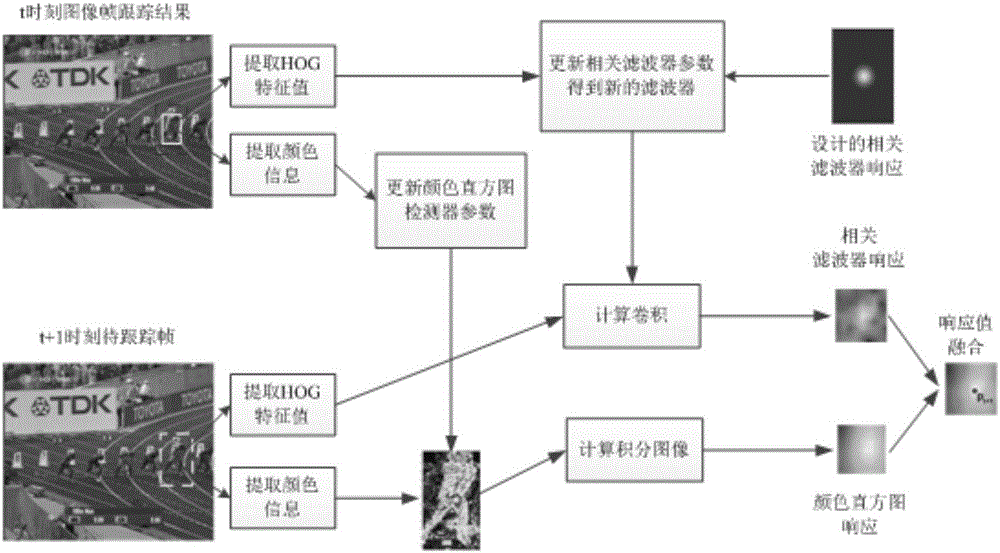

Target tracking method based on correlation filtering and color histogram statistics and ADAS (Advanced Driving Assistance System)

InactiveCN106651913AGuaranteed real-timeGuaranteed accuracyImage enhancementImage analysisHistogram of oriented gradientsTrack algorithm

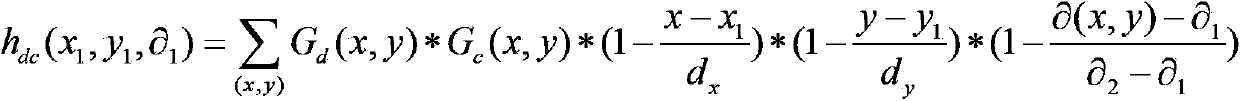

The invention discloses a target tracking method based on correlation filtering and color histogram statistics and an ADAS (Advanced Driving Assistance System). The target tracking method comprises the steps of extracting HOG (Histogram of Oriented Gradients) features and color histogram statistical information of a target region, and generating an initial tracker; extracting HOG features and color histogram statistical information of the next image frame according to the initial tracker, and performing convolution operation on a feature image by using a current filter h<t> to acquire a template response value f<tmpl>(x); extracting the color histogram statistical information of the image frame, and calculating a histogram response value f<hist>(x) by using a current color histogram weight vector beta<t>; fusing the template response value f<tmpl>(x) and the histogram response value f<hist>(x) to acquire a final response value f(x) of a target, and performing target detection and positioning on the target according to the final response value f(x). According to the invention, the complementarity of two tracking algorithms is utilized, the speed and the accuracy of a tracking algorithm can be simultaneously ensured, a condition of drifting of the tracking target is greatly reduced, and the target tracking method has good application prospects in a driving assistance system.

Owner:开易(北京)科技有限公司

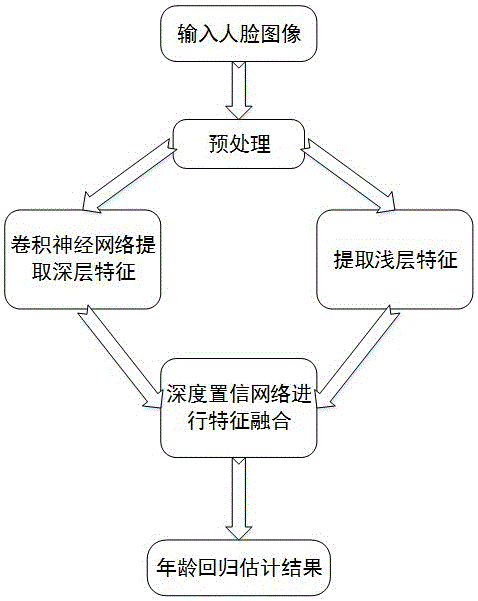

Human face age estimation method based on fusion of deep characteristics and shallow characteristics

ActiveCN106778584AImprove reliabilitySimple structureCharacter and pattern recognitionDeep belief networkData set

The invention discloses a human face age estimation method based on the fusion of deep characteristics and shallow characteristics. The method comprises the following steps that: preprocessing each human face sample image in a human face sample dataset; training a constructed initial convolutional neural network, and selecting a convolutional neural network used for human face recognition; utilizing a human face dataset with an age tag value to carry out fine tuning processing on the selected convolutional neural network, and obtaining a plurality of convolutional neural networks used for age estimation; carrying out extraction to obtain multi-level age characteristics corresponding to the human face, and outputting the multi-level age characteristics as the deep characteristics; extracting the HOG (Histogram of Oriented Gradient) characteristic and the LBP (Local Binary Pattern) characteristic of the shallow characteristics of each human face image; constructing a deep belief network to carry out fusion on the deep characteristics and the shallow characteristics; and according to the fused characteristics in the deep belief network, carrying out the age regression estimation of the human face image to obtain an output an age estimation result. By sue of the method, age estimation accuracy is improved, and the method owns a human face image age estimation capability with high accuracy.

Owner:NANJING UNIV OF POSTS & TELECOMM

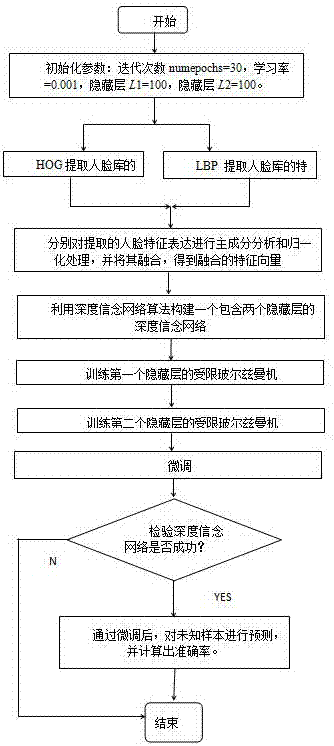

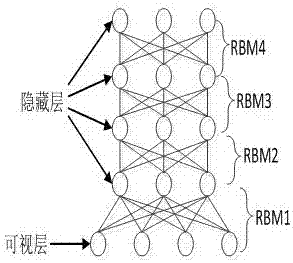

Multi-feature fusion-based deep learning face recognition method

InactiveCN107578007AImprove accuracyReduce operationCharacter and pattern recognitionNeural architecturesDeep belief networkFeature vector

The invention discloses a multi-feature fusion-based deep learning face recognition method. The multi-feature fusion-based deep learning face recognition method comprises performing feature extractionon images in ORL (Olivetti Research Laboratory) through a local binary pattern and an oriented gradient histogram algorithm; fusing acquired textual features and gradient features, connecting the twofeature vectors into one feature vector; recognizing the feature vector through a deep belief network of deep learning, and taking fused features as input of the deep belief network to layer by layertrain the deep belief network and to complete face recognition. By fusing multiple features, the multi-feature fusion-based deep learning face recognition method can improve accuracy, algorithm stability and applicability to complex scenes.

Owner:HANGZHOU DIANZI UNIV

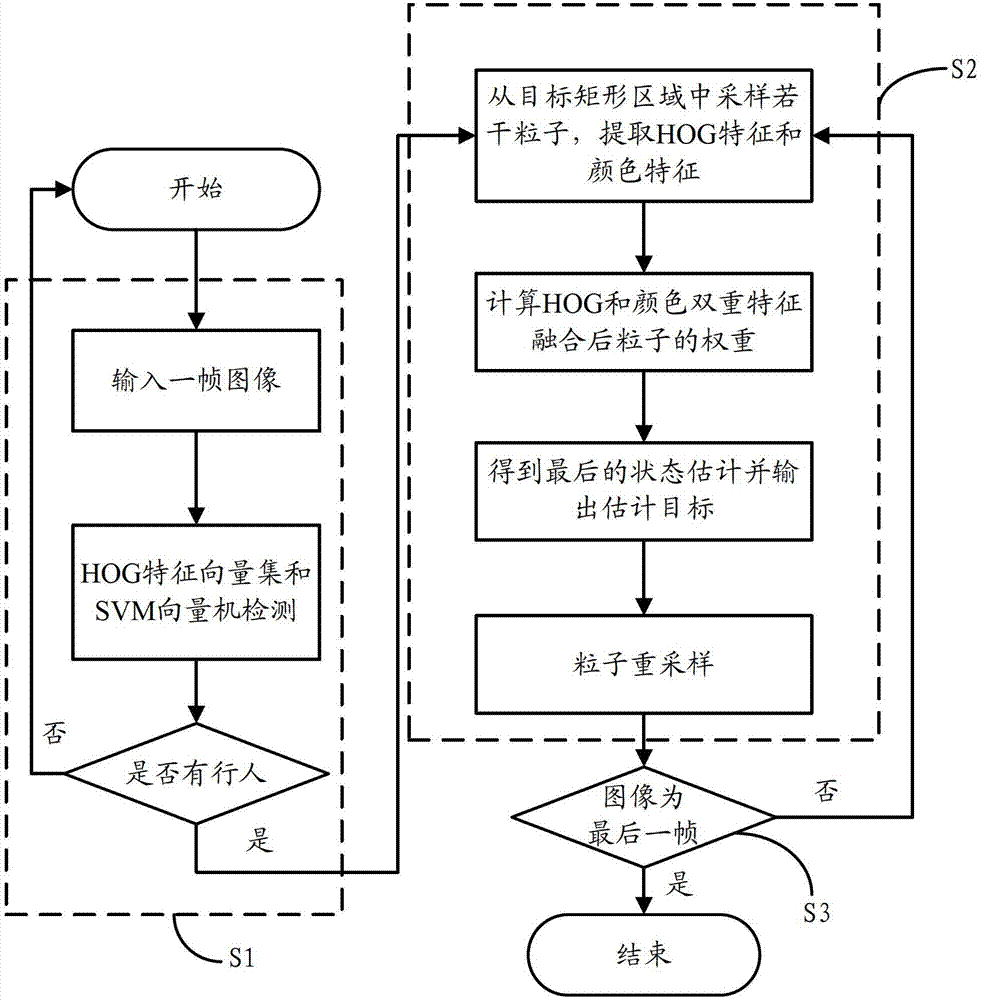

Method and system for automatically tracking moving pedestrian video based on particle filtering

InactiveCN102831409AImprove robustnessReduce instabilityCharacter and pattern recognitionFeature vectorHistogram of oriented gradients

The invention discloses a method and a system for automatically tracking a moving pedestrian video based on particle filtering. The method comprises the following steps of: inputting one frame of images, and carrying out detection through an HOG (Histogram of Oriented Gradient) feature vector set and an SVM (Support Vector Machine) vector machine; in order to realize particle filtering tracking based on double HOG and color features, firstly obtaining an initial rectangular area of target pedestrian, sampling a plurality of particles from a target rectangular area, extracting an HOG feature and a color feature, computing the weight of the particles after the double HOG and color features are fused, obtaining the final state estimation through a minimum mean square error estimator, outputting an estimation target and then resampling; and closely locking the tracked target pedestrian. The method extracts the double HOG and color features to increase the robustness of a particle filtering likelihood model and eliminate the unstable situation in the tracking process, the method combines the HOG feature to build the better likelihood model through a fusion strategy of weighted mean, the robustness of the tracking algorithm is greatly increased, and the stable tracking is completed.

Owner:SUZHOU UNIV

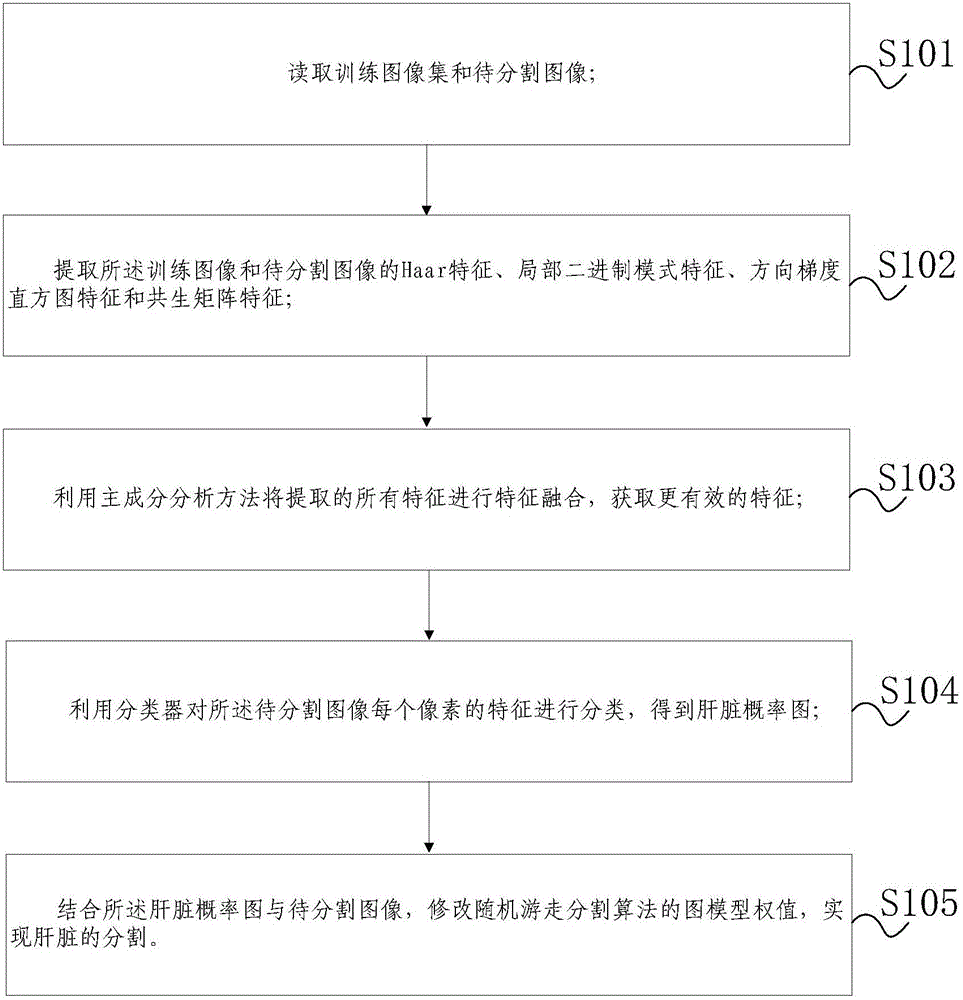

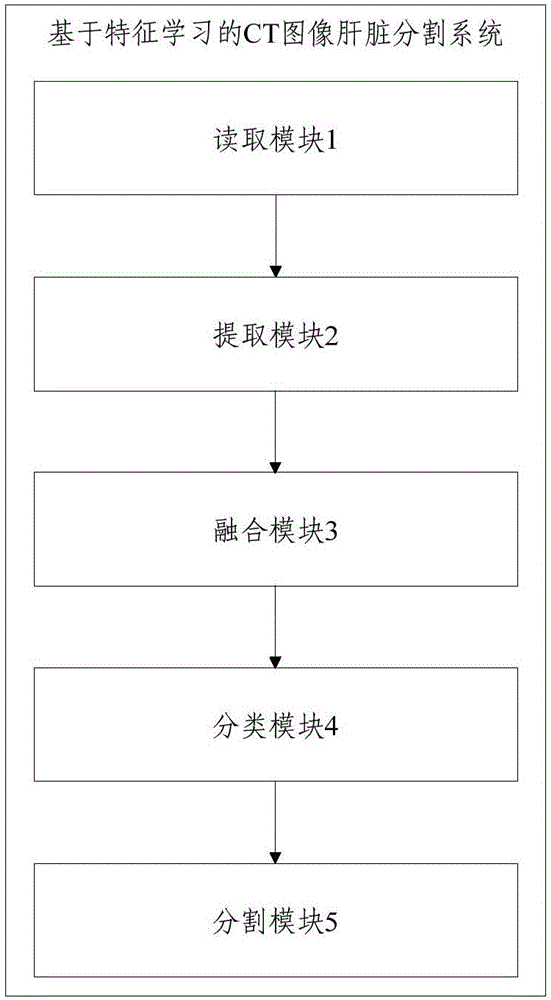

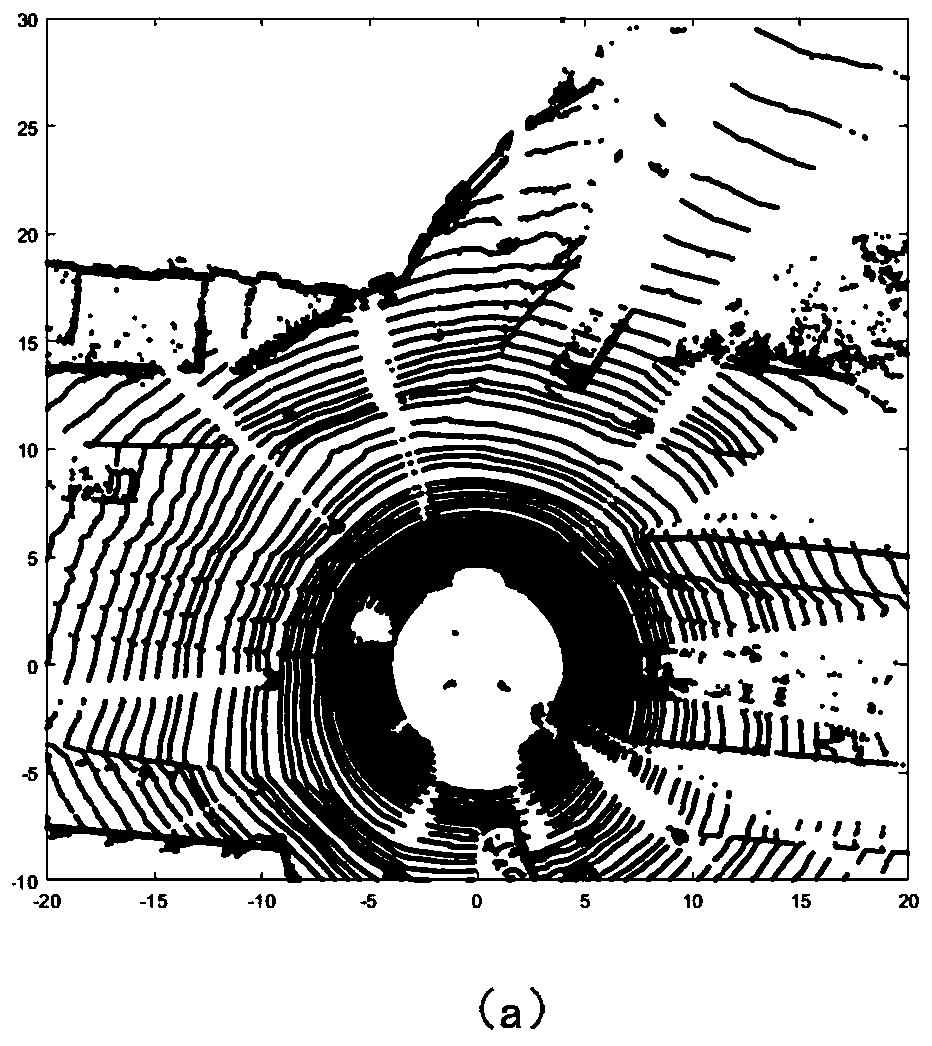

CT image liver segmentation method and system based on characteristic learning

ActiveCN105894517AImprove Segmentation AccuracyGood segmentation resultImage enhancementImage analysisPrincipal component analysisHistogram of oriented gradients

The invention discloses a CT image liver segmentation method and system based on characteristic learning, being able to effectively improve the segmentation precision of the liver in a CT image. The method comprises the steps: S101: reading a training image set and an image to be segmented, wherein the training images in the training image set and the image to be segmented are the CT images of a belly; S102: extracting the Haar characteristic of the training images and the image to be segmented, the local binary pattern characteristic, the directional gradient histogram and the co-occurrence matrix characteristic; S103: utilizing a principal component analysis method to perform characteristic fusion on all the extracted characteristics so as to acquire more effective characteristics; S104: utilizing a classifier to classify the characteristics of each pixel of the image to be segmented to obtain a liver probability graph; and S105: combining the liver probability graph with the image to be segmented, modifying the graph model weight of a random walk segmentation algorithm to realize segmentation of the liver.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

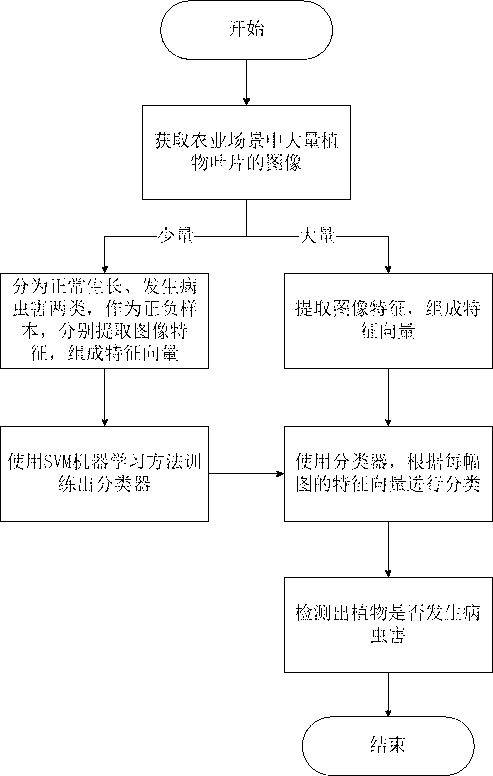

Plant disease and pest detection method based on SVM (support vector machine) learning

InactiveCN102915446AImprove efficiencyRealize continuous computingCharacter and pattern recognitionDiseaseFeature vector

The invention belongs to the technical field of digital image processing and pattern recognition and particularly relates to a plant disease and pest detection method based on SVM (support vector machine) learning. The plant disease and pest detection method comprises the following steps: acquiring a large number of regularly grown plant leaves and the plant leaves with diseases and pests from a large number of monitoring videos of agricultural scenes; extracting a part of pictures of the regularly grown plant leaves and the plant leaves with diseases and pests as samples; extracting characteristics [including color characteristic, HSV (herpes simplex virus) characteristic, edge characteristic and HOG (histogram of oriented gradient) characteristic] of each leaf picture; combining the characteristics into characteristic vectors; training the characteristic vectors of each leaf picture through an SVM learning method; forming a classifier after training; and detecting the large number of plant leaf pictures by the classifier to detect whether diseases or pests occur to the plant leaves. Compared with a biologic plant disease and pest detection method, the plant disease and pest detection method based on the SVM learning is higher in real time and easier to implement; and the shortcoming of detecting the plant diseases and pests by working in the fields is overcome.

Owner:FUDAN UNIV

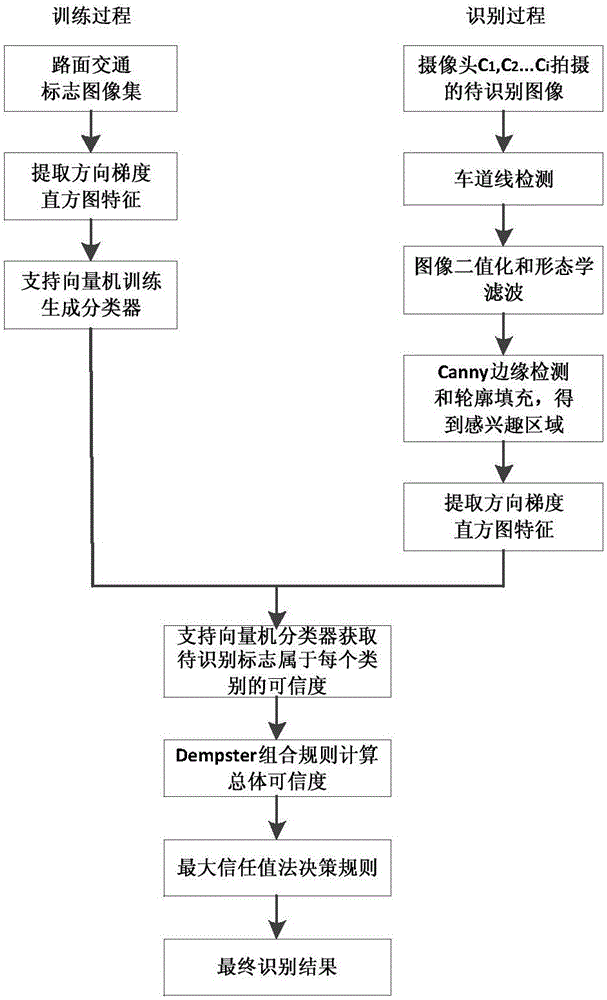

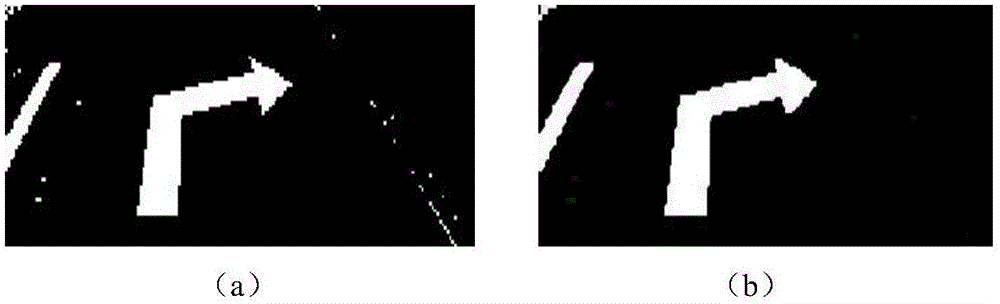

Road traffic sign identification method with multiple-camera integration based on DS evidence theory

ActiveCN105930791AEfficient identificationRecognition is stable and efficientCharacter and pattern recognitionImaging processingHistogram of oriented gradients

The present invention relates to a road traffic sign identification method with multiple-camera integration based on the DS evidence theory, belonging to the technical field of image processing. According to the method, five types of road traffic indication signs which are going straight, turning left, turning right, going straight and turning left, and going straight and turning right are mainly identified, and the method is divided into two parts which are training and testing. In a training stage, the direction gradient histogram feature of a training sample is extracted, thus a sample characteristic and a category label are introduced into a support vector machine to carry out classification training. In a testing stage, an interested region is obtained through image pre-processing, the direction gradient histogram feature of the interested region is extracted and is sent into a classifier to carry out classification, according to the credibility of the sign to be identified obtained by the classifier belonging to each category, and combined with a DS evidence theory data integration method and a maximum credibility decision rule, a final sign identification result is determined. According to the invention, a multiple-camera data integration method based on the DS evidence theory is employed, the information of multiple cameras are integrated to obtain a final identification result, and the road traffic signs can be stably and efficiently identified.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

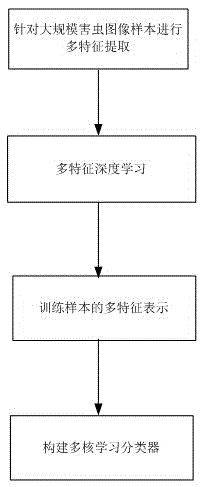

Agricultural pest image recognition method based on multi-feature deep learning technology

ActiveCN105488536AImprove accuracyImprove recognition rateCharacter and pattern recognitionScale-invariant feature transformHistogram of oriented gradients

The invention relates to an agricultural pest image recognition method based on a multi-feature deep learning technology. In comparison with the prior art, a defect of poor pest image recognition performance under the complex environment condition is solved. The method comprises the following steps of carrying out multi-feature extraction on large-scale pest image samples and extracting color features, texture features, shape features, scale-invariant feature conversion features and directional gradient histogram features of the large-scale pest image samples; carrying out multi-feature deep learning and respectively carrying out unsupervised dictionary training on different types of features to obtain sparse representation of the different types of features; carrying out multi-feature representation on training samples and constructing a multi-feature representation form-multi-feature sparse coding histogram for the pest image samples through combining different types of features; and constructing a multi-core learning classifier and constructing a multi-core classifier through learning a sparse coding histogram for positive and negative pest image samples to classify pest images. According to the method, the accuracy for pest recognition is improved.

Owner:HEFEI INSTITUTES OF PHYSICAL SCIENCE - CHINESE ACAD OF SCI

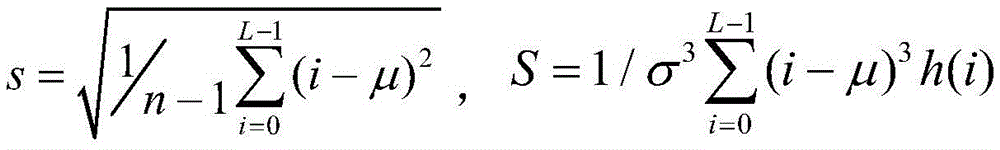

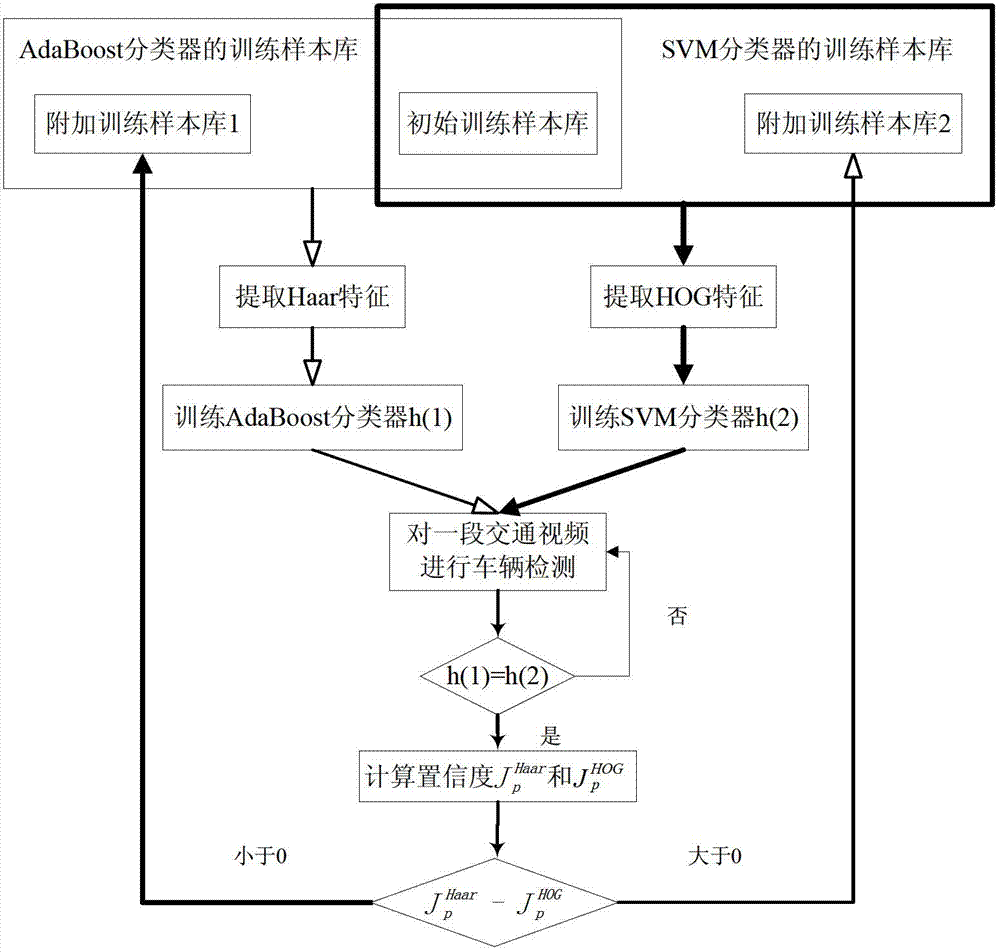

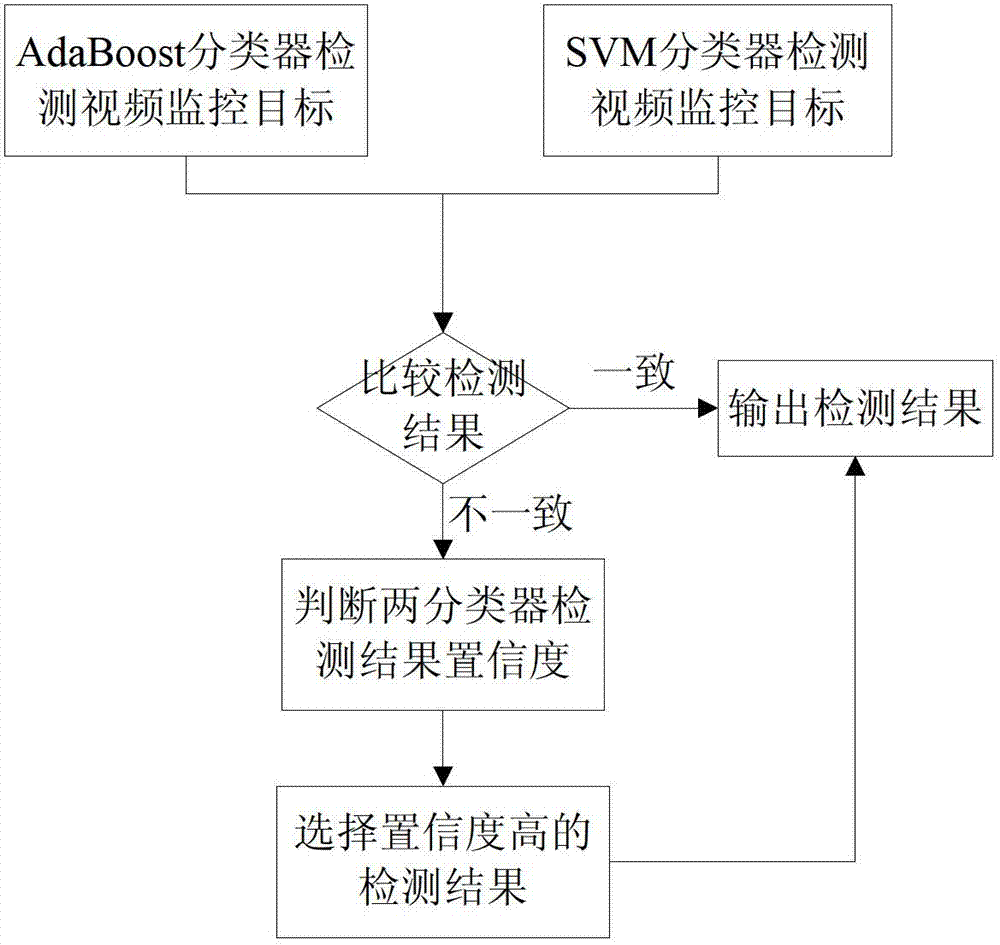

Fast adaptation method for traffic video monitoring target detection based on machine vision

InactiveCN103208008AShorten the training processRobust and accurate object detection resultsCharacter and pattern recognitionVideo monitoringHistogram of oriented gradients

The invention belongs to the field of machine vision and intelligent control for achieving fast self-adaptation of traffic video monitoring target detection. The method comprises the steps of building an initial training sample bank; training an AdaBoost classifier based on Haar characteristics and a support vector machine (SVM) classifier based on histograms of oriented gradients (HOG) characteristics respectively; and detecting monitoring images frame by frame by employing of the two classifiers, wherein the detection process is divided into steps of predicting of sub-images in a detection frame through the two classifiers respectively, performing of confidence-degree determination on predicted results, adding of prediction labels corresponding to large confidence-degree and the sub-images into additional training sample banks of the classifiers corresponding to small confidence-degree till the size of the detection frame reaches half the sizes of detected images, retraining of the two classifiers by using the updated training sample banks and detecting of a next frame of image till all images are detected, and the final classifiers can be used for detecting targets of vehicles, pedestrians and the like in actual traffic scenes.

Owner:HUNAN HUANAN OPTO ELECTRO SCI TECH CO LTD

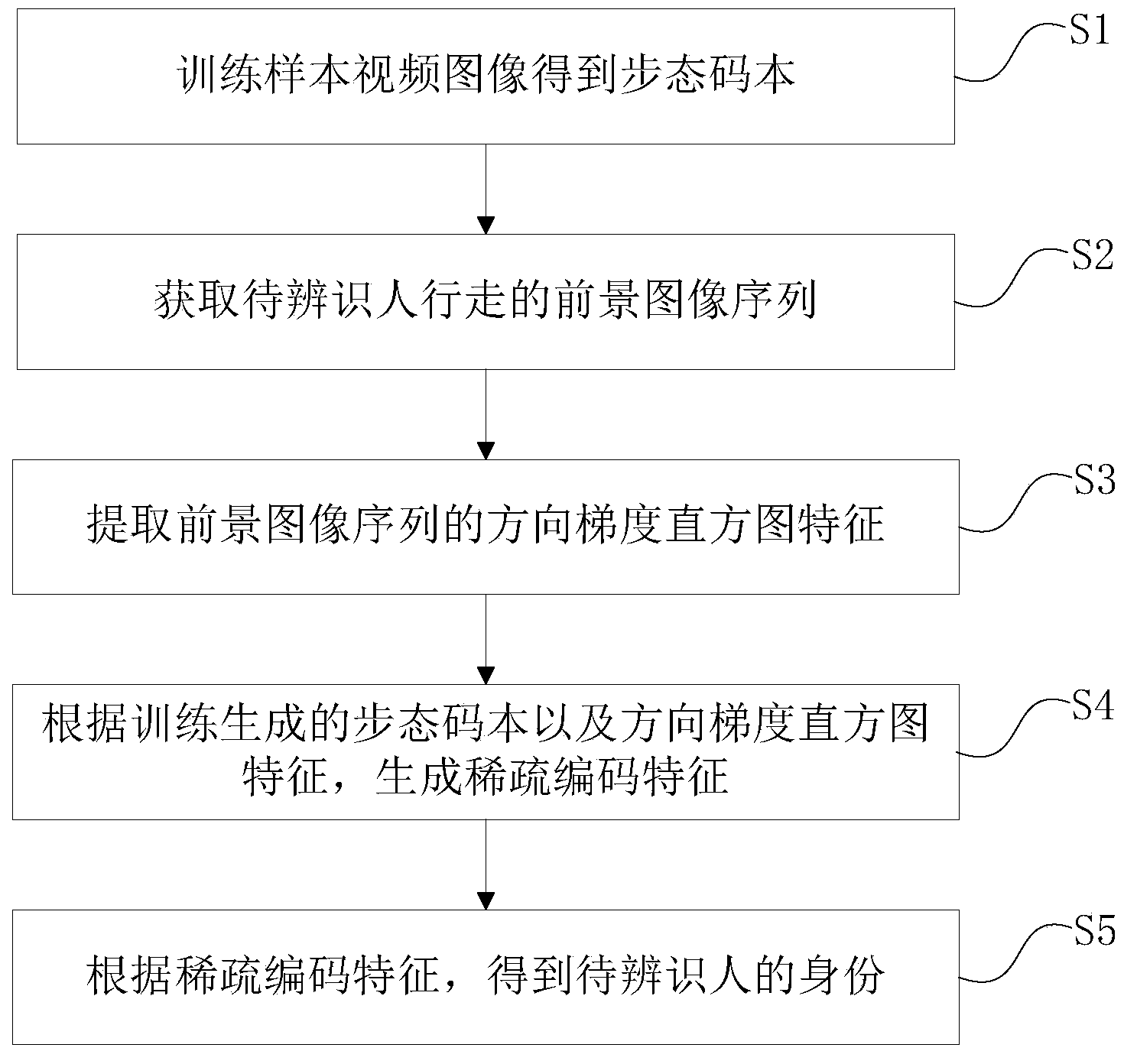

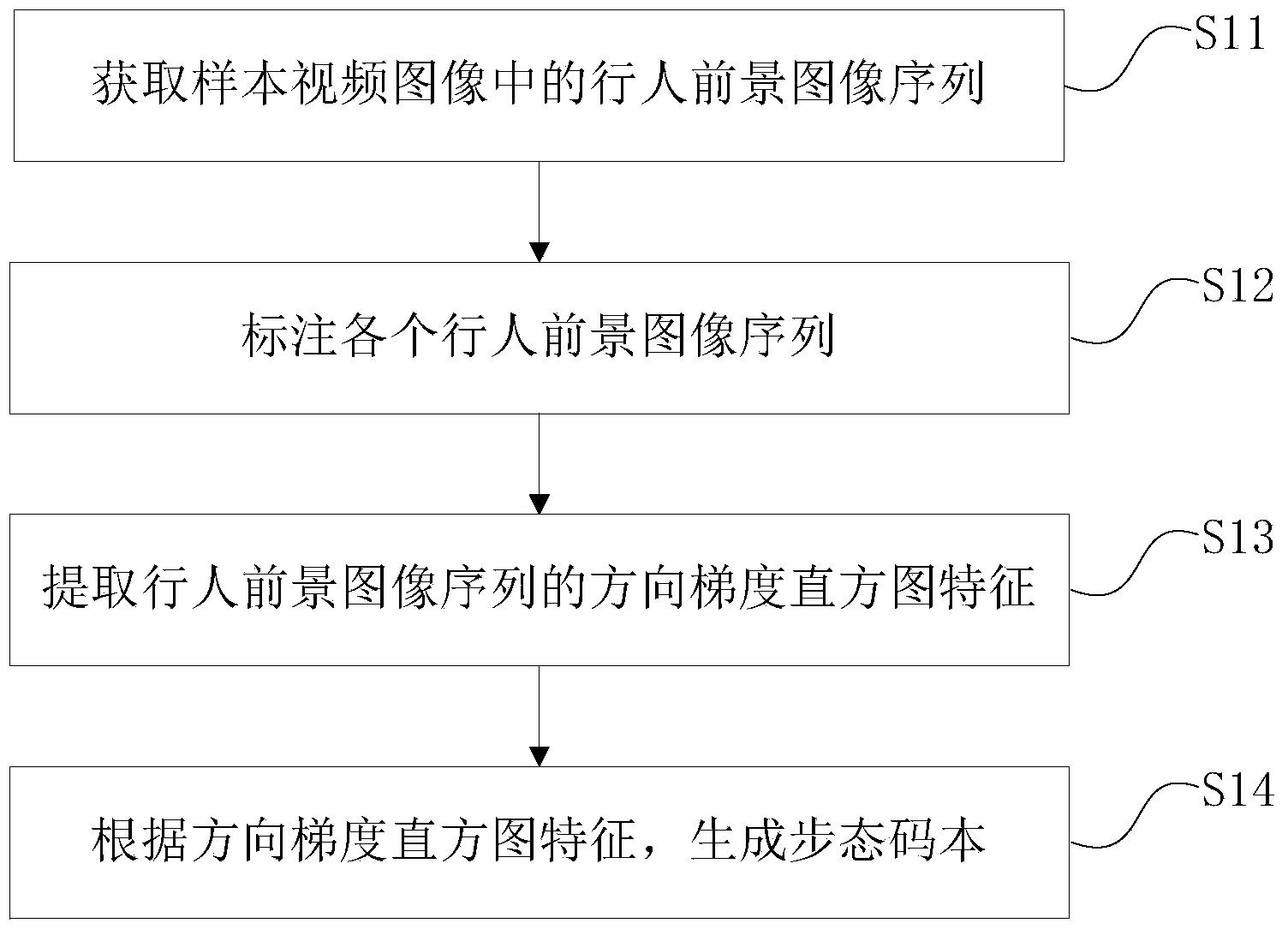

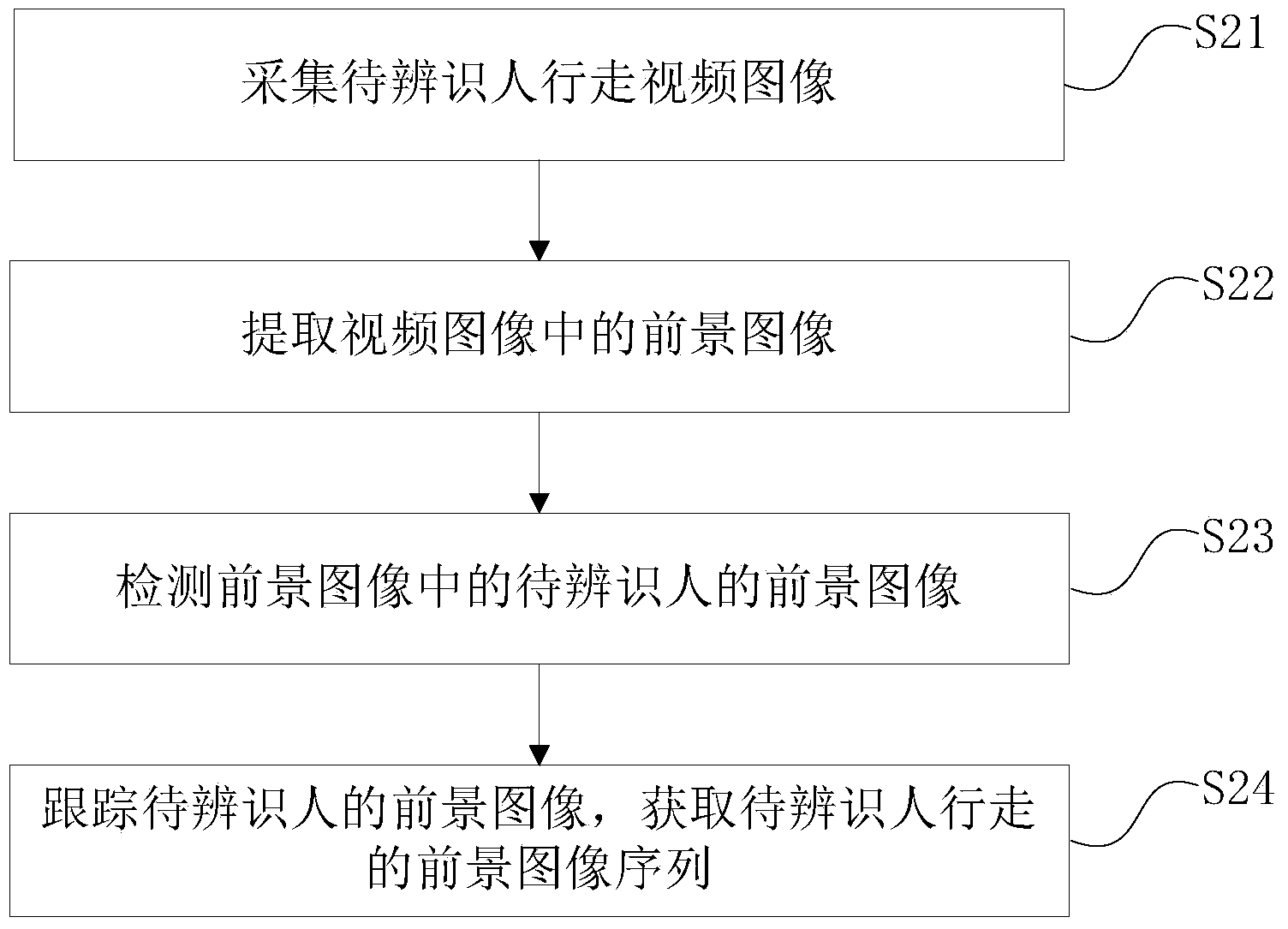

Gait recognition method and device

ActiveCN103473539AAccurate identificationWill not affect the recognition resultCharacter and pattern recognitionHistogram of oriented gradientsVideo image

The invention provides a gait recognition method and device and belongs to the technical field of mode recognition. The gait recognition method comprises the steps of training sample video images to obtain a gait codebook, obtaining foreground image sequences of a person to be recognized during walking, extracting oriented gradient histogram characteristics of the foreground image sequences, generating sparse coding characteristics according to the gait codebook generated through training and the oriented gradient histogram characteristics and obtaining identity of the person to be recognized according to the sparse coding characteristics. When the person to be recognized is blocked by parts of video frames or appears in videos only for a short period of time, the gait recognition method and device can accurately recognize the identity of the person to be recognized.

Owner:深圳中智卫安机器人技术有限公司

A multi-layer convolution feature self-adaptive fusion moving target tracking method

ActiveCN109816689AHigh expressionImprove adaptabilityImage analysisCharacter and pattern recognitionAdaptive weightingCorrelation filter

The invention relates to a multi-layer convolution feature self-adaptive fusion moving target tracking method, and belongs to the field of computer vision. The method comprises the following steps: firstly, initializing a target area in a first frame of image, and utilizing a trained deep network framework VGG-19 to extract first and fifth layers of convolution features of the target image block,and obtaining two templates through learning and training of a related filter; Secondly, extracting features of a detection sample from the prediction position and the scale size of the next frame andthe previous frame of target, and carrying out convolution on the features of the detection sample and the two templates of the previous frame to obtain a response graph of the two-layer features; calculating the weight of the obtained response graph according to an APCE measurement method, and adaptively weighting and fusing the response graph to determine the final position of the target; And after the position is determined, estimating the target optimal scale by extracting the directional gradient histogram features of the multiple scales of the target. According to the method, the targetis positioned more accurately, and the tracking precision is improved.

Owner:KUNMING UNIV OF SCI & TECH

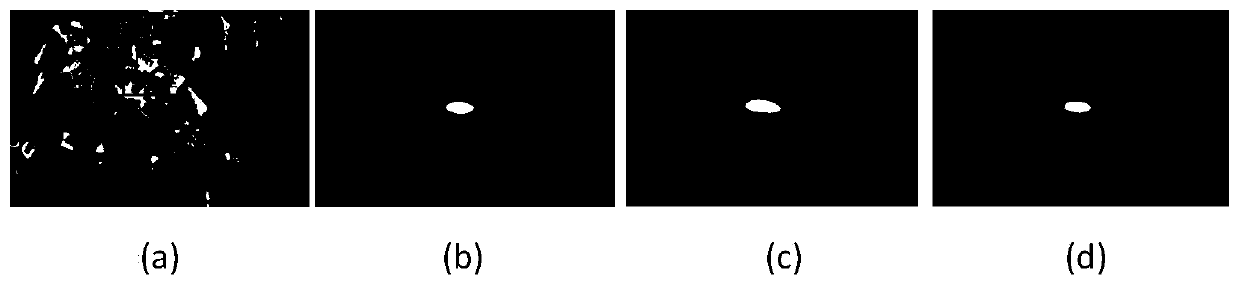

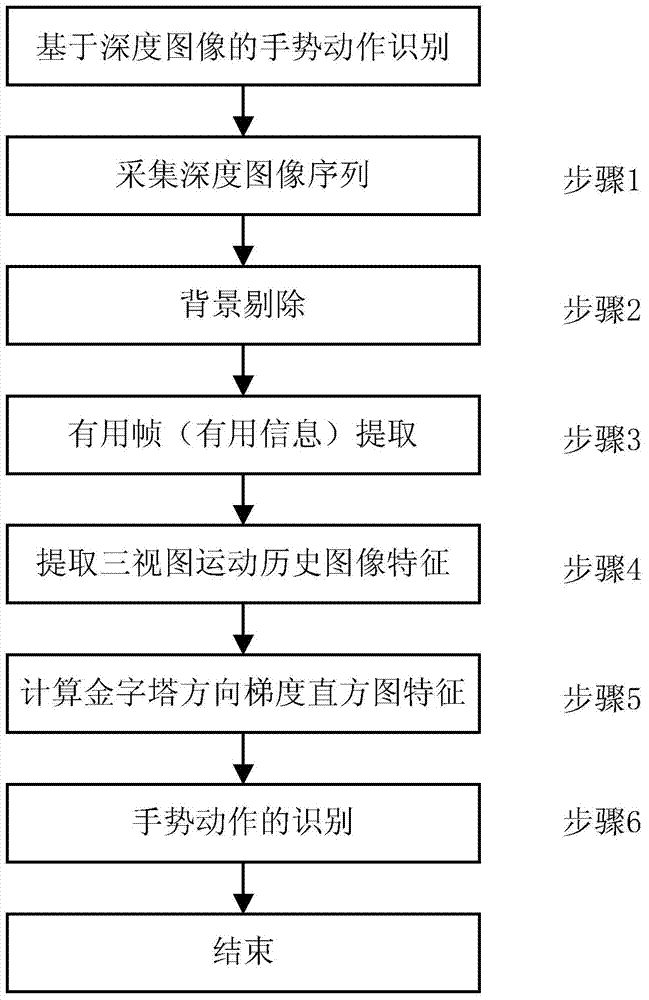

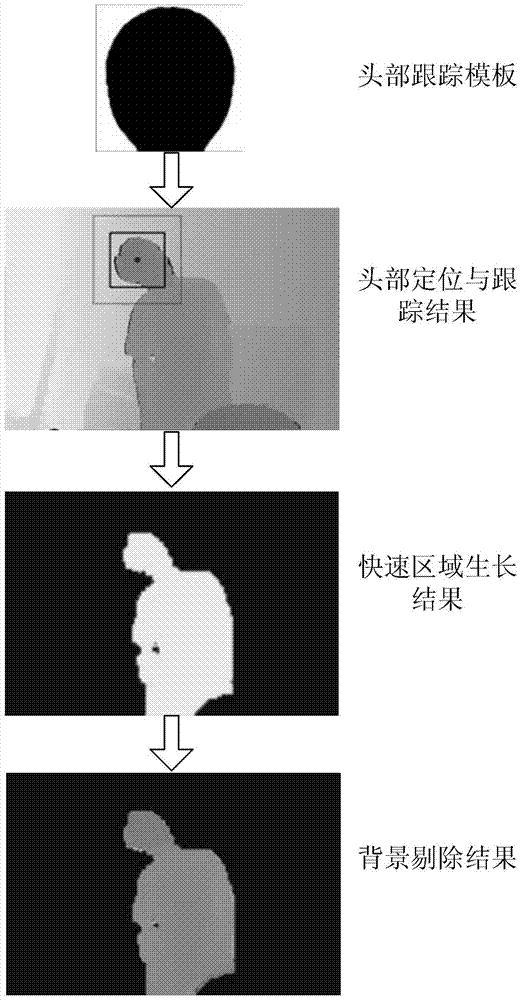

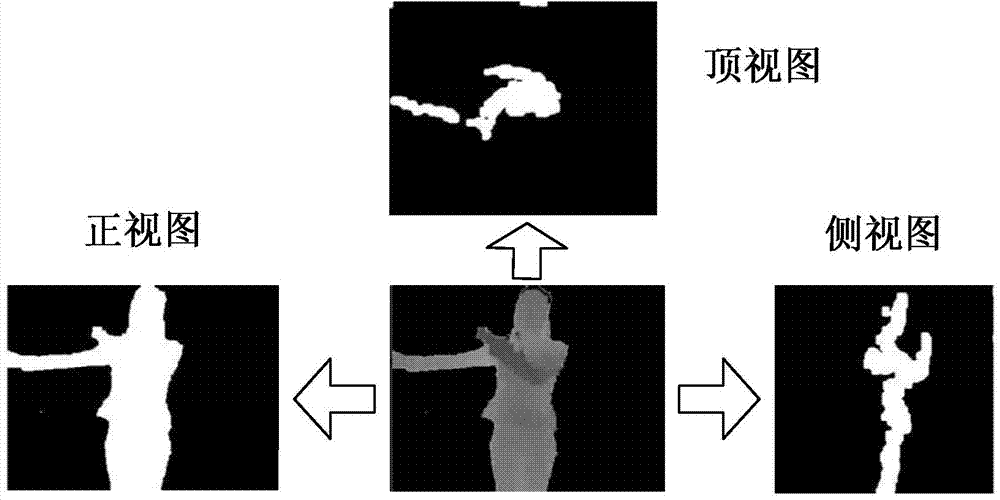

Three-dimensional gesture action recognition method based on depth images

InactiveCN103679154AImprove recognition rateDisambiguationImage analysisCharacter and pattern recognitionObject motionHistogram of oriented gradients

The invention provides a three-dimensional gesture action recognition method based on depth images. The three-dimensional gesture action recognition method comprises the steps of acquiring the depth images including gesture actions; dividing a human body region corresponding to the gesture actions from the images through tracking and positioning based on quick template tracking and oblique plane matching to obtain a depth image sequence after the background is removed; extracting useful frames of the gesture actions according to the depth images after the background is removed; calculating three-view drawing movement historical images of the gesture actions in the front-view, top-view and side-view projection directions according to the extracted useful frames; extracting direction gradient histogram features corresponding to the three-view drawing movement historical images; calculating relevance of combination features of the obtained gesture actions and gesture action templates stored in a pre-defined gesture action library; using a template with largest relevance as a recognition result of a current gesture action. Therefore, three-dimensional gesture action recognition can be achieved by adopting the three-dimensional gesture action recognition method, and the three-dimensional gesture action recognition method can be applied to recognition of the movement process of simple objects.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

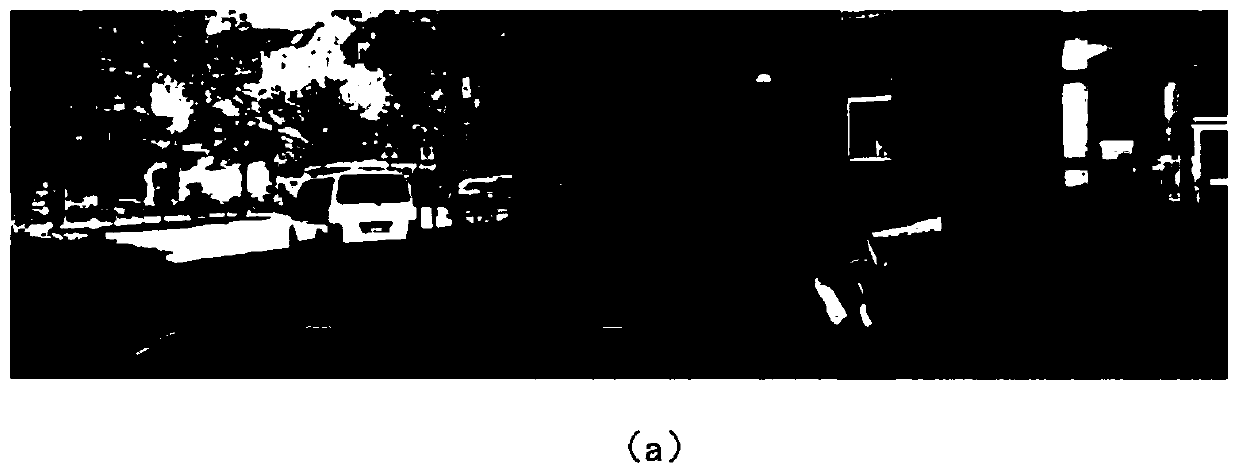

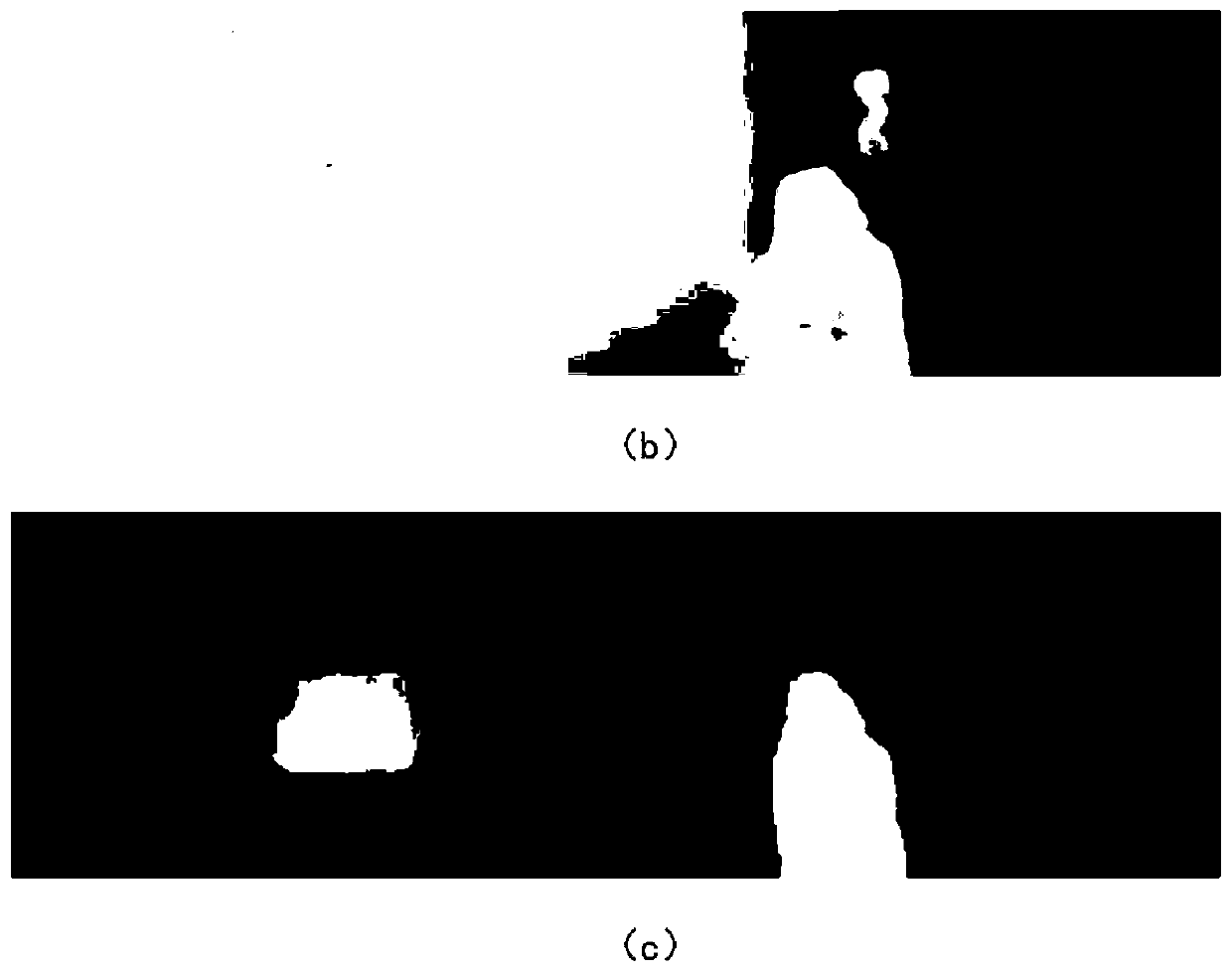

Traveling vehicle vision detection method combining laser point cloud data

ActiveCN110175576AAvoid the problem of difficult access to spatial geometric informationRealize 3D detectionImage enhancementImage analysisHistogram of oriented gradientsVehicle detection

The invention discloses a traveling vehicle vision detection method combining laser point cloud data, belongs to the field of unmanned driving, and solves the problems in vehicle detection with a laser radar as a core in the prior art. The method comprises the following steps: firstly, completing combined calibration of a laser radar and a camera, and then performing time alignment; calculating anoptical flow grey-scale map between two adjacent frames in the calibrated video data, and performing motion segmentation based on the optical flow grey-scale map to obtain a motion region, namely a candidate region; searching point cloud data corresponding to the vehicle in a conical space corresponding to the candidate area based on the point cloud data after time alignment corresponding to eachframe of image to obtain a three-dimensional bounding box of the moving object; based on the candidate region, extracting a direction gradient histogram feature from each frame of image; extracting features of the point cloud data in the three-dimensional bounding box; and based on a genetic algorithm, carrying out feature level fusion on the obtained features, and classifying the motion areas after fusion to obtain a final driving vehicle detection result. The method is used for visual inspection of the driving vehicle.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

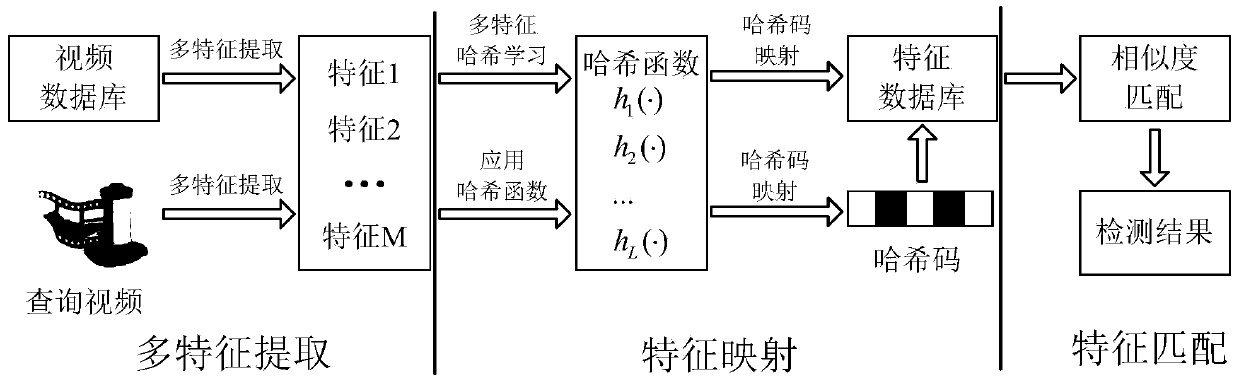

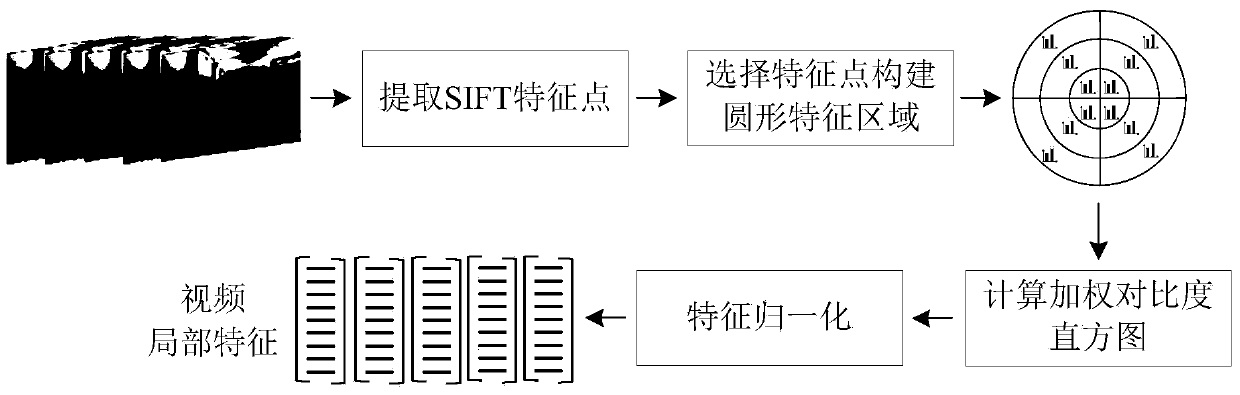

Video copy detection method based on multi-feature Hash

InactiveCN103744973AHigh precisionImprove robustnessCharacter and pattern recognitionSpecial data processing applicationsDigital videoHash function

The invention discloses a video copy detection method based on multi-feature Hash, which mainly solves the problem that detection efficiency and detection accuracy cannot be effectively balanced in the exiting video copy detection algorithm. The video copy detection method based on multi-feature Hash comprises the following realization steps of: (1) extracting the pyramid histogram of oriented gradients (PHOG) of a key frame as the global feature of the key frame; (2) extracting a weighted contrast histogram based on scale invariant feature transform (SIFT) of the key frame as the local feature of the key frame; (3) establishing a target function by a similarity-preserving multi-feature Hash learning SPM2H algorithm, and obtaining L Hash functions by optimization solution; (4) mapping the key frame of a database video and the key frame of an inquired video into an L-dimensional Hash code by virtue of the L Hash functions; (5) judging whether the inquired video is the copied video or not through feature matching. The video copy detection method based on multi-feature Hash disclosed by the invention is good in robustness for multiple attacks, and capable of being used for copyright protection, copy control and data mining for digital videos on the Internet.

Owner:XIDIAN UNIV

Method and system for detecting pedestrian in front of vehicle

InactiveCN105260712AImprove accuracyImprove securityBiometric pattern recognitionDriver/operatorHistogram of oriented gradients

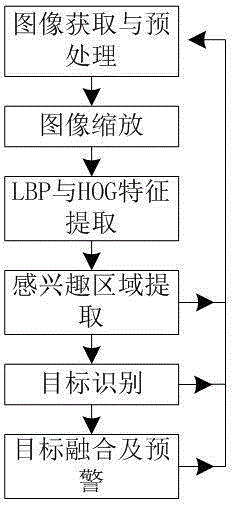

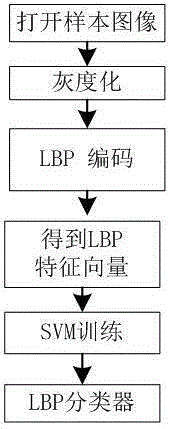

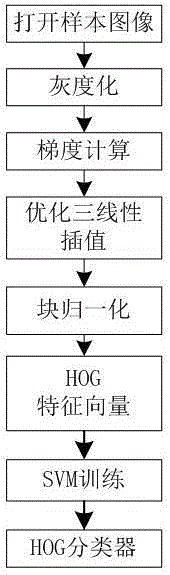

The invention discloses a method and a system for detecting the pedestrian in front of a vehicle. The method comprises the steps of image acquisition and preprocessing, image scaling, LBP (Local Binary Pattern) and HOG (Histogram of Oriented Gradient) feature extraction, region of interest extraction, target identification, and target fusion and early warning. A driver is reminded timely at the presence of the pedestrian in front of the vehicle. The system for detecting the pedestrian in front of the vehicle comprises three portions which are an image acquisition unit, an SOPC (System on Programmable Chip) unit and an ASIC (Application Specific Integrated Circuit) unit, wherein the image acquisition unit is a camera unit, the SOPC unit comprises an image preprocessing unit, a region of interest extraction unit, a target identification unit, and a target fusion and early warning unit, and the ASIC unit comprises an image scaling unit, an LBP feature extraction unit and an HOG feature extraction unit. According to the invention, LBP features and HOG features are used in a joint manner, and two-level detection improves the accuracy of pedestrian detection on the whole; and HOG feature extraction is dynamically adjusted according to classification conditions of an LBP based SVM (Support Vector Machine), the calculation amount is reduced, the calculation speed is improved, and the driving safety of the vehicle is improved.

Owner:SHANGHAI UNIV

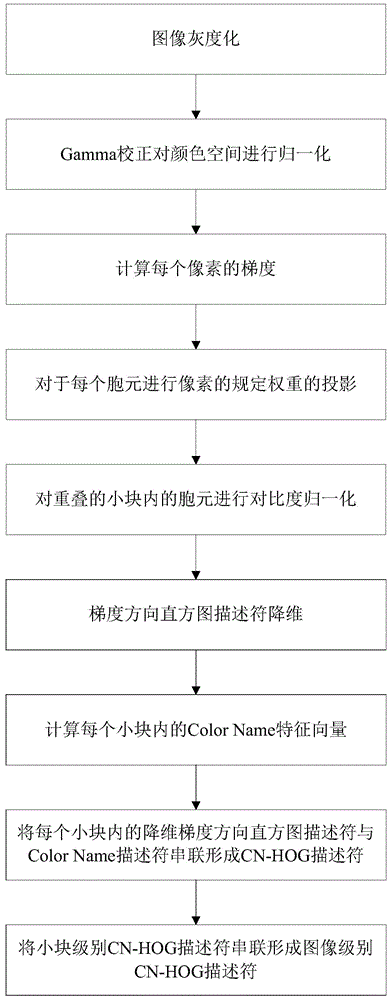

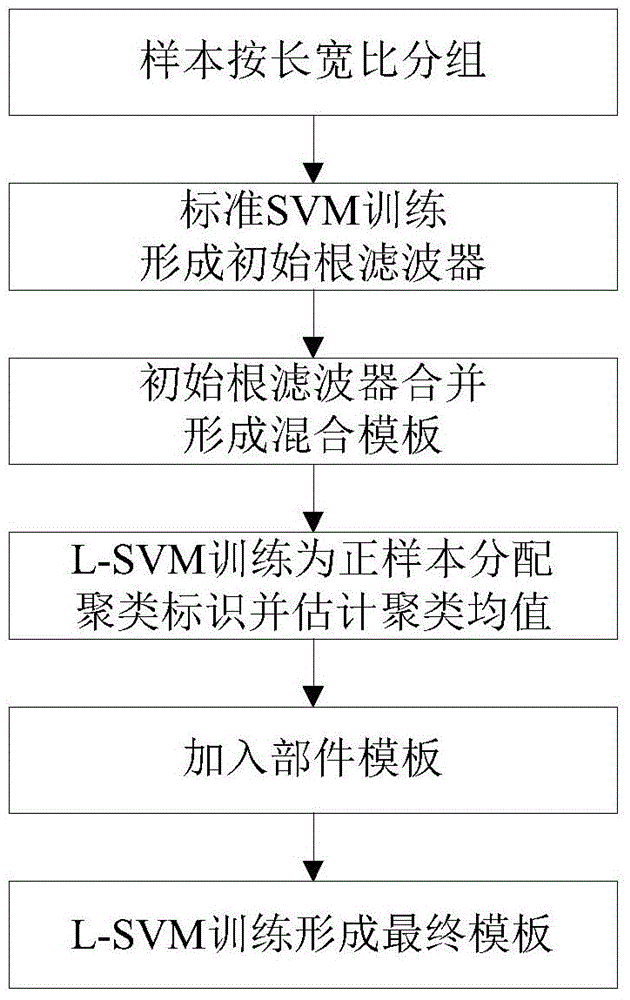

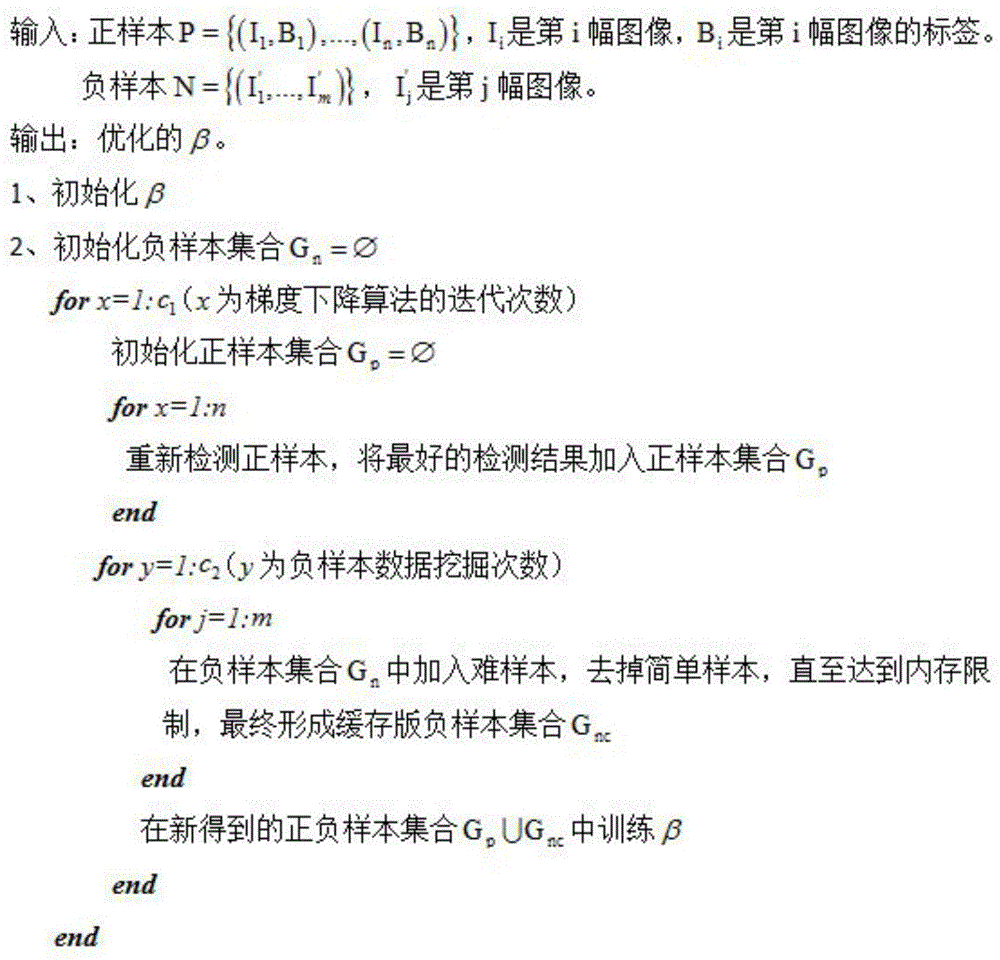

Deformable part model object detection method based on color description

ActiveCN104134071AConfidenceEliminate false detectionsCharacter and pattern recognitionMorphingSlide window

The invention discloses a deformable part model object detection method based on color description, and belongs to the technical field of image target detection. The invention provides an intelligent object detection method which combines shape and color features. A deformable part model is used as a bottom frame, and a linguistics-based Color Name color descriptor is added into the original feature space of a histogram of oriented gradient when a template is trained to obtain a shape template of a specific object type and a color template of the specific object type; and finally, in a detection stage, an object is detected by a sliding window method characterized by the matching of dual templates, i.e. the shape template of the histogram of oriented gradient and the Color Name color template. The defect of wrong detection since the object is described by a single feature in a traditional method is overcome.

Owner:BEIJING UNIV OF TECH

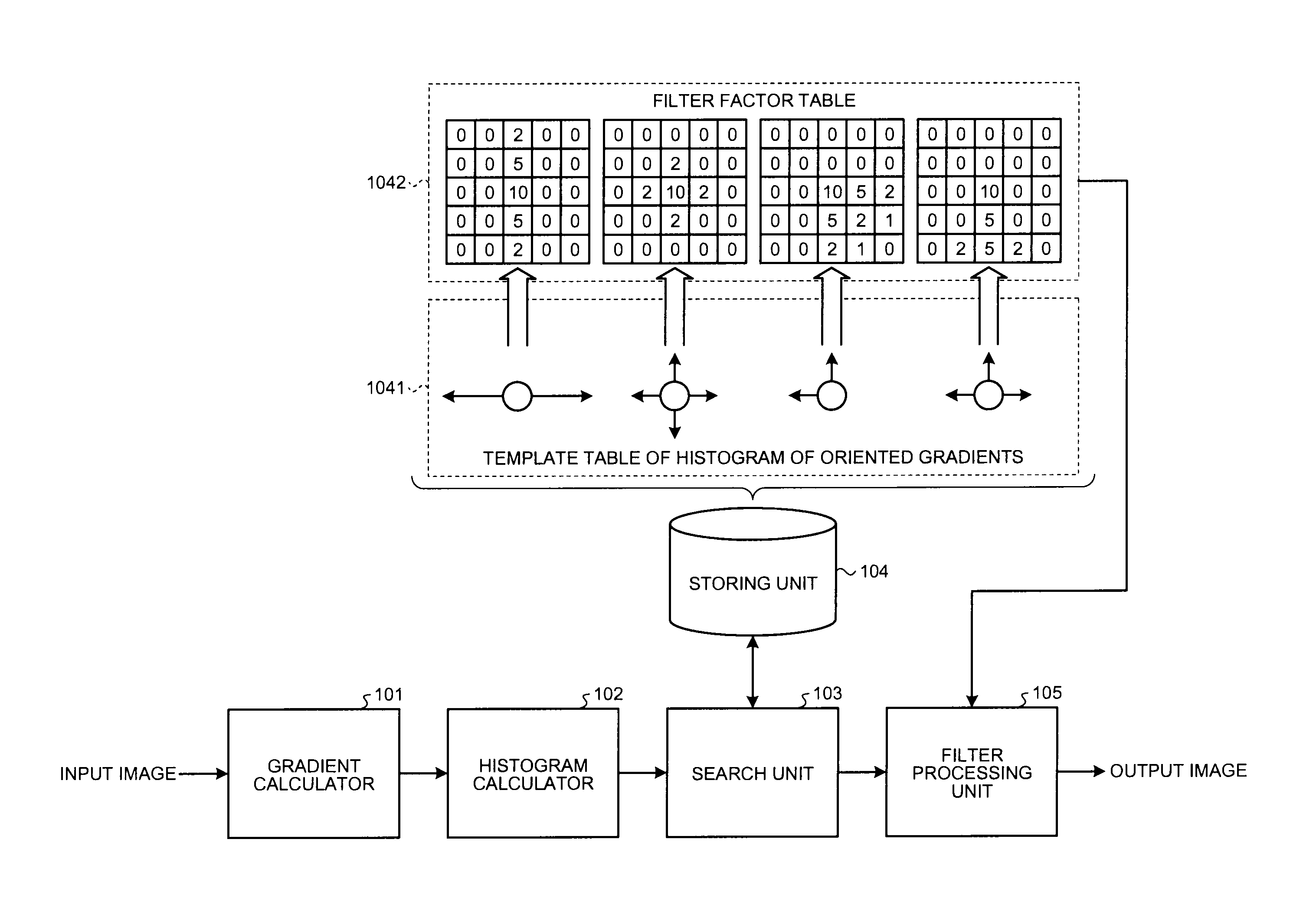

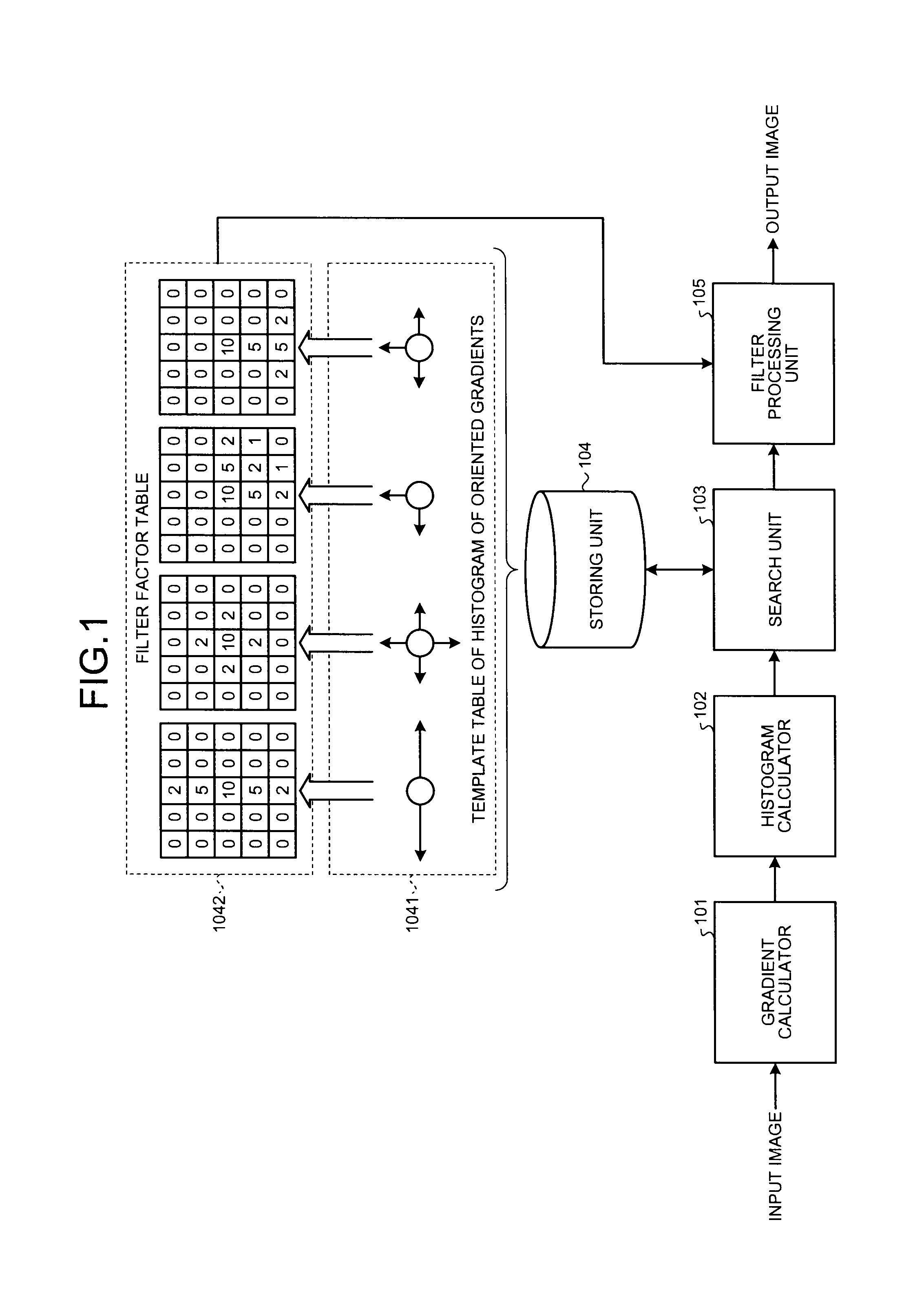

Image processing apparatus and image processing method

ActiveUS20100232697A1Image enhancementImage analysisImaging processingHistogram of oriented gradients

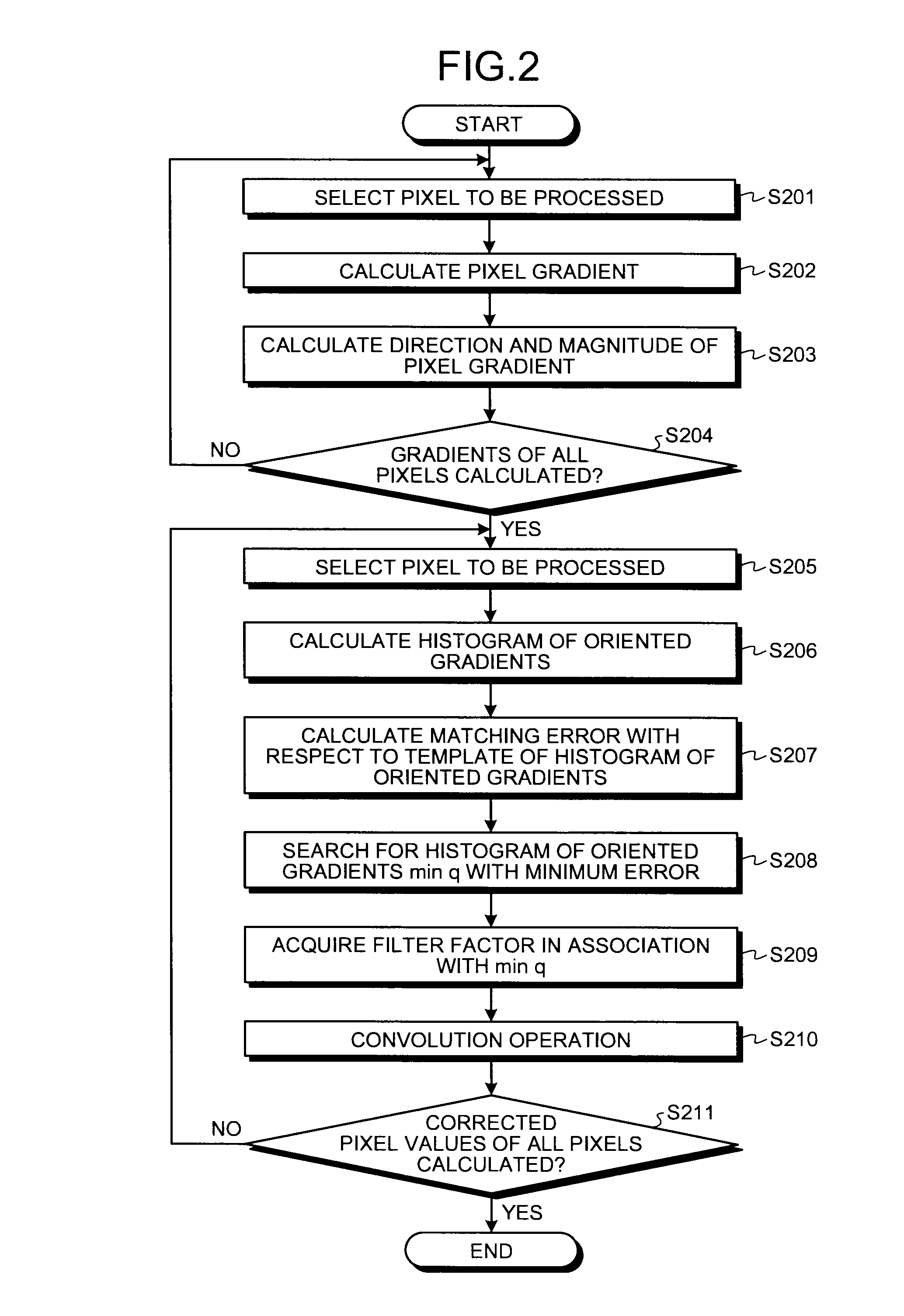

An image processing apparatus includes a gradient calculator that calculates a direction and a magnitude of a gradient of each pixel in an input image using neighboring pixel values; a histogram calculator that calculates a Histogram of Oriented Gradients containing plural sampled directions from the directions and the magnitudes of the gradients calculated for the pixels in a region including the pixel being processed; a storing unit that stores plural smoothing filters and associated Histograms of Oriented Gradients; a search unit that calculates errors between Histogram of Oriented Gradients calculated for the pixel being processed and the Histograms of Oriented Gradients stored in the storing unit and searches the Histogram of Oriented Gradients that has the minimum error; and a filter processing unit that acquires one of the smoothing filters stored in association with the Histogram of Oriented Gradients having the minimum error and determines a corrected pixel value of the pixel being processed by filter processing with the acquired smoothing filter.

Owner:MAXELL HLDG LTD

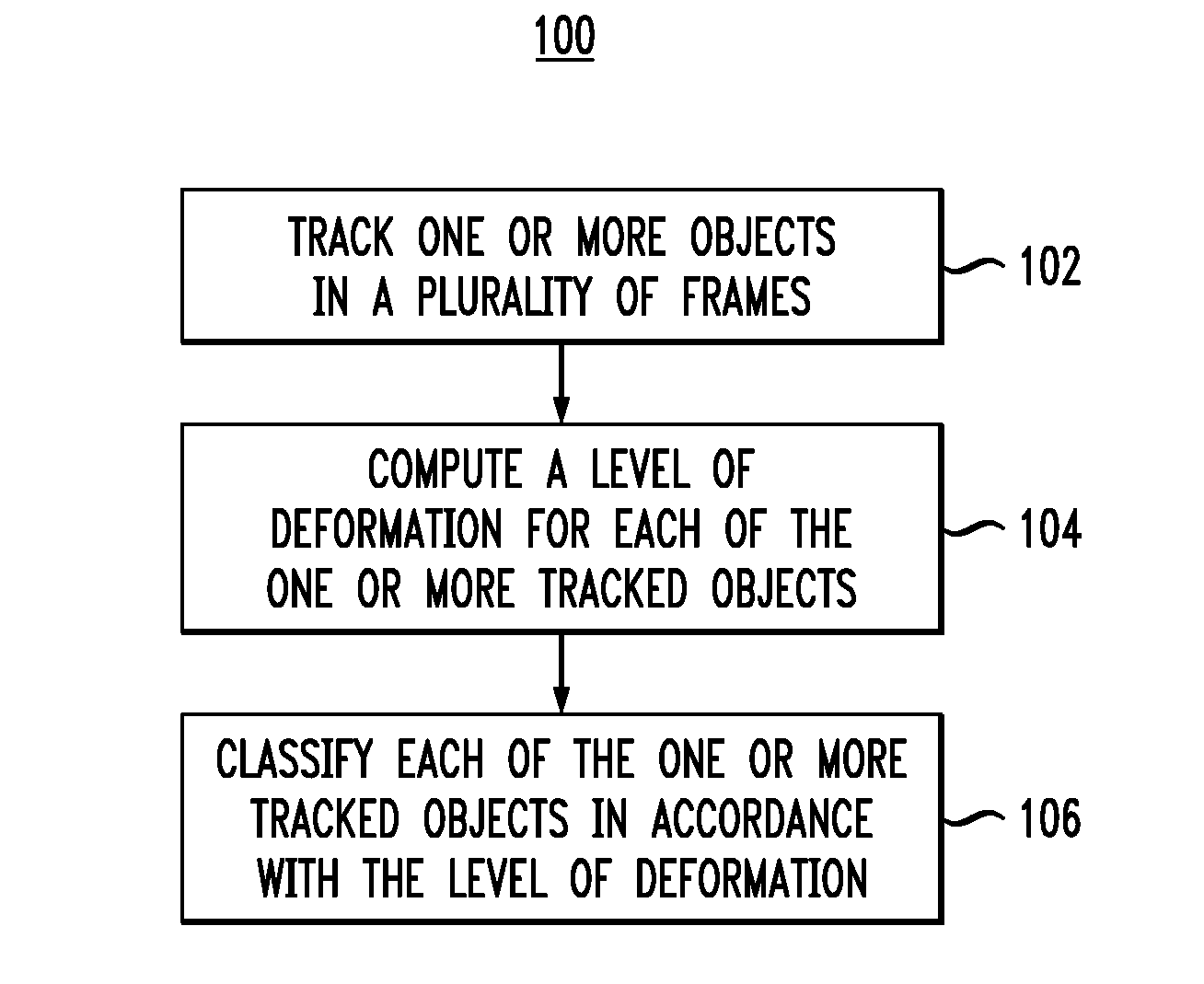

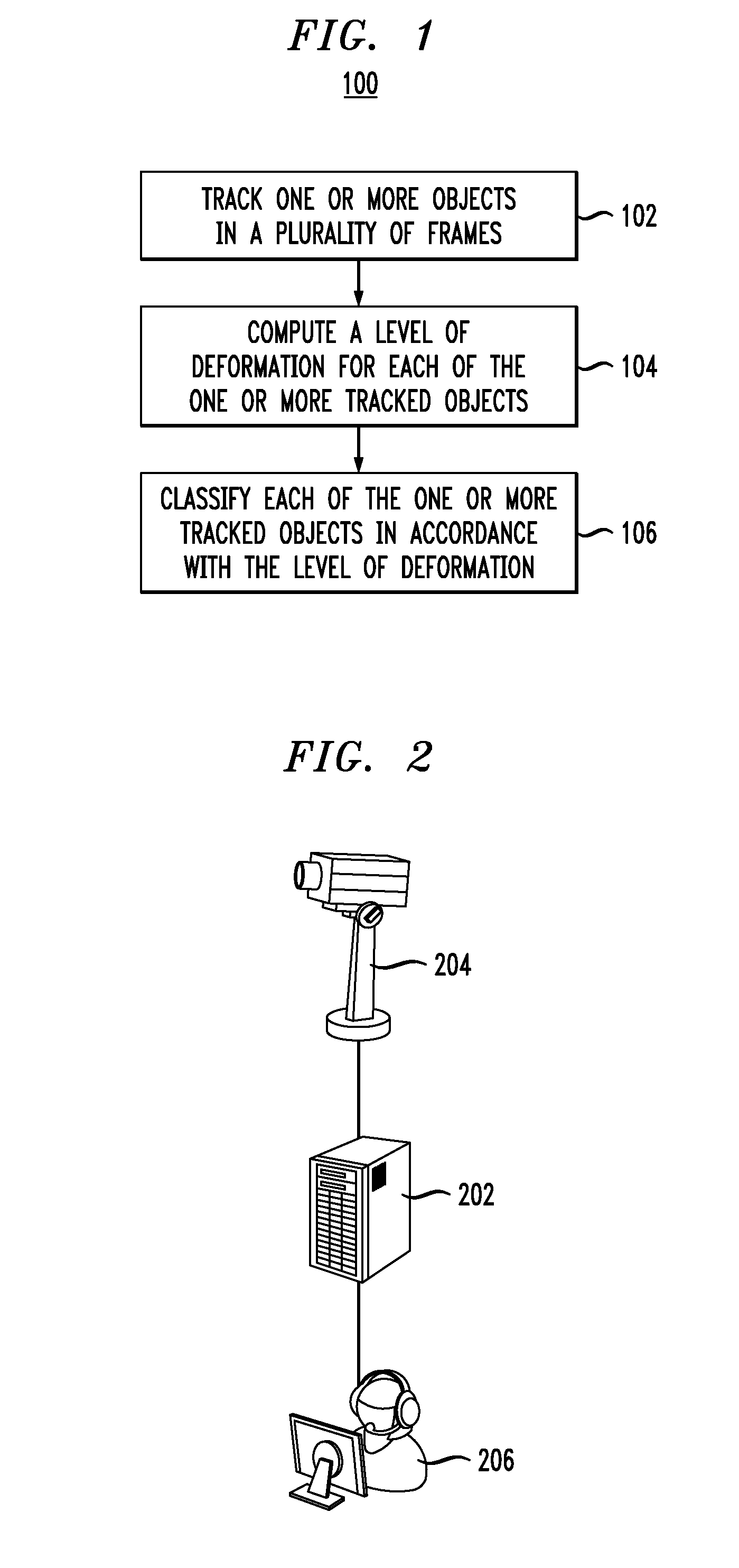

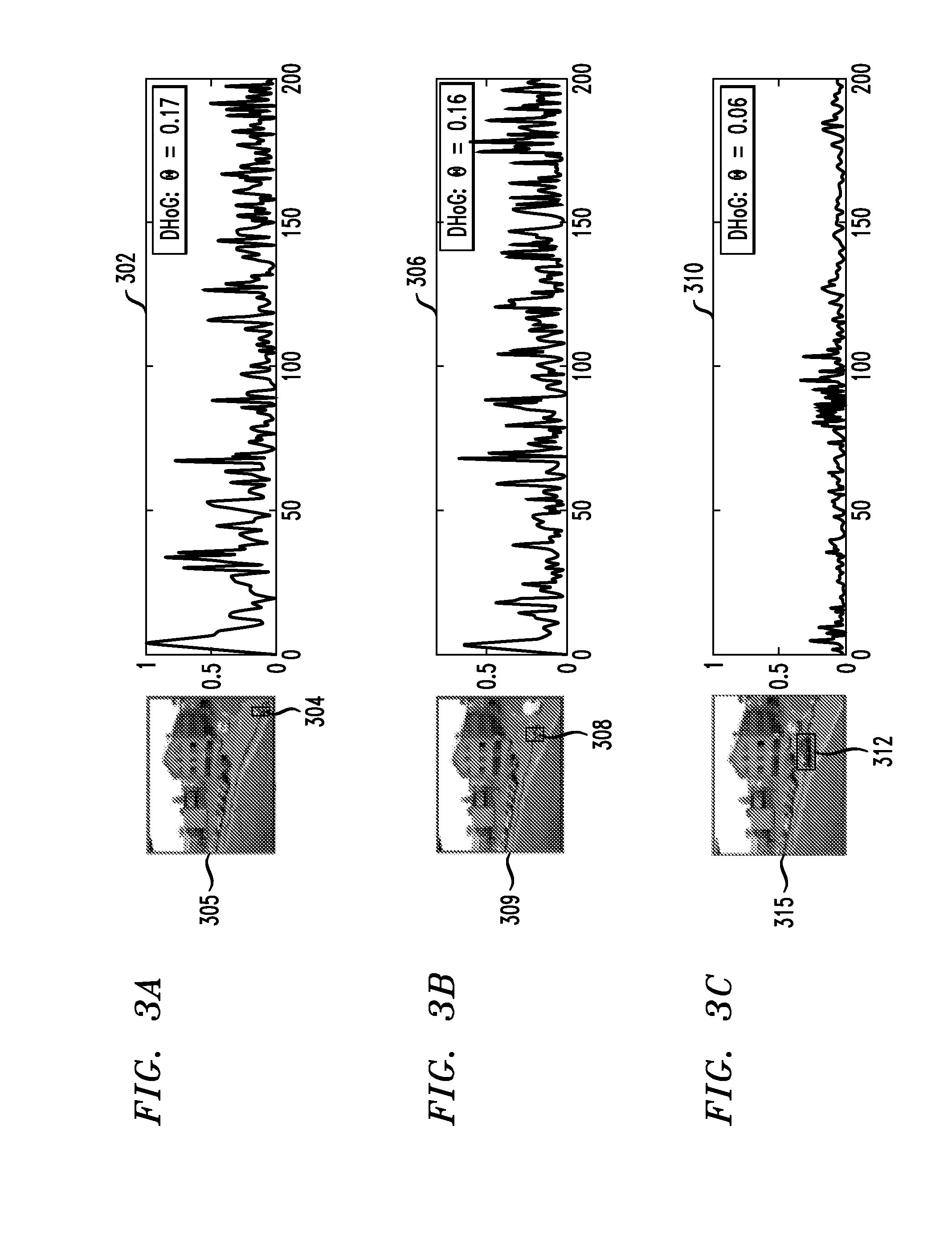

Video Object Classification

InactiveUS20100054535A1Improve accuracyOvercomes drawbackCharacter and pattern recognitionHistogram of oriented gradientsComputer vision

Owner:KYNDRYL INC

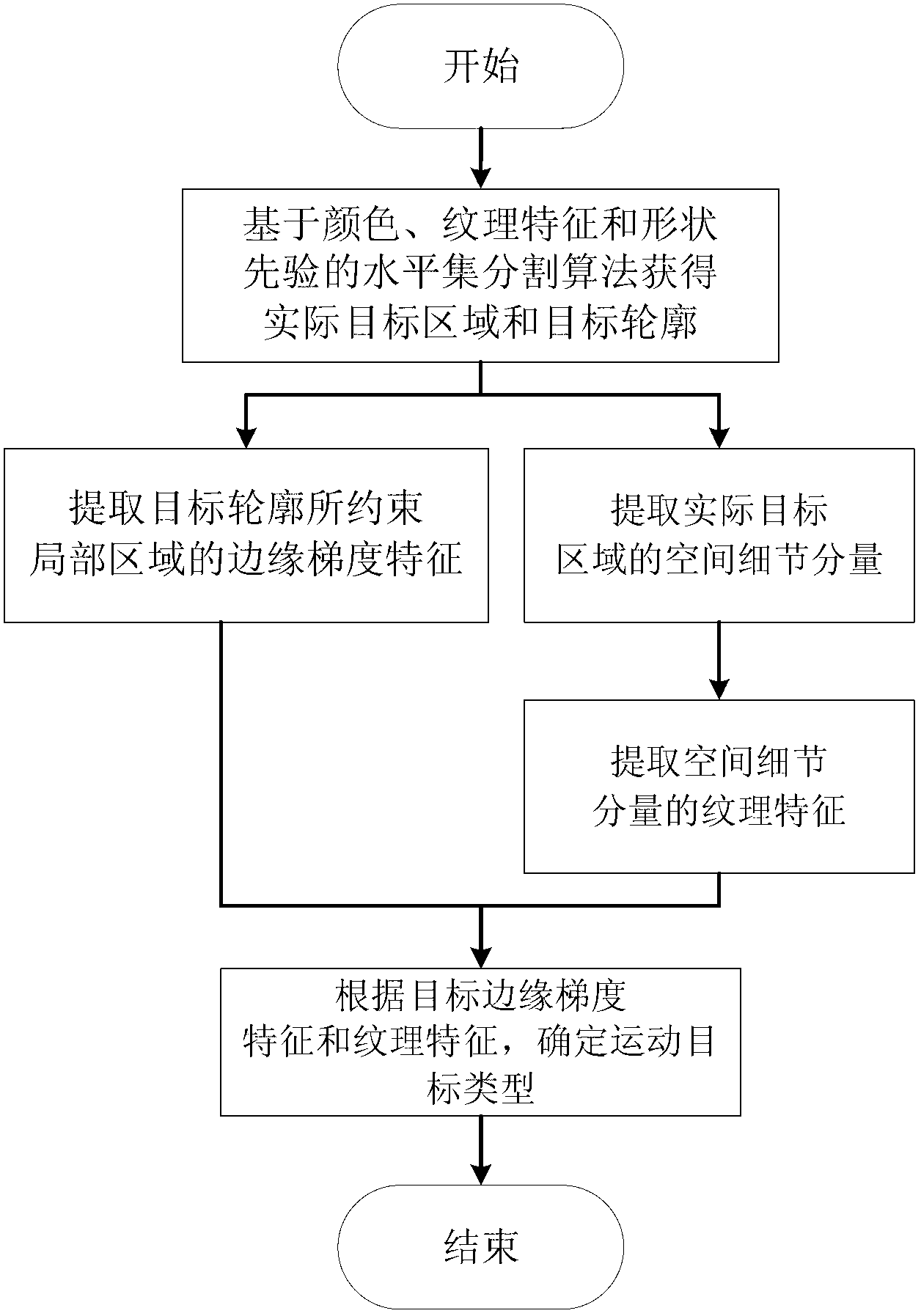

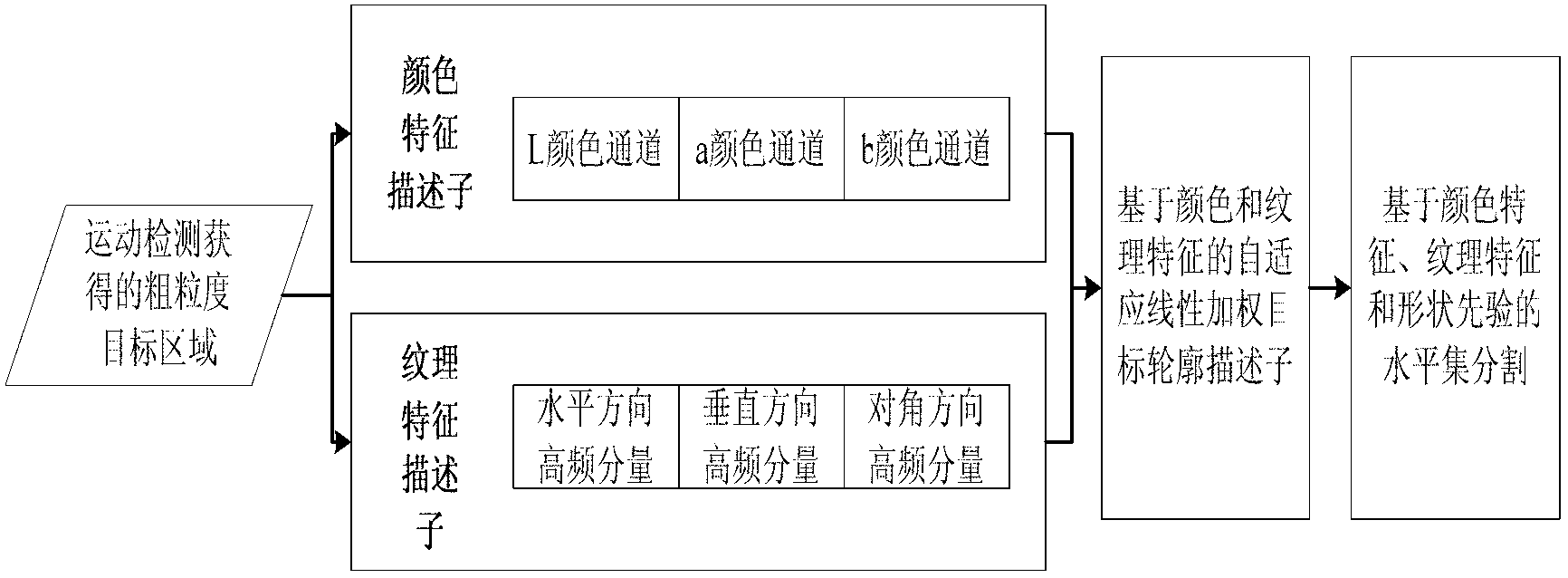

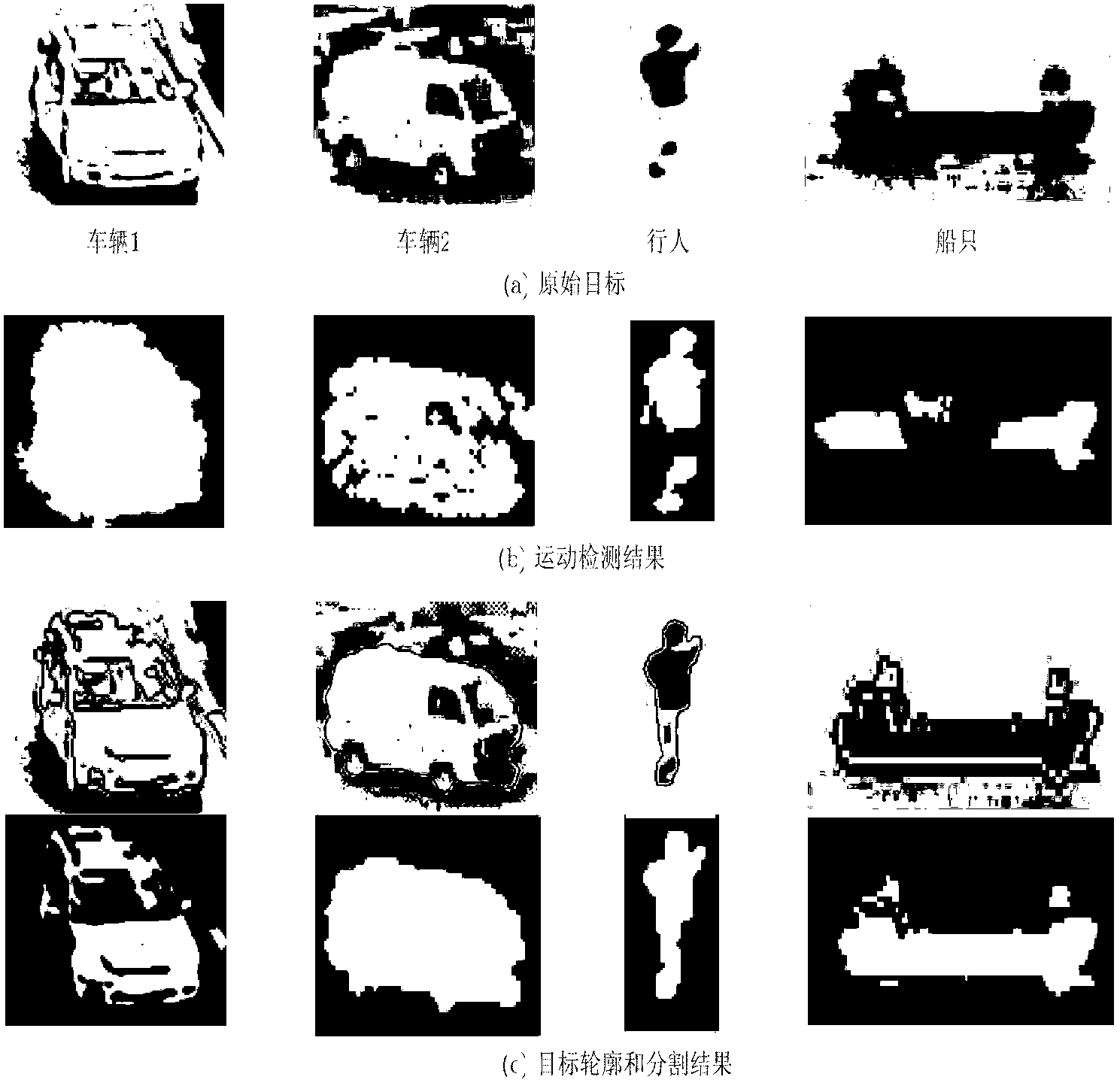

Video moving target classification and identification method based on outline constraint

ActiveCN103218831AImprove recognition accuracyReduce redundant featuresImage analysisLocal binary pattern histogramHistogram of oriented gradients

The invention provides a video moving target classification and identification method based on outline constraint. The video moving target classification and identification method includes the steps: (1) obtaining a realistic target region and a target outline through a level set partitioning algorithm which is based on color features, textural features and shape prior constraint; (2) conducting convolution operation on the realistic target region through Gaussian filter and obtaining space detail constituent of the target; (3) extracting a local binary pattern histogram of the space detail constituent and obtaining the textural features of the target; (4) extracting a directional gradient histogram of an outline constraint local region in the realistic target region and obtaining the edge gradient features of the target; (5) extracting the texture features and the edge gradient features of a training sample target, training the texture features and the edge gradient features of the training sample target through a machine learning method, obtaining a target classification model; and (6) extracting the texture features and the edge gradient features of a to-be-identified target, inputting the classification model and confirming the type of the target. By means of the video moving target classification and identification method based on outline constraint, classification accuracy under complex outdoor conditions is improved.

Owner:BEIHANG UNIV

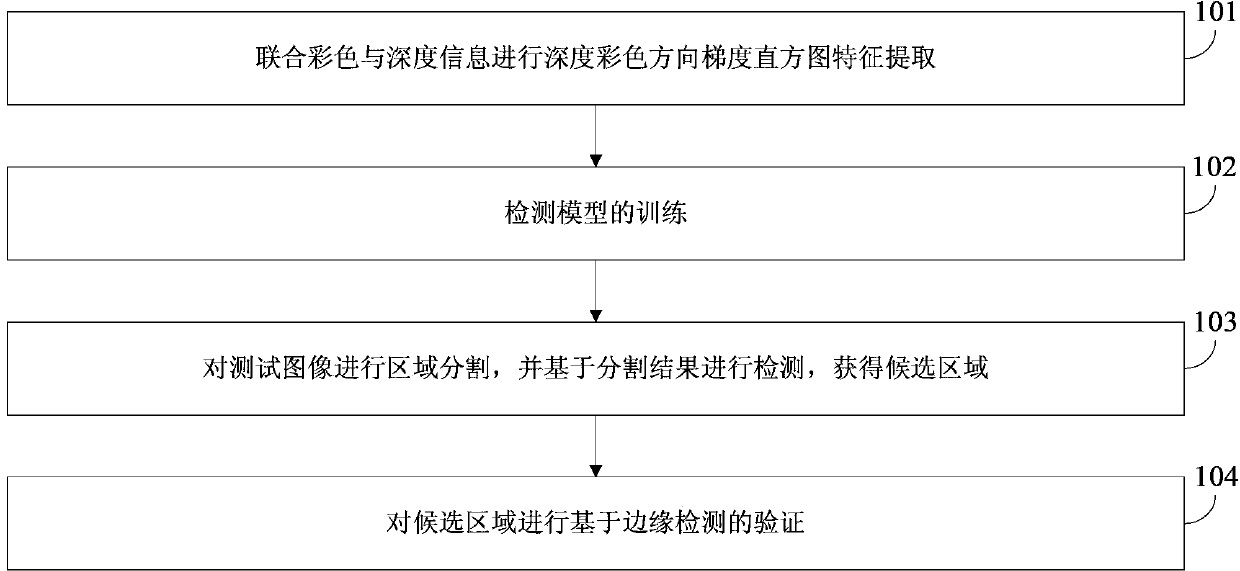

Human detection method

ActiveCN103473571AOvercome the shortcomings of low precisionRepresentativeCharacter and pattern recognitionAcquired characteristicValidation methods

The invention discloses a human detection method. The human detection method includes the following steps that gradient histogram features are extracted in the depth color direction by the cooperation of color information with depth information; detection models are trained; region segmentation is conducted on test images, and the images are detected based on a segmentation result to obtain a candidate region (SR); the candidate region (SR) is verified based on edge detection. The human detection method based on color information and depth information is achieved, the obtained features comprise color information and depth information by the cooperation of feature extraction, outline edge information is enhanced, and then the features are more representative. Detection strategies based on different features and different classification models are used for different parts, and the defect that accuracy of long-distance objects shot by a depth camera is low is overcome. The verification method based on edge information is adopted, and then the detection result is more accurate.

Owner:TIANJIN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com