Target tracking based on adaptive feature fusion.

A feature fusion and target tracking technology, applied in the field of image processing and computer vision, can solve problems such as scale transformation, occlusion, and difficulty in achieving real-time tracking, and achieve accurate tracking and improve tracking accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0102] In order to make the purpose, technical route and beneficial effects of the present invention clearer, the present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

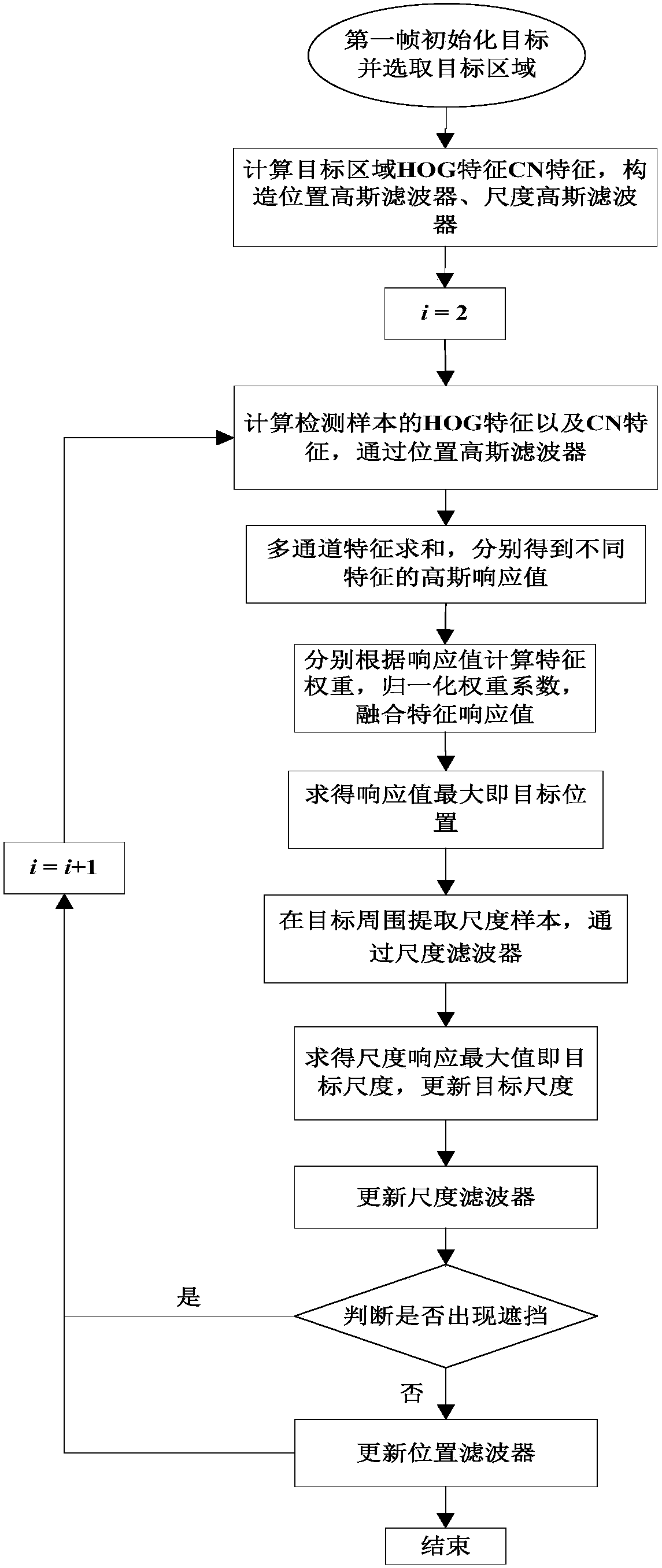

[0103] The implementation process of the target tracking method based on adaptive feature fusion is as follows: figure 1 shown, including the following steps:

[0104] Step 1: Initialize the target and select the target area;

[0105] Specific steps:

[0106] The initial position of the tracked target according to the first frame is p=[x, y, w, h].

[0107] Where x, y represent the abscissa and ordinate of the center point of the target, and w, h represent the width and height of the target frame, respectively.

[0108] The target area selects the target center point as the center and a rectangular area twice the size of the target as the target area P t .

[0109] Step 2: Select samples in the target area to calculate HOG features and CN...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com