Patents

Literature

182 results about "Level fusion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

How Spinal Fusion Surgery Works. Each level of the spine consists of the disc in front and two facets (joints) in the back. These structures work together to define a motion segment. When performing a fusion, for example L4 to L5, this is considered a one level fusion.

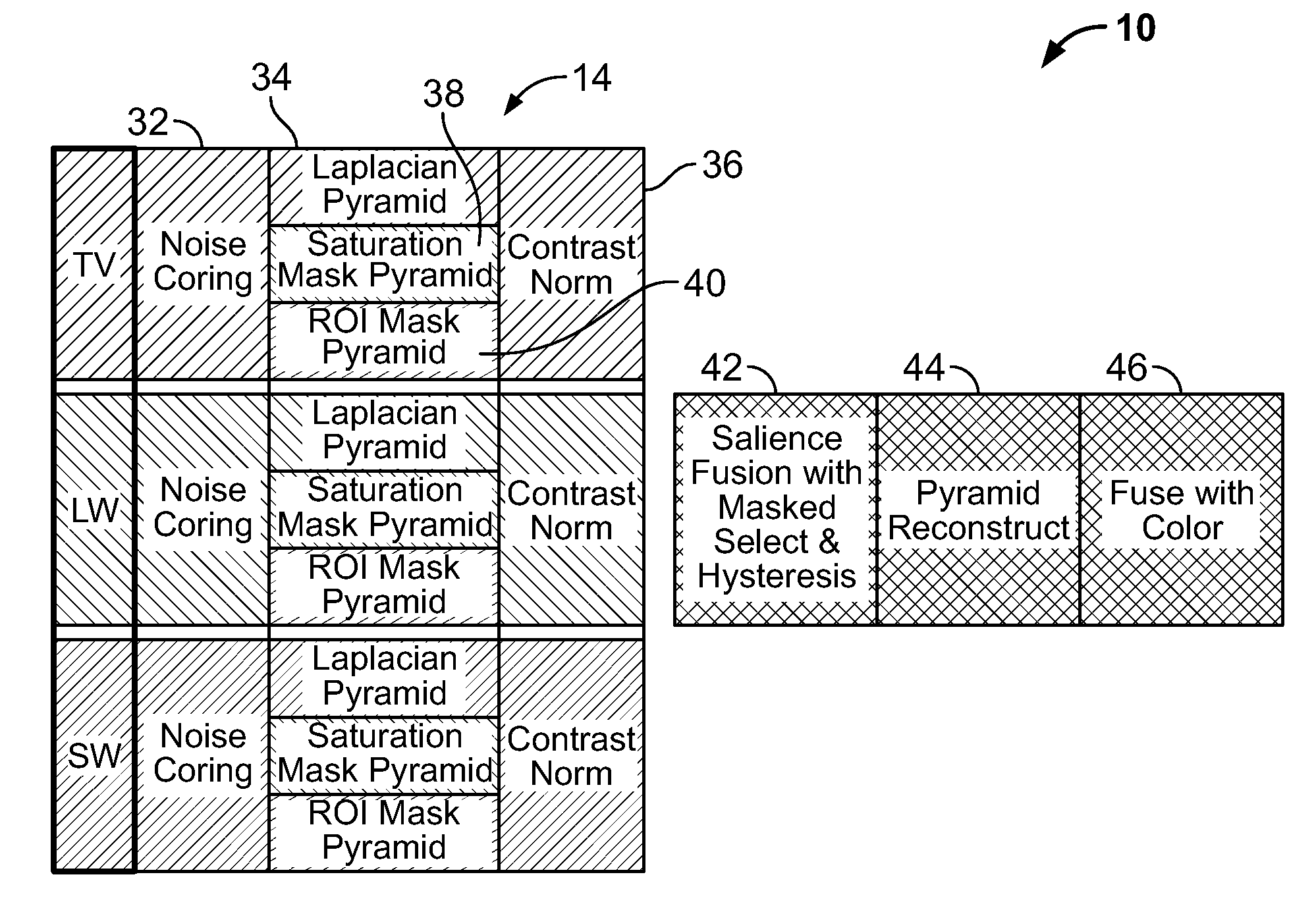

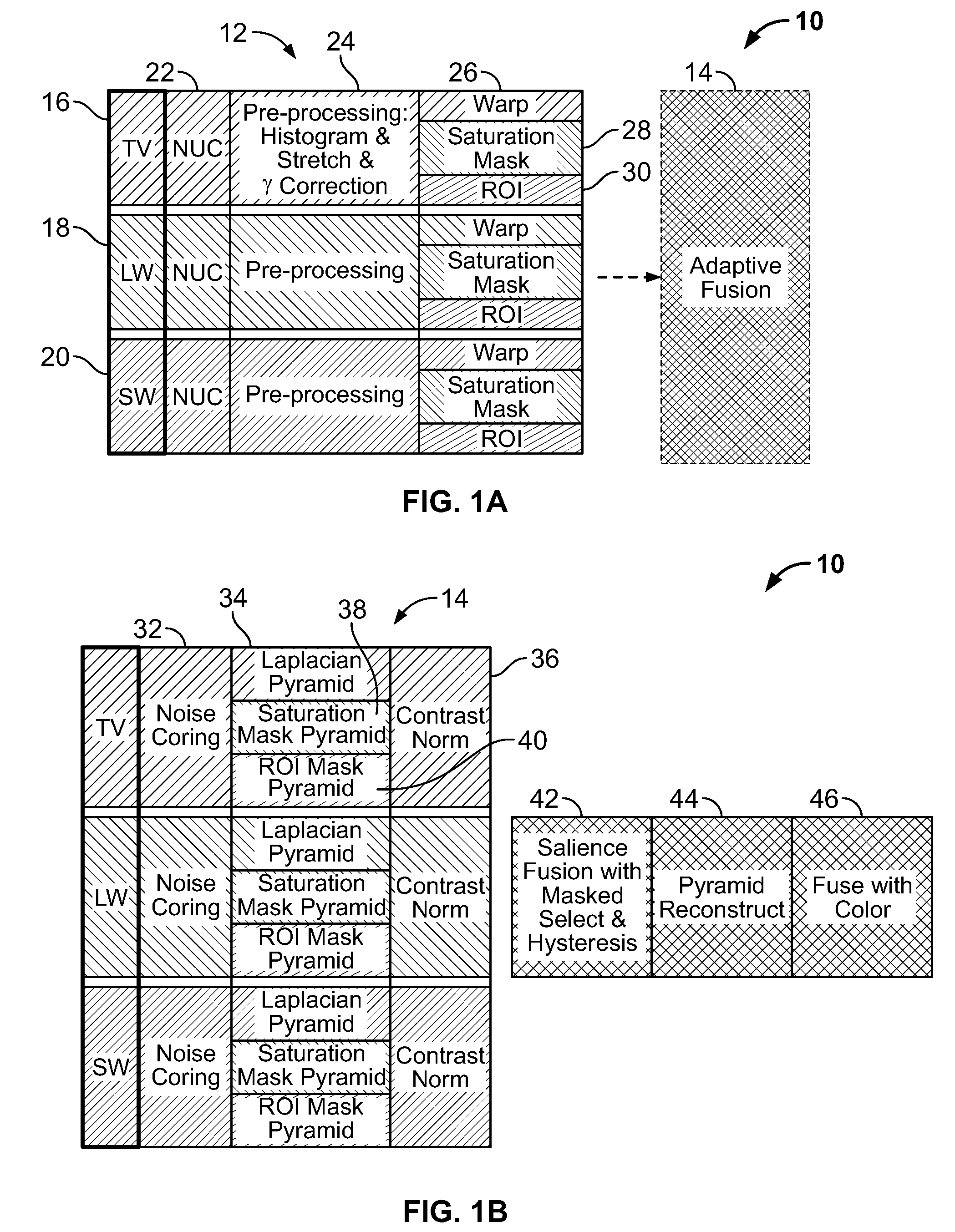

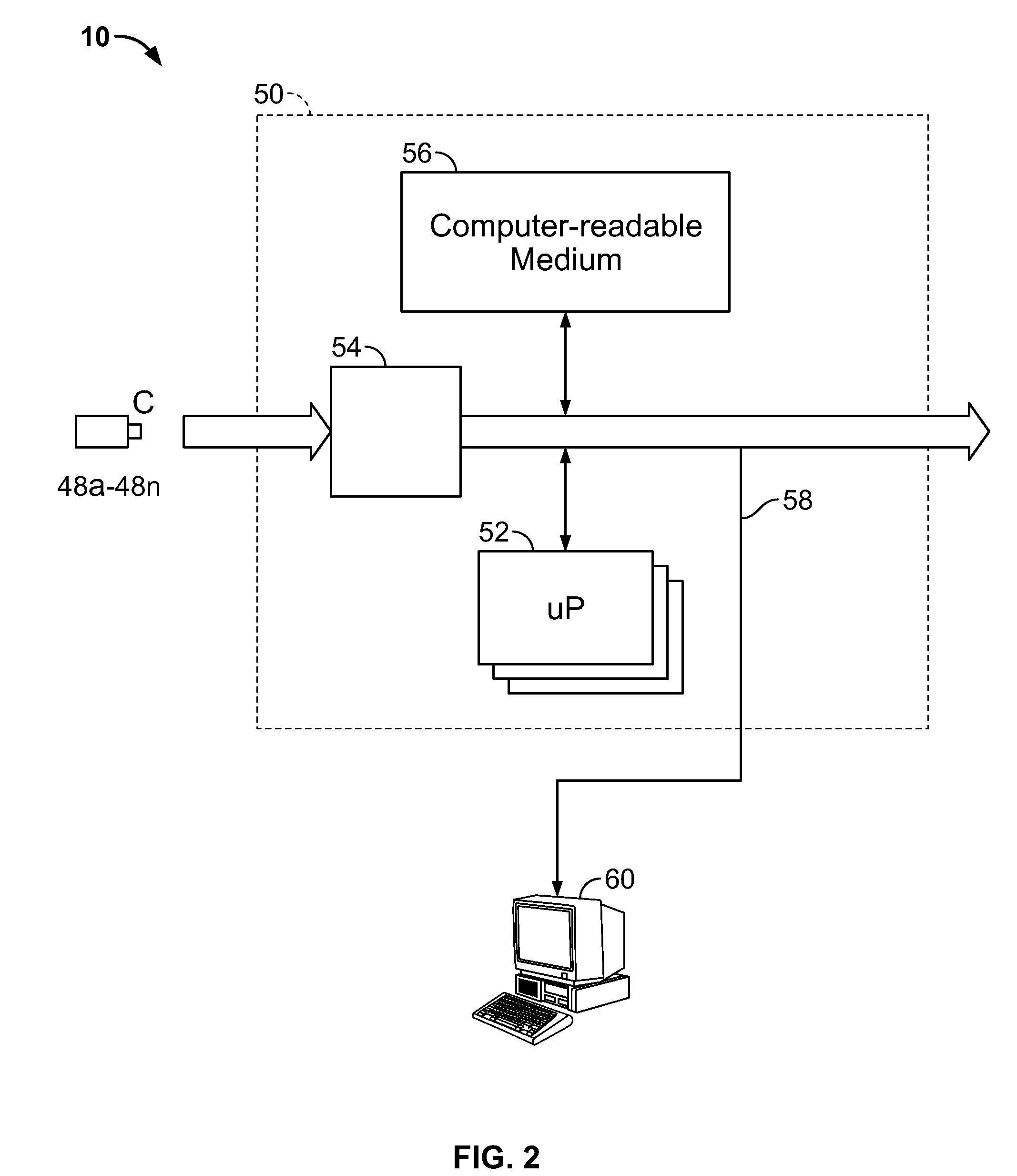

Multi-scale multi-camera adaptive fusion with contrast normalization

ActiveUS20090169102A1Reduce flickering artifactReduce artifactsTelevision system detailsImage enhancementMulti cameraEnergy based

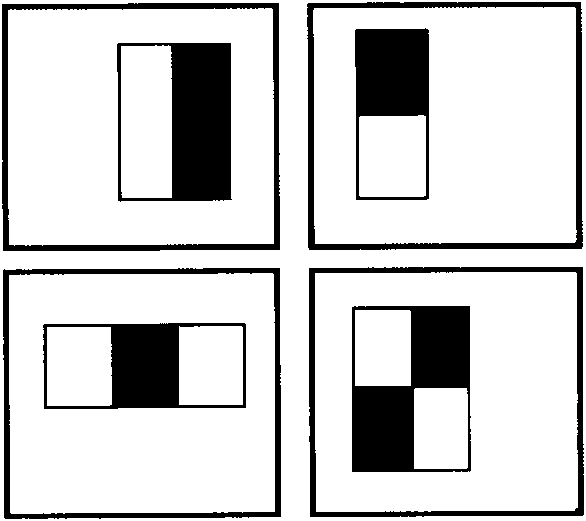

A computer implemented method for fusing images taken by a plurality of cameras is disclosed, comprising the steps of: receiving a plurality of images of the same scene taken by the plurality of cameras; generating Laplacian pyramid images for each source image of the plurality of images; applying contrast normalization to the Laplacian pyramids images; performing pixel-level fusion on the Laplacian pyramid images based on a local salience measure that reduces aliasing artifacts to produce one salience-selected Laplacian pyramid image for each pyramid level; and combining the salience-selected Laplacian pyramid images into a fused image. Applying contrast normalization further comprises, for each Laplacian image at a given level: obtaining an energy image from the Laplacian image; determining a gain factor that is based on at least the energy image and a target contrast; and multiplying the Laplacian image by a gain factor to produce a normalized Laplacian image.

Owner:SRI INTERNATIONAL

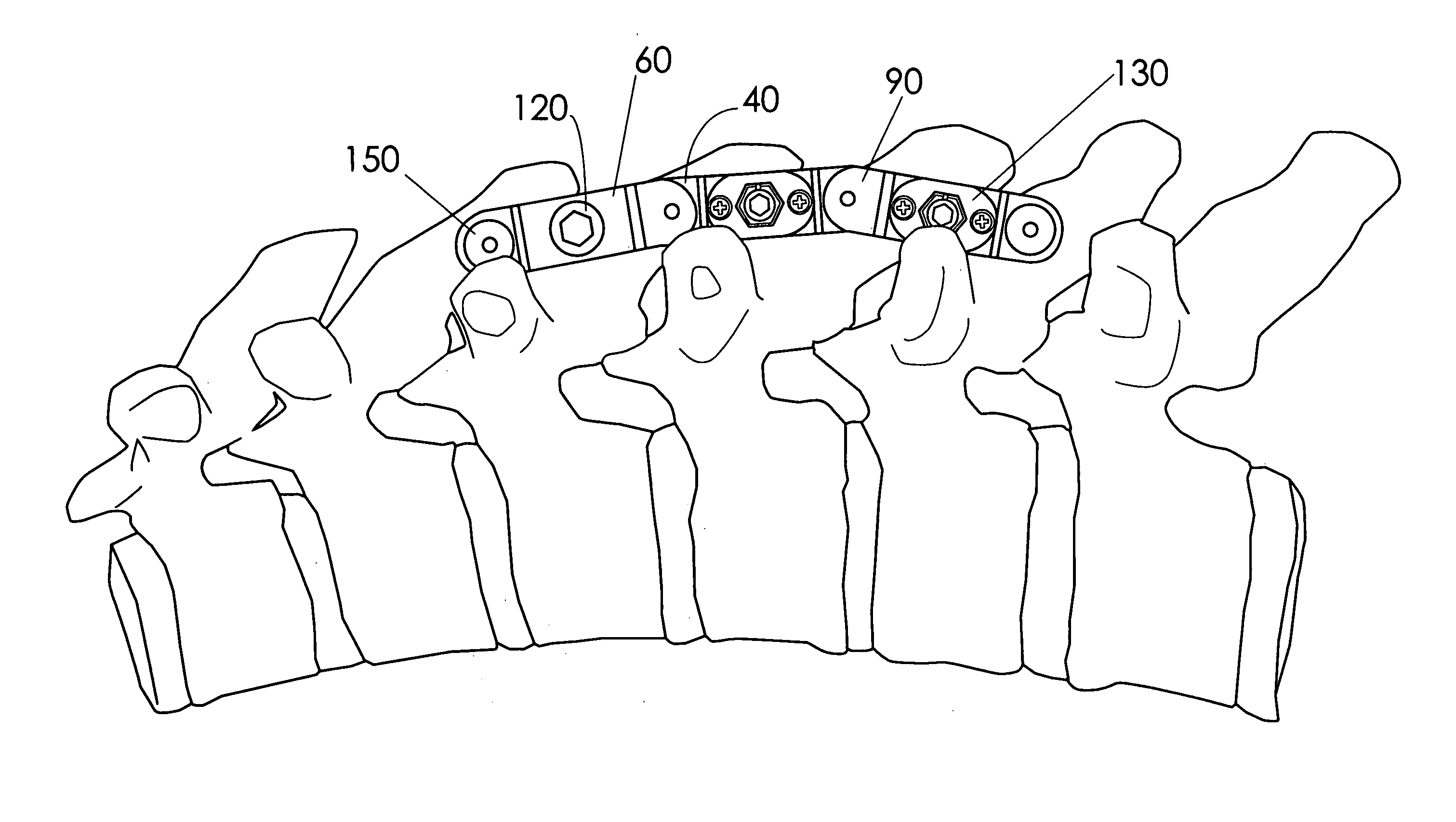

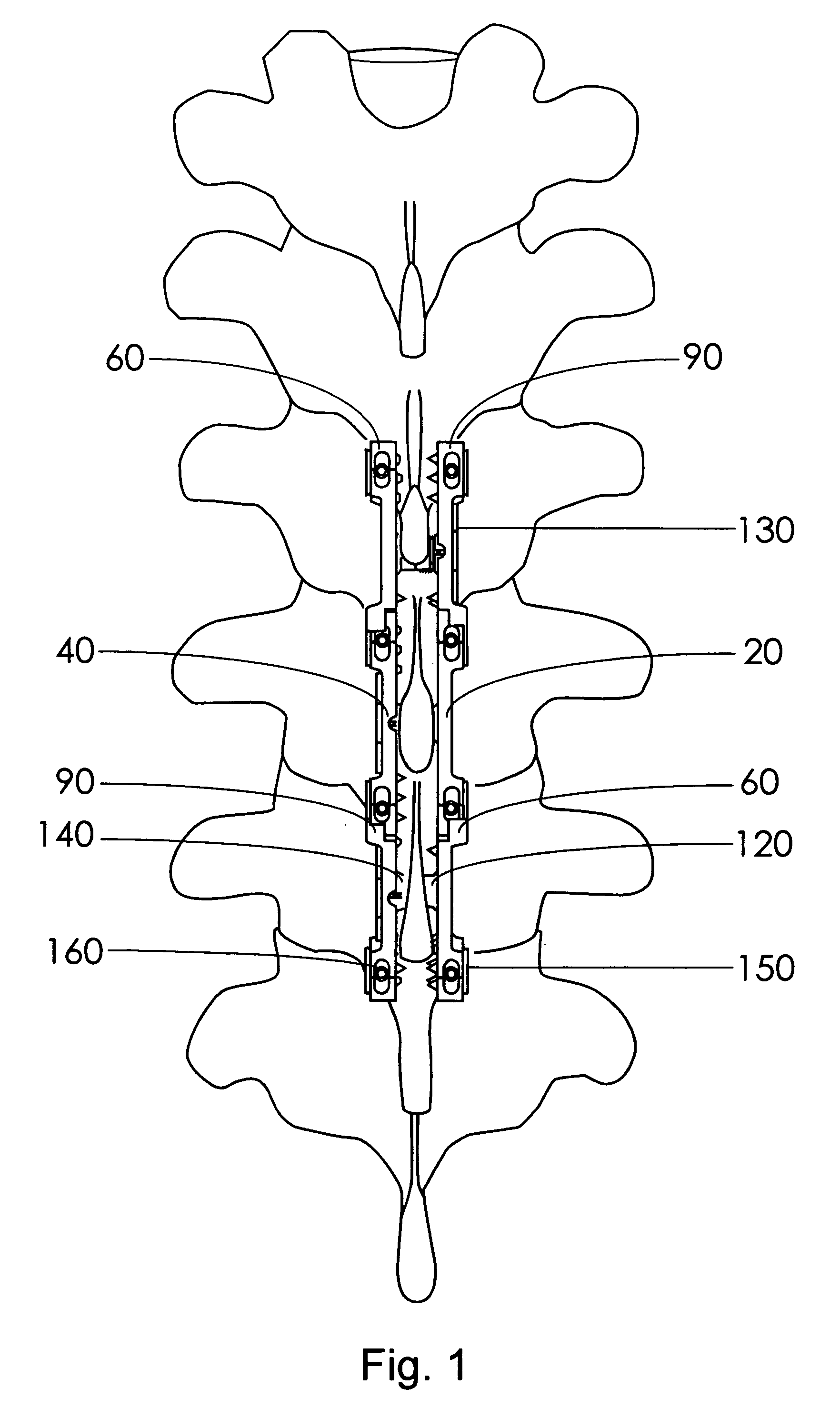

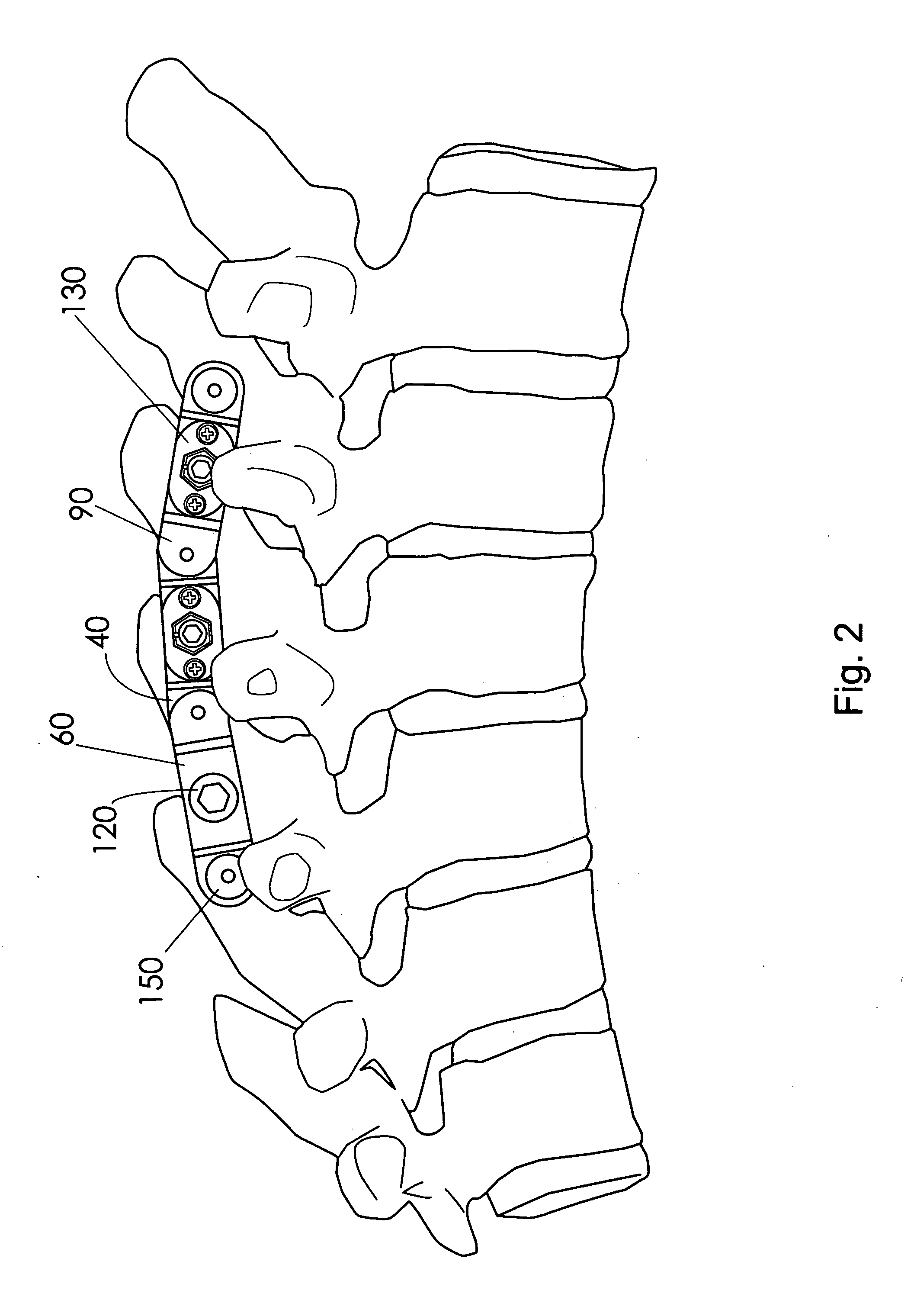

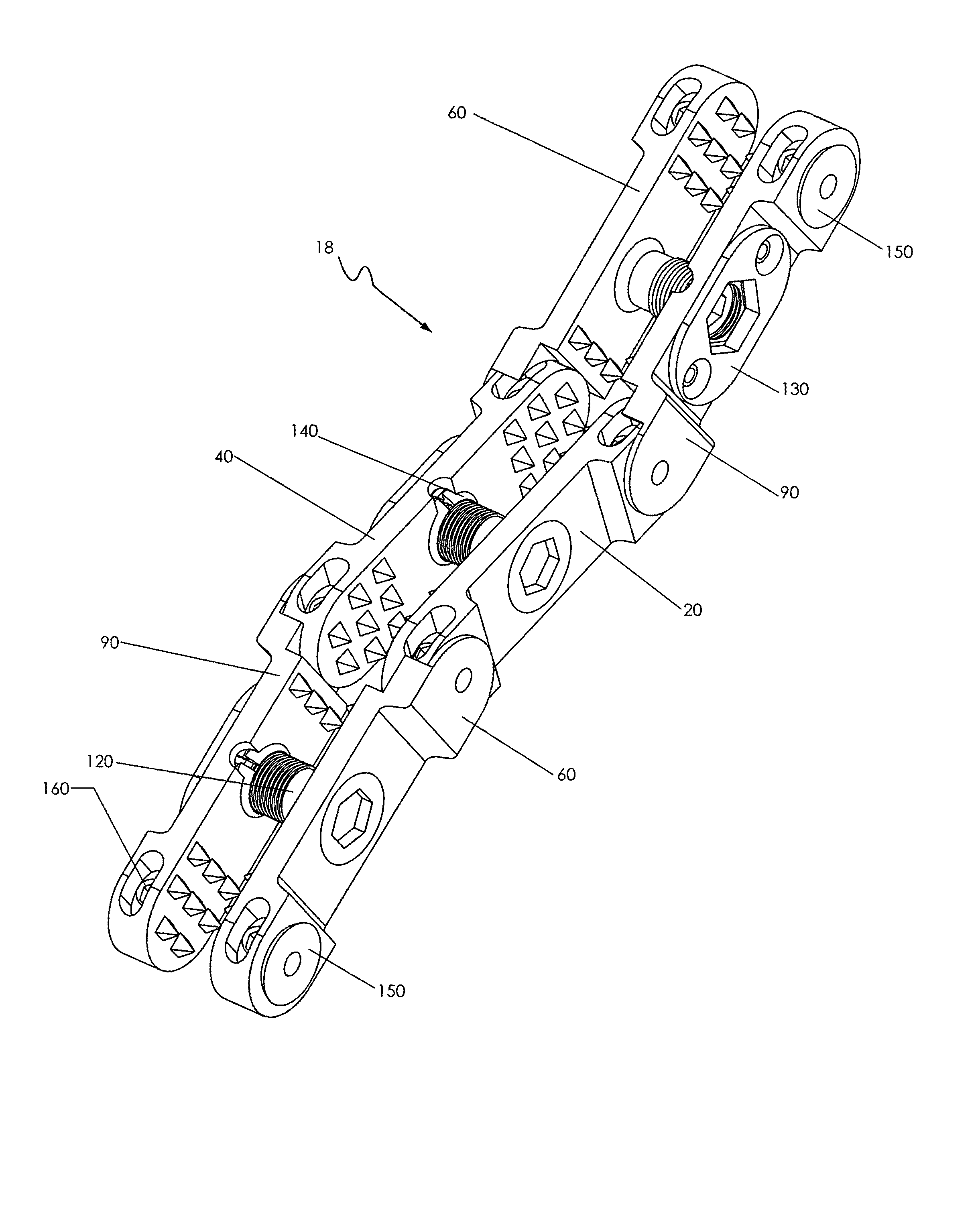

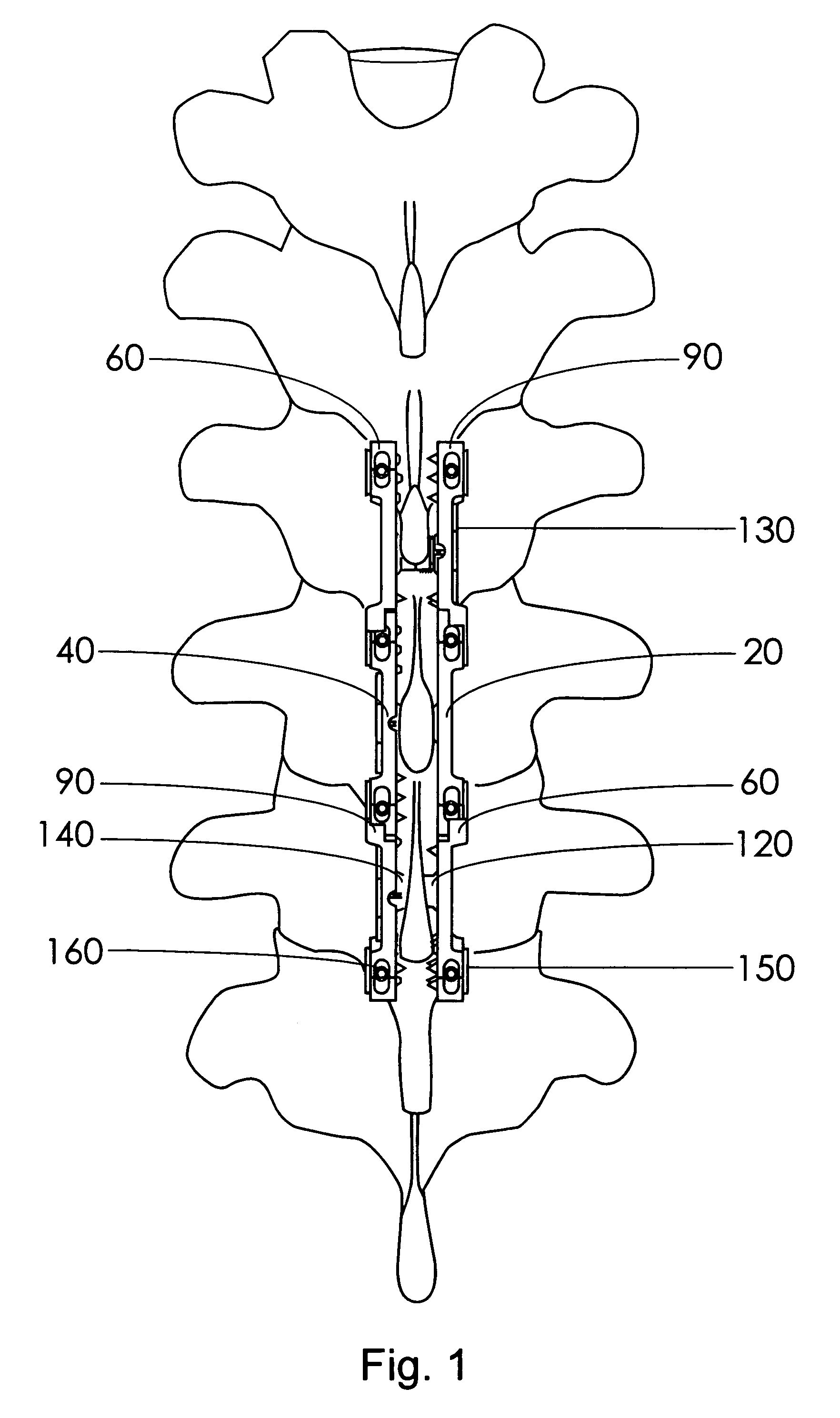

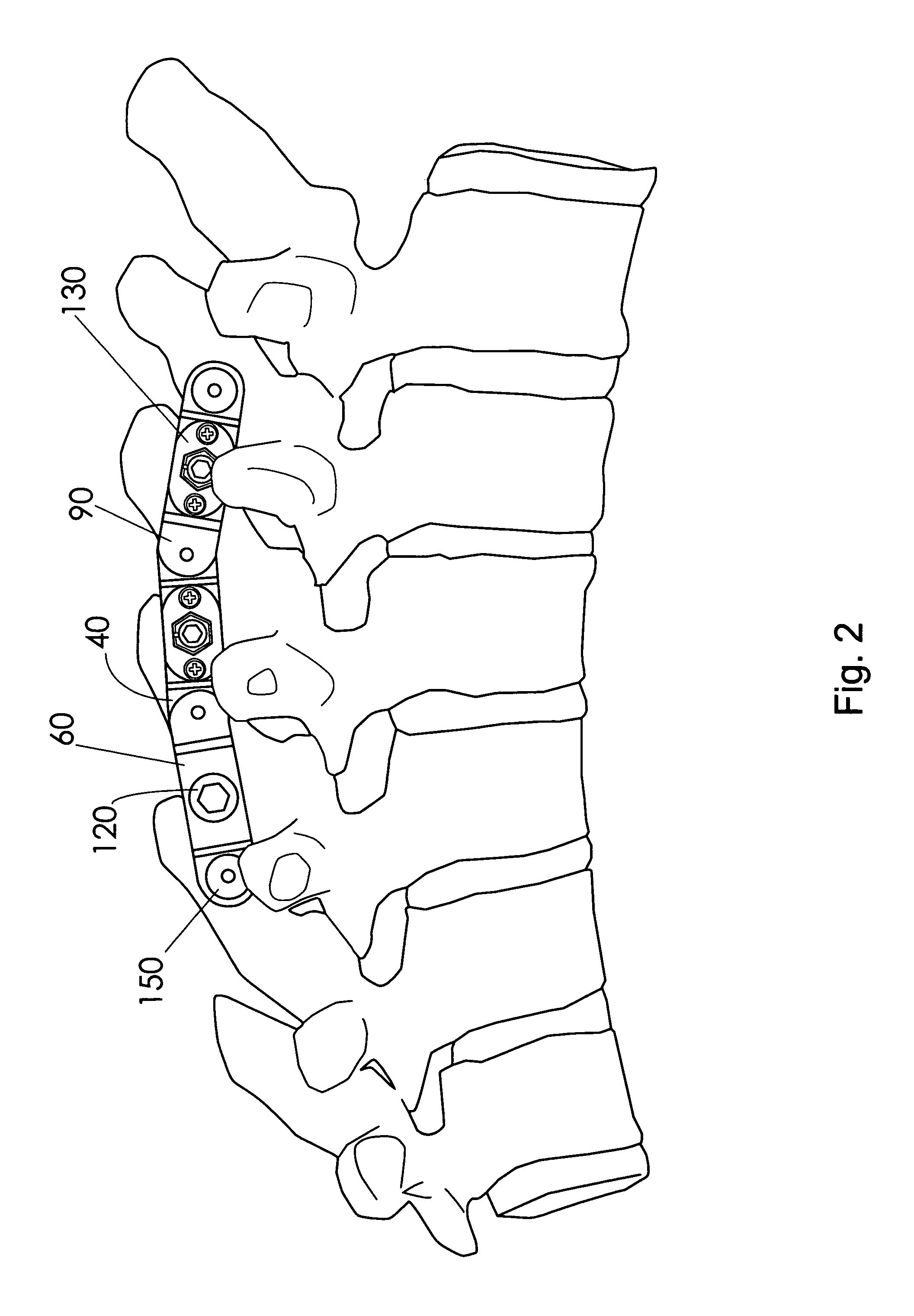

Spinous process stabilization device and method

InactiveUS20090264927A1Easy to integrateInternal osteosythesisJoint implantsCoronal planeEmbedded teeth

A fixation device to immobilize a spinal motion segment and promote posterior fusion, used as stand-alone instrumentation or as an adjunct to an anterior approach. The device functions as a multi-level fusion system including modular single-level implementations. At a single-level the implant includes a pair of plates spanning two adjacent vertebrae with embedding teeth on the medially oriented surfaces directed into the spinous processes or laminae. The complementary plates at a single-level are connected via a cross-post with a hemi-spherical base and cylindrical shaft passed through the interspinous process gap and ratcheted into an expandable collar. The expandable collar's spherical profile contained within the opposing plate allows for the ratcheting mechanism to be correctly engaged creating a uni-directional lock securing the implant to the spine when a medially directed force is applied to both complementary plates using a specially designed compression tool. The freedom of rotational motion of both the cross-post and collar enables the complementary plates to be connected at a range of angles in the axial and coronal planes accommodating varying morphologies of the posterior elements in the cervical, thoracic and lumbar spine. To achieve multi-level fusion the single-level implementation can be connected in series using an interlocking mechanism fixed by a set-screw.

Owner:GINSBERG HOWARD JOESEPH +2

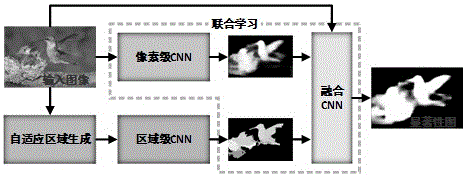

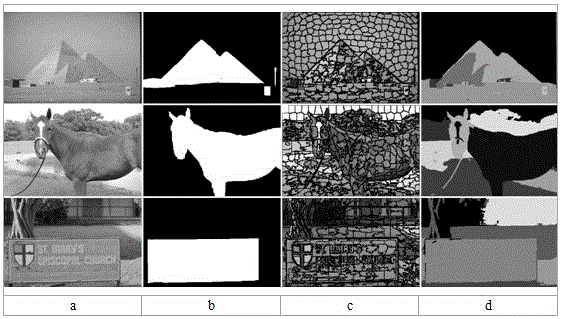

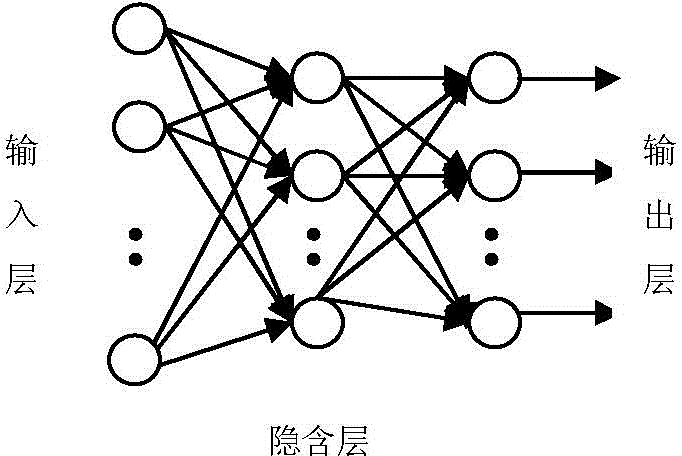

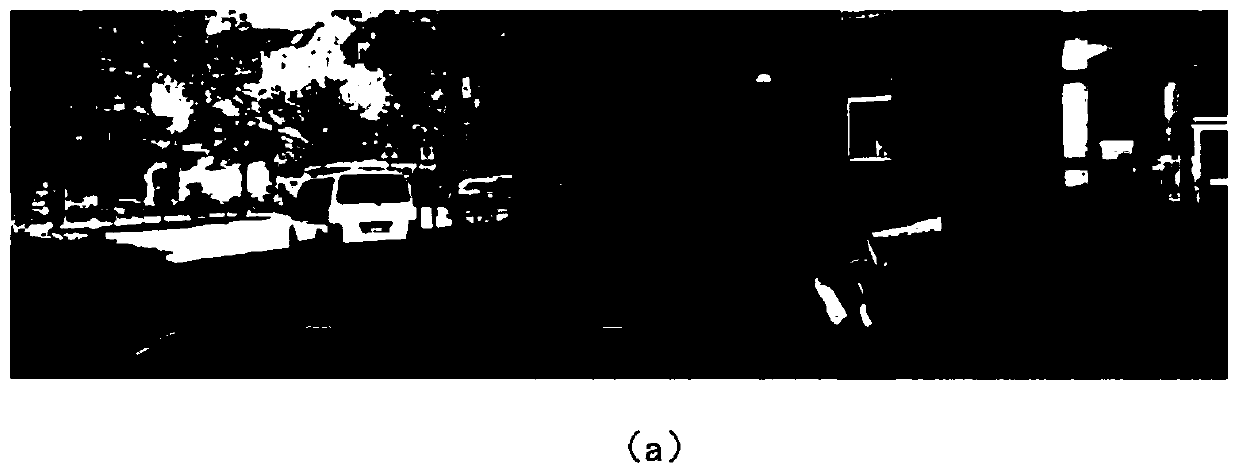

Region-level and pixel-level fusion saliency detection method based on convolutional neural networks (CNN)

ActiveCN106157319AGood saliency detection performanceGuaranteed edgeImage enhancementImage analysisSaliency mapResearch Object

The invention discloses a region-level and pixel-level fusion saliency detection method based on convolutional neural networks (CNN). The research object of the method is a static image of which the content can be arbitrary, and the research goal of the method is to find a target striking the eyes of a person from images and assign different saliency values to the target. An adaptive region generation technology is mainly proposed, and two CNN structures are designed and used for pixel-level saliency prediction and saliency fusion respectively. The two CNN models are used for training a network model with the image as input and the real result of the image as a supervisory signal and finally outputting a saliency map of the same size as the input image. By means of the method, region-level saliency estimation and pixel-level saliency prediction can be effectively carried out to obtain two saliency maps, and finally the two saliency maps and the original image are fused through the CNNs for saliency fusion to obtain the final saliency map.

Owner:HARBIN INST OF TECH

Spinous process stabilization device and method

A fixation device is provided to immobilize a spinal motion segment and promote posterior fusion, used as stand-alone instrumentation or as an adjunct to an anterior approach. The device functions as a multi-level fusion system including modular single-level implementations. At a single-level the implant includes a pair of plates spanning two adjacent vertebrae with embedding teeth on the medially oriented surfaces directed into the spinous processes or laminae. The complementary plates at a single-level are connected via a cross-post passed through the interspinous process gap The freedom of rotational motion of both the cross-post and collar enables the complementary plates to be connected at a range of angles in the axial and coronal planes accommodating varying morphologies of the posterior elements in the cervical, thoracic and lumbar spine. To achieve multi-level fusion the single-level implementation can be connected in series using an interlocking mechanism fixed by a set-screw.

Owner:GINSBERG HOWARD JOESEPH +2

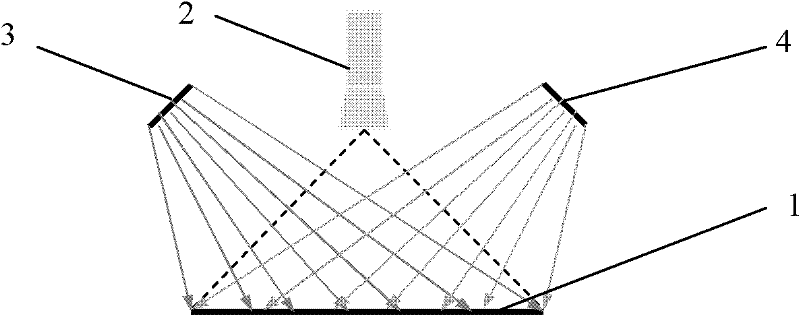

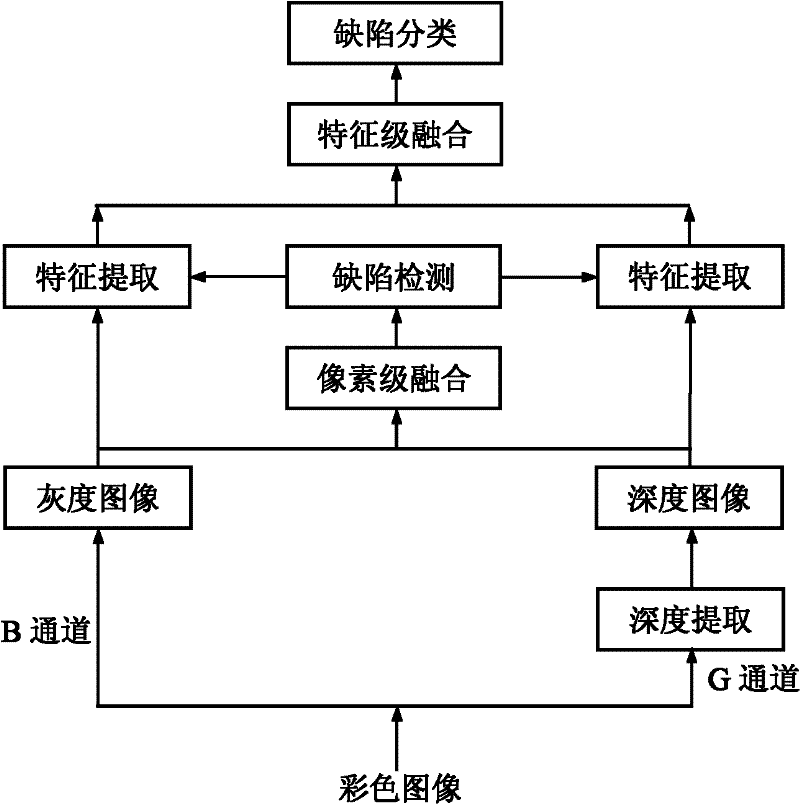

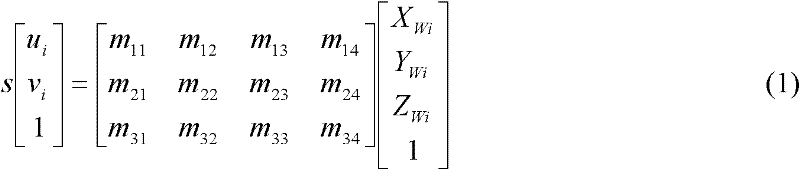

A Surface Defect Detection Method Based on Fusion of Gray Level and Depth Information

ActiveCN102288613AImprove accuracyAccurate detectionImage enhancementOptically investigating flaws/contaminationEdge extractionSurface structure

The invention relates to an on-line detecting method for surface defects of an object and a device for realizing the method. The accuracy for the detection and the distinguishing of the defects is improved through the fusion of grey and depth information, and the method and the device can be applied to the detection of the object with a complicated shape and a complicated surface. A grey image and a depth image of the surface of the object are collected by utilizing the combination of a single colored area array CCD (charge-coupled device) camera and a plurality of light sources with different colors, wherein obtaining of the depth information is achieved through a surface structured light way. The division and the defect edge extraction of the images are carried out through the pixel level fusion of the depth image and the grey image, so that the area where the defects are positioned can be detected more accurately. According to the detected area with the defects, the grey characteristics, the texture characteristics and the two-dimensional geometrical characteristics of the defects are extracted from the grey image; the three-dimensional geometrical characteristics of the defects are extracted from the depth image; further, the fusion of characteristic levels is carried out; and a fused characteristic quantity is used as the input of a classifier to classify the defects, thereby achieving the distinguishing of the defects.

Owner:UNIV OF SCI & TECH BEIJING

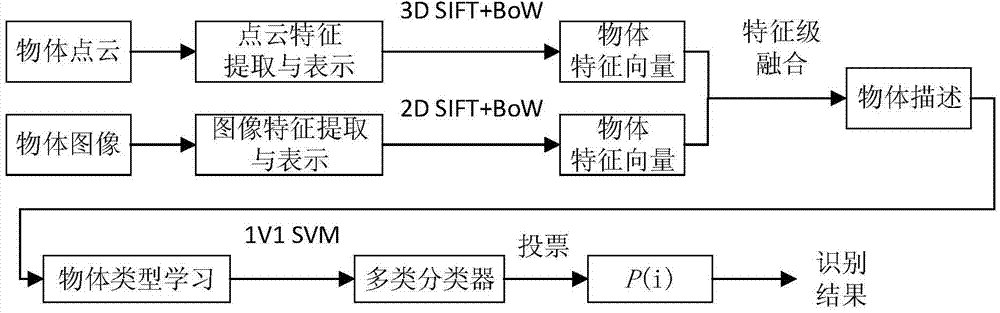

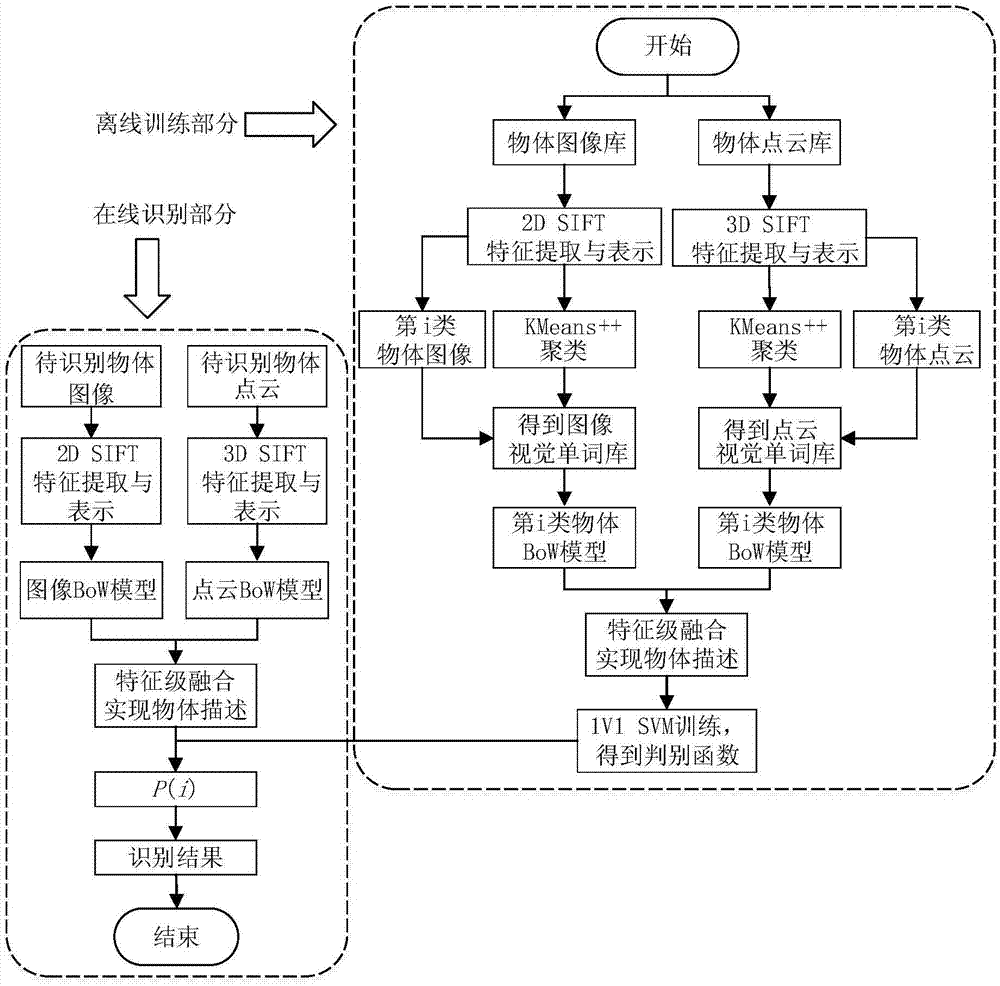

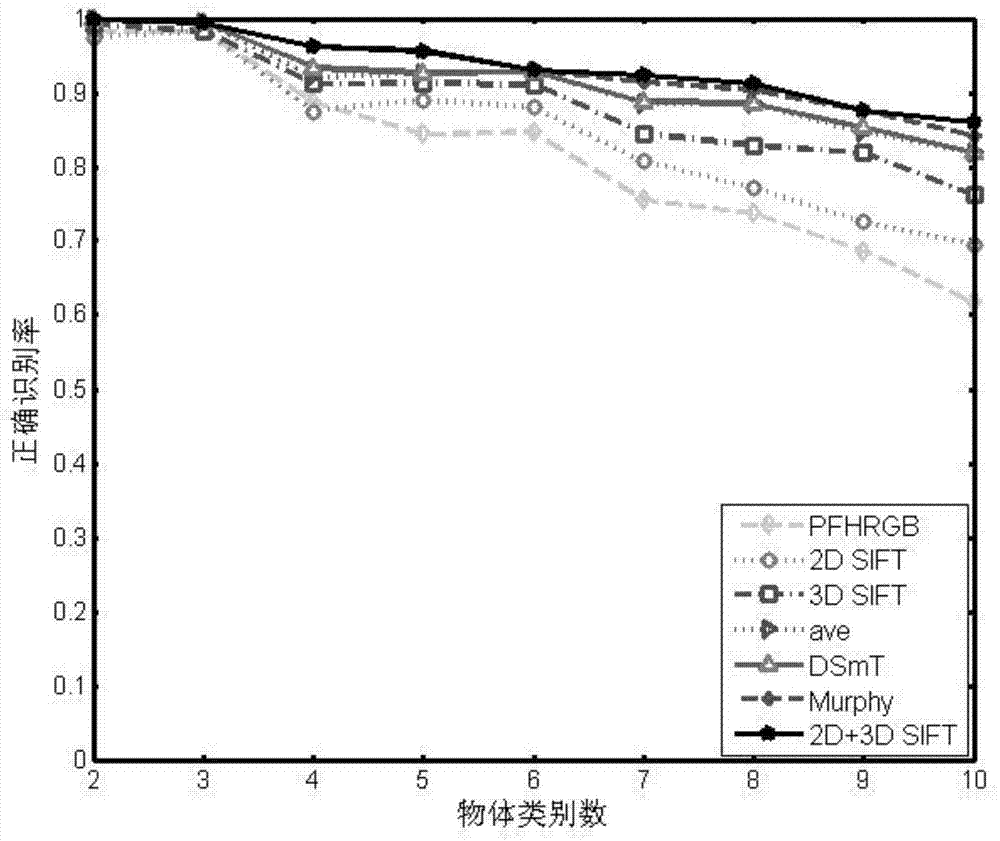

Ordinary object recognizing method based on 2D and 3D SIFT feature fusion

ActiveCN104715254ASolve the problem of low single feature recognition rateCharacter and pattern recognitionSupport vector machineFeature vector

The invention discloses an ordinary object recognizing method based on 2D and 3D SIFT feature fusion. The ordinary object recognizing method based on 2D and 3D SIFT feature fusion aims to increase the ordinary object recognizing accuracy. A 3D SIFT feather descriptor based on a point cloud model is provided based on Scale Invariant Feature Transform, SIFT (2D SIFT), and then the ordinary object recognizing method based on 2D and 3D SIFT feature fusion is provided. The ordinary object recognizing method based on 2D and 3D SIFT feature fusion comprises the following steps that 1, a two-dimension image and 2D and 3D feather descriptors of three-dimension point cloud of an object are extracted; 2, feather vectors of the object are obtained by means of a BoW (Bag of Words) model; 3, the two feature vectors are fused according to feature level fusion, so that description of the object is achieved; 4, classified recognition is achieved through a support vector machine (SVN) of a supervised classifier, and a final recognition result is given.

Owner:SOUTHEAST UNIV

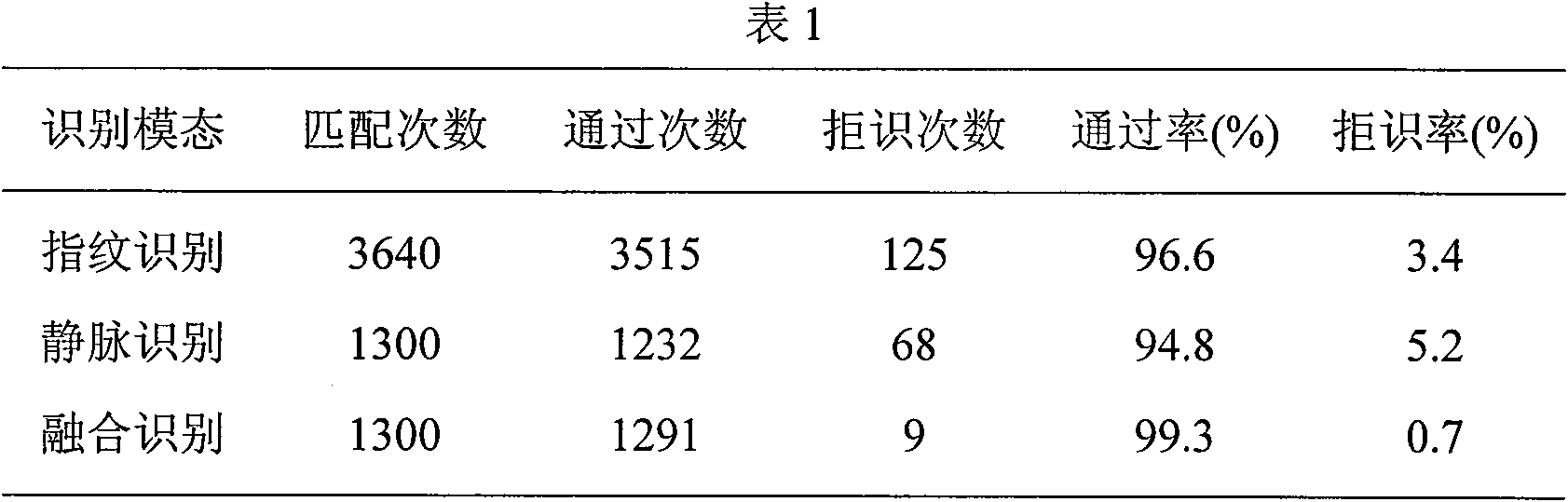

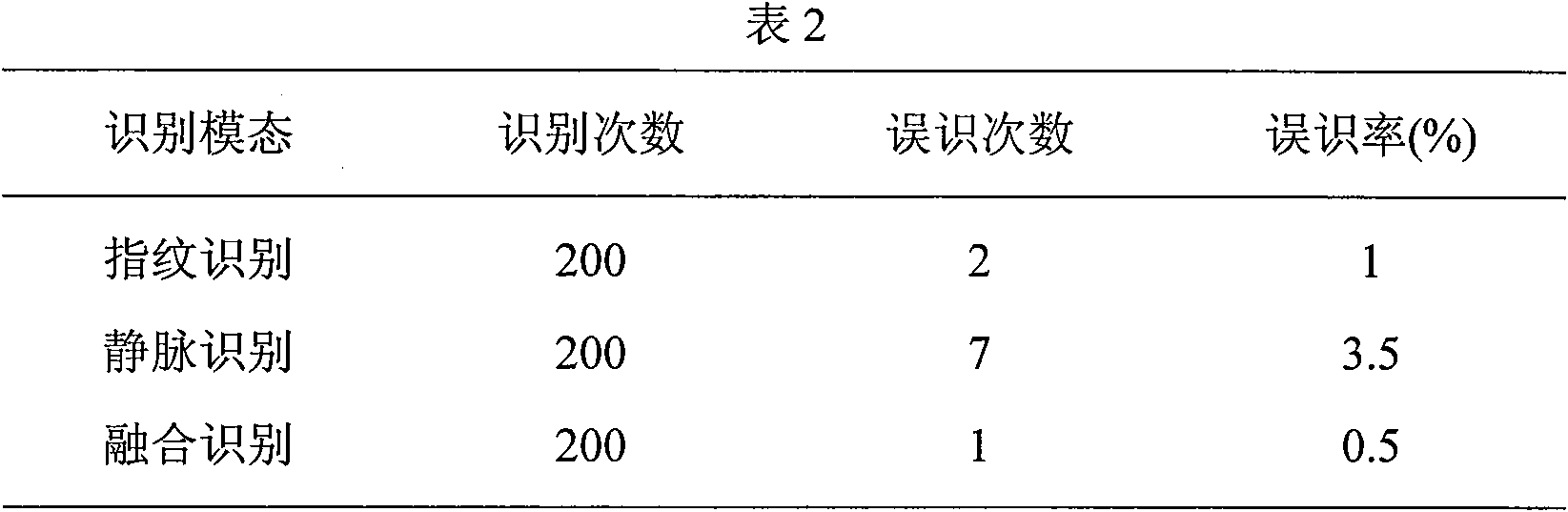

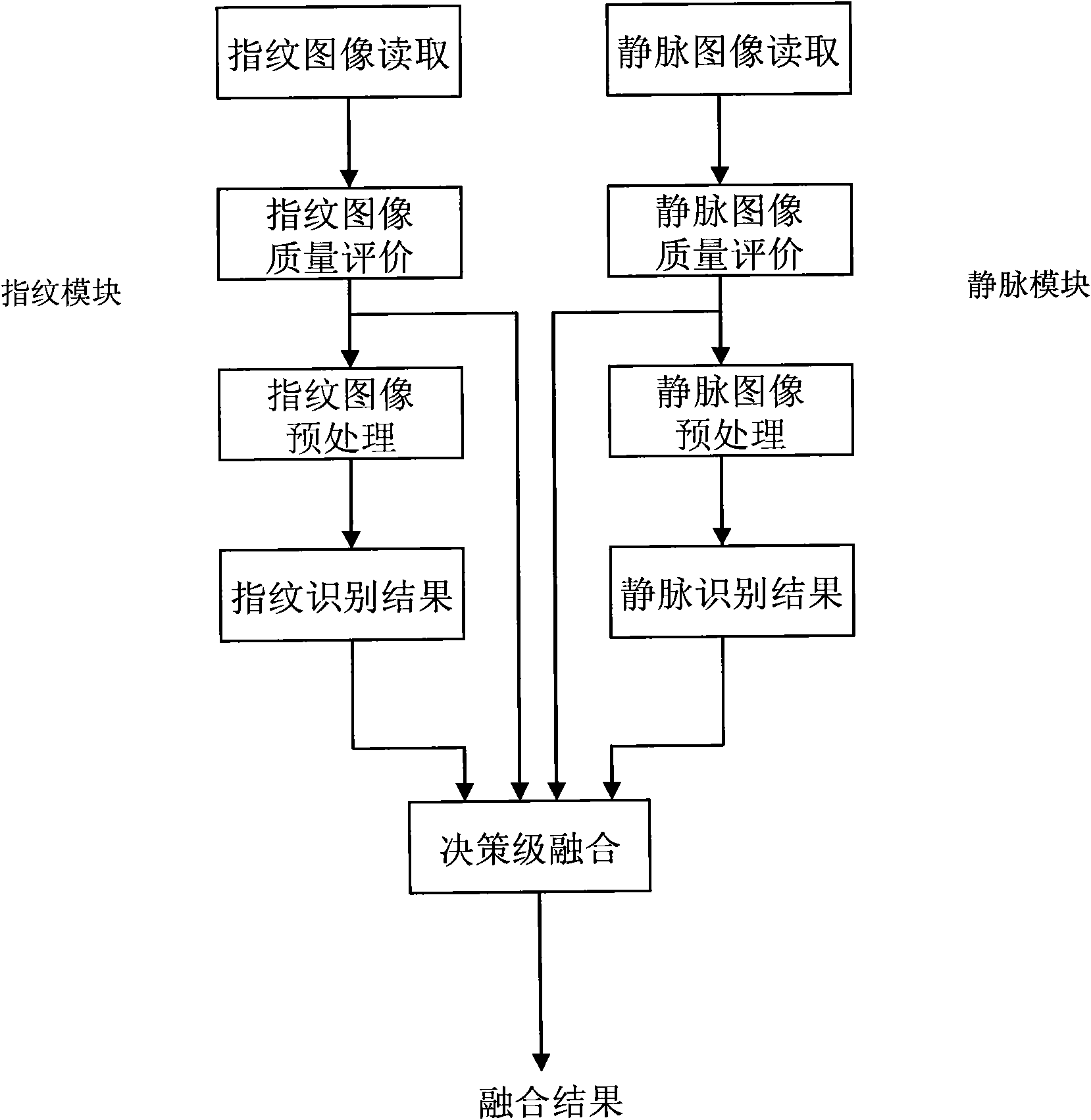

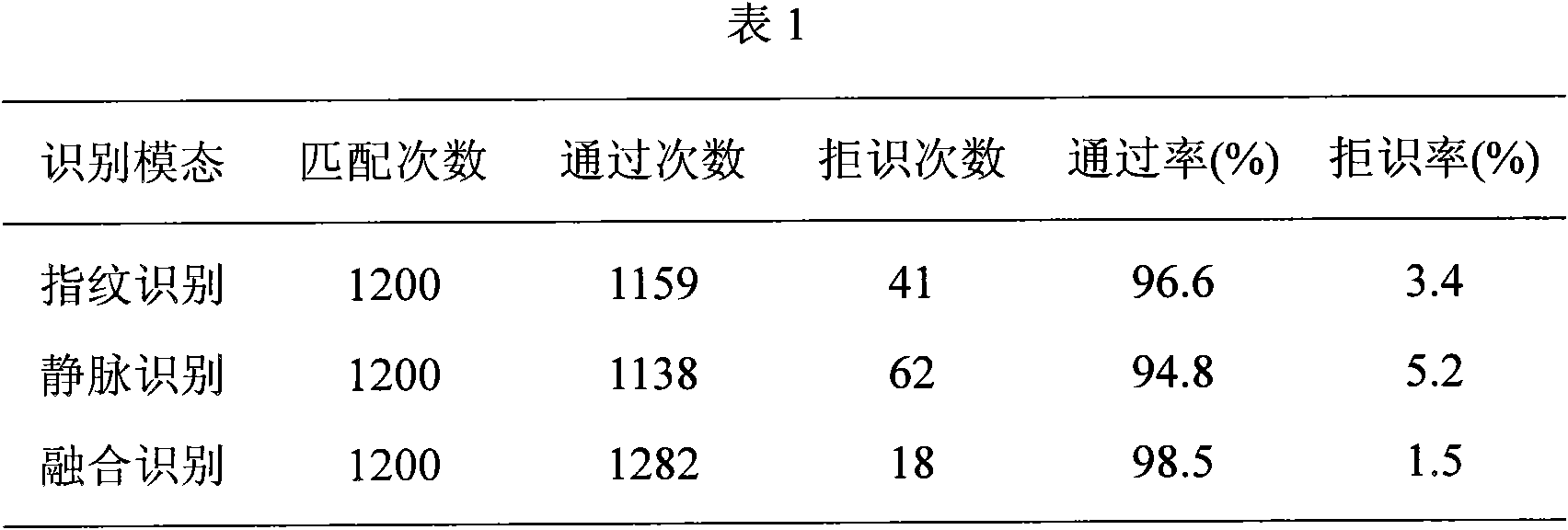

Fingerprint and finger vein bimodal recognition decision level fusion method

InactiveCN101901336AIdentification helpsThe recognition result is reliableCharacter and pattern recognitionPattern recognitionVein

The invention provides a fingerprint and finger vein bimodal recognition decision level fusion method. Two modules, namely a fingerprint module and a vein module are included. The fingerprint module and the vein module read fingerprint images and vein images; the read fingerprint images and vein images are subjected to image quality evaluation according to respective image characteristics to acquire quality scores; the fingerprint images and vein images are preprocessed and recognized, wherein the fingerprint recognition adopts a minutiae-based matching method and the vein recognition adopts an improved Hausdorff distance mode, and respective recognition results are obtained; and finally, a weight is designed according to image quality scores of the two modes, and the recognition results are subjected to decision level fusion according to the weight and a final recognition result is obtained. Based on the fact that the performance of the system after fusion is superior to that of a single fingerprint recognition or finger vein recognition system, the method has strong practicability.

Owner:HARBIN ENG UNIV

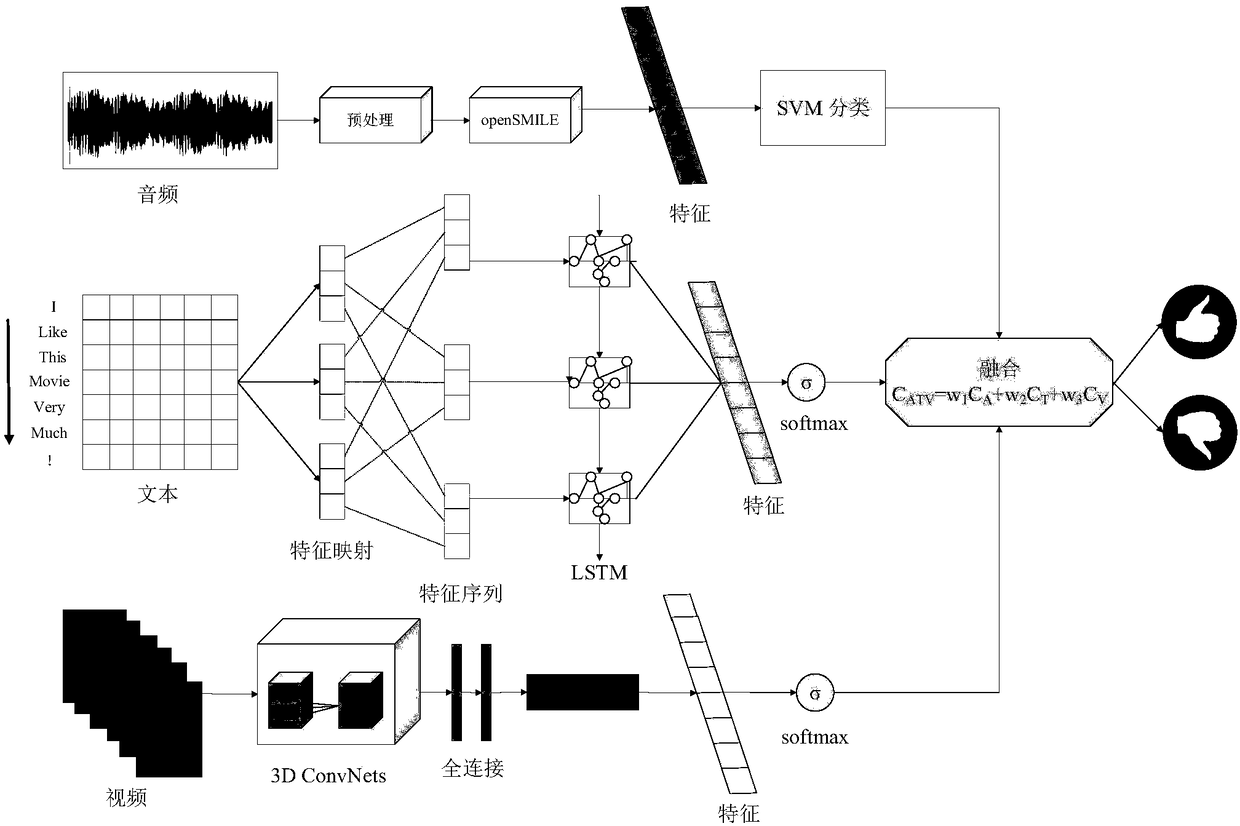

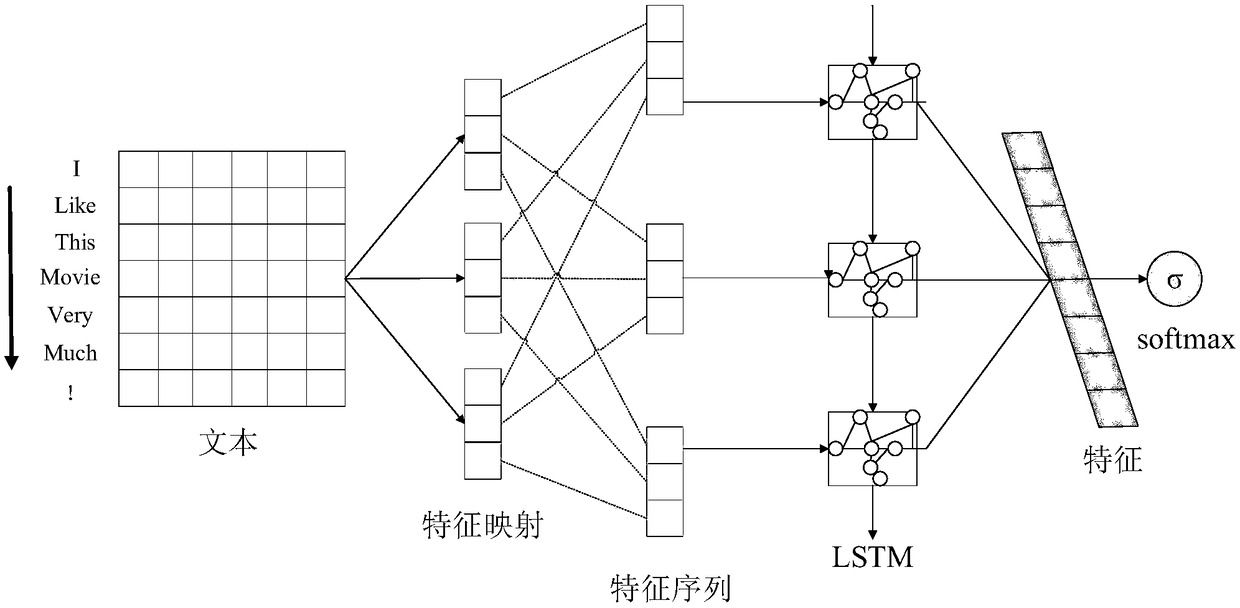

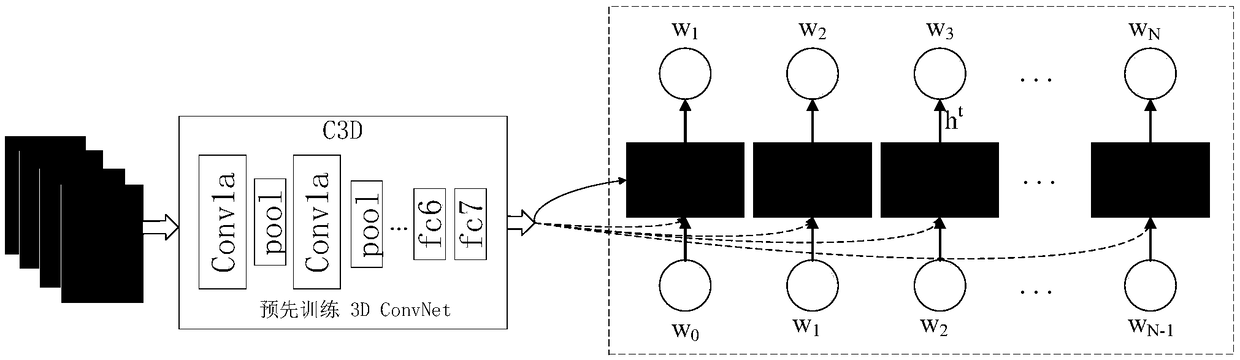

A socio-emotional classification method based on multimodal fusion

InactiveCN109508375AEfficient extractionImprove accuracySemantic analysisNeural architecturesShort-term memoryClassification methods

The invention provides a social emotion classification method based on multimodal fusion, which relates to information in the form of audio, visual and text. Most of affective computing research onlyextracts affective information by analyzing single-mode information, ignoring the relationship between information sources. The present invention proposes a 3D CNN ConvLSTM (3D CNN ConvLSTM) model forvideo information, and establishes spatio-temporal information for emotion recognition tasks through a cascade combination of a three-dimensional convolution neural network (C3D) and a convolution long-short-term memory recurrent neural network (ConvLSTM). For text messages, use CNN-RNN hybrid model is used to classify text emotion. Heterogeneous fusion of vision, audio and text is performed by decision-level fusion. The deep space-time feature learned by the invention effectively simulates the visual appearance and the motion information, and after fusing the text and the audio information,the accuracy of the emotion analysis is effectively improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

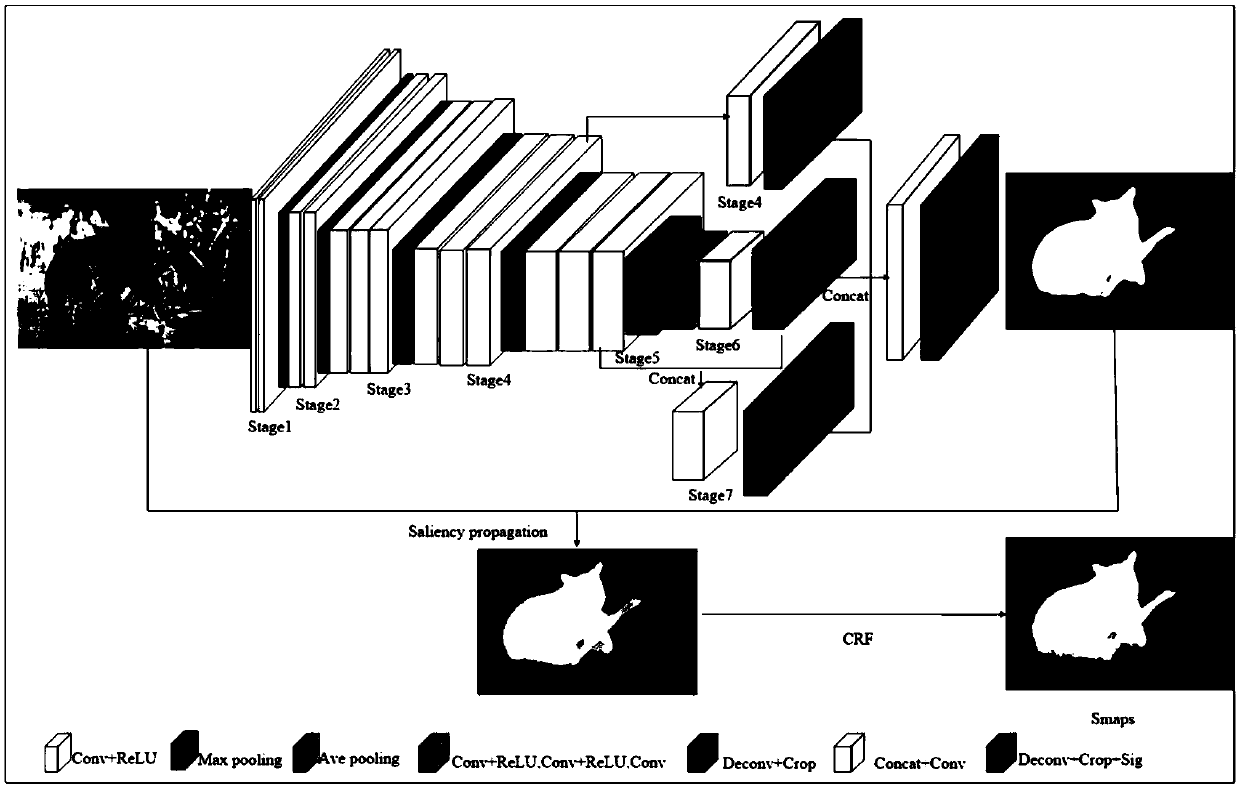

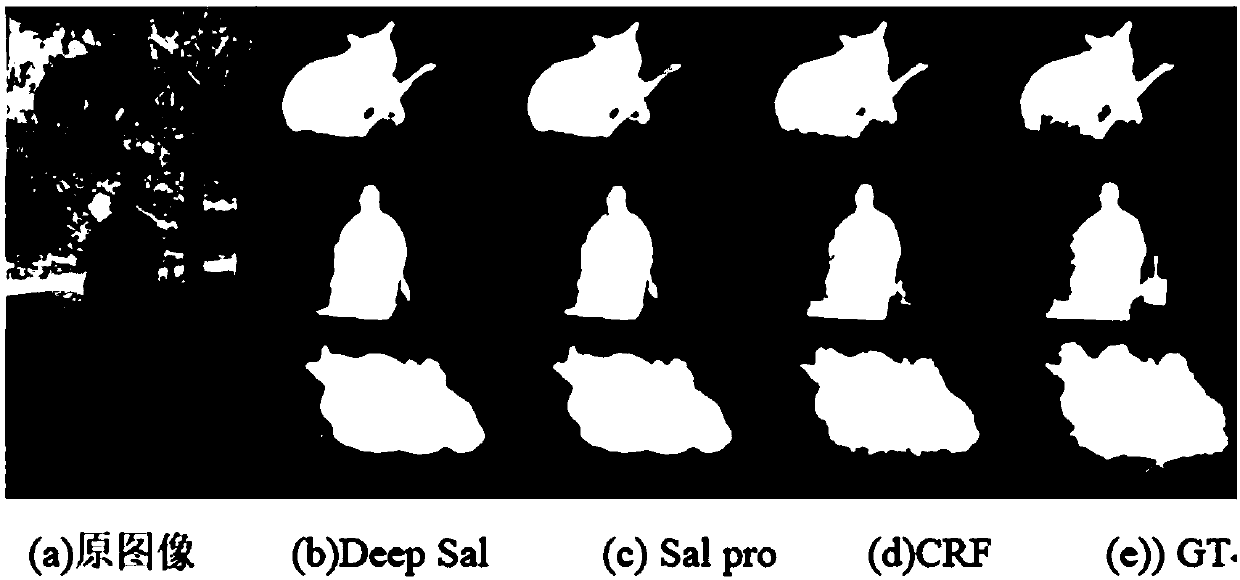

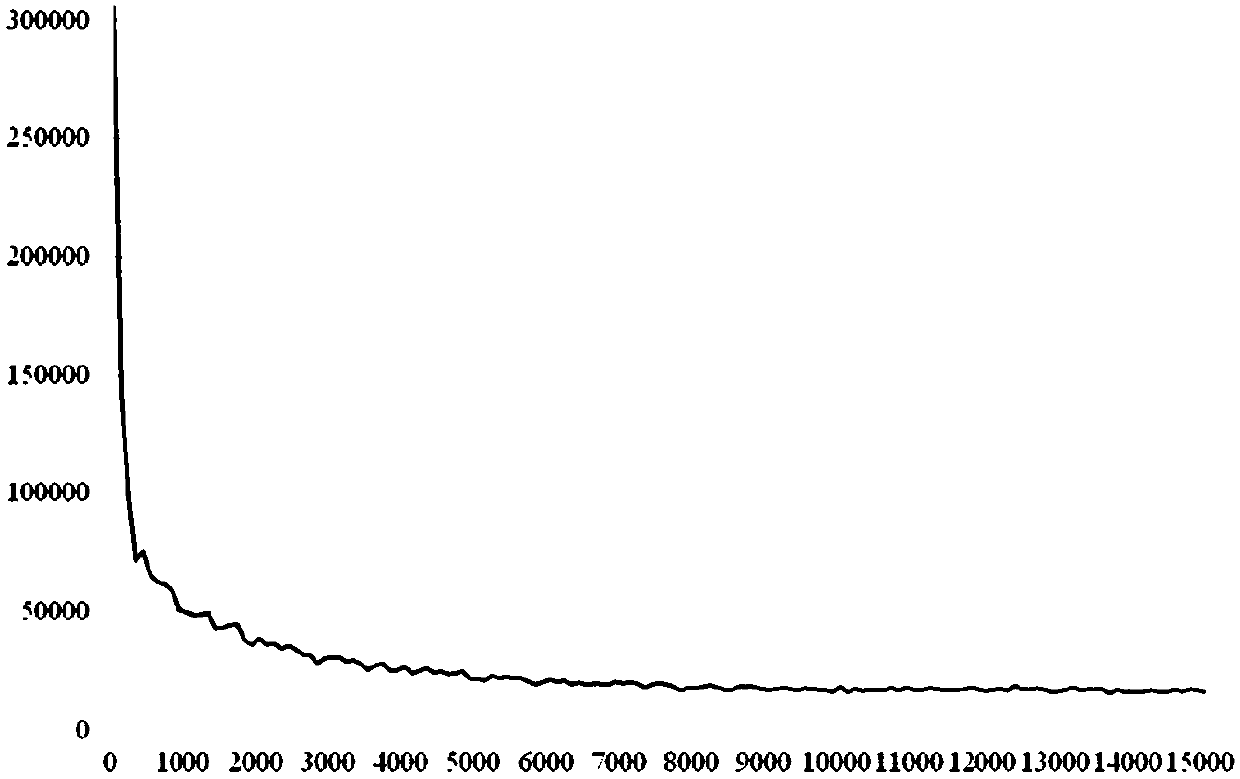

Image salient target detection method combined with deep learning

InactiveCN108898145AImprove robustnessEasy to integrateCharacter and pattern recognitionConditional random fieldSaliency map

The invention provides an image salient target detection method combined with deep learning. The method is based on an improved RFCN deep convolution neural network of cross-level feature fusion, anda network model comprises two parts of basic feature extraction and cross-level feature fusion. The method comprises: firstly, using an improved deep convolution network model to extract features of an input image, and using a cross-level fusion framework for feature fusion, to generate a high-level semantic feature preliminary saliency map; then, fusing the preliminary saliency map with image bottom-layer features to perform saliency propagation and obtain structure information; finally, using a conditional random field (CRF) to optimize a saliency propagation result to obtain a final saliency map. In a PR curve graph obtain by the method, F value and MAE effect are better than those obtained by other nine algorithms. The method can improve integrity of salient target detection, and has characteristics of less background noise and high algorithm robustness.

Owner:SOUTHWEST JIAOTONG UNIV

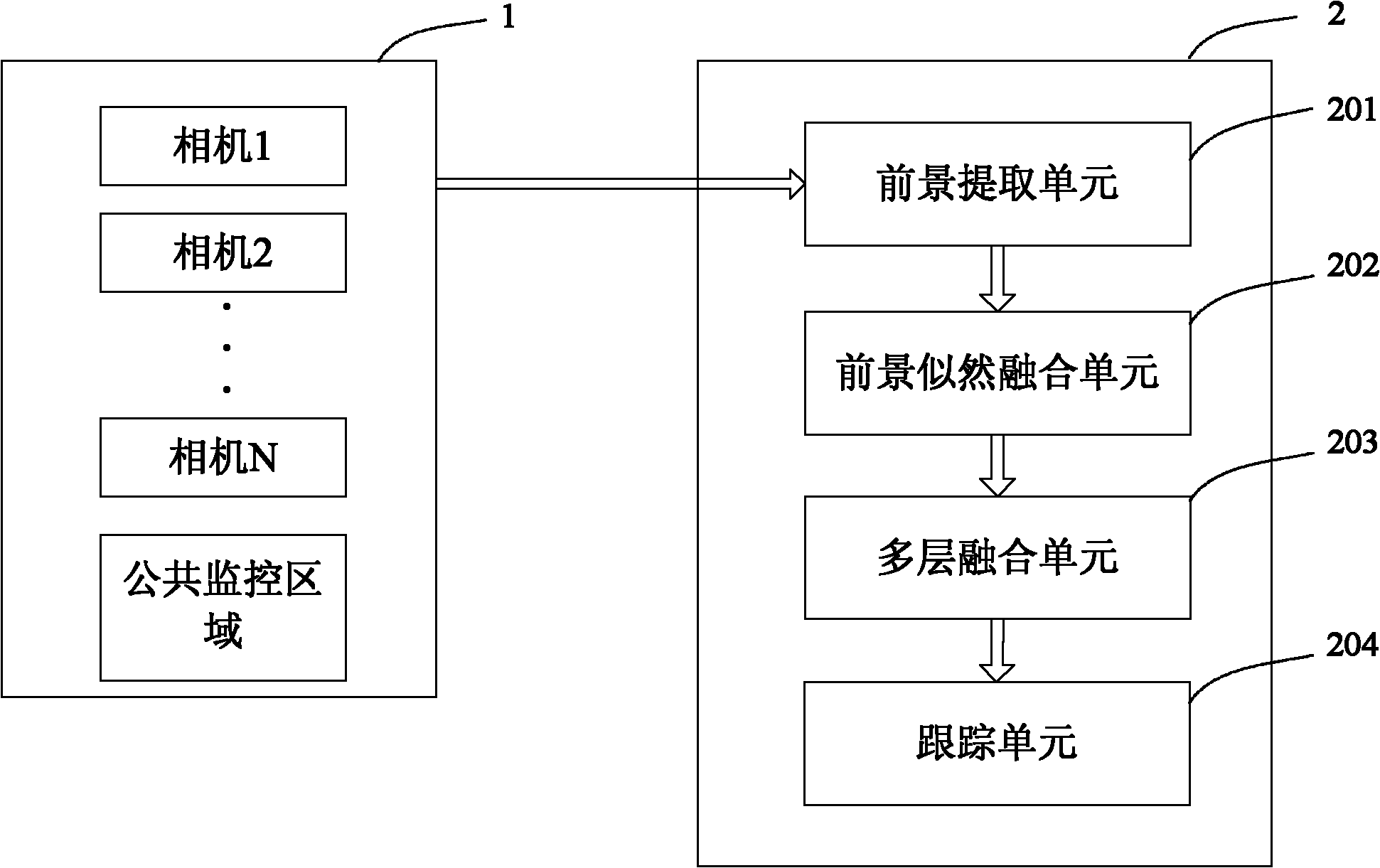

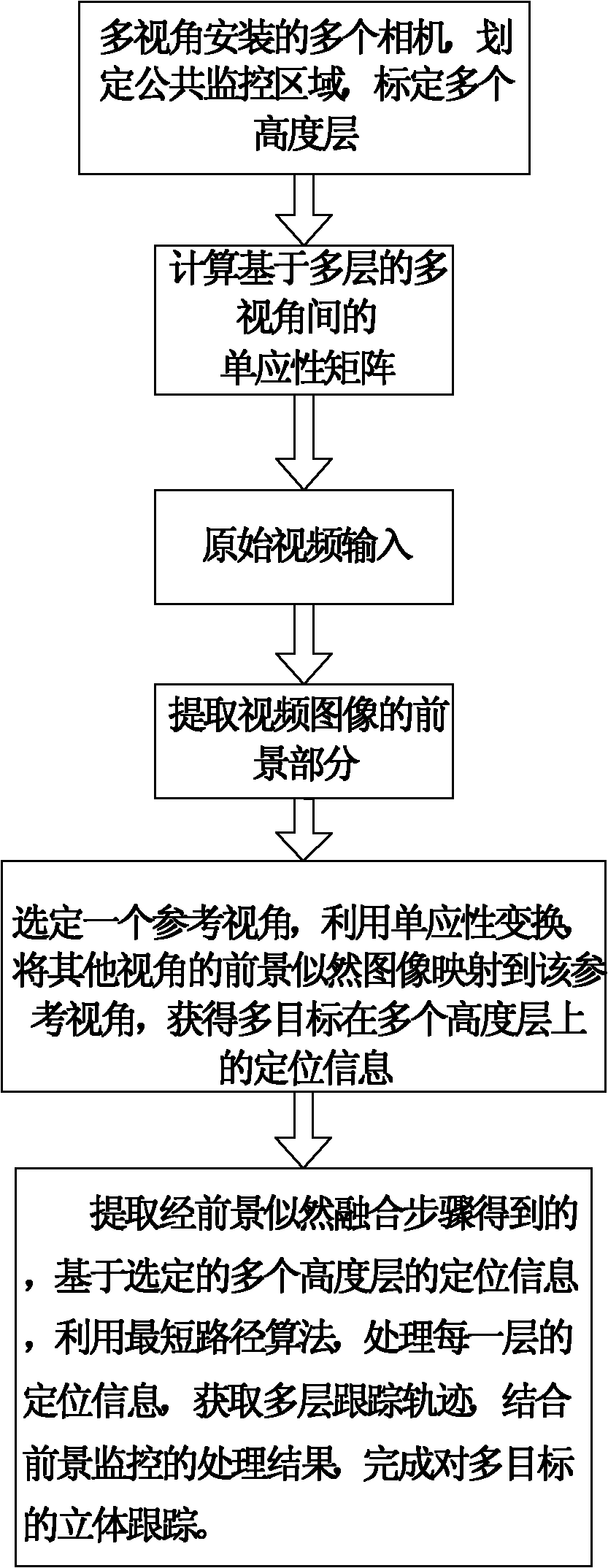

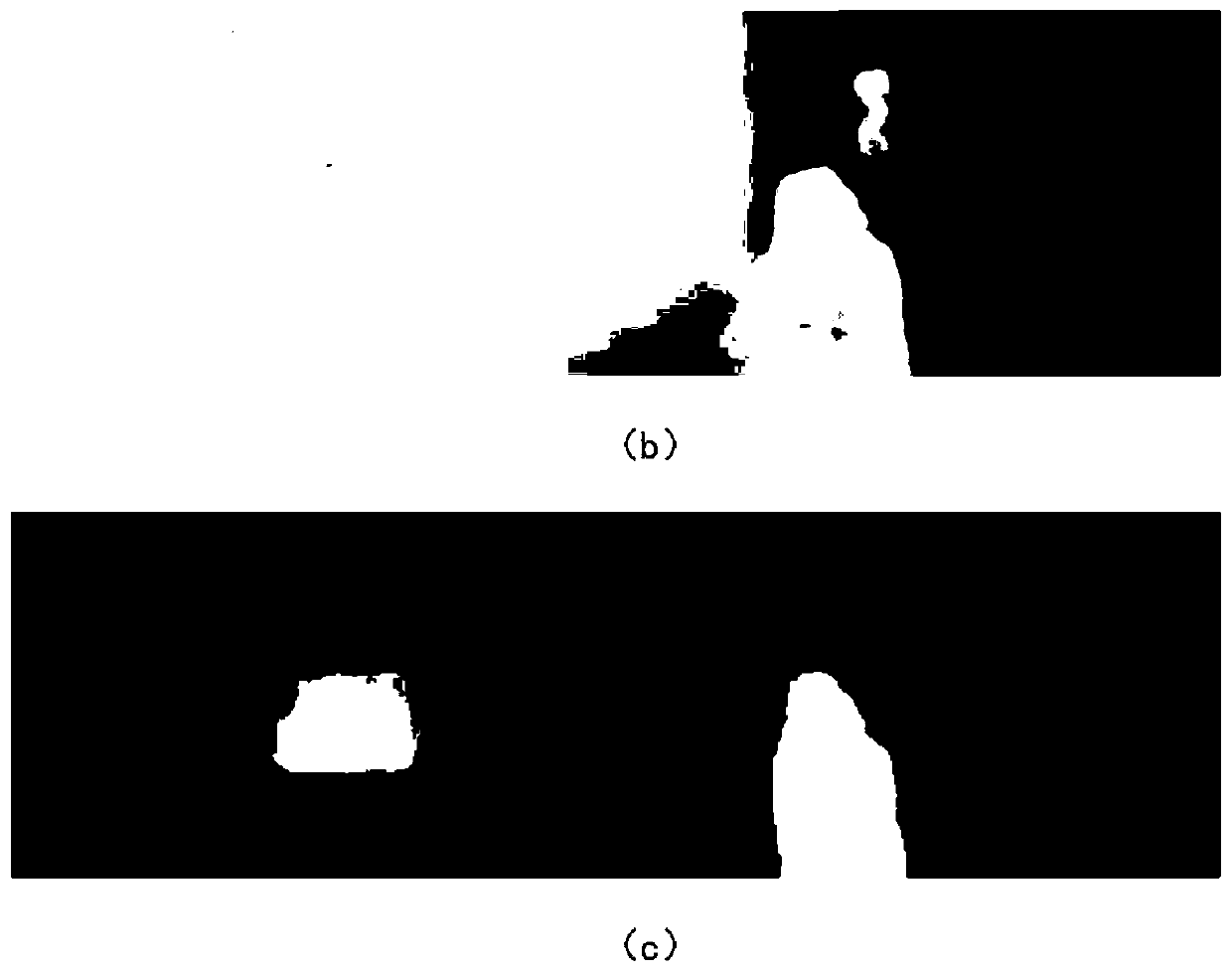

Multi-camera-based multi-objective positioning tracking method and system

InactiveCN102243765AImprove accuracyHigh precisionImage analysisClosed circuit television systemsMulti cameraShort path algorithm

The invention discloses a multi-camera-based multi-objective positioning tracking method. The method is characterized by comprising the following steps: installing a plurality of cameras at a plurality of visual angles firstly, planning a public surveillance area for the cameras, and calibrating a plurality of height levels; sequentially implementing the steps of foreground extraction, homography matrix calculation, foreground likelihood fusion and multi-level fusion; extracting positioning information which is based on selected a plurality of height levels and obtained in the step of foreground likelihood fusion; processing the positioning information of each level by using the shortest path algorithm so as to obtain the tracking paths of the levels; and after combining with the processing results of foreground extraction, completing the multi-objective three-dimensional tracking. By using the method disclosed by the invention, in the process of tracking, the vanishing points of the plurality of cameras are not required to be calculated, and a codebook model is introduced for the first time for solving the multi-objective tracking problem, thereby improving the accuracy of tracking; and the method has the characteristics of good stability, good instantaneity and high precision.

Owner:DALIAN NATIONALITIES UNIVERSITY

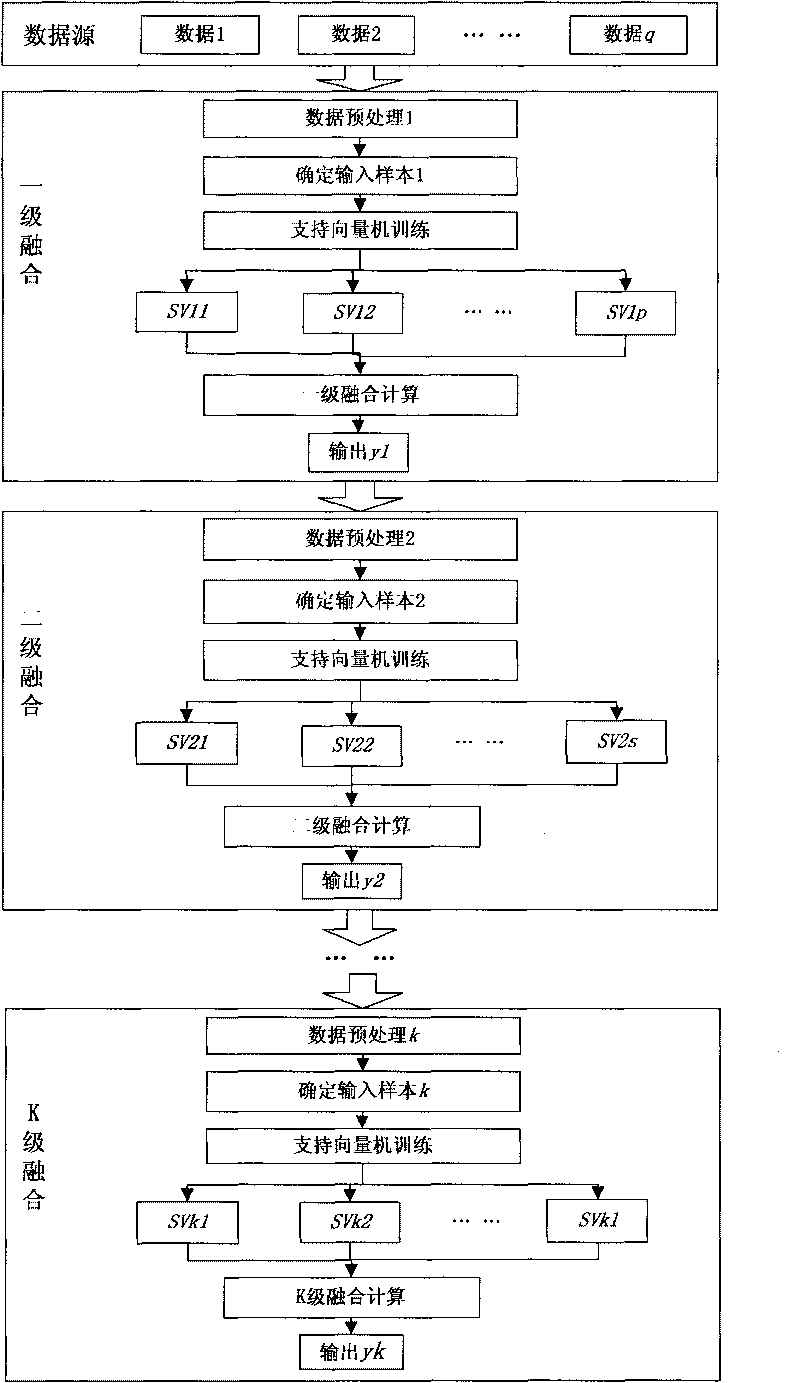

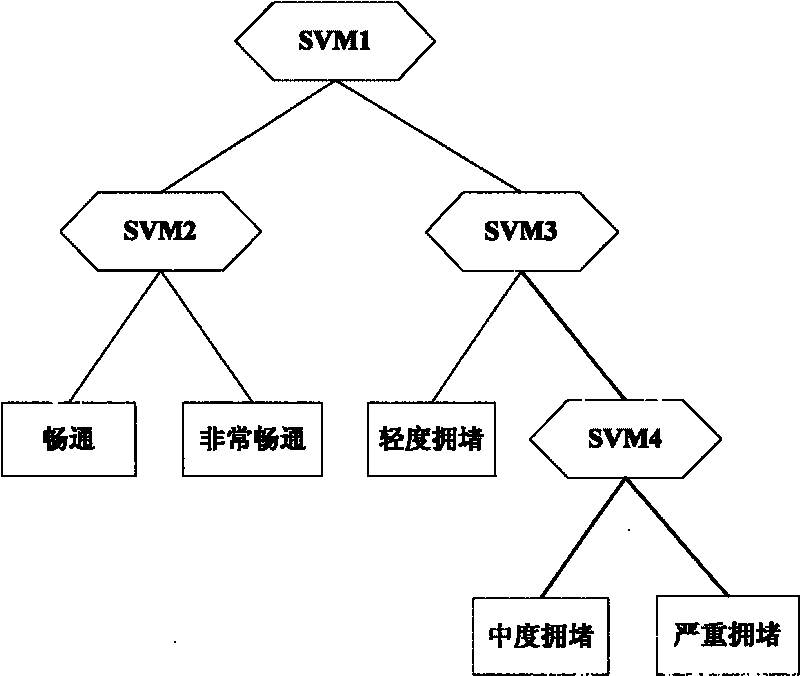

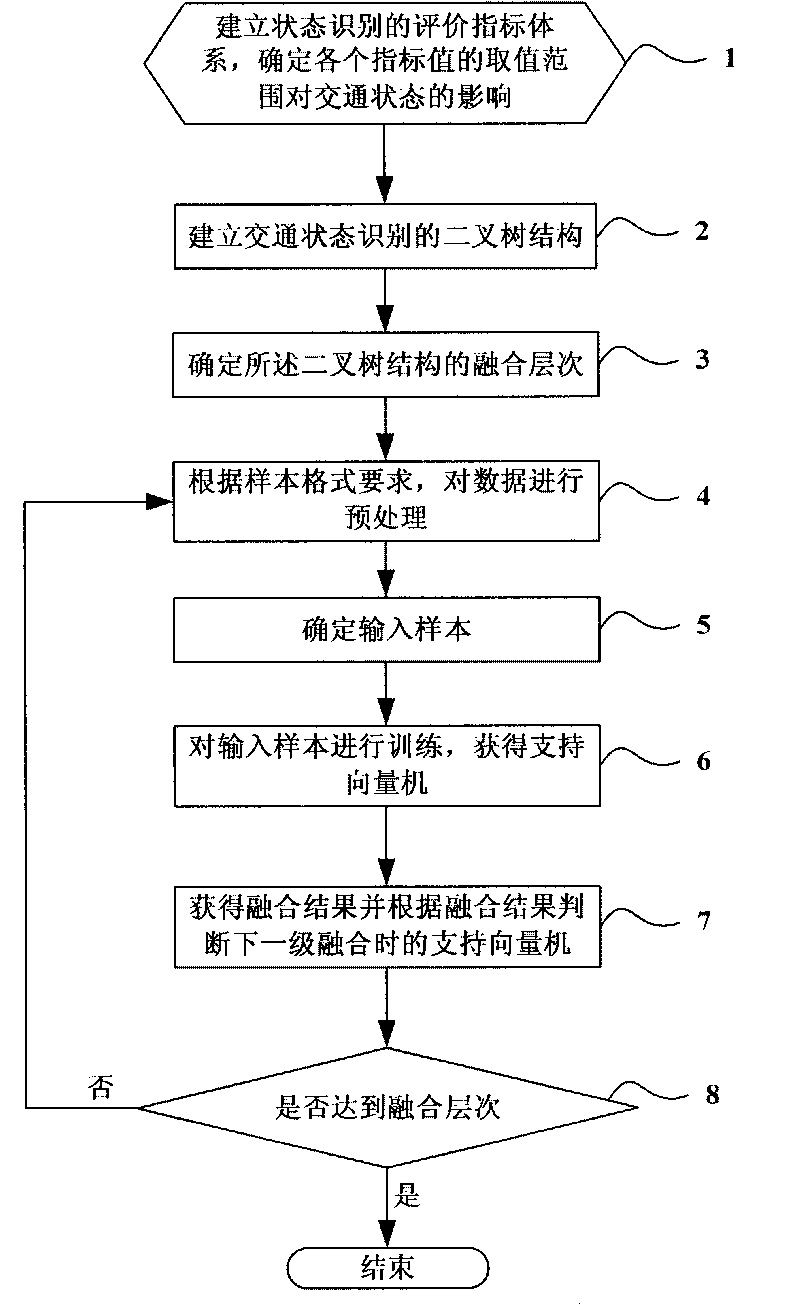

Method for identifying traffic status of express way based on information fusion

InactiveCN101706996ASolve the problem of low accuracy of traffic status recognitionReliable decision supportDetection of traffic movementSupport vector machineBinary tree

The invention discloses a method for identifying the traffic status of an express way based on information fusion, belonging to the technical field of traffic information fusion. The method comprises the steps: selecting traffic parameters, and establishing an evaluation index system of status identification; according to decision tree algorithm, establishing a binary tree structure of the traffic status identification; determining the fusion layer K of the binary tree structure, wherein K is more than or equal to 2 and i is equal to 1; according to sample format requirements of an ith layer, preprocessing data of the ith layer, and determining an input sample of the ith layer; utilizing a machine learning method of a support vector machine to train the input sample of the ith layer, thus obtaining the support vector machine; carrying out data fusion on the support vector machine to obtain a fusion result, and judging the support vector machine in next level fusion according to the fusion result; and if i is equal to i plus 1, judging whether that i is more than or equal to K is set, if so, returning the step 4, and executing the data fusion at next layer, and if not, ending the process. The method inherits the advantages of the information fusion method of the traditional support vector machine, and solves the problem of low accuracy of traffic status identification of the express way.

Owner:BEIJING JIAOTONG UNIV

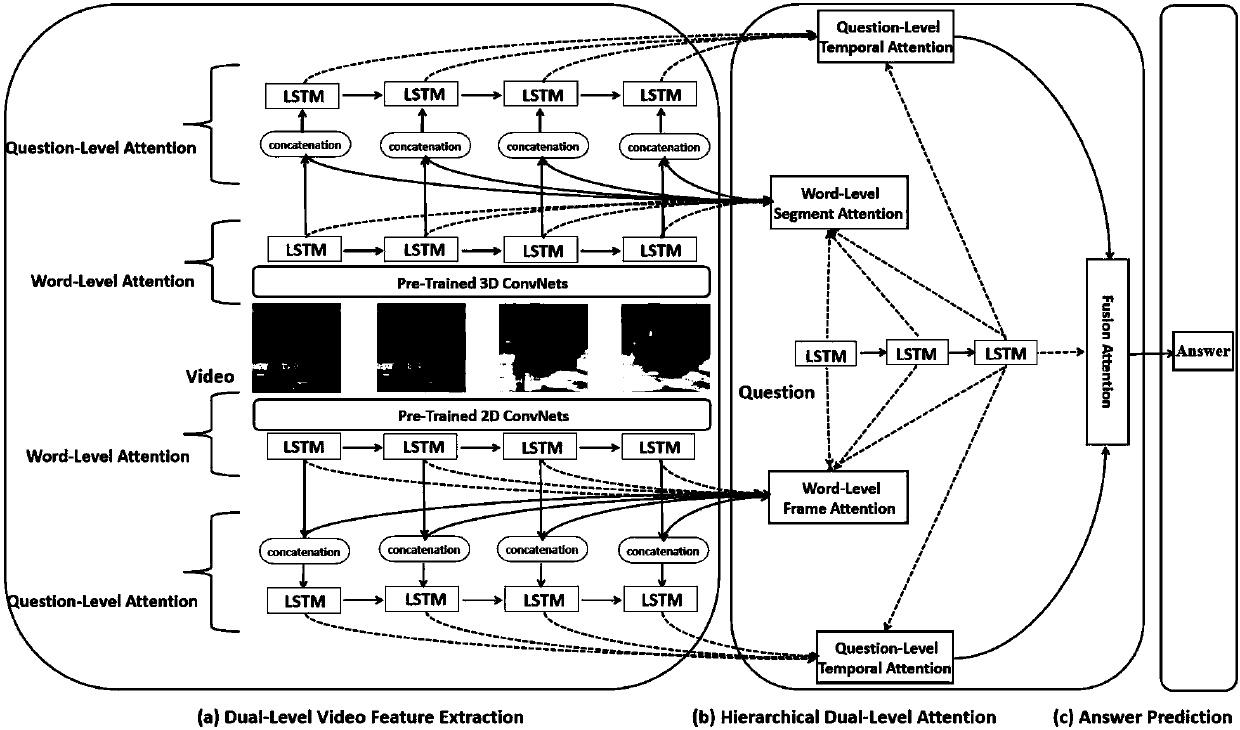

Method of using multi-layer attention network mechanism to solve video question answering

ActiveCN107766447ACharacter and pattern recognitionSpecial data processing applicationsQuestion answerAttention network

The invention discloses a method of utilizing a multi-layer attention network mechanism to solve video question answering. The method mainly includes the following steps: 1) for a group of videos, utilizing a pre-trained convolutional neural network to obtain frame-level and segment-level video expressions; 2) using a question-word-level attention network mechanism to obtain frame-level and segment-level video expressions for a question word level; 3) using a question-level time attention mechanism to obtain frame-level and segment-level video expressions related to a question; 4) utilizing aquestion-level fusion attention network mechanism to obtain joint video expressions related to the question; and 5) utilizing the obtained joint video expressions to acquire answers to the question asked for the videos. Compared with general video question answering solution, the method utilizes the multi-layer attention mechanism, and can more accurately reflect video and question characteristics, and generate the more conforming answers. Compared with traditional methods, the method achieves a better effect in video question answering.

Owner:ZHEJIANG UNIV

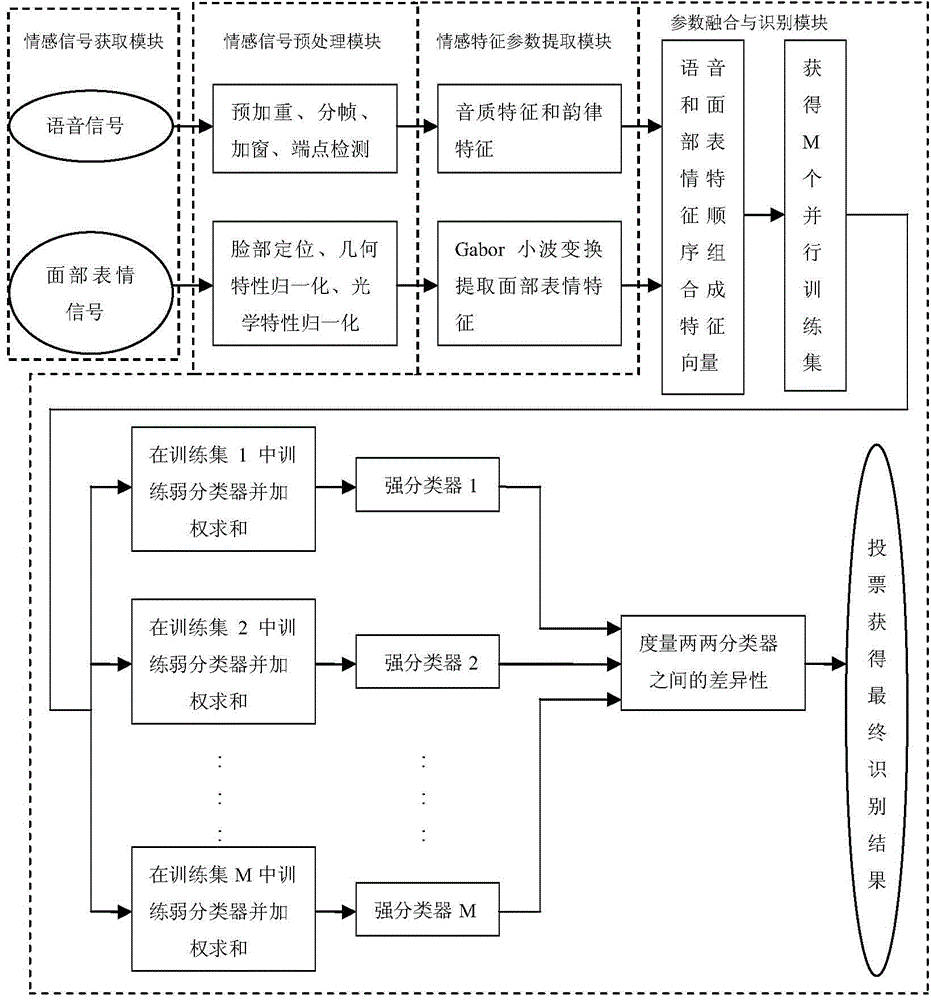

Serial-parallel combined multi-mode emotion information fusion and identification method

InactiveCN104835507AOvercome limitationsImprove accuracyCharacter and pattern recognitionSpeech recognitionFeature vectorAdaboost algorithm

The present invention discloses a serial-parallel combined multi-mode emotion information fusion and identification method belonging to the emotion identification technology field. The method mainly comprises obtaining an emotion signal; pre-processing the emotion signal; extracting an emotion characteristic parameter; and fusing and identifying the characteristic parameter. According to the present invention, firstly, the extracted voice signal and facial expression signal characteristic parameters are fused to obtain a serial characteristic vector set, then M parallel training sample sets are obtained by the sampling with putback, and sub-classifiers are obtained by the Adabost algorithm training, and then difference of every two classifiers is measured by a dual error difference selection strategy, and finally, vote is carried out by utilizing the majority vote principle, thereby obtaining a final identification result, and identifying the five human basic emotions of pleasure, anger, surprise, sadness and fear. The method completely gives play to the advantage of the decision-making level fusion and the characteristic level fusion, and enables the fusion process of the whole emotion information to be closer to the human emotion identification, thereby improving the emotion identification accuracy.

Owner:BOHAI UNIV

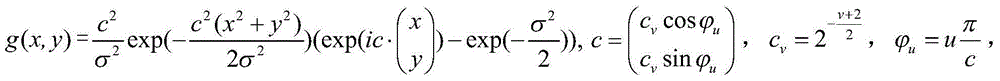

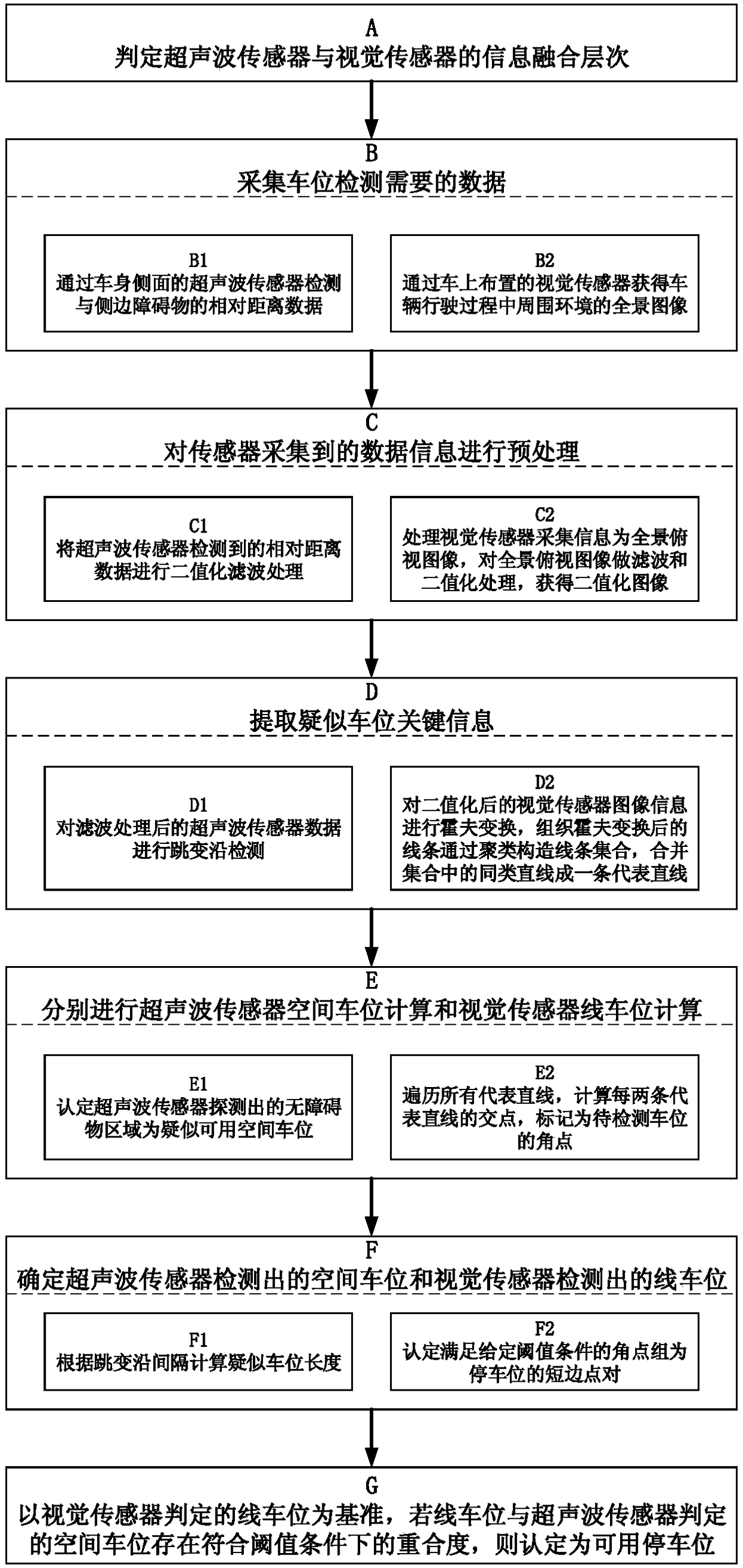

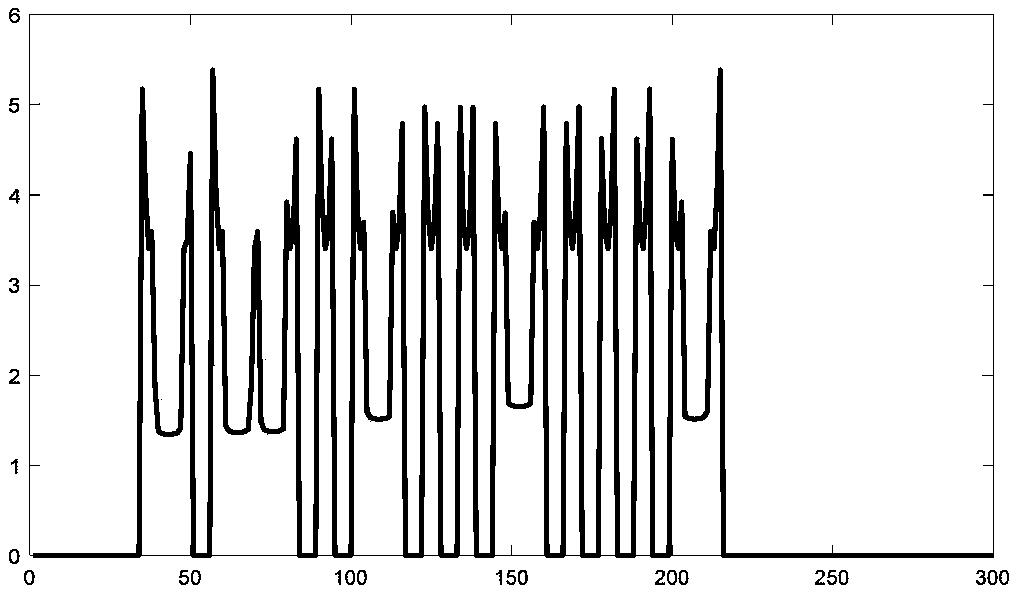

Parking space detection method based on ultrasonic and visual sensor fusion

InactiveCN108281041AAvoid undetectabilityAvoid the flaw of not being able to accurately detect specific parking locationsAnti-collision systemsAcoustic wave reradiationUltrasonic sensorParking space

The invention relates to a parking space detection method based on ultrasonic and visual sensor fusion, comprising: determining that information fusion level of two sensors is decision fusion level, acquiring data required for parking space detection, synchronously preprocessing the acquired data, extracting suspected parking space key information of the two sensors separately, performing ultrasonic sensor space parking space calculation and visual sensor line parking space calculating, performing parking space judging and decision-level data fusion for the two sensors, and finally judging parking space condition. The parking space detection method based on ultrasonic and visual sensor fusion has the advantages that spatial parking spaces are determined via the ultrasonic sensor, line parking spaces are judged via visual sensor, detection results of the two sensors are subjected to decision-level fusion, parking space recognition accuracy reaches 99% and above, and the parking space detection method based on ultrasonic and visual sensor fusion is widely applicable to the field of parking space detection.

Owner:SOUTHEAST UNIV

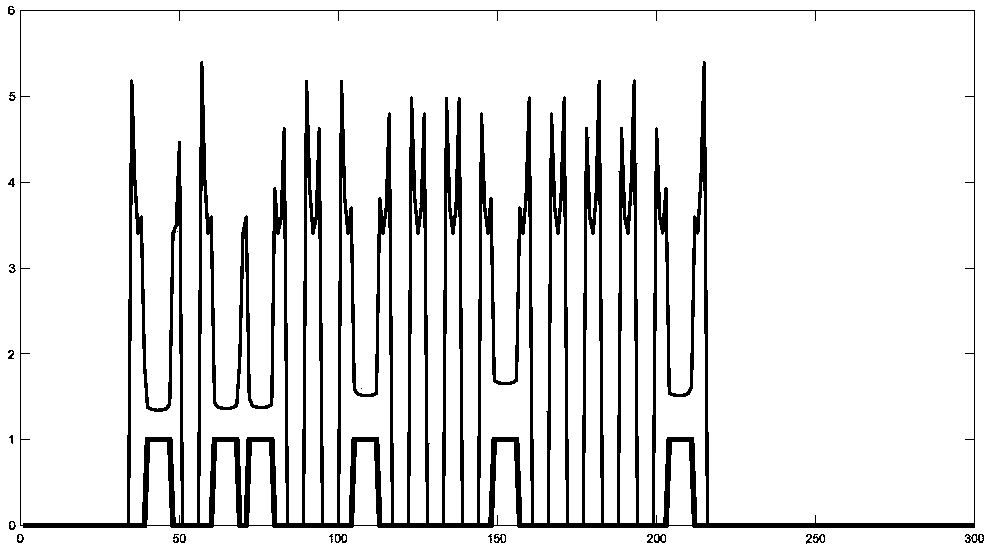

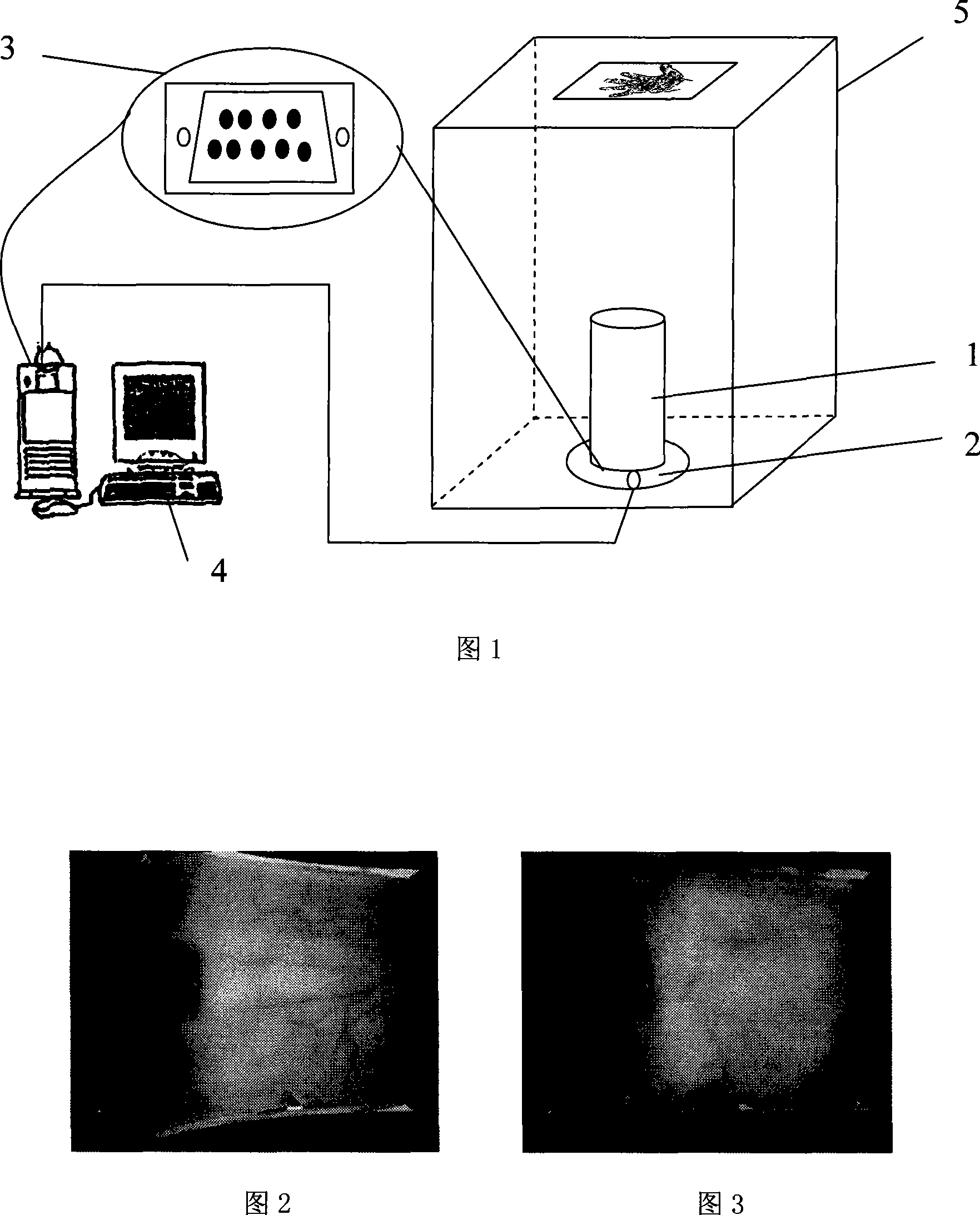

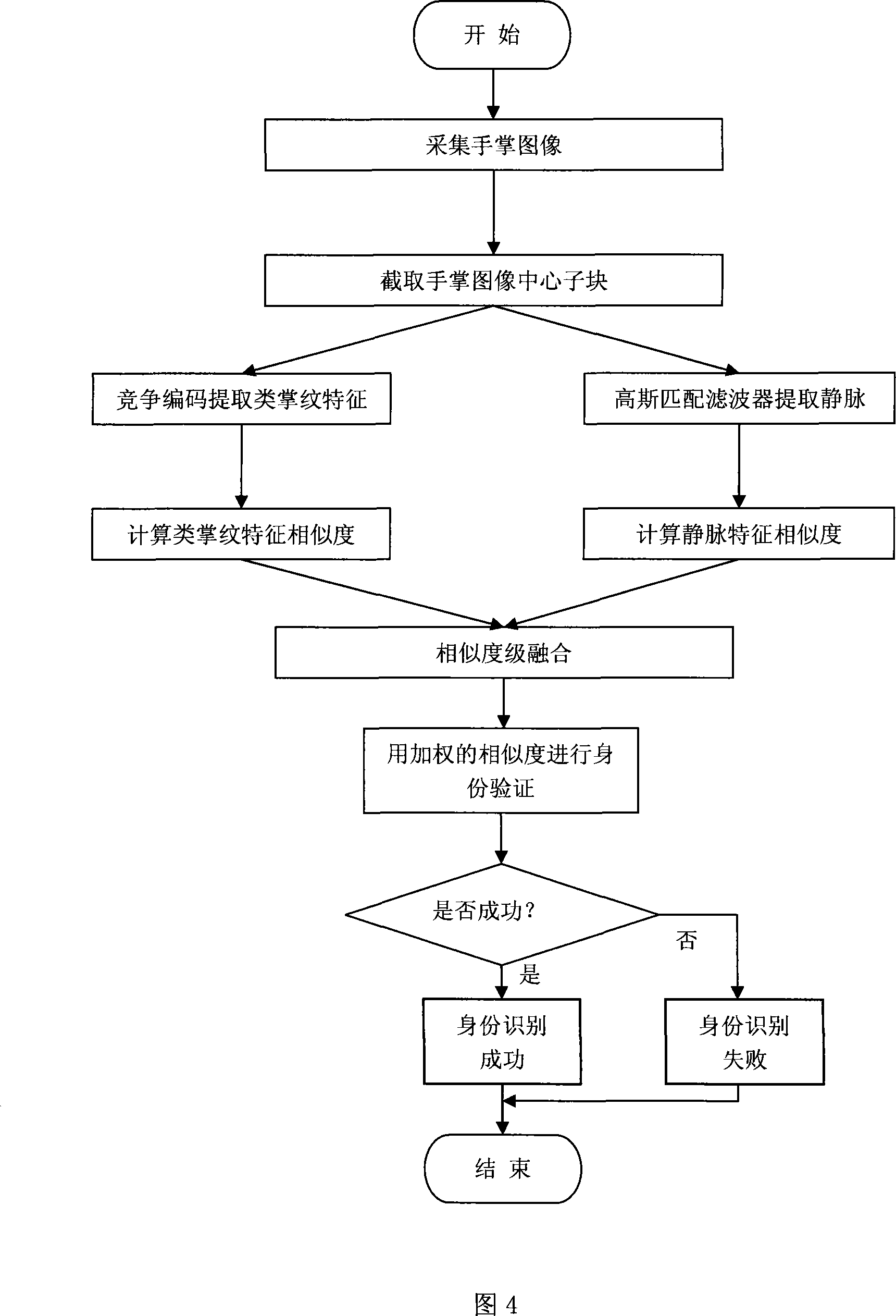

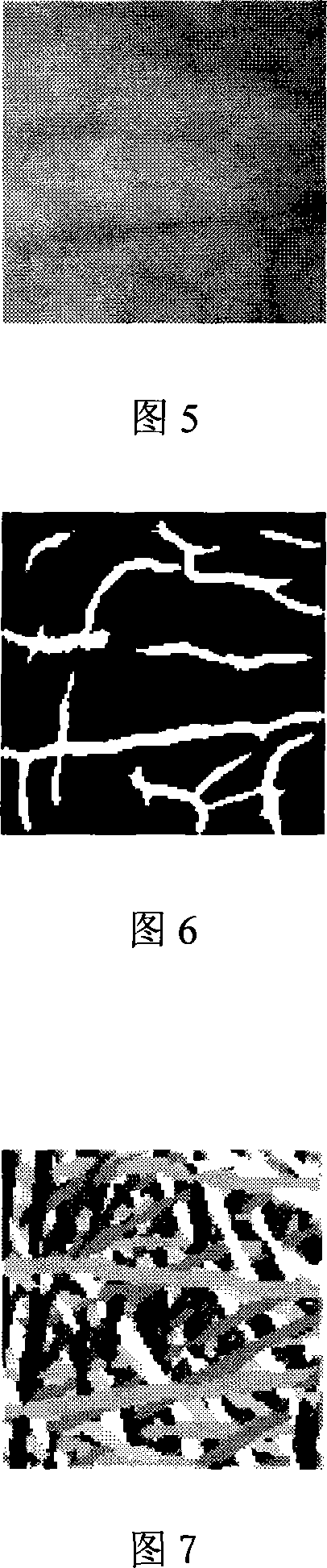

Personal identification method and near-infrared image forming apparatus based on palm vena and palm print

The invention provides a near-infrared imaging device and an identification method based on the palm vein and the palm print. Firstly, a palm image is obtained through a near-infrared imaging device, the central subblock sample needed to be processed is extracted, the subblock is inputted into two feature extraction modules: a genus palm print information code extraction and a vein structure extraction, then the two features are respectively matched, each own similarity of the two features is respectively calculated through using different similarity evaluation methods, the optimized weighted array of the genus palm print and the vein vessel structure is obtained according to a training sample, then the two similarities perform the similarity level fusion, then the similarity level after the fusing performs decision-making and comparing according to a scheduled threshold value, and then the final determination is obtained in reference to the fusioned matching. The near-infrared imaging device and the identification method based on the palm vein and the palm print can overcome the disadvantages of less image features and single processing, and has the advantages of improving the identification rate and the stability of the system.

Owner:深圳市中识健康科技有限公司

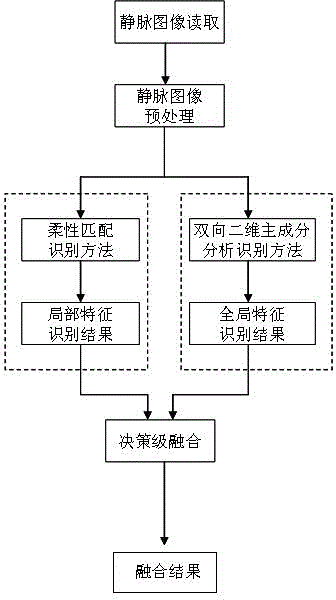

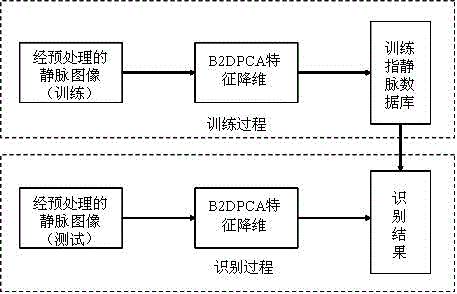

Finger vein recognition method fusing local features and global features

InactiveCN103336945AIdentification helpsOvercome limitationsCharacter and pattern recognitionVeinFinger vein recognition

The invention discloses a finger vein recognition method fusing local features and global features. At present, a number of vein recognition methods adopt the local features of a vein image, so that the recognition precision of the vein recognition methods is greatly affected by the quality of the image; the phenomena of rejection and false recognition are liable to appear. The finger vein recognition method provided by the invention comprises the following steps: firstly, performing pretreatment operations such as finger area extraction of a read-in finger vein image, binarization and the like; then, according to the point set of extracted detail features, realizing the matching of the local features within a certain angle and a certain radius by virtue of a flexible matching-based local feature recognition module; using a global feature recognition module for vein image recognition to realize the matching of the global features as the global feature recognition module is used for analyzing bidirectional two-dimensional principal components and can better display a two-dimensional image data set on the whole; finally, designing weights according to the correct recognition rates of the two recognition methods, performing decision-level fusion to the results of two classifiers, and taking the fused result as a final recognition result. The method is applied to finger vein recognition.

Owner:HEILONGJIANG UNIV

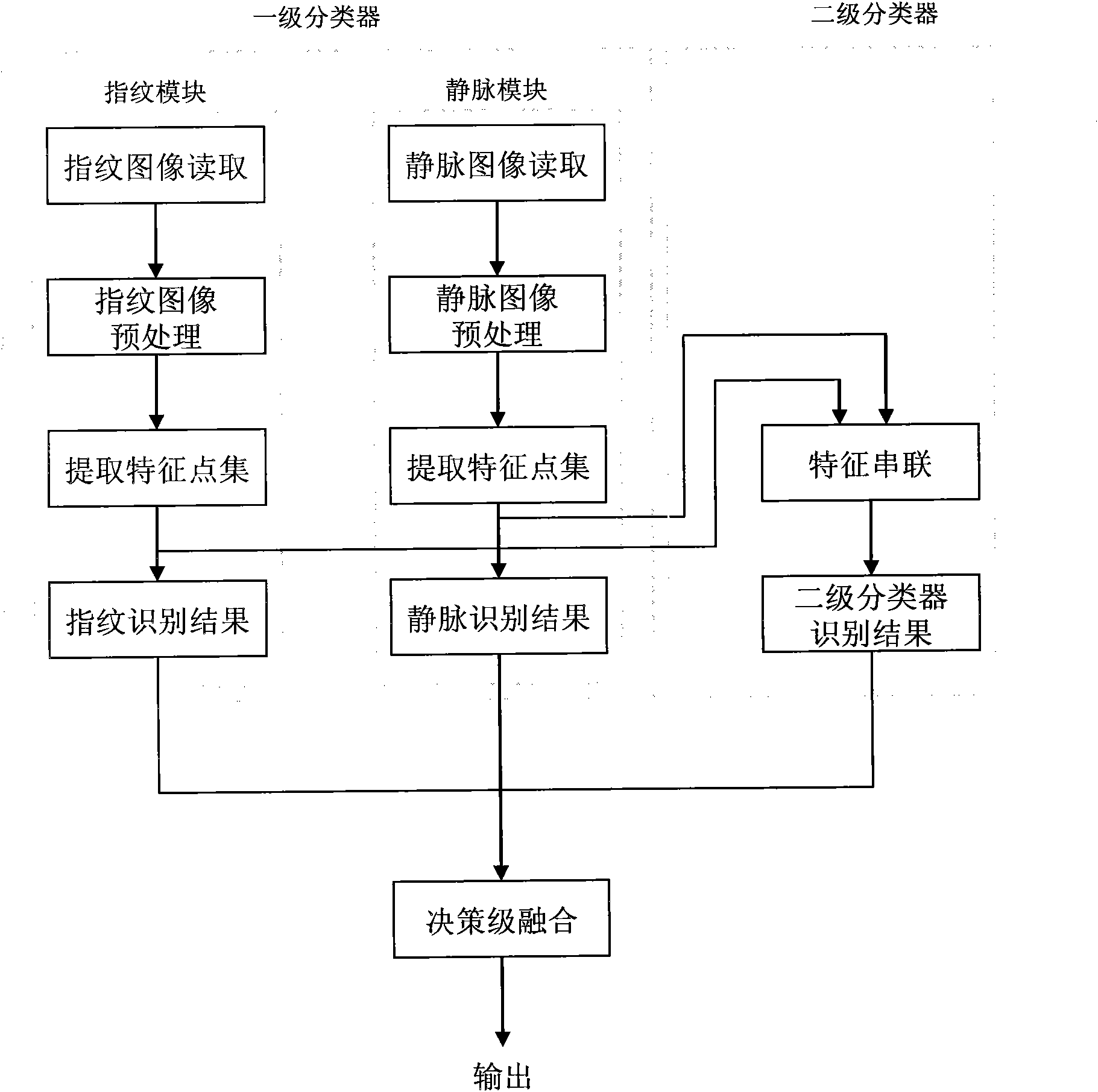

Secondary classification fusion identification method for fingerprint and finger vein bimodal identification

InactiveCN101847208AIdentification helpsImprove accuracyCharacter and pattern recognitionVeinPoint match

The invention provides a secondary classification fusion identification method for fingerprint and finger vein bimodal identification. A fingerprint module and a vein module are used as primary classifiers, and a secondary decision module is used as a secondary classifier. The method comprises the following steps of: reading a fingerprint image and a vein image through the fingerprint module and the vein module; pre-processing the read images respectively and extracting characteristic point sets of the both; performing identification on the images respectively to obtain respective identification results, wherein the fingerprint identification adopts a detail point match-based method, and the vein identification uses an improved Hausdorff distance mode to perform identification; forming a new characteristic vector by using the extracted fingerprint and vein characteristic point sets in a characteristic series mode through the secondary decision module so as to form the secondary classifier and obtain an identification result; and finally, performing decision-level fusion on the three identification results. The method has the advantages of making full use of identification information of fingerprints and finger veins, and effectively improving the accuracy of an identification system, along with high identification rate.

Owner:HARBIN ENG UNIV

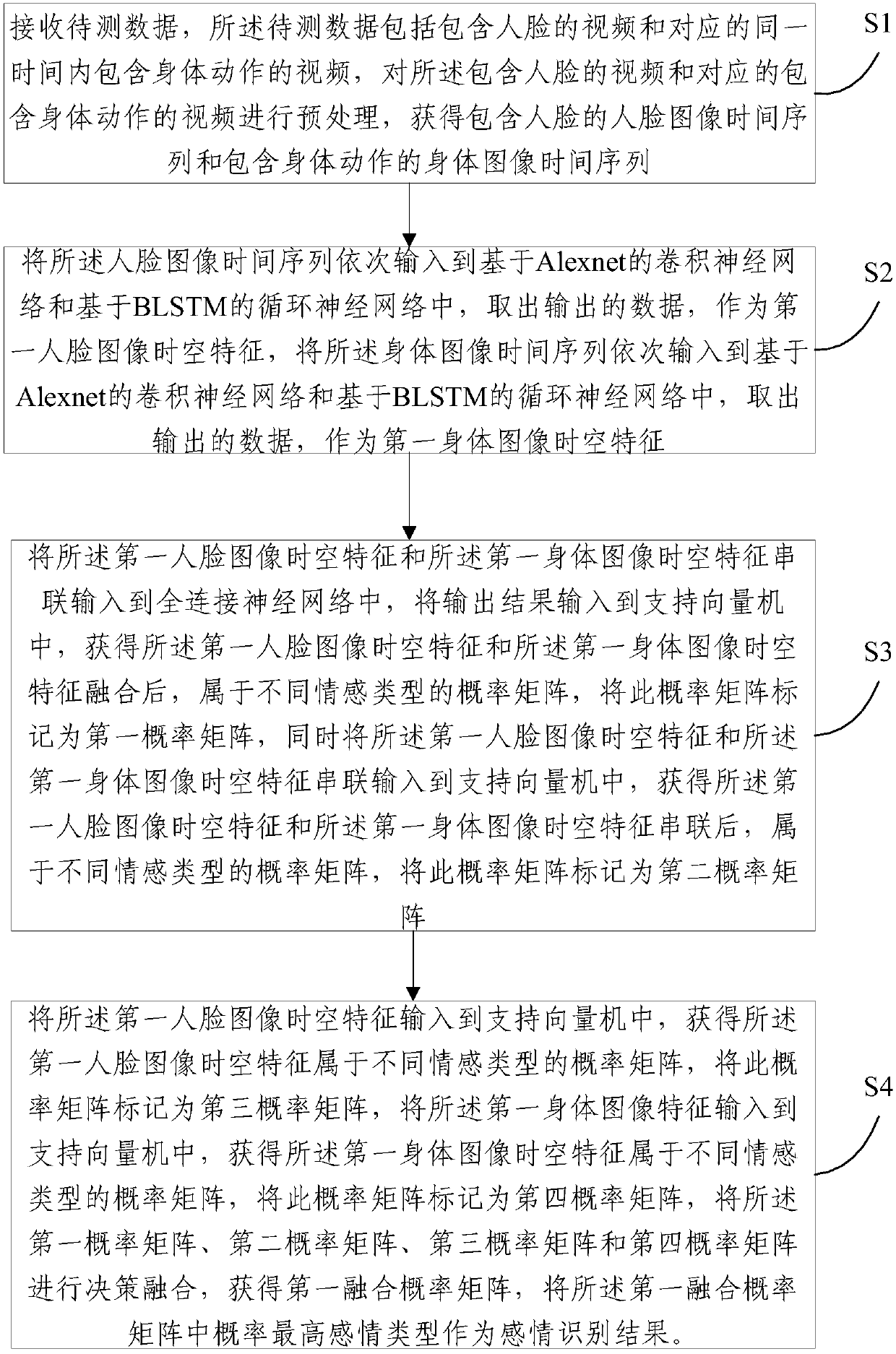

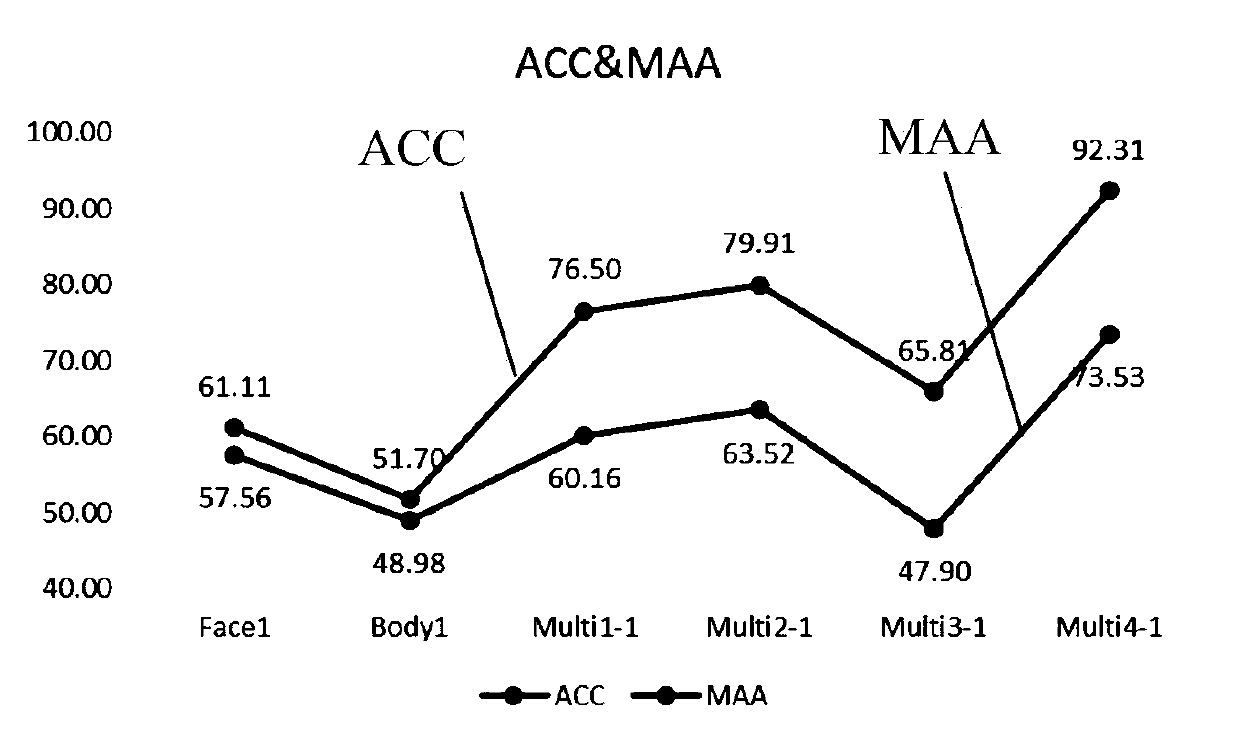

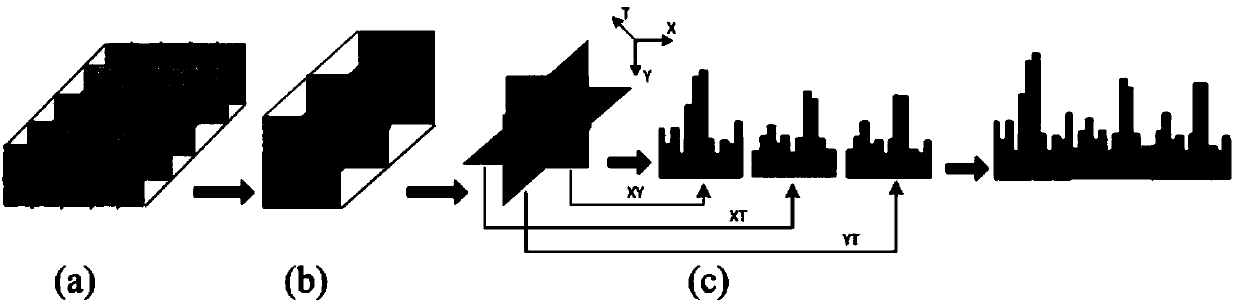

Multi-mode emotion recognition and classification method

ActiveCN107808146AImprove accuracyImprove fusion efficiencyAcquiring/recognising facial featuresClassification methodsSpacetime

The invention provides a multi-mode emotion recognition and classification method. The method comprises the following steps of: processing a to-be-detected video comprising a face and a correspondingvideo comprising a body action in a same time, so as to convert the videos into an image time sequence which consists of image frames; extracting time features and space features in the image time sequence; carrying out multi-feature level fusion on the features on the basis of the obtained multilayer deep time and space features; and carrying out decision level fusion on a classification result so as to recognize an emotion type of a task in the to-be-detected video from multi-mode. According to the method, effective information in each mode is sufficiently utilized and the emotion recognition rate is enhanced.

Owner:BEIJING NORMAL UNIVERSITY

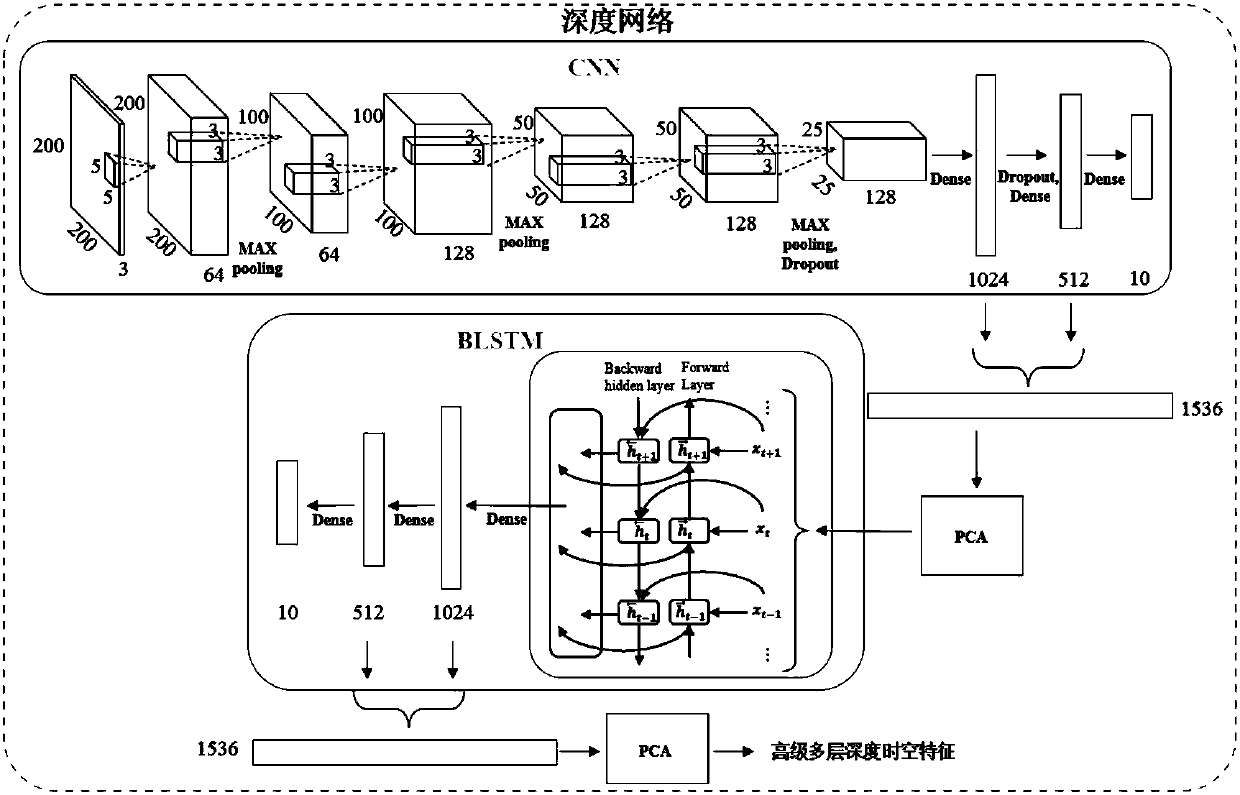

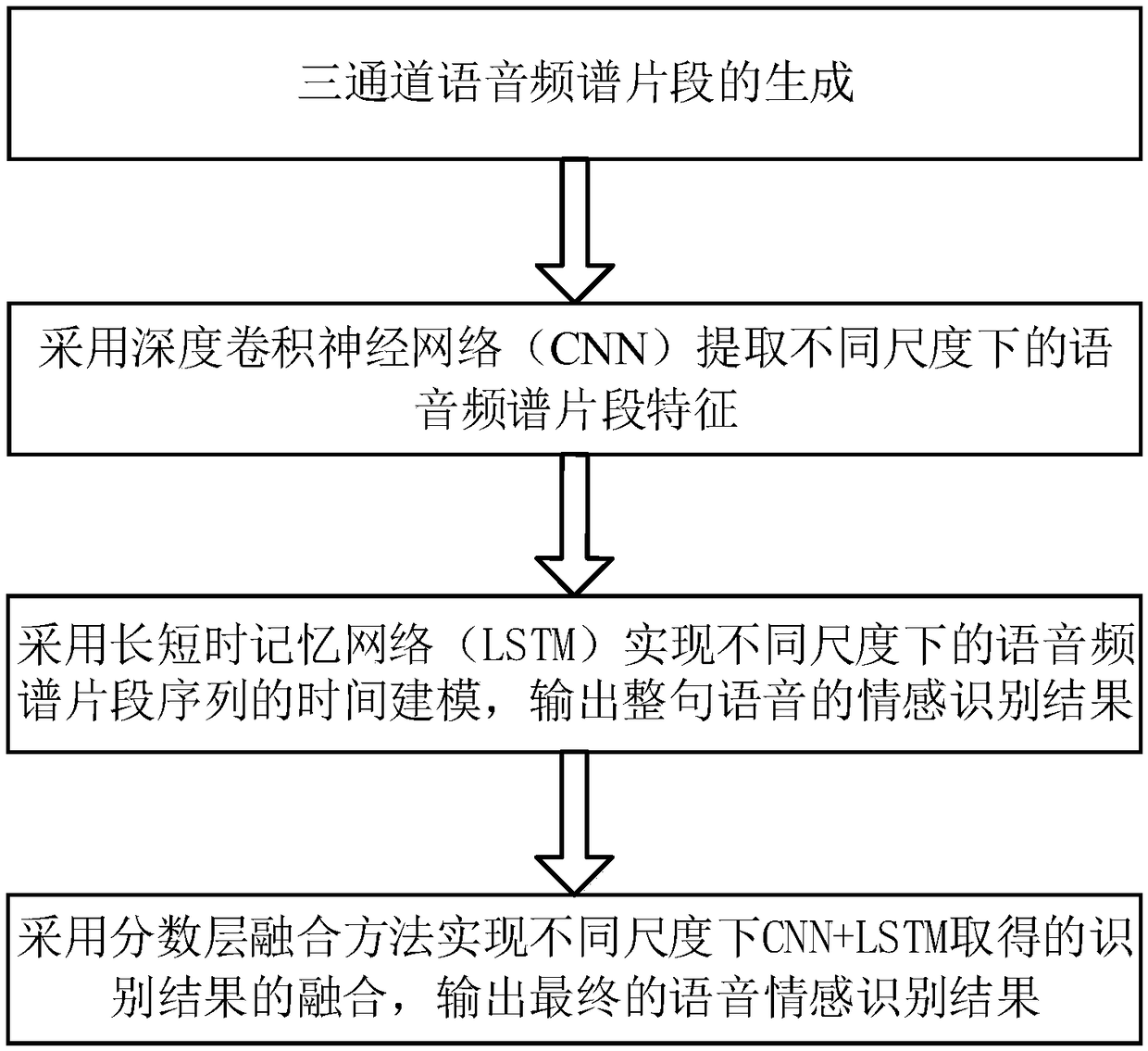

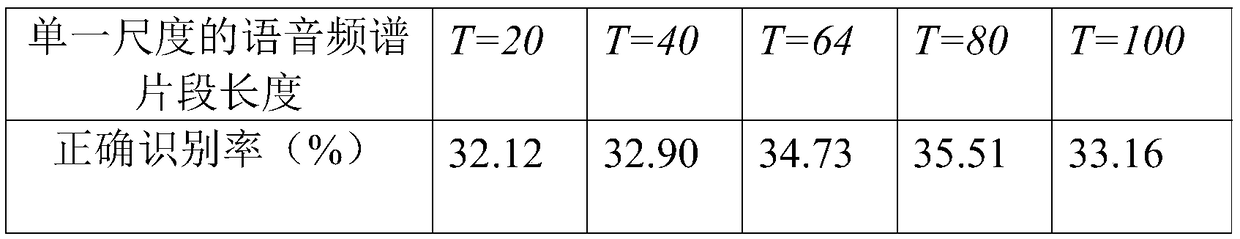

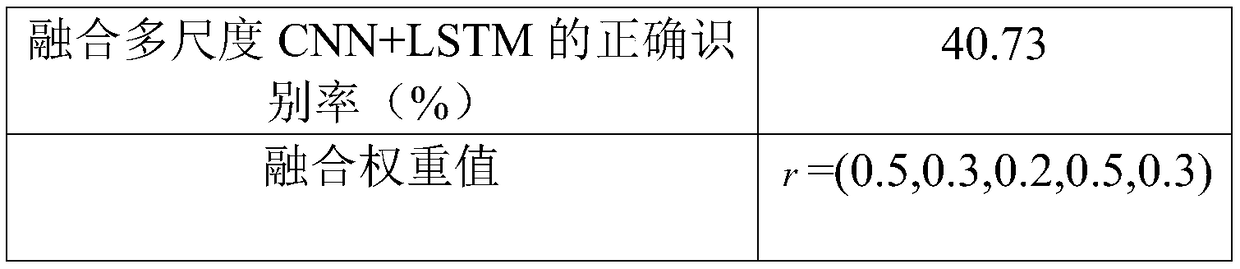

Speech emotion recognition method based on multi-scale deep convolution recurrent neural network

ActiveCN108717856AAlleviate the problem of insufficient samplesSpeech analysisPattern recognitionFrequency spectrum

The invention discloses a speech emotion recognition method based on a multi-scale deep convolution recurrent neural network. The method comprises the steps that (1), three-channel speech spectrum segments are generated; (2), speech spectrum segment features under different scales are extracted by adopting the convolution neural network (CNN); (3), time modeling of a speech spectrum segment sequence under different scales is achieved by adopting a long short-term memory (LSTM), and emotion recognition results of a whole sentence of speech is output; (4), fusions of recognition results obtainedby CNN+LSTM under different scales are achieved by adopting a score level fusion method, and the final speech emotion recognition result is output. By means of the method, natural speech emotion recognition performance under actual environments can be effectively improved, and the method can be applied to the fields of artificial intelligence, robot technologies, natural human-computer interaction technologies and the like.

Owner:TAIZHOU UNIV

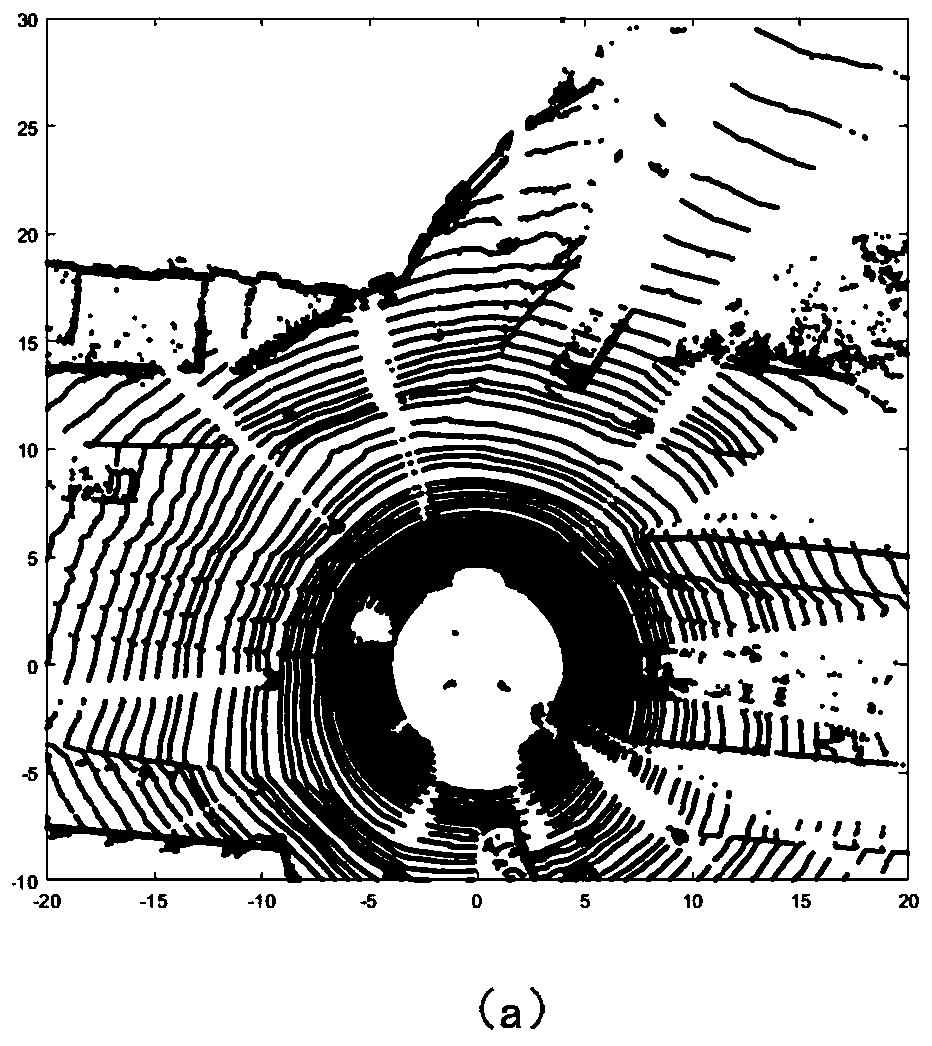

Traveling vehicle vision detection method combining laser point cloud data

ActiveCN110175576AAvoid the problem of difficult access to spatial geometric informationRealize 3D detectionImage enhancementImage analysisHistogram of oriented gradientsVehicle detection

The invention discloses a traveling vehicle vision detection method combining laser point cloud data, belongs to the field of unmanned driving, and solves the problems in vehicle detection with a laser radar as a core in the prior art. The method comprises the following steps: firstly, completing combined calibration of a laser radar and a camera, and then performing time alignment; calculating anoptical flow grey-scale map between two adjacent frames in the calibrated video data, and performing motion segmentation based on the optical flow grey-scale map to obtain a motion region, namely a candidate region; searching point cloud data corresponding to the vehicle in a conical space corresponding to the candidate area based on the point cloud data after time alignment corresponding to eachframe of image to obtain a three-dimensional bounding box of the moving object; based on the candidate region, extracting a direction gradient histogram feature from each frame of image; extracting features of the point cloud data in the three-dimensional bounding box; and based on a genetic algorithm, carrying out feature level fusion on the obtained features, and classifying the motion areas after fusion to obtain a final driving vehicle detection result. The method is used for visual inspection of the driving vehicle.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

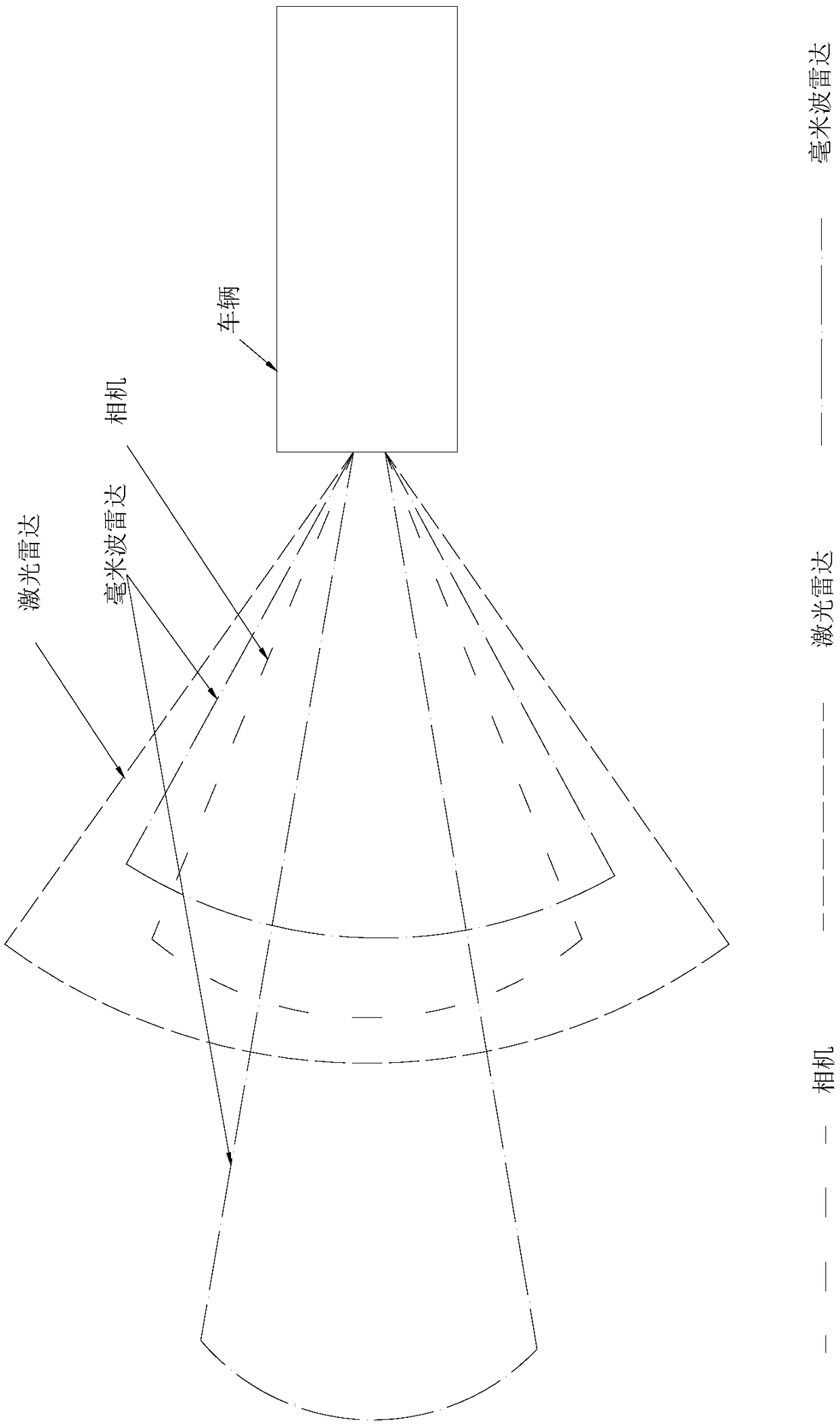

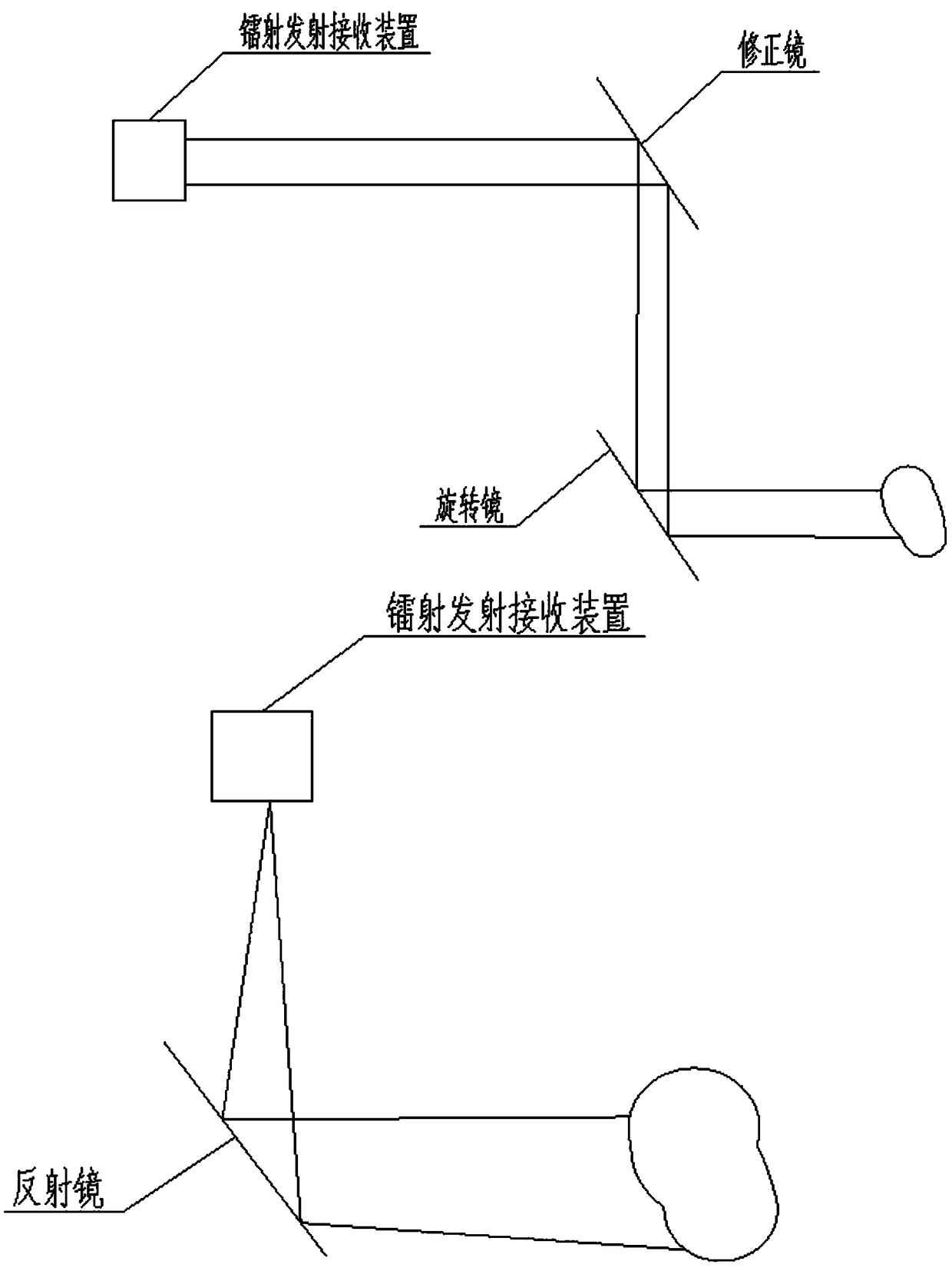

Track and road obstacle detecting method

ActiveCN109298415ARealize early warningFulfillment requirementsElectromagnetic wave reradiationRadio wave reradiation/reflectionSequence analysisRadar systems

The invention discloses a track and a road obstacle detecting method. The method comprises the following steps: A. obtaining an image of the front of a vehicle through a monocular camera or multiple cameras; B. transmitting and receiving an optical signal by a millimeter-wave device and a laser device to obtain a 2D point cloud image and a 2D spectrogram; C. performing a multi-look processing on the image, taking a video system as a medium, and analyzing and matching a laser radar with the video system by using a focal plane array; for a millimeter-wave radar, matching the video system with amillimeter-wave radar system by a data preprocessing and an edge matching method to complete the pixel level fusion of the laser radar, the millimeter-wave radar and the video system; performing sequence analysis on the acquired image, filtering a multi-radar system and the video system, and matching images acquired by the millimeter-wave radar and the laser radar with an original image; and D. marking obstacles. The track and the road obstacle detecting method can accurately identify the obstacles, has certain early warning functions, and can simultaneously ensure accuracy and early warning functions.

Owner:ZHUZHOU ELECTRIC LOCOMOTIVE CO

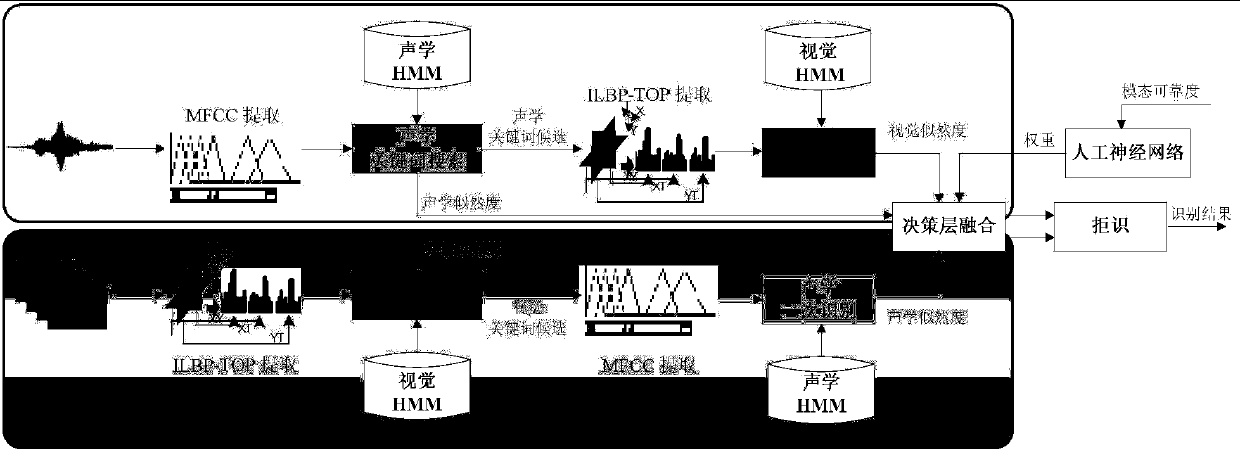

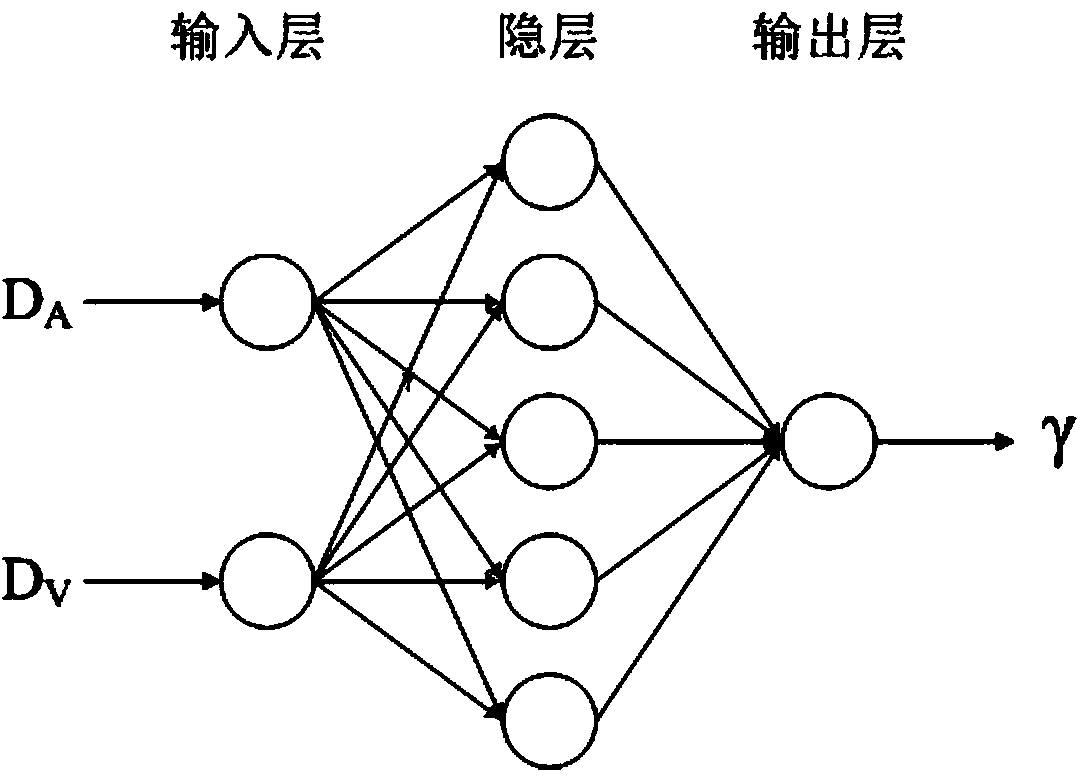

Audio/video keyword identification method based on decision-making level fusion

ActiveCN103943107AEasy to identifyValid descriptionSpeech recognitionSpecial data processing applicationsVisual functionFeature vector

The invention relates to an audio / video keyword identification method based on decision-making level fusion. The method mainly includes the following steps that (1) a keyword audio / video is recorded, a keyword and non-keyword voice acoustic feature vector sequence and a visual feature vector sequence are obtained, and accordingly a keyword and non-keyword acoustic template and a visual template are trained; (2) acoustic likelihood and visual likelihood are obtained according to the audio / video in different acoustic noise environments, so that the acoustic mode reliability, visual mode reliability and optimal weight are obtained, and accordingly an artificial neural network can be trained; (3) secondary parallel keyword identification based on the acoustic mode and the visual mode is conducted on the audio / video to be detected according to the acoustic template, the visual template and the artificial neural network. According to the audio / video keyword identification method based on decision-making level fusion, the acoustic function and the visual function are fused at a decision-making level, the secondary parallel keyword identification based on the dual modes is conducted on the audio / video to be detected, the contribution of visual information in the acoustic noise environment is fully utilized, and therefore identification performance is improved.

Owner:PEKING UNIV SHENZHEN GRADUATE SCHOOL

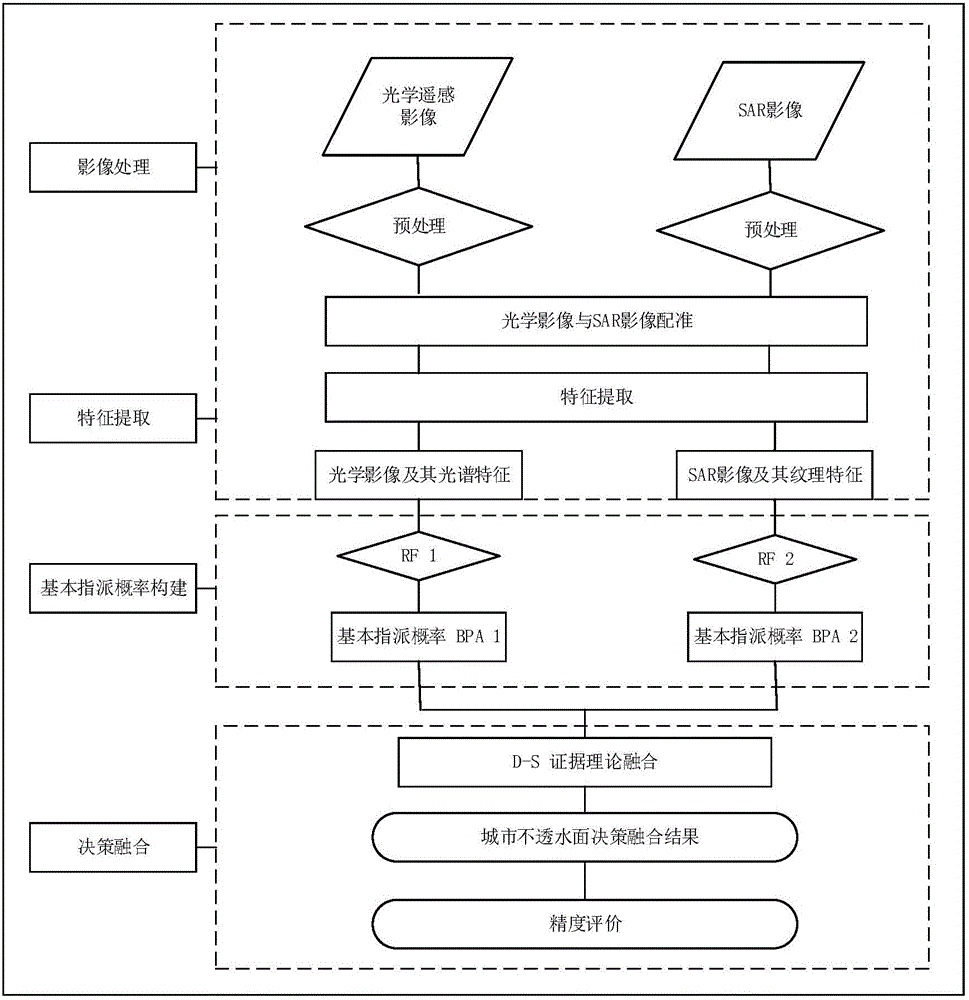

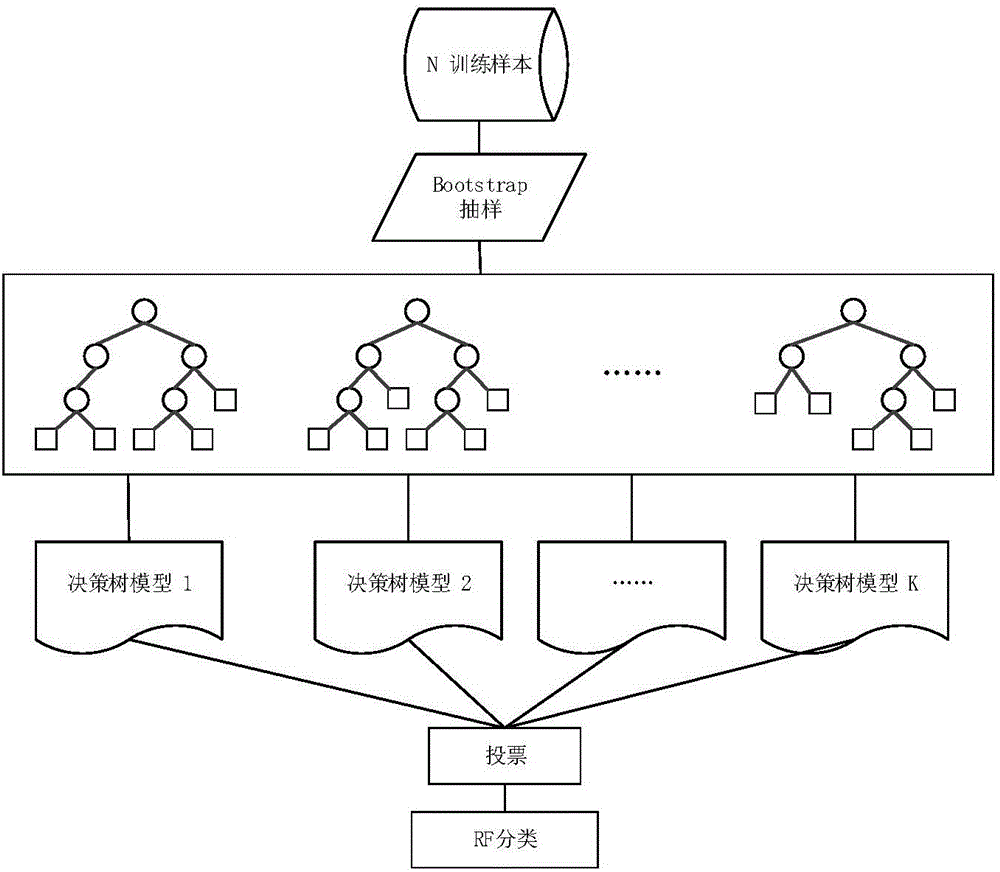

City impervious surface extraction method based on fusion of SAR image and optical remote sensing image

InactiveCN105930772AHigh precisionCharacter and pattern recognitionRemote sensing image fusionData source

Provided is a city impervious surface extraction method based on fusion of an SAR image and an optical remote sensing image. The method comprises that a general sample set formed by samples of a research area is selected in advance, and a classifier training set, a classifier test set and a precision verification set of impervious surface extraction results are generated from the general sample set in a random sampling method; the optical remote sensing image is configured with the SAR image of the research area, and features are extracted from the optical remote sensing image and the SAR image; training is carried out, the city impervious surface is extracted preliminarily on the basis of a random forest classifier, and optimal remote sensing image data, SAR image data and an impervious surface RF preliminary extraction result are obtained; decision level fusion is carried out by utilizing a D-S evidence theory synthesis rule, and a final impervious surface extraction result of the research area is obtained; and the precision of each extraction result is verified via the precision verification set. Advantages of the optical remote sensing image and SAR image data sources are utilized fully, the SAR image and optical remote sensing image fusion method based on the RF and D-S evidence theory is provided, and the impervious surface of higher precision in the city is obtained.

Owner:WUHAN UNIV

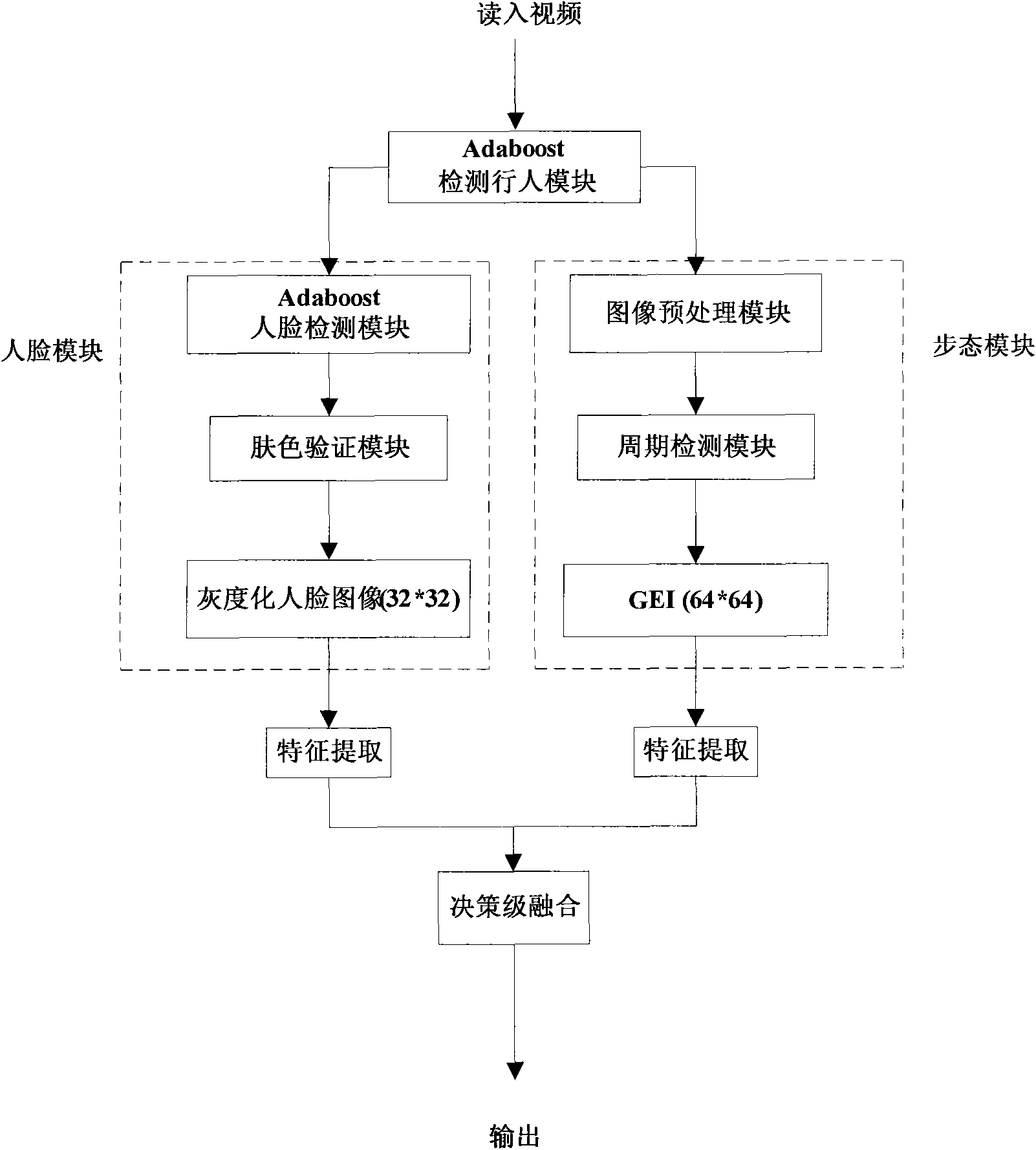

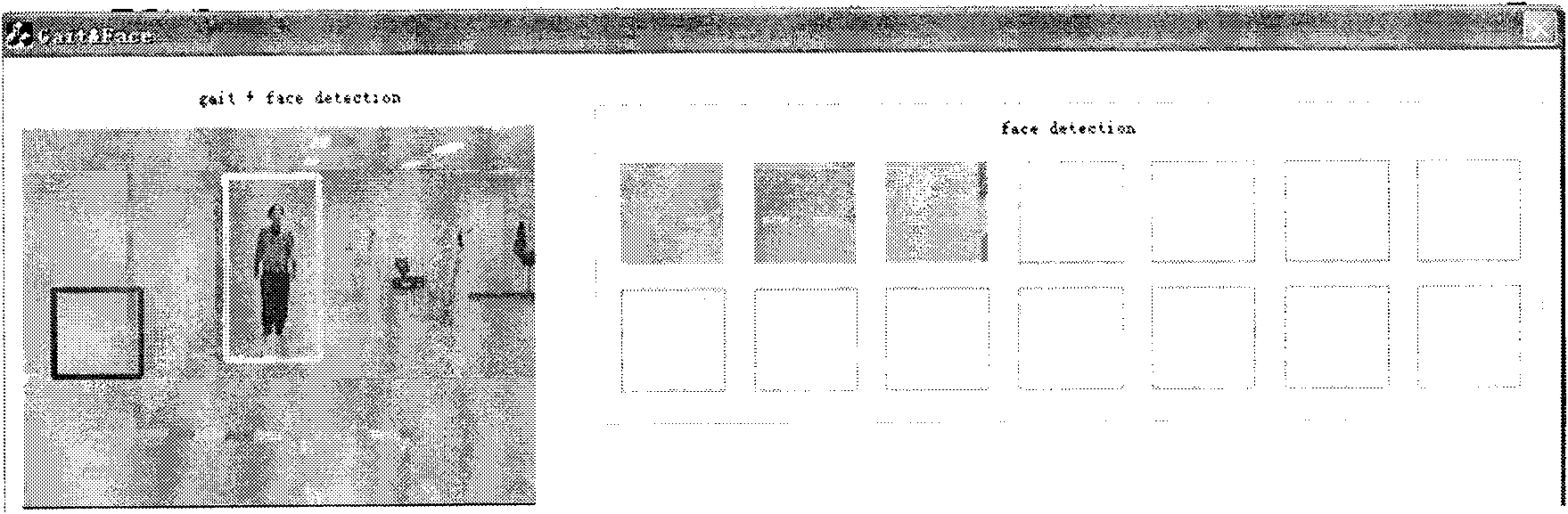

Front face human body automatic identity recognition method under long-distance video

InactiveCN101661554AIdentification helpsImprove recognition accuracyPerson identificationCharacter and pattern recognitionHuman bodyKernel principal component analysis

The invention provides a front face human body automatic identity recognition method under a long-distance video. The method comprises a gait module and a human face module, and the method comprises the steps of firstly reading a video file, using the Adaboost method for detecting pedestrians, automatically opening the human face module and the gait module for respectively adopting the kernel principal component analysis on gait and human face for carrying out feature extraction if detecting the pedestrians, and finally adopting the decision-making level fusion method which adopts the human face features to assist the gait features for carrying out recognition. The method proposes a new solution concept for long-distance identity recognition and adopts the decision-making level fusion method which uses the human face features to assist the gait features. The human face features are assisted in the single-sample gait recognition, and the method has the advantages that even the gait training sample is the single sample and human face images are multiple, the number of the training samples can be expanded from another point of view, thereby being beneficial to the identity recognition, and improving the recognition precision by 2.4% by the fusion with the human face features.

Owner:HARBIN ENG UNIV

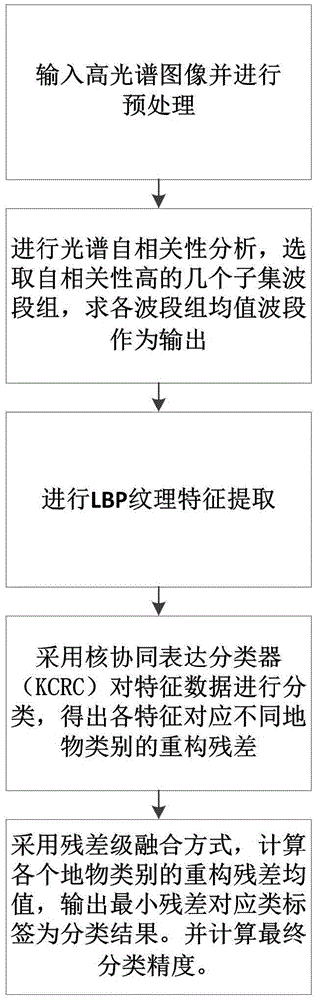

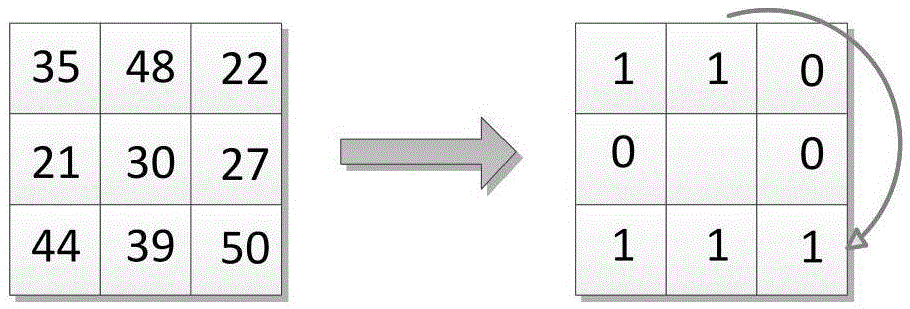

Nuclear coordinated expression-based hyperspectral image classification method

ActiveCN105608433AGood divisibilityRotation invariantScene recognitionOperabilityHyperspectral image classification

The invention discloses a nuclear coordinated expression-based hyperspectral image classification method. The method comprises the following steps: carrying out feature selection by adopting a waveband selection strategy with strong operability; carrying out local binary-pattern space feature extraction on the basis of selected different feature groups; carrying out nuclear coordinated expression classification; and finally fusing classification results corresponding to the groups of features and a residual-level fusion strategy and obtaining the final high-precision classification result. According to the method, the textural features of data extracted by a LBP operator are combined, the LBP has the remarkable advantages of rotation invariance and grey level invariance, and the calculation is simple, so that the robustness of the features to be classified is further increased. Finally, a nuclear coordinated expression classifier is used for classification, the calculation efficiency is better than that of the traditional sparse manner and the nonlinear space data can be classified, so that the application range is wider and the application performance is more excellent.

Owner:BEIJING UNIV OF CHEM TECH

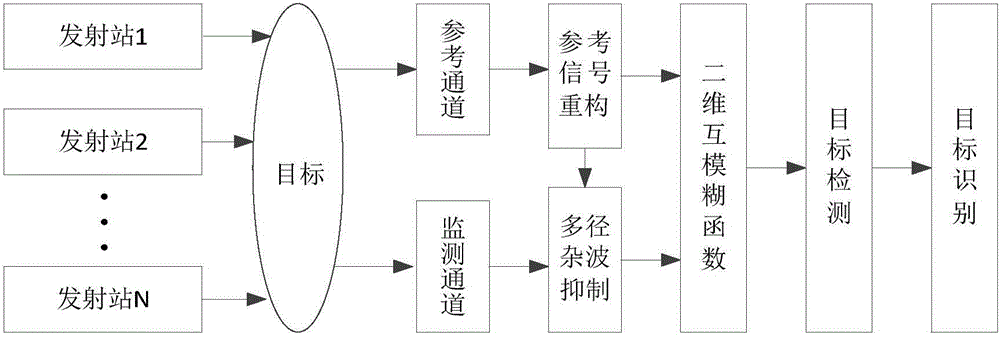

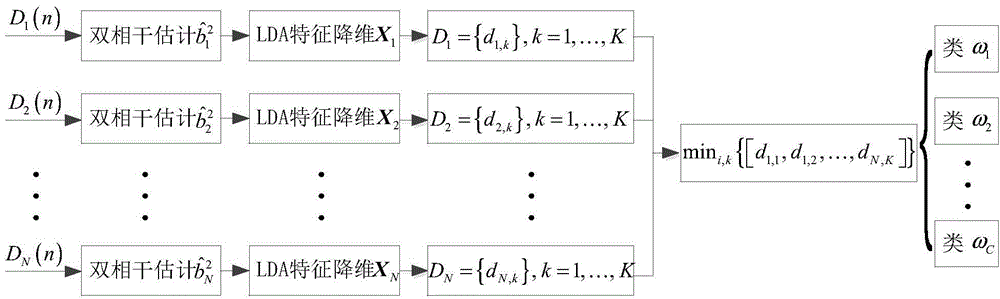

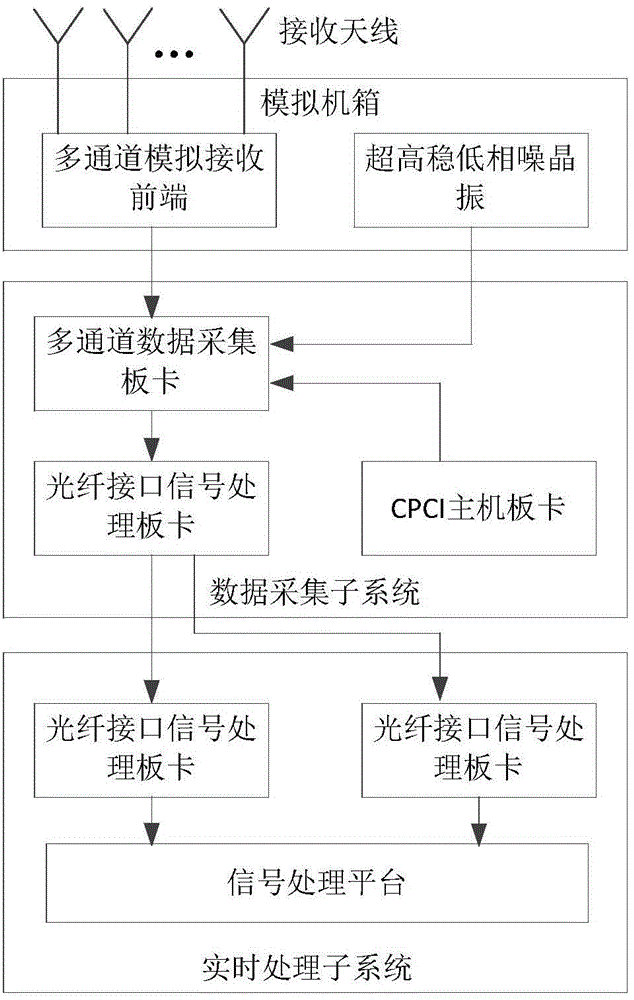

Aircraft target recognition method based on single frequency network passive radar

ActiveCN104865569ARealize multi-angle feature fusion recognitionReduce Posture SensitivityRadio wave reradiation/reflectionPassive radarJet engine

The invention discloses an aircraft target recognition method based on a single frequency network passive radar. Micro Doppler modulation characteristics generated by an aircraft target rotating part (such as a rotor, a propeller or a jet engine) are used for target recognition. Firstly, two-dimensional cross correlation processing is carried out on signals of a reference channel and a monitoring channel, and thus target detection is realized; then, micro Doppler characteristics corresponding to each transmitting station are extracted from different distance unit positions on a range-Doppler spectrum; and finally, information fusion technology is used for carrying out decision level fusion recognition on multi-angle characteristics. Micro Doppler characteristics provided by each transmitting station in the single frequency network at the same time are combined, attitude sensitivity of the target micro Doppler characteristics is reduced, and recognition accuracy is thus improved.

Owner:WUHAN UNIV

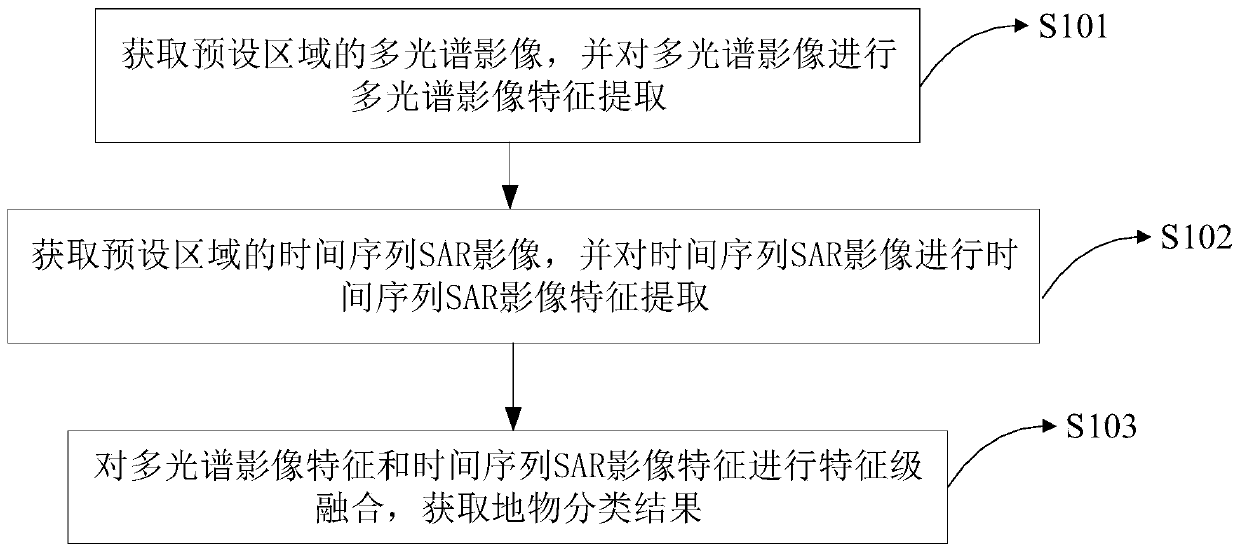

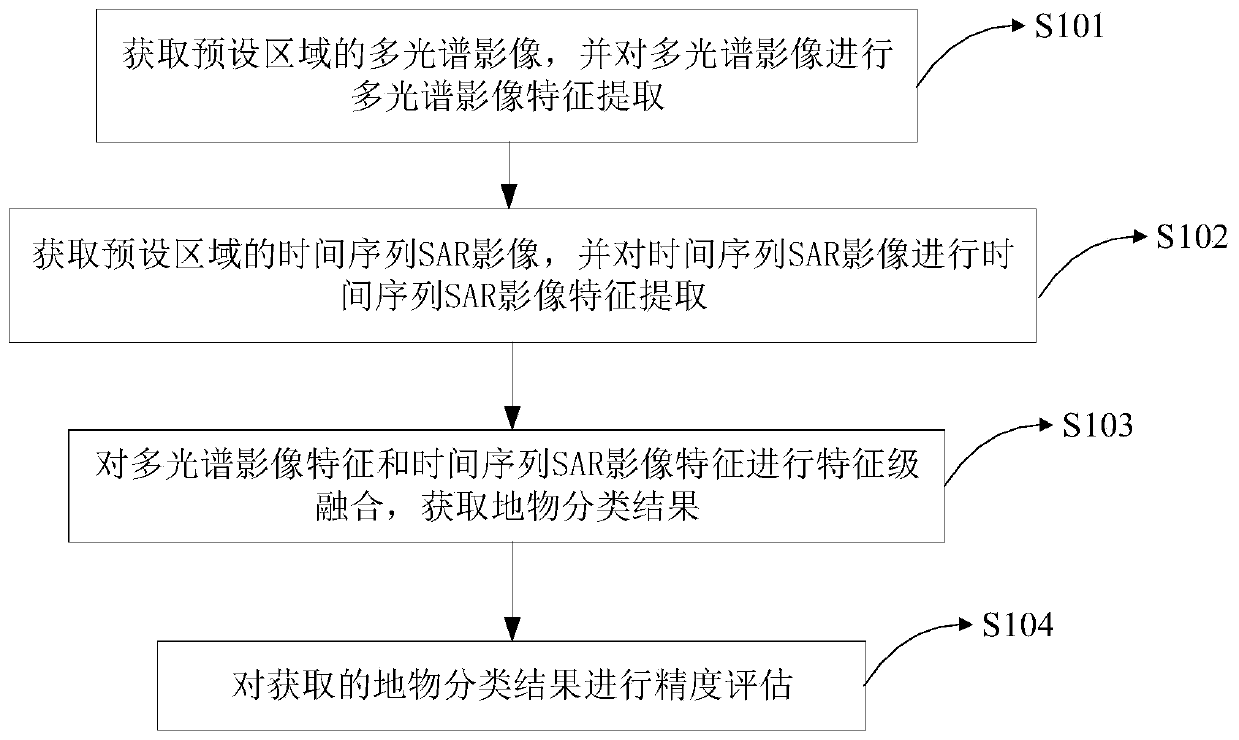

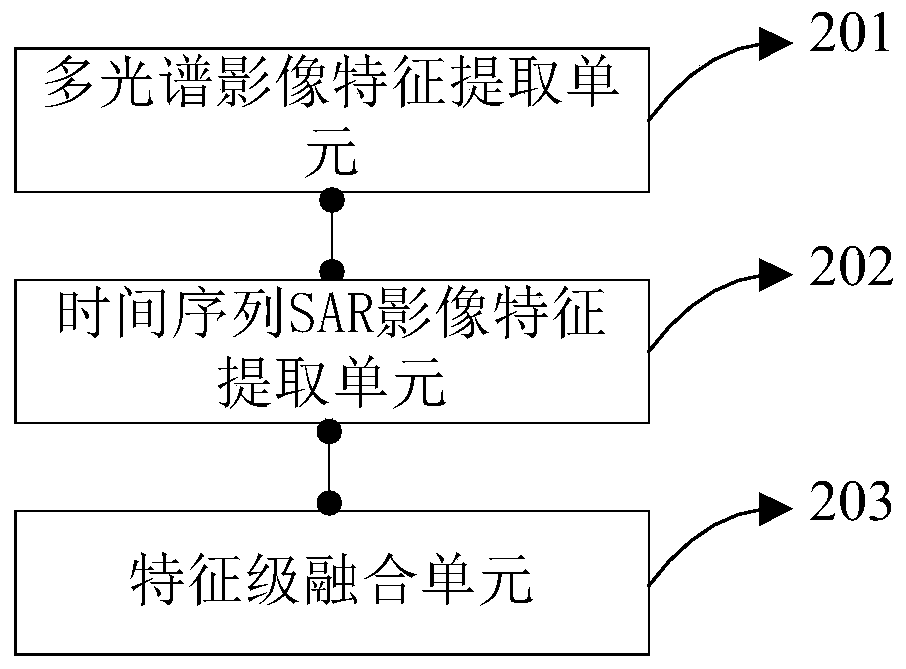

A ground object classification method and device based on a multispectral image and an SAR image

ActiveCN109711446AShort revisit cycleIncrease feature dimensionCharacter and pattern recognitionFeature DimensionSynthetic aperture radar

The invention relates to the field of ground object classification, in particular to a ground object classification method and device based on a multispectral image and an SAR image, and the method comprises the steps: obtaining the multispectral image of a preset area, and carrying out the multispectral image feature extraction of the multispectral image; obtaining a time sequence SAR image of apreset area, and performing time sequence SAR image feature extraction on the time sequence SAR image; and performing feature level fusion on the multispectral image features and the time sequence SARimage features to obtain a ground object classification result. According to the method and the device, the advantages of all-day working, all-weather working and short revisit period of the synthetic aperture radar SAR are utilized to obtain a long-time sequence SAR image, and the input feature dimension is increased; Characteristic level fusion is carried out on multispectral images and SAR images, and while multispectral information is fully utilized, ground feature interpretation is assisted by combining ground feature structures, textures and electromagnetic scattering characteristics reflected by time sequence SAR images.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

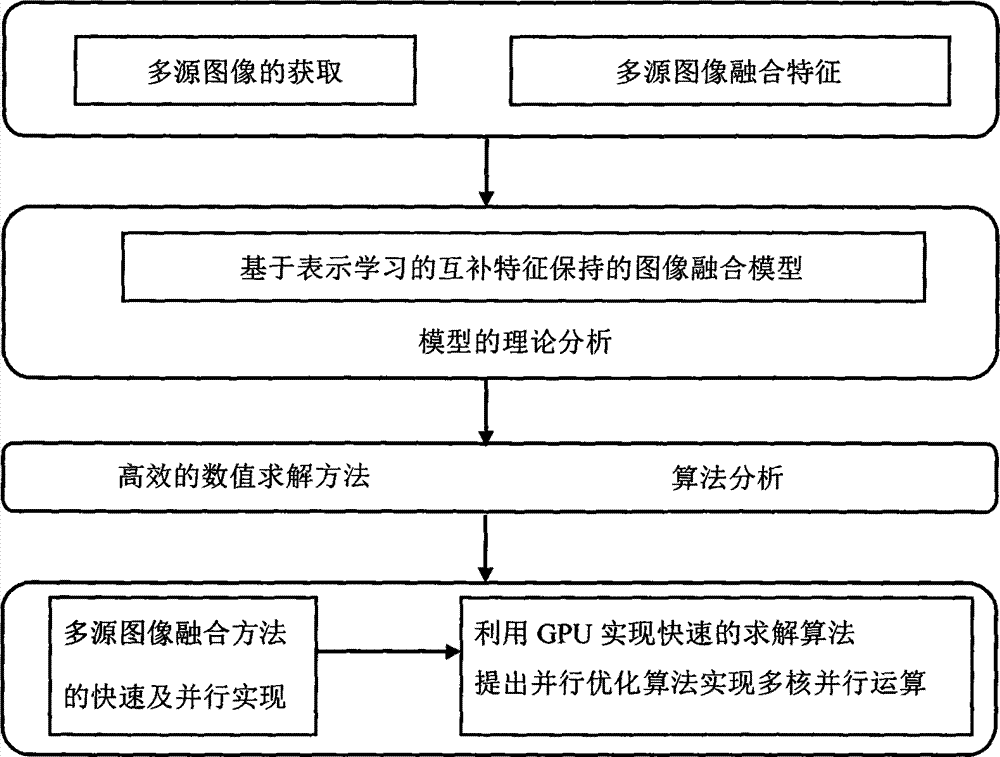

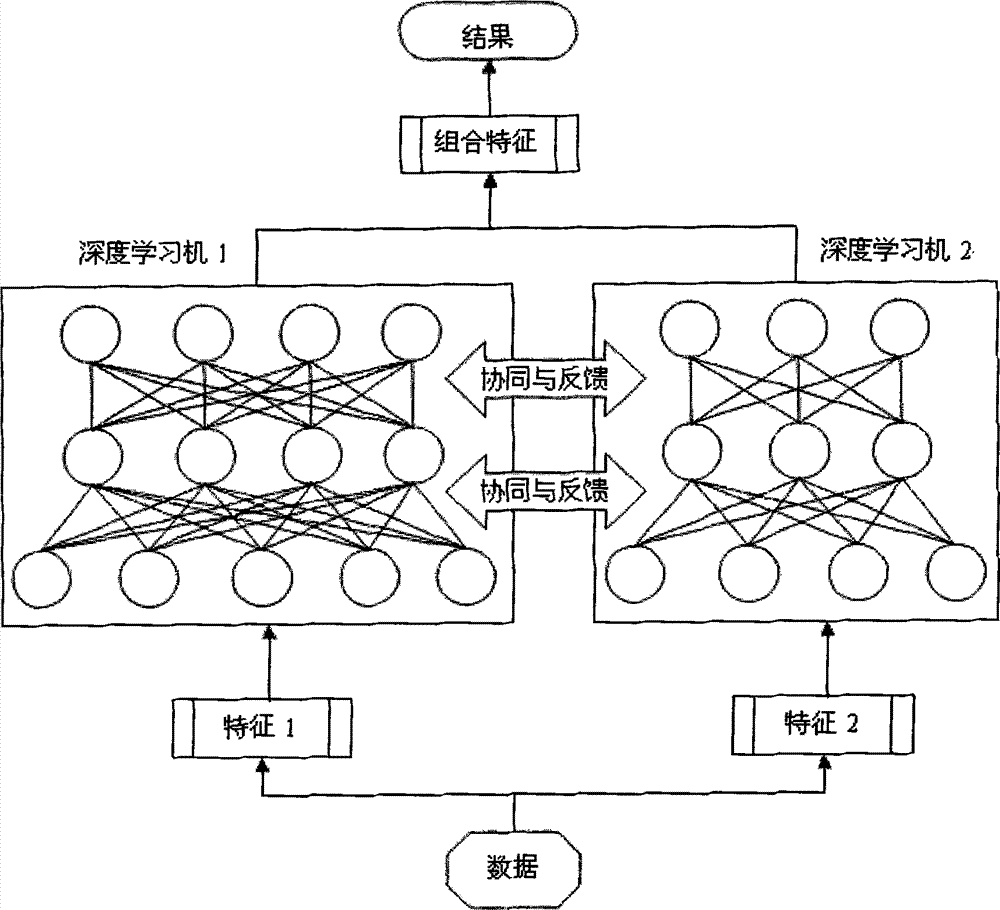

Method for image fusion based on representation learning

InactiveCN104851099AImplement image fusion technologyFast solutionImage enhancementImage analysisImage fusionBoltzmann machine

The invention discloses a method for image fusion based on representation learning, which comprises the steps of acquiring a multi-source image, learning features of the multi-source image through a learning framework of a deep neural network formed by a sparse adaptive encoder, a deep confidence network formed by a Boltzmann machine and a deep convolutional neural network, completing fusion of the multi-source image by using the automatically learned features, and establishing an image fusion model; studying a convex optimization problem of the image fusion model, and carrying out initialization on the networks by using unsupervised pre-training in deep learning, thereby enabling the networks to find an optimal solution quickly in the training process; and establishing a deep learning network for cooperative training according to the features of the multi-source image through two or more deep learning networks, thereby realizing an image fusion technology of representation learning. The method disclosed by the invention studies feature-level fusion of the image by using artificial intelligence and a deep learning based feature representation method. Compared with a traditional pixel-level fusion method, the method disclosed by the invention can better understand image information, and thus further improves the quality of image fusion.

Owner:ZHOUKOU NORMAL UNIV +1

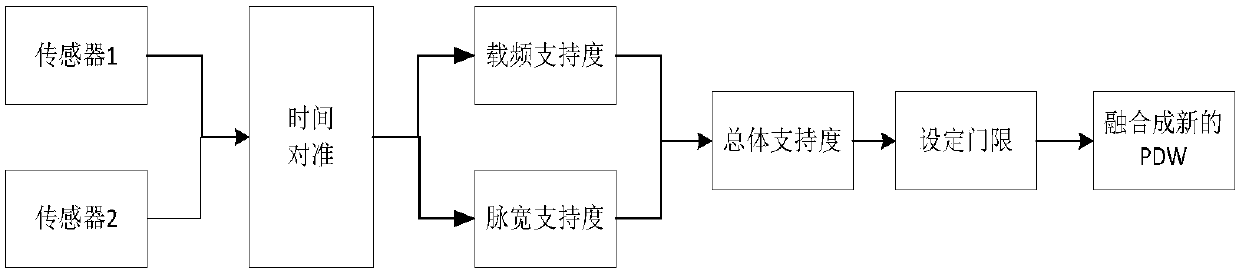

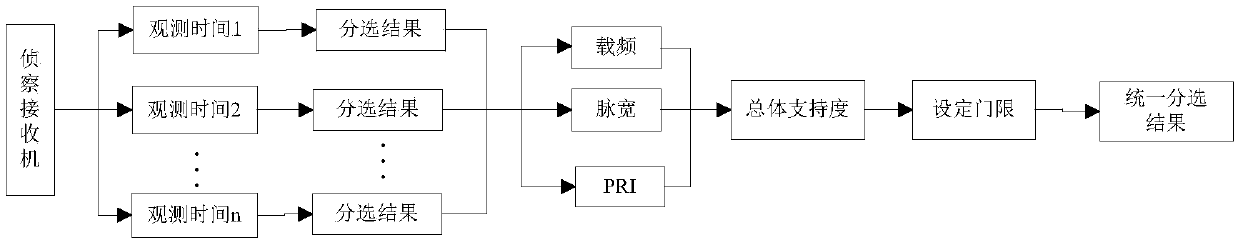

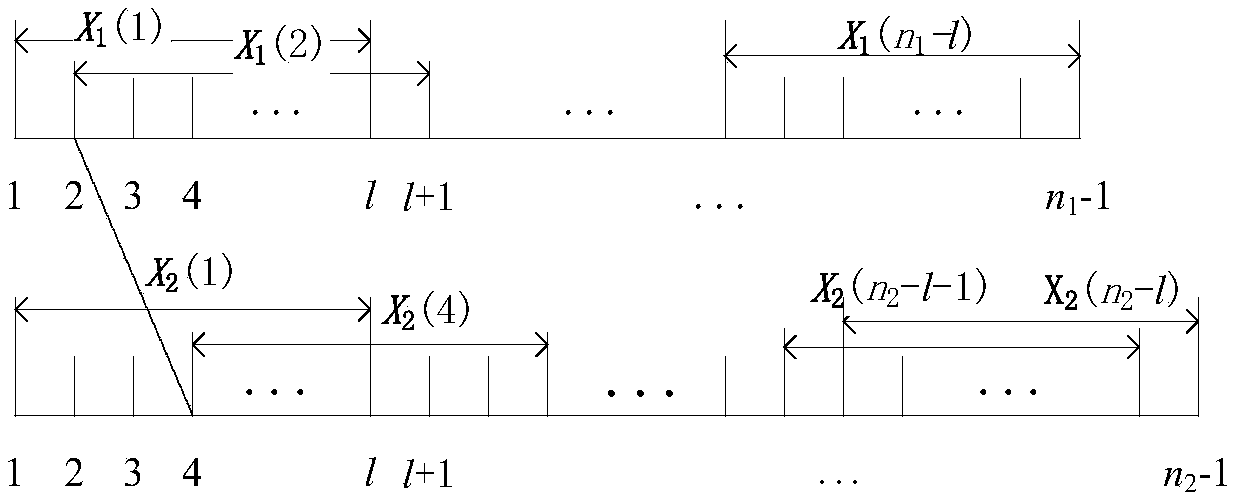

Method for applying information fusion to radar signal sorting

ActiveCN107656245ASolve the problem of sorting failureSolve the problem that the description parameters are not exactly the sameWave based measurement systemsRadar signal processingTime alignment

The invention discloses a method for applying information fusion to radar signal sorting and belongs to the radar signal processing field. The method is characterized by carrying out data level fusionon pulse description words (PDW) before radar signal sorting, and carrying out feature level fusion on sorting results after sorting, wherein in the data level fusion, providing a time alignment method based on first-level pulse time-of-arrival (TOA) difference matching, and carrying out correlation judgment on pulses arriving at the same time through a D-S evidence theory to obtain new PDW; andin the feature level fusion, unifying parameters describing the same radar and carrying out reliability ranking on the sorting results. The method solves the problems of possible sorting failure due to received pulse loss of a single reception device, and not exactly same describing parameters of the same radar after sorting; and simulation results show that, through the data level fusion, successrate of radar signal sorting can be improved effectively, and through application of the feature level fusion, more concise and visual radiation source information can be obtained.

Owner:HARBIN ENG UNIV

Multi-source traffic data fusion method

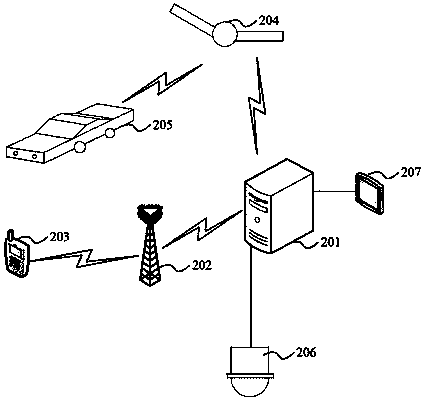

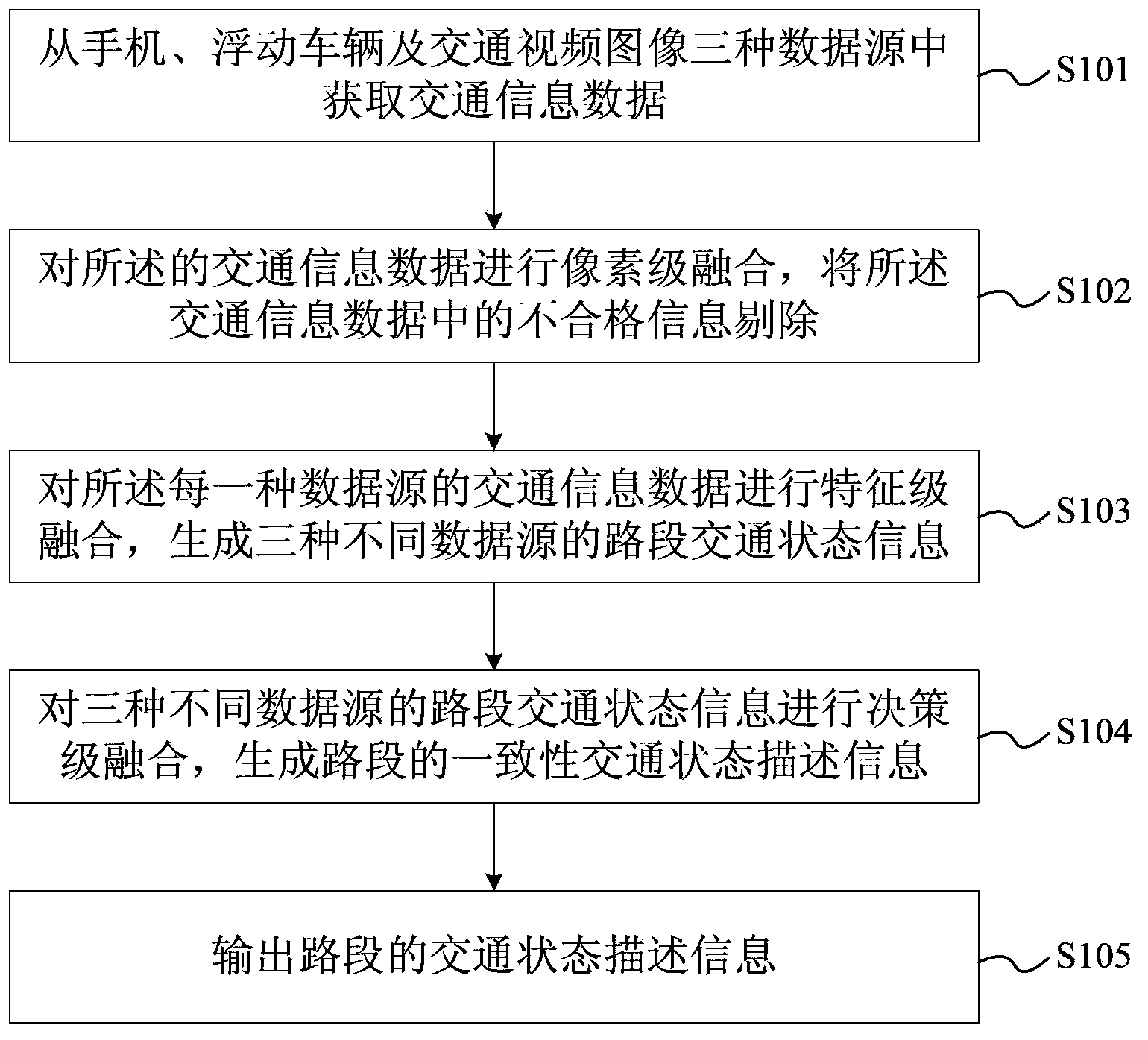

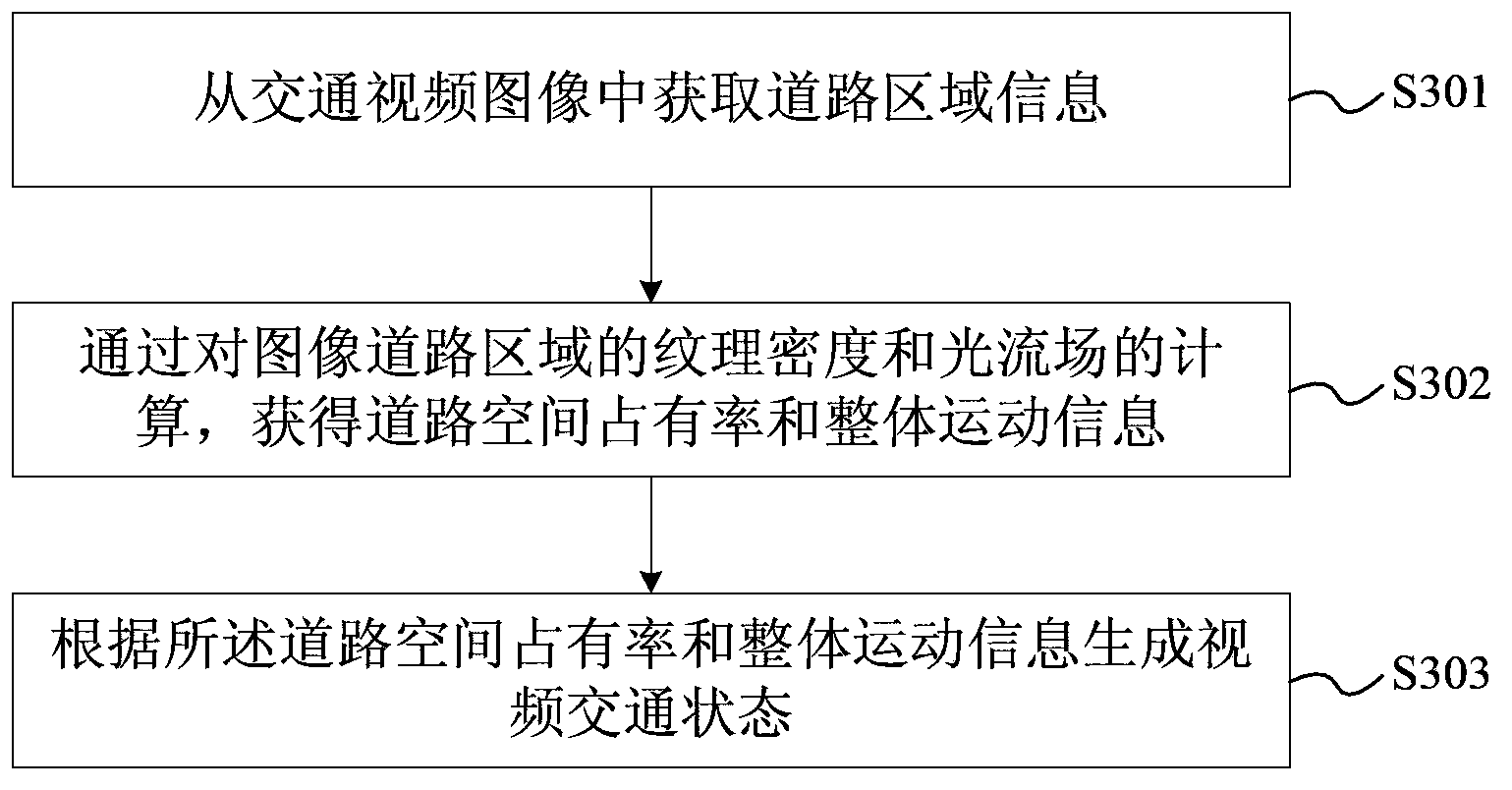

ActiveCN103838772ADetermine traffic conditionsDetection of traffic movementSpecial data processing applicationsThree levelData source

The invention provides a multi-source traffic fusion method. The method includes the steps that traffic information data are acquired from three data sources of a mobile phone, a floating vehicle and traffic video images; pixel-level fusion is conducted on the traffic information data, and disqualified information in the traffic information data is removed; feature-level fusion is conducted on the traffic information data of each data source to generate road segment traffic state information of the three different data sources; decision-level fusion is conduced on the road segment traffic state information of the three different data sources to generate consistent traffic state description information of road segments; the traffic state description information of the road segments is output. The traffic information data are acquired from the multiple data sources, after three levels of fusion, final traffic states of the road segments are generated, and the traffic state of the road surface can be more accurately determined.

Owner:HONG KONG PRODUCTIVITY COUNCIL

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com