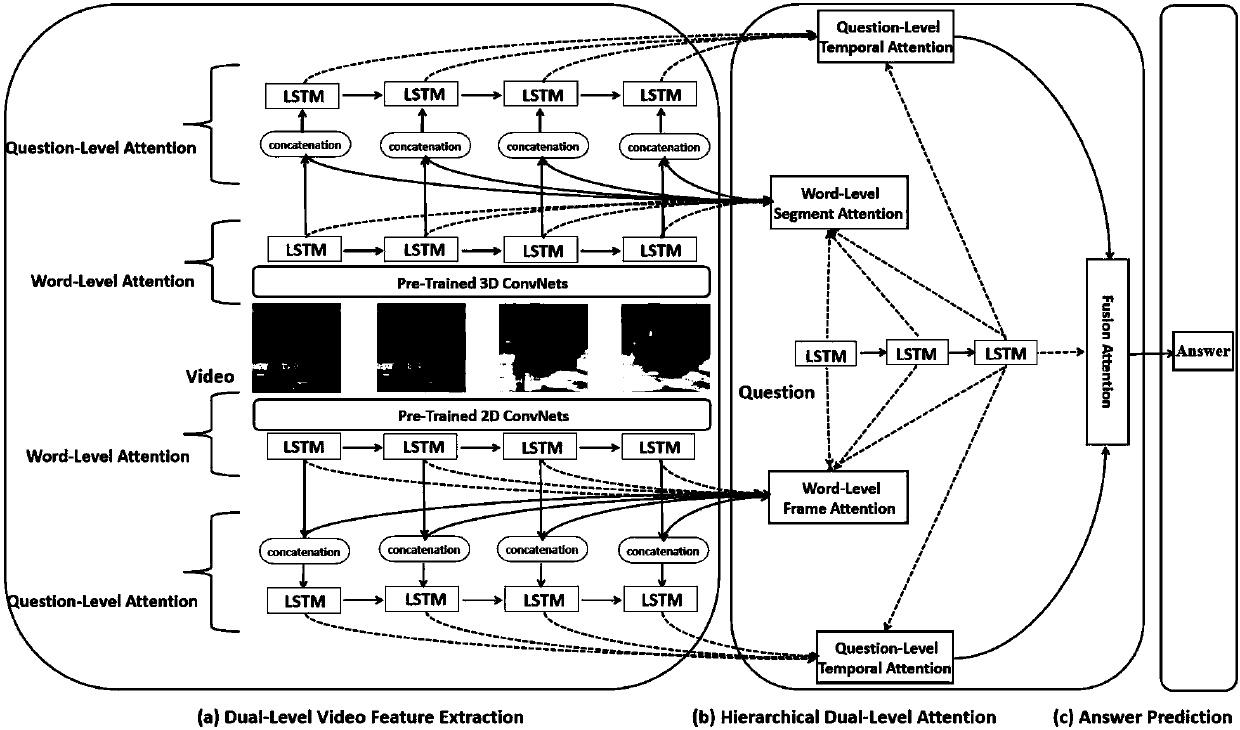

Method of using multi-layer attention network mechanism to solve video question answering

An attention and video technology, applied in computer parts, special data processing applications, instruments, etc., can solve problems such as lack of temporal dynamic information modeling

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

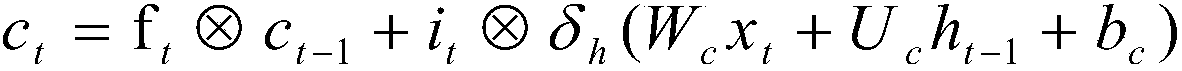

Method used

Image

Examples

Embodiment

[0129] The present invention conducts experimental verification on the data set built by itself, and constructs a total of two data sets, namely the YouTube2Text data set and the VideoClip data set. The YouTube2Text data set contains 1987 video clips and 122708 text descriptions, and the VideoClip data set contains 201068 video clips and 287933 text descriptions. The present invention generates corresponding question answer pairs for the text descriptions in the two data sets. For the YouTube2Text data set, the present invention generates four question answer pairs, which are respectively related to the object, number, location, and person of the video; for the VideoClip data set , The present invention generates four question answer pairs, which are respectively related to the object, number, color, and location of the video. Subsequently, the present invention performs the following preprocessing on the constructed video question and answer data set:

[0130] 1) Take 60 frames...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com