Patents

Literature

988 results about "Attention network" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

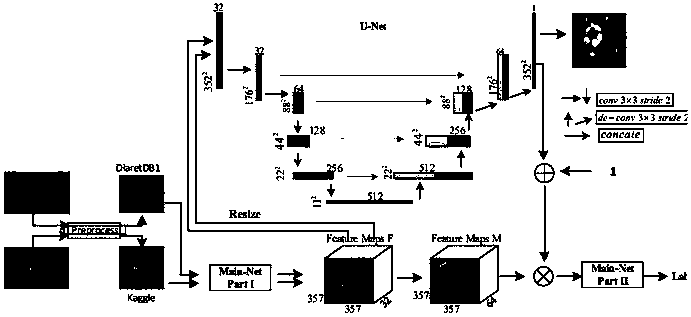

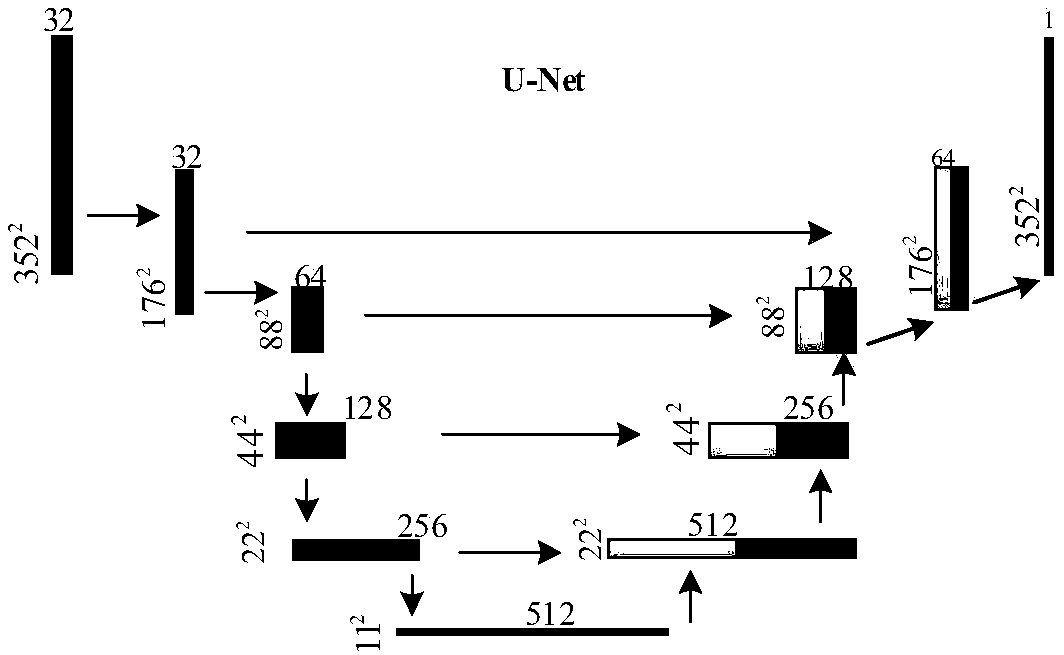

Attention mechanism-based in-depth learning diabetic retinopathy classification method

ActiveCN108021916AEnhanced feature informationImproving Lesion Diagnosis ResultsImage enhancementImage analysisNerve networkDiabetes retinopathy

The invention discloses an attention mechanism-based in-depth learning diabetic retinopathy classification method comprising the following steps: a series of eye ground images are chosen as original data samples which are then subjected to normalization preprocessing operation, the preprocessed original data samples are divided into a training set and a testing set after being cut, a main neutralnetwork is subjected to parameter initializing and fine tuning operation, images of the training set are input into the main neutral network and then are trained, and a characteristic graph is generated; parameters of the main neutral network are fixed, the images of the training set are adopted for training an attention network, pathology candidate zone degree graphs are output and normalized, anattention graph is obtained, an attention mechanism is obtained after the attention graph is multiplied by the characteristic graph, an obtained result of the attention mechanism is input into the main neutral network, the images of the training set are adopted for training operation, and finally a diabetic retinopathy grade classification model is obtained. Via the method disclosed in the invention, the attention mechanism is introduced, a diabetic retinopathy zone data set is used for training the same, and information characteristics of a retinopathy zone is enhanced while original networkcharacteristics are reserved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

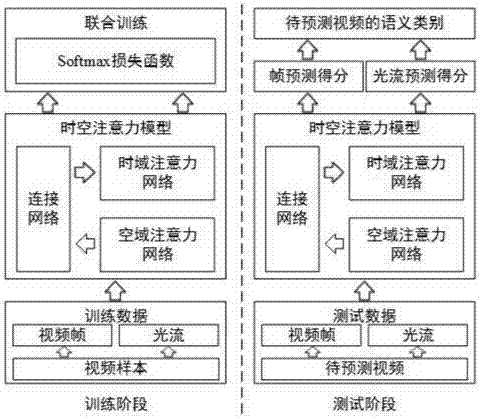

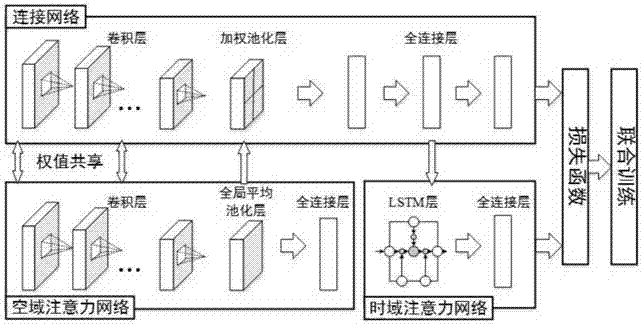

Space-time attention based video classification method

ActiveCN107330362AImprove classification performanceTime-domain saliency information is accurateCharacter and pattern recognitionAttention modelTime domain

The invention relates to a space-time attention based video classification method, which comprises the steps of extracting frames and optical flows for training video and video to be predicted, and stacking a plurality of optical flows into a multi-channel image; building a space-time attention model, wherein the space-time attention model comprises a space-domain attention network, a time-domain attention network and a connection network; training the three components of the space-time attention model in a joint manner so as to enable the effects of the space-domain attention and the time-domain attention to be simultaneously improved and obtain a space-time attention model capable of accurately modeling the space-domain saliency and the time-domain saliency and being applicable to video classification; extracting the space-domain saliency and the time-domain saliency for the frames and optical flows of the video to be predicted by using the space-time attention model obtained by learning, performing prediction, and integrating prediction scores of the frames and the optical flows to obtain a final semantic category of the video to be predicted. According to the space-time attention based video classification method, modeling can be performing on the space-domain attention and the time-domain attention simultaneously, and the cooperative performance can be sufficiently utilized through joint training, thereby learning more accurate space-domain saliency and time-domain saliency, and thus improving the accuracy of video classification.

Owner:PEKING UNIV

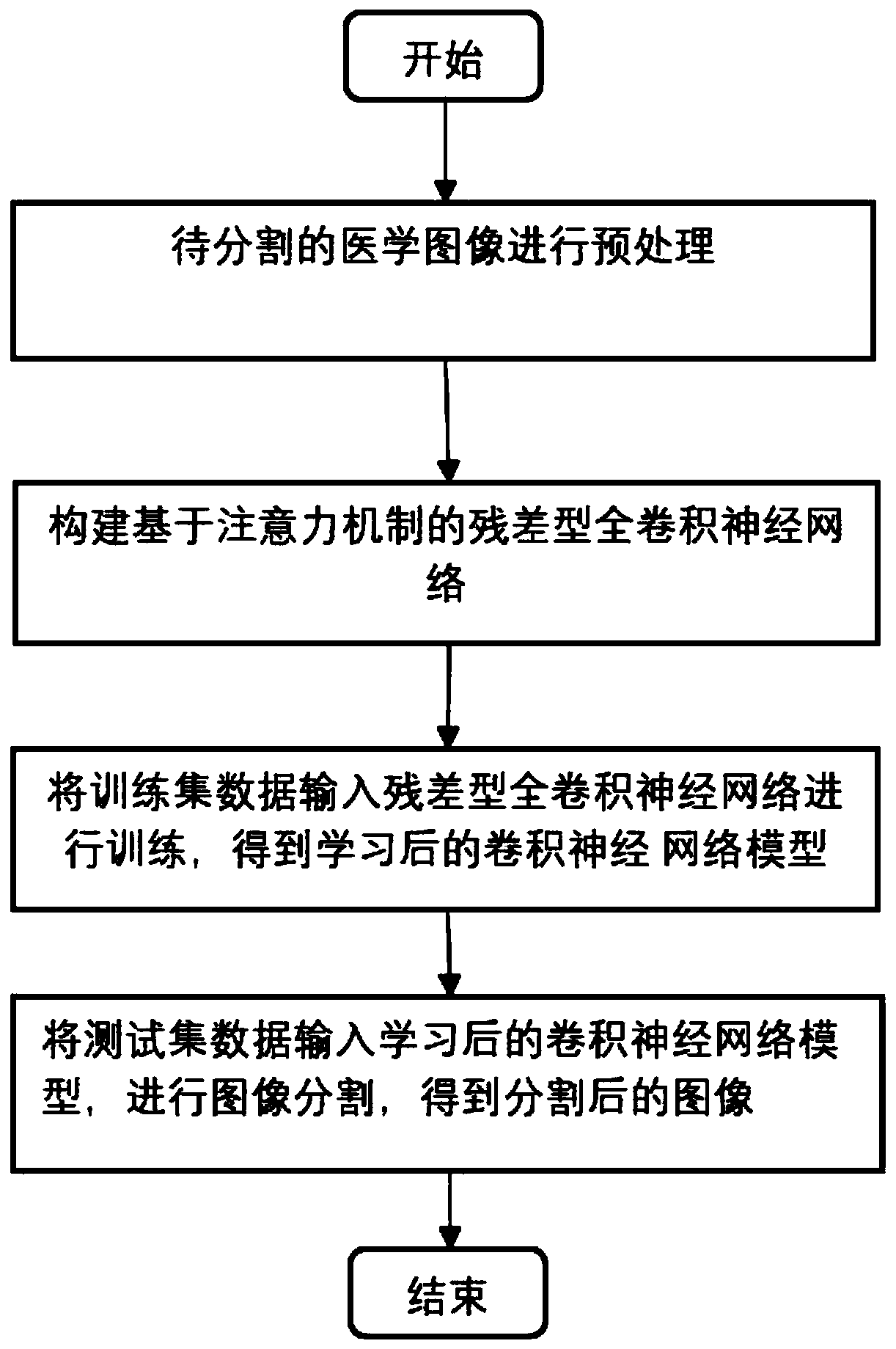

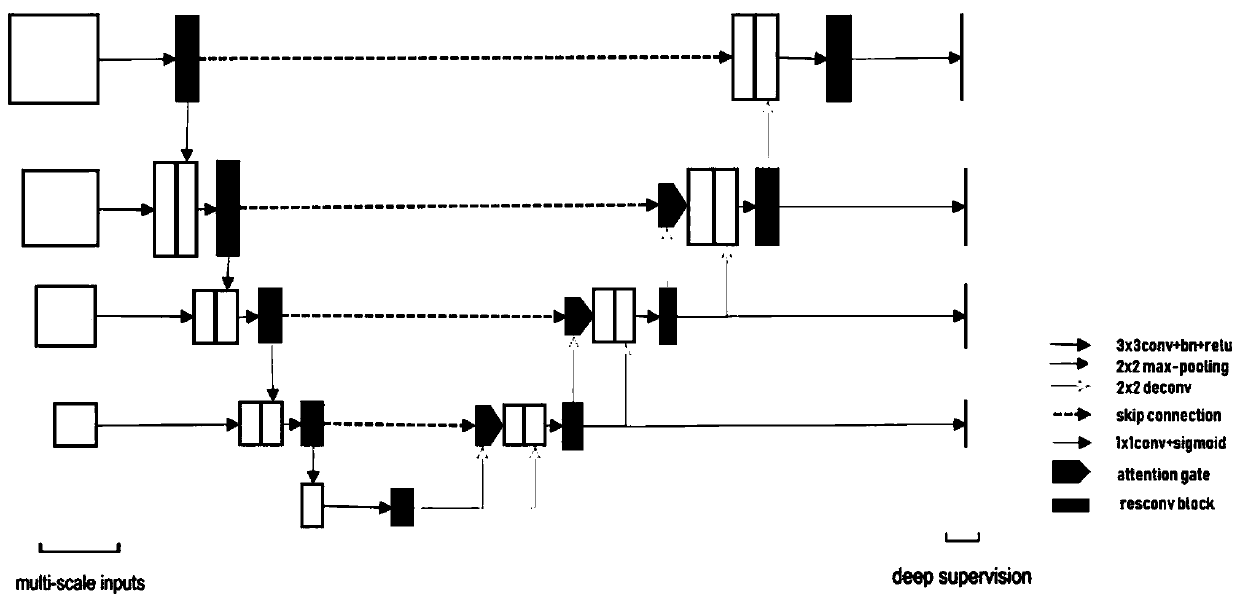

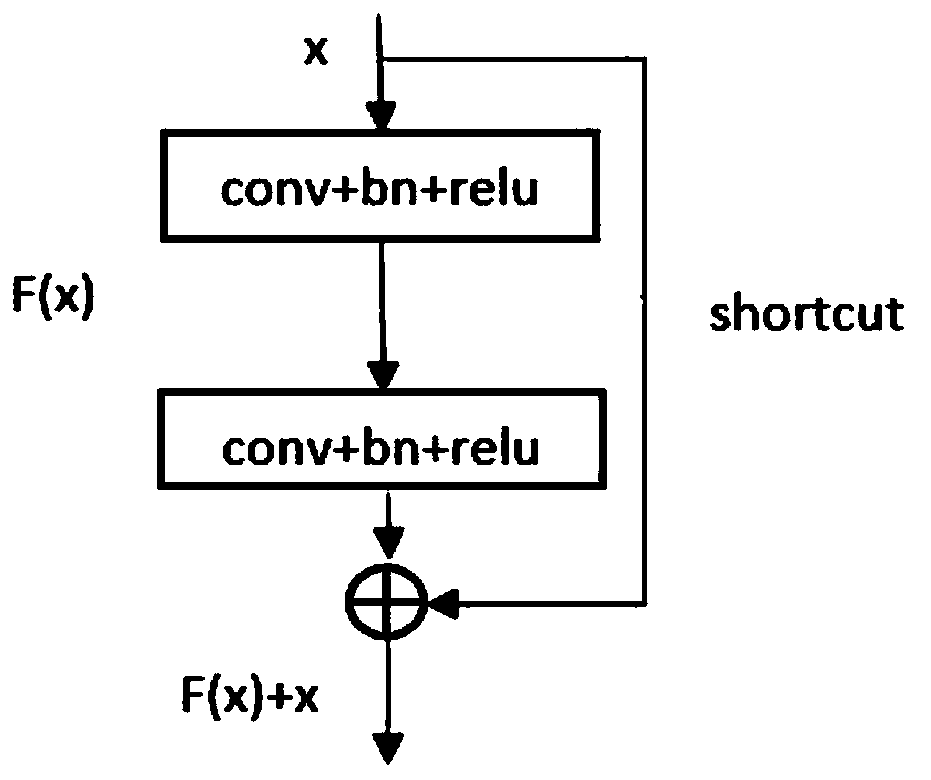

Medical image segmentation method of residual full convolutional neural network based on attention mechanism

ActiveCN110189334ASolve the problem of lack of spatial features of imagesReduce redundancyImage enhancementImage analysisImage segmentationImaging Feature

The invention provides a medical image segmentation method of a residual full convolutional neural network based on an attention mechanism. The medical image segmentation method comprises the steps: preprocessing a to-be-segmented medical image; constructing a residual full convolutional neural network based on the attention mechanism, wherein the residual full convolutional neural network comprises a feature map contraction network, an attention network and a feature map expansion network group; inputting the training set data into a residual error type full convolutional neural network for training to obtain a learned convolutional neural network model; and inputting the test set data into the learned convolutional neural network model, and performing image segmentation to obtain segmented images. According to the medical image segmentation method, an attention network is utilized to effectively transmit image features extracted from a feature map contraction network to a feature mapexpansion network; and the problem of lack of image spatial features in an image deconvolution process is solved while the attention network can also inhibit image regions irrelevant to a segmentation target in a low-layer feature image, so that the redundancy of the image is reduced, and meanwhile, the accuracy of image segmentation is also improved.

Owner:NANJING UNIV OF POSTS & TELECOMM

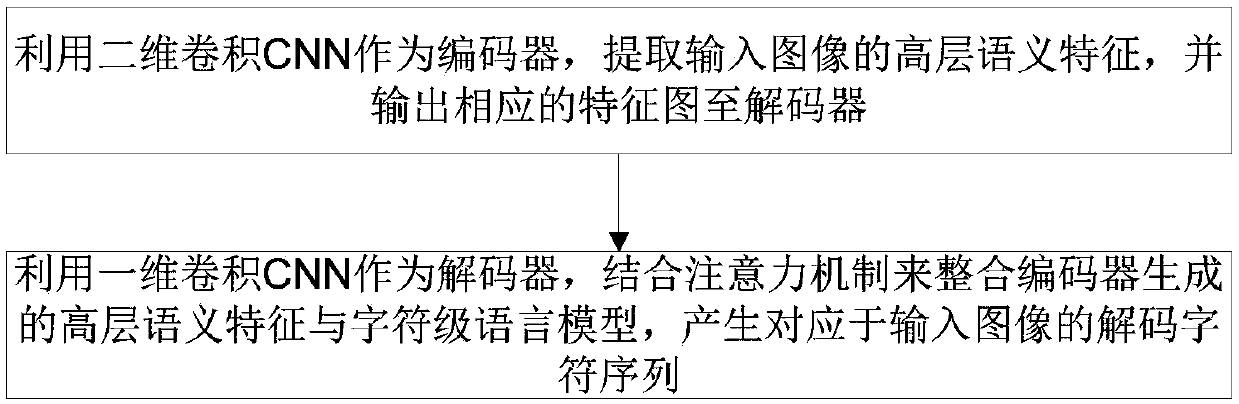

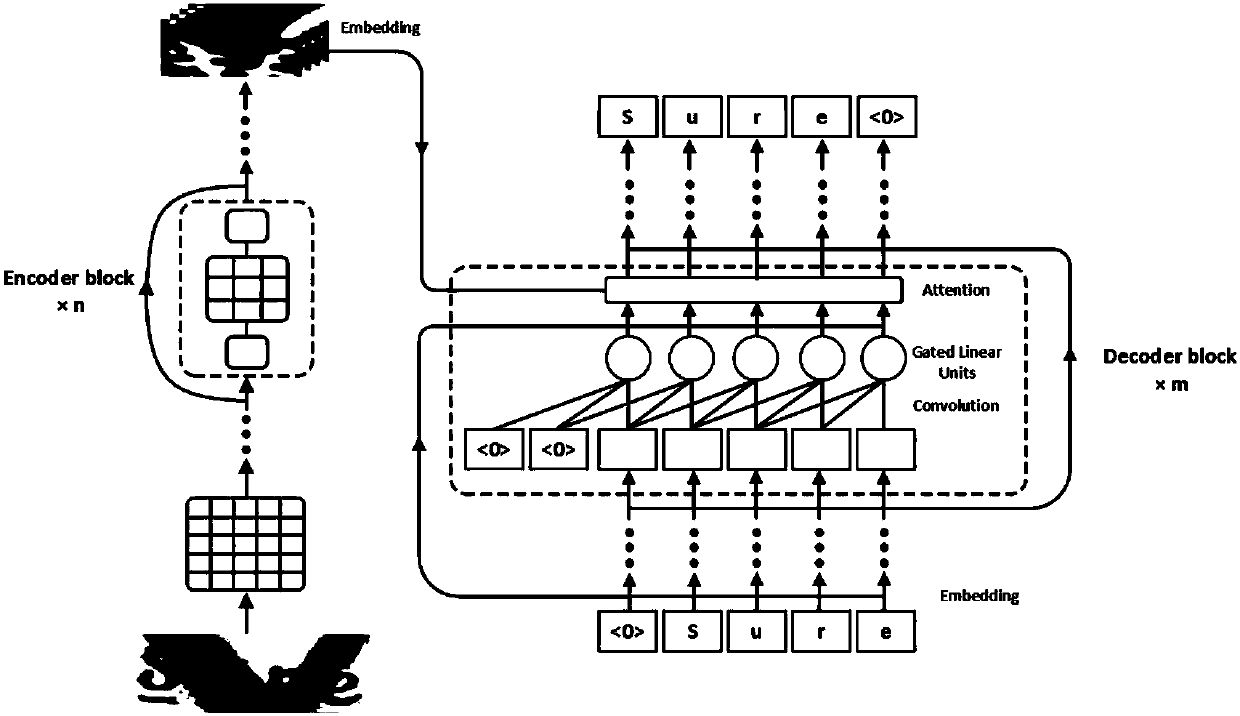

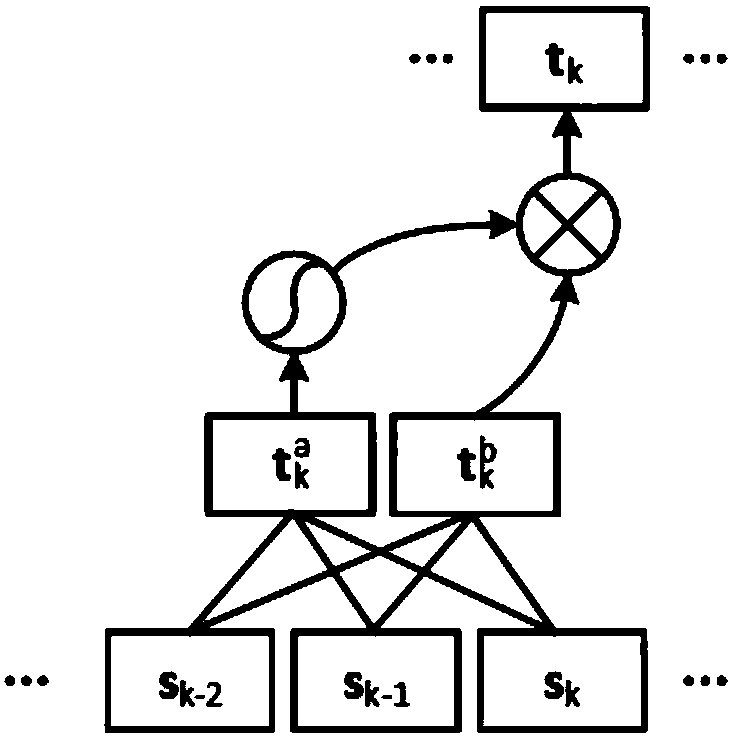

Natural scene text identification method based on convolution attention network

ActiveCN108615036AEasy to parallelizeReduce complexityNeural architecturesNeural learning methodsPattern recognitionConvolution theorem

The invention discloses a natural scene text identification method based on a convolution attention network; the method comprises the following steps: using a two-dimensional convolution CNN as an encoder, extracting High-level semantic features of an input image, and outputting a corresponding feature graph to a decoder; using a one-dimensional convolution CNN as the decoder, combining an attention mechanism to integrate the High-level semantic feature formed by the encoder with a character level language model, thus forming a decode character sequence corresponding to the input image. For asequence with the length of n, the method uses the CNN modeling character sequence with a convolution kernel as s, and only O (n / s) times of operation is needed to obtain the long term dependence expression, thus greatly reducing the algorithm complexity; in addition, because of convolution operation characteristics, the CNN can be better paralleled when compared with a RNN, thus performing advantages of GPU resources; more importantly, a deep model can be obtained via a convolution layer stacking mode, thus improving abstractness expression of a higher level, and improving the model accuracy.

Owner:UNIV OF SCI & TECH OF CHINA

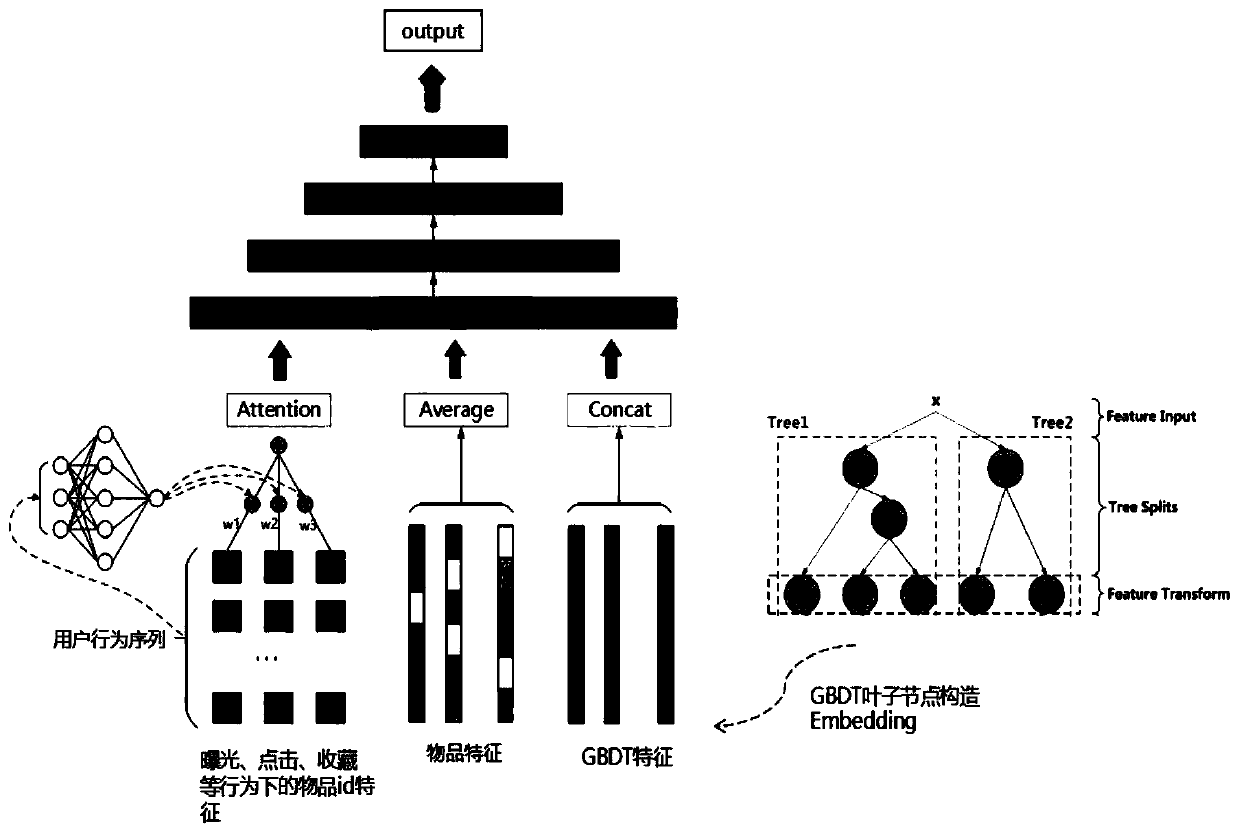

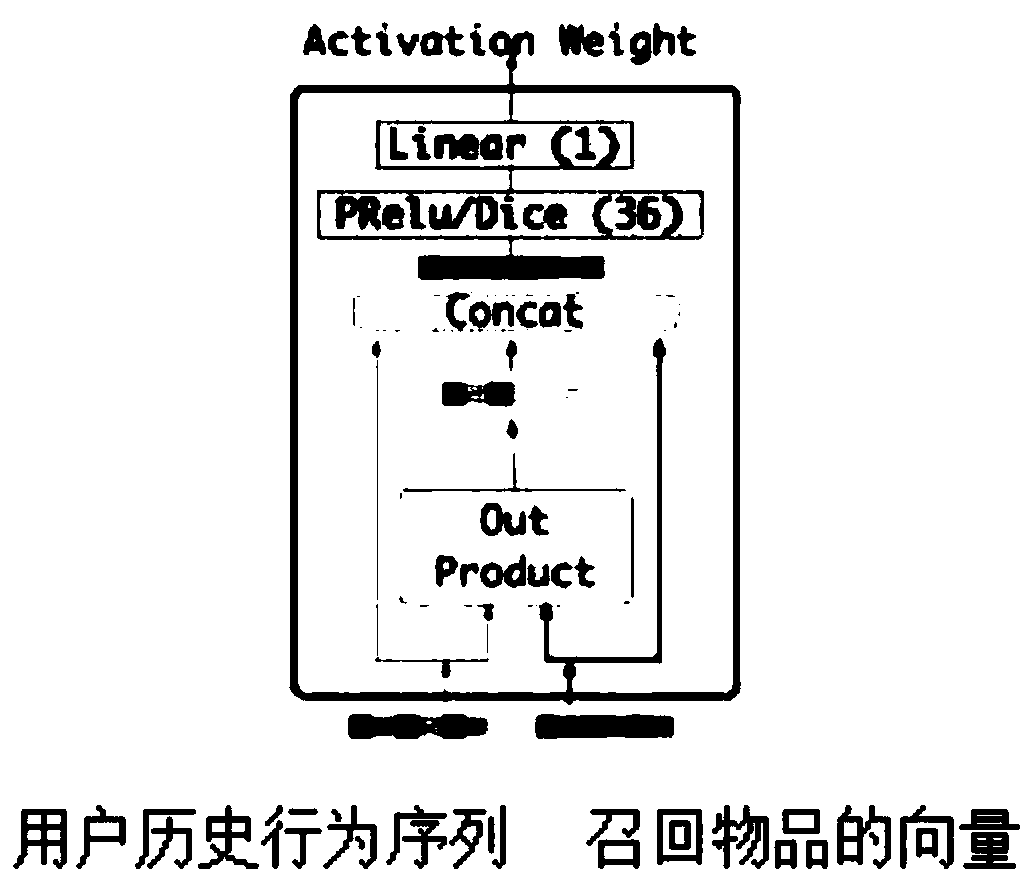

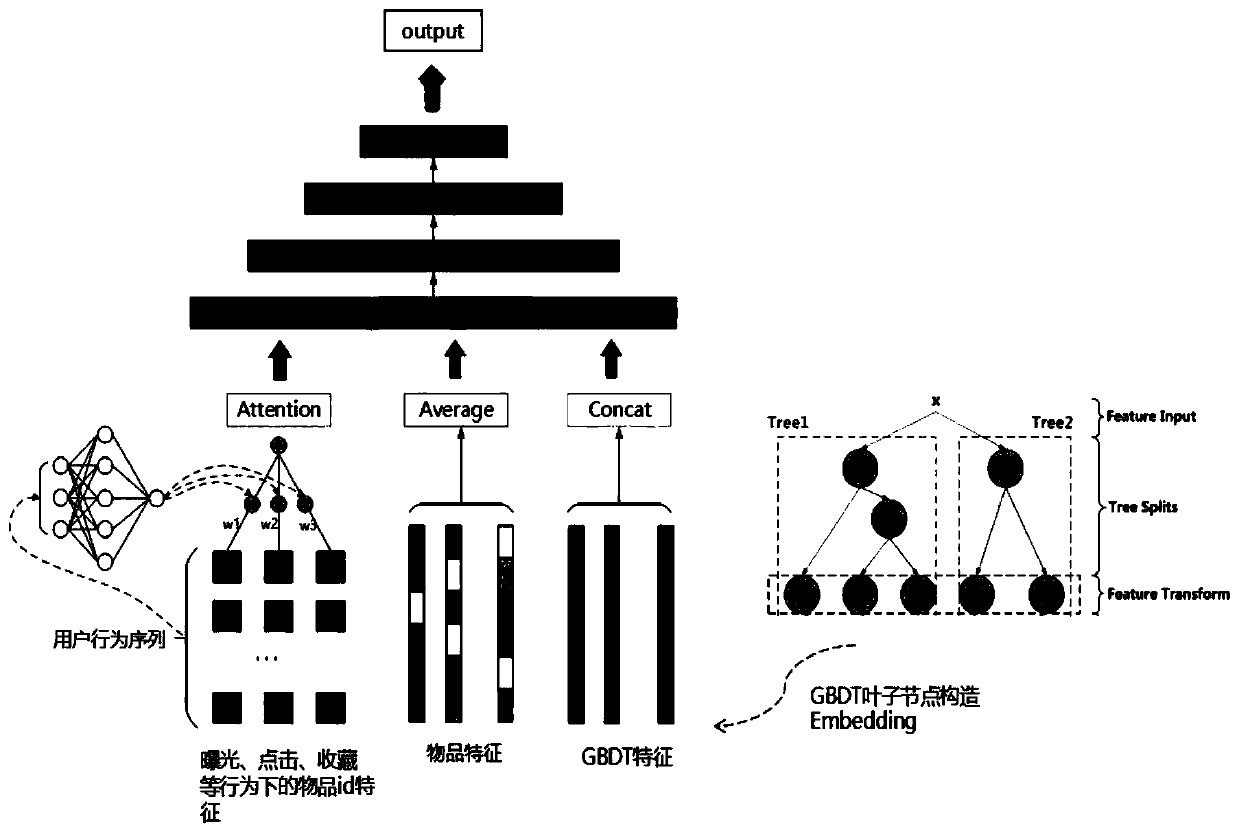

Recommendation system click rate prediction method based on deep neural network

ActiveCN109960759AHigh degree of generalizationImprove scalabilityDigital data information retrievalForecastingHidden layerFeature vector

The invention discloses a recommendation system click rate prediction method based on a deep neural network, and the method comprises the steps: collecting a user click behavior as a sample, extracting numerical features of the sample with a numerical value relation, and inputting the numerical features into a GBDT tree model for training, and obtaining a GBDT leaf node matrix E1; inputting a behavior sequence formed by clicking the articles by all the users in the sample into an Attention network to obtain an interest intensity matrix E2 of all the users in the sample for the articles; summing and averaging the article feature vectors of the click interaction of the user to obtain a click interaction matrix E3 corresponding to the user, splicing E1, E2 and E3, and inputting the E1, E2 andE3 into a deep neural network model with three hidden layers and one output layer to output a prediction result. According to the method, user clicking behaviors are decomposed into attribute characteristics, a GBDT tree model, an Attention network and a deep neural network model are subjected to nonlinear fitting, a recommendation system clicking rate prediction model is constructed, a prediction result is obtained through model training, and the method has the advantages of deep mining of recent interests of users, high generalization degree and high expansibility.

Owner:SUN YAT SEN UNIV

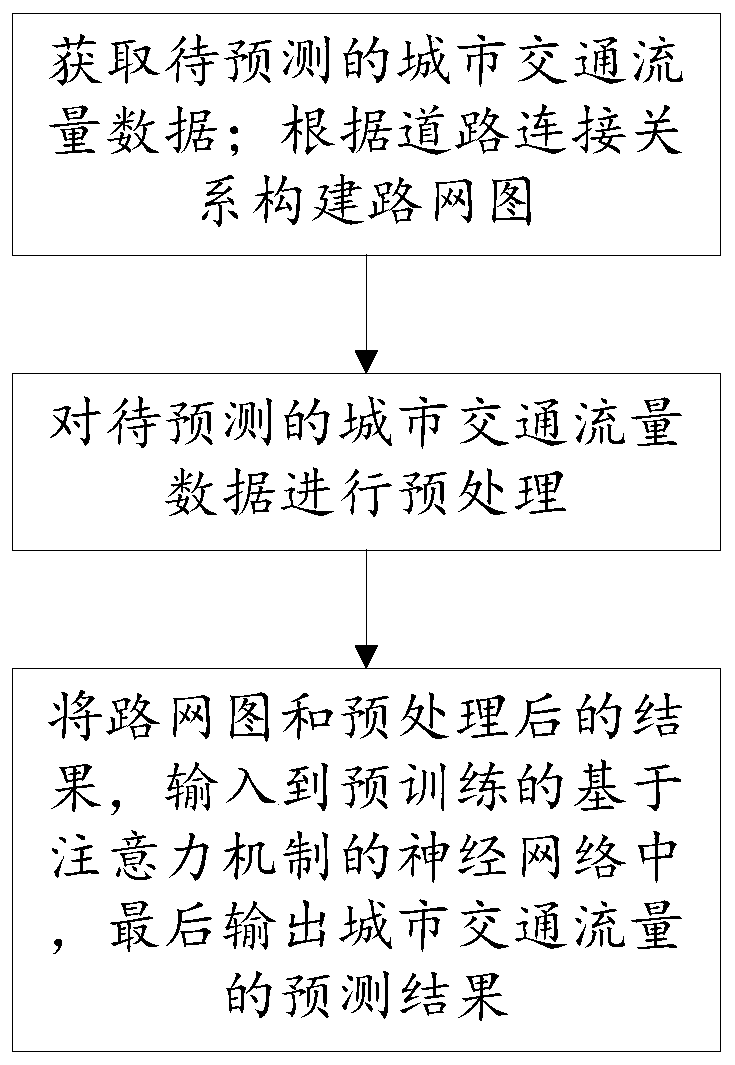

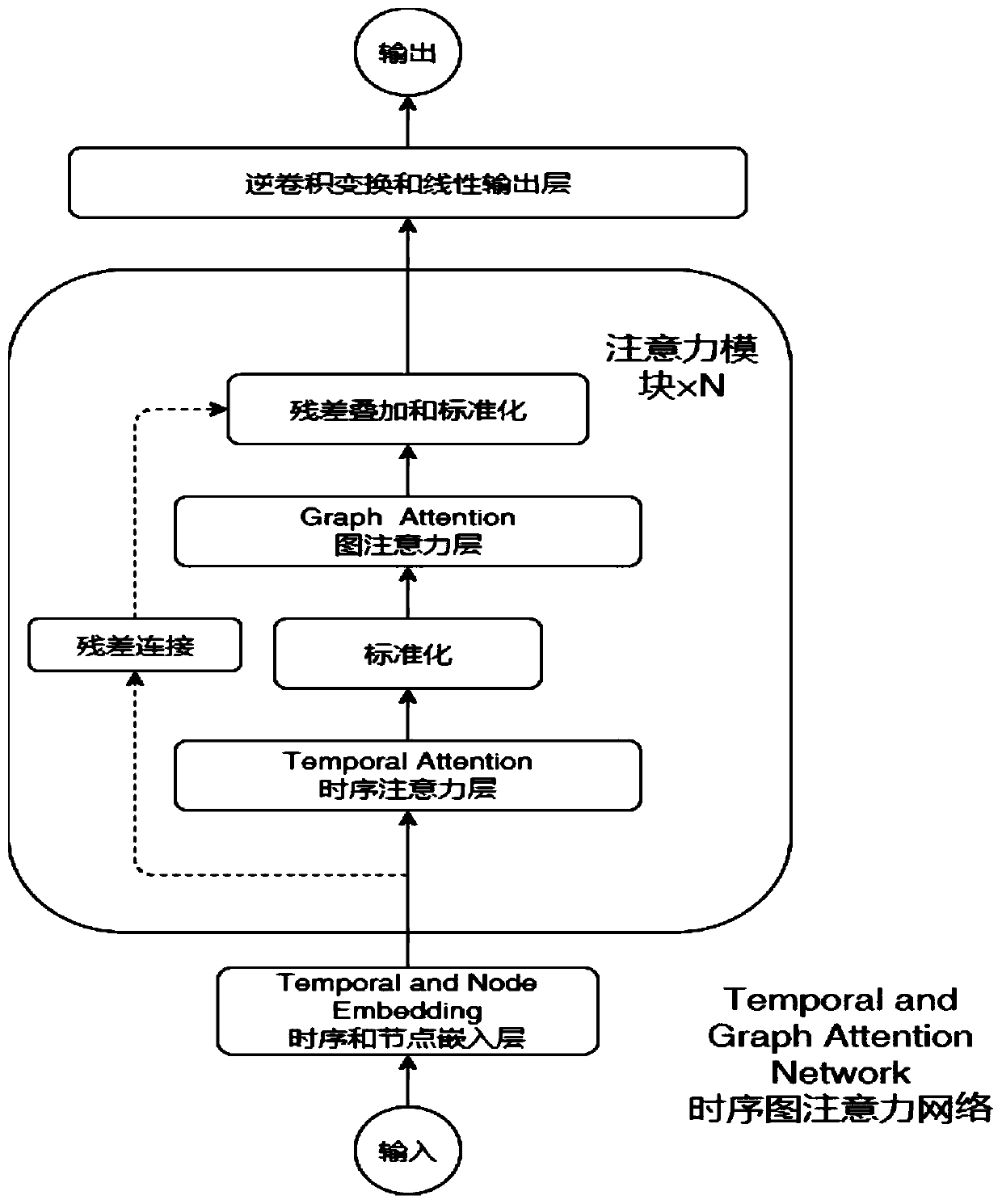

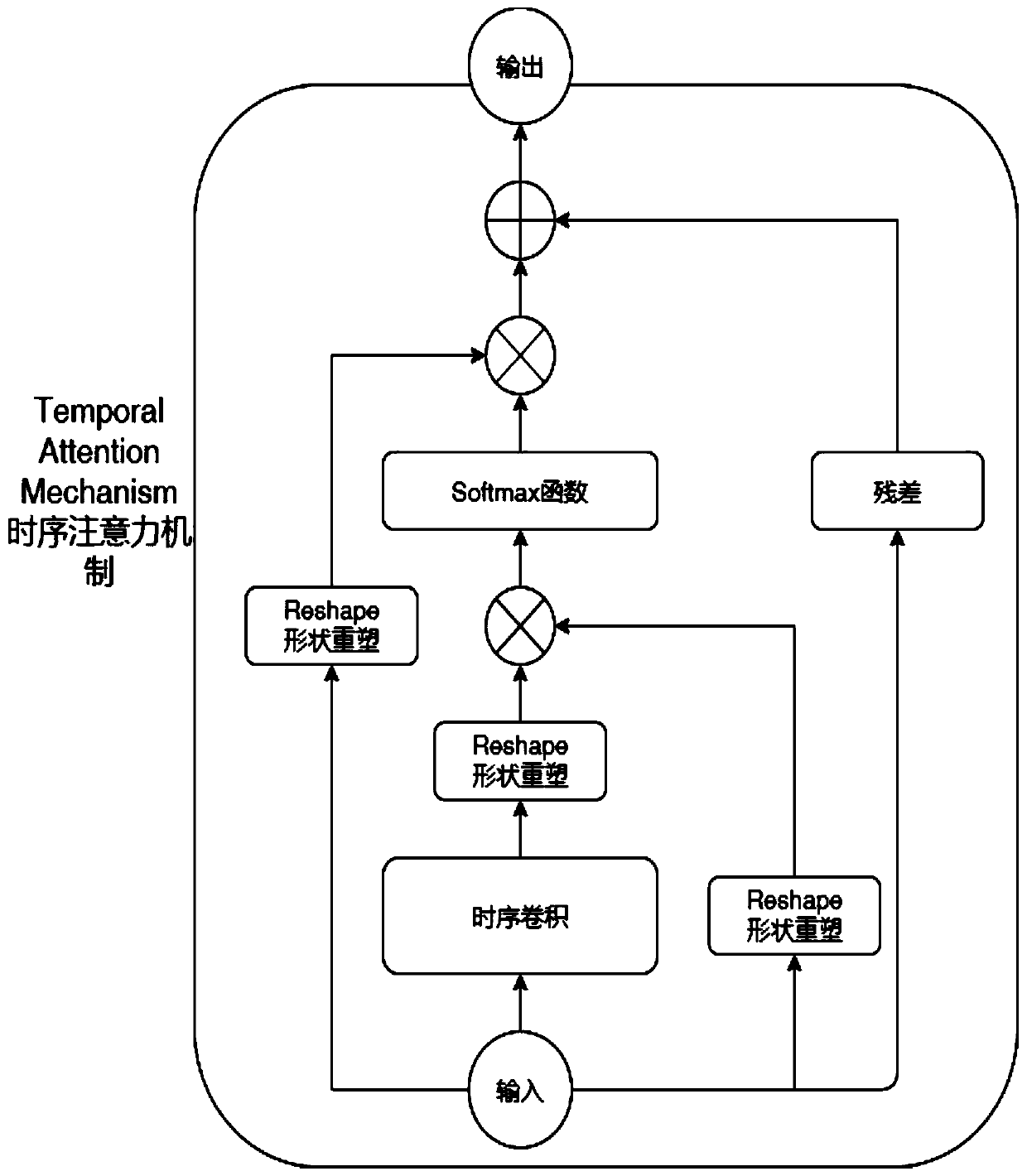

Graph neural network traffic flow prediction method and system based on attention mechanism

The invention discloses a graph neural network traffic flow prediction method and system based on an attention mechanism. The method comprises the steps of obtaining to-be-predicted urban traffic flowdata; constructing a road network map according to the road connection relationship; preprocessing the urban traffic flow data to be predicted; and inputting the road network map and the preprocessedresult into a pre-trained neural network based on an attention mechanism, and finally outputting a prediction result of the urban traffic flow. Roads and checkpoints are encoded according to the roadnetwork information, a road network graph structure is established according to the upstream and downstream relationship of the roads, vehicle passing data of the checkpoints is counted under different time dimensions nd summarized to form a road network traffic flow data table; a graph neural network formed by stacking multiple layers of attention modules is constructed, a time sequence attention mechanism and the graph attention network are used for modeling the traffic flow in the whole road network, and the future traffic flow condition of a specified checkpoint is predicted.

Owner:SHANDONG UNIV

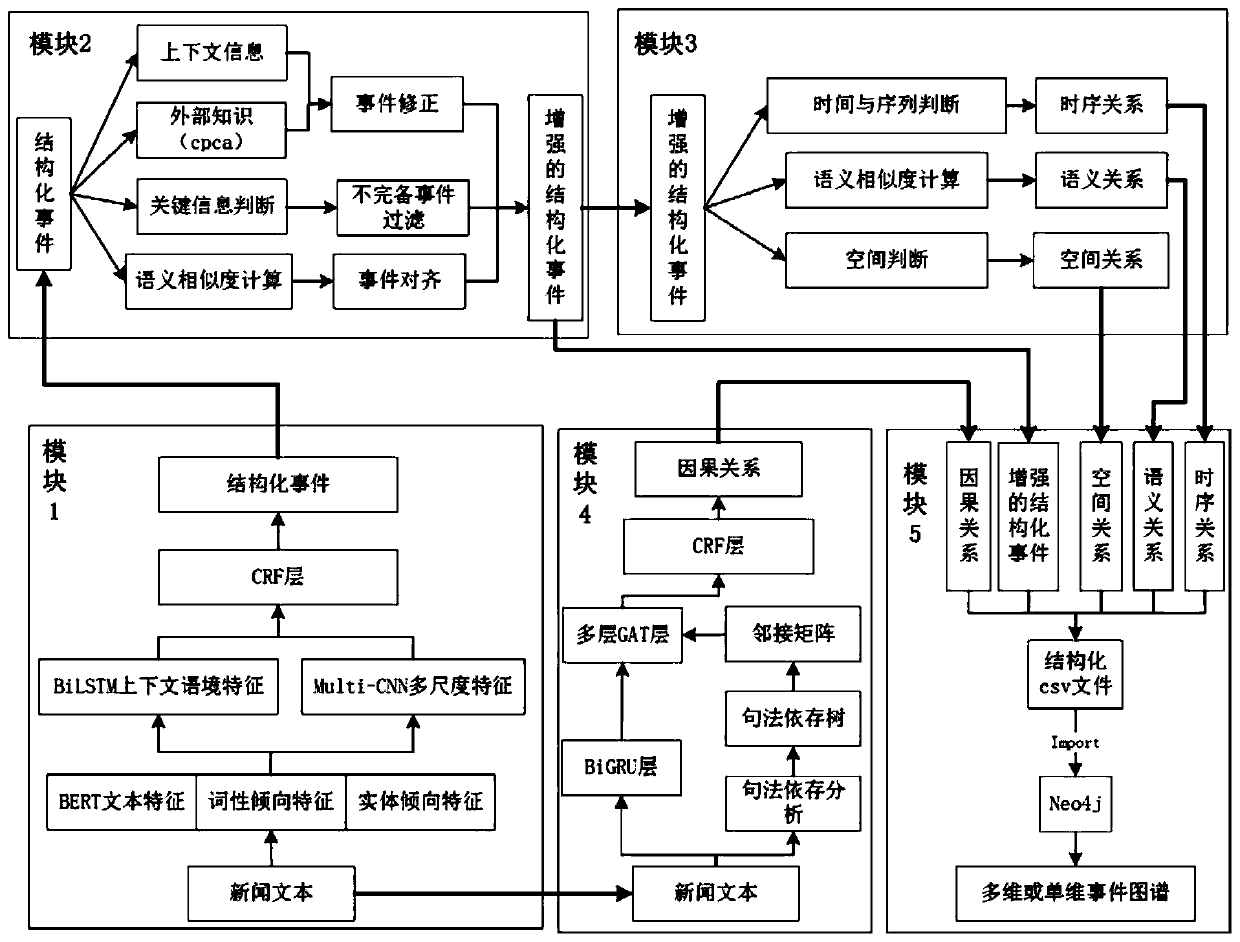

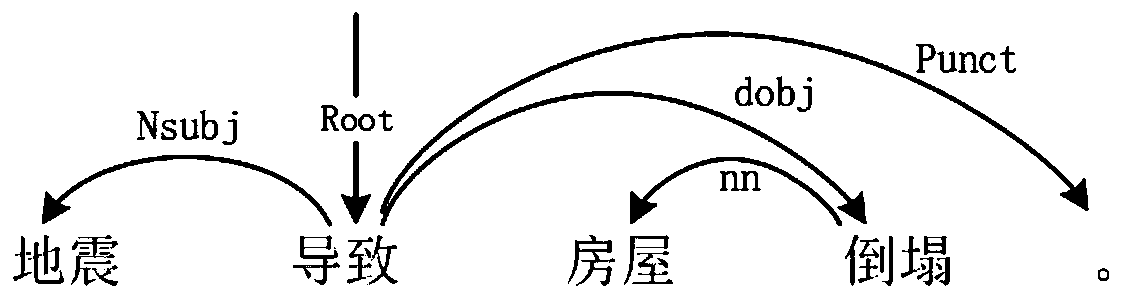

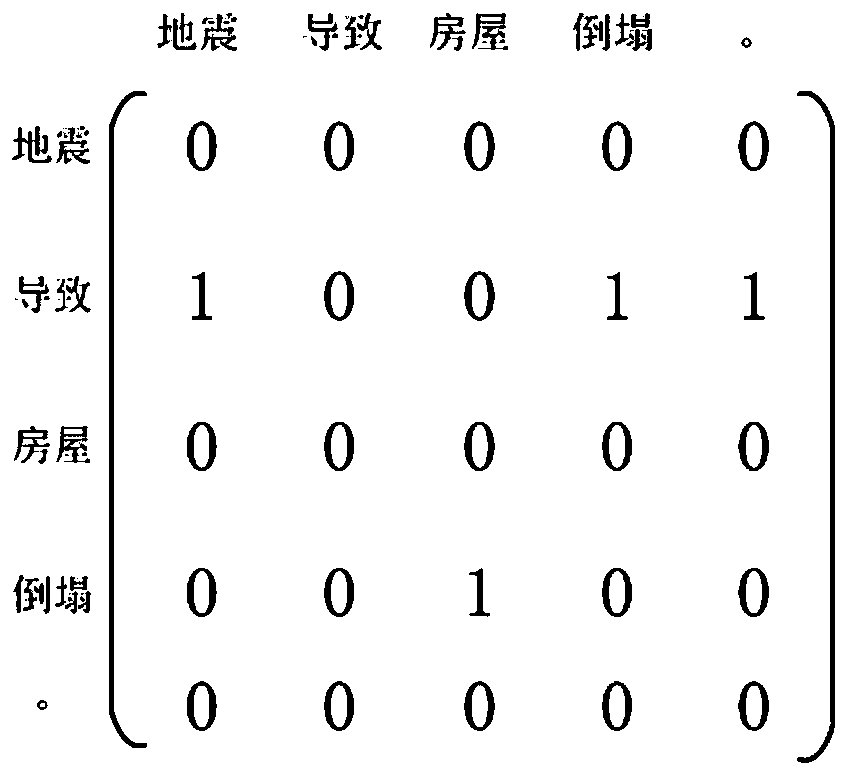

Event atlas construction system and method based on multi-dimensional feature fusion and dependency syntax

ActiveCN111581396AOvercoming the defects of the impact of the buildImprove the extraction effectSemantic analysisNeural architecturesEvent graphEngineering

The invention discloses an event atlas construction system and method based on multi-dimensional feature fusion and dependency syntax. The event graph construction method based on multi-dimensional feature fusion and dependency syntax is realized through joint learning of event extraction, event correction and alignment based on multi-dimensional feature fusion, relationship extraction based on enhanced structured events, causal relationship extraction based on dependency syntax and graph attention network and an event graph generation module. According to the event graph construction method and device, the event graph is constructed through the quintuple information of the enhanced structured events and the relations between the events in four dimensions, and the defects that in the priorart, event representation is simple and depends on an NLP tool, the event relation is single, and the influence of the relations between the events on event graph construction is not considered at the same time are overcome. According to the event atlas construction method provided by the invention, the relationships among the events in four dimensions can be randomly combined according to different downstream tasks, and the structural characteristics of the event atlas are learned to be associated with potential knowledge, so that downstream application is assisted.

Owner:XI AN JIAOTONG UNIV

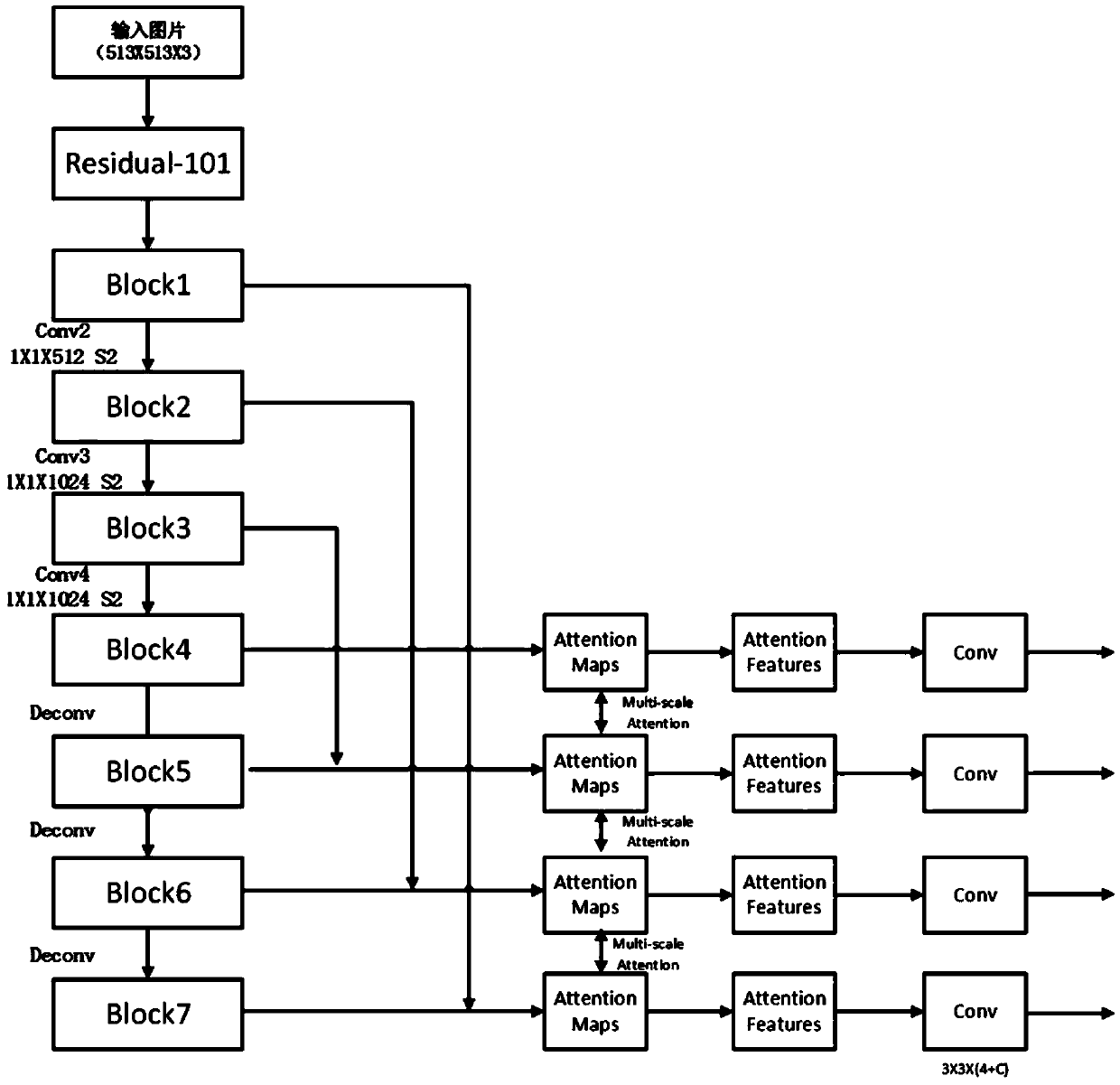

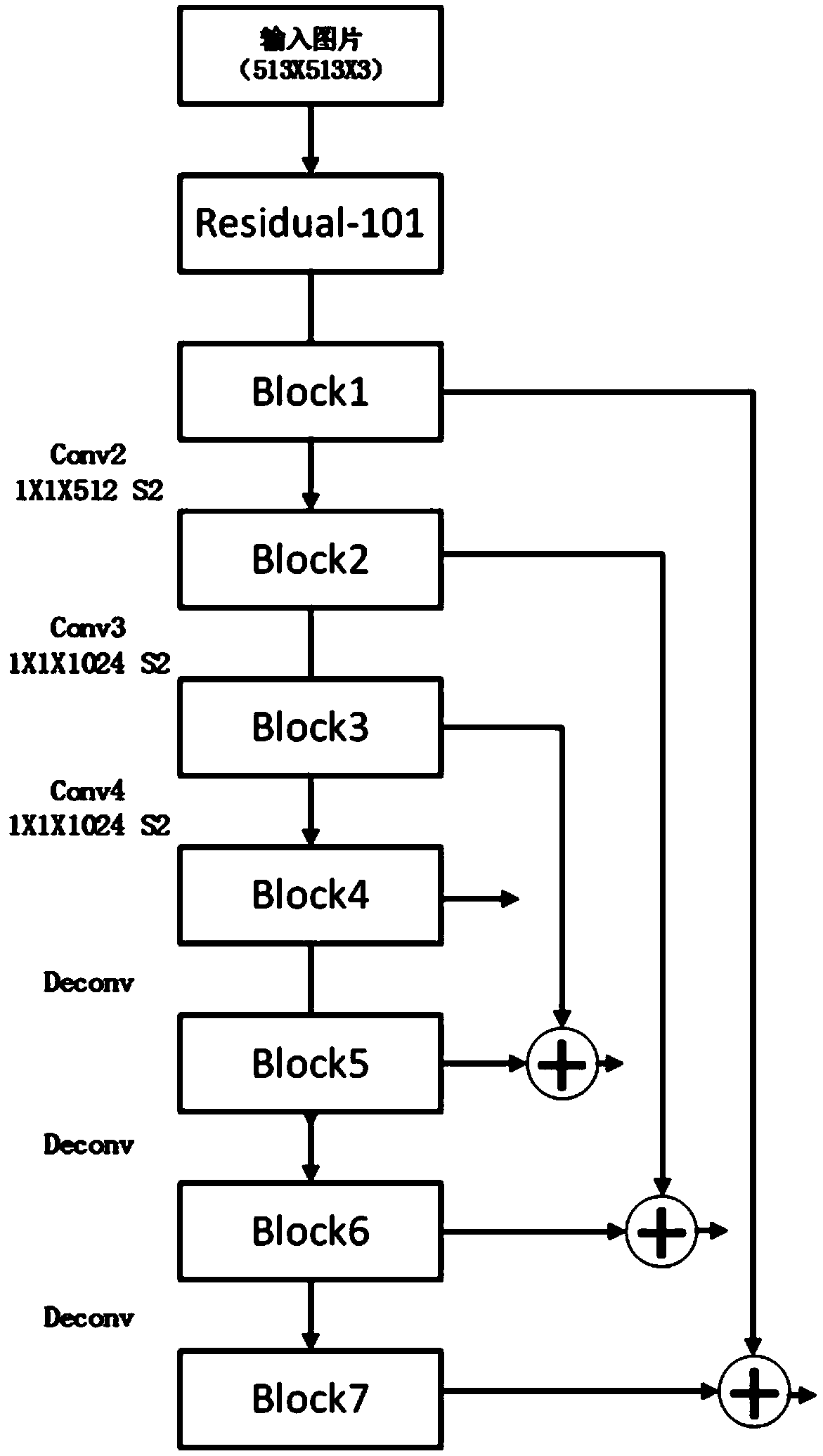

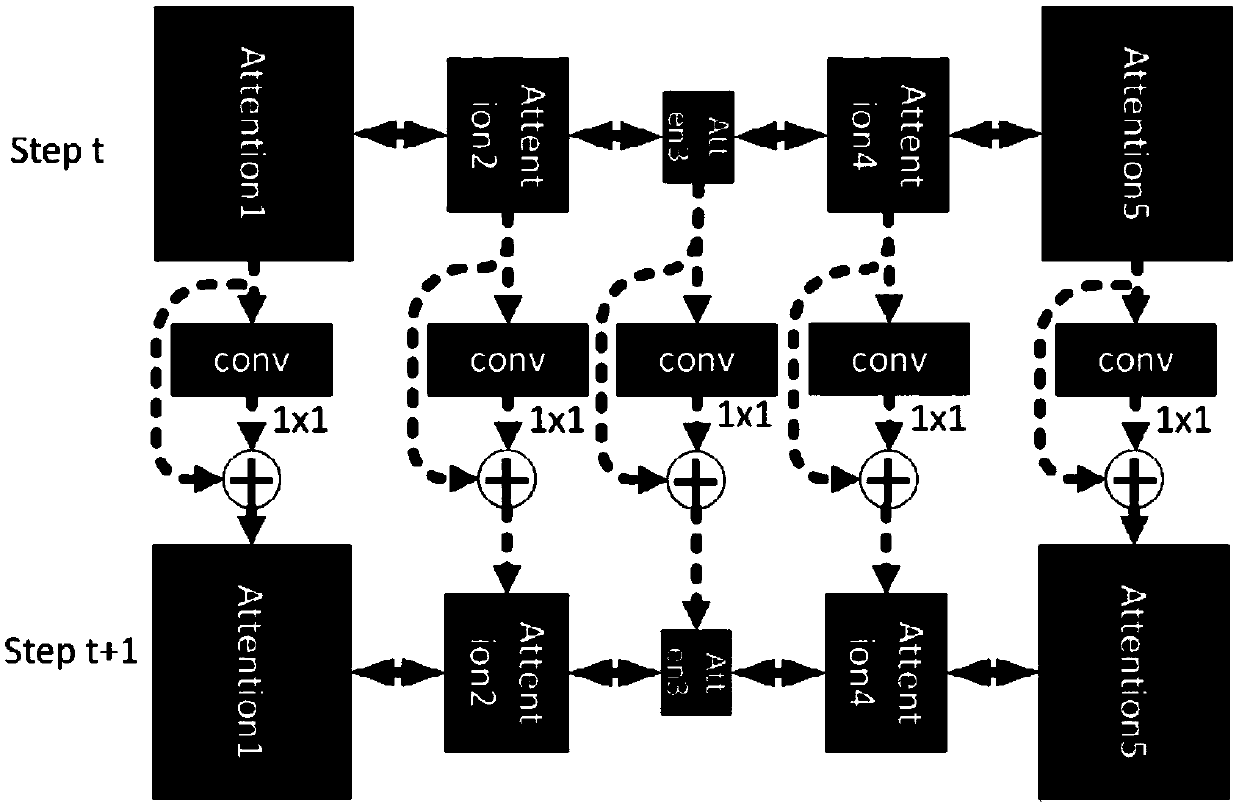

Traffic identifier detection method based on multi-scale circulation attention network

ActiveCN108647585AImprove detection resultsInternal combustion piston enginesCharacter and pattern recognitionCompound aFeature extraction

The invention discloses a traffic identifier detection method based on multi-scale circulation attention network. The method comprises the following steps: firstly, building a traffic identifier detection model, wherein the traffic identifier detection model is formed by compounding a convolutional neural network model feature extraction model for carrying out image feature extraction and a multi-scale circulation attention network model for improving small-target detection accuracy; then training the traffic identifier detection model by utilizing a reasonable training sample so as to acquirea trained traffic identifier detection model; and inputting to-be-detected images into the trained traffic identifier detection model during testing so as to acquire a detection result. According tothe method disclosed by the invention, by applying an encoder / decoder structure, the acquired features are enhanced, small targets are detected by using a multi-scale attention structure, and referring to a residual difference structure, the problems of gradient disappearance and gradient explosion are solved. Compared with the other advanced traffic identifier detection methods, the method disclosed by the invention has the advantage of competitiveness.

Owner:ZHEJIANG GONGSHANG UNIVERSITY

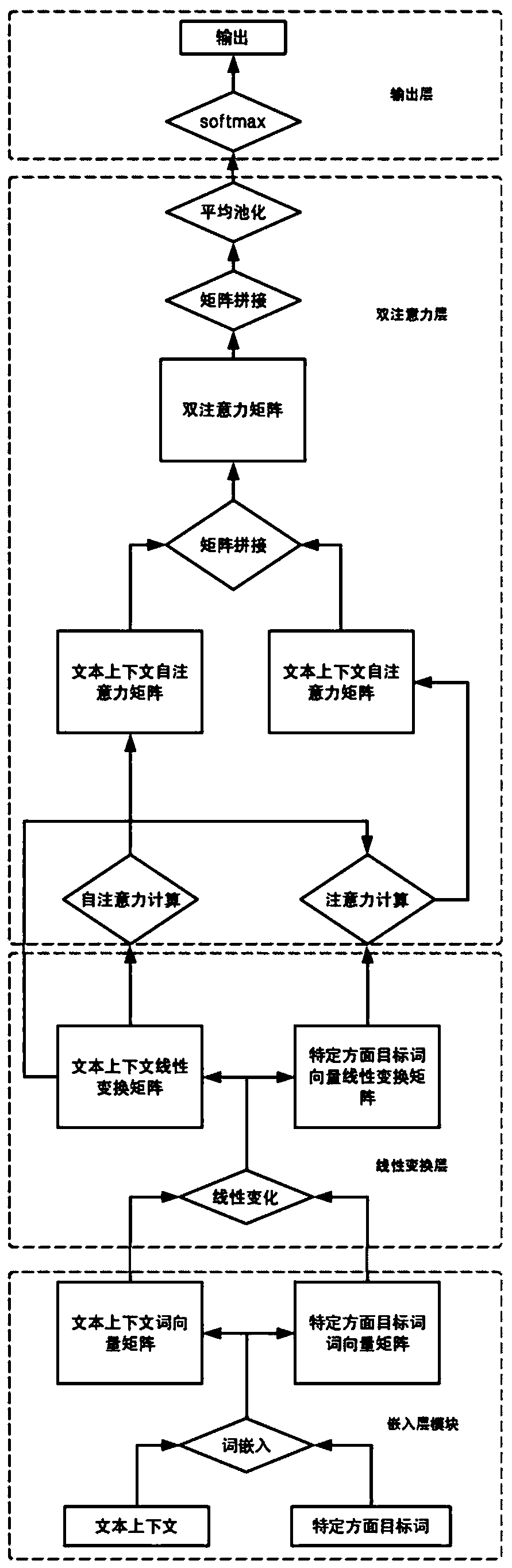

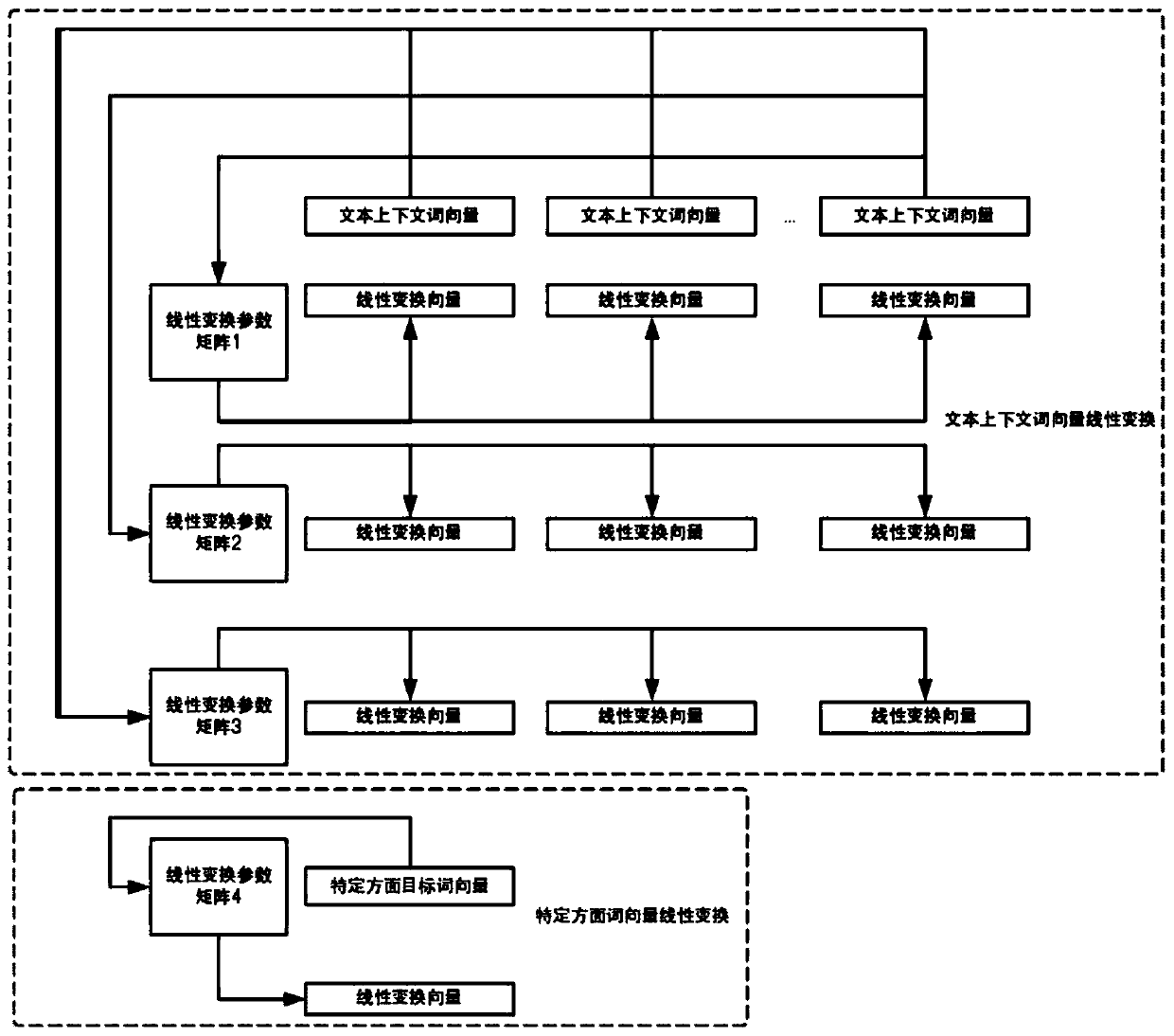

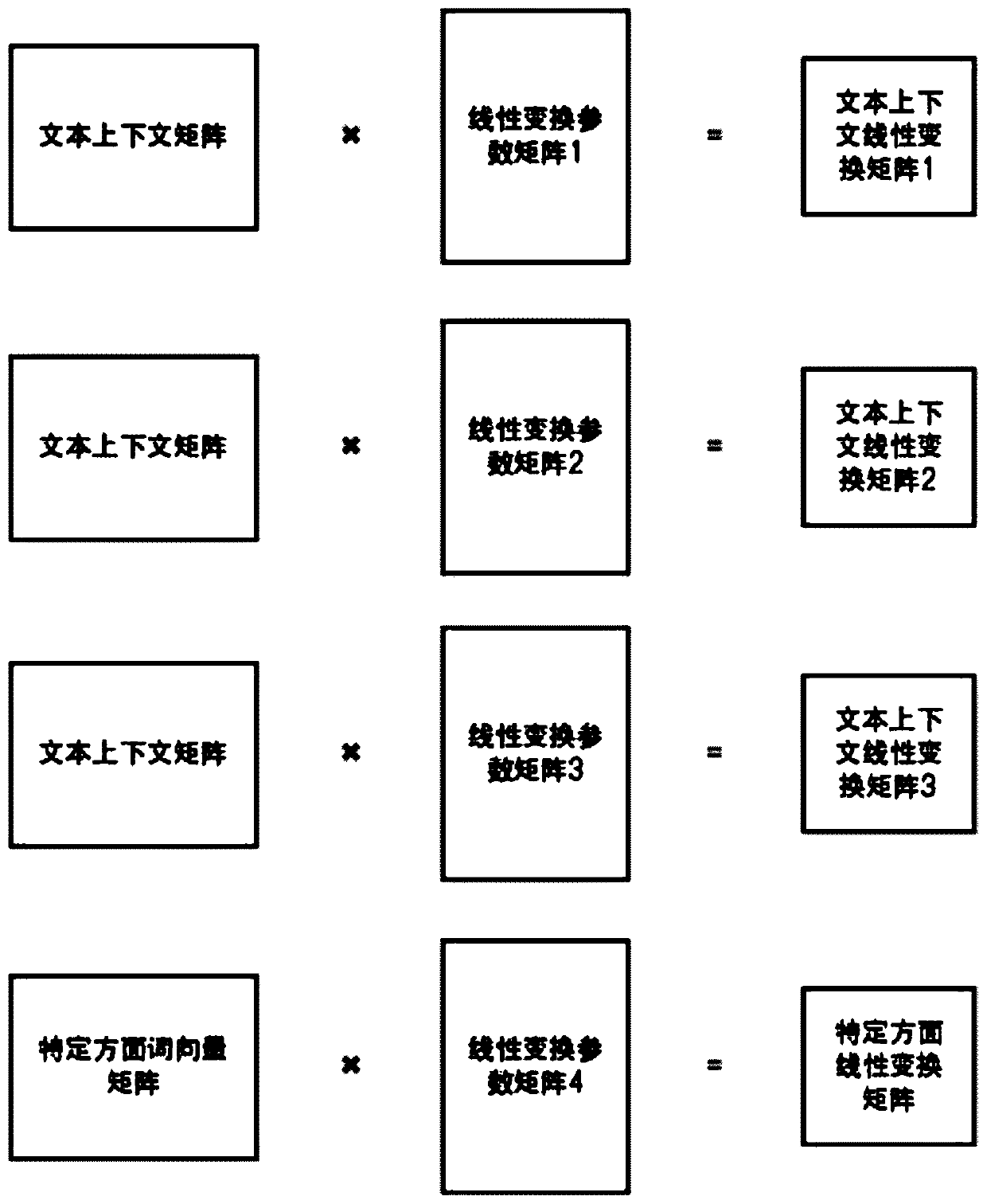

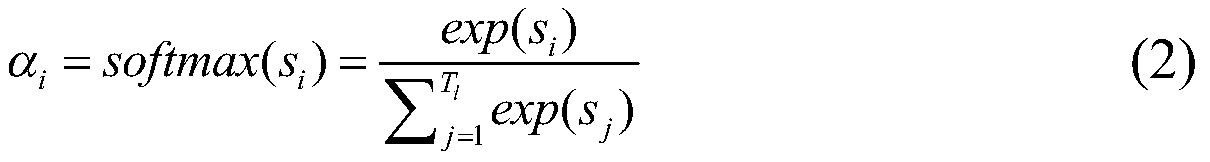

A fine-grained emotion polarity prediction method based on a hybrid attention network

ActiveCN109948165AAccurate predictionMake up for the shortcoming that it is difficult to obtain global structural informationSpecial data processing applicationsSelf attentionAlgorithm

The invention discloses a fine-grained emotion polarity prediction method based on a hybrid attention network, and aims to overcome the problems of lack of flexibility, insufficient precision, difficulty in obtaining global structure information, low training speed, single attention information and the like in the prior art. The method comprises the following steps: 1, determining a text context sequence and a specific aspect target word sequence according to a comment text sentence; 2, mapping the sequence into two multi-dimensional continuous word vector matrixes through log word embedding;3, performing multiple different linear transformations on the two matrixes to obtain corresponding transformation matrixes; 4, calculating a text context self-attention matrix and a specific aspect target word vector attention matrix by using the transformation matrix, and splicing the two matrixes to obtain a double-attention matrix; 5, splicing the double attention matrixes subjected to different times of linear change, and then performing linear change again to obtain a final attention representation matrix; and 6, through an average pooling operation, inputting the emotion polarity into asoftmax classifier through full connection layer thickness to obtain an emotion polarity prediction result.

Owner:JILIN UNIV

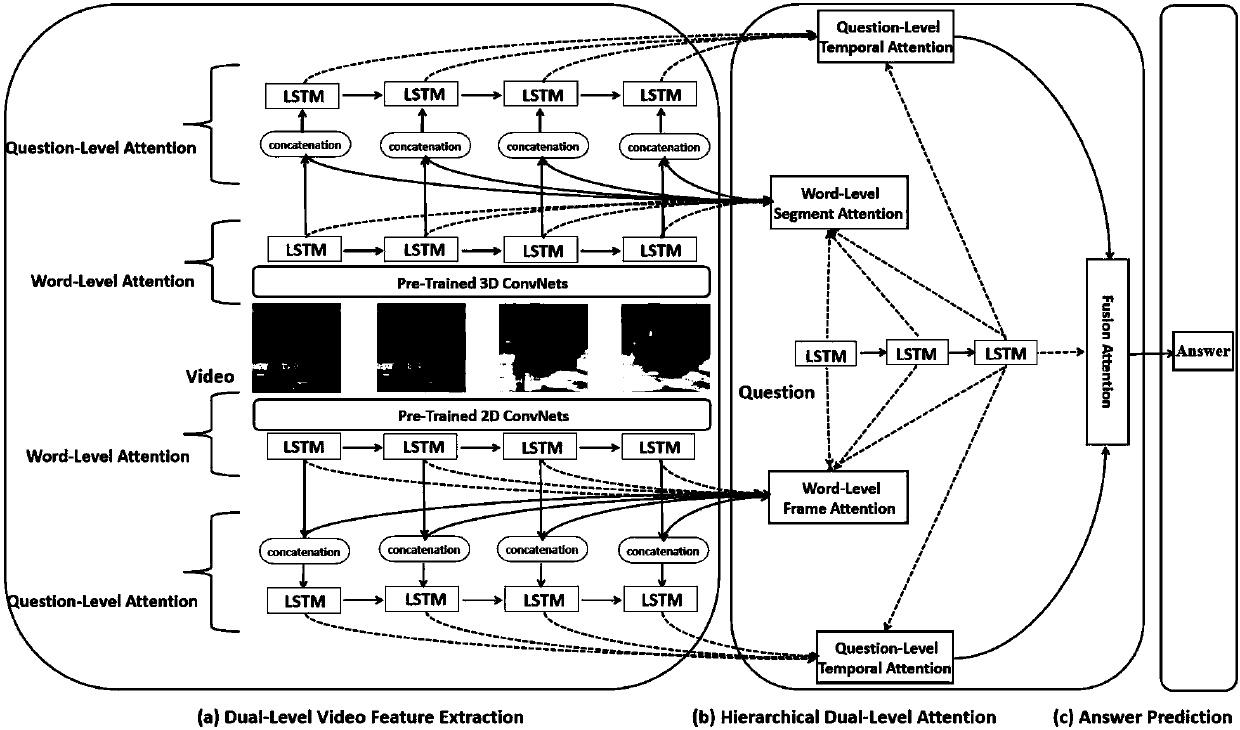

Method of using multi-layer attention network mechanism to solve video question answering

ActiveCN107766447ACharacter and pattern recognitionSpecial data processing applicationsQuestion answerAttention network

The invention discloses a method of utilizing a multi-layer attention network mechanism to solve video question answering. The method mainly includes the following steps: 1) for a group of videos, utilizing a pre-trained convolutional neural network to obtain frame-level and segment-level video expressions; 2) using a question-word-level attention network mechanism to obtain frame-level and segment-level video expressions for a question word level; 3) using a question-level time attention mechanism to obtain frame-level and segment-level video expressions related to a question; 4) utilizing aquestion-level fusion attention network mechanism to obtain joint video expressions related to the question; and 5) utilizing the obtained joint video expressions to acquire answers to the question asked for the videos. Compared with general video question answering solution, the method utilizes the multi-layer attention mechanism, and can more accurately reflect video and question characteristics, and generate the more conforming answers. Compared with traditional methods, the method achieves a better effect in video question answering.

Owner:ZHEJIANG UNIV

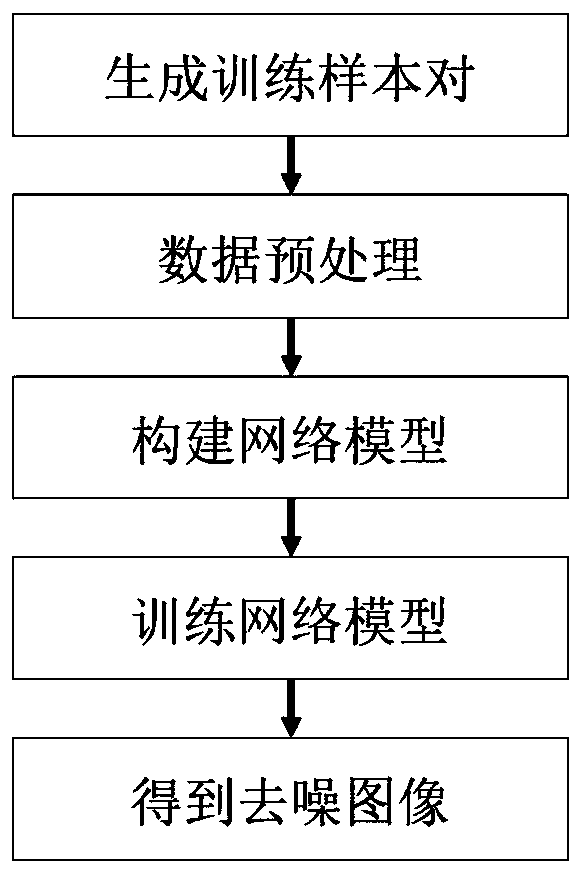

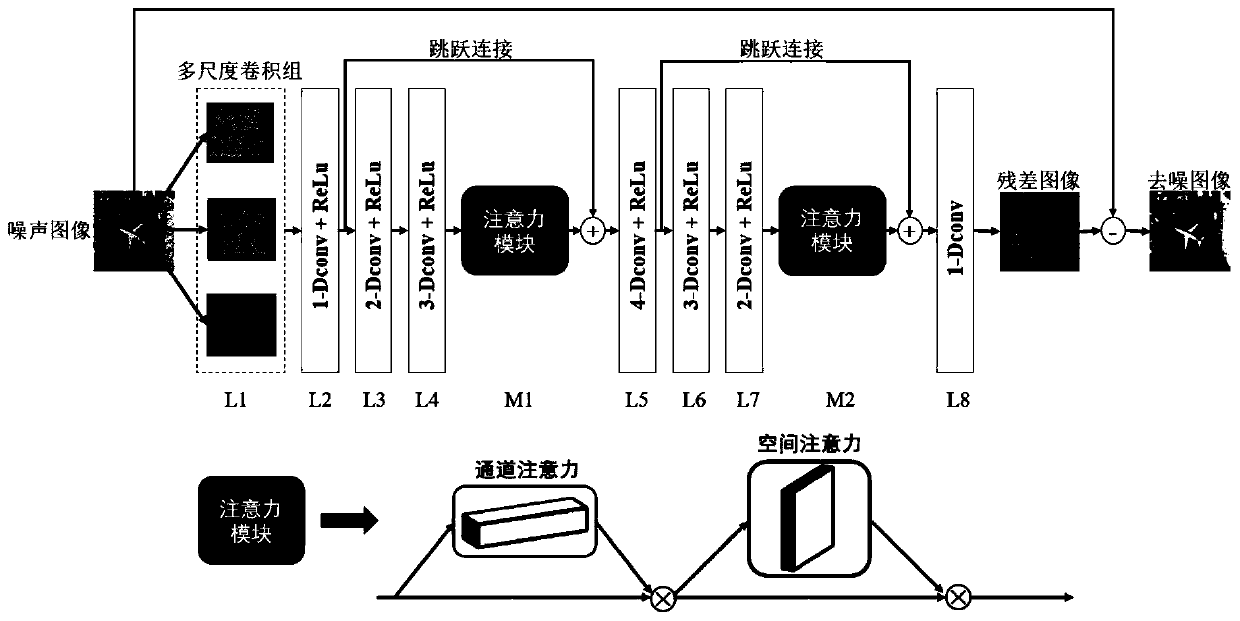

SAR image denoising method based on multi-scale cavity residual attention network

PendingCN110120020AKeep detailsGood removal effectImage enhancementImage analysisPattern recognitionImage denoising

The invention relates to an SAR image denoising method based on a multi-scale hole residual attention network. The method comprises the following steps of by extracting features of different scales ofthe image through a multi-scale convolution group, broadening a convolution kernel receptive field by utilizing cavity convolution; exuecting more context information of the image; and transmitting the feature information of the shallow layer to a deep convolutional layer by using jump connection to keep image details, adding an attention mechanism to intensively extract noise-related features, and automatically learning the SAR image speckle noise distribution form in combination with a residual error learning strategy to achieve the purpose of removing speckle noise. Experimental results show that compared with a traditional SAR image noise removal method, the method has the advantages that the speckle noise removal effect is good, the number of artificial traces is small, detail information of the image is kept, and the calculation speed is higher through the GPU.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

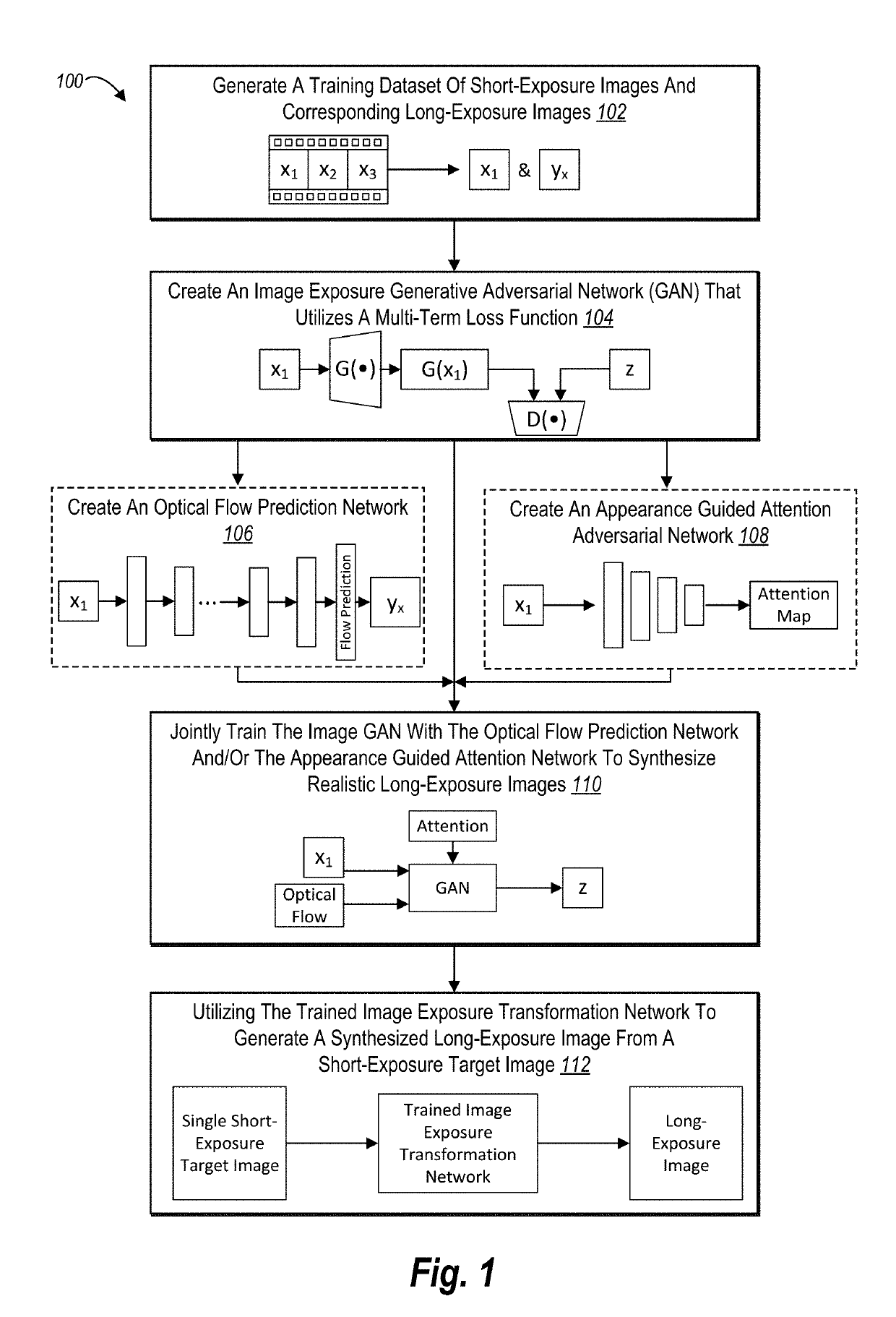

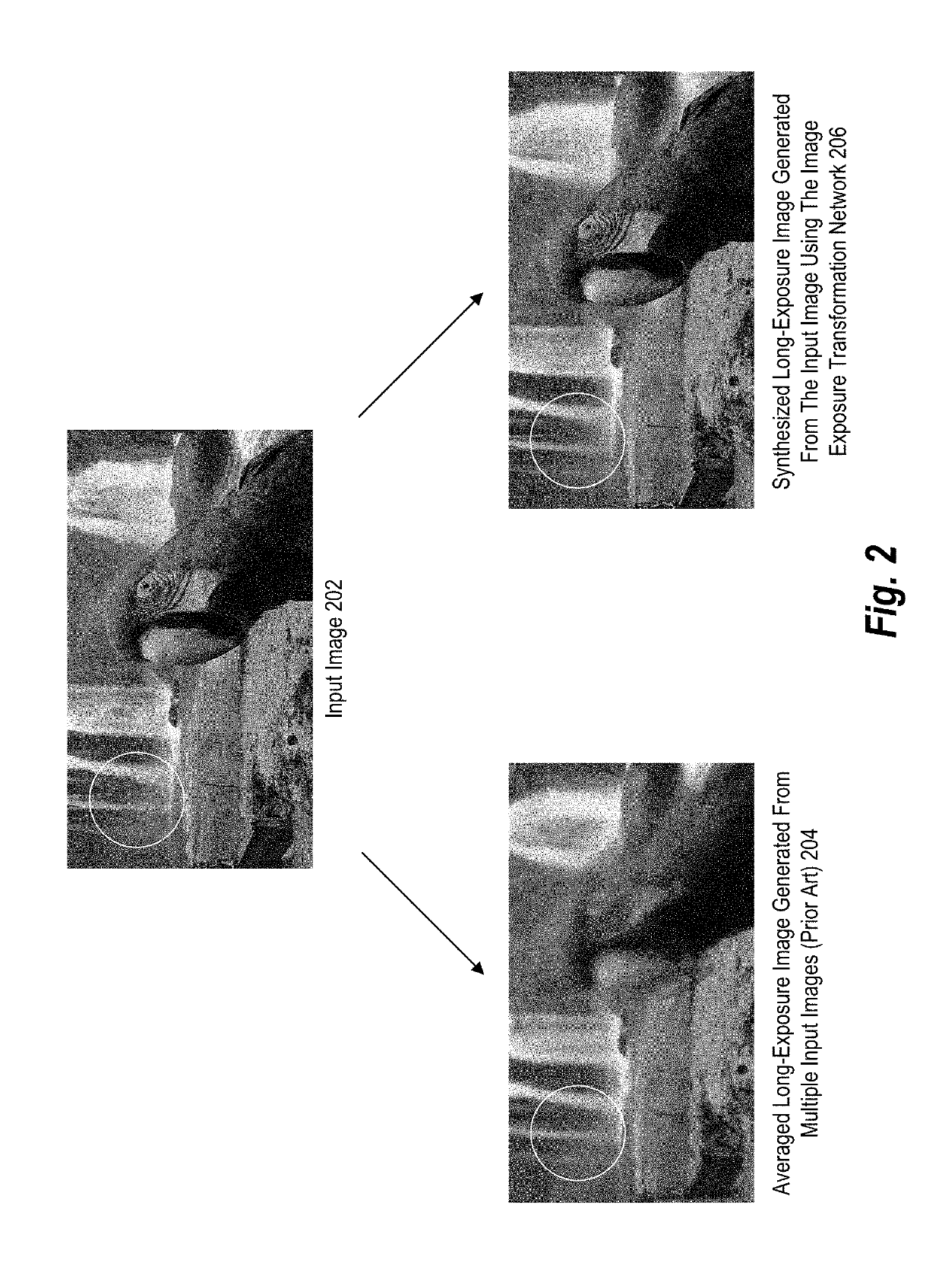

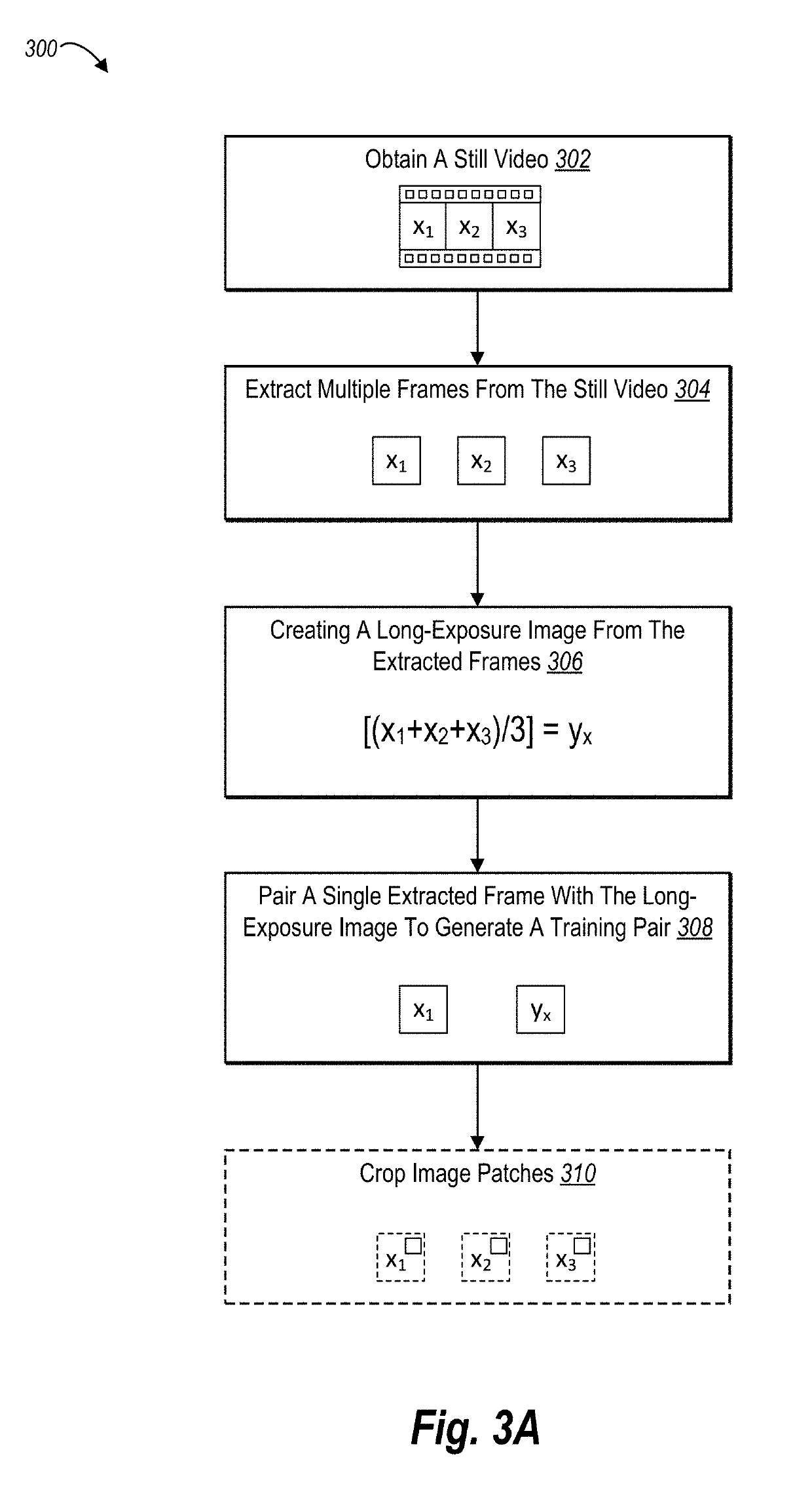

Training and utilizing an image exposure transformation neural network to generate a long-exposure image from a single short-exposure image

ActiveUS20190333198A1Accurate exposureRealistic long-exposureImage enhancementImage analysisGround truthOptical flow

The present disclosure relates to training and utilizing an image exposure transformation network to generate a long-exposure image from a single short-exposure image (e.g., still image). In various embodiments, the image exposure transformation network is trained using adversarial learning, long-exposure ground truth images, and a multi-term loss function. In some embodiments, the image exposure transformation network includes an optical flow prediction network and / or an appearance guided attention network. Trained embodiments of the image exposure transformation network generate realistic long-exposure images from single short-exposure images without additional information.

Owner:ADOBE INC

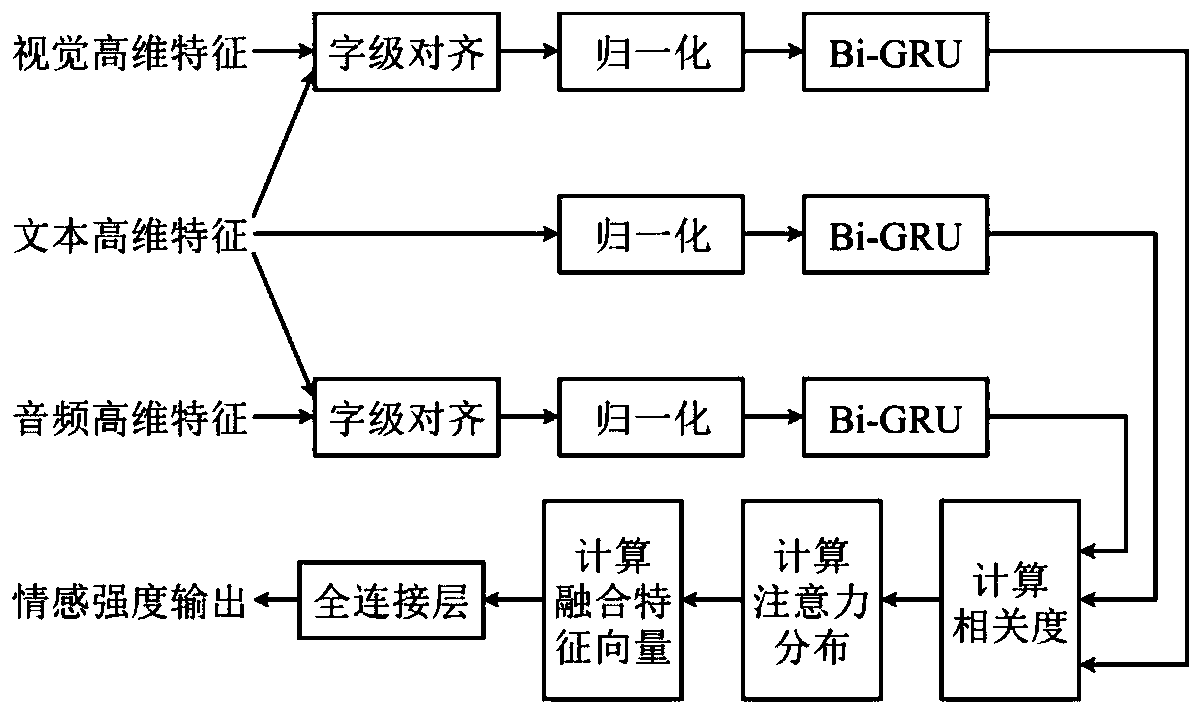

Multi-modal emotion recognition method based on fusion attention network

ActiveCN110188343AImprove accuracyOvercoming Weight Consistency IssuesCharacter and pattern recognitionNatural language data processingDiagnostic Radiology ModalityFeature vector

The invention discloses a multi-modal emotion recognition method based on a fusion attention network. The method comprises: extracting high-dimensional features of three modes of text, vision and audio, and aligning and normalizing according to the word level; then, inputting the signals into a bidirectional gating circulation unit network for training; extracting state information output by the bidirectional gating circulation unit network in the three single-mode sub-networks to calculate the correlation degree of the state information among the multiple modes; calculating the attention distribution of the plurality of modalities at each moment; wherein the state information is the weight parameter of the state information at each moment; and weighting and averaging state information ofthe three modal sub-networks and the corresponding weight parameters to obtain a fusion feature vector as input of the full connection network, a to-be-identified text, inputting vision and audio intothe trained bidirectional gating circulation unit network of each modal, and obtaining final emotion intensity output. According to the method, the problem of weight consistency of all modes during multi-mode fusion can be solved, and the emotion recognition accuracy under multi-mode fusion is improved.

Owner:ZHEJIANG UNIV OF TECH

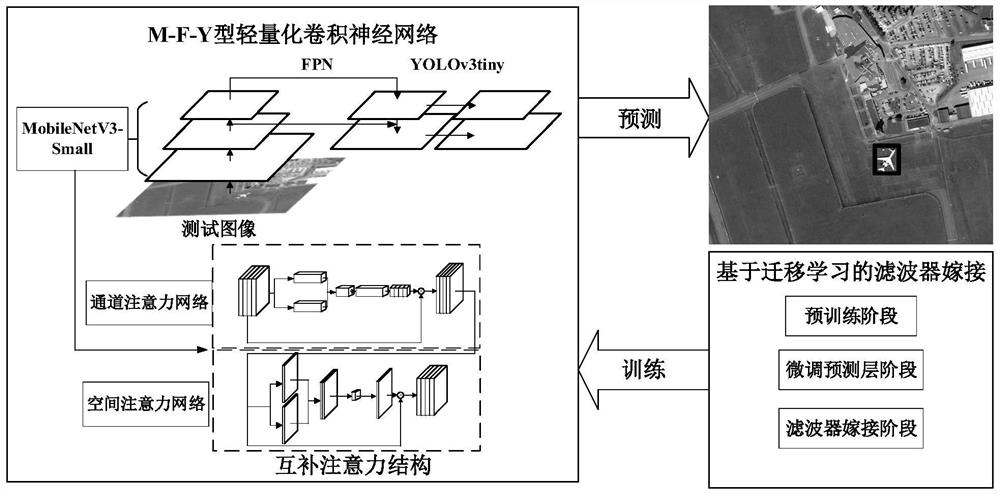

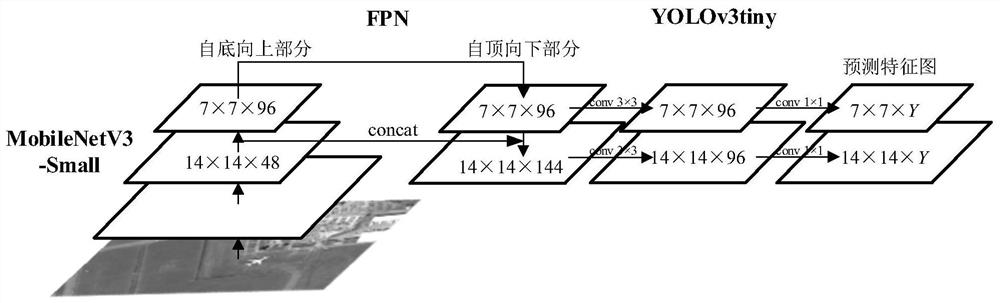

High-resolution remote sensing image target detection method of M-F-Y type lightweight convolutional neural network

ActiveCN111666836AImprove performanceReduce the amount of parametersScene recognitionNeural architecturesImage resolutionNetwork structure

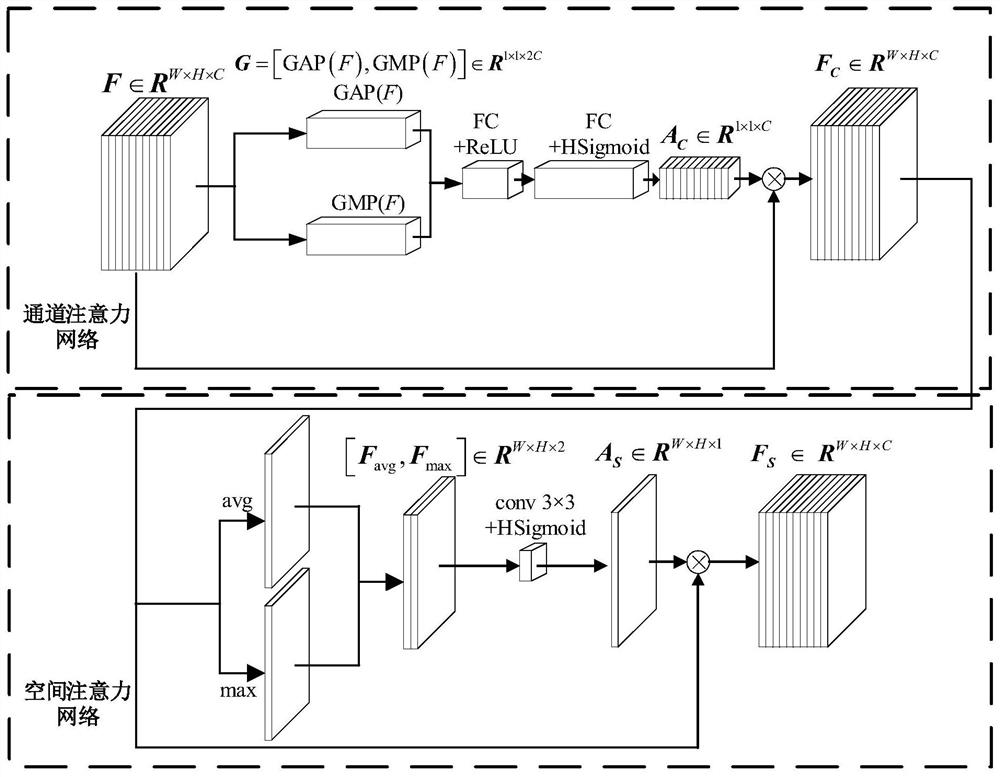

The invention discloses a high-resolution remote sensing image target detection method of an M-F-Y type lightweight convolutional neural network, and belongs to the field of remote sensing. The high-resolution remote sensing image target detection method comprises the following steps: firstly, constructing a feature pyramid network structure FPN on the basis of a lightweight convolutional neural network (CNN) model MobileNetV3-Small; extracting a high-resolution remote sensing image, fusing multi-scale depth features, and constructing an M-F-Y type lightweight convolutional neural network by jointly utilizing a YOLOv3tiny target detection framework; then, by constructing a complementary attention network structure, improving the attention to spatial position information of the target whileinhibiting a complex background; and finally, using a filter grafting strategy training model based on transfer learning to realize high-resolution remote sensing image target detection. The high-resolution remote sensing image target detection method can improve the target detection accuracy of the high-resolution remote sensing image while reducing the constraint on the high-speed computing power of the platform through less parameter quantity and lower delay, and can provide technical accumulation for the practicability of the target detection of the high-resolution remote sensing image.

Owner:BEIJING UNIV OF TECH

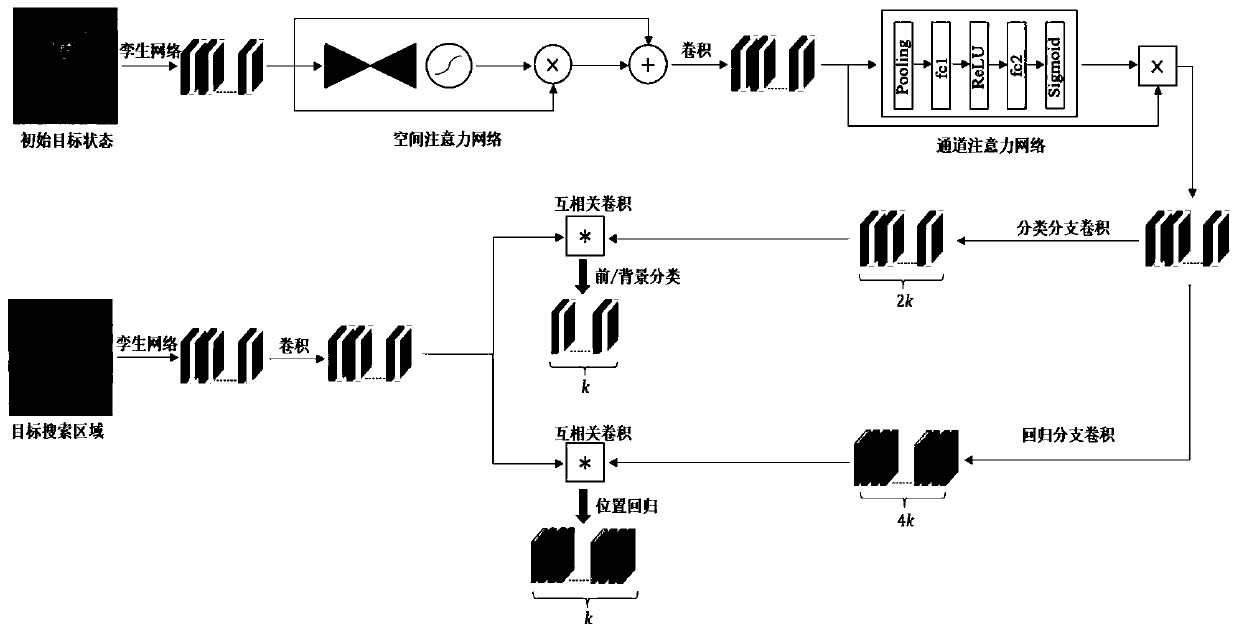

Twin candidate region generation network target tracking method based on attention mechanism

ActiveCN110335290AImprove discrimination abilityImprove accuracyImage enhancementImage analysisPattern recognitionImaging processing

The invention relates to a twin candidate region generation network target tracking method based on an attention mechanism, and belongs to the technical field of image processing. The twin candidate region generation network target tracking method comprises the following specific steps: 1, extracting initial target template features and target search region features by using a twin network; 2, constructing a spatial attention network to enhance a target template foreground and suppress a semantic background; 3, constructing a channel attention network to activate strong correlation characteristics of the target template, and eliminating redundancy; and 4, constructing a candidate region generation network to realize multi-scale target tracking. The twin candidate region generation networktarget tracking method has the advantages that the attention mechanism is used for constructing the adaptive target appearance feature model; the target foreground is enhanced; the semantic backgroundis inhibited; and the difference features of the target foreground and the interference background are highlighted; redundant information is removed, so that the efficient appearance feature expression capacity is obtained, and the target drifting problem is effectively relieved.

Owner:DALIAN UNIV OF TECH

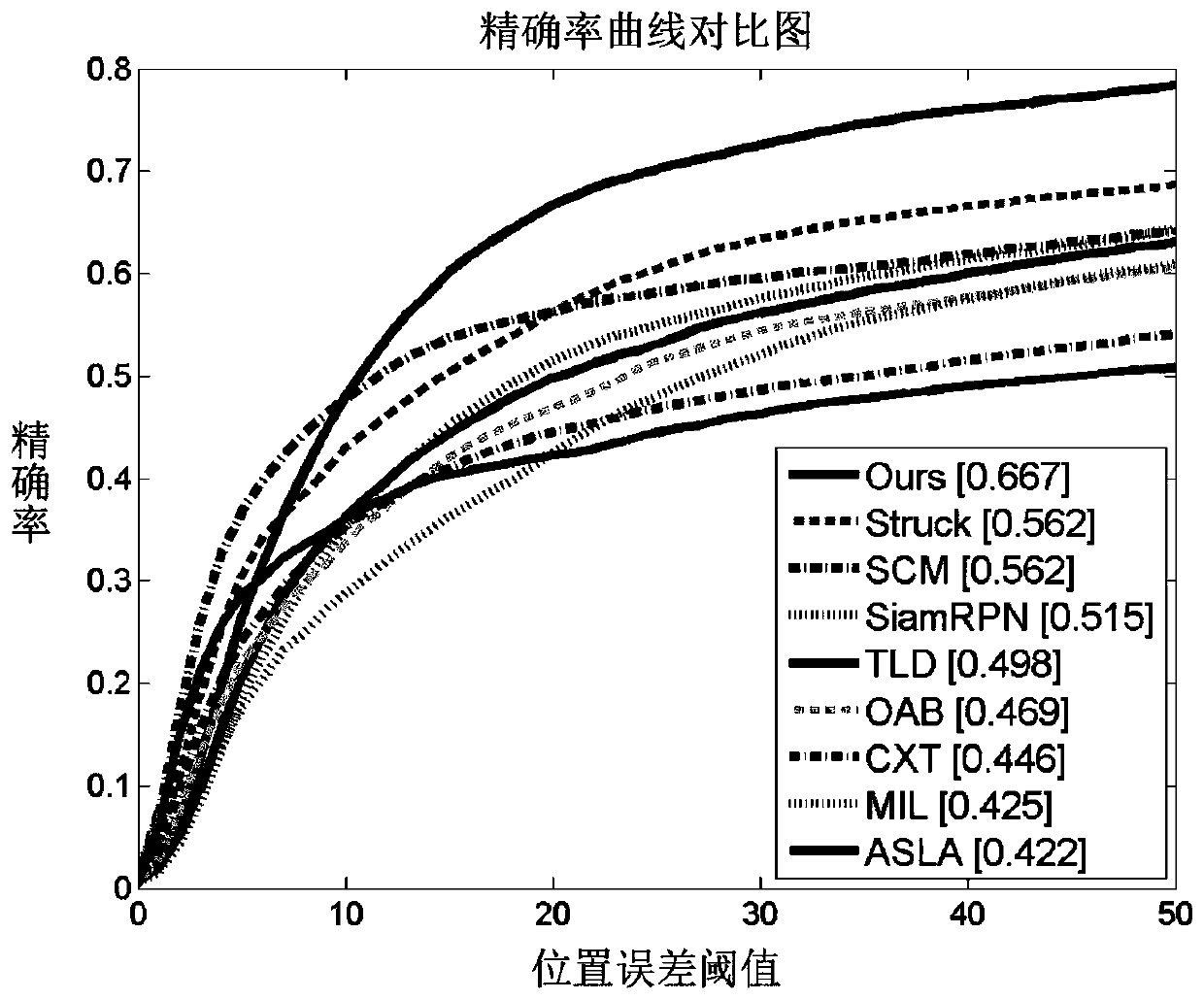

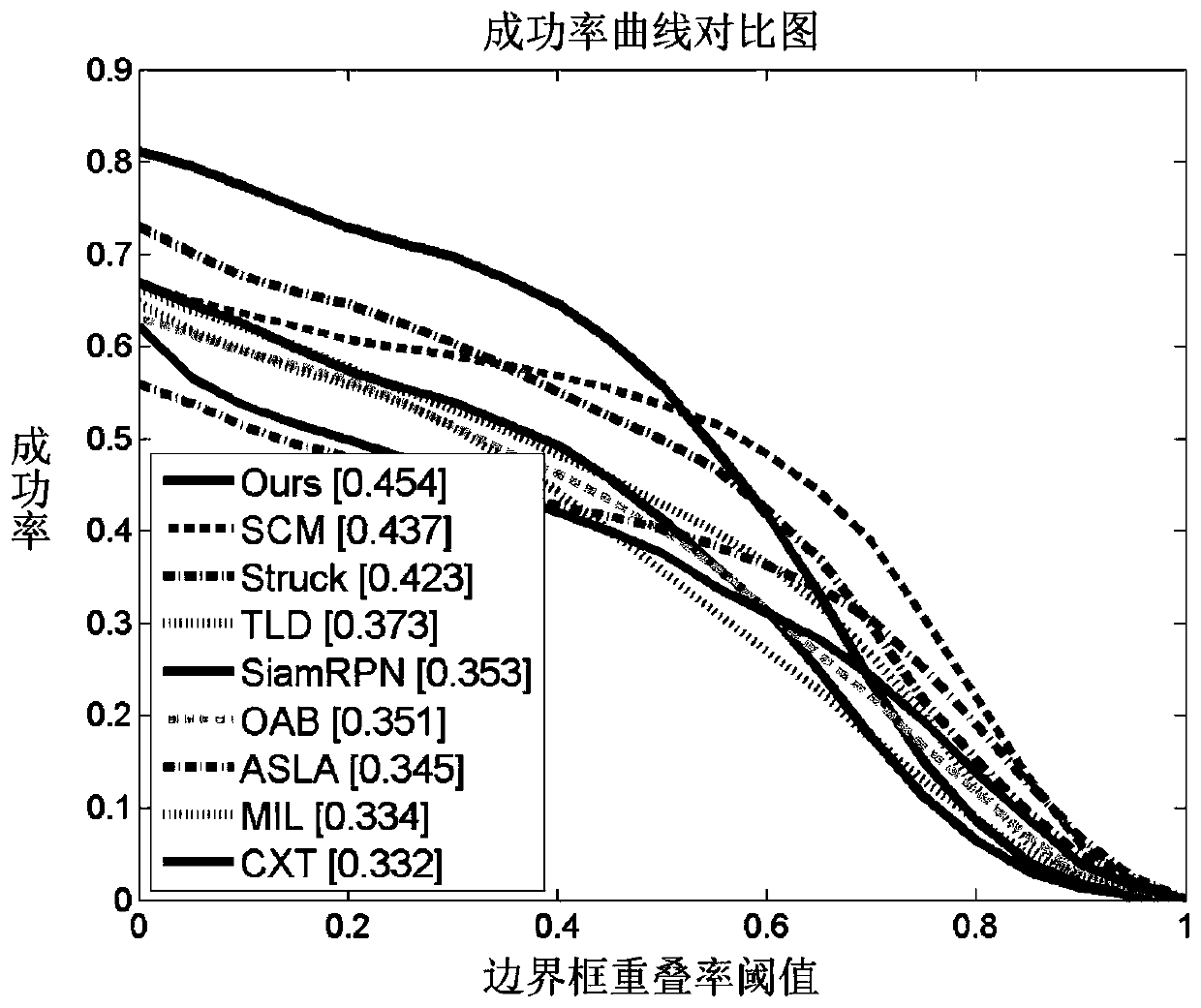

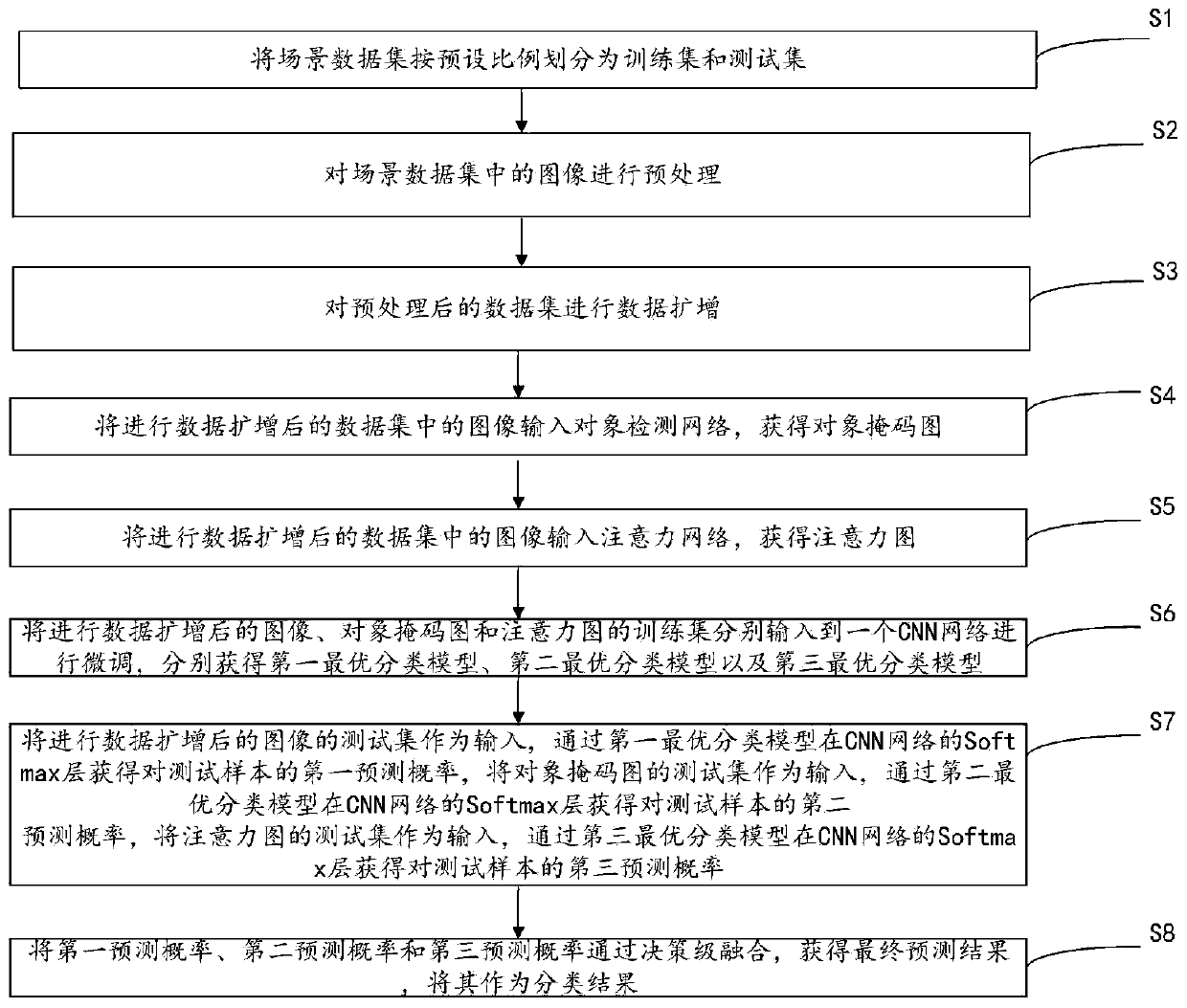

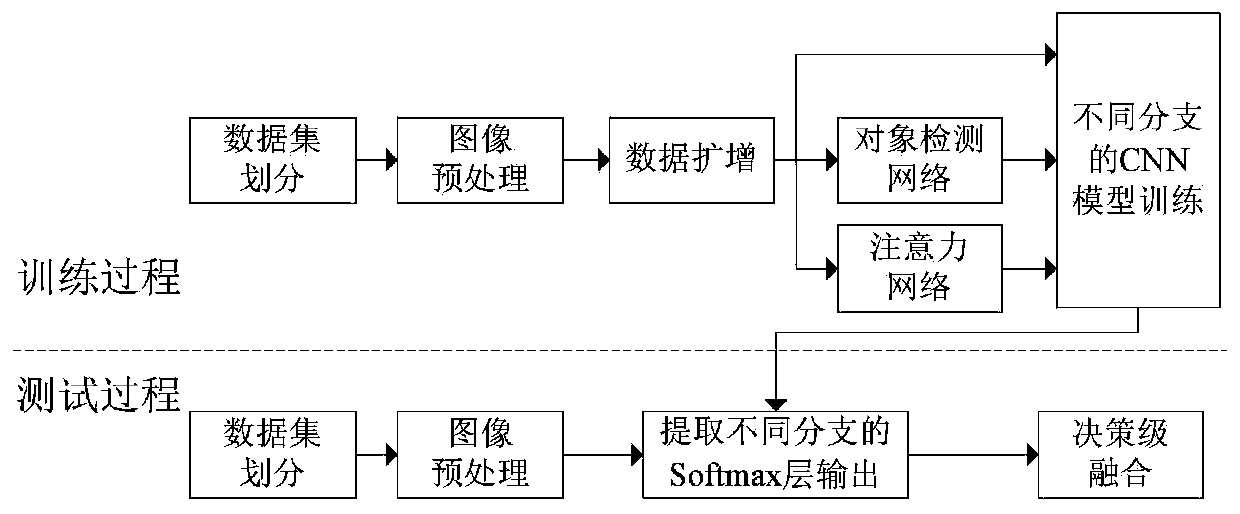

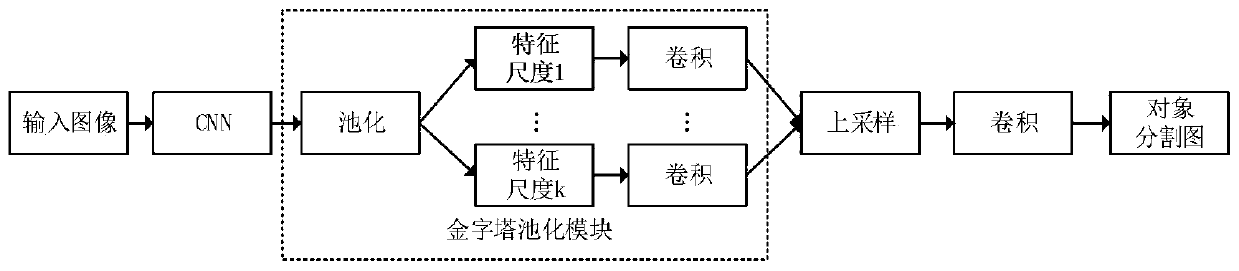

Remote sensing image scene classification method based on multi-branch convolutional neural network fusion

ActiveCN110443143AImprove classification performanceEasy to detectScene recognitionData setClassification methods

The invention discloses a remote sensing image scene classification method based on multi-branch convolutional neural network fusion. The method comprises the following steps: firstly, randomly dividing a scene data set into a training set and a test set in proportion; performing preprocessing and data amplification on the data set; obtaining an object mask graph and an attention graph according to the processed data through an object detection network and an attention network respectively; respectively inputting the original image, the object mask image and the attention image training set into a CNN network for fine adjustment; obtaining three groups of test sets, respectively obtaining optimal classification models, respectively obtaining outputs of Softmax layers through the optimal classification models by taking the three groups of test sets as inputs, and finally obtaining a final prediction result by fusing the outputs of the three groups of Softmax layers through a decision-making level. The classification accuracy and the classification effect can be improved.

Owner:WUHAN UNIV OF SCI & TECH

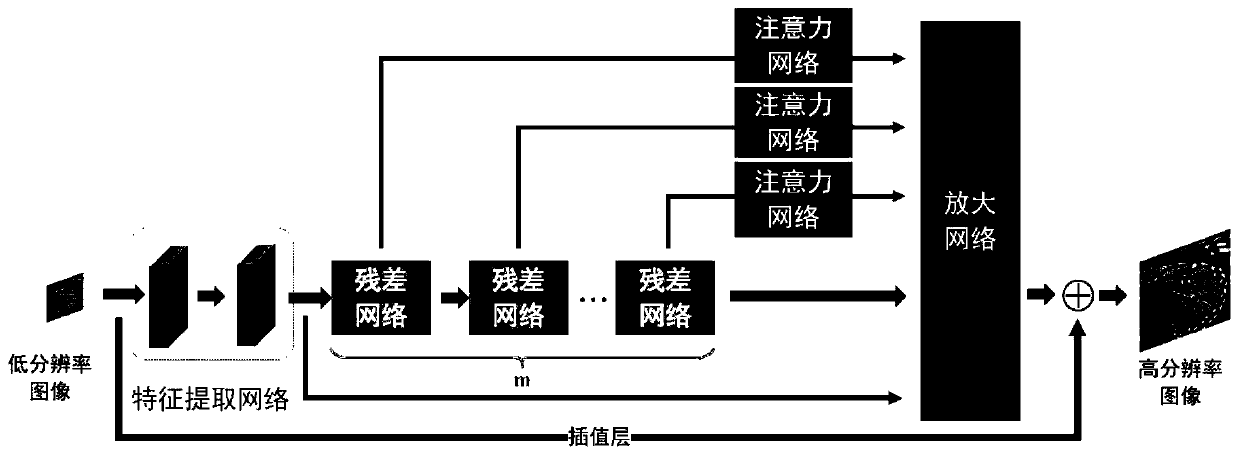

Image reconstruction model training method and image super-resolution reconstruction method and device

InactiveCN110033410AImprove visual effectsEasy extractionGeometric image transformationNeural architecturesFeature extractionImage resolution

The invention discloses an image reconstruction model training method and an image super-resolution reconstruction method and device, and belongs to the technical field of image super-resolution. Themethod comprises the steps of obtaining a sample set by preprocessing an image, establishing an image reconstruction model for image super-resolution reconstruction; using the sample set to train andtest the image reconstruction model; in the image reconstruction model, using a feature extraction network for performing feature extraction on a low-resolution image and inputting into a first residual network, wherein the m cascaded residual networks are respectively used for carrying out feature extraction on an output image of a previous network and then superposing the output image with the image, m attention networks are respectively used for extracting images of a region of interest from the output images of the m residual network, and an amplification network is used for fusing and amplifying the output images of the attention networks and the m residual networks, so that the output images and the image subjected to bicubic interpolation amplification are fused by the first fusionlayer. According to the present invention, the visual effect of the reconstructed image can be effectively improved.

Owner:HUAZHONG UNIV OF SCI & TECH

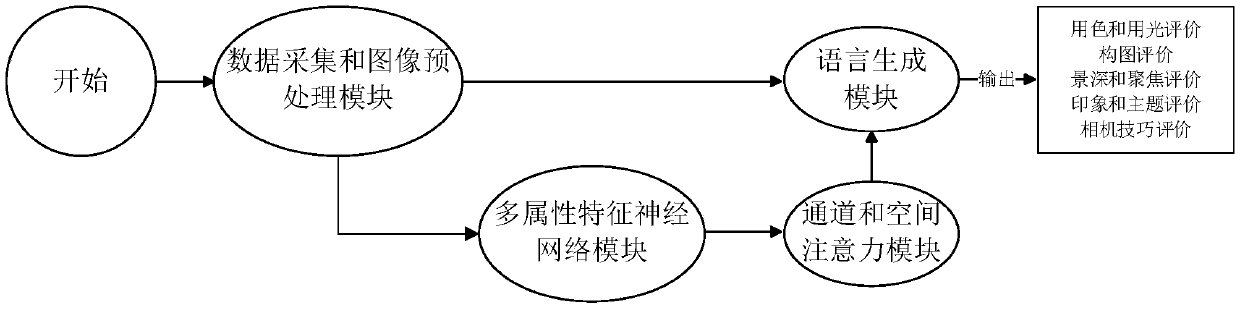

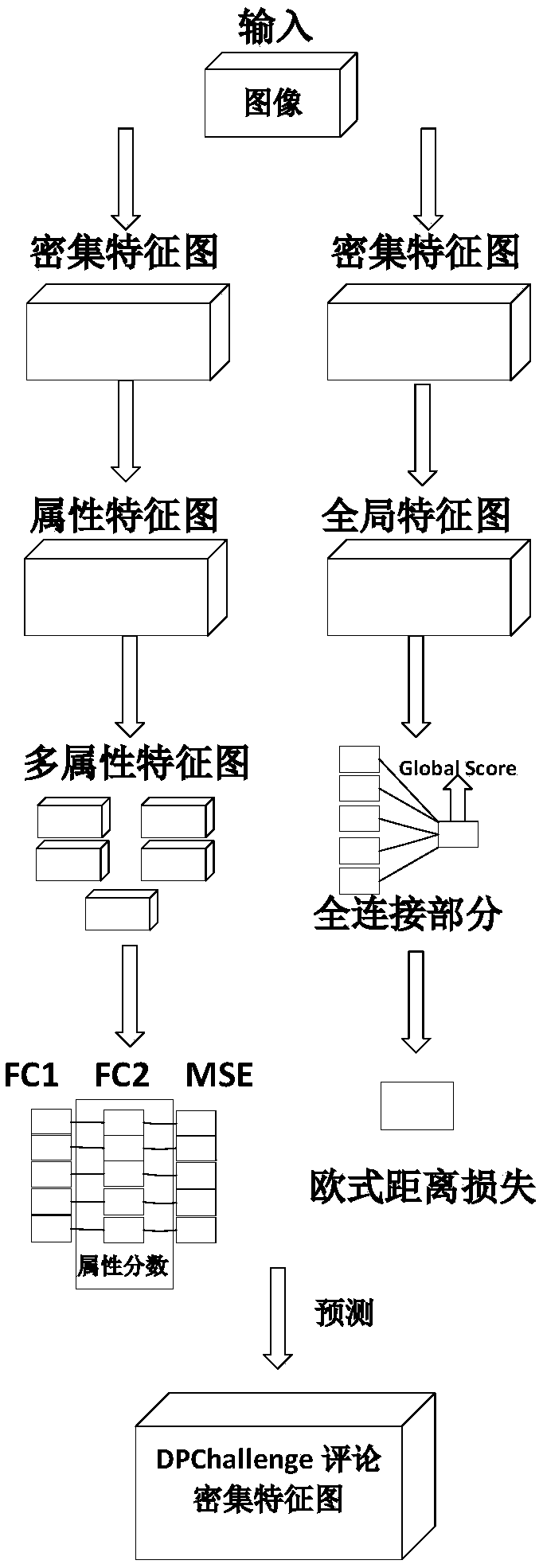

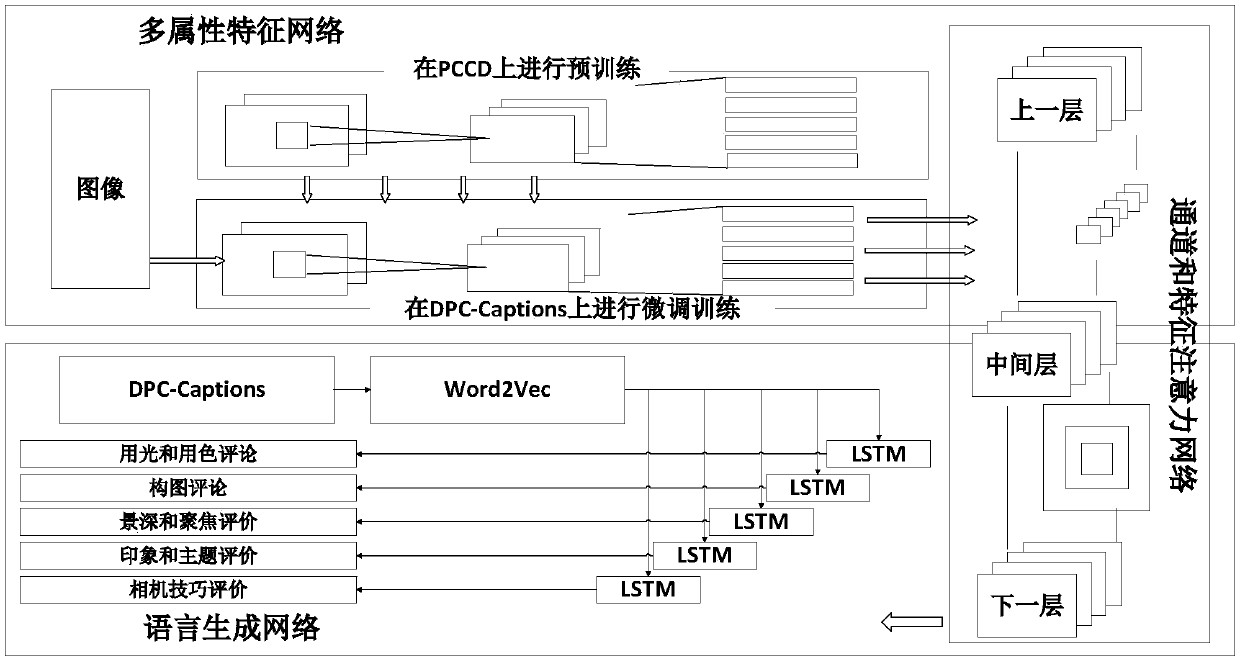

A multi-attribute image aesthetics evaluation system based on attention mechanism

ActiveCN109544524ALanguage evaluation is objective and comprehensiveGrammaticalImage enhancementImage analysisPattern recognitionData set

The invention provides a multi-attribute image aesthetics evaluation system based on attention mechanism. Using machine learning methods, A composite neural network model is trained by using large-scale photograph dataset and corresponding comment information, this model can extract the multi-attribute aesthetic features of image effectively by convolution operation, the image features are extracted from the multi-attribute feature extraction network of the model, Features are further processed in the channel and spatial attention network, and finally the final comments are generated in the language generation network through the long-short memory network unit. The model can automatically output comments of different attributes according to the image characteristics. When an image is inputted, the generated model considers the characteristics of the image from different attributes and evaluates the aesthetic quality of the image in natural language. The method is easily realized by software, and the invention can be widely applied to computer vision, image evaluation and the like.

Owner:中共中央办公厅电子科技学院

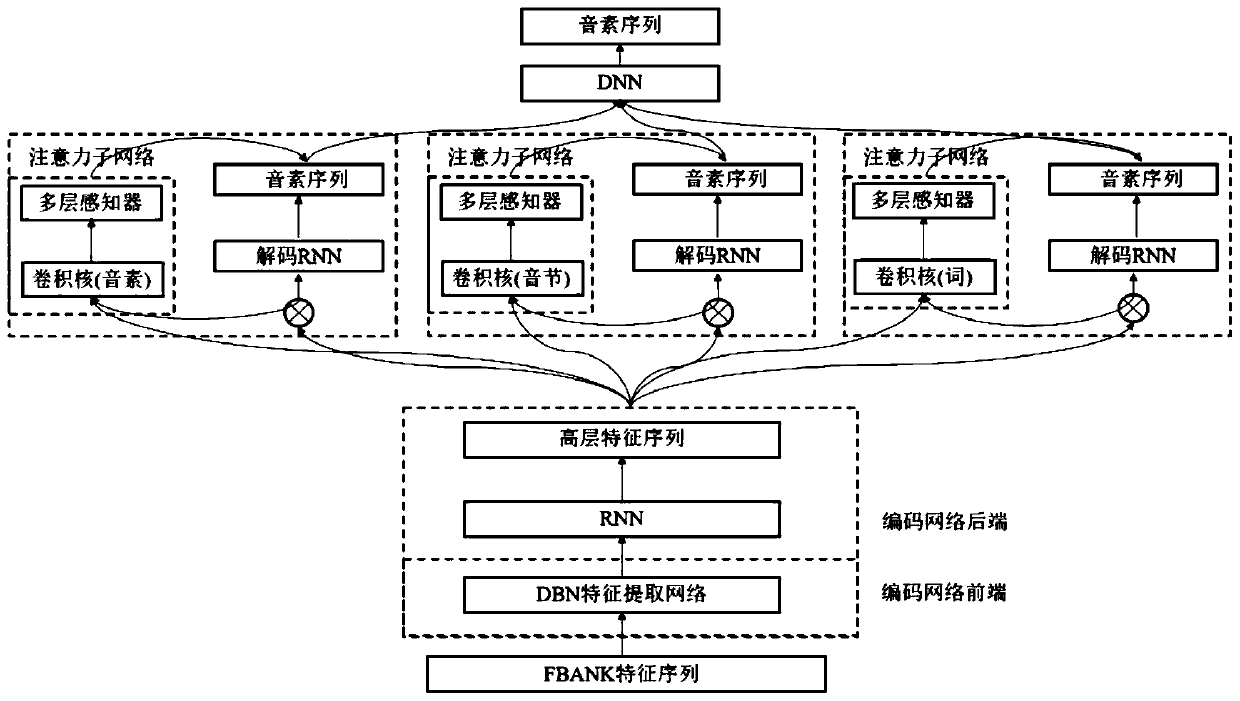

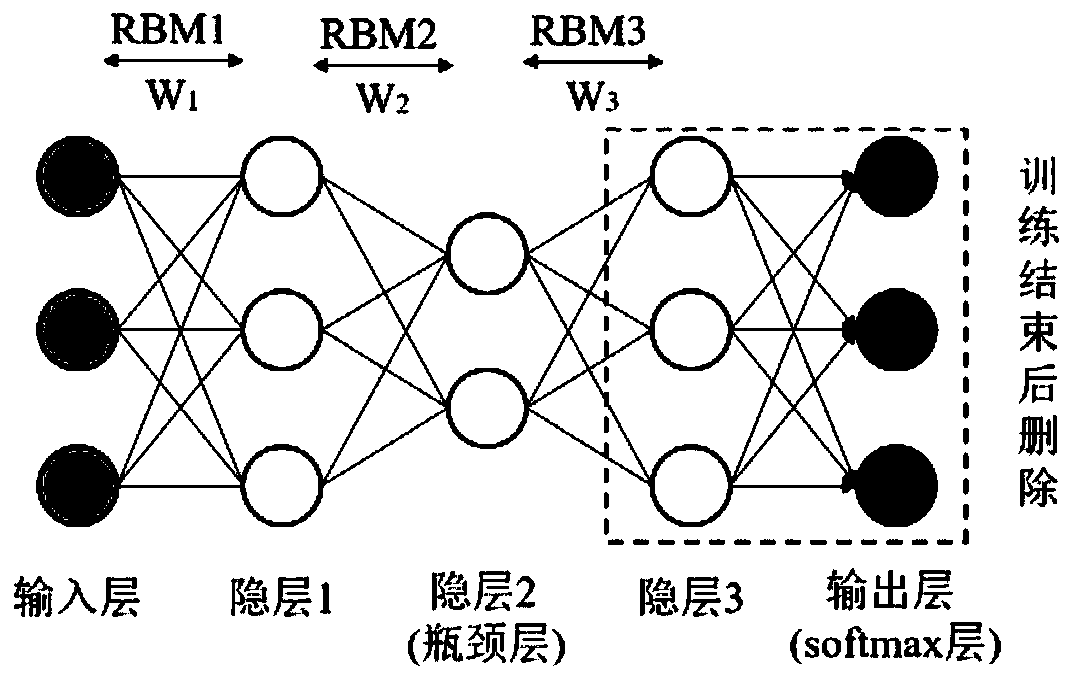

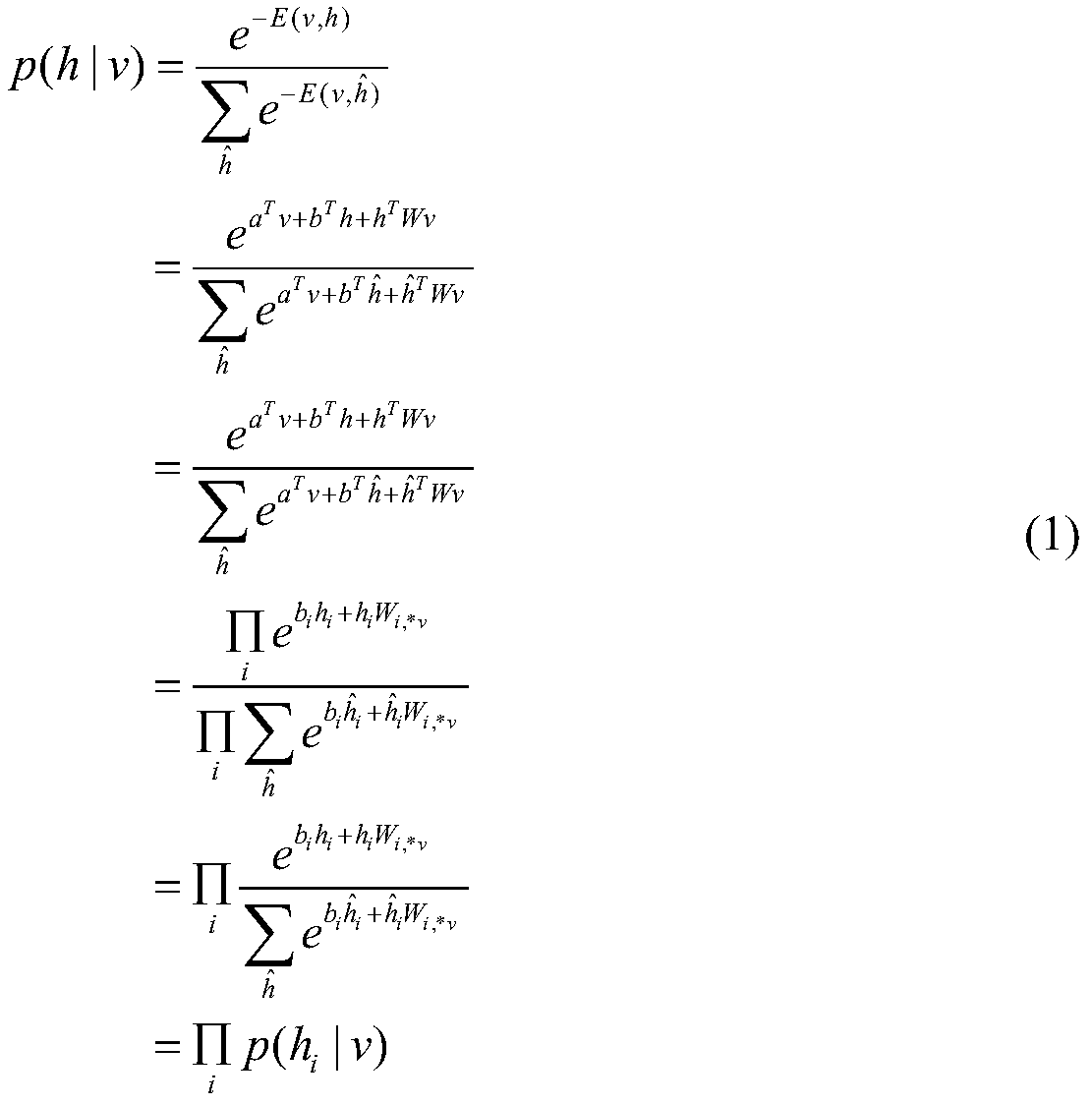

Speech recognition model establishing method based on bottleneck characteristics and multi-scale and multi-headed attention mechanism

The invention provides a speech recognition model establishing method based on bottleneck characteristics and a multi-scale and multi-headed attention mechanism, and belongs to the field of model establishing methods. A traditional attention model has the problems of poor recognition performance and simplex attention scale. According to the speech recognition model establishing method based on thebottleneck characteristics and the multi-scale and multi-headed attention mechanism, the bottleneck characteristics are extracted through a deep belief network to serve as a front end, the robustnessof a model can be improved, a multi-scale and multi-headed attention model constituted by convolution kernels of different scales is adopted as a rear end, model establishing is conducted on speech elements at the levels of phoneme, syllable, word and the like, and recurrent neural network hidden layer state sequences and output sequences are calculated one by one; and elements of the positions where the output sequences are located are calculated through decoding networks corresponding to attention networks of all heads, and finally all the output sequences are integrated into a new output sequence. The recognition effect of a speech recognition system can be improved.

Owner:HARBIN INST OF TECH

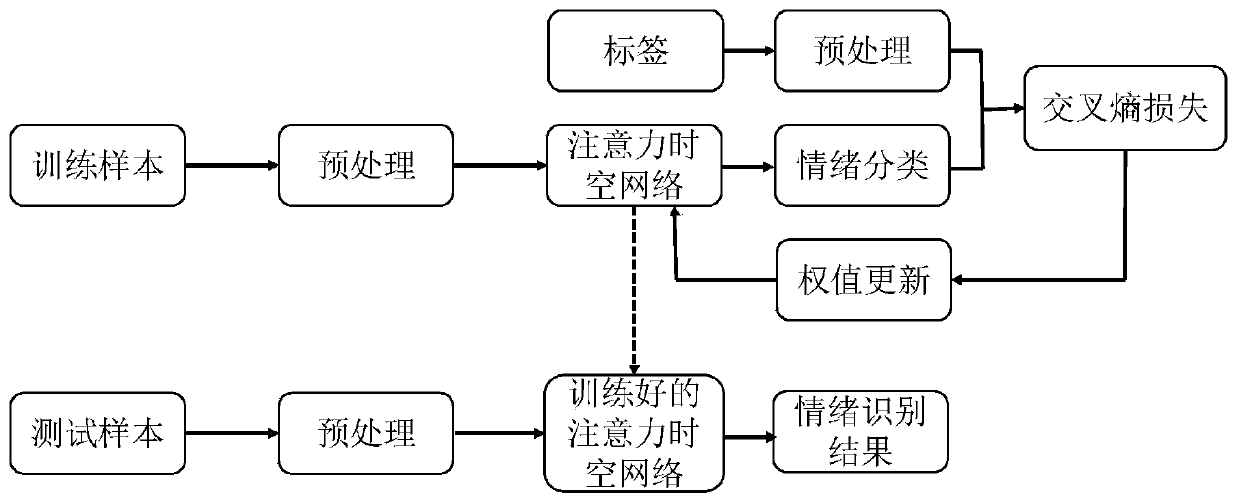

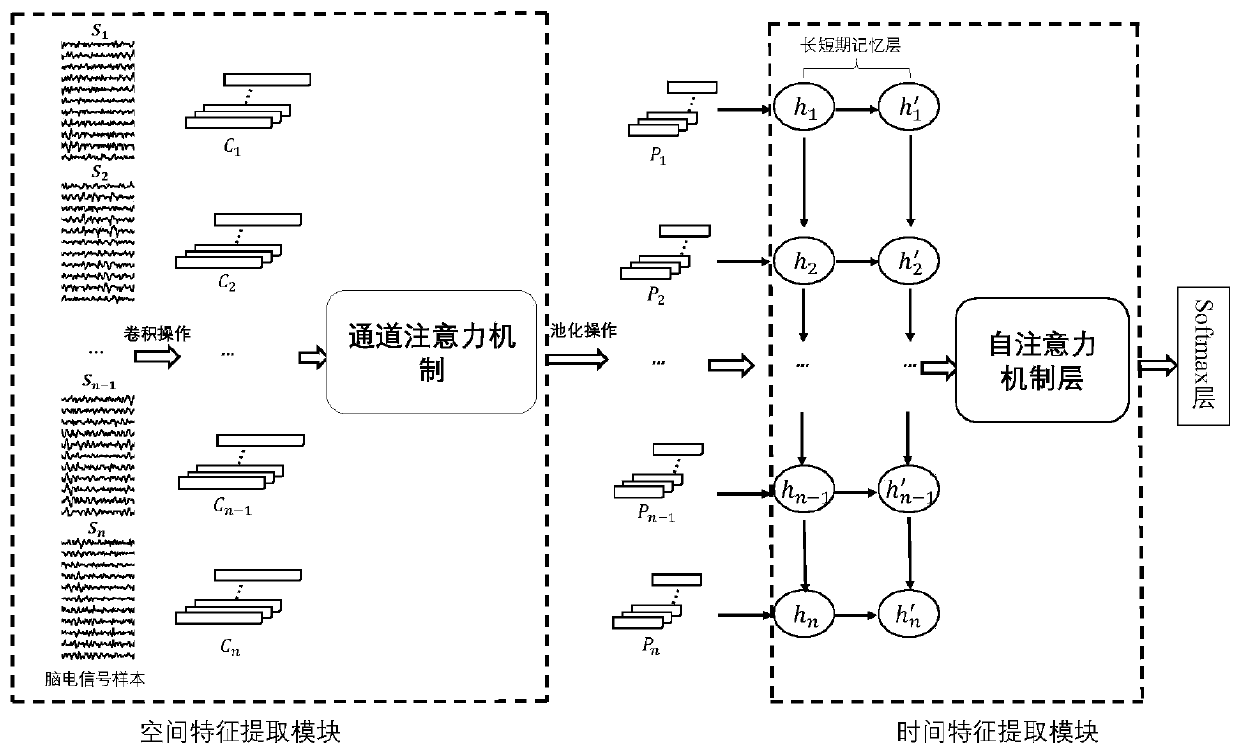

Electroencephalogram emotion recognition method based on attention mechanism

ActiveCN110610168ASolve the characteristicsSolve problems such as extractionCharacter and pattern recognitionSensorsData setEeg data

The invention discloses an electroencephalogram signal emotion recognition method based on an attention mechanism. The electroencephalogram signal emotion recognition method comprises the steps of 1,carrying out the preprocessing of removing the baseline and segmenting the fragments on the original EEG data; 2, establishing a space-time attention neural network model; 3, training the establishedconvolutional recurrent attention network model on a public data set by adopting a ten-fold crossing method; and 4, realizing an emotion classification task by utilizing the established model. According to the invention, the high-precision emotion recognition can be realized, so that the recognition rate is improved.

Owner:HEFEI UNIV OF TECH

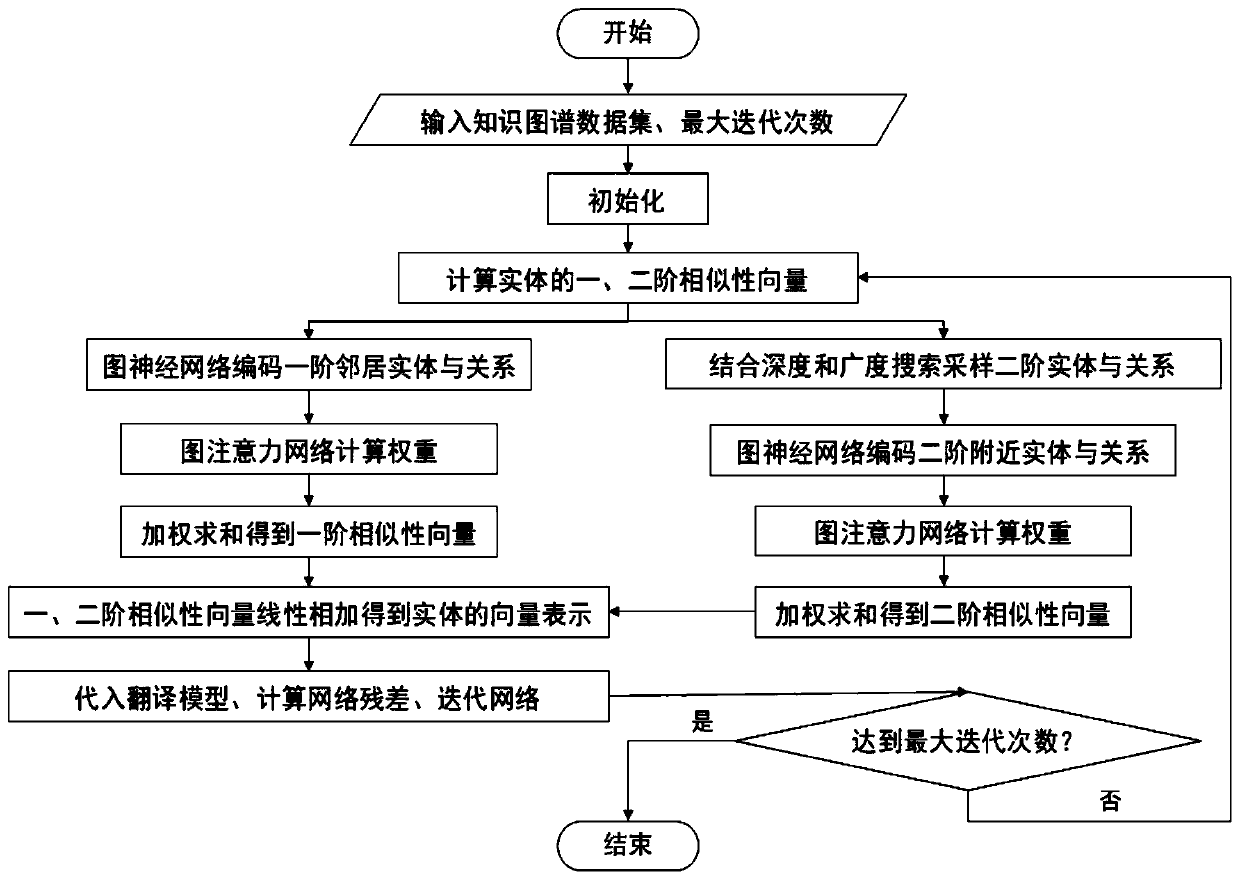

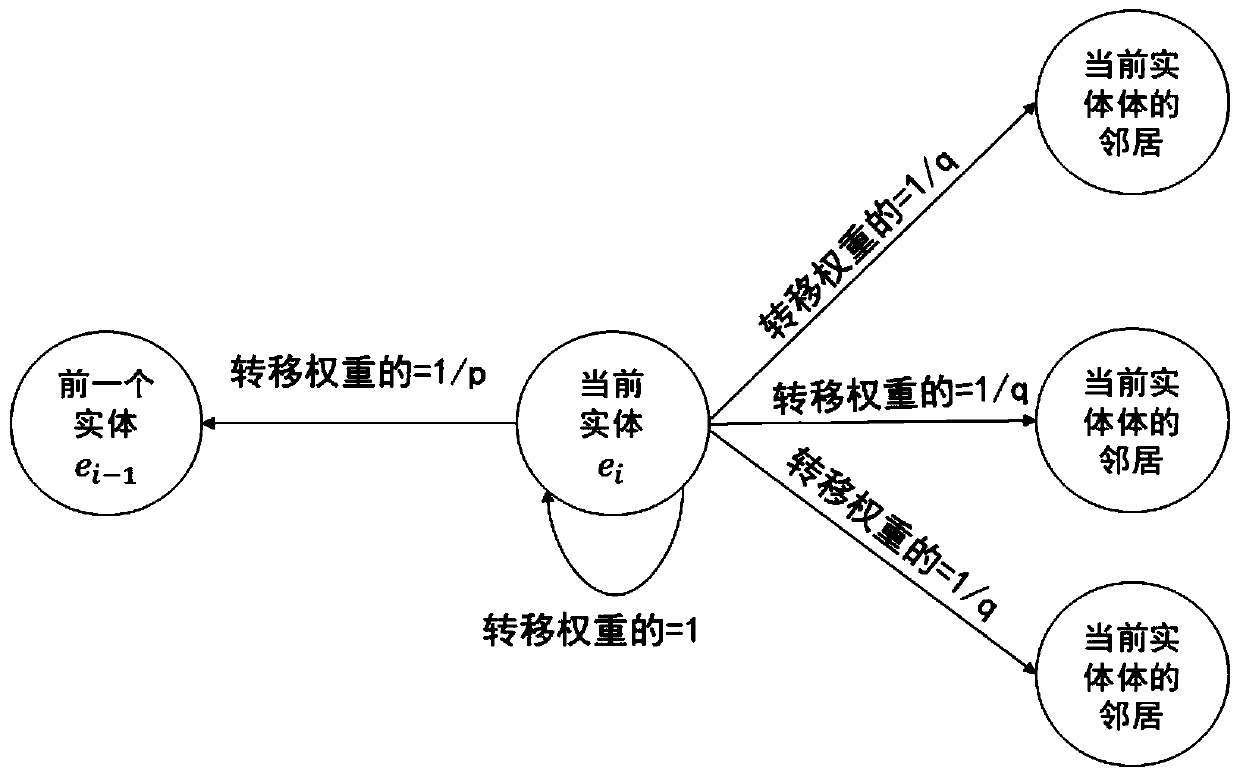

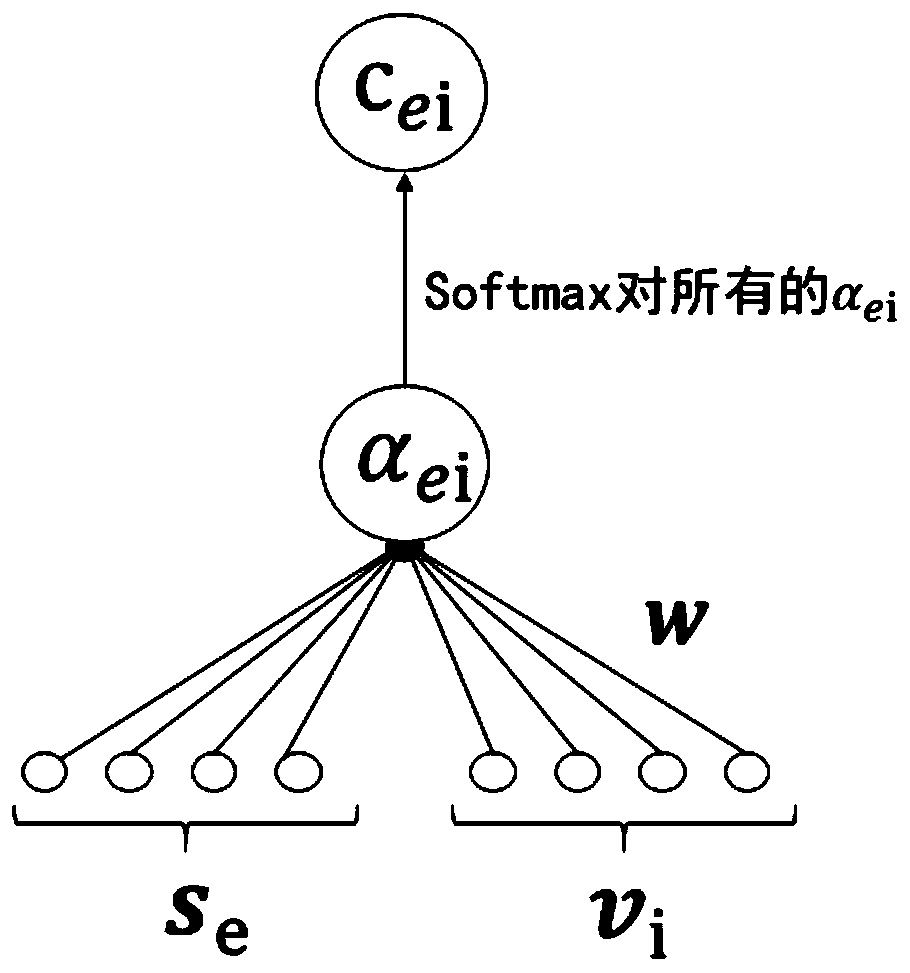

Knowledge graph entity semantic space embedding method based on graph second-order similarity

ActiveCN109829057AVector representation goodSolving the Semantic Space Embedding ProblemNeural learning methodsSemantic tool creationData setGraph spectra

The invention discloses a knowledge graph entity semantic space embedding method based on graph second-order similarity, and the method comprises the steps: (1) inputting a knowledge graph data set and a maximum number of iterations; (2) calculating first-order and second-order similarity vector representations through first-order and second-order similarity feature embedding processing by considering a relation between entities through a graph attention mechanism to obtain first-order and second-order similarity semantic space embedding representations; (3) carrying out weighted summation onthe final first-order similarity vector and the final second-order similarity vector of the entity to obtain a final vector representation of the entity, inputting a translation model to calculate a loss value to obtain a graph attention network and a graph neural network residual, and iterating the network model; And (4) performing link prediction and classification test on the network model. According to the method, the relation between entities is mined by using a graph attention mechanism for the first time, and patents have a relatively good effect in the application fields of link prediction, classification and the like of the knowledge graph.

Owner:SUN YAT SEN UNIV

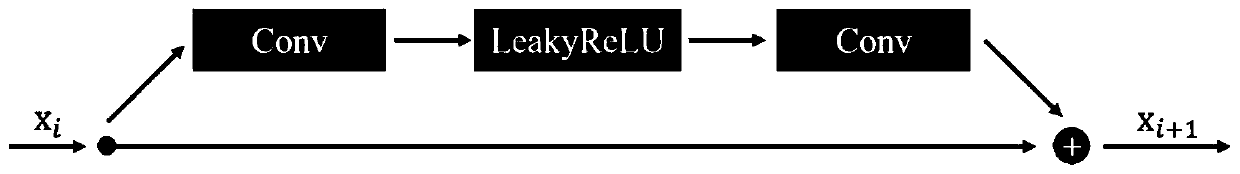

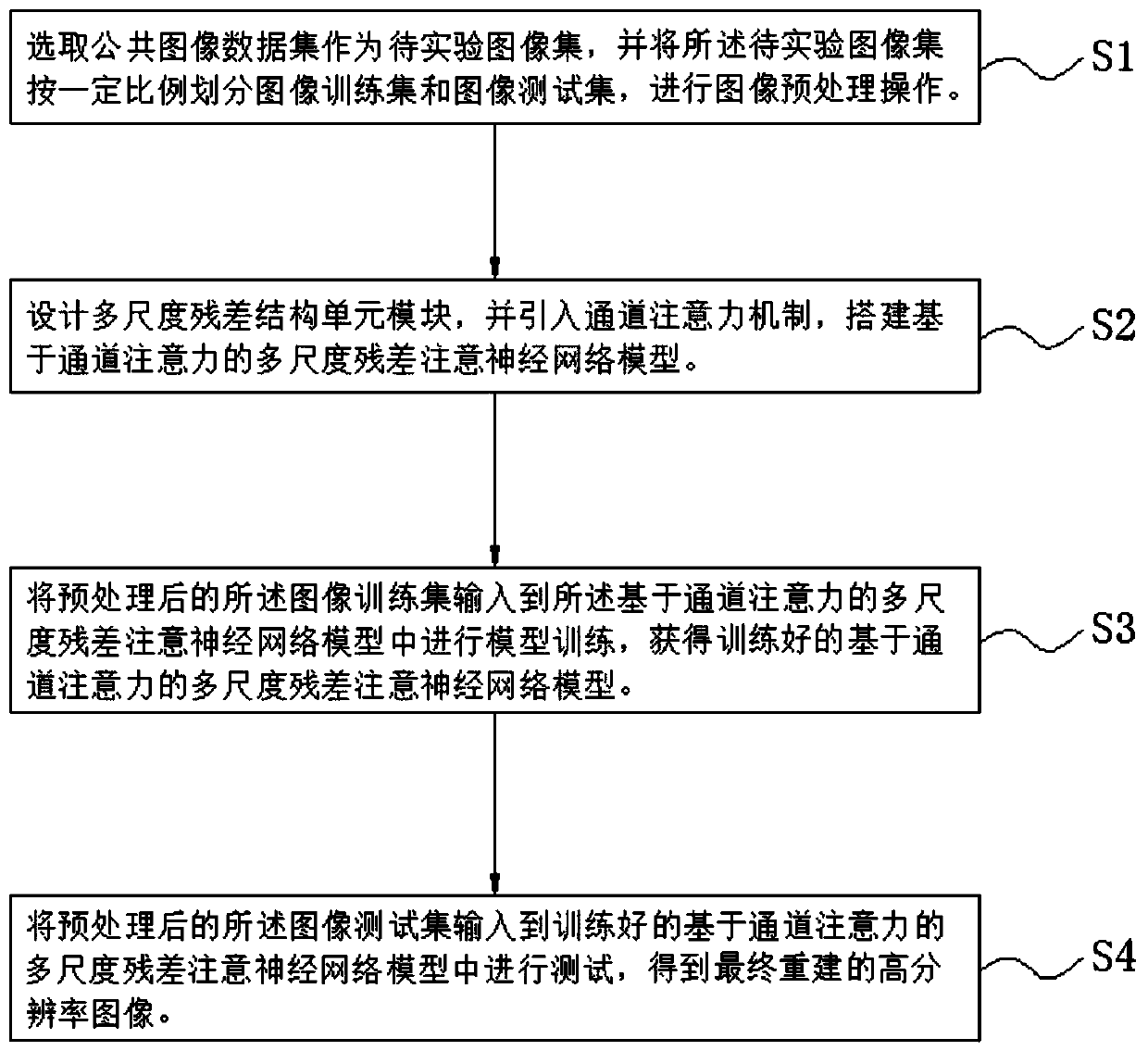

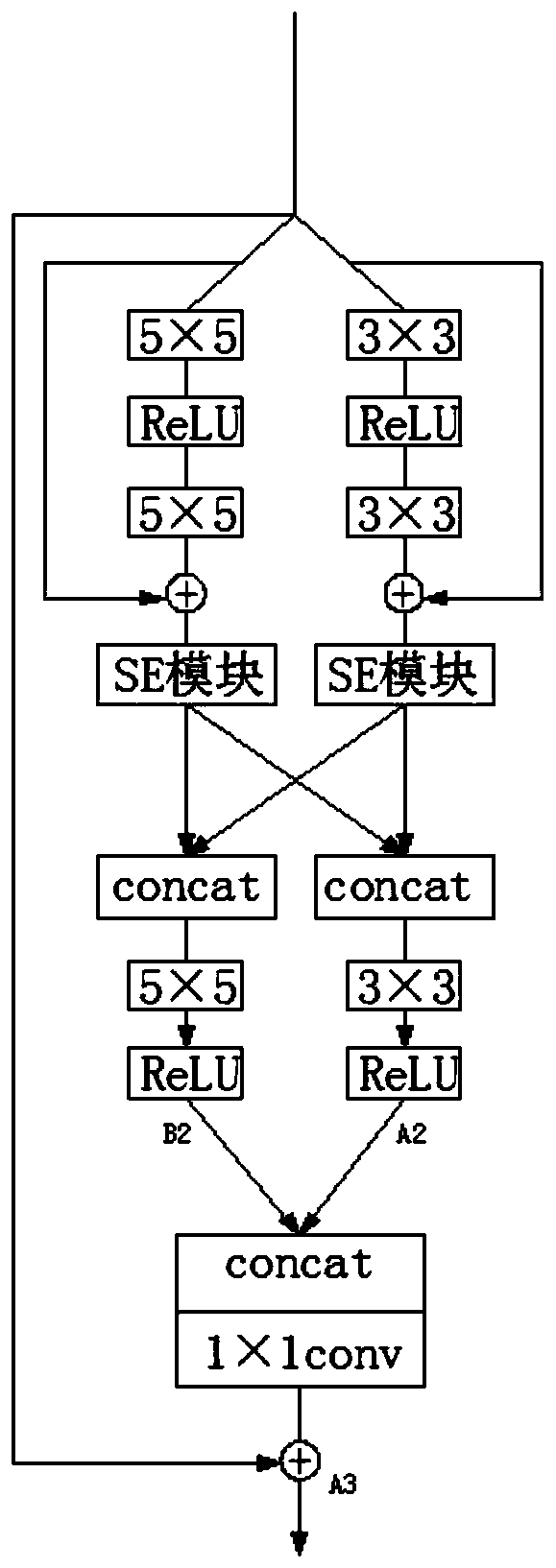

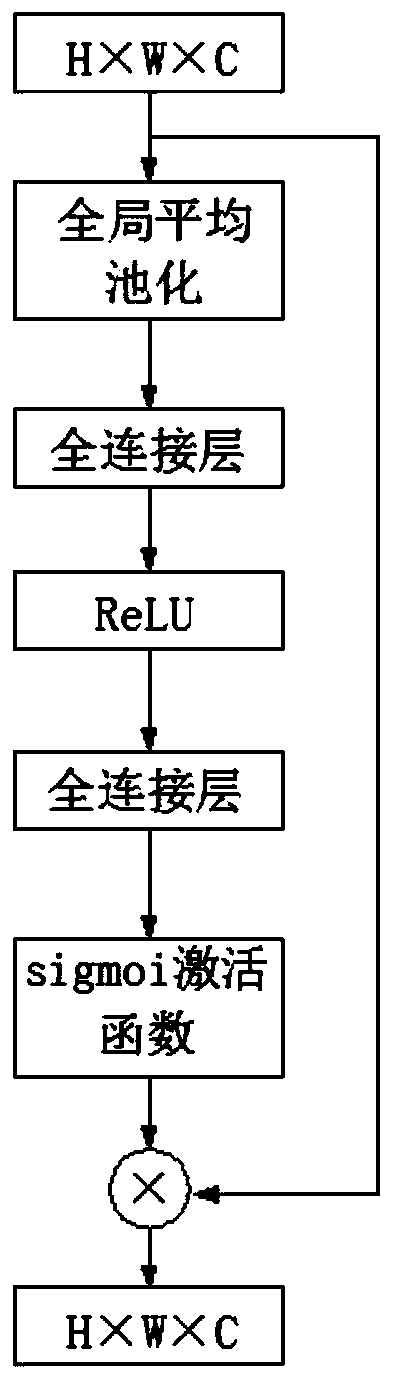

Multi-scale residual attention network image super-resolution reconstruction method based on attention

InactiveCN110992270AExtract focusEasy extractionGeometric image transformationCharacter and pattern recognitionImage resolutionReconstruction method

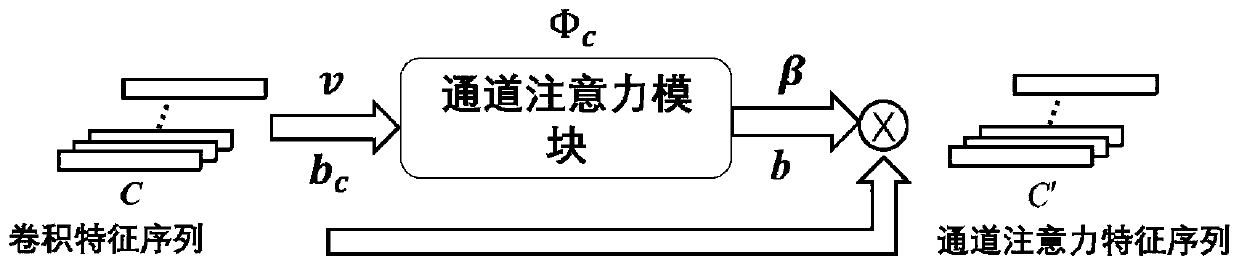

The invention belongs to the technical field of image super-resolution reconstruction, and discloses a multi-scale residual attention network image super-resolution reconstruction method based on attention. The method comprises the steps of selecting a common image data set as a to-be-experimented image set, dividing the to-be-experimented image set into an image training set and an image test set, and performing image preprocessing, designing a multi-scale residual structure unit module, introducing a channel attention mechanism, and building a multi-scale residual attention neural network model based on channel attention, inputting the preprocessed image training set into a multi-scale residual attention neural network model based on channel attention for model training, and inputting the preprocessed image test set into the trained model for testing to obtain a finally reconstructed high-resolution image. According to the method, a basic unit is enabled to focus on extraction of high-frequency information, important feature map information in a channel is better highlighted, important information in an image is better extracted, and reconstruction errors are reduced.

Owner:SOUTHWEST PETROLEUM UNIV

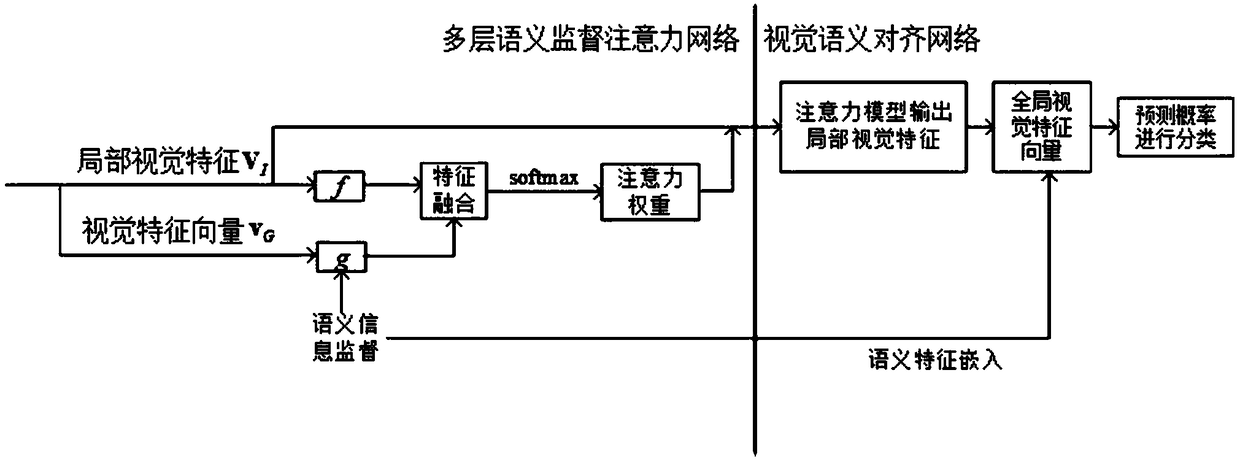

Fine-grained zero-sample classification method based on multi-layer semantic supervised attention model

InactiveCN109447115AEasy to classifyCharacter and pattern recognitionNeural architecturesAttention modelNetwork output

The present invention relates to a fine-grained zero-sample classification method based on multi-layer semantic supervised attention model. The local visual features of fine-grained images are extracted by a convolution neural network, and the local visual features of fine-grained images are supervised by the text description information of categories as category semantic features, and the local visual features of fine-grained images are weighted gradually. The loss function of the multi-level semantic supervised attention model is obtained by mapping the class semantic features to the hiddenspace local visual features. The global visual features of fine-grained images are combined with the local visual features weighted by the multi-layer semantic supervised attention model as the new visual features of images. The category semantic features are embedded into the new visual feature space, and the visual features and semantic features of the output of the multi-layer semantic supervised attention network are aligned, and the softmax function is used for classification. The method of the invention can input the extracted visual features and category semantic features, and output the classification result of the image.

Owner:TIANJIN UNIV

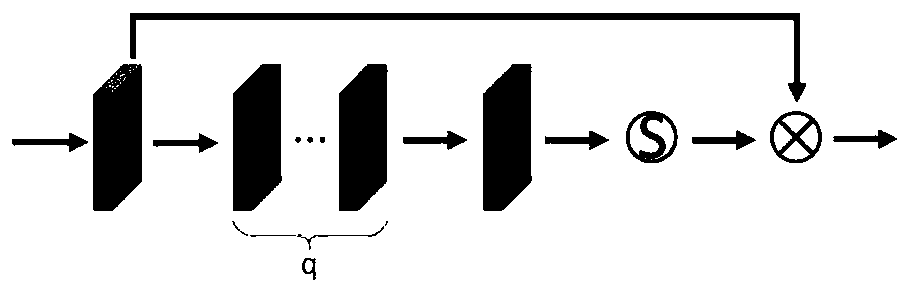

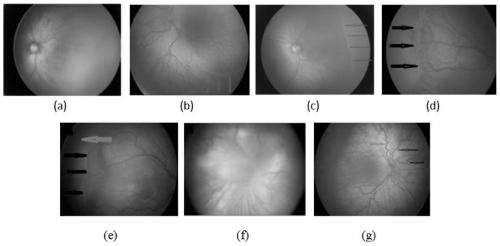

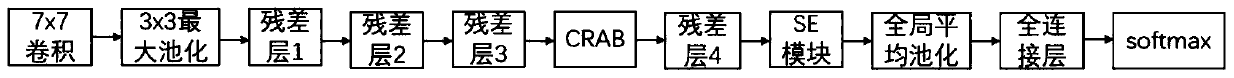

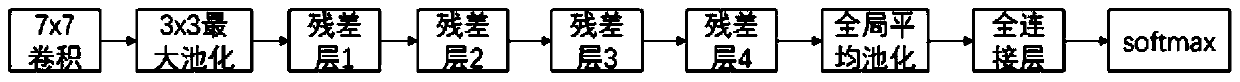

Premature infant retinal image classification method and device based on attention mechanism

ActiveCN111259982AImprove accuracyImprove recognition efficiencyNeural architecturesRecognition of medical/anatomical patternsOphthalmologyNetwork model

The invention discloses a premature infant retinal image classification method and device based on an attention mechanism, and the method comprises the steps: carrying out the preprocessing of a to-be-recognized two-dimensional retinal fundus image, and obtaining a preprocessed two-dimensional retinal fundus image; inputting the preprocessed two-dimensional retinal fundus image into a pre-traineddeep attention network model, and outputting the classification result of the image to identify a premature infant retinopathy ROP image, wherein the deep attention network model is formed by respectively adding a complementary residual attention module and a channel attention SE module behind a third residual layer and a fourth residual layer of the original ResNet18 network. According to the method, rich and important global and local information can be obtained, so that the network can learn correct lesion features, the classification network can better solve the problem of great data imbalance between lesions and backgrounds, and the classification performance of the deep attention network model is improved.

Owner:SUZHOU UNIV

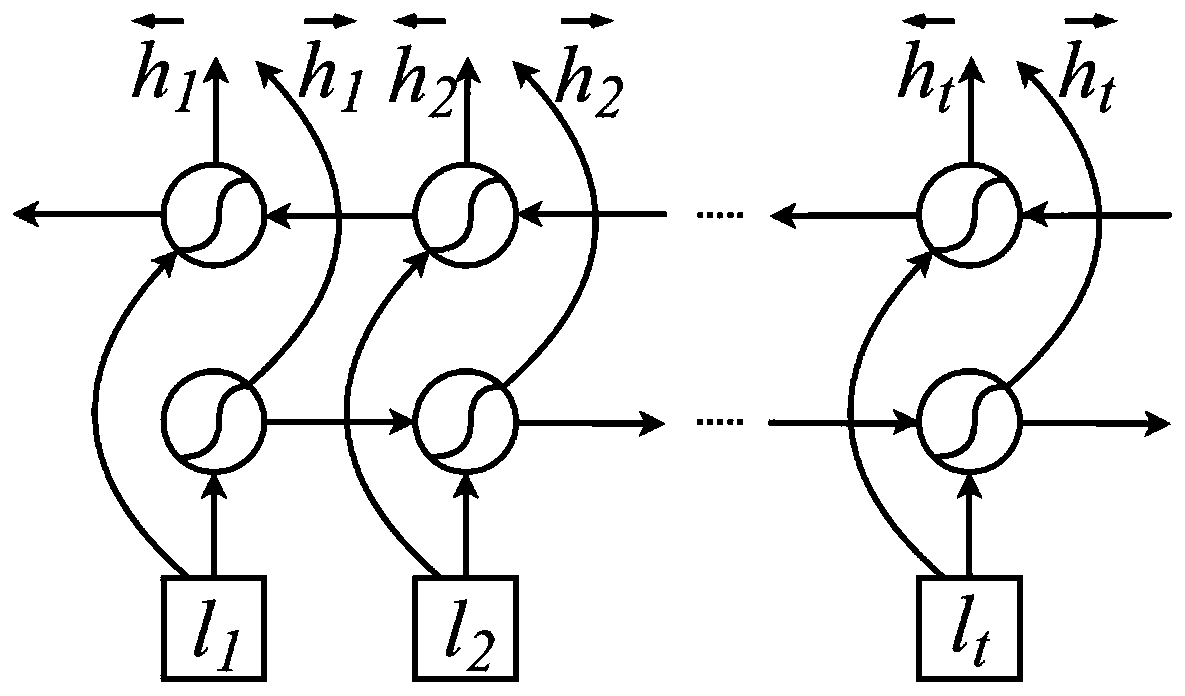

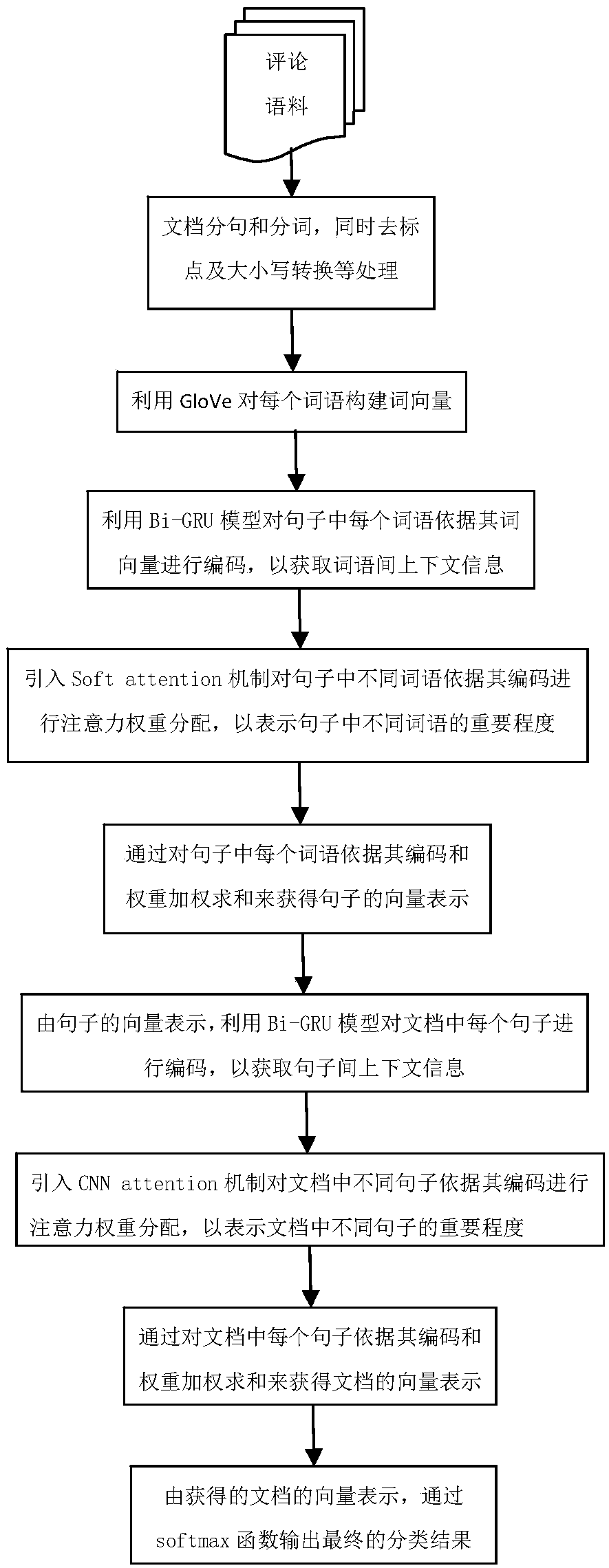

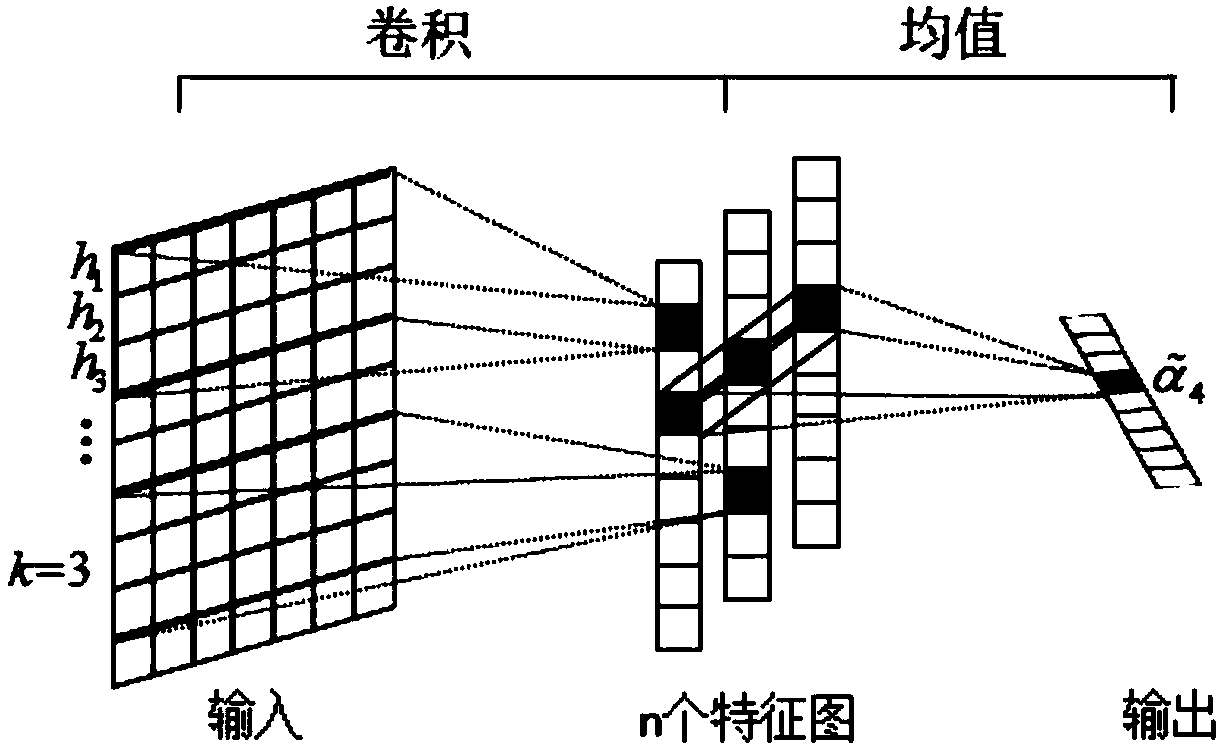

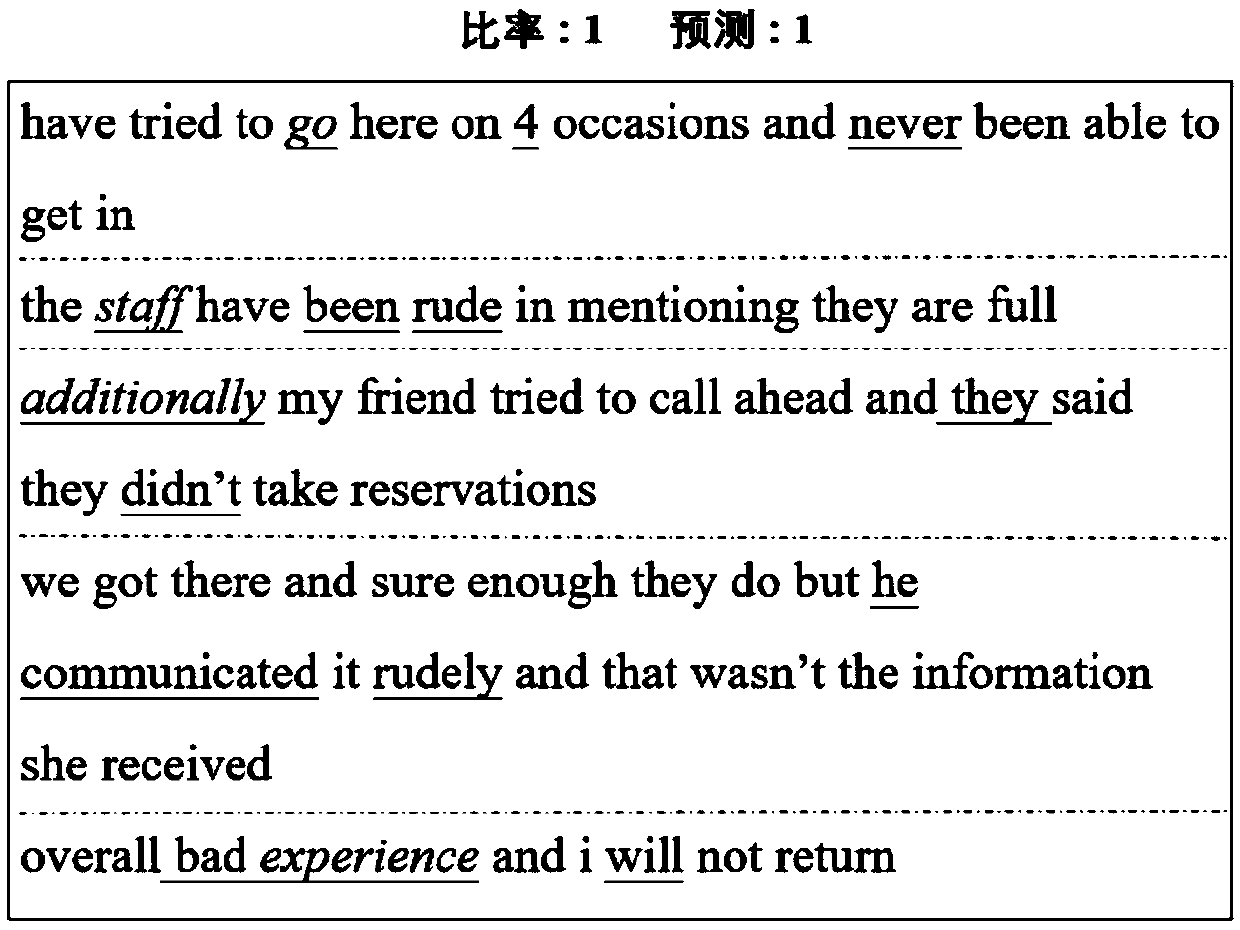

Document classification method based on hierarchical multi-attention network

InactiveCN109558487APreserve contextual informationReasonable distribution of attention weightNatural language data processingNeural architecturesDocument modelingSequence model

The invention discloses a document classification method based on a hierarchical multi-attention network. The method comprises the following steps of utilizing a Bi-GRU sequence model for carrying outword-sentence and sentence-to-document modeling on the document; using Bi-GRU sequence model to encode each word, obtaining the context information in the sentence, and using the Soft attention to carry out the weight distribution on each word; for the process from the sentences to the document, introducing the CNN attention, and obtaining the local relevant characteristics between the sentencesin the window by utilizing a CNN model, so that the attention weight of each sentence is further obtained. Modeling can be carried out from words to sentences and from sentences to documents accordingto document characteristics, and the hierarchical structure of the documents is fully considered. Meanwhile, aiming at the word level and the sentence level, different attention mechanisms are respectively adopted to properly distribute the weights of the related contents, so that the document classification accuracy is improved.

Owner:SOUTH CHINA NORMAL UNIVERSITY

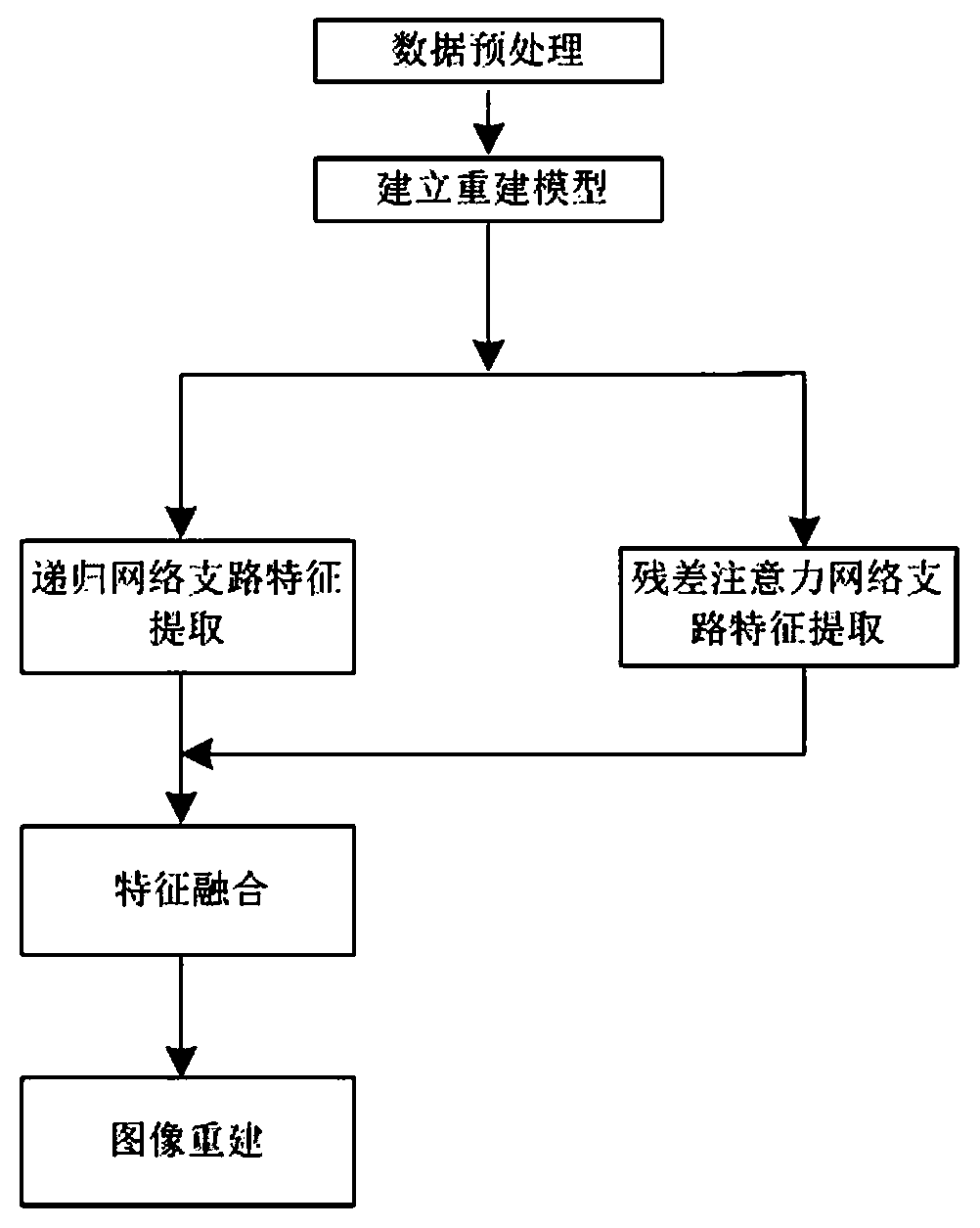

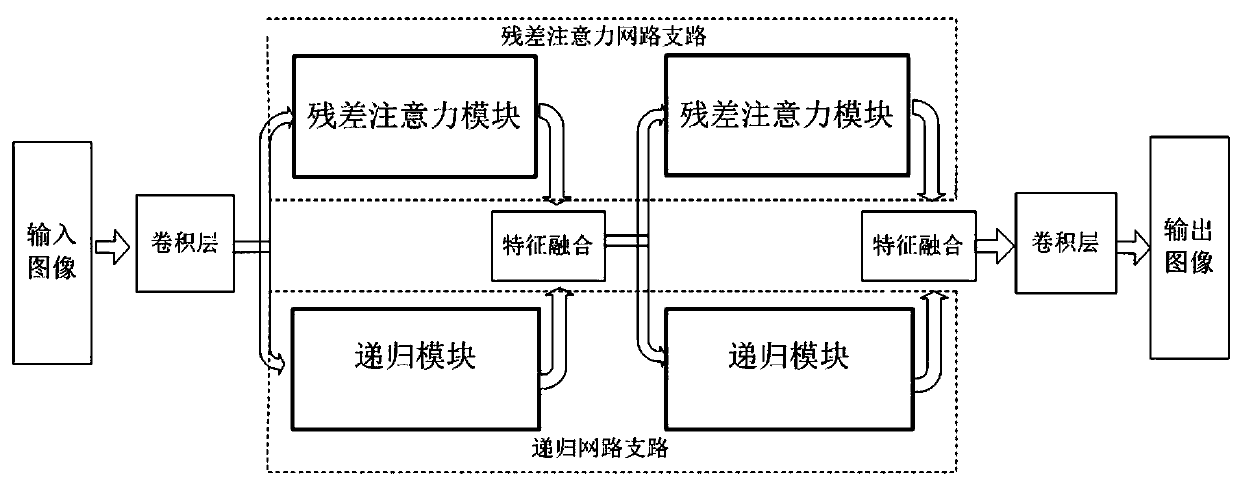

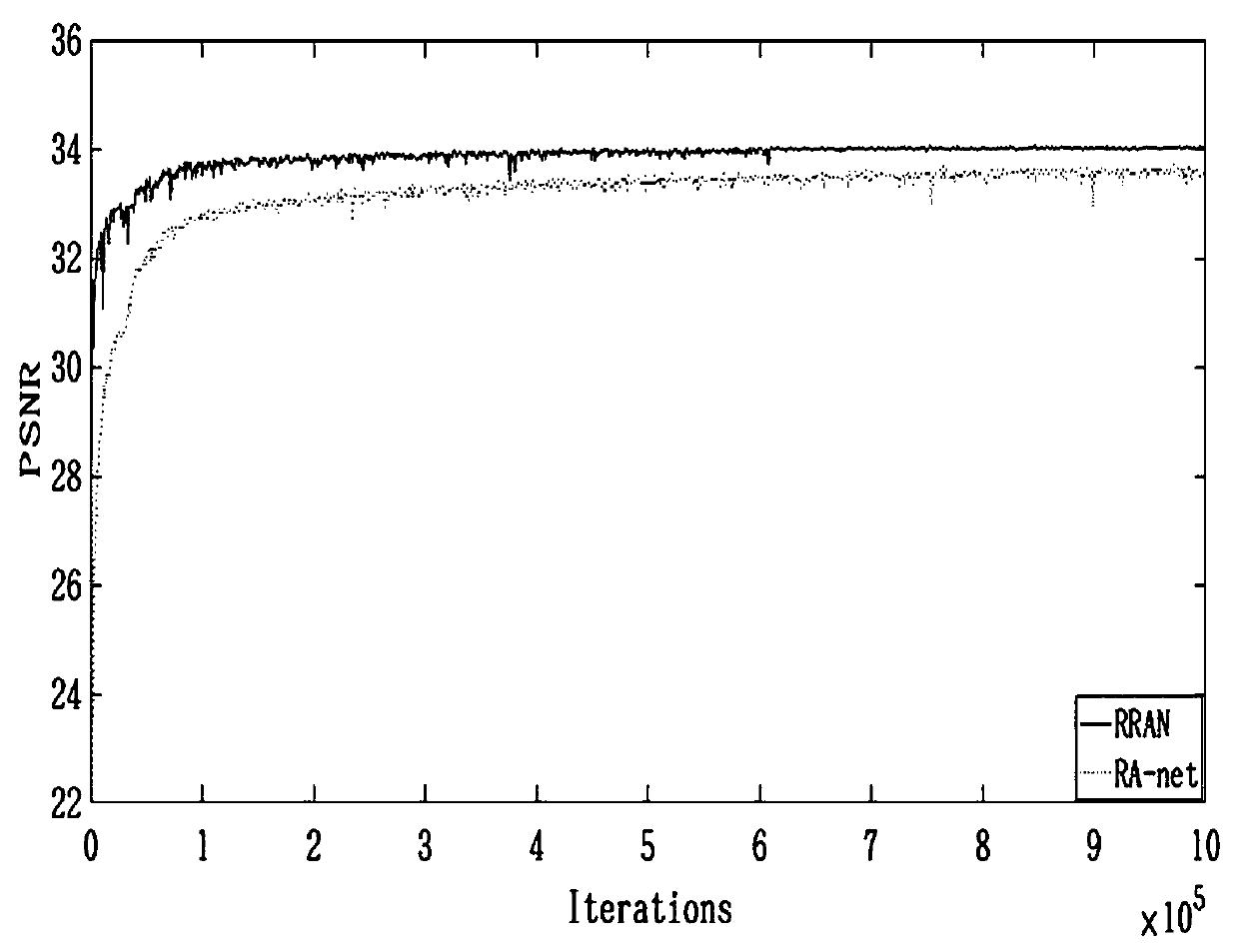

Recursive residual attention network-based image super-resolution reconstruction method

ActiveCN108765296AEnhance feature detailsBroad captureGeometric image transformationFeature extractionReconstruction method

The invention discloses a recursive residual attention network-based image super-resolution reconstruction method. The method is characterized by comprising the following steps of: 1) preprocessing data; 2) establishing a reconstruction model; 3) extracting features of a first residual attention module of a residual attention network branch; 4) extracting features of a first recursive module of arecursive network branch; 5) fusing the features; and 6) reconstructing an image. According to the method, noises caused by preprocessing can be solved, more high-frequency information can be obtainedto enrich the image details, the network parameters can be decreased, new parameters are not increased while the layers are increased; and the precision of super-resolution reconstruction can be improved.

Owner:GUILIN UNIV OF ELECTRONIC TECH

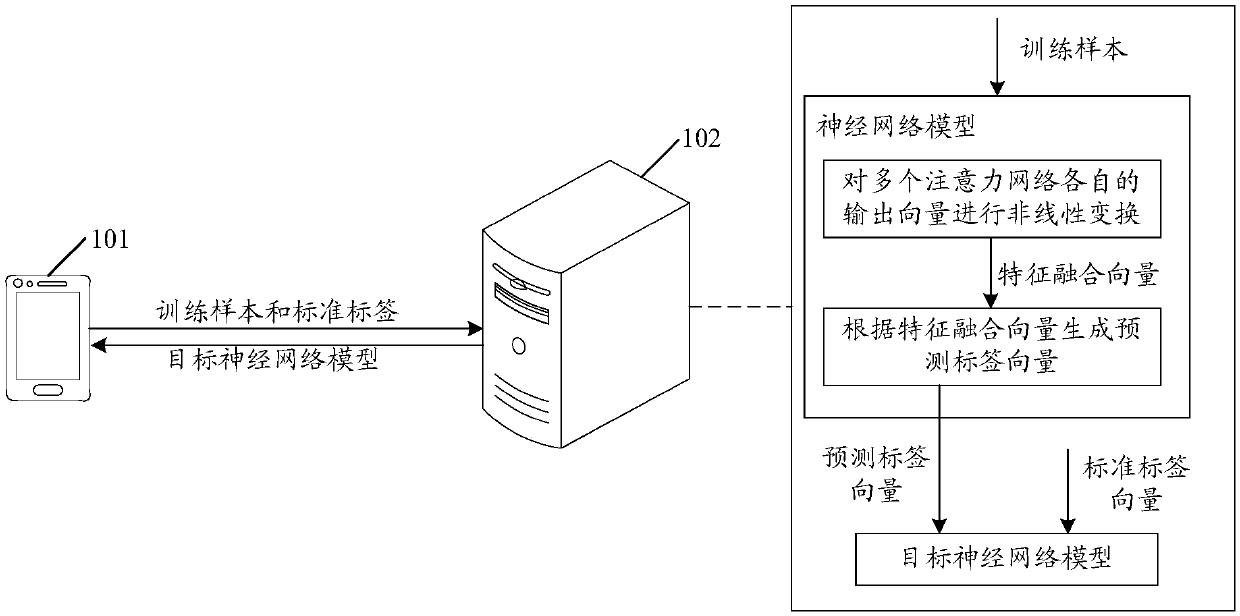

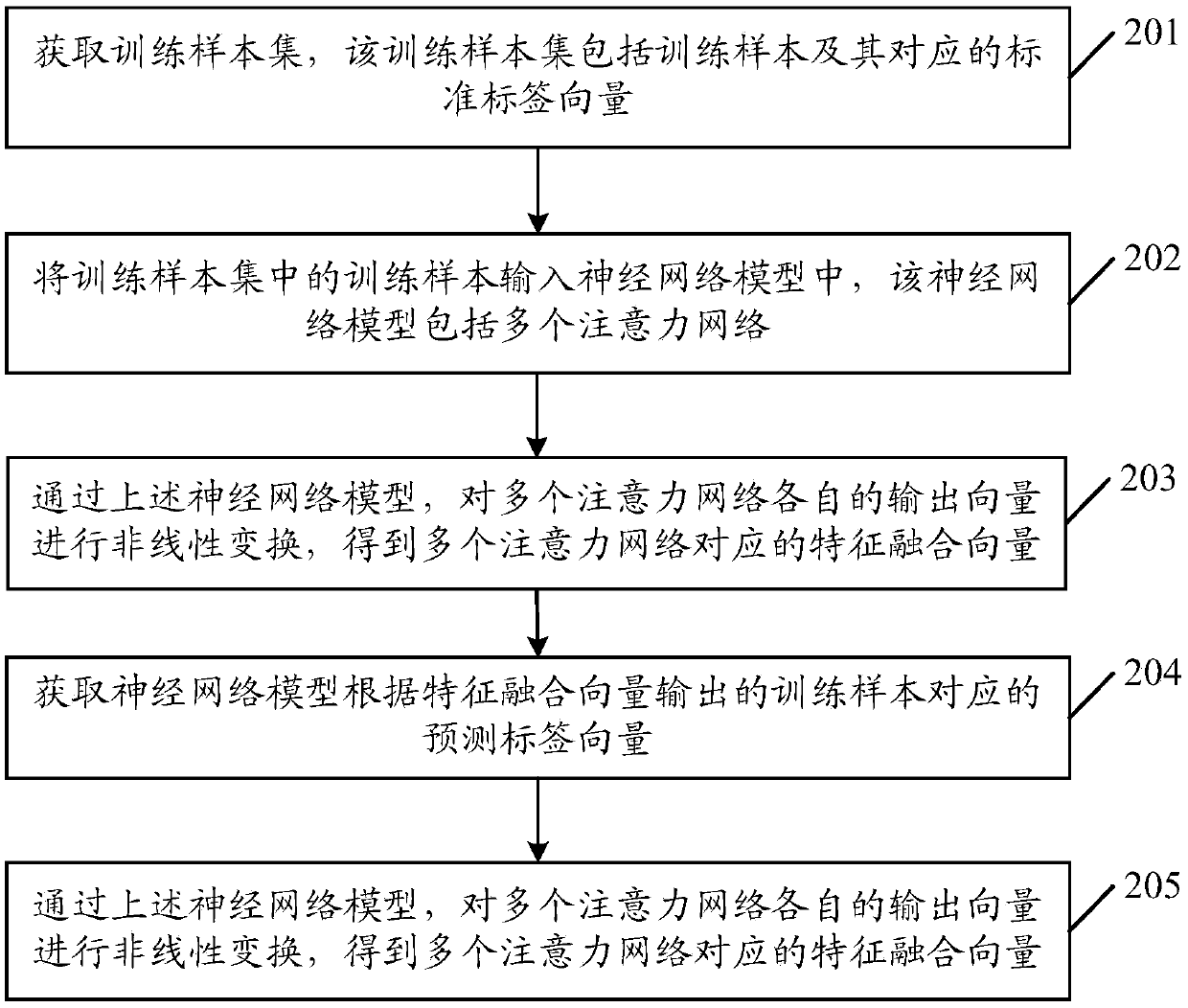

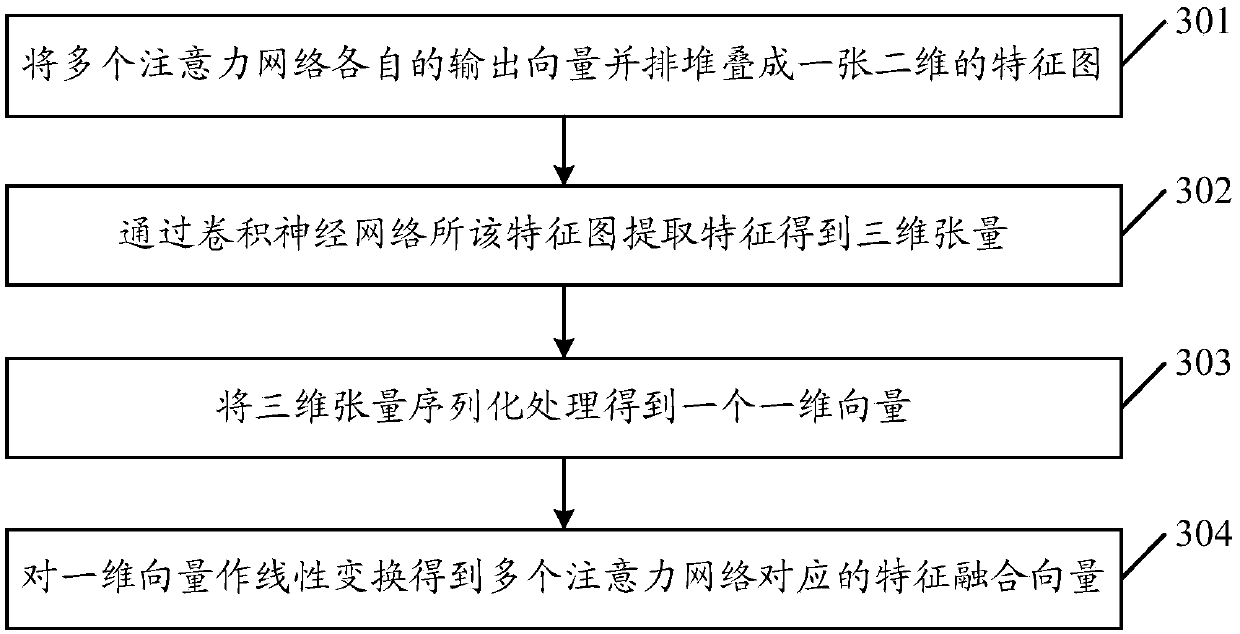

Model training method, machine translation method and related devices and equipment

ActiveCN110162799AImprove learning abilityAchieve interactionNatural language translationSemantic analysisFeature vectorNetwork model

The embodiment of the invention discloses a neural network model training method, device and equipment and a medium. The method comprises the steps: acquiring a training sample set comprising trainingsamples and standard label vectors corresponding to the training samples; inputting the training sample into a neural network model comprising a plurality of attention networks; performing nonlineartransformation on the respective output vectors of the attention networks through the neural network model to obtain feature fusion vectors corresponding to the attention networks; and obtaining a neural network model, outputting a prediction label vector according to the feature fusion vector, and adjusting model parameters of the neural network model according to a comparison result of the prediction label vector and a standard label vector until a convergence condition is met, thereby obtaining a target neural network model. The output vectors of all the attention networks are fused in a nonlinear transformation mode, so that the output vectors of all the attention networks are fully interacted, a feature fusion feature vector with more information amount is generated, and the final output representation effect is ensured to be better.

Owner:TENCENT TECH (SHENZHEN) CO LTD

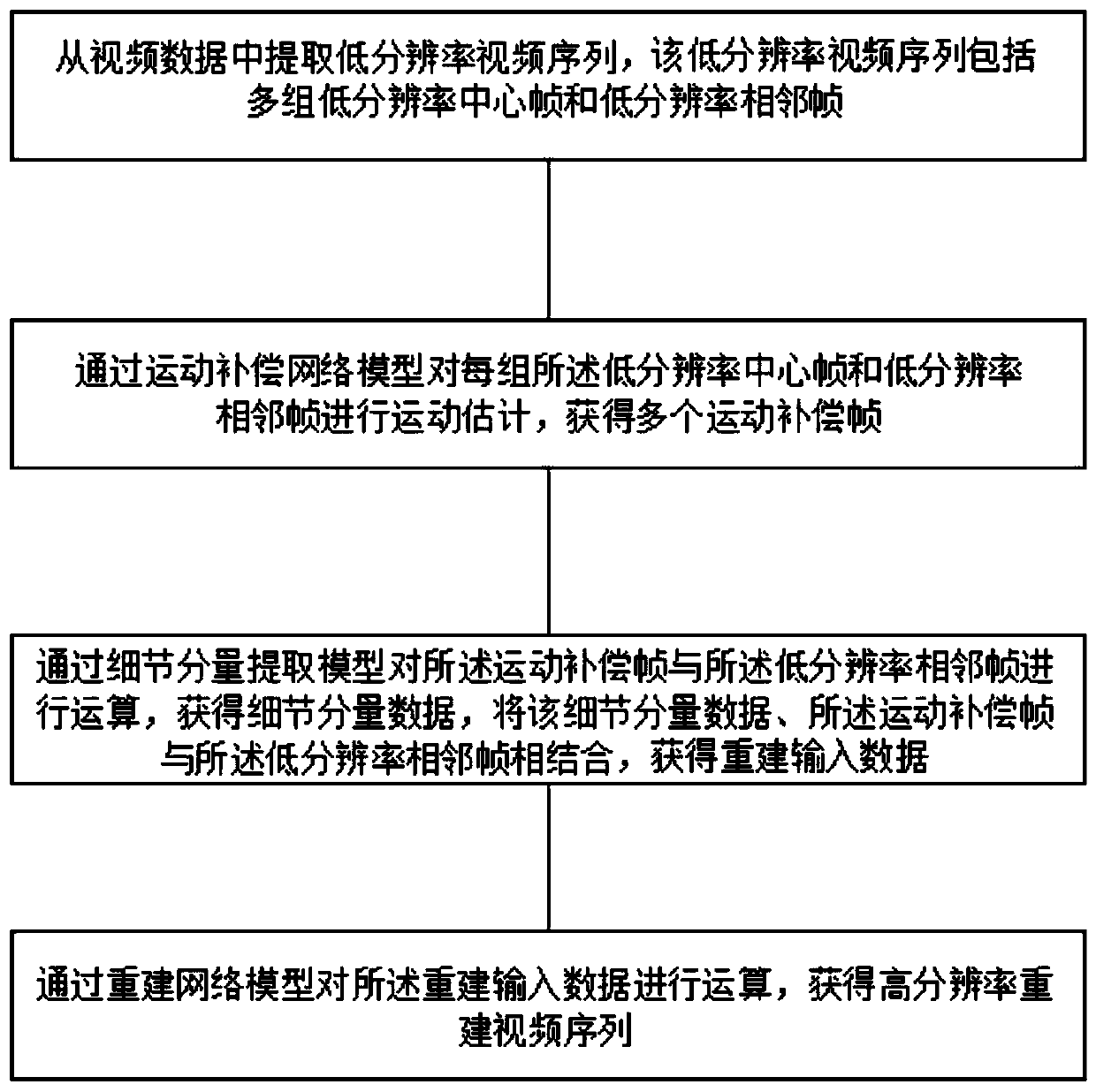

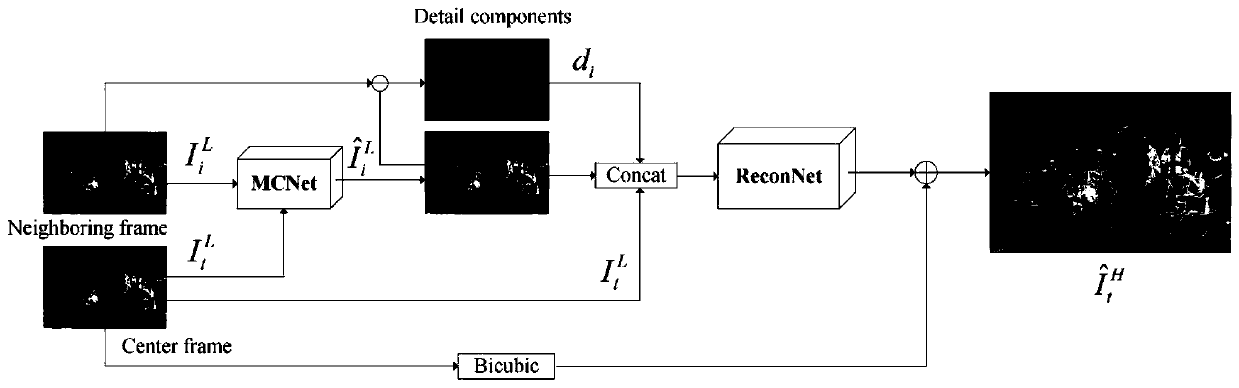

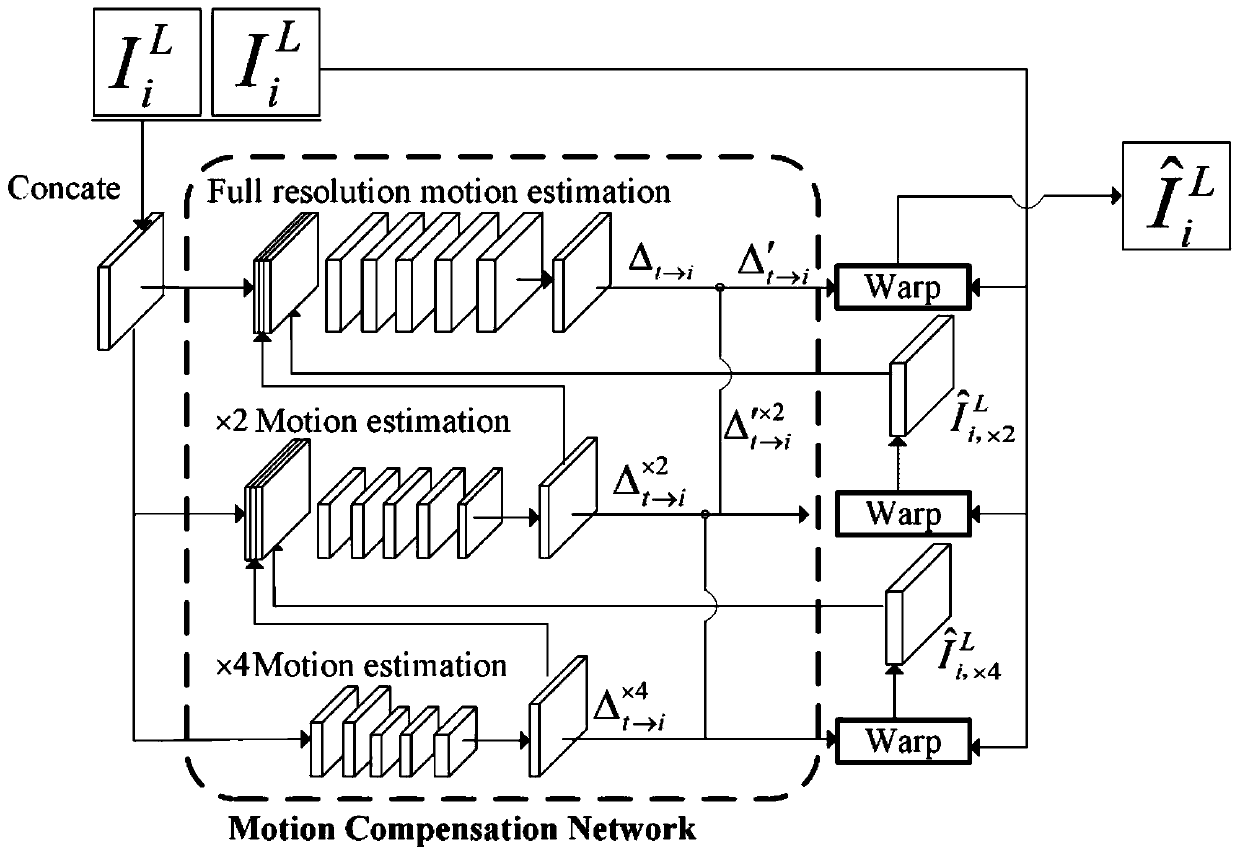

Video super-resolution reconstruction method based on deep dual attention network

ActiveCN110969577AAccurate super-resolution reconstructionImprove performanceImage enhancementGeometric image transformationTemporal informationComputer graphics (images)

According to the video super-resolution reconstruction method based on the deep dual attention network provided by the invention, the cascaded motion compensation network model and reconstruction network model are loaded, and the accurate video super-resolution reconstruction is realized by fully utilizing the spatial-temporal information characteristics, wherein the motion compensation network model can gradually learn the multi-scale motion information of the optical flow representation synthesis adjacent frames from rough to fine; a double attention mechanism is utilized in a reconstructionnetwork model, a residual attention unit is formed, intermediate information features are focused, and image details can be better recovered; and compared with the prior art, the method can effectively realize excellent performance in the aspects of quantitative and qualitative evaluation.

Owner:BEIJING JIAOTONG UNIV

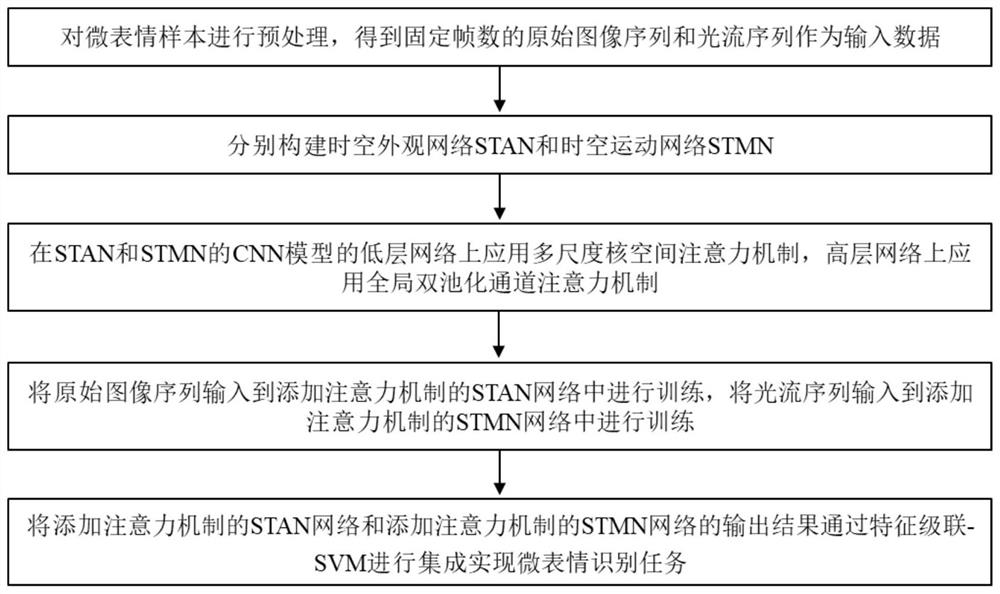

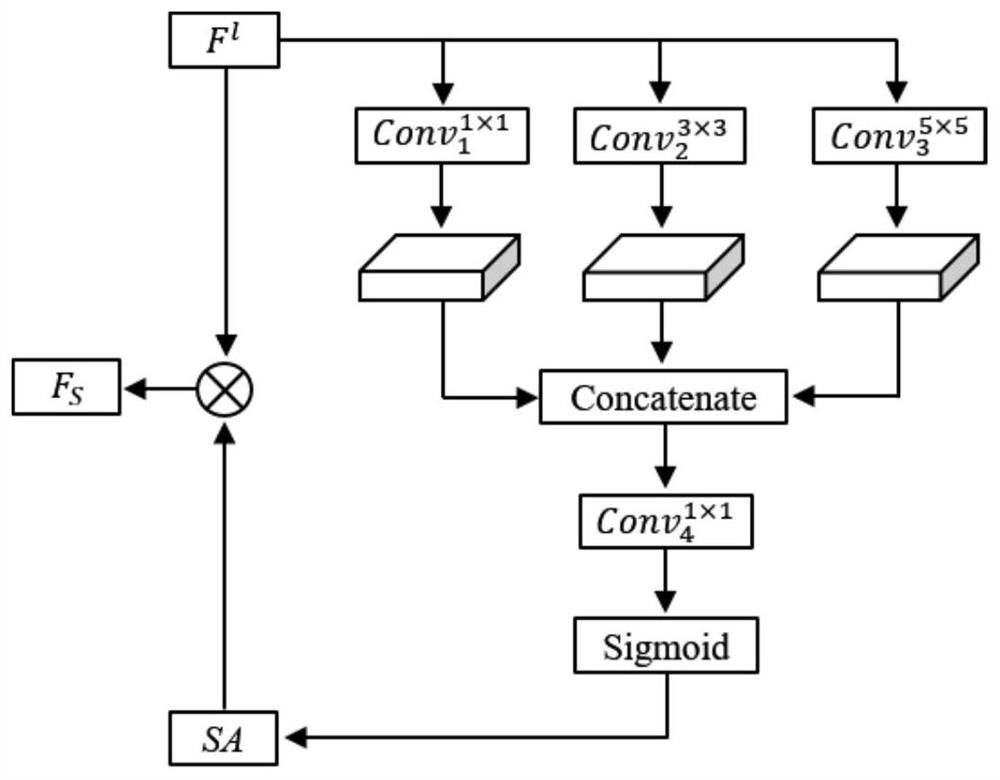

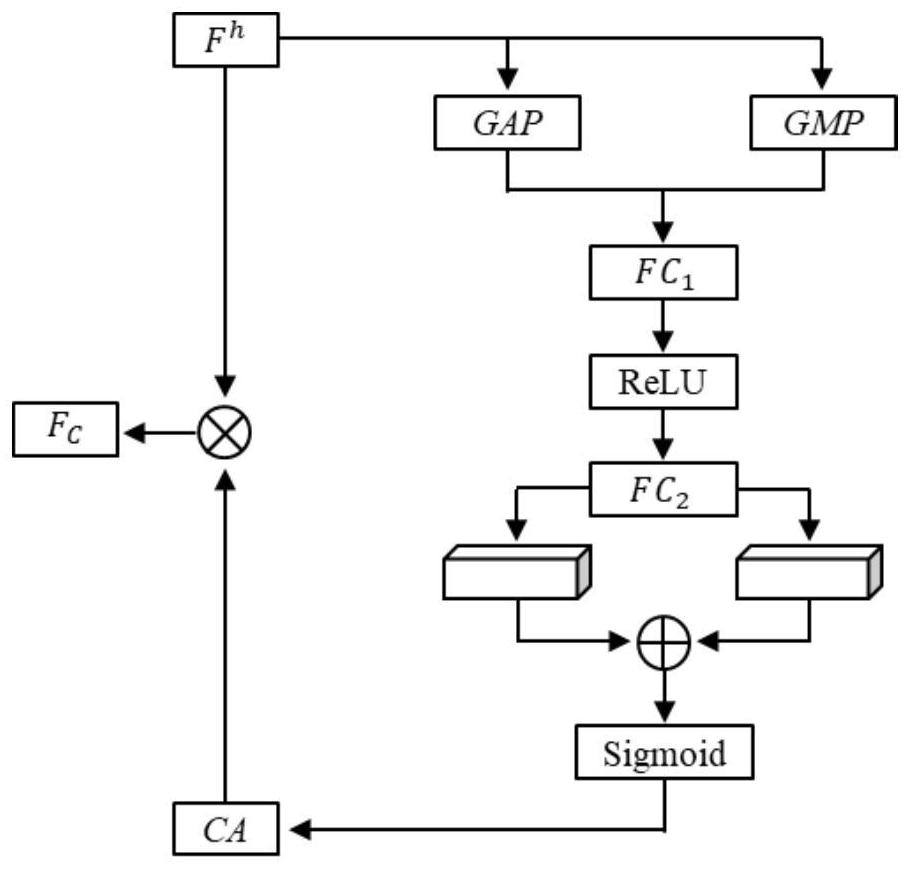

Micro-expression recognition method based on space-time appearance movement attention network

ActiveCN112307958ASuppression identifies features with small contributionsTake full advantage of complementarityCharacter and pattern recognitionNeural architecturesPattern recognitionNetwork on

The invention relates to a micro-expression recognition method based on a space-time appearance movement attention network, and the method comprises the following steps: carrying out the preprocessingof a micro-expression sample, and obtaining an original image sequence and an optical flow sequence with a fixed number of frames; constructing a space-time appearance motion network which comprisesa space-time appearance network STAN and a space-time motion network STMN, designing the STAN and the STMN by adopting a CNN-LSTM structure, learning spatial features of micro-expressions by using a CNN model, and learning time features of the micro-expressions by using an LSTM model; introducing hierarchical convolution attention mechanisms into CNN models of an STAN and an STMN, applying a multi-scale kernel space attention mechanism to a low-level network, applying a global double-pooling channel attention mechanism to a high-level network, and respectively obtaining an STAN network added with the attention mechanism and an STMN network added with the attention mechanism; inputting the original image sequence into the STAN network added with the attention mechanism to be trained, inputting the optical flow sequence into the STMN network added with the attention mechanism to be trained, integrating output results of the original image sequence and the optical flow sequence through the feature cascade SVM to achieve a micro-expression recognition task, and improving the accuracy of micro-expression recognition.

Owner:HEBEI UNIV OF TECH +2

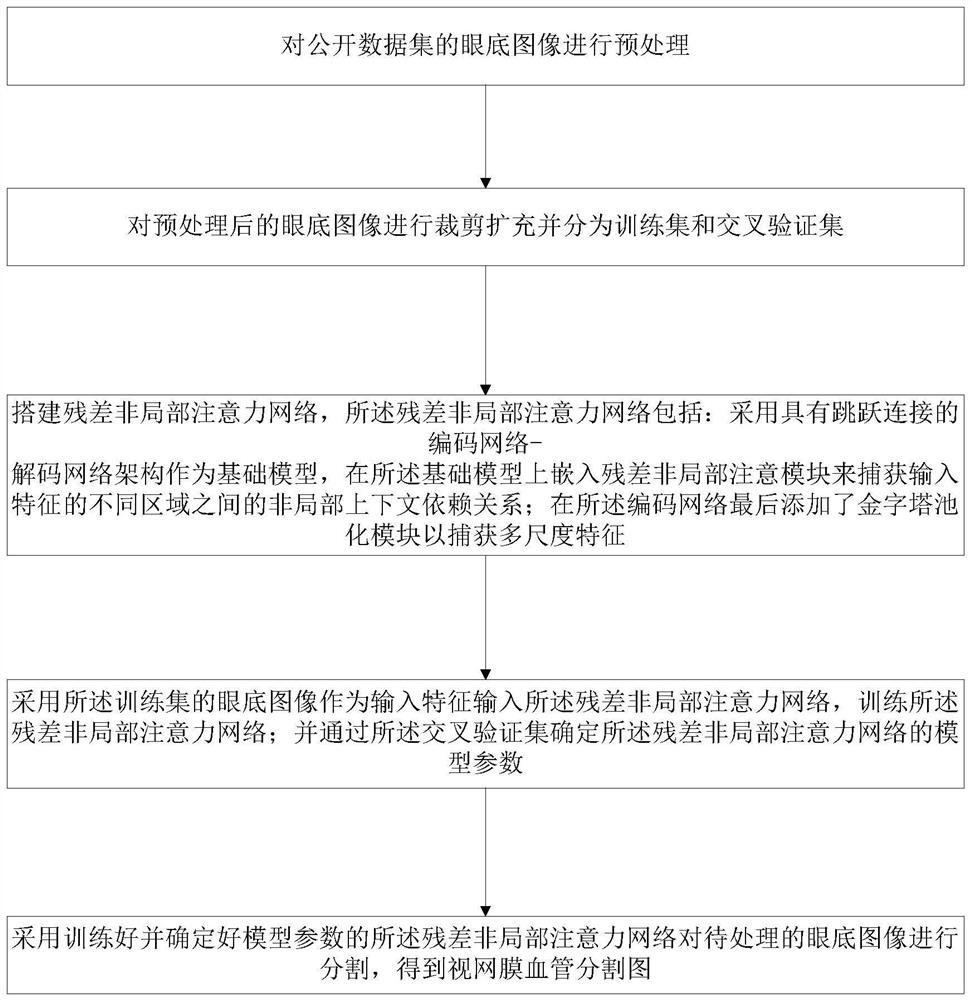

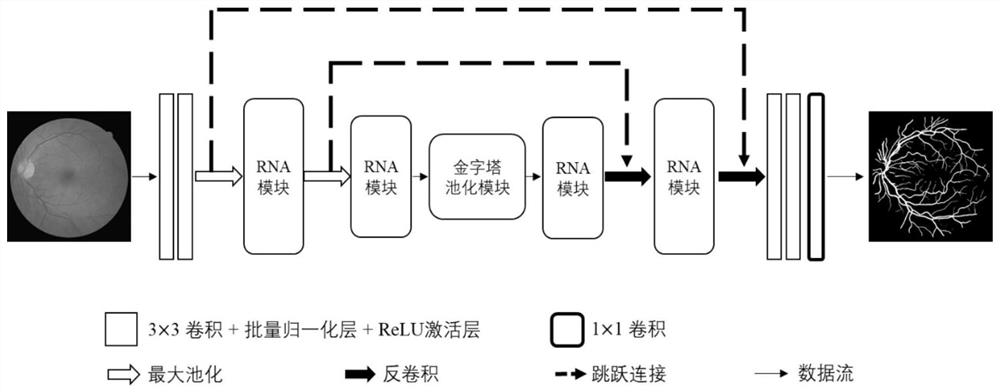

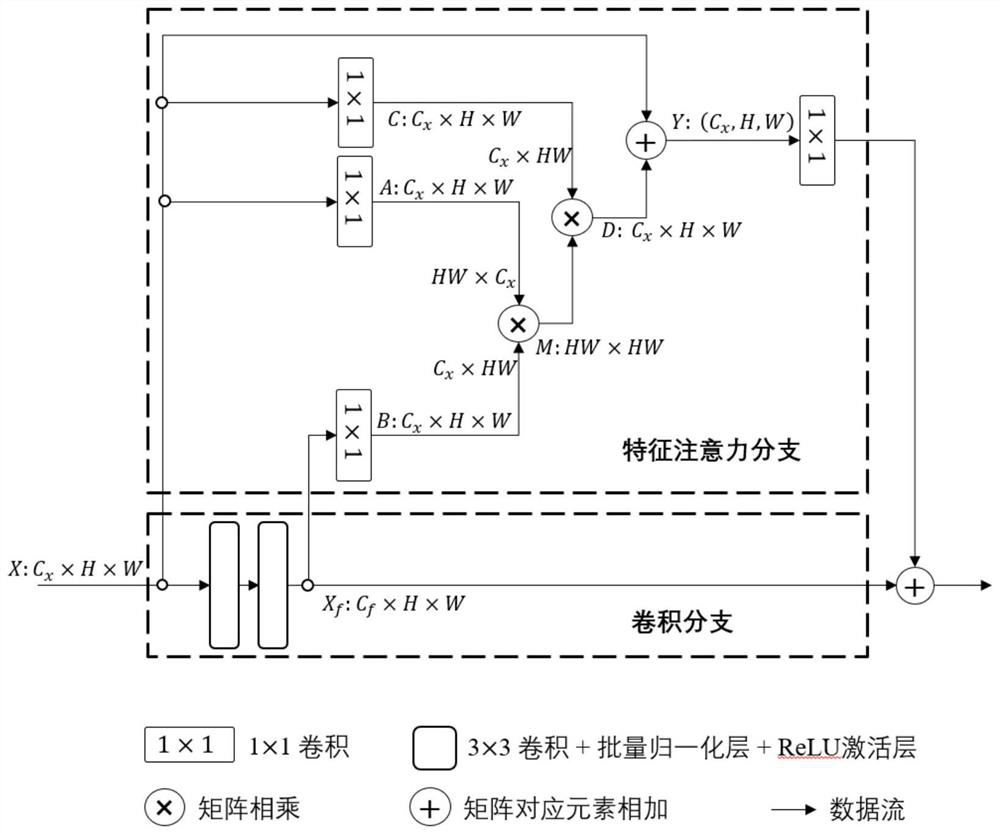

Retinal vessel segmentation method in fundus image and computer readable storage medium

InactiveCN112233135AEasy to divide and locatePrecise Segmentation and PositioningImage enhancementImage analysisData setNetwork architecture

The invention provides a retinal vessel segmentation method in a fundus image and a computer readable storage medium. The method comprises the steps of preprocessing the fundus image of a public dataset; cutting and expanding the preprocessed fundus image and dividing the fundus image into a training set and a cross validation set; building a residual non-local attention network, wherein the residual non-local attention network comprises the steps of adopting a coding network decoding network architecture with jump connection as a basic model, and embedding a residual non-local attention module to capture a non-local context dependency relationship between different areas of input features; adding a pyramid pooling module to the encoding network to capture multi-scale features; training aresidual non-local attention network by adopting the training set; determining model parameters of the residual non-local attention network through the cross validation set; and adopting the trainedresidual non-local attention network to segment the fundus image to be processed, so that a retinal vessel segmentation image can be obtained. Segmentation performance is better.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com