Document classification method based on hierarchical multi-attention network

A document classification and attention technology, applied in text database clustering/classification, biological neural network model, unstructured text data retrieval, etc. Weight and other issues

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] The implementation of the invention will be further described below in conjunction with the accompanying drawings and examples, but the implementation and protection of the present invention are not limited thereto. If there are processes or symbols that are not specifically described in detail below, those skilled in the art can refer to the prior art to understand or realized.

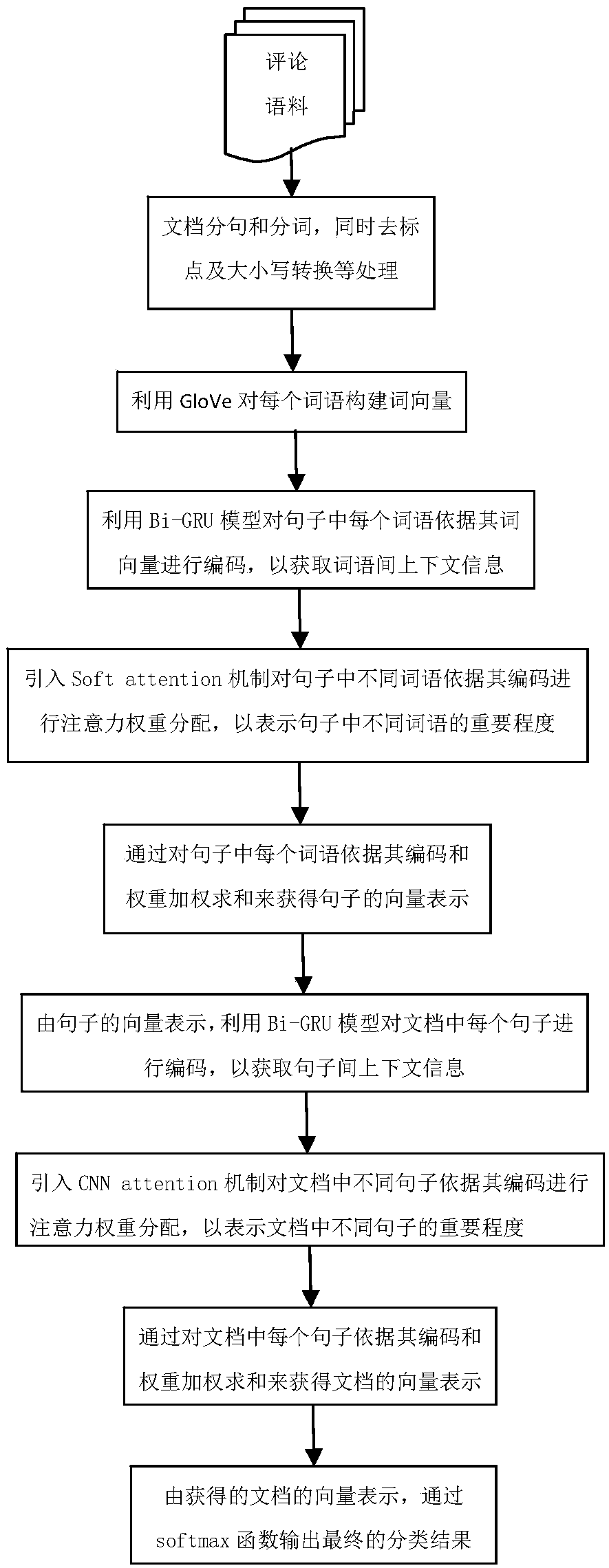

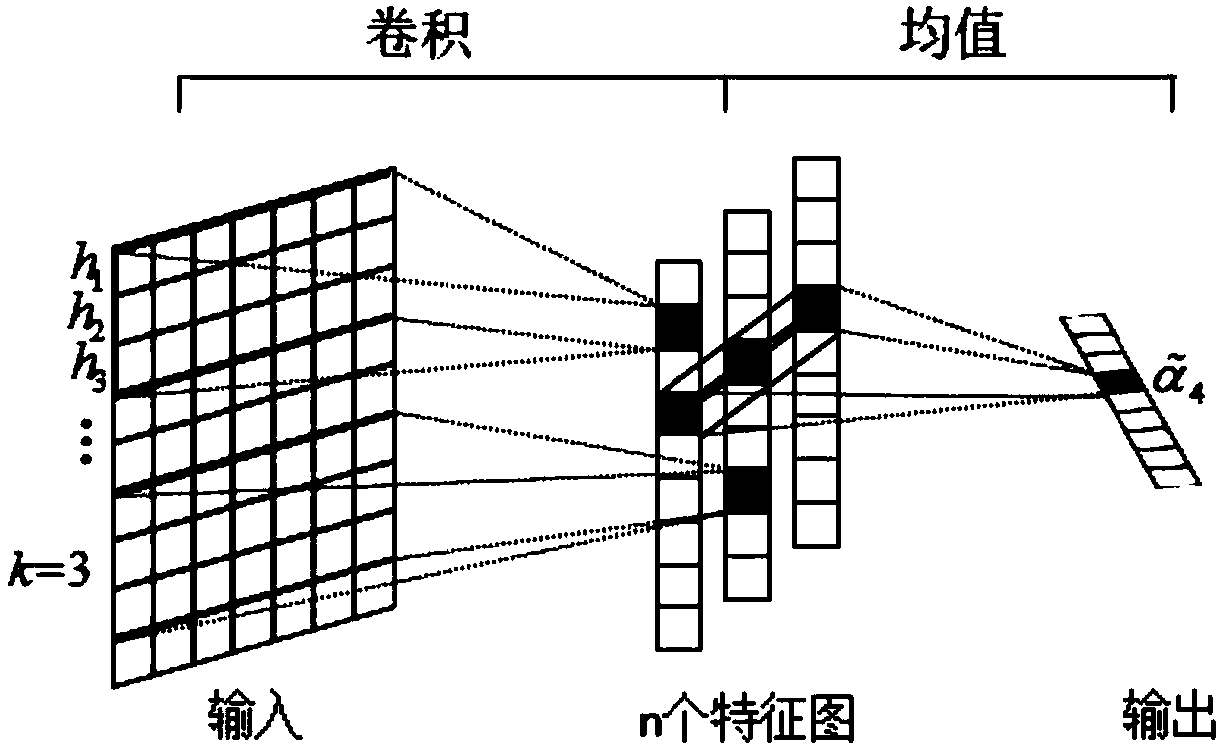

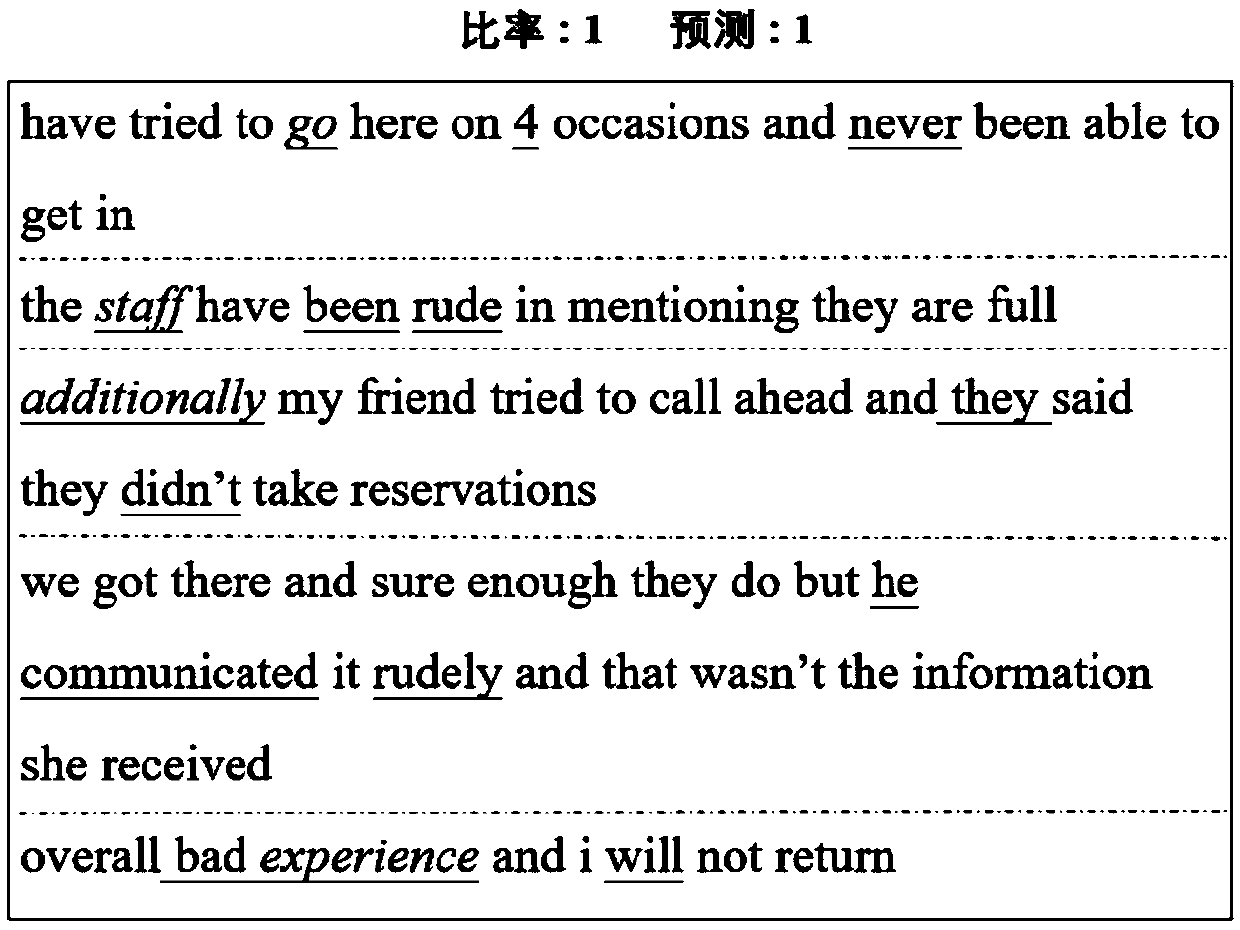

[0045] A kind of document classification method based on hierarchical multi-attention network of this example, comprises steps: (1) according to the modeling characteristic of document in the text classification, utilize bidirectional GRU sequence model to carry out document from word to sentence, sentence to document Modeling fully embodies the hierarchical structure of documents in the model; (2) for the process from words to sentences, in order to accurately express the importance of different words in sentences, the present invention utilizes a bidirectional GRU sequence model for each Wor...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com