Patents

Literature

193 results about "Receptive field" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A sensory space can be the space surrounding an animal, such as an area of auditory space that is fixed in a reference system based on the ears but that moves with the animal as it moves (the space inside the ears), or in a fixed location in space that is largely independent of the animal's location (place cells). Receptive fields have been identified for neurons of the auditory system, the somatosensory system, and the visual system.

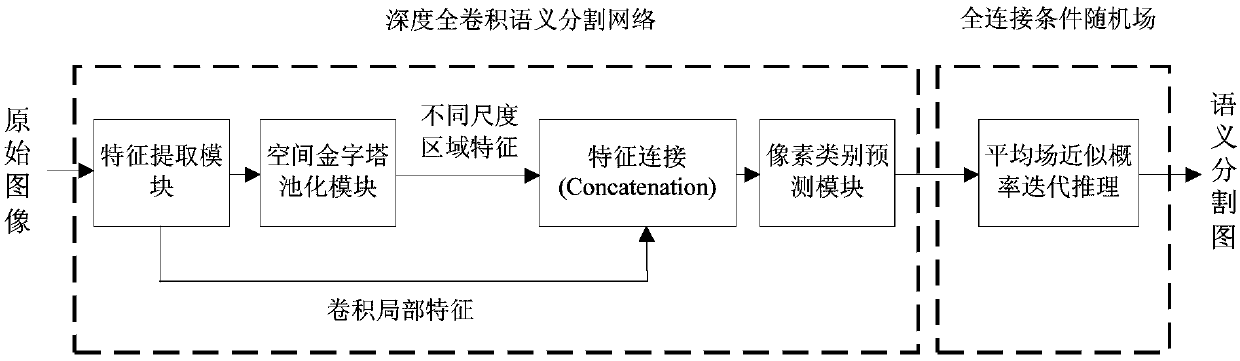

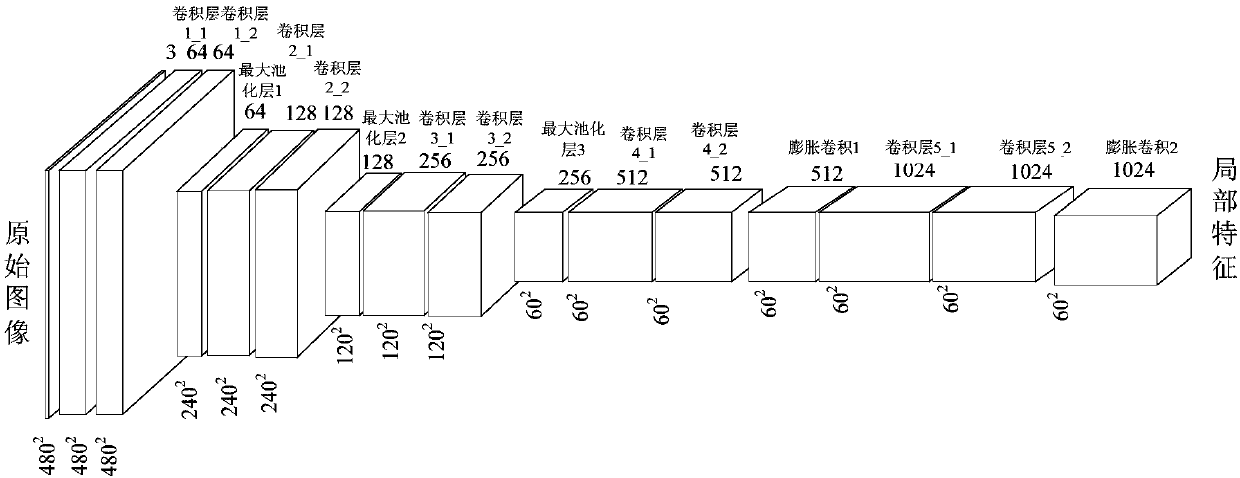

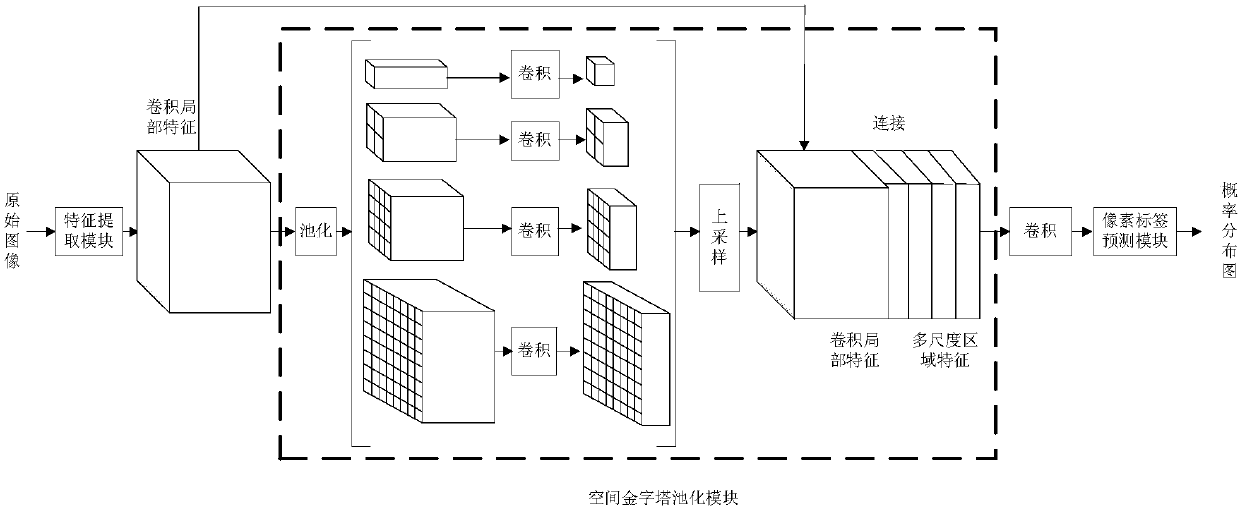

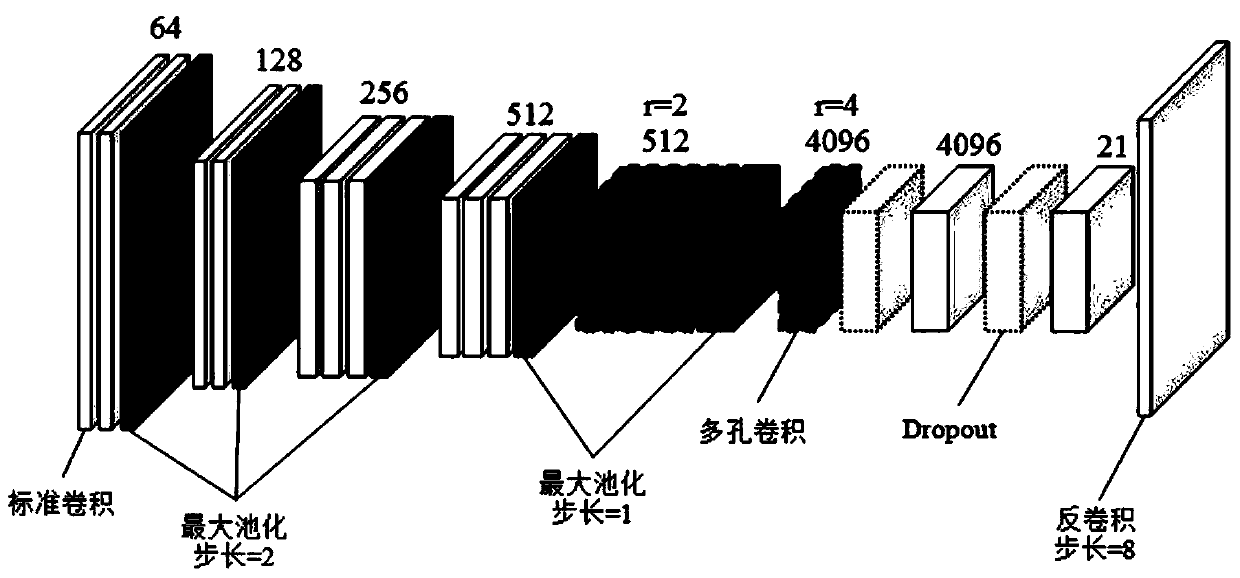

Image semantic division method based on depth full convolution network and condition random field

InactiveCN108062756ADoes not reduce dimensionalityHigh-resolutionImage enhancementImage analysisConditional random fieldImage resolution

The invention provides an image semantic division method based on a depth full convolution network and a condition random field. The image semantic division method comprises the following steps: establishing a depth full convolution semantic division network model; carrying out structured prediction based on a pixel label of a full connection condition random field, and carrying out model training, parameter learning and image semantic division. According to the image semantic division method provided by the invention, expansion convolution and a spatial pyramid pooling module are introduced into the depth full convolution network, and a label predication pattern output by the depth full convolution network is further revised by utilizing the condition random field; the expansion convolution is used for enlarging a receptive field and ensures that the resolution ratio of a feature pattern is not changed; the spatial pyramid pooling module is used for extracting contextual features of different scale regions from a convolution local feature pattern, and a mutual relation between different objects and connection between the objects and features of regions with different scales are provided for the label predication; the full connection condition random field is used for further optimizing the pixel label according to feature similarity of pixel strength and positions, so that a semantic division pattern with a high resolution ratio, an accurate boundary and good space continuity is generated.

Owner:CHONGQING UNIV OF TECH

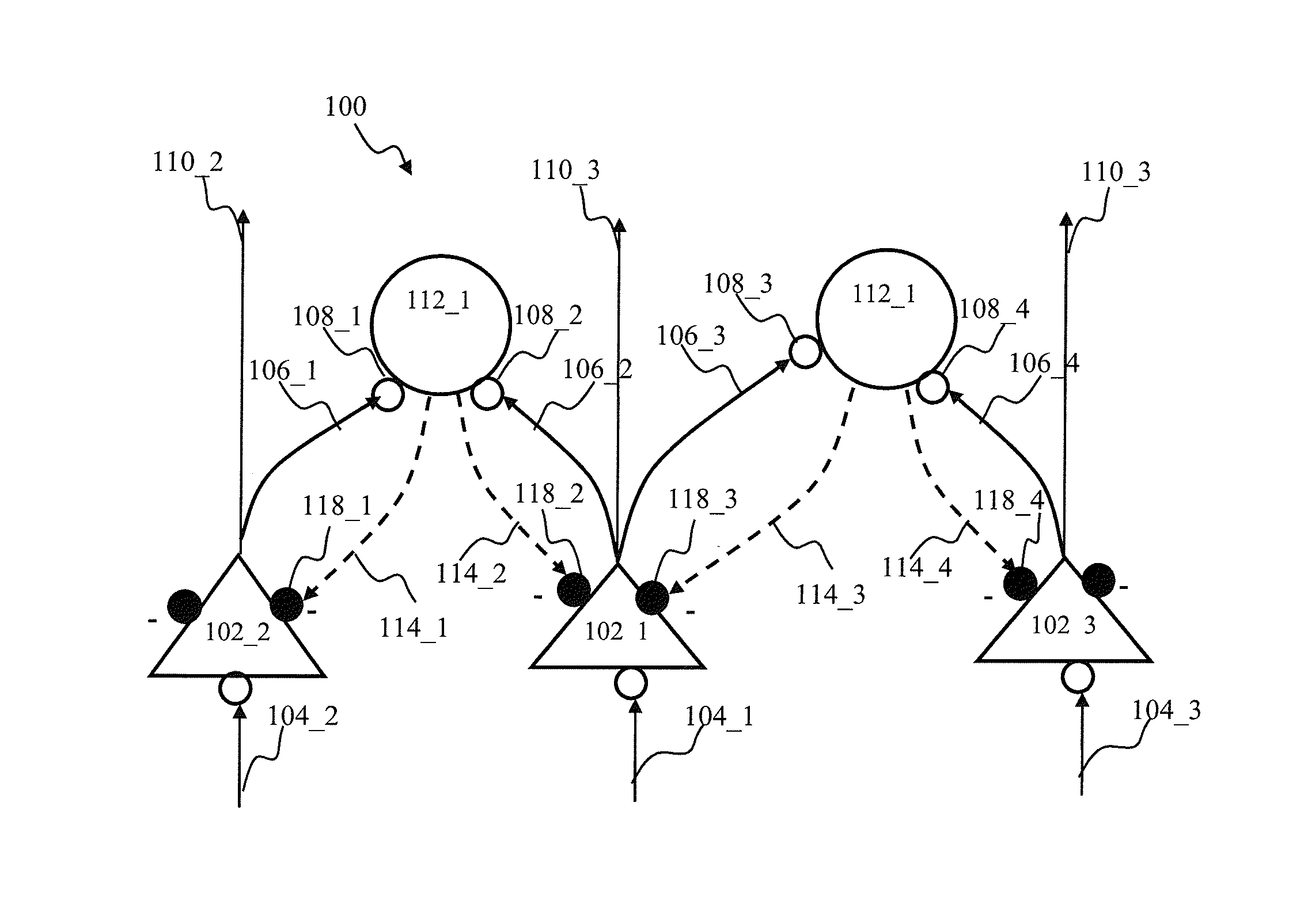

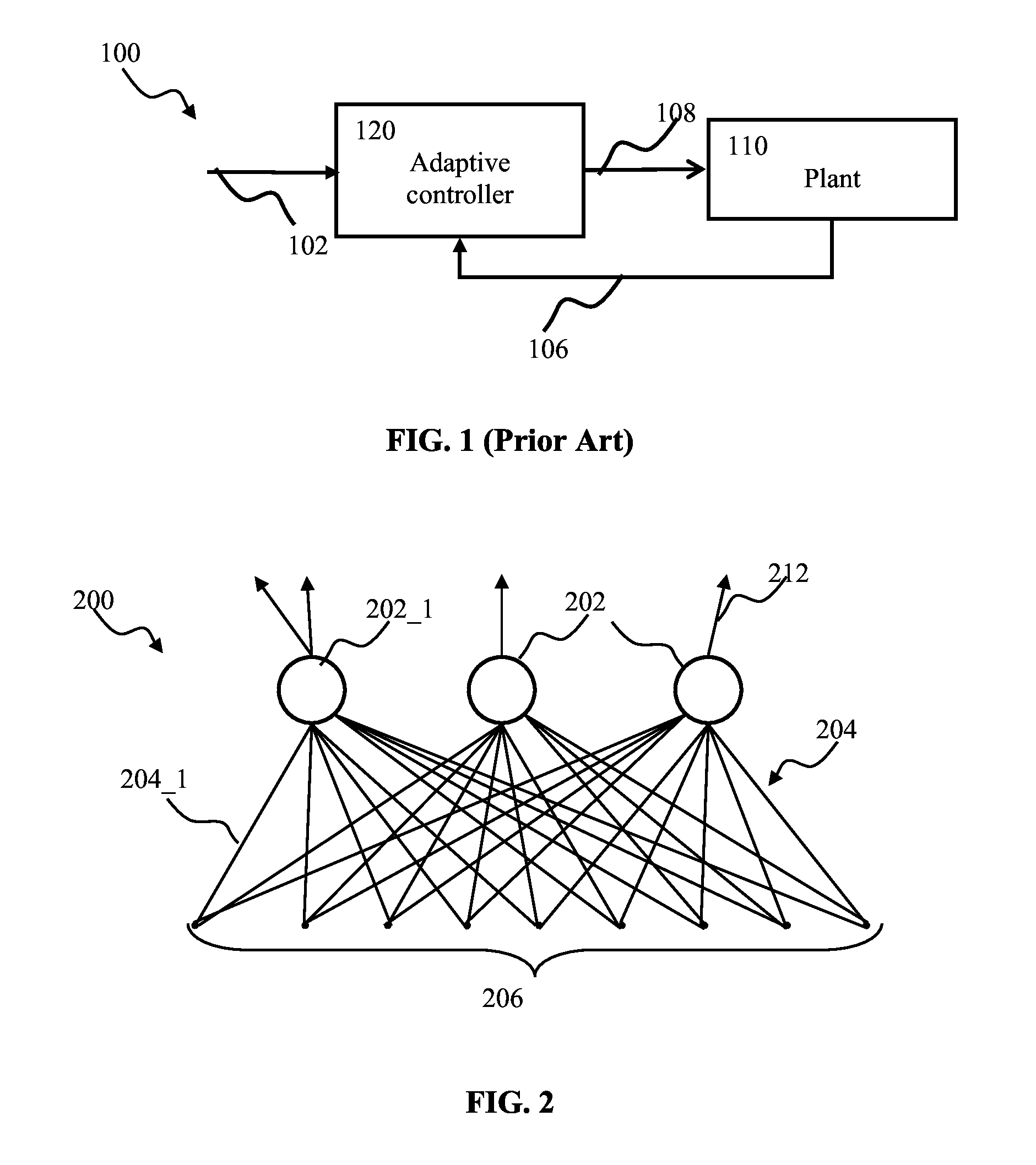

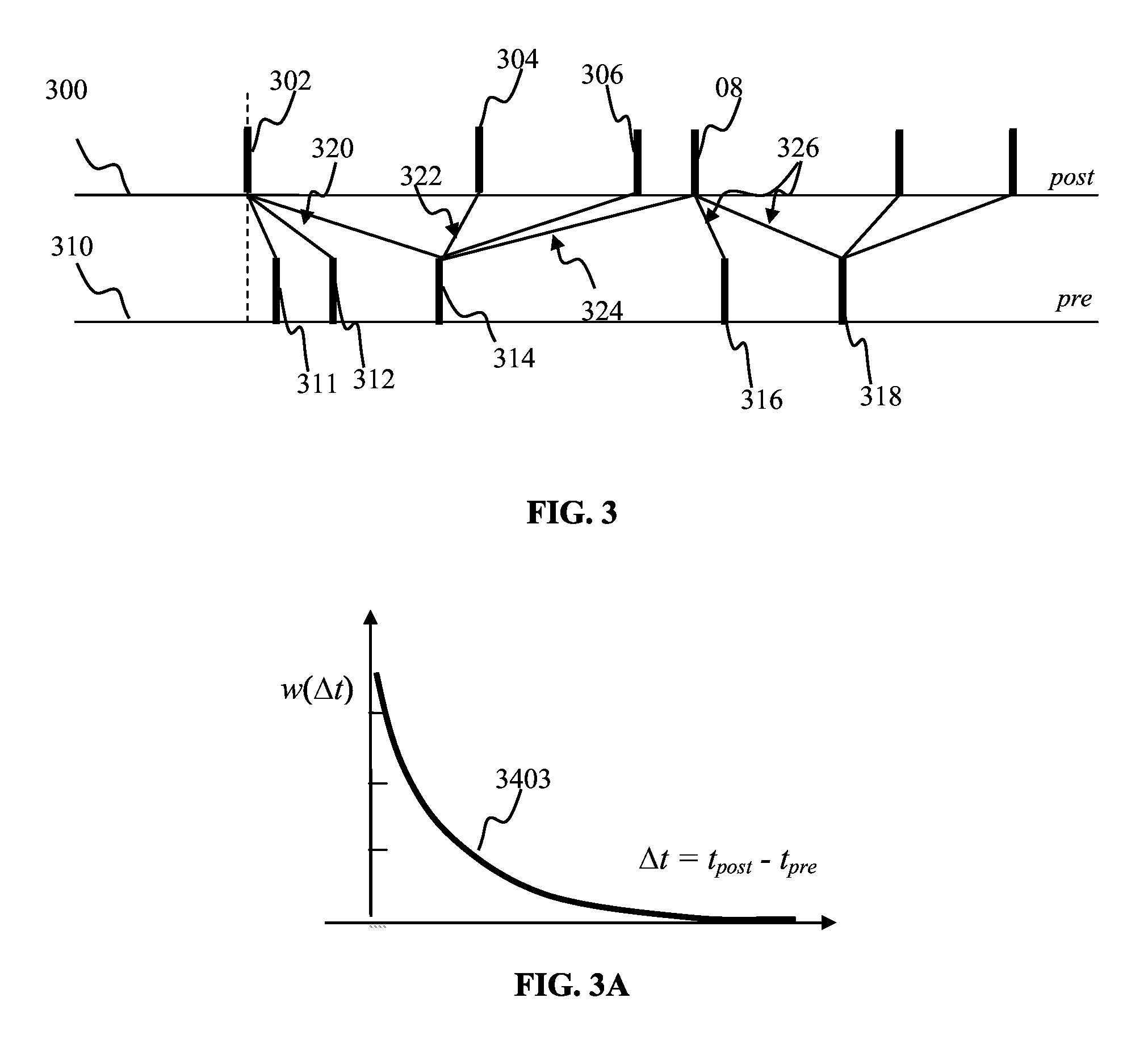

Rate stabilization through plasticity in spiking neuron network

ActiveUS20140156574A1Stabilization of neuron firing rateFirmly connectedDigital computer detailsDigital dataComputer scienceReceptive field

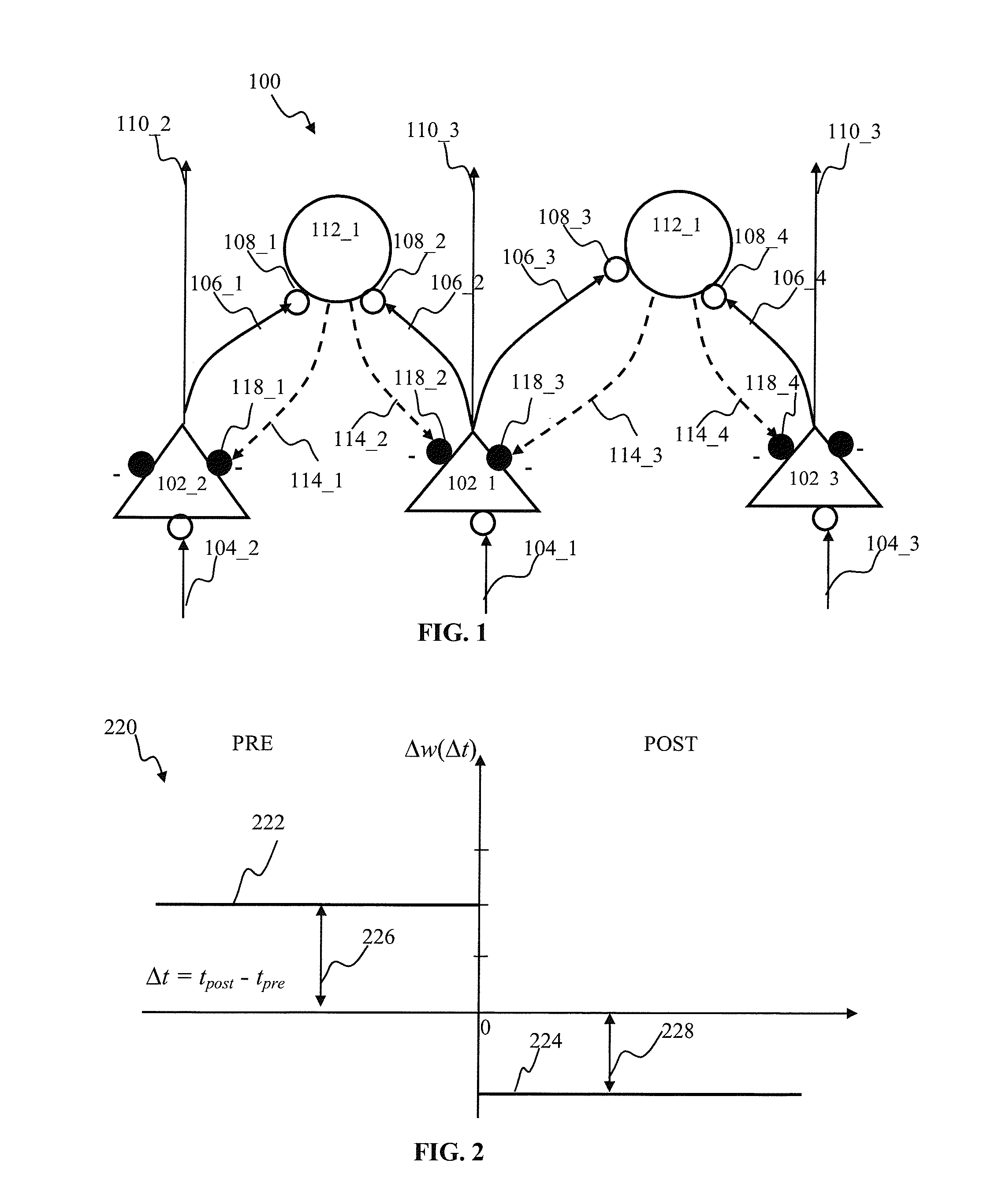

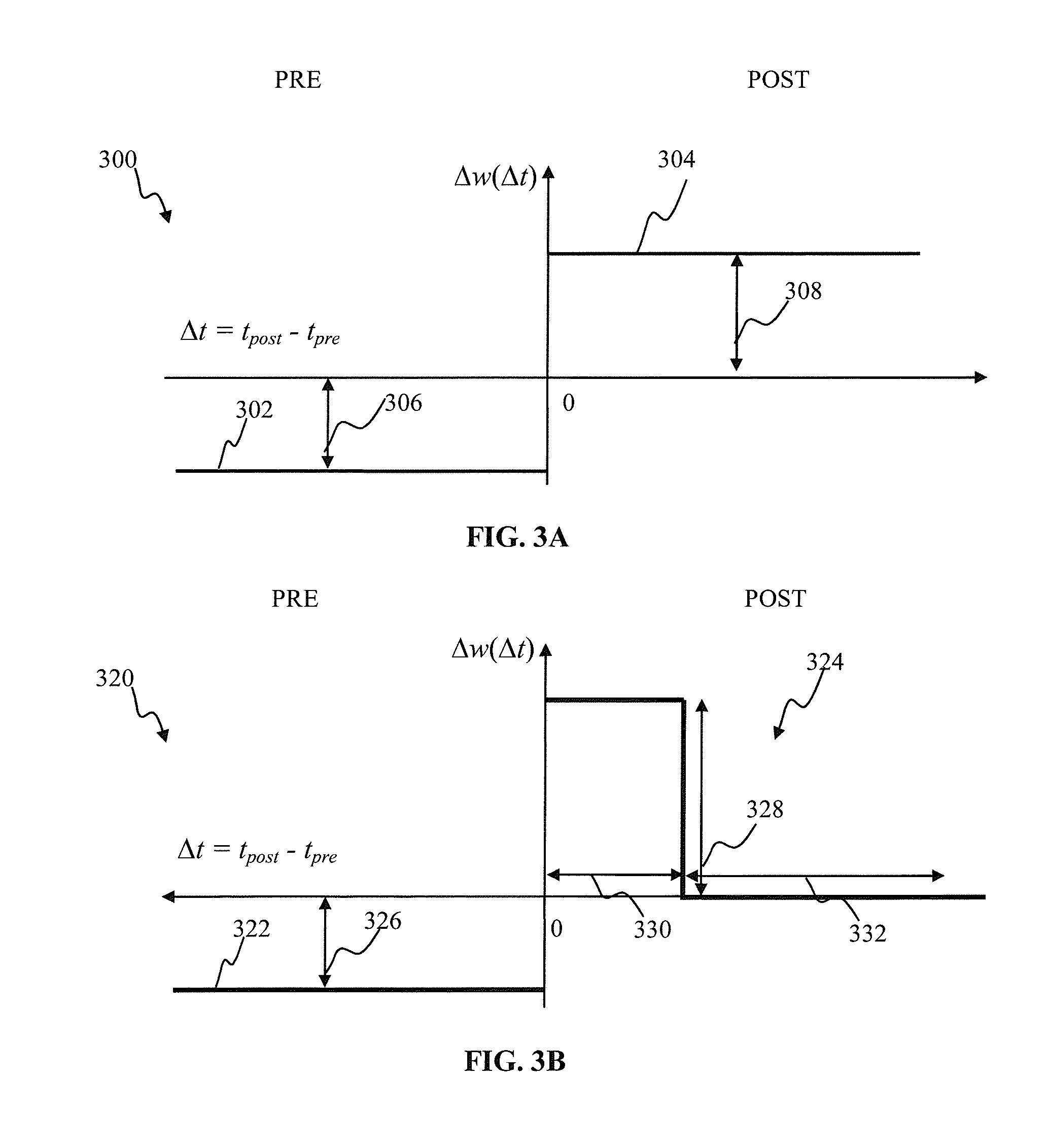

Apparatus and methods for activity based plasticity in a spiking neuron network adapted to process sensory input. In one embodiment, the plasticity mechanism may be configured for example based on activity of one or more neurons providing feed-forward stimulus and activity of one or more neurons providing inhibitory feedback. When an inhibitory neuron generates an output, inhibitory connections may be potentiated. When an inhibitory neuron receives inhibitory input, the inhibitory connection may be depressed. When the inhibitory input arrives subsequent to the neuron response, the inhibitory connection may be depressed. When input features are unevenly distributed in occurrence, the plasticity mechanism is capable of reducing response rate of neurons that develop receptive fields to more prevalent features. Such functionality may provide network output such that rarely occurring features are not drowned out by more widespread stimulus.

Owner:BRAIN CORP

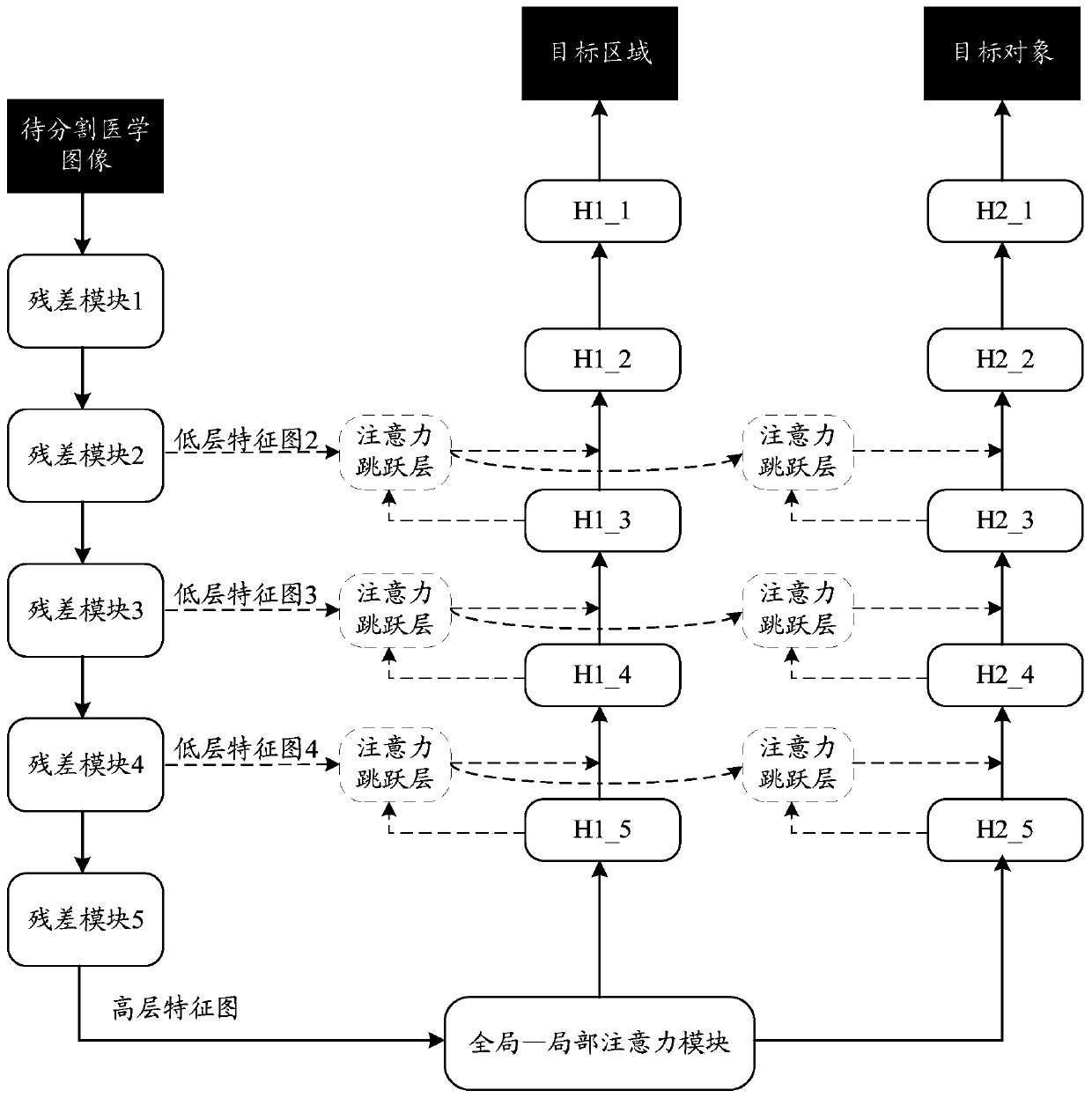

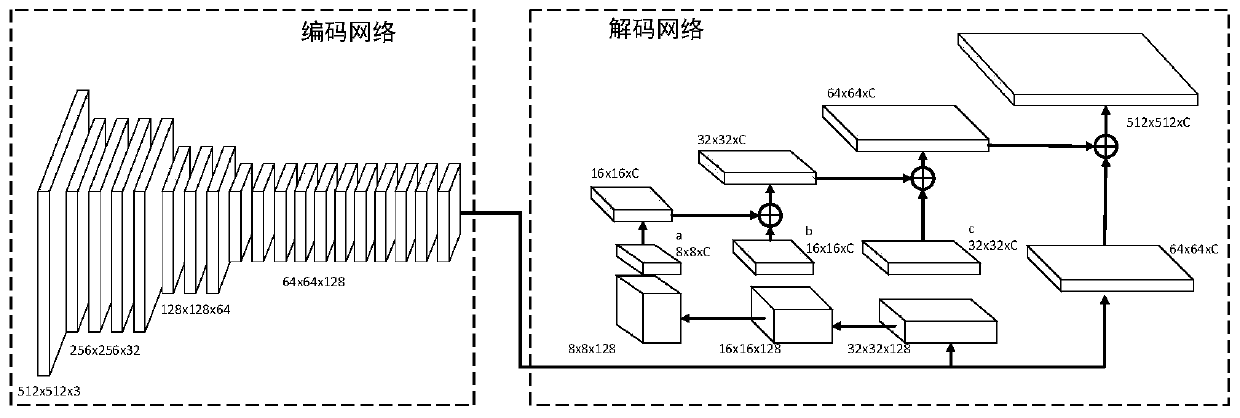

Medical image segmentation method and device and storage medium

ActiveCN109872306AGood segmentation effectEasy to divideImage analysisFeature extractionImage segmentation

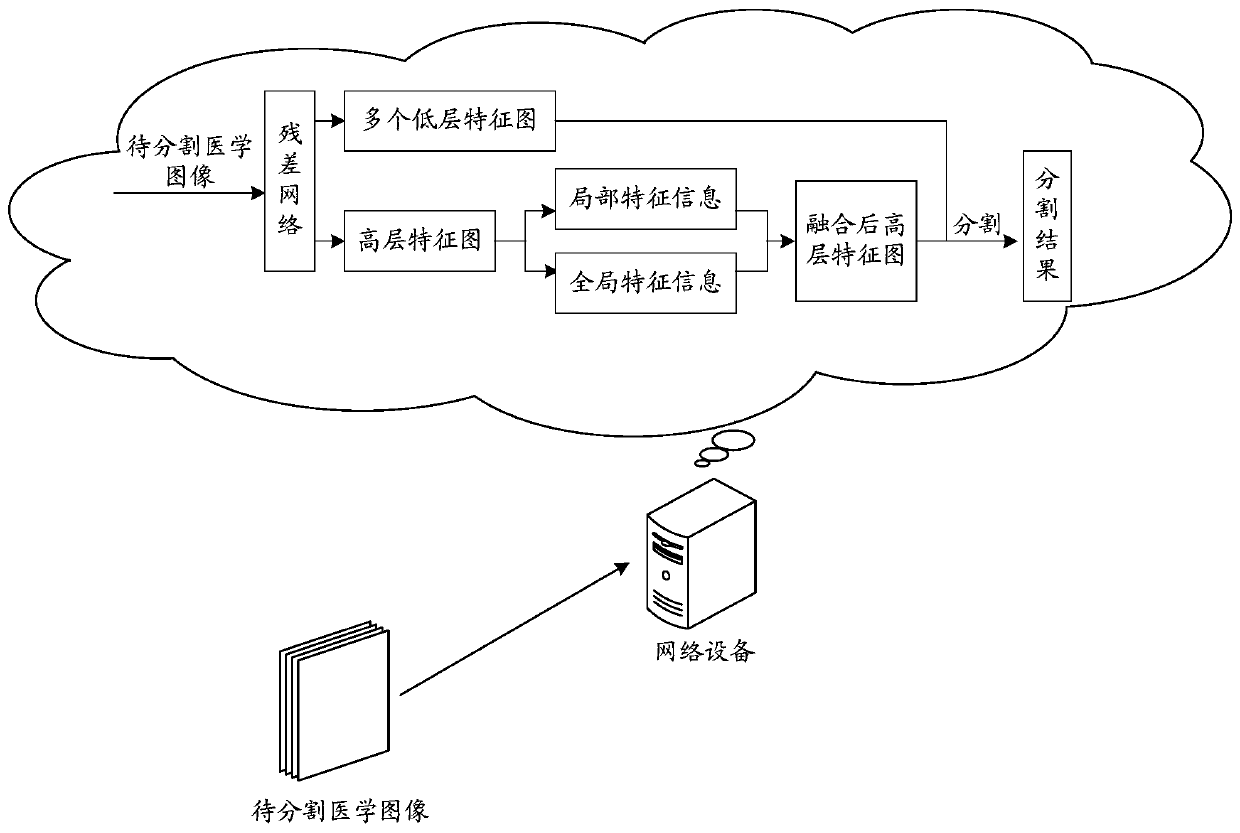

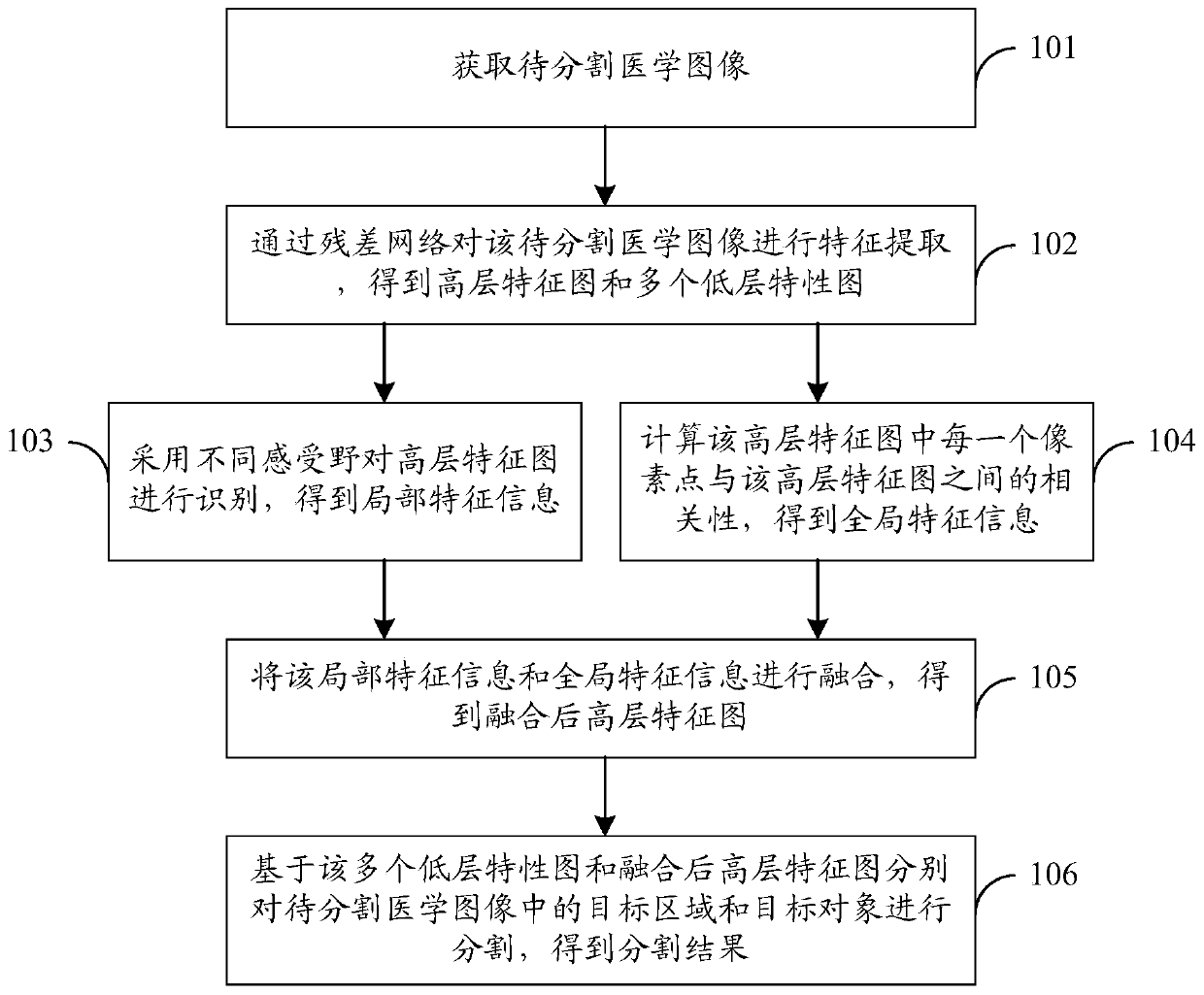

The embodiment of the invention discloses a medical image segmentation method and device and a storage medium. The method comprises the steps of obtaining a to-be-segmented medical image; carrying outfeature extraction on the to-be-segmented medical image through a residual network; obtaining a high-level feature map and a plurality of low-level feature maps; then, on one hand, different receptive fields are adopted to identify the high-level feature map; obtaining local feature information, on the other hand, the correlation between each pixel point in the high-level feature map and the high-level feature map is calculated; then, fusing the local feature information with the global feature information, and segmenting a target area and a target object in the to-be-segmented medical imagebased on the fused high-level feature map and the plurality of low-level feature maps; According to the scheme, the detail segmentation accuracy can be improved, and the overall segmentation effect ofthe medical image is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

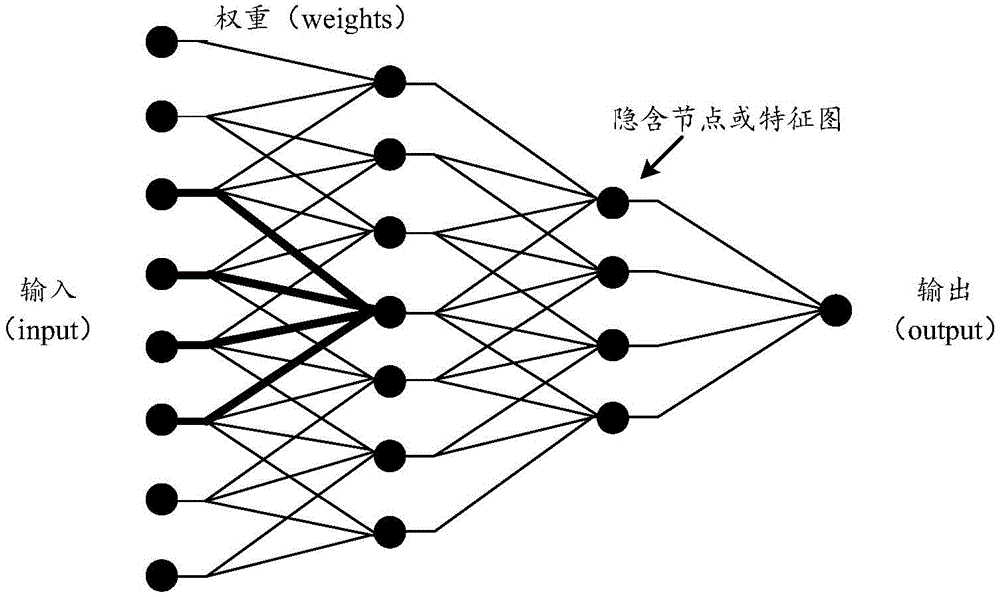

Convolutional neural network training method and target identification method and device

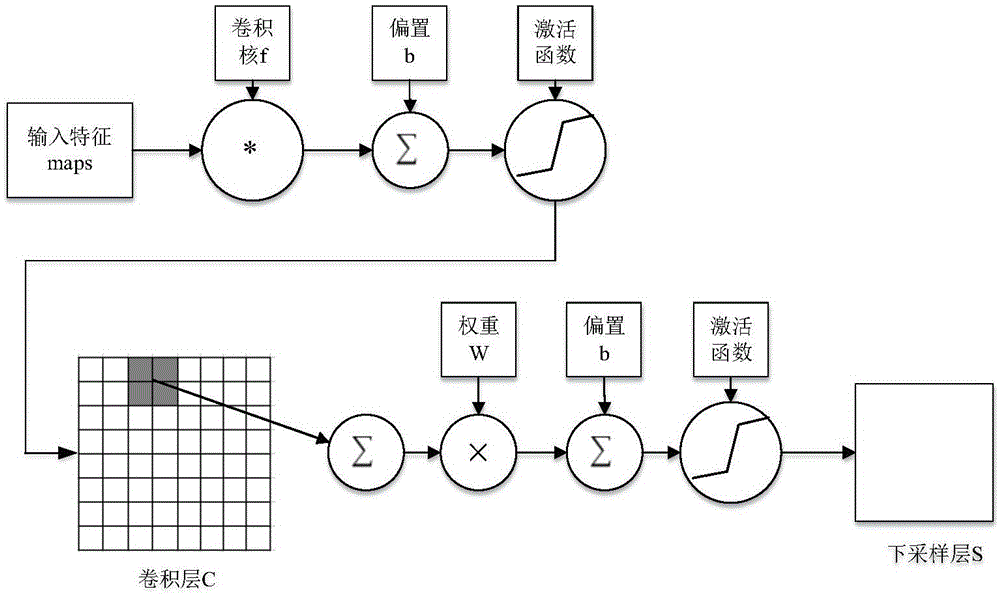

ActiveCN104809426ABiological neural network modelsCharacter and pattern recognitionNeuronComputer science

The invention discloses a convolutional neural network training method and a target identification method and device. According to the convolutional neural network training method, on the one hand, a convolutional neural network convolutes data on different signal channels separately on the basis of the signal channels, and due to the differences of different signal channels, trained neuron convolution kernels are different from each other, so that the identification level of the convolutional neural network can be enhanced compared with the prior art; on the other hand, the convolutional neural network performs dropout on the basis of the signal channels during a forward transmission process and an object recognition process, the number of neurons keeps unchanged, so that data of all the channels of local receptive fields can be disposed. Therefore, the convolutional neural network training method can enhance the identification level of the convolutional neural network.

Owner:NEC CORP

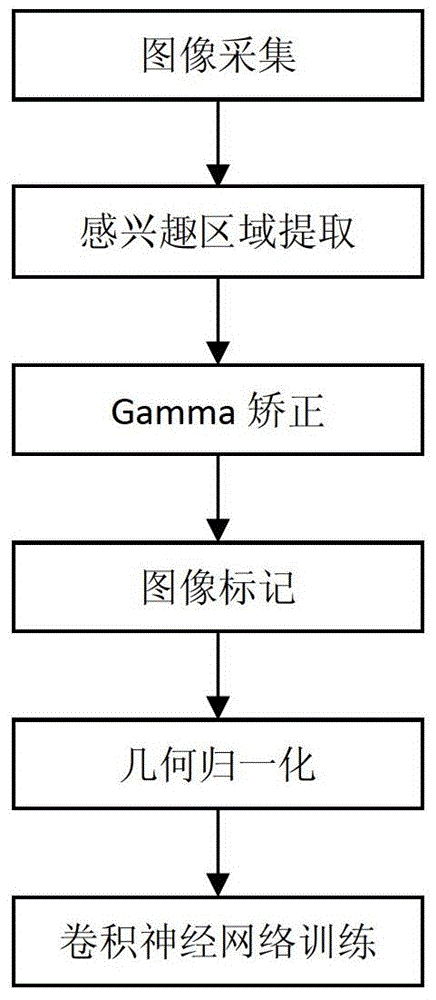

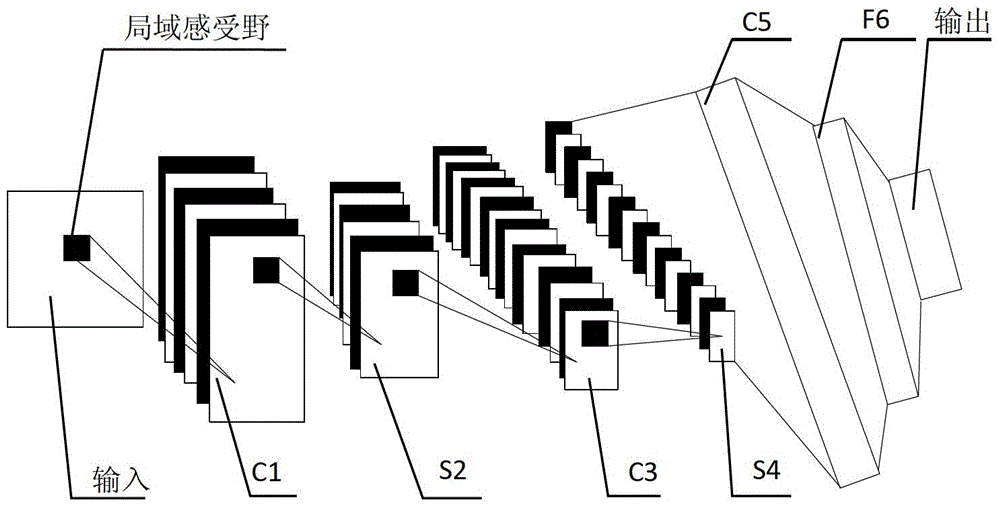

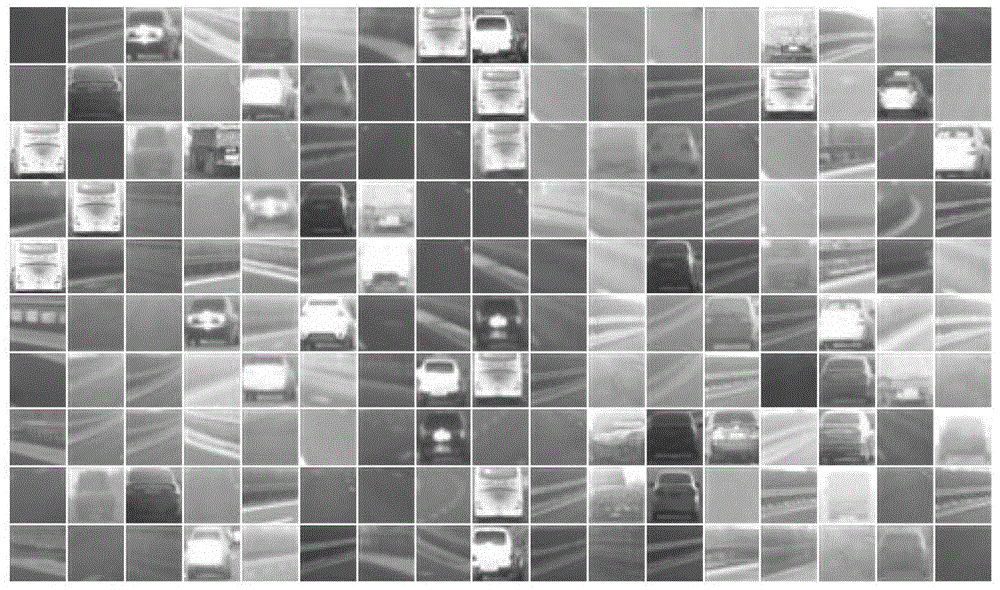

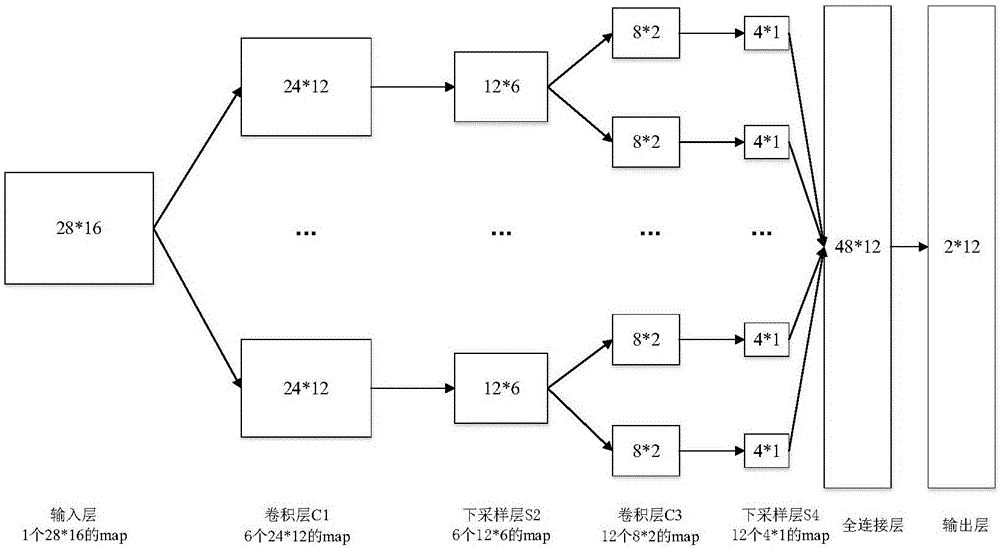

Vehicle front trafficability analyzing method based on convolution nerve network

InactiveCN103279759AHigh-resolutionAvoid the effects of target recognitionCharacter and pattern recognitionNerve networkImage resolution

The invention discloses a vehicle front trafficability analyzing method based on a convolution nerve network. The method comprises the following steps: first, a vidicon arranged in the front of a vehicle is used for collecting a large number of actual vehicle traveling environment images; a Gamma rectification function is used for pre-processing the images; training of the convolution nerve network is conducted. According to the method, a nonlinear function superimposed Gamma rectification method is used for pre-processing the images, so that influence of light illumination of strong changes on identification of objects is avoided, and the image resolution ratio is improved. According to the method, a geometry normalization method is used, so that the resolution ratio difference caused by identifying the distance of an object distance vidicon is reduced. The convolution nerve network LeNet-5 adopted in the method can extract implicit expression characteristics with class distinguishing capacity and is simple in extracting process. The LeNet-5 is combined with a local receptive field, weight share and secondary sampling to ensure robustness of simple geometry deformation, reduce training parameters of the network, and simplify the structure of the network.

Owner:DALIAN UNIV OF TECH

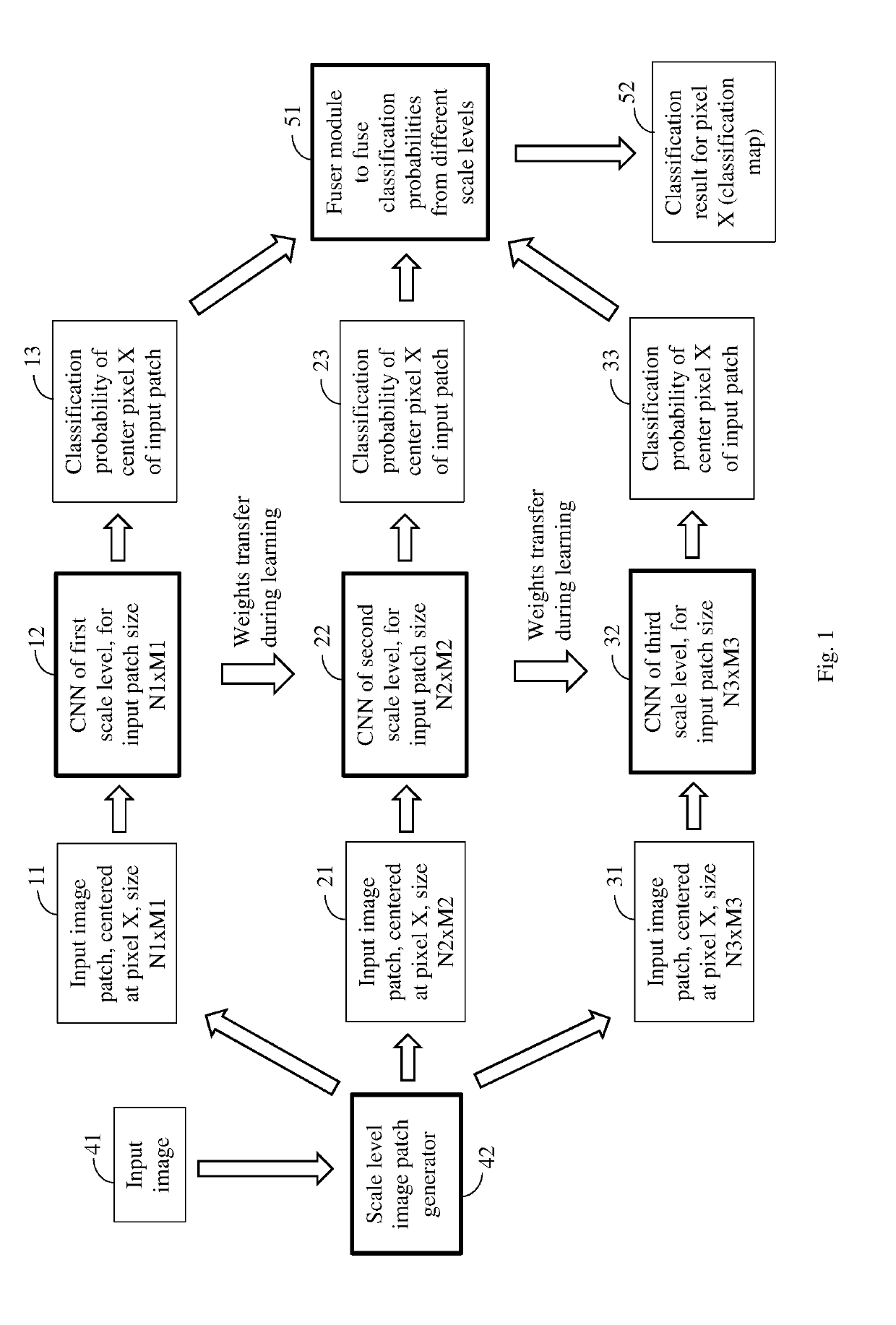

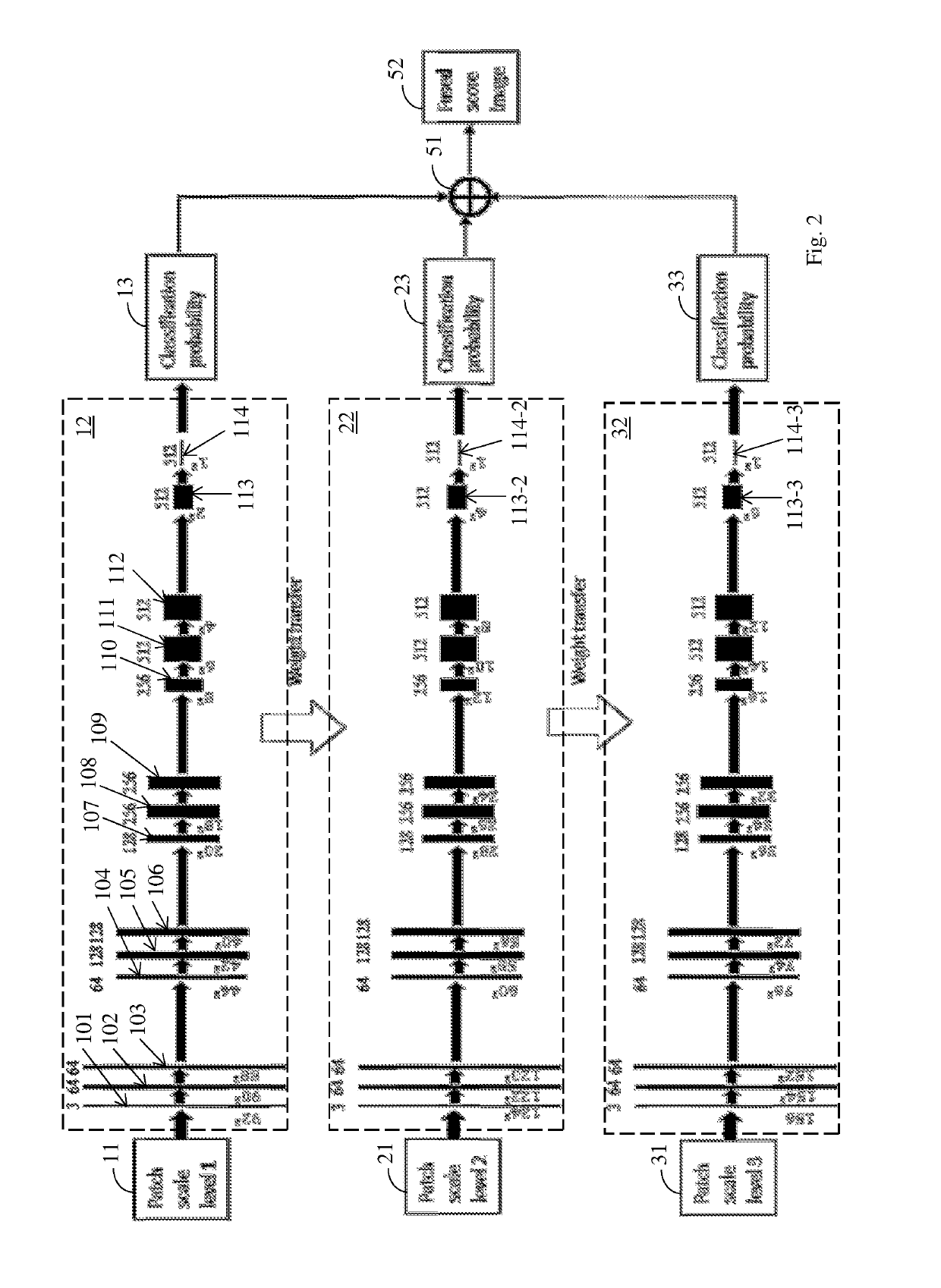

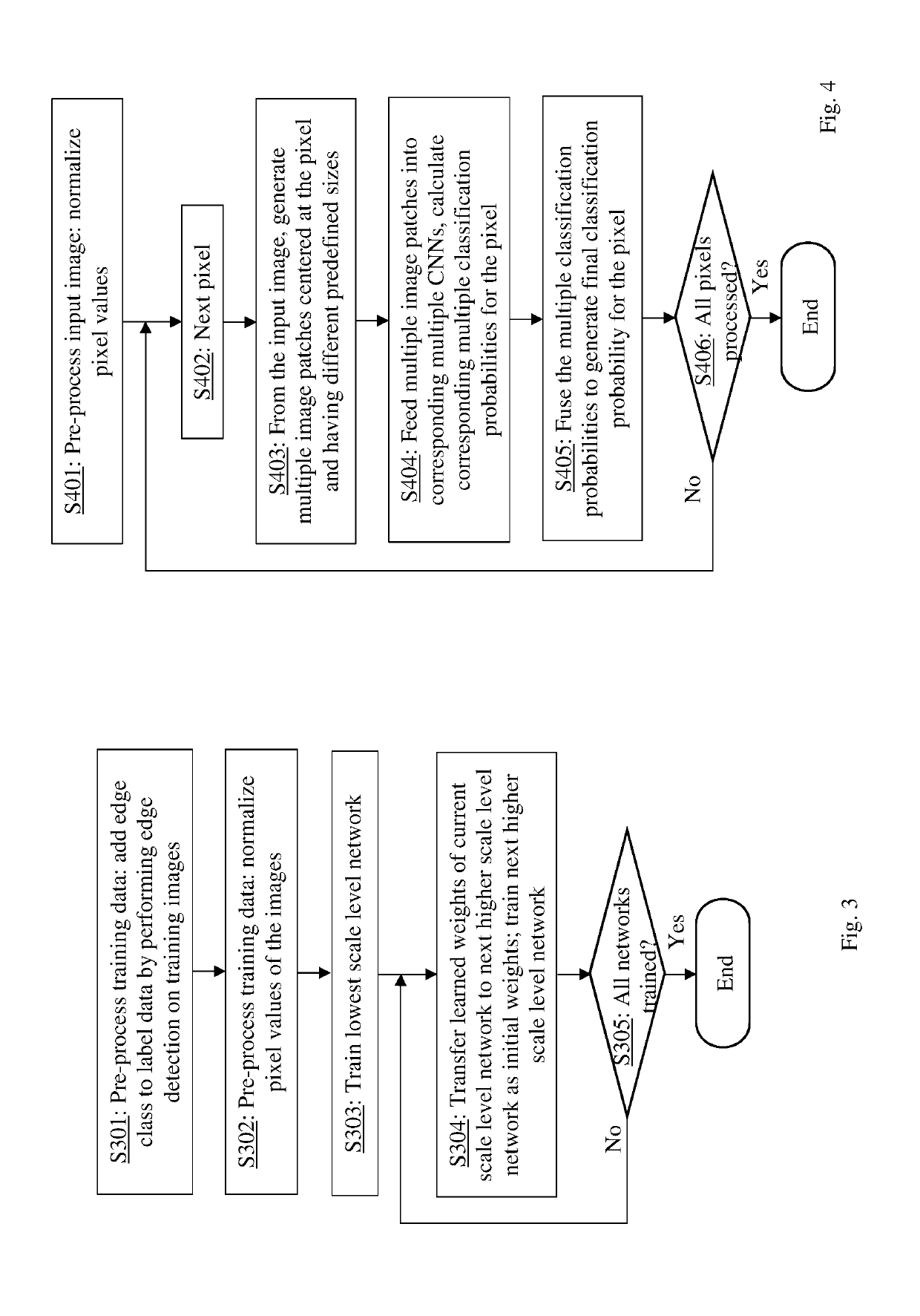

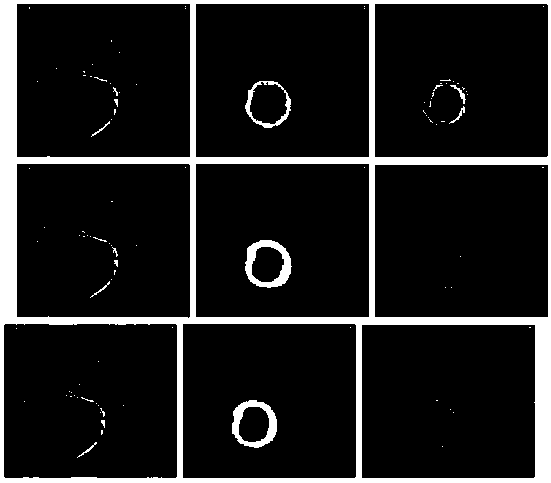

Method and system for multi-scale cell image segmentation using multiple parallel convolutional neural networks

ActiveUS20190236411A1Take advantage ofGeometric image transformationCharacter and pattern recognitionCell image segmentationMultiple input

An artificial neural network system for image classification, formed of multiple independent individual convolutional neural networks (CNNs), each CNN being configured to process an input image patch to calculate a classification for the center pixel of the patch. The multiple CNNs have different receptive field of views for processing image patches of different sizes centered at the same pixel. A final classification for the center pixel is calculated by combining the classification results from the multiple CNNs. An image patch generator is provided to generate the multiple input image patches of different sizes by cropping them from the original input image. The multiple CNNs have similar configurations, and when training the artificial neural network system, one CNN is trained first, and the learned parameters are transferred to another CNN as initial parameters and the other CNN is further trained. The classification includes three classes, namely background, foreground, and edge.

Owner:KONICA MINOLTA LAB U S A INC

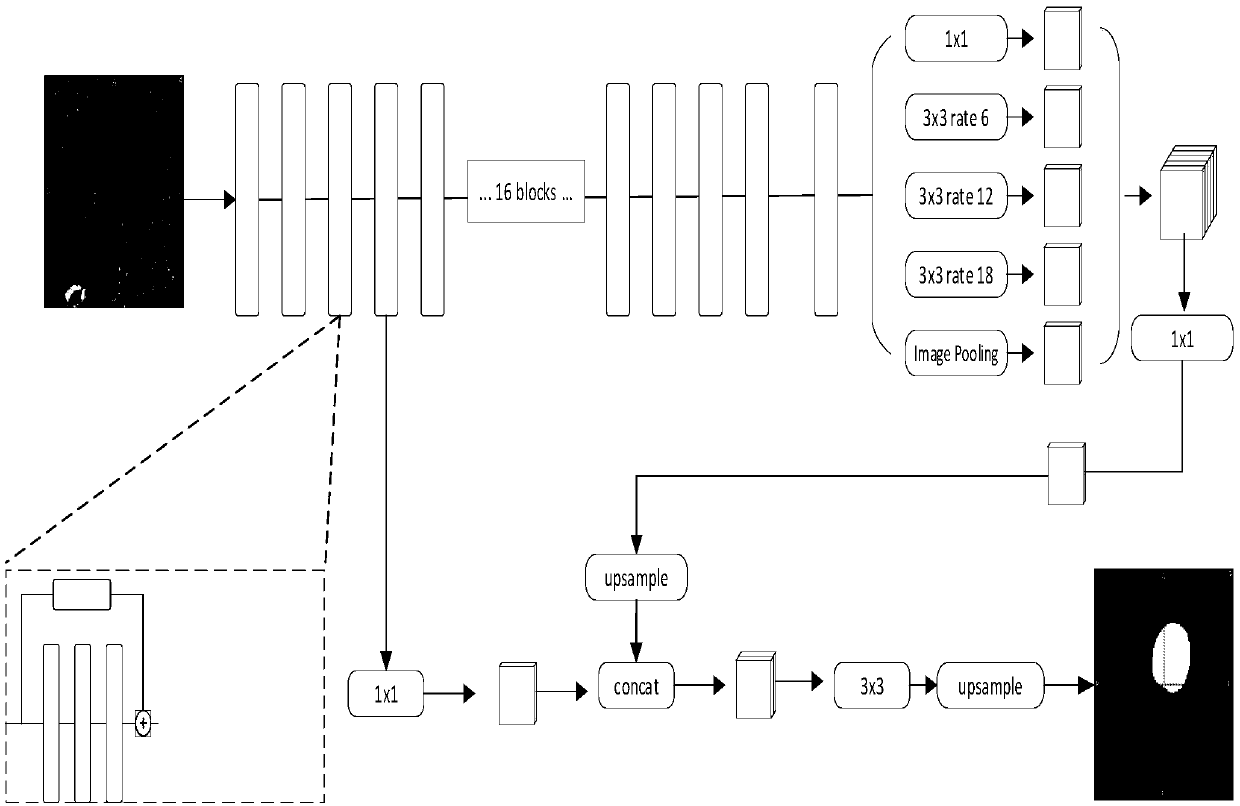

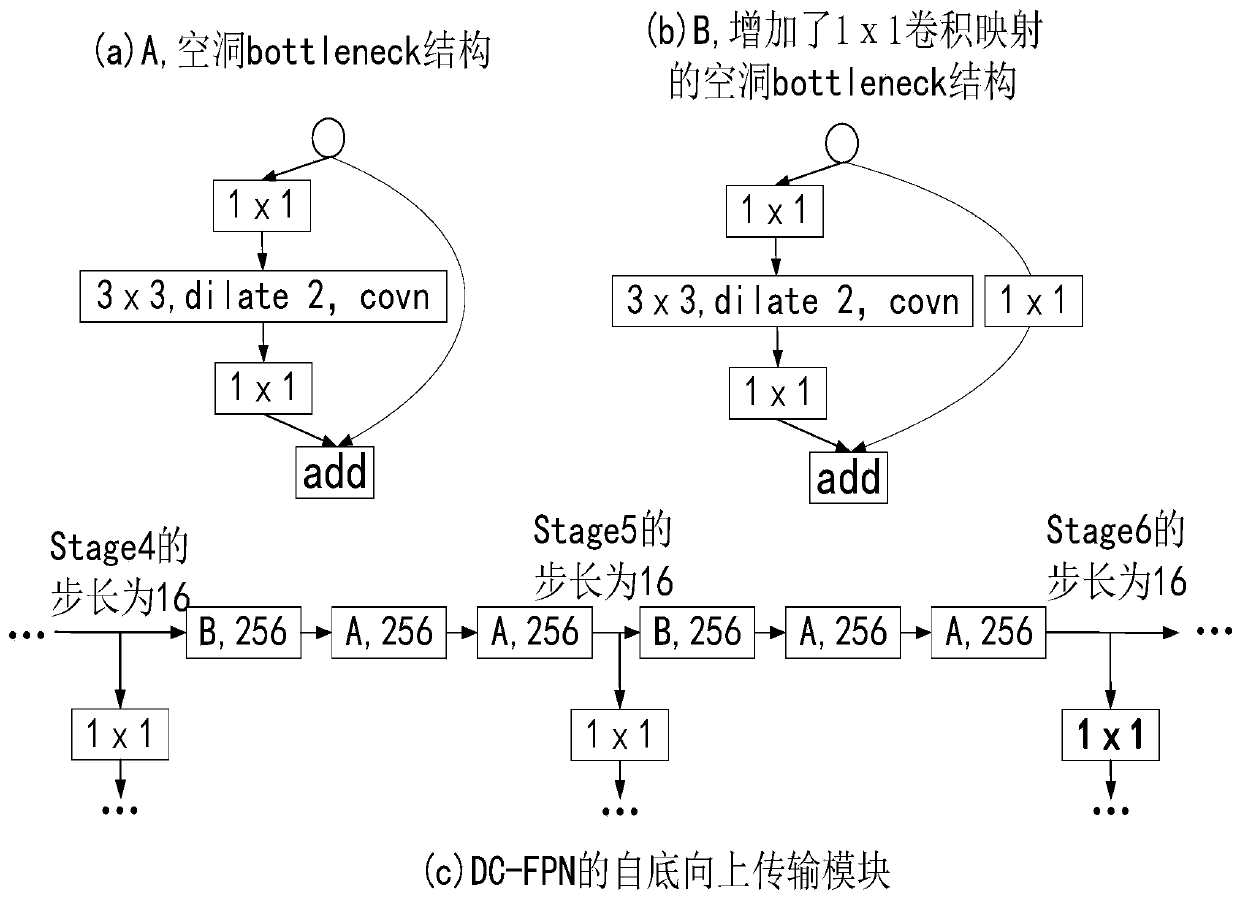

DCM myocardial diagnosis and treatment radiation image segmentation method based on a multi-scale feature pyramid

ActiveCN109584246ASemantic richFull detailsImage enhancementImage analysisDiseasePattern recognition

The invention belongs to the technical field of medical image processing, and discloses a DCM myocardial diagnosis and treatment radiation image segmentation method based on a multi-scale characteristic pyramid, which is used for segmenting a cardiac MRI image and completely segmenting medical anatomical structures such as myocardium and a blood pool. According to the method, traditional convolution is replaced by convolution with holes, so that the method has the advantages that the receptive field of the convolution kernel is larger, and larger context information can be obtained; By using spatial pyramid pooling, information extraction can be carried out on the image on different scales to cope with multi-scale characteristics of the image, and even a very small object can be effectively captured; Detail information can be recovered by using an encoder structure and a decoder structure, shallow features and high-level features are fused, and a segmentation mask with rich semantics and complete details is obtained; According to the method, the workload of doctors is reduced, and the method has great significance for disease analysis and subsequent treatment plans and postoperative evaluation.

Owner:CHENGDU UNIV OF INFORMATION TECH

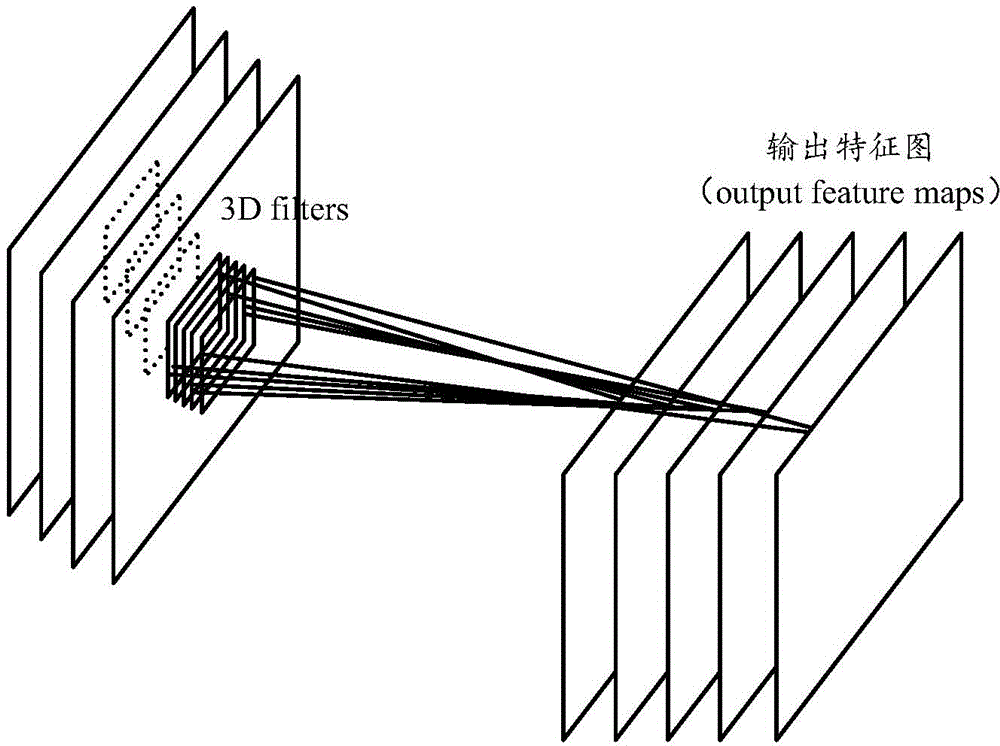

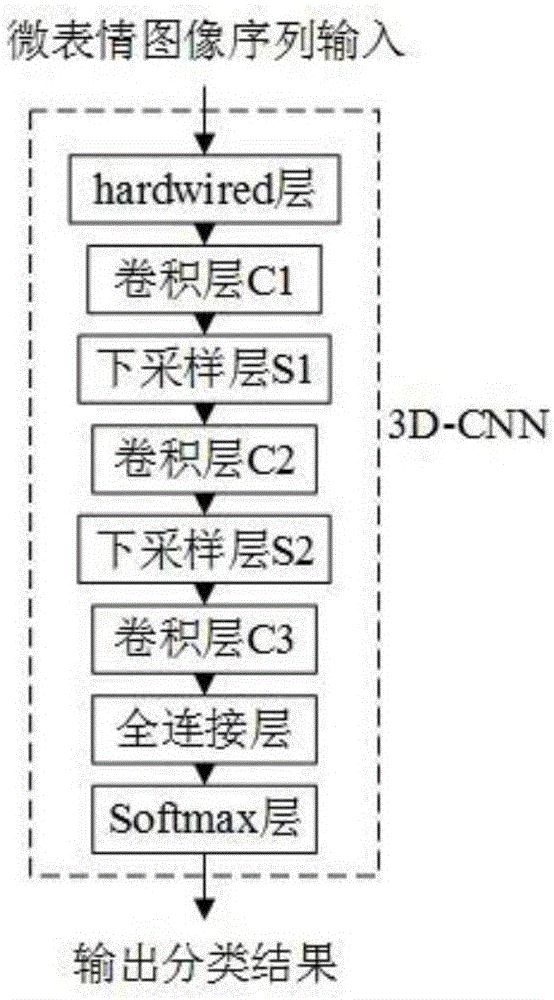

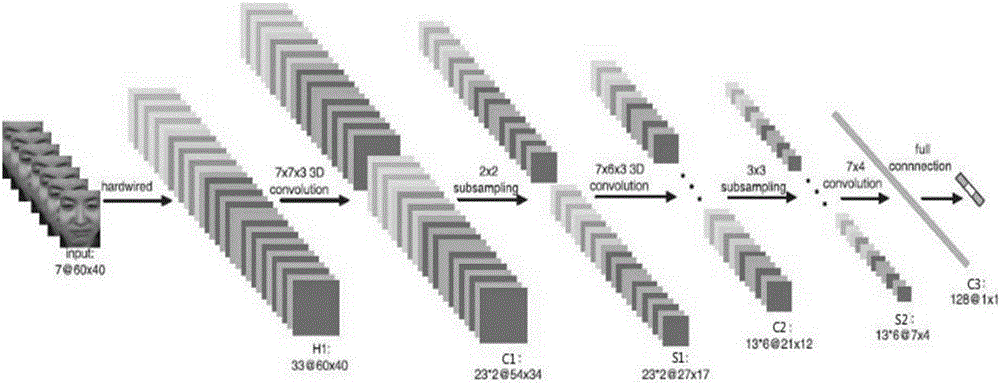

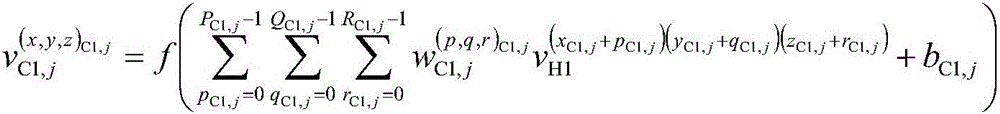

Micro expression recognition method based on 3D convolution neural network

ActiveCN106570474AImprove robustnessReduce complexityNeural architecturesAcquiring/recognising facial featuresFeature DimensionHybrid neural network

The invention relates to a micro expression recognition method based on a 3D convolution neural network. Based on a constructed 3D convolution neural network (3D-CNN) model, happiness, disgust, depression, surprise as well as five other micro expressions can be recognized effectively. The designed micro expression recognition method is simple and efficient. There is no need to carry out a series of processes such as feature extraction, feature dimension reduction and classification on sample data. The difficulty of preprocessing is reduced greatly. Through receptive field and weight sharing, the number of parameters needing to be trained by the neural network is reduced, and the complexity of the algorithm is reduced greatly. In addition, in the designed micro expression recognition method, through down-sampling operation of a down-sampling layer, the robustness of the network is enhanced, and image distortion to a certain degree can be tolerated.

Owner:NANJING UNIV OF POSTS & TELECOMM

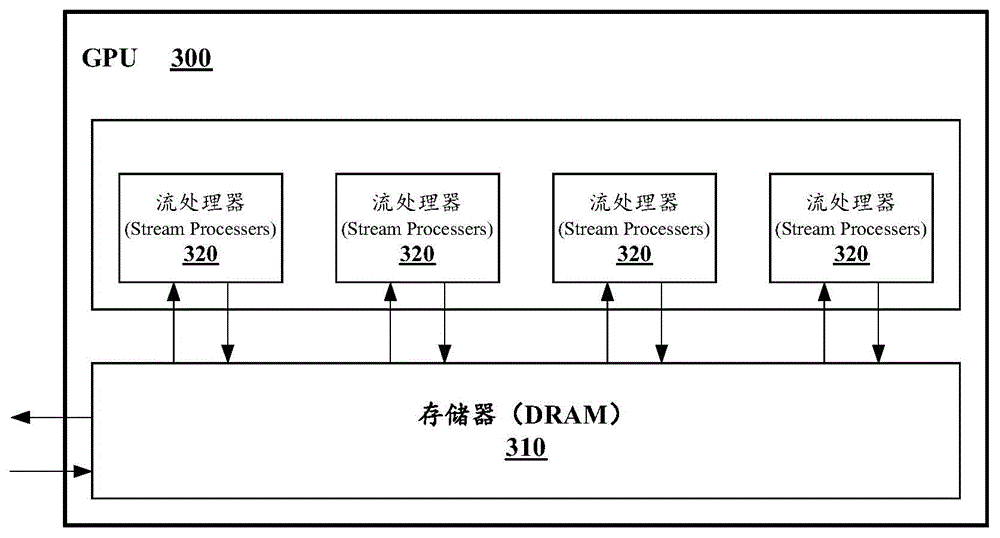

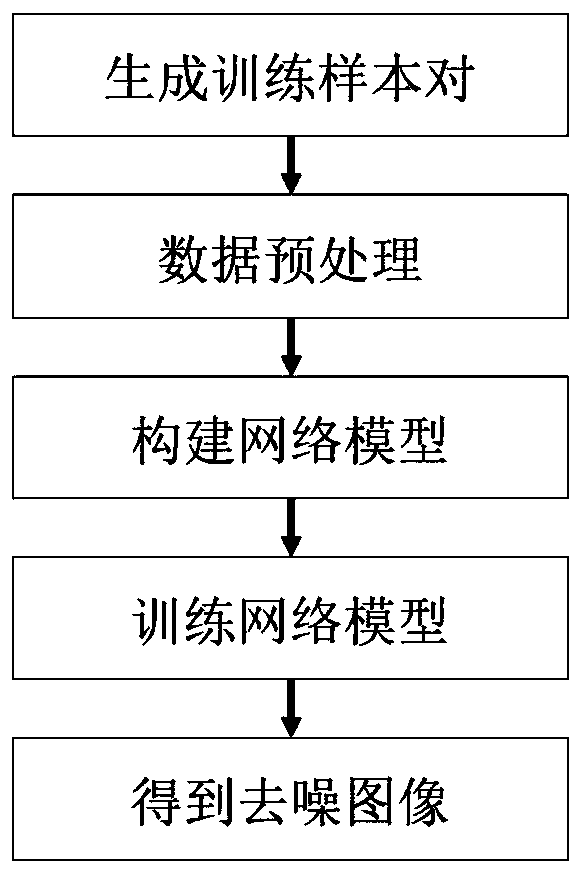

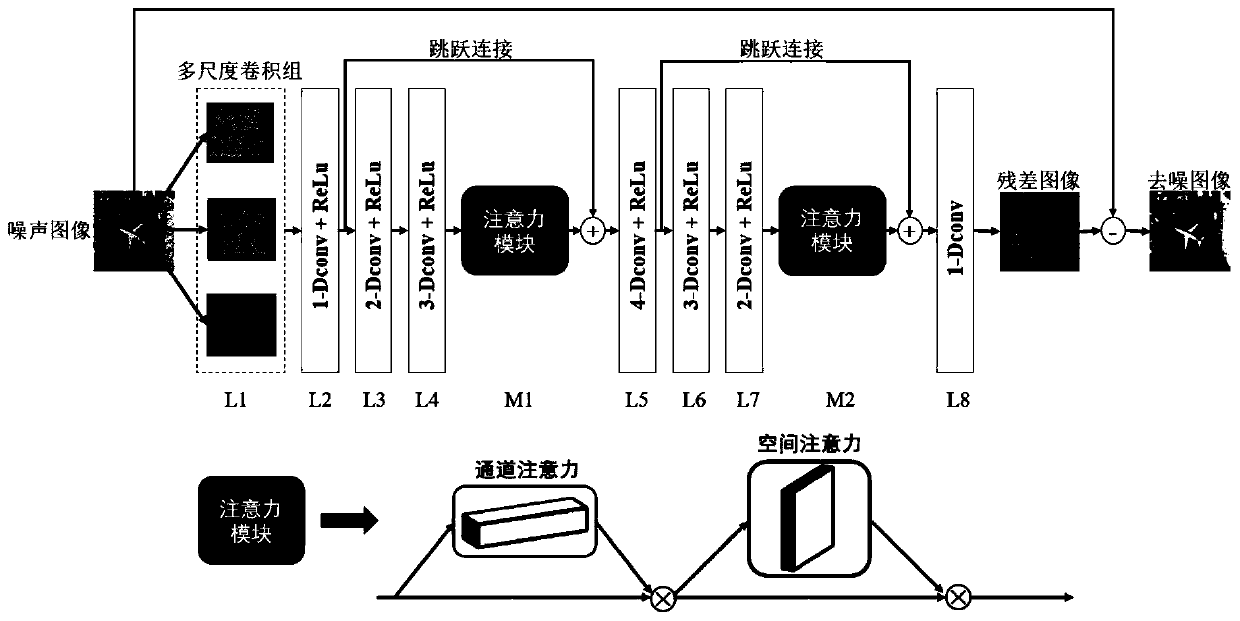

SAR image denoising method based on multi-scale cavity residual attention network

PendingCN110120020AKeep detailsGood removal effectImage enhancementImage analysisPattern recognitionImage denoising

The invention relates to an SAR image denoising method based on a multi-scale hole residual attention network. The method comprises the following steps of by extracting features of different scales ofthe image through a multi-scale convolution group, broadening a convolution kernel receptive field by utilizing cavity convolution; exuecting more context information of the image; and transmitting the feature information of the shallow layer to a deep convolutional layer by using jump connection to keep image details, adding an attention mechanism to intensively extract noise-related features, and automatically learning the SAR image speckle noise distribution form in combination with a residual error learning strategy to achieve the purpose of removing speckle noise. Experimental results show that compared with a traditional SAR image noise removal method, the method has the advantages that the speckle noise removal effect is good, the number of artificial traces is small, detail information of the image is kept, and the calculation speed is higher through the GPU.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

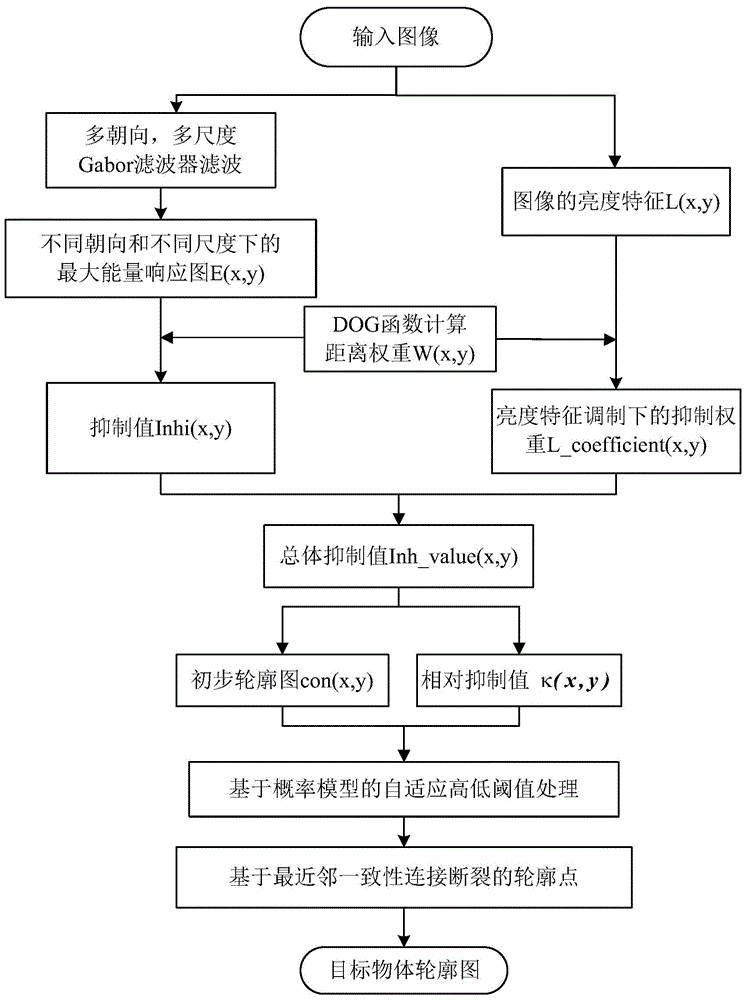

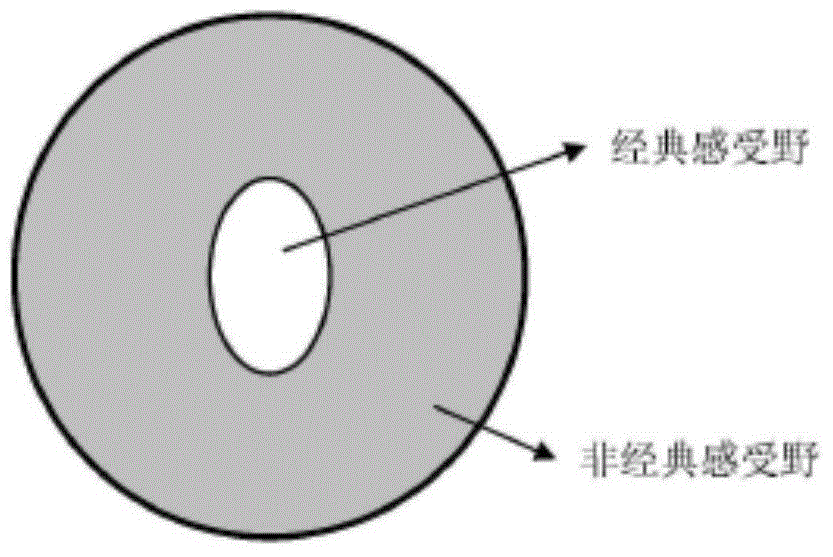

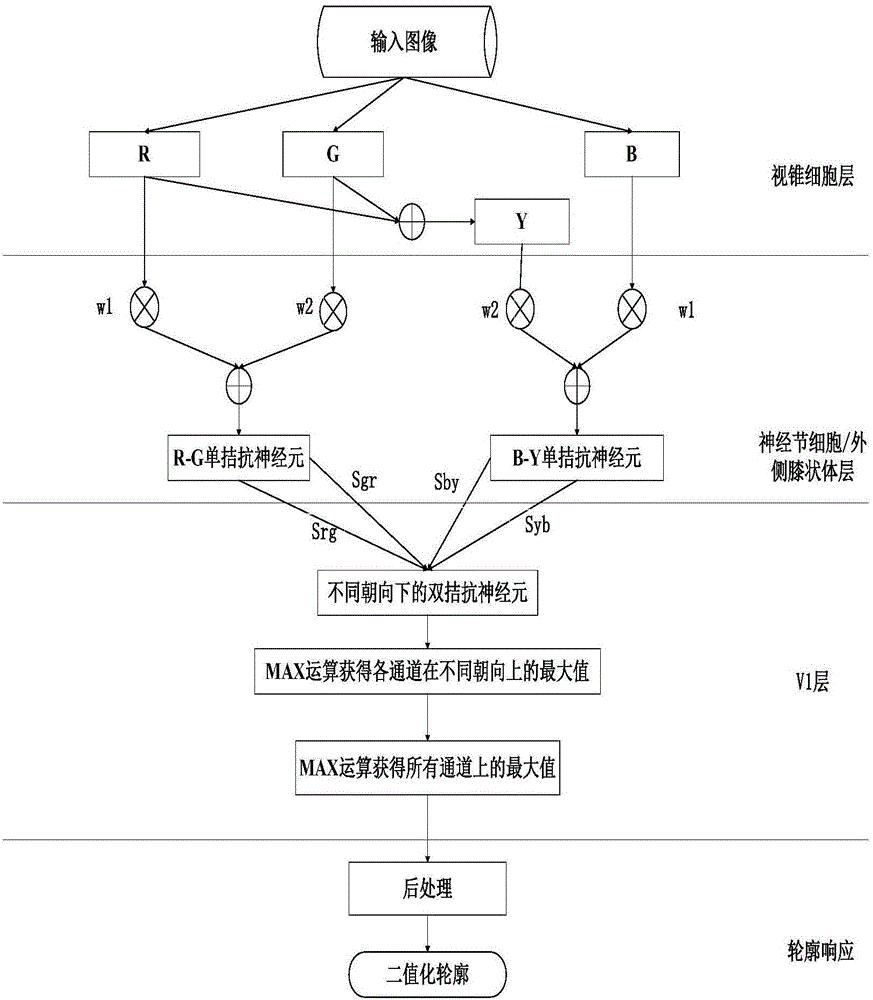

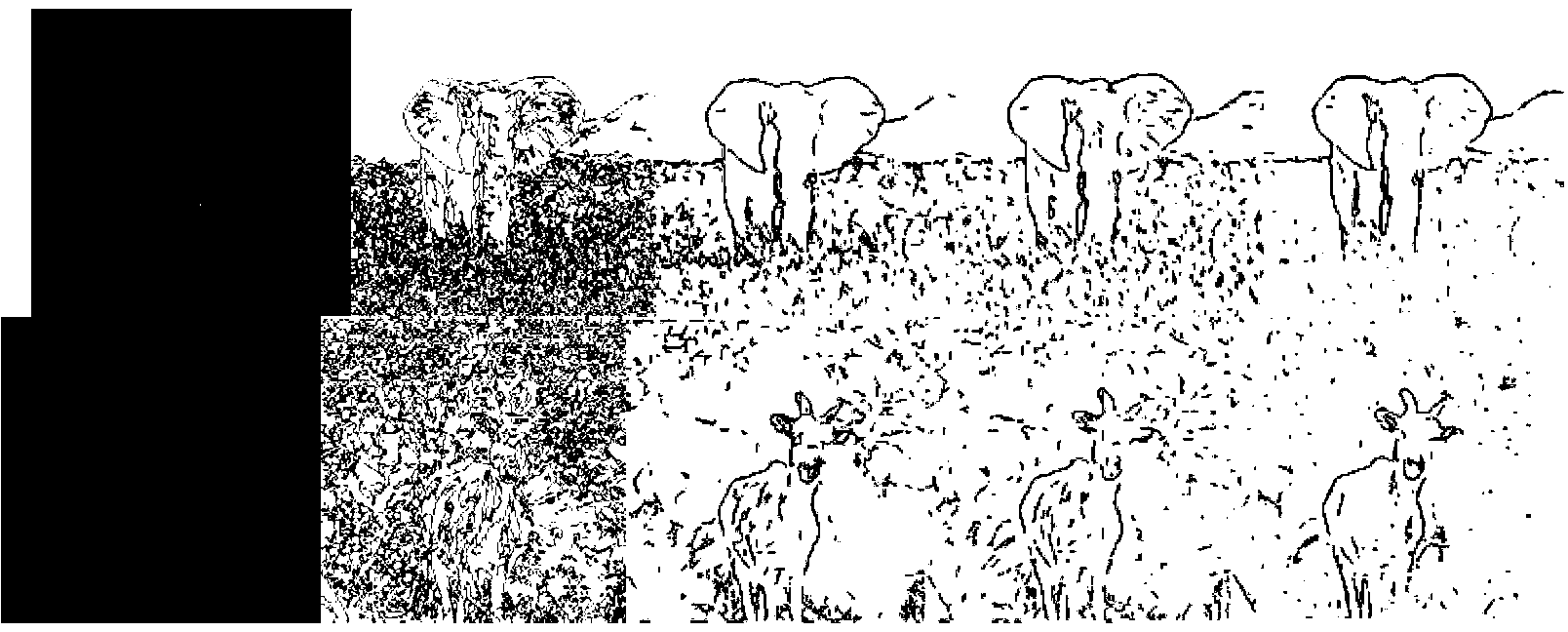

Contour extraction method based on brightness characteristic and contour integrity

InactiveCN104484667AEffective filteringPreserve preliminary profile informationImage enhancementImage analysisPattern recognitionNear neighbor

The invention discloses a contour extraction method based on the brightness characteristic and the contour integrity, and belongs to the crossing field of computer vision and pattern recognition. The contour extraction method aims to completely extract a contour of an object out of a complex environmental background. The contour extraction method includes the step of obtaining a maximum energy response diagram, the step of carrying out non-classic receptive field restraint on brightness characteristic modulation, the step of extracting the contour of the object and carrying out postprocessing on a high-low self-adaptive threshold value and the like based on a probability model, and the step of processing the nearest neighbor orientation consistency connection rupture contour based on the contour integrity. A Gabor filter is used for simulating response of a human simple cell classic receptive field to obtain the maximum Gabor energy response diagram; the brightness characteristic of images is used for restraining the maximum Gabor energy response diagram and rejecting grain and other non-target contours; the postprocessing on the high-low self-adaptive threshold value and the like based on the probability model is carried out on the obtained target contour; rupture points of the nearest neighbor orientation consistency connection contour based on the contour integrity are processed. The contour of the object can be extracted well, and a contour diagram of integrity connection is obtained after the contour of the object is processed.

Owner:HUAZHONG UNIV OF SCI & TECH

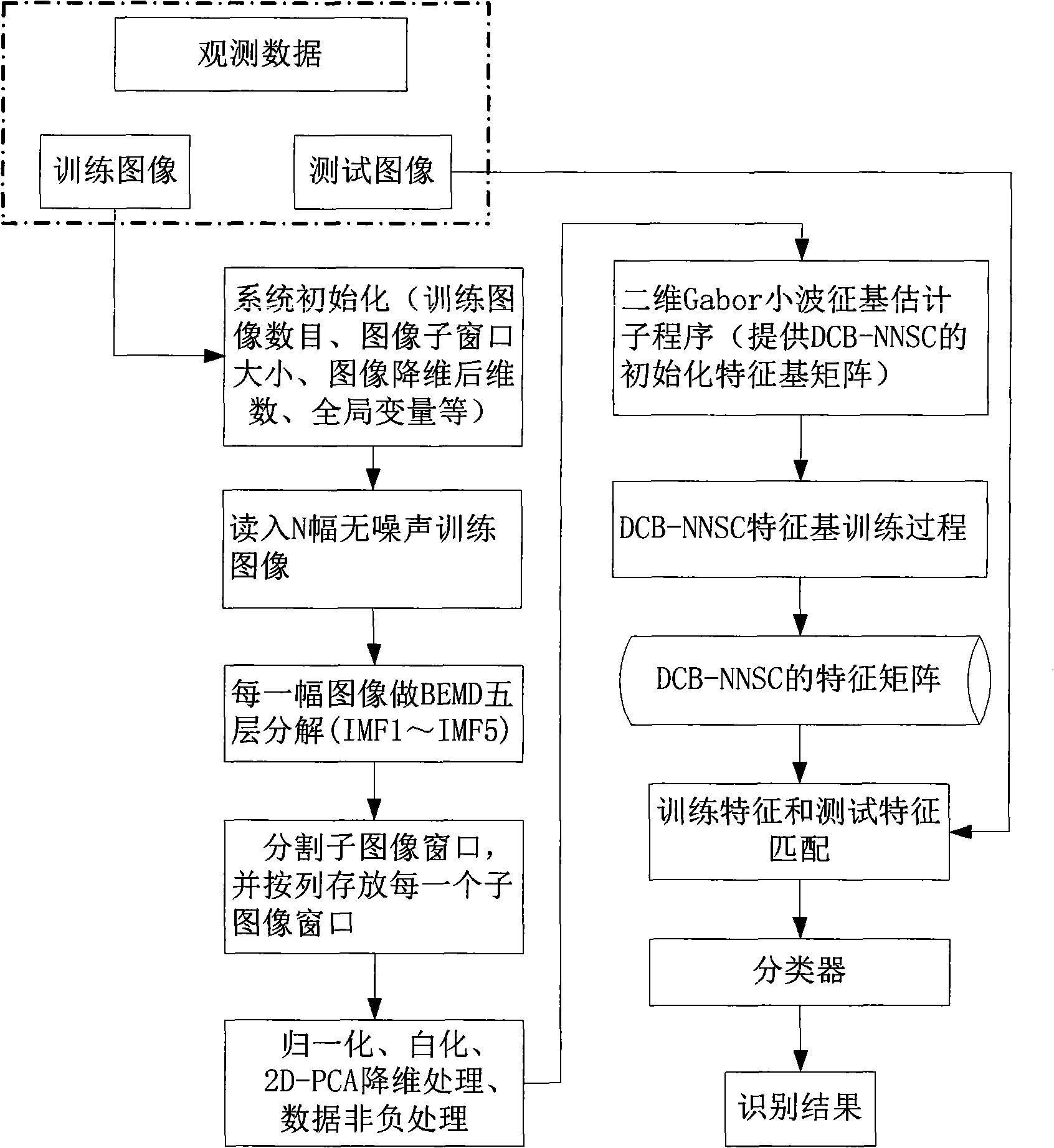

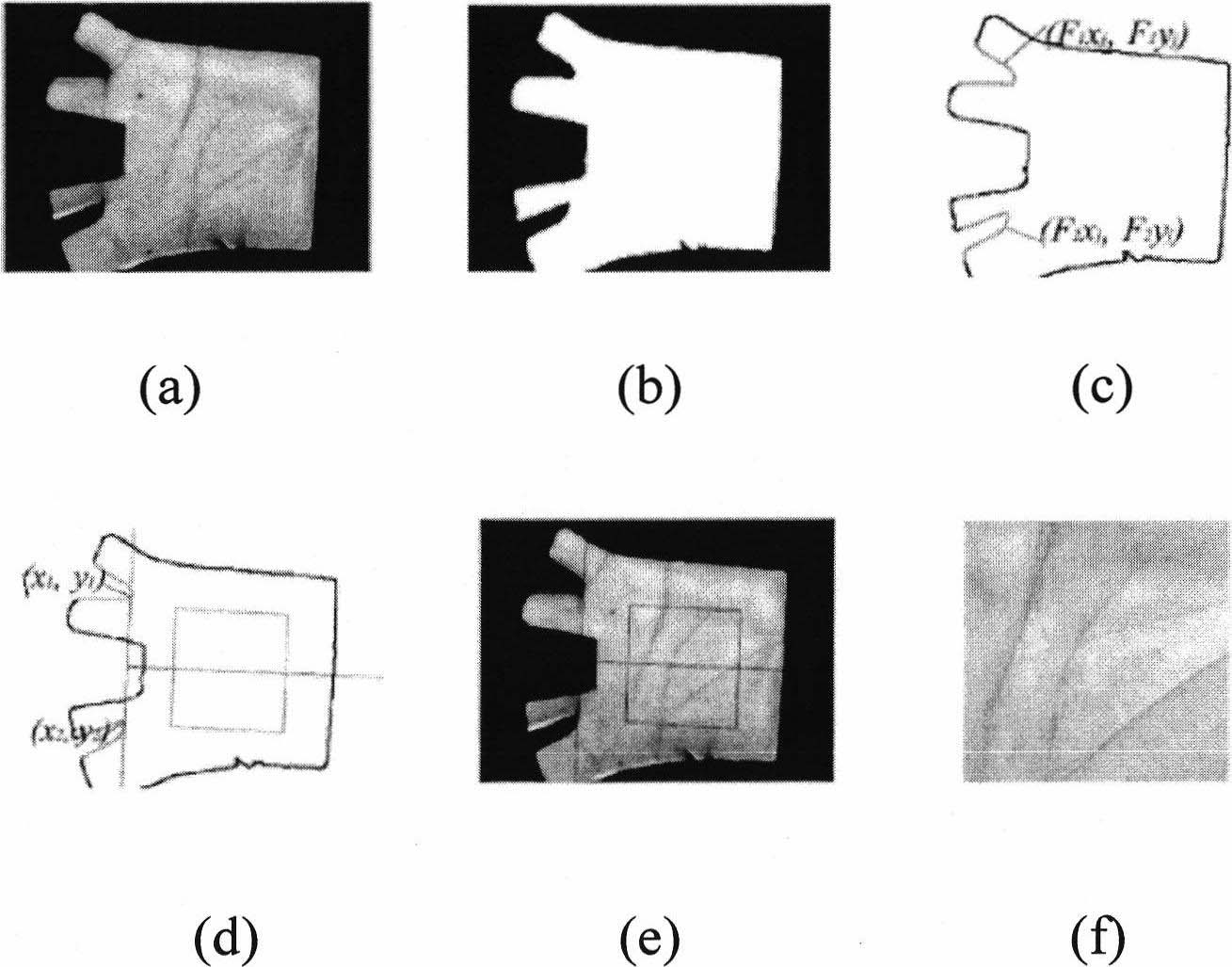

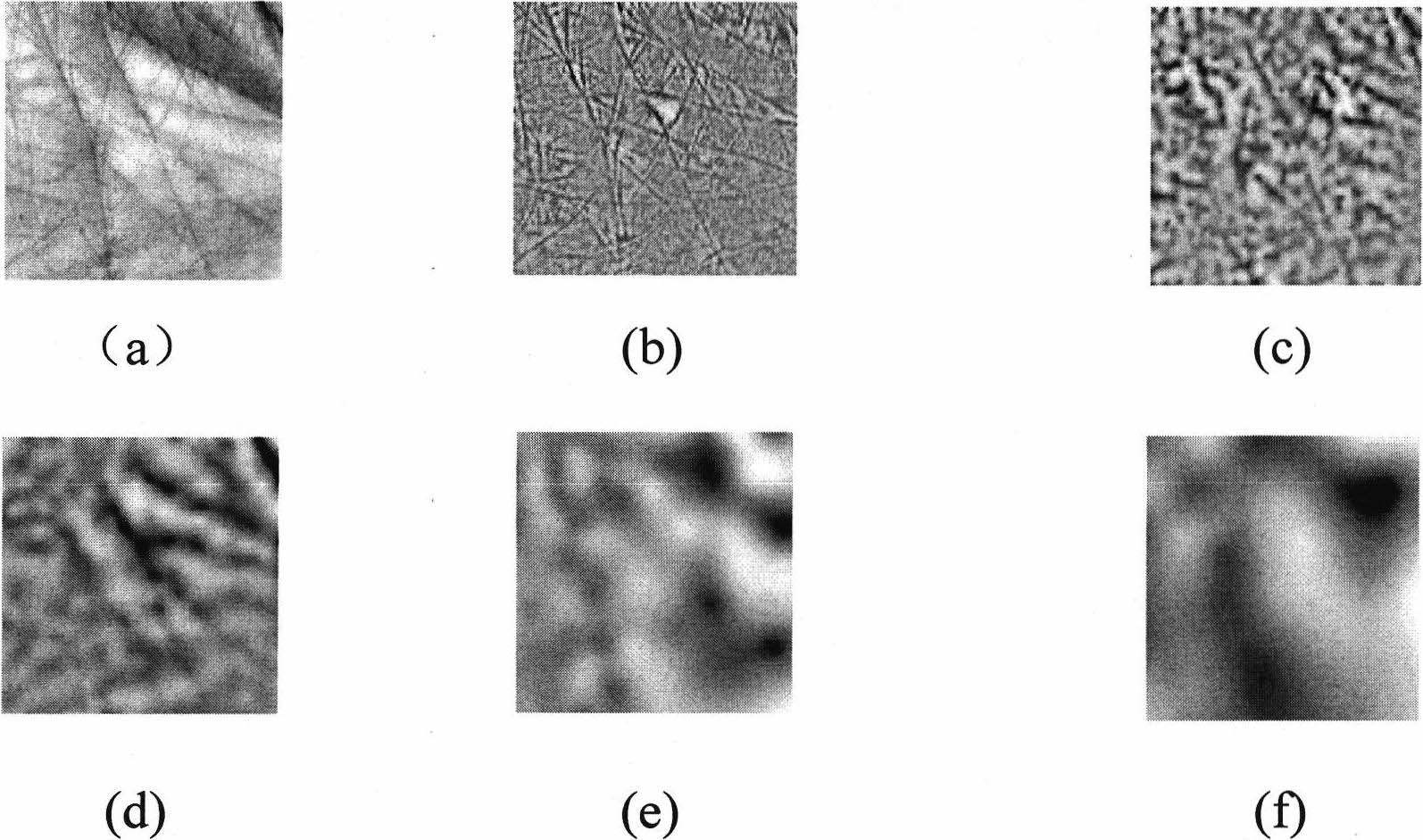

Method for extracting characteristic of natural image based on dispersion-constrained non-negative sparse coding

InactiveCN101866421AAggregation tightEfficient extractionCharacter and pattern recognitionFeature extractionNerve cells

The invention discloses a method for extracting the characteristic of a natural image based on dispersion-constrained non-negative sparse coding, which comprises the following steps of: partitioning an image into blocks, reducing dimensions by means of 2D-PCA, non-negative processing image data, initializing a wavelet characteristic base based on 2D-Gabor, defining the specific value between intra-class dispersion and extra-class dispersion of a sparsity coefficient, training a DCB-NNSC characteristic base, and image identifying based on the DCB-NNSC characteristic base, etc. The method has the advantages of not only being capable of imitating the receptive field characteristic of a V1 region nerve cell of a human eye primary vision system to effectively extract the local characteristic of the image; but also being capable of extracting the characteristic of the image with clearer directionality and edge characteristic compared with a standard non-negative sparse coding arithmetic; leading the intra-class data of the characteristic coefficient to be more closely polymerized together to increase an extra-class distance as much as possible with the least constraint of specific valuebetween the intra-class dispersion and the extra-class dispersion of the sparsity coefficient; and being capable of improving the identification performance in the image identification.

Owner:SUZHOU VOCATIONAL UNIV

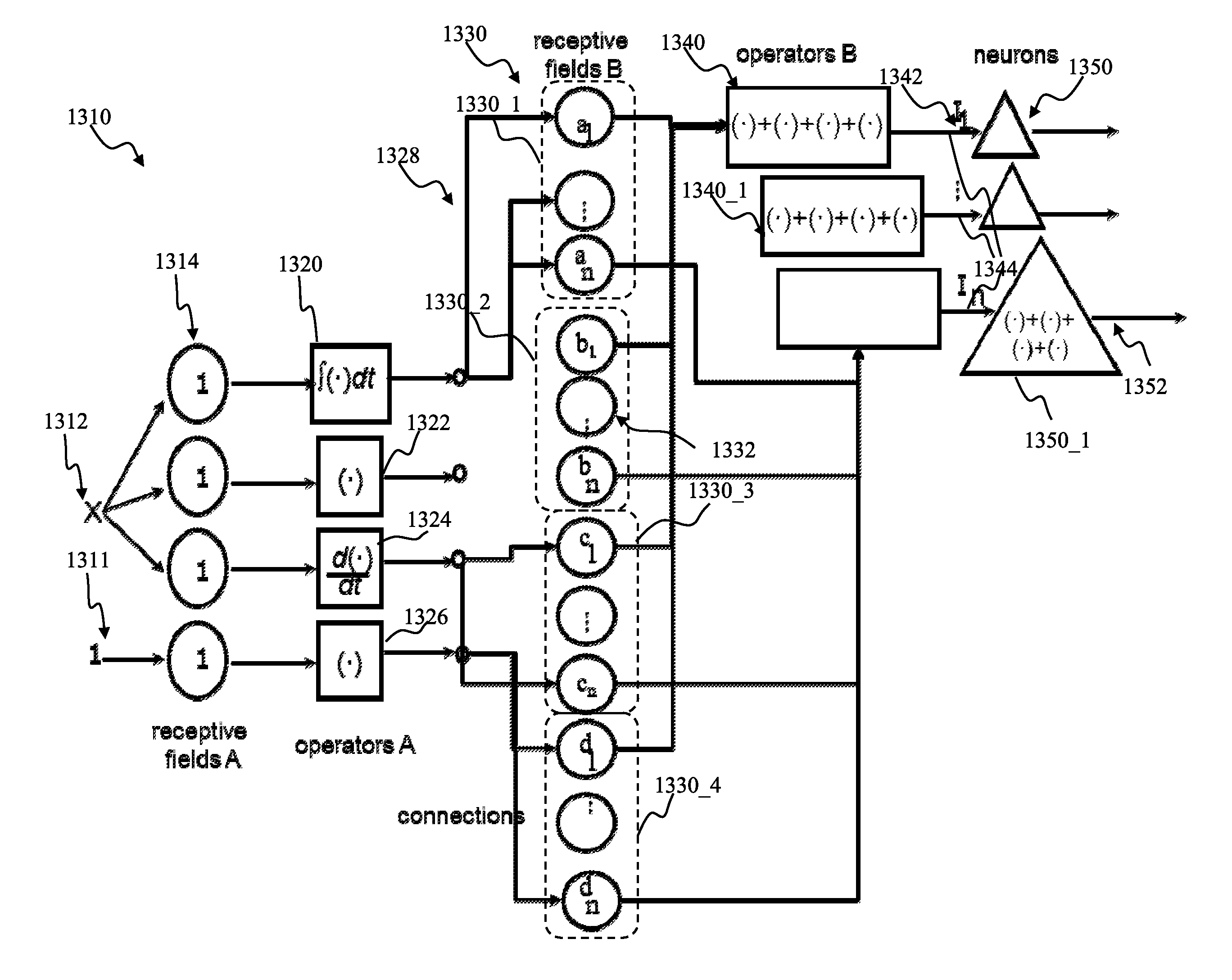

Proportional-integral-derivative controller effecting expansion kernels comprising a plurality of spiking neurons associated with a plurality of receptive fields

ActiveUS9082079B1Improve connection efficiencyEasy to measureDigital computer detailsNeural architecturesNeuron networkControl engineering

Adaptive proportional-integral-derivative controller apparatus of a plant may be implemented. The controller may comprise an encoder block utilizing basis function kernel expansion technique to encode an arbitrary combination of inputs into spike output. The basis function kernel may comprise one or more operators configured to manipulate basis components. The controller may comprise spiking neuron network operable according to reinforcement learning process. The network may receive the encoder output via a plurality of plastic connections. The process may be configured to adaptively modify connection weights in order to maximize process performance, associated with a target outcome. Features of the input may be identified and used for enabling the controlled plant to achieve the target outcome.

Owner:BRAIN CORP

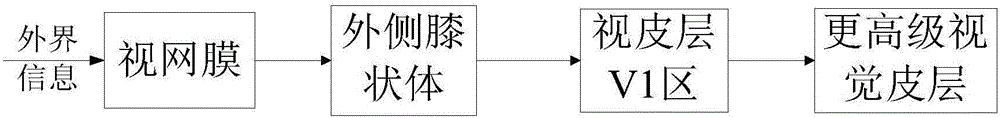

Contour and boundary detection algorithm based on visual color theory and homogeneity inhibition

The invention discloses a contour and boundary detection algorithm based on a visual color theory and homogeneity inhibition, belongs to the cross field of computer vision and pattern recognition, and aims at extracting the contour and boundary of a target from a complex natural scene. Through the research of the visual information processing mechanism of human eyes, the method carries out the mathematic modeling of a receptive field of a nerve cell of a visual pathway at each stage, and employs the modulation effect of a non-classical receptive field to inhibit the texture edge, thereby highlighting the contour and boundary. The innovation of the invention lies in introducing the visual information processing mechanism of human eyes to a contour and boundary detection model, detecting the color and brightness boundary through setting imbalance vision cone input, maintaining the contour integrity, giving consideration to the homogeneity of the texture region and the homogeneity inhibition on a classical receptive field from the non-classical receptive field, employing the homogeneity inhibition to inhibit the texture edge, and extracting the contour and boundary of a natural image.

Owner:HUAZHONG UNIV OF SCI & TECH

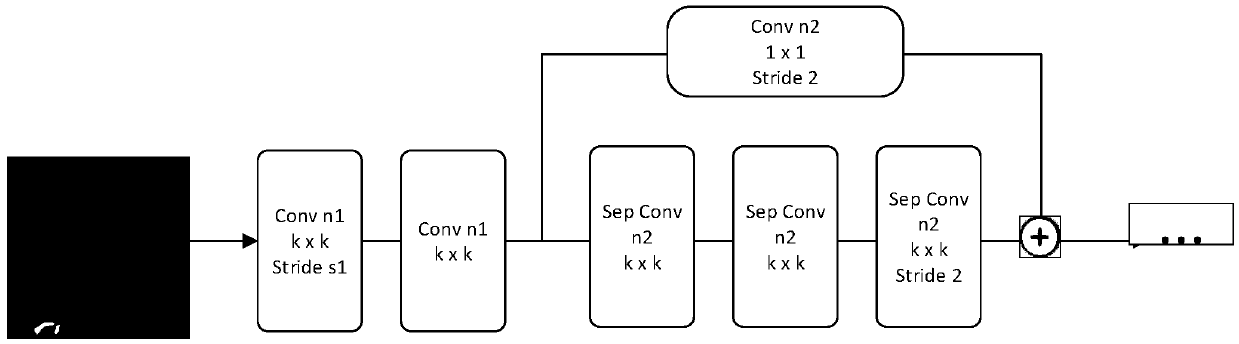

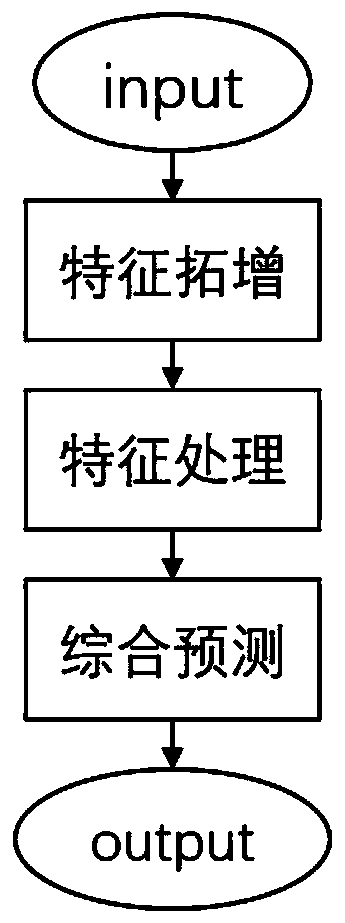

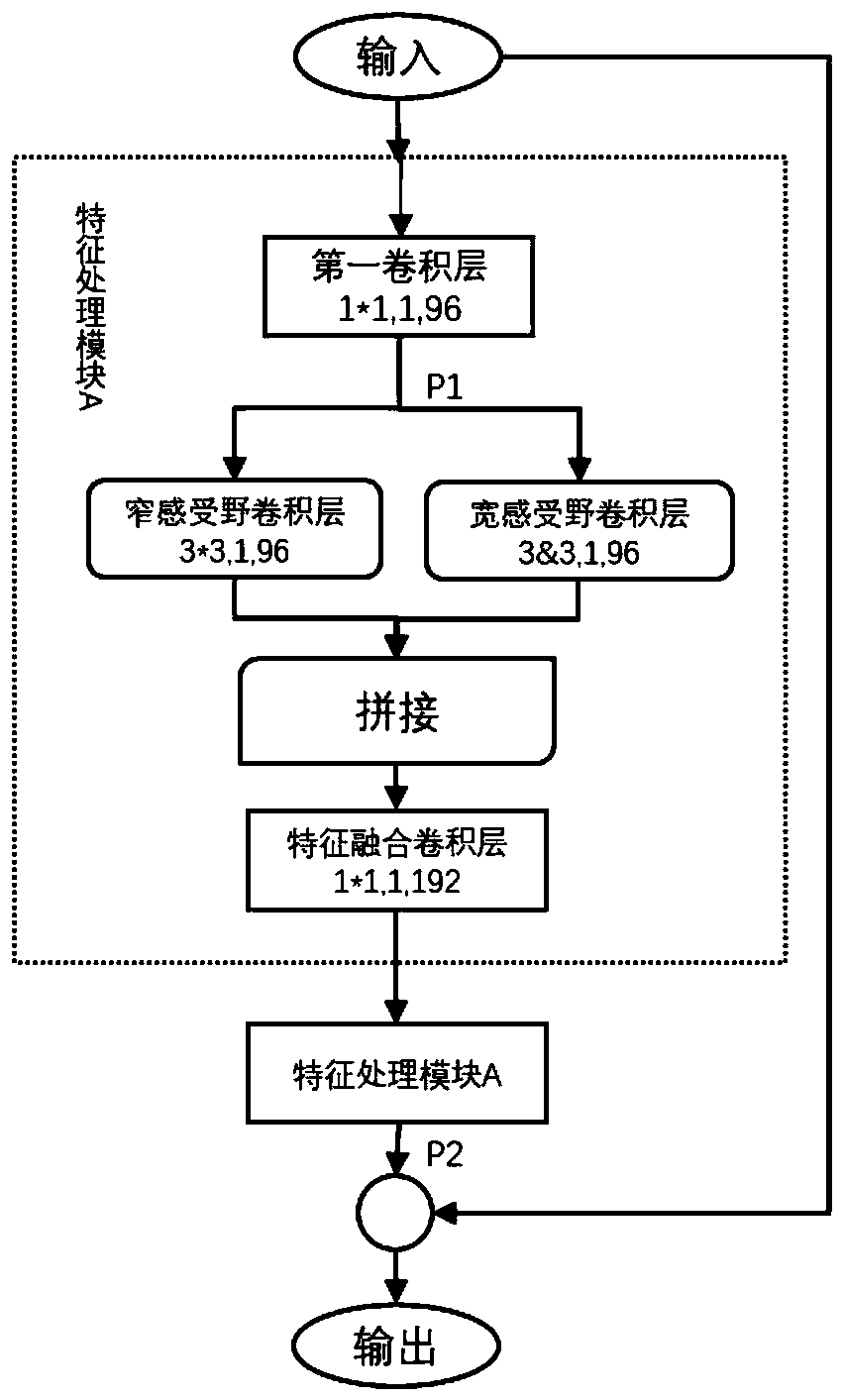

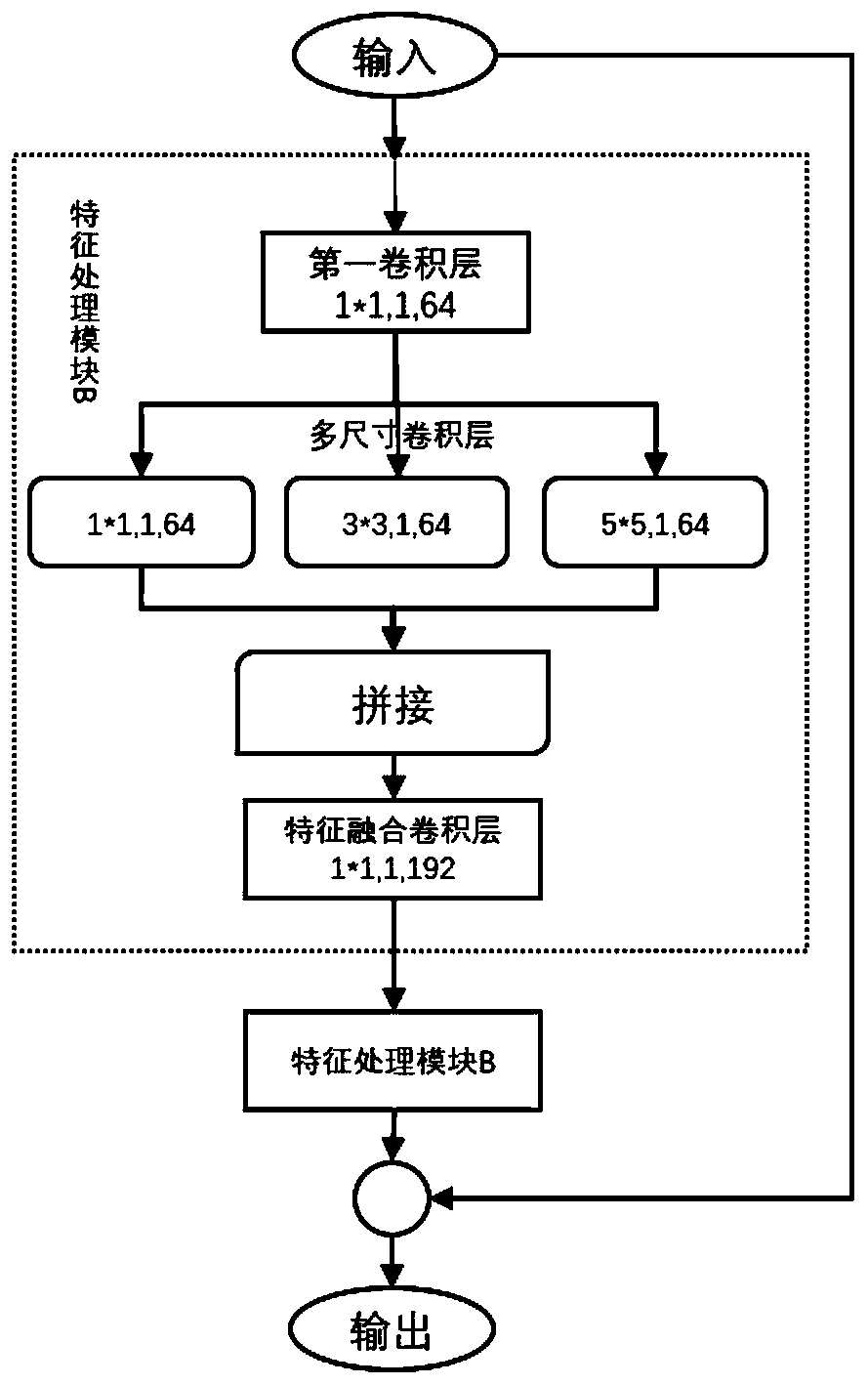

Real-time image semantic segmentation method based on lightweight full convolutional neural network

PendingCN110110692AReduce memory usageReduce the amount of calculation dataCharacter and pattern recognitionData setAlgorithm

The invention discloses a real-time image semantic segmentation method based on a lightweight full convolutional neural network. The method comprises the following steps of 1) constructing a full convolutional neural network by using the design elements of a lightweight neural network, wherein the network totally comprises three stages of a feature extension stage, a feature processing stage and acomprehensive prediction stage, and the feature processing stage uses a multi-receptive field feature fusion structure, a multi-size convolutional fusion structure and a receptive field amplificationstructure; 2) at a training stage, training the network by using a semantic segmentation data set, using a cross entropy function as a loss function, using an Adam algorithm as a parameter optimization algorithm, and using an online difficult sample retraining strategy in the process; and 3) at a test stage, inputting the test image into the network to obtain a semantic segmentation result. According to the present invention, the high-precision real-time semantic segmentation method suitable for running on a mobile terminal platform is obtained by adjusting a network structure and adapting asemantic segmentation task while controlling the scale of the model.

Owner:NANJING UNIV

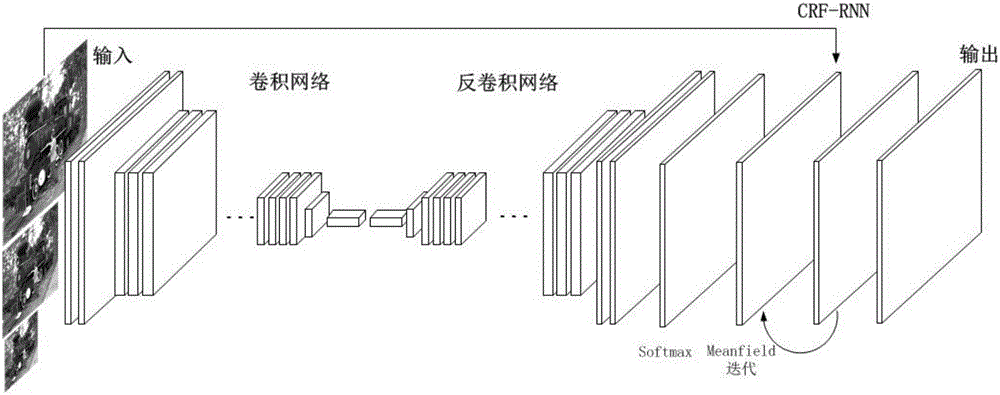

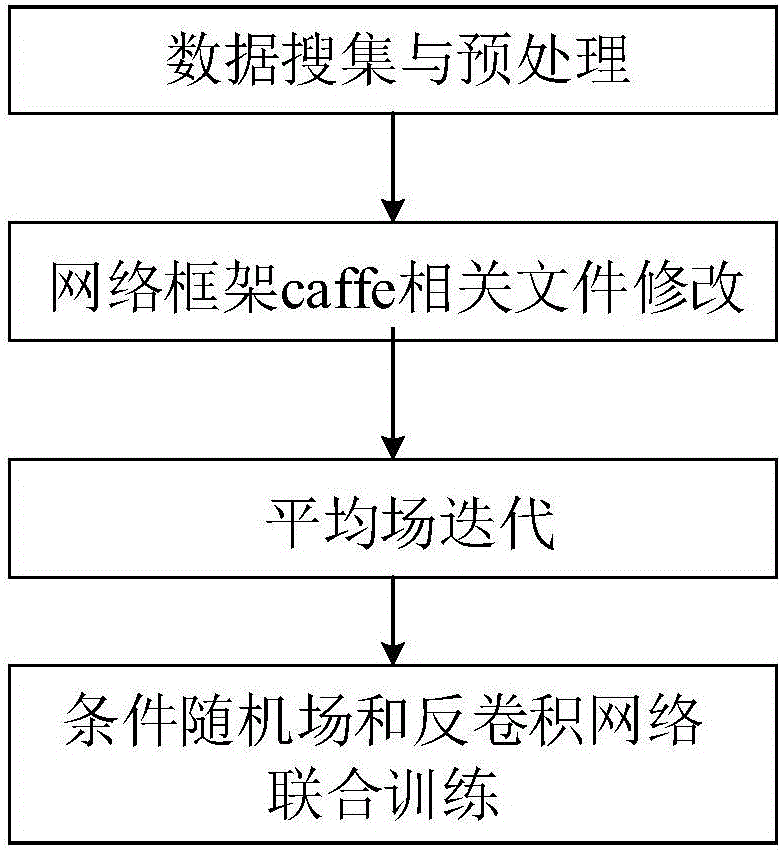

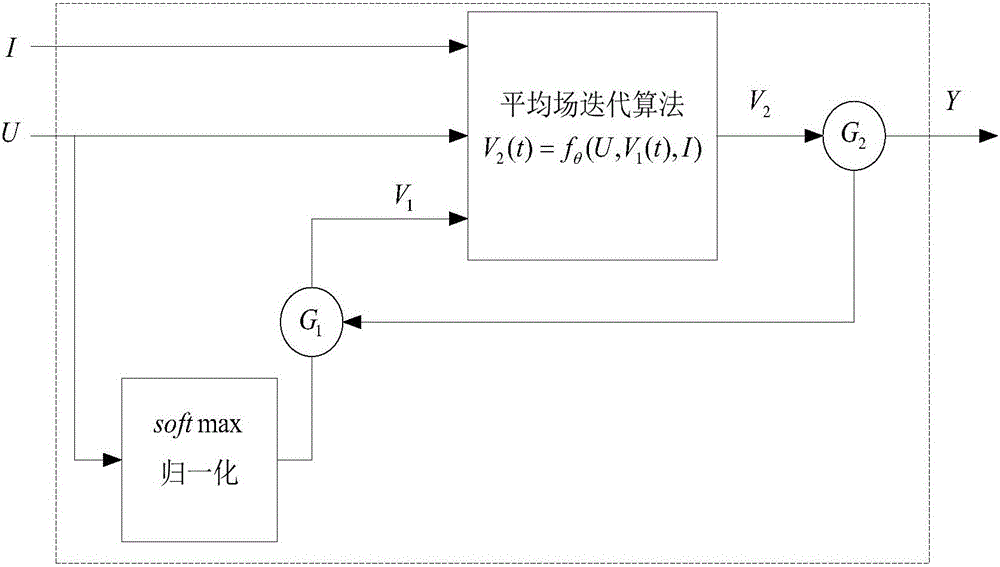

Deep learning network construction method and system applicable to semantic segmentation

InactiveCN107180430ADeep learning network is goodImprove robustnessImage enhancementImage analysisConditional random fieldLearning network

The invention discloses a deep learning network construction method and system applicable to semantic segmentation. According to the invention, based on the deconvolution semantic segmentation, by considering the characteristic that a conditional random field is quite good for edge optimization, the conditional random field is explained to be a recursion network to be fused in a deconvolution network and end to end trainings are performed, so the parameter learning in the convolution network and the recursion network is allowed to act with each other and a better integration network is trained; through combined training of the deconvolution network and the conditional random field, quite accurate detail and shape information is obtained, so a problem of inaccuracy of image edge segmentation is solved; by use of the strategy of combining the multi-scale input and multi-scale pooling, a problem is solve that a big target is excessively segmented or segmentation of a small target is ignored generated by the single receptive field in the semantic segmentation; and by expanding the classic deconvolution network, by use of the united training of the conditional random field and the multi-feature information fusion, accuracy of the semantic segmentation is improved.

Owner:HUAZHONG UNIV OF SCI & TECH

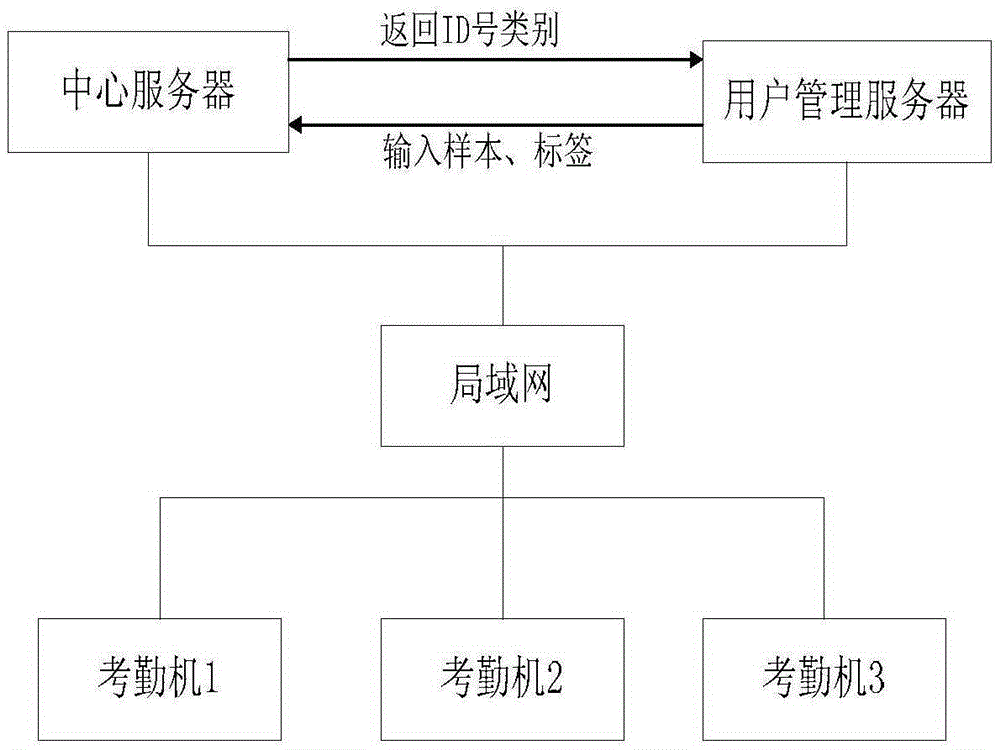

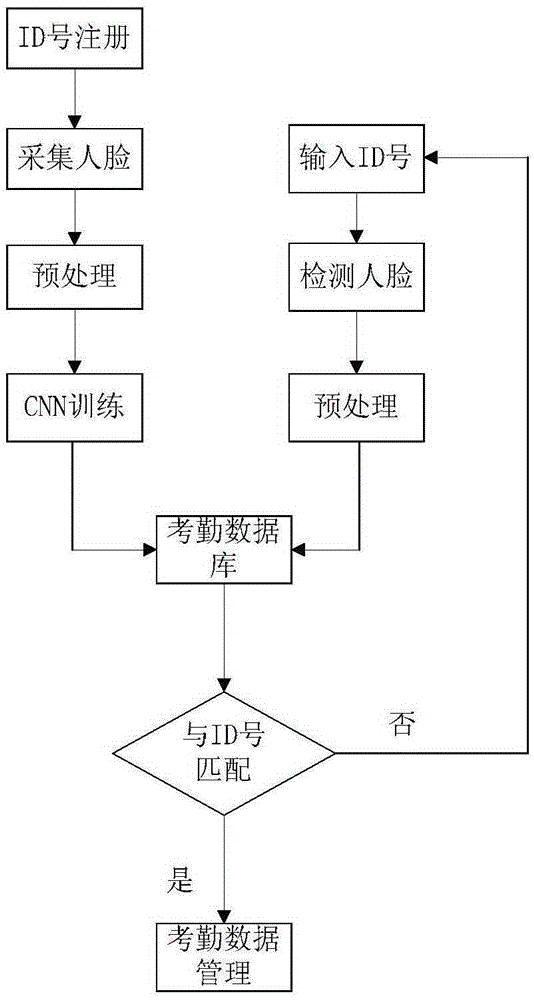

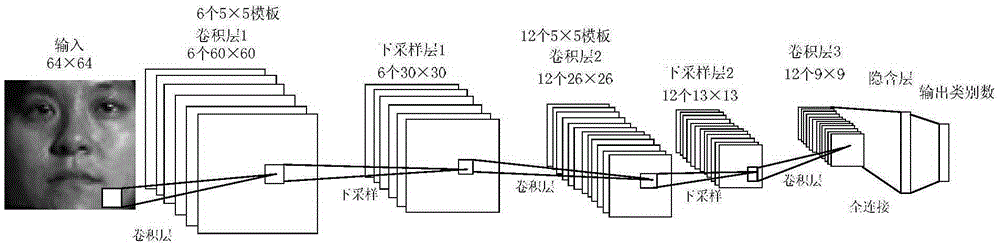

Face identification method and attendance system based on deep convolution neural network

InactiveCN105426875AAccurate descriptionGuaranteed immutabilityRegistering/indicating time of eventsCharacter and pattern recognitionNerve networkManual extraction

The invention relates to a face identification method and an attendance system based on a deep convolution neural network. The system comprises that user information and face sample labels are input into a user management server and then sent to a central server; in the central server, the pre-processed sample labels are used to establish a face identification model based on the deep convolution neutral network; employees carry out the online face identification through a trained neutral network on attendance machines at each site, and face identification results will be returned to the user management server by an internal local area network; and management staffs can carry out checking, modification and other operations to attendance records on the user management server. A face identification algorithm based on the deep convolution neutral network used by the invention can avoid problems such as incomplete and uncertain characteristic description caused by traditional manual extraction, can also make use of advantages of a receptive field and weight sharing, and can increase a face identification rate so as to increase an accuracy rate of the attendance system.

Owner:WUHAN UNIV OF SCI & TECH

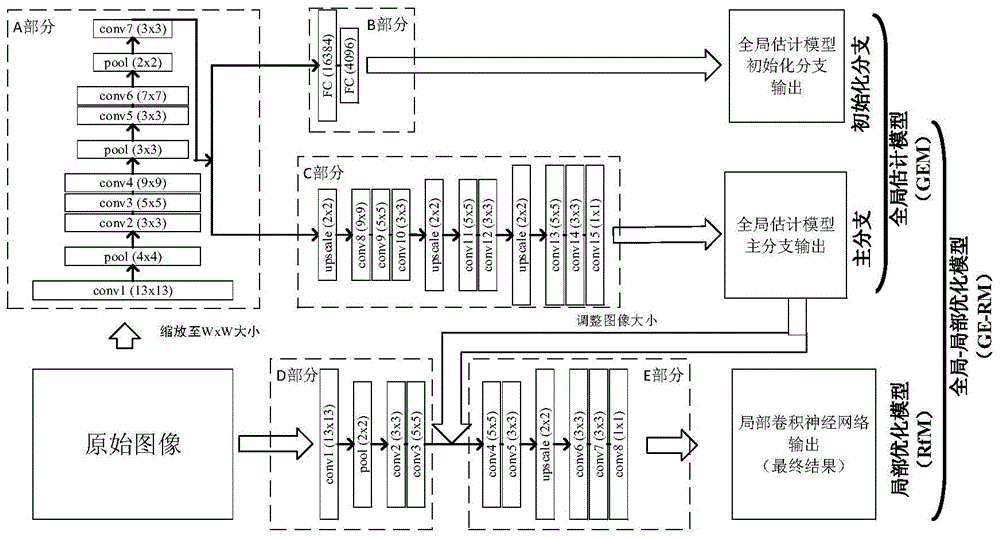

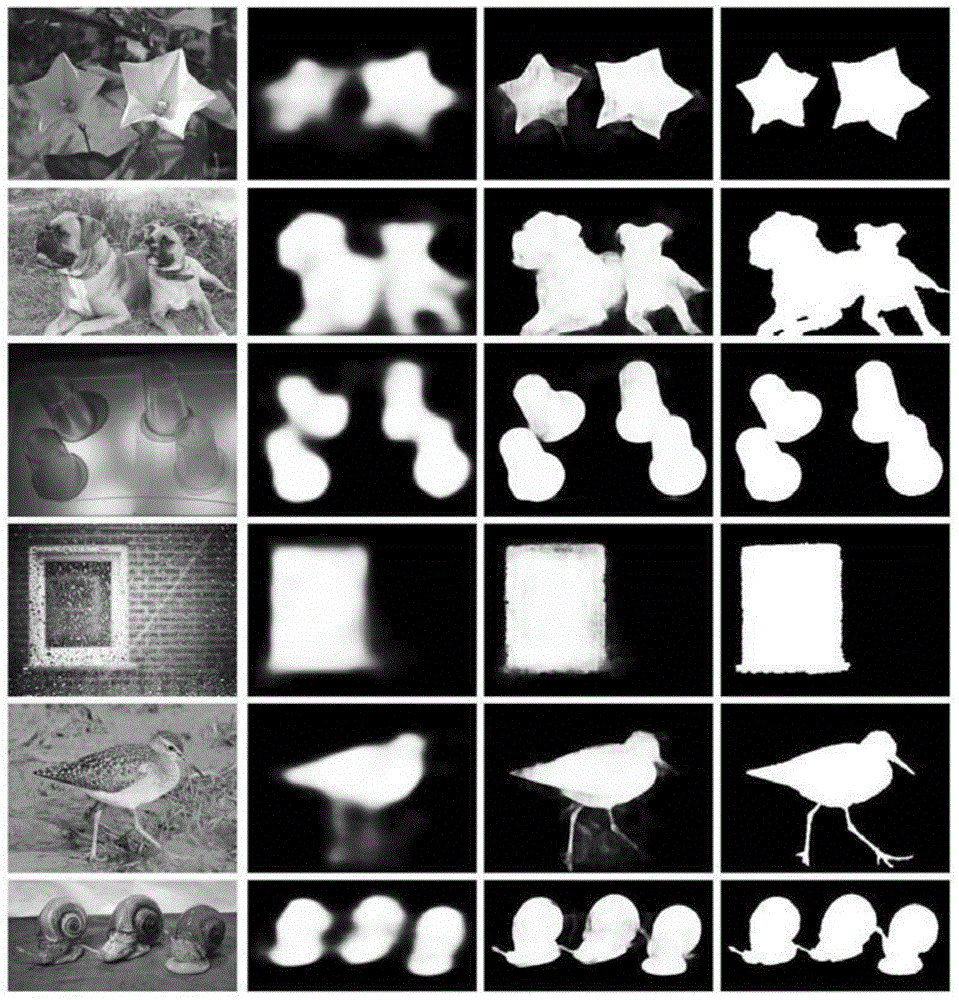

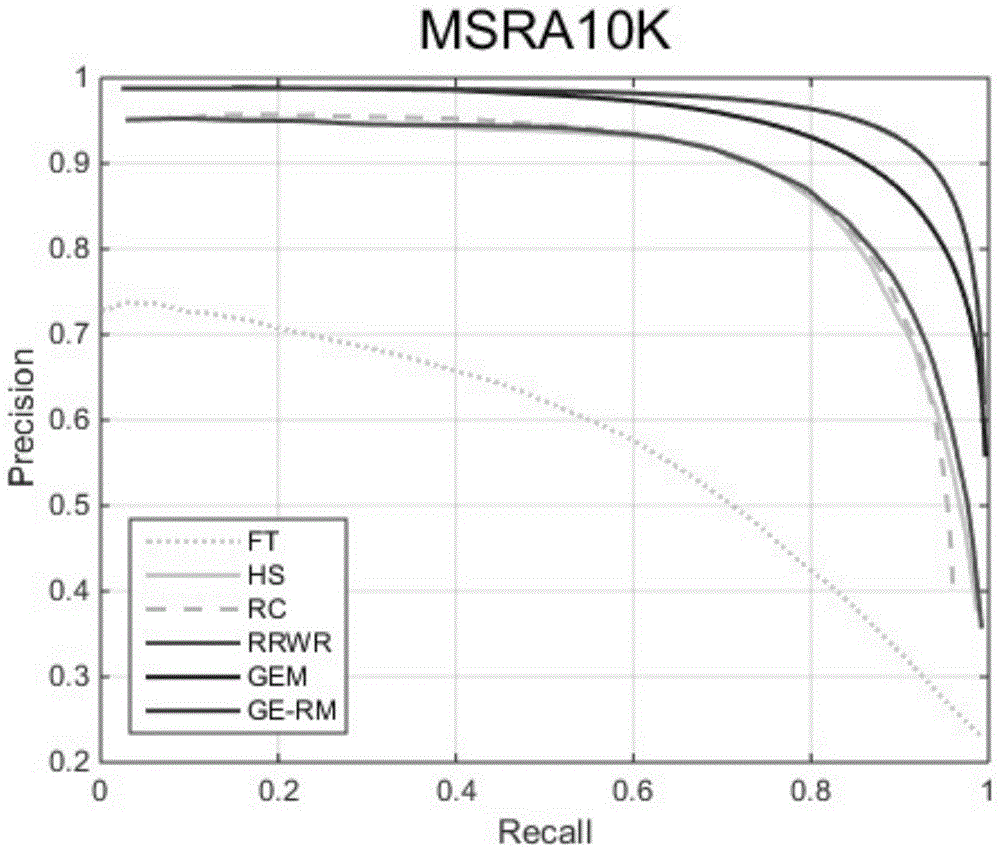

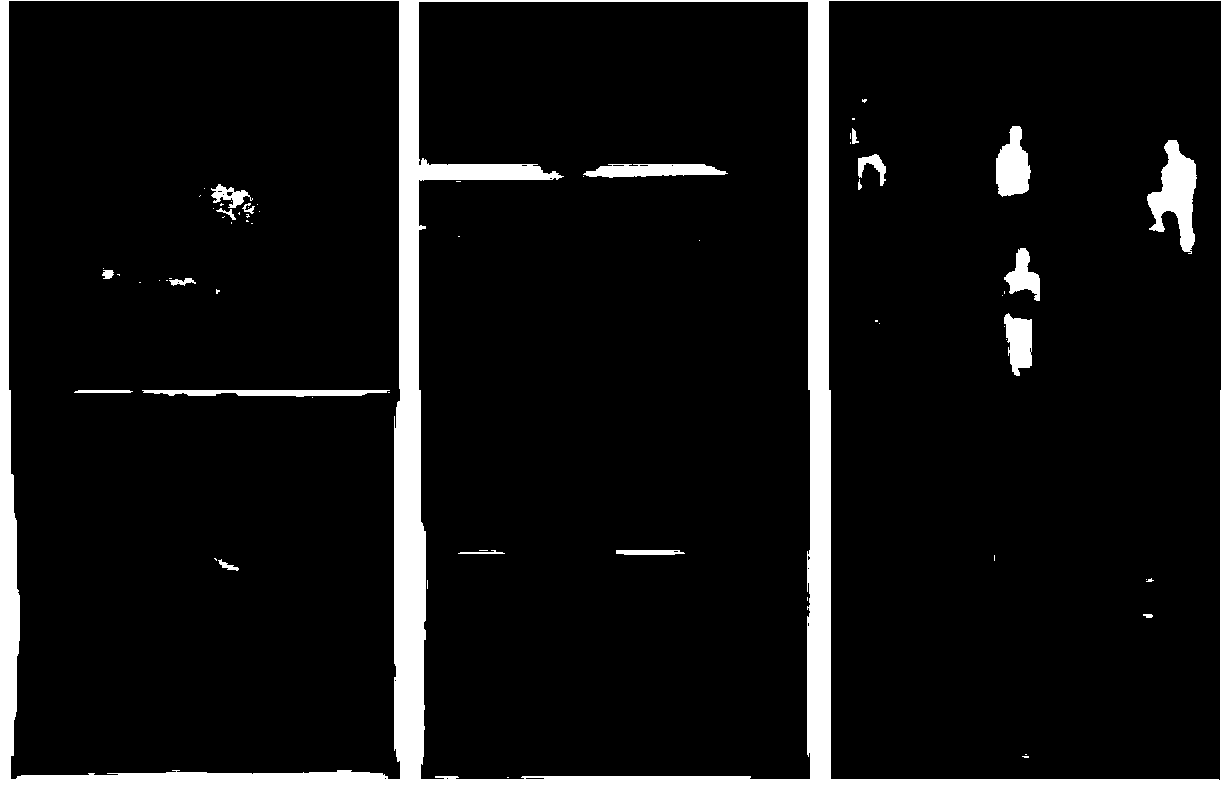

Global-local optimization model based on multistage convolution neural network and significant detection algorithm

The invention provides a significant detection algorithm based on a multistage convolution neural network. The algorithm comprises the following steps of using a global estimation model of a large receptive field to carry out global significance estimation; during a global estimation model training process, using a full connection layer as an output layer to train and initialize parts of convolution layer parameters; using a plurality of alternative convolution layers and liter sampling layers to replace the full connection layer and training and acquiring an optimal global significance estimation graph; using a local convolution neural network with a small receptive field and a large output picture size to fuse global and local information to acquire a high quality significance graph. Through processing of the local convolution neural network, an original image is served as input of the model. A final output result possesses a same size with an original input image and is clear. By using the significant detection algorithm based on the multistage convolution neural network, compared to a traditional method, high accuracy is possessed; a significant object can be accurately found and simultaneously a contour is clear.

Owner:XI AN JIAOTONG UNIV

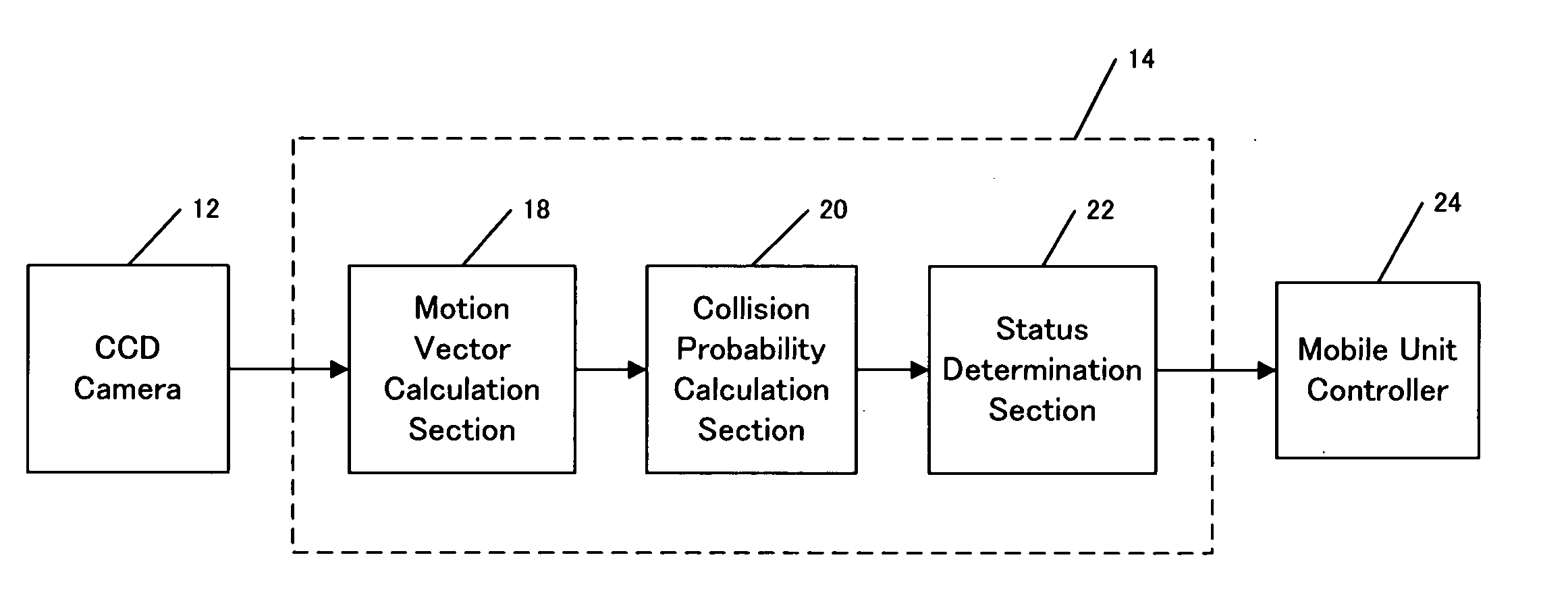

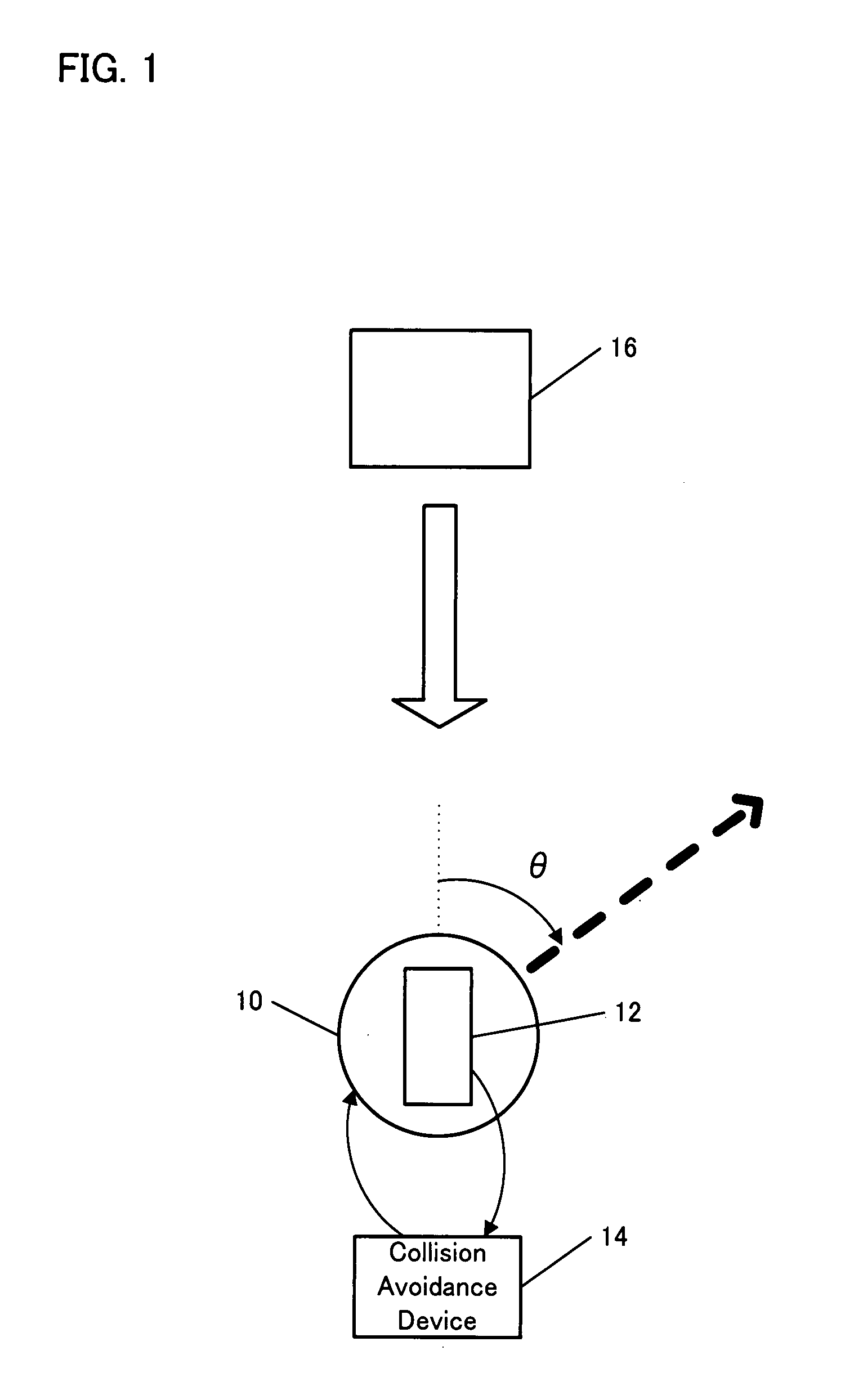

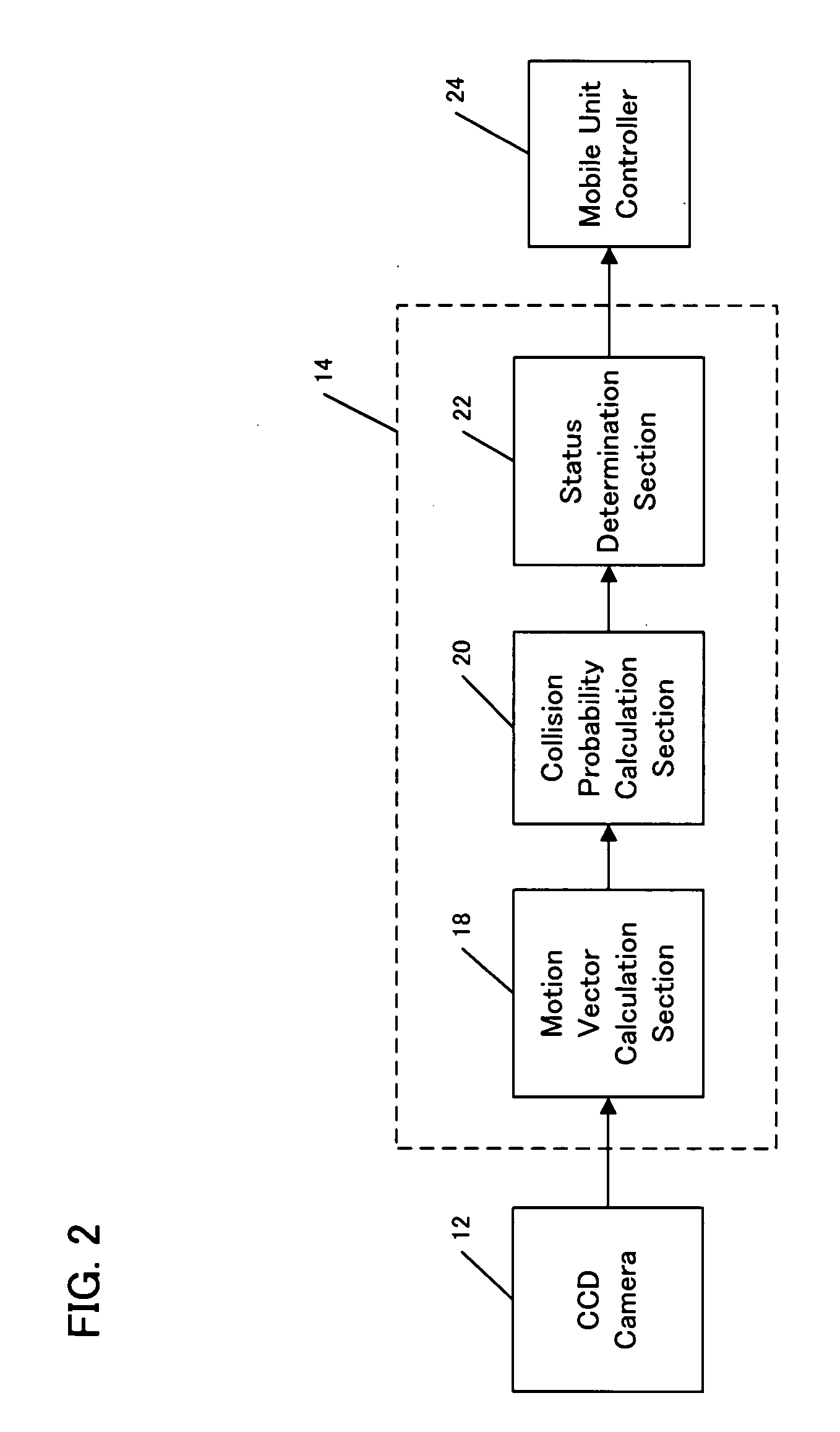

Collision avoidance of a mobile unit

InactiveUS20080033649A1Avoid collisionAnti-collision systemsScene recognitionMotion vectorComputer science

A collision avoidance system for a mobile unit includes an image capturing unit for capturing an image of an environment surrounding a mobile unit. Motion vector is calculated based on the captured image. Collision probability is calculated based on the motion vectors of the image. The system includes a plurality of receptive field units that are modeled on the optic lobe cells of the flies. Each of the receptive field units includes a filter producing an excitatory response to the motion vector diverging from the center of the receptive field in the central area of the receptive field and producing an inhibitory response to the motion vector converging toward the center of the receptive field in the areas around the central area. The outputs of the receptive field units are compared to determine a direction in which the obstacle approaches the mobile unit. The mobile unit moves in a direction to avoid collision.

Owner:HONDA MOTOR CO LTD

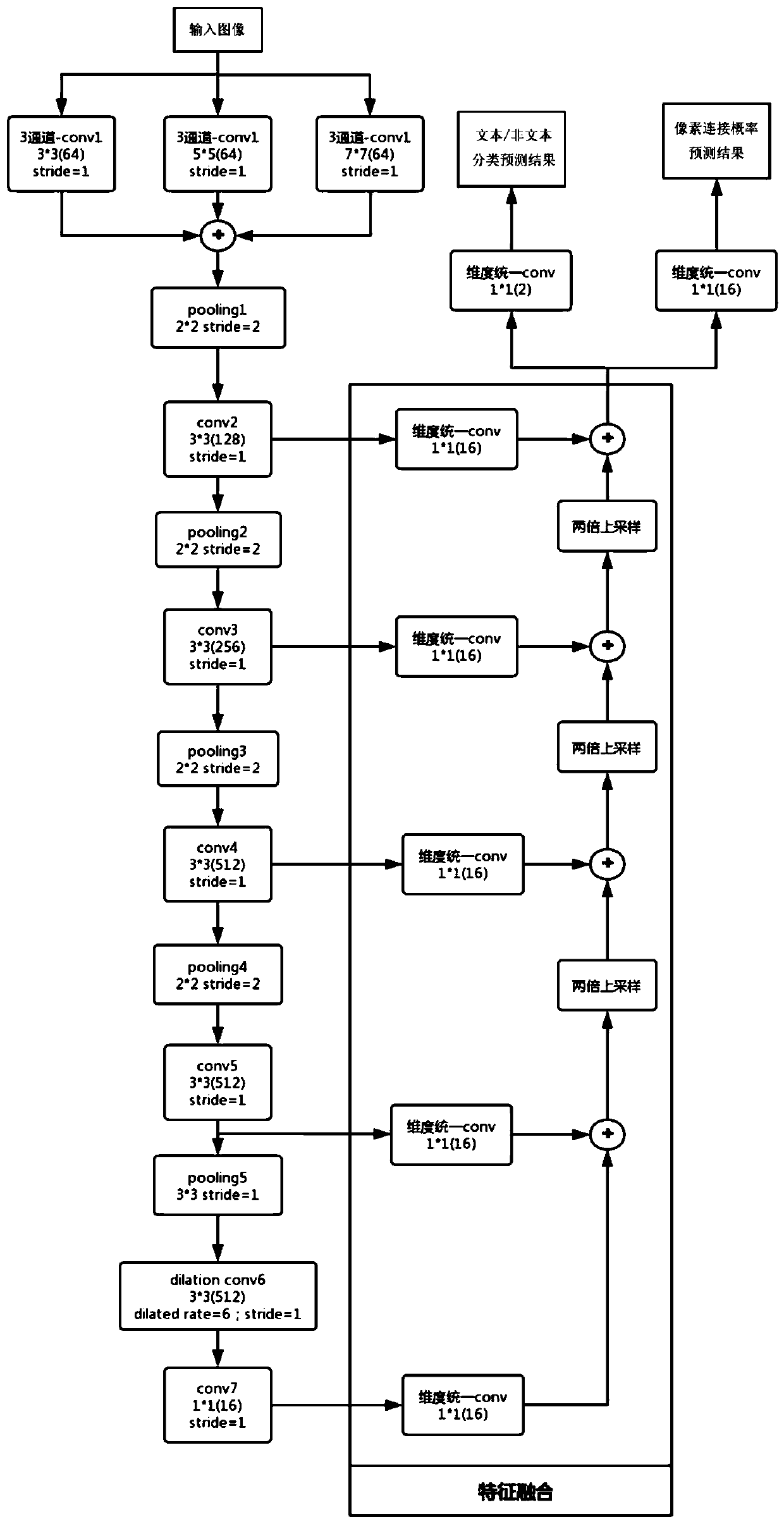

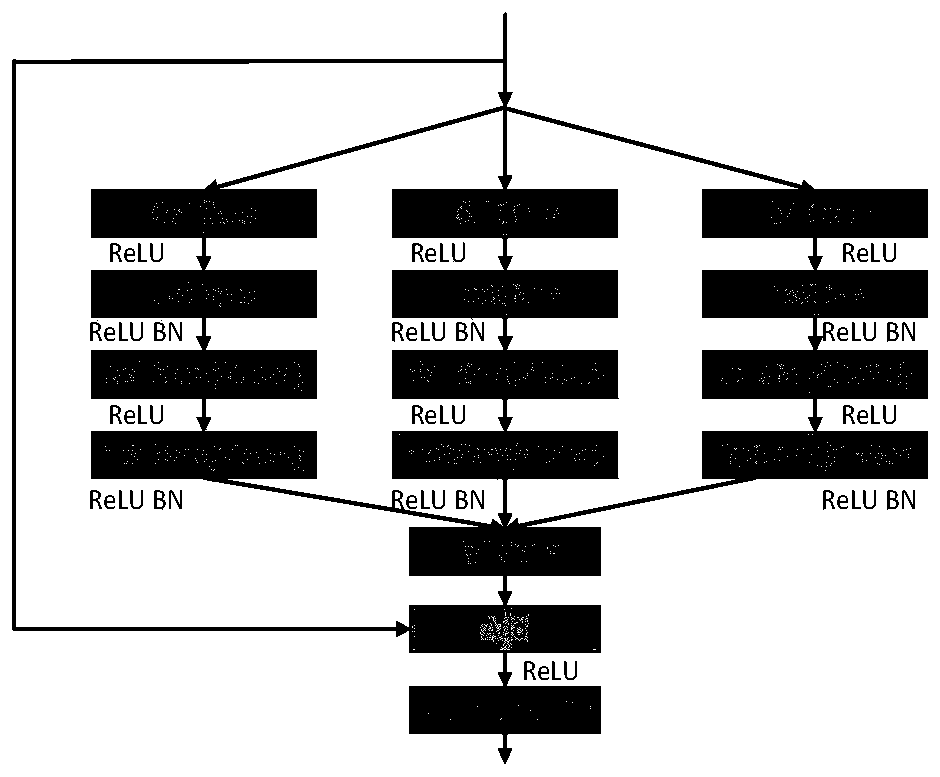

Text detection method, system and equipment based on multi-receptive field depth characteristics and medium

InactiveCN110020676AImprove detection accuracyImprove recallImage analysisCharacter and pattern recognitionText detectionTruth value

The invention discloses a text detection method, system and device based on multi-receptive field depth characteristics and a medium, and the method comprises the steps: obtaining a text detection database, and taking the text detection database as a network training database; building a multi-receptive field depth network model; inputting a natural scene text picture and corresponding textbox coordinate true value data in the network training database into a multi-receptive field depth network model for training; calculating an image mask for segmentation through the trained multi-receptive field depth network model to obtain a segmentation result, and converting the segmentation region into a regression textbox coordinate; and counting the textbox size of the network training database, designing a textbox filtering condition, and screening out a target textbox according to the textbox filtering condition. The method fully utilizes the feature learning capability and classification performance of the deep network model, combines the characteristics of image segmentation, has the characteristics of high detection accuracy, high recall rate, strong robustness and the like, and has agood text detection effect in a natural scene.

Owner:SOUTH CHINA UNIV OF TECH

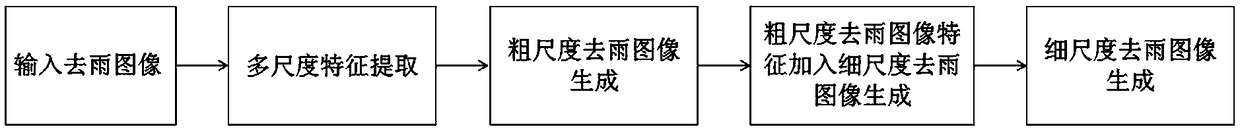

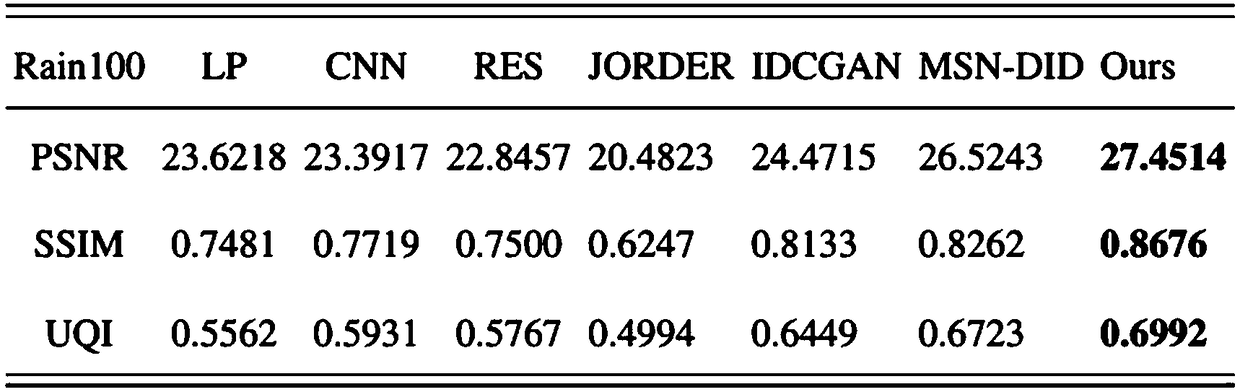

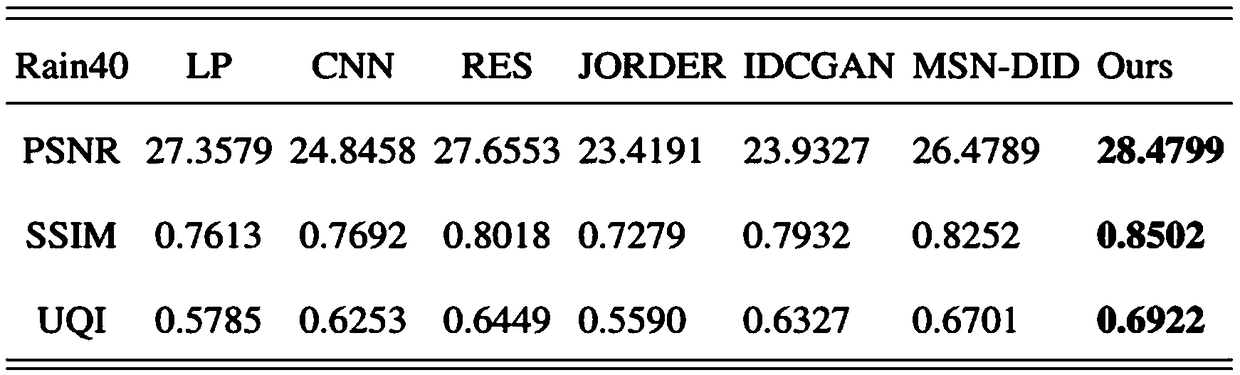

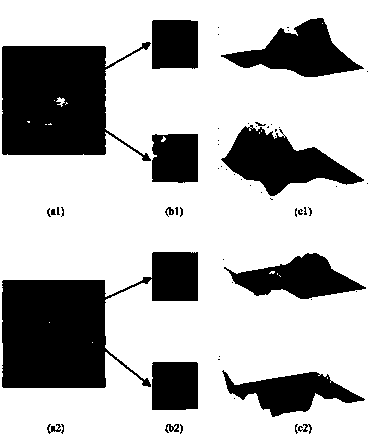

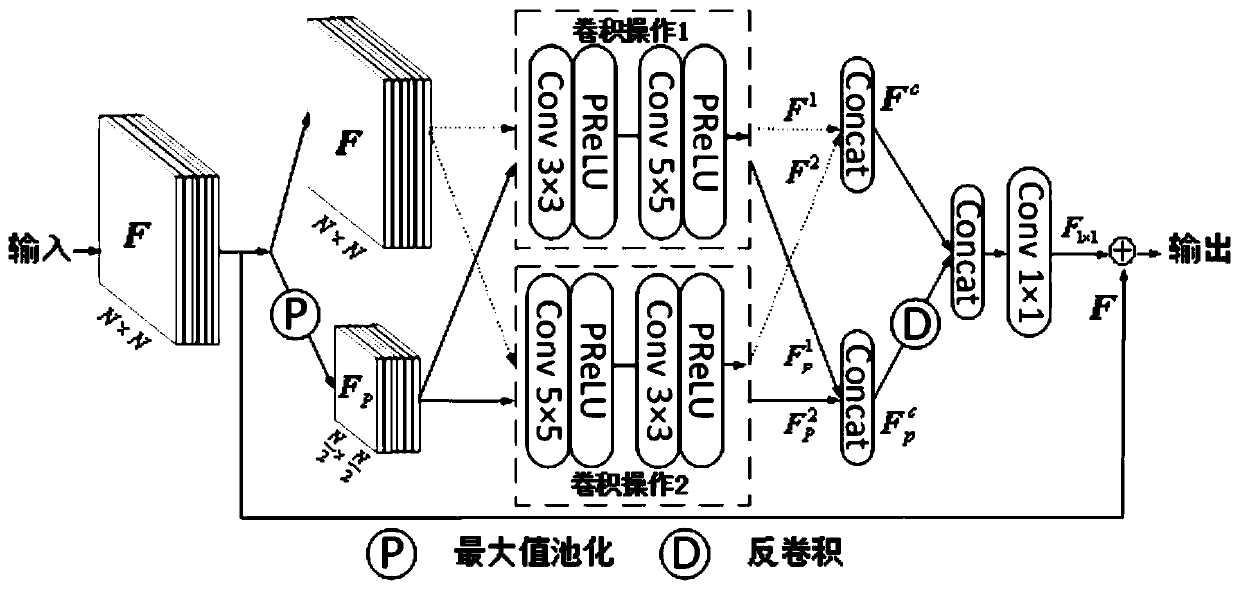

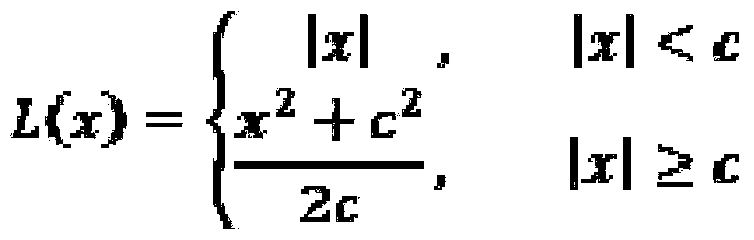

Single-frame rainfall removing method based on multi-scale feature fusion

ActiveCN109360155AGood effect in removing rainUniversalImage enhancementNeural architecturesPeak valueError function

The invention provides a single frame image rain removing method based on multi-scale feature fusion, Feature extraction of rainless images with different scale receptive fields, then deconvolution operation to get the result of rainless images, using the combination of coarse-scale features and fine-scale features, to promote the rainless images generated by fine-scale to achieve the best effectof rainless. By removing rainlines on multiple scales, the algorithm can be used in a variety of rainwater situations, and the rainout algorithm is more universal. The invention cites the antagonisticerror and the perceptual error to construct a new error function, and trains the rain removing model without any prior knowledge, and does not need to preprocess and post-process the image, thus ensuring the integrity of the whole structure. The results on a plurality of test sets show that the invention can improve the peak signal-to-noise ratio on the luminance signal channel by 2-5dB.

Owner:SHANGHAI JIAO TONG UNIV

Night vision image salient contour extracting method based on non-classical receptive field composite modulation

The invention discloses a night vision image salient contour extracting method based on non-classical receptive field composite modulation. A multi-scale iterative noticing method is constructed according to a non-classical receptive field composite modulation model, the scale factor of non-classical receptive field composite modulation is dynamically changed in the iterative process, and a composite modulation result of an input night vision image is calculated. In each step of the iterative process, for each pixel of the input image, a multi-dimensional feature contrast MFC weighted inhibition model is adopted to calculate an inhibition result of each pixel first, then, a facilitation result of each pixel is calculated based on a grouping excited voting GEV facilitation model, and finally, non-classical receptive field composite modulation output is obtained. By adopting the method of the invention, the problem of noise and texture suppression in low-light and infrared images and the problem of contour discontinuity caused by imaging characteristics, environment inhibition and noise interference are solved.

Owner:NANJING UNIV OF SCI & TECH

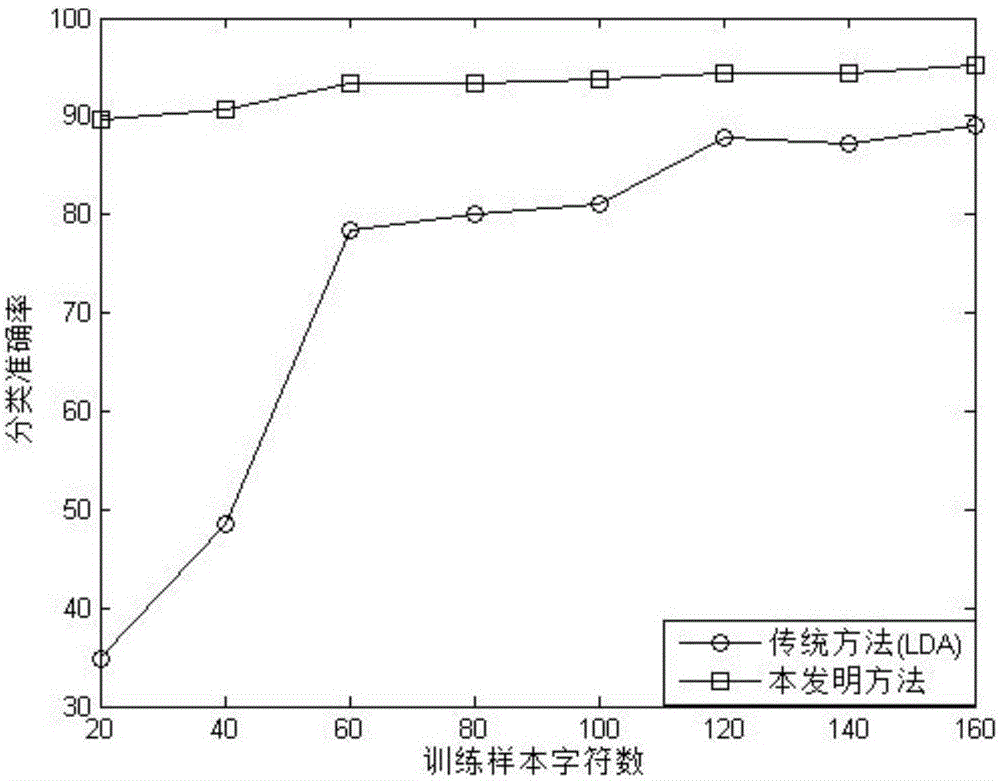

Method for detecting P300 electroencephalogram based on convolutional neural network

InactiveCN105068644ASolve the small sample problemImprove classification accuracyInput/output for user-computer interactionCharacter and pattern recognitionComputation complexityBrain computer interfacing

The invention discloses a method for detecting a P300 electroencephalogram based on a convolutional neural network, which is used for a brain-computer interface classification algorithm and is capable of effectively solving a small sample problem in the conventional classification algorithm while improving the classification accuracy. Through using a thought of an image recognition field for reference, the method fully utilizes thoughts of a local receptive field and weight sharing of the convolutional neural network to take a typical P300 electroencephalogram acquisition sample as an analogy of a feature image, the sample characteristics are extracted through a continuous convolution process, and through carrying out feature mapping on a down sampling process, feature extraction and feature mapping are continuously performed, so that the sample characteristics are more simplified, meanwhile, through applying the local receptive field and weight sharing, network weighting parameters and computation complexity are greatly reduced to facilitate popularization of the algorithm. The experimental result shows that through the method adopted in the invention, the classification accuracy is effectively improved, the system stability is increased, and the method has better application prospect.

Owner:SHANDONG UNIV

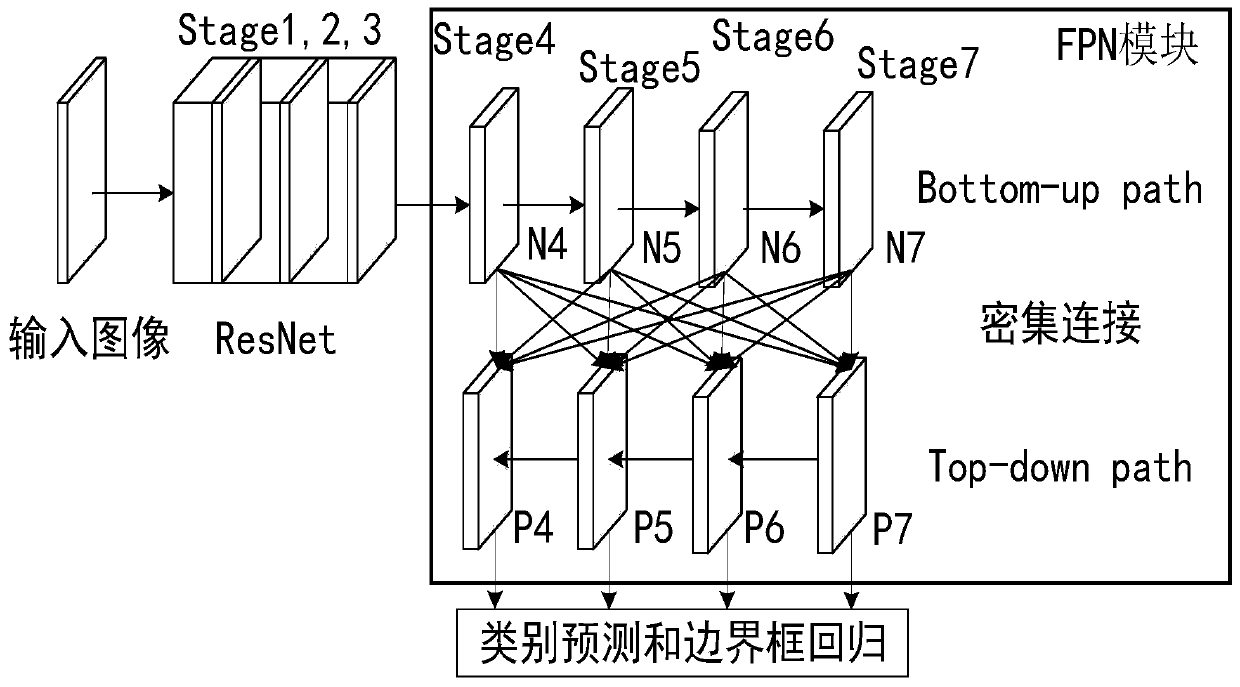

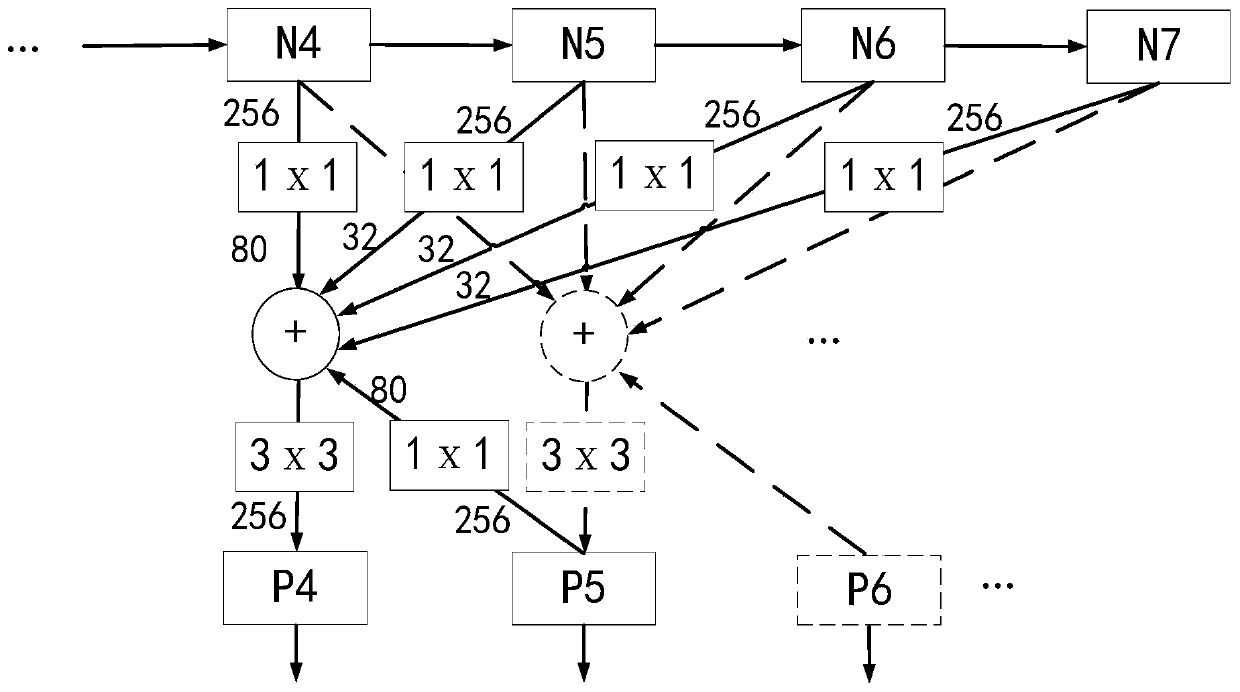

Target detection method and system based on fusion of different-scale receptive field feature layers, and medium

ActiveCN110321923AImprove featuresEasy to detectCharacter and pattern recognitionNeural architecturesData setFeature extraction

The invention provides a target detection method and system based on different-scale receptive field feature layer fusion and a medium. The method comprises the following steps: a data volume increasing step: carrying out incremental processing on a training data set with a label, increasing the data volume of the training data set, adjusting the training image size of the training data to be thesame as the model input size, and obtaining the training data set after data increase; and a target detection network model building step: taking the classic network model as the network basis of thetarget detector, and replacing the transverse connection in the feature pyramid network FPN with the dense connection to obtain a dense connection FPN target detection network model. The defect that an existing target detection model only uses feature information in part of feature layers to detect a target object is overcome; the feature layers of a plurality of different receptive fields are fused through FPN dense connection, so that feature information required for object detection in a plurality of scale ranges can be obtained, and the feature extraction capability and the target detection performance of the target detector are improved.

Owner:SHANGHAI UNIV

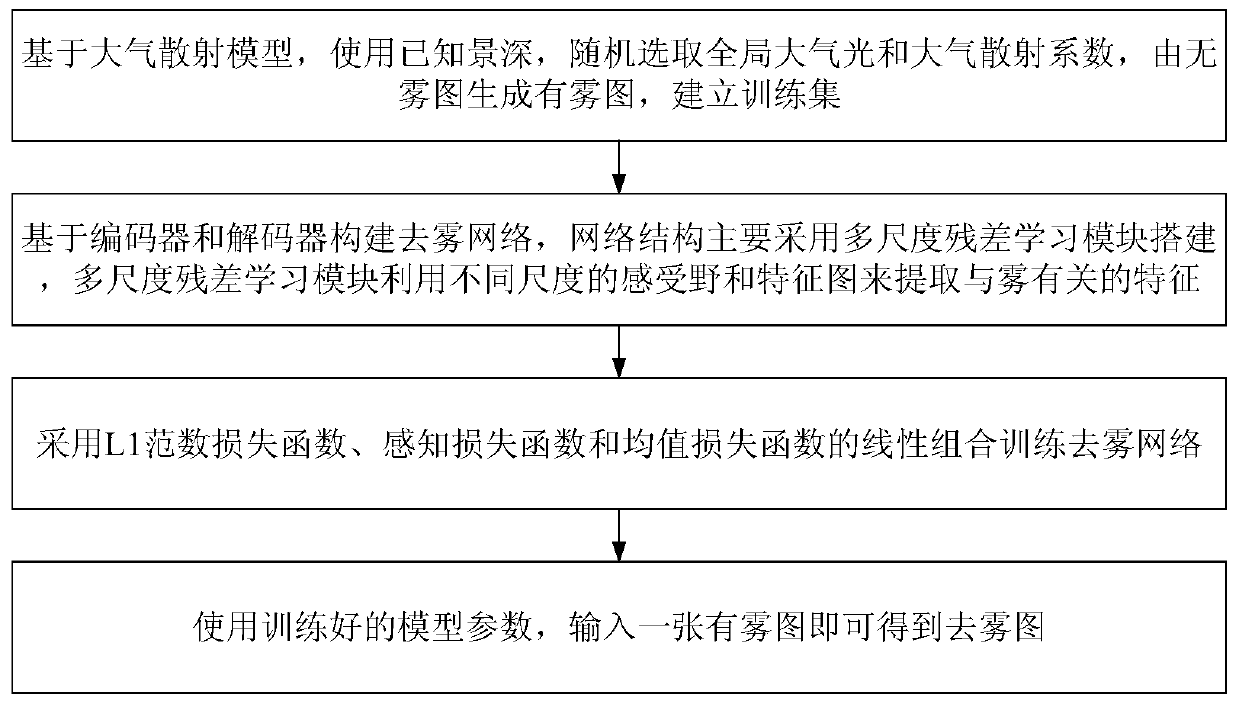

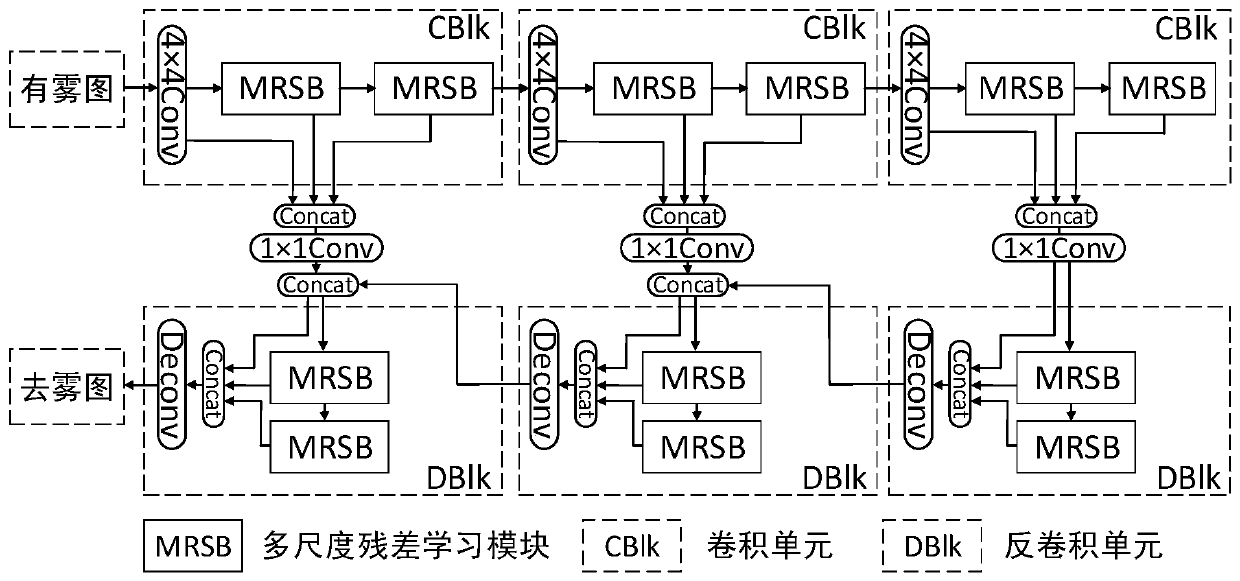

Image defogging method based on multi-scale residual learning

PendingCN110570371ARealistic Image DehazingEasy to implementImage enhancementImage analysisHypothesisNetwork structure

The invention discloses an image defogging method based on multi-scale residual learning, and the method comprises the steps: randomly selecting global atmospheric light and atmospheric scattering coefficients through a known depth of field based on an atmospheric scattering model, generating a foggy image through a fogless image, and building a training set; creating a defogging network based onan encoder and a decoder, creating a network structure by adopting a multi-scale residual learning module, and enabling a multi-scale residual learning module to extract features related to fog by utilizing receptive fields and feature maps of different scales; training a defogging network by adopting a linear combination of an L1 norm loss function, a perception loss function and a mean value loss function; and inputting a foggy image by using the trained model parameters to obtain a defogged image. Complex hypothesis and prior are not needed, the fogless image can be directly recovered fromone foggy image, and the method is simple and easy to implement.

Owner:TIANJIN UNIV

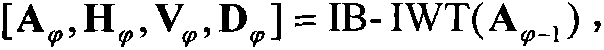

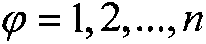

Remote sensing image region of interest detection method based on integer wavelets and visual features

InactiveCN103247059AImprove detection accuracyReduce computational complexityImage analysisComputation complexityGoal recognition

The invention discloses a remote sensing image region of interest detection method based on integer wavelets and visual features, which belongs to the technical field of remote sensing image target identification. The implementing process of the method comprises the following steps: 1, performing color synthesis and filtering and noise reduction preprocessing on a remote sensing image; 2, converting the preprocessed RGB spatial remote sensing image into a CIE Lab color space to obtain a brightness and color feature map, and converting an L component by using integer wavelets to obtain a direction feature map; 3, constructing a Gaussian difference filter for simulating the retina receptive field of a human eye, performing cross-scale combination in combination with a Gaussian pyramid to obtain a brightness and color feature saliency map, and performing wavelet coefficient sieving and cross-scale combination to obtain a direction feature saliency map; 4, synthesizing a main saliency map by using a feature competitive strategy; and 5, partitioning the threshold values of the main saliency map to obtain a region of interest. Due to the adoption of the remote sensing image region of interest detection method, the detection accuracy of a remote sensing image region of interest is increased, and the computation complexity is lowered; and the remote sensing image region of interest detection method can be applied to the fields of environmental monitoring, urban planning, forestry investigation and the like.

Owner:BEIJING NORMAL UNIVERSITY

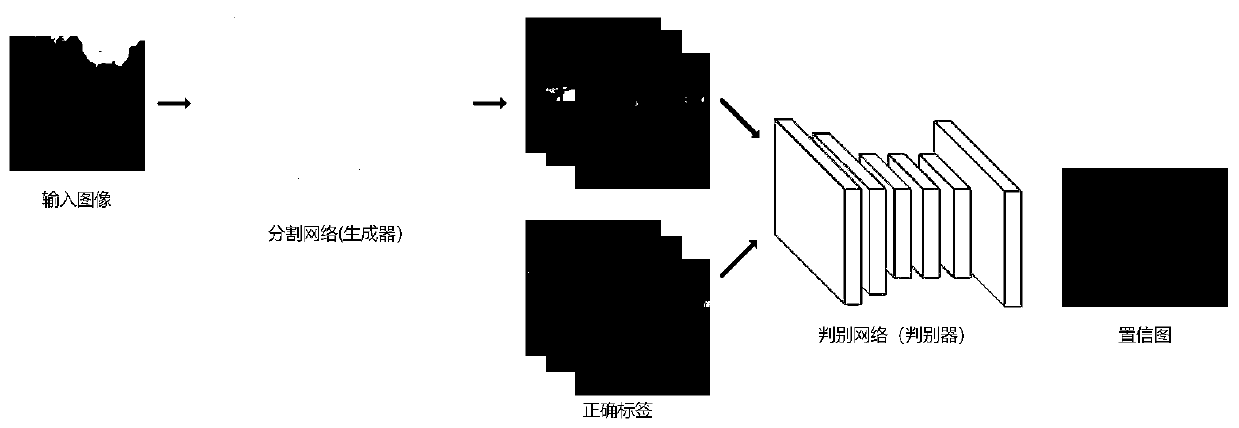

Adversarial-based lightweight network semantic segmentation method

ActiveCN110490884AProcessing speedImprove accuracyImage enhancementImage analysisPattern recognitionNetwork processing

Owner:BEIJING UNIV OF TECH

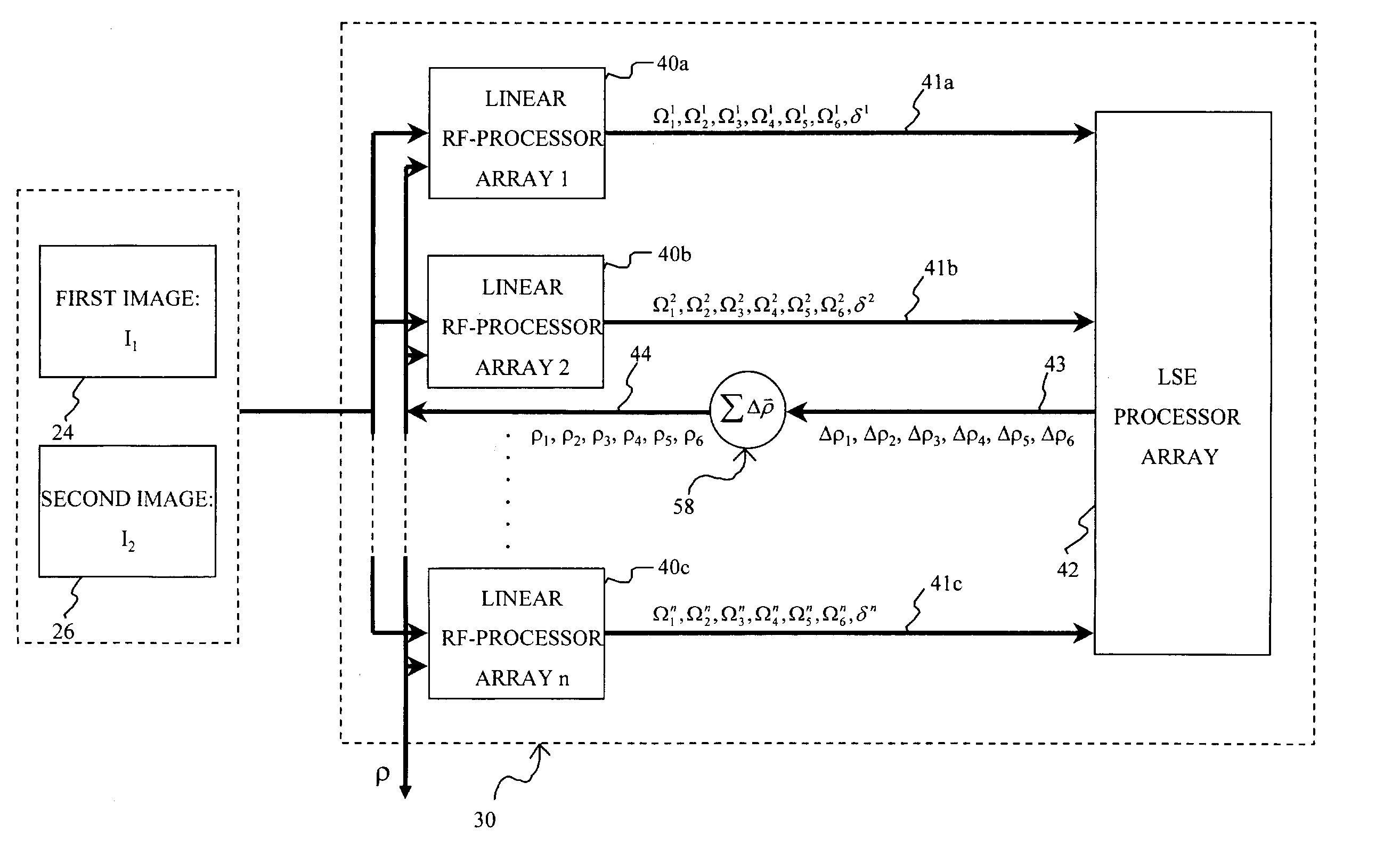

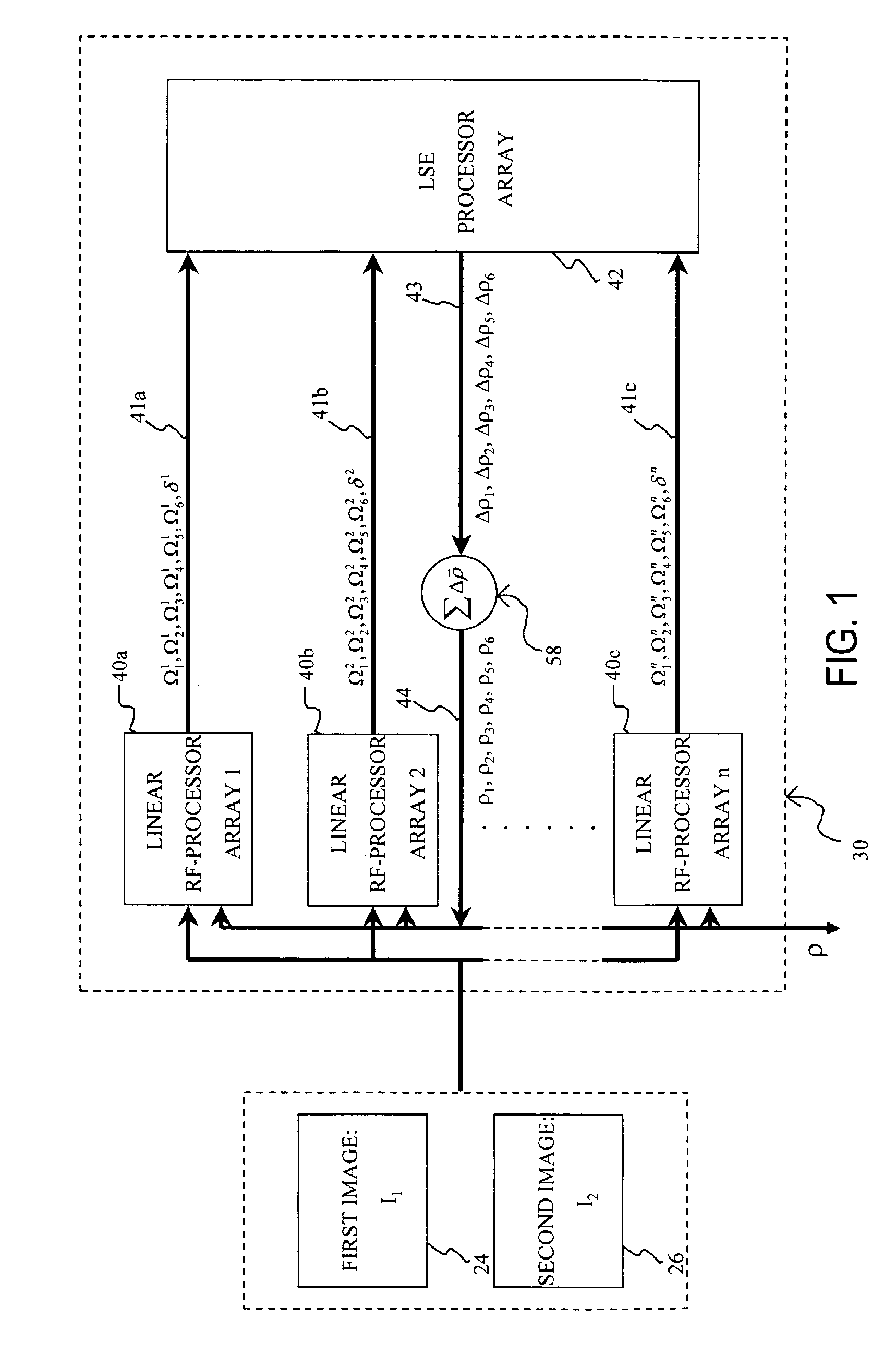

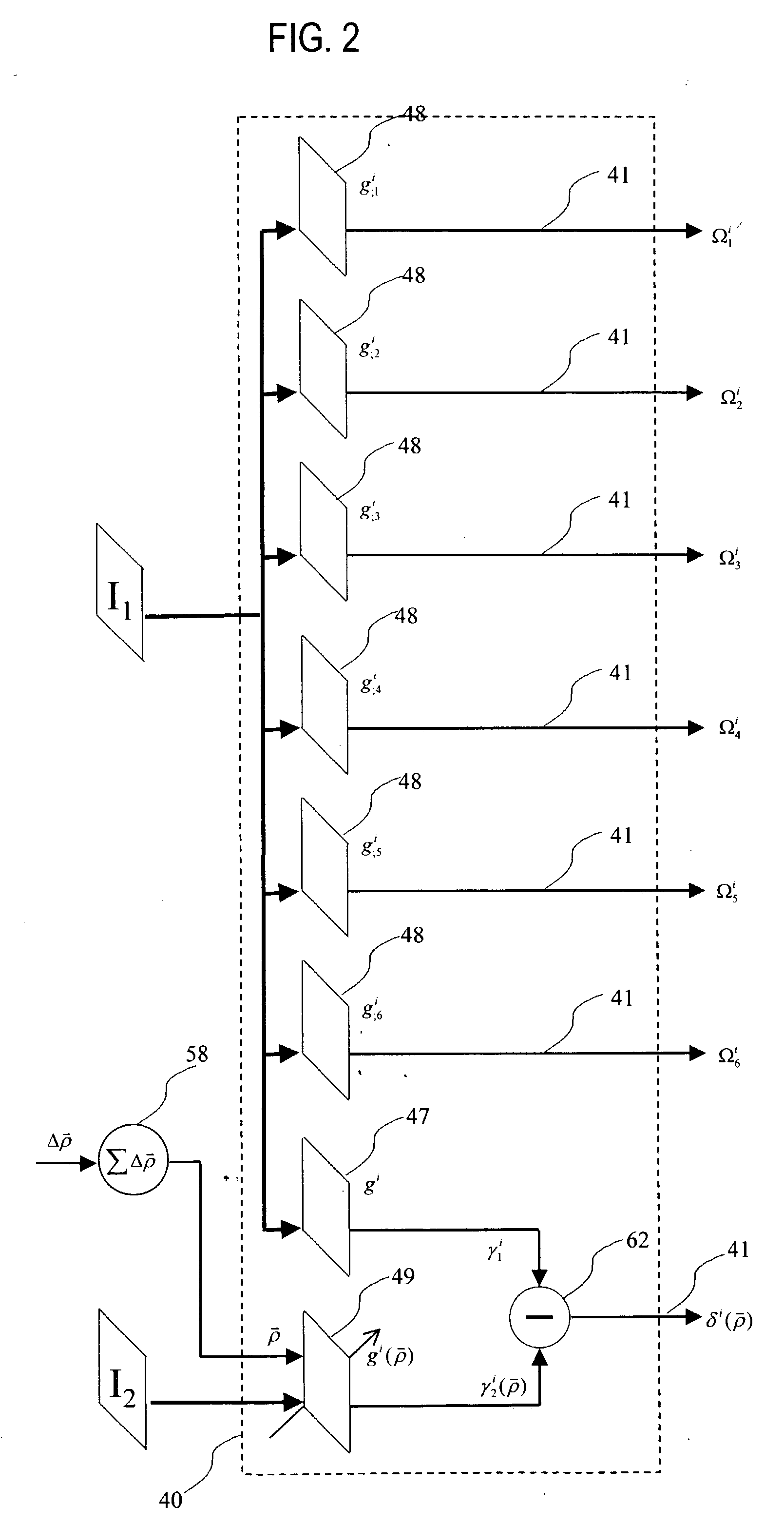

Affine transformation analysis system and method for image matching

InactiveUS20040175057A1Minimize the differenceImprove accuracyImage analysisGeneral purpose stored program computerRadio frequencyVisual perception

An affine transformation analysis system and method is provided for matching two images. The novel systolic array image affine transformation analysis system comprising a linear rf-processing means, an affine parameter incremental updating means, and a least square error fitting means is based on a Lie transformation group model of cortical visual motion and stereo processing. Image data is provided to a plurality of component linear rf-processing means each comprising a Gabor receptive field, a dynamical Gabor receptive field, and six Lie germs. The Gabor coefficients of images and affine Lie derivatives are extracted from responses of linear receptive fields, respectively. The differences and affine Lie-derivatives of these Gabor coefficients obtained from each parallel pipelined linear rf-processing components are then input to a least square error fitting means, a systolic array comprising a QR decomposition means and a backward substitution means. The output signal from the least square error fitting means then be used to updating of the affine parameters until the difference signals between the Gabor coefficients from the static and dynamical Gabor receptive fields are substantially reduced.

Owner:TSAO THOMAS +1

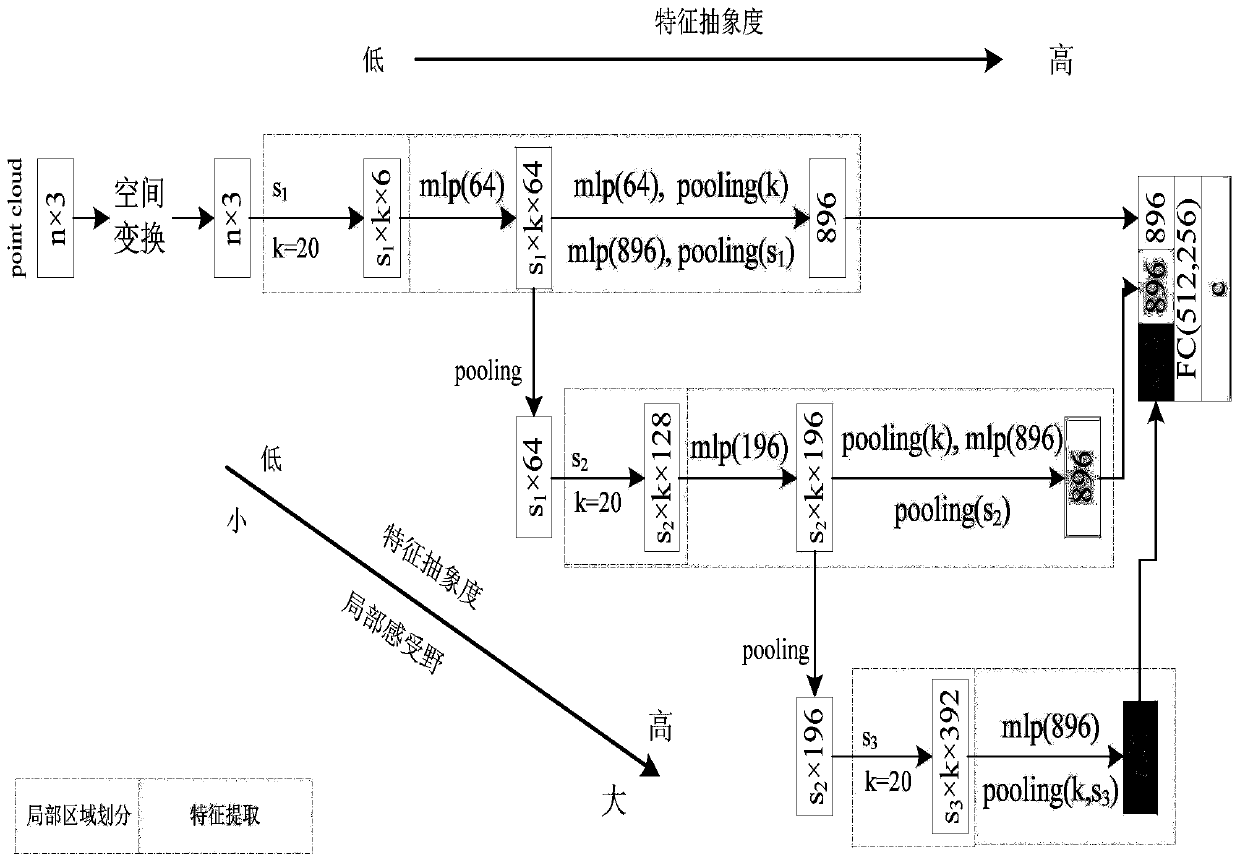

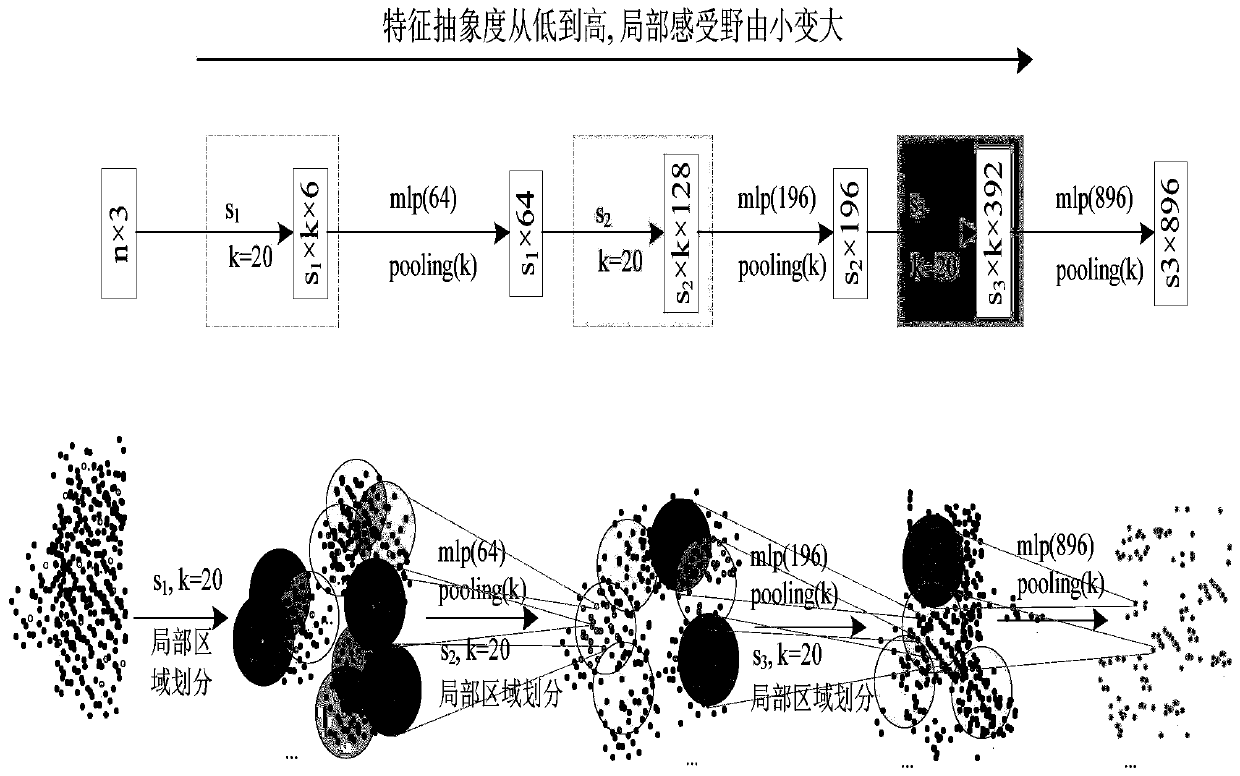

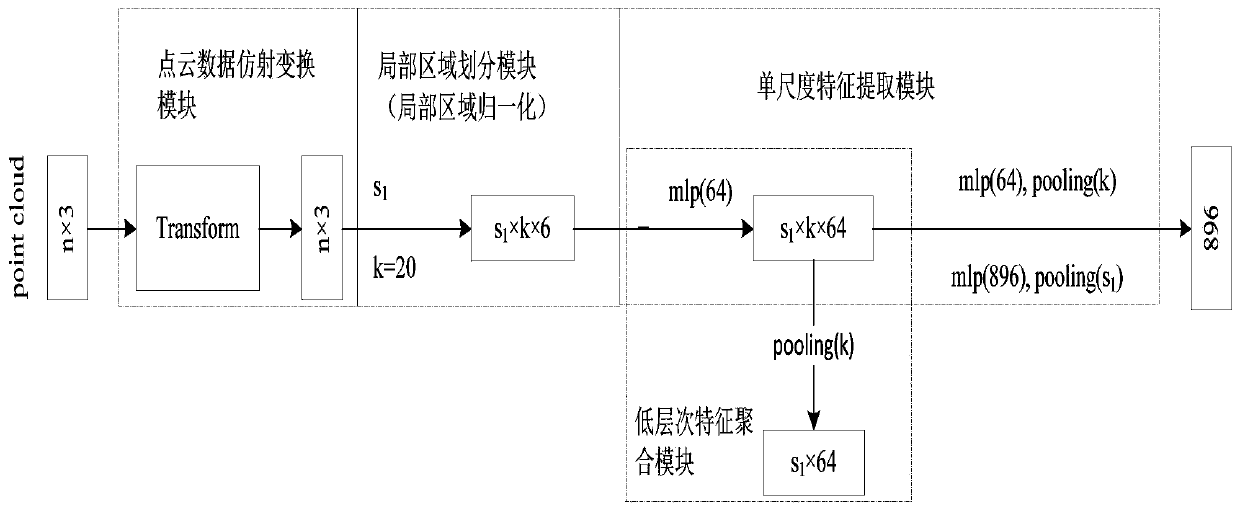

Point cloud data classification method based on deep learning

ActiveCN110197223AGuaranteed affine transformation invarianceExcellent division effectCharacter and pattern recognitionPoint cloudData set

The invention discloses a point cloud data classification method based on deep learning. The method provides a multi-scale point cloud classification network, and comprises the steps of firstly, providing a multi-scale local area division algorithm on the basis of completeness, adaptivity, overlap and multi-scale characteristic requirements of the local area division, and obtaining a multi-scale local area by taking the point cloud and the characteristics of different levels as input; and then constructing the multi-scale point cloud classification network comprising a single-scale feature extraction module, a low-level feature aggregation module, a multi-scale feature fusion module and the like. The network fully simulates the action principle of the convolutional neural network, and hasthe basic characteristics that the local receptive field becomes larger and larger and the feature abstraction degree becomes higher and higher along with the increase of the network scale and depth.The method of the invention respectively obtains the 94.71% and 91.73% classification accuracies at the standard public data set ModelNet 10 and ModelNet 40, is in a leading or equivalent level in thesimilar work, and the feasibility and effectiveness of the method are verified.

Owner:BEIFANG UNIV OF NATITIES

Semantic segmentation method based on improved full convolutional neural network

InactiveCN108921196APrecise Control of ResolutionExpand the receptive fieldCharacter and pattern recognitionStochastic gradient descentAlgorithm

The invention discloses a semantic segmentation method based on an improved full convolutional neural network. The semantic segmentation method comprises the steps of: acquiring training image data; inputting the training image data into a porous full convolutional neural network, and obtaining a size-reduced feature map through a standard convolution pooling layer; extracting denser features while maintaining the feature map size through a porous convolutional layer; predicting the feature map pixel-by-pixel to obtain a segmentation result; using the stochastic gradient descent method SGD totrain parameters in the porous convolutional neural network in the training; acquiring image data that needs to be subjected to semantic segmentation, and inputting the image data the trained porous convolutional neural network, and obtaining a corresponding semantic segmentation result. The invention can improve the problem that the feature map of the final upsampling recovery in the full convolution network loses sensitivity to the details of the image, and effectively expands the receptive field of a filter without increasing the number of parameters and the amount of calculation.

Owner:NANJING UNIV OF POSTS & TELECOMM

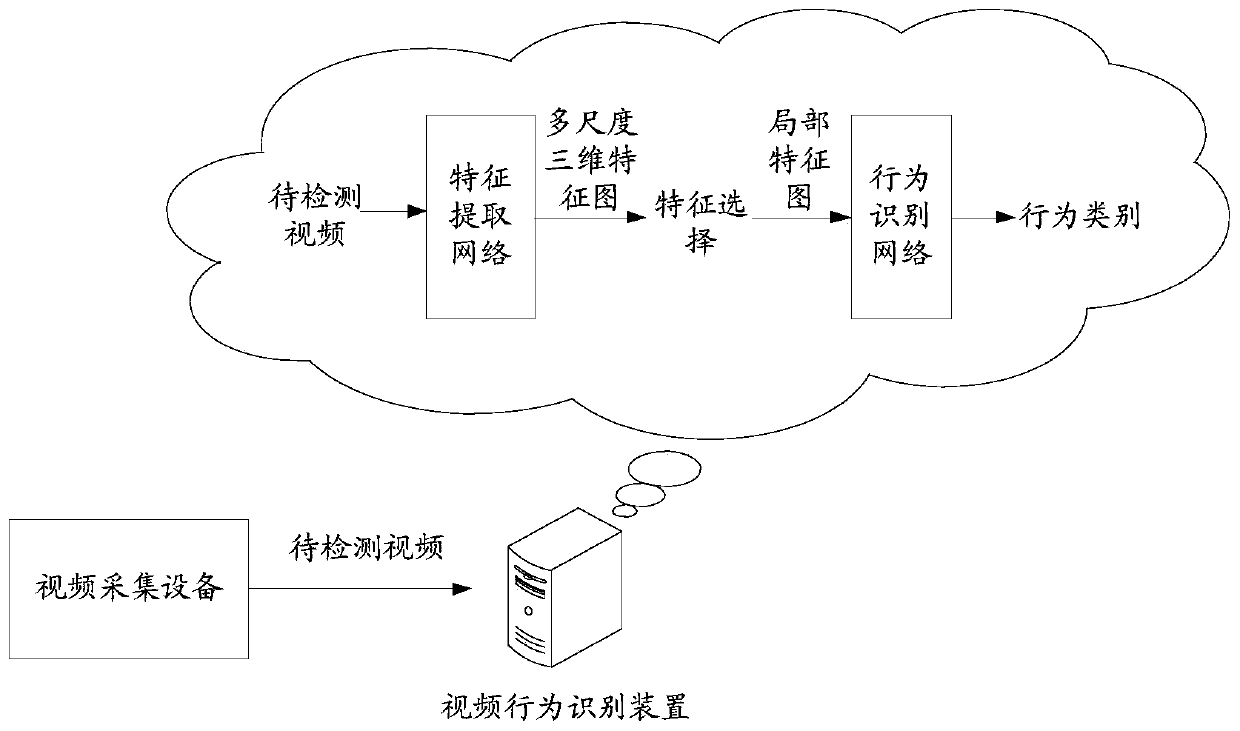

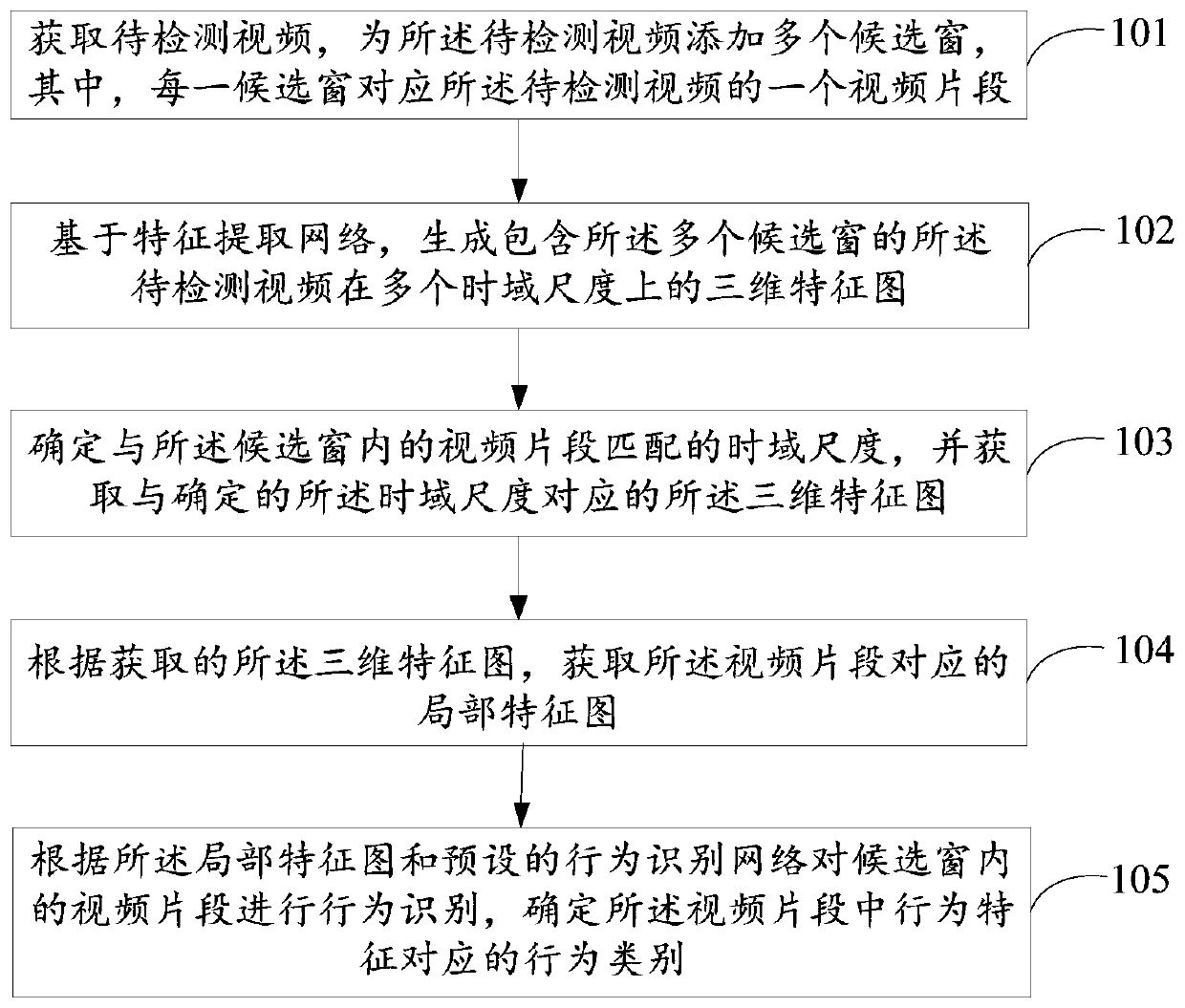

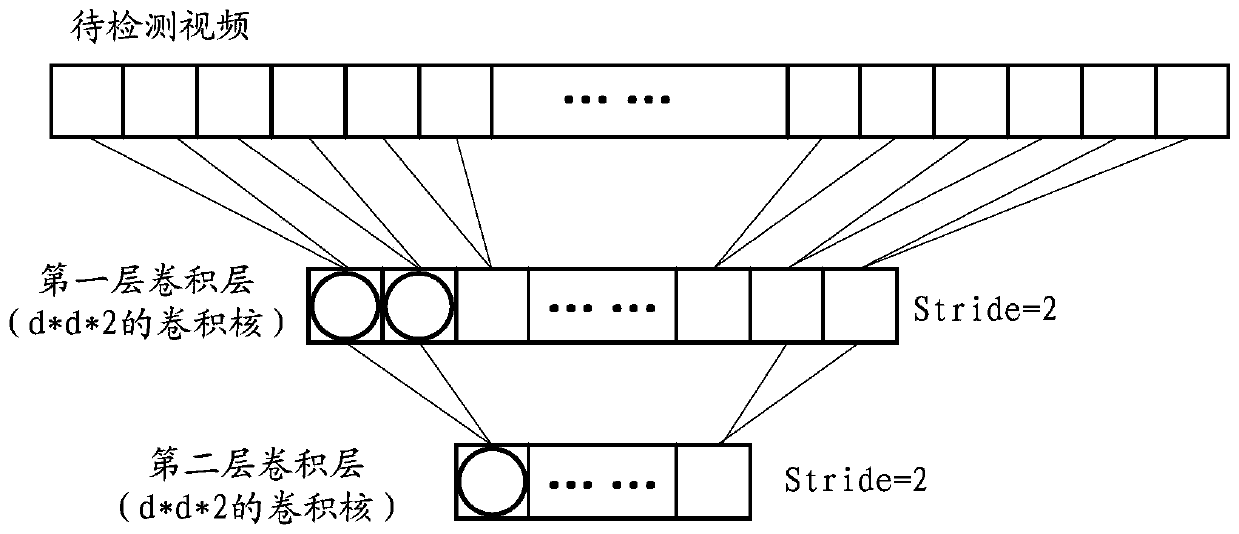

Behavior recognition method and device and storage medium

ActiveCN109697434AHigh precisionCharacter and pattern recognitionReceptive fieldBehavior recognition

The invention discloses a behavior recognition method and device and a storage medium. According to the scheme, a to-be-detected video is acquired, and a plurality of candidate windows are added to the to-be-detected video; based on the feature extraction network, generating a three-dimensional feature map of the to-be-detected video containing a plurality of candidate windows on a plurality of time domain scales; determining a time domain scale matched with the video clip in the candidate window, obtaining a three-dimensional feature map corresponding to the determined time domain scale, andobtaining a local feature map corresponding to the video clip according to the obtained three-dimensional feature map; and performing behavior recognition according to the local feature map and a preset behavior recognition network, and determining a behavior category corresponding to the behavior feature in the video clip. According to the scheme, the three-dimensional feature maps of the to-be-detected video on multiple time domain scales can be obtained from the to-be-detected video by using the feature extraction network, so that the receptive field of the classifier can adapt to the behavior features of different time lengths, and the accuracy of behavior recognition of multiple time spans is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com