Patents

Literature

47 results about "Feature saliency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Improved visual attention model-based method of natural scene object detection

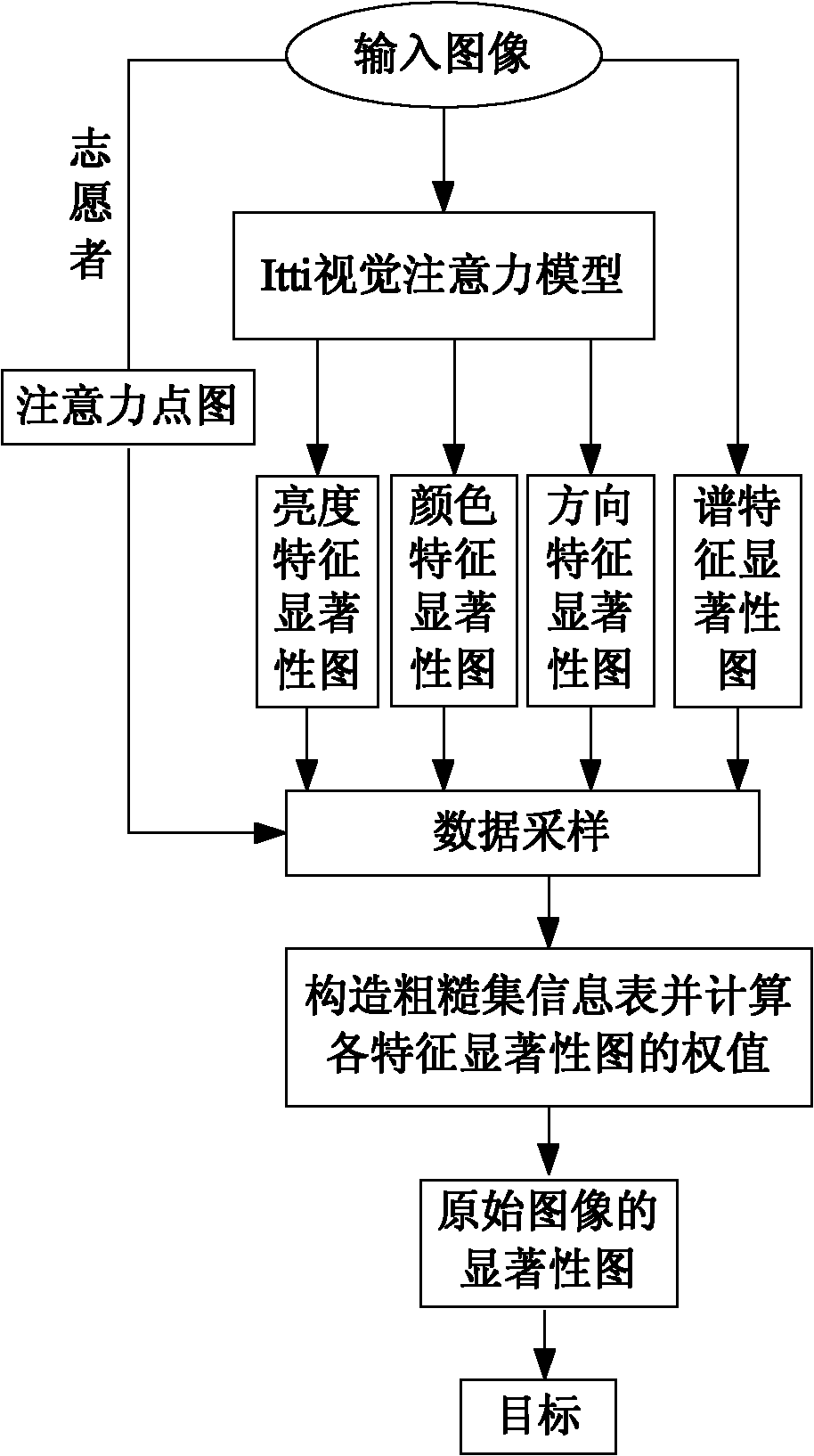

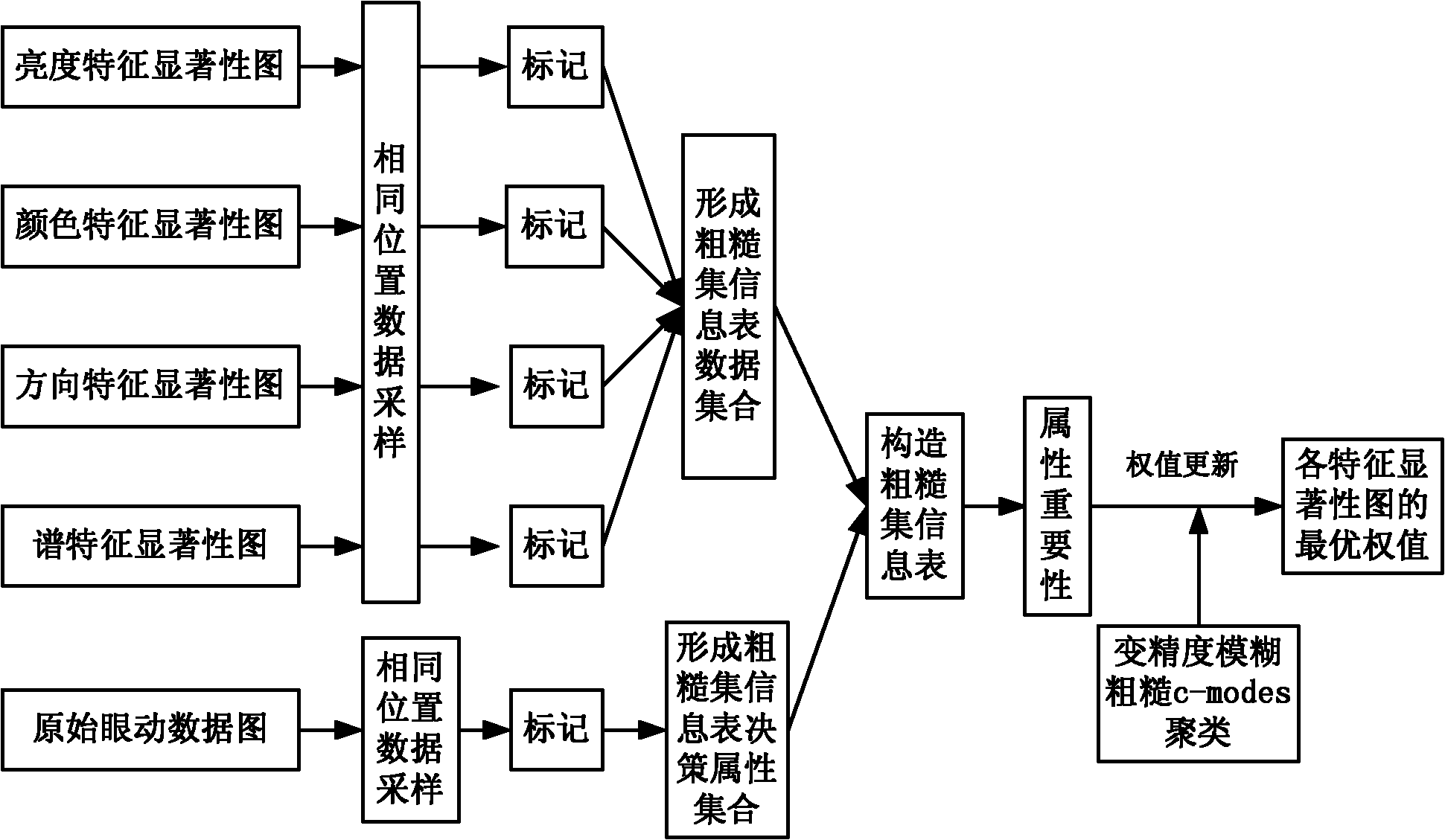

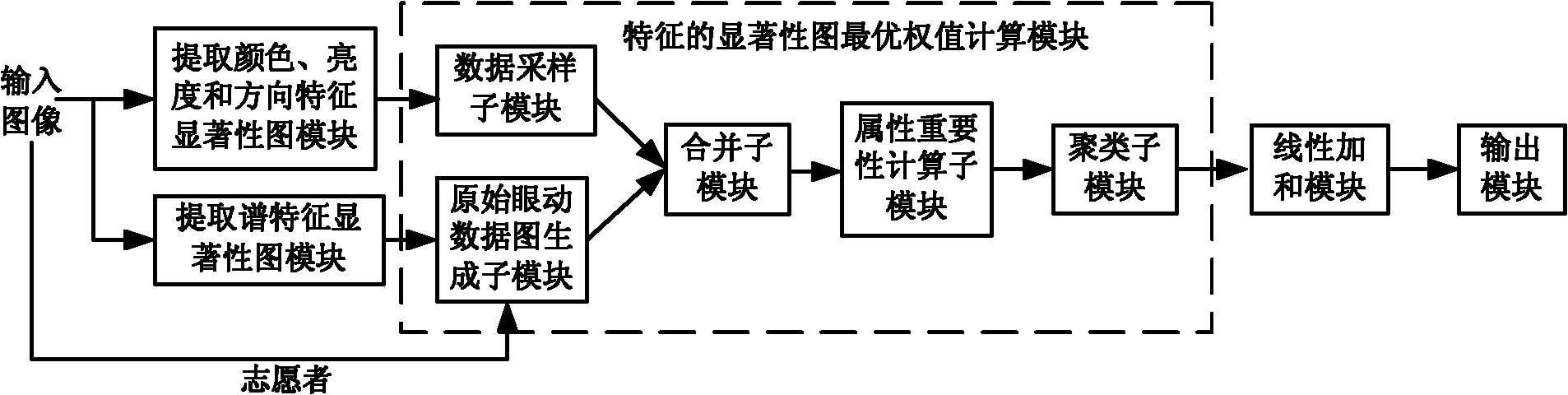

InactiveCN101980248AImprove accuracyIncrease contributionImage analysisCharacter and pattern recognitionImage extractionModel extraction

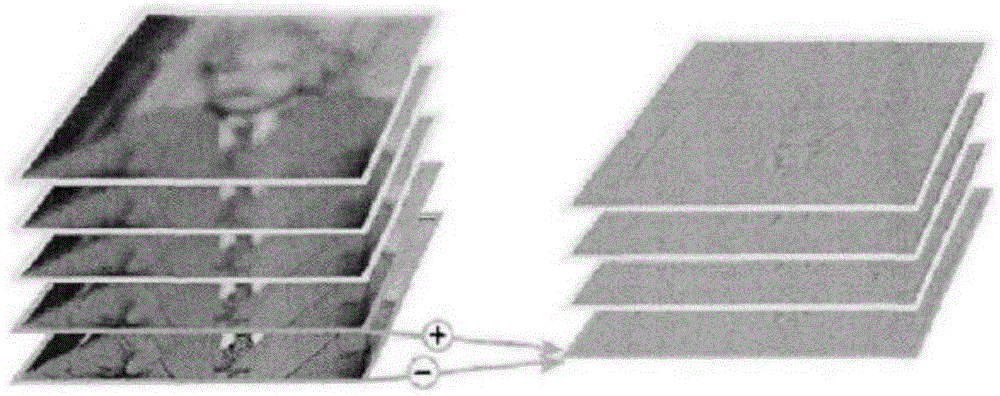

The invention discloses an improved visual attention model-based method of a natural scene object detection, which mainly solves the problems of low detection accuracy rate and high false detection rate in the conventional visual attention model-based object detection. The method comprises the following steps of: (1) inputting an image to be detected, and extracting feature saliency images of brightness, color and direction by using a visual attention model of Itti; (2) extracting a feature saliency image of a spectrum of an original image; (3) performing data sampling and marking on the feature saliency images of the brightness, the color, the direction and the spectrum and an attention image of an experimenter to form a final rough set information table; (4) constructing attribute significance according to the rough set information table, and obtaining the optimal weight value of the feature images by clustering ; and (5) weighing feature sub-images to obtain a saliency image of the original image, wherein a saliency area corresponding to the saliency image is a target position area. The method can more effectively detect a visual attention area in a natural scene and position objects in the visual attention area.

Owner:XIDIAN UNIV

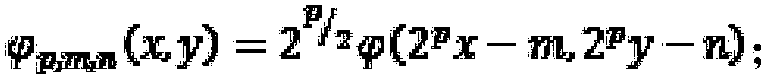

Remote sensing image region of interest detection method based on integer wavelets and visual features

InactiveCN103247059AImprove detection accuracyReduce computational complexityImage analysisComputation complexityGoal recognition

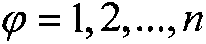

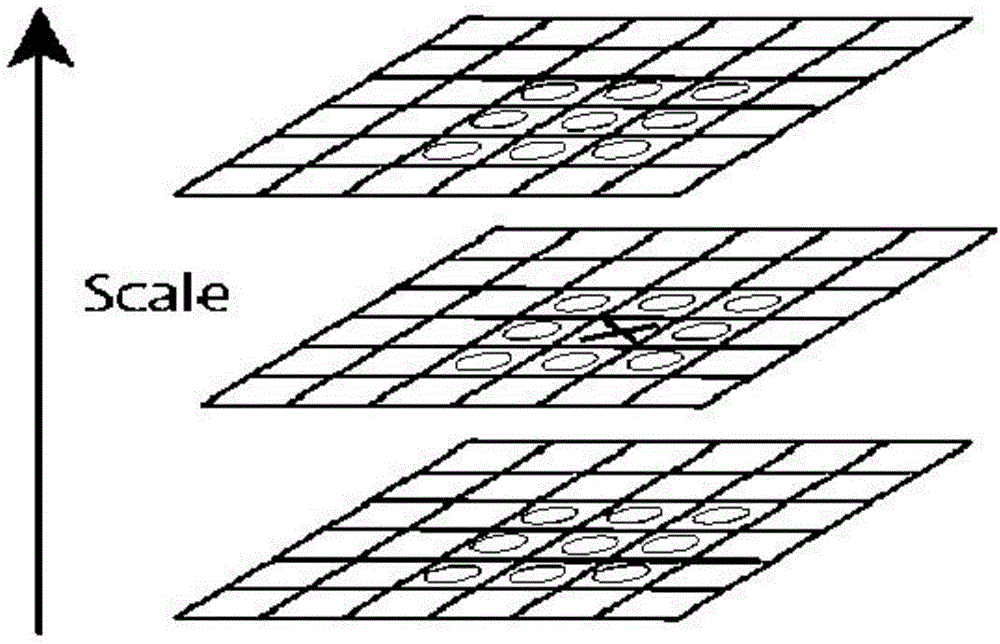

The invention discloses a remote sensing image region of interest detection method based on integer wavelets and visual features, which belongs to the technical field of remote sensing image target identification. The implementing process of the method comprises the following steps: 1, performing color synthesis and filtering and noise reduction preprocessing on a remote sensing image; 2, converting the preprocessed RGB spatial remote sensing image into a CIE Lab color space to obtain a brightness and color feature map, and converting an L component by using integer wavelets to obtain a direction feature map; 3, constructing a Gaussian difference filter for simulating the retina receptive field of a human eye, performing cross-scale combination in combination with a Gaussian pyramid to obtain a brightness and color feature saliency map, and performing wavelet coefficient sieving and cross-scale combination to obtain a direction feature saliency map; 4, synthesizing a main saliency map by using a feature competitive strategy; and 5, partitioning the threshold values of the main saliency map to obtain a region of interest. Due to the adoption of the remote sensing image region of interest detection method, the detection accuracy of a remote sensing image region of interest is increased, and the computation complexity is lowered; and the remote sensing image region of interest detection method can be applied to the fields of environmental monitoring, urban planning, forestry investigation and the like.

Owner:BEIJING NORMAL UNIVERSITY

Visual underlying feature-based image enhancement method

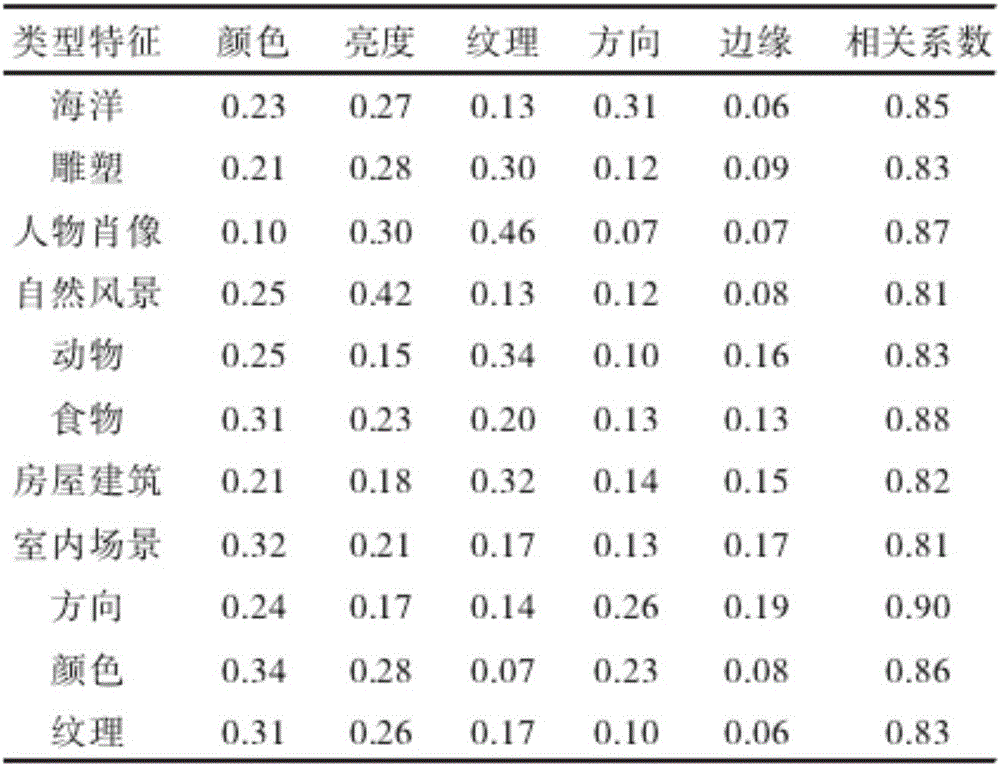

InactiveCN105023253AImprove visual qualityCompatible with human visual perceptionImage enhancementImage analysisColor imageFixation point

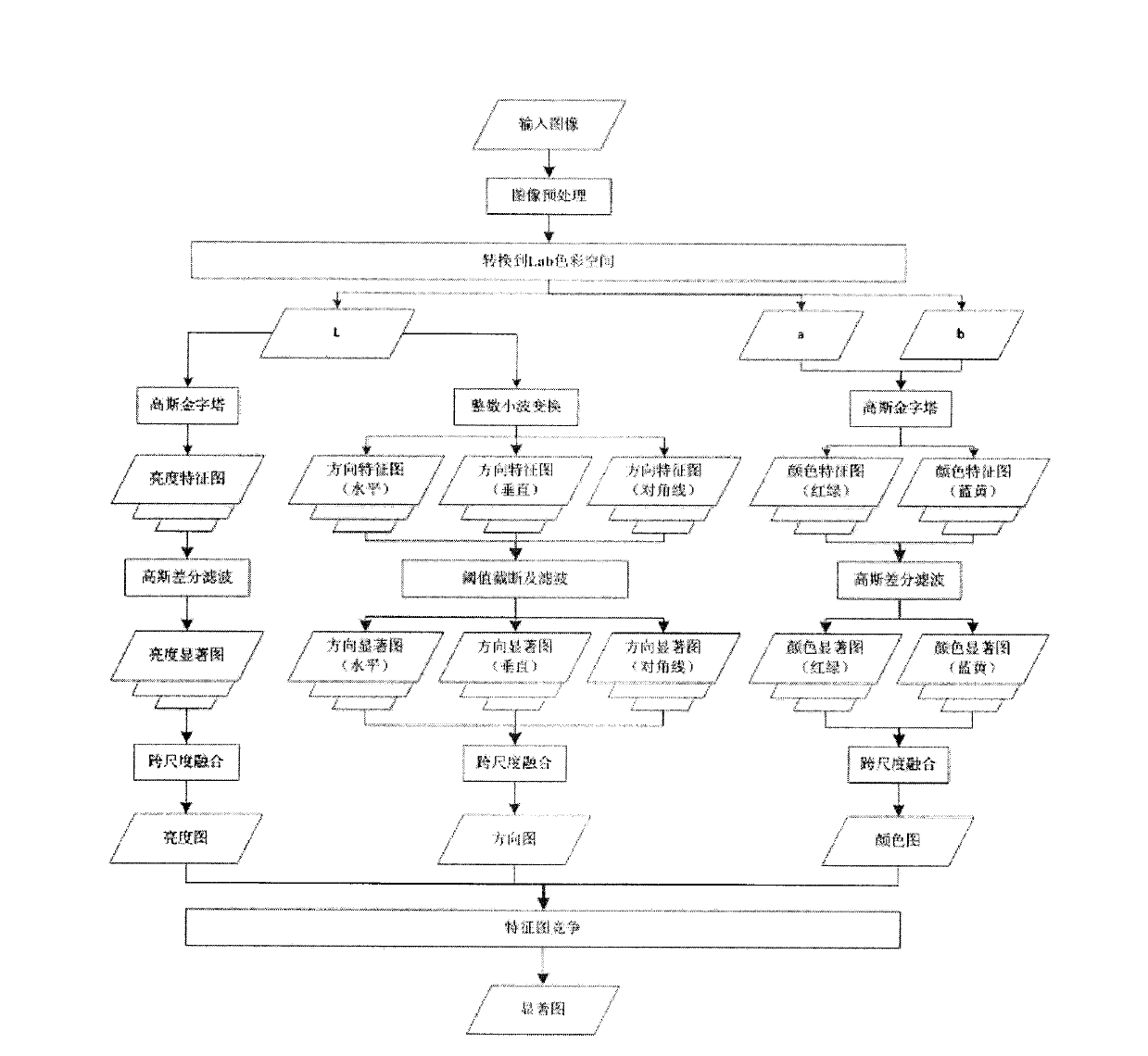

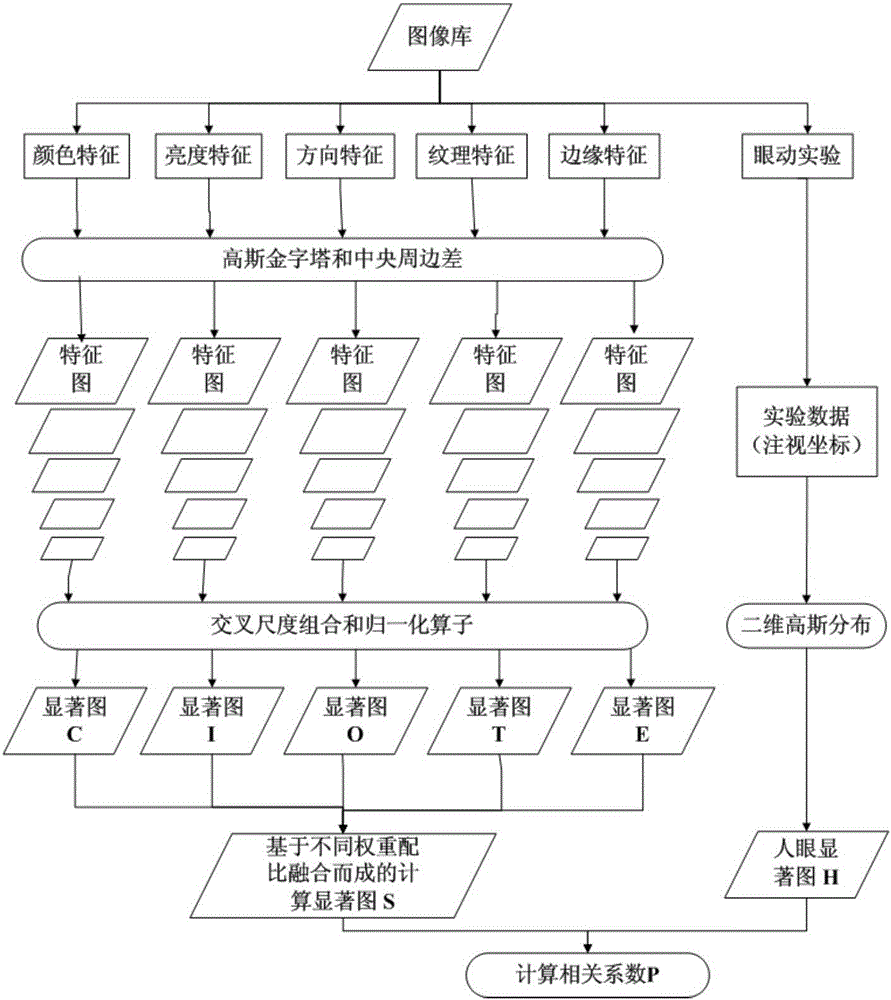

The invention relates to a visual underlying feature-based image enhancement method. The method includes the following steps that: 1, the visual underlying features of an image are extracted; 2, weight fusion is performed on the visual underlying features of the image, so that a computing saliency map can be formed; 3, correlation coefficient comparison is performed on an eye movement saliency map measured by an eye tracker and the computing saliency map, so that the optimal weight of the visual underlying features can be determined; and 4, proper image enhancement methods can be selected for different types of images according to weight. According to the visual underlying feature-based image enhancement method of the invention, the eye fixation point-based eye movement saliency map is combined with a traditional image enhancement method for the first time, and an optimized visual underlying feature-based Itti visual attention model is adopted to extract the computing feature saliency map of the image, and therefore, the visual quality of color images re-displayed on a digital display device can be improved; and important information in the images can be highlighted according to different types of images, and less information is weakened, and therefore, a final image enhancement effect can more accords with eye visual perception. The method has a certain application value in image enhancement.

Owner:UNIV OF SHANGHAI FOR SCI & TECH

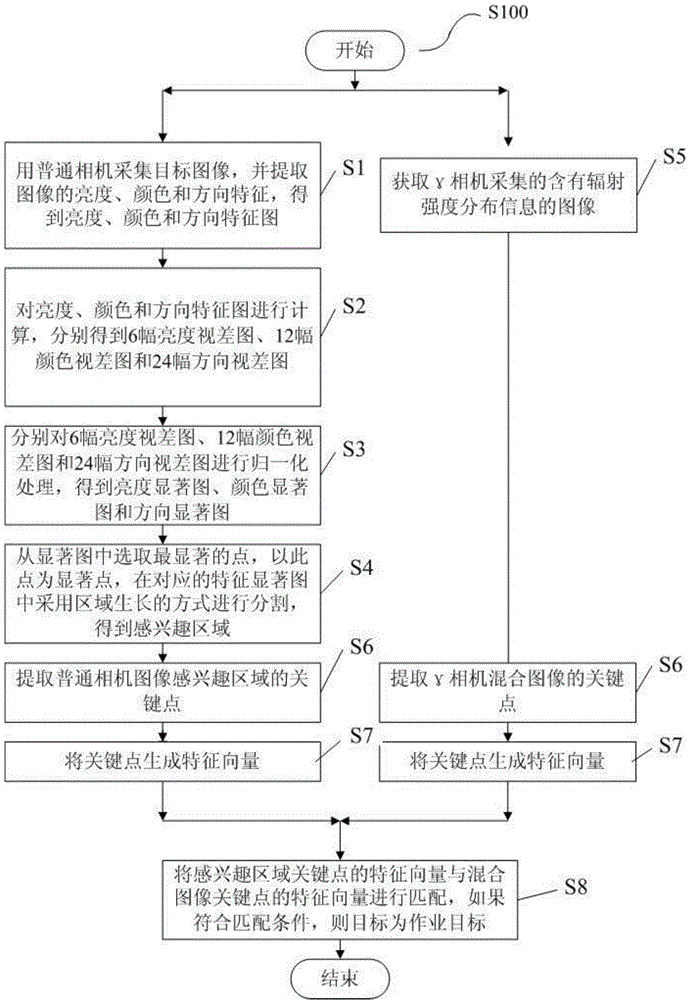

Visual attention mechanism-based method for detecting target in nuclear environment

The invention discloses a visual attention mechanism-based method for detecting a target in a nuclear environment. The method includes: extracting brightness, color, direction features collected by an ordinary camera, and so as to obtain three feature saliency images; performing weighing fusion on the abovementioned saliency images to obtain a weighting saliency image; obtaining regions of interest according to the weighing saliency image and performing feature extraction on the region of interests; extracting features of a gamma camera mixed graph; and using an SIFT method to fuse the regions of interest with the mixed graph, and detecting the position of the target. The visual attention mechanism-based method for detecting the target in the nuclear environment uses a bottom-up data drive attention model to extract the plurality of regions of interests, and greatly reduces the calculated amount of a later matching process; then combines the plurality of regions of interest with the up-down task drive attention model to establish a bidirectional visual attention model, detection precision and processing efficiency of target areas in the images can be greatly improved, and a matching process eliminates interference of unrelated regions in a scene, thereby enabling extracted operation target to have better robustness and accuracy.

Owner:四川核保锐翔科技有限责任公司

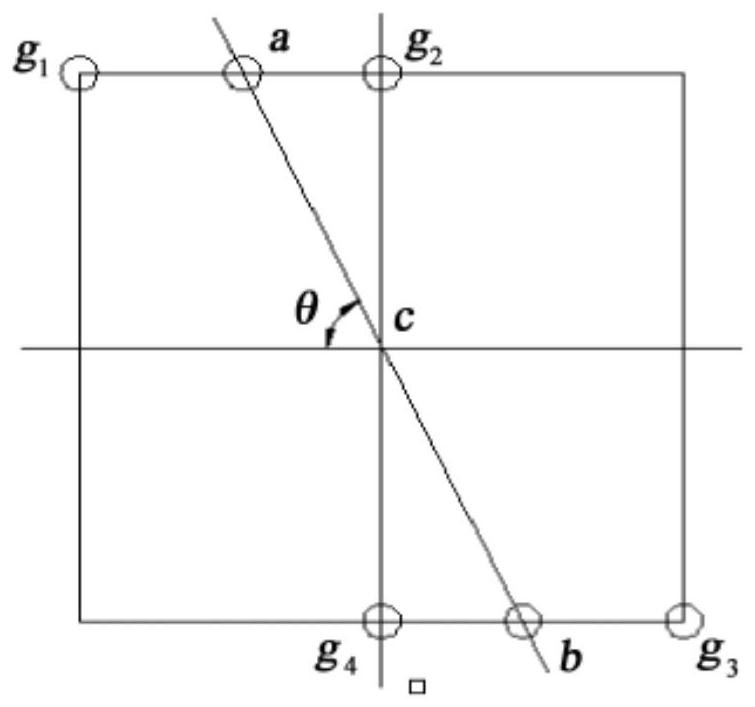

View-angle-invariant local region constraint-based slanted image linear feature matching method

ActiveCN107025449AImprove matching accuracyImprove consistencyScene recognitionReference imageFeature based

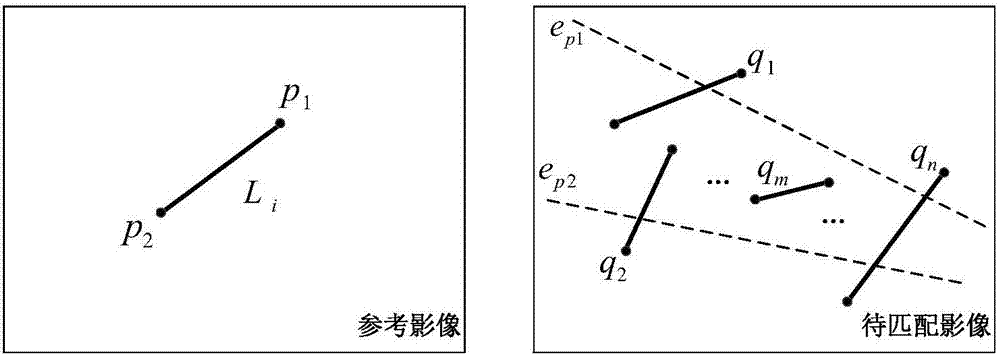

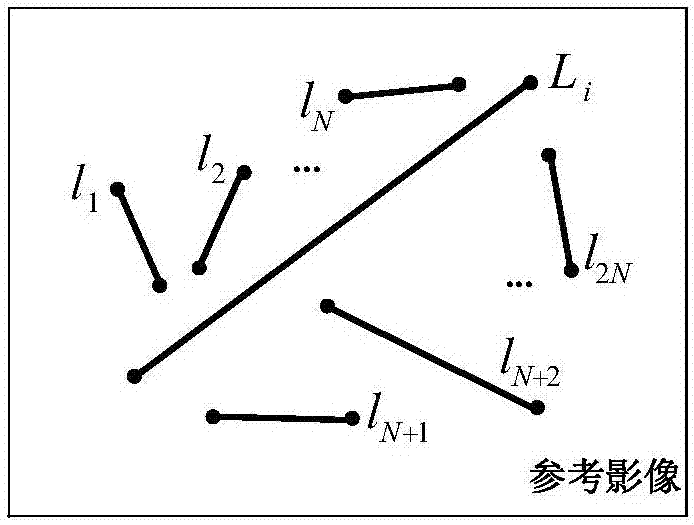

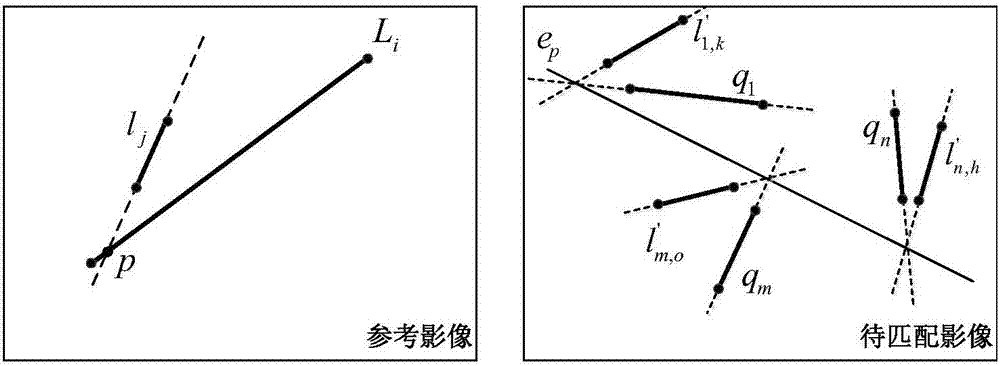

The invention relates to a view-angle-invariant local region constraint-based slanted image linear feature matching method. The method sequentially comprises the following steps of respectively extracting the linear features of a reference image and a to-be-matched image, and calculating the feature saliency of each linear feature; based on the polynomial mapping function, constructing a view-angle-invariant local region, and calculating the feature region of each linear feature based on the view-angle-invariant local region; for each linear feature, calculating the phase consistency value and the direction in the feature region, and constructing a phase consistency feature descriptor for each linear feature; according to the feature saliency of each linear feature, adopting linear features which are ranked to be the front t% in feature saliency as salient linear features, while adopting all the rest linear features as non-salient linear features; conducting the salient linear feature matching; classifying salient linear features, which are not matched successfully, as non-salient linear features; respectively adopting successfully matched salient linear features in the reference image and in the to-be-matched image as clustering centers, and clustering non-salient linear features into the type of salient linear features; conducting the non exhaustive search method and performing the non-salient linear feature matching.

Owner:SOUTHWEST JIAOTONG UNIV

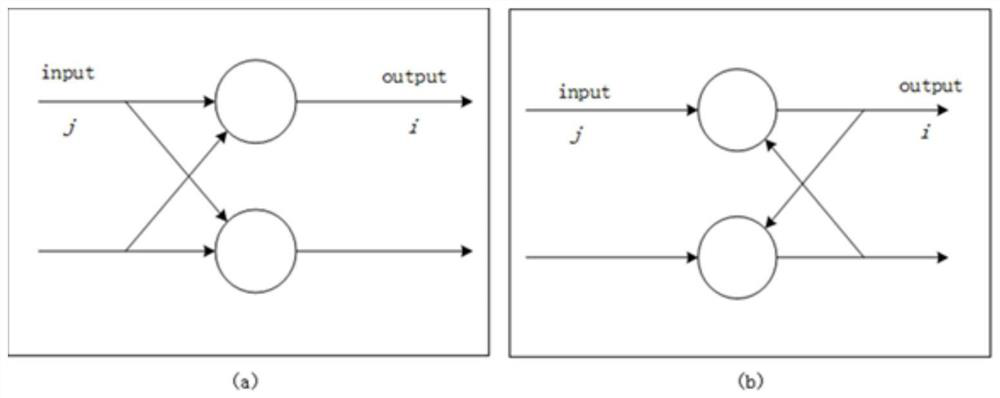

Method for detecting salient regions in sequence images based on improved visual attention model

ActiveCN104063872AReal-time processingSimple structureImage analysisCore functionPattern recognition

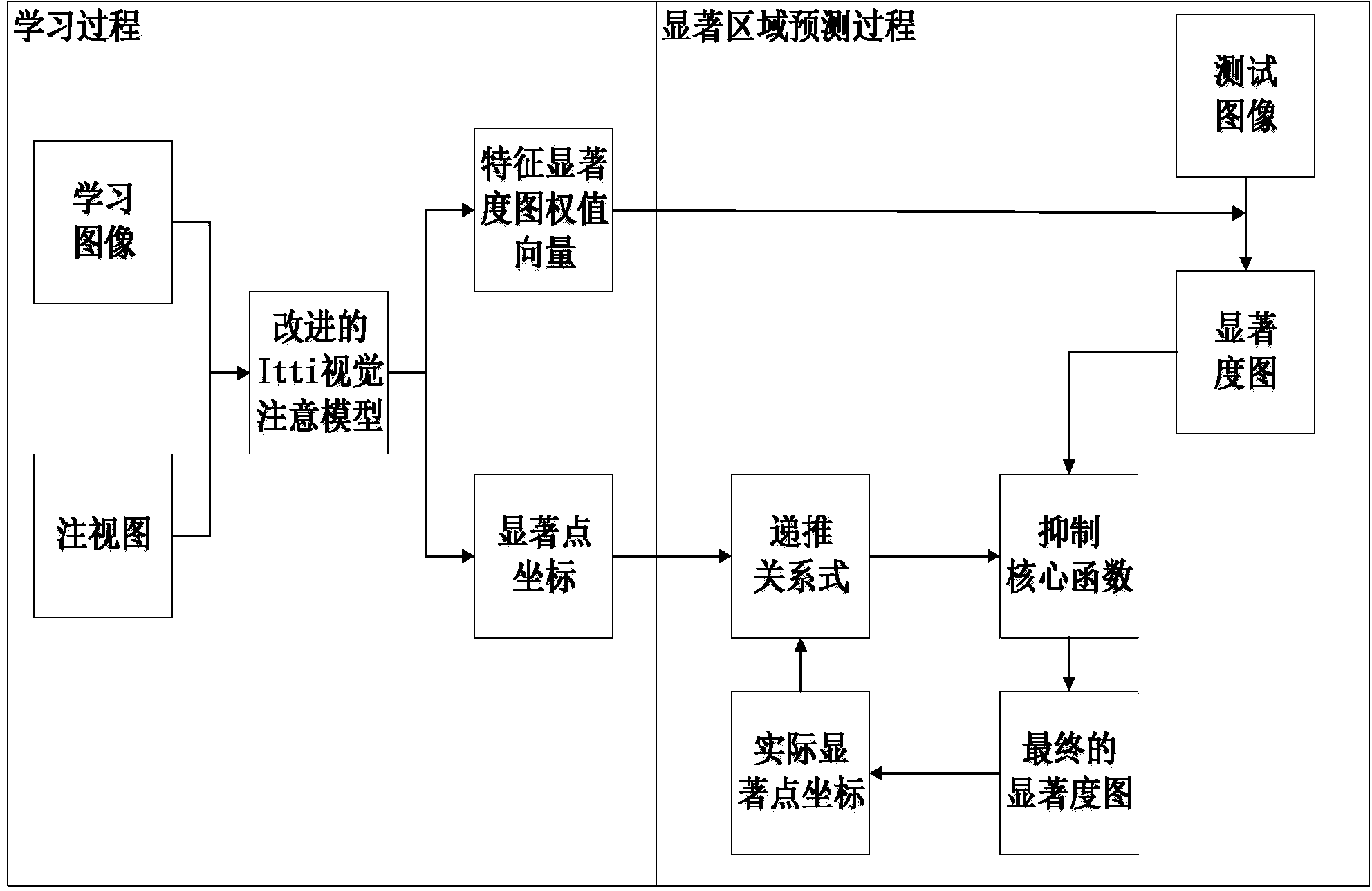

The invention discloses a method for detecting salient regions in sequence images based on an improved visual attention model. The method mainly aims at solving the problems that an existing method for detecting salient regions based on the visual attention model is complex in process and poor in real-time performance. The method includes the implementation steps that firstly, a watching graph of salient regions of a study image is generated, a feature saliency graph and weight vectors of the feature saliency graph of the study image are generated, and salient point coordinates are recorded; secondly, a saliency graph of a test image is generated, salient point coordinates of the saliency graph of the test image are recursively predicted through the salient point coordinates of the study image, and a restraint core function is established to highlight the regions where salient points are located; thirdly, the salient point coordinates are updated, and salient regions of a next test image are predicted through a salient point coordinate recurrence relation and the restraint core function; fifthly, the salient regions of the sequence images are detected by cyclically executing the third step and the fourth step. The salient regions in the sequence images can be detected in real time, the model is simple and effective, and the method can be used for target recognition.

Owner:XIDIAN UNIV

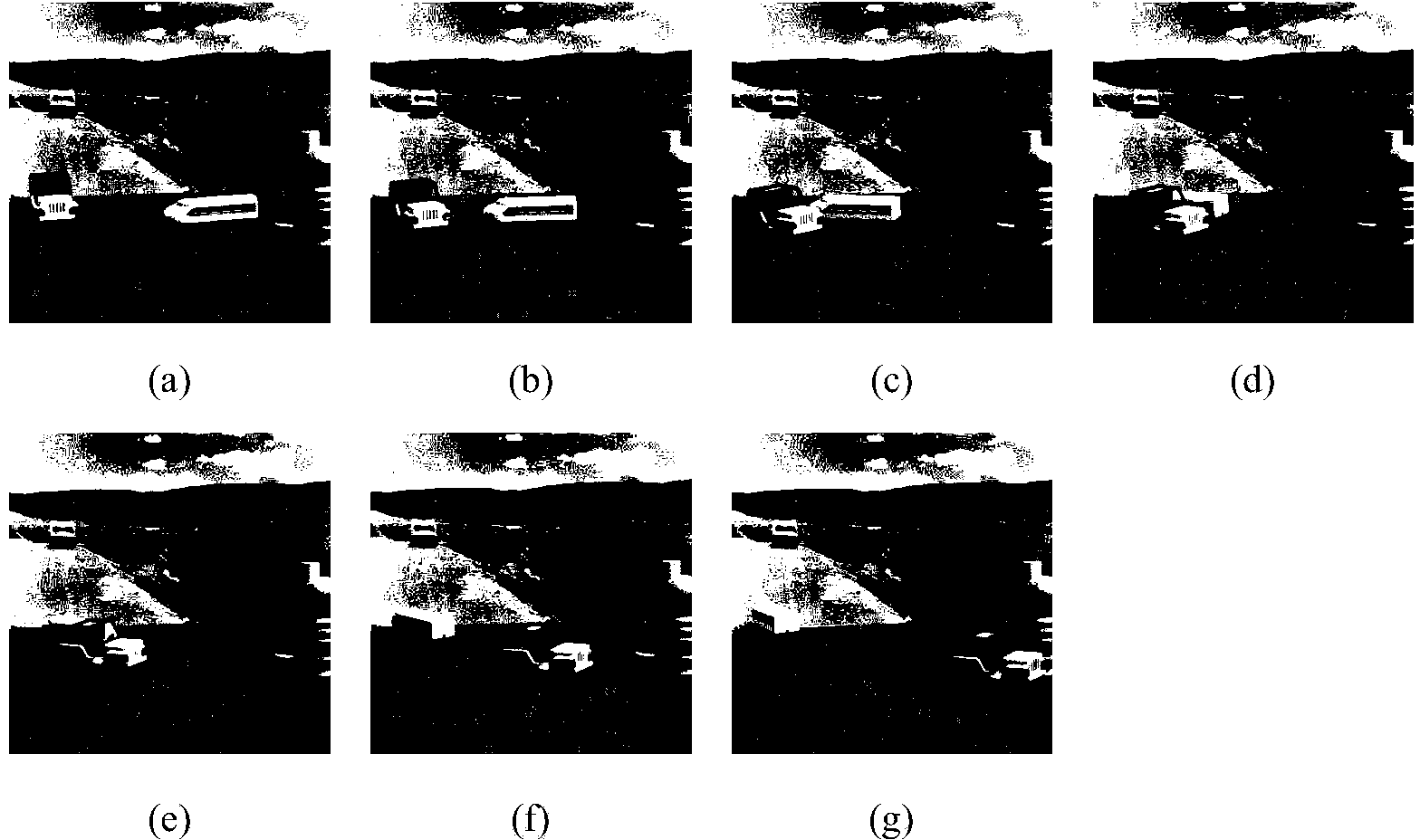

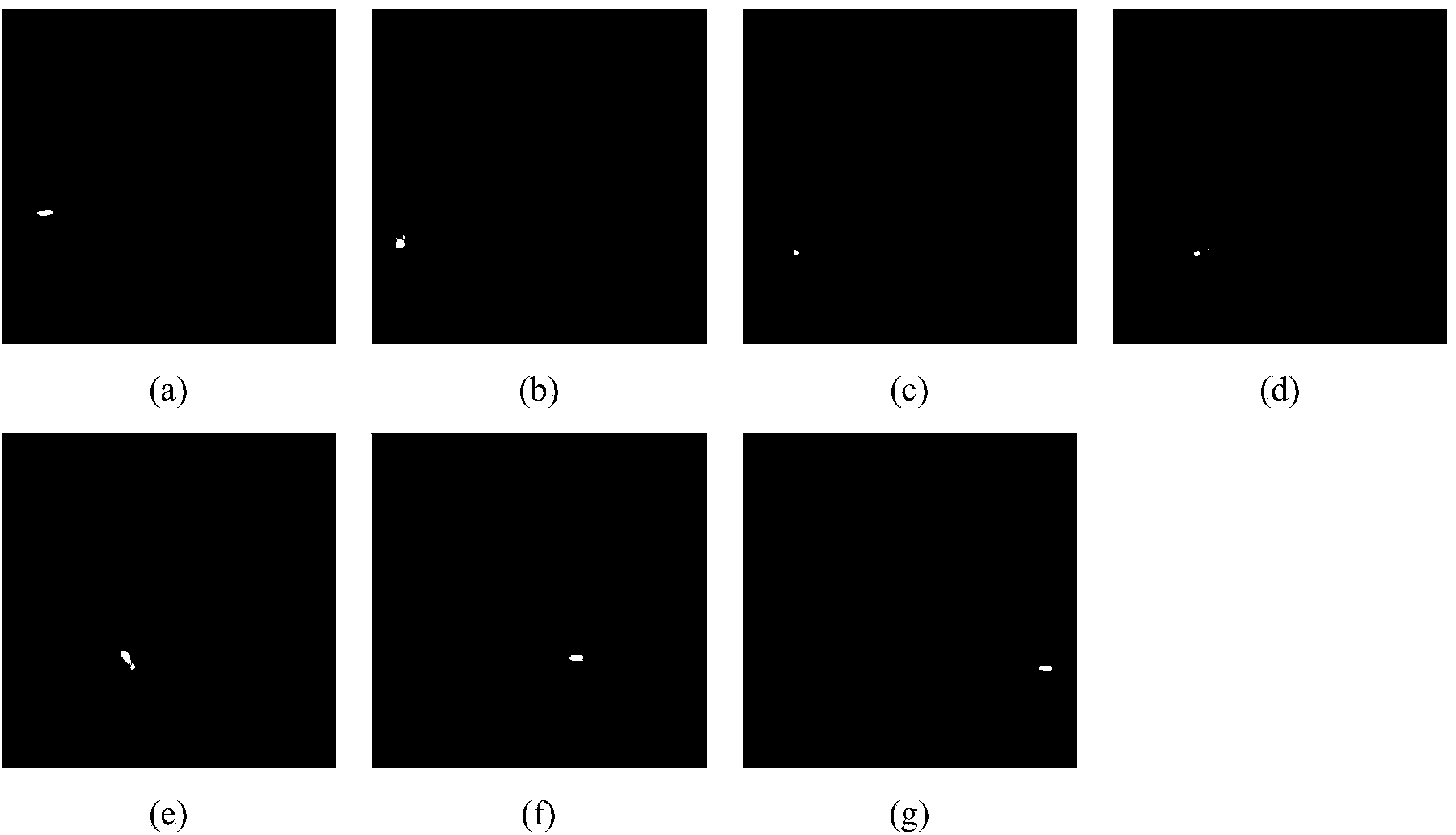

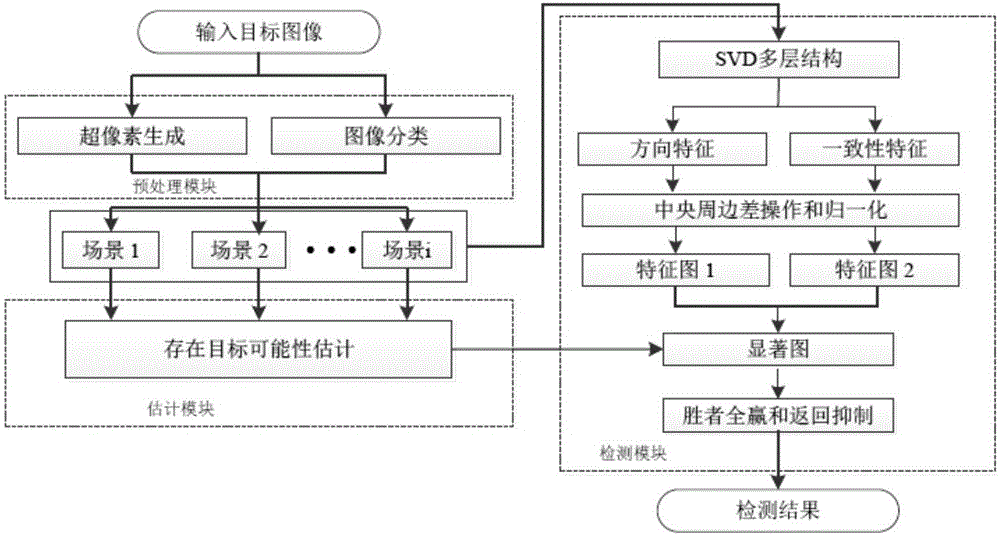

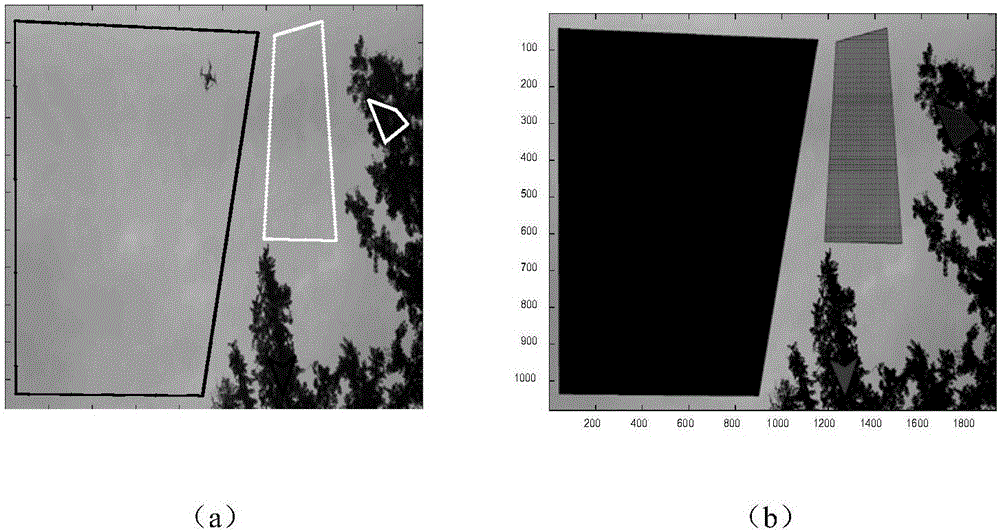

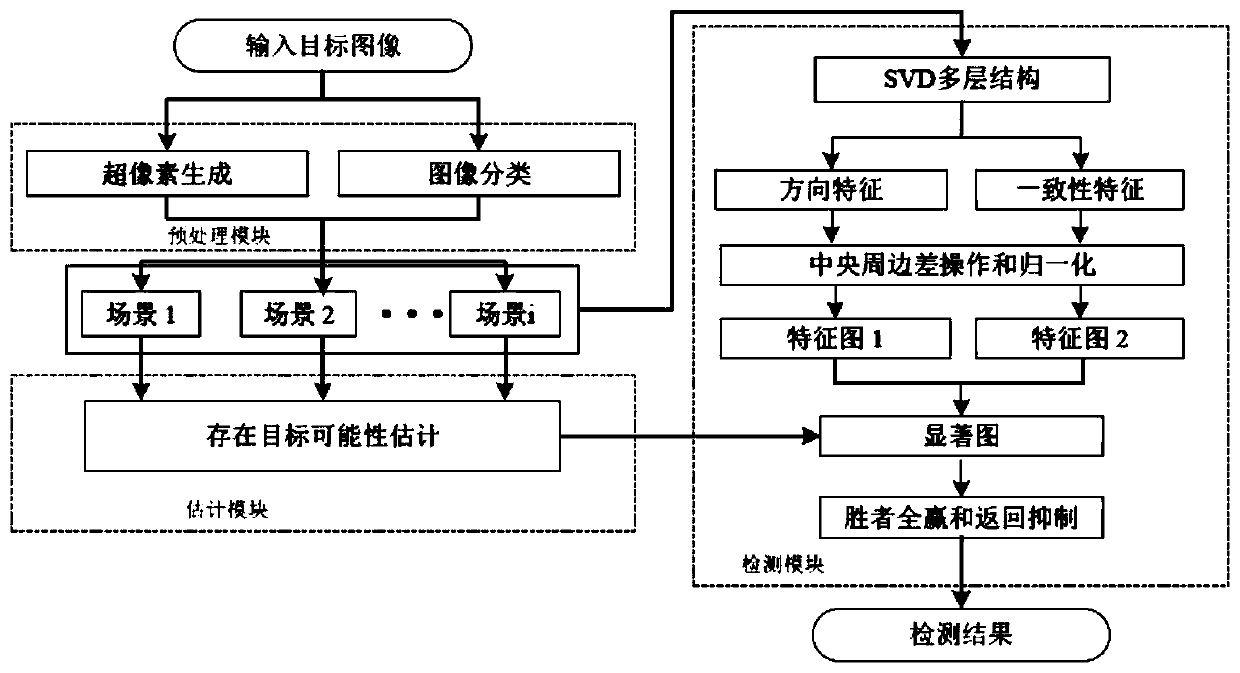

Method for detecting small unmanned aerial vehicle target based on super-pixels and scene prediction

ActiveCN106651937AAvoid blurAvoid problems that go away quicklyImage analysisImaging processingProbability estimation

The invention belongs to the technical field of image processing and unmanned aerial vehicle detection, and relates to a method for detecting a small unmanned aerial vehicle target based on super-pixels and scene prediction. The method mainly comprises the steps of preprocessing, unmanned aerial vehicle target probability estimation and unmanned aerial vehicle detection, and is characterized in that in the step of preprocessing, super-pixel generation and scene classification are performed on an optical image to be detected so as to acquire a super-pixel based scene classification image; in the step of unmanned aerial vehicle target probability estimation, a significance depth value of each scene in the classification image acquired in the step a is respectively estimated, and the probability of existence of an unmanned aerial vehicle of each scene is calculated; and in the step of unmanned aerial vehicle detection, feature of the image to be detected are extracted, feature saliency maps are acquired by adopting an SVD based multilayer pyramid structure, weighting is performed on the different feature saliency maps to acquire a general saliency map, the general saliency map is loaded into the super-pixel classification image acquired in the step a, and a target detection result of an unmanned aerial vehicle is acquired according to a weight of the probability acquired in the step b when being applied to different scene areas by adopting a mechanism of winner-take-all and return inhibition. The method has the beneficial effect that the detection accuracy is higher compared with traditional technologies.

Owner:成都电科智达科技有限公司

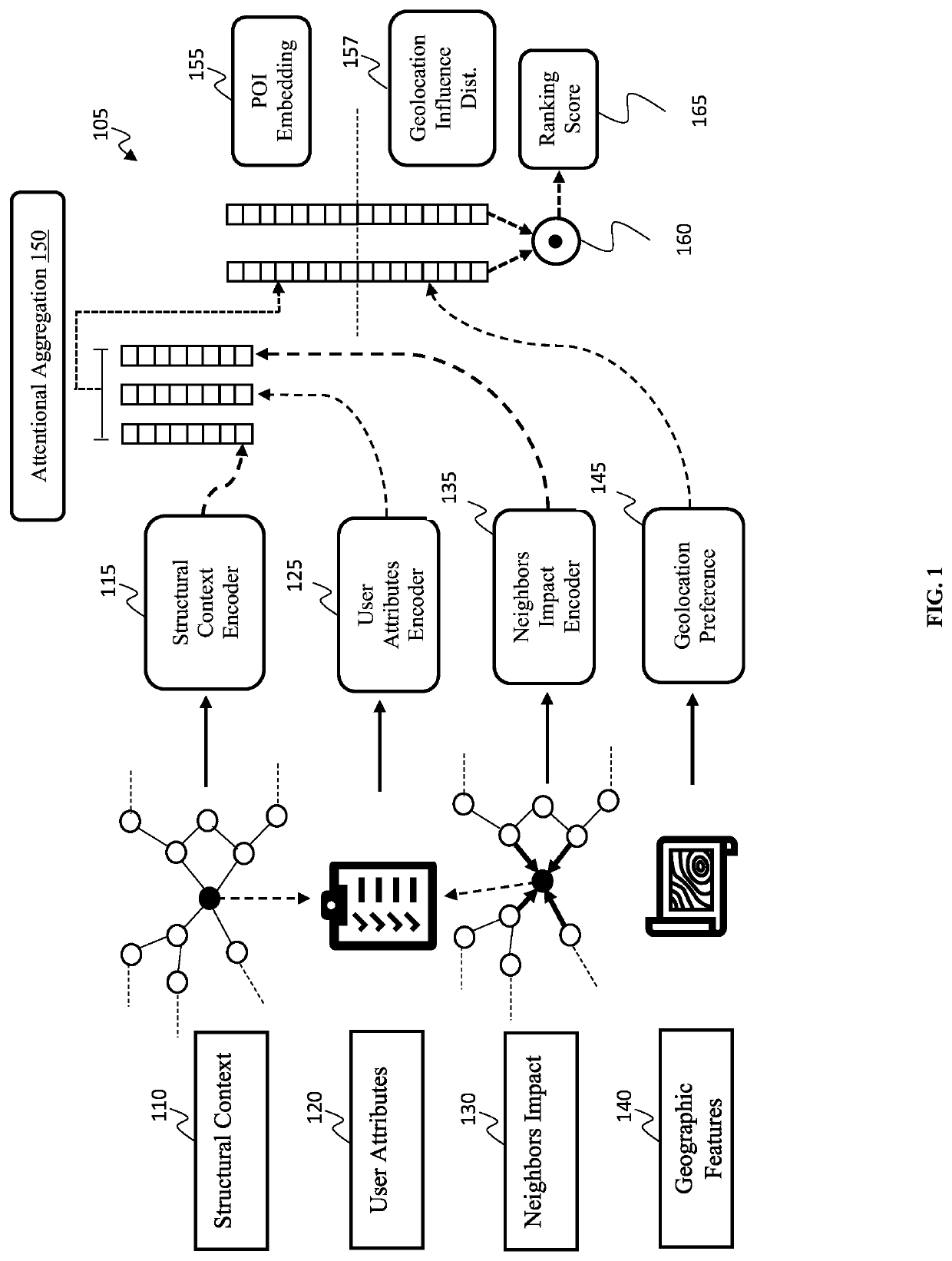

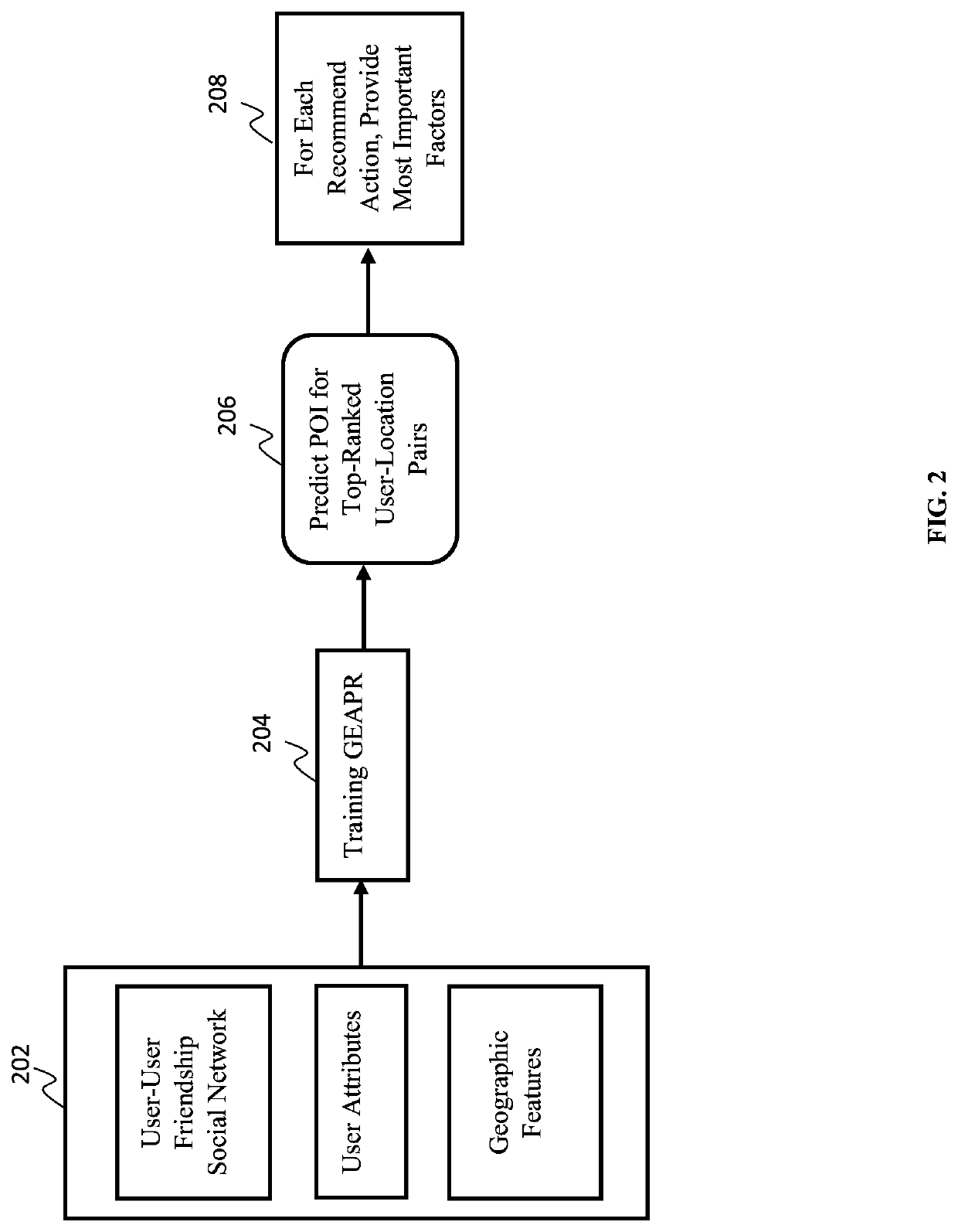

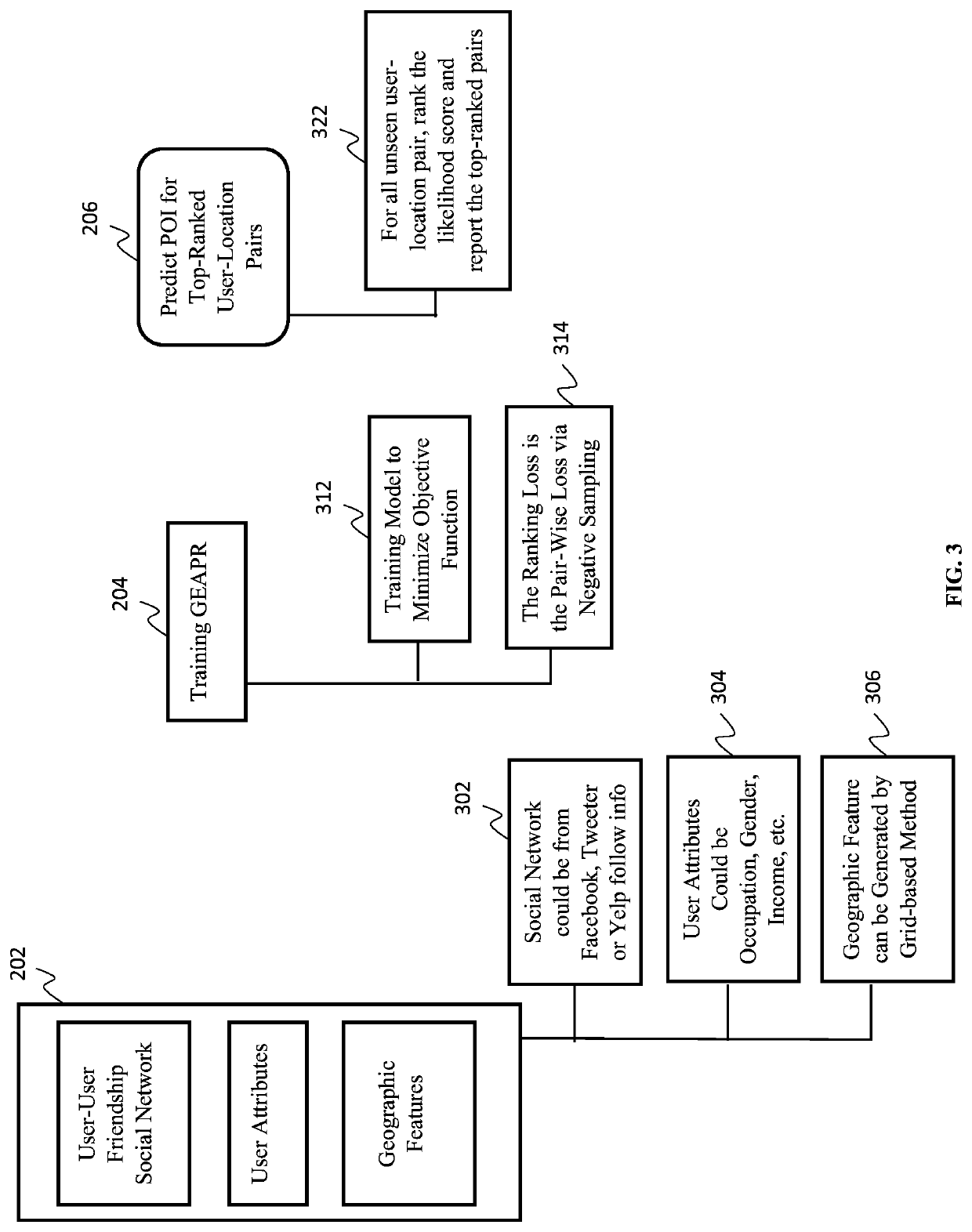

Graph enhanced attention network for explainable poi recommendation

A method for employing a graph enhanced attention network for explainable point-of-interest (POI) recommendation (GEAPR) is presented. The method includes interpreting POI prediction in an end-to-end fashion by adopting an adaptive neural network, learning user representations by aggregating a plurality of factors, the plurality of factors including structural context, neighbor impact, user attributes, and geolocation influence, and quantifying each of the plurality of factors by numeric values as feature salience indicators.

Owner:NEC LAB AMERICA

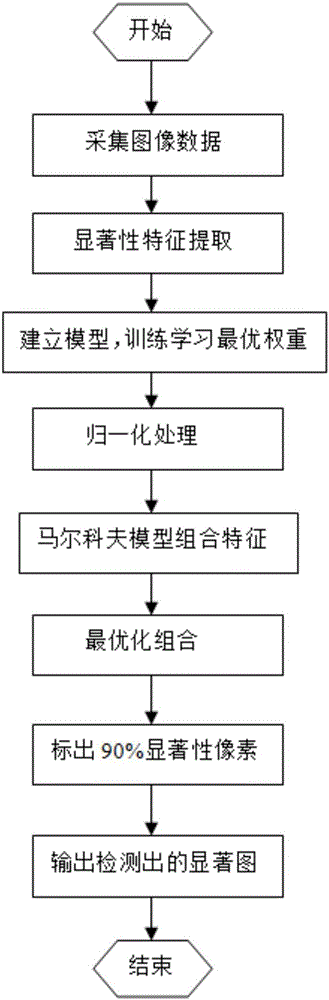

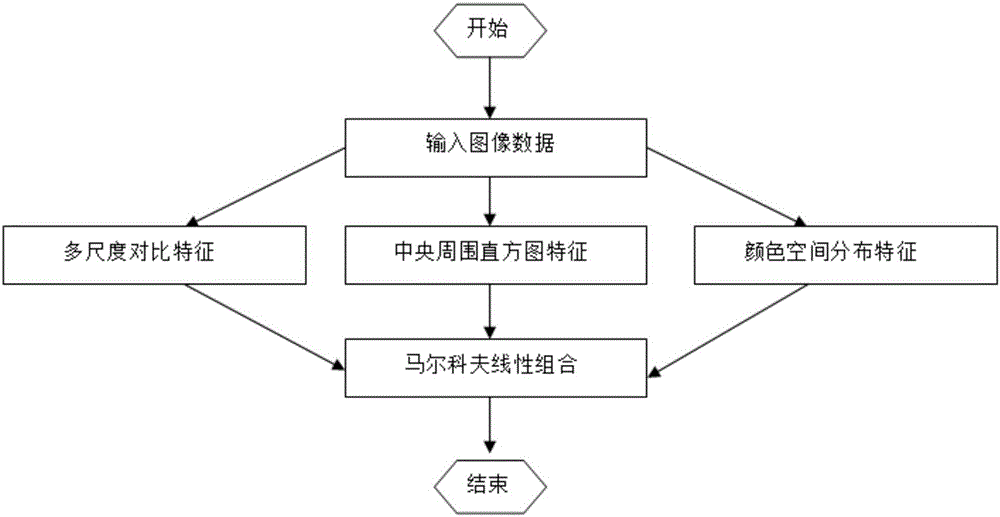

Multi-feature-based saliency detection method

InactiveCN106127210AOvercome limitationsImprove bindingCharacter and pattern recognitionEstimation methodsModel parameters

The invention provides a multi-feature-based saliency detection method. The method comprises the steps of firstly, performing calculation by applying three different saliency clues of multi-scale comparison, a center-surround histogram and color space distribution to obtain separate feature saliency maps; secondly, calculating weights of the separate feature saliency maps through Markov model learning, and obtaining an optimal solution of model parameter estimation by adopting a maximum likelihood estimation method; and finally, detecting a test image by utilizing a Markov model.

Owner:SYSU CMU SHUNDE INT JOINT RES INST +1

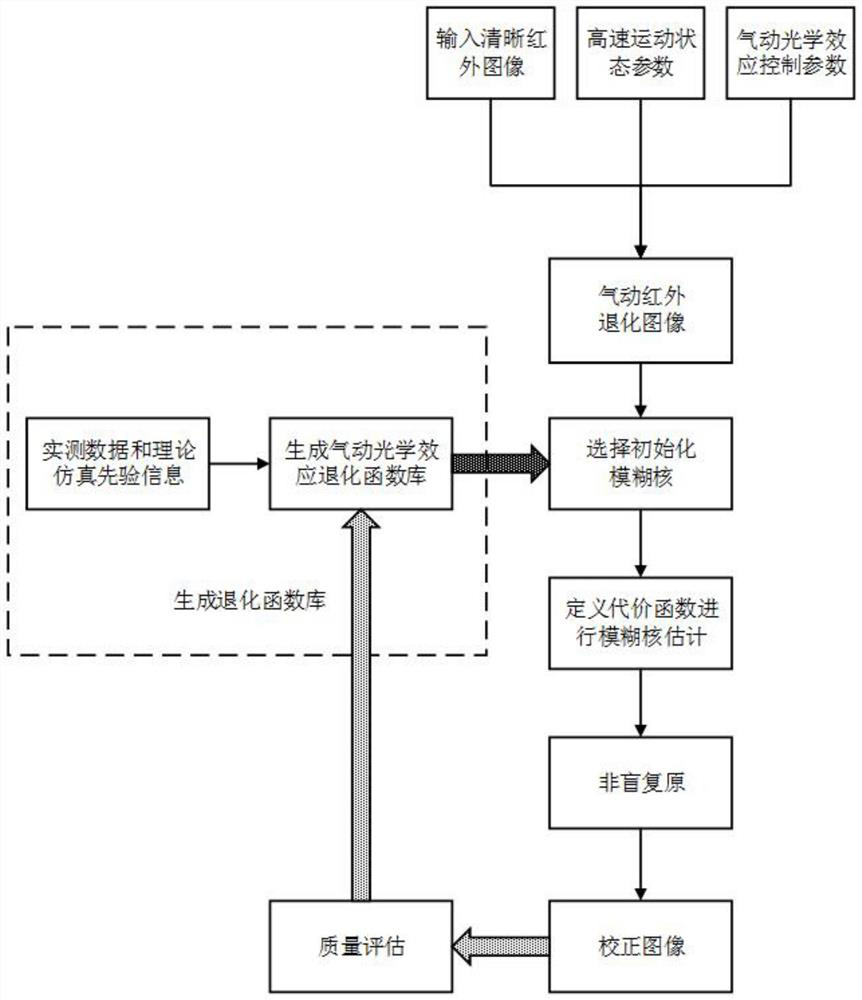

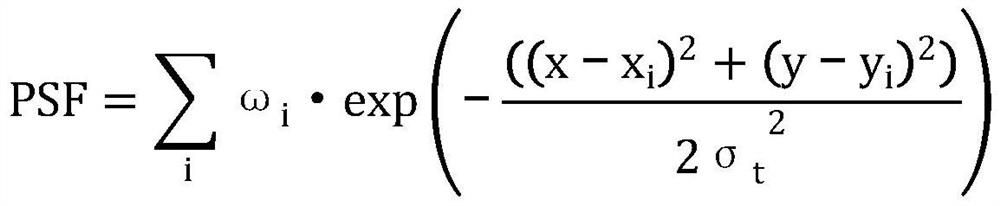

Infrared image blurring correction method for high-speed moving object

PendingCN113538374AHigh precisionReal-time correctionImage enhancementImage analysisPattern recognitionComputer graphics (images)

The invention discloses an infrared image blurring correction method for a high-speed moving object. The infrared image blurring correction method specifically comprises the following steps: synchronously acquiring a degraded image and a high-speed moving state parameter corresponding to the current degraded image; selecting a prior degradation function from a prior degradation function library according to the high-speed motion state parameters; performing feature saliency region optimization on the degraded image; defining a minimization cost function of aero-optical effect correction based on prior information constraint; solving blurring kernels under different scales by using a pyramid principle, and constraining an estimation result of each layer through a priori blurring kernel to obtain an estimated blurring kernel under an image scale; obtaining a corrected image through a non-blind image restoration algorithm according to the estimated degradation blurring kernel; and evaluating the estimation quality of the fuzzy kernel, and updating the priori fuzzy kernel library. According to the invention, based on a pneumatic theoretical model and prior knowledge of test data, intelligent correction of a pneumatic optical distortion image is realized by combining a pyramid principle.

Owner:SHANGHAI INST OF TECHNICAL PHYSICS - CHINESE ACAD OF SCI

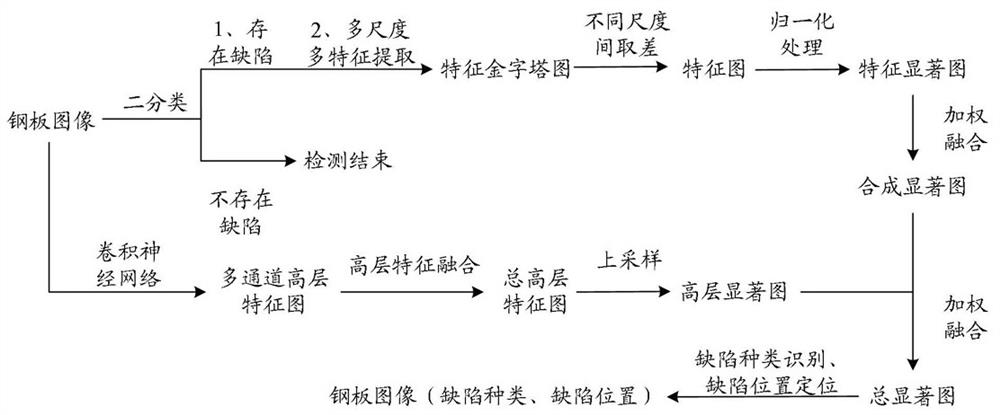

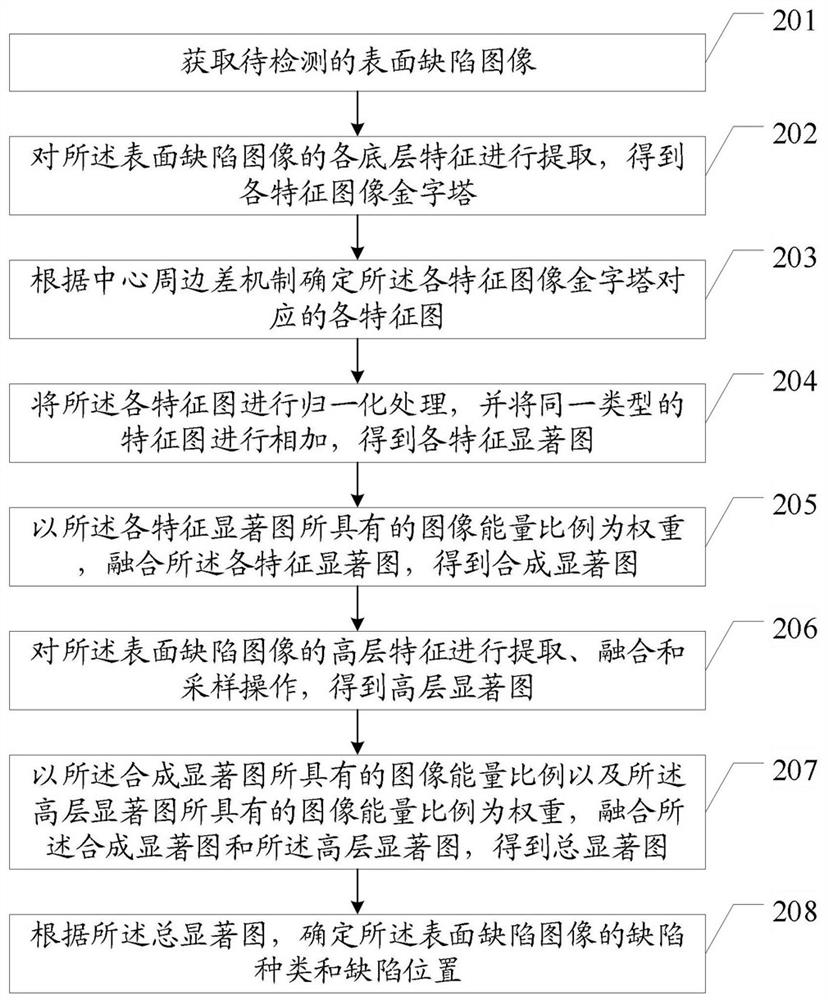

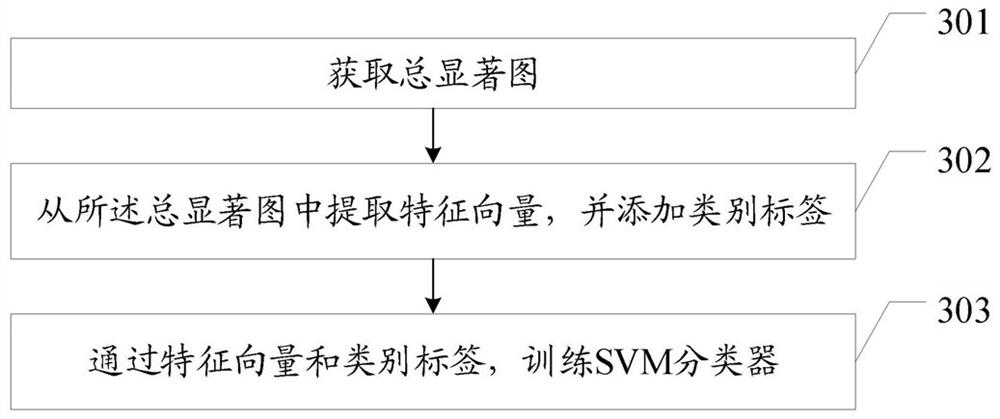

Surface defect detection method and device

ActiveCN112907595AImprove recognition accuracyTake advantage ofImage enhancementImage analysisPattern recognitionImaging processing

The invention relates to the technical field of image processing, and provides a surface defect detection method and device. The method comprises the following steps: firstly, extracting each bottom-layer feature of a to-be-detected surface defect image to obtain each feature image pyramid, determining each feature image corresponding to each feature image pyramid according to a center peripheral difference mechanism, normalizing each feature image, and adding the feature images of the same type to obtain each feature saliency map; fusing the feature saliency maps by taking the energy proportion of each feature saliency map as a weight to obtain a synthetic saliency map, then extracting, fusing and sampling high-level features of the surface defect image to obtain a high-level saliency map; and finally, taking the energy proportion of the synthetic saliency map and the energy proportion of the high-level saliency map as a weight, fusing the synthetic saliency map and the high-level saliency map to obtain a total saliency map, and determining defect types and defect positions of the surface defect image according to the total saliency map. According to the invention, the recognition precision of surface defect detection is improved.

Owner:WUHAN UNIV OF SCI & TECH

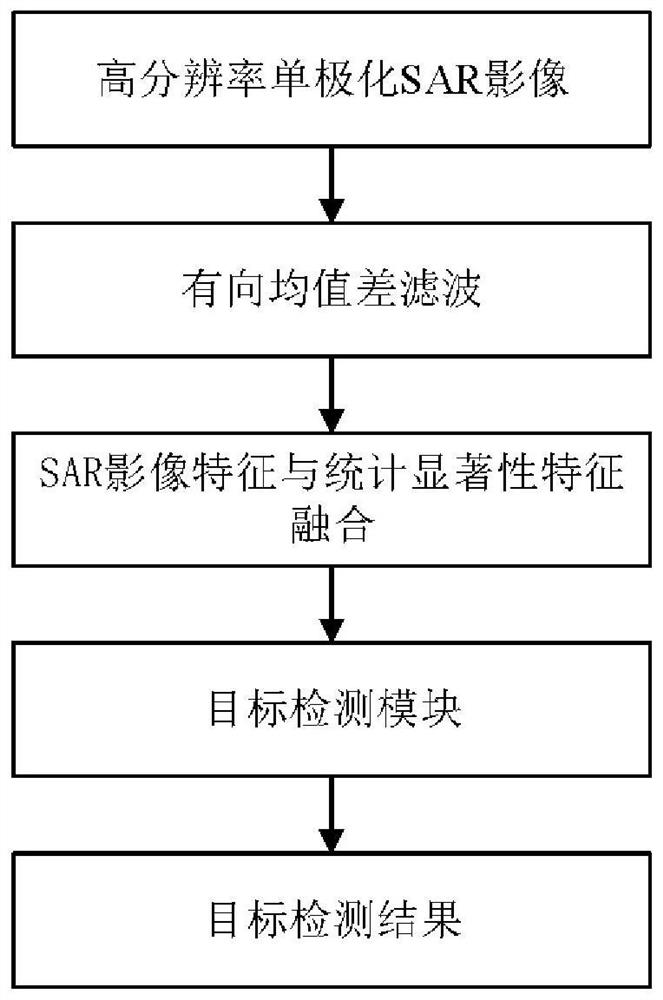

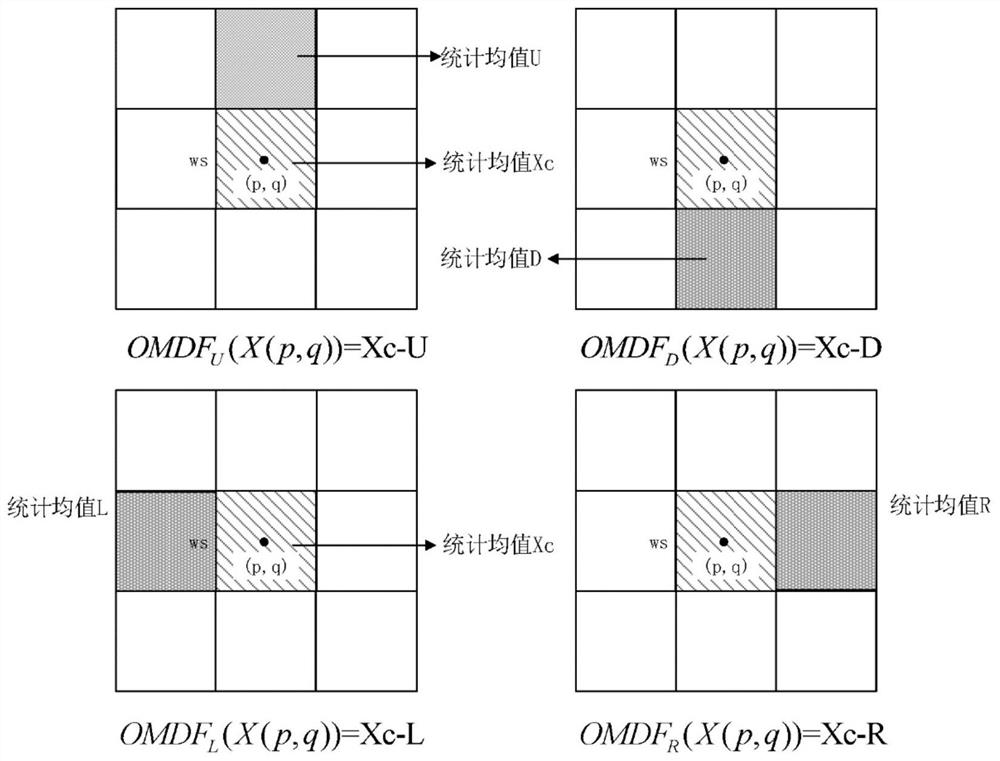

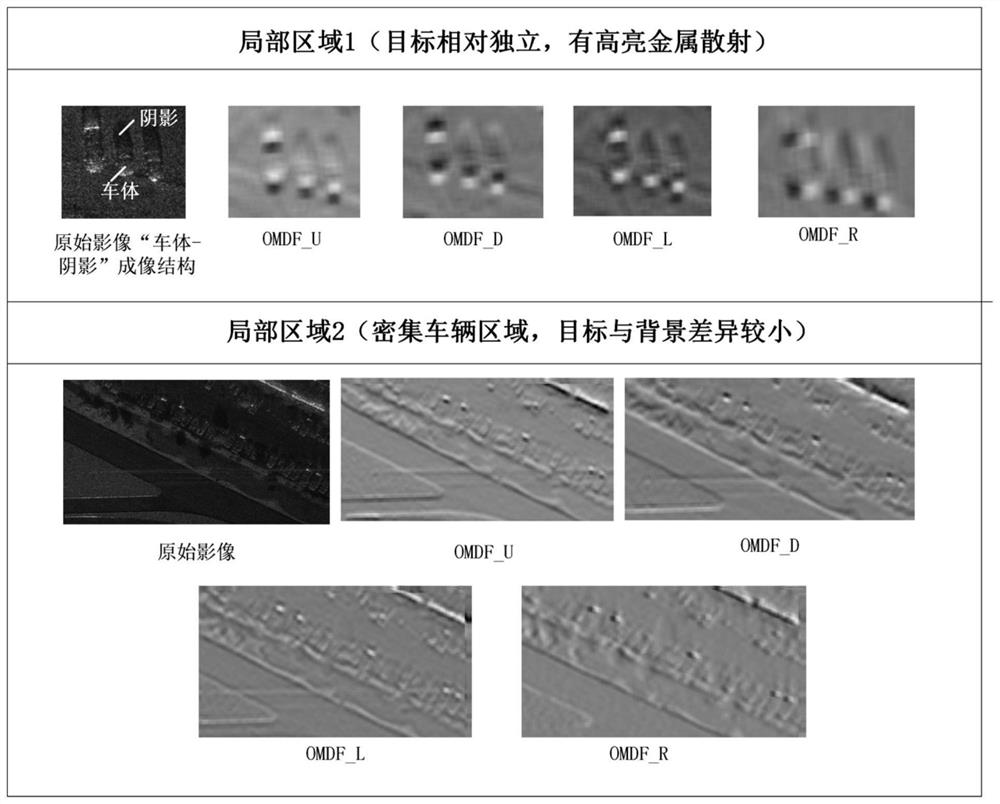

High-resolution SAR image vehicle target detection method integrating statistical significance

ActiveCN111666854AIncrease contrastReduce the impact of object detectionScene recognitionNeural architecturesPattern recognitionImaging Feature

The invention provides a high-resolution SAR image vehicle target detection method fusing statistical significance, and the high-resolution SAR image vehicle target detection method comprises the steps: reading a high-resolution single-polarization SAR image, and extracting a region with a significant statistical feature in the image through a directed mean difference filter according to the imaging features of a vehicle body region and a shadow region in a vehicle target; fusing the original SAR data with the statistical feature saliency map so as to detect a vehicle target by adopting a deepneural network framework, and facilitating a target detection model to focus attention on an area with a significant statistical feature by fusing the features; training a target detection model, including selecting a training sample, and training to obtain a vehicle target detection model by taking the fusion feature as an input, wherein the target detection model is an improved YOLOv3 model, and the improvement mode is that an original SAR image and a directed mean difference filtering result are extracted at a network input end, and feature fusion is carried out; and performing vehicle target detection in the high-resolution single-polarization SAR image to be detected by using the trained target detection model.

Owner:WUHAN UNIV

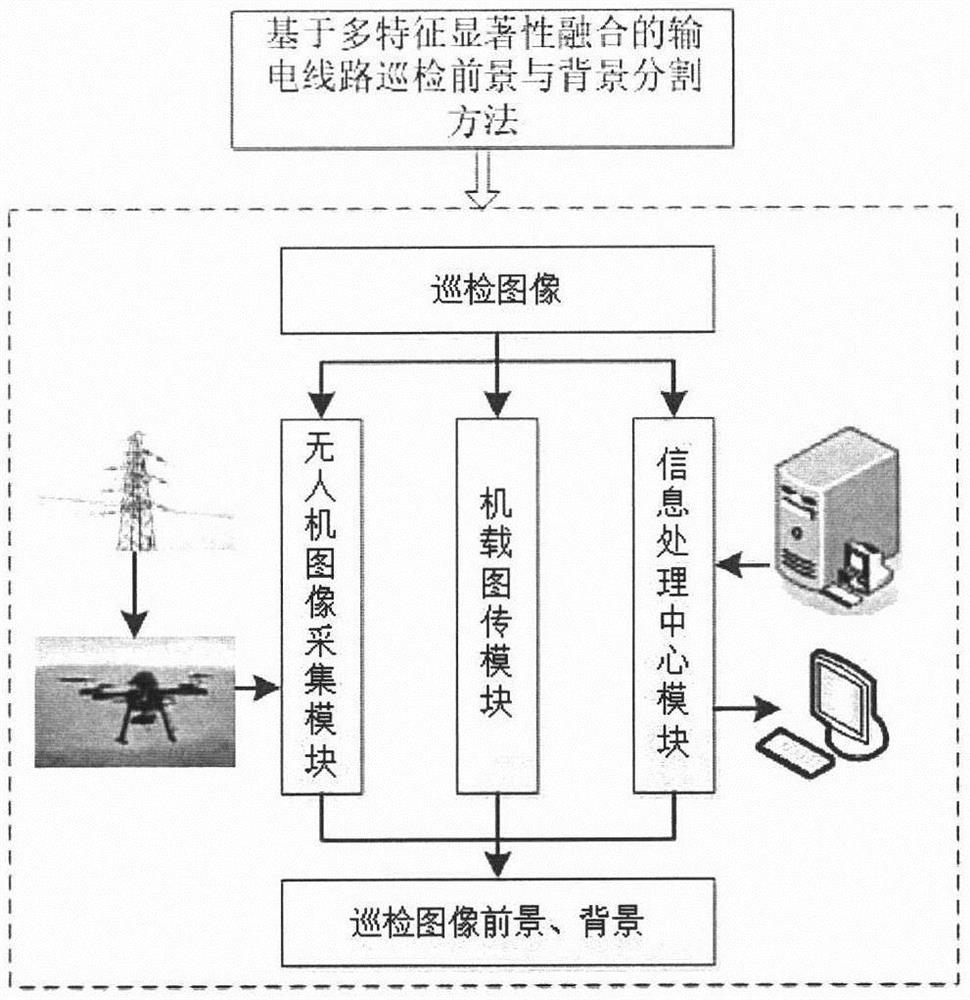

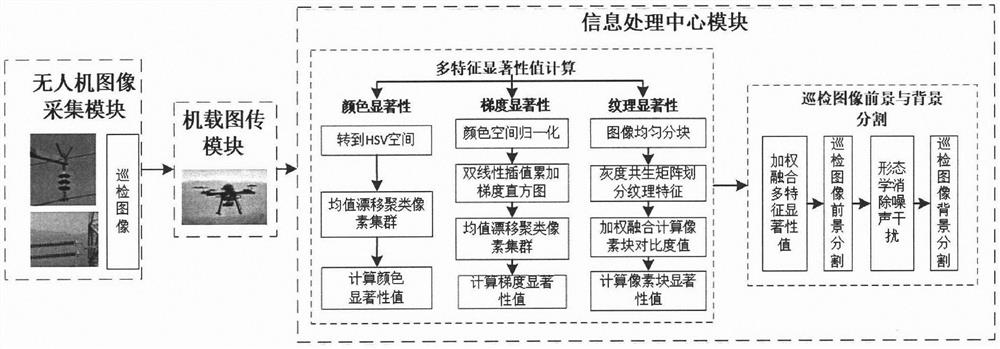

Power transmission line inspection foreground and background segmentation method based on multi-feature significance fusion

InactiveCN112884795AImprove Segmentation AccuracyAvoid detection errorsImage enhancementImage analysisInformation processingMean-shift

The invention discloses a power transmission line inspection foreground and background segmentation method based on multi-feature saliency fusion. The method comprises an unmanned aerial vehicle image acquisition module, an airborne image transmission module and an information processing center module. The processing steps of the information processing center includes: firstly, dividing an image into different color intervals, clustering similar pixels by using a mean shift algorithm, and calculating a color saliency value; converting the image into a gray scale space, clustering similar pixels through a mean shift algorithm, and calculating a gradient significance value; calculating a texture contrast difference value between the pixel blocks, and solving a texture saliency value; weighting and fusing the multi-feature saliency value of each pixel block according to a center distance method to obtain a foreground segmentation result image; and finally, subtracting the foreground segmentation result image from the original image to obtain an image background so as to achieve foreground and background segmentation. Through the method, foreground and background segmentation in power transmission line inspection can be realized, and maintenance personnel can be helped to quickly identify various power accessories.

Owner:JIANGSU ELECTRIC POWER CO +1

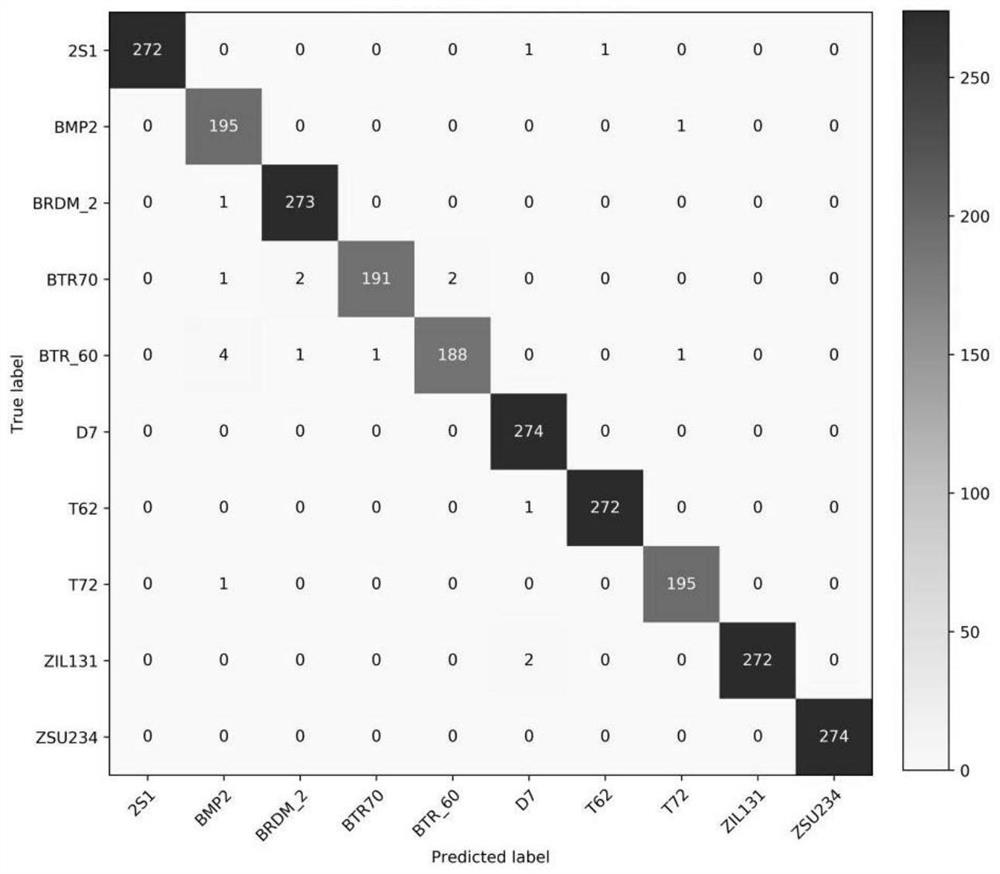

SAR target identification method based on multi-level features

ActiveCN112800980AMake the most of synergiesImprove utilizationKernel methodsScene recognitionFeature vectorSaliency map

The invention discloses an SAR target recognition method based on multi-level features, which comprises the following steps of: firstly, establishing pattern expression of SAR target image features, performing feature extraction to obtain feature vectors and a feature saliency map of a target, and fusing the extracted feature vectors to obtain shallow features; connecting an original target image and the extracted image feature saliency map according to a channel to obtain an input image, extracting features of the input image by using a deep convolutional network, extracting an output of a middle convolutional layer as a middle-layer feature, and extracting an output of a final full-connection layer as a deep-layer feature; performing further adaptive weight fusion on the obtained features of the shallow layer, the middle layer and the deep layer; and finally, carrying out classification identification on the fused features by utilizing a trained machine learning classification model to obtain a final identification result. According to the method, the respective advantages of the shallow, middle and deep features are combined, the synergistic effect of the shallow, middle and deep features is brought into full play, and the utilization capability of the multi-level features and the precision of target recognition are improved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS +1

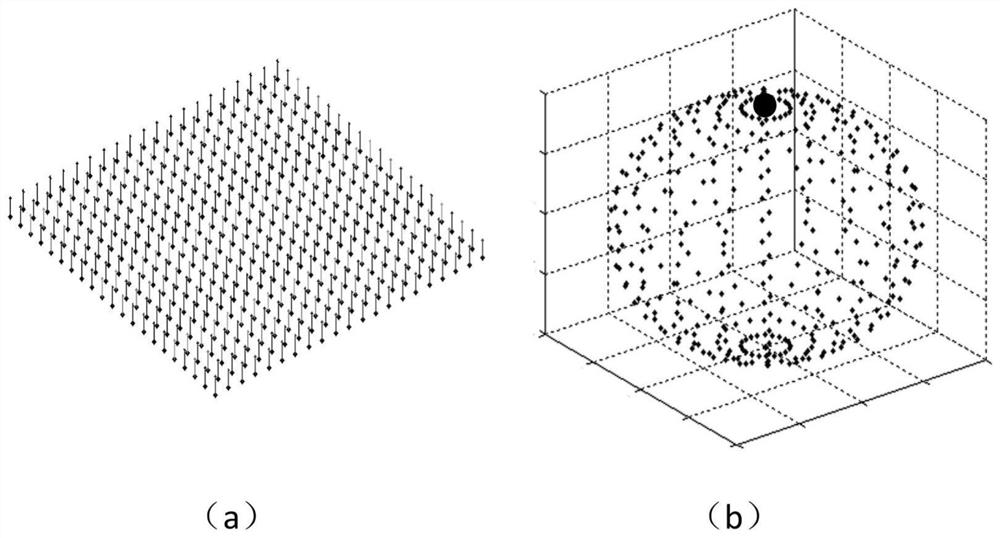

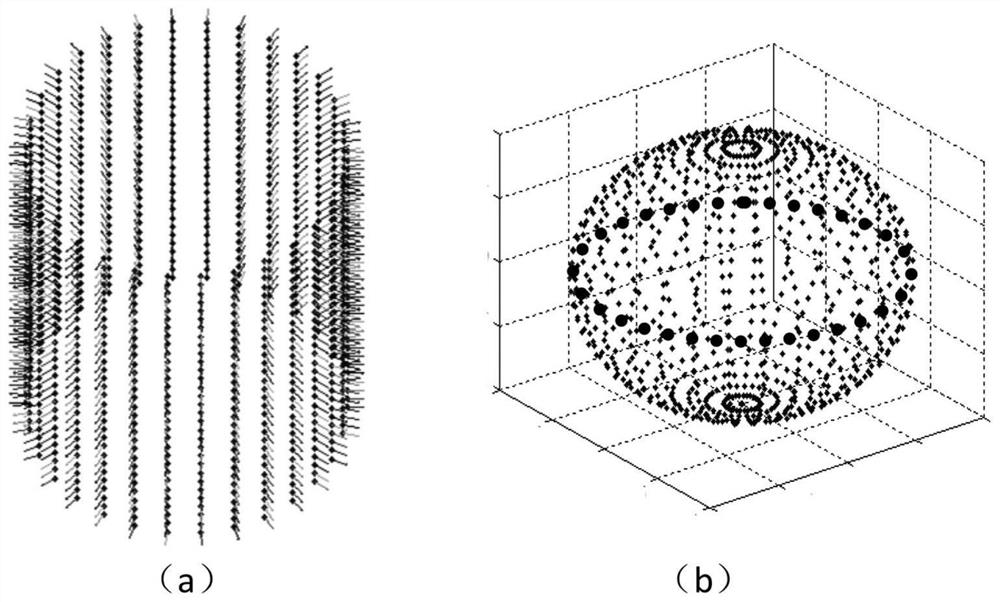

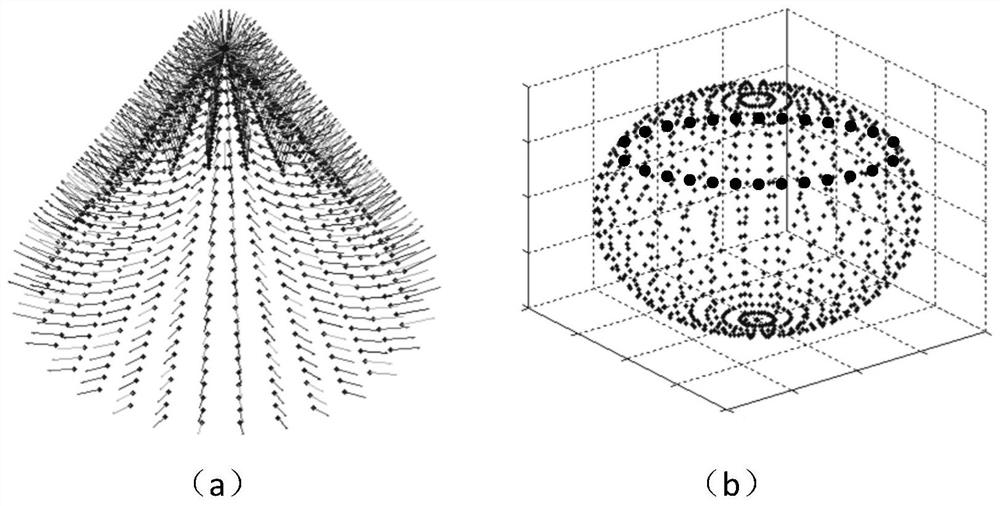

A panoramic image salience image generation method and system for fusion of visual characteristics and behavioral characteristics

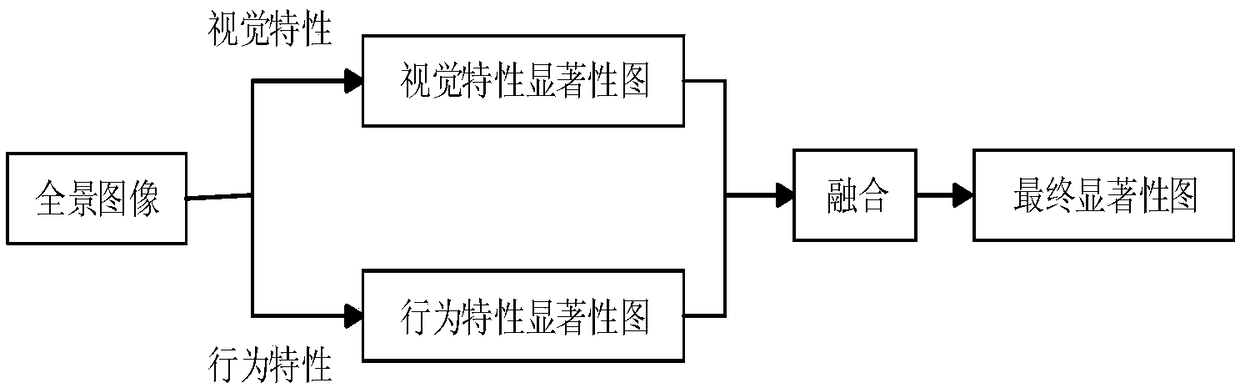

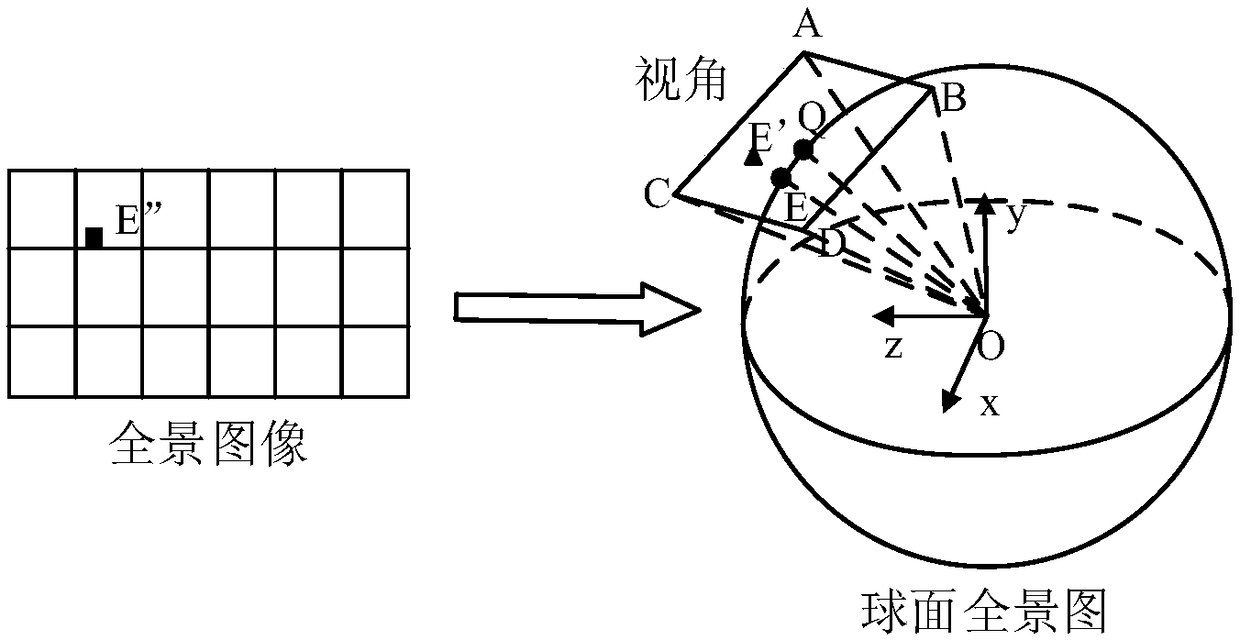

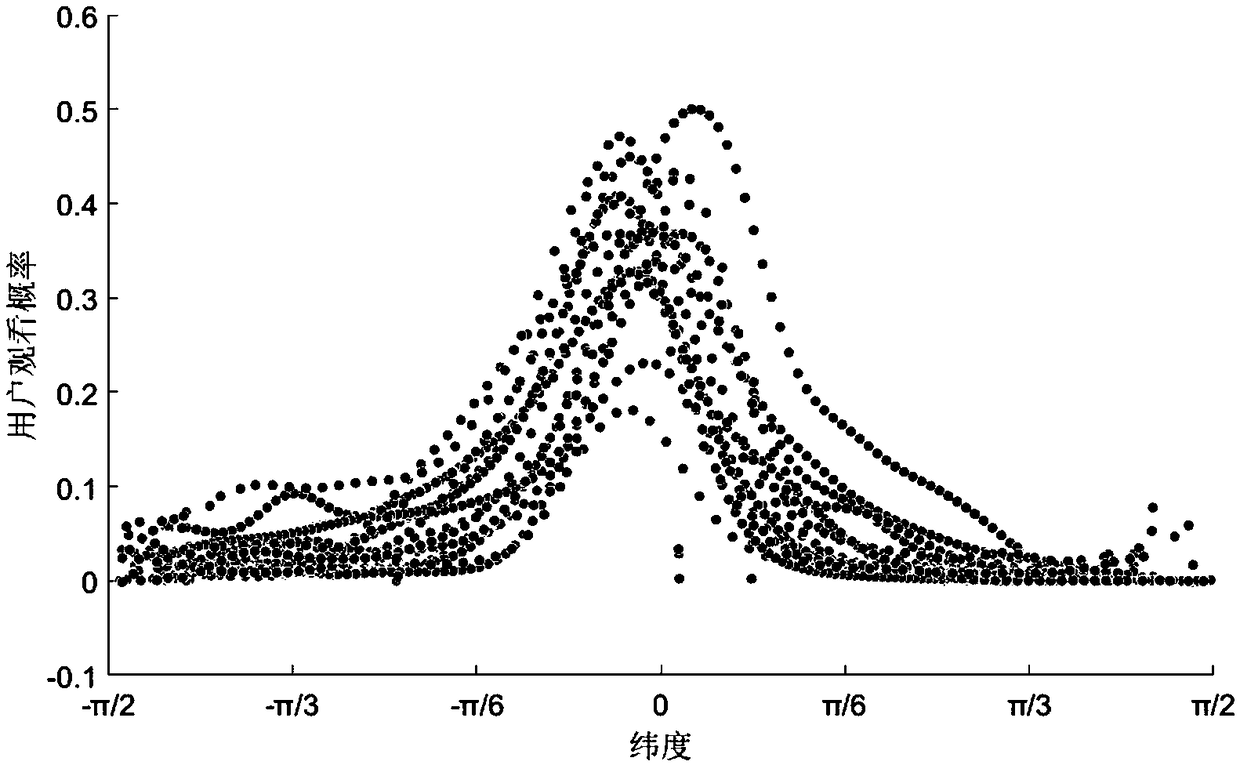

The invention discloses a panoramic image salience image generation method and system for fusion of visual characteristics and behavioral characteristics. The method comprises the following steps: 1)converting a panoramic image from an equal rectangular domain to a spherical domain, and then converting the panoramic image from the spherical domain to a view angle domain to obtain a view angle plane image corresponding to the panoramic image; 2) using the Gaussian difference filter to process the plane image of the view angle to obtain DoG values of each pixel in the image in the visual space;3) using the Euclidean distance value between the DoG value corresponding to each pixel and the average DoG value to represent the visual feature saliency of the corresponding pixel on the whole panoramic image, and obtaining the visual feature saliency map SV corresponding to the panoramic image; 4) obtaining the behavior characteristic salience map Sb of the user viewing the panoramic image according to the actual head movement data of the user; 5) fusing the visual characteristic salience map SV and the behavior characteristic salience map Sb to obtain the final panoramic image salience map S. The method greatly improves the display effect of the panoramic image.

Owner:INST OF INFORMATION ENG CHINESE ACAD OF SCI

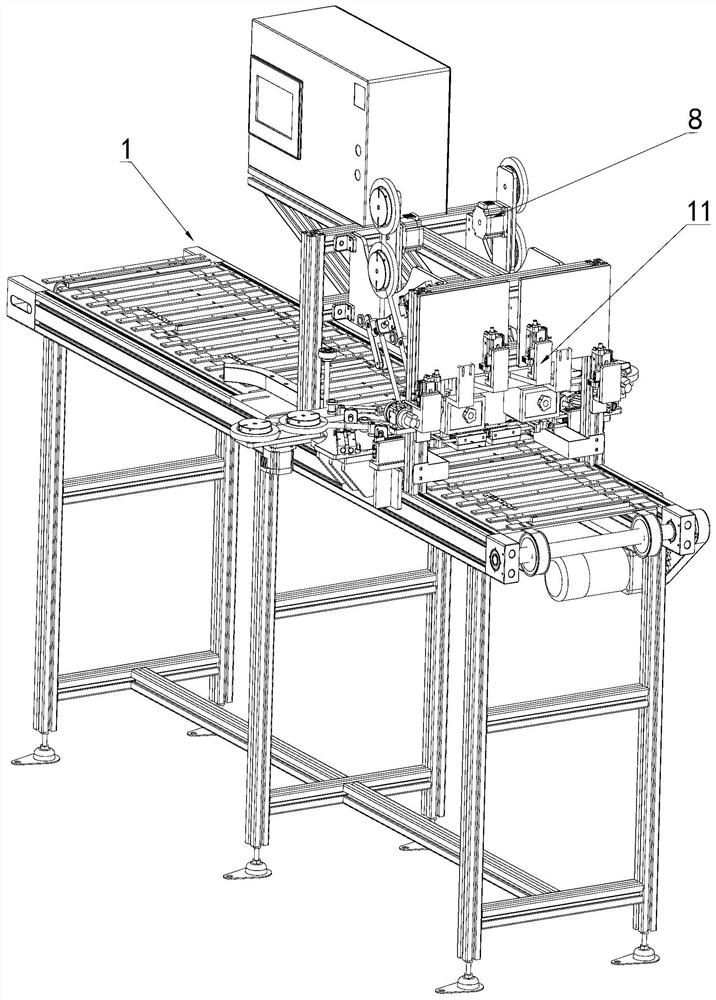

Medical mask production equipment and medical mask quality rapid detection method thereof

ActiveCN111642833AImprove productivity levelsReduce labor intensityDetection of fluid at leakage pointProtective garmentComputer hardwareEngineering

The invention discloses medical mask production equipment. The medical mask production equipment comprises a rack and a conveying device arranged on the rack. A synthesis module, a sewing module, a stacking module, an edge sealing module, a cutting-off module, a hot-pressing fixing module, a detection module and a sterilization module which are used for vertically stacking a plurality of cloth layers are arranged on the rack in the conveying direction of the conveying device. The detection module comprises a physical detection unit, a flexible detection unit and a display unit; the physical detection unit is in communication connection with the display unit; the physical detection unit comprises a camera and a processor; the processor comprises a preprocessing module used for carrying outnoise reduction on the gray image of the medical finished mask, a stroking extraction module used for carrying out edge detection on the image of the medical finished mask, and a feature saliency module used for detecting the image of the edge and improving the edge continuity. The invention further discloses a rapid quality detection method for the medical mask, and the method is rapid in detection, simple to operate and convenient to rapidly popularize and use.

Owner:王峰

A Target Detection Method for Small UAVs Based on Superpixels and Scene Prediction

ActiveCN106651937BDetection hardware requirements are not highHigh precisionImage analysisProbability estimationSaliency map

The invention belongs to the technical field of image processing and unmanned aerial vehicle detection, and relates to a method for detecting a small unmanned aerial vehicle target based on super-pixels and scene prediction. The method mainly comprises the steps of preprocessing, unmanned aerial vehicle target probability estimation and unmanned aerial vehicle detection, and is characterized in that in the step of preprocessing, super-pixel generation and scene classification are performed on an optical image to be detected so as to acquire a super-pixel based scene classification image; in the step of unmanned aerial vehicle target probability estimation, a significance depth value of each scene in the classification image acquired in the step a is respectively estimated, and the probability of existence of an unmanned aerial vehicle of each scene is calculated; and in the step of unmanned aerial vehicle detection, feature of the image to be detected are extracted, feature saliency maps are acquired by adopting an SVD based multilayer pyramid structure, weighting is performed on the different feature saliency maps to acquire a general saliency map, the general saliency map is loaded into the super-pixel classification image acquired in the step a, and a target detection result of an unmanned aerial vehicle is acquired according to a weight of the probability acquired in the step b when being applied to different scene areas by adopting a mechanism of winner-take-all and return inhibition. The method has the beneficial effect that the detection accuracy is higher compared with traditional technologies.

Owner:成都电科智达科技有限公司

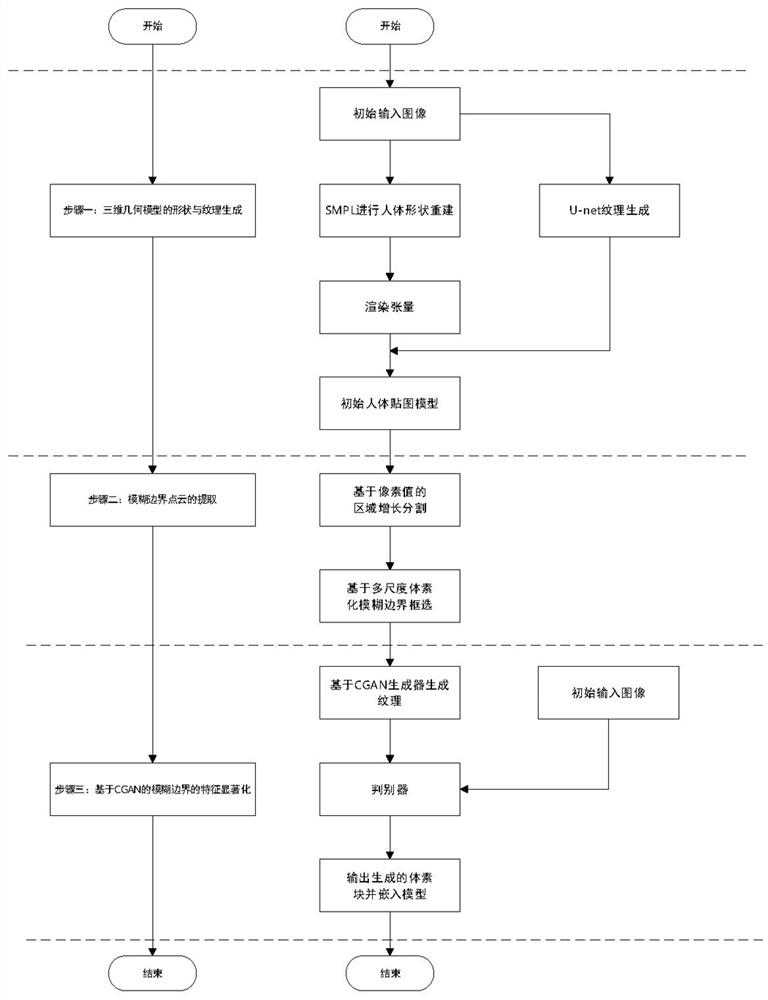

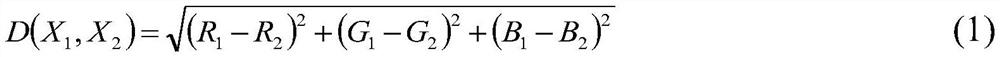

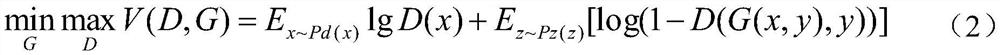

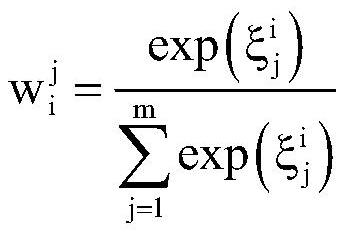

Conditional generative adversarial-based three-dimensional model fuzzy texture feature saliency method

PendingCN114119924AReduce computing timeIncreased realismDetails involving 3D image dataNeural architecturesVoxelPoint cloud

The invention discloses a three-dimensional model fuzzy texture feature salification method based on conditional generative adversarial, and the method comprises the following steps: 1), generating the shape and texture of a three-dimensional geometric model, and obtaining the point cloud features of the three-dimensional geometric model; 2) extracting a fuzzy boundary point cloud; and 3) feature saliency of the fuzzy boundary based on the conditional generative adversarial network. According to the method, based on multi-scale voxelization fuzzy boundary frame selection, textures are generated through the conditional generative adversarial network for mapping, the extracted voxel blocks are embedded into the three-dimensional geometric model, global texture fidelity of the geometric model is achieved through a multi-scale voxel segmentation mode, global texture optimization is changed into local texture optimization, and the calculation amount is reduced.

Owner:扬州大学江都高端装备工程技术研究所

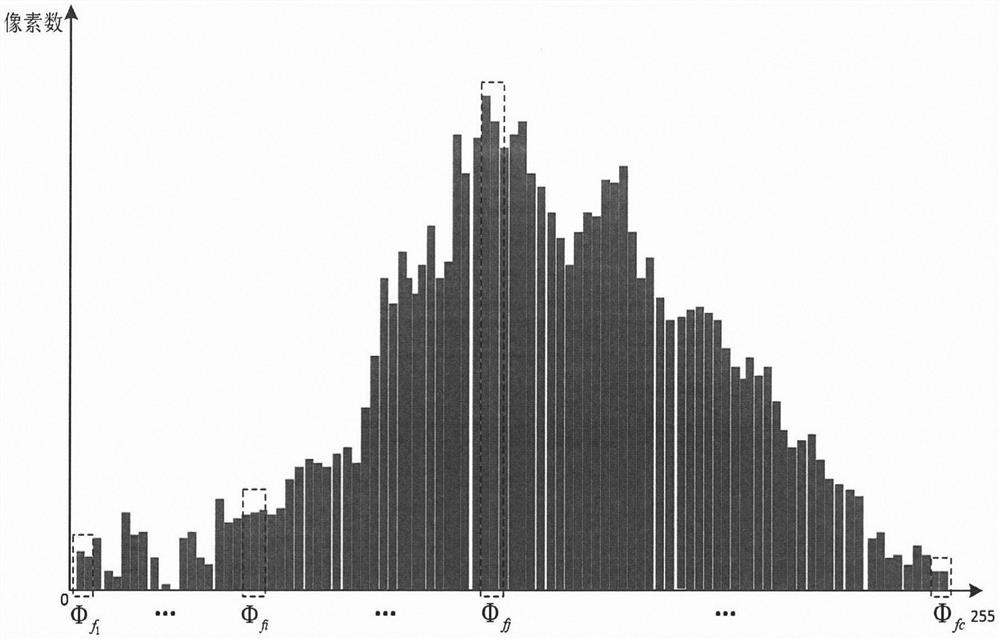

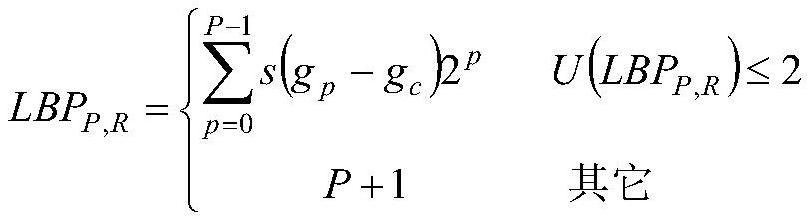

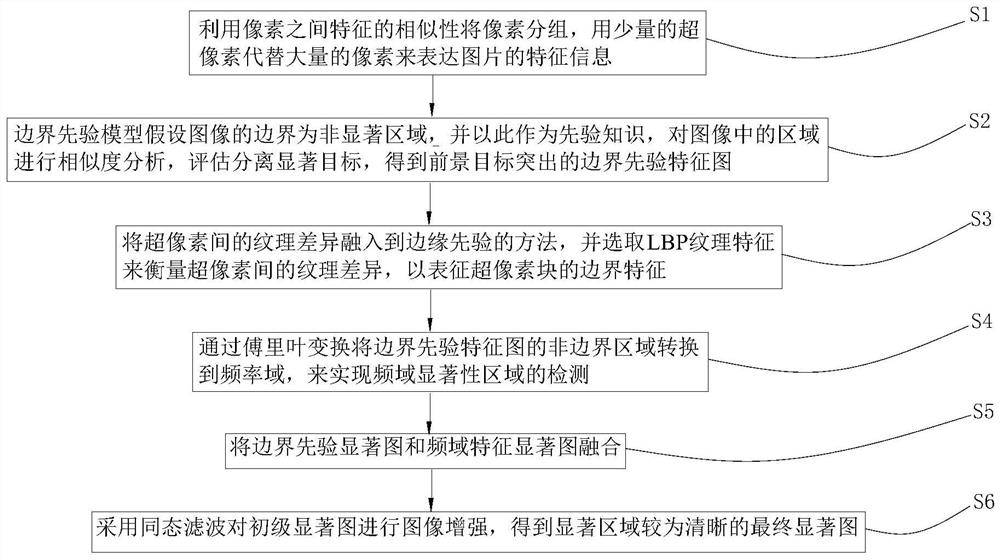

Significance target detection method fusing boundary priori and frequency domain information

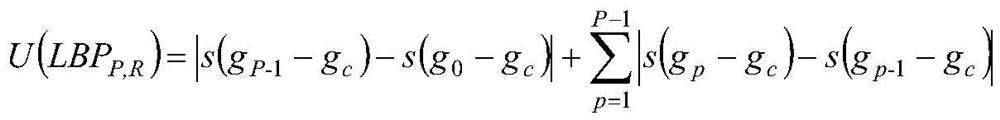

The invention discloses a saliency target detection method fusing boundary priori and frequency domain information. The method mainly comprises the steps of: grouping pixels through the similarity of features of the pixels, and replacing a large number of pixels with a small number of superpixels to express the feature information of a picture; assuming, by a boundary priori model, that the boundary of the image is a non-salient region and takes the non-salient region as priori knowledge; selecting lBP texture features to measure texture differences between the superpixels so as to represent boundary features of superpixel blocks; converting a non-boundary region of the boundary prior feature map to a frequency domain through Fourier transform, so that detection of a frequency domain saliency region is realized; fusing a boundary prior saliency map and a frequency domain feature saliency map; and carrying out image enhancement on a primary saliency map by adopting homomorphic filtering to obtain a final saliency map with a clear salient region. According to the method, boundary information and frequency domain information are combined, and a salient region with a more complete boundary can be detected for a salient target in a complex scene.

Owner:LIAONING TECHNICAL UNIVERSITY

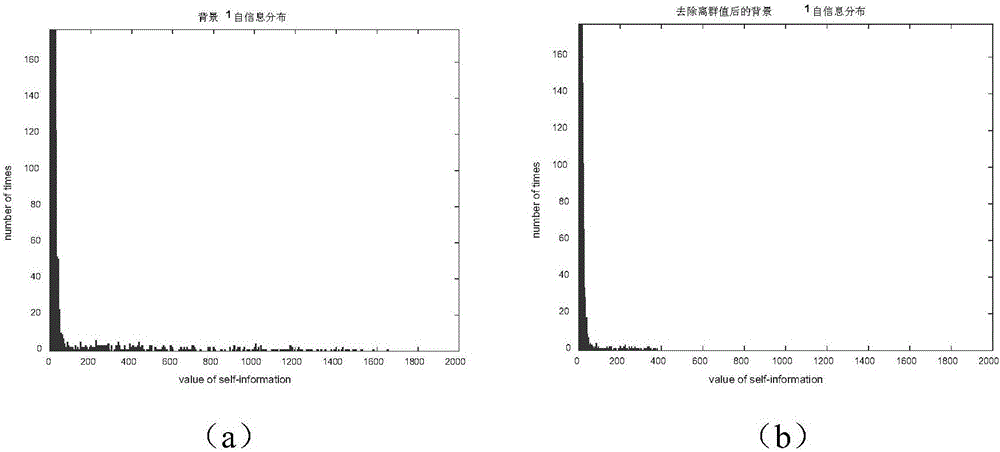

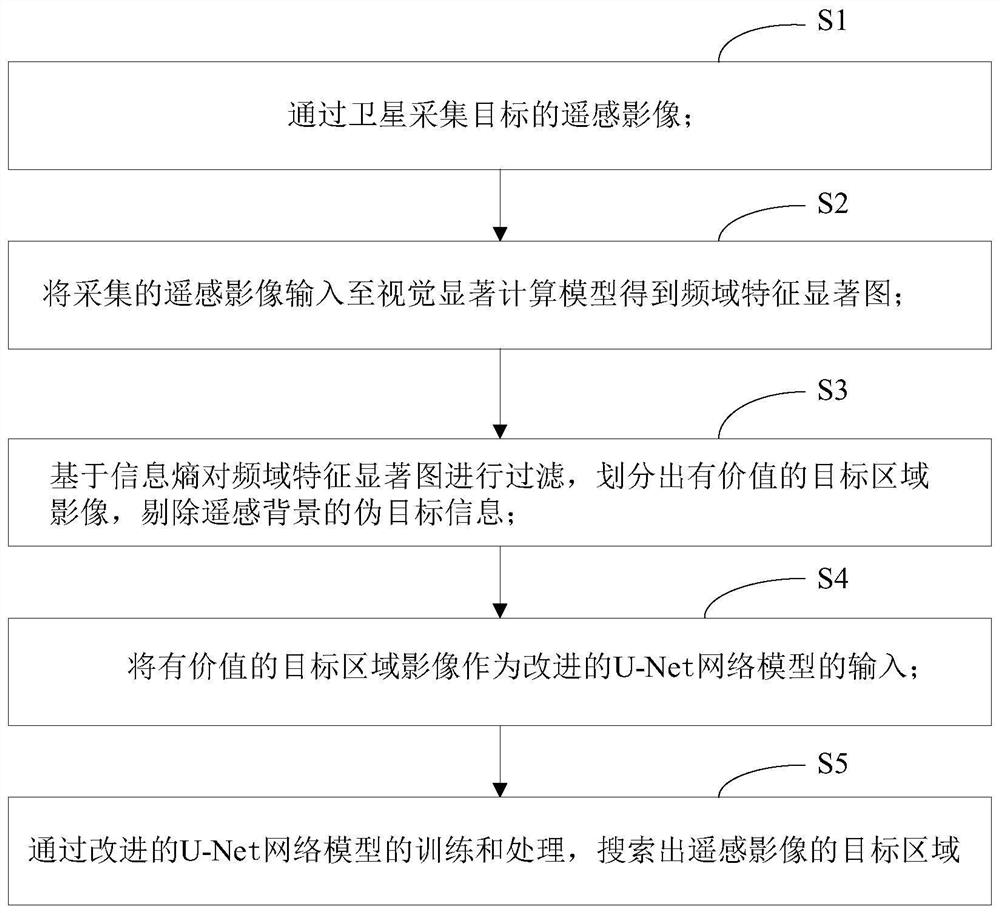

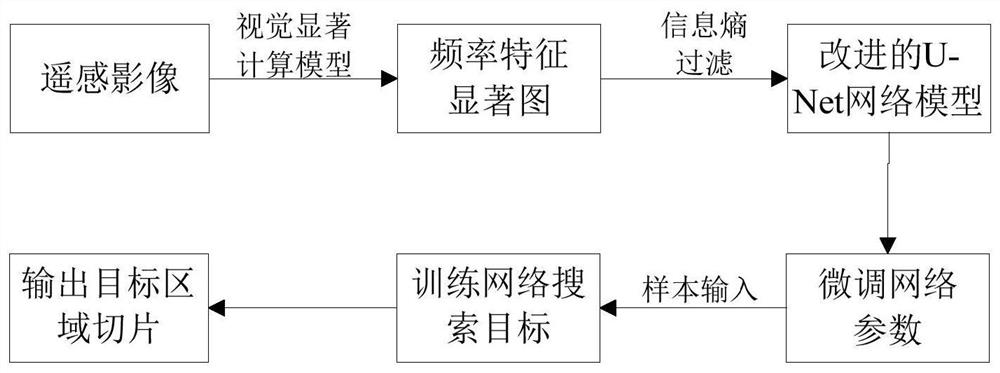

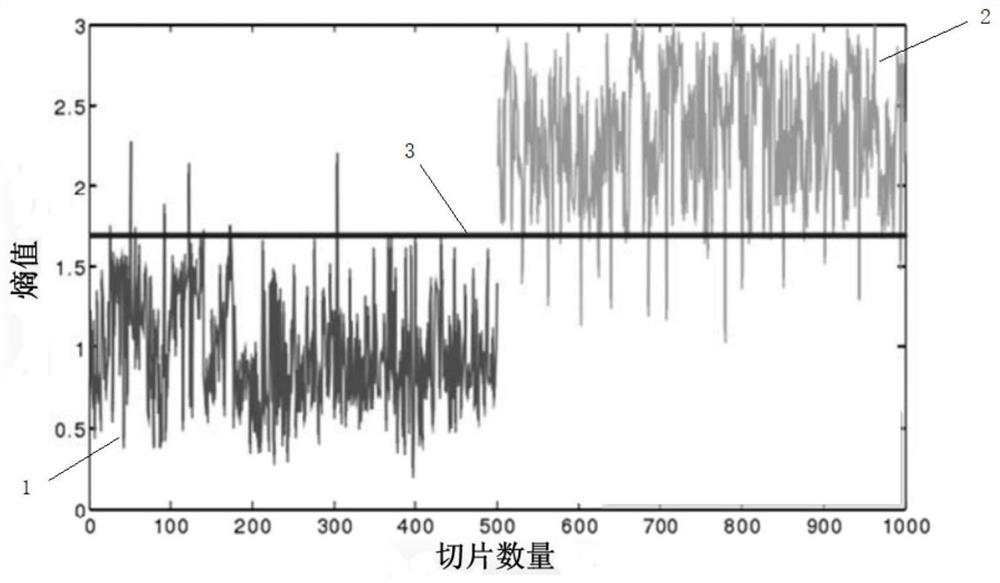

Satellite in-orbit application-oriented remote sensing image visual salient region intelligent search method

PendingCN111832502AEasy extractionRich low frequency featuresScene recognitionSaliency mapVisual saliency

The invention discloses a satellite in-orbit application-oriented remote sensing image visual salient region intelligent search method. The method comprises the following steps: S1, collecting a remote sensing image of a target through a satellite; s2, inputting the acquired remote sensing image into a visual saliency calculation model to obtain a frequency domain feature saliency map; s3, filtering the frequency domain feature saliency map based on the information entropy, dividing a valuable target area image, and removing pseudo target information of a remote sensing background; s4, takingthe valuable target area image as the input of an improved U-Net network model; and S5, searching a target area of the remote sensing image through training and processing of the improved U-Net network model. According to the invention, the visual saliency based on the remote sensing image is combined with the improved U-Net network model for carrying out the quick search of a valuable target region of the remote sensing image, and a saliency map extracted by the visual saliency calculation model is taken as the input of the adjusted U-Net network model, thus achieving the light-weight and quick region search of a valuable remote sensing target.

Owner:PLA PEOPLES LIBERATION ARMY OF CHINA STRATEGIC SUPPORT FORCE AEROSPACE ENG UNIV

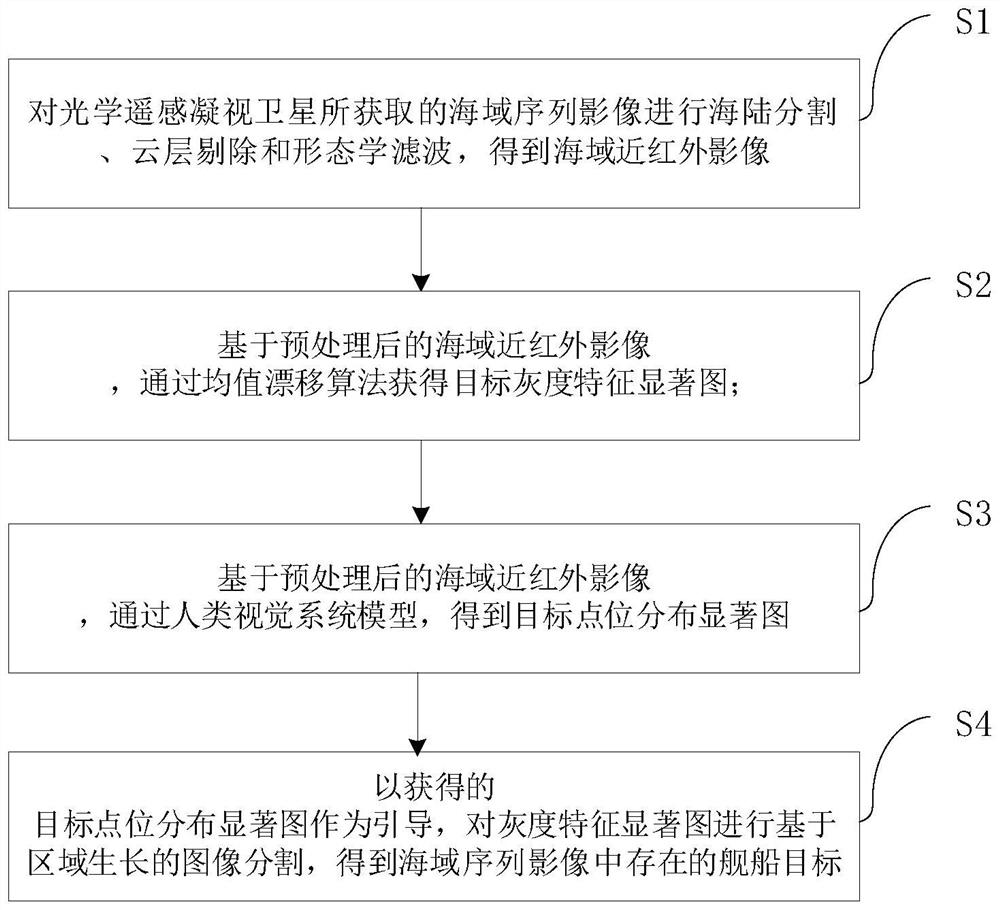

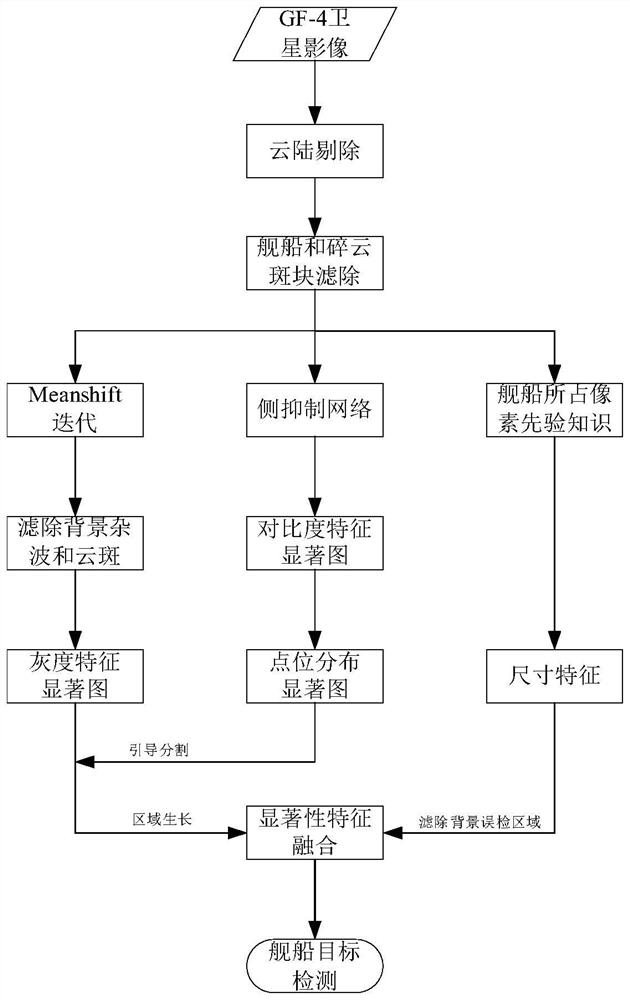

Weak and small ship target fusion detection method and device based on multi-vision salient features

PendingCN114764801ASolve the problem of limited suppression abilityAccurate identificationImage enhancementImage analysisHuman visual system modelImage segmentation

The invention provides a weak ship target fusion detection method and device based on multi-vision significant features, and the method comprises the following steps: carrying out the sea-land segmentation, cloud layer elimination and morphological filtering of a sea area sequence image obtained by an optical remote sensing staring satellite, and obtaining a sea area near-infrared image; based on the sea area near-infrared image, obtaining a target gray feature saliency map through a mean shift algorithm; obtaining a target point location distribution saliency map through a human vision system model based on the sea area near-infrared image; and taking the target point location distribution saliency map as guidance, performing image segmentation based on region growth on the gray feature saliency map, and extracting a ship target existing in the sea area near-infrared image in combination with ship target size features. The weak and small target detection method solves the problems that a weak and small target detection method in the prior art is high in missed alarm rate and false alarm rate, low in robustness and accuracy and not suitable for weak and small multi-target detection under the conditions of low signal-to-noise ratio and ocean clutter.

Owner:INST OF MICROELECTRONICS CHINESE ACAD OF SCI

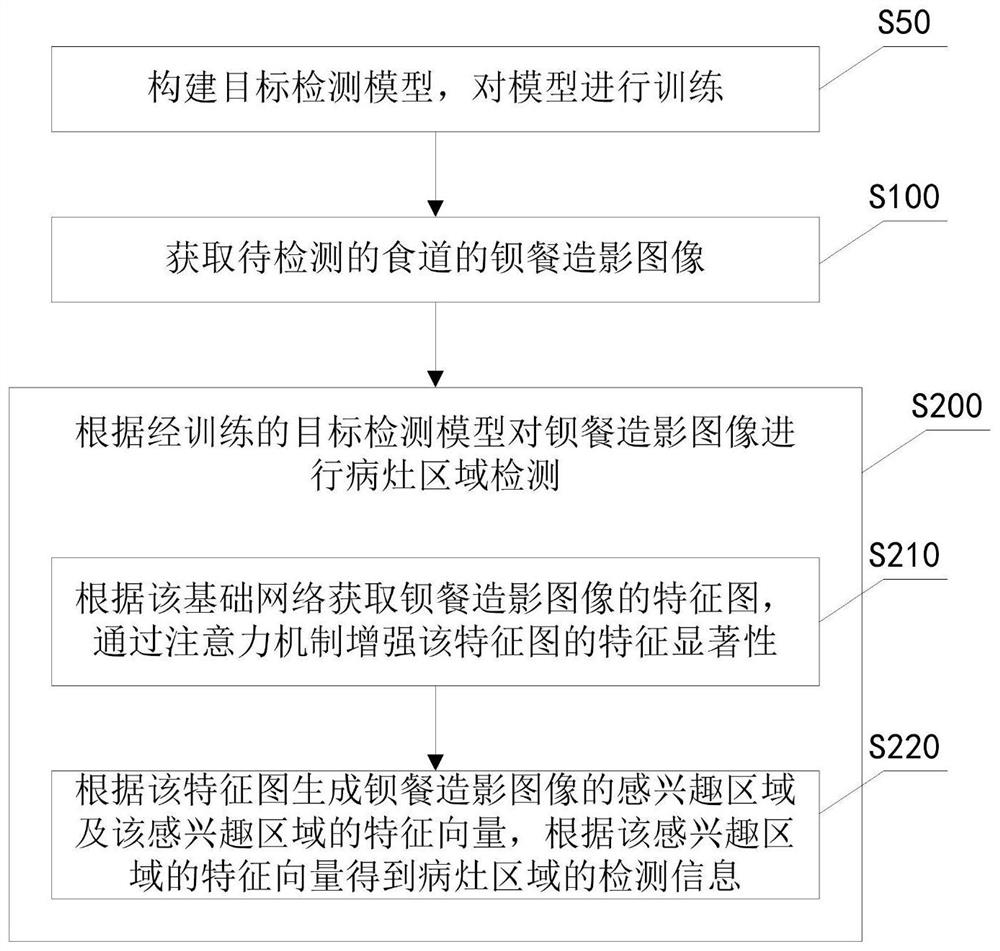

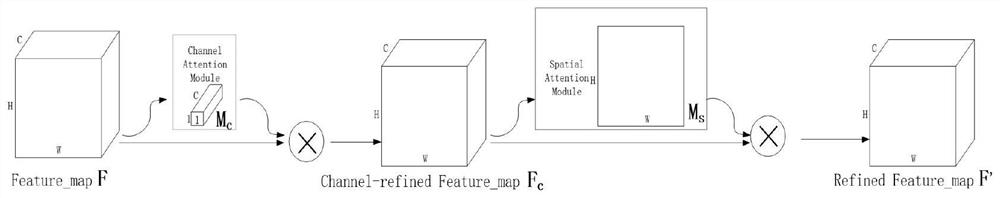

Esophageal cancer detection method and system based on barium meal radiography image

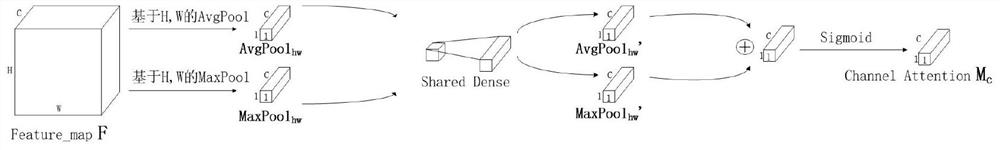

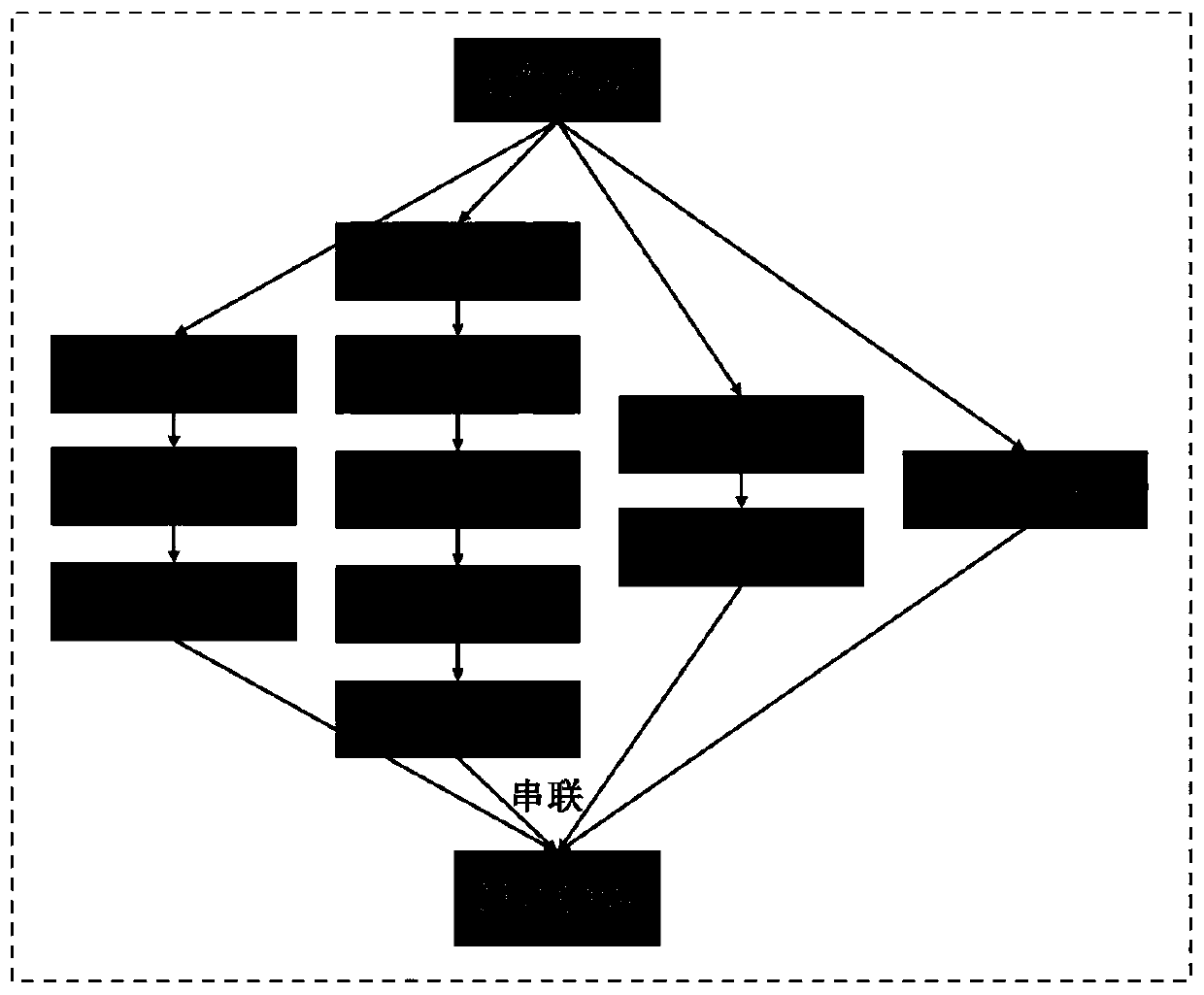

PendingCN112950546AImprove performanceImprove detection accuracyImage enhancementImage analysisFeature vectorEsophago-esophageal

The invention provides an esophageal cancer detection method and system based on a barium meal contrast image. The method comprises the following steps: acquiring the barium meal contrast image of an esophagus to be detected; performing focus area detection on the barium meal contrast image according to the trained target detection model; wherein the target detection model adopts an improved Faster R-CNN, and a basic network of the target detection model is composed of a convolutional neural network carrying an attention mechanism; obtaining a feature map of the image according to the basic network, and enhancing the feature saliency of the feature map through an attention mechanism; and generating a region of interest and a feature vector of the region of interest according to the feature map, and obtaining detection information of the lesion region according to the feature vector of the region of interest. The attention mechanism is embedded in the basic network, the capability of obtaining the features of the region of interest of the target detection model is improved, and the esophageal cancer detection accuracy of the system is improved through fusion of multi-image and multi-body-position detection information.

Owner:SOUTH CENTRAL UNIVERSITY FOR NATIONALITIES

Image feature extraction method and device

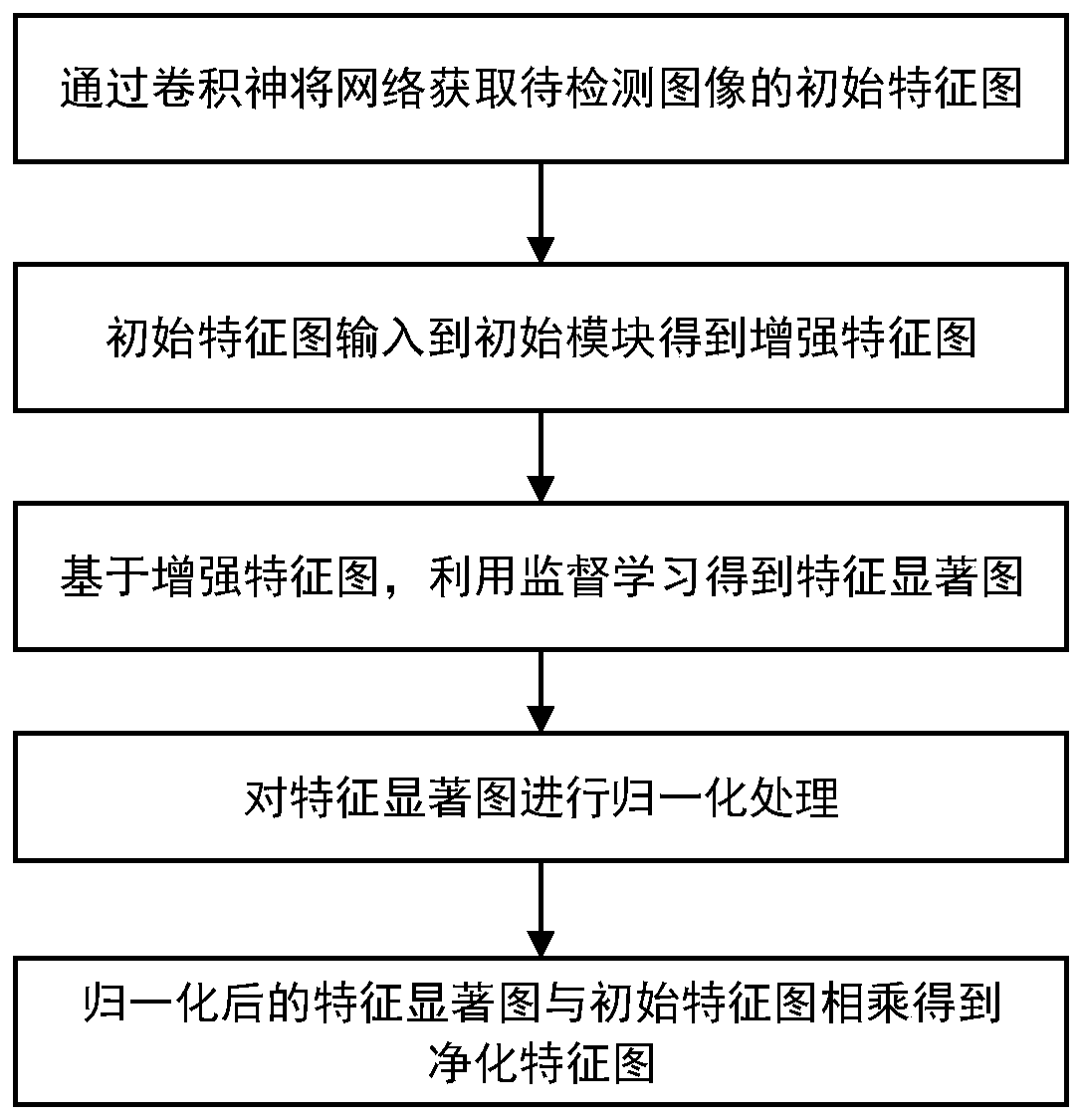

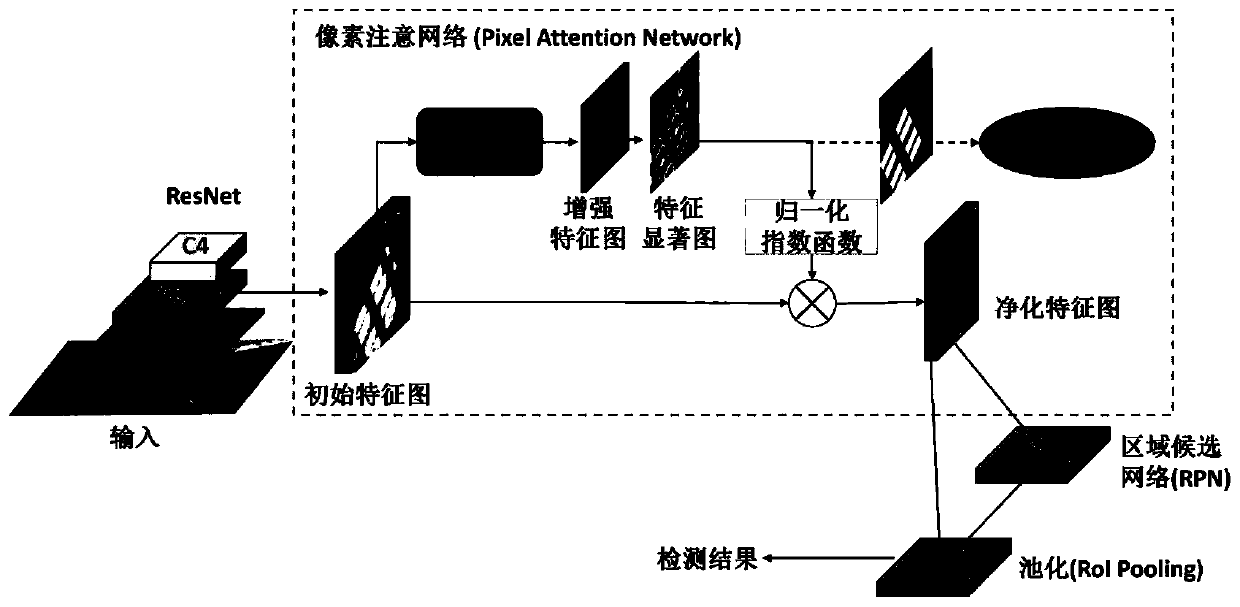

InactiveCN109977947AIncrease diversityReduce noiseCharacter and pattern recognitionNeural architecturesFeature extractionImaging Feature

The invention discloses an image feature extraction method and device, and the method comprises the steps: S1, generating an initial feature map based on a convolutional neural network and a to-be-detected target image; S2, inputting the initial feature map into an initial module to further learn high-order abstract feature information to obtain an enhanced feature map; and S3, based on the enhanced feature map, generating a feature saliency map with the same length and width as the enhanced feature map by using supervised learning; and S4, performing normalization processing on the feature saliency map; and S5, multiplying the initial feature map by the normalized feature saliency map to obtain a purified feature map. The noise in the feature map can be inhibited, the target information is highlighted, and the small target detection capability of the model is improved.

Owner:CENT SOUTH UNIV

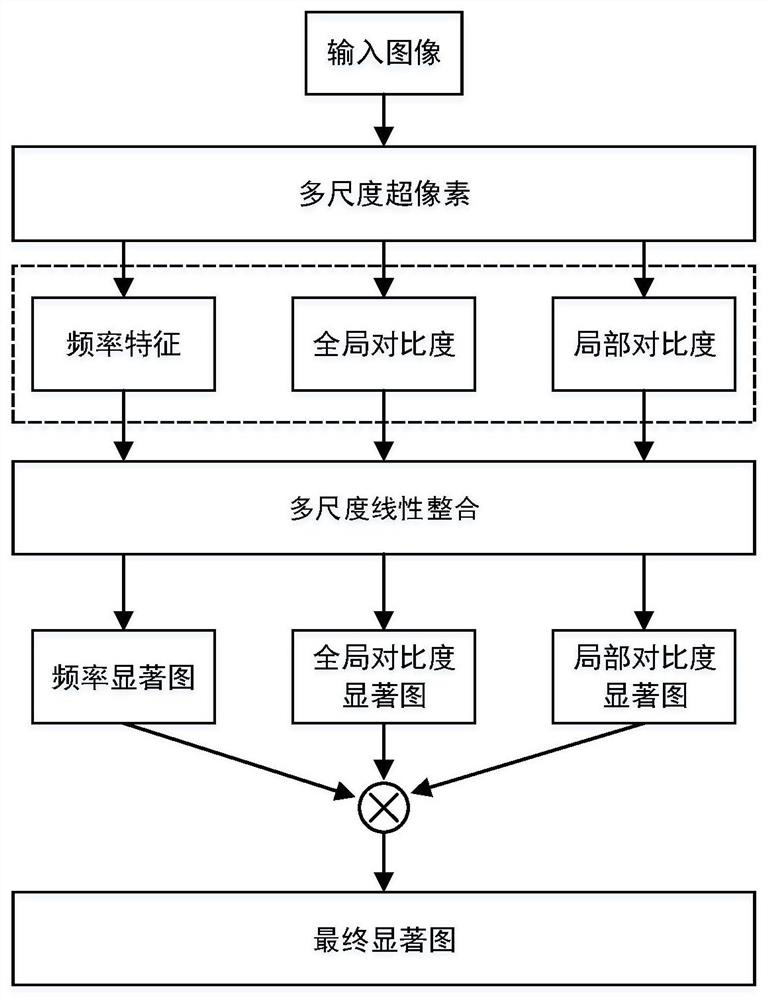

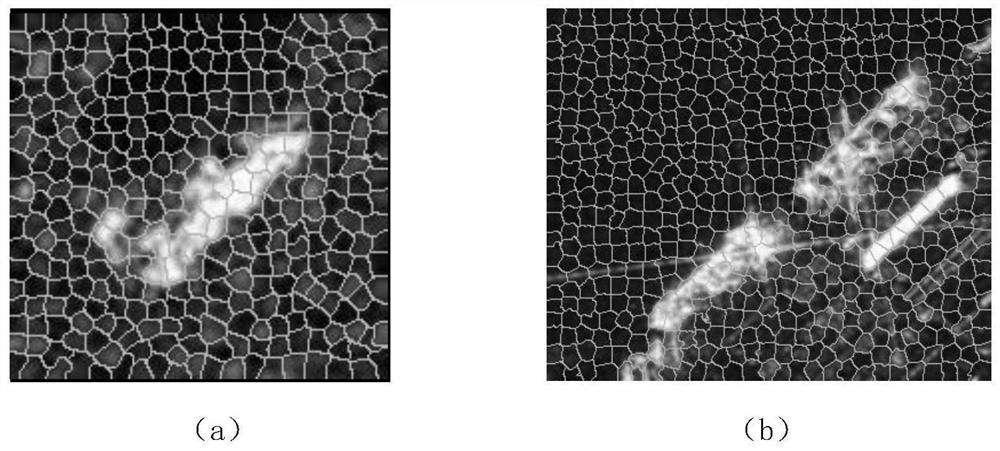

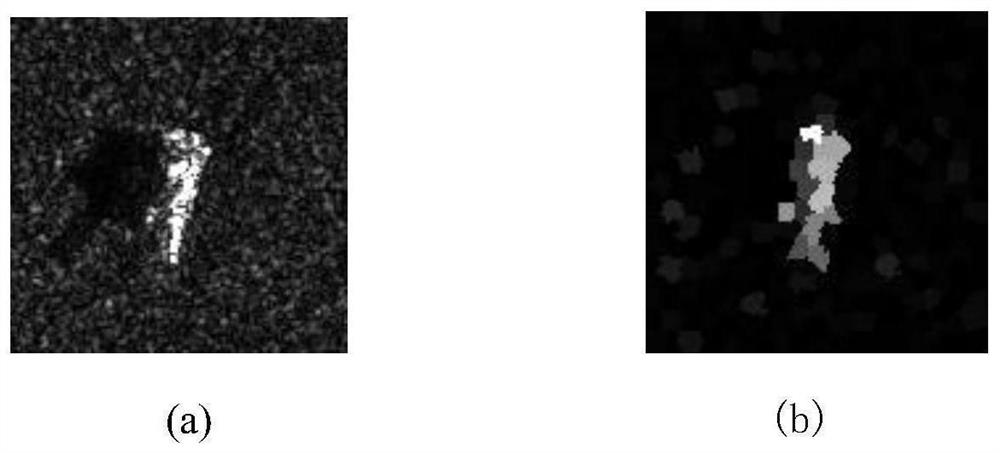

SAR image saliency map generation method based on multi-scale and super-pixel segmentation

InactiveCN112766032AAccurate depictionEnhanced inhibitory effectScene recognitionSaliency mapFeature extraction

The invention provides an SAR image salient target detection method based on multi-scale superpixels, and belongs to the field of signal processing, in particular to the field of synthetic aperture radar image feature extraction. According to the method, super-pixel-level frequency characteristics, super-pixel-level local contrast and super-pixel-level global contrast are included. Linear integration is carried out on the same features under different scales, saliency maps of the features are obtained, and it is guaranteed that the superpixel features obtained under the multiple scales can participate in fusion of the feature maps. And finally, multiplying the feature saliency maps to obtain a final saliency target detection result map. Finally, simulation experiments are carried out on SAR images in different scenes, and compared with various classic saliency detection algorithms and a two-parameter CFAR detection method, it is proved that the saliency target detection effect of the algorithm under most conditions can be better than that of a comparison algorithm, and meanwhile the background clutter suppression effect of the algorithm is higher than that of the comparison algorithm; under comprehensive evaluation, the SAR image salient target detection method has a good SAR image salient target detection effect.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

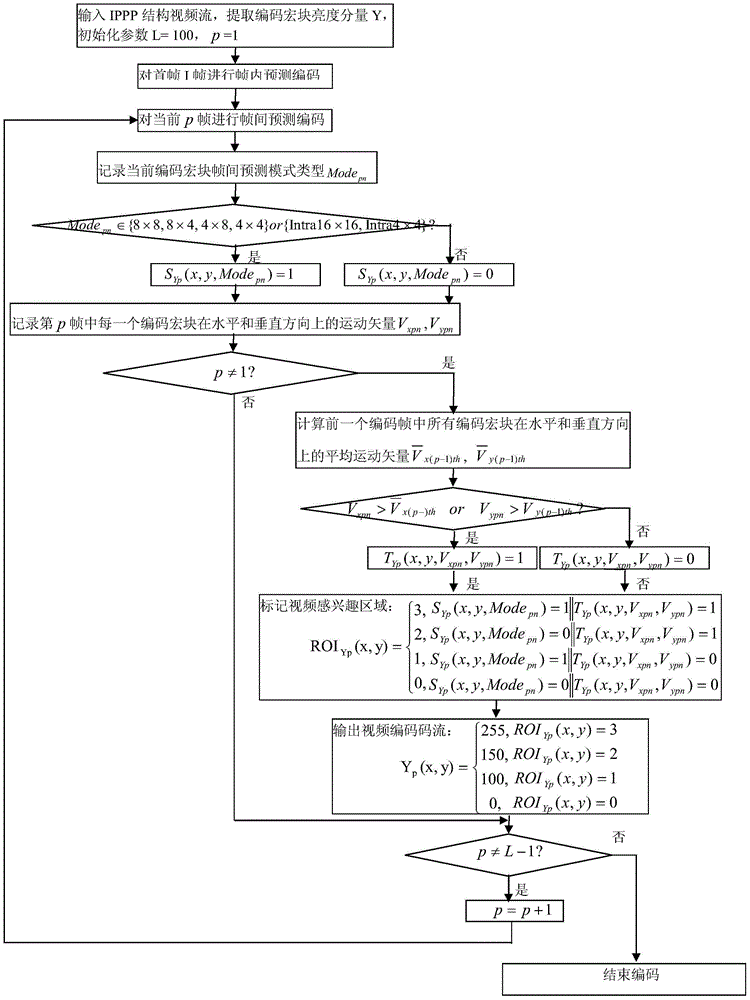

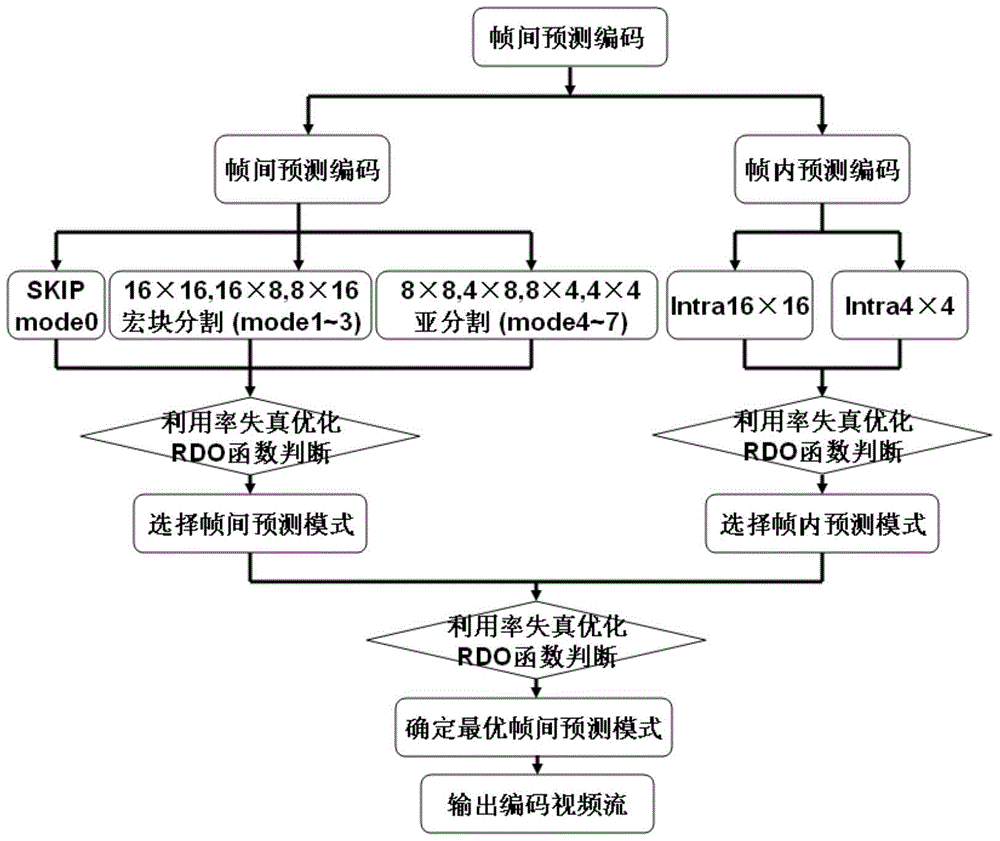

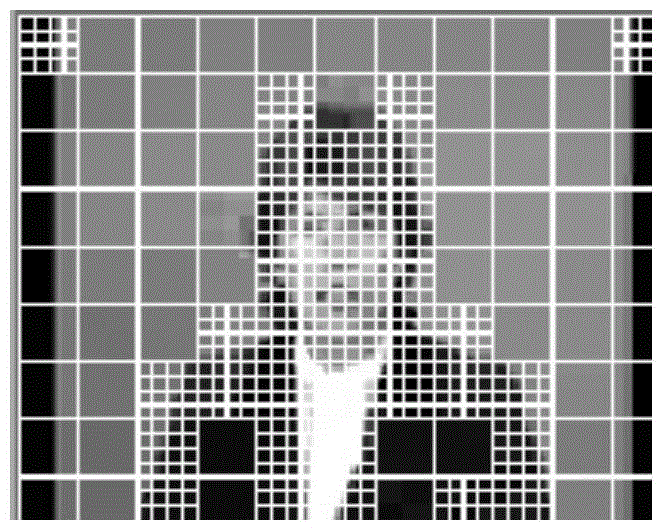

Extraction method of video region of interest based on coding information

ActiveCN103618900BRealize automatic extractionQuick extractionDigital video signal modificationPattern recognitionMotion vector

The invention discloses a video interest region extraction method based on visual perception features and coding information, and relates to the field of video coding. The present invention includes the following steps: first extracting the brightness information of the current coded macroblock from the original video stream; then, using the inter-frame prediction mode type of the current coded macroblock to identify the saliency region of the spatial domain visual feature; and then encoding the macroblock of the previous frame The average motion vectors in the horizontal and vertical directions respectively are dynamic double thresholds. According to the comparison results of the horizontal and vertical motion vectors of the current coded macroblock and the dynamic double thresholds, the time-domain visual feature salience area is identified; finally, combined with the spatial domain and The identification result of the saliency region of temporal visual features defines the priority of video interest, and realizes the automatic extraction of video interest. The method of the invention can provide an important coding basis for ROI (Region of Interest, ROI)-based video coding technology.

Owner:BEIJING UNIV OF TECH

Geometric shape recognition method and feature recognition method of basic primitive point cloud surface

ActiveCN108010114BAvoid blindnessGood orientationImage rendering3D-image renderingPoint cloudAlgorithm

The invention provides a geometric shape recognition method and a feature recognition method of a point cloud surface of a basic graphic element. The geometric shape recognition method comprises the following steps: based on the Gaussian mapping method, the feature saliency of the Gaussian image is used to group the basic primitives to obtain a plane-cylindrical-conical surface group and a spherical-circular surface group; through the Gaussian image feature analysis , identify the plane, cylinder and conical surface in the plane-cylindrical-cone group; construct the Laplacian Beltrami operator, calculate the operator value of the Laplacian Beltrami operator, and use the operator The subvalues Mean and Variance identify the sphere and torus in the Sphere‑Torus group. The feature recognition method includes: identifying the geometric shape of the point cloud surface of the basic primitive; and extracting the shape geometric parameters according to the recognized geometric shape of the point cloud curved surface of the basic primitive. The beneficial effects of the invention include that the identification method is simple and feasible, has good pertinence, and enhances the guidance of parameter extraction.

Owner:SOUTHWEAT UNIV OF SCI & TECH

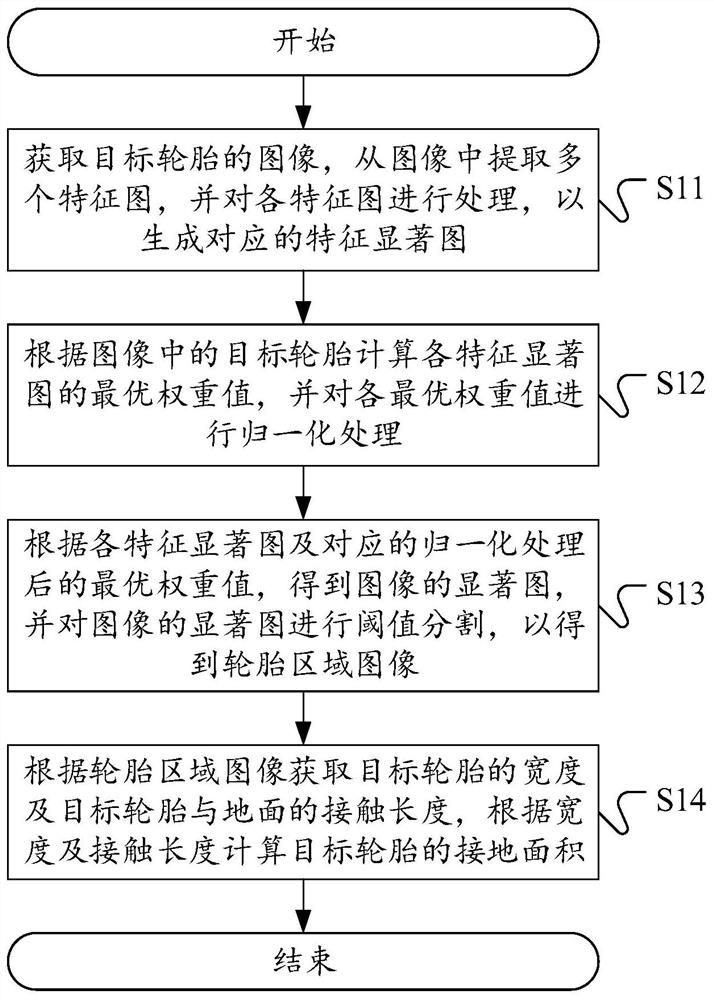

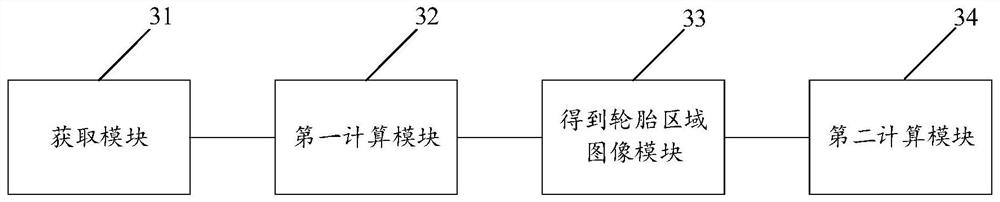

Tire grounding area measuring method and device based on image feature extraction

PendingCN112767471AImprove measurement efficiencyImprove measurement accuracyImage analysisCharacter and pattern recognitionGround contactSaliency map

The invention discloses a tire grounding area measurement method, device and equipment based on image feature extraction and a computer readable storage medium. The method comprises the steps: obtaining an image of a target tire, extracting a feature map from the image, and generating a feature saliency map; calculating an optimal weight value of each feature saliency map according to a target tire in the image, and carrying out normalization processing on each optimal weight value; obtaining a saliency map of the image according to the feature saliency map and the corresponding optimal weight value after normalization processing, and performing threshold segmentation on the saliency map to obtain a tire area image; acquiring the width of the target tire and the contact length between the target tire and the ground according to the tire area image, and calculating the grounding area of the target tire according to the width and the contact length. According to the technical scheme disclosed by the invention, the ground contact area of the tire is automatically measured in a non-contact manner by acquiring the image of the target tire and processing the image of the target tire, so that the efficiency, accuracy and safety of tire ground contact area measurement are improved.

Owner:HUNAN UNIV

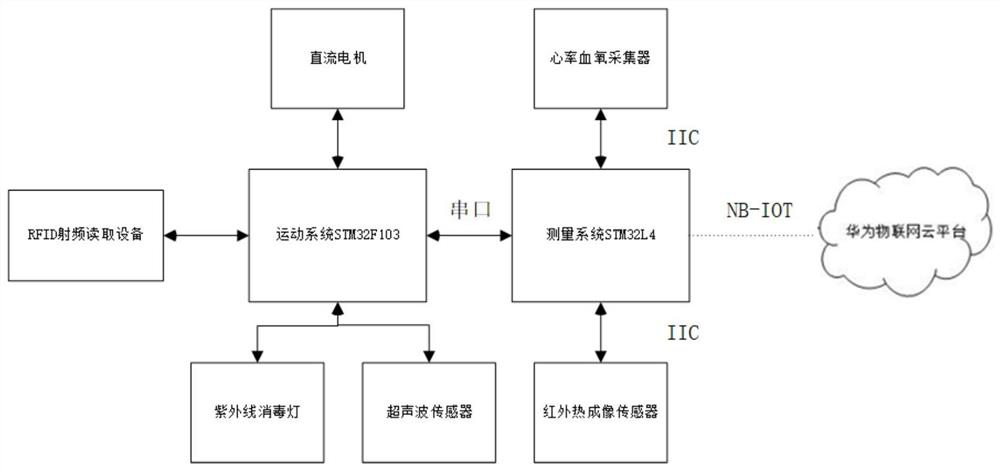

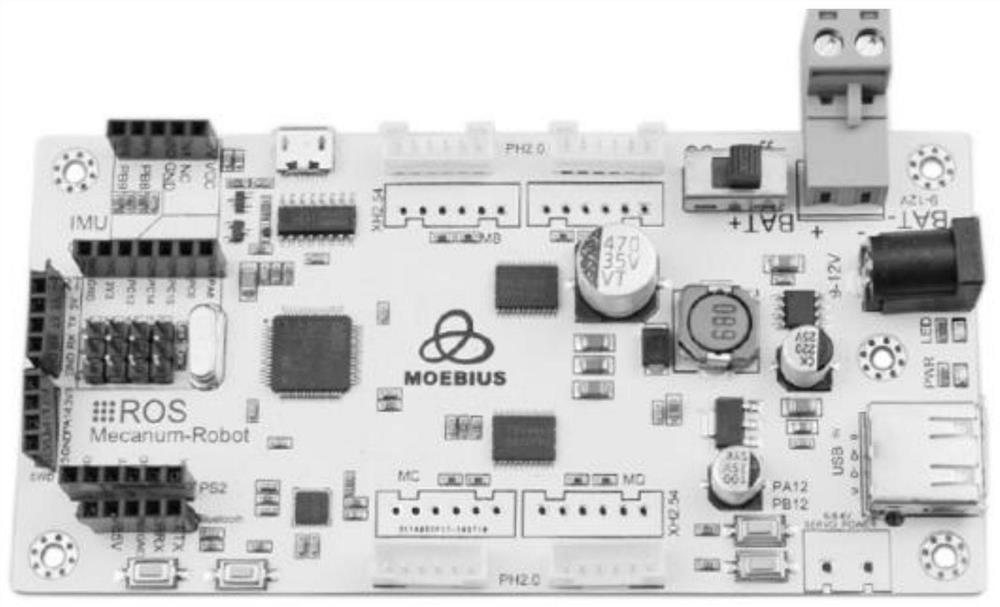

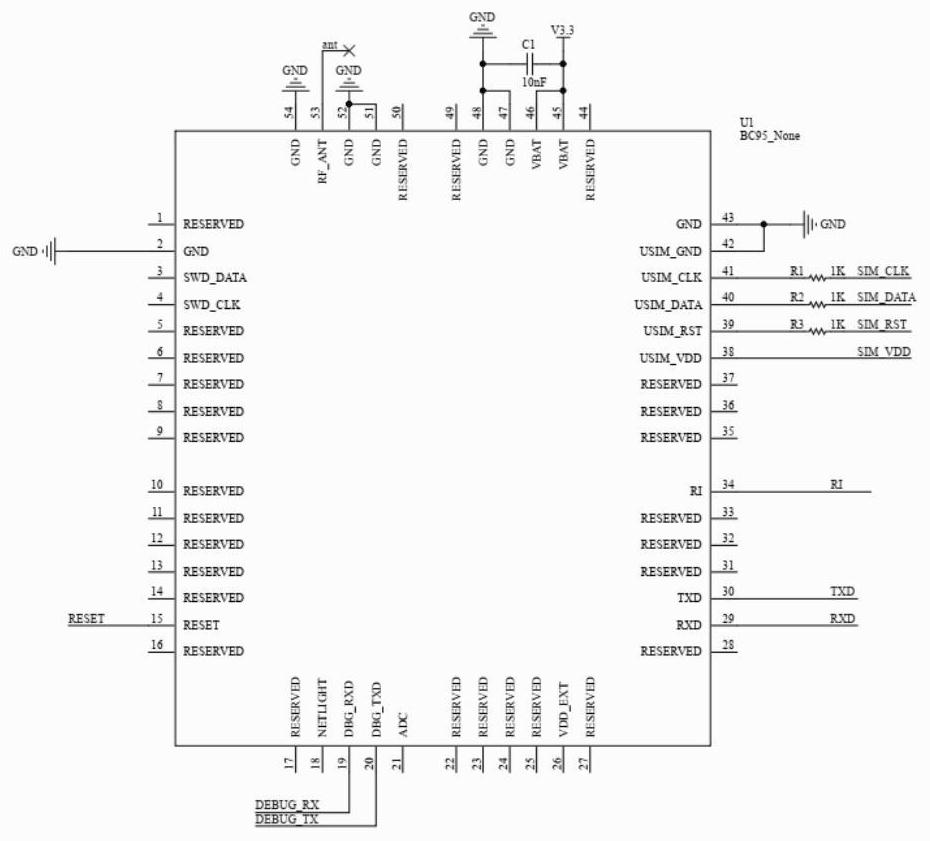

Disinfection robot design method based on multi-feature fusion and attention weight obstacle avoidance

PendingCN113065470ASolve problems such as dead angles and inconvenient steeringMonitor physical indicatorsImage analysisLavatory sanitoryPhysical medicine and rehabilitationMedicine

The invention discloses a disinfection robot design method based on multi-feature fusion and attention weight obstacle avoidance. The method comprises the following steps: 1, designing hardware facilities of a disinfection robot; 2, designing a steering mode of the robot; 3, constructing a map model; 4, robot autonomous obstacle avoidance control; 5, the robot monitoring the body indexes of the patient in the working operation interval and sterilizing and killing the specific place of the hospital, and an ultraviolet disinfection lamp being installed above the robot. Therefore, the robot can have a certain sterilization and killing function. The newest Mecanum wheels are adopted on the basis of the disinfection robot, the problems that dead angles are prone to occurring in a narrow space, steering is inconvenient and the like are solved, medical workers can be replaced for basic work of some hospitals, meanwhile, contour features and color features are fused through feature saliency monitoring and attention weighting, the accuracy of the pedestrian detection model is improved.

Owner:JINLING INST OF TECH

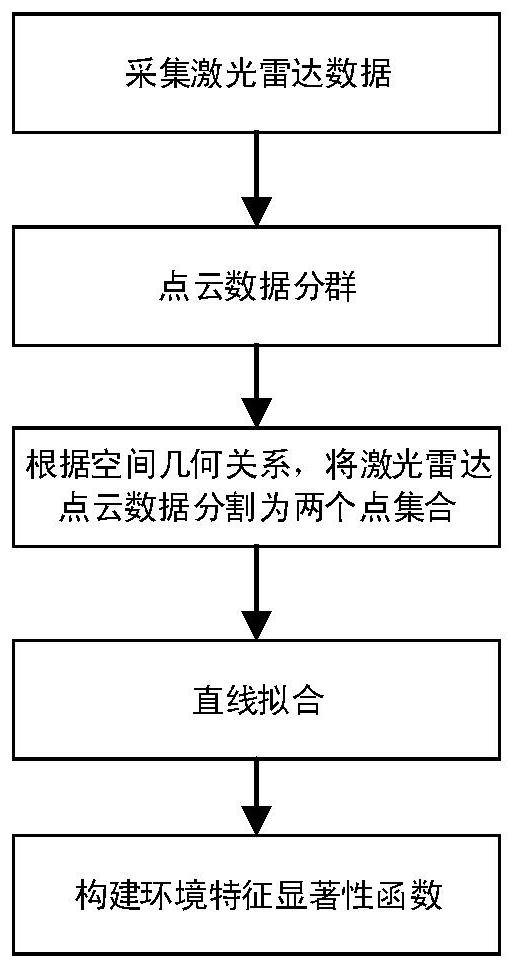

Feature saliency detection method based on lidar in line environment

ActiveCN108445505BAccurate identificationNavigational calculation instrumentsElectromagnetic wave reradiationPoint cloudRadar

The invention discloses a feature saliency detection method based on laser radar in a line environment, comprising the following steps: (1) periodically collecting laser radar information S(k) at time k; (2) grouping laser radar point cloud data; 3) According to the spatial geometric relationship of the point cloud data, the lidar point cloud data is divided into two point sets; (4) the two point sets obtained in step (3) are fitted with a straight line; (5) the environment features are constructed The salience function is used to judge the salience of environmental features. The beneficial effects of the present invention are: through the present invention, the feature salience detection of the environment can be completed, and the environment with inconspicuous features can be accurately identified.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

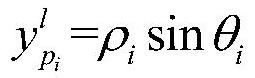

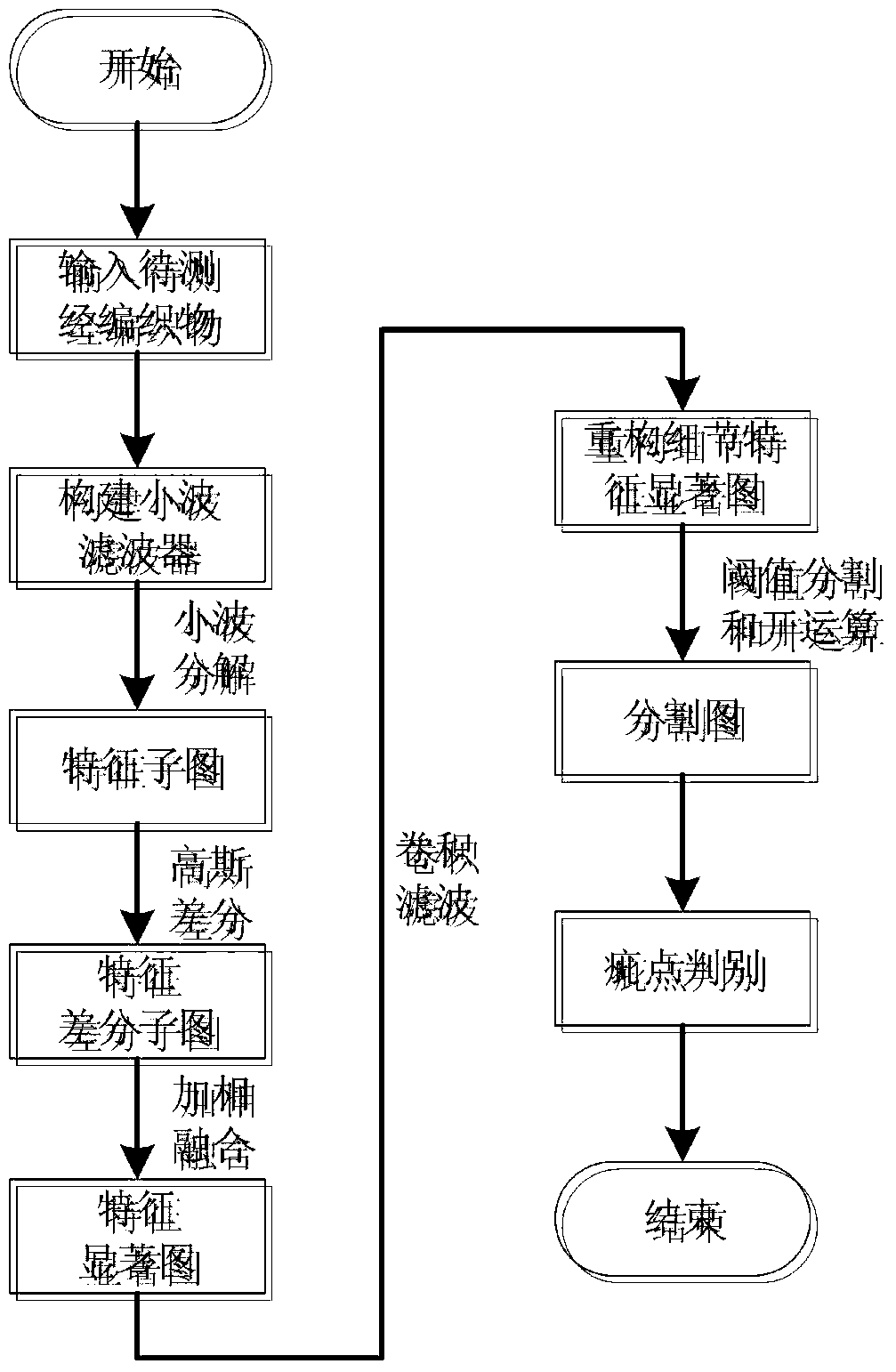

Warp Knitted Fabric Defect Detection Method Based on Wavelet Contourlet Transform and Visual Saliency

ActiveCN105335972BAvoid redundancyAvoid visual errorsImage enhancementImage analysisConvolution filterHigh energy

The invention relates to a warp knitting fabric defect detection method based on wavelet contourlet transformation and visual saliency. The method comprises the steps: selecting a fundamental wave, and constructing a wavelet transformation filter; performing wavelet decomposition for a warp knitting fabric image to be detected, and obtaining approximate feature sub-graphs and detail feature sub-graphs; performing Gauss difference among the approximate feature sub-graphs and the detail feature sub-graphs so as to obtain approximate feature difference sub-graphs and detail feature difference sub-graphs; performing normalization processing for the feature difference sub-graphs, and performing addition and mean value treatment to obtain approximate feature saliency graphs and detail feature saliency graphs; utilizing a non-subsample direction filter bank to perform convolution filtering for the detail feature saliency graphs to obtain detail feature direction sub-band coefficients, and selecting a higher sub-band coefficient with higher energy according to the energy theory to reconstruct the detail feature saliency graphs; and performing segmentation for the approximate feature saliency graphs and the reconstructed detail feature saliency graphs, adding each segmented images after processing the segmented images, and then performing defect determination. The warp knitting fabric defect detection method based on wavelet contourlet transformation and visual saliency can improve the defect detection accuracy.

Owner:JIANGNAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com