Patents

Literature

11009 results about "Imaging Feature" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

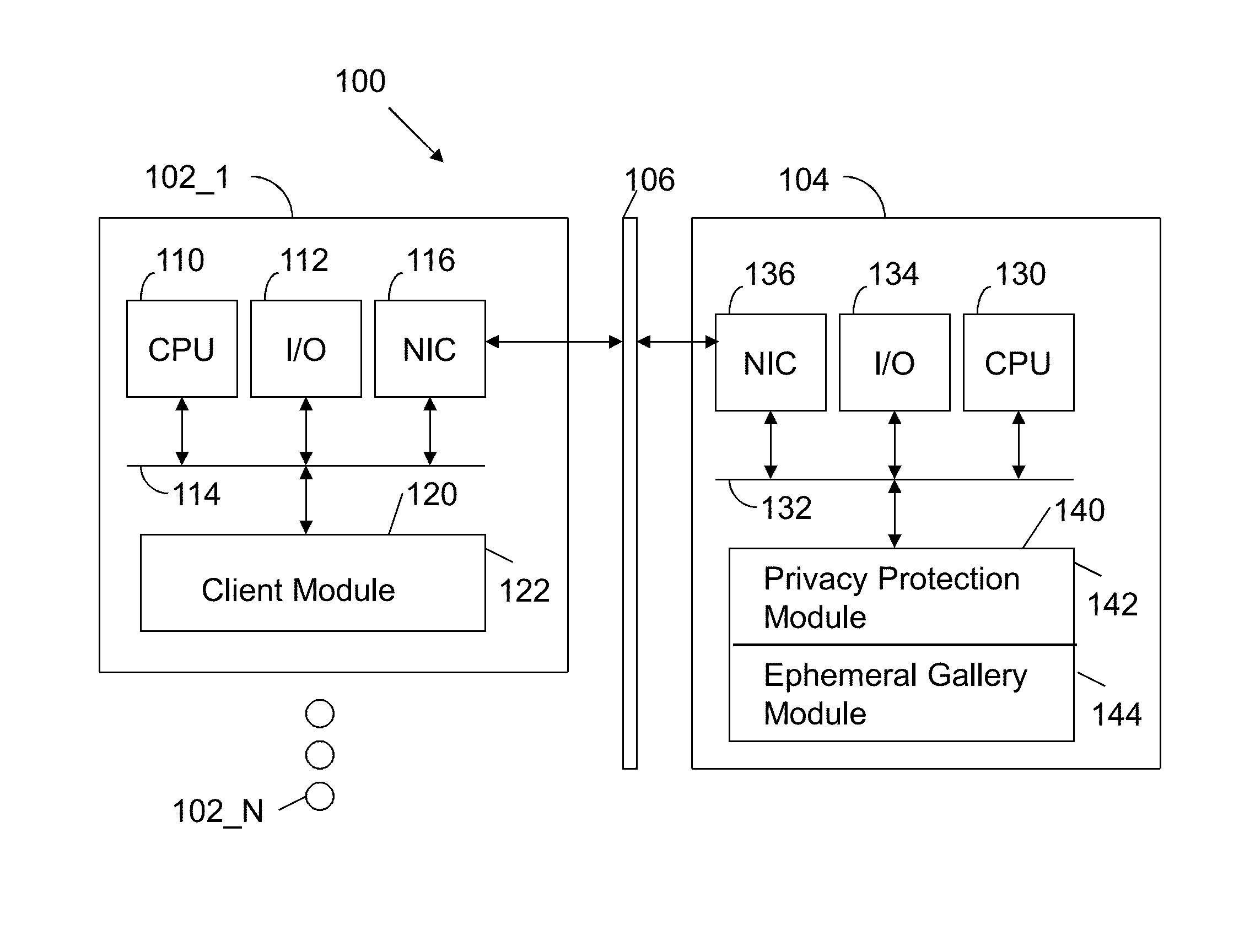

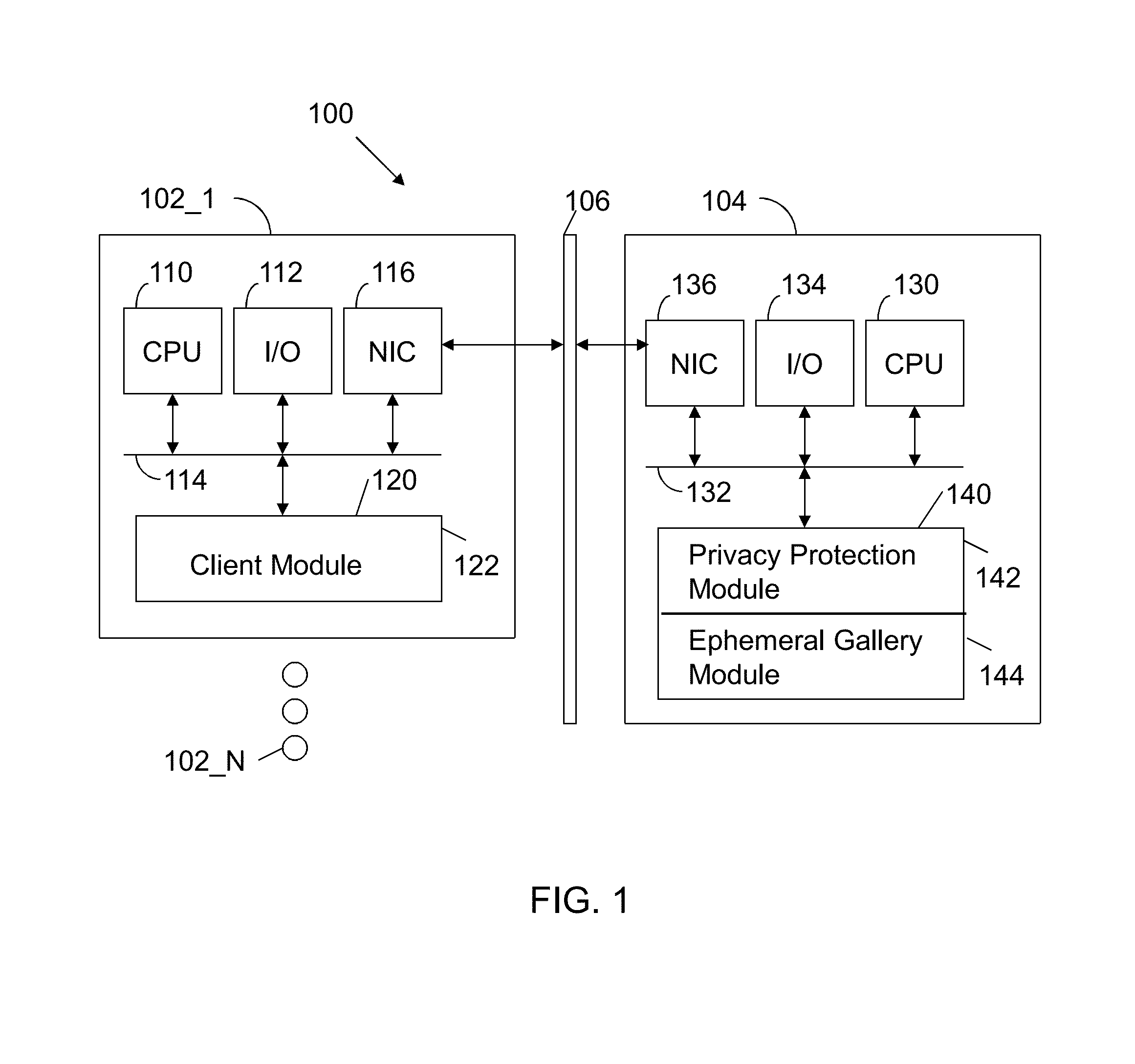

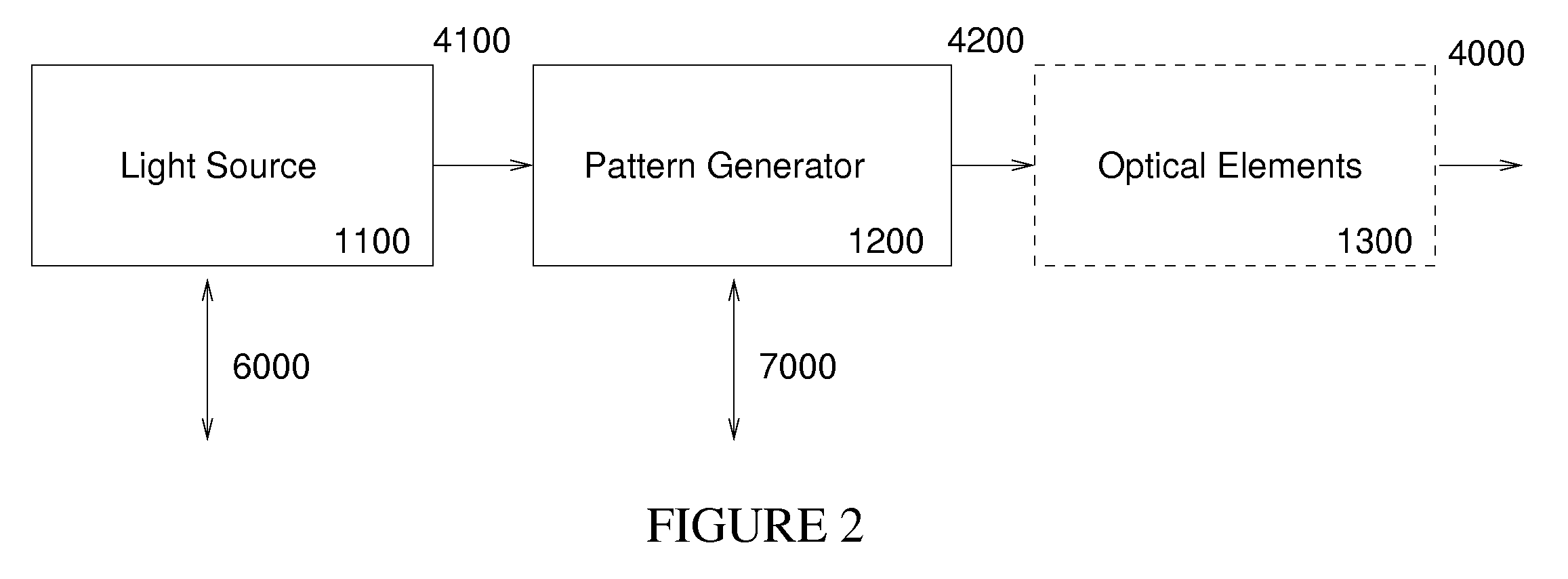

Apparatus and method for automated privacy protection in distributed images

ActiveUS9396354B1Data processing applicationsCharacter and pattern recognitionPrivacy rulePrivacy protection

A method executed by a computer includes receiving an image from a client device. A facial recognition technique is executed against an individual face within the image to obtain a recognized face. Privacy rules are applied to the image, where the privacy rules are associated with privacy settings for a user associated with the recognized face. A privacy protected version of the image is distributed, where the privacy protected version of the image has an altered image feature.

Owner:SNAP INC

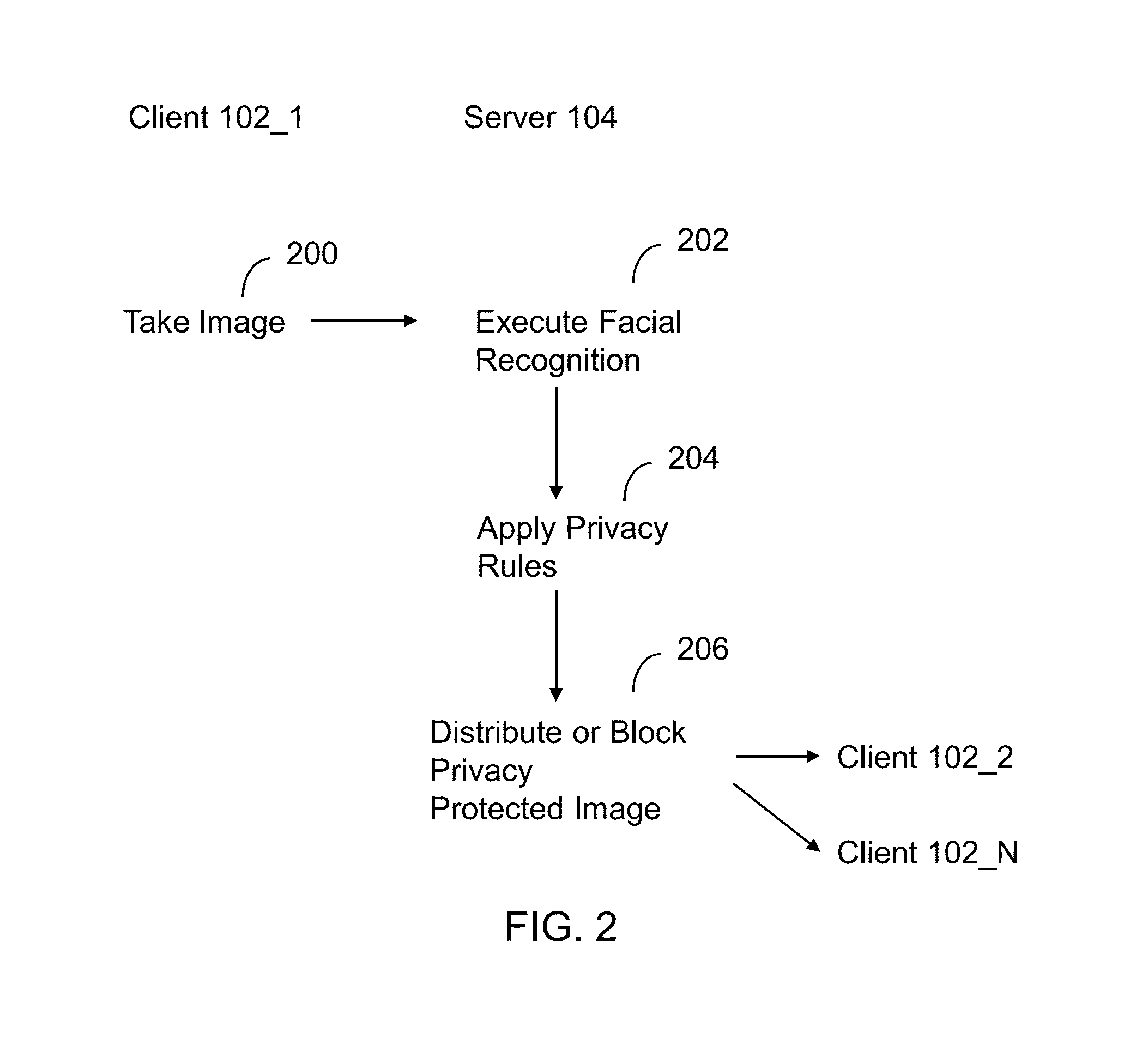

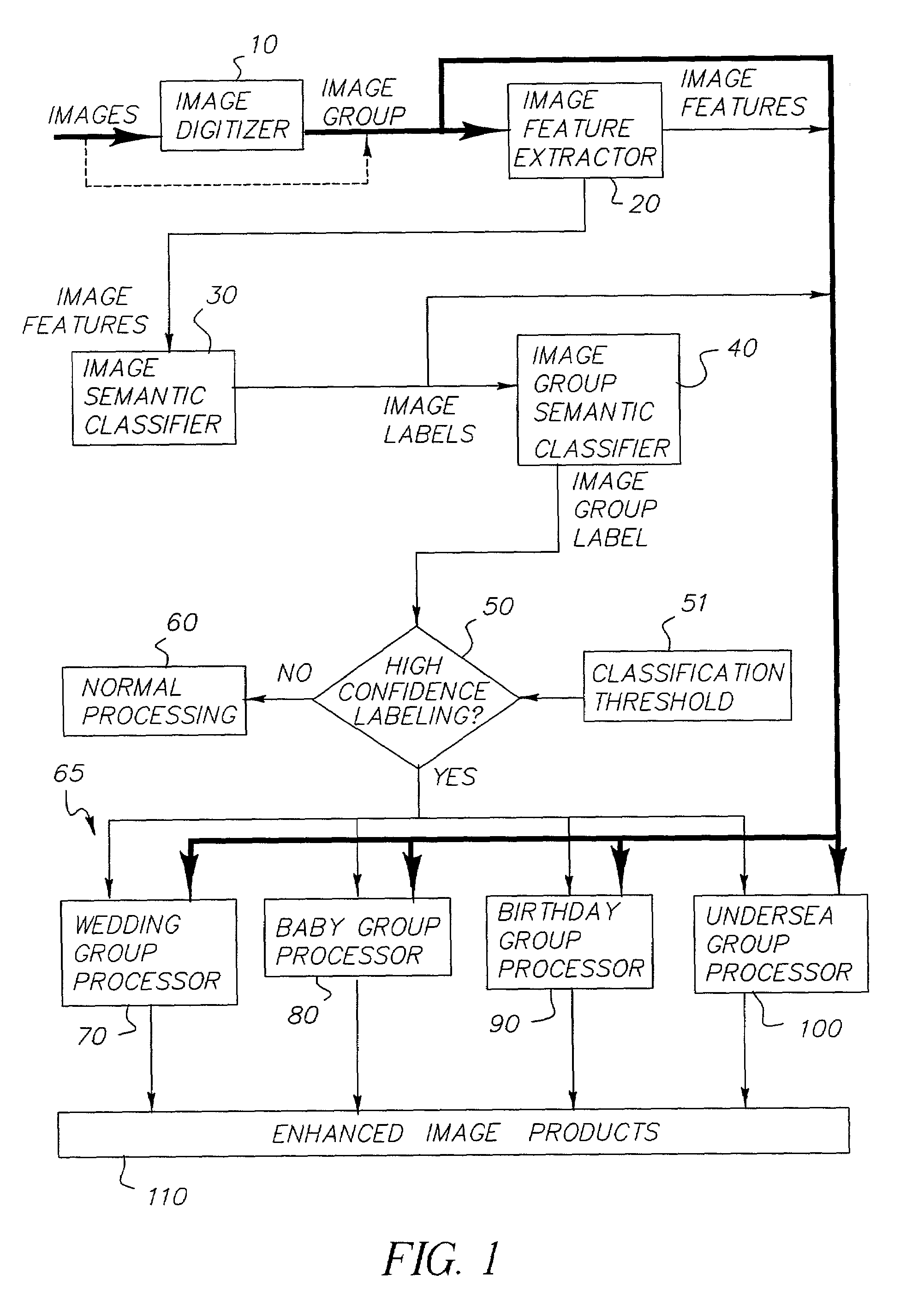

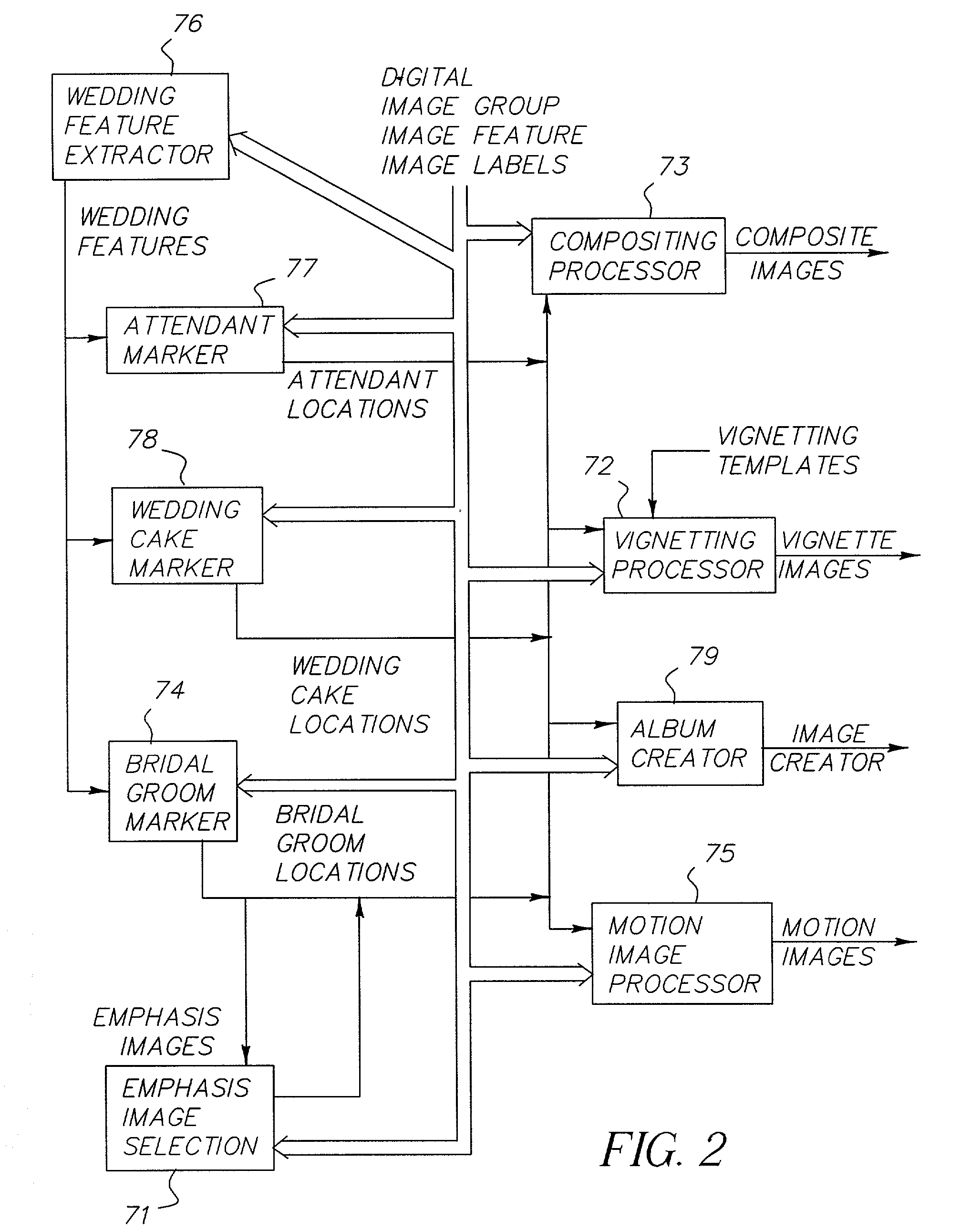

Method and system for processing images for themed imaging services

In a method for determining the general semantic theme of a group of images, whereby each digitized image is identified as belonging to a specific group of images, one or more image feature measurements are extracted from each of the digitized images in an image group, and then used to produce an individual image confidence measure that an individual image belongs to one or more semantic classifications. Then, the individual image confidence measures for the images in the image group are used to produce an image group confidence measure that the image group belongs to one or more semantic classifications, and the image group confidence measure is used to decide whether the image group belongs to one or to none of the semantic classifications, whereby the selected semantic classification constitutes the general semantic theme of the group of images. Additionally, a plurality of semantic theme processors, one for each semantic classification, are provided to produce enhanced value imaging services and products for image groups that fall into an appropriate semantic theme.

Owner:MONUMENT PEAK VENTURES LLC

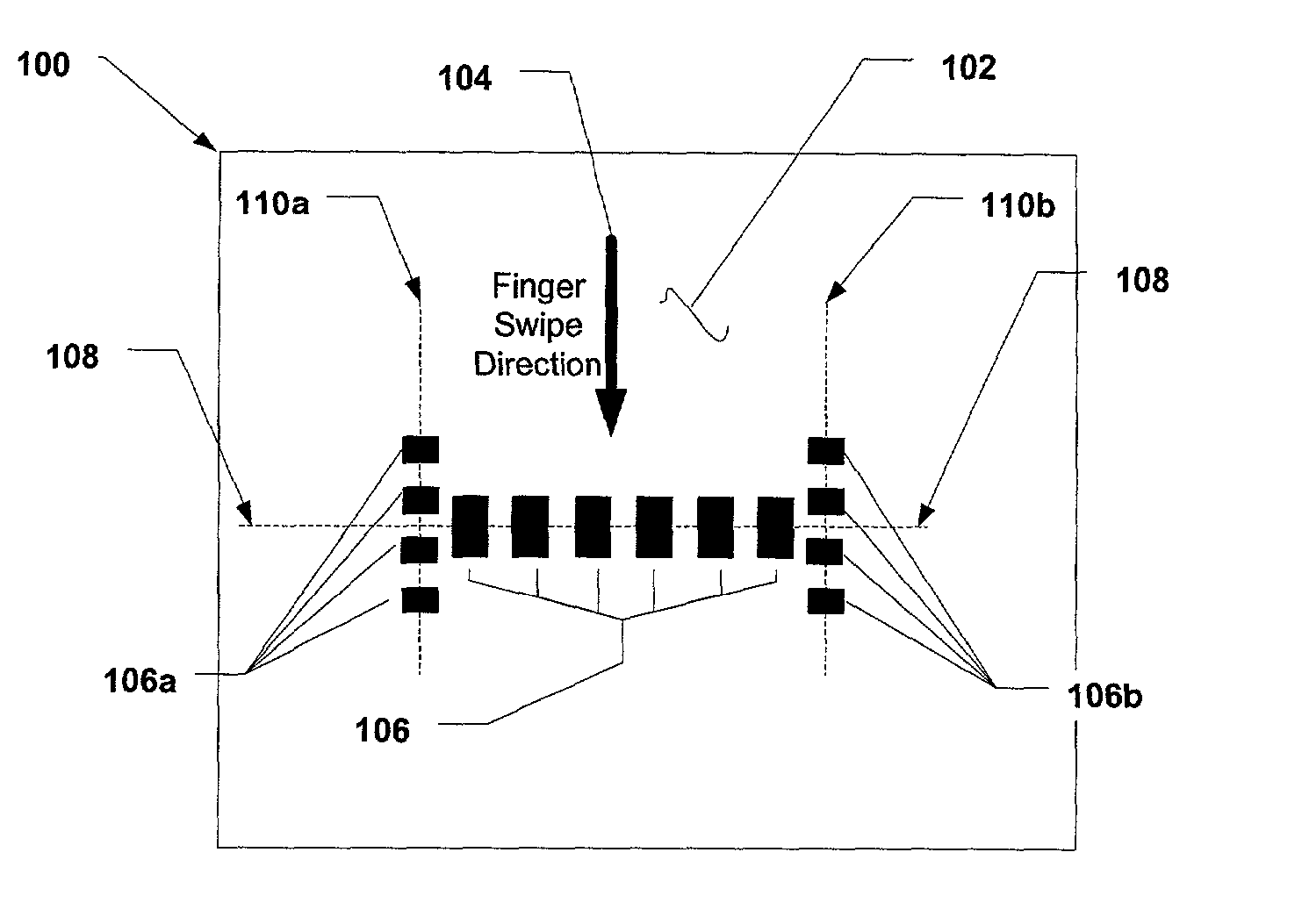

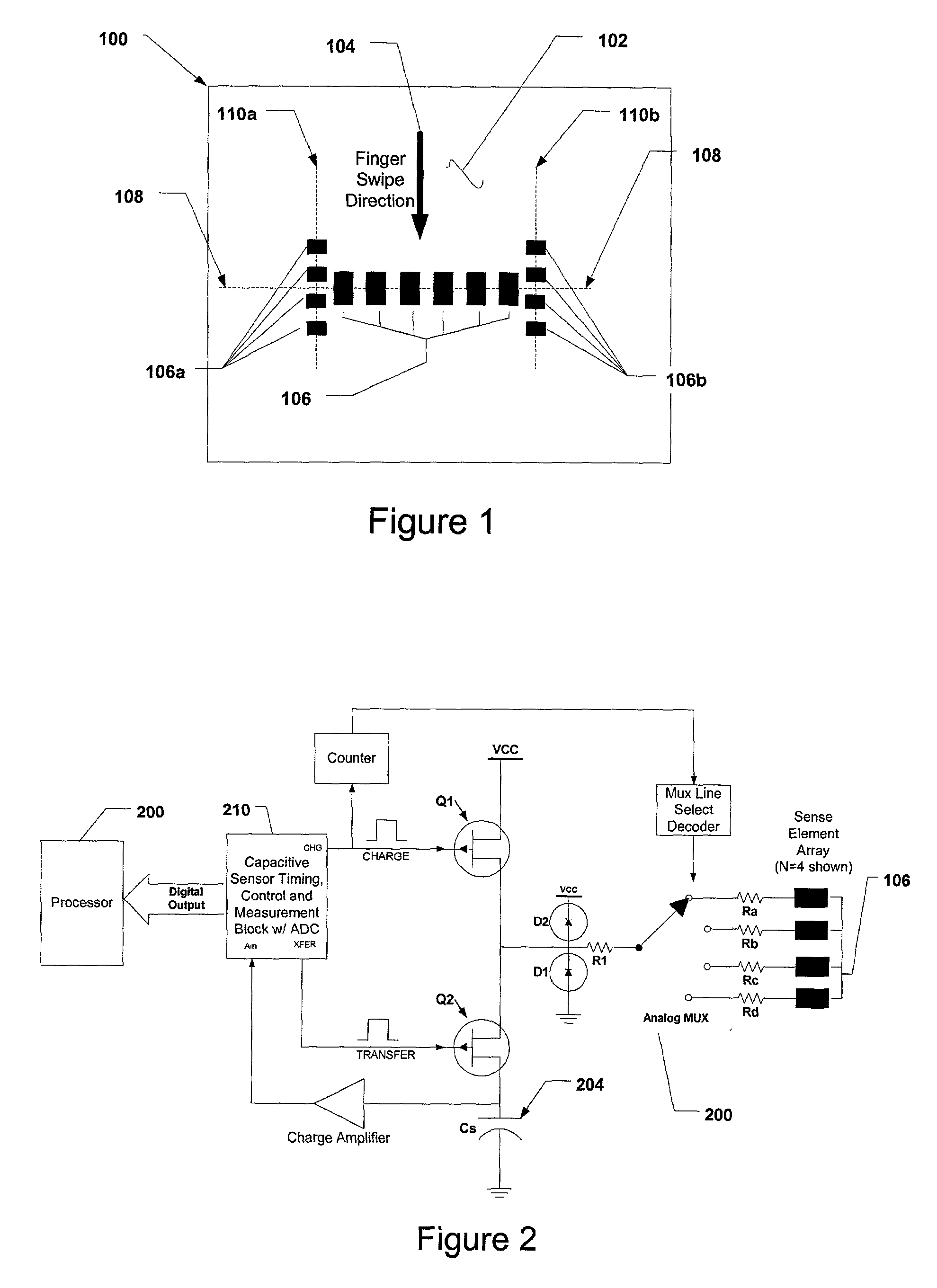

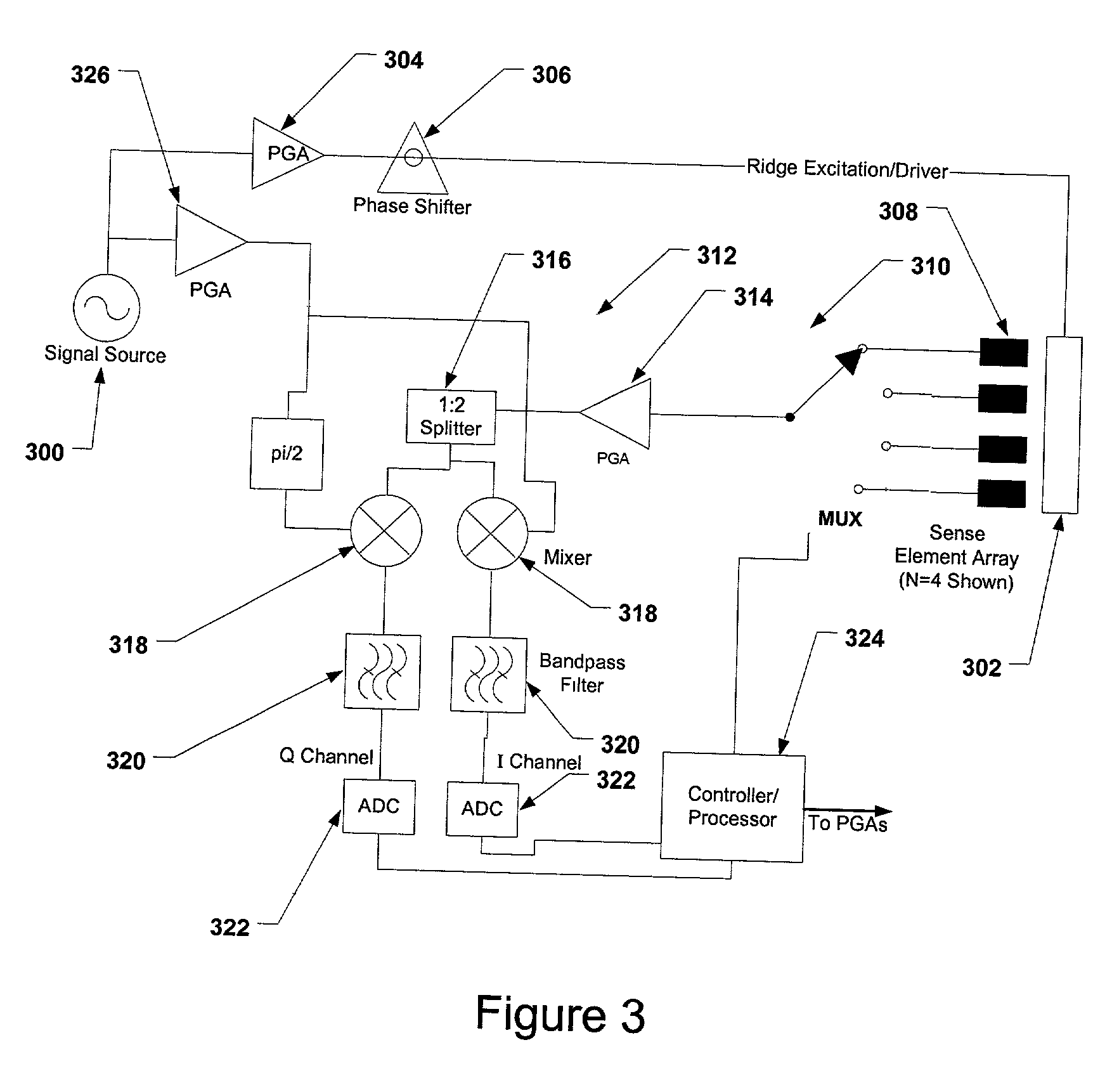

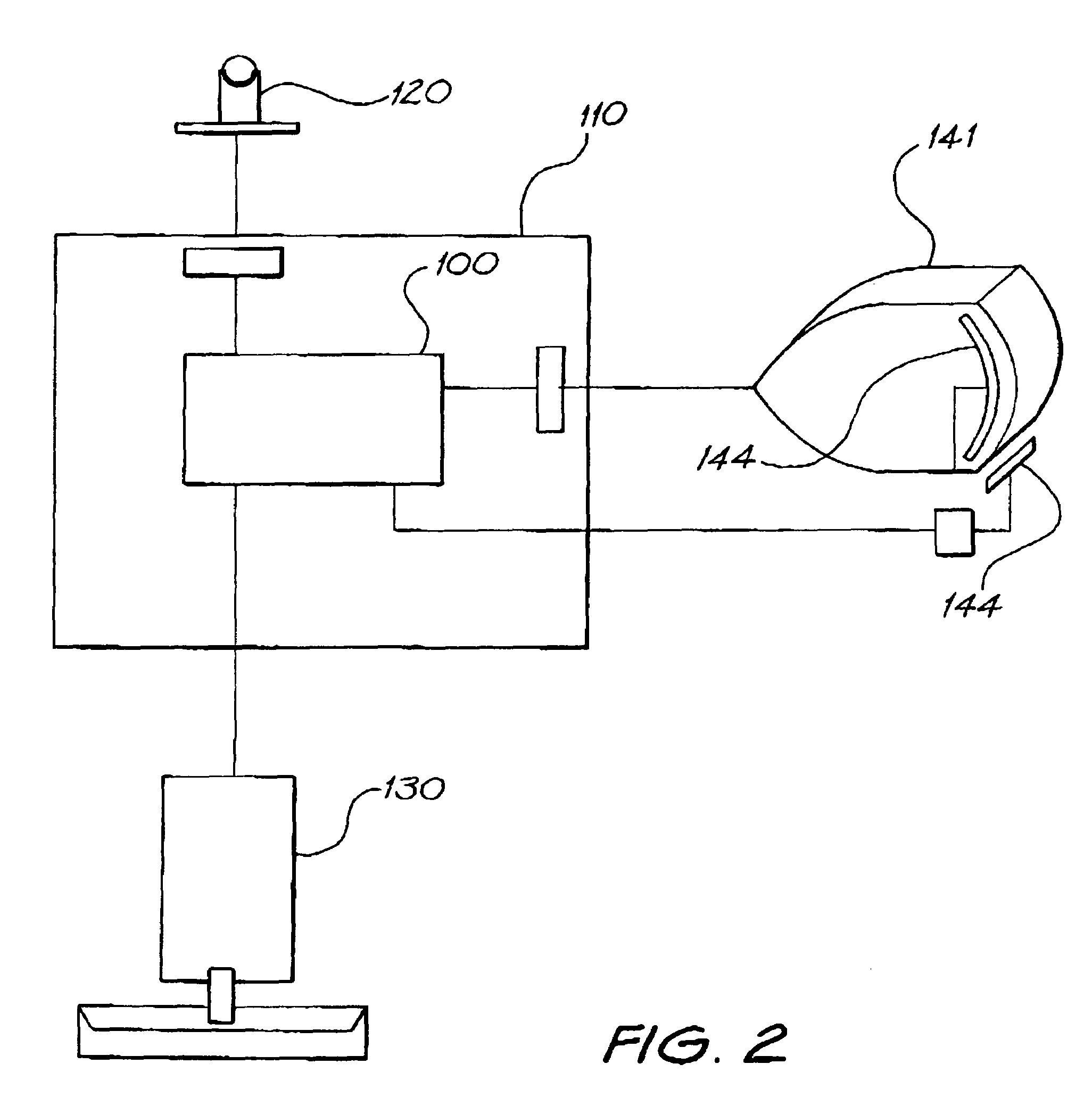

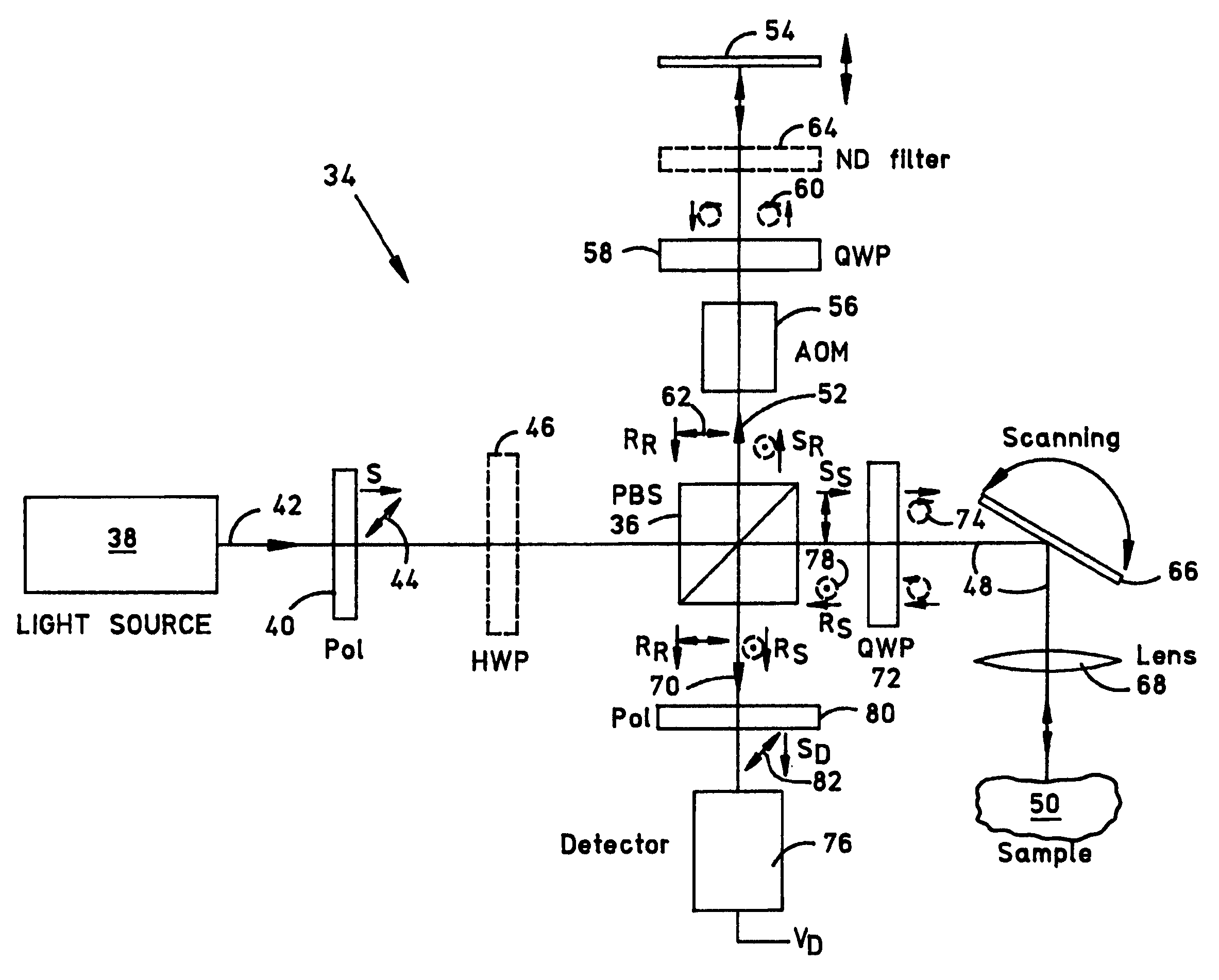

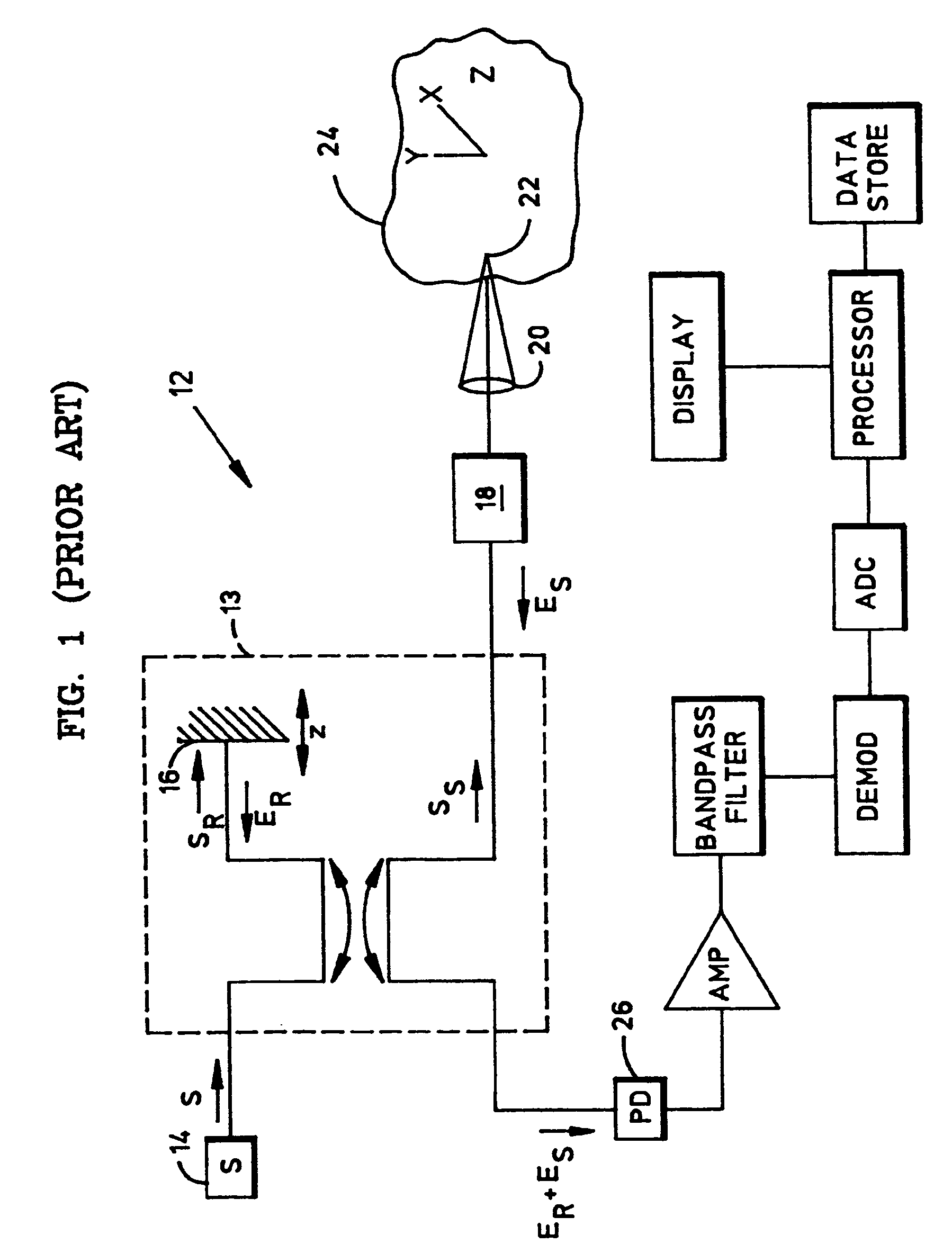

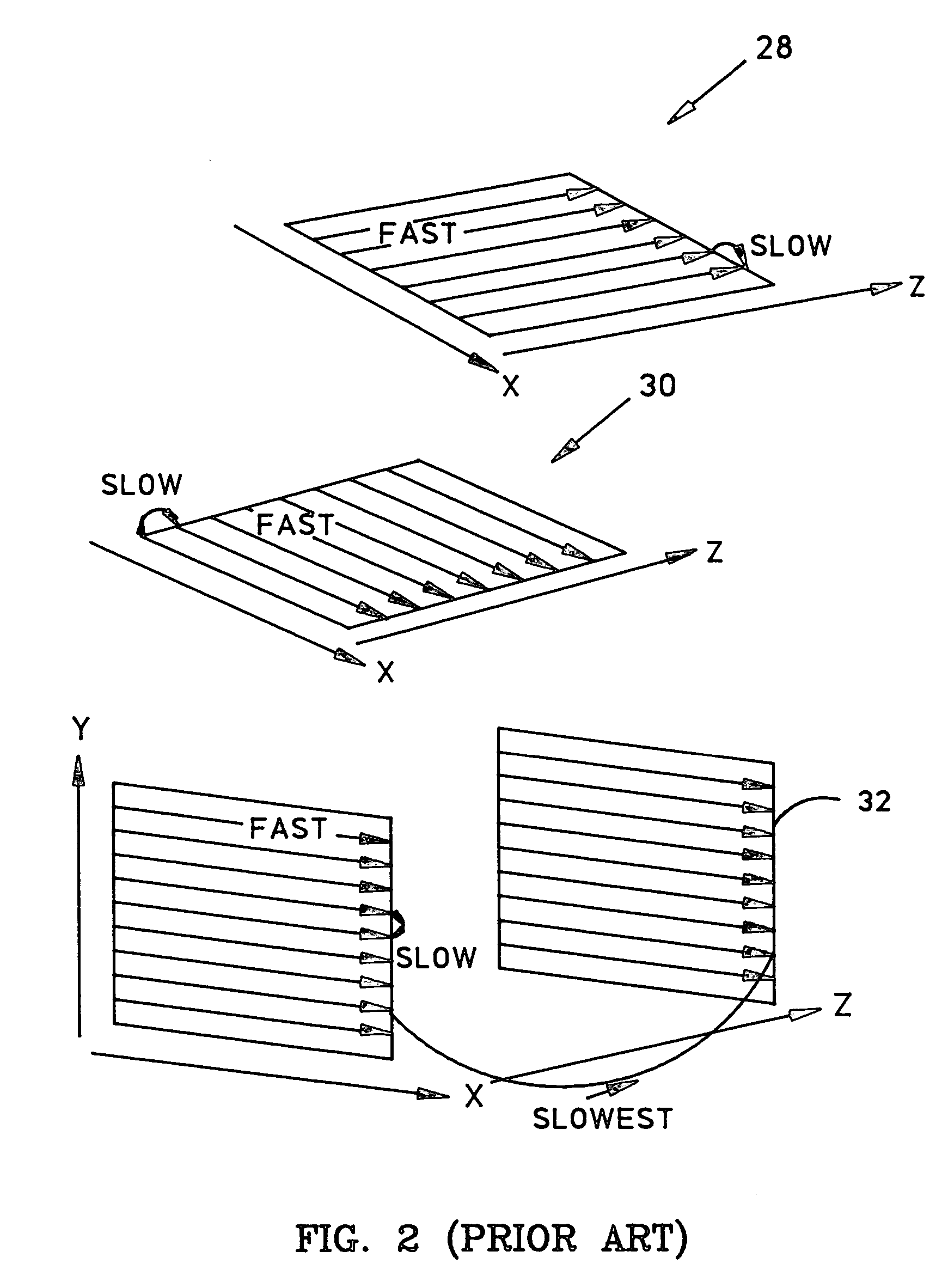

Sensor apparatus and method for use in imaging features of an object

A sensing device and method for use in imaging surface features of an object is provided. A surface along which an object can slide in a predetermined direction includes an array of contact sense elements configured to form a single array oriented transverse to the predetermined direction and at least one additional contact sense element located in spaced relation to the single array in a manner that enables a velocity measurement of the object in the predetermined direction. A scanning device is configured to provide a periodic scan of the array of contact sense elements, and a processor in circuit communication with the scanning device is configured to receive data from the scanning device and to produce image and velocity data related to the object. Preferably, the contact sense elements are electrically conductive elements disposed on a ceramic or polymeric substrate, using printed circuit board construction. A technique is described that enables the reconstruction of an object from such a device

Owner:ARETE ASSOCIATES INC

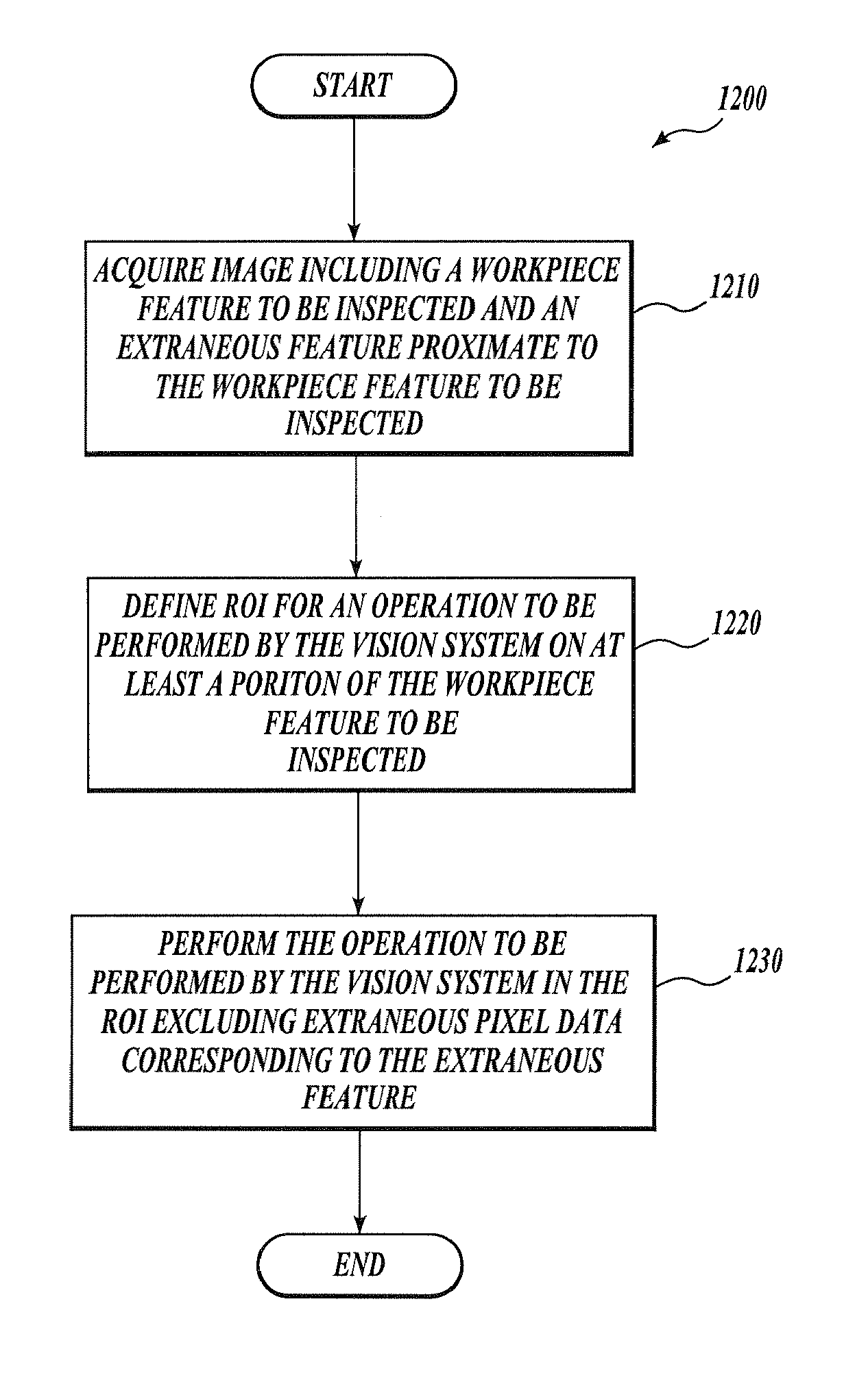

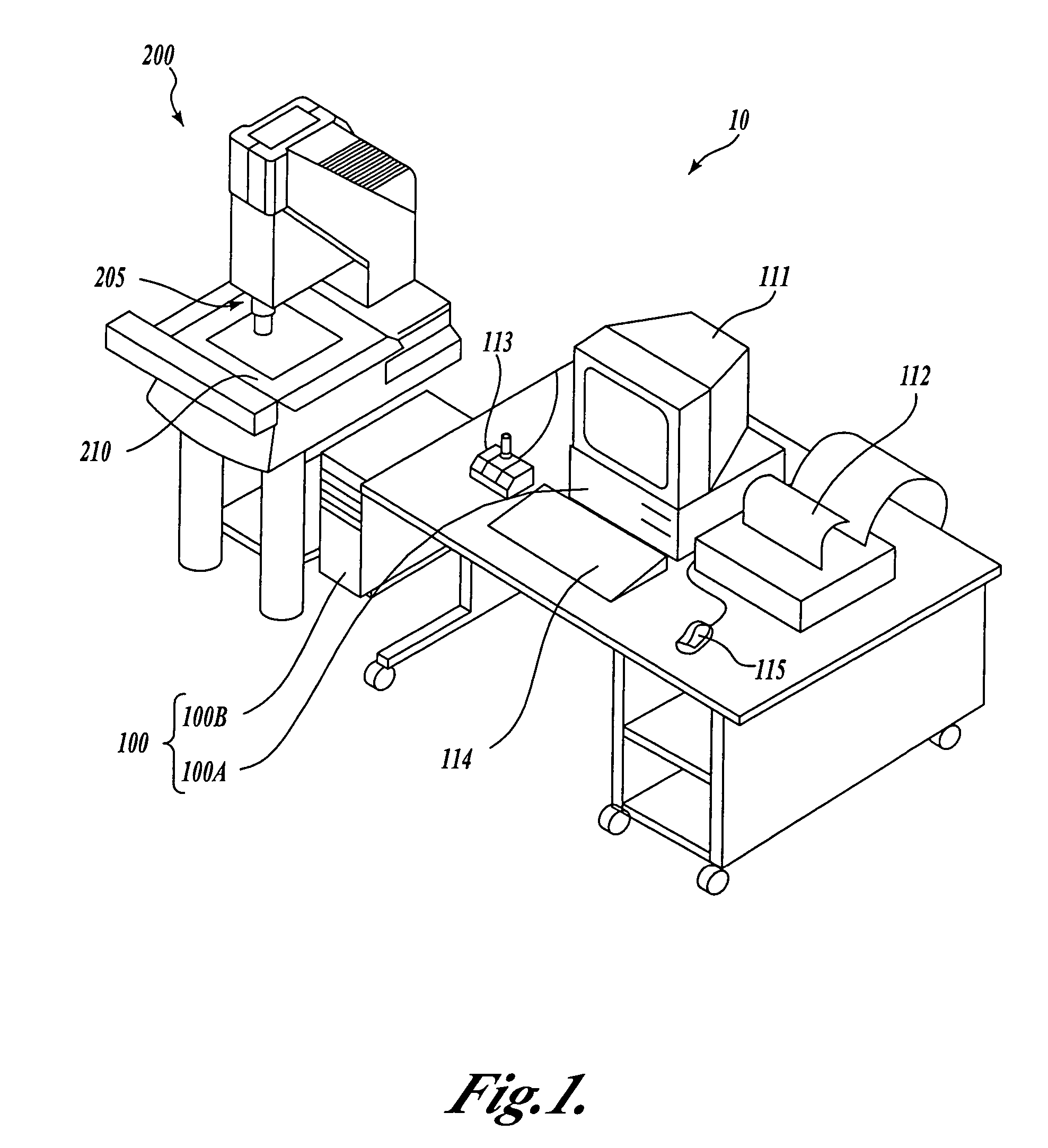

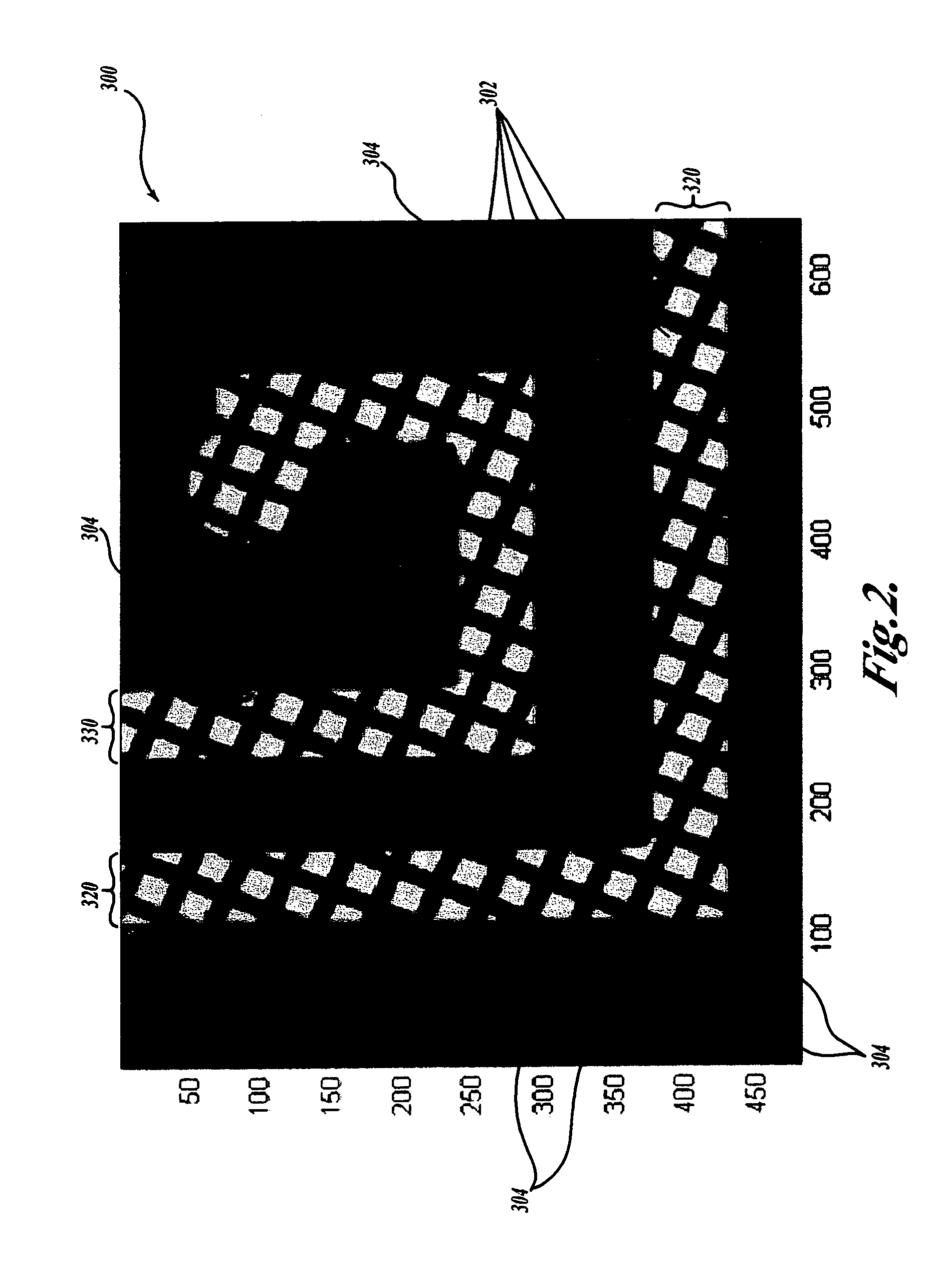

System and method for excluding extraneous features from inspection operations performed by a machine vision inspection system

ActiveUS7324682B2Easy to useVariation in spacingImage enhancementImage analysisMachine visionImaging Feature

Systems and methods are provided for excluding extraneous image features from inspection operations in a machine vision inspection system. The method identifies extraneous features that are close to image features to be inspected. No image modifications are performed on the “non-excluded” image features to be inspected. A video tool region of interest provided by a user interface of the vision system can encompass both the feature to be inspected and the extraneous features, making the video tool easy to use. The extraneous feature excluding operations are concentrated in the region of interest. The user interface for the video tool may operate similarly whether there are extraneous features in the region of interest, or not. The invention is of particular use when inspecting flat panel display screen masks having occluded features that are to be inspected.

Owner:MITUTOYO CORP

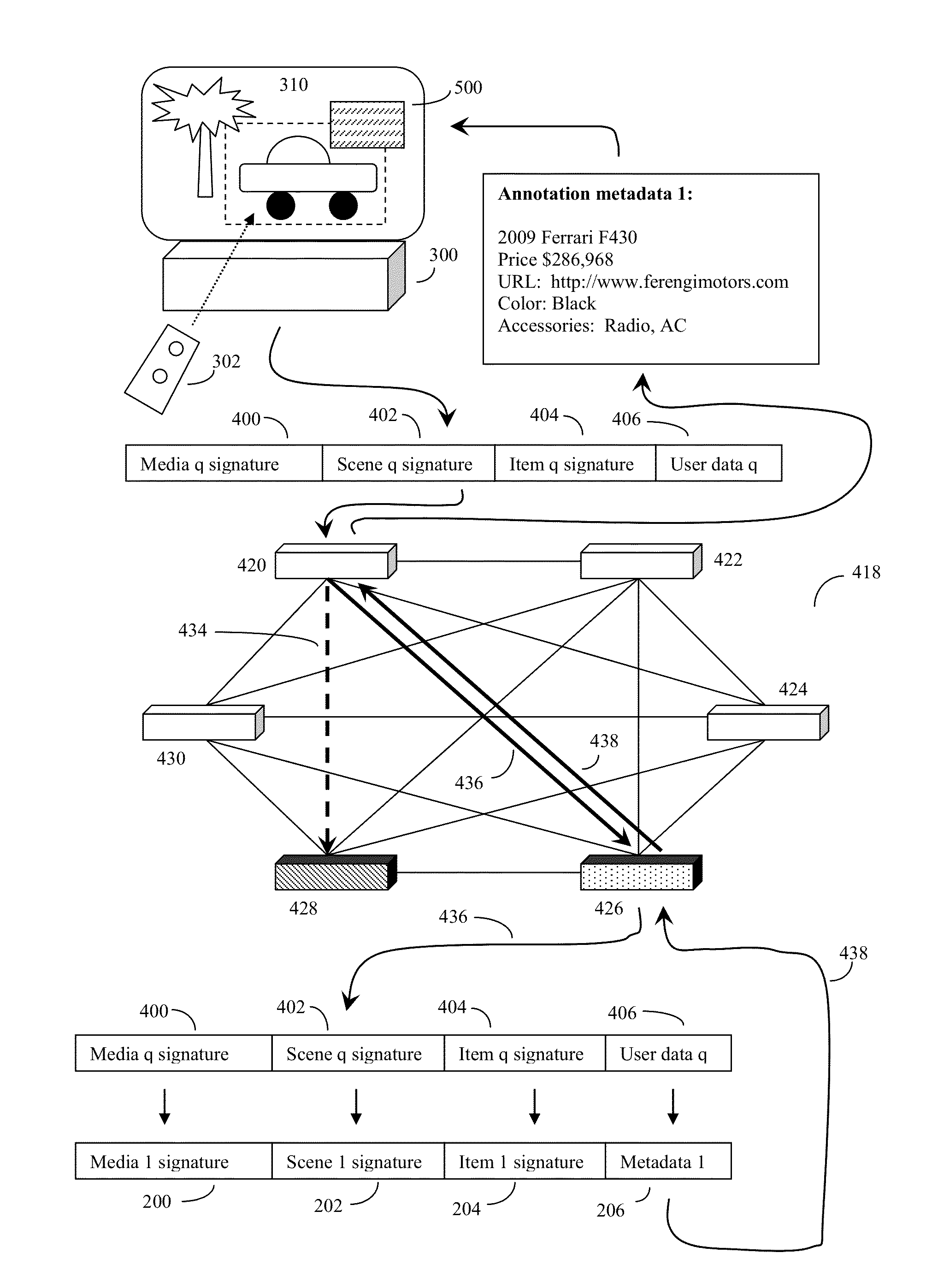

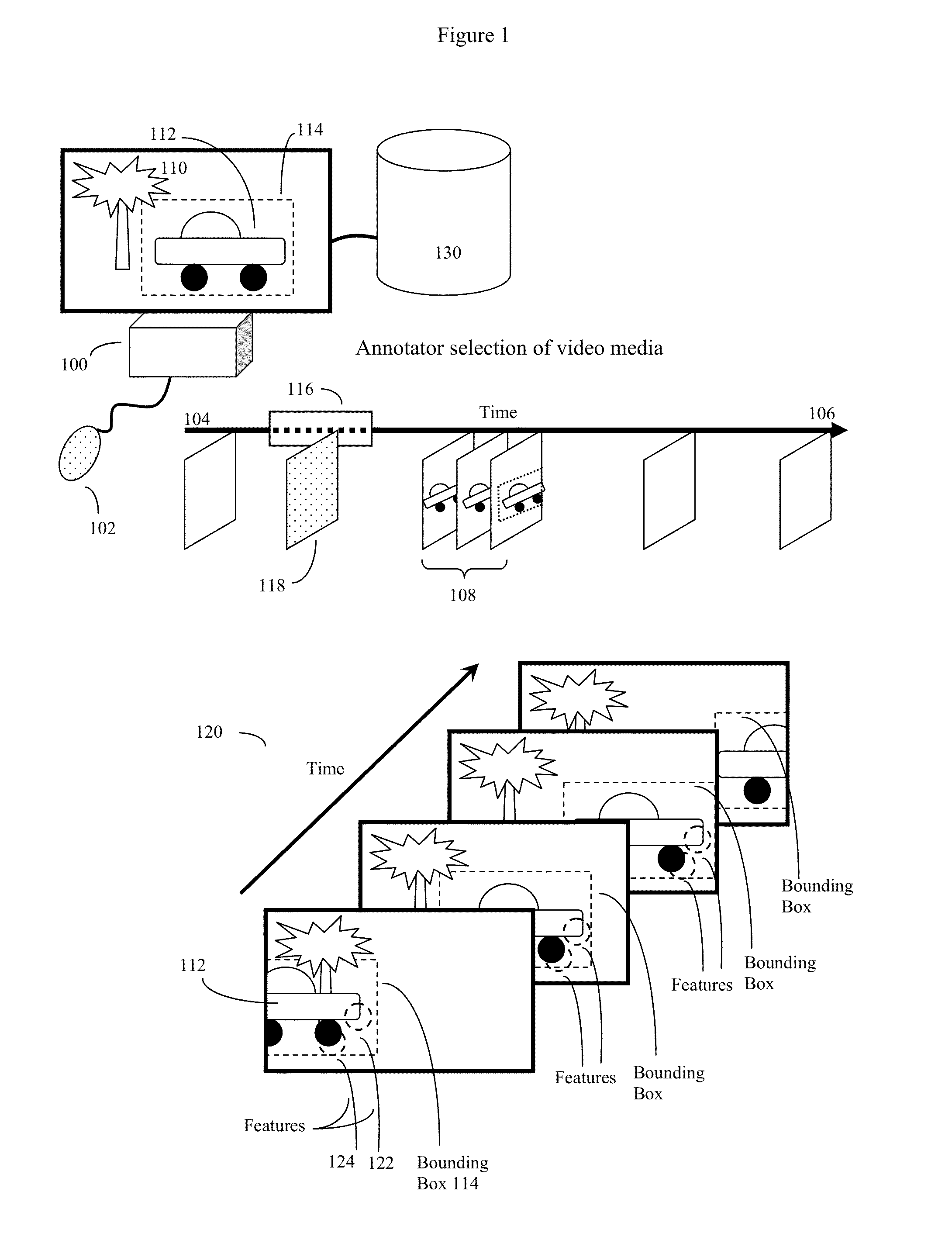

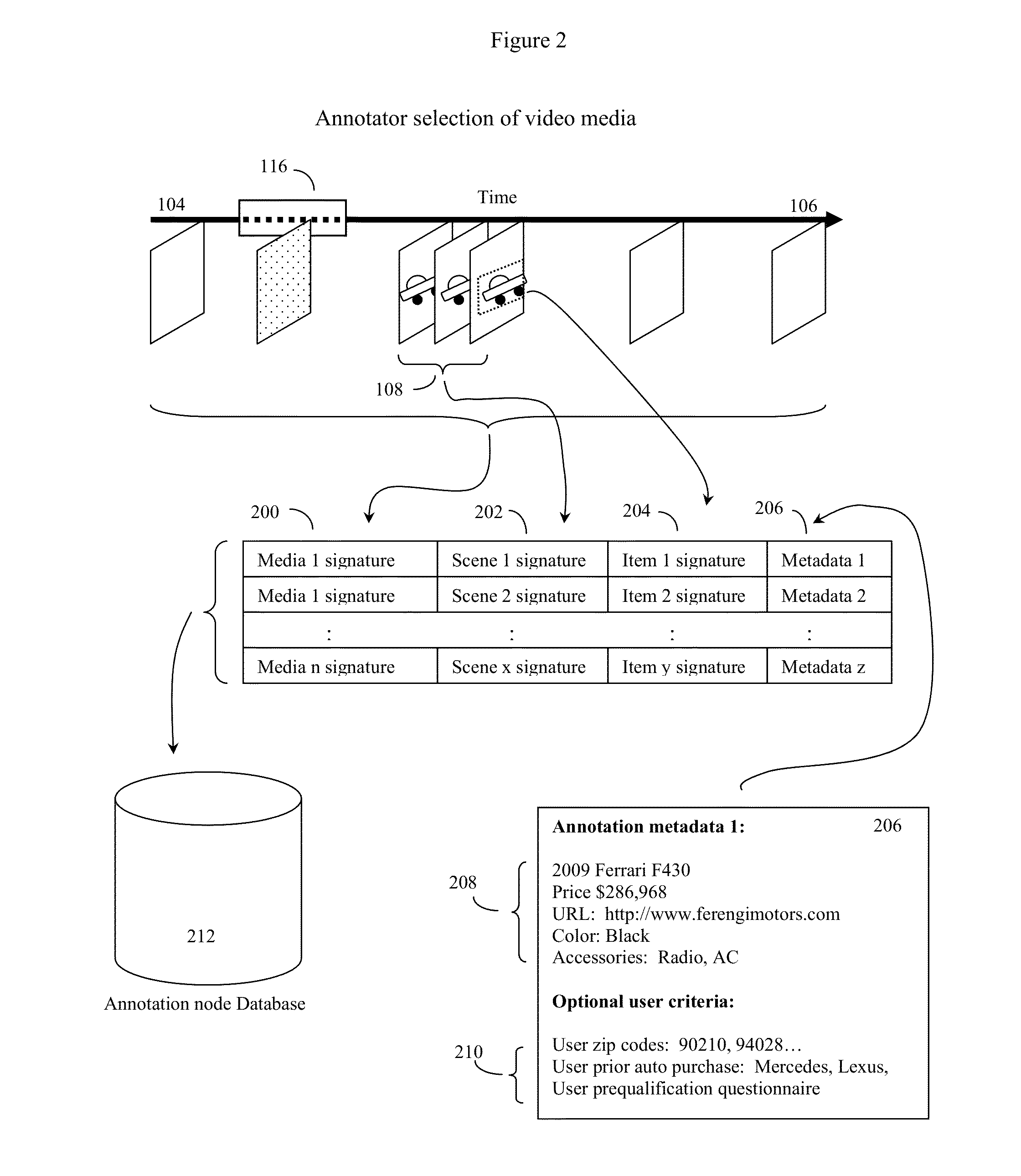

Retrieving video annotation metadata using a p2p network and copyright free indexes

InactiveUS20150046537A1Minimize barrierMinimal costDigital data information retrievalUsing detectable carrier informationThird partyComputer graphics (images)

Video programs (media) are analyzed, often using computerized image feature analysis methods. Annotator index descriptors or signatures that are indexes to specific video scenes and items of interest are determined, and these in turn serve as an index to annotator metadata (often third party metadata) associated with these video scenes. The annotator index descriptors and signatures, typically chosen to be free from copyright restrictions, are in turn linked to annotator metadata, and then made available for download on a P2P network. Media viewers can then use processor equipped video devices to select video scenes and areas of interest, determine the corresponding user index, and send this user index over the P2P network to search for index linked annotator metadata. This metadata is then sent back to the user video device over the P2P network. Thus video programs can be enriched with additional content without transmitting any copyrighted video data.

Owner:NOVAFORA

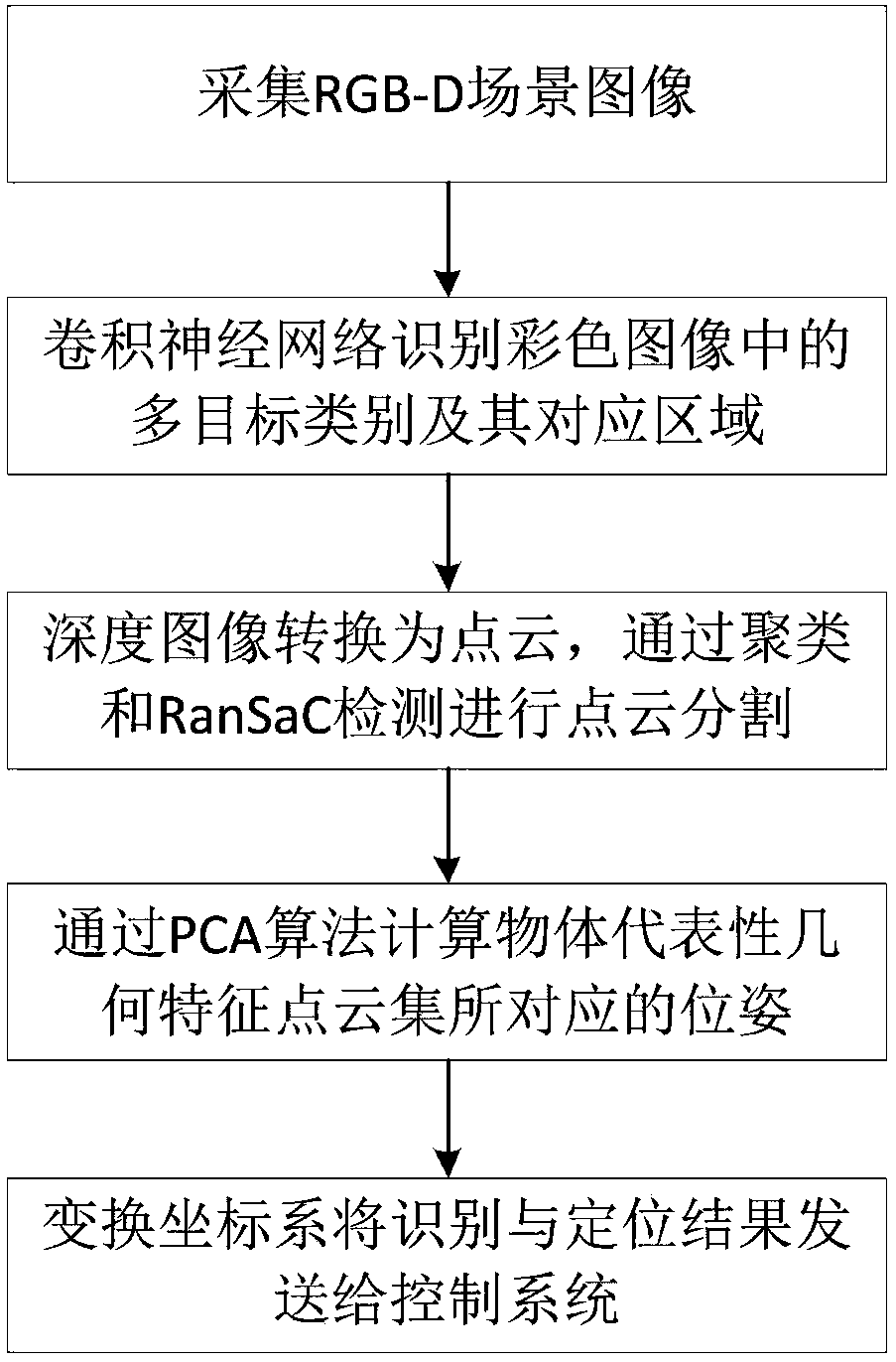

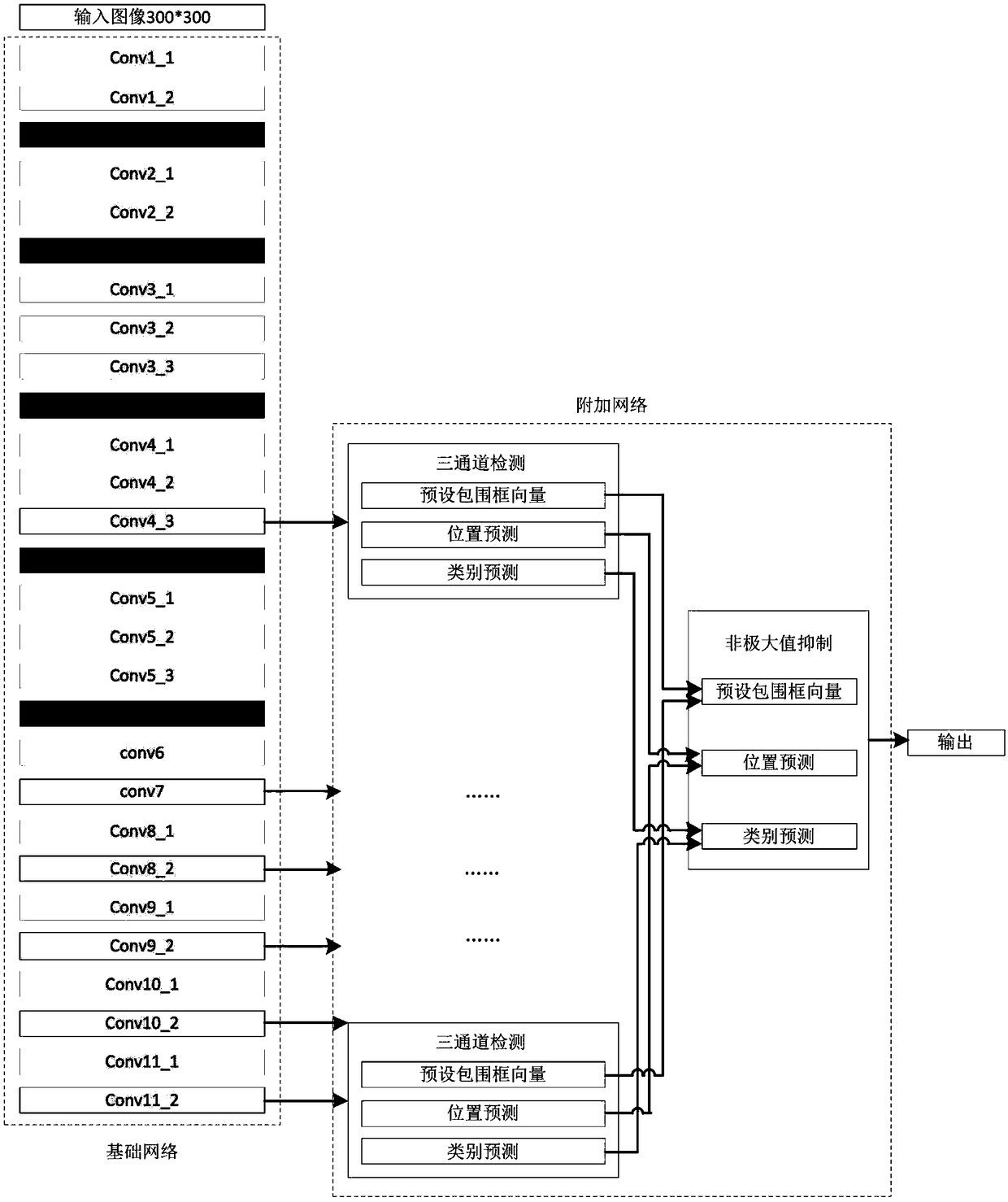

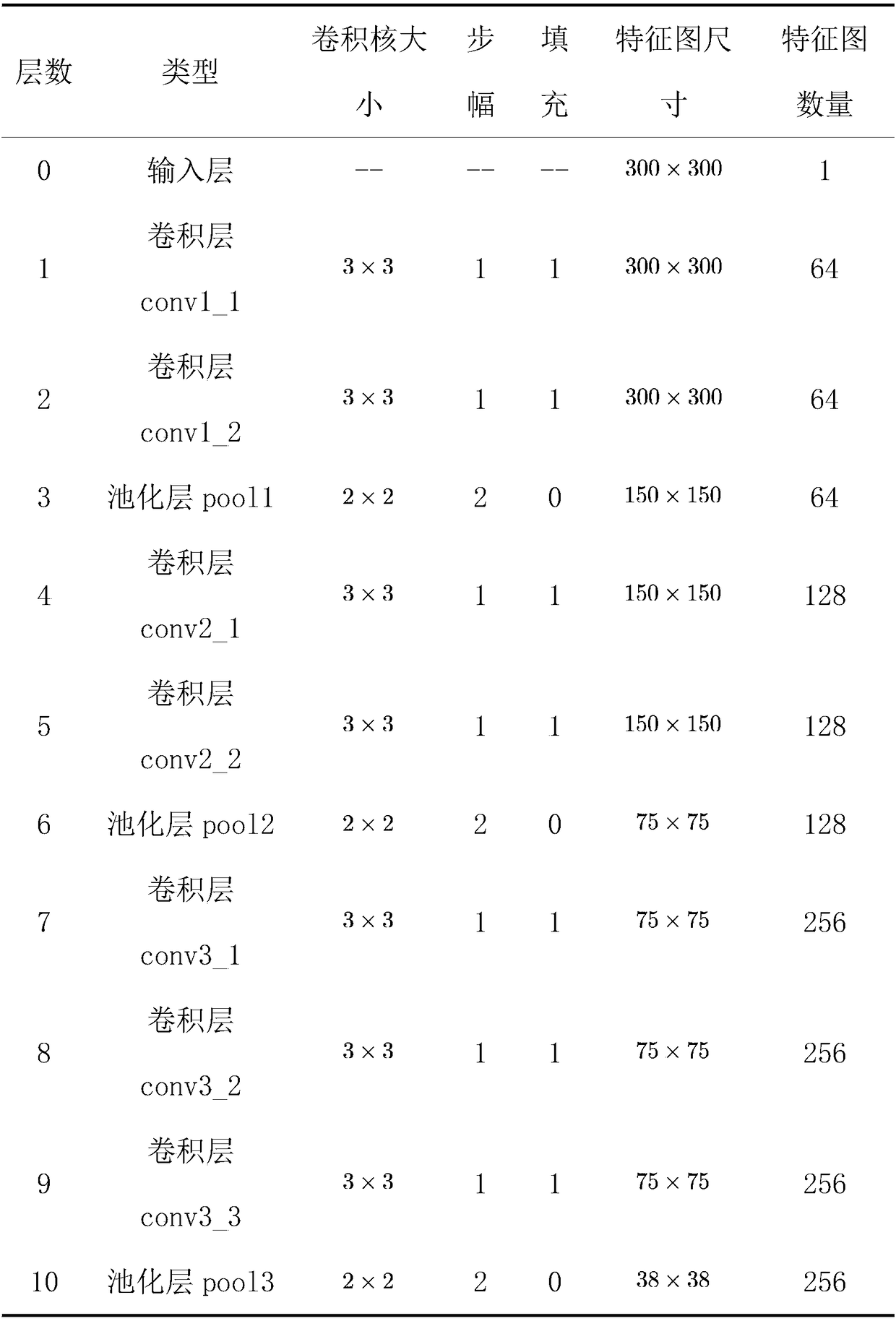

Visual recognition and positioning method for robot intelligent capture application

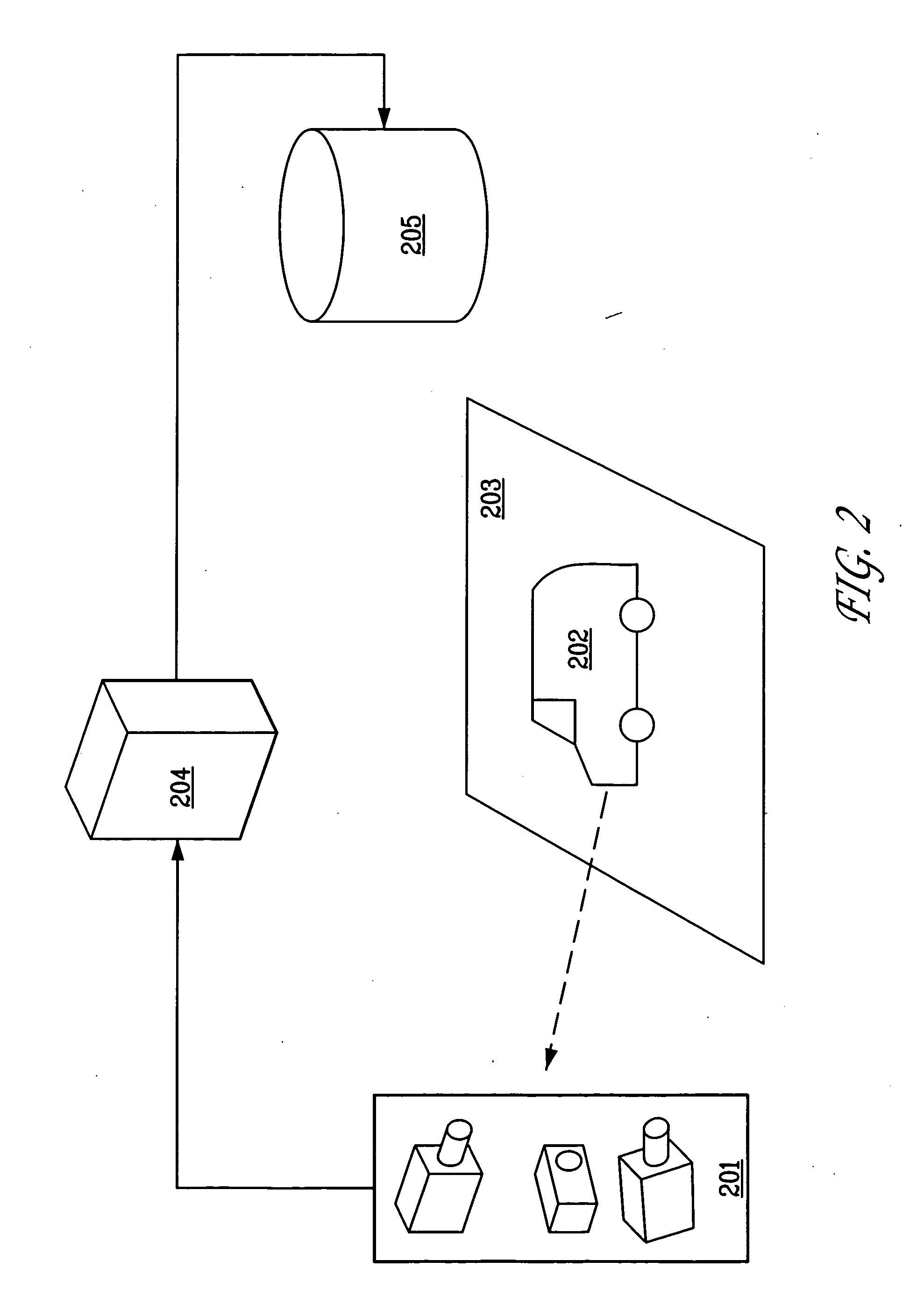

The invention relates to a visual recognition and positioning method for robot intelligent capture application. According to the method, an RGB-D scene image is collected, a supervised and trained deep convolutional neural network is utilized to recognize the category of a target contained in a color image and a corresponding position region, the pose state of the target is analyzed in combinationwith a deep image, pose information needed by a controller is obtained through coordinate transformation, and visual recognition and positioning are completed. Through the method, the double functions of recognition and positioning can be achieved just through a single visual sensor, the existing target detection process is simplified, and application cost is saved. Meanwhile, a deep convolutional neural network is adopted to obtain image features through learning, the method has high robustness on multiple kinds of environment interference such as target random placement, image viewing anglechanging and illumination background interference, and recognition and positioning accuracy under complicated working conditions is improved. Besides, through the positioning method, exact pose information can be further obtained on the basis of determining object spatial position distribution, and strategy planning of intelligent capture is promoted.

Owner:合肥哈工慧拣智能科技有限公司

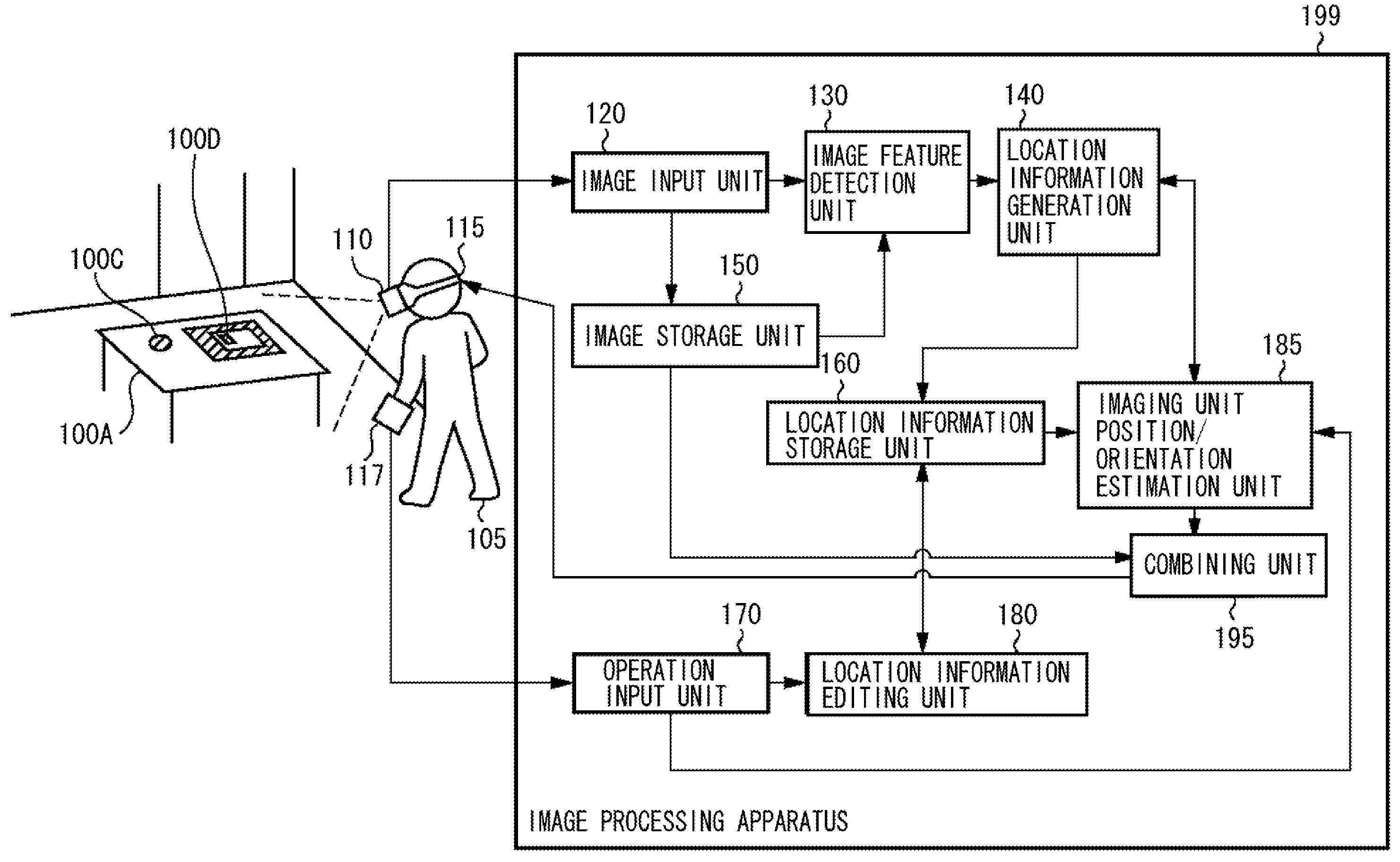

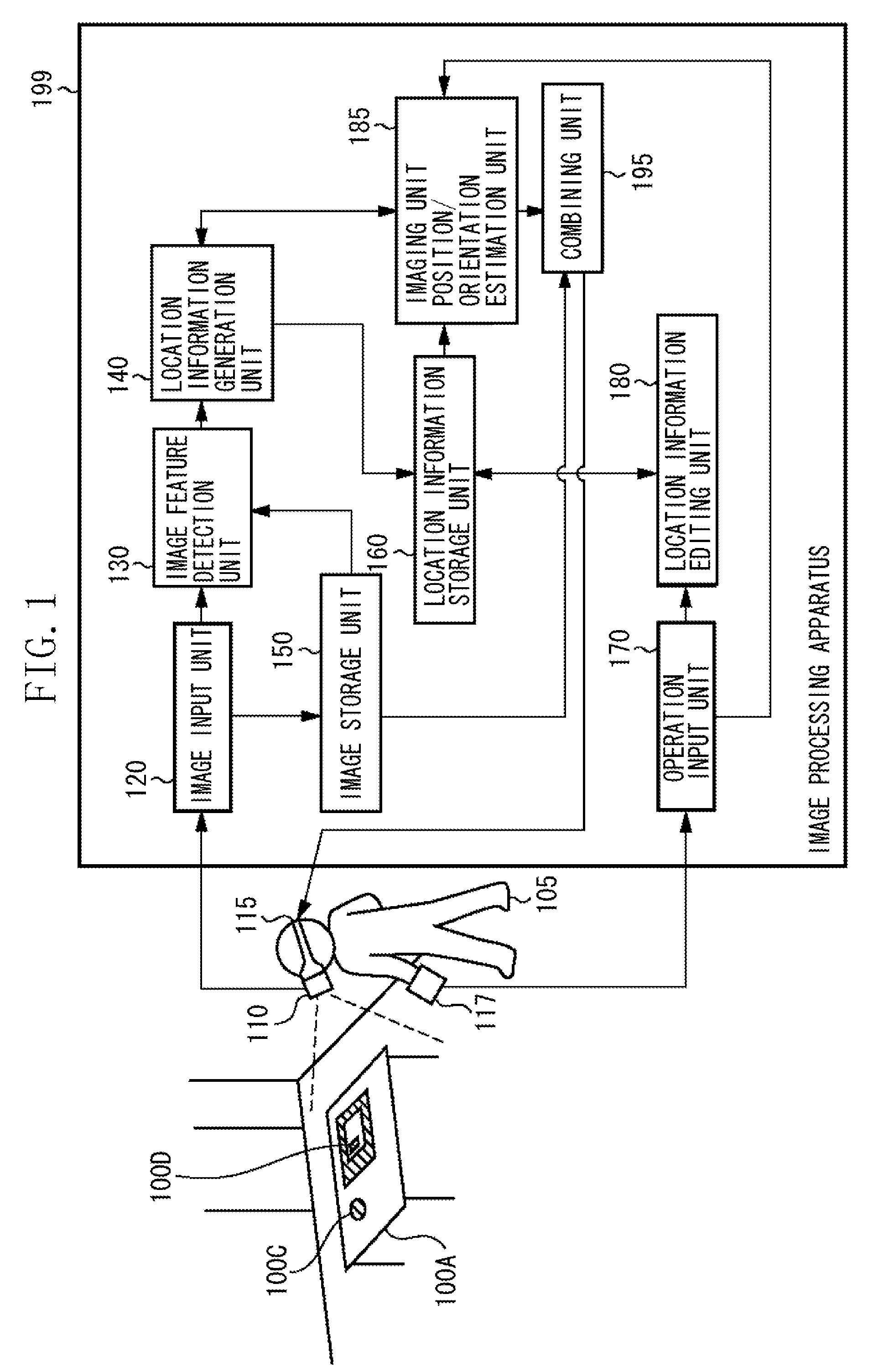

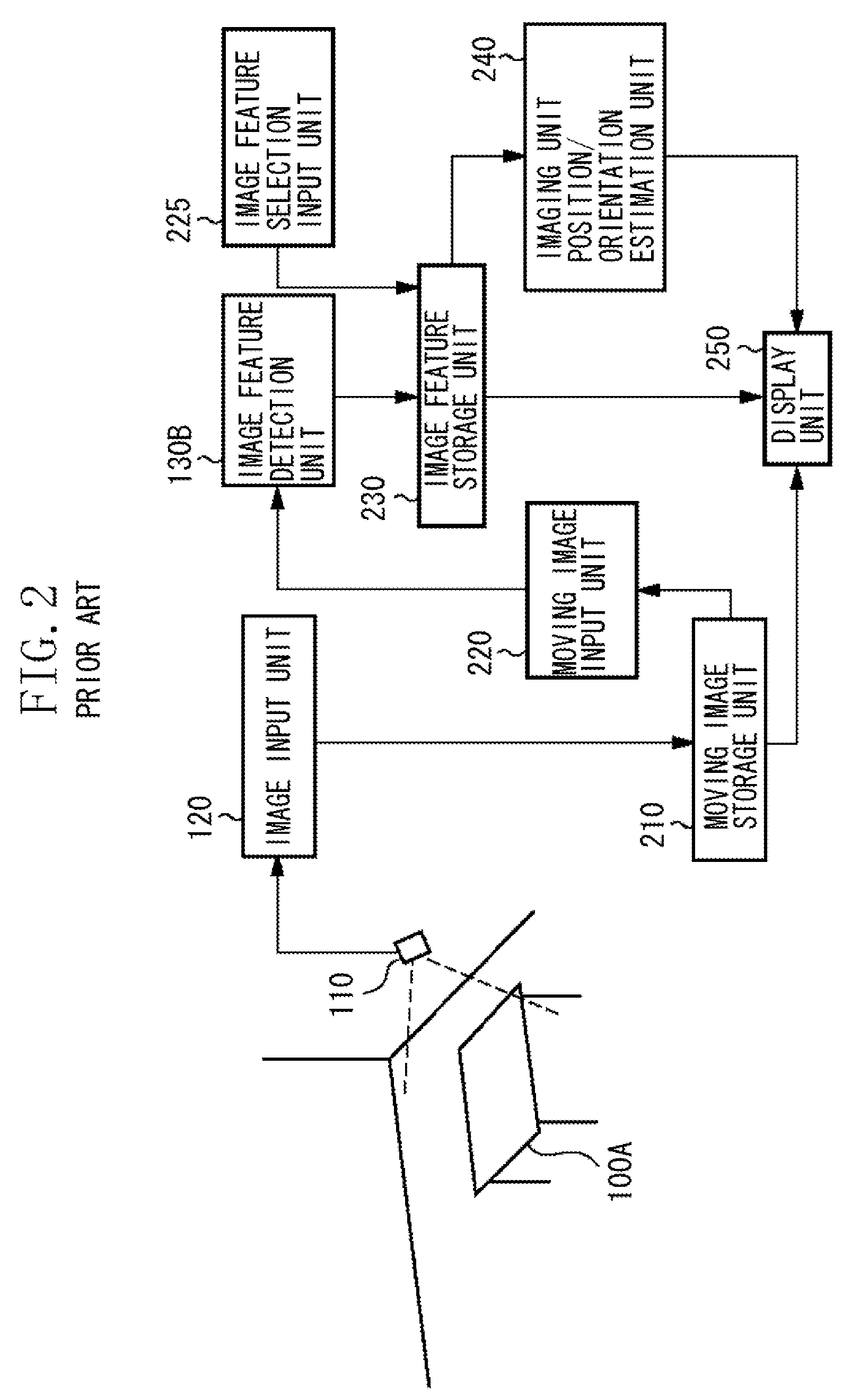

Image processing apparatus and method for obtaining position and orientation of imaging apparatus

InactiveUS20090110241A1Effective positioningImage enhancementImage analysisImaging processingImaging Feature

An image processing apparatus obtains location information of each image feature in a captured image based on image coordinates of the image feature in the captured image. The image processing apparatus selects location information usable to calculate a position and an orientation of the imaging apparatus among the obtained location information. The image processing apparatus obtains the position and the orientation of the imaging apparatus based on the selected location information and an image feature corresponding to the selected location information among the image features included in the captured image.

Owner:CANON KK

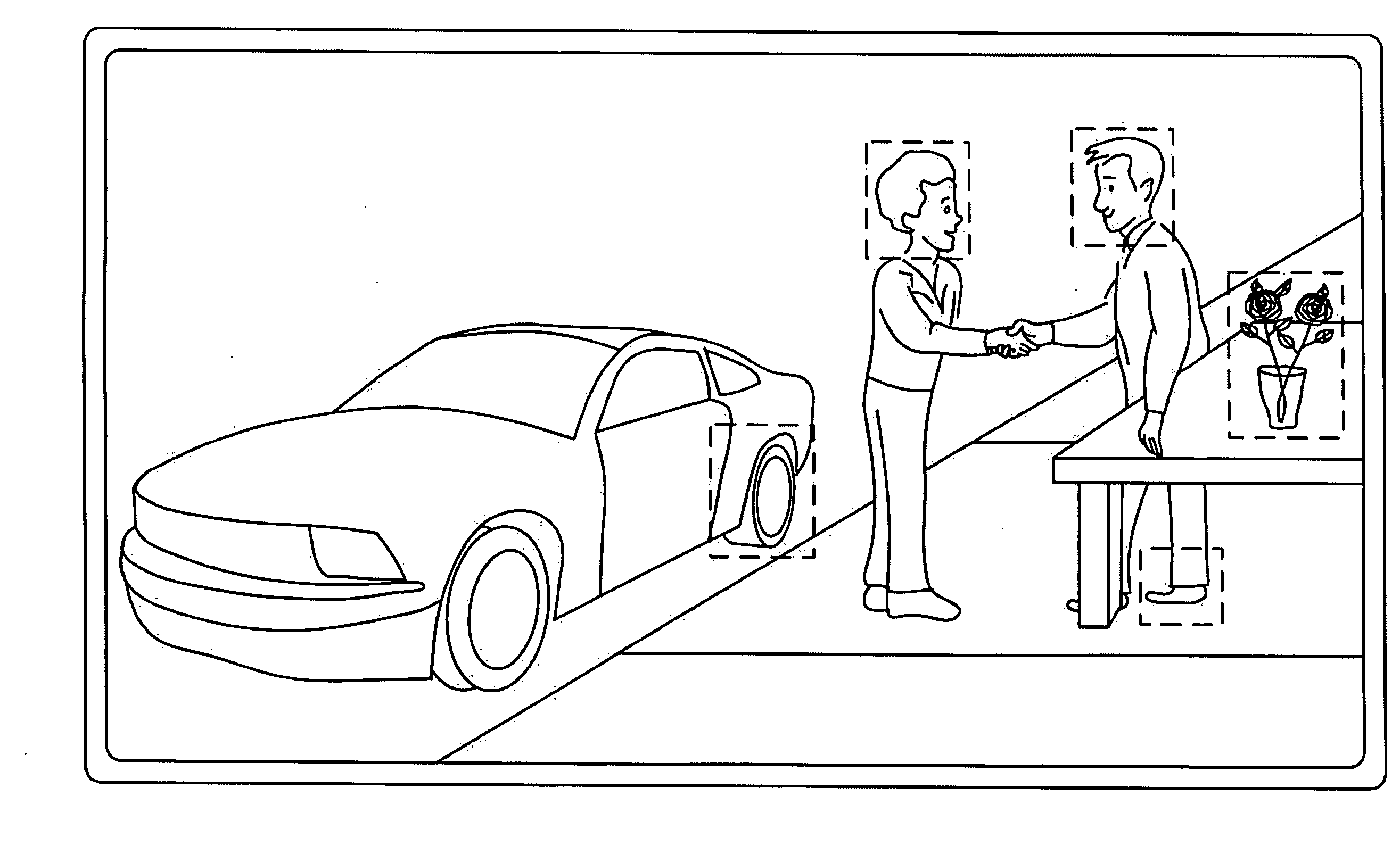

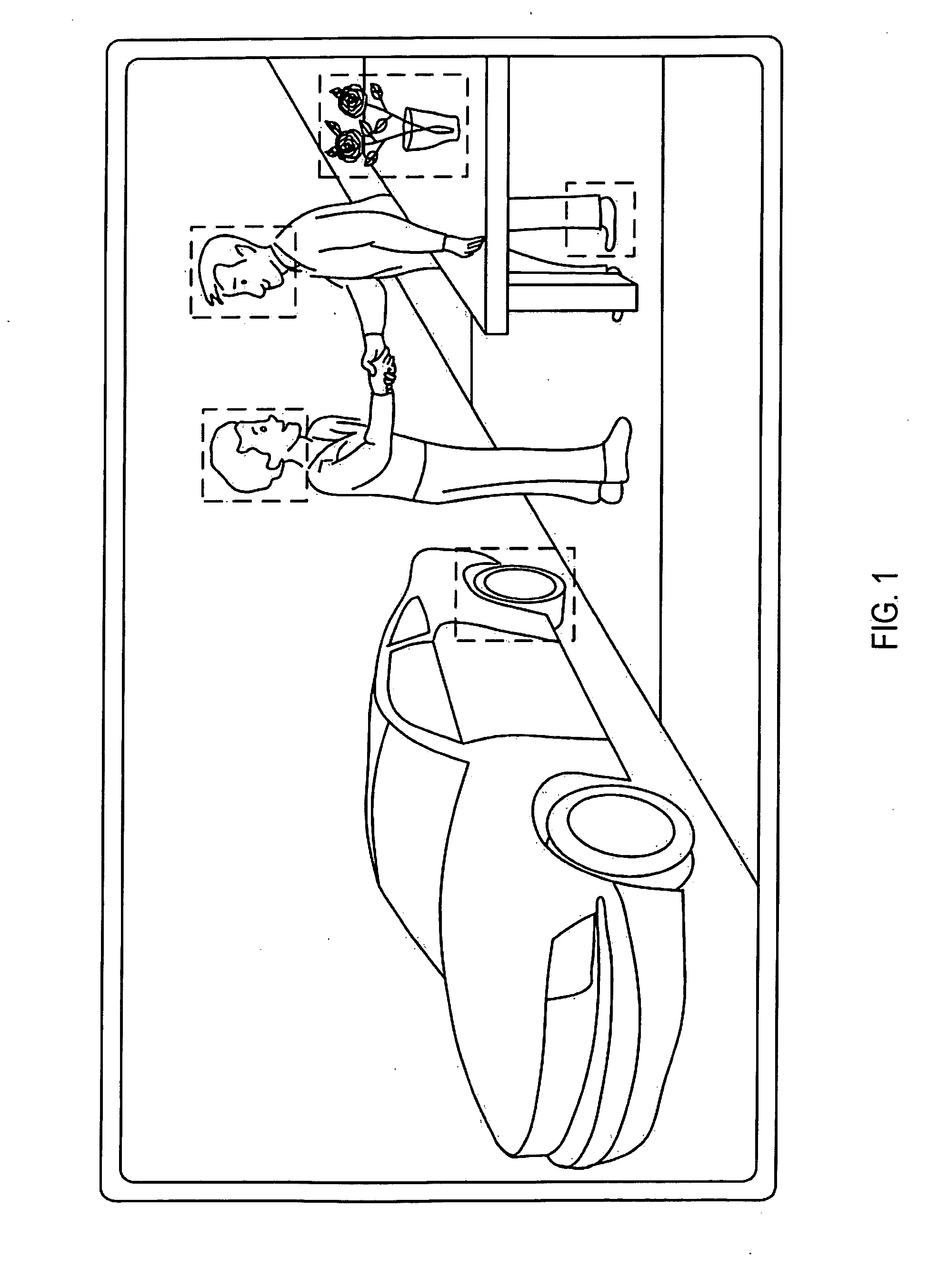

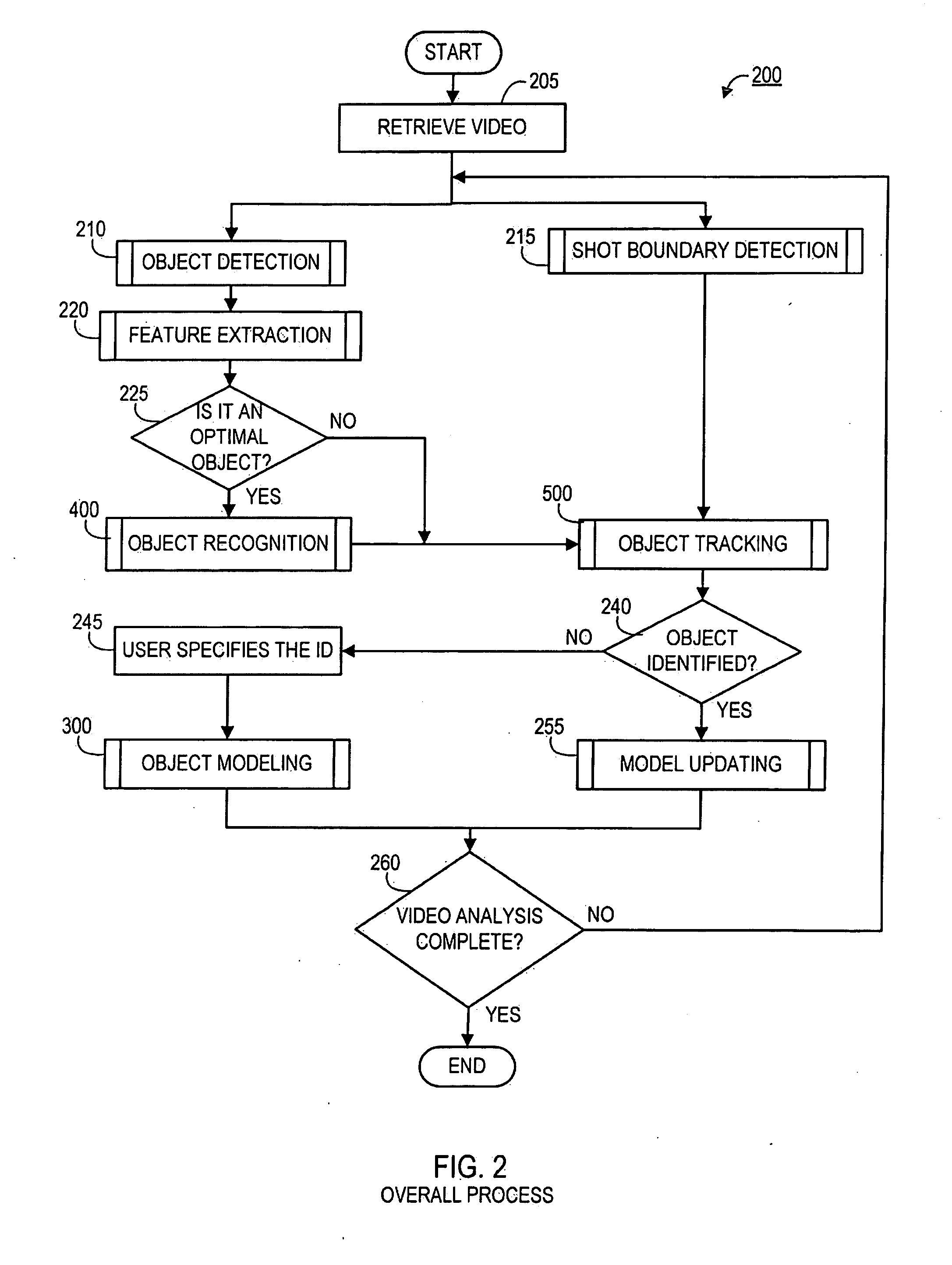

Integrated Systems and Methods For Video-Based Object Modeling, Recognition, and Tracking

The present disclosure relates to systems and methods for modeling, recognizing, and tracking object images in video files. In one embodiment, a video file, which includes a plurality of frames, is received. An image of an object is extracted from a particular frame in the video file, and a subsequent image is also extracted from a subsequent frame. A similarity value is then calculated between the extracted images from the particular frame and subsequent frame. If the calculated similarity value exceeds a predetermined similarity threshold, the extracted object images are assigned to an object group. The object group is used to generate an object model associated with images in the group, wherein the model is comprised of image features extracted from optimal object images in the object group. Optimal images from the group are also used for comparison to other object models for purposes of identifying images.

Owner:TIVO SOLUTIONS INC

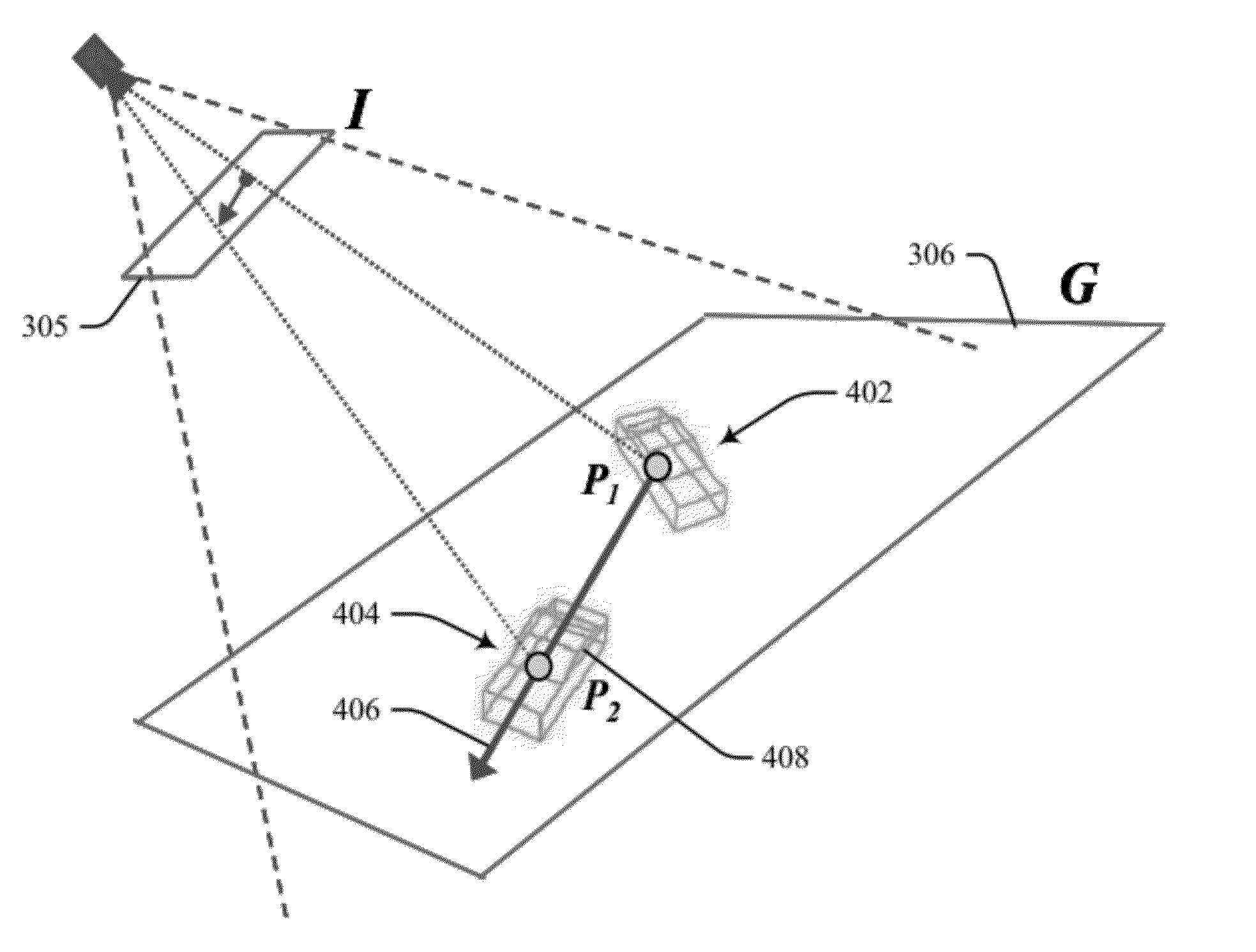

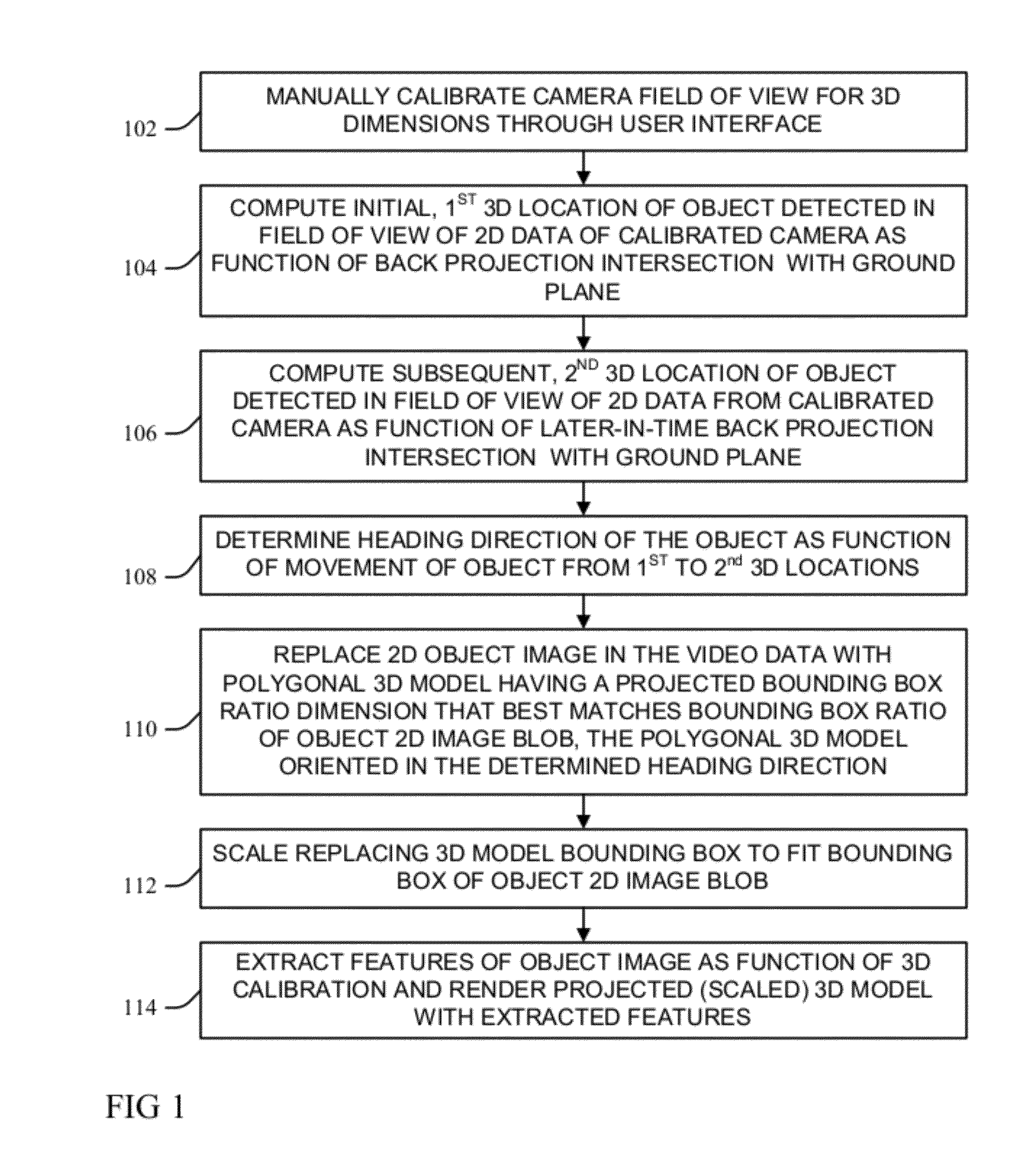

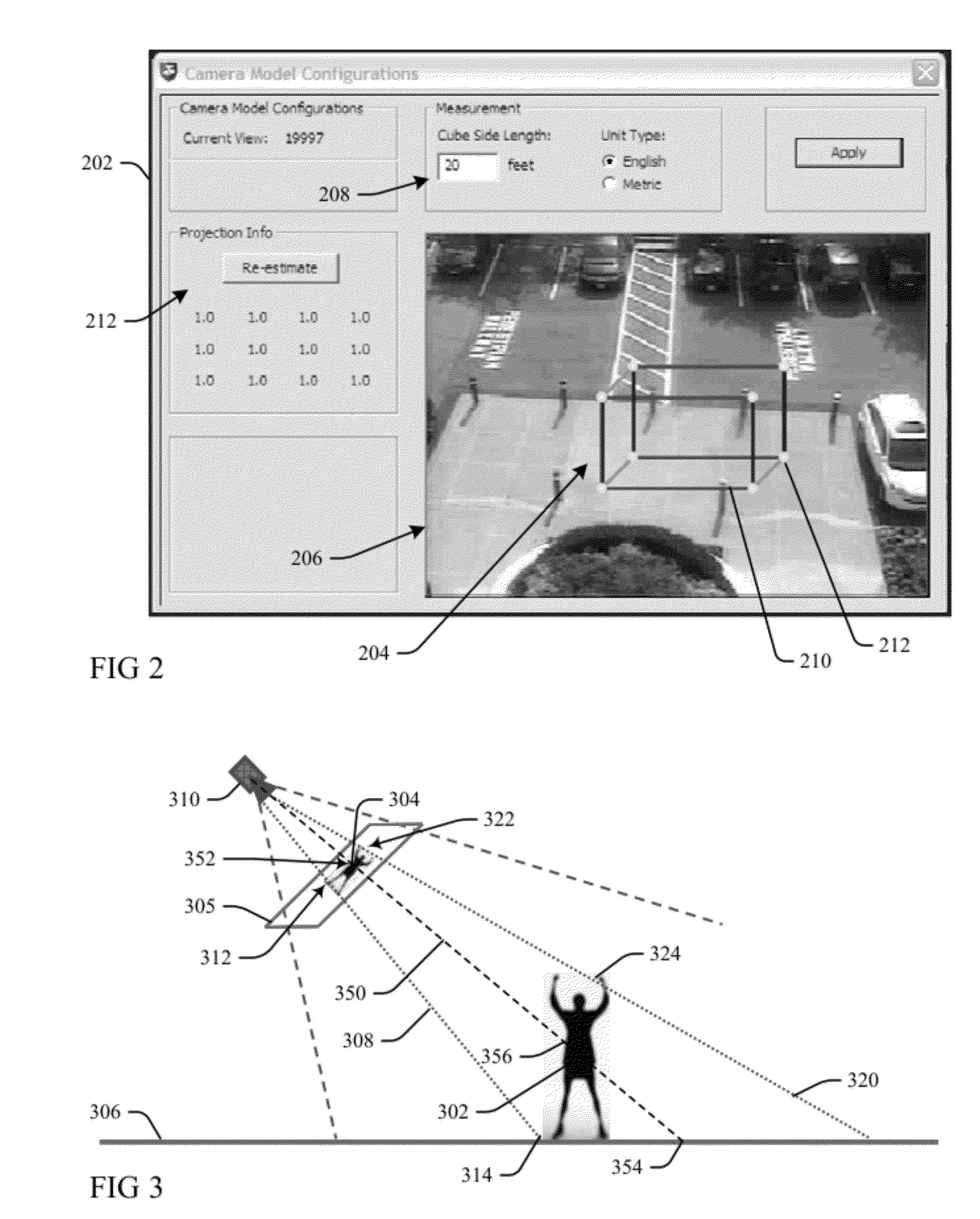

Estimation of object properties in 3D world

Objects within two-dimensional (2D) video data are modeled by three-dimensional (3D) models as a function of object type and motion through manually calibrating a 2D image to the three spatial dimensions of a 3D modeling cube. Calibrated 3D locations of an object in motion in the 2D image field of view of a video data input are computed and used to determine a heading direction of the object as a function of the camera calibration and determined movement between the computed 3D locations. The 2D object image is replaced in the video data input with an object-type 3D polygonal model having a projected bounding box that best matches a bounding box of an image blob, the model oriented in the determined heading direction. The bounding box of the replacing model is then scaled to fit the object image blob bounding box, and rendered with extracted image features.

Owner:KYNDRYL INC

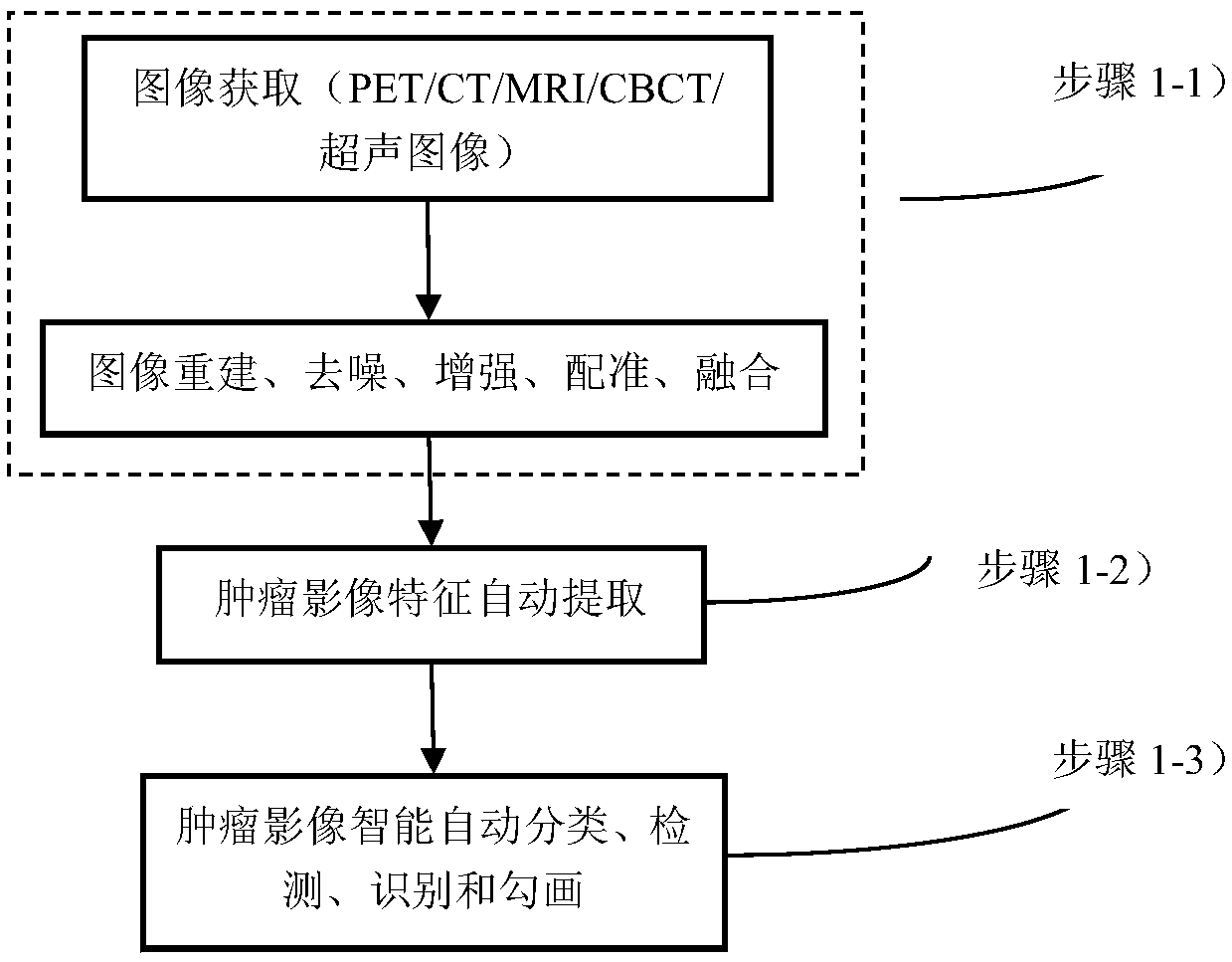

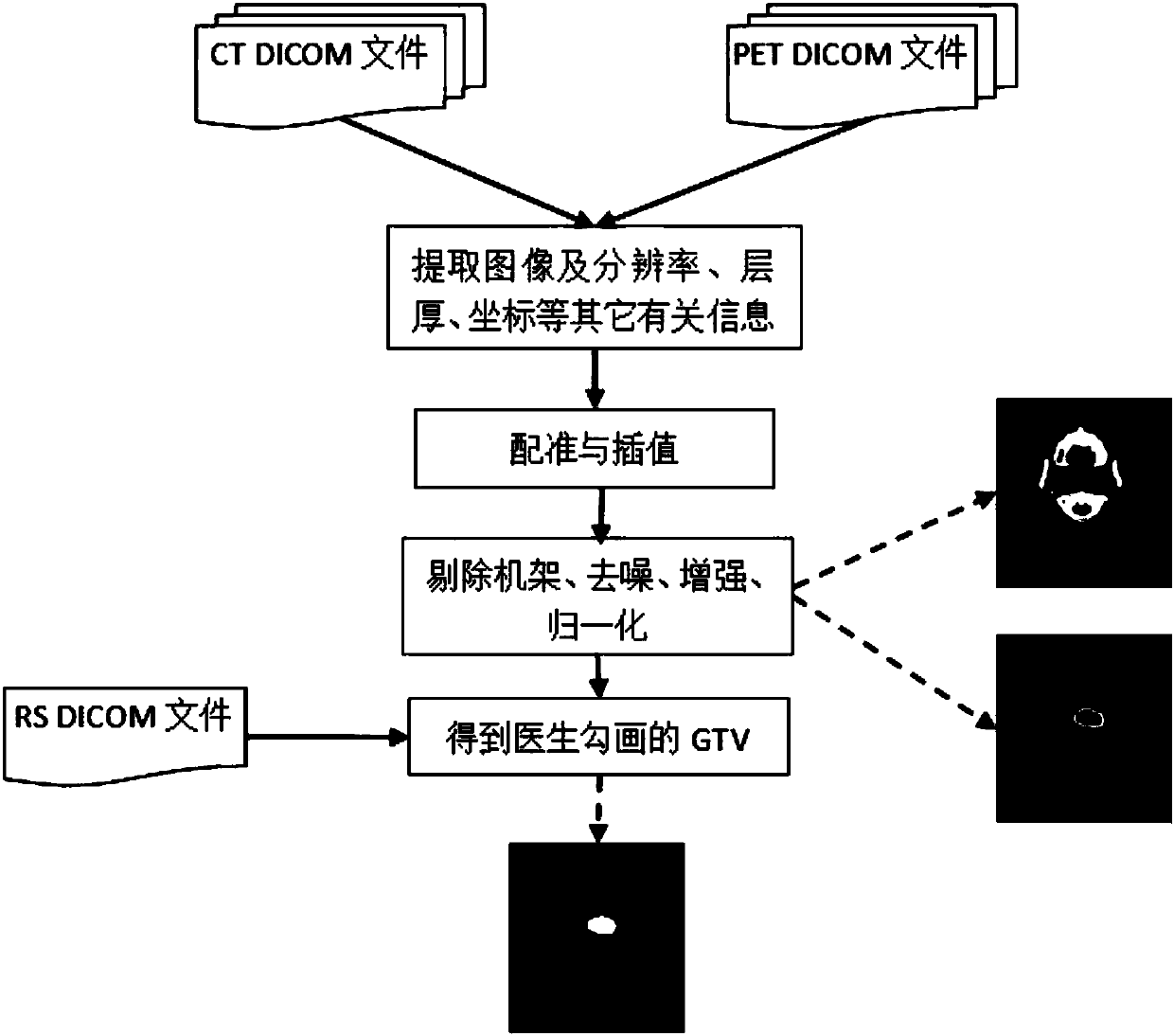

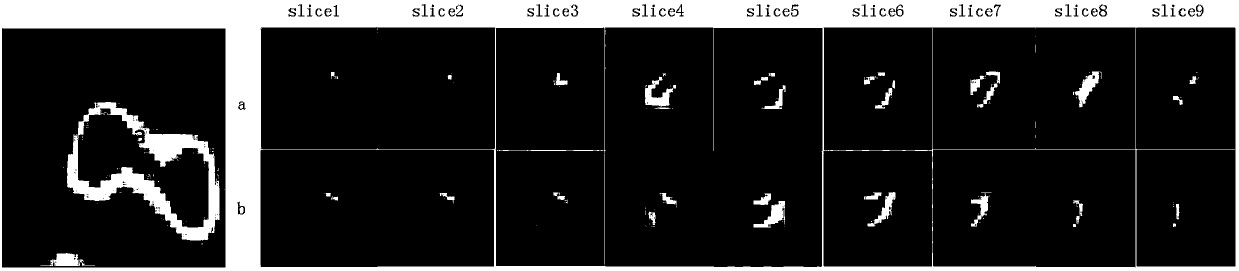

Intelligent and automatic delineation method for gross tumor volume and organs at risk

InactiveCN107403201ASmart sketchAutomatically sketchImage enhancementImage analysisSonificationMathematical Graph

The invention relates to an intelligent and automatic delineation method for a gross tumor volume and organs at risk, which includes the following steps: (1) tumor multi-modal (mode) image reconstruction, de-noising, enhancement, registration, fusion and other preprocessing; (2) automatic extraction of tumor image features: automatically extracting one or more pieces of tumor image group (texture feature spectrum) information from CT, CBCT, MRI, PET and / or ultrasonic multi-modal (mode) tumor medical image data; and (3) intelligently and automatically delineating a gross tumor volume and organs at risk through deep learning, machine learning, artificial intelligence, regional growing, the graph theory (random walk), a geometric level set, and / or a statistical theory method. A gross tumor volume (GTV) and organs at risk (OAR) can be delineated with high precision.

Owner:强深智能医疗科技(昆山)有限公司

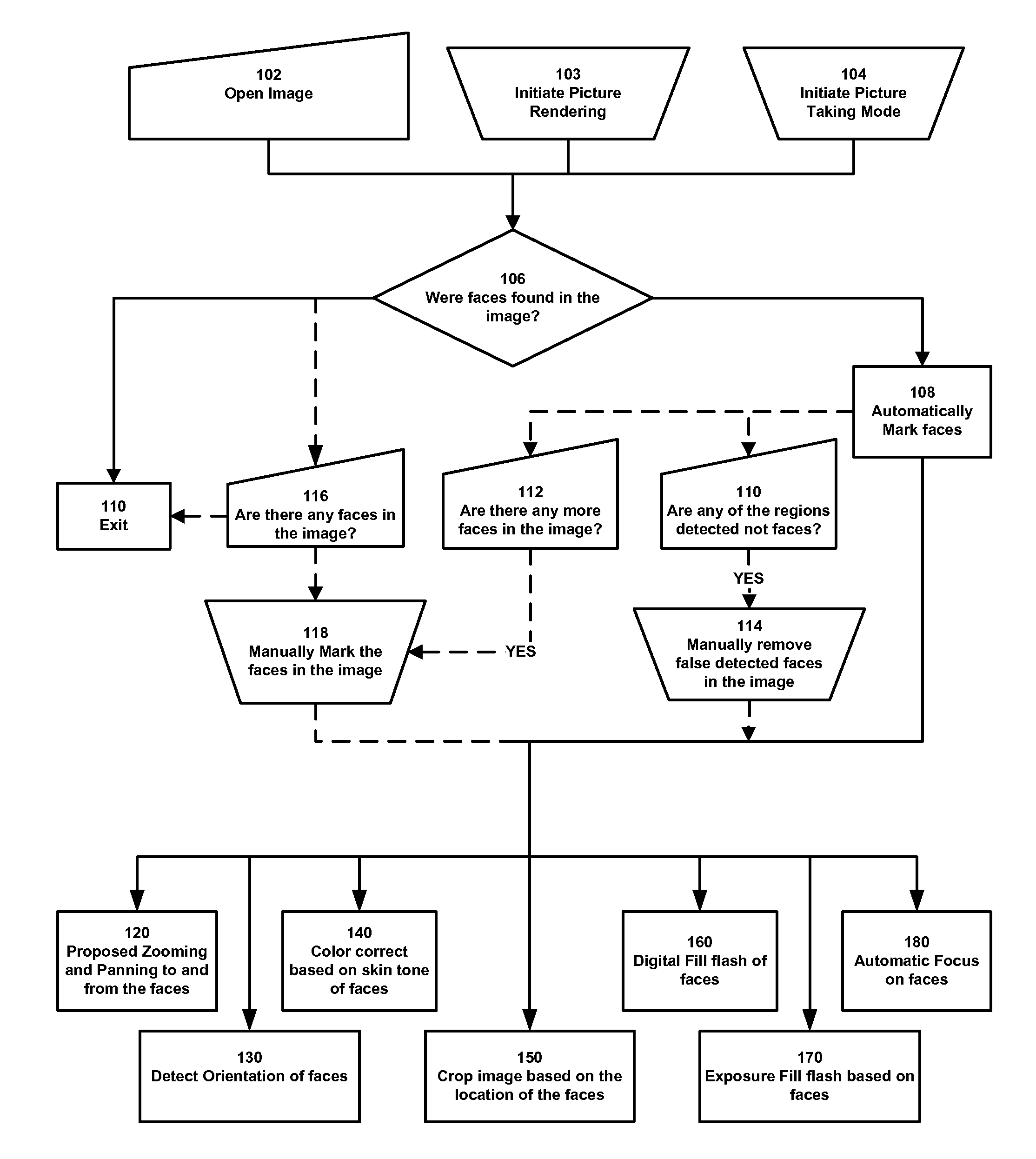

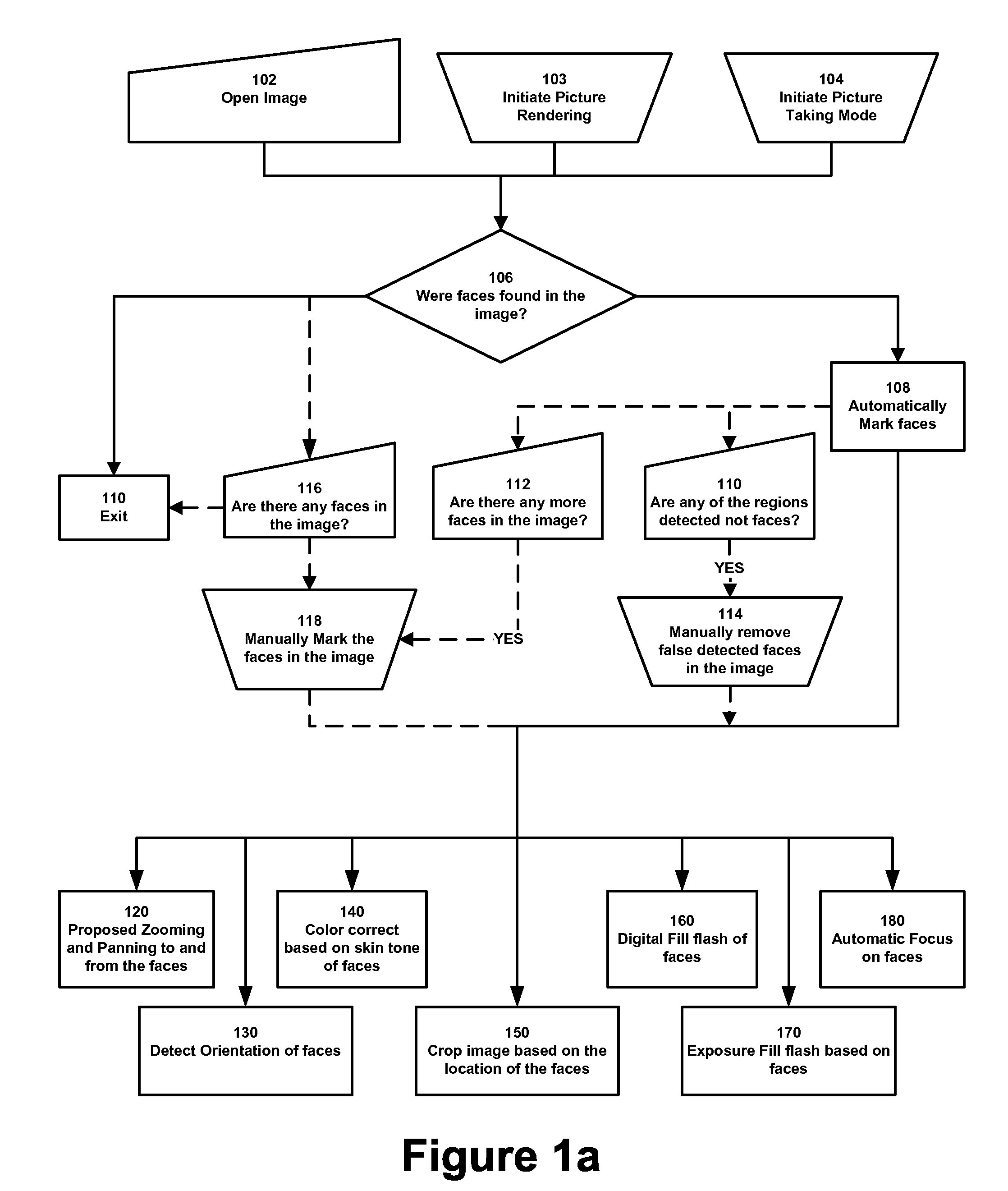

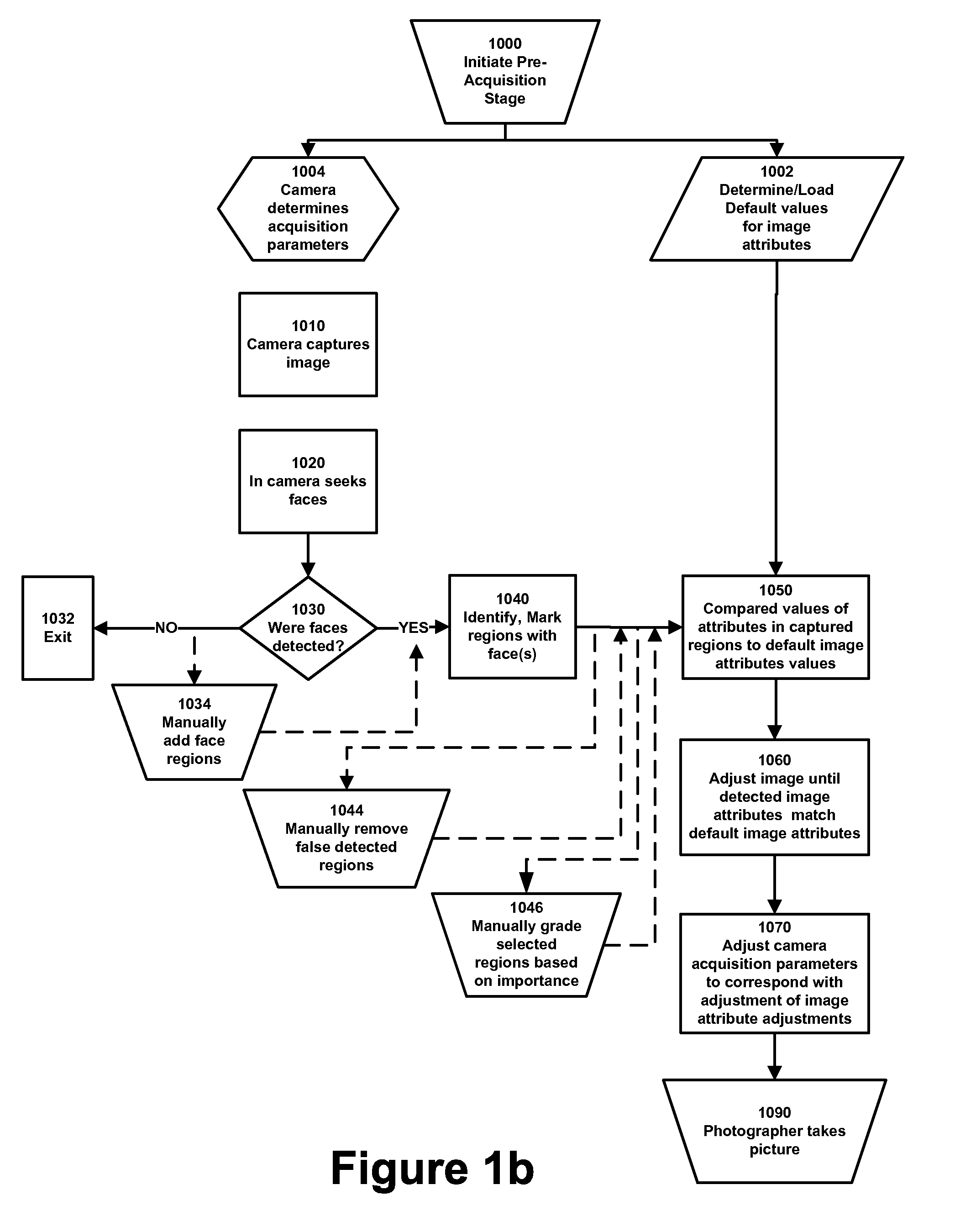

Modification of post-viewing parameters for digital images using image region or feature information

A method of generating one or more new digital images using an original digitally-acquired image including a selected image feature includes identifying within a digital image acquisition device one or more groups of pixels that correspond to the selected image feature based on information from one or more preview images. A portion of the original image is selected that includes the one or more groups of pixels. The technique includes automatically generating values of pixels of one or more new images based on the selected portion in a manner which includes the selected image feature within the one or more new images.

Owner:FOTONATION LTD

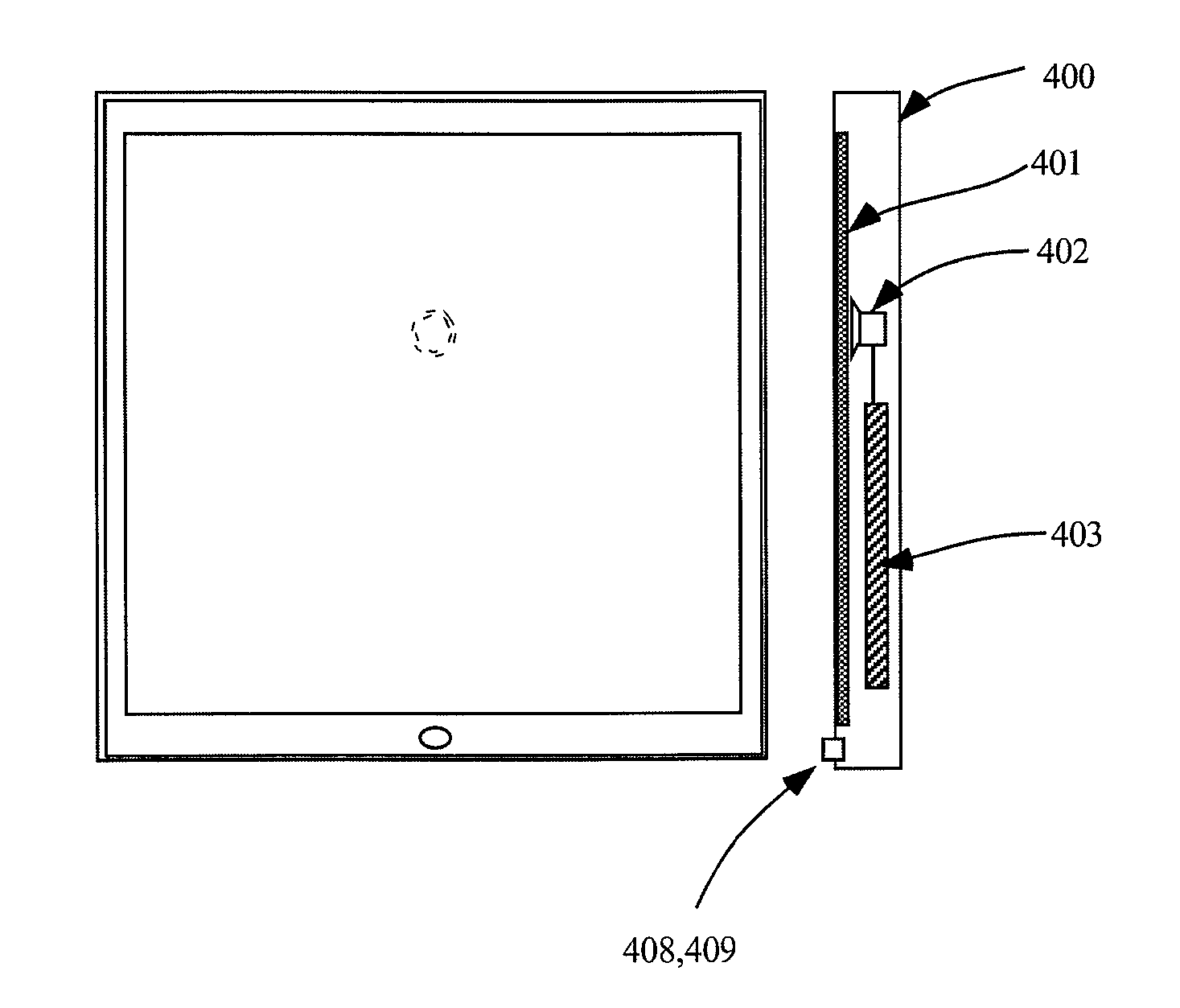

Digital mirror system with advanced imaging features and hands-free control

A digital mirror system is provided that emulates a traditional mirror by displaying real-time video imagery of a user who stands before it. The digital mirror system provides a plurality of digital mirror modes, including a traditional mirror mode and a third person mirror mode. The digital mirror system provides a plurality of digital mirroring features including an image freeze feature, an image zoom feature, and an image buffering feature. The digital mirror system provides a plurality of operational states including a digital mirroring state and an alternate state, the alternate state including a power-conservation state and / or a digital picture frame state. The digital mirror system provides a user sensor that automatically transitions between operational states in response to whether or not a user is detected before the digital mirror display screen for a period of time. The digital mirror system provides for hands-free user control using speech recognition, the speech recognition being employed to enable a user to selectively access one or more of the digital mirror modes or features in response to verbal commands relationally associated with those modes or features.

Owner:OUTLAND RES

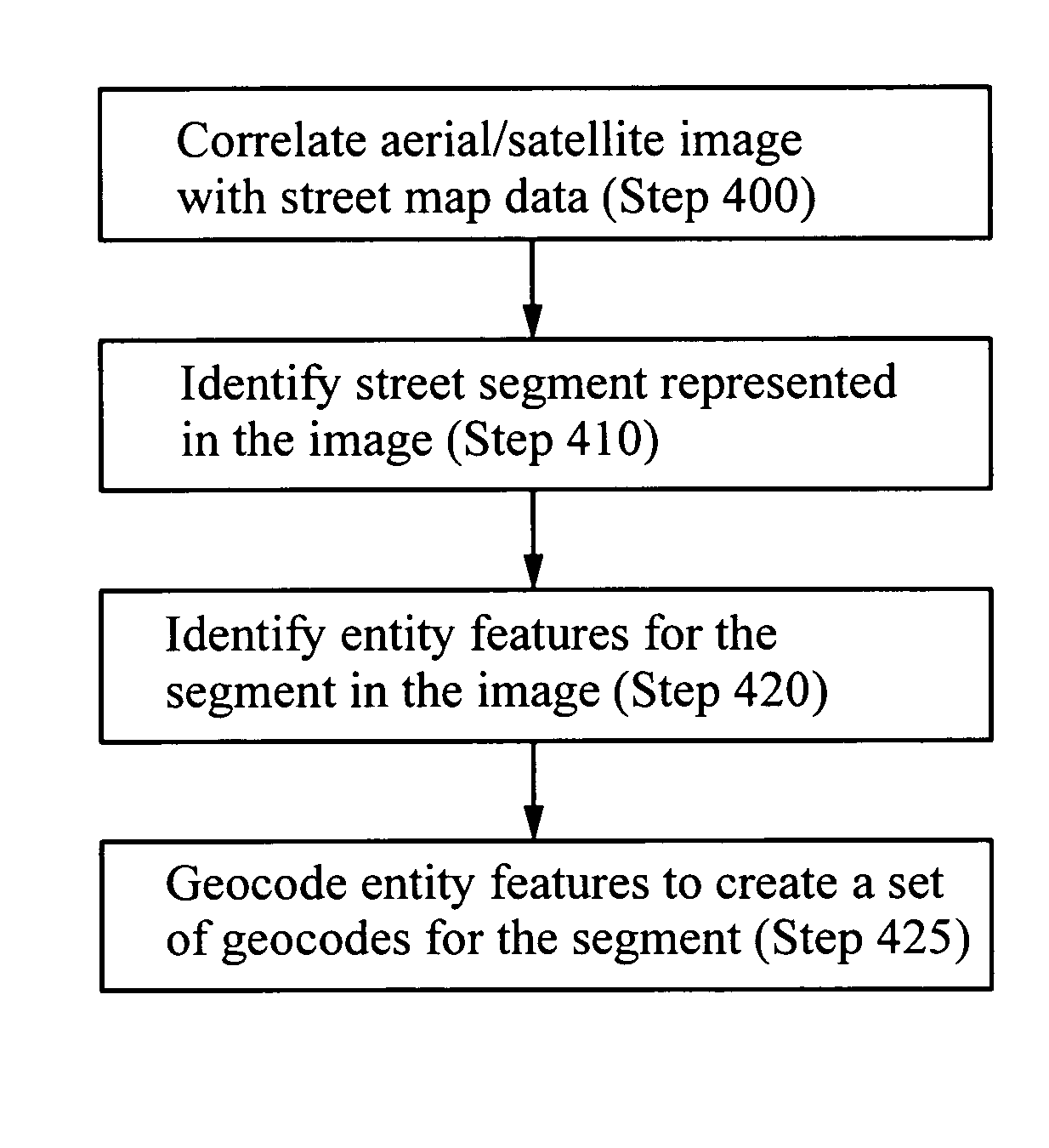

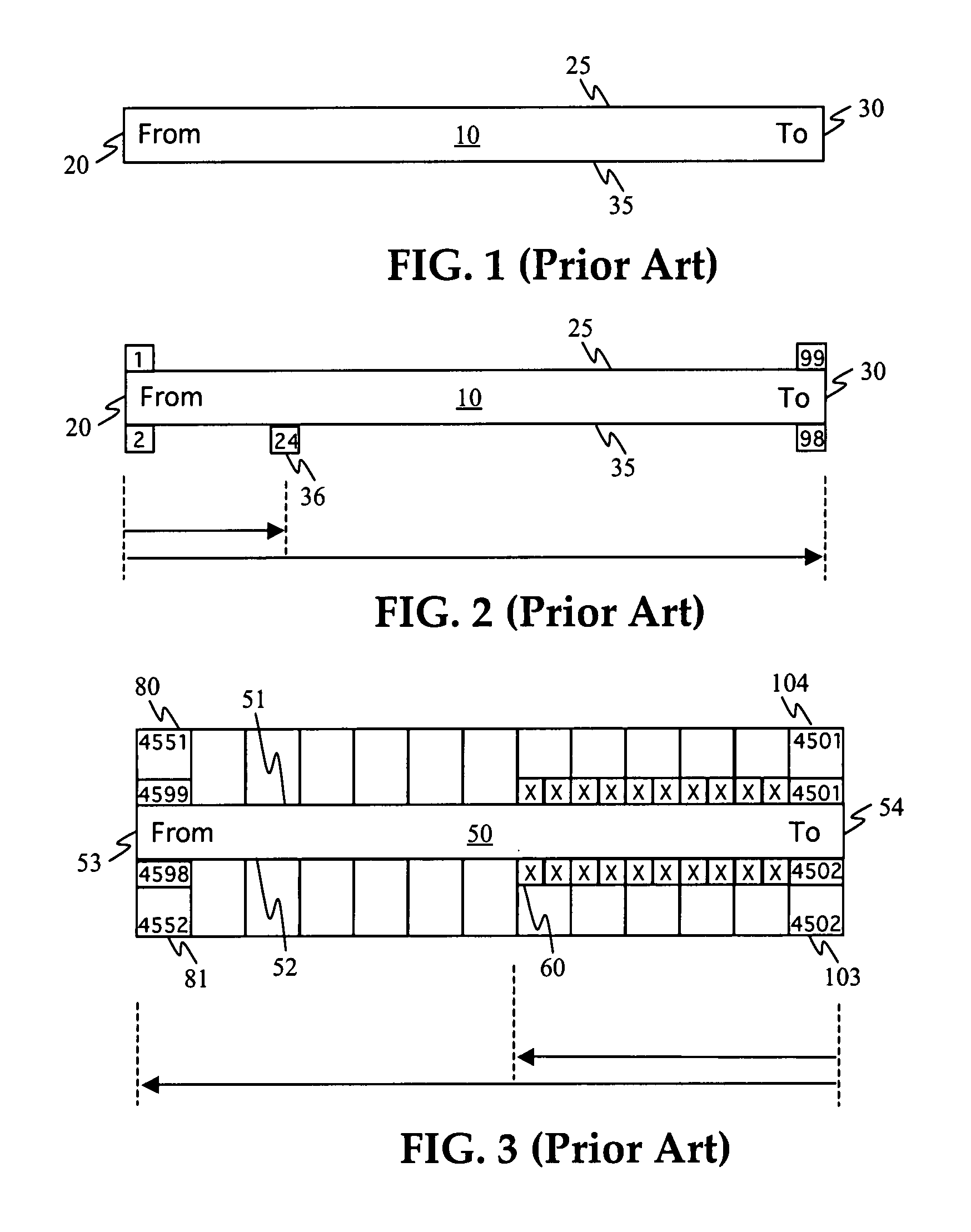

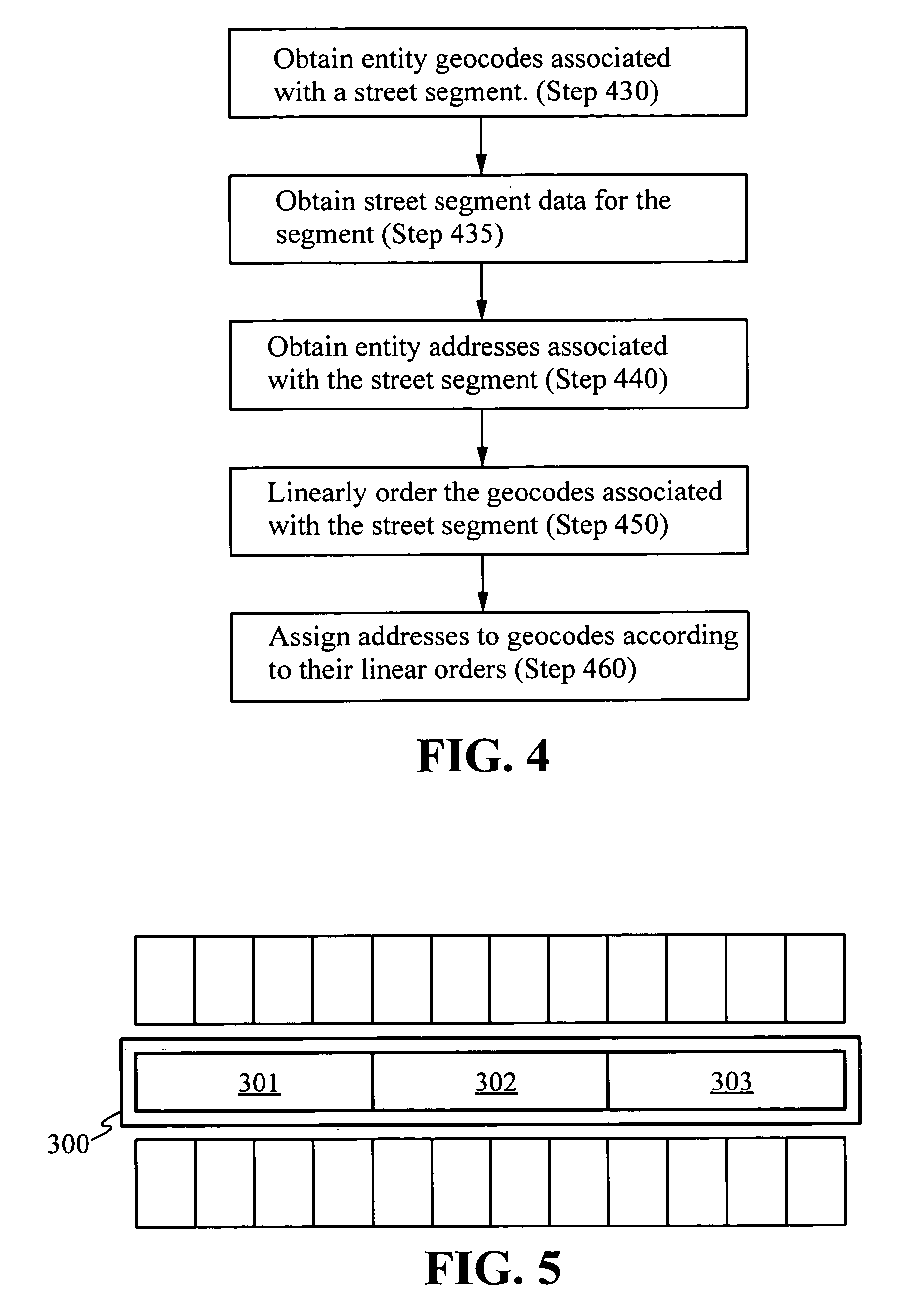

Methods for assigning geocodes to street addressable entities

ActiveUS7324666B2Improve accuracyInstruments for road network navigationCharacter and pattern recognitionImaging FeatureAerial photography

A method for assigning of geocodes to addresses identifying entities on a street includes ordering a set of entity geocodes associated with a street segment based on ordering criteria such as the linear distance of the geocodes along the length of the street segment. Assignable addresses are matched with the ordered geocodes creating a correspondence between a numerical order of the assignable addresses and a linear order of the ordered set of geocodes, consistent with address range direction data and street segment side data. The geocodes may be implicit or explicit, locally unique or globally unique. The set of entity geocodes may be obtained by identifying image features in aerial imagery corresponding to streets and buildings and correlating this information with data from a street map database.

Owner:TAMIRAS PER PTE LTD LLC

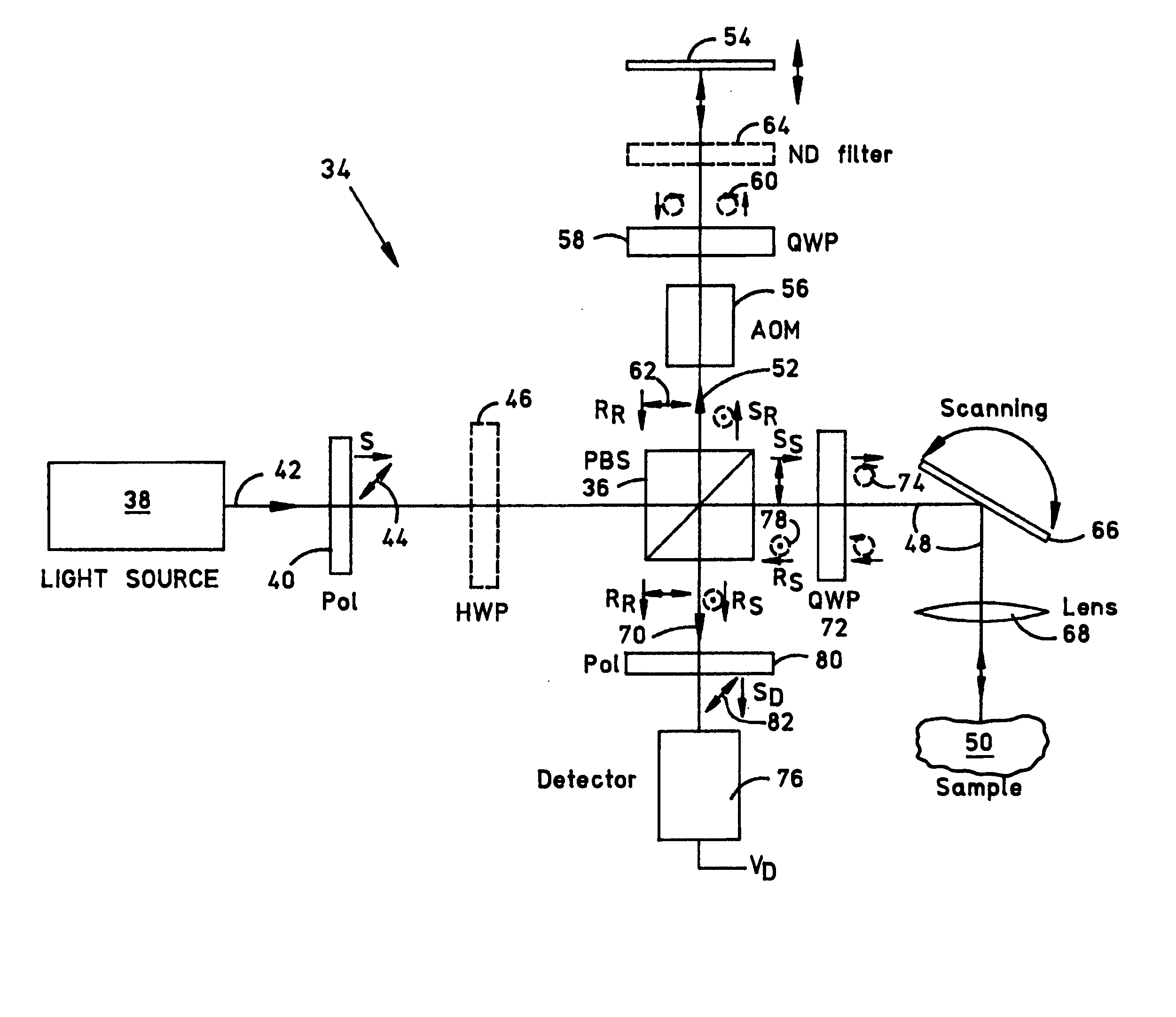

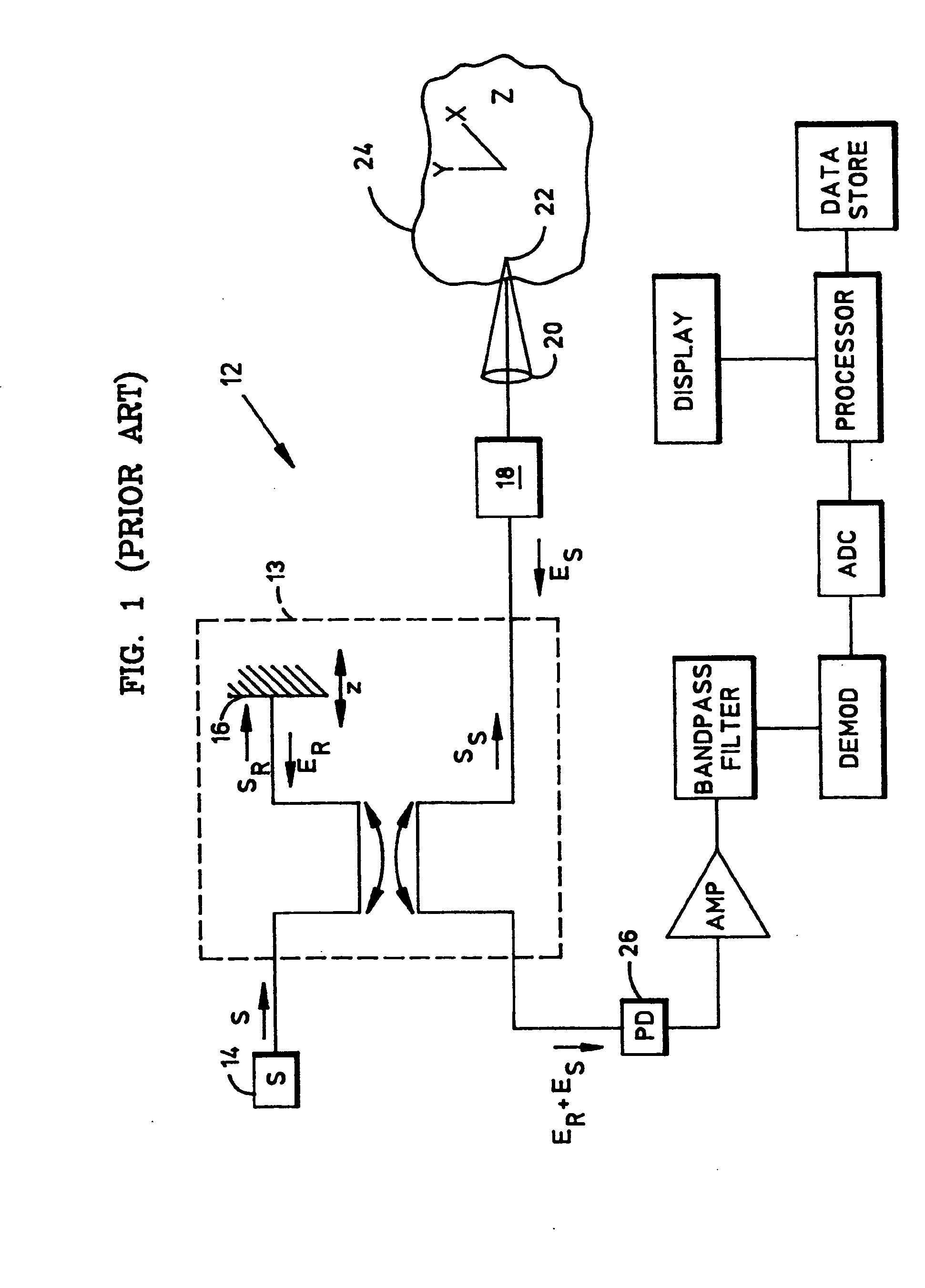

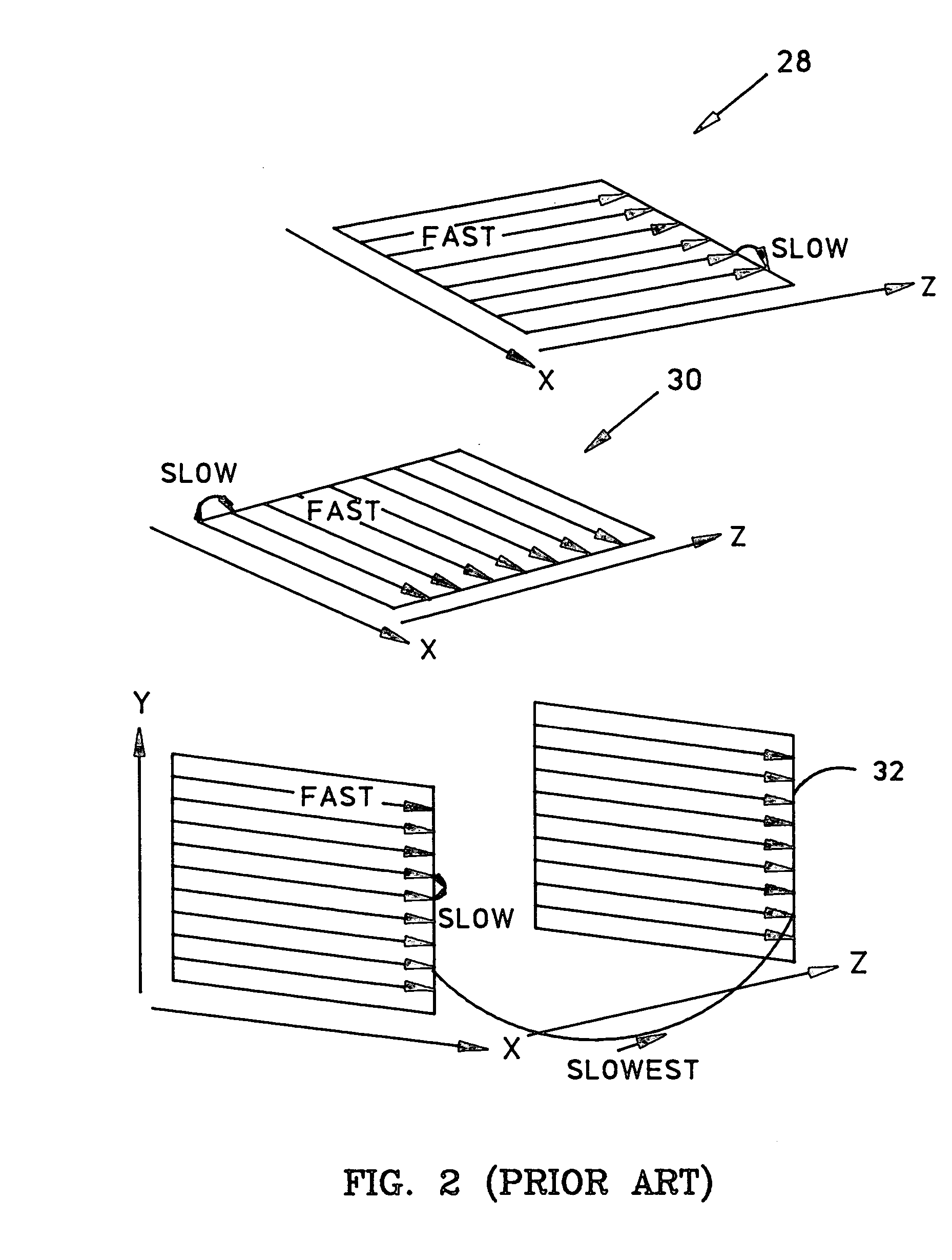

Efficient optical coherence tomography (OCT) system and method for rapid imaging in three dimensions

InactiveUS20050140984A1Enhanced OCT channel sensitivityImprove accuracyScattering properties measurementsUsing optical meansRapid imagingData set

An optical coherence tomography (OCT) system including a polarizing splitter disposed to direct light in an interferometer such that the OCT detector operates in a noise-optimized regime. When scanning an eye, the system detector simultaneously produces a low-frequency component representing a scanning laser ophthalmoscope-like (SLO-like) image pixel and a high frequency component representing a two-dimensional (2D) OCT en face image pixel of each point. The SLO-like image is unchanging with depth, so that the pixels in each SLO-like image may be quickly realigned with the previous SLO-like image by consulting prominent image features (e.g., vessels) should lateral eye motion shift an OCT en face image during recording. Because of the pixel-to-pixel correspondence between the simultaneous OCT and SLO-like images, the OCT image pixels may be remapped on the fly according to the corresponding SLO-like image pixel remapping to create an undistorted 3D image data set for the scanned region.

Owner:CARL ZEISS MEDITEC INC

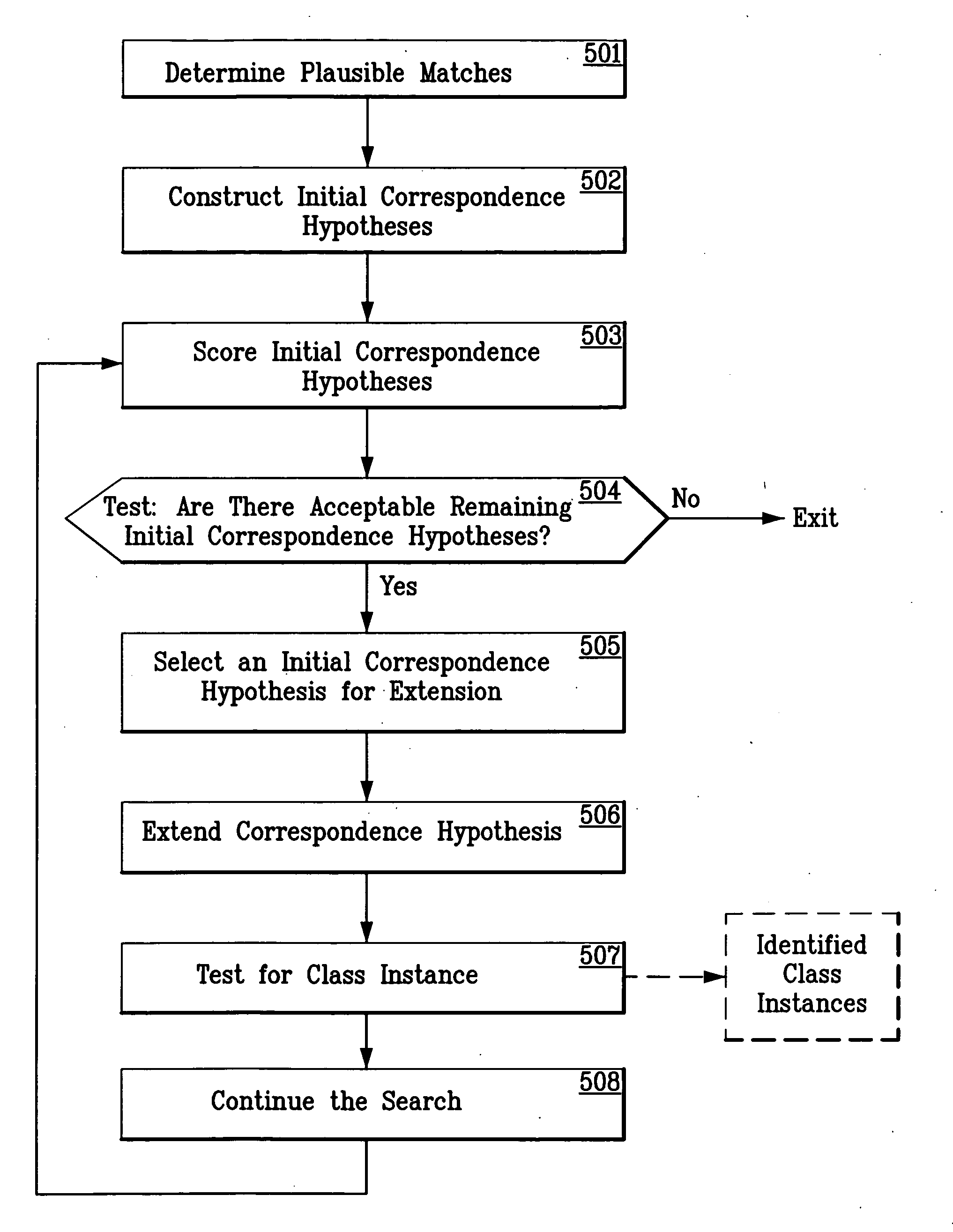

System and method for recognition in 2D images using 3D class models

ActiveUS20060285755A1Digital data information retrievalSpecial data processing applicationsPattern recognitionClass model

A system and method for recognizing instances of classes in a 2D image using 3D class models and for recognizing instances of objects in a 2D image using 3D class models. The invention provides a system and method for constructing a database of 3D class models comprising a collection of class parts, where each class part includes part appearance and part geometry. The invention also provides a system and method for matching portions of a 2D image to a 3D class model. The method comprises identifying image features in the 2D image; computing an aligning transformation between the class model and the image; and comparing, under the aligning transformation, class parts of the class model with the image features. The comparison uses both the part appearance and the part geometry.

Owner:STRIDER LABS

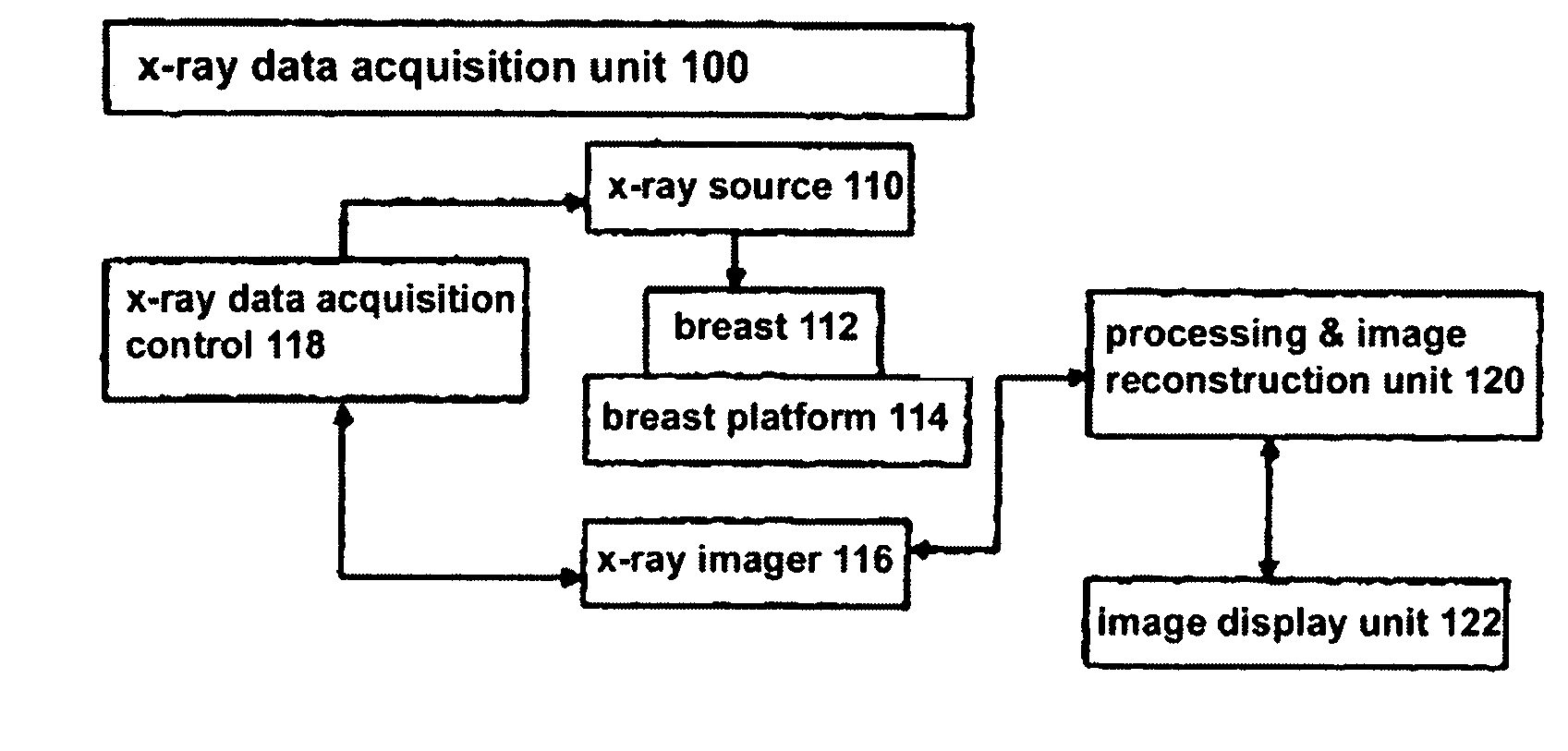

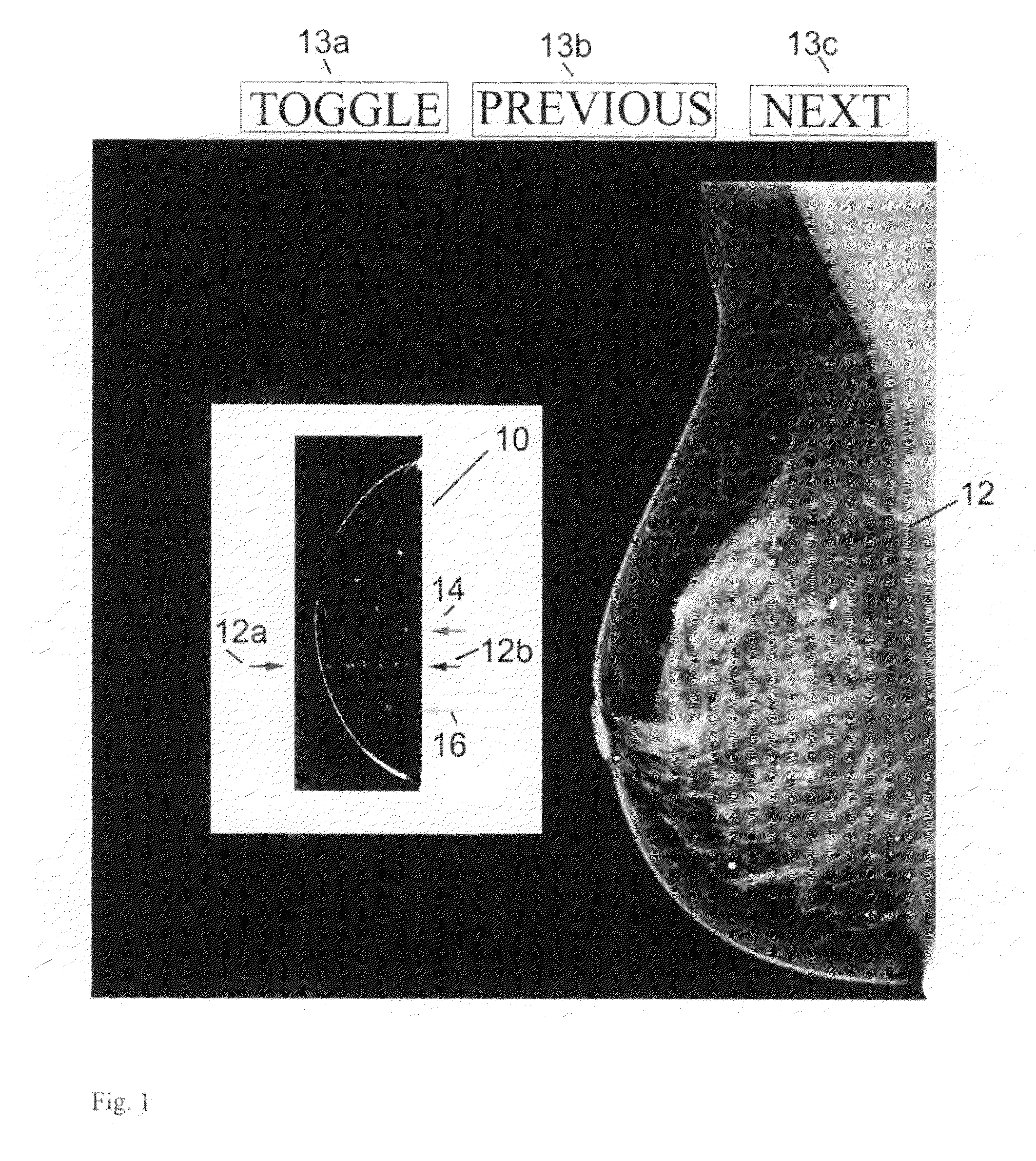

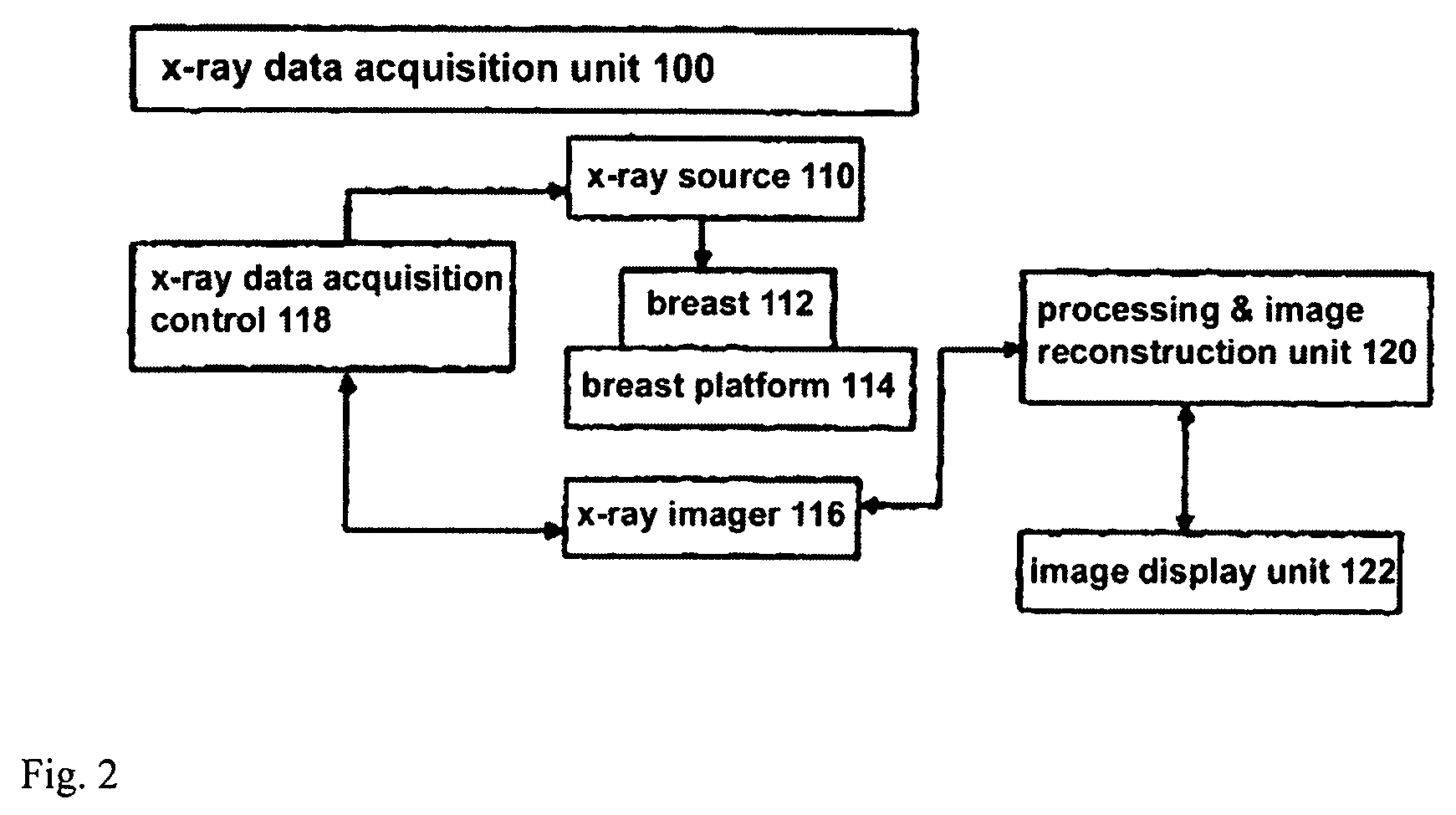

Breast tomosynthesis with display of highlighted suspected calcifications

Systems and methods that facilitate the presentation and assessment of selected features in projection and / or reconstructed breast images, such as calcifications that meet selected criteria of size, shape, presence in selected slice images, distribution of pixels that could be indicative of calcification relative to other pixels or of other image features of clinical interest.

Owner:HOLOGIC INC

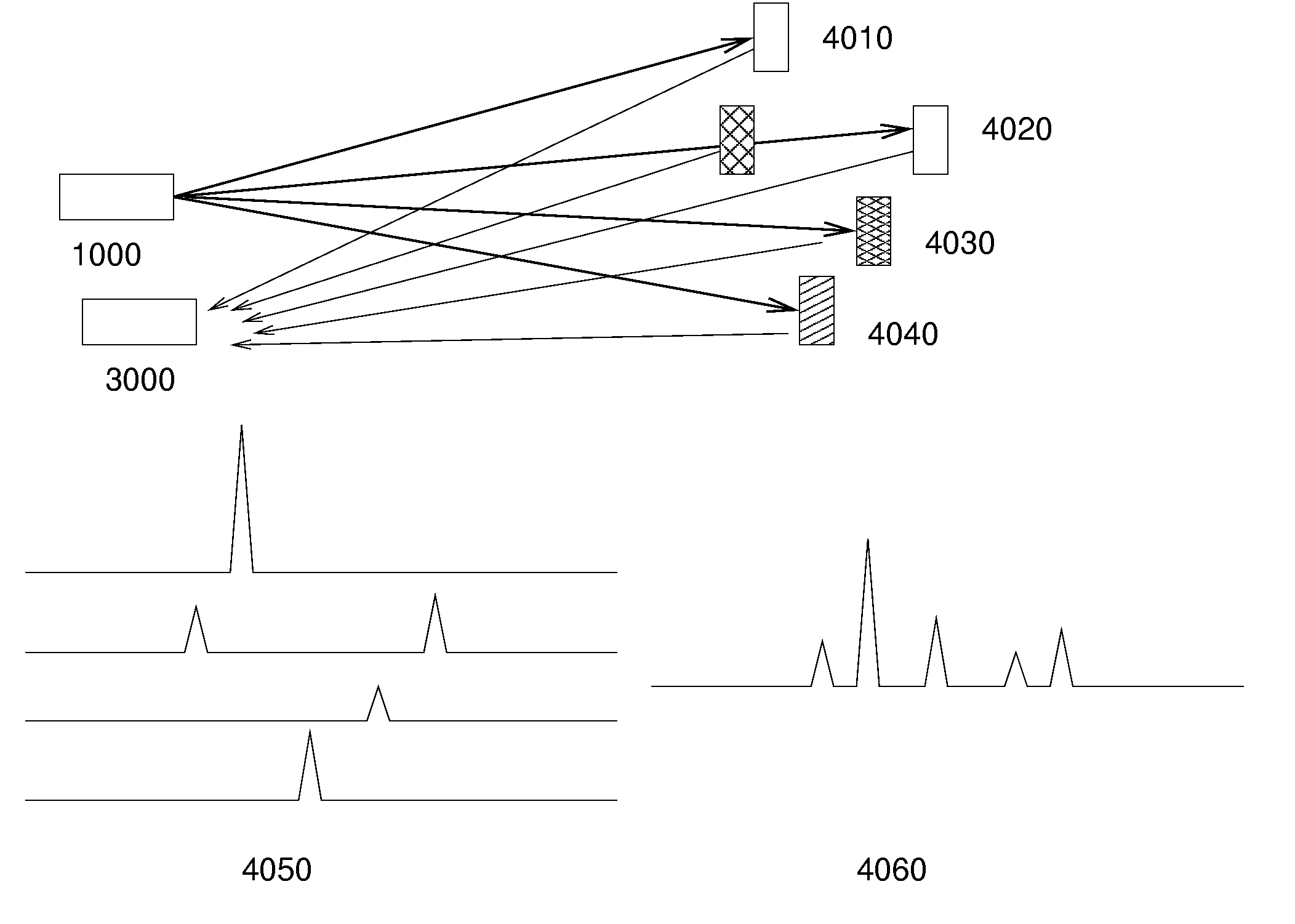

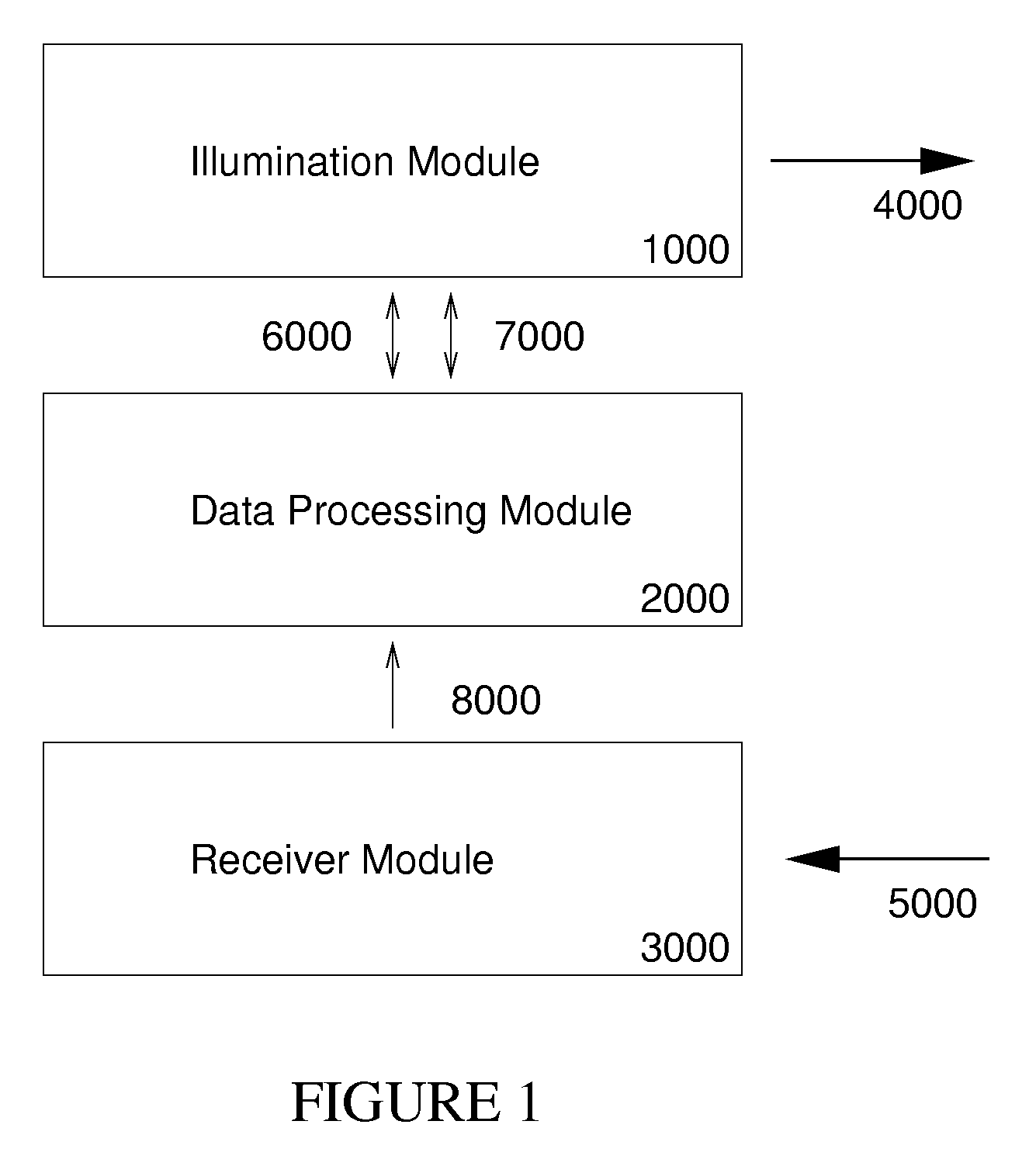

Multiplexed, spatially encoded illumination system for determining imaging and range estimation

InactiveUS20040213463A1Increased complexityIncrease diversityCharacter and pattern recognitionUsing optical meansLight equipmentOphthalmology

A illumination device sequentially projects a selective set of spatially encoded intensity light pulses toward a scene. The spatially encoded patterns are generated by an array of diffractive optical or holographic elements on a substrate that is rapidly translated in the path of the light beam. Alternatively, addressable micromirror arrays or similar technology are used to manipulate the beam wavefront. Reflected light is collected onto an individual photosensor or a very small set of high performance photodetectors. A data processor collects a complete set of signals associated with the encoded pattern set. The sampled signals are combined by a data processing unit in a prescribed manner to calculate range estimates and imaging features for elements in the scene. The invention may also be used to generate three dimensional reconstructions.

Owner:MORRISON RICK LEE

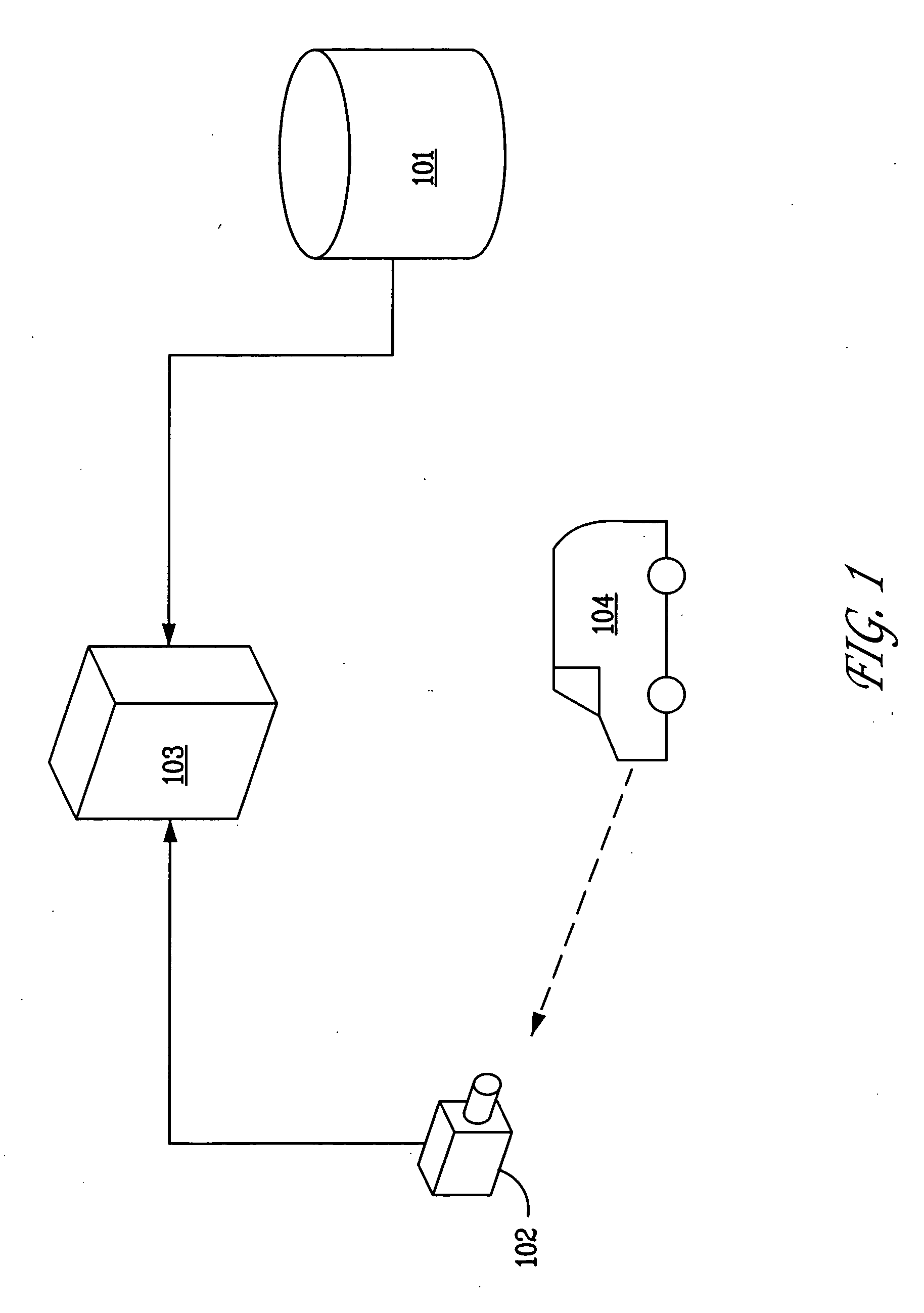

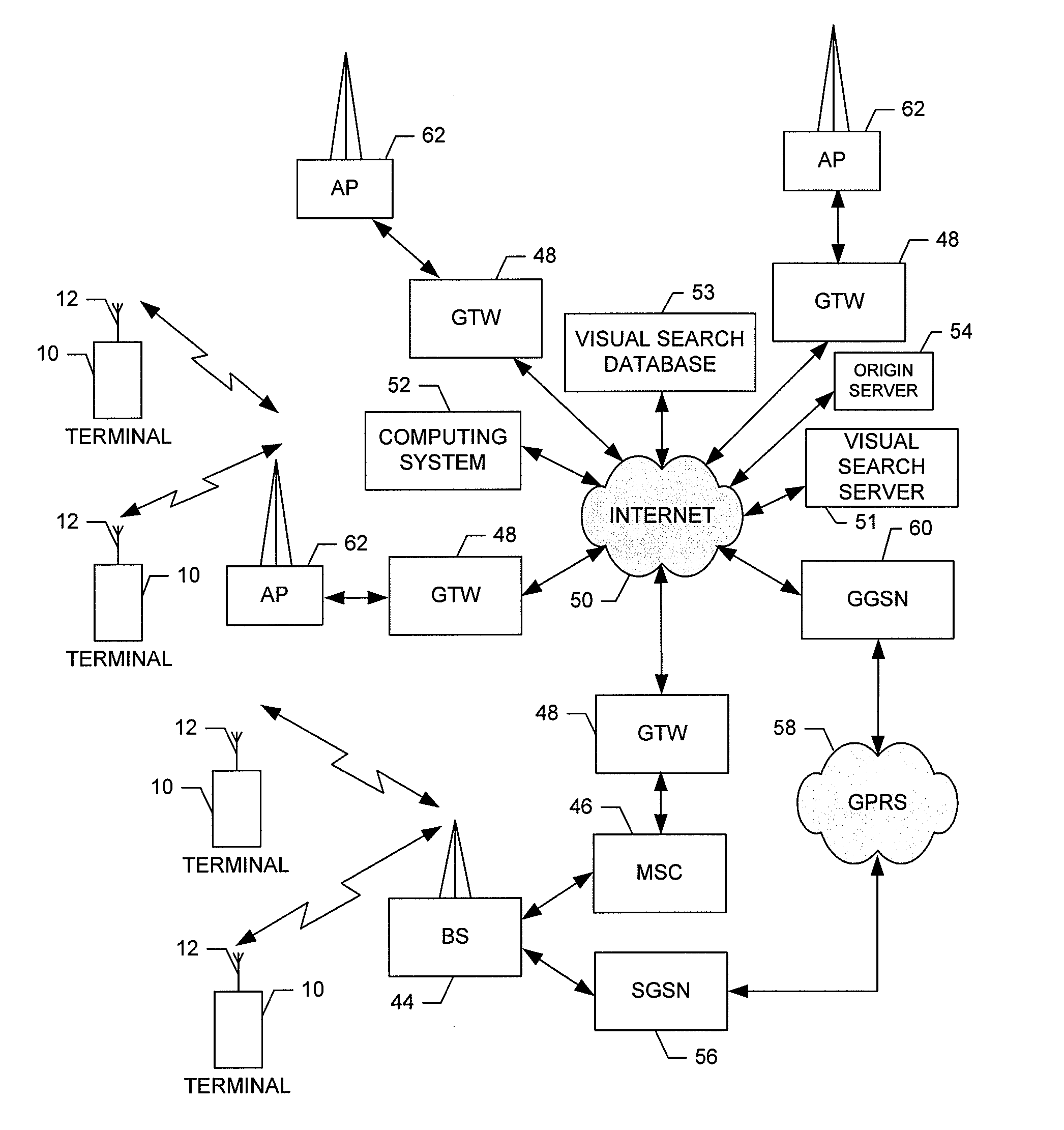

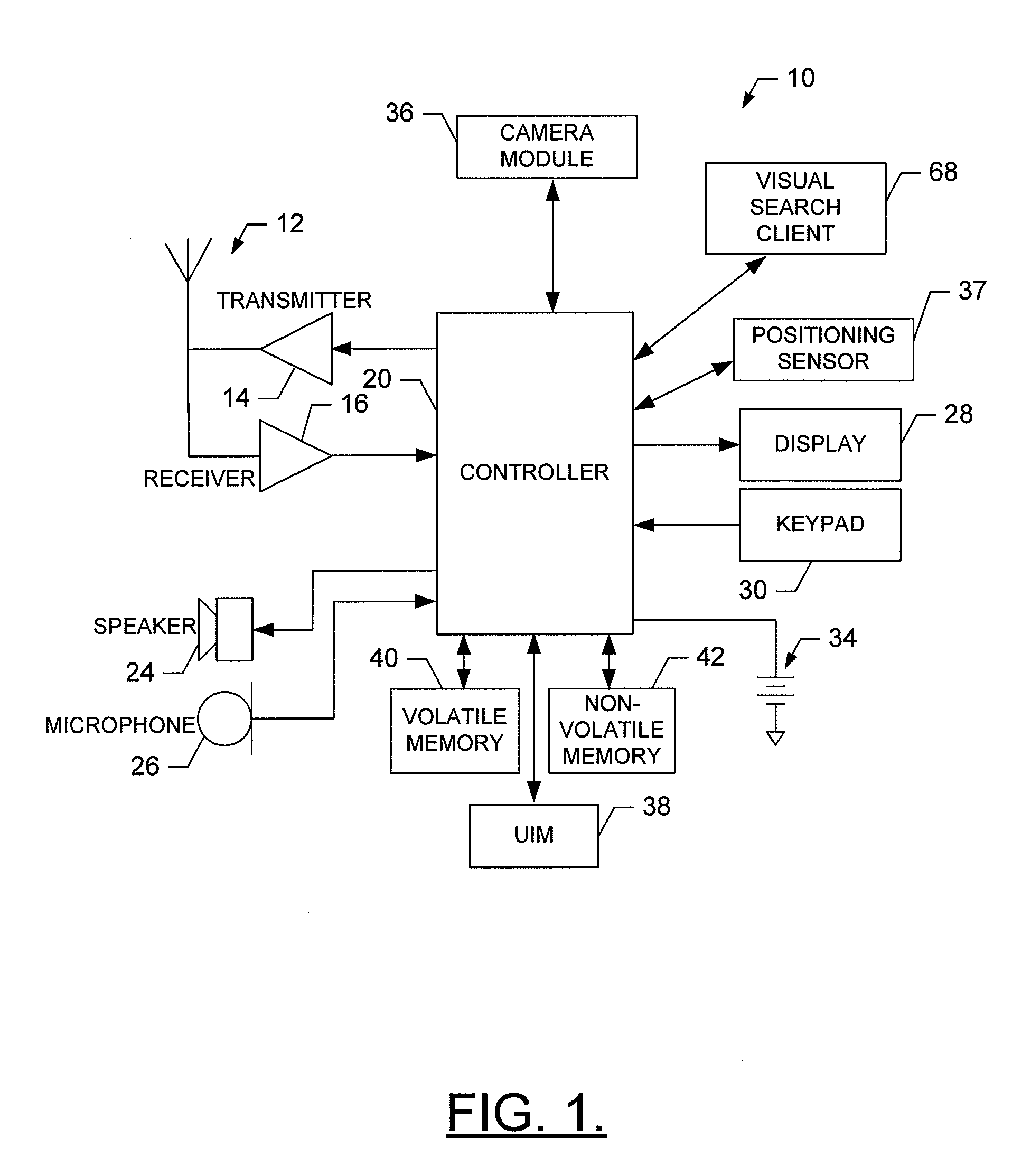

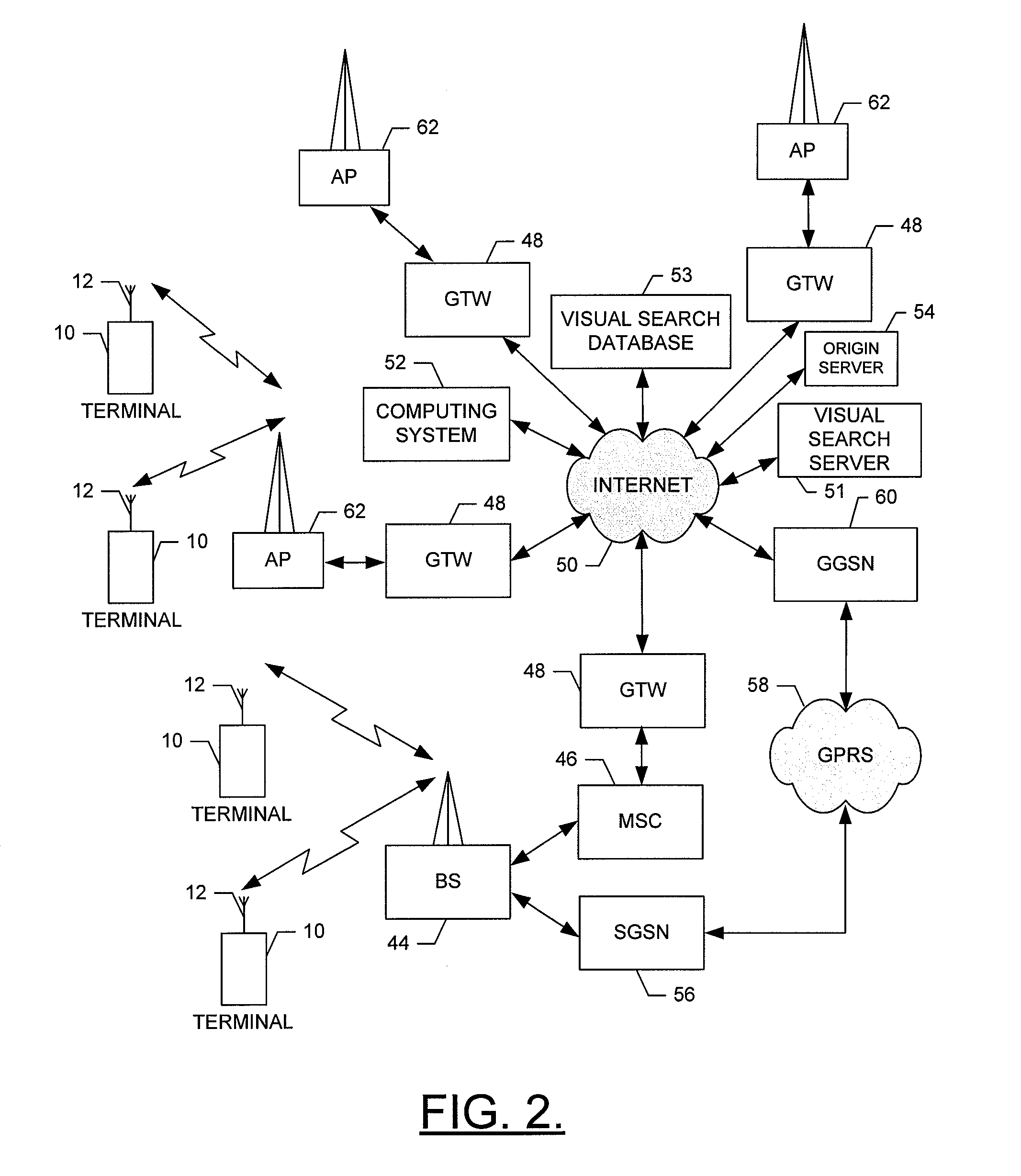

Method, Apparatus and Computer Program Product for Performing a Visual Search Using Grid-Based Feature Organization

InactiveUS20090083275A1Rapid and efficient mannerFast and efficientDigital data processing detailsGeographical information databasesFeature setGrid based

A method, apparatus and computer program product are provided for visually searching feature sets that are organized in a grid-like manner. As such, a feature set associated with a location-based grid area may be received. The location-based grid area may also be associated with the location of a device. After receiving query image features, a visual search may be performed by comparing the query image features with the feature set. The search results are then returned. By conducting the visual search within a feature set that is selected based upon the location of the device, the efficiency of the search can be enhanced and the search may potentially be performed by the device, such as a mobile device, itself.

Owner:NOKIA CORP

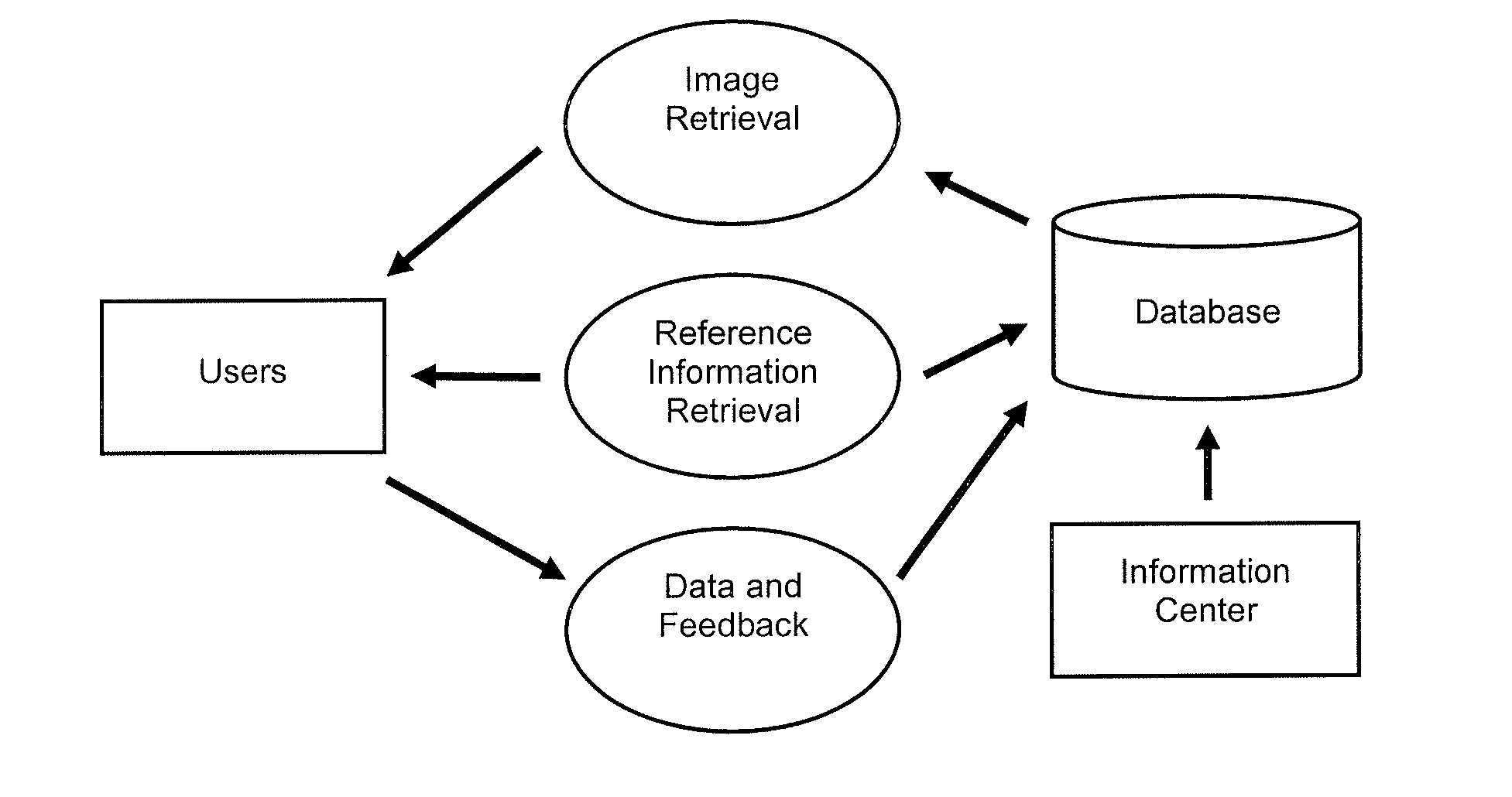

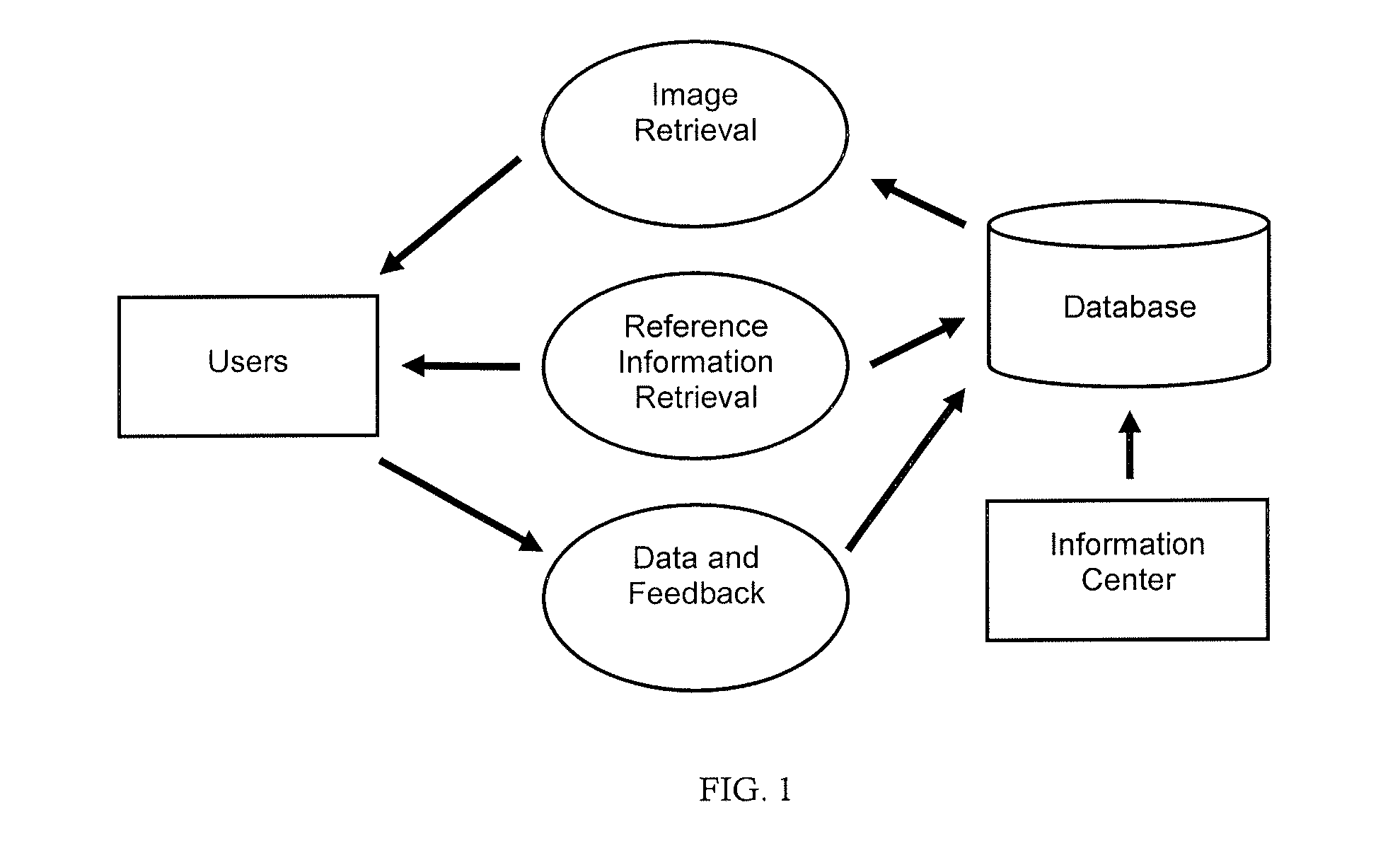

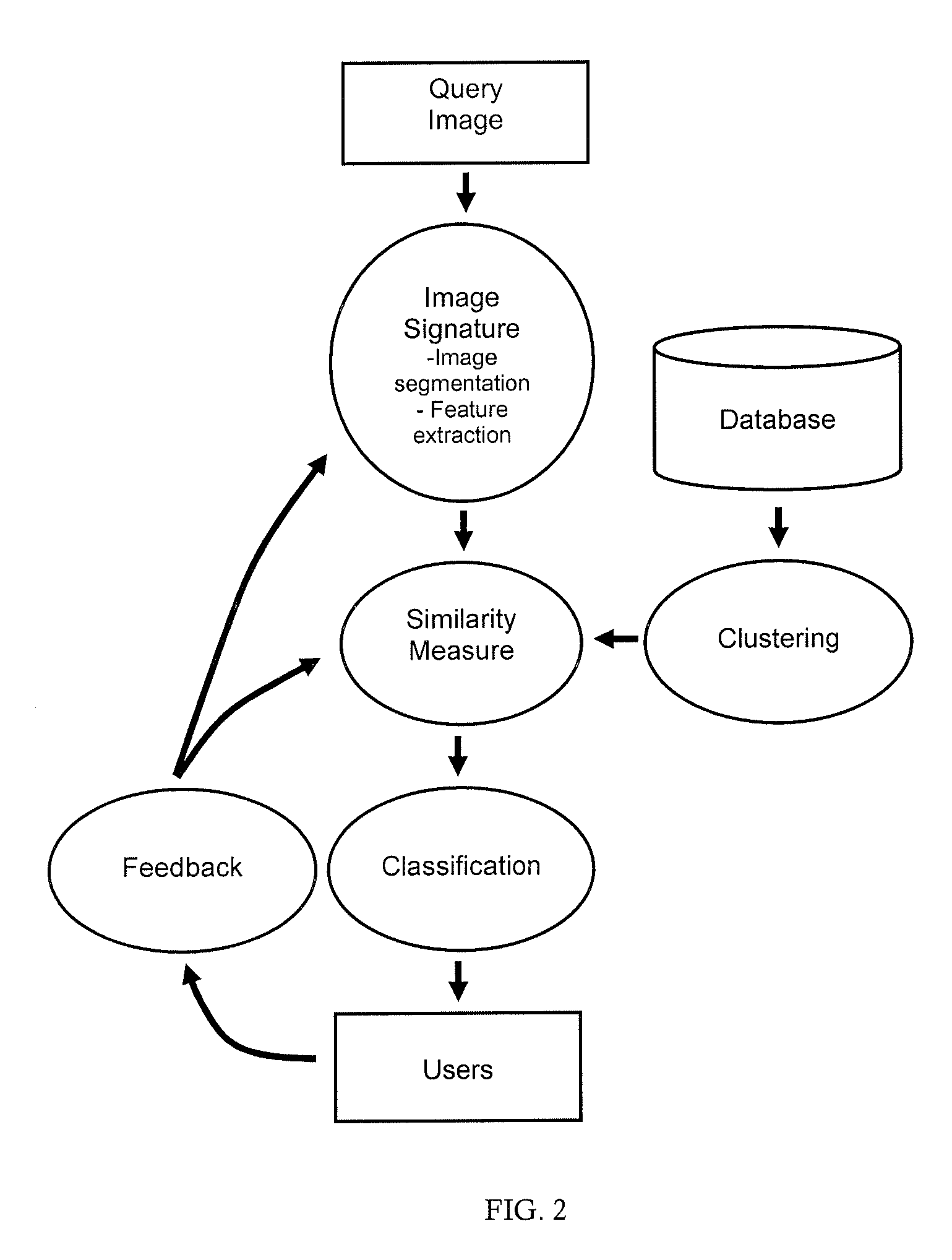

Diagnosis Support System Providing Guidance to a User by Automated Retrieval of Similar Cancer Images with User Feedback

InactiveUS20120283574A1Improve proficiencyIncrease diagnostic powerDigital data information retrievalImage analysisDiseaseImage retrieval

The present invention is a diagnosis support system providing automated guidance to a user by automated retrieval of similar disease images and user feedback. High resolution standardized labeled and unlabeled, annotated and non-annotated images of diseased tissue in a database are clustered, preferably with expert feedback. An image retrieval application automatically computes image signatures for a query image and a representative image from each cluster, by segmenting the images into regions and extracting image features in the regions to produce feature vectors, and then comparing the feature vectors using a similarity measure. Preferably the features of the image signatures are extended beyond shape, color and texture of regions, by features specific to the disease. Optionally, the most discriminative features are used in creating the image signatures. A list of the most similar images is returned in response to a query. Keyword query is also supported.

Owner:STI MEDICAL SYST

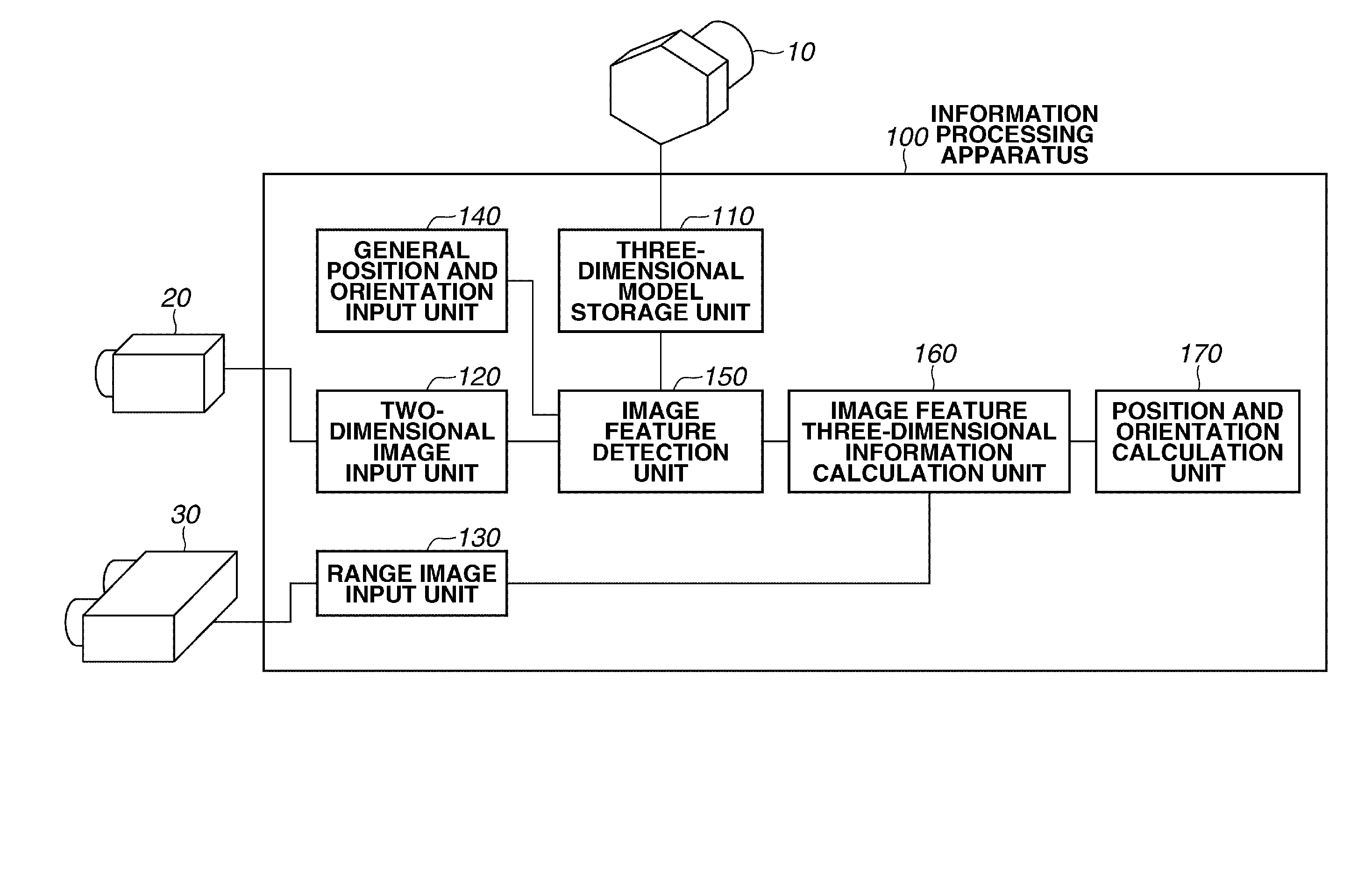

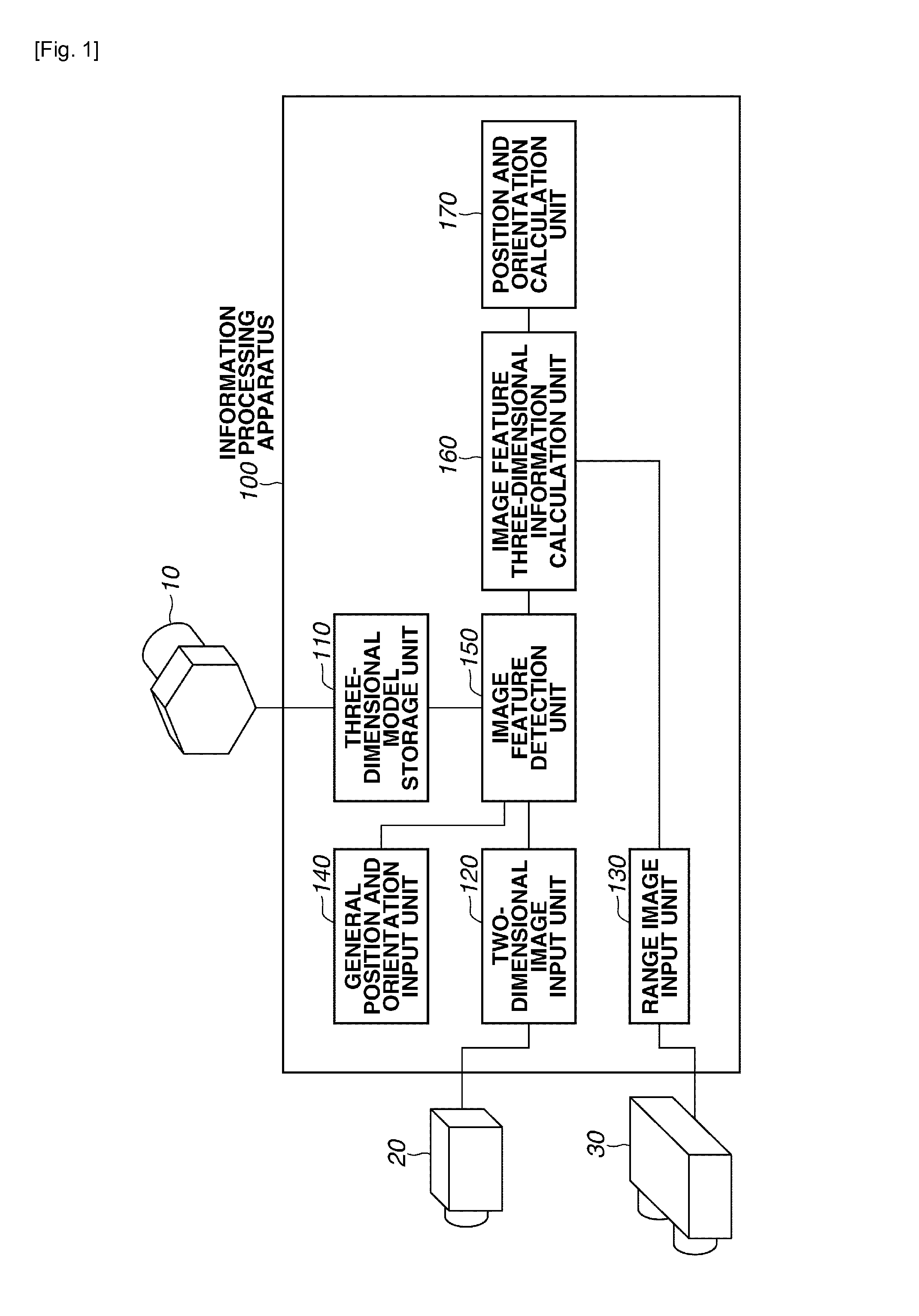

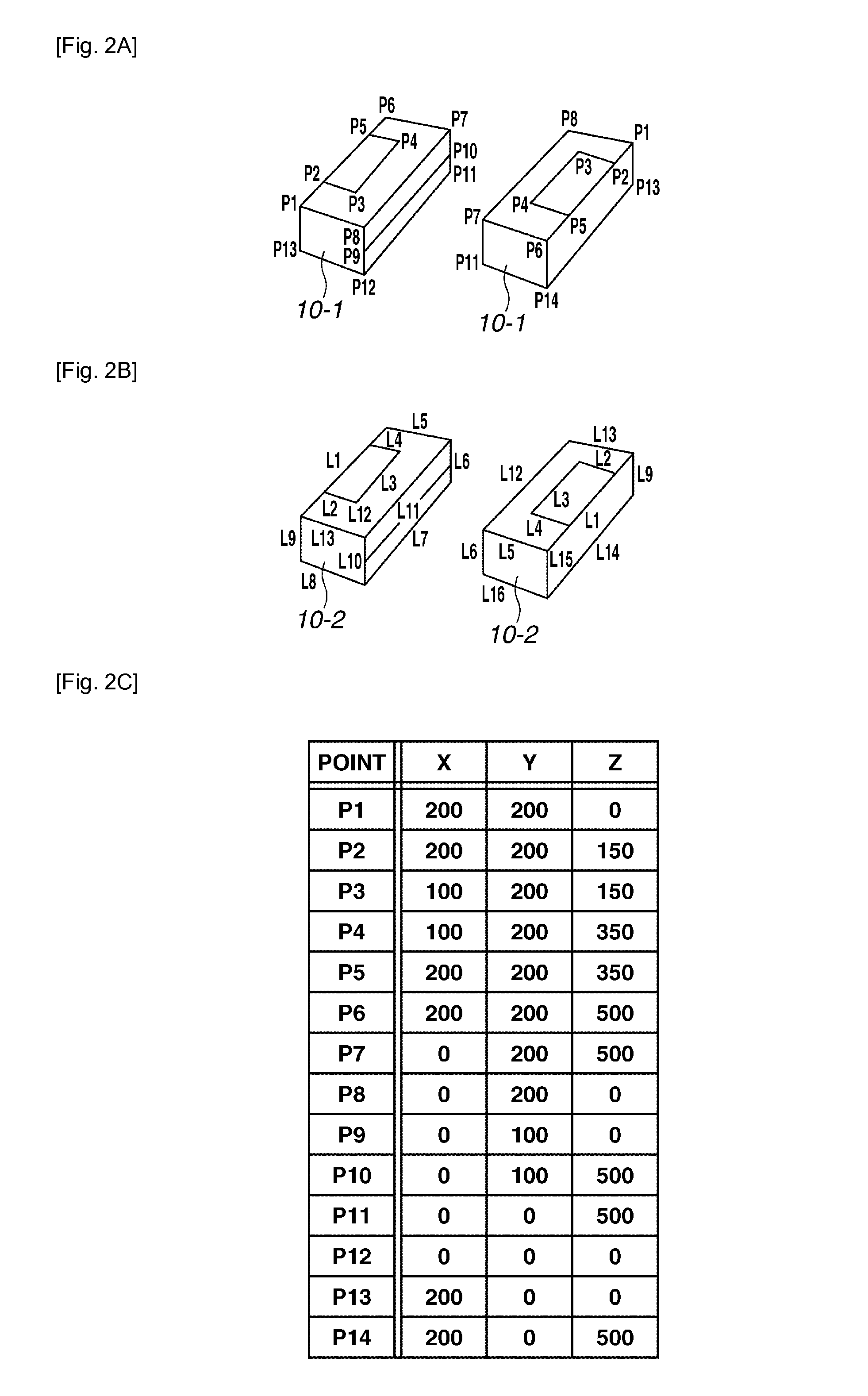

Information processing apparatus and information processing method

InactiveUS20130230235A1Less susceptible to noiseImage enhancementImage analysisInformation processingImaging Feature

An information processing apparatus according to the present invention includes a three-dimensional model storage unit configured to store data of a three-dimensional model that describes a geometric feature of an object, a two-dimensional image input unit configured to input a two-dimensional image in which the object is imaged, a range image input unit configured to input a range image in which the object is imaged, an image feature detection unit configured to detect an image feature from the two-dimensional image input from the two-dimensional image input unit, an image feature three-dimensional information calculation unit configured to calculate three-dimensional coordinates corresponding to the image feature from the range image input from the range image input unit, and a model fitting unit configured to fit the three-dimensional model into the three-dimensional coordinates of the image feature.

Owner:CANON KK

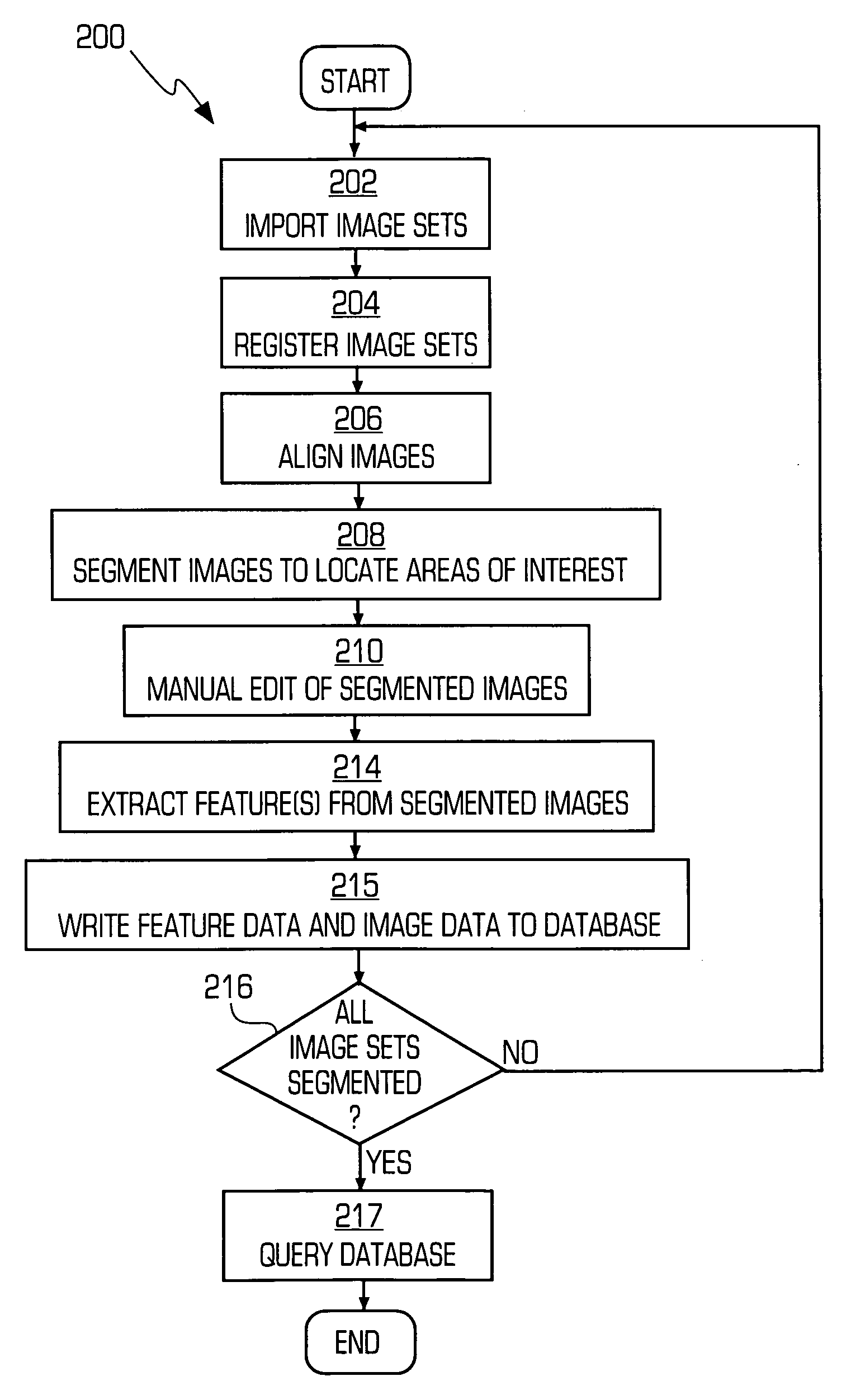

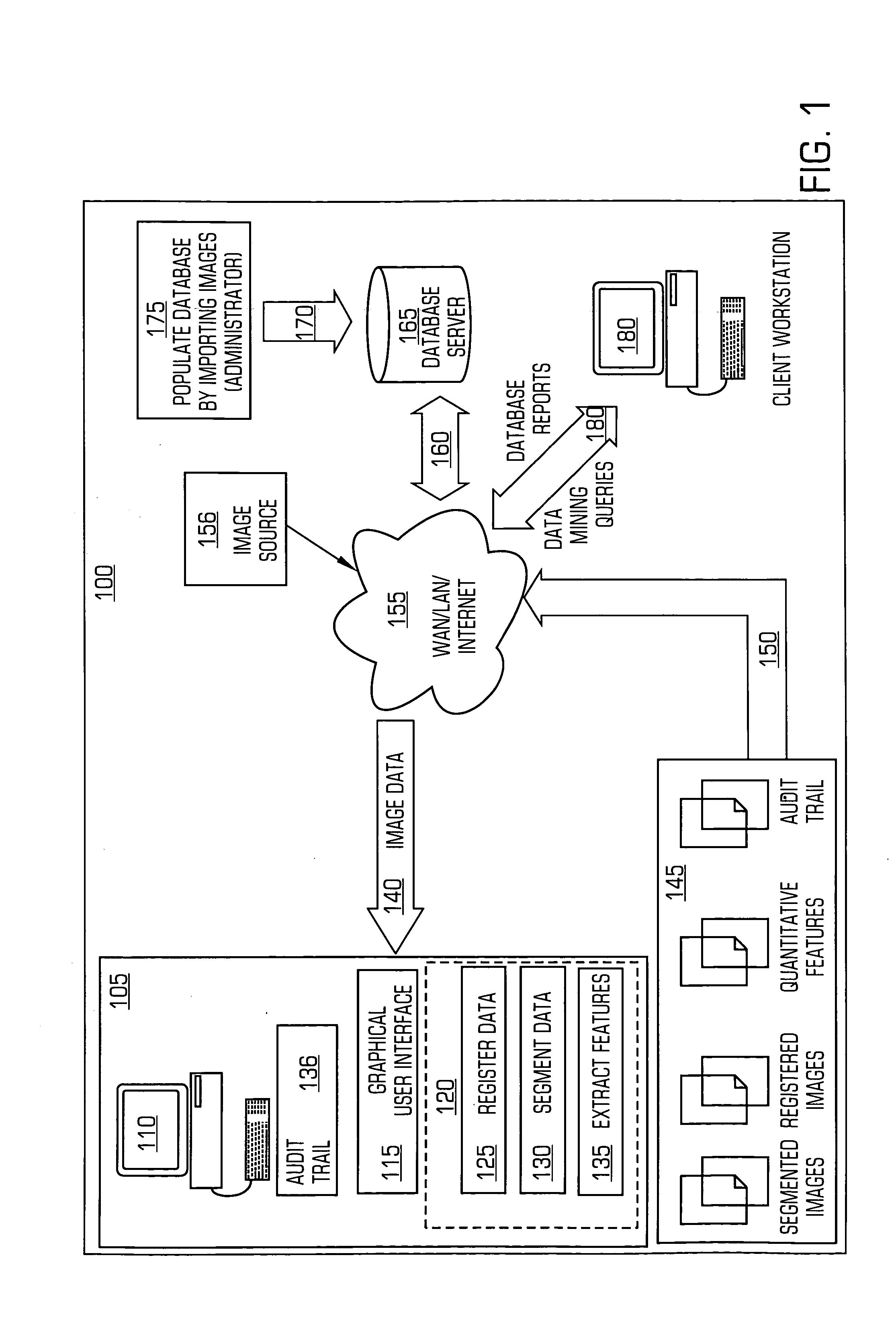

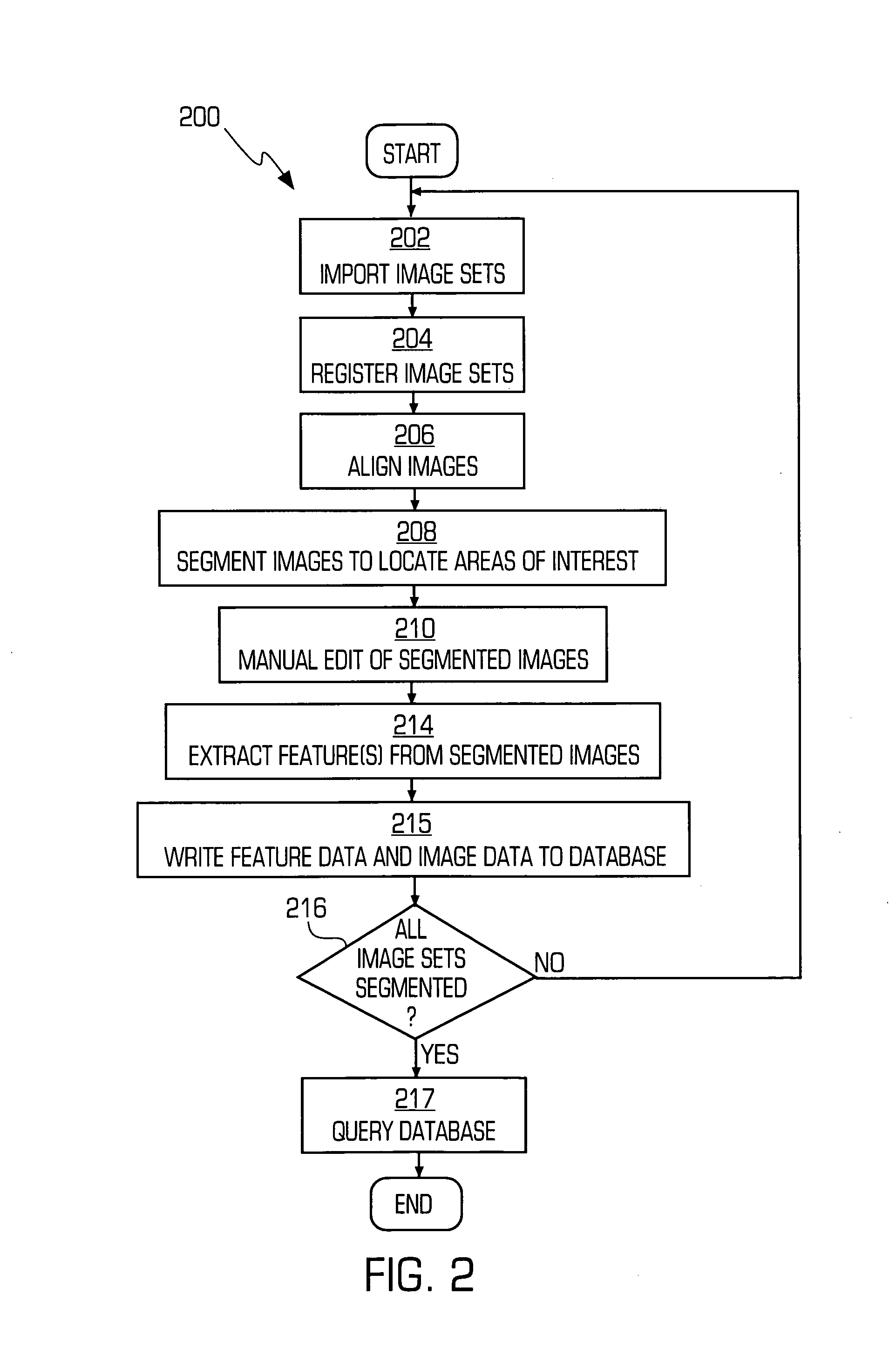

System and method for mining quantitive information from medical images

ActiveUS7158692B2Enhancing clinical diagnosisImprove researchMedical data miningRecognition of medical/anatomical patternsGraphicsFeature extraction

A system for image registration and quantitative feature extraction for multiple image sets. The system includes an imaging workstation having a data processor and memory in communication with a database server in communication. The a data processor capable of inputting and outputting data and instructions to peripheral devices and operating pursuant to a software product and accepts instructions from a graphical user interface capable of interfacing with and navigating the imaging software product. The imaging software product is capable of instructing the data processor, to register images, segment images and to extract features from images and provide instructions to store and retrieve one or more registered images, segmented images, quantitative image features and quantitative image data and from the database server.

Owner:CLOUD SOFTWARE GRP INC

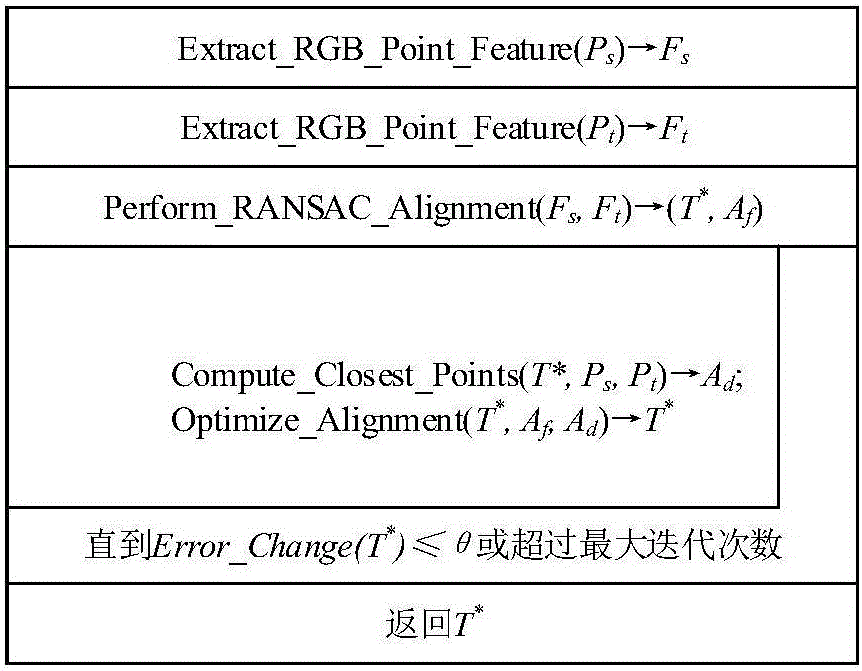

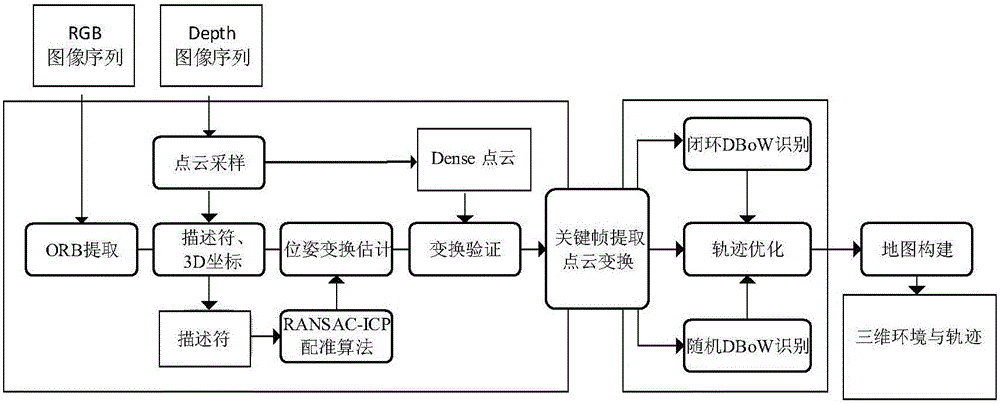

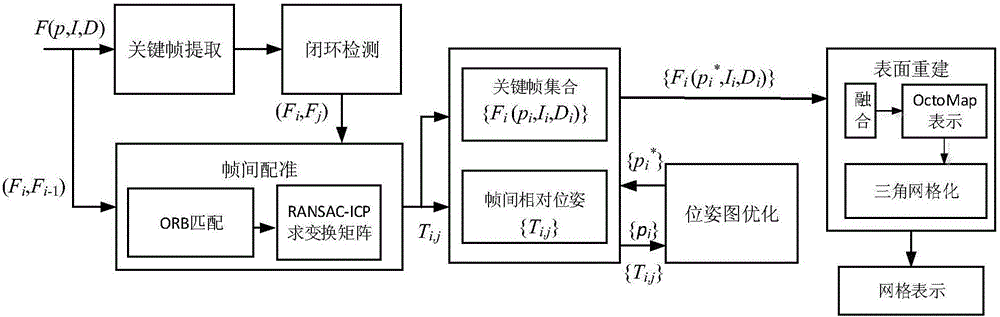

ORB key frame closed-loop detection SLAM method capable of improving consistency of position and pose of robot

InactiveCN105856230AGuaranteed positioning accuracyGuaranteed accuracyProgramme controlProgramme-controlled manipulatorClosed loopImaging Feature

The invention discloses an ORB key frame closed-loop detection SLAM method capable of improving the consistency of the position and the pose of a robot. The ORB key frame closed-loop detection SLAM method comprises the following steps of, firstly, acquiring color information and depth information of the environment by adopting an RGB-D sensor, and extracting the image features by using the ORB features; then, estimating the position and the pose of the robot by an algorithm based on RANSAC-ICP interframe registration, and constructing an initial position and pose graph; and finally, constructing BoVW (bag of visual words) by extracting the ORB features in a Key Frame, carrying out similarity comparison on the current key frame and words in the BoVW to realize closed-loop key frame detection, adding constraint of the position and pose graph through key frame interframe registration detection, and obtaining the global optimal position and pose of the robot. The invention provides the ORB key frame closed-loop detection SLAM method with capability of improving the consistency of the position and the pose of the robot, higher constructing quality of an environmental map and high optimization efficiency.

Owner:简燕梅

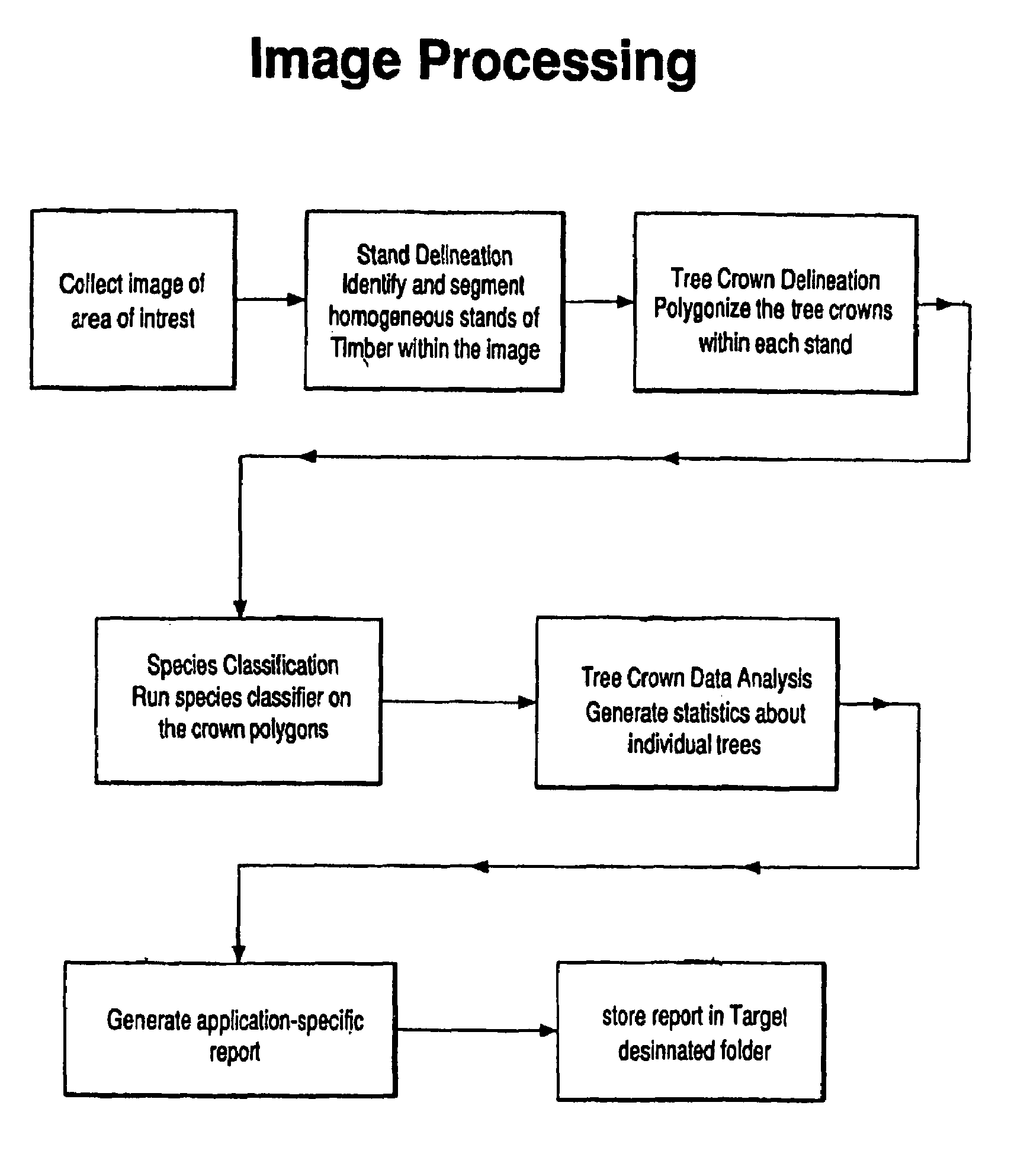

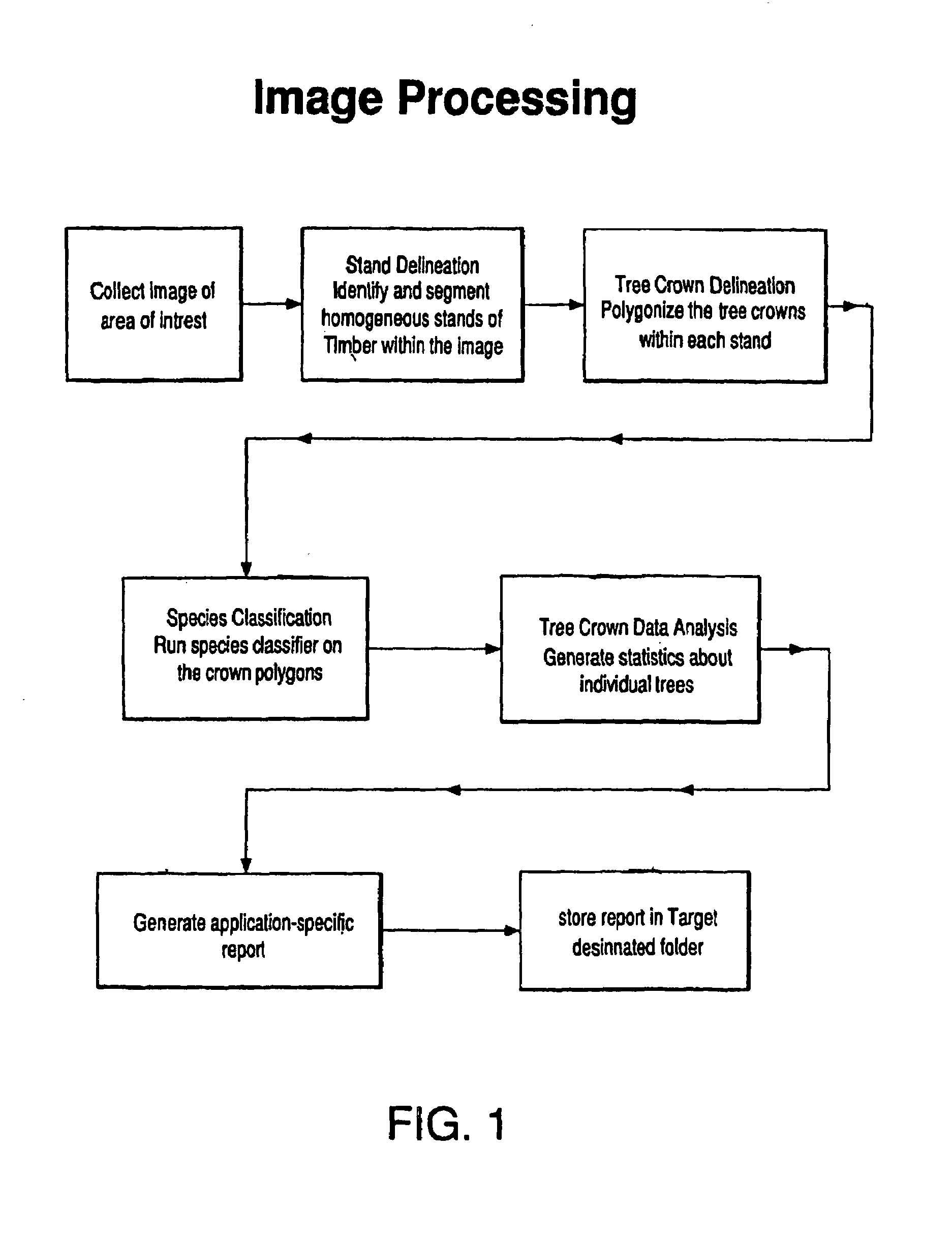

Method of feature identification and analysis

ActiveUS7212670B1Efficiently and accurately identifyingEfficiently and accurately and analyzingImage analysisScene recognitionTree standImaging Feature

A method for efficiently and accurately inventorying image features such as timber, including steps of segmenting digital images into tree stands, segmenting tree stands into tree crowns, each tree crown having a tree crown area, classifying tree crowns based on species, and analyzing the tree crown classification to determine information about the individual tree crowns and aggregate tree stands. The tree crown area is used to determine physical information such as tree diameter breast height, tree stem volume and tree height. The tree crown area is also used to determine the value of timber in tree stands and parcels of land using tree stem volume and market price of timber per species.

Owner:GEODIGITAL INT

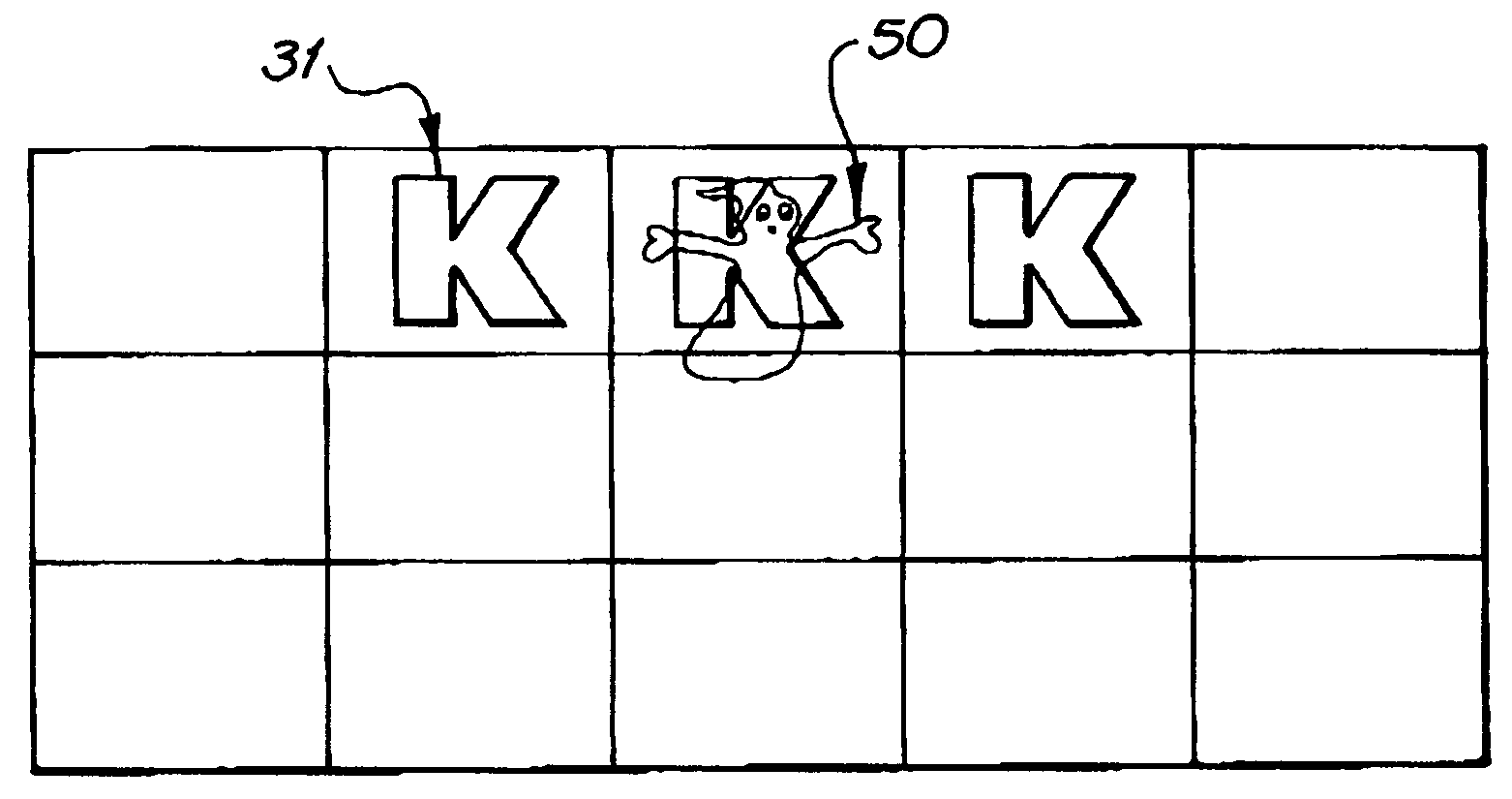

Gaming console with transparent sprites

Owner:ARISTOCRAT TECH AUSTRALIA PTY LTD

Efficient optical coherence tomography (OCT) system and method for rapid imaging in three dimensions

InactiveUS7145661B2Remove Motion ArtifactsRaise the ratioScattering properties measurementsUsing optical meansRapid imagingData set

Owner:CARL ZEISS MEDITEC INC

Image processing device and method

InactiveUS20100208942A1Promote productionCharacter and pattern recognitionSteroscopic systemsImaging processingDisplay device

An image processing device comprises receiving means operable to receive, from a camera, a captured image corresponding to an image of a scene captured by the camera. The scene contains at least one object. The device comprises determining means operable to determine a distance between the object within the scene and a reference position defined with respect to the camera, and generating means operable to detect a position of the object within the captured image, and to generate a modified image from the captured image based on image features within the captured image which correspond to the object in the scene. The generating means is operable to generate the modified image by displacing the position of the captured object within the modified image with respect to the determined position of the object within the captured image by an object offset amount which is dependent on the distance between the reference position and the object in the scene so that, when the modified image and the captured image are viewed together as a pair of images on a display, the captured object appears to be positioned at a predetermined distance from the display.

Owner:SONY CORP

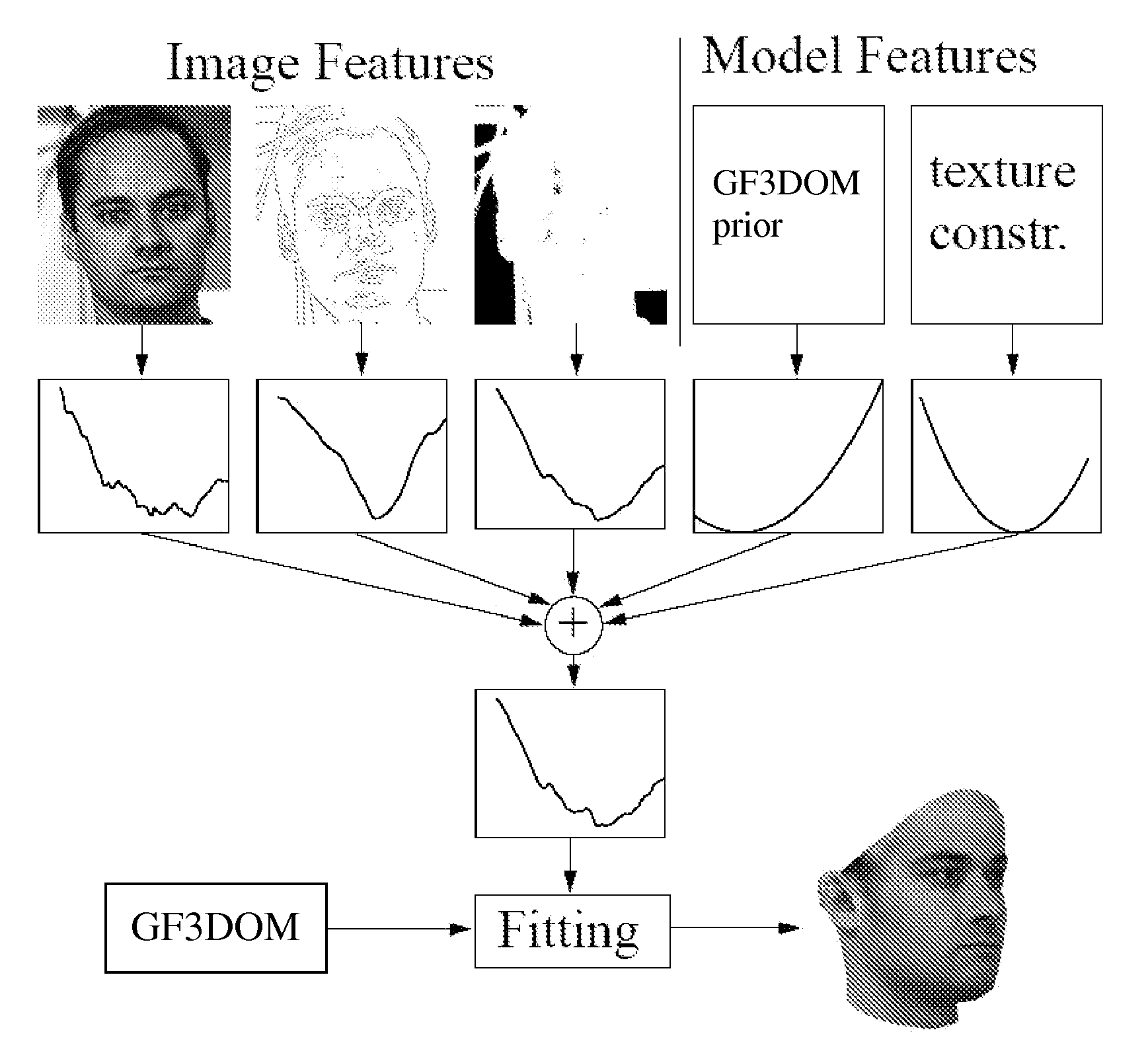

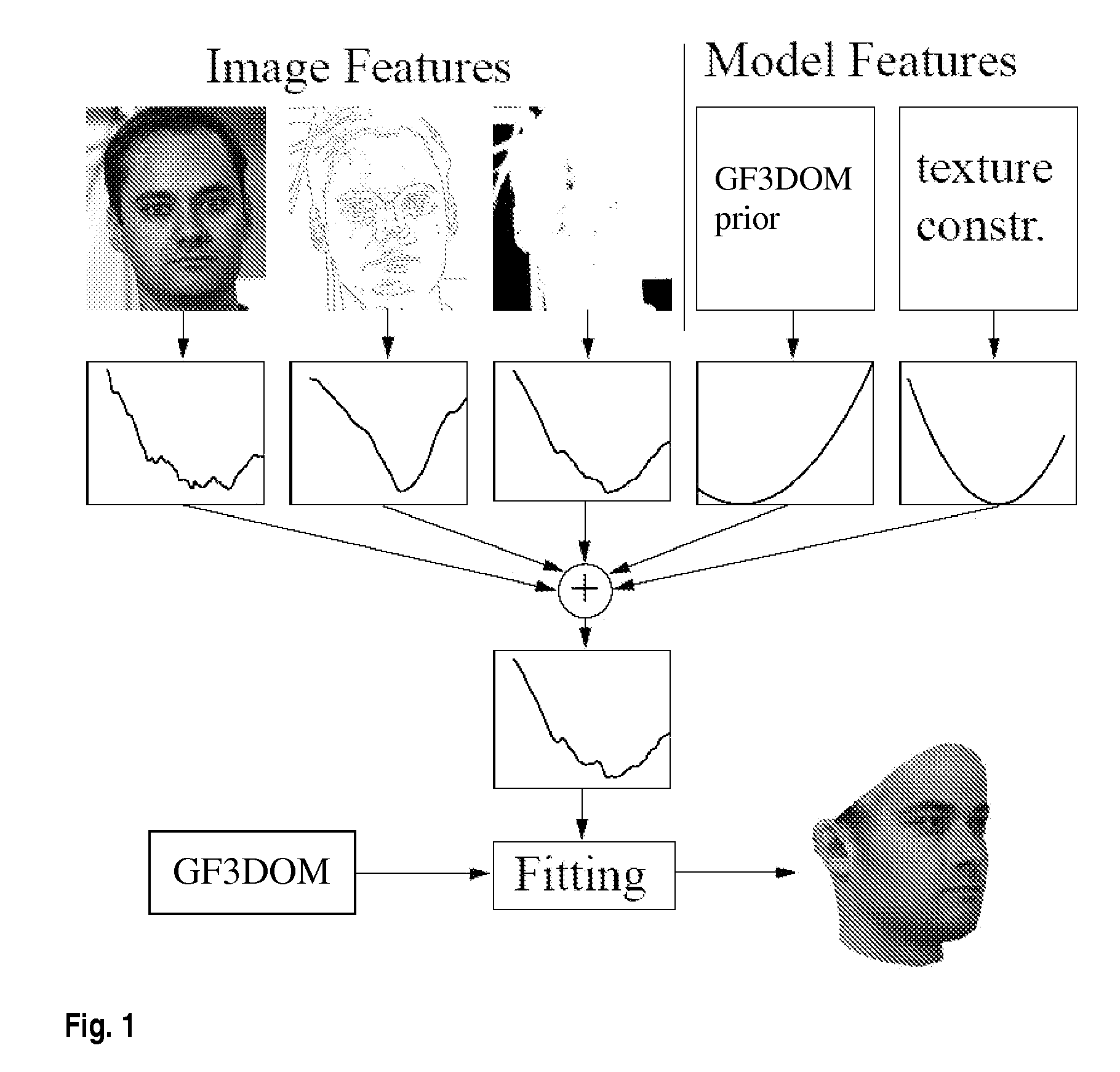

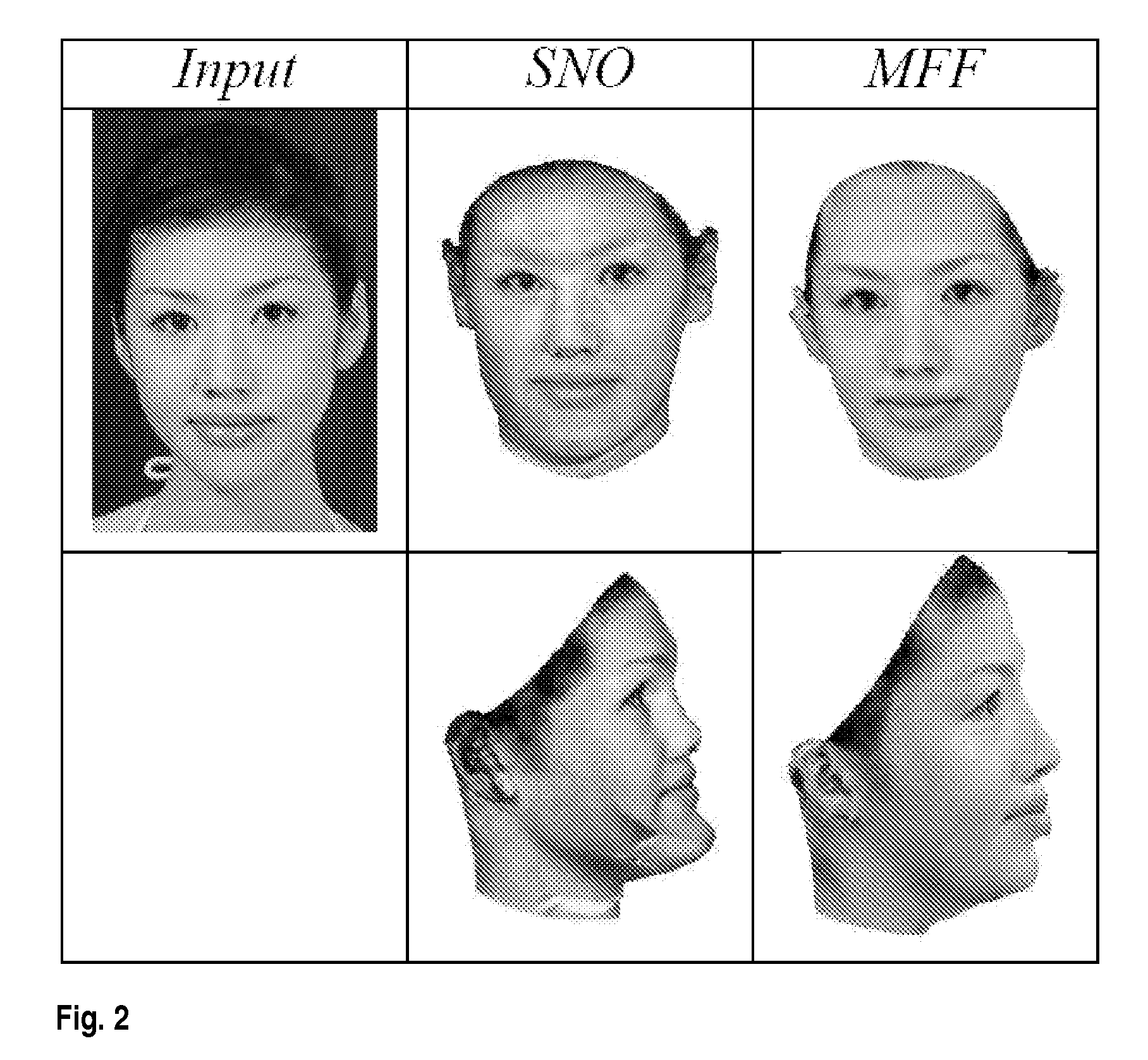

Estimating 3D shape and texture of a 3D object based on a 2d image of the 3D object

InactiveUS20070031028A1Maximizing posterior probabilityPerformance maximizationAdditive manufacturing apparatusDrawing from basic elements3d shapesObject based

Disclosed is an improved algorithm for estimating the 3D shape of a 3-dimensional object, such as a human face, based on information retrieved from a single photograph by recovering parameters of a 3-dimensional model and methods and systems using the same. Beside the pixel intensity, the invention uses various image features in a multi-features fitting algorithm (MFF) that has a wider radius of convergence and a higher level of precision and provides thereby better results.

Owner:UNIVERSITY OF BASEL

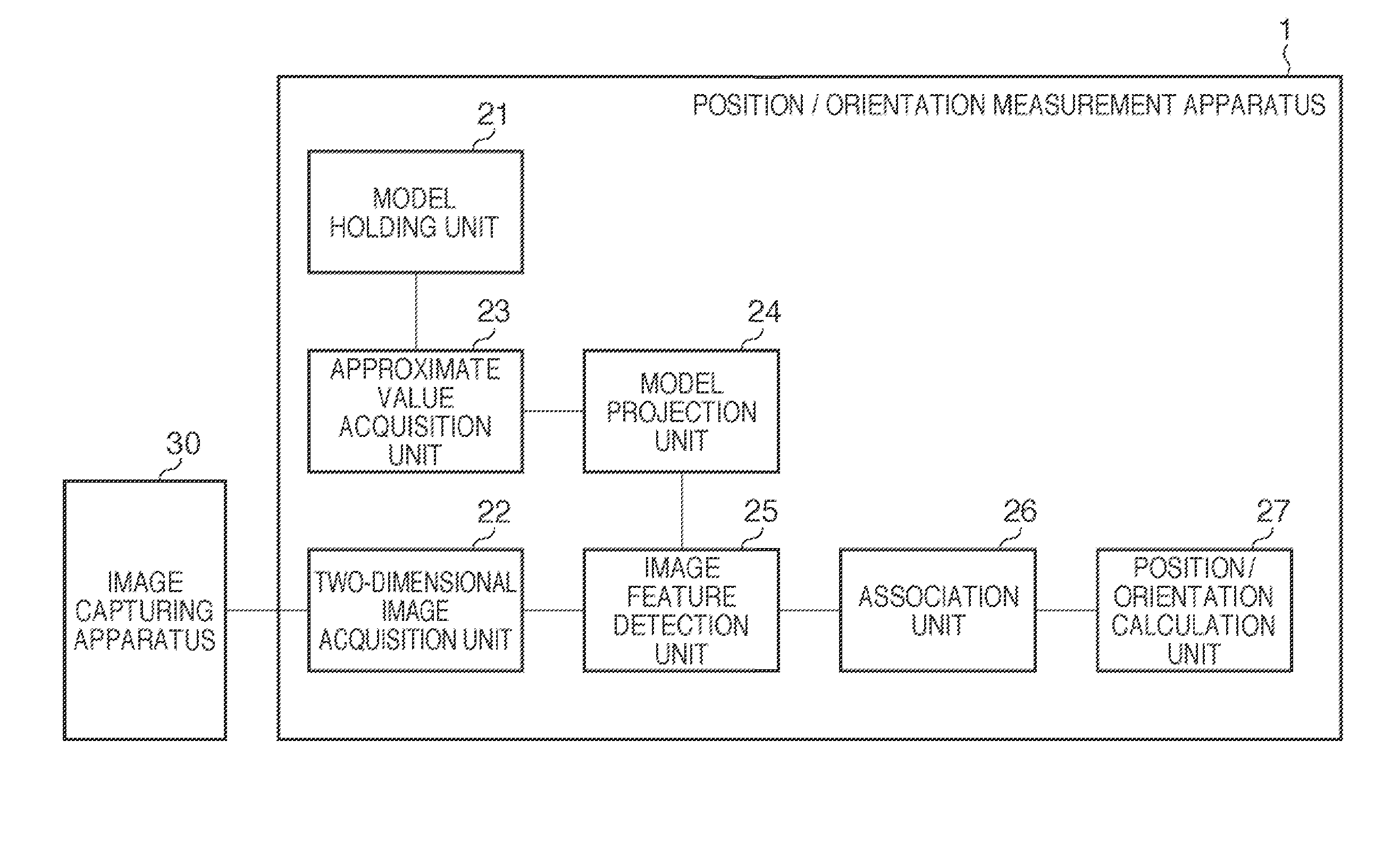

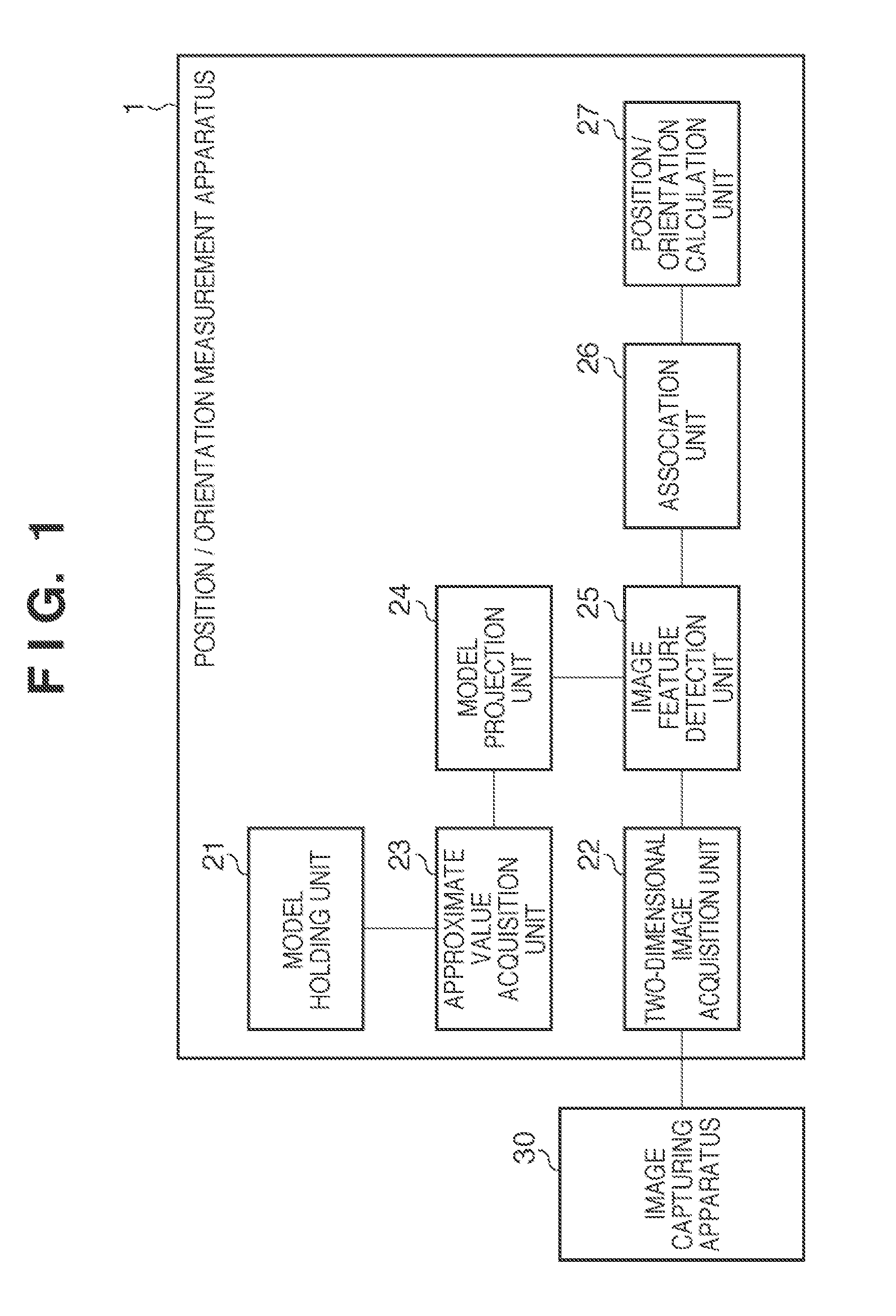

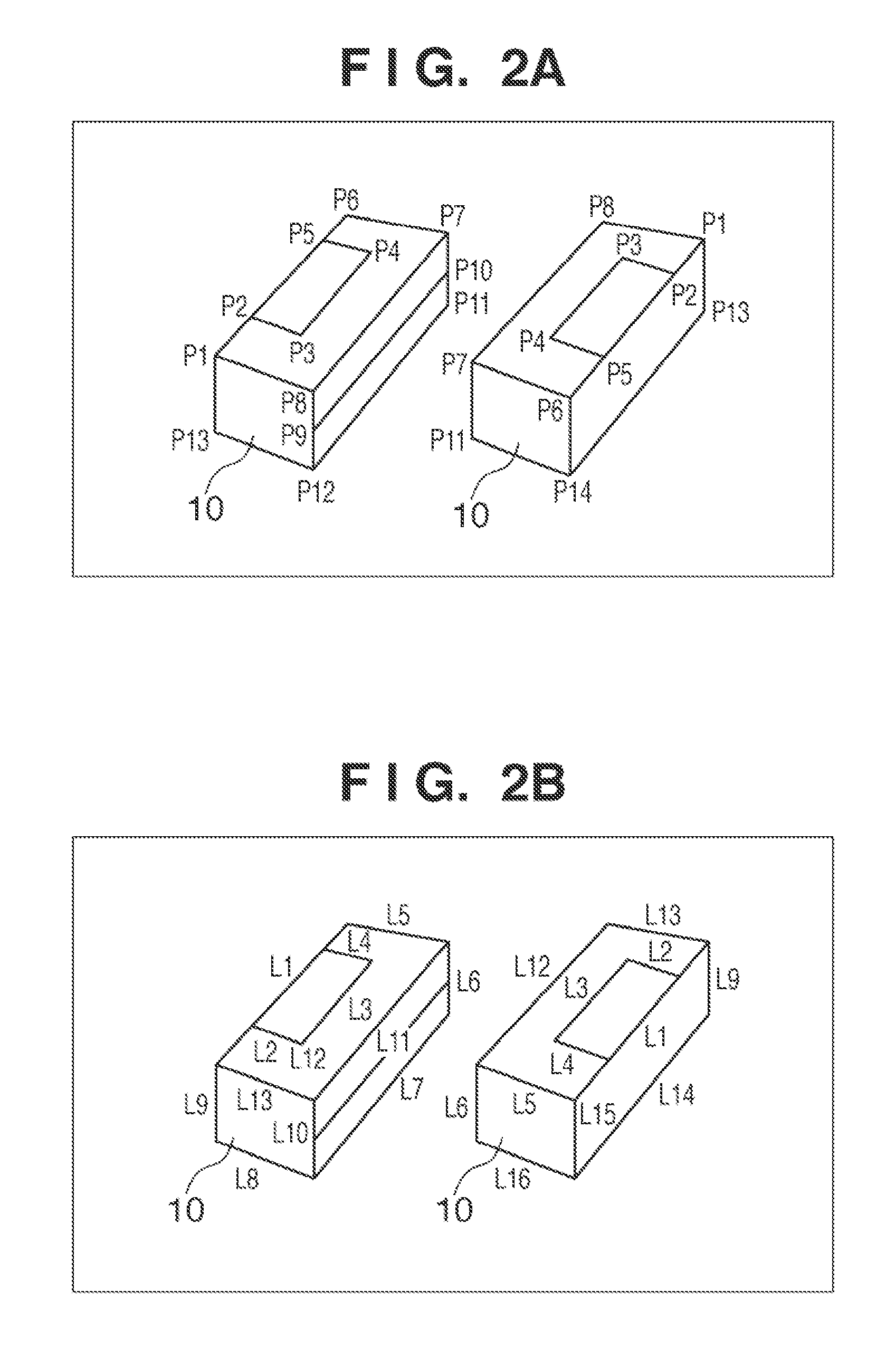

Position/orientation measurement apparatus, processing method therefor, and non-transitory computer-readable storage medium

A position / orientation measurement apparatus holds a three-dimensional shape model of a object, acquires approximate value indicating a position and an orientation of the object, acquires a two-dimensional image of the object, projects a geometric feature of the three-dimensional shape model on the two-dimensional image based on the approximate value, calculates the direction of the geometric feature of the three-dimensional shape model projected on the two-dimensional image, detects an image feature based on the two-dimensional image, calculates the direction of the image feature, associates the image feature and the geometric feature by comparing the direction of the image feature calculated based on the two-dimensional image and the direction of the geometric feature calculated based on the three-dimensional shape model, and calculates the position and orientation of the object by correcting the approximate value based on the distance between the geometric feature and the image feature associated therewith.

Owner:CANON KK

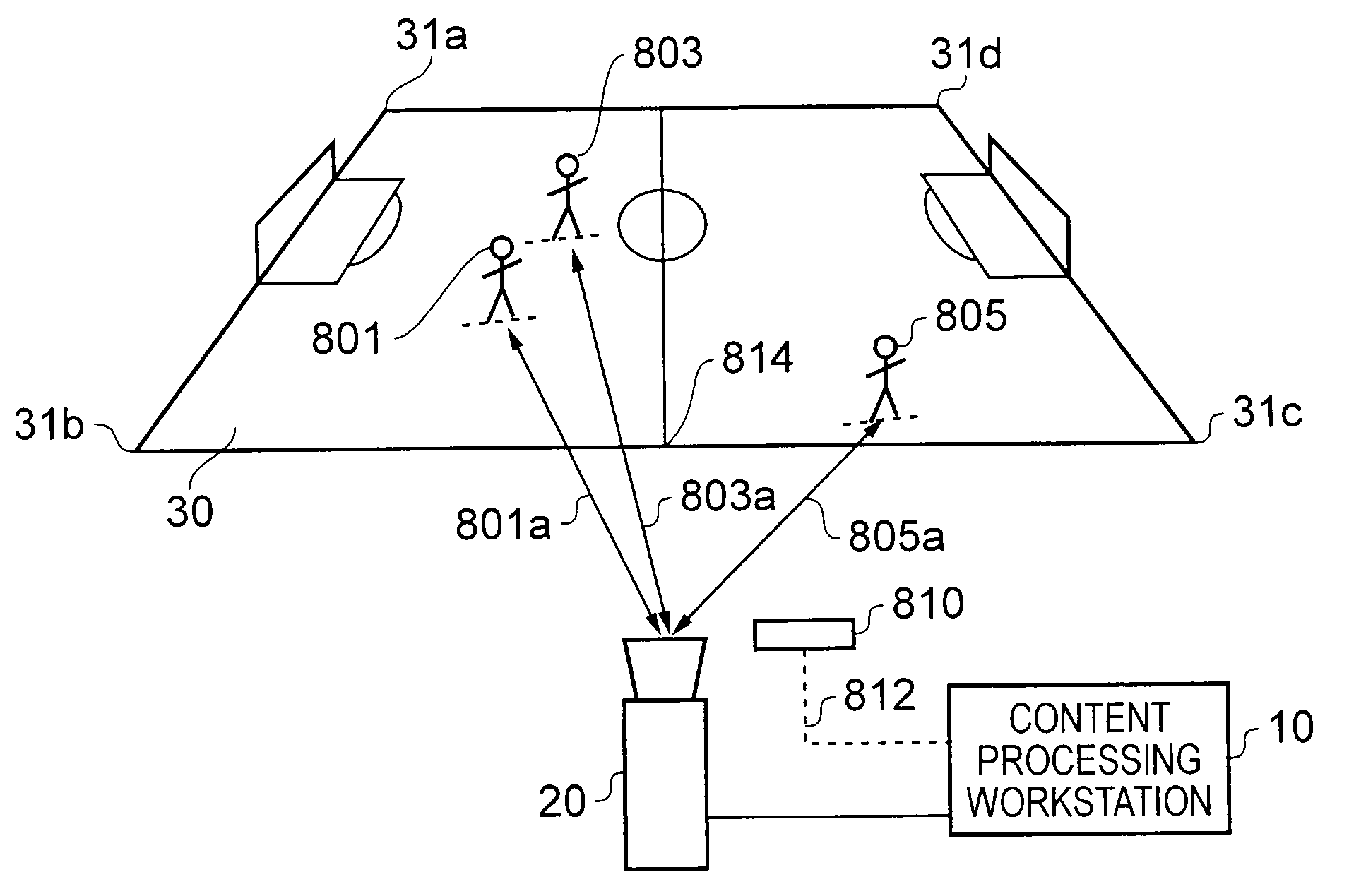

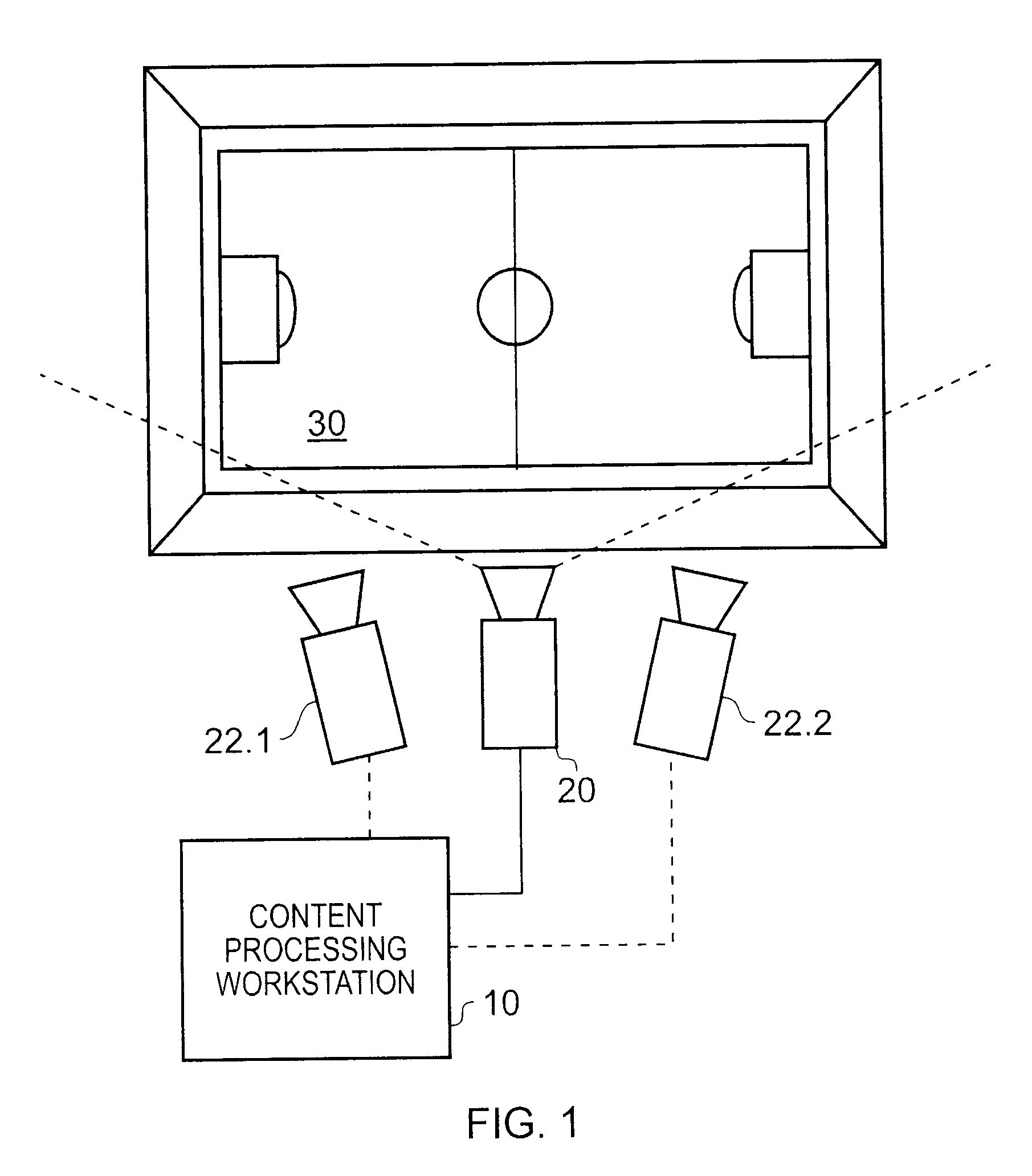

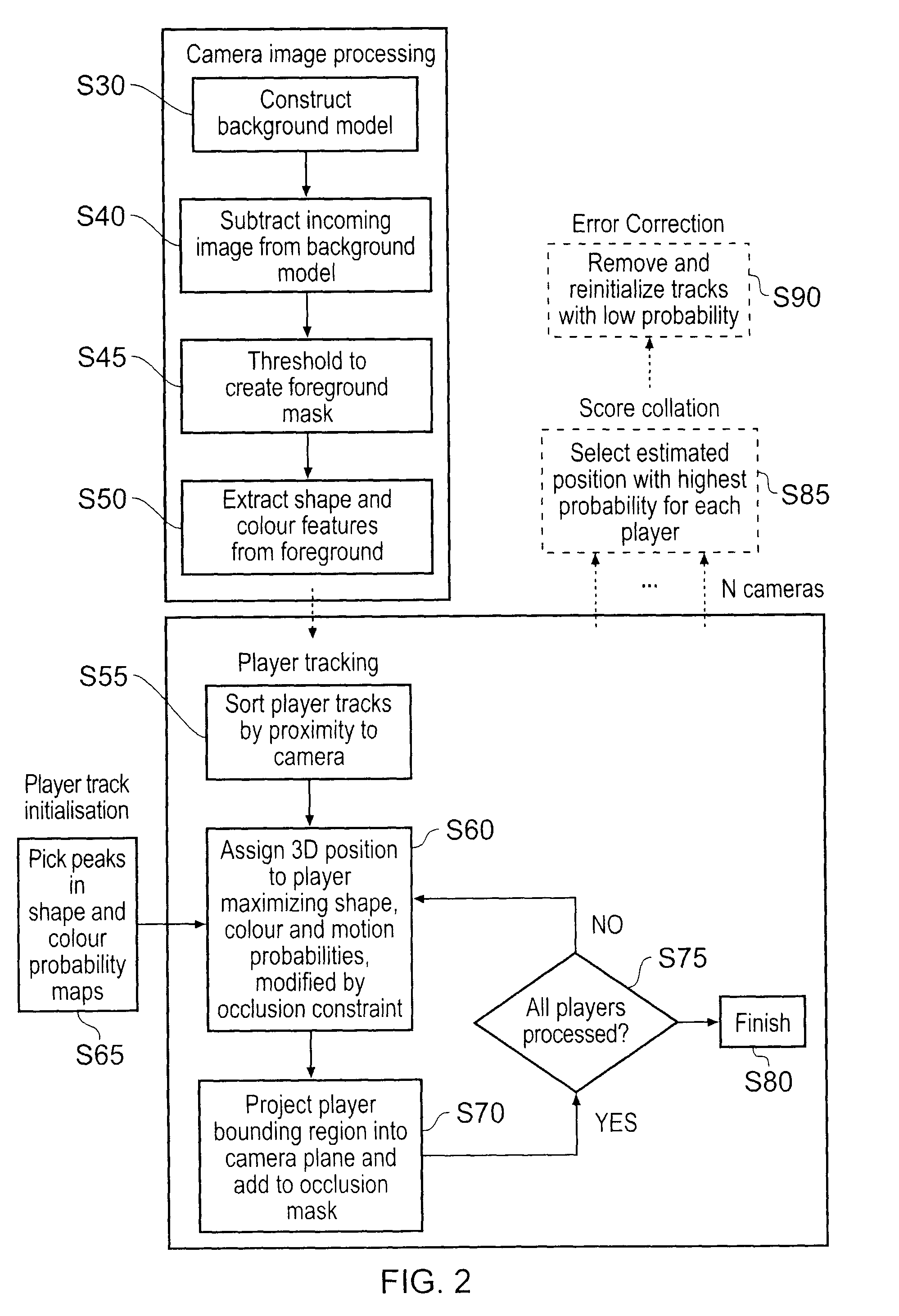

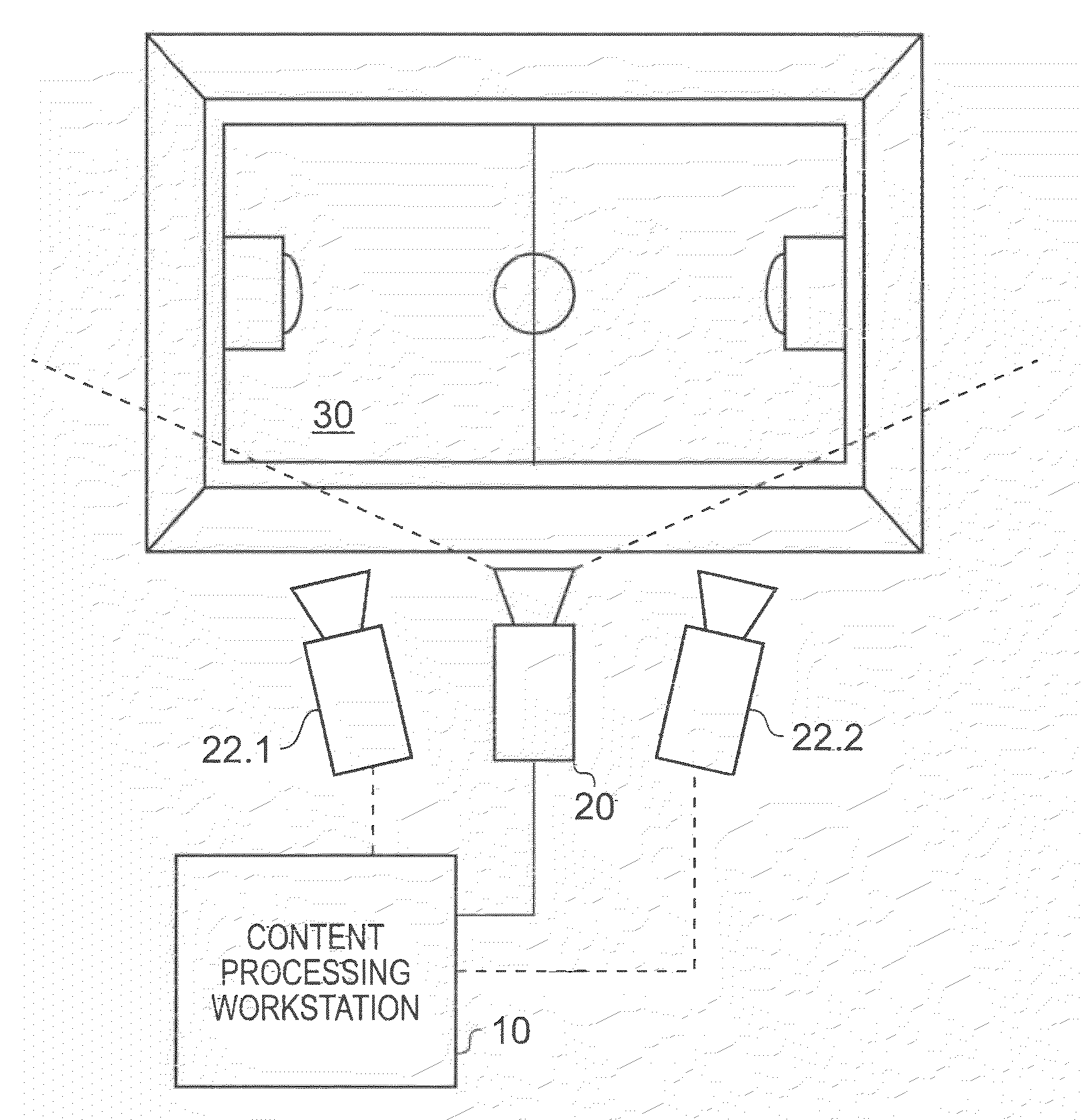

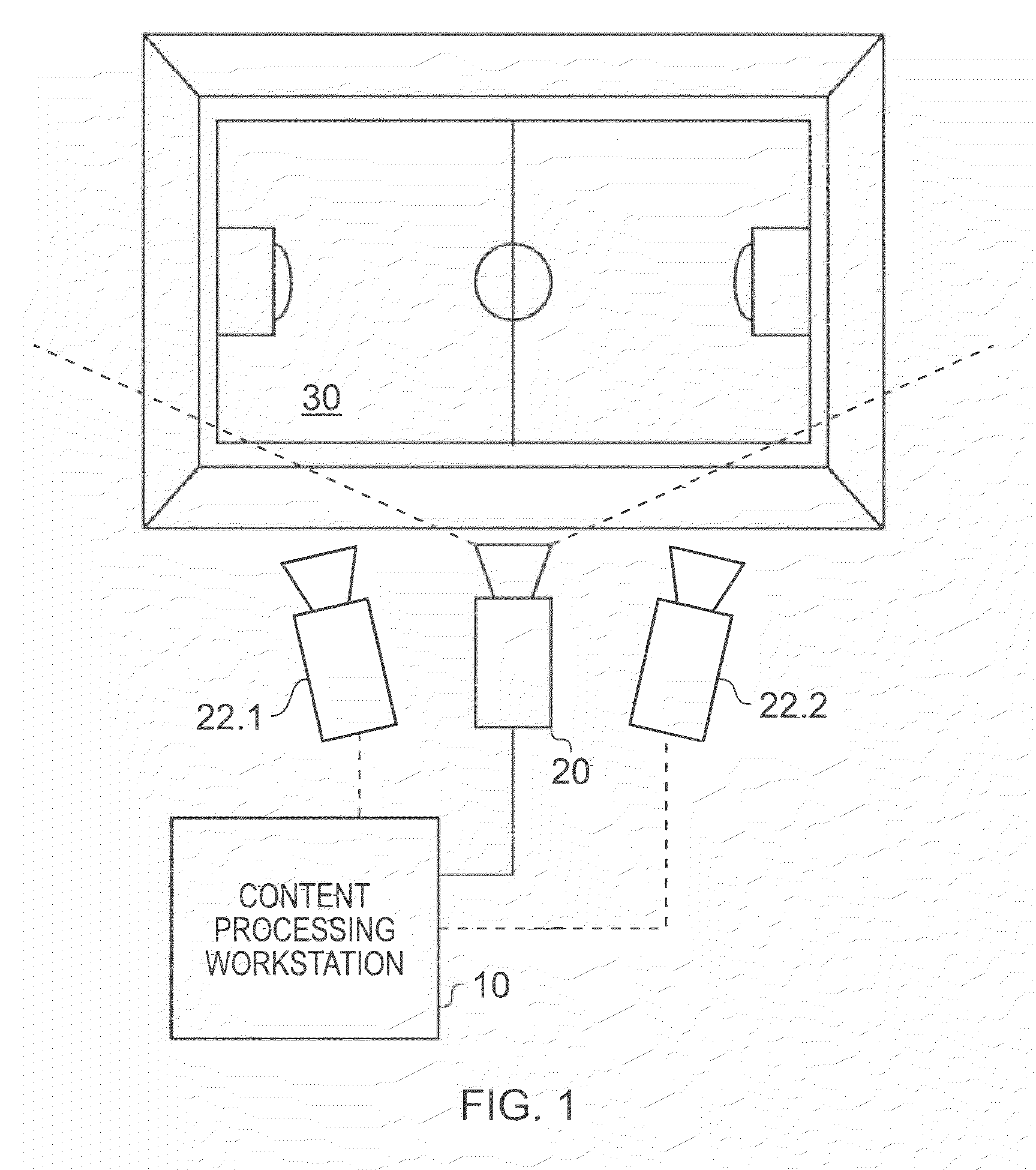

Apparatus and method of object tracking

InactiveUS20090059007A1Low costReduce laborImage enhancementTelevision system detailsSample imageImaging Feature

A method of tracking objects on a plane within video images of the objects captured by a video camera. The method includes processing the captured video images so as to extract one or more image features from each object, detecting each of the objects from a relative position of the objects on the plane as viewed from the captured video images by comparing the one or more extracted image features associated with each object with sample image features from a predetermined set of possible example objects which the captured video images may contain; and generating object identification data for each object, from the comparing, which identifies the respective object on the plane. The method further includes generating a three dimensional model of the plane and logging, for each detected object, the object identification data for each object which identifies the respective object on the plane together with object path data. The object path provides a position of the object on the three dimensional model of the plane from the video images with respect to time and relates to the path that each object has taken within the video images. The logging includes detecting an occlusion event in dependence upon whether a first image feature associated with a first of the objects obscures a whole or part of at least a second image feature associated with at least a second of the objects; and, if an occlusion event is detected, associating the object identification data for the first object and the object identification data for the second object with the object path data for both the first object and the second object respectively and logging the associations. The logging further includes identifying at least one of the objects involved in the occlusion event in dependence upon a comparison between the one or more image features associated with that object and the sample image features from the predetermined set of possible example objects, and updating the logged path data after the identification of at least one of the objects so that the respective path data is associated with the respective identified object.

Owner:SONY CORP

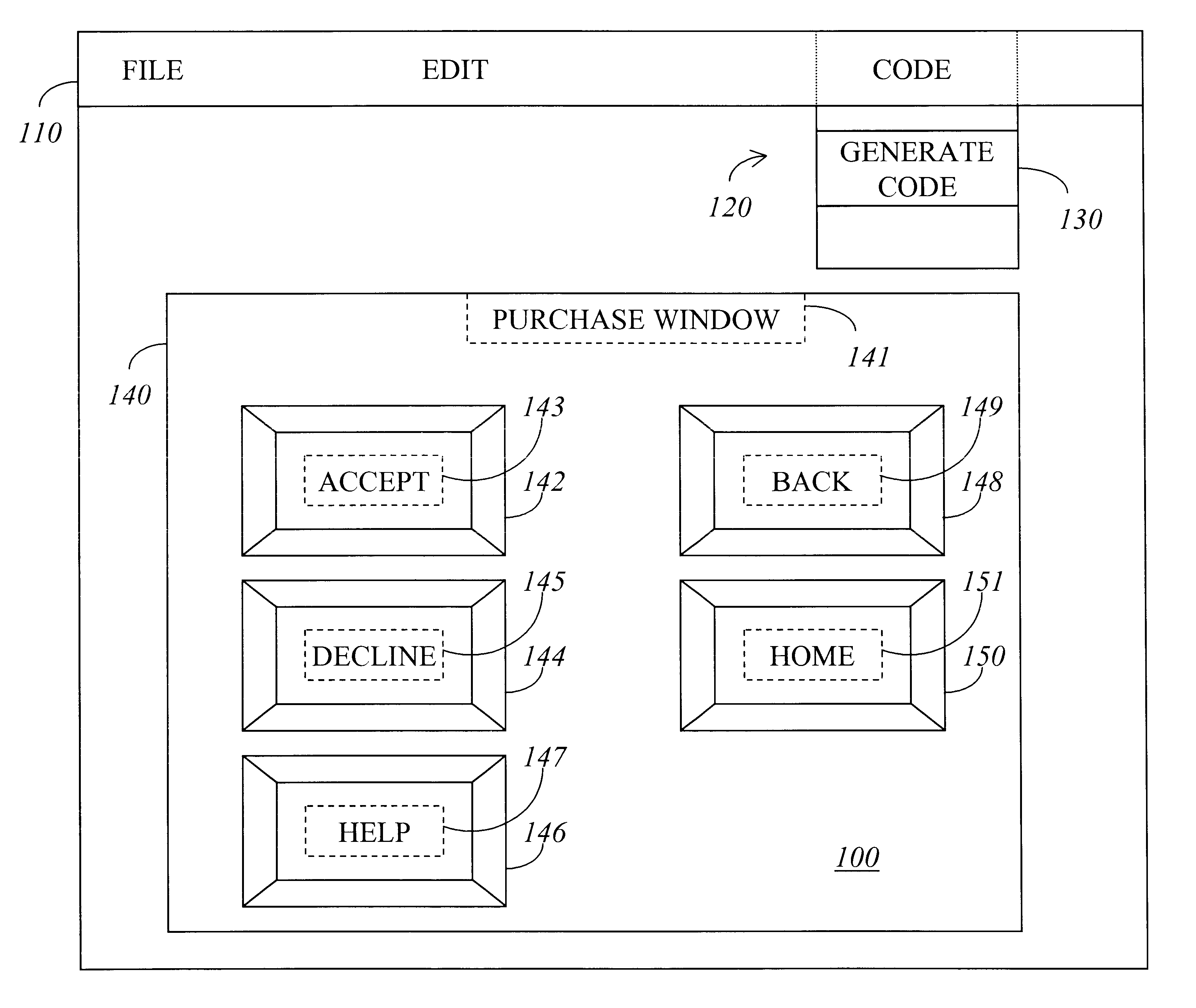

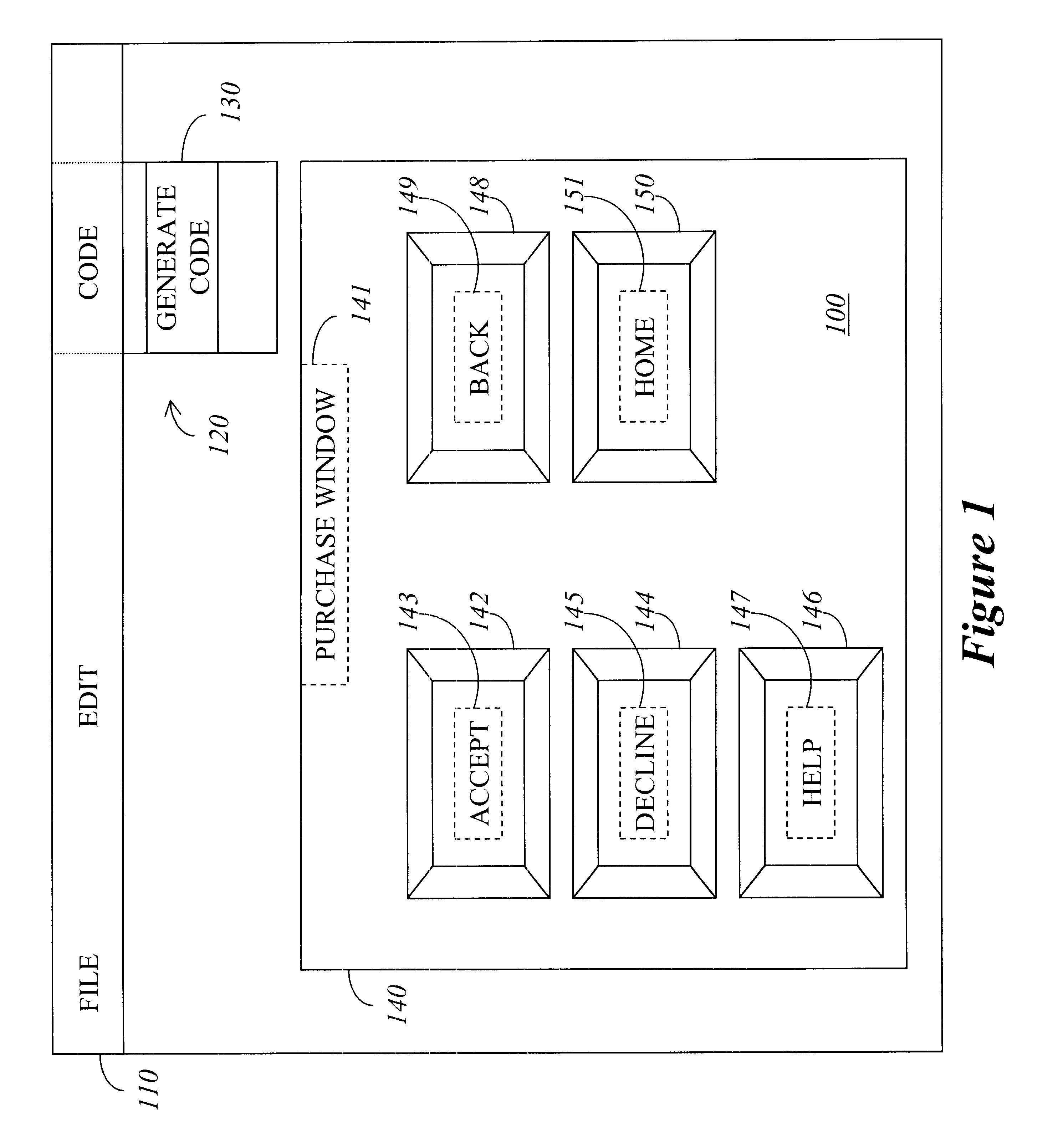

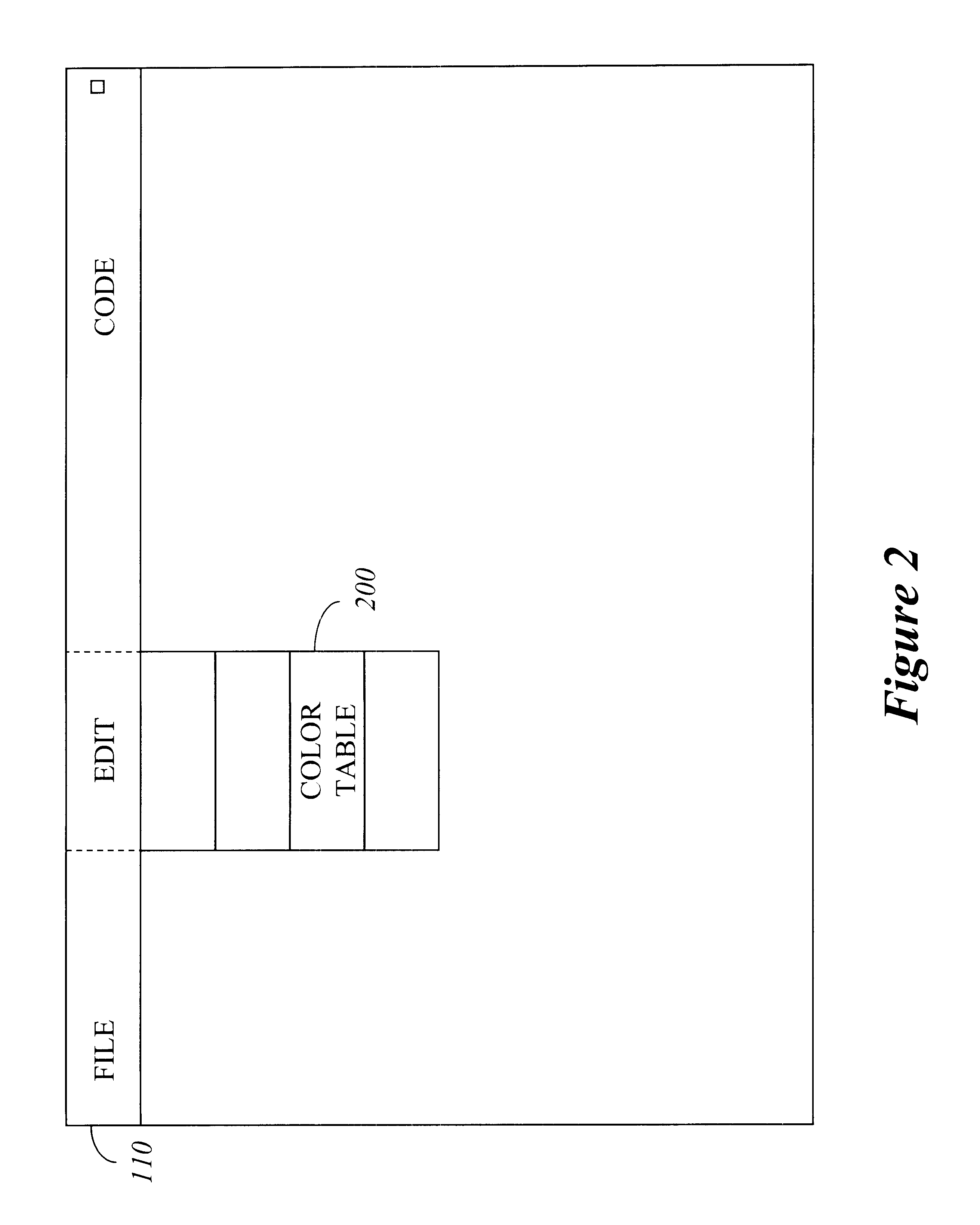

GUI resource editor for an embedded system

InactiveUS6429885B1Cathode-ray tube indicatorsImage data processing detailsGraphicsGraphical user interface

A technique for converting displayable objects of a graphics user interface (GUI) display into a code representation with minimal user intervention. The technique includes creation of the GUI display and analysis of each displayable object when prompted by the user to convert to a code representation. The analysis features accessing parameters of each displayable object to produce an object data file, a color data file storing colors used by the displayable objects of the GUI display and a bitmap data file to contain bitmaps of logos or images featured in the GUI display. These data files are complied with an implementation file featuring a software library to generate an executable program having the code representation of the GUI display.

Owner:SONY CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com