Patents

Literature

3007 results about "Aerial photography" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Aerial photography (or airborne imagery) is the taking of photographs from an aircraft or other flying object. Platforms for aerial photography include fixed-wing aircraft, helicopters, unmanned aerial vehicles (UAVs or "drones"), balloons, blimps and dirigibles, rockets, pigeons, kites, parachutes, stand-alone telescoping and vehicle-mounted poles. Mounted cameras may be triggered remotely or automatically; hand-held photographs may be taken by a photographer.

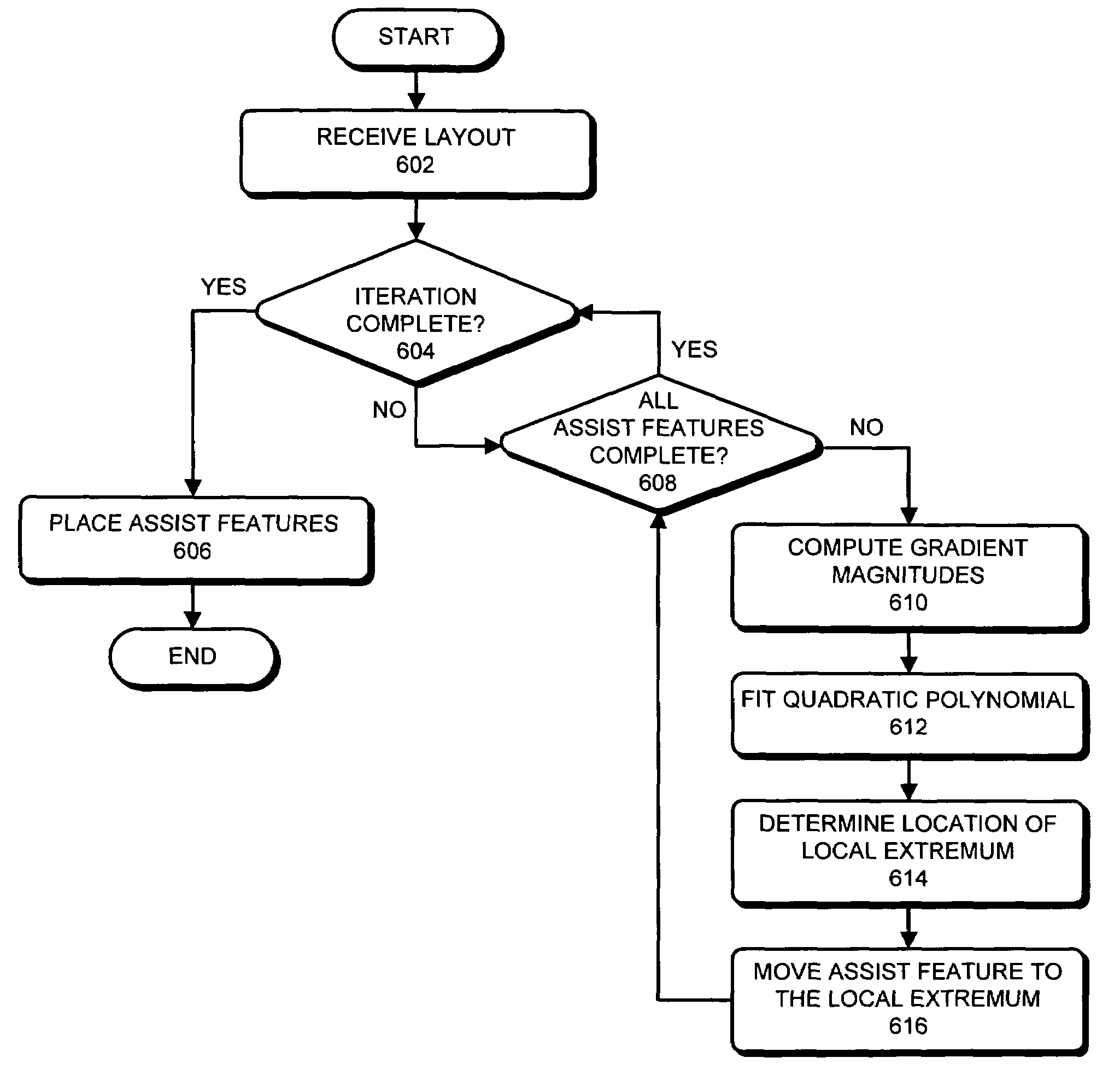

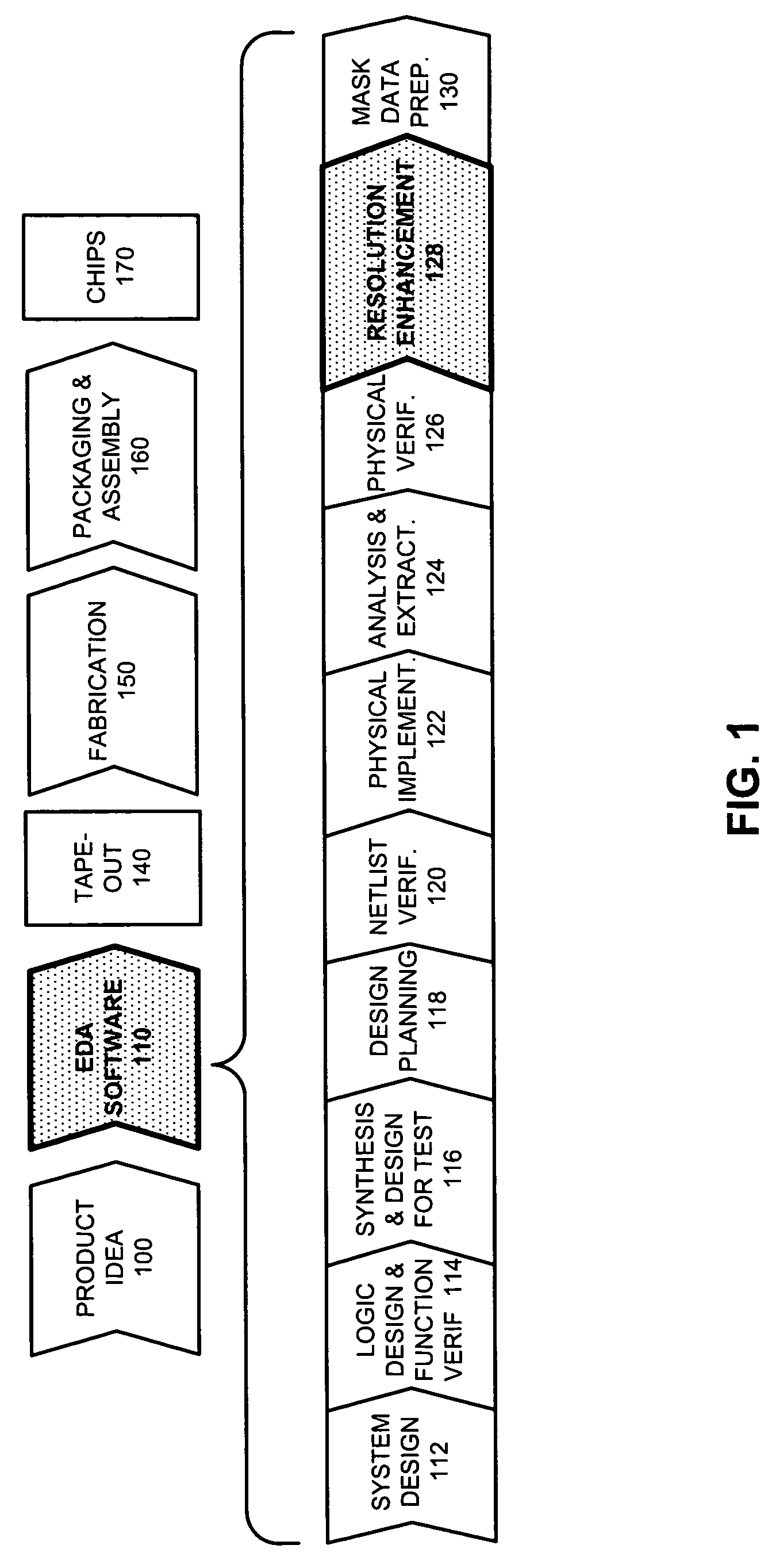

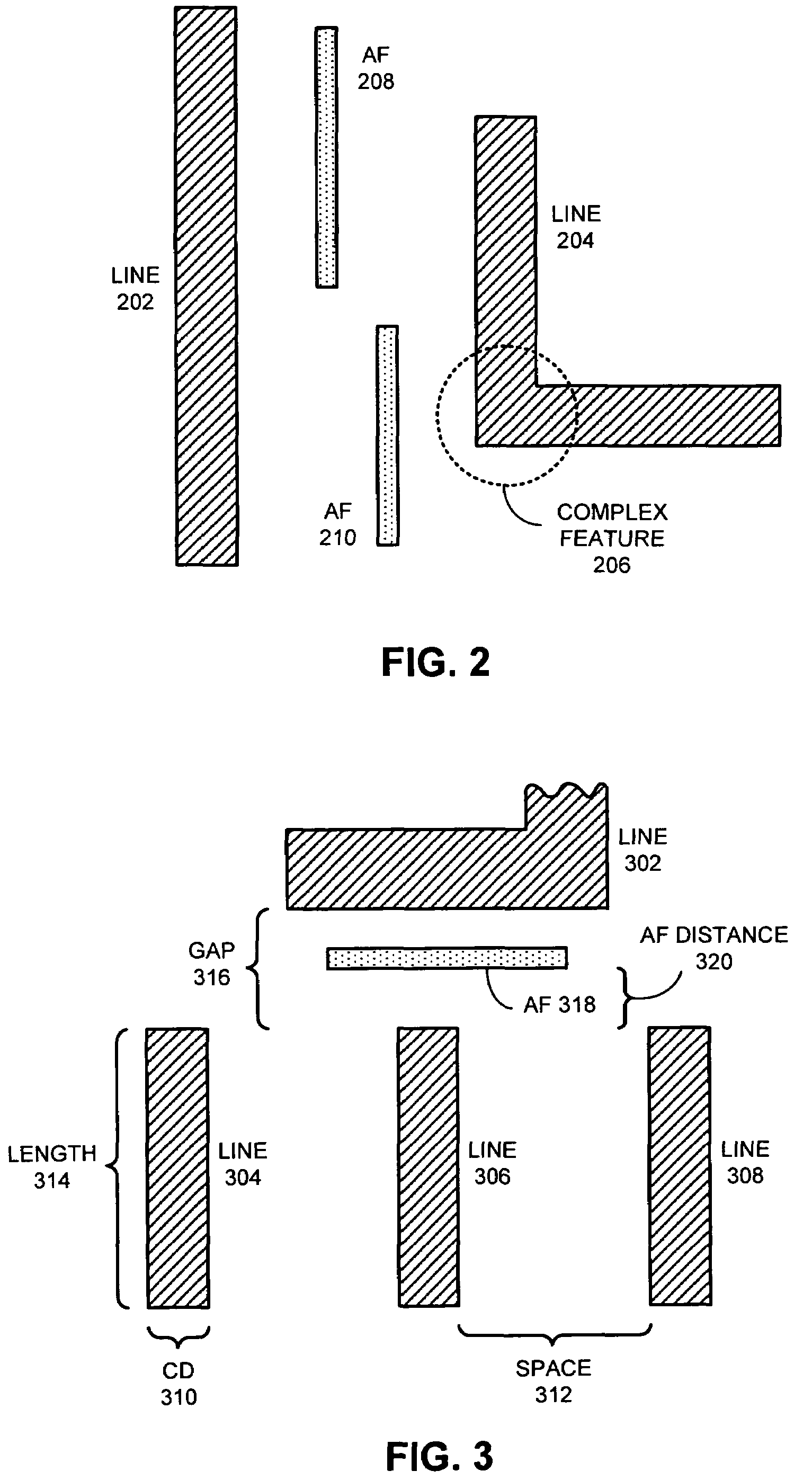

Method and apparatus for placing assist features by identifying locations of constructive and destructive interference

One embodiment of the present invention provides a system that determines a location in a layout to place an assist feature. During operation, the system receives a layout of an integrated circuit. Next, the system selects an evaluation point in the layout. The system then chooses a candidate location in the layout for placing an assist feature. Next, the system determines the final location in the layout to place an assist feature by, iteratively, (a) selecting perturbation locations for placing representative assist features in the proximity of the candidate location, (b) computing aerial-images using an image intensity model, the layout, and by placing representative assist features at the candidate location and the perturbation locations, (c) calculating image-gradient magnitudes at the evaluation point based on the aerial-images, and (d) updating the candidate location for the assist feature based on the image-gradient magnitudes.

Owner:SYNOPSYS INC

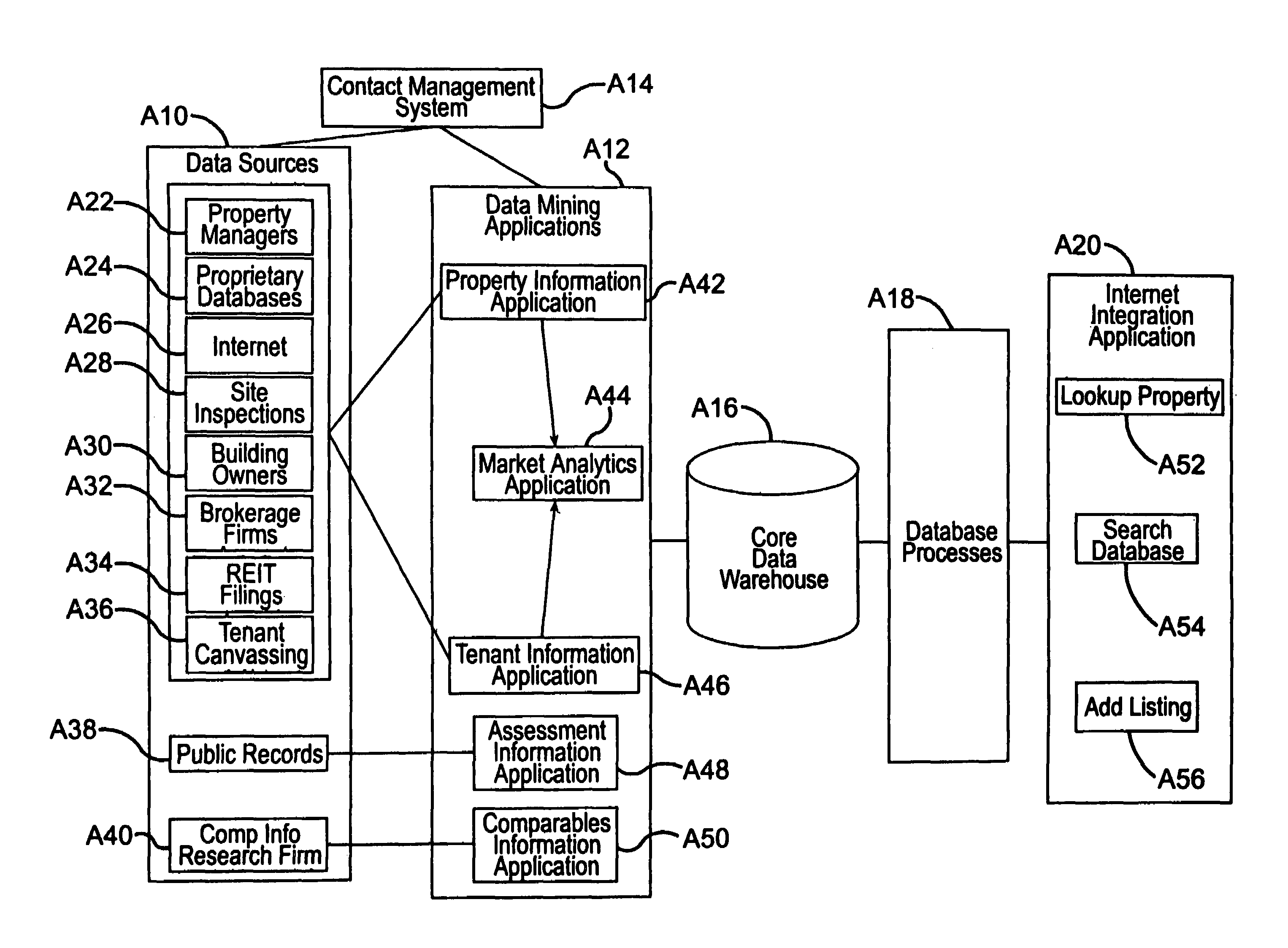

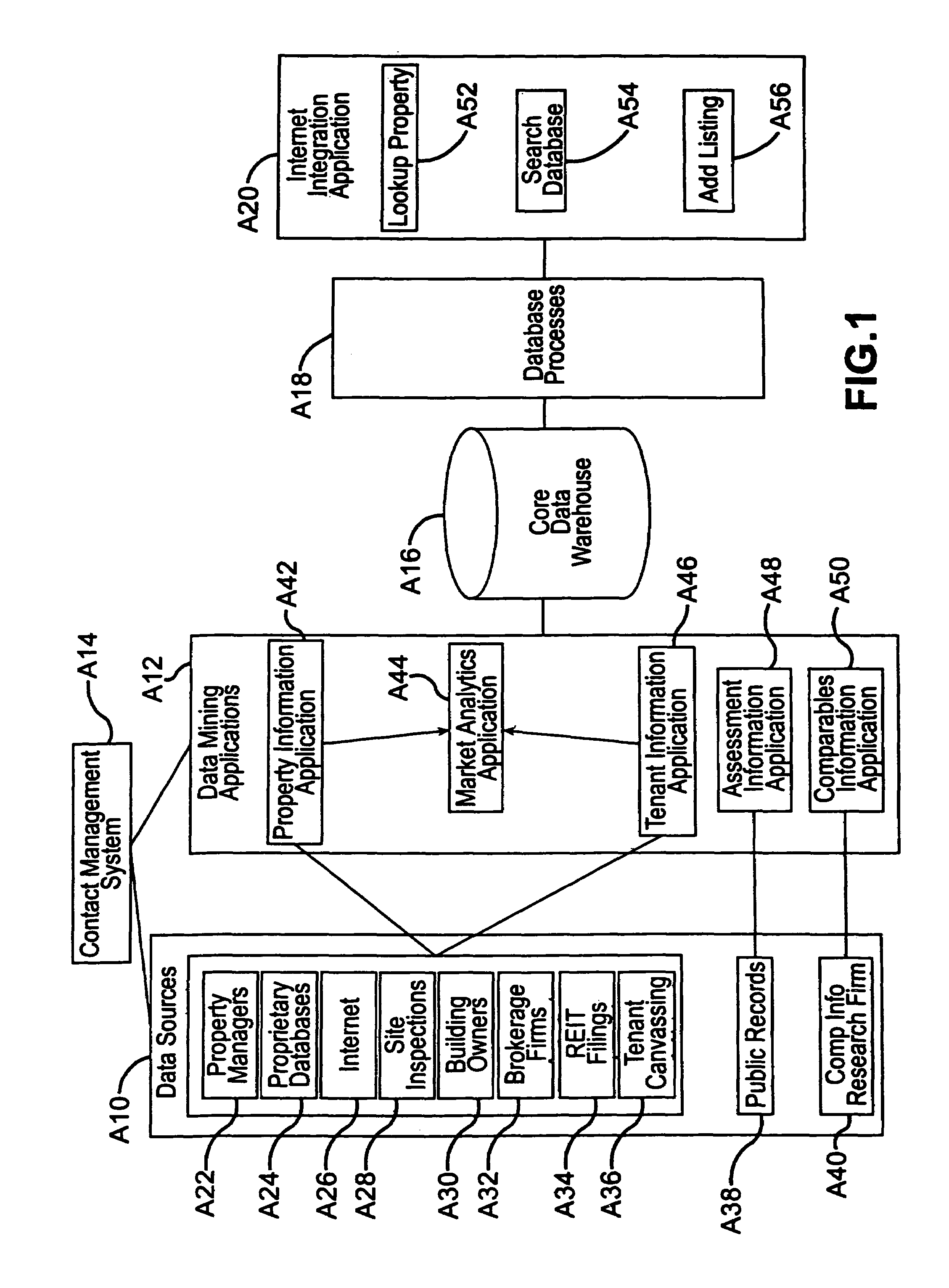

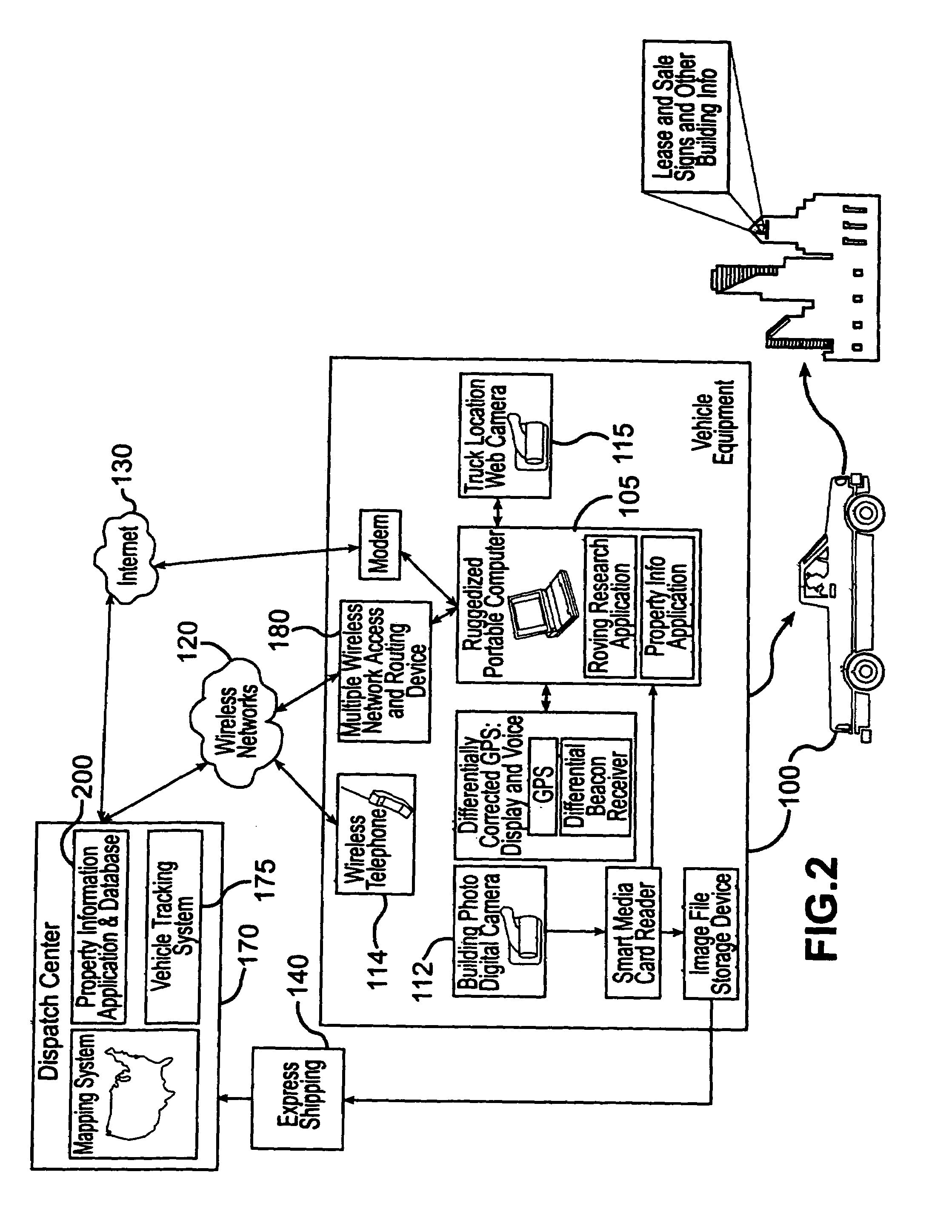

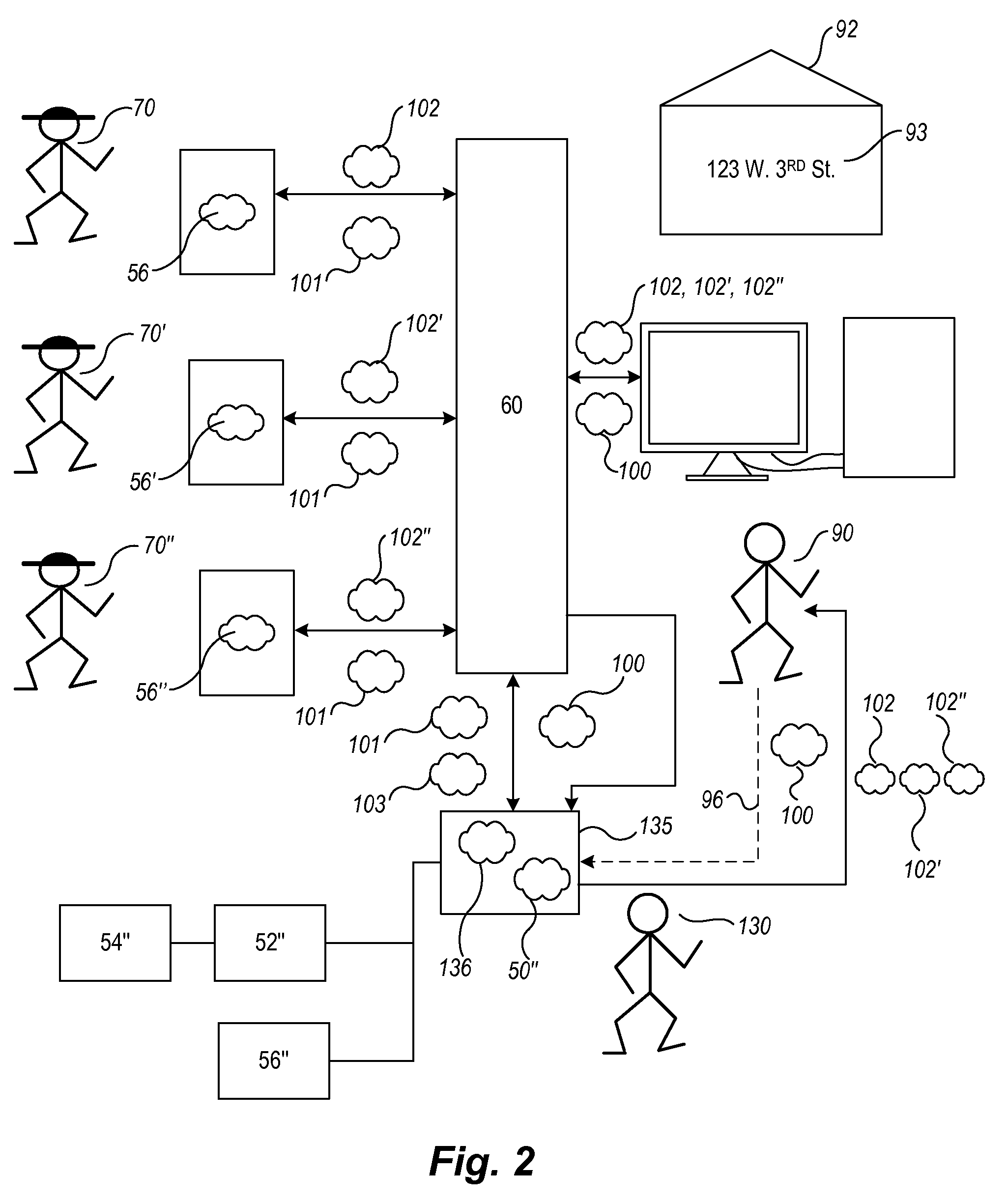

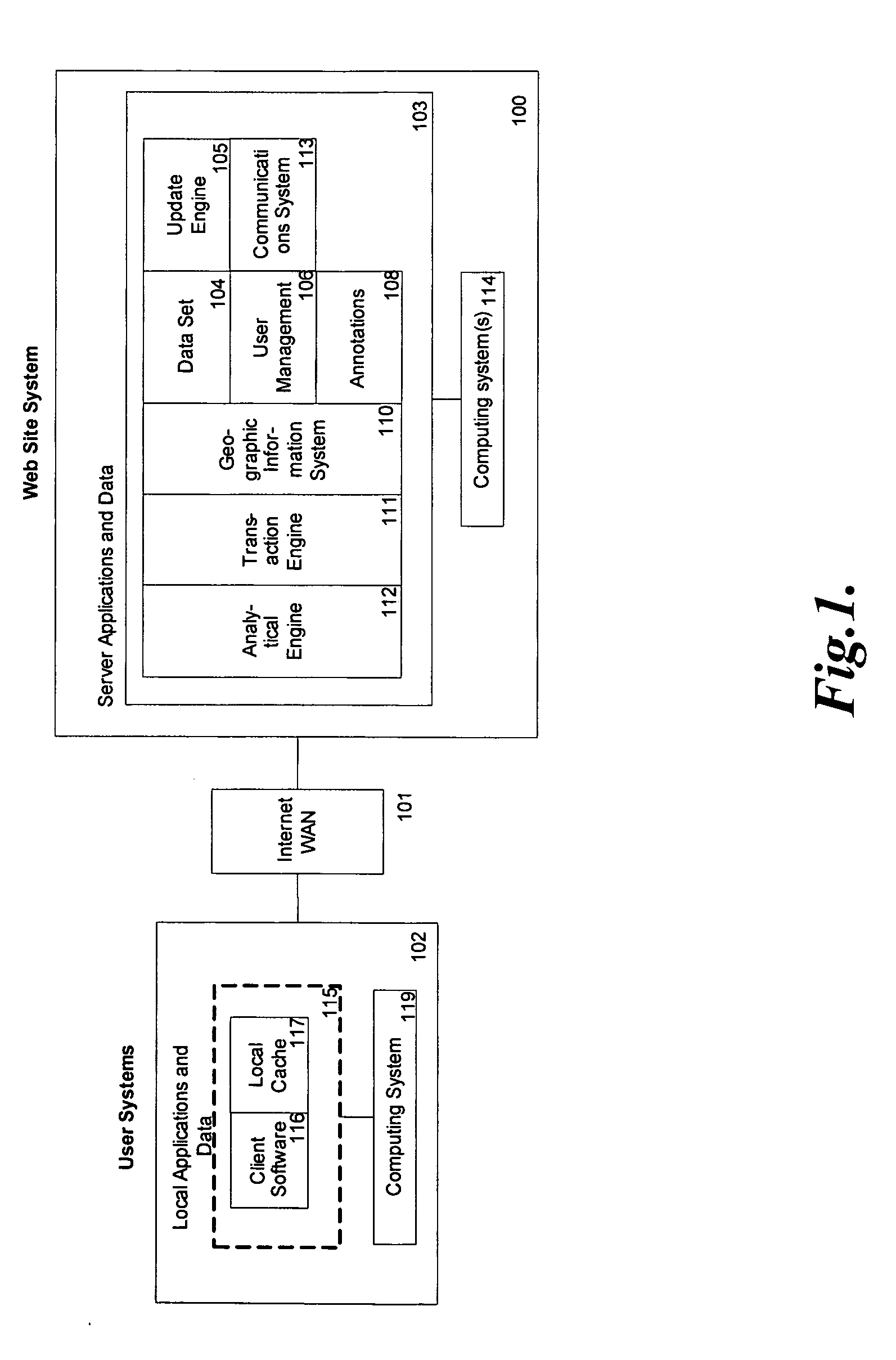

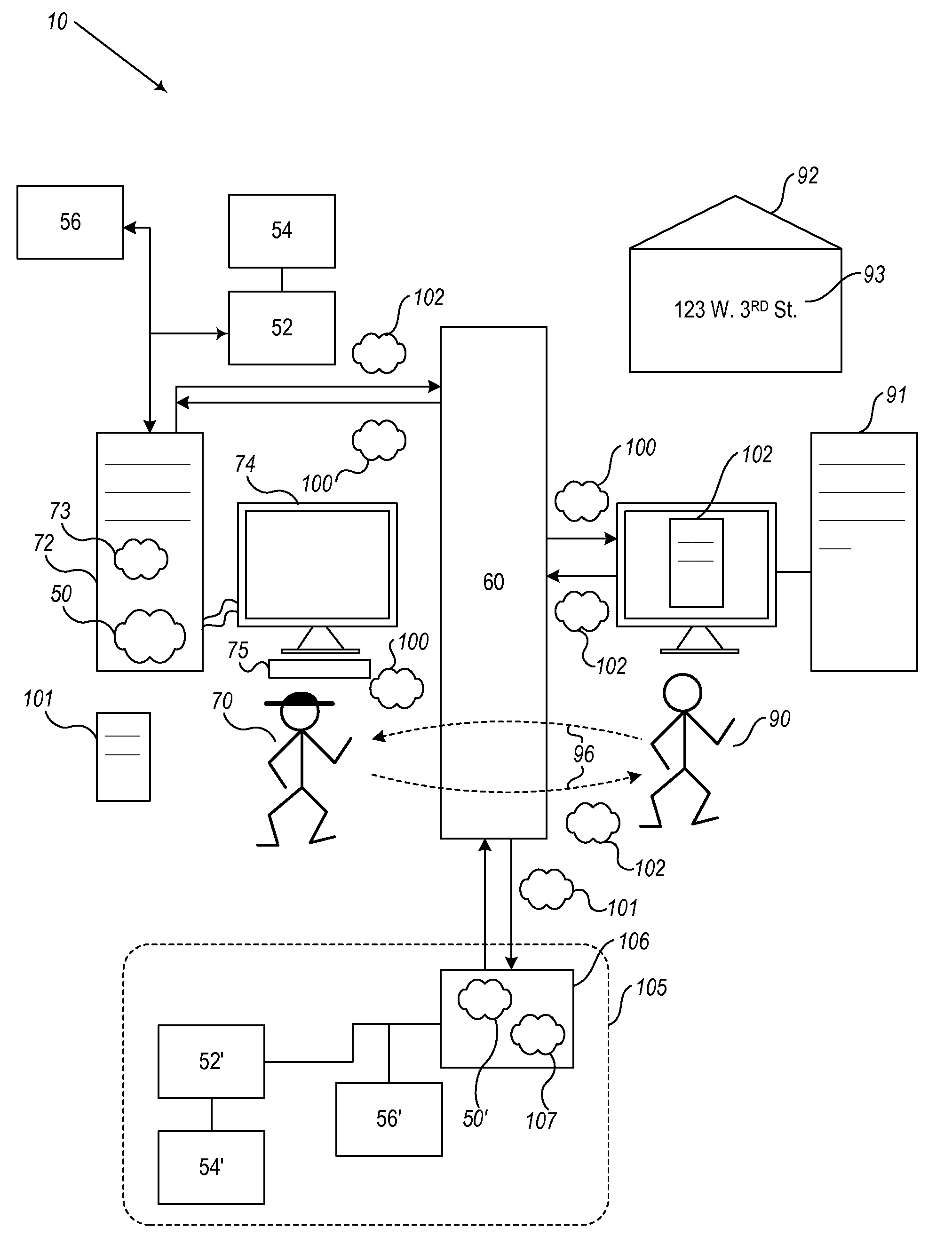

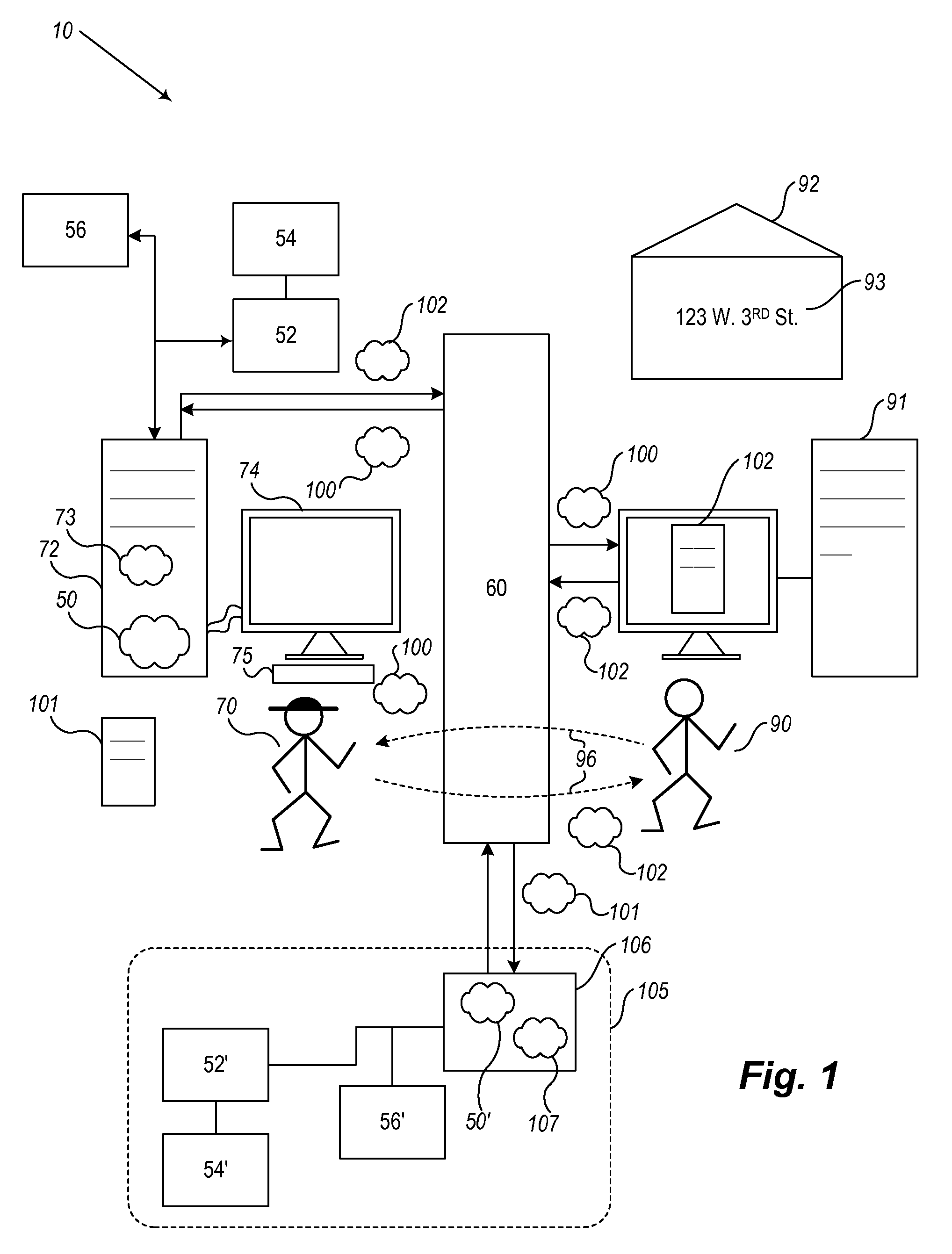

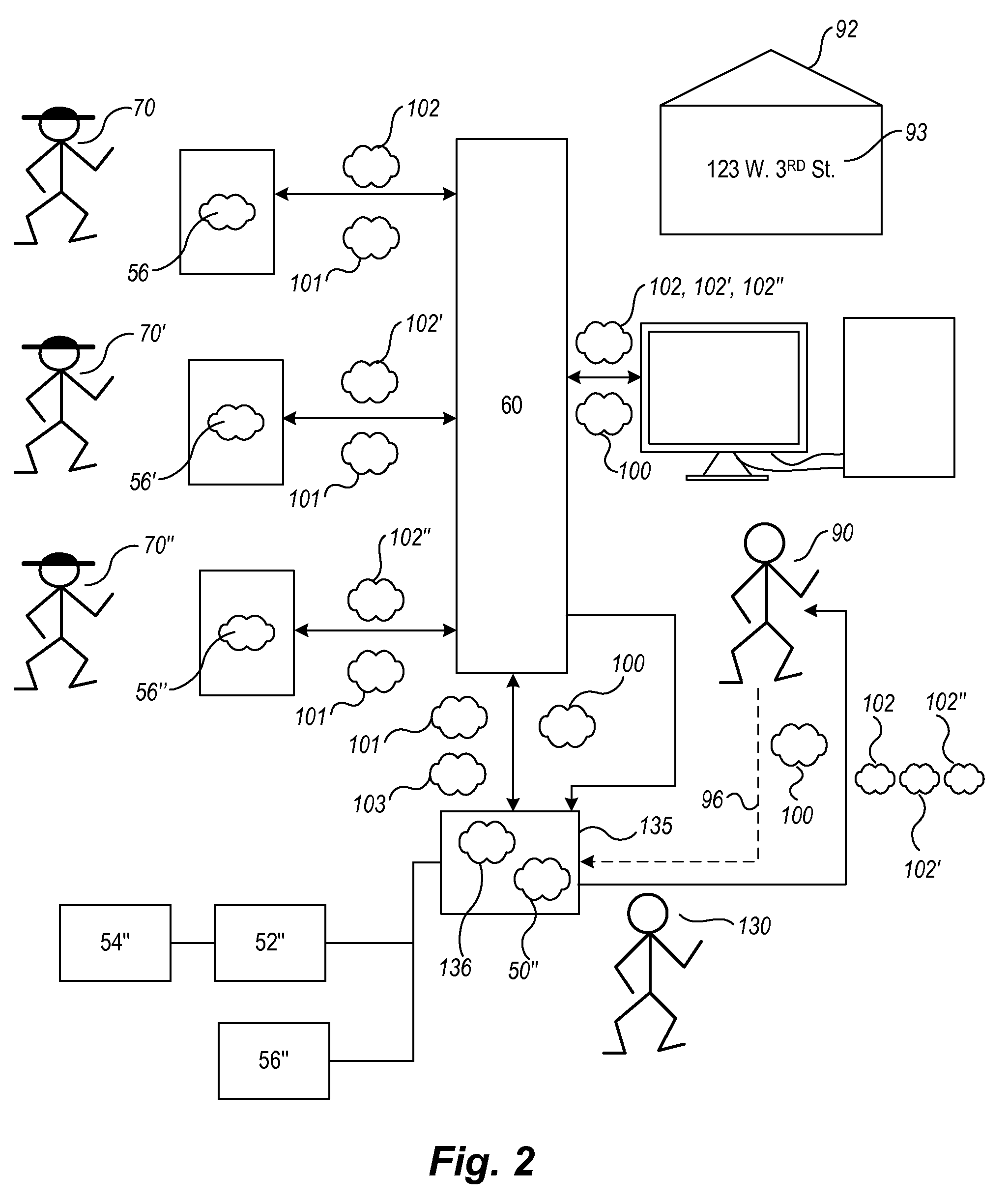

System and method for associating aerial images, map features, and information

InactiveUS7487114B2Easy to buy and sellEfficient and secure buying and sellingInstruments for road network navigationRoad vehicles traffic controlFinancial transactionAerial photography

Owner:COSTAR REALTY INFORMATION

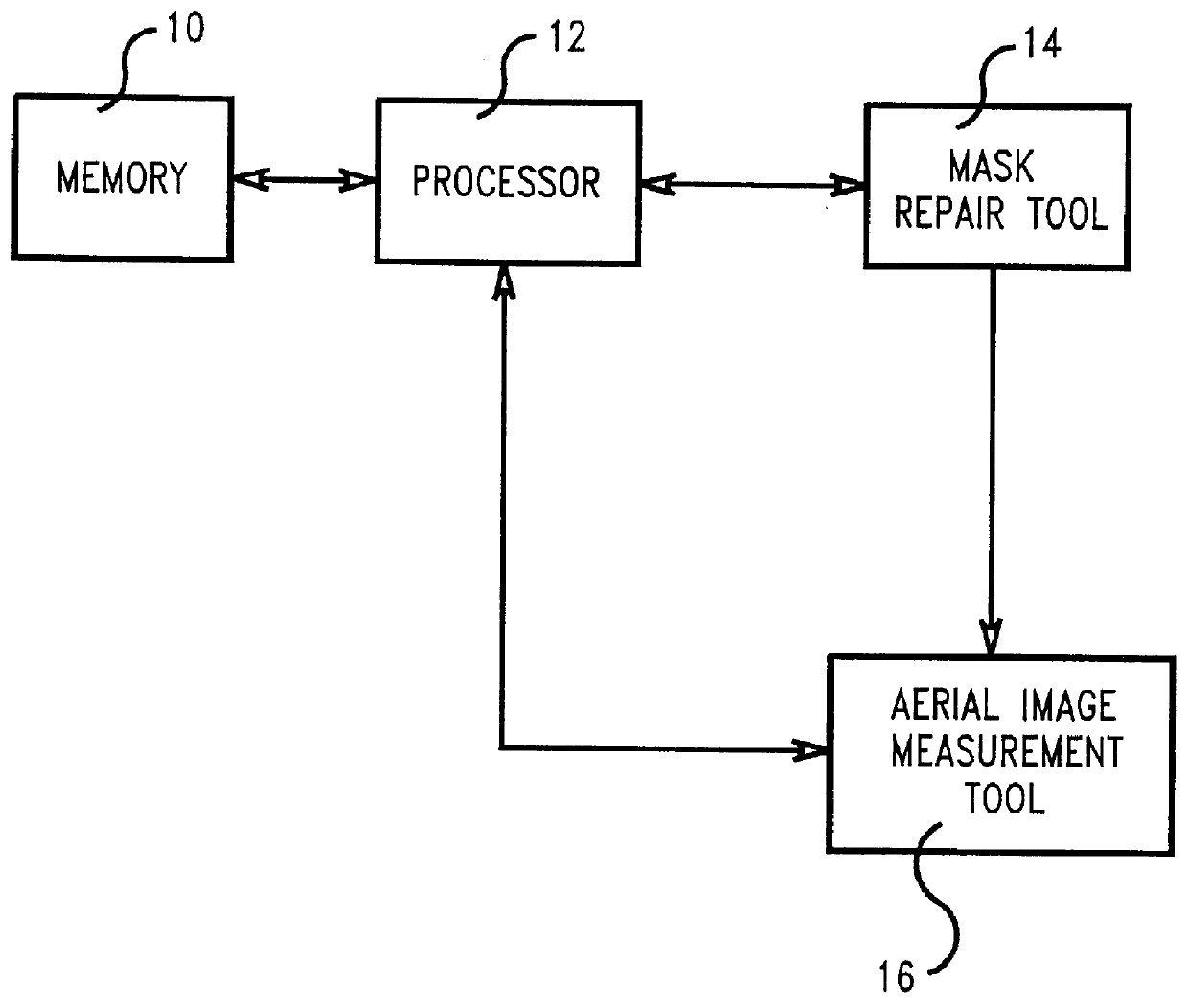

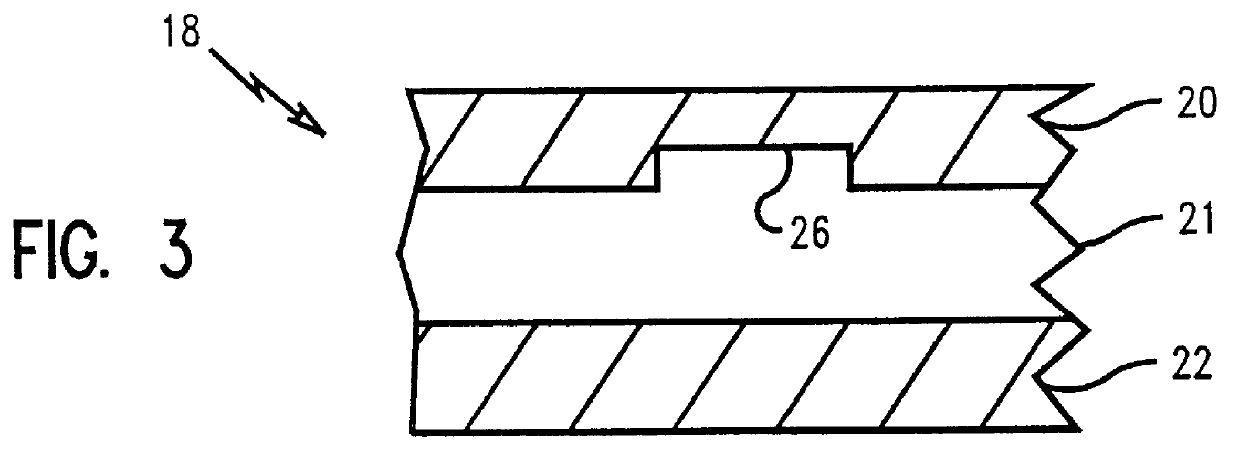

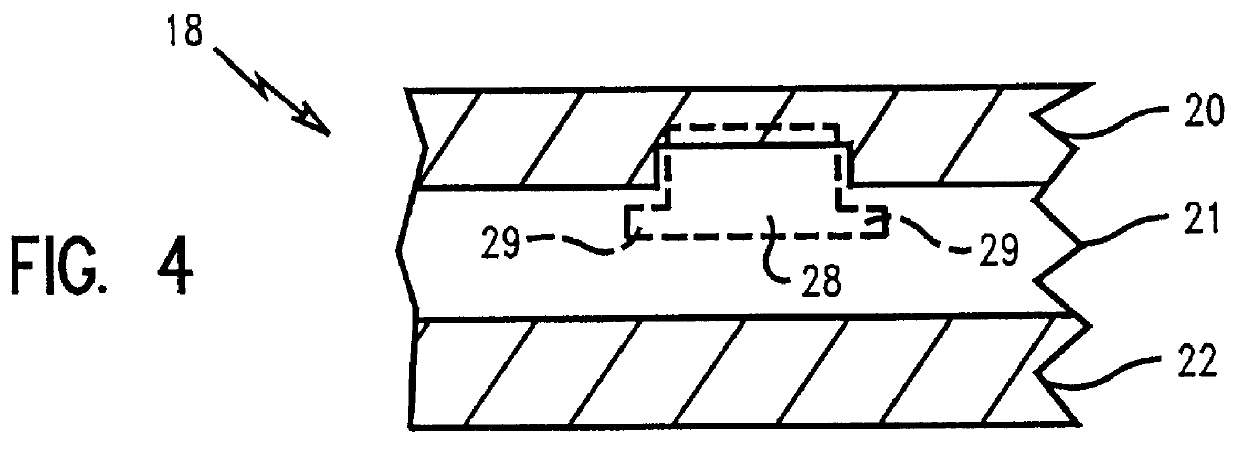

Feedback method to repair phase shift masks

InactiveUS6016357APhotomechanical apparatusSemiconductor/solid-state device manufacturingPhase shiftedSpatial image

A method of repairing a semiconductor phase shift mask comprises first providing a semiconductor mask having a defect and then illuminating the mask to create an aerial image of the mask. Subsequently, the aerial image of the mask is analyzed and the defect in the mask is detected from the aerial image. An ideal mask image is defined and compared to the aerial image of the defective mask to determine the repair parameters. Unique parameters for repairing the mask defect are determined by utilizing the aerial image analysis and a look-up table having information on patch properties as a function of material deposition parameters. The mask is then repaired in accordance with the parameters to correct the mask defect. A patch of an attenuated material may be applied to the mask or a predetermined amount of material may be removed from the mask. The aerial image of the repair is analyzed to determine whether the repair sufficiently corrects the defect within predetermined tolerances.

Owner:GOOGLE LLC

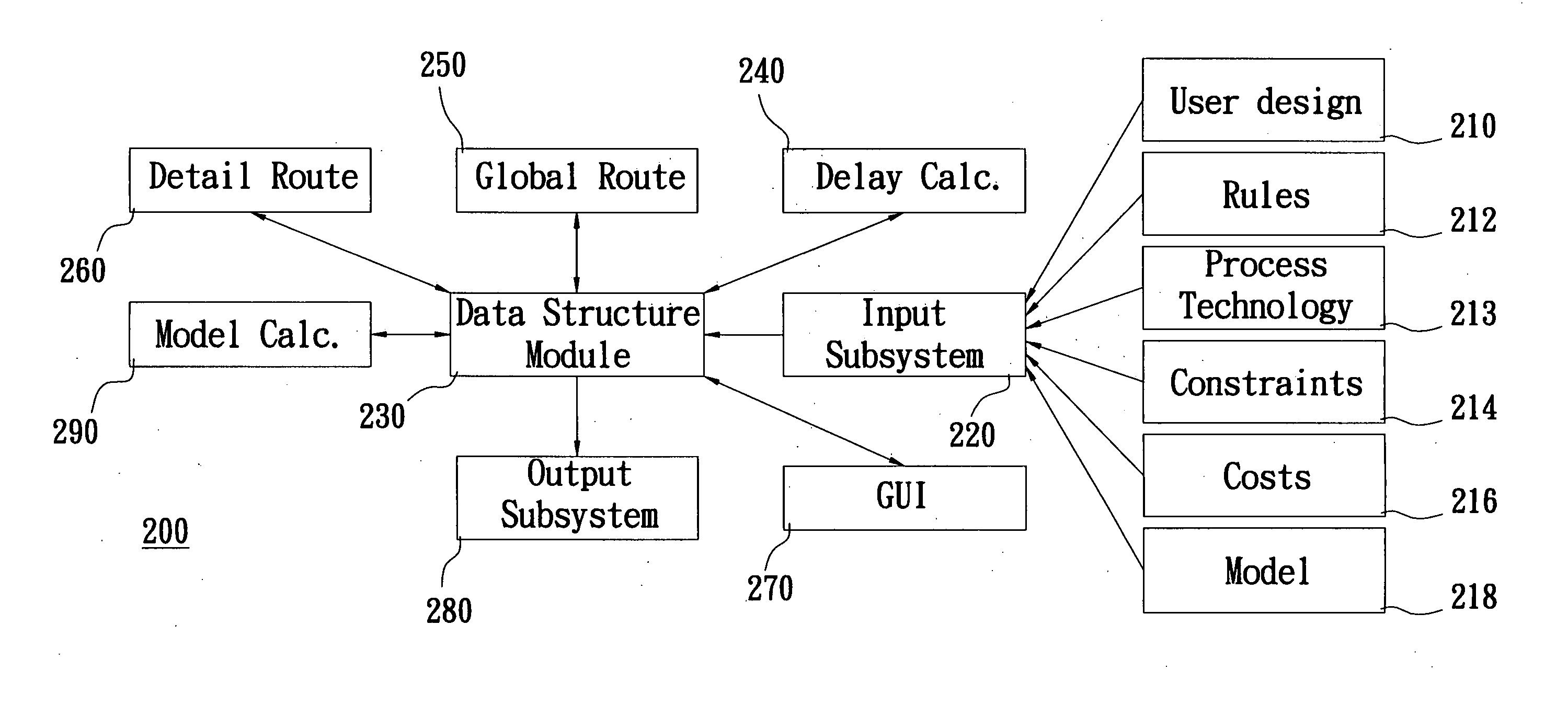

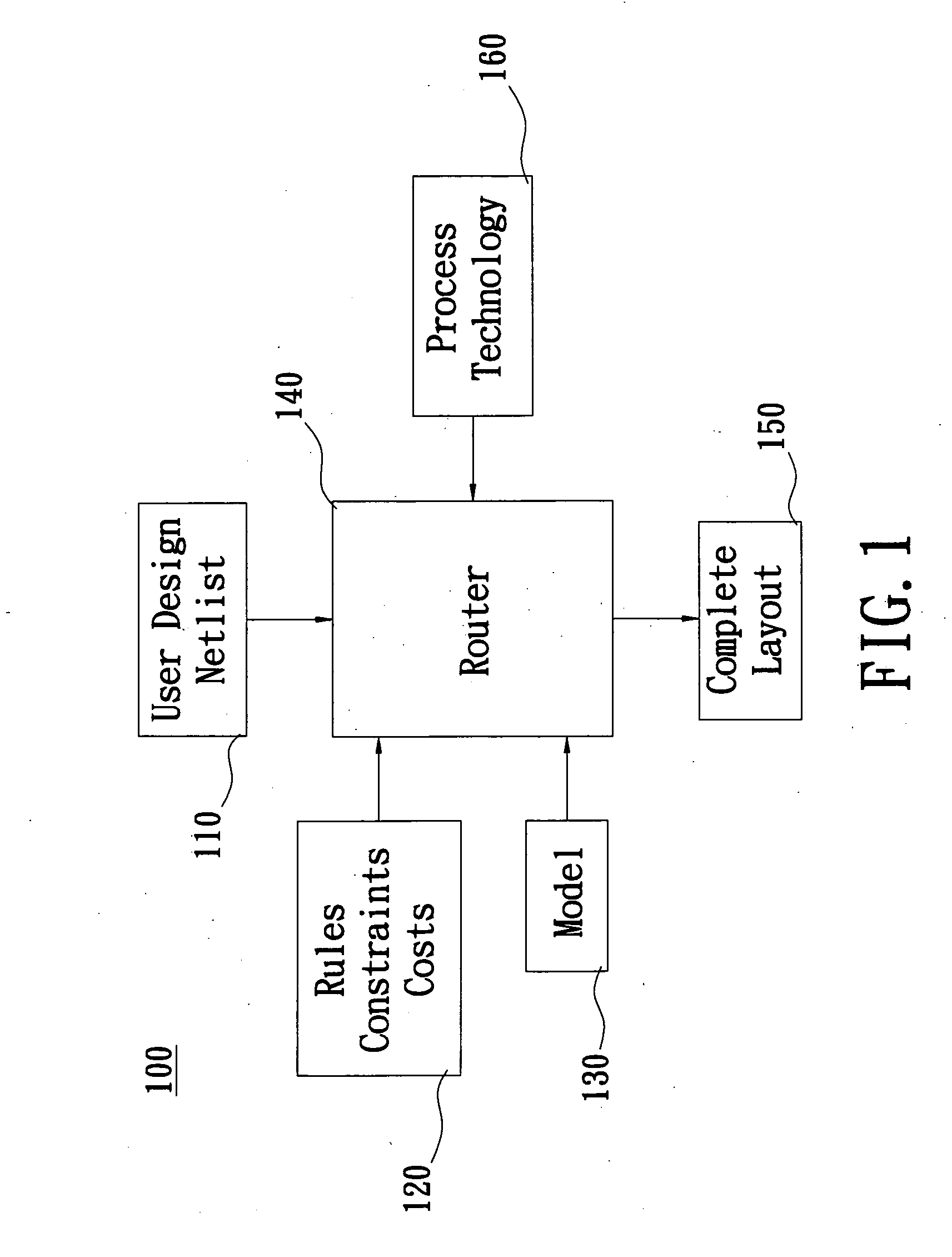

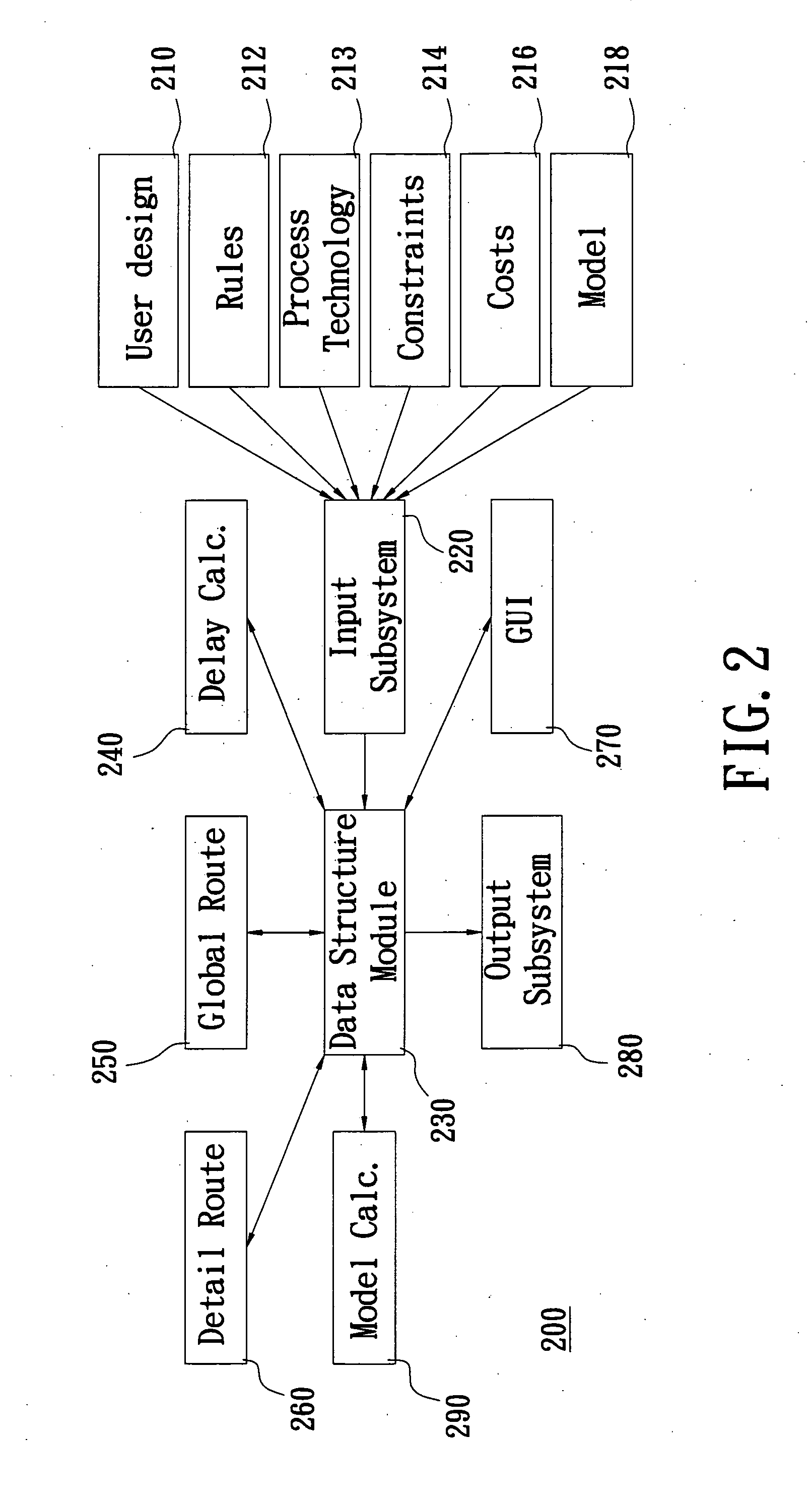

Apparatus for a routing system

InactiveUS20070106971A1Reduce violationsReduce processComputer programmed simultaneously with data introductionComputer aided designAlgorithmGrid based

The invention details methods and apparatus for a routing system or router that includes a model. The model can be in many different forms including but not limited to: resolution enhancement technologies such as OPC; lithography model including but not limited to aerial image; pattern-dependent functions; functions for timing / signal integrity / power; manufacturing process variations; and measured silicon data. In one embodiment, the model can be described as input to the system and the model calculator can interact either with the data structure or the query engine of the detail router or both. The model calculator can accept input as a set of geometry description and produce output to guide the query functions. An example technique called set intersection is disclosed herein to combine multiple models in the system. A preferred embodiment of this invention includes a full chip grid-based router being aware of manufacturability.

Owner:LIZOTECH

Method and System for Remotely Inspecting Bridges and Other Structures

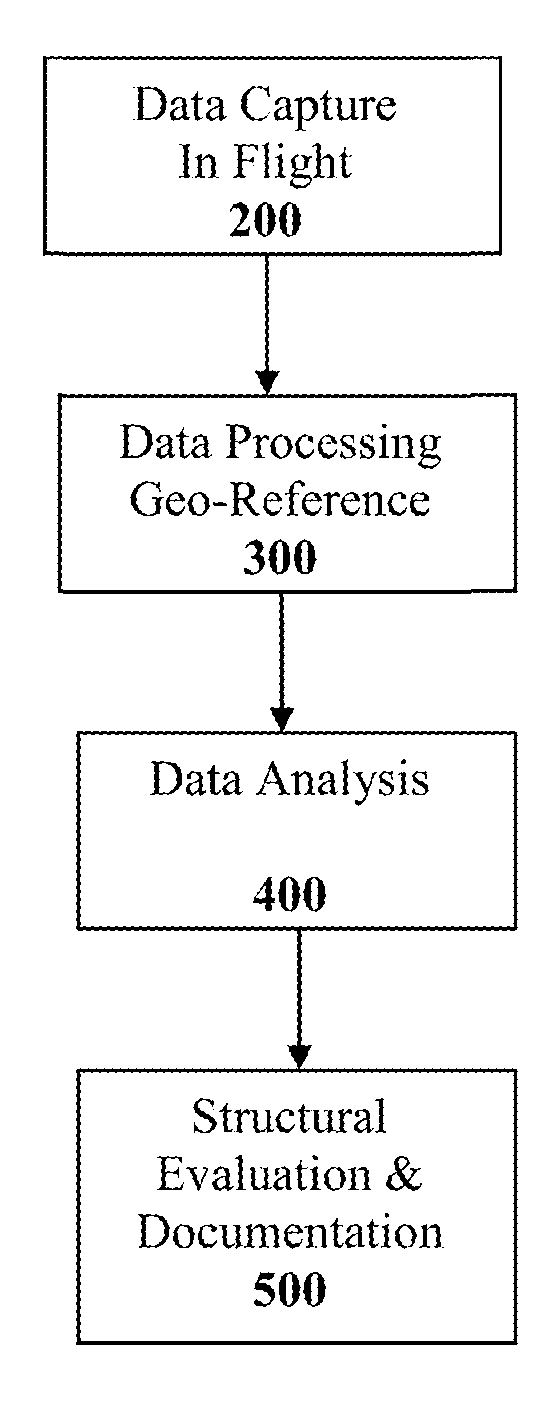

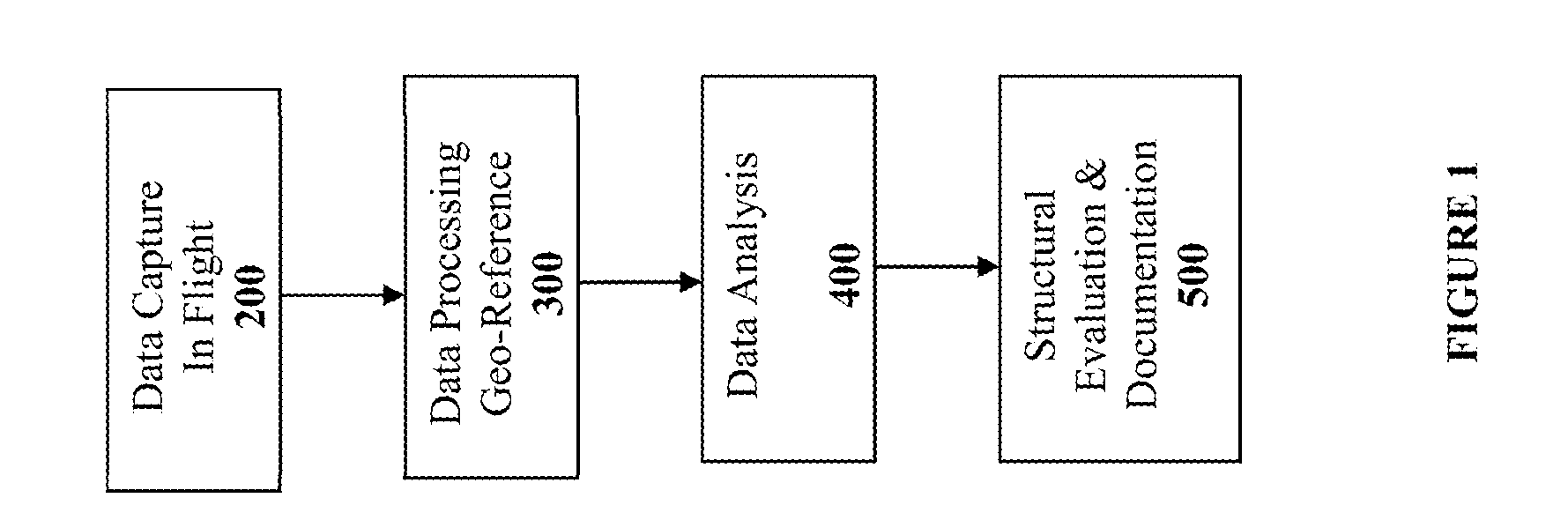

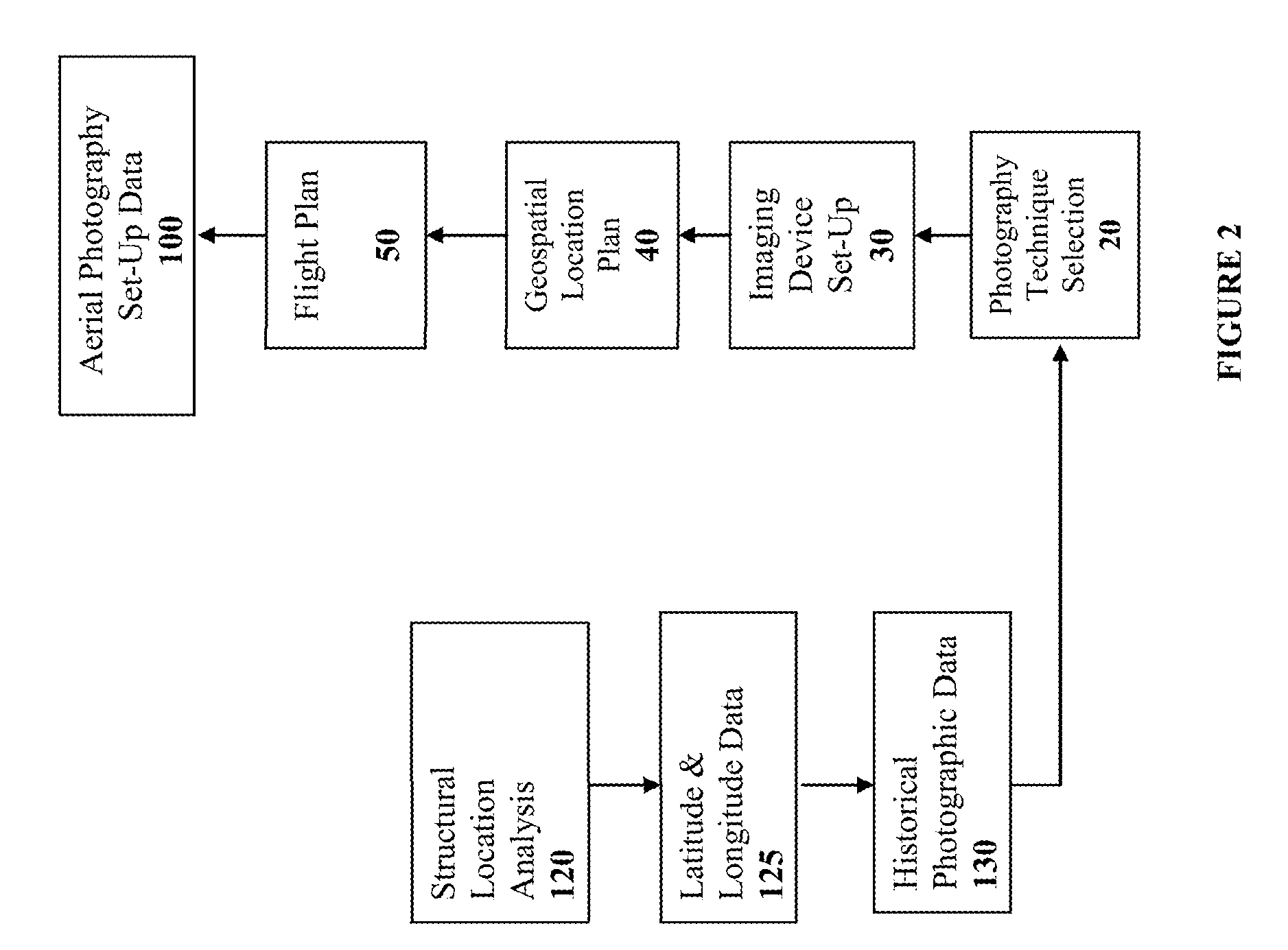

ActiveUS20130216089A1Facilitating spatial integrationFacilitating automated damage detectionImage enhancementImage analysisJet aeroplaneOn board

Spatially Integrated Small-Format Aerial Photography (SFAP) is one aspect of the present invention. It is a low-cost solution for bridge surface imaging and is proposed as a remote bridge inspection technique to supplement current bridge visual inspection. Providing top-down views, the airplanes flying at about 1000 feet can allow visualization of sub-inch (large) cracks and joint openings on bridge decks or highway pavements. On board Global Positioning System (GPS) is used to help geo-reference images collected and facilitate damage detection. Image analysis is performed to identify structural defects such as cracking. A deck condition rating technique based on large crack detection is used to quantify the condition of the existing bridge decks.

Owner:THE UNIV OF NORTH CAROLINA AT CHAPEL HILL

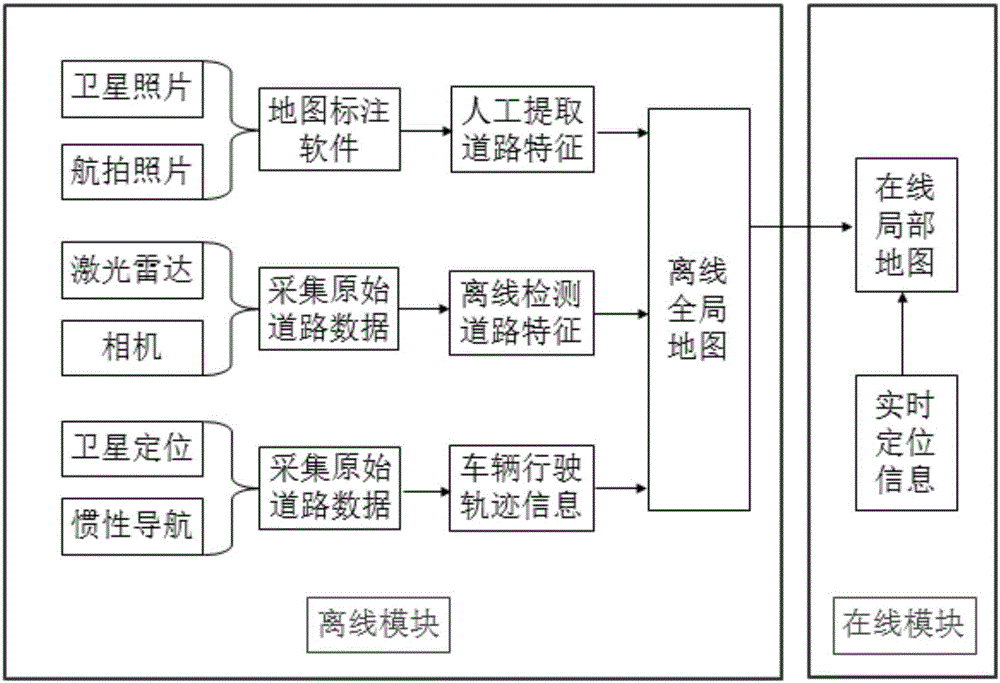

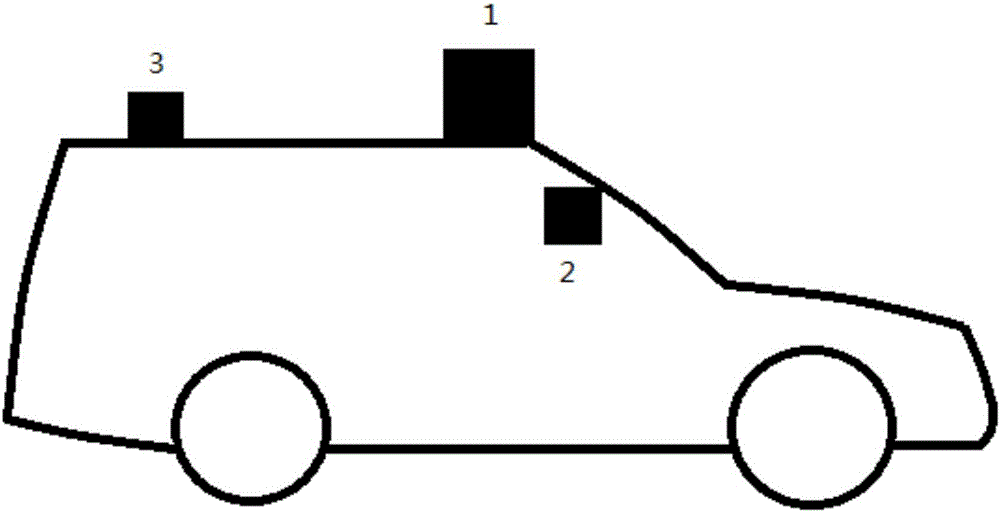

System and method for generating lane-level navigation map of unmanned vehicle

ActiveCN106441319AWide applicabilityDetailed and rich map dataInstruments for road network navigationGlobal Positioning SystemPositioning system

The invention relates to a system and method for generating a lane-level navigation map of an unmanned vehicle based on multi-source data. The lane-level navigation map comprises an offline global map part and an online local map part. According to an offline module, within a target region where the unmanned vehicle runs, original road data is acquired through satellite photos (or aerial photos), a vehicle sensor (laser radar and a camera) and a high-precision integrated positioning system (a global positioning system and an inertial navigation system), then the original road data is subjected to offline processing, multiple kinds of road information are extracted, and finally the road information extracting results are fused to generate the offline global map. The offline global map is stored through a layered structure. According to an online module, when the unmanned vehicle automatically drives in the target region, the road data in the offline global map is extracted according to real-time positioning information, and the online local map with the vehicle as the center within the fixed distance range is drawn. The system and method can be applied to fusion sensing, high-precision positioning and intelligent decisions of the unmanned vehicle.

Owner:安徽中科星驰自动驾驶技术有限公司

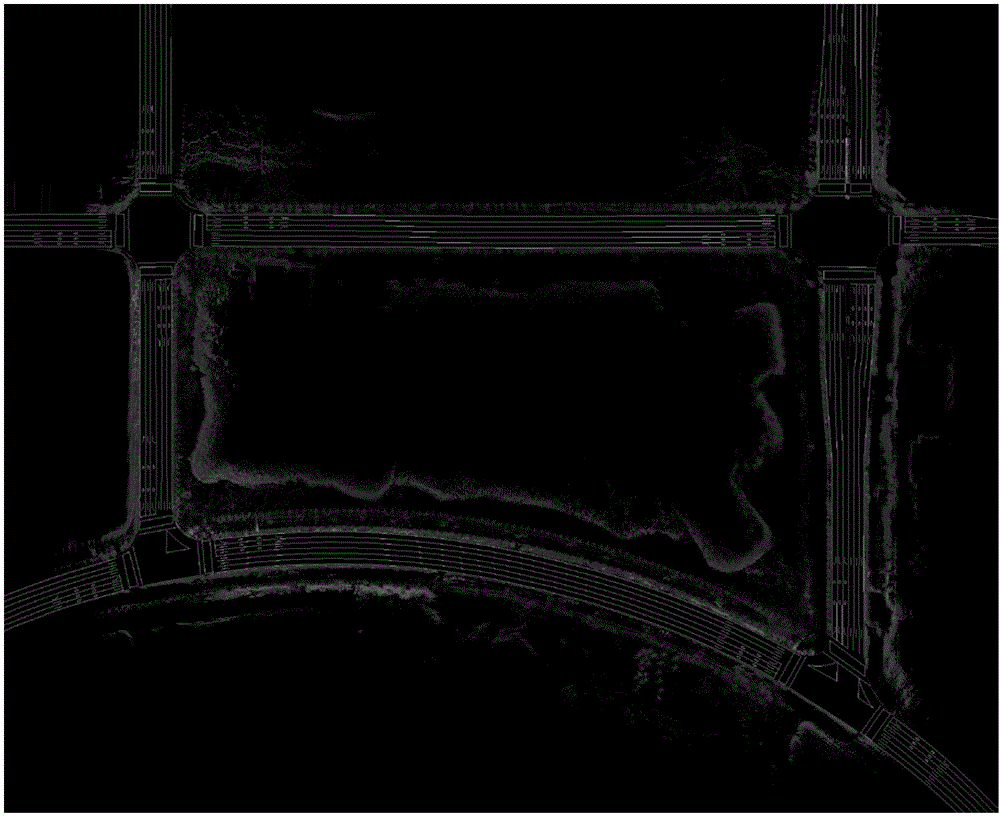

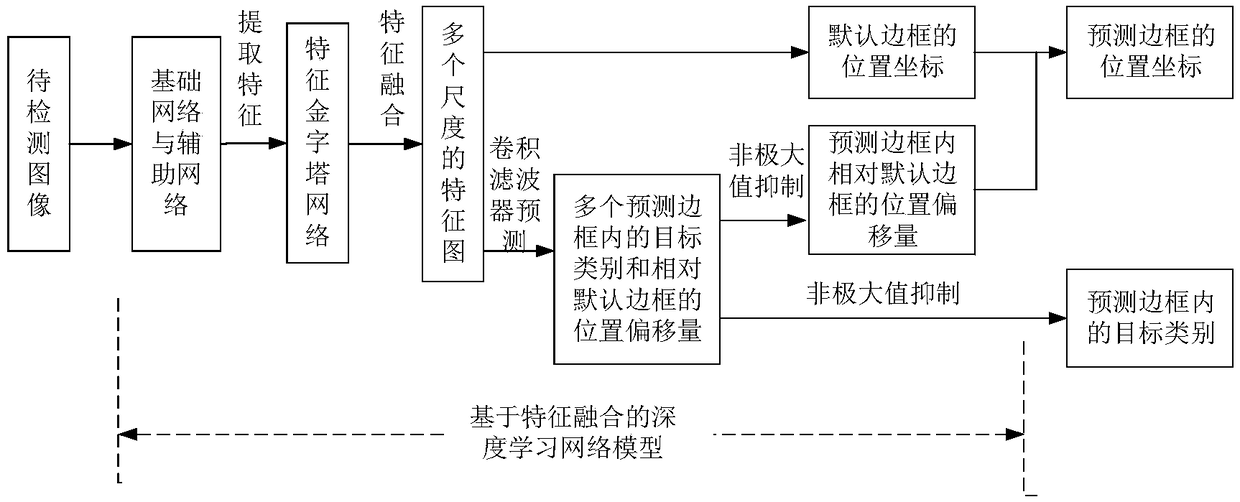

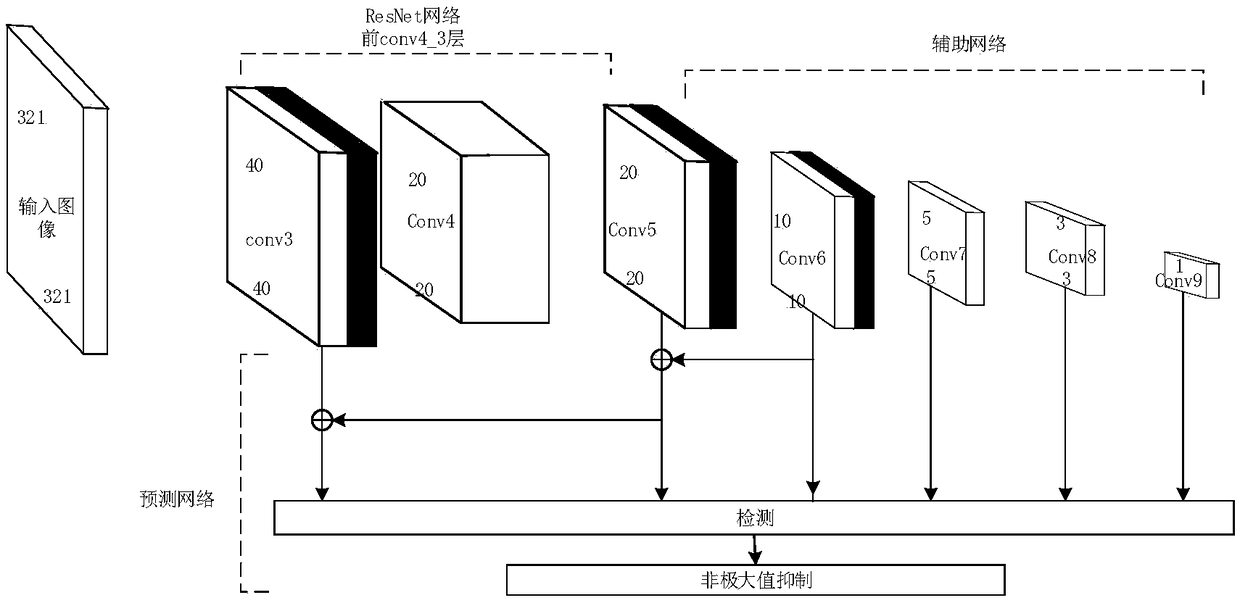

Small target detection method based on feature fusion and depth learning

InactiveCN109344821AScalingRich information featuresCharacter and pattern recognitionNetwork modelFeature fusion

The invention discloses a small target detection method based on feature fusion and depth learning, which solves the problems of poor detection accuracy and real-time performance for small targets. The implementation scheme is as follows: extracting high-resolution feature map through deeper and better network model of ResNet 101; extracting Five successively reduced low resolution feature maps from the auxiliary convolution layer to expand the scale of feature maps. Obtaining The multi-scale feature map by the feature pyramid network. In the structure of feature pyramid network, adopting deconvolution to fuse the feature map information of high-level semantic layer and the feature map information of shallow layer; performing Target prediction using feature maps with different scales and fusion characteristics; adopting A non-maximum value to suppress the scores of multiple predicted borders and categories, so as to obtain the border position and category information of the final target. The invention has the advantages of ensuring high precision of small target detection under the requirement of ensuring real-time detection, can quickly and accurately detect small targets in images, and can be used for real-time detection of targets in aerial photographs of unmanned aerial vehicles.

Owner:XIDIAN UNIV

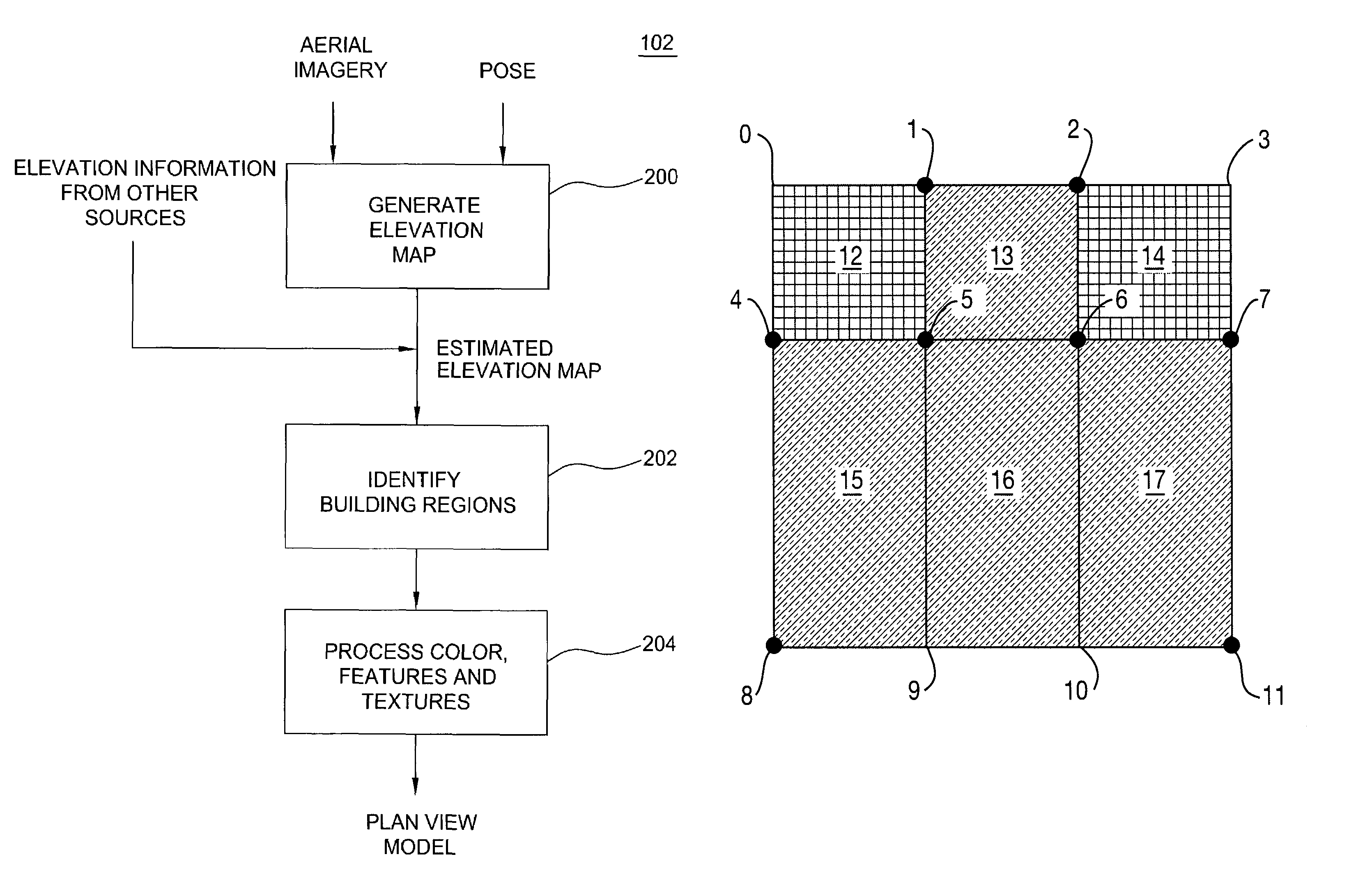

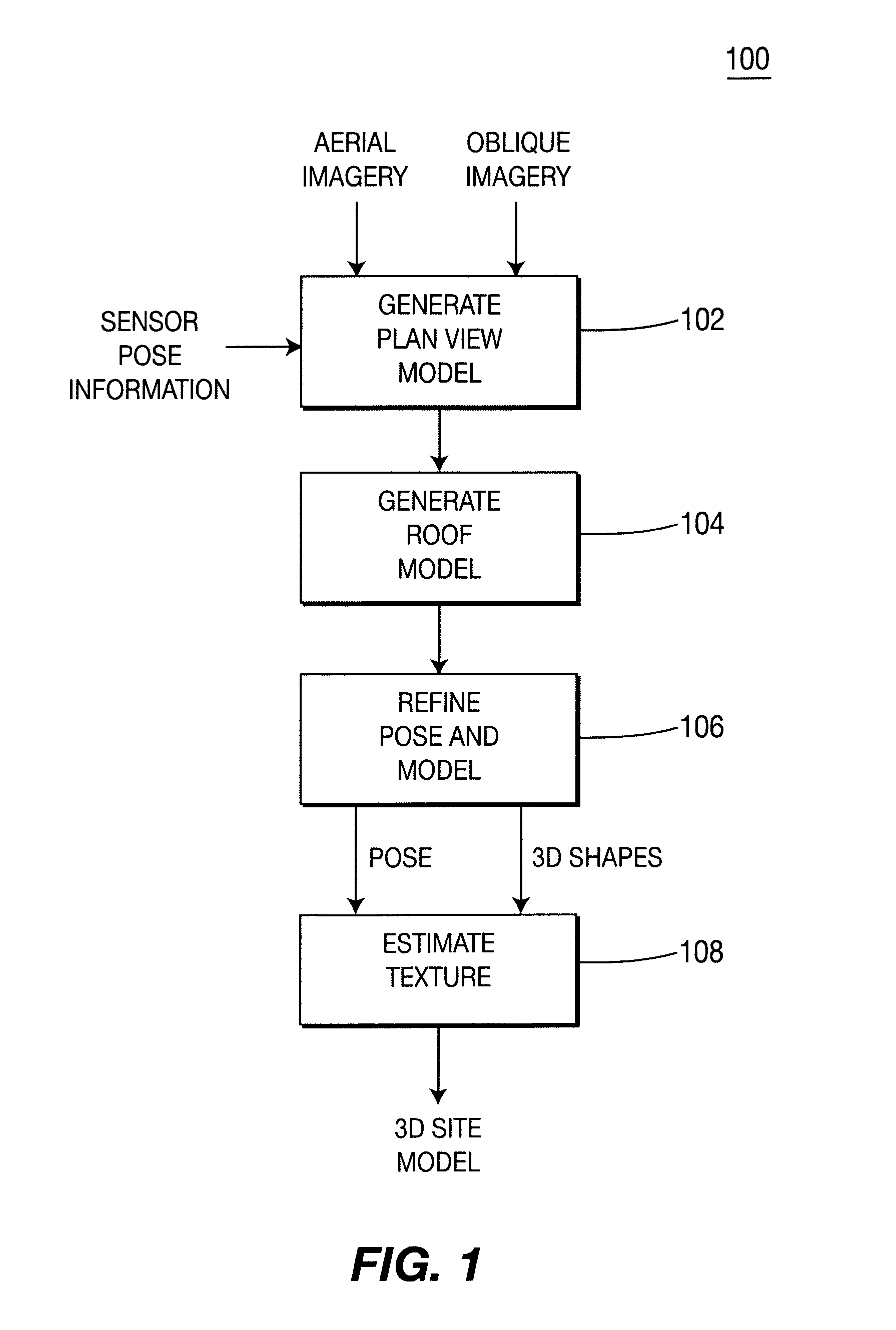

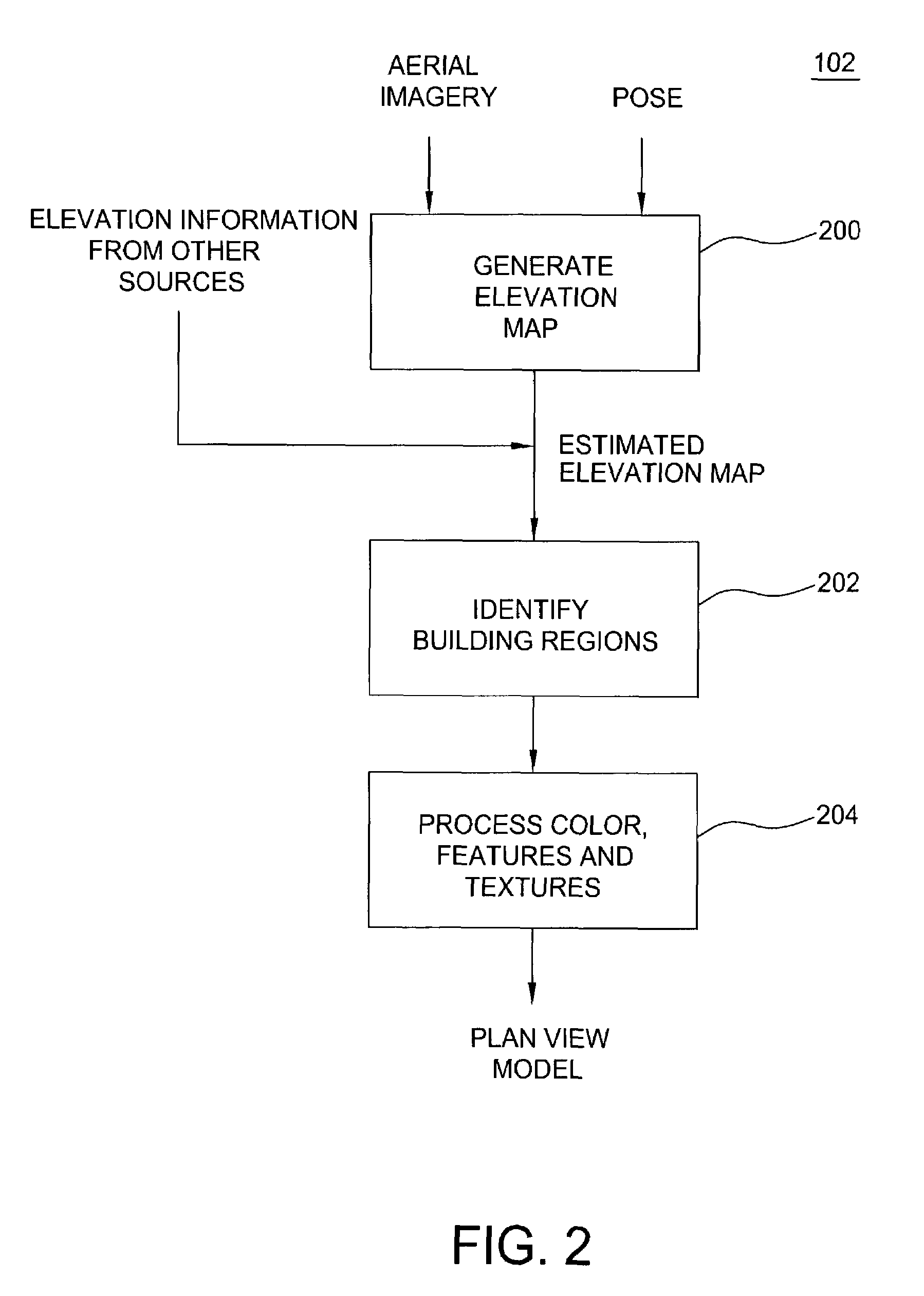

Method and apparatus for automatically generating a site model

ActiveUS7509241B2Overcome disadvantagesPrecise definitionGeometric CADDetails involving processing stepsSite modelView model

A method and apparatus for automatically combining aerial images and oblique images to form a three-dimensional (3D) site model. The apparatus or method is supplied with aerial and oblique imagery. The imagery is processed to identify building boundaries and outlines as well as to produce a depth map. The building boundaries and the depth map may be combined to form a 3D plan view model or used separately as a 2D plan view model. The imagery and plan view model is further processed to determine roof models for the buildings in the scene. The result is a 3D site model having buildings represented rectangular boxes with accurately defined roof shapes.

Owner:SRI INTERNATIONAL

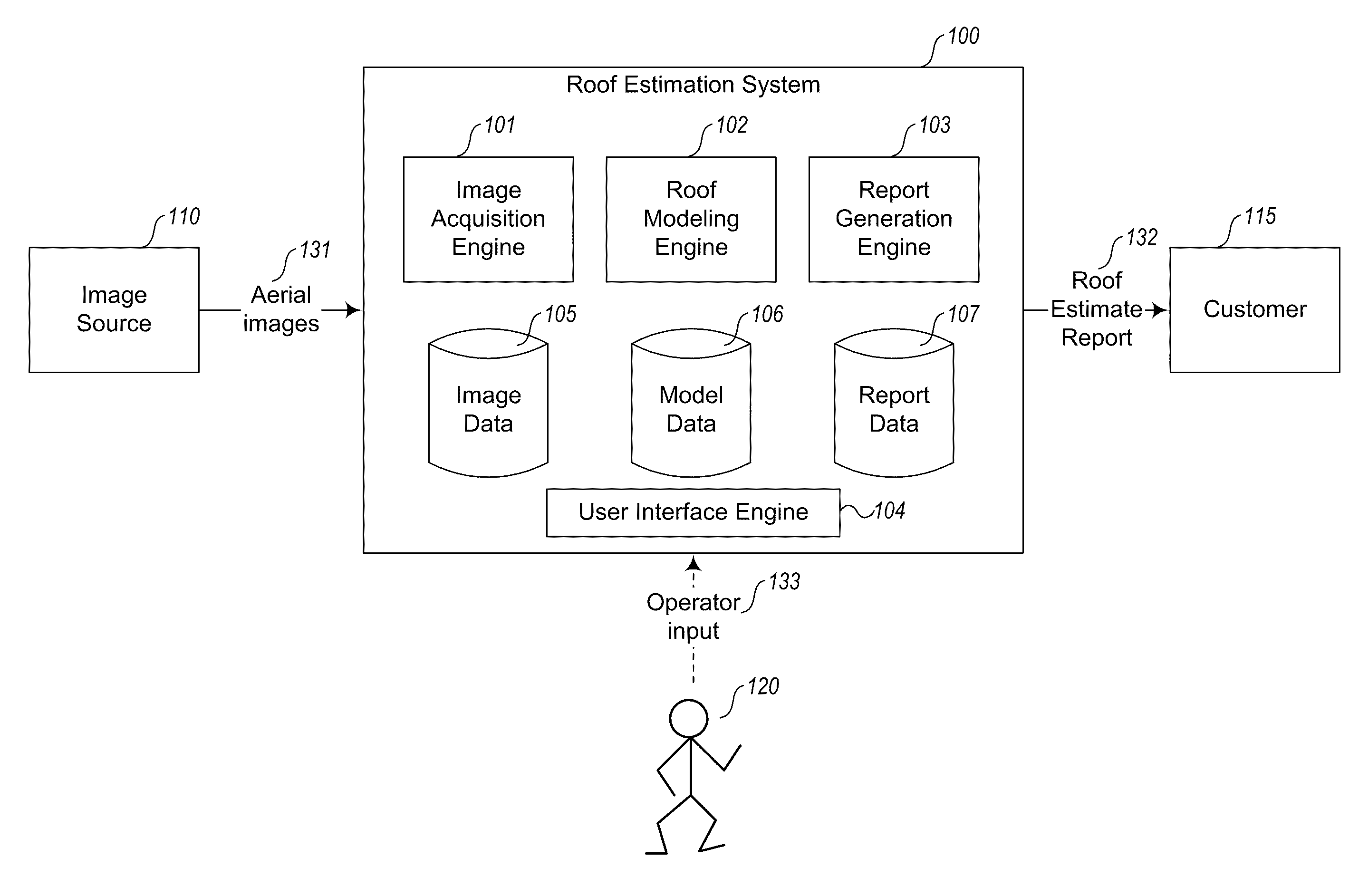

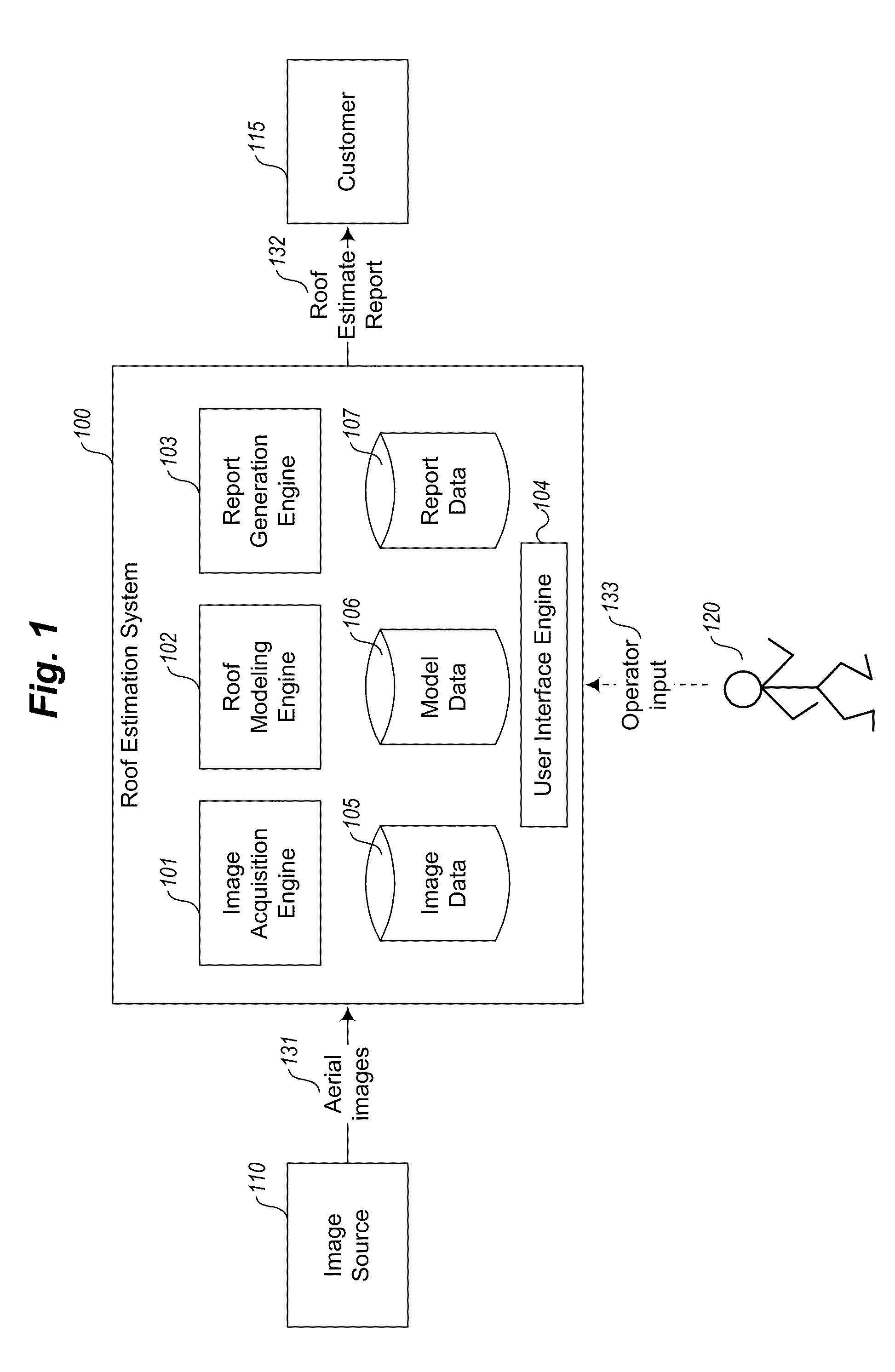

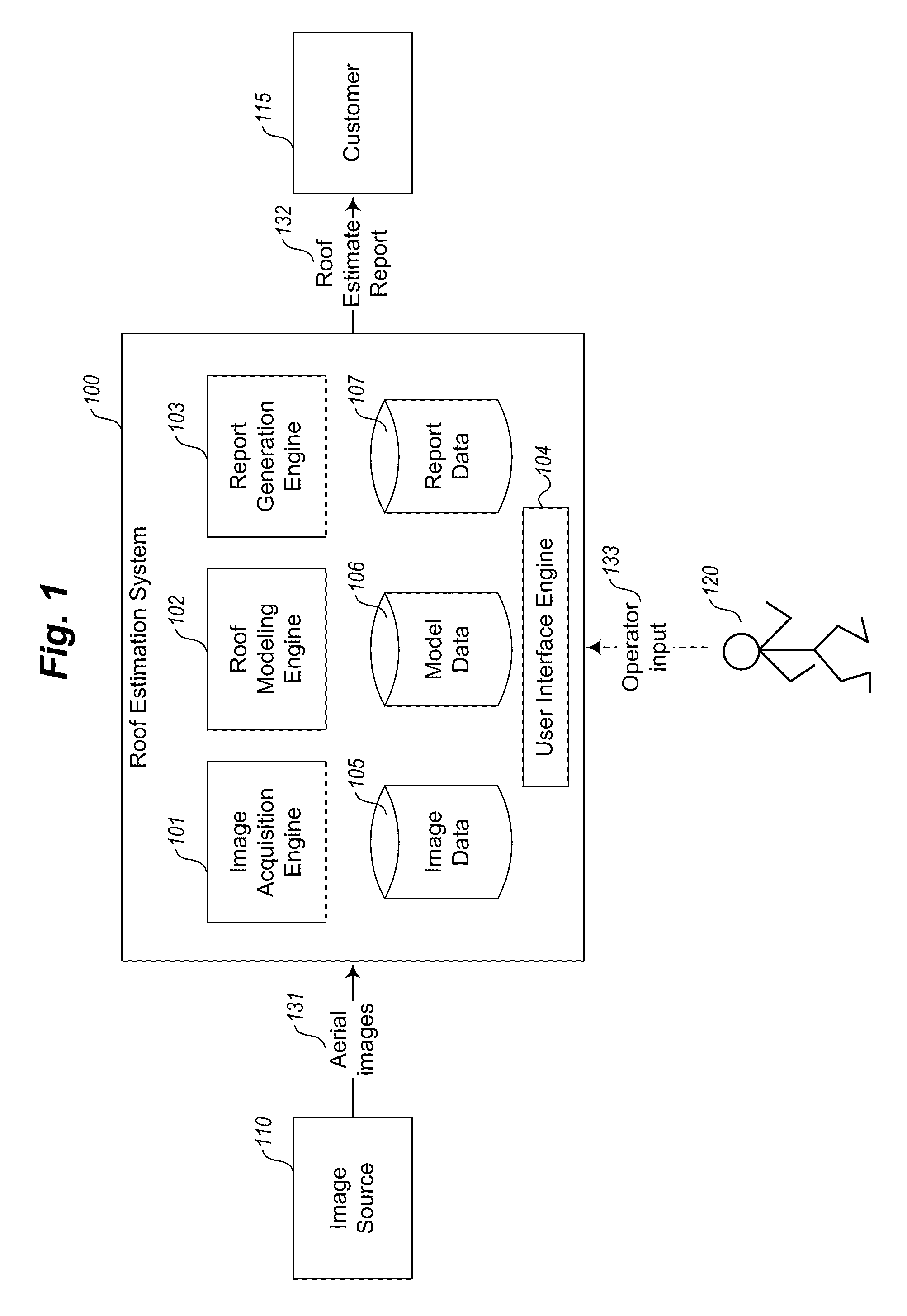

Aerial roof estimation system and method

Owner:EAGLEVIEW TECH INC

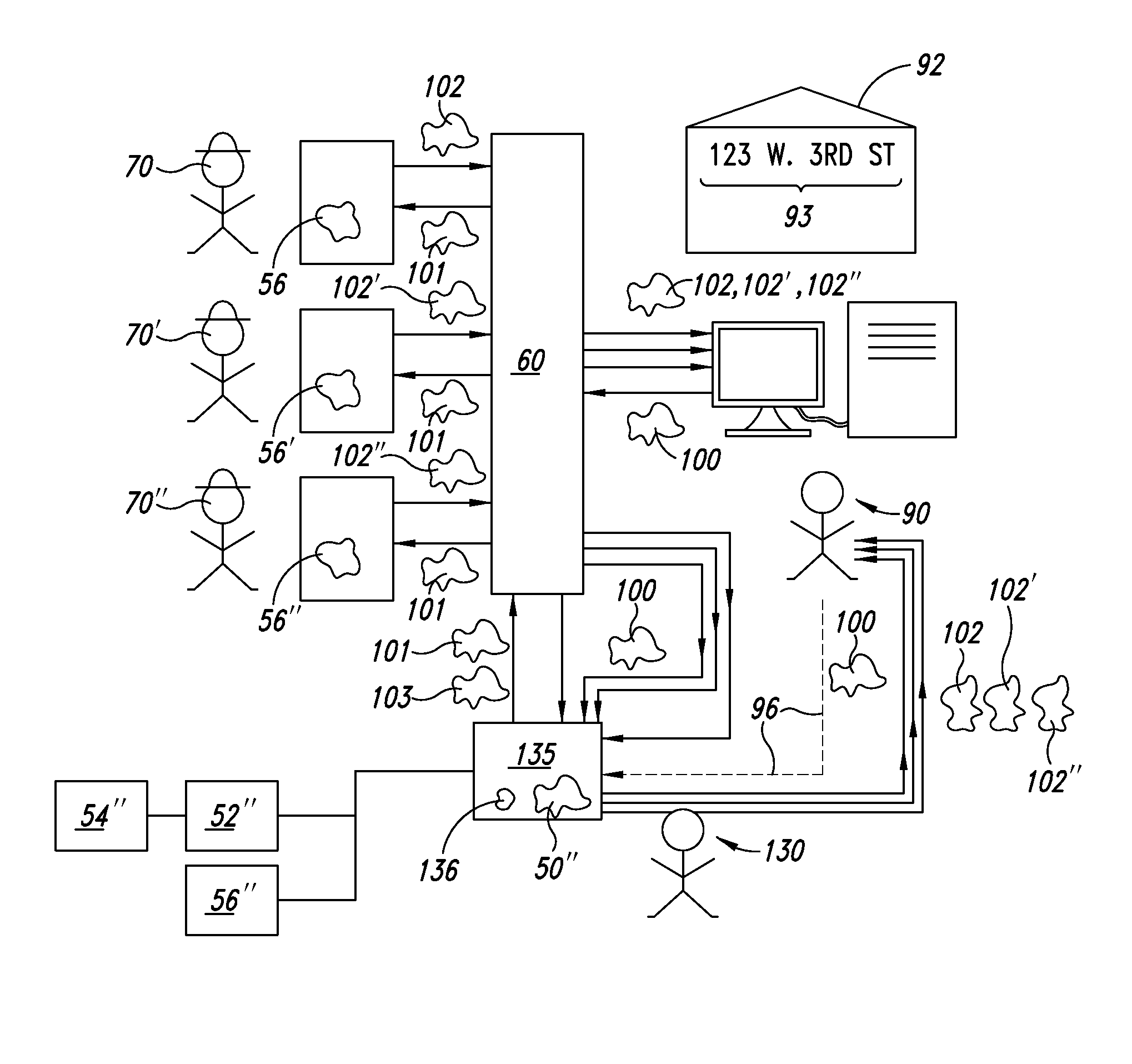

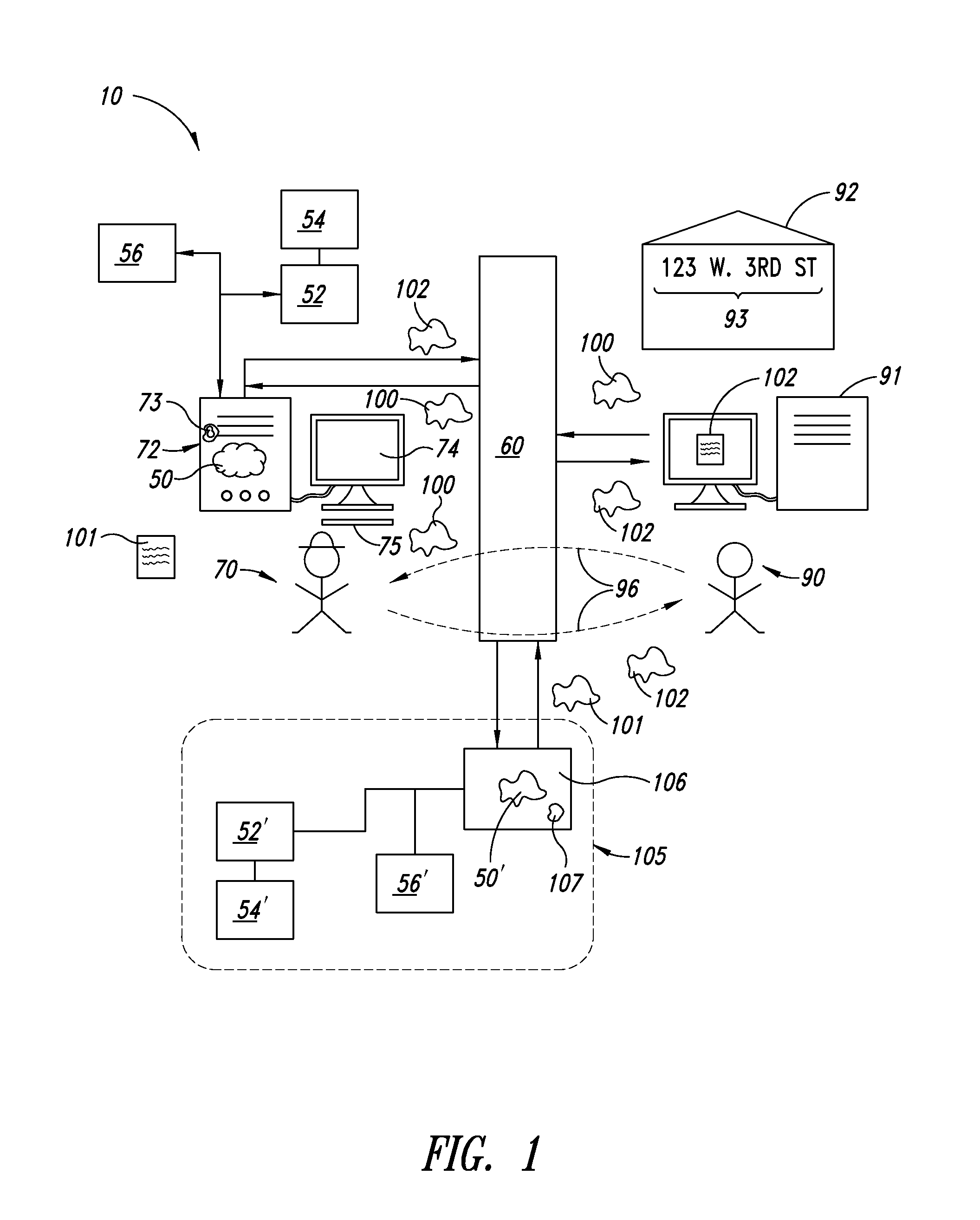

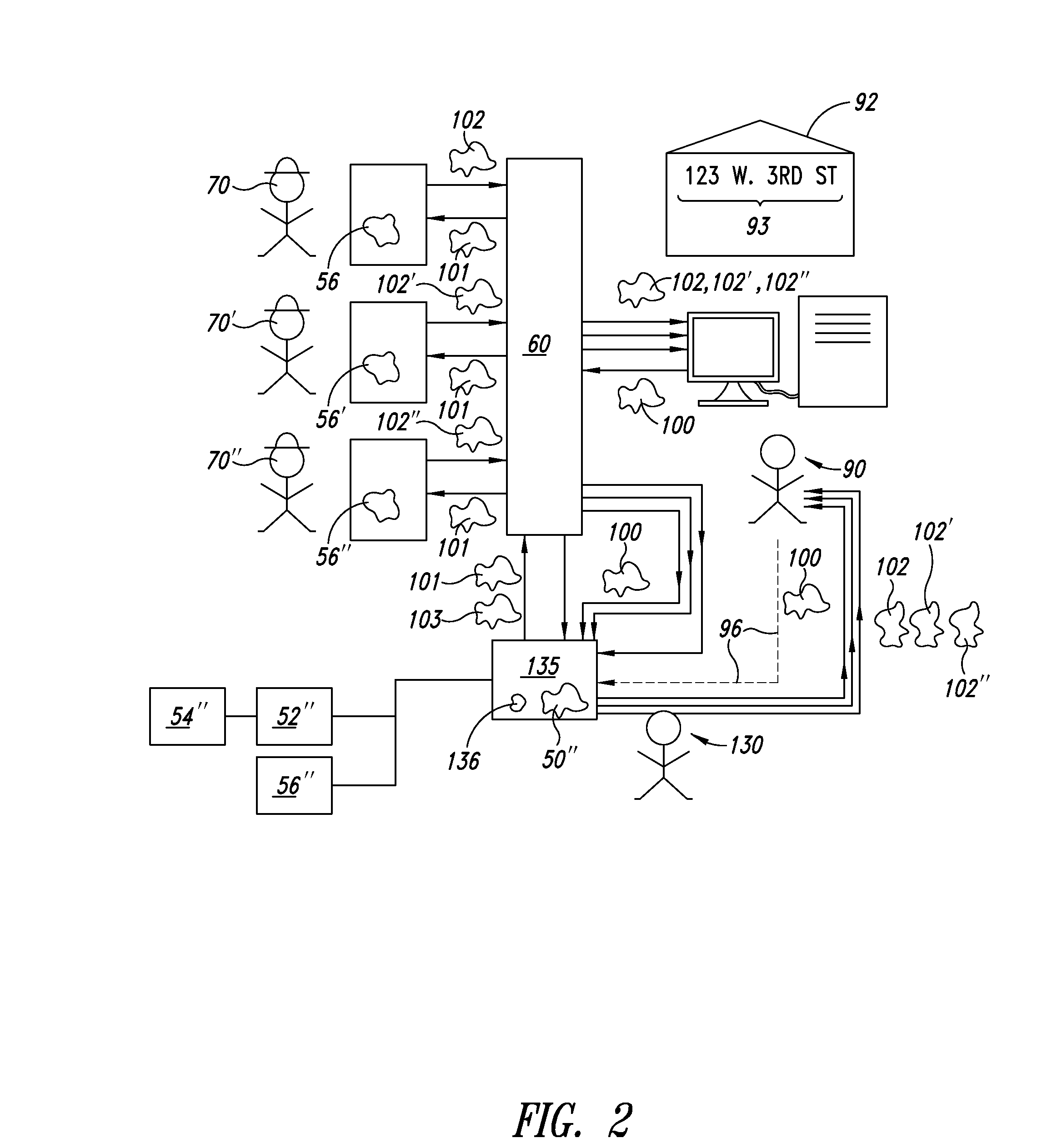

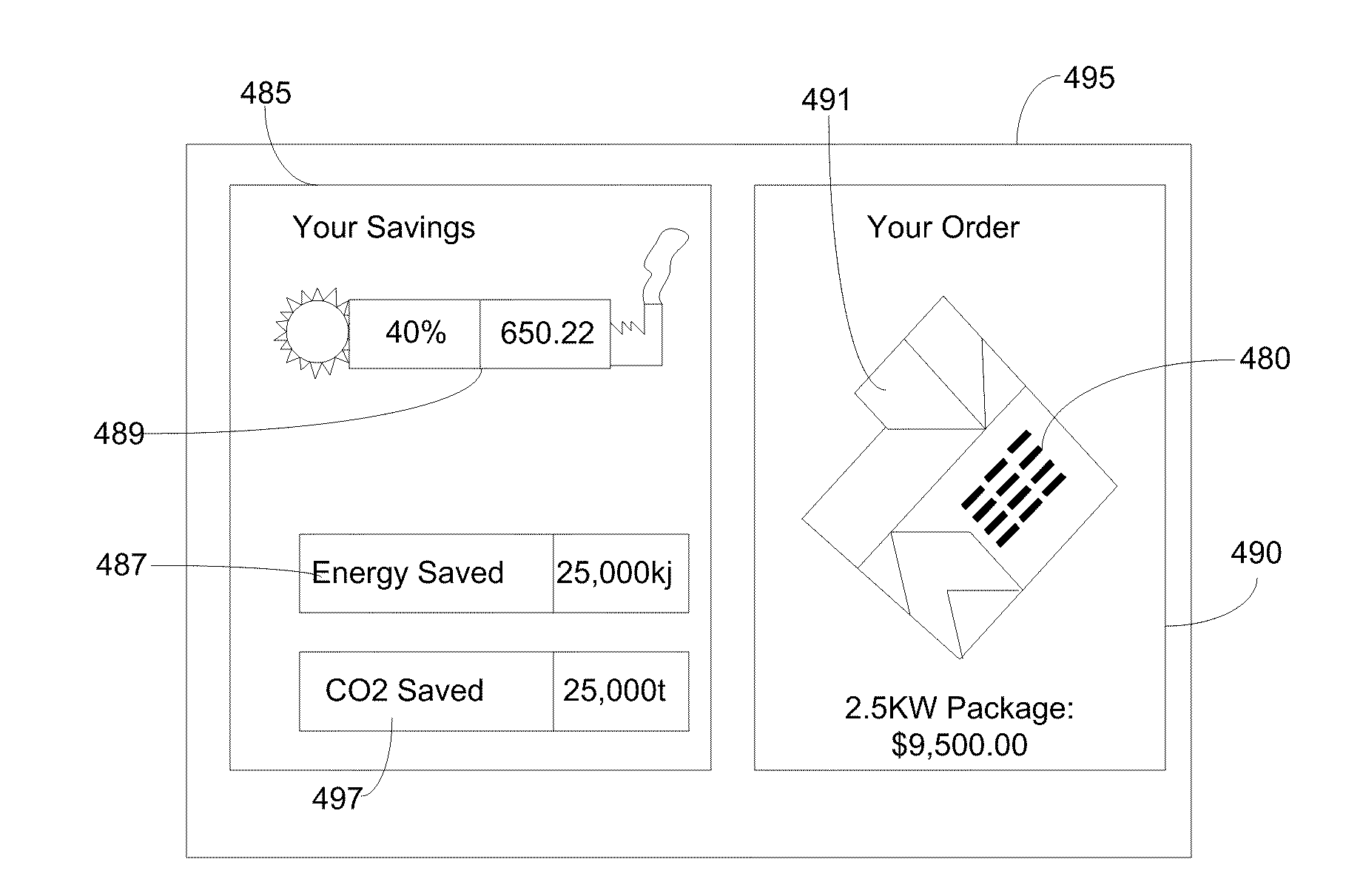

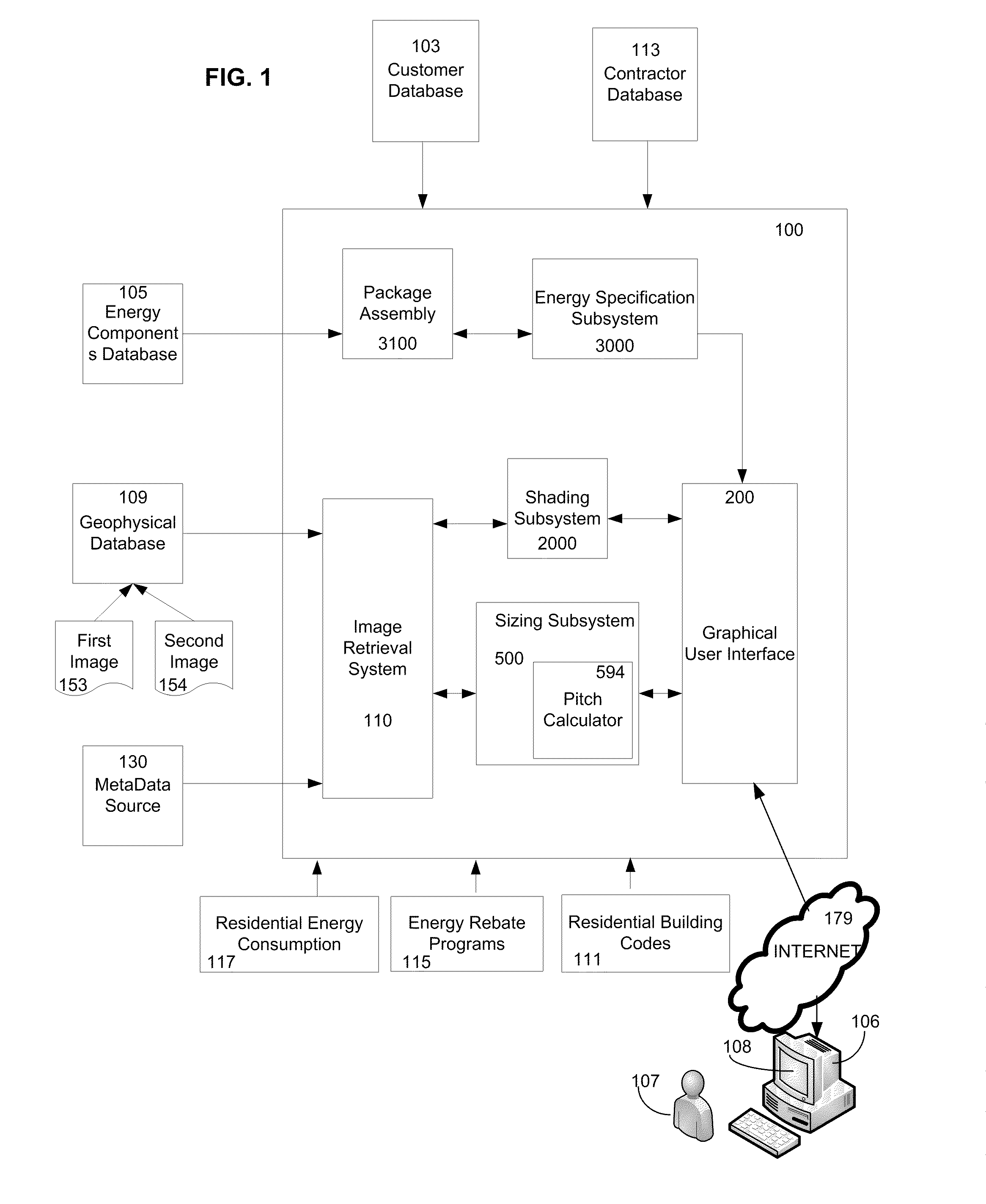

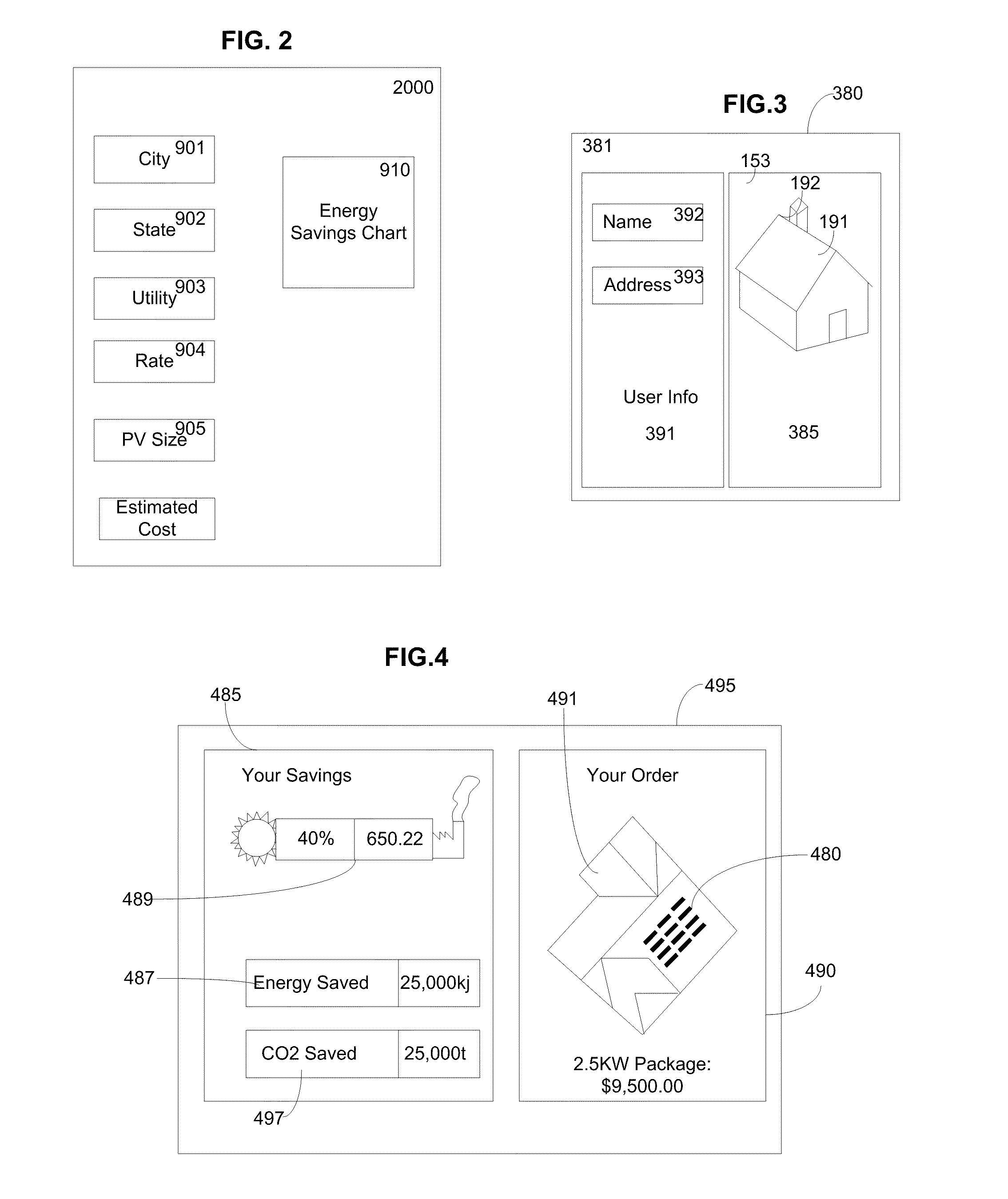

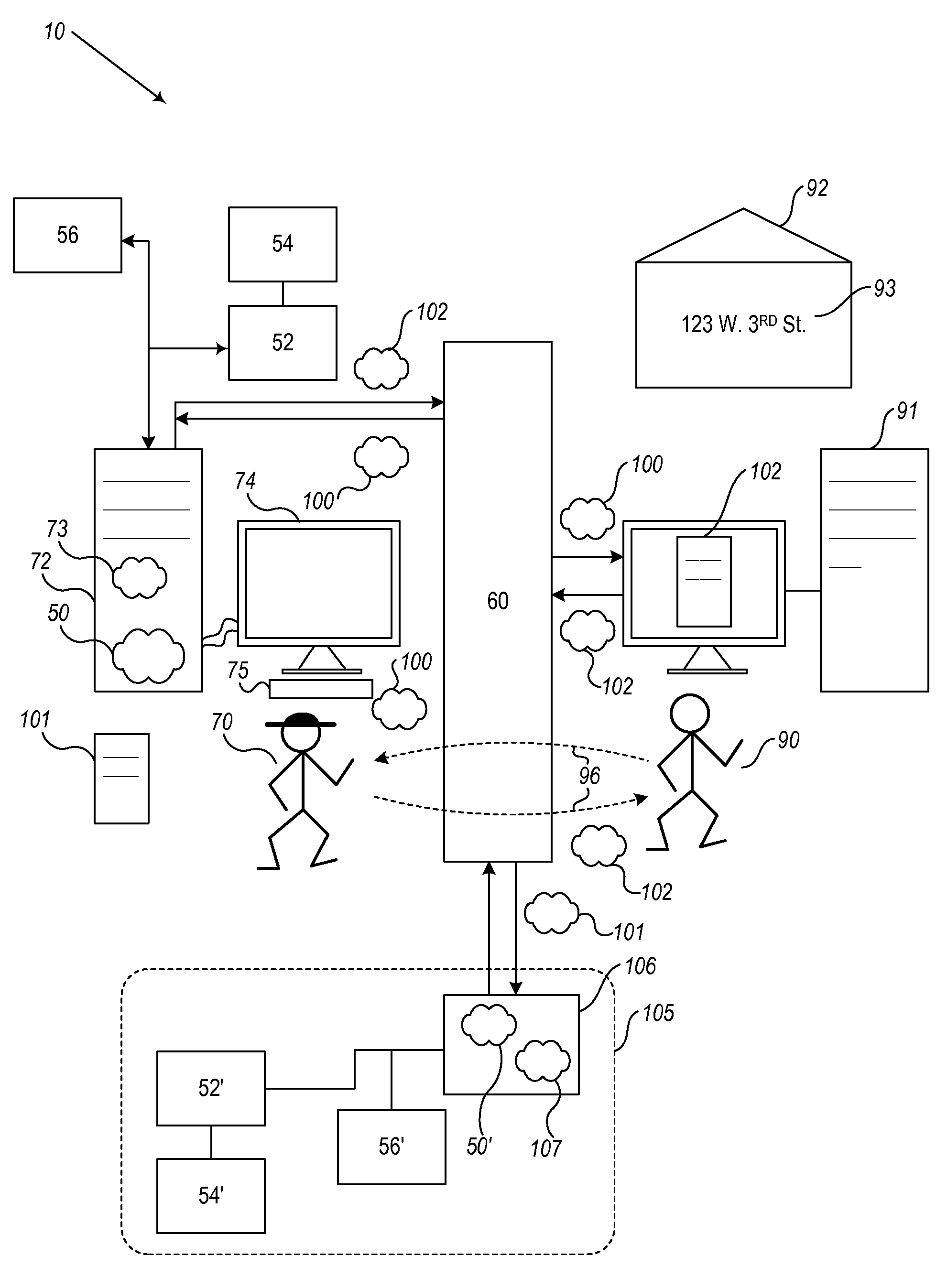

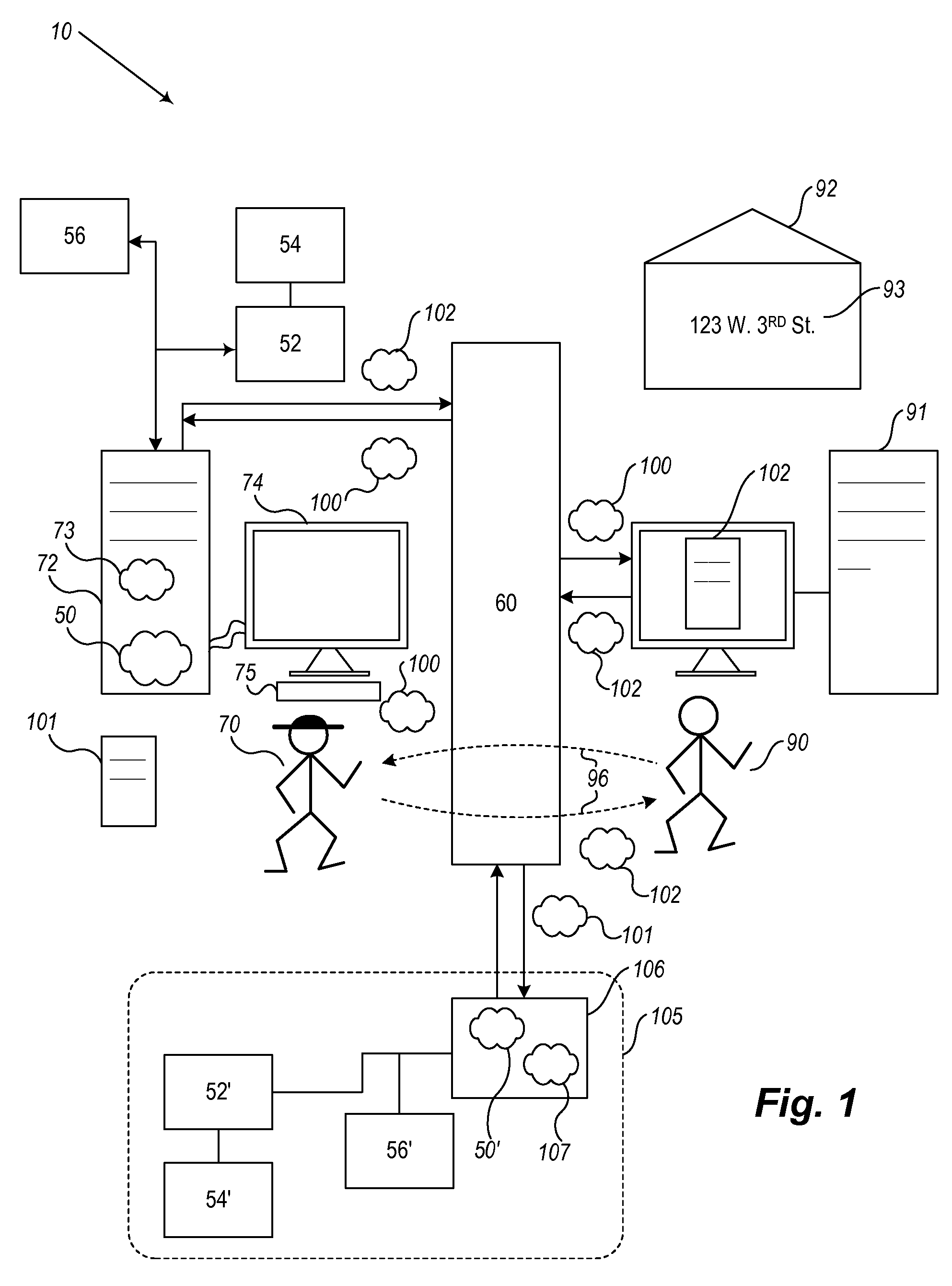

Methods and Systems for Provisioning Energy Systems

ActiveUS20090304227A1Solar heating energySolar heat simulation/predictionThird partySize determination

The invention provides consumers, private enterprises, government agencies, contractors and third party vendors with tools and resources for gathering site specific information related to purchase and installation of energy systems. A system according to one embodiment of the invention remotely determines the measurements of a roof. An exemplary system comprises a computer including an input means, a display means and a working memory. An aerial image file database contains a plurality of aerial images of roofs of buildings in a selected region. A roof estimating software program receives location information of a building in the selected region and then presents the aerial image files showing roof sections of building located at the location information. Some embodiments of the system include a sizing tool for determining the size, geometry, and pitch of the roof sections of a building being displayed.

Owner:SUNGEVITY

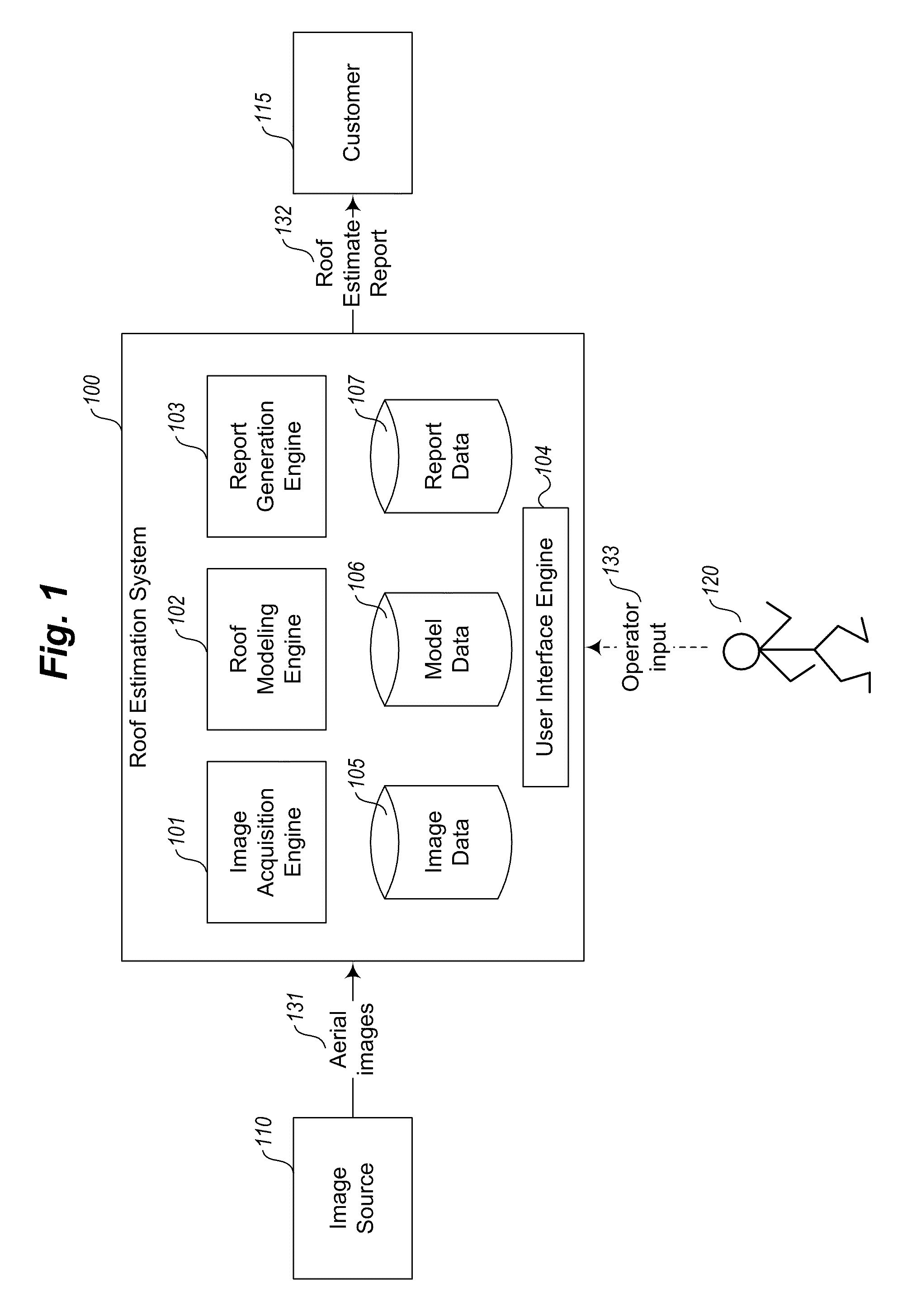

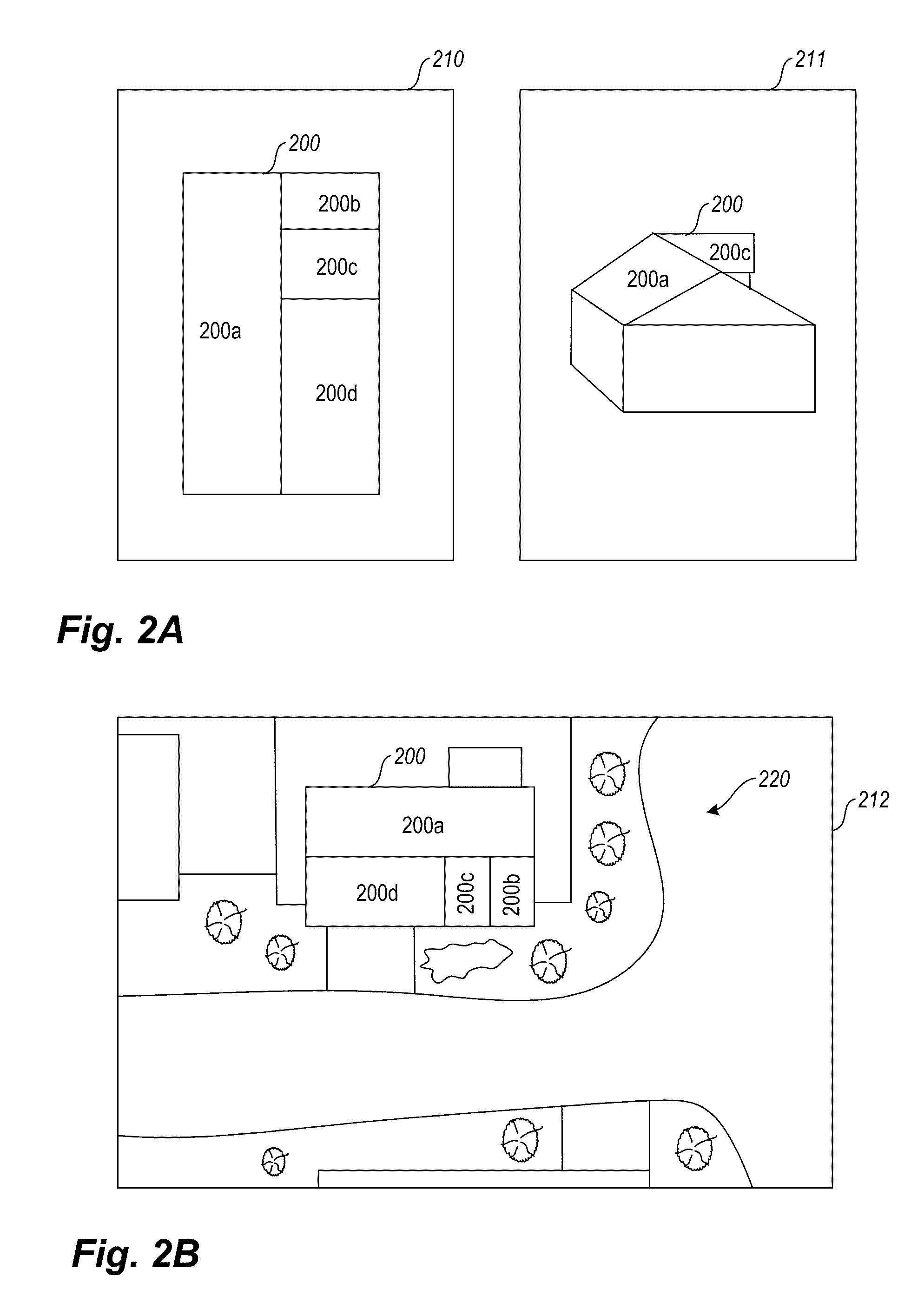

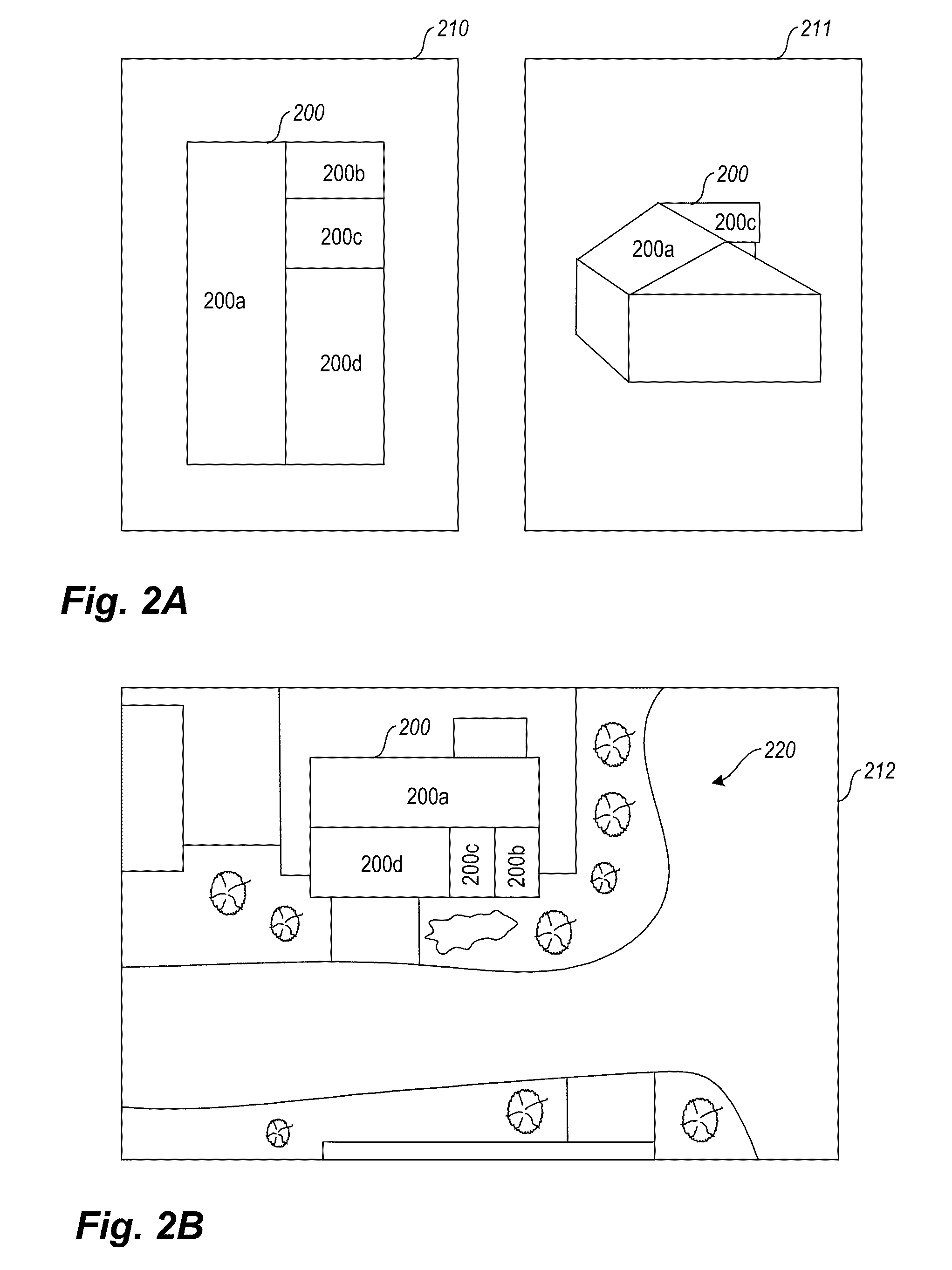

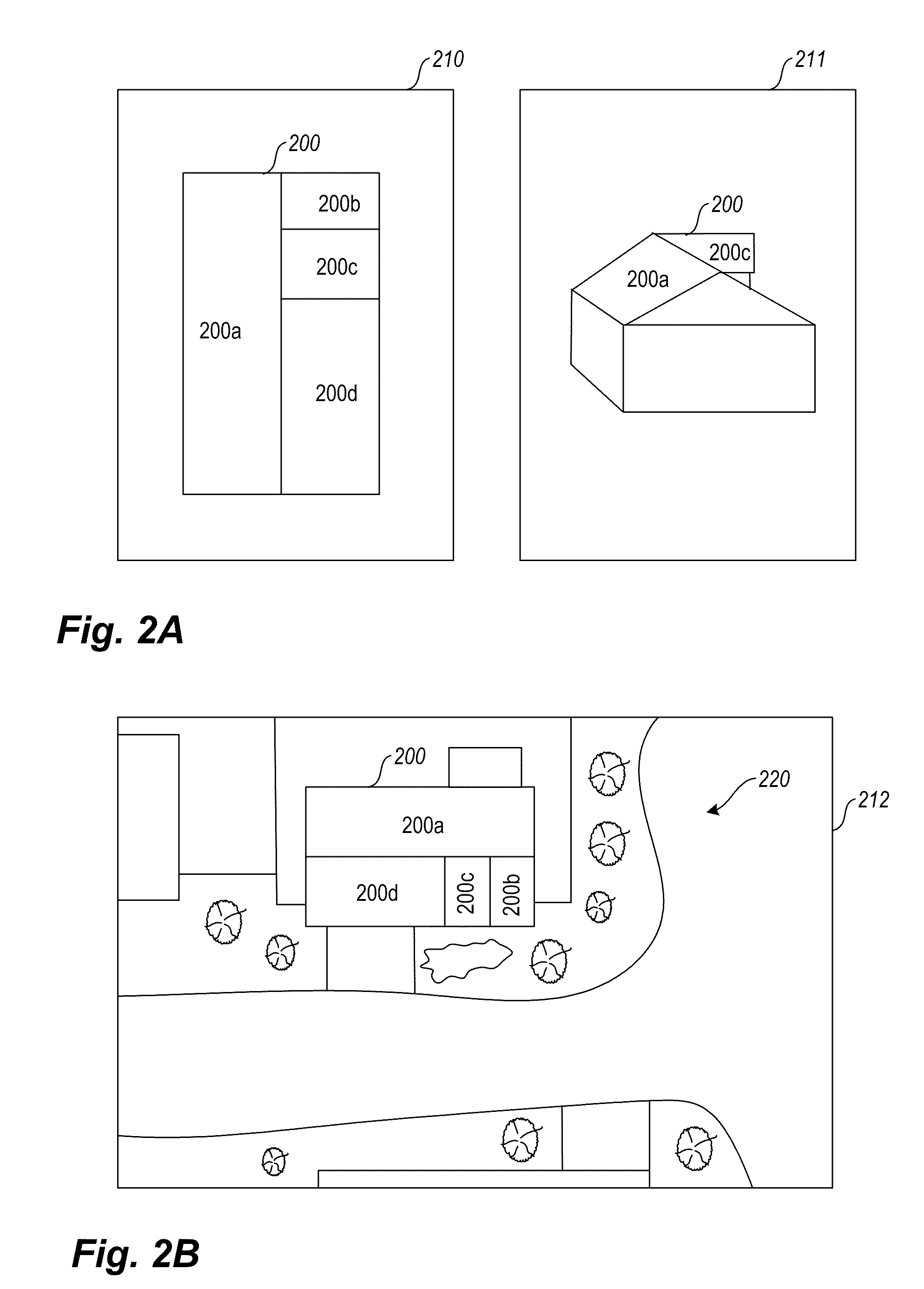

Aerial roof estimation systems and methods

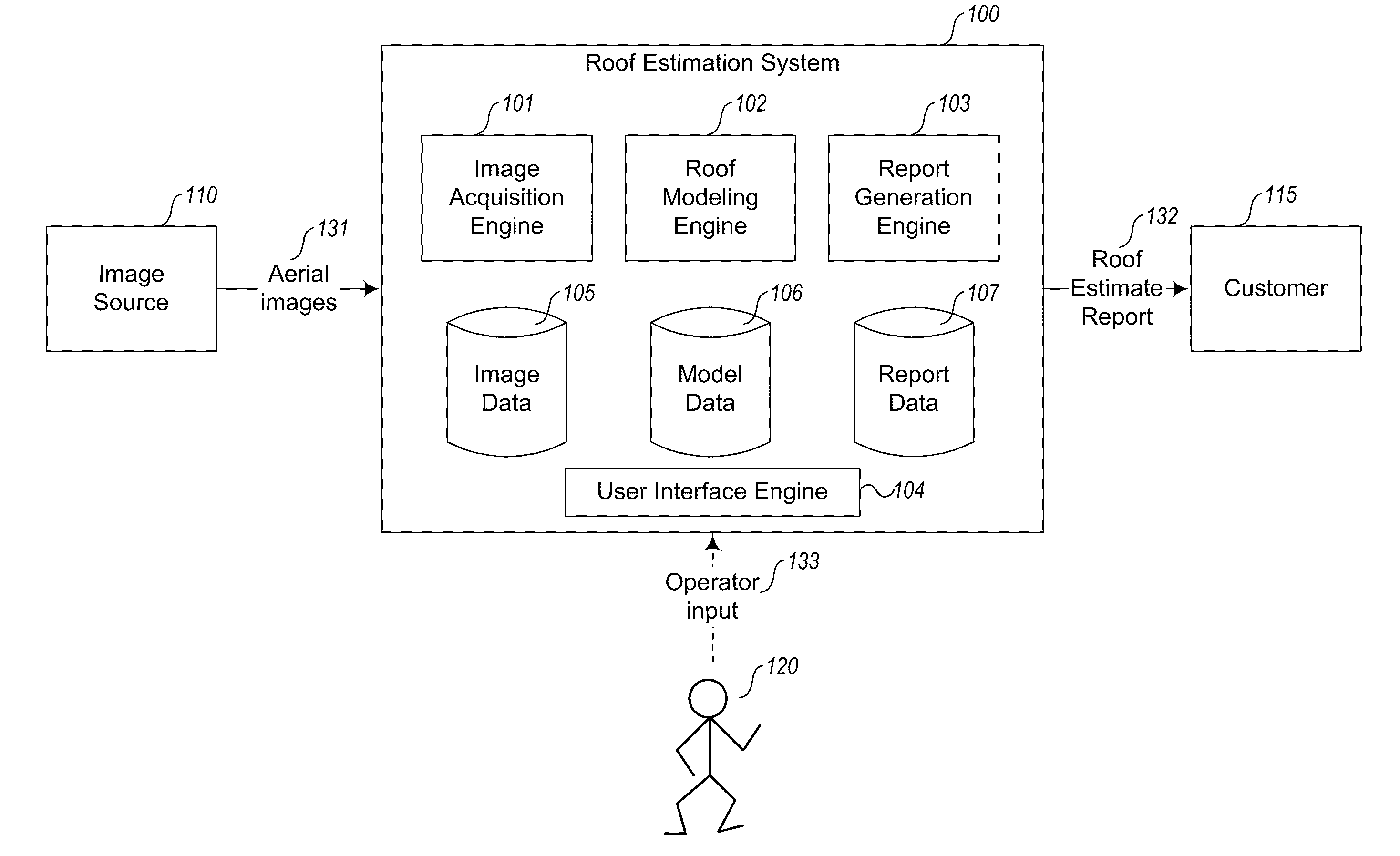

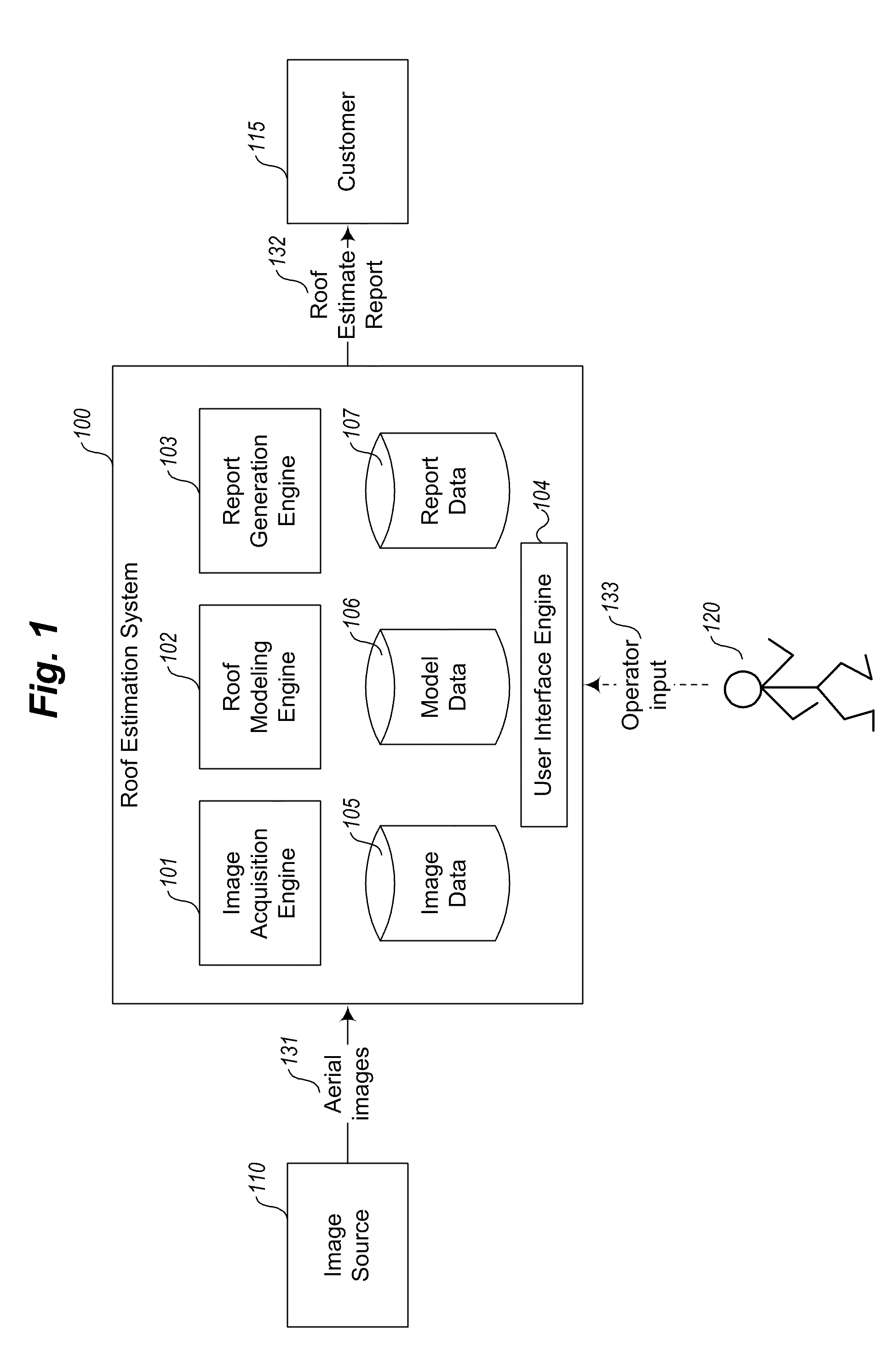

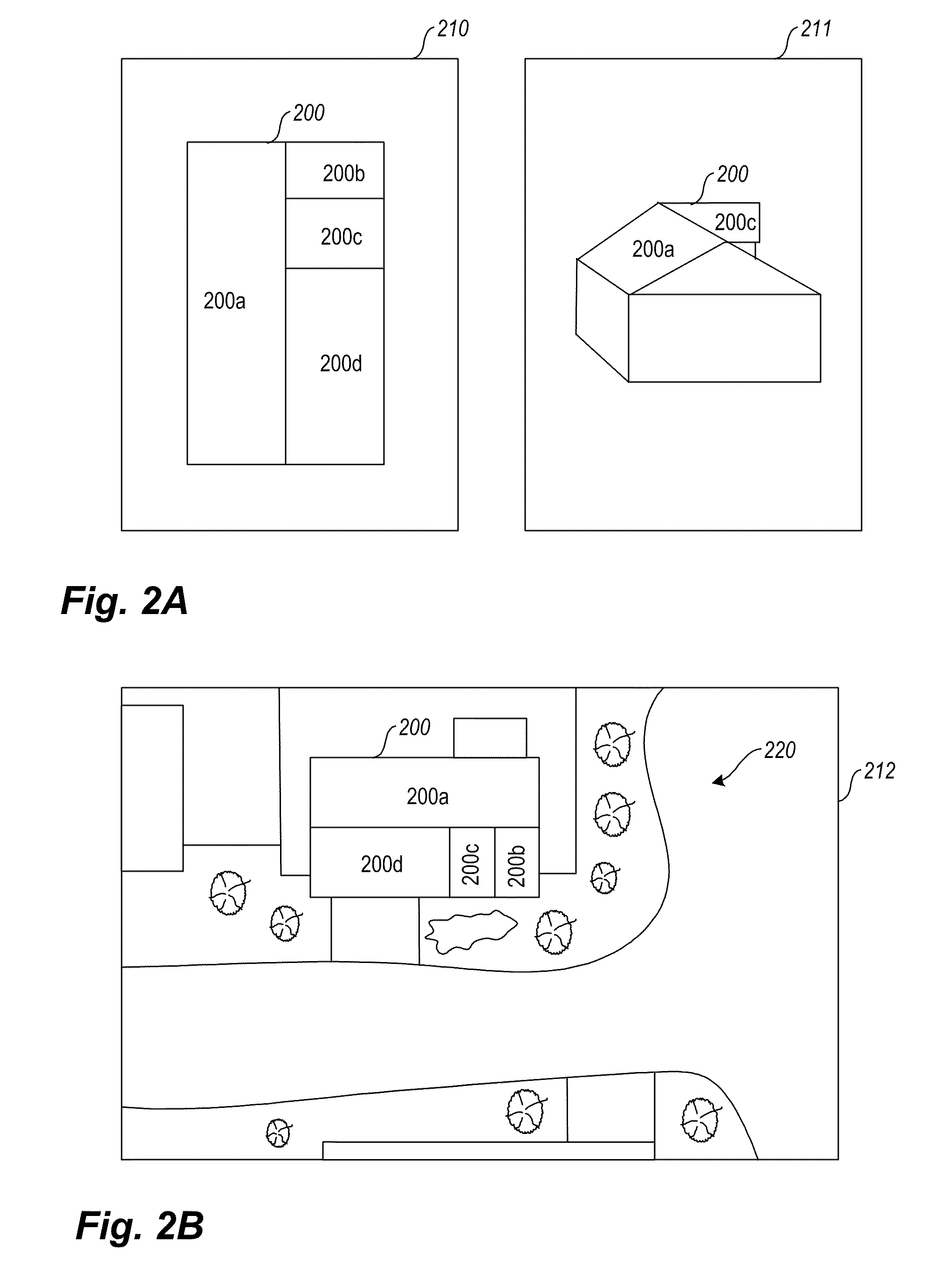

Methods and systems for roof estimation are described. Example embodiments include a roof estimation system, which generates and provides roof estimate reports annotated with indications of the size, geometry, pitch and / or orientation of the roof sections of a building. Generating a roof estimate report may be based on one or more aerial images of a building. In some embodiments, generating a roof estimate report of a specified building roof may include generating a three-dimensional model of the roof, and generating a report that includes one or more views of the three-dimensional model, the views annotated with indications of the dimensions, area, and / or slope of sections of the roof. This abstract is provided to comply with rules requiring an abstract, and it is submitted with the intention that it will not be used to interpret or limit the scope or meaning of the claims.

Owner:EAGLEVIEW TECH

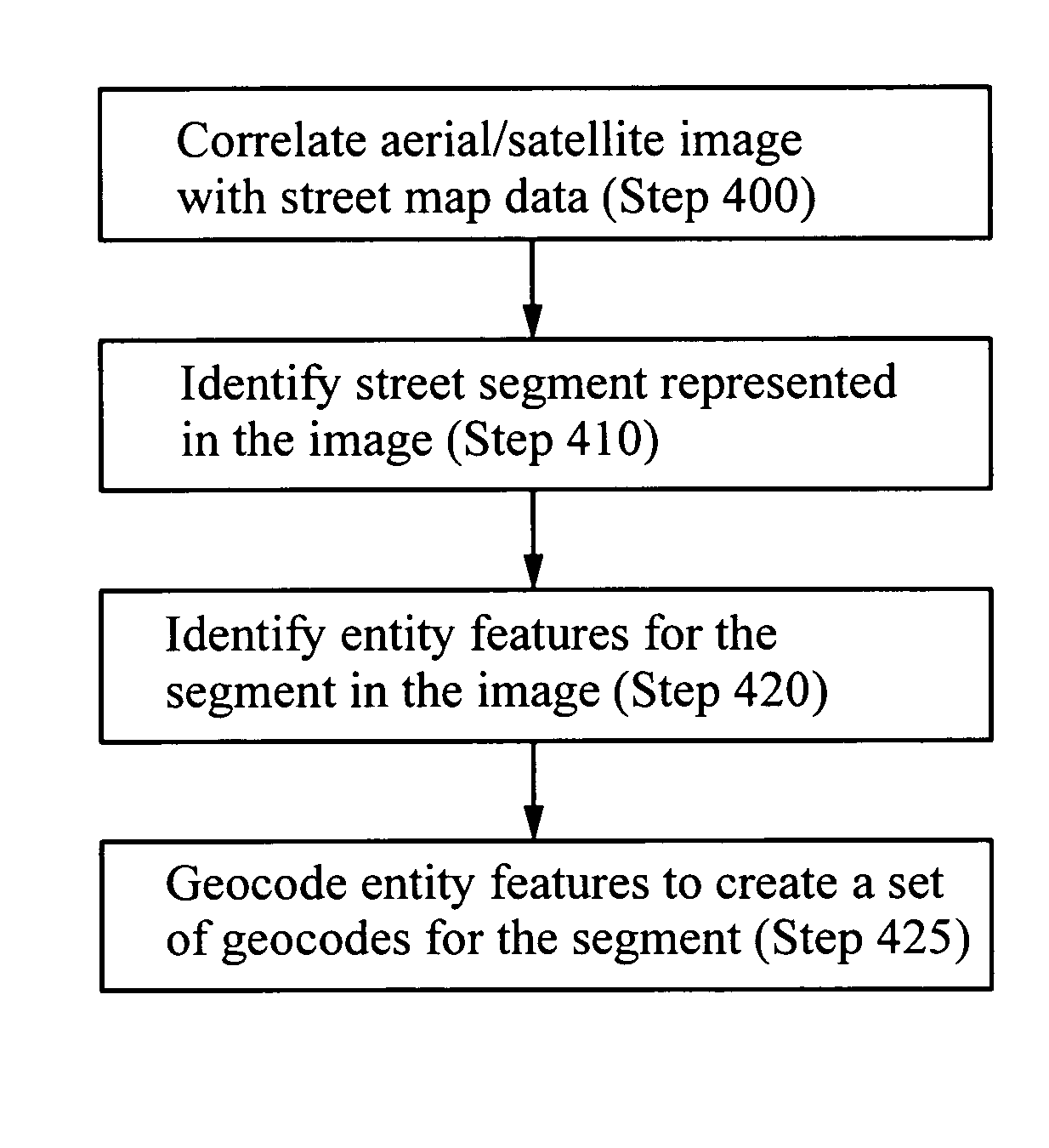

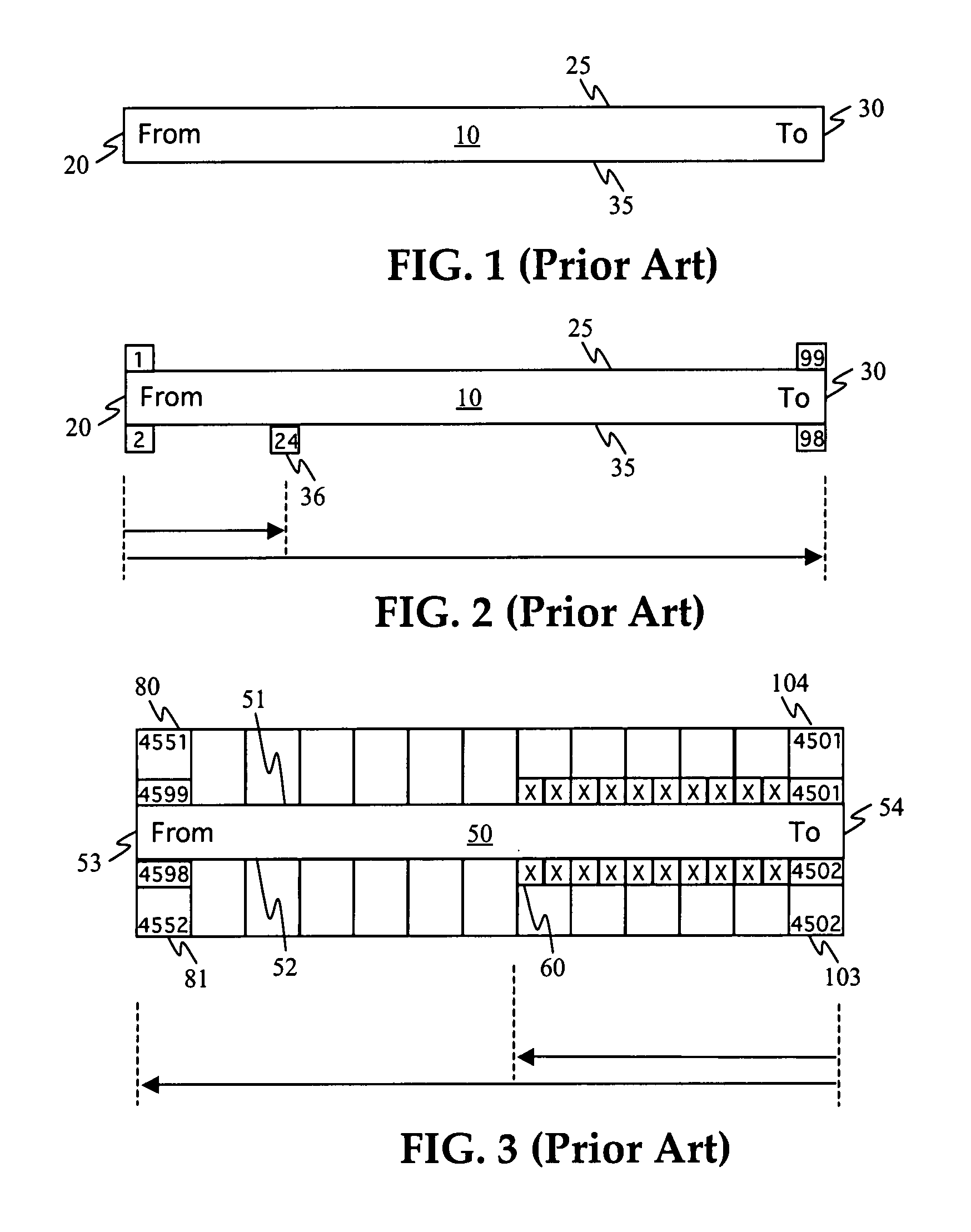

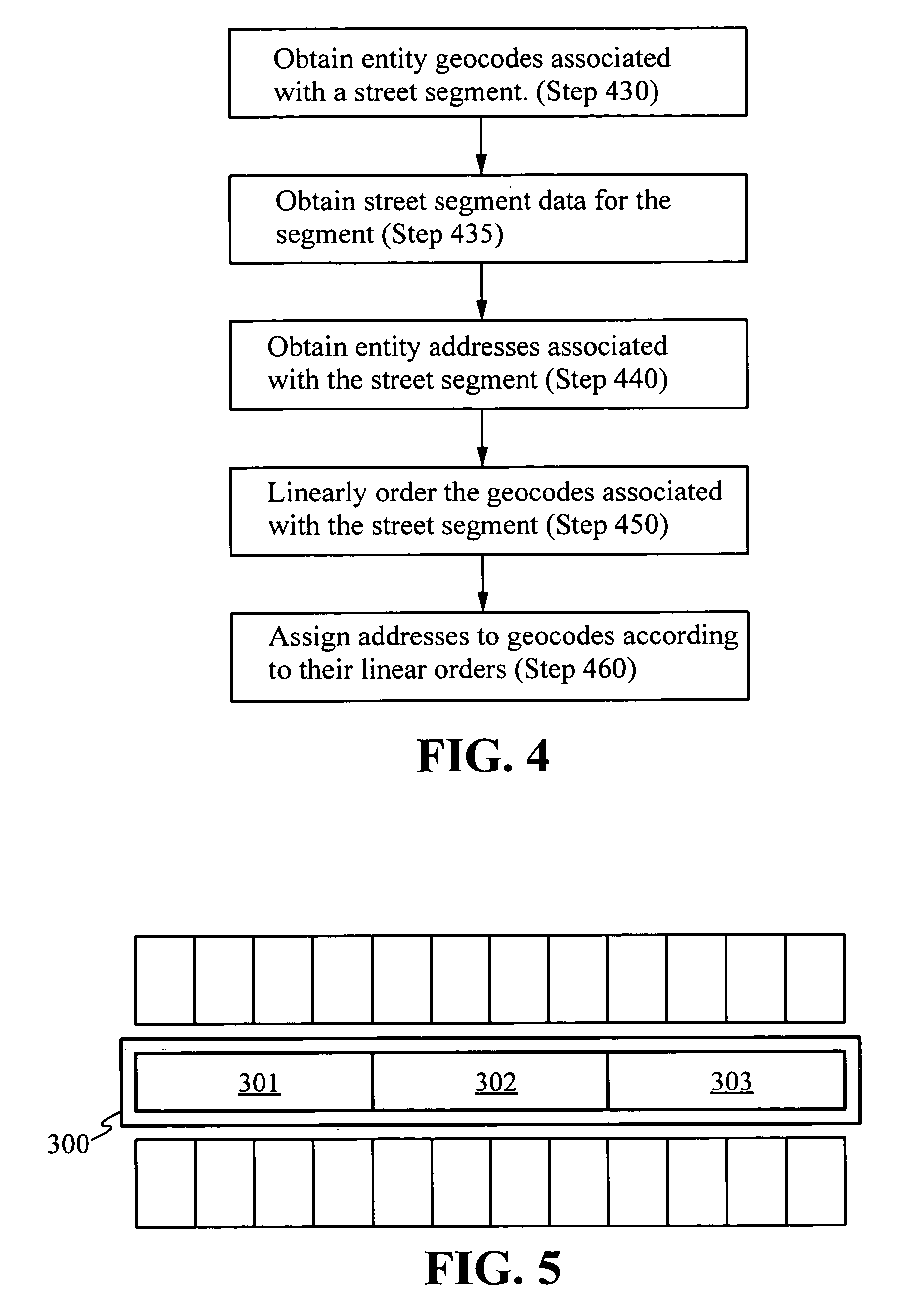

Methods for assigning geocodes to street addressable entities

ActiveUS7324666B2Improve accuracyInstruments for road network navigationCharacter and pattern recognitionImaging FeatureAerial photography

A method for assigning of geocodes to addresses identifying entities on a street includes ordering a set of entity geocodes associated with a street segment based on ordering criteria such as the linear distance of the geocodes along the length of the street segment. Assignable addresses are matched with the ordered geocodes creating a correspondence between a numerical order of the assignable addresses and a linear order of the ordered set of geocodes, consistent with address range direction data and street segment side data. The geocodes may be implicit or explicit, locally unique or globally unique. The set of entity geocodes may be obtained by identifying image features in aerial imagery corresponding to streets and buildings and correlating this information with data from a street map database.

Owner:TAMIRAS PER PTE LTD LLC

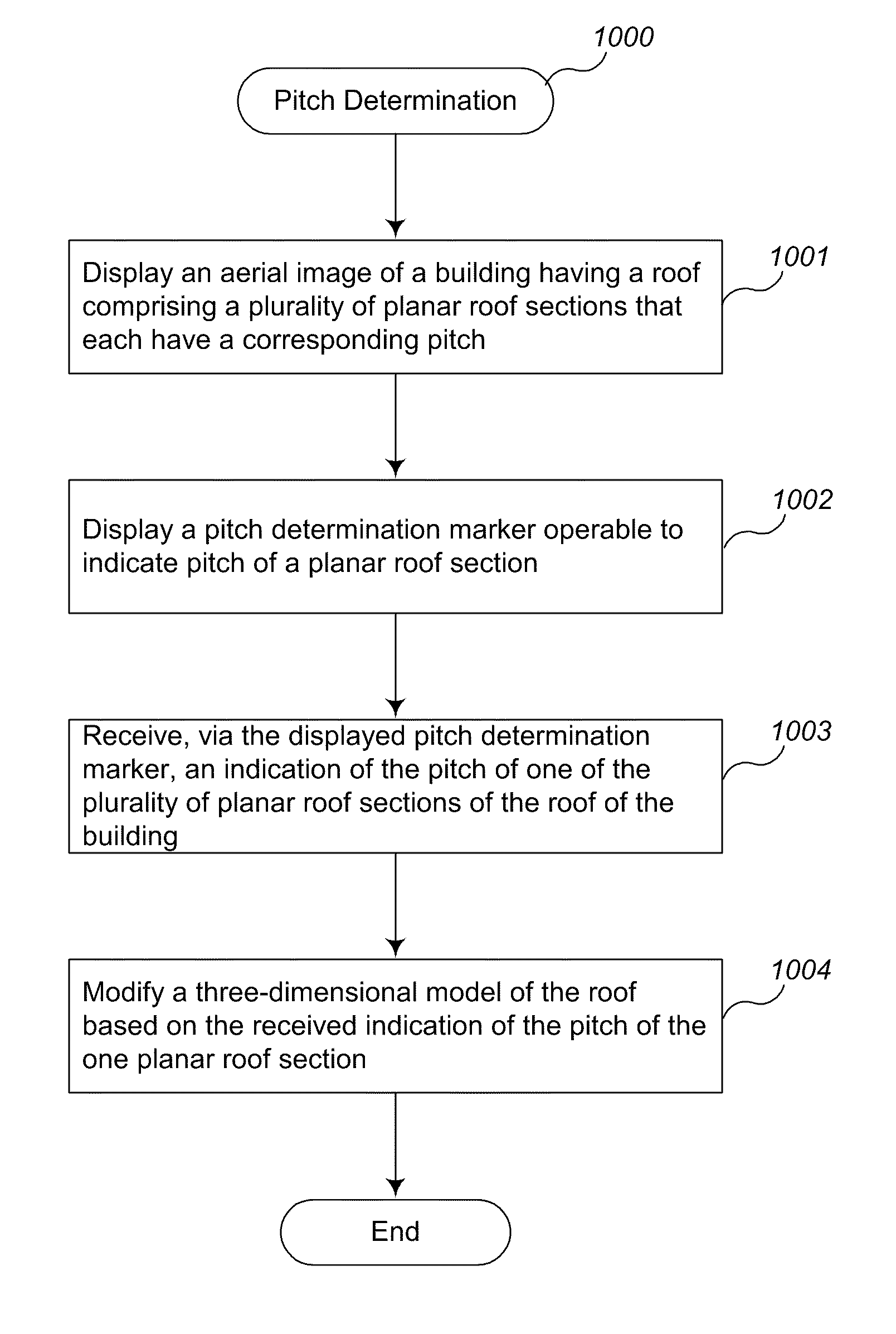

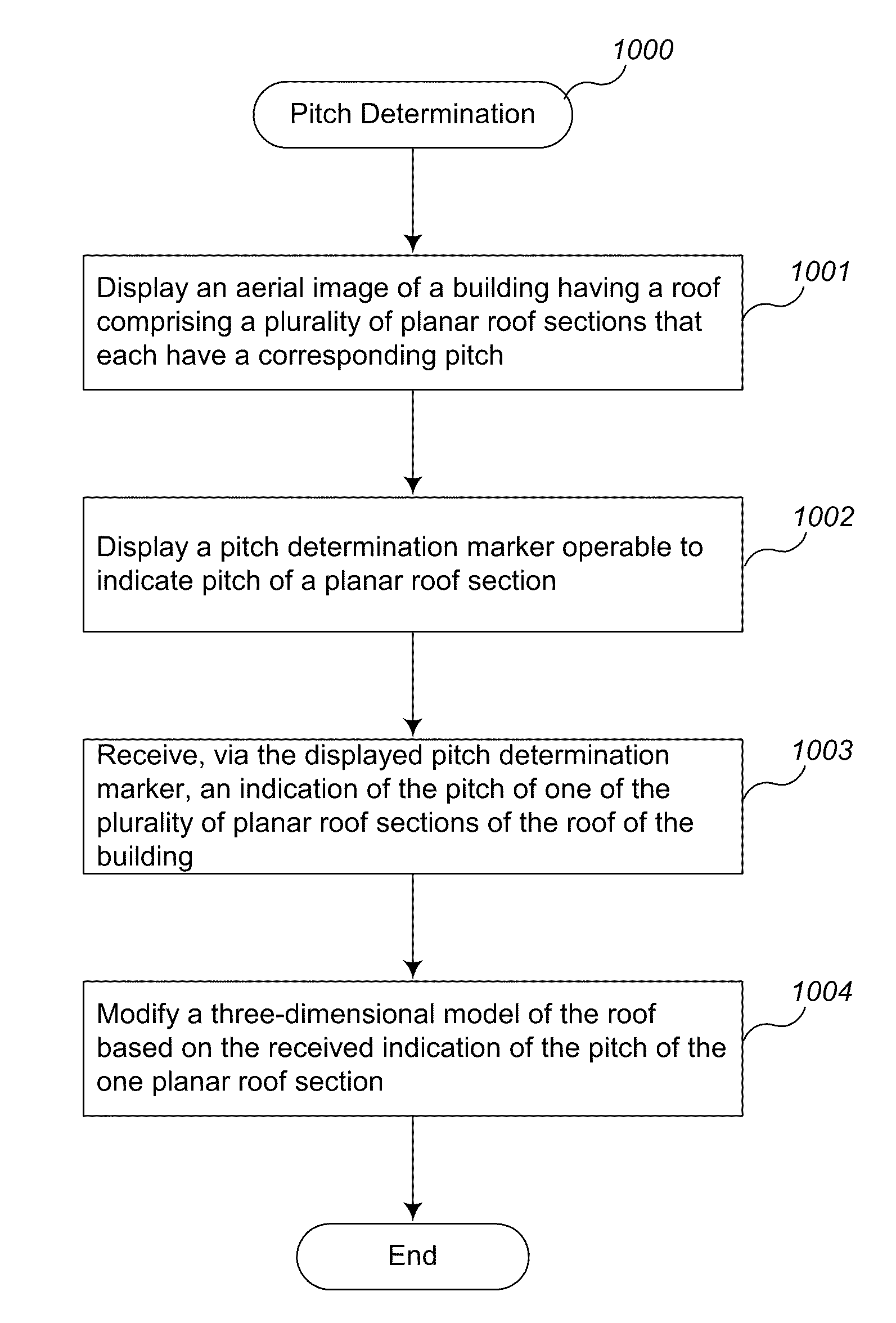

Pitch determination systems and methods for aerial roof estimation

User interface systems and methods for roof estimation are described. Example embodiments include a roof estimation system that provides a user interface configured to facilitate roof model generation based on one or more aerial images of a building roof. In one embodiment, roof model generation includes image registration, image lean correction, roof section pitch determination, wire frame model construction, and / or roof model review. The described user interface provides user interface controls that may be manipulated by an operator to perform at least some of the functions of roof model generation. In one embodiment, the user interface provides user interface controls that facilitate the determination of pitch of one or more sections of a building roof. This abstract is provided to comply with rules requiring an abstract, and it is submitted with the intention that it will not be used to interpret or limit the scope or meaning of the claims.

Owner:EAGLEVIEW TECH

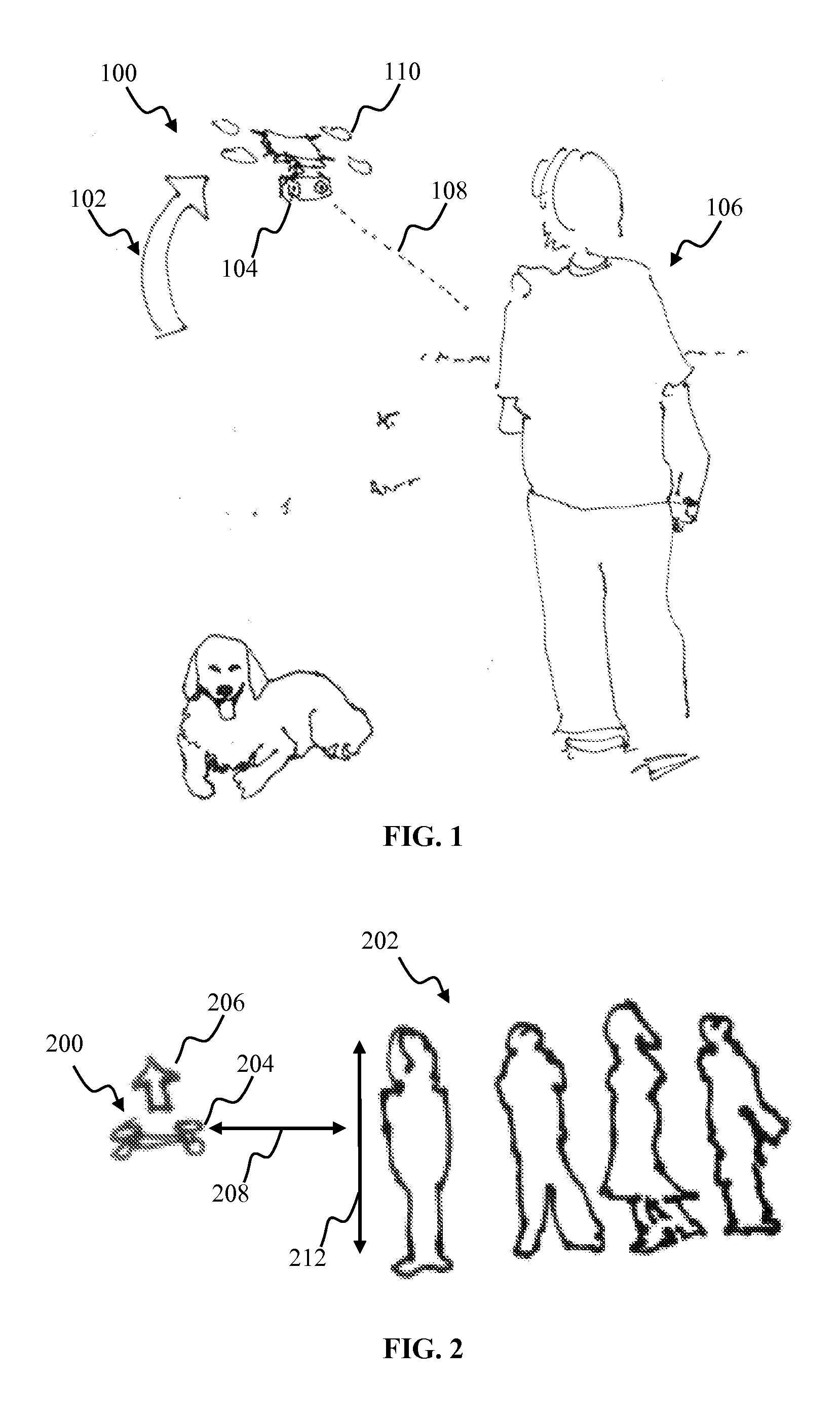

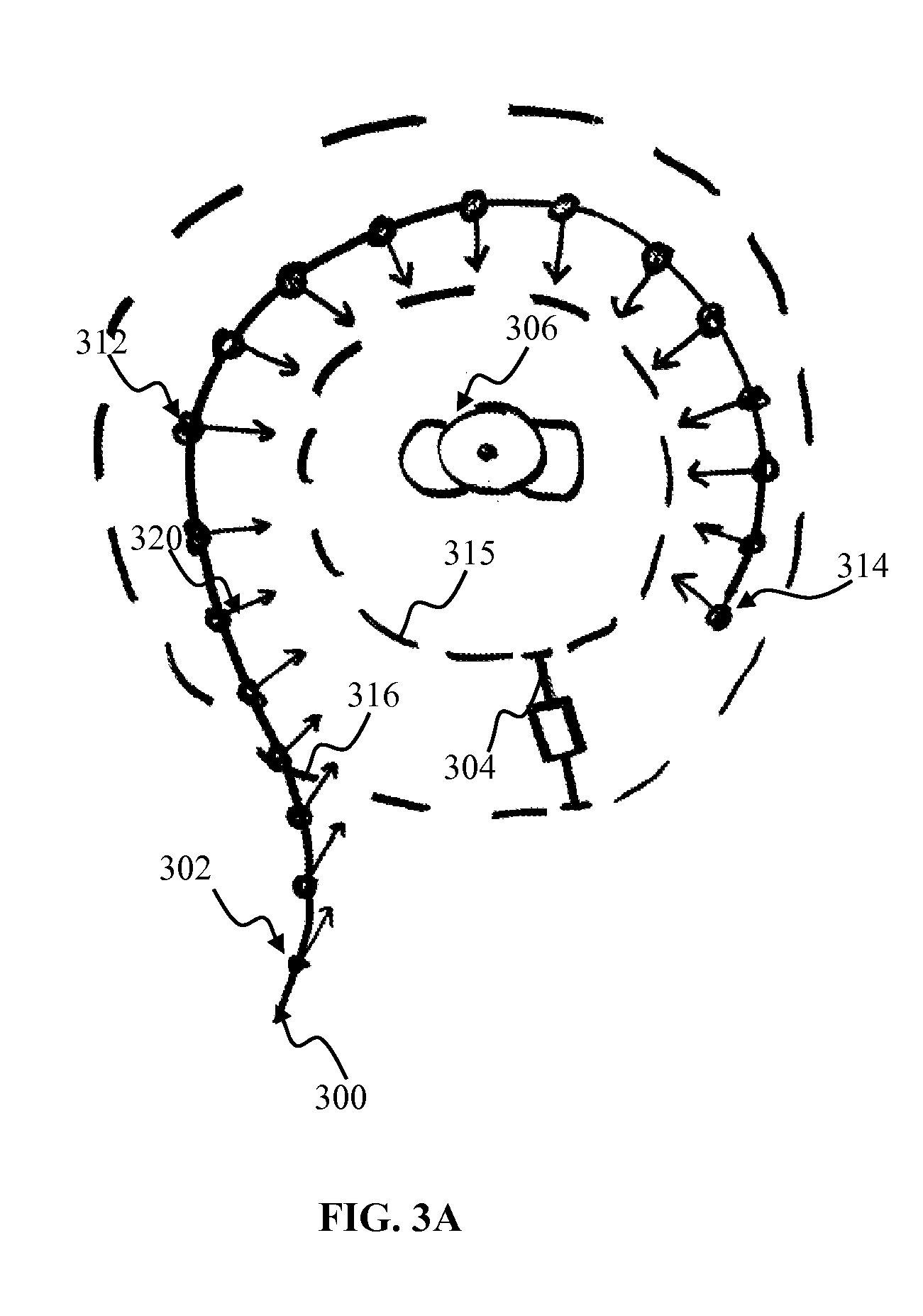

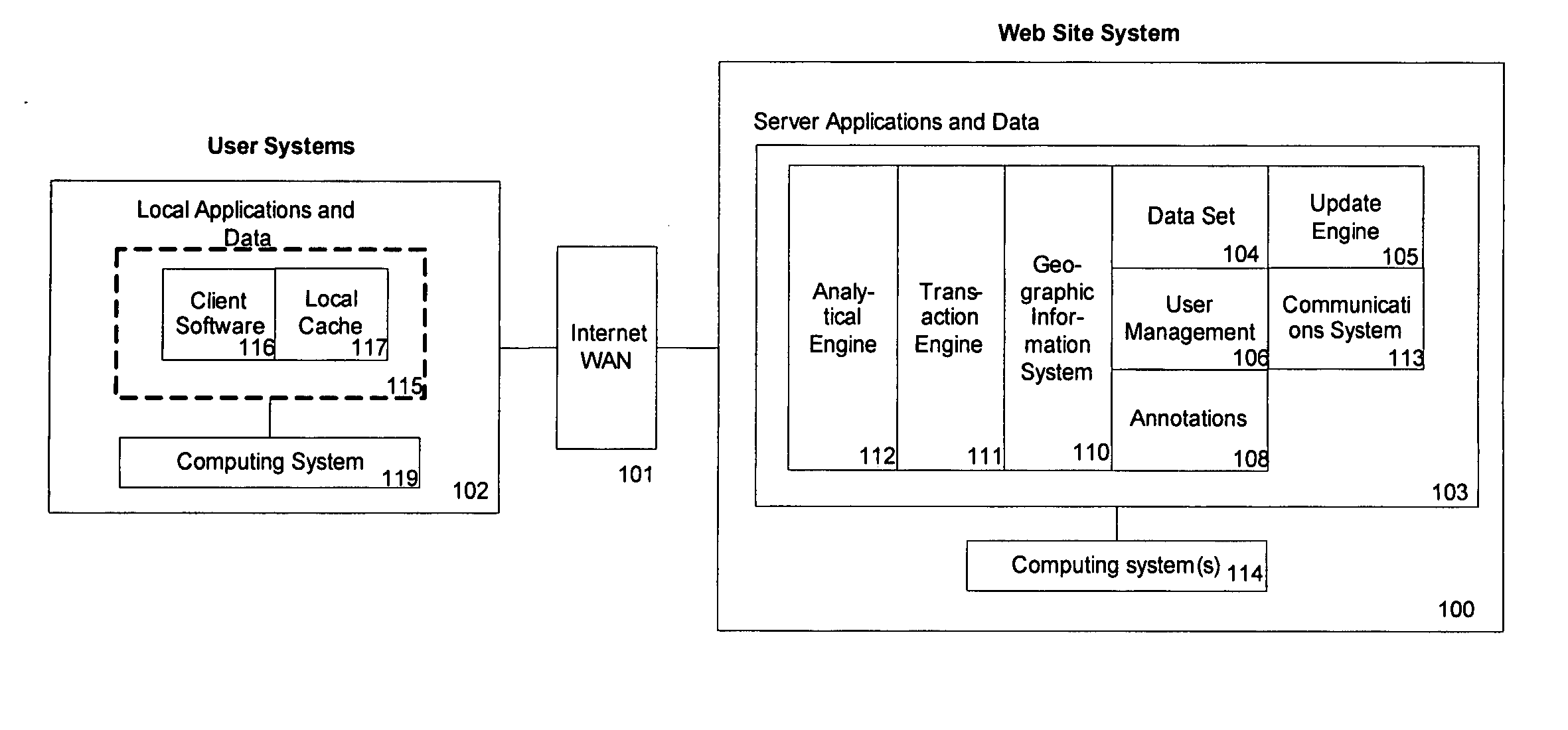

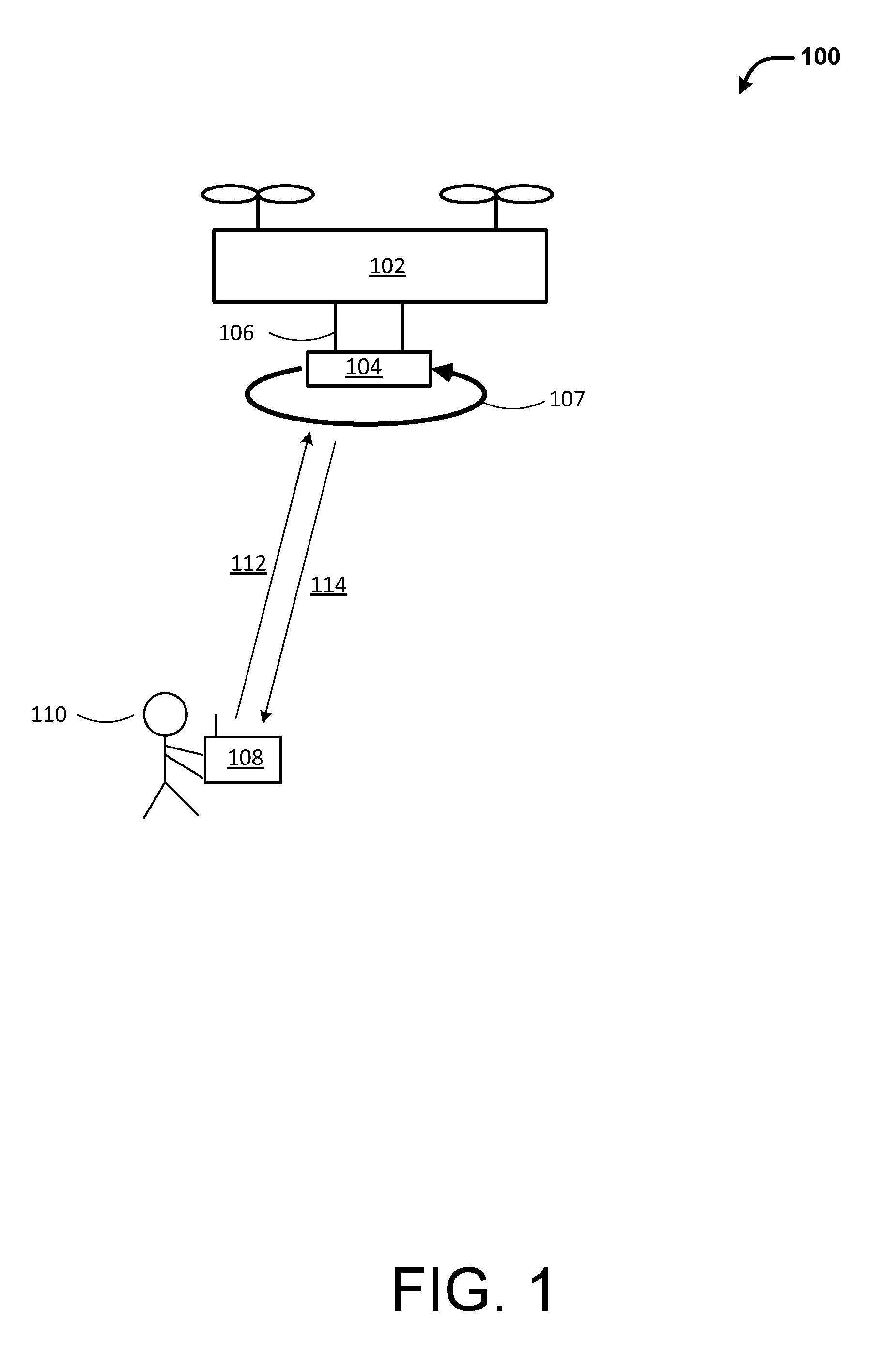

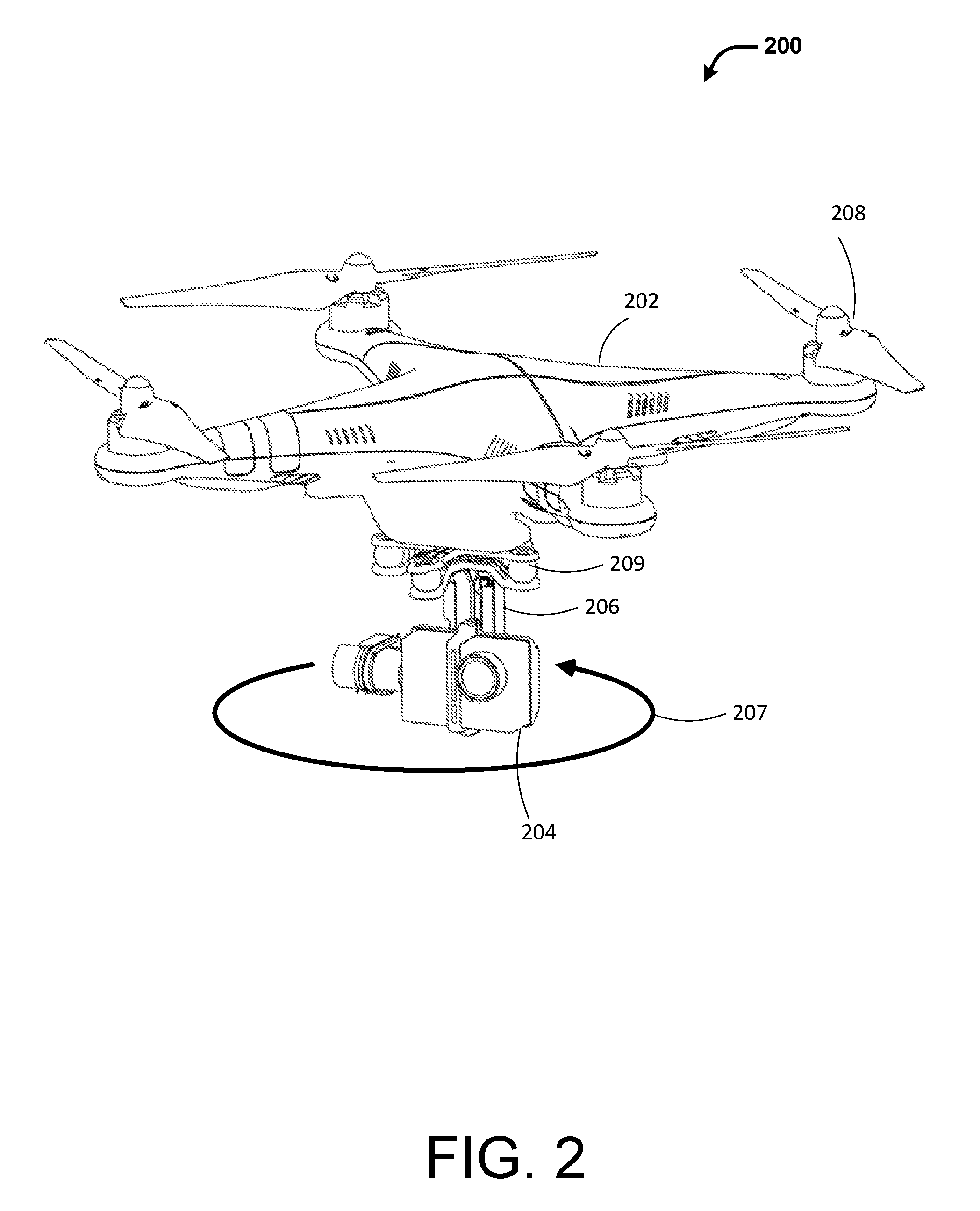

Apparatus and methods for tracking using aerial video

InactiveUS20150350614A1Television system detailsColor television detailsIndication of interestAerial video

In some implementations, a camera may be disposed on an autonomous aerial platform. A user may operate a smart wearable device adapted to configured, and / or operate video data acquisition by the camera. The camera may be configured to produce a time stamp, and / or a video snippet based on receipt of an indication of interest from the user. The aerial platform may comprise a controller configured to navigate a target trajectory space. In some implementation, a data acquisition system may enable the user to obtain video footage of the user performing an action from the platform circling around the user.

Owner:GOPRO

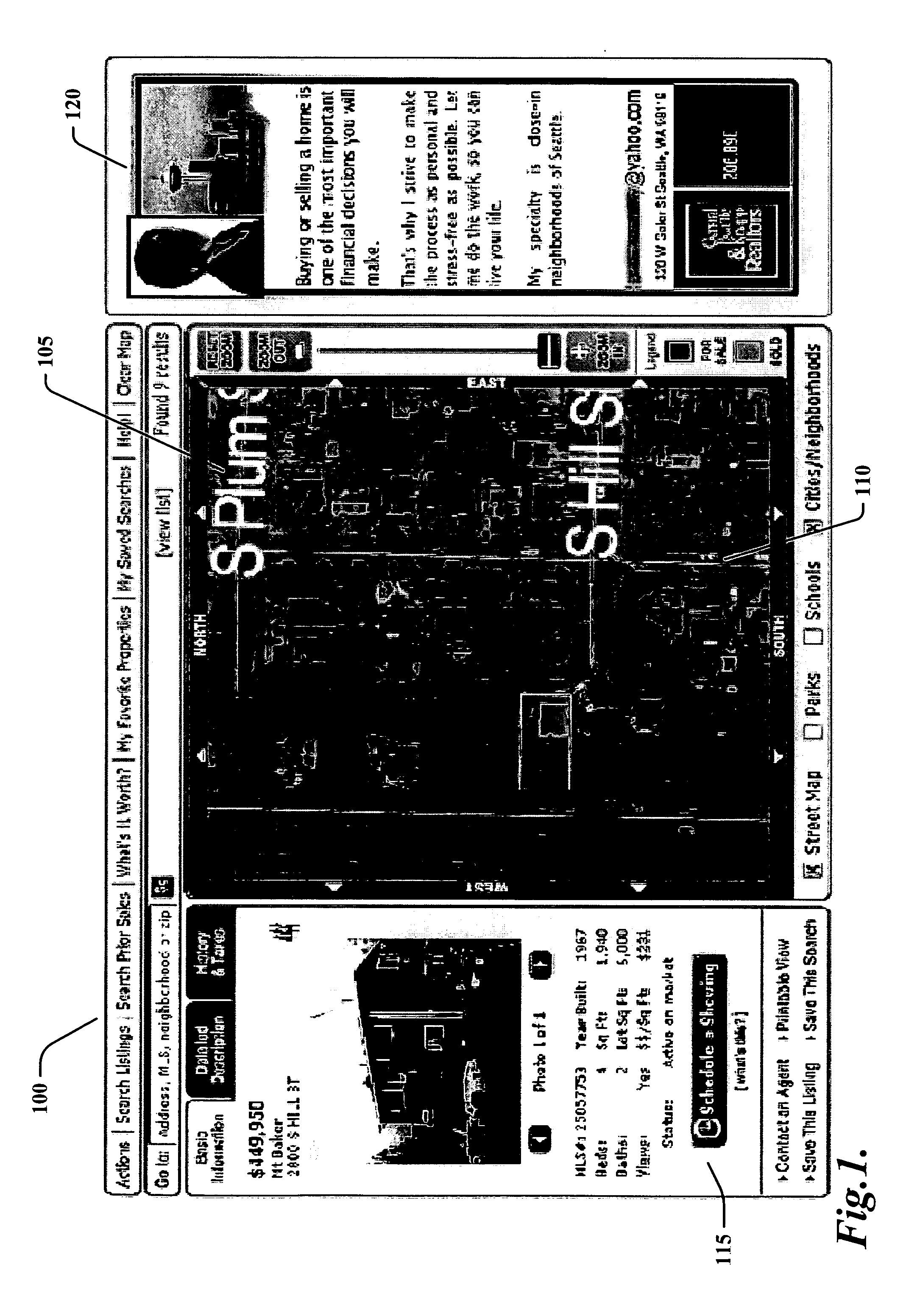

Web-based real estate mapping system

An innovative web-based tool displays visual information about real estate. In one embodiment, an aerial image is overlaid with various data layers to visually present real estate data. Data associated with various embodiments of the tool can include tax parcel information, historical sales information, Multiple Listing Service information, school information, neighborhood information, and park information.

Owner:REDFIN CORP

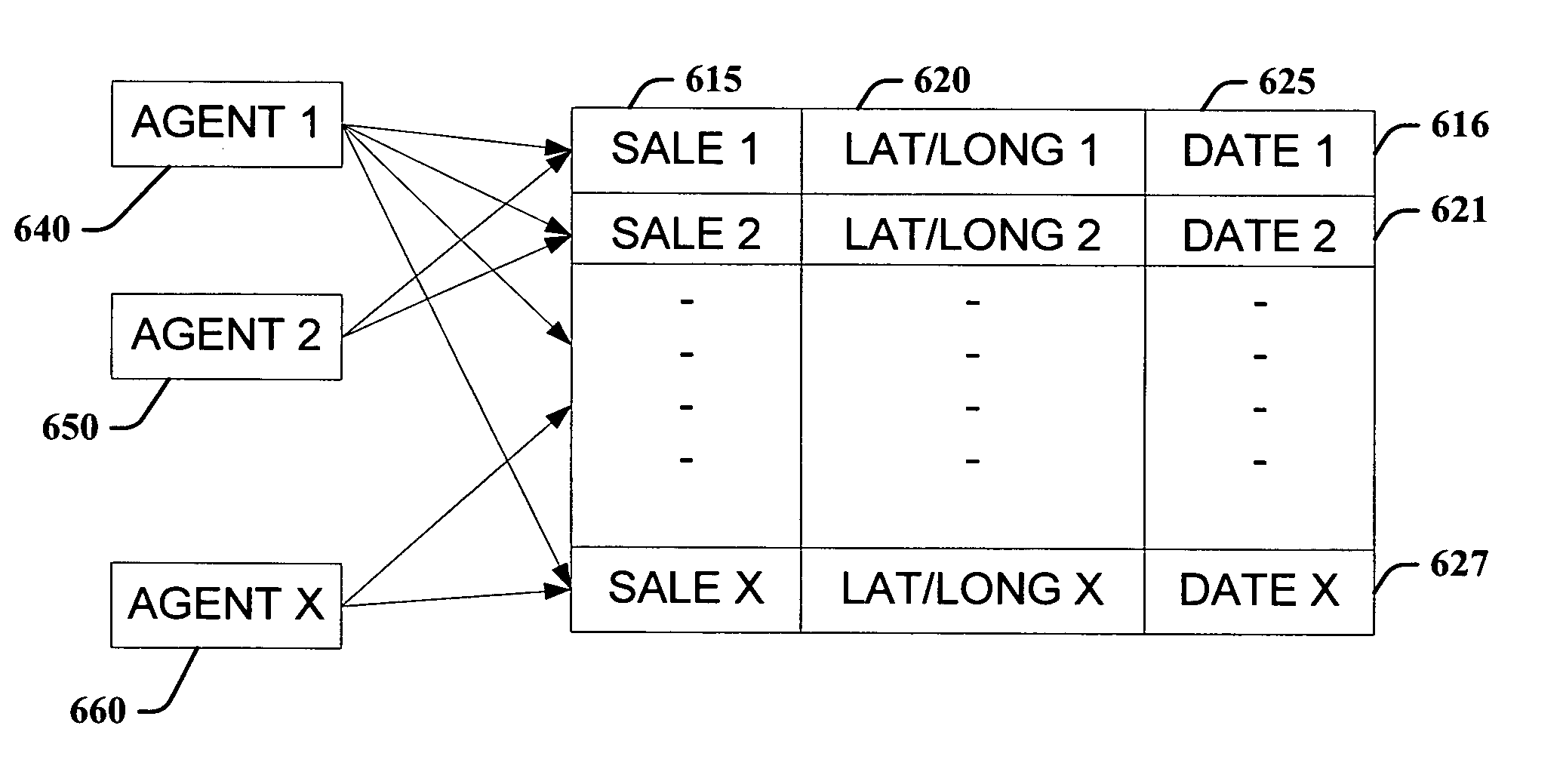

Map-based search for real estate service providers

InactiveUS20050288959A1Multiple digital computer combinationsGeographical information databasesGeographic regionsService provision

A computer system for locating real estate service providers by navigating an aerial image map of a geographic region. The system determines a search region corresponding to the geographic region by use of geospatial information associated with the geographic region. Once the search region is determined, the system searches a database for real estate service providers that are associated with the search region and that satisfy certain predetermined criteria.

Owner:REDFIN CORP

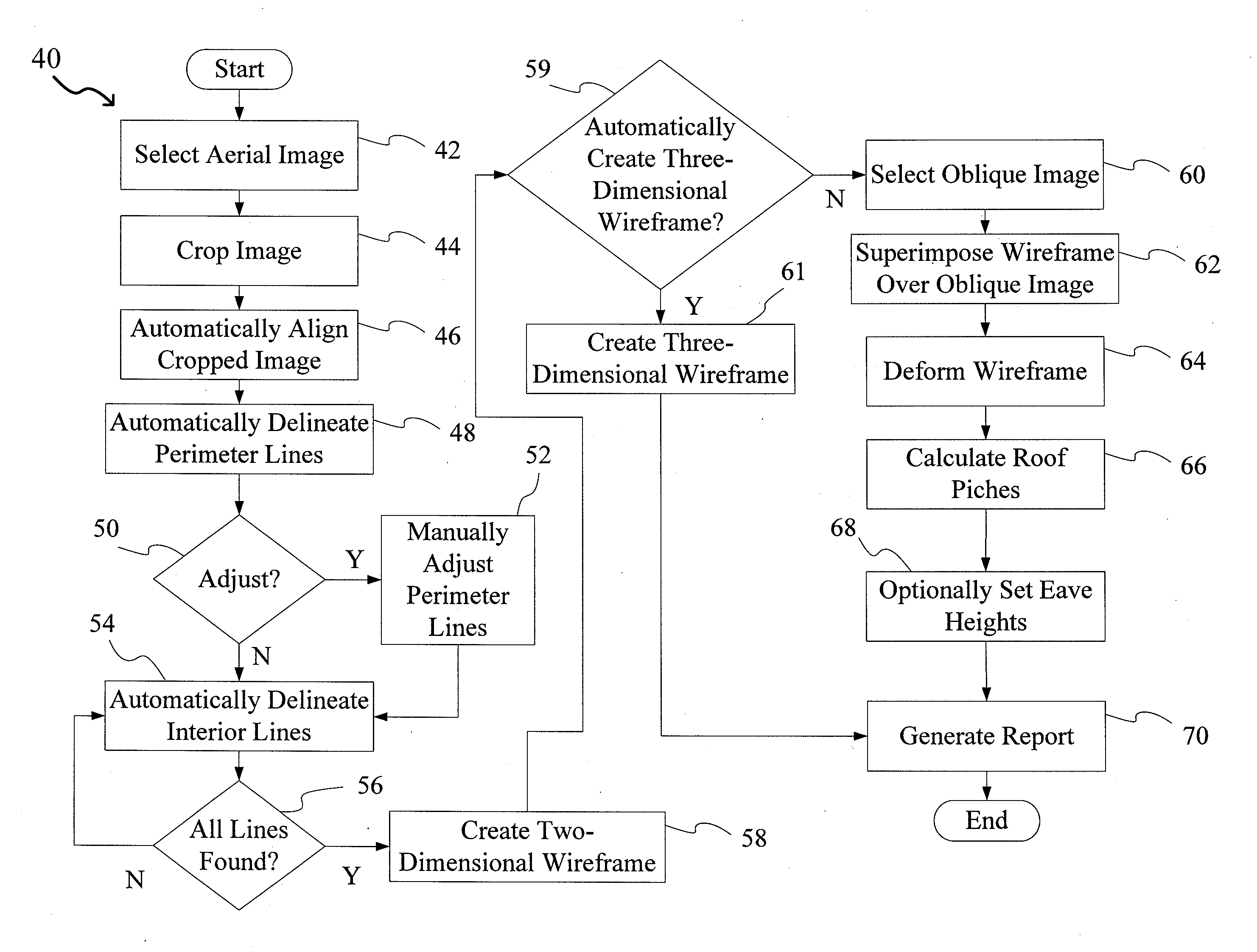

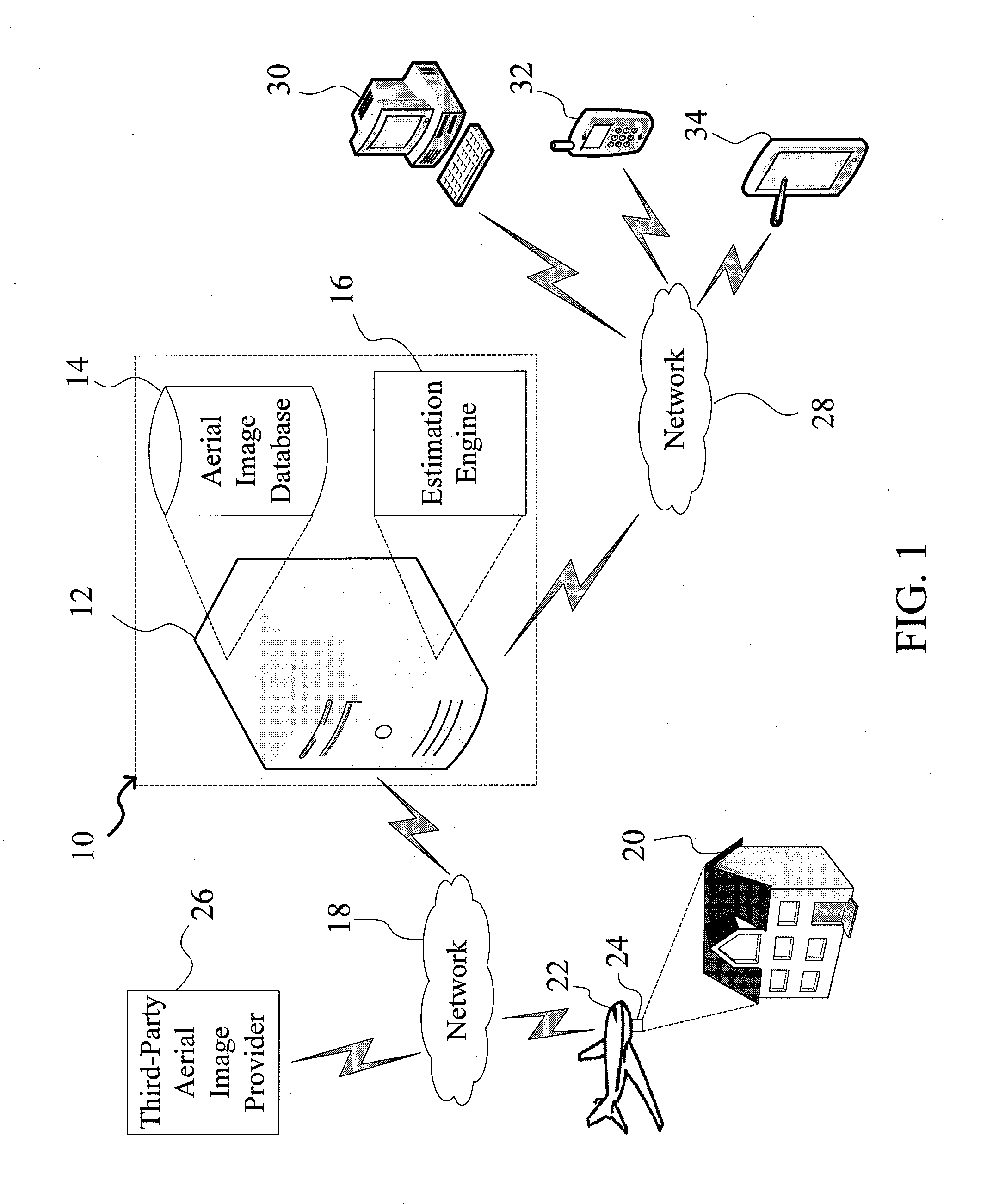

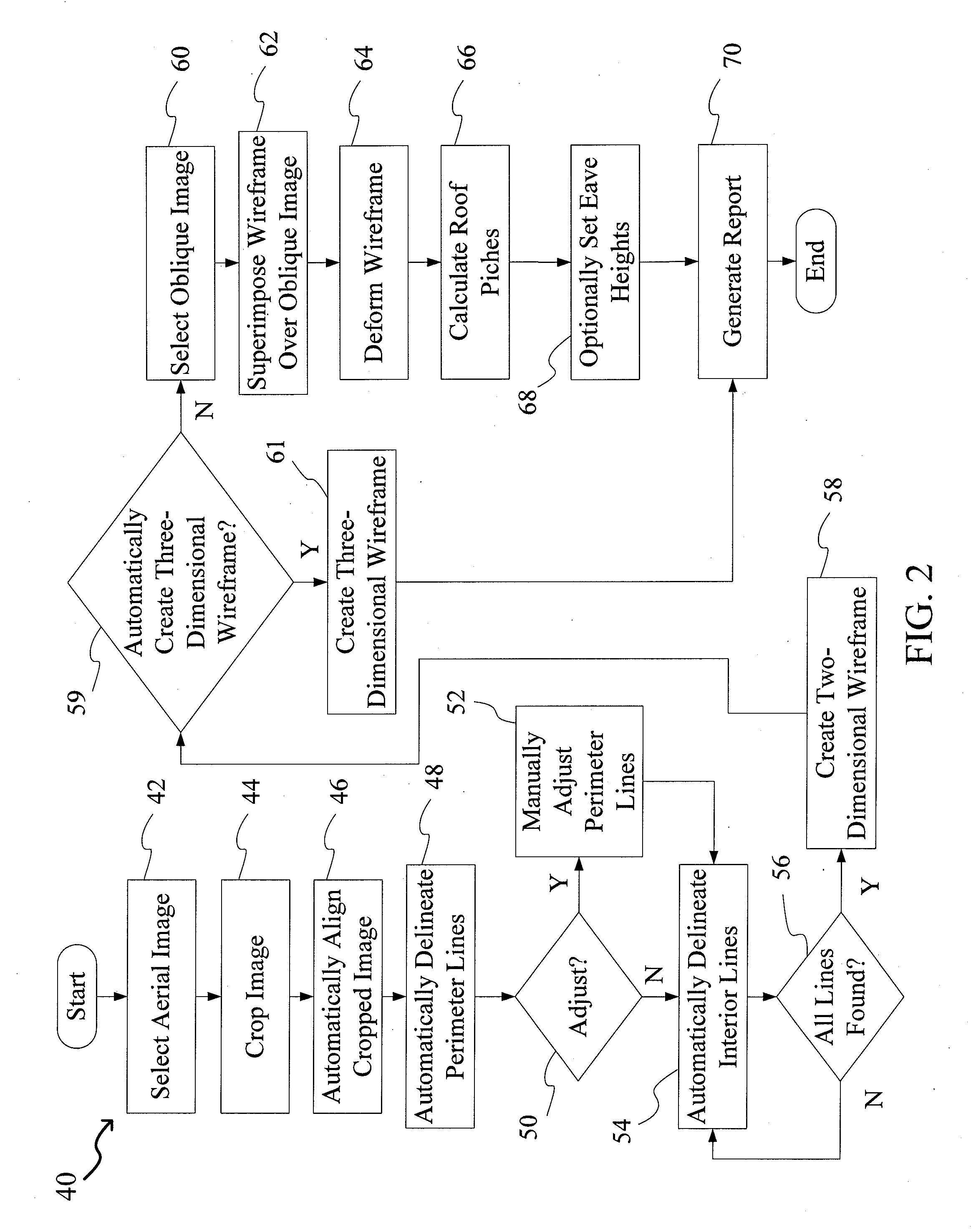

System and Method for Construction Estimation Using Aerial Images

A system and method for construction estimation using aerial images is provided. The system receives at least one aerial image of a building. An estimation engine processes the aerial image at a plurality of angles to automatically identify a plurality (e.g., perimeter and interior) lines in the image corresponding to a plurality of features of a roof the building. The estimation engine allows users to generate two-dimensional and three-dimensional models of the roof by automatically delineating various roof features, and generates a report including information about the roof of the building.

Owner:XACTWARE SOLUTIONS

Concurrent display systems and methods for aerial roof estimation

User interface systems and methods for roof estimation are described. Example embodiments include a roof estimation system that provides a user interface configured to facilitate roof model generation based on one or more aerial images of a building roof. In one embodiment, roof model generation includes image registration, image lean correction, roof section pitch determination, wire frame model construction, and / or roof model review. The described user interface provides user interface controls that may be manipulated by an operator to perform at least some of the functions of roof model generation. The user interface is further configured to concurrently display roof features onto multiple images of a roof. This abstract is provided to comply with rules requiring an abstract, and it is submitted with the intention that it will not be used to interpret or limit the scope or meaning of the claims.

Owner:EAGLEVIEW TECH

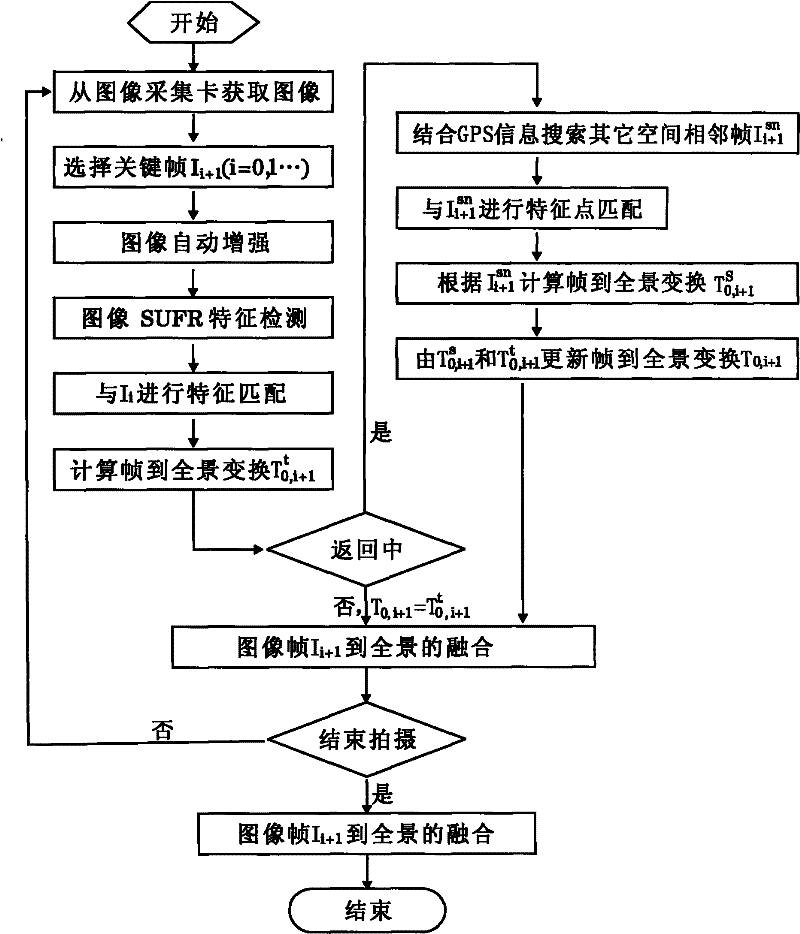

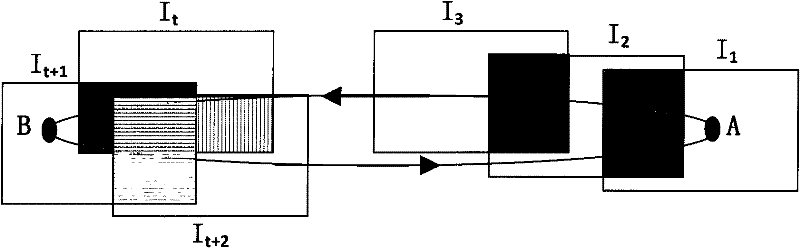

Real-time panoramic image stitching method of aerial videos shot by unmanned plane

ActiveCN102201115ARealize the transformation relationshipQuickly achieve registrationTelevision system detailsImage enhancementGlobal Positioning SystemTime effect

The invention discloses a real-time panoramic image stitching method of aerial videos shot by an unmanned plane. The method comprises the steps of: utilizing a video acquisition card to acquire images which are transmitted to a base station in real time by an unmanned plane through microwave channels, carrying out key frame selection on an image sequence, and carrying out image enhancement on key frames; in the image splicing process, firstly carrying out characteristic detection and interframe matching on image frames by adopting an SURF (speeded up robust features) detection method with good robustness; then reducing the series-multiplication accumulative errors of images in a frame-to-mosaic image transformation mode, determining images which are not adjacent in time sequence but adjacent in space on a flight path according to the GPS (global positioning system) position information of the unmanned plane, optimizing the frame-to-mosaic transformation relation, determining image overlapping areas, thereby realizing image fusion and the panoramic image construction and realizing real-time effect of carrying out flying and stitching simultaneously; and in image transformation, based on adjacent frame information in a vision field and adjacent frame information in airspace, optimizing image transformation to obtain the accurate panoramic images. The stitching method has good real-time performance, is fast and accurate and meets the requirements of application occasions in multiple fields.

Owner:HUNAN AEROSPACE CONTROL TECH CO LTD

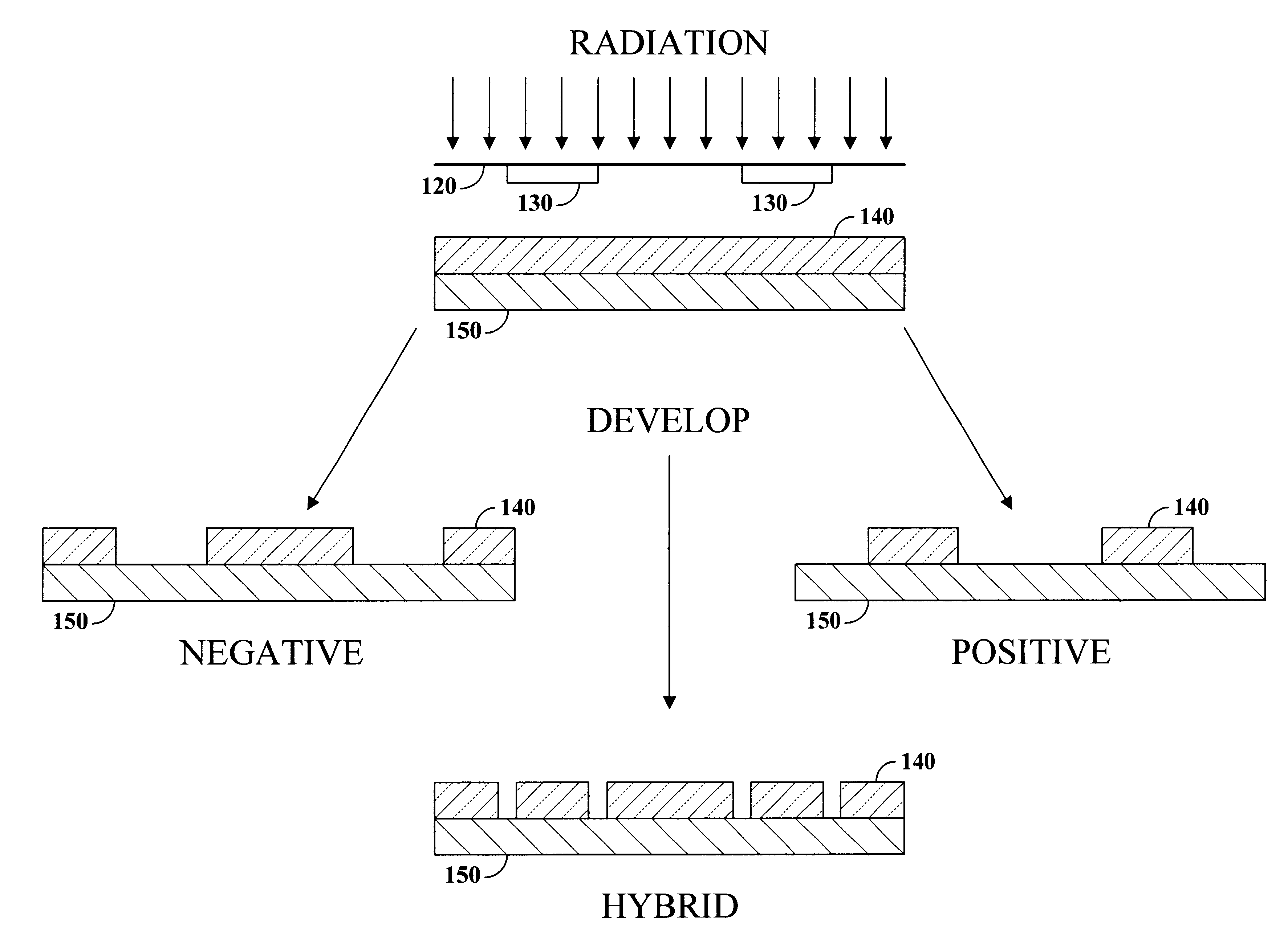

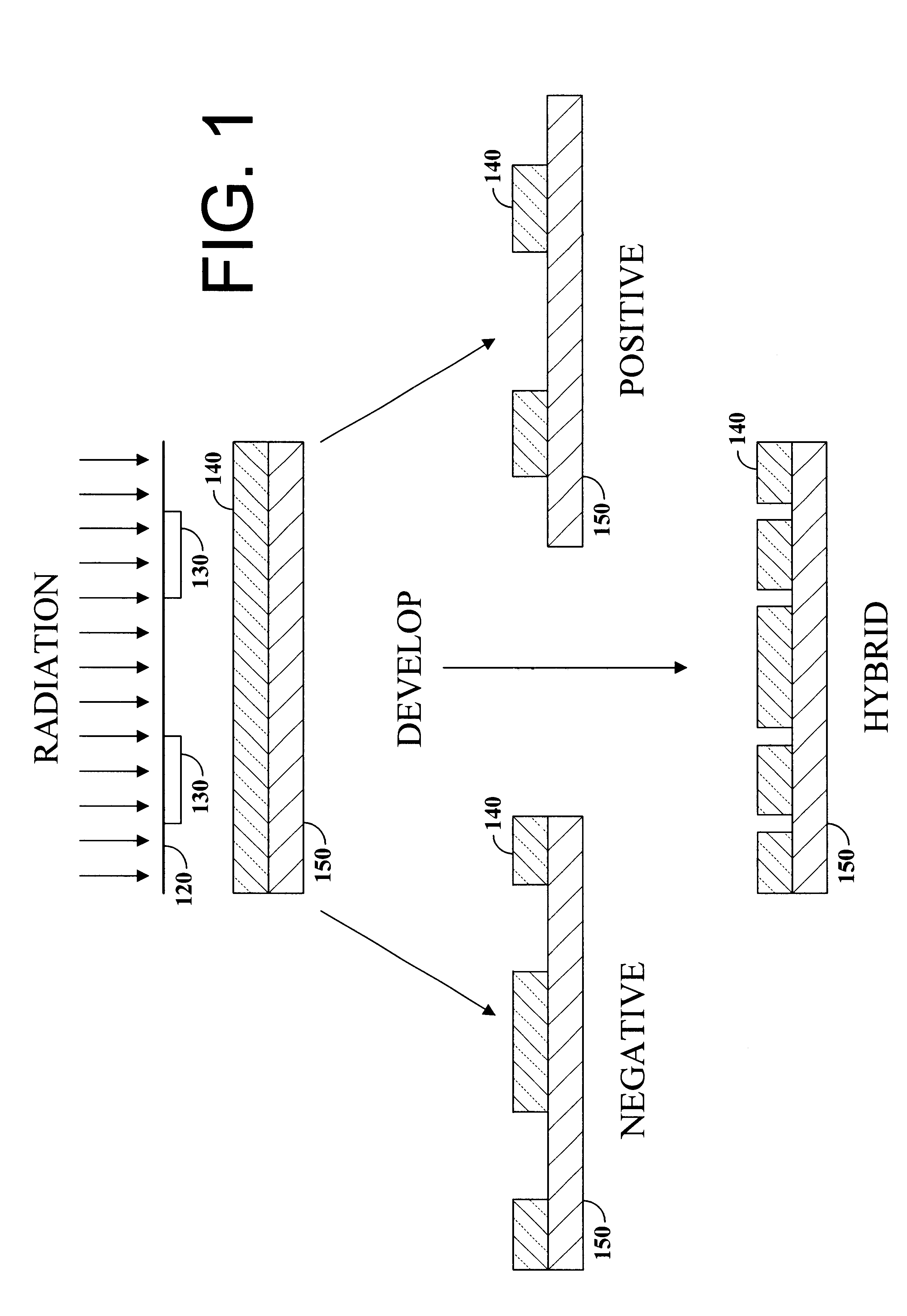

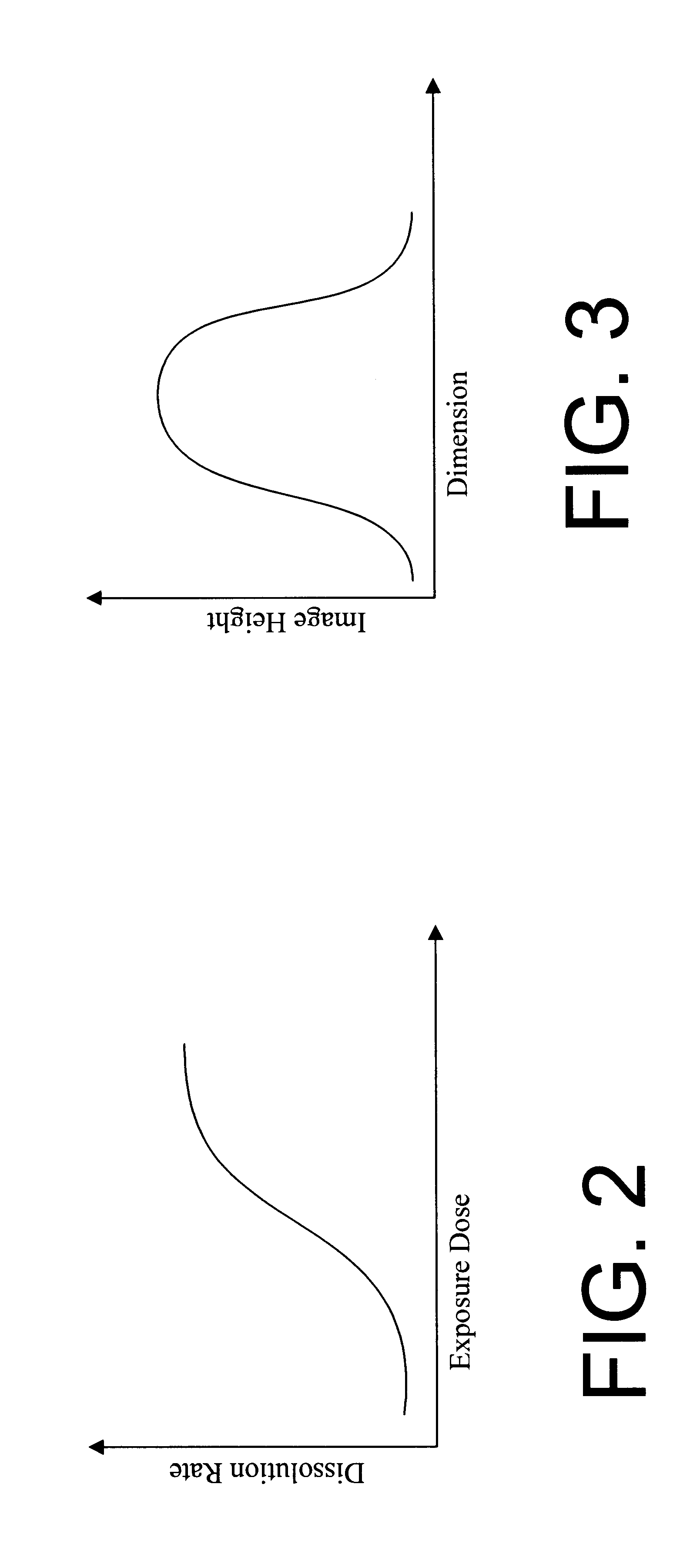

Hybrid resist based on photo acid/photo base blending

A photo resist composition contains a polymer resin, a first photo acid generator (PAG) requiring a first dose of actinic energy to generate a first photo acid, and a photo base generator (PBG) requiring a second dose of actinic energy, different from the first dose, to generate a photo base. The amounts and types of components in the photo resist are selected to produce a hybrid resist image. Either the first photo acid or photo base acts as a catalyst for a chemical transformation in the resist to induce a solubility change. The other compound is formulated in material type and loading in the resist such that it acts as a quenching agent. The catalyst is formed at low doses to induce the solubility change and the quenching agent is formed at higher doses to counterbalance the presence of the catalyst. Accordingly, the same frequency doubling effect of conventional hybrid resist compositions may be obtained, however, either a line or a space may be formed at the edge of an aerial image. Feature size may also be influenced by incorporating a quenching agent into the resist composition that does not require photo generation.

Owner:IBM CORP

Pitch determination systems and methods for aerial roof estimation

Owner:EAGLEVIEW TECH

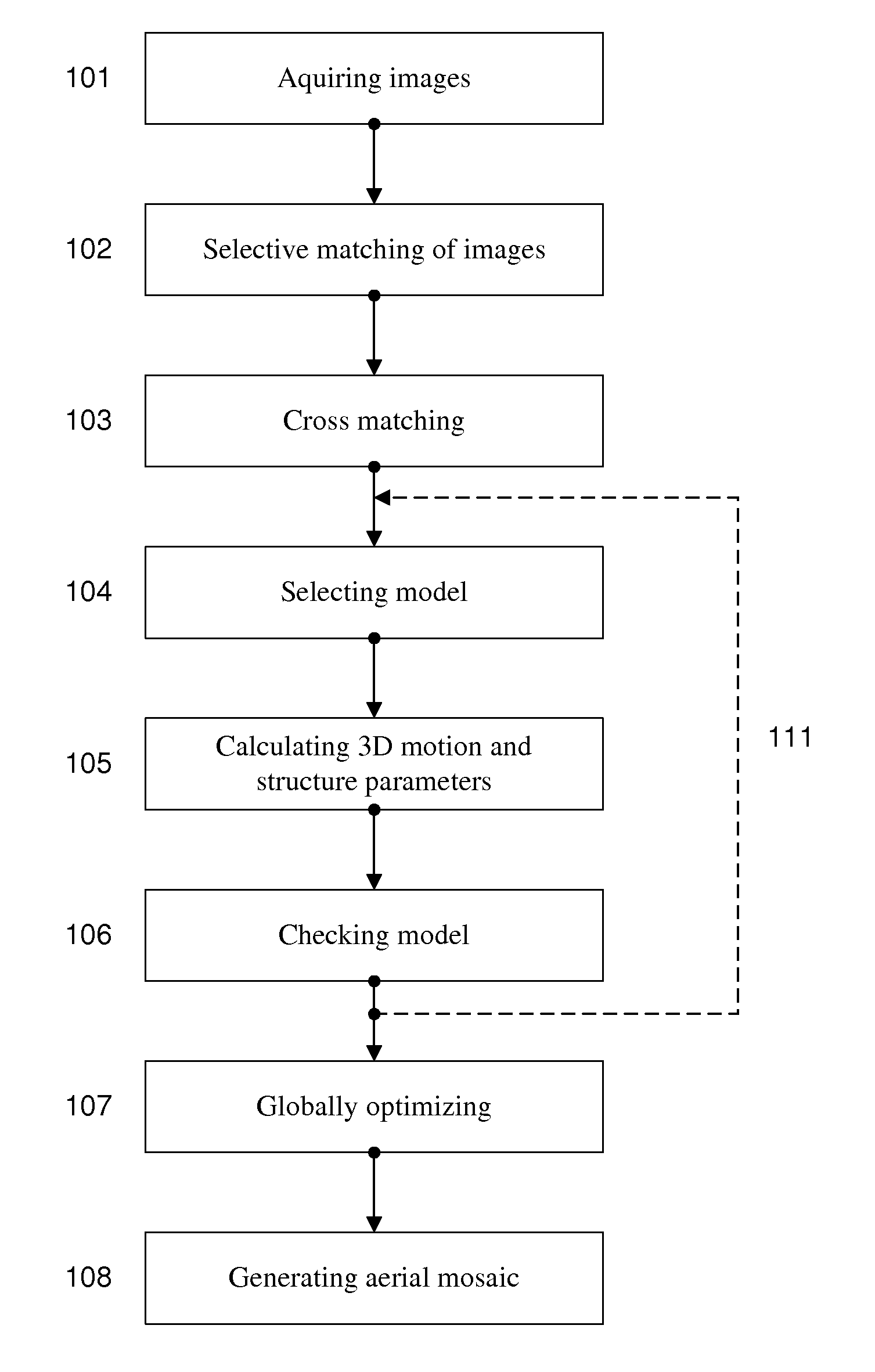

Generation of aerial images

ActiveUS20110090337A1Quality improvementRobust and cheap and lightPhotogrammetry/videogrammetryColor television detailsSingle imageAerial photography

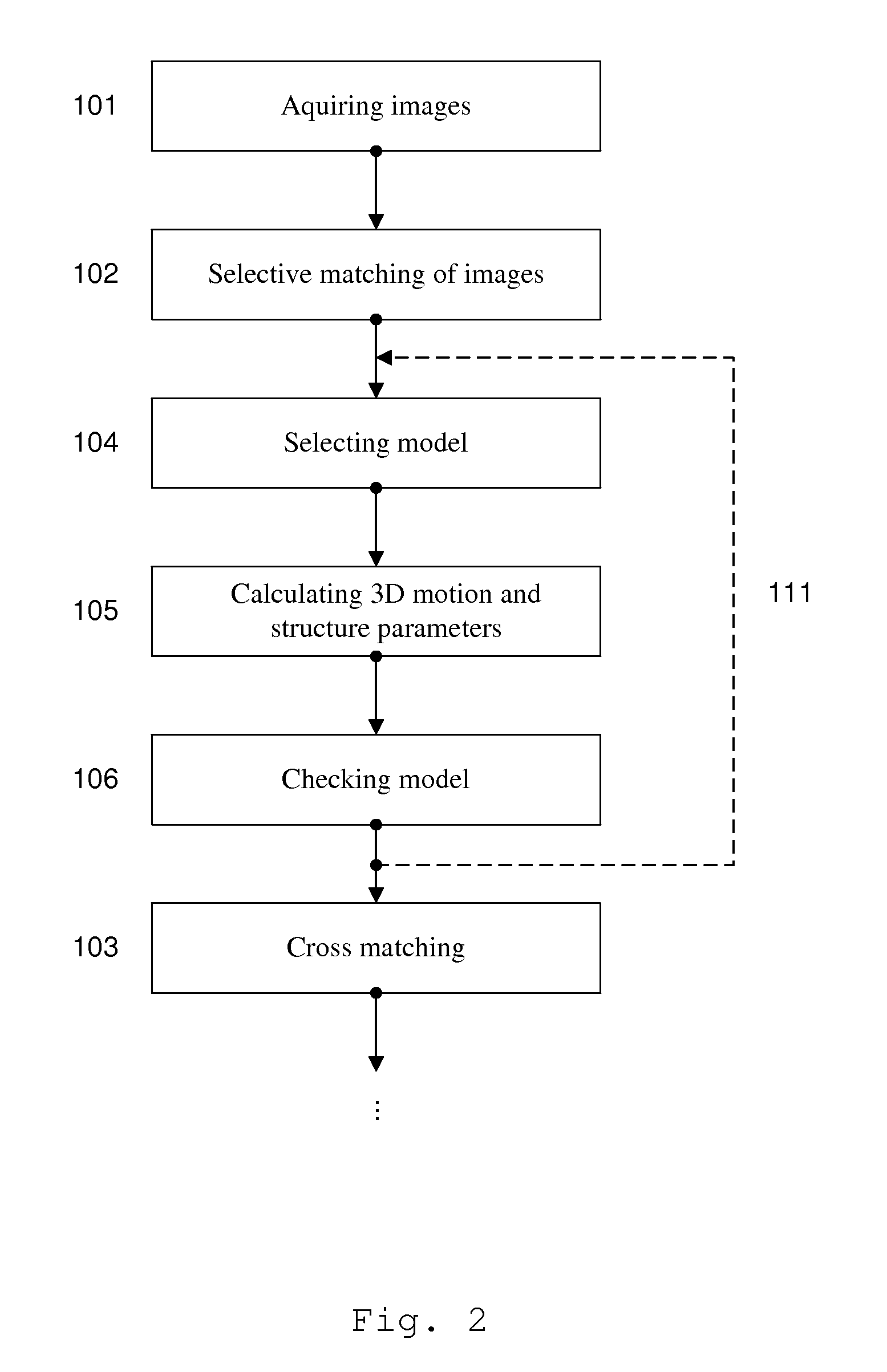

The method according to the invention gene rates an aerial image mosaic viewing a larger area than a single image from a camera can provide using a combination of computer vision and photogrammetry. The aerial image mosaic is based on a set of images acquired from a camera. Selective matching and cross matching of consecutive and non-consecutive images, respectively, are performed and three dimensional motion and structure parameters are calculated and implemented on the model to check if the model is stable. Thereafter the parameters are globally optimised and based on these optimised parameters the serial image mosaic is generated. The set of images may be limited by removing old image data as new images are acquired. The method makes it is possible to establish images in near real time using a system of low complexity and small size, and using only image information.

Owner:IMINT IMAGE INTELLIGENCE AB

Concurrent display systems and methods for aerial roof estimation

User interface systems and methods for roof estimation are described. Example embodiments include a roof estimation system that provides a user interface configured to facilitate roof model generation based on one or more aerial images of a building roof. In one embodiment, roof model generation includes image registration, image lean correction, roof section pitch determination, wire frame model construction, and / or roof model review. The described user interface provides user interface controls that may be manipulated by an operator to perform at least some of the functions of roof model generation. The user interface is further configured to concurrently display roof features onto multiple images of a roof. This abstract is provided to comply with rules requiring an abstract, and it is submitted with the intention that it will not be used to interpret or limit the scope or meaning of the claims.

Owner:EAGLEVIEW TECH

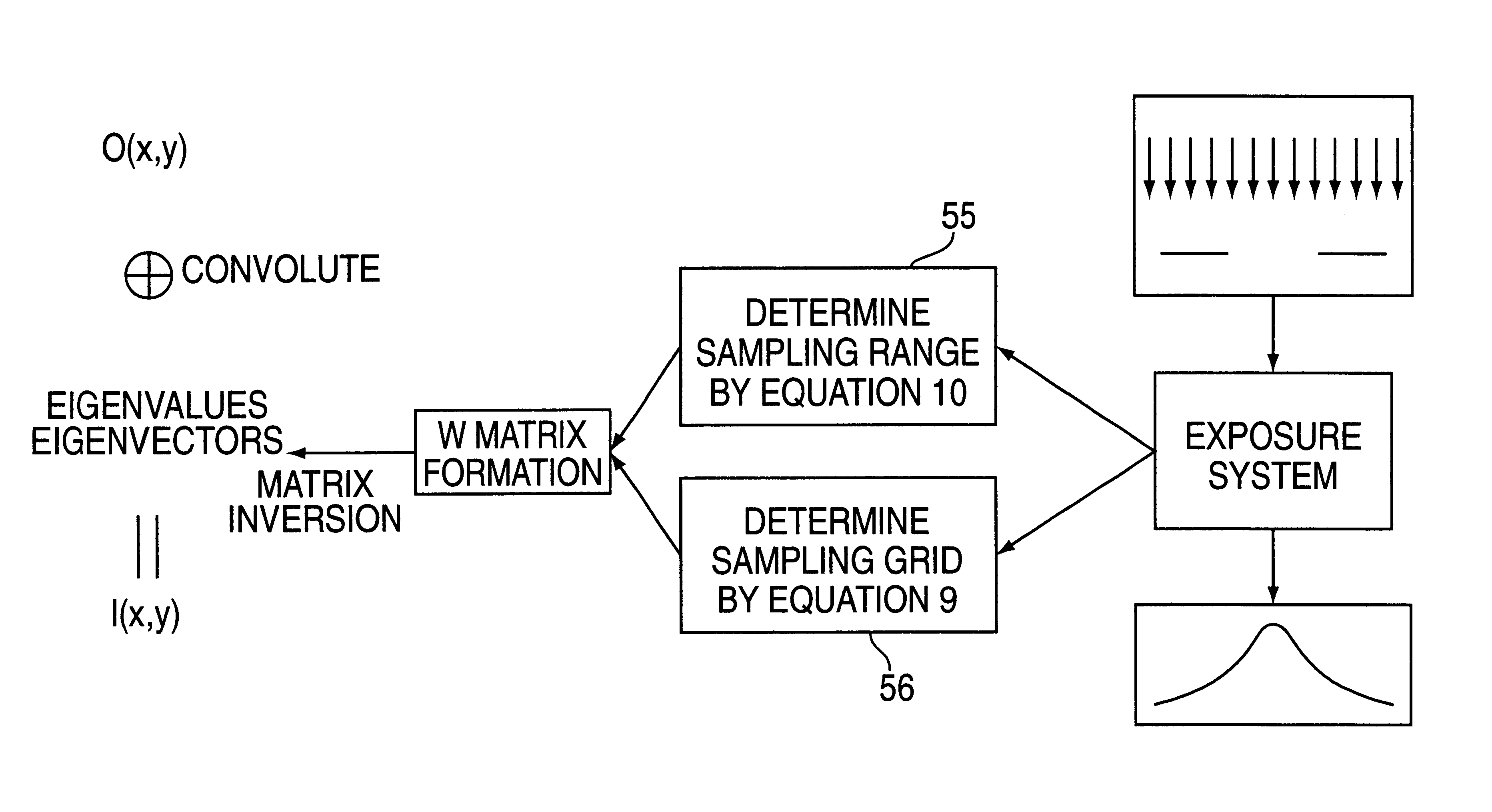

Kernel-based fast aerial image computation for a large scale design of integrated circuit patterns

InactiveUS6223139B1Advantageously producedComputationally efficientPump componentsBlade accessoriesFeature vectorDecomposition

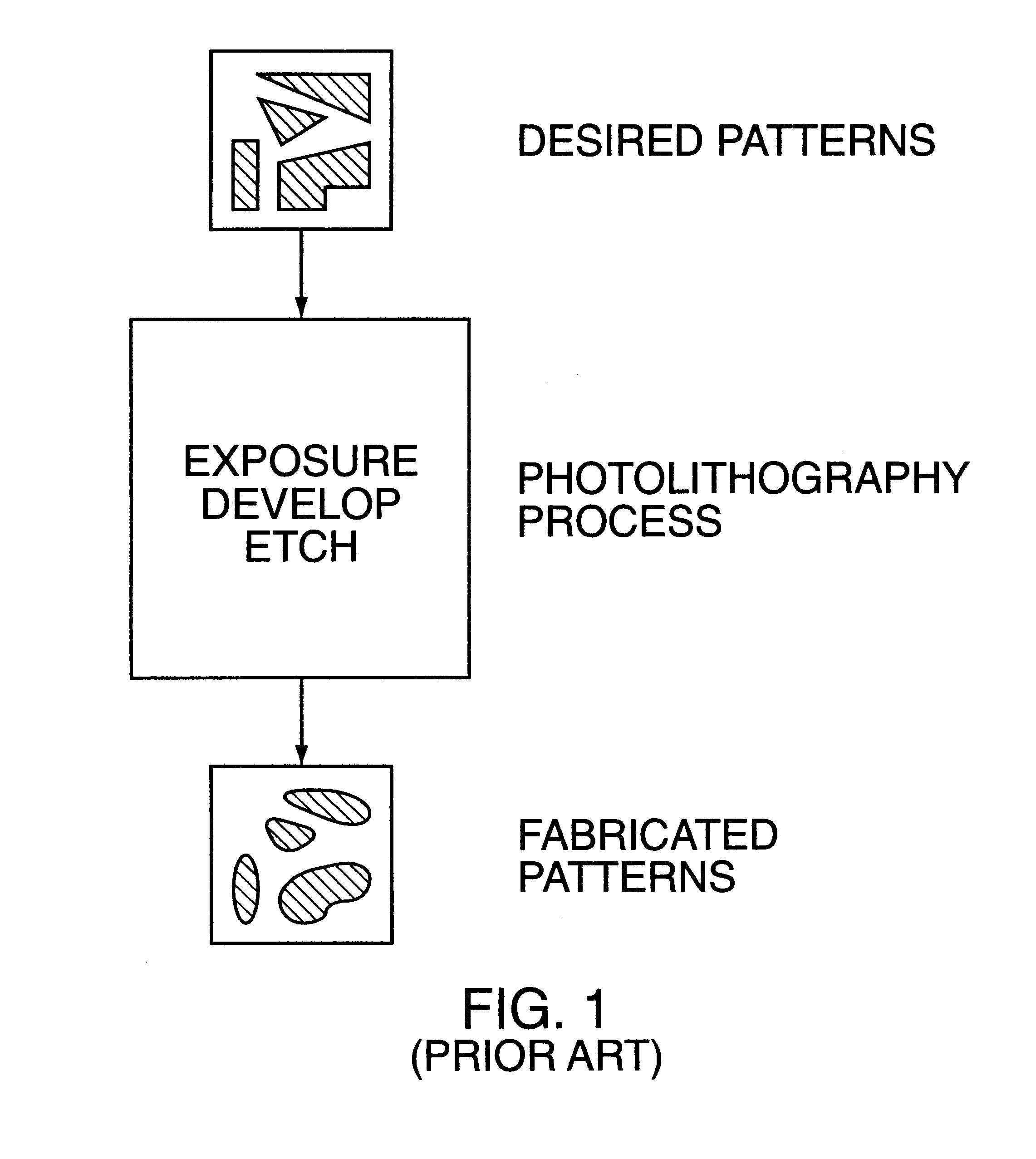

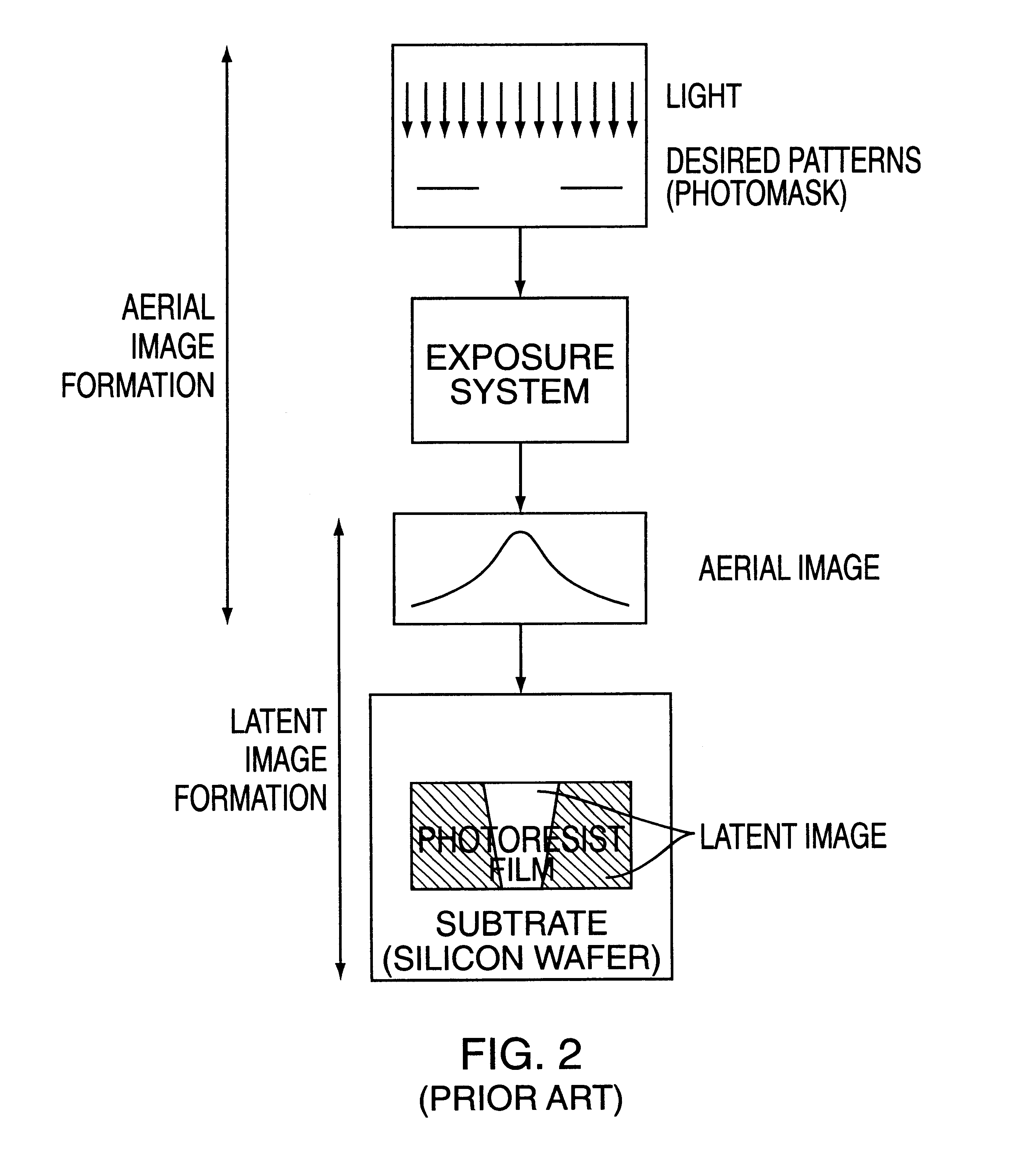

A method of simulating aerial images of large mask areas obtained during the exposure step of a photo-lithographic process when fabricating a semiconductor integrated circuit silicon wafer is described. The method includes the steps of defining mask patterns to be projected by the exposure system to create images of the mask patterns; determining an appropriate sampling range and sampling interval; generating a characteristic matrix describing the exposure system; inverting the matrix to obtain eigenvalues as well as the eigenvectors (or kernels) representing the decomposition of the exposure system; convolving the mask patterns with these eigenvectors; and weighing the resulting convolution by the eigenvalues to form the aerial images. The method is characterized in that the characteristic matrix is precisely defined by the sampling range and the sampling interval, such that the sampling range is the shortest possible and the sampling interval, the largest possible, without sacrificing accuracy. The method of generating aerial images of patterns having large mask areas provides a speed improvement of several orders of magnitude over conventional approaches.

Owner:IBM CORP

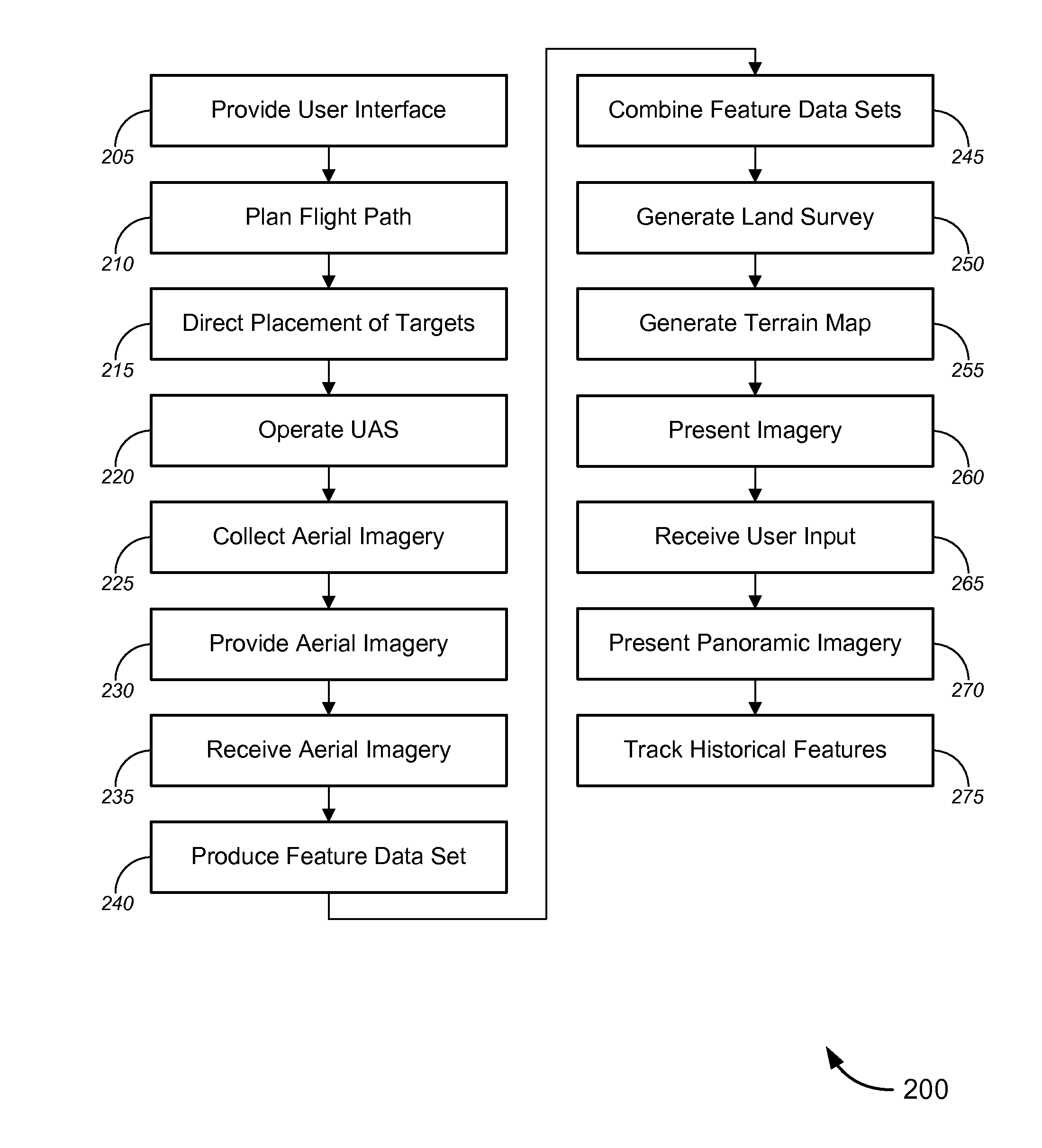

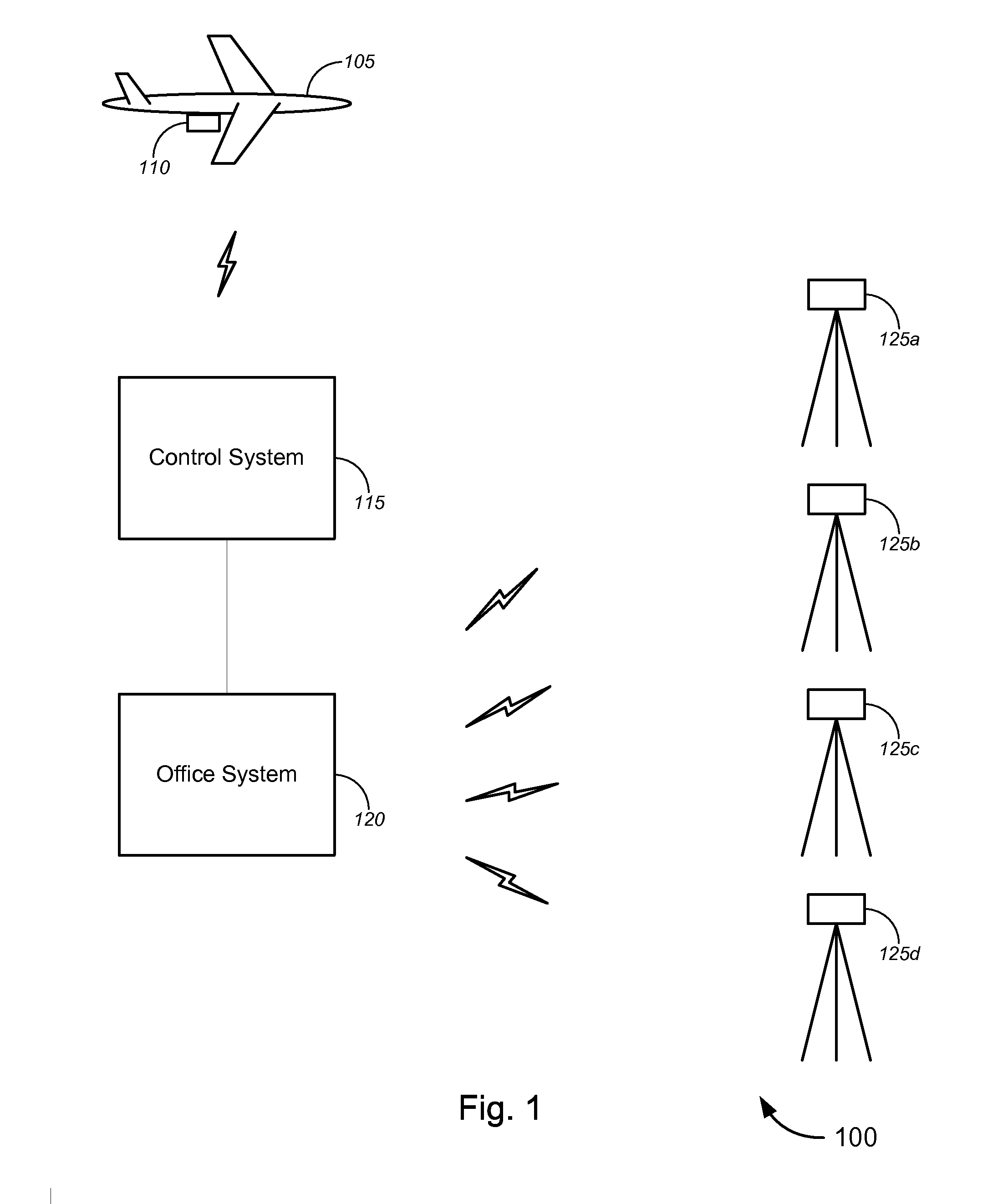

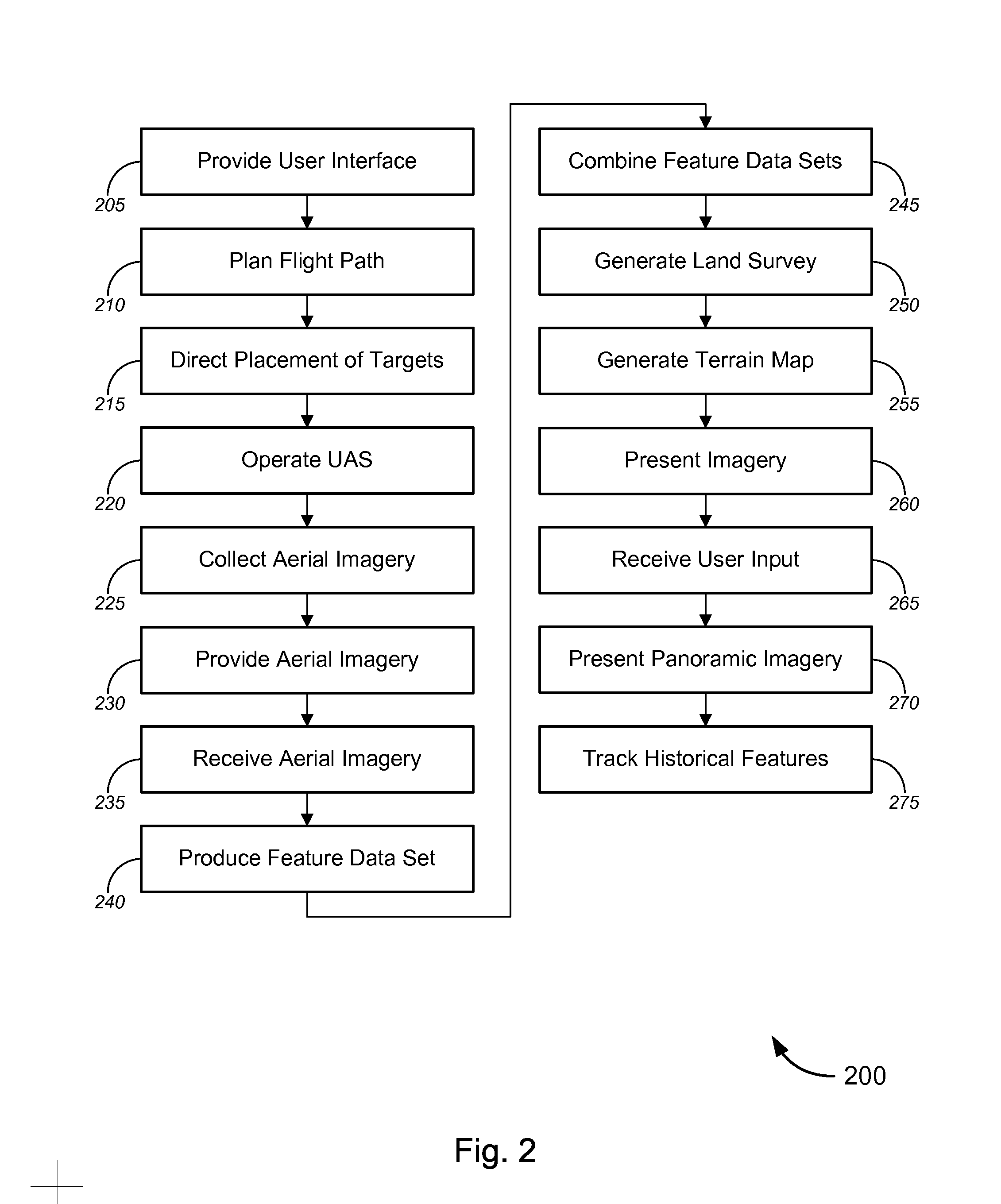

Integrated aerial photogrammetry surveys

ActiveUS9235763B2Low costSufficient resolutionSurveying instrumentsScene recognitionAviationEngineering

Novel tools and techniques for generating survey data about a survey site. Aerial photography of at least part of the survey site can be analyzed using photogrammetric techniques. In some cases, an unmanned aerial system can be used to collect site imagery. The use of a UAS can reduce the fiscal and chronological cost of a survey, compared to the use of other types aerial imagery and / or conventional terrestrial surveying techniques used alone.

Owner:TRIMBLE INC

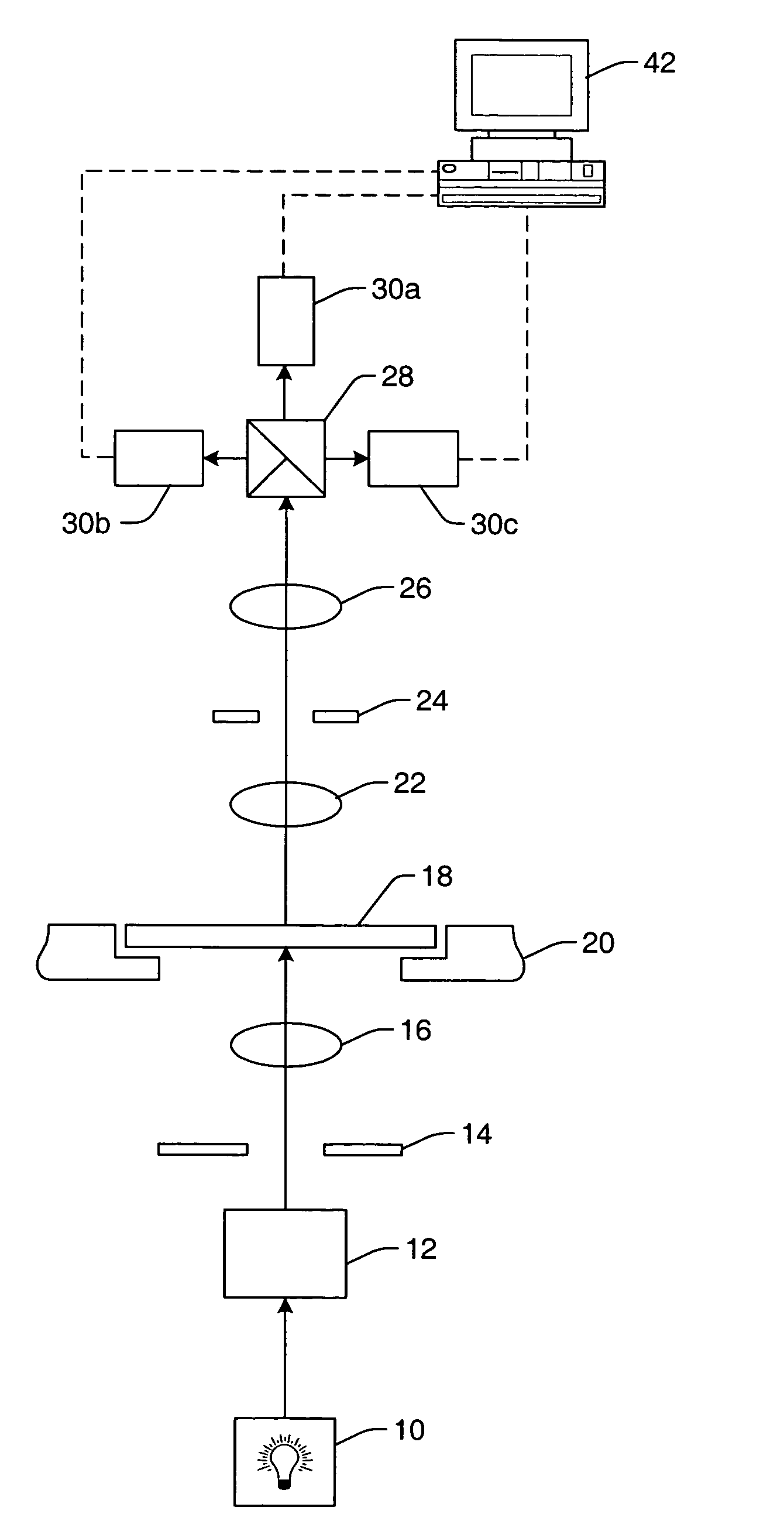

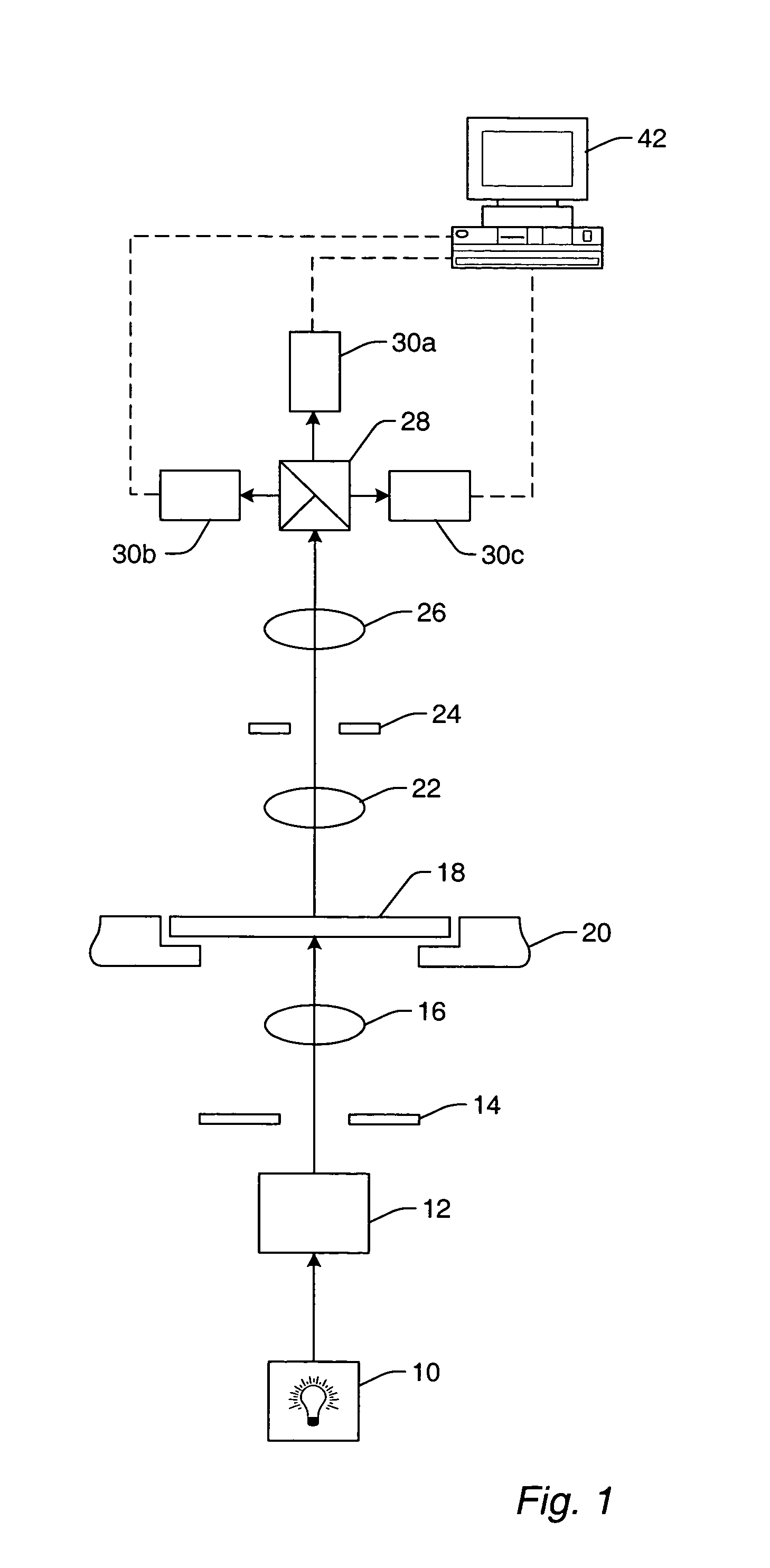

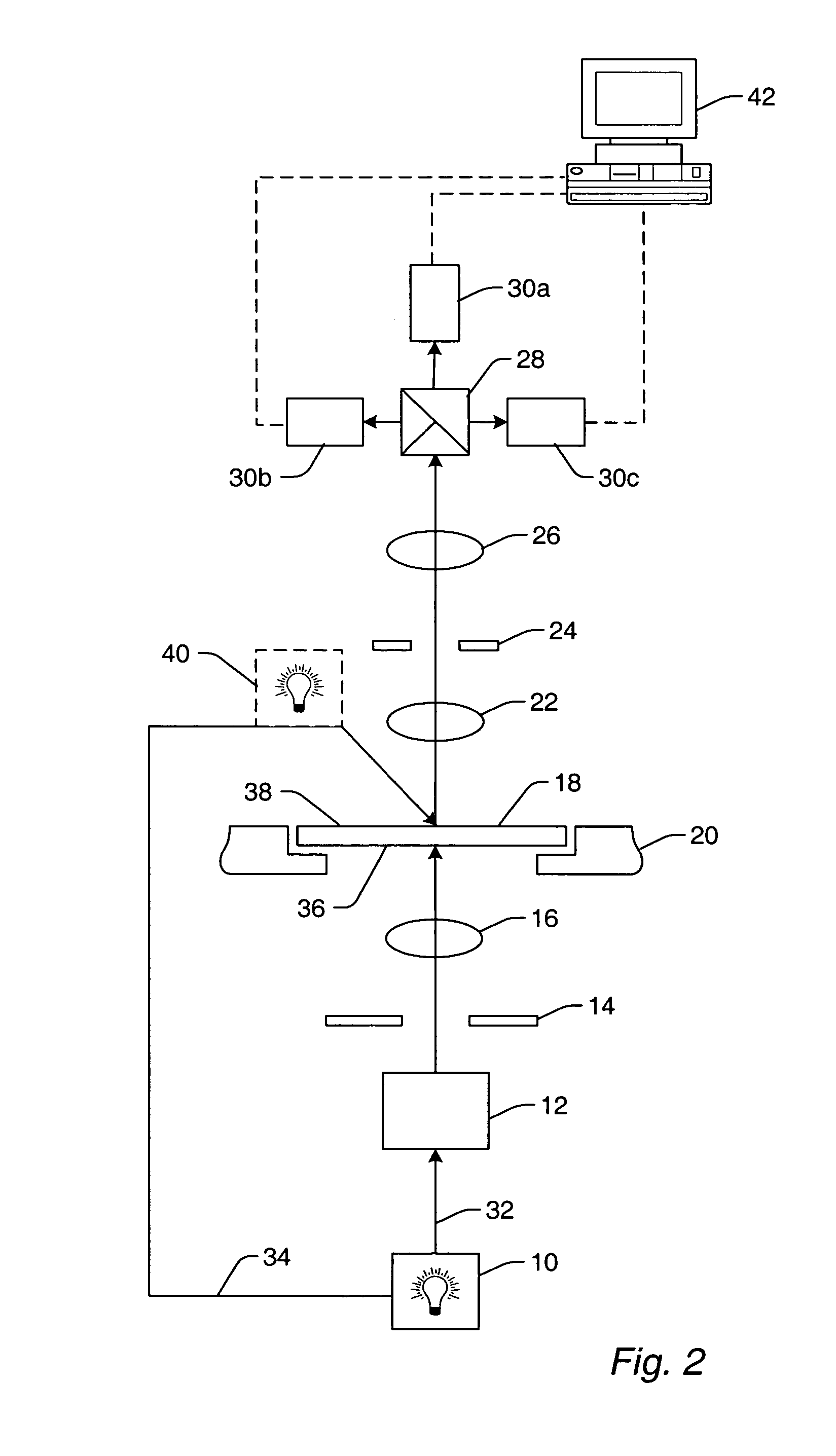

Methods and systems for inspecting reticles using aerial imaging at off-stepper wavelengths

ActiveUS7027143B1Material analysis by optical meansCharacter and pattern recognitionAerial imagingSpatial image

Methods and systems for inspecting a reticle are provided. A method may include forming an aerial image of the reticle with an inspection system at a wavelength different from a wavelength of an exposure system. The method may also include correcting the aerial image for differences between modulation transfer functions (MTF) of the inspection system and the exposure system. In this manner, the corrected aerial image may be substantially equivalent to an image of the reticle that would be printed onto a specimen by the exposure system at the wavelength of the exposure system. In addition, the method may include detecting defects on the reticle using the corrected aerial image. The detected defects may include approximately all of the defects that would be printed onto a specimen by the exposure system using the reticle.

Owner:KLA TENCOR TECH CORP

Aerial roof estimation systems and methods

Methods and systems for roof estimation are described. Example embodiments include a roof estimation system, which generates and provides roof estimate reports annotated with indications of the size, geometry, pitch and / or orientation of the roof sections of a building. Generating a roof estimate report may be based on one or more aerial images of a building. In some embodiments, generating a roof estimate report of a specified building roof may include generating a three-dimensional model of the roof, and generating a report that includes one or more views of the three-dimensional model, the views annotated with indications of the dimensions, area, and / or slope of sections of the roof. This abstract is provided to comply with rules requiring an abstract, and it is submitted with the intention that it will not be used to interpret or limit the scope or meaning of the claims.

Owner:EAGLEVIEW TECH

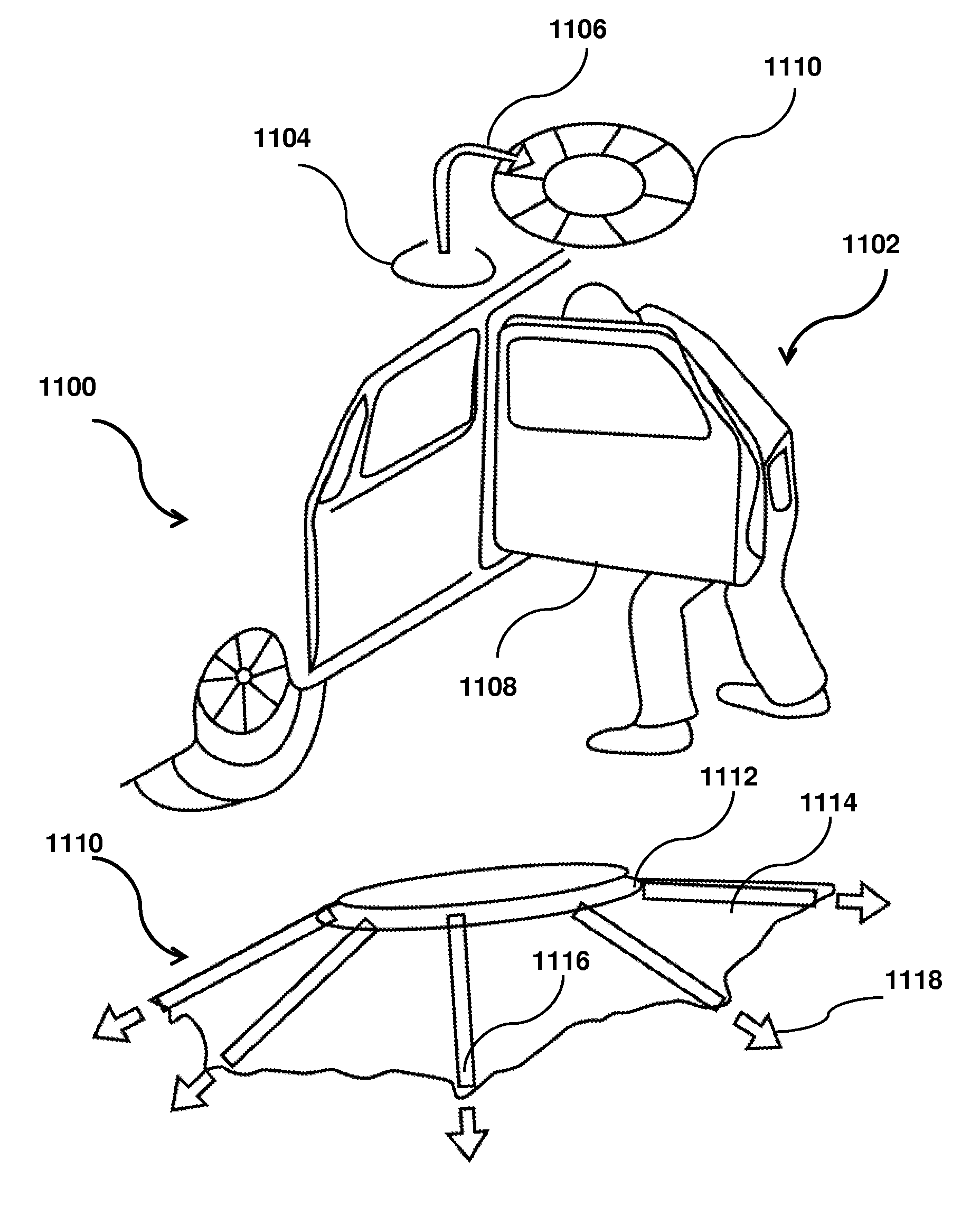

Aerial image collection

ActiveUS20140257595A1Aircraft controlDigital data processing detailsCollection systemAerial photography

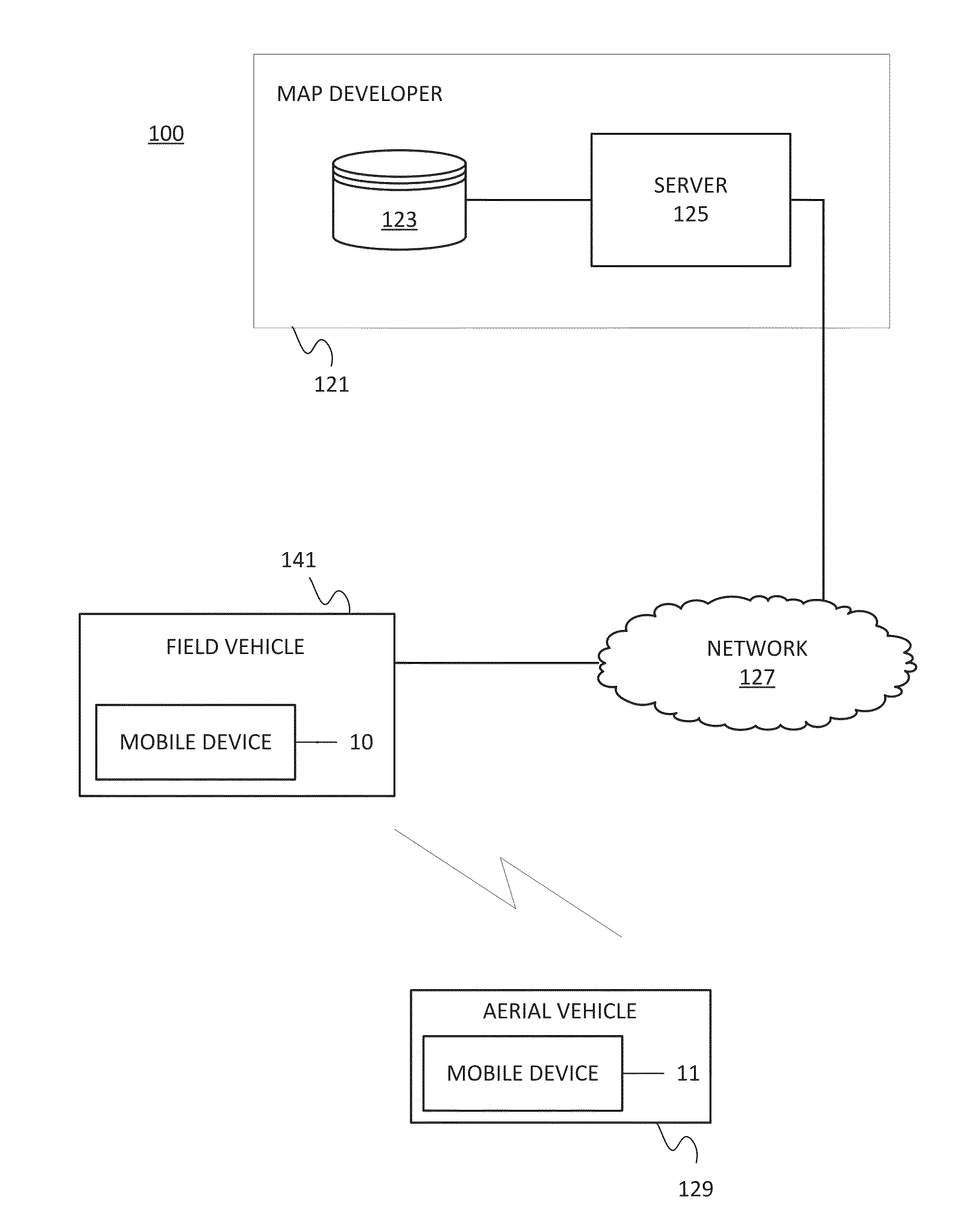

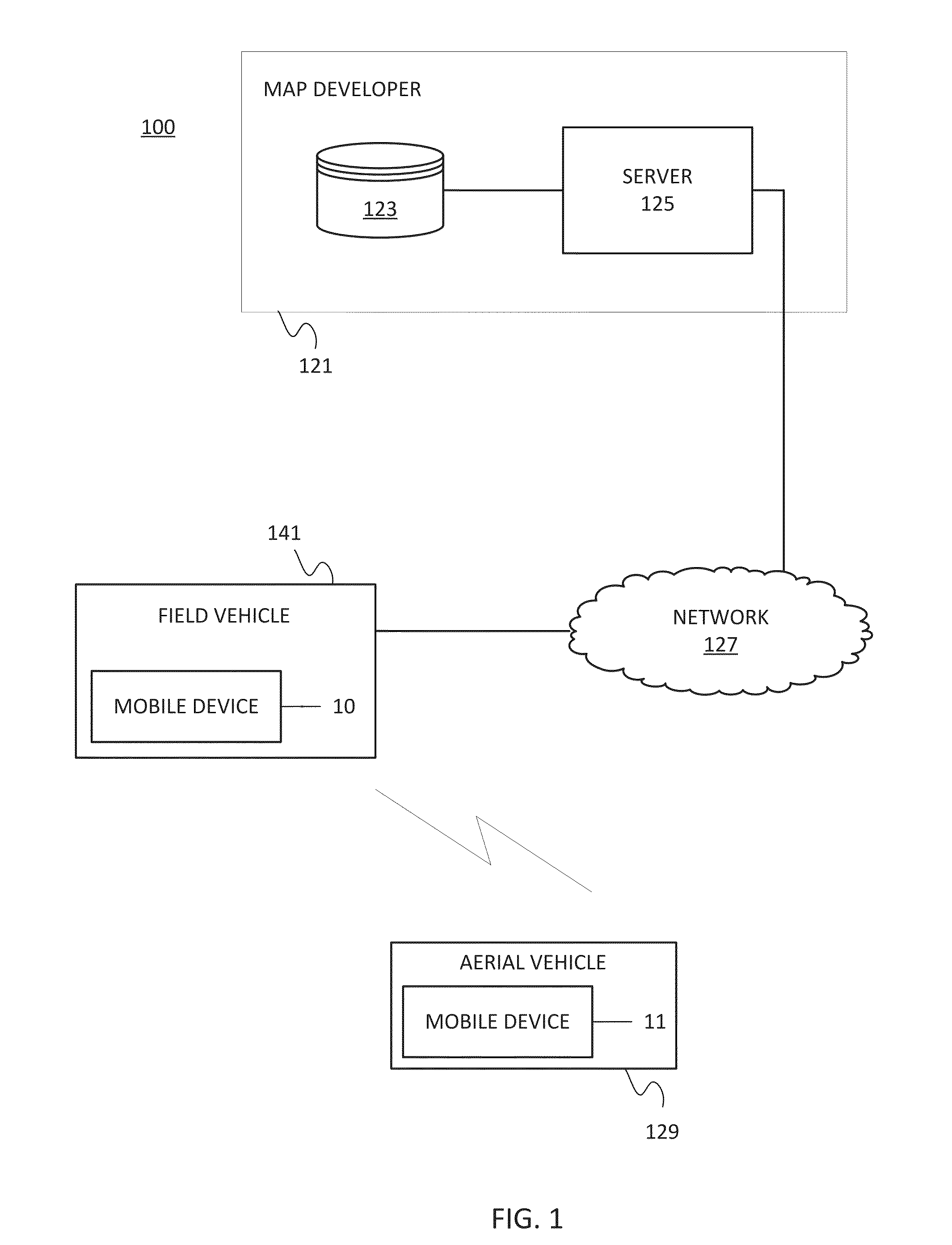

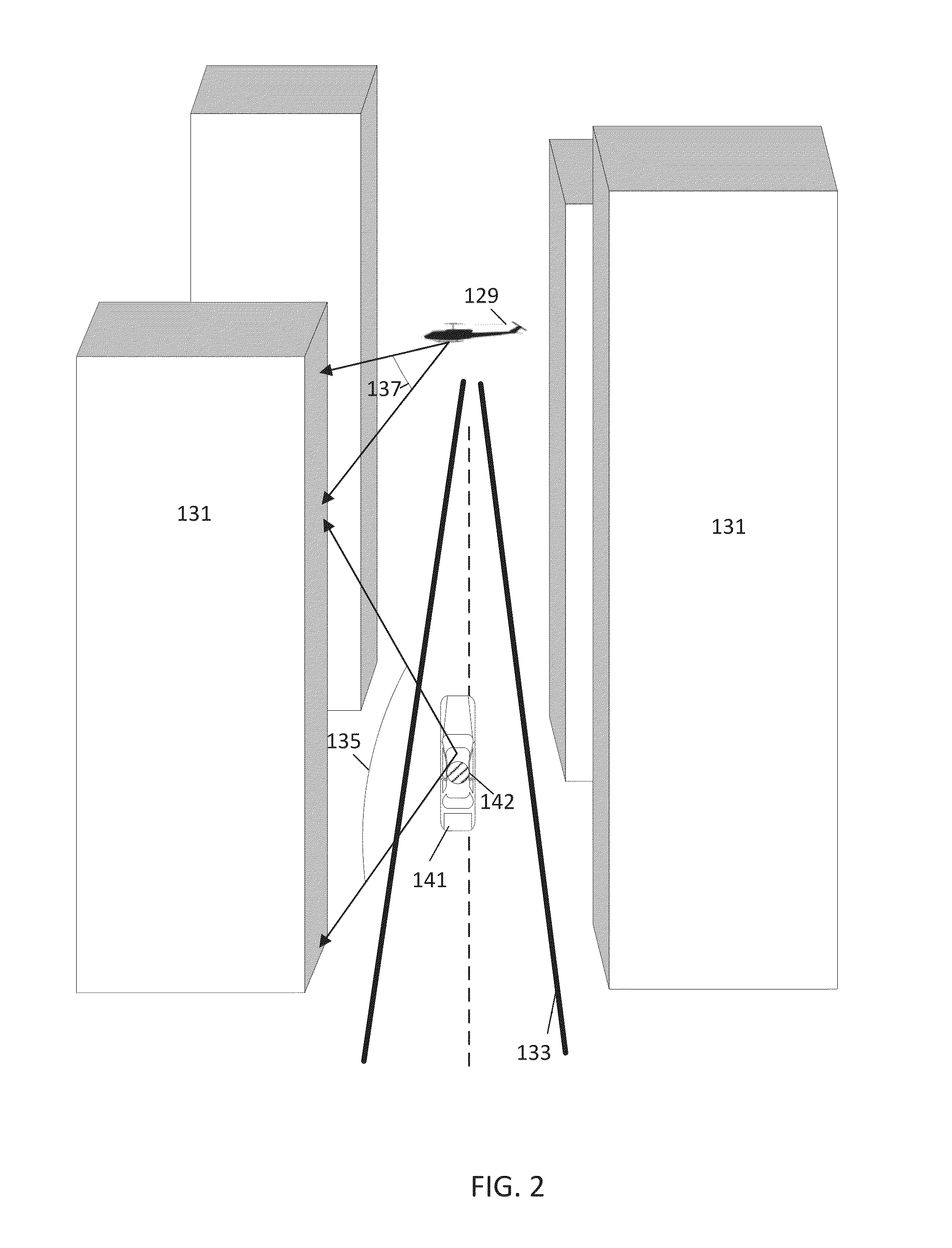

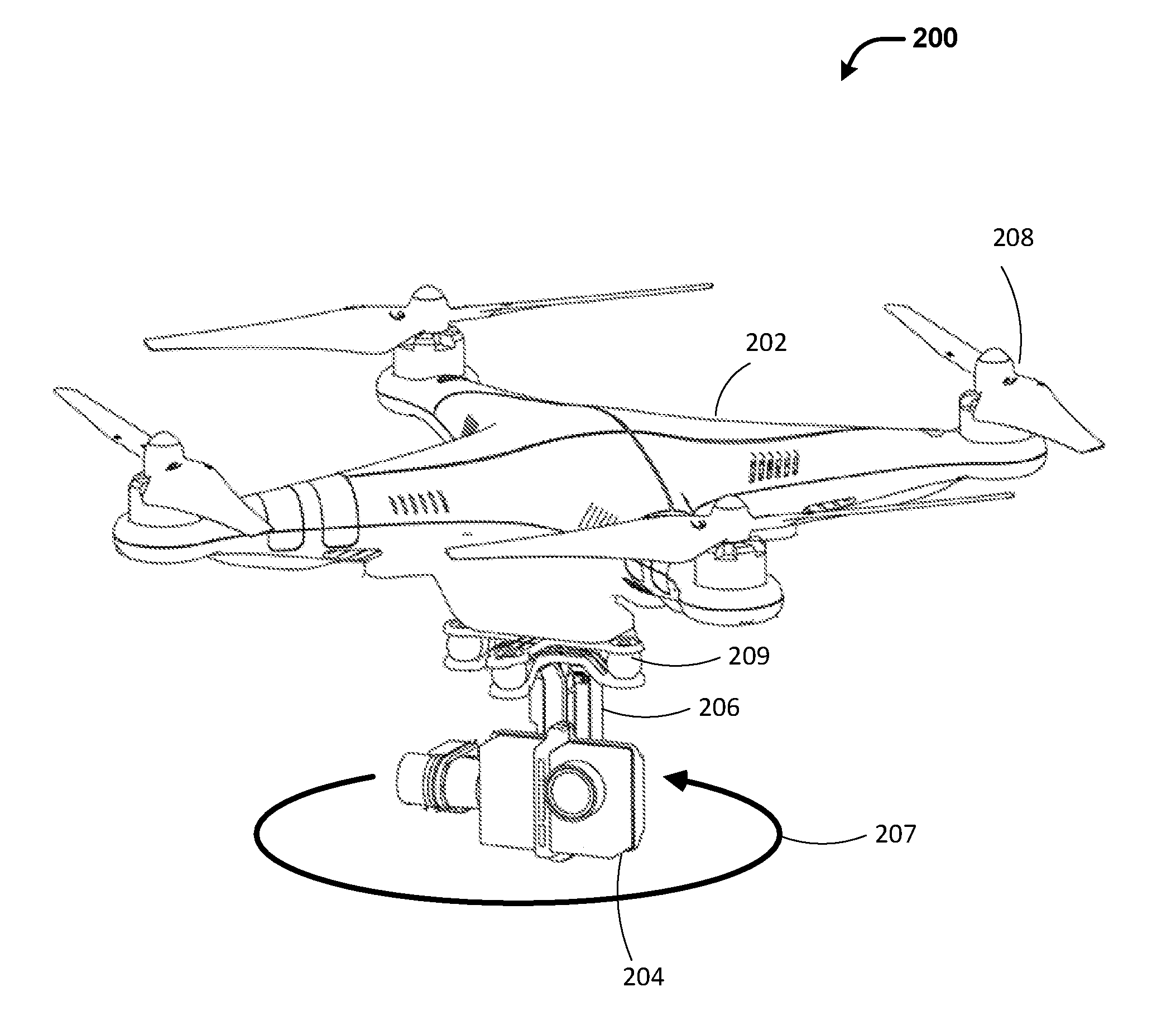

In one embodiment, an aerial collection system includes an image collection field vehicle that travels at street level and an image collection aerial vehicle that travels in the air above the street. The aerial vehicle collects image data including at least a portion of the field vehicle. The field vehicle includes a marker, which is identified from the collected image data. The marker is analyzed to determine an operating characteristic of the aerial vehicle. In one example, the operating characteristic in the marker includes information for a flight instruction for the aerial vehicle. In another example, the operating characteristic in the marker includes information for the three dimensional relationship between the vehicles. The three dimensional relationship is used to combine images collected from the air and images collected from the street level.

Owner:HERE GLOBAL BV

UAV panoramic imaging

Owner:SZ DJI OSMO TECH CO LTD

Method for aerial imagery acquisition and analysis

A method and system for multi-spectral imagery acquisition and analysis, the method including capturing preliminary multi-spectral aerial images according to pre-defined survey parameters at a pre-selected resolution, automatically performing preliminary analysis on site or location in the field using large scale blob partitioning of the captured images in real or near real time, detecting irregularities within the pre-defined survey parameters and providing an output corresponding thereto, and determining, from the preliminary analysis output, whether to perform a second stage of image acquisition and analysis at a higher resolution than the pre-selected resolution. The invention also includes a method for analysis and object identification including analyzing high resolution multi-spectral images according to pre-defined object parameters, when parameters within the pre-defined object parameters are found, performing blob partitioning on the images containing such parameters to identify blobs, and comparing objects confined to those blobs to pre-defined reference parameters to identify objects having the pre-defined object parameters.

Owner:AGROWING LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com