Patents

Literature

3456 results about "Pyramid" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A pyramid (from Greek: πυραμίς pyramís) is a structure whose outer surfaces are triangular and converge to a single step at the top, making the shape roughly a pyramid in the geometric sense. The base of a pyramid can be trilateral, quadrilateral, or of any polygon shape. As such, a pyramid has at least three outer triangular surfaces (at least four faces including the base). The square pyramid, with a square base and four triangular outer surfaces, is a common version.

Pyramid match kernel and related techniques

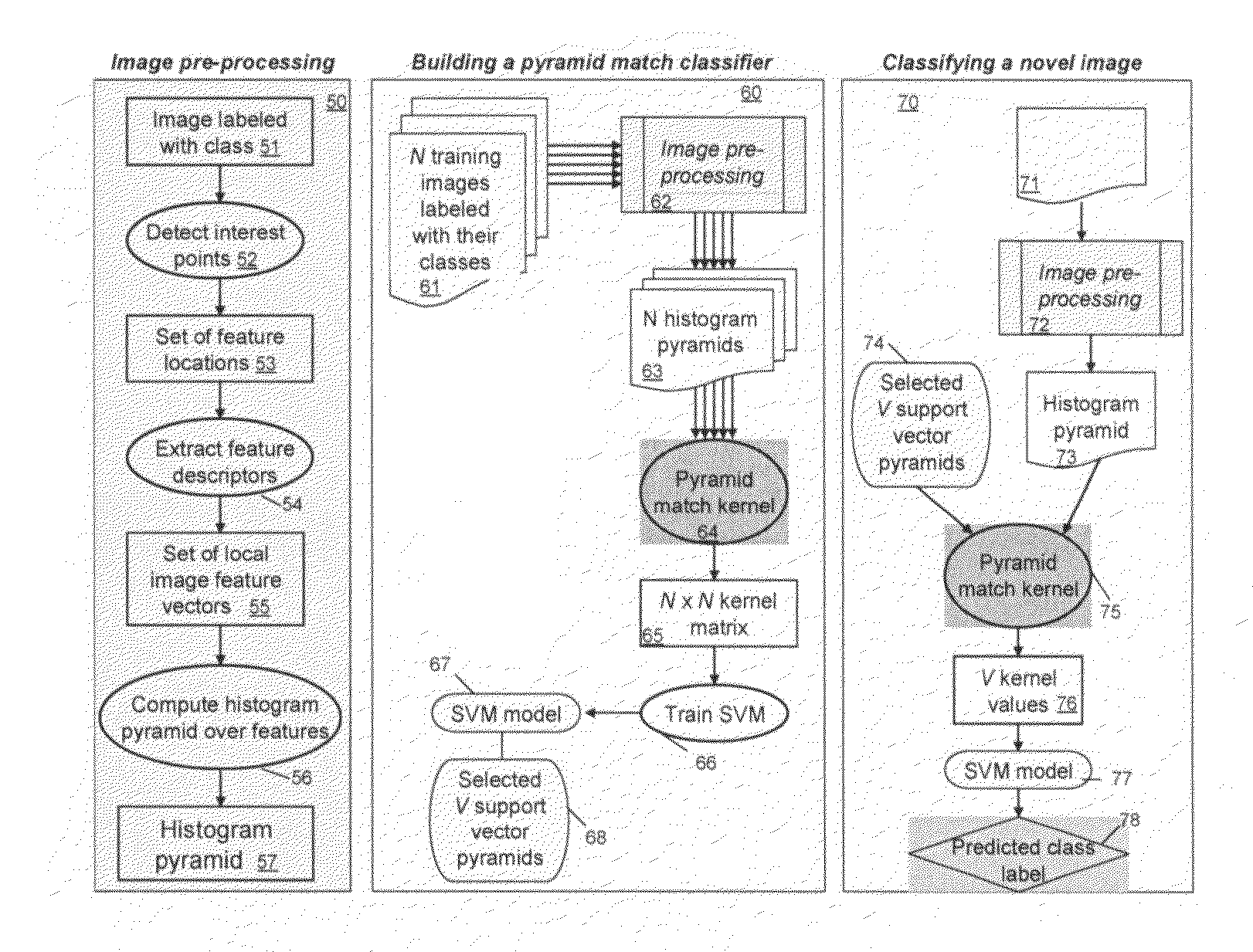

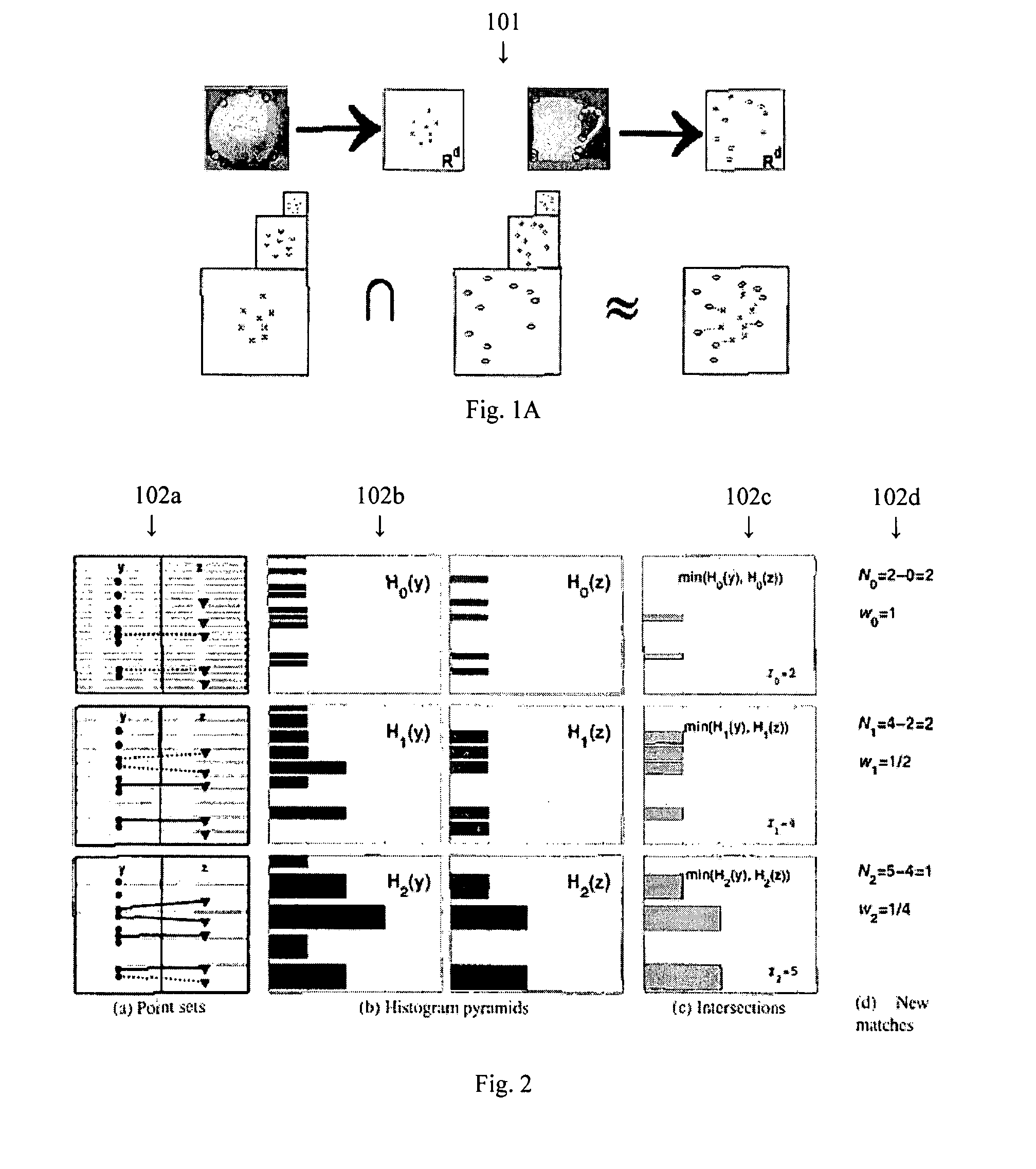

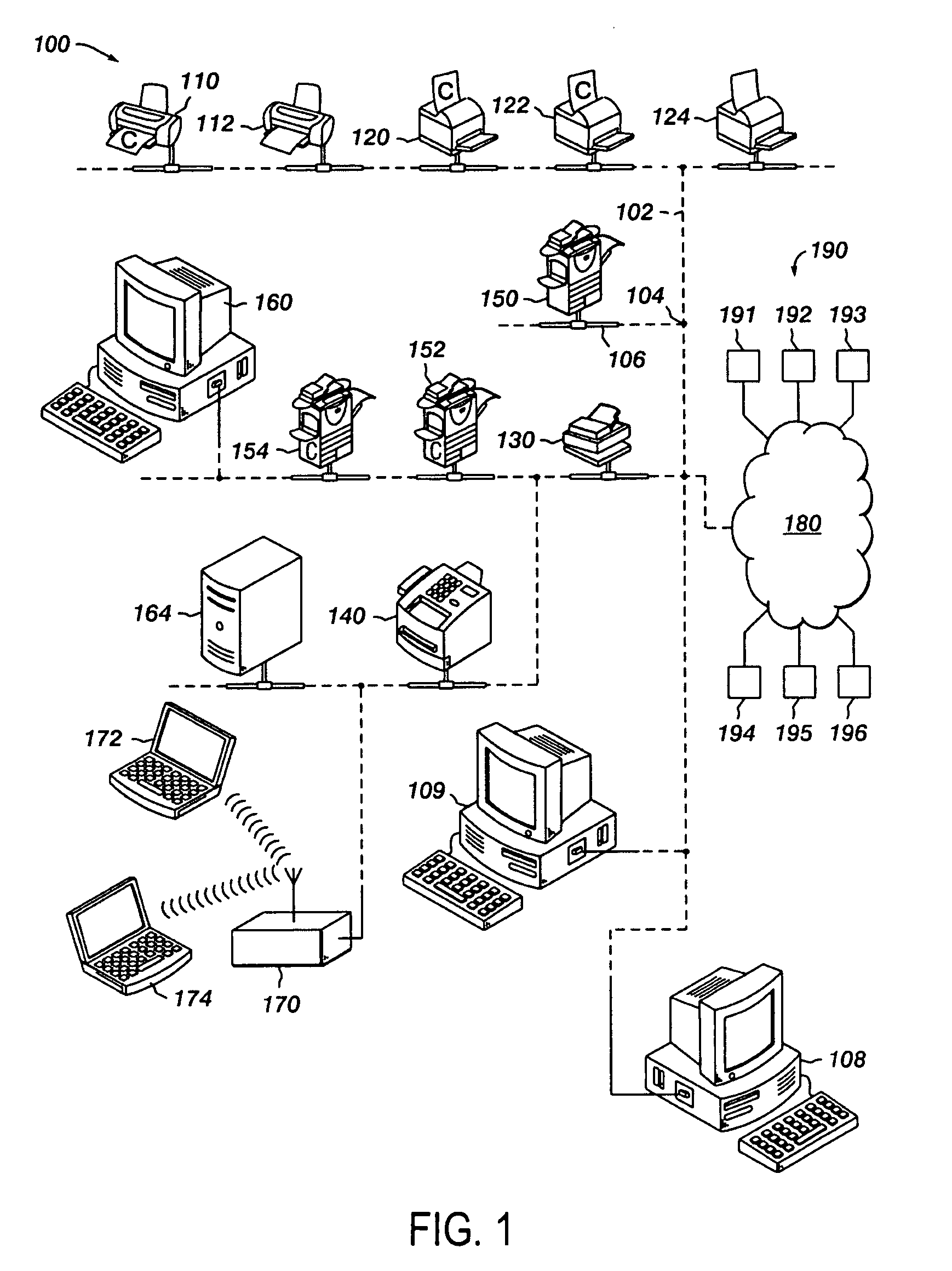

InactiveUS20070217676A1Efficient and accurateFinish quicklyCharacter and pattern recognitionFeature vectorHigh dimensional

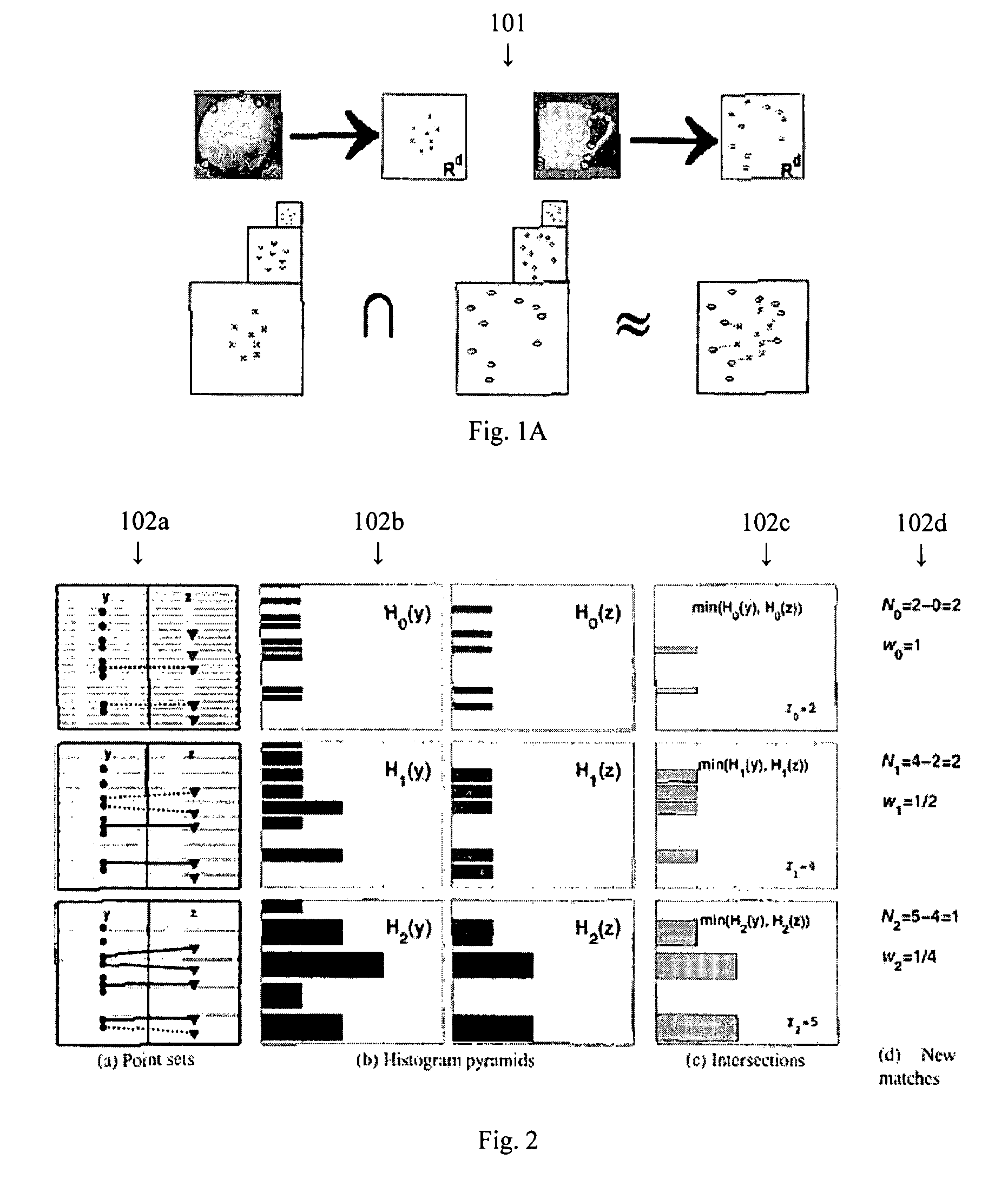

A method for classifying or comparing objects includes detecting points of interest within two objects, computing feature descriptors at said points of interest, forming a multi-resolution histogram over feature descriptors for each object and computing a weighted intersection of multi-resolution histogram for each object. An alternative embodiment includes a method for matching objects by defining a plurality of bins for multi-resolution histograms having various levels and a plurality of cluster groups, each group having a center, for each point of interest, calculating a bin index, a bin count and a maximal distance to the bin center and providing a path vector indicative of the bins chosen at each level. Still another embodiment includes a method for matching objects comprising creating a set of feature vectors for each object of interest, mapping each set of feature vectors to a single high-dimensional vector to create an embedding vector and encoding each embedding vector with a binary hash string.

Owner:MASSACHUSETTS INST OF TECH

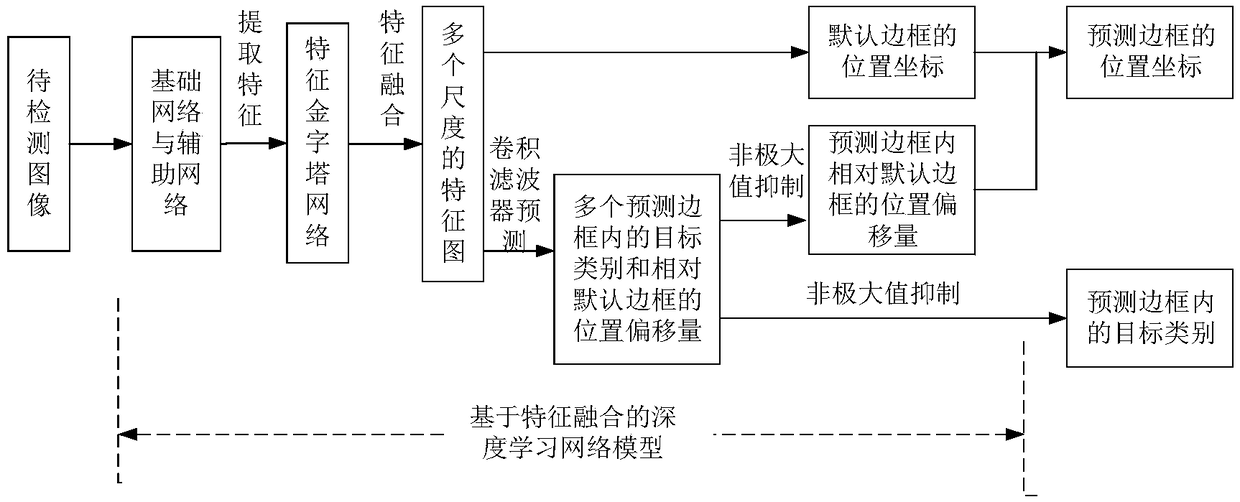

Small target detection method based on feature fusion and depth learning

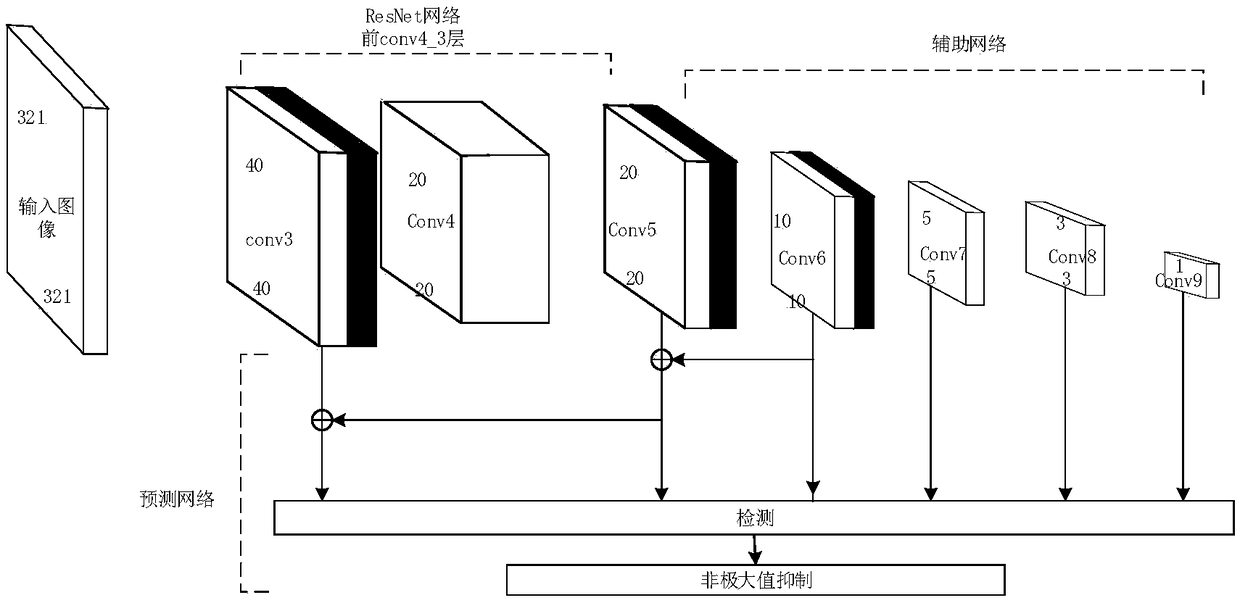

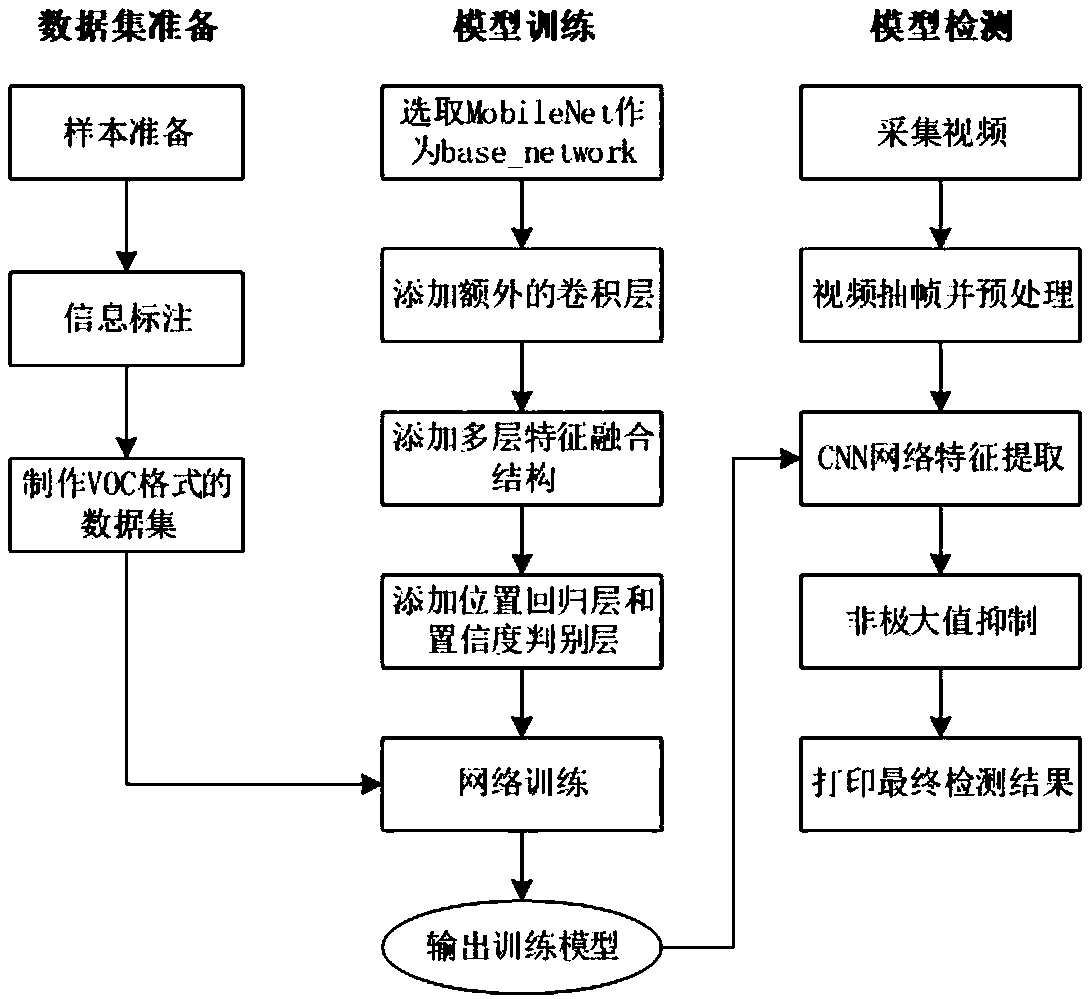

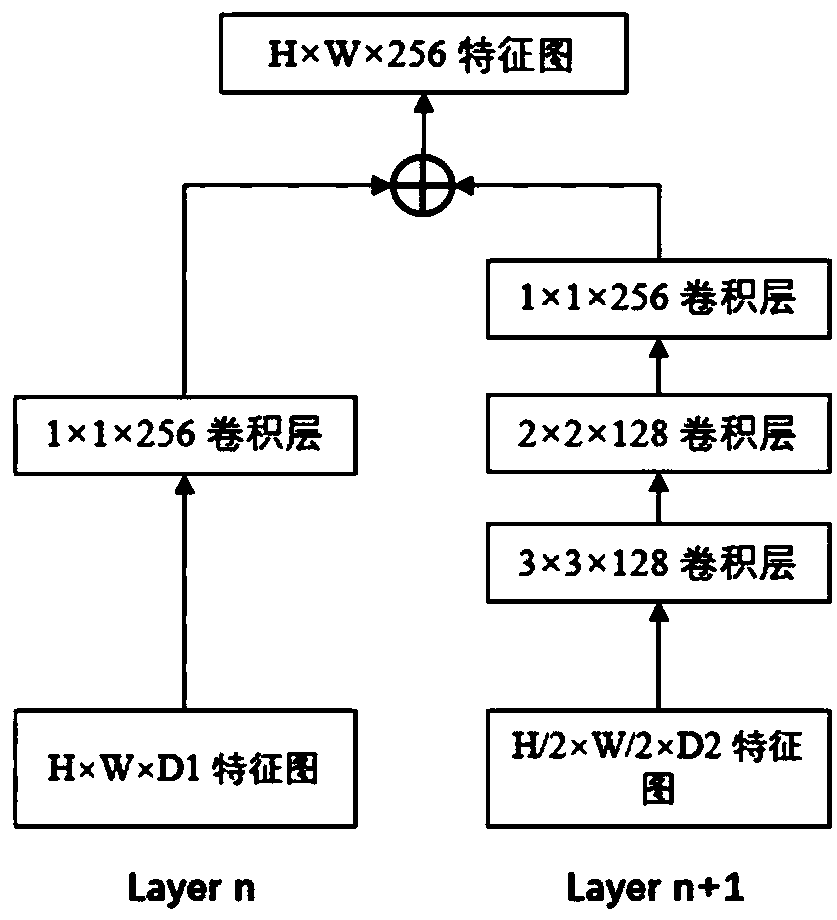

InactiveCN109344821AScalingRich information featuresCharacter and pattern recognitionNetwork modelFeature fusion

The invention discloses a small target detection method based on feature fusion and depth learning, which solves the problems of poor detection accuracy and real-time performance for small targets. The implementation scheme is as follows: extracting high-resolution feature map through deeper and better network model of ResNet 101; extracting Five successively reduced low resolution feature maps from the auxiliary convolution layer to expand the scale of feature maps. Obtaining The multi-scale feature map by the feature pyramid network. In the structure of feature pyramid network, adopting deconvolution to fuse the feature map information of high-level semantic layer and the feature map information of shallow layer; performing Target prediction using feature maps with different scales and fusion characteristics; adopting A non-maximum value to suppress the scores of multiple predicted borders and categories, so as to obtain the border position and category information of the final target. The invention has the advantages of ensuring high precision of small target detection under the requirement of ensuring real-time detection, can quickly and accurately detect small targets in images, and can be used for real-time detection of targets in aerial photographs of unmanned aerial vehicles.

Owner:XIDIAN UNIV

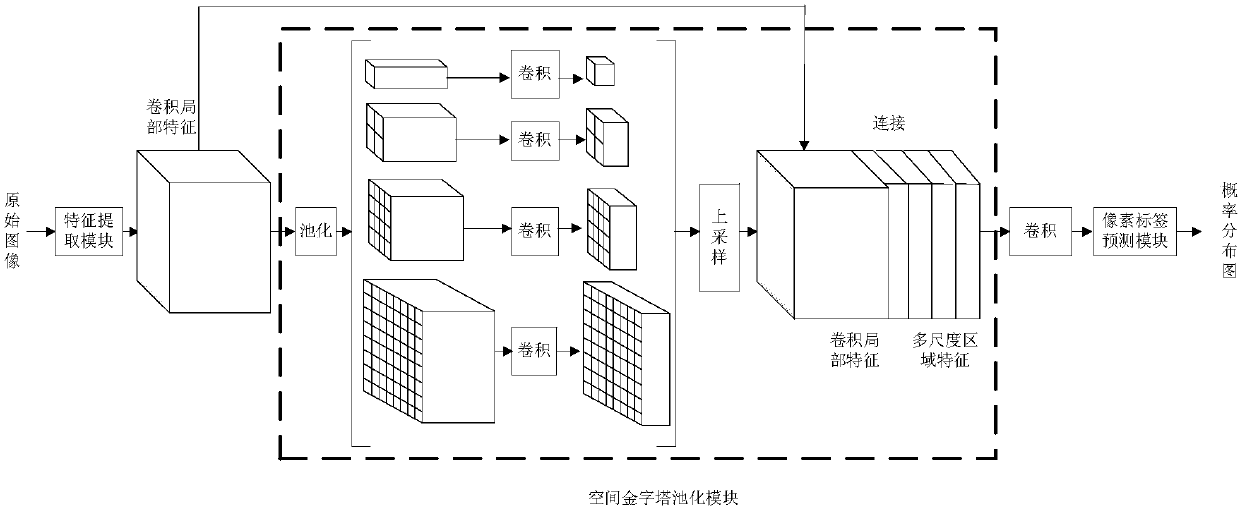

Image semantic division method based on depth full convolution network and condition random field

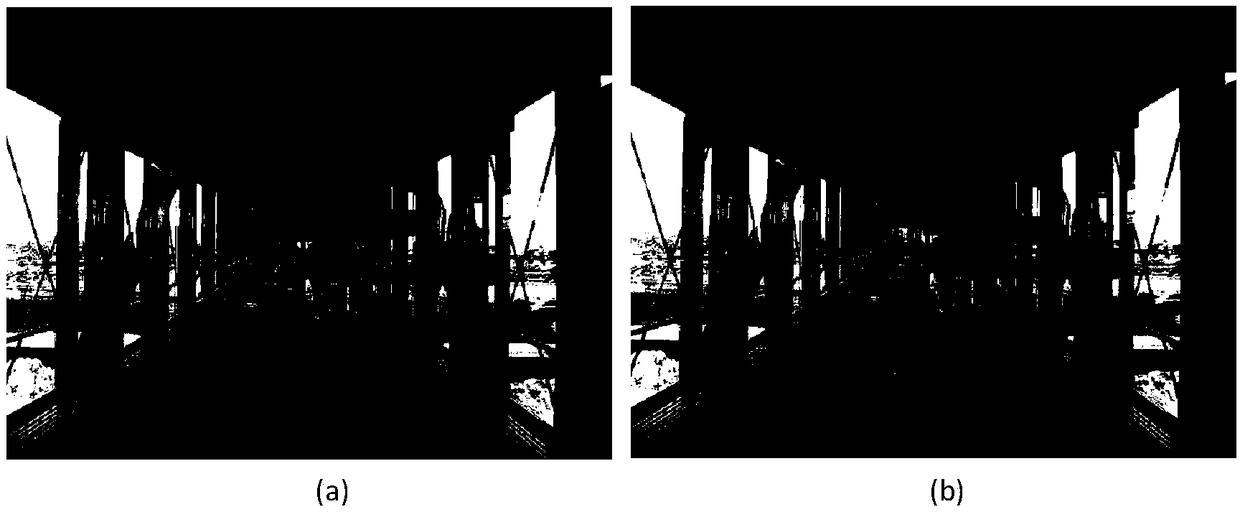

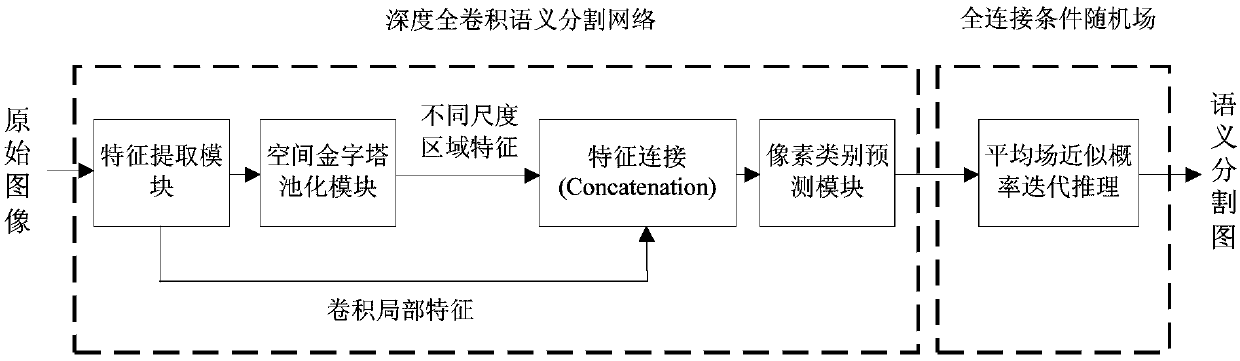

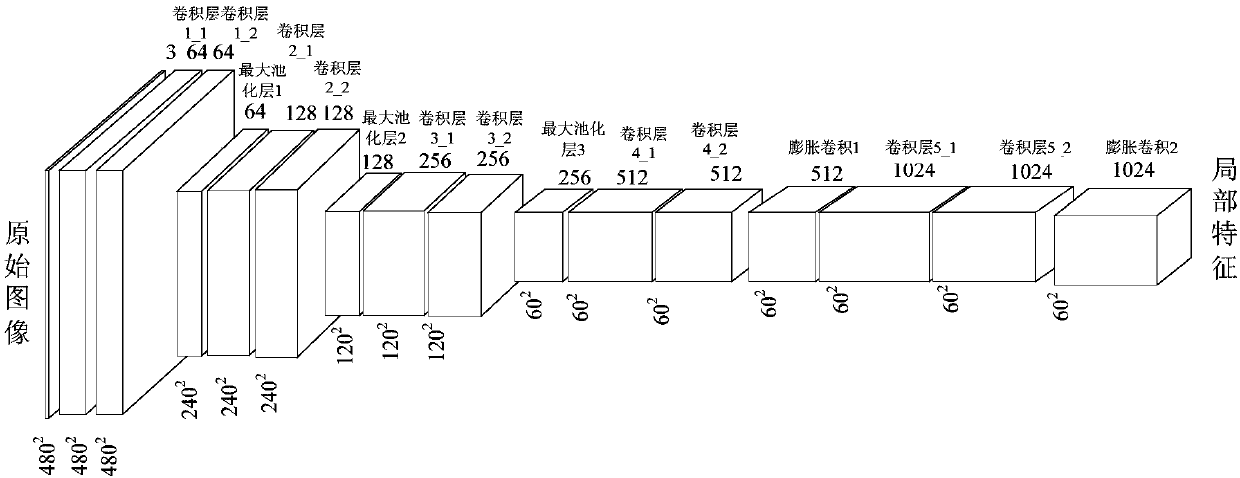

InactiveCN108062756ADoes not reduce dimensionalityHigh-resolutionImage enhancementImage analysisConditional random fieldImage resolution

The invention provides an image semantic division method based on a depth full convolution network and a condition random field. The image semantic division method comprises the following steps: establishing a depth full convolution semantic division network model; carrying out structured prediction based on a pixel label of a full connection condition random field, and carrying out model training, parameter learning and image semantic division. According to the image semantic division method provided by the invention, expansion convolution and a spatial pyramid pooling module are introduced into the depth full convolution network, and a label predication pattern output by the depth full convolution network is further revised by utilizing the condition random field; the expansion convolution is used for enlarging a receptive field and ensures that the resolution ratio of a feature pattern is not changed; the spatial pyramid pooling module is used for extracting contextual features of different scale regions from a convolution local feature pattern, and a mutual relation between different objects and connection between the objects and features of regions with different scales are provided for the label predication; the full connection condition random field is used for further optimizing the pixel label according to feature similarity of pixel strength and positions, so that a semantic division pattern with a high resolution ratio, an accurate boundary and good space continuity is generated.

Owner:CHONGQING UNIV OF TECH

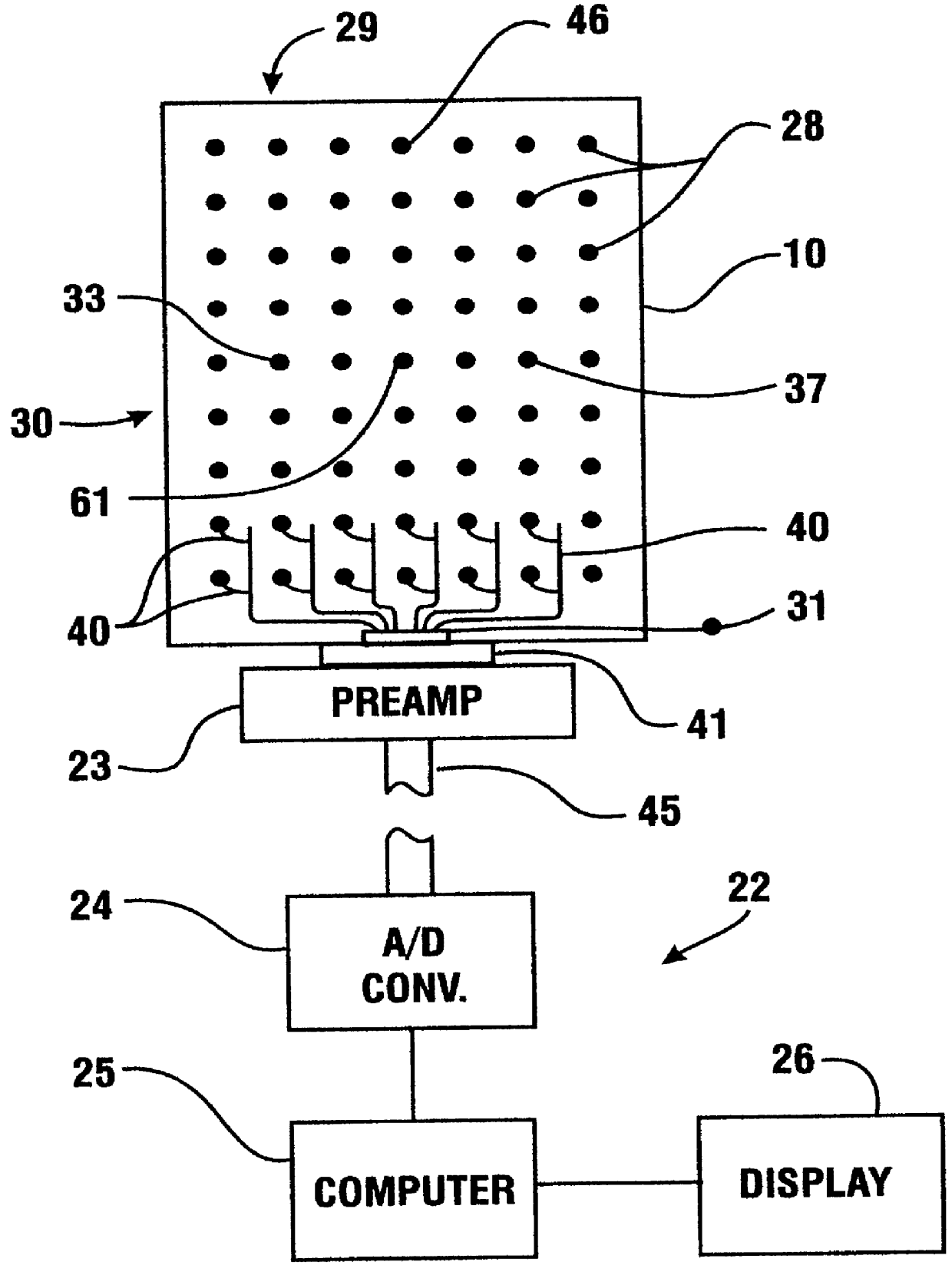

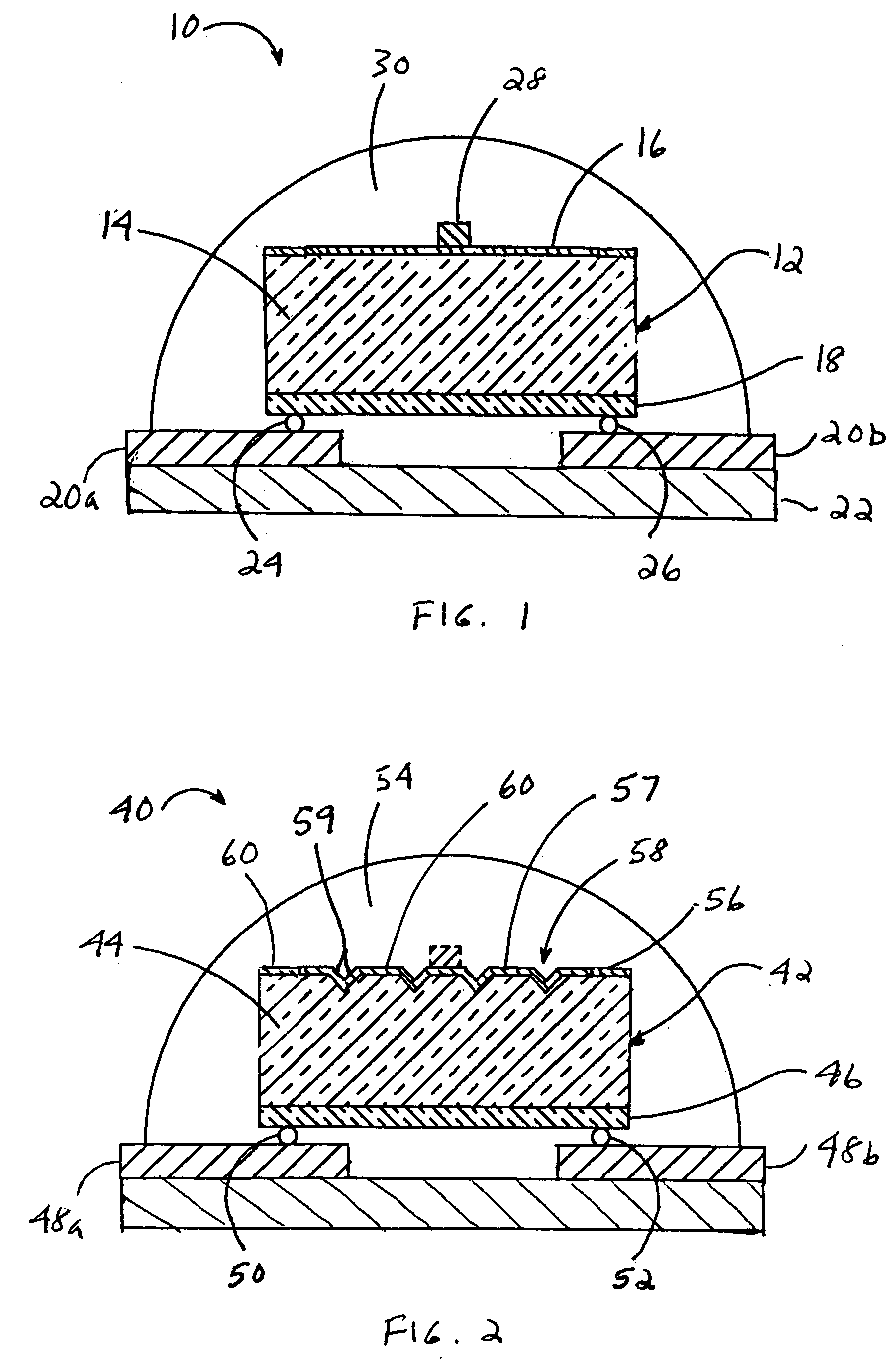

EMG electrode

An electrode for collecting surface electromyographic (EMG) signals consists of a generally bolt-shaped structure having a circular signal-gathering head and an integral shaft for support purposes and for transmission of electrical signals. The head of the electrode is configured as a disc having a plurality of pyramids distributed substantially evenly over the signal gathering surface with the tips of the pyramids projecting outwardly of the head for contact with the skin of a patient. Preferably, the electrode consists entirely of 316L stainless steel, the pyramids are formed by grinding or electromachining and the shaft is threaded for receipt of a clamp nut. The electrode is adapted for mounting in a hole in a thin, flexible support member by means of the shaft to maintain spacing among adjacent electrodes and to assure proper contact with the patient.

Owner:SPINEMATRIX

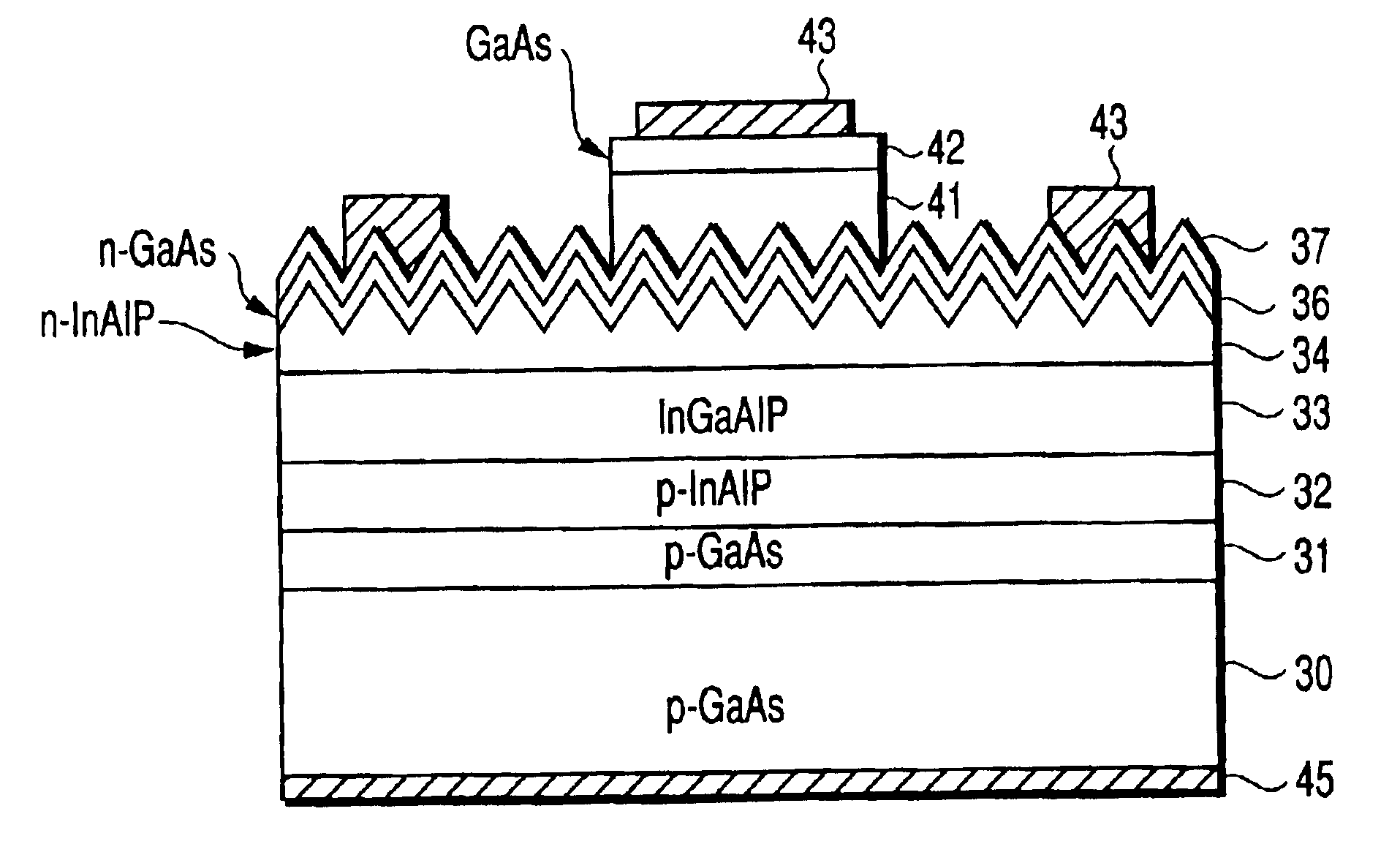

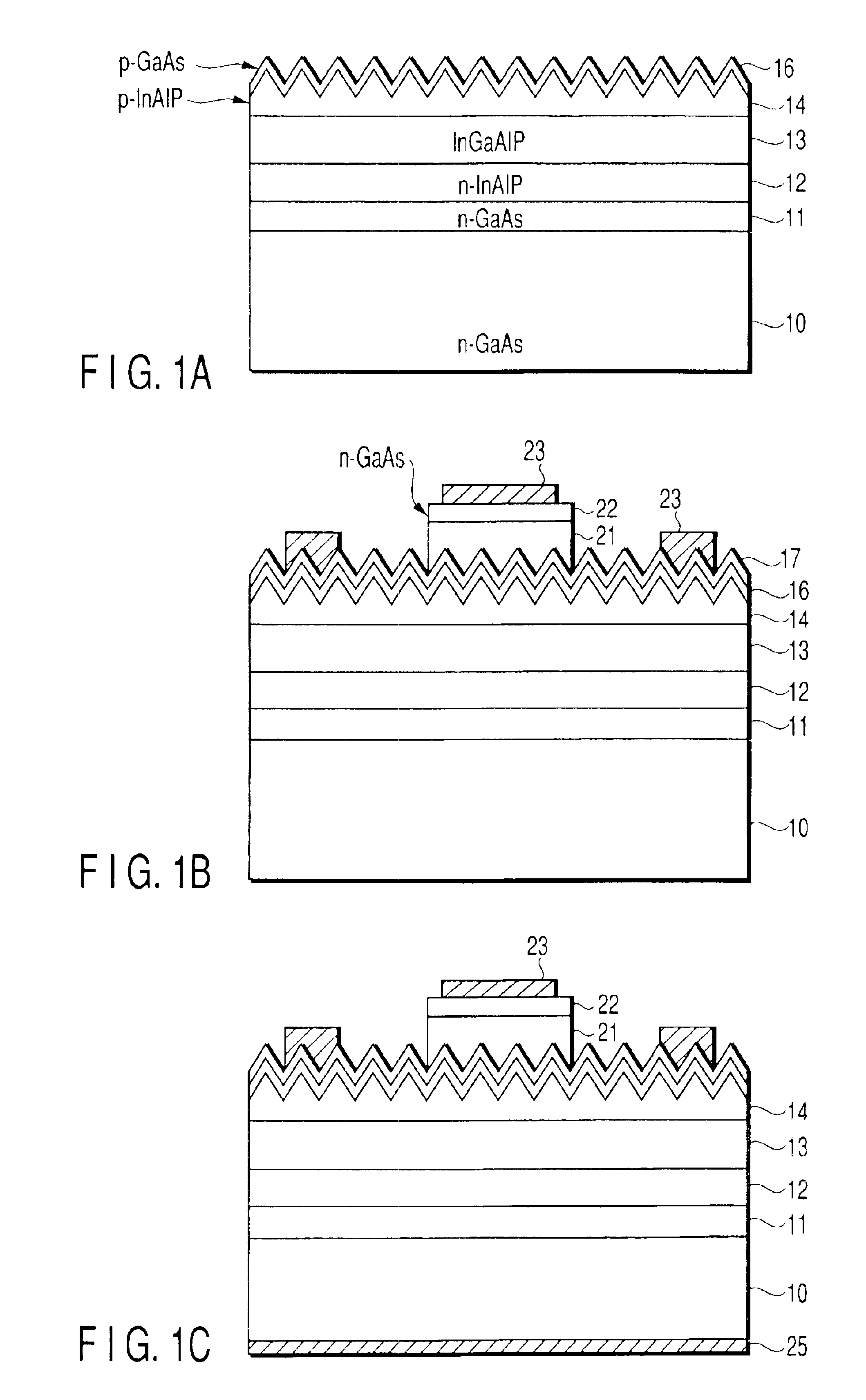

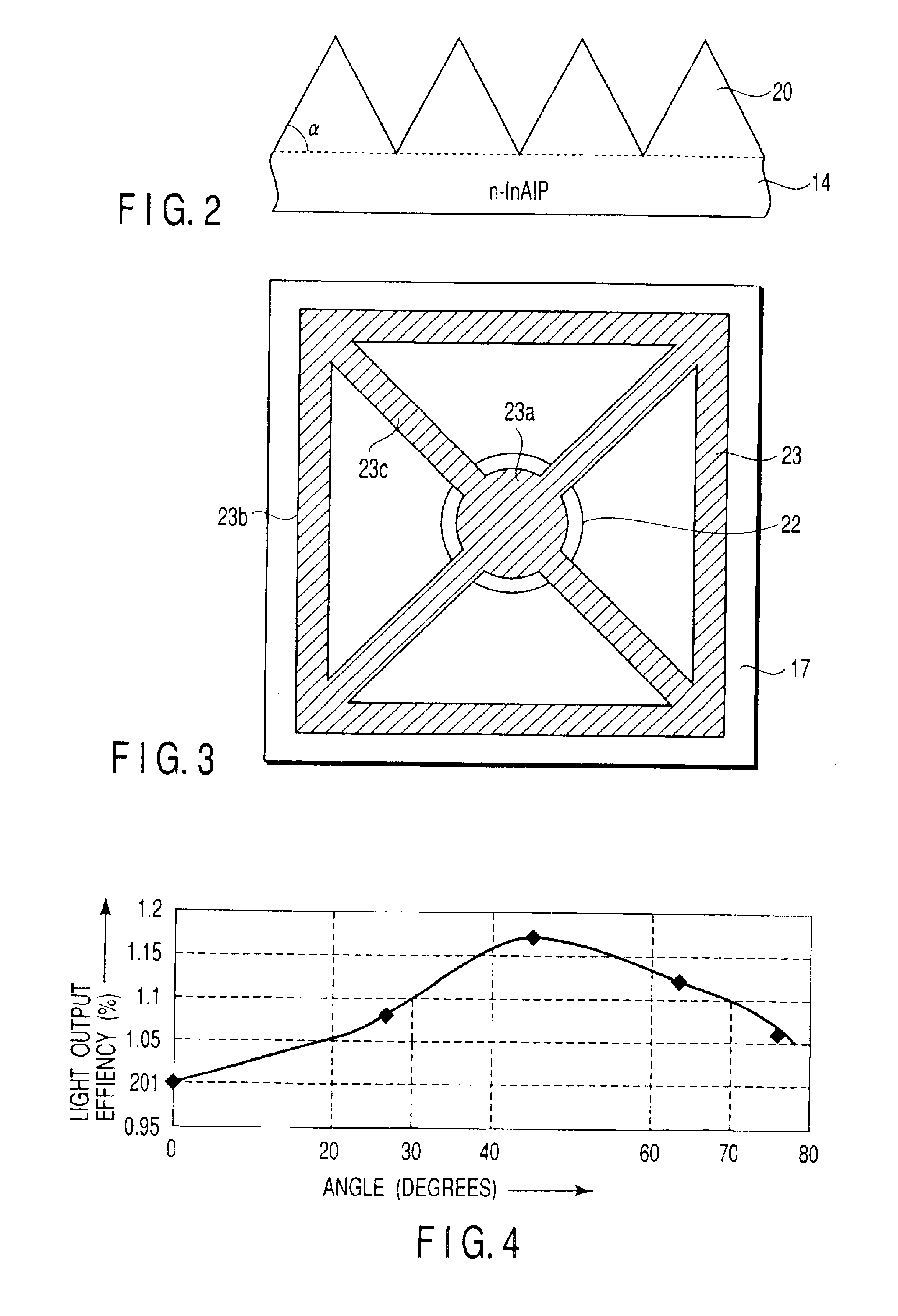

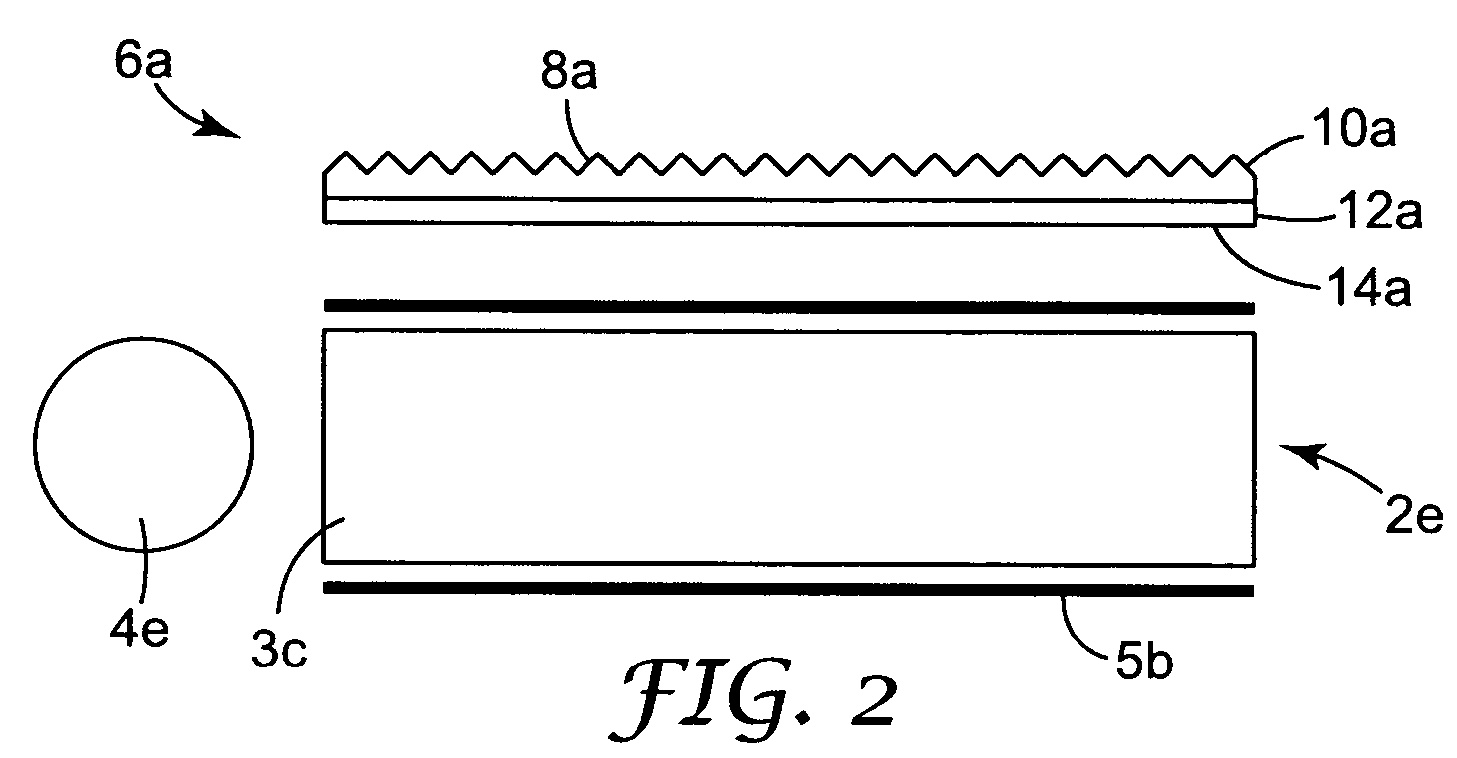

Surface-emitting semiconductor light device

A semiconductor light emitting device is disclosed in which a semiconductor multilayer structure including a light emitting layer is formed on a substrate and light is output from the opposite surface of the semiconductor multilayer structure from the substrate. The light output surface is formed with a large number of protrusions in the form of cones or pyramids. To increase the light output efficiency, the angle between the side of each protrusion and the light output surface is set to between 30 and 70 degrees.

Owner:KK TOSHIBA

Pyramid match kernel and related techniques

InactiveUS7949186B2Search can be accomplished more quicklyFinish quicklyCharacter and pattern recognitionFeature vectorCluster group

Owner:MASSACHUSETTS INST OF TECH

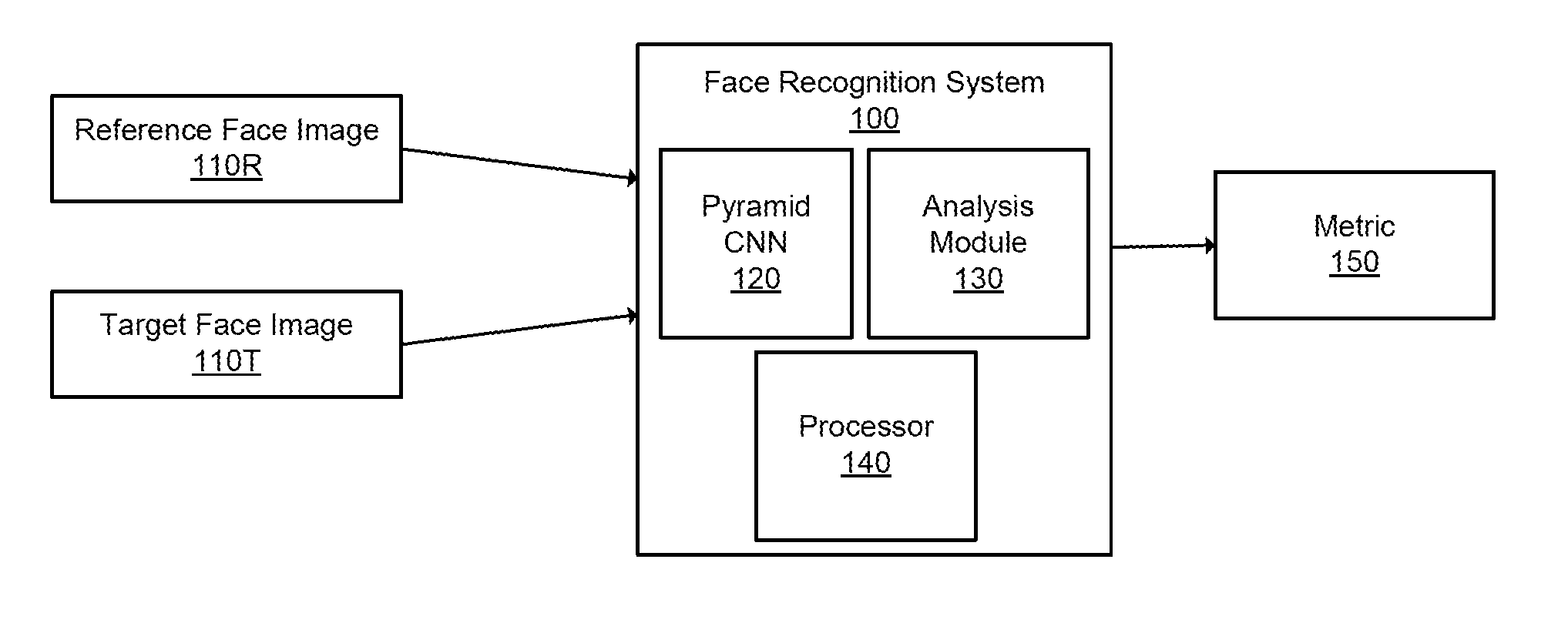

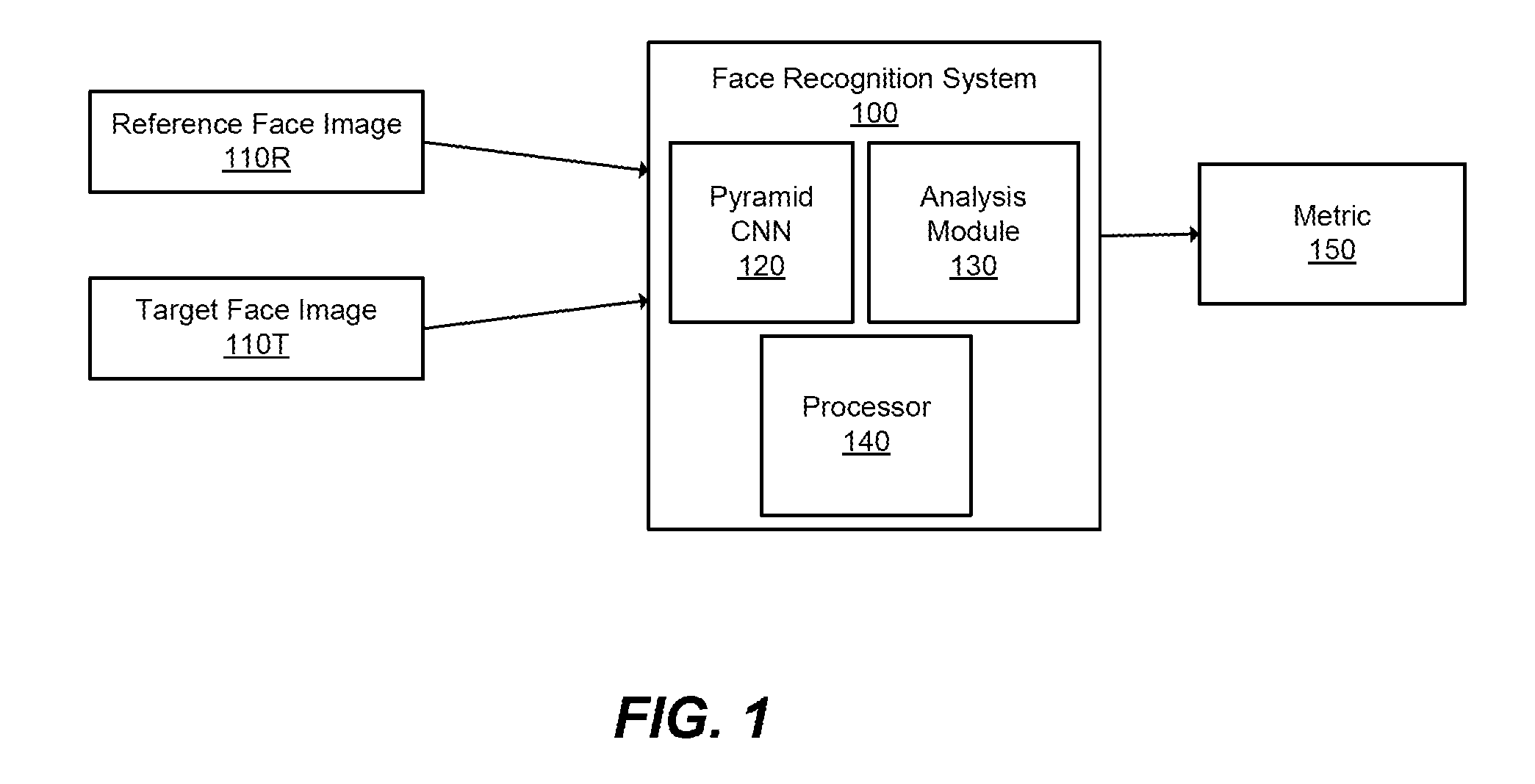

Learning Deep Face Representation

ActiveUS20150347820A1Way fastOvercome limitationsCharacter and pattern recognitionNeural learning methodsLearning basedFacial recognition system

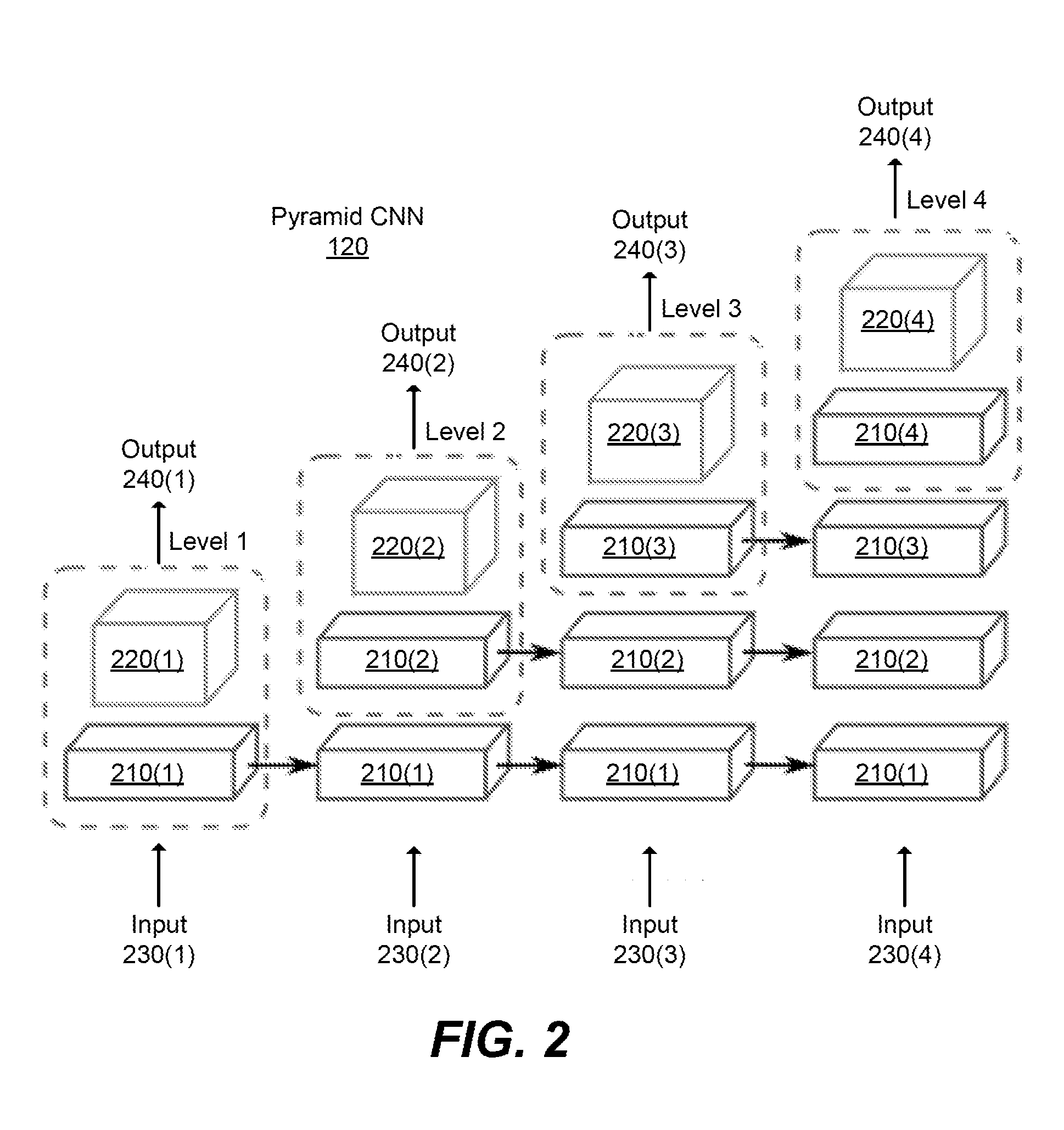

Face representation is a crucial step of face recognition systems. An optimal face representation should be discriminative, robust, compact, and very easy to implement. While numerous hand-crafted and learning-based representations have been proposed, considerable room for improvement is still present. A very easy-to-implement deep learning framework for face representation is presented. The framework bases on pyramid convolutional neural network (CNN). The pyramid CNN adopts a greedy-filter-and-down-sample operation, which enables the training procedure to be very fast and computation efficient. In addition, the structure of Pyramid CNN can naturally incorporate feature sharing across multi-scale face representations, increasing the discriminative ability of resulting representation.

Owner:BEIJING KUANGSHI TECH

Multi-scale multi-camera adaptive fusion with contrast normalization

ActiveUS20090169102A1Reduce flickering artifactReduce artifactsTelevision system detailsImage enhancementMulti cameraEnergy based

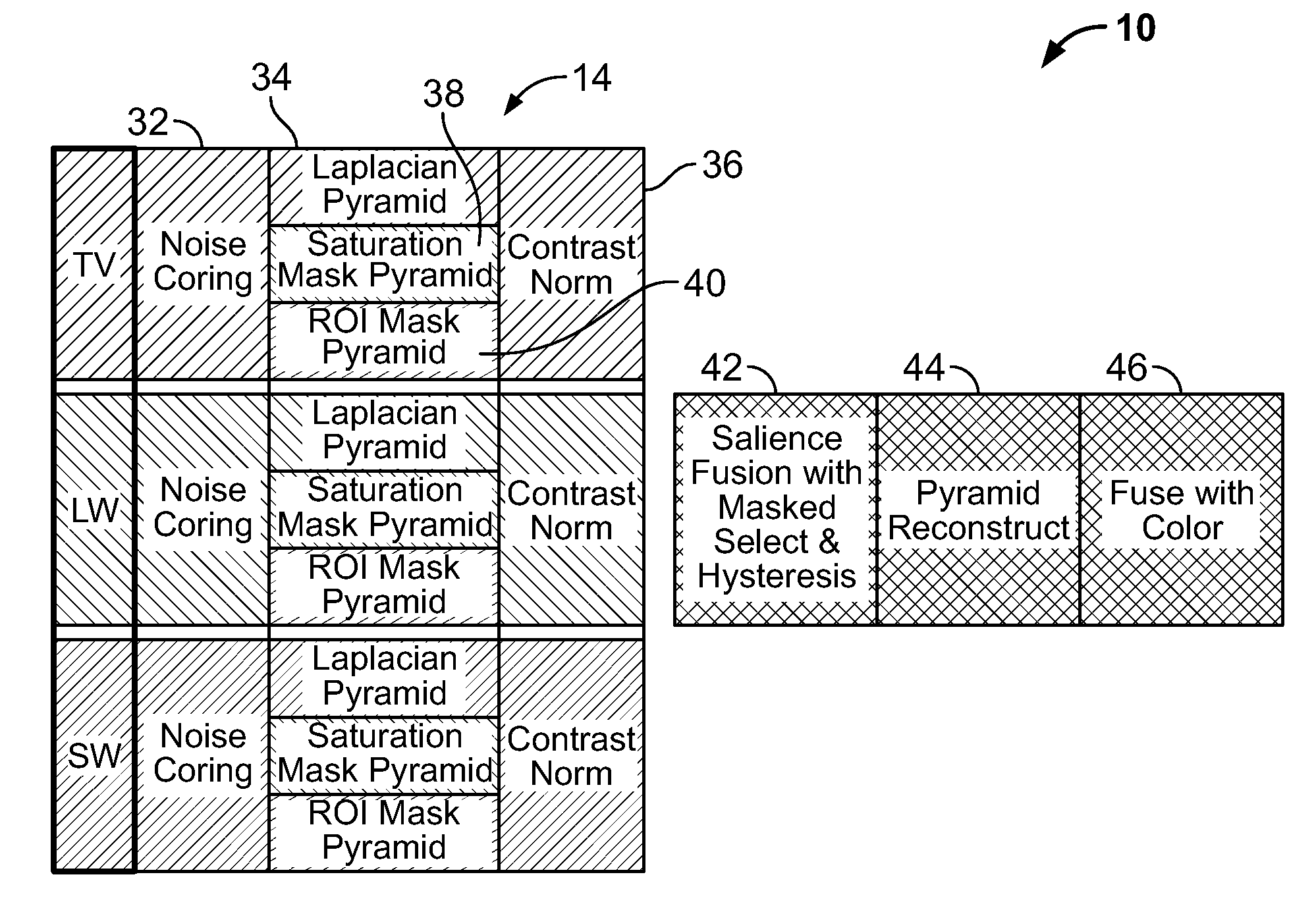

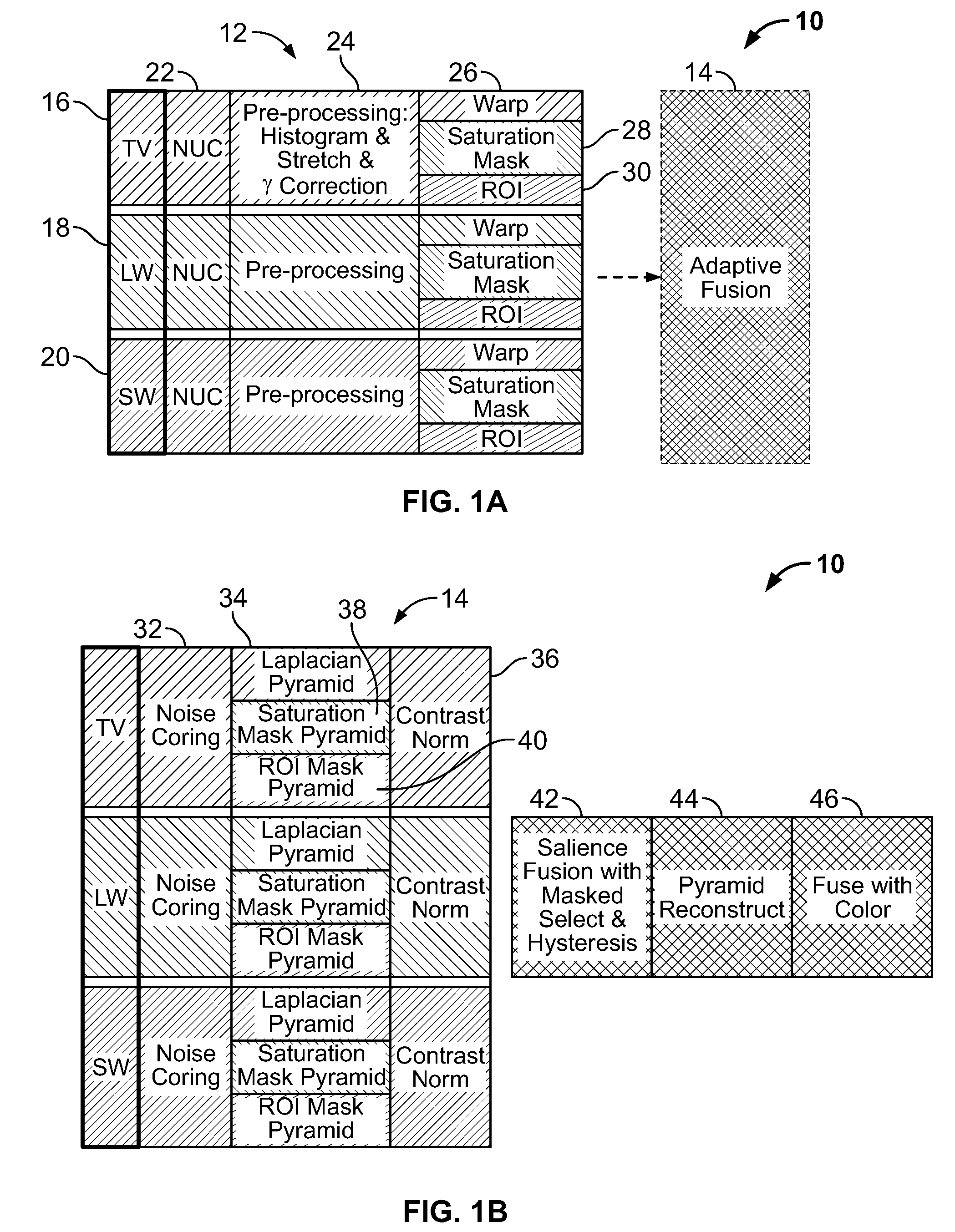

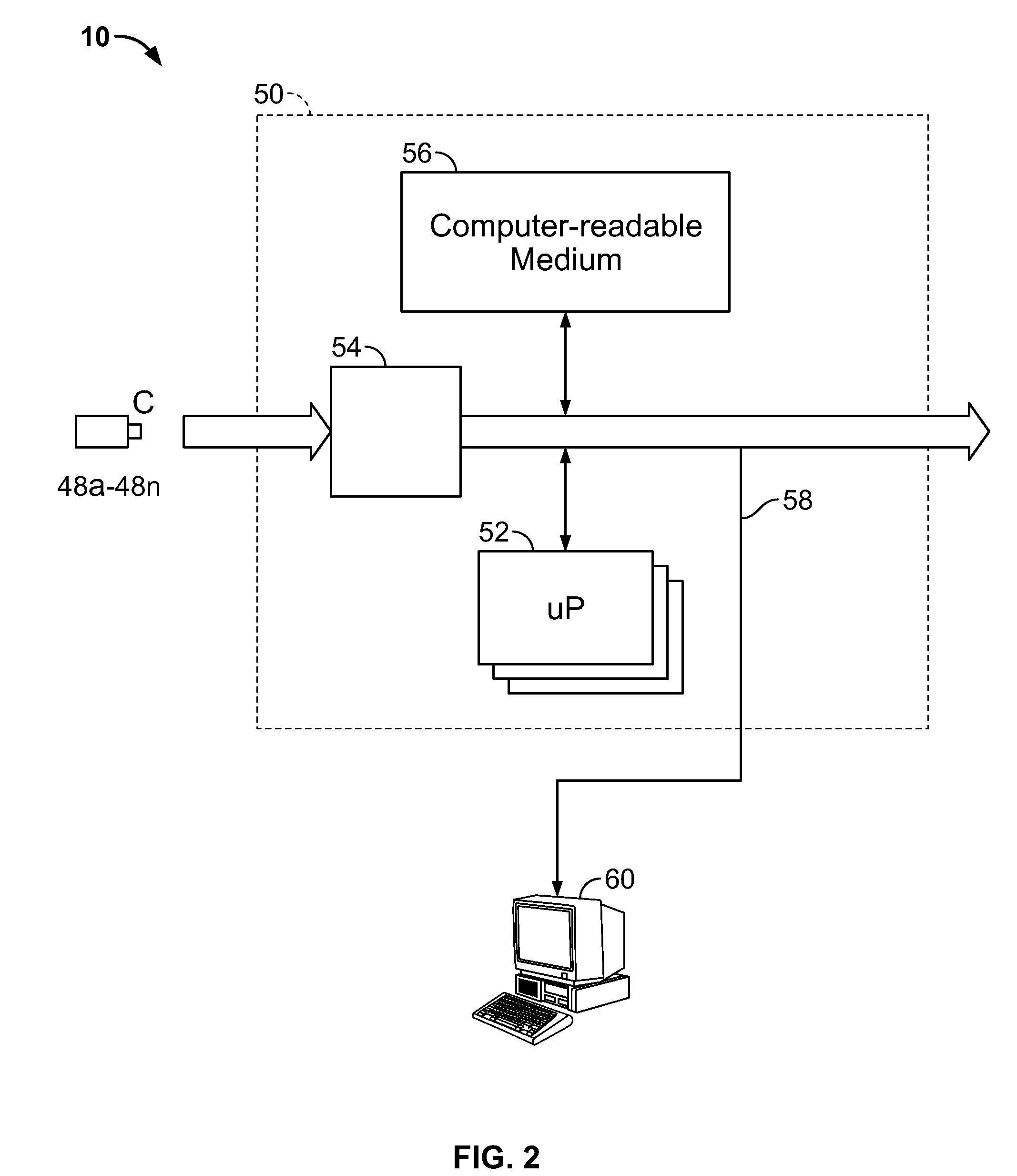

A computer implemented method for fusing images taken by a plurality of cameras is disclosed, comprising the steps of: receiving a plurality of images of the same scene taken by the plurality of cameras; generating Laplacian pyramid images for each source image of the plurality of images; applying contrast normalization to the Laplacian pyramids images; performing pixel-level fusion on the Laplacian pyramid images based on a local salience measure that reduces aliasing artifacts to produce one salience-selected Laplacian pyramid image for each pyramid level; and combining the salience-selected Laplacian pyramid images into a fused image. Applying contrast normalization further comprises, for each Laplacian image at a given level: obtaining an energy image from the Laplacian image; determining a gain factor that is based on at least the energy image and a target contrast; and multiplying the Laplacian image by a gain factor to produce a normalized Laplacian image.

Owner:SRI INTERNATIONAL

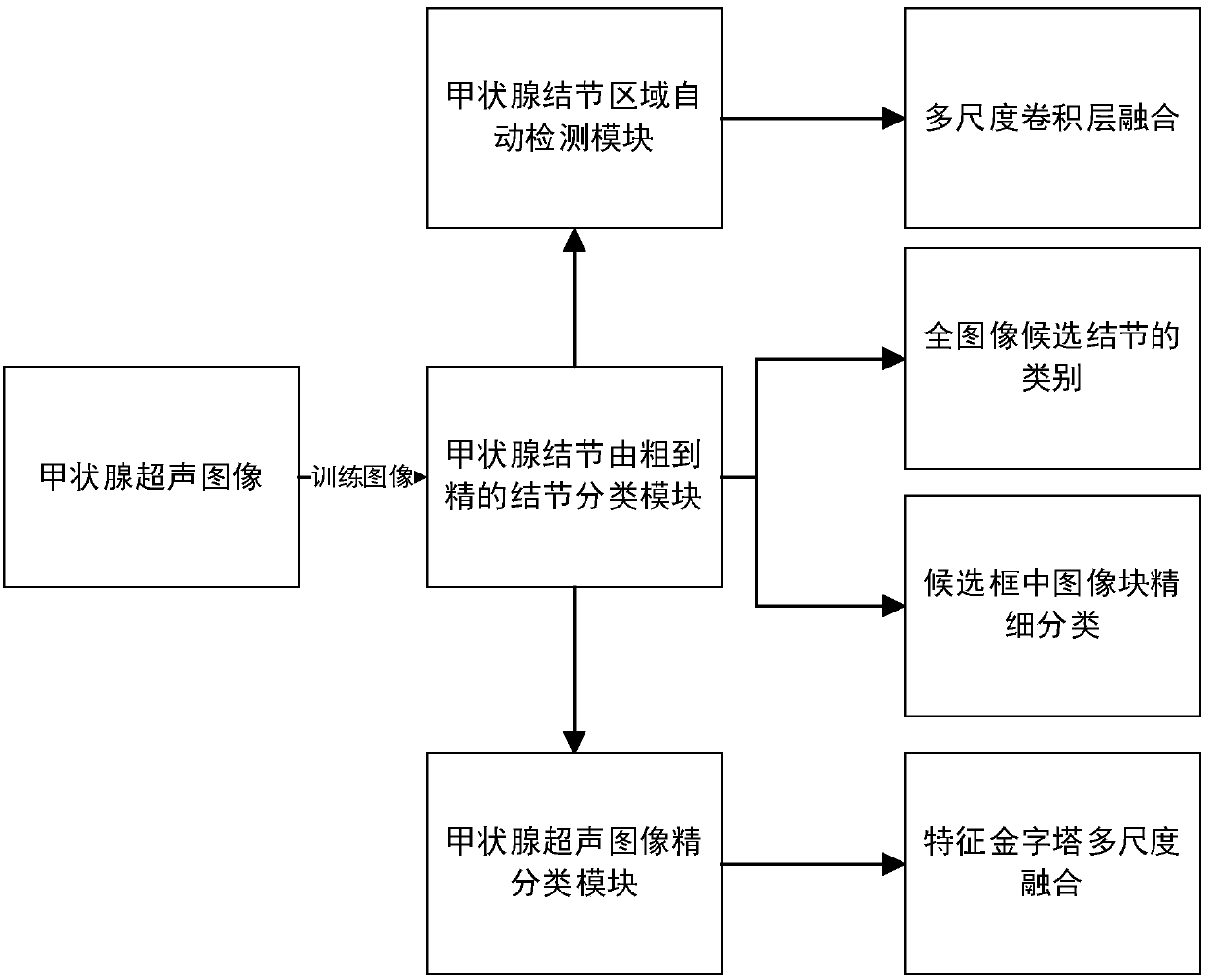

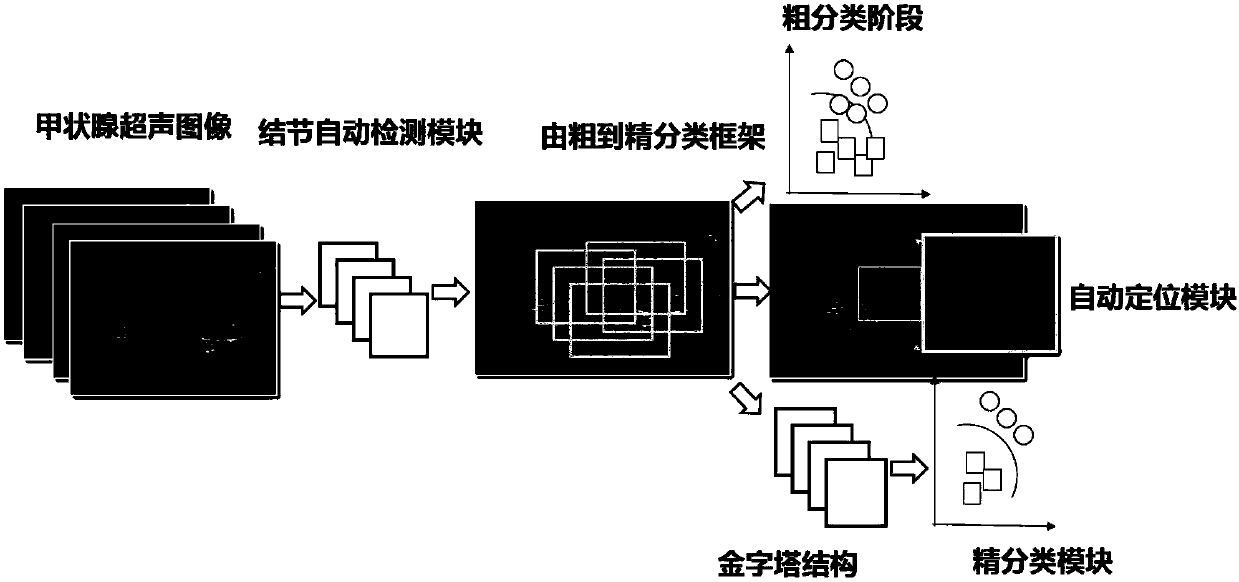

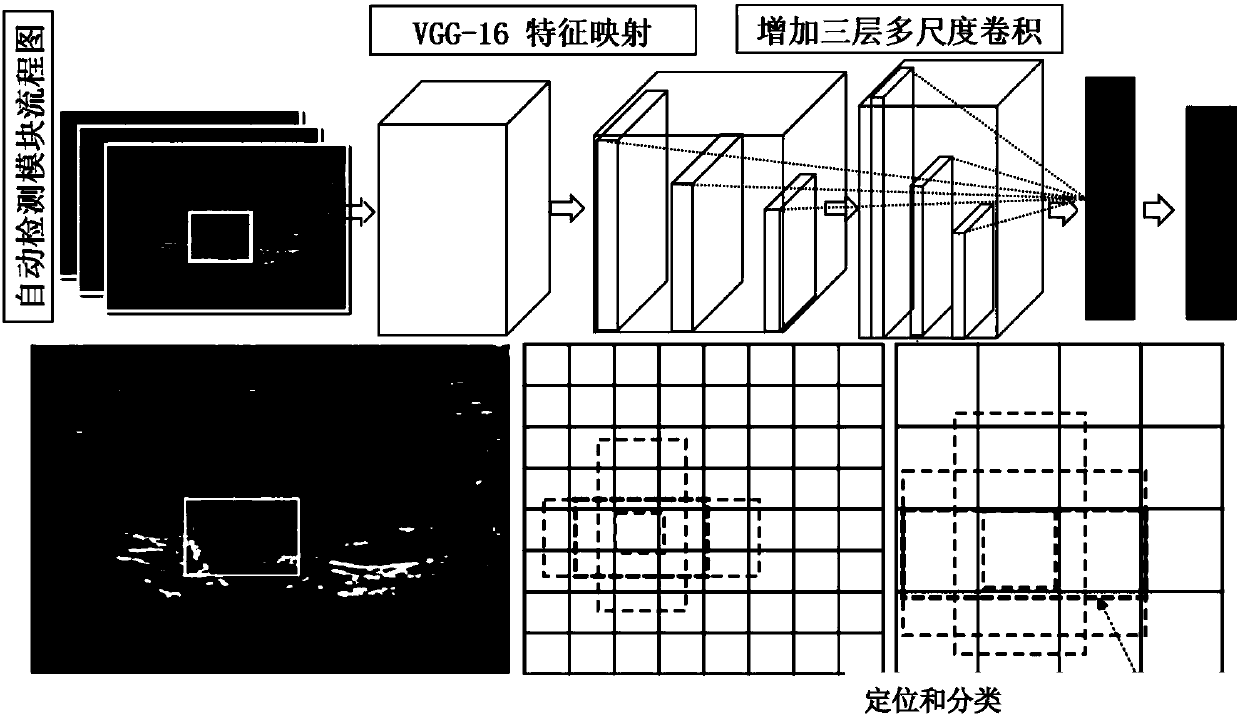

Thyroid ultrasound image nodule automatic diagnosis system based on multi-scale convolutional neural network

ActiveCN107680678AAccurate detectionAdapt to polymorphic automatic detectionImage enhancementImage analysisSemantic featureGlobal information

The invention provides a thyroid ultrasound image nodule automatic diagnosis system based on a multi-scale convolutional neural network. The system includes a thyroid nodule coarse-to-fine classification module, a thyroid nodule region automatic detection module, and a thyroid nodule fine classification module. The size features of different sensing regions are extracted through a multi-scale feature fusion convolutional neural network, and then, the context semantic features of a thyroid nodule can be extracted according to local and global information, and the thyroid nodule can be automatically located. Through multi-scale coarse-to-fine feature extraction based on a neural network and the design of a multi-scale fine classification AlexNet of a pyramid structure, the position of a focus and the probability that the focus is benign or malignant can be accurately predicted, doctors can be assisted in diagnosing a thyroid focus, and the objectivity of diagnosis is improved. The systemhas the characteristics of good real-time performance and high accuracy.

Owner:BEIHANG UNIV

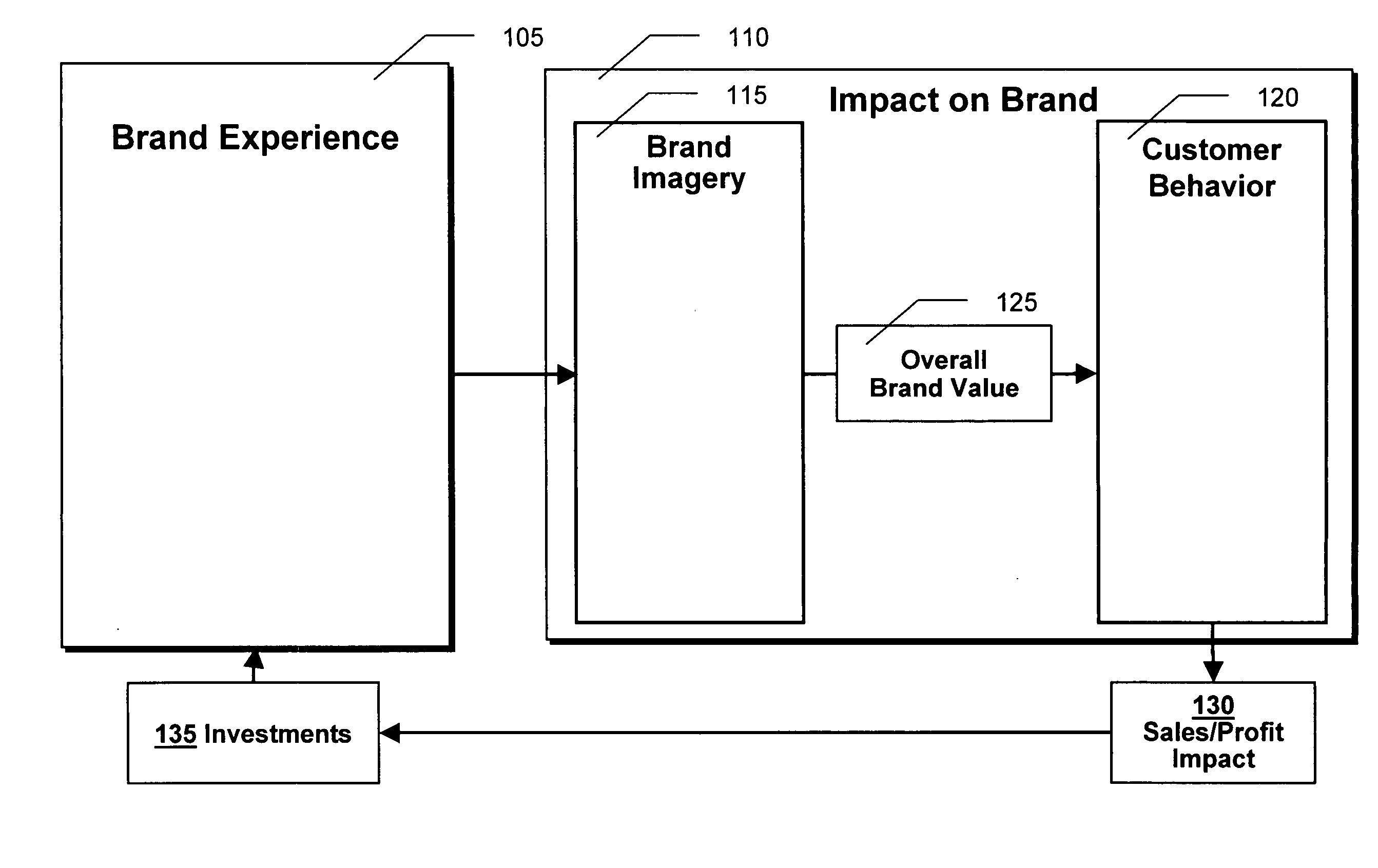

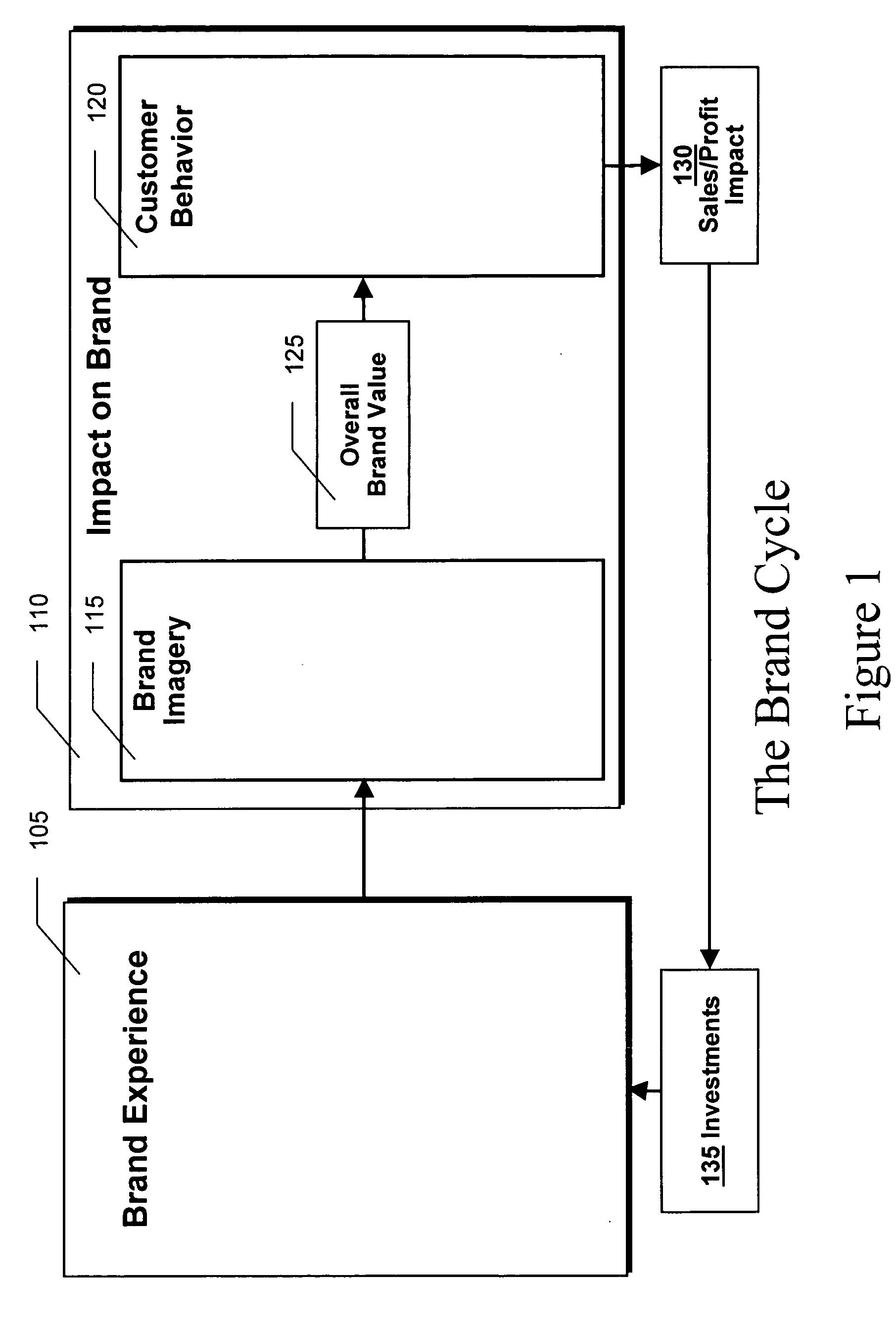

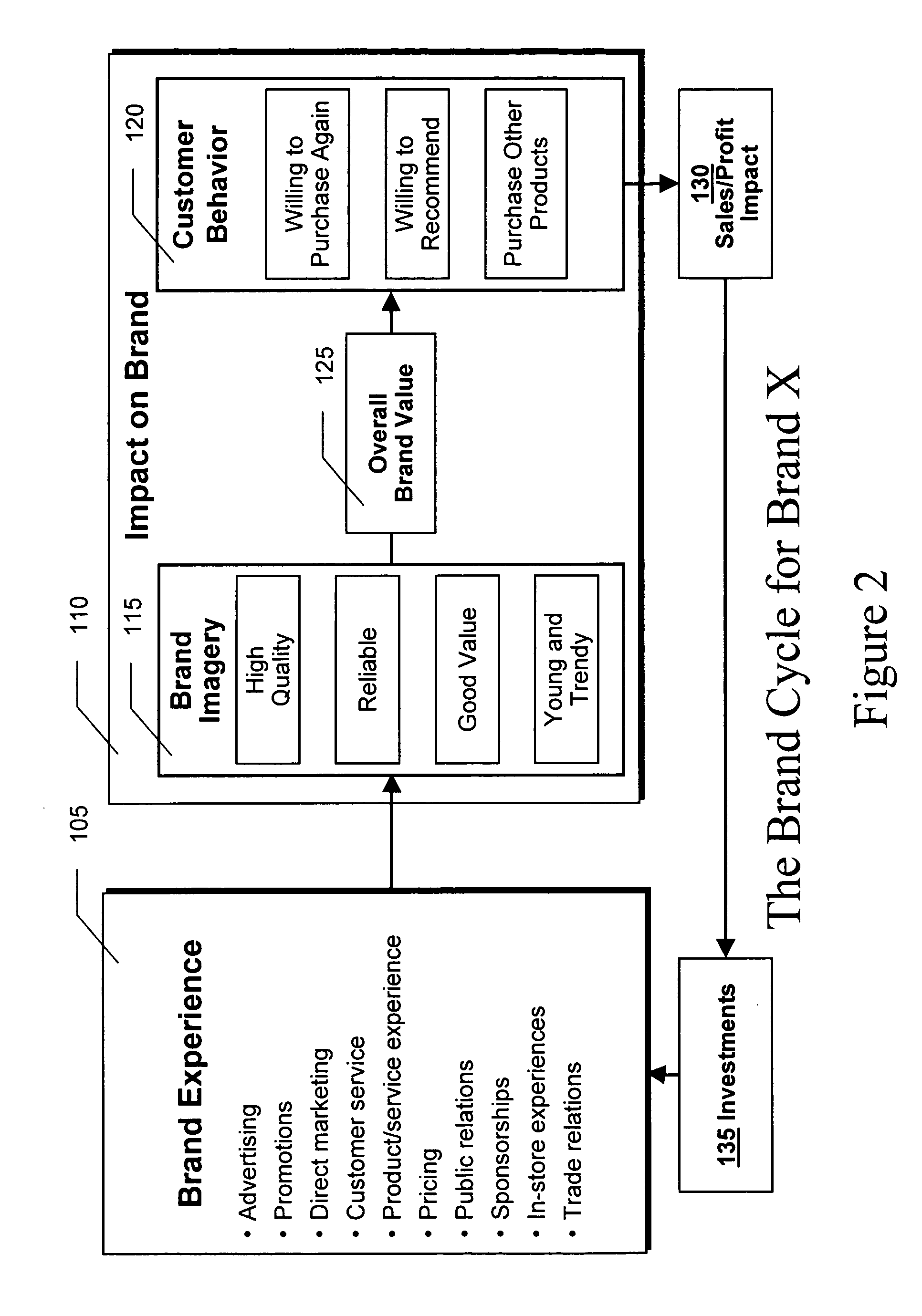

Brand value management

InactiveUS20050209909A1Enhance brand valueProfit maximizationMarket predictionsFinanceTrade offsProgram analysis

A combination of several analytical computer-assisted modeling techniques may be used to evaluate the value of a brand, the relative value of competitive brands and may identify the opportunities to increase brand value and the priority of those opportunities. Image / Attitudinal driver analysis, pyramid analysis, probability analysis, trade-off analysis, and other regression techniques may be used in novel combinations to quantify brand development, impacts and the overall estimate of brand value. For example: (1) image driver analysis may be applied to each level of a brand pyramid to understand how to most effectively move customers through to the next level in the pyramid; (2) probability analysis may be used to estimate the impact of each movement through the pyramid; and (3) tradeoff analysis may be used to improve the value customers perceive at any particular level of the pyramid.

Owner:ACCENTURE GLOBAL SERVICES LTD

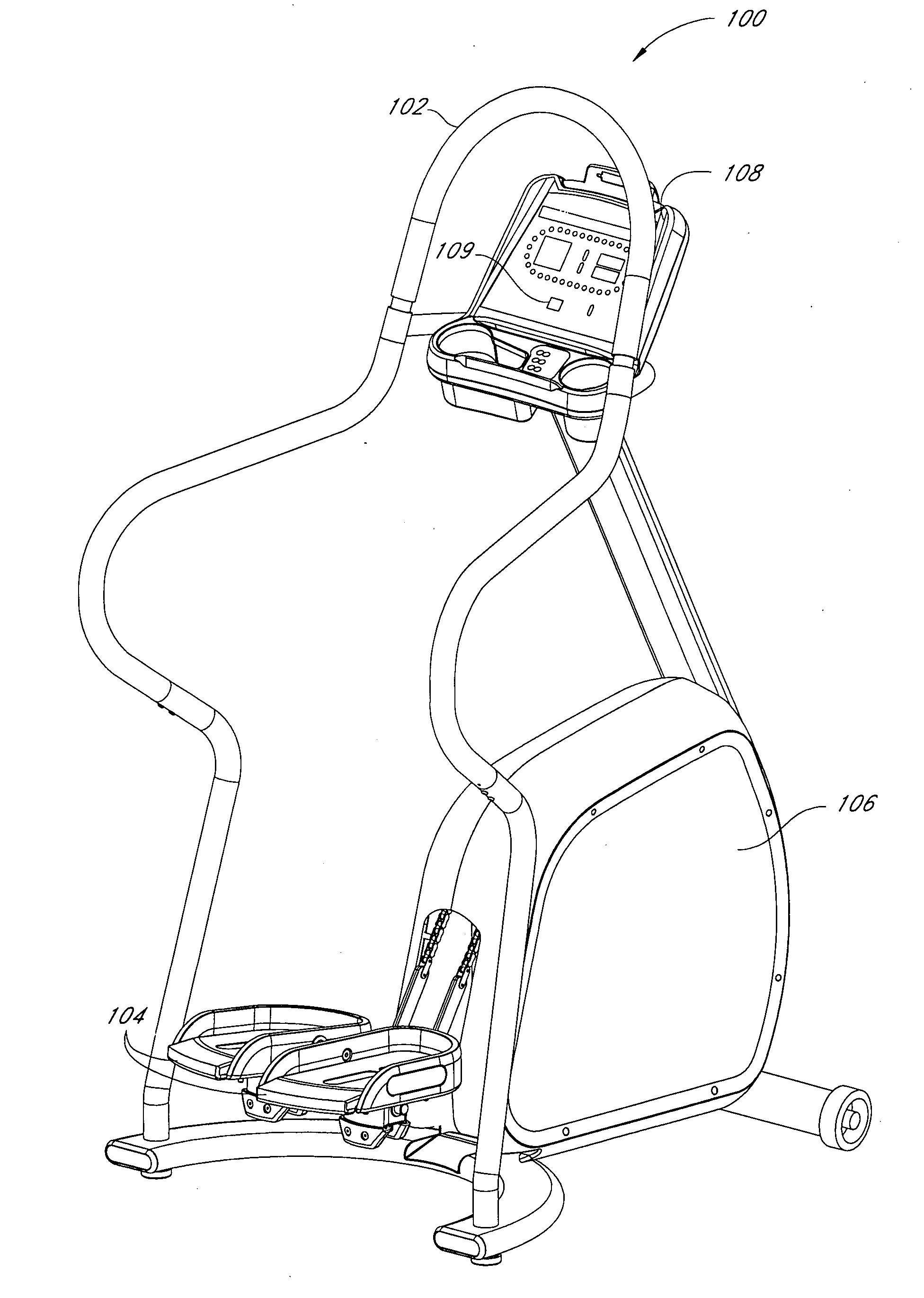

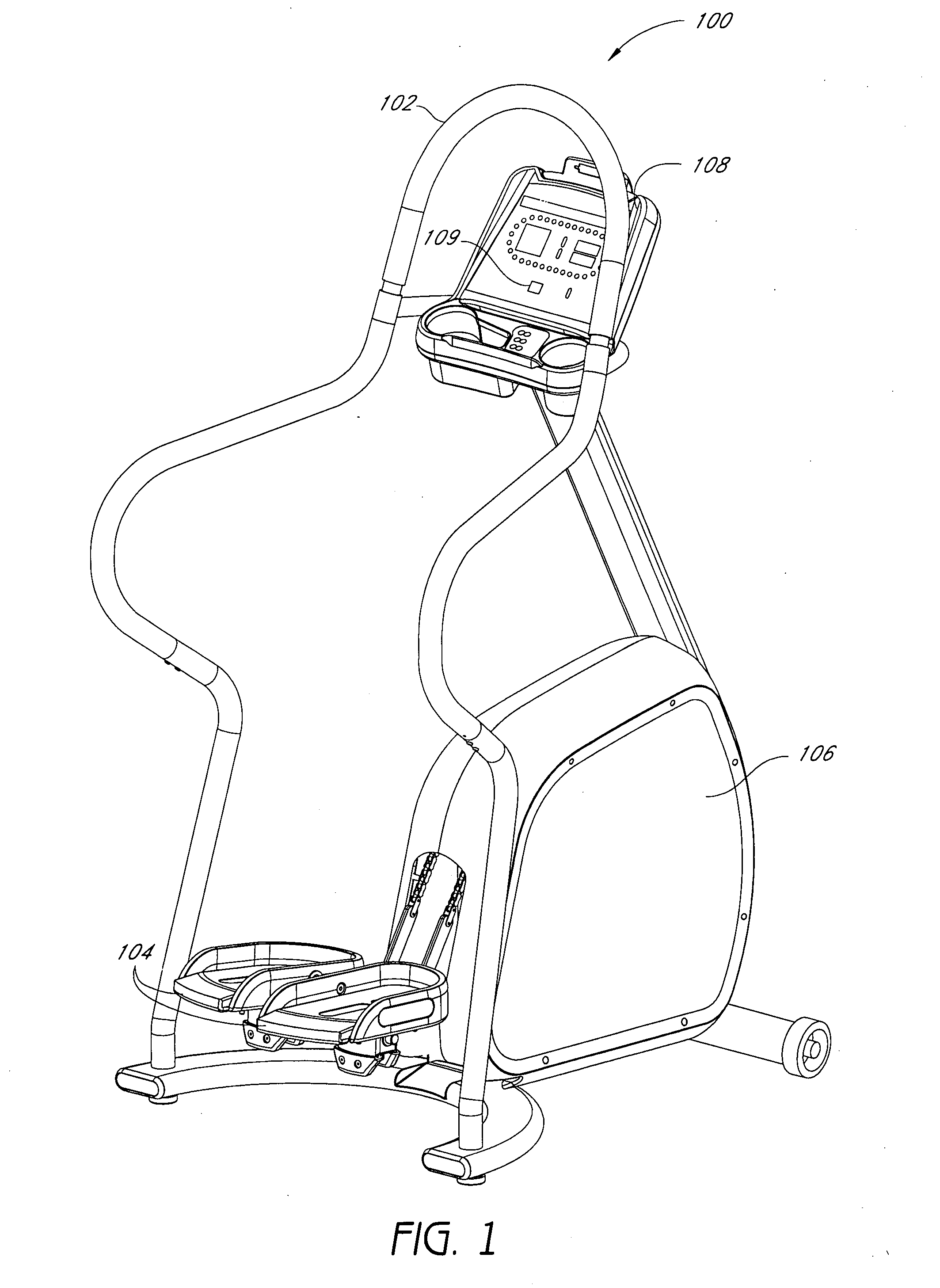

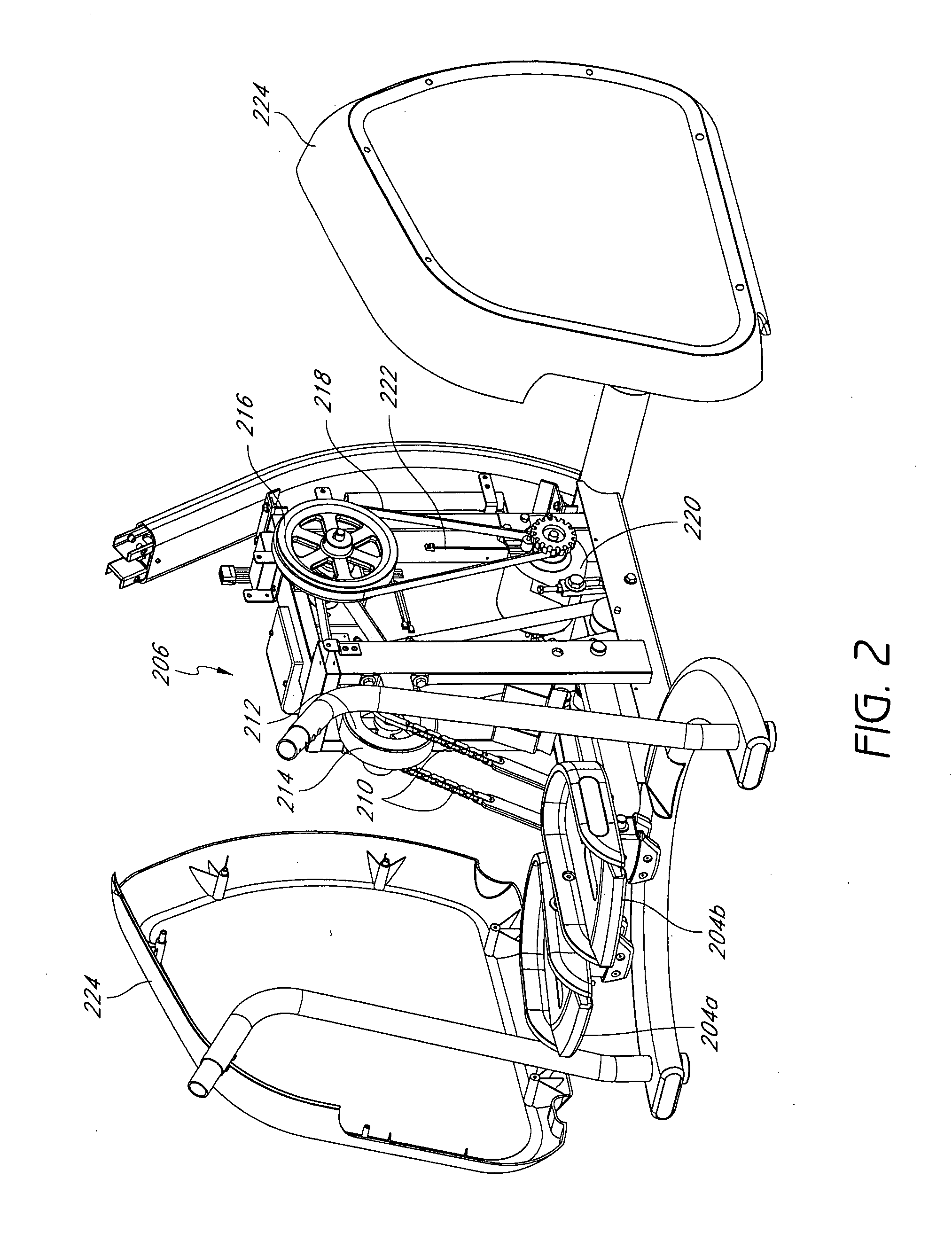

Motivational displays and methods for exercise machine

A stationary exercise machine includes a system for simulating a climb of a landmark or other selected geographic location or structure. The exercise machine includes an electronic control system that monitors pedal movement and that controls a display for depicting a progress in the simulated climb. For example, the control system may include a processor that determines the height of a selected landmark, determines the height of each selected step, monitors the number of steps in the simulated climb, and that outputs a signal for displaying the progress of the user's simulated climb. For instance, the display may include a silhouette of a block pyramid whose levels are filled in based on a percentage of the climb. In certain embodiments, the control system also adjusts a resistive load based on a current force of the user in pumping the pedals.

Owner:UNISEN INC DBA STAR TRAC

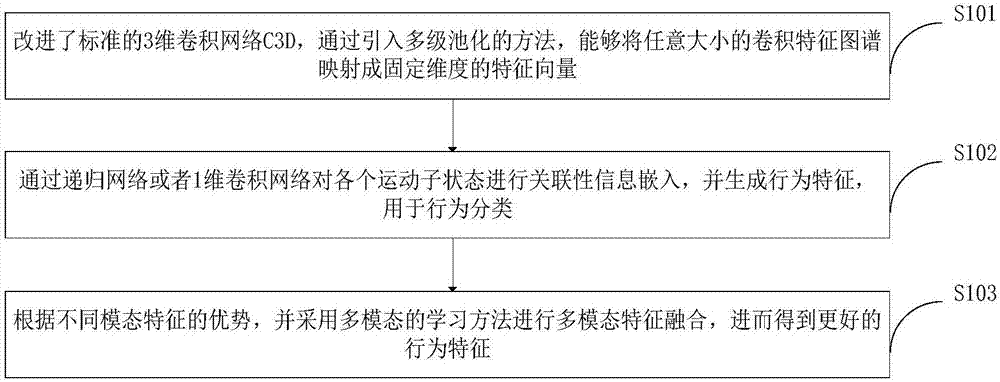

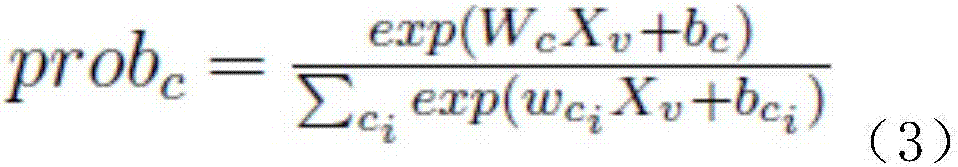

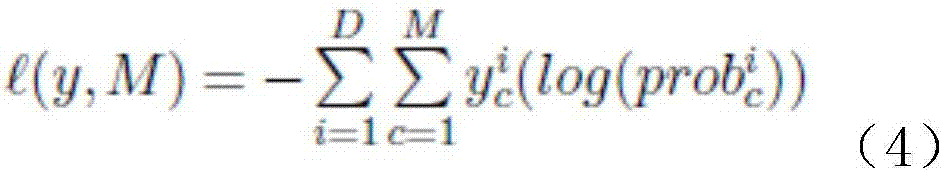

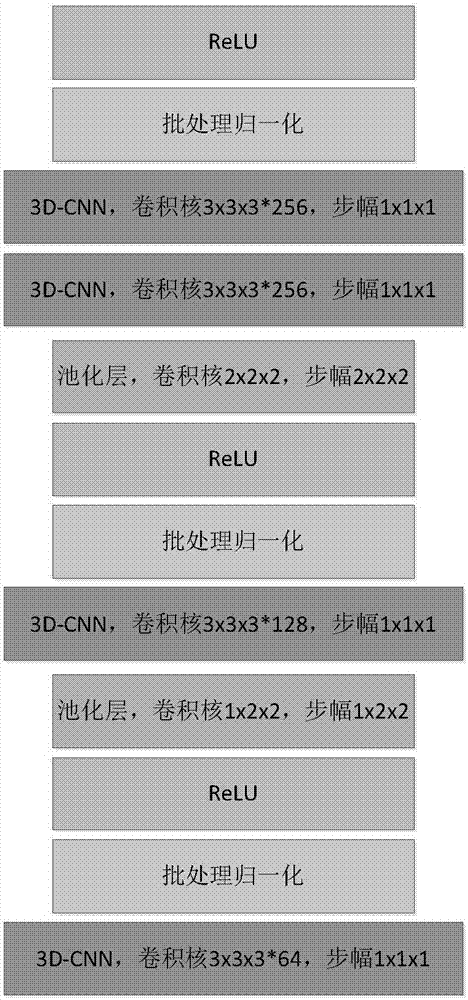

Human behavior identification method based on 3D deep convolutional network

ActiveCN107506712AImprove robustnessScale upCharacter and pattern recognitionNeural architecturesHuman behaviorFeature extraction

The invention belongs to the field of computer vision video motion identification, and discloses a human behavior identification method based on a 3D deep convolutional network. The human behavior identification method comprises the steps of: firstly, dividing a video into a series of consecutive video segments; then, inputting the consecutive video segments into a 3D neural network formed by a convolutional computation layer and a space-time pyramid pooling layer to obtain features of the consecutive video segments; and then calculating global video features by means of a long and short memory model, and regarding the global video features as a behavior pattern. The human behavior identification method has obvious advantages, can perform feature extraction on video segments of arbitrary resolution and time length by improving a standard 3D convolutional network C3D and introducing multistage pooling, improves the great robustness of the model to behavior change, is conductive to increasing video training data scale while maintaining video quality, and improves the integrity of behavior information through carrying out correlation information embedding according to motion sub-states.

Owner:CHENGDU KOALA URAN TECH CO LTD

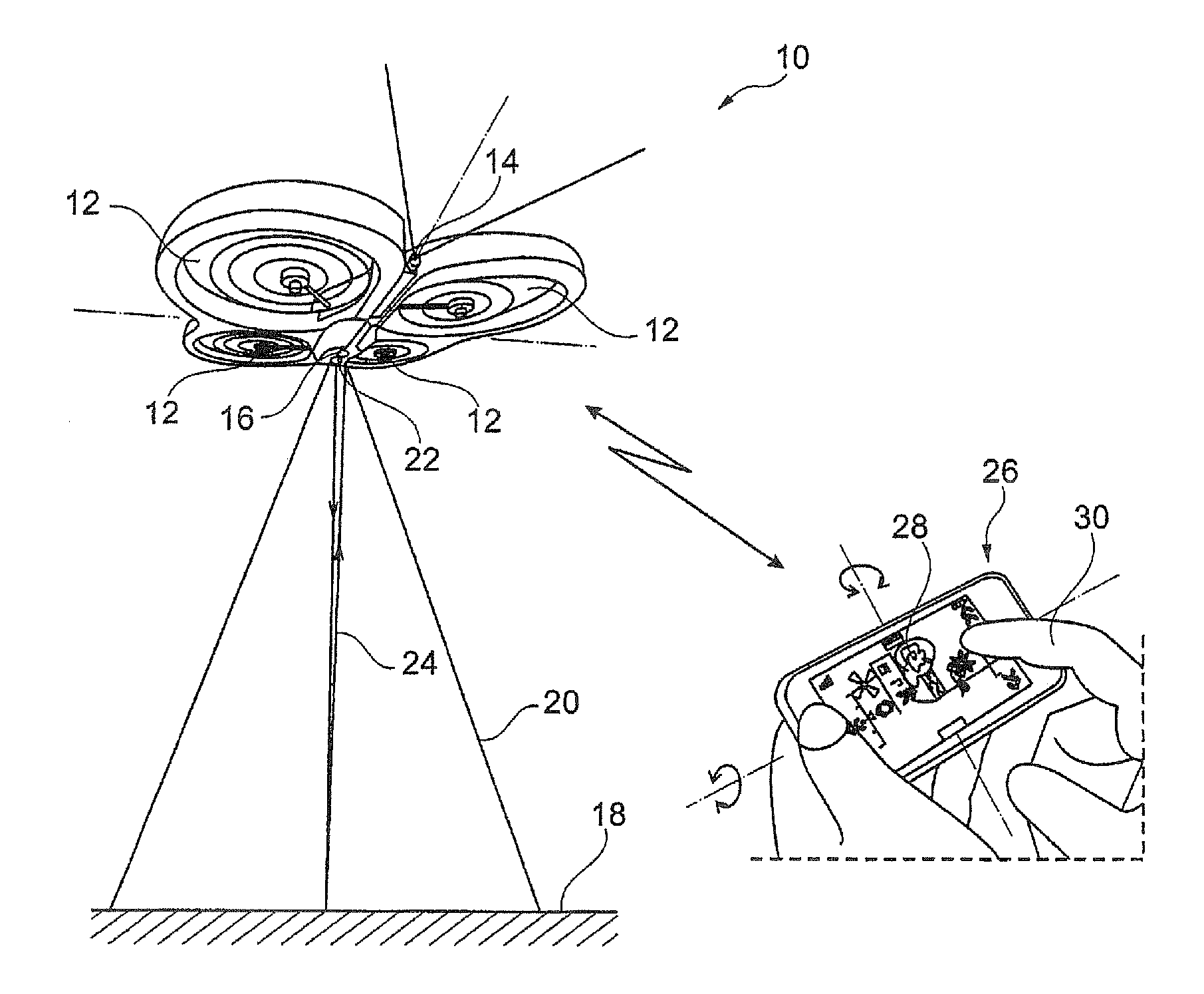

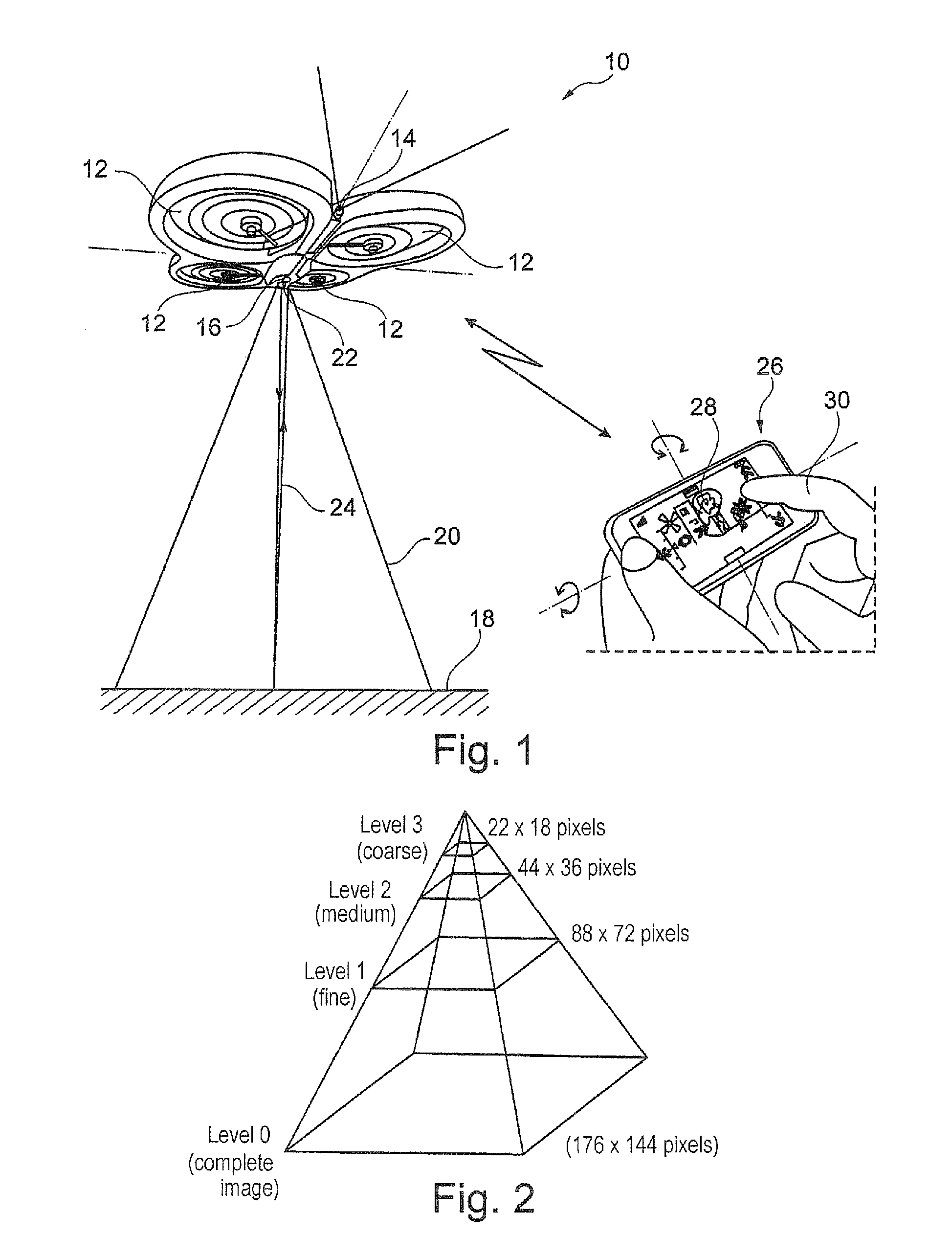

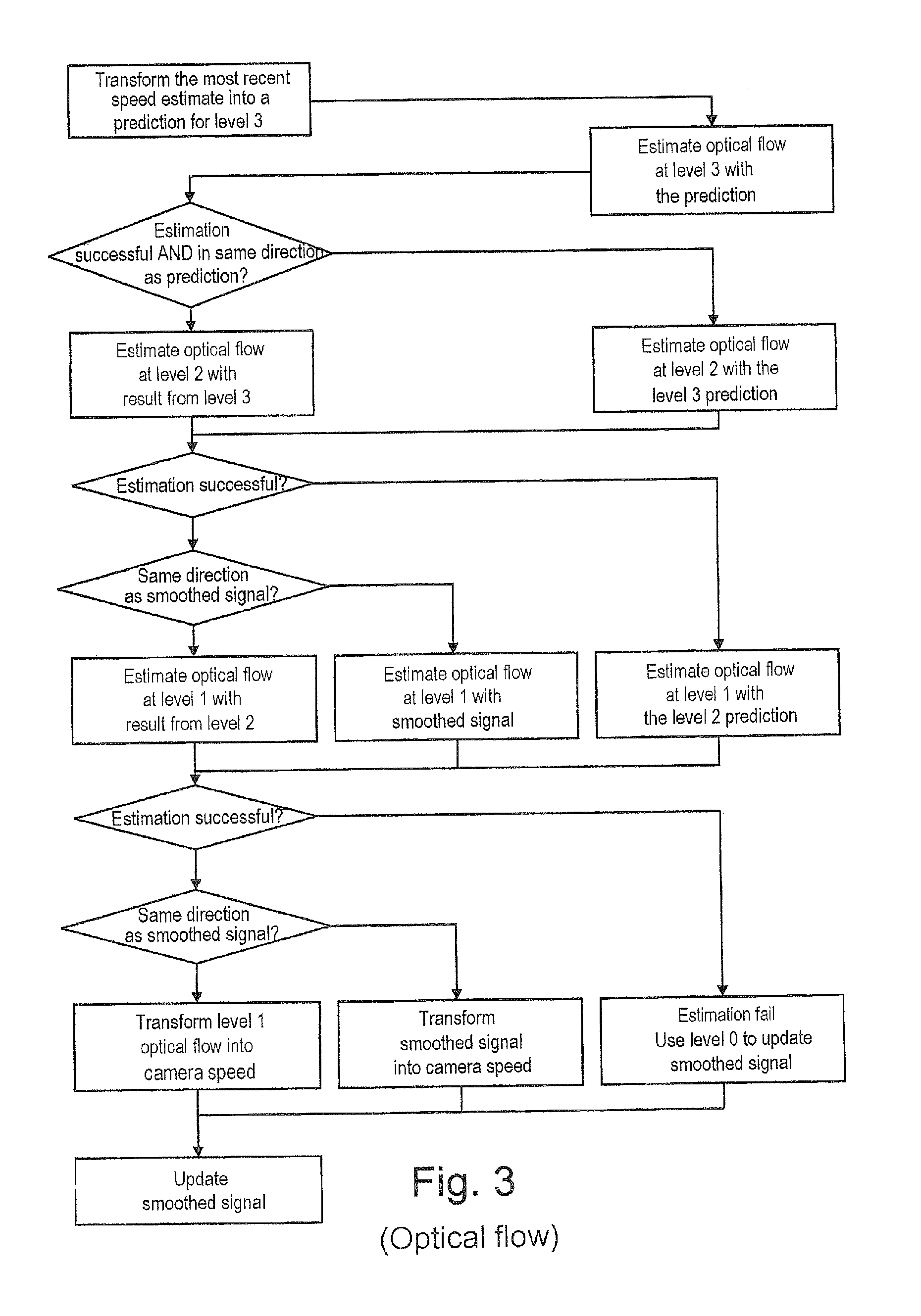

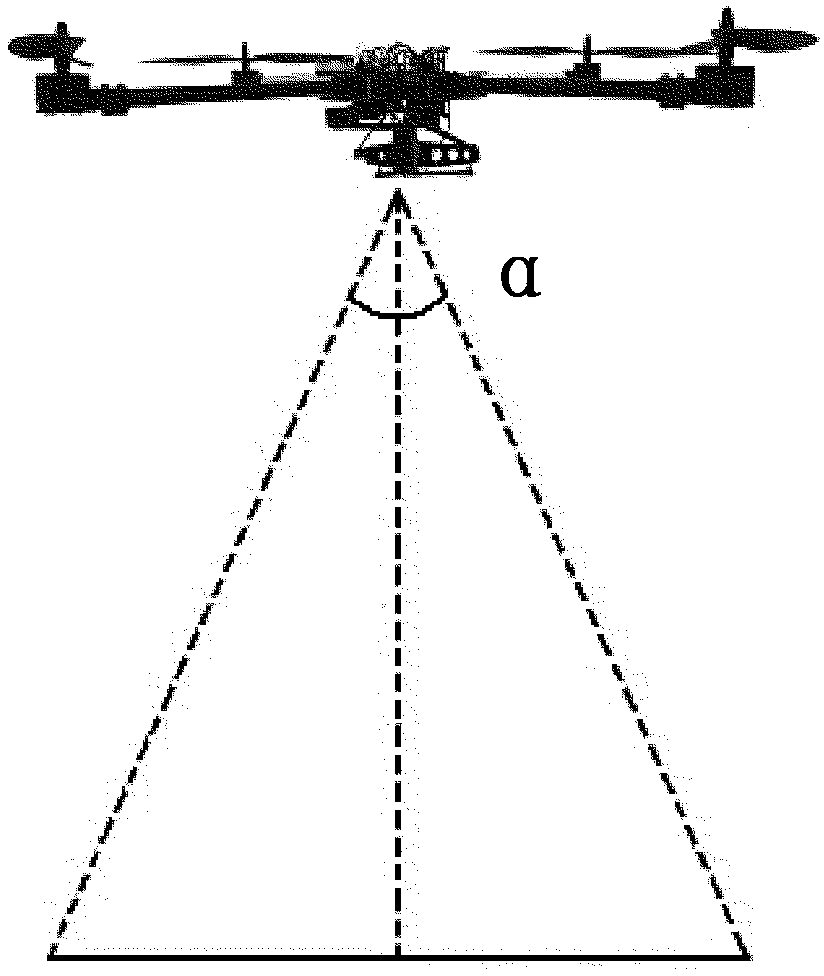

Method of evaluating the horizontal speed of a drone, in particular a drone capable of performing hovering flight under autopilot

ActiveUS20110311099A1Reduce contrastImprove noiseImage enhancementImage analysisImage resolutionUncrewed vehicle

The method operates by estimating the differential movement of the scene picked up by a vertically-oriented camera. Estimation includes periodically and continuously updating a multiresolution representation of the pyramid of images type modeling a given picked-up image of the scene at different, successively-decreasing resolutions. For each new picked-up image, an iterative algorithm of the optical flow type is applied to said representation. The method also provides responding to the data produced by the optical-flow algorithm to obtain at least one texturing parameter representative of the level of microcontrasts in the picked-up scene and obtaining an approximation of the speed, to which parameters a battery of predetermined criteria are subsequently applied. If the battery of criteria is satisfied, then the system switches from the optical-flow algorithm to an algorithm of the corner detector type.

Owner:PARROT

Gesture recognition method based on 3D-CNN and convolutional LSTM

InactiveCN107451552AShorten the timeReduce overfittingCharacter and pattern recognitionNeural architecturesPattern recognitionShort terms

The invention discloses a gesture recognition method based on 3D-CNN and convolution LSTM. The method comprises the steps that the length of a video input into 3D-CNN is normalized through a time jitter policy; the normalized video is used as input to be fed to 3D-CNN to study the short-term temporal-spatial features of a gesture; based on the short-term temporal-spatial features extracted by 3D-CNN, the long-term temporal-spatial features of the gesture are studied through a two-layer convolutional LSTM network to eliminate the influence of complex backgrounds on gesture recognition; the dimension of the extracted long-term temporal-spatial features are reduced through a spatial pyramid pooling layer (SPP layer), and at the same time the extracted multi-scale features are fed into the full-connection layer of the network; and finally, after a latter multi-modal fusion method, forecast results without the network are averaged and fused to acquire a final forecast score. According to the invention, by learning the temporal-spatial features of the gesture simultaneously, the short-term temporal-spatial features and the long-term temporal-spatial features are combined through different networks; the network is trained through a batch normalization method; and the efficiency and accuracy of gesture recognition are improved.

Owner:BEIJING UNION UNIVERSITY

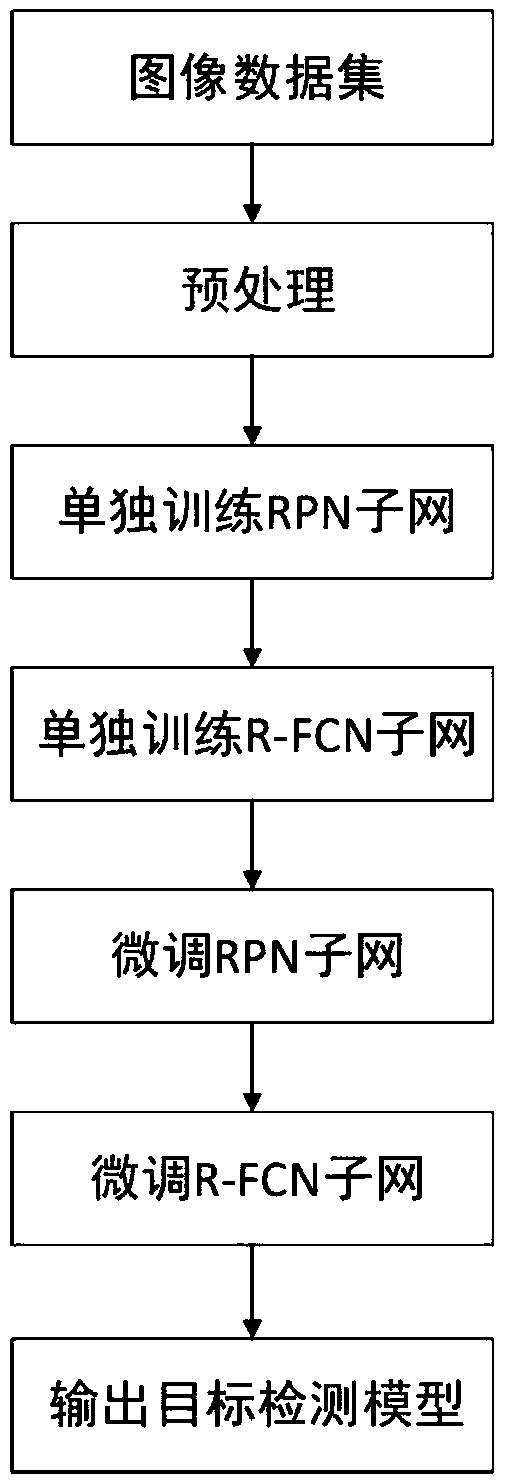

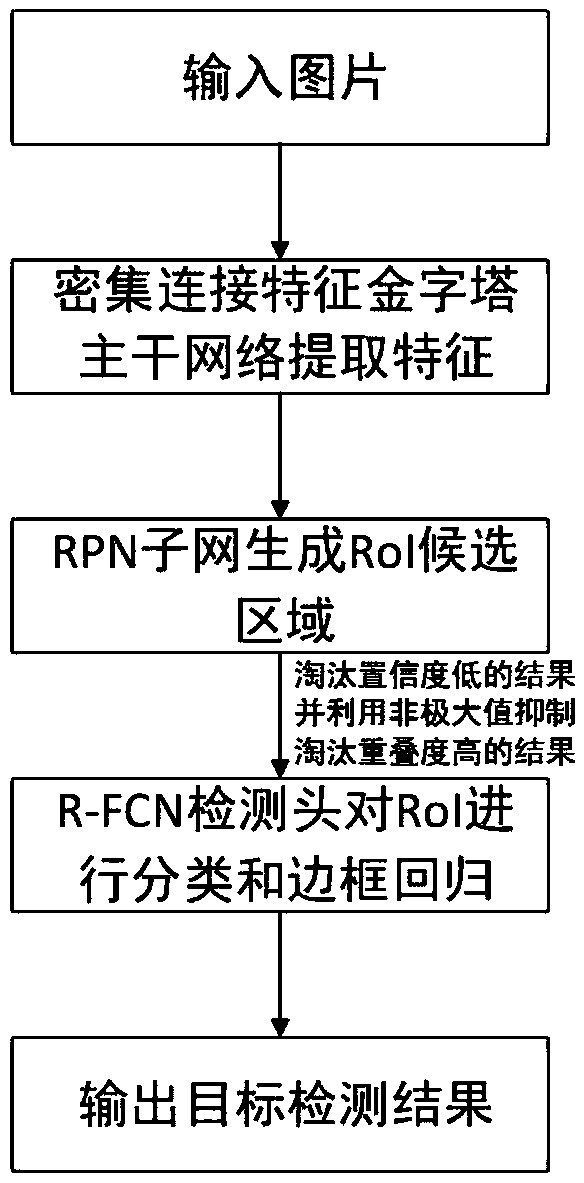

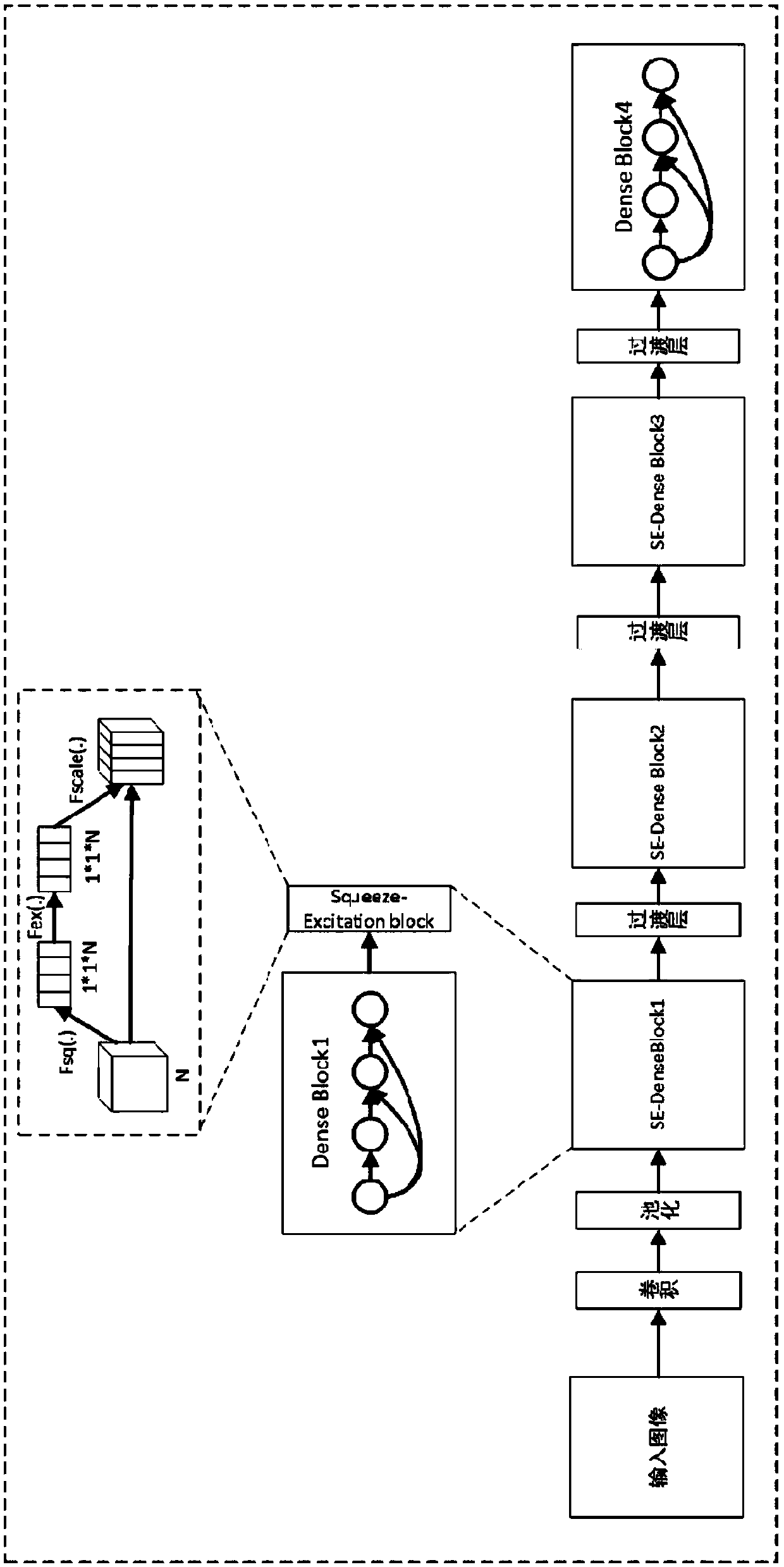

Target detection method based on a dense connection characteristic pyramid network

ActiveCN109614985AEnhanced Representational CapabilitiesImprove adaptabilityCharacter and pattern recognitionNeural architecturesData setImaging processing

The invention discloses a target detection method based on a dense connection characteristic pyramid network, and relates to the image processing and computer vision technology. The method comprises the steps of collecting an image data set labeled with a target bounding box and category information; constructing a dense connection feature pyramid network containing a Squeeze-Exciton structure capable of learning a dependency relationship between feature channels as a feature extraction backbone network; alternately training the RPN subnet and the R-FCN subnet to obtain a target detection model; and detecting a specific target in the image by using the model. The squeeze-Exciton structure and a dense connection structure are introduced into a feature extraction trunk network, characterization capability of the model is enhanced, adaptability of the model to targets of different sizes is enhanced through the feature pyramid structure, calculation sharing of the whole network model is achieved to the maximum degree through the R-FCN detection head, calculation resources are saved, and performance of the whole target detection model is improved. .

Owner:SOUTH CHINA UNIV OF TECH

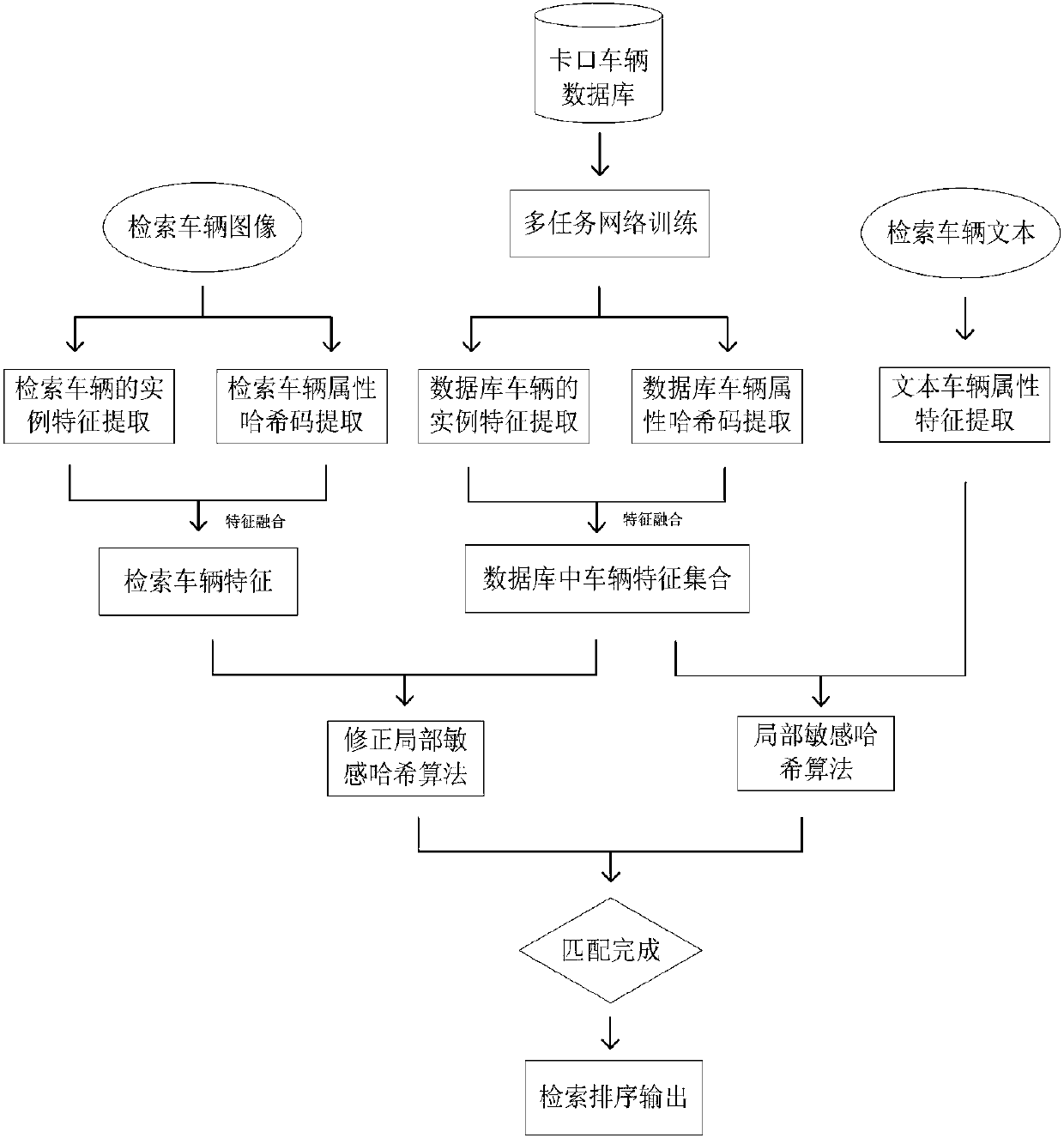

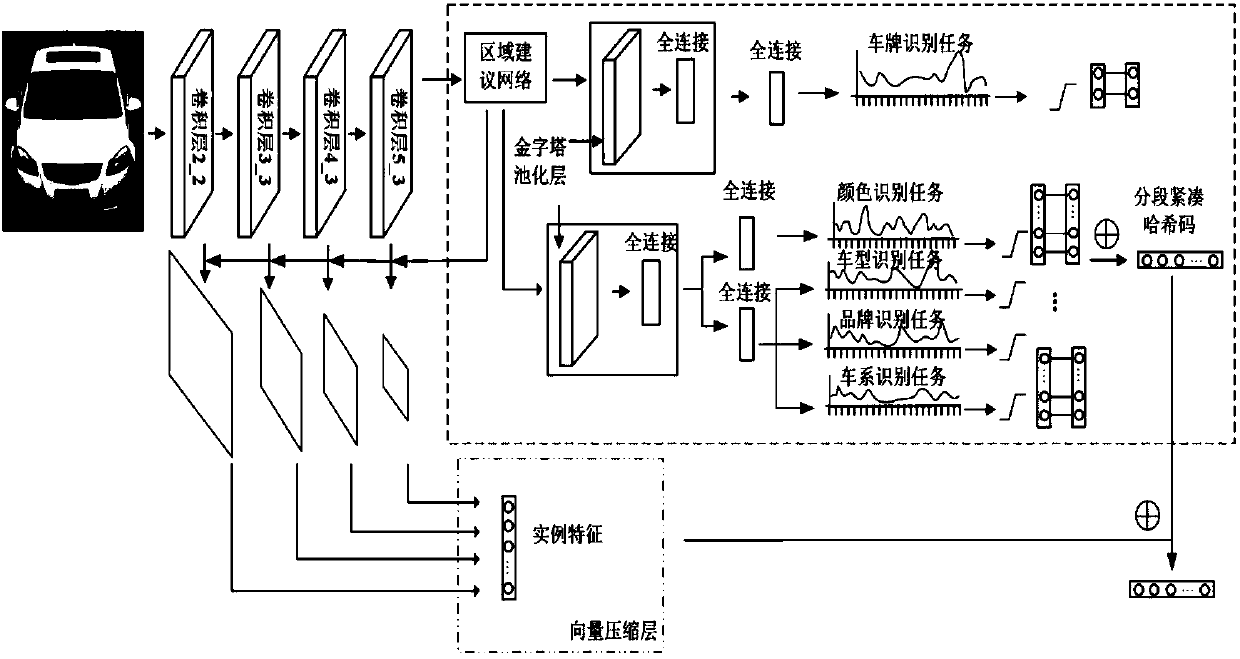

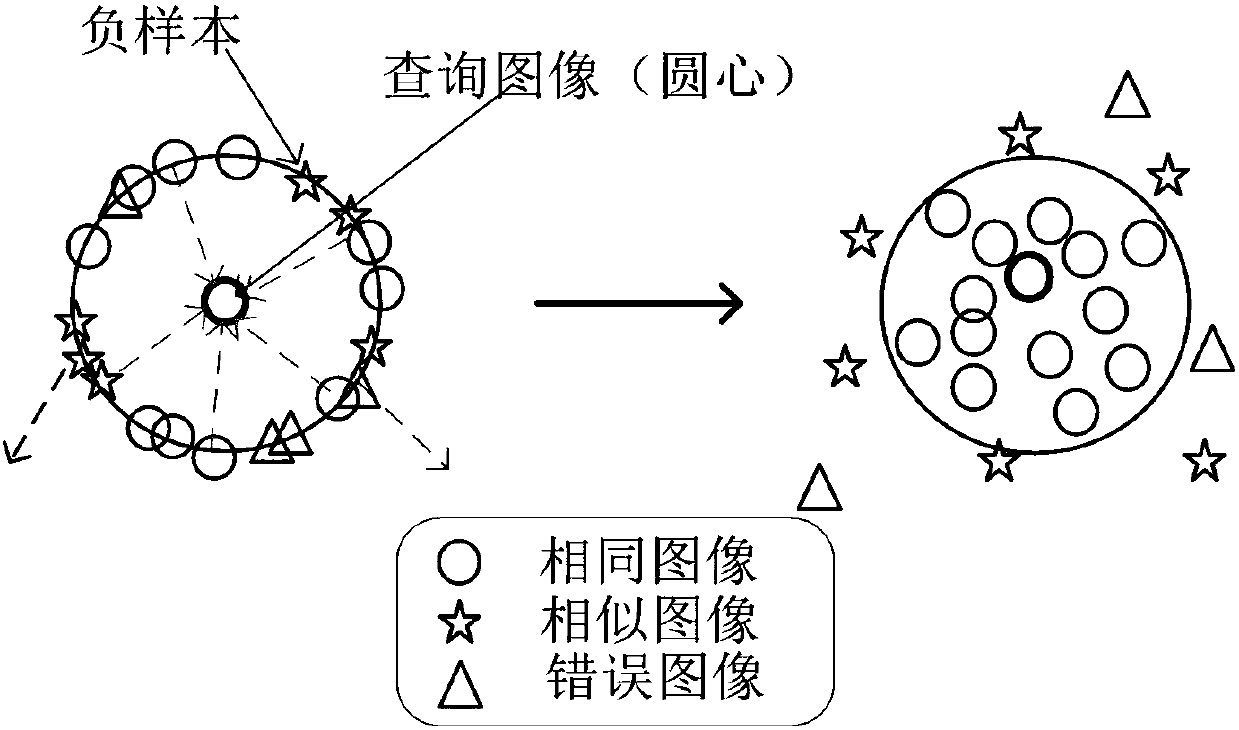

Modified local sensitive hash vehicle retrieval method based on multitask deep learning

ActiveCN108108657AAchieve retrievalRealize adaptive feature extractionCharacter and pattern recognitionNeural architecturesSorting algorithmAlgorithm

The invention discloses a modified local sensitive hash vehicle retrieval method based on multitask deep learning. A multitask end-to-end convolution neural network is used to identify a vehicle model, a vehicle system, a vehicle logo, a color and a license plate simultaneously in a subsection parallel mode. A network module for extracting vehicle image example features based on a characteristic pyramid and an algorithm by using a modified local sensitive hash sorting algorithm to sort the vehicle characteristics in a database, and a cross-modal text retrieval method when a retrieval vehicle image can not be acquired are included. The multitask end-to-end convolution neural network and the modified local sensitive hash vehicle retrieval method are provided, the automation level and the intelligence level of vehicle retrieval can be improved effectively, little storage space is used, and image retrieval requirements in a big data era are met by using a quicker retrieval speed.

Owner:ZHEJIANG UNIV OF TECH

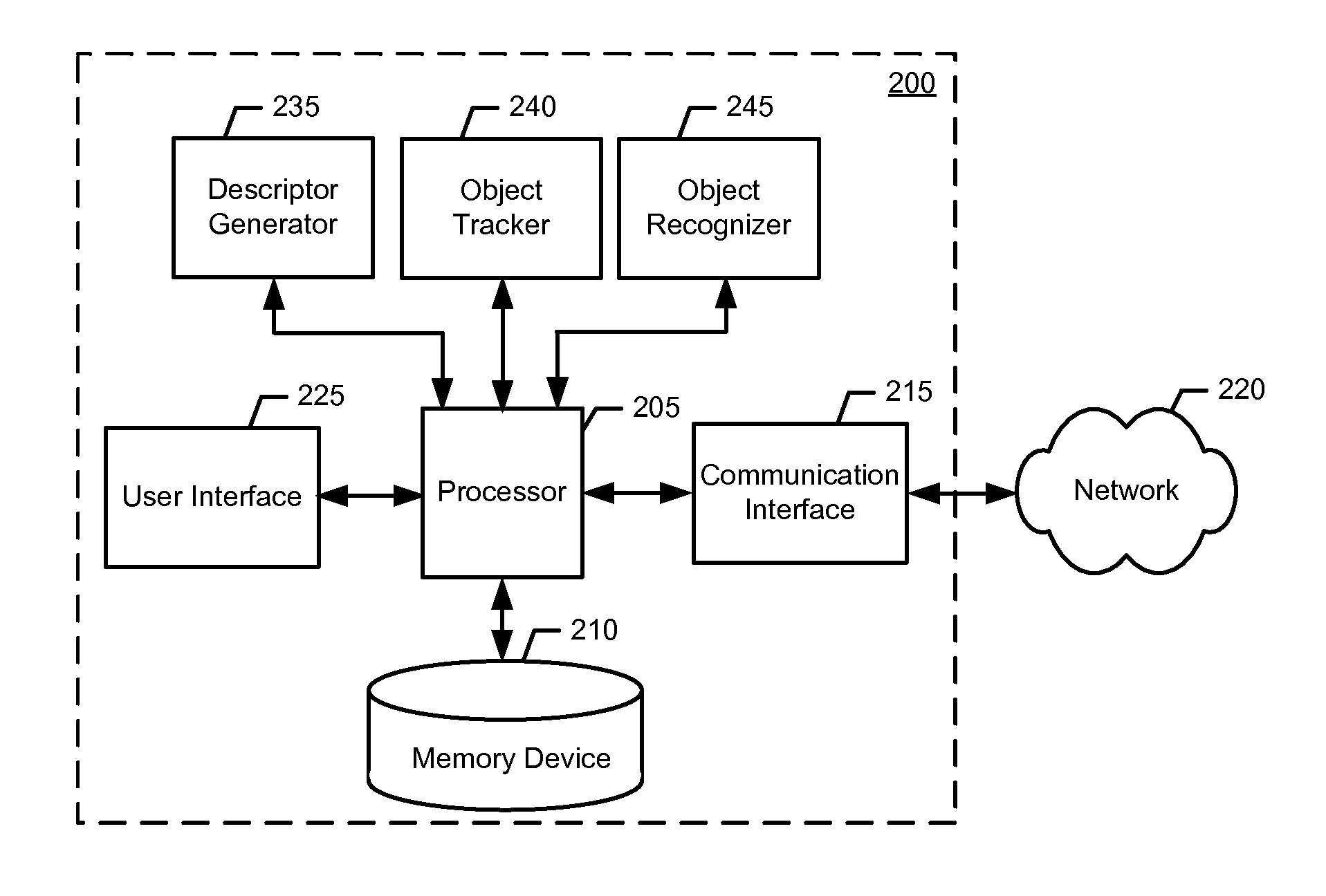

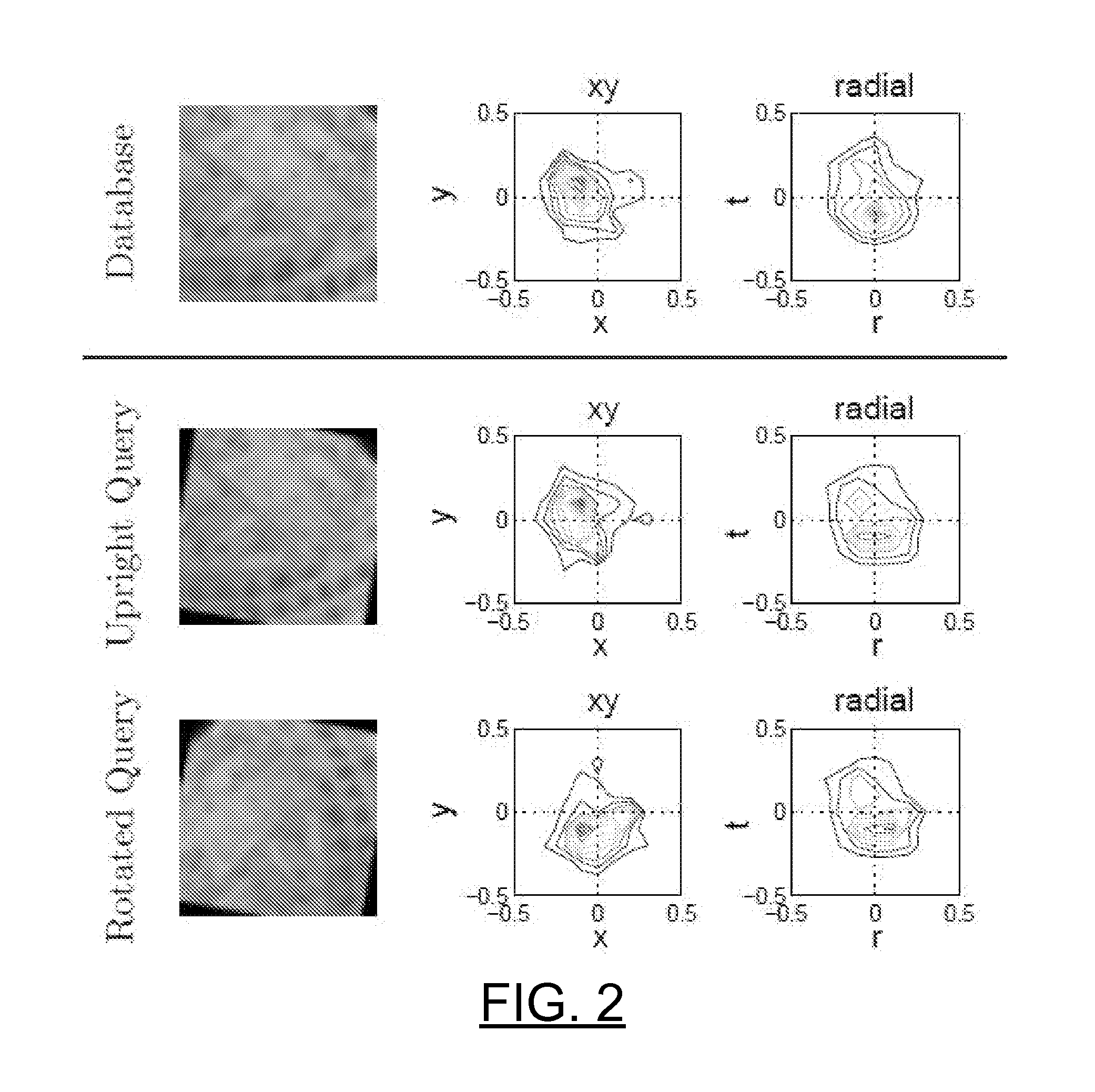

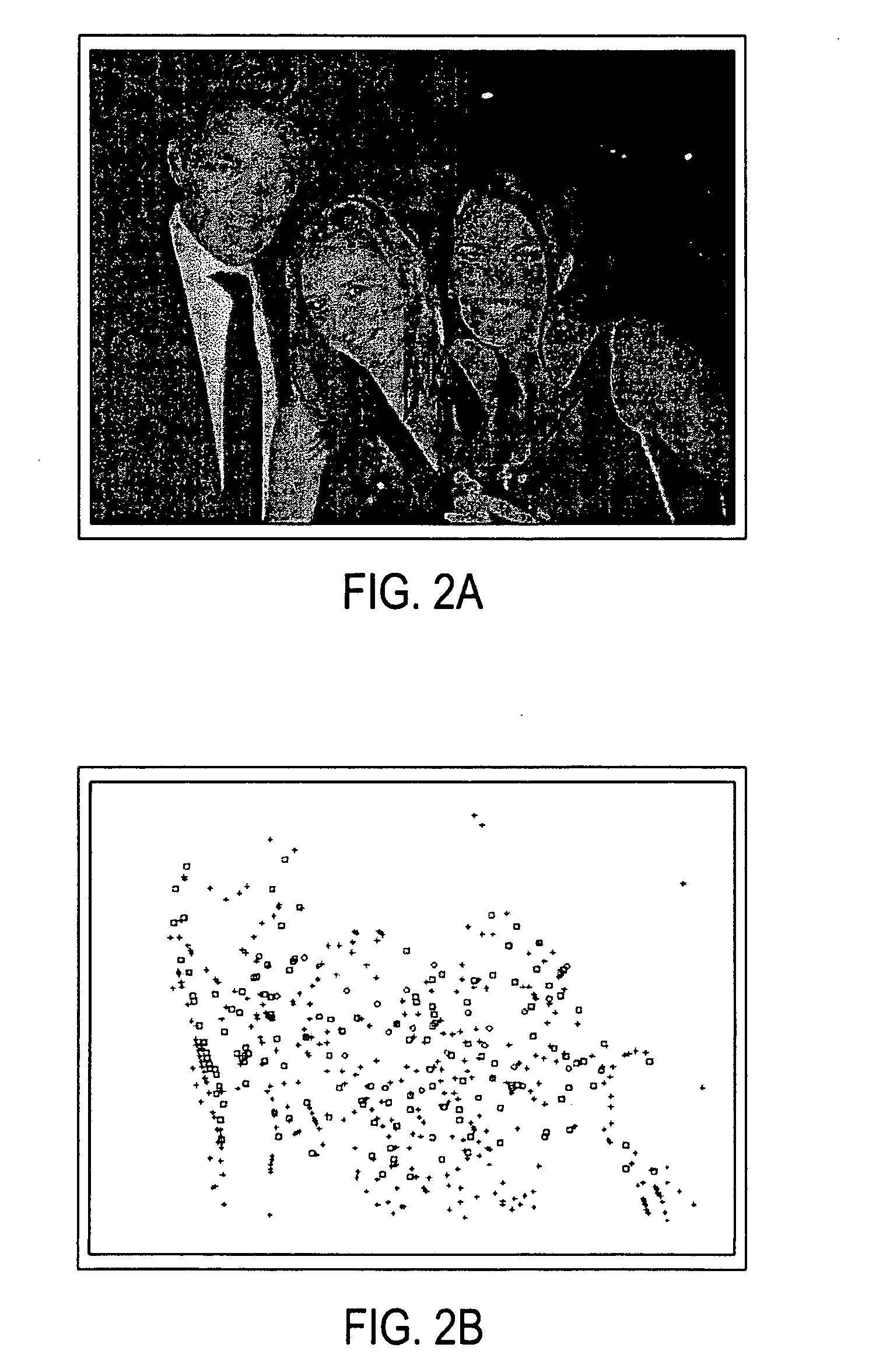

Method and apparatus for tracking and recognition with rotation invariant feature descriptors

ActiveUS20110286627A1Sufficiently robustCheap computerImage enhancementImage analysisPyramidInvariant feature

Various methods for tracking and recognition with rotation invariant feature descriptors are provided. One example method includes generating an image pyramid of an image frame, detecting a plurality of interest points within the image pyramid, and extracting feature descriptors for each respective interest point. According to some example embodiments, the feature descriptors are rotation invariant. Further, the example method may also include tracking movement by matching the feature descriptors to feature descriptors of a previous frame and performing recognition of an object within the image frame based on the feature descriptors. Related example methods and example apparatuses are also provided.

Owner:NOKIA CORP +1

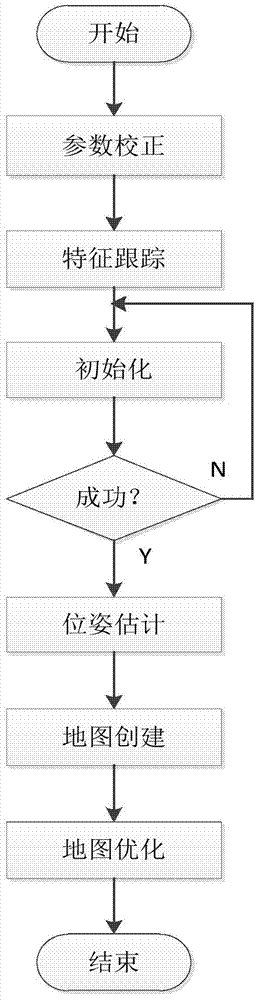

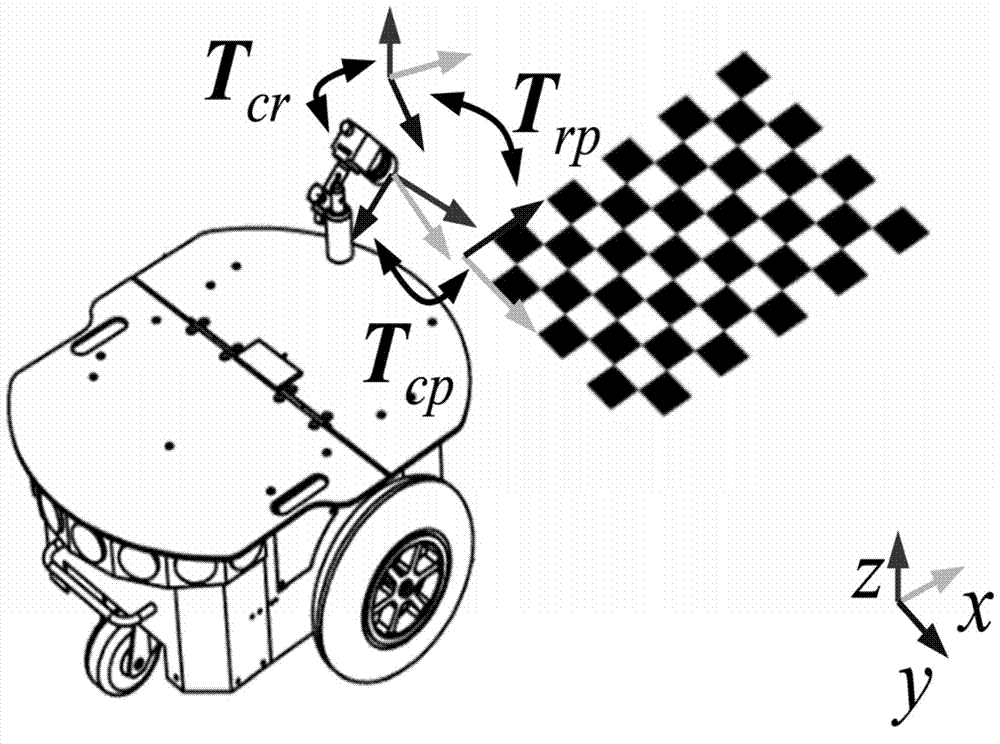

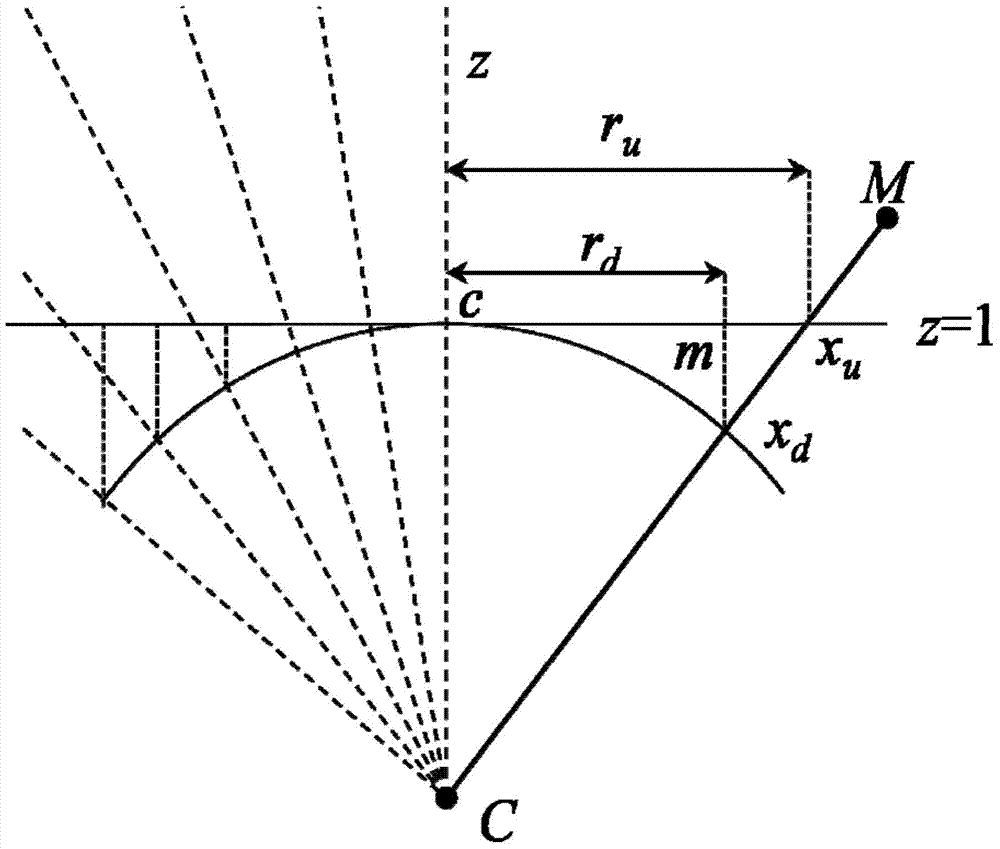

PTAM improvement method based on ground characteristics of intelligent robot

InactiveCN104732518ALift strict restrictionsEasy to initializeImage analysisNavigation instrumentsLine searchKey frame

The invention discloses a PTAM improvement method based on ground characteristics of an intelligent robot. The PTAM improvement method based on ground characteristics of the intelligent robot comprises the steps that firstly, parameter correction is completed, wherein parameter correction includes parameter definition and camera correction; secondly, current environment texture information is obtained by means of a camera, a four-layer Gausses image pyramid is constructed, the characteristic information in a current image is extracted by means of the FAST corner detection algorithm, data relevance between corner characteristics is established, and then a pose estimation model is obtained; two key frames are obtained so as to erect the camera on the mobile robot at the initial map drawing stage; the mobile robot begins to move in the initializing process, corner information in the current scene is captured through the camera and association is established at the same time; after a three-dimensional sparse map is initialized, the key frames are updated, the sub-pixel precision mapping relation between characteristic points is established by means of an extreme line searching and block matching method, and accurate re-positioning of the camera is achieved based on the pose estimation model; finally, matched points are projected in the space, so that a three-dimensional map for the current overall environment is established.

Owner:BEIJING UNIV OF TECH

Driving scene target detection method based on deep learning and multi-layer feature fusion

InactiveCN108875595AHigh quality feature extractionFeature extraction quality improvementCharacter and pattern recognitionNeural architecturesData setImaging processing

The invention relates to the technical field of traffic image processing, and discloses a driving scene target detection method based on deep learning and multi-layer feature fusion. The method comprises the following steps of 1) collecting a video image to serve as a training data set, and performing preprocessing; 2) building a training network; 3) initializing the training network to obtain a pre-training model; 4) performing training on the training data set by using the pre-training model obtained in the step 3), thereby obtaining a training model; 5) collecting a front image by using a vehicle-mounted camera, and inputting the image into the training model obtained in the step 4), thereby obtaining a detection result. The multi-layer feature fusion method based on a feature pyramid is adopted to enhance semantic information of a low-layer feature graph, so that the feature extraction quality of the network is improved, and higher detection precision is obtained.

Owner:CHONGQING UNIV

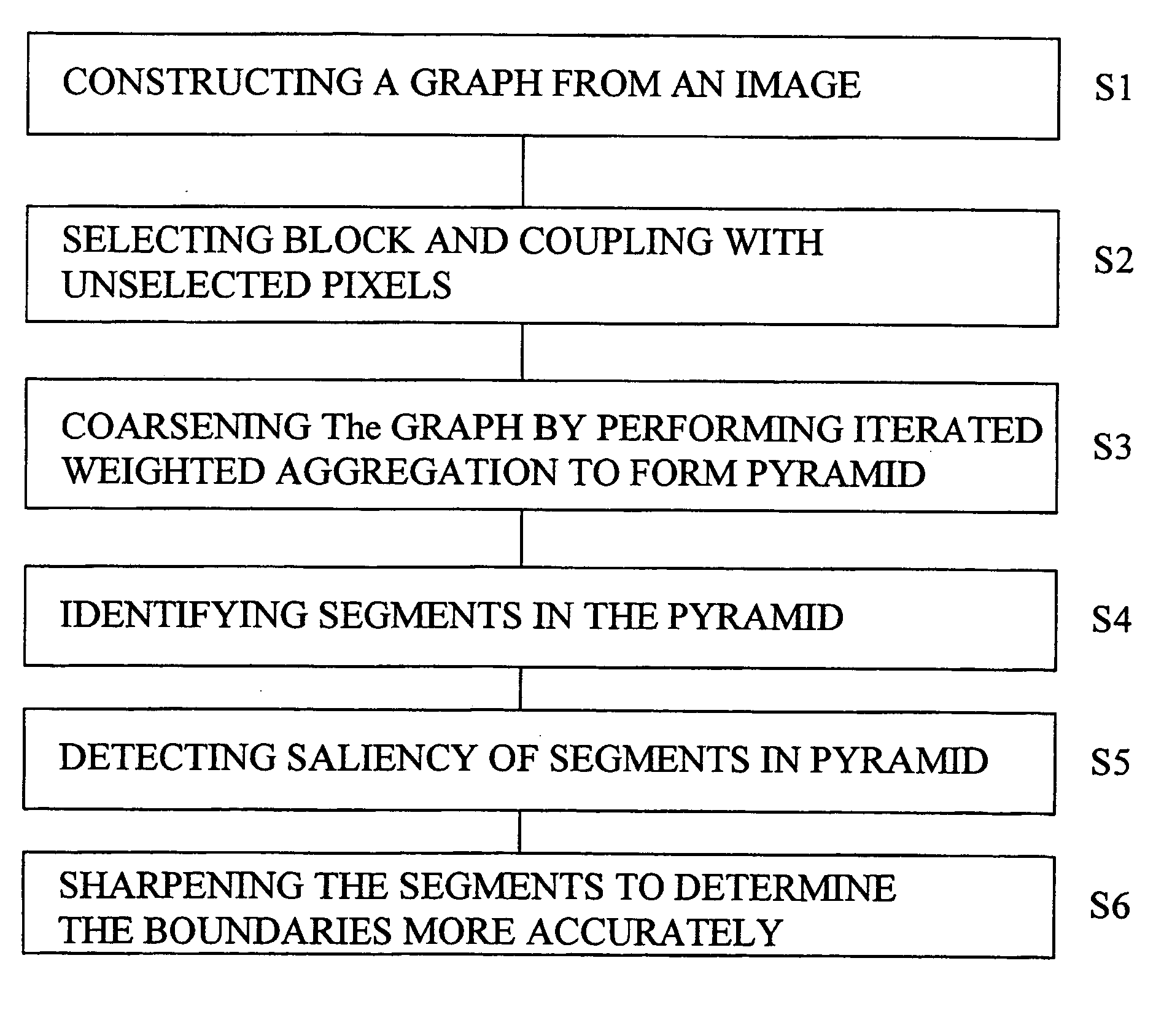

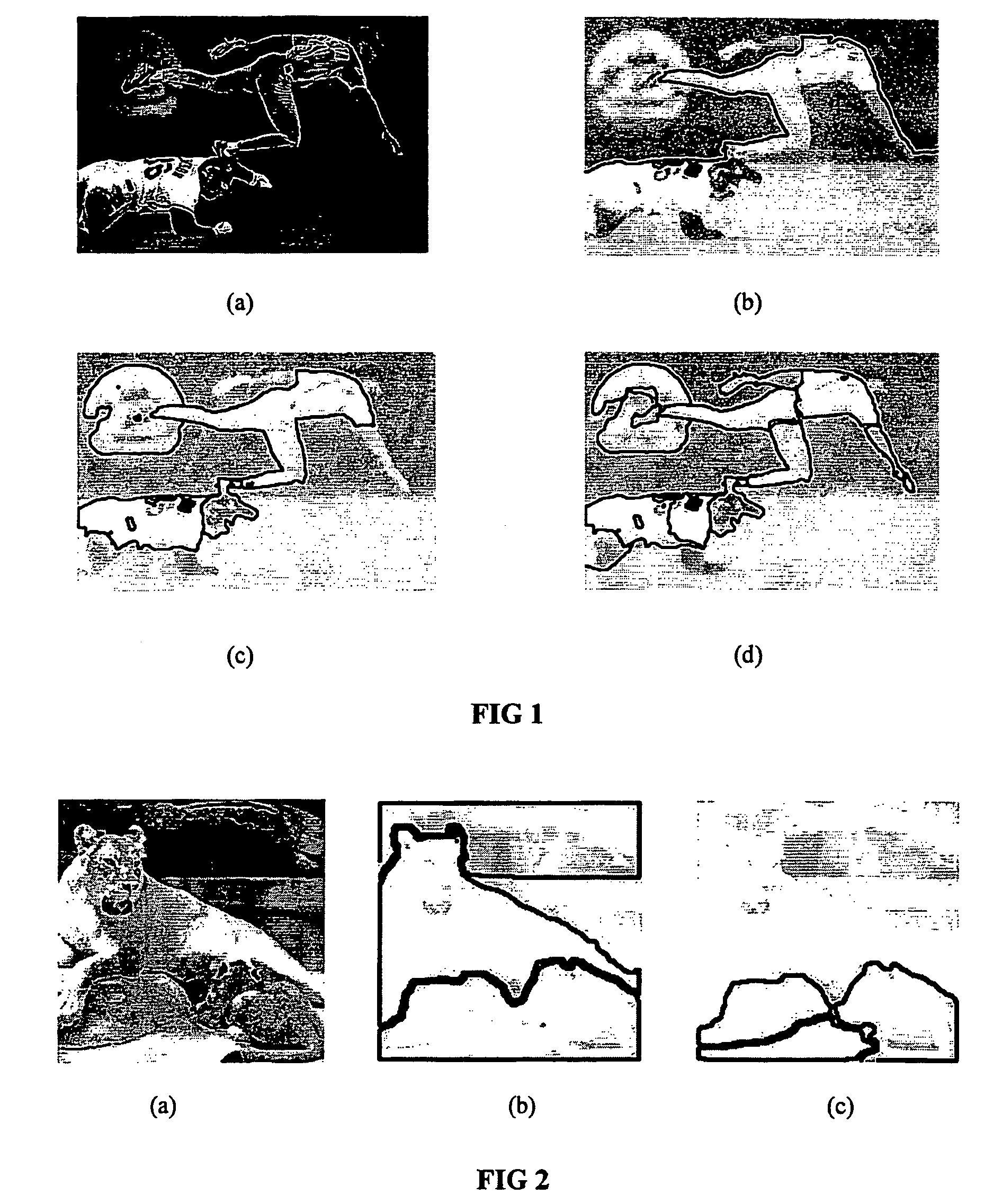

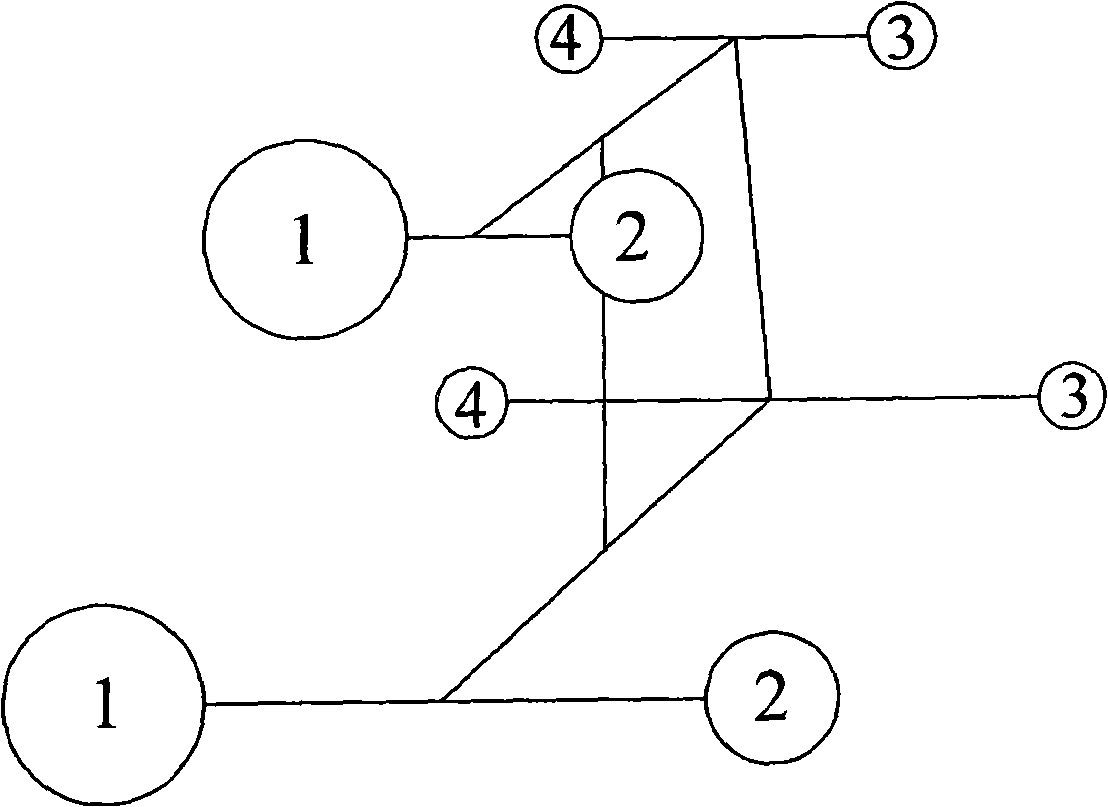

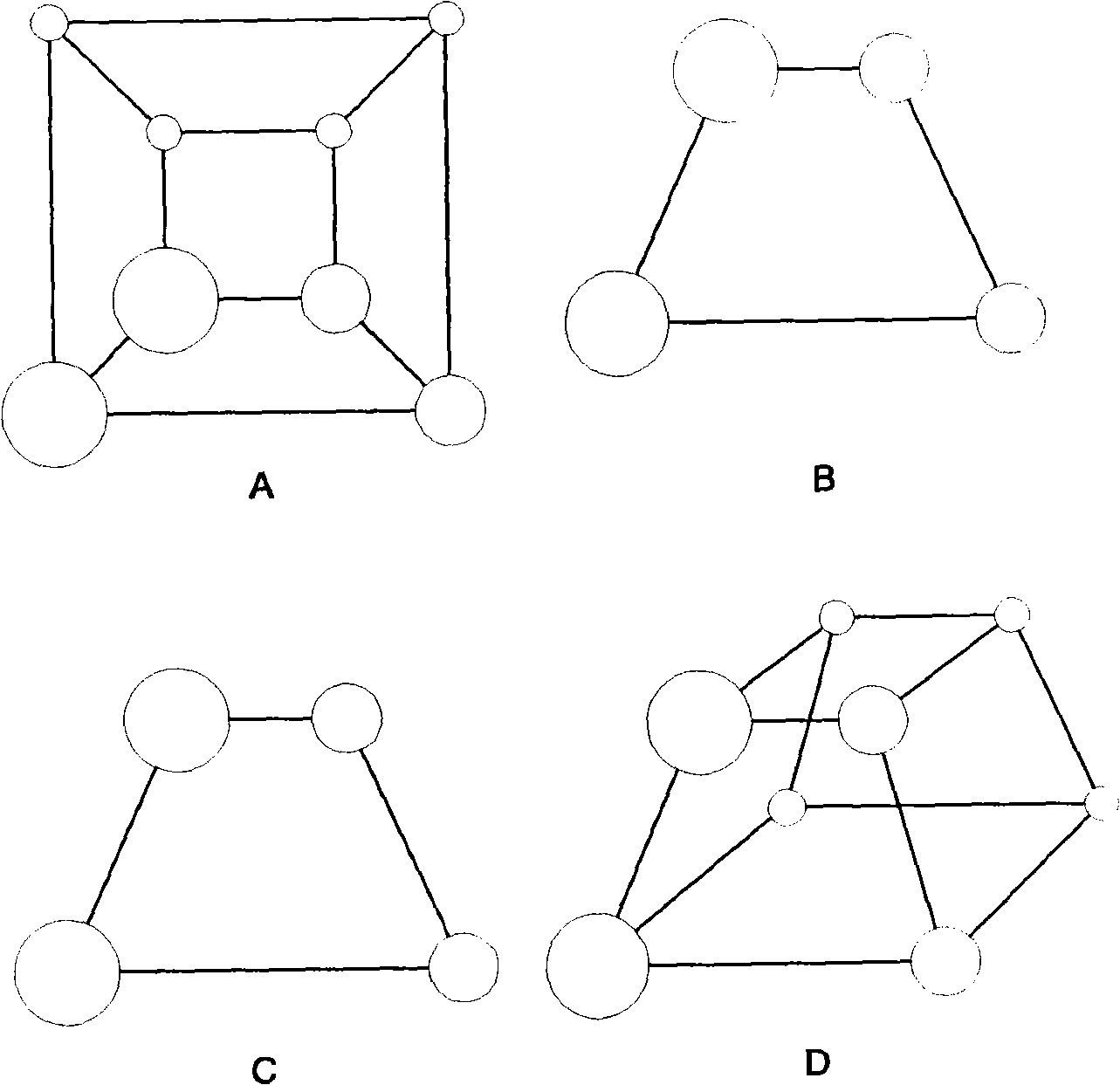

Method and apparatus for data clustering including segmentation and boundary detection

InactiveUS20040013305A1Efficient constructionQuick extractionImage enhancementImage analysisGraphicsPattern recognition

A method and apparatus for clustering data, particularly regarding an image, that constructs a graph in which each node of the graph represents a pixel of the image, and every two nodes represent neighboring pixels associated by a coupling factor. Block pixels are selected with unselected neighboring pixels coupled with a selected block to form aggregates. The graph is coarsened recursively by performing iterated weighted aggregation to form larger blocks (aggregates) and obtain hierarchical decomposition of the image while forming a pyramid structure over the image. Saliency of segments is detected in the pyramid, and by computing recursively, a degree of attachment of every pixel to each of the blocks in the pyramid. The pyramid is scanned from coarse to fine starting at the level a segment is detected, to lower levels and rebuilding the pyramid before continuing to the next higher level. Relaxation sweeps sharpen the boundaries of a segment.

Owner:YEDA RES & DEV CO LTD

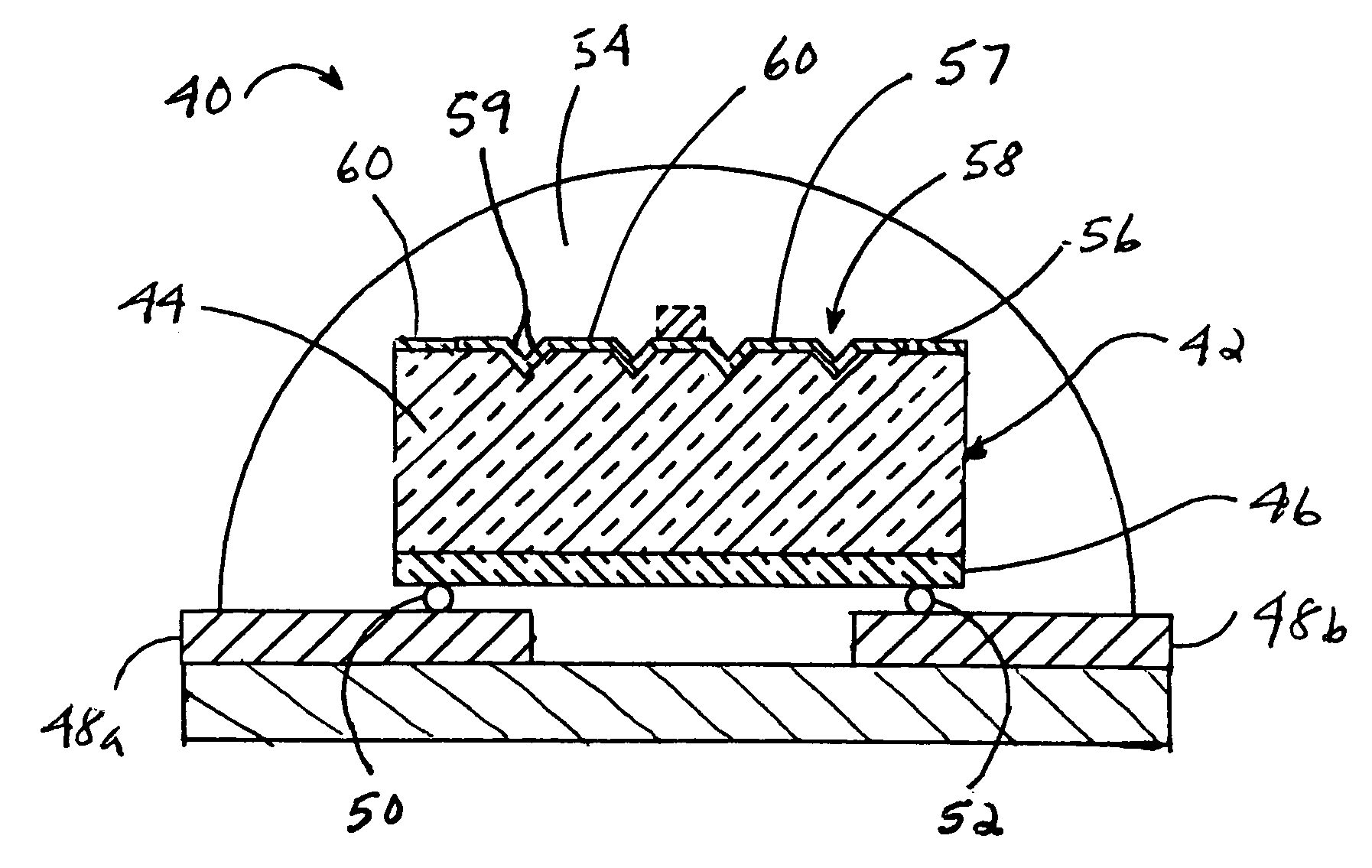

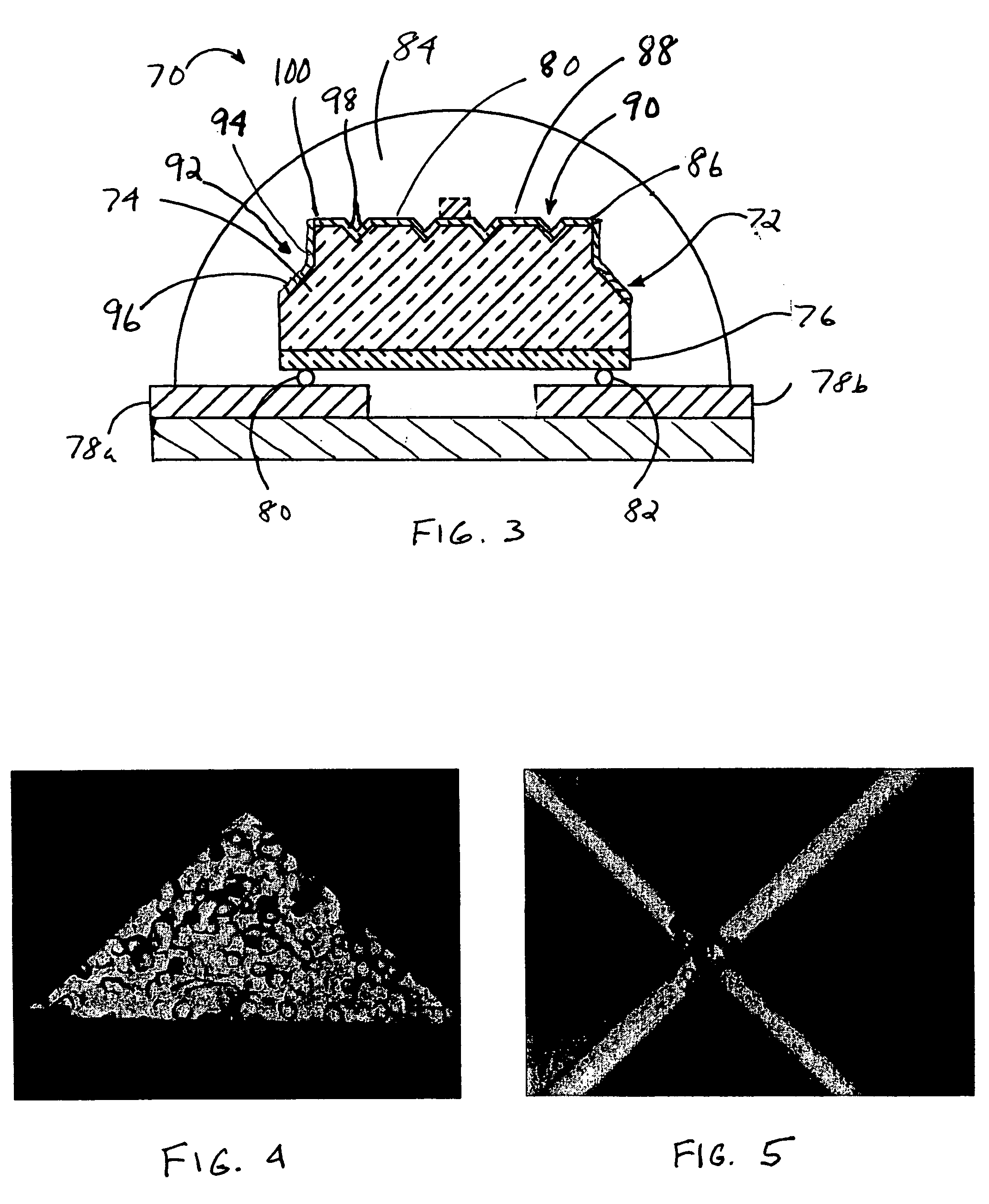

LED with substrate modifications for enhanced light extraction and method of making same

ActiveUS20060001046A1Eliminate damageSolid-state devicesSemiconductor/solid-state device manufacturingSubstrate modificationOptoelectronics

The surface morphology of an LED light emitting surface is changed by applying a reactive ion etch (RIE) process to the light emitting surface. Etched features, such as truncated pyramids, may be formed on the emitting surface, prior to the RIE process, by cutting into the surface using a saw blade or a masked etching technique. Sidewall cuts may also be made in the emitting surface prior to the RIE process. A light absorbing damaged layer of material associated with saw cutting is removed by the RIE process. The surface morphology created by the RIE process may be emulated using different, various combinations of non-RIE processes such as grit sanding and deposition of a roughened layer of material or particles followed by dry etching.

Owner:CREELED INC

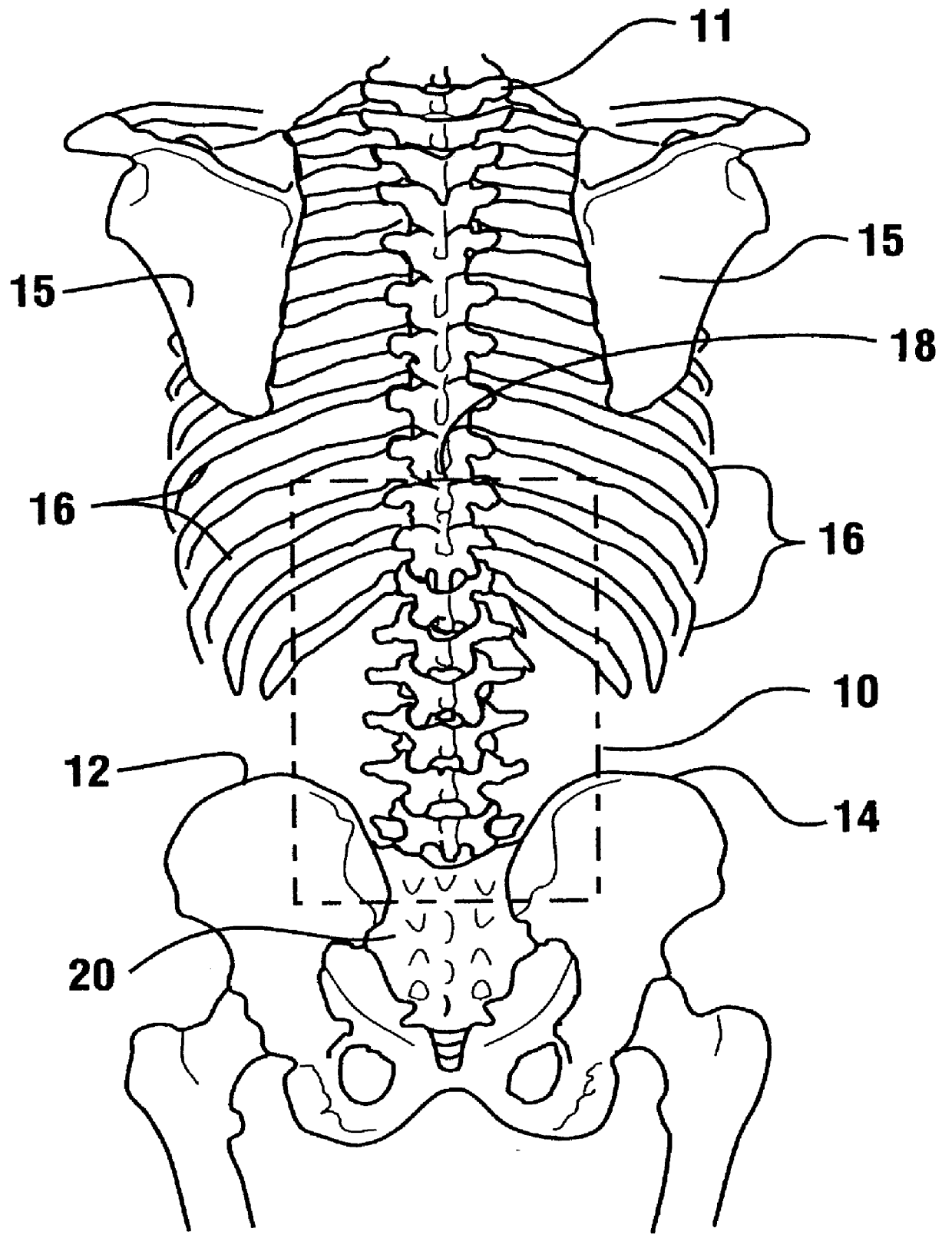

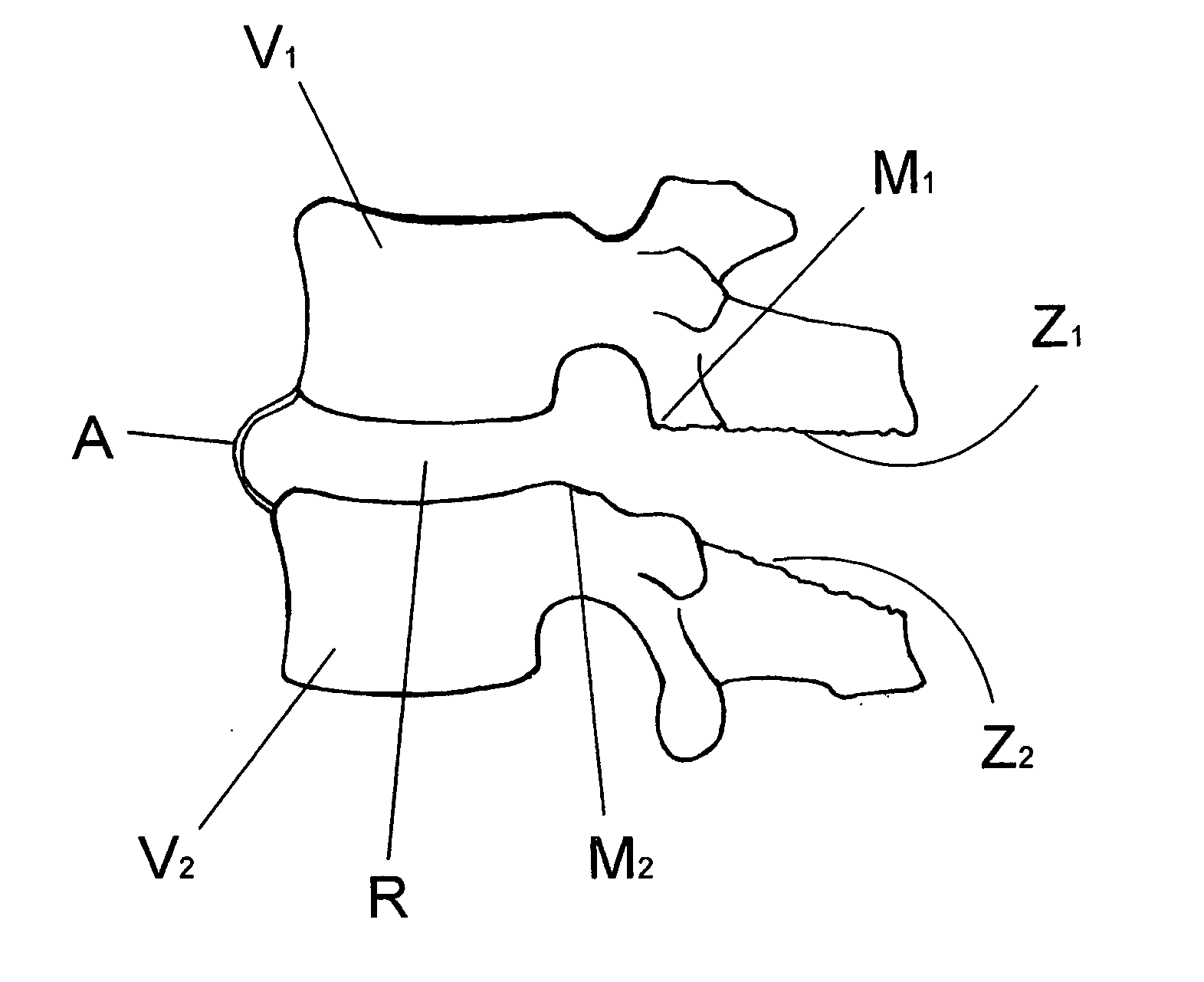

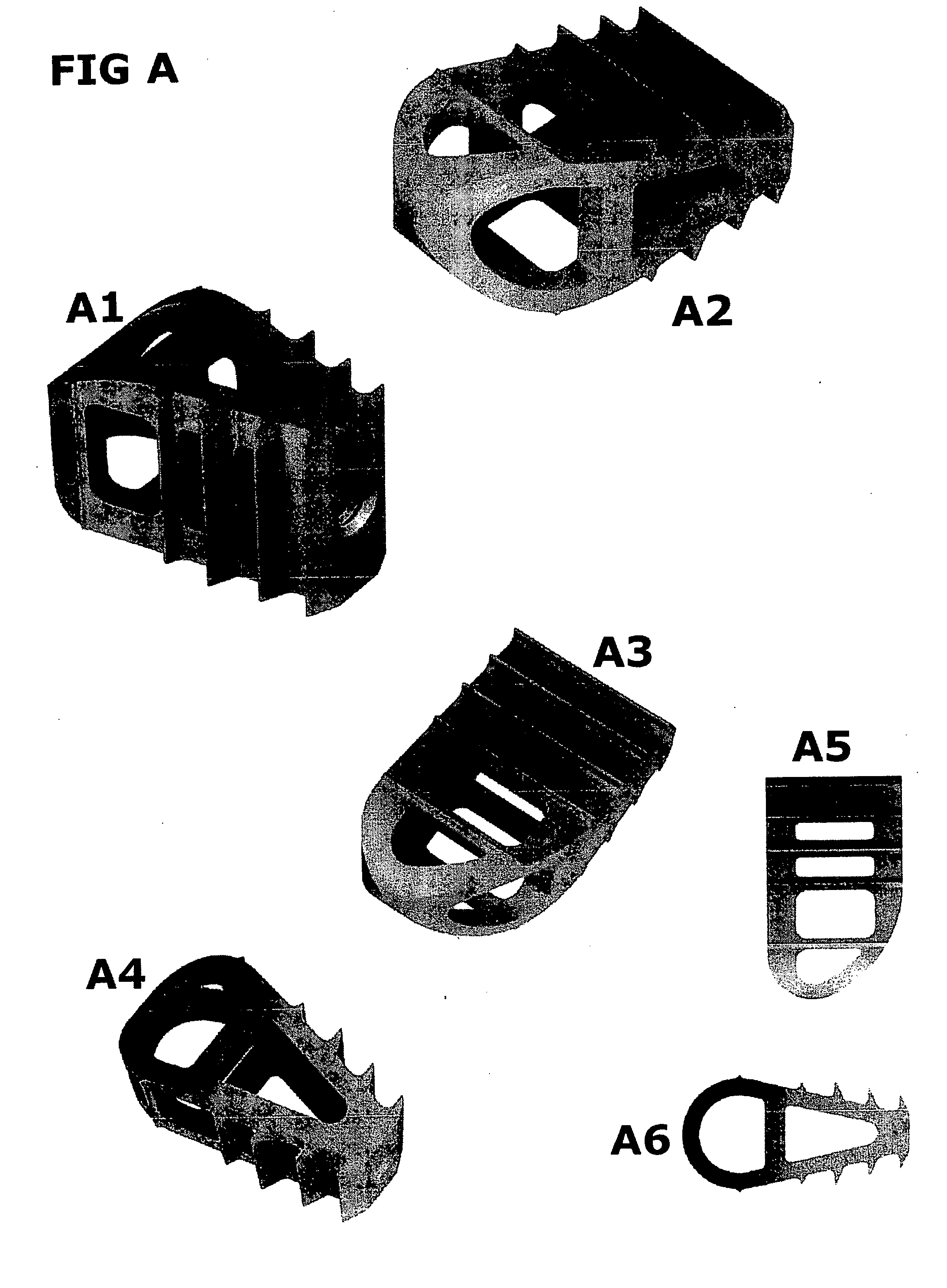

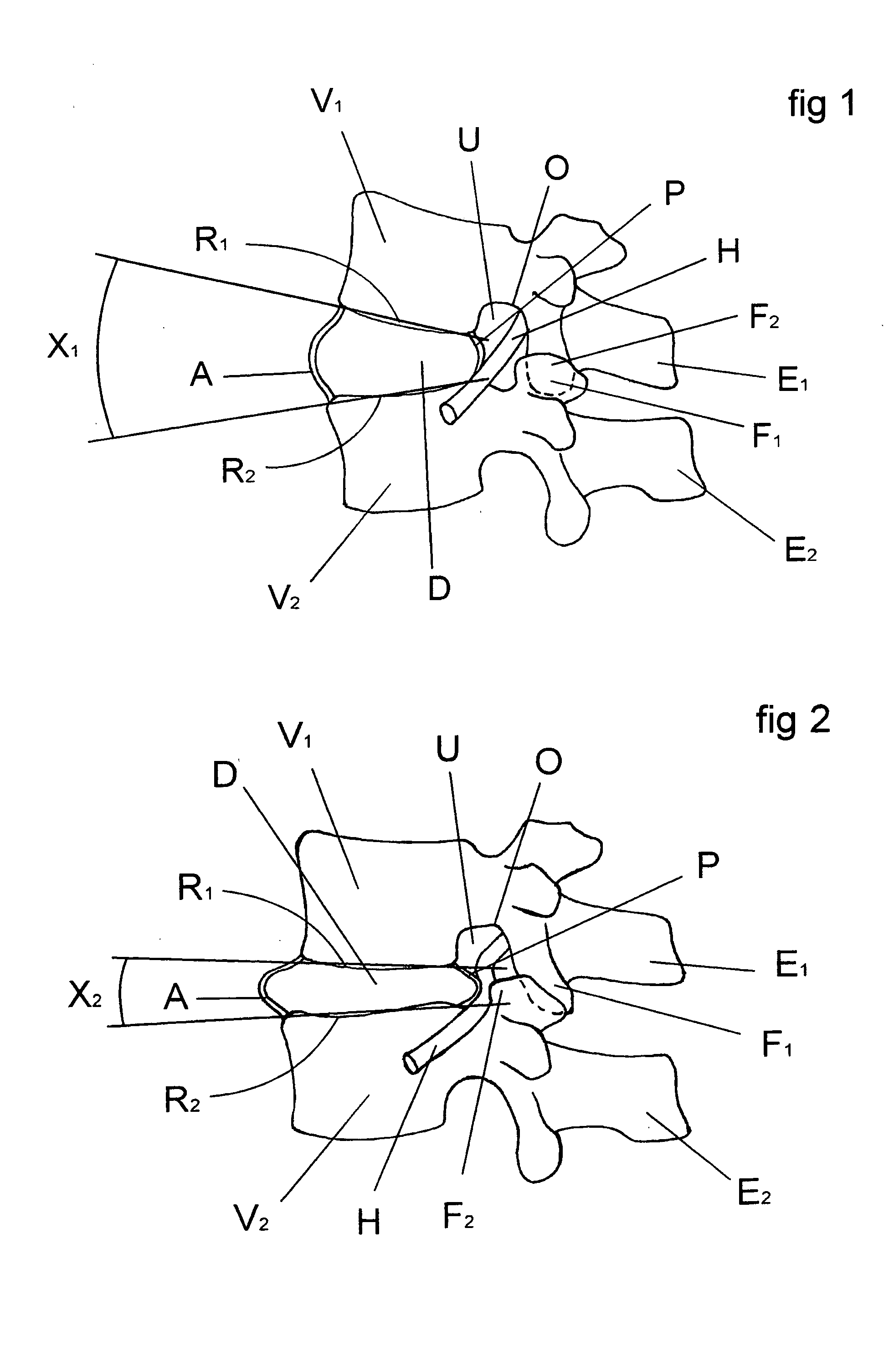

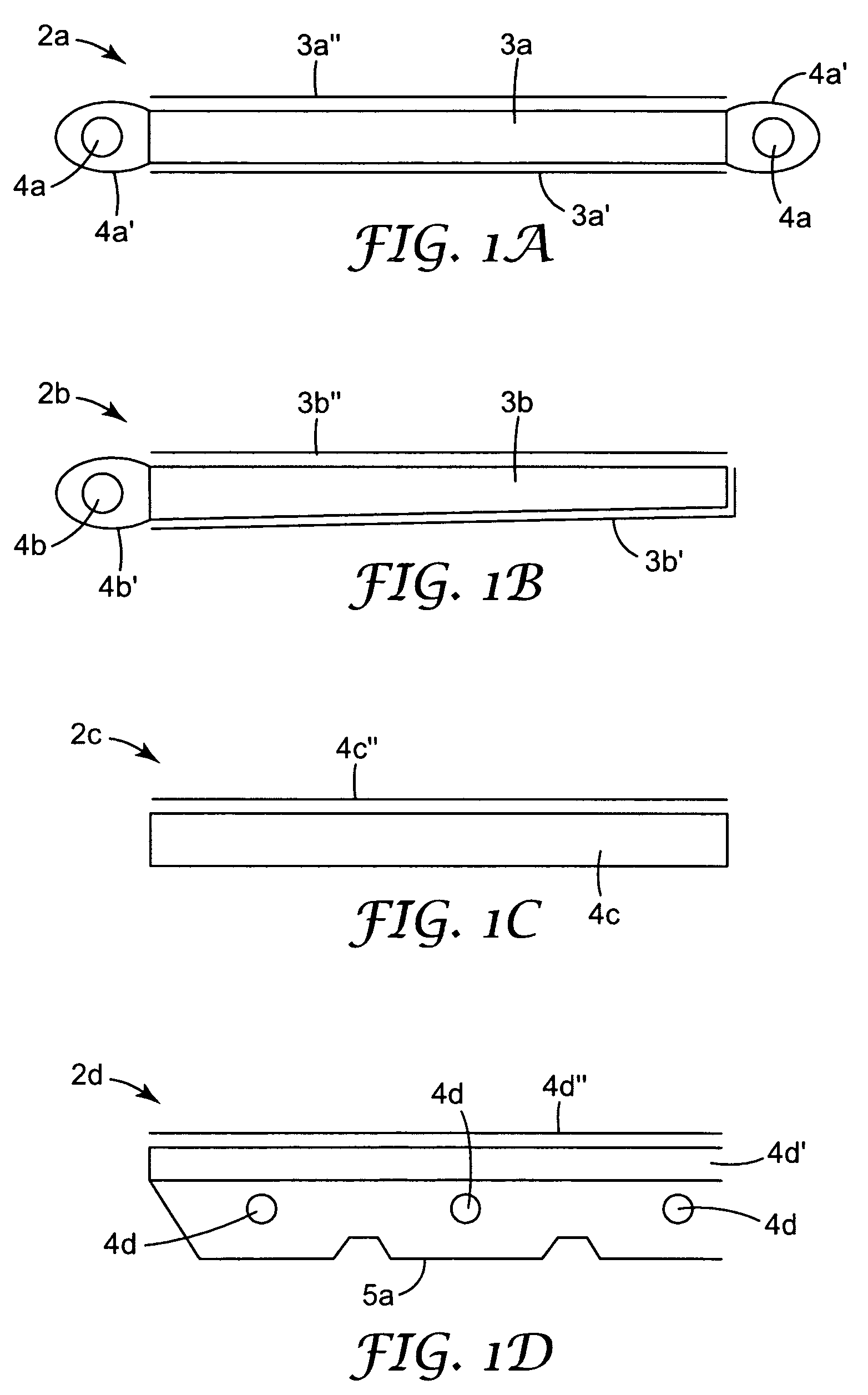

Method for correcting a deformity in the spinal column and its corresponding implant

InactiveUS20050010292A1Correction of spinal deformityCausing an increase in the spine curvatureInternal osteosythesisBone implantSpinal columnBase of the sacrum

This invention relates to an implant to be inserted in the disc space between two adjacent vertebrae for the correction of the vertebral spine curvature. The configuration (lateral view) of the invention is basically a wedge or acute-angled isosceles trapeze, wherein the area opposite the shorter base or opposite to the vertex is a rounded pyramid-like surface, and the upper and lower surfaces of the trapeze include fixation protuberances to the vertebral plates of the adjacent vertebrae.

Owner:CARRASCO MAURICIO RODOLFO

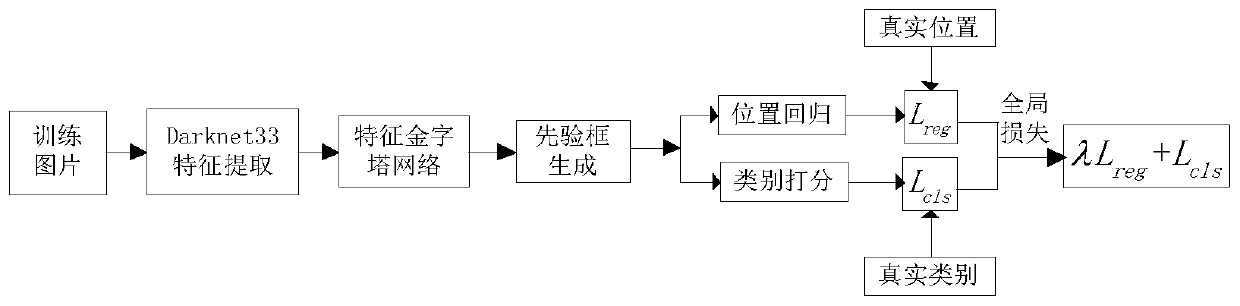

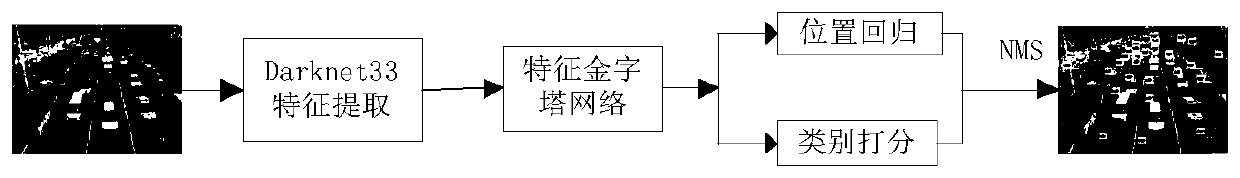

A pedestrian and vehicle detection method and system based on improved YOLOv3

ActiveCN109815886ADetection matchHigh speedCharacter and pattern recognitionNeural architecturesMulti-label classificationVehicle detection

The invention discloses a pedestrian and vehicle detection method and system based on improved YOLOv3. According to the method, an improved YOLOv3 network based on Darknet-33 is adopted as a main network to extract features; the cross-layer fusion and reuse of multi-scale features in the backbone network are carried out by adopting a transmittable feature map scale reduction method; and then a feature pyramid network is constructed by adopting a scale amplification method. In the training stage, a K-means clustering method is used for clustering the training set, and the cross-to-parallel ratio of a prediction frame to a real frame is used as a similarity standard to select a priori frame; and then the BBox regression and the multi-label classification are performed according to the loss function. And in the detection stage, for all the detection frames, a non-maximum suppression method is adopted to remove redundant detection frames according to confidence scores and IOU values, and an optimal target object is predicted. According to the method, a feature extraction network Darknet-33 of feature map scale reduction fusion is adopted, a feature pyramid is constructed through feature map scale amplification migration fusion, and a priori frame is selected through clustering, so that the speed and precision of the pedestrian and vehicle detection can be improved.

Owner:NANJING UNIV OF POSTS & TELECOMM

System and method for finding stable keypoints in a picture image using localized scale space properties

A method and system is provided for finding stable keypoints in a picture image using localized scale properties. An integral image of an input image is calculated. Then a scale space pyramid layer representation of the input image is constructed at mulitple scales, wherein at each scale, a set of specific filters are applied to the input image to produce an approximation of at least a portion of the input image. Outputs from filters are combined together to form a single function of scale and space. Stable keypoint locations are identified in each scale at pixel locations at which the single function attains a local peak value. The stable keypoint locations which have been identified are then stored in a memory storage.

Owner:XEROX CORP

Multiple video cameras synchronous quick calibration method in three-dimensional scanning system

A synchronous quick calibration method of a plurality of video cameras in a three-dimensional scanning system, which includes: (1) setting a regular truncated rectangular pyramid calibration object, setting eight calibration balls at the vertexes of the truncated rectangular pyramid, and respectively setting two reference calibration balls at the upper and lower planes; (2) using the video cameras to pick-up the calibration object, adopting the two-threshold segmentation method to respectively obtain the corresponding circles of the upper and lower planes, extracting centers of the circles, obtaining three groups of corresponding relationships between circle center points in the image and the centres of calibration ball in the space, solving the homography matrix to obtain the internal parameter matrix and external parameter matrix and obtaining the distortion coefficient, taking the solved video camera parameter as the initial values, and then using a non-linear optimization method to obtain the optimum solution of a single video camera parameter; (3) obtaining in sequence the external parameter matrix between a plurality of video cameras and a certain video camera in the space, using the polar curve geometric constraint relationship of the binocular stereo vision to establish an optimizing object function, and then adopting a non-linear optimization method to solve to get the optimum solution of the external parameter matrix between two video cameras.

Owner:NANTONG TONGYANG MECHANICAL & ELECTRICAL MFR +1

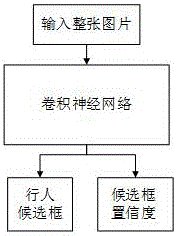

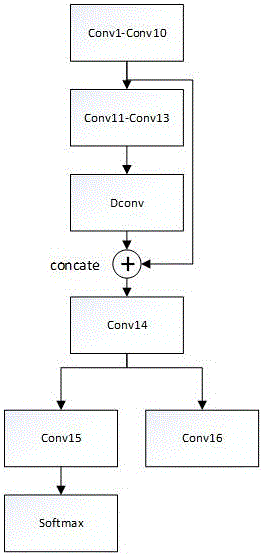

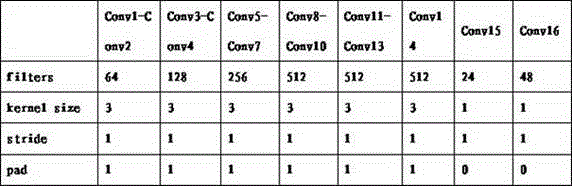

Pedestrian detection method based on end-to-end convolutional neural network

InactiveCN106022237AEasy to train and testImprove recallCharacter and pattern recognitionAlgorithmConfidence measures

The invention discloses a pedestrian detection method based on an end-to-end convolutional neural network in order to solve the problem that the existing pedestrian detection algorithm has the disadvantages of low detection precision, complex algorithm and difficult multi-module fusion. A novel end-to-end convolutional neural network is adopted, a training sample set with marks is constructed, and end-to-end training is performed to get a convolutional neural network model capable of predicting a pedestrian candidate box and the confidence of the corresponding box. During test, a test picture is input into a trained model, and a corresponding pedestrian detection box and the confidence thereof can be obtained. Finally, non-maximum suppression and threshold screening are performed to get an optimal pedestrian area. The invention has two advantages compared with previous inventions. First, through end-to-end training and testing, the whole model is very easy to train and test. Second, pedestrian scale and proportion problems are solved by constructing a candidate box regression network, the pyramid technology adopted in previous inventions is not needed, and a lot of computing resources are saved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

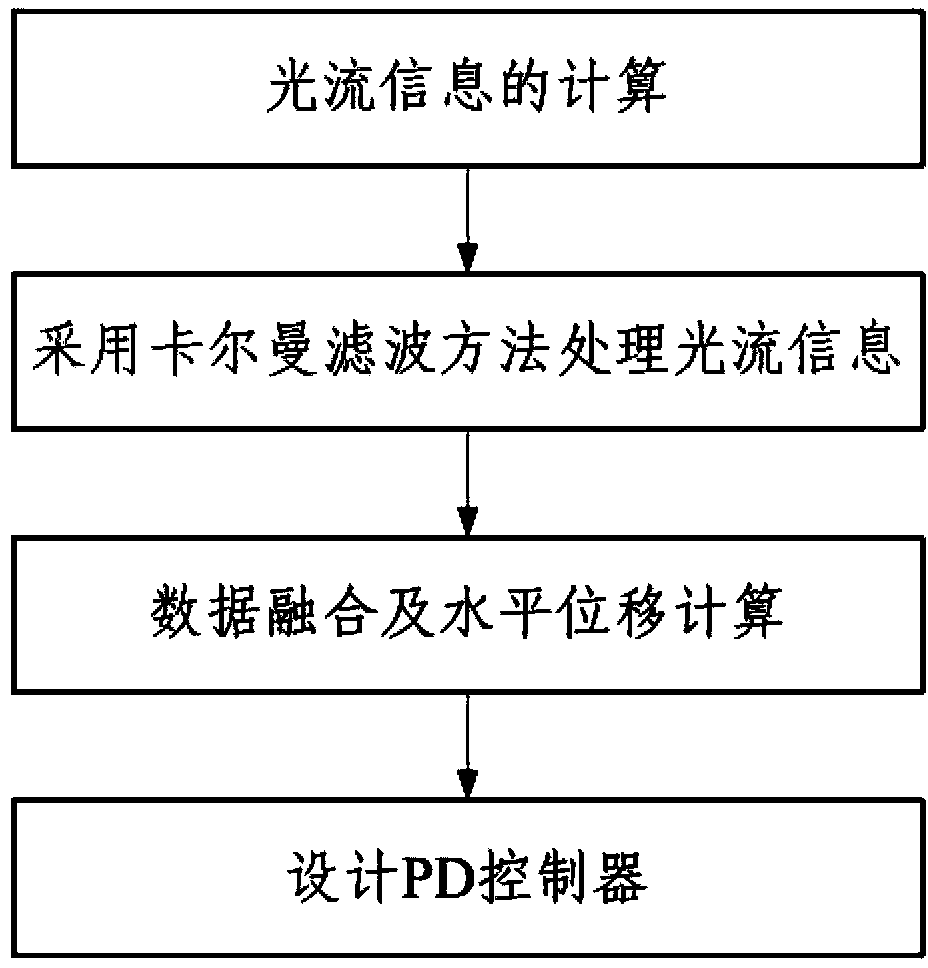

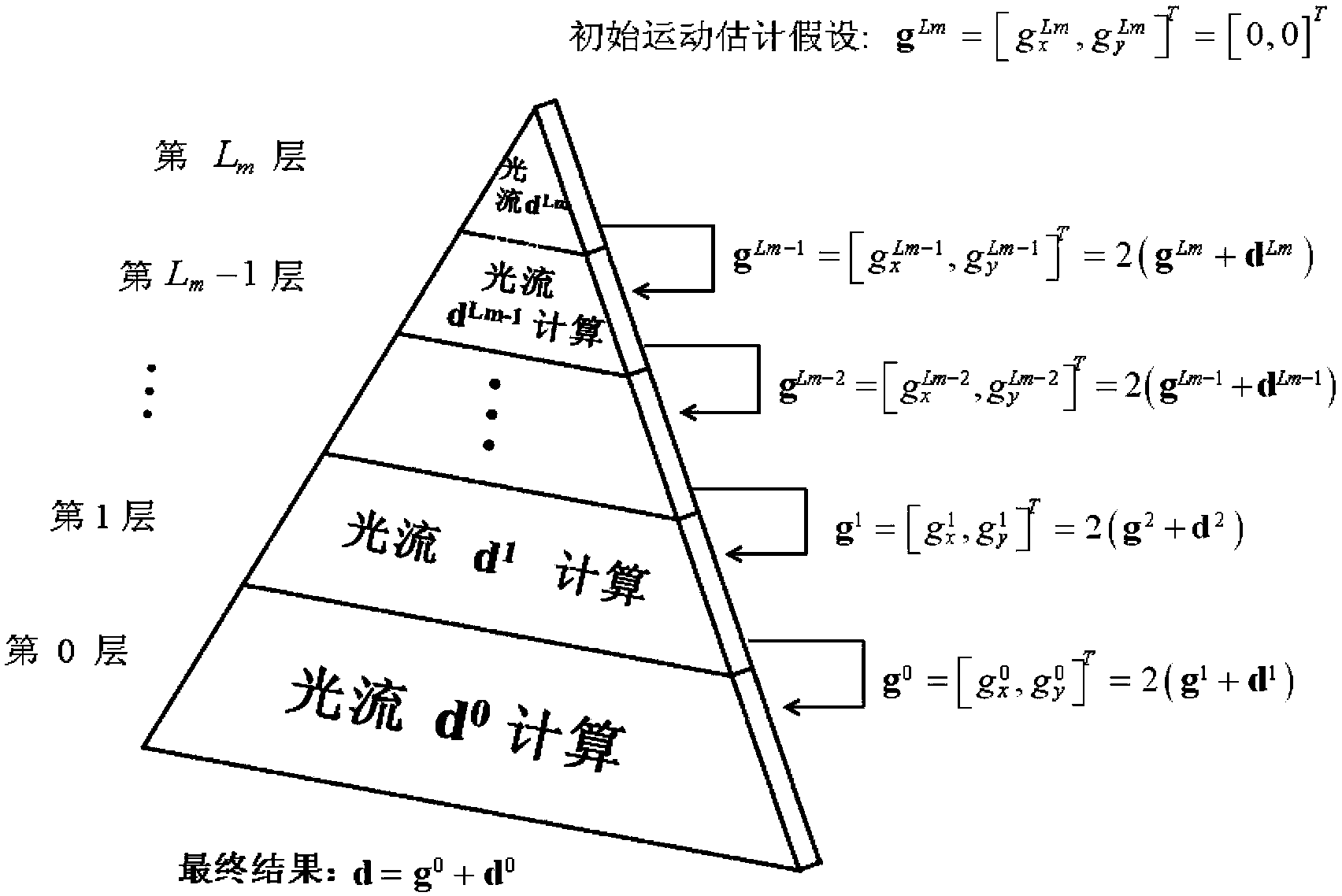

Optical flow-based four-rotor unmanned aerial vehicle flight control method

ActiveCN103365297AReduce volumeReduce weightPosition/course control in three dimensionsVehicle dynamicsProportional differential

The invention discloses an optical flow-based four-rotor unmanned aerial vehicle flight control method. The method comprises the following steps: calculating optical flow information by utilizing an image pyramid-based Lucas. Canard method; processing the optical flow information by adopting a Kalman filtering method; performing data fusion on an optical flow and an attitude angle, and calculating the horizontal displacement of an unmanned aerial vehicle; and designing a proportional-differential controller, including determining a four-rotor unmanned aerial vehicle dynamic model and designing a control algorithm. By the optical flow-based four-rotor unmanned aerial vehicle flight control method disclosed by the invention, the horizontal position information of the unmanned aerial vehicle is calculated by fusing the image information and the attitude angle information acquired by utilizing an airborne camera; and the position of a small unmanned aerial vehicle is controlled by taking the unmanned aerial vehicle horizontal position information as the feedback information of an outer ring PD (proportional-differential).

Owner:TIANJIN UNIV

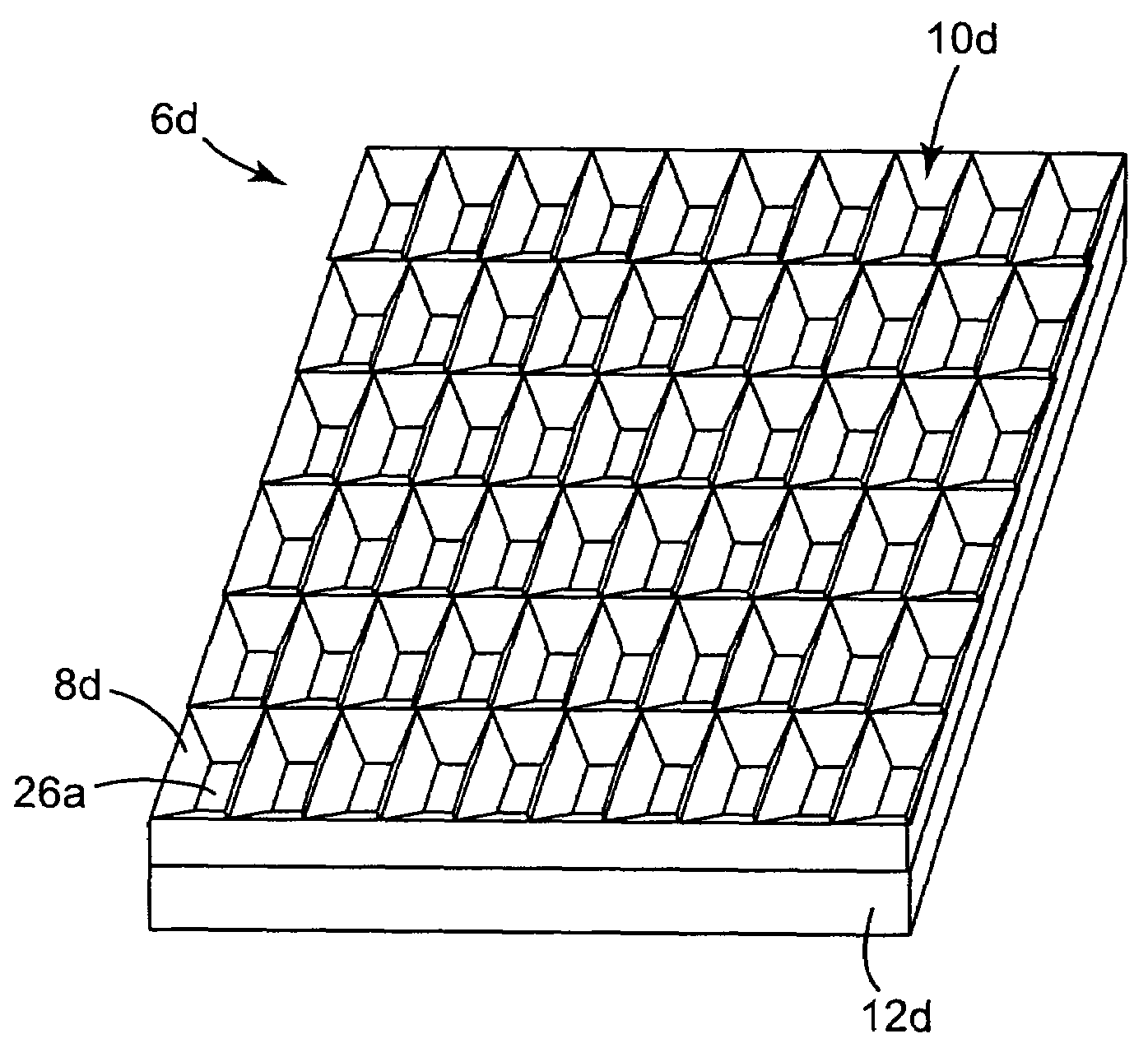

Optical film having a structured surface with concave pyramid-shaped structures

Optical films are disclosed, which have a structured surface including a plurality of concave pyramid-shaped structures, each structure having a base including at least two first sides and at least two second sides. In addition, optical devices are disclosed that incorporate the optical films, for example, such that a first surface of the optical film is disposed to receive light from a light source and the structured surface faces away from the light source.

Owner:3M INNOVATIVE PROPERTIES CO

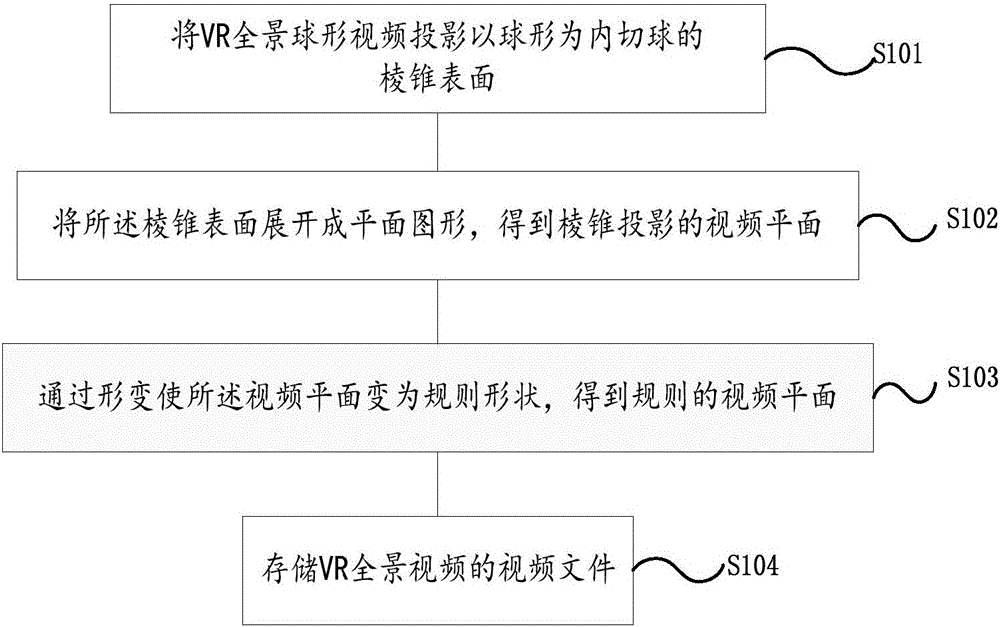

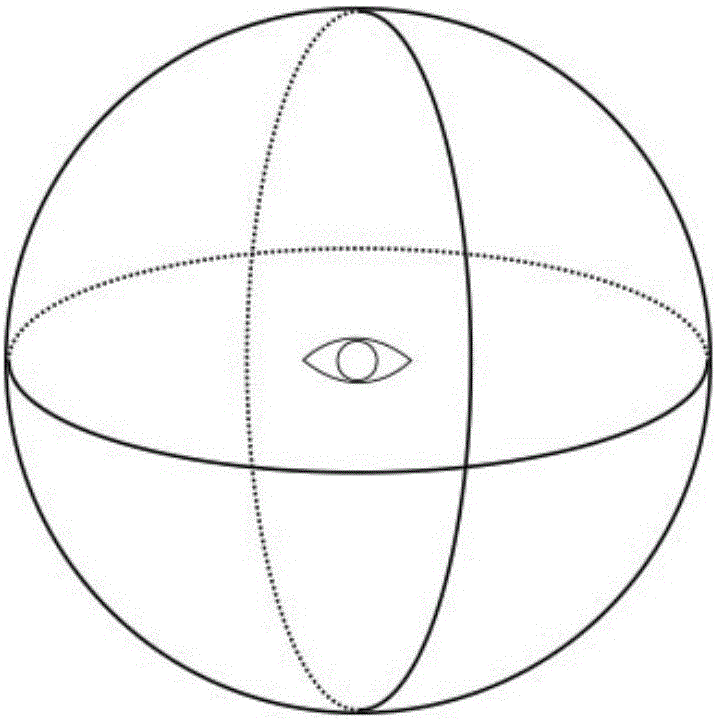

VR (Virtual Reality) panoramic video layout method and device and VR panoramic video presentation method and system capable of saving bandwidth

ActiveCN105898254ASave bandwidthSmall surface areaInput/output for user-computer interactionPicture reproducers using projection devicesGraphicsVideo player

The invention provides a VR (Virtual Reality) panoramic video layout method, device and system. The method comprises the following steps: projecting a VR panoramic spherical video onto a pyramid surface which takes a sphere as an inscribed sphere; expanding the pyramid surface into a planar graph to obtain a video plane of pyramid projection; and turning the video plane to a regular shape through deformation to obtain a regular video plane. The VR panoramic video layout system comprises a VR panoramic video layout processing device, a VR panoramic video streaming media server and a VR panoramic video player, wherein the VR panoramic video player is used for playing video data from the VR panoramic video streaming media server according to a viewing angle of a user. Through adoption of the VR panoramic video layout method, device and system provided by the invention, a pyramid-based projection way is provided. In the pyramid-based projection way, the surface area of a projected video is reduced by 80 percent compared with an equirectangular projection way; the bandwidth of VR panoramic video transmission is reduced by 80 percent compared with an existing VR video technology; and a panoramic video can be played according to the viewing angle of the user.

Owner:北京金字塔虚拟现实科技有限公司

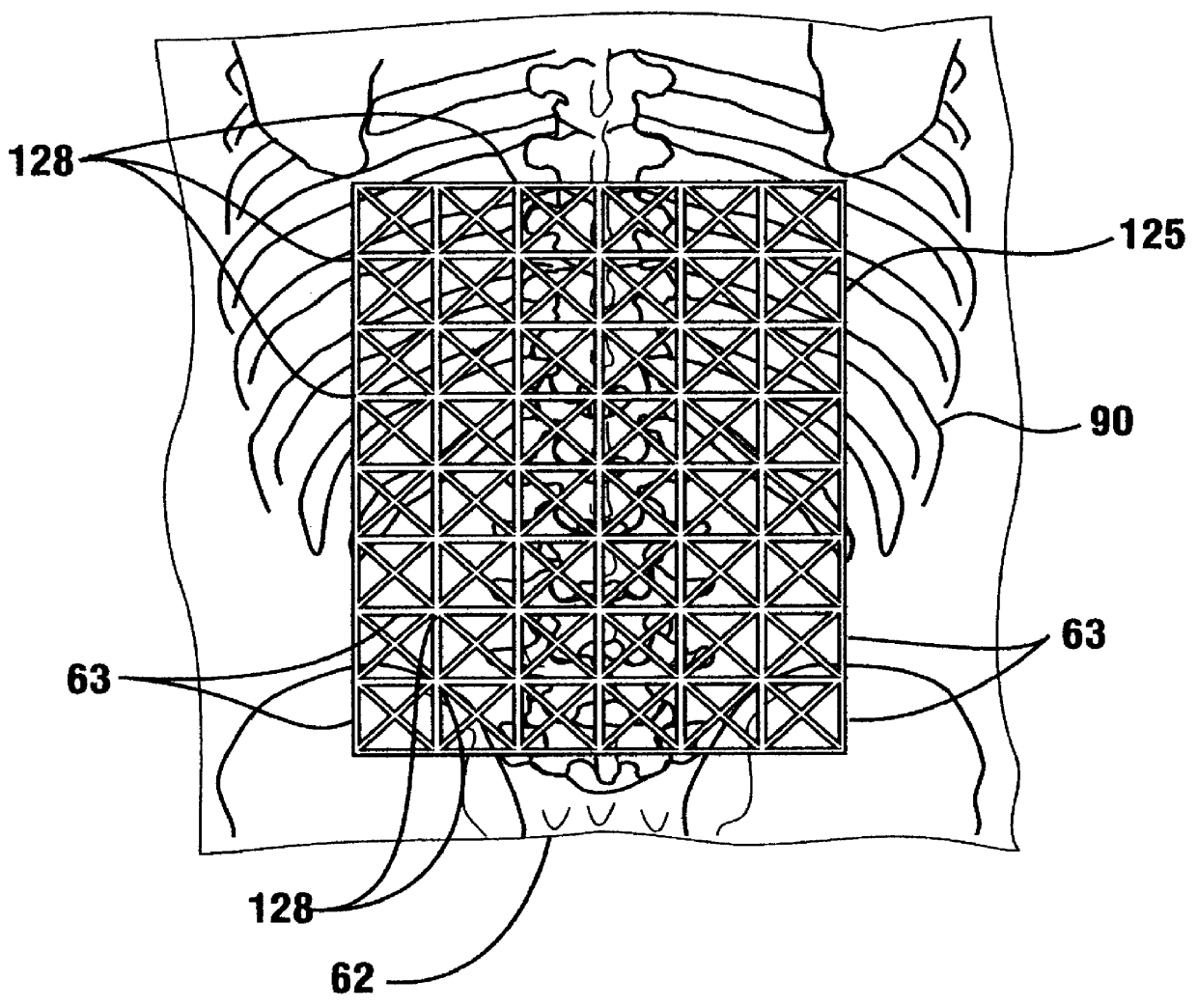

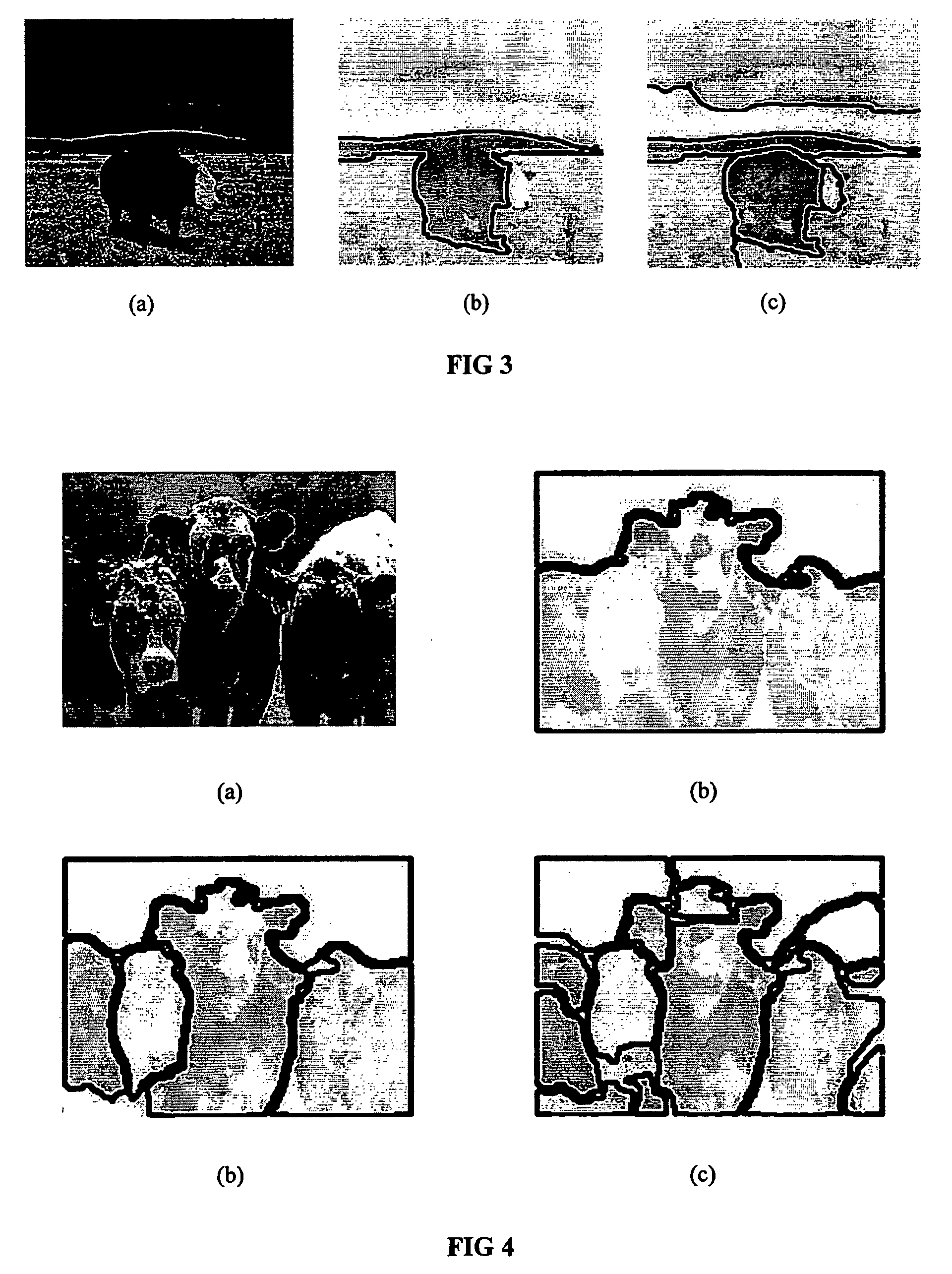

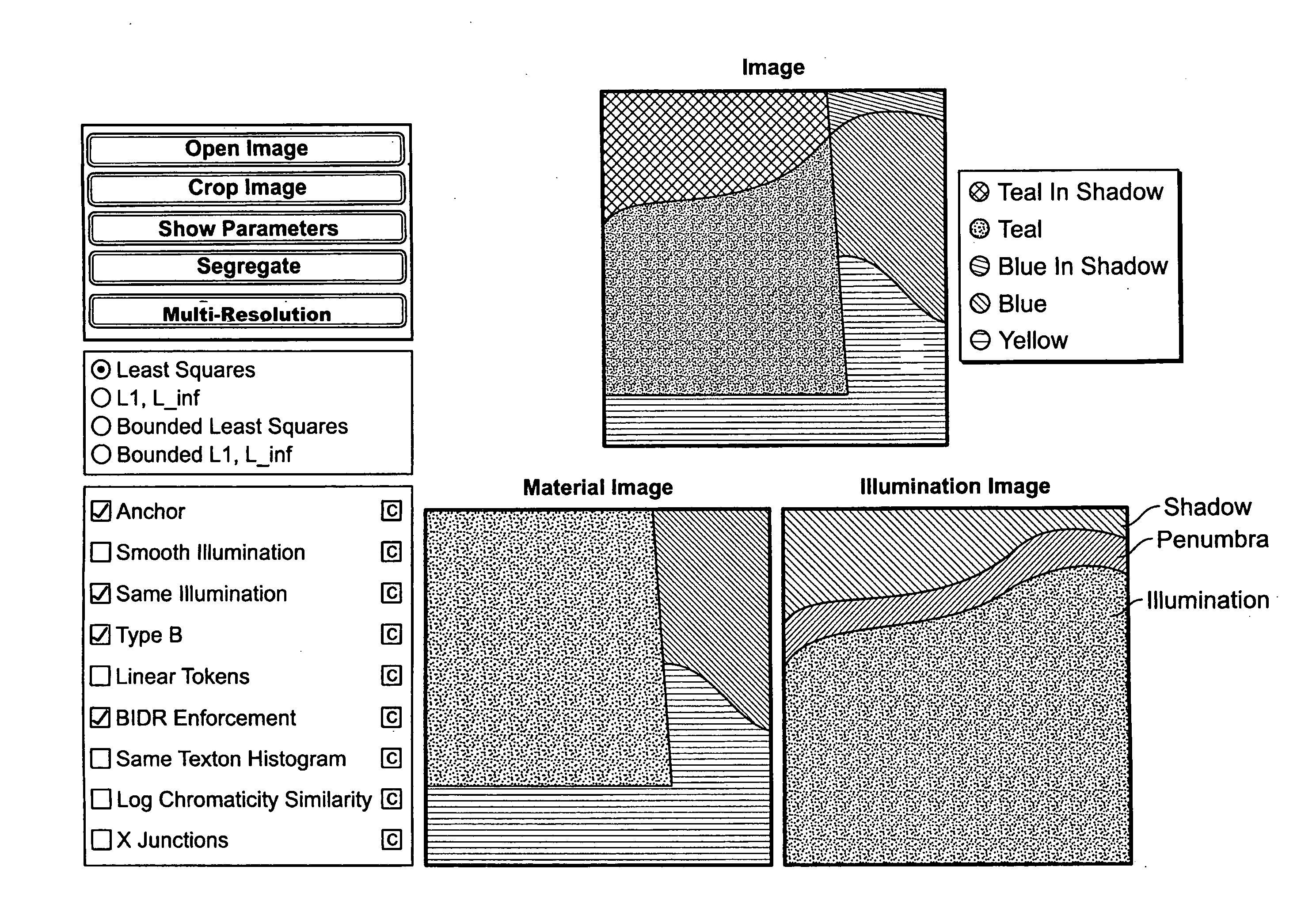

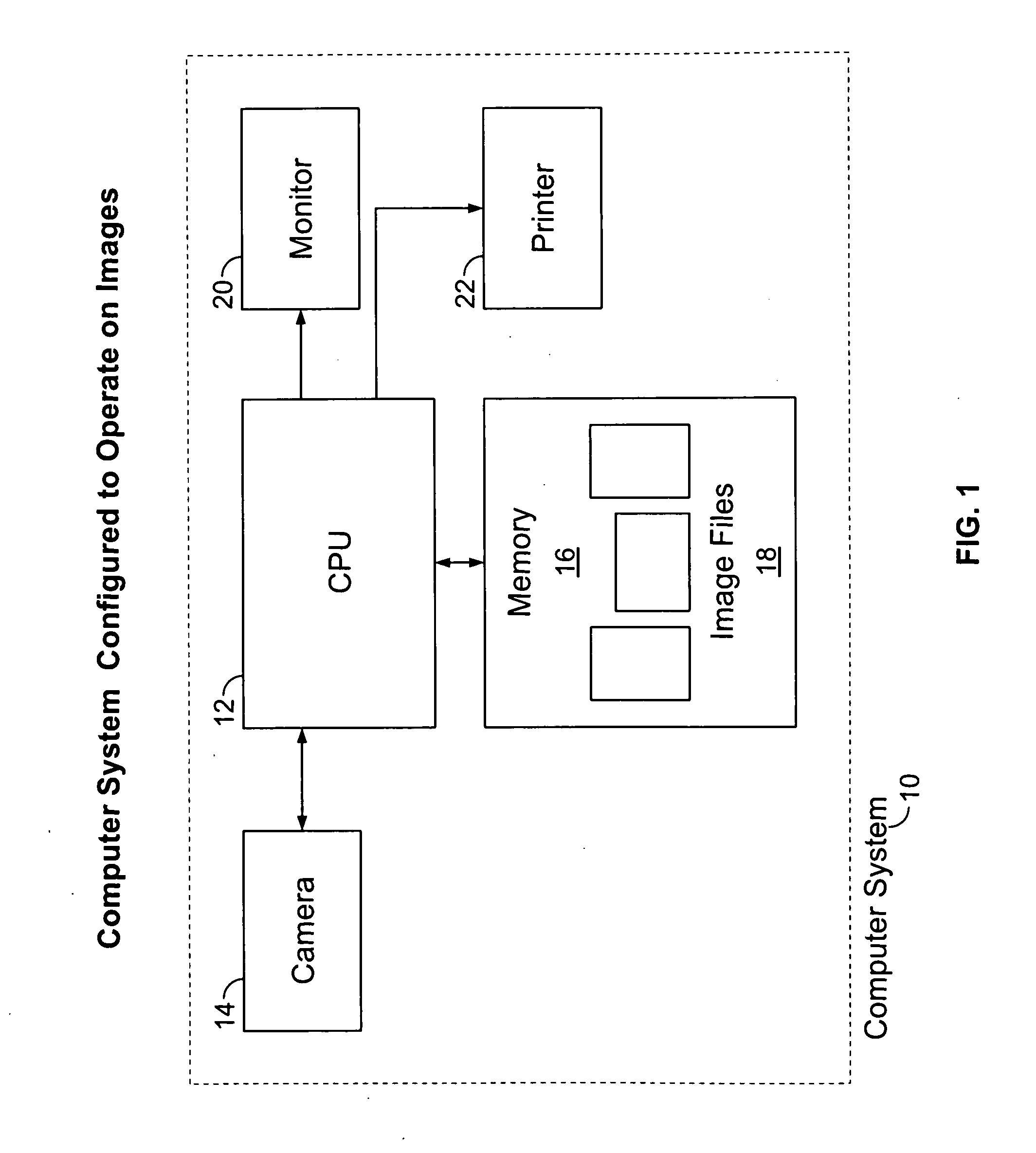

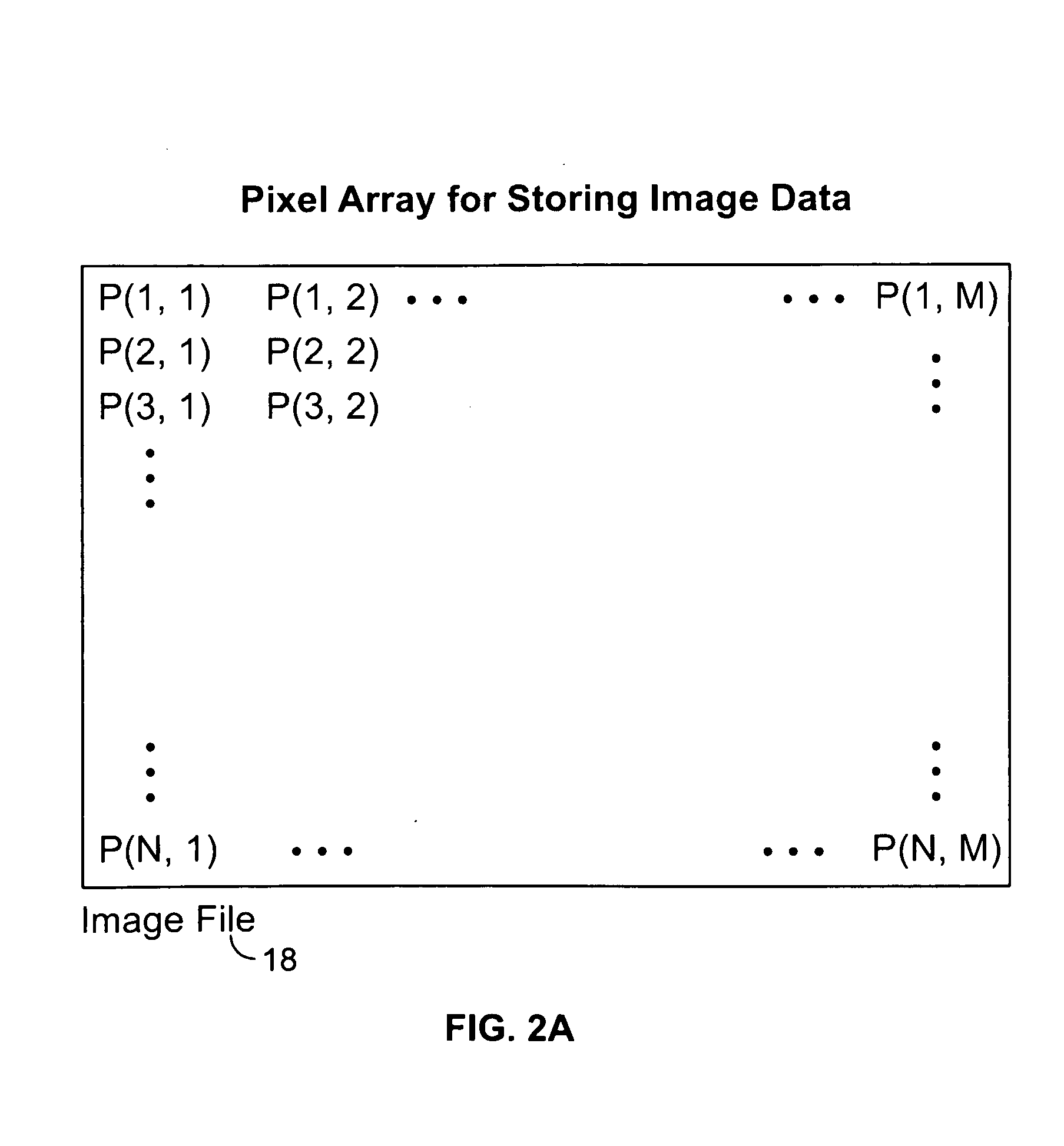

Multi-resolution analysis in image segregation

ActiveUS20100303348A1Accurately correctly identifyImage enhancementImage analysisMulti resolution analysisScale space

In a first exemplary embodiment of the present invention, an automated, computerized method is provided for processing an image. According to a feature of the present invention, the method comprises the steps of providing an image file depicting an image, in a computer memory, forming a set of selectively varied representations of the image file and performing an image segregation operation on at least one preselected representation of the image of the image file, to generate intrinsic images corresponding to the image. According to a feature of the exemplary embodiment of the present invention, the selectively varied representations comprise multi-resolution representations such as a scale-spaced pyramid of representations. In a further feature of the exemplary embodiment of the present invention, the intrinsic images comprise a material image and an illumination image.

Owner:INNOVATION ASSET COLLECTIVE

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com